Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1091 results about "Emotion recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Emotion recognition is the process of identifying human emotion, most typically from facial expressions as well as from verbal expressions. This is both something that humans do automatically but computational methodologies have also been developed.

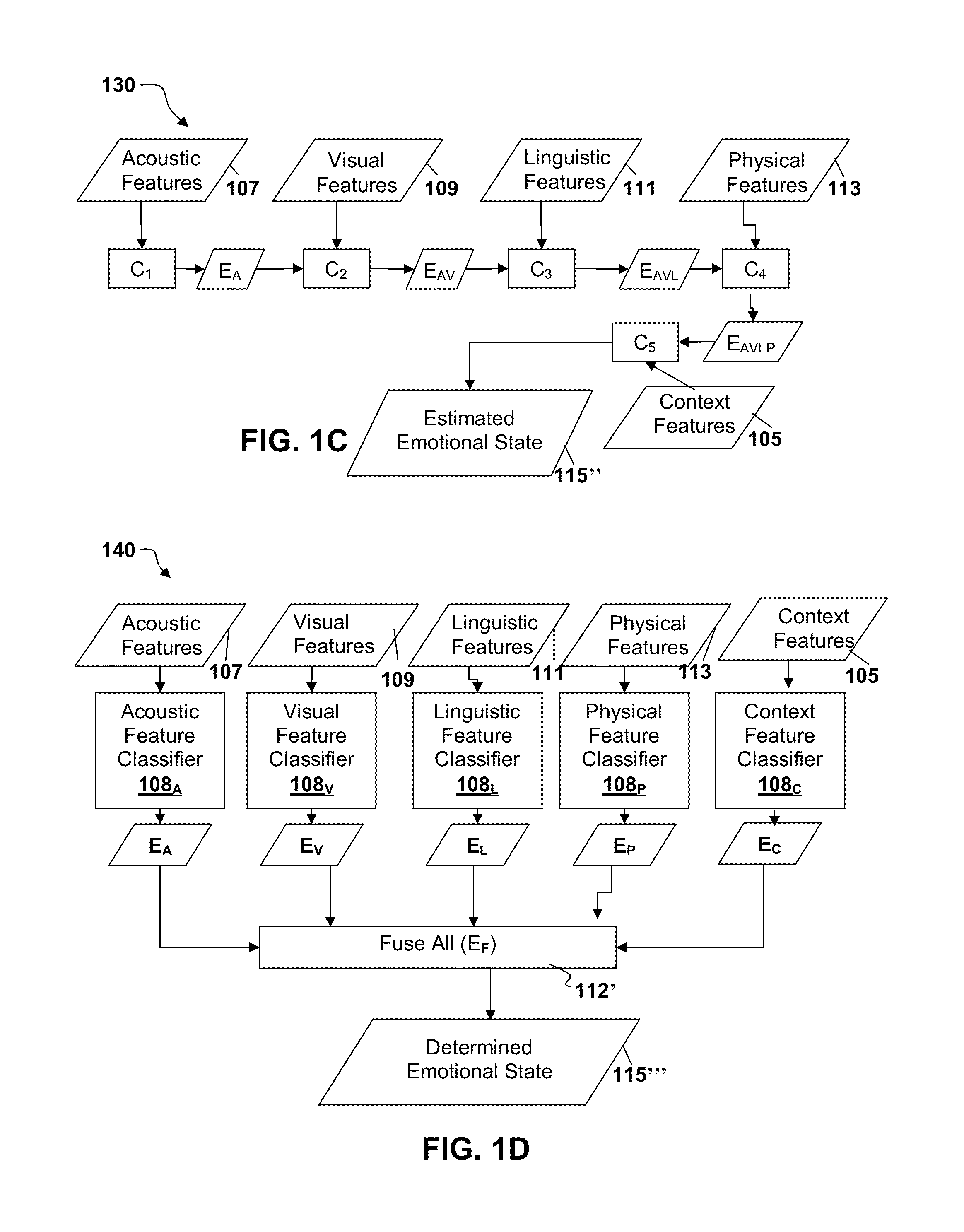

Multi-modal sensor based emotion recognition and emotional interface

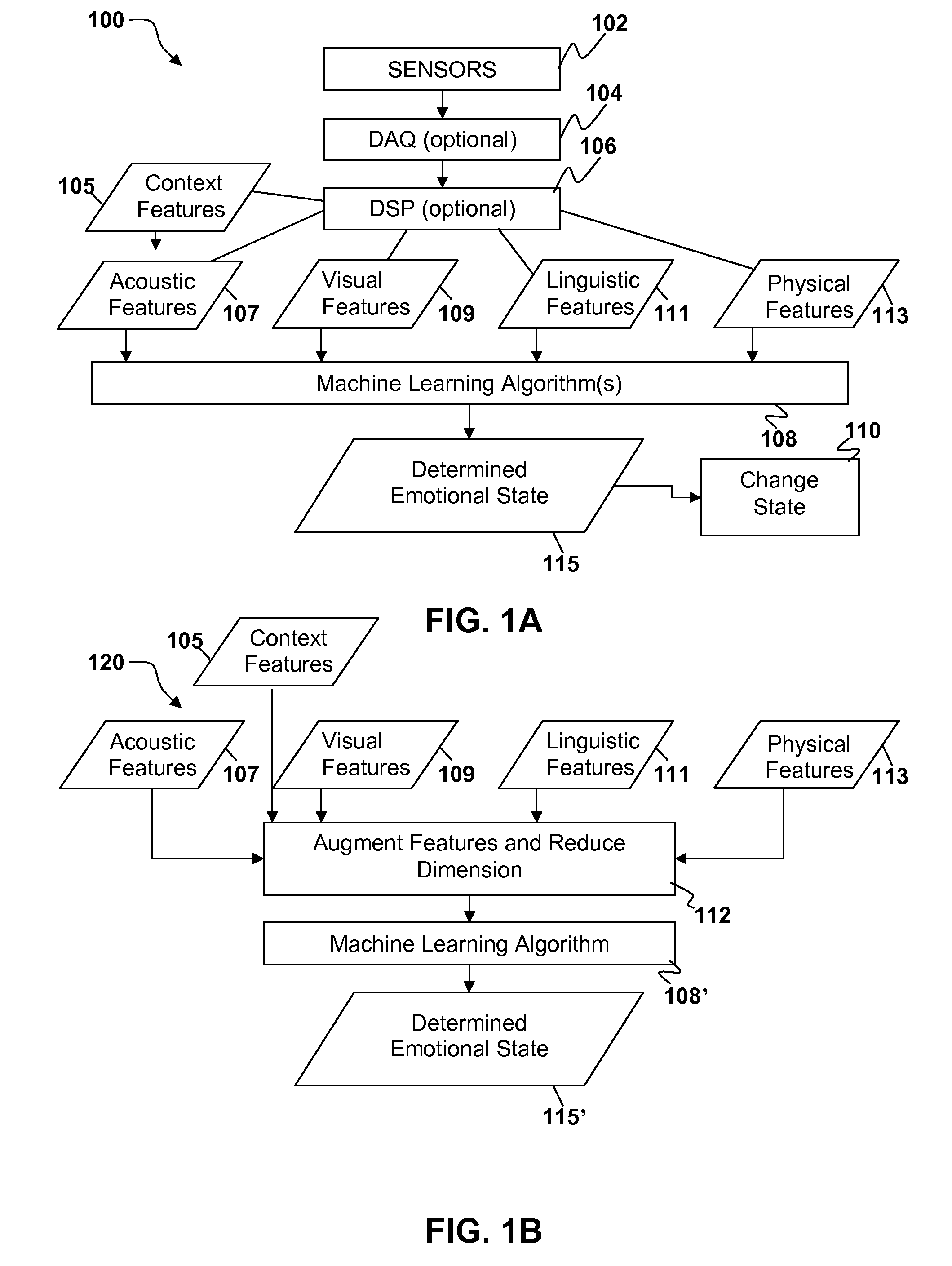

ActiveUS20140112556A1Speech recognitionAcquiring/recognising facial featuresPattern recognitionSubject matter

Features, including one or more acoustic features, visual features, linguistic features, and physical features may be extracted from signals obtained by one or more sensors with a processor. The acoustic, visual, linguistic, and physical features may be analyzed with one or more machine learning algorithms and an emotional state of a user may be extracted from analysis of the features. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:SONY COMPUTER ENTERTAINMENT INC

Multi-mode based emotion recognition method

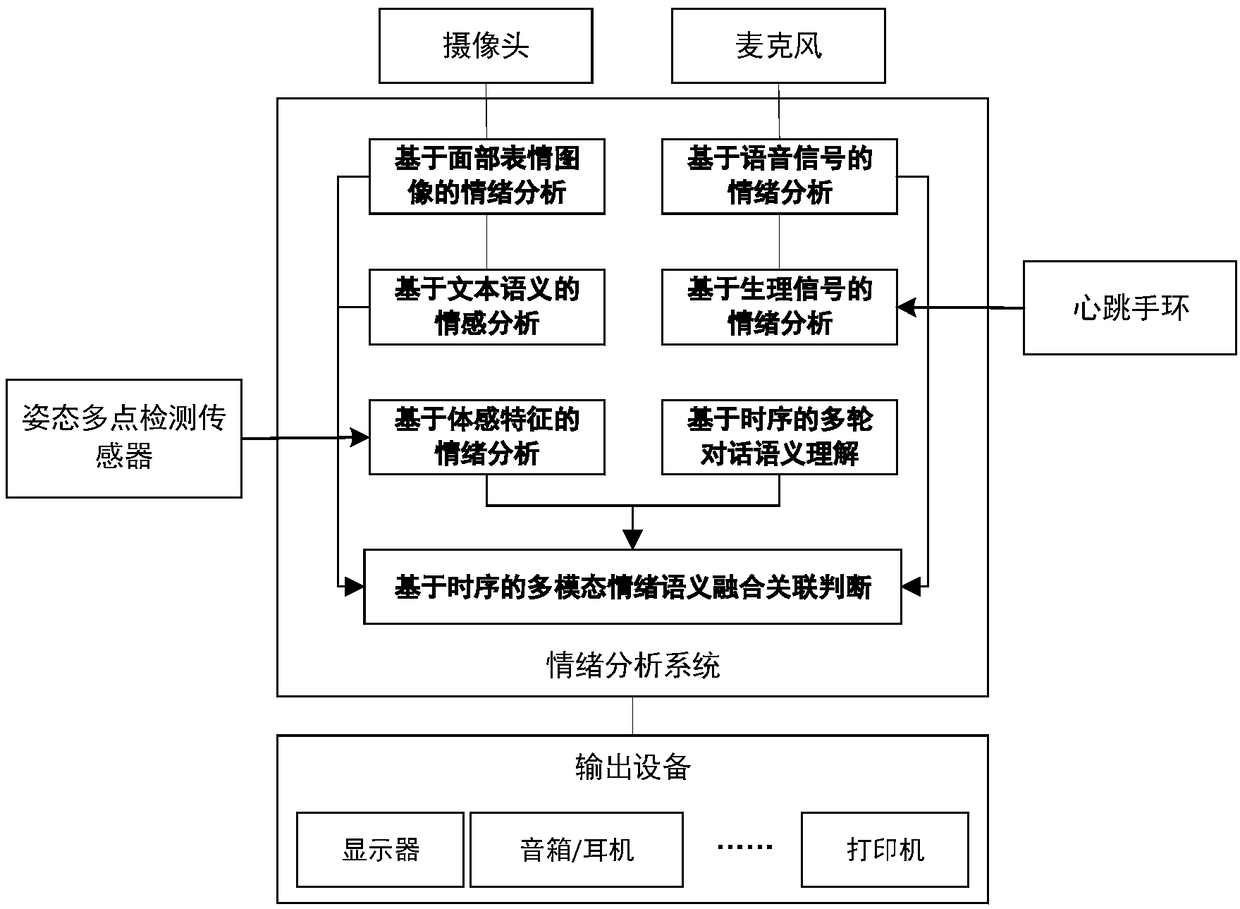

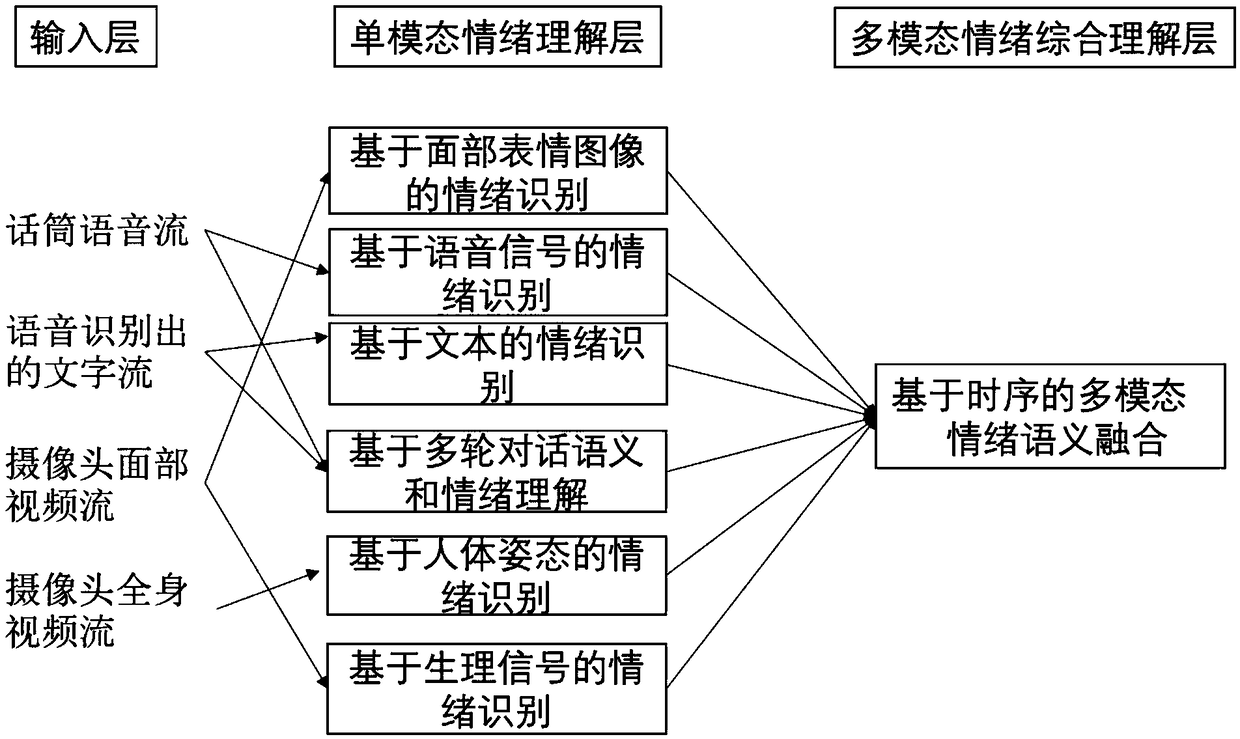

ActiveCN108805089ALow costImprove ease of useSemantic analysisSpeech analysisPattern recognitionData acquisition

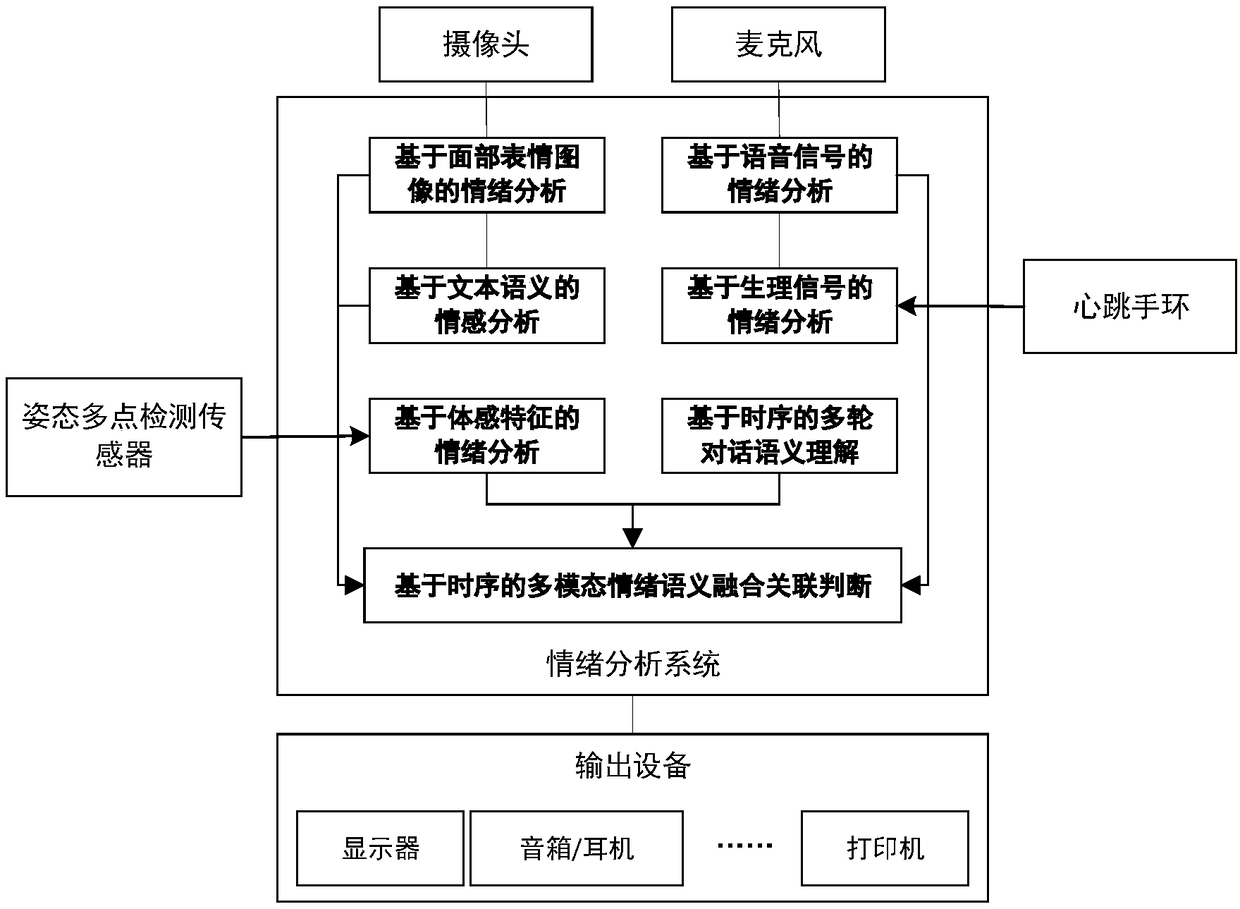

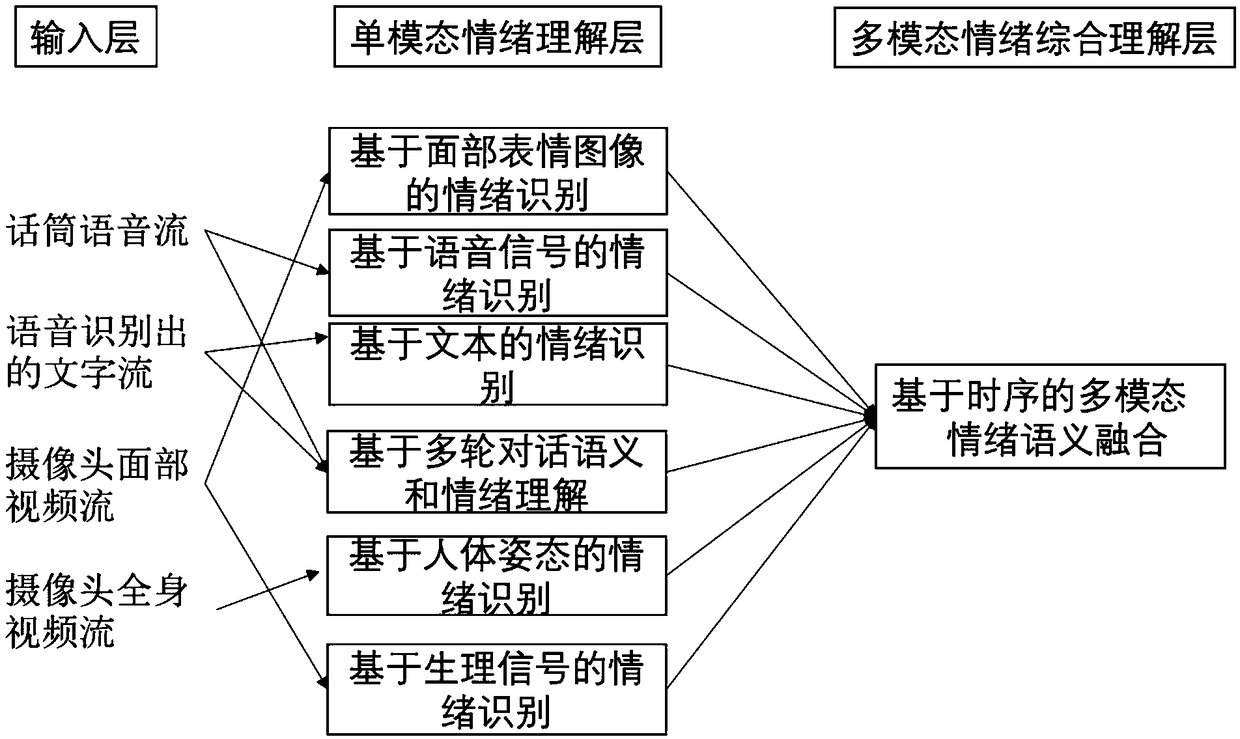

The invention provides a multi-mode based emotion recognition method, comprising a data collection device, an output device and an emotion analysis software system, wherein the emotion analysis software system performs comprehensive analysis and reasoning on data obtained by the data collection device and finally outputs a result to the output device. The specific steps are as follows: an emotionrecognition step based on facial image expressions, an emotion recognition step based on voice signals, an emotion analysis step based on text semantic, an emotion recognition step based on human gestures, an emotion recognition step based on physiological signals, a semantic comprehension step based on multi-round dialogues, and a multi-mode emotion semantic fusion association judgment step basedon timing sequence. The multi-mode based emotion recognition method provided by the invention has the advantages of breaking through the five kinds of single-mode emotion recognition, innovatively performing comprehensive judgment on the information of multiple single modes by using a deep neural network through neural network coding, deep correlation and understanding, greatly improving the accuracy and being suitable for most general inquiry interaction application scenes.

Owner:南京云思创智信息科技有限公司

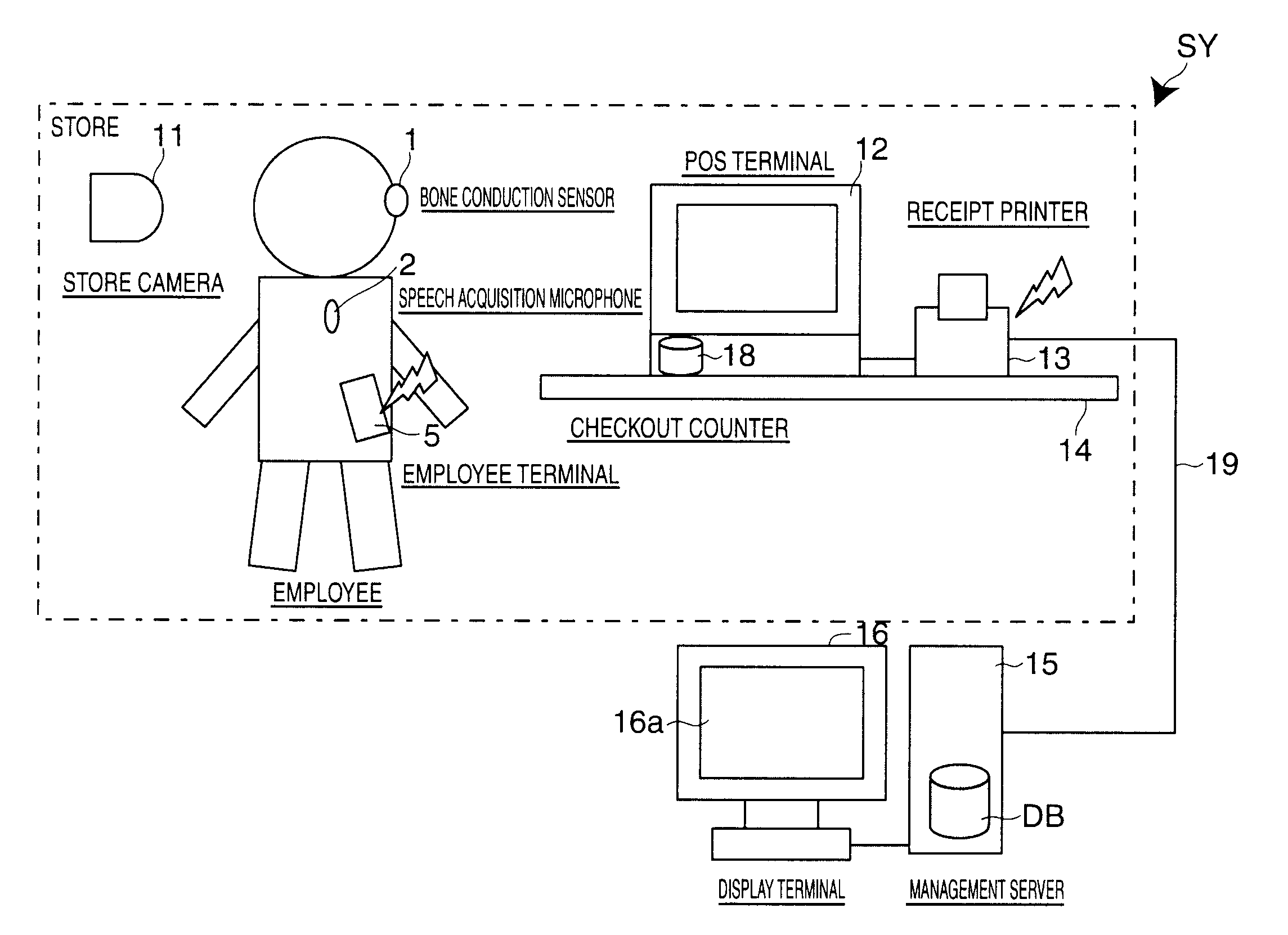

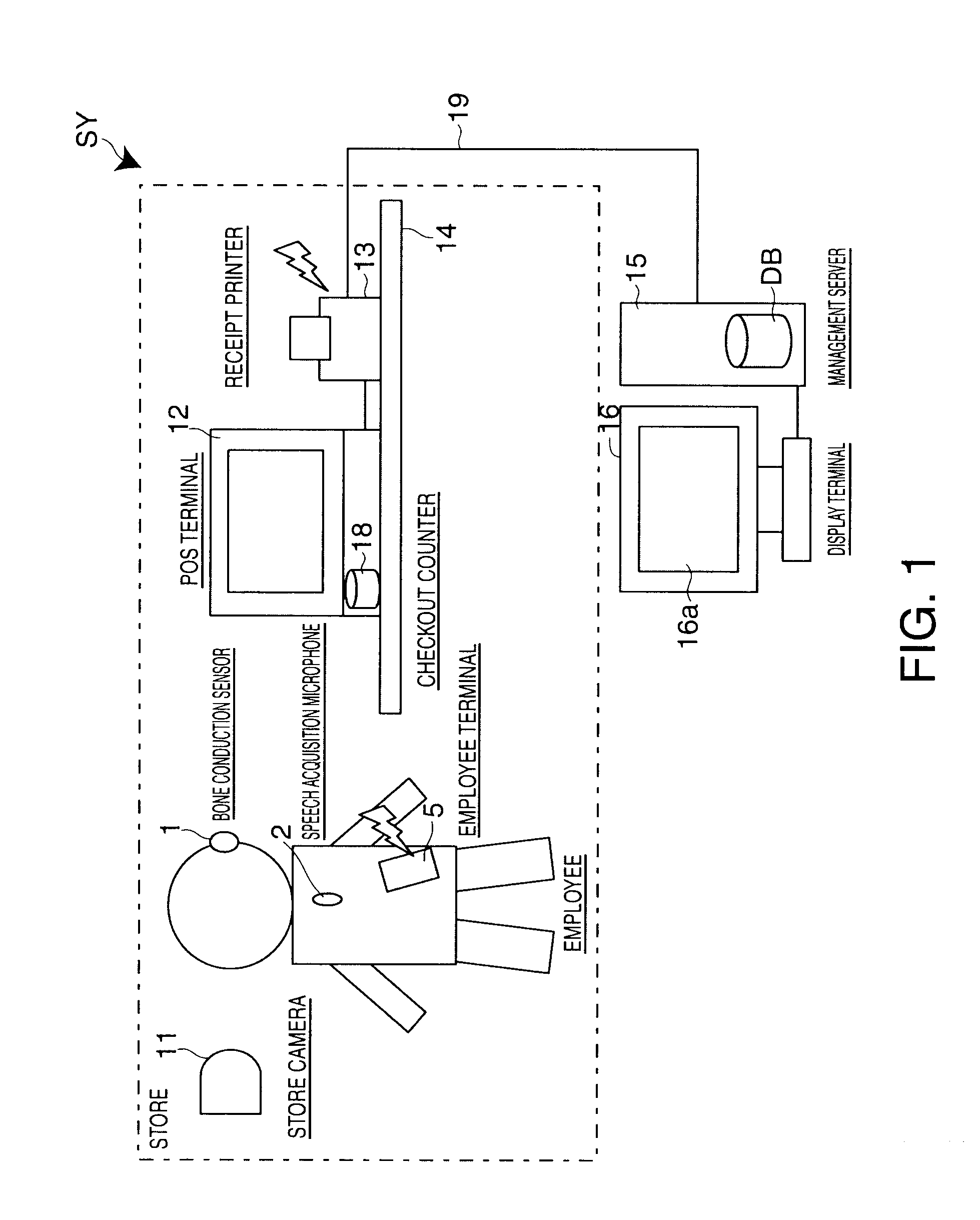

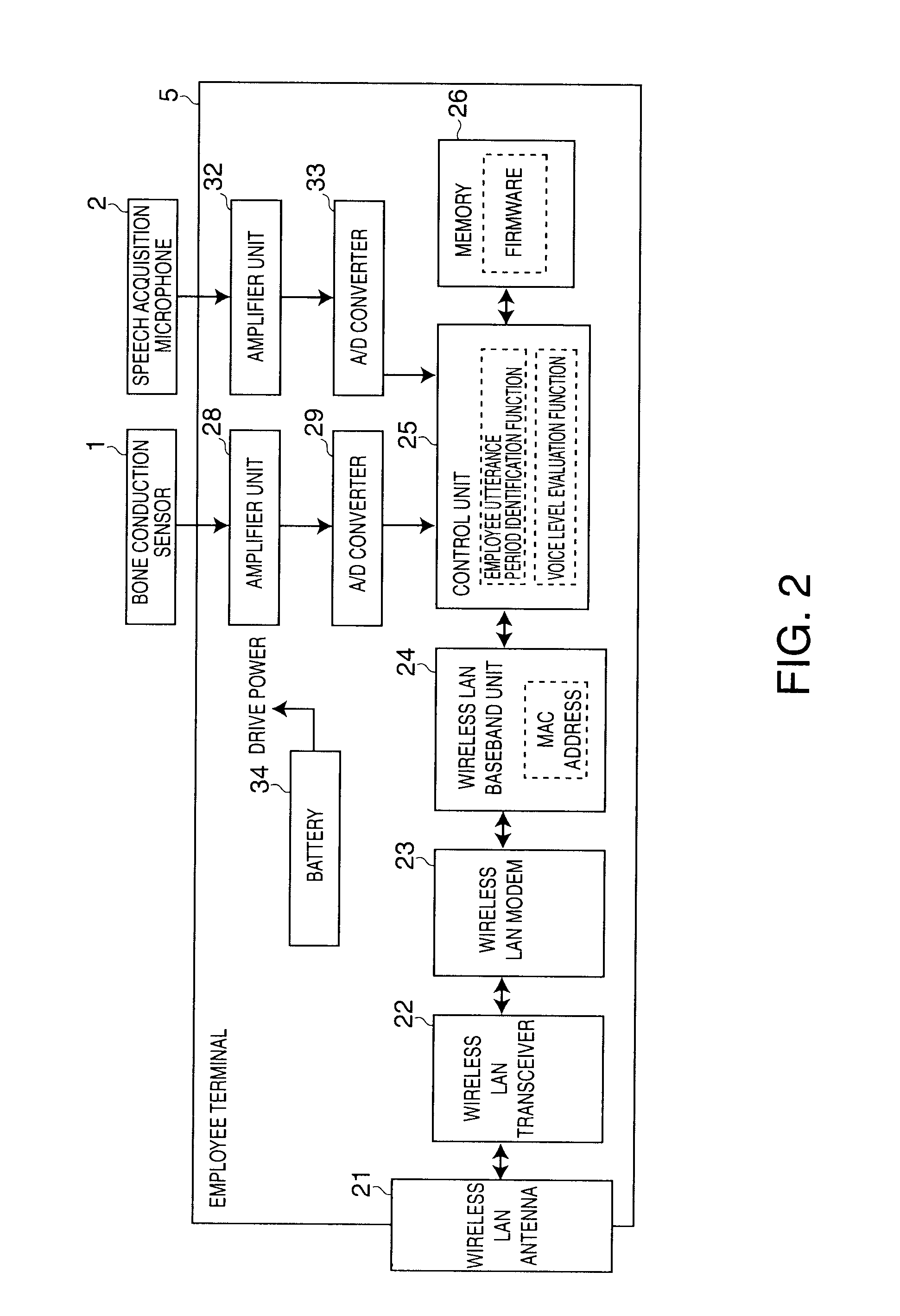

Customer Service Data Recording Device, Customer Service Data Recording Method, and Recording Medium

InactiveUS20110282662A1Improve conversation skillsAccurately identify employee and customer speechSpeech recognitionData recordingSpeech sound

To enable determining the correlation between customer satisfaction and employee satisfaction, a speech acquisition unit 102 acquires conversations between employees and customers; an emotion recognition unit 155 recognizes employee and customer emotions based on employee and customer speech in the conversation; a satisfaction calculator 156, 157 calculates employee satisfaction and customer satisfaction based on the emotion recognition output from the emotion recognition unit 155; and a customer service data recording unit 159 relates and records employee satisfaction data denoting employee satisfaction and customer satisfaction data denoting customer satisfaction as customer service data in a management server database DB.

Owner:SEIKO EPSON CORP

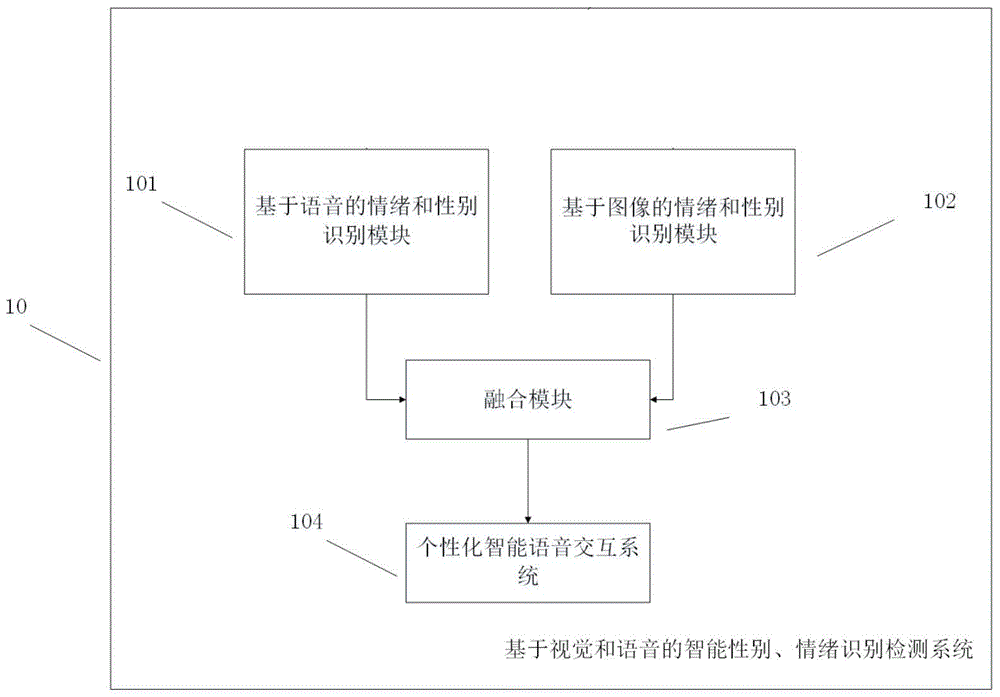

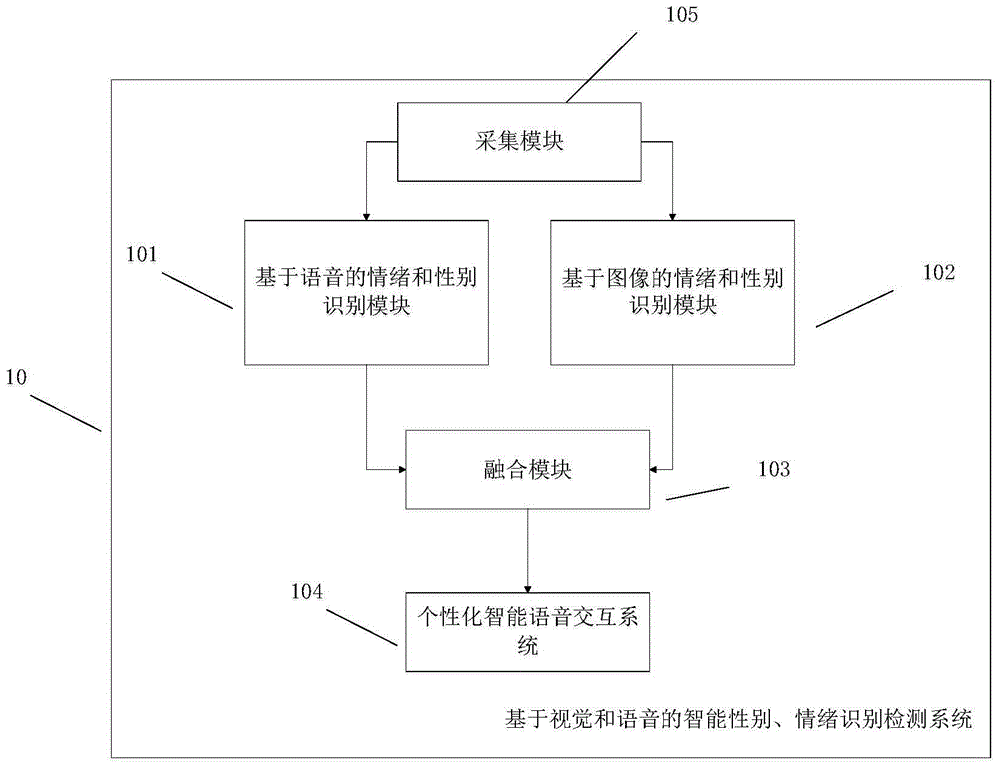

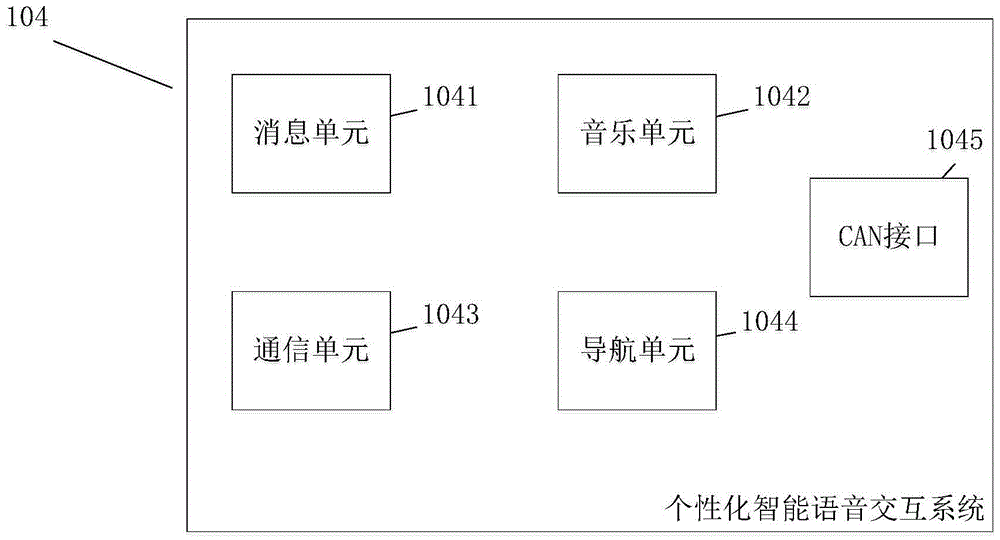

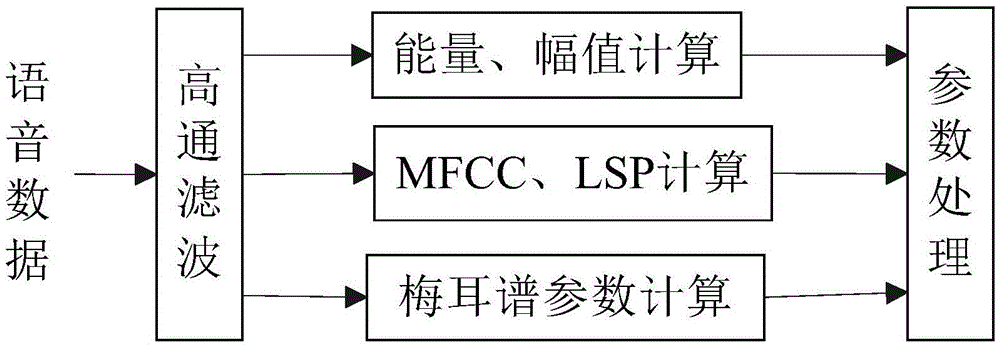

Intelligent gender and emotion recognition detection system and method based on vision and voice

InactiveCN105700682AAutomatic gender/mood detectionHigh precisionInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionInteraction systems

The invention discloses an intelligent gender and emotion recognition detection system and method based on vision and voice. The system comprises an image-based emotion and gender recognition module, a voice-based emotion and gender recognition module, a fusion module and a personalized intelligent voice interaction system, wherein the image-based emotion and gender recognition module is used for recognizing the emotion of a person in a vehicle according to a face image and recognizing the gender of the person in the vehicle according to the face; the voice-based emotion and gender recognition module is used for recognizing the emotion and the gender of the person in the vehicle according to voice; the fusion module is used for matching the gender recognition results, fusing the emotion recognition result, and sending the results to the personalized intelligent voice interaction system; and the personalized intelligent voice interaction system is used for voice interaction. By adopting the system and the method, the gender / emotion recognition accuracy is improved via fused images and voice recognition results; the driving experience and the driving safety are improved via the personalized intelligent voice interaction system; and the using fun of vehicle-mounted equipment and the accuracy of information service are improved by voice interaction.

Owner:BEIJING ILEJA TECH CO LTD

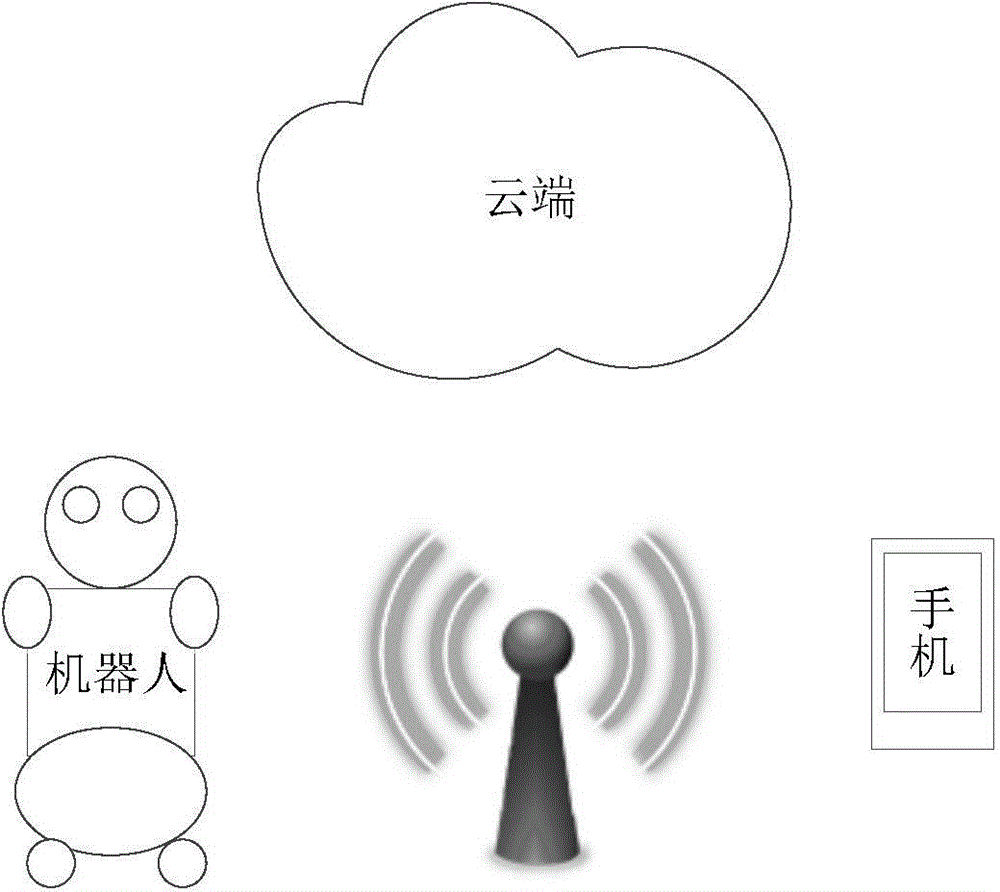

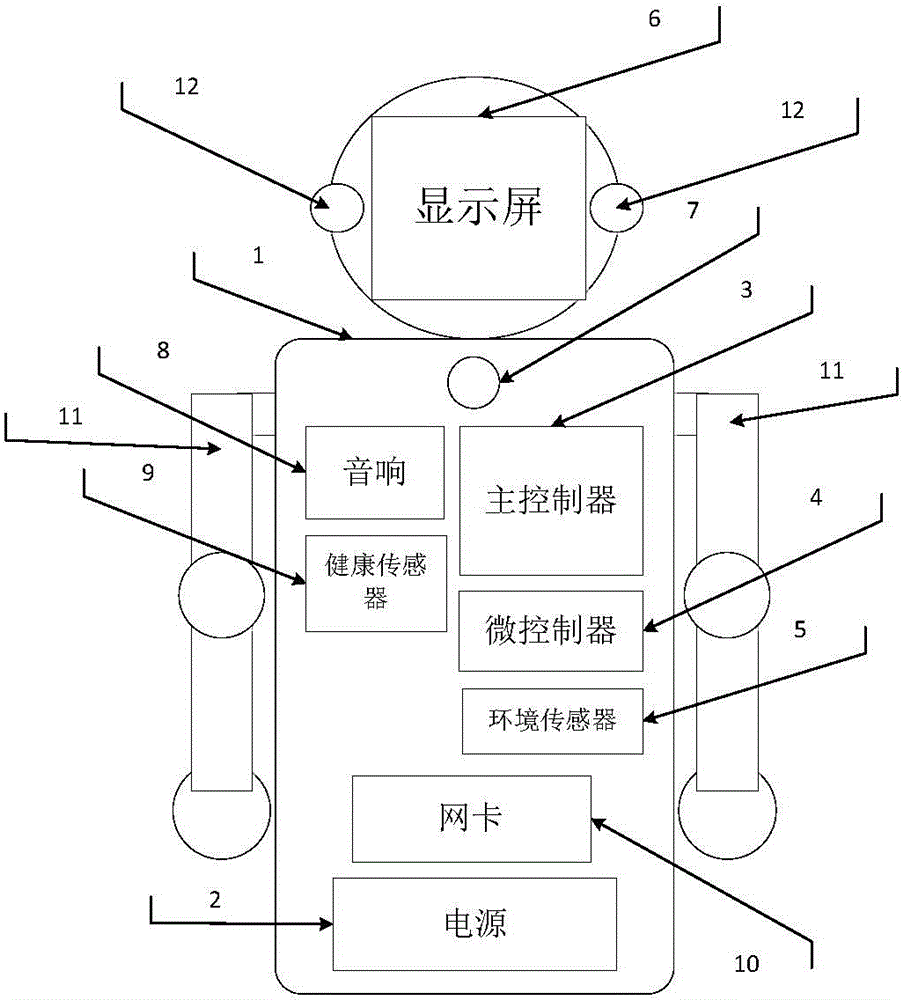

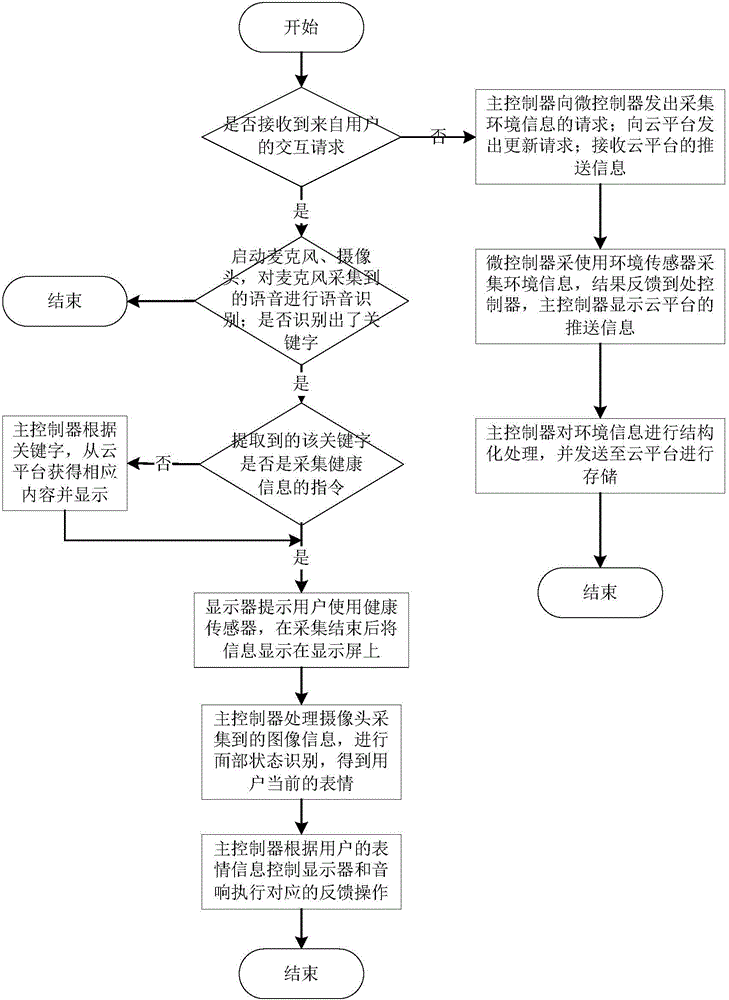

Household information acquisition and user emotion recognition equipment and working method thereof

InactiveCN104102346AVarious functionsImprove scalabilityProgramme controlInput/output for user-computer interactionMicrocontrollerAudio electronics

The invention discloses a household information acquisition and user emotion recognition equipment, which comprises a shell, a power supply, a main controller, a microcontroller, multiple environmental sensors, a screen, a microphone, an audio, multiple health sensors, a pair of robot arms and a pair of cameras, wherein the microphone is arranged on the shell; the power supply, the main controller, the microcontroller, the environmental sensors, the audio and the pair of cameras are arranged symmetrically relative to the screen respectively on the left and right sides; the robot arms are arranged on the two sides of the shell; the main controller is in communication connection with the microcontroller, and is used for controlling the microcontroller to control the movements of the robot arms through motors of the robot arms; the power supply is connected with the main controller and the microcontroller, and is mainly used for providing energy for the main controller and the microcontroller. According to the household information acquisition and user emotion recognition equipment, the intelligent speech recognition technology, the speech synthesis technology and the facial expression recognition technology are integrated, thus the use of the household information acquisition and user emotion recognition equipment is more convenient, and the feedback is more reasonable.

Owner:HUAZHONG UNIV OF SCI & TECH

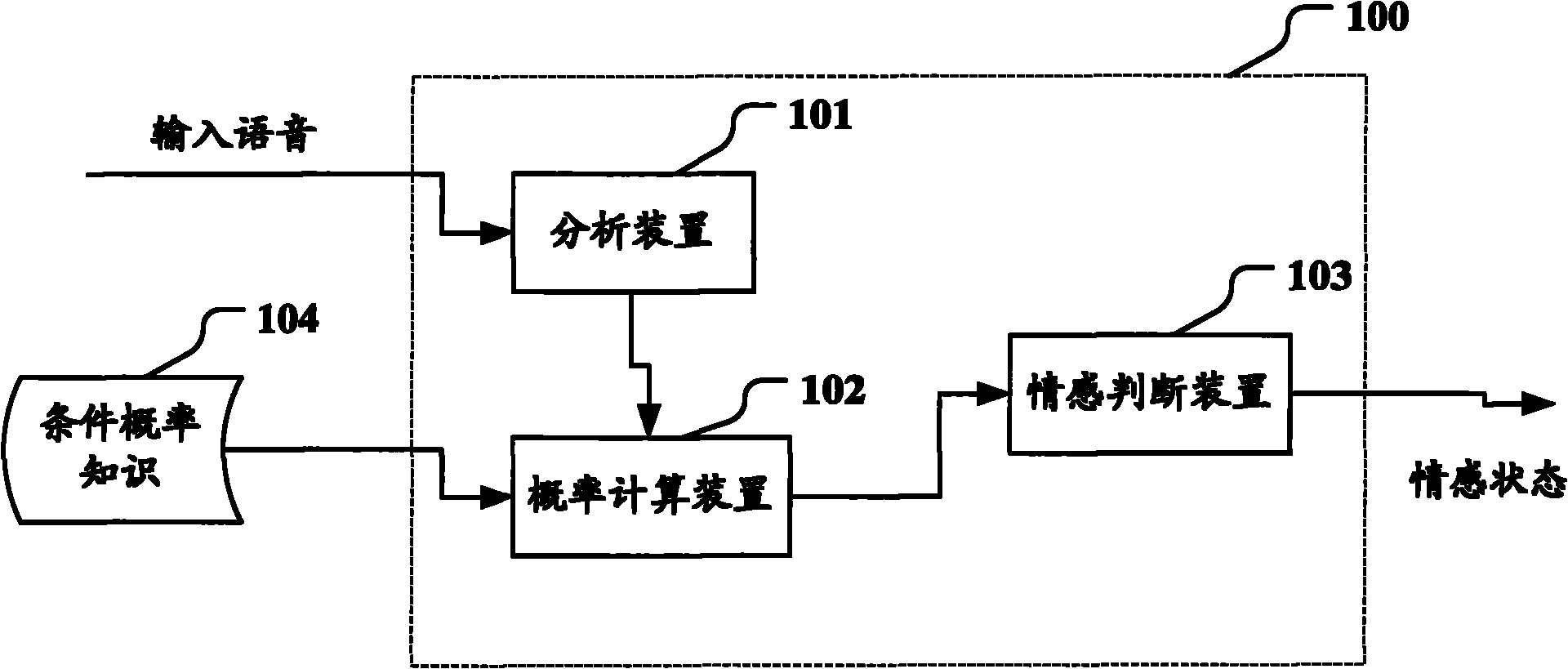

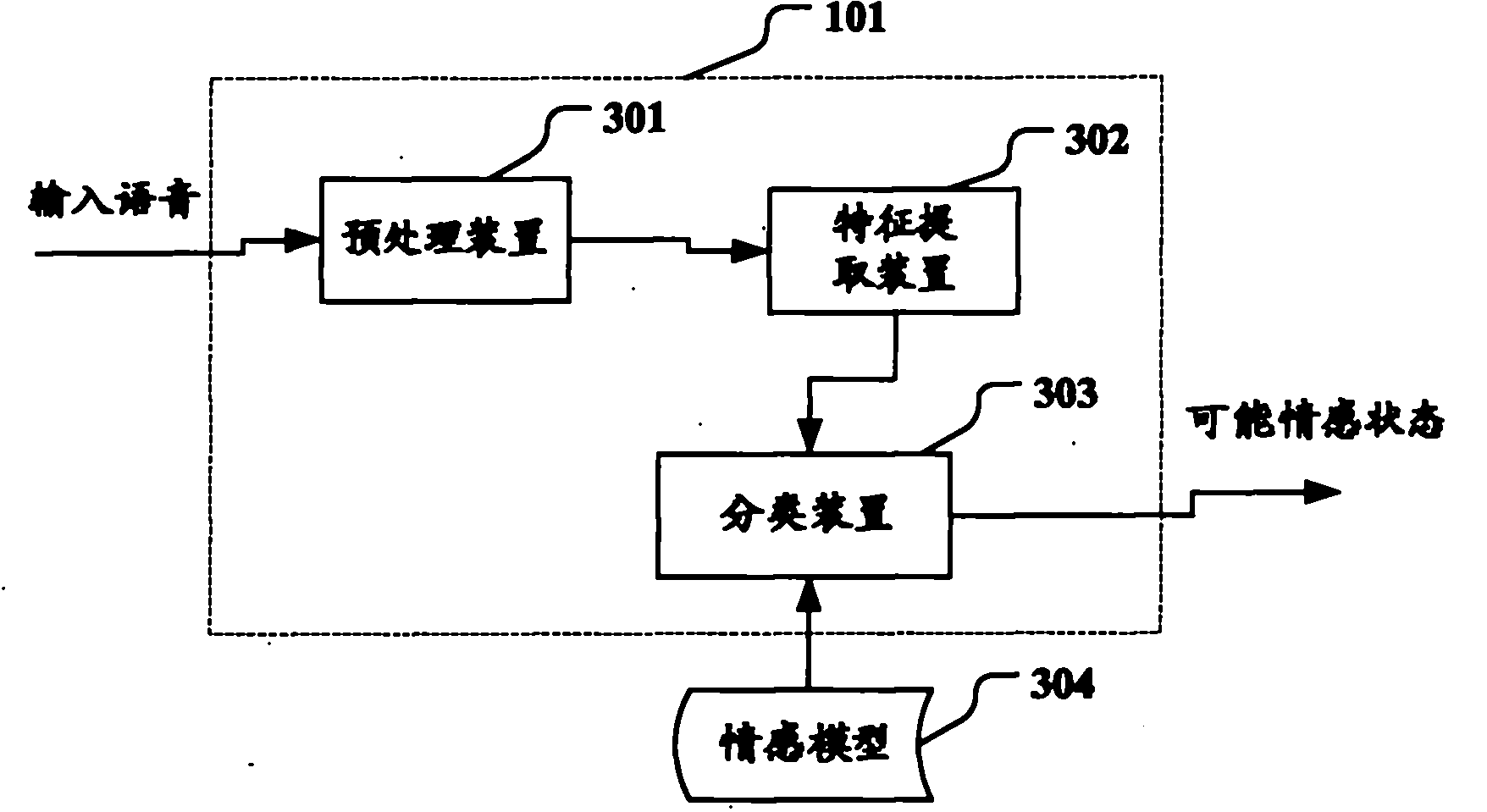

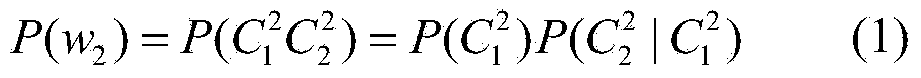

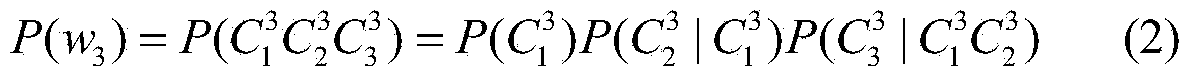

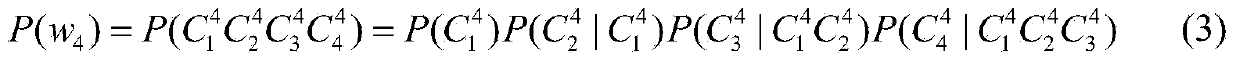

Speech emotion recognition equipment and speech emotion recognition method

The invention relates to speech emotion recognition equipment and a speech emotion recognition method. The speech emotion recognition equipment comprises an analysis device, a probability calculating device and an emotion judging device, wherein the analysis device matches emotional characteristics of input speech with a plurality of emotion models so as to ensure a plurality of possible emotional states; the probability calculating device calculates the final probability of the possible emotional states in the previous emotional states of a speaker according to the knowledge of conditional probability converted among the emotional states in the speaking process of the speaker; and the emotion judging device selects a possible emotional state with the maximum final probability from the possible emotional states as the emotional state of the input speech.

Owner:FUJITSU LTD

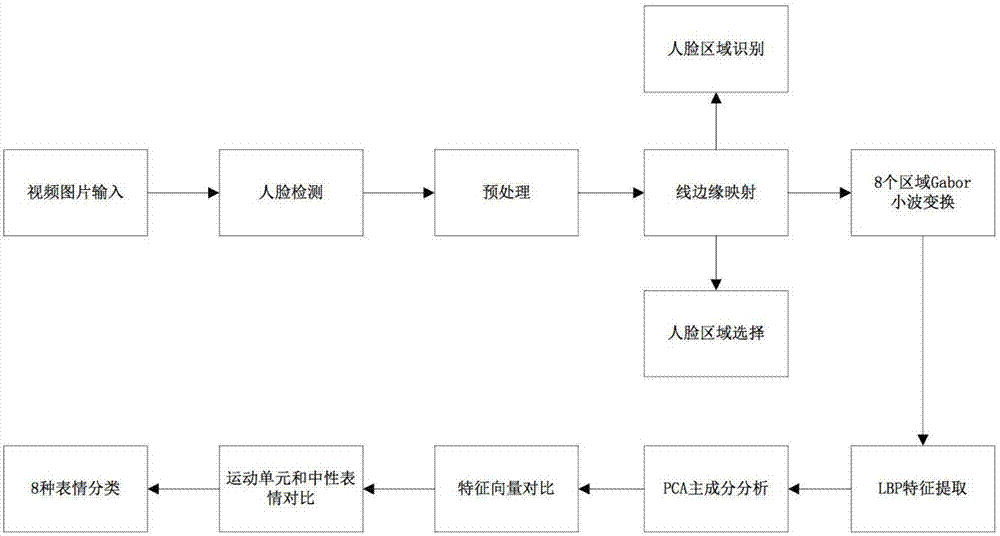

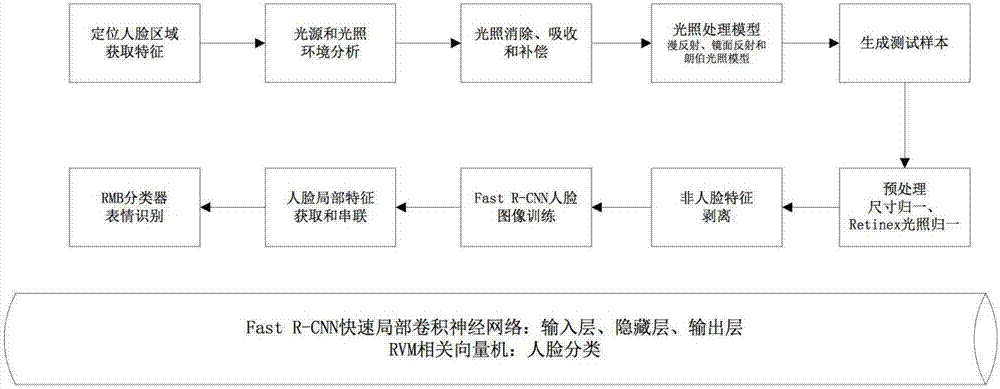

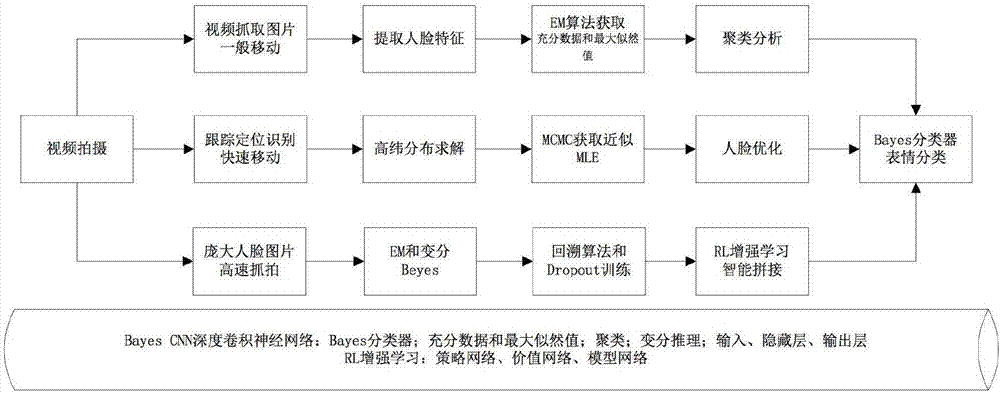

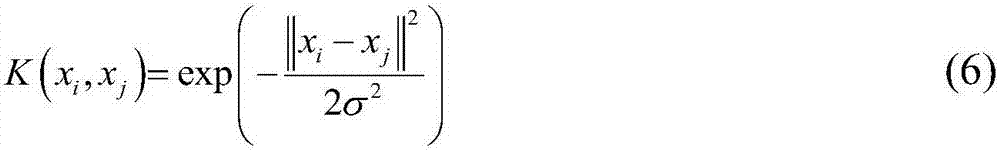

Human face emotion recognition method in complex environment

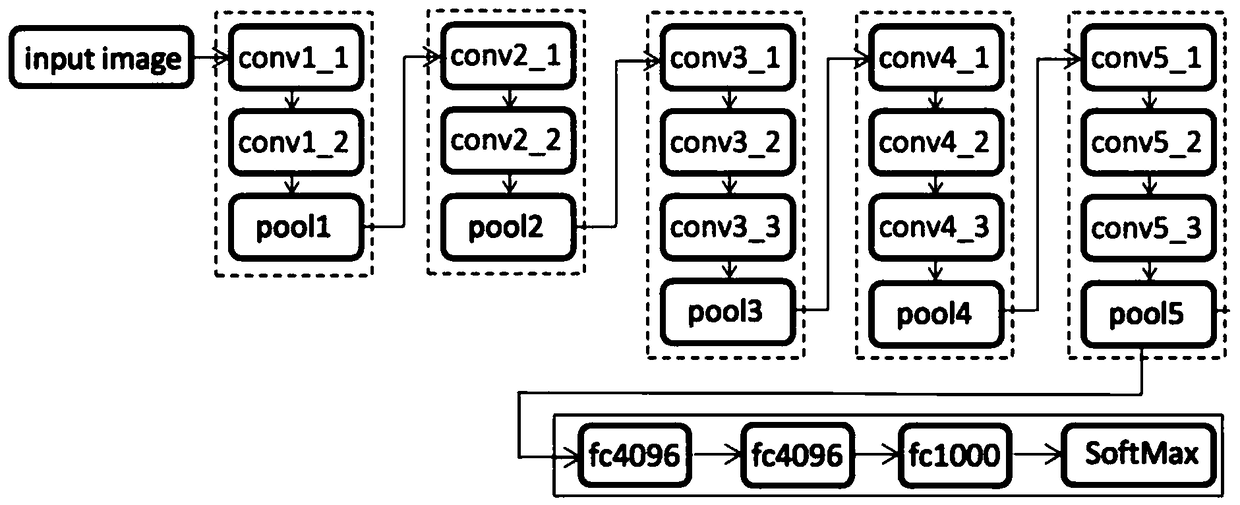

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

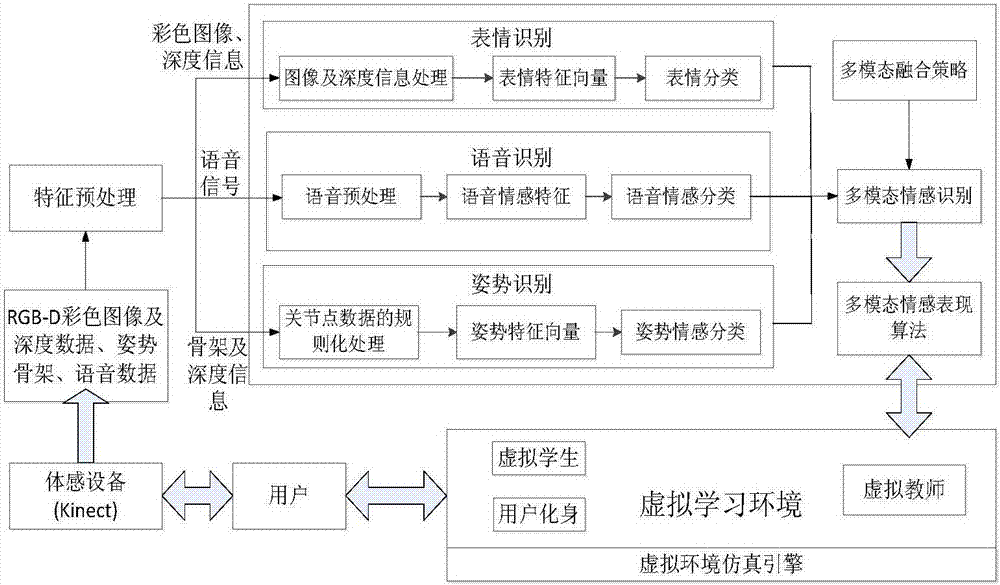

Virtual learning environment natural interaction method based on multimode emotion recognition

InactiveCN106919251AAccuracyImprove practicalityInput/output for user-computer interactionSpeech recognitionHuman bodyColor image

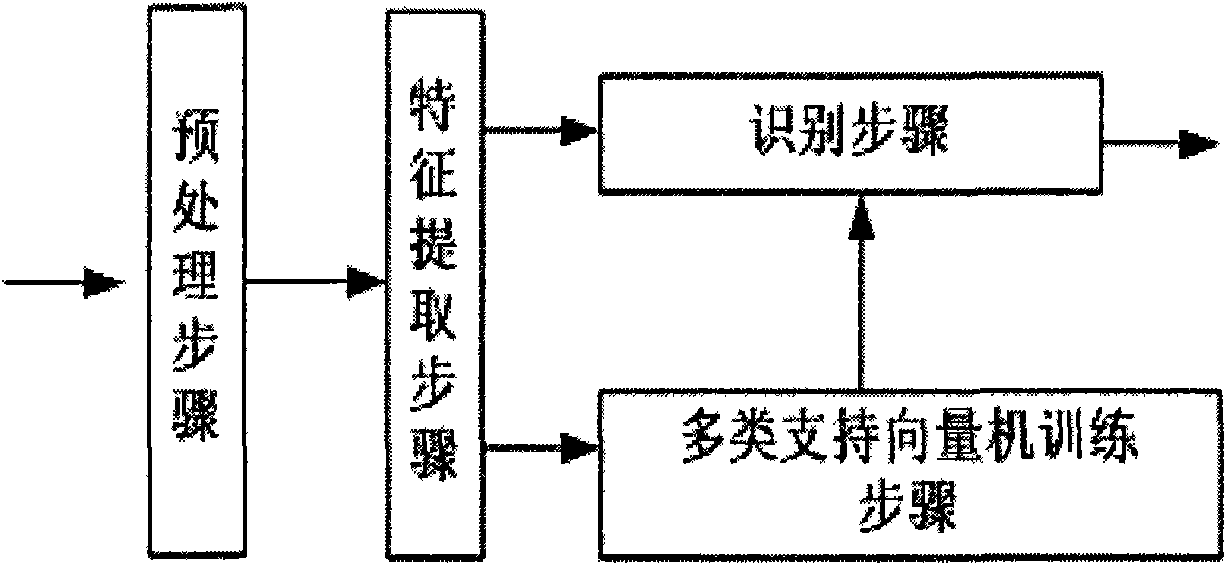

The invention provides a virtual learning environment natural interaction method based on multimode emotion recognition. The method comprises the steps that expression information, posture information and voice information representing the learning state of a student are acquired, and multimode emotion features based on a color image, deep information, a voice signal and skeleton information are constructed; facial detection, preprocessing and feature extraction are performed on the color image and a depth image, and a support vector machine (SVM) and an AdaBoost method are combined to perform facial expression classification; preprocessing and emotion feature extraction are performed on voice emotion information, and a hidden Markov model is utilized to recognize a voice emotion; regularization processing is performed on the skeleton information to obtain human body posture representation vectors, and a multi-class support vector machine (SVM) is used for performing posture emotion classification; and a quadrature rule fusion algorithm is constructed for recognition results of the three emotions to perform fusion on a decision-making layer, and emotion performance such as the expression, voice and posture of a virtual intelligent body is generated according to the fusion result.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Temporal semantic fusion association determining sub-system based on multimodal emotion recognition system

ActiveCN108805087ALower requirementImprove accuracySemantic analysisSpeech analysisData acquisitionOutput device

The invention discloses a temporal semantic fusion association determining sub-system based on a multimodal emotion recognition system. The sub-system comprises a data collection device and an outputdevice and is characterized by further comprising an emotion analysis software system, wherein the emotion analysis software system performs comprehensive analysis and deduction on data obtained by the data collection device and finally outputs a result to the output device, and the emotion analysis software system comprises a time sequence-based semantic fusion association determining sub-system.The system makes a breakthrough of five single modes of emotion recognition, uses a deep neural network to encode, deeply associate and comprehend multiple single modes of information and then determines comprehensively, thereby greatly improving the accuracy and being suitable for most common inquiry interaction scenes.

Owner:南京云思创智信息科技有限公司

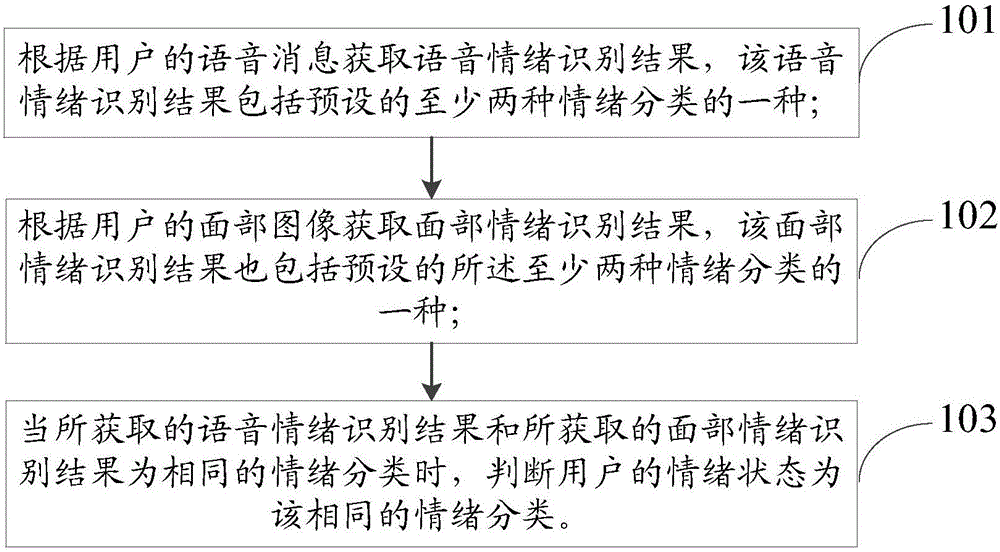

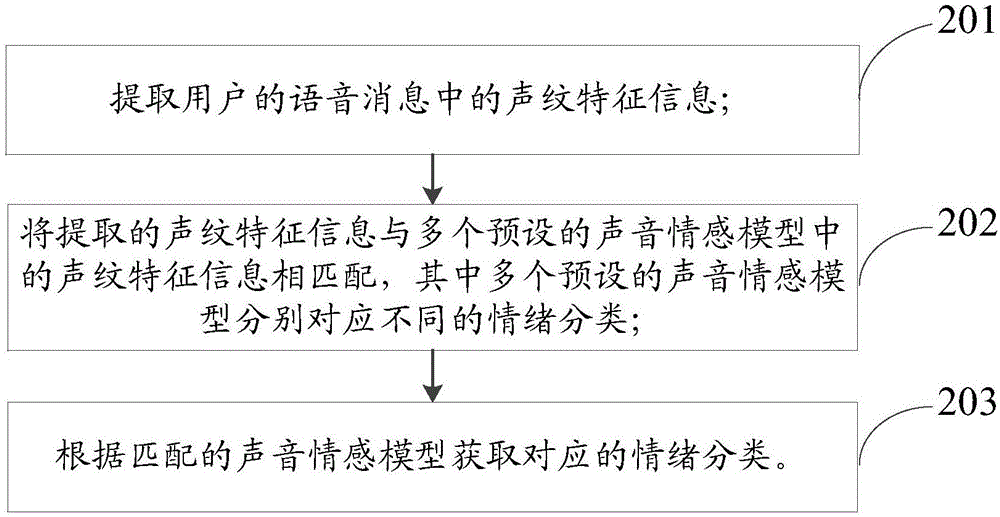

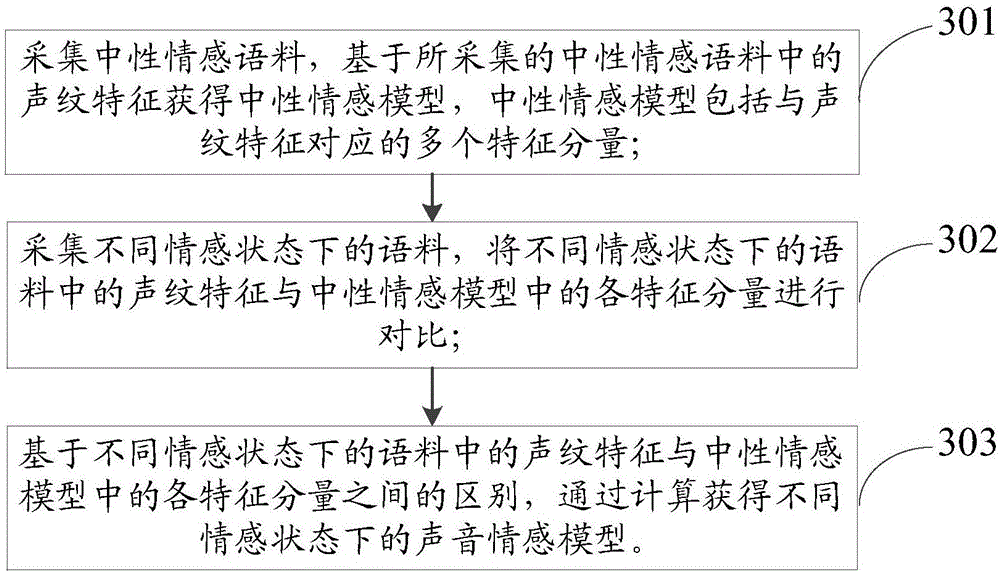

Emotion recognition method and device and intelligent interaction method and device

ActiveCN106570496AImprove experienceSmart Interaction Smart and AccurateSpeech analysisAcquiring/recognising facial featuresPattern recognitionSpeech sound

Owner:SHANGHAI XIAOI ROBOT TECH CO LTD

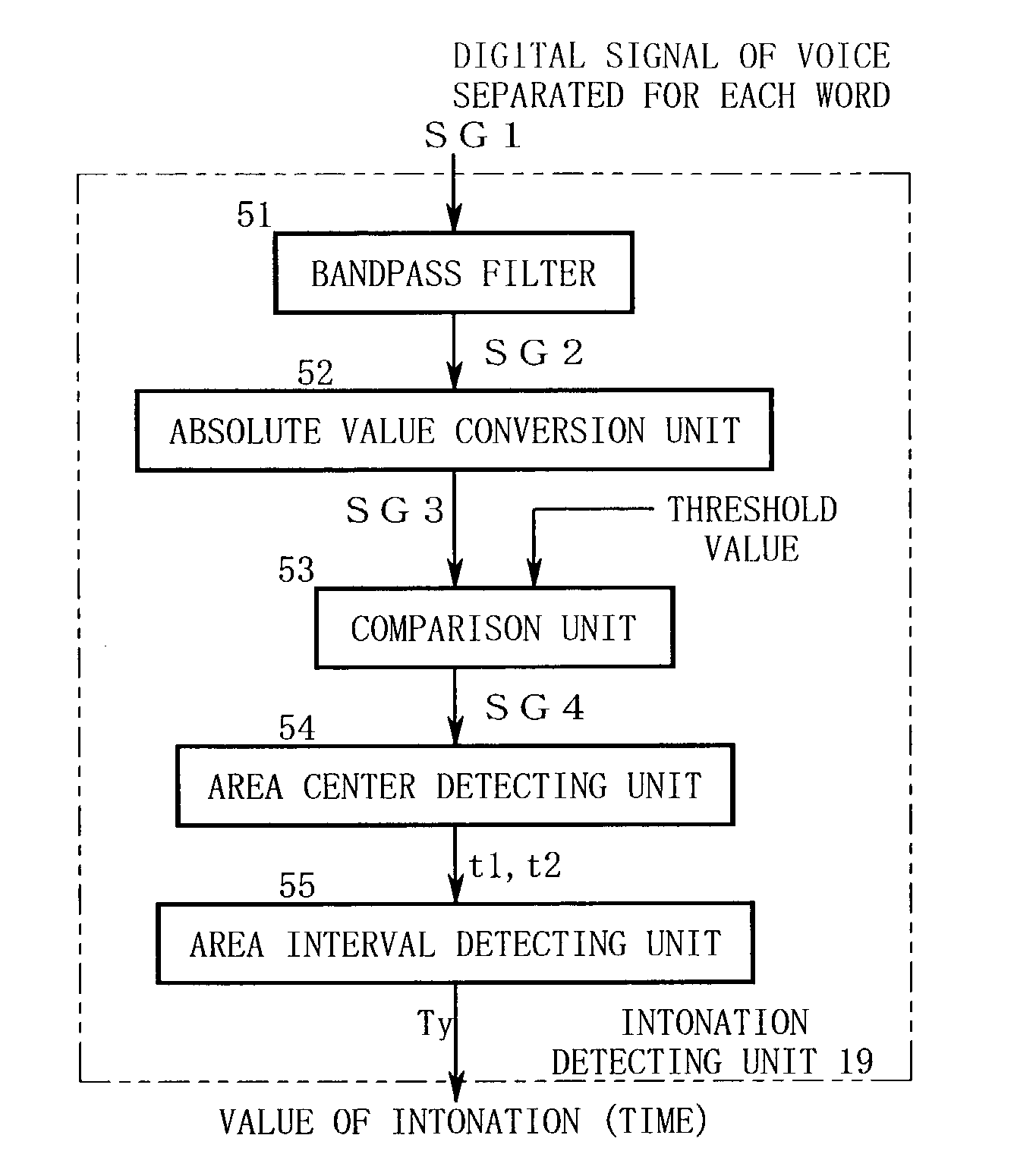

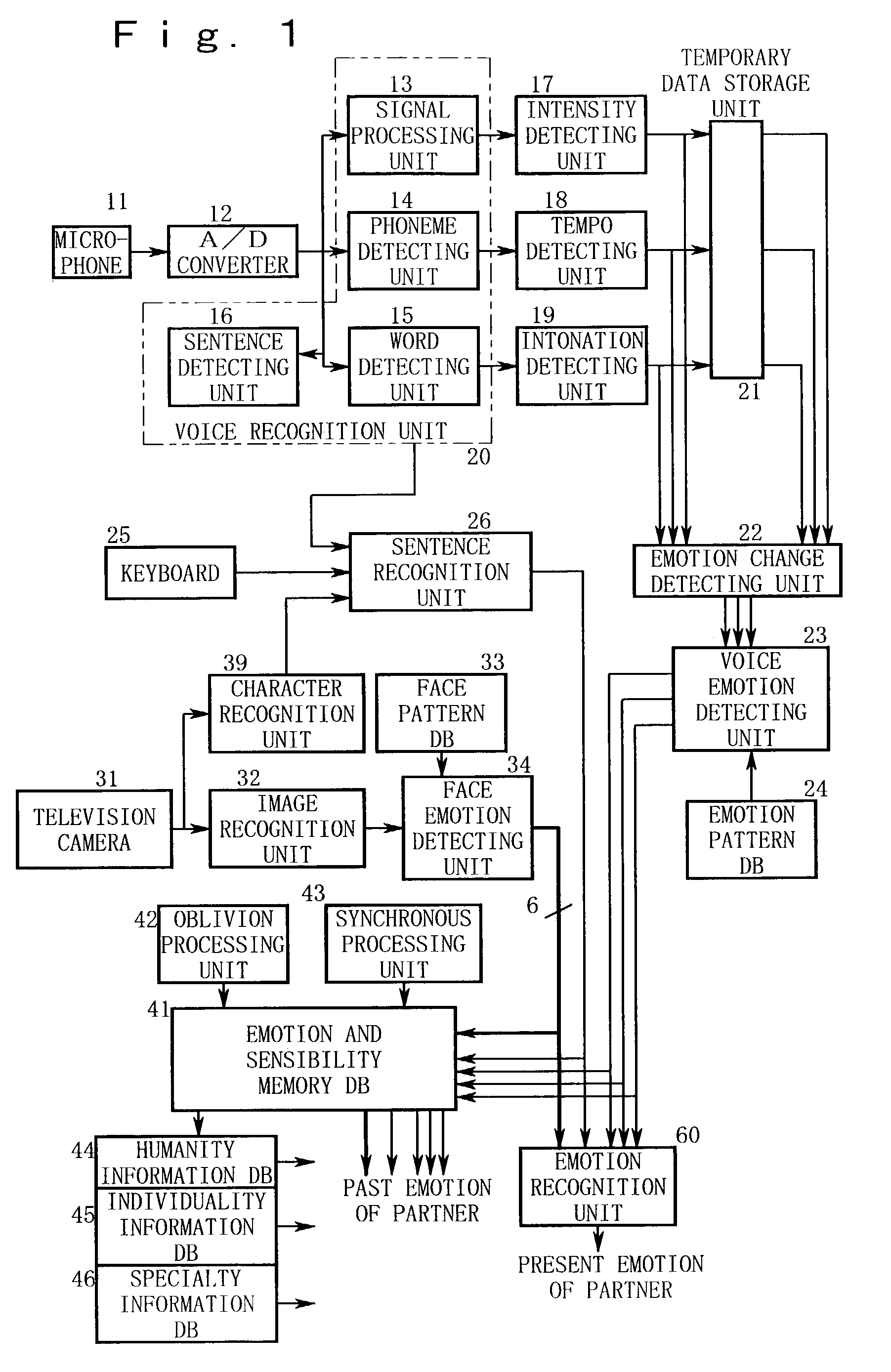

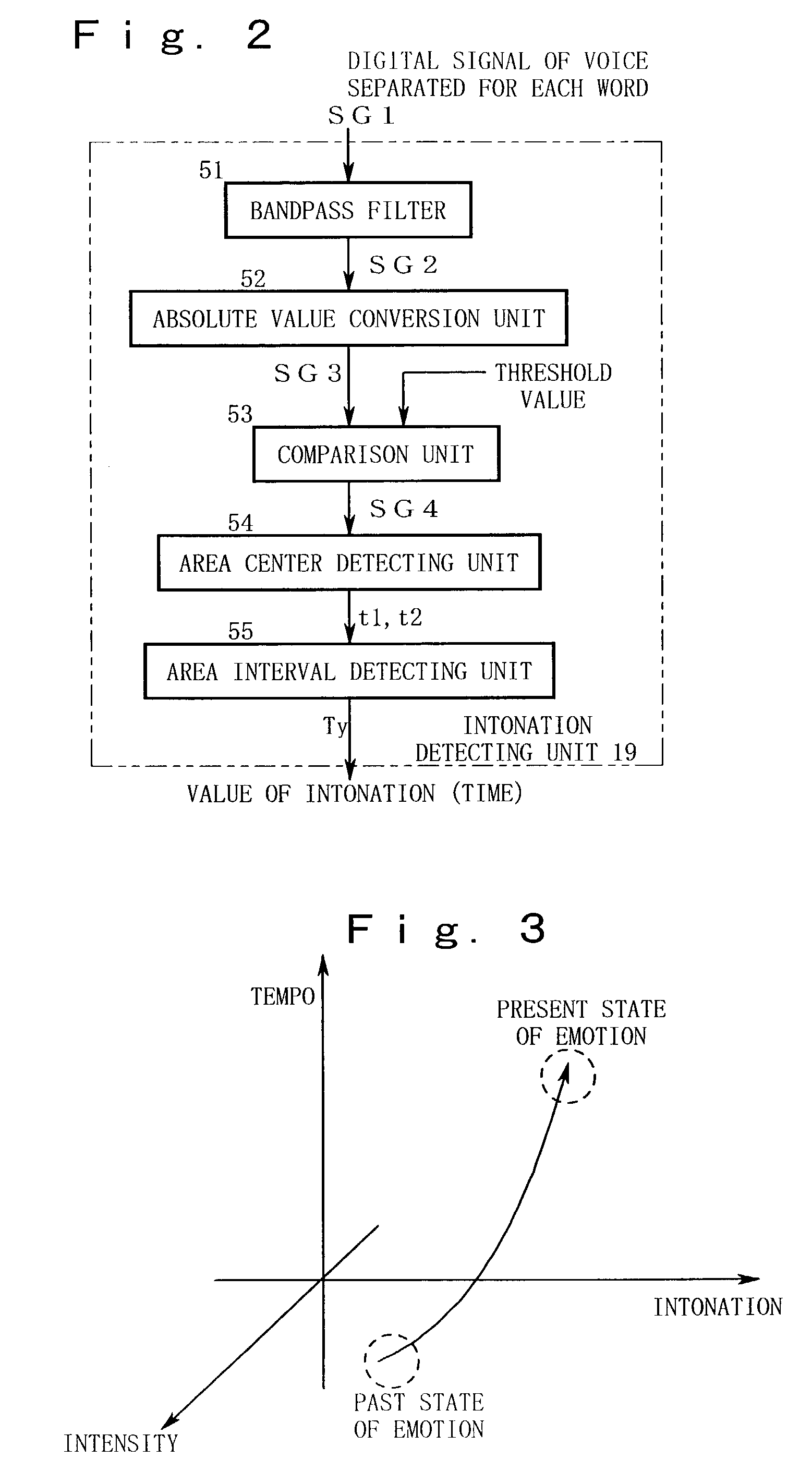

Emotion recognizing method, sensibility creating method, device, and software

ActiveUS7340393B2Reduce system capacityEnhanced informationIndoor gamesDigital computer detailsPattern recognitionSadness

An object of the invention is to provide an emotion detecting method capable of detecting emotion of a human accurately, and provide sensibility generating method capable of outputting sensibility akin to that of a human. An intensity, a tempo, and intonation in each word of a voice are detected based on an inputted voice signal, amounts of change are obtained for the detected contents, respectively, and signals expressing each states of emotion of anger, sadness, and pleasure are generated based on the amounts of change. A partner's emotion or situation information is inputted, and thus instinctive motivation information is generated. Moreover, emotion information including basic emotion parameters of pleasure, anger, and sadness is generated, which is controlled based on the individuality information.

Owner:AGI INC

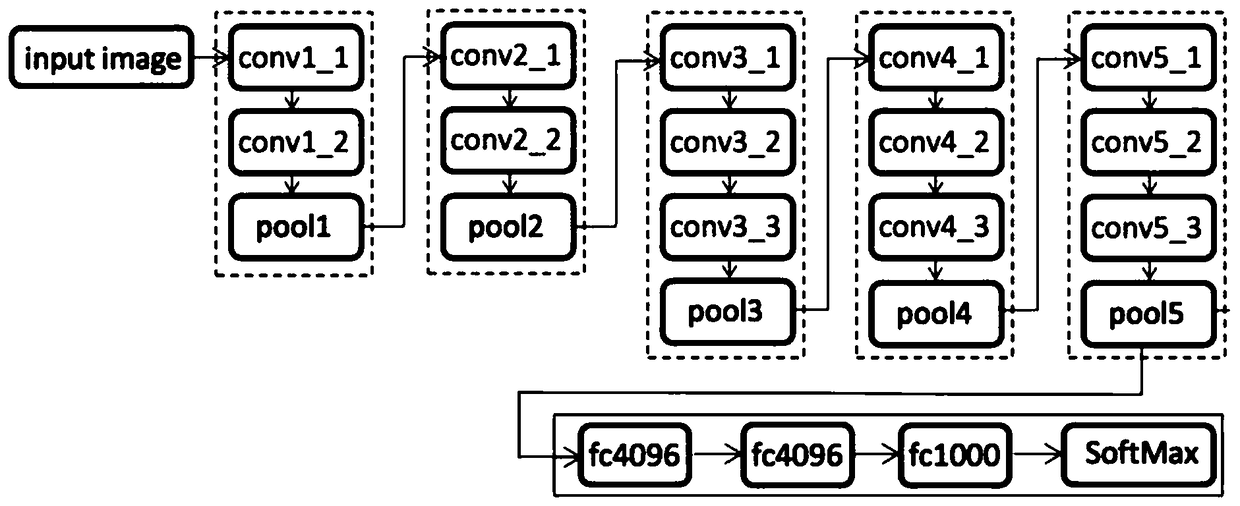

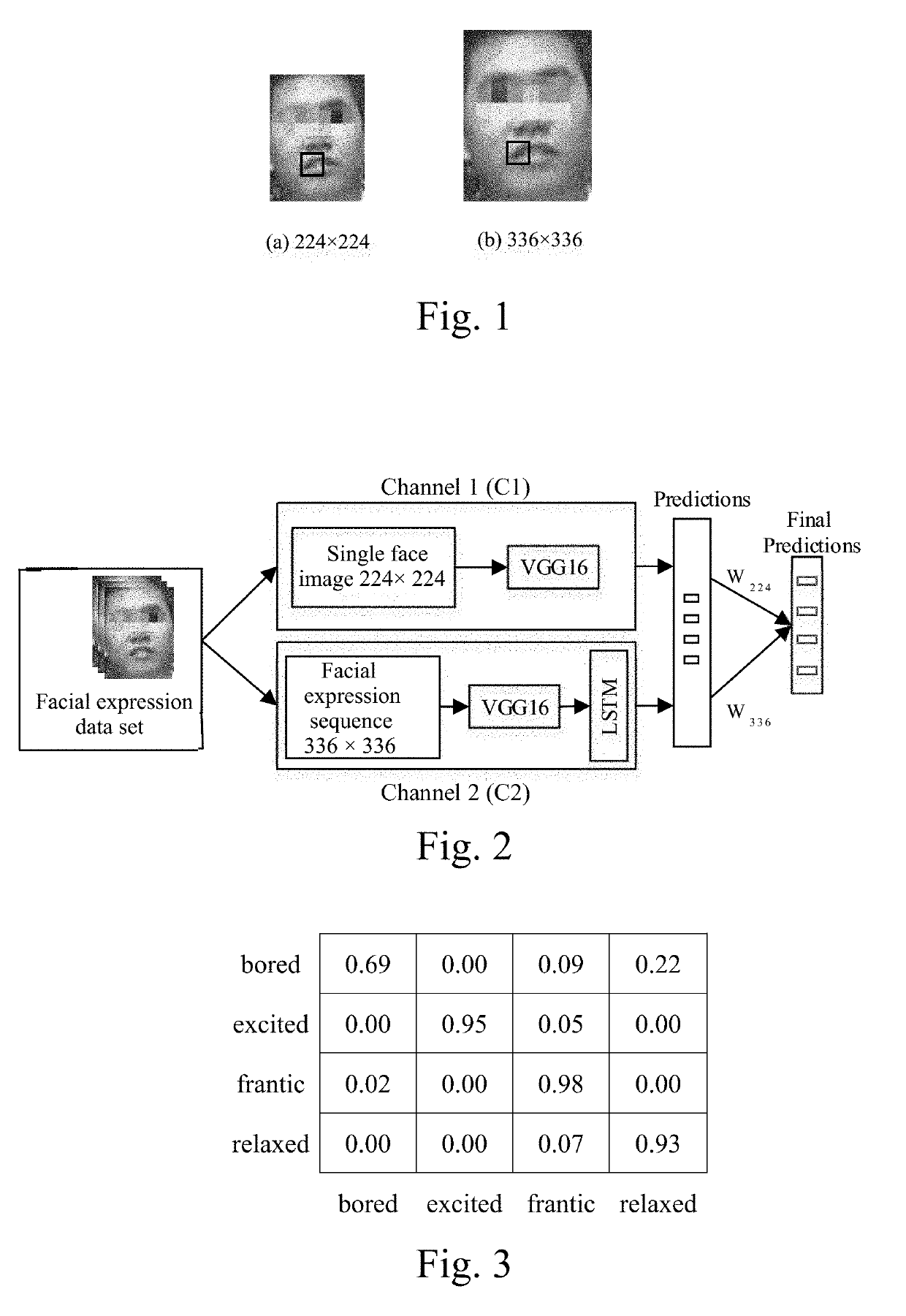

Face emotion recognition method based on dual-stream convolutional neural network

ActiveUS20190311188A1Low accuracyPromote resultsNeural architecturesNeural learning methodsManual extractionChannel network

A face emotion recognition method based on dual-stream convolutional neural network uses a multi-scale face expression recognition network to single frame face images and face sequences to perform learning classification. The method includes constructing a multi-scale face expression recognition network which includes a channel network with a resolution of 224×224 and a channel network with a resolution of 336×336, extracting facial expression characteristics at different resolutions through the recognition network, effectively combining static characteristics of images and dynamic characteristics of expression sequence to perform training and learning, fusing the two channel models, testing and obtaining a classification effect of facial expressions. The present invention fully utilizes the advantages of deep learning, effectively avoids the problems of manual extraction of feature deviations and long time, and makes the method provided by the present invention more adaptable. Moreover, the present invention improves the accuracy and productivity of expression recognition.

Owner:SICHUAN UNIV

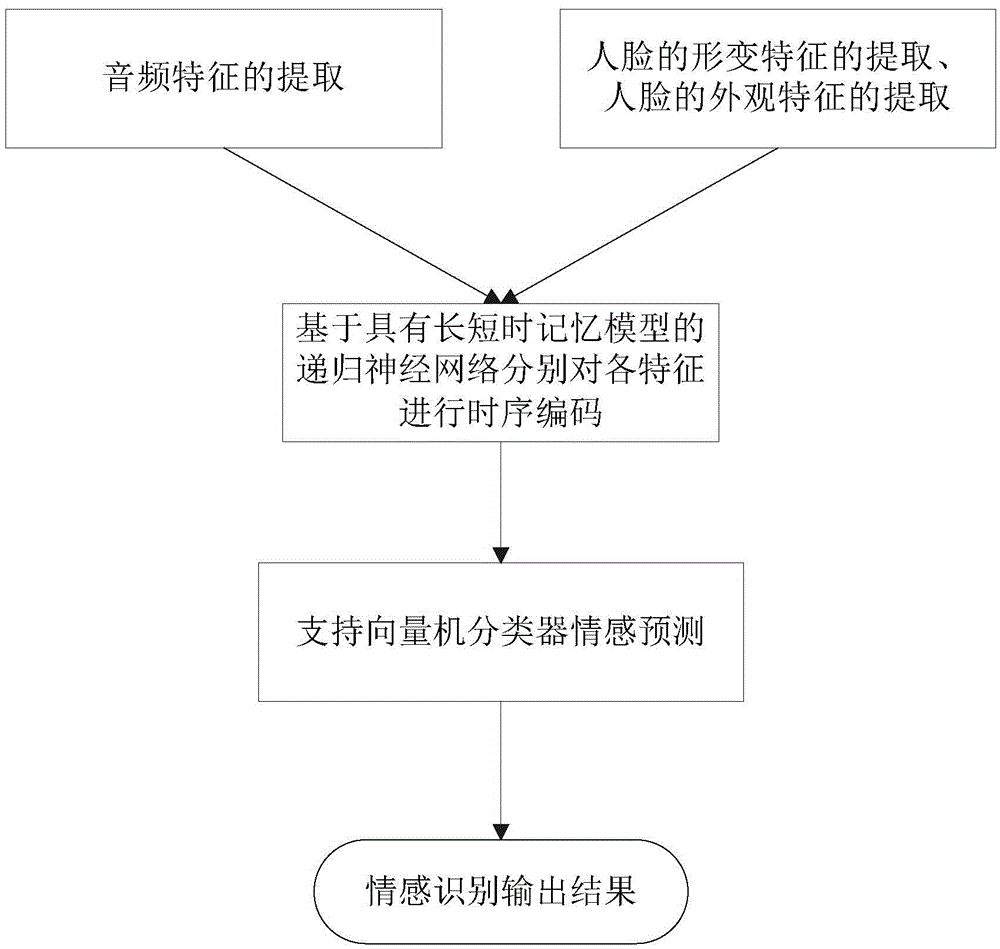

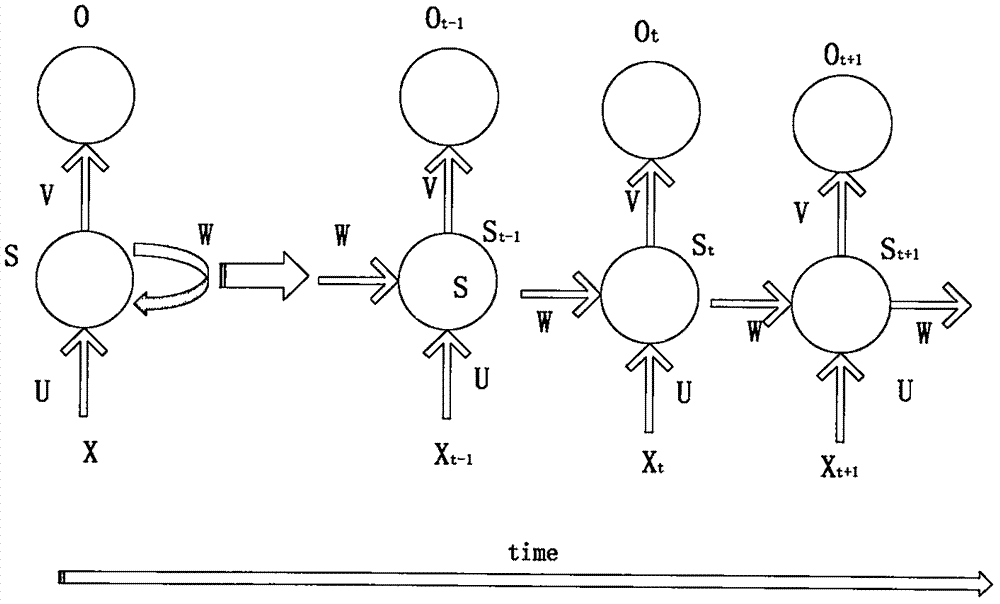

Recurrent neural network-based discrete emotion recognition method

ActiveCN105469065AModeling implementationRealize precise identificationCharacter and pattern recognitionDiscrete emotionsSupport vector machine classifier

The invention provides a recurrent neural network-based discrete emotion recognition method. The method comprises the following steps: 1, carrying out face detecting and tracking on image signals in a video, extracting key points of faces to serve as deformation features of the faces after obtaining the face regions, clipping the face regions and normalizing to a uniform size and extracting the appearance features of the faces; 2, windowing audio signals in the video, segmenting audio sequence units out and extracting audio features; 3, respectively carrying out sequential coding on the three features obtained by utilizing a recurrent neural network with long short-term memory models to obtain emotion representation vectors with fixed lengths, connecting the vectors in series and obtaining final emotion expression features; and 4, carrying out emotion category prediction by utilizing the final emotion expression features obtained in the step 3 on the basis of a support vector machine classifier. According to the method, dynamic information in the emotion expressing process can be fully utilized, so that the precise recognition of emotions of participators in the video is realized.

Owner:北京中科欧科科技有限公司

Multi-modal sensor based emotion recognition and emotional interface

ActiveUS9031293B2Input/output for user-computer interactionSpeech analysisPattern recognitionSubject matter

Features, including one or more acoustic features, visual features, linguistic features, and physical features may be extracted from signals obtained by one or more sensors with a processor. The acoustic, visual, linguistic, and physical features may be analyzed with one or more machine learning algorithms and an emotional state of a user may be extracted from analysis of the features. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:SONY COMPUTER ENTERTAINMENT INC

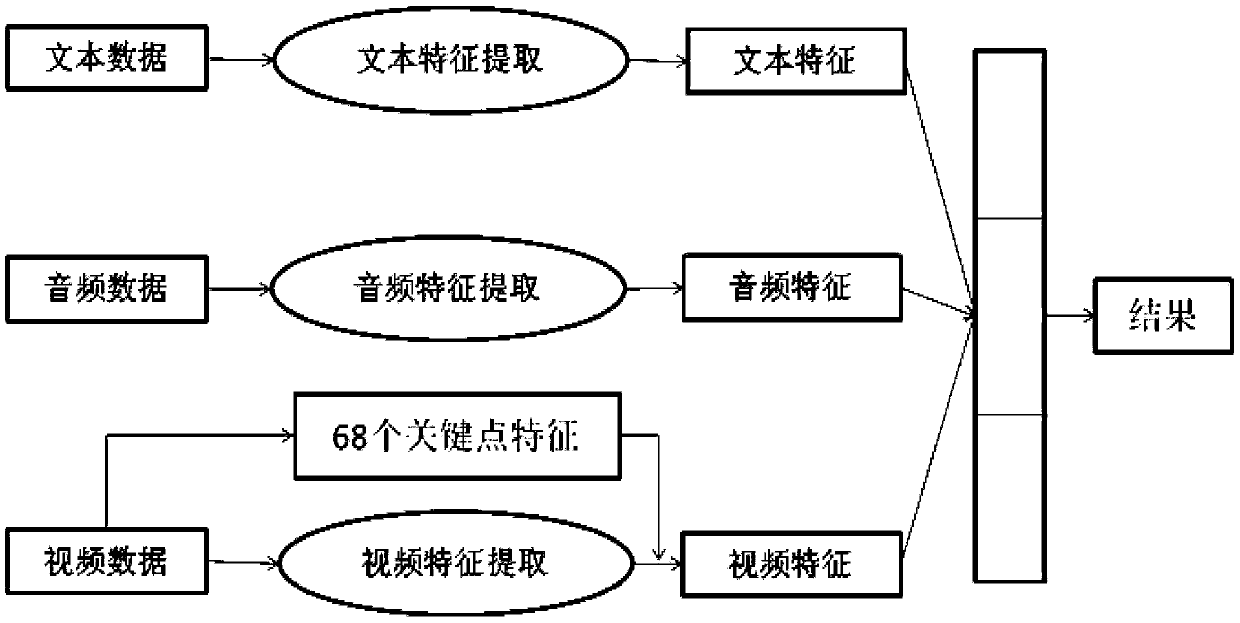

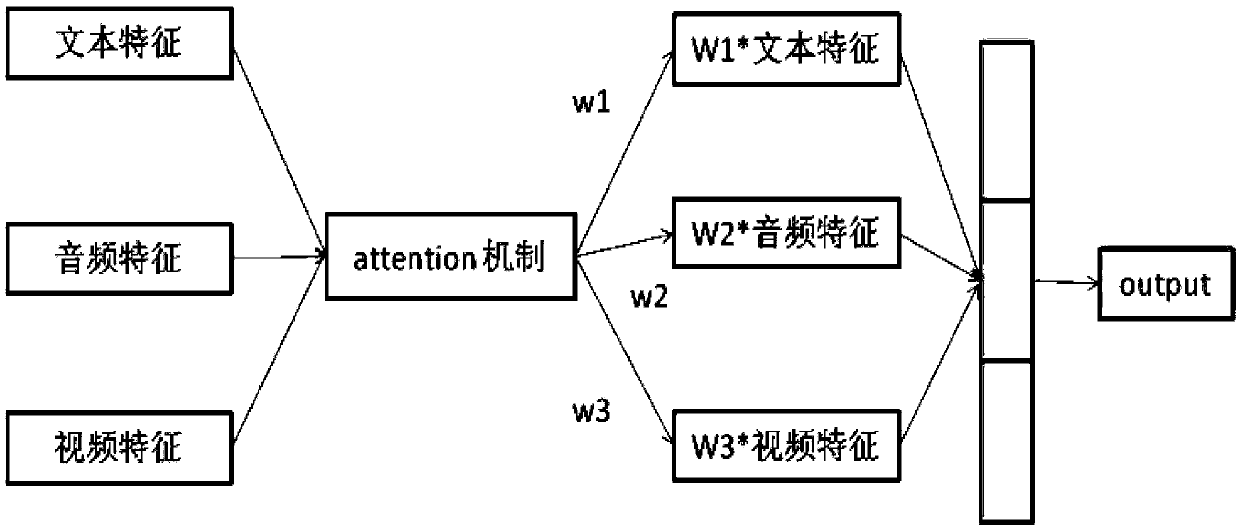

Multi-modal emotion recognition method based on attention feature fusion

InactiveCN109614895AMaximize UtilizationFully reflect the degree of influenceCharacter and pattern recognitionNeural architecturesSpeech soundComputer science

The invention relates to a multi-modal emotion recognition method based on attention feature fusion. According to the multi-modal emotion recognition method based on attention feature fusion, final emotion recognition is carried out by mainly utilizing data of three modes of a text, a voice and a video. The method comprises the following steps: firstly, performing feature extraction on data of three modes; in the text aspect, bidirectional LSTM is used for extracting text features, a convolutional neural network is used for extracting features in a voice mode, and a three-dimensional convolutional neural network model is used for extracting video features in a video mode. Performing feature fusion on the features of the three modes by adopting an attention-based feature layer fusion mode;a traditional feature layer fusion mode is changed, complementary information between different modes is fully utilized, certain weights are given to the features of the different modes, the weights and the network are obtained through training and learning together, and therefore the method better conforms to the whole data distribution of people, and the final recognition effect is well improved.

Owner:SHANDONG UNIV

Remote Chinese language teaching system based on voice affection identification

InactiveCN101201980ASolve the problem of lack of emotional communicationElectrical appliancesPersonalizationIndividual study

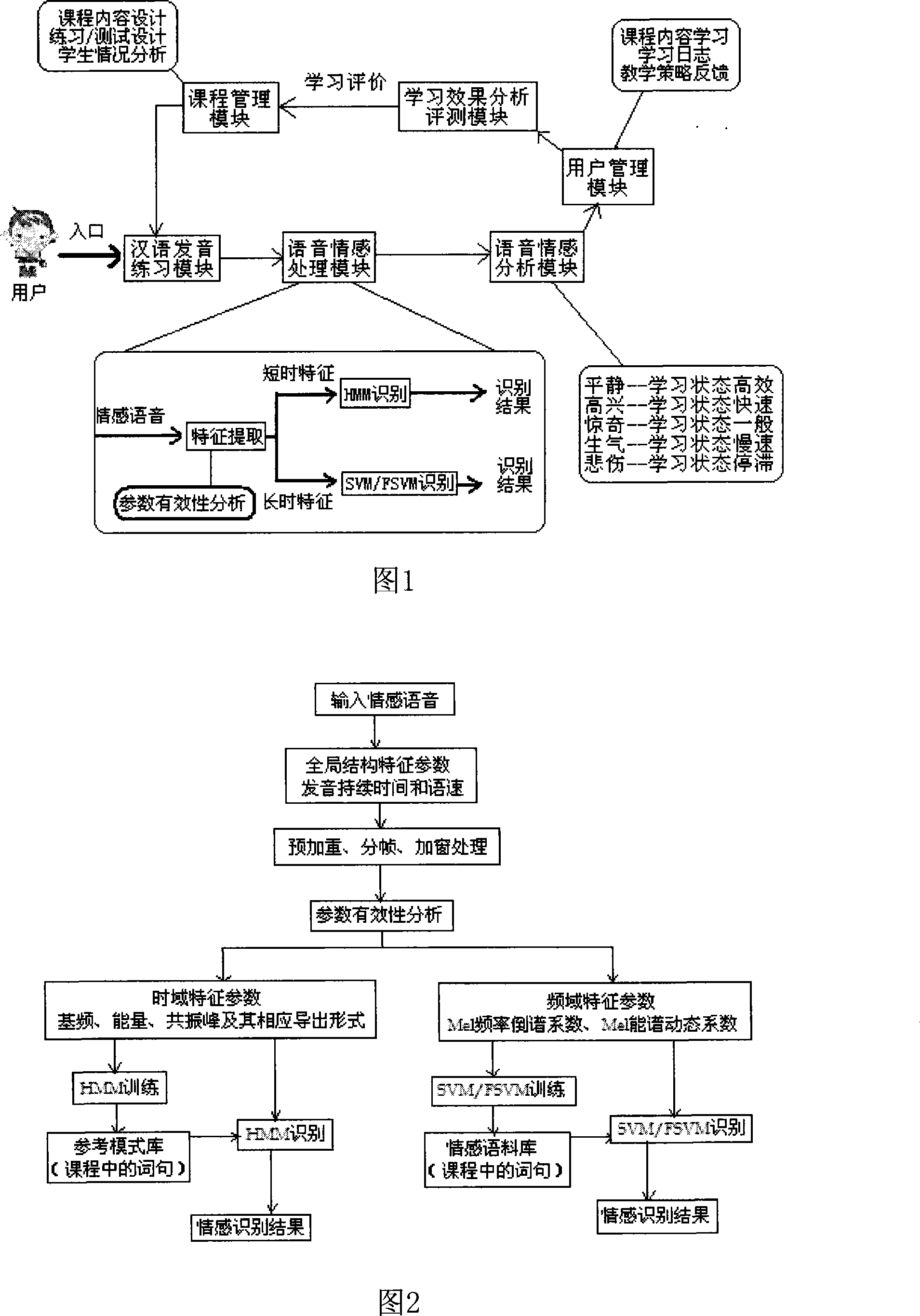

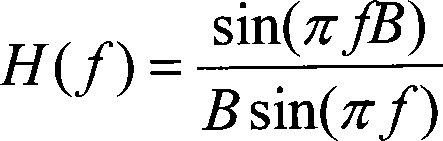

The invention discloses a remote Chinese teaching system based on speech emotion recognition, which comprises six modules of Chinese pronunciation practice, speech emotion processing, speech emotion analysis, user management, learning effect analysis and evaluation and curriculum management. Relevant theory of speech signal processing and statistical signal processing is used for analyzing the speech that users input in the speech emotion processing module to recognize different emotion states of the users, the corresponding mental states are analyzed in the speech emotion analysis module and the purpose of individual study according to the emotions of the users is realized through the dynamic interaction of the learning effect analysis and evaluation module, the curriculum management module and the user management module. The designed proposal carries out complete revolution of traditional teaching with an 'extreme equalitarianism, even distribution' type and really realizes a teaching type according to different people, time and things. Teaching proposals are designed according to the emotions of the users, and can effectively promote learning efficiency and integrate teaching and happiness.

Owner:BEIJING JIAOTONG UNIV

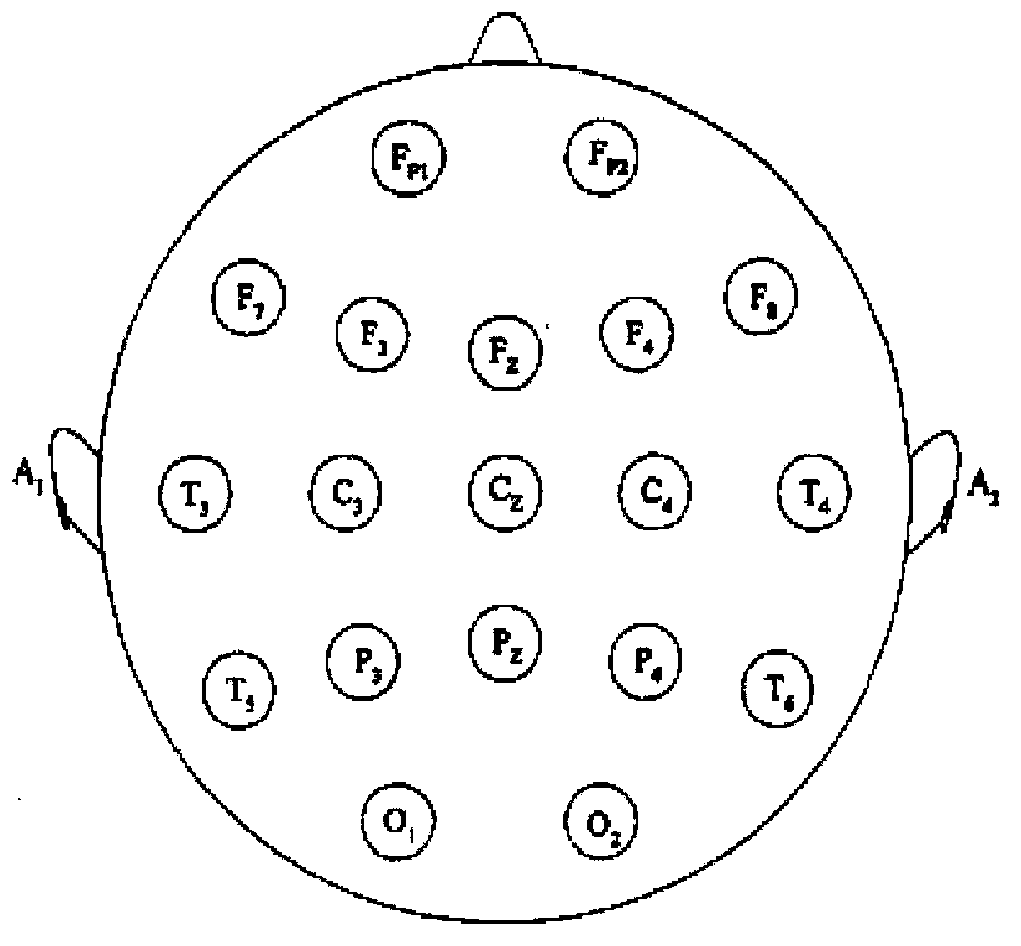

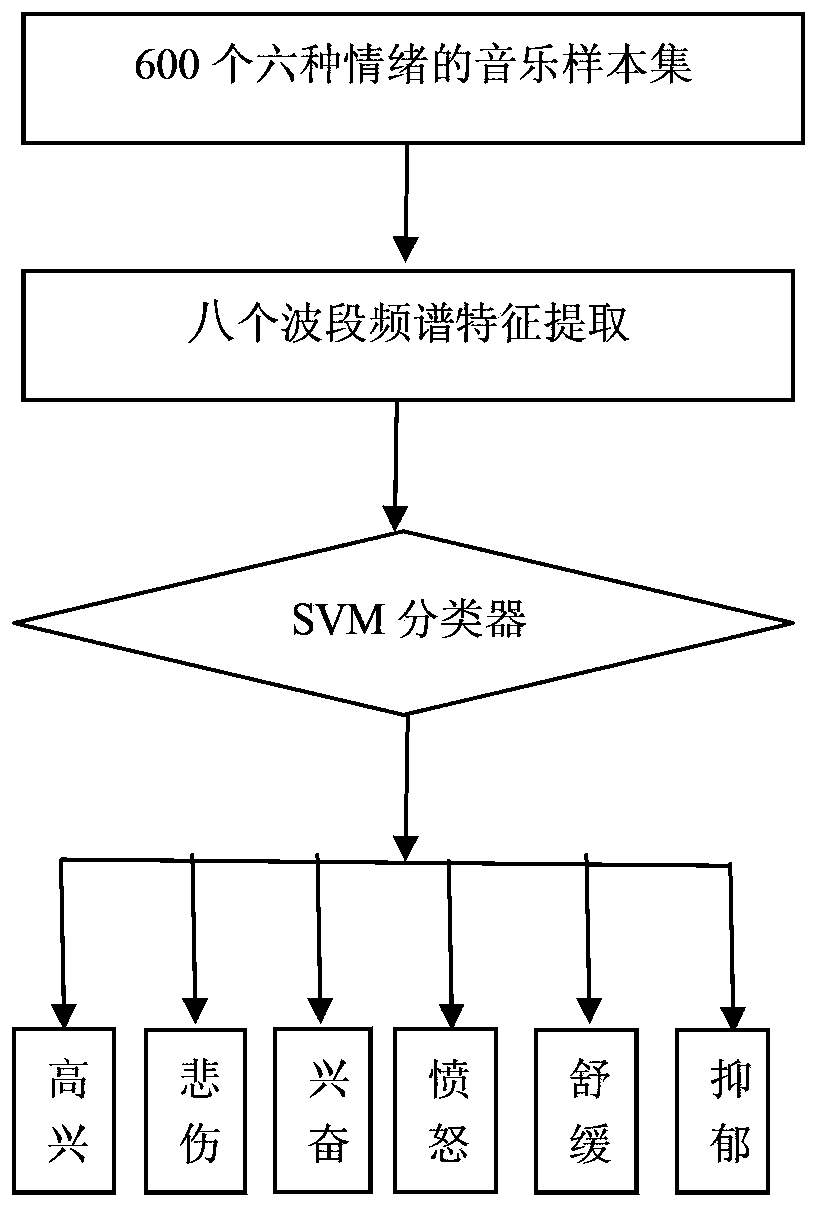

Emotional music recommendation method based on brain-computer interaction

InactiveCN103412646AIncrease flexibilityInput/output for user-computer interactionSensorsSleep treatmentWavelet decomposition

The invention discloses an emotional music recommendation method based on brain-computer interaction. Music corresponding to emotions is automatically searched and recommended to a user by acquiring electroencephalogram signals of the user. The process includes the steps: firstly, extracting the EEG (electroencephalogram) signals of the user by an electroencephalogram acquisition instrument, performing wavelet decomposition on the EEG signals into four wave bands alpha, beta, gamma and delta, taking frequency band energy of the four wave bands as a feature, recognizing the emotions by a trained electroencephalogram emotion recognition model EMSVM, and judging emotion categories corresponding to the EEG signals; averagely decomposing external music signals into eight frequency bands within the range of 20Hz-20kHz, taking energy values of the eight frequency bands as characteristic values, recognizing music emotions by a trained music emotion recognition model MMSVM and building a music emotion database MMD; recommending the music corresponding to index numbers to the user according to the emotion categories of the electroencephalogram signals, and implementing an emotion-based music recommendation system. By the emotional music recommendation method, a new approach can be brought for infant music cultivation, sleep treatment and music search.

Owner:NANJING NORMAL UNIVERSITY

Various-information coupling emotion recognition method for human-computer interaction

ActiveCN104200804AReflect emotional tendenciesStrong fault toleranceCharacter and pattern recognitionSpeech recognitionFeature extractionFacial expression

The invention discloses a various-information coupling emotion recognition method for the human-computer interaction. The method is characterized by including the steps of 1, acquiring the video and audio data of facial expression; 2, extracting features of text content, and acquiring the text information features; 3, extracting and coupling the prosodic features and overall audio features of the audio data; 4, coupling the text information features, audio information features and expression information features, and acquiring the comprehensive information features; 5, performing data optimization on the comprehensive information features by the deep learning method, utilizing a classifier to train the optimized comprehensive information features, and acquiring an emotion recognition model for various information coupling emotion recognition. According to the method, data information of text, audio and video can be combined completely, and the accuracy of emotion state judgment in human-computer interaction can be improved accordingly.

Owner:山东心法科技有限公司

Recognition method of digital music emotion

InactiveCN101599271AImprove recognition efficiencyAdd nonlinearityCharacter and pattern recognitionSpeech recognitionMusic and emotionComputer pattern recognition

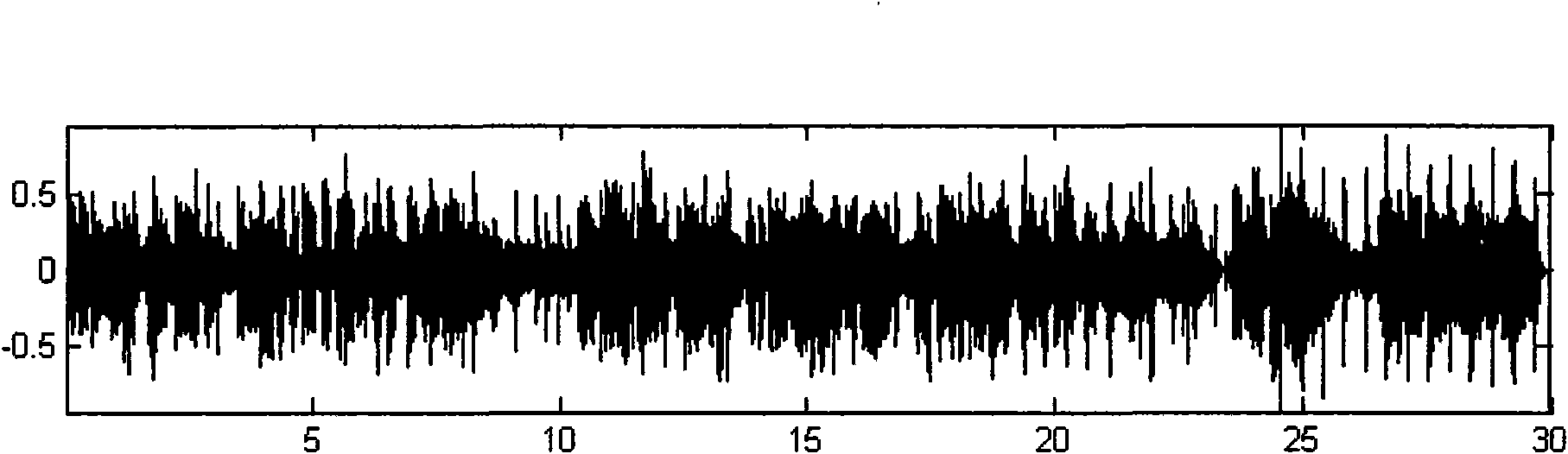

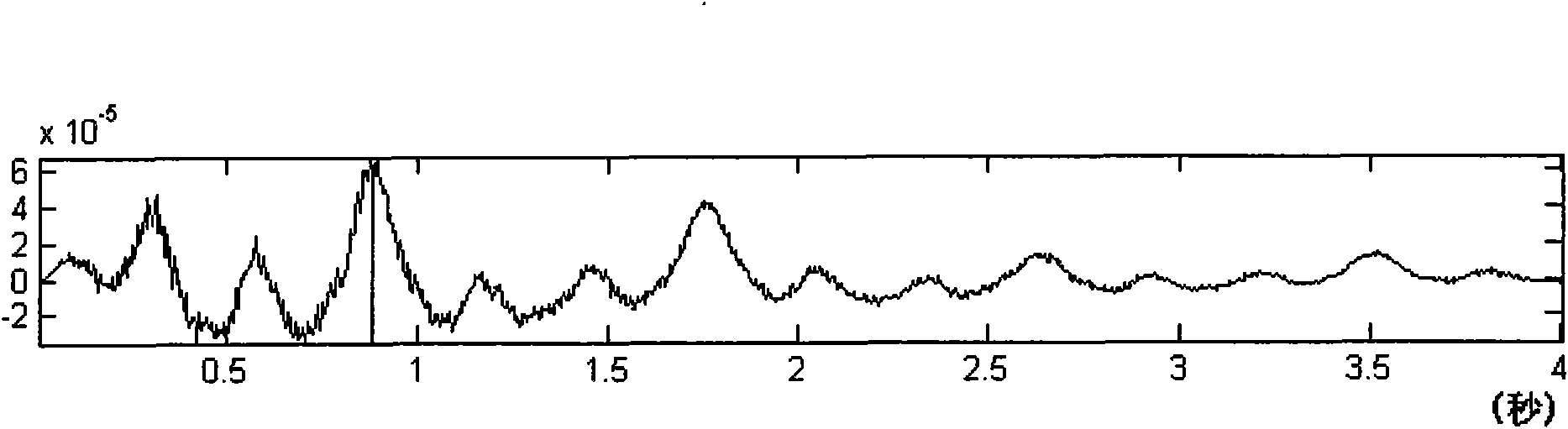

The invention relates to a recognition method of digital music emotion, belonging to the field of computer pattern recognition; the recognition method solves the problem that the existing recognition method of digital music emotion can not recognize sampling-based digital music format, the sorting technology based on a multi-class support vector machine is adopted, acoustic characteristic parameters and music theory characteristic parameters are combined, so as to carry out emotion recognition of digital music; the recognition method comprises the following steps: (1) pretreatment; (2) characteristic extraction; (3) training the multi-class support vector machine; (4) recognition. The music emotion is classified into happiness, impassion, sadness and relaxation, the emotion recognition is carried out based on a sampling-based digital music format file, the common acoustic characteristics in the speech recognition field are not only extracted, and a series of music theory characteristics are extracted according to the theory characteristics of music; meanwhile, the sorting method based on the support vector machine is adopted, the leaning speed is rapid, the sorting precision ratio is high and the recognition efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

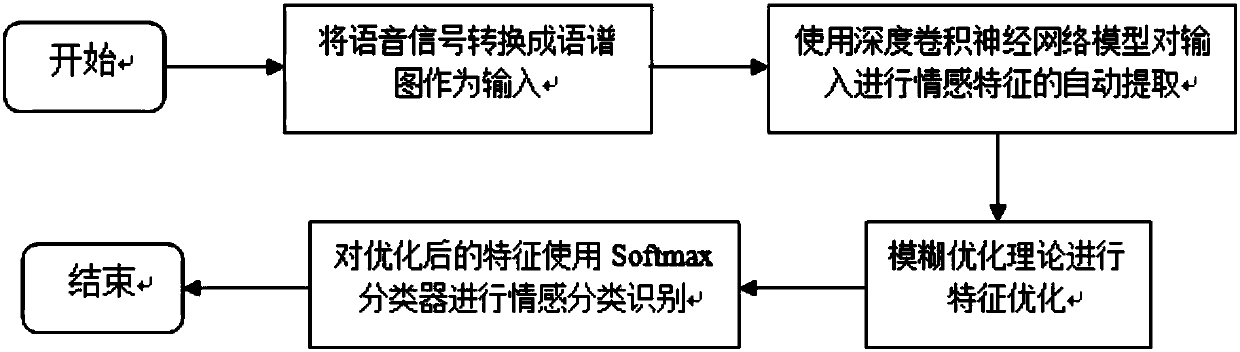

Speech emotion recognition method

InactiveCN106847309AImprove robustnessIncrease salienceSpeech recognitionFuzzy membership functionSpeech sound

The invention discloses a speech emotion recognition method. The method includes the steps that firstly, a speech signal is converted into a spectrogram to serve as initial input; secondly, a deep convolutional neural network is trained to automatically extract emotion features; thirdly, a stack type auto-encoder is trained for each kind of emotions, and all the stack type auto-encoders are fused to automatically construct membership functions of an emotion fuzzy set; fourthly, the features obtained in the second step are subjected to feature optimization by means of the fuzzy optimal theory in the third step; fifthly, emotion classification recognition is conducted by means of a Softmax classifier. The method takes abstract fuzzy properties of speech emotion information into consideration, the extracted emotion features are subjected to selective fuzzy optimization to improve the significance of the features, fuzzy membership functions in the fuzzy theory are automatically constructed by means of the concept of deep neural network layer-by-layer training, and the problem that the proper membership functions in the fuzzy theory are difficult to select and determine is solved.

Owner:SOUTH CHINA UNIV OF TECH

Multi-mode interaction method and system for multi-mode virtual robot

ActiveCN107340859AHigh viscosityImprove fluencyInput/output for user-computer interactionArtificial lifeAnimationVirtual robot

The invention provides a multi-mode interaction method for a multi-mode virtual robot. An image of the virtual robot is displayed in a preset display region of a target hardware device; and the constructed virtual robot has preset role attributes. The method comprises the following steps of obtaining a single-mode and / or multi-mode interaction instruction sent by a user; calling interfaces of a semantic comprehension capability, an emotion recognition capability, a visual capability and a cognitive capability to generate response data of all modes, wherein the response data of all the modes is related to the preset role attributes; fusing the response data of all the modes to generate multi-mode output data; and outputting multi-mode output data through the image of the virtual robot. The virtual robot is adopted for performing conversation interaction; on one hand, an individual with an image can be displayed on a man-machine interaction interface through a high-modulus 3D modeling technology; and on the other hand, the effect of natural fusion of voices and mouth shapes as well as expressions and body actions can be achieved through an animation of a virtual image.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

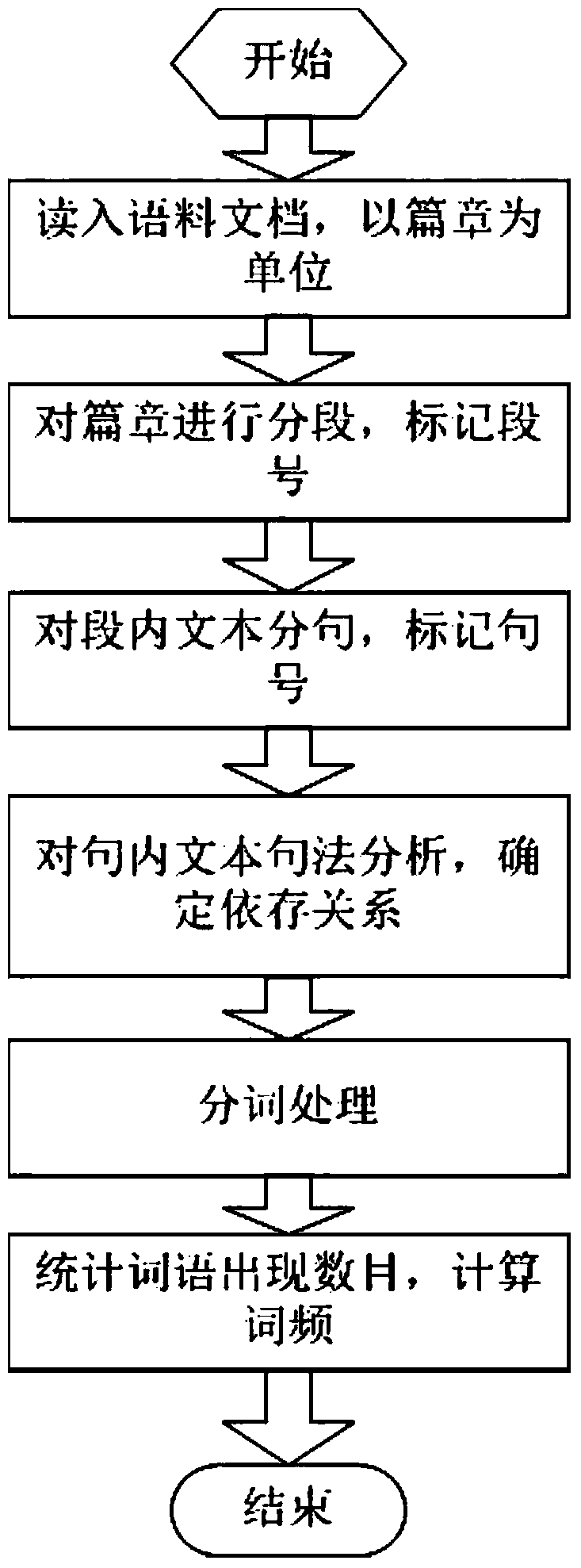

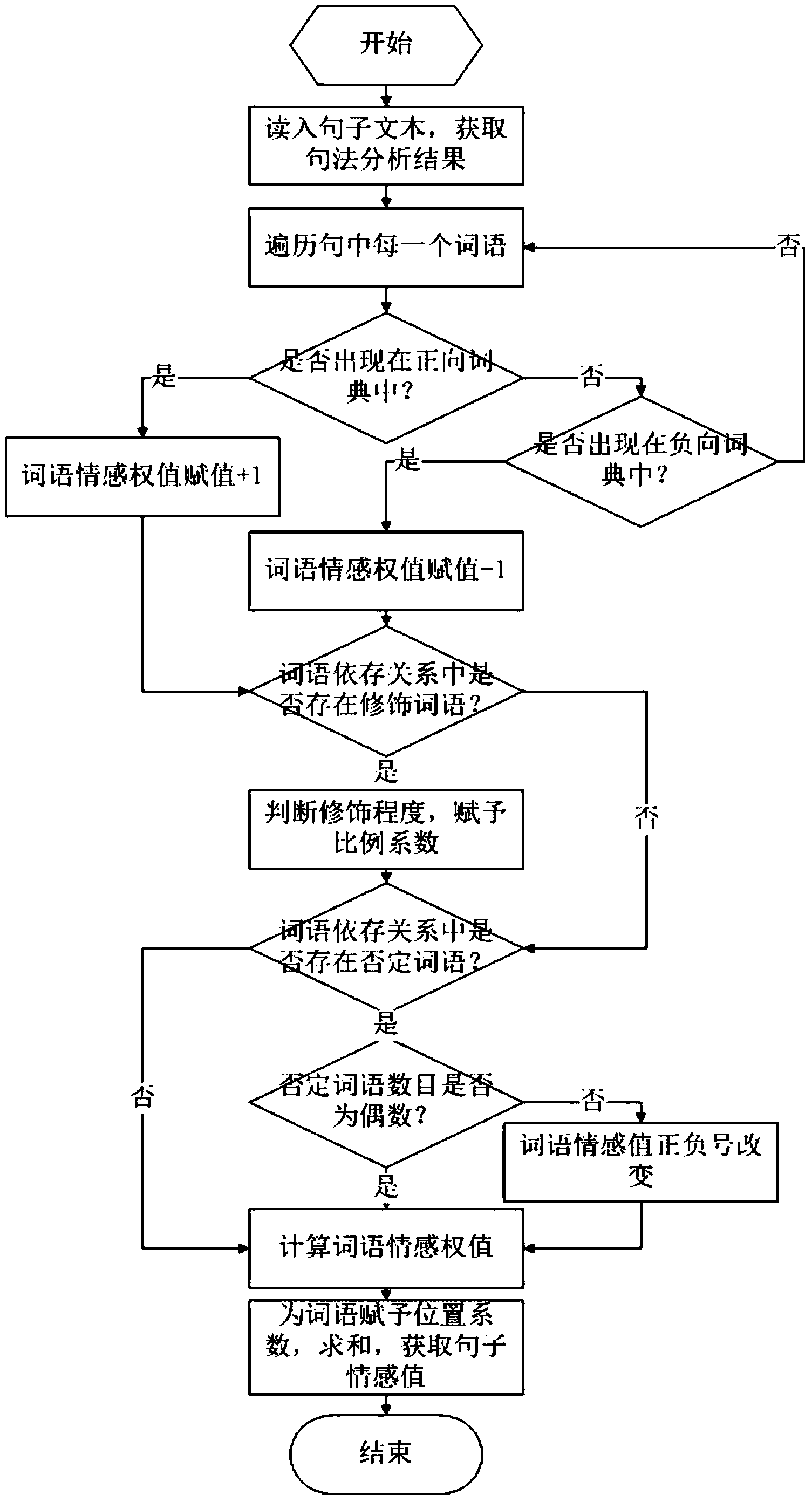

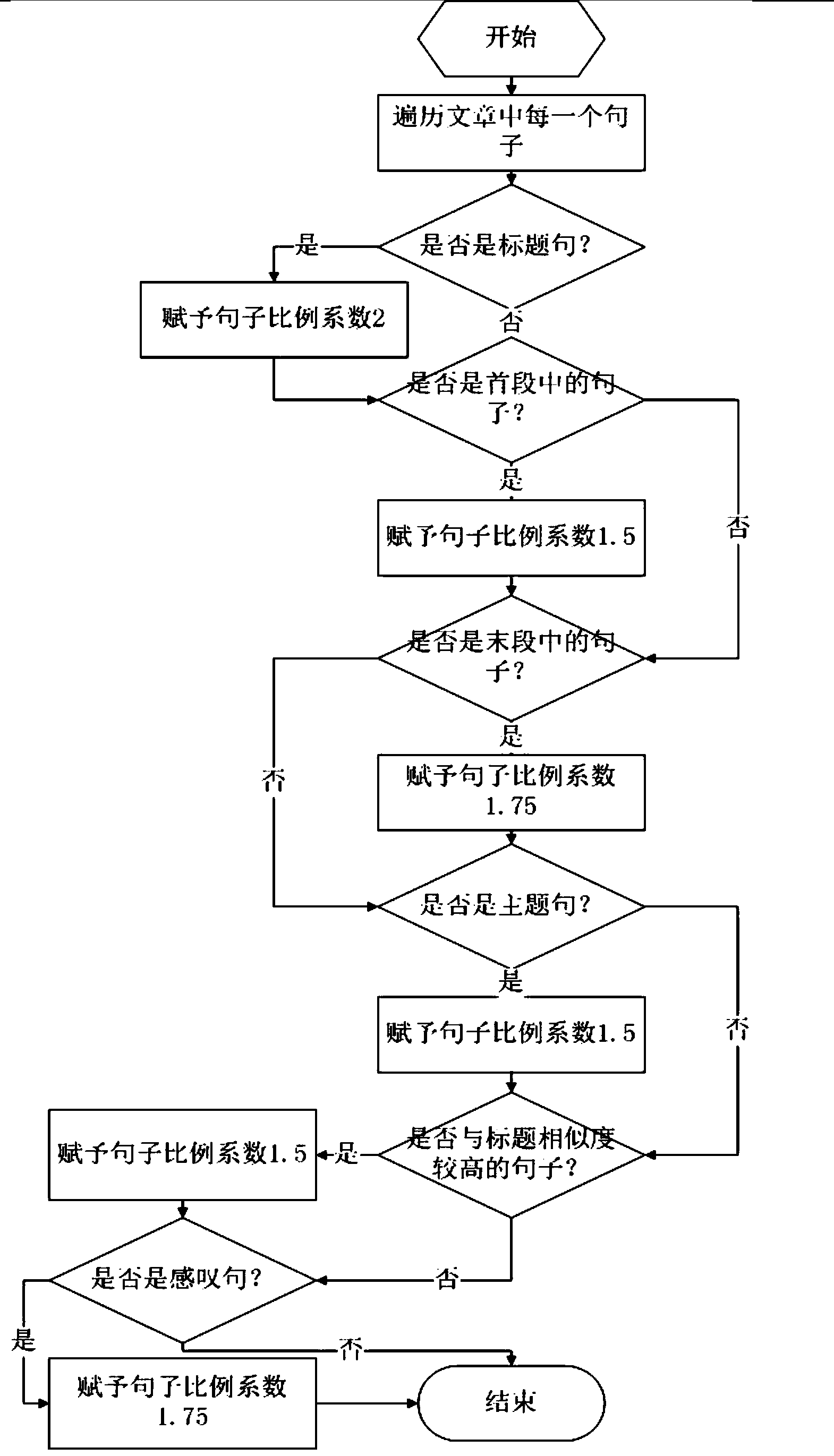

Chinese text emotion recognition method

InactiveCN103678278AImprove accuracySpecial data processing applicationsLexical frequencyEmotion recognition

The invention discloses a Chinese text emotion recognition method which includes the steps of (1) respectively building a commendatory-derogatory-term dictionary, a degree-term dictionary and a privative-term dictionary, (2) carrying out term-segmentation processing on sentences of a Chinese text to be processed, and obtaining dependence relationships and term frequency of terms, (3) selecting subject terms according to the term frequency, and signing the sentences containing the subject terms as subject sentences, (4) judging whether the terms in the subject sentences exit in the commendatory-derogatory-term dictionary, determining emotion initial values of the terms, determining modifying degree terms and privative terms of the terms according to the dependence relationships of the terms, then determining the weights of the terms according to values of the modifying degree terms in the degree-term dictionary, determining polarities according to the number of the privative terms, obtaining the emotion values of the terms, then summing the emotion values of all the terms of the subject sentences, and obtaining the emotion values of the subject sentences, and (5) summing the emotion values of all the sentences in the text, and obtaining the emotion state of the text. According to the Chinese text emotion recognition method, the emotion recognition accuracy rate of the text is greatly improved.

Owner:COMP NETWORK INFORMATION CENT CHINESE ACADEMY OF SCI

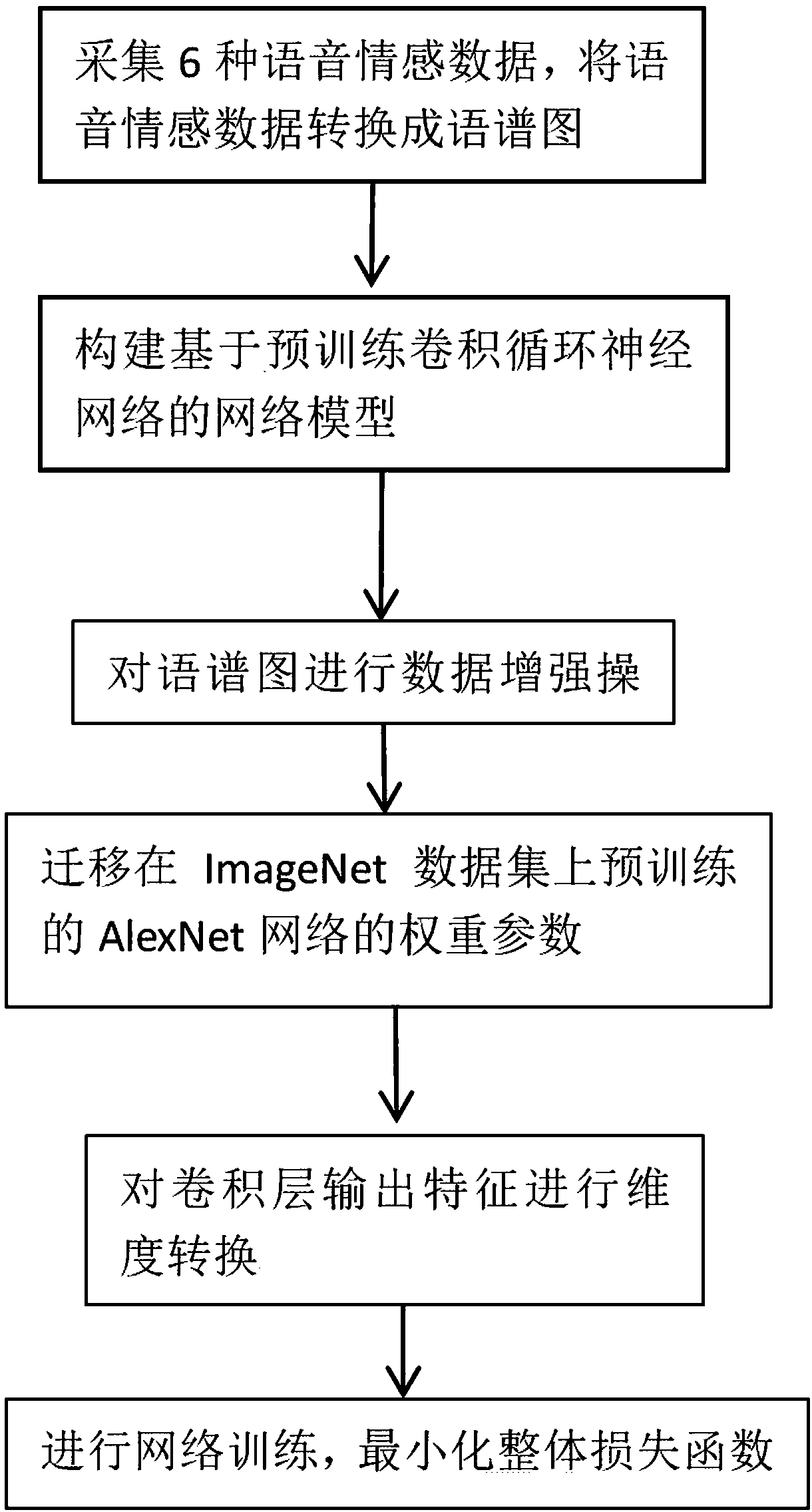

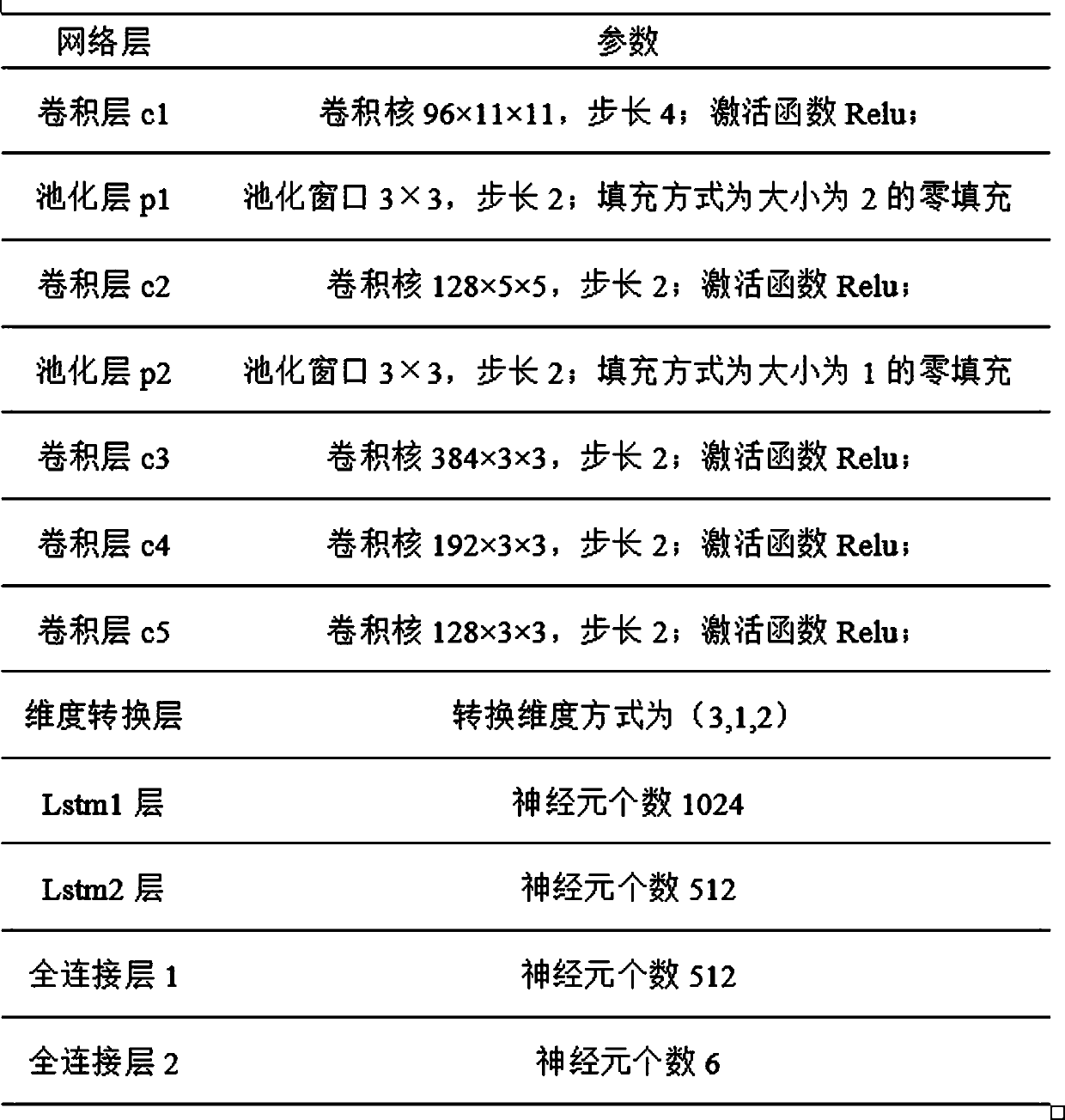

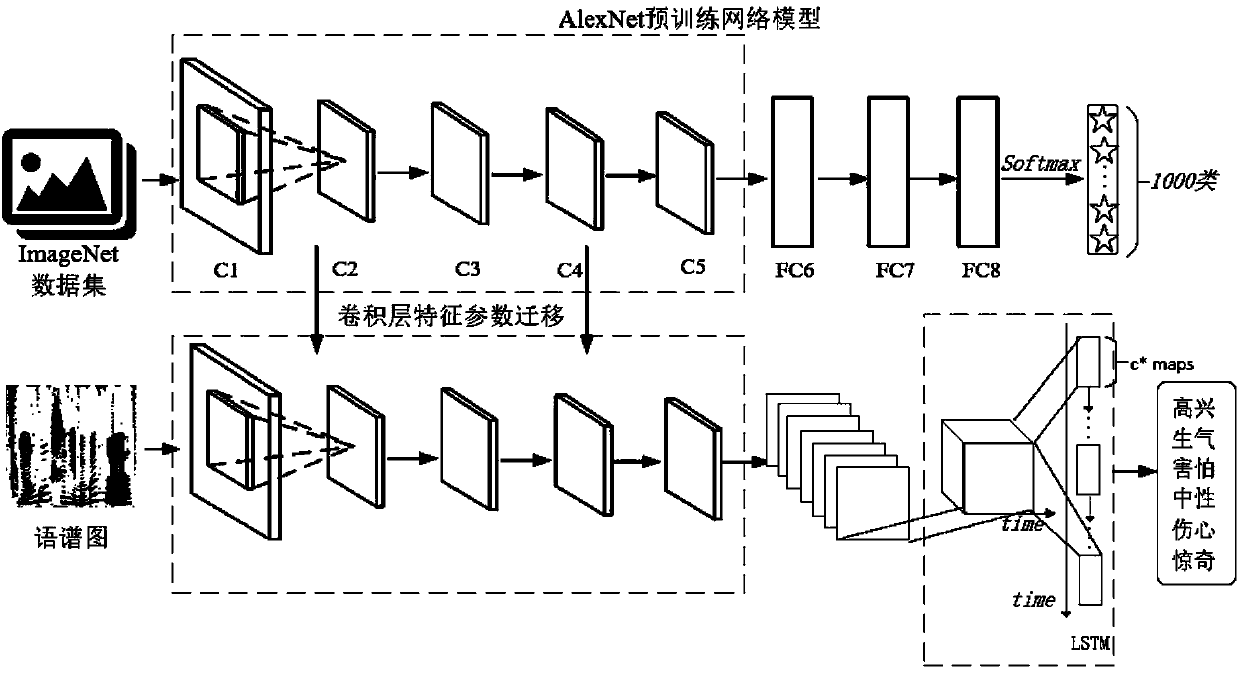

Speech emotion recognition method based on parameter migration and spectrogram

ActiveCN108597539AIncrease training speedImprove recognition accuracyCharacter and pattern recognitionSpeech recognitionNetwork modelAnger

The invention discloses a speech emotion recognition method based on parameter migration and a spectrogram, and the method comprises the following steps: 1), collecting the speech emotion data from aChinese emotion database of Institute of Automation, Chinese Academy of Sciences, and carrying out the preprocessing of the speech emotion data, wherein the speech emotion data contains six emotions:anger, fear, happiness, neutral emotion, sadness, and surprise; 2), constructing a network model based on a pre-trained convolutional cyclic neural network; 3), carrying out the parameter migration and training of the network model at step 2). The method can achieve the extraction of the emotion features of the spectrogram in the time and frequency domains, improves the recognition accuracy, alsocan learn the pre-training technology, and improves the network training speed.

Owner:GUILIN UNIV OF ELECTRONIC TECH

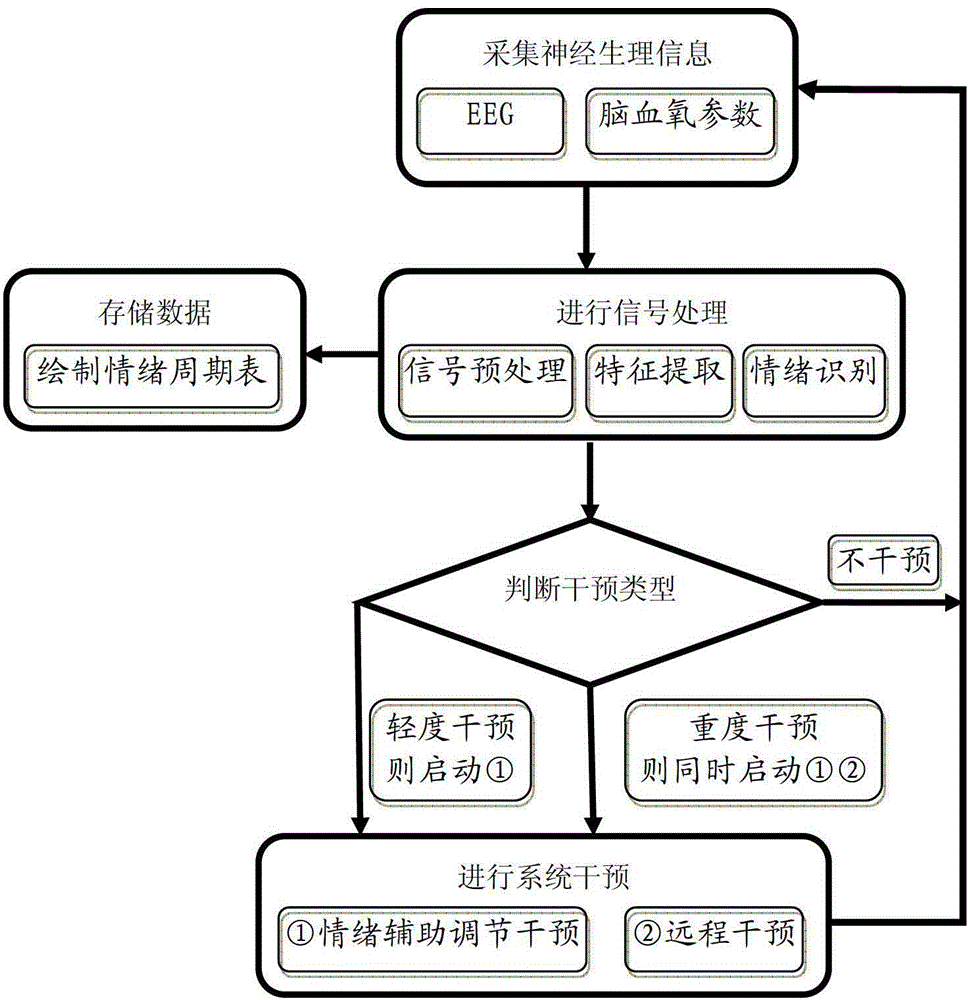

Emotion monitoring method for special people

InactiveCN102715902AImprove emotional stateAvoid mental deteriorationDiagnostic recording/measuringSensorsCrowdsOxygen

An emotion monitoring method for special people includes: acquiring neural physiological information, and acquiring electroencephalogram signal and brain blood oxygen signal; performing signal treatments including signal pretreatment, feature extraction and emotion recognition; determining intervention type, determining recognized emotions, and returning to a start phase to continue monitoring if a user is calm or positive emotionally, or proceeding a next phase if not; performing systematic intervention, namely determining the manner of systematic auxiliary emotion regulation and intervention or remote intervention according to different emotions; and storing data, drawing an emotion periodic table in a certain period, and recording and storing mood fluctuations of a user. By the aid of portable detection of electroencephalogram and brain blood oxygen signals, accurate emotion status is recognized, unhealthy emotions of users and the outside world can be helped and noticed timely, humane placation measures are taken, emotion statuses of the users can be improved, mental state deterioration can be avoided for the users, and nursing burden and physiological pressure of family members of a patient with emotional disorder can also be relieved.

Owner:TIANJIN UNIV

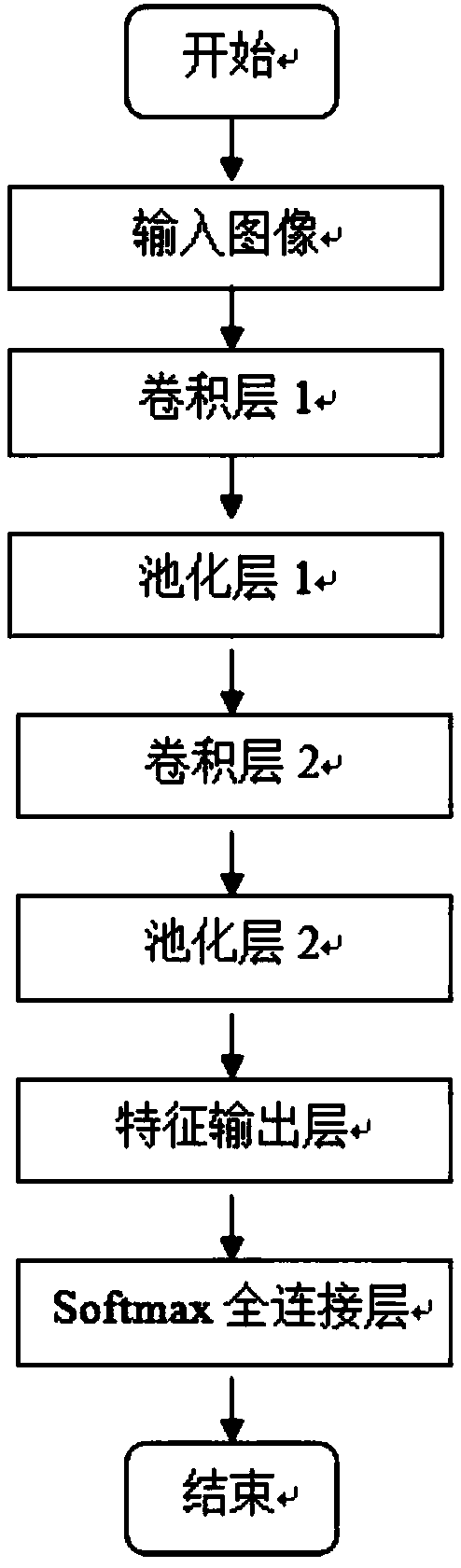

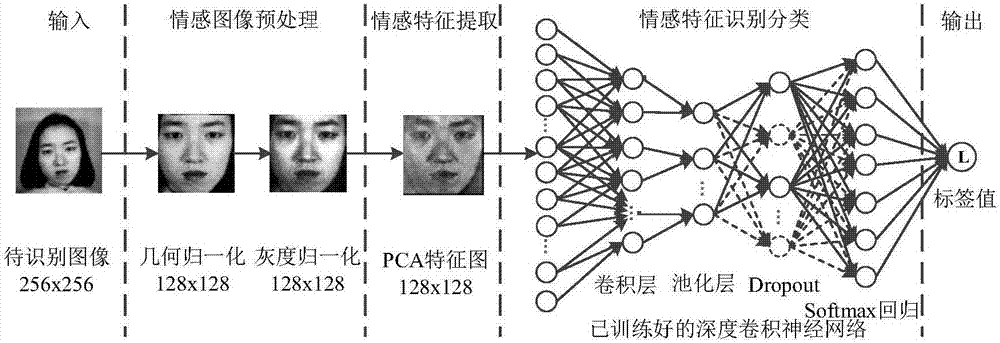

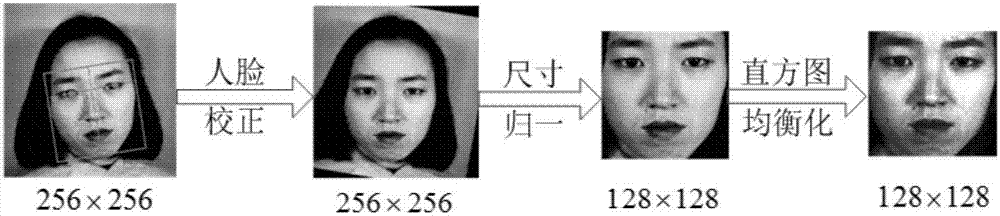

Facial emotion recognition method based on deep sparse convolutional neural network

InactiveCN107506722ASimple structureImprove generalization abilityPhysical realisationAcquiring/recognising facial featuresLocal optimumFeature extraction

The invention provides a facial emotion recognition method based on a deep sparse convolutional neural network. The method comprises the following steps: to begin with, carrying out emotion image preprocessing; then, carrying out emotion feature extraction; and finally, carrying out emotion feature identification and classification. The facial emotion recognition method based on the deep sparse convolutional neural network carries out optimization on weight of the deep sparse convolutional neural network through a Nesterov accelerated gradient descent algorithm to enable network structure to be optimal, thereby improving generalization of the face emotion recognition algorithm; since the NAGD has a precognition capability, the algorithm can be prevented from being too fast or too slow foreseeingly; and meanwhile, response capability of the algorithm can be enhanced, and better local optimum value can be obtained.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

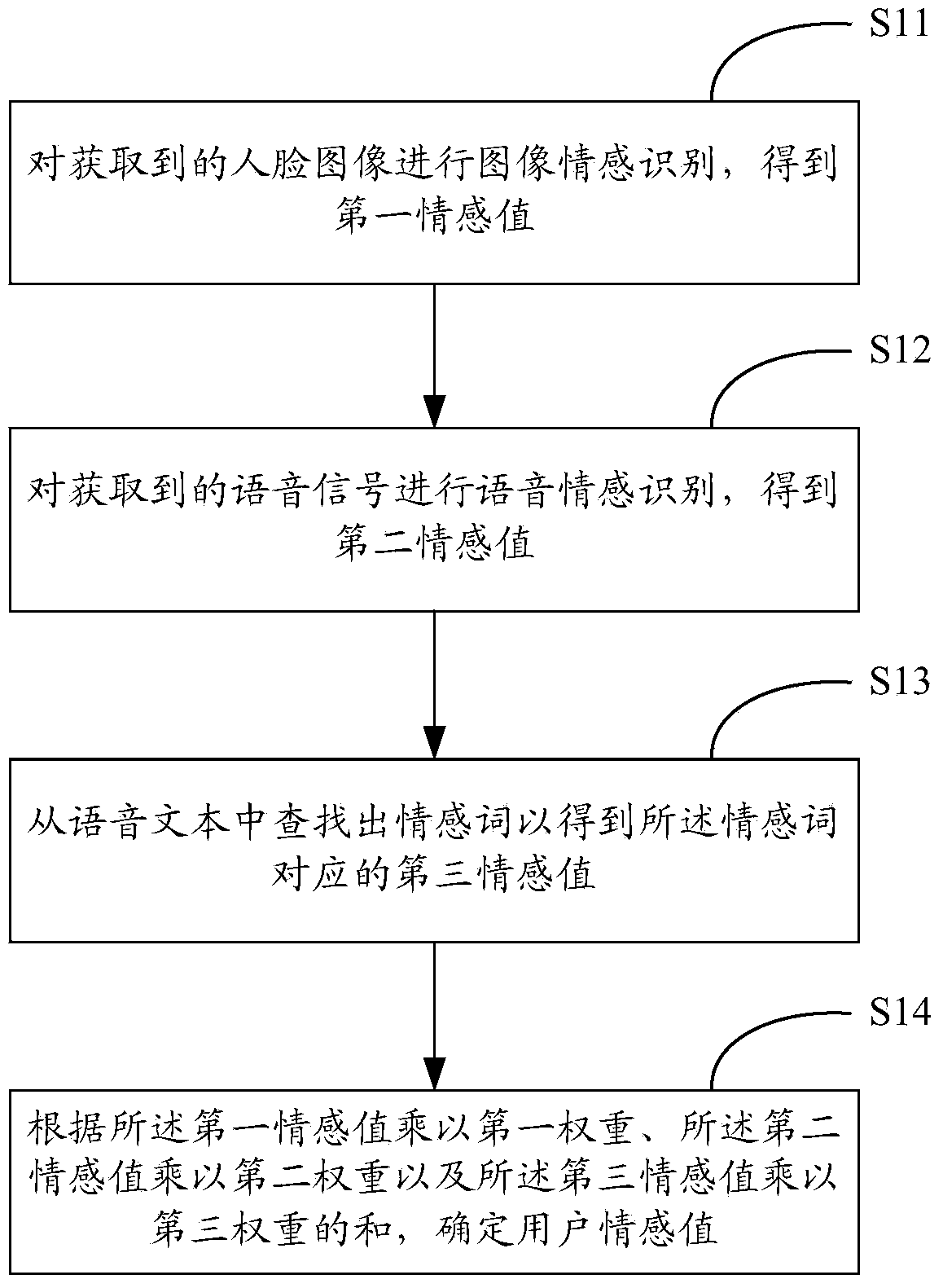

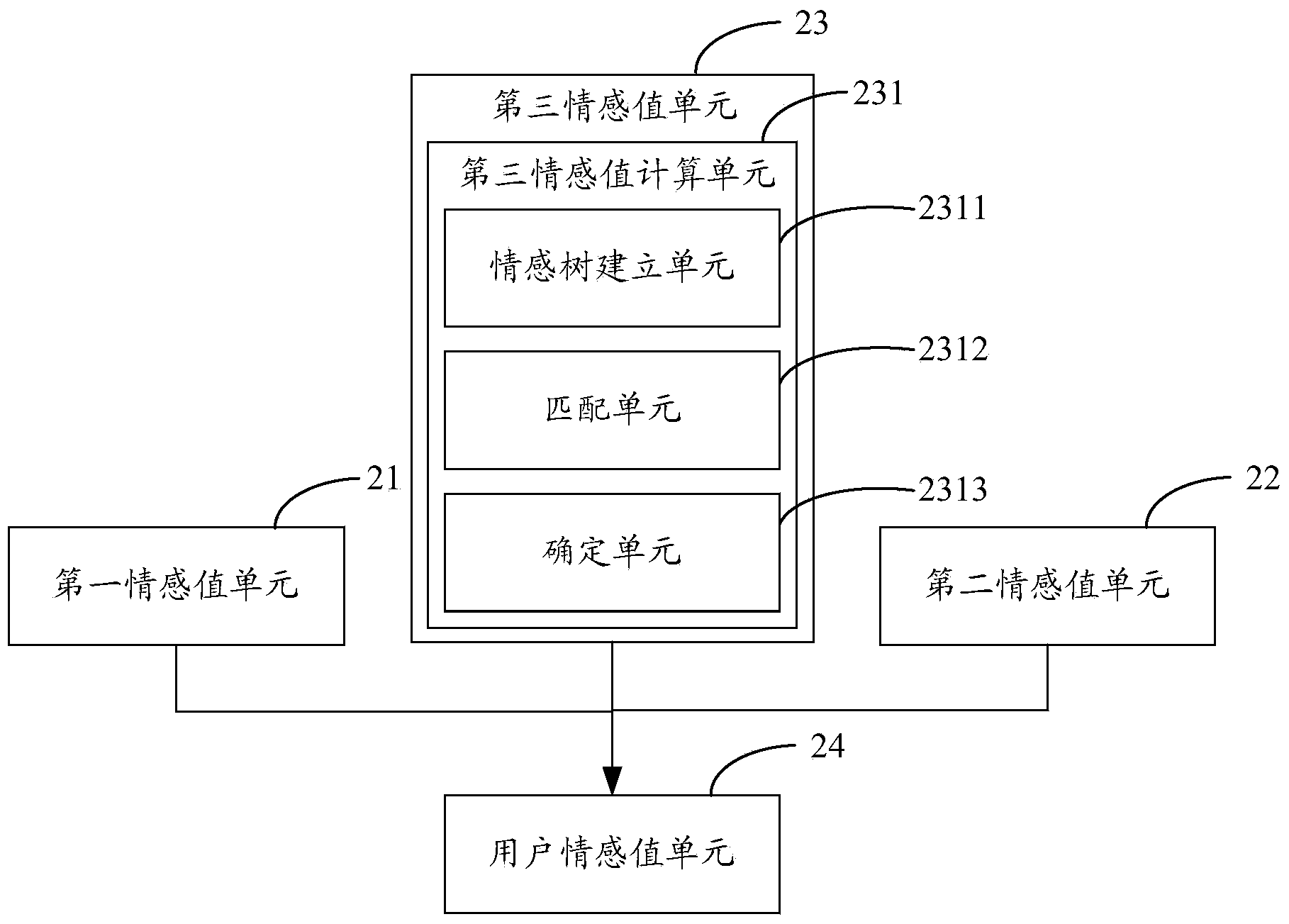

Emotion recognition method and device

The invention is applicable to the field of communication, and provides an emotion recognition method and device. A first emotion value is obtained according to obtained emotion characteristics. Meanwhile, a second emotion value is obtained according to obtained voice signals; at the same time, an emotion word is found in voice test recognized through a voice recognition technology, and therefore a third emotion value corresponding to the emotion word is obtained. Then, according to the sum of the product of the first emotion value and a first weight, the product of the second emotion value and a second weight, and the product of the third emotion value and a third weight, an emotion value of a user can be determined. Therefore, according to the emotion value of the user, a mobile terminal can judge the current mood of the user and execute preset operation.

Owner:GUANGZHOU SKYWORTH PLANE DISPLAY TECH

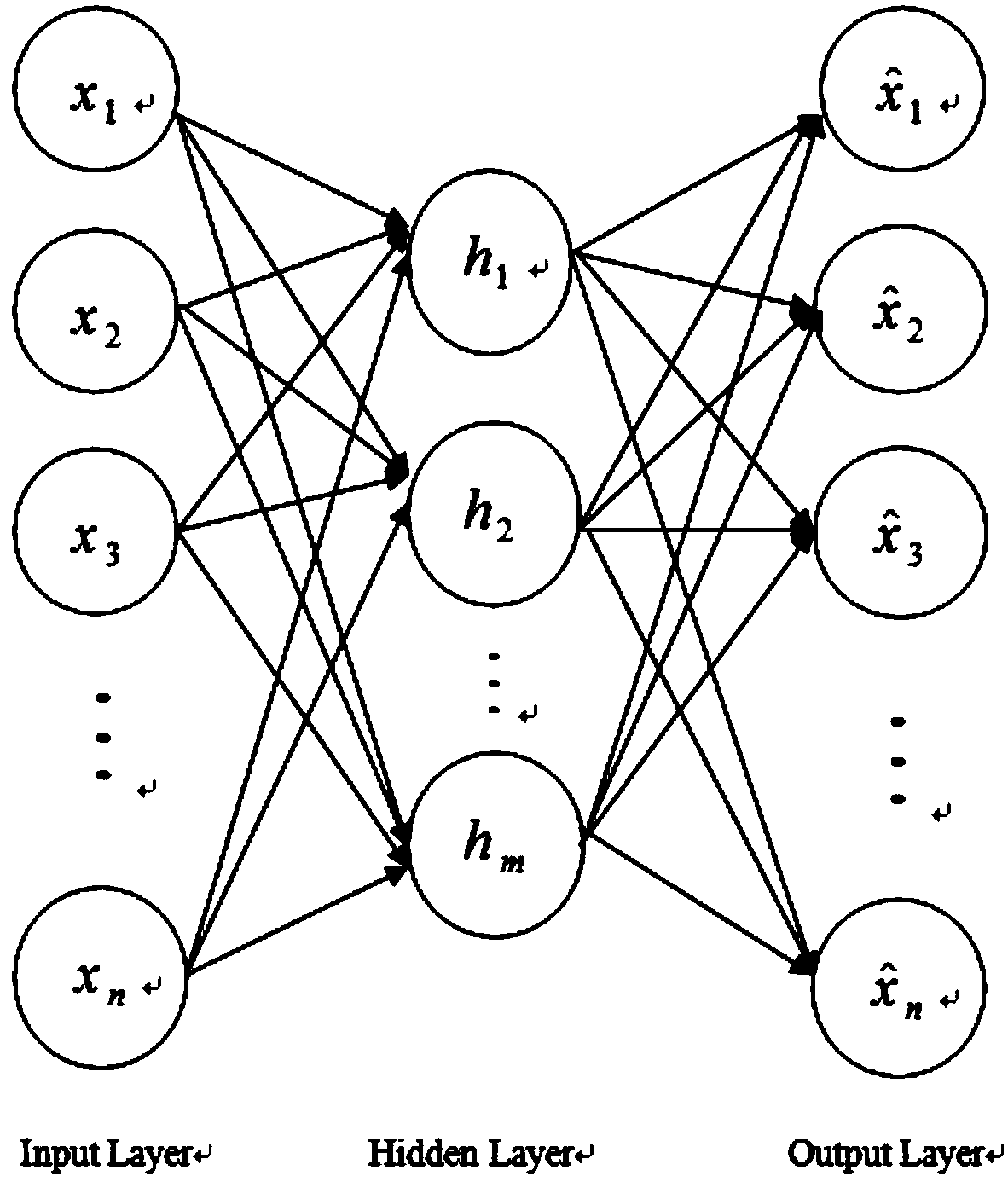

Facial emotion recognition method based on depth sparse self-encoding network

InactiveCN106503654ARobustIncrease training speedAcquiring/recognising facial featuresNeural learning methodsNODALPattern recognition

The present invention discloses a facial emotion recognition method based on a depth sparse self-encoding network. The method comprises the steps of 1, acquiring and pre-processing data; 2, establishing a depth sparse self-encoding network; 3, automatically encoding / decoding the depth sparse self-encoding network; 4, training a Softmax classifier; and 5, finely adjusting the overall weight of the network. According to the technical scheme of the invention, sparseness parameters are introduced. In this way, the number of neuronal nodes is reduced, and the compressed representation of data can be learned. Meanwhile, the training and recognizing speed is improved effectively. Moreover, the weight of the network is finely adjusted based on the back-propagation algorithm and the gradient descent method, so that the global optimization is realized. The local extremum and gradient diffusion problem during the training process can be overcome, so that the recognition performance is improved.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

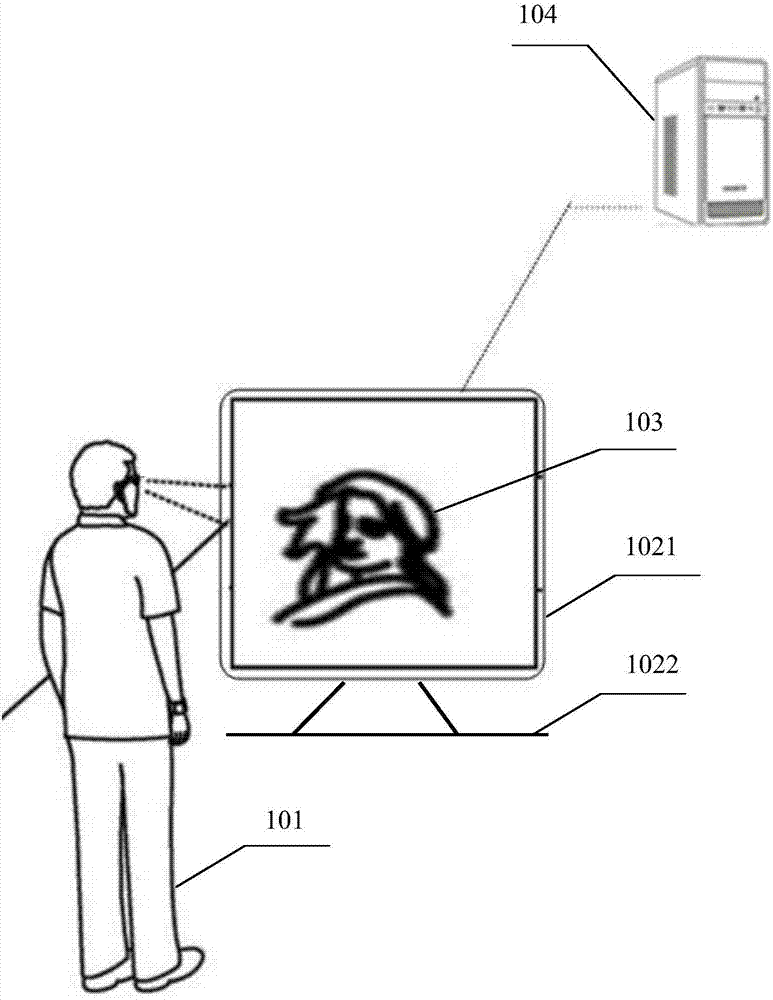

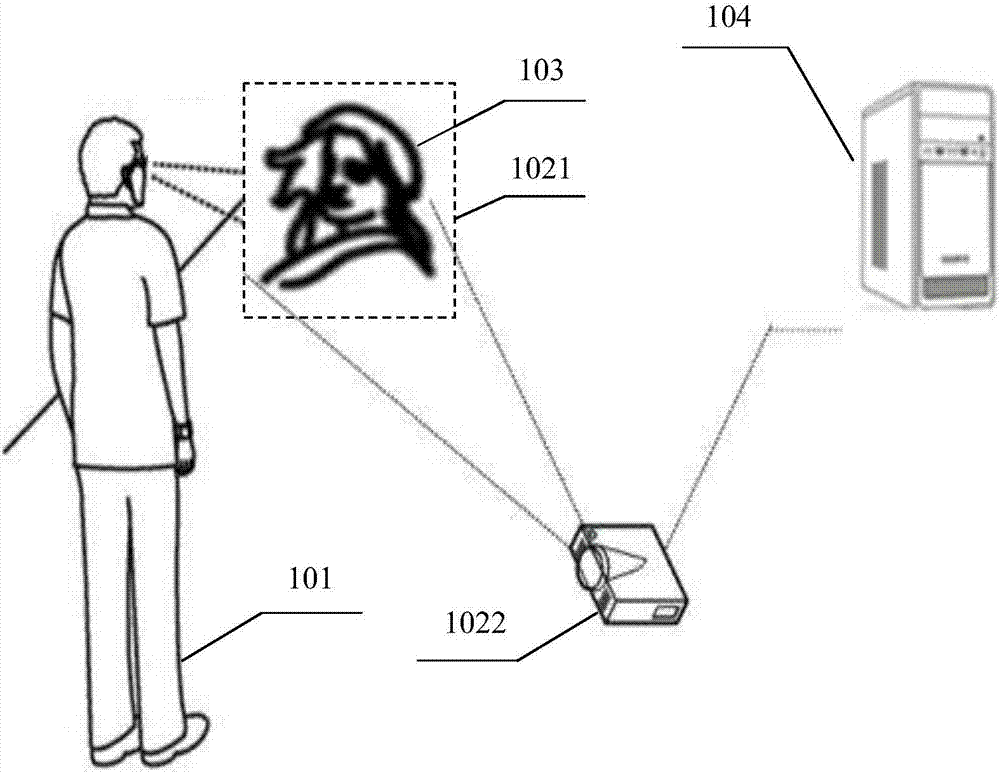

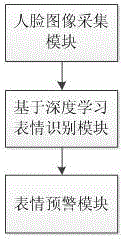

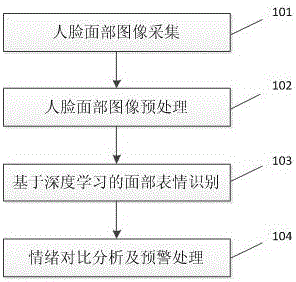

Deep learning-based emotion recognition method and system

InactiveCN106650621AStable working conditionCharacter and pattern recognitionLearning basedPattern recognition

The invention provides a deep learning-based emotion recognition method and system. The system includes a face image acquisition module, a deep learning-based facial expression recognition module and a facial expression early warning module. The facial images of employees are acquired when the employees punch in every time; the emotions of the employees are analyzed through adopting a deep learning algorithm-based facial expression analysis algorithm, and the emotions are compared with historical emotions; and when the emotions are abnormal, the system sends alarm information to relevant personnel. According to the deep learning-based emotion recognition method and system of the present invention, the deep learning algorithm is adopted to perform emotional analysis on the employees, and therefore, deep-level humanistic care can be provided for the employees.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

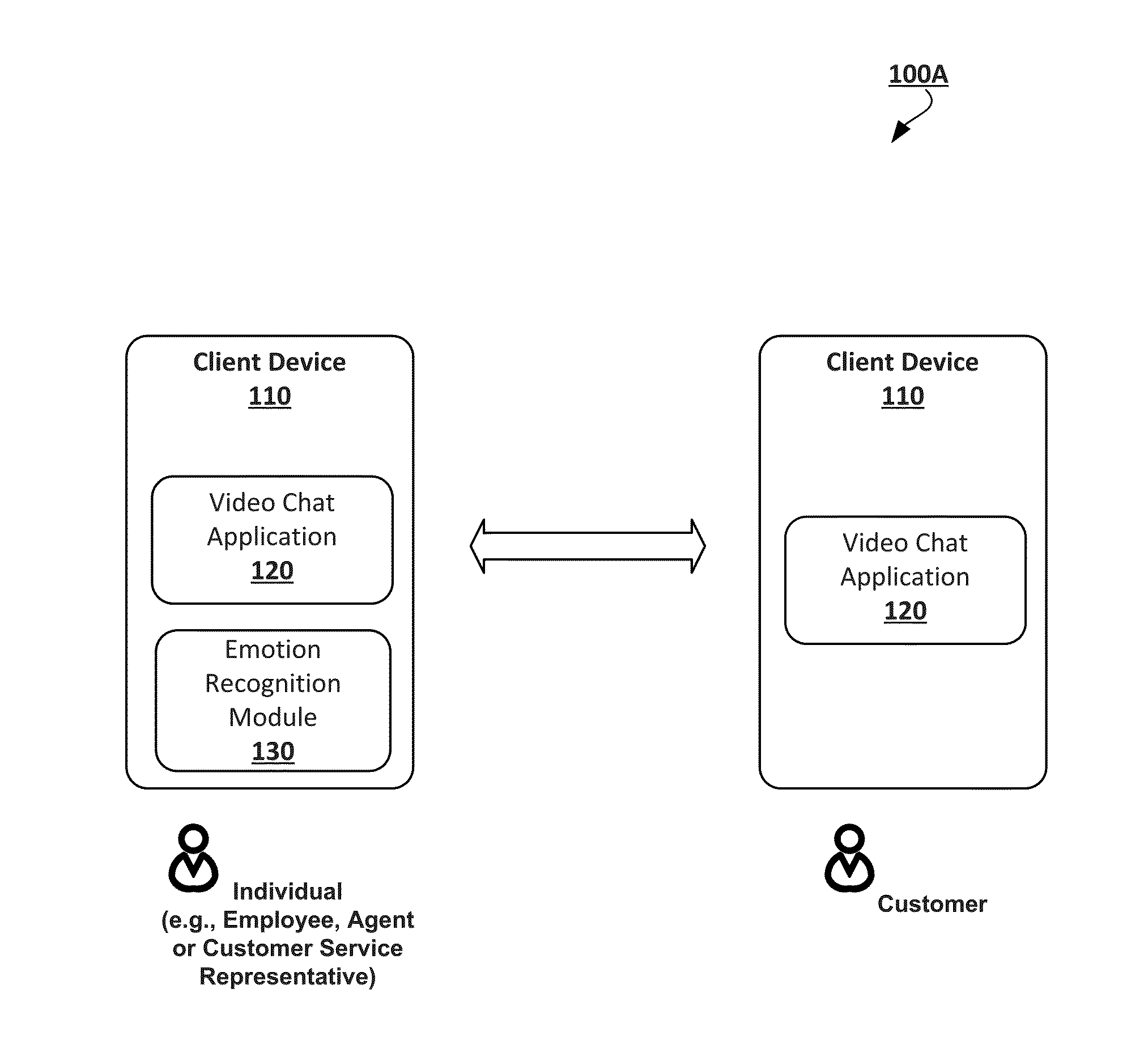

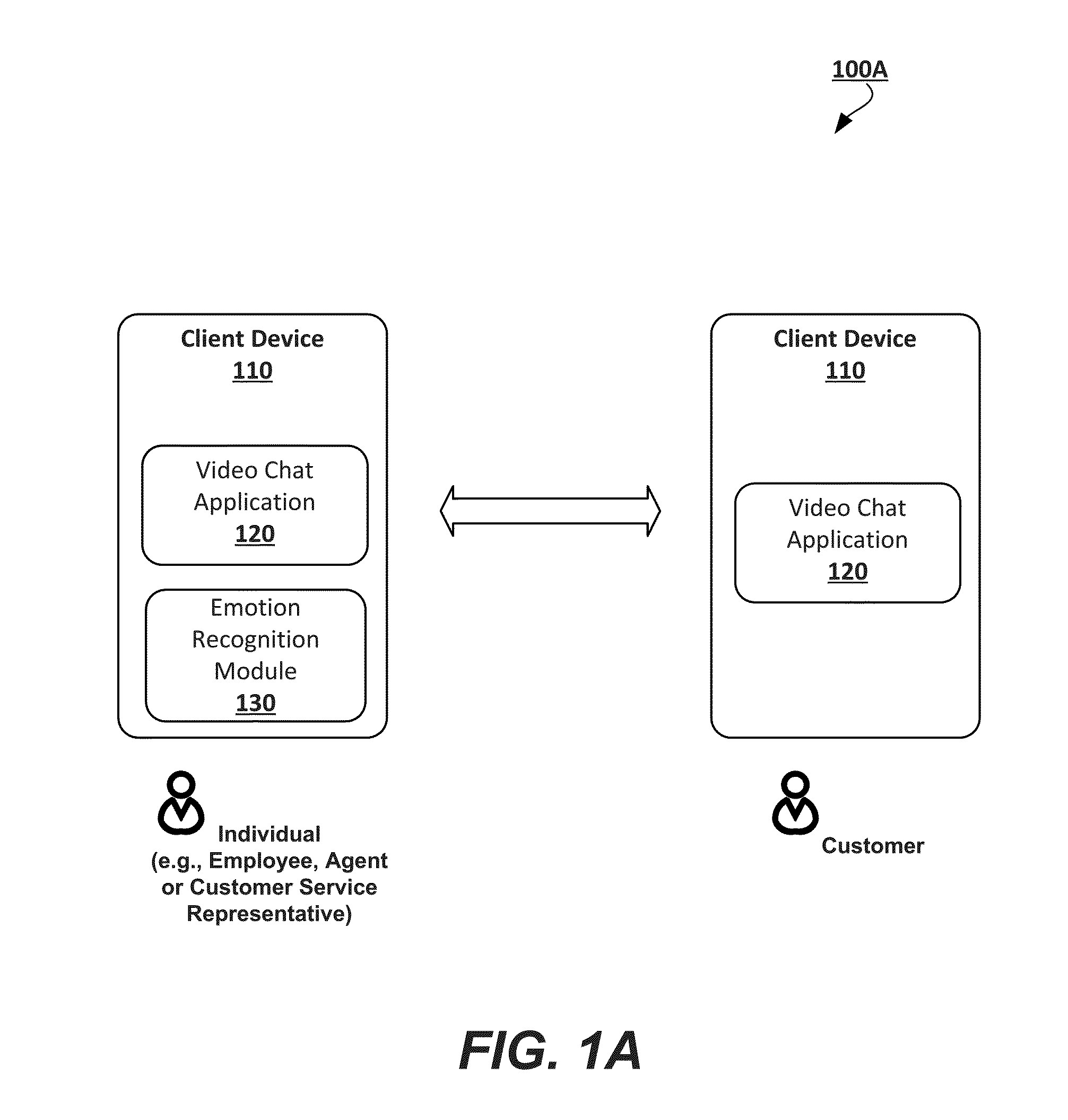

Emotion recognition for workforce analytics

ActiveUS20150193718A1Improve emotion recognitionNegative workTelevision conference systemsTwo-way working systemsPattern recognitionWork quality

Methods and systems for videoconferencing include generating work quality metrics based on emotion recognition of an individual such as a call center agent. The work quality metrics allow for workforce optimization. One example method includes the steps of receiving a video including a sequence of images, detecting an individual in one or more of the images, locating feature reference points of the individual, aligning a virtual face mesh to the individual in one or more of the images based at least in part on the feature reference points, dynamically determining over the sequence of images at least one deformation of the virtual face mesh, determining that the at least one deformation refers to at least one facial emotion selected from a plurality of reference facial emotions, and generating quality metrics including at least one work quality parameter associated with the individual based on the at least one facial emotion.

Owner:SNAP INC

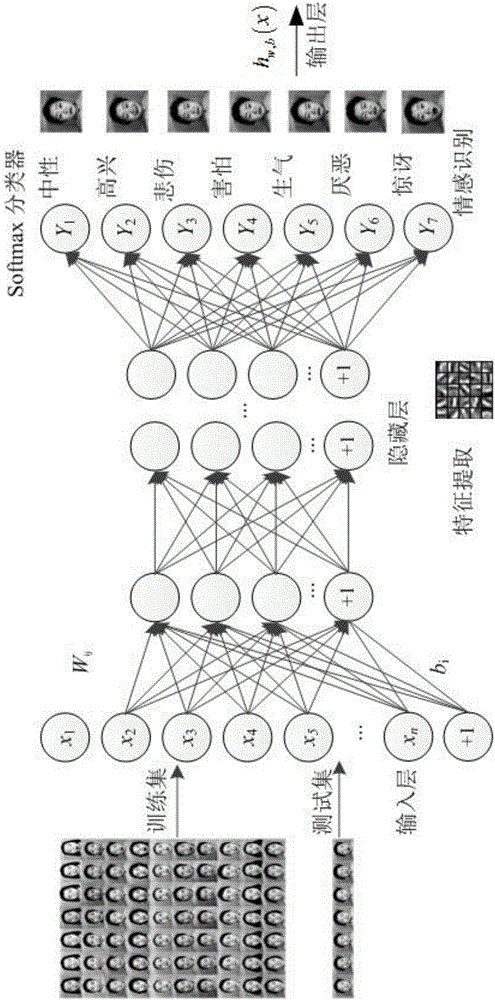

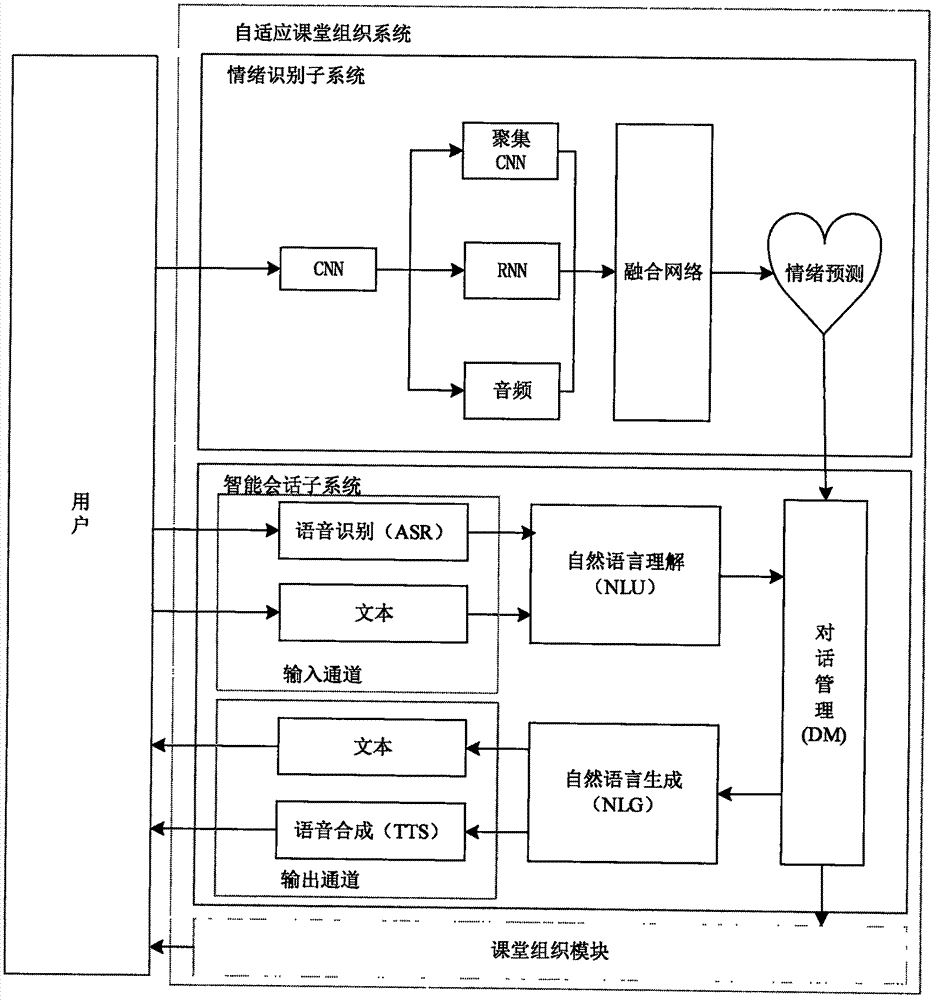

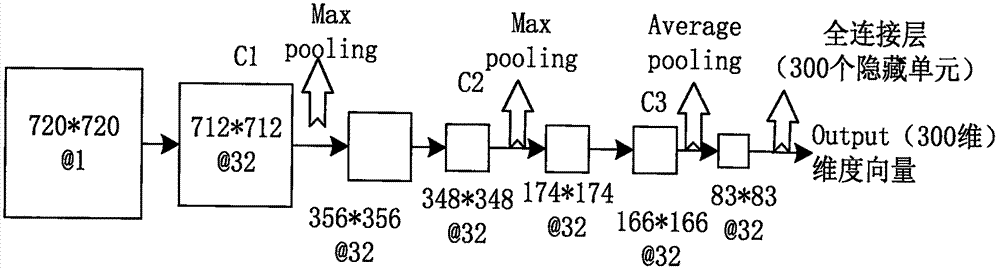

Man-machine interaction method and system for online education based on artificial intelligence

PendingCN107958433ASolve the problem of poor learning effectData processing applicationsSpeech recognitionPersonalizationOnline learning

The invention discloses a man-machine interaction method and system for online education based on artificial intelligence, and relates to the digitalized visual and acoustic technology in the field ofelectronic information. The system comprises a subsystem which can recognize the emotion of an audience and an intelligent session subsystem. Particularly, the two subsystems are combined with an online education system, thereby achieving the better presentation of the personalized teaching contents for the audience. The system starts from the improvement of the man-machine interaction vividnessof the online education. The emotion recognition subsystem judges the learning state of a user through the expression of the user when the user watches a video, and then the intelligent session subsystem carries out the machine Q&A interaction. The emotion recognition subsystem finally classifies the emotions of the audiences into seven types: angry, aversion, fear, sadness, surprise, neutrality,and happiness. The intelligent session subsystem will adjust the corresponding course content according to different emotions, and carry out the machine Q&A interaction, thereby achieving a purpose ofenabling the teacher-student interaction and feedback in the conventional class to be presented in an online mode, and enabling the online class to be more personalized.

Owner:JILIN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com