Various-information coupling emotion recognition method for human-computer interaction

A technology of emotion recognition and comprehensive information, applied in speech recognition, character and pattern recognition, computer parts and other directions, can solve the problems of high noise impact, poor performance, and inability to comprehensively express the emotional transmission of human conversations.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

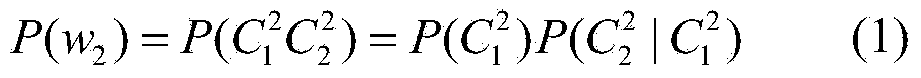

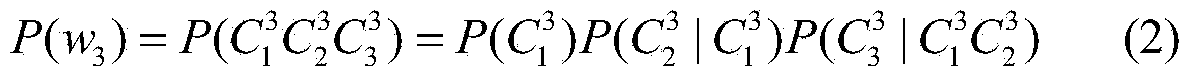

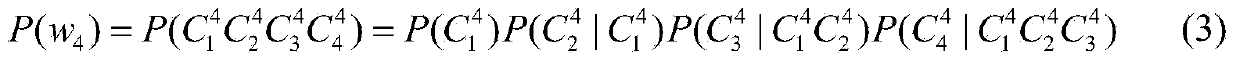

[0070] In this embodiment, a human-computer interaction-oriented multi-(class) information coupling emotion recognition method based on deep learning includes the following steps:

[0071] Step 1. Use the camera device and the microphone to obtain video data and voice data of human facial expressions synchronously. The video data is required to shoot the speaker's face; the collected video is classified into emotions; specifically, it is divided into angry (angry), Fear (fear), happiness (happy), neutral (neutral), sadness (sad) and surprise (surprise) are six types of emotions, and they are represented by 1, 2, 3, 4, 5, and 6 respectively. The comprehensive emotional features of each video can be represented by a quaternion Y.

[0072] Y=(E,V T ,V S ,V i )(1)

[0073] In formula (1), E represents the emotion classification of this video, V T Represents the first information feature, that is, the text information feature (TextFeature), V S Indicates that the second infor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com