Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

7538 results about "Human machine interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human Machine Interaction is a multidisciplinary field with a range of contributions from Human-Computer interaction (HCI), Human-Robot Interaction (HRI) and – most important of all for us – Artificial Intelligence (AI) and Interaction Design (including product and service design).

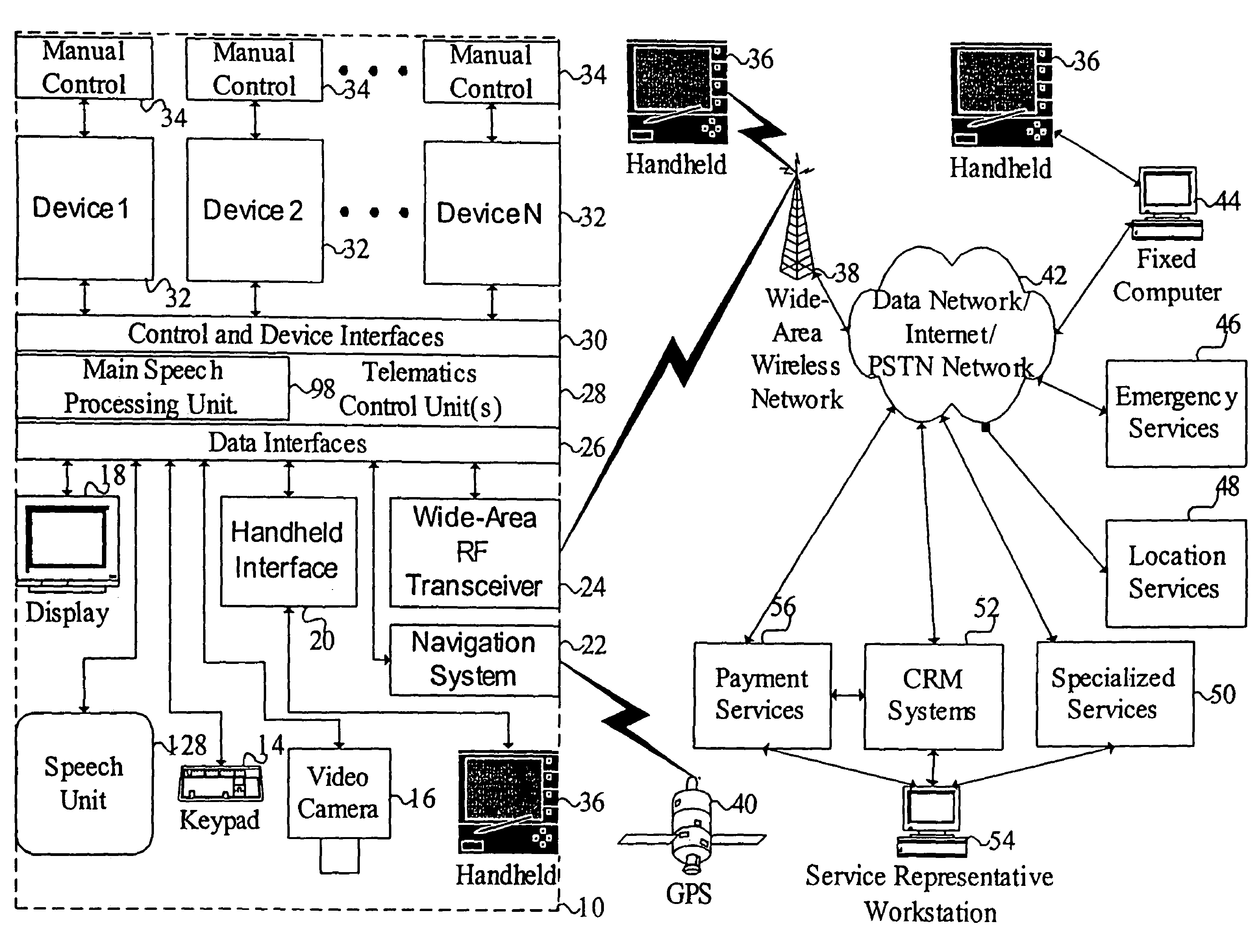

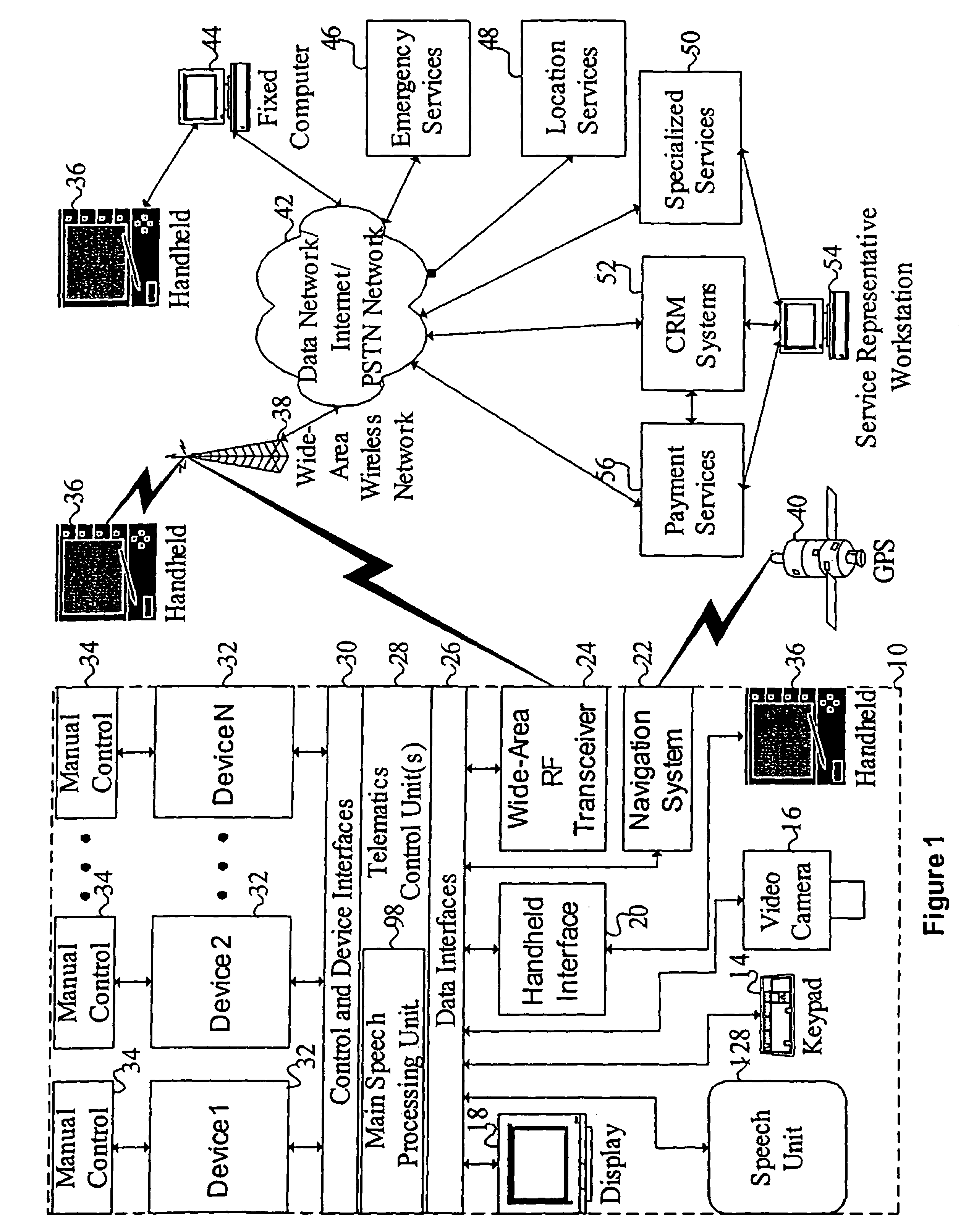

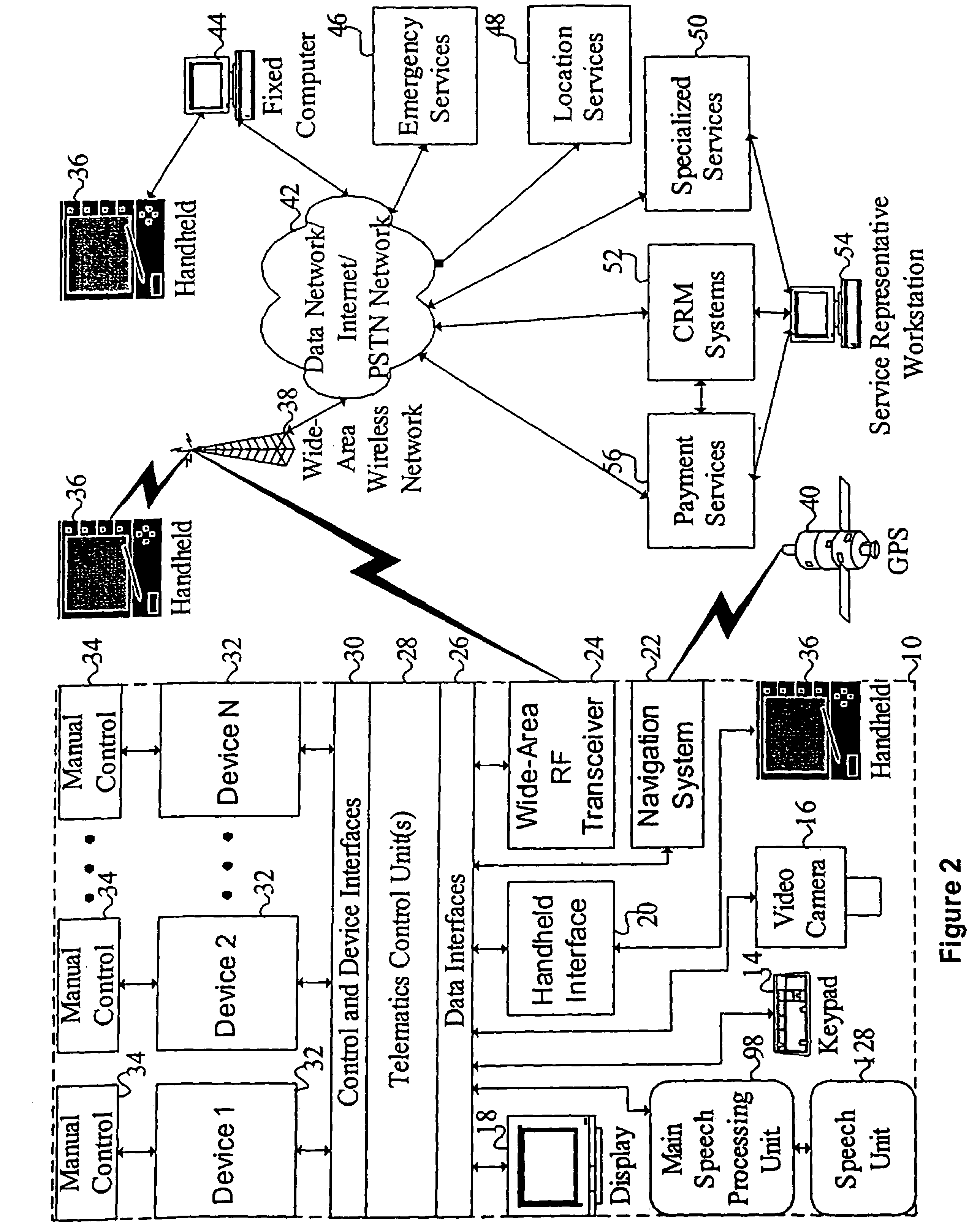

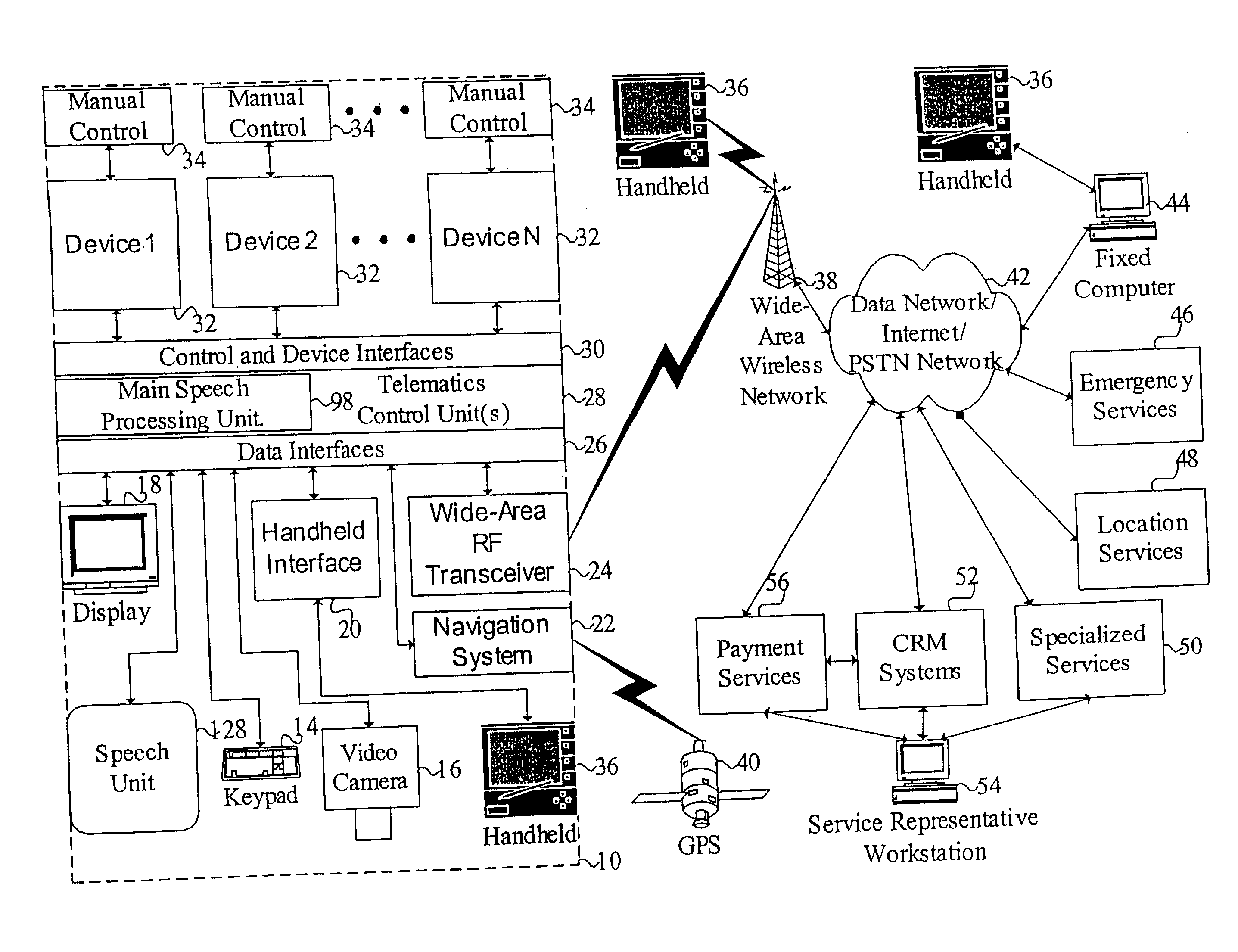

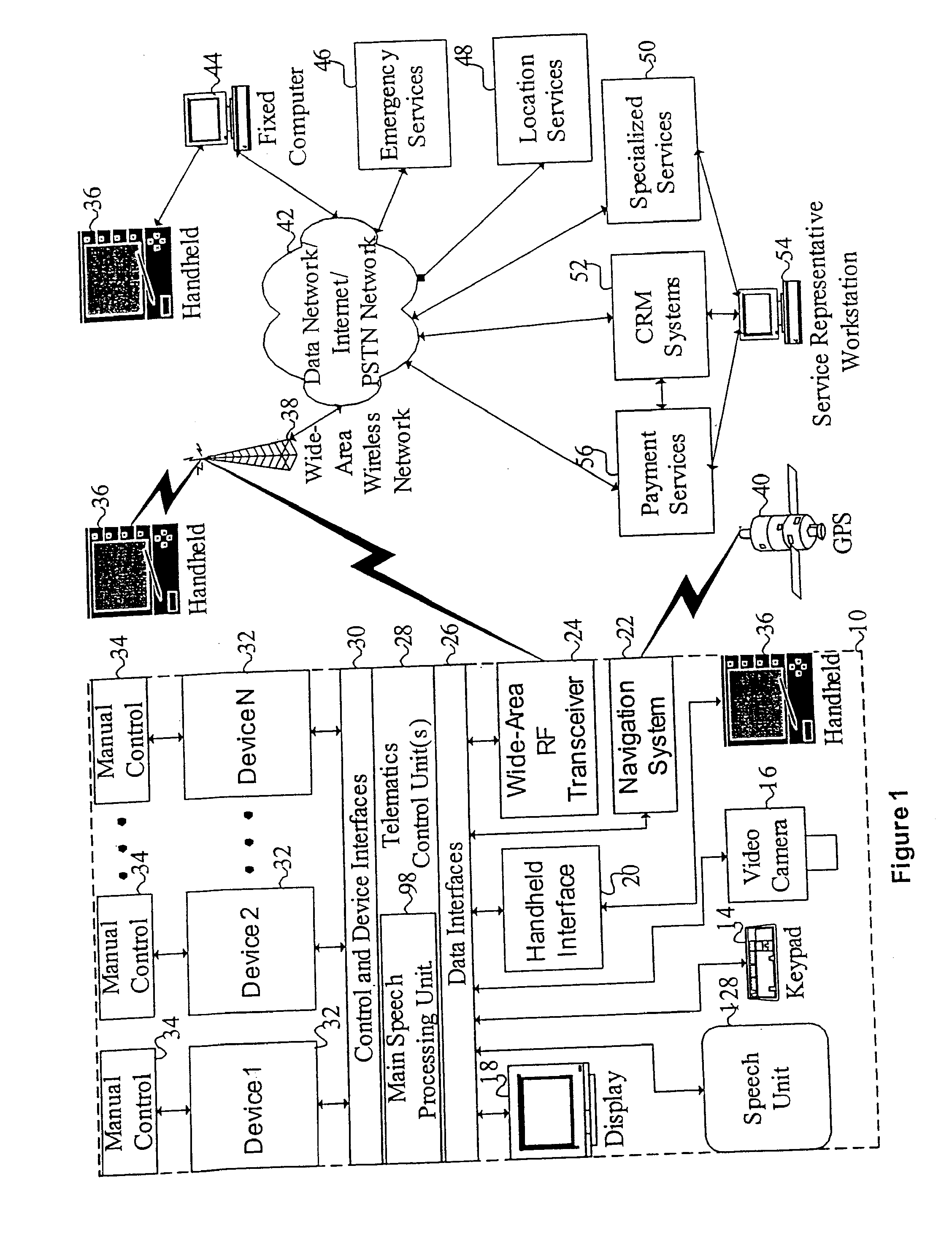

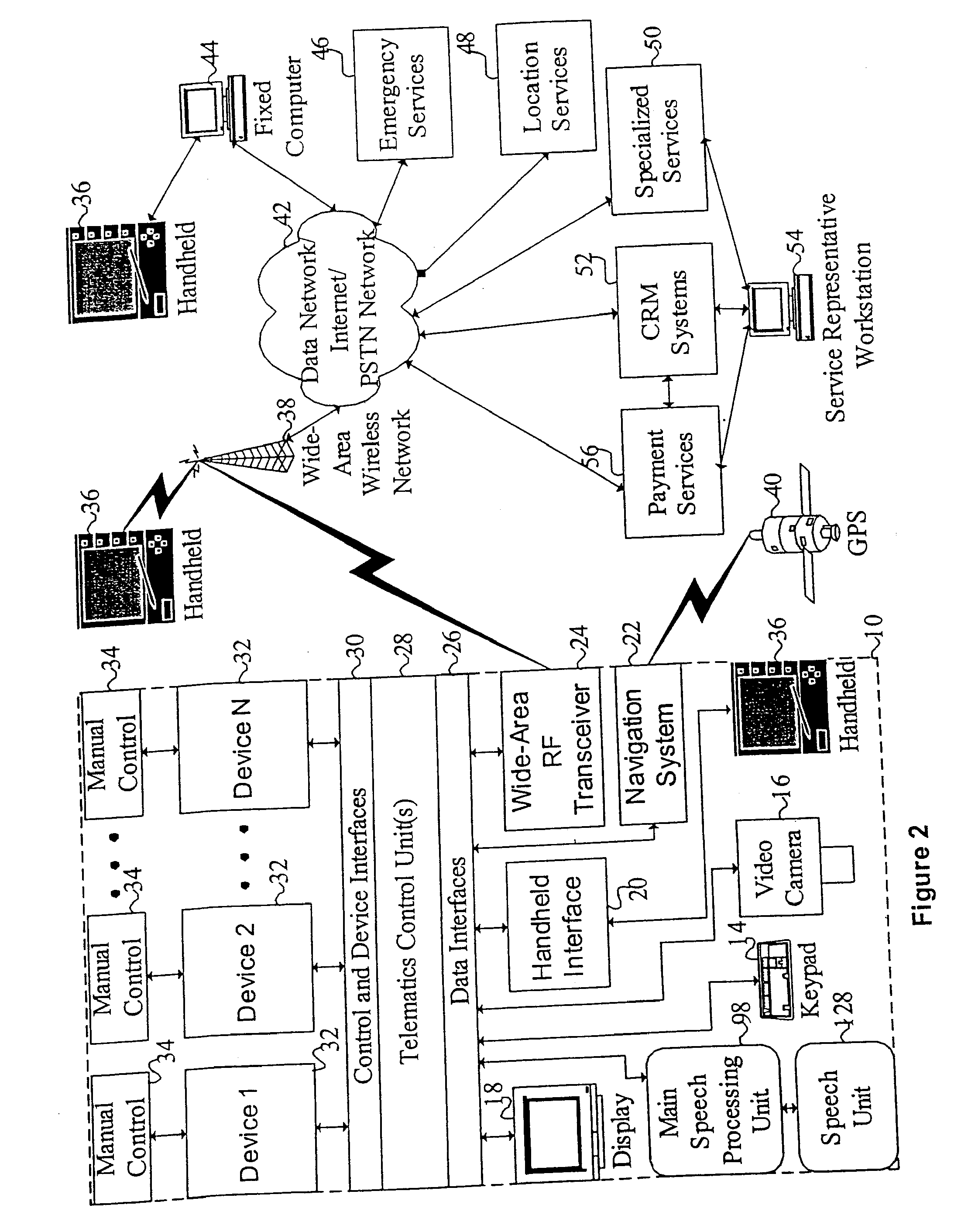

Mobile systems and methods of supporting natural language human-machine interactions

ActiveUS7949529B2Promotes feeling of naturalConvenient timeWeb data indexingDevices with voice recognitionTelematicsWide area network

A mobile system is provided that includes speech-based and non-speech-based interfaces for telematics applications. The mobile system identifies and uses context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for users that submit requests and / or commands in multiple domains. The invention creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context and presenting the expected results for a particular question or command. The invention may organize domain specific behavior and information into agents, that are distributable or updateable over a wide area network.

Owner:DIALECT LLC

Mobile systems and methods of supporting natural language human-machine interactions

ActiveUS20110231182A1Promotes feeling of naturalConvenient timeWeb data indexingDevices with voice recognitionEngineeringSpeech sound

A mobile system is provided that includes speech-based and non-speech-based interfaces for telematics applications. The mobile system identifies and uses context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for users that submit requests and / or commands in multiple domains. The invention creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context and presenting the expected results for a particular question or command. The invention may organize domain specific behavior and information into agents, that are distributable or updateable over a wide area network.

Owner:DIALECT LLC

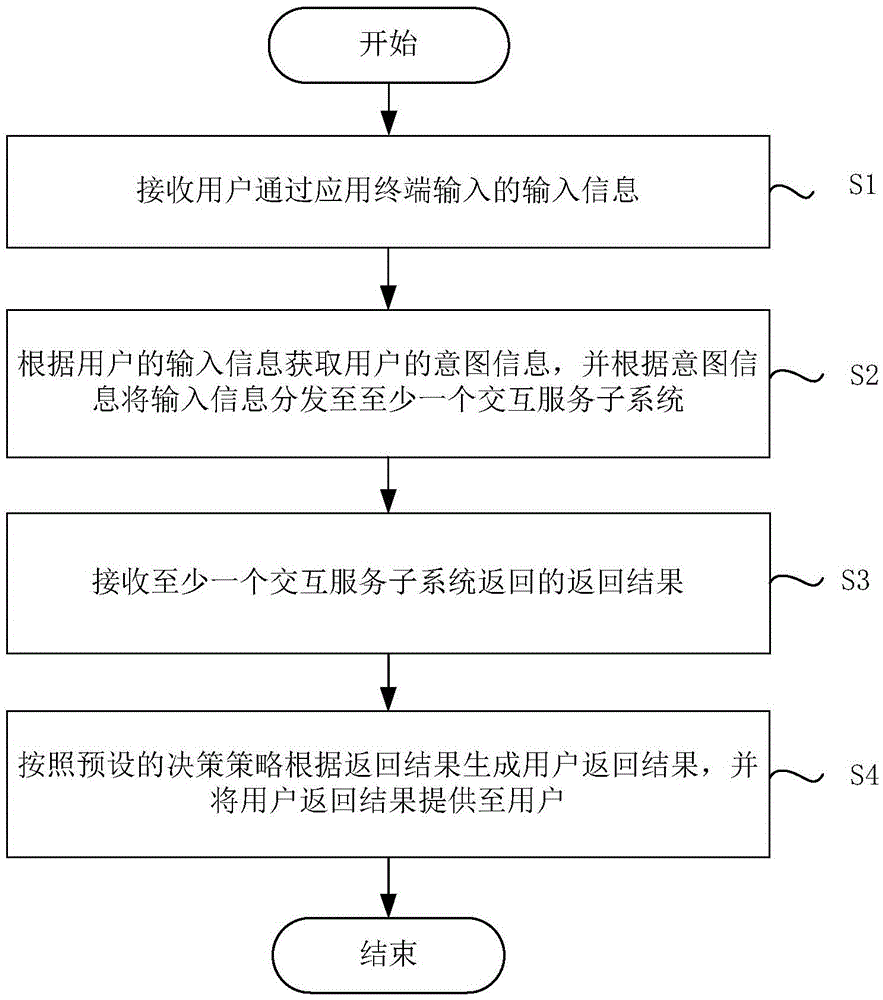

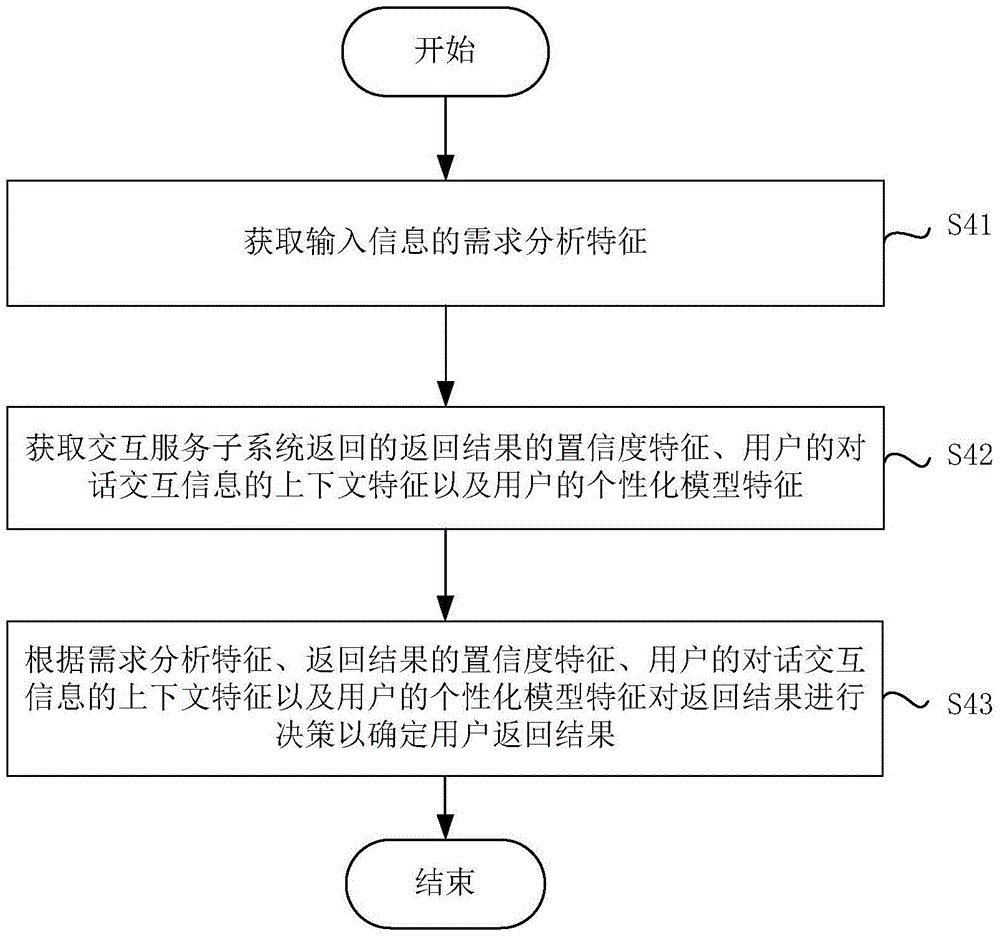

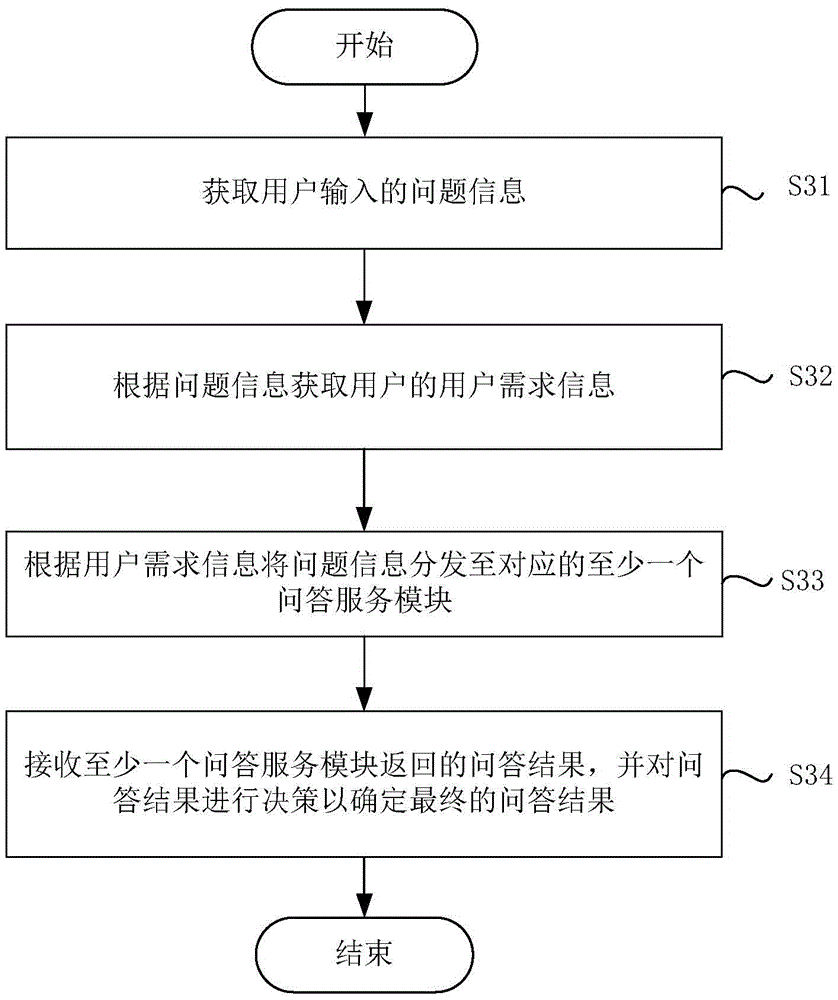

Man-machine interaction method and system based on artificial intelligence

ActiveCN105068661AEasy and pleasant interactive experienceInput/output for user-computer interactionGraph readingDecision strategyComputer terminal

The invention discloses a man-machine interaction method and system based on artificial intelligence. The method includes the following steps of receiving input information input by a user through an application terminal, obtaining the intent information of the user according to the input information of the user, distributing the input information to at least one interaction service subsystem according to the intent information, receiving the return result returned by the interaction service subsystems, generating a user return result according to the return result through a preset decision strategy, and providing the user return result to the user. By means of the method and system, the man-machine interaction system is virtualized instead of being instrumentalized, and the user can obtain the relaxed and pleasure interaction experience in the intelligent interaction process through chat, research and other service. The search in the form of keywords is improved into the search based on natural languages, the user can express demands through flexible and free natural languages, and the multi-round interaction process is closer to the interaction experience among humans.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Human-computer user interaction

ActiveUS20100261526A1Input/output for user-computer interactionElectronic switchingHuman interactionComputer users

Methods of and apparatuses for providing human interaction with a computer, including human control of three dimensional input devices, force feedback, and force input.

Owner:META PLATFORMS INC

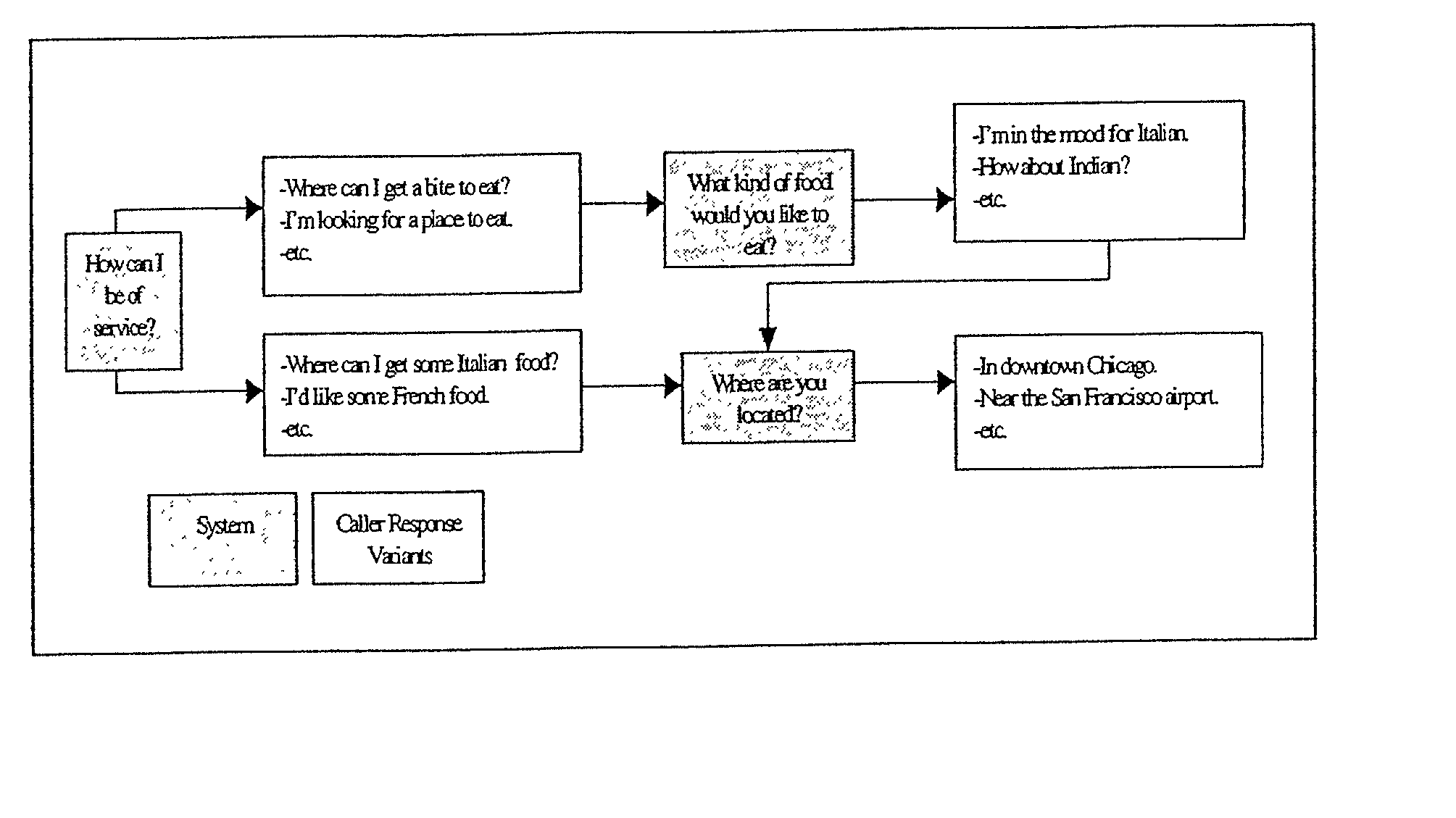

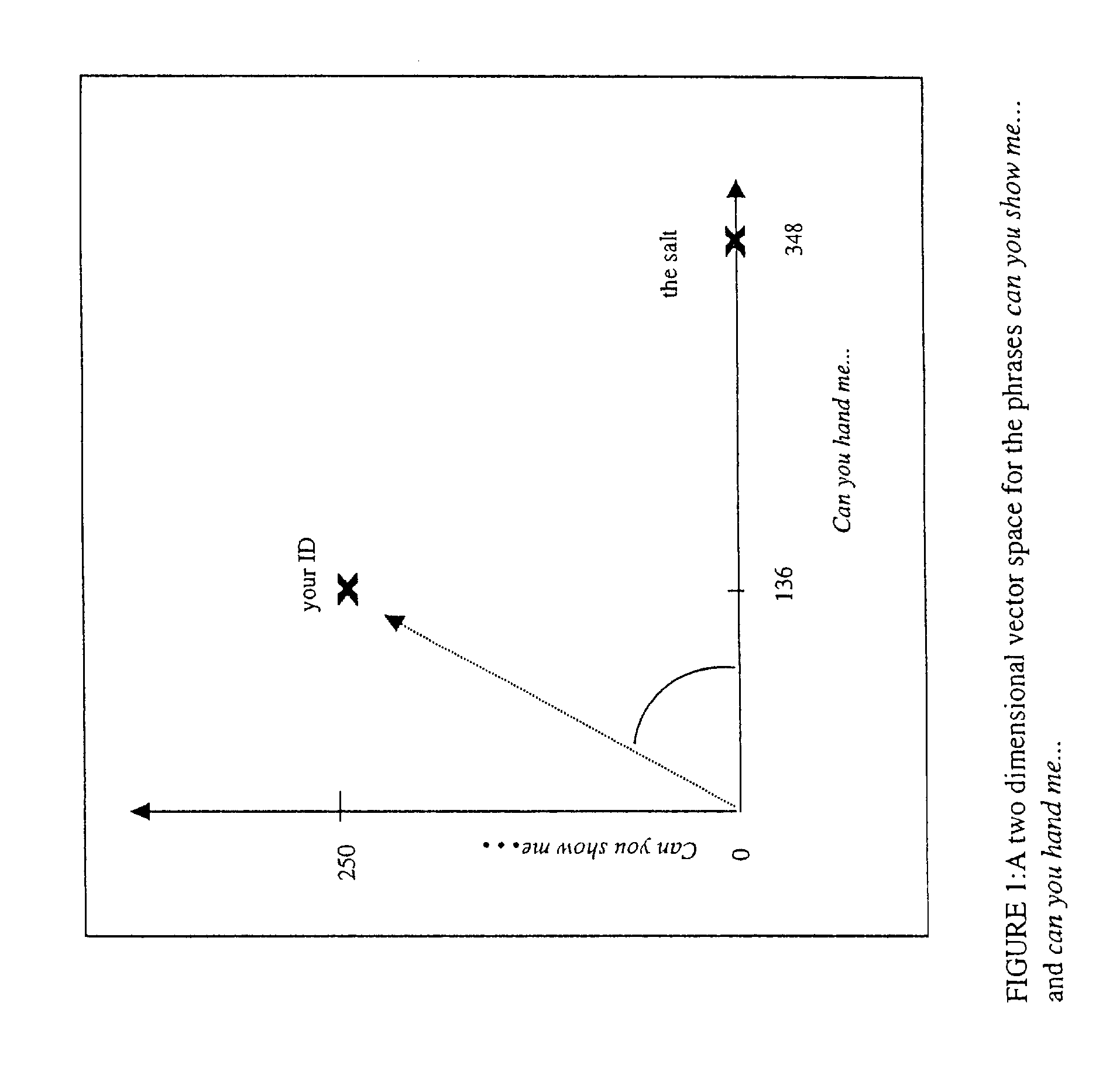

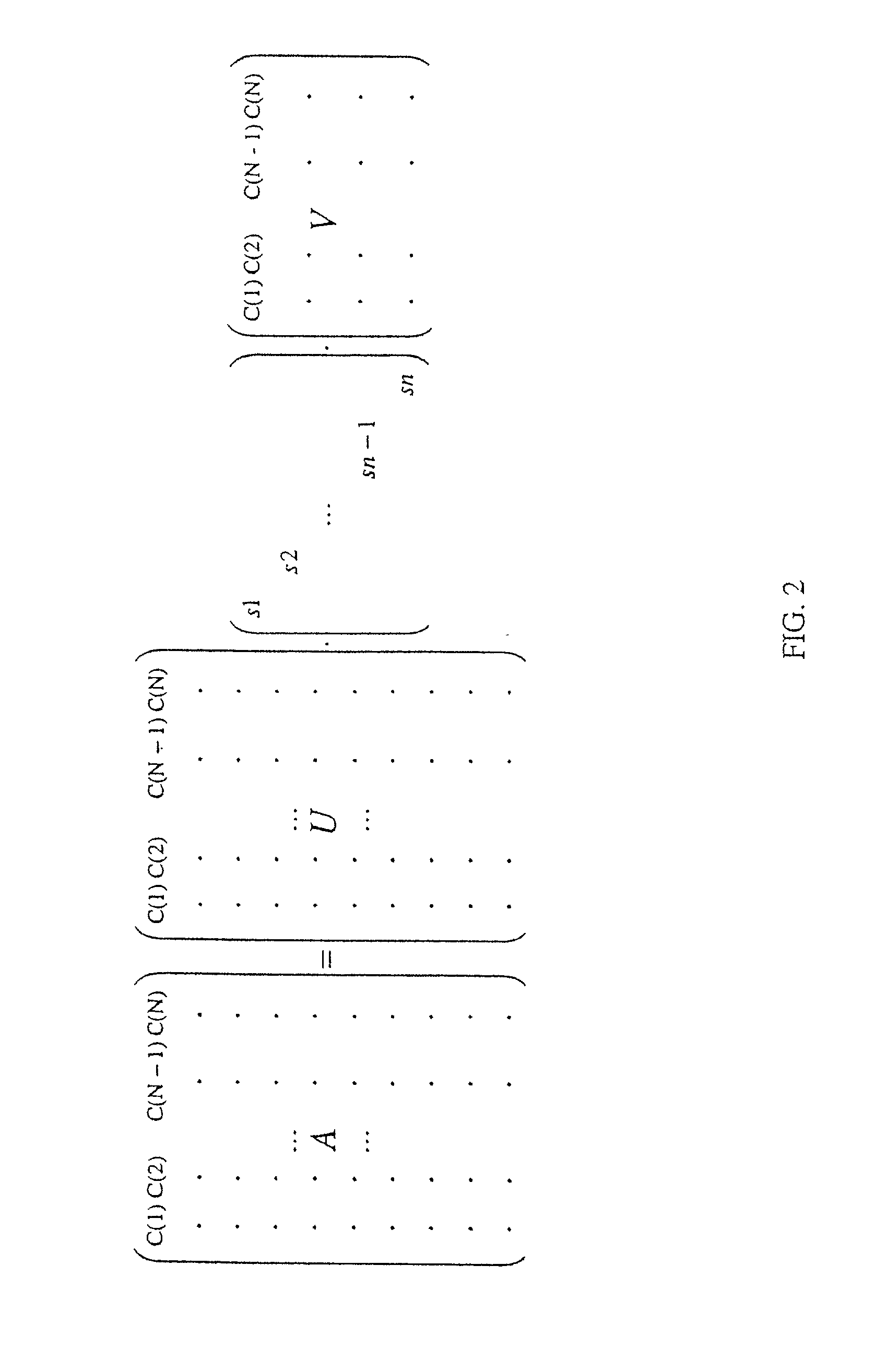

Phrase-based dialogue modeling with particular application to creating recognition grammars for voice-controlled user interfaces

InactiveUS20020128821A1Quick identificationLess timeNatural language data processingSpeech recognitionSpeech soundHuman system interaction

The invention enables creation of grammar networks that can regulate, control, and define the content and scope of human-machine interaction in natural language voice user interfaces (NLVUI). More specifically, the invention concerns a phrase-based modeling of generic structures of verbal interaction and use of these models for the purpose of automating part of the design of such grammar networks.

Owner:NANT HLDG IP LLC

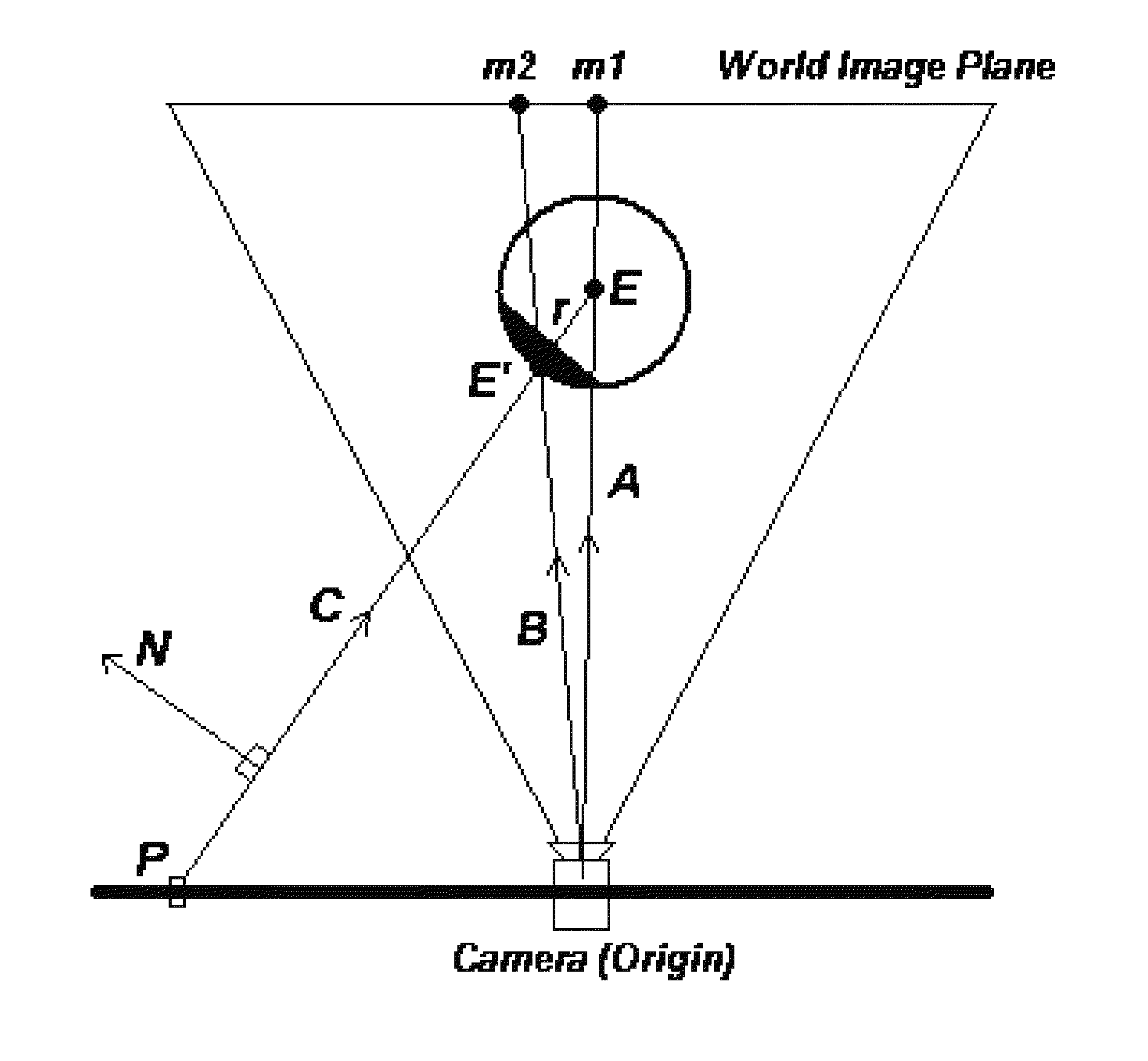

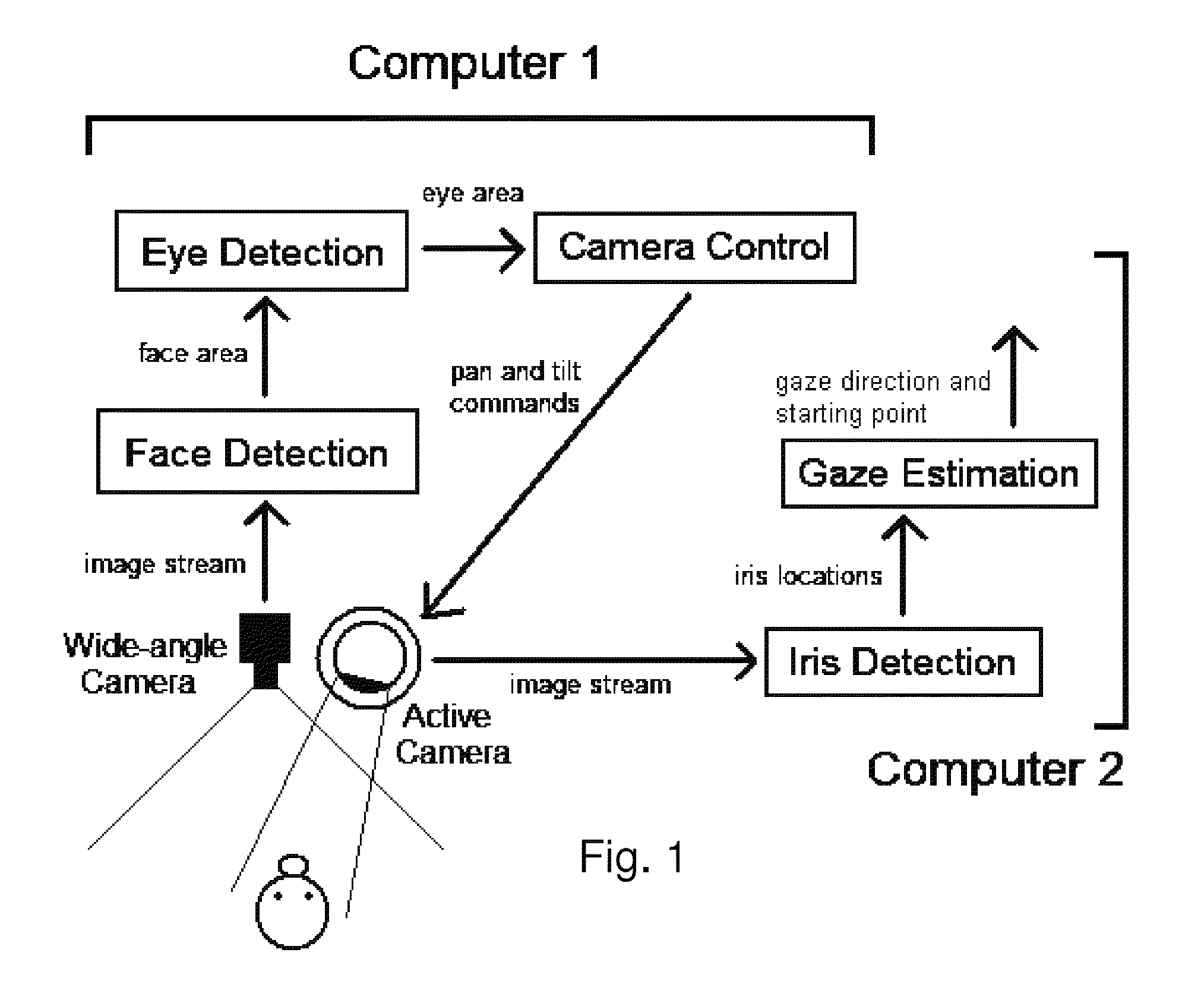

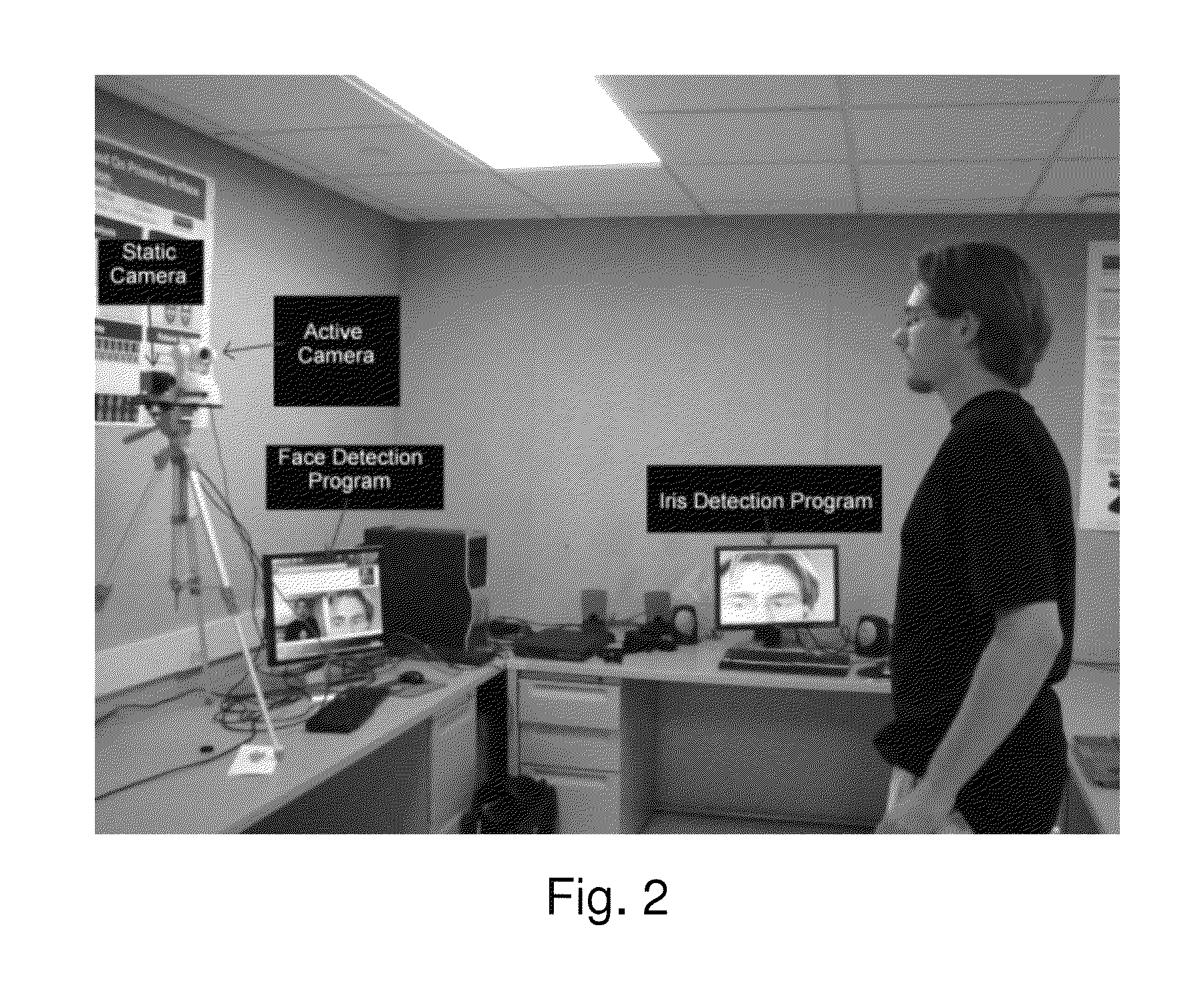

Real time eye tracking for human computer interaction

ActiveUS8885882B1Improve interactivityMore intelligent behaviorImage enhancementImage analysisOptical axisGaze directions

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

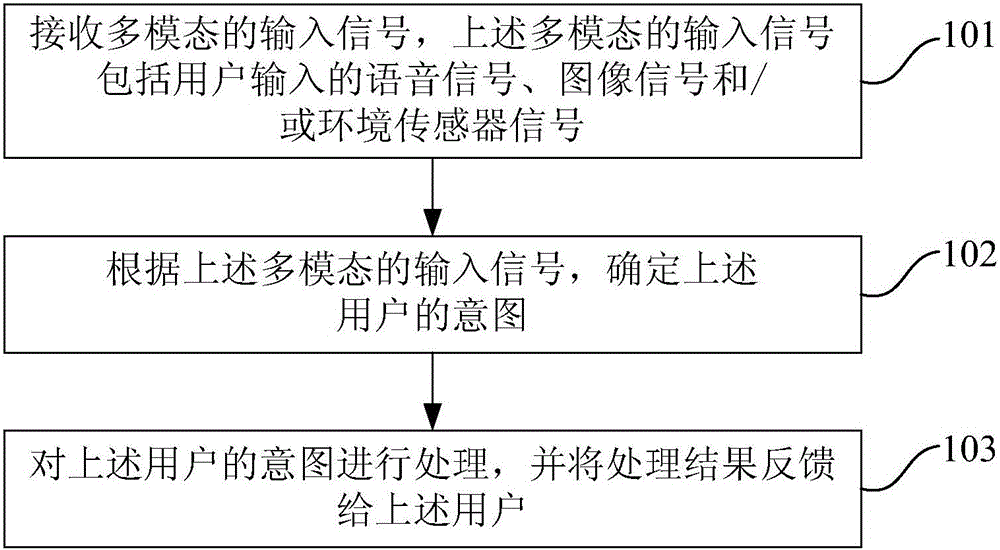

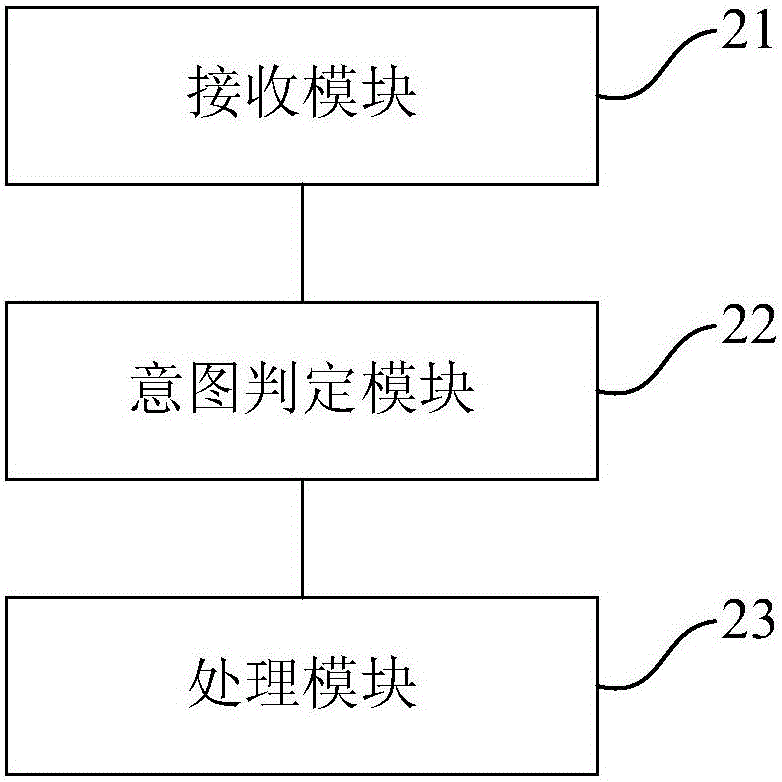

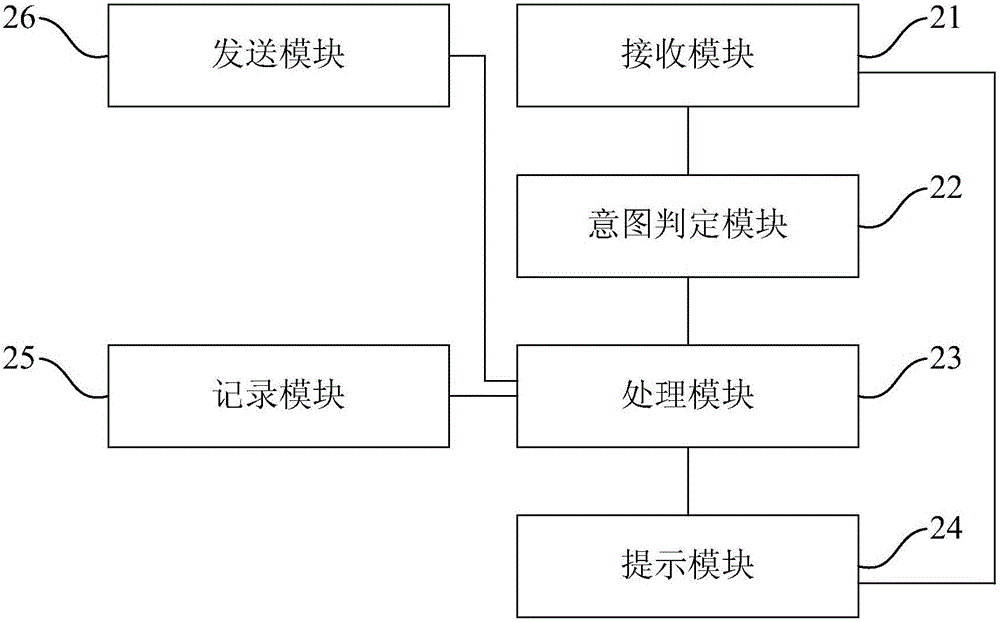

Man-machine interaction method and device based on artificial intelligence and terminal equipment

InactiveCN104951077AImprove experienceGood human-computer interaction functionInput/output for user-computer interactionArtificial lifeUser inputTerminal equipment

The invention provides a man-machine interaction method and device based on artificial intelligence and terminal equipment. The man-machine interaction method based on artificial intelligence comprises the steps of receiving multi-modal input signals, wherein the multi-modal input signals comprise voice signals, image signals and / or environment sensor signals input by a user; determining the intention of the user according to the multi-modal input signals; processing the intention of the user and feeding the processing result back to the user. The man-machine interaction method and device based on artificial intelligence and the terminal equipment can achieve the good man-machine interaction function and high-functioning and high-accompany type intelligent man-machine interaction.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

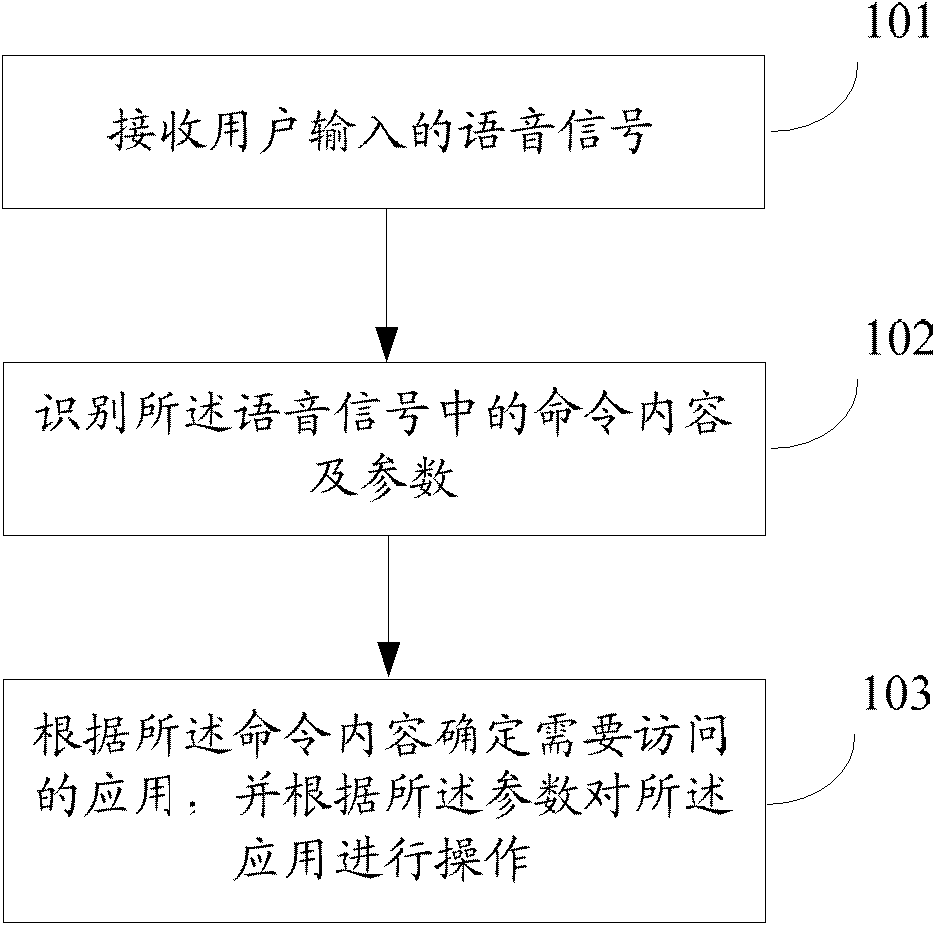

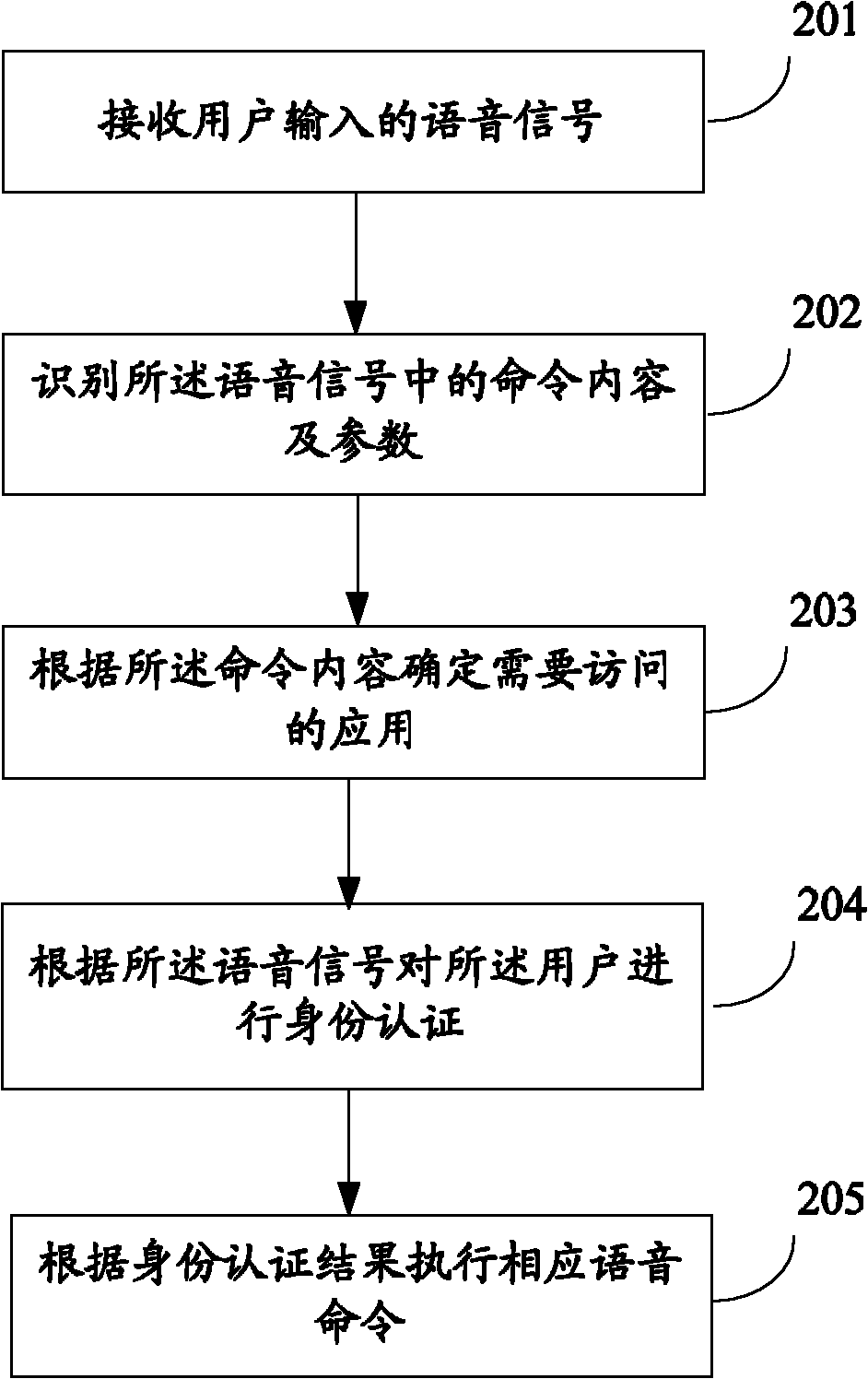

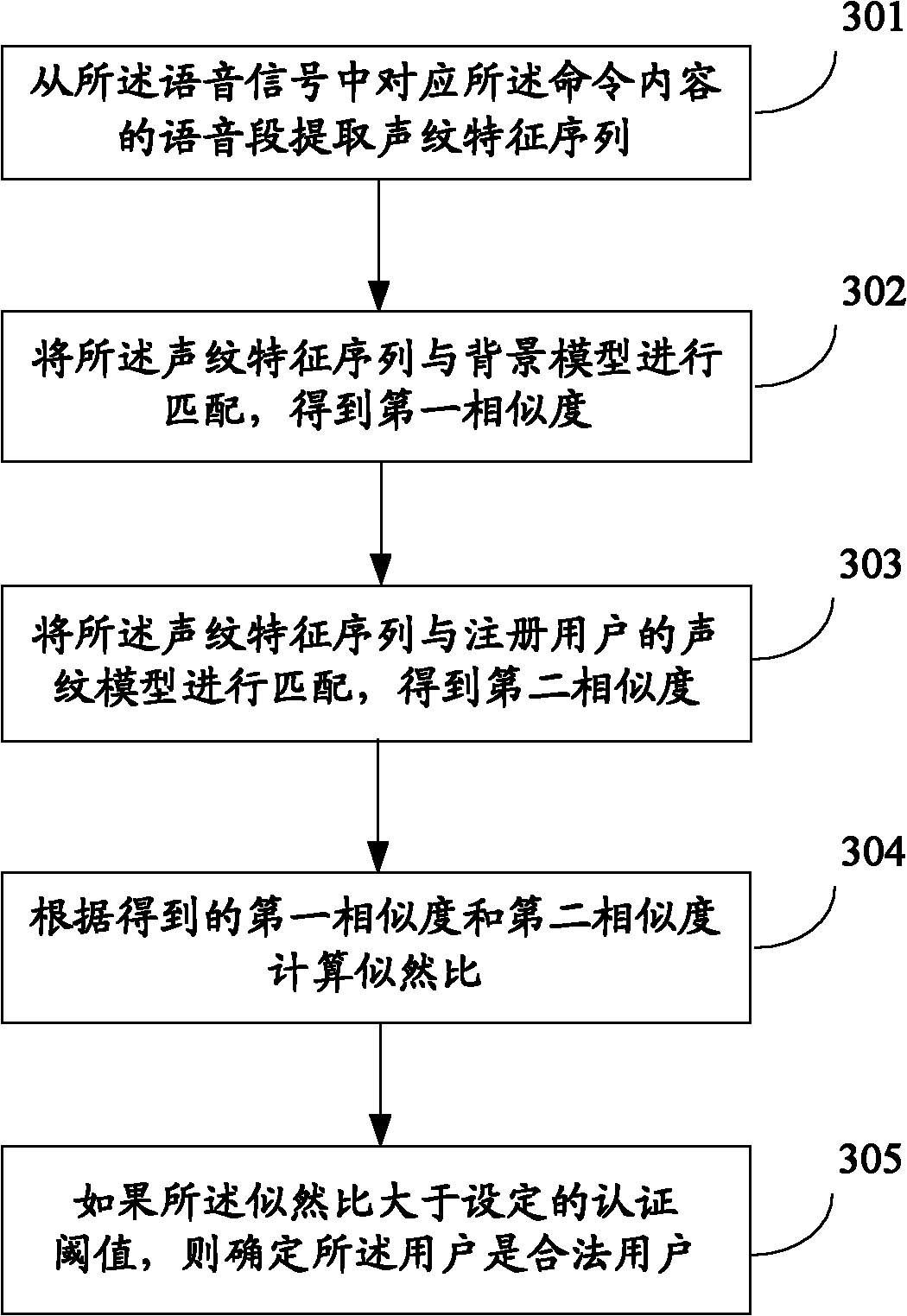

Personal assistant application access method and system

InactiveCN102510426ARealize the function of personal virtual assistantEfficient shortcut command orientationSpeech analysisSubstation equipmentAccess methodUser input

The invention relates to the technical field of application access and discloses a personal assistant application access method and system. The method comprises the following steps: receiving a voice signal input by a user; identifying command content and parameters in the voice signal; according to the command content, determining application which needs to access; and according to the parameters, operating the application. By utilizing the personal assistant application access method and system disclosed by the invention, the human-computer interaction efficiency can be improved.

Owner:IFLYTEK CO LTD

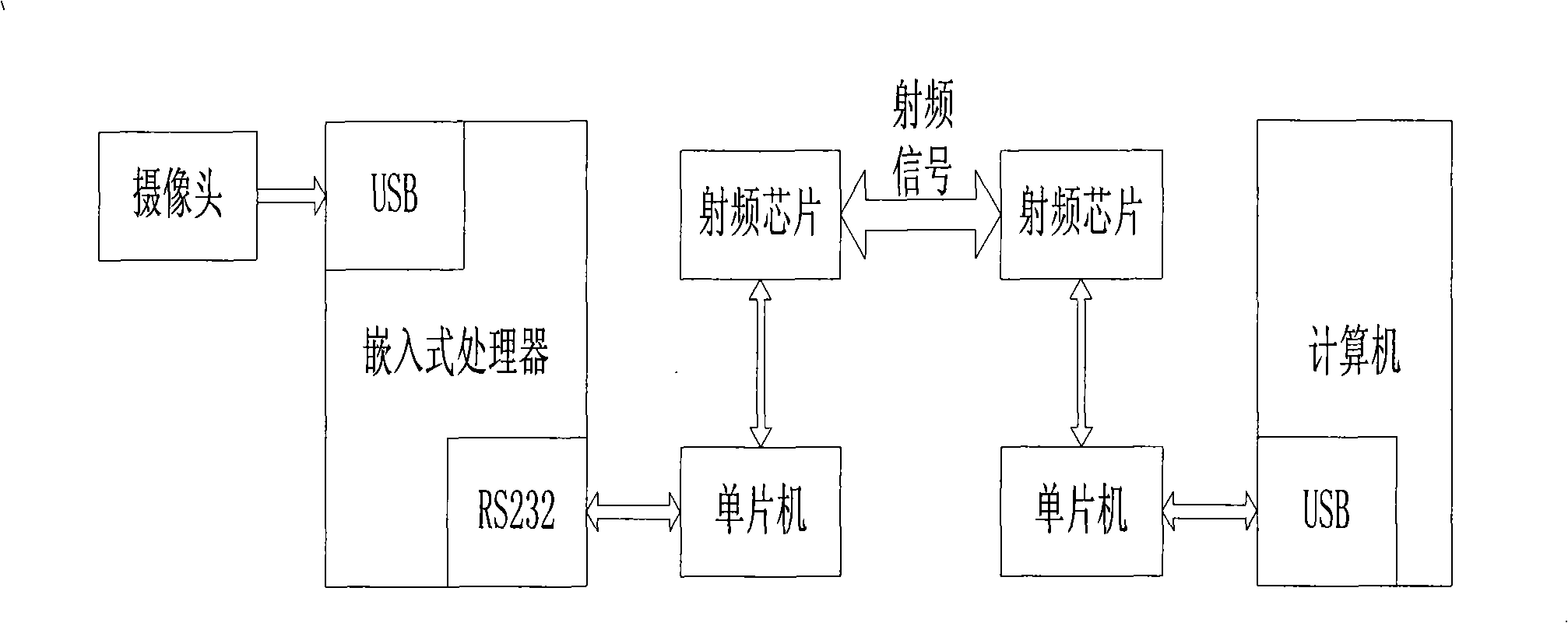

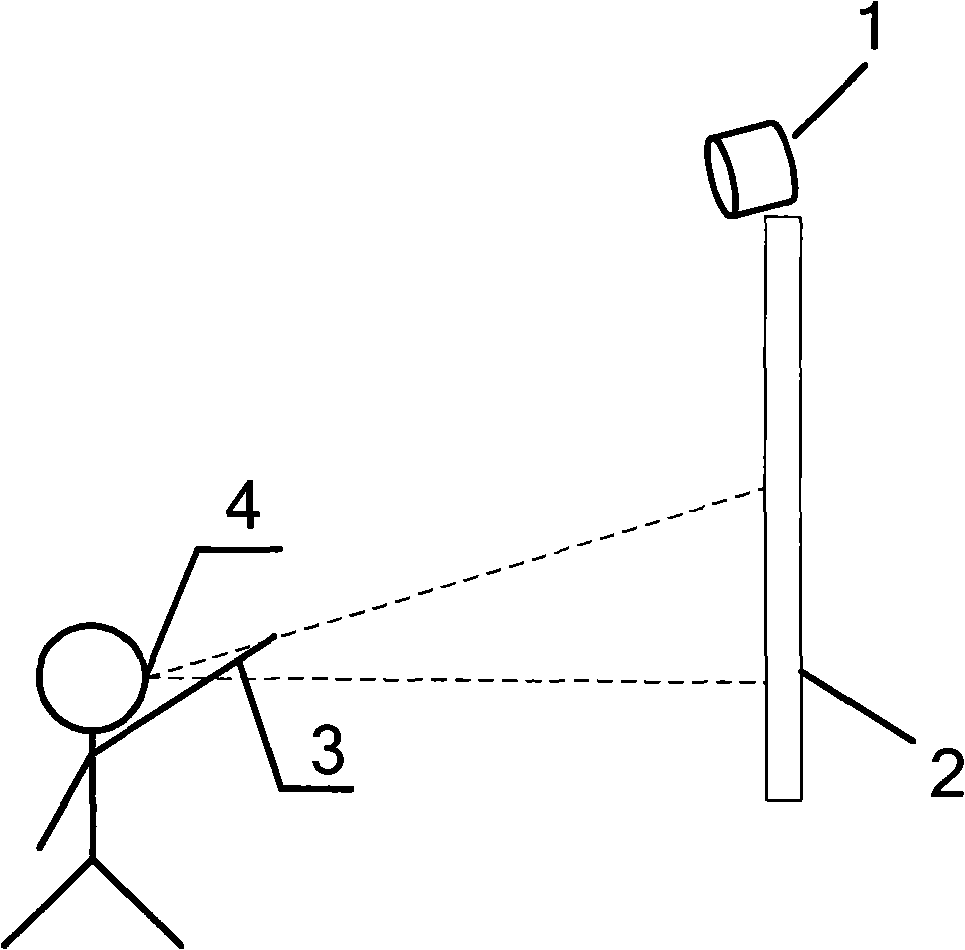

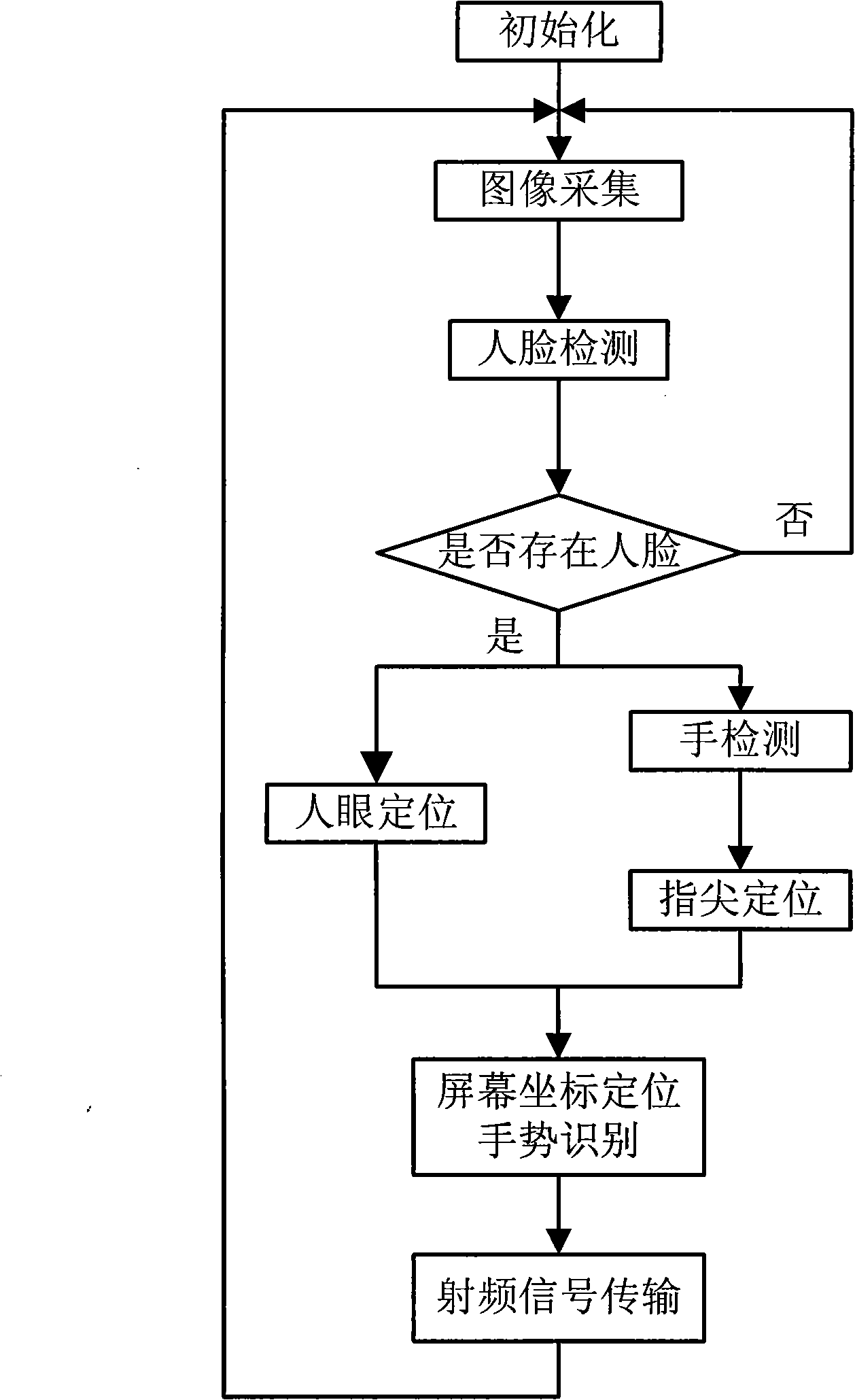

Human-machine interaction method and device based on sight tracing and gesture discriminating

InactiveCN101344816AEasy to controlSolve bugs that limit users' freedom of useInput/output for user-computer interactionCharacter and pattern recognitionImaging processingWireless transmission

The invention discloses a human-computer interaction method and a device based on vision follow-up and gesture identification. The method comprises the following steps of: facial area detection, hand area detection, eye location, fingertip location, screen location and gesture identification. A straight line is determined between an eye and a fingertip; the position where the straight line intersects with the screen is transformed into the logic coordinate of the mouse on the screen, and simultaneously the clicking operation of the mouse is simulated by judging the pressing action of the finger. The device comprises an image collection module, an image processing module and a wireless transmission module. First, the image of a user is collected at real time by a camera and then analyzed and processed by using an image processing algorithm to transform positions the user points to the screen and gesture changes into logic coordinates and control orders of the computer on the screen; and then the processing results are transmitted to the computer through the wireless transmission module. The invention provides a natural, intuitive and simple human-computer interaction method, which can realize remote operation of computers.

Owner:SOUTH CHINA UNIV OF TECH

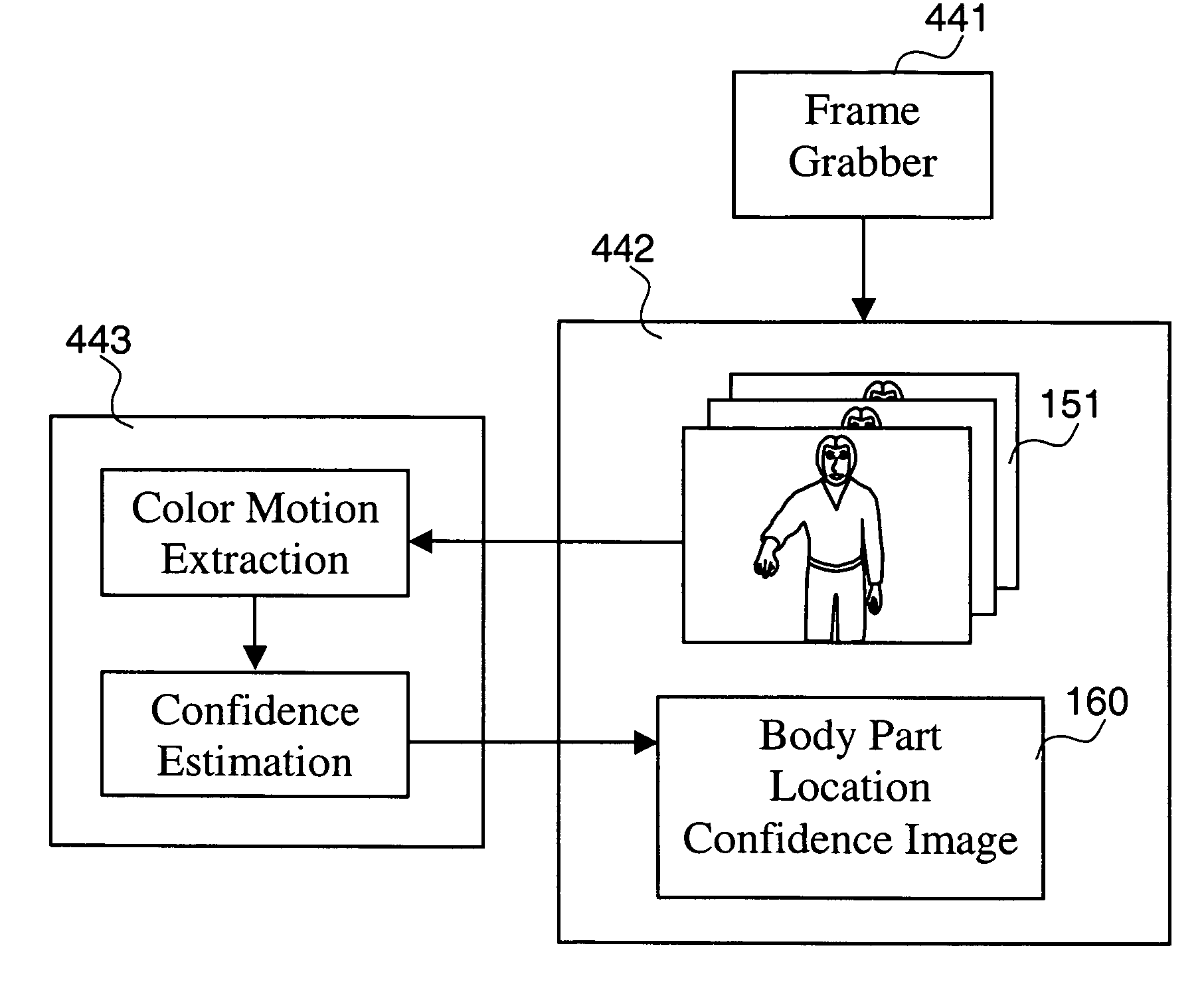

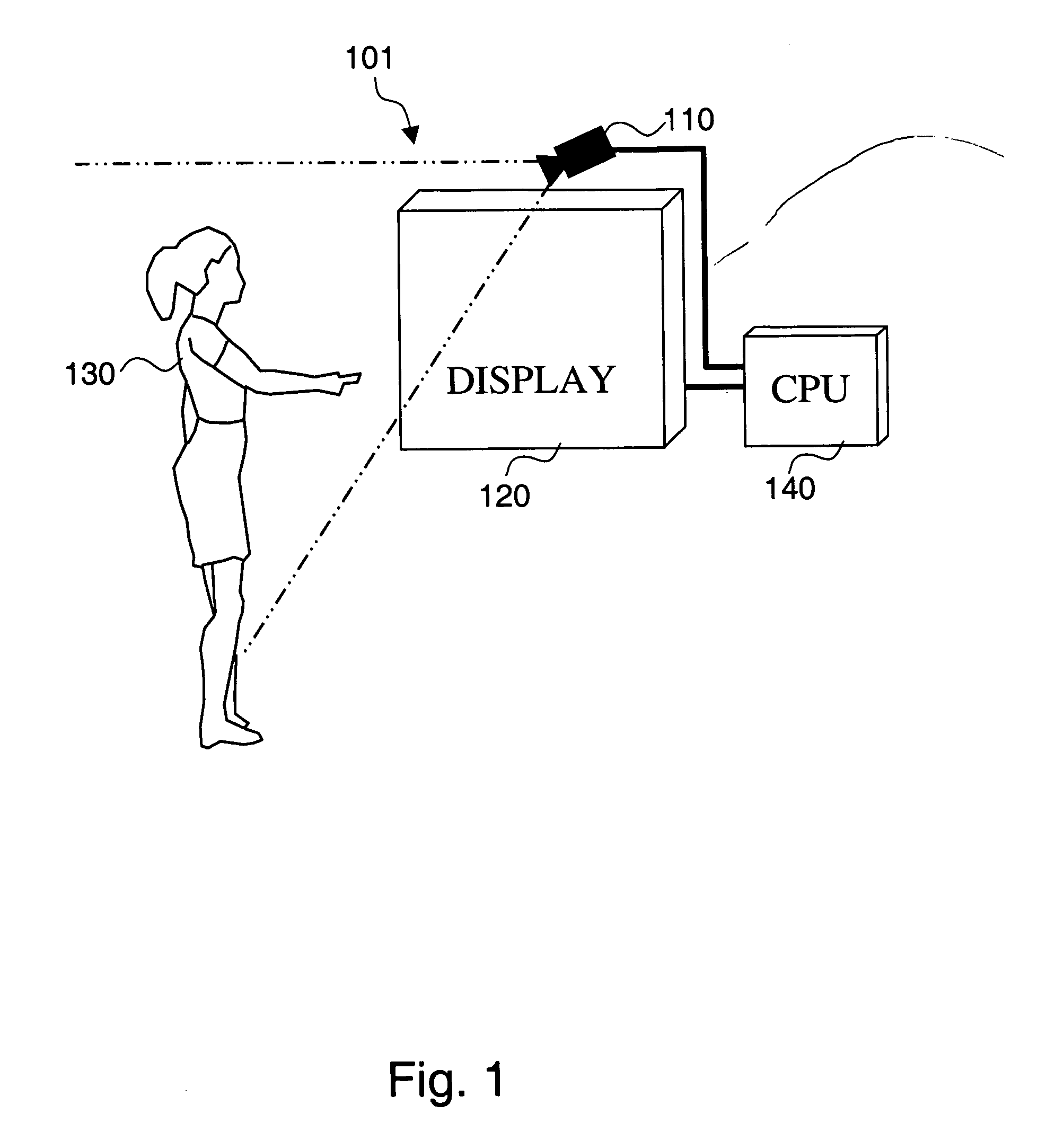

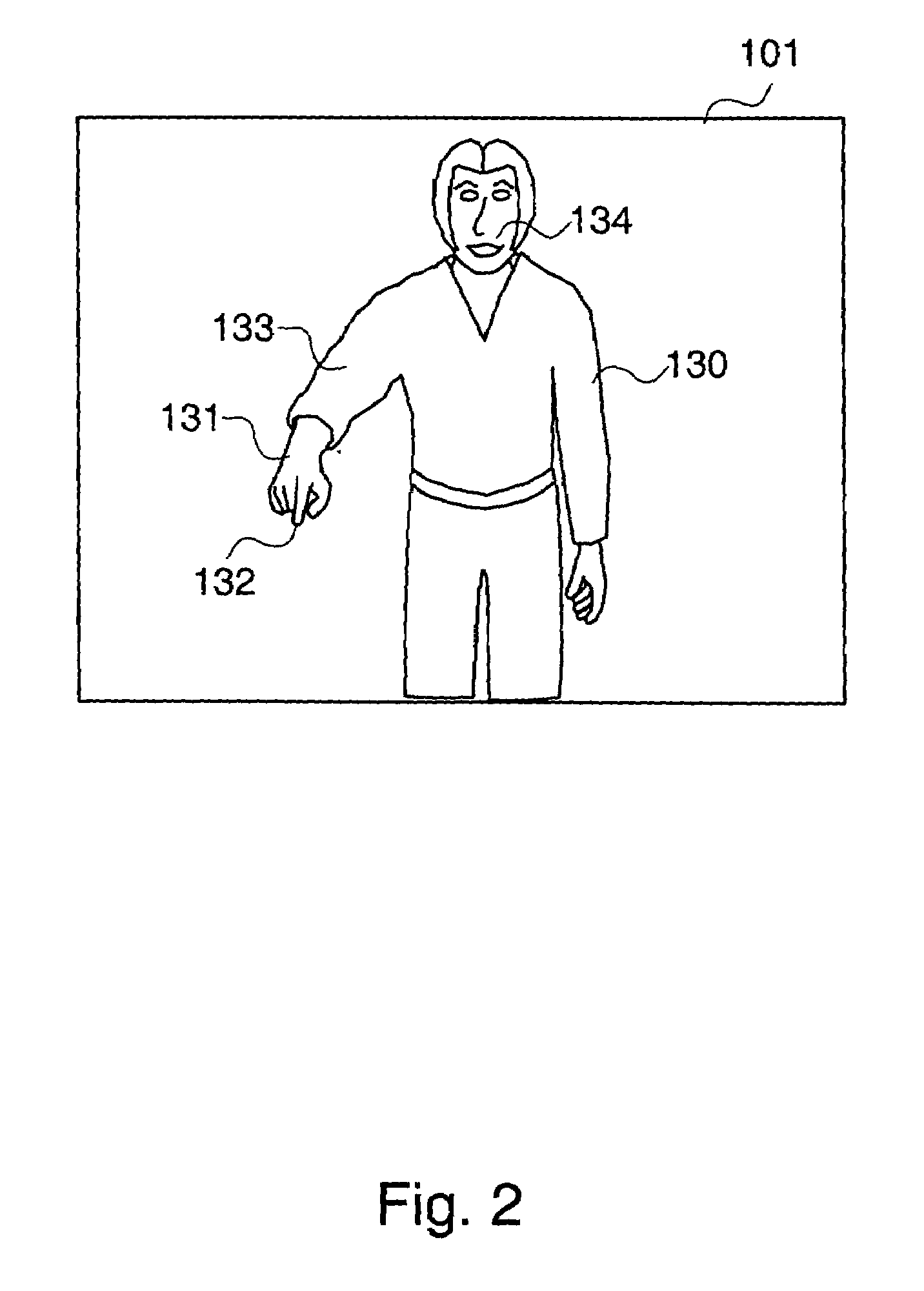

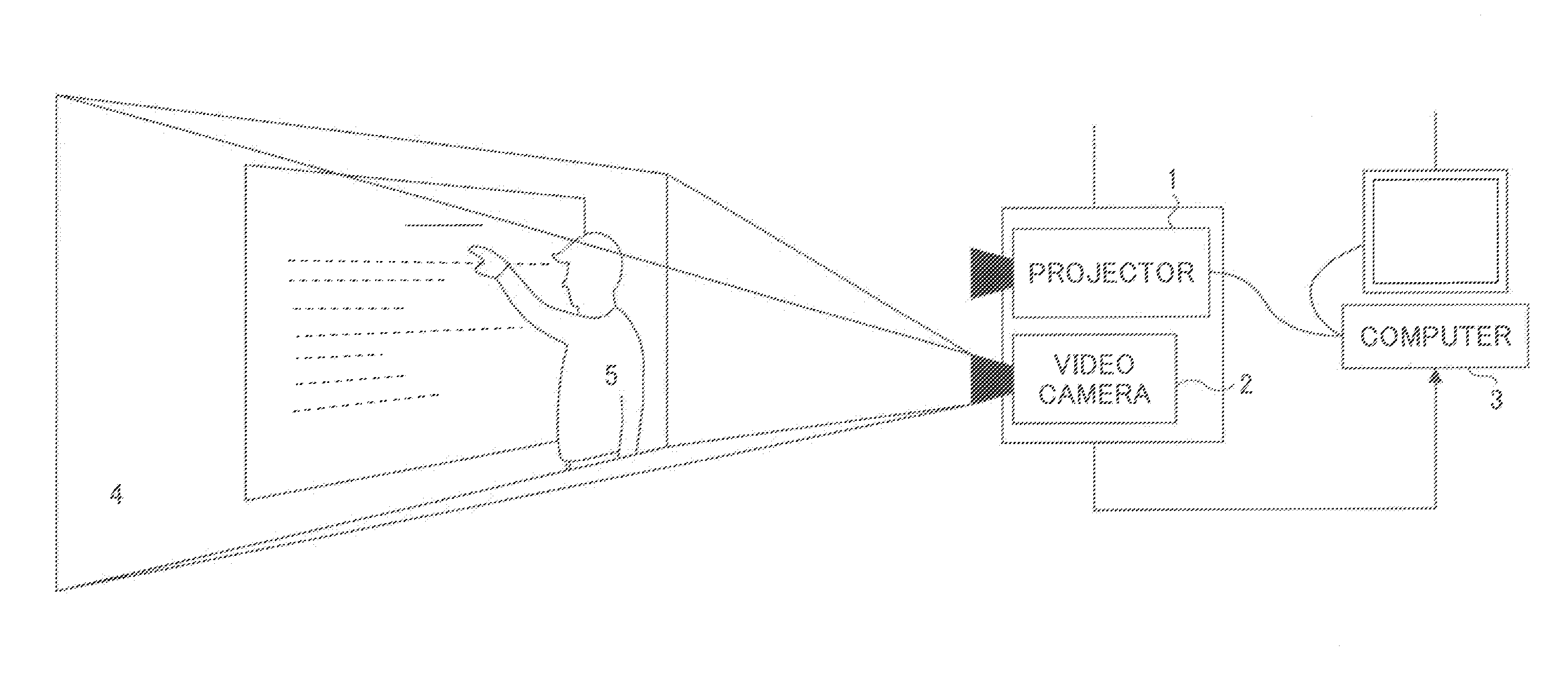

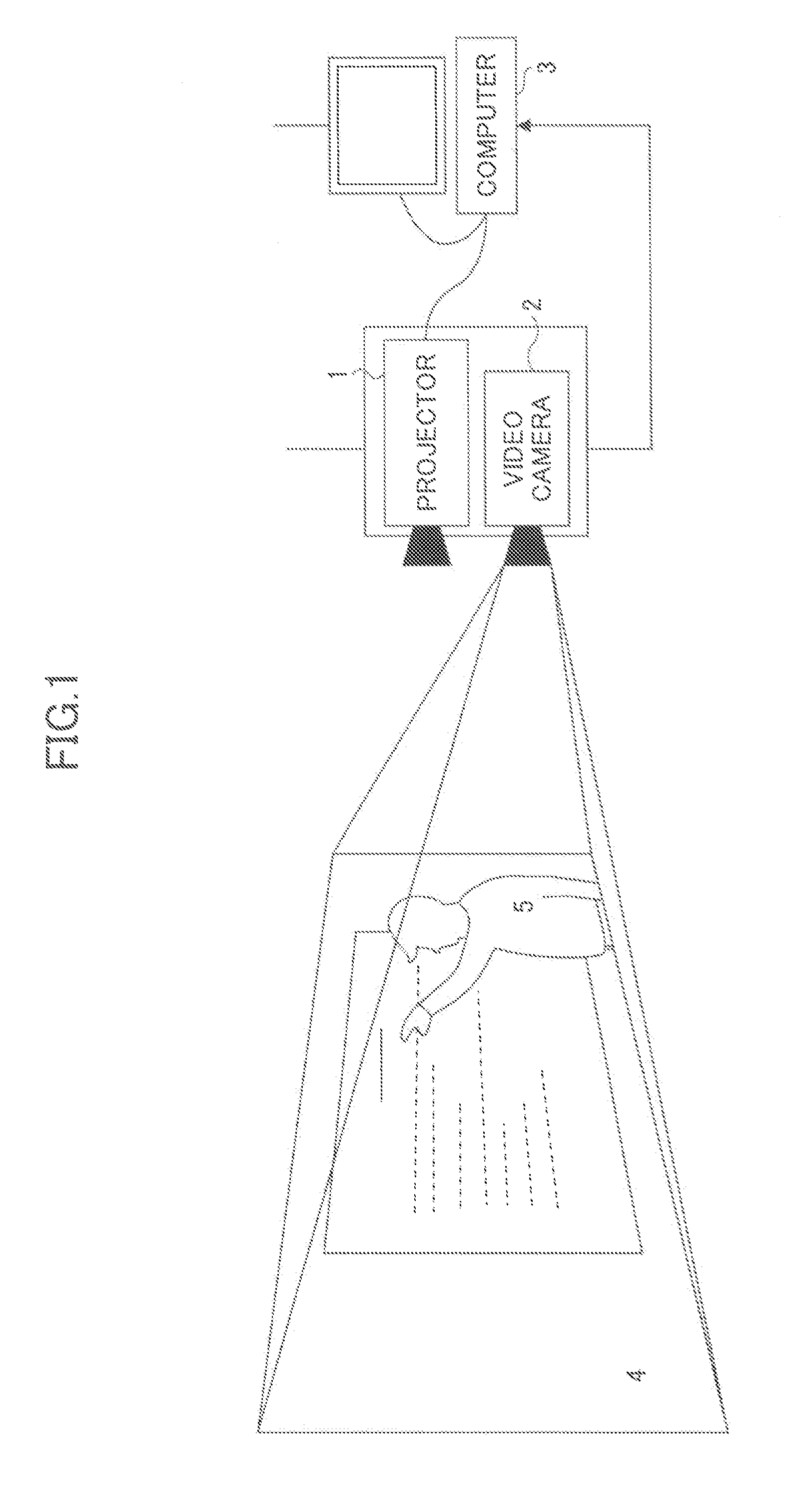

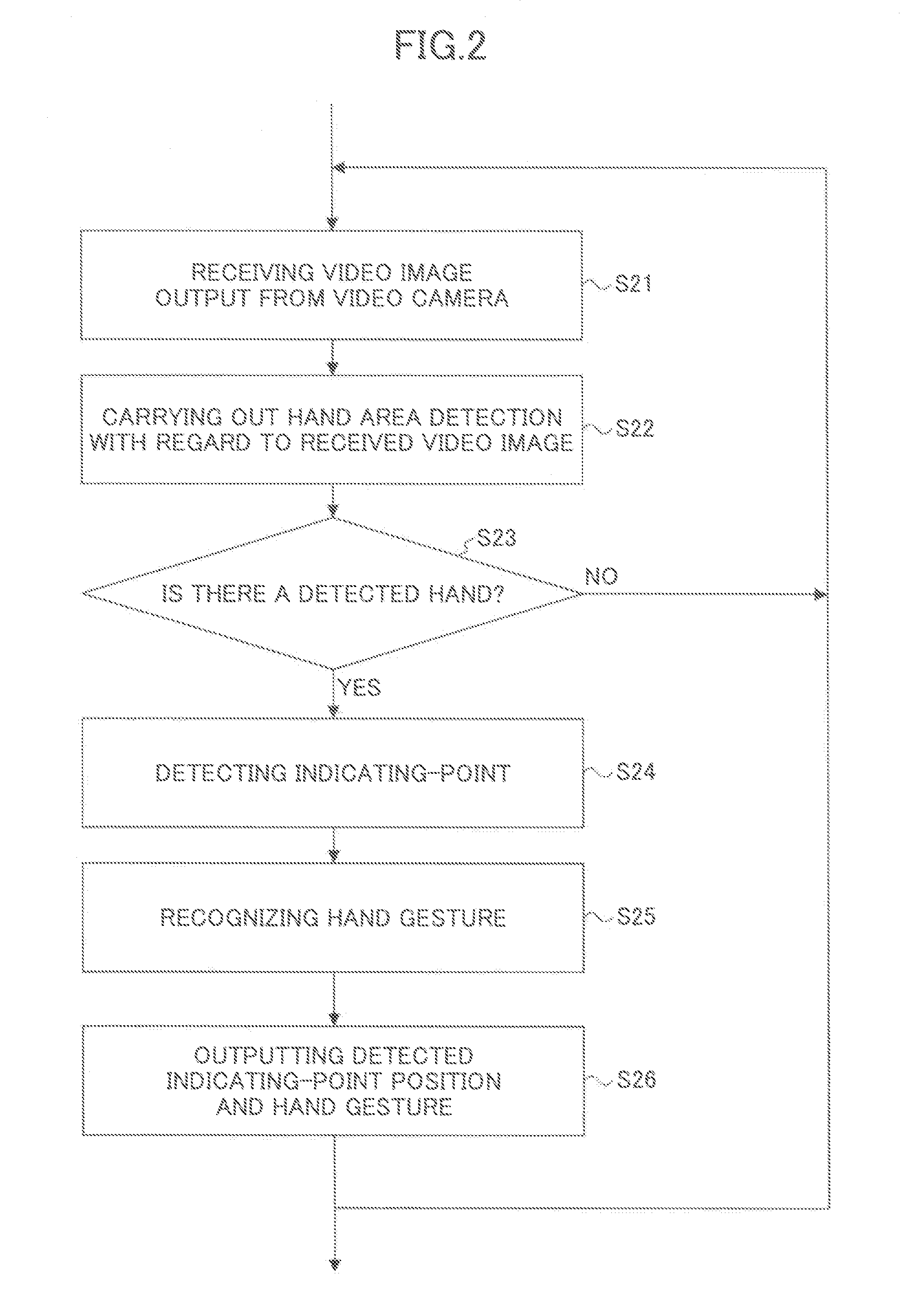

Method and system for detecting conscious hand movement patterns and computer-generated visual feedback for facilitating human-computer interaction

ActiveUS7274803B1Effective visual feedbackEfficient feedbackCharacter and pattern recognitionColor television detailsContact freePublic place

The present invention is a system and method for detecting and analyzing motion patterns of individuals present at a multimedia computer terminal from a stream of video frames generated by a video camera and the method of providing visual feedback of the extracted information to aid the interaction process between a user and the system. The method allows multiple people to be present in front of the computer terminal and yet allow one active user to make selections on the computer display. Thus the invention can be used as method for contact-free human-computer interaction in a public place, where the computer terminal can be positioned in a variety of configurations including behind a transparent glass window or at a height or location where the user cannot touch the terminal physically.

Owner:F POSZAT HU

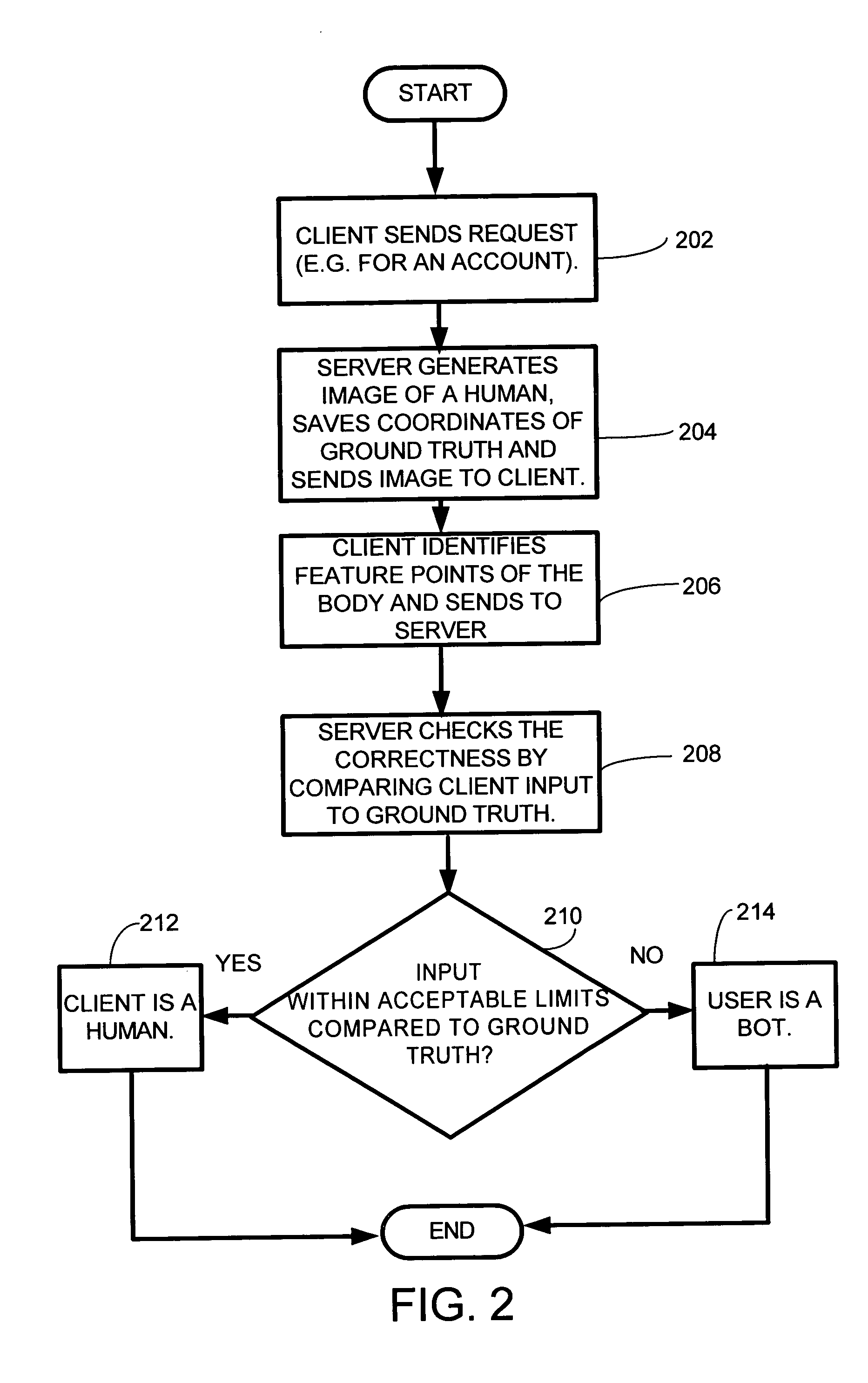

System and method for devising a human interactive proof that determines whether a remote client is a human or a computer program

InactiveUS20050065802A1Character and pattern recognitionComputer security arrangementsGuidelineUsability

A system and method for automatically determining if a remote client is a human or a computer. A set of HIP design guidelines which are important to ensure the security and usability of a HIP system are described. Furthermore, one embodiment of this new HIP system and method is based on human face and facial feature detection. Because human face is the most familiar object to all human users the embodiment of the invention employing a face is possibly the most universal HIP system so far.

Owner:MICROSOFT TECH LICENSING LLC

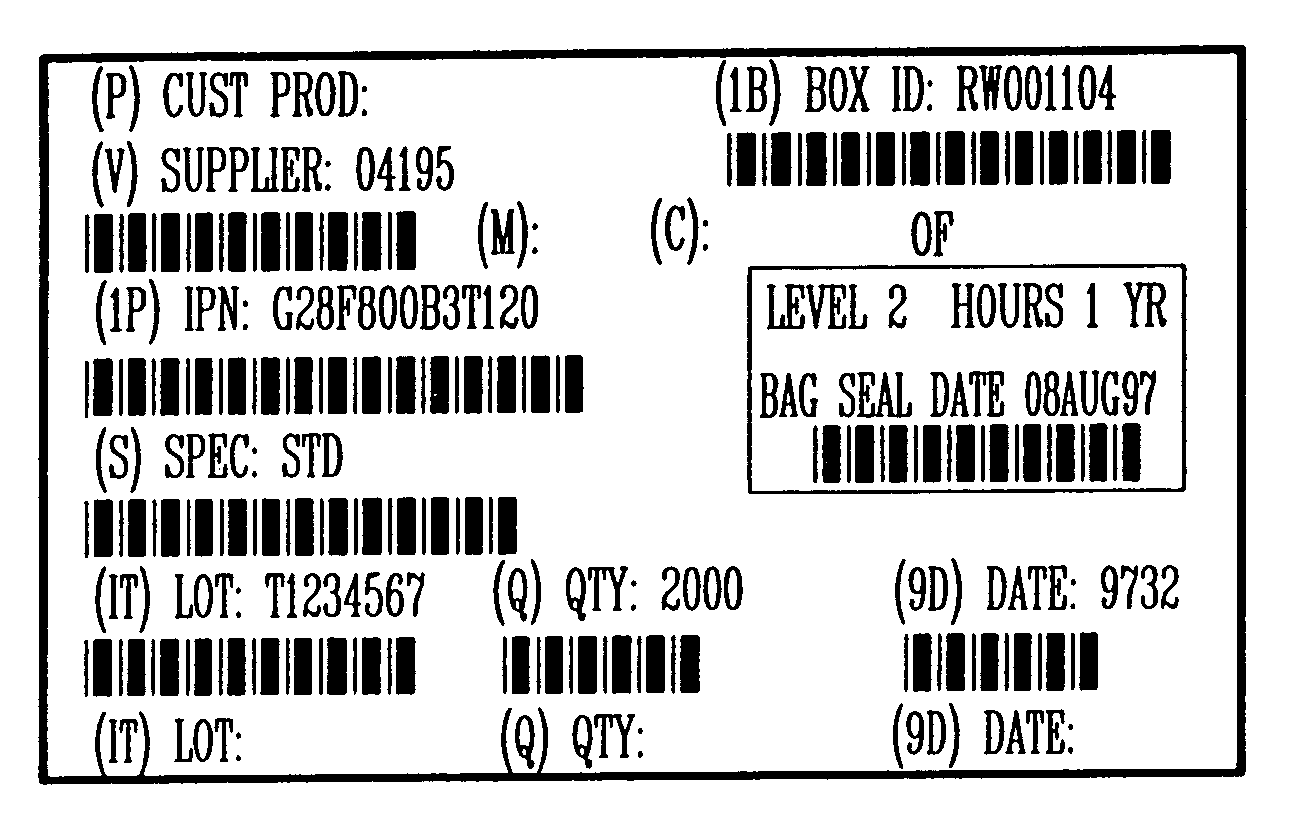

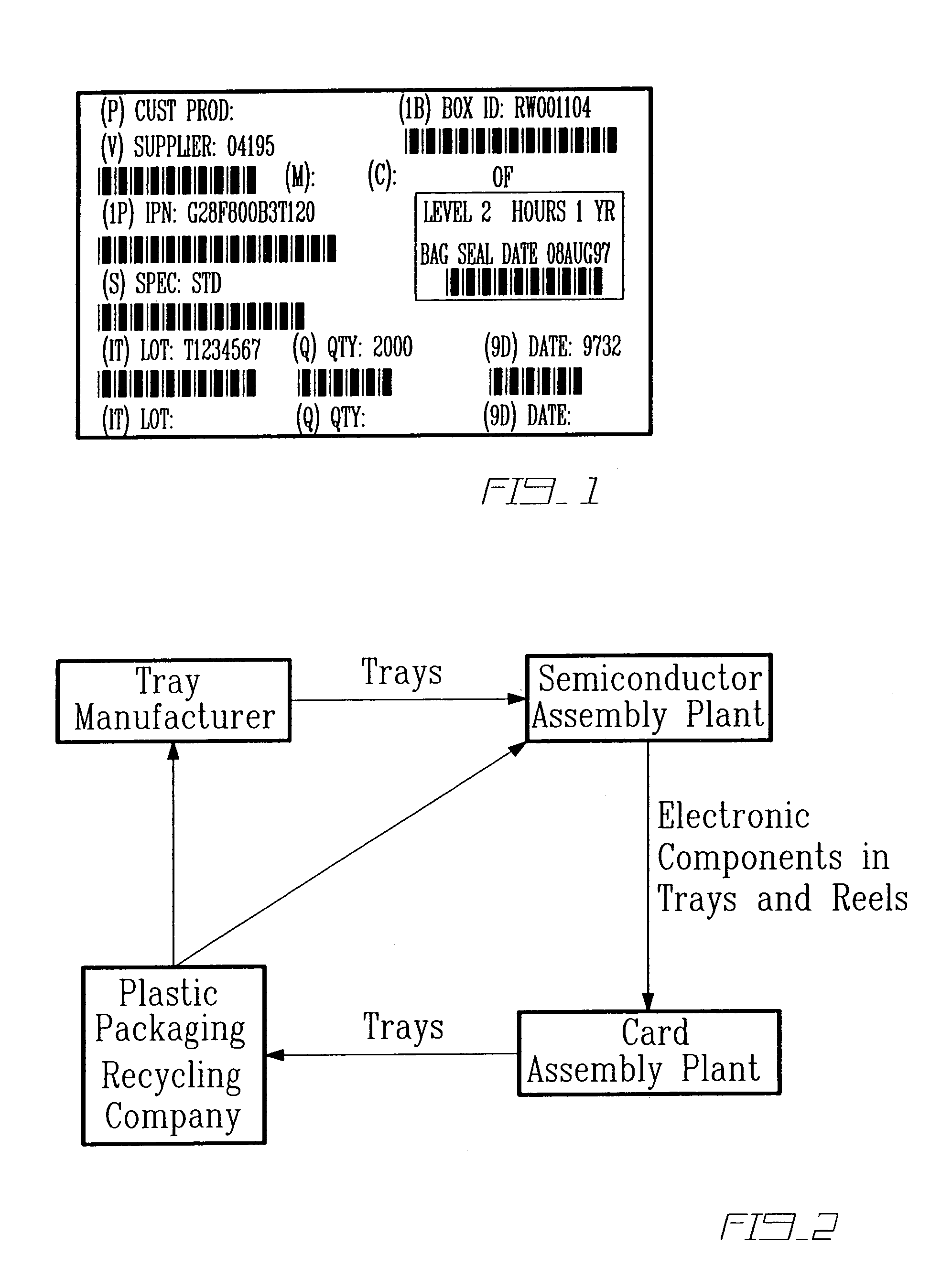

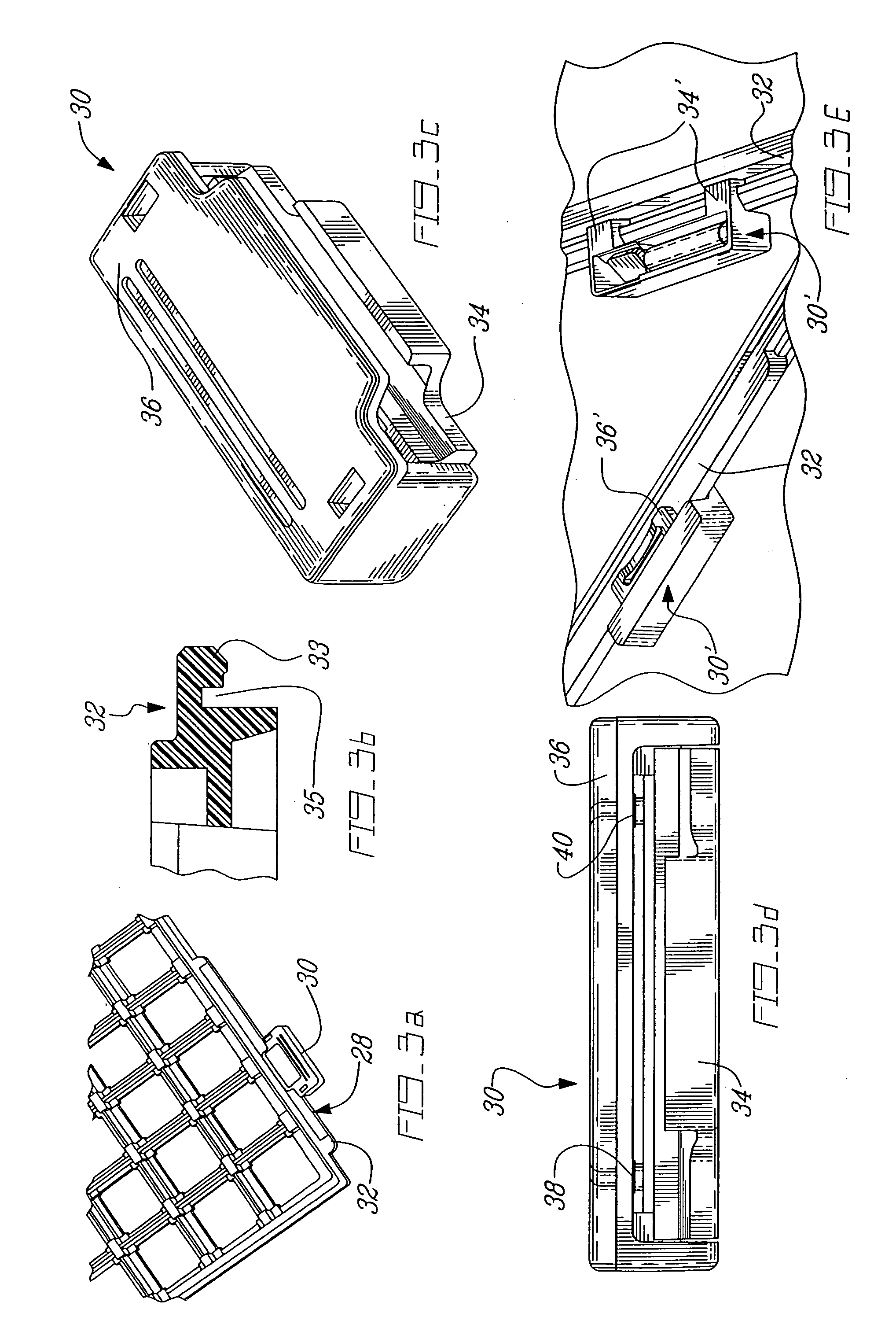

Automated manufacturing control system

InactiveUS7069100B2Reduce human interactionDigital data processing detailsTotal factory controlHuman interactionMachine

An automated manufacturing control system is proposed to greatly reduce the human interaction relative to the data transfer, physical verification and process control associated with the movement of components, tooling and operators in a manufacturing system. This is achieved by the use of data carriers which are attached to the object(s) to be traced. These data carriers (12) can store all the relevant identification, material and production data to required by the various elements, e.g. stations, of the manufacturing system. Various readers, integrated with controllers and application software, are located at strategic points of the production area, including production machines and storage areas, to enable automatic data transfer and physical verification that the right material is at the right place at the right time, using the right tooling.

Owner:COGISCAN

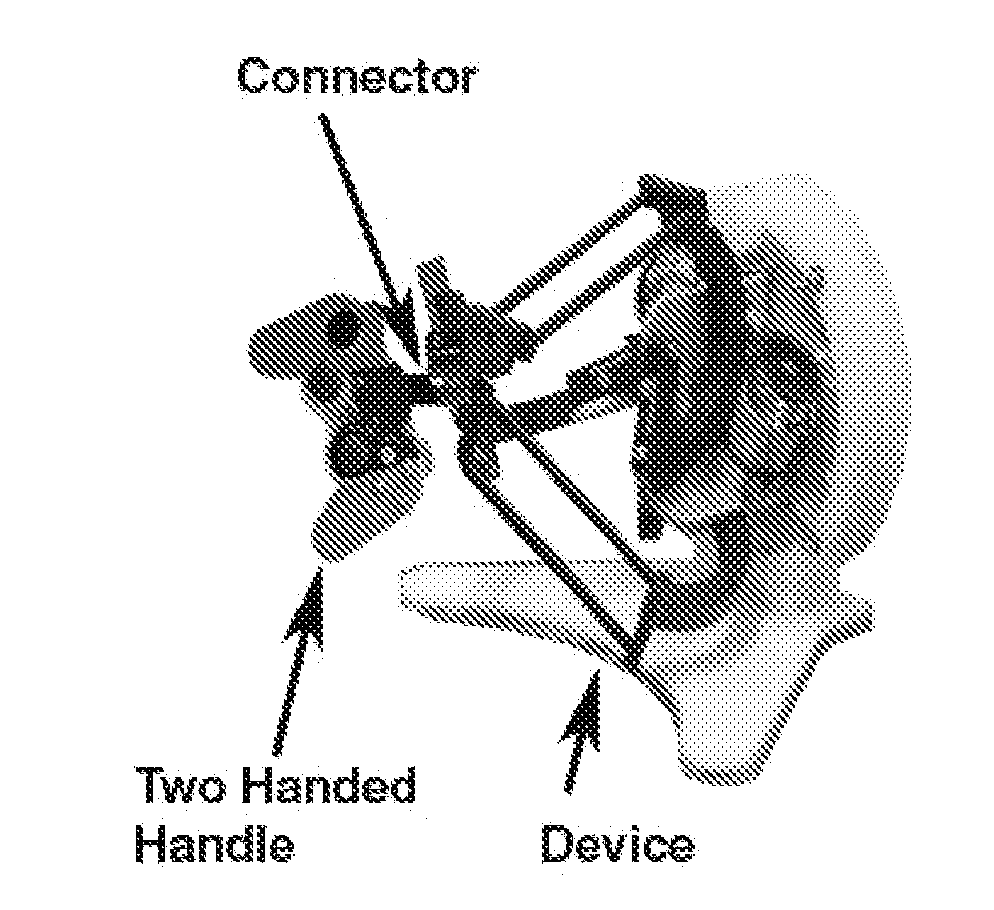

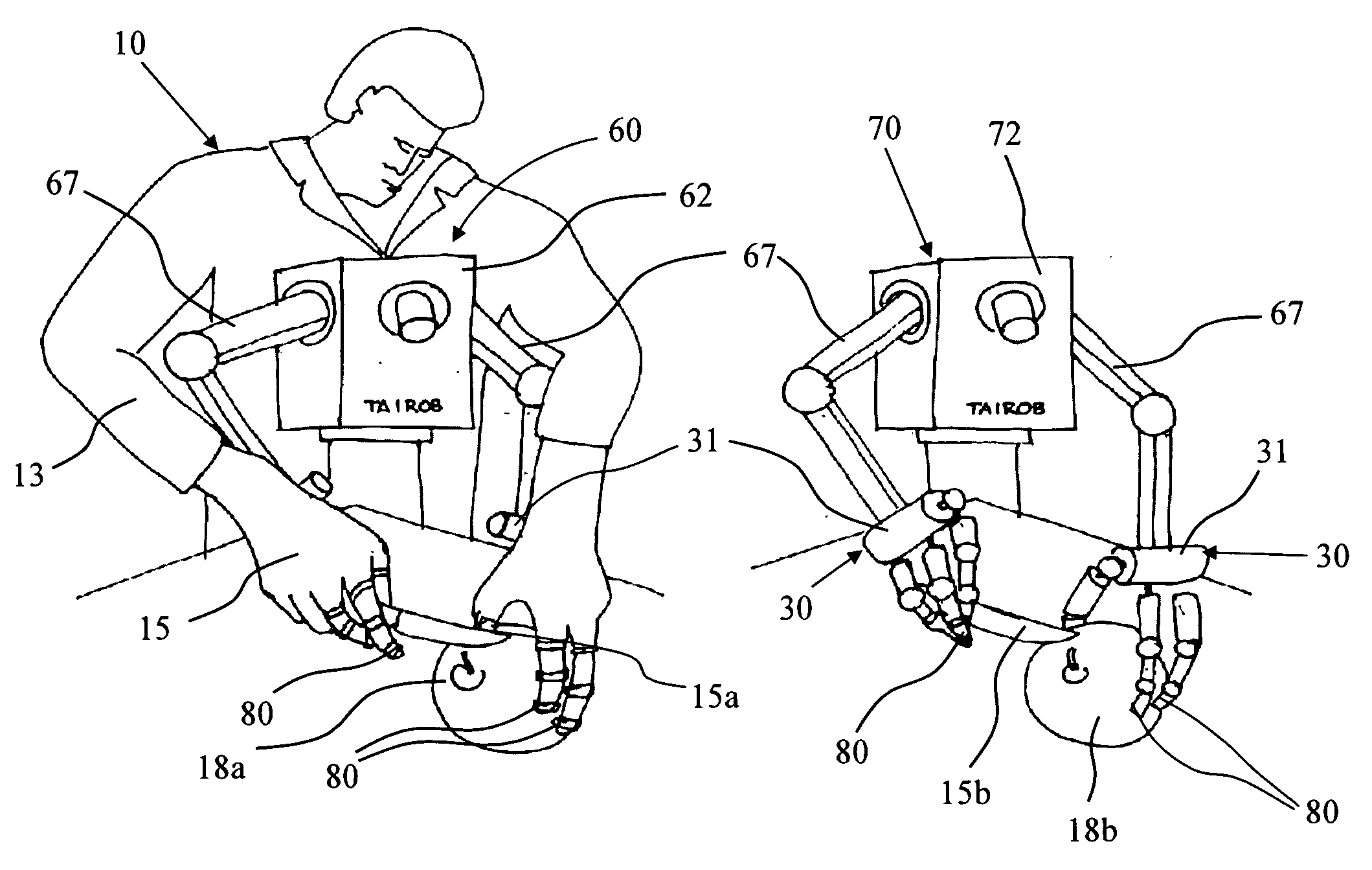

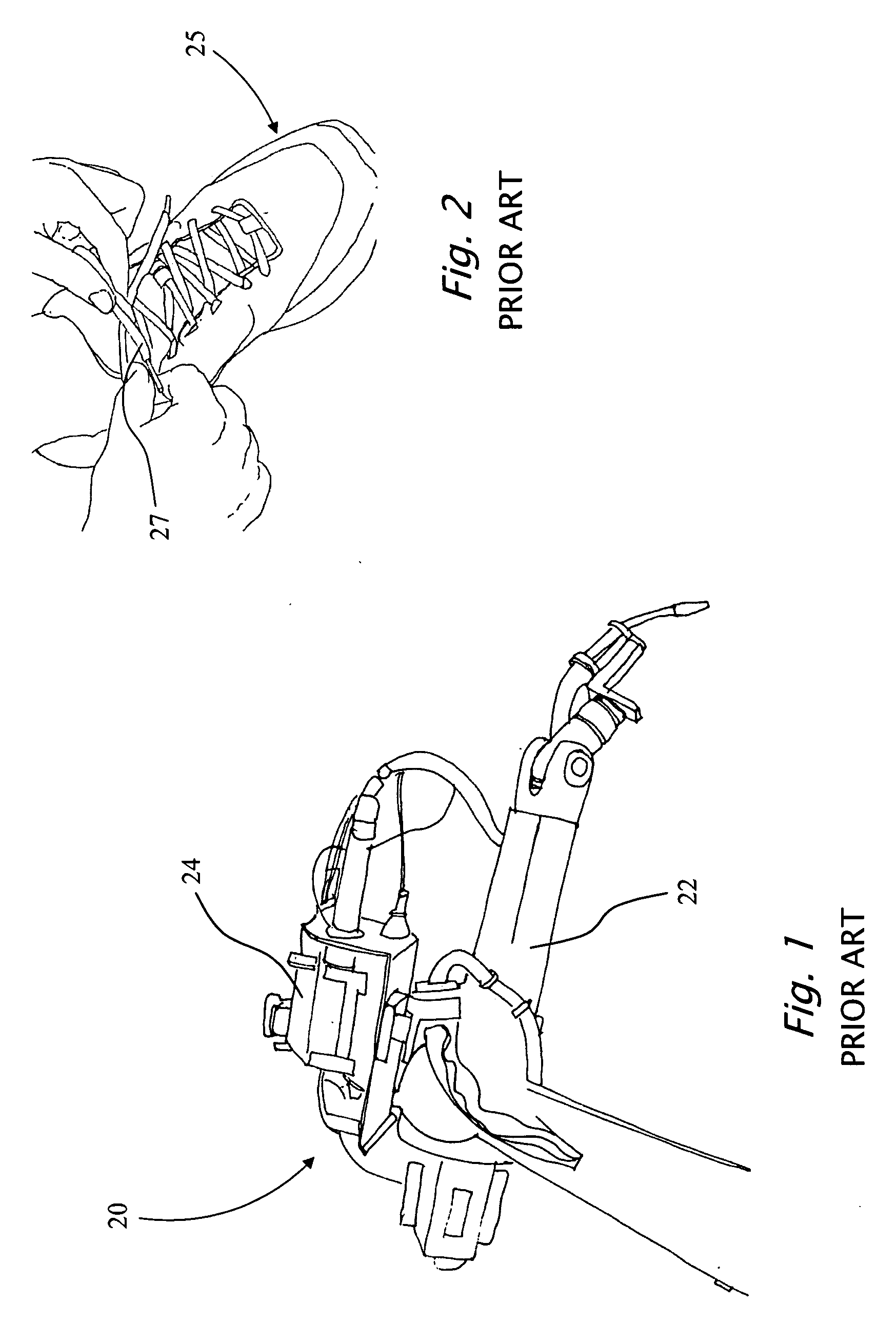

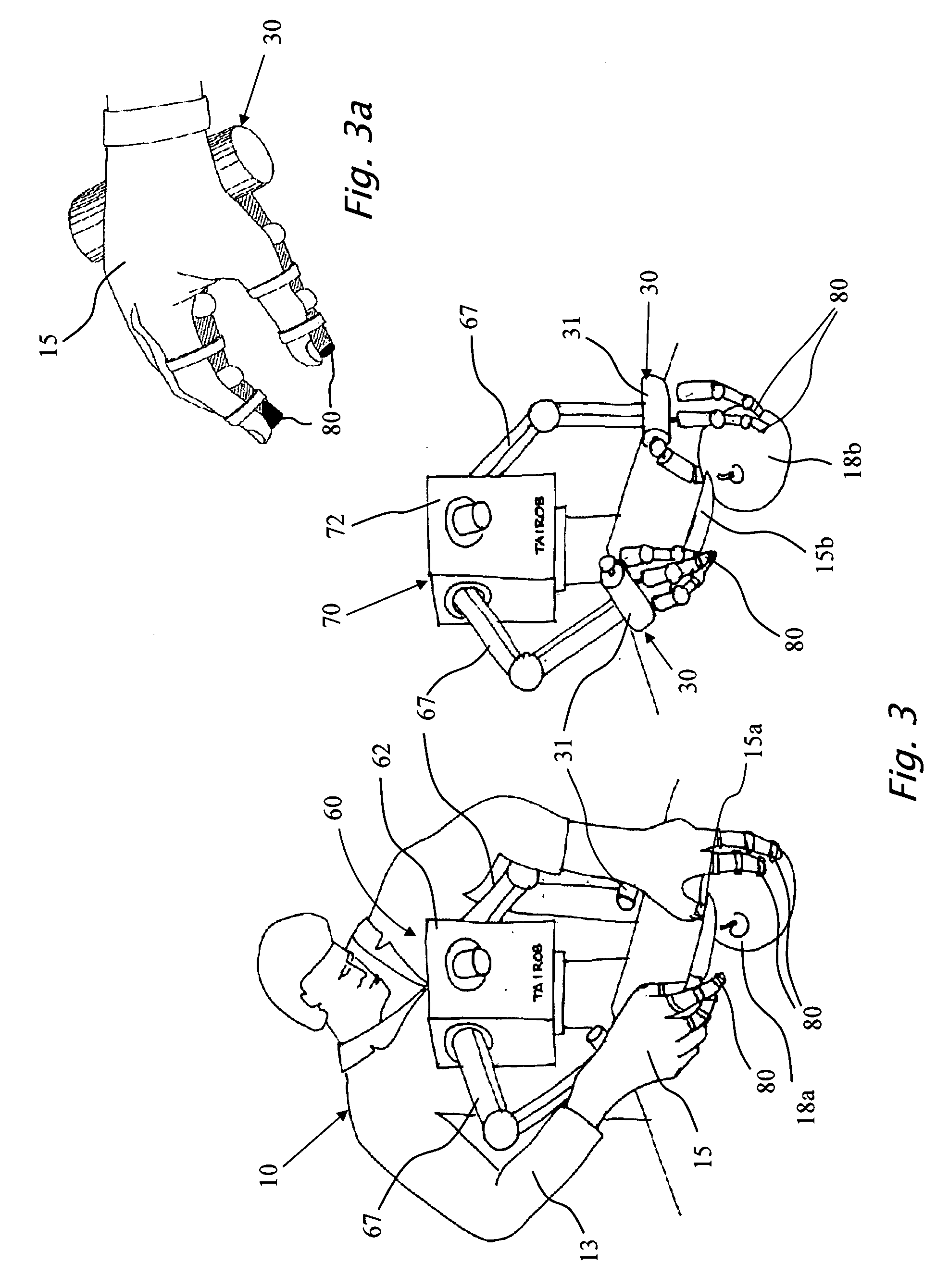

Transfer of knowledge from a human skilled worker to an expert machine - the learning process

InactiveUS20090132088A1Improve operational sensitivityComputer controlSimulator controlSoftware engineeringLearning methods

A learning environment and method which is a first milestone to an expert machine that implements the master-slave robotic concept. The present invention is of a learning environment and method for teaching the master expert machine by a skilled worker that transfers his professional knowledge to the master expert machine in the form of elementary motions and subdivided tasks. The present invention further provides a stand alone learning environment, where a human wearing one or two innovative gloves equipped with 3D feeling sensors transfers a task performing knowledge to a robot in a different learning process than the Master-Slave learning concept. The 3D force\torque, displacement, velocity\acceleration and joint forces are recorded during the knowledge transfer in the learning environment by a computerized processing unit that prepares the acquired data for mathematical transformations for transmitting commands to the motors of a robot. The objective of the new robotic learning method is a learning process that will pave the way to a robot with a “human-like” tactile sensitivity, to be applied to material handling, or man / machine interaction.

Owner:TAIROB

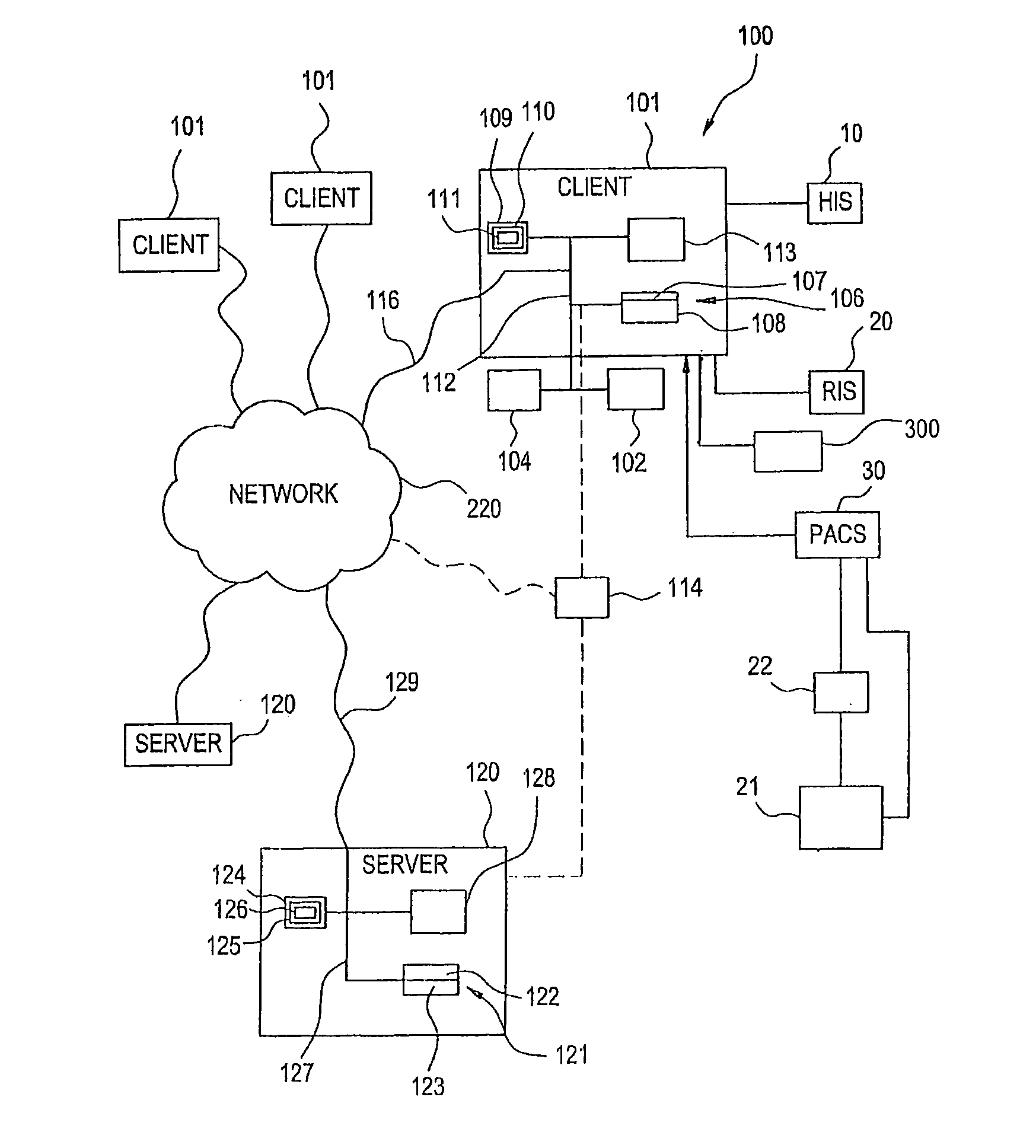

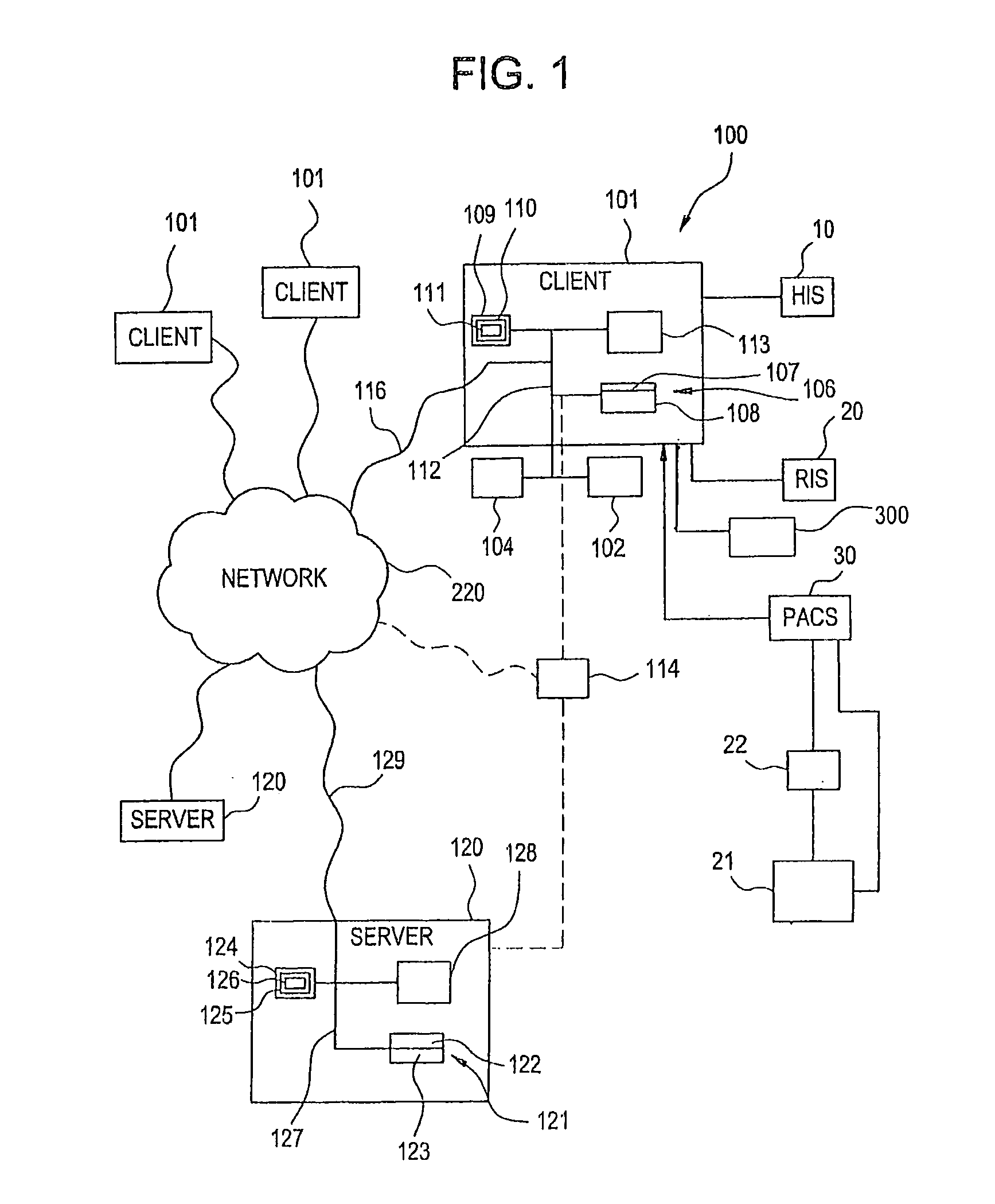

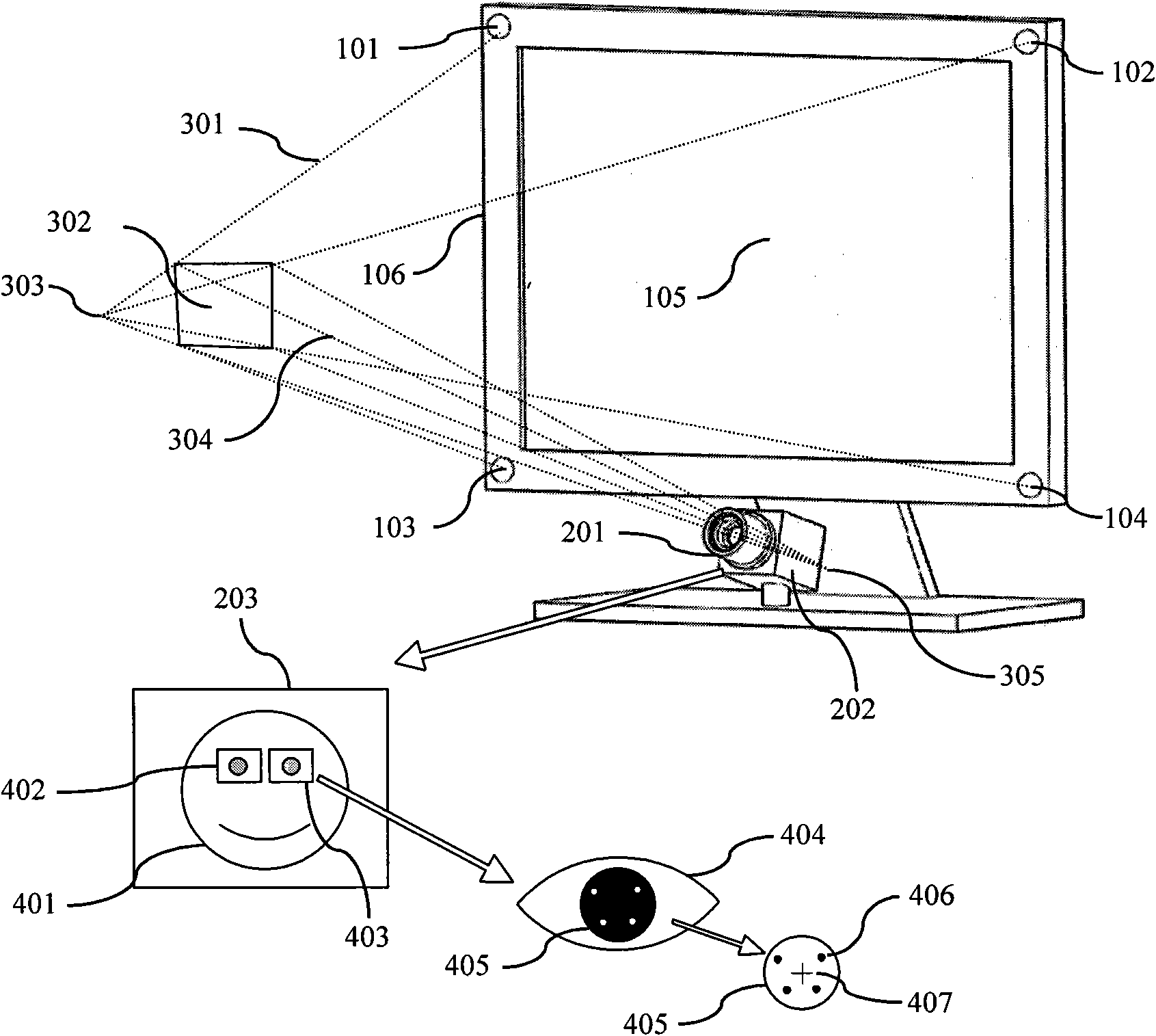

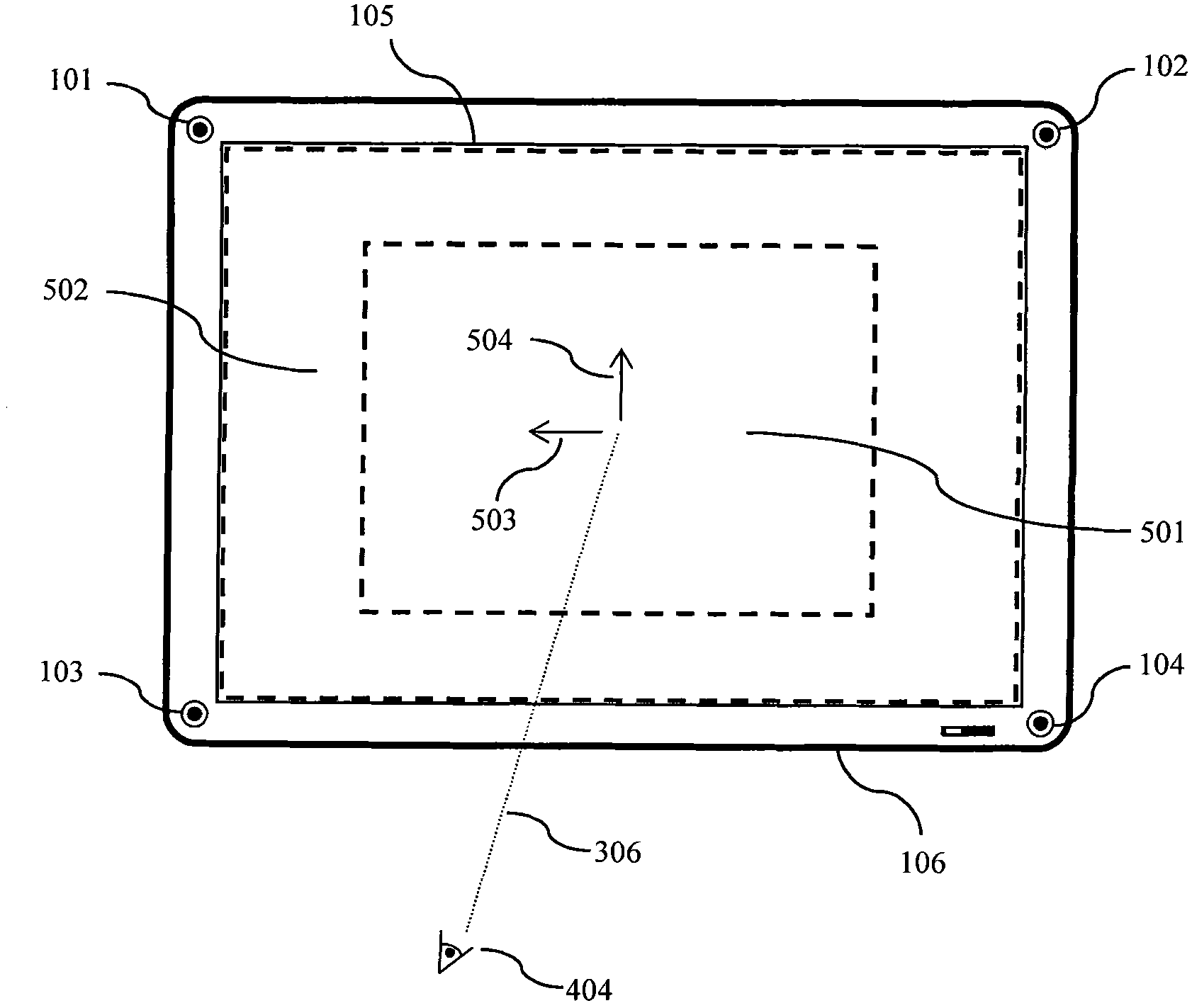

Visually directed human-computer interaction for medical applications

InactiveUS20110270123A1Faster and intuitive human-computer inputFaster and more intuitive human-computer inputDiagnostic recording/measuringSensorsGuidelineApplication software

The present invention relates to a method and apparatus of utilizing an eye detection apparatus in a medical application, which includes calibrating the eye detection apparatus to a user; performing a predetermined set of visual and cognitive steps using the eye detection apparatus; determining a visual profile of a workflow of the user; creating a user-specific database to create an automated visual display protocol of the workflow; storing eye-tracking commands for individual user navigation and computer interactions; storing context-specific medical application eye-tracking commands, in a database; performing the medical application using the eye-tracking commands; and storing eye-tracking data and results of an analysis of data from performance of the medical application, in the database. The method includes performing an analysis of the database for determining best practice guidelines based on clinical outcome measures.

Owner:REINER BRUCE

Human-computer interaction device and method adopting eye tracking in video monitoring

ActiveCN101866215AReduce the impactEnhanced interactionInput/output for user-computer interactionTelevision system detailsVideo monitoringHuman–machine interface

The invention belongs to the technical field of video monitoring and in particular relates to a human-computer interaction device and a human-computer interaction method adopting human-eye tracking in the video monitoring. The device comprises a non-invasive facial eye image video acquisition unit, a monitoring screen, an eye tracking image processing module and a human-computer interaction interface control module, wherein the monitoring screen is provided with infrared reference light sources around; and the eye tracking image processing module separates out binocular sub-images of a left eye and a right eye from a captured facial image, identifies the two sub-images respectively and estimates the position of a human eye staring position corresponding to the monitoring screen. The invention also provides an efficient human-computer interaction way according to eye tracking characteristics. The unified human-computer interaction way disclosed by the invention can be used for selecting a function menu by using eyes, switching monitoring video contents, regulating the focus shooting vision angle of a remote monitoring camera and the like to improve the efficiency of operating videomonitoring equipment and a video monitoring system.

Owner:FUDAN UNIV

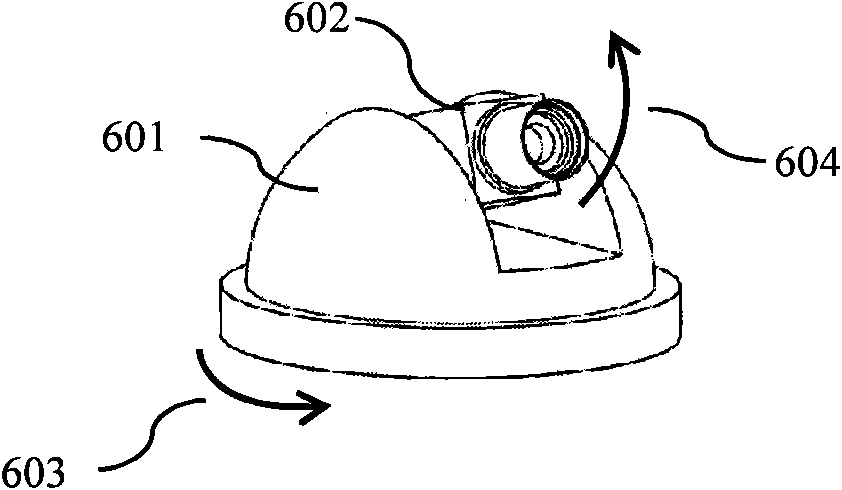

Hand and indicating-point positioning method and hand gesture determining method used in human-computer interaction system

InactiveUS20120062736A1Rapid positioningCharacter and pattern recognitionColor television detailsInteraction systemsPattern recognition

Disclosed are a hand positioning method and a human-computer interaction system. The method comprises a step of continuously capturing a current image so as to obtain a sequence of video images; a step of extracting a foreground image from each of the captured video images, and then carrying out binary processing so as to obtain a binary foreground image; a step of obtaining a vertex set of a minimum convex hull of the binary foreground image, and then creating areas of concern serving as candidate hand areas; and a step of extracting hand imaging features from the respective created areas of concern, and then determining a hand area from the candidate hand areas by carrying out pattern recognition based on the extracted hand imaging features.

Owner:RICOH KK

Method and system for implicitly resolving pointing ambiguities in human-computer interaction (HCI)

InactiveUS6907581B2Simple design and implementationEasy accessInput/output processes for data processingObject basedAmbiguity

Owner:RAMOT AT TEL AVIV UNIV LTD

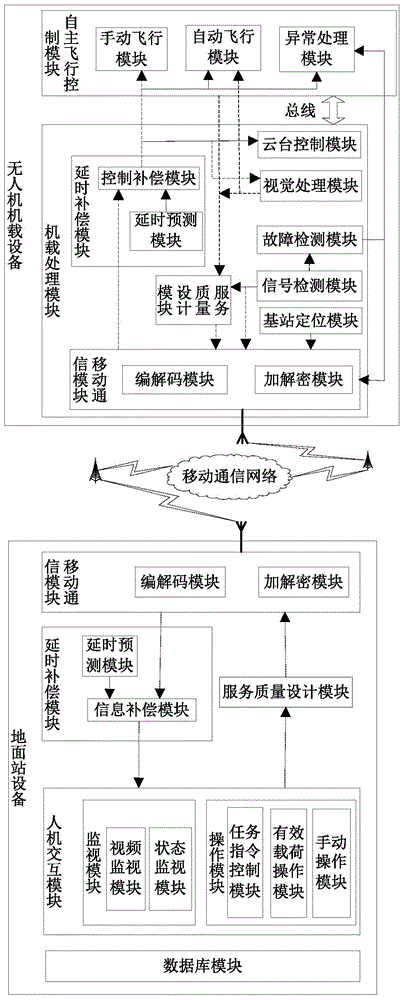

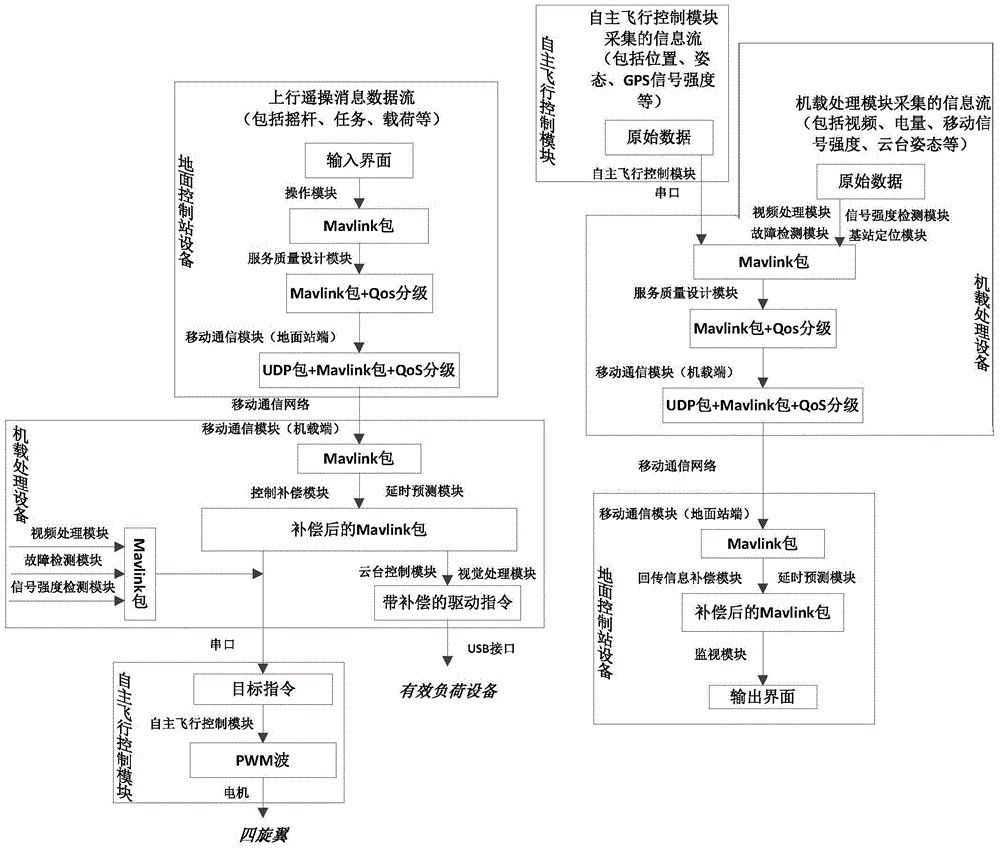

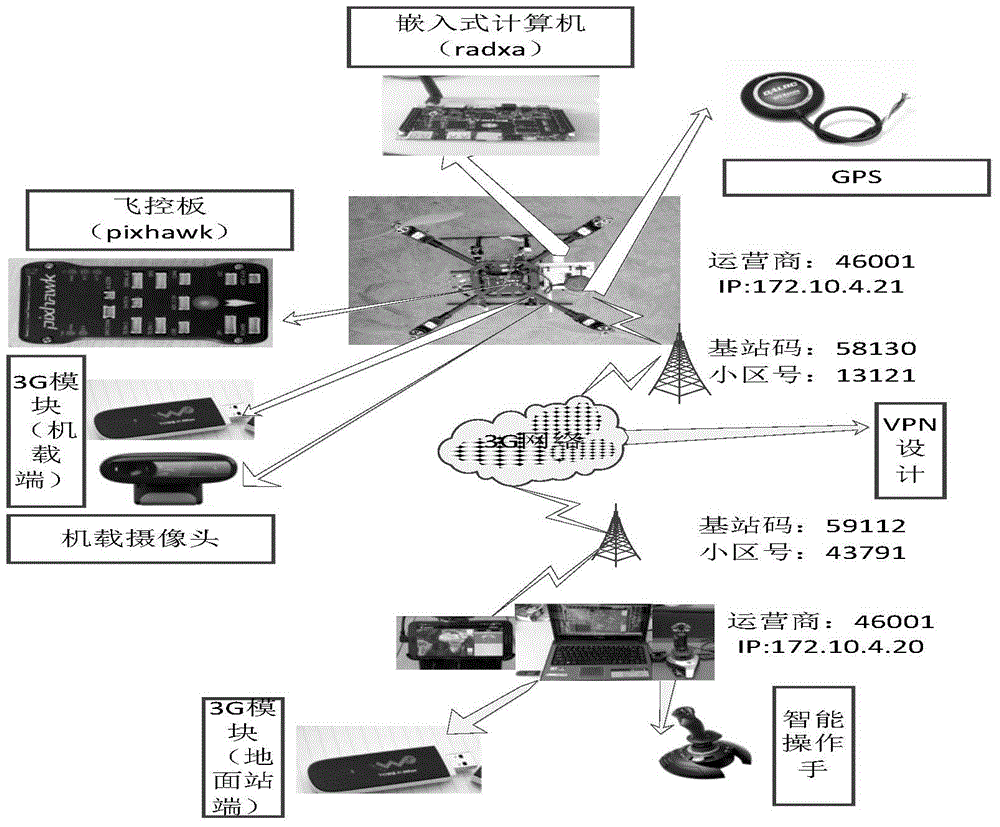

Unmanned aerial vehicle remote measuring and control system and method based on mobile communication network

InactiveCN104950906ASolve the problem of remote measurement and controlImprove real-time performancePosition/course control in three dimensionsNerve networkControl system

The invention discloses an unmanned aerial vehicle remote measuring and control system and method based on a mobile communication network, and solves the problems of unmanned aerial vehicle remote measuring and control. The system comprises unmanned aerial vehicle airborne equipment, ground control station equipment and the mobile communication network, wherein the unmanned aerial vehicle airborne equipment comprises an autonomous flight control module and an airborne processing module; the ground control station equipment comprises a mobile communication module, a time delay compensation module, a service quality design module, a man-machine interaction module and a database module; the unmanned aerial vehicle airborne equipment is in wireless connection with the ground control station equipment through the mobile communication network. The autonomous flight control module is connected with the airborne processing module through a bus. The unmanned aerial vehicle remote measuring and control system and method based on the mobile communication network has the advantages that a neural network is adopted for carrying out time delay predication on the mobile communication network, in addition, the result is introduced into the unmanned aerial vehicle position for compensation, the unmanned aerial vehicle remote measuring and control is realized, and the integral performance of the unmanned aerial vehicle remote measuring and control system based on the mobile communication network is improved.

Owner:NAT UNIV OF DEFENSE TECH

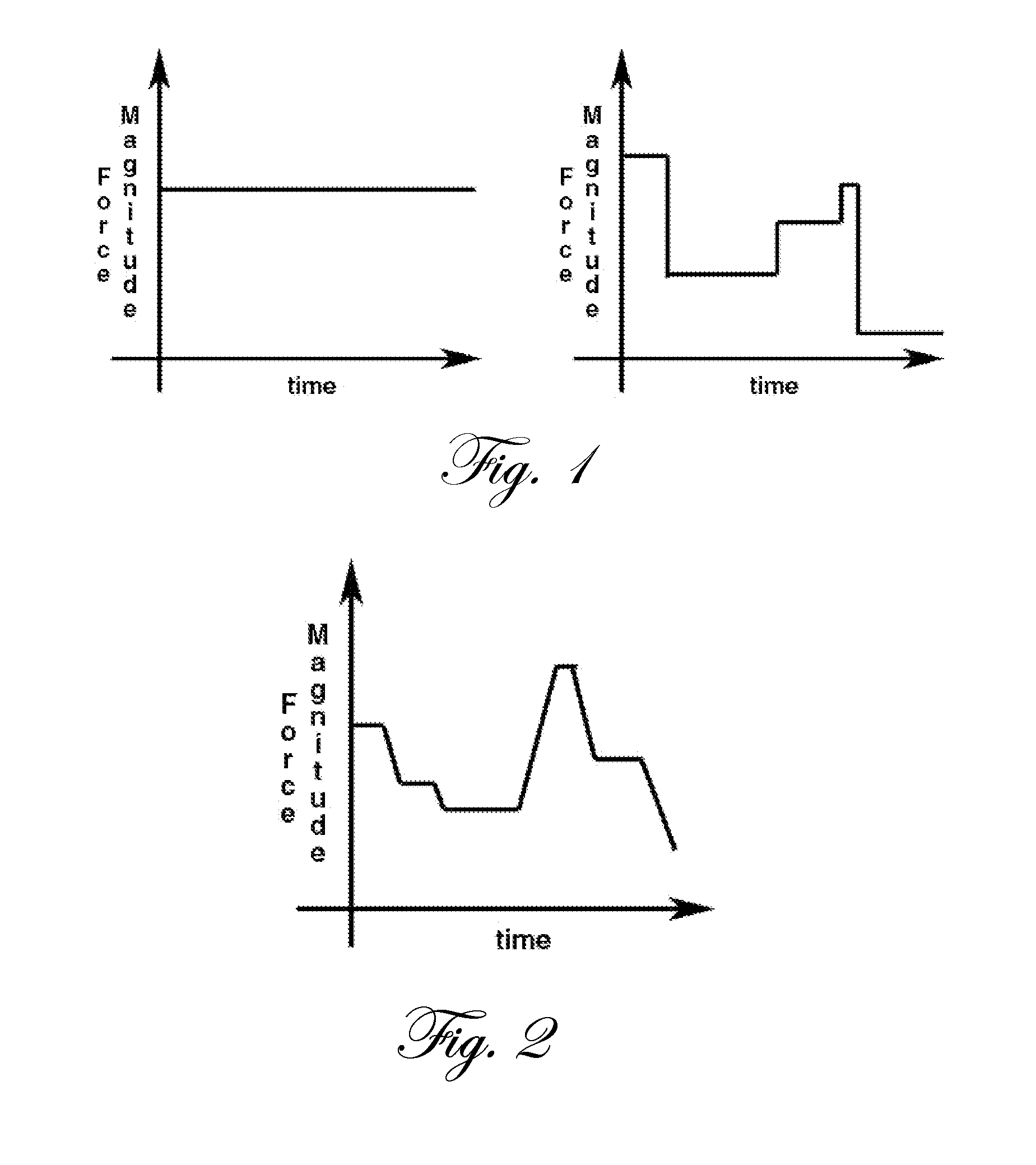

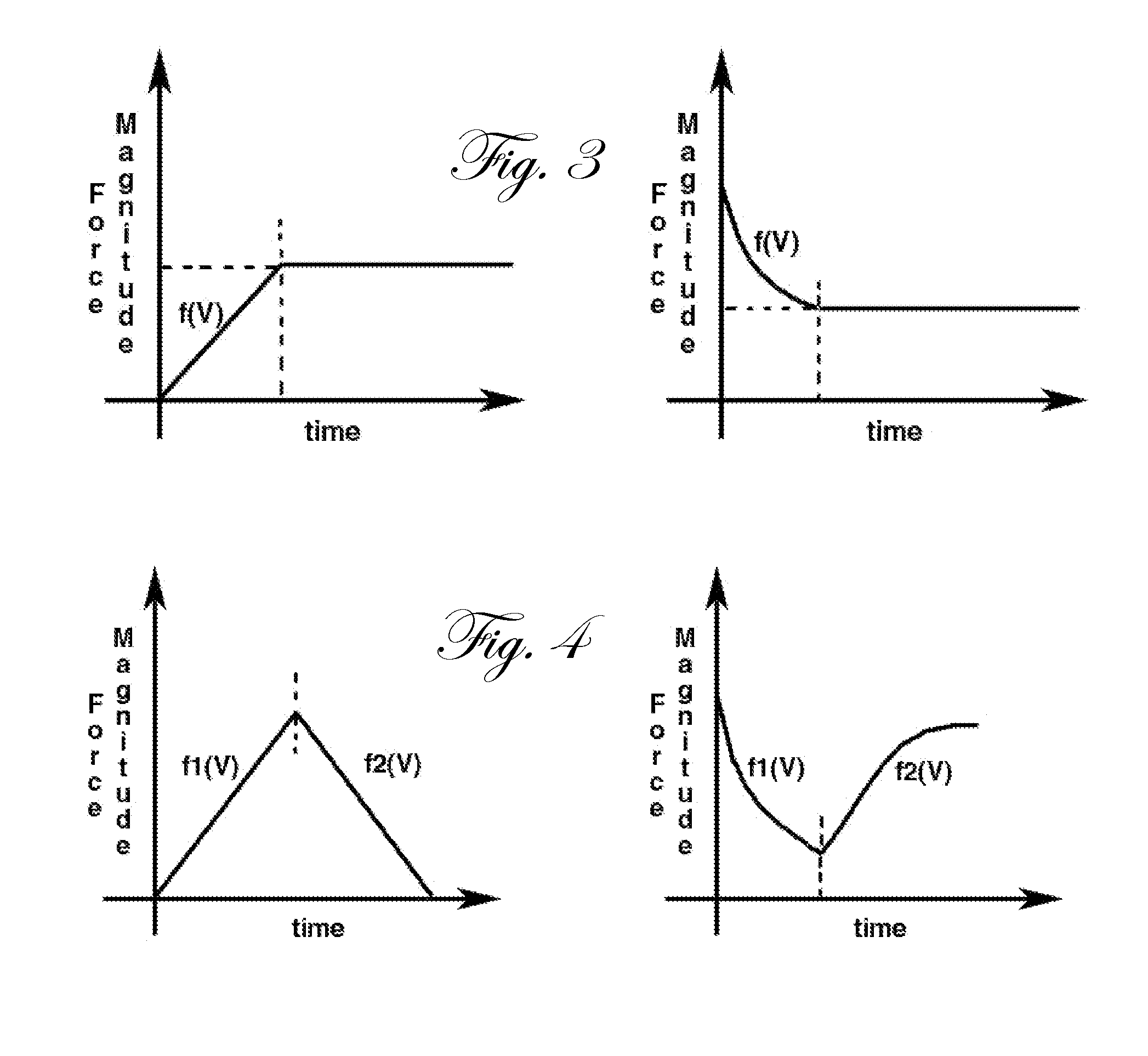

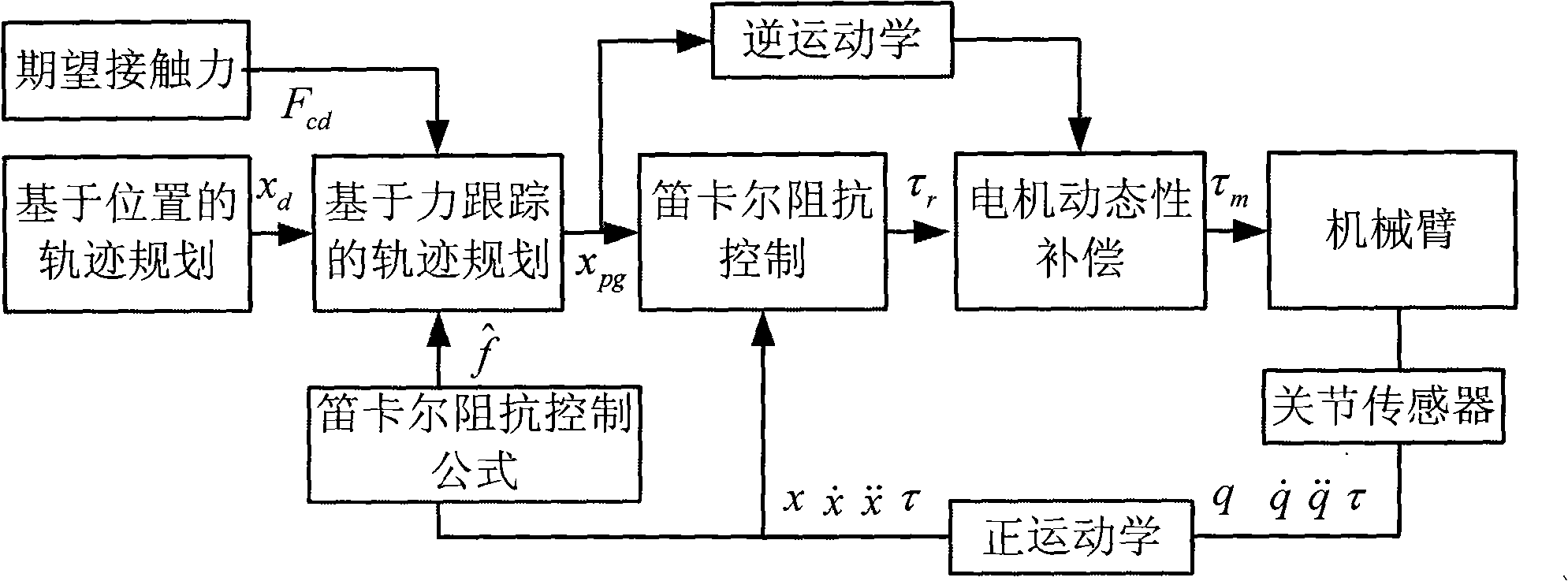

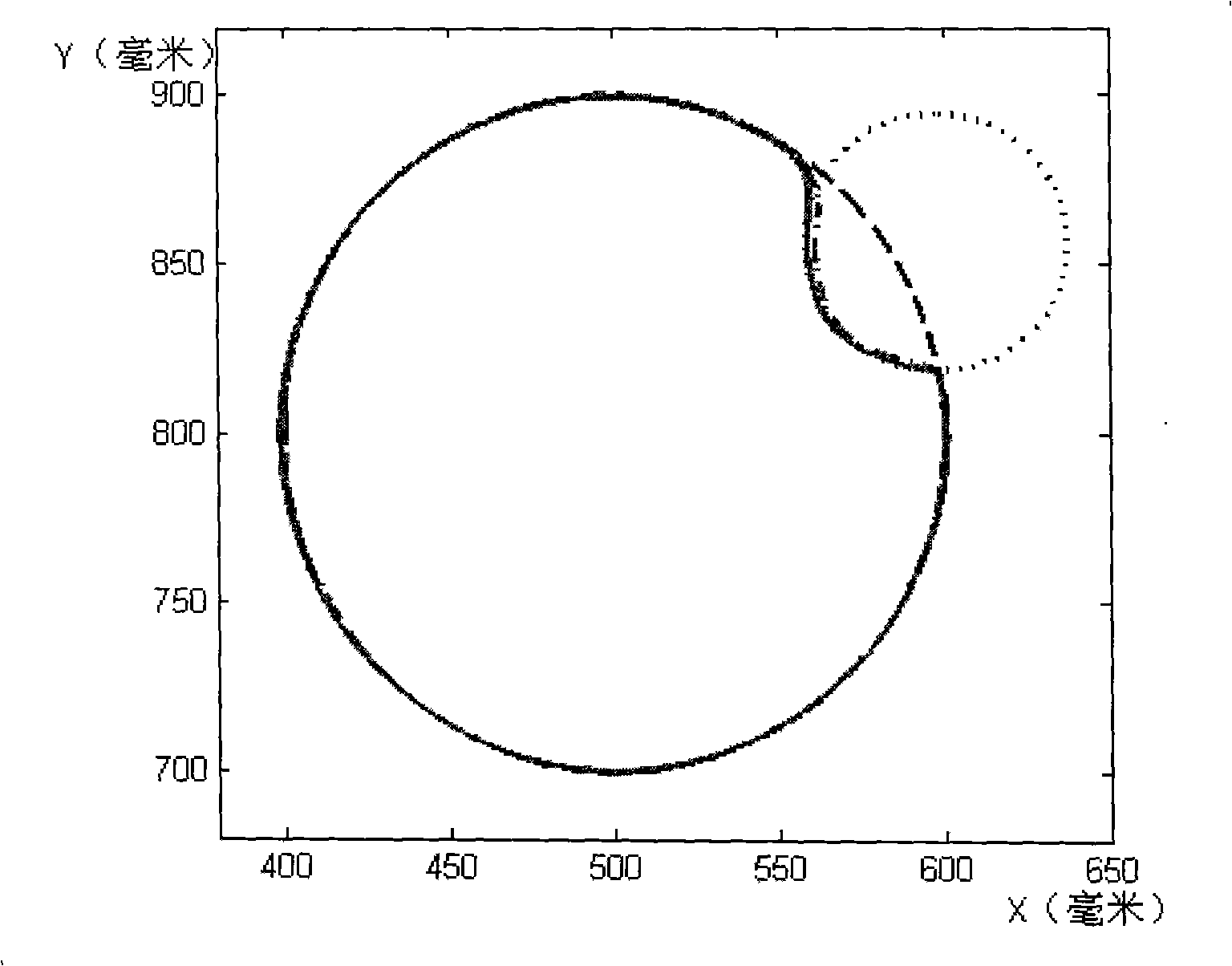

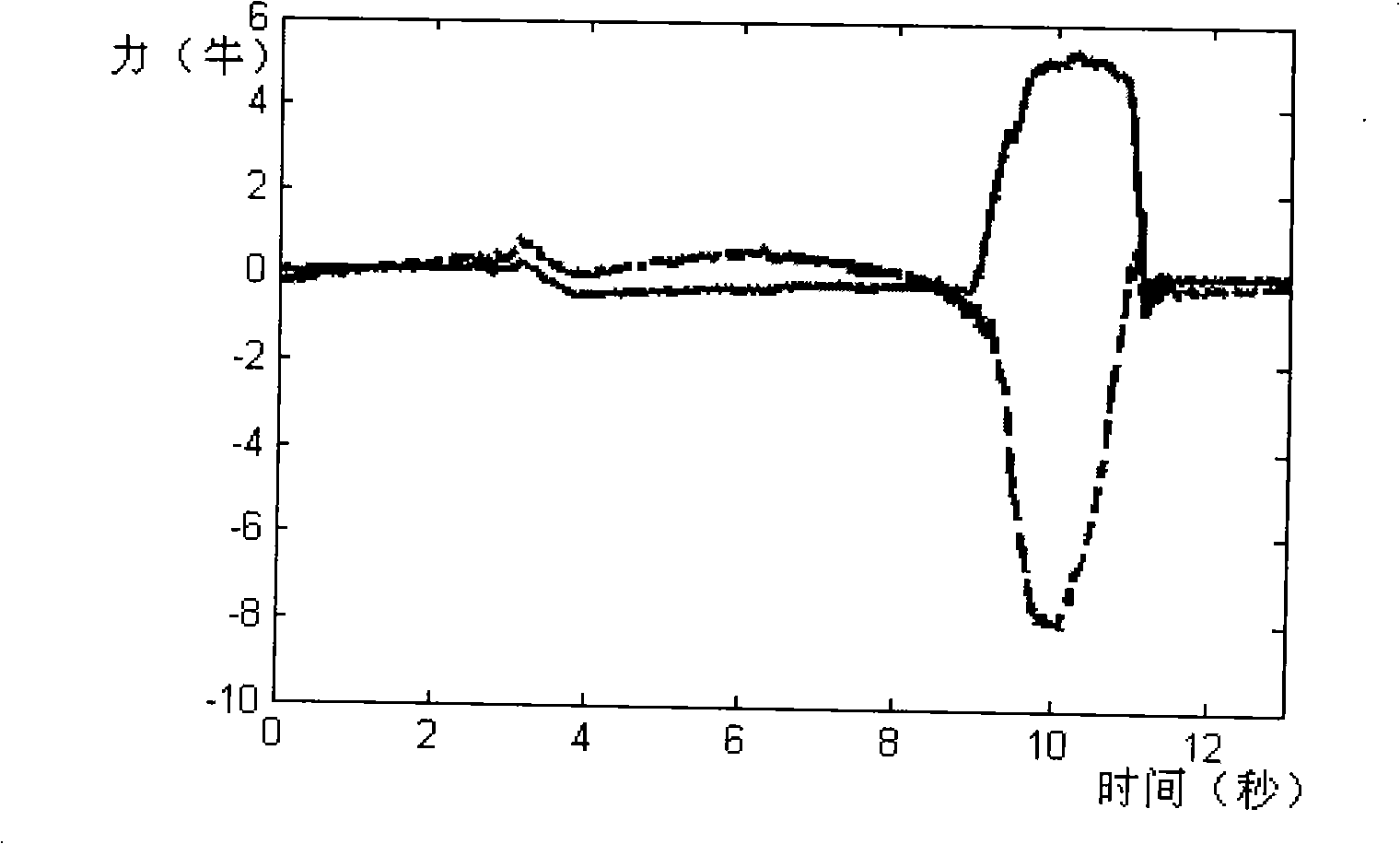

Control method of man machine interaction mechanical arm

InactiveCN101332604AEnsure safetySoft touchProgramme-controlled manipulatorKinematicsElectric machinery

The invention provides a control method of a human-machine interaction mechanical arm, which relates to a safe control method of a mechanical arm working under an unknown environment and solves the problem that an operator accidentally injured due to failure of the existing mechanical arm to accurately model the working environment when the mechanical arm works in close contact with the operator. A mechanical arm controller of the invention collects a joint position in a real time manner by a joint sensor and transforms the joint position q to a Descartes position x by the positive kinematics, and calculates the real-time trajectory planning xpg which is provided with a feedback of the Descartes force; the mechanical arm controller also collects the torque Tau by the joint sensor in a real time manner, calculates the expected torque Taur by Descartes impedance control, and calculates the input torque Taum of the mechanical arm joint by the dynamic compensation of a motor. The control method can effectively detect the force from each joint of the mechanical arm; when contacting an object, the mechanical arm can carry out a soft contact; when a collision happens, the mechanical arm can ensure that the contact force from each direction is within the range of the expected force, thus ensuring the safety of the mechanical arm and the operator.

Owner:HARBIN INST OF TECH

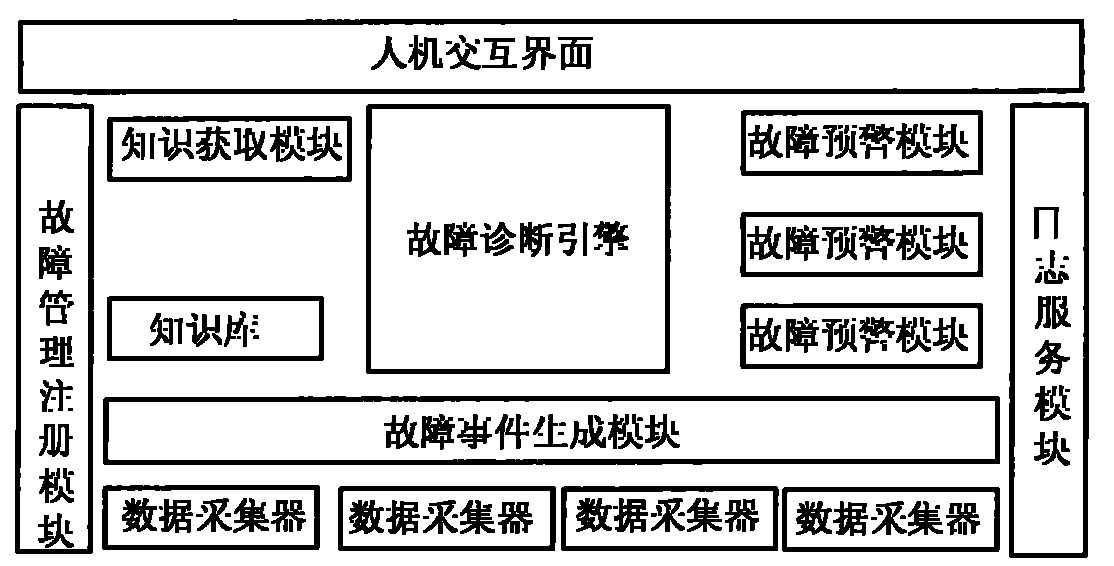

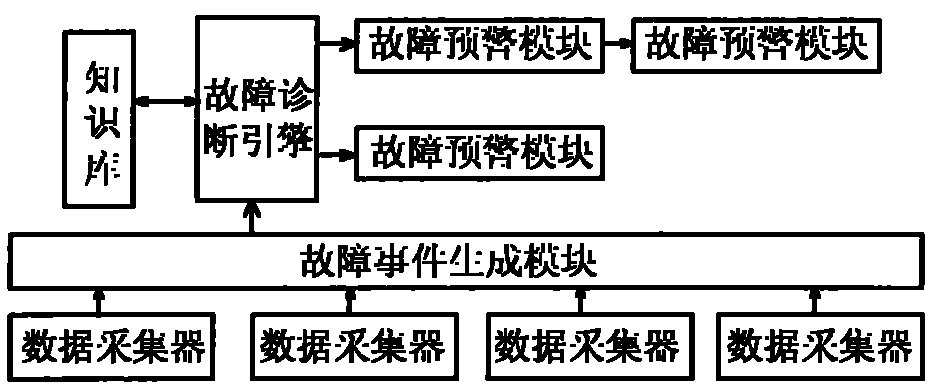

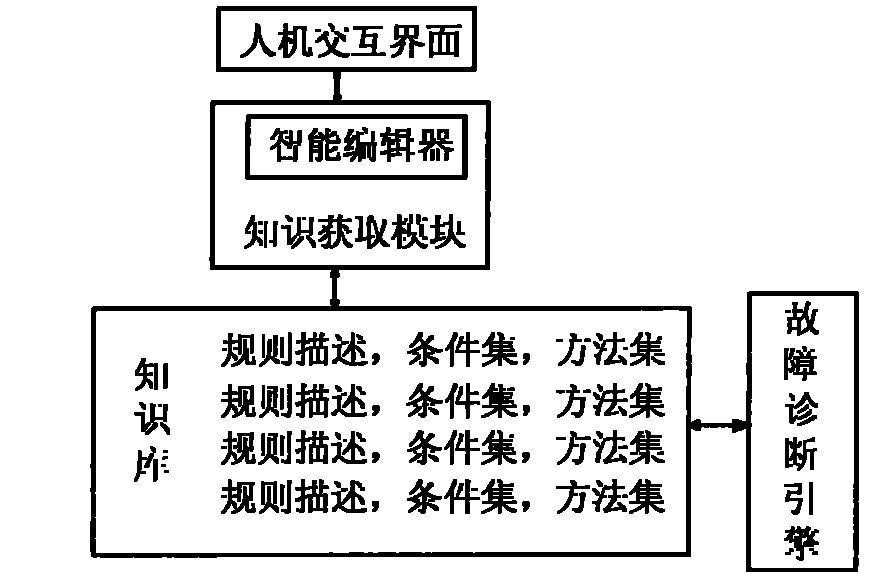

Computer fault management system based on expert system method

ActiveCN101833497AWell structured designMake full use of resourcesHardware monitoringSystems managementData acquisition

The invention provides a computer fault management system based on an expert system method, which comprises a data acquisition unit (1), a fault event generation module (2), a fault diagnosis engine (3), a knowledge base (4), a knowledge acquisition module (5), a fault isolation module (6), a fault recovery module (7), a fault early-warning module (8), a log service module (9), a fault management registration module (10) and a human-computer interaction interface (11); and a system administrator monitors and manages the data acquisition unit (1), the fault event generation module (2), the fault diagnosis engine (3), the knowledge base (4), the fault isolation module (5), the fault recovery module (6), the fault early-warning module (7) and the log service module (8) through the human-computer interaction interface (11), and accesses an intelligent editor provided by the knowledge acquisition module (5) through the human-computer interaction interface (11).

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

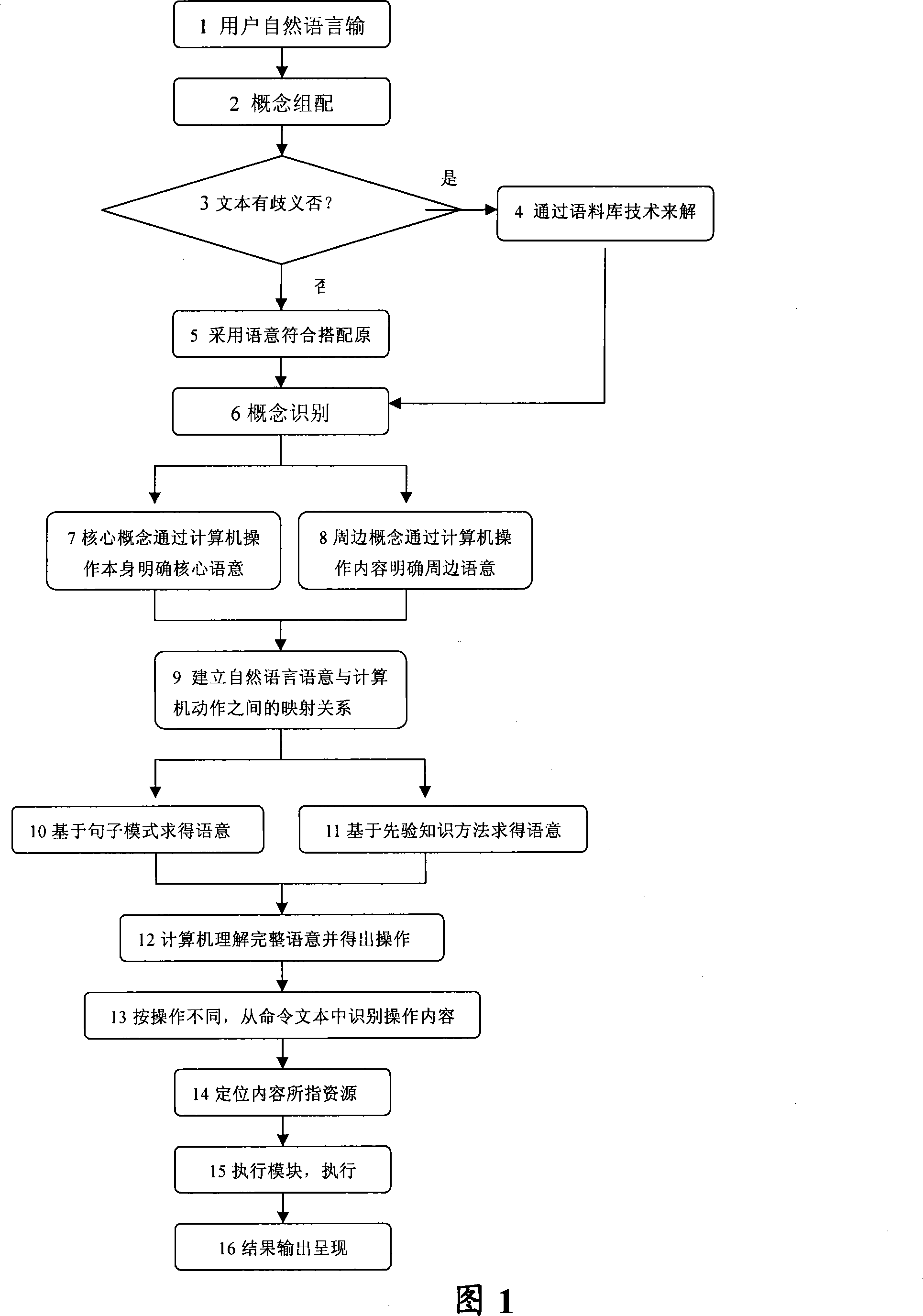

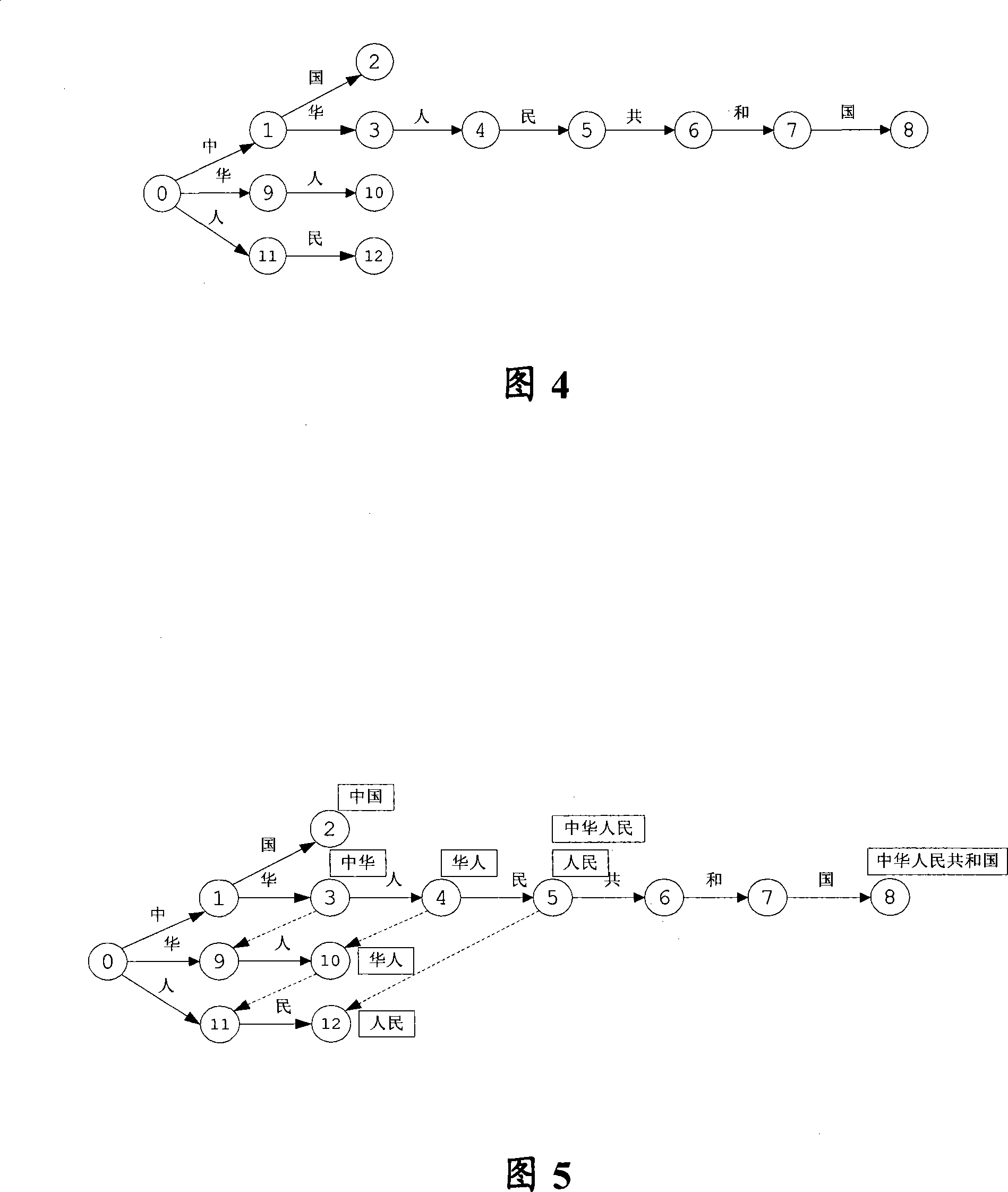

Free-running speech comprehend method and man-machine interactive intelligent system

InactiveCN101178705AEasy to understandCorrect answerSpeech recognitionSpecial data processing applicationsNatural language understandingAmbiguity

The invention discloses natural language understanding method, comprising the steps that: a natural language is matched with a conceptual language symbol after receiving the natural language input by the customer, and then a conception is associated with the conceptual language symbol; a conception which is most suitable to the current language content is selected by being compared with the preset conception dictionary, and then whether the conception is ambiguous is judged; and if the answer is YES, the conception is obtained by a language data base, entering the next step; and if the answer is NO, the conception is obtained based on the principle of language content matched, entering to the next step; a core conception and a sub conception are obtained by a conception reorganization, wherein, the core language meaning of the core conception is defined by an operation of the computer while the sub language meaning of the sub conception is defined by the operation content of the computer; and the complete language meaning is obtained by combining the core language meaning with the sub language meaning. The invention also provides a human-computer interaction intelligent system based on the method provided by the invention. The invention recognizes the natural sound input by the customer more accurately, thereby providing the customer with more intelligent and perfect services.

Owner:CHINA TELECOM CORP LTD

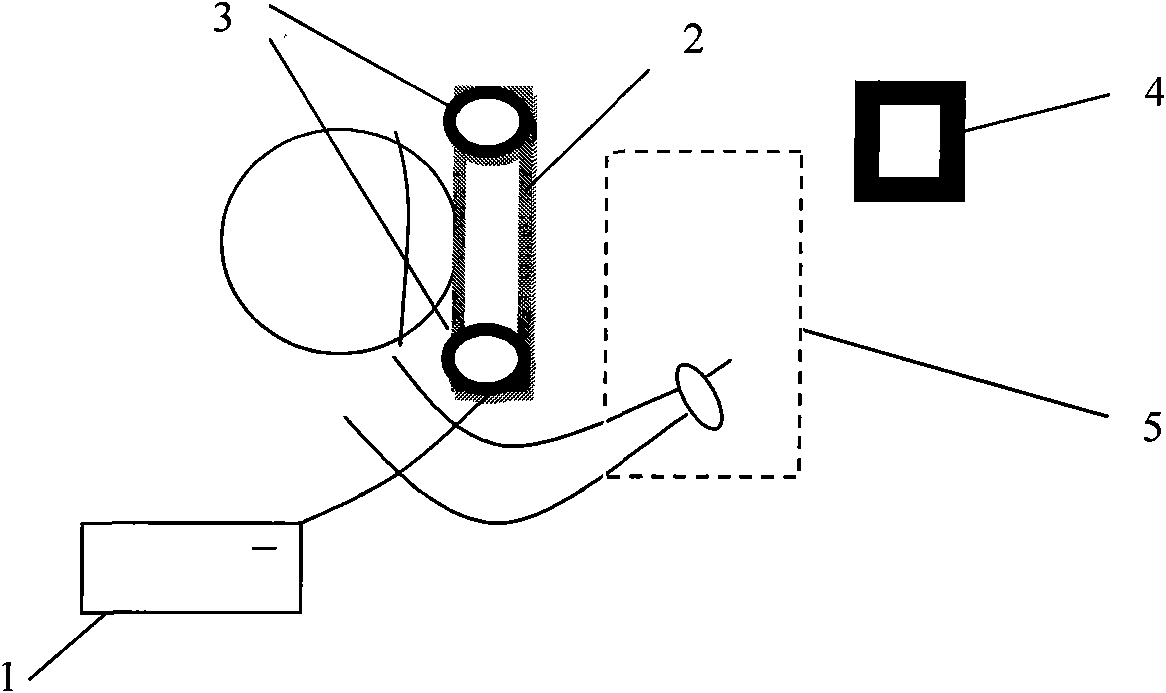

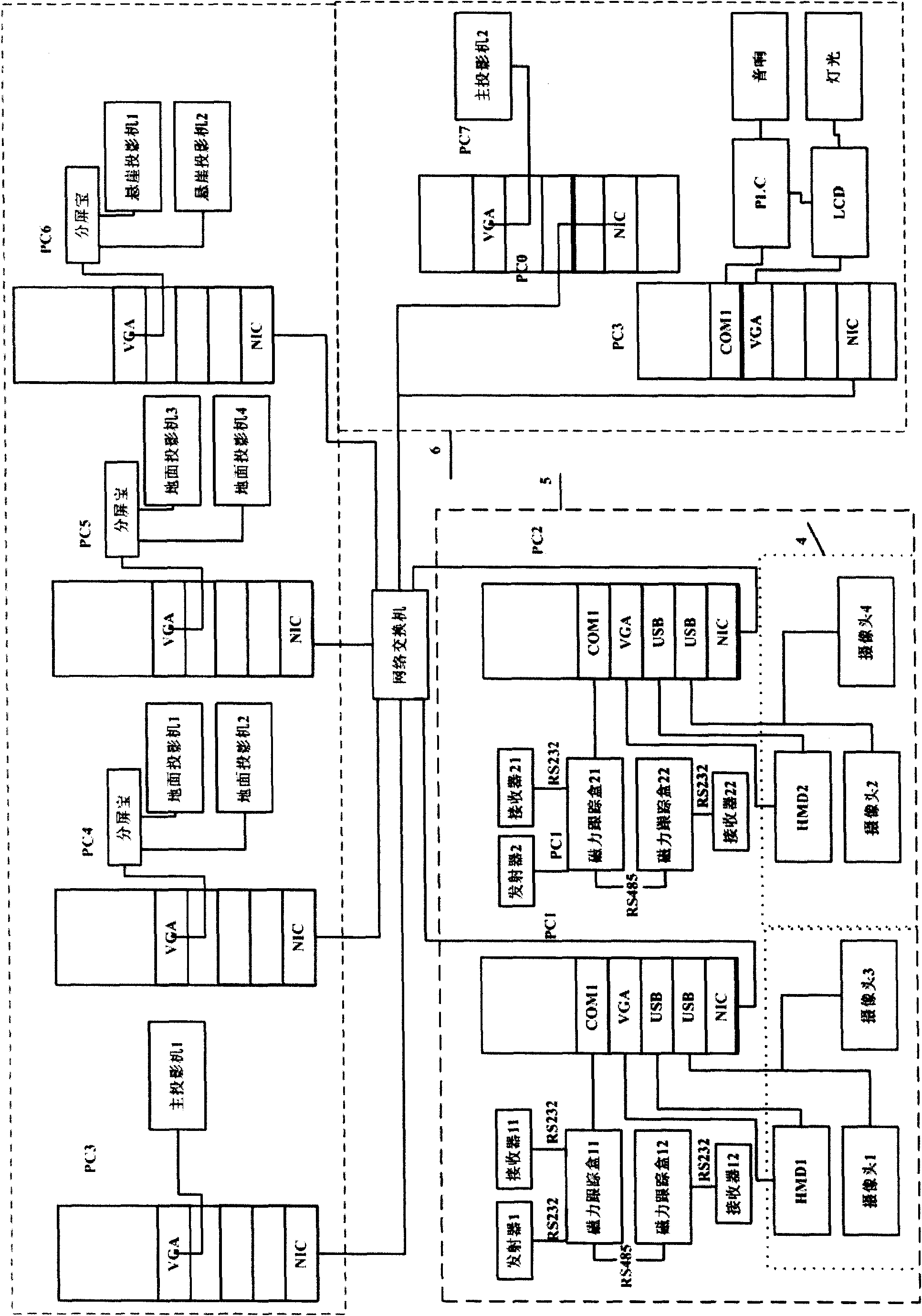

Visual, operable and non-solid touch screen system

InactiveCN101673161AImprove adaptabilityImprove portabilityInput/output for user-computer interactionGraph readingHead worn displaySpatial calibration

The invention belongs to the technical field of human-computer interaction, and is a visual, operable and non-solid touch screen system which comprises a computer, two network cameras, a head-mounteddisplay and a calibration reference object, wherein the calibration reference object calibrates a virtual touch screen. A user can observe a real world containing the virtual touch screen, and directly operates the non-solid touch screen by hand through the head-mounted display. The invention uses two cameras for real-time image collection. The computer system carries out spatial calibration and the identification of the action of fingertip positions according to the image input by the cameras and synthesizes a corresponding image. The head-mounted display outputs the image containing the virtual touch screen to the user, and the user directly observes the feedback of the virtual touch screen through the head-mounted display. The invention can be used in the field of human-computer interaction, and is particularly suitable to be used as the equipment of human-computer interaction of a portable computer or a multimedia interaction experience system.

Owner:FUDAN UNIV

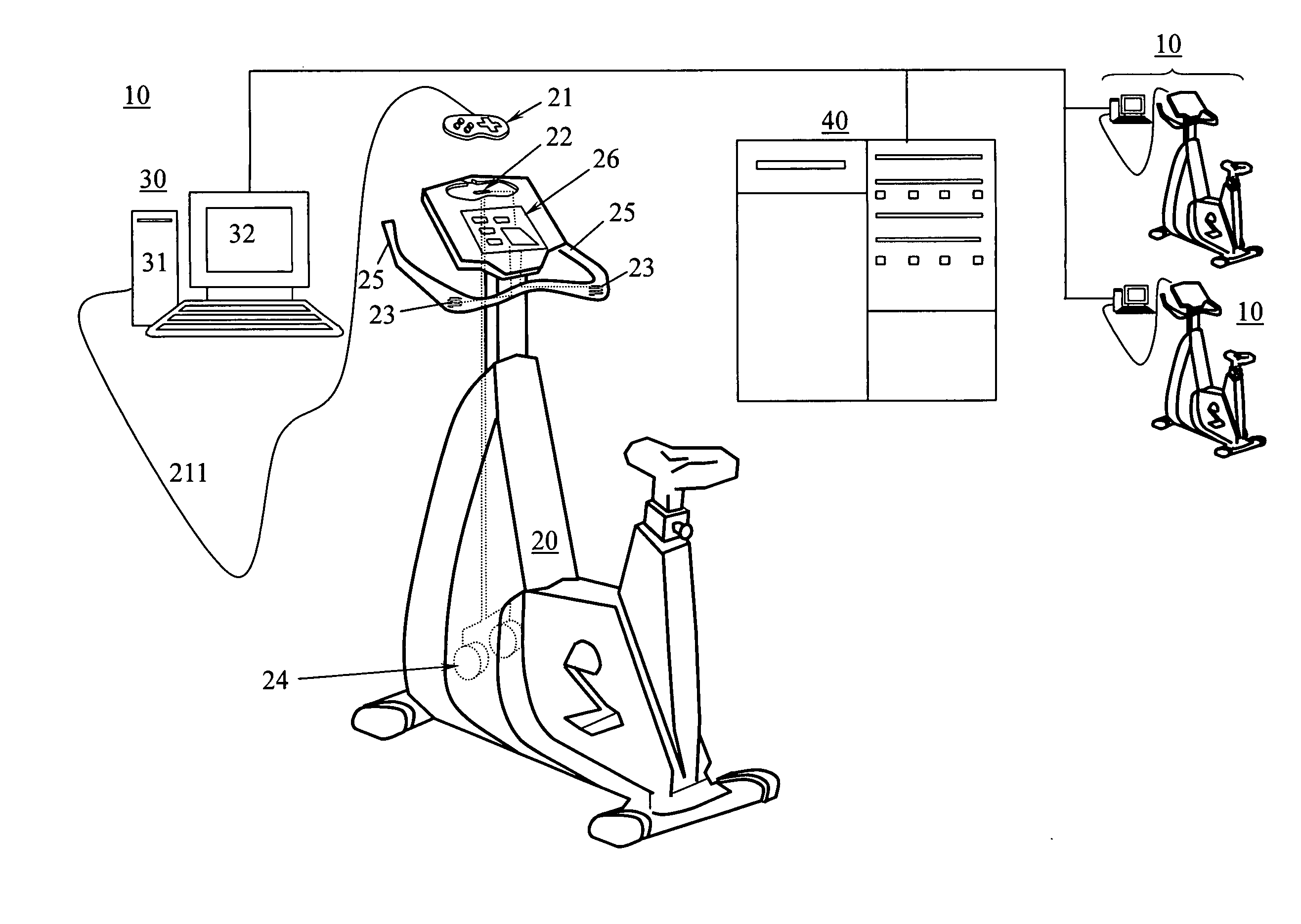

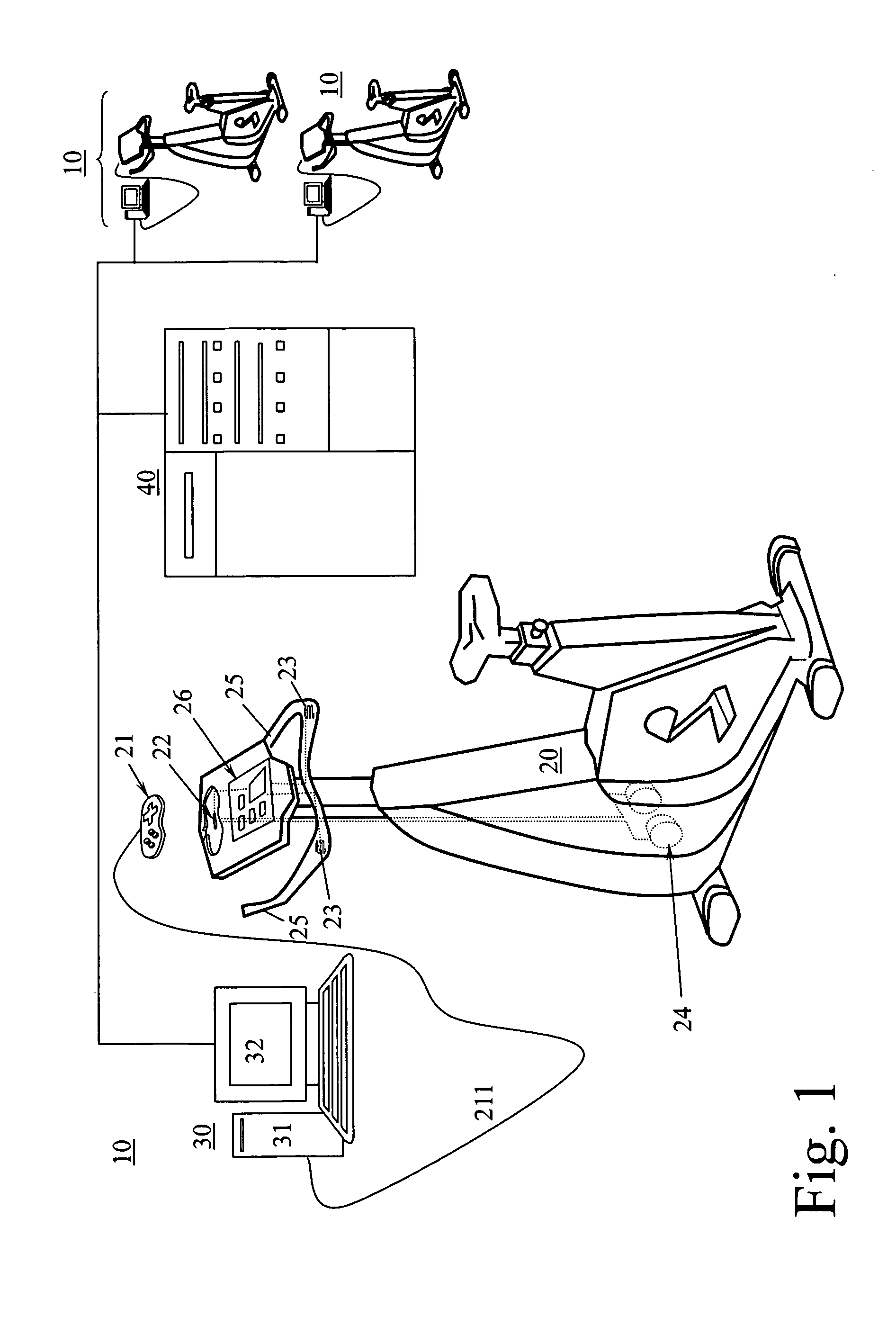

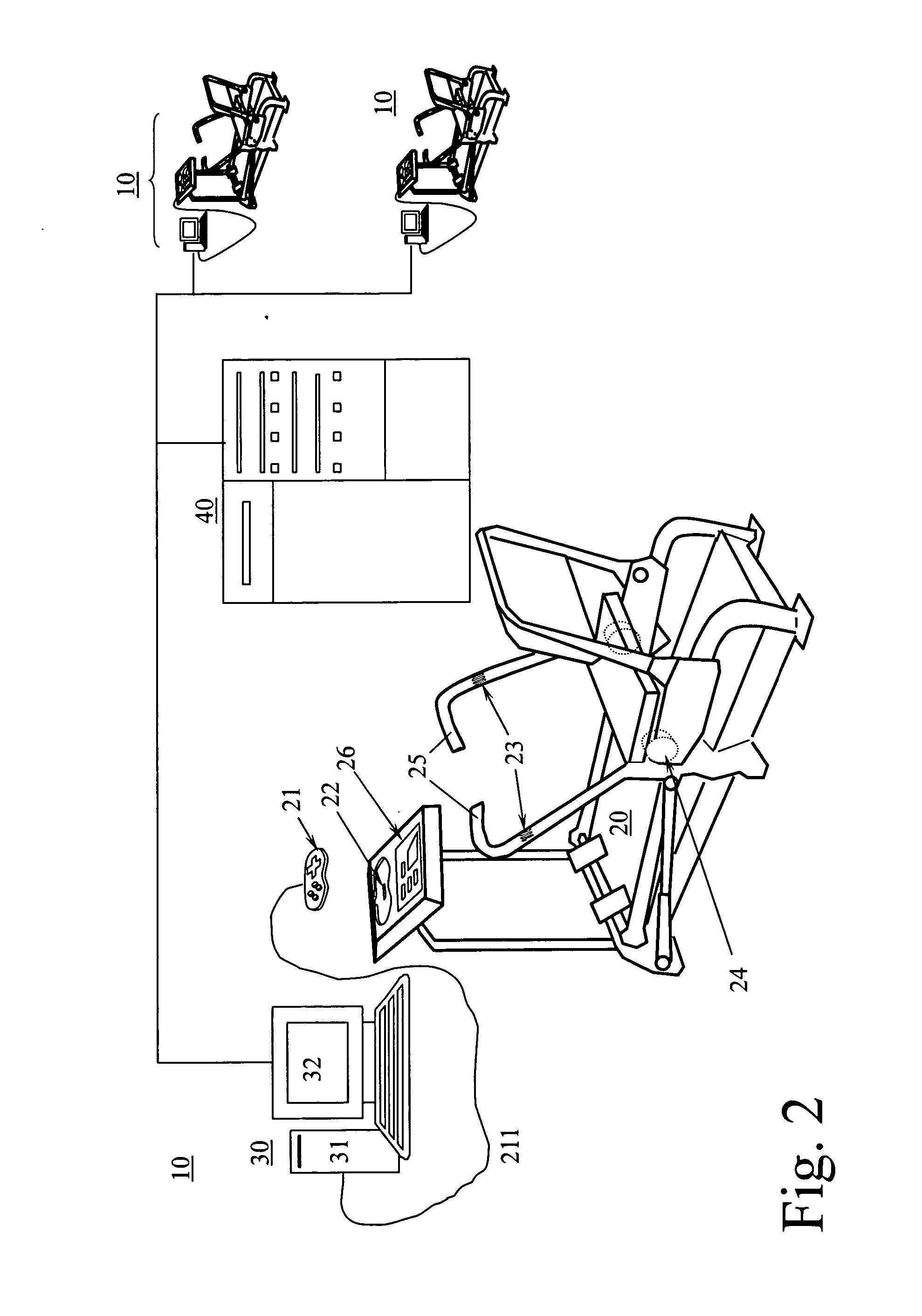

Multifunctional virtual-reality fitness equipment with a detachable interactive manipulator

InactiveUS20060063645A1Manual control with multiple controlled membersClubsMicrocontrollerThe Internet

A multifunctional virtual-reality fitness equipment provide user to select mode of one user doing exercise alone or multiple users participating in on-line internet competition game with others through internet, which has a detachable interactive manipulator equipped with single-chip microcontroller and served as an interface for coupling conventional fitness equipment and personal computer to enable the conventional fitness equipment to become a novel man-machine interaction fitness equipment having function of internet 3-dimensional virtual simulation, and the communication between the detachable interactive manipulator and the personal computer is achieved by employing male-to-female plug-socket connecting means and according the International Standard Communication Protocol; when the detachable interactive manipulator is connected to a personal computer and a fitness equipment, an automatic detection to software program of personal computer shall be executed, if correct message is detected, the fitness equipment will be set in 3-dimensional virtual simulation mode capable of performing an internet 3-dimensional virtual simulation function, and the user may select this mode to execute an on-line internet competition game with others through internet, however, if no software program of personal computer was detected, the fitness equipment is still used as a conventional fitness equipment to provide user doing exercise alone.

Owner:LAI

Eye tracking human-machine interaction method and apparatus

InactiveCN101311882AAccurate outputSimple protocol for interactionInput/output for user-computer interactionCharacter and pattern recognitionComputer graphics (images)Man machine

The invention relates to a view line tracking man-machine interactive method, including: view line tracking information is collected and a view line focus position is obtained according to the view line tracking information; facial image information is collected and facial action is recognized according to the facial image information; a control command corresponding to the facial action is output according to the view line focus position. the invention also relates to a view line tracking man-machine interactive device which comprises a view line tracing processing unit which is used for the view line tracking operation, a facial action recognition unit for collecting the facial image information and recognizing the facial action according to the facial image information and a control command output unit which is respectively connected with the view line tracking processing unit and the facial action recognition unit and is used for outputting the control command corresponding to the facial action according to the view line focus position. the embodiment of the invention provides a non-contact view line tracking man-machine interactive method and a device with interaction protocols.

Owner:HUAWEI TECH CO LTD

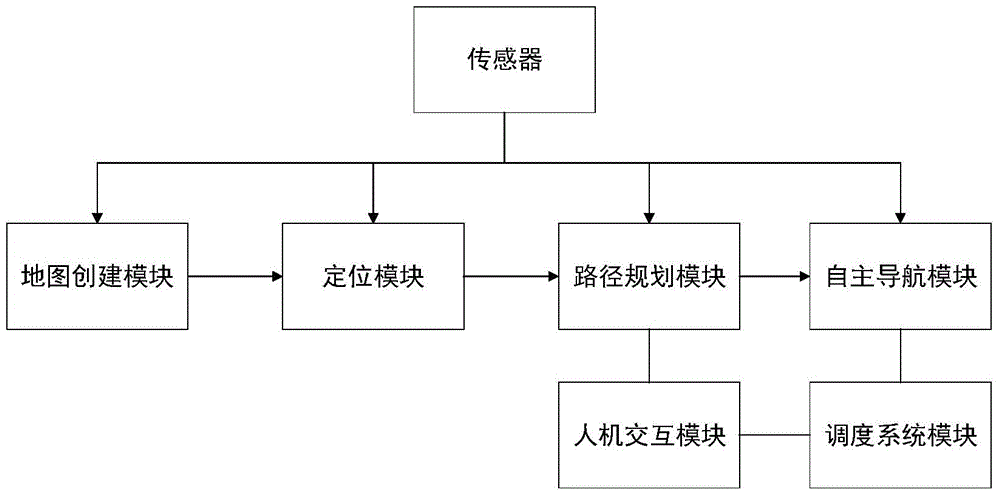

Automatic guided vehicle based on map matching and guide method of automatic guided vehicle

ActiveCN104596533AImprove environmental adaptabilityEasy to changeInstruments for road network navigationOptimum routeAutomated guided vehicle

The invention provides an automatic guided vehicle based on map matching and a guide method of the automatic guided vehicle. The automatic guided vehicle comprises a map creation module, a location module, a human-machine interaction module, a route planning module, an autonomous navigation module and a dispatching system module, wherein the map creation module is used for creating an environmental map; the automatic guided vehicle utilizes the carried location module to match the observed local environmental information with a preliminarily created global map to obtain pose information under the global situation; the human-machine interaction module is used for displaying the information and working state of the automatic guided vehicle in the map in real time; the route planning module is used for planning a feasible optimum route in the global map; the automatic guided vehicle is autonomously navigated by virtue of the autonomous navigation module according to the planned route; the dispatching system module is used for dispatching the automatic guided vehicle closest to a calling site to go to work. By adopting the automatic guided vehicle, no other auxiliary location facility is needed, no alteration is made for the environment, the capability of the automatic guided vehicle is completely depended, the automatic guided vehicle is utterly ignorant to the environment, and no priori information is provided.

Owner:SHANGHAI JIAO TONG UNIV

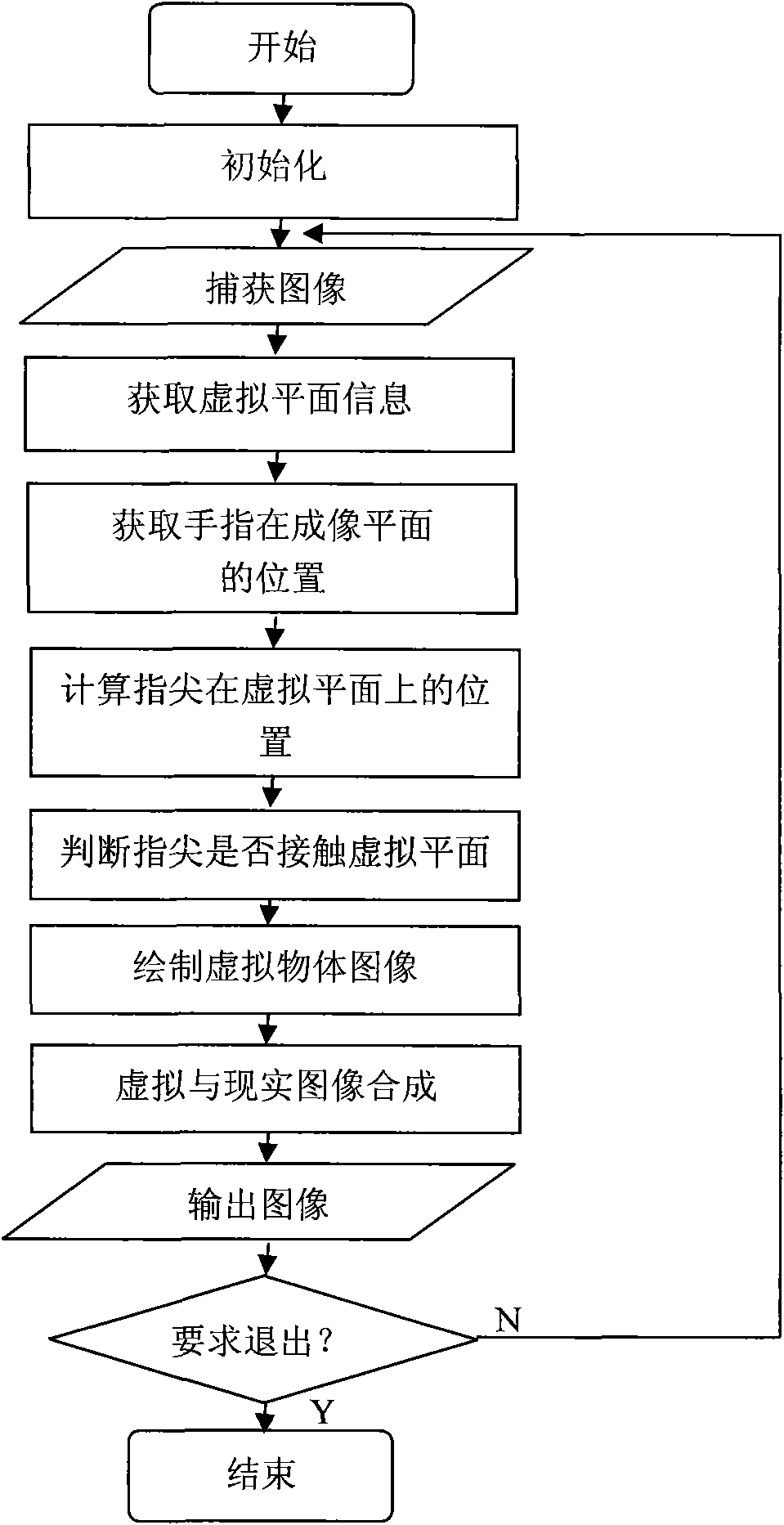

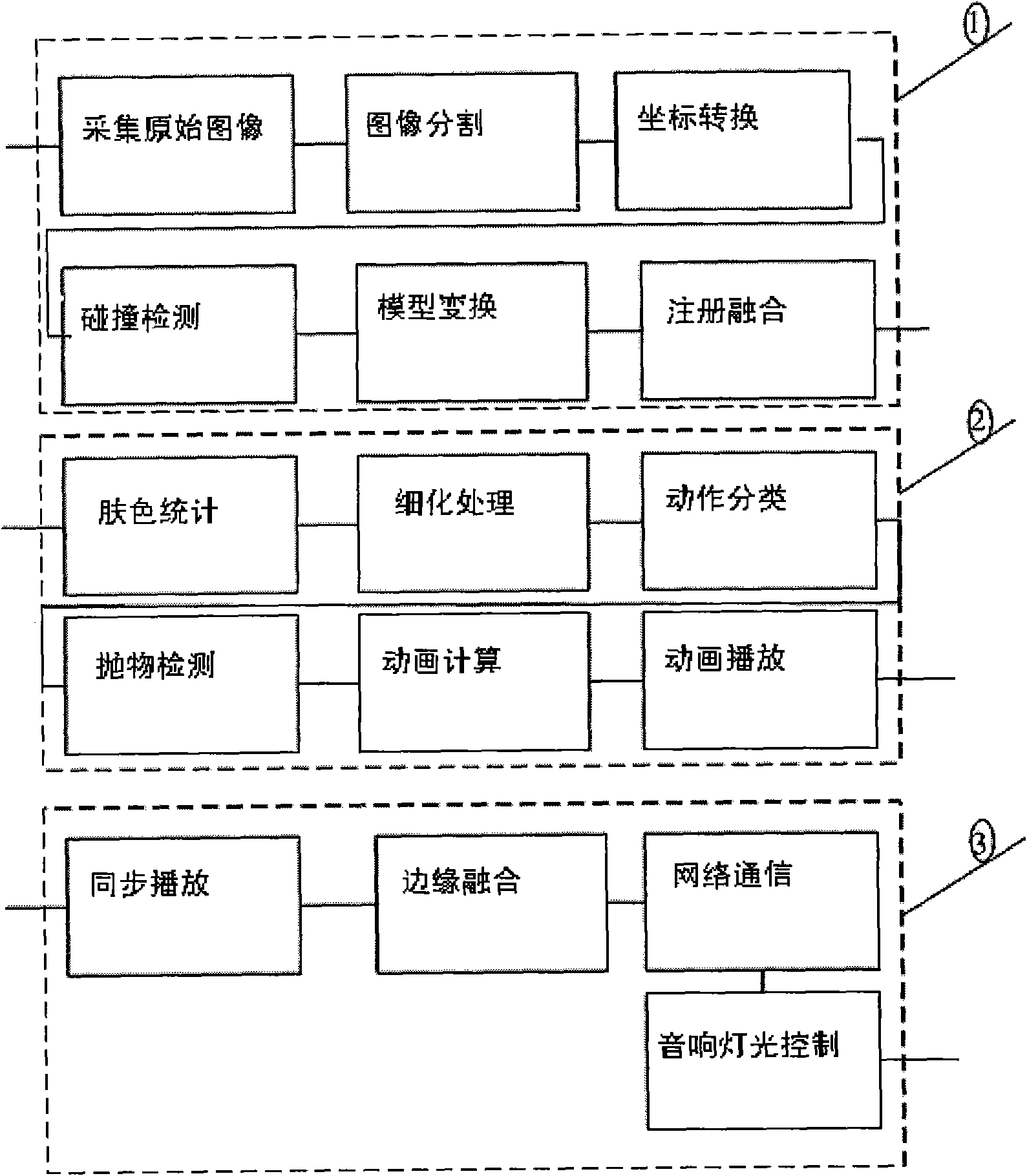

Real time human-machine interaction method and system based on augmented virtual reality and anomalous screen

InactiveCN101539804AStrong real-timeGuaranteed to run synchronouslyInput/output for user-computer interactionAnimationInteractive contentAnimation

The invention provides a real time human-machine interaction method and a system based on augmented virtual reality and anomalous screen. The method comprises the following operation steps: 1) modeling is carried out on virtual objects to be interacted and statistical average hand model ; 2) video acquisition is carried out on hand by N cameras to obtain original image; 3) processing is carried out in a computer. The system comprises a system for realizing real time transparent strengthened display, algorithm and system of real time false or true grasping and parabolic motion detection and real time parabolic animation generating , and real time multi-anomalous screens interactive system. A whole set of large special effect is constructed by various false or true conversion in large anomalous screen scene, three-dimensional animation or three-dimensional image of interactive content expressed in solid spectacles, additionally, video / audio devices, light control devices and the like.

Owner:SHANGHAI UNIV

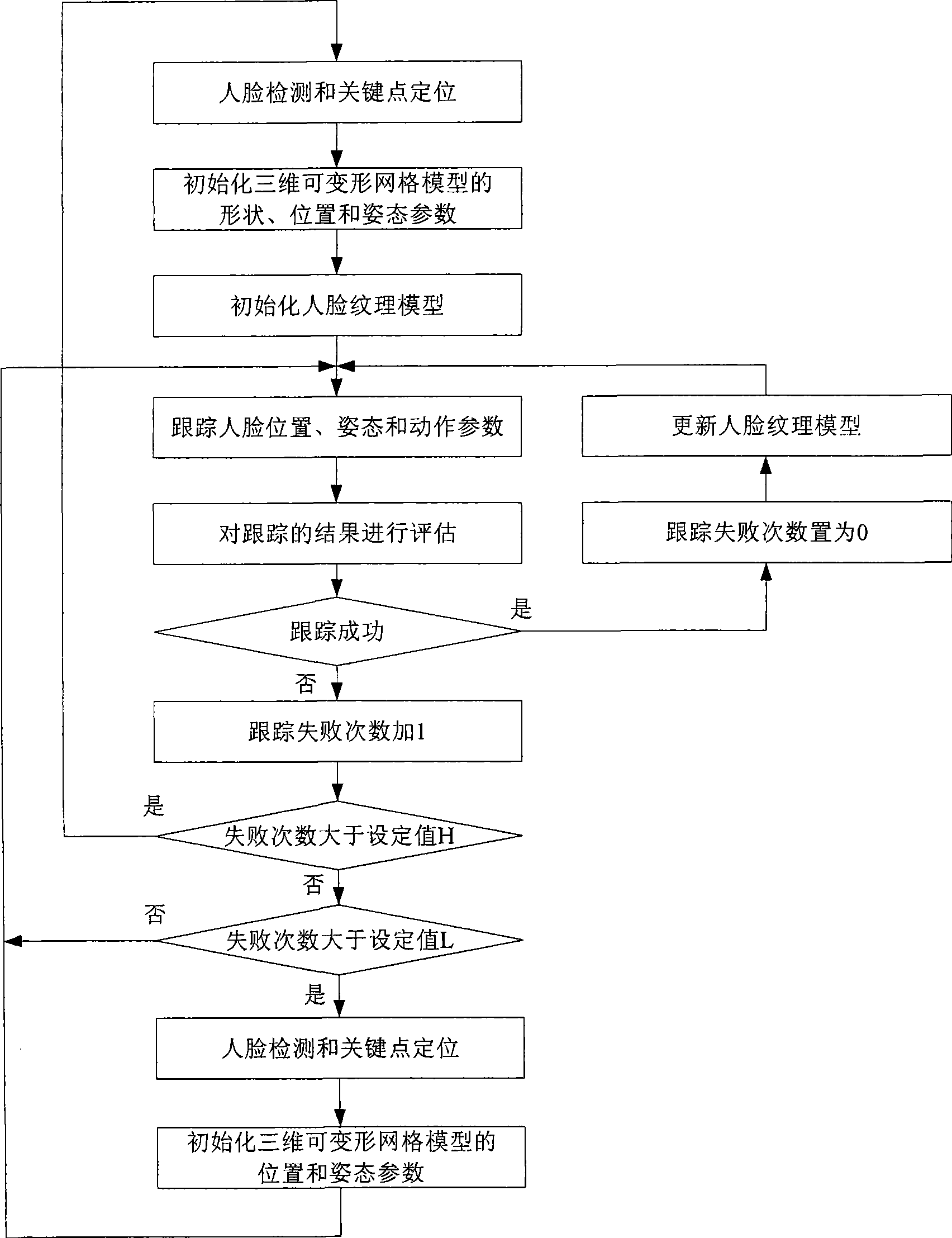

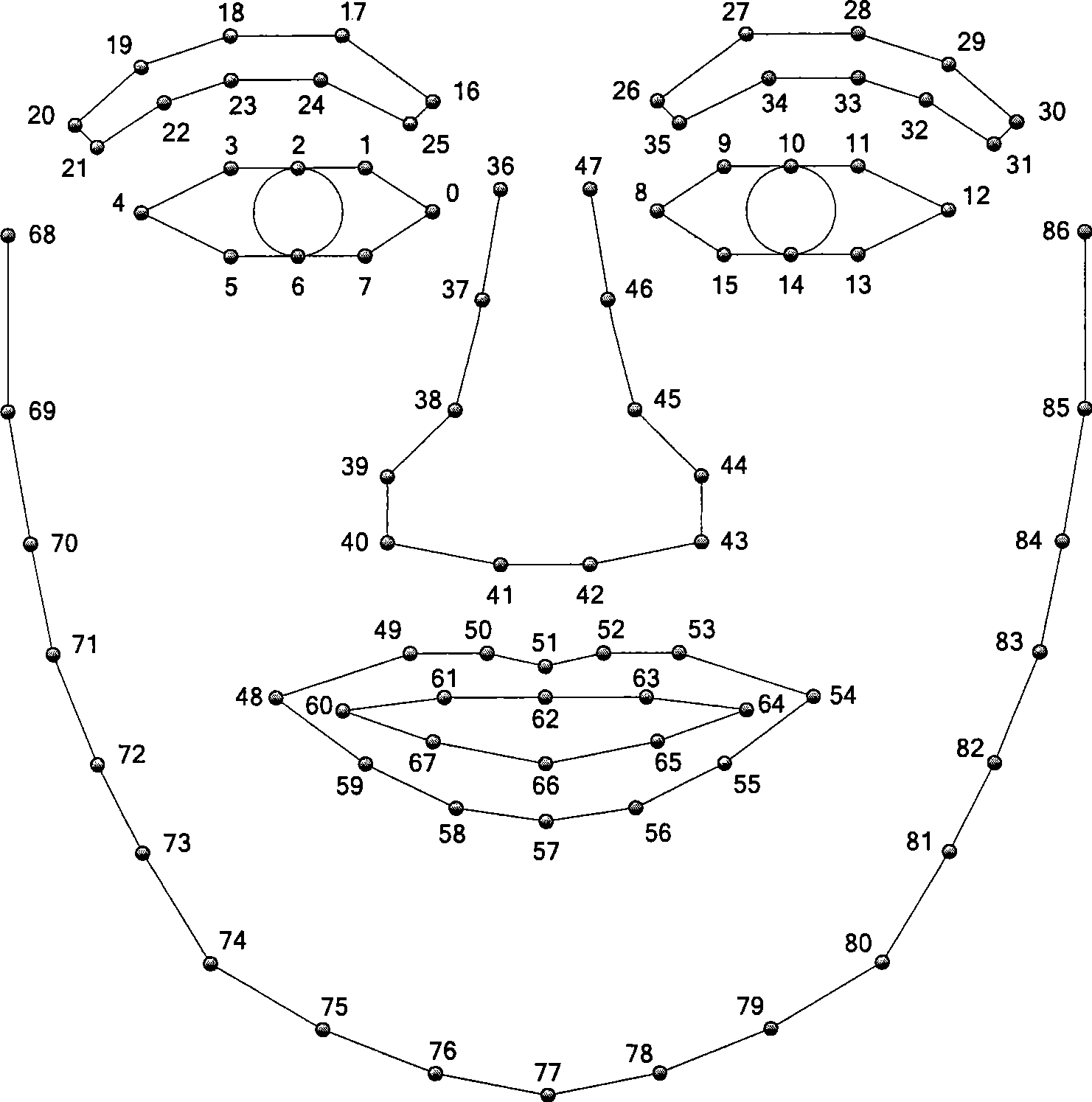

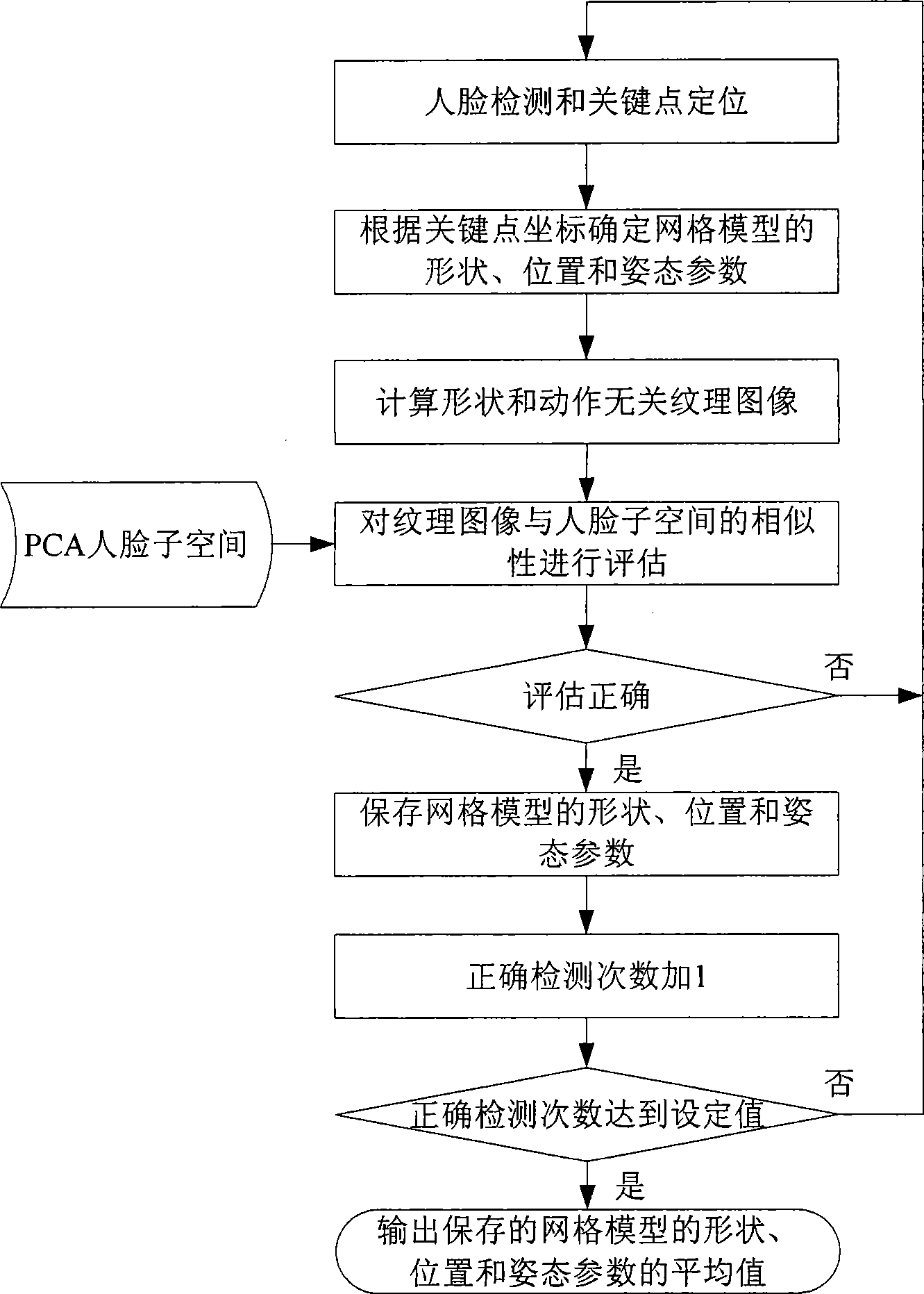

Three-dimensional human face action detecting and tracing method based on video stream

InactiveCN101499128AImplement automatic detectionGuaranteed robustnessCharacter and pattern recognitionPattern recognitionCrucial point

The invention provides a method for detecting and tracing three-dimension face action based on video stream. The method include steps as follows: detecting face and a key point position on the face; initializing the three-dimension deformable face gridding module and face texture module used for tracing; processing real time, continuous trace to the face position, gesture and face action in follow video image by using image registering with two modules; processing evaluation to the result of detection, location and tracking by using a PCl face sub-space, if finding the trace is interrupted, adopting measure to restore trace automatically. The method does not need to train special user, has wide head gesture tracing range and accurate face action detail, and has certain robustness to illumination and shelter. The method has more utility value and wide application prospect in the field, such as human-computer interaction, expression analysis, game amusement.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

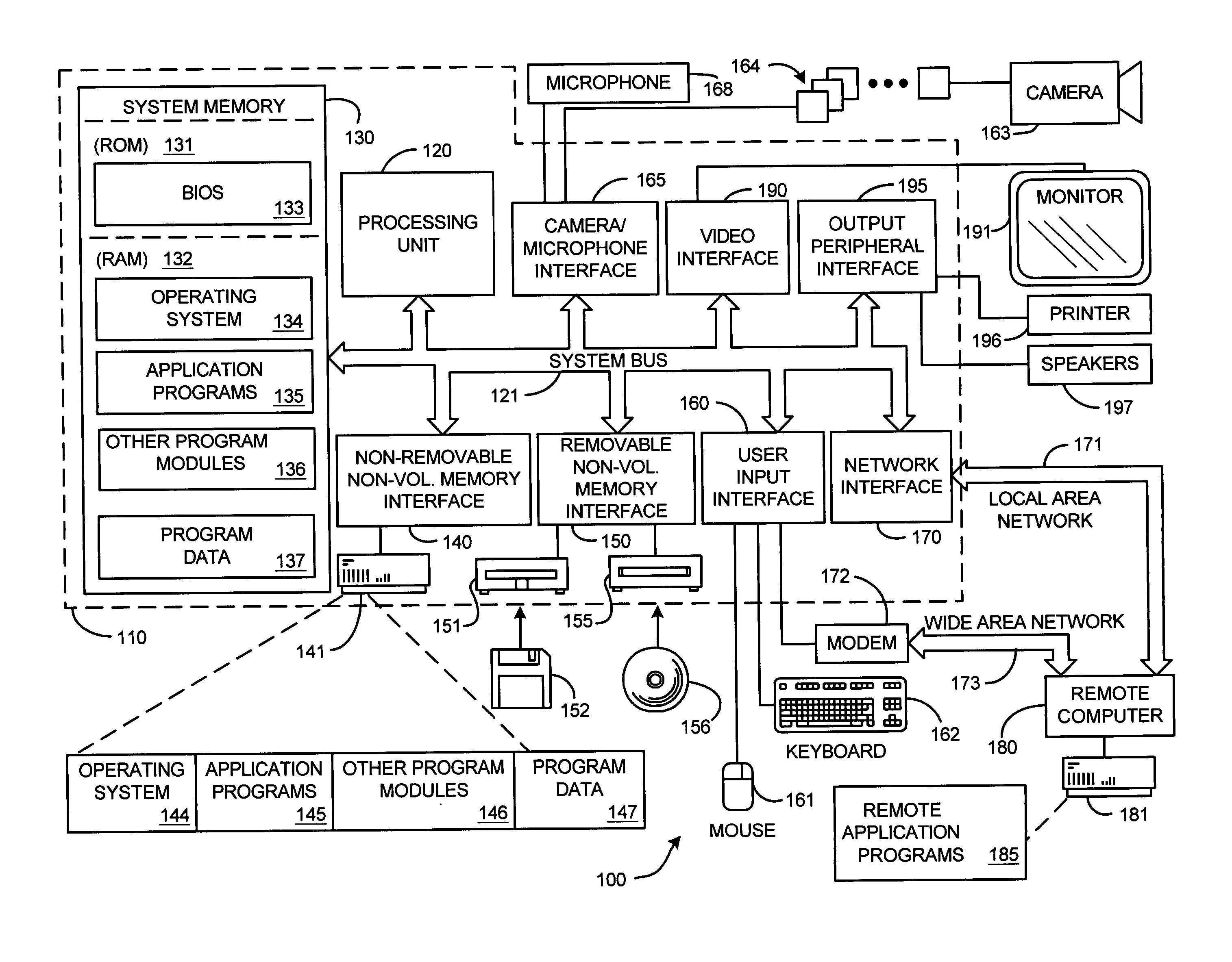

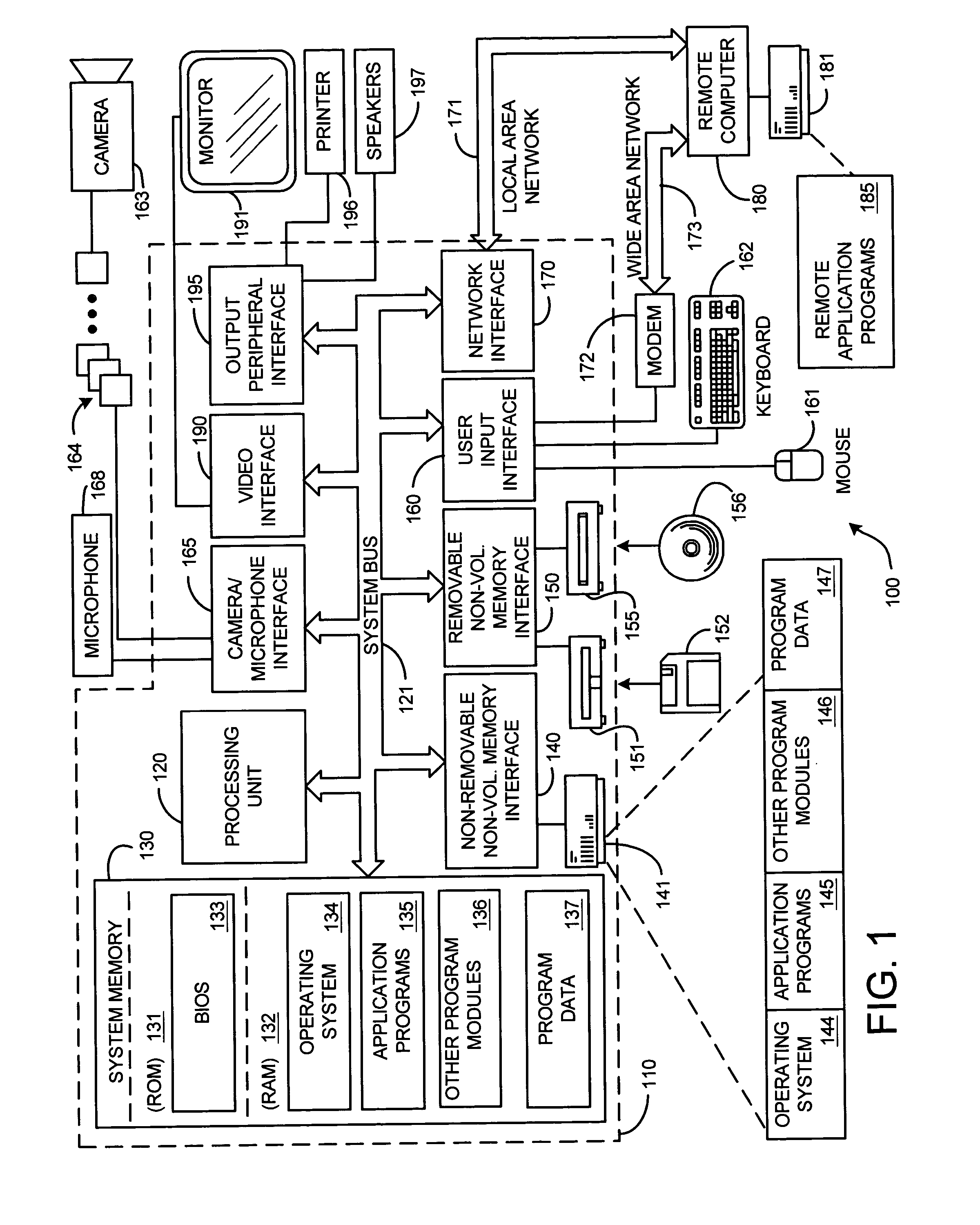

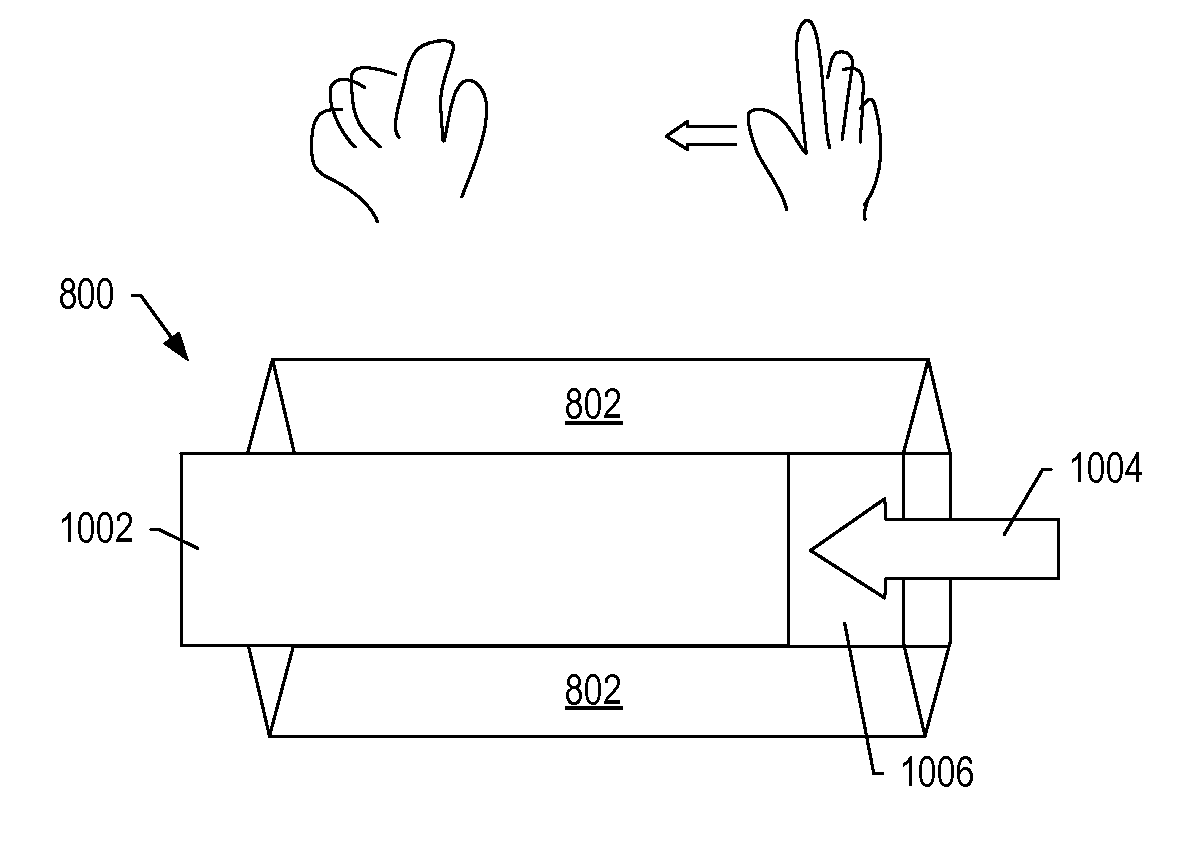

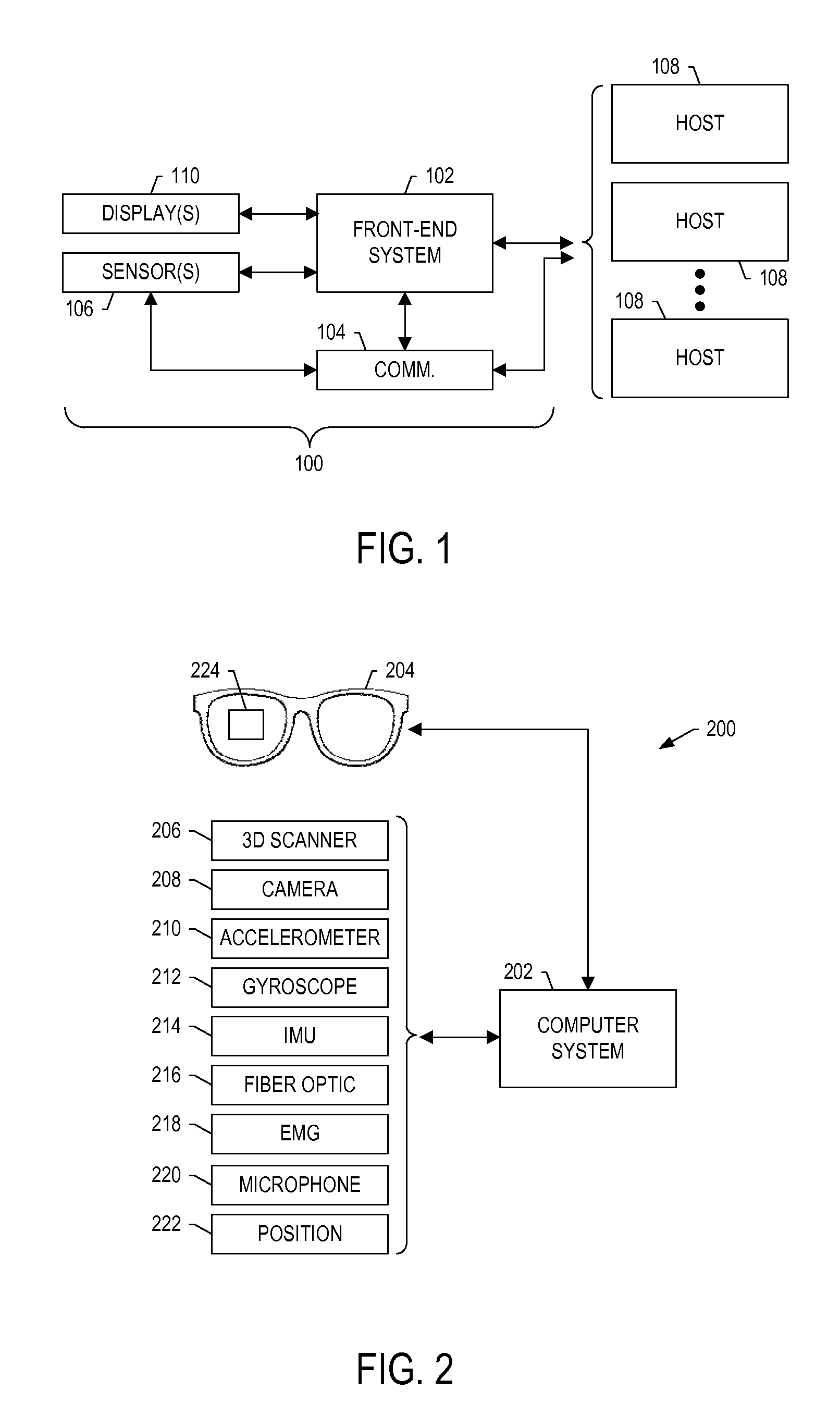

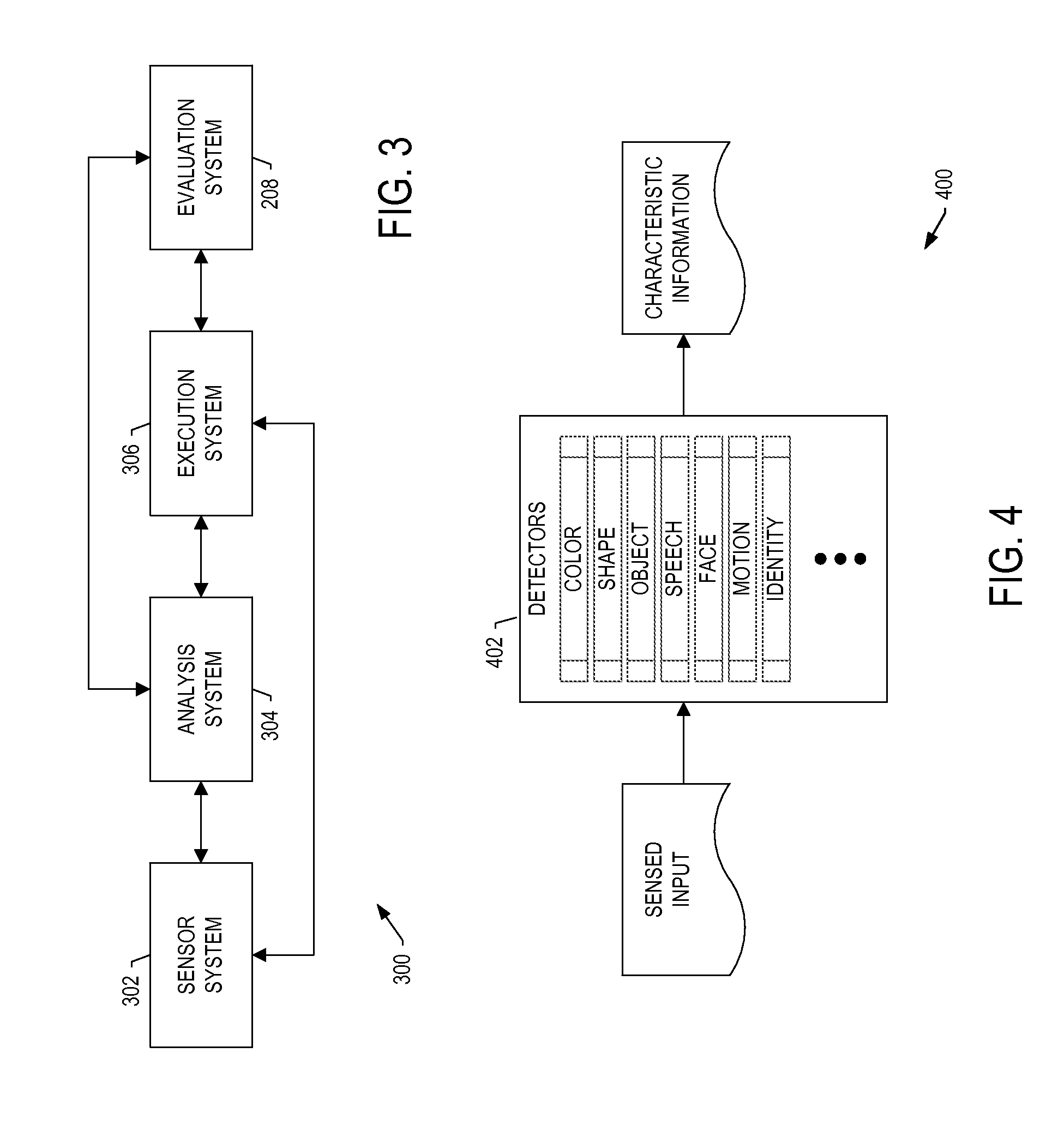

Ubiquitous natural user system for human-machine interaction

ActiveUS20140359540A1Input/output for user-computer interactionGraph readingEnd systemDisplay device

A system is provided that includes sensor(s) configured to provide sensed input including measurements of motion of a user during performance of a task, and in which the motion may include a gesture performed in one of a plurality of 3D zones in an environment of the user that are defined to accept respective, distinct gestures. A front-end system may receive and process the sensed input including the measurements to identify the gesture and from the gesture, identify operations of an electronic resource. The front-end system may identify the gesture based on the one of the plurality of 3D zones in which the gesture is performed. The front-end system may then form and communicate an input to cause the electronic resource to perform the operations and produce an output. And the front-end system may receive the output from the electronic resource, and communicate the output to a display device.

Owner:THE BOEING CO

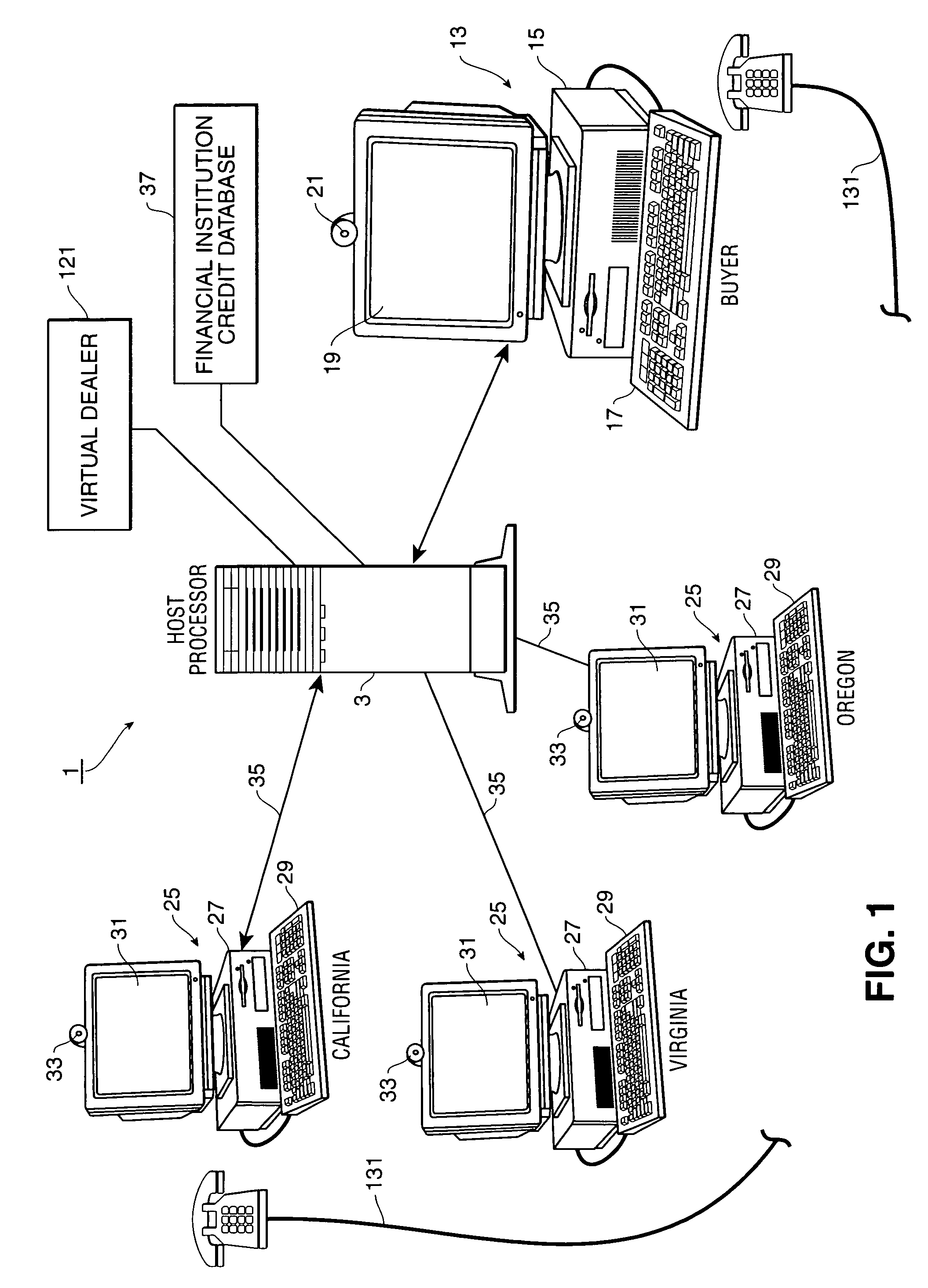

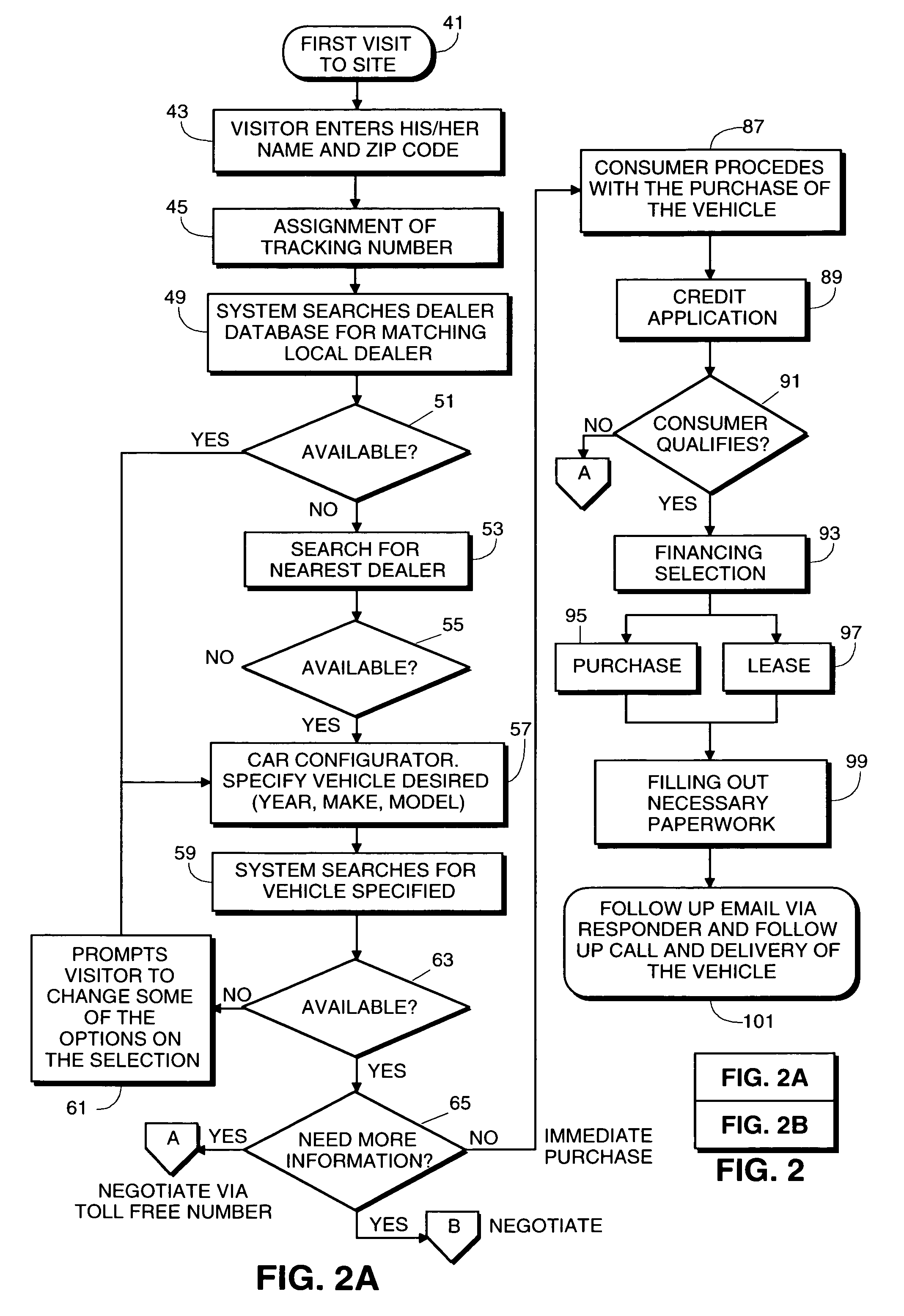

Computer system and method for negotiating the purchase and sale of goods or services using virtual sales

InactiveUS7596509B1Improve relationshipBuying/selling/leasing transactionsReal-time dataThe Internet

A computer and human interactive system and method for negotiating the purchase and sale of goods or services is provided. The system includes a plurality of primary seller terminals and at least one virtual seller terminal which are connected over a network, preferably the Internet, by a host processor to buyer terminals. The host processor connects buyers to sellers of goods according to information provided by the buyer such as the buyer's location and / or goods or services to be purchased. Where a primary dealer is unavailable to complete purchasing transactions, such as where the seller has closed for the day, a virtual seller is connected to the buyer to complete the purchasing transaction. Once a primary seller or virtual seller has been selected to complete a purchasing transaction with a buyer, the buyer and seller are connected by a real time data communications connection and a real time speech communications connection so that the buyer and seller may negotiate and complete a purchasing transaction.

Owner:NEW HOPE TRUST - ANNA & JEFF BRYSON TRUSTEES

Hand movement tracking system and tracking method

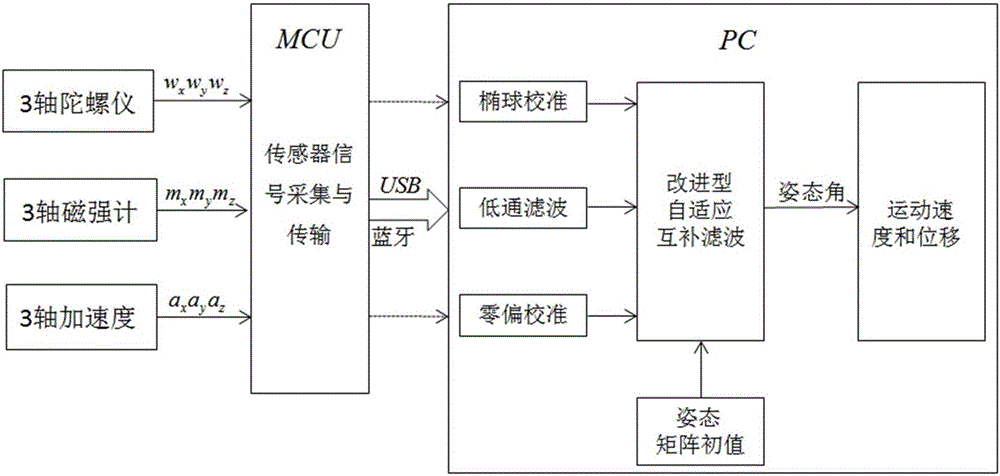

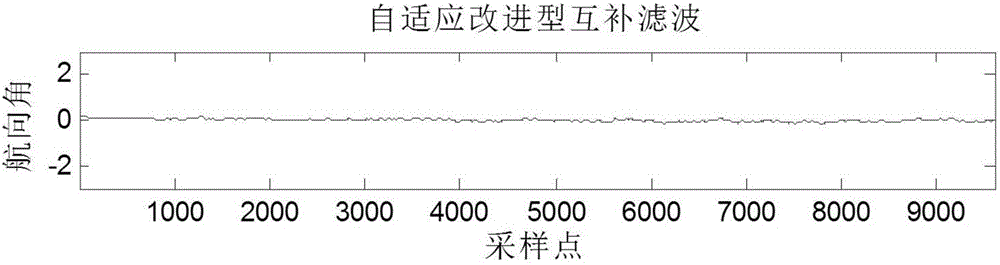

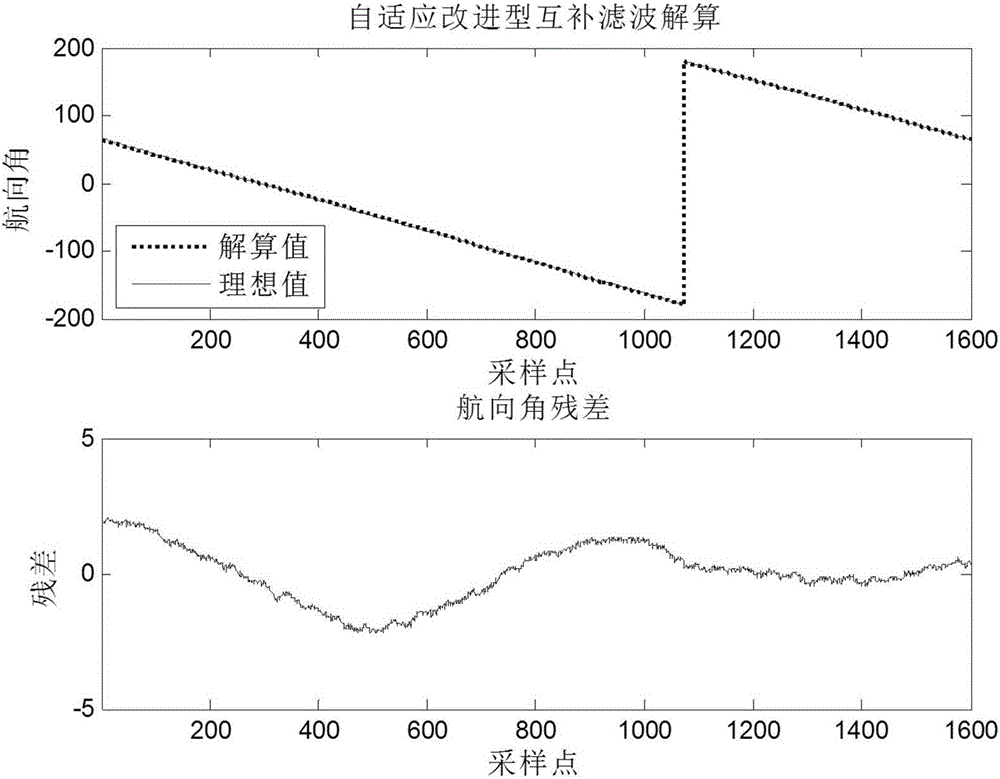

ActiveCN106679649AAttitude Calculation ImprovementsAccurate solutionNavigation by terrestrial meansNavigation by speed/acceleration measurementsHand movementsLow-pass filter

The invention discloses a hand movement tracking system and a hand movement tracking method. The invention comprises an attitude and heading reference system based on an accelerometer, a gyroscope and a magnetic sensor, and a hand movement tracking method based on the attitude and heading reference system. The hand movement tracking method comprises the following steps: firstly, obtaining a triaxial acceleration measured by the accelerometer, a triaxial angular velocity measured by the gyroscope and a triaxial magnetic-field component measured by the magnetic sensor, performing error compensation on the magnetic sensor by adopting a least square method to establish an error model after an upper computer receives sensor data, eliminating high-frequency noise of the triaxial acceleration by virtue of a window low-pass filter, and establishing an error model for the gyroscope so as to perform error compensation on random drift of the gyroscope; secondly, effectively integrating the gyroscope, the accelerometer and the magnetic sensor by virtue of an improved adaptive complementary filtering algorithm to obtain an attitude angle and a path angle; and finally, performing gravity compensation and discrete digital integration on acceleration signals to obtain a velocity and a track of a hand movement. The tracking system and the tracking method disclosed by the invention can be applied to a man-machine interactive system, is convenient to operate, and is strong in experience feeling.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com