Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

2242 results about "Action recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

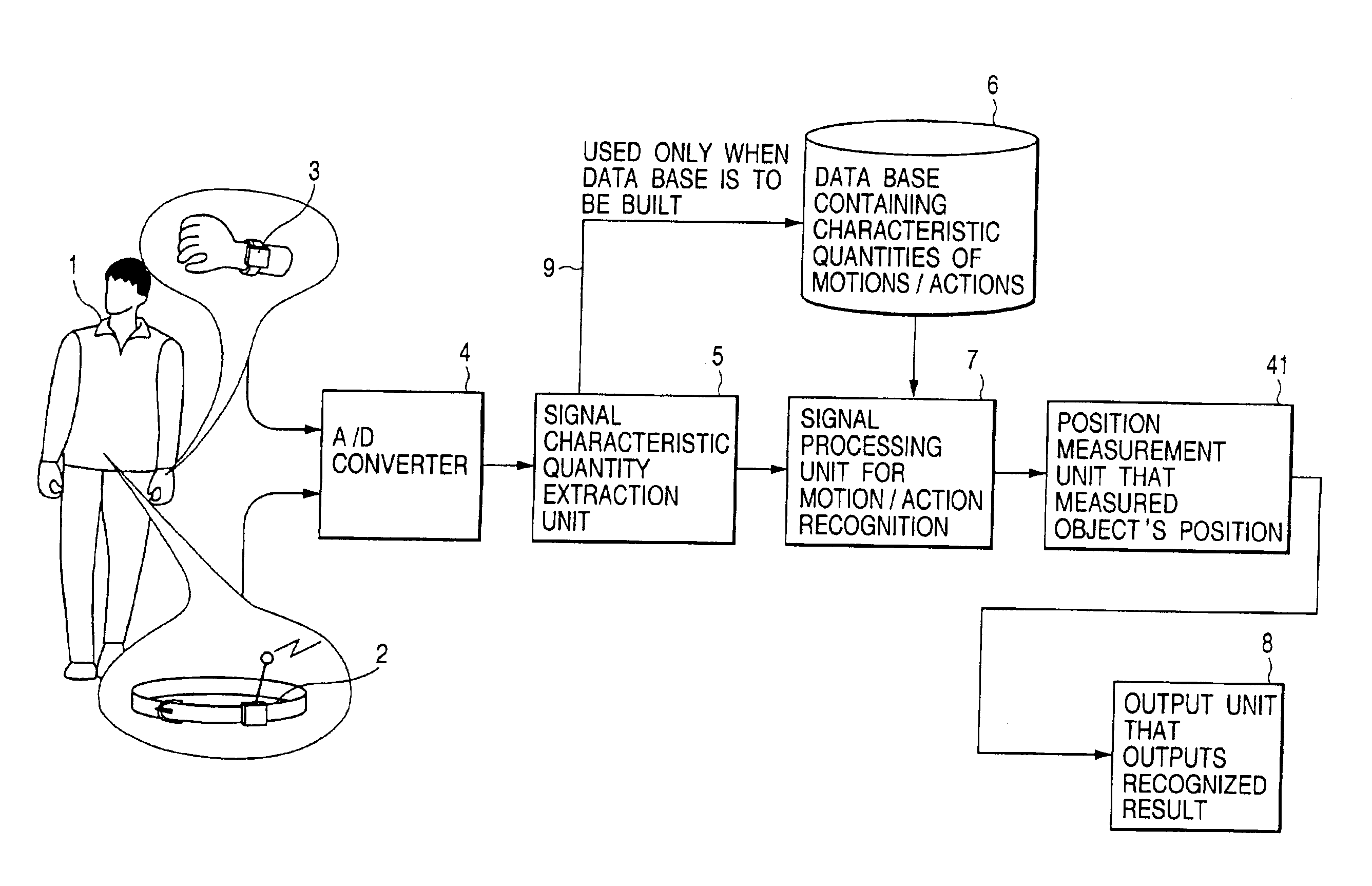

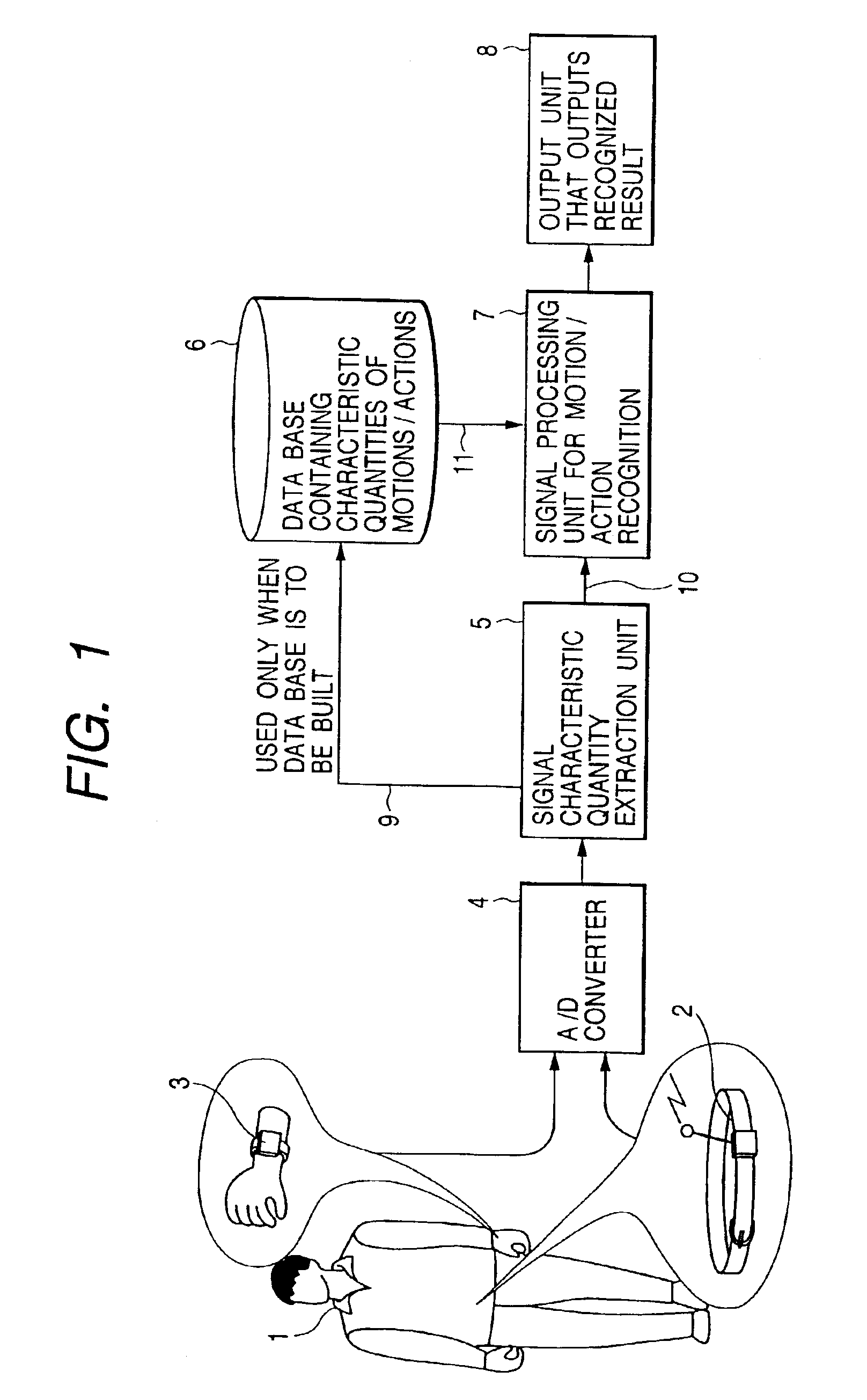

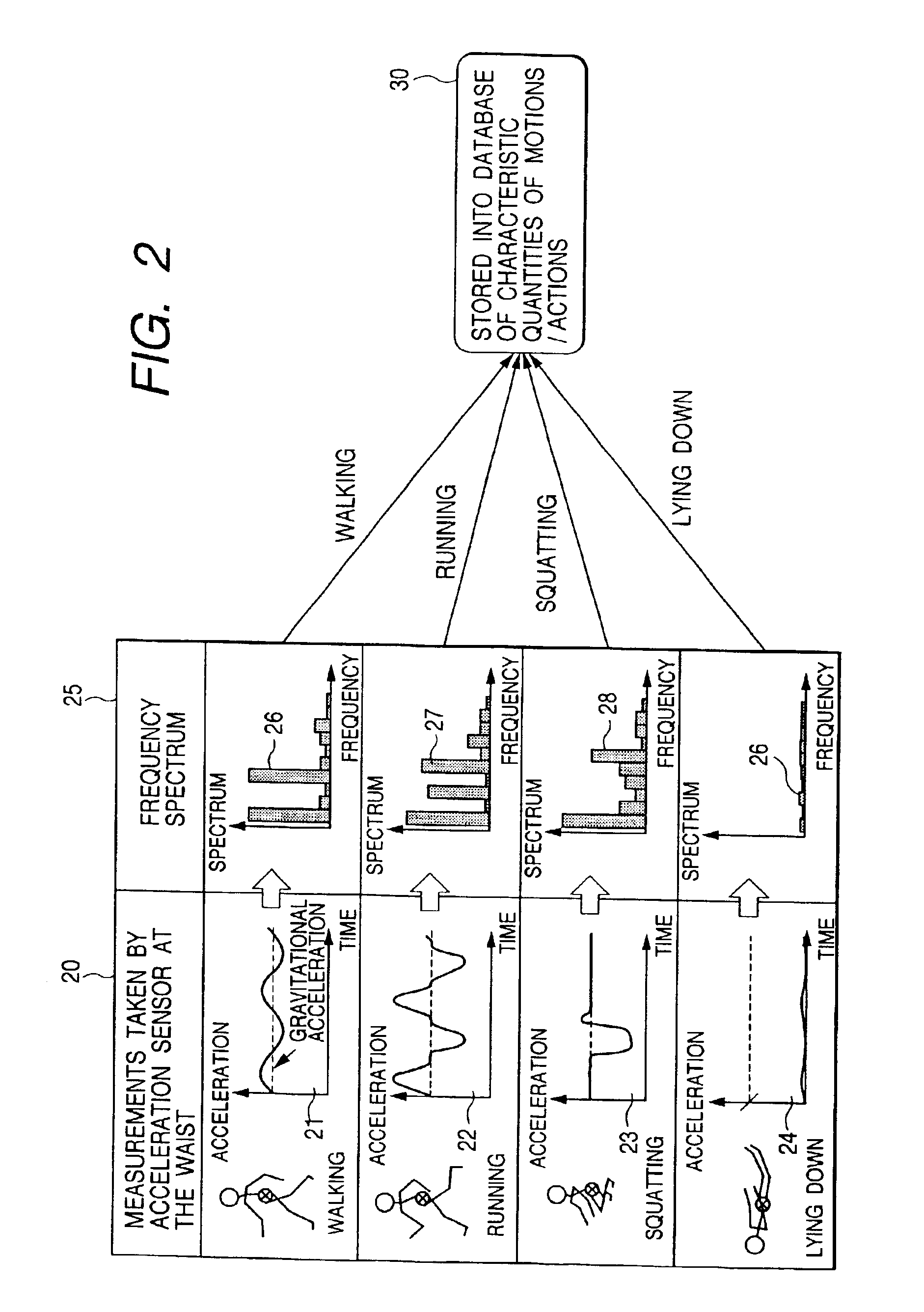

Method, apparatus and system for recognizing actions

InactiveUS6941239B2More precisionFreedom of movementInput/output for user-computer interactionGymnastic exercisingPhysical medicine and rehabilitationState variation

An object of the present invention is to provide a method, an apparatus and a system for automatically recognizing motions and actions of moving objects such as humans, animals and machines.Measuring instruments are attached to an object under observation. The instruments measure a status change entailing the object's motion or action and to issue a signal denoting the measurements. A characteristic quantity extraction unit extracts a characteristic quantity from the measurement signal received which represents the motion or action currently performed by the object under observation. A signal processing unit for motion / action recognition correlates the extracted characteristic quantity with reference data in a database containing previously acquired characteristic quantities of motions and actions. The motion or action represented by the characteristic quantity with the highest degree of correlation is recognized and output.

Owner:APPLE INC

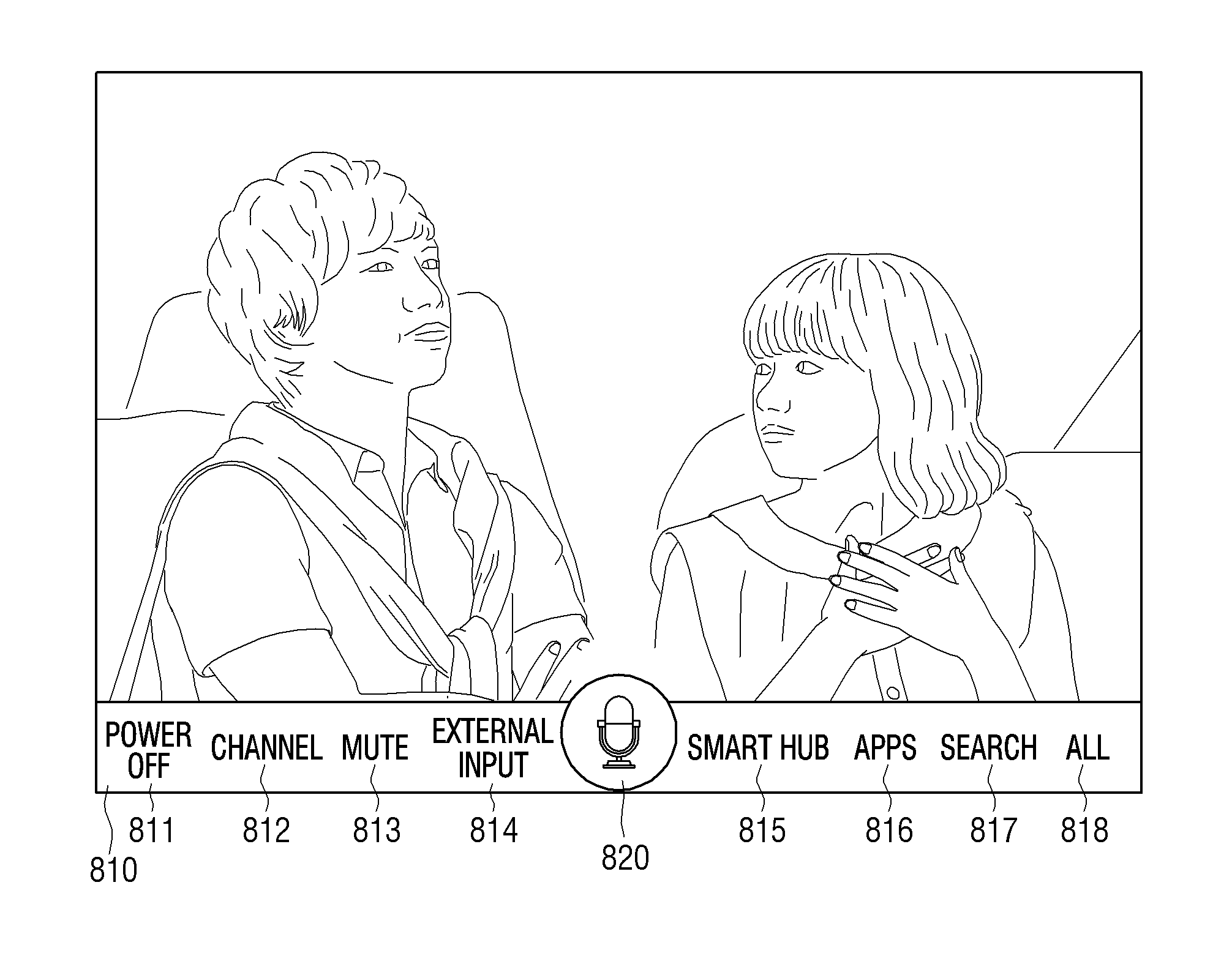

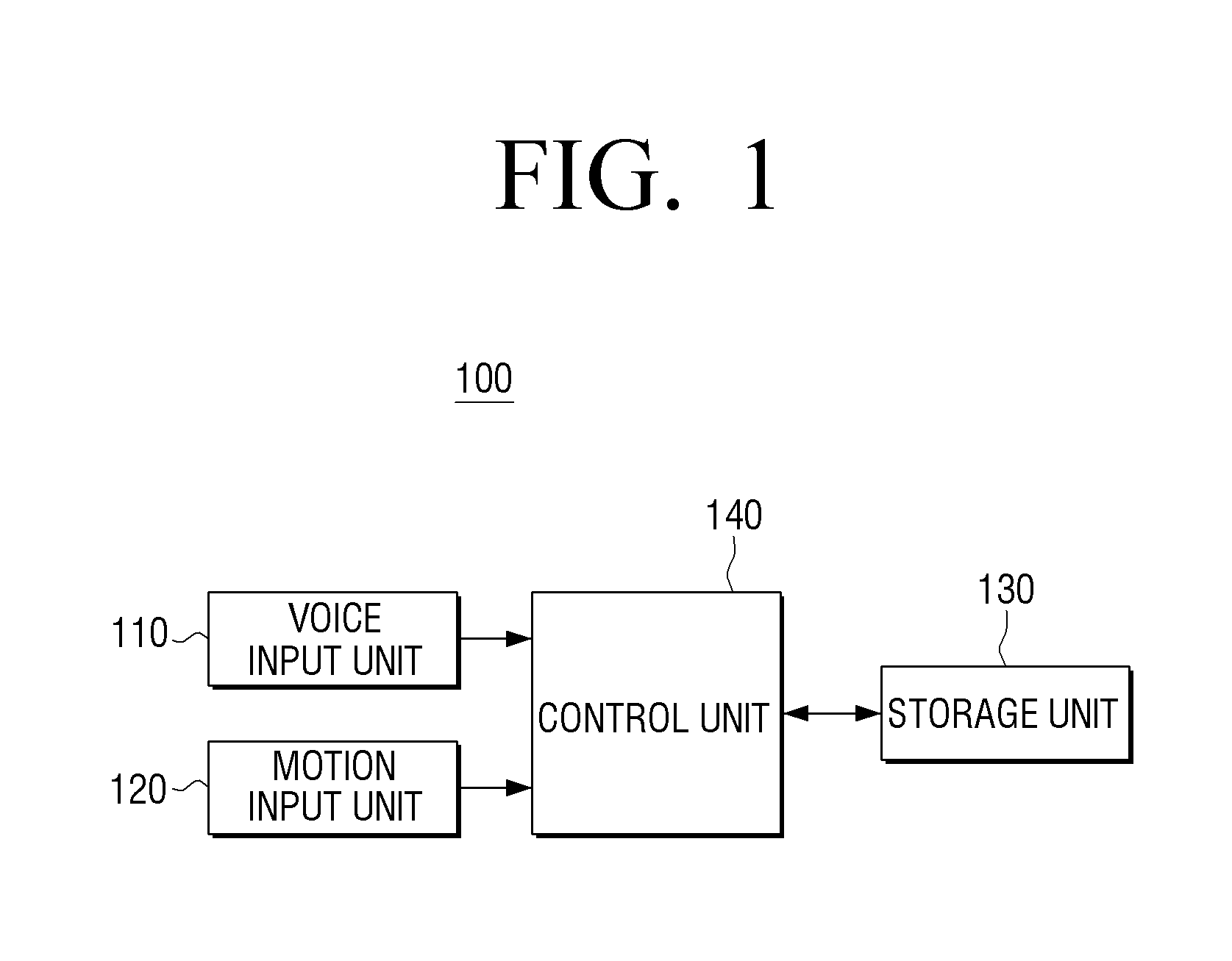

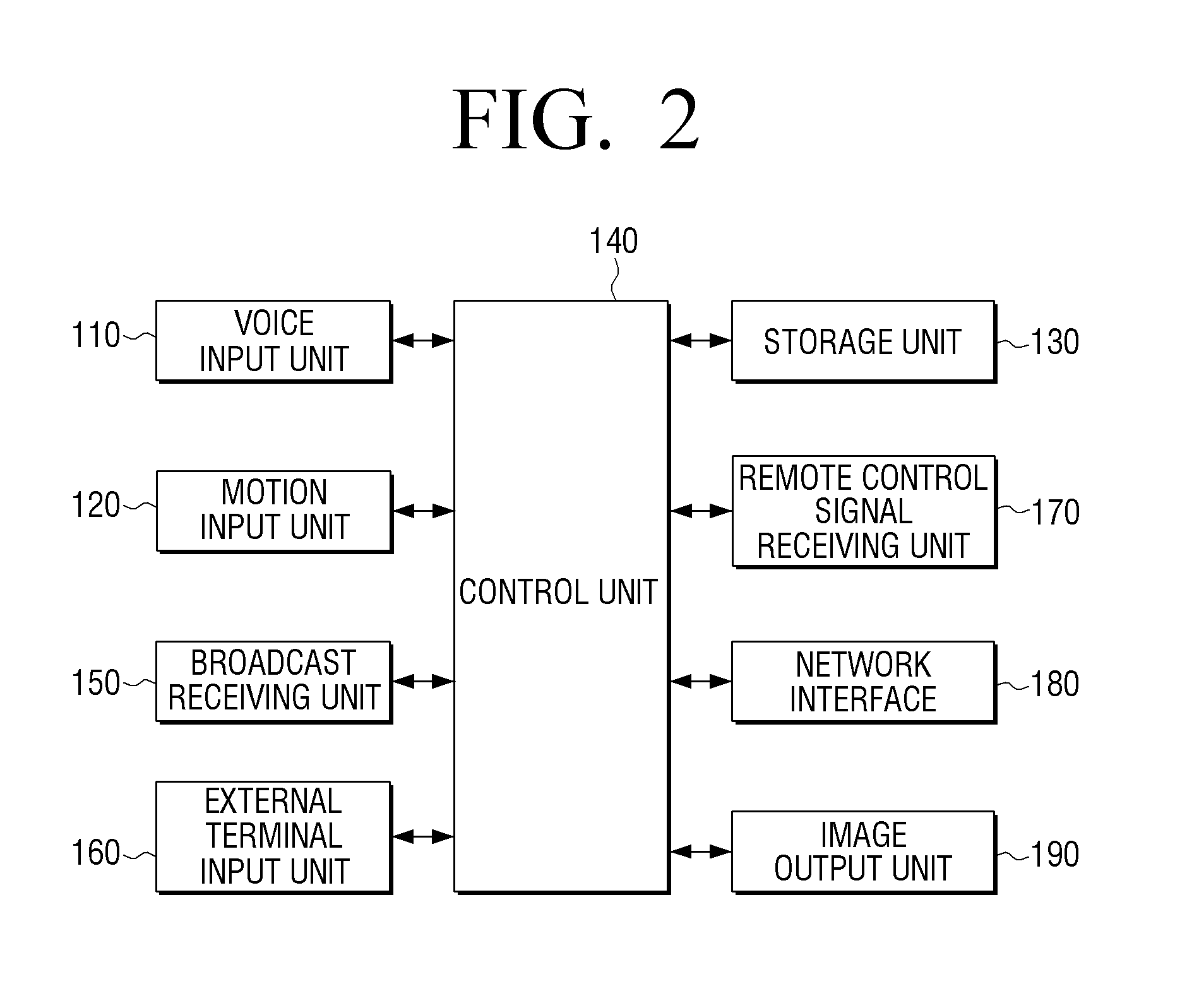

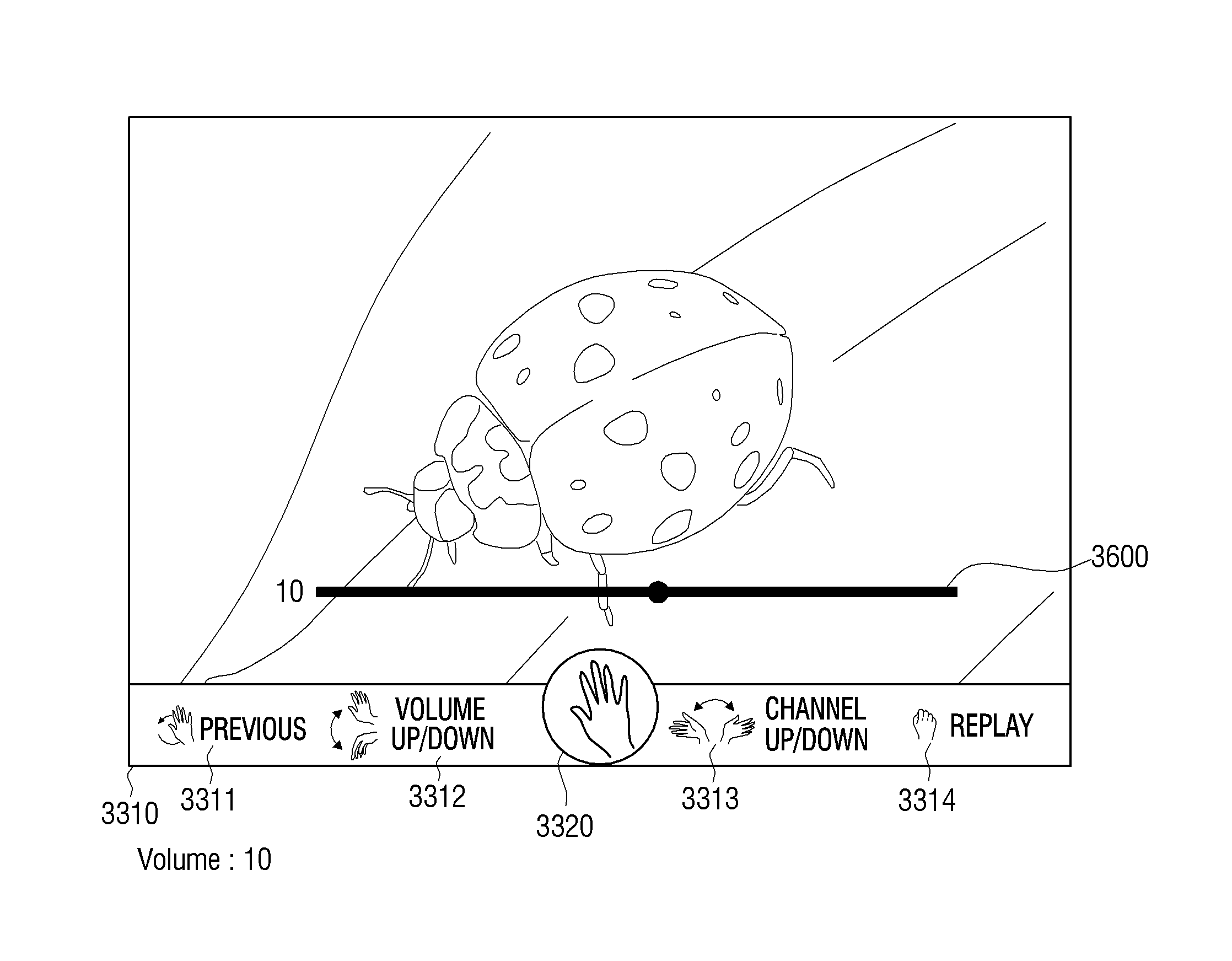

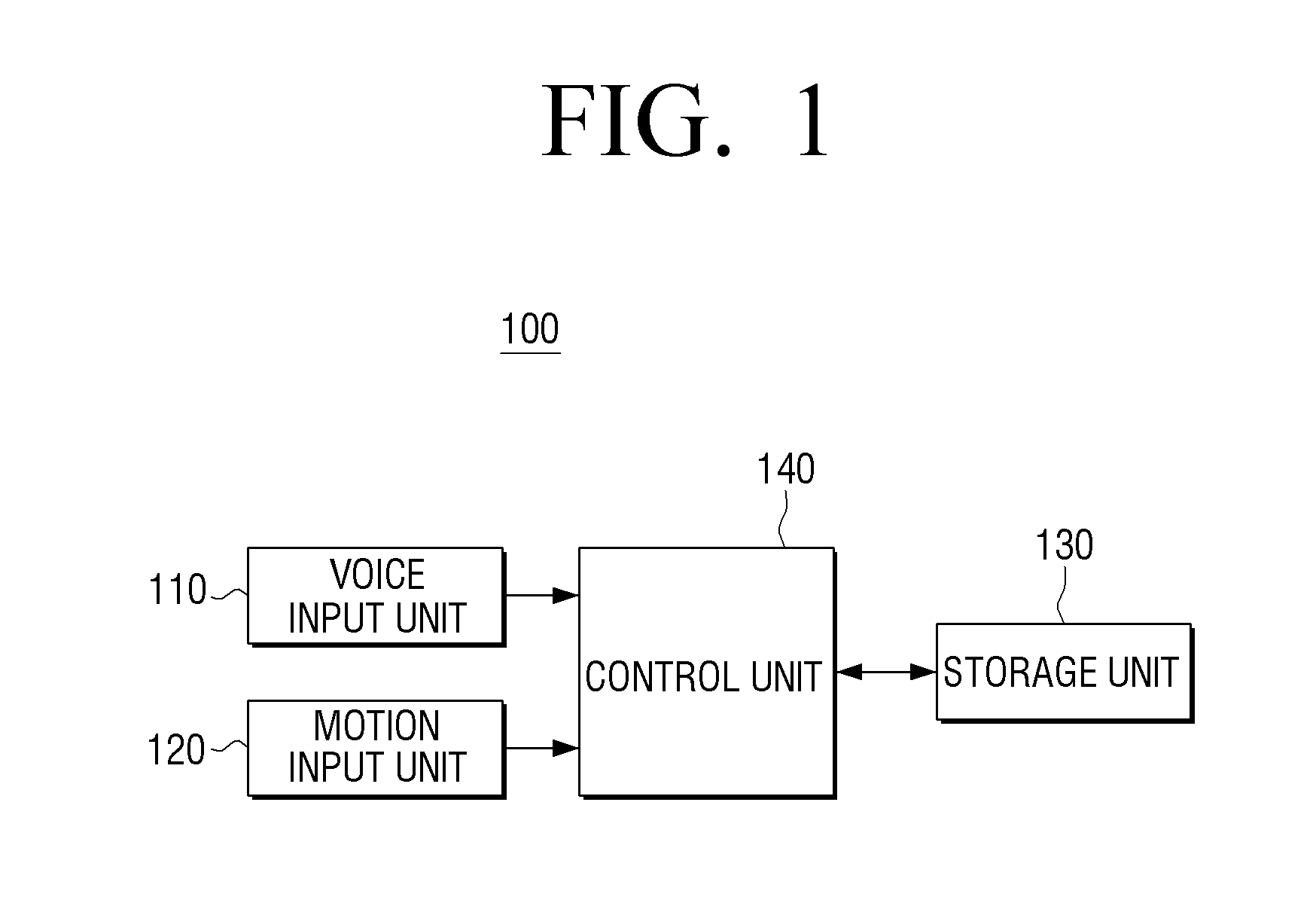

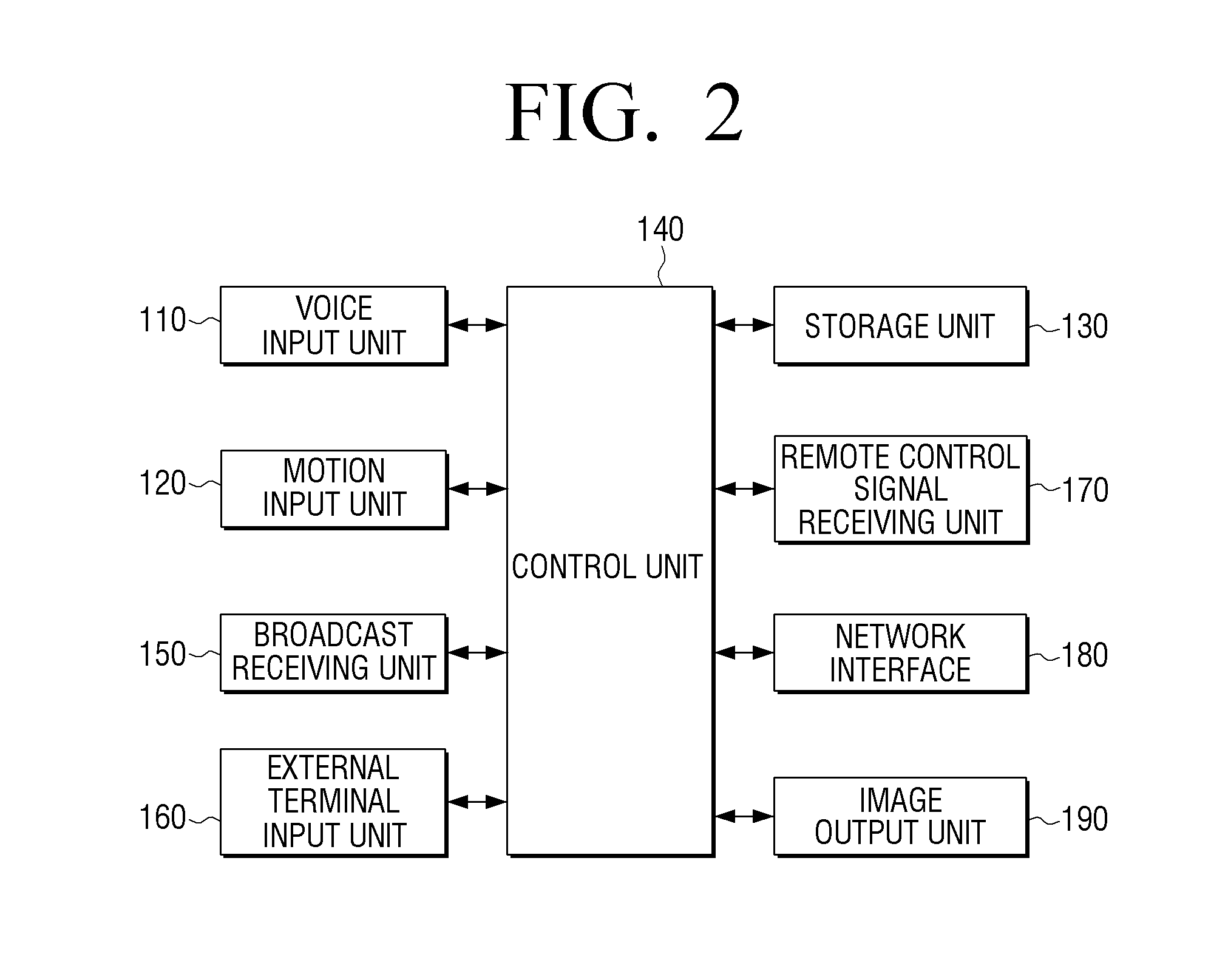

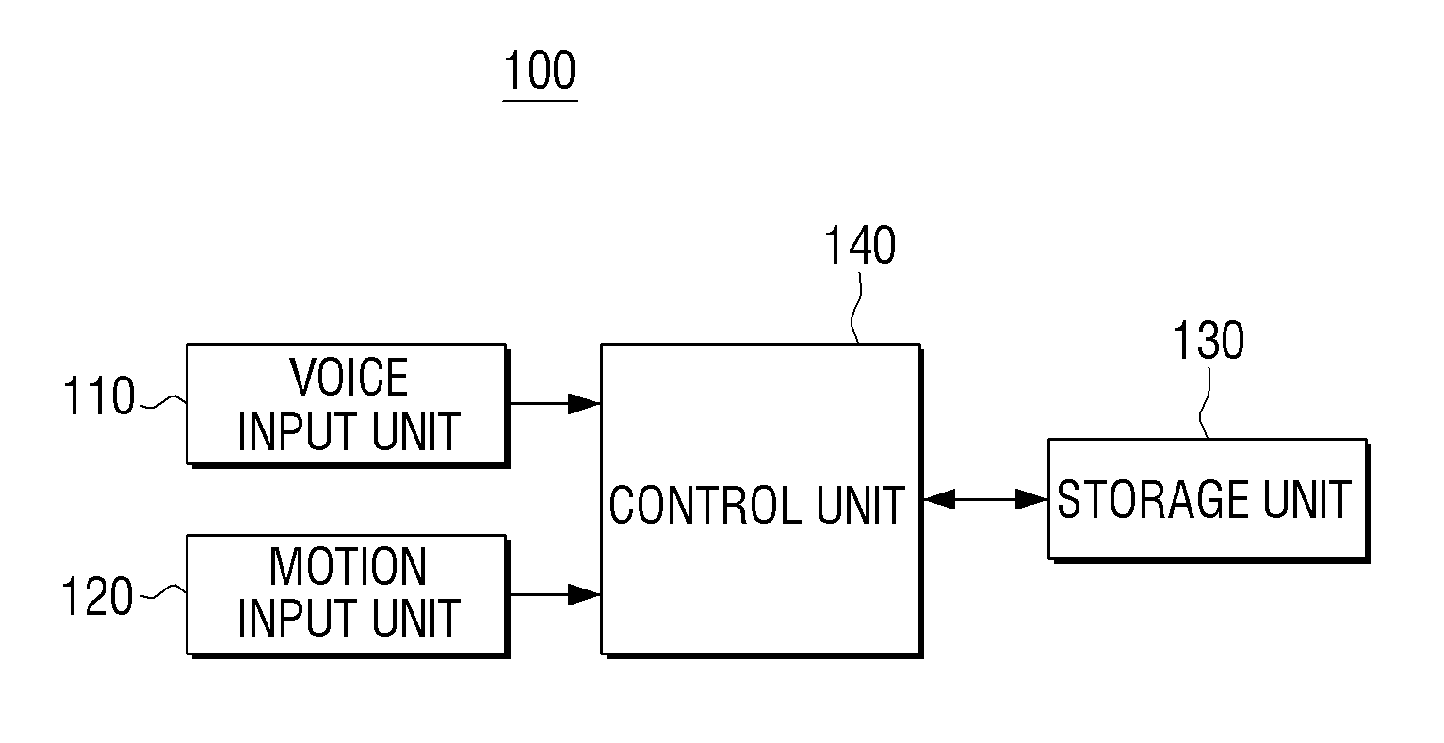

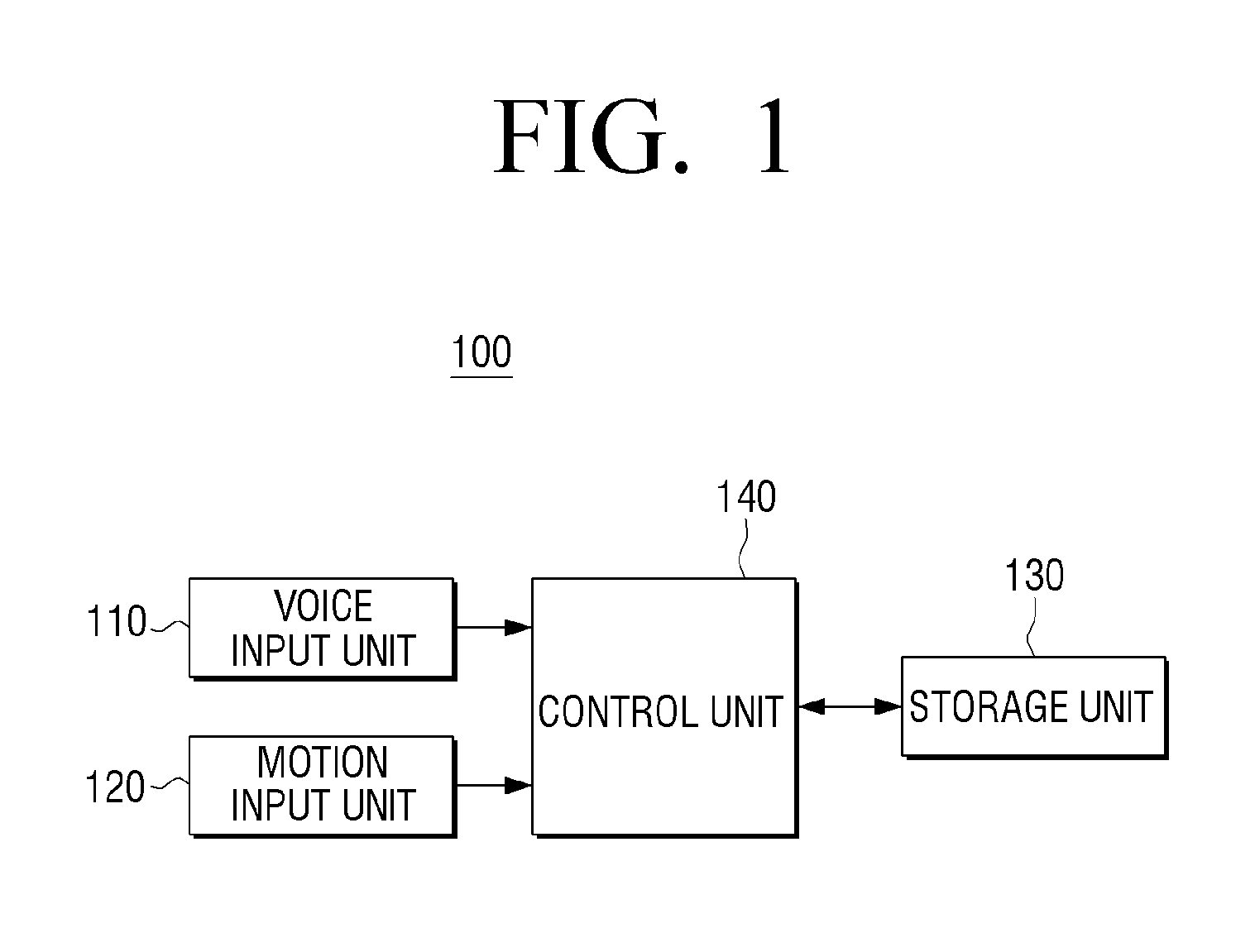

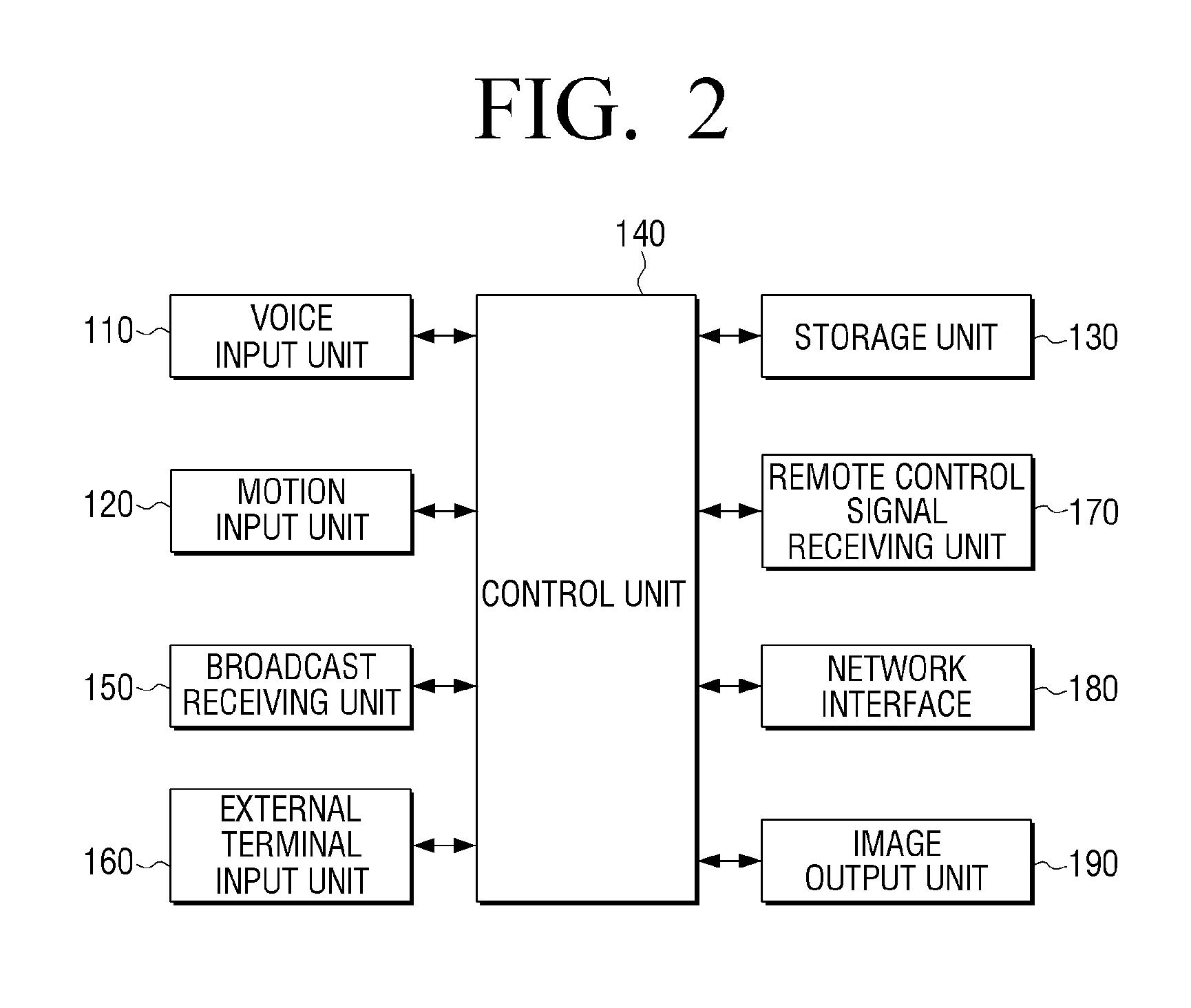

Method for controlling electronic apparatus based on voice recognition and motion recognition, and electronic apparatus applying the same

ActiveUS20130033643A1Television system detailsTransmission systemsComputer hardwareMotion recognition

A method for controlling an electronic apparatus which uses voice recognition and motion recognition, and an electronic apparatus applying the same are provided. In a voice task mode, in which voice tasks are performed according to recognized voice commands, the electronic apparatus displays voice assistance information to assist in performing the voice tasks. In a motion task mode, in which motion tasks are performed according to recognized motion gestures, the electronic apparatus displays motion assistance information to aid in performing the motion tasks.

Owner:SAMSUNG ELECTRONICS CO LTD

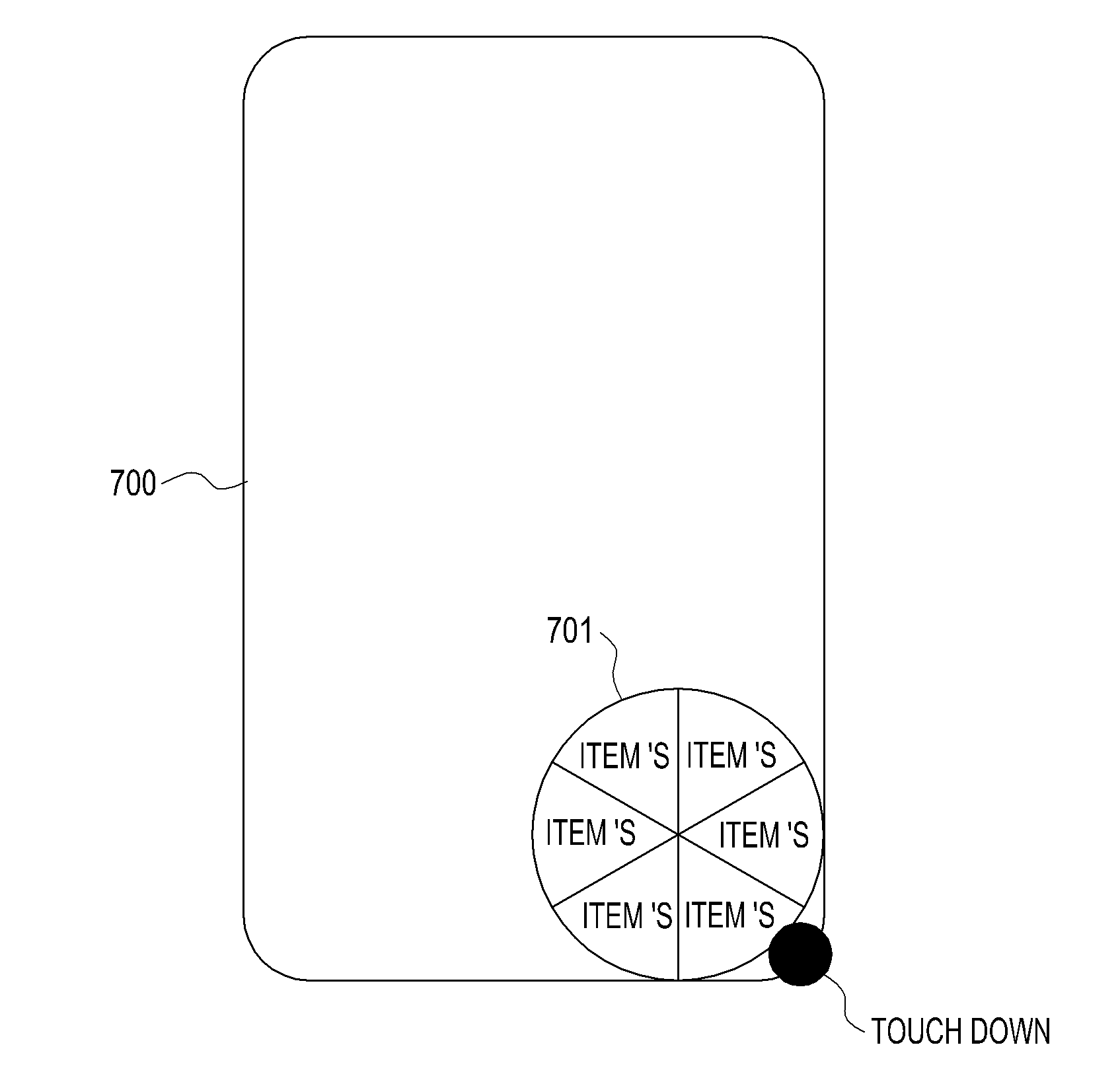

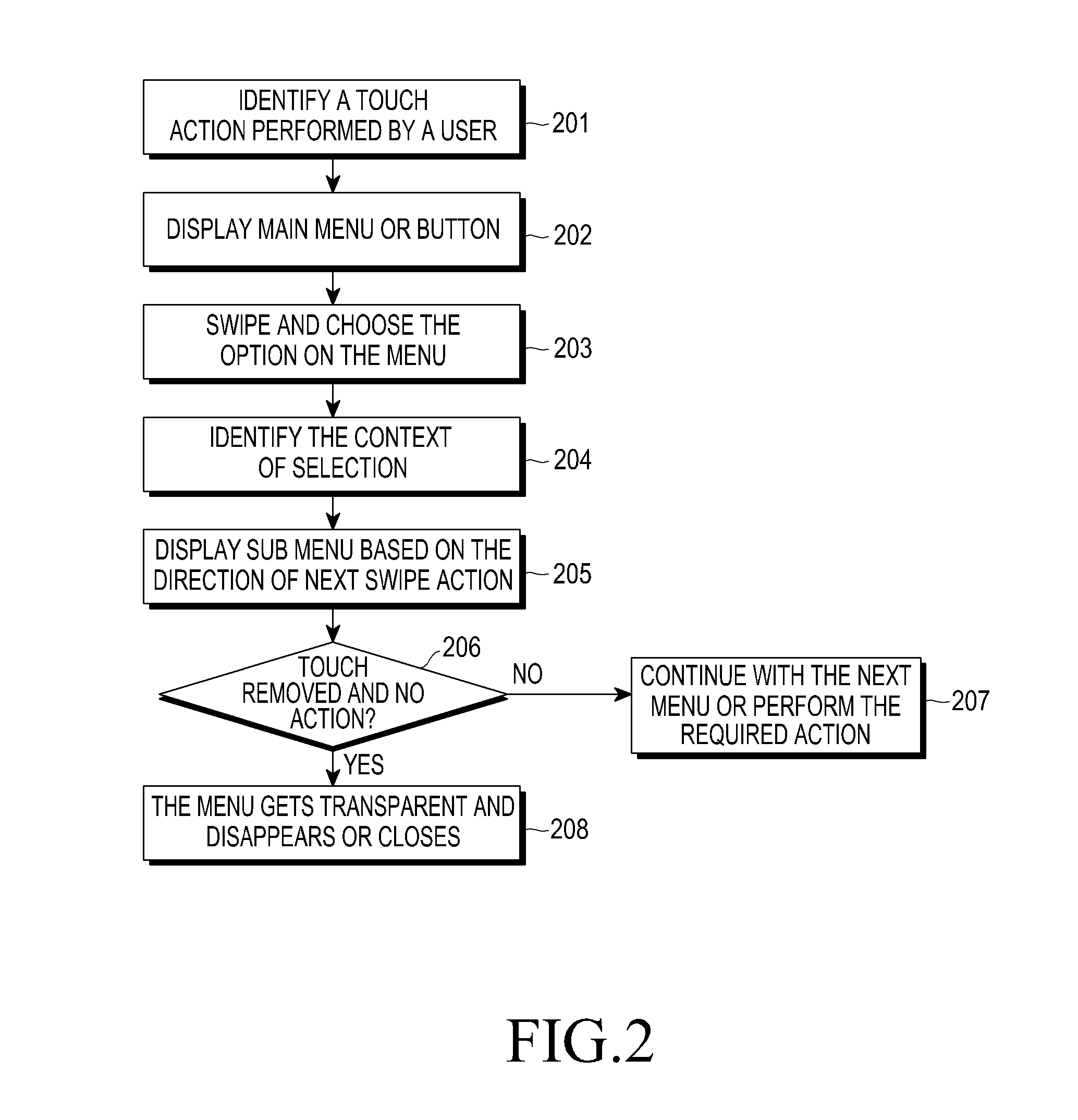

User interface for touch and swipe navigation

InactiveUS20130212529A1Remove complexityRapidly and simply allowingInput/output for user-computer interactionGraph readingDisplay deviceTouchscreen

A method and device to create a user interface for touch and swipe navigation in a touch sensitive mobile device is provided. A touch action performed by a user is identified on the touch screen display, a context related to the touch action is identified, a menu is displayed based on the identified context, and a menu option corresponding to direction of swipe performed onto the menu is selected from among options of the menu, without removing the touch.

Owner:SAMSUNG ELECTRONICS CO LTD

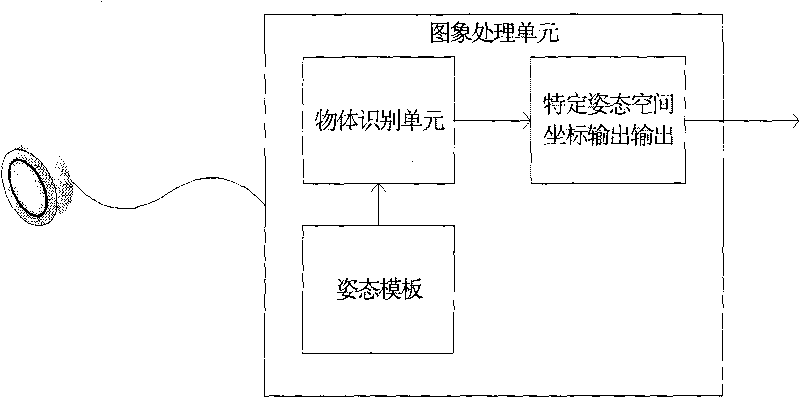

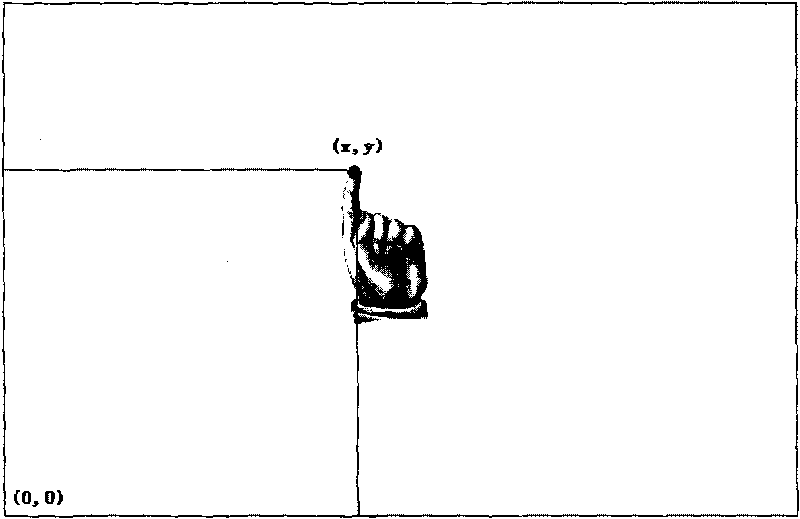

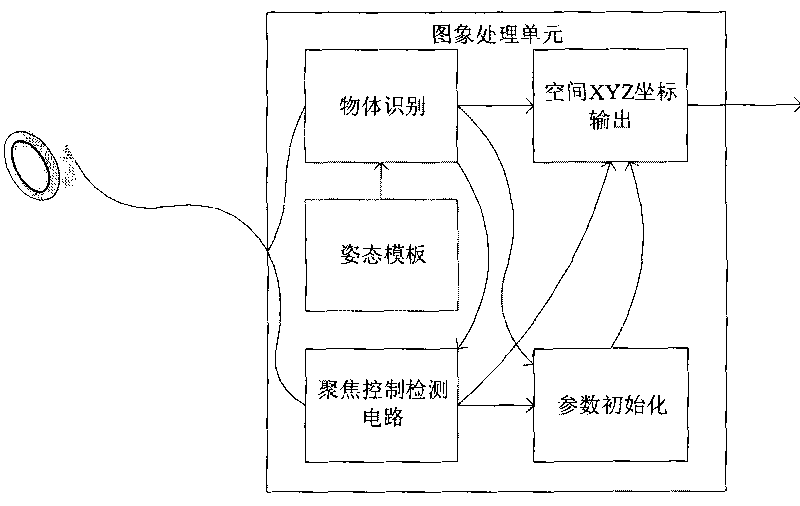

Remote control method for television and system for remotely controlling television by same

ActiveCN101729808ASimple and user-friendly remote control operationAdd entertainment functionTelevision system detailsColor television detailsOperational systemRemote control

The invention discloses a remote control method for a television and a system for remotely controlling the television by same. The remote control method for the television is characterized by comprising the following steps: giving a specific gesture to a camera by an operator; transmitting the acquired specific gesture by the camera to a three-dimensional motion recognition module in the television for the three-dimensional motion and gesture recognition; acquiring three-dimensional motion coordinates of the specific gesture by the module, and outputting a control signal; executing corresponding programs according to the control signal by an executing device in the television. By utilizing the remote control method for the television, the remote control operation on the television can be performed by the gesture; and the remote control operation on the television becomes simpler and more humanized by means of a corresponding remote control operation system. In particular, the recreation functions which can be realized on a computer originally can be finished on the television without a mouse, a keyboard and other peripheral equipment of the computer based on the platform.

Owner:TCL CORPORATION

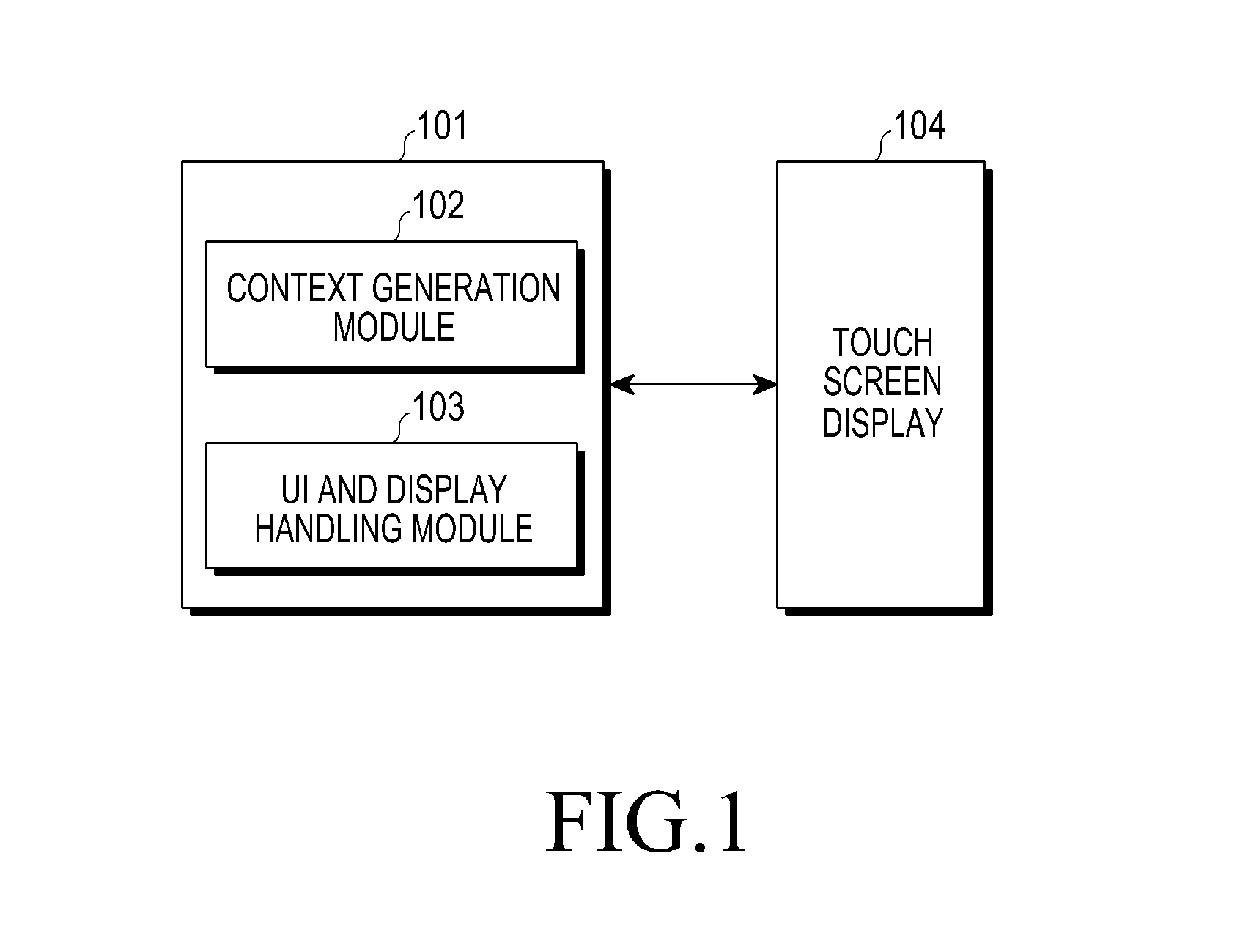

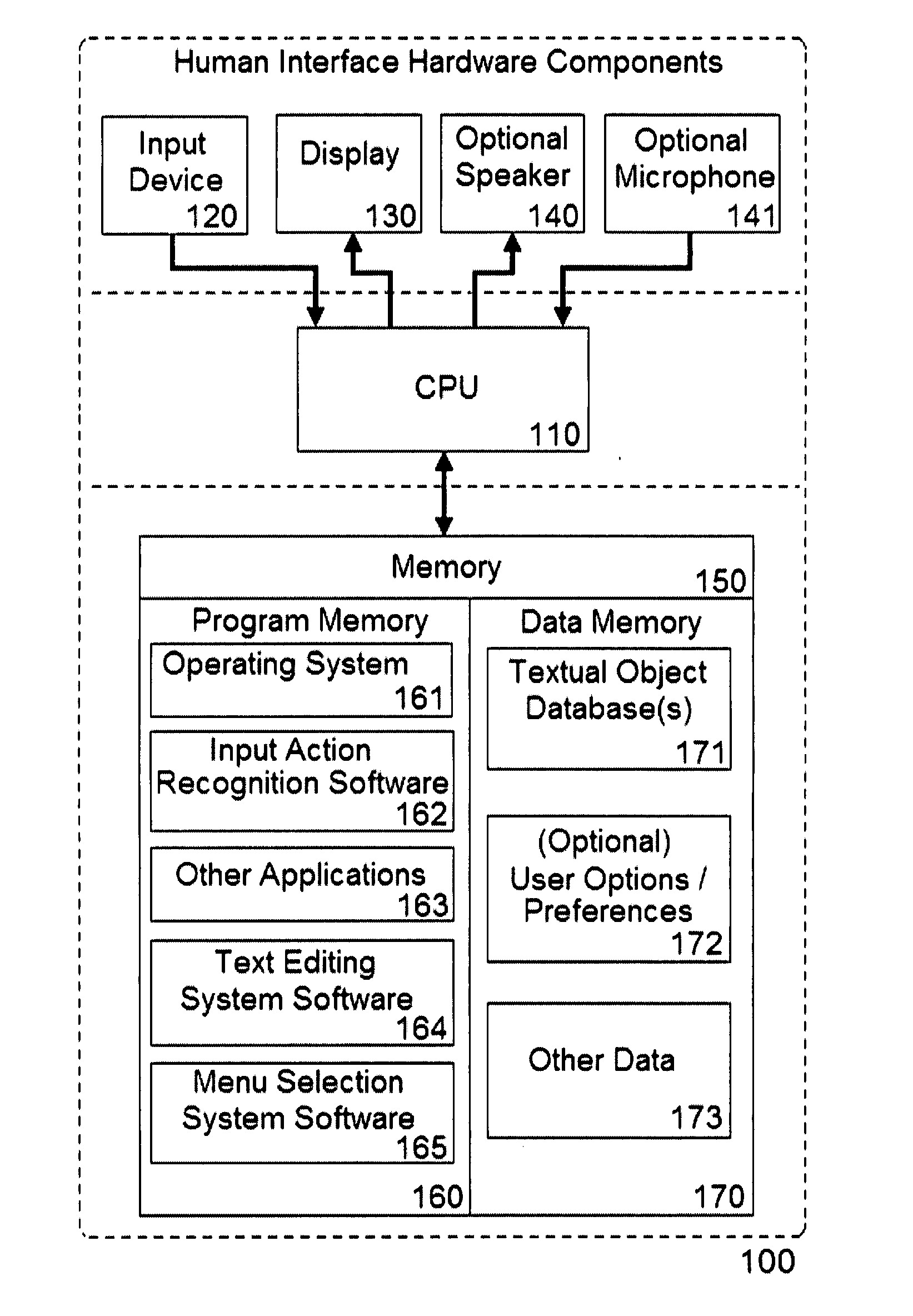

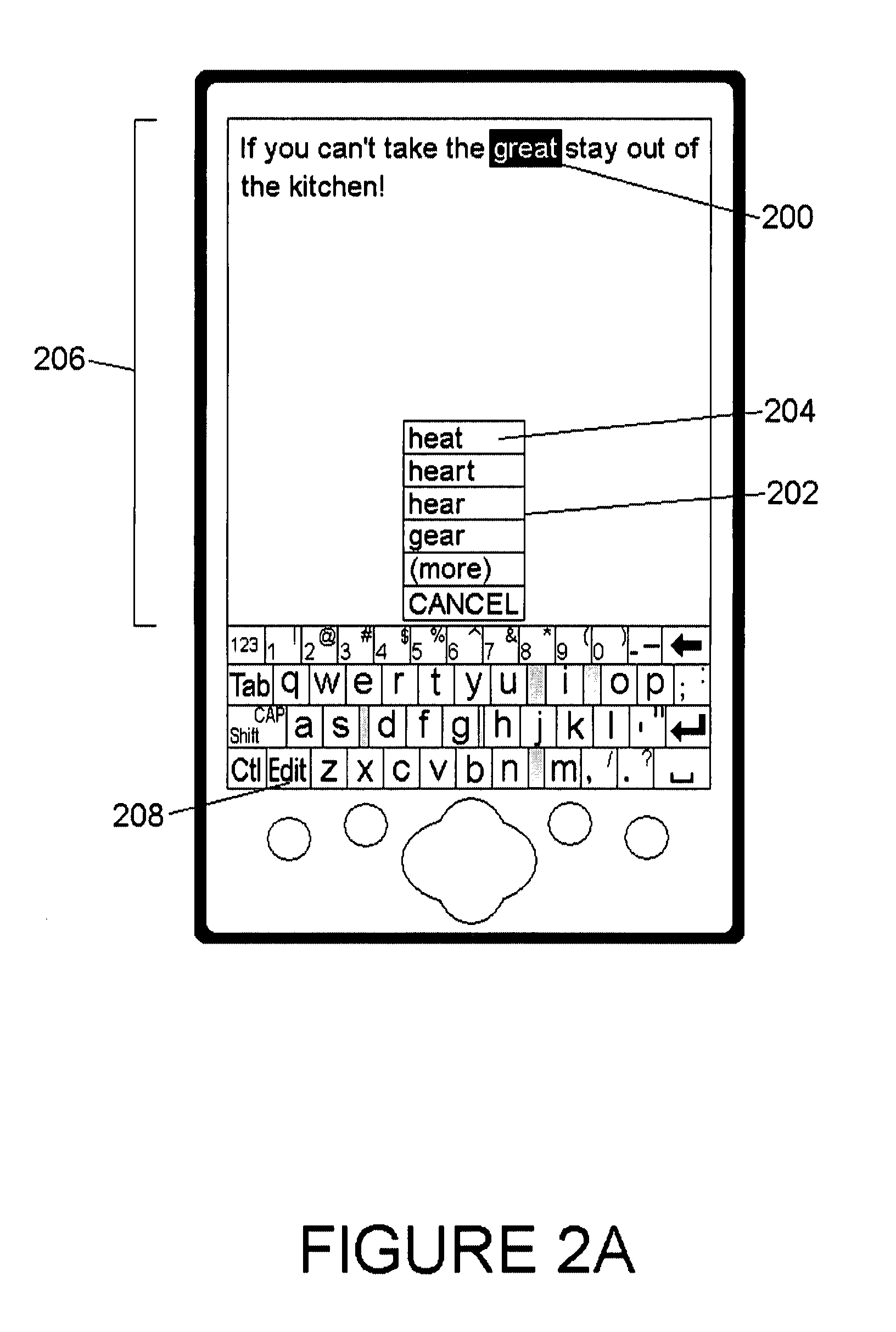

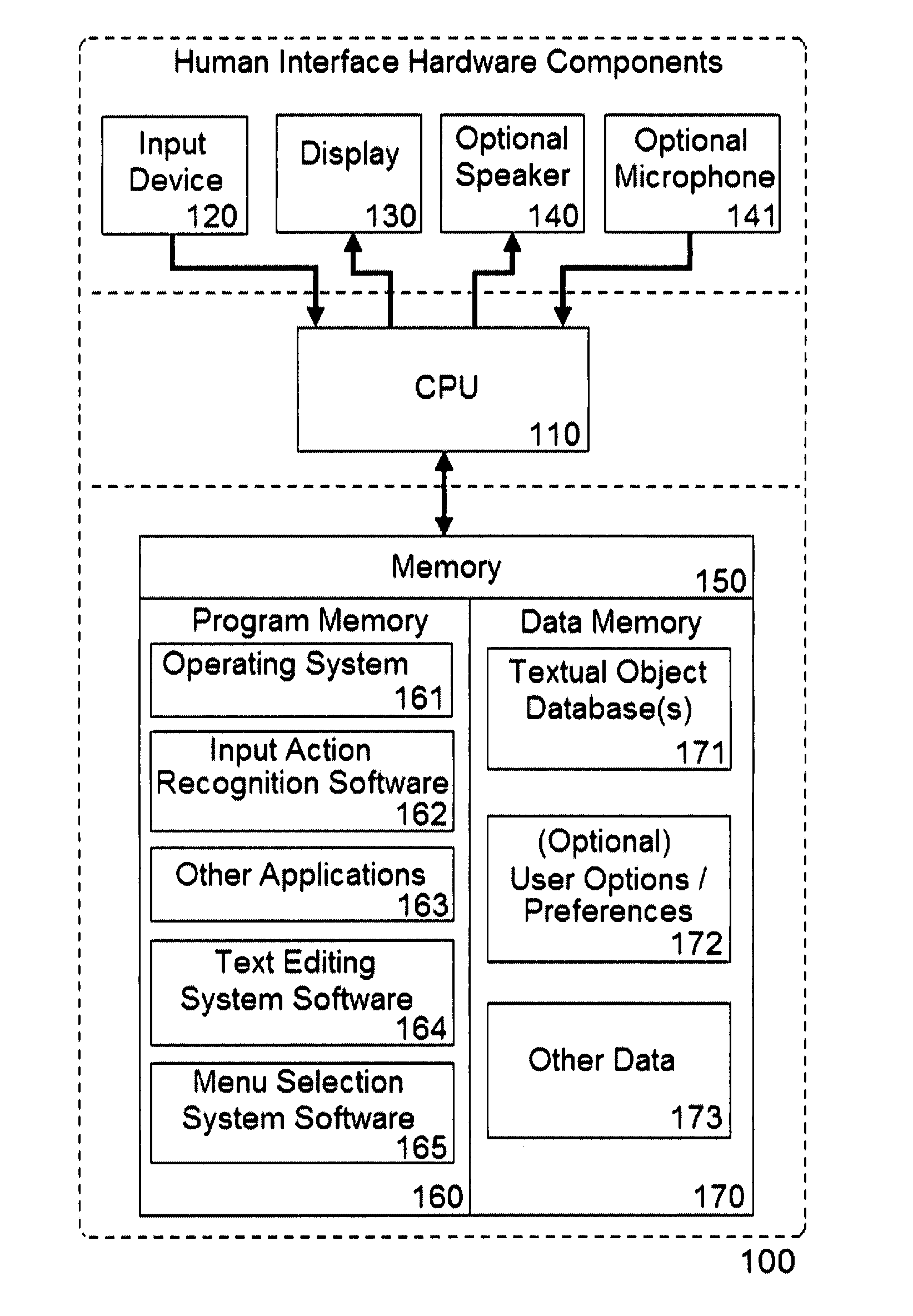

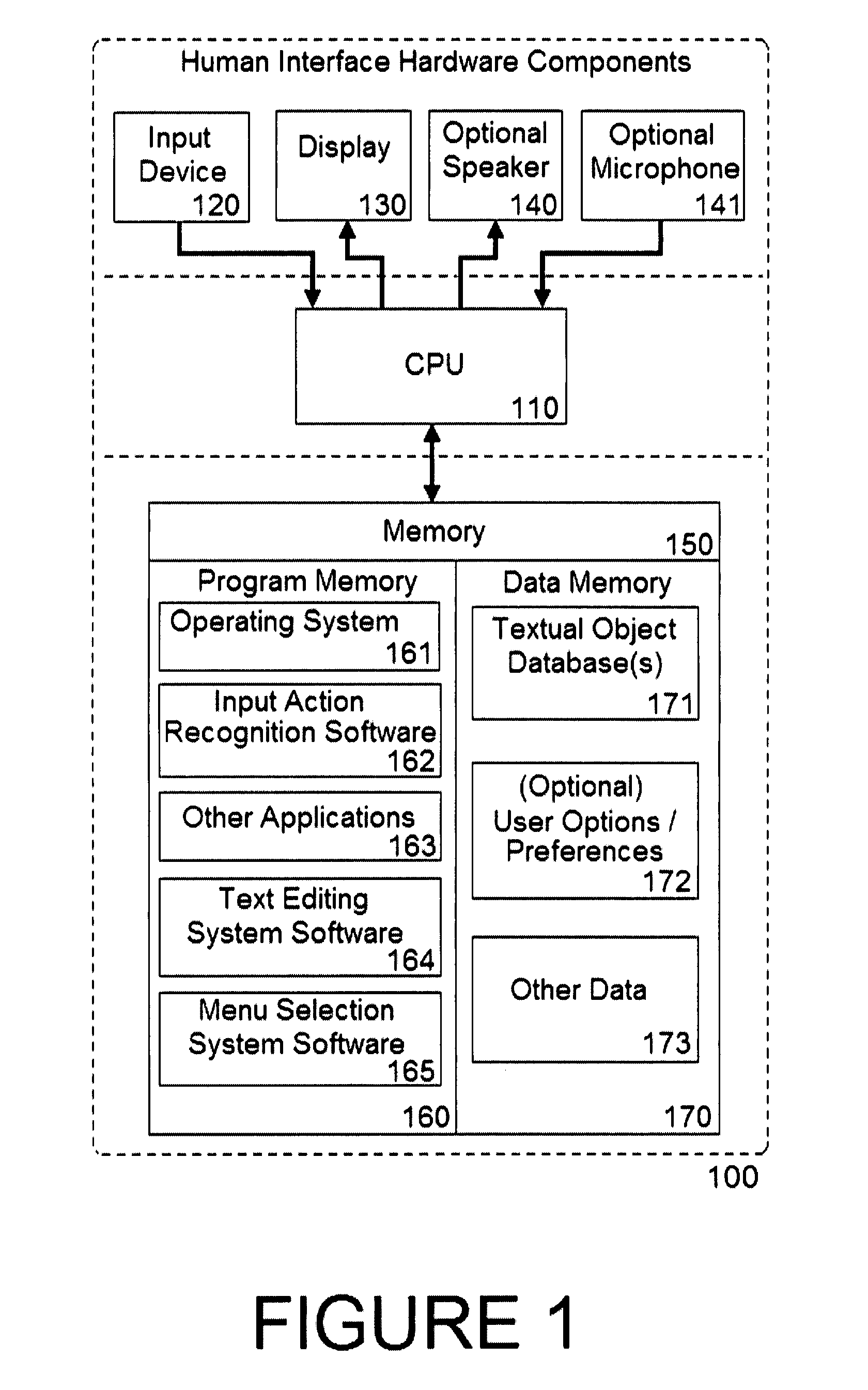

System and method for a user interface for text editing and menu selection

ActiveUS20080316212A1Increase speedImprove easeInput/output for user-computer interaction2D-image generationGraphicsText editing

Methods and system to enable a user of an input action recognition text input system to edit any incorrectly recognized text without re-locating the text insertion position to the location of the text to be corrected. The System also automatically maintains correct spacing between textual objects when a textual object is replaced with an object for which automatic spacing is generated in a different manner. The System also enables the graphical presentation of menu choices in a manner that facilitates faster and easier selection of a desired choice by performing a selection gesture requiring less precision than directly contacting the sub-region of the menu associated with the desired choice.

Owner:CERENCE OPERATING CO

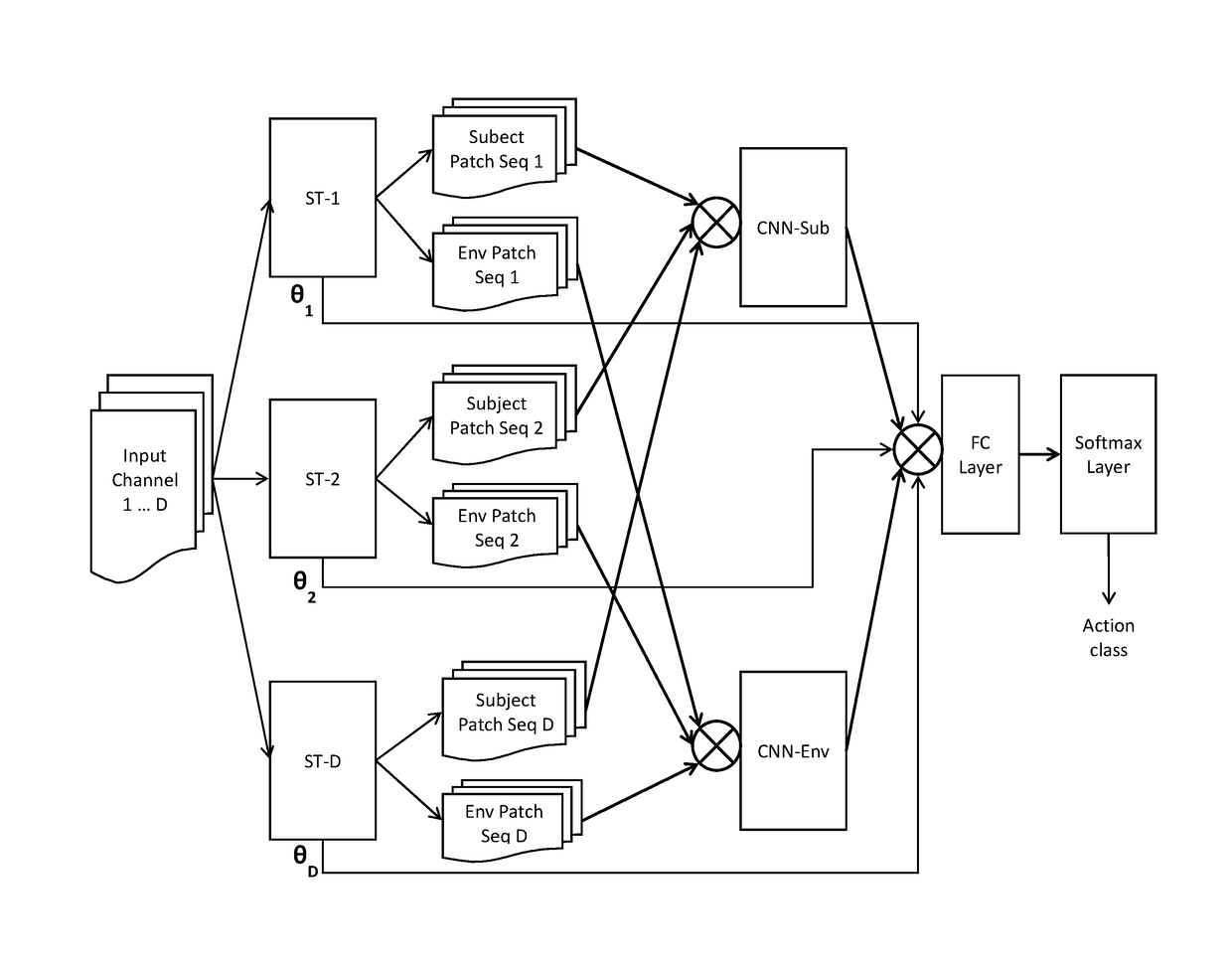

Self-attention deep neural network for action recognition in surveillance videos

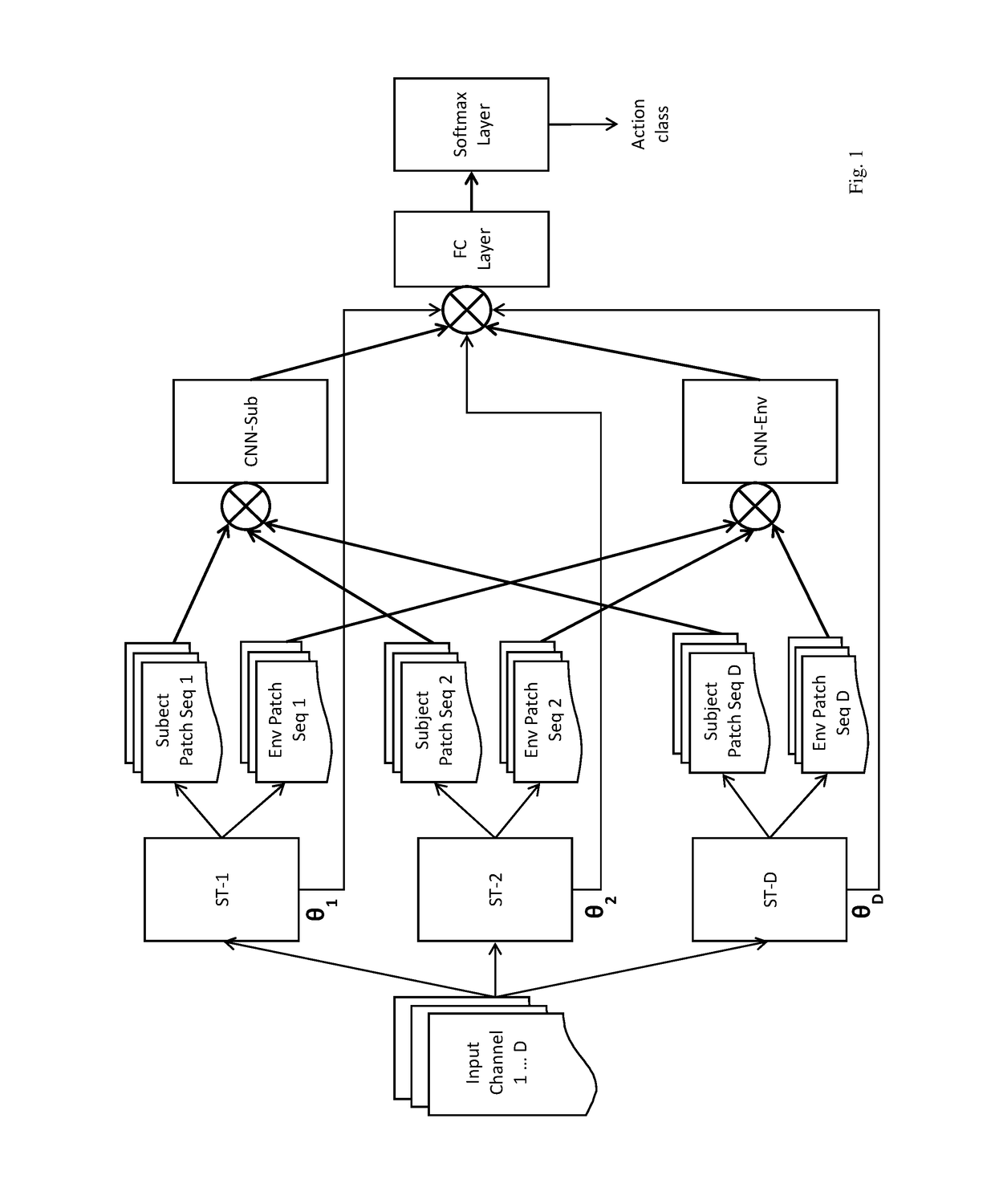

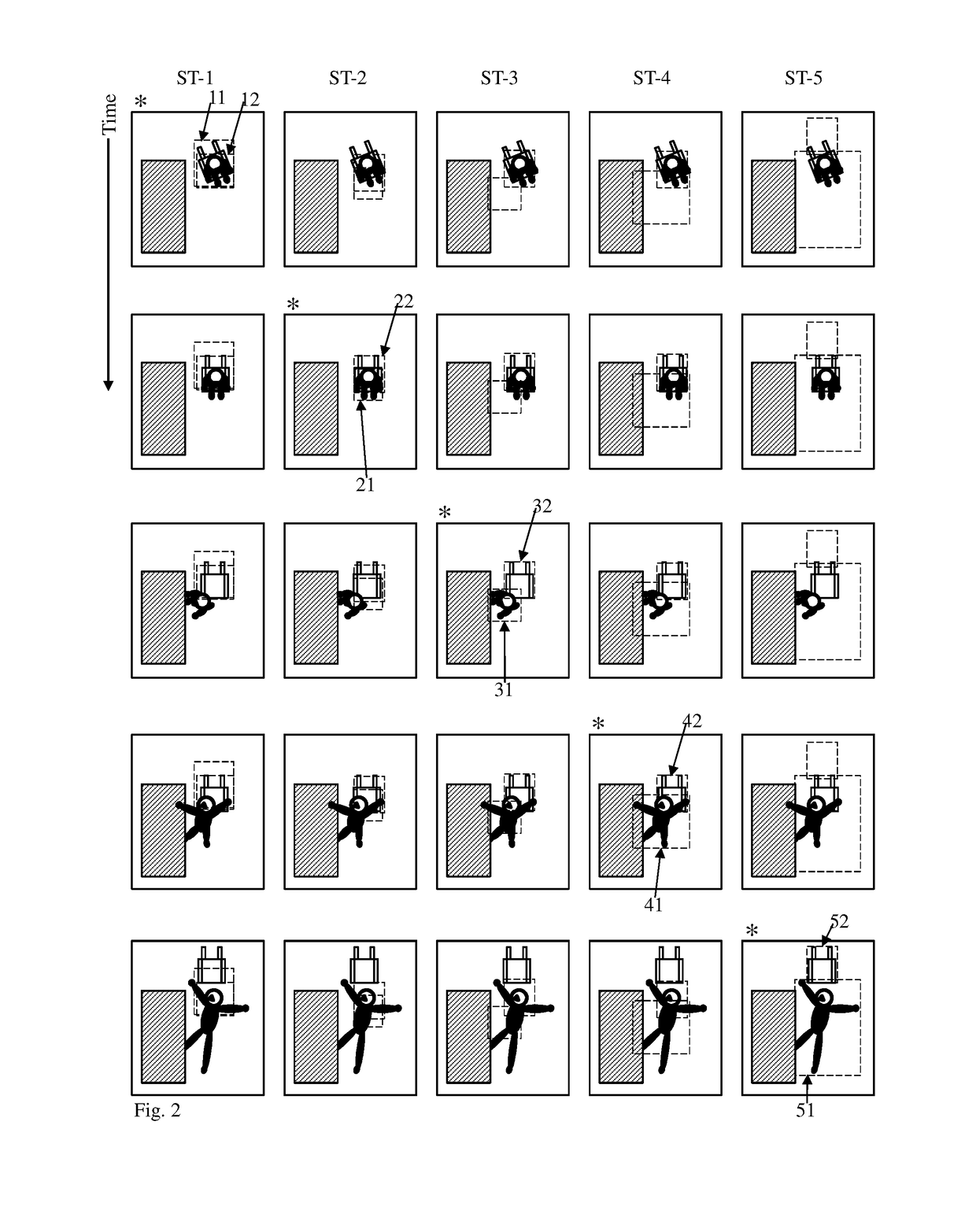

An artificial neural network for analyzing input data, the input data being a 3D tensor having D channels, such as D frames of a video snippet, to recognize an action therein, including: D spatial transformer modules, each generating first and second spatial transformations and corresponding first and second attention windows using only one of the D channels, and transforming first and second regions of each of the D channels corresponding to the first and second attention windows to generate first and second patch sequences; first and second CNNs, respectively processing a concatenation of the D first patch sequences and a concatenation of the D second patch sequences; and a classification network receiving a concatenation of the outputs of the first and second CNNs and the D sets of transformation parameters of the first transformation outputted by the D spatial transformer modules, to generate a predicted action class.

Owner:KONICA MINOLTA LAB U S A INC

Method and apparatus for matching local self-similarities

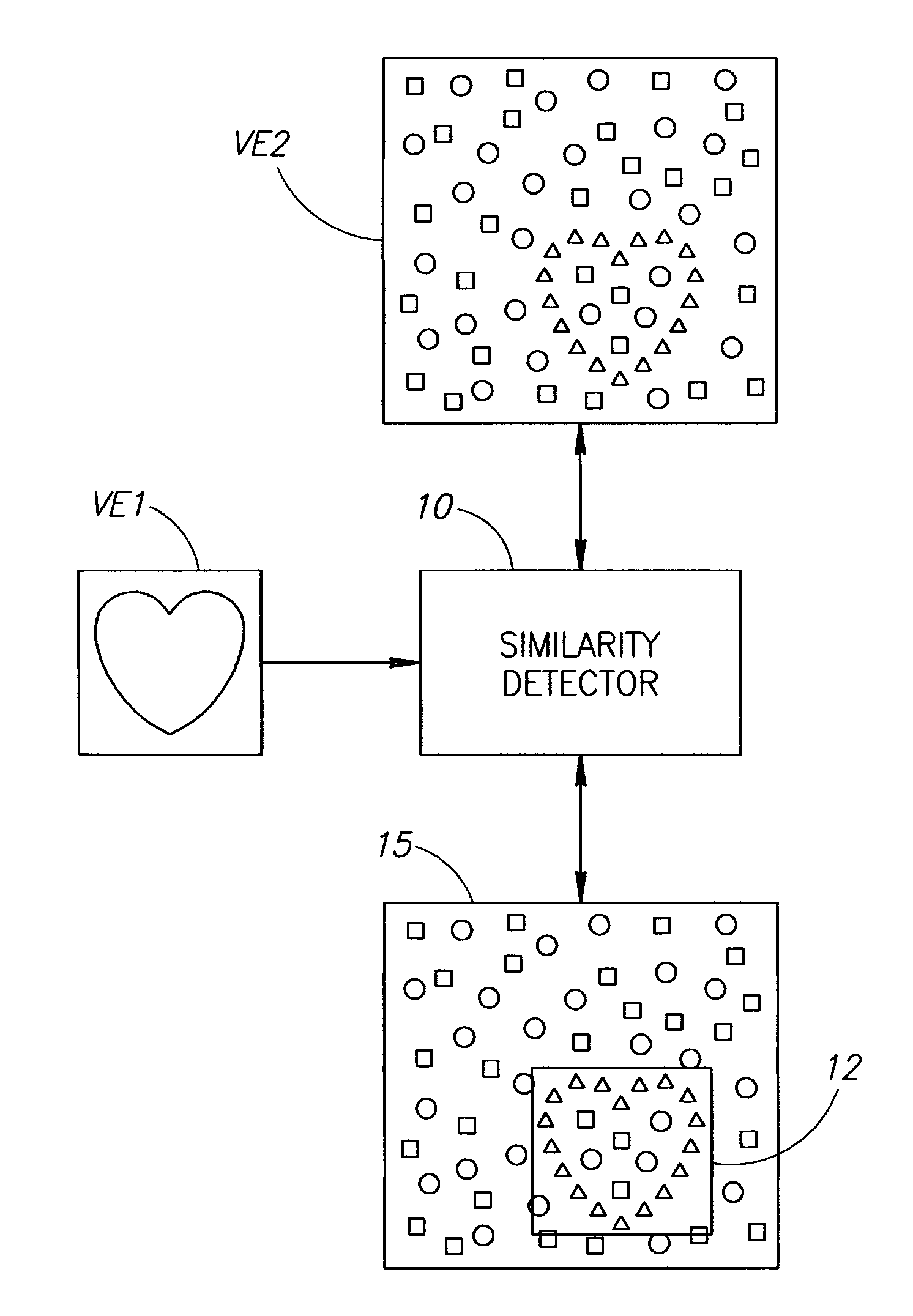

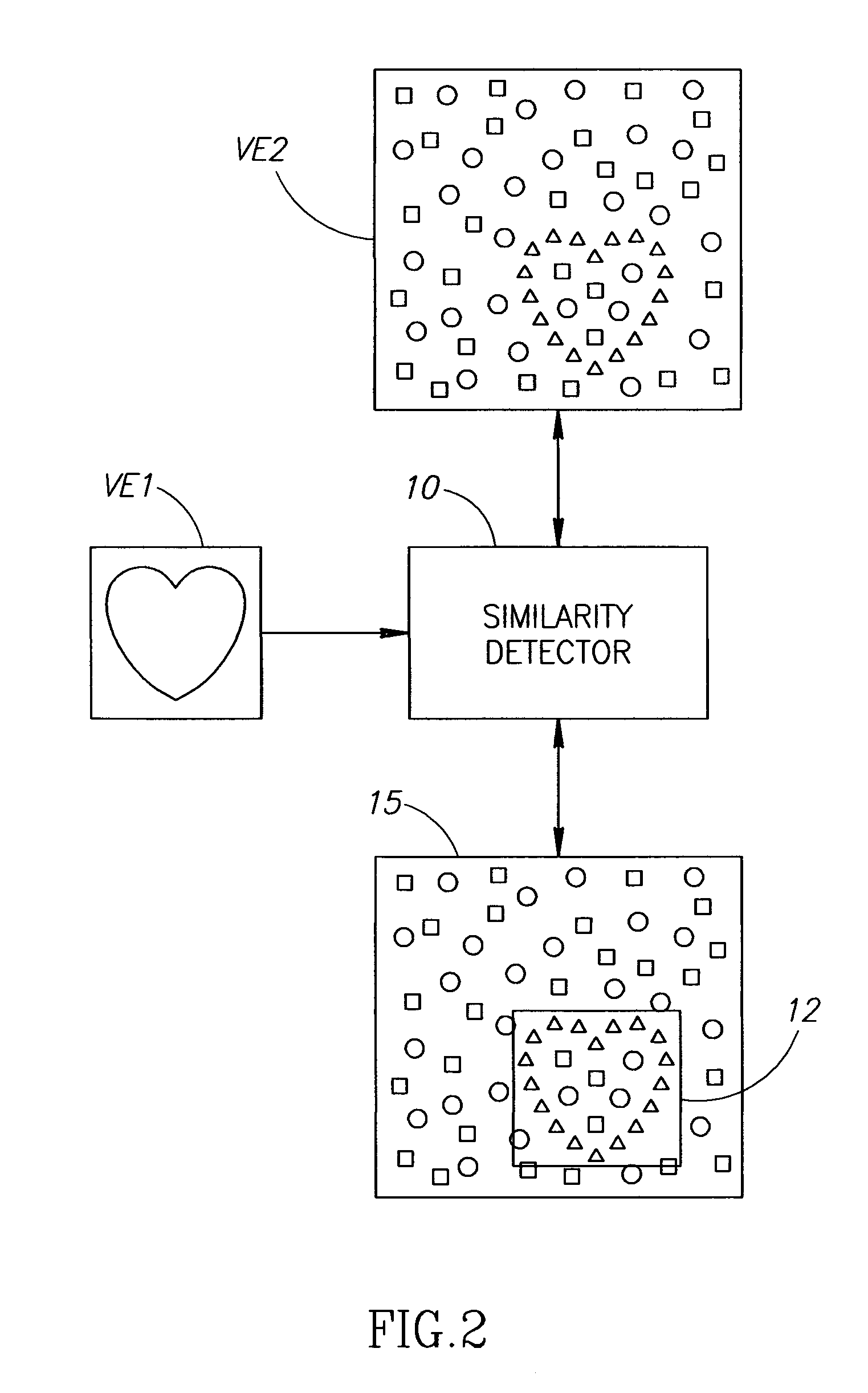

A method includes matching at least portions of first, second signals using local self-similarity descriptors of the signals. The matching includes computing a local self-similarity descriptor for each one of at least a portion of points in the first signal, forming a query ensemble of the descriptors for the first signal and seeking an ensemble of descriptors of the second signal which matches the query ensemble of descriptors. This matching can be used for image categorization, object classification, object recognition, image segmentation, image alignment, video categorization, action recognition, action classification, video segmentation, video alignment, signal alignment, multi-sensor signal alignment, multi-sensor signal matching, optical character recognition, image and video synthesis, correspondence estimation, signal registration and change detection. It may also be used to synthesize a new signal with elements similar to those of a guiding signal synthesized from portions of the reference signal. Apparatus is also included.

Owner:YEDA RES & DEV CO LTD

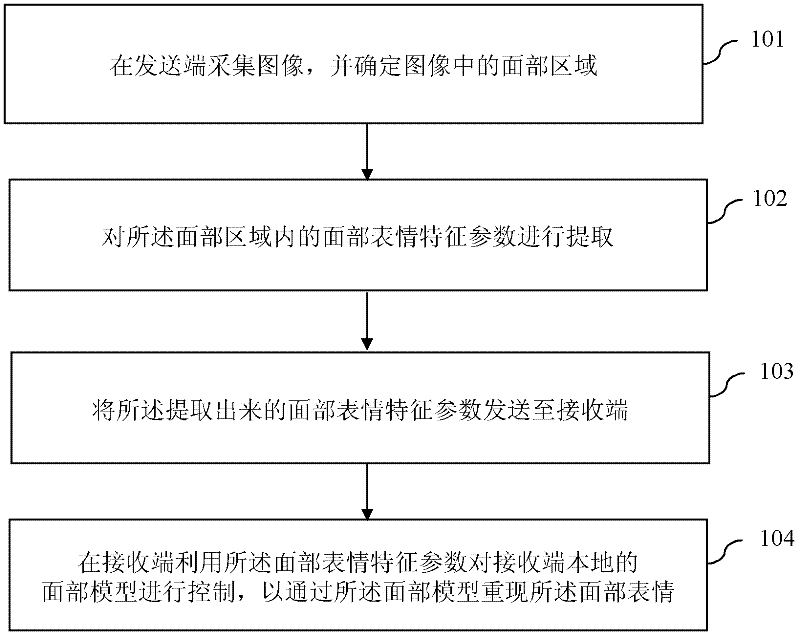

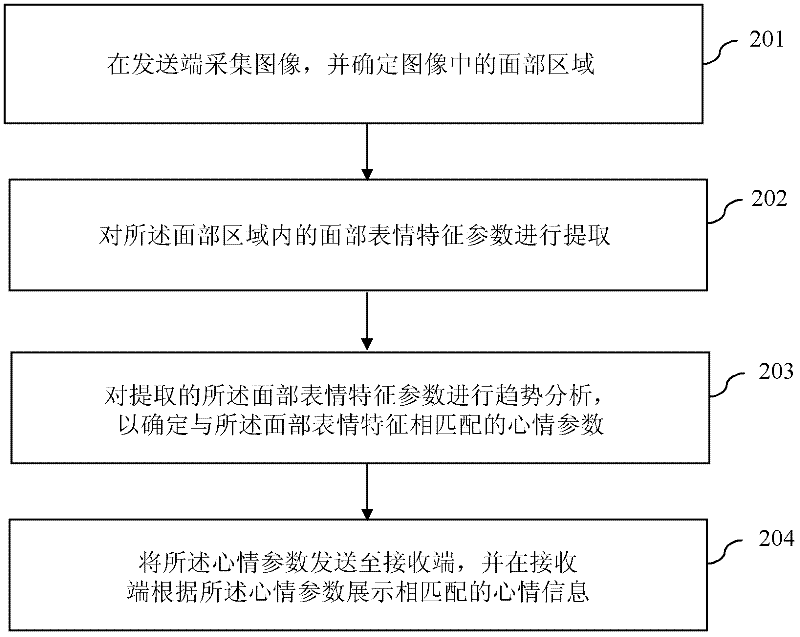

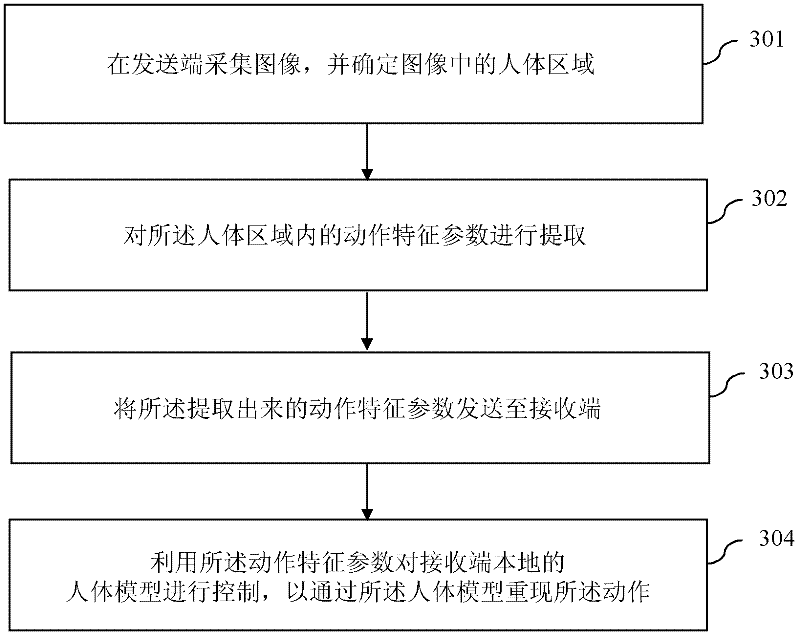

Image communication method and system based on facial expression/action recognition

InactiveCN102271241AReduced transfer rate requirementsTelevision conference systemsCharacter and pattern recognitionFace modelFeature parameter

The invention discloses an image communication method and system based on facial expression / action recognition. In the image communication method based on facial expression recognition, firstly, an image is collected at the sending end, and the facial area in the image is determined; The facial expression feature parameters in the region are extracted; then the extracted facial expression feature parameters are sent to the receiving end; finally, the receiving end uses the facial expression feature parameters to control the local facial model of the receiving end to pass the The facial model reproduces the facial expression. The application of the present invention can not only enhance user experience, but also greatly reduce the requirement on the information transmission rate of the video bearer network, realize more effective video communication, and is especially suitable for wireless communication networks with limited network bandwidth and capacity.

Owner:BEIJING UNIV OF POSTS & TELECOMM

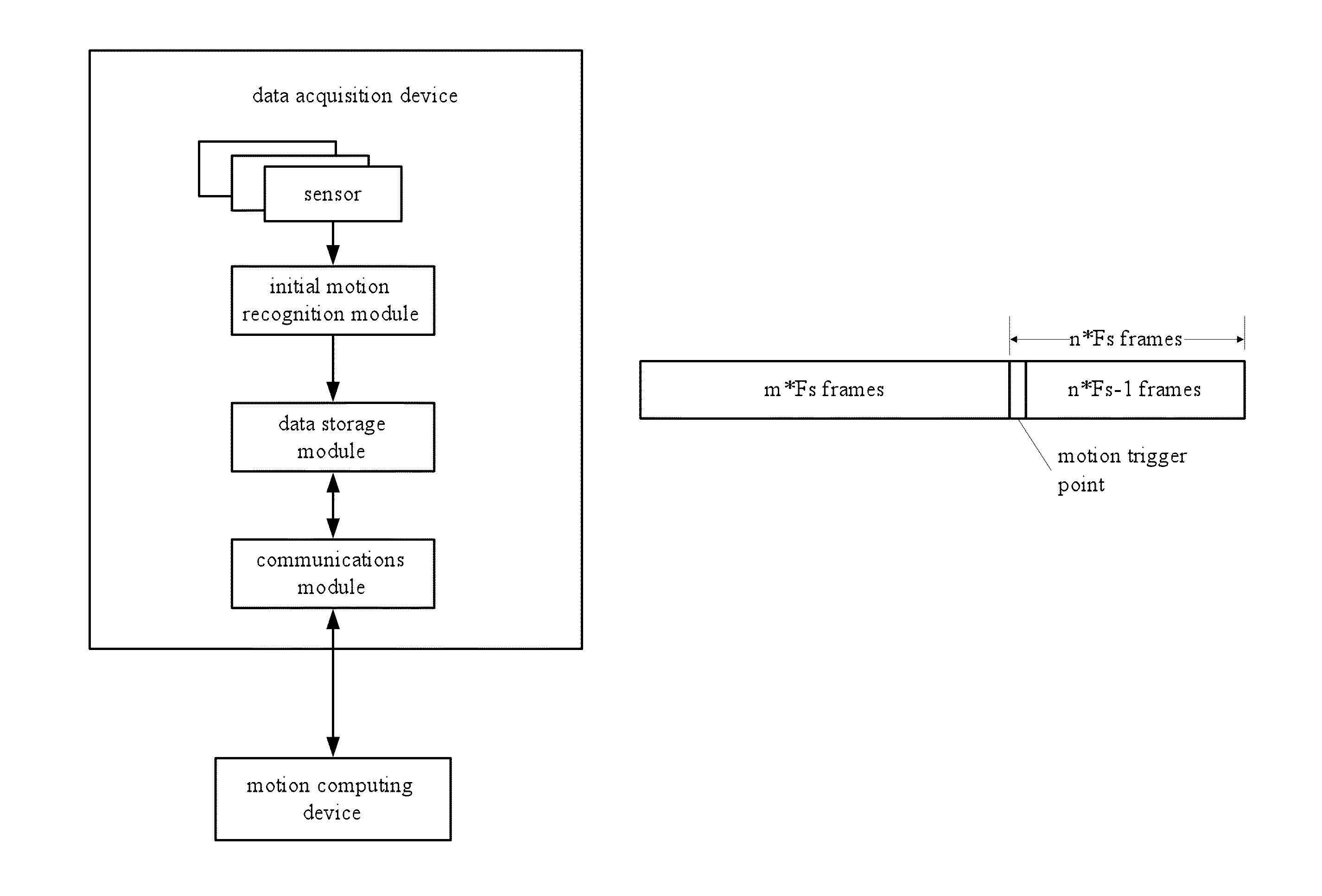

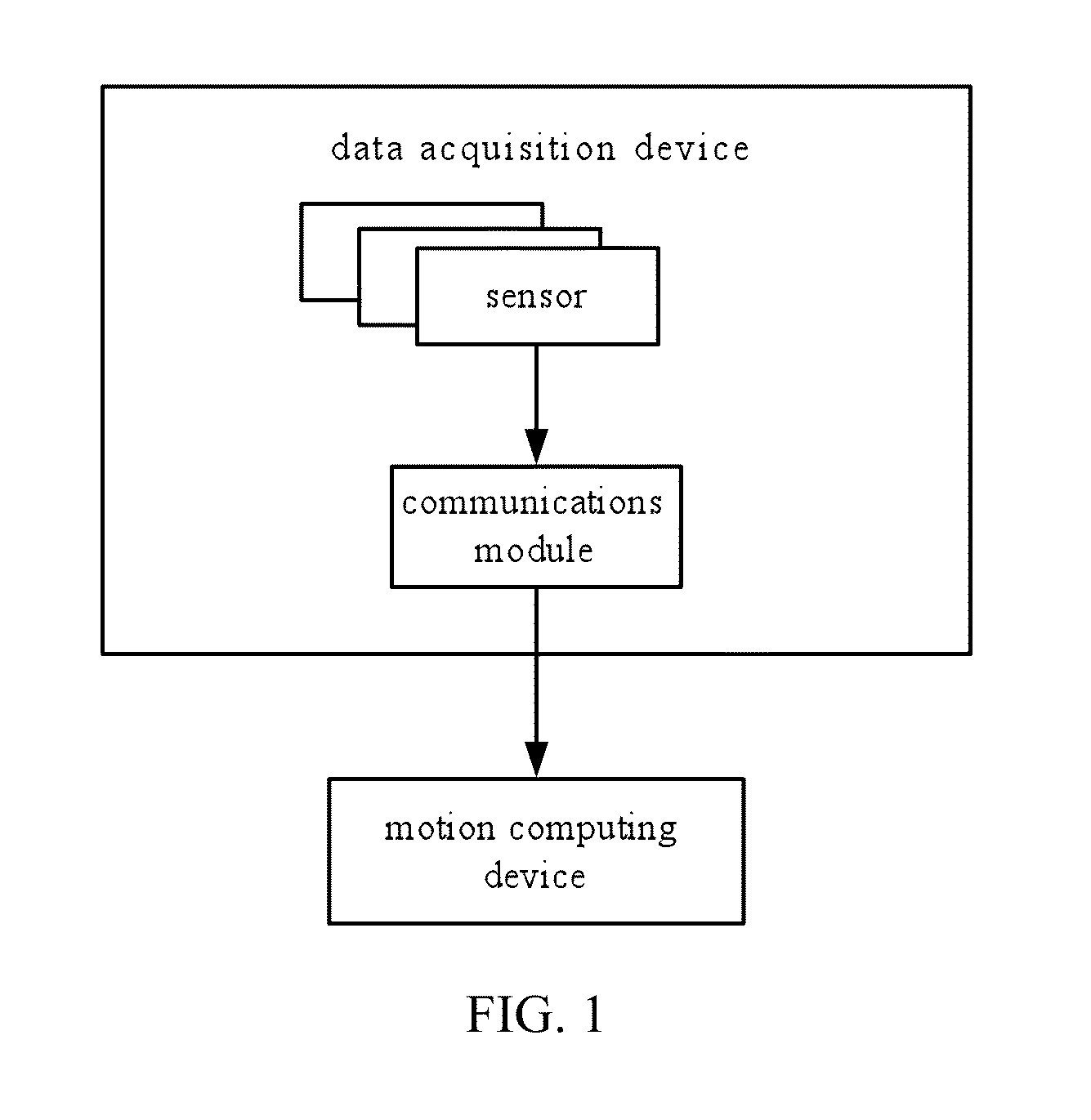

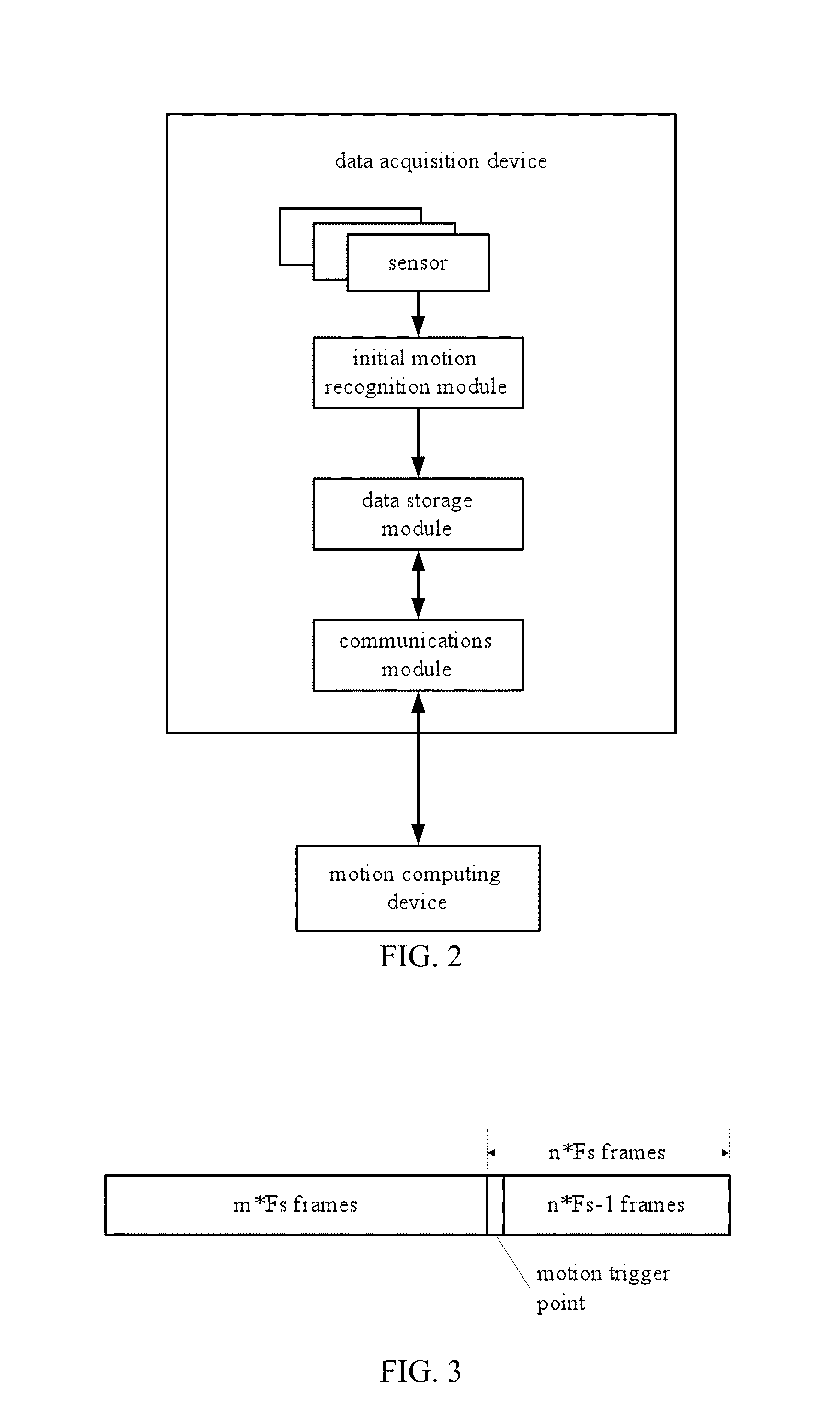

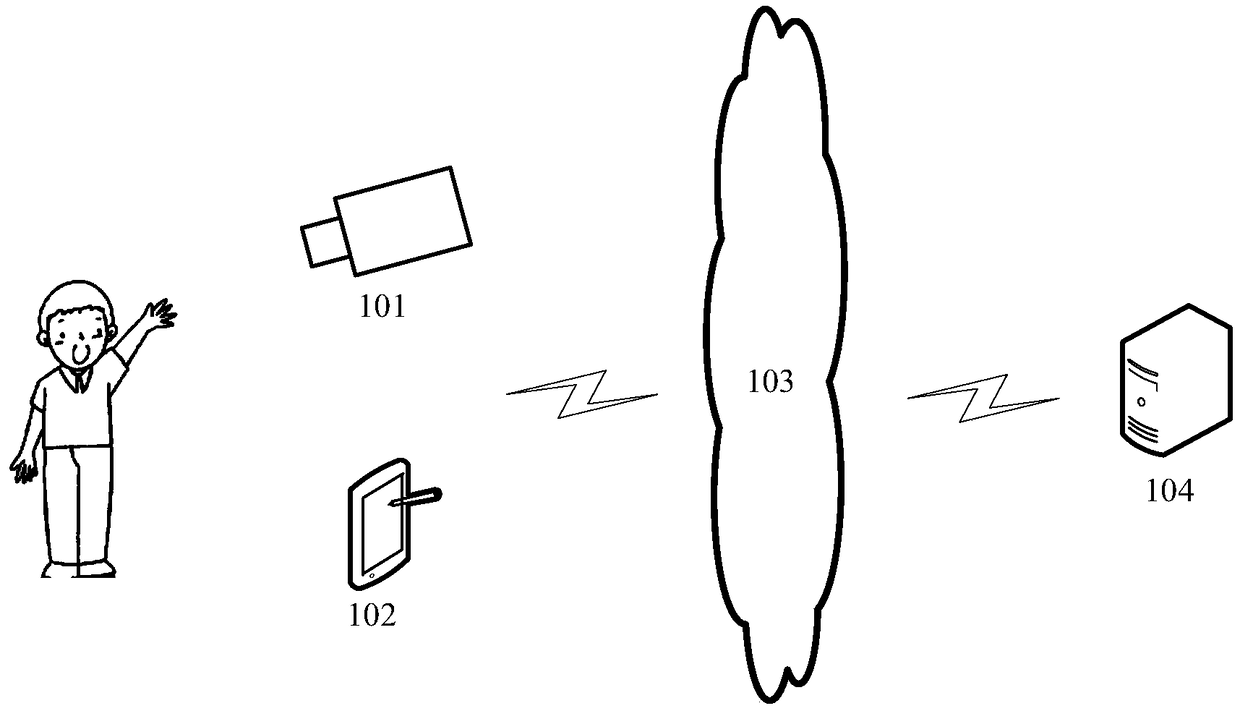

Data acquisition method and device for motion recognition, motion recognition system and computer readable storage medium

ActiveUS8989441B2Improve accuracyReduce power consumptionImage analysisCharacter and pattern recognitionComputer moduleData acquisition

A data acquisition method and device for motion recognition, a motion recognition system and a computer readable storage medium are disclosed. The data acquisition device for motion recognition comprises: an initial motion recognition module adapted to perform an initial recognition with respect to motion data collected by a sensor and provide motion data describing a predefined range around a motion trigger point to a data storage module for storage; a data storage module adapted to store motion data provided from the initial motion recognition module; and a communications module adapted to forward the motion data stored in the data storage module to a motion computing device for motion recognition. The present invention makes an initial selection to the motion data to be transmitted to the motion computing device under the same sampling rate. Consequently, the present invention reduces pressures on wireless channel transmission and wireless power consumption, and provides high accuracy in motion recognition while providing motion data at the same sampling rate.

Owner:BEIJING SHUNYUAN KAIHUA TECH LTD

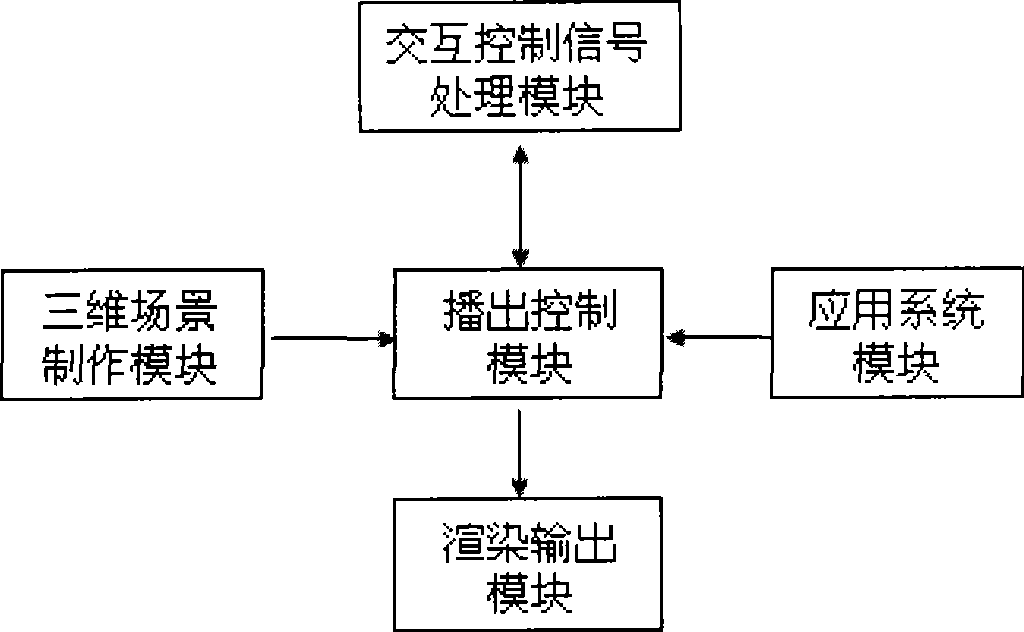

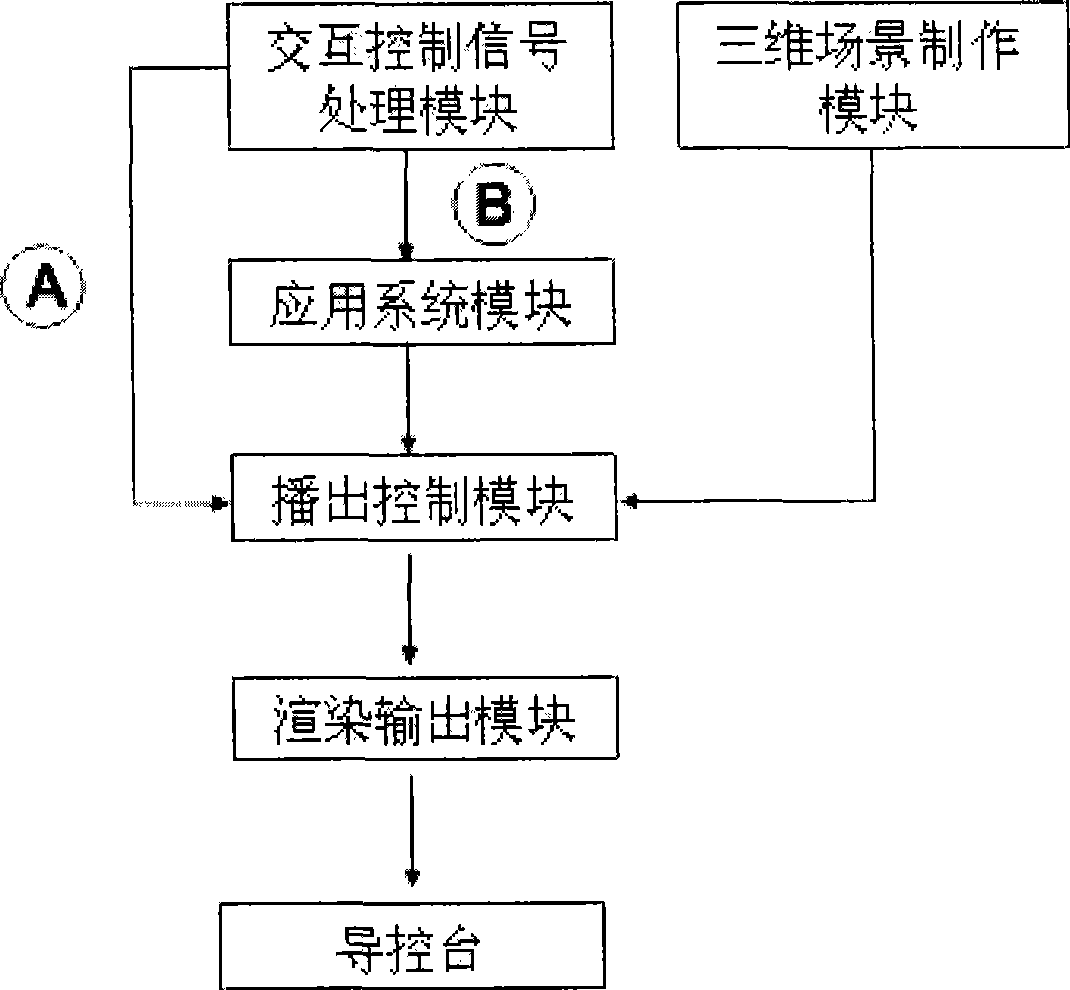

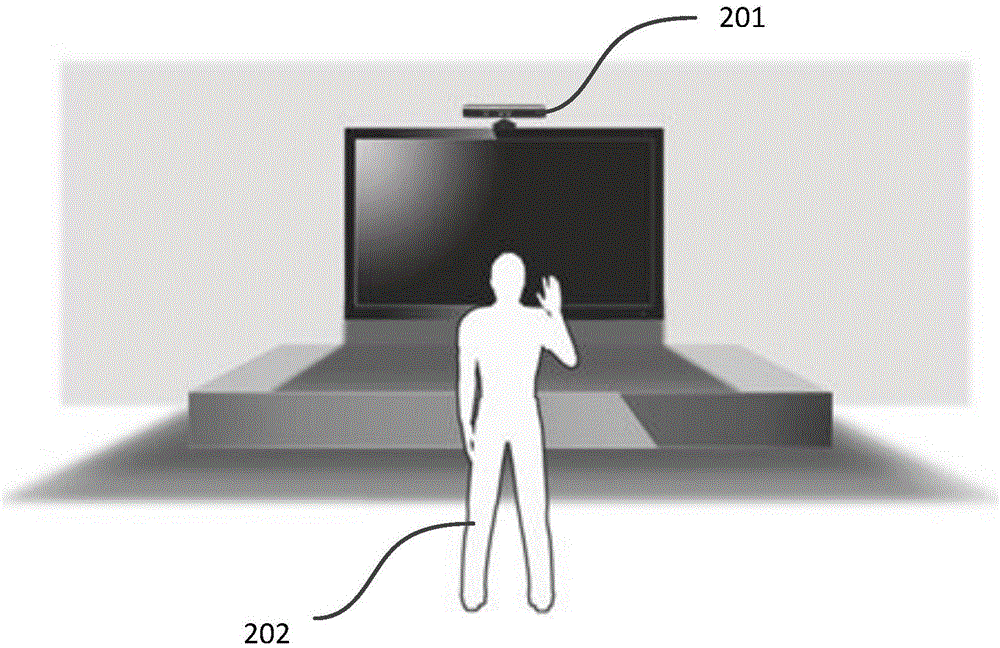

System for implementing remote control interaction in virtual three-dimensional scene

InactiveCN101465957ATo achieve the effect of random selectionImprove the problem that the interaction cannot be realizedTelevision system detailsColor television detailsTelevision stationHuman–computer interaction

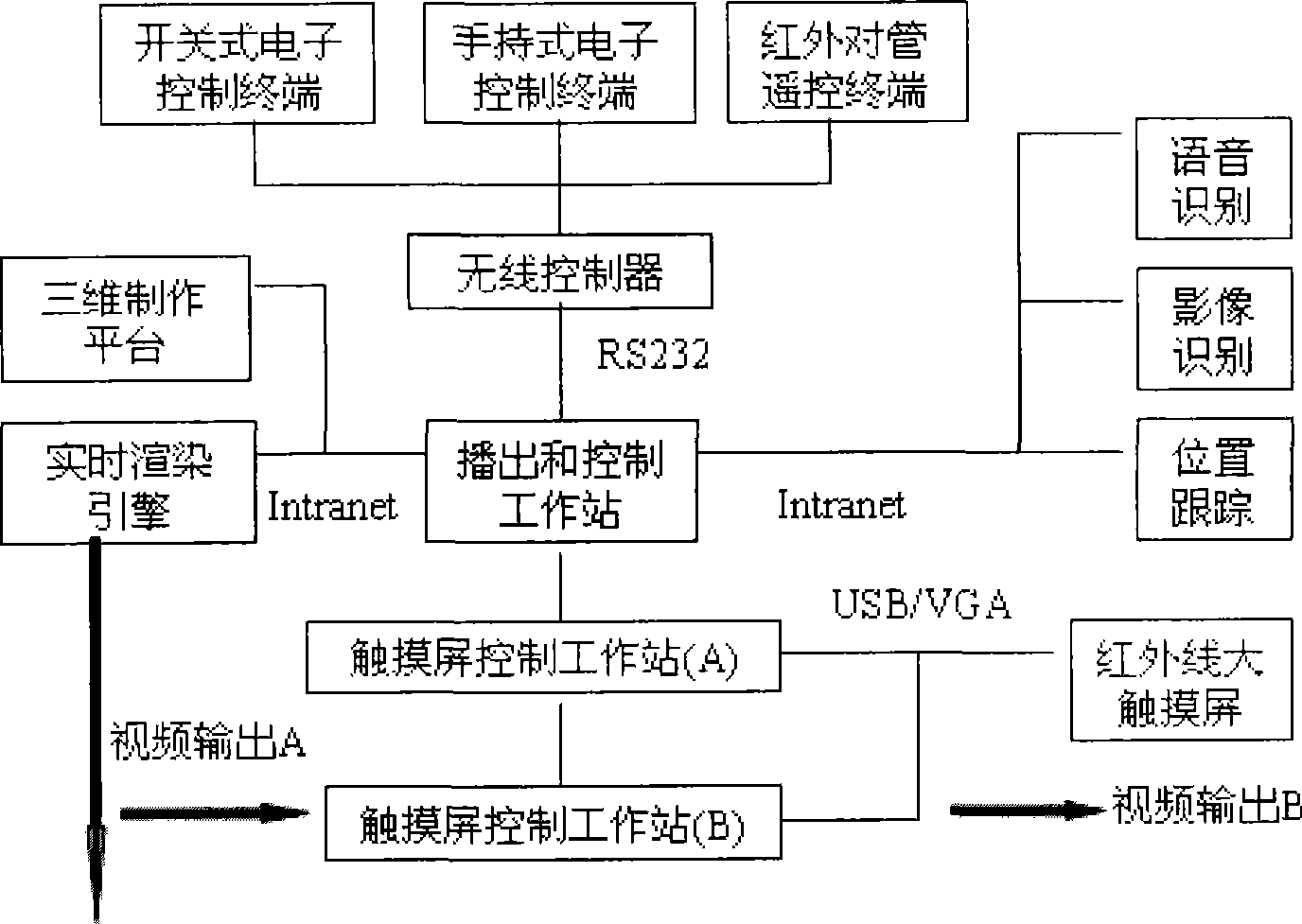

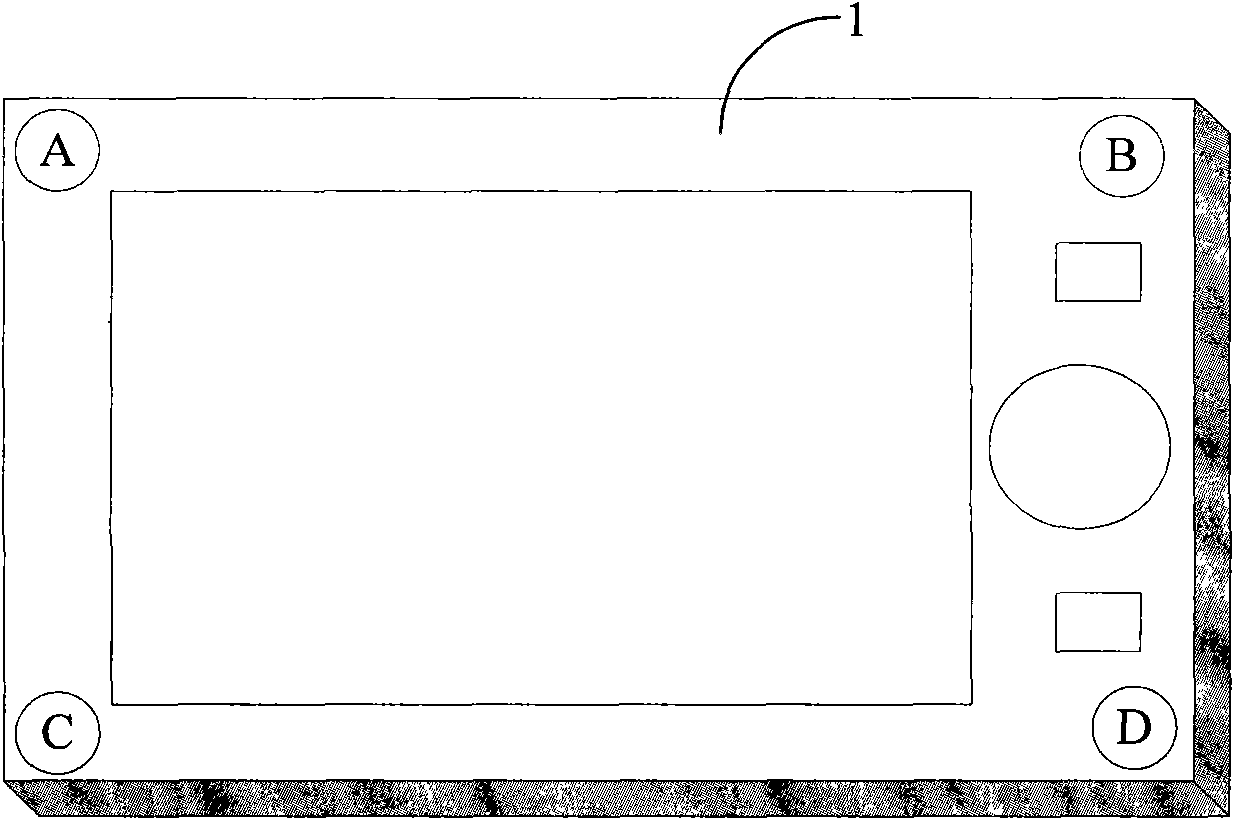

The invention relates to a system and a controlling method for realizing remote control interaction in a virtual three-dimensional scene. An interactive control system solves how to carry out the interaction and control on a virtual studio scene, animation and an object in the scene by an operator. The system mainly comprises three modules: first, a three-dimensional producing and real-time rendering module; second, a playing and controlling module; third, an interactive module. The interactive module includes subsystems such as a wireless remote control subsystem, a voice recognizing subsystem, an image recognizing subsystem, a position tracking subsystem (an action recognizing subsystem), a touch screen subsystem (a visual multimedia playing and controlling interface and a multi-point touch controlling method are provided), and the like. A three-dimensional real-time rendering engine can be driven by the playing and controlling module by the operator through various interactive methods, thus realizing the selectively instant playing of the virtual scene and the controlling of the virtual object. The system can be applied to virtual studios of television stations and other virtual interactive demonstrating occasions.

Owner:应旭峰 +1

Mobile terminal with action recognition function and action recognition method thereof

ActiveCN102055836ASimple structureImprove recognition accuracyInput/output for user-computer interactionSubstation equipmentHuman bodyComputer science

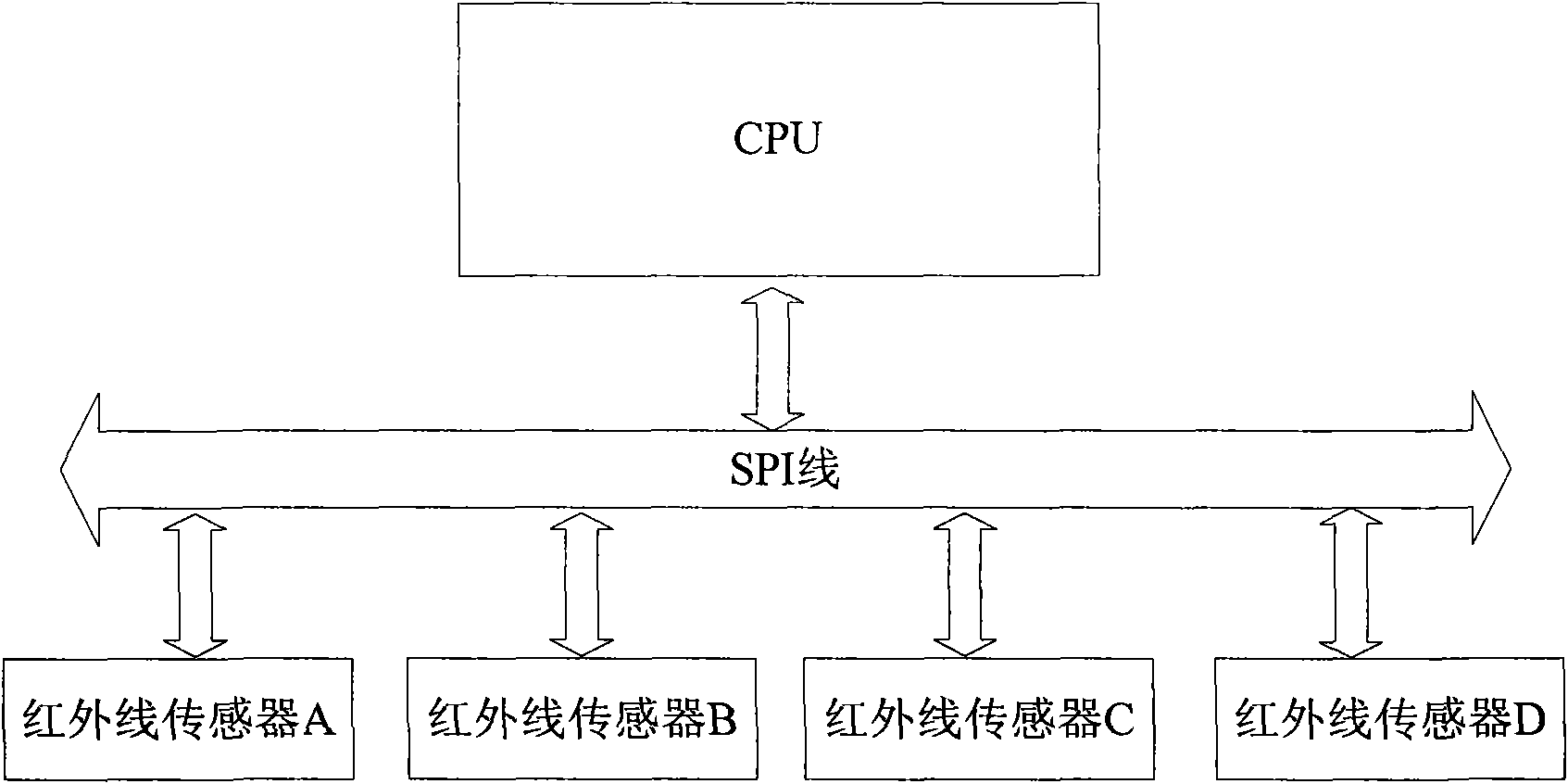

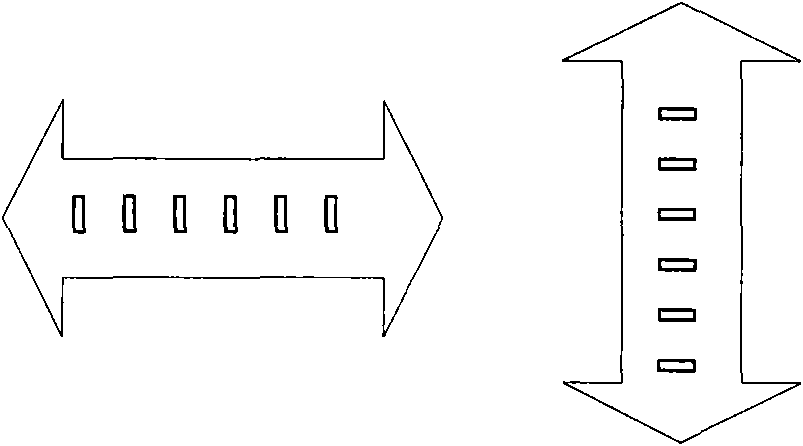

The invention provides a mobile terminal with an action recognition function and an action recognition method thereof. The speed of gesture actions of human bodies is judged by a plurality of infrared sensors arranged on the mobile terminal so as to judge the direction of the reasonable action gesture and make the corresponding response. The mobile terminal has a very simple structure and high recognition accuracy, is low in cost, and is suitable for various mobile terminals such as mobile phones, mobile internet devices (MID) and the like.

Owner:TCL CORPORATION

Eye tracking human-machine interaction method and apparatus

InactiveCN101311882AAccurate outputSimple protocol for interactionInput/output for user-computer interactionCharacter and pattern recognitionComputer graphics (images)Man machine

The invention relates to a view line tracking man-machine interactive method, including: view line tracking information is collected and a view line focus position is obtained according to the view line tracking information; facial image information is collected and facial action is recognized according to the facial image information; a control command corresponding to the facial action is output according to the view line focus position. the invention also relates to a view line tracking man-machine interactive device which comprises a view line tracing processing unit which is used for the view line tracking operation, a facial action recognition unit for collecting the facial image information and recognizing the facial action according to the facial image information and a control command output unit which is respectively connected with the view line tracking processing unit and the facial action recognition unit and is used for outputting the control command corresponding to the facial action according to the view line focus position. the embodiment of the invention provides a non-contact view line tracking man-machine interactive method and a device with interaction protocols.

Owner:HUAWEI TECH CO LTD

Method for controlling electronic apparatus based on voice recognition and motion recognition, and electronic apparatus applying the same

ActiveUS9002714B2Input/output for user-computer interactionTransmission systemsComputer hardwareSpeech sound

A method for controlling an electronic apparatus which uses voice recognition and motion recognition, and an electronic apparatus applying the same are provided. In a voice task mode, in which voice tasks are performed according to recognized voice commands, the electronic apparatus displays voice assistance information to assist in performing the voice tasks. In a motion task mode, in which motion tasks are performed according to recognized motion gestures, the electronic apparatus displays motion assistance information to aid in performing the motion tasks.

Owner:SAMSUNG ELECTRONICS CO LTD

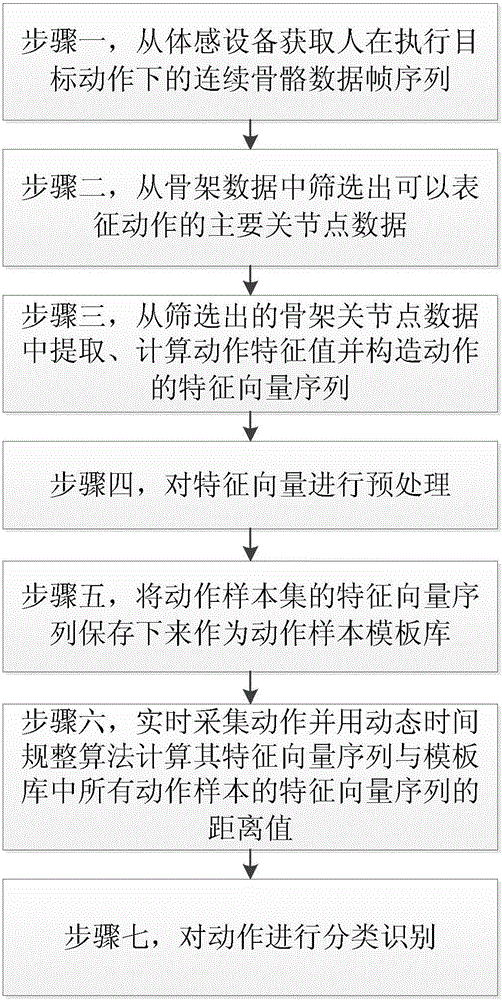

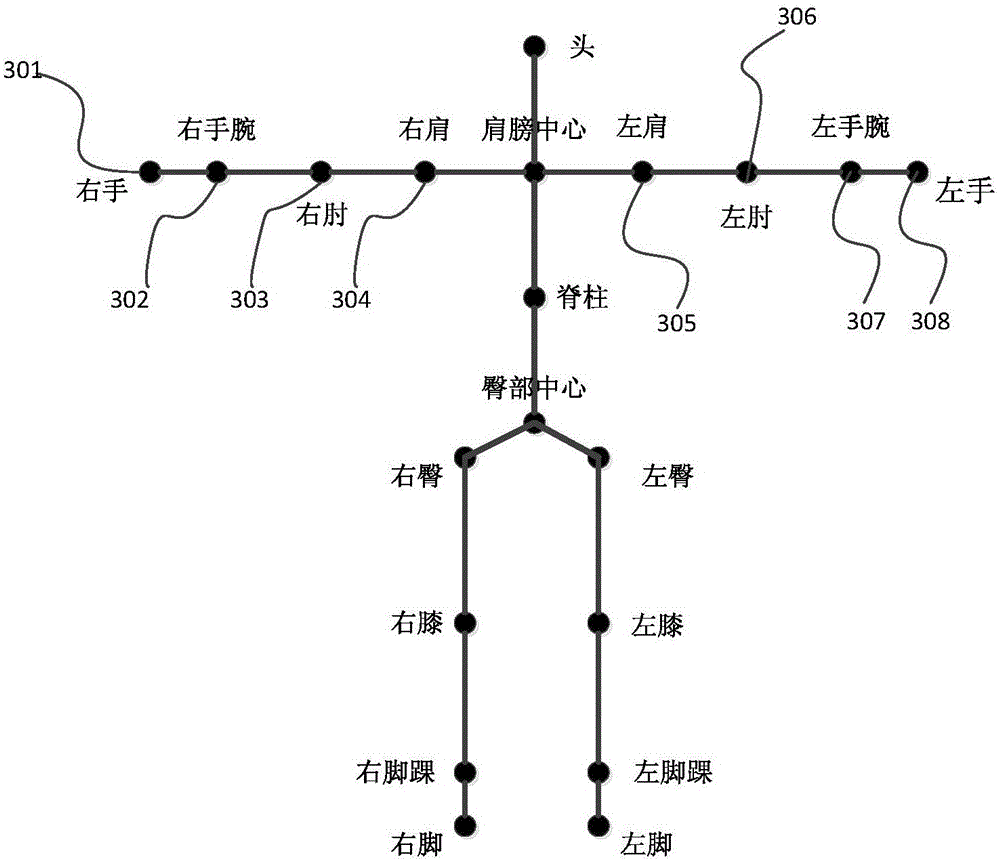

Human body skeleton-based action recognition method

ActiveCN105930767AImprove versatilityEliminate the effects of different relative positionsCharacter and pattern recognitionHuman bodyFeature vector

The invention relates to a human body skeleton-based action recognition method. The method is characterized by comprising the following basic steps that: step 1, a continuous skeleton data frame sequence of a person who is executing target actions is obtained from a somatosensory device; step 2, main joint point data which can characterize the actions are screened out from the skeleton data; step 3, action feature values are extracted from the main joint point data and are calculated, and a feature vector sequence of the actions is constructed; step 4, the feature vectors are preprocessed; step 4, the feature vector sequence of an action sample set is saved as an action sample template library; step 6, actions are acquired in real time, the distance value of the feature vector sequence of the actions and the feature vector sequence of all action samples in the template library is calculated by using a dynamic time warping algorithm; and step 7, the actions are classified and recognized. The method of the invention has the advantages of high real-time performance, high robustness, high accuracy and simple and reliable implementation, and is suitable for a real-time action recognition system.

Owner:NANJING HUAJIE IMI TECH CO LTD

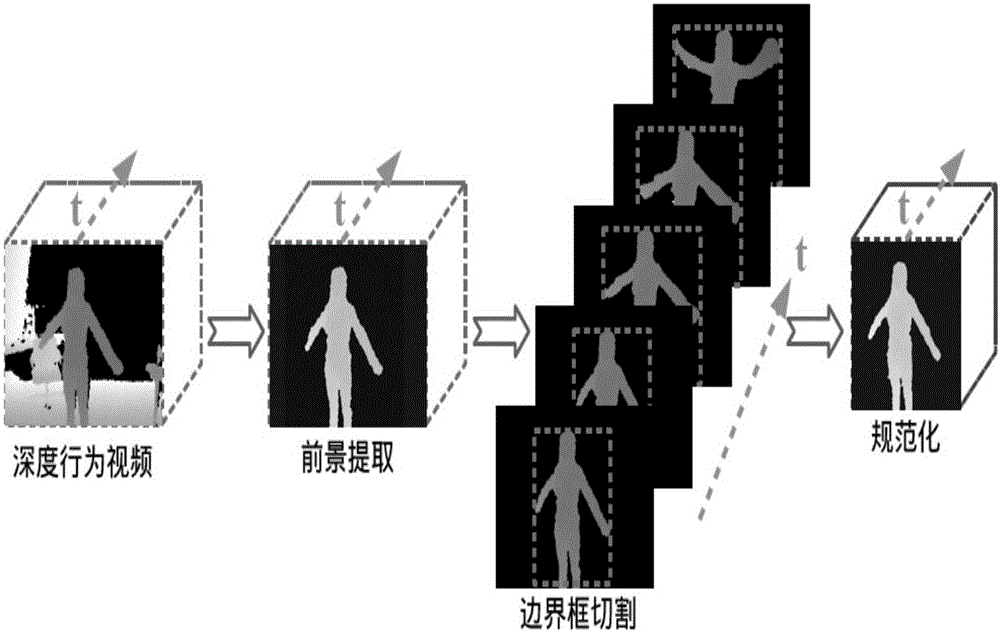

Motion recognition method based on three-dimensional convolution depth neural network and depth video

InactiveCN106203283AGood distinctionRecognizableBiometric pattern recognitionNeural architecturesHuman bodyData set

The invention discloses a motion recognition method based on a three-dimensional convolution depth neural network and depth video. In the invention, depth video is used as the object of study, a three-dimensional convolution depth neural network is constructed to automatically learn temporal and spatial characteristics of human body behaviors, and a Softmax classifier is used for the classification and recognition of human body behaviors. The proposed method by the invention can effectively extract the potential characteristics of human body behaviors, and can not only obtain good recognition results on an MSR-Action3D data set, but also obtain good recognition results on a UTKinect-Action3D data set.

Owner:CHONGQING UNIV OF TECH

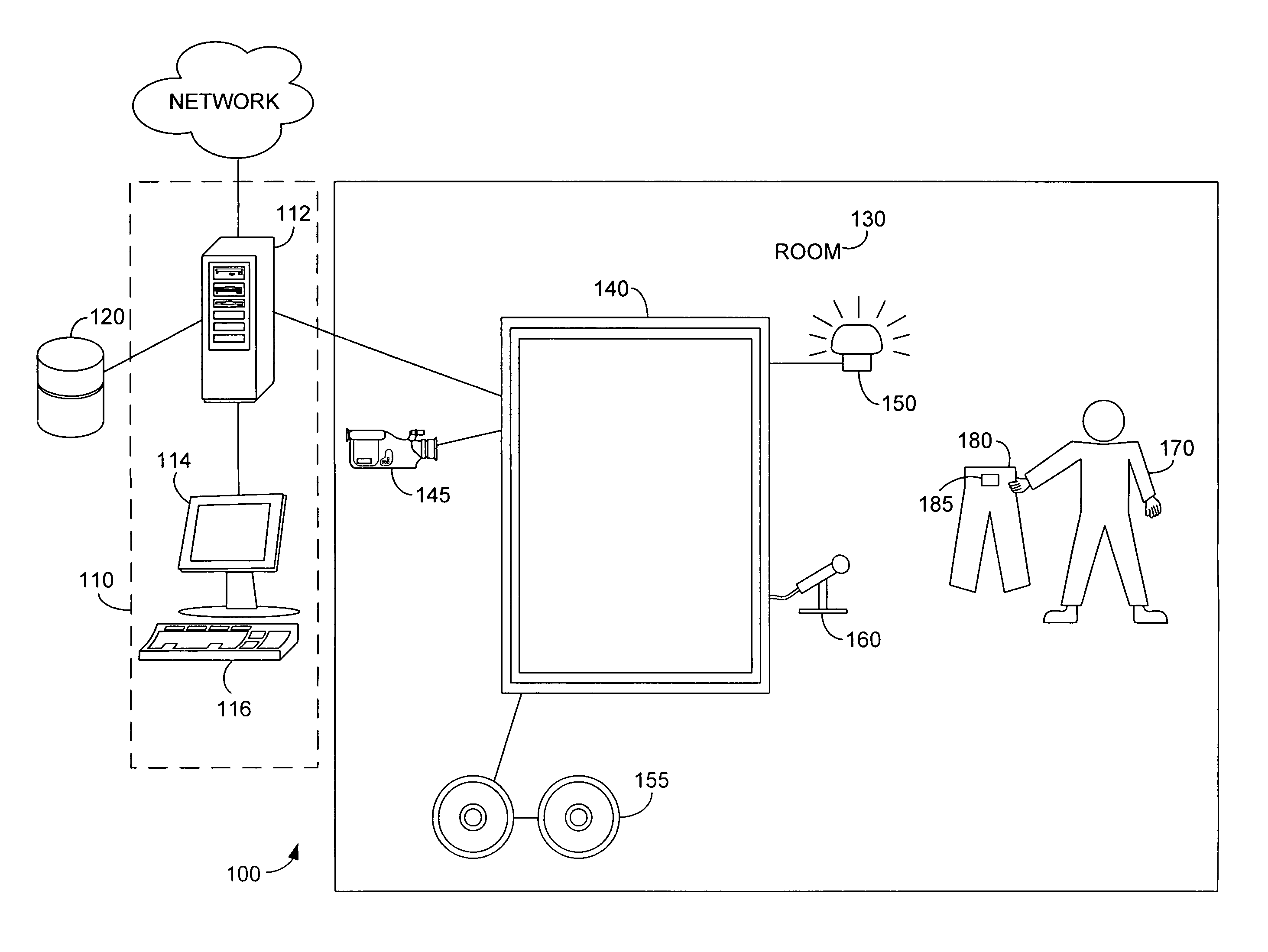

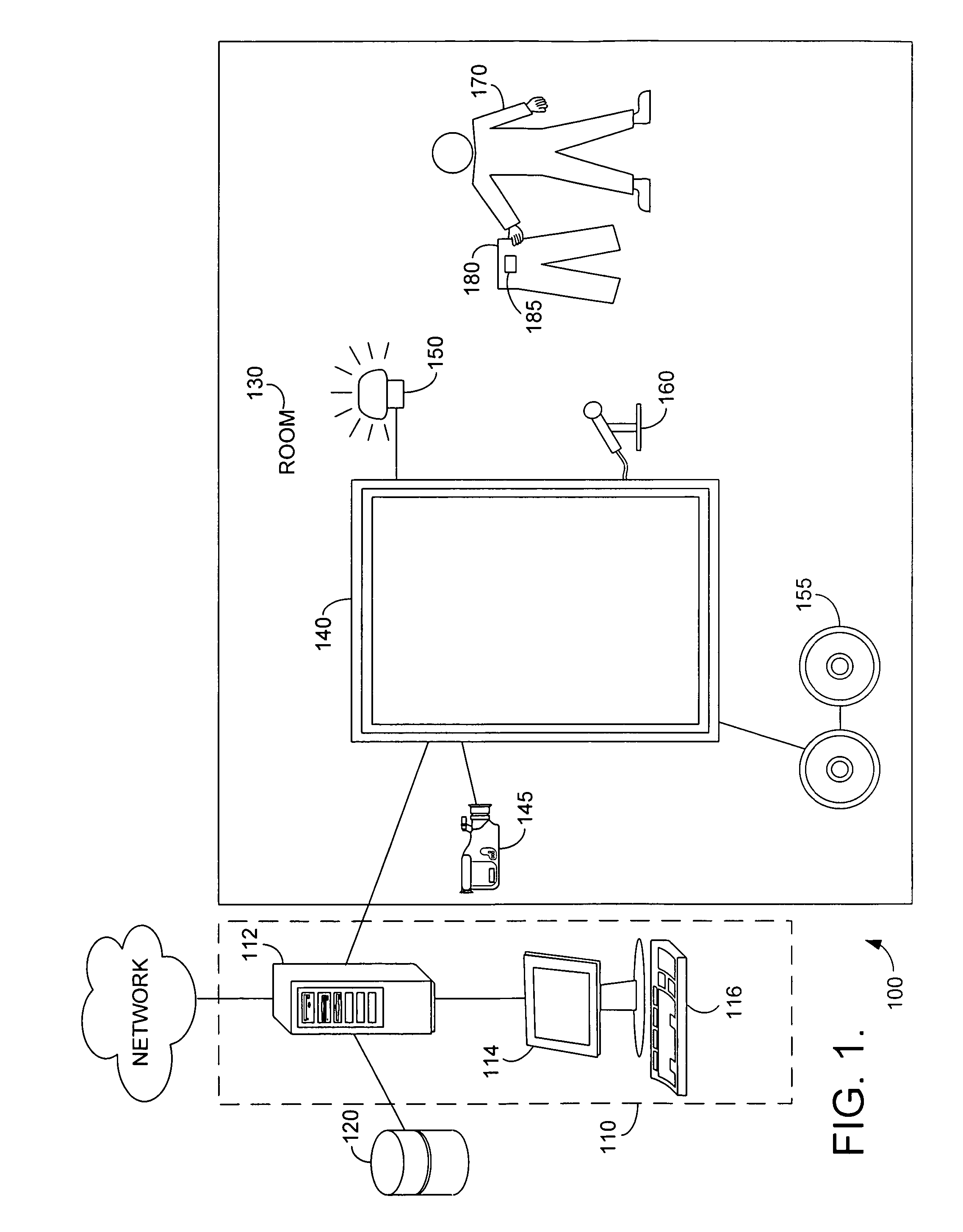

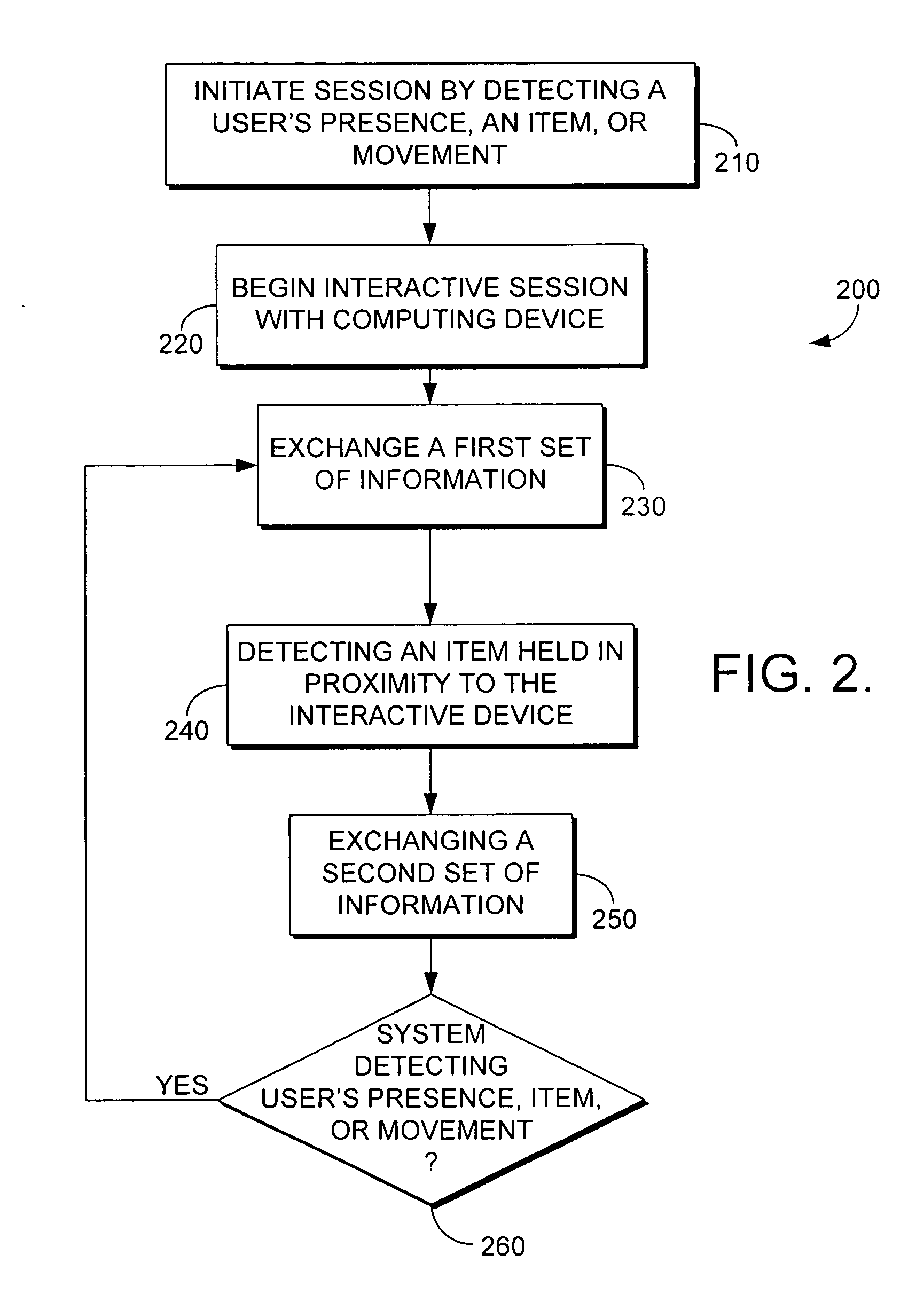

Method and system for collecting and using data

InactiveUS20060184993A1Easy transferTelevision system detailsAnalogue secracy/subscription systemsDisplay deviceInteraction device

The present invention provides at least a method and system for collecting and using data with a computing device for a smart closet. With respect to the present invention, a computing device processes information from the interaction of a user with an interactive device such as a mirror or display. Information is relayed to the user to enable to the user to make informed decisions about the clothing to wear. The user interacts with the computing device using gesture recognition, voice inputs, motion recognition, or touch.

Owner:MICROSOFT TECH LICENSING LLC

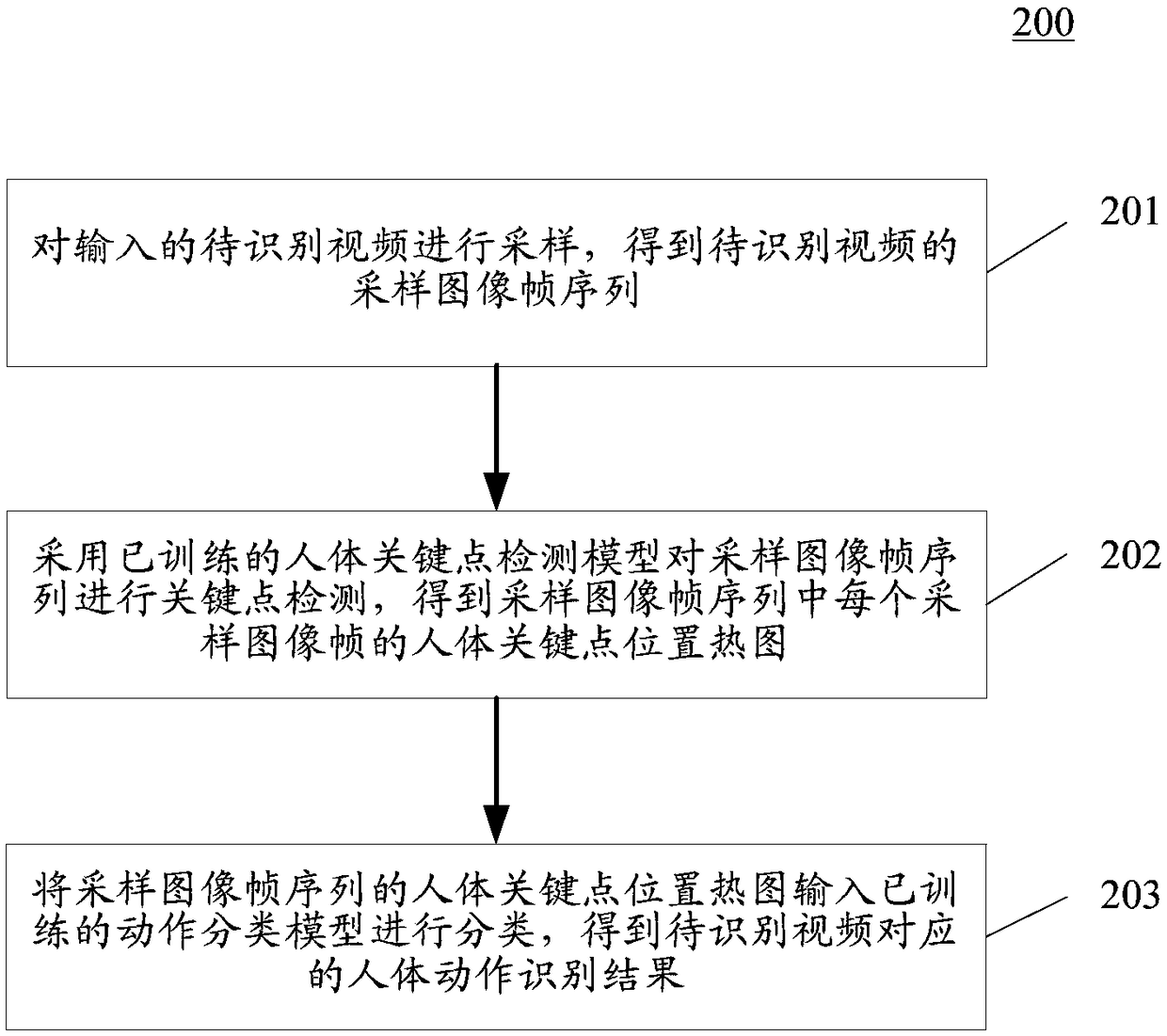

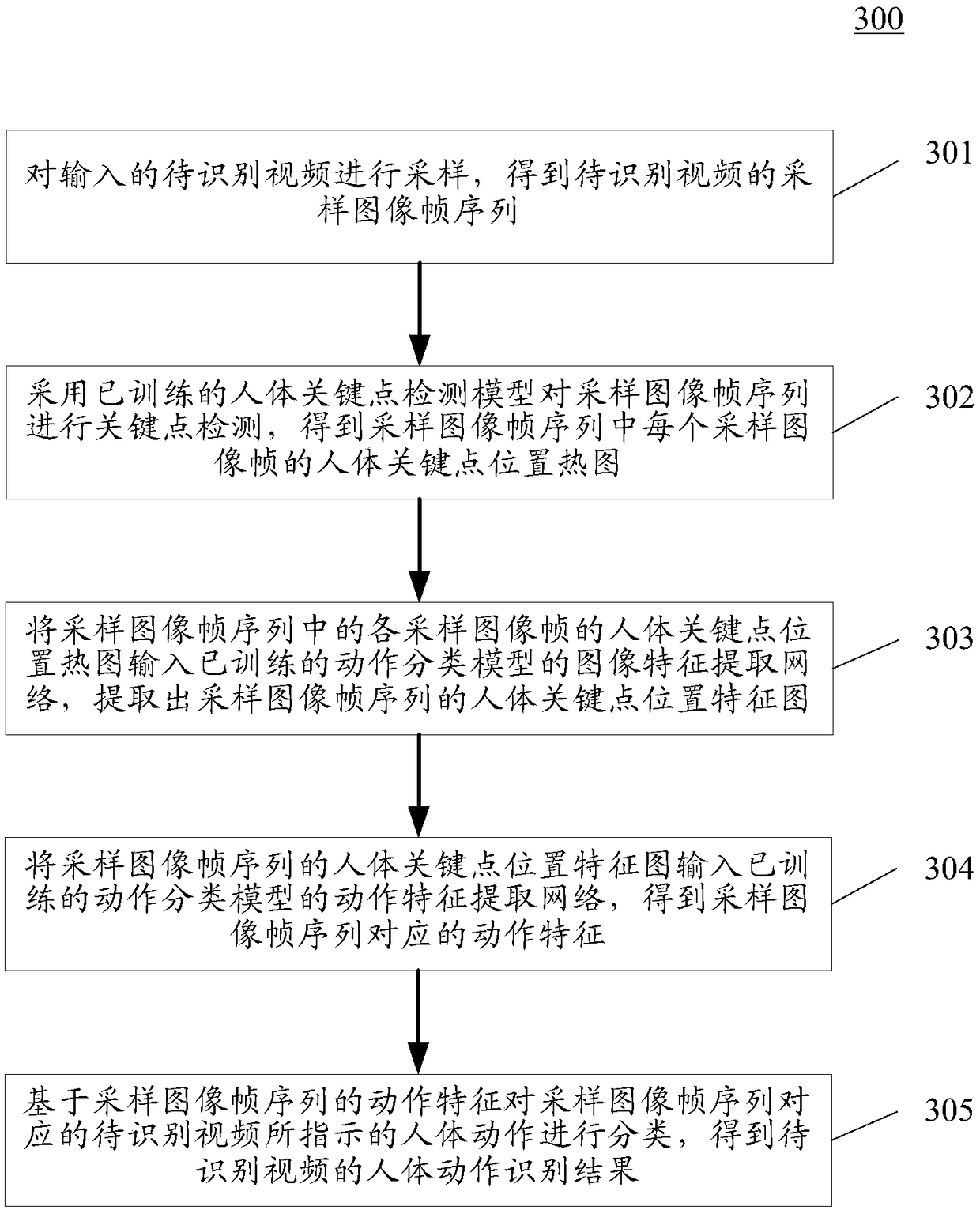

Human motion recognition method and device

ActiveCN108985259AImprove recognition accuracyCharacter and pattern recognitionFrame sequenceHuman motion

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

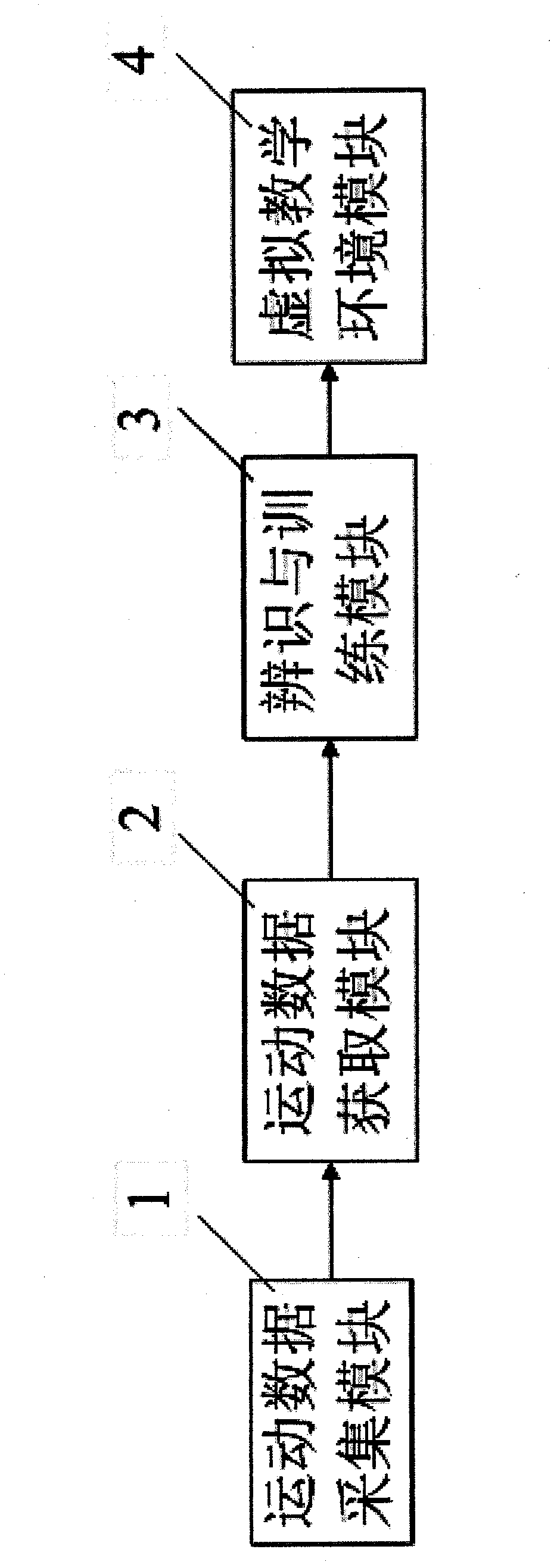

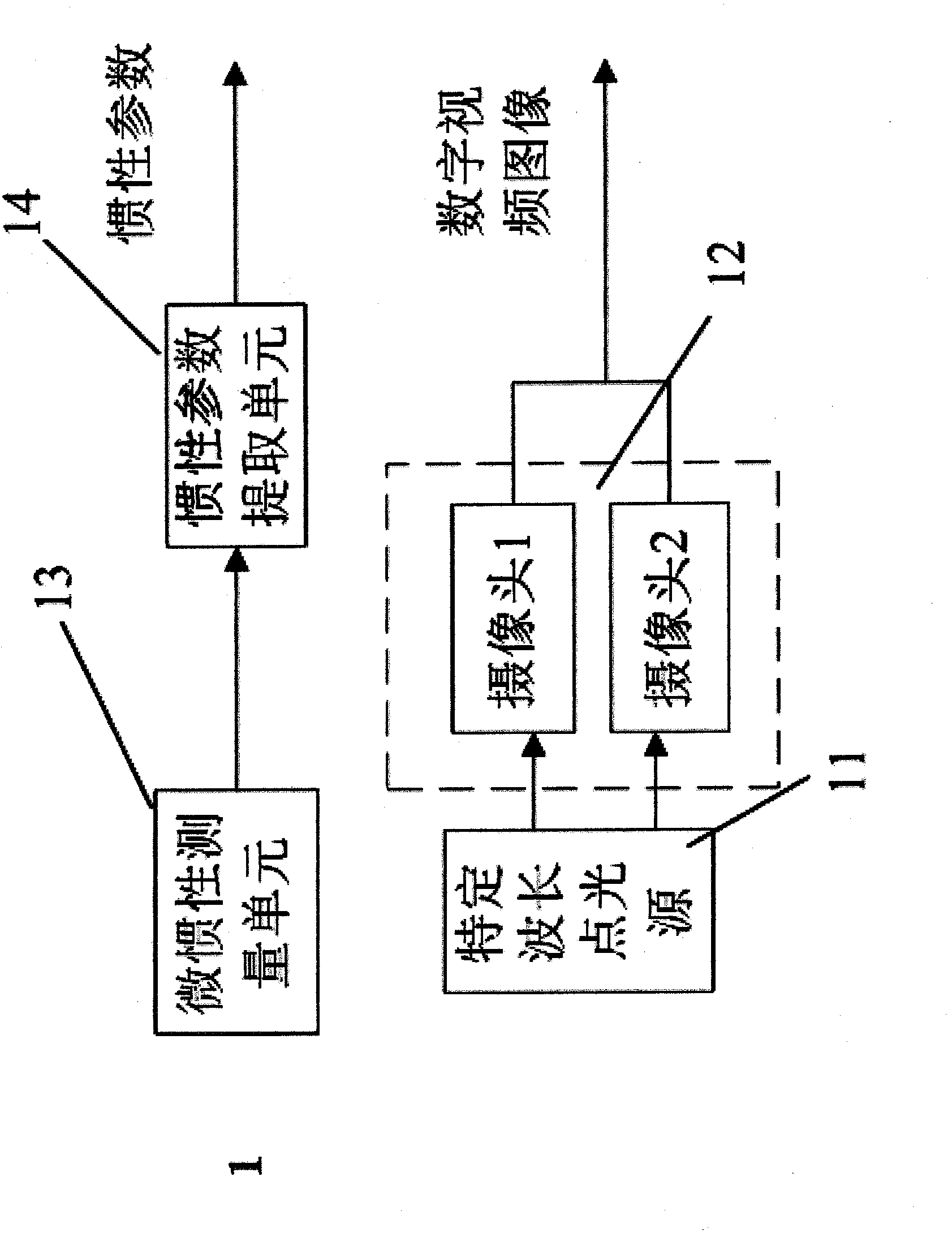

Physical education teaching auxiliary system based on motion identification technology and implementation method of physical education teaching auxiliary system

InactiveCN102243687ASolve the problem of poor authenticitySolution rangeCharacter and pattern recognitionSport apparatusData acquisitionData acquisition module

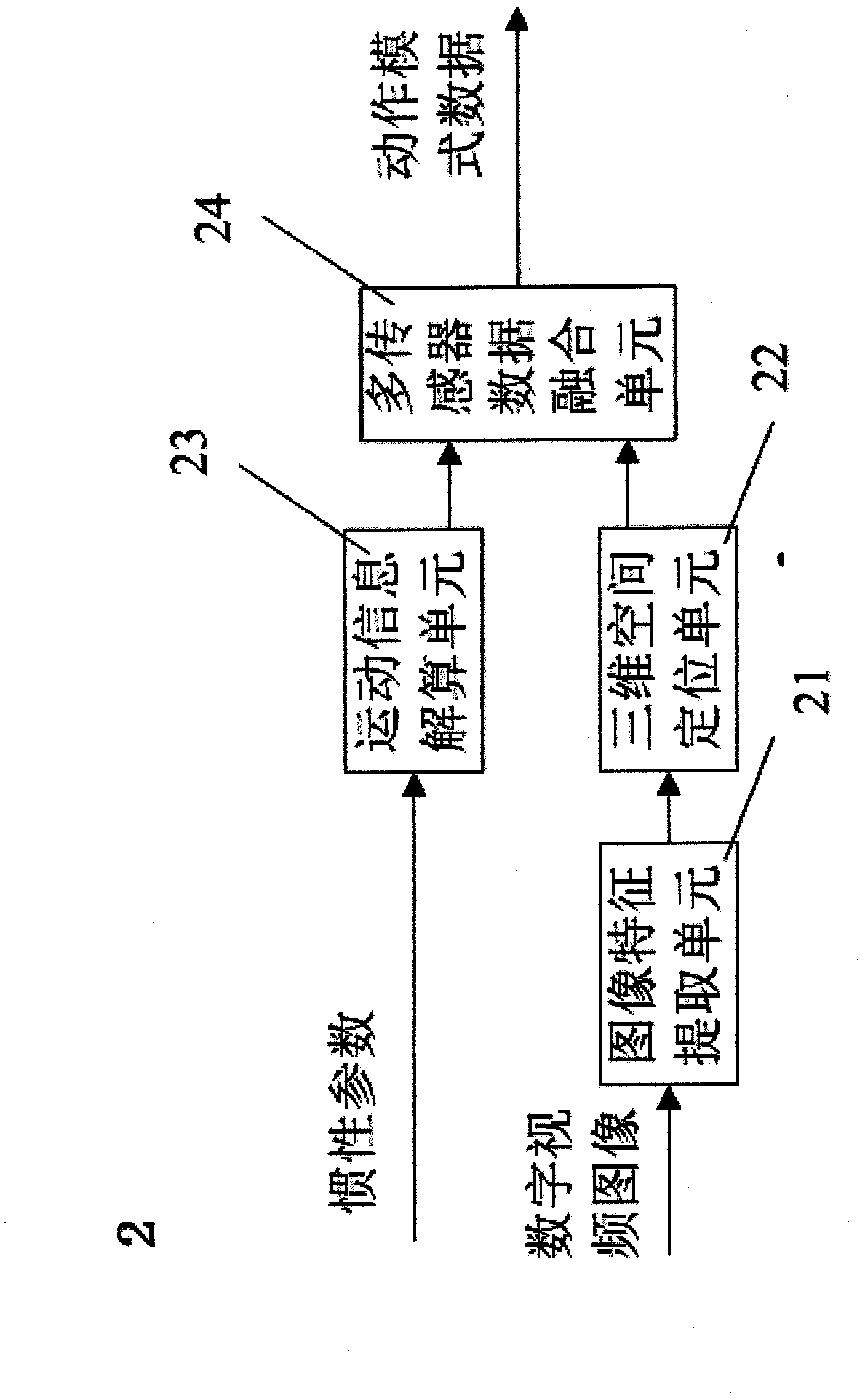

The invention provides a physical education teaching auxiliary system based on a motion identification technology and an implementation method of the physical education teaching auxiliary system. The system is applicable to a personal computer (PC) or an embedded host. The system comprises a movement data collection module, a movement data acquisition module, an identification and training module and a virtual teaching environment module. The device is characterized in that: a micro-inertia measurement unit and an inertia parameter extraction unit are arranged in the movement data collection module; and a movement information resolving unit transmits data which is output by the inertia parameter extraction unit to a multi-sensor data fusion unit for resolving. The movement situation of a target is reflected comprehensively in an inertia tracking mode and an optical tracking mode, so a tracking range is effectively expanded, measurement accuracy is improved, and the problems that the integral information of the target cannot be acquired in the inertia tracking mode, complicated movement identification cannot be performed and sensitivity is poor are solved. A brand new teaching mode is provided for physical education teaching, and a physical education teaching method is digitalized, multi-media and scientifically standardized.

Owner:ANHUI COSWIT INFORMATION TECH

System and method for a user interface for text editing and menu selection

ActiveUS7542029B2Reduce speedImprove efficiencyInput/output for user-computer interaction2D-image generationGraphicsText editing

Methods and system to enable a user of an input action recognition text input system to edit any incorrectly recognized text without re-locating the text insertion position to the location of the text to be corrected. The System also automatically maintains correct spacing between textual objects when a textual object is replaced with an object for which automatic spacing is generated in a different manner. The System also enables the graphical presentation of menu choices in a manner that facilitates faster and easier selection of a desired choice by performing a selection gesture requiring less precision than directly contacting the sub-region of the menu associated with the desired choice.

Owner:CERENCE OPERATING CO

Method for controlling electronic apparatus based on voice recognition and motion recognition, and electronic apparatus applying the same

InactiveUS20130035941A1Speech analysisSelective content distributionControl electronicsSpeech identification

An electronic apparatus and a method for controlling thereof are provided. The method recognizes one of among a user voice and a user motion through one of among a voice recognition module and a motion recognition module, and if a user voice is recognized through the voice recognition module, performs a voice task corresponding to the recognized user voice, and, if a user motion is recognized through the motion recognition module, performs a motion task corresponding to the recognized user motion.

Owner:SAMSUNG ELECTRONICS CO LTD

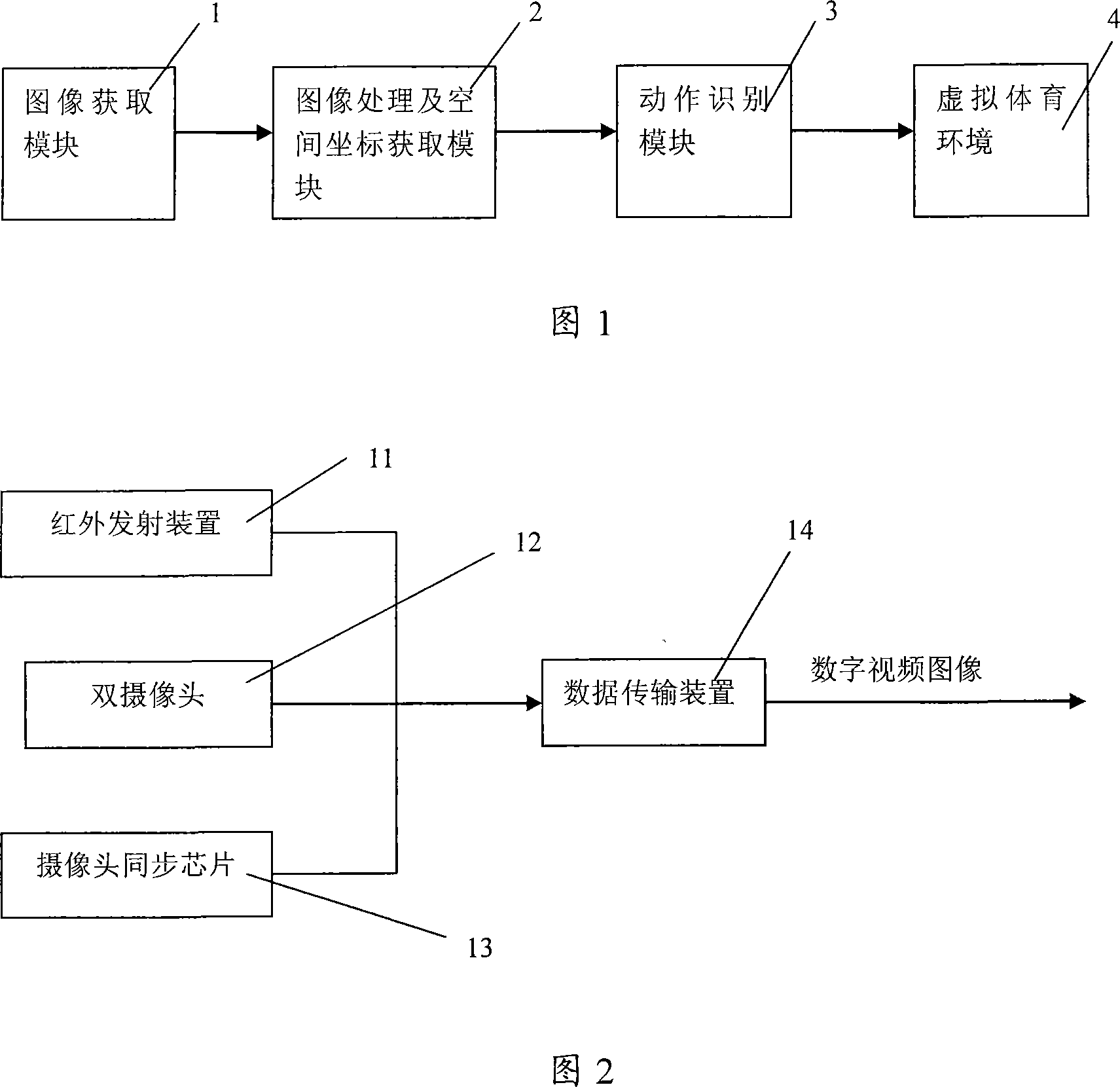

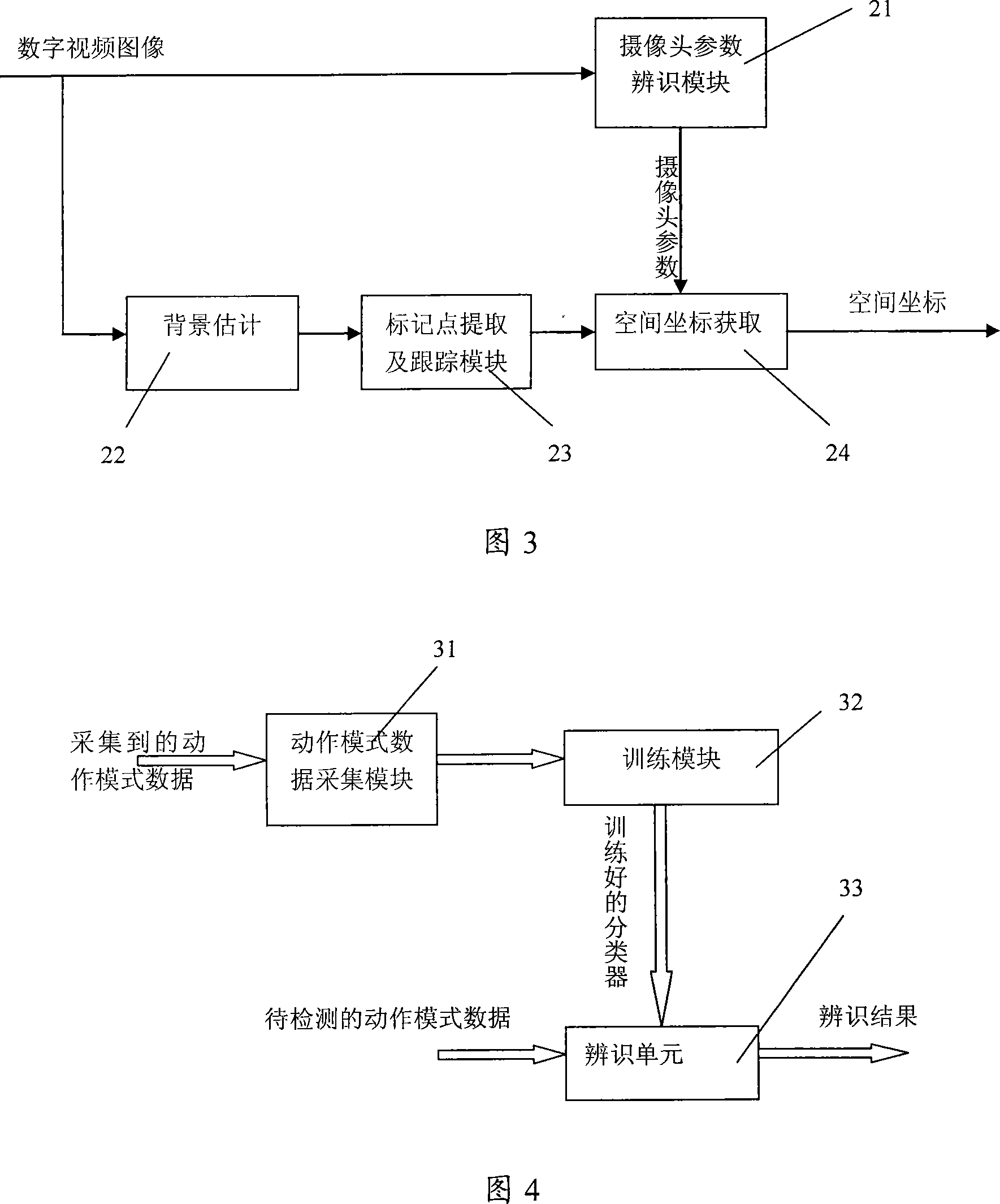

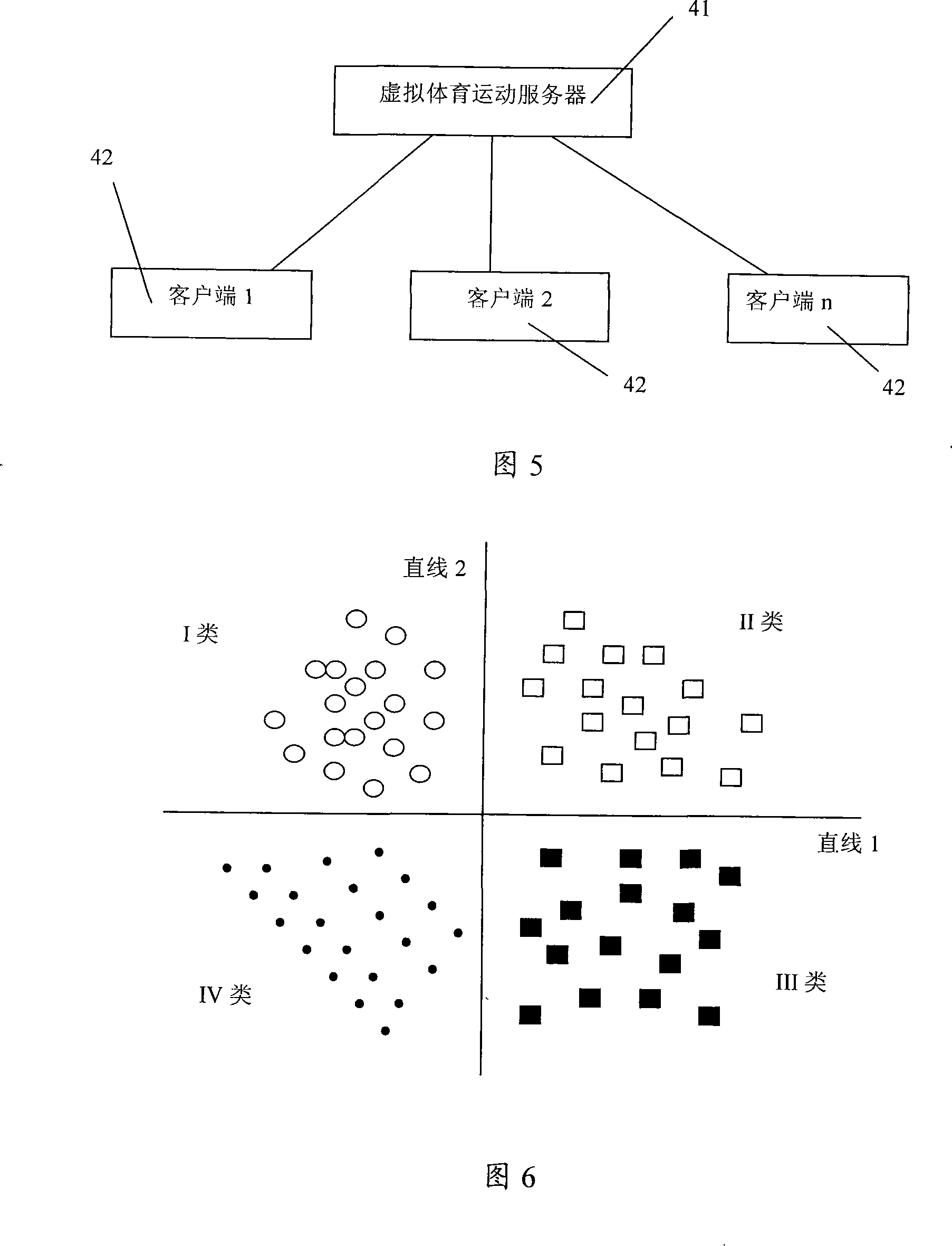

Virtual gym system based on computer visual sense and realize method thereof

ActiveCN101158883ALow costImprove real-time performanceInput/output for user-computer interactionTelevision system detailsDigital videoHuman body

The invention discloses a computer vision-based virtual sports system and a realization method thereof, which is used for a universal computer. The system comprises an image acquisition module for capturing digital video image data in a designated area; an image processing and space coordinate acquisition module for processing the obtained digital video image data to gain a marker space coordinate on a sports apparatus and a human body; an action recognition module for collecting action trajectory data of various action modes to classify and study and recognize classification of the action to be recognized; a virtual sports environment module for processing and displaying the interactive action state in accordance with the recognized action. The system and the realization method of the invention is able to realize the virtual sports process on the universal computer, thereby having lower realization cost; the system is applied for the sports excise, is able to be promoted in average families, and has good real-time performance and expandability.

Owner:SHENZHEN TAISHAN SPORTS TECH CO LTD

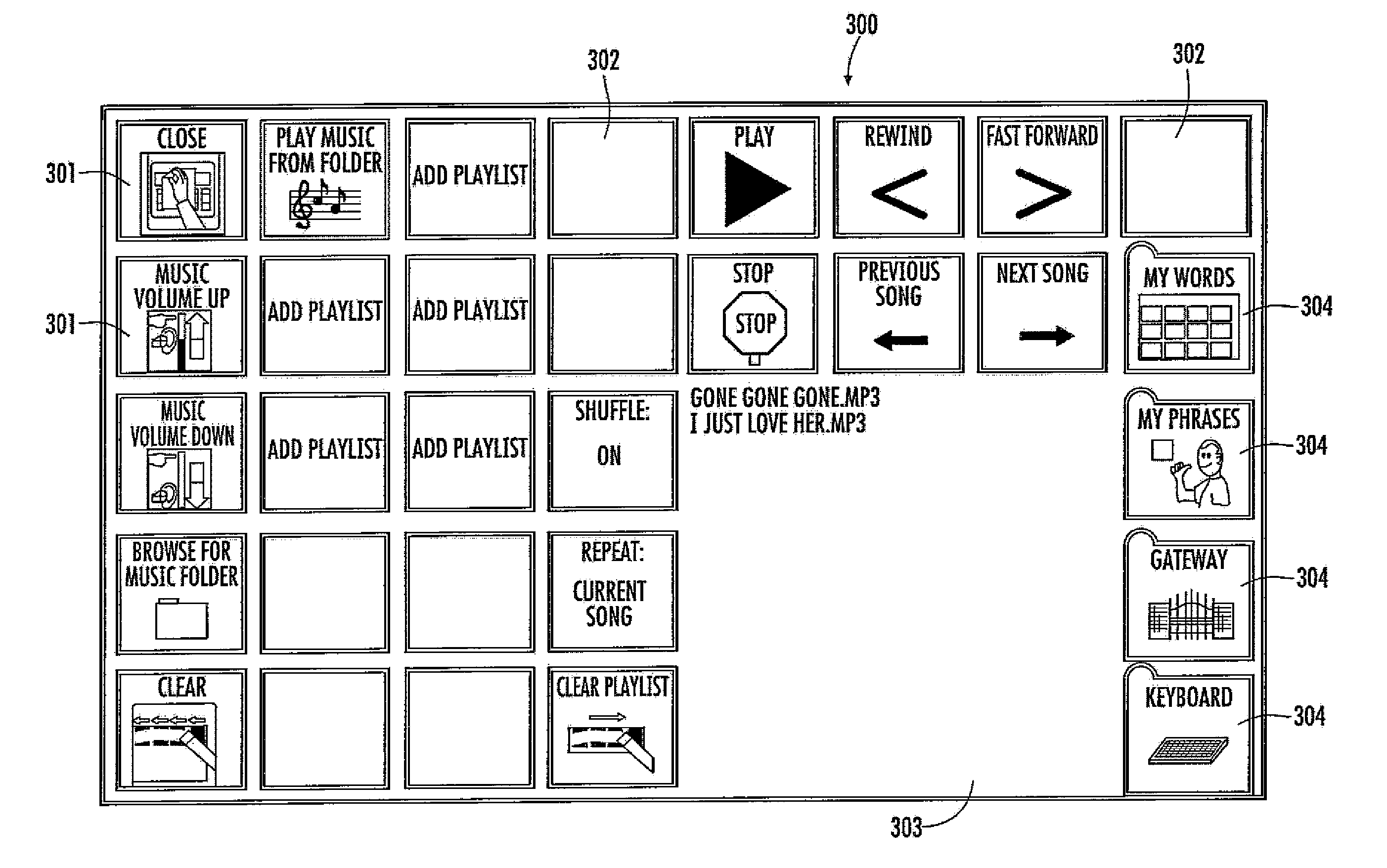

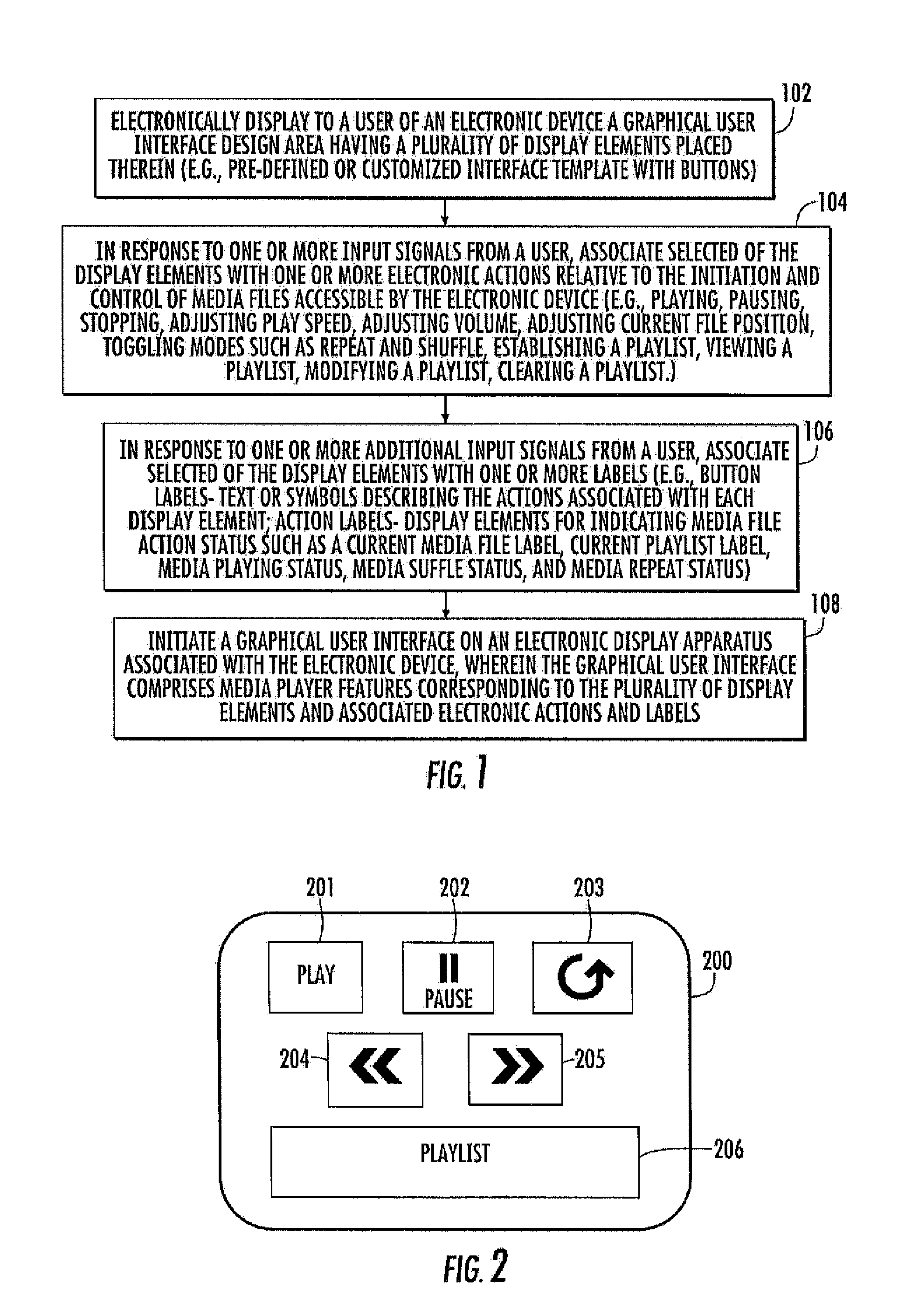

System and method of creating custom media player interface for speech generation device

InactiveUS20110202842A1OptimizationInput/output for user-computer interactionSoftware engineeringGraphicsGraphical user interface

Systems and methods of providing electronic features for creating a customized media player interface for an electronic device include providing a graphical user interface design area having a plurality of display elements. Electronic input signals then may define for association with selected display elements one or more electronic actions relative to the initiation and control of media files accessible by the electronic device (e.g., playing, pausing, stopping, adjusting play speed, adjusting volume, adjusting current file position, toggling modes such as repeat or shuffle, and / or establishing, viewing and / or clearing a playlist.) Additional electronic input signals may define for association with selected display elements labels such as action identification labels or media status labels. A graphical user interface is then initiated on an electronic display associated with an electronic device, wherein the graphical user interface comprises media player features corresponding to the plurality of display elements and associated electronic actions and / or labels.

Owner:DYNAVOX SYST

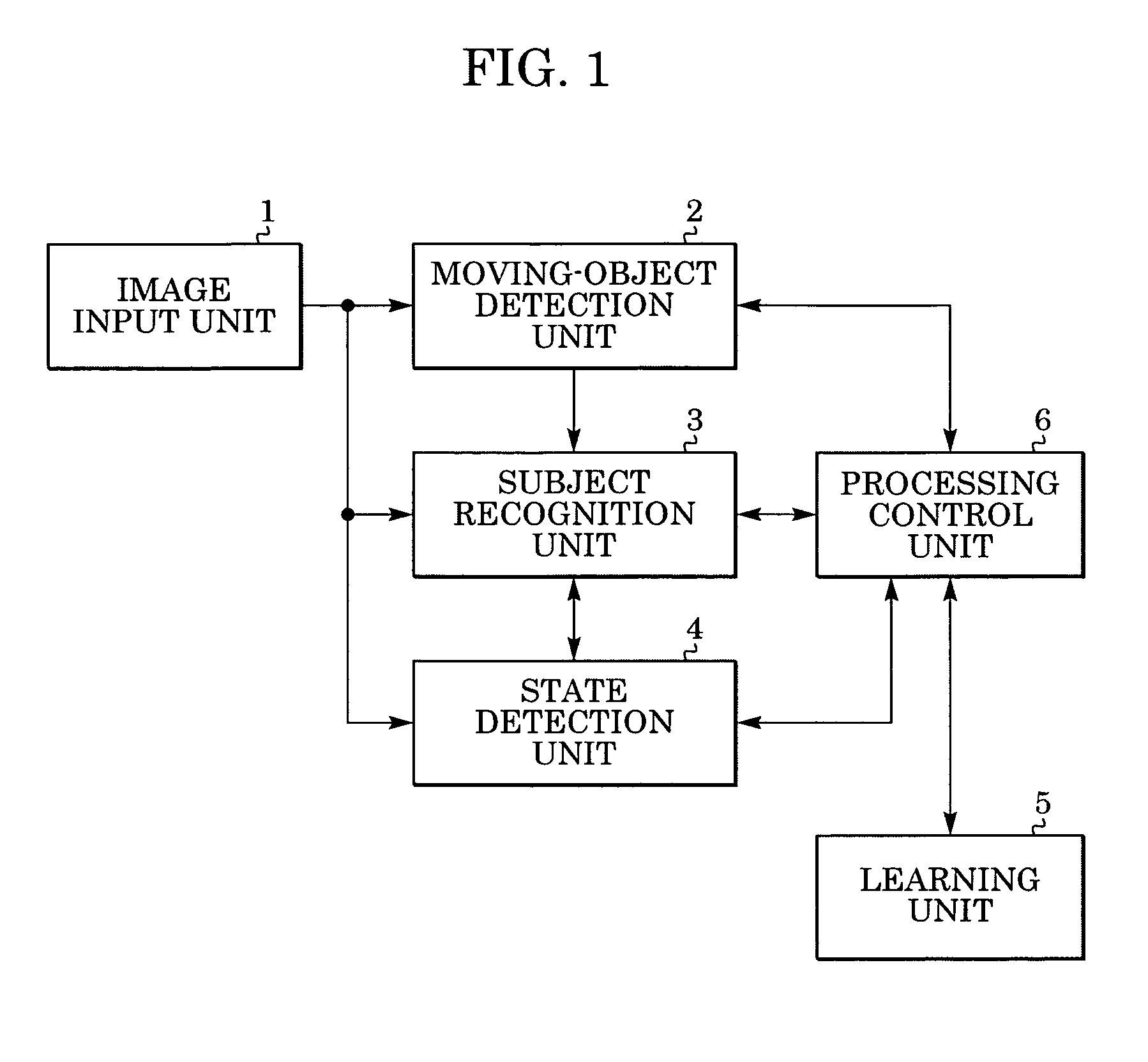

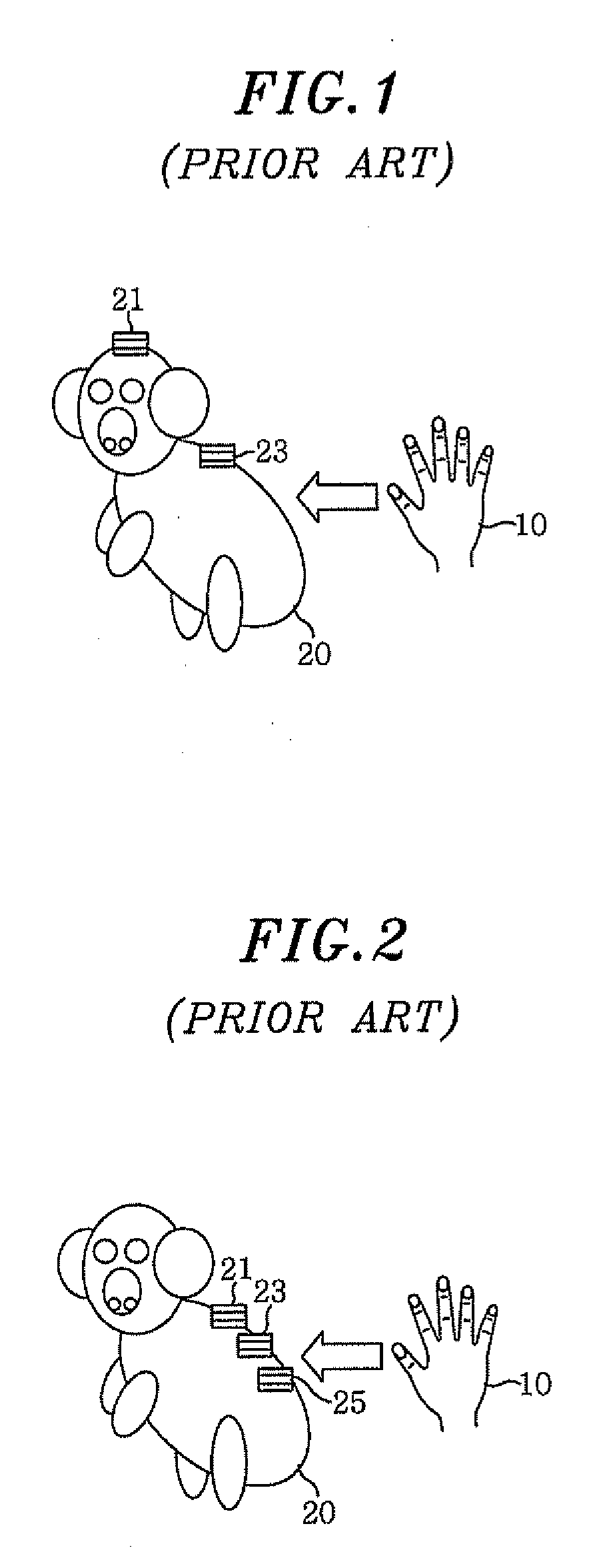

Action recognition apparatus and method, moving-object recognition apparatus and method, device control apparatus and method, and program

An action recognition apparatus includes an input unit for inputting image data, a moving-object detection unit for detecting a moving object from the image data, a moving-object identification unit for identifying the detected moving object based on the image data, a state detection unit for detecting a state or an action of the moving object from the image data, and a learning unit for learning the detected state or action by associating the detected state or action with meaning information specific to the identified moving object.

Owner:CANON KK

Touch action recognition system and method

InactiveUS20090153499A1Erroneous recognitionManipulatorInput/output processes for data processingHuman–computer interactionSignal processing

Owner:ELECTRONICS & TELECOMM RES INST

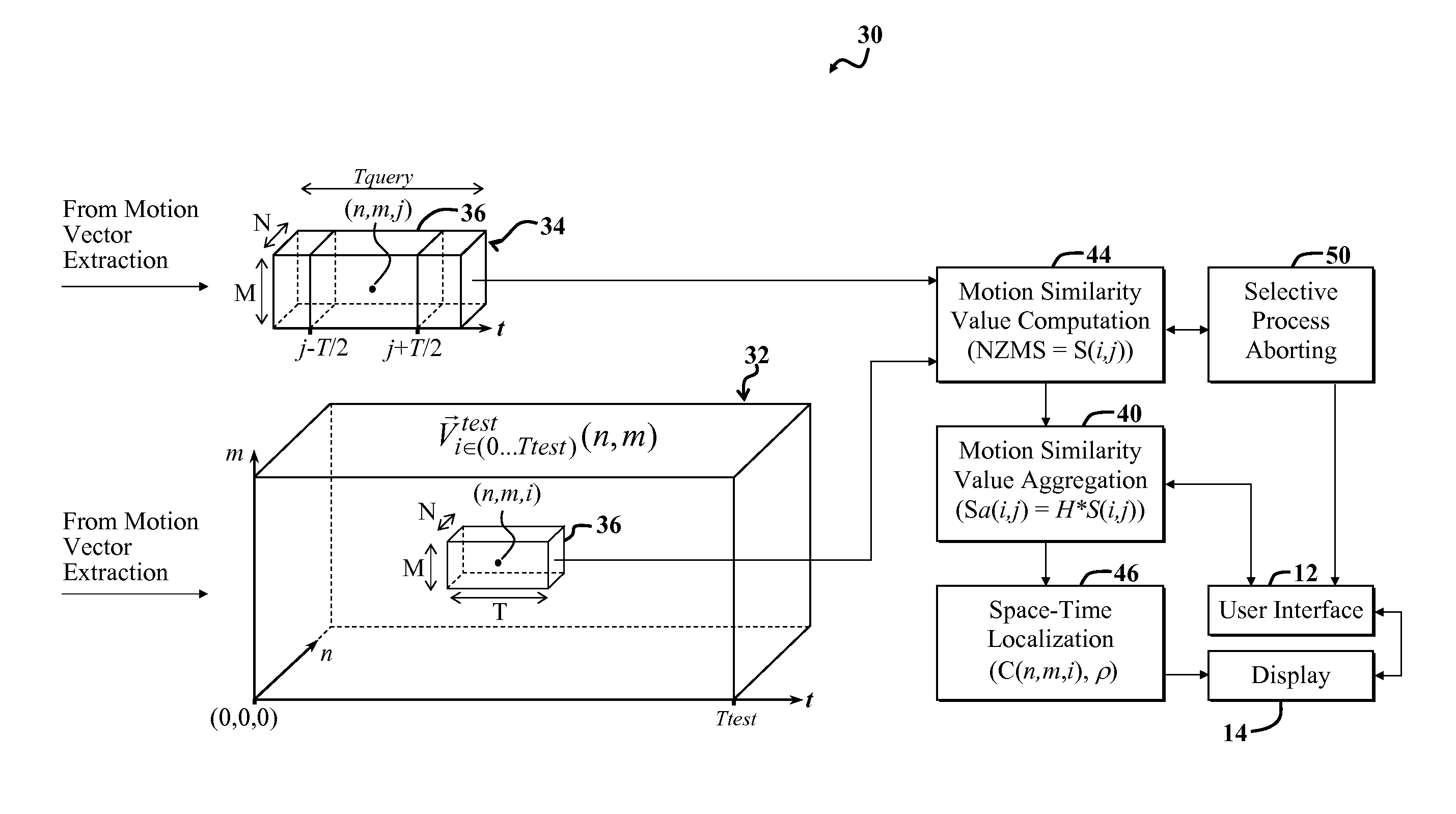

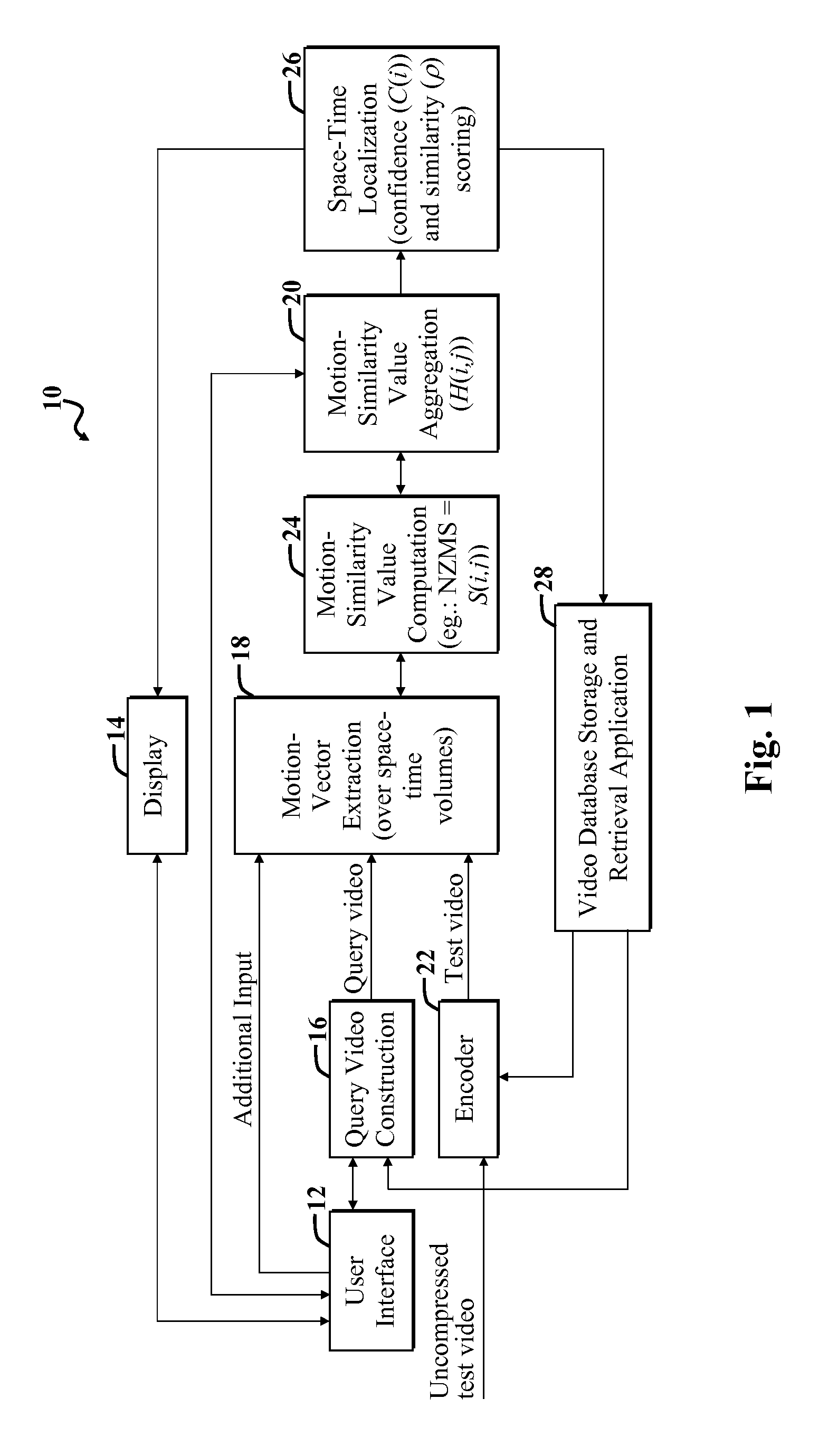

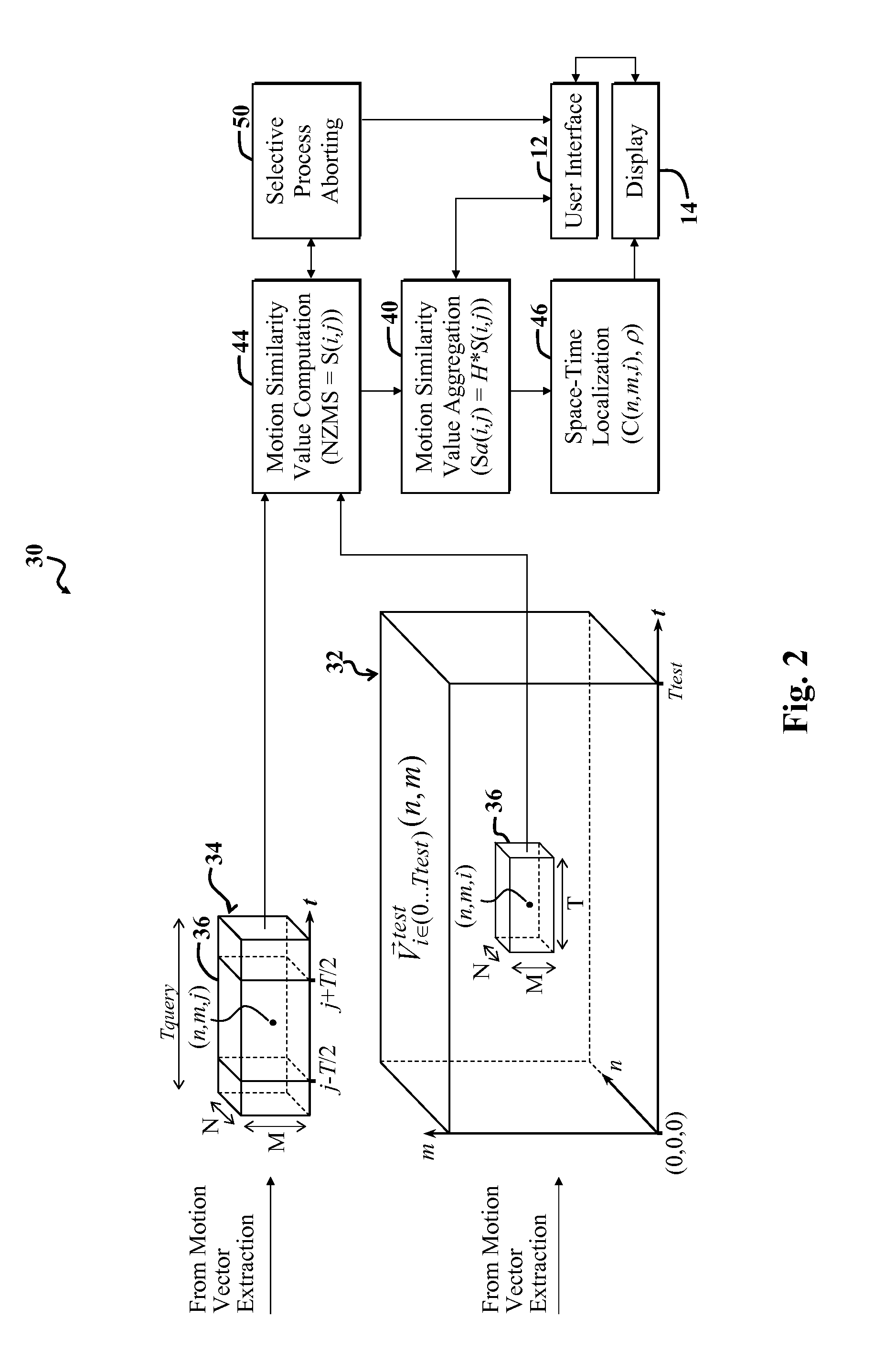

High speed video action recognition and localization

InactiveUS20080310734A1Facilitates high speed real-time action recognitionFacilitates temporalTelevision system detailsPicture reproducers using cathode ray tubesMotion vectorHigh speed video

Owner:RGT UNIV OF CALIFORNIA

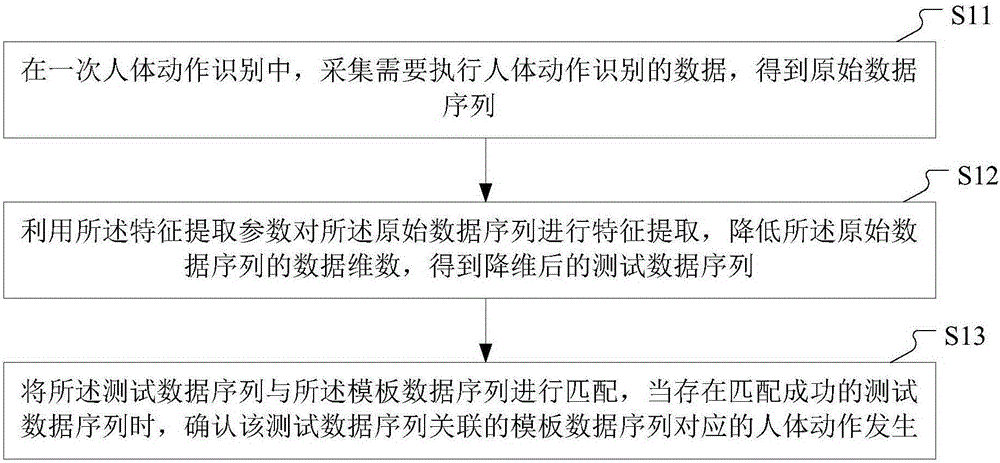

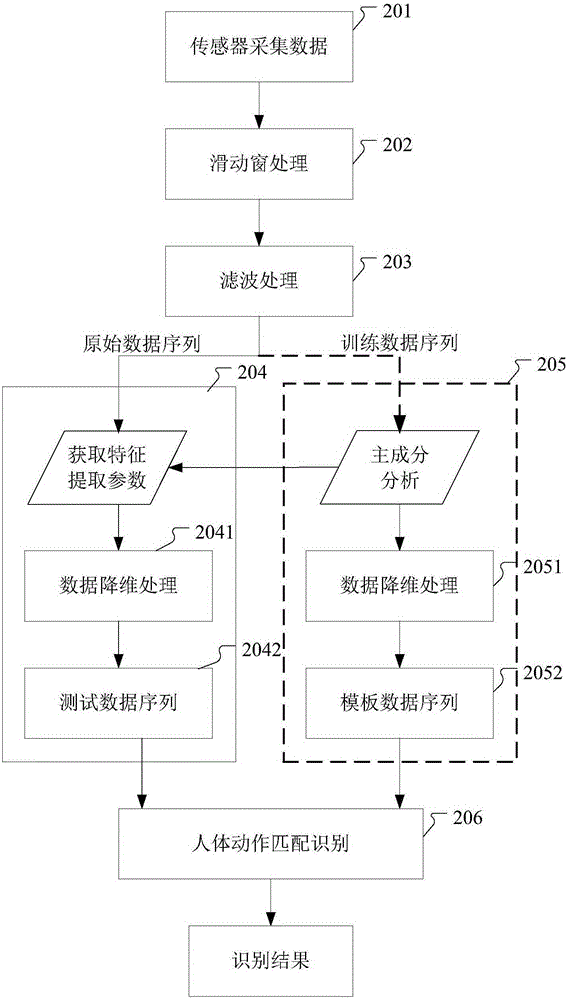

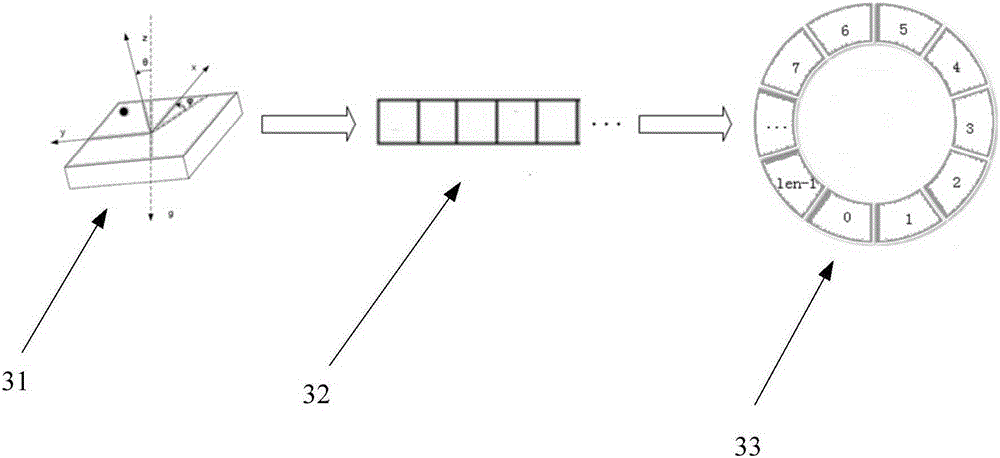

Human body action recognition method and mobile intelligent terminal

ActiveCN105184325AImprove experienceReduce complexityCharacter and pattern recognitionTemplate matchingComputation complexity

The invention discloses a human body action recognition method and a mobile intelligent terminal. The human body action recognition method comprises the steps that human body action data are acquired for training so that feature extraction parameters and template data sequences are obtained, and the data requiring performance of human body action recognition are acquired in one time of human body action recognition so that original data sequences are obtained; feature extraction is performed on the original data sequences by utilizing the feature extraction parameters, and the data dimension of the original data sequences is reduced so that test data sequences after dimension reduction are obtained; and the test data sequences and the template data sequences are matched, and generation of human body actions corresponding to the template data sequences to which the test data sequences are correlated is confirmed when the successfully matched test data sequences exist. Dimension reduction is performed on the test data sequences so that the requirements for the human body action attitudes are reduced, and noise is removed. Then the data after dimension reduction are matched with the templates so that calculation complexity is reduced, accurate human body action recognition is realized and user experience is enhanced.

Owner:GOERTEK INC

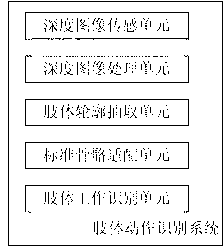

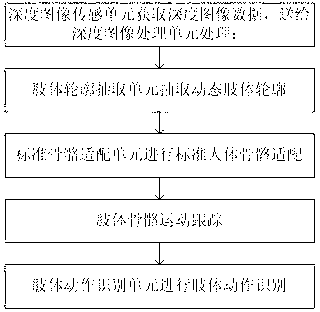

Body action identification method and system based on depth image induction

InactiveCN102831380AImprove motion recognition efficiencyImprove experienceInput/output for user-computer interactionImage analysisHuman–robot interactionComputer science

The invention relates to a body action identification method and a body action identification system based on depth image induction. The body action identification method comprises the following steps: acquiring the depth image information of a user and an environment where the user stands; extracting the body outline of the user from the background of the depth image information; respectively changing the size of each part in the skeletal framework of the human body to be adapted to the body outline of the user, and acquiring the adapted body skeletal framework of the user; tracking and extracting the data which present the movement of the body of the user in a manner adapted to the body skeletal framework; and identifying the body action of the user according to the data which present the movement of the body of the user. According to the invention, the body action of the user is further identified and tracked by establishing the skeletal system of the user, so that the problem existing in the current action induction identification solution is better solved, the body action identification efficiency is improved, and the user experience of human-computer interaction is improved.

Owner:KONKA GROUP

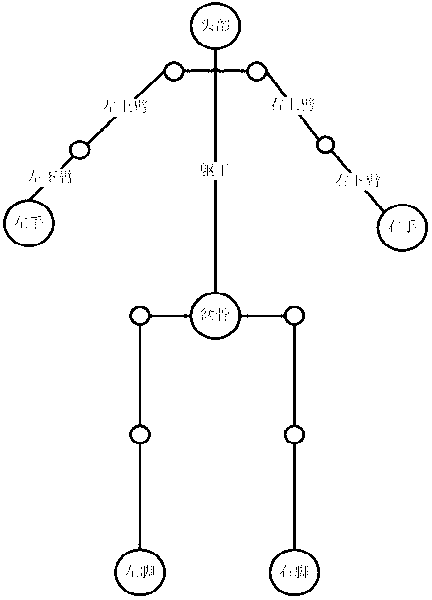

Automatic special effect matching method, system and device in network live streaming

The invention provides an automatic special effect matching method, system and device in network live streaming. The method includes the following steps: binding trigger behaviors and special effect information and then storing the trigger behaviors and the special effect information in a live streaming device; and obtaining live streaming behaviors during live streaming in real time, matching the live streaming behaviors with the stored trigger behaviors, calling the special effect information that is bound with the trigger behaviors if matching succeeds, and enabling the special effect information to be matched in corresponding positions of the live streaming behaviors. The invention relates to an operation of special effect matching on the basis of motion identification and speech recognition or mutual cooperation between motion identification and speech recognition. No manual operation is needed, selection problems in a live streaming actual scene that a host is far away from a hand-held device and it is inconvenient to carry out gesture interaction with the hand-held device are effectively avoided, and the interaction interest is increased.

Owner:1VERGE INTERNET TECH BEIJING

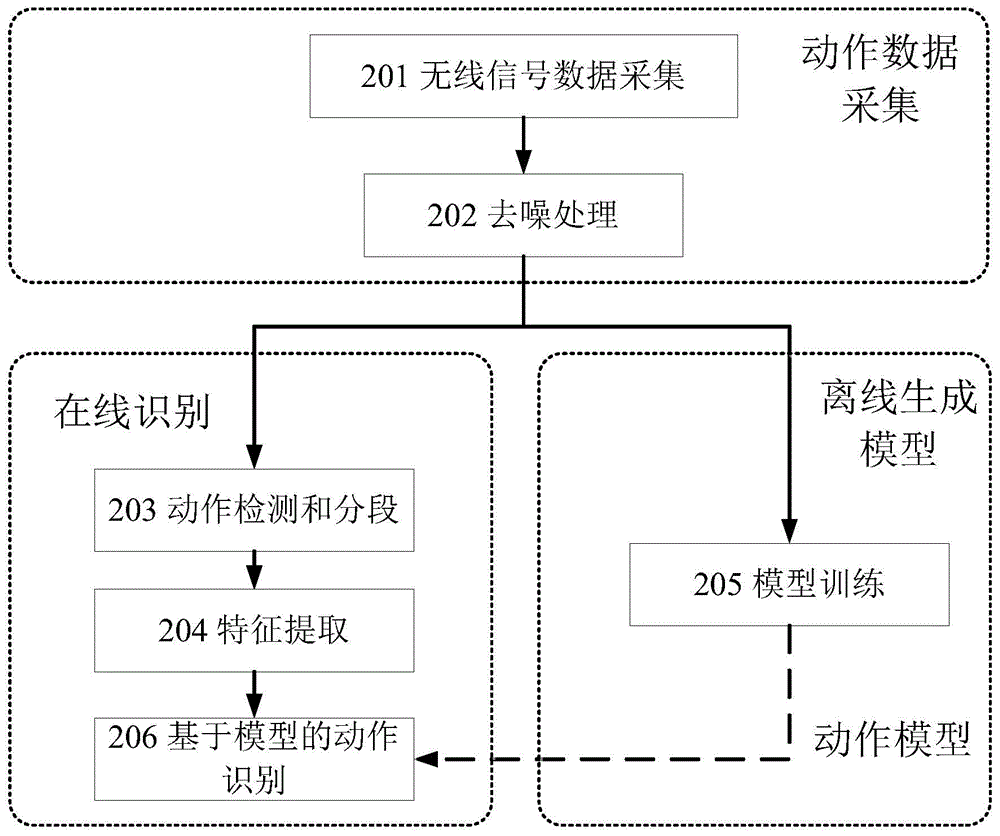

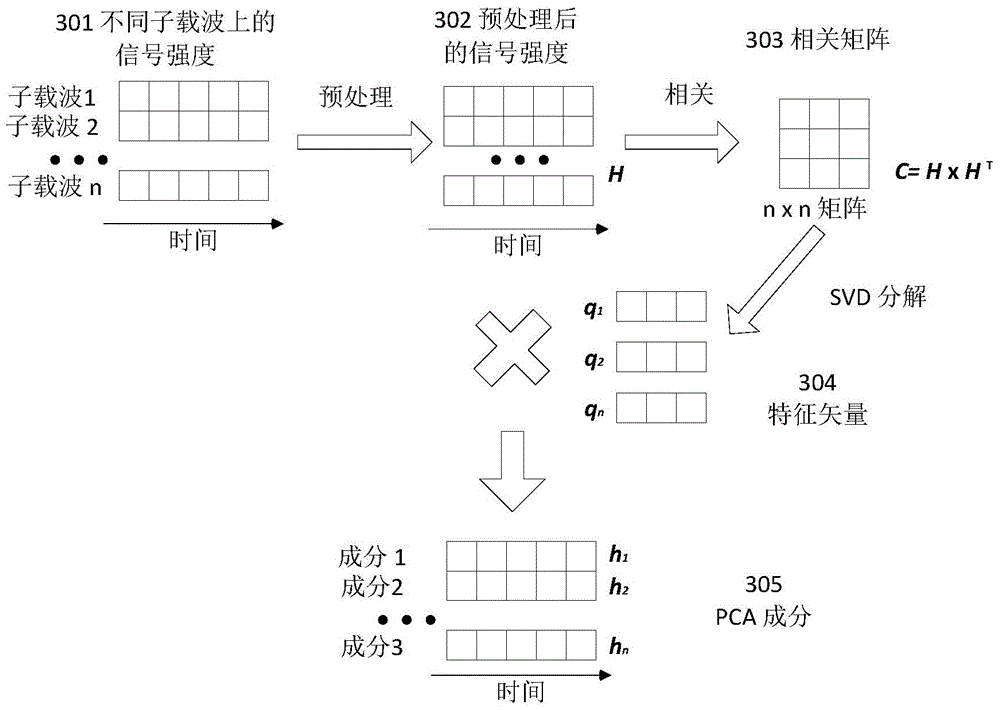

Action detecting and identifying method based on radio signals

ActiveCN104951757AEasy to identifyImprove anti-interference abilityCharacter and pattern recognitionRadio networksFeature extraction

The invention discloses an action detecting and identifying method based on radio signals and relates to the field of radio network and pervasive computing, in particular to an action detecting and identifying method based on radio signals. Radio signals are interfered by means of human actions, a universal radio device acquires radio signal data, and data is subjected to noise removal and characteristic extraction correlated with action speed to identify action. The radio signal data is acquired via one or more universal radio devices, radio data is subjected to noise removal by means of multichannel radio signal data, and characteristics correlated to human action speed can be extracted from the radio data so as to detect and identify the actions. The action detecting and identifying method includes steps of data acquisition, data denoising, data segmenting, characteristic extraction, model training and action identifying.

Owner:NANJING UNIV

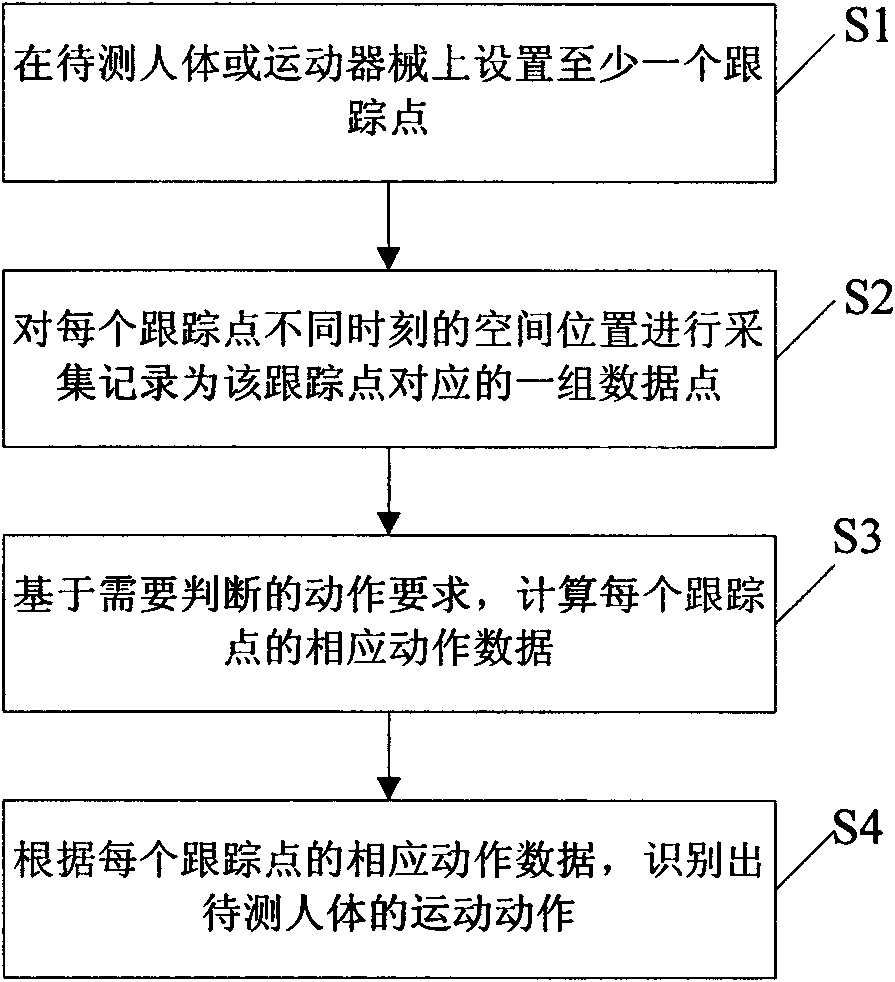

Multiple trace point-based human body action recognition method

ActiveCN101964047ARealize multi-target trackingSimple methodImage analysisCharacter and pattern recognitionPhysical medicine and rehabilitationMulti target tracking

The invention relates to a multiple trace point-based human body action recognition method. The method comprises the following steps of: based on an action requirement needing to be judged, setting at least one trace point on a human body or a sports apparatus to be tested; acquiring the space positions of each track point at different moments and recording the acquired space positions as a group of data points corresponding to the trace points; calculating corresponding action data of each trace point based on the action requirement needing to be judged by using the space position data of the group of data points corresponding to each trace point; and recognizing the movement action of the human body to be tested according to the corresponding action data of each trace point. The method can also recognize a human body gesture. The method has the advantages of realizing tracking of a plurality of targets, tracking a plurality of positions of the human body to be tested, recording the movement locus of a tracked position, positioning and describing the posture of the human body and truly reflecting the movement situation of the human body.

Owner:SHENZHEN TAISHAN SPORTS TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com