Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

400 results about "Expression Feature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Describes the expression pattern of a gene.

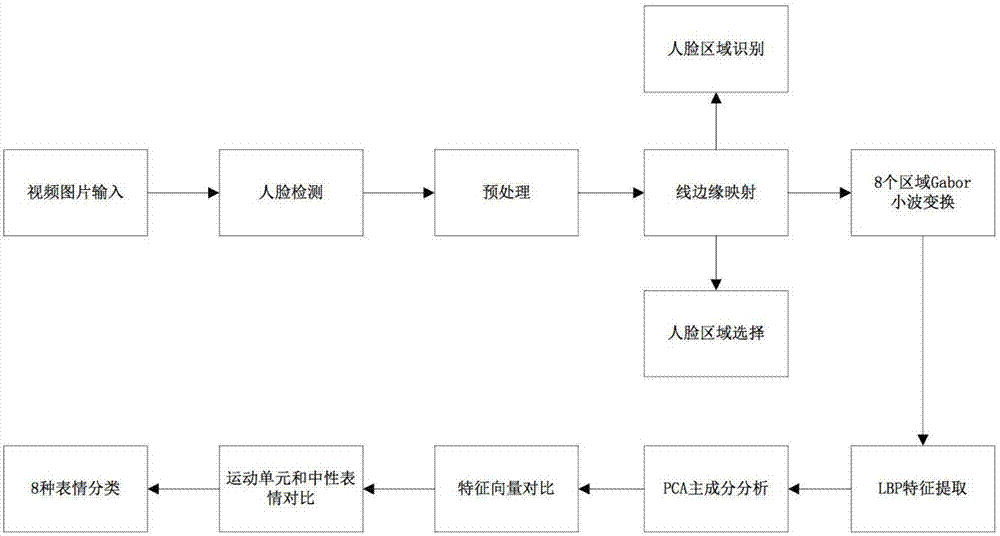

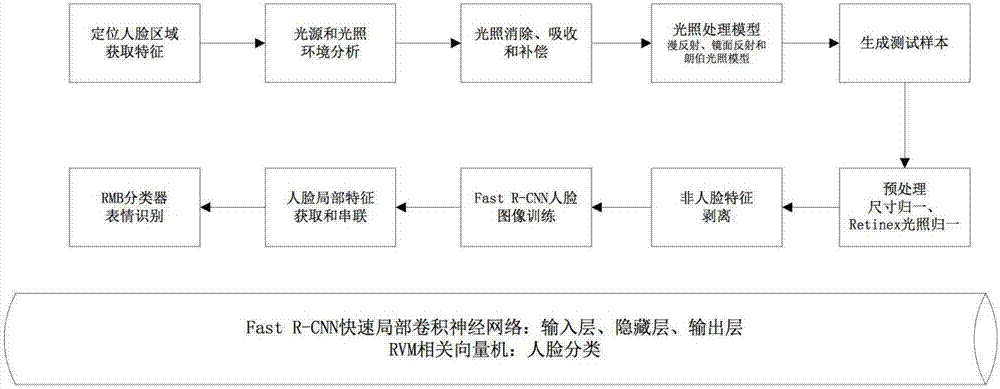

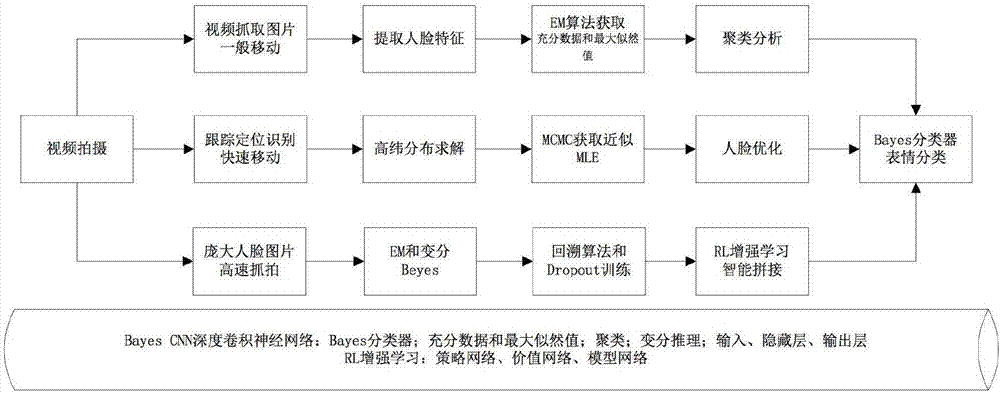

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

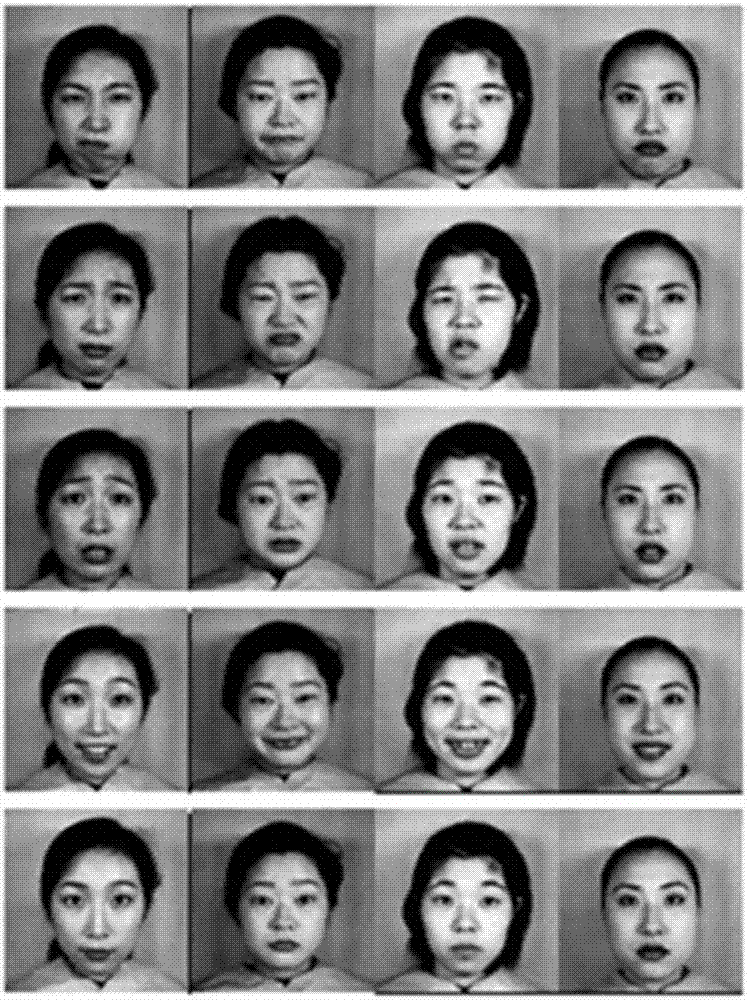

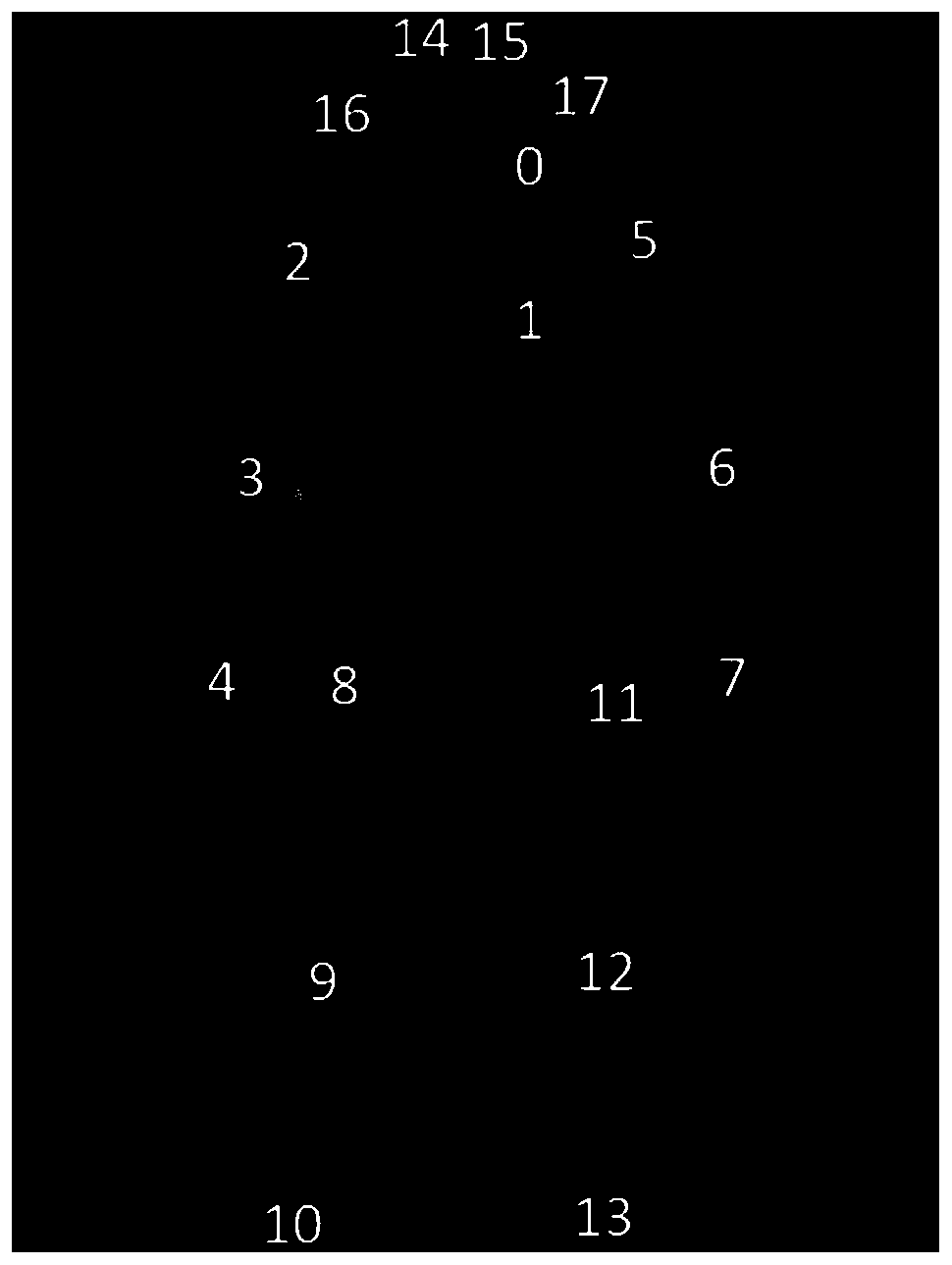

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

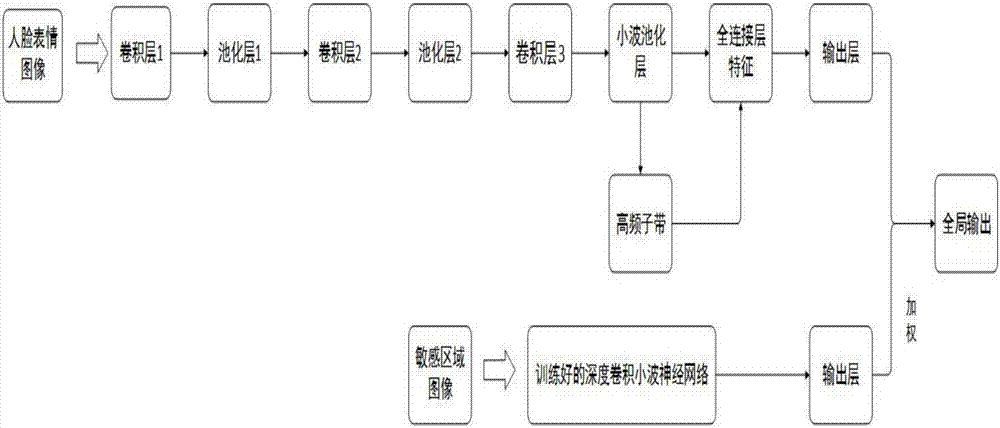

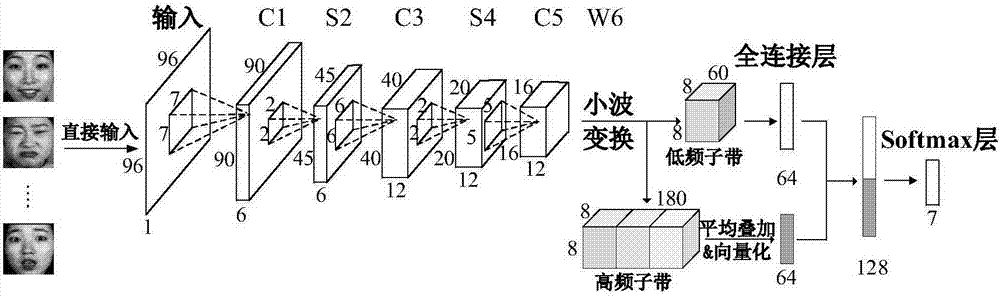

Depth convolution wavelet neural network expression identification method based on auxiliary task

ActiveCN107292256AImprove generalization abilityEfficient complete feature transferNeural architecturesAcquiring/recognising facial featuresFeature selectionExpression Feature

The invention discloses a depth convolution wavelet neural network expression identification method based on auxiliary tasks, and solves problems that an existing feature selection operator cannot efficiently learn expression features and cannot extract more image expression information classification features. The method comprises: establishing a depth convolution wavelet neural network; establishing a face expression set and a corresponding expression sensitive area image set; inputting a face expression image to the network; training the depth convolution wavelet neural network; propagating network errors in a back direction; updating each convolution kernel and bias vector of the network; inputting an expression sensitive area image to the trained network; learning weighting proportion of an auxiliary task; obtaining network global classification labels; and according to the global labels, counting identification accuracy rate. The method gives both considerations on abstractness and detail information of expression images, enhances influence of the expression sensitive area in expression feature learning, obviously improves accuracy rate of expression identification, and can be applied in expression identification of face expression images.

Owner:XIDIAN UNIV

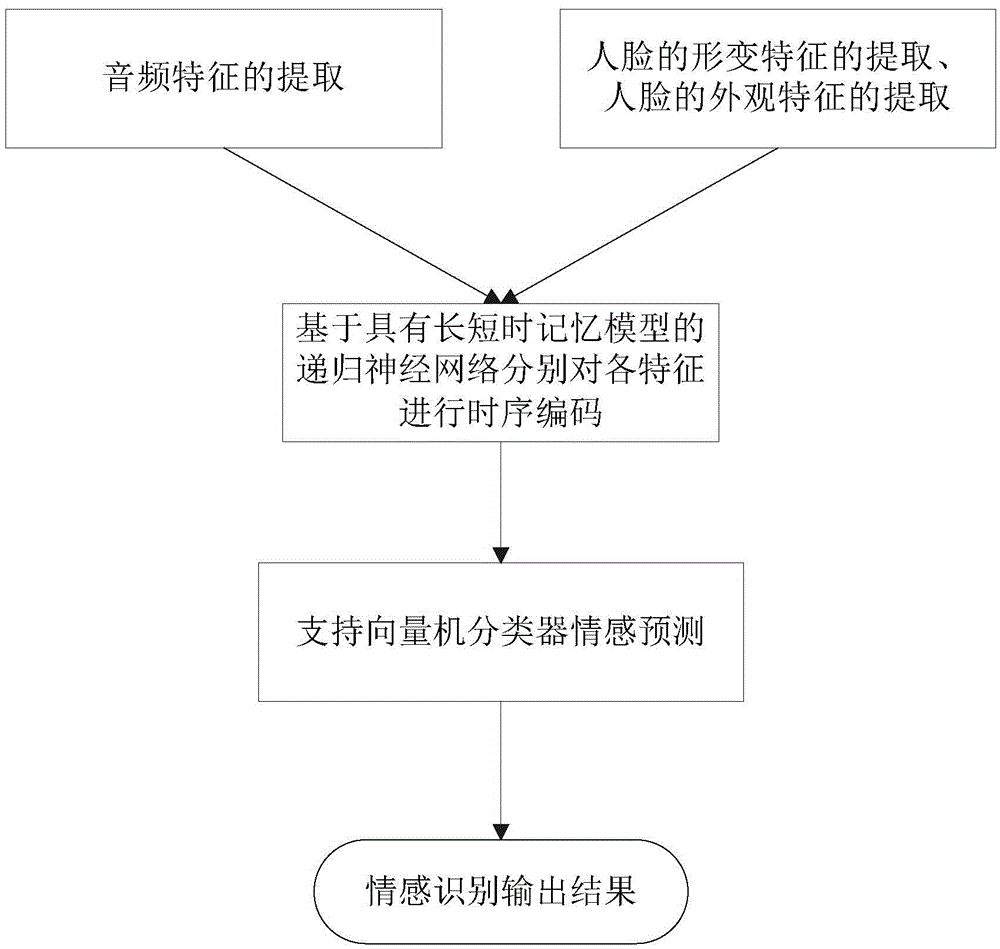

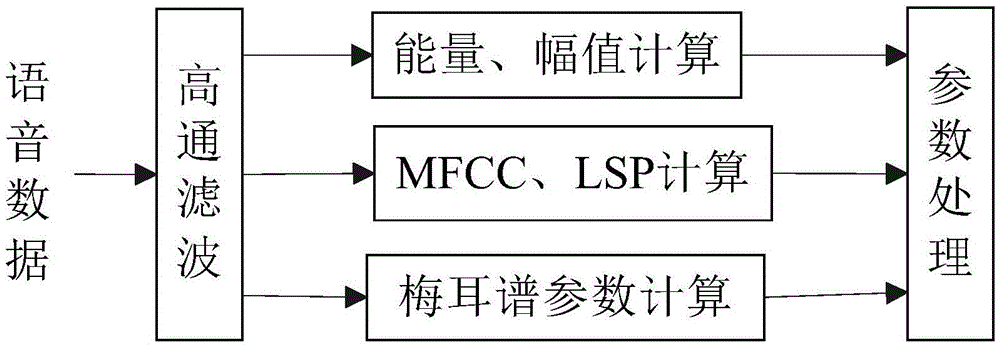

Recurrent neural network-based discrete emotion recognition method

ActiveCN105469065AModeling implementationRealize precise identificationCharacter and pattern recognitionDiscrete emotionsSupport vector machine classifier

The invention provides a recurrent neural network-based discrete emotion recognition method. The method comprises the following steps: 1, carrying out face detecting and tracking on image signals in a video, extracting key points of faces to serve as deformation features of the faces after obtaining the face regions, clipping the face regions and normalizing to a uniform size and extracting the appearance features of the faces; 2, windowing audio signals in the video, segmenting audio sequence units out and extracting audio features; 3, respectively carrying out sequential coding on the three features obtained by utilizing a recurrent neural network with long short-term memory models to obtain emotion representation vectors with fixed lengths, connecting the vectors in series and obtaining final emotion expression features; and 4, carrying out emotion category prediction by utilizing the final emotion expression features obtained in the step 3 on the basis of a support vector machine classifier. According to the method, dynamic information in the emotion expressing process can be fully utilized, so that the precise recognition of emotions of participators in the video is realized.

Owner:北京中科欧科科技有限公司

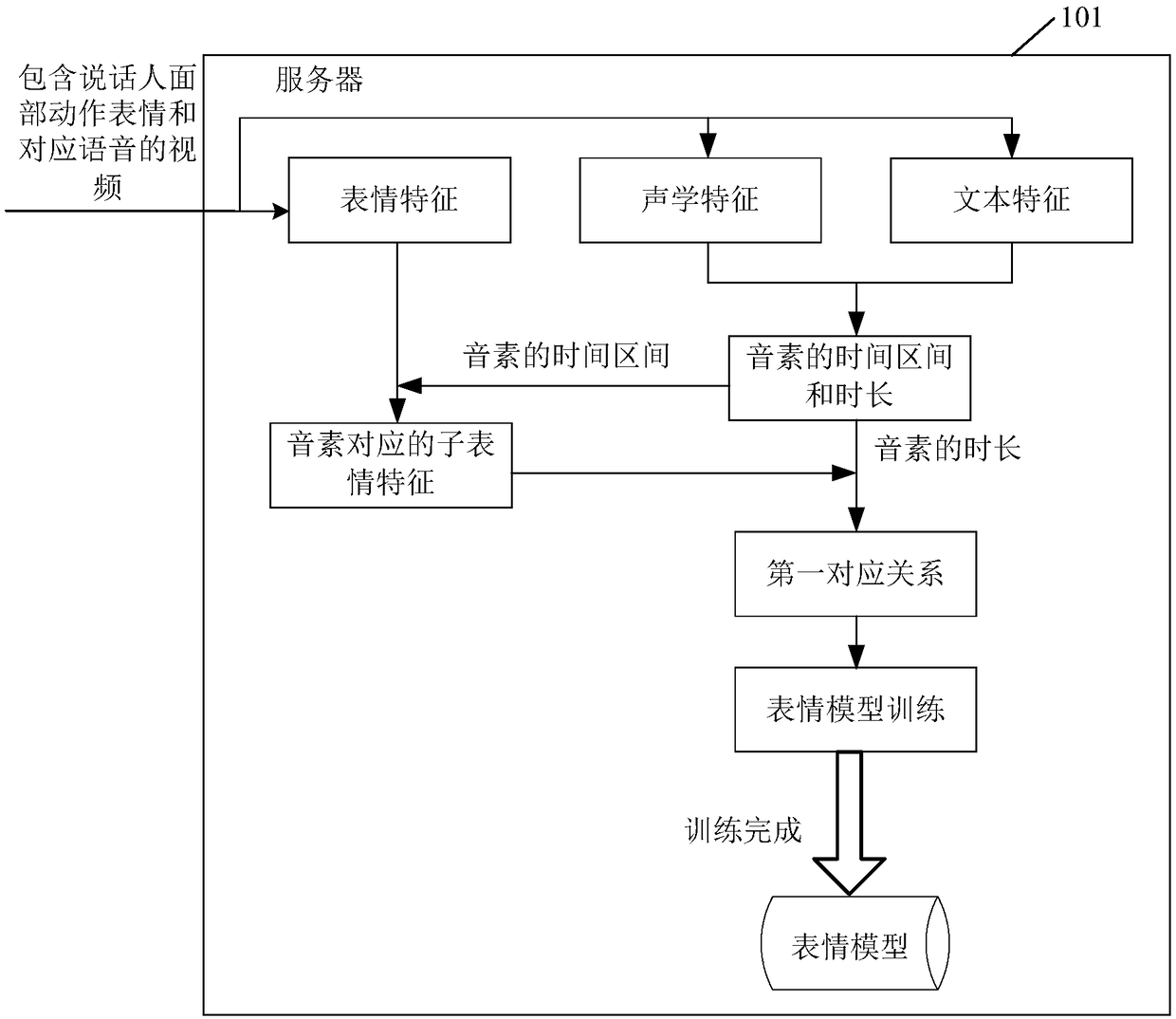

Model training method, method for synthesizing speaking expression and related device

The embodiment of the invention discloses a model training method for synthesizing speaking expressions. Expression characteristics, acoustic characteristics and text characteristics are obtained according to videos containing face action expressions of speakers and corresponding voices. Because the acoustic feature and the text feature are obtained according to the same video, the time interval and the duration of the pronunciation element identified by the text feature are determined according to the acoustic feature. A first corresponding relation is determined according to the time interval and duration of the pronunciation element identified by the text feature and the expression feature, and an expression model is trained according to the first corresponding relation. The expressionmodel can determine different sub-expression characteristics for the same pronunciation element with different durations in the text characteristics; the change patterns of the speaking expressions are added, the speaking expressions generated according to the target expression characteristics is determined by the expression model. The speaking expressions have different change patterns for the same pronunciation element, and therefore the situation that the speaking expressions are excessively unnatural in change is improved to a certain degree.

Owner:TENCENT TECH (SHENZHEN) CO LTD

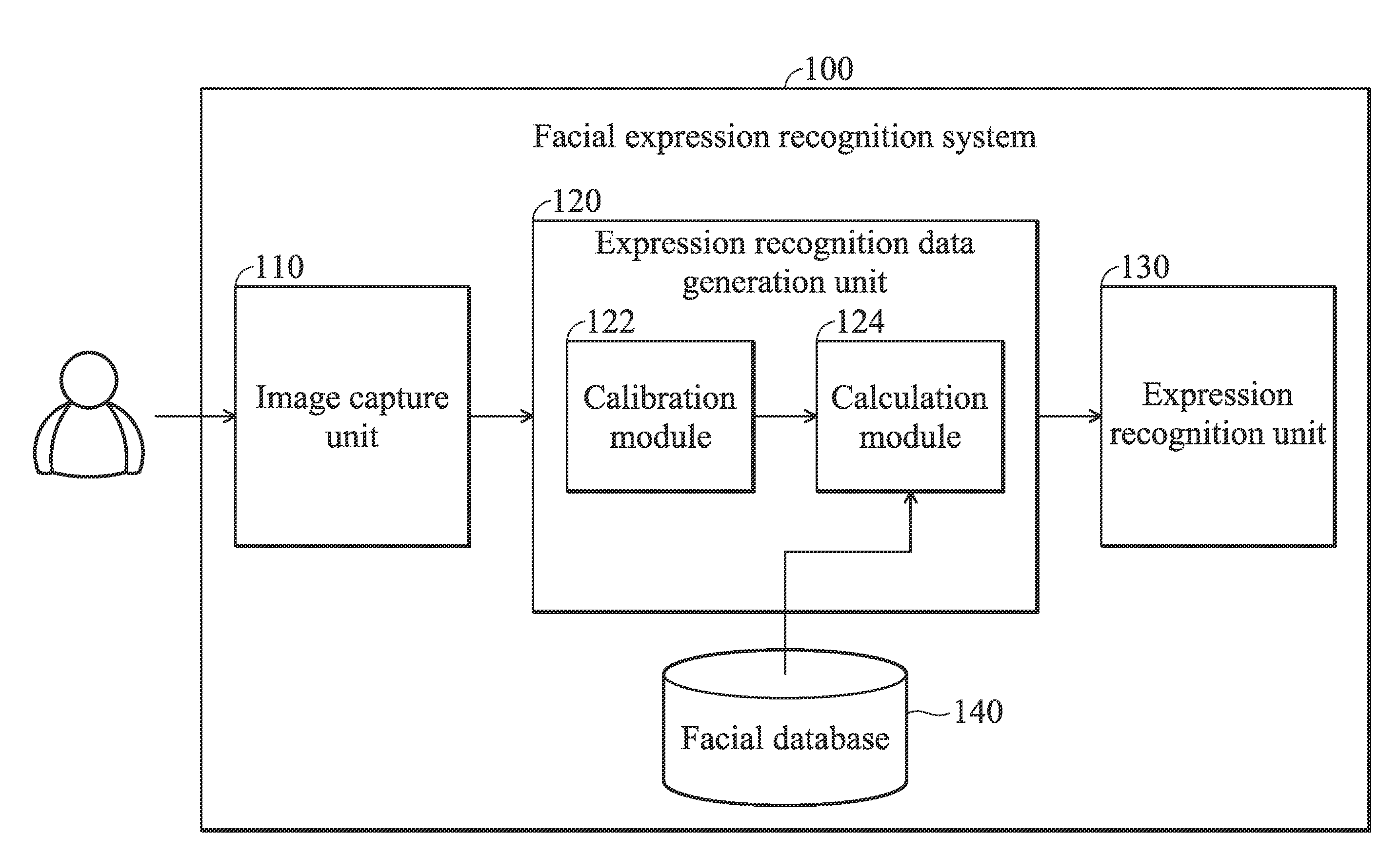

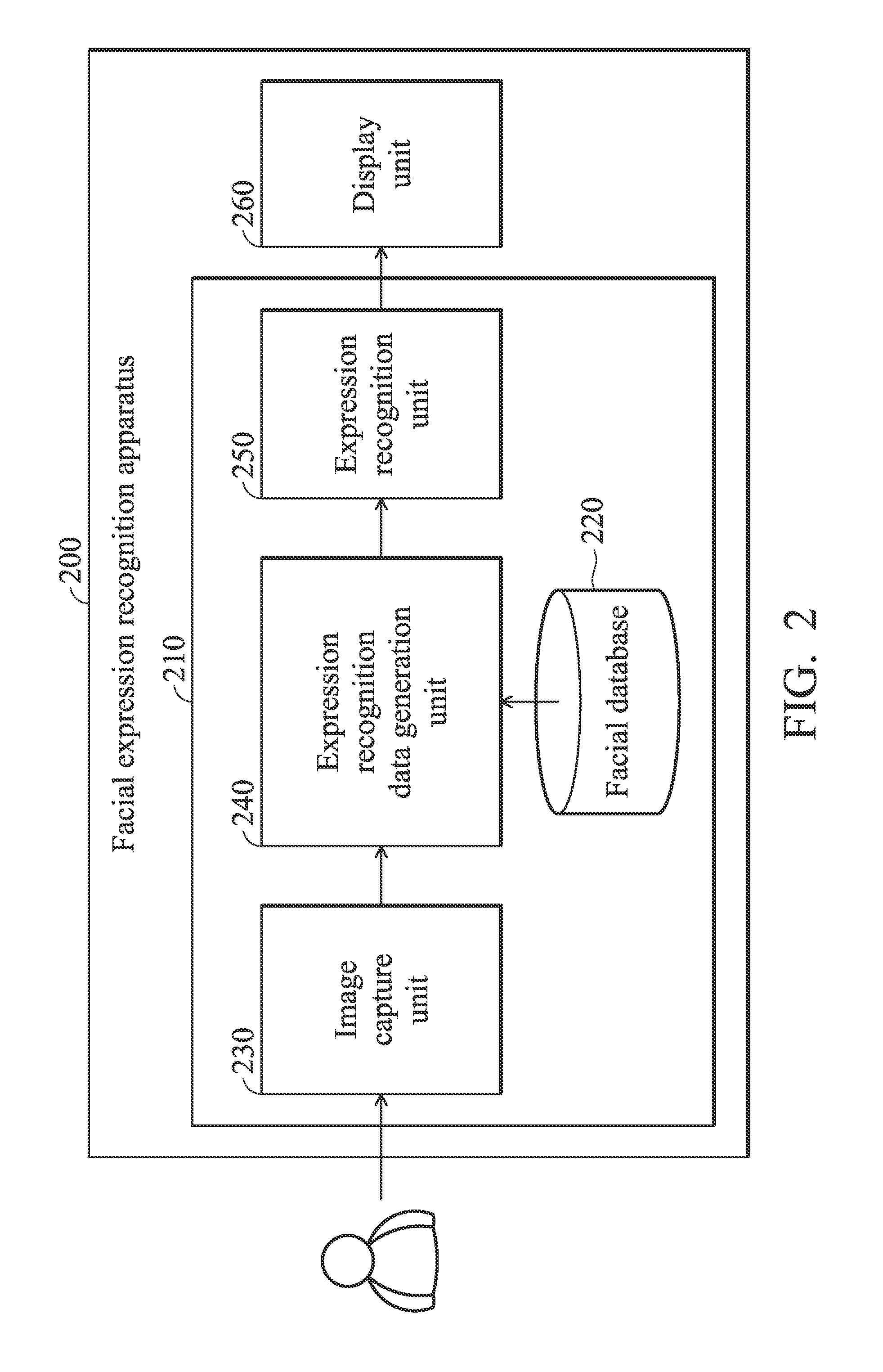

Facial Expression Recognition Systems and Methods and Computer Program Products Thereof

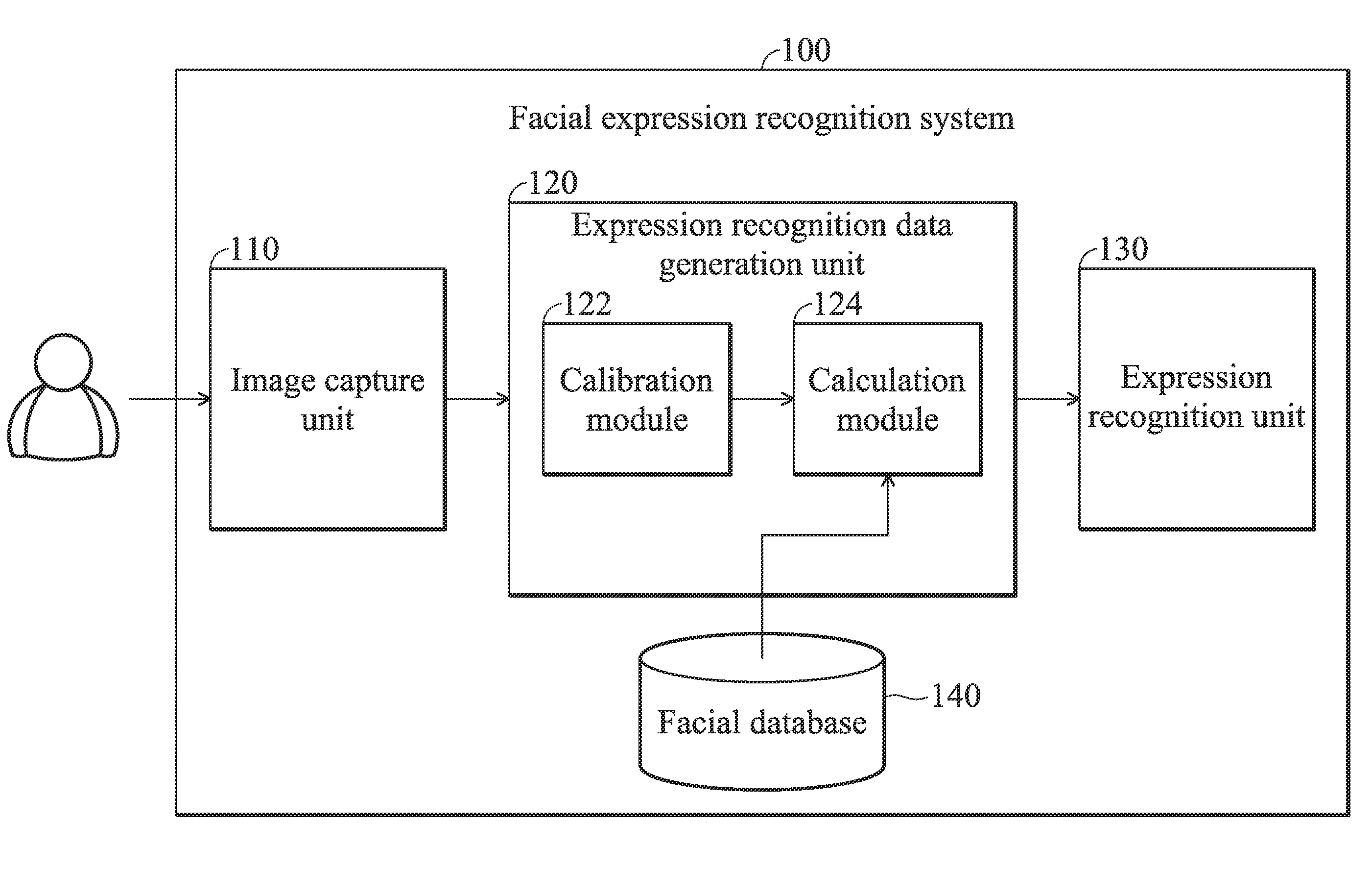

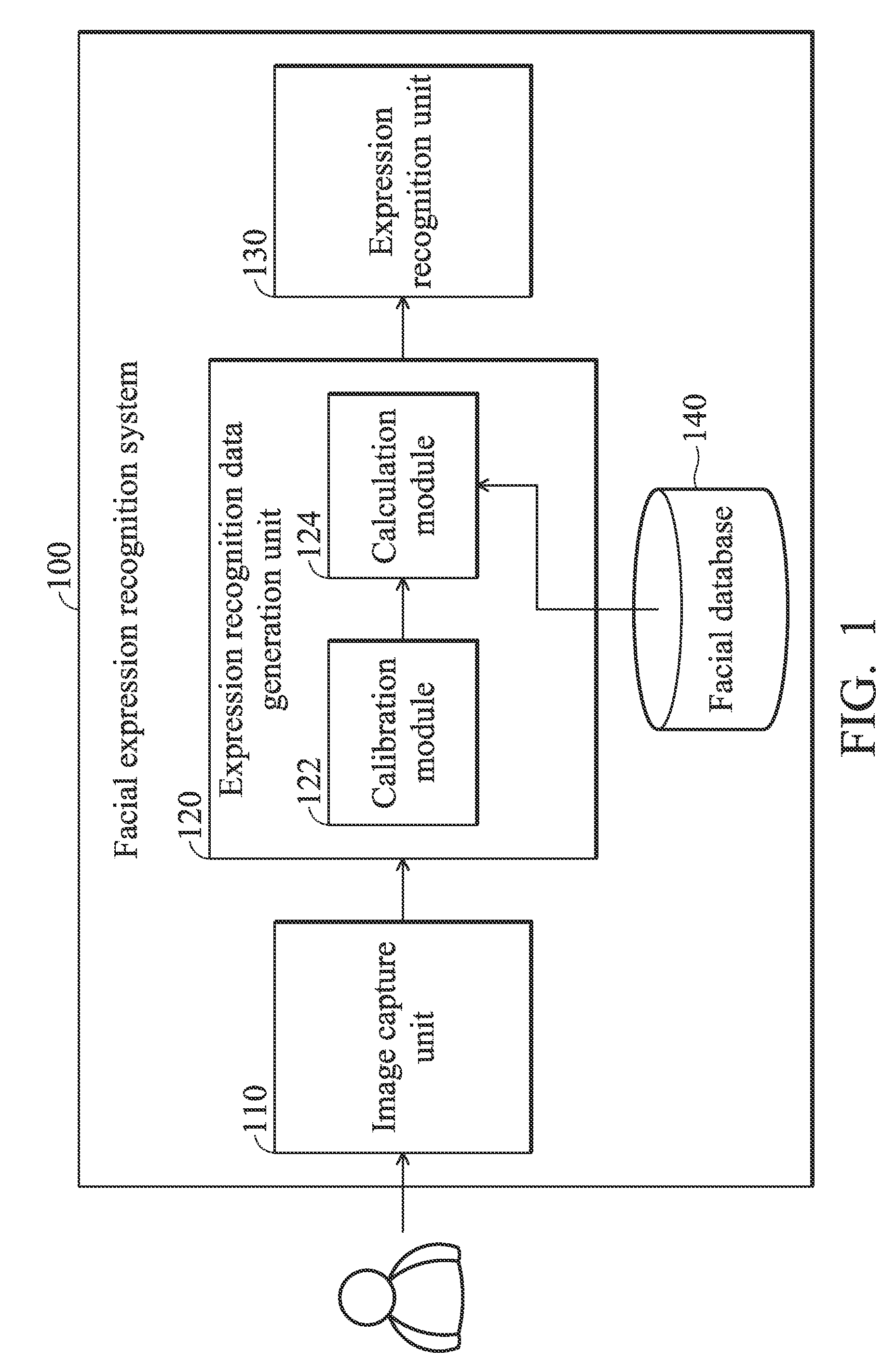

A facial expression recognition system includes a facial database, an image capture unit, an expression recognition data generation unit and an expression recognition unit. The facial database includes a plurality of expression information and expression features of optical flow field, wherein each of the expression features of optical flow field corresponds to one of the expression information. The image capture unit captures a plurality of facial images. The expression recognition data generation unit is coupled to the image capture unit and the facial database for receiving a first facial image and a second facial image from the image capture unit and calculating an expression feature of optical flow field between the first facial image and the second facial image corresponding to each of the expression information. The expression recognition unit is coupled to the expression recognition data generation unit for determining a facial expression corresponding to the first and second facial images according to the calculated expression feature of optical flow field for each of the expression information and the variation features in optical flow in the facial database.

Owner:INSTITUTE FOR INFORMATION INDUSTRY

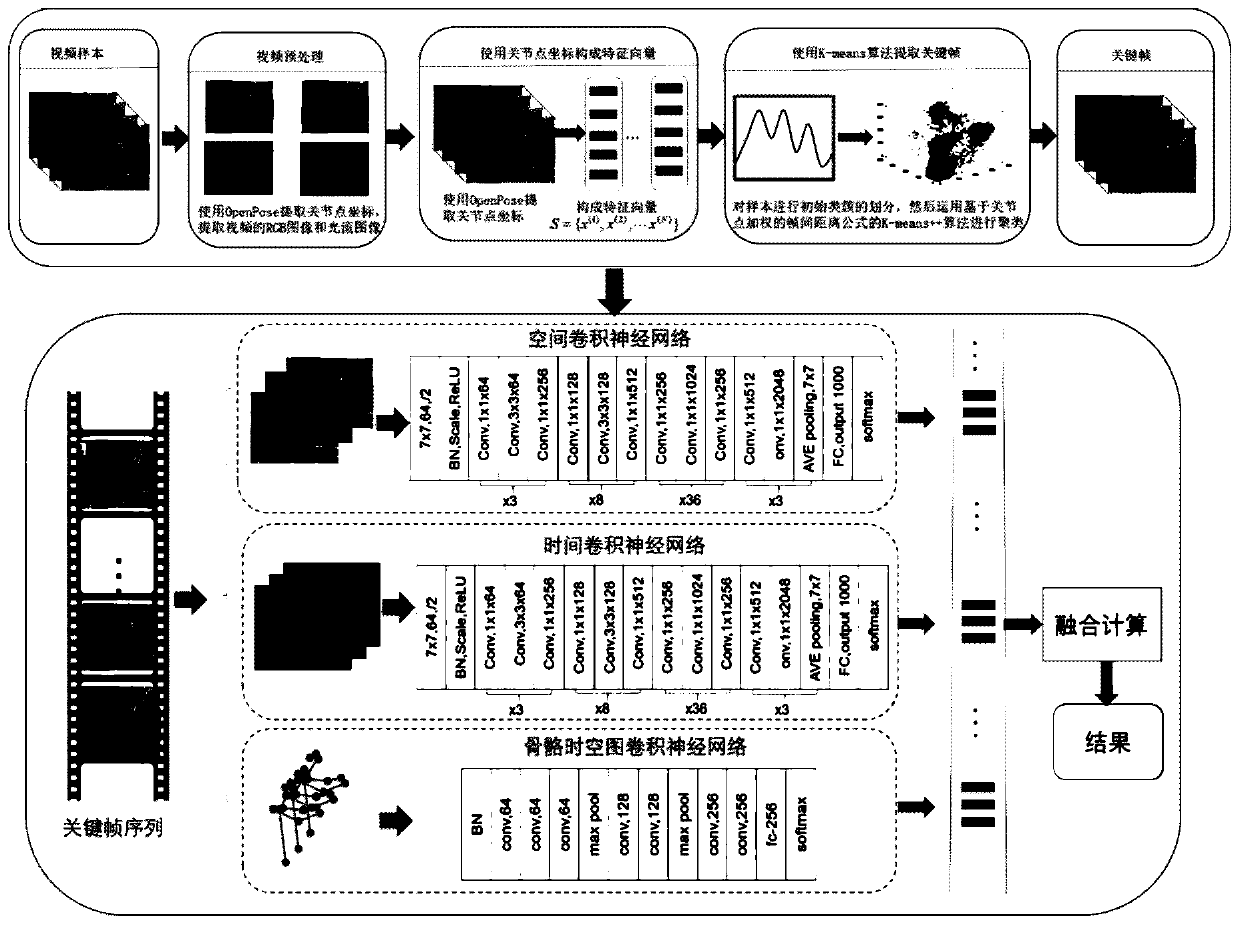

Multi-feature fusion behavior identification method based on key frame

ActiveCN110096950ARefine nuancesImprove accuracyCharacter and pattern recognitionFeature vectorOptical flow

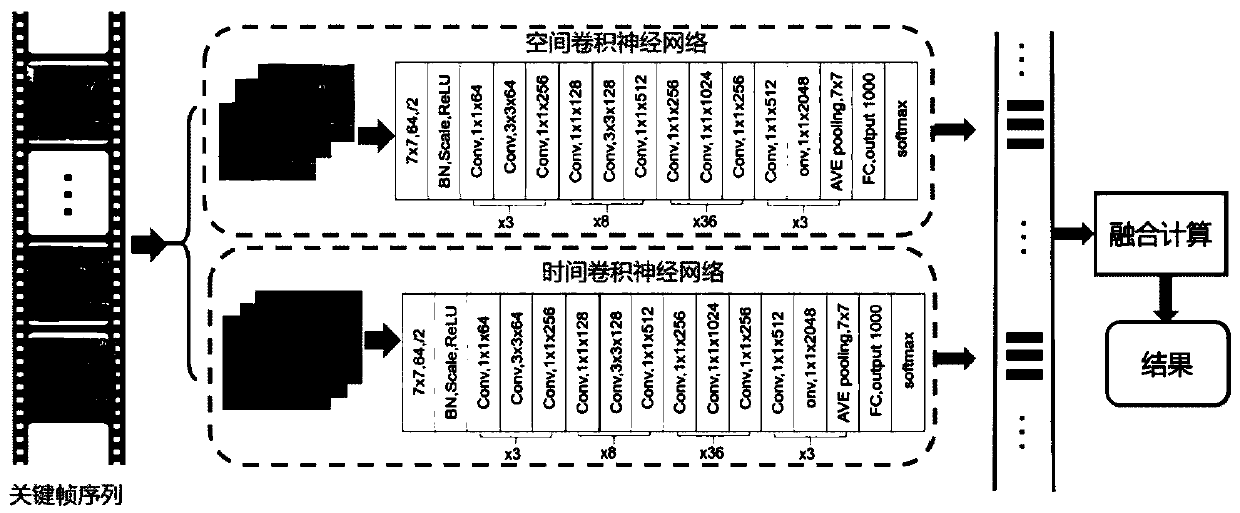

A multi-feature fusion behavior identification method based on a key frame comprises the following steps of firstly, extracting a joint point feature vector x (i) of a human body in a video through anopenpose human body posture extraction library to form a sequence S = {x (1), x (2),..., x (N)}; secondly, using a K-means algorithm to obtain K final clustering centers c '= {c' | i = 1, 2,..., K},extracting a frame closest to each clustering center as a key frame of the video, and obtaining a key frame sequence F = {Fii | i = 1, 2,..., K}; and then obtaining the RGB information, optical flow information and skeleton information of the key frame, processing the information, and then inputting the processed information into a double-flow convolutional network model to obtain the higher-levelfeature expression of the RGB information and the optical flow information, and inputting the skeleton information into a space-time diagram convolutional network model to construct the space-time diagram expression features of the skeleton; and then fusing the softmax output results of the network to obtain a final identification result. According to the process, the influences, such as the timeconsumption, accuracy reduction, etc., caused by redundant frames can be well avoided, and then the information in the video can be better utilized to express the behaviors, so that the recognition accuracy is further improved.

Owner:NORTHWEST UNIV(CN)

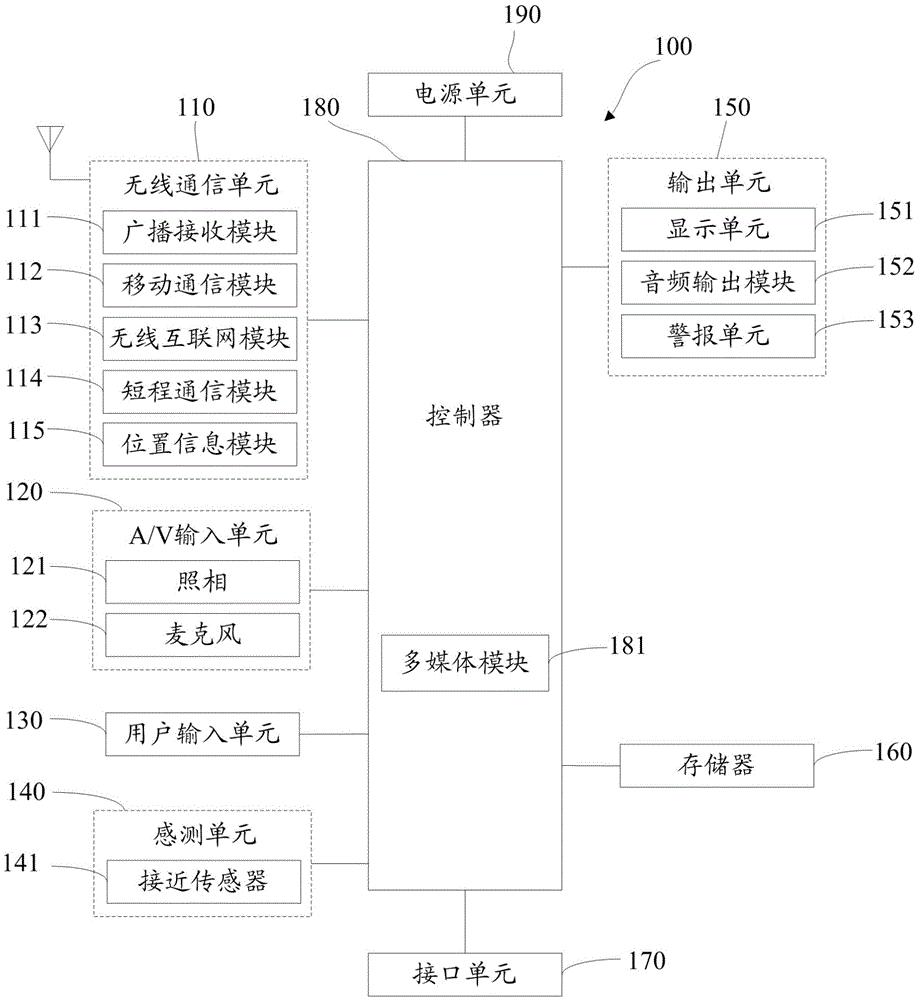

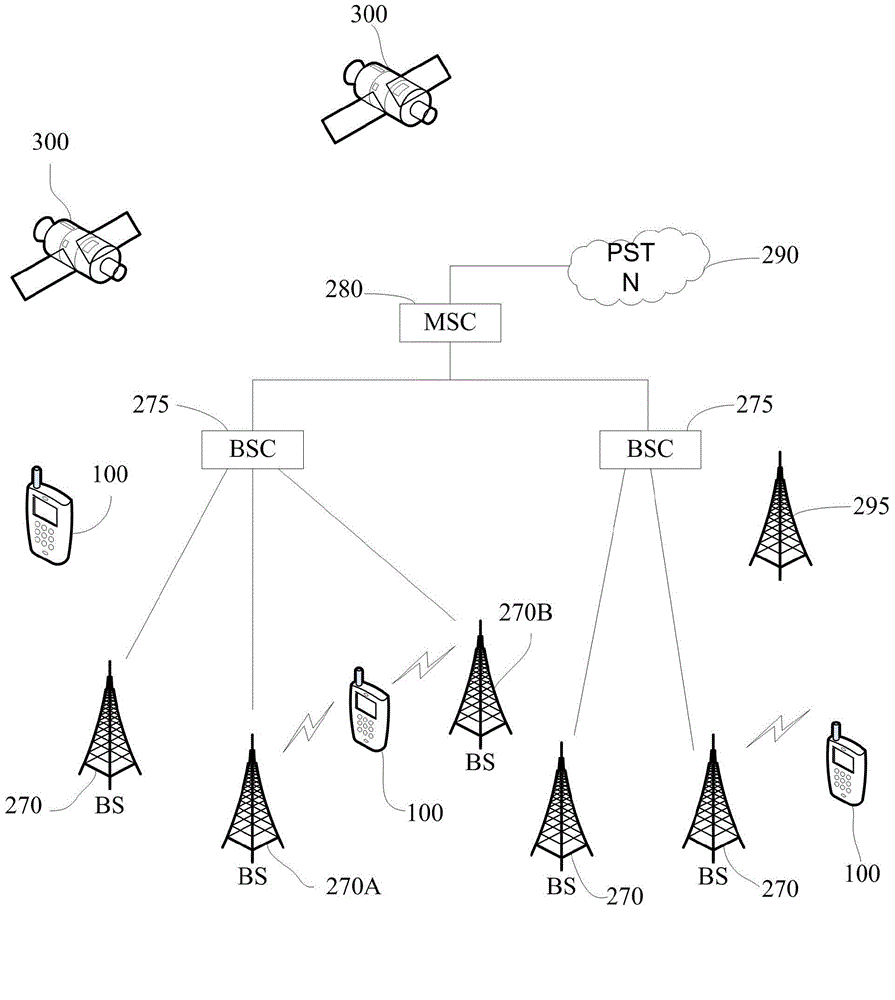

Authentication and authorization method, processor, equipment and mobile terminal

InactiveCN102509053AIncrease interactionImprove securityCharacter and pattern recognitionDigital data authenticationPasswordExpression templates

An authentication and authorization method comprises the following steps: acquiring images, identifying the human face information of images, extracting the expression features of the human face information, matching the expression features with expression templates; and confirming the matched authorized information. According to the invention, based on the image identification, some kind of image features, such as human expression, serve as authentication information / password, so not only is the communication manner between the user and the equipment increased, but also new user experience is brought and the safety of the equipment is improved. The invention also discloses a processor, equipment and a mobile terminal.

Owner:唐辉 +3

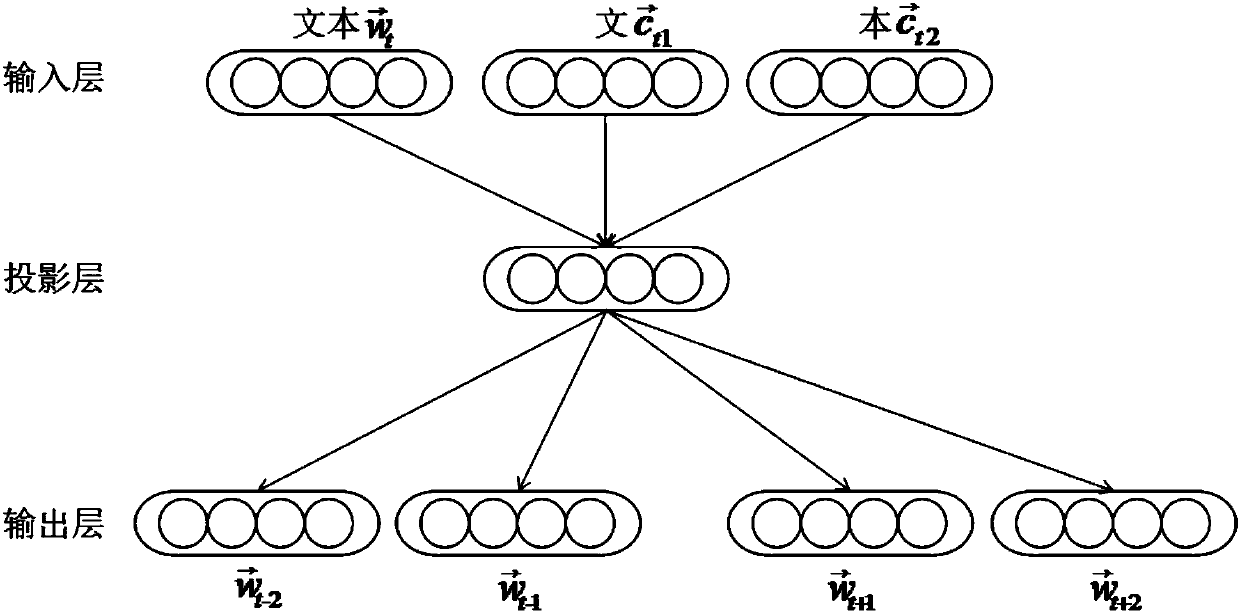

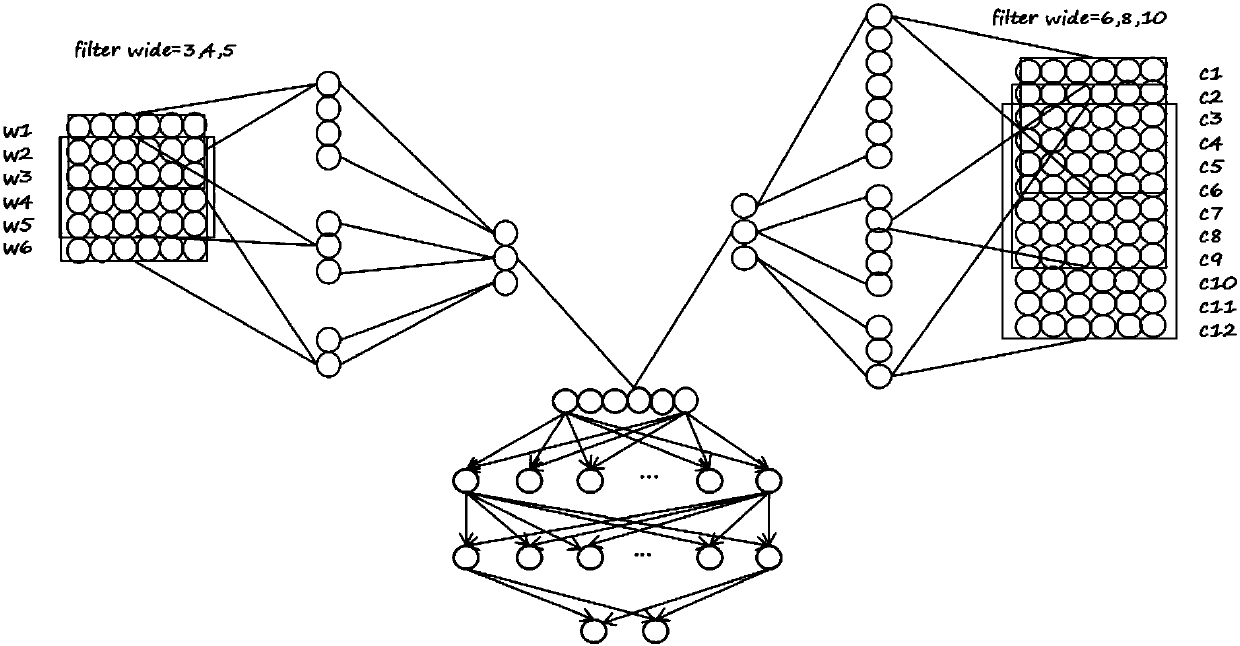

Text classification method based on feature information of characters and terms

InactiveCN107656990ARich semantic informationLittle useful informationSpecial data processing applicationsNerve networkClassification methods

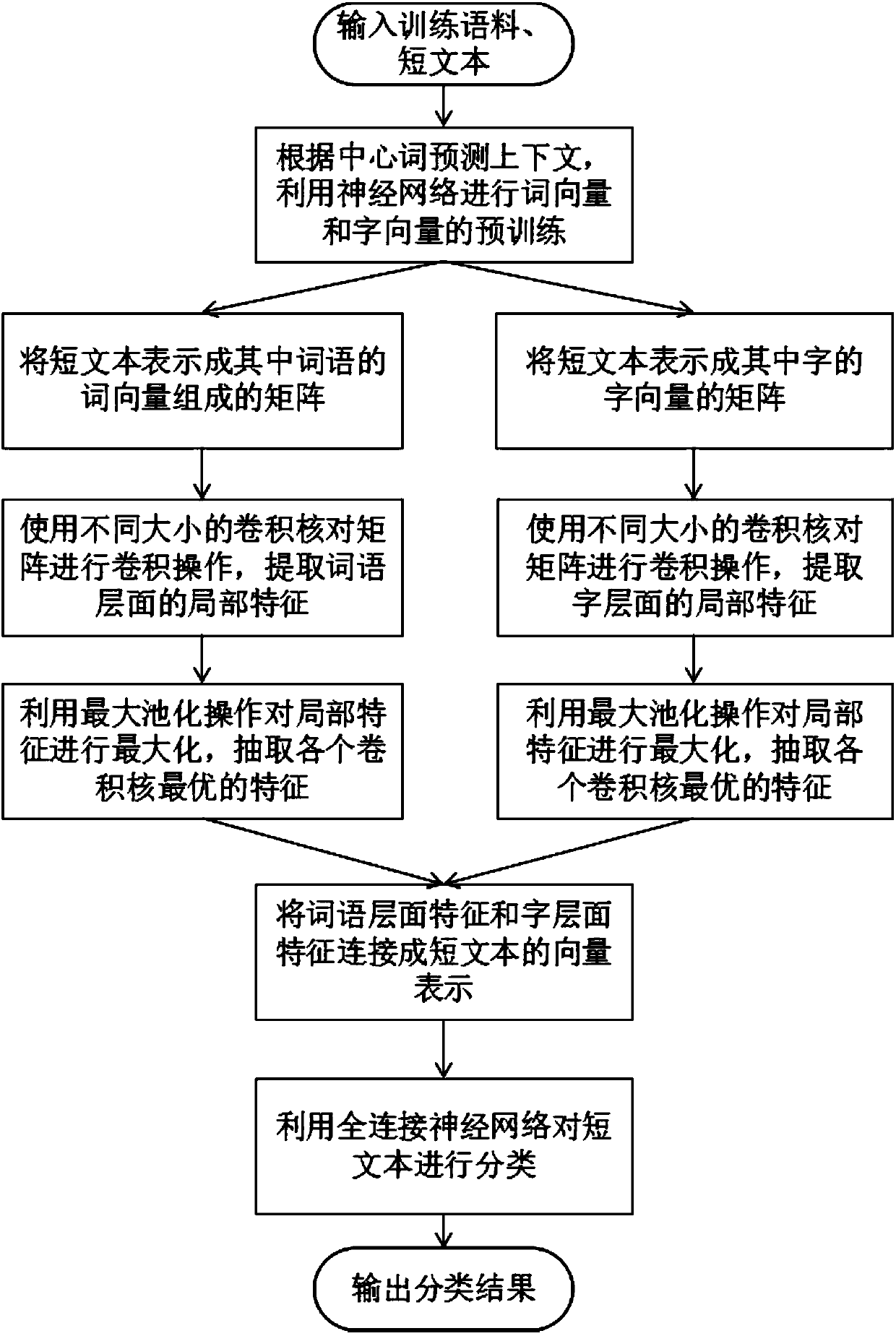

The invention discloses a text classification method based on feature information of characters and terms. The method comprises the steps that a neural network model is utilized to perform character and term vector joint pre-training, and initial term vector expression of the terms and initial character vector expression of Chinese characters are obtained; a short text is expressed to be a matrixcomposed of term vectors of all terms in the short text, a convolutional neural network is utilized to perform feature extraction, and term layer features are obtained; the short text is expressed tobe a matrix composed of character vectors of all Chinese characters in the short text, the convolutional neural network is utilized to perform feature extraction, and Chinese character layer featuresare obtained; the term layer features and the Chinese character layer features are connected, and feature vector expression of the short text is obtained; and a full-connection layer is utilized to classify the short text, a stochastic gradient descent method is adopted to perform model training, and a classification model is obtained. Through the method, character expression features and term expression features can be extracted, the problem that the short text has insufficient semantic information is relieved, the semantic information of the short text is fully mined, and classification of the short text is more accurate.

Owner:SUN YAT SEN UNIV

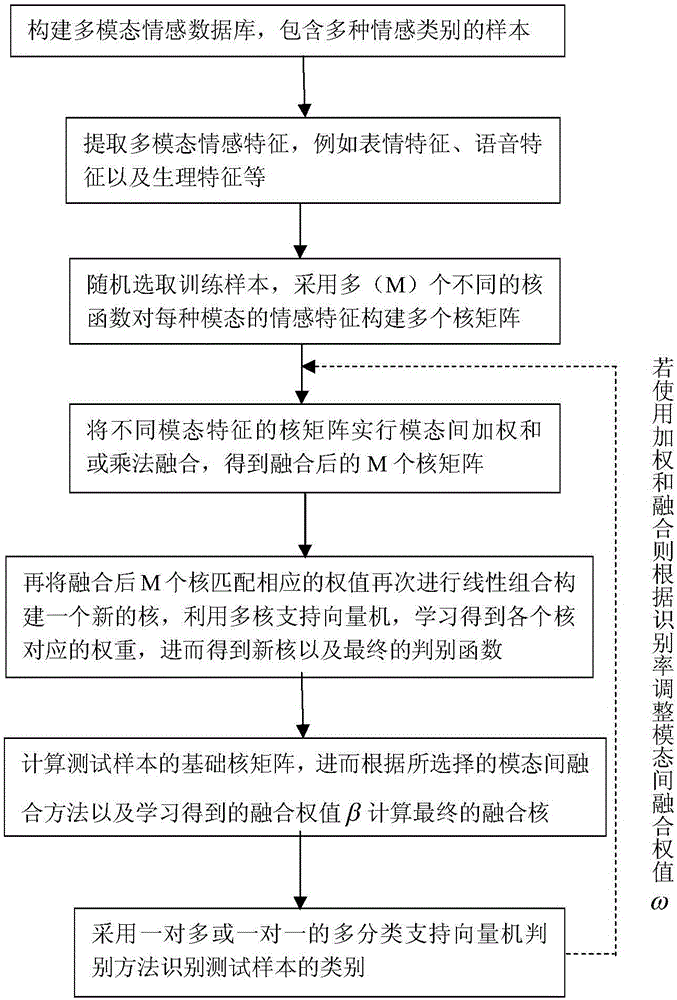

Multi-kernel-learning-based multi-mode emotion identification method

ActiveCN106250855AEasy to identifyGood effectCharacter and pattern recognitionLearning basedMatrix group

The invention discloses a multi-kernel-learning-based multi-mode emotion identification method. According to the method, extraction of emotion features like an expression feature, a voice feature and a physiological feature is carried out on sample data of each mode in a multi-mode emotion database; several different kernel matrixes are constructed for each mode respectively; the kernel matrix groups corresponding to different modes are fused to obtain a fused multi-mode emotion feature; and a multi-kernel support vector machine is used as a classifier to carry out training and identification. Therefore, basic emotions like angering, disgusting, fearing, delighting, upsetting, and surprising and the like can be identified effectively.

Owner:NANJING UNIV OF POSTS & TELECOMM

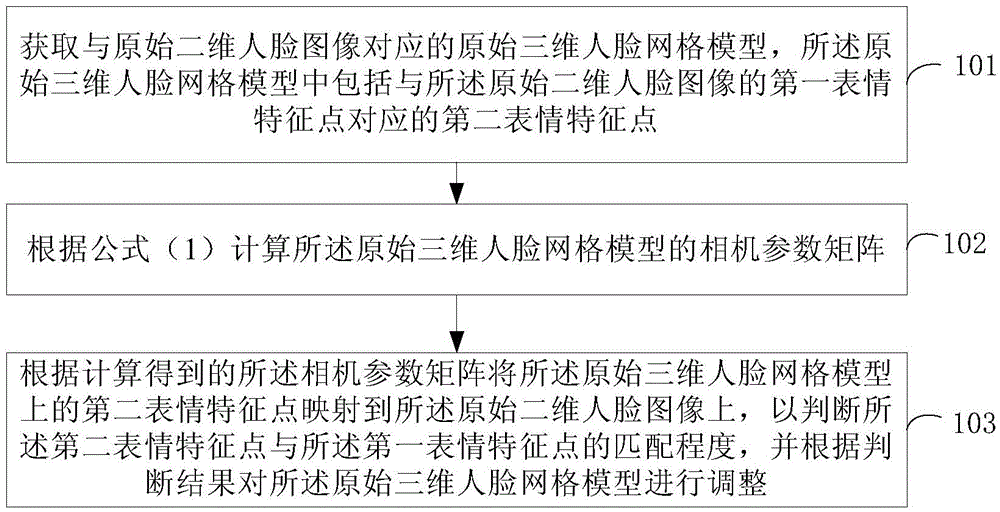

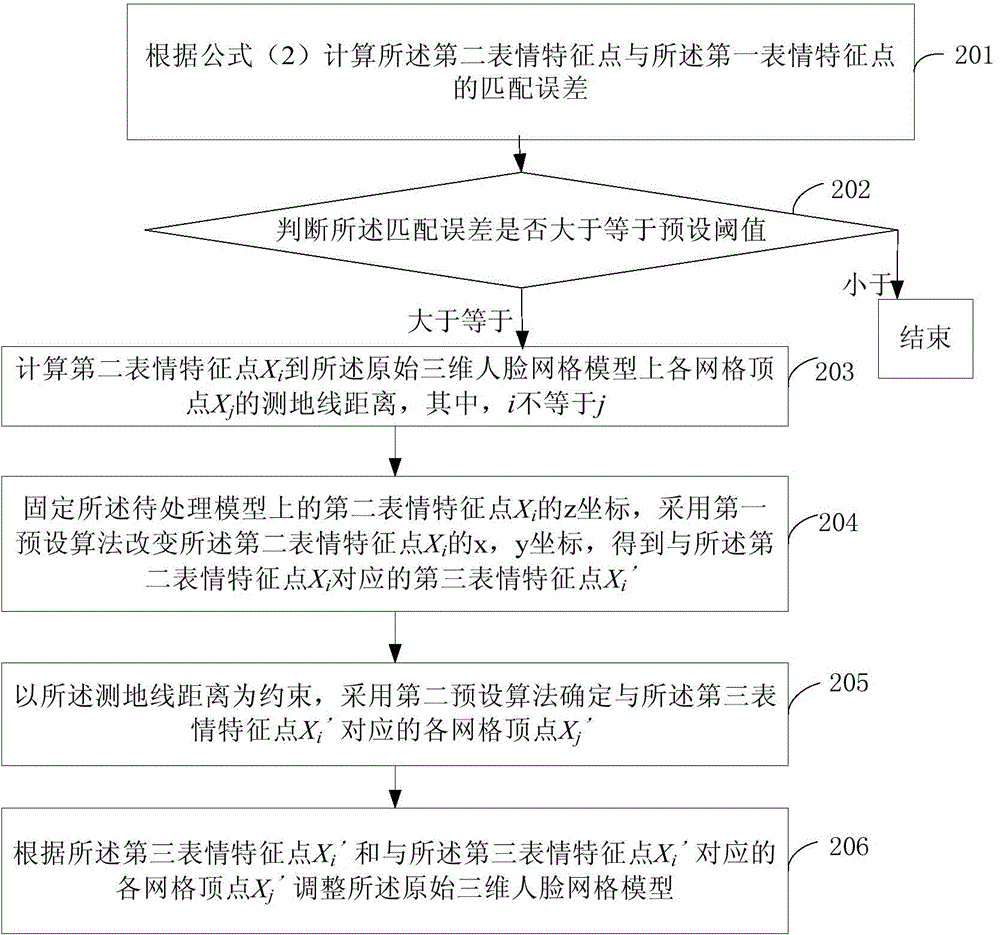

Three-dimensional face mesh model processing method and three-dimensional face mesh model processing equipment

The invention provides a three-dimensional face mesh model processing method and three-dimensional face mesh model processing equipment. The method comprises the following steps of: obtaining an original three-dimensional face mesh model corresponding to an original two-dimensional face image, wherein the original three-dimensional face mesh model contains a second expression feature point corresponding to a first expression feature point of the original two-dimensional face image; calculating a camera parameter matrix of the original three-dimensional face mesh model according to a formula (1); and mapping the second expression feature point onto the original two-dimensional face image according to the camera parameter matrix for judging the matching degree of the second expression feature point and the first expression feature point, and adjusting the original three-dimensional mesh model according to the judging result. The original three-dimensional face mesh model and the original two-dimensional face image are subjected to matching degree judgment according to the camera parameters, and the original three-dimensional face mesh model is adjusted under the condition of low matching degree, so that the regulated three-dimensional face mesh model can be enabled to achieve a better matching degree with the original two-dimensional face image.

Owner:广东思理智能科技股份有限公司

Newborn painful expression recognition method based on deep neural network

InactiveCN107392109AEasy to identifyImprove robustnessNeural architecturesAcquiring/recognising facial featuresTime domainShort-term memory

The invention relates to a newborn painful expression recognition method based on a deep neural network. According to the method, a deep learning method based on a convolutional neural network (CNN) and a long and short term memory (LSTM) network is introduced and applied to newborn painful expression recognition work, and therefore expressions such as a slight pain and an acute pain caused when a newborn is in a silent or crying state and during pain inducing operation can be effectively recognized. The deep neural network is introduced to extract time domain features and spatial domain features of a video clip, therefore, the technical bottleneck in traditional manual design and extraction of explicit expression features is broken through, and the recognition rate and robustness under complicated conditions such as face sheltering, posture tilting and illumination changing are improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

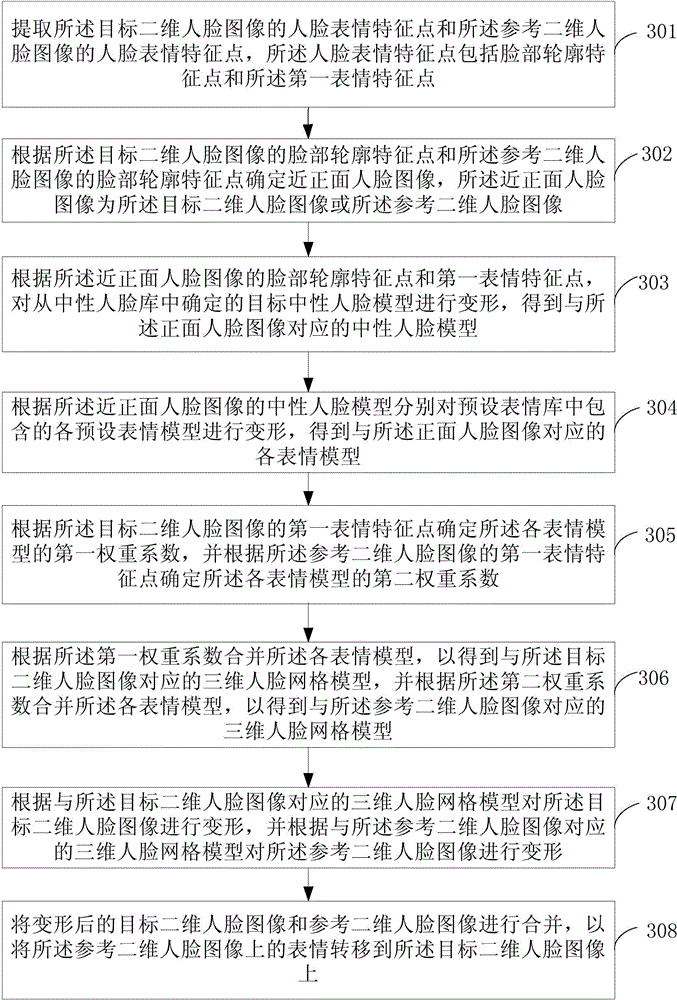

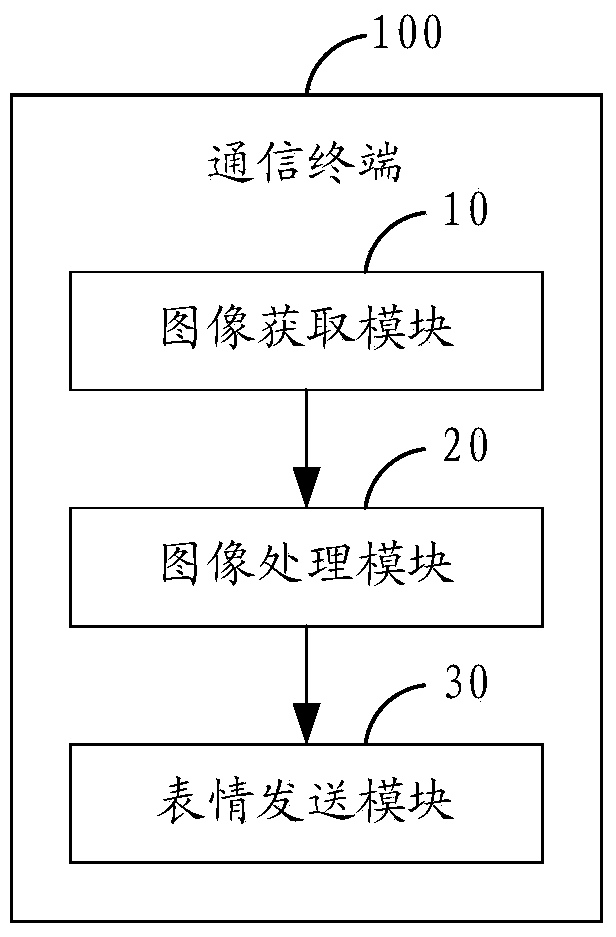

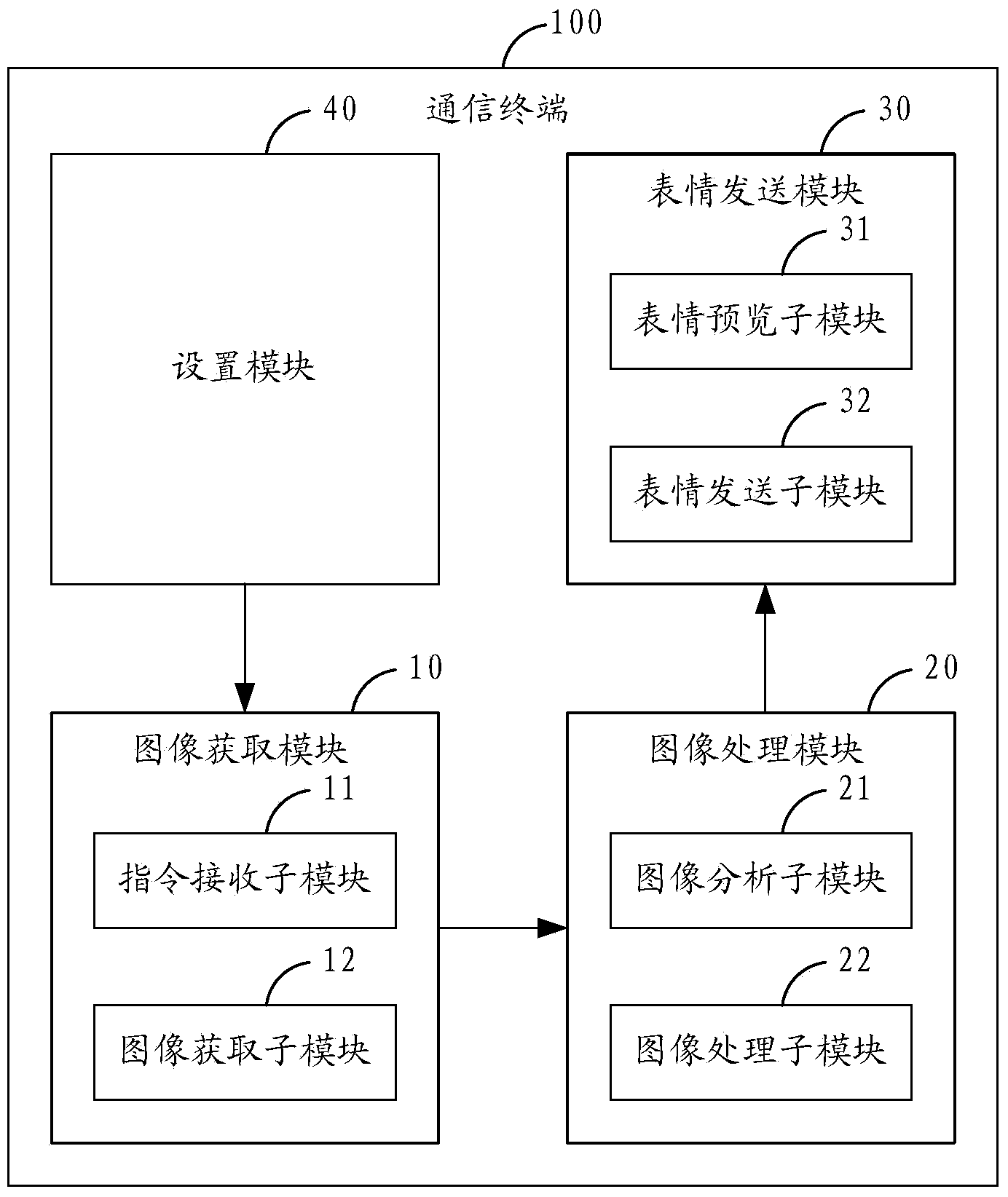

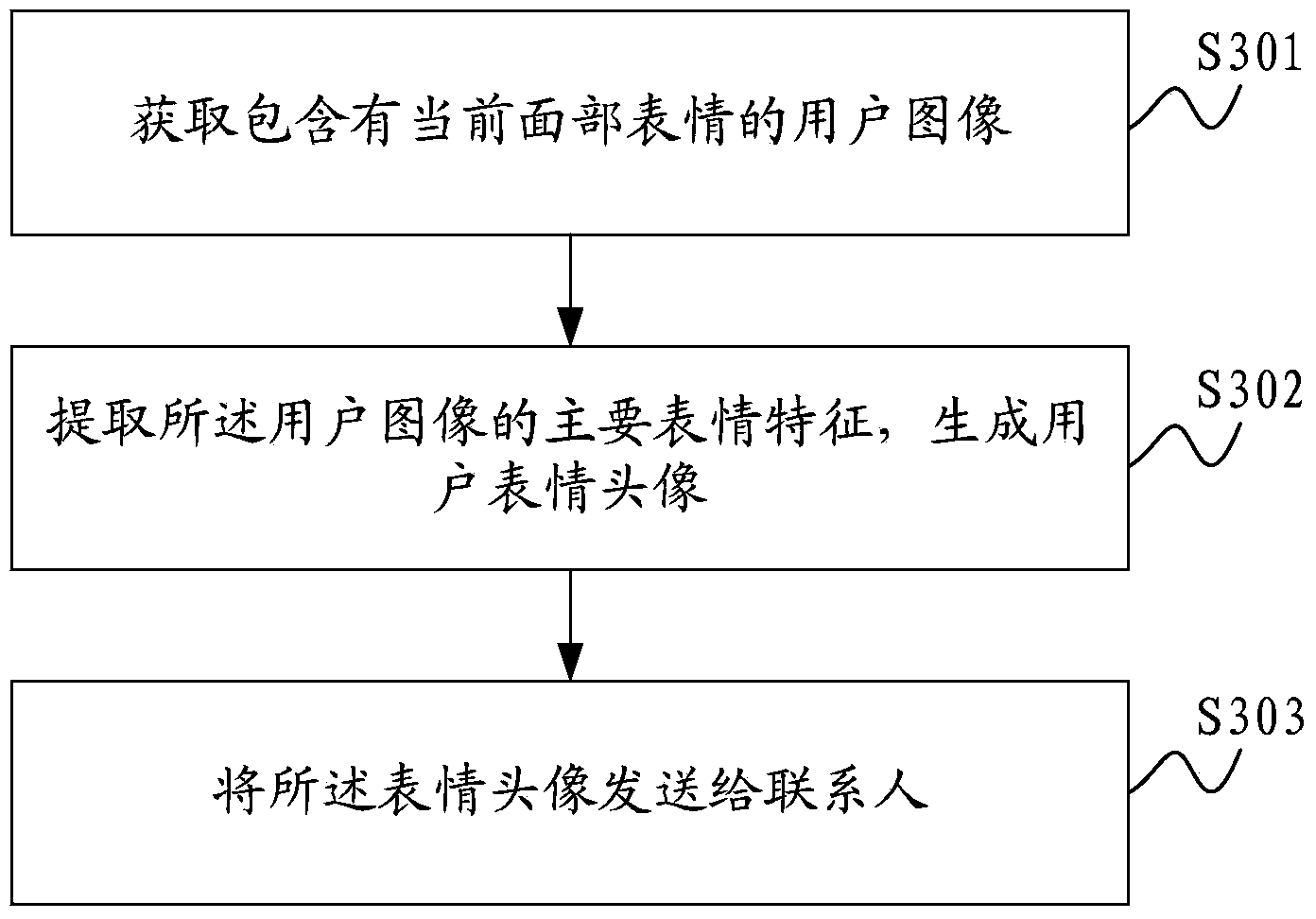

Method for generating user expression head portrait and communication terminal

InactiveCN103886632AIncrease the fun of useAnimationData switching networksFacial expressionComputer science

The invention is applicable to the communication technical field and provides a method for generating a user expression head portrait. The method comprises a step of obtaining a user image comprising a current facial expression and a step of extracting the main expression feature of the user image and generating a user expression head portrait. Correspondingly, the invention also provides a communication terminal. In this way, the user facial expression as a real-time expression head portrait is sent to a friend, the mood of the user at that time can be visually and vividly expressed, and the interest of use is raised at the same time.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

Quality classification method for portrait photography image

ActiveCN104834898AImprove accuracyHighlight the impact of visual aestheticsCharacter and pattern recognitionClassification methodsSample image

The invention discloses a quality classification method for portrait photography images. The method comprises: firstly, randomly selecting a plurality of portrait images used as sample images of the quality grade for each quality grade from a sample image library in which images are classified into different quality grades according to quality classification of portrait photography images; using a factial feature point detection algorithm to acquire feature points of a face, and then extracting the features of the face, including basic features in the face, position relation features, face shadow features, face proportion features, face saliency and expression features; through and combining with the global feature and distinguishing features of the sample images, performing learning training based on SVM on the samples, obtaining a classifier of quality classification; and calling the classifier obtained in the step (6) on the target portrait photography images, to perform quality classification. The quality classification method for portrait photography images deeply digs into face related features, and accuracy of the classification is high.

Owner:SOUTH CHINA UNIV OF TECH

Three-dimensional human head and face model reconstruction method based on random face image

ActiveCN110443885AKeep detailsSuppress Distortion DetailsCharacter and pattern recognitionArtificial lifePattern recognitionPoint cloud

The invention provides a three-dimensional human head and face model reconstruction method based on a random face image. The method includes; establishing a human face bilinear model and an optimization algorithm by using a three-dimensional human face database; gradually separating the spatial attitude of the human face, camera parameters and identity features and expression features for determining the geometrical shape of the human face through the two-dimensional feature points, and adjusting the generated three-dimensional human face model through Laplace deformation correction to obtaina low-resolution three-dimensional human face model; finally, calculating the face depth, and achieving high-precision three-dimensional model reconstruction of the target face through registration ofthe high-resolution template model and the point cloud model, so as to enable the reconstructed face model to conform to the shape of the target face. According to the method, while face distortion details are eliminated, original main details of the face are kept, the reconstruction effect is more accurate, especially in face detail reconstruction, face detail distortion and expression influences are effectively reduced, and the display effect of the generated face model is more real.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

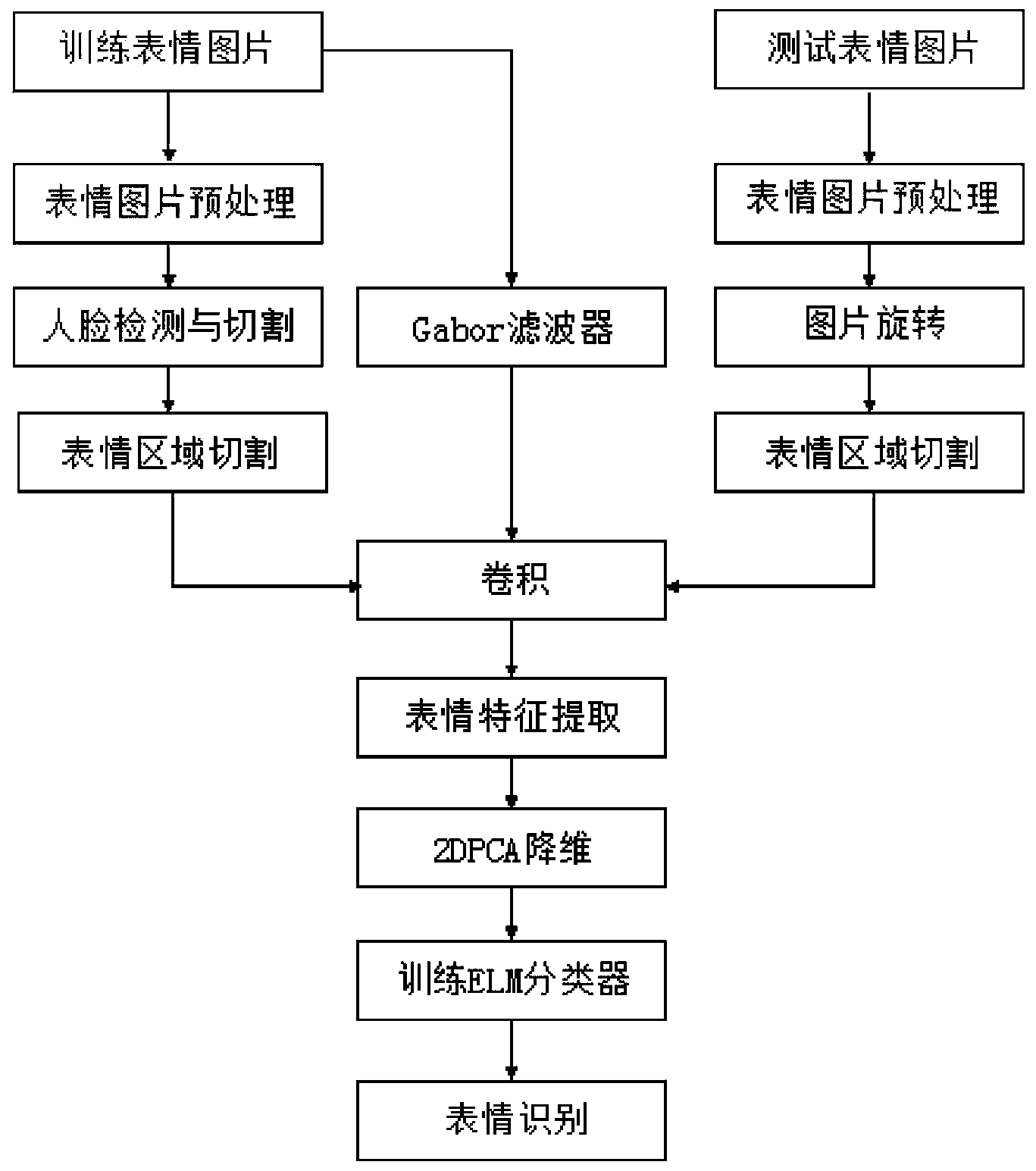

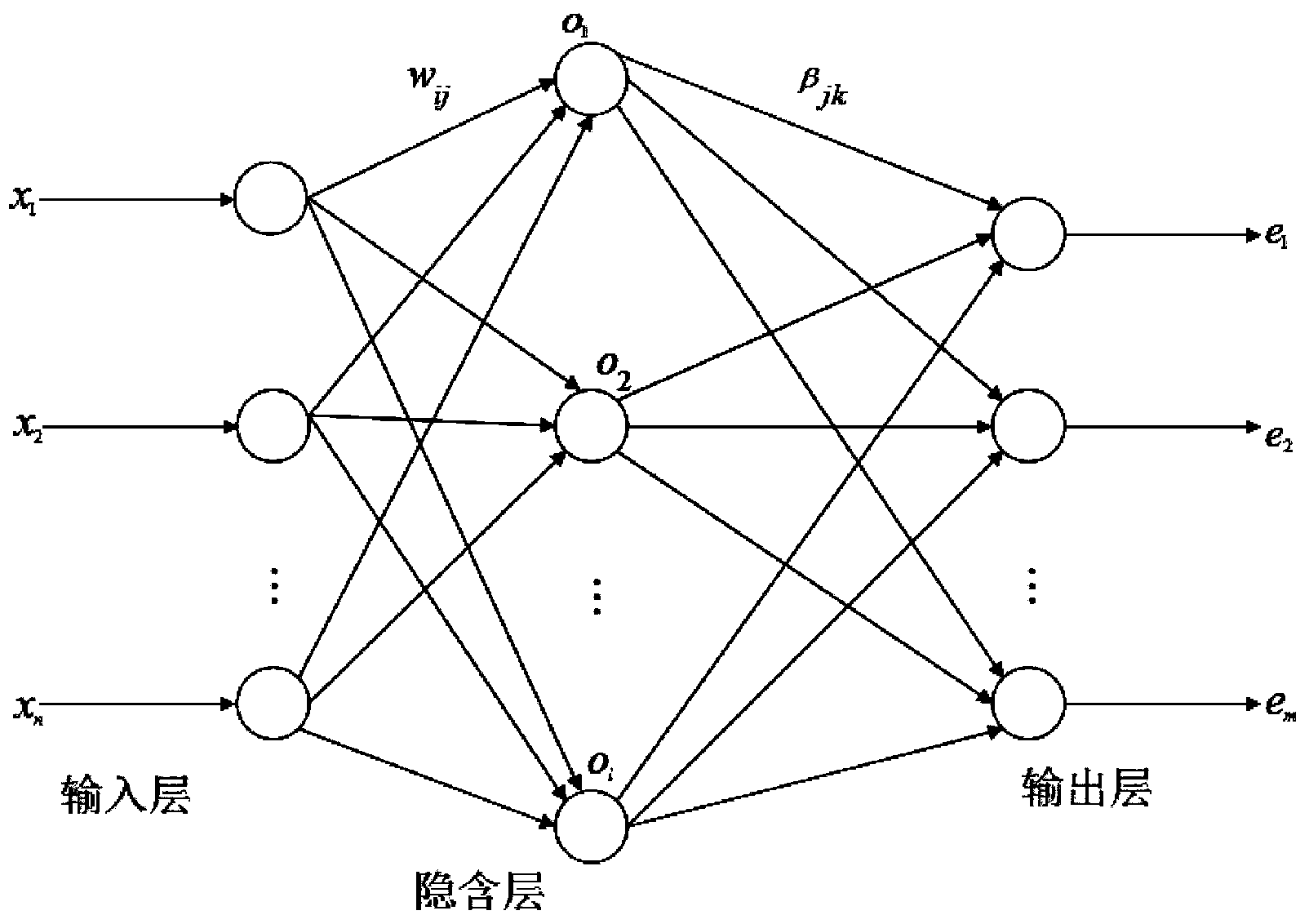

Facial expression recognition method based on ELM

InactiveCN104318221AReduce processing difficultyReduce power consumptionCharacter and pattern recognitionFeature vectorFacial region

The invention discloses a facial expression recognition method based on an ELM. The method comprises the steps that a facial expression image is processed to obtain a facial region, image segmentation is conducted on an expression region containing expression features to obtain an eye expression region body, a nose expression region body and a lip expression region body, then Gabor filters are used for conducting expression feature extraction on the expression region bodies to obtain a total feature vector of each expression, the obtained expression feature vectors are used for training ELM models, the ELM models for all the expressions are combined to form an ELM expression classifier, and at last, the ELM classifier is tested to achieve the purpose of expression classified recognition. The facial expression recognition method based on the ELM increases the expression recognition rate on the condition that illumination influences are avoided.

Owner:CENT SOUTH UNIV

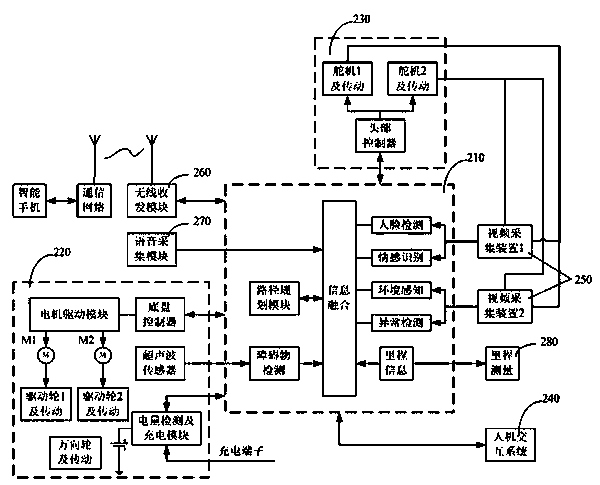

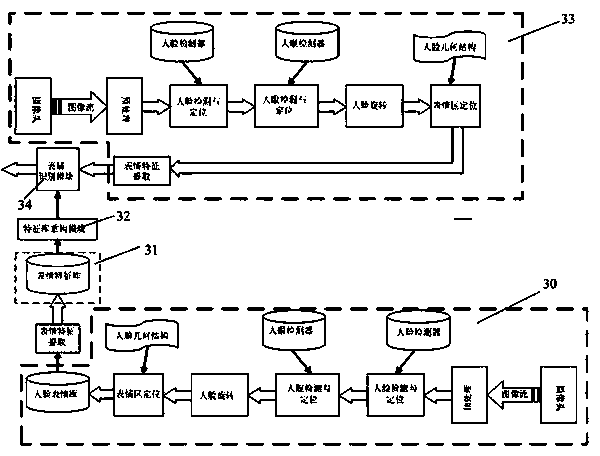

Robot system and method for detecting human face and recognizing emotion

InactiveCN103679203AImprove home monitoringImprove the ability to accompanyImage enhancementCharacter and pattern recognitionFace detectionColor image

The invention discloses a robot system and method for detecting a human face and recognizing emotion. The system comprises a human face expression library collecting module, an original expression library building module, a feature library rebuilding module, a field expression feature extracting module and an expression recognizing module. The human face expression library collecting module is used for collecting a large number of human face expression color image frames through a video collecting device and processing the human face expression color image frames to form a human face expression library. The original expression library building module is used for extracting expression features after removing image redundant information of training images in the human face expression library to form an original expression feature library. The feature library rebuilding module is used for rebuilding the original expression feature library as a structuralized hash table through the distance hash method. The field expression feature extracting module is used for collecting field human face expression color image frames through the video collecting device and extracting field expression features. The expression recognizing module is used for recognizing the human face expression through the k neighbor sorting algorithm in the feature library in which the field expression features extracted by field expression feature extracting module are rebuilt.

Owner:江苏久祥汽车电器集团有限公司

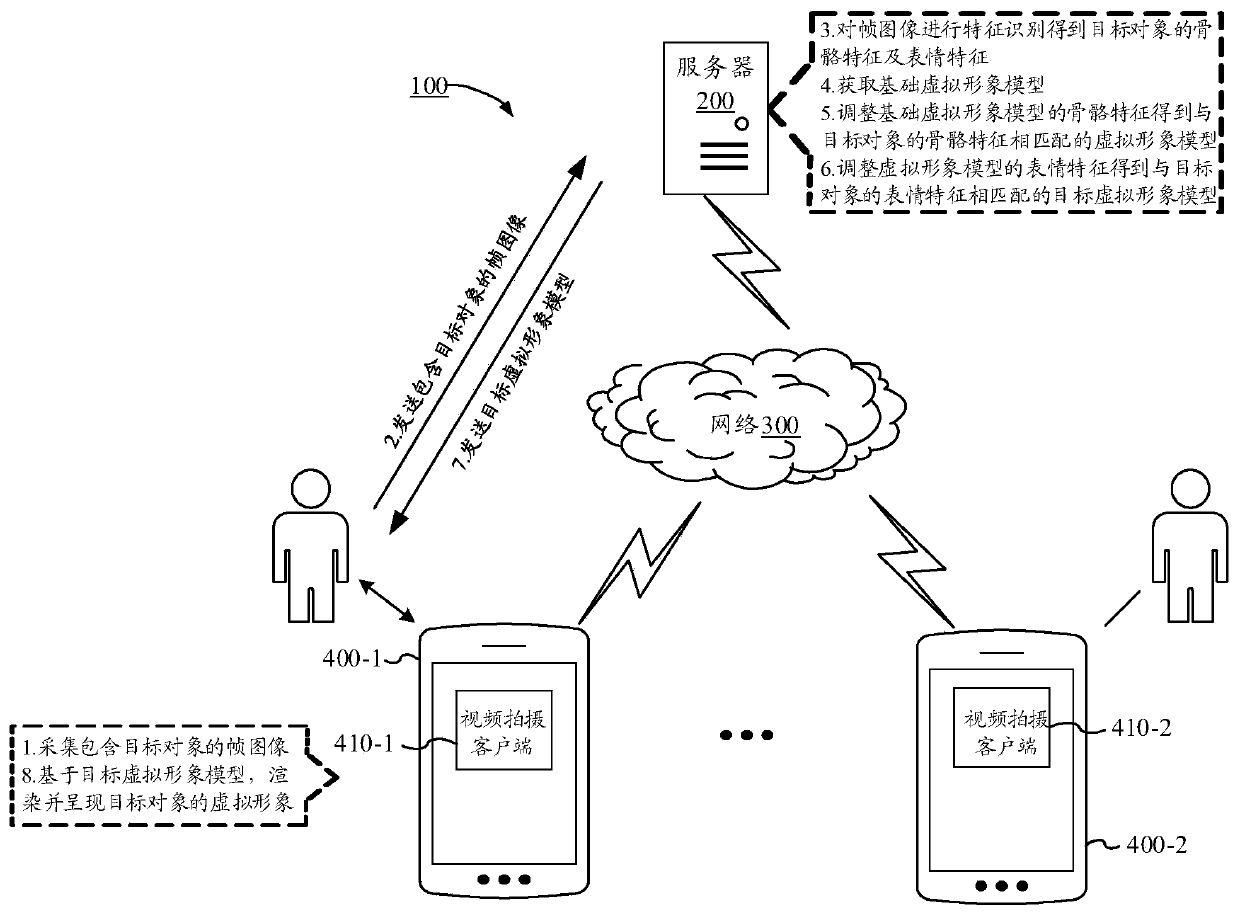

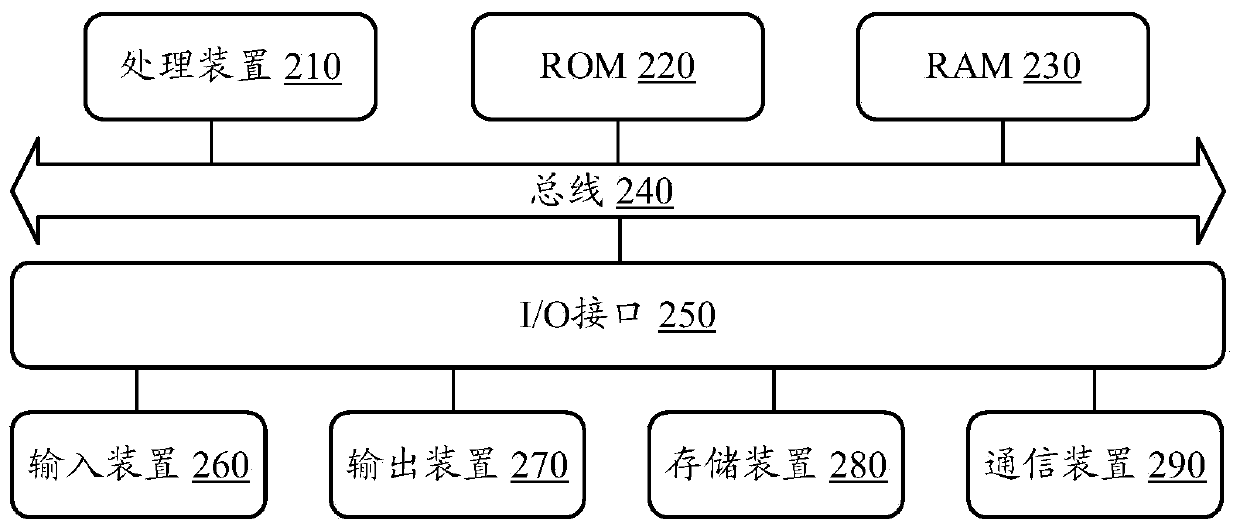

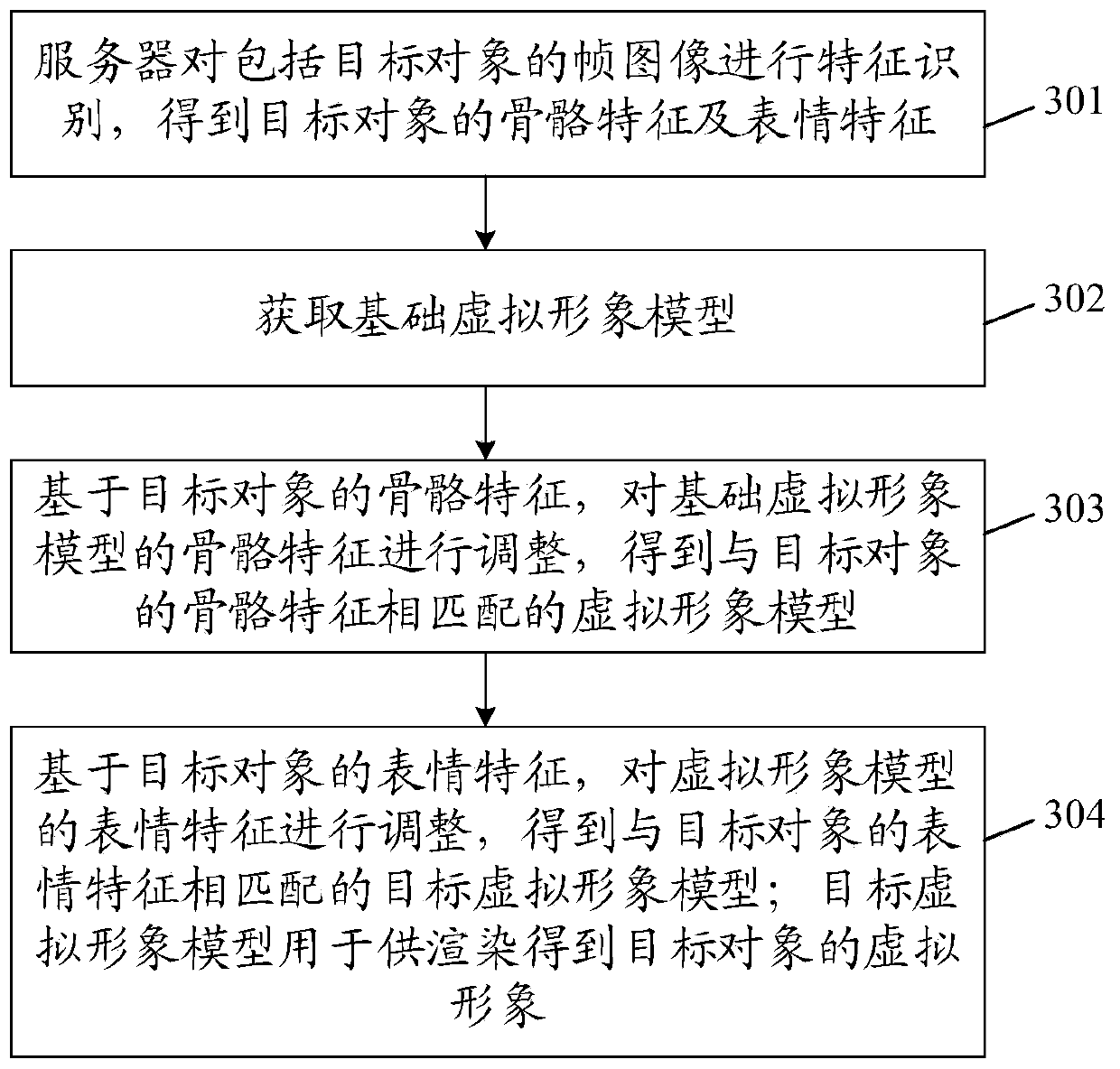

Virtual image generation method and device, electronic equipment and storage medium

ActiveCN110766777ARealize personalized creationAnimationMultiple biometrics useRadiologyExpression Feature

The embodiment of the invention provides a virtual image generation method and device, electronic equipment and a storage medium. The method comprises the following steps: performing feature recognition on a frame image comprising a target object to obtain skeleton features and expression features of the target object; obtaining a basic virtual image model; based on the skeleton features of the target object, adjusting the skeleton features of the basic virtual image model to obtain a virtual image model matched with the skeleton features of the target object; based on the expression featuresof the target object, adjusting the expression features of the virtual image model to obtain a target virtual image model matched with the expression features of the target object; wherein the targetvirtual image model is used for rendering to obtain a virtual image of the target object. According to the method and the device, the personalized virtual image can be created.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

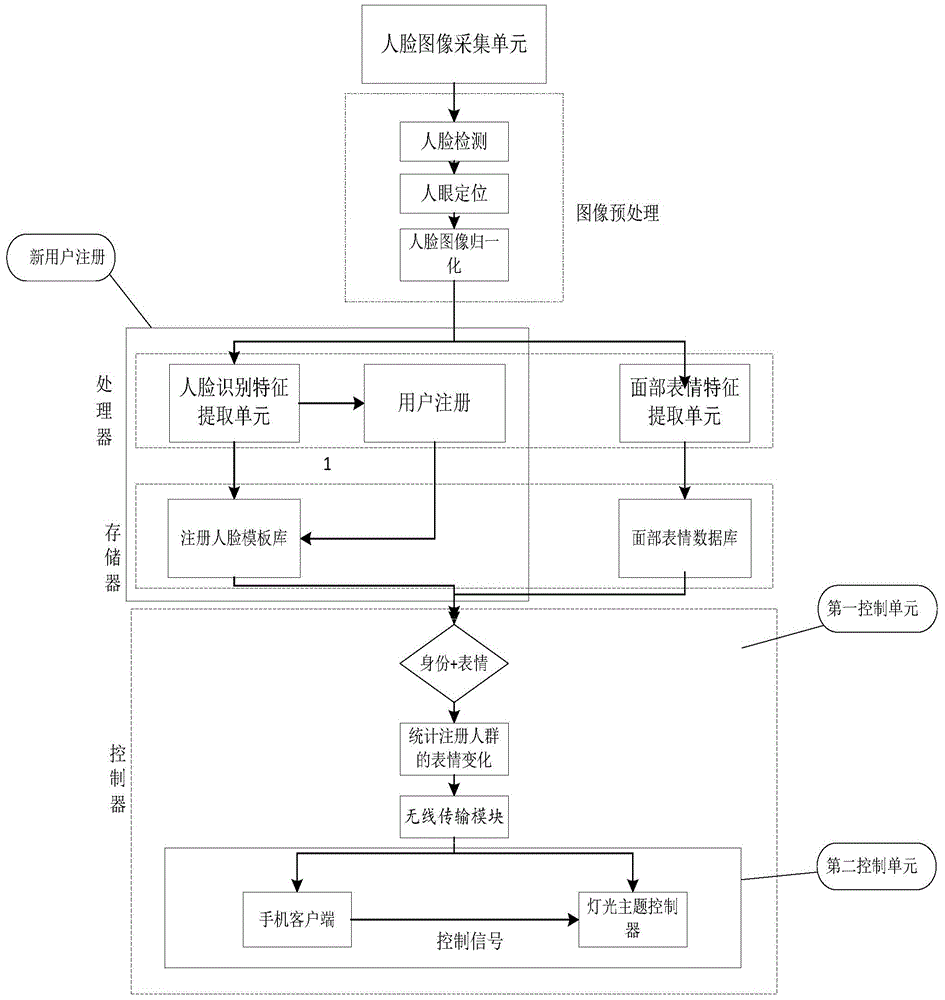

Recording and lamplight control system and method based on face recognition and facial expression recognition

ActiveCN104582187AImprove experienceRich entertainment lifeCharacter and pattern recognitionElectric light circuit arrangementSubject specificExpression Feature

The invention discloses a recording and lamplight control system and method based on face recognition and facial expression recognition. The system comprises a face image collection unit, a storage, an operation processing unit, a wireless transmission module and a lamplight theme controller. The face image collection unit is used for collecting face images and converting the collected face images into feature data to be stored; the storage is used for facial storing expression features which are offline trained and a face registering template collected primarily; the operation processing unit achieves face recognition and facial expression recognition, and sends out corresponding wireless control signals through the wireless transmission module according to a recognition analysis result; the lamplight theme controller stores lamplight themes suitable for different moods and receives controls signals sent by the wireless transmission module, and lamplight theme control signals conforming to the corresponding moods are generated and transmitted to the lamplight system. Mood recording and household control based on face recognition and facial expression recognition are achieved, the good experience can be brought to a user, and entertainment life of a home is enriched.

Owner:SHANDONG UNIV

Tristimania diagnosis system and method based on attention and emotion information fusion

InactiveCN105559802AComprehensive recognitionAccurate identificationPsychotechnic devicesPupil diameterFeature extraction

The invention provides a tristimania diagnosis system and method based on attention and emotion information fusion. The system comprises an emotion stimulating module, an image collecting module, a data transmission module, a data pre-processing module, a data processing module, a feature extraction module and an identification feedback module, wherein the emotion stimulating module is used for setting a plurality of emotion stimulating tasks and providing the emotion stimulating tasks to a subject; the image collecting module is used for collecting eye images and face images of the subject in the emotion stimulating task performing process; the data transmission module is used for obtaining and sending the eye images and the face images; the data pre-processing module is used for pre-processing the eye images and the face images; the data processing module is used for calculating the attention point position and the pupil diameter of the subject; the feature extraction module is used for extracting attention type features and emotion type features; and the identification feedback module is used for performing tristimania diagnosis and identification on the subject. The system and the method have the advantage that the tristimania can be comprehensively, systematically and quantificationally identified by using the attention point center distance features, the attention deviation score features, the emotion zone width and the face expression features.

Owner:BEIJING UNIV OF TECH +1

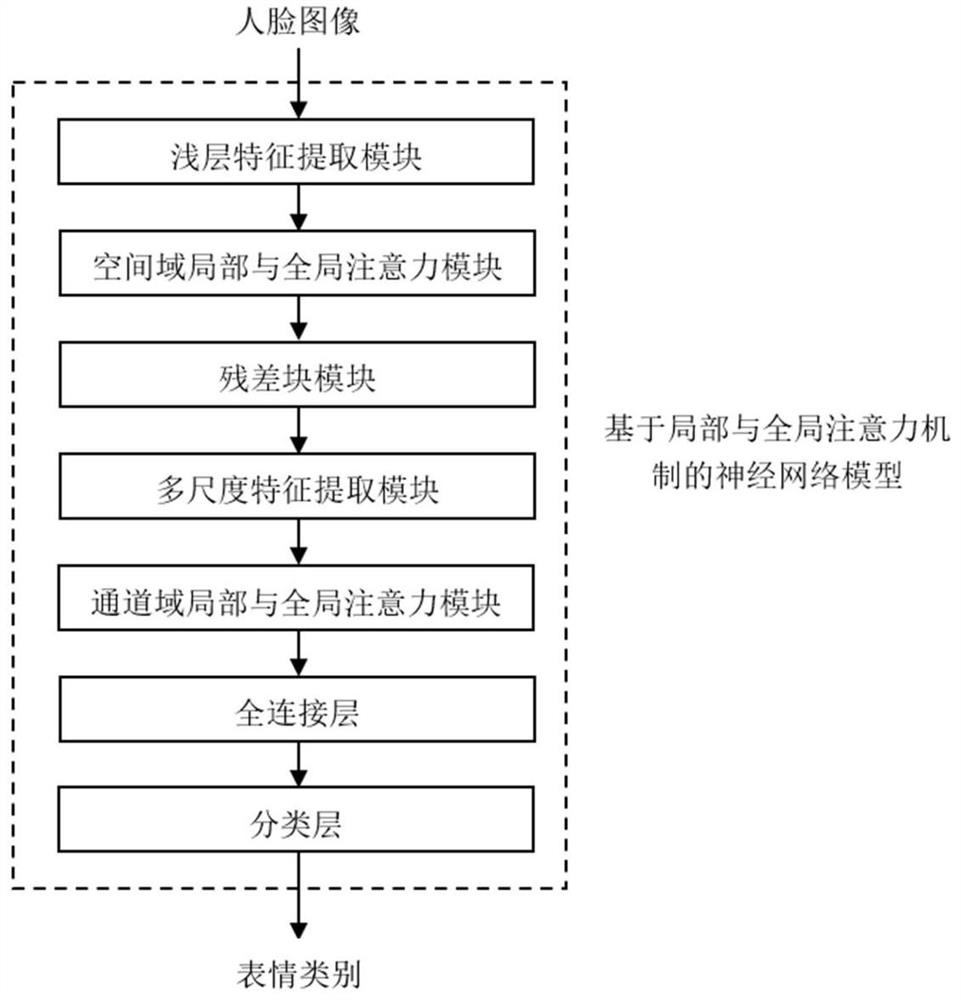

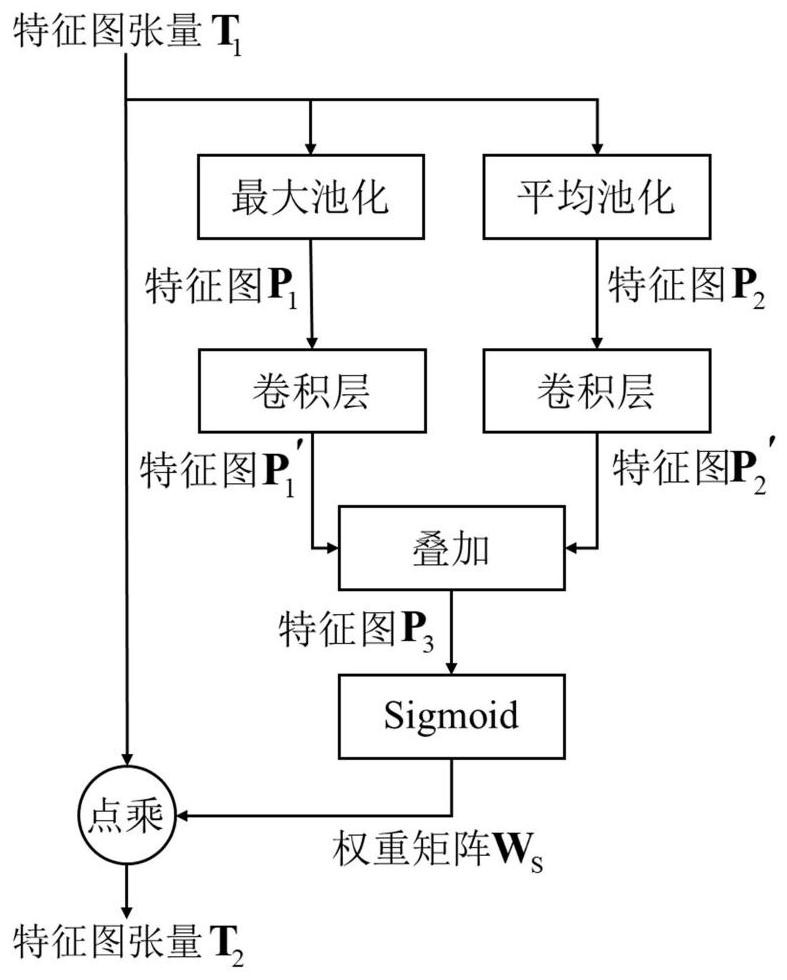

Expression recognition method and system based on local and global attention mechanism

ActiveCN112784764AImprove accuracyImprove robustnessNeural architecturesAcquiring/recognising facial featuresFeature extractionSample image

The invention discloses an expression recognition method and system based on local and global attention mechanisms. The method comprises the following steps: firstly, constructing a neural network model based on a local and global attention mechanism, wherein the model is composed of a shallow feature extraction module, a spatial domain local and global attention module, a residual network module, a multi-scale feature extraction module, a channel domain local and global attention module, a full connection layer and a classification layer; training the neural network model by using sample images in the facial expression image library; and finally, inputting a to-be-tested face image into the trained neural network model for expression recognition. According to the invention, a multi-scale feature extraction module is used to extract texture features of different scales in a face image, so that loss of discriminative expression features is avoided; local and global attention modules of a spatial domain and a channel domain are used for enhancing features which play a key role in expression recognition and have higher discriminability, and the accuracy and robustness of expression recognition can be effectively improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

A latent semantic min-Hash-based image retrieval method

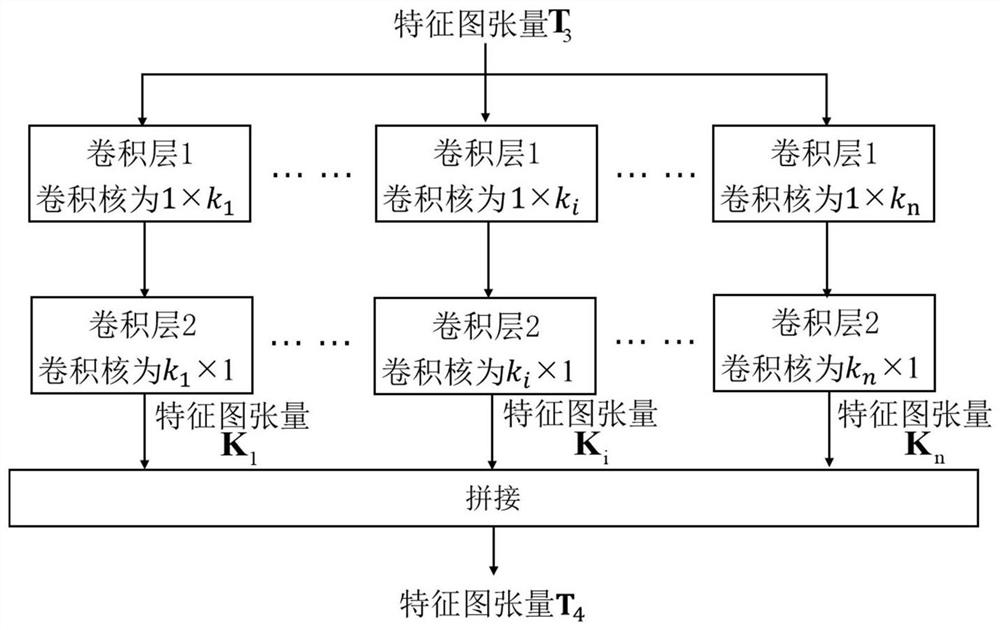

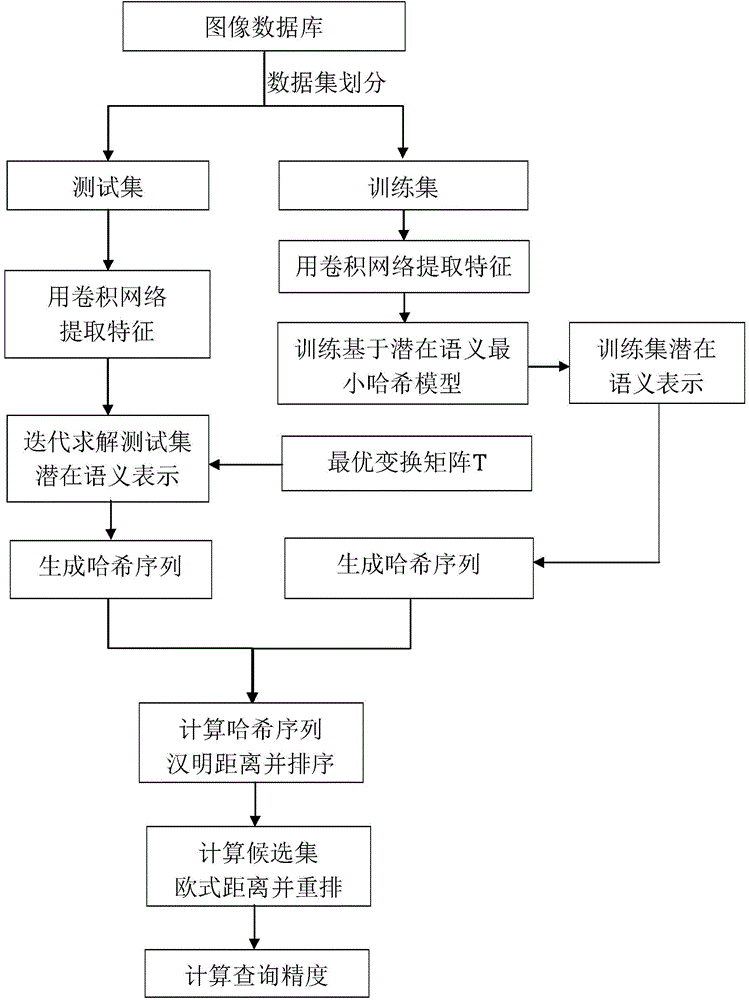

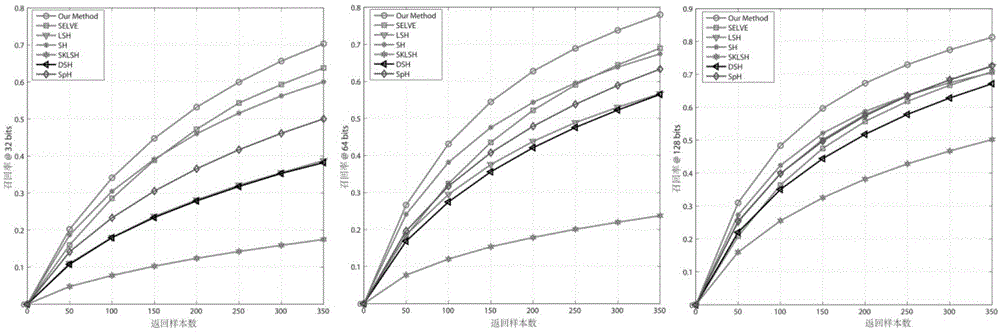

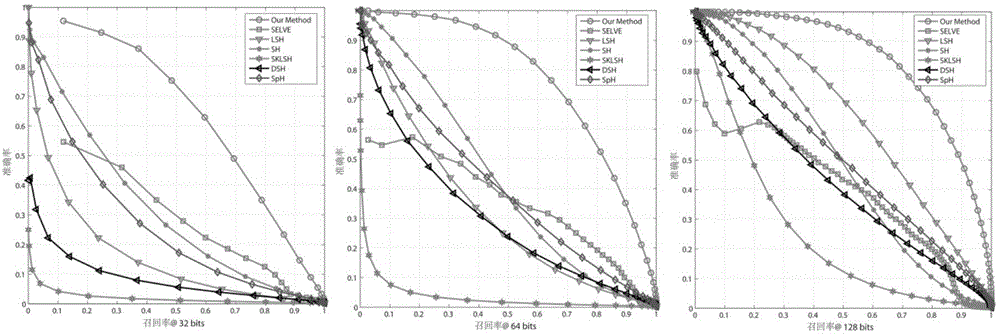

ActiveCN106033426AHigh precisionImprove efficiencyCharacter and pattern recognitionSpecial data processing applicationsMatrix decompositionImaging processing

The invention relates to the technical field of image processing and in particular relates to a latent semantic min-Hash-based image retrieval method comprising the steps of (1) obtaining datasets through division; (2) establishing a latent semantic min-Hash model; (3) solving a transformation matrix T; (4) performing Hash encoding on testing datasets Xtest; (5) performing image query. Based on the facts that the convolution network has better expression features and latent semantics of primitive characteristics can be extracted by using matrix decomposition, minimizing constraint is performed on quantization errors in an encoding quantization process, so that after the primitive characteristics are encoded, the corresponding Hamming distances in a Hamming space of semantically-similar images are smaller and the corresponding Hamming distances of semantically-dissimilar images are larger. Thus, the image retrieval precision and the indexing efficiency are improved.

Owner:XI'AN INST OF OPTICS & FINE MECHANICS - CHINESE ACAD OF SCI

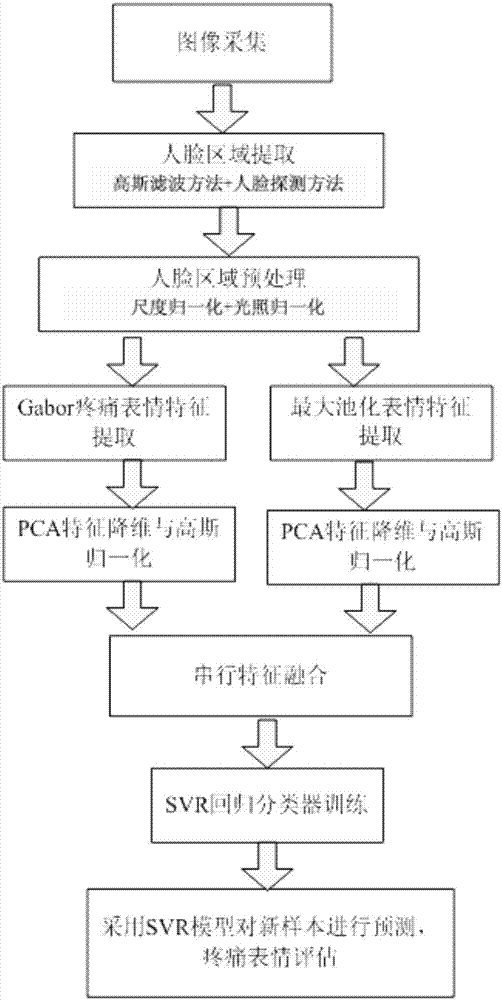

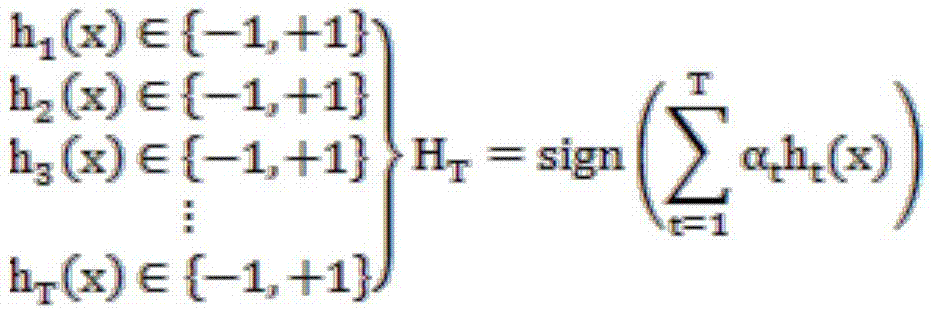

Method for evaluating pain by face expression

ActiveCN107358180AImprove anti-interference abilityComprehensive pain expressionAcquiring/recognising facial featuresFeature vectorLocation detection

The invention relates to the technical fields of expression identification and pain evaluation, and in particular relates to a method for evaluating pain by face expression. The method includes the following steps: successfully denoising and smoothing an image, detecting the position of a face in the image, conducting normalization, extracting Cabor pain expression features by separately using a Gabor filter and the maximum pooling method, and maximum pooling pain expression features and fusing the features, which realizes anti-interference capability of an expression feature vector set at the maximum, enables the extraction of more comprehensive expression features, such that the evaluation is much more accurate; and eventually training and studying the to-be-trained pain expression feature vector set by using a SVR regression classifier, and obtaining a SVR model. The method herein can effectively, fast, and accurately evaluate the grades of pain expressions.

Owner:JIANGSU APON MEDICAL TECHNOLOGY CO LTD

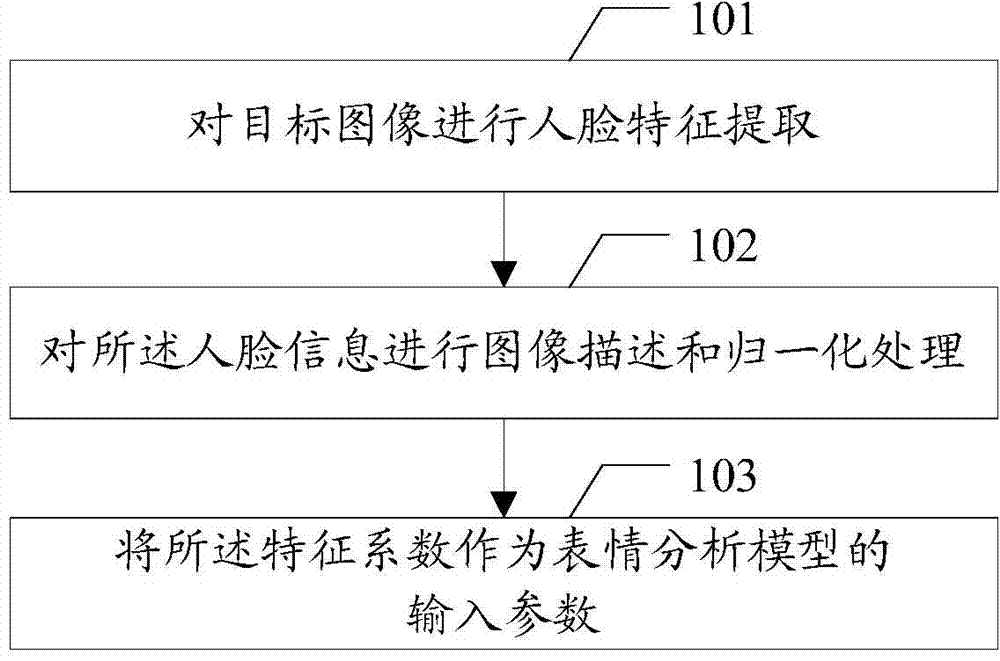

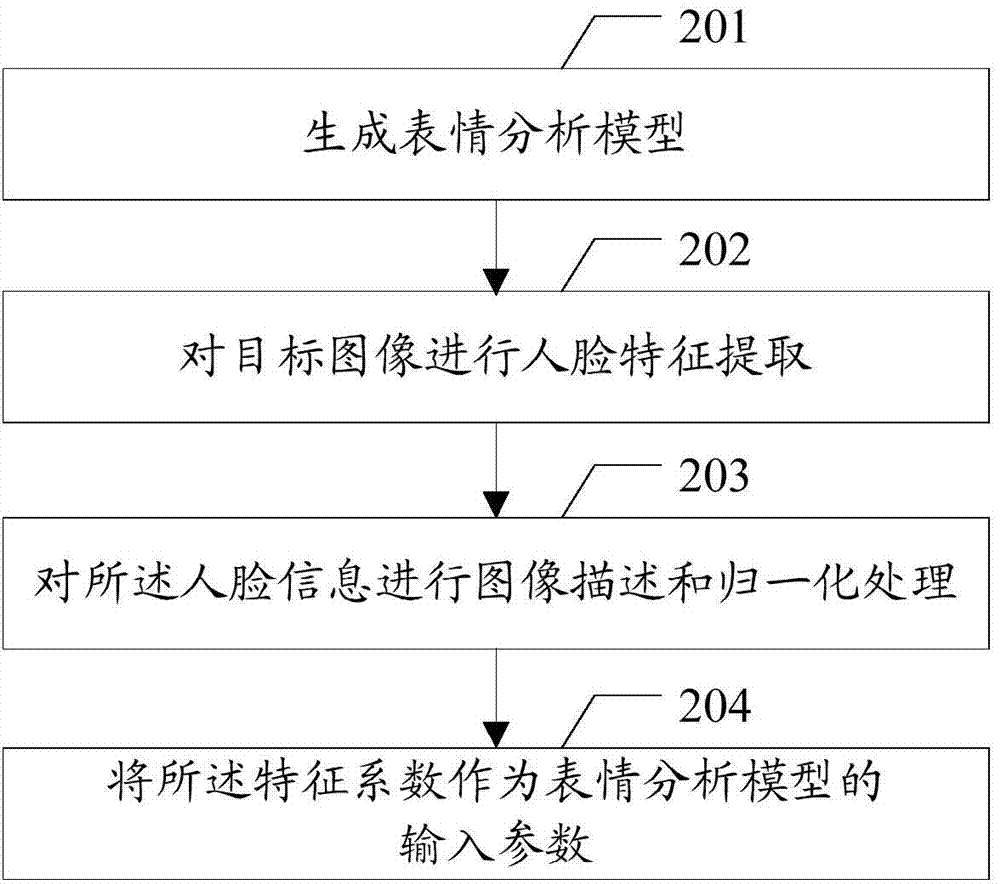

Image recognition method, device and system

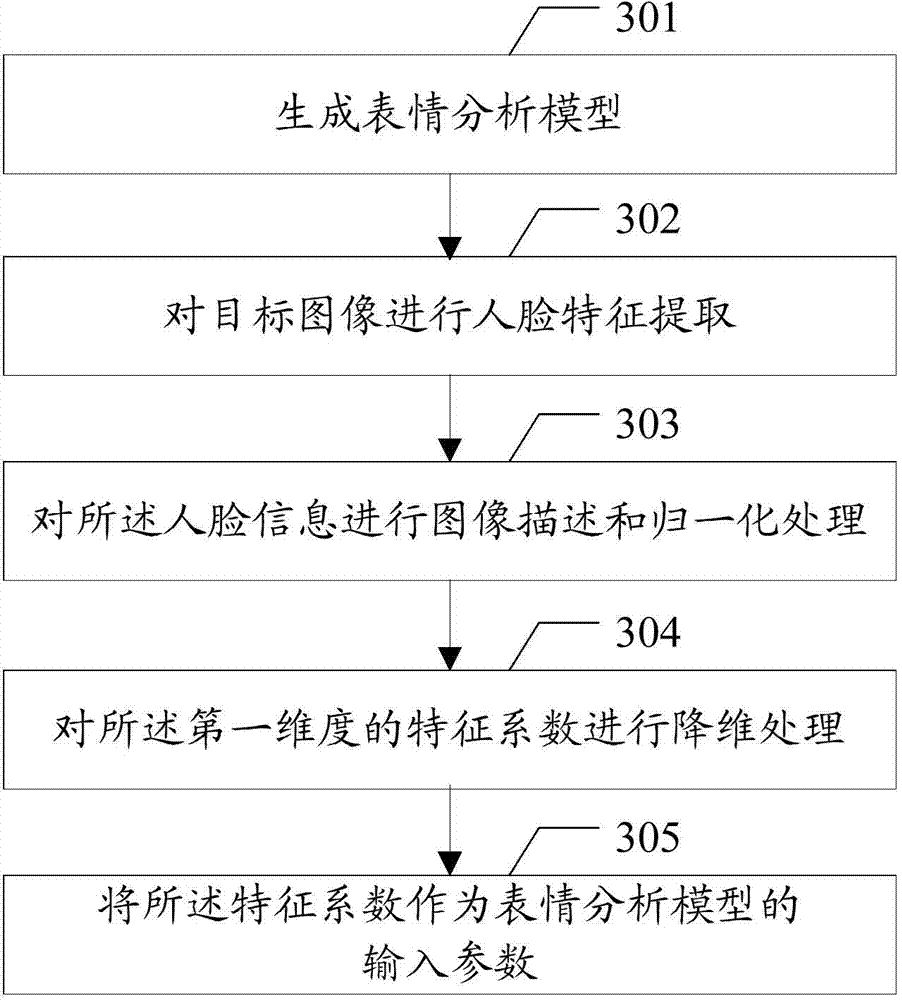

InactiveCN104766041AImplement image filtering functionCharacter and pattern recognitionAnalytic modelFeature extraction

The embodiment of the invention discloses an image recognition method and a related device for recognizing a facial expression of an image. The method comprises steps: facial feature extraction is carried out on a target image, and facial information is obtained; normalized treatment is carried out on the facial information to obtain a first dimension feature coefficient; and the feature coefficient serves as an input parameter of an expression analysis model, and an expression analysis result of the target image is acquired according to the expression analysis model, wherein the expression analysis model is a linear fitting model for facial expression features obtained through expression feature training.

Owner:TENCENT TECH (SHENZHEN) CO LTD

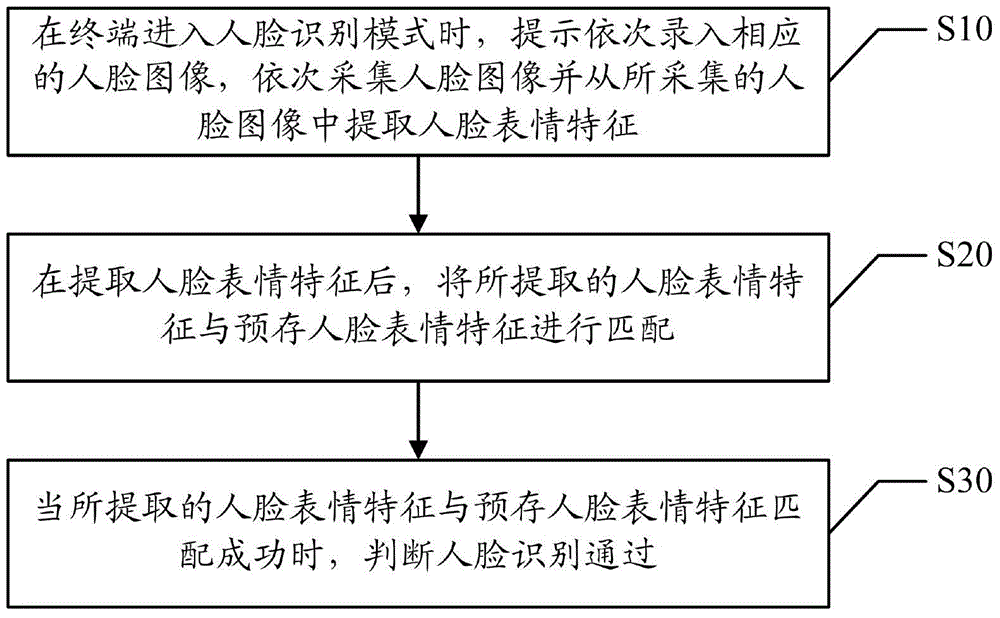

Terminal face recognition method and device

InactiveCN104636734AImprove securityCharacter and pattern recognitionPattern recognitionComputer terminal

Owner:NUBIA TECHNOLOGY CO LTD

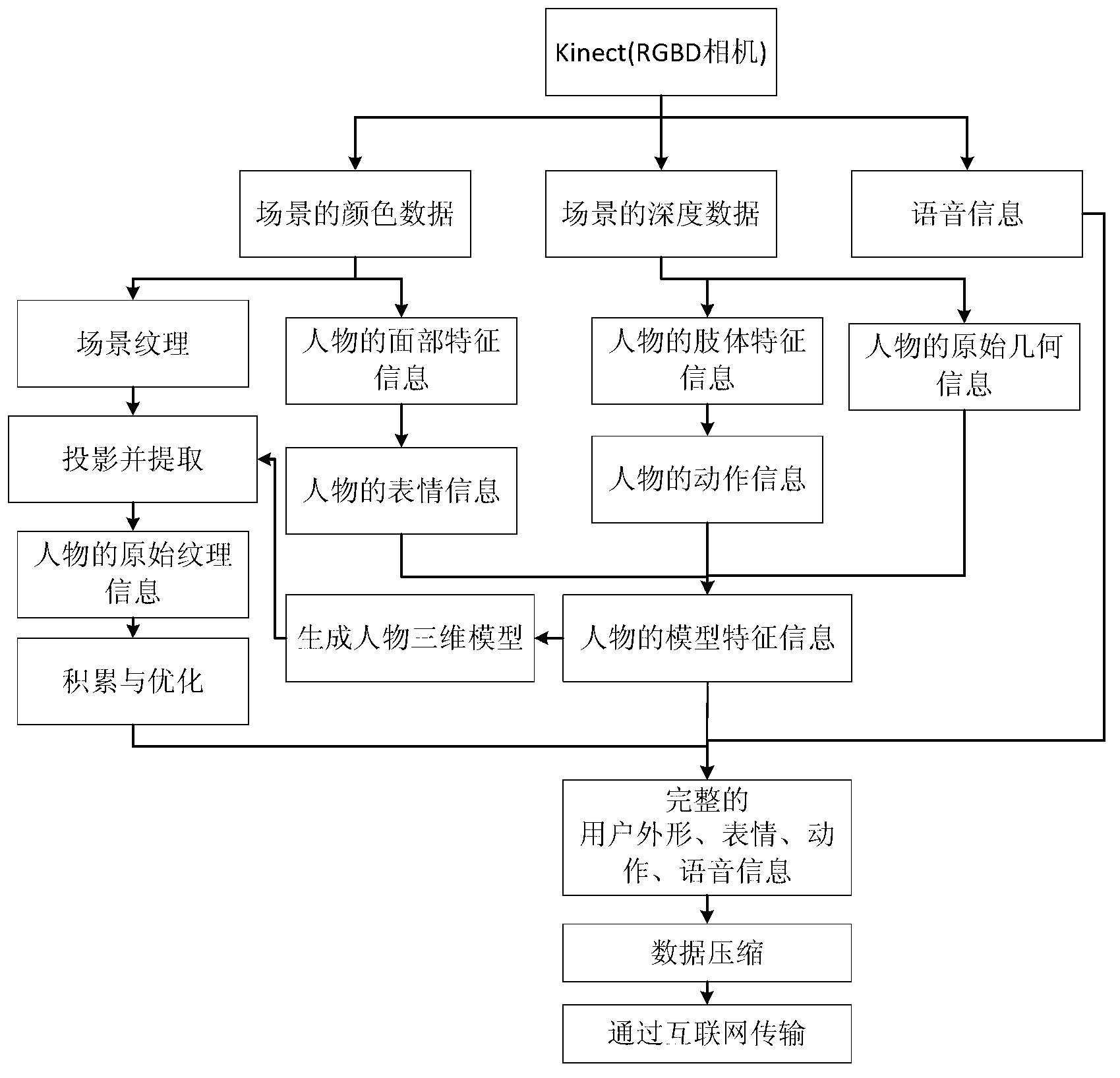

Expandable three-dimensional display remote video communication method

ActiveCN103269423ALow costEfficient captureTwo-way working systemsSteroscopic systemsPoint cloudData transmission

The invention discloses an expandable three-dimensional display remote video communication method. According to the expandable three-dimensional display remote video communication method, the image of a first user is obtained by means of an RGB-D camera at the data transmission end, the image of the first user comprises a texture image and a depth image, facial feature information and expression feature information are extracted from the texture image, physical feature information is extracted from the depth image, and point clouds of the first user are reconstructed; all the feature information is optimized by means of the point clouds, the optimized feature information is obtained to generate a three-dimensional model A, the three-dimensional model A is projected on a corresponding texture image, the corresponding texture information is extracted, and acquired voice information, the optimized feature information and texture data are sent to a second user; at the data receiving end, the second user receives the data from the first user and extracts the optimized feature information from the received data to generate a three-dimensional model B, the three-dimensional model B is rendered by means of the texture data, and a rendering result is output through a three-dimensional display device, and the voice information is played.

Owner:ZHEJIANG UNIV

A facial expression conversion method based on identity and expression feature conversion

InactiveCN109934767AValid conversionEnhance the charmGeometric image transformationCharacter and pattern recognitionPersonalizationPaired samples

The invention provides a facial expression conversion method based on identity and expression feature conversion, and mainly solves the problem of personalized facial expression. Most of the existingfacial expression synthesis work attempts to learn conversion between expression domains, so that paired samples and marked query images are needed. Identity information and expression feature information of an original image can be stored by establishing the two encoders, and target facial expression features are used as condition tags. The method mainly comprises the following steps: firstly, carrying out facial expression training, preprocessing a neutral expression picture and other facial expression pictures, then extracting identity characteristic parameters and target facial expressioncharacteristic parameters of a neutral expression, and establishing a matching model; Secondly, performing facial expression conversion, inputting the neutral expression picture into a conversion model, and applying model output parameters to expression synthesis to synthesize a target expression image. Pairing data sets of different expressions with the same identity are not limited any more, identity information of an original image can be effectively reserved due to existence of the two encoders, and conversion from a neutral expression to different expressions can be achieved.

Owner:CENT SOUTH UNIV

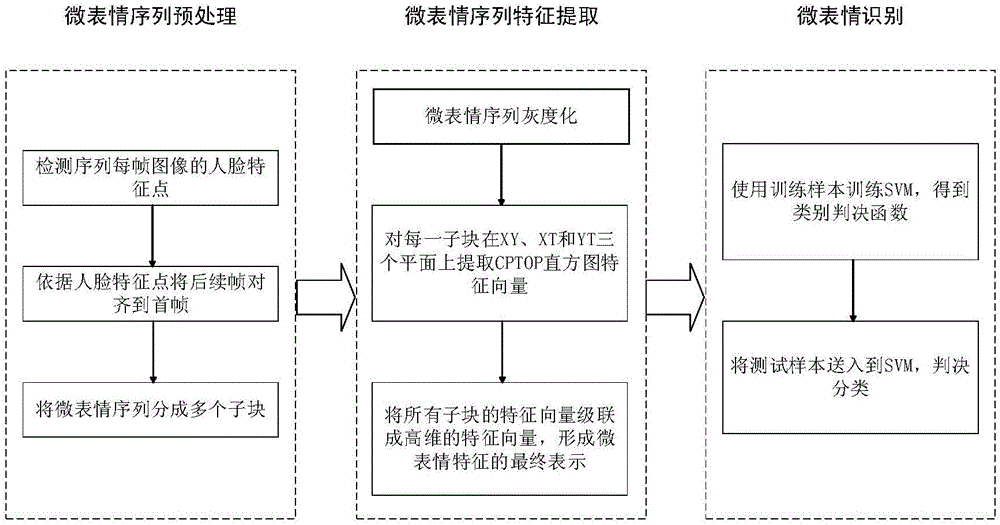

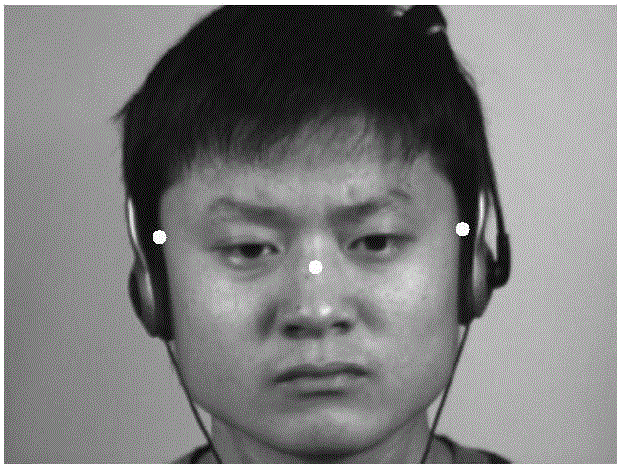

Micro expression automatic identification method based on multiple-dimensioned sampling

InactiveCN106096537AImprove robustnessEnhanced description abilityAcquiring/recognising facial featuresPattern recognitionTime complexity

The invention provides a micro expression automatic identification method based on multiple-dimensioned sampling. The method comprises micro expression image sequence preprocessing, micro expression feature extraction and micro expression identification. For the purpose of reducing influences exerted by face natural displacement and an ineffective area on micro expression identification, a method for automatically aligning a face and effectively partitioning a face area, and thus the robustness of an identification result is improved; and for the purpose of solving the defect of an existing feature descriptor, the invention brings forward a novel micro expression feature description operator CPTOP, the CPTOP operator the same sampling point number as a LBP-TOP operator yet employs a multiple-dimensioned sampling strategy, and under the same time complexity and space complexity, better description information is obtained.

Owner:SHANDONG UNIV

A shielded expression recognition algorithm combining double dictionaries and an error matrix

ActiveCN109711283AHigh precisionImprove robustnessCharacter and pattern recognitionDictionary learningDecomposition

The invention discloses a shielded expression recognition algorithm combining double dictionaries and an error matrix, which comprises the following steps of: firstly, separating expression characteristics and identity characteristics in each type of expression images by utilizing low-rank decomposition, and respectively carrying out dictionary learning on a low-rank matrix and a sparse matrix toobtain an intra-class related dictionary and a difference structure dictionary; Secondly, when the shielded images are classified, original sparse coding does not consider coding errors, the coding errors caused by shielding cannot be accurately described, it is proposed that the errors caused by shielding are expressed by a single matrix, and the matrix can be separated from a feature matrix of the unshielded training image; a clear image can be recovered by subtracting the error matrix from the test sample; a clear image sample is decomposed into identity features and expression features ina low-rank manner by using double-dictionary cooperative representation, and finally classification is realized according to contribution of each type of expression features in joint sparse representation. The method has robustness for random shielding expression recognition.

Owner:GUANGDONG UNIV OF TECH

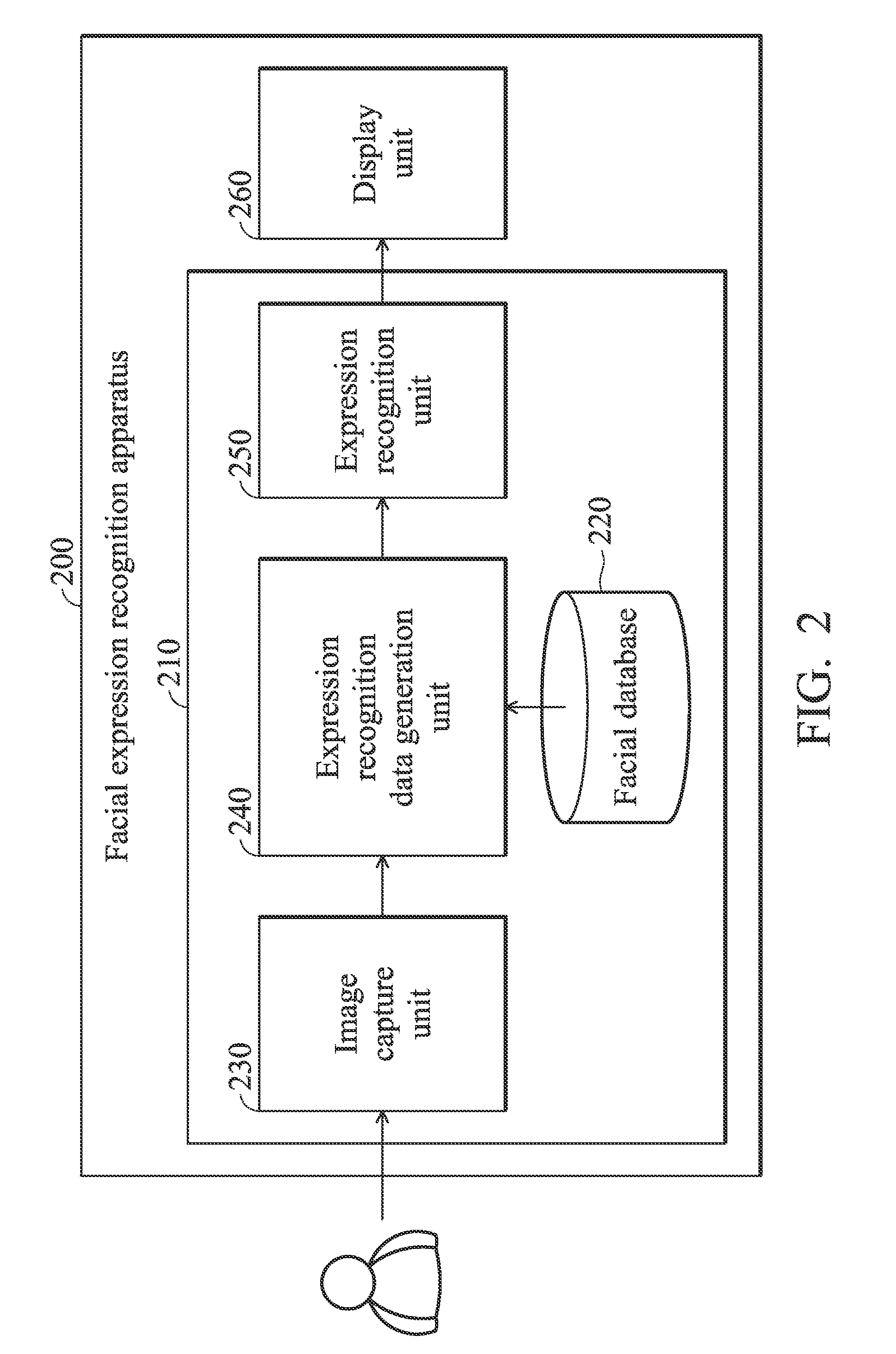

Facial expression recognition systems and methods and computer program products thereof

Owner:INSTITUTE FOR INFORMATION INDUSTRY

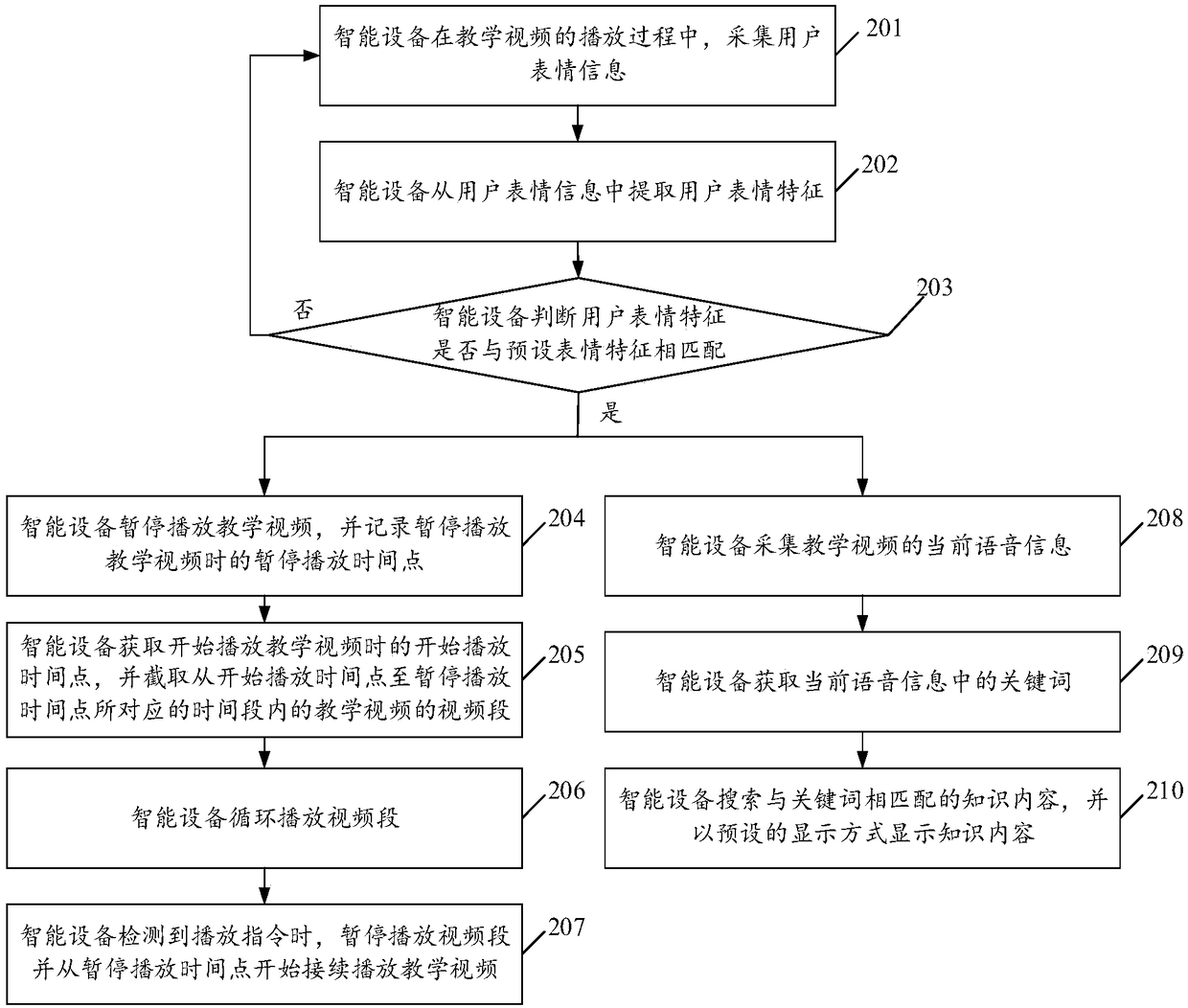

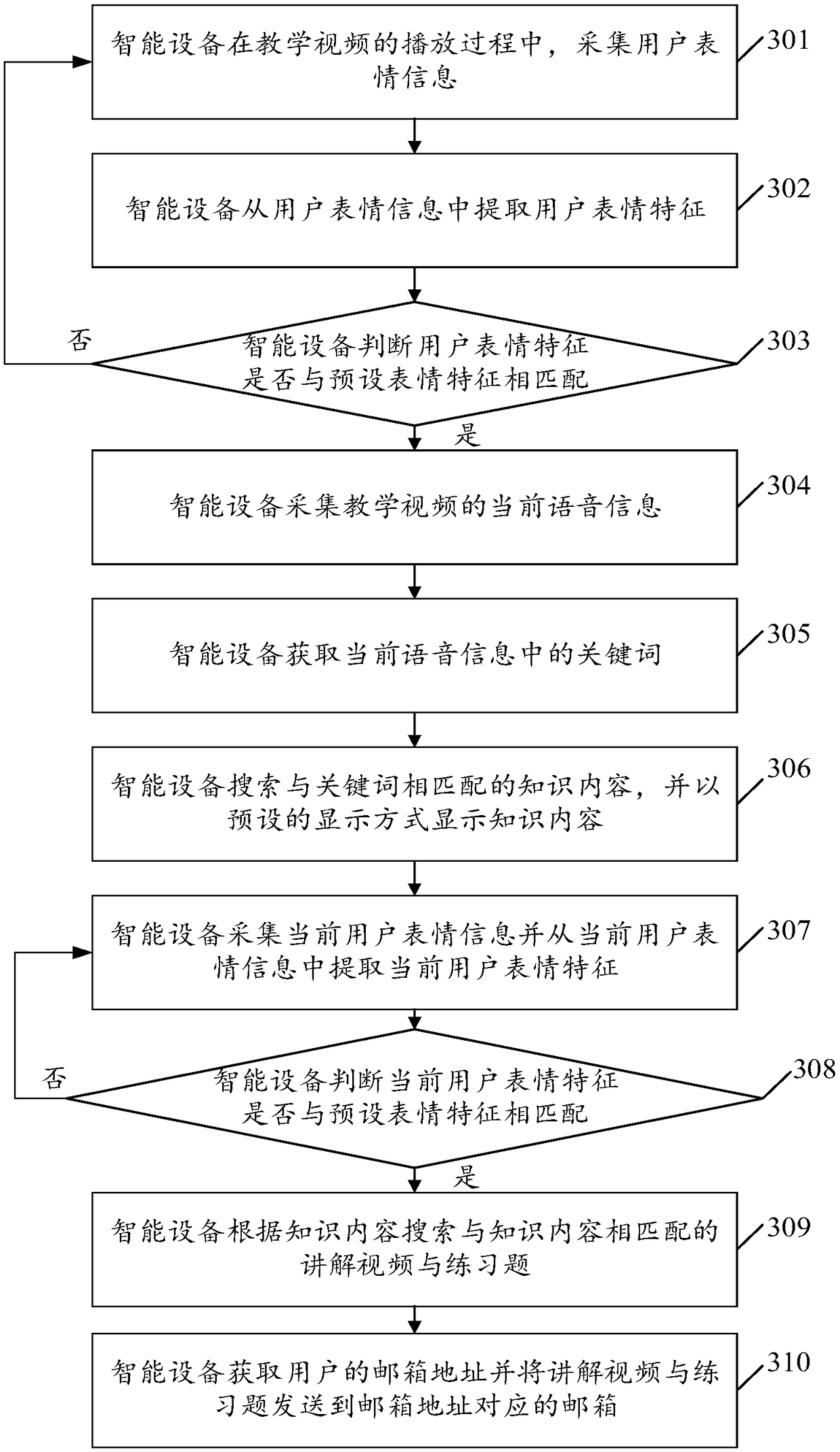

Video teaching auxiliary method and intelligent equipment

ActiveCN108924608AImprove learning efficiencyImprove user experienceSelective content distributionElectrical appliancesKnowledge contentIntelligent equipment

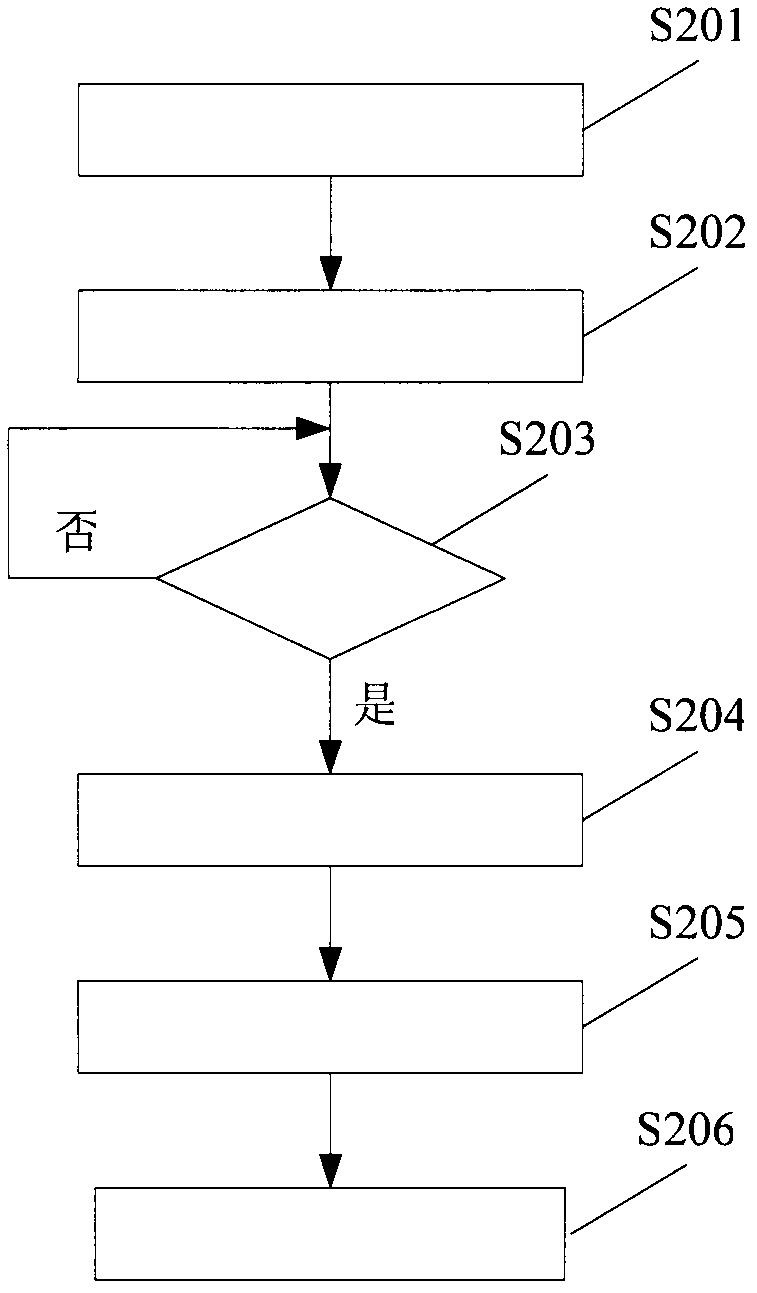

The embodiment of the invention relates to the technical field of intelligent equipment, and discloses a video teaching auxiliary method and intelligent equipment. The method comprises the steps of collecting user expression information during a teaching video playing process; extracting a user expression feature from the user expression information; judging whether the user expression feature ismatched with a preset expression feature, and if yes, collecting current voice information of a teaching video; acquiring a keyword in the current voice information; and searching knowledge content matched with the keyword, and displaying the knowledge content in a preset displaying manner. With the implementation of the method and equipment provided by the embodiment of the invention, the user can be assisted in real time for learning unknown knowledge points in the teaching video, the information interaction efficiency of the user during video teaching is improved, and thus the learning efficiency of the user is improved, and the user can acquire better usage experience.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com