Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

477 results about "Bottleneck" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In production and project management, a bottleneck is one process in a chain of processes, such that its limited capacity reduces the capacity of the whole chain. The result of having a bottleneck are stalls in production, supply overstock, pressure from customers and low employee morale. There are both short and long-term bottlenecks. Short-term bottlenecks are temporary and are not normally a significant problem. An example of a short-term bottleneck would be a skilled employee taking a few days off. Long-term bottlenecks occur all the time and can cumulatively significantly slow down production. An example of a long-term bottleneck is when a machine is not efficient enough and as a result has a long queue.

Methods and systems for detecting, locating and remediating a congested resource or flow in a virtual infrastructure

InactiveUS20140215077A1Well formedDigital computer detailsData switching networksRemedial actionDistributed computing

Once a potential bottleneck is identified, the system provides a methodology for locating the bottleneck. The methodology involves checking in order each of the application, transport and network layers of a sender and receiver nodes for performance problems. Conditions are defined for each of the layers at the sender and receiver nodes that are indicative of a particular type of bottleneck. When the conditions are met in any one of the layers, the system can be configured to stop the checking process and output a message indicating the presence of a bottleneck in the layer and its location. In addition, the system can be configured to generate and output a remedial action for eliminating the bottleneck. The system can be configured to perform the action automatically or after receiving a confirmation from the user.

Owner:F5 NETWORKS INC

Communication device and method of prioritizing transference of time-critical data

InactiveUS20060187836A1Raise transfer toSolve insufficient bandwidthError preventionTransmission systemsData packTime critical

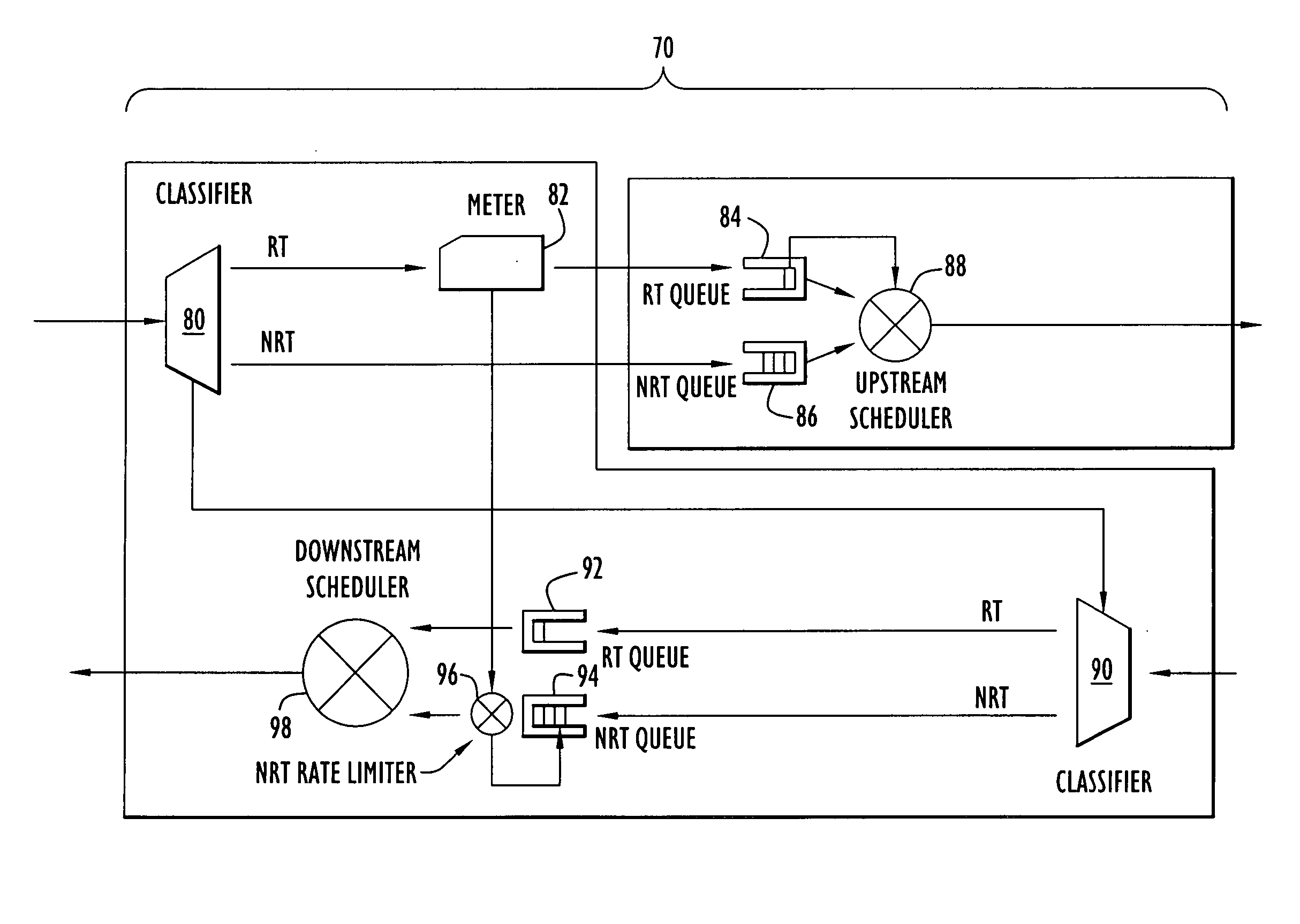

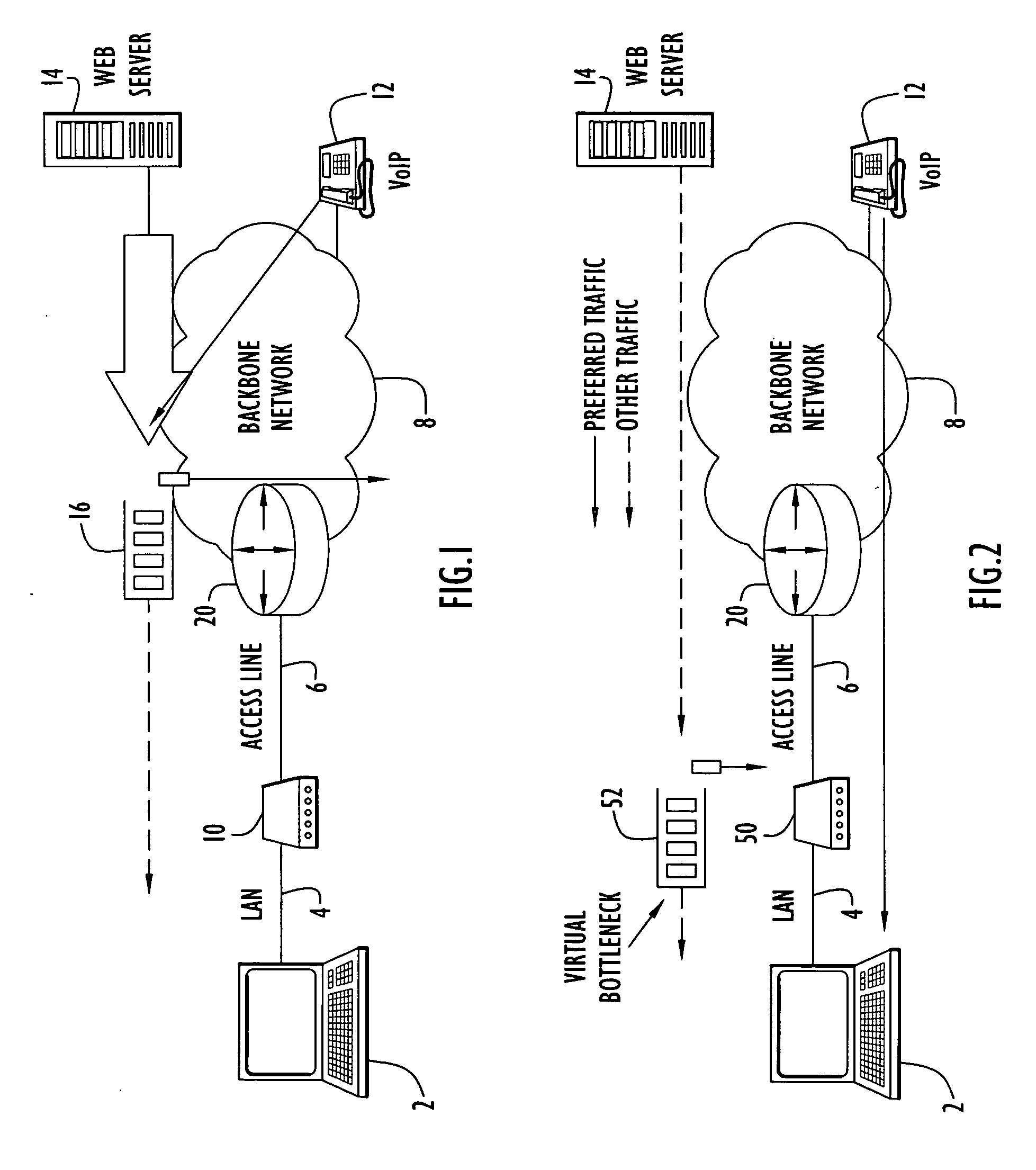

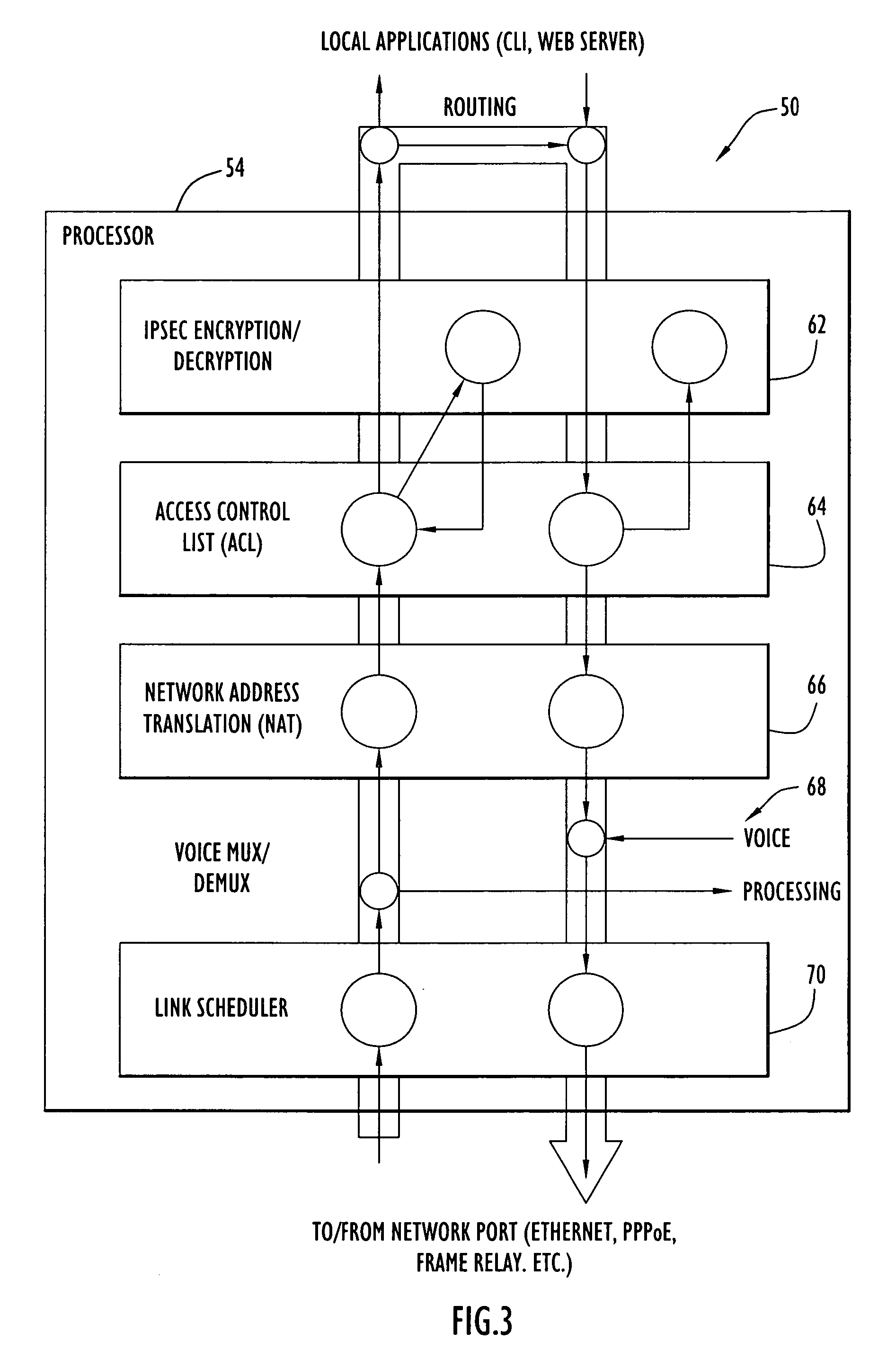

A communication device according to the present invention enhances transfer of time-critical data between one or more LANs and a device (e.g., edge router, etc.) coupled to a backbone network. A virtual bottleneck in the form of a queue is introduced by the communication device at the customer premises or customer end of a backbone network access line where the network congestion or bottleneck resides. The virtual bottleneck delays and / or discards time insensitive traffic prior to time-critical or voice traffic being delayed in the edge router. This is accomplished by the virtual bottleneck queue including a storage capacity or length less than that of a queue utilized by the edge router. A traffic manager or scheduler controlling the virtual bottleneck dynamically adjusts the virtual bottleneck based on the bandwidth required for time-critical packets to ensure sufficient bandwidth is available for those packets.

Owner:PATTON ELECTRONICS

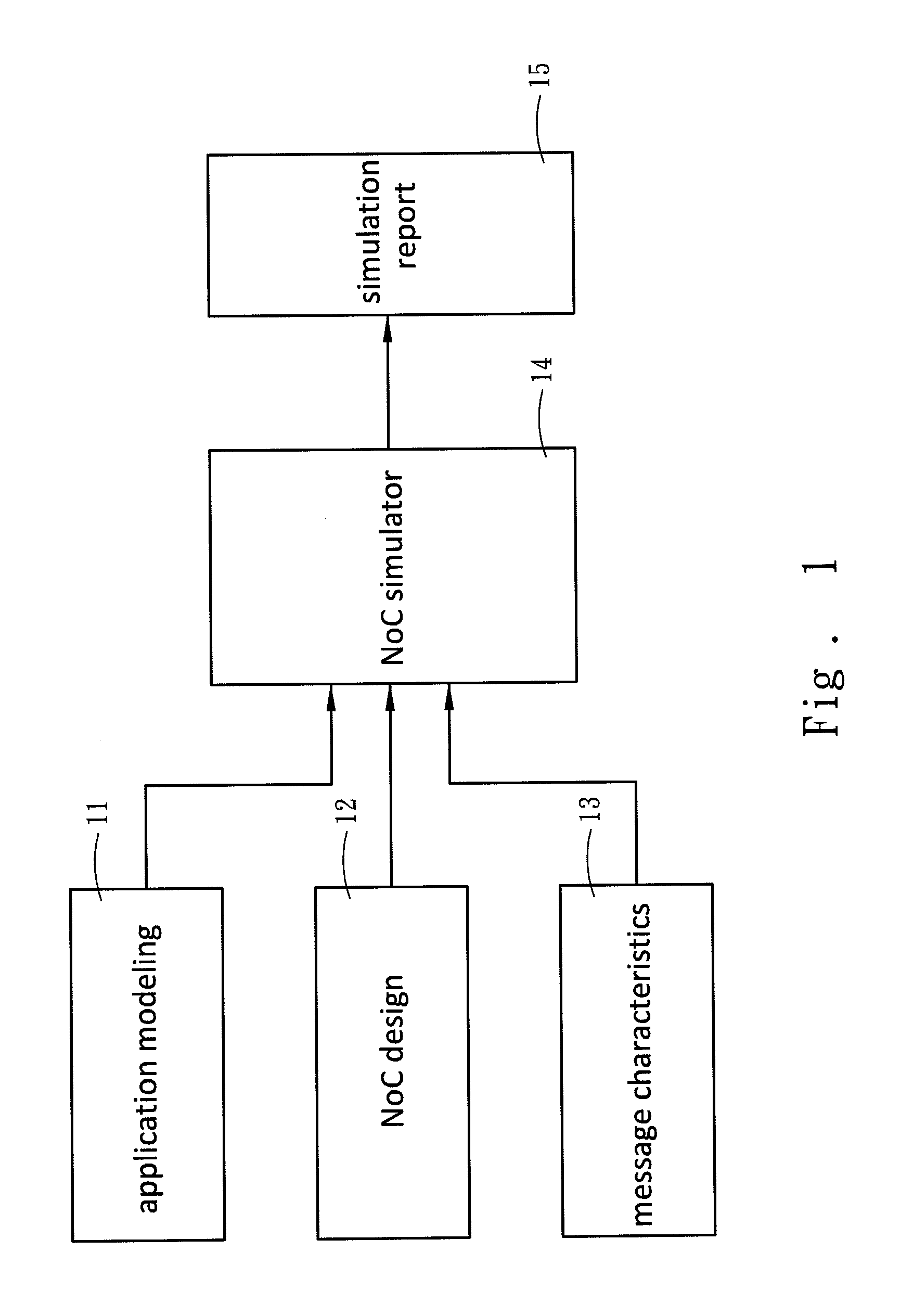

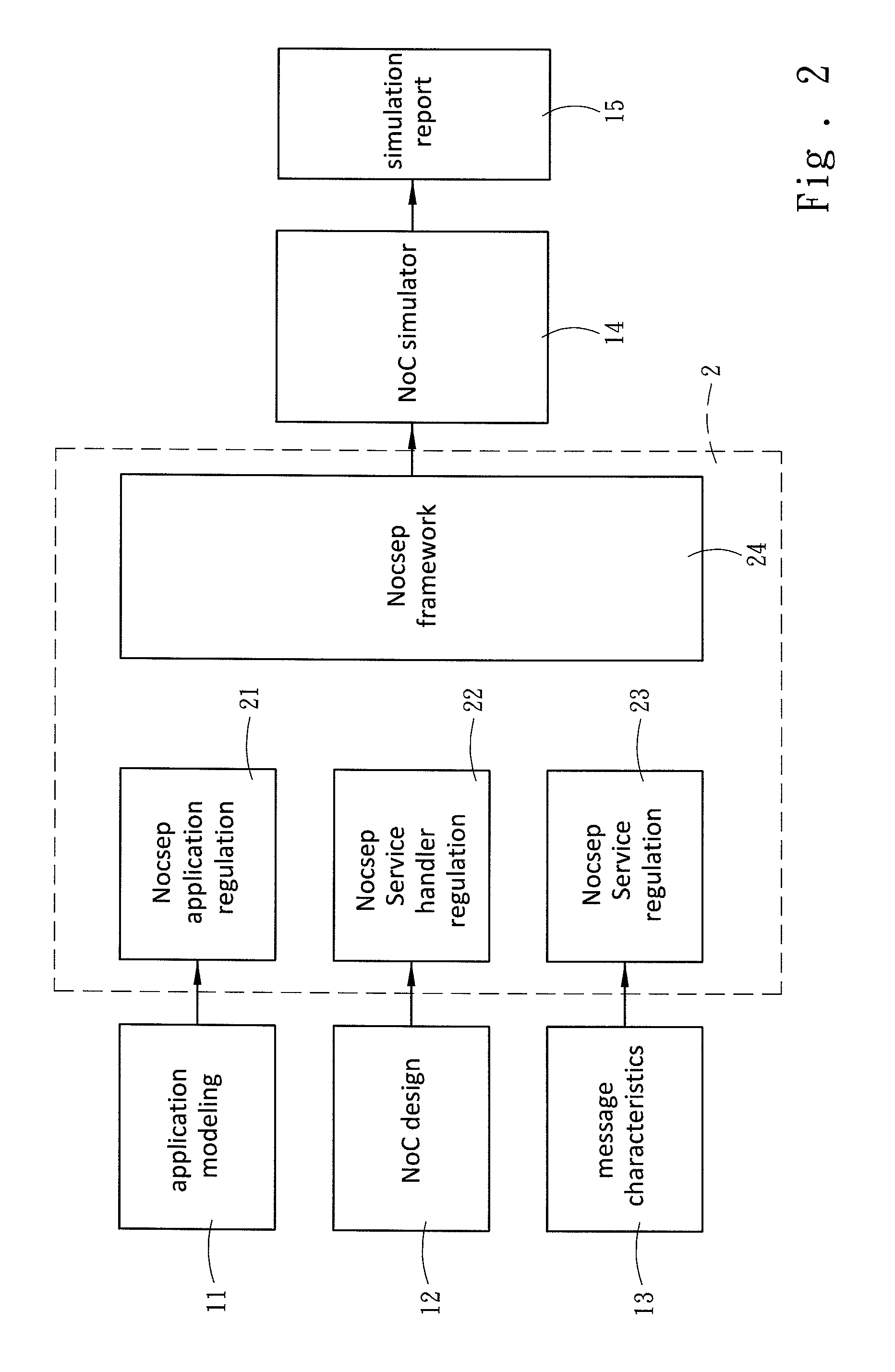

Noc-centric system exploration platform and parallel application communication mechanism description format used by the same

InactiveUS20110191774A1Fast simulationSimplifies some non-critical detailsMultiprogramming arrangementsTransmissionDescription formatComputer architecture

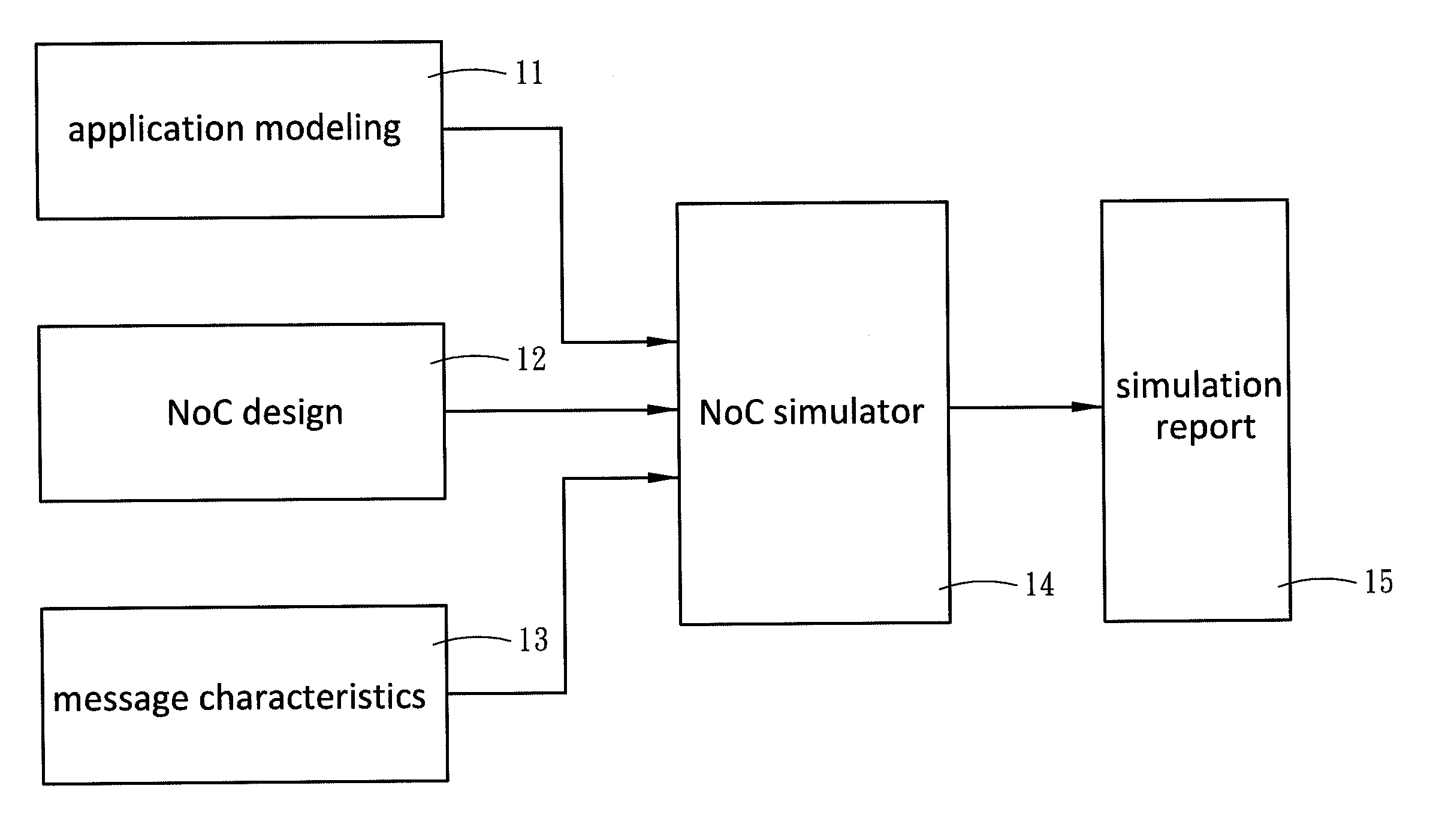

Network-on-Chip (NoC) is to solve the performance bottleneck of communication in System-on-Chip, and the performance of the NoC significantly depends on the application traffic. The present invention establishes a system framework across multiple layers, and defines the interface function behaviors and the traffic patterns of layers. The present invention provides an application modeling in which the task-graph of parallel applications is described in a text method, called Parallel Application Communication Mechanism Description Format. The present invention further provides a system level NoC simulation framework, called NoC-centric System Exploration Platform, which defines the service spaces of layers in order to separate the traffic patterns and enable the independent designs of layers. Accordingly, the present invention can simulate a new design without modifying the framework of simulator or interface designs. Therefore, the present invention increases the design spaces of NoC simulators, and provides a modeling to evaluate the performance of NoC.

Owner:NATIONAL TSING HUA UNIVERSITY

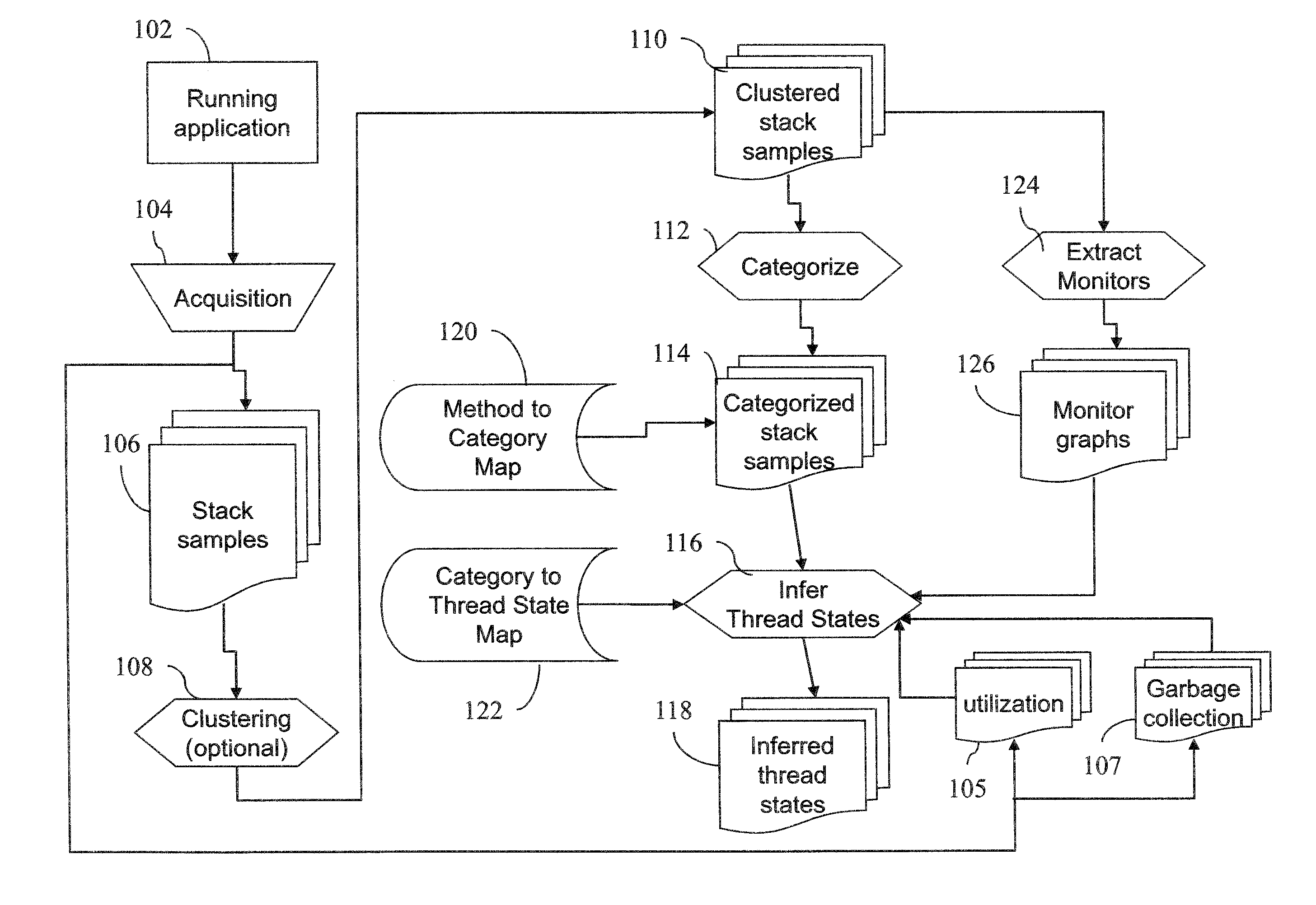

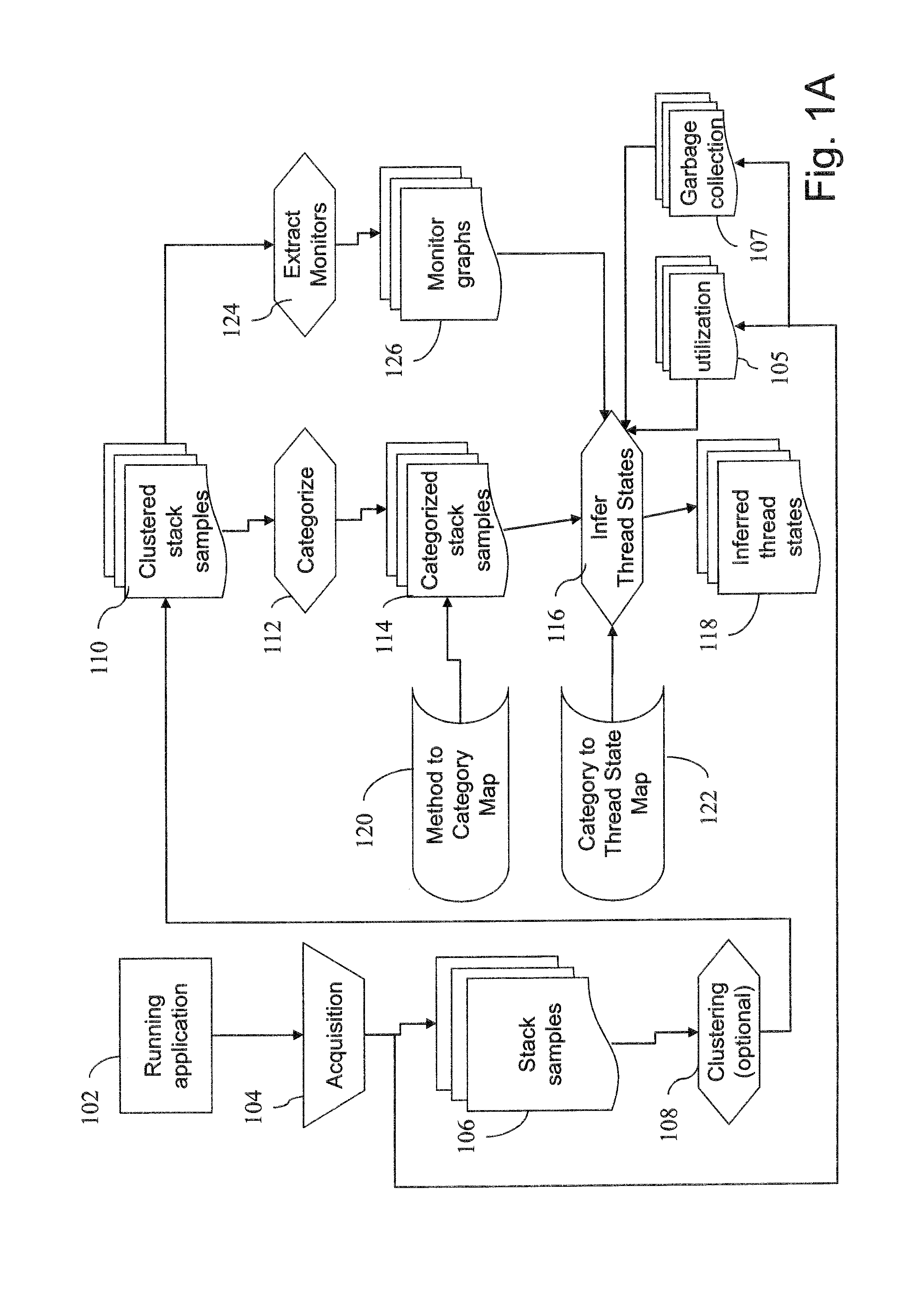

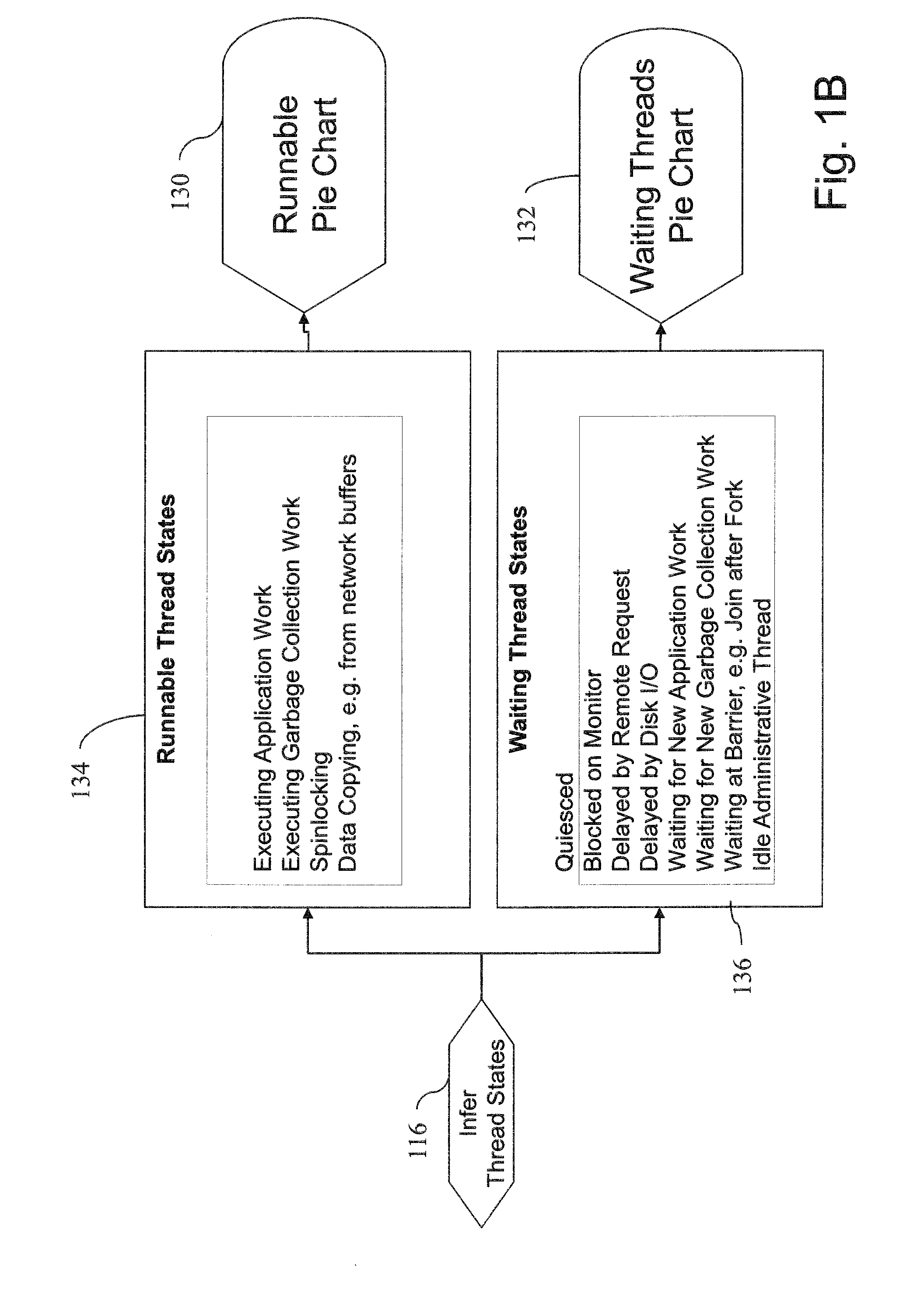

Automatic identification of bottlenecks using rule-based expert knowledge

InactiveUS20120054472A1Error detection/correctionDigital computer detailsKnowledge levelOperating system

Execution states of tasks are inferred from collection of information associated with runtime execution of a computer system. Collection of information may include infrequent samples of executing tasks, the samples which may provide inaccurate executing states. One or more tasks may be aggregated by one or more execution states for determining execution time, idle time, or system policy violations, or combinations thereof.

Owner:DOORDASH INC

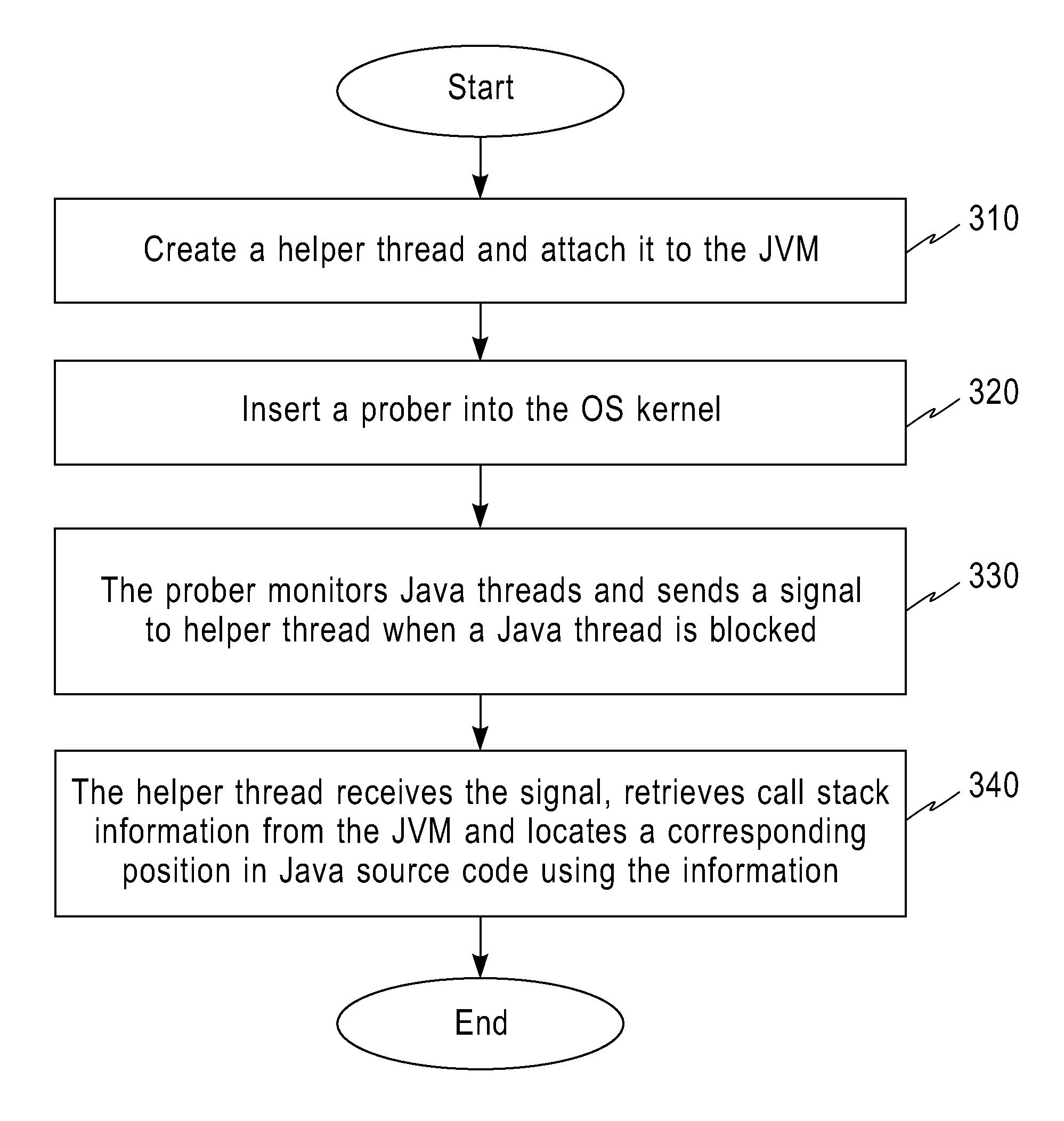

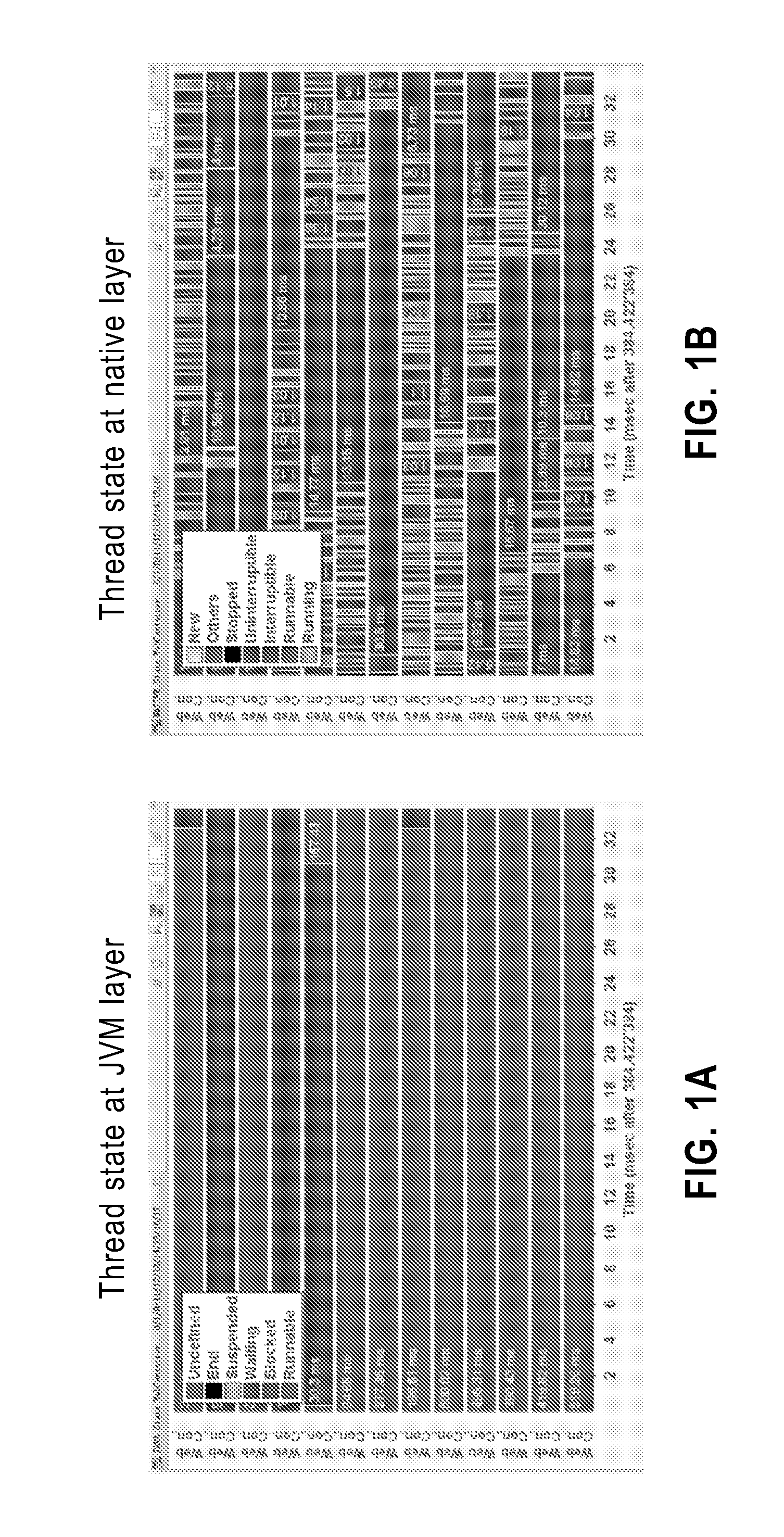

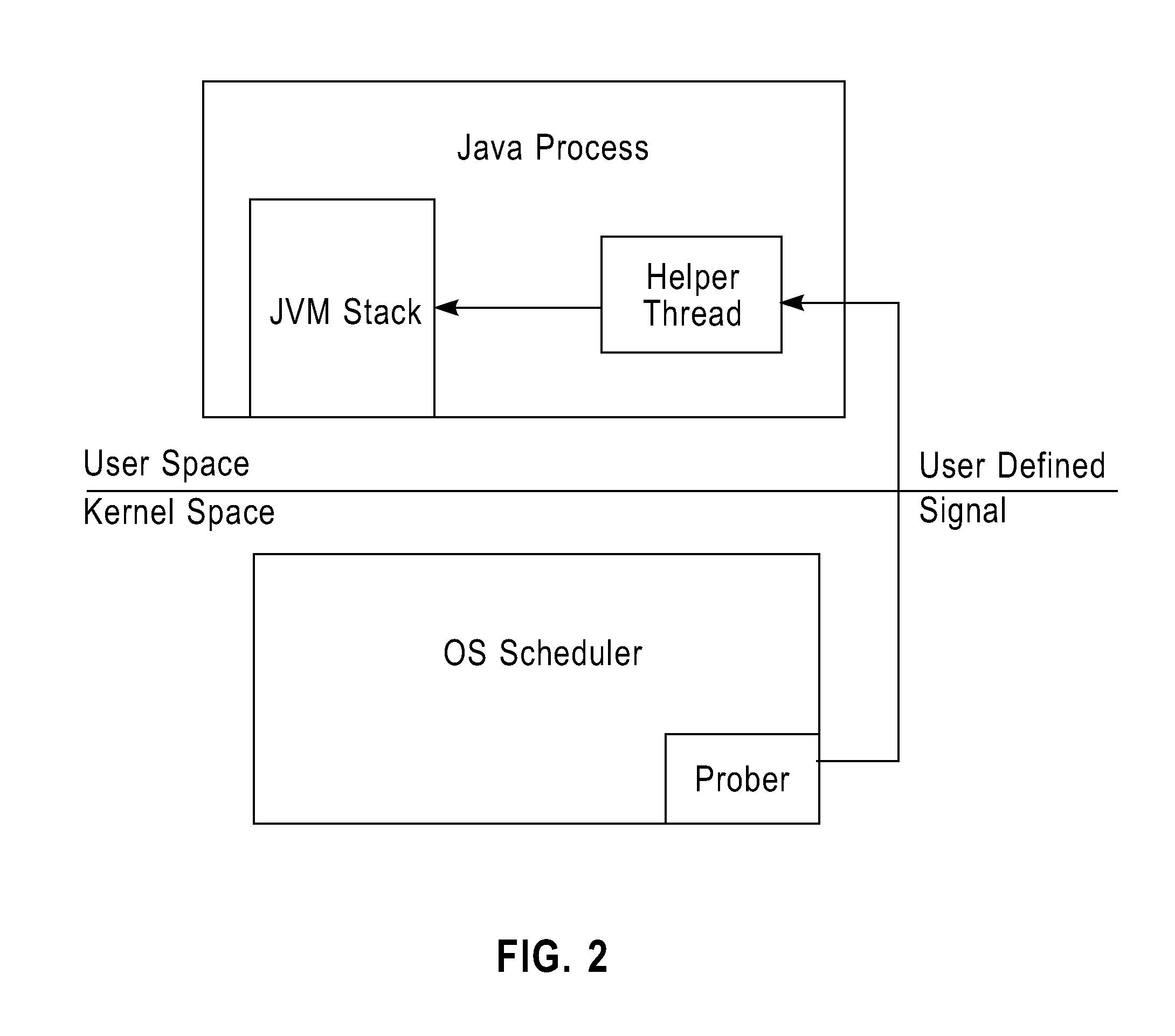

Method and apparatus to locate bottleneck of JAVA program

InactiveUS20110258608A1No obvious performance overheadLinked very accuratelyError detection/correctionSpecific program execution arrangementsCall stackOperational system

A method and an apparatus to locate a bottleneck of a Java program. The method to locate a bottleneck of a Java program includes the steps of: creating a helper thread in a Java process corresponding to the Java program, and attaching the helper thread to a Java virtual machine (JVM) created in the Java process; inserting a prober into an operating system kernel; monitoring states in the operating system kernel of Java threads in the Java process and sending a signal to the helper thread in response to detect that a Java thread is blocked; and retrieving call stack information from the JVM in response to receive the signal from the operating system kernel and locating the position in source code of the Java program that causes the block using the retrieved call stack information.

Owner:IBM CORP

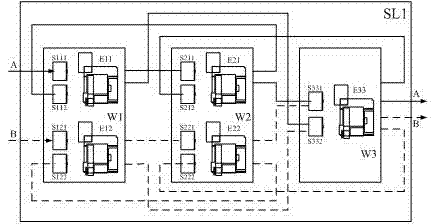

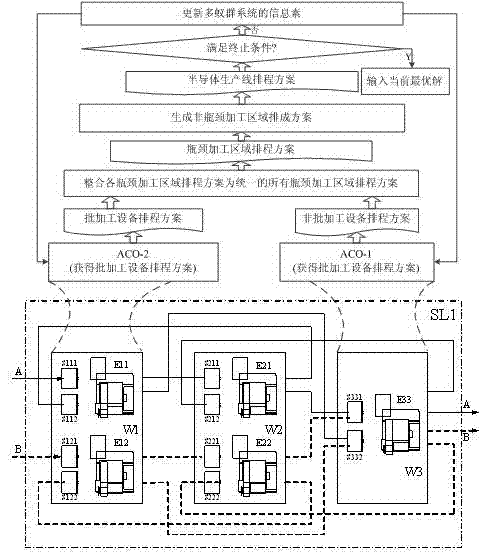

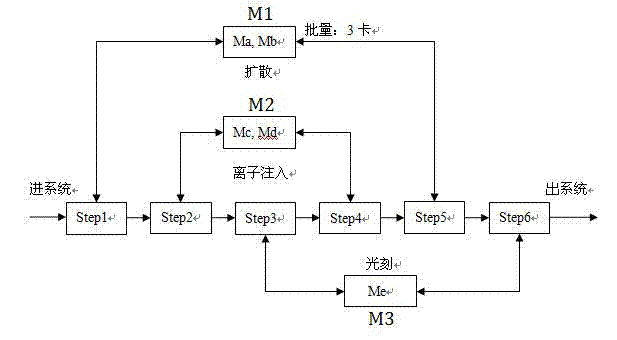

Scheduling method for semiconductor production line based on multi-ant-colony optimization

InactiveCN102253662AThe scheduling method is stableRobust and stableTotal factory controlProgramme total factory controlProduction lineBottleneck

The invention relates to a scheduling method for a semiconductor production line based on multi-ant-colony optimization. The method comprises the following steps of: determining bottleneck processing areas of the semiconductor production line, wherein processing areas, of which average utilization rate exceeds 70 percent, of equipment are regarded as the bottleneck processing areas; setting the number of ant colonies as the number of the bottleneck processing areas, and initializing a multi-ant-colony system; parallelly searching scheduling schemes of all bottleneck processing areas by all ant colony systems; restraining and integrating the scheduling schemes of all bottleneck processing areas into one scheduling scheme for all bottleneck processing areas according to a procedure processing sequence, and deducing the scheduling schemes of other non-bottleneck processing areas by using the scheduling scheme and the procedure processing sequence as restraint to obtain the scheduling scheme of the whole semiconductor production line; and judging whether program ending conditions are met, if so, inputting the scheduling scheme which is optimal in performance, otherwise, updating pheromones of the ant colonies by using the scheduling scheme which is current optimal in performance, and guiding a new round of searching process. The method has the advantages that: an important practical value is provided for solving the optimal dispatching problem of the semiconductor production line; and important instructional significance is provided for improving the production management level of semiconductor enterprises of China.

Owner:TONGJI UNIV

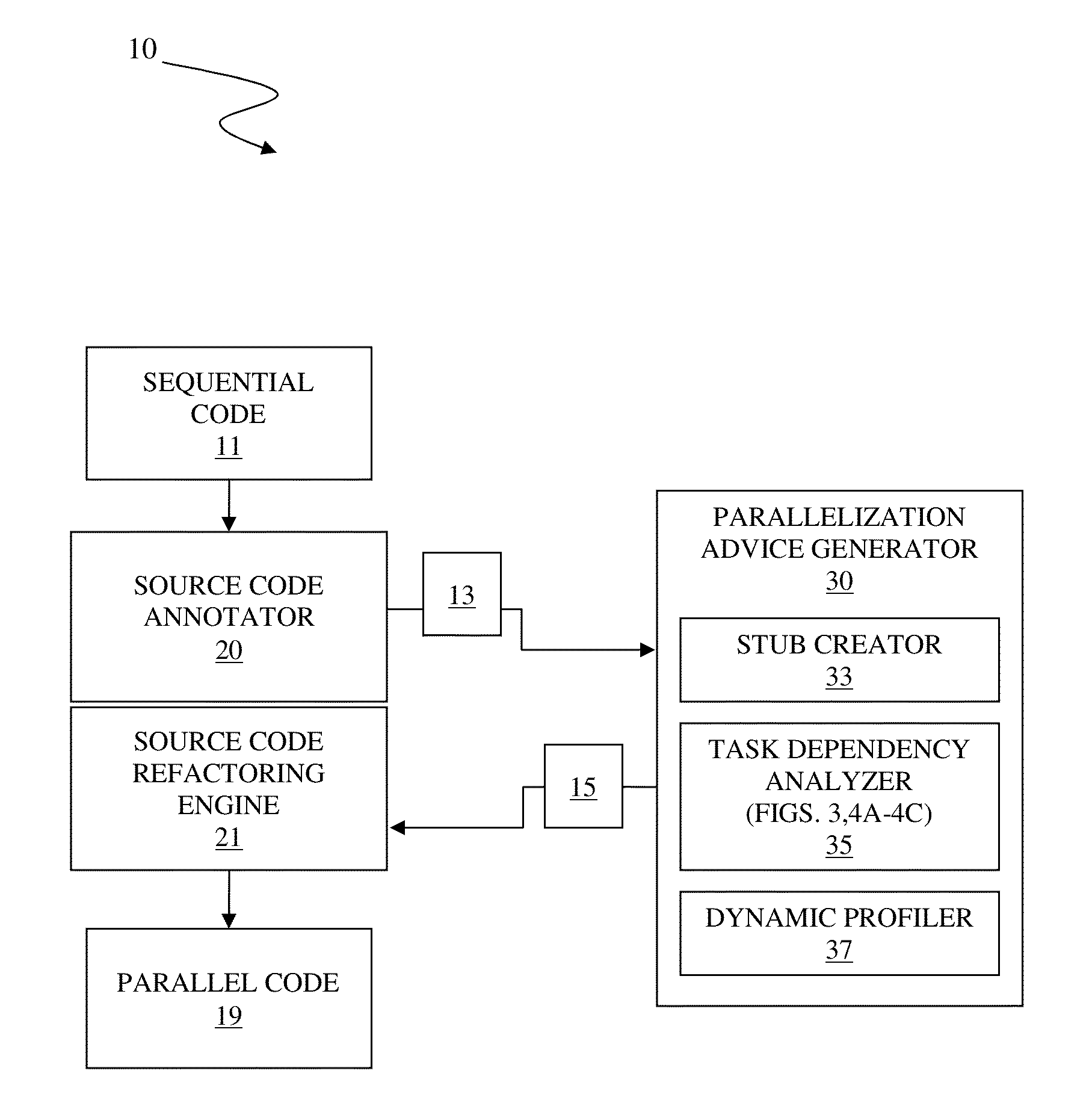

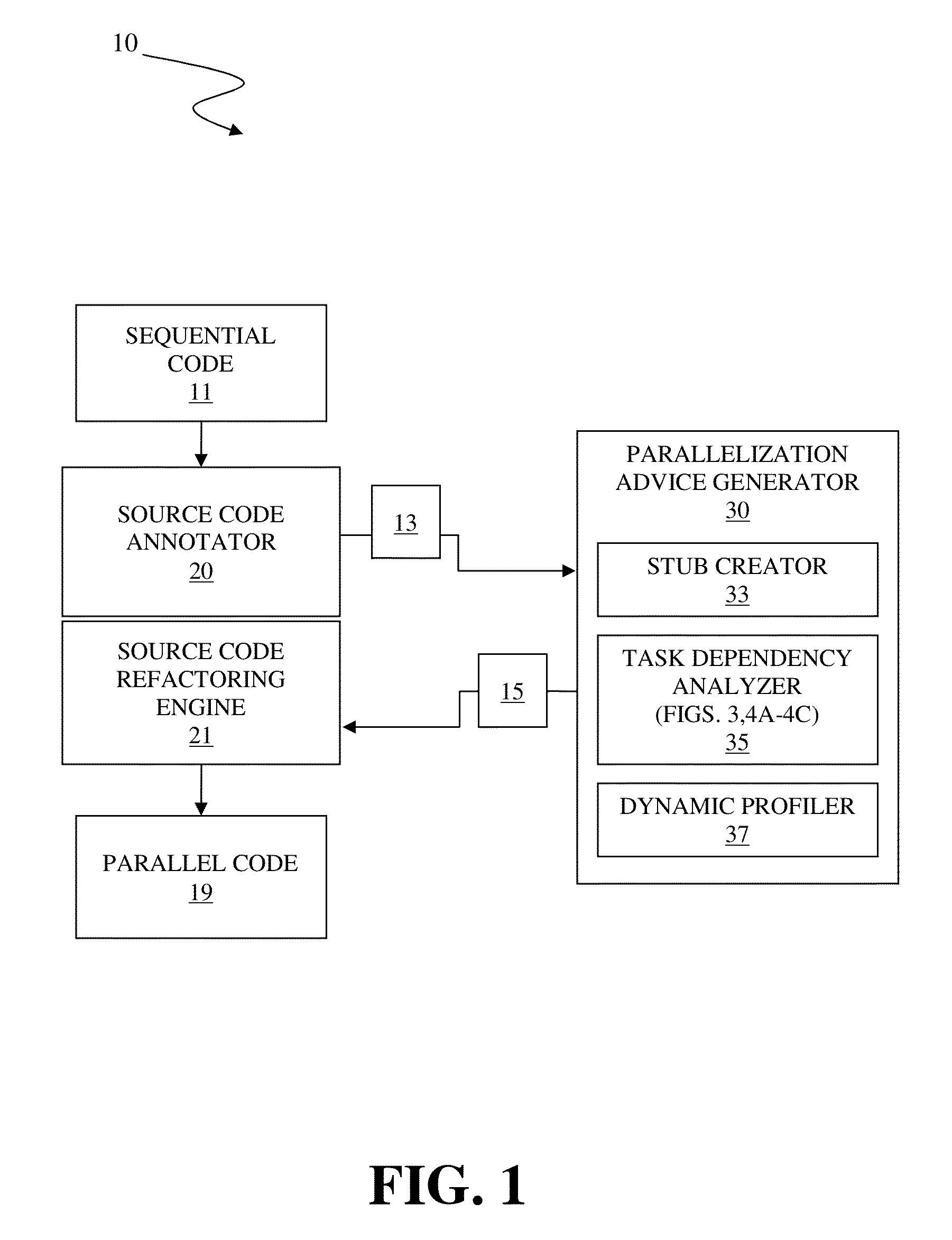

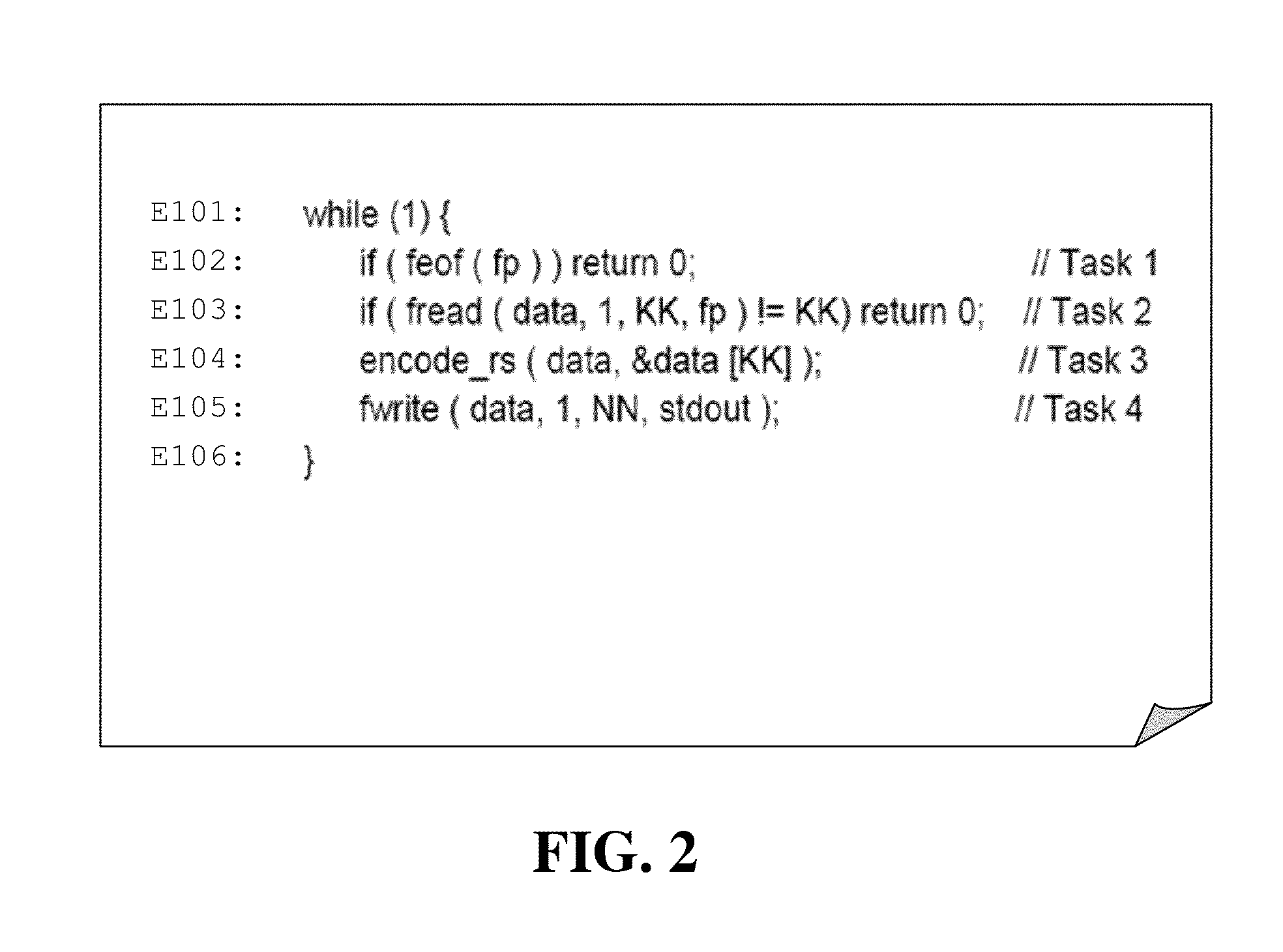

Automatic pipeline parallelization of sequential code

A system and associated method for automatically pipeline parallelizing a nested loop in sequential code over a predefined number of threads. Pursuant to task dependencies of the nested loop, each subloop of the nested loop are allocated to a respective thread. Combinations of stage partitions executing the nested loop are configured for parallel execution of a subloop where permitted. For each combination of stage partitions, a respective bottleneck is calculated and a combination with a minimum bottleneck is selected for parallelization.

Owner:IBM CORP

Database all-in-one machine capable of realizing high-speed storage

InactiveCN103873559AImprove performanceFix performance issuesTransmissionSpecial data processing applicationsEngineeringDatabase application

The invention discloses a database all-in-one machine capable of realizing high-speed storage. The database all-in-one machine is characterized by comprising a computing cluster, an Infiniband network cluster and a Fusion-io distributed storage, wherein the computing cluster comprises a plurality of computing servers which are used for computing; the computing servers form the computing cluster; the Infiniband network cluster comprises a plurality of Infiniband interchangers which are used for data exchange; the Fusion-io distributed storage comprises a plurality of Fusion-io storage servers. A Fusion-io flash memory product is introduced to a storage system of the database all-in-one machine, the I / O (Input / Output) bottleneck problem of the database all-in-one machine is effectively solved, the exponential order promotion is brought for performance indexes such as storage IOPS (Input / Output Operations Per Second) and throughput, and the database all-in-one machine capable of realizing high-speed storage is suitable for database application scenes with higher requirements such as high concurrency and high throughput.

Owner:南京斯坦德云科技股份有限公司

Method and system for test, simulation and concurrence of software performance

InactiveCN103544103AAvoid influenceAvoid interference of response time with each otherSoftware testing/debuggingUser inputSoftware engineering

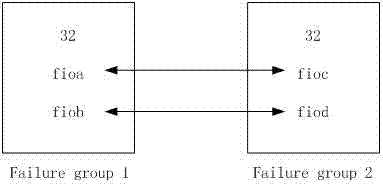

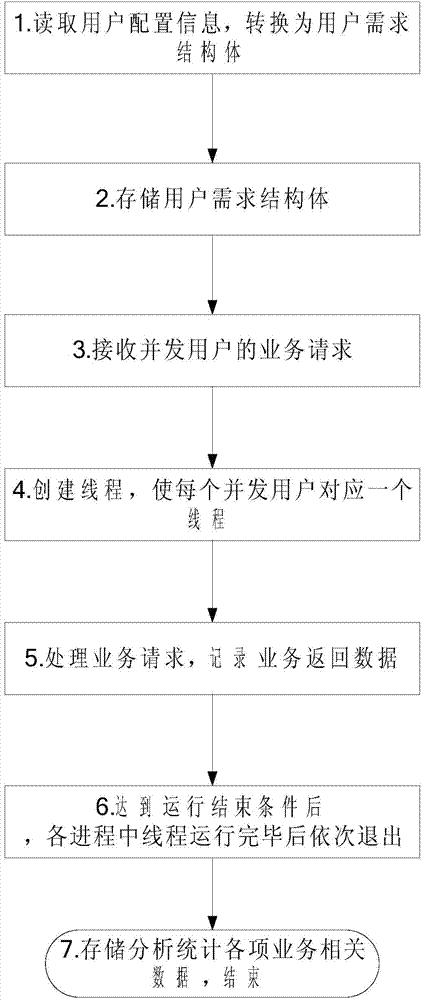

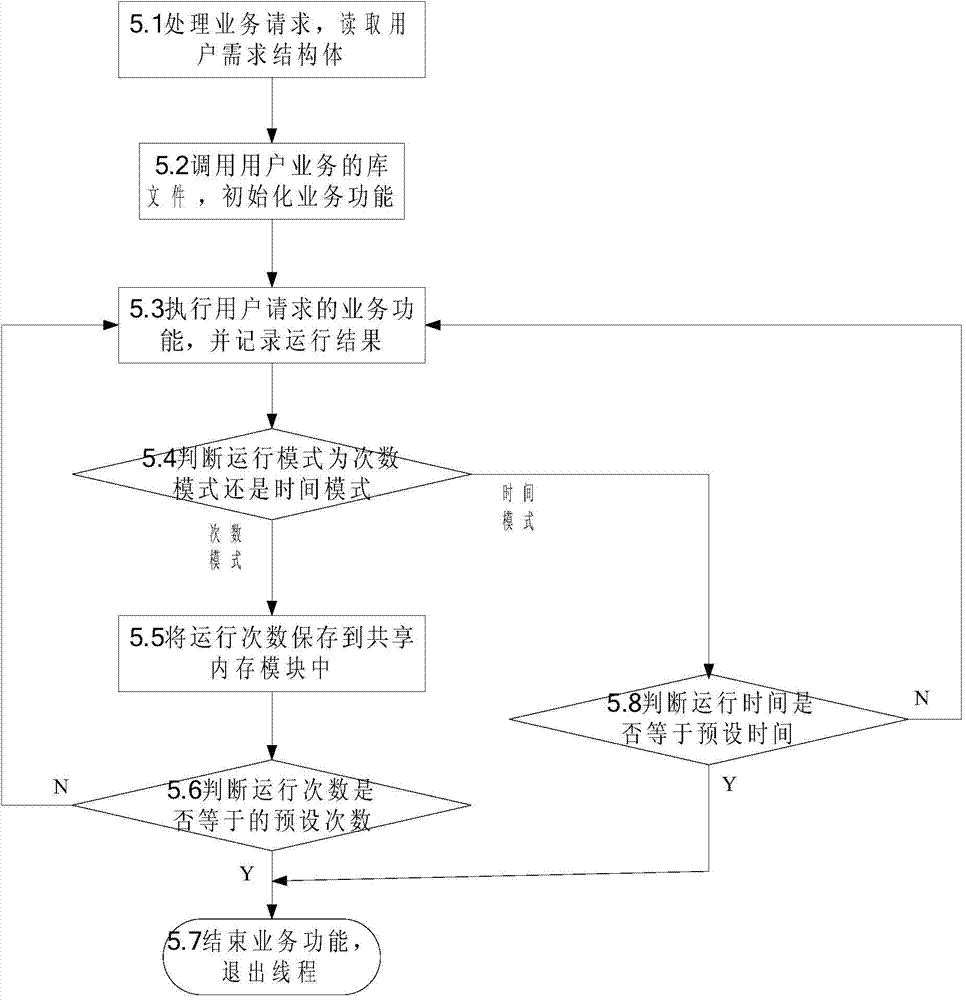

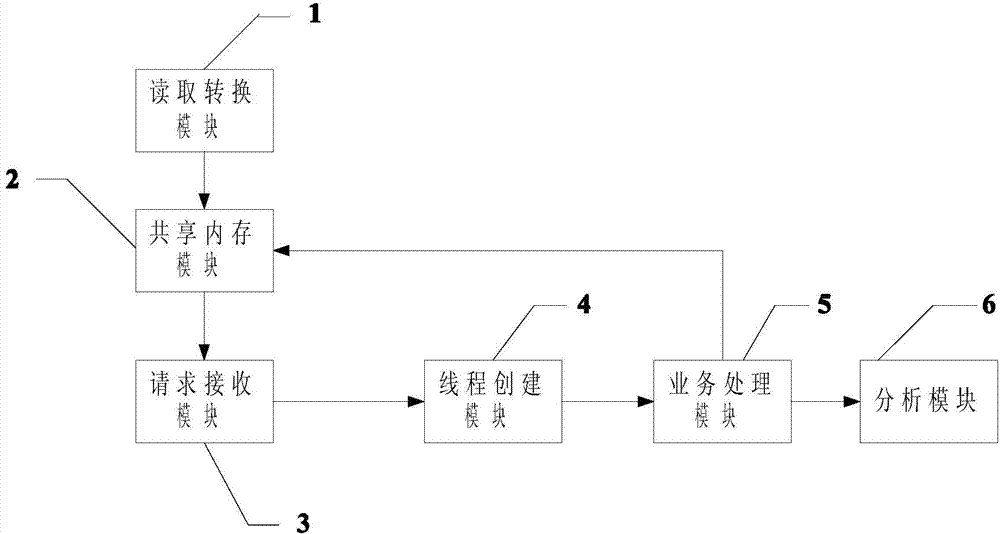

The invention relates to a method for test, simulation and concurrence of software performance. The method for the test, simulation and concurrence of the software performance specifically comprises the steps that (1) user configuration information which is input by a user is read, (2) a user requirement structural body is stored in a shared memory module and mapping is established, (3) service requests of concurrent users are received and at least one test progress is established according to the number of the concurrent users and the user requirement structural body, (4) test threads are established, (5) each test thread is used for processing the service request of a corresponding user and is stopped when a stopping condition is met, (6) operation is ended and the test threads in each test process are stopped after operation of the test threads in each process is ended in sequence, (7) relevant data of each service are stored, analyzed and counted, and then all the processes are finished. According to the method and system for the test, simulation and concurrence of the software performance, the fact that how to simulate user concurrence is explained, bottlenecks are prevented from occurring, and the purpose of a high-concurrency scene by means of a small number of hardware sources is achieved; concurrency stability is guaranteed; support to different user services is achieved; help is provided for positioning and development cycle shorting.

Owner:烟台中科网络技术研究所

Management scheduling technology based on hyper-converged framework

PendingCN112000421AImprove virtualization capabilitiesImprove management abilityResource allocationSoftware simulation/interpretation/emulationResource poolSystem architecture design

The invention discloses a management scheduling technology based on a hyper-converged framework. The method comprises a hyper-converged system architecture design, resource integrated management basedon a hyper-converged architecture, unified computing virtualization oriented to a domestic heterogeneous platform, storage virtualization based on distributed storage, network virtualization based onsoftware definition and a container dynamic scheduling management technology oriented to a high-mobility environment. According to the management scheduling technology based on the hyper-converged framework provided by the invention, the virtualization capability and the management capability of the tactical cloud platform are improved; a key technical support is provided for constructing army maneuvering tactical cloud full-link ecology; an on-demand flexible virtualized computing storage resource pool is provided, heterogeneous fusion computing virtualization is achieved, meanwhile, a distributed storage technology is used for constructing a storage resource pool, a software definition technology is used for constructing a virtual network, a super-fusion resource pool is formed, localization data and network access of application services are achieved, the I / O bottleneck problem of a traditional virtualization deployment mode is solved, and the service response performance is improved.

Owner:BEIJING INST OF COMP TECH & APPL

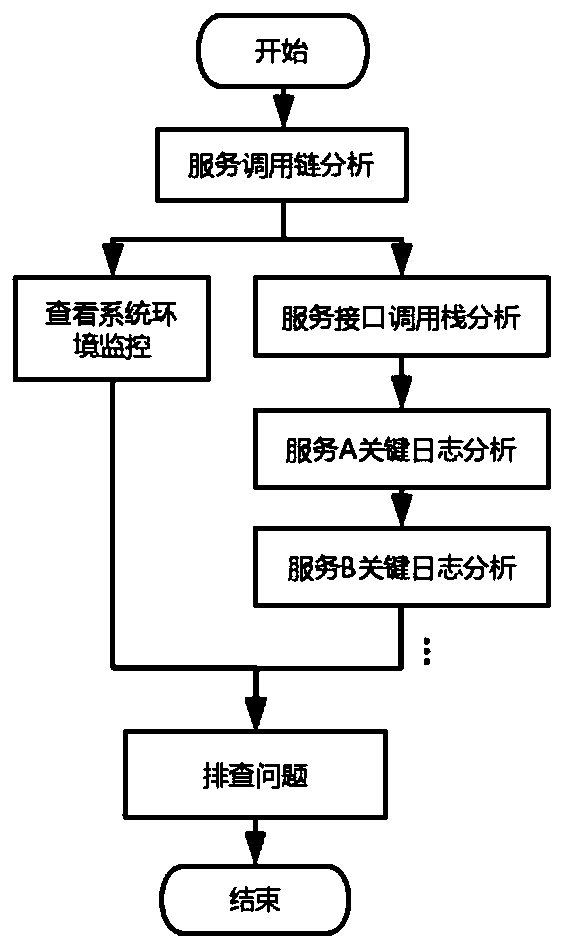

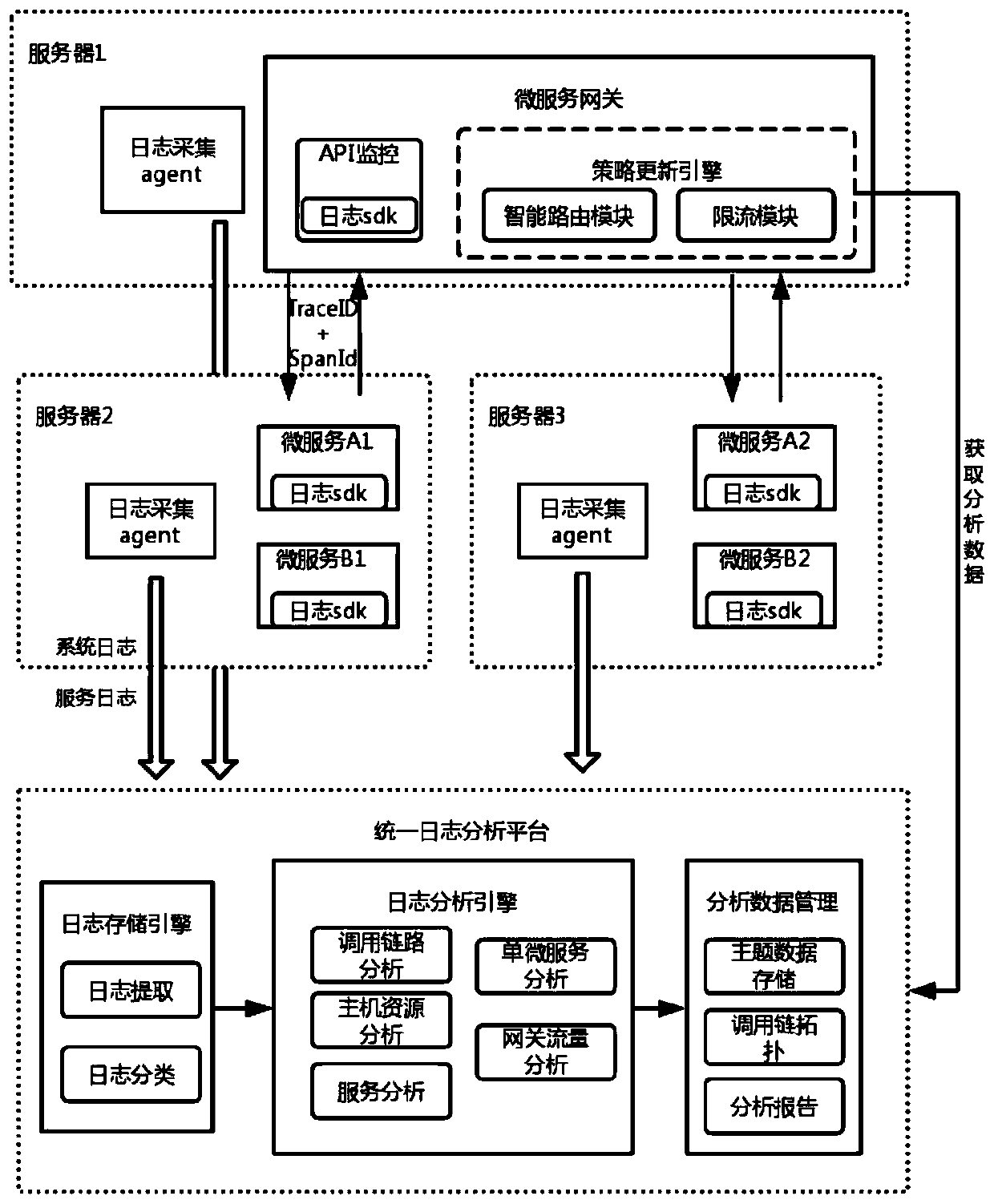

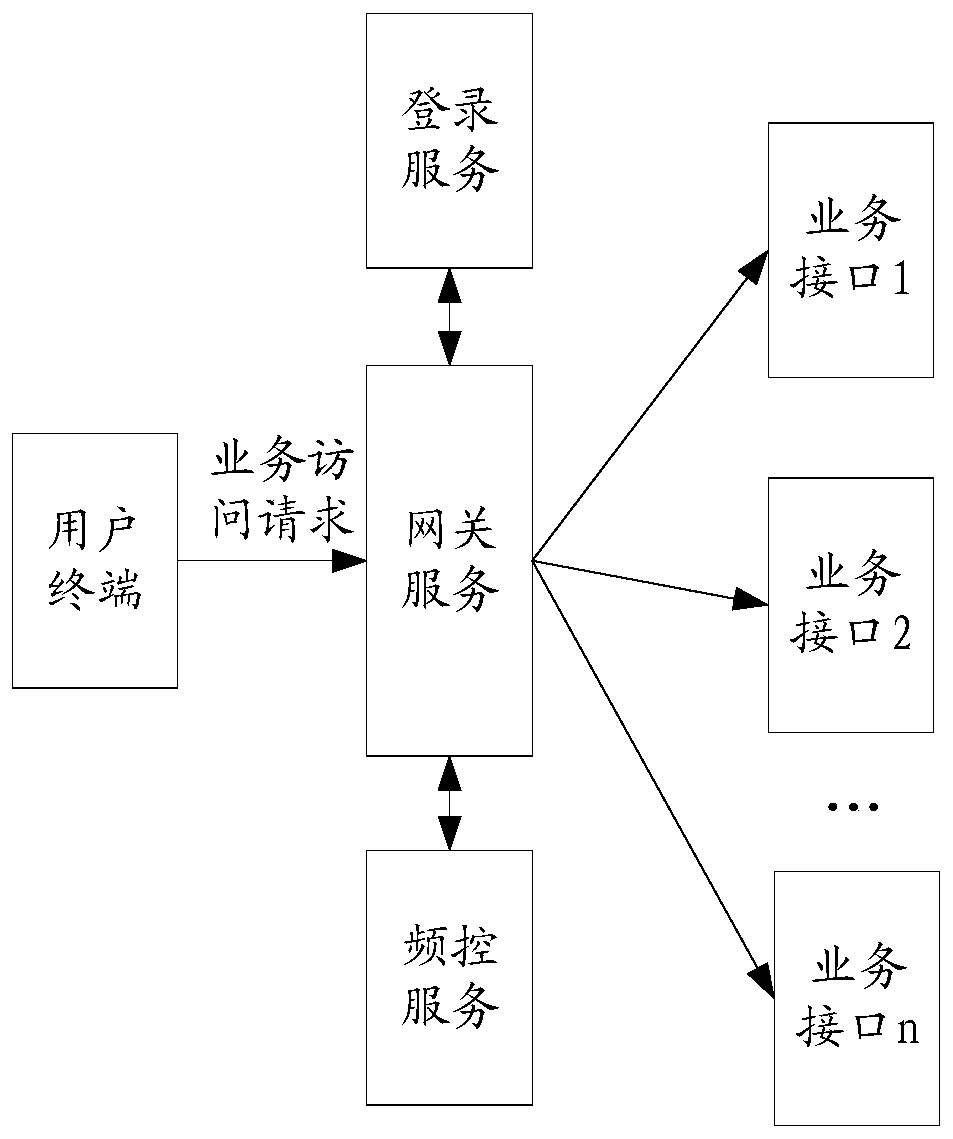

Log analysis-based micro-service performance optimization system and analysis method

ActiveCN109756364AReduce workloadQuickly identify performance bottlenecksHardware monitoringData switching networksMicroservicesService gateway

The invention discloses a micro-service performance optimization method based on log analysis. The micro-service performance optimization method comprises the following steps that a key interface of amicro-service module records an access log called by an interface through a log sdk; The log collection agent module collects performance monitoring information of the service system at regular intervals; The unified log analysis platform carries out extraction and analysis according to the access logs to obtain performance bottleneck points of the system; The micro-service gateway updates the routing strategy of the intelligent routing module at regular intervals through the performance indexes of the micro-service modules; Meanwhile, the API monitoring module extracts the external request number and the throughput through log analysis system processing, and then obtains the external current limiting weight of the microservice gateway according to the external request number, the throughput and the performance bottleneck point. According to the method, through automatic extraction and analysis of logs, a complete calling chain topology is generated, hidden performance doubtful pointsare found, performance bottleneck points of the system are quickly found out, and the actual workload of development and operation maintenance personnel can be effectively reduced.

Owner:CHENGDU SEFON SOFTWARE CO LTD

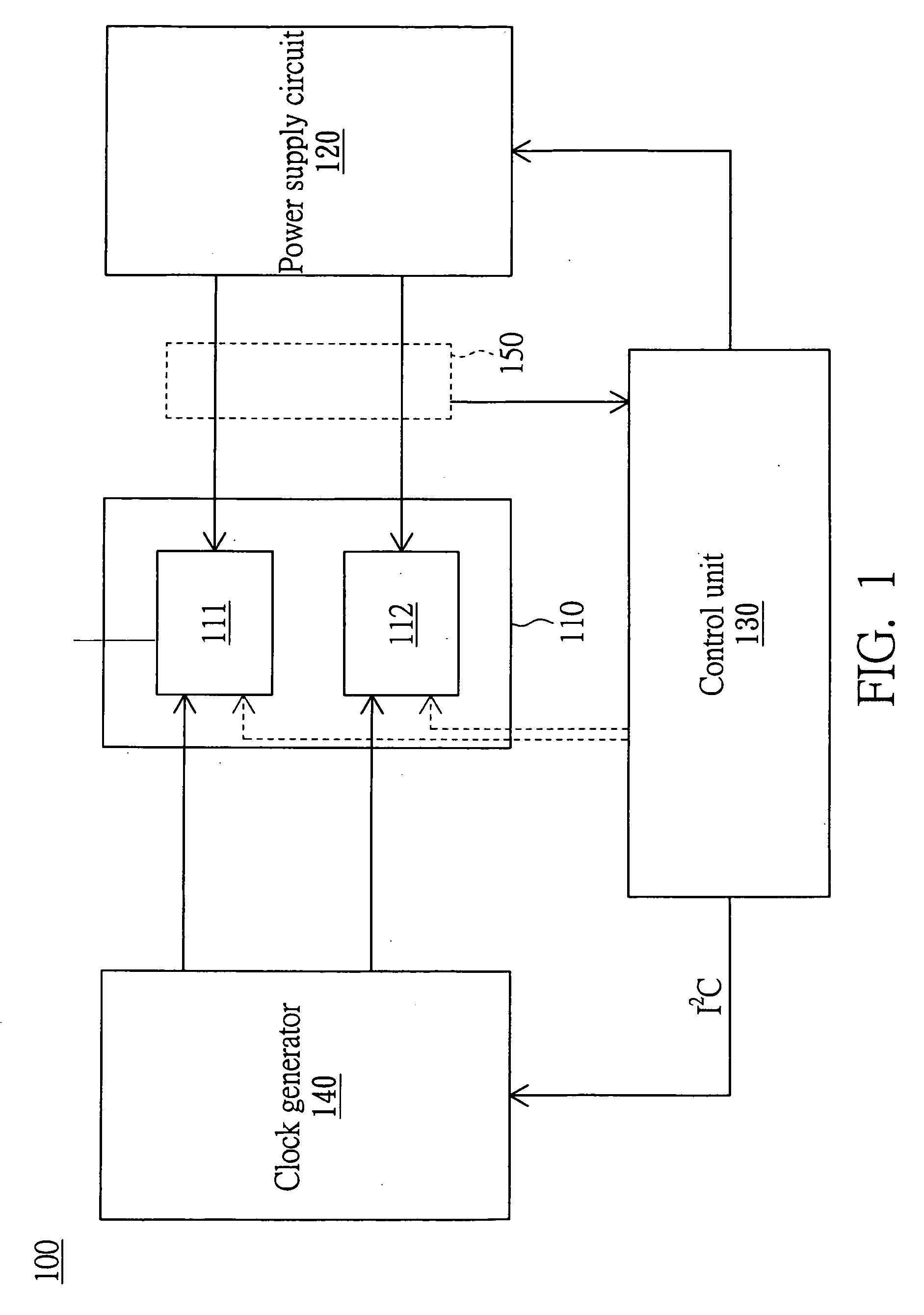

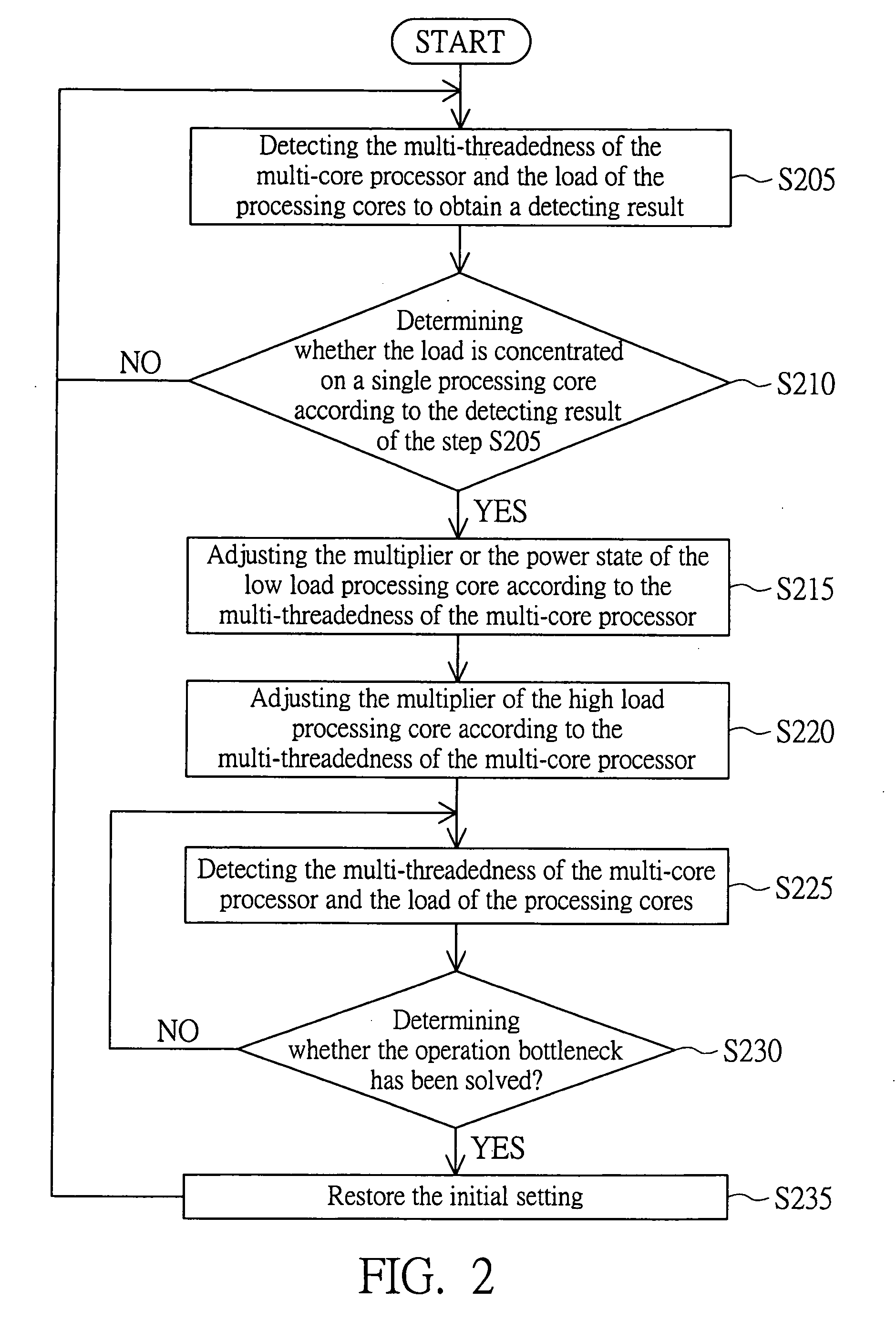

Adjusting performance method for multi-core processor

InactiveUS20080189569A1Decrease operation bottleneckImprove throughputMultiprogramming arrangementsGenerating/distributing signalsProcessing coreMulti-core processor

An adjusting performance method for a multi-core processor is provided. A plurality of processing cores of the multi-core processor at least includes a first processing core and a second processing core. The adjusting performance method includes the steps of detecting the multi-threadedness of the multi-core processor and the load of the processing cores to obtain a detecting result in the step (a), determining whether the operation bottleneck is concentrated on one processing core of the processing cores according to the detecting result in the step (b), and adjusting the operating frequency of the first processing core according to the multi-threadedness of the multi-core processor if the operation bottleneck occurs at the first processing core in the step (c).

Owner:ASUSTEK COMPUTER INC

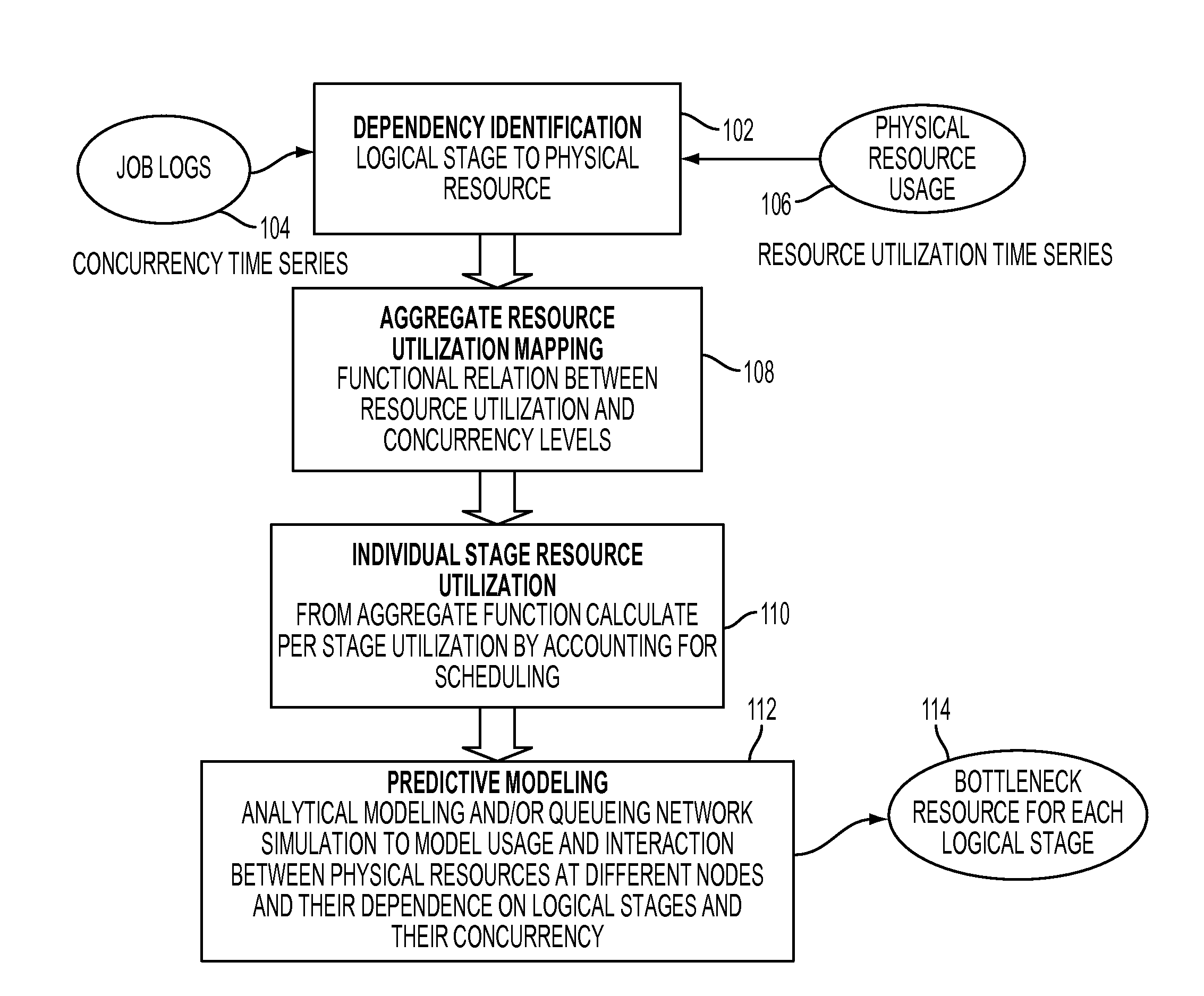

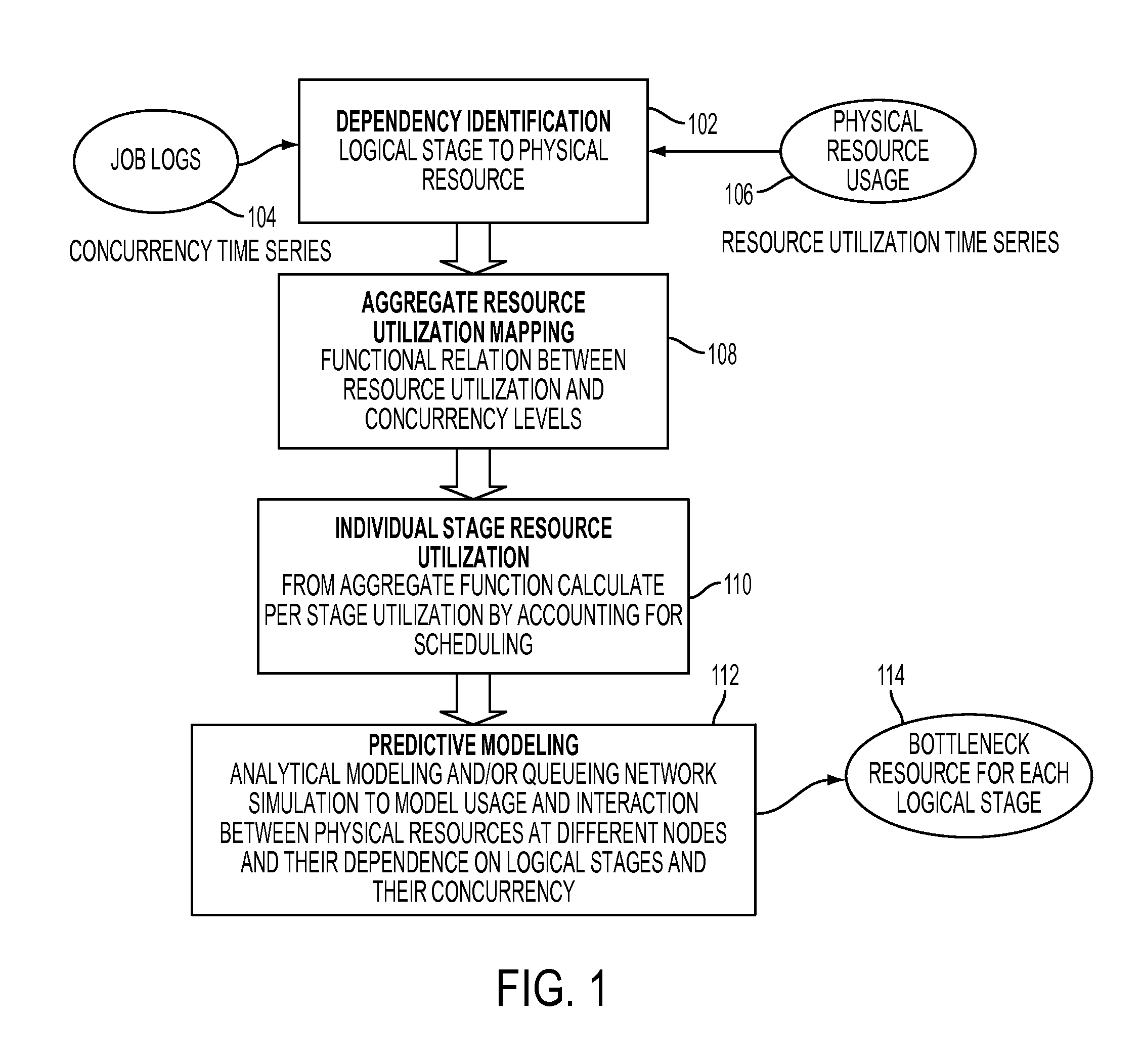

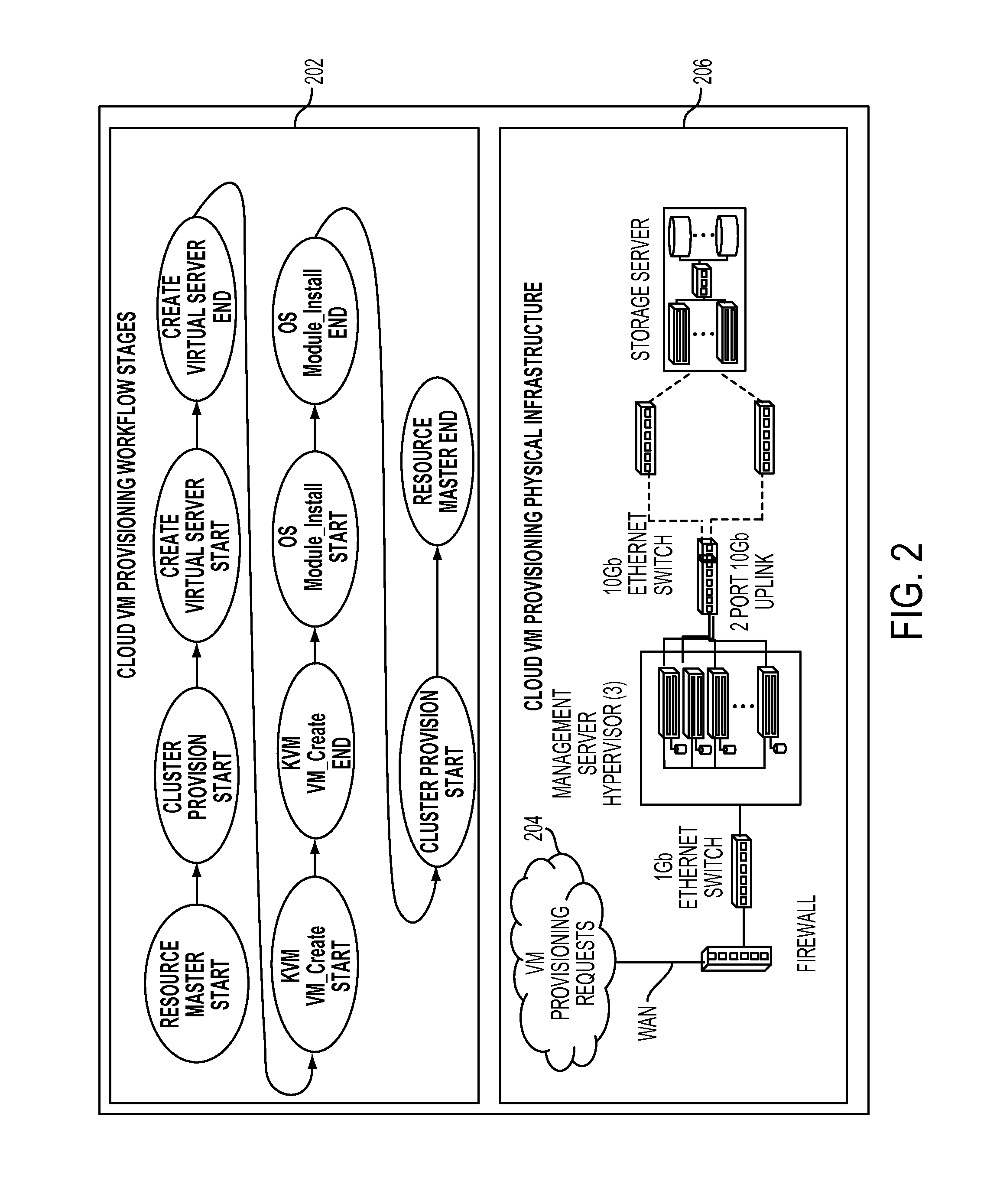

Resource bottleneck identification for multi-stage workflows processing

InactiveUS20150178129A1Program initiation/switchingDesign optimisation/simulationPhases of clinical researchComputing systems

Identifying resource bottleneck in multi-stage workflow processing may include identifying dependencies between logical stages and physical resources in a computing system to determine which logical stage involves what set of resources; for each of the identified dependencies, determining a functional relationship between a usage level of a physical resource and concurrency level of a logical stage; estimating consumption of the physical resources by each of the logical stages based on the functional relationship determined for each of the logical stages; and performing a predictive modeling based on the estimated consumption to determine a concurrency level at which said each of the logical stages will become bottleneck.

Owner:IBM CORP

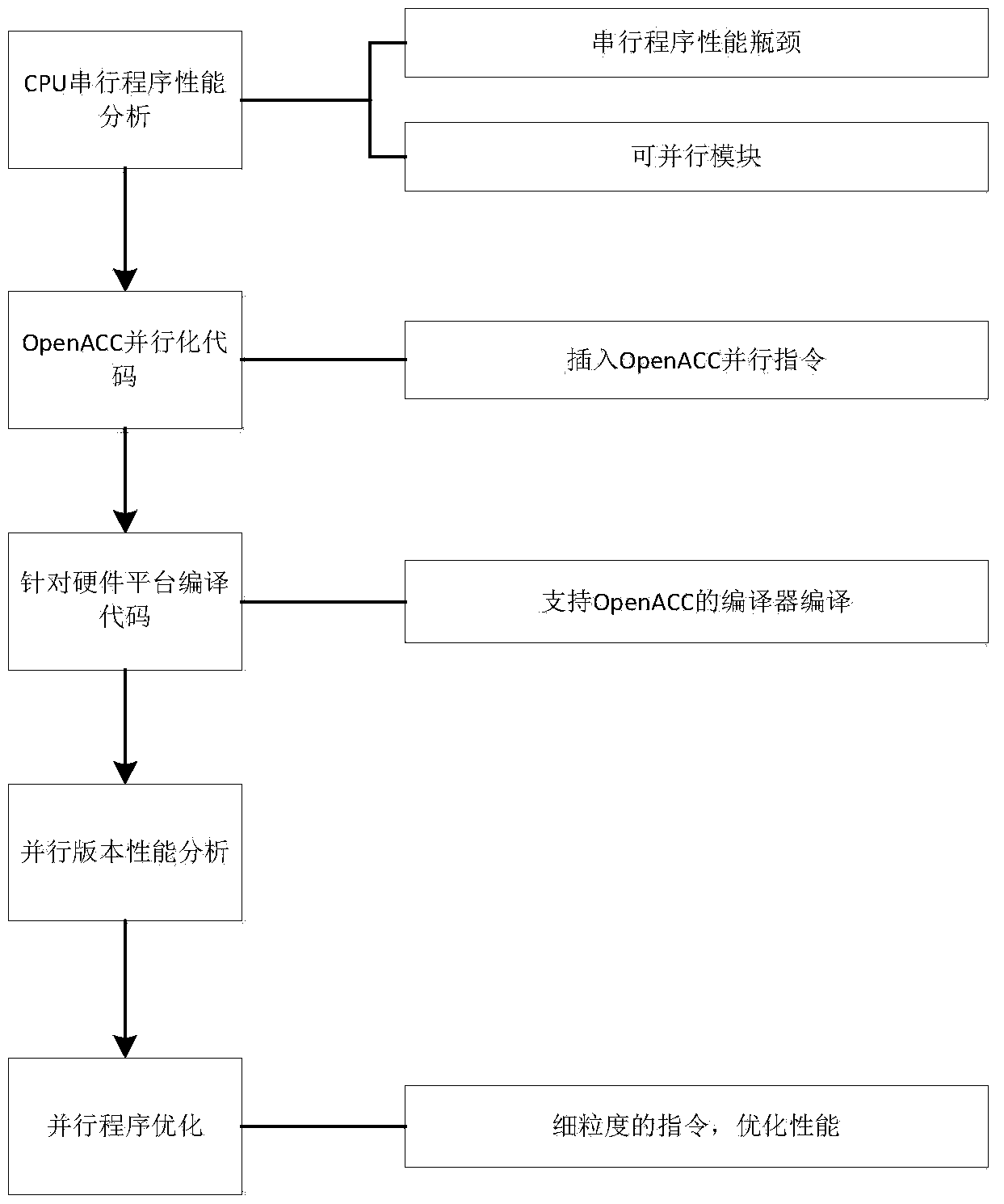

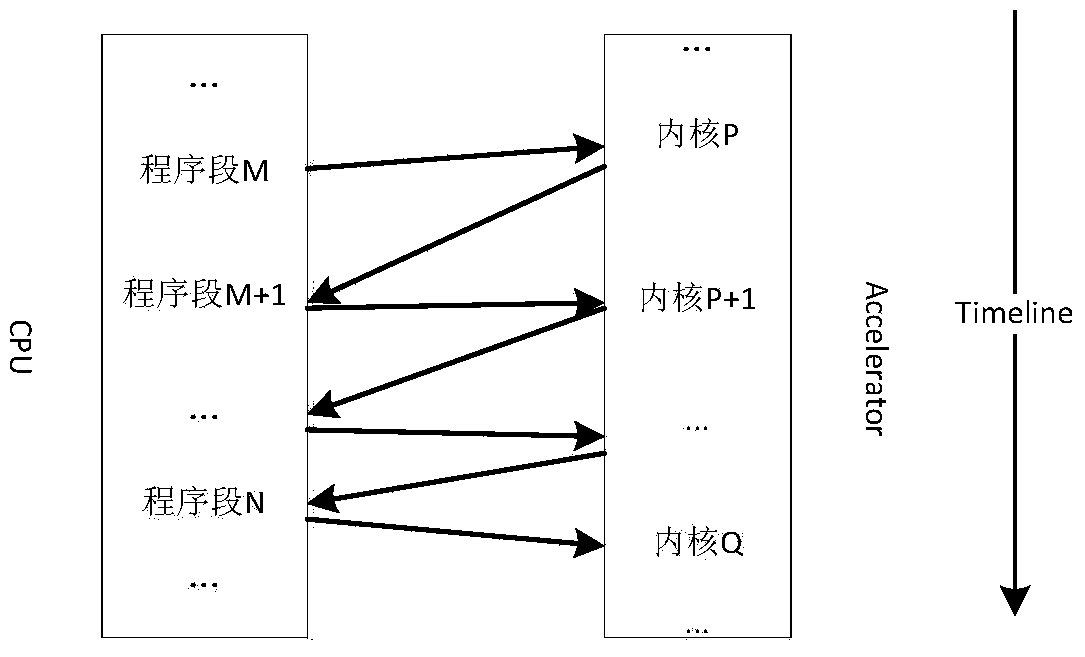

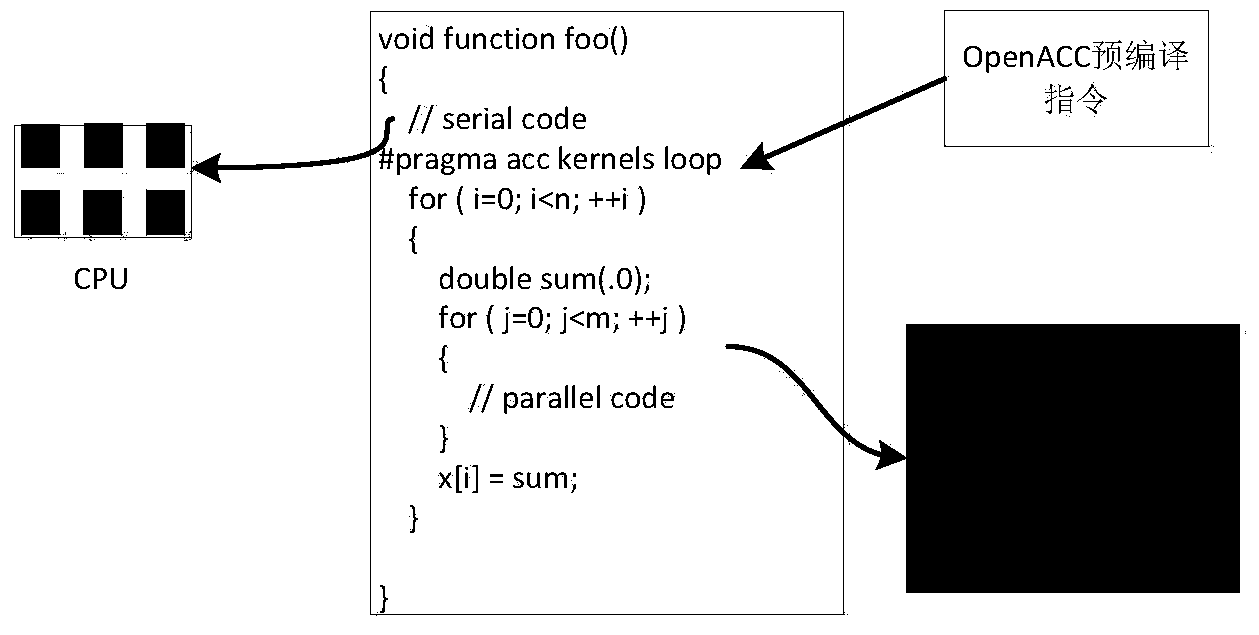

Method for quickly developing heterogeneous parallel program

ActiveCN104035781AShorten the development cycleReduce bug debugging timeSpecific program execution arrangementsSoftwareTransplantation

The invention provides a method for quickly developing a heterogeneous parallel program, and relates to performance analysis of a CPU (central processing unit) serial program and transplantation of a heterogeneous parallel program. The method includes: firstly, performing performance and algorithm analysis on the CPU serial program, and positioning a performance bottleneck and parallelizability of the program; secondly, inserting an OpenACC pre-compilation command on the basis of an original code to obtain a heterogeneous parallel code which can be executed in heterogeneous parallel environment; compiling and executing the code according to specified parameters of a hardware and software platform, and determining whether further optimization is needed or not according to a program run result. Compared with the prior art, the method has the advantages that existing codes need not to be reconstructed; multilanguage support is realized, and languages such as C / C++ and FORTRAN (formula translator) are supported; cross-platform and cross-hardware are realized, operating systems such as Linux, Windows, Mac and the like are supported, and hardware such as Nvidia, GPU of AMD and Intel Xeon Phi is supported. By the method which is high in practicality and easy to popularize, existing programs can be parallelized efficiently, and the programs are enabled to make full use of computing power of a heterogeneous system.

Owner:三多(杭州)科技有限公司

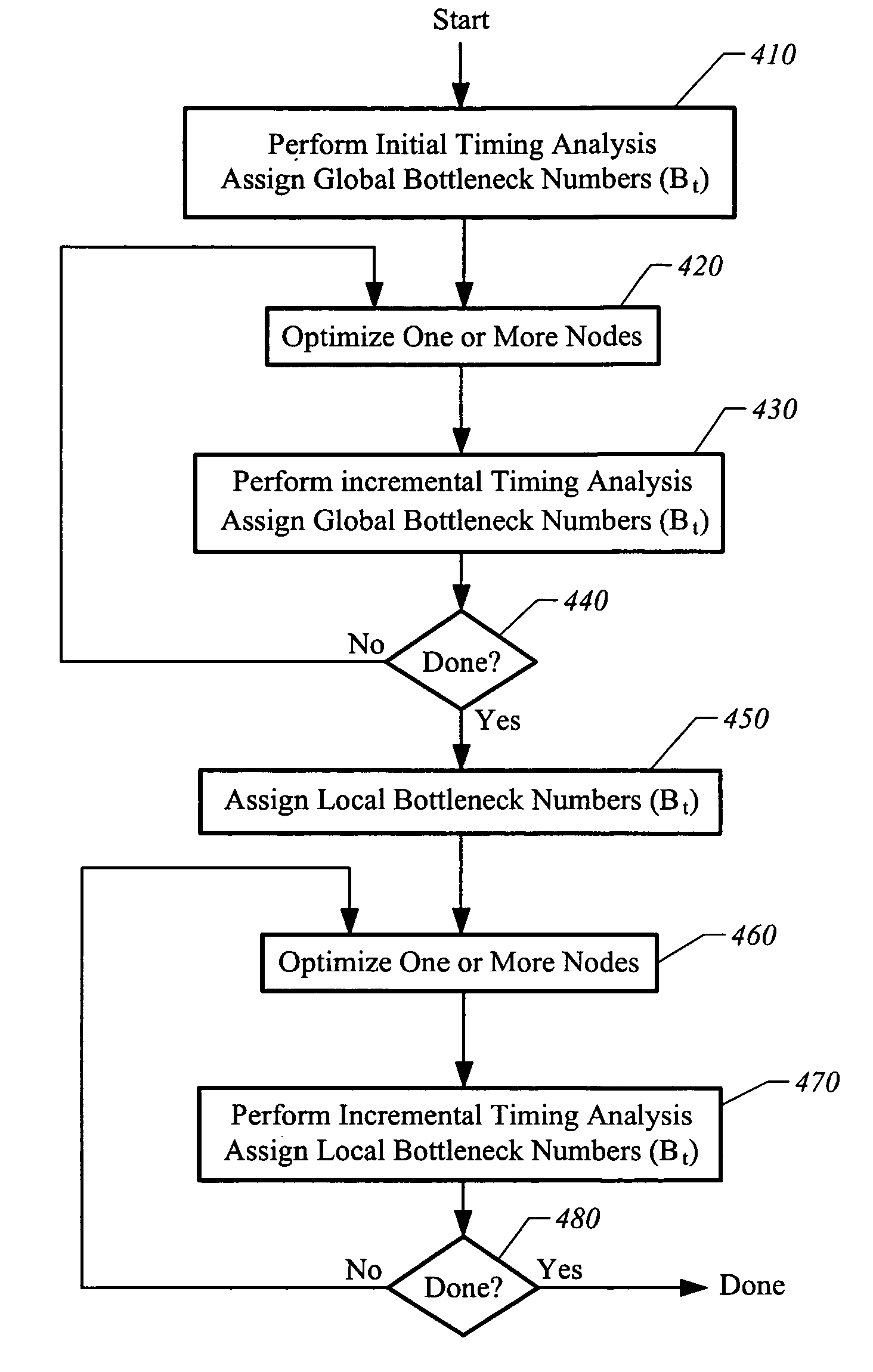

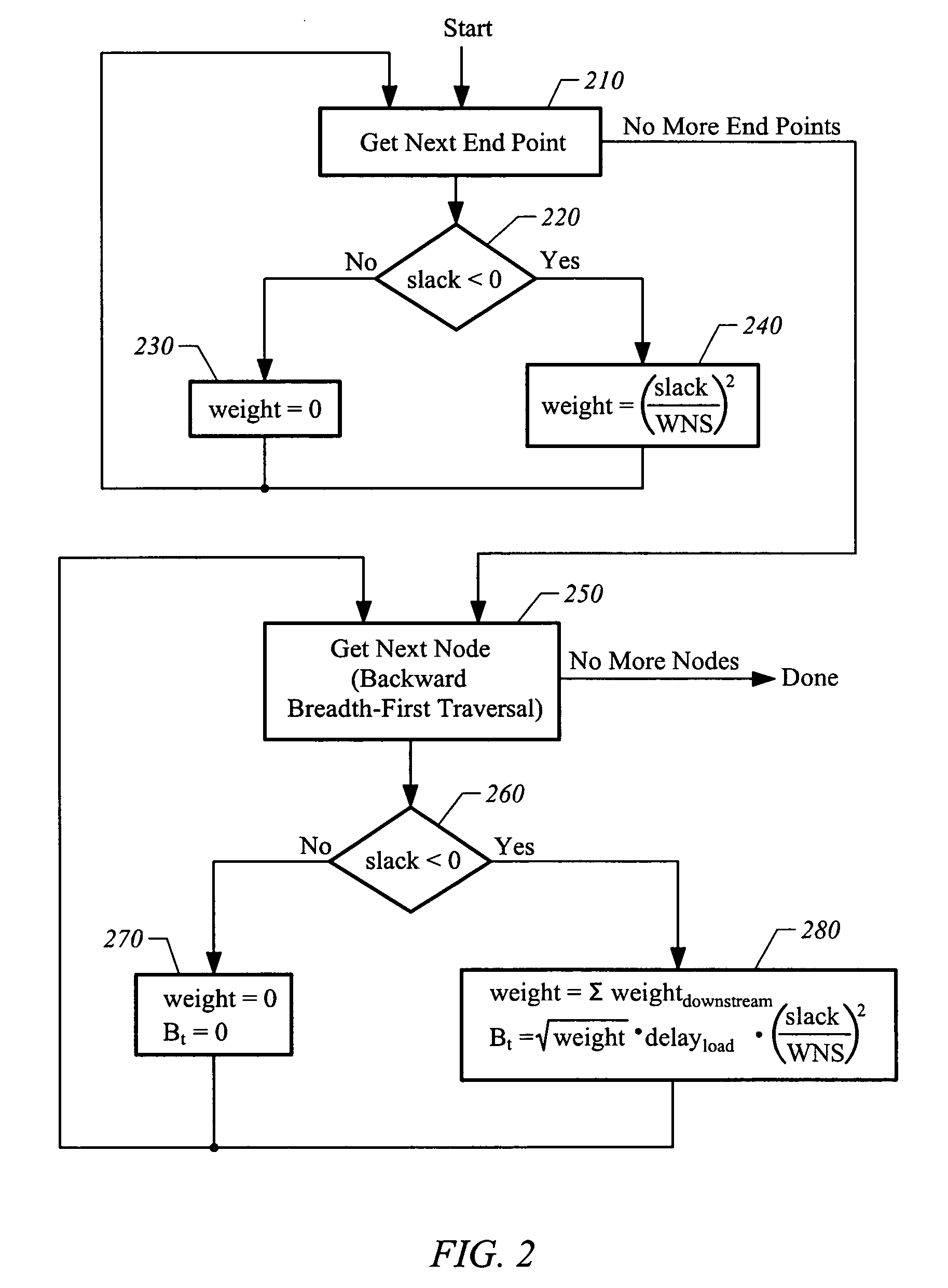

Method and apparatus for optimization of digital integrated circuits using detection of bottlenecks

ActiveUS7191417B1Reduce the amount of calculationHigh return on investmentDetecting faulty computer hardwareCAD circuit designTheoretical computer scienceObject function

A method and apparatus is described which allows efficient optimization of integrated circuit designs. By performing a global analysis of the circuit and identifying bottleneck nodes, optimization focuses on the nodes most likely to generate the highest return on investment and those that have the highest room for improvement. The identification of bottleneck nodes is seamlessly integrated into the timing analysis of the circuit design. Nodes are given a bottleneck number, which represents how important they are in meeting the objective function. By optimizing in order of highest bottleneck number, the optimization process converges quickly and will not get side-tracked by paths that cannot be improved.

Owner:SIEMENS PROD LIFECYCLE MANAGEMENT SOFTWARE INC

System and method for processing high-concurrency data request

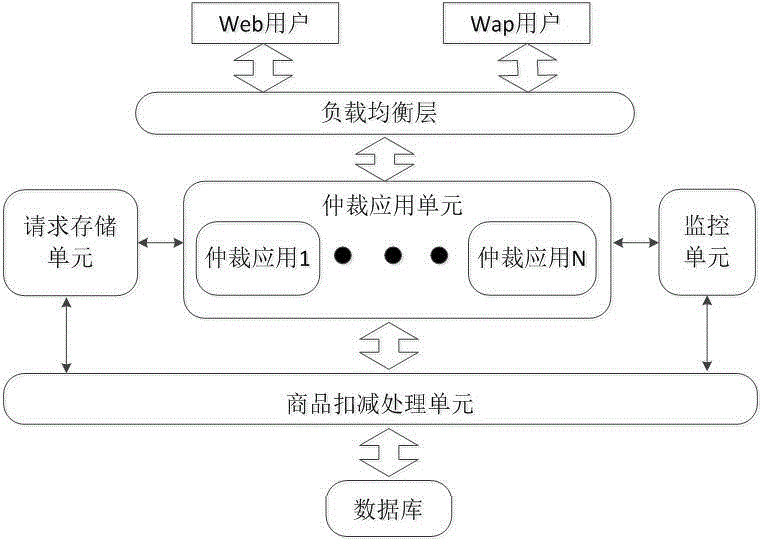

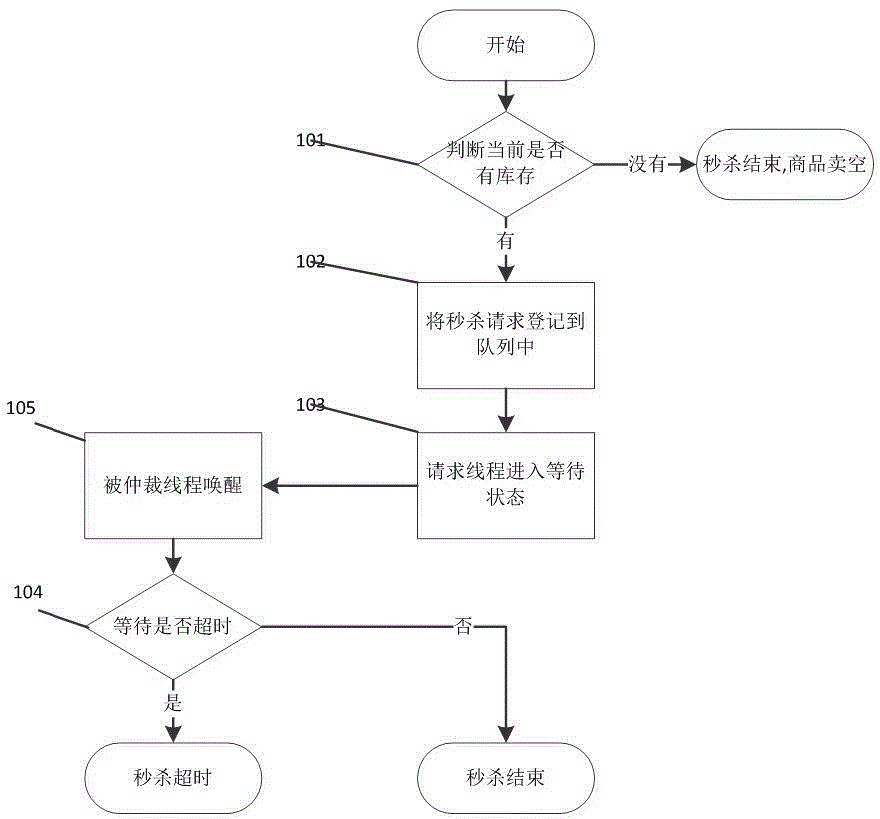

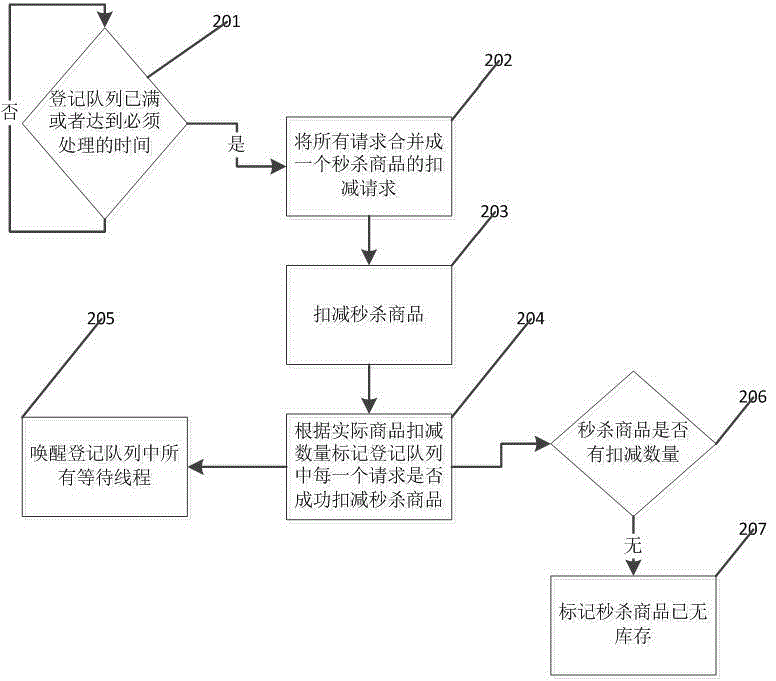

ActiveCN104636957ASolve bottlenecksReduce overheadInterprogram communicationData switching networksE-commerceDistributed computing

The invention belongs to the technical field of electronic commerce, and in particular relates to a system and a method for processing a high-concurrency data request. A main body of the system provided by the invention is divided into three parts, namely, a load balancing layer, an arbitration application unit and a goods deduction processing unit, wherein the load balancing layer is used for equally distributing a great amount of client requests to arbitration applications at a rear end; the arbitration application unit is used for receiving user requests which are distributed by the load balancing layer, carrying out qualification judgment, merging and recombining the user requests, and then transmitting to the goods deduction processing unit to carry out deduction processing of goods; the goods deduction processing unit is used for receiving goods deduction requests from the arbitration applications, and carrying out inventory quantity deduction on second-kill goods. By virtue of a single-thread batch processing manner, the bottleneck of a database system caused by multi-thread high concurrency is avoided; meanwhile, high business handling capability is reserved; the cost of system resources is also reduced as low as possible.

Owner:SHANGHAI HANDPAL INFORMATION TECH SERVICE

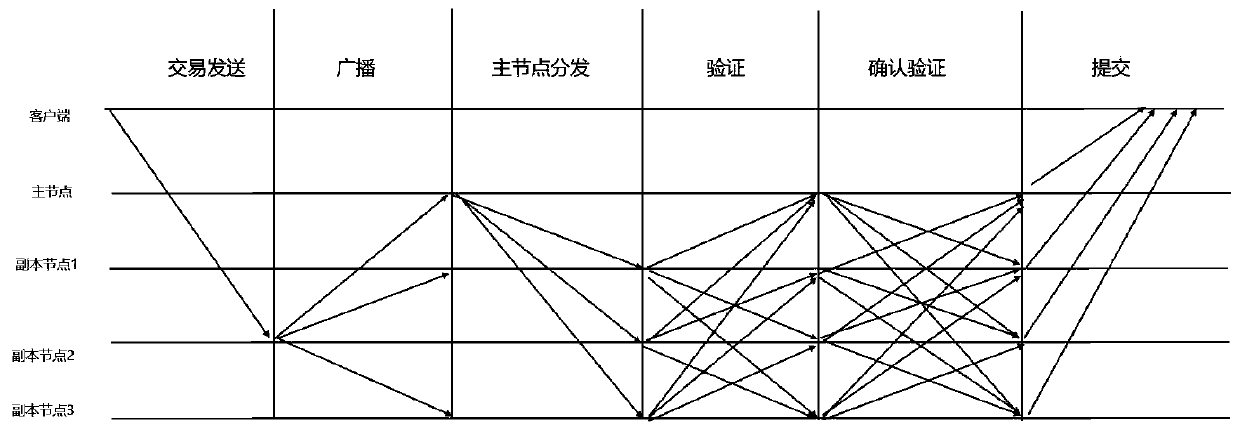

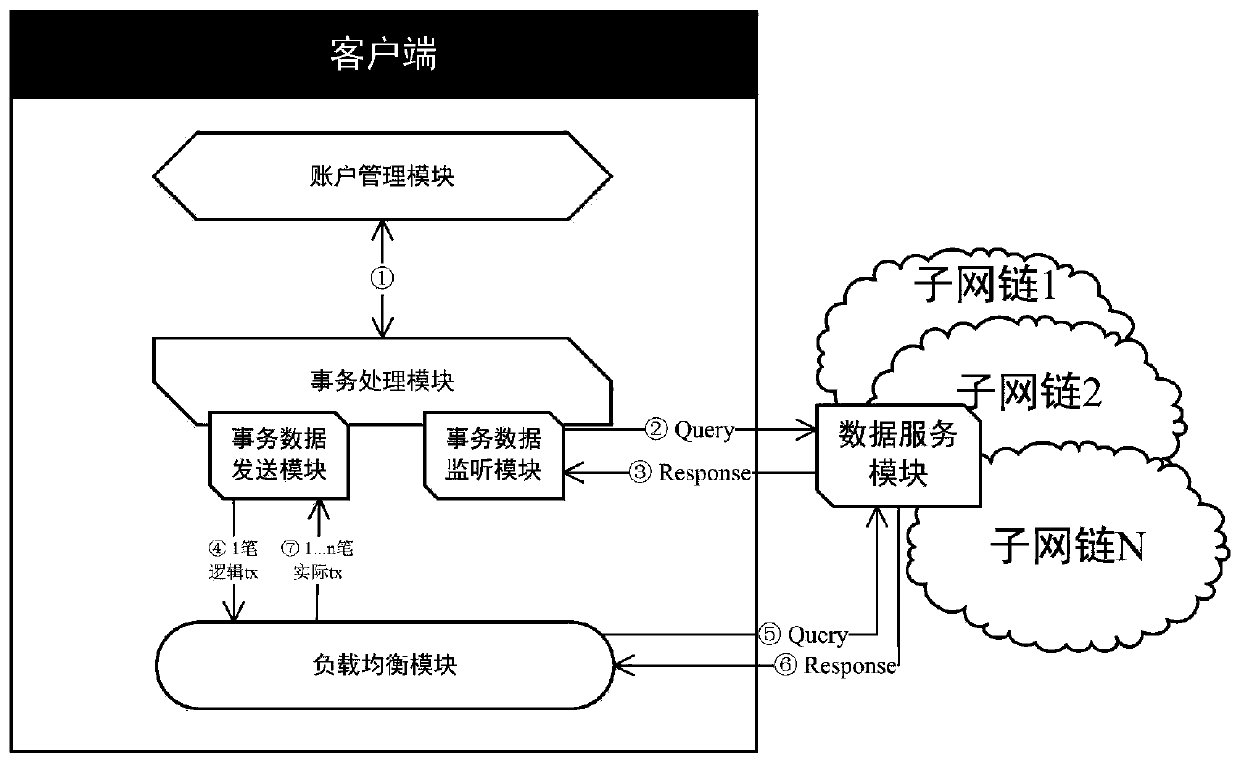

Block chain network node load balancing method based on PBFT

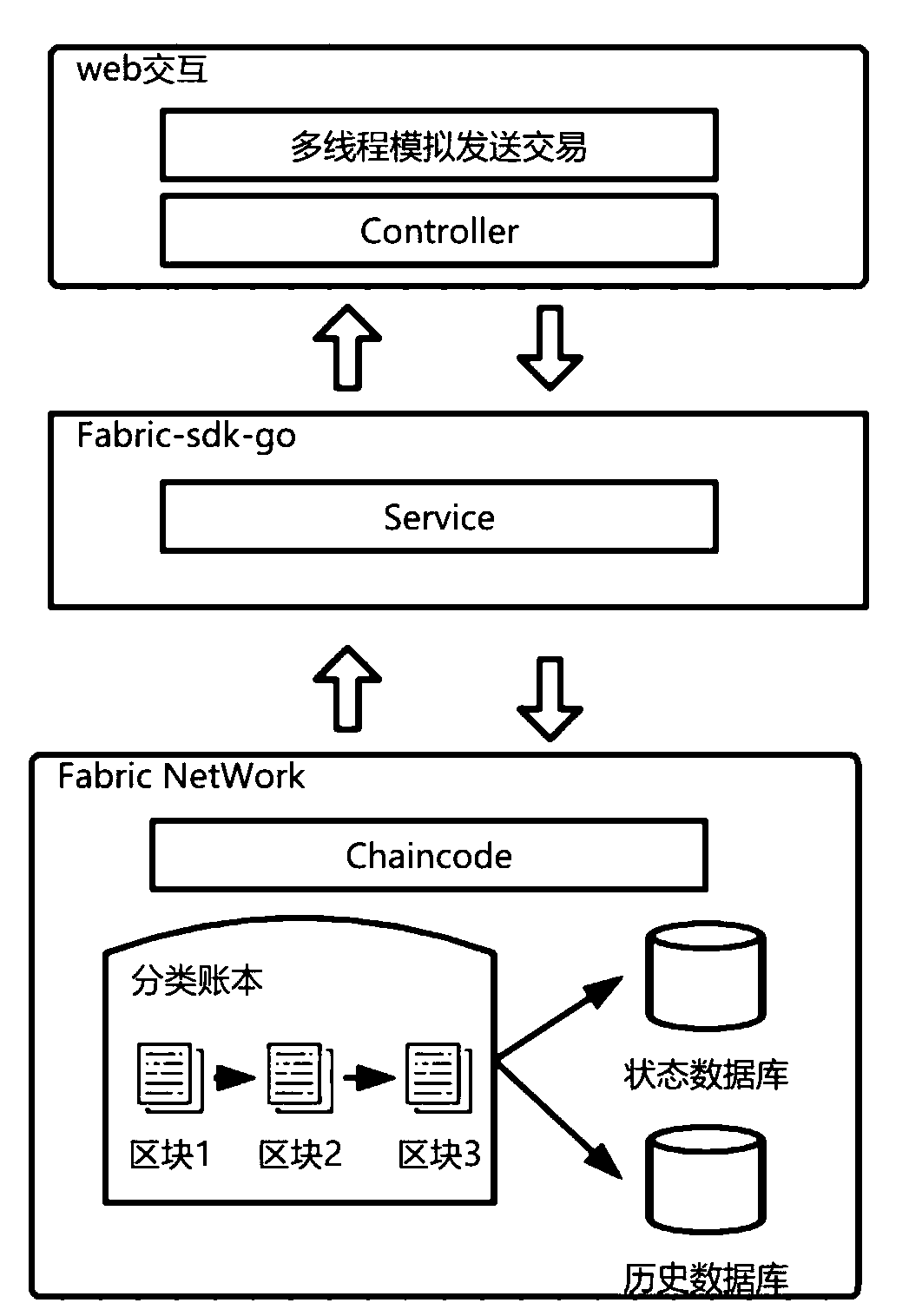

ActiveCN110971684AGuaranteed validityGuaranteed fault toleranceFinanceUser identity/authority verificationEngineeringFinancial transaction

The invention discloses a block chain network node load balancing method based on PBFT, the method is realized based on an alliance chain platform-super account book Fabric, and the system is dividedinto a web layer, a business layer Fabric-sdk-go, an intelligent contract layer namely a chain code layer, and a Fabric namely a block chain storage layer. According to the invention, a main node carries out transaction legality judgment, verification, hash calculation and the like. When a large number of transactions arrive at a main node at the same time, the workload of the main node is very large, the other nodes are in an idle state, and when block information is broadcasted, the large number of transactions can cause too large block output bandwidth of the main node and too heavy load, which is an important factor influencing the performance bottleneck of a block chain system. The load balancing method solves the problem, a part of work of the main node is completed by other nodes, the compressed transaction information is broadcasted, the network exit bandwidth is reduced, the transaction throughput of the system is improved, and meanwhile, the safety and the error-tolerant rateof the system are still ensured.

Owner:BEIJING UNIV OF TECH

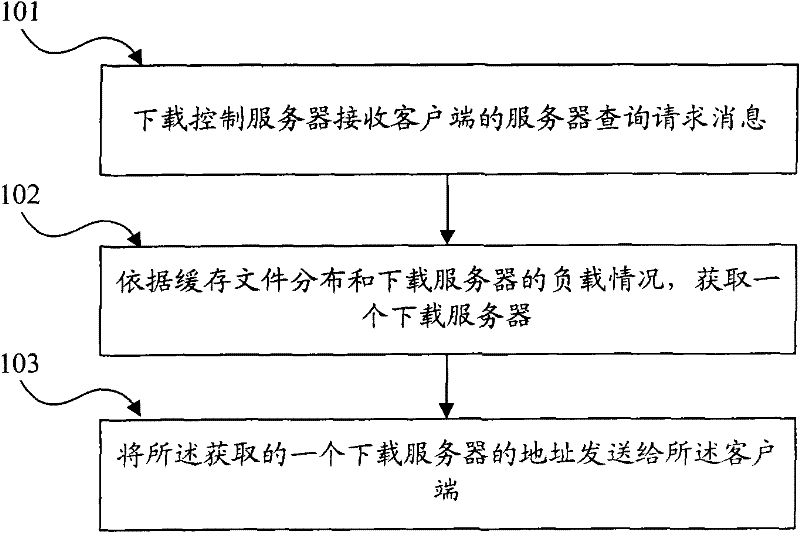

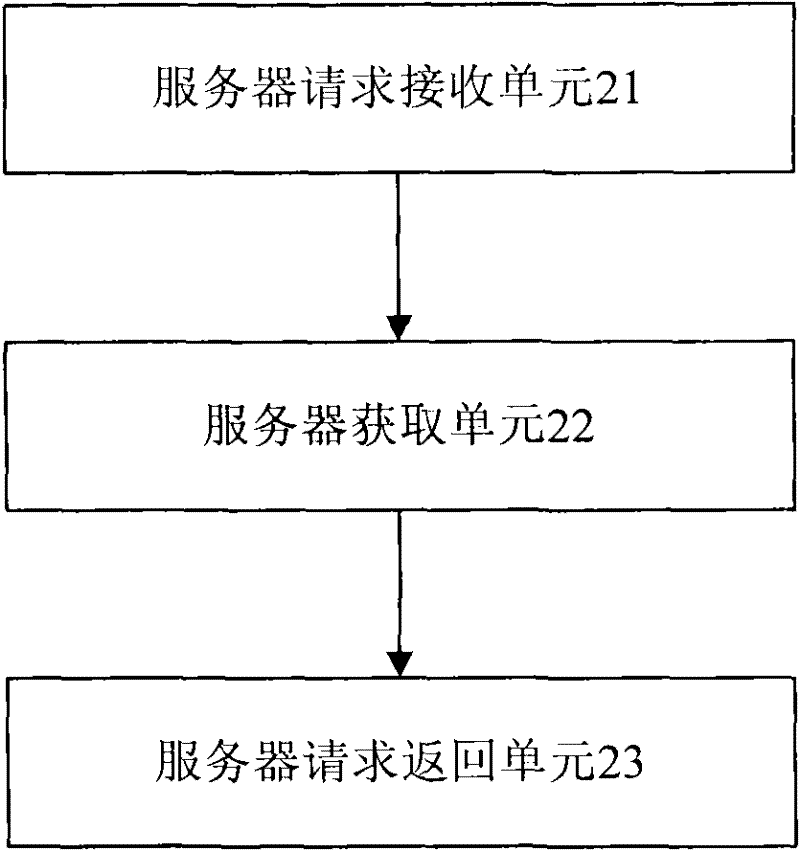

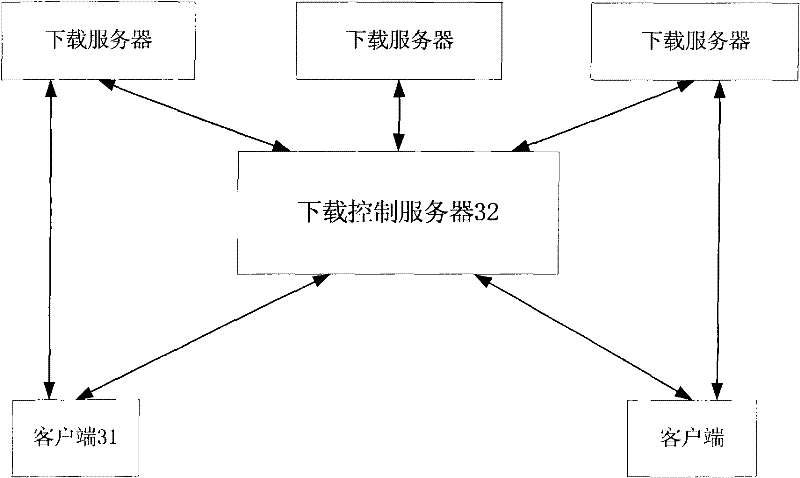

Method, device and system for supporting large quantity of concurrent downloading

The invention discloses a method, a device and a system for supporting large quantity of concurrent downloading and relates to the field of computer communication. By using the method, device and system provided by the invention, the downloading pressure of a server caused by the large quantity of concurrent downloading is reduced. The method for supporting large quantity of concurrent downloading comprises the following steps: downloading a downloading server query request message of a receiving client of a control server; acquiring a downloading server according to the downloading server query request message, the cache file distribution and the load condition of the downloading server; and sending the address of the acquired downloading server to the client and indicating the client to download file from the downloading server corresponding to the address. By using the method provided by the embodiment of the invention in the computer network for supplying downloading, the processing bottleneck problem of the server in the large quantity of concurrent downloading is solved.

Owner:JUHAOKAN TECH CO LTD

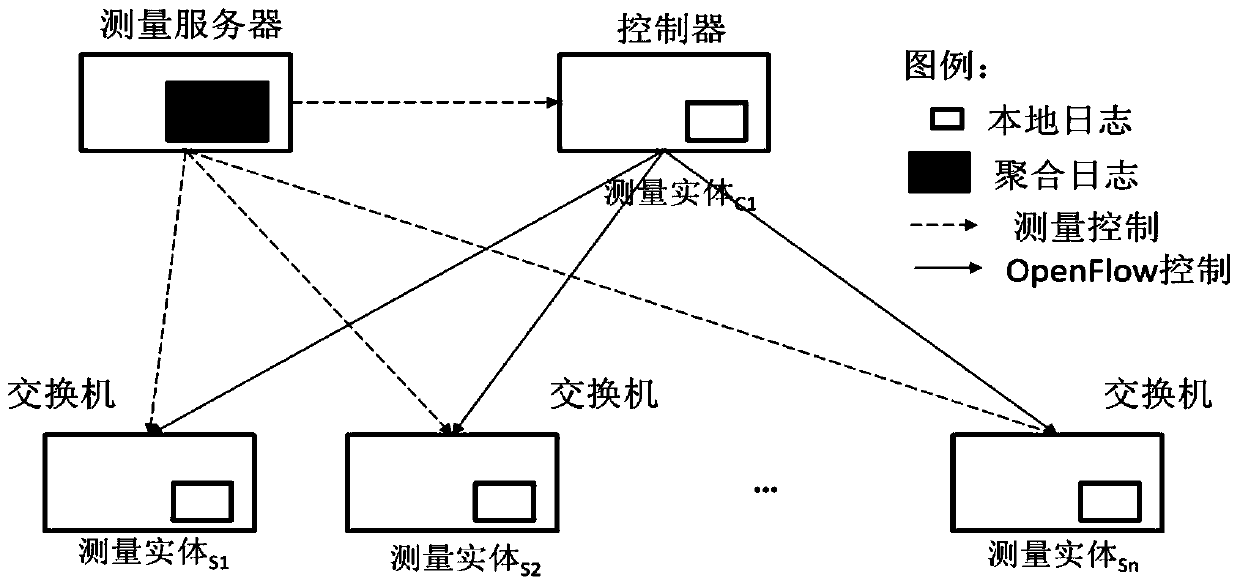

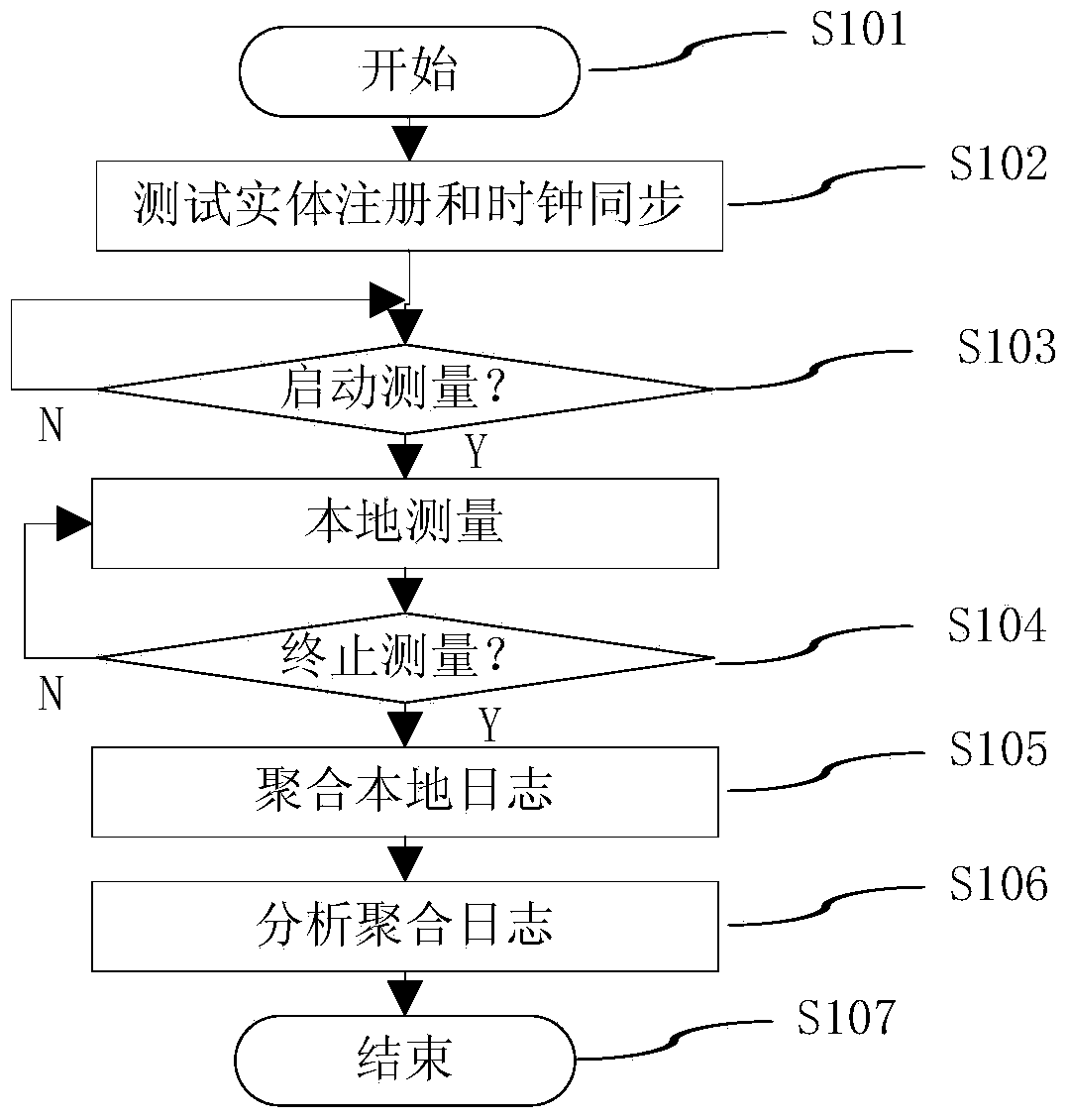

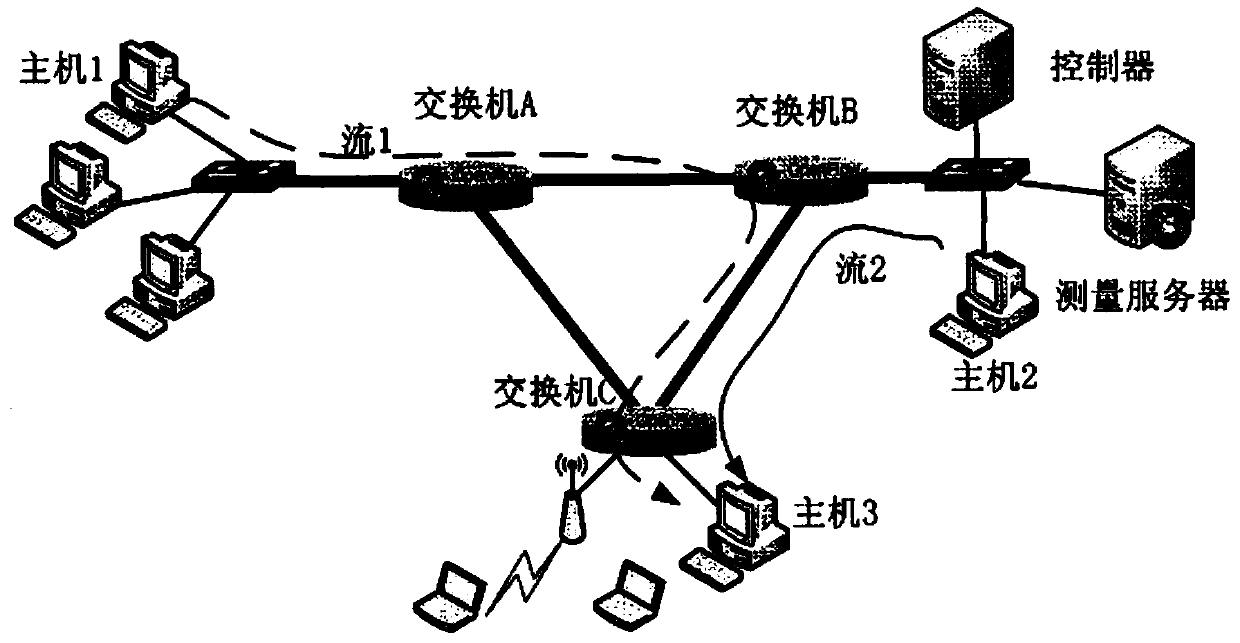

Measuring system and method for supporting analysis of OpenFlow application performance

InactiveCN103997432AOvercome the defect that frequent reading of switch flow table data can easily affect network performanceOvercoming defects that can easily affect network performanceData switching networksRouting control planeReal-time computing

The invention discloses a measuring system and method for supporting the analysis of the OpenFlow application performance. The measuring system and method are based on an OpenFlow network and a measuring server. The OpenFlow network comprises a controller and n exchangers connected with the controller respectively. The n exchangers are controlled by the OpenFlow of the controller. The controller and the n exchangers become measuring entities to be controlled by the measuring server in a centralized mode after expanding the local journal function and the clock synchronization function. The measuring system and method for supporting the analysis of the OpenFlow application performance have the advantages that a centralized performance bottleneck does not exist, the interference on the network application by measuring is small, information of a data plane and a control plane can be obtained comprehensively, and an interactive relationship between the control plane and the data plane can be obtained.

Owner:PLA UNIV OF SCI & TECH

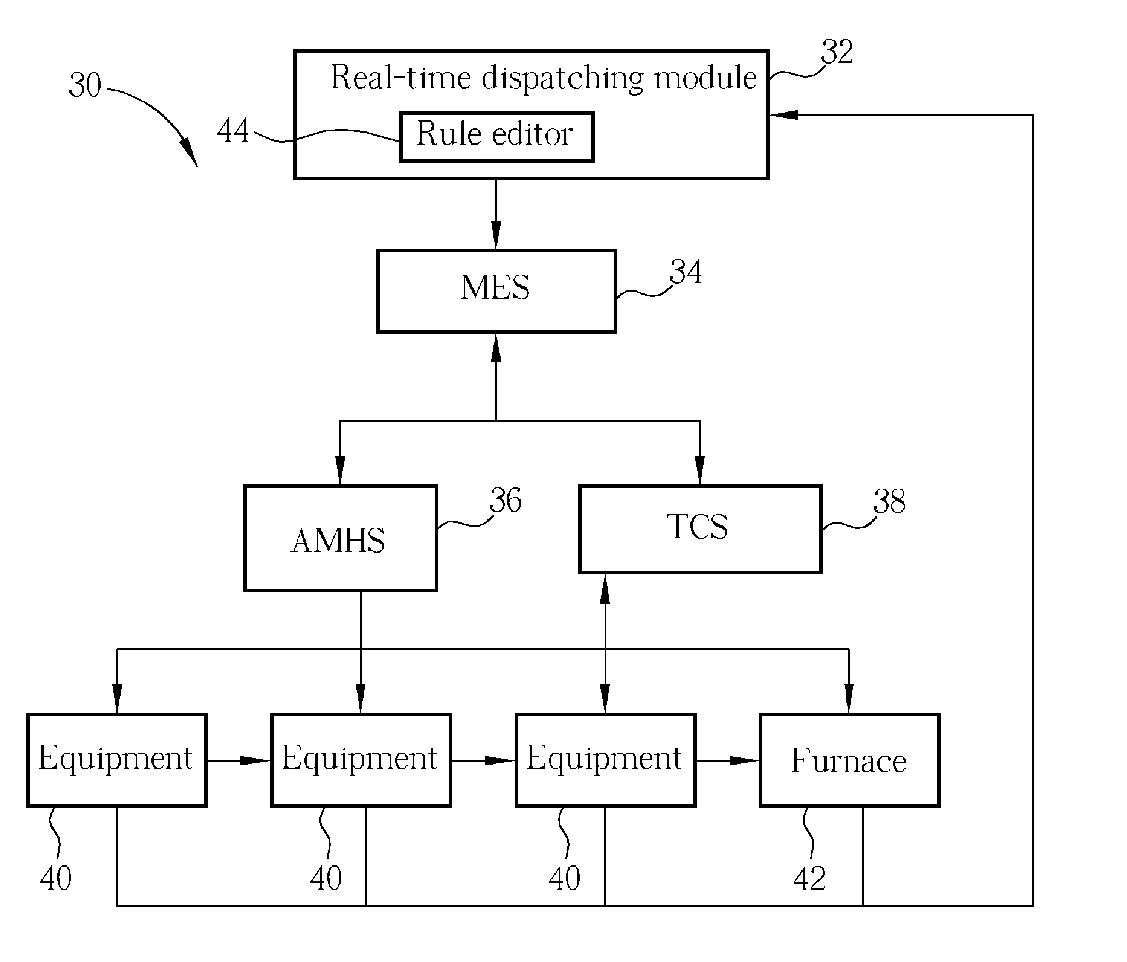

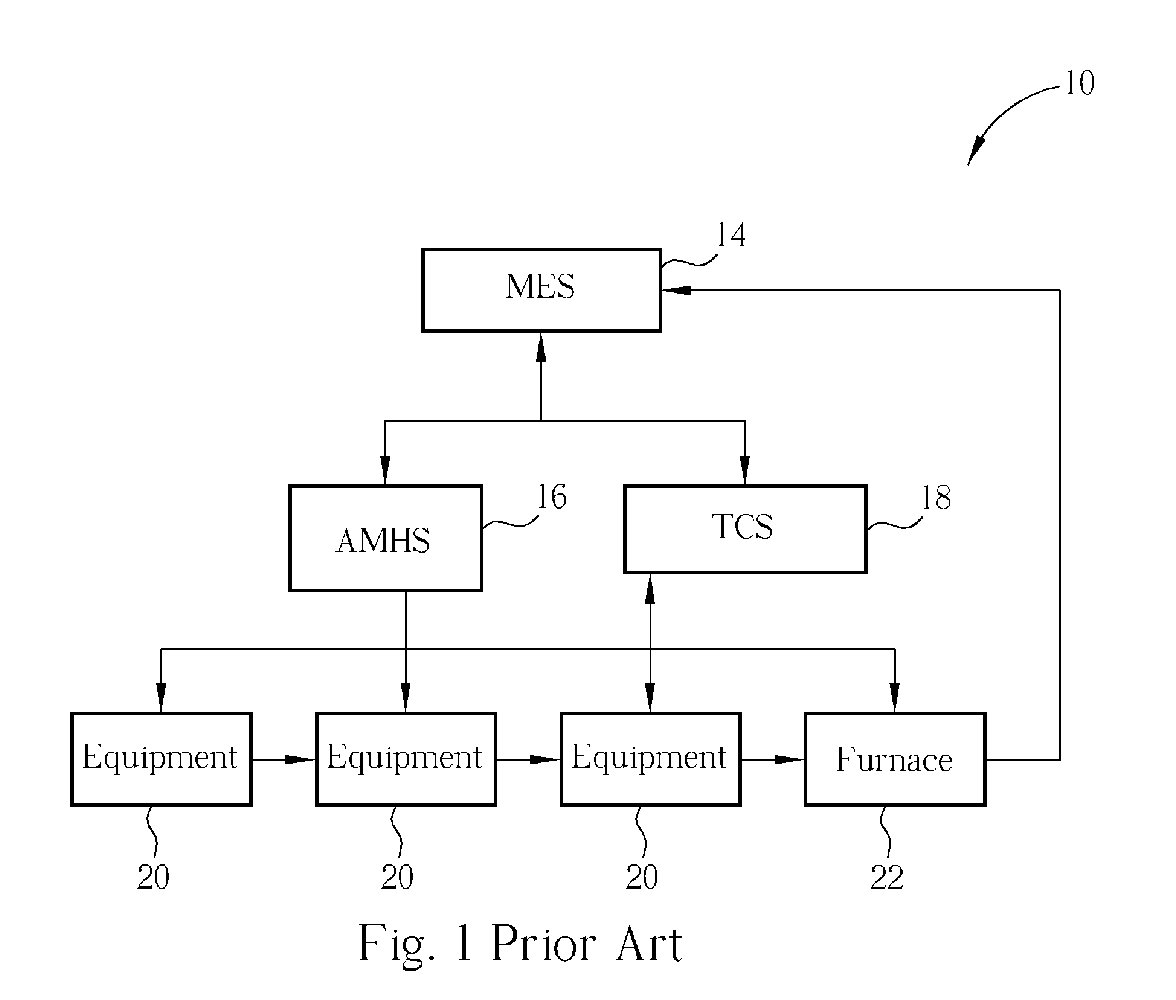

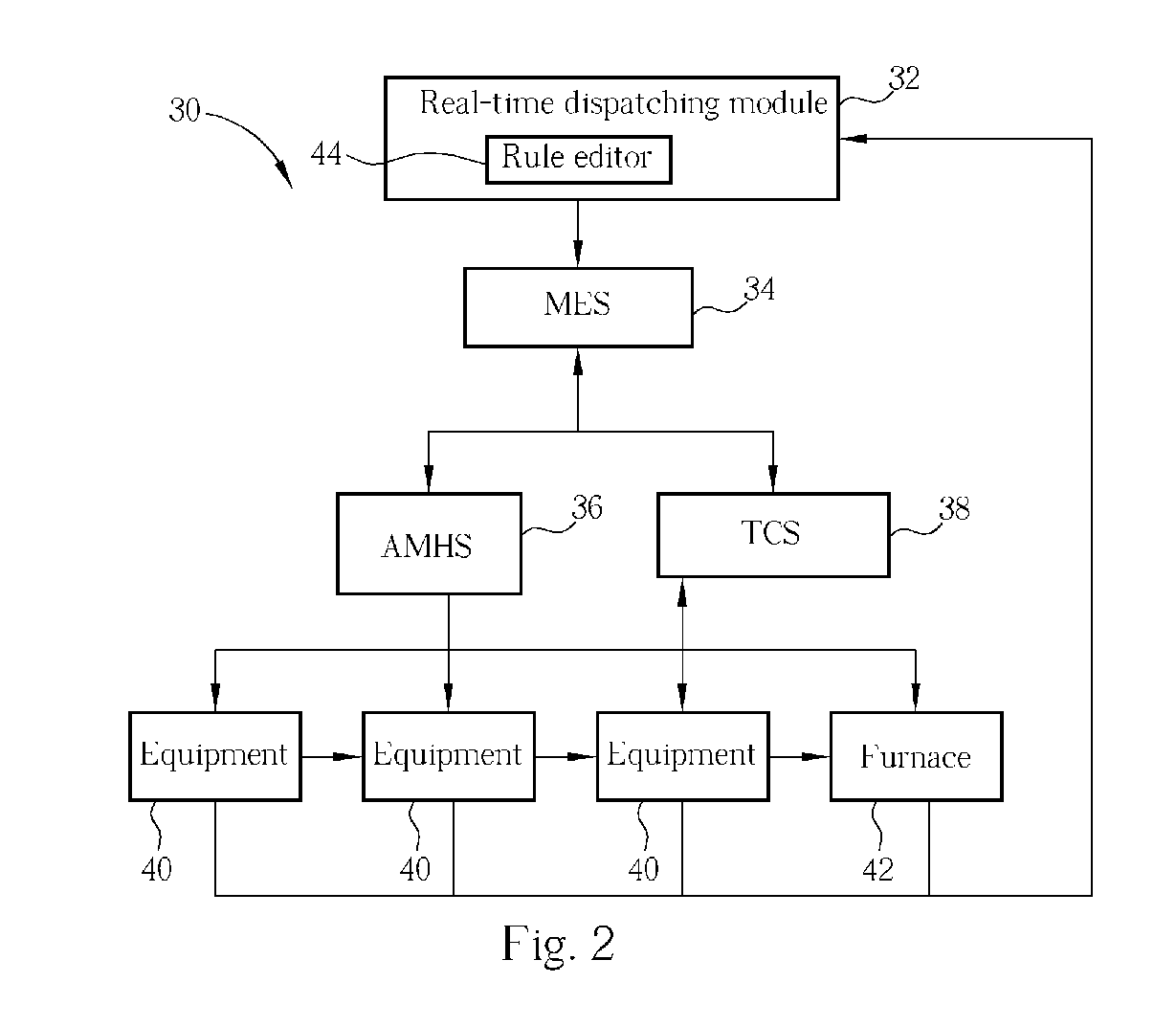

System and method thereof for real-time batch dispatching manufacturing process

InactiveUS20050256599A1Reduce standby timeIncrease profitSemiconductor/solid-state device manufacturingResourcesComputer moduleManufacturing execution system

The present invention provides a system and method for real-time batch dispatching in a manufacturing process. The system includes a bottleneck equipment, a real-time dispatching module for calculating a time point of forming a batch and deciding the lot numbers of a plurality of products which are included in the batch at the time point, and a manufacturing execution system electronically connected to the bottleneck equipment and the real-time dispatching module for receiving the batch transmitted from the real-time dispatching module so as to choose the plurality of products according to the lot numbers and controlling the plurality of products to be simultaneously processed by the bottleneck equipment at the same time point.

Owner:POWERCHIP SEMICON CORP

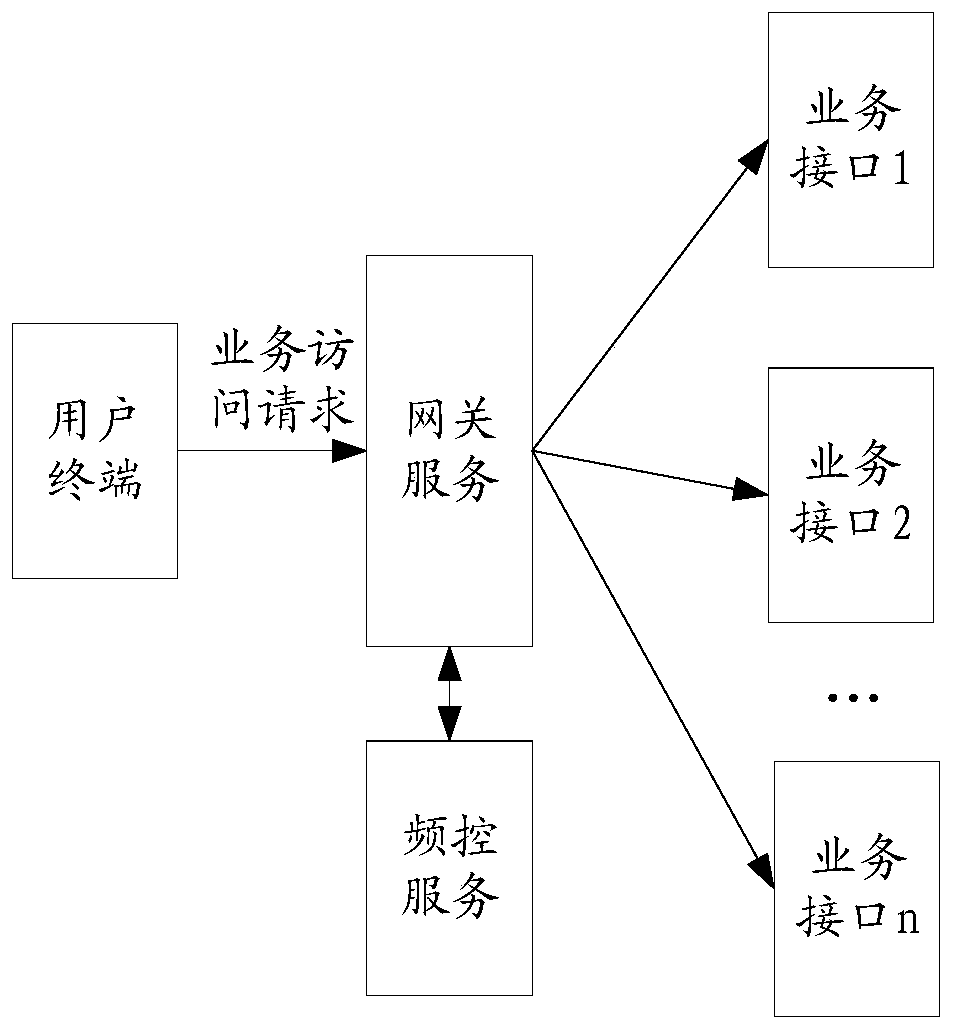

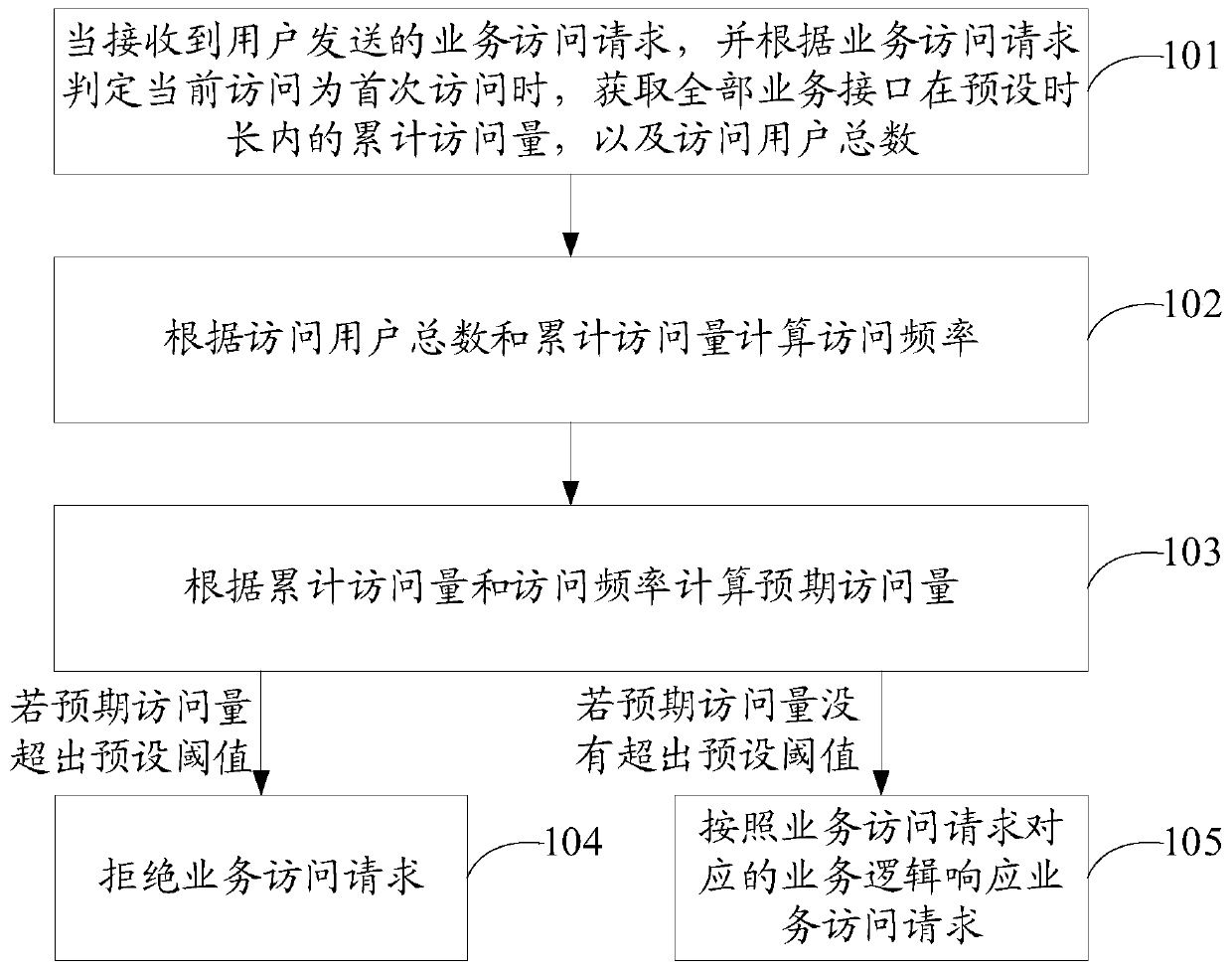

Network access flow limiting control method and device and computer readable storage medium

ActiveCN111030936AGuaranteed uptimeCurrent limiting implementationData switching networksPage viewAccess frequency

The embodiment of the invention discloses a flow limiting control method and device for network access and a computer readable storage medium. The method comprises the steps of when a service access request sent by a user is received and the current access is judged to be the first access, acquiring the accumulated page view of all service interfaces within a preset duration and the total number of access users; calculating access frequency according to the total number of the access users and the accumulated page view; calculating an expected page view according to the accumulated page view and the access frequency; if the expected page view exceeds a preset threshold, refusing the service access request; and if the expected page view does not exceed the preset threshold, responding to the service access request according to the service logic corresponding to the service access request. Based on the scheme, when a new request is received, the expected page view is estimated. When thepage view is judged to be close to the bottleneck of the system in advance, flow limiting is carried out, and part of new user access is refused to guarantee normal operation of the system. Users entering the system are not affected by flow limiting while flow limiting is achieved.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

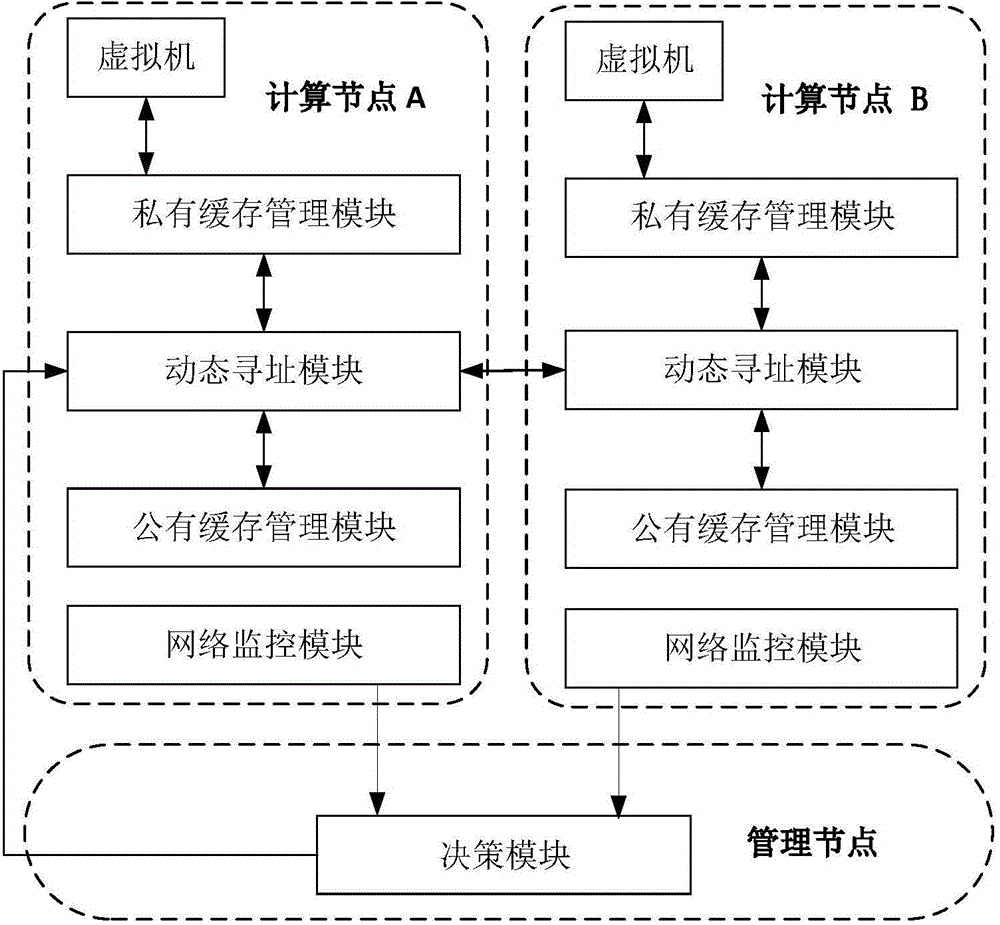

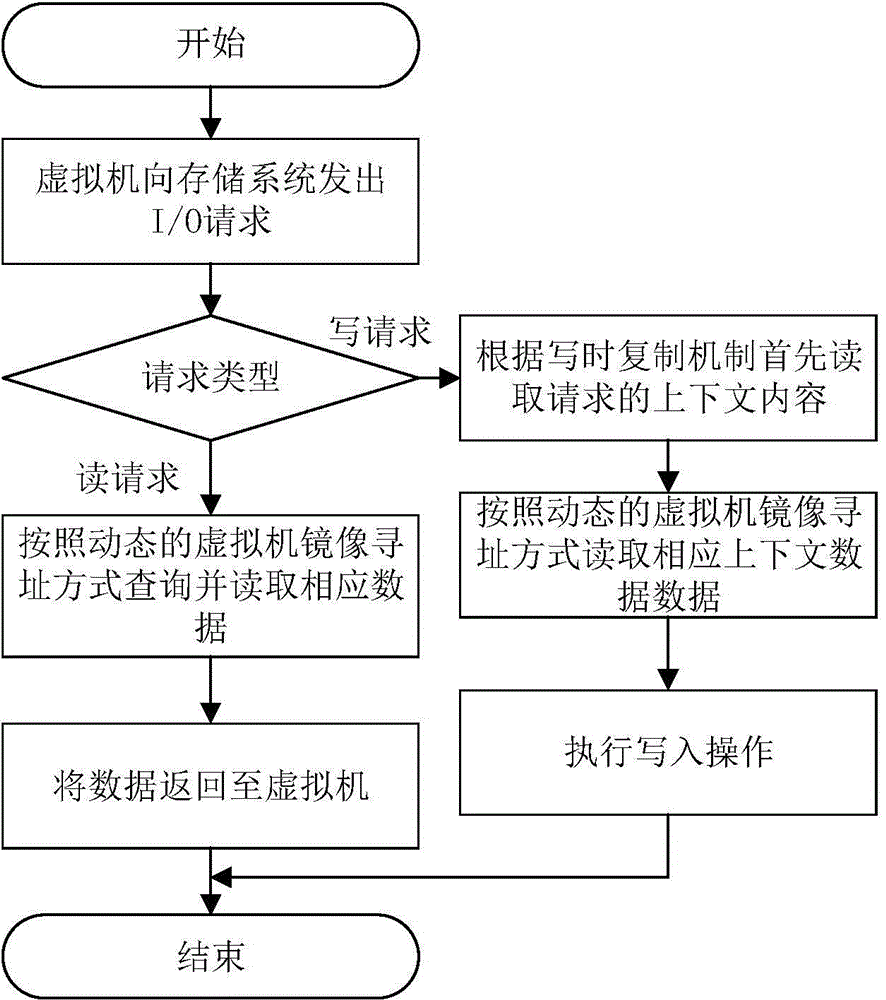

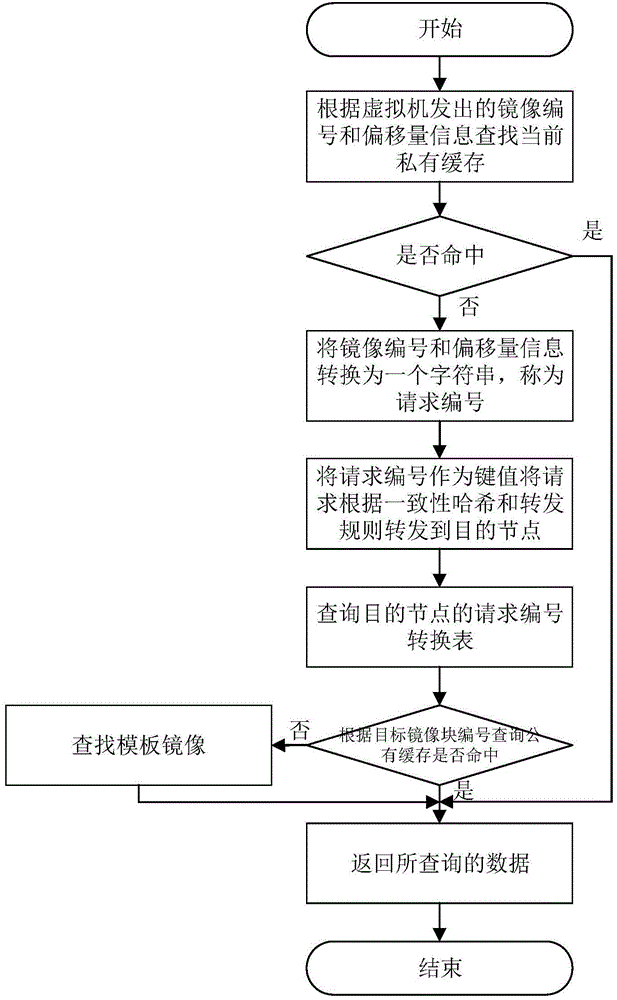

Network-aware virtual machine mirroring storage system and read-write request handling method

The invention discloses and provides a network-aware virtual machine mirroring storage system. The network-aware virtual machine mirroring storage system comprises a private cache management module, a public cache management module, a network monitoring module, a decision module and a dynamic addressing module; the private cache management module, the public cache management module, the network monitoring module and the dynamic addressing module are arranged at a computational node; the decision module is arranged at a management node. The invention also discloses a network-aware virtual machine mirroring storage system based read-write request handling method to enable nodes with lighter network loads to process more mirroring access requests and nodes with heavier network loads to process less mirroring access requests and accordingly I / O hotspots are avoided, and overall network load is balanced; appropriate purpose nodes can be selected for every virtual machine in a self-adaptive mode according to communication modes and the network utilization conditions of a current virtual machine, the network load of a data center can be balanced, and a bottleneck problem in read and write of the virtual machine caused by network congestion is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

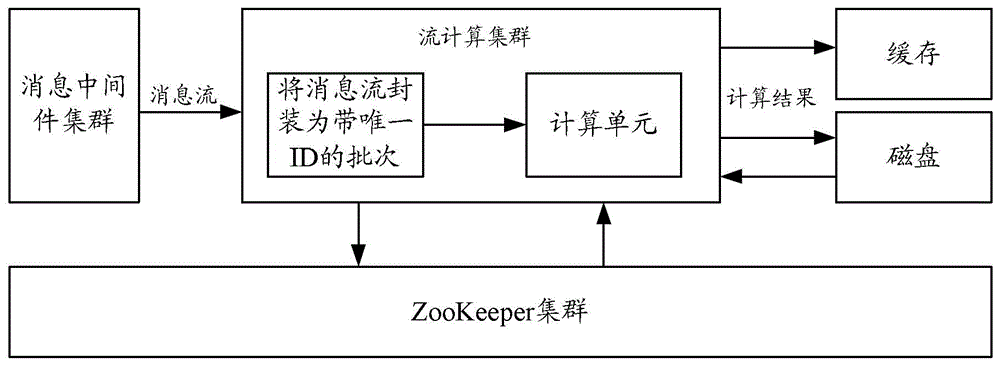

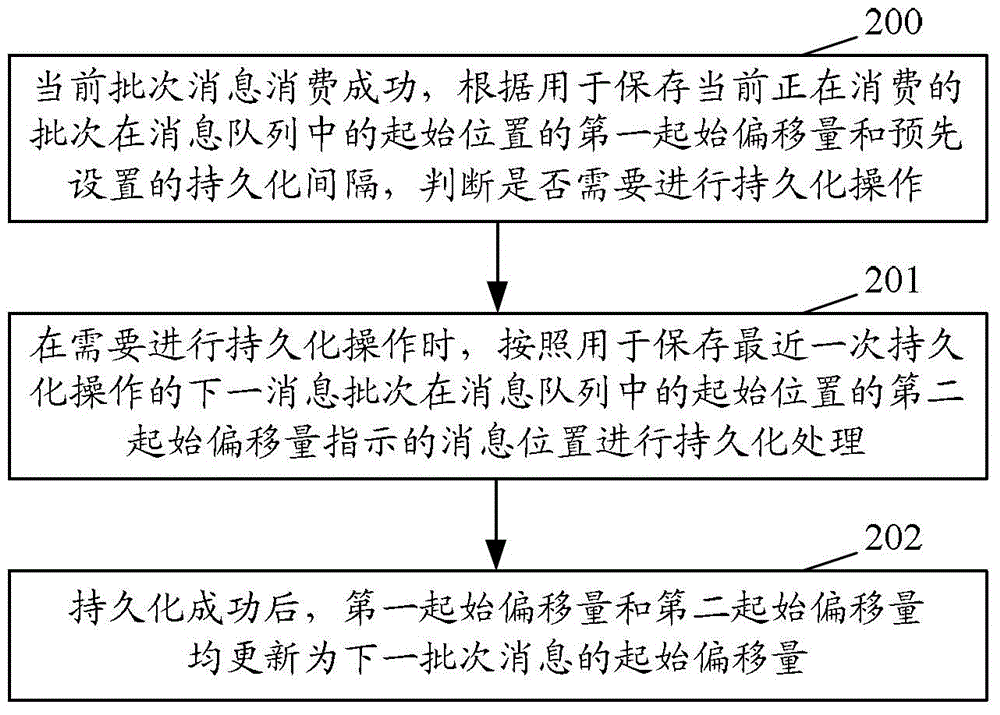

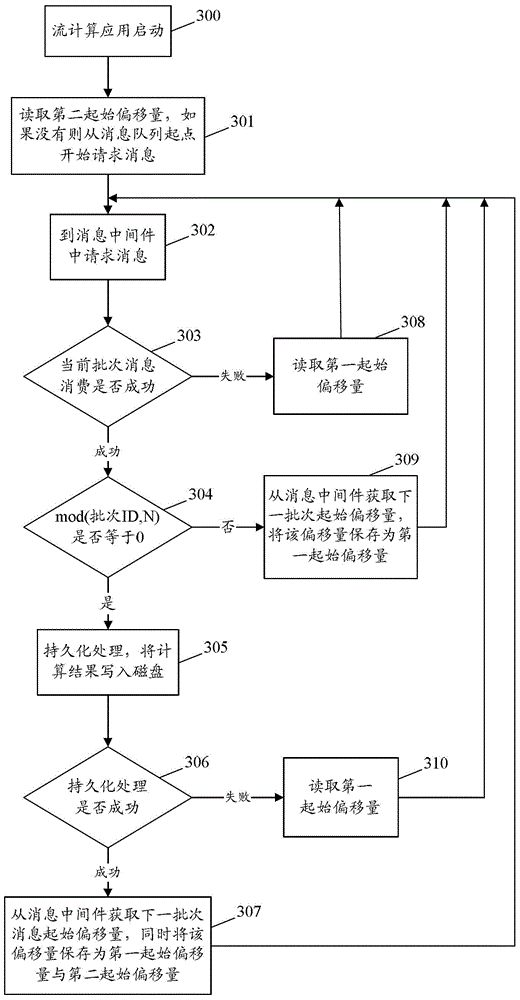

Method and device for realizing persistence in flow calculation application

ActiveCN104424186AExtended time intervalImprove real-time computing efficiencySpecial data processing applicationsOrder of magnitudeThroughput

The invention discloses a method and a device for realizing persistence in flow calculation application. The method comprises the following steps that: when the current batch message is successfully consumed, whether the persistence operation needs to be performed or not is judged according to the first initial offset and the preset persistence interval; when the persistence operation needs to be performed, the persistence processing is carried out according to the message position indicated by the second initial offset; and after the persistence succeeds, the first initial offset and the second initial offset are updated into the initial offset of a next batch message. The persistence operation is performed after the persistence interval, and the disk persistence time interval is prolonged, so that the real-time calculation efficiency is greatly improved. During fault recovery, at most the batch message in the persistence interval needs to be consumed again; the performance bottleneck caused by frequent disk writing in the existing synchronous persistence process is avoided; the real-time calculation message throughput performance is improved by an order of magnitude; and meanwhile, the delay caused by the fault recovery is reduced to the second stage, and the real-time performance cannot be influenced.

Owner:阿里巴巴华南技术有限公司

Method for solving performance bottleneck of network management system in communication industry based on cloud computing technology

InactiveCN102624558AFix performance issuesSolve difficult system performance problemsData switching networksVirtualizationThird party

The invention provides a method for solving the performance bottleneck of a network management system in the communication industry based on a cloud computing technology. In the method, cloud computing is adopted to determine the guiding principle of the network management system. The method comprises the following steps of: 1) aiming at hardware and third-party software, a mainstream virtualization technology which supports unified cloud computing implementation mode is adopted, and 2) aiming at system software, a design mode adaptive to distributed deployment is adopted; and in terms of three levels (hardware, middleware and application software), the cloud computing technology is utilized to effectively solve the performance problem of the network management system; Based on a cloud computing architecture design and a deployment application system, the system performance problem which is hardly solved by a traditional system can be effectively solved; and simultaneously, by utilizing cloud computing, the advantages in the aspects of low cost, high expansibility and the like can be achieved, and from the macroscopic view, great problems can be solved by utilizing existing technologies without spending great time in processing certain technical details.

Owner:INSPUR TIANYUAN COMM INFORMATION SYST CO LTD

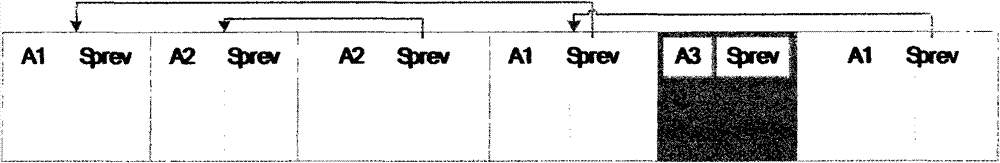

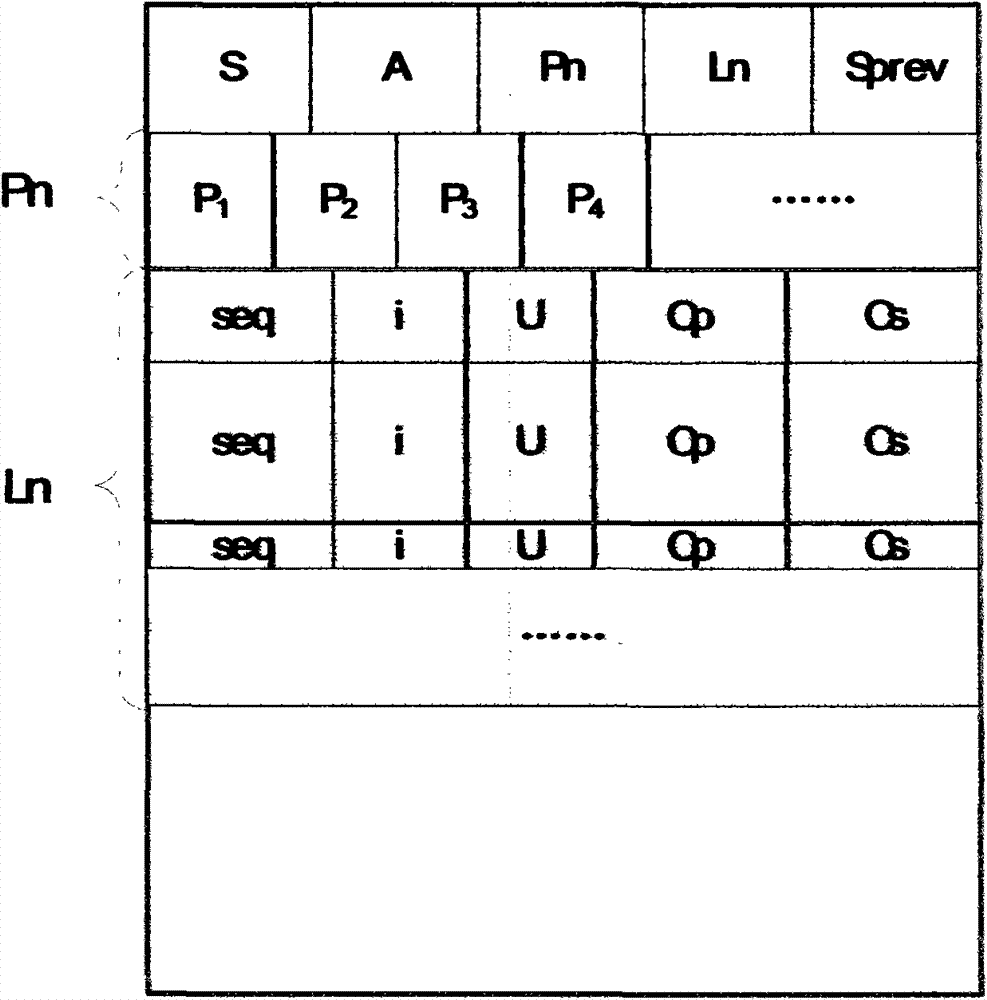

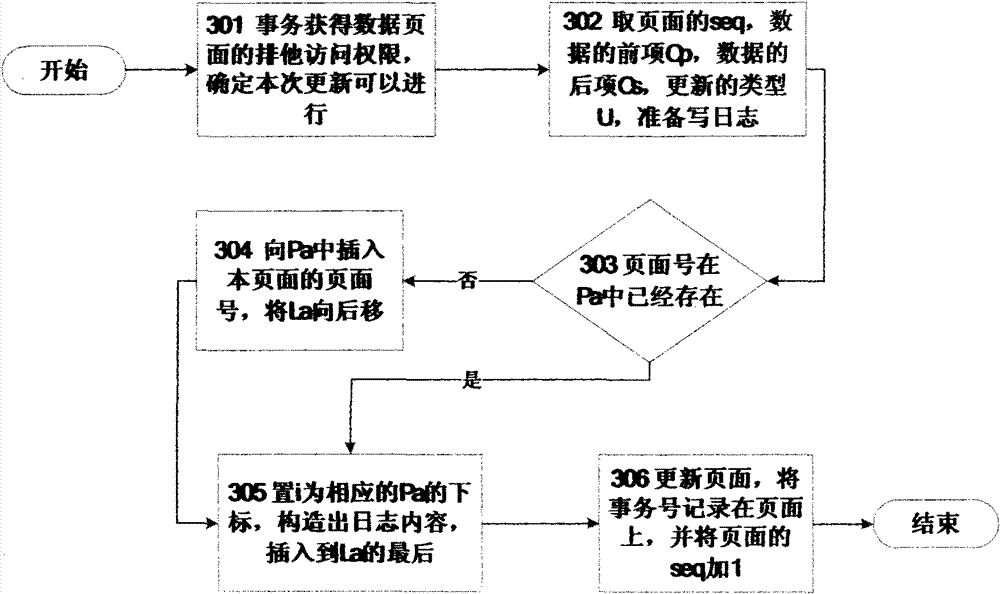

Log organization structure clustered based on transaction aggregation and method for realizing corresponding recovery protocol thereof

InactiveCN102760161AAvoid performance bottlenecksImprove operational efficiencyHardware monitoringSpecial data processing applicationsArray data structureData management

The invention discloses a log organization structure clustered according to transaction aggregation and a recovery protocol based on the log organization structure clustered, which can be applied to a transactional data management system of a large-sized computer. A log file is sequentially organized to a plurality of log fragments and each log fragment is used for storing the log content of the same transaction and reserving a transaction number as well as a preceding log fragment pointer of the transaction; a data page number involved in a log entry of the same fragment is stored in the form of an array. When the system is operating, each transaction only writes its own log fragment and writes the log fragment in the log file when the transaction is submitted. In a recovering process, the system can be recovered to a lasting and consistent state by scanning all the log fragments for remake and returning the log fragments of all the active transactions for return. The problem of producing bottlenecks during writing logs in the traditional transactional data management system is resolved and log amount of the system can be effectively reduced.

Owner:天津神舟通用数据技术有限公司

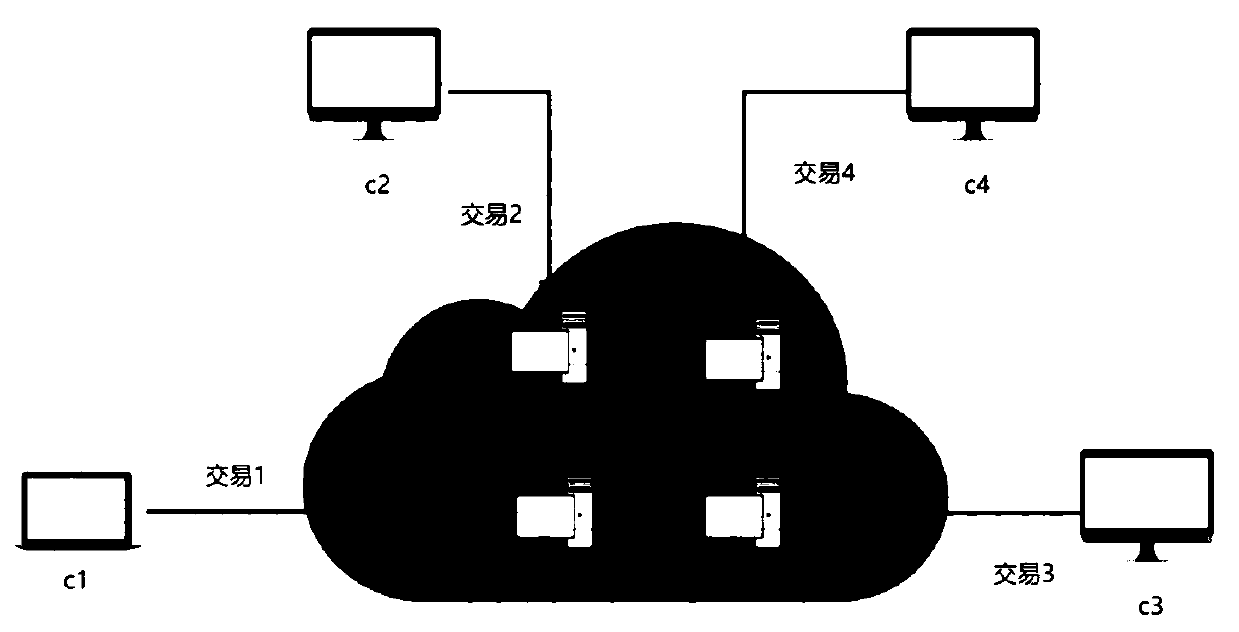

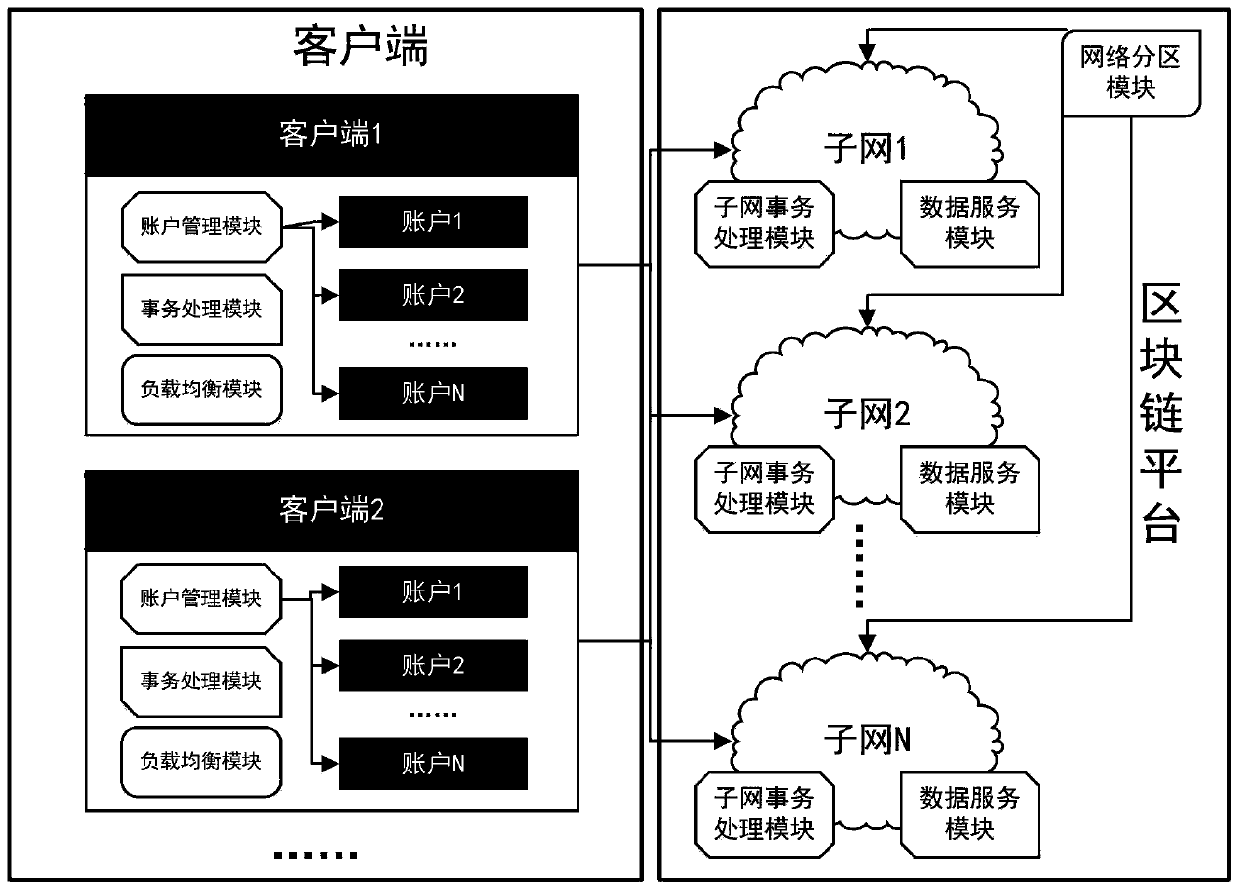

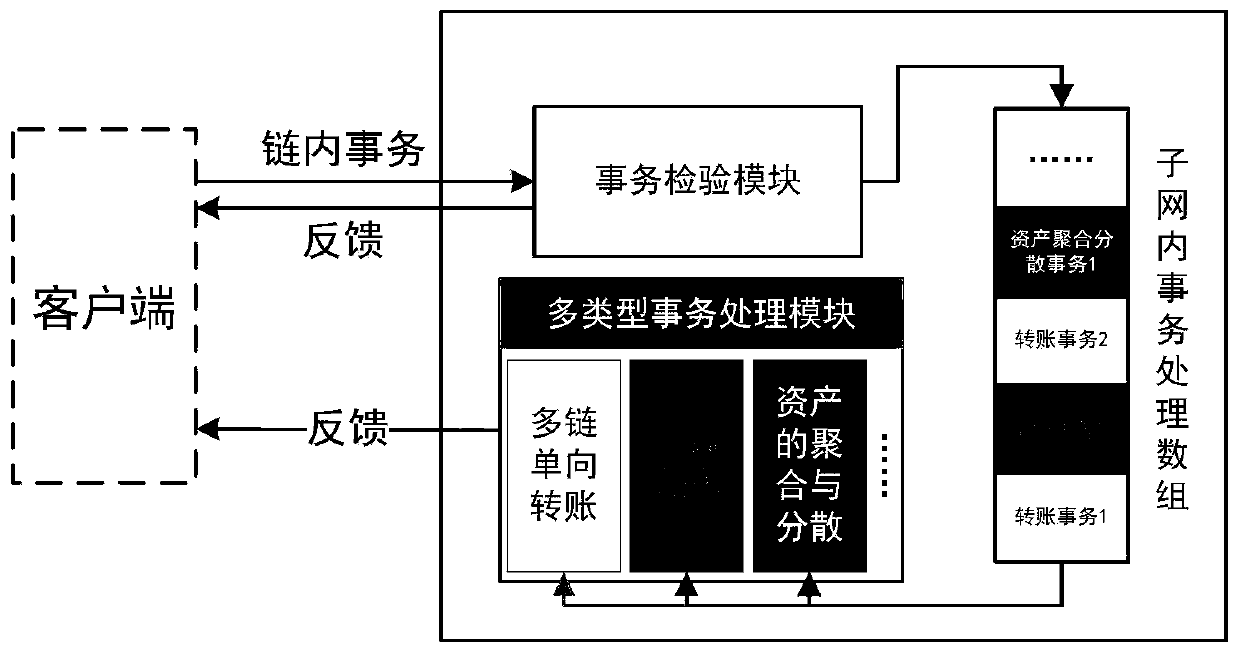

Block chain parallel transaction processing method and system based on isomorphic multi-chain, and terminal

The invention relates to a parallel transaction processing method based on isomorphic multi-chains, which comprises the following steps: constructing one or more sub-network chains, each sub-network chain having the same block chain framework; dividing a logic transaction to be executed into at least one actual transaction; and distributing the actual transaction to the corresponding subnet chainto carry out parallel transaction processing. Transaction processing mainly comprises one-way asset transfer, Dapp application compatibility and asset aggregation and dispersion. The overall architecture is divided into two parts, namely a client and a block chain platform, the client constructs optimized parallel transactions according to statistical information of the block chain platform, userrequirements are considered comprehensively, and the overall performance of the system is improved; meanwhile, information of a user account is tracked, related states are maintained, and communication under a chain is achieved. Aiming at the performance problem of a single chain, a logic transaction parallel execution algorithm is innovatively provided, the performance optimization bottleneck problem in an original block chain technical architecture is solved, and the flux upper limit of global transaction processing is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

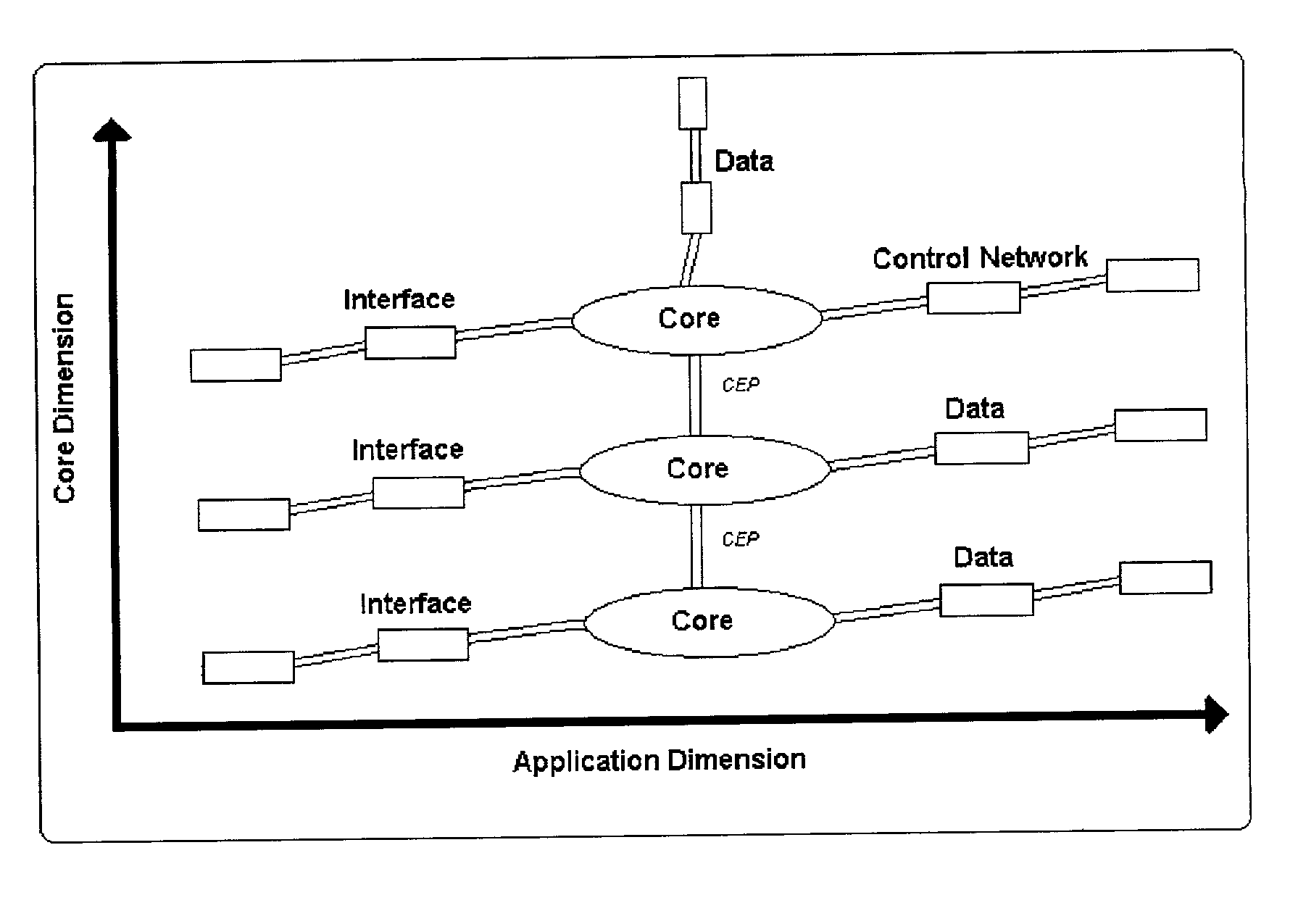

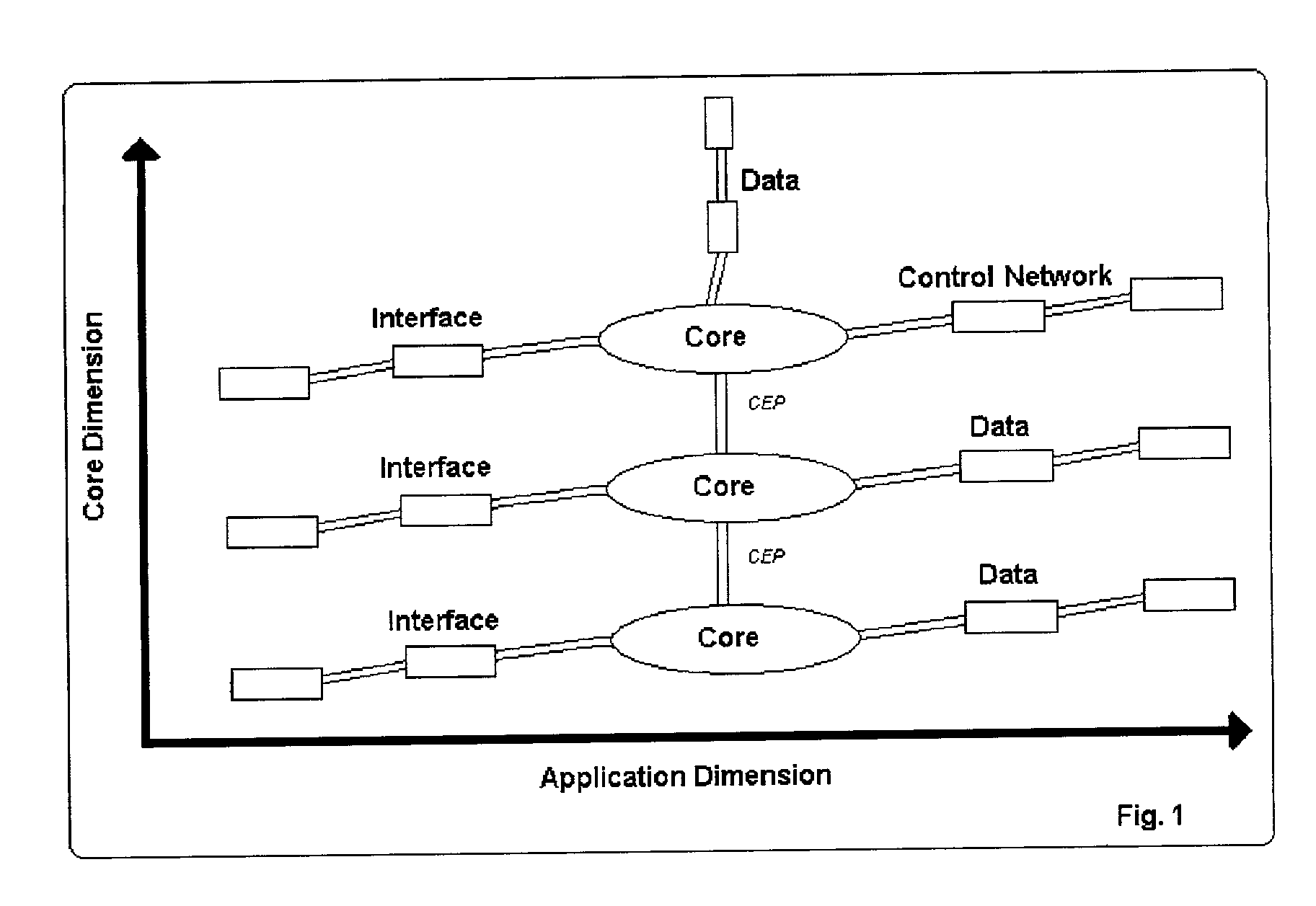

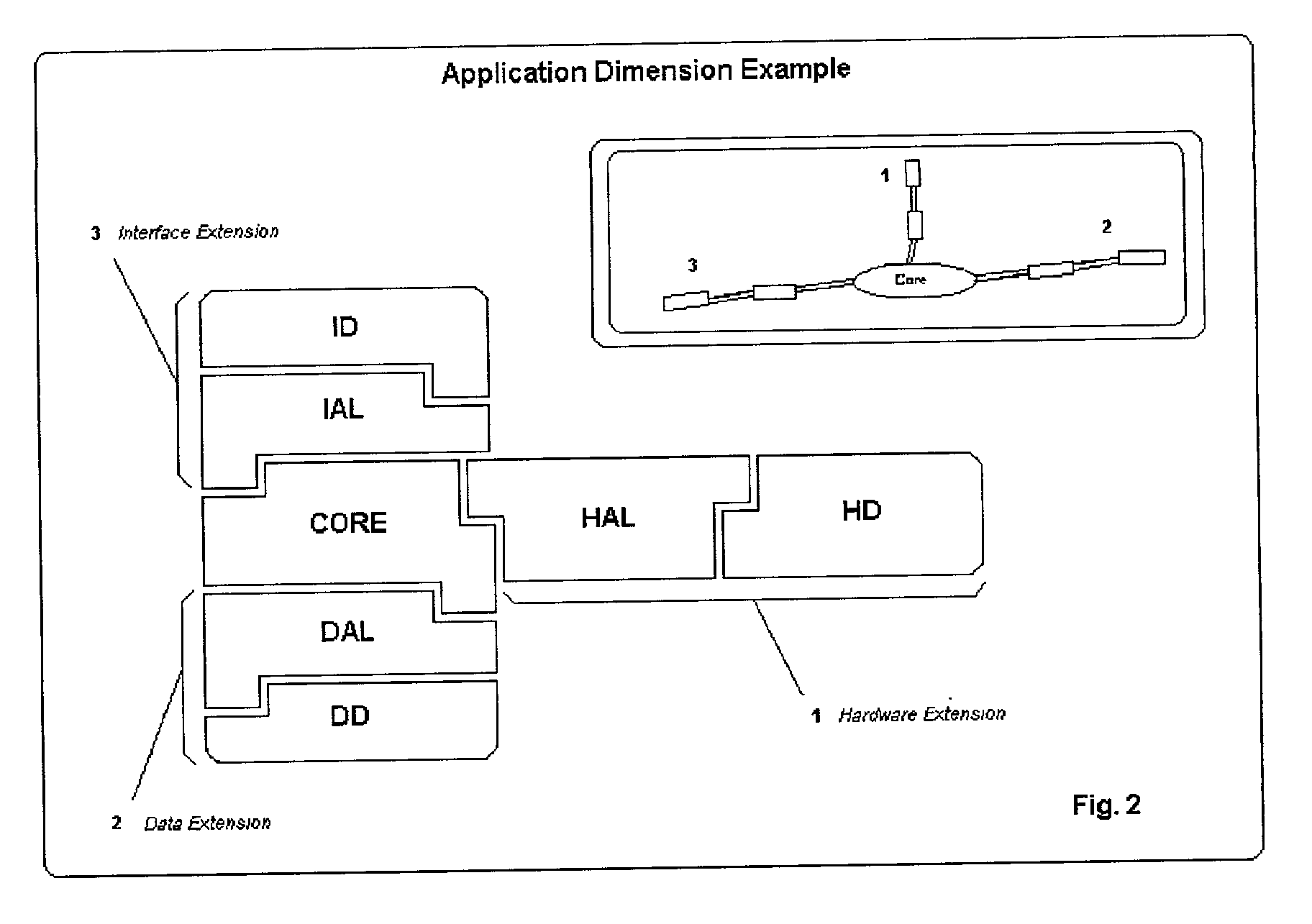

Multidimensional advanced adaptive software architecture

InactiveUS20030014560A1Improve versatilityImprove performanceMultiprogramming arrangementsSoftware designAbstraction layerMulticore architecture

The present invention is a software architecture that provides high versatility and performance. This architecture is composed of two dimensions: the first one belongs in the application level, and the second in the multicore dimension. The application dimension is related to the different applications based in the conceptual model of abstractions exposed in this patent. The multicore dimension is related to the applications dimension instantiated several times in the same computer (multiple processors) or in several computers. All the cores within the multicore dimension are related in order to share information and integrate all the architecture's applications. The multicore architecture avoids bottlenecks in the simultaneous execution of multiple applications on the same computer by means of a large virtual core composed of small interconnected cores. The conceptual model of abstractions is composed of various drivers, abstraction layers and a unique core that provides support by playing a referee role between different extensions of an application.

Owner:SMARTMATIC

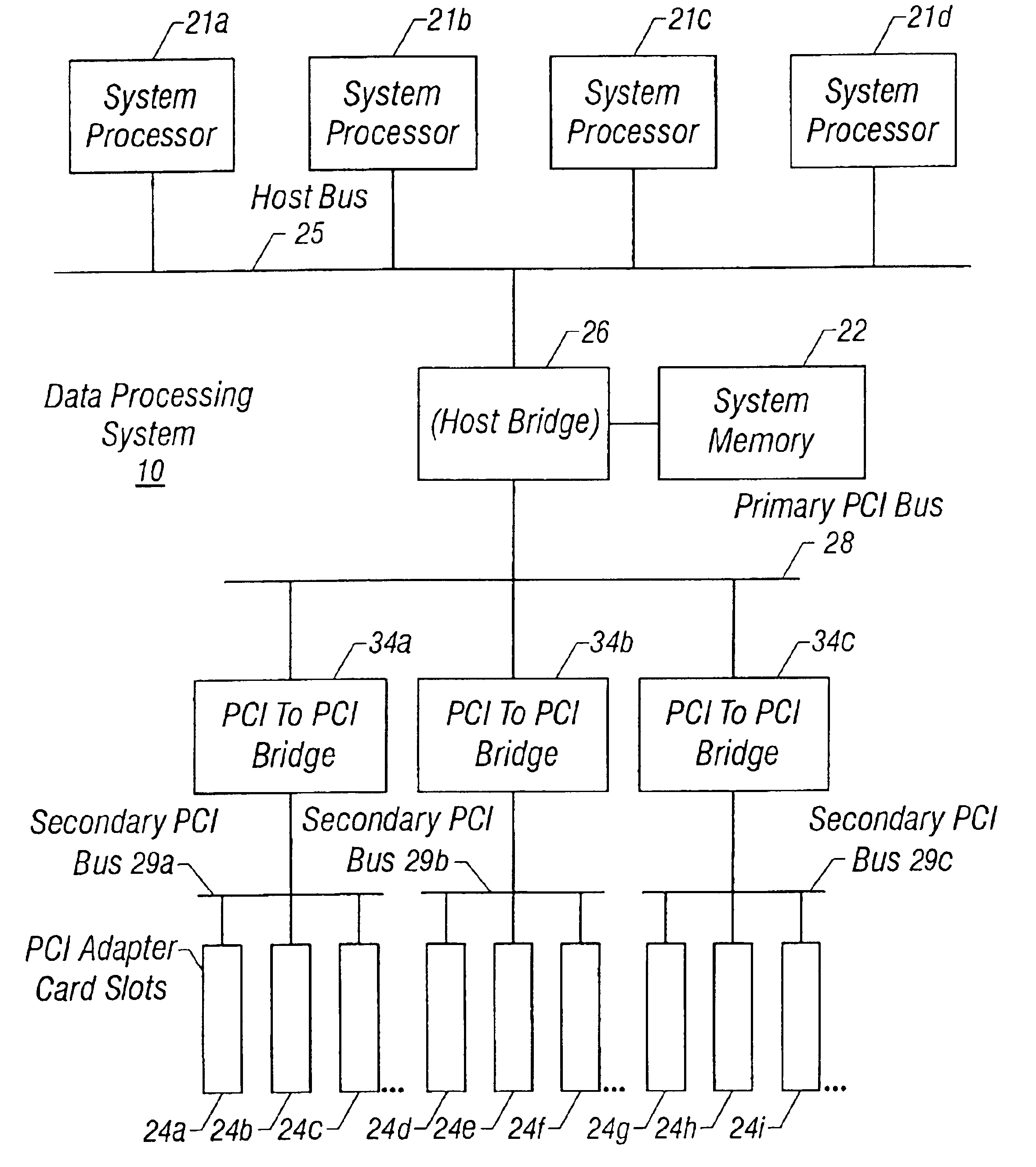

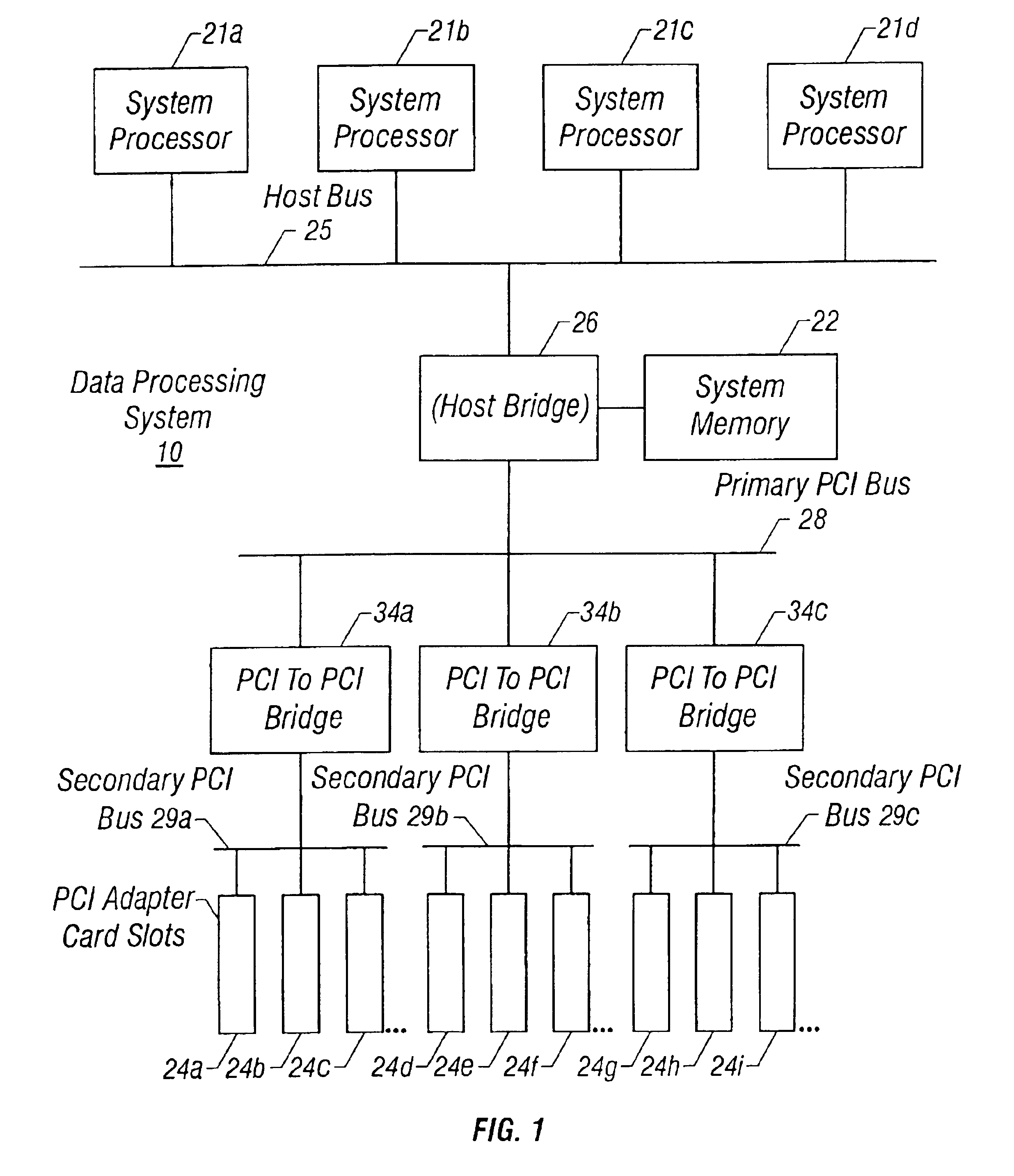

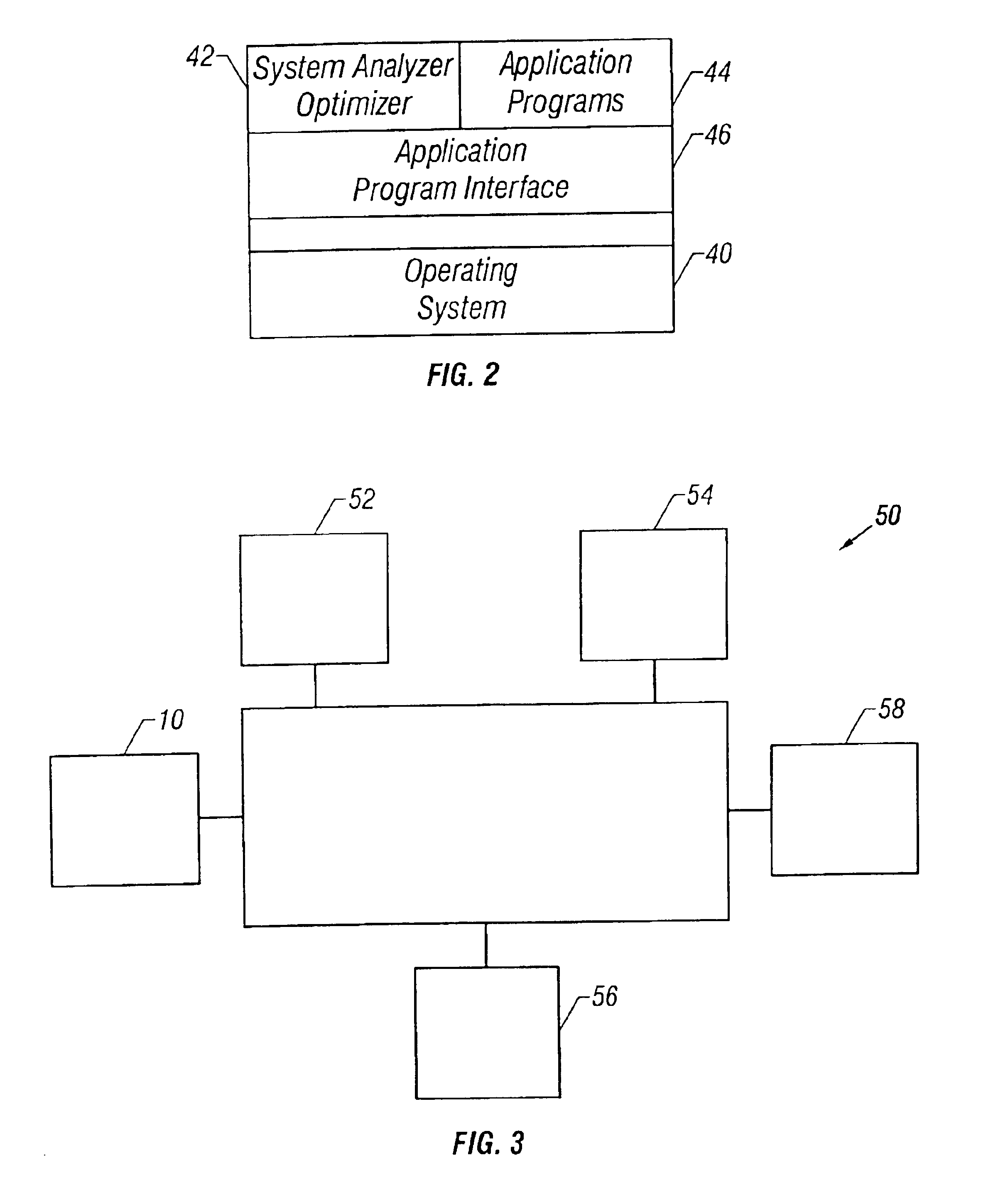

System and method for analyzing and optimizing computer system performance utilizing observed time performance measures

A data processing system and method analyze the performance of its components by obtaining measures of usage of the components over time as well as electrical requirements of those components to recommend an optimal configuration. The location in the system and the time duration that any one or more components is in a performance-limiting or bottleneck condition is determined. Based on the observed bottlenecks, their times of occurrence and their time duration, more optimal configurations of the system are recommended. The present invention is particularly adapted for use in data processing systems where a peripheral component interconnect (PCI) bus is used.

Owner:LENOVO GLOBAL TECH INT LTD

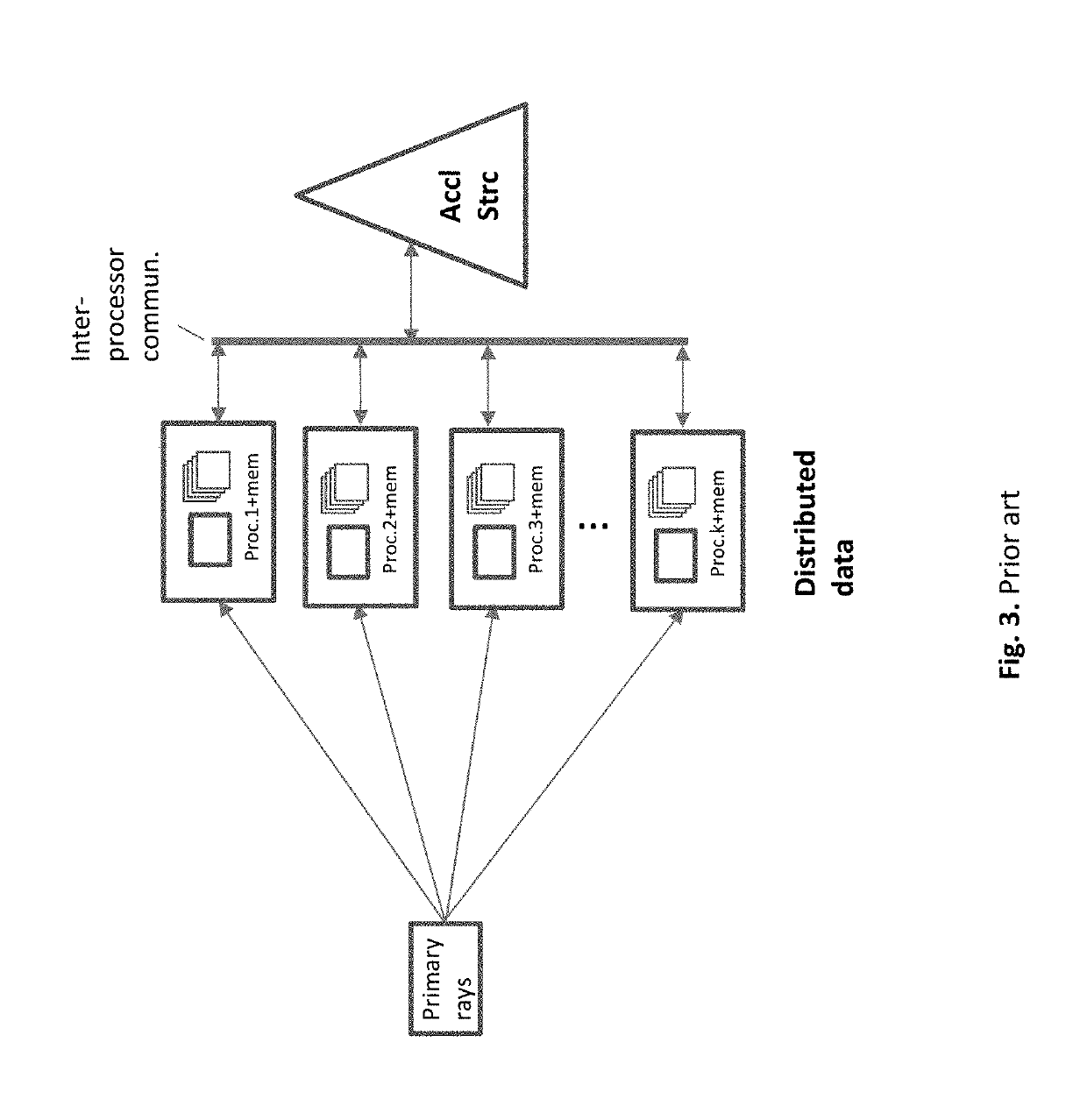

Path Tracing System Employing Distributed Accelerating Structures

ActiveUS20190304162A1Convenient amountReduce communicationImage analysisTexturing/coloringParallel computingPath tracing

A path tracing system in which the traversal task is distributed between one global acceleration structure, which is central in the system, and multiple local acceleration structures, distributed among cells, of high locality and of autonomous processing. Accordingly, the centrality of the critical resource of accelerating structure is reduced, lessening bottlenecks, while improving parallelism.

Owner:SNAP INC

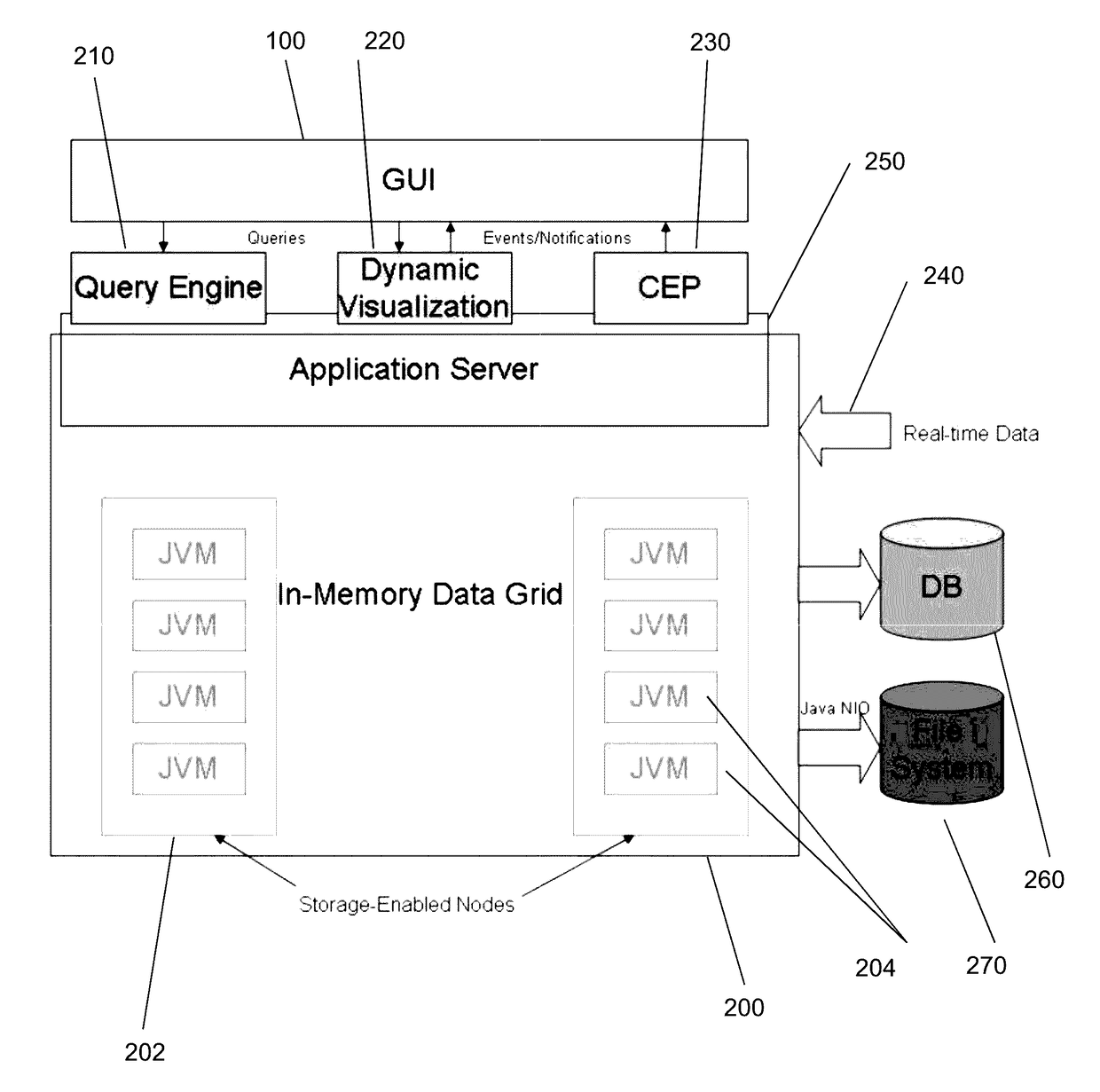

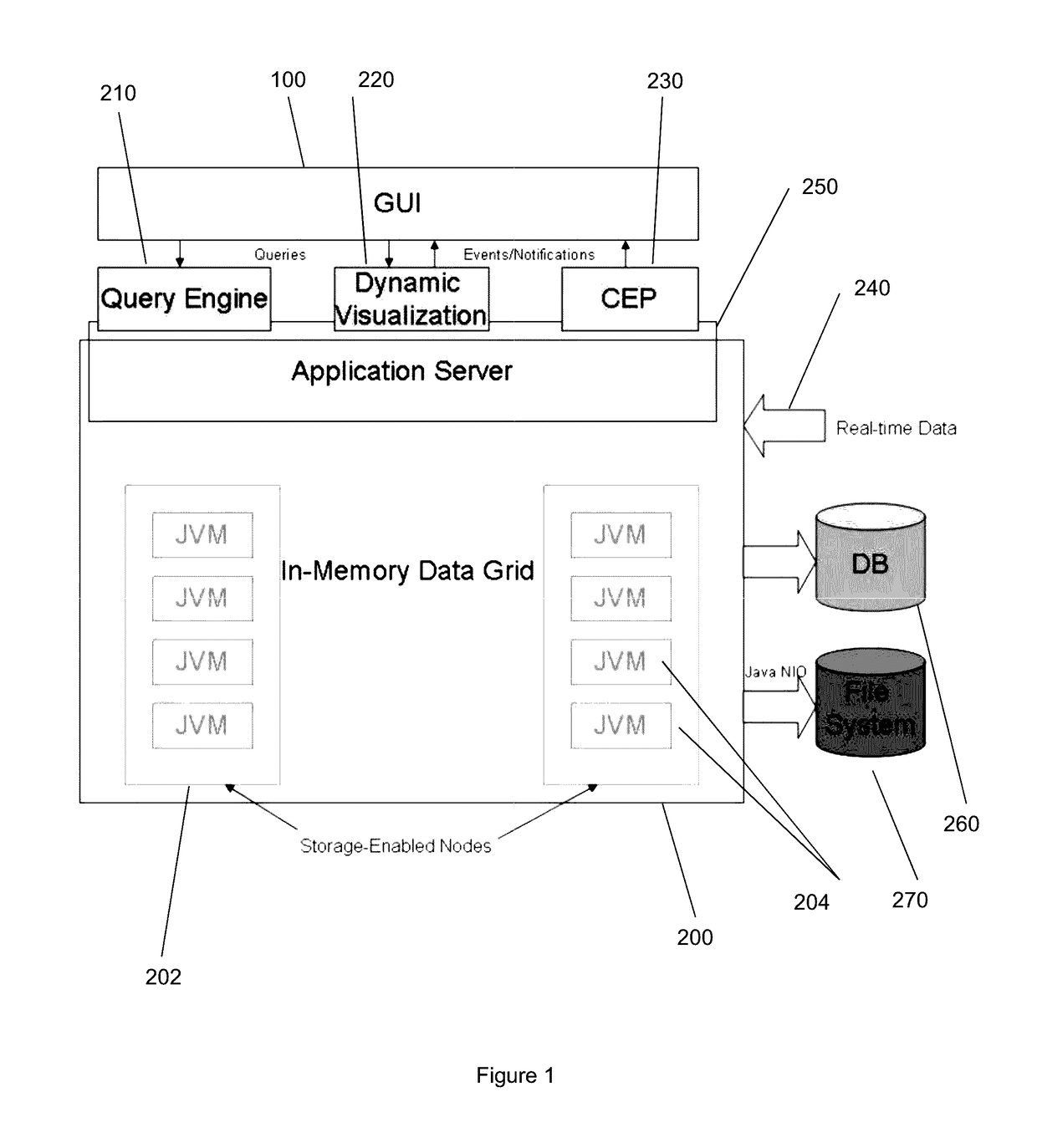

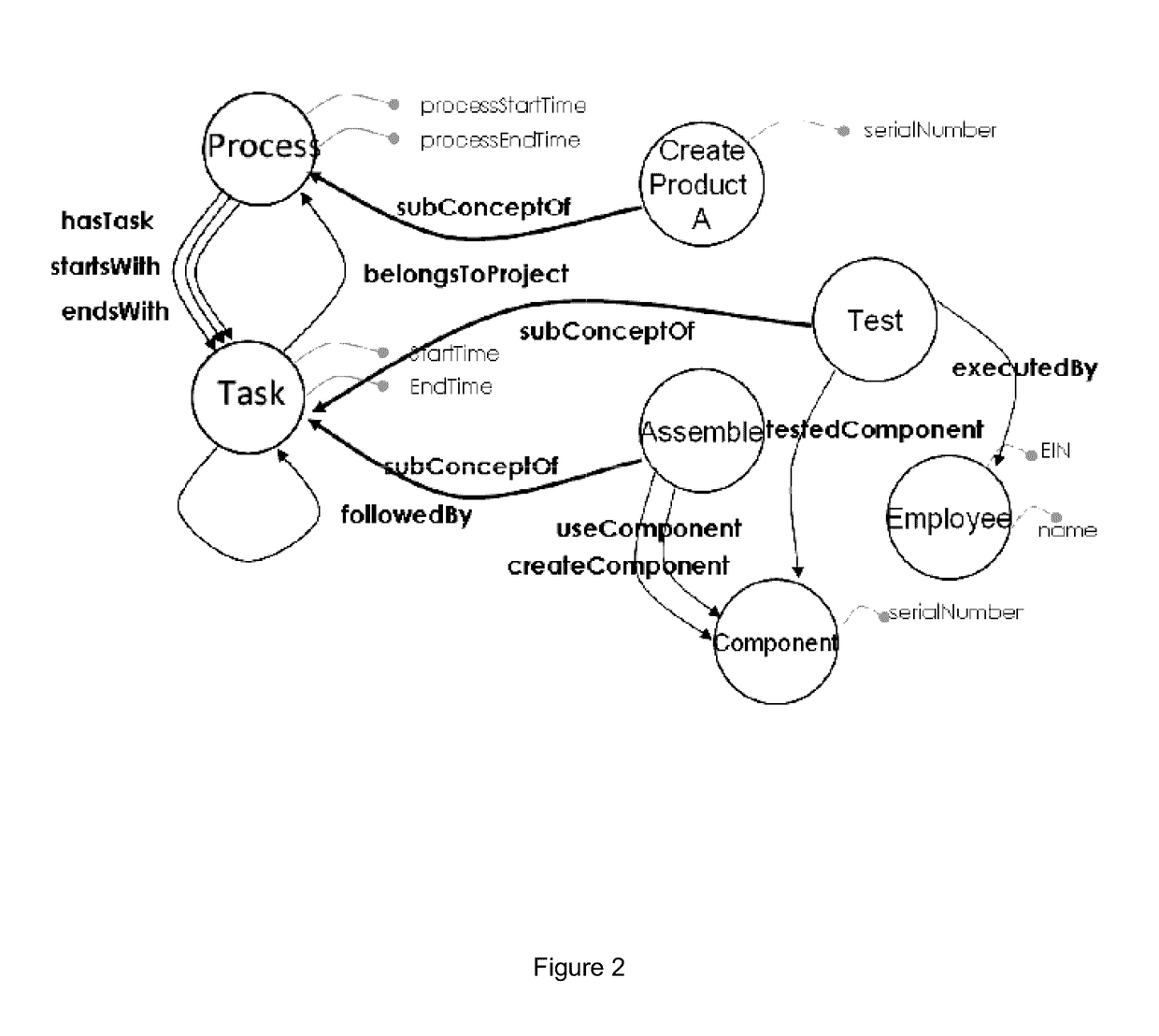

Method and system for processing data queries

ActiveUS9639575B2Digital data information retrievalDigital data processing detailsGranularityContinuous flow

The invention relates to a method and system that provide a high performance and extremely scalable triple store within the Resource Description Framework (or alternative data models), with optimized query execution. An embodiment of the invention provides a data storage and analysis system to support scalable monitoring and analysis of business processes along multiple configurable perspectives and levels of granularity. This embodiment analyses data from processes that have been already executed and from ongoing processes, as a continuous flow of information. This embodiment provides defining and monitoring processes based on no initial domain knowledge about the process and such that the process will be built only from the incoming flow of information. Another embodiment of the invention provides a grid infrastructure that allows storage of data across many grid nodes and distribution of the workload, avoiding the bottleneck represented by constantly querying a database.

Owner:KHALIFA UNIV OF SCI & TECH +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com