Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

15571 results about "Granularity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Granularity (also called graininess), the condition of existing in granules or grains, refers to the extent to which a material or system is composed of distinguishable pieces or grains. It can either refer to the extent to which a larger entity is subdivided, or the extent to which groups of smaller indistinguishable entities have joined together to become larger distinguishable entities.

Certificate-based search

InactiveUS20080005086A1Fine granularityImprove reusabilitySpecial data processing applicationsSecuring communicationGranularityReusability

The systems and methods disclosed herein provide for authentication of content sources and / or metadata sources so that downstream users of syndicated content can rely on these attributes when searching, citing, and / or redistributing content. To further improve the granularity and reusability of content, globally unique identifiers may be assigned to fragments of each document. This may be particularly useful for indexing documents that contain XML grammar with functional aspects, where atomic functional components can be individually indexed and referenced independent from a document in which they are contained.

Owner:MOORE JAMES F

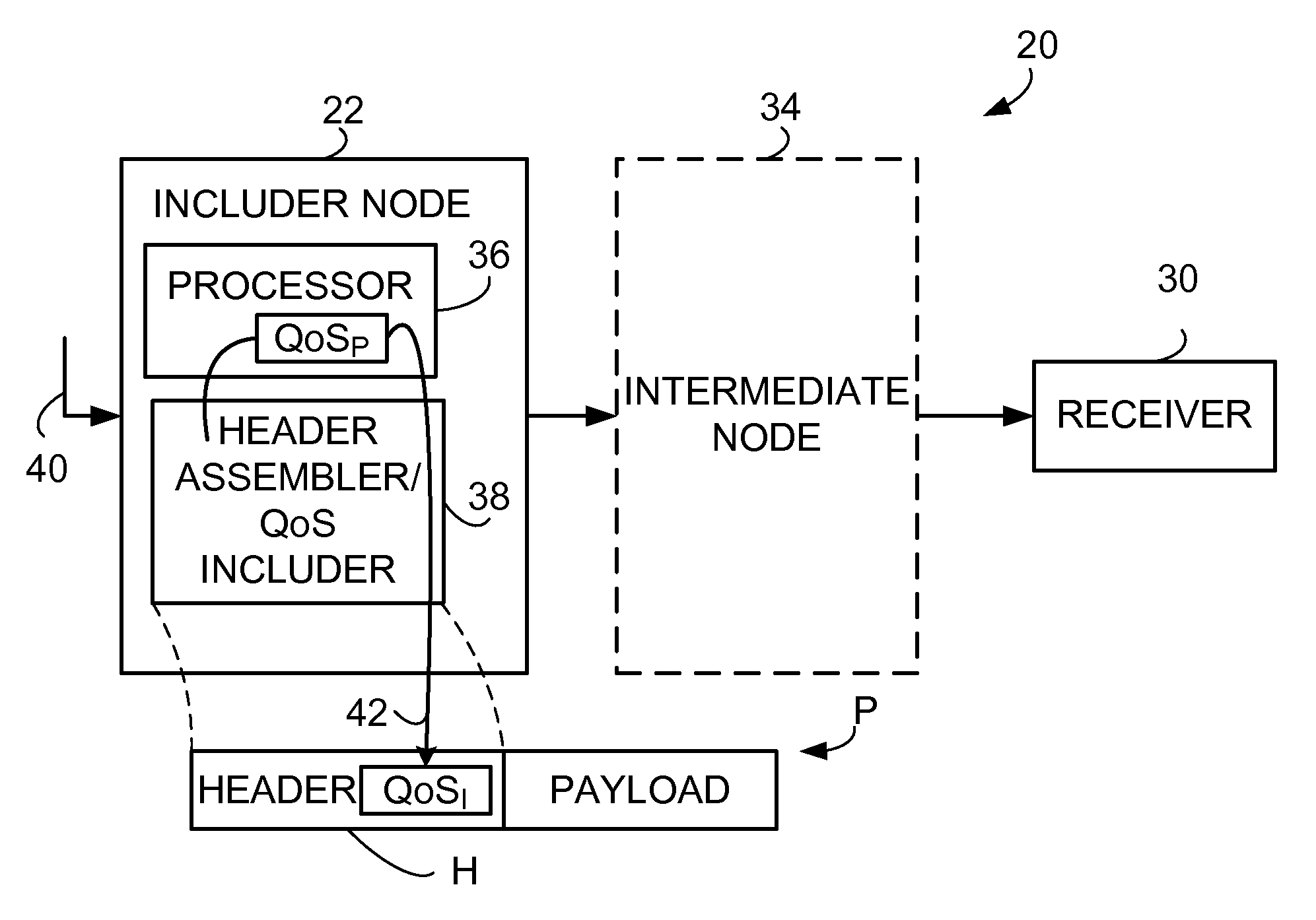

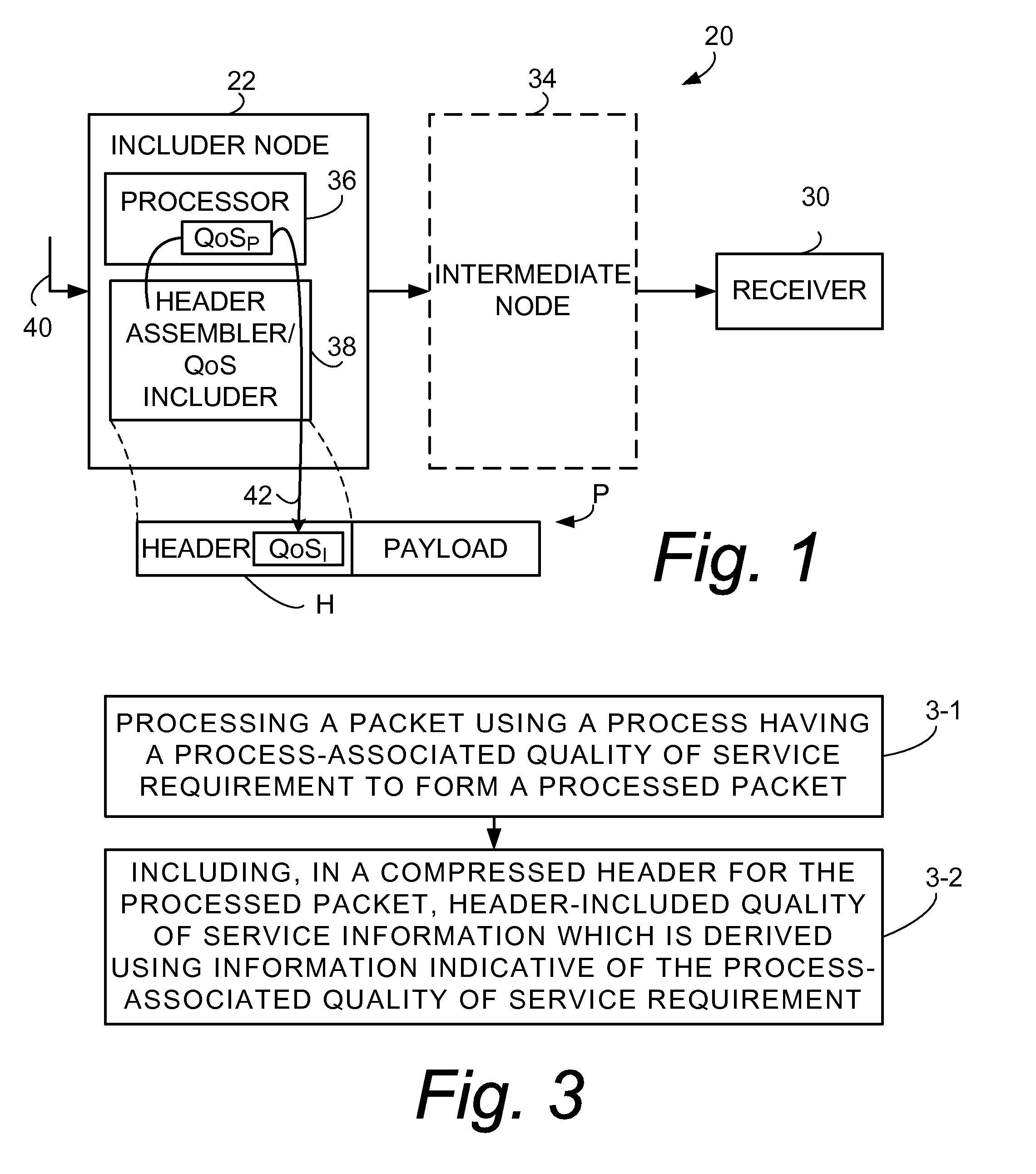

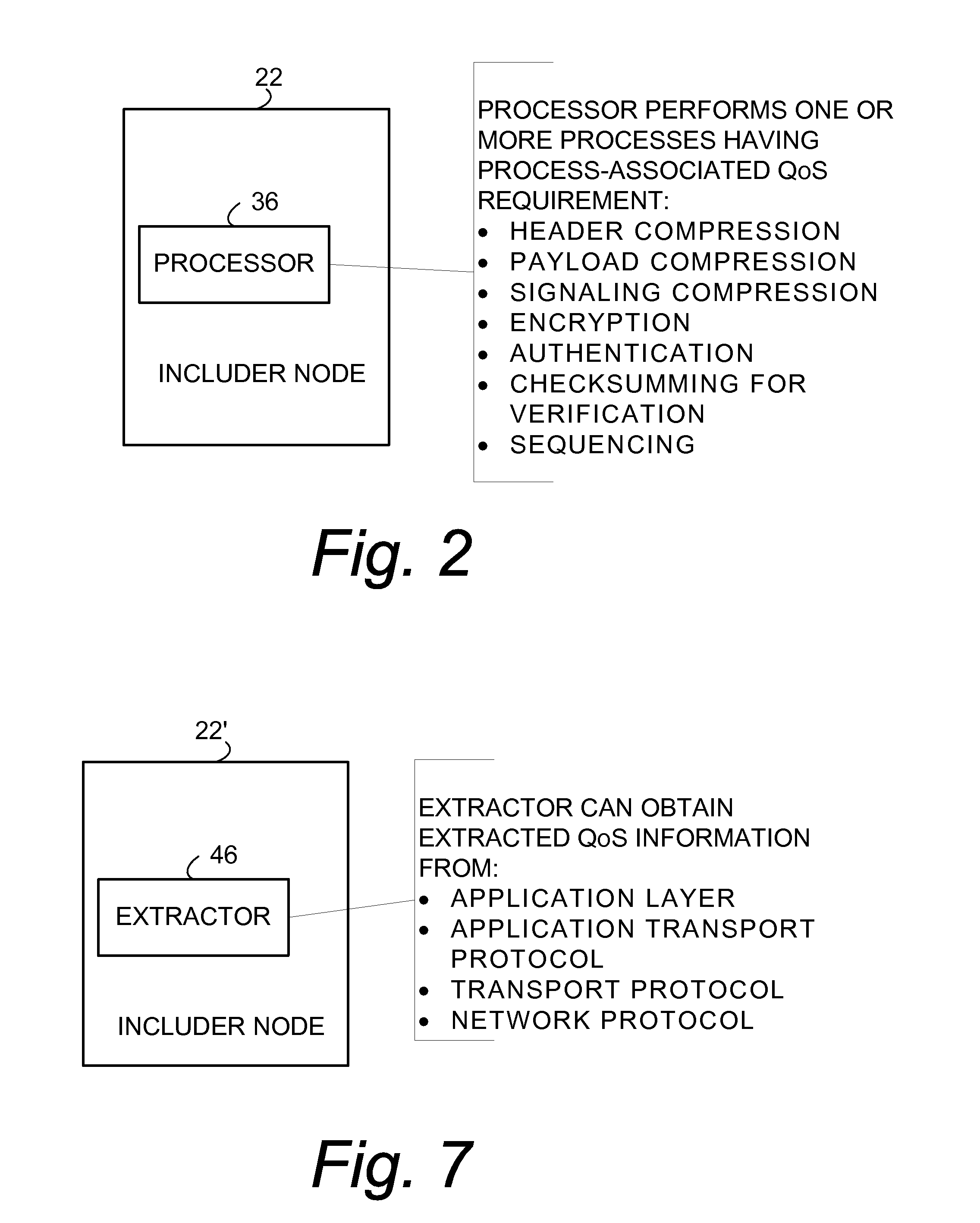

Inclusion of Quality of Service Indication in Header Compression Channel

Quality of service information (QoSI) is included with a compressed header of a data packet and can be utilized by nodes supporting the header compression channel to perform QoS enforcements at a sub-flow granularity level. A basic mode of a method comprises (1) processing a packet using a process having a process-associated quality of service requirement to form a processed packet, and (2) including, with a compressed header for the processed packet, the header-included quality of service information which is derived using information indicative of the process-associated quality of service requirement. In another mode the header-included quality of service information is derived both from the process-associated quality of service requirement and quality of service information extracted from the received packet.

Owner:TELEFON AB LM ERICSSON (PUBL)

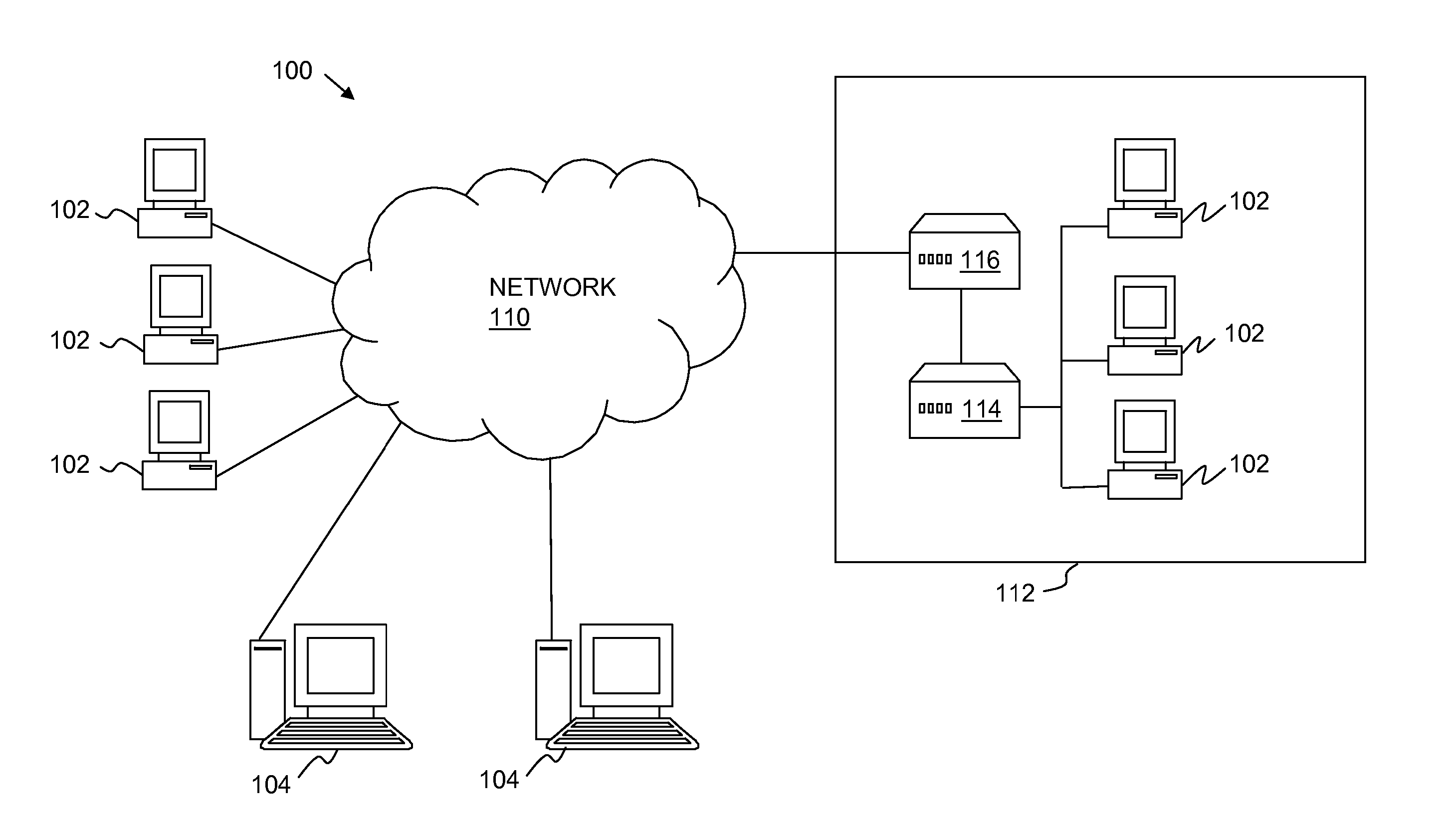

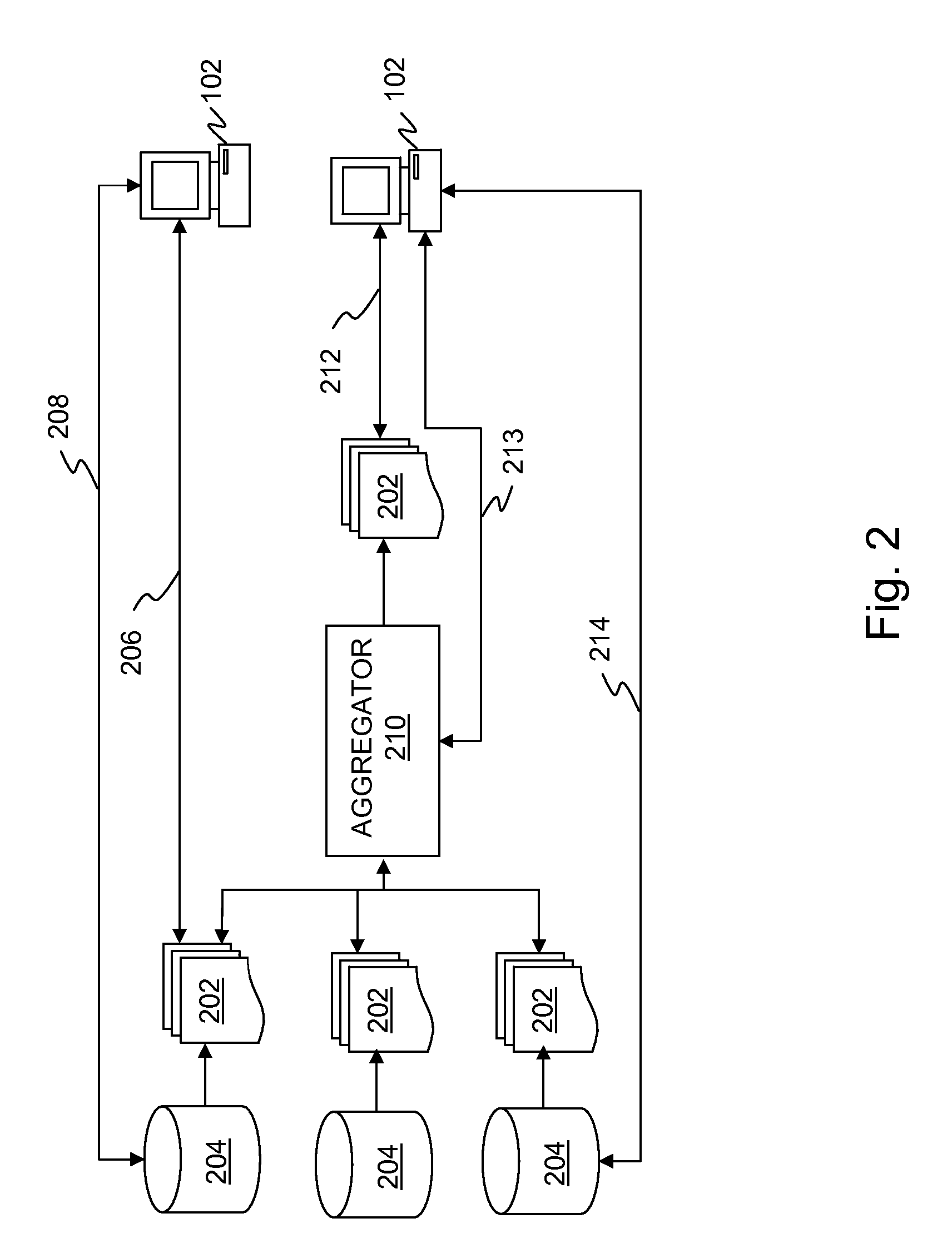

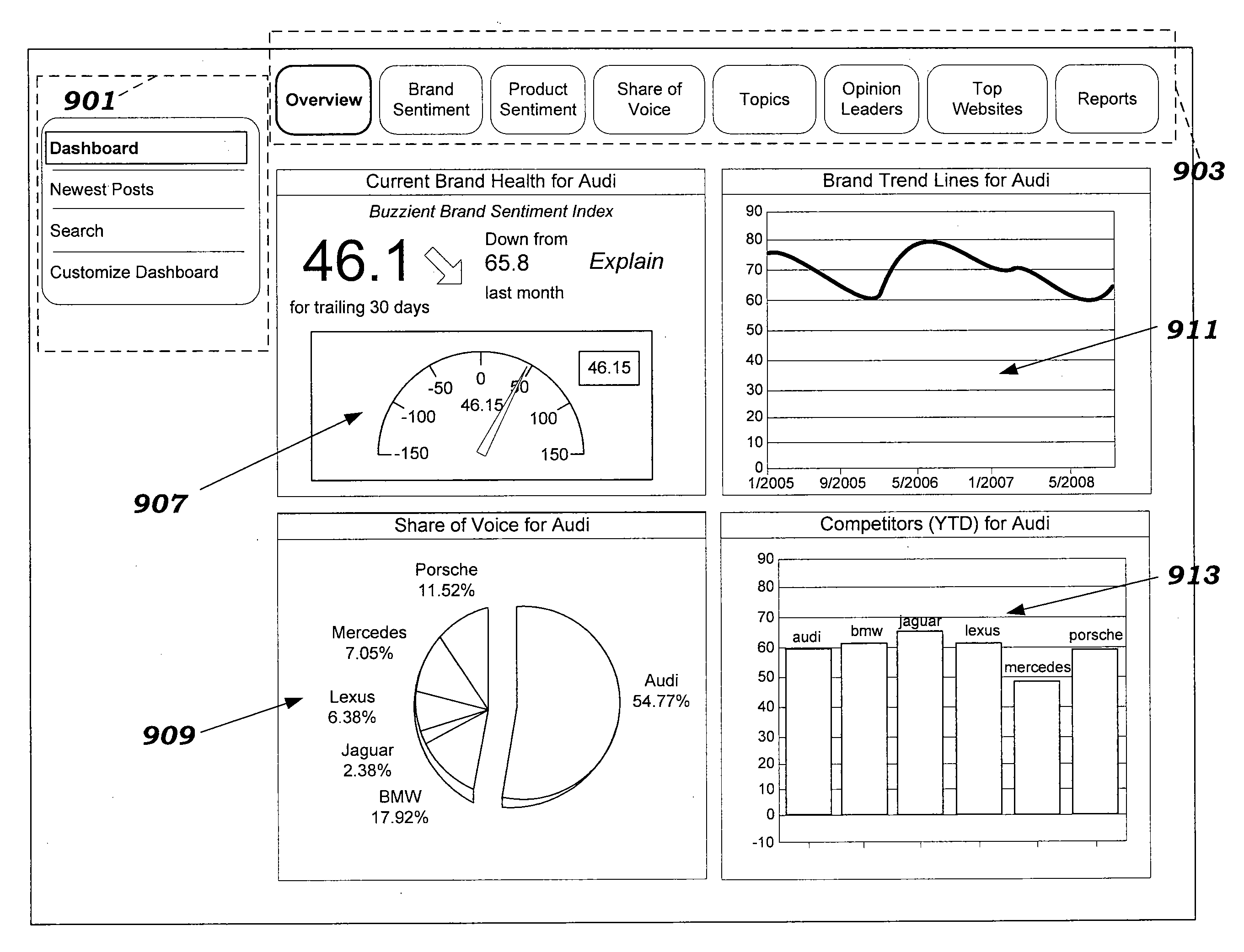

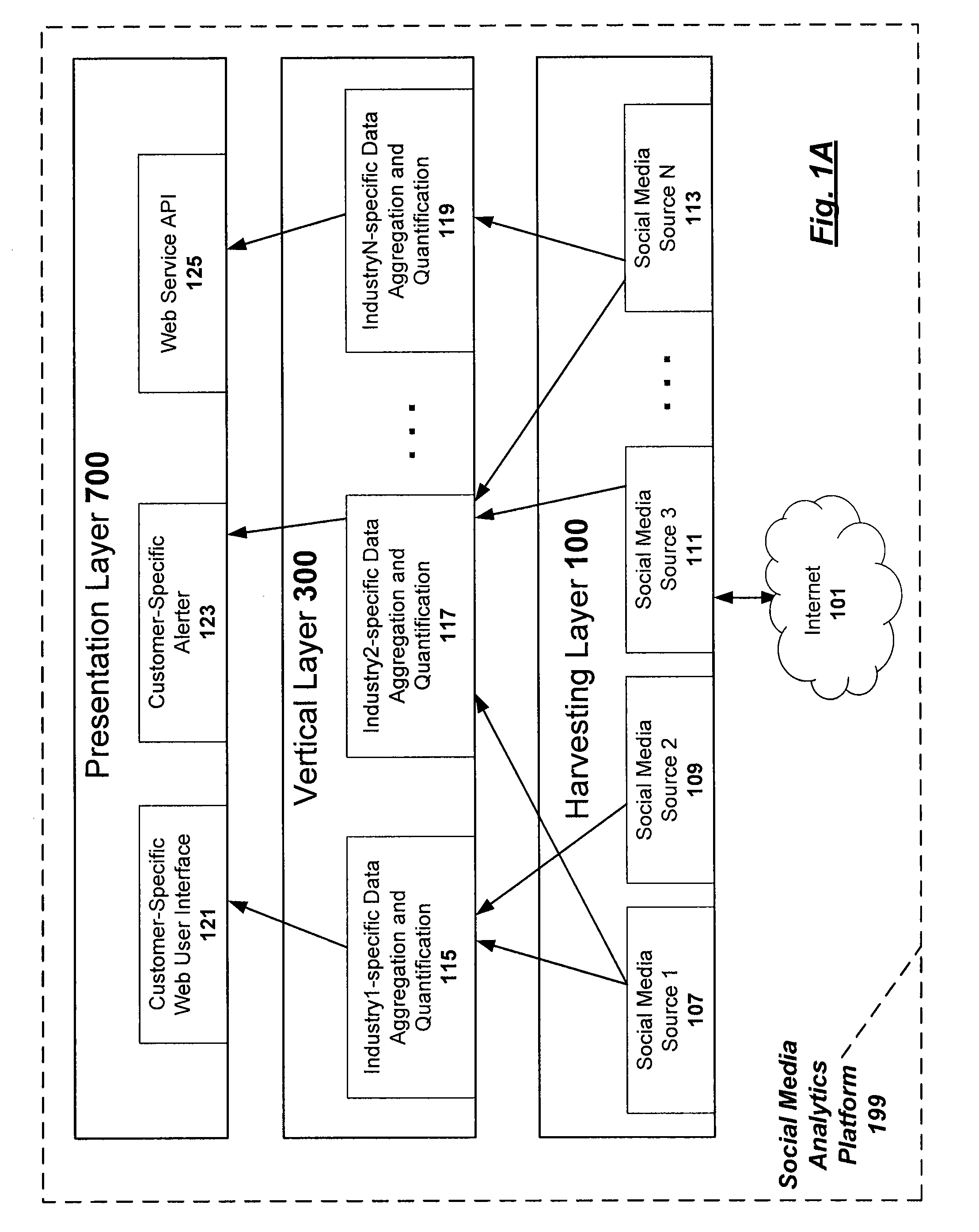

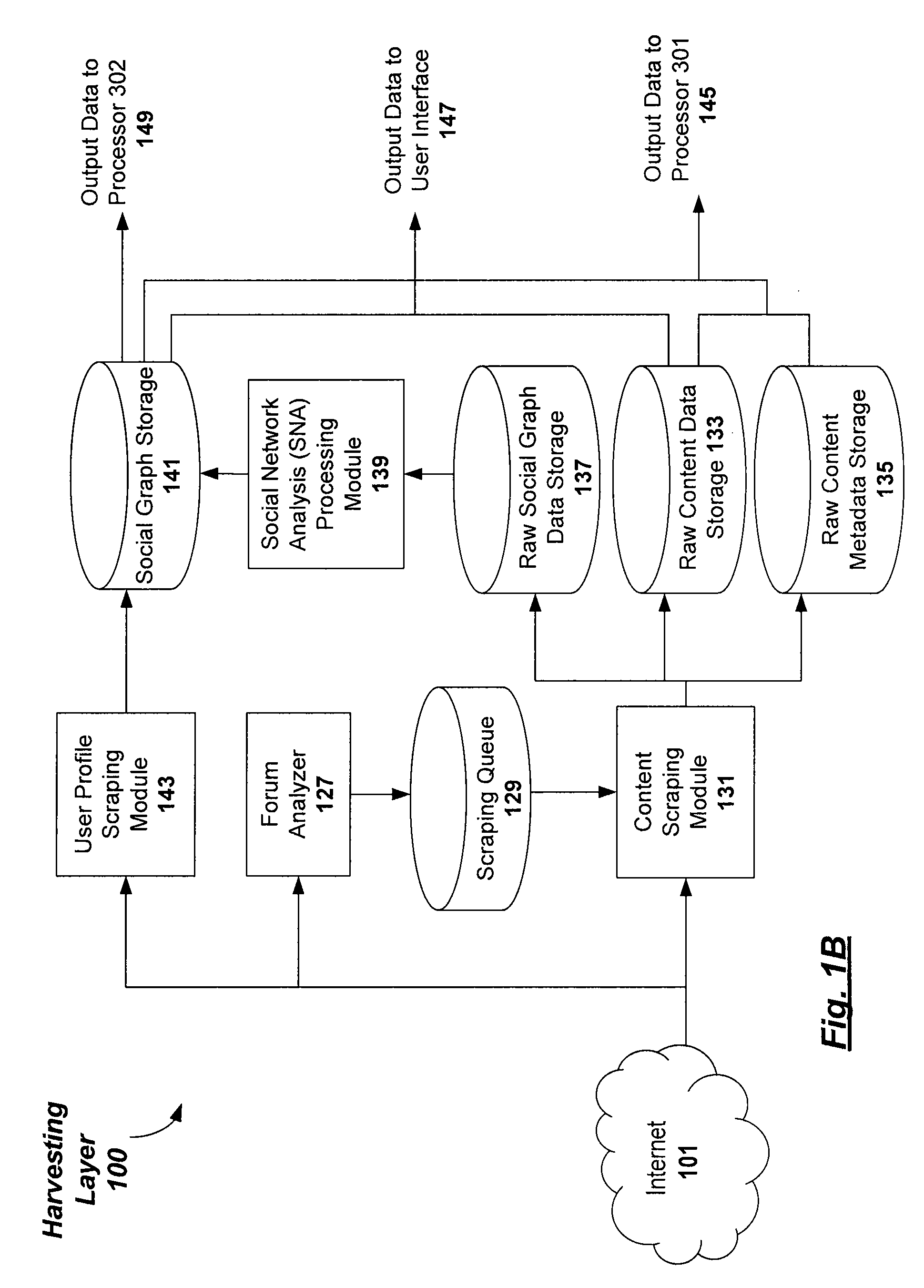

Displaying analytic measurement of online social media content in a graphical user interface

Methods, apparatuses, and computer-readable media for displaying one or more visualizations of quantitative measurements of the sentiment expressed among online social media participants concerning subject matter of interest in a category. Embodiments are configured for displaying how the sentiment among the online social media participants trends over time and how the sentiment trends with respect to social media source or groups of sources. Embodiments display the actionable information in a graphical interface in an intuitive and user-friendly manner at different levels of granularity that enables the quantified online social media content to be grouped and filtered in a variety of default and / or customizable ways.

Owner:BUZZIENT

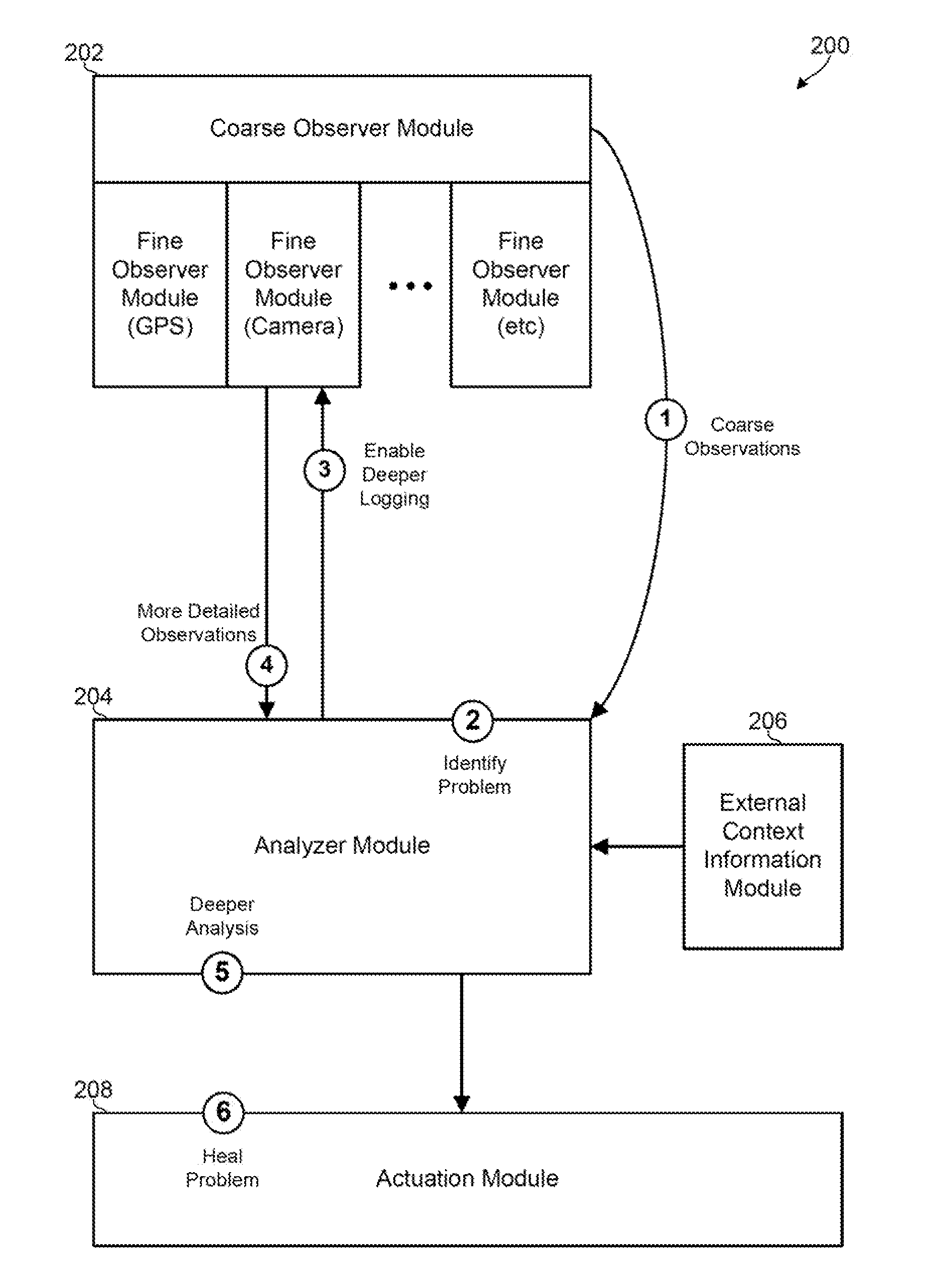

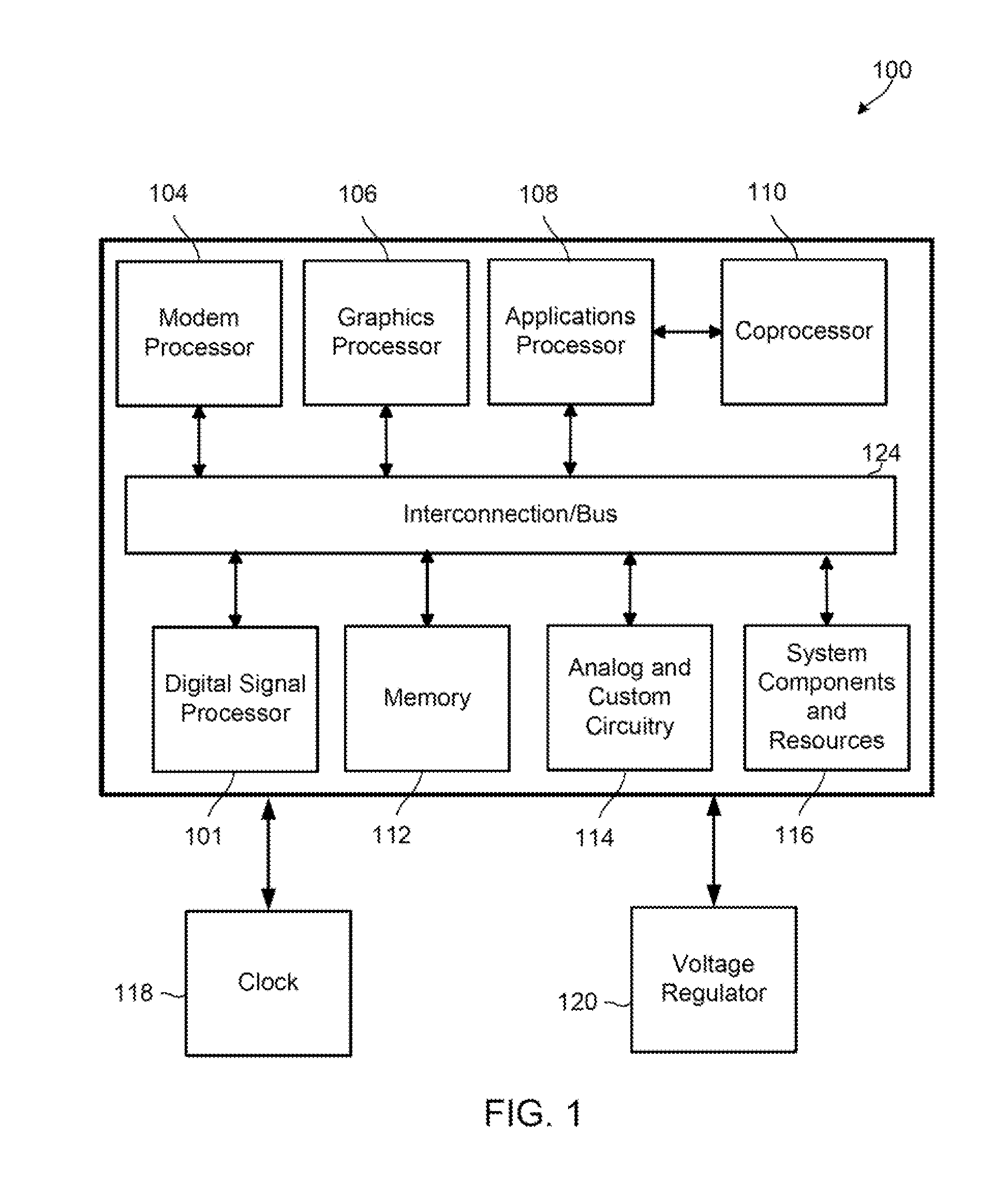

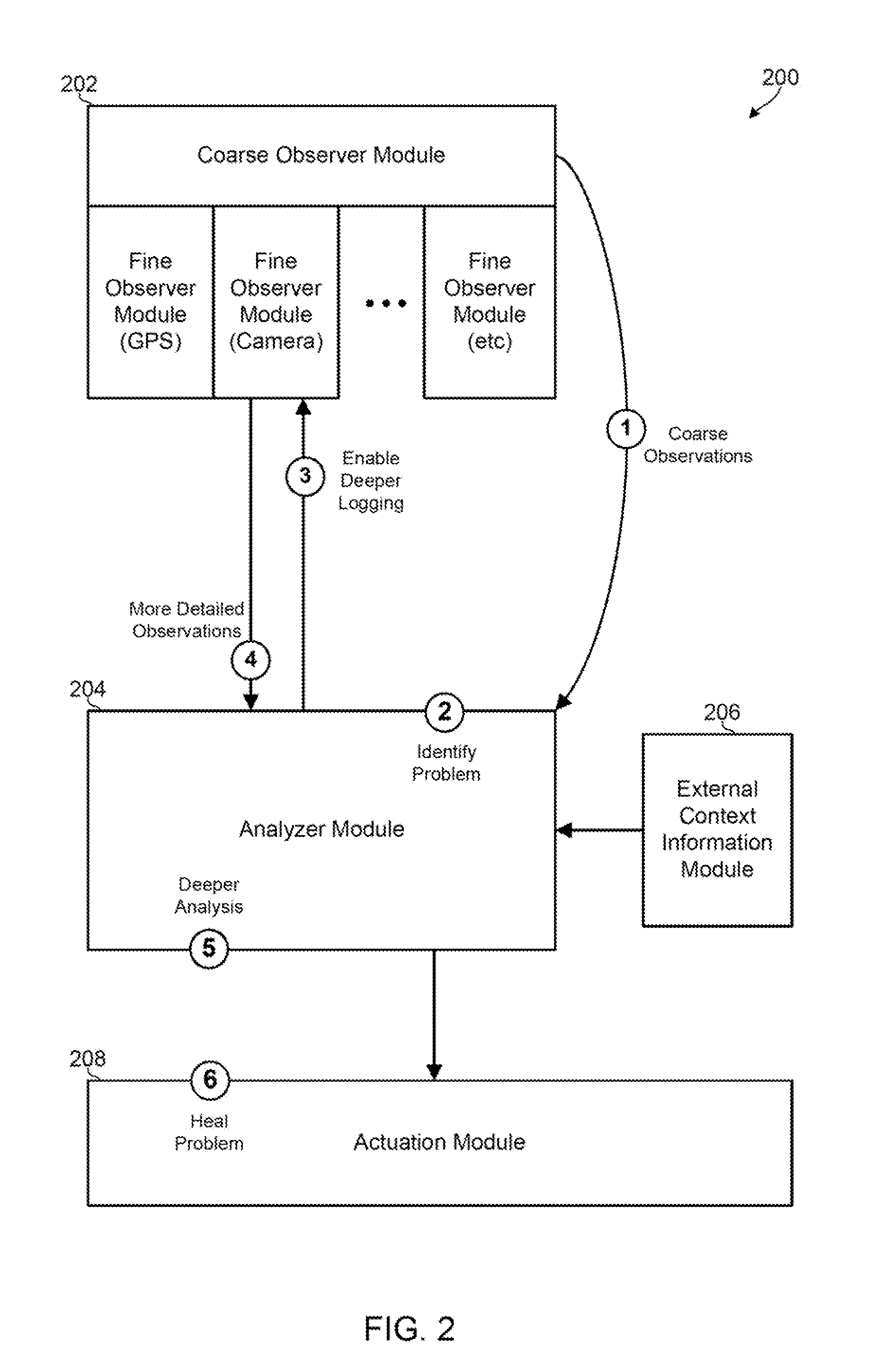

Adaptive Observation of Behavioral Features on a Mobile Device

Methods, devices and systems for detecting suspicious or performance-degrading mobile device behaviors intelligently, dynamically, and / or adaptively determine computing device behaviors that are to be observed, the number of behaviors that are to be observed, and the level of detail or granularity at which the mobile device behaviors are to be observed. The various aspects efficiently identify suspicious or performance-degrading mobile device behaviors without requiring an excessive amount of processing, memory, or energy resources.

Owner:QUALCOMM INC

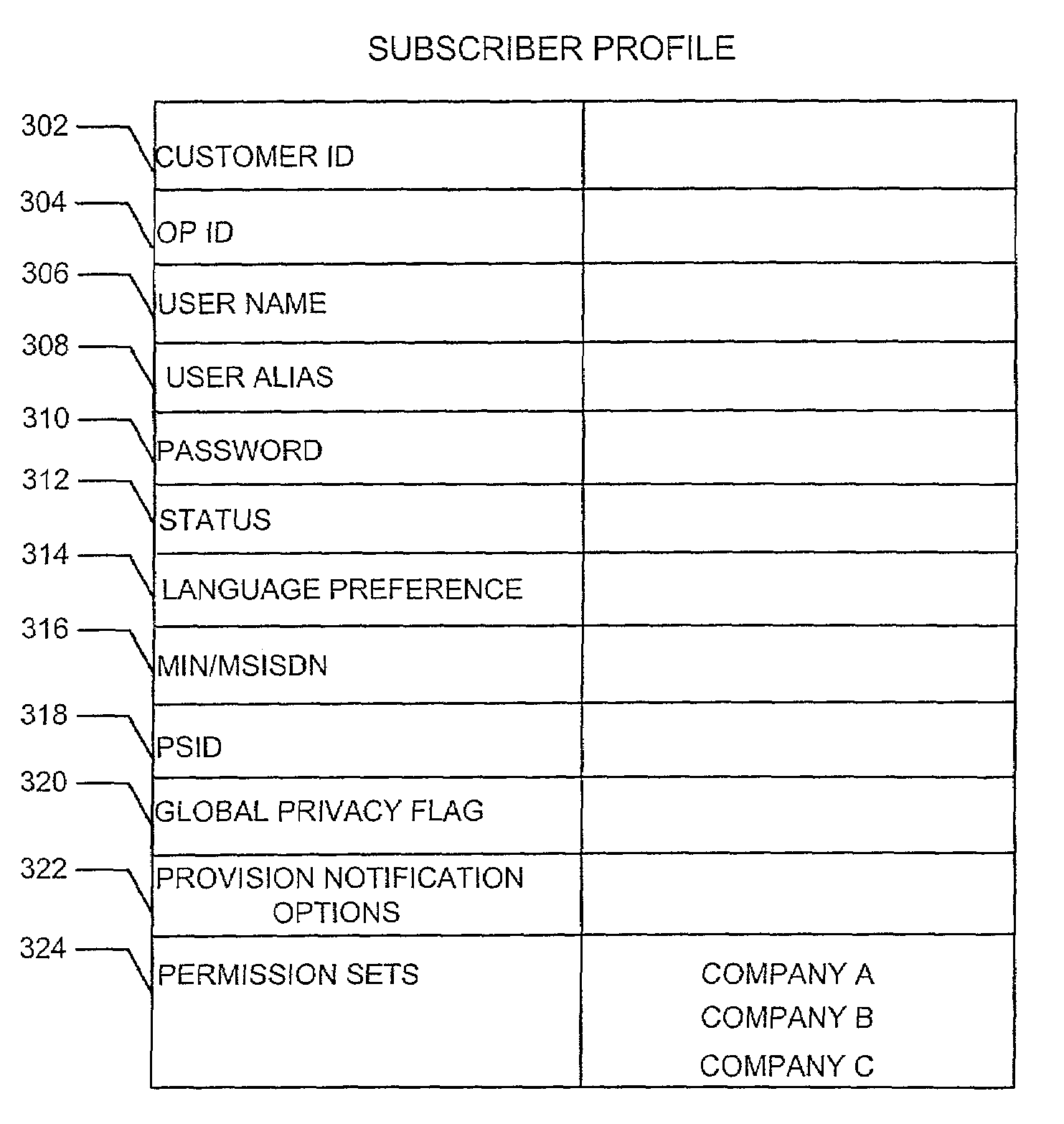

Method and system for managing location information for wireless communications devices

ActiveUS7203752B2Access controlInformation formatSpecial service for subscribersGranularityClient-side

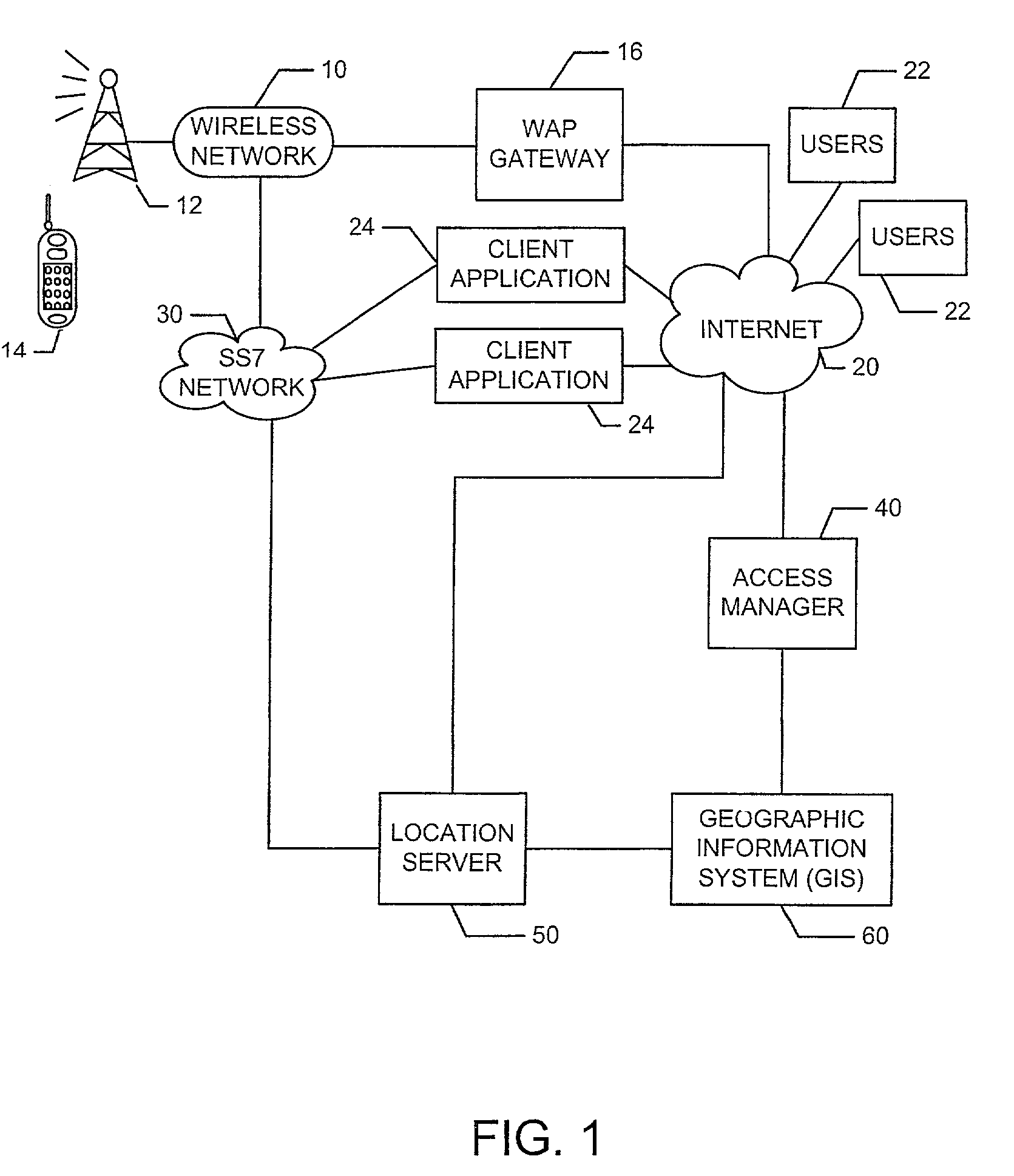

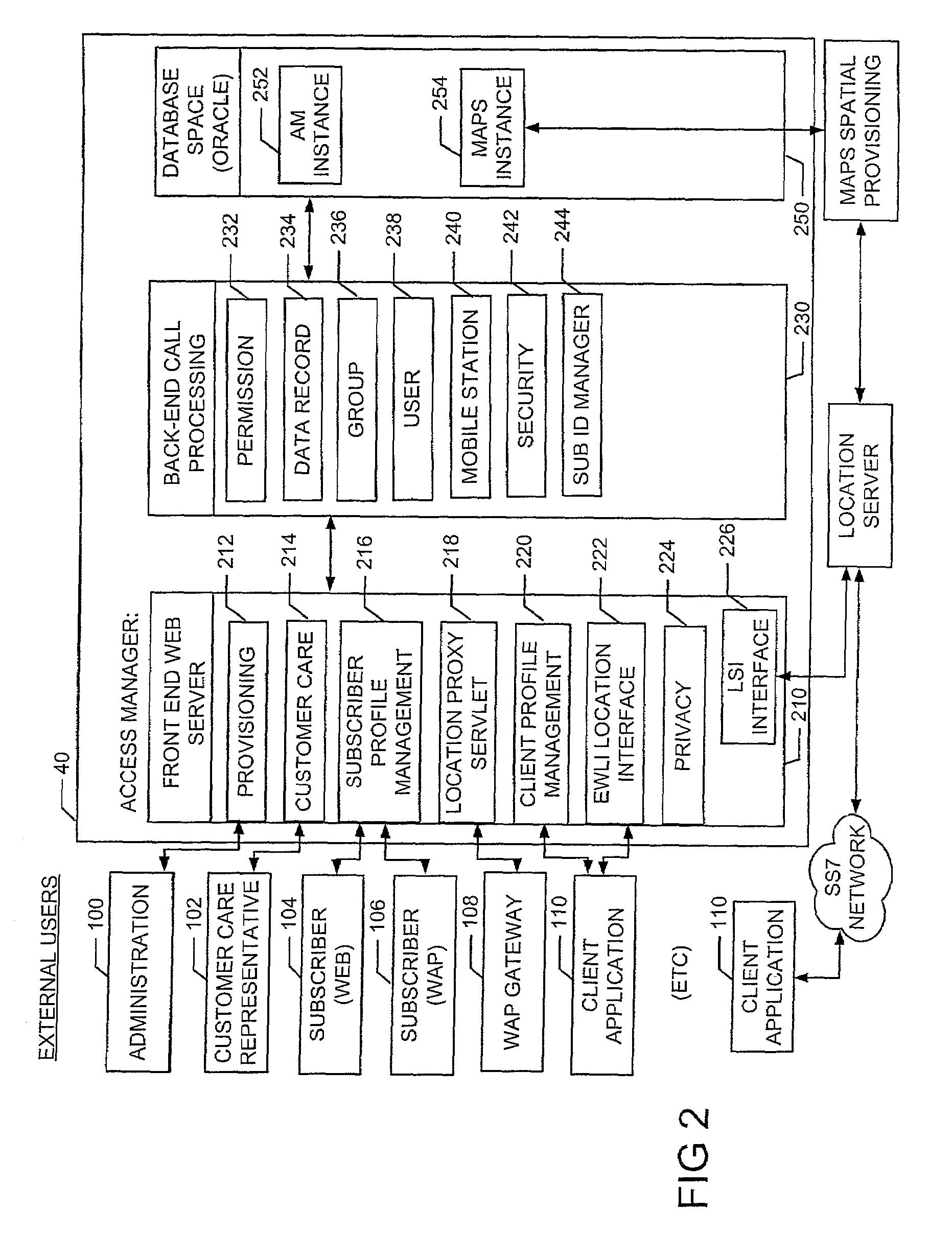

A system and method provide for establishment and use of permission sets for subscribers where client applications in a wireless communication environment are requesting location information for a particular wireless communications device from a provider of such information. The system described herein provides the capability for a wireless communications device operator to establish a profile wherein limitations may be placed on the provision of such location information based on such things as the requesting party, spatial and temporal limitations, as well as granularity. The system described herein may be further configured such that an authentication process is preformed for client application seeking location information which would require the registration of such client applications with a centralized processing system.

Owner:VIDEOLABS

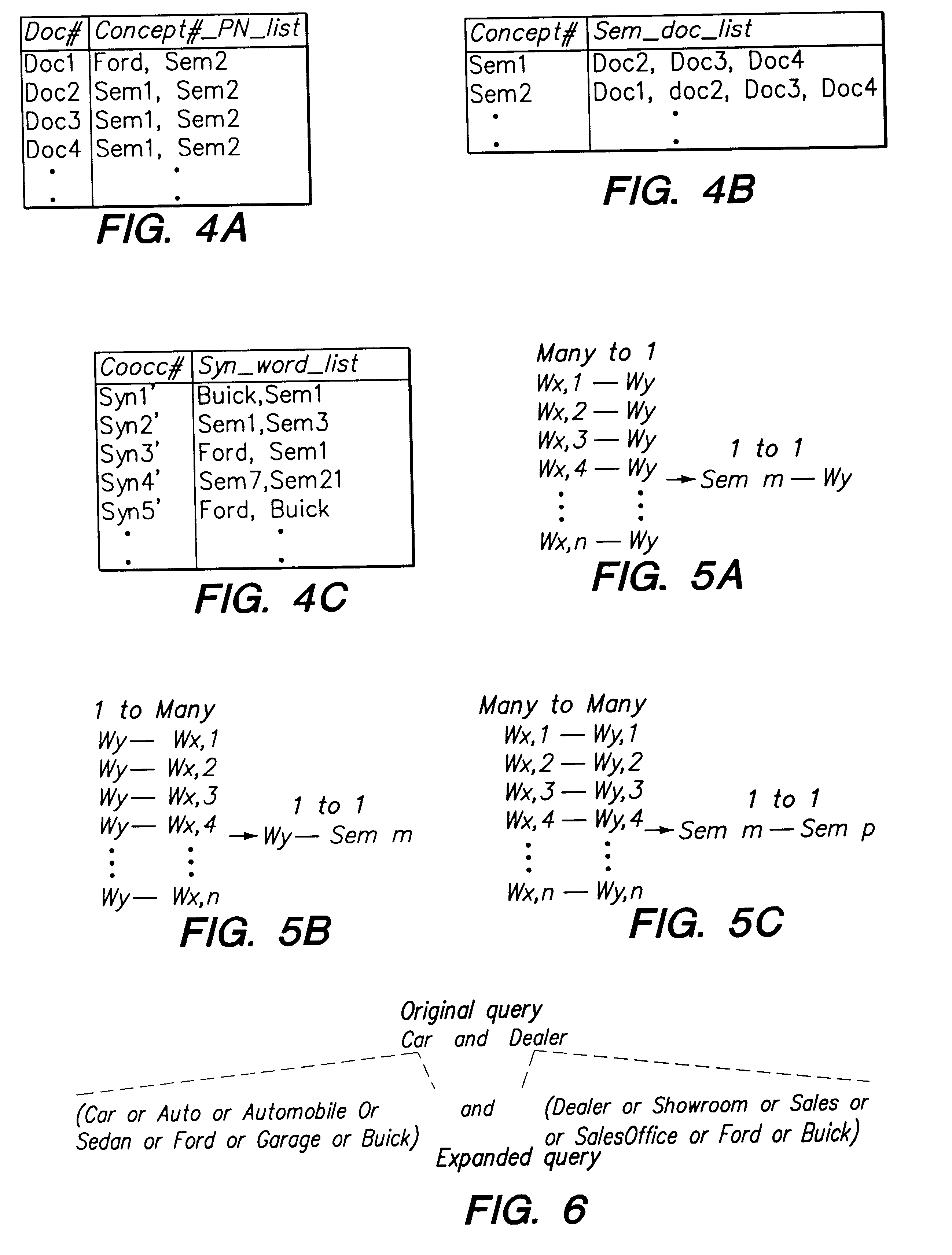

Supporting web-query expansion efficiently using multi-granularity indexing and query processing

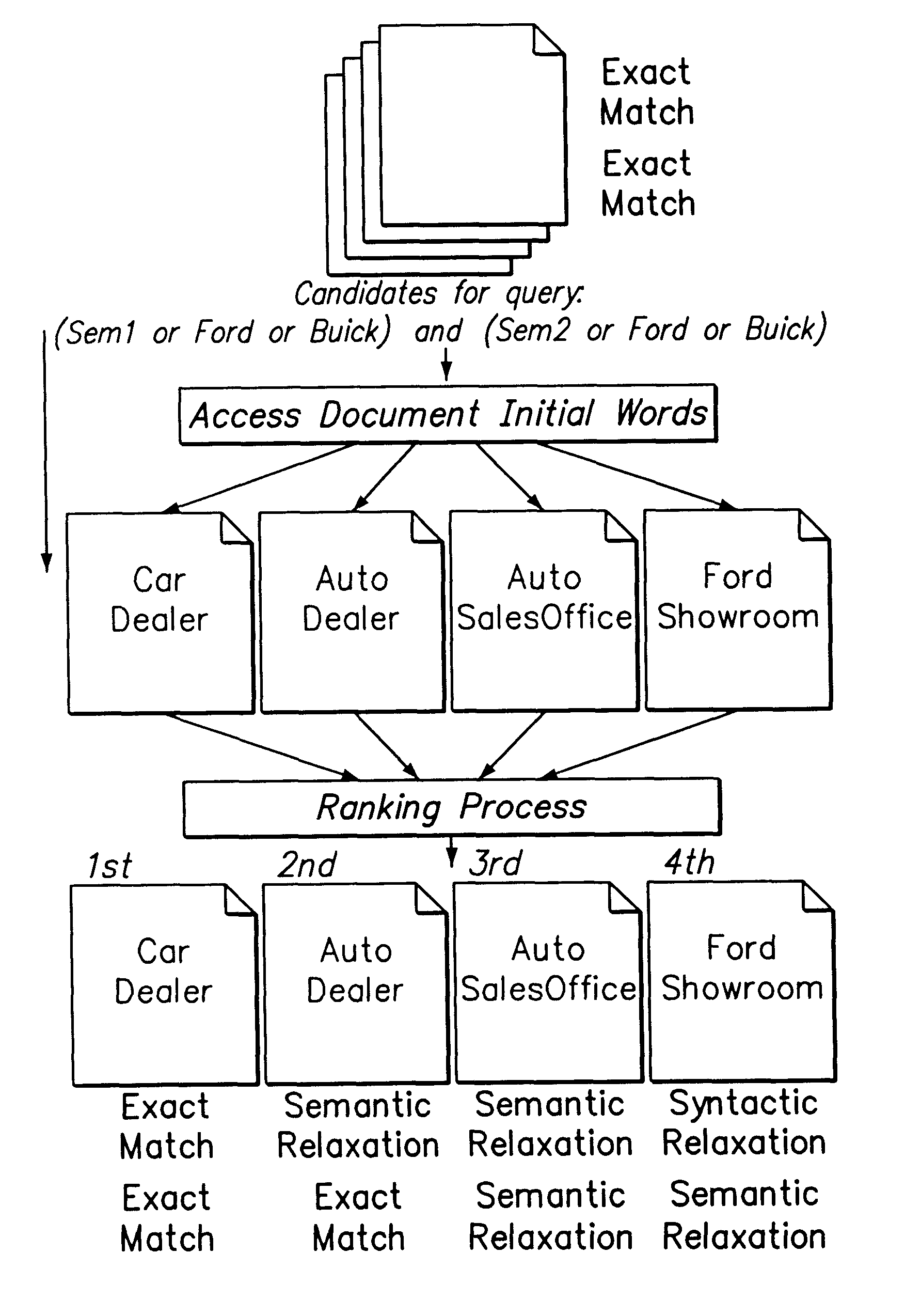

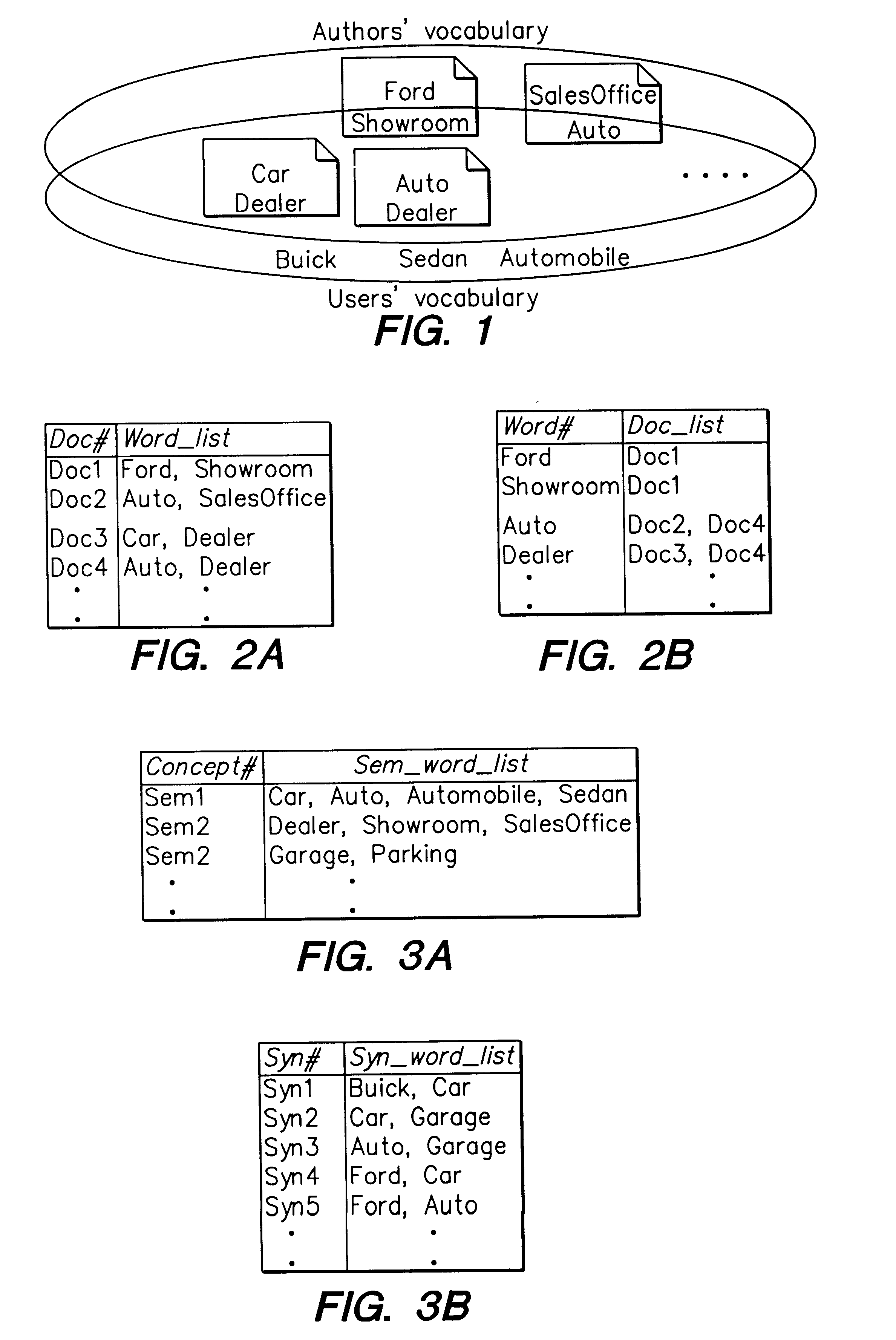

A method and apparatus for efficient query expansion using reduced size indices and for progressive query processing. Queries are expanded conceptually, using semantically similar and syntactically related words to those specified by the user in the query to reduce the chances of missing relevant documents. The notion of a multi-granularity information and processing structure is used to support efficient query expansion, which involves an indexing phase, a query processing and a ranking phase. In the indexing phase, semantically similar words are grouped into a concept which results in a substantial index size reduction due to the coarser granularity of semantic concepts. During query processing, the words in a query are mapped into their corresponding semantic concepts and syntactic extensions, resulting in a logical expansion of the original query. Additionally, the processing overhead is avoided. The initial query words can then be used to rank the documents in the answer set on the basis of exact, semantic and syntactic matches and also to perform progressive query processing.

Owner:NEC CORP

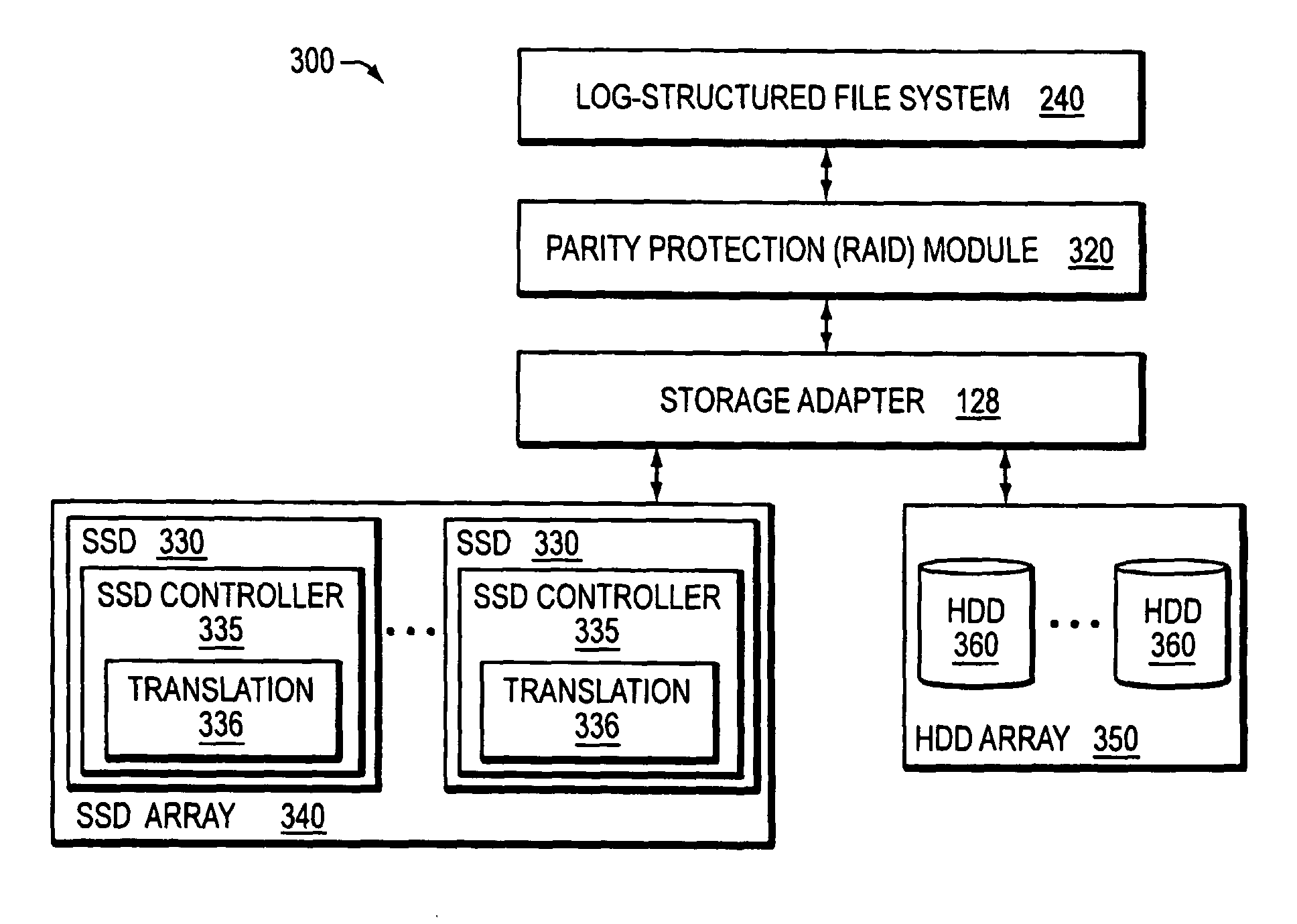

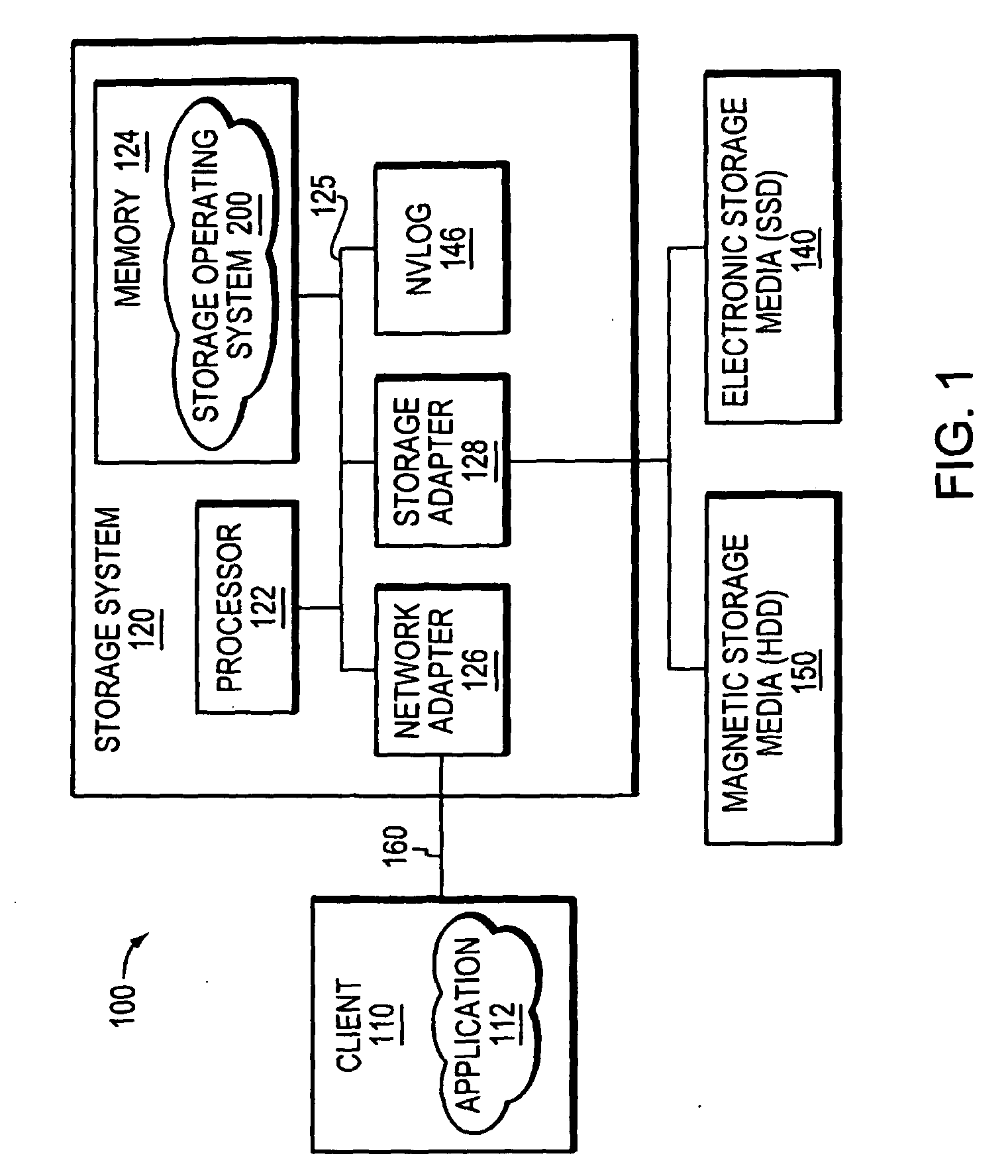

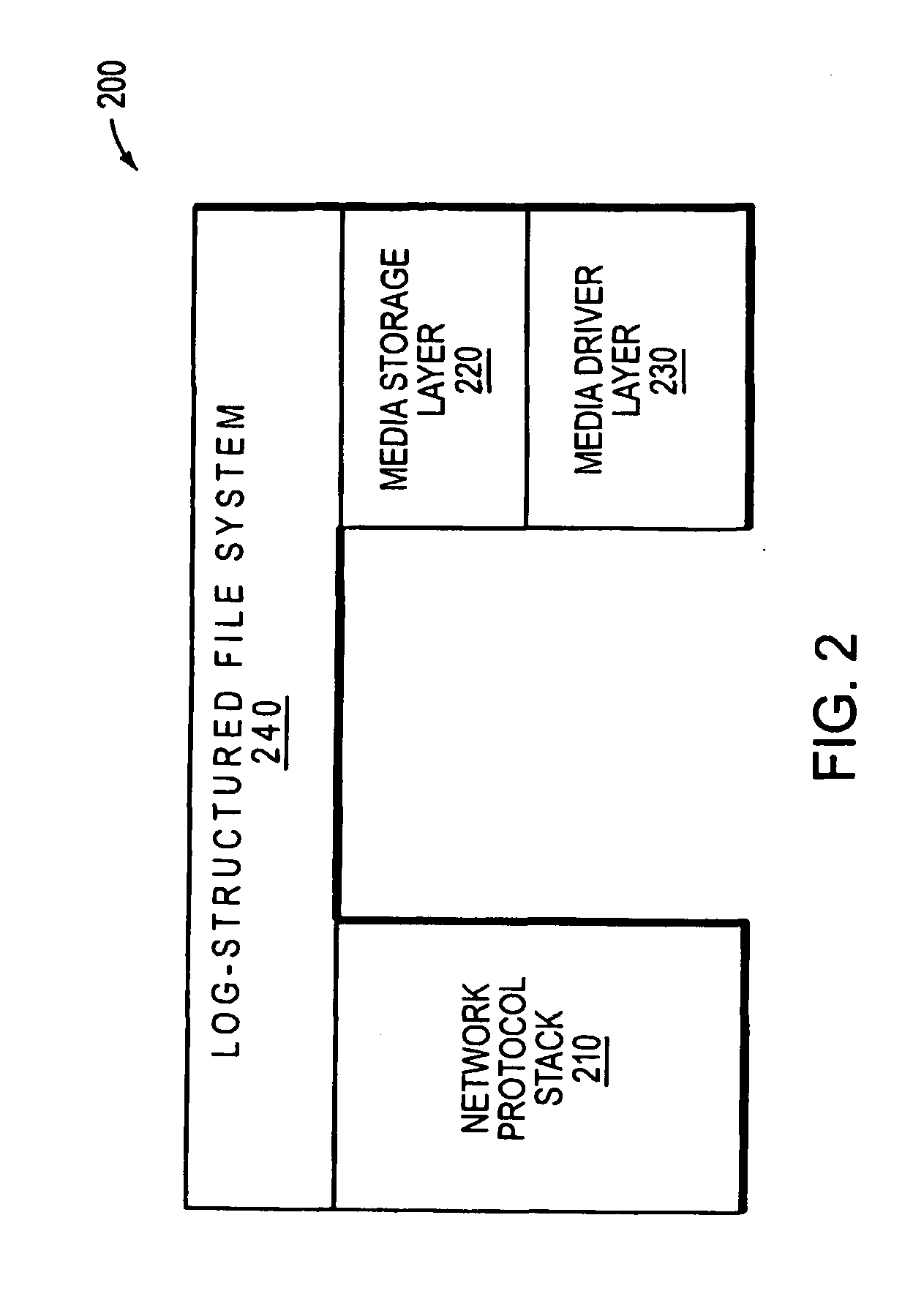

Hybrid media storage system architecture

ActiveUS20110035548A1Improved performance characteristicsOvercome disadvantagesDigital data information retrievalError detection/correctionFile systemGranularity

Owner:NETWORK APPLIANCE INC

Network analysis sample management process

InactiveUS20050076113A1Maintain validityDigital computer detailsData switching networksManagement processGranularity

Embodiments of the invention may further provide a method for adjusting the granularity of a network analysis sample while maintaining validity. The method includes calculating states for each device in the network for a first number of predetermined equal intervals within a first sample window, selecting a second sample window that is smaller than the first sample window, selecting a second predetermined number of equal intervals for the second sample window, and determining an interval from the first number of predetermined intervals that immediately precedes an initial interval of the second predetermined number of equal intervals. The method further includes using the calculated state from the preceding interval to calculate a starting state for the initial interval, and calculating state for each device in the network for each of the second predetermined number of equal intervals.

Owner:FINISAR

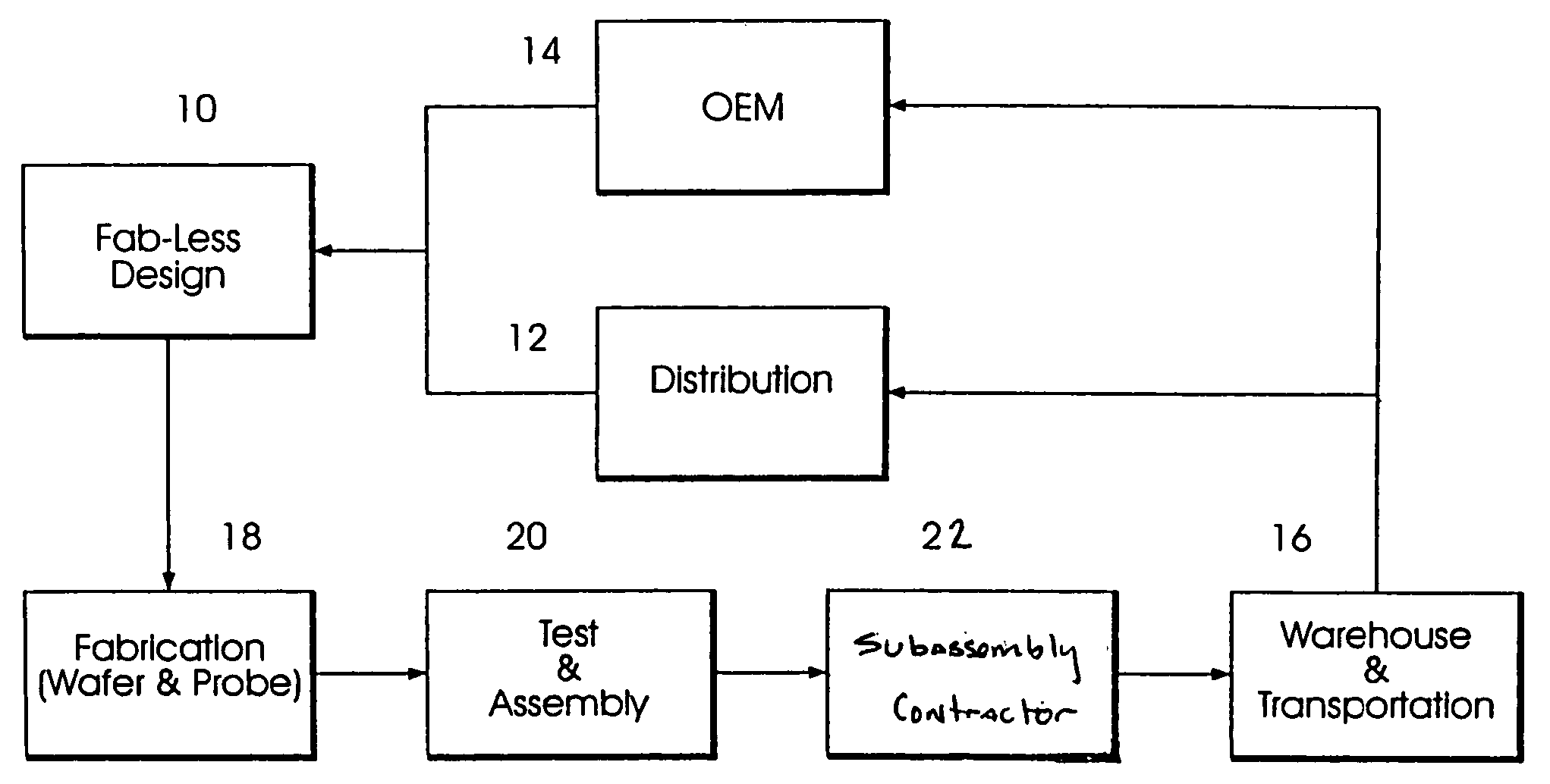

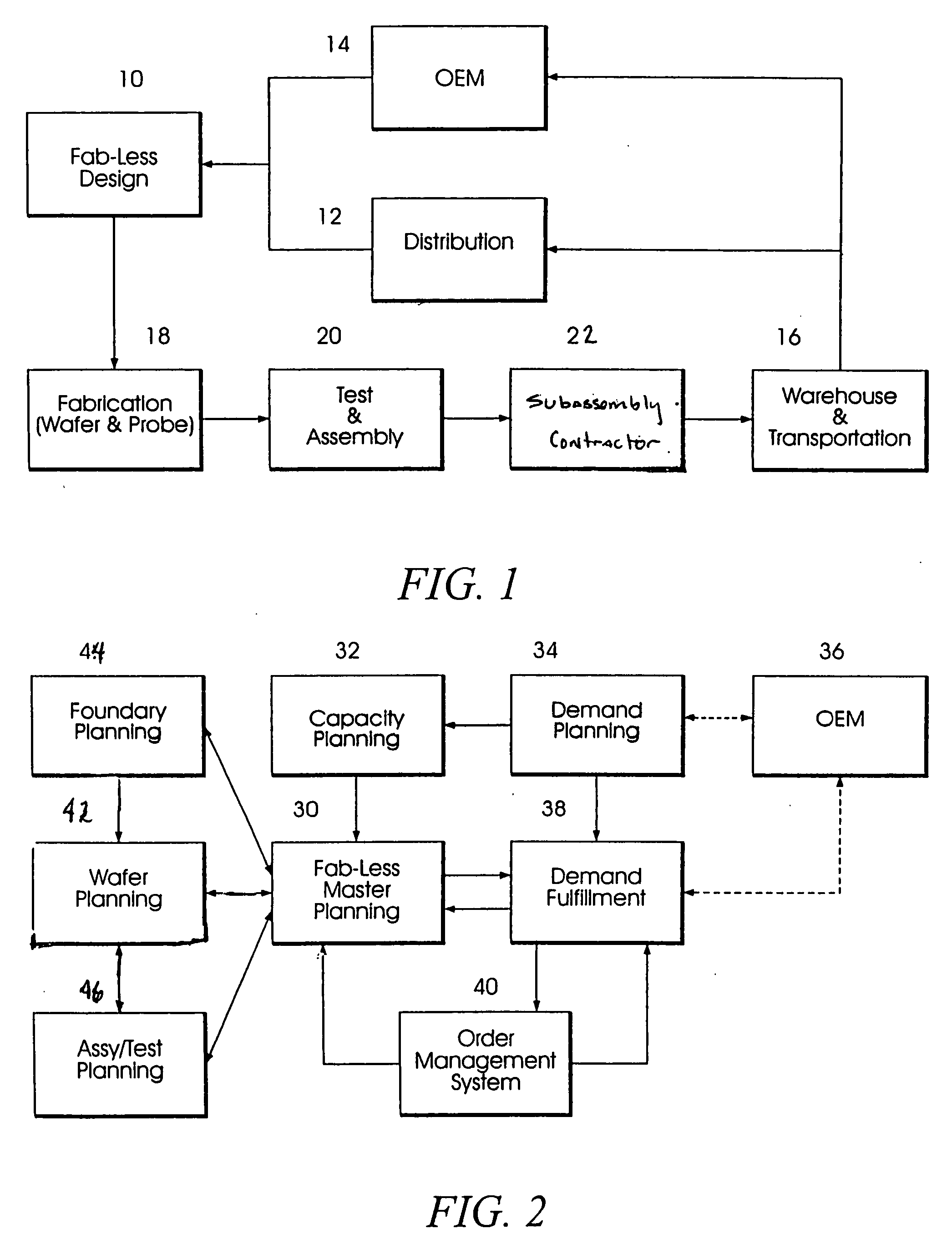

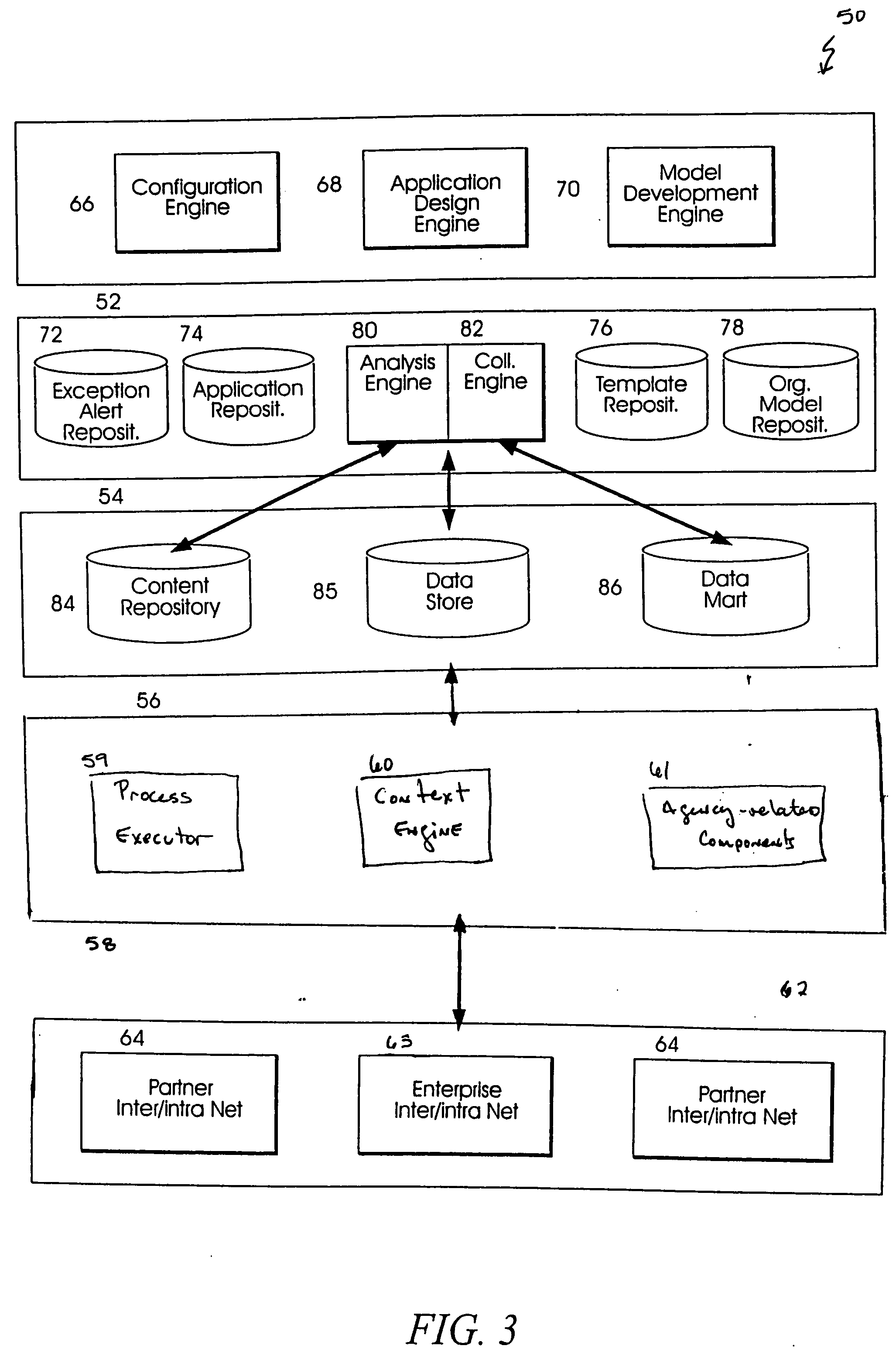

Decision support system for supply chain management

InactiveUS20050209732A1Eliminates and reduces disadvantageEliminates and reduces and problemHand manipulated computer devicesDigital data processing detailsGranularityEngineering

A decision support system for supply chain management is disclosed. In one embodiment, an organizational structure of an enterprise value chain is mock-constructed as a framework model and solutions are logically distributed through the organization in accordance with the model. Product management, demand management and inventory management are performed on an exception basis and these processes are implemented incrementally and organizationally such that enterprise activities may be tracked and monitored, by exception, at multiple levels of granularity. In a general aspect, the invention enables collaborative ordering, forecasting, inventory and replenishment management by implementing such systems through an enterprise organizational model.

Owner:AUDIMOOLAM SRINIVASARAGAVAN +1

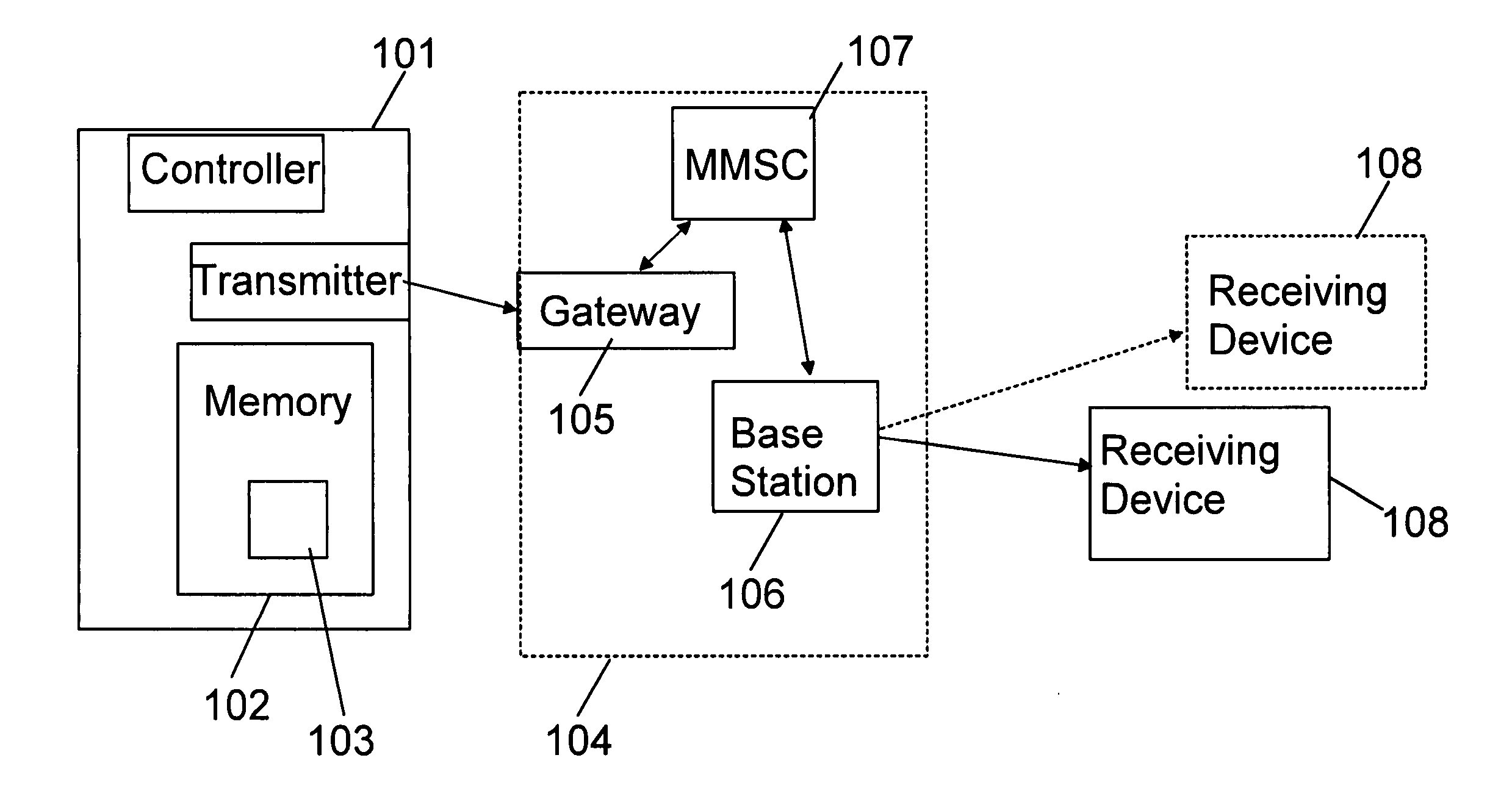

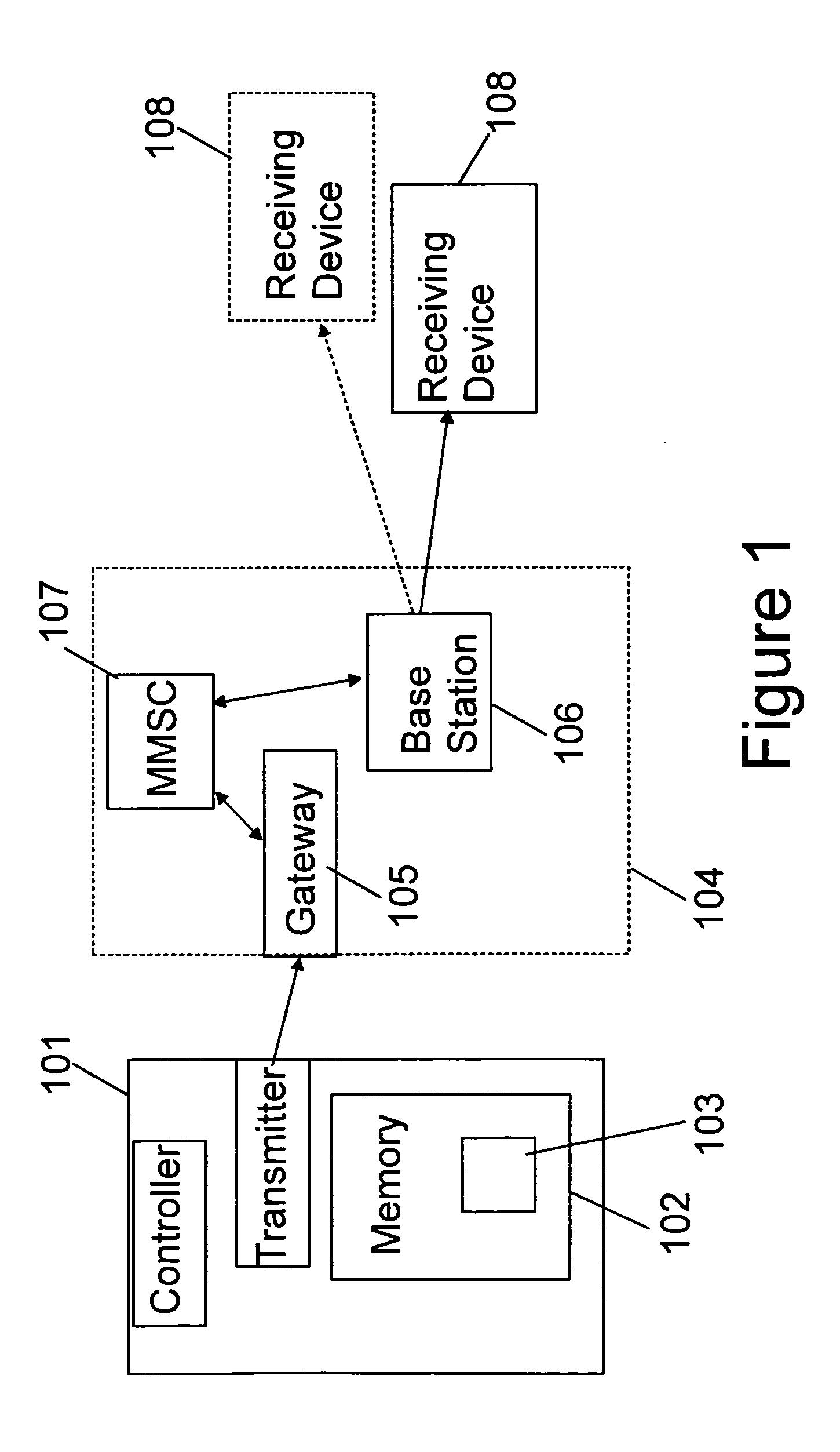

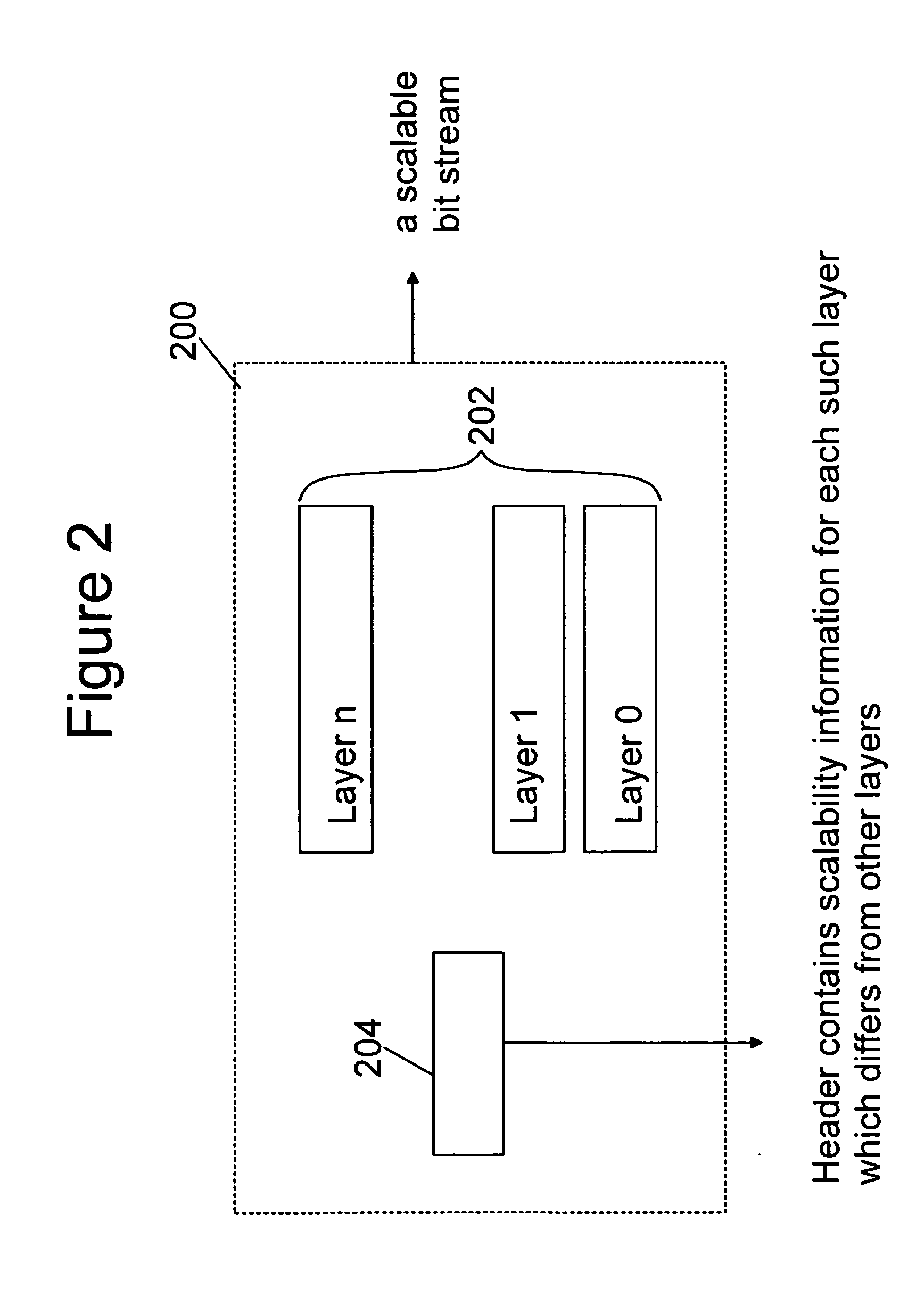

Coding, storage and signalling of scalability information

ActiveUS20060256851A1Reduce computational complexityAvoid inclusionsColor television with pulse code modulationColor television with bandwidth reductionExtensibilityComputer architecture

A method and device for encoding, decoding, storage and transmission of a scalable data stream to include layers having different coding properties including: producing one or more layers of the scalable data stream, wherein the coding properties include at least one of the following: Fine granularity scalability information; Region-of-interest scalability information; Sub-sample scalable layer information; Decoding dependency information; and Initial parameter sets, and signaling the layers with the characterized coding property such that they are readable by a decoder without the need to decode the entire layers. A corresponding method of encoding, decoding, storage, and transmission of a scalable bit stream is also disclosed, wherein at least two scalability layers are present and each layer has a set of at least one property, such as those above identified.

Owner:NOKIA TECHNOLOGLES OY

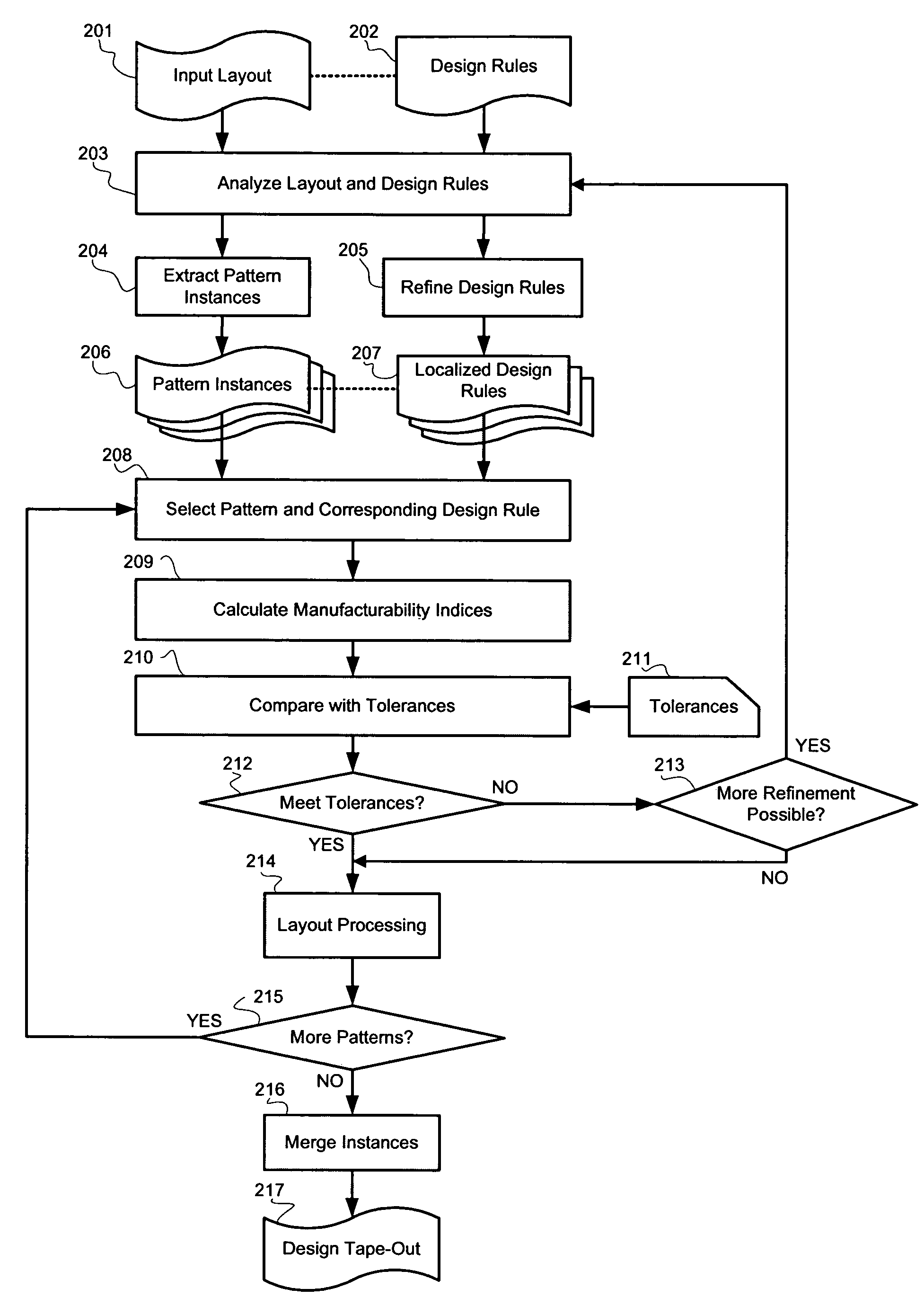

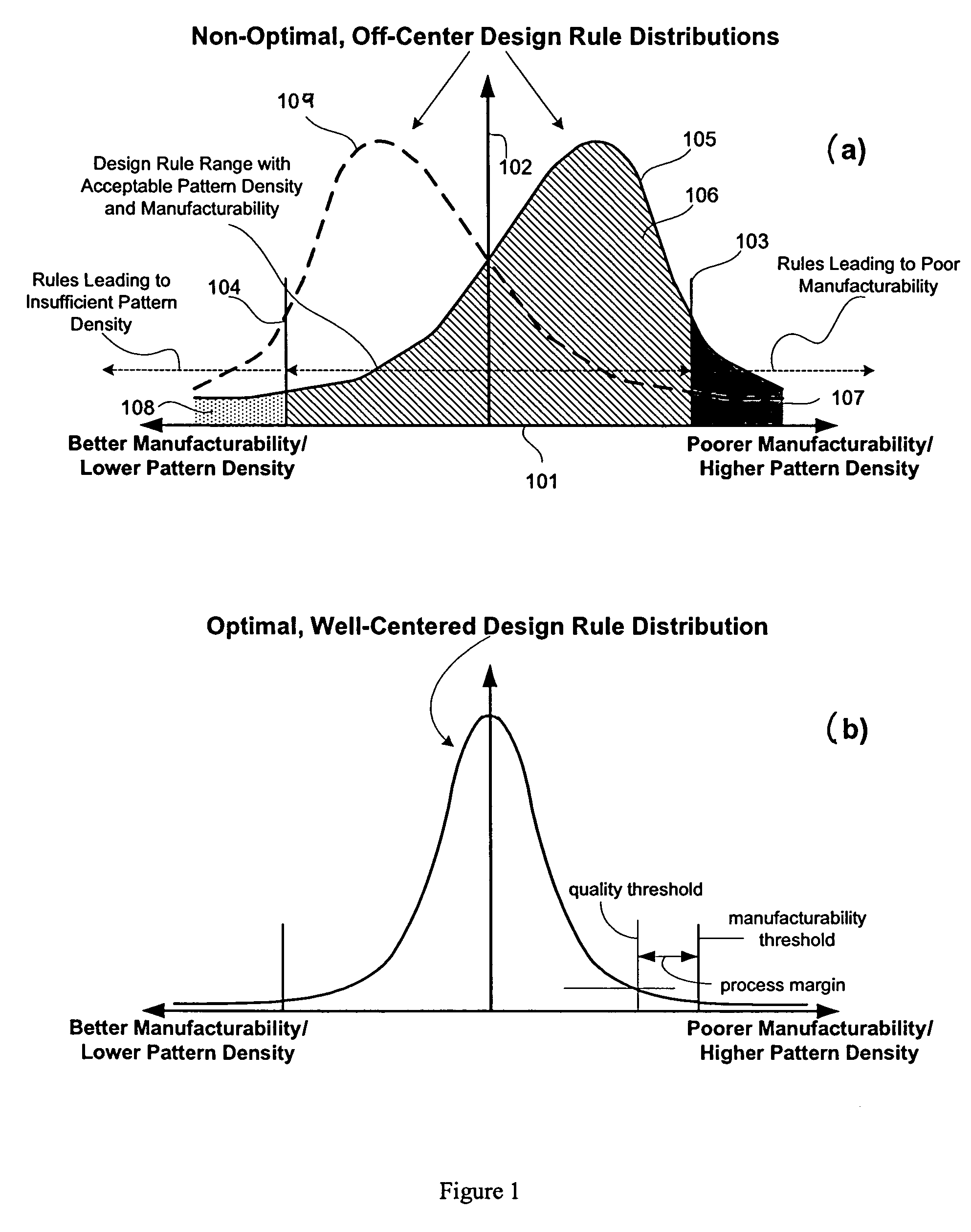

System for designing integrated circuits with enhanced manufacturability

ActiveUS7523429B2Improve manufacturabilityLittle interferencePressersMattress sewingGranularityEngineering

A system and method for integrated circuit design are disclosed to enhance manufacturability of circuit layouts through generation of hierarchical design rules which capture localized layout requirements. In contrast to conventional techniques which apply global design rules, the disclosed IC design system and method partition the original design layout into a desired level of granularity based on specified layout and integrated circuit properties. At that localized level, the design rules are adjusted appropriately to capture the critical aspects from a manufacturability standpoint. These adjusted design rules are then used to perform localized layout manipulation and mask data conversion.

Owner:APPLIED MATERIALS INC

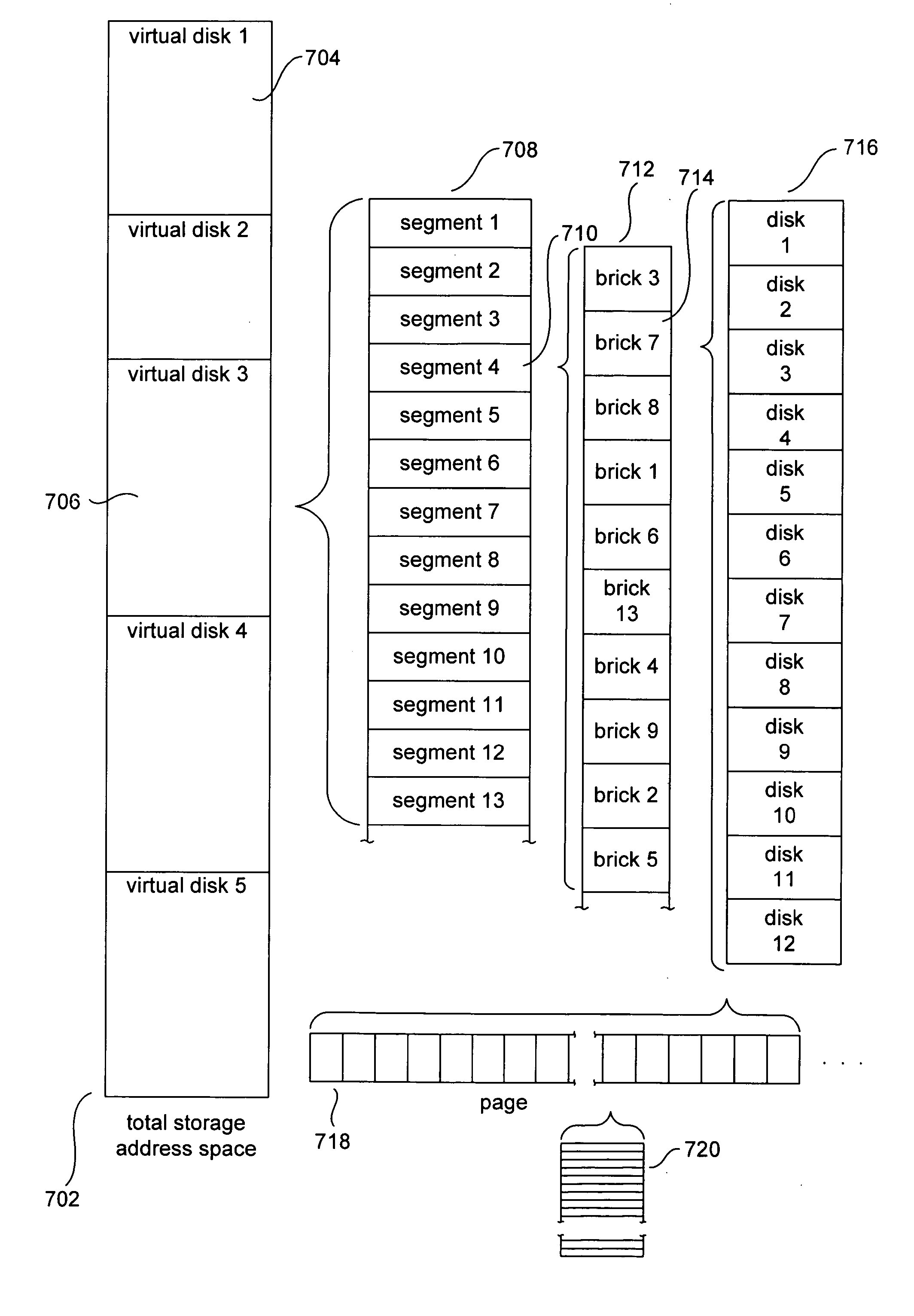

Methods and systems for hierarchical management of distributed data

Various method and system embodiments of the present invention are directed to hierarchical control logic within each component data-storage system of a distributed data-storage system composed of networked component data-storage systems over which virtual disks, optionally replicated as virtual-disk images, composed of data segments in turn composed of data blocks, are distributed at the granularity of segments. Each data segment is distributed according to a configuration. The hierarchical control logic includes, in one embodiment of the present invention, a top-level coordinator, a virtual-disk-image-level coordinator, a segment-configuration-node-level coordinator, a configuration-group-level coordinator, and a configuration-level coordinator.

Owner:HEWLETT PACKARD DEV CO LP

Provision of location information to a call party

InactiveUS7085578B2Radio/inductive link selection arrangementsSubstation equipmentTelecommunicationsGranularity

Apparatus and method provide location information for a calling party to a called party and / or provide a location for called party to a calling party. The information may be provided to a customer on a subscription basis or a customer may query for location information for the party at the other end of a call on an ad hoc basis (413). The customer may select the granularity (303) of the location information as well as the format (309) in which the information is sent. A party may optionally disable (403) the location ID feature so that the other person is not able to receive the party's location.

Owner:SOUND VIEW INNOVATIONS

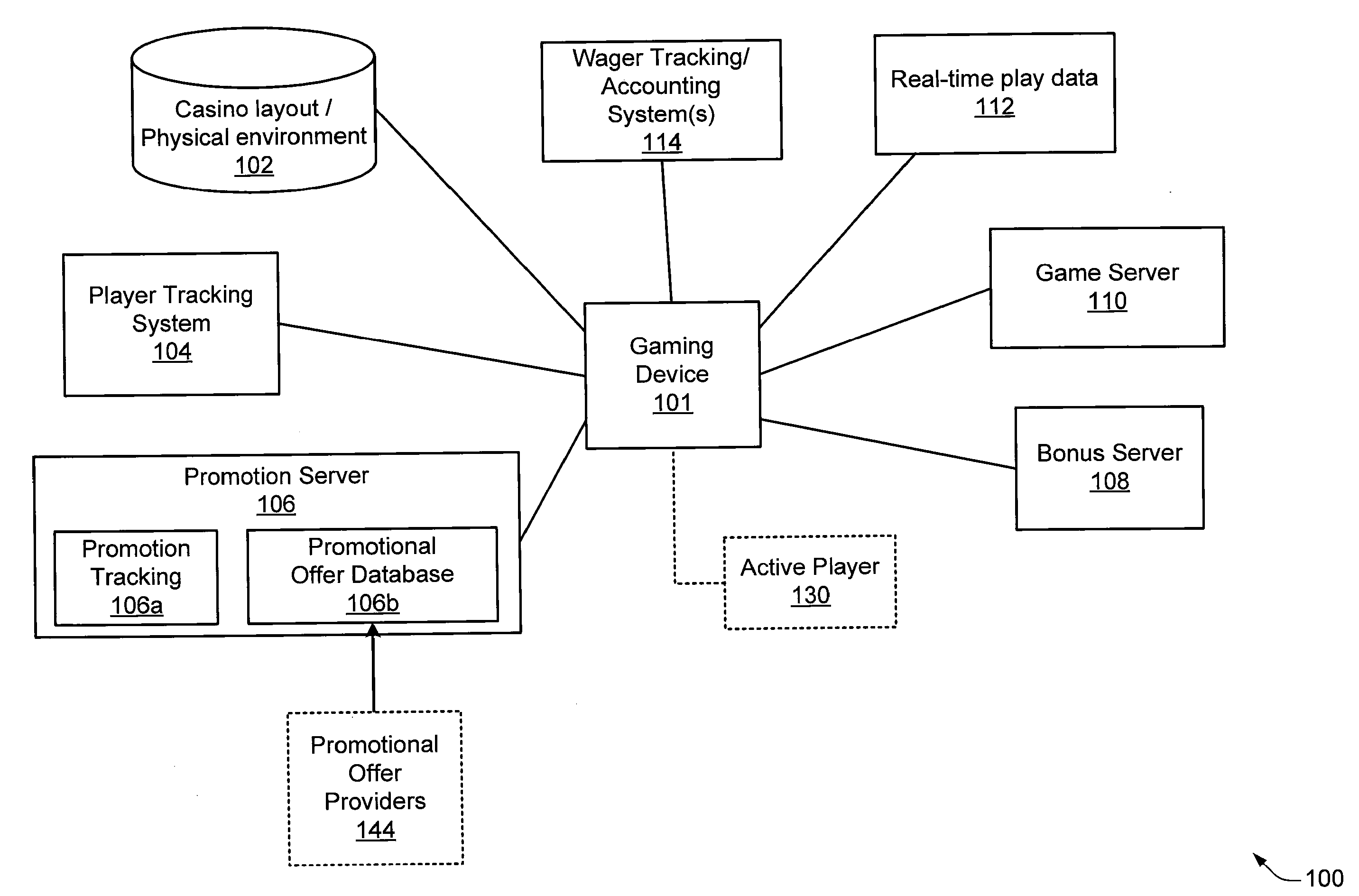

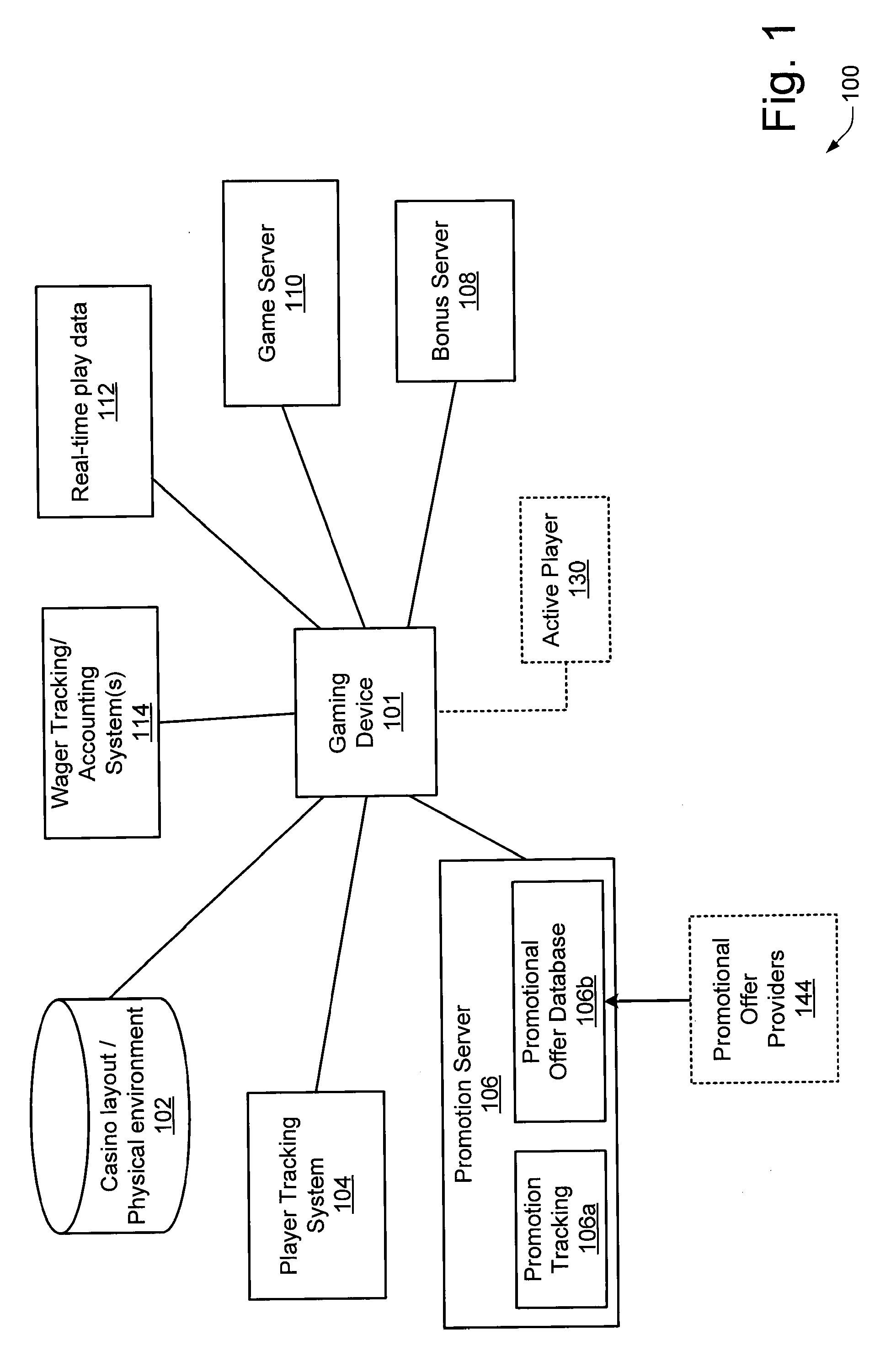

Casino gaming incentives using game themes, game types, paytables, denominations

Various techniques are disclosed for conducting promotional activities in a casino gaming network. In at least one embodiment, various incentives and / or promotions may be associated with selected gaming components which are accessible to a given gaming machine. Such gaming components may include, for example, paytables, denominations, game types, and / or game themes which are accessible to a given gaming machine cabinet. In this way, the lowest level of granularity for targeting promotional offers and incentives may be extended to selected paytables, denominations, game types, game themes, and / or other desired components within (and / or accessible to) selected gaming machine cabinet(s).

Owner:IGT

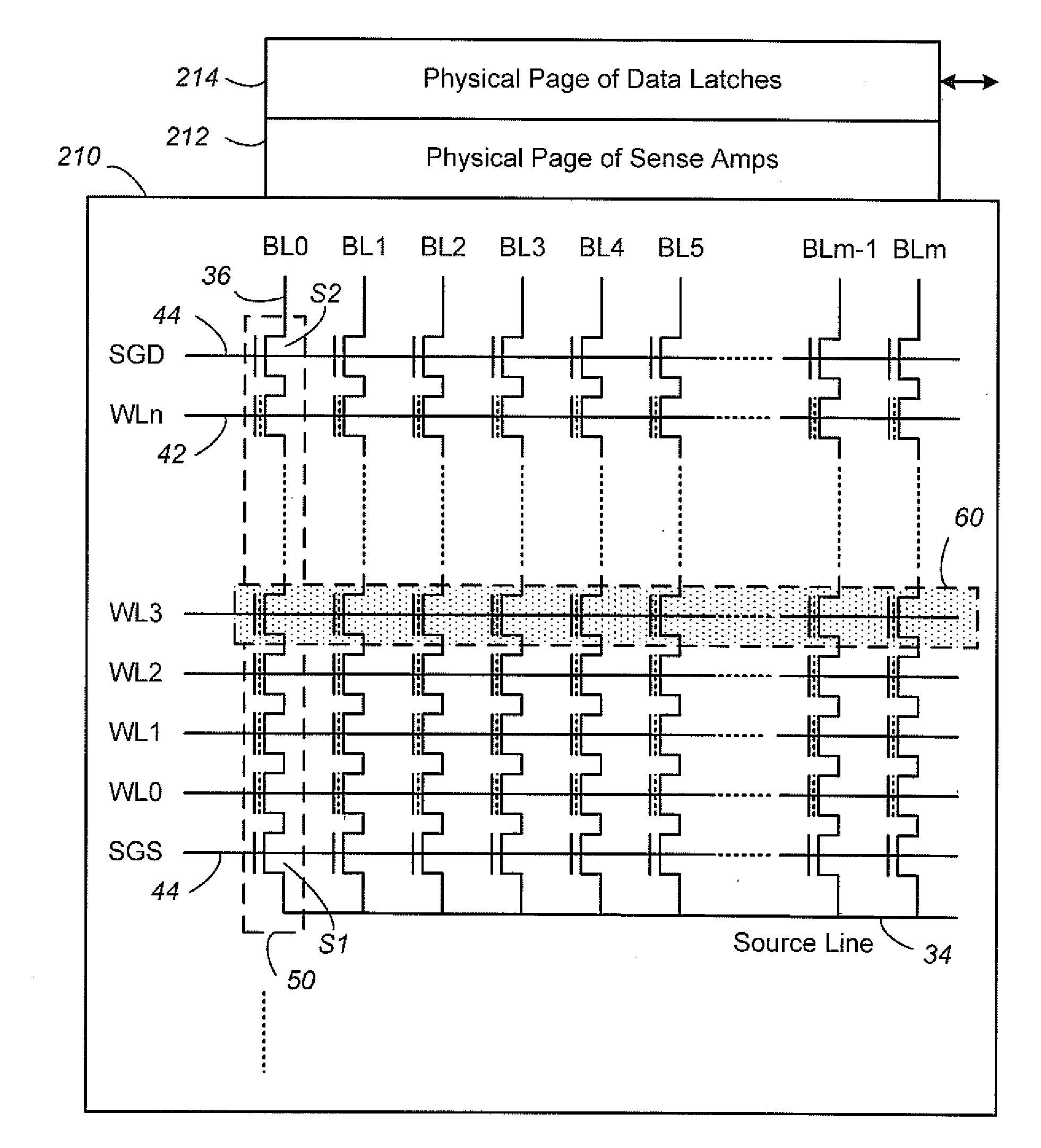

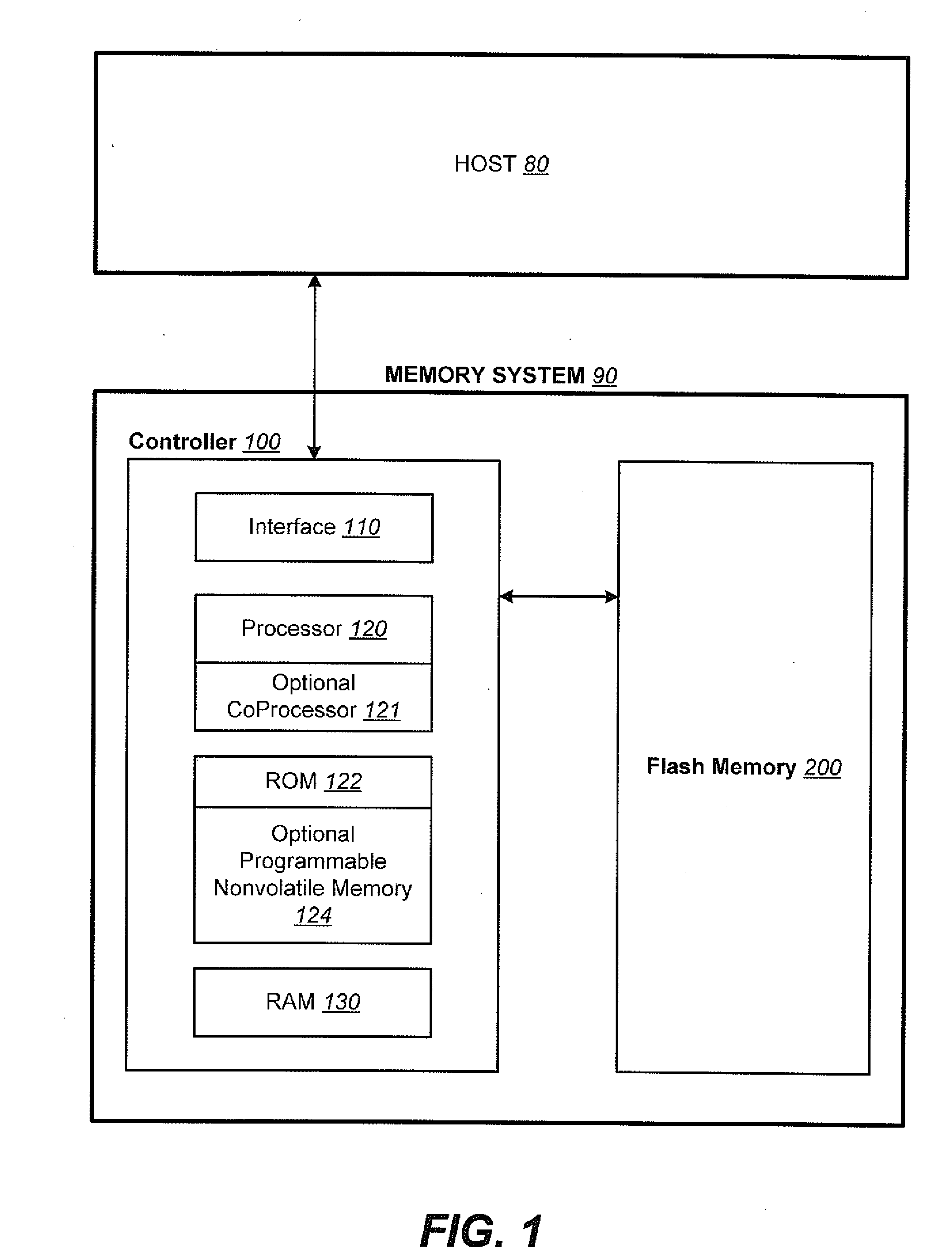

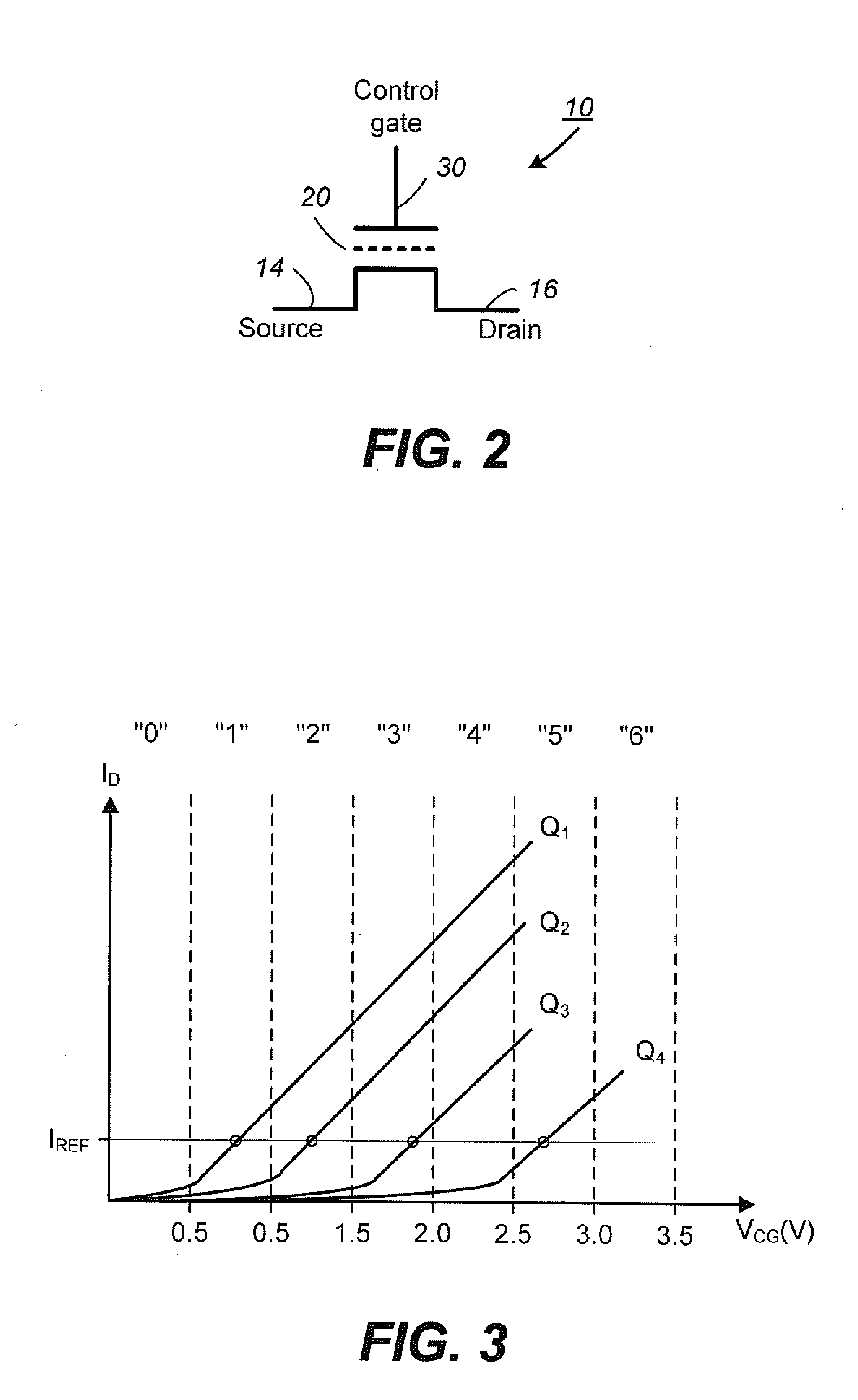

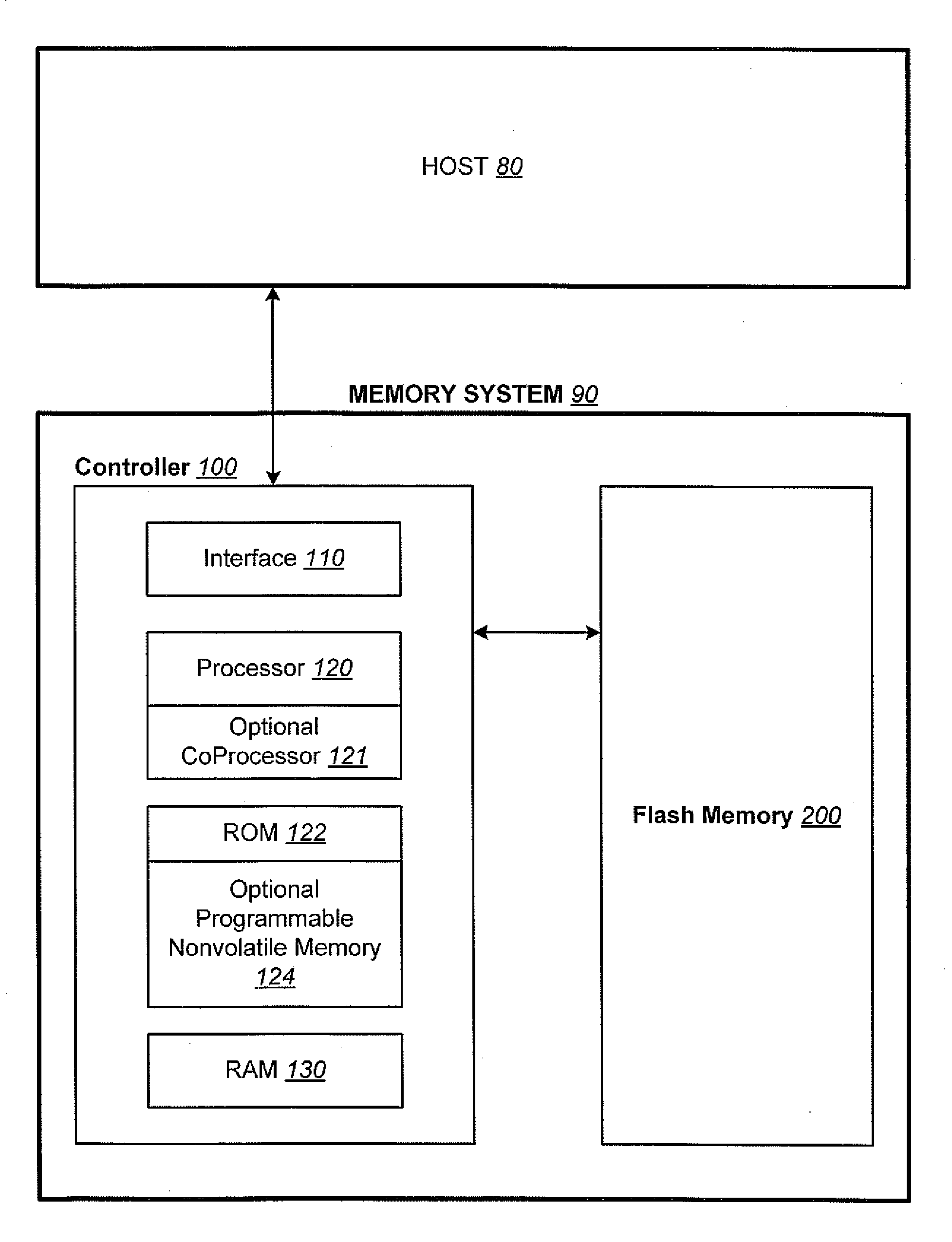

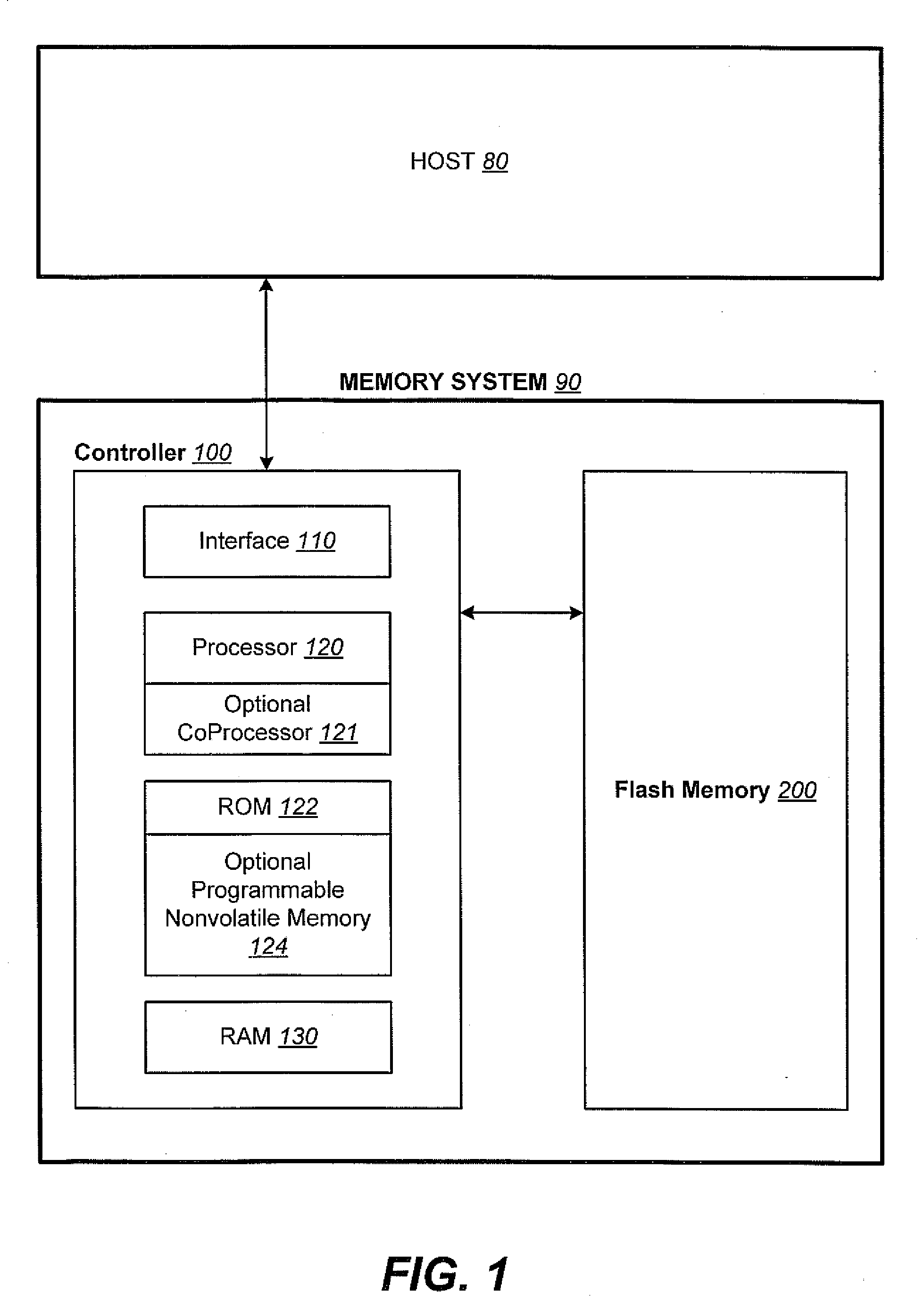

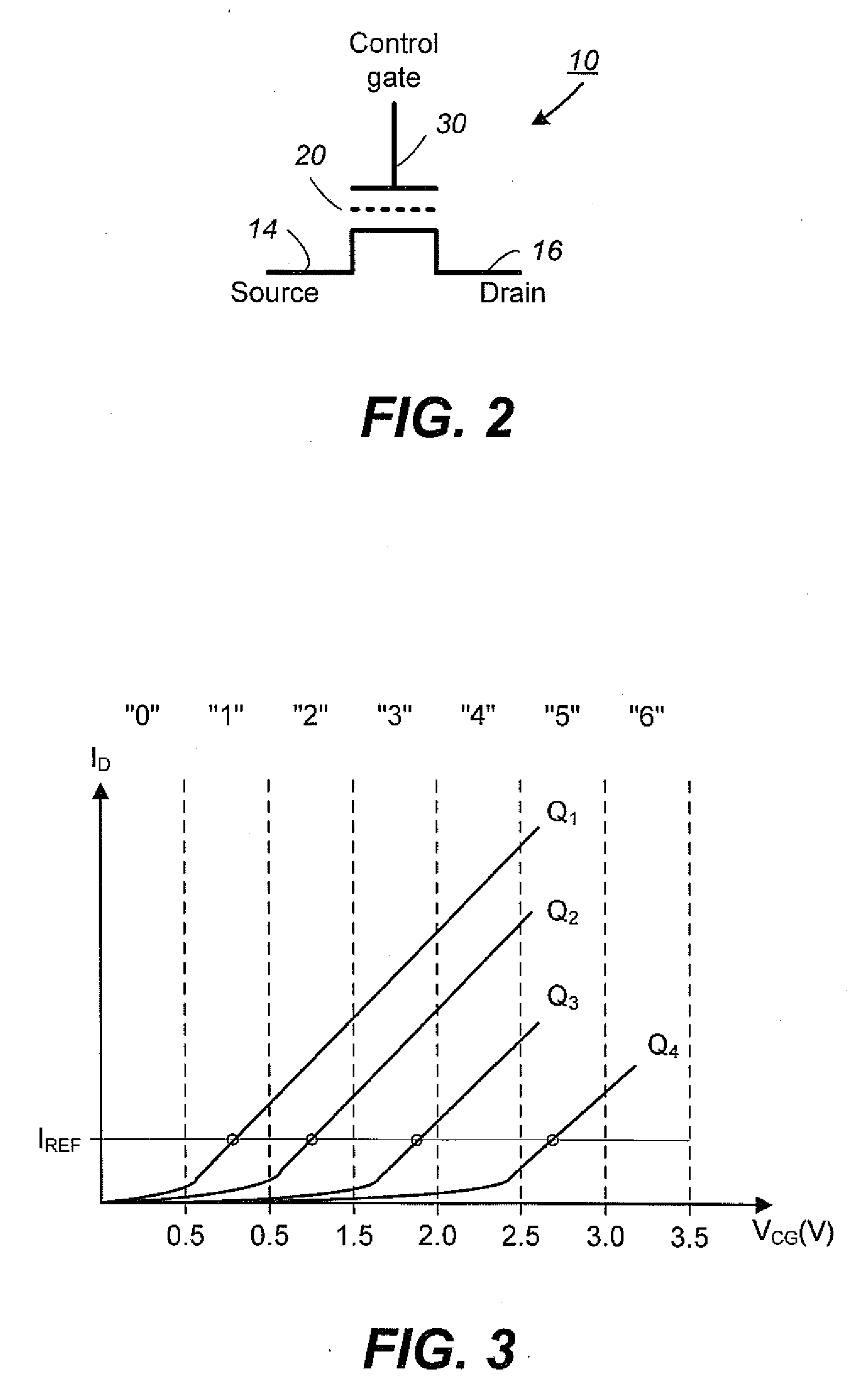

Non-Volatile Memory and Method With Write Cache Partitioning

ActiveUS20100172180A1Faster and robust write and read performanceIncrease burst write speedMemory architecture accessing/allocationRead-only memoriesMultilevel memoryGranularity

A portion of a nonvolatile memory is partitioned from a main multi-level memory array to operate as a cache. The cache memory is configured to store at less capacity per memory cell and finer granularity of write units compared to the main memory. In a block-oriented memory architecture, the cache has multiple functions, not merely to improve access speed, but is an integral part of a sequential update block system. Decisions to write data to the cache memory or directly to the main memory depend on the attributes and characteristics of the data to be written, the state of the blocks in the main memory portion and the state of the blocks in the cache portion.

Owner:SANDISK TECH LLC

Non-Volatile Memory and Method With Write Cache Partition Management Methods

InactiveUS20100174847A1Faster and robust write and read performanceIncrease burst write speedMemory architecture accessing/allocationMemory adressing/allocation/relocationGranularityMultilevel memory

A portion of a nonvolatile memory is partitioned from a main multi-level memory array to operate as a cache. The cache memory is configured to store at less capacity per memory cell and finer granularity of write units compared to the main memory. In a block-oriented memory architecture, the cache has multiple functions, not merely to improve access speed, but is an integral part of a sequential update block system. The cache memory has a capacity dynamically increased by allocation of blocks from the main memory in response to a demand to increase the capacity. Preferably, a block with an endurance count higher than average is allocated. The logical addresses of data are partitioned into zones to limit the size of the indices for the cache.

Owner:SANDISK TECH LLC

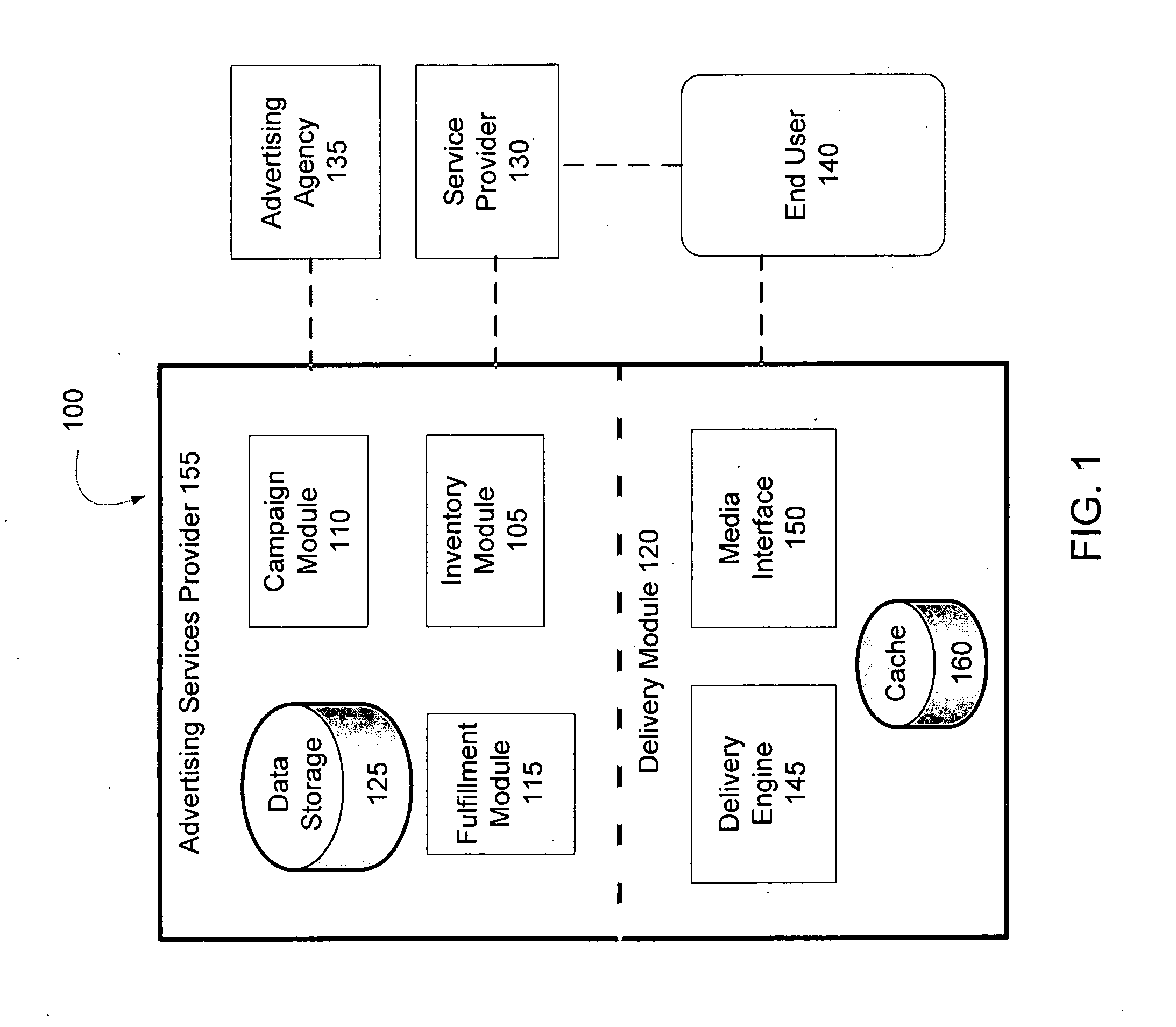

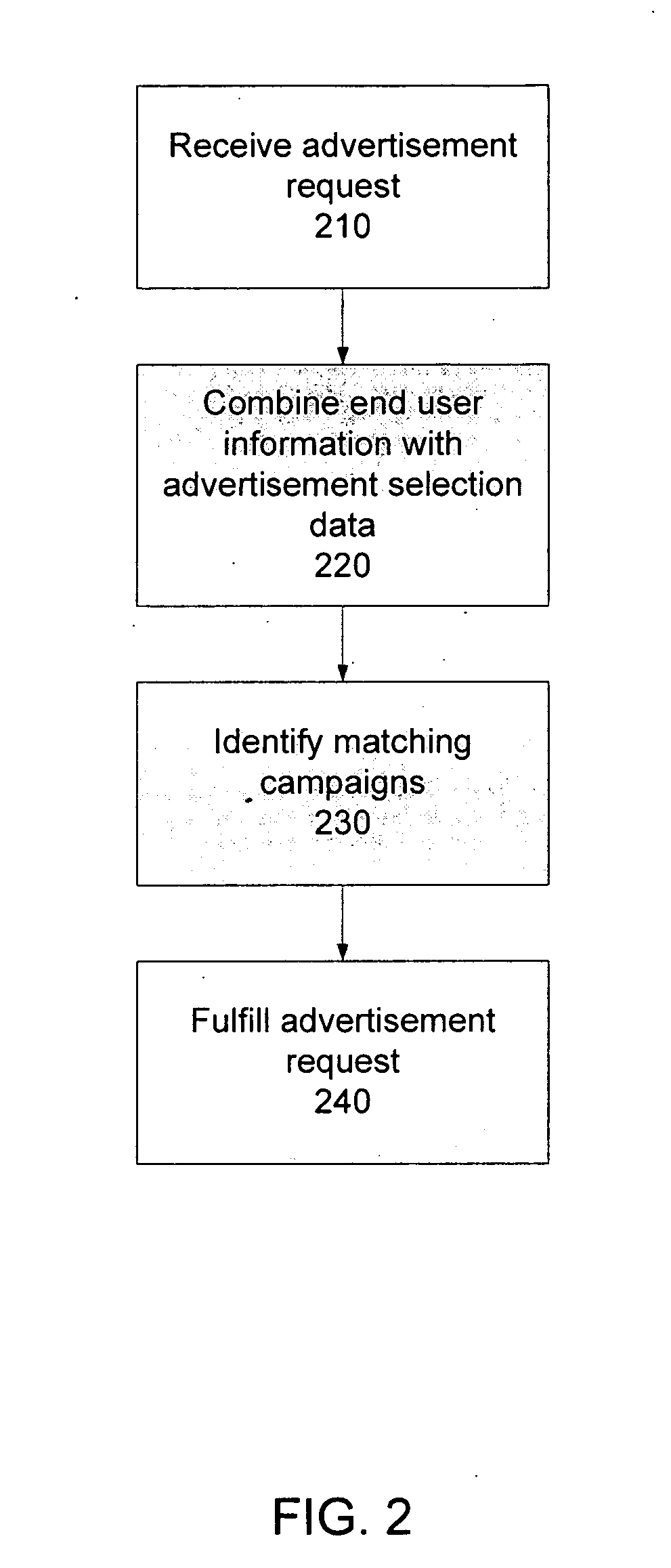

Targeted advertising using verifiable information

A system and a method to match advertisement requests with campaigns using targeting attributes, and campaigns are selected for fulfillment of the advertisement request according to a priority algorithm. The targeting uses end user information that is verifiable, and which the user has granted permission to use, improving the granularity and accuracy of the targeting data. The algorithm includes load balancing and campaign state evaluation on a per campaign, per user basis. The algorithm enables control over the frequency and number of exposures for a campaign, optimizing the advertising both from the perspective of the user and the advertiser.

Owner:RHYTHMONE

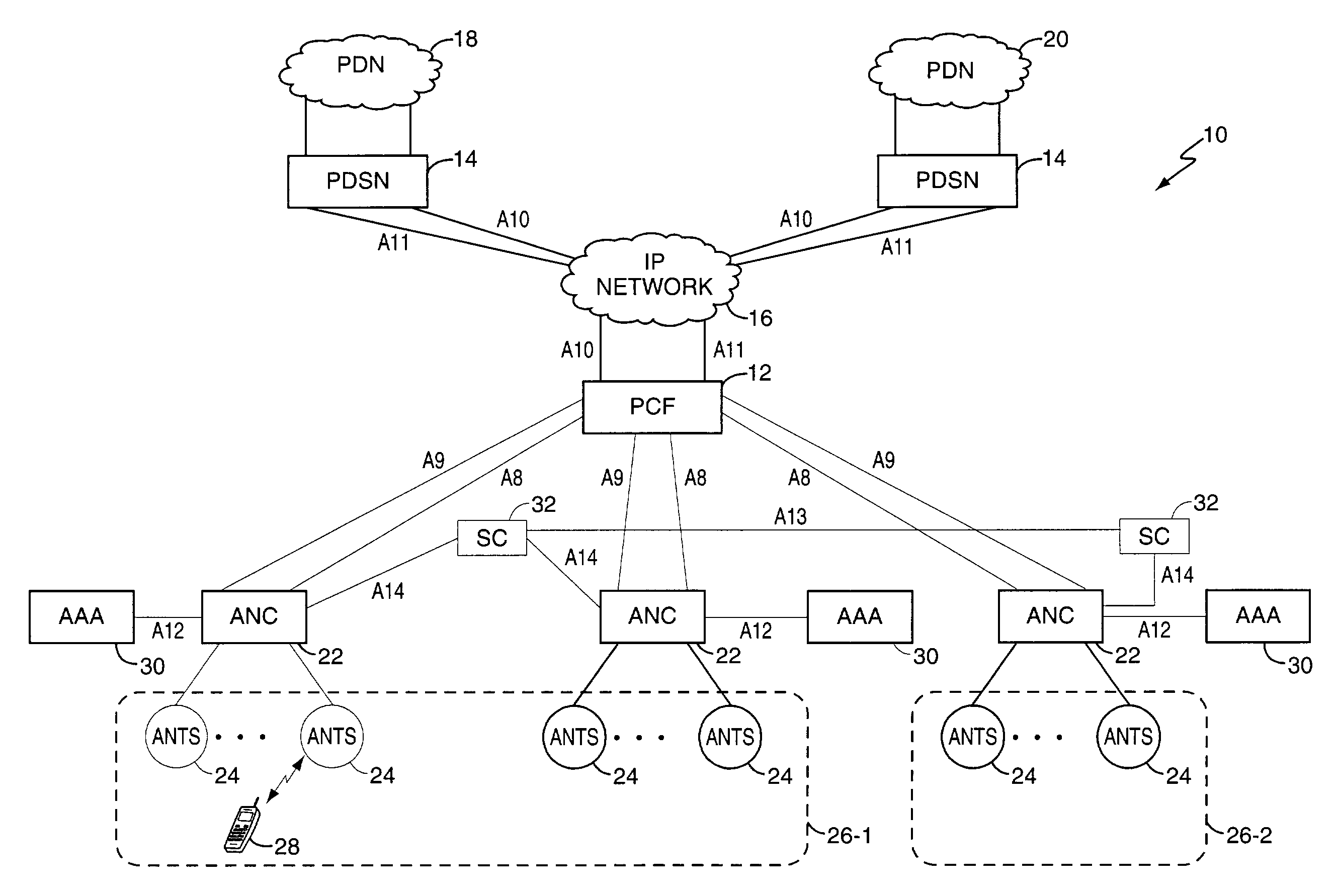

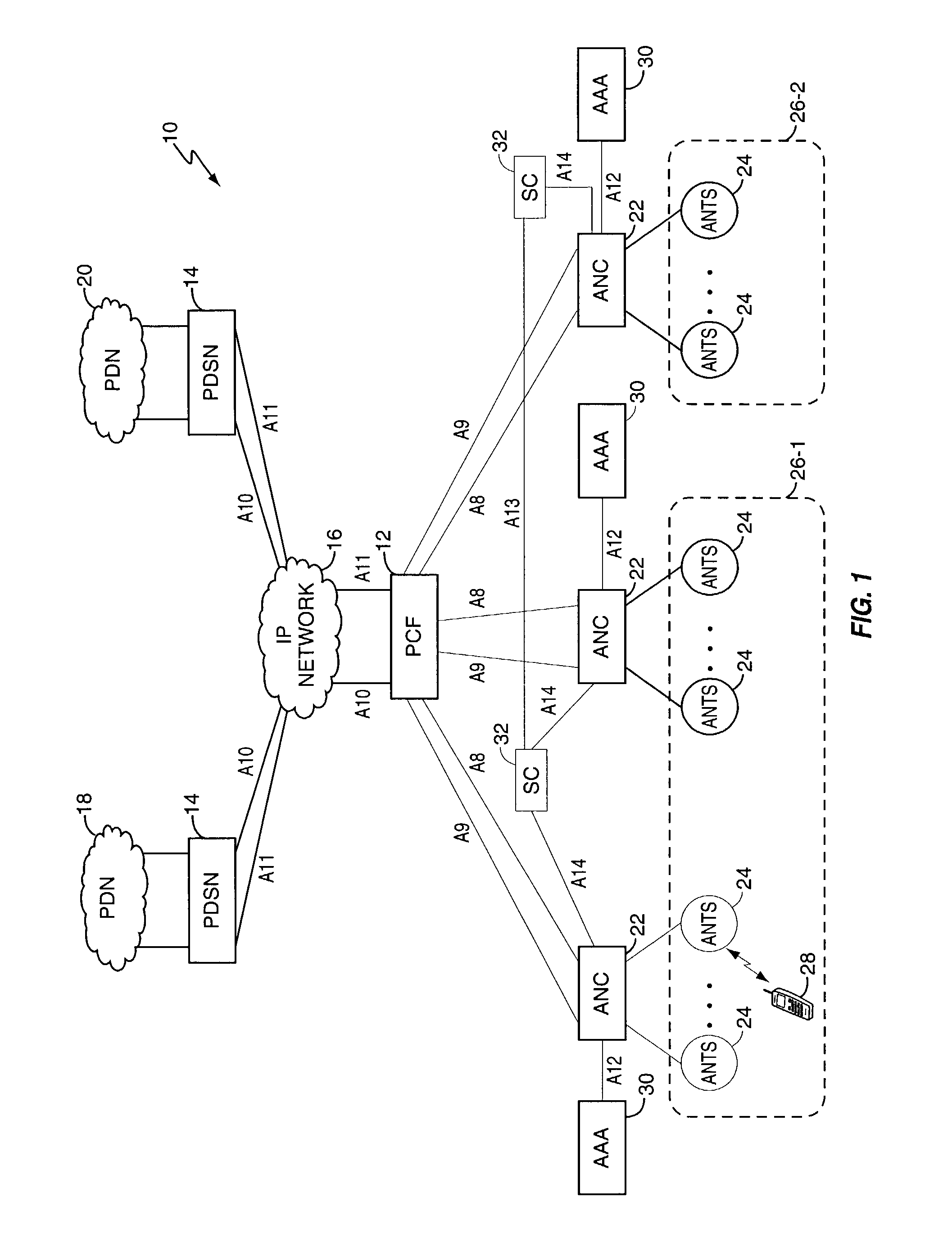

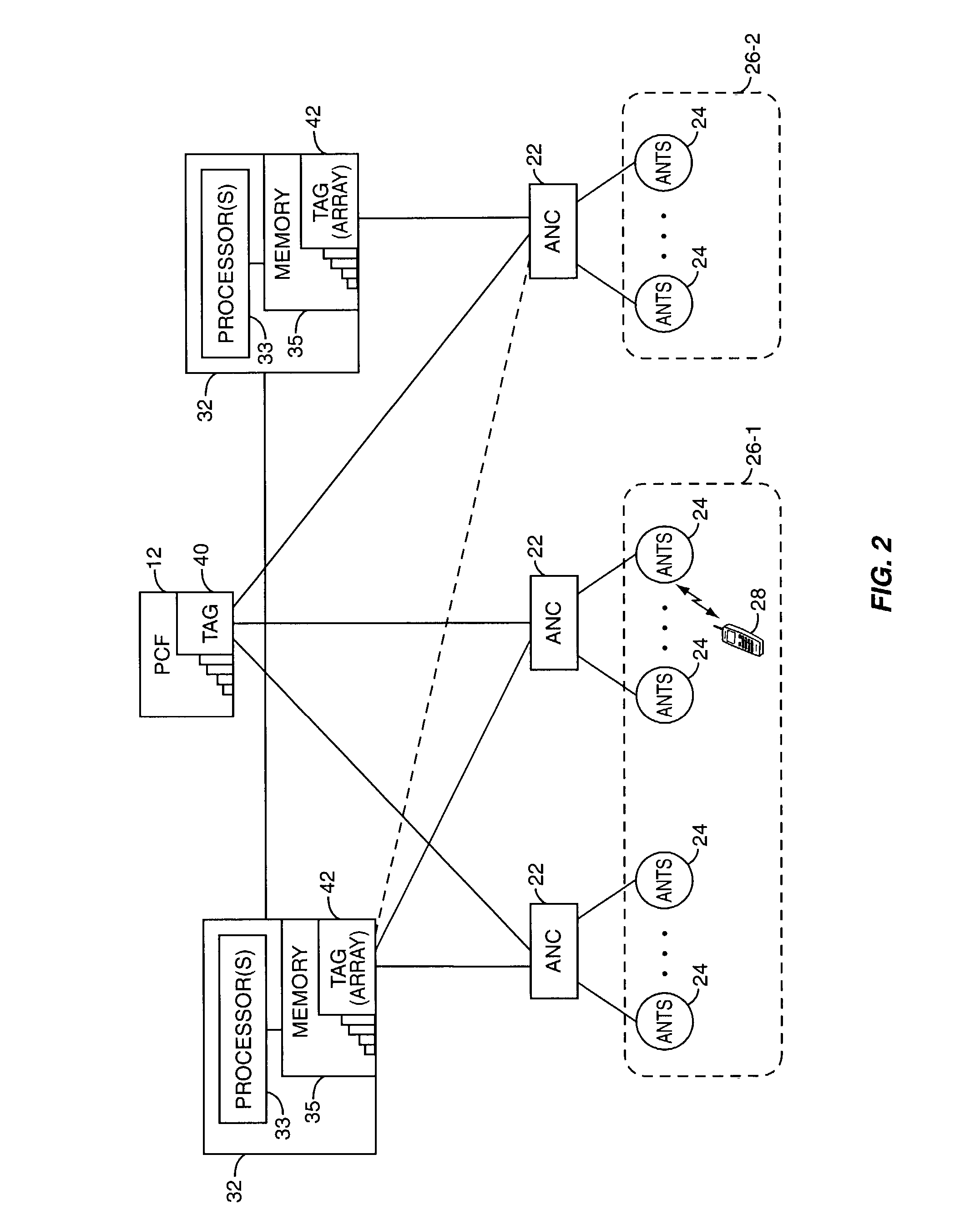

Mobility management entity for high data rate wireless communication networks

ActiveUS7457265B2Reduce amountEasy to manageTime-division multiplexData switching by path configurationSession controlGranularity

A session controller provides mobility management support in a 1xEVDO wireless communication network, such as one configured in accordance with the TIA / EIA / IS-856 standard. Operating as a logical network entity, the SC maintains location (e.g., a pointing tag) and session information at an access network controller (ANC) granularity, thus allowing it to track access terminal (AT) transfer between ANCs but within subnet boundaries, where a network subnet comprises one or more ANCs. This allows a packet control function (PCF) to maintain location information at a packet zone granularity, thereby reducing mobility management overhead at the PCF. The SC provides updated tag and session information to PCFs, ANCs, and other SCs as needed. Information exchange with other SCs arises, for example, when two or more SCs cooperate to maintain or transfer routing and session information across subnets.

Owner:TELEFON AB LM ERICSSON (PUBL)

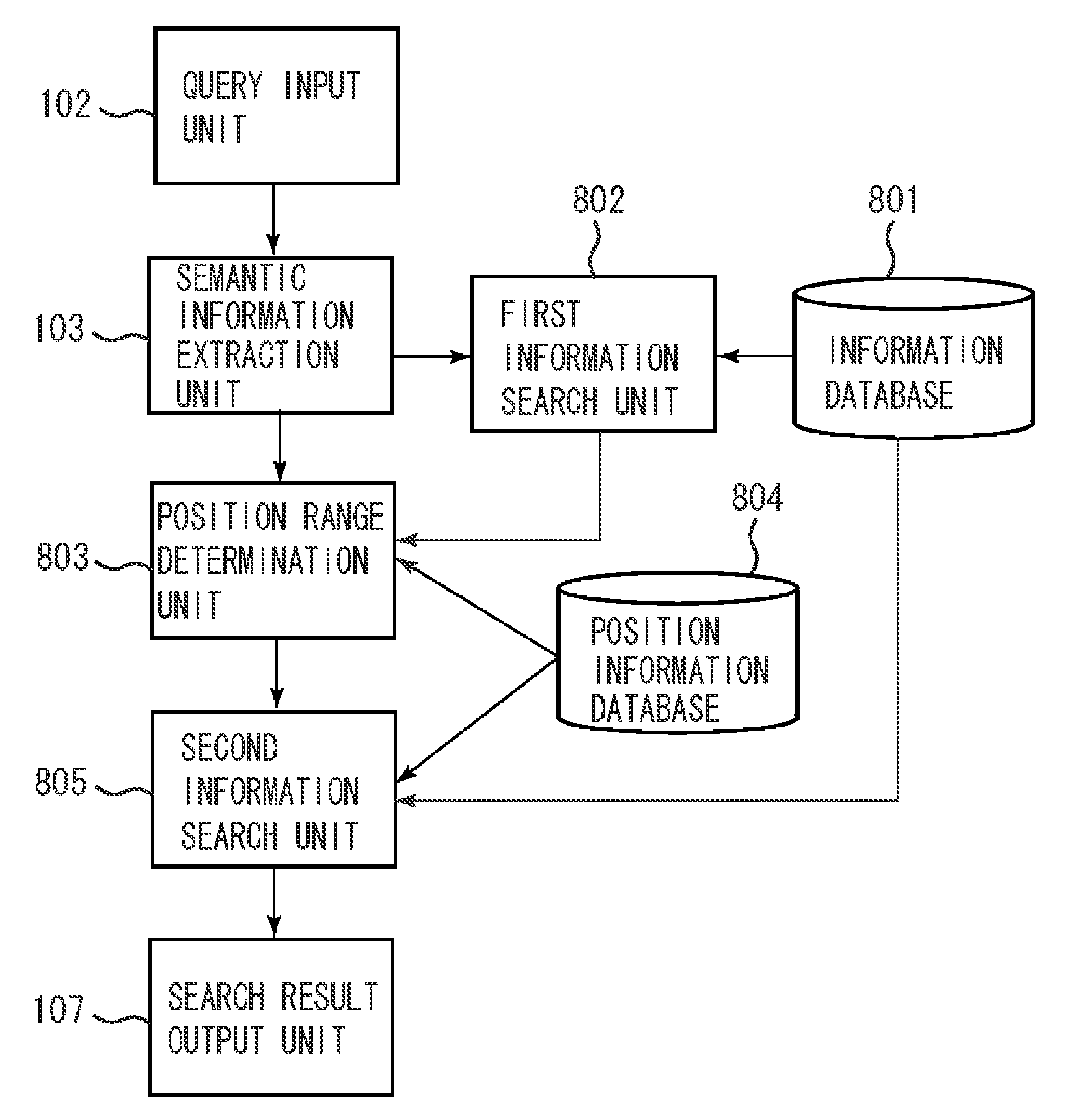

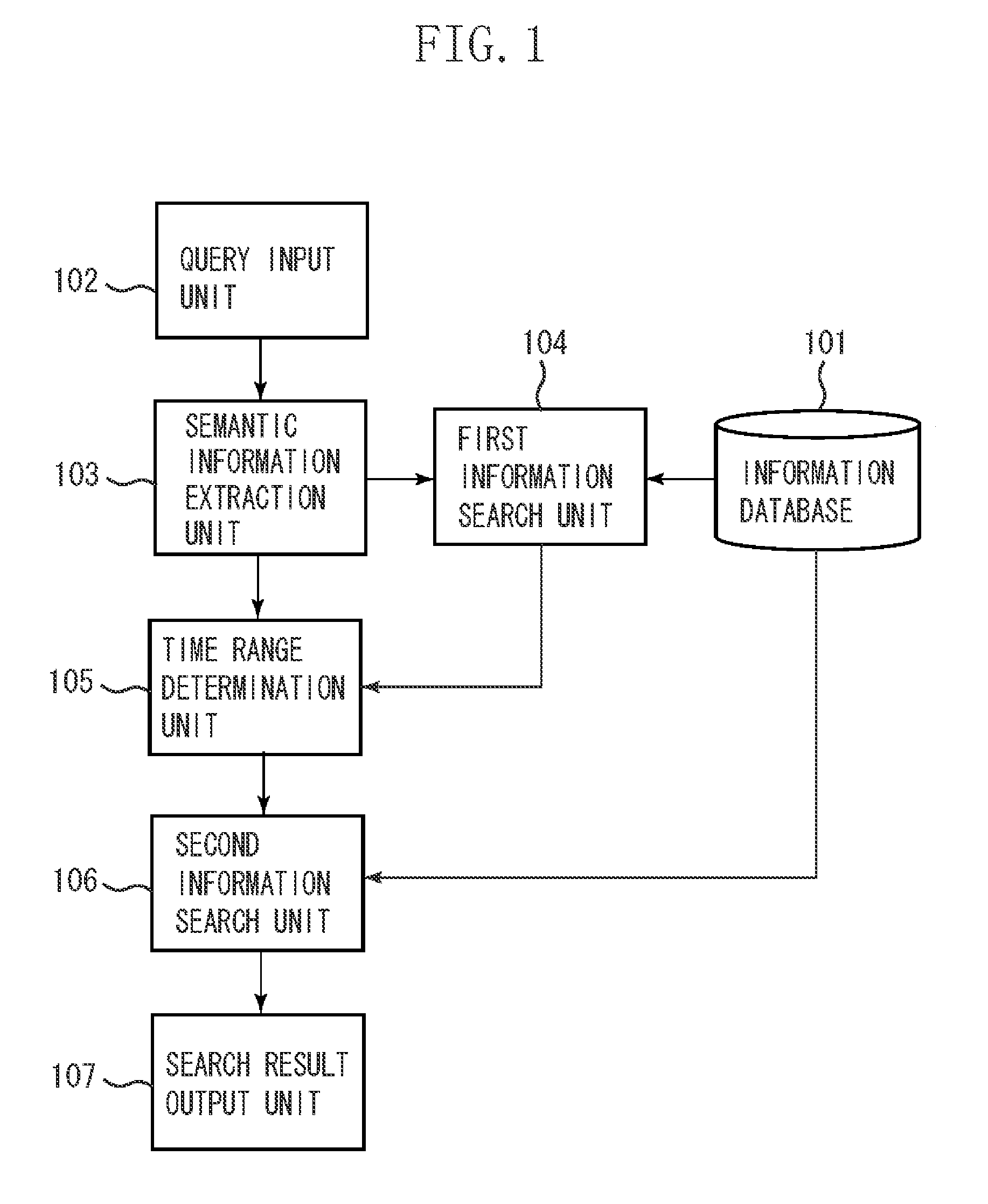

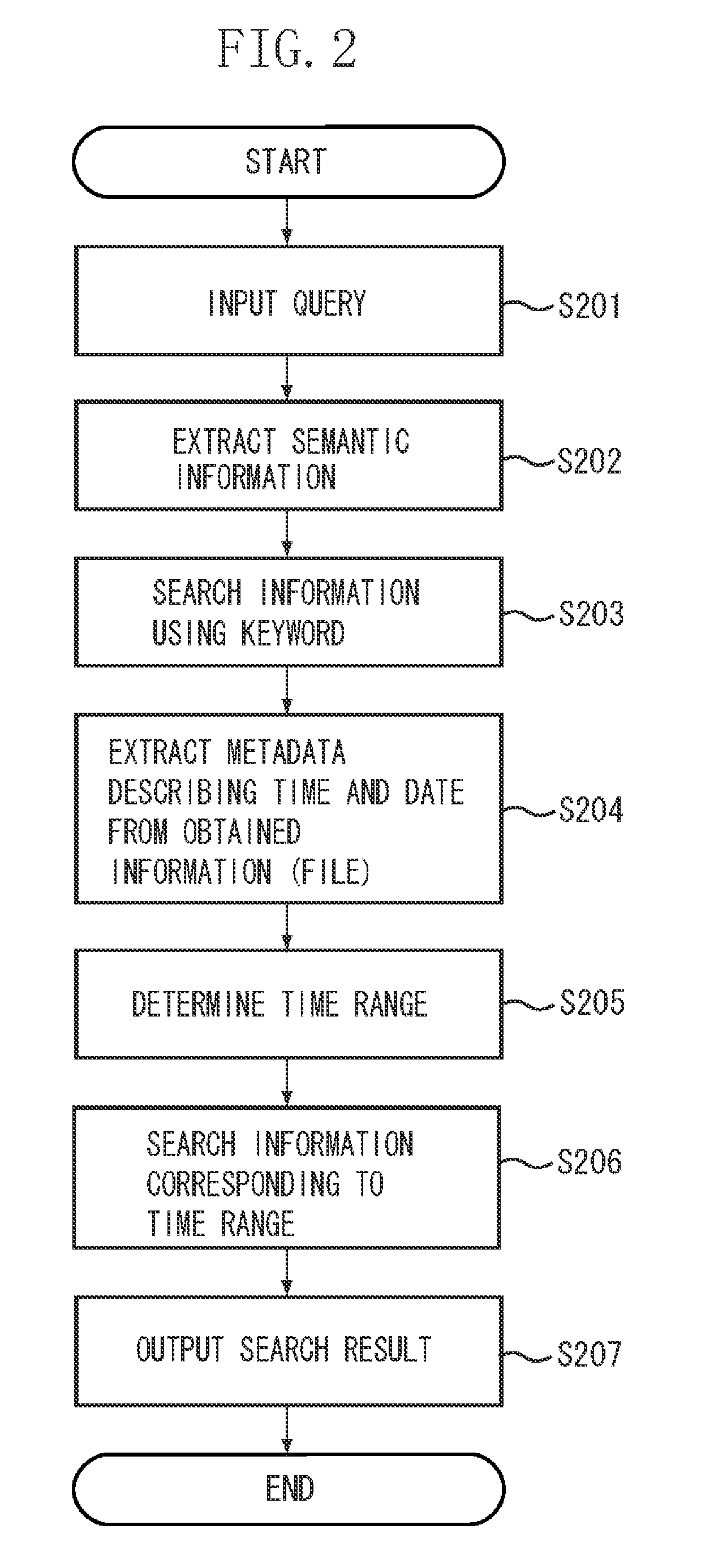

Information search apparatus, information search method, and storage medium

InactiveUS20100114856A1Efficient searchDigital data processing detailsStill image data indexingGranularityInformation searching

The present invention provides a technique for determining a search range using metadata that is associated with information (file) of an image as well as determining granularity of the search range based on a unit of numerical information included in a query when a file is searched from a database. More particularly, an information search apparatus searches a plurality of files that include numerical information. As a query for determining the search range, a first numerical value and a keyword are input, a unit of the first numerical value is determined, the second numerical value of the unit that corresponds to the keyword is acquired, and a file included in the search range that is determined based on the first the second numerical values is searched from the plurality of files.

Owner:CANON KK

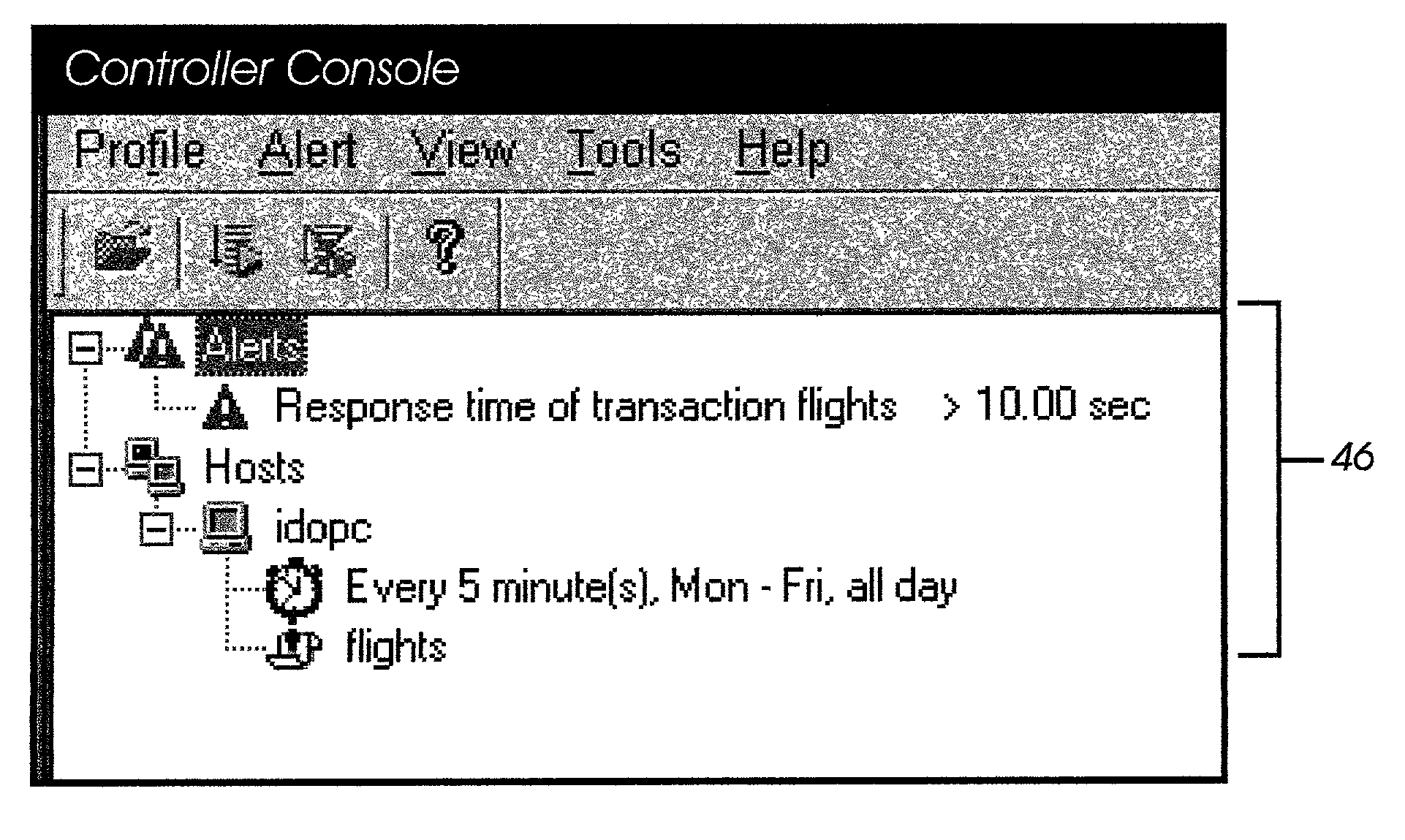

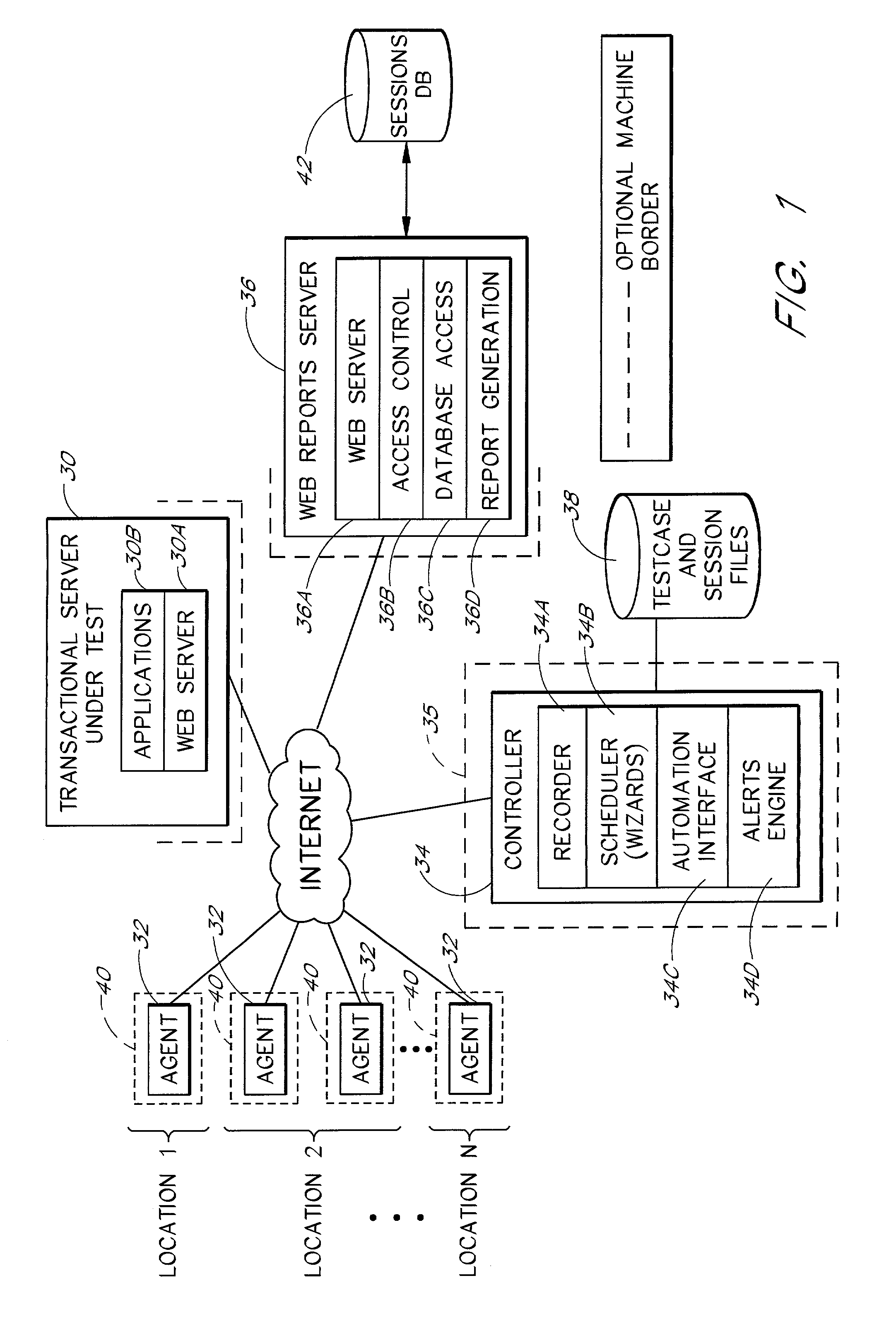

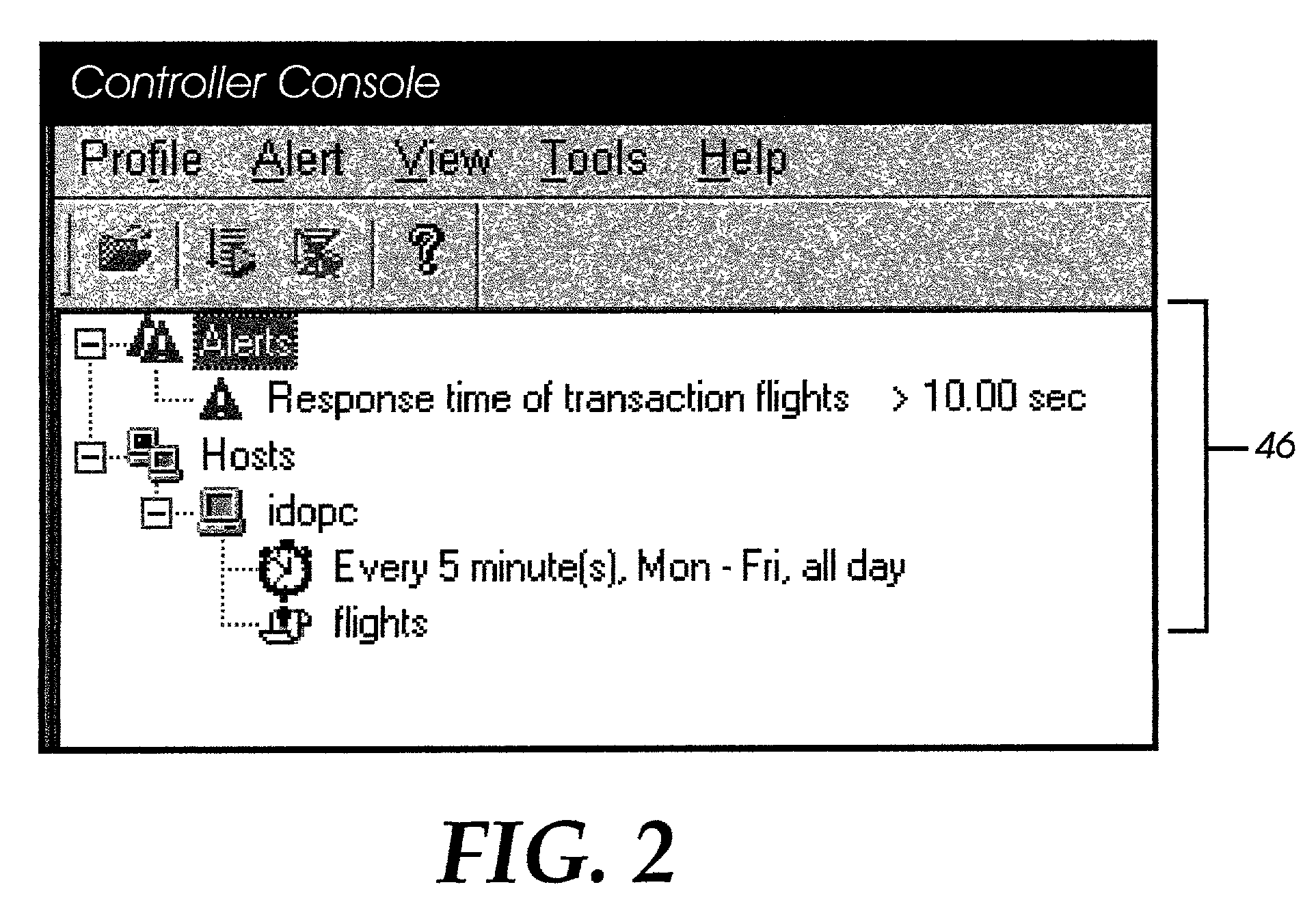

Transaction breakdown feature to facilitate analysis of end user performance of a server system

InactiveUS7197559B2Facilitates taskFlexible choiceHardware monitoringMultiple digital computer combinationsGeographic siteGranularity

A system for monitoring the post-deployment performance of a web-based or other transactional server is disclosed. The monitoring system includes an agent component that monitors the performance of the transactional server as seen from one or more geographic locations and reports the performance data to a reports server and / or centralized database. The performance data may include, for example, transaction response times, server response times, network response times and measured segment delays along network paths. Using the reported performance data, the system provides a breakdown of time involved in completion of a transaction into multiple time components, including a network time and a server time. Users view the transaction breakdown data via a series of customizable reports, which assist the user in determining whether the source of the performance problem. Additional features permit the source to be identified with further granularity.

Owner:MICRO FOCUS LLC

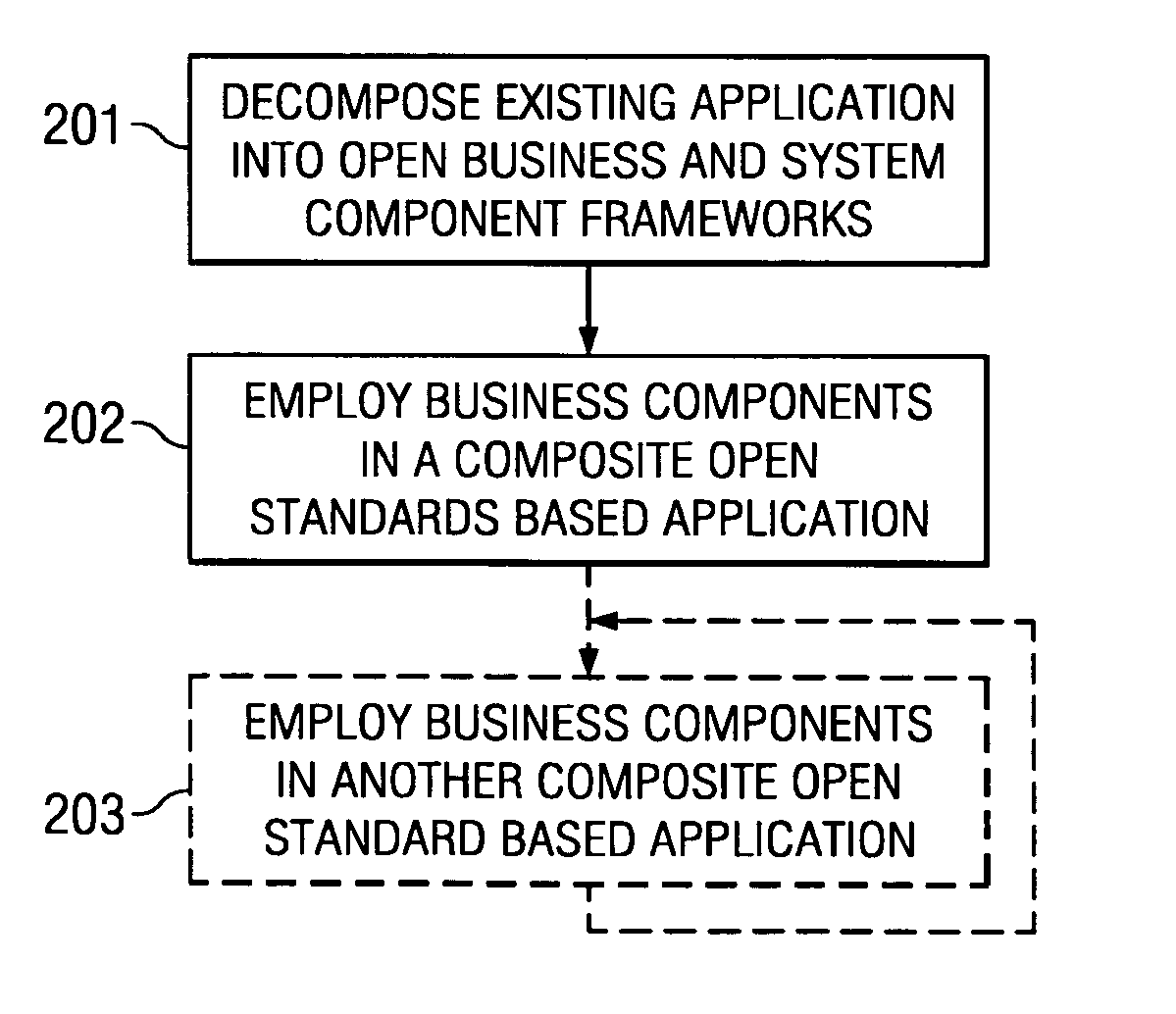

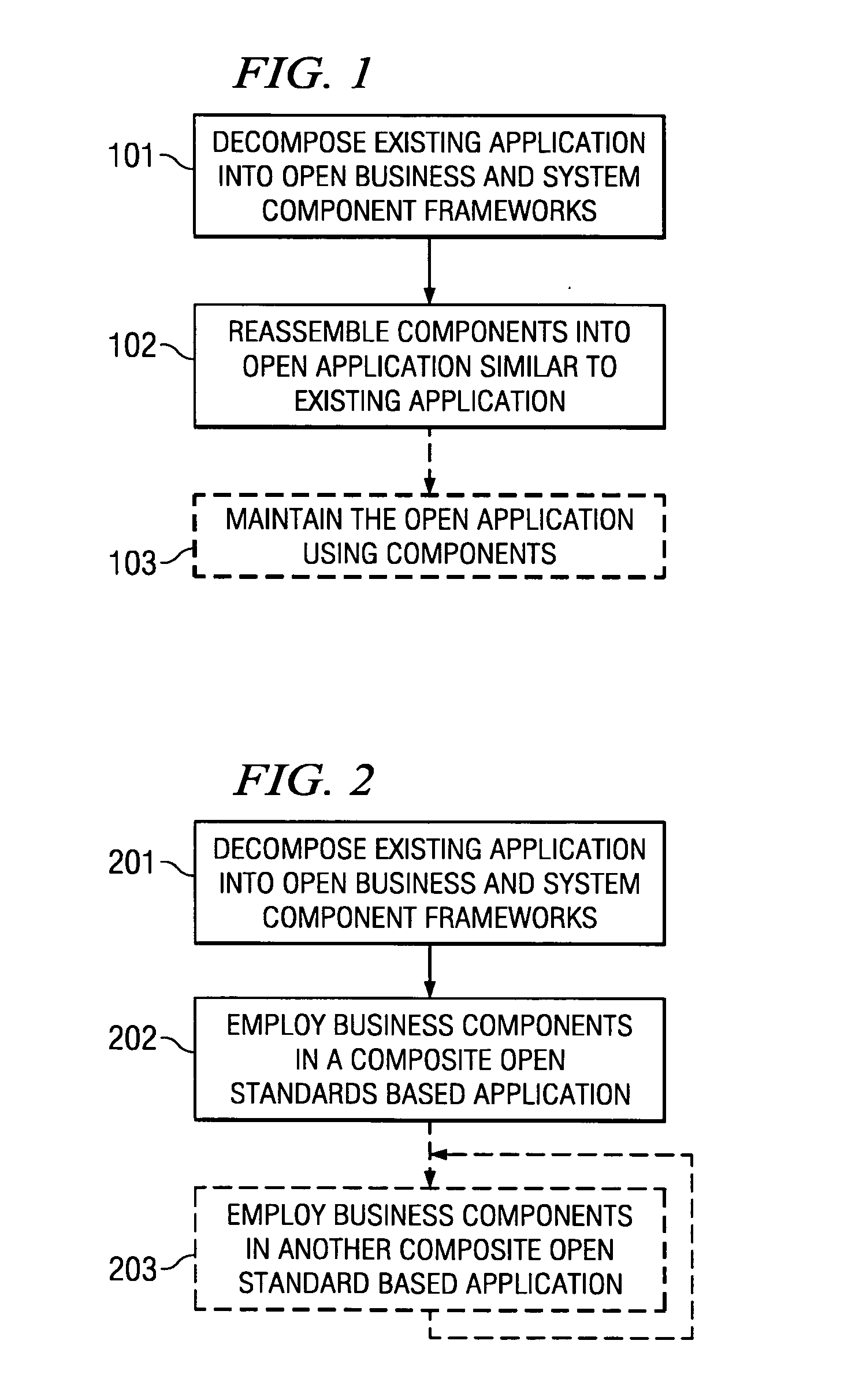

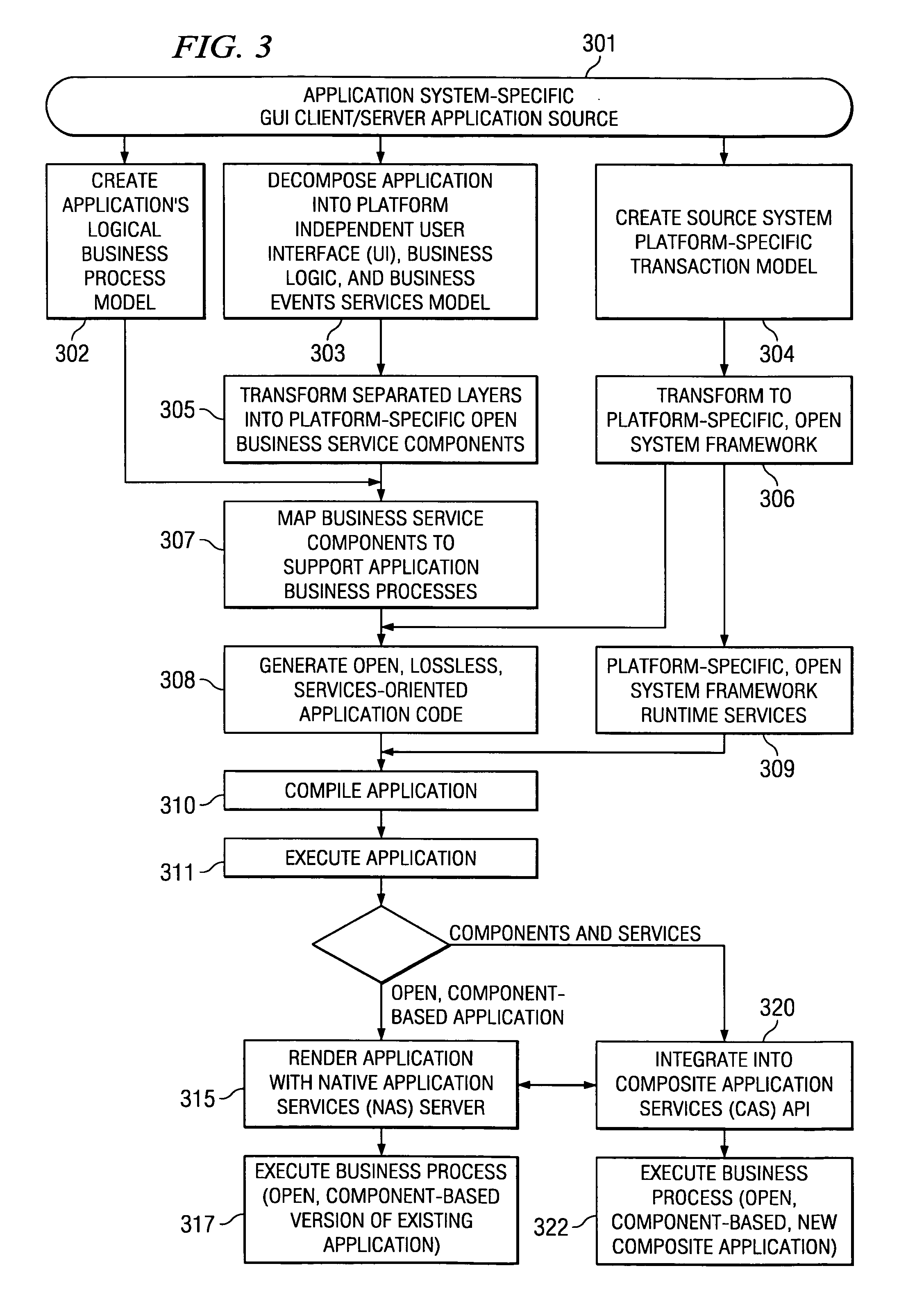

Systems and methods for modeling and generating reusable application component frameworks, and automated assembly of service-oriented applications from existing applications

InactiveUS20050144226A1Effective and meaningful supportLower barrierSoftware maintainance/managementMultiple digital computer combinationsService oriented applicationsBusiness process

Embodiments of systems and methods model and generate open reusable, business components for Service Oriented Architectures (SOAs) from existing client / server applications. Applications are decomposed into business component frameworks with separate user interface, business logic, and event management layers to enable service-oriented development of new enterprise applications. Such layers are re-assembled through an open standards-based, Native Application Services (NAS) to render similar or near identical transactional functionality within a new application on an open platform, without breaking former production code, and without requiring a change in an end-user's business processes and / or user experience. In addition, the same separated layers may form re-usable business components at any desired level of granularity for re-use in external composite applications through industry-standard interfaces, regardless of usage, context, or complexity in the former Client / Server application.

Owner:SAP AG

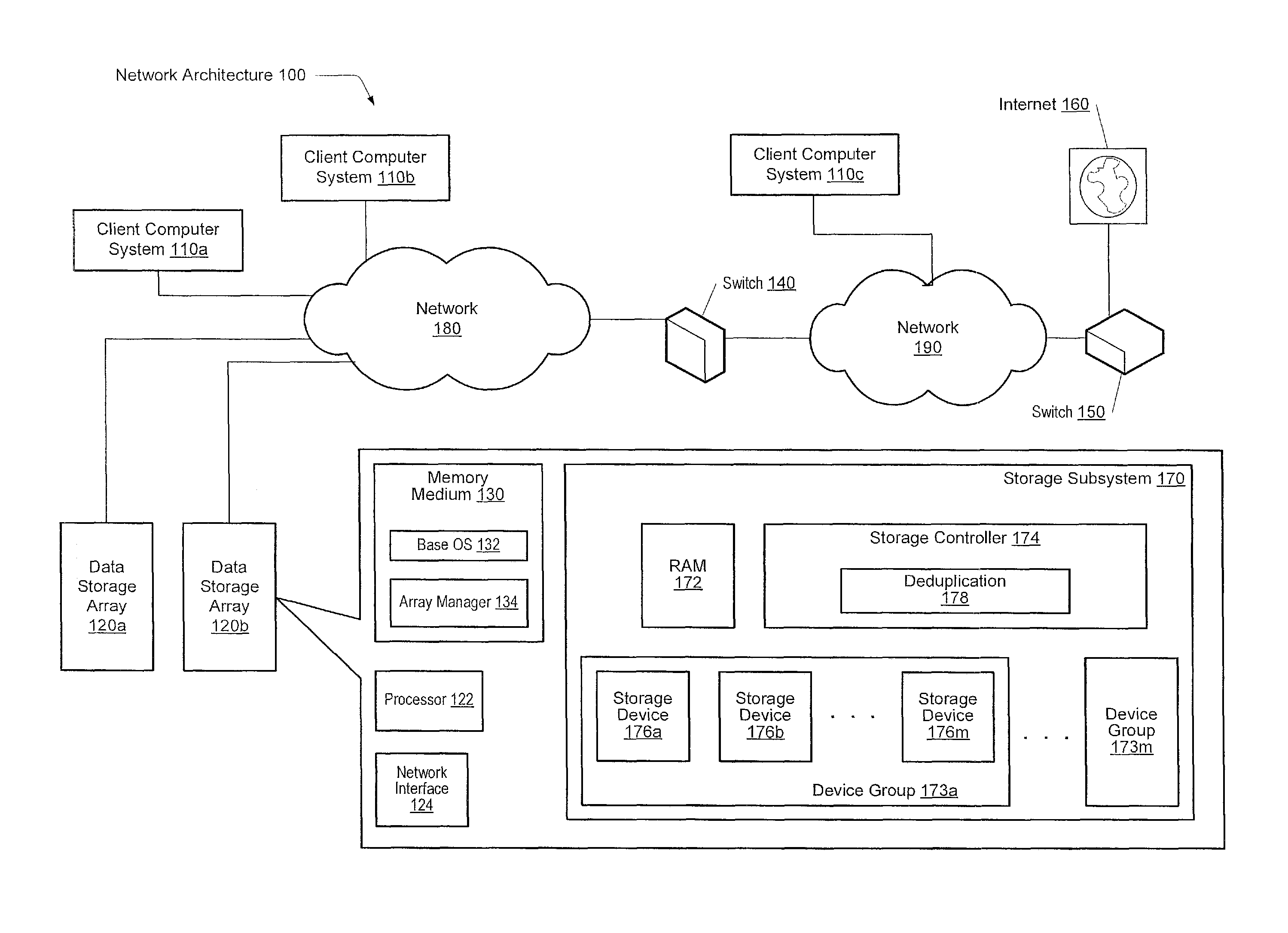

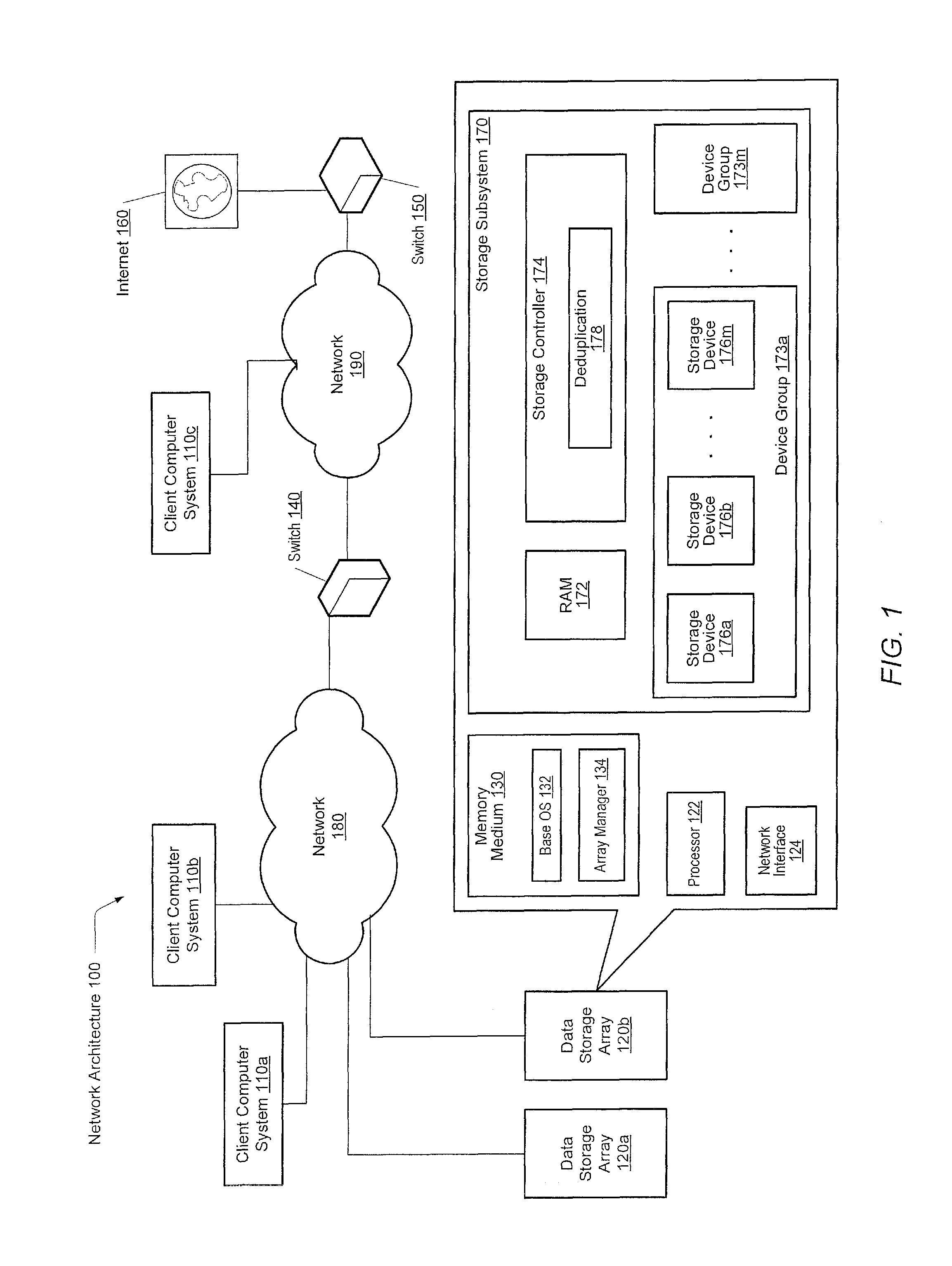

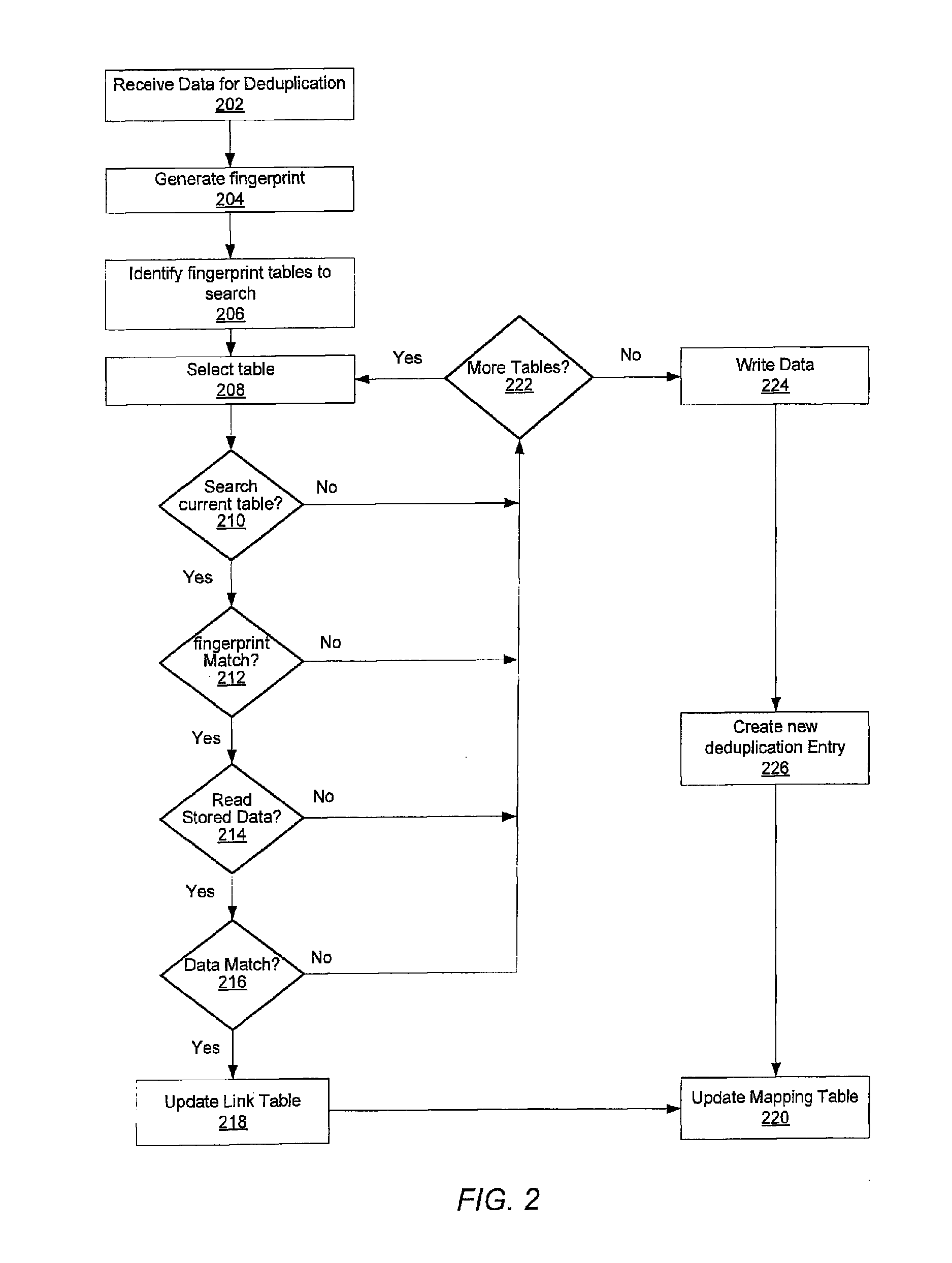

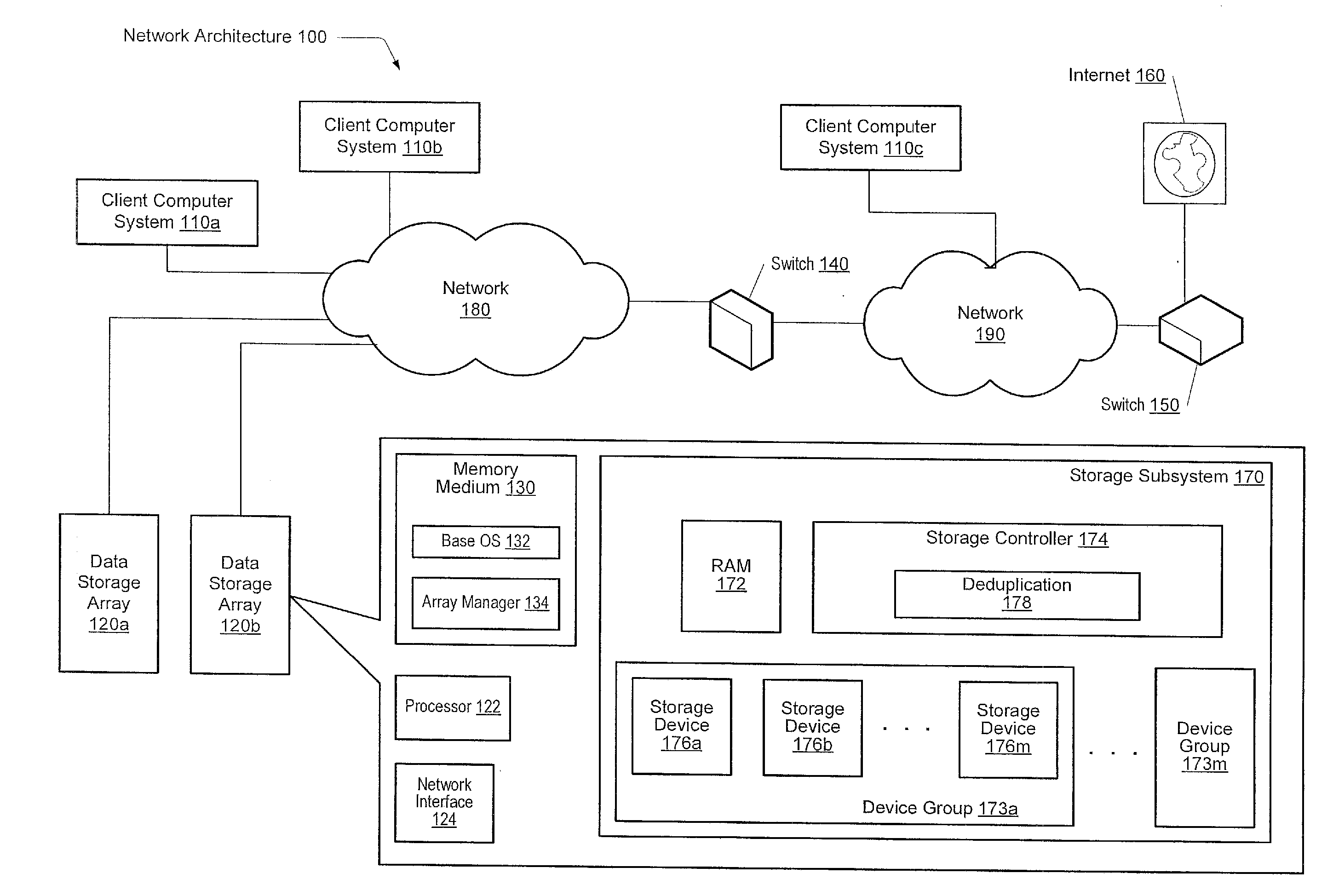

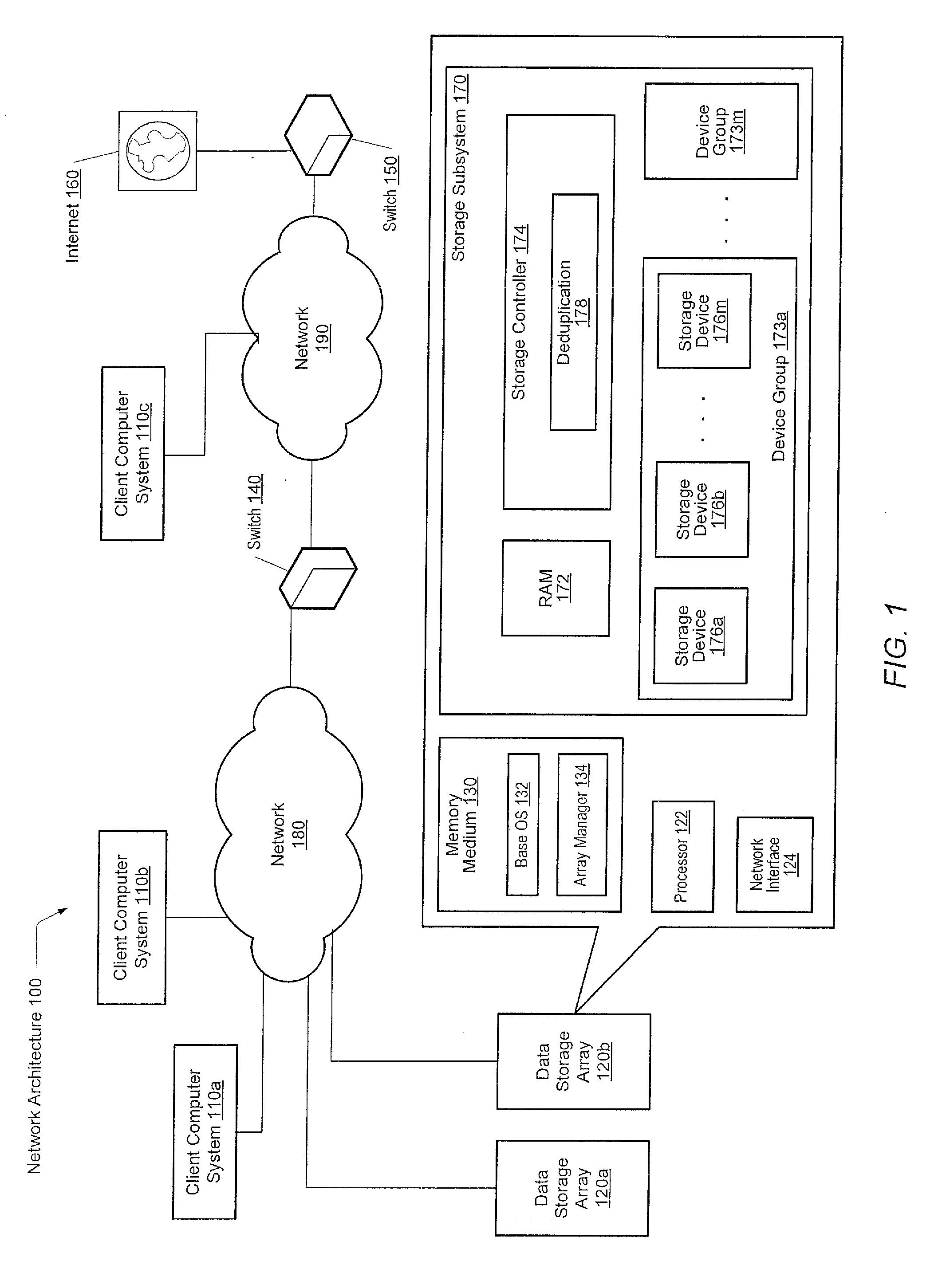

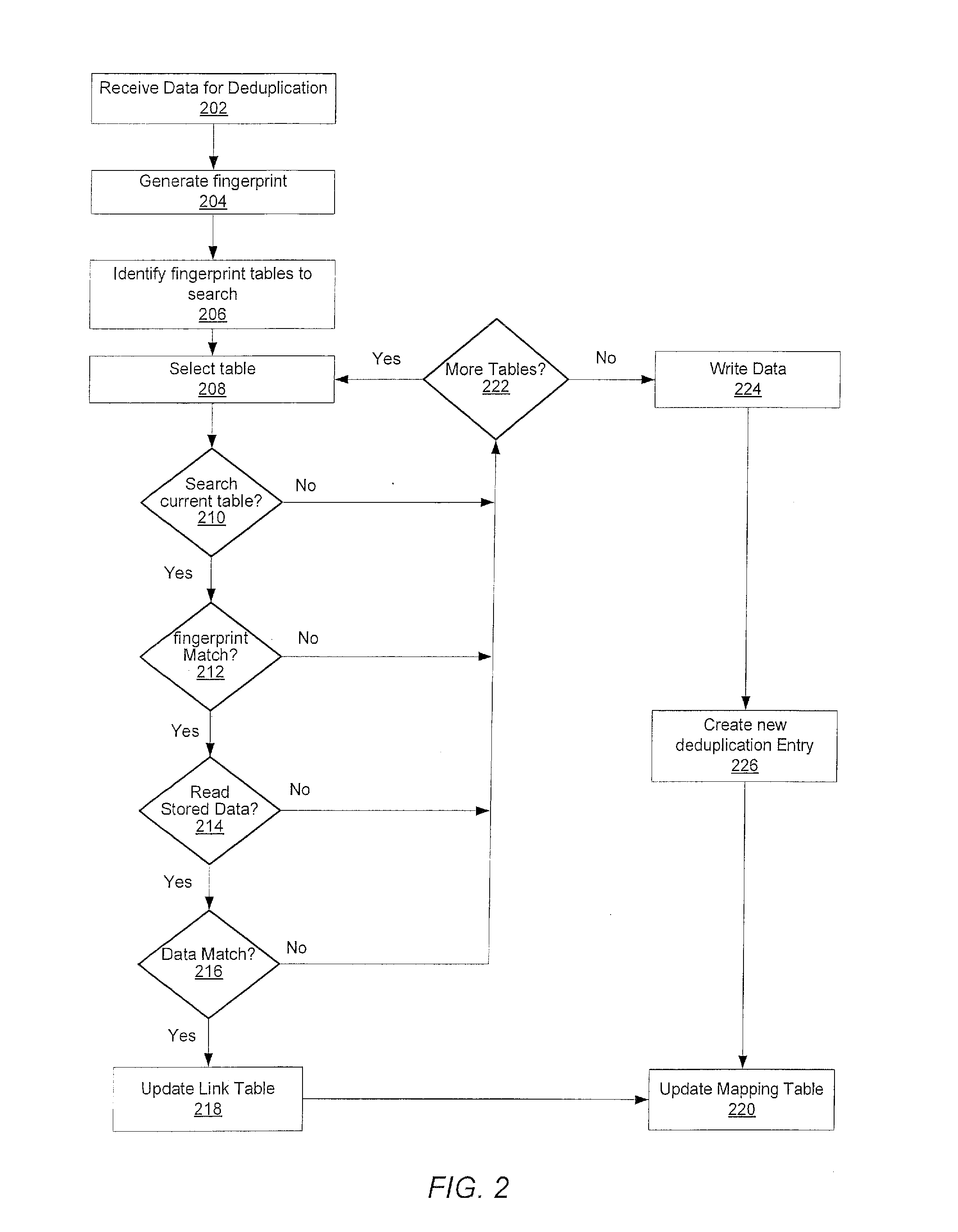

Method for removing duplicate data from a storage array

ActiveUS8930307B2Digital data information retrievalInput/output to record carriersGranularityData store

A system and method for efficiently removing duplicate data blocks at a fine-granularity from a storage array. A data storage subsystem supports multiple deduplication tables. Table entries in one deduplication table have the highest associated probability of being deduplicated. Table entries may move from one deduplication table to another as the probabilities change. Additionally, a table entry may be evicted from all deduplication tables if a corresponding estimated probability falls below a given threshold. The probabilities are based on attributes associated with a data component and attributes associated with a virtual address corresponding to a received storage access request. A strategy for searches of the multiple deduplication tables may also be determined by the attributes associated with a given storage access request.

Owner:PURE STORAGE

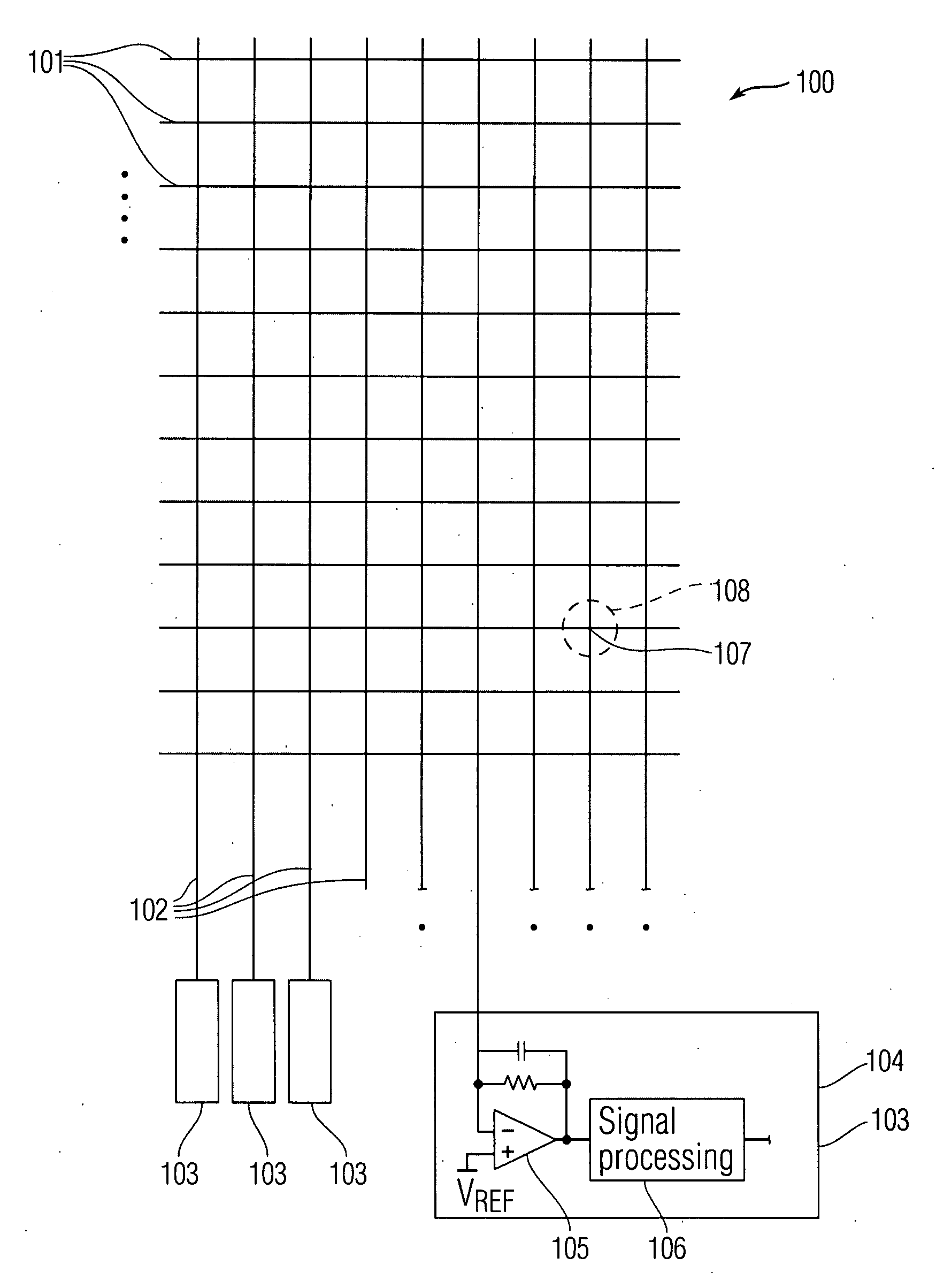

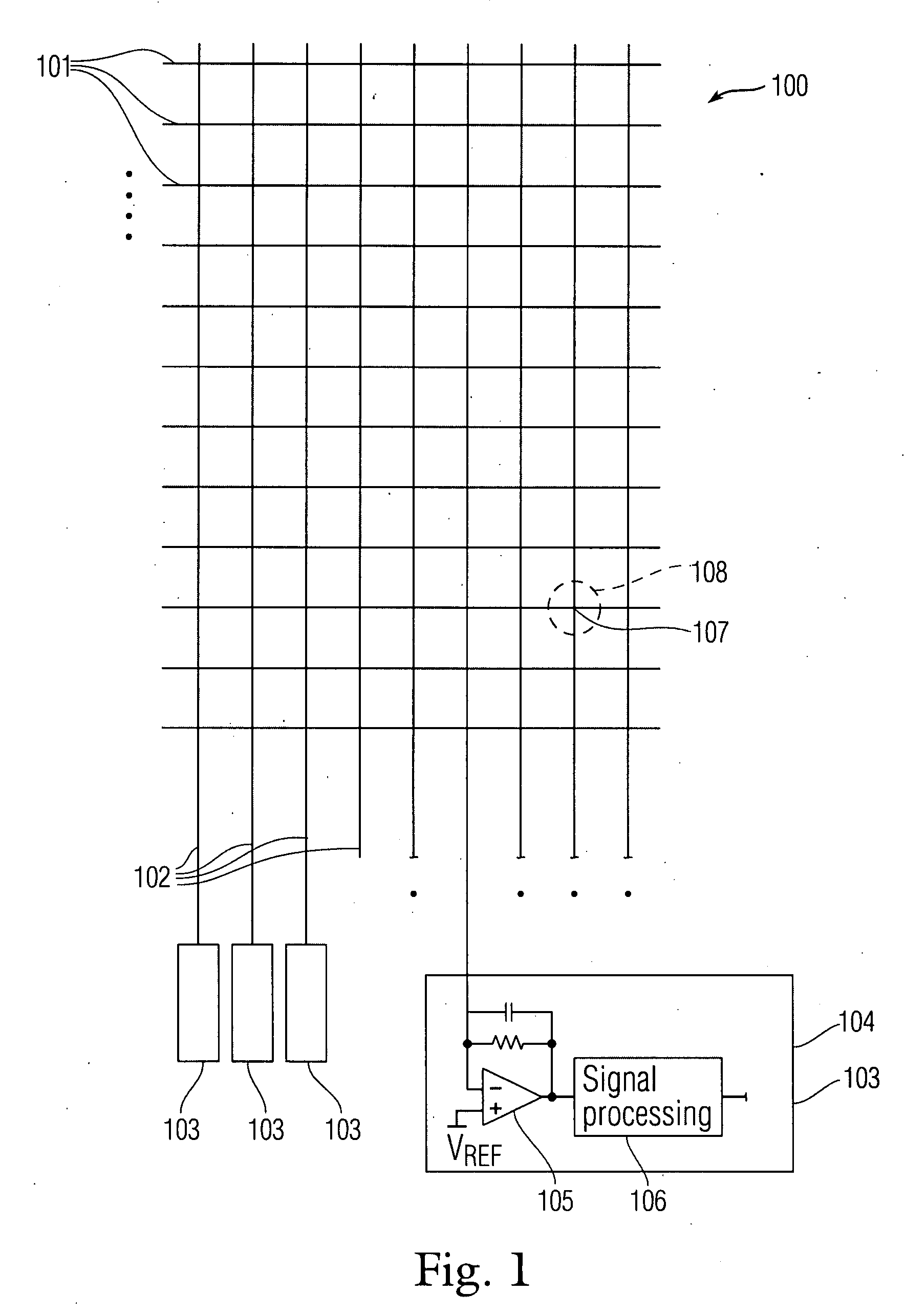

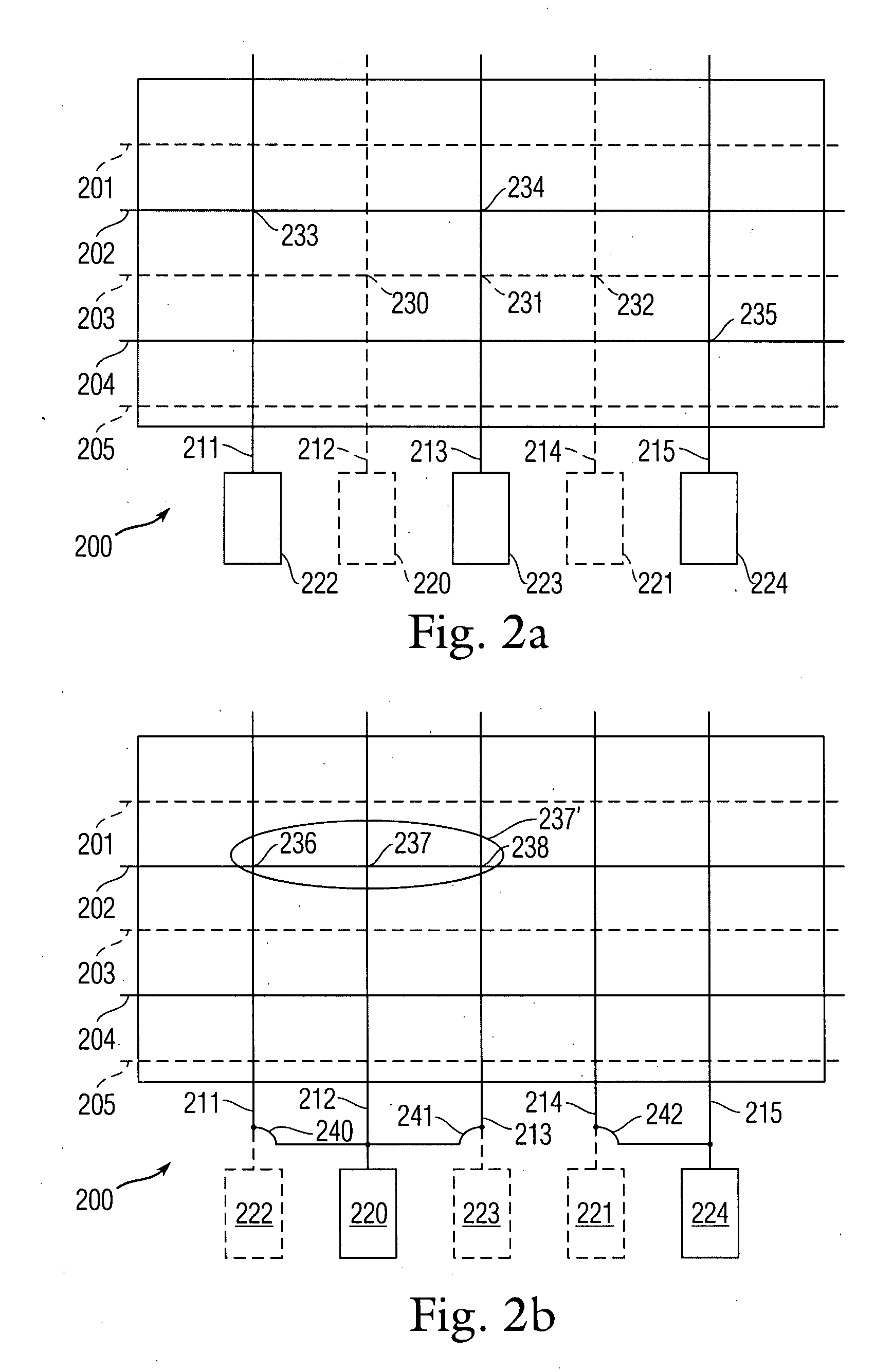

Integrated multi-touch surface having varying sensor granularity

ActiveUS20080309631A1Easy inputFine granularityDigital data processing detailsSubstation equipmentGranularityComputer science

This relates to an event sensing device that includes an event sensing panel and is able to dynamically change the granularity of the panel according to present needs. Thus, the granularity of the panel can differ at different times of operation. Furthermore, the granularity of specific areas of the panel can also be dynamically changed, so that different areas feature different granularities at a given time. This also relates to panels that feature different inherent granularities in different portions thereof. These panels can be designed, for example, by placing more stimulus and / or data lines in different portions of the panel, thus ensuring different densities of pixels in the different portions. Optionally, these embodiments can also include the dynamic granularity changing features noted above.

Owner:APPLE INC

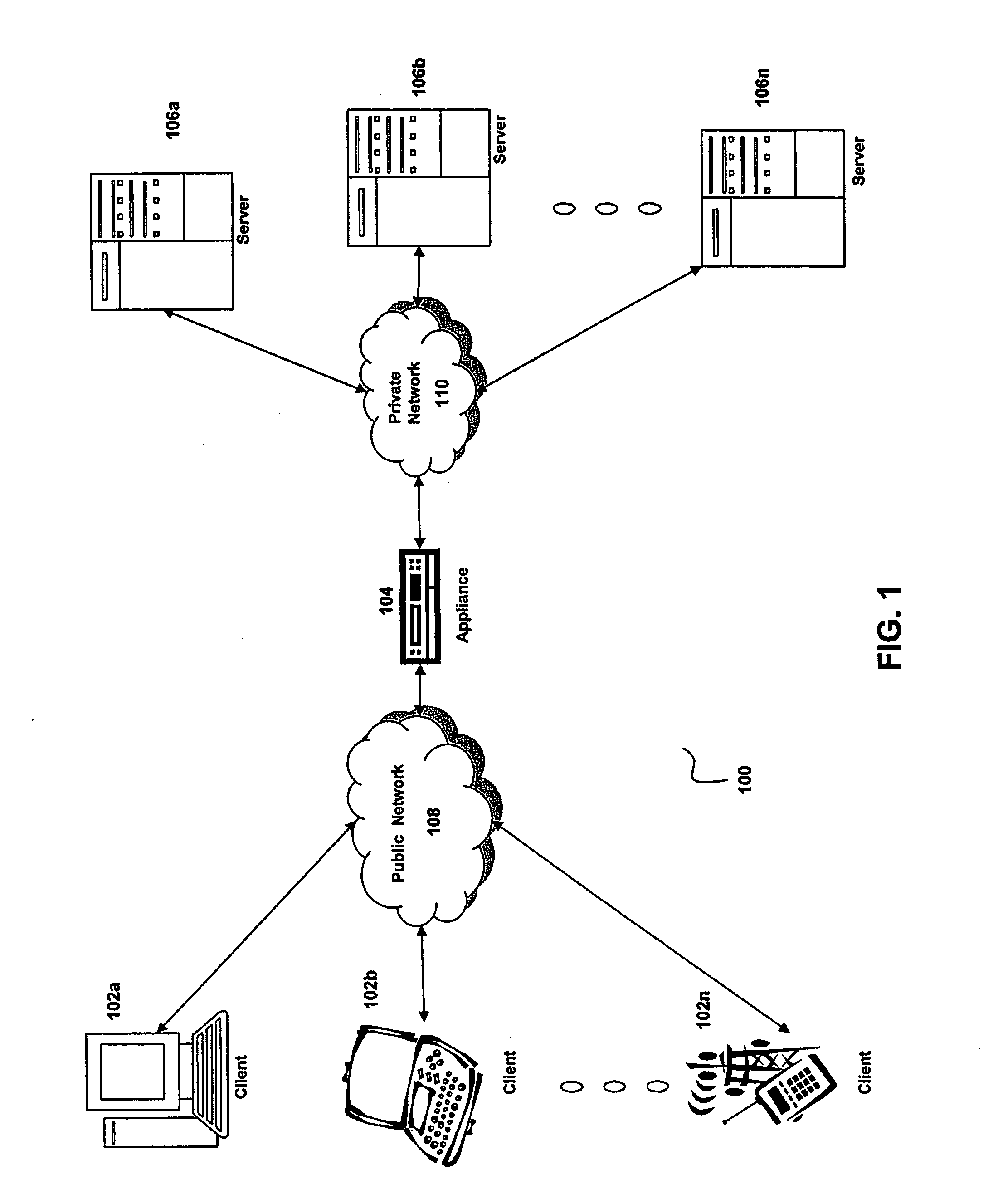

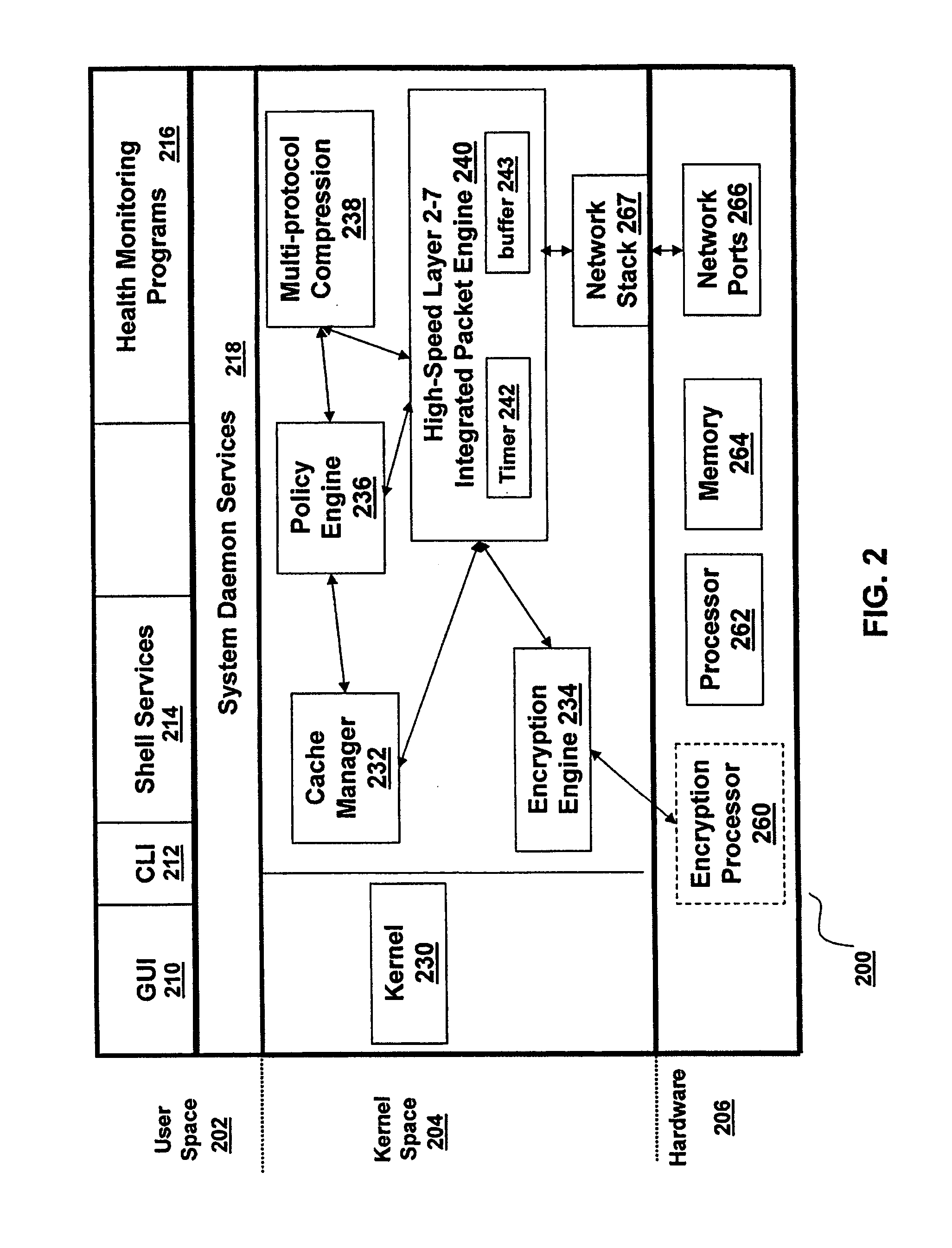

System and method for performing granular invalidation of cached dynamically generated objects in a data communication network

ActiveUS20070156966A1Great time intervalMemory architecture accessing/allocationMemory systemsExpiration TimeGranularity

The present invention is directed towards a method and system for providing granular timed invalidation of dynamically generated objects stored in a cache. The techniques of the present invention incorporates the ability to configure the expiration time of objects stored by the cache to fine granular time intervals, such as the granularity of time intervals provided by a packet processing timer of a packet processing engine. As such, the present invention can cache objects with expiry times down to very small intervals of time. This characteristic is referred to as “invalidation granularity.” By providing this fine granularity in expiry time, the cache of the present invention can cache and serve objects that frequently change, sometimes even many times within a second. One technique is to leverage the packet processing timers used by the device of the present invention that are able operate at time increments on the order of milliseconds to permit invalidation or expiry granularity down to 10 ms or less.

Owner:CITRIX SYST INC

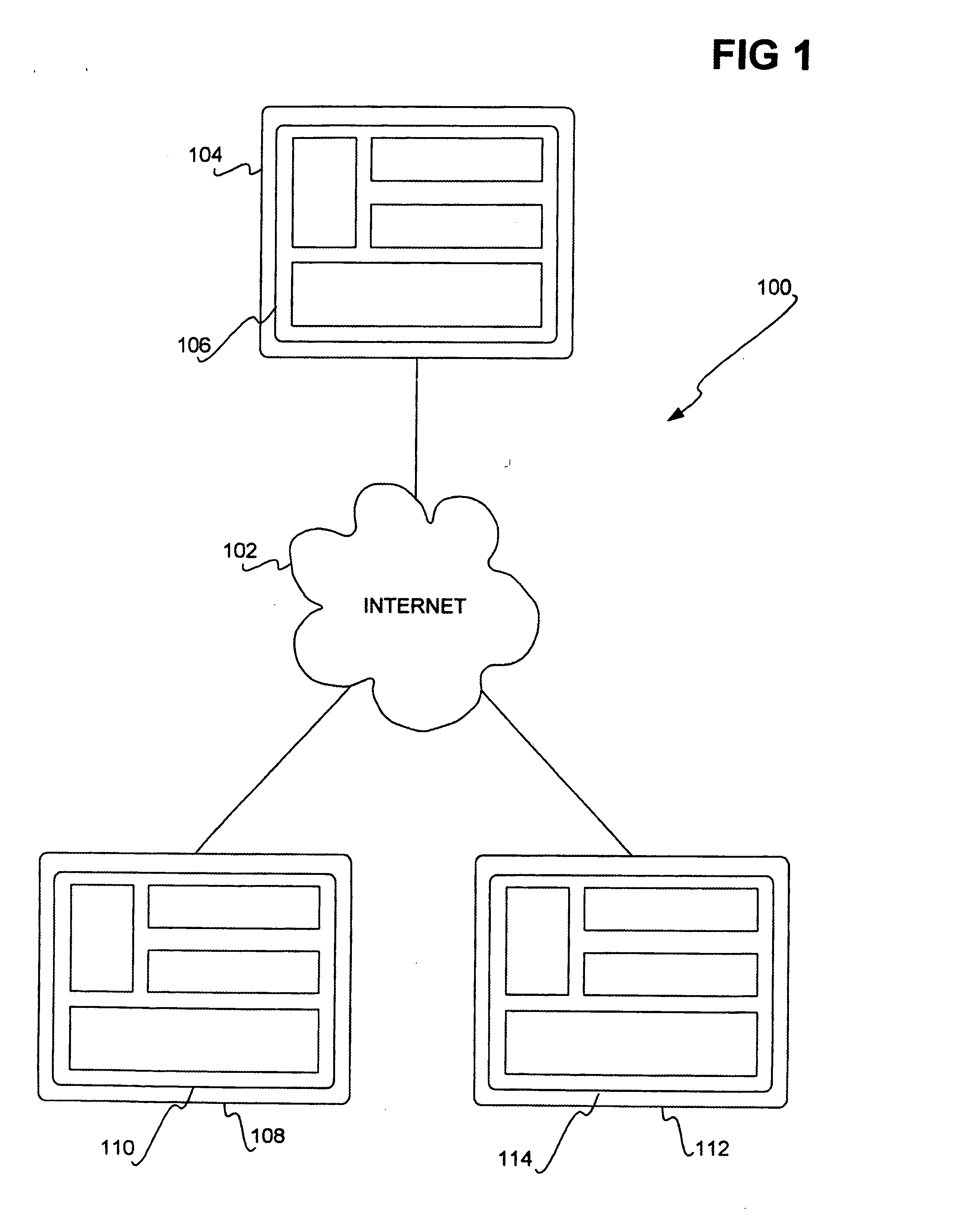

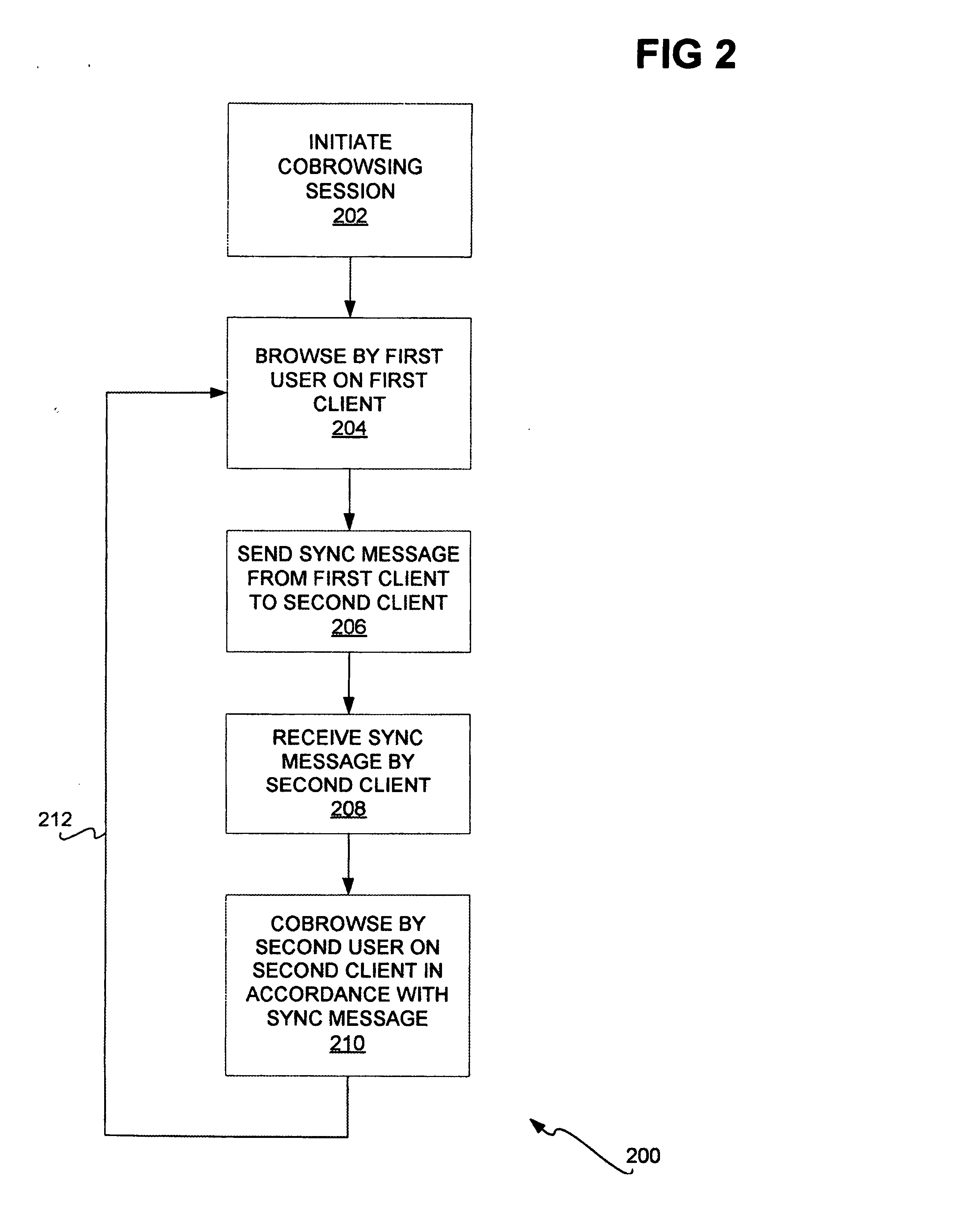

Web site cobrowsing

InactiveUS20050091572A1Synchronised browsingMultiple digital computer combinationsWeb siteGranularity

Cobrowsing web sites by two or more users is disclosed. For a cobrowsing session between a first client of a first user and a second client of a second user, the cobrowsing session is first initiated. The first user browses a web site on the first client. The first client sends to the second client a synchronization message. The synchronization message indicates one or more commands reflecting the browsing performed by the first user. The second client receives the synchronization message, and cobrowses the web site in accordance with the message and its included commands. Cobrowsing continues until the cobrowsing session is terminated. The commands of the synchronization message allow for fine granularity of cobrowsing.

Owner:MICROSOFT TECH LICENSING LLC

Method for removing duplicate data from a storage array

ActiveUS20130086006A1Digital data processing detailsSpecial data processing applicationsGranularityComputer science

A system and method for efficiently removing duplicate data blocks at a fine-granularity from a storage array. A data storage subsystem supports multiple deduplication tables. Table entries in one deduplication table have the highest associated probability of being deduplicated. Table entries may move from one deduplication table to another as the probabilities change. Additionally, a table entry may be evicted from all deduplication tables if a corresponding estimated probability falls below a given threshold. The probabilities are based on attributes associated with a data component and attributes associated with a virtual address corresponding to a received storage access request. A strategy for searches of the multiple deduplication tables may also be determined by the attributes associated with a given storage access request.

Owner:PURE STORAGE

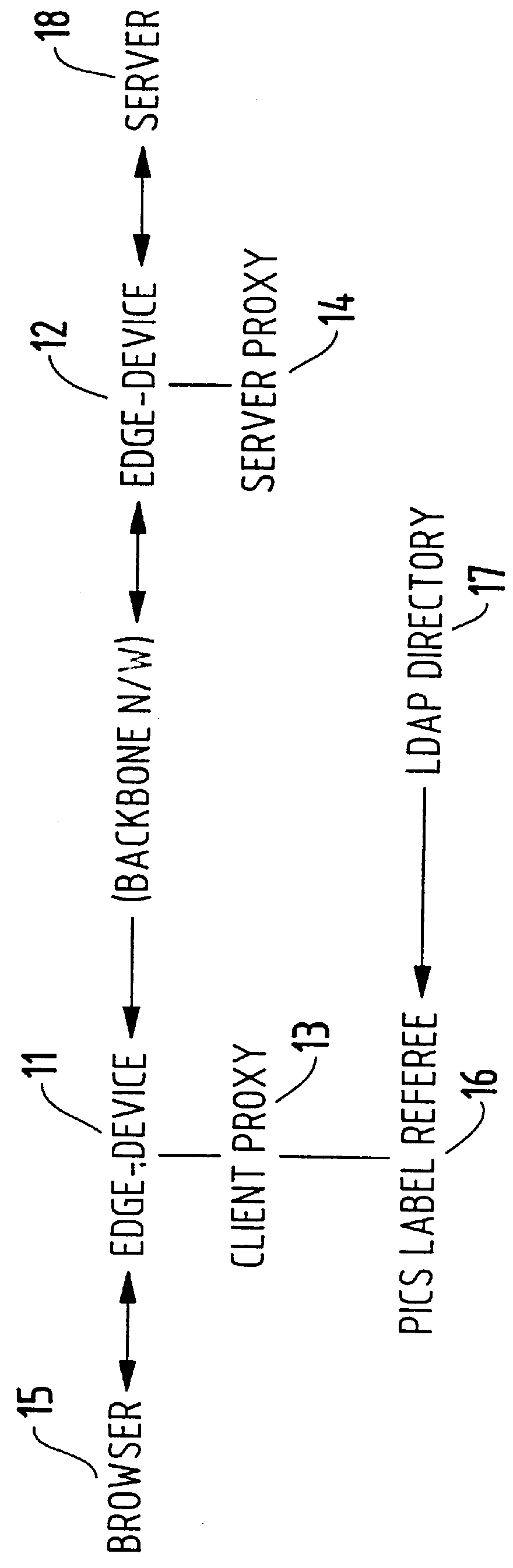

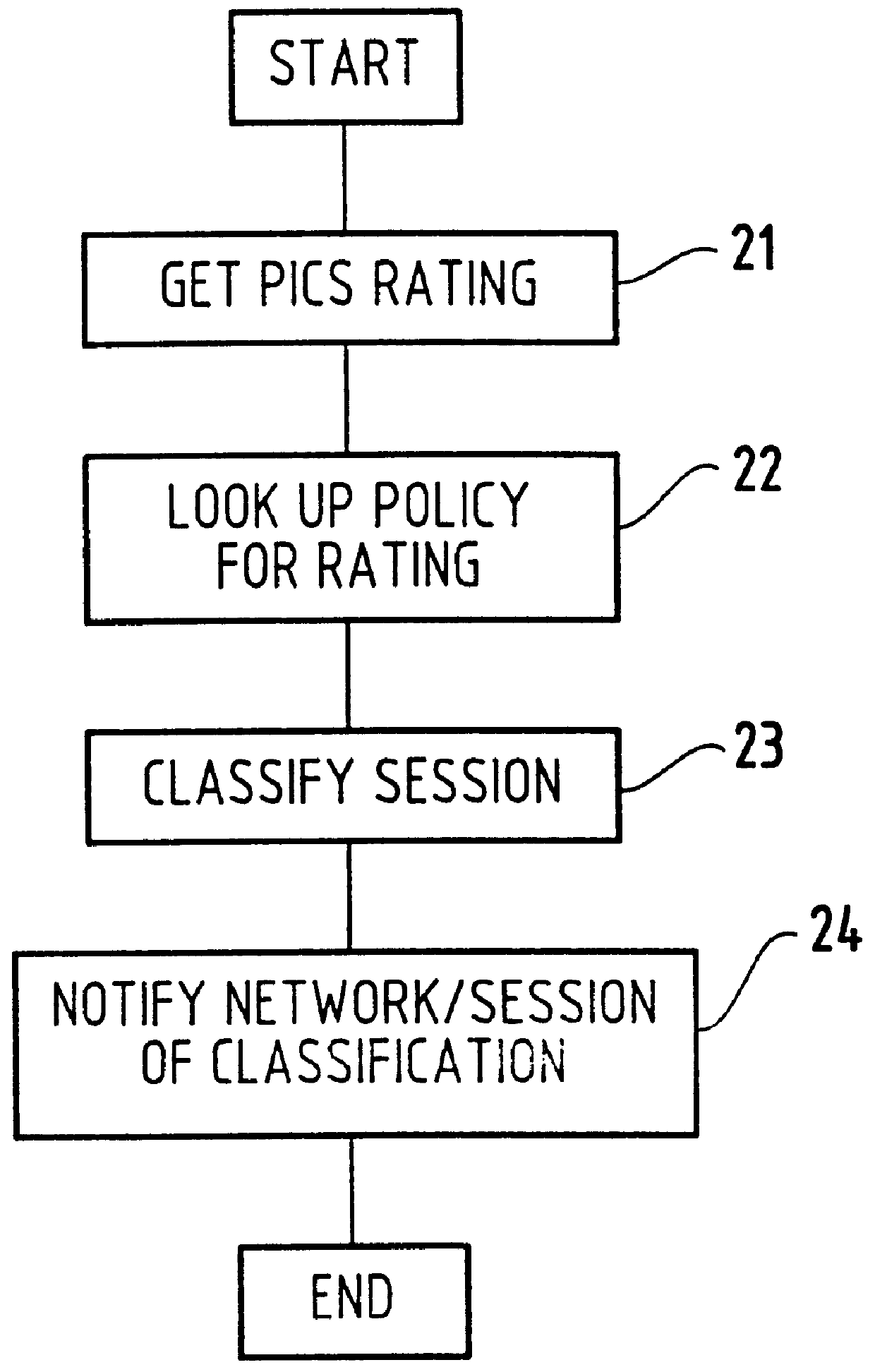

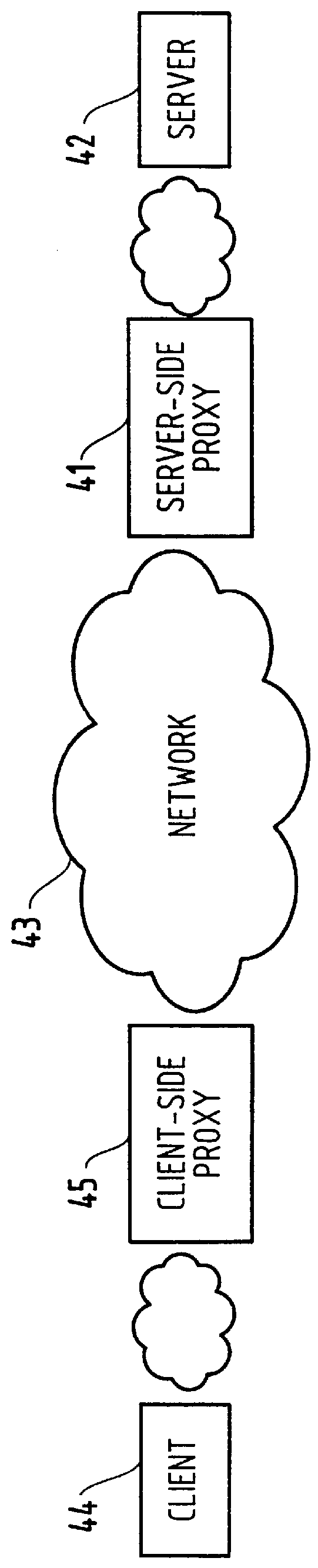

Method for supporting different service levels in a network using web page content information

A method for classifying different pages accessed by a web-browser into different service-levels on a granularity finer than that of the connection. The method augments each edge device with two applications, a Client-Proxy and a Server-Proxy. The Client-Proxy obtains identifying information from the client's request, and the obtain PICS labelling information from a label referee. This information is used to obtain a service level from an LDAP based SLA directory, and this service level information is then imbedded along with a unique identifier for the network operator organization in the HTTP header request which is transferred to the Server-Proxy. The Server-Proxy then strips the header containing the PICS information from the request and forwards the request to the server. When the Server-Proxy gets a response, it uses the PICS information to mark the packets.

Owner:IBM CORP

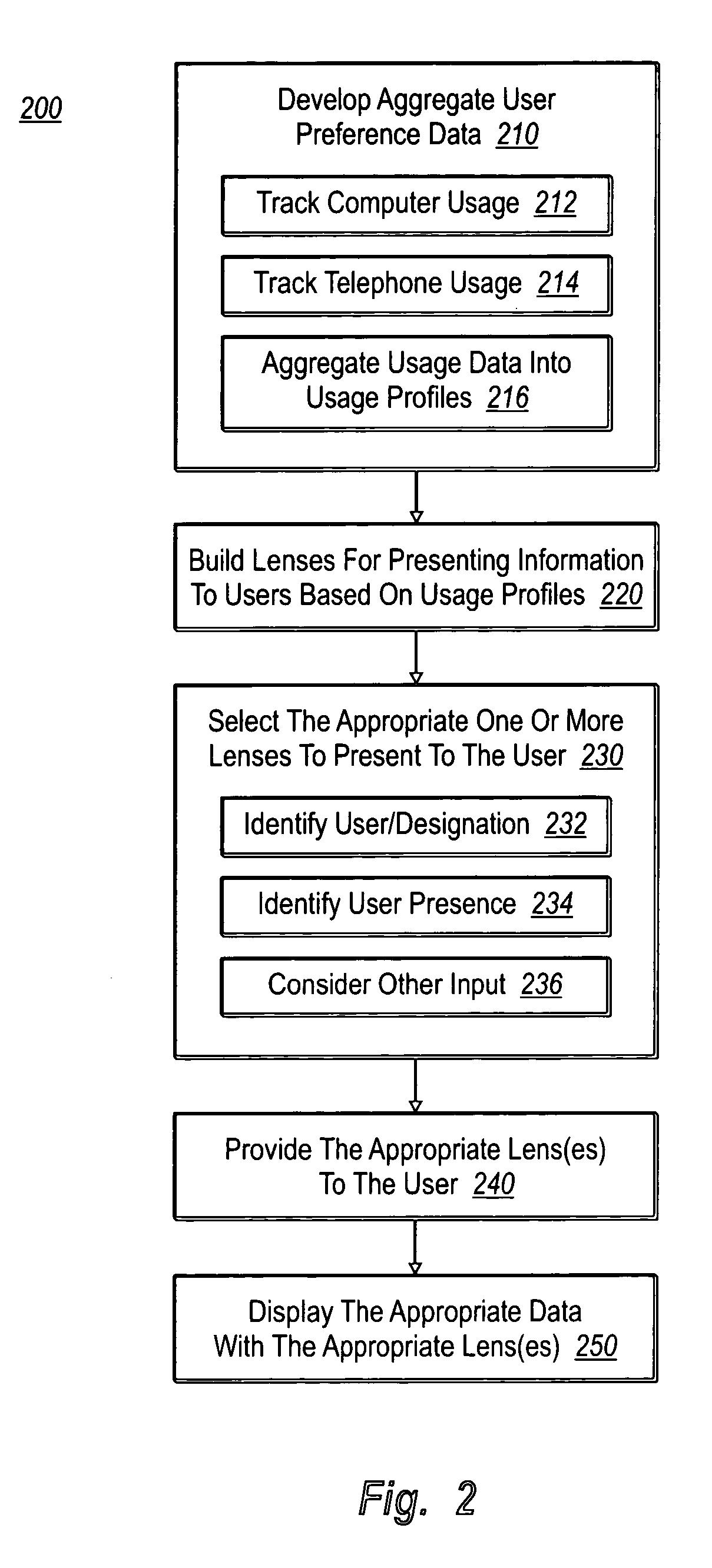

Mobile information services

InactiveUS20060019716A1Increase speedImprove usabilityDigital data information retrievalSpecial service for subscribersRelevant informationGranularity

Mobile communications devices display contextually relevant information based on the presence, status, and identification of a user. Lens templates control how the information is displayed and can be customized and designed for specific usage profiles. The lenses that are used can be updated at any time to accommodate changes in a user's presence. The granularity of the lenses and corresponding information can also vary to accommodate different needs and preferences. Lenses can also be specialized for different events or venues. The lenses allow a user to access contextually relevant information from a mobile communications device having limited display and / or browse capabilities without requiring a user to navigate through undesired information, wasting valuable resources in the process.

Owner:MICROSOFT TECH LICENSING LLC

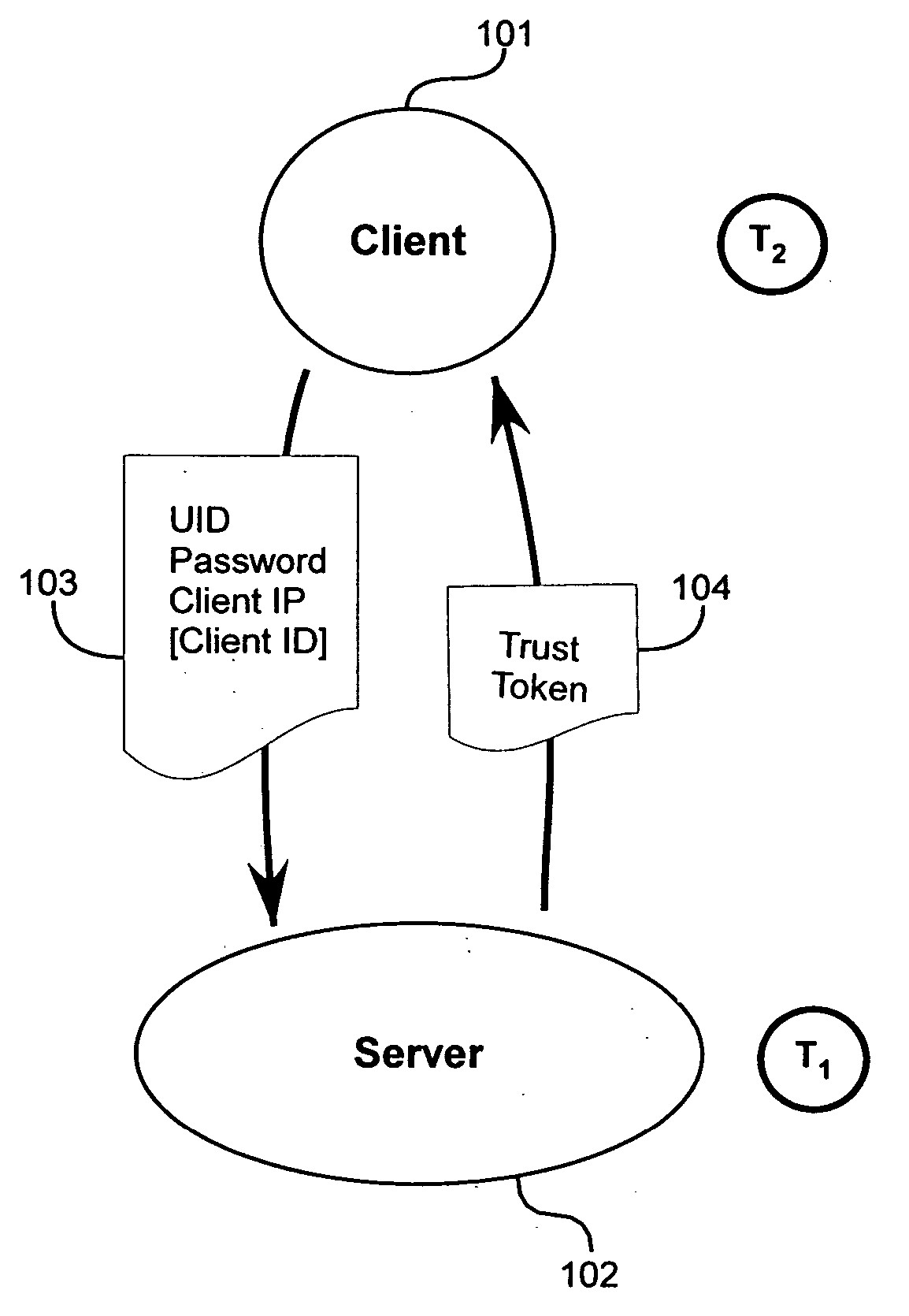

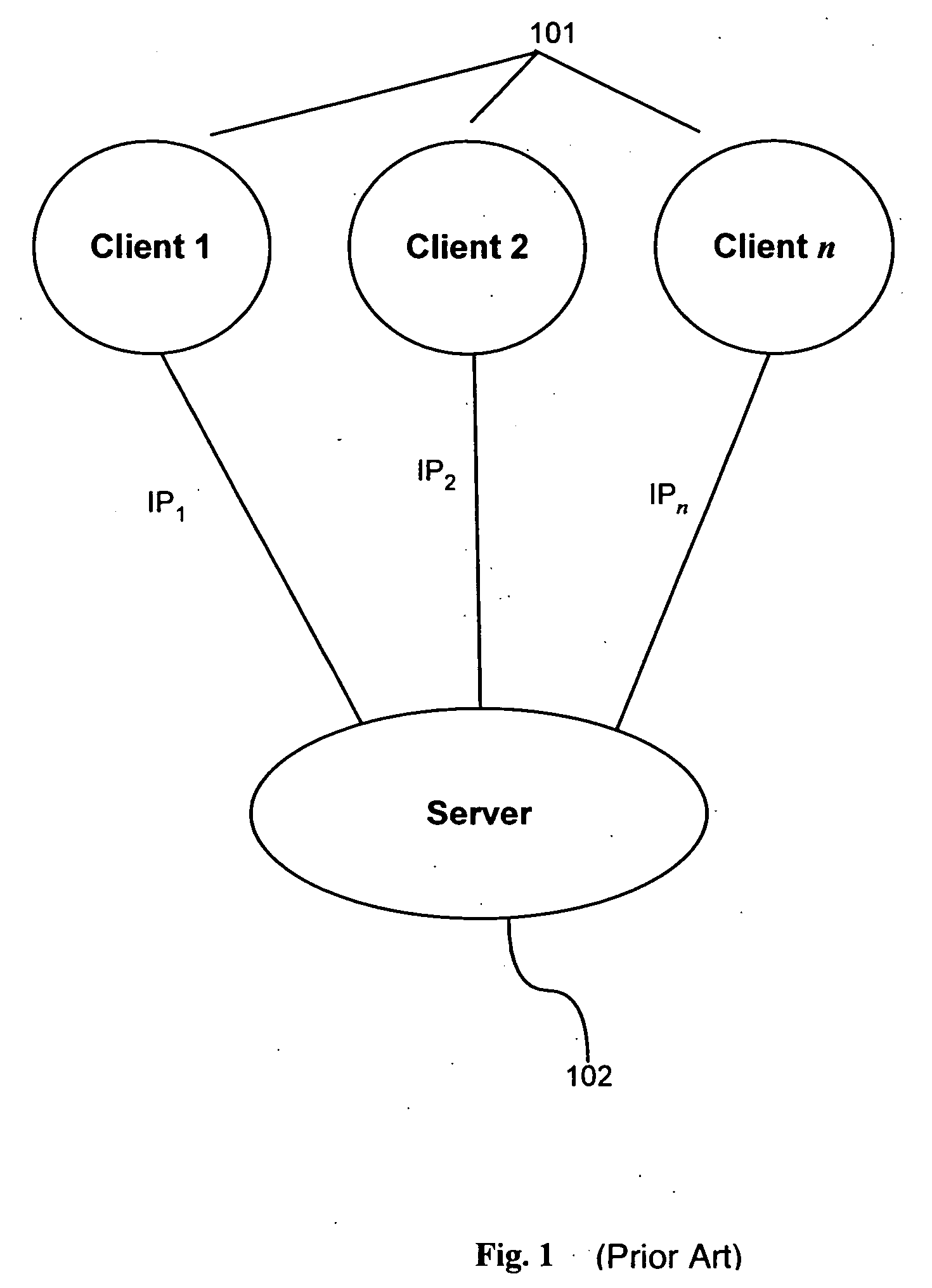

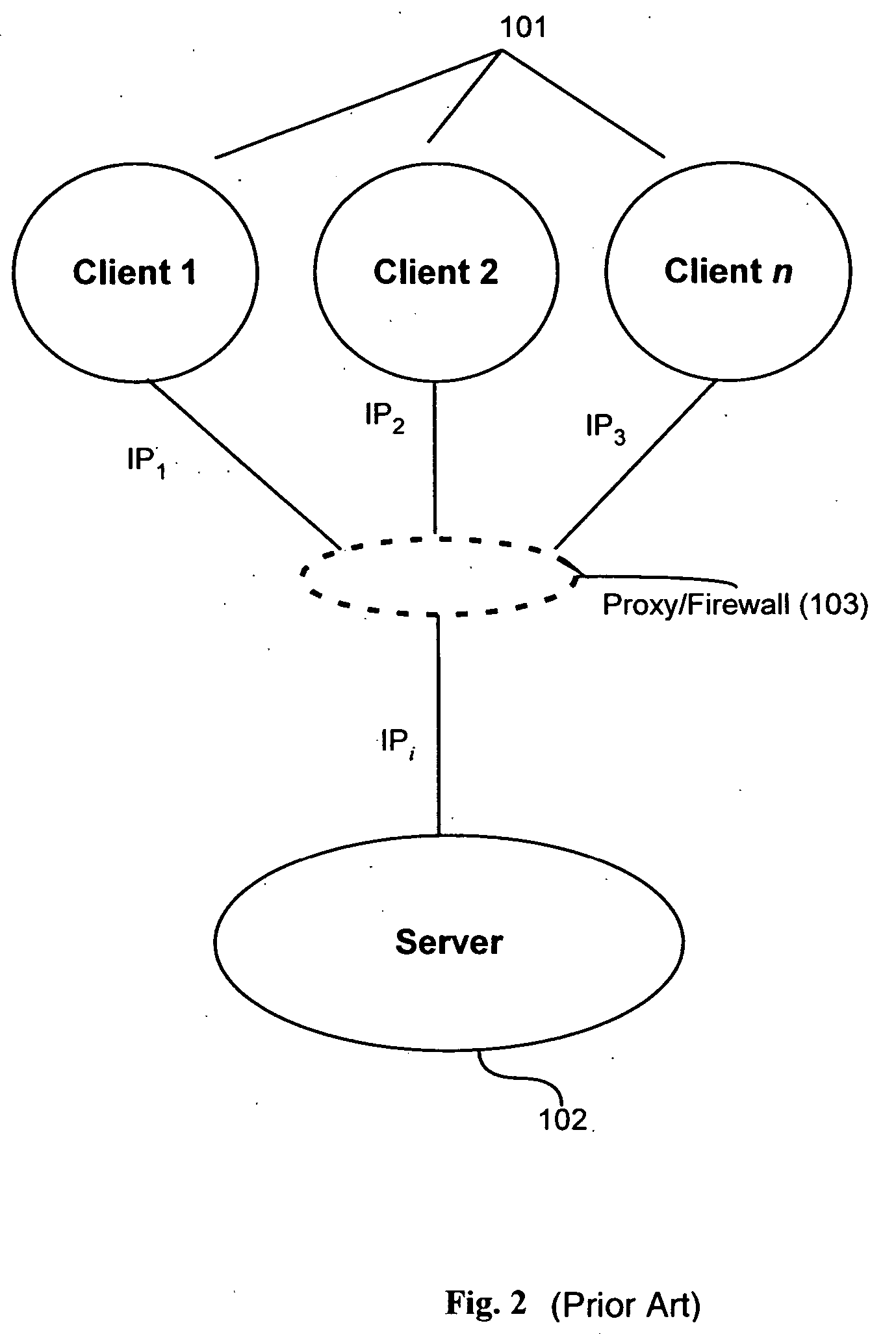

Method and apparatus for trust-based, fine-grained rate limiting of network requests

ActiveUS20050108551A1Digital data processing detailsUser identity/authority verificationRate limitingInternet traffic

A method and apparatus for fine-grained, trust-based rate limiting of network requests distinguishes trusted network traffic from untrusted network traffic at the granularity of an individual user / machine combination, so that network traffic policing measures are readily implemented against untrusted and potentially hostile traffic without compromising service to trusted users. A server establishes a user / client pair as trusted by issuing a trust token to the client when successfully authenticating to the server for the first time. Subsequently, the client provides the trust token at login. At the server, rate policies apportion bandwidth according to type of traffic: network requests that include a valid trust token are granted highest priority. Rate policies further specify bandwidth restrictions imposed for untrusted network traffic. This scheme enables the server to throttle untrusted password-guessing requests from crackers without penalizing most friendly logins and only slightly penalizing the relatively few untrusted friendly logins.

Owner:META PLATFORMS INC

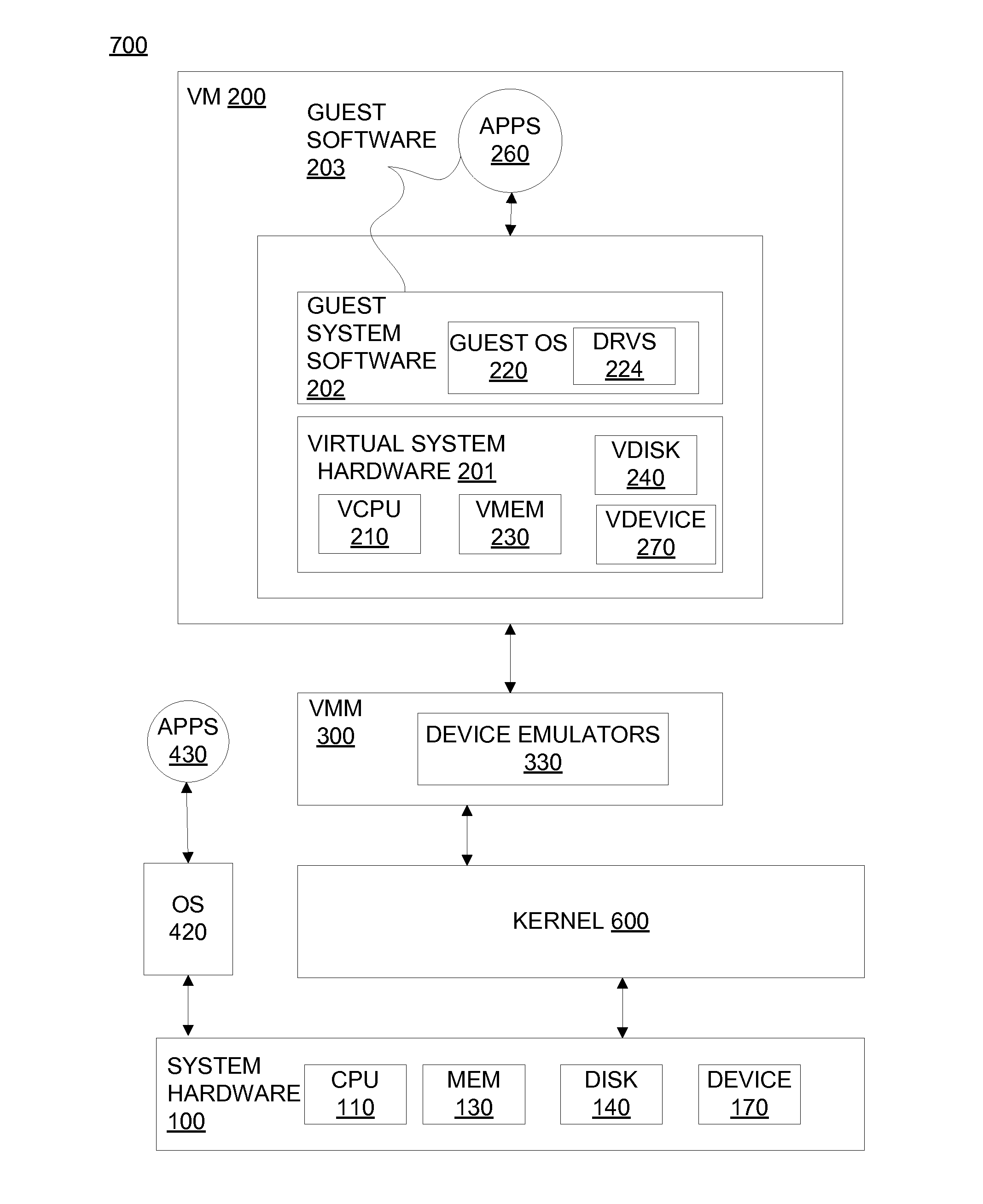

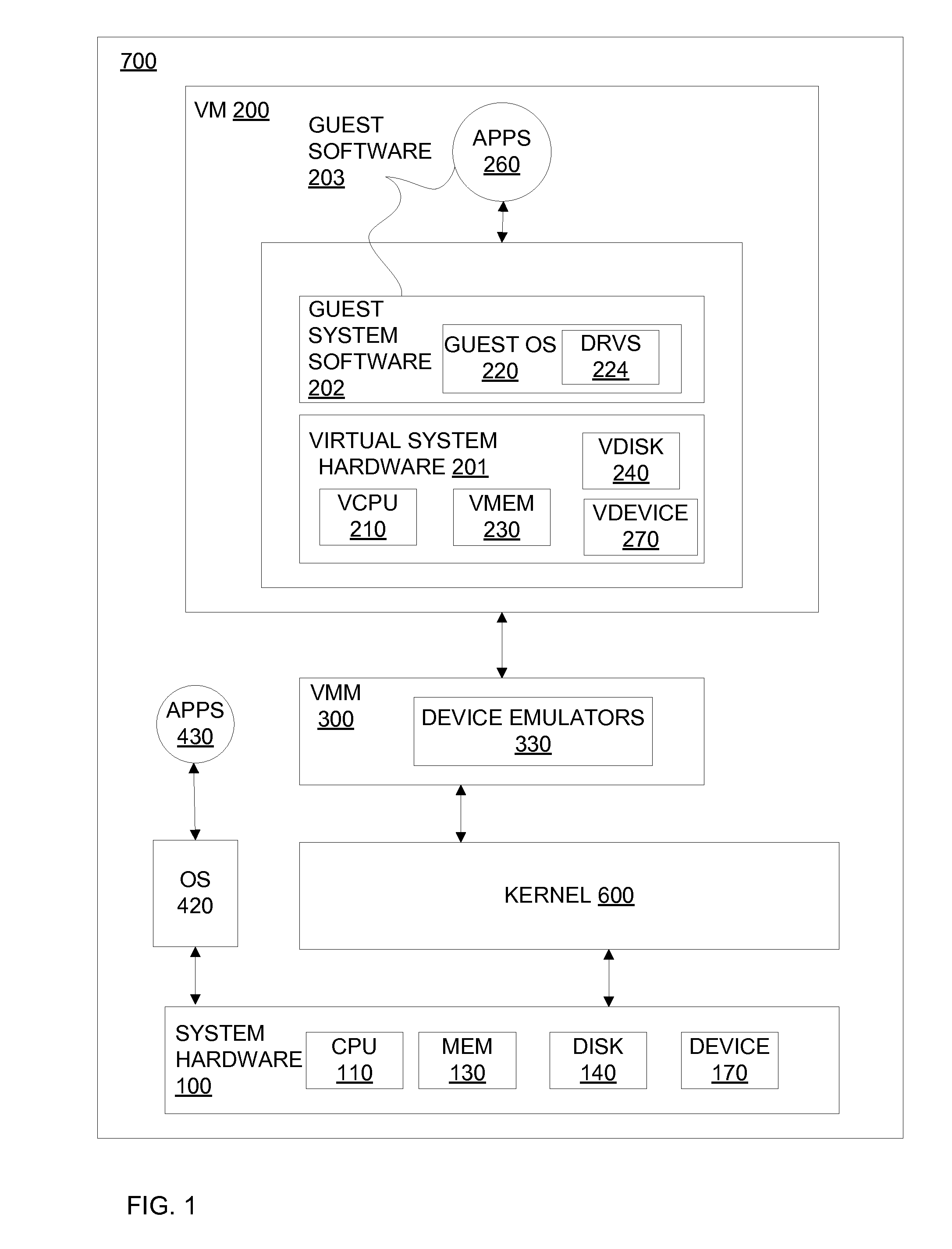

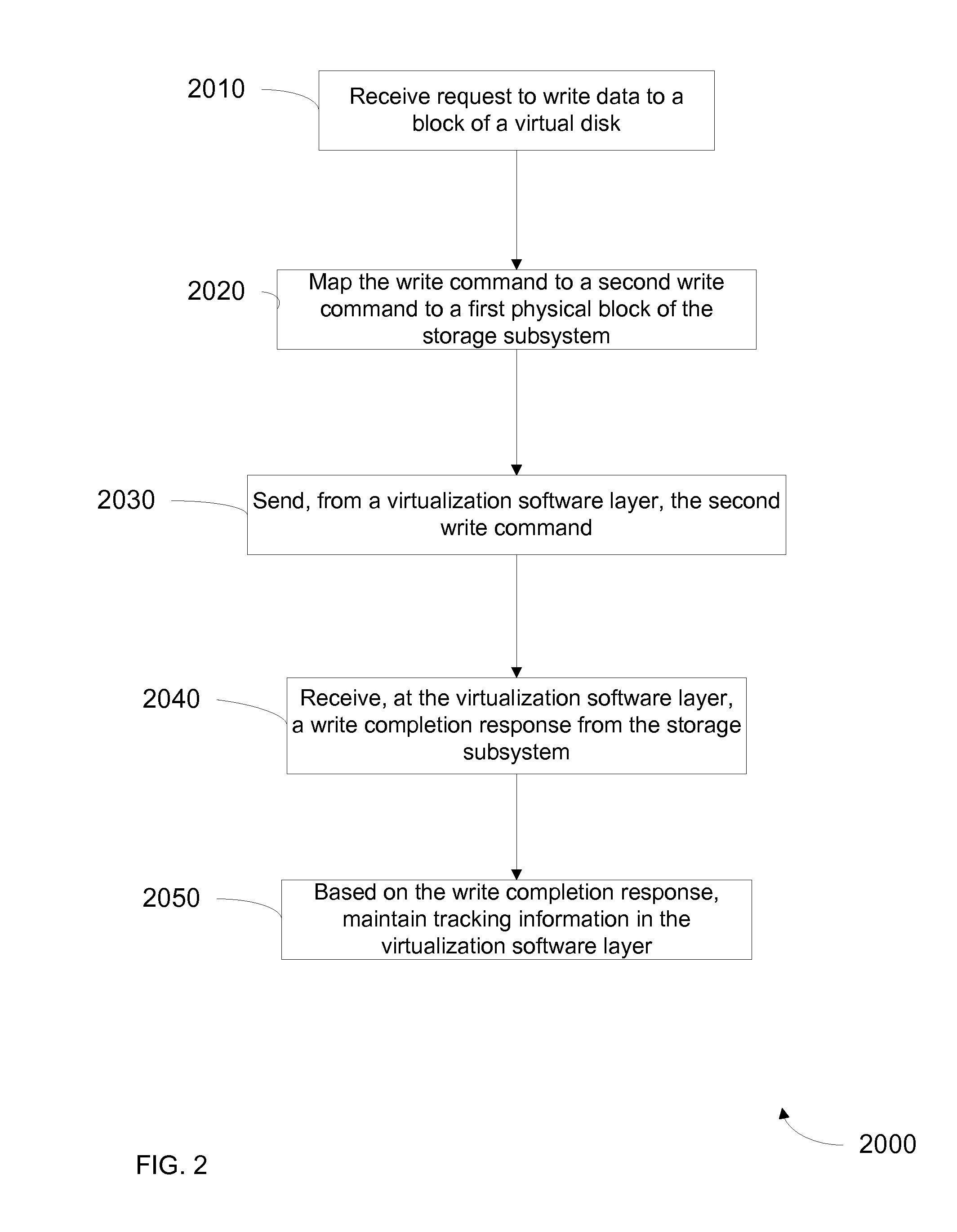

Method for tracking changes in virtual disks

ActiveUS20100228913A1Reduce the impactMore efficientMemory loss protectionError detection/correctionVirtualizationGranularity

Systems and methods for tracking changes and performing backups to a storage device are provided. For virtual disks of a virtual machine, changes are tracked from outside the virtual machine in the kernel of a virtualization layer. The changes can be tracked in a lightweight fashion with a bitmap, with a finer granularity stored and tracked at intermittent intervals in persistent storage. Multiple backup applications can be allowed to accurately and efficiently backup a storage device. Each backup application can determine which block of the storage device has been updated since the last backup of a respective application. This change log is efficiently stored as a counter value for each block, where the counter is incremented when a backup is performed. The change log can be maintained with little impact on I / O by using a coarse bitmap to update the finer grained change log.

Owner:VMWARE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com