Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

91 results about "Affective computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Affective computing (sometimes called artificial emotional intelligence, or emotion AI) is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While the origins of the field may be traced as far back as to early philosophical inquiries into emotion, the more modern branch of computer science originated with Rosalind Picard's 1995 paper on affective computing. A motivation for the research is the ability to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

Text orientation analysis method and product review orientation discriminator on basis of same

InactiveCN103455562AImprove accuracyReduce uncertaintySpecial data processing applicationsMarketingDiscriminatorPattern recognition

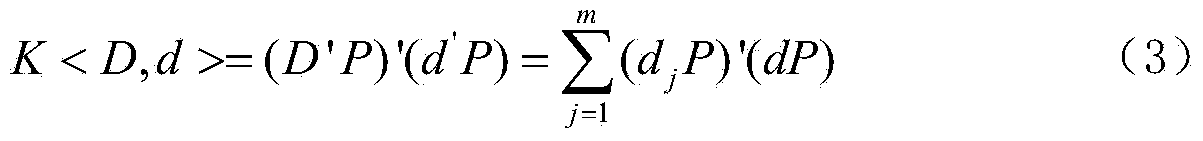

The invention discloses a text orientation analysis method which comprises the following steps of: preprocessing a review text; identifying a dependency relation structure of the Chinese syntax; calculating content polarity values of sentiment words; completing two-tuples extraction of evaluated objects and evaluation words and determining a slave relation between the evaluated objects; weighting and summing orientation values of the sentiment words to obtain an orientation value of a sentence so as to implement discrimination on orientation of a sentence level; discriminating appraising orientation of sentiment in the review by positive and negative polarity values of the sentence level; and according to the size of a polarity absolute value, discriminating intensity of appraising sentiment in the review. A product review orientation discriminator comprises an acquisition module, a preprocessing module, a syntactic analysis module, a sentiment calculating engine, a two-tuples mining engine, a content controller and a sentiment discriminator. According to the invention, a combined sentiment dictionary is combined and a domain ontology is added into text orientation analysis; accuracy of polarity calculation of the sentiment words and (the evaluated objects and the evaluation words) two-tuples extraction is improved; and orientation analysis on product reviews in a forum is implemented.

Owner:XI'AN UNIVERSITY OF ARCHITECTURE AND TECHNOLOGY

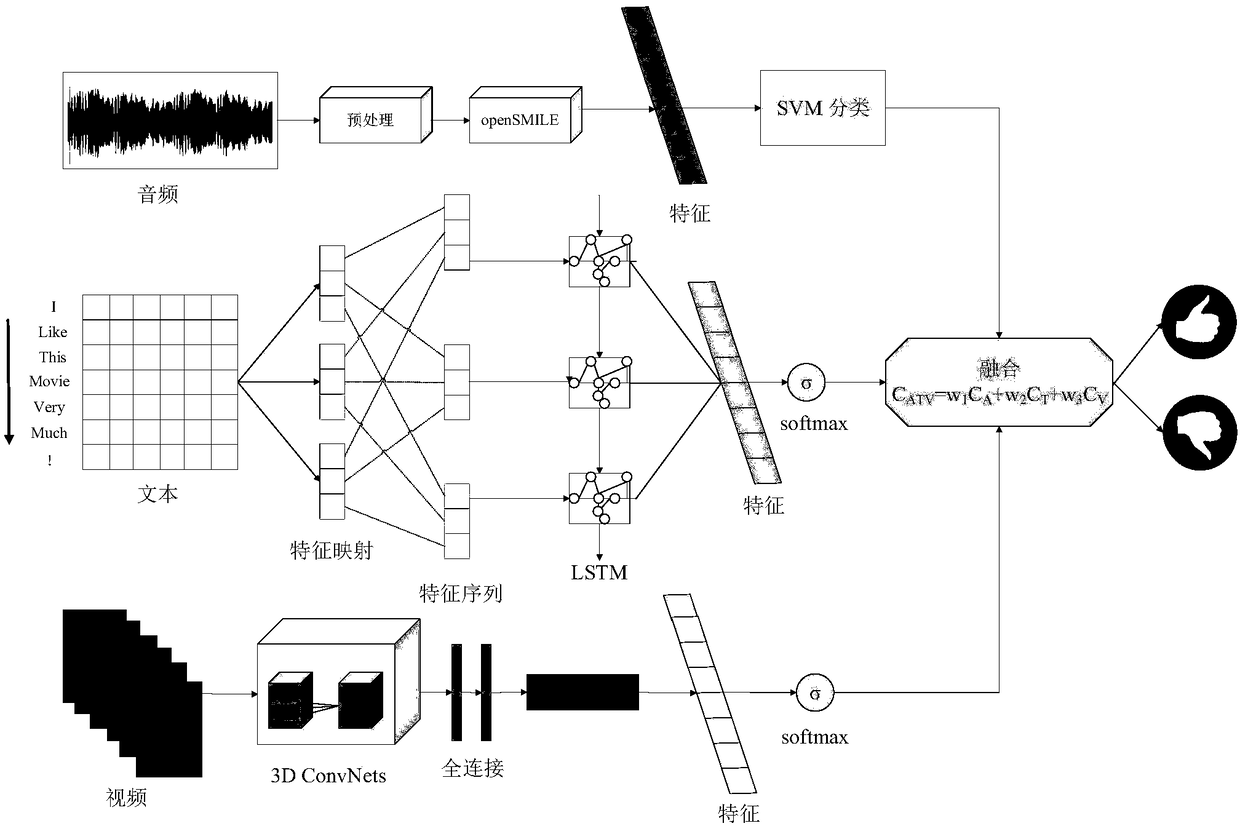

A socio-emotional classification method based on multimodal fusion

InactiveCN109508375AEfficient extractionImprove accuracySemantic analysisNeural architecturesShort-term memoryClassification methods

The invention provides a social emotion classification method based on multimodal fusion, which relates to information in the form of audio, visual and text. Most of affective computing research onlyextracts affective information by analyzing single-mode information, ignoring the relationship between information sources. The present invention proposes a 3D CNN ConvLSTM (3D CNN ConvLSTM) model forvideo information, and establishes spatio-temporal information for emotion recognition tasks through a cascade combination of a three-dimensional convolution neural network (C3D) and a convolution long-short-term memory recurrent neural network (ConvLSTM). For text messages, use CNN-RNN hybrid model is used to classify text emotion. Heterogeneous fusion of vision, audio and text is performed by decision-level fusion. The deep space-time feature learned by the invention effectively simulates the visual appearance and the motion information, and after fusing the text and the audio information,the accuracy of the emotion analysis is effectively improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

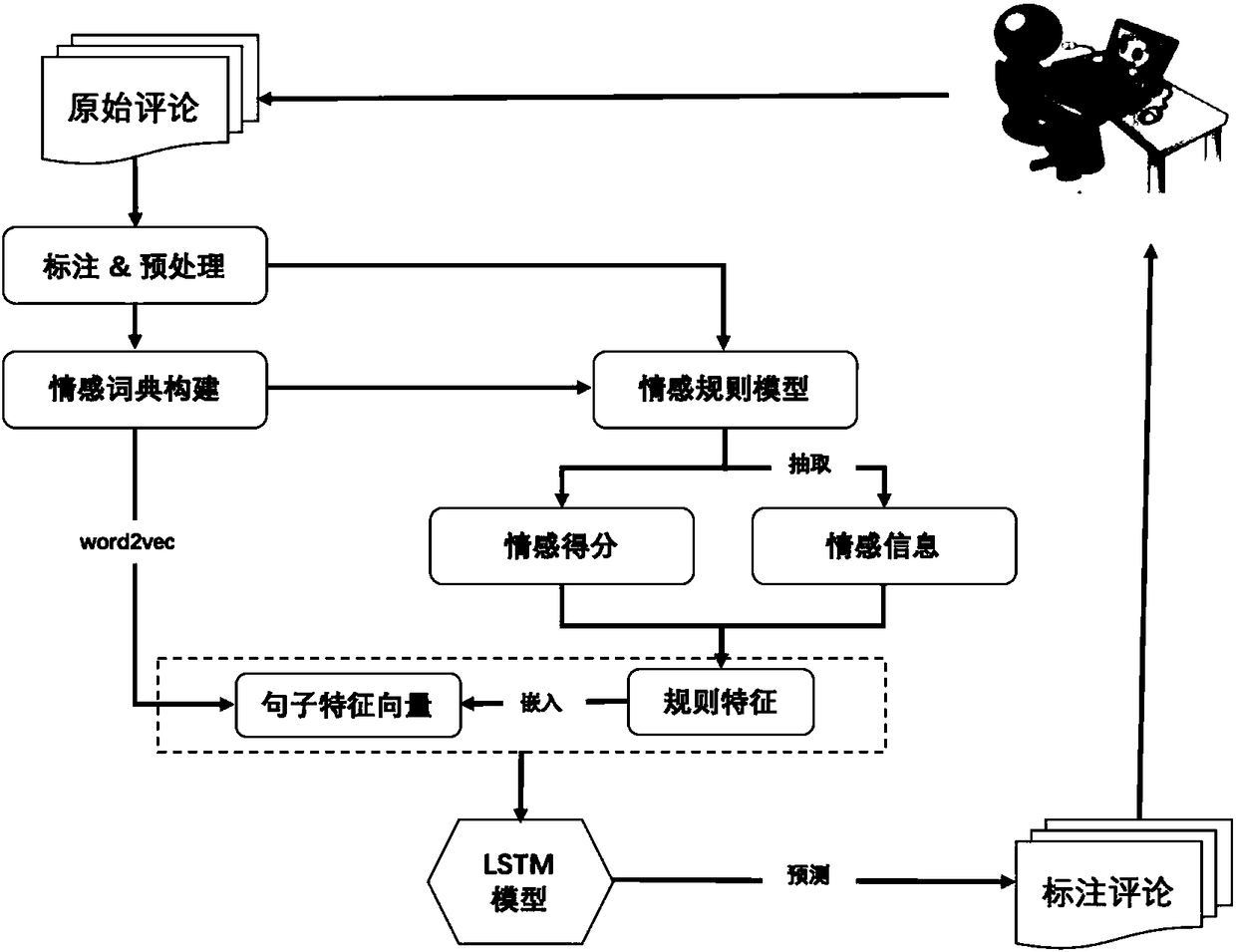

Emotion analysis method based on rule and data network fusion

InactiveCN108108433AHigh precisionMeet the needs of the characteristicsSemantic analysisSpecial data processing applicationsPattern recognitionPart of speech

The invention discloses an emotion analysis method based on rule and neural network fusion. The method comprises the steps that 1, a certain quantity of structured comments about a target object are acquired to form a to-be-analyzed corpus, and an emotion ontology base is obtained through semantic analysis generalization according to an emotion ontology base provided by an authority in combinationwith the corpus, wherein the emotion ontology base contains emotion limits and emotion degrees; 2, emotion word matching and an emotion word relation are preprocessed, word segmentation, text analysis and ontology base matching are performed on the corpus, emotion words in sentences are commented, and emotion information of the emotion words and an depending relation corresponding to a context are annotated, wherein the emotion information contains emotion strength of the words, emotion polarity and part-of-speeches of the emotion words; and 3, emotion calculation, feature fusion and emotiontendency judgment are performed. Through the method, emotion classification can be performed according to the context more precisely.

Owner:HANGZHOU DIANZI UNIV

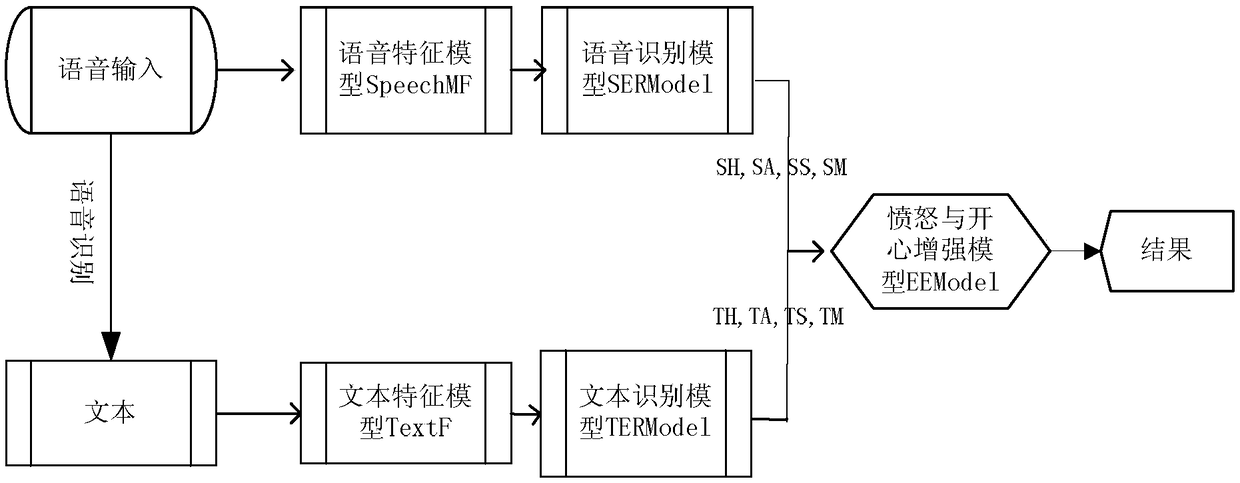

Speech emotion recognition method and system for enhancing anger and happiness recognition

ActiveCN108597541AImprove angerImprove happy misjudgment problemSpeech recognitionFeature vectorAnger

The invention provides a speech emotion recognition method and system for enhancing anger and happiness recognition. The method comprises the steps of receiving a user voice signal, and extracting acoustic feature vectors of a voice; converting the voice signal into text information, and acquiring text feature vectors of the voice; inputting the acoustic feature vectors and the text feature vectors into a speech emotion recognition model and a text emotion recognition model respectively to obtain probability values of different emotions; reducing and enhancing the obtained anger and happinessemotional probability values to obtain final emotion judgment and recognition results. The method can provide assistance for application such as emotional computing, human-computer interaction and thelike.

Owner:NANJING NORMAL UNIVERSITY

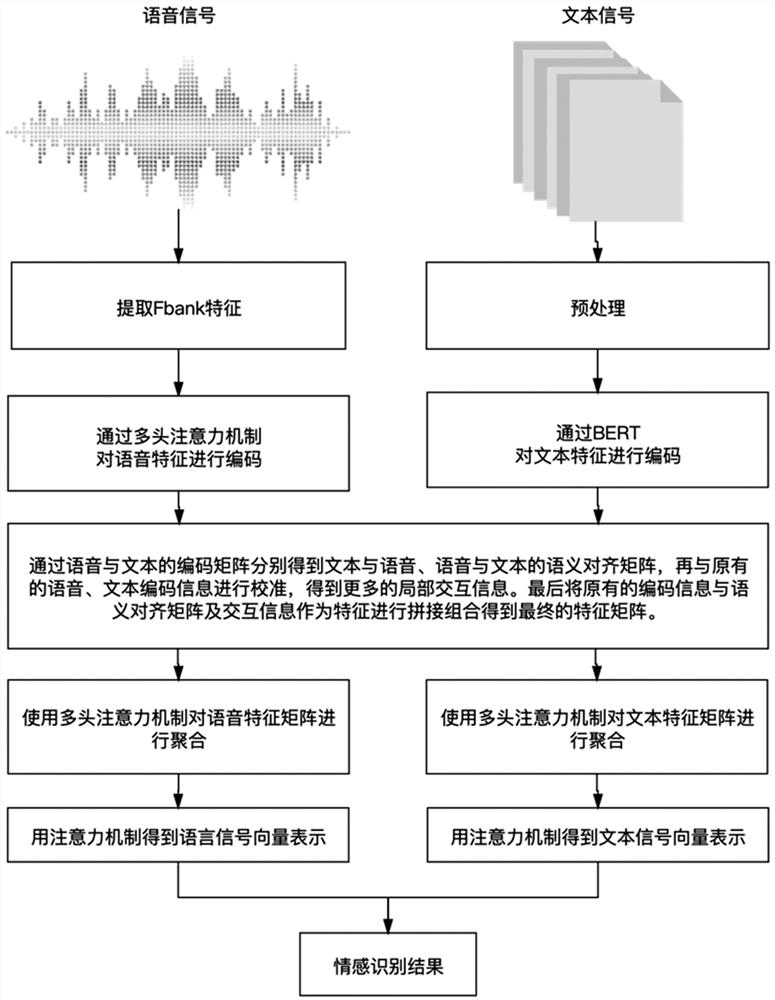

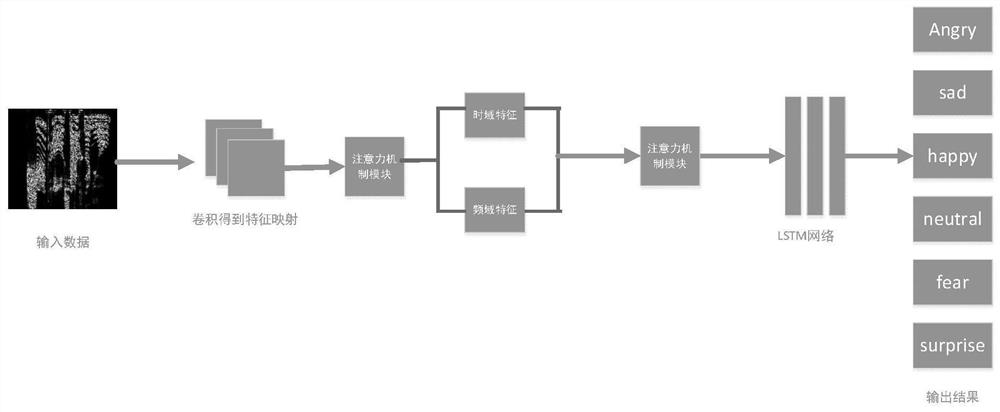

Multi-modal emotion recognition method based on attention enhancing mechanism

ActiveCN112489635AImprove accuracySolve the problem of easy overfittingNatural language data processingSpeech recognitionSemantic alignmentEmotion classification

The invention belongs to the technical field of emotion calculation and relates to a multi-modal emotion recognition method based on an attention enhancement mechanism. The method comprises steps of obtaining a voice coding matrix through a multi-head attention mechanism, and obtaining a text coding matrix through a pre-trained BERT model; performing point multiplication on the coding matrixes ofthe voice and the text respectively to obtain alignment matrixes of the voice and the text, and calibrating the alignment matrixes with original modal coding information to obtain more local interaction information; and finally, splicing the coding information, the semantic alignment matrix and the interaction information of each mode as features to obtain a feature matrix of each mode; aggregating the voice feature matrix and the text feature matrix by using a multi-head attention mechanism; converting the aggregated feature matrix into vector representation through an attention mechanism; and splicing the vector representations of the voice and the text, and obtaining a final emotion classification result by using a full connection network. According to the method, a problem of multi-modal interaction is solved, and accuracy of multi-modal emotion recognition is improved.

Owner:HANGZHOU DIANZI UNIV

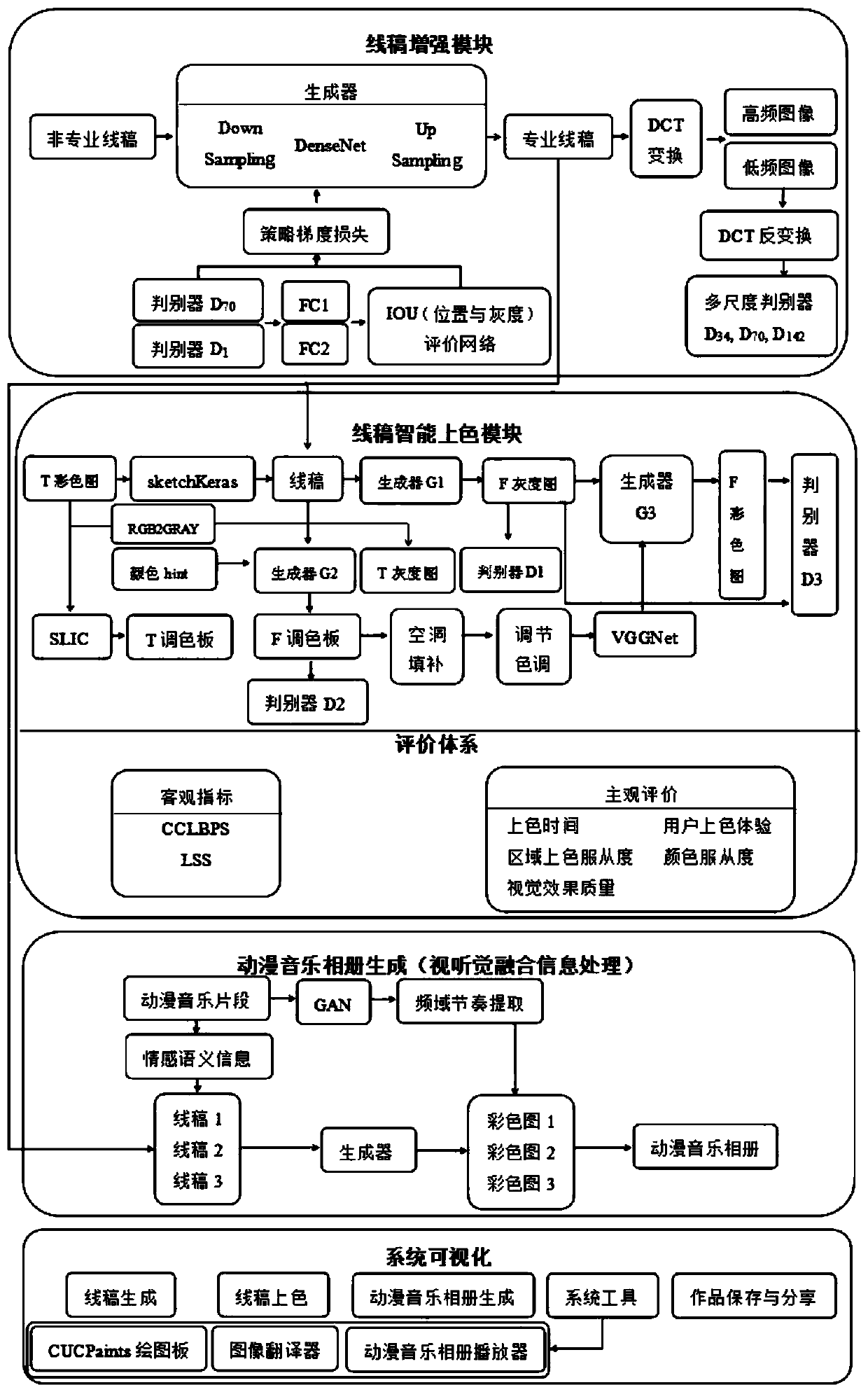

Animation drawing auxiliary creation method based on GAN

ActiveCN110378985AImprove experienceImprove artistic aestheticsAnimationNeural architecturesAnimationGenerative adversarial network

The invention discloses an animation drawing auxiliary creation method based on a GAN. An integrated cartoon drawing auxiliary creation system is constructed by utilizing line draft professional enhancement technology based on generative adversarial network and reinforcement learning, line draft coloring technology based on related genus learning and color clustering, cartoon music album generation technology based on audio-visual association and emotion calculation and the like, so that the creation experience and artistic aesthetic appreciation of users are improved.

Owner:COMMUNICATION UNIVERSITY OF CHINA

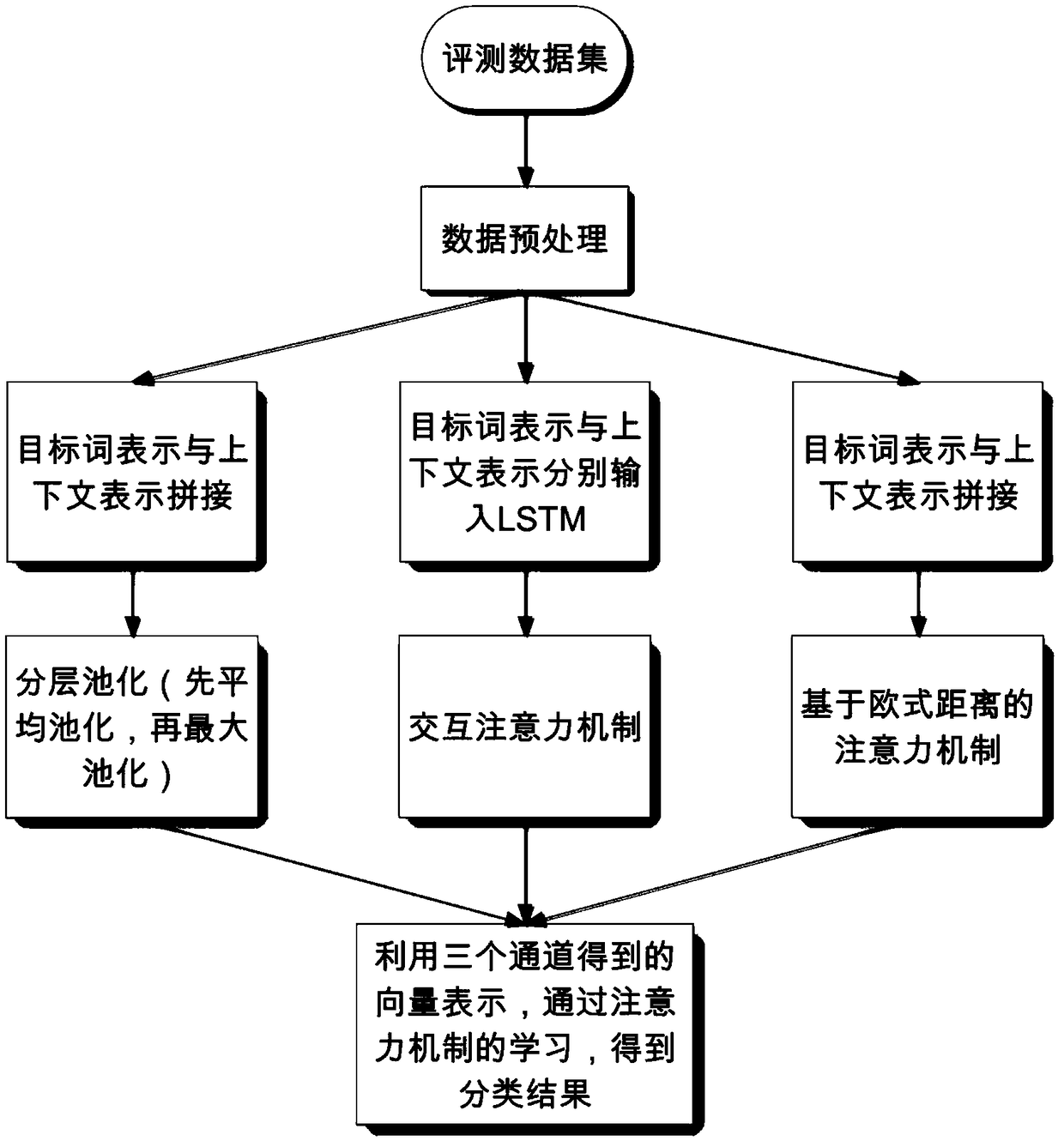

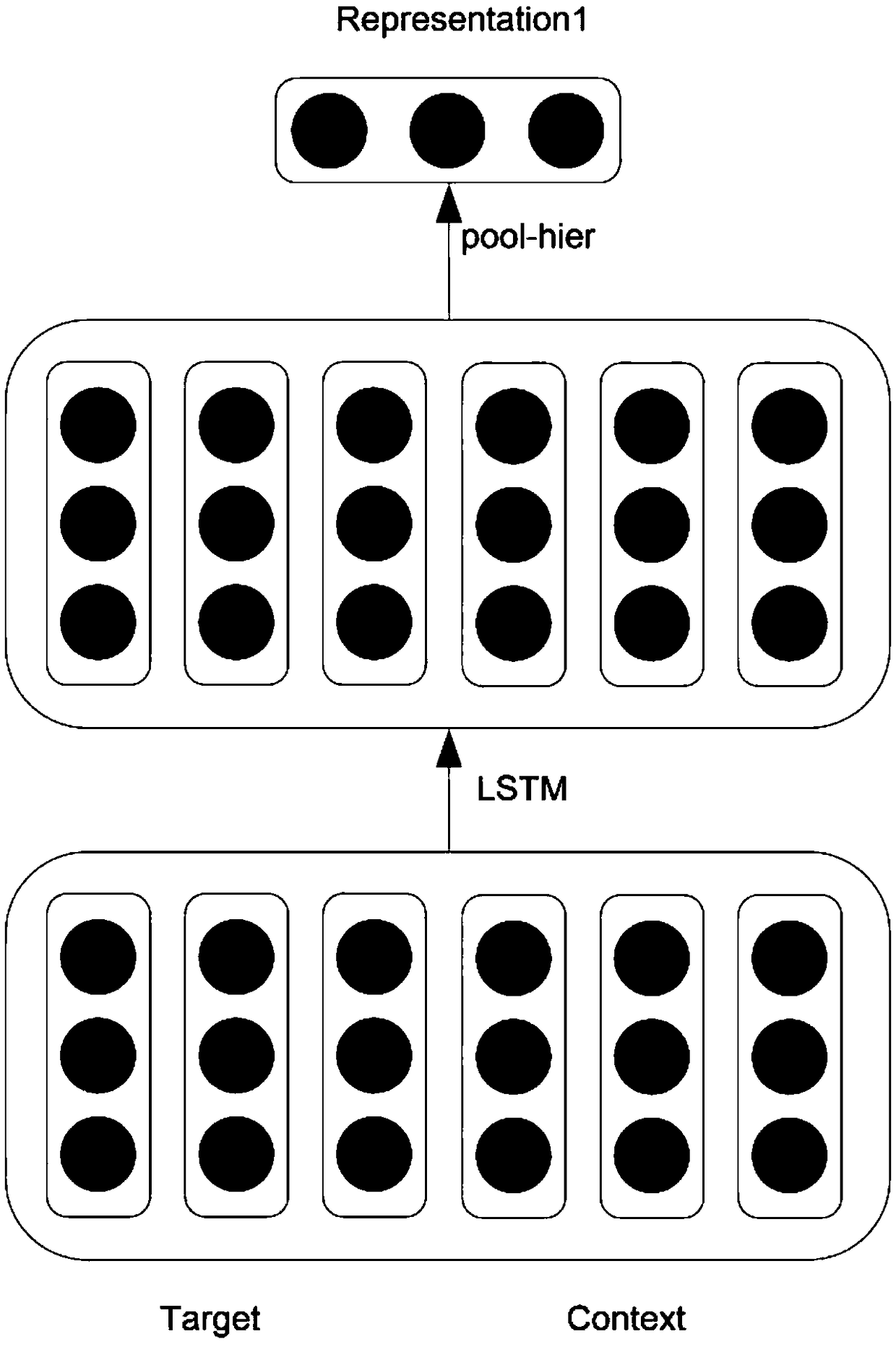

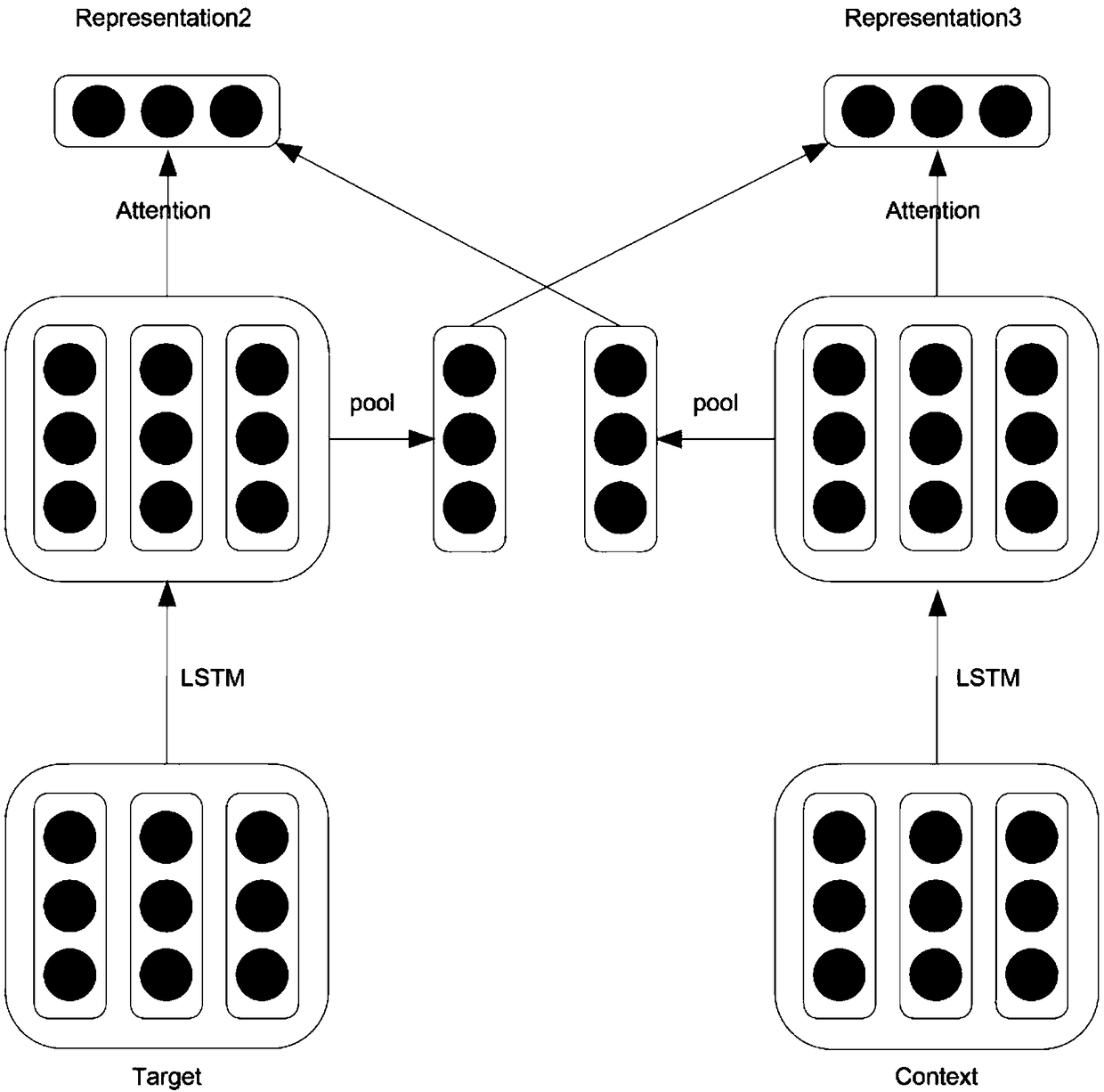

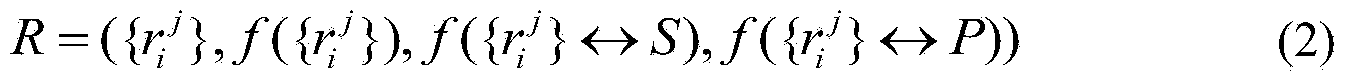

A specific target emotion analysis method based on a multi-channel model

ActiveCN109408823AUnderstand opinionHigh practical valueMarket predictionsSemantic analysisData setFeature extraction

The invention discloses a specific target emotion analysis method based on a multi-channel model. According to the specific target emotion analysis method, target words and contexts are fully utilized, the method is provided with three channels, and expression of the target words and the contexts is obtained through a hierarchical pooling mechanism, an interaction attention mechanism and an attention mechanism based on the Euclidean distance. Through the three channels, the target words and the context can learn the expression helpful for sentiment classification; the technical scheme is as follows: (1) inputting a SemEval2014 data set, preprocessing the data set and dividing the data set into a training set and a test set; (2) inputting the preprocessed data into three channels respectively, and performing feature extraction to obtain vectors r1, r2, r3, r4 and r5; (3) obtaining a classification result through learning of an attention mechanism by utilizing vectors r1, r2, r3, r4 andr5; (4) carrying out sentiment classification on a specific target of each comment text in the test set by using the trained model, and comparing the sentiment classification with a label of the testset to calculate the classification accuracy; the invention belongs to the fields of natural language processing technology and sentiment calculation.

Owner:南京智慧橙网络科技有限公司

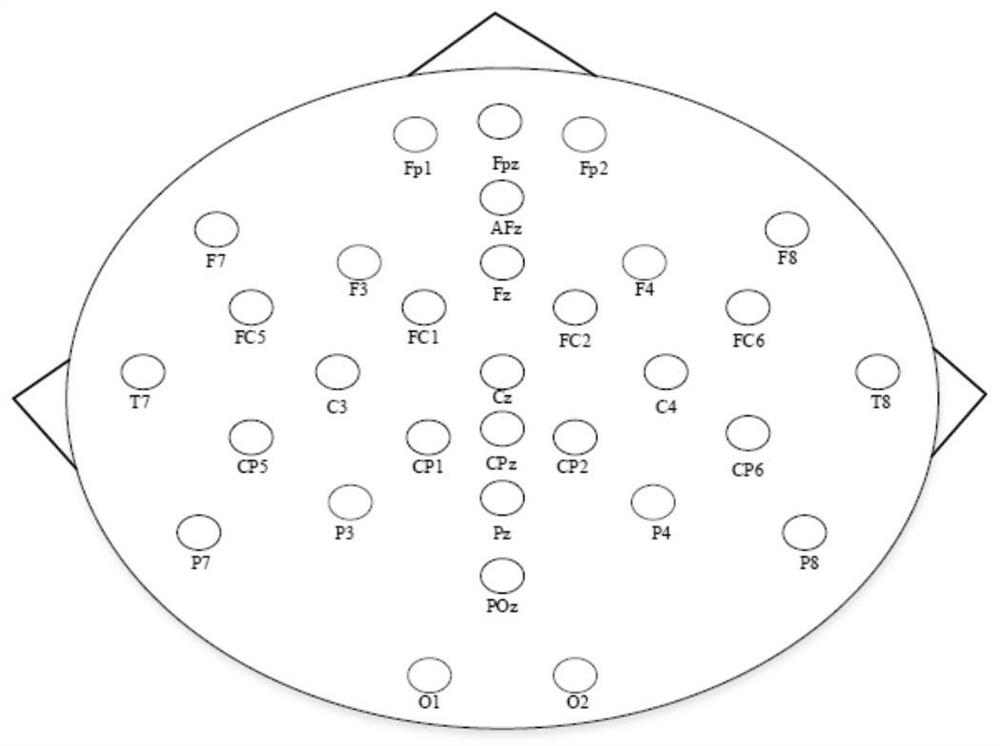

Fine-grained visualization system and method for emotional electroencephalography (EEG)

ActiveCN110169770ASolve fine-grained visualization problemsRich and detailed expressionSensorsPsychotechnic devicesEeg dataBrain computer interfacing

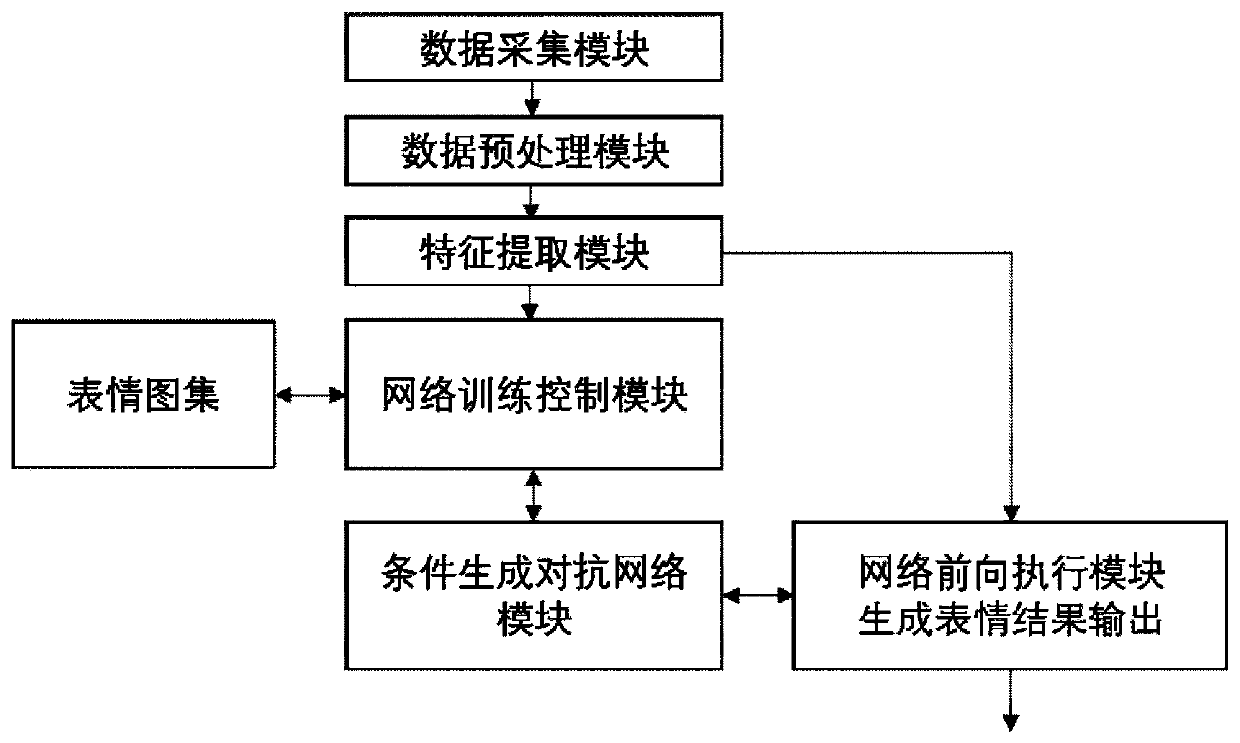

The invention discloses a fine-grained visualization system and method for emotional electroencephalography (EEG), and solves the technical problem of how to display fine-grained information in the emotional EEG. The system is connected with a data acquisition module, a data preprocessing module, a feature extraction module and a network training control module in sequence; an expression atlas provides a target image; the network training control module and an affective computing generative adversarial network (AC-GAN) module complete the training of an AC-GAN; a network forward execution module controls to complete the generation of fine-grained expressions. The method comprises the steps of collecting emotional EEG data, preprocessing the EEG data, extracting EEG features, constructing the AC-GAN, preparing the expression atlas, training the AC-GAN, and obtaining a fine-grained facial expression generation result. The emotional EEG is directly visualized into facial expressions withthe fine-grained information which can be directly recognized, and the visualization system is used for interactive enhancement and experience optimization of rehabilitation equipment, emotional robots, VR devices and the like with a brain-computer interface.

Owner:XIDIAN UNIV

Chat-robot-oriented hybrid strategy type emotional comforting system

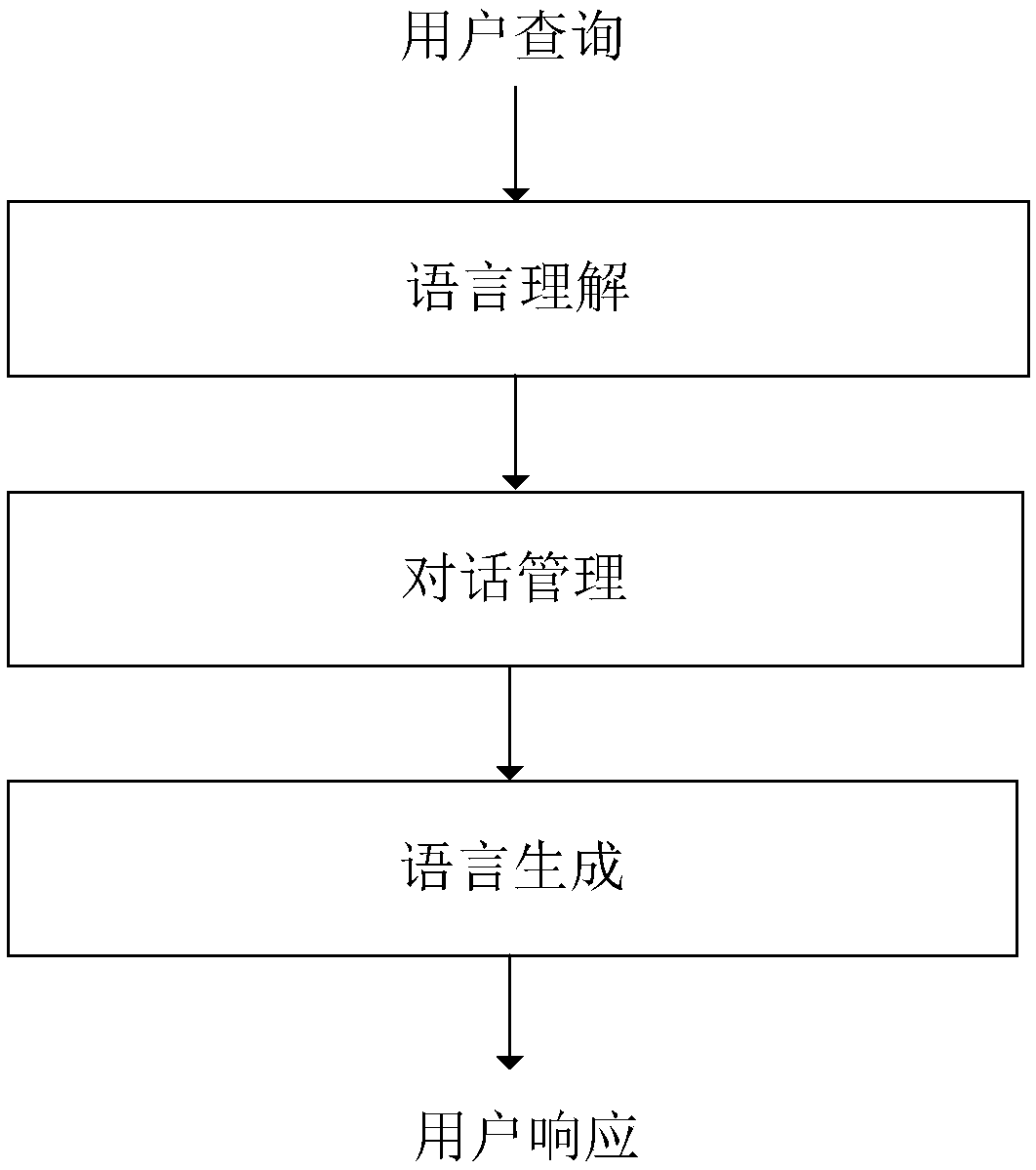

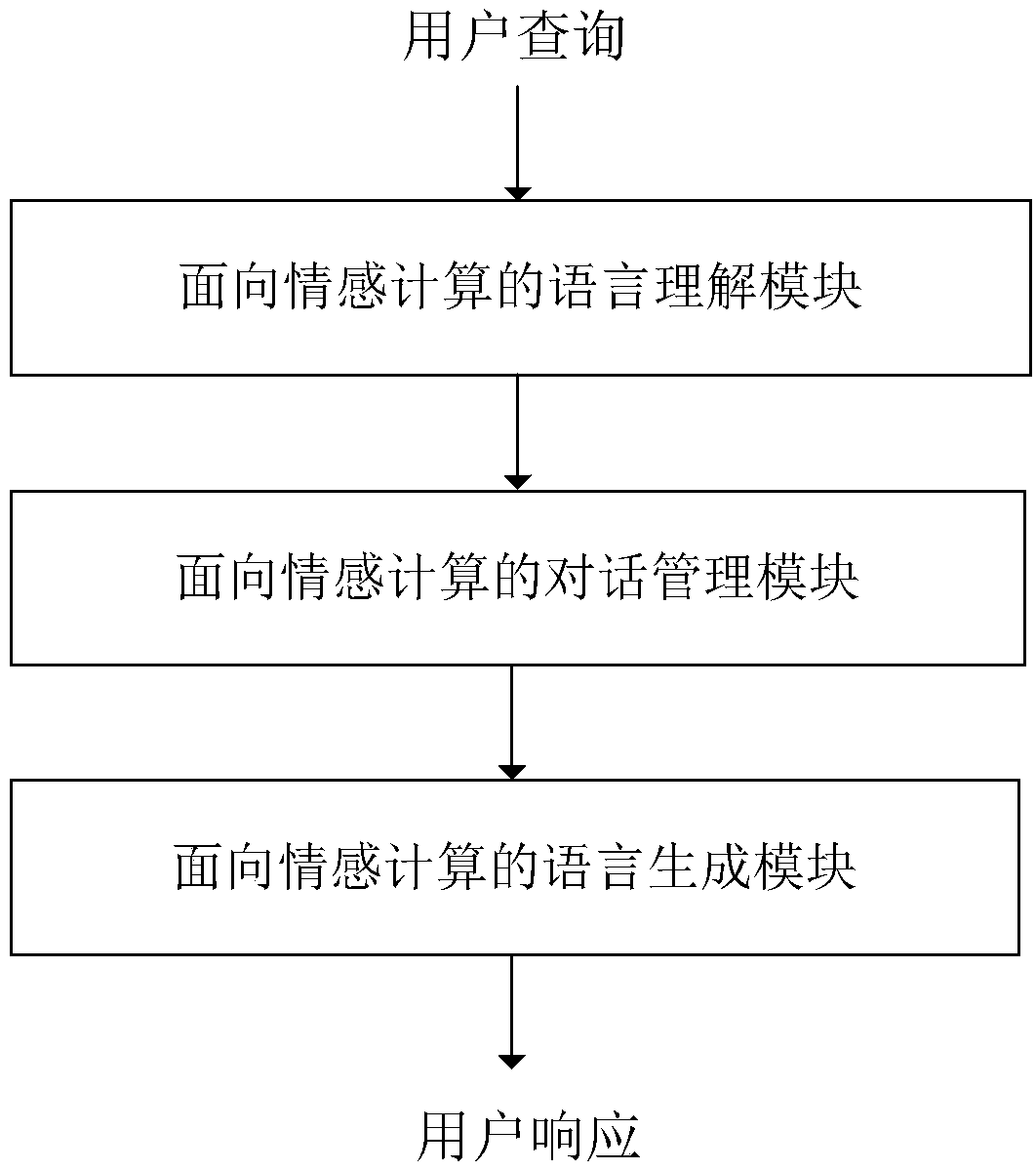

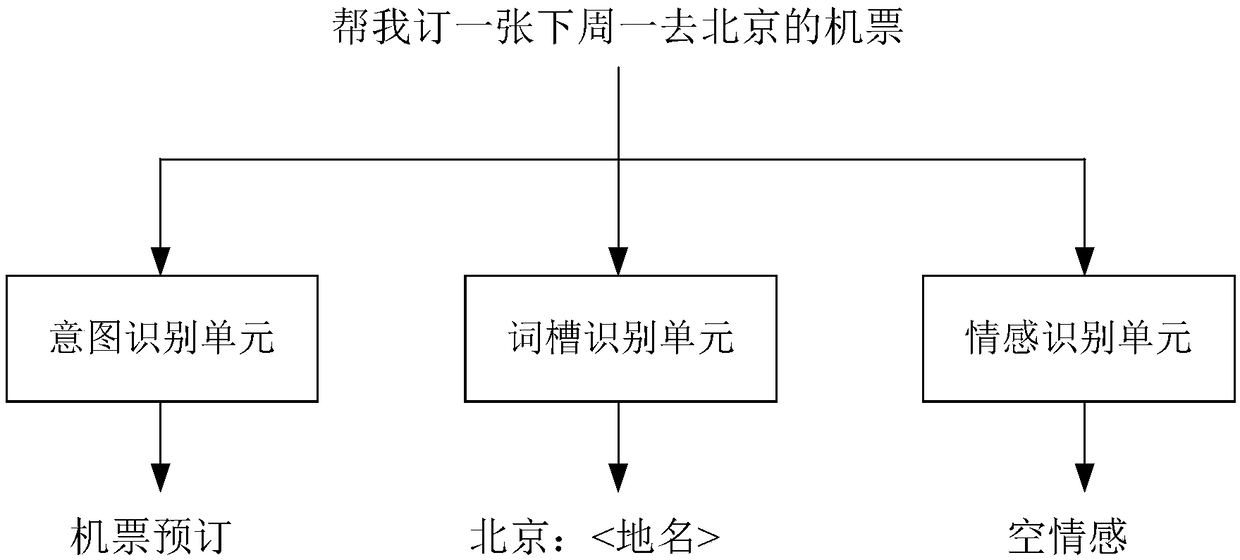

InactiveCN108960402AAdd emotional computing moduleCapture emotional needsBiological neural network modelsCharacter and pattern recognitionLanguage understandingQuestion answer

The invention discloses a chat-robot-oriented hybrid strategy type emotional comforting system and method. The system takes the emotion calculation as a core and has the capability of task completionand automatic question answering, and the defects of existing chat robots in the current market are effectively overcome. According to the technical scheme, the system comprises an emotional calculation-oriented language understanding module for identifying intention label, a keyword slot and an emotion category possibly contained from a user query which is inquired by a current user; an emotionalcalculation-oriented conversation management module, which is used for generating candidate user responses for the user, re-sorting the generated candidate emotion responses according to the dialoguestate determined by the multi-round dialogue, and generating re-ordered user responses; and an emotional calculation-oriented language generation module which generates a final user response according to a robot portrait set by the user according to the re-ordered user responses which are output by emotional calculation-oriented conversation management module.

Owner:上海乐言科技股份有限公司

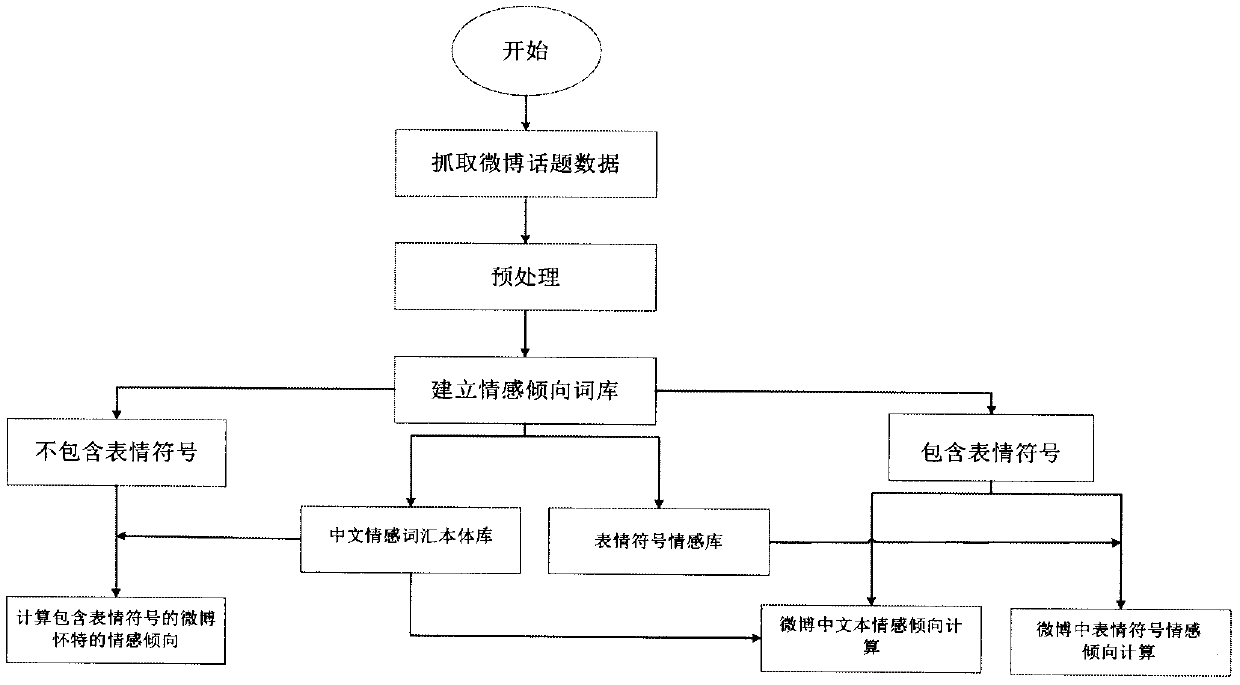

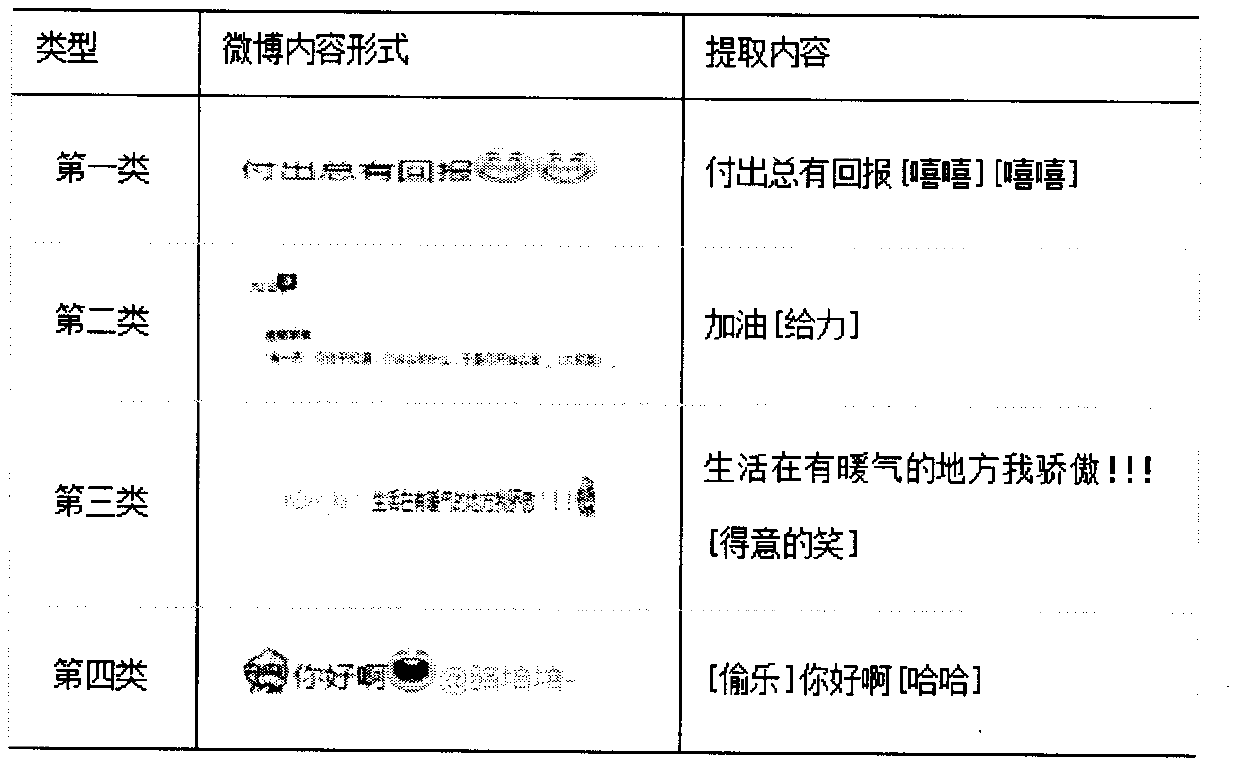

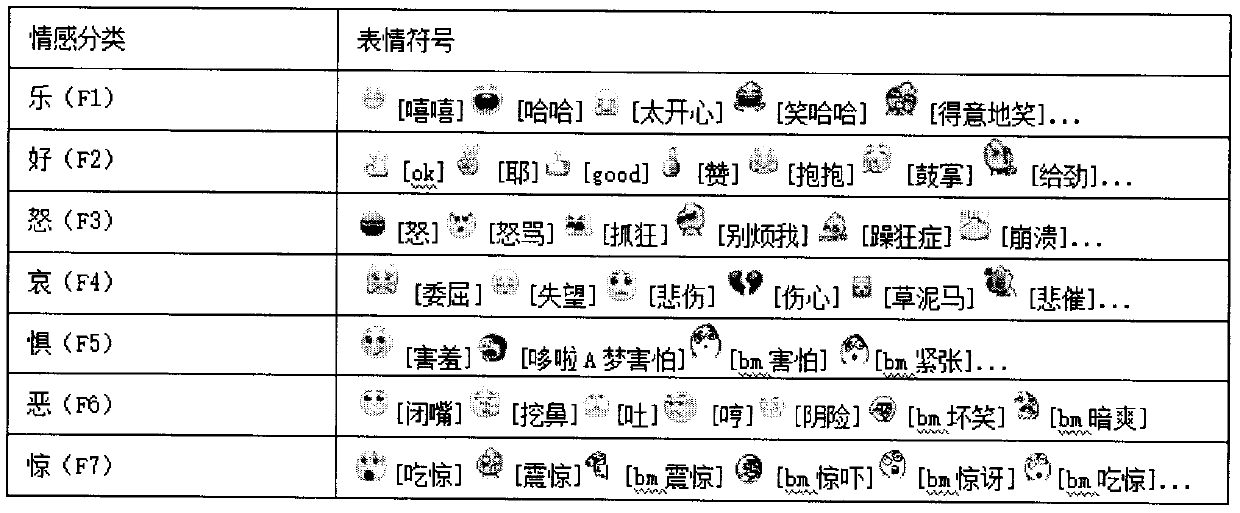

Method for calculating and analyzing microblog topic public opinions

InactiveCN107943800AImprove effectivenessData processing applicationsSemantic analysisMicrobloggingOpinion analysis

The invention discloses a method for calculating and analyzing microblog topic public opinions. The method comprises the following steps that: S1: utilizing crawler software to capture microblog data,and preprocessing the captured data; S2: establishing a text sentiment lexicon and an expression icon sentiment lexicon required for sentiment calculation; S3: according to the like number, the number of comments and the re-sharing number of a microblog, calculating the diffusance of the microblog topic, and taking the calculated diffusance as one factor for calculating the microblog topic publicopinion; S4: calculating a microblog topic sentiment tendency: for microblog contents which do not contain expression icons, directly taking the established text sentiment lexicon as a sentiment dictionary, utilizing Naive Bayes to finish calculation, for the microblog which contains the expression icons, independently calculating a text sentiment tendency and an expression icon sentiment tendency, and finally, synthesizing the sentiment tendencies of two parts to realize sentiment tendency calculation; and S5: carrying out microblog public opinion analysis: combining the diffusance of the microblog topic with the topic sentiment tendency to realize the analysis of the microblog public opinions. By use of the method, the calculated microblog topic public opinion is more accurate.

Owner:ZHENGZHOU UNIV

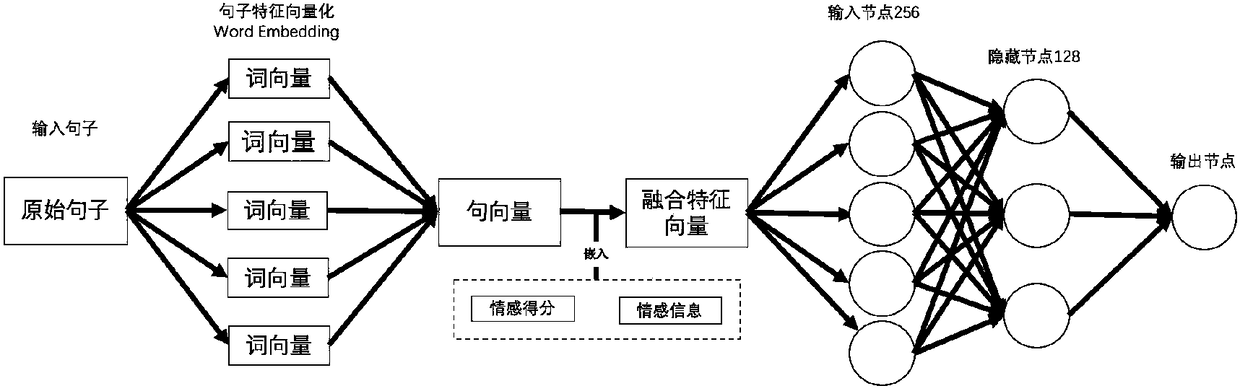

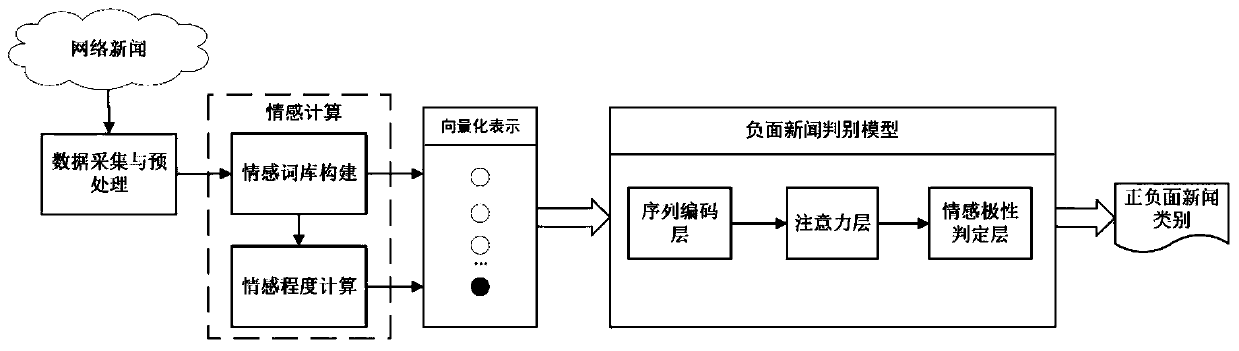

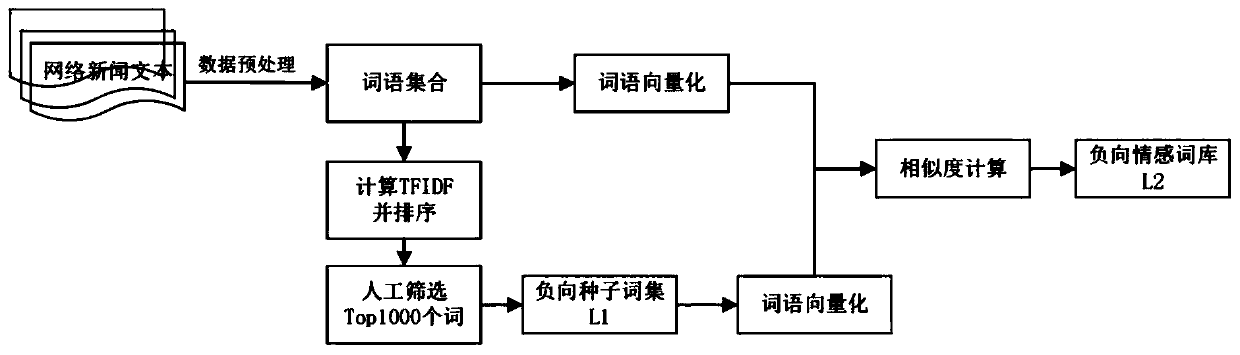

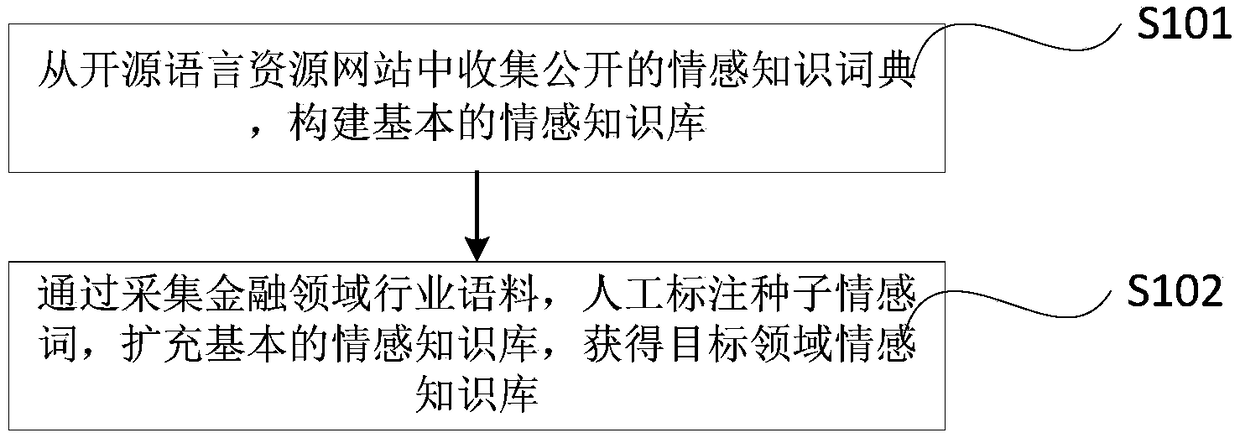

Negative news recognition method based on emotion calculation and multi-head attention mechanism

The invention discloses a negative news recognition method based on emotion calculation and a multi-head attention mechanism, relates to the technical field of network public opinion monitoring, and solves the technical problem of how to solve the problem that objective negative news is difficult to identify, and the negative news recognition method comprises the following specific steps: (1) collecting and preprocessing network news text data; (2) establishing and expanding a negative emotion seed word bank and calculating the susceptibility; (3) performing vectorization representation, and determining the input of a discrimination model; (4) establishing a negative news discrimination model; and (5) carrying out negative news recognition. According to the method, the problem that the negative news is difficult to identify is effectively solved, and a good effect is achieved in the aspects of recognition accuracy and effectiveness of the negative news text.

Owner:BEIJING INFORMATION SCI & TECH UNIV

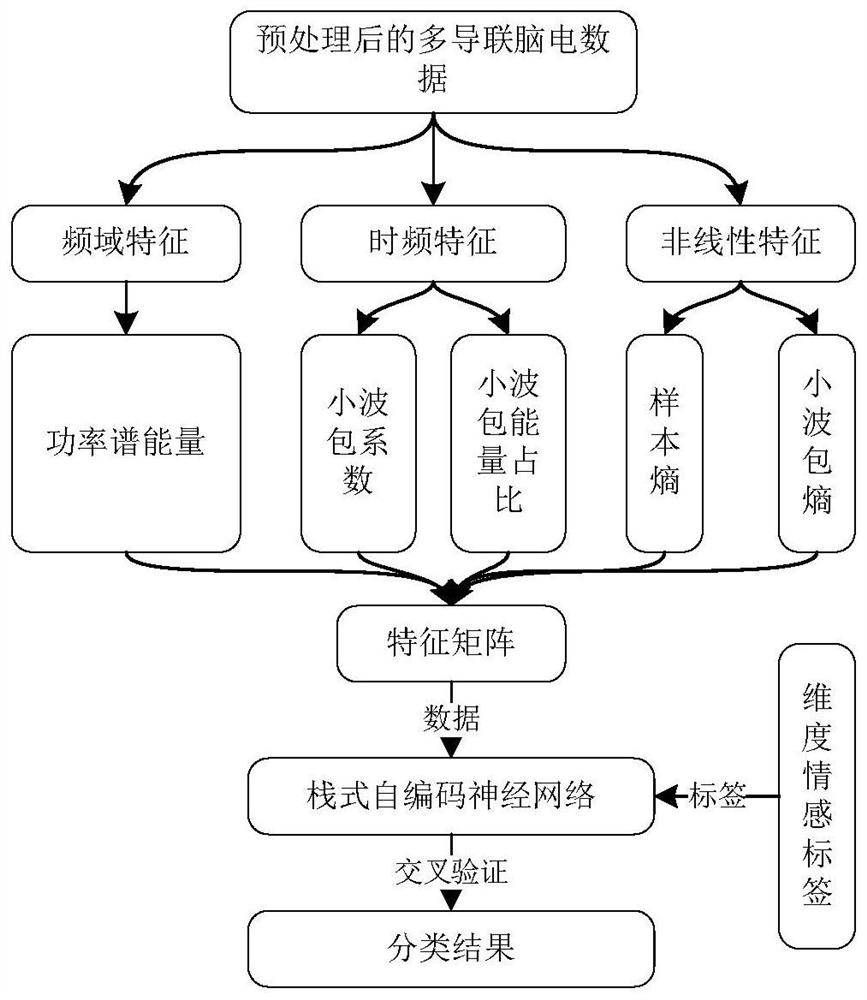

Electroencephalogram signal emotion recognition method based on dimension model

ActiveCN112656427AAchieve estimatesImprove accuracySensorsPsychotechnic devicesPattern recognitionData balancing

The invention belongs to the technical field of emotion calculation and emotion recognition, and particularly relates to an electroencephalogram signal emotion recognition method based on a dimension model. Aiming at the technical problem of low positive and negative emotion classification accuracy under a dimension model at present, the electroencephalogram signal emotion recognition method based on the dimension model comprises the following steps: (1) electroencephalogram preprocessing; (2) respectively extracting frequency domain, time frequency and nonlinear characteristics of the preprocessed multi-lead electroencephalogram signals; and (3) conducting emotion classification of a stack type self-encoding neural network. The classification method provided by the invention is stable and reliable, explains the influence of data balance, feature combination and emotion label threshold on electroencephalogram signal emotion recognition, and improves the accuracy of electroencephalogram signal positive and negative emotion classification under the dimension model.

Owner:SHANXI UNIV

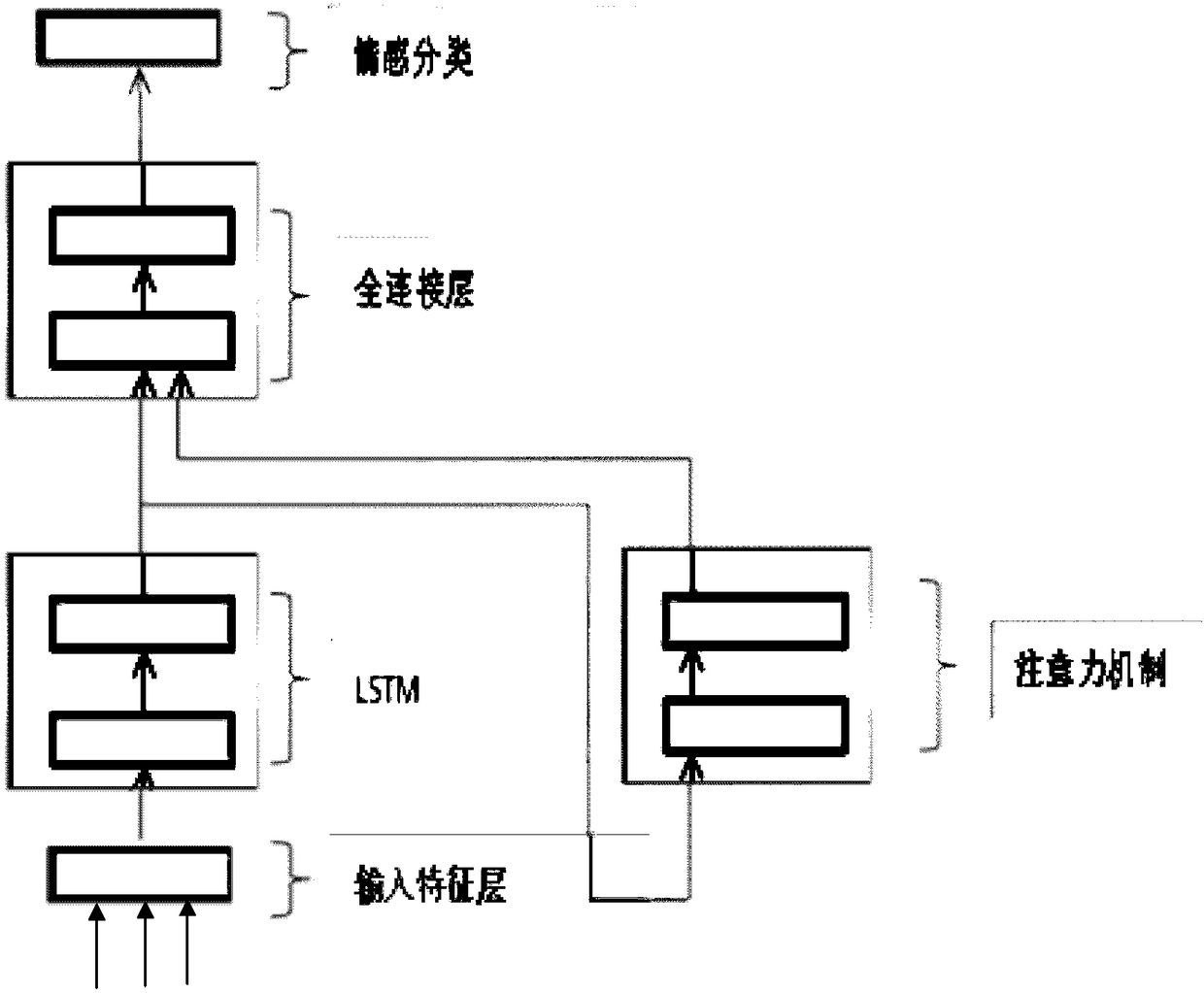

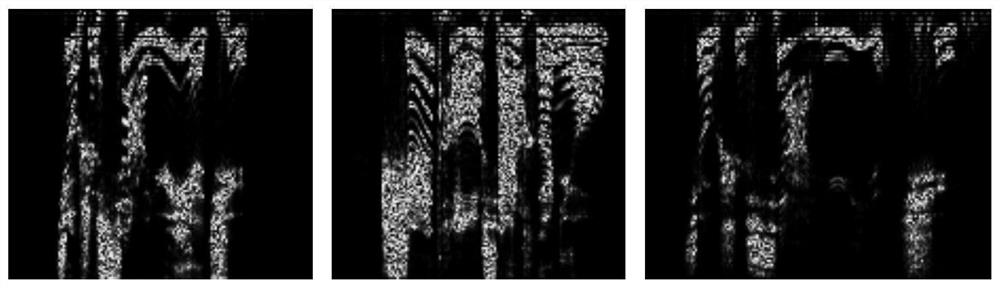

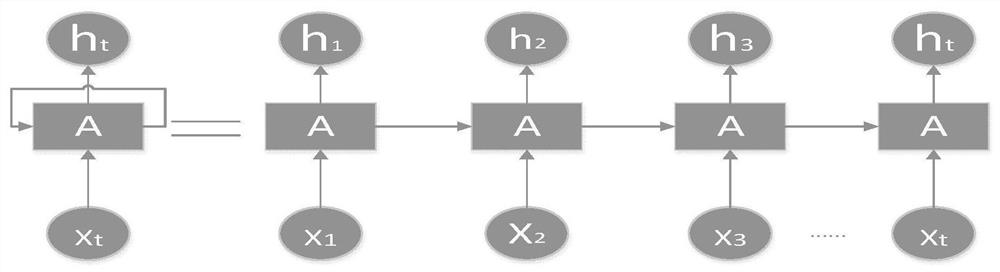

Speech emotion recognition method based on speech spectrum

ActiveCN112581979AEfficient learning processImprove accuracySpeech analysisAttention modelResidual neural network

The invention belongs to the field of artificial intelligence, voice processing and emotion calculation, and particularly relates to a speech emotion recognition method based on a speech spectrum. Themethod comprises the following steps of: acquiring a speech signal in real time, and converting the speech signal into a speech spectrum; and inputting the speech spectrum into a trained speech emotion recognition model to recognize speech emotion, wherein the speech emotion recognition model comprises a residual neural network based on an attention mechanism and a long short-term memory (LSTM) neural network. According to the invention, the neural network and the attention model are combined together, so that the effective features of the speech energy value in the speech spectrum can be learned more efficiently, and the accuracy of speech emotion recognition is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Domain-self-adaptive facial expression analysis method

InactiveCN104616005AIncrease the scope of applicationSolve the training dataCharacter and pattern recognitionApplicability domainObject based

The invention relates to a domain-self-adaptive facial expression analysis method and belongs to the field of computer vision and emotion computing research. The method aims to solve the problem that the prediction precision is hindered by training and test data domain differences in automatic expression analysis, and is more aligned with the actual needs. The invention provides the domain-adaptive expression analysis method based on an object domain. The method comprises the following steps: defining a data domain for each tested object; defining the distance between object domains in a way of establishing an auxiliary prediction problem; selecting a group of objects similar to the data character of the tested object from a source data set to form a training set; on the training set, directly using part of tested object data in model training in a way of weighted cooperative training, and thus enabling a prediction model to be closer to the tested object domain. The method has the advantages that the isolation problem of training and testing data is solved, and the prediction model is adaptive to the testing data domain; the method has robustness for the domain differences and is wide in range of application.

Owner:南京宜开数据分析技术有限公司

Robot cloud system

The invention discloses a robot cloud system, which is characterized by comprising a server side, a robot cluster and a robot cloud system interaction interface, wherein the server side comprises an advanced intelligent data resource and capability pool, an emotion computing and emotion creation module and a data transmission module; the robot cluster comprises a robot collection, interaction of robots, interaction of the robot and the server side S, and interaction of the robot and a user. The robot cloud system is applicable to large-scale robot service tasks, the robot emotion service is blended, and the quality, the efficiency and the expansibility of the robot service are improved based on guaranteeing the capability of robot group cooperation.

Owner:HEFEI UNIV OF TECH

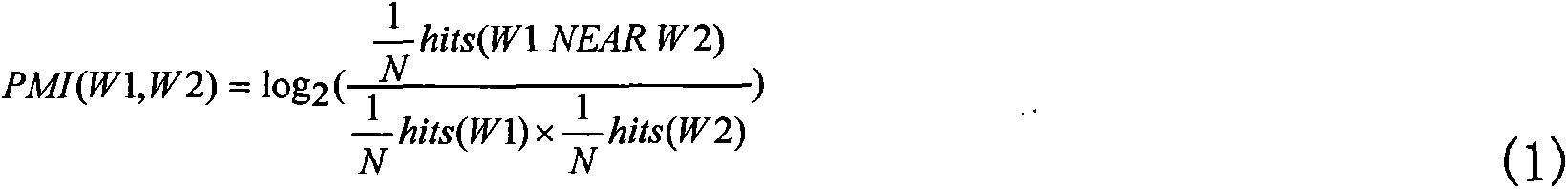

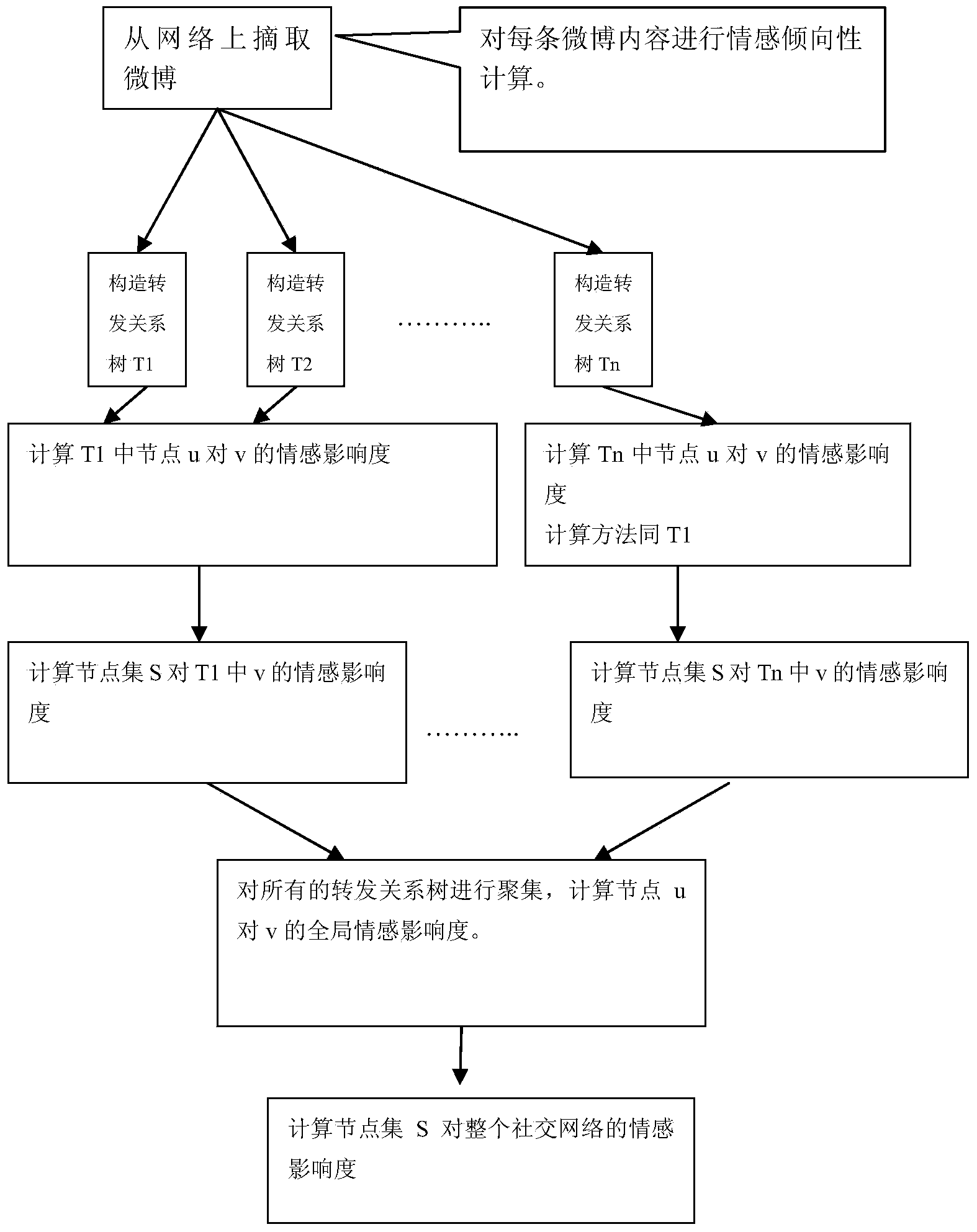

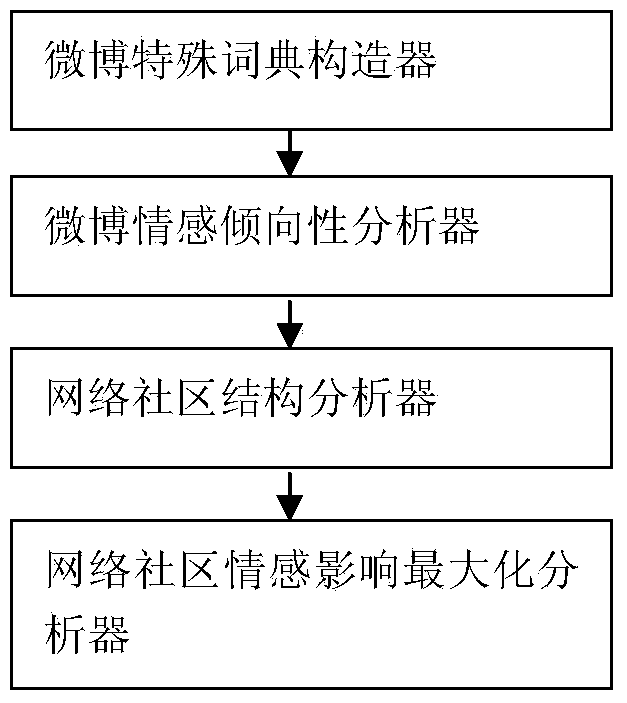

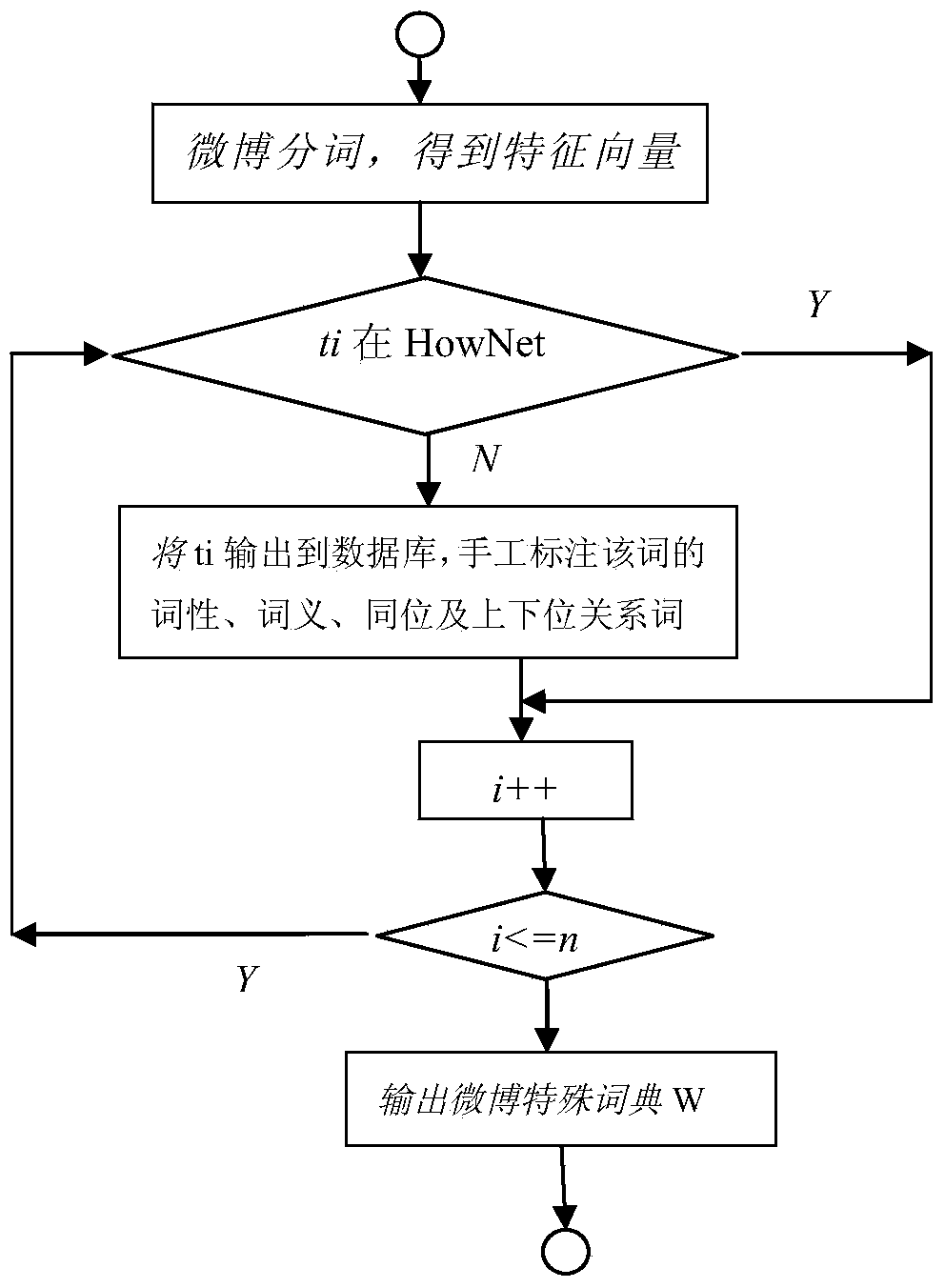

Network society influence maximization algorithm based on microblog text affective computing

InactiveCN103530360AAccurate sentiment analysisStatistical Science of Emotional TendencyWeb data indexingNatural language data processingStructure extractionMicroblogging

The invention discloses a network society influence maximization algorithm based on microblog text affective computing, and mainly relates to the field of text affective computing, network society calculation and influence maximization, in particular to a microblog emotional tendency calculation method and a network community relation structure extraction method. Firstly, a special microblog dictionary is constructed according to anagrams, neologisms and new meanings (such as 'watch man') appearing in microblogs. The emotional tendency of microblog texts is analyzed according to a HowNet dictionary. Then, a network community user relationship tree is constructed according to the interaction operation relationship of various users. Finally, network design emotional influence maximization calculation is carried out according to the emotional tendency of the microblog texts and the network community user relationship tree. The problems that the user relationship in a network community structure is single, and maximization influence problem calculation is incomplete are solved, and the microblog emotional tendency can be calculated more accurately, and a network emotional influence maximization user set meeting reality better can be obtained.

Owner:GUANGXI TEACHERS EDUCATION UNIV

Highly anthropomorphic voice interaction algorithm and emotion interaction algorithm for robot and robot

InactiveCN109741746AImmune to noiseInteractive natureSpeech recognitionManipulatorAlgorithmSimulation

The invention discloses a highly anthropomorphic voice interaction algorithm and an emotion interaction algorithm for a robot and a robot and solves the problem that the interaction of the traditionalroot is not natural and smooth. The robot of the invention has a continuous voice listening and answering mode. In the continuous voice listening and answering mode, a user can either talk to the robot or chat with other people. The robot determines whether the user talks to itself or not through the algorithm, so that the robot can answer. By adopting a positioning algorithm and a microphone array algorithm, voices in the user direction are collected, influence of surrounding noises is reduced, and the robot is not affected by noises. According to an emotion computing method for answering ofthe robot, the robot looks at the user and gives answer with emotion and anthropomorphic expressions according to the emotion computing method. The robot will lock at the counterpart for communication like interpersonal communication and has corresponding expressions and emotions. According to the whole highly anthropomorphic human-machine voice interaction technology, interaction between human and robot is as natural as interpersonal communication, so that the robot is more intelligent and convenient to apply.

Owner:SHANGHAI YUANQU INFORMATION TECH

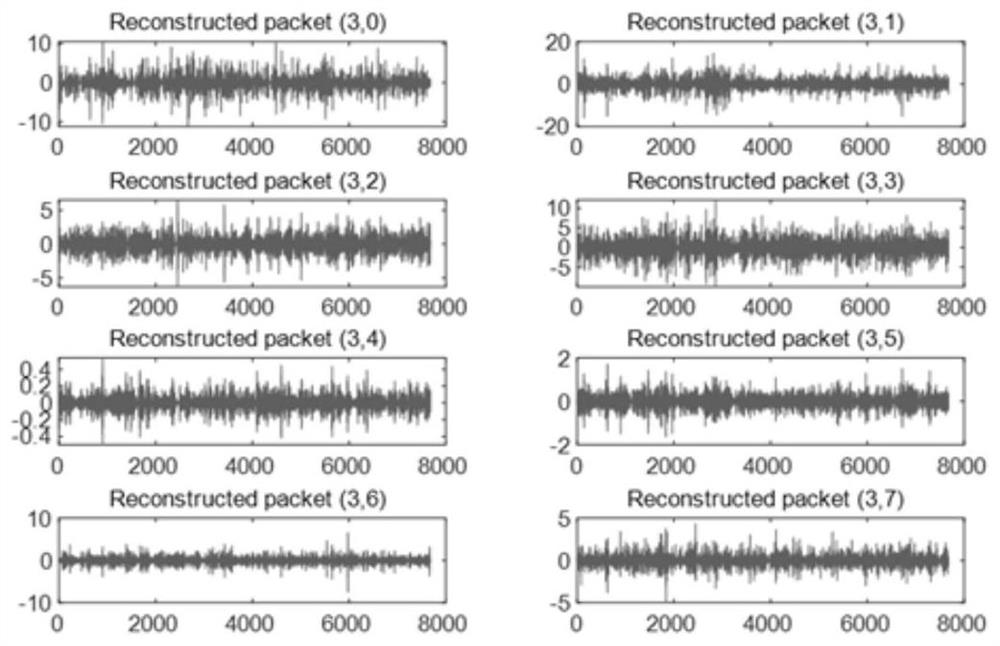

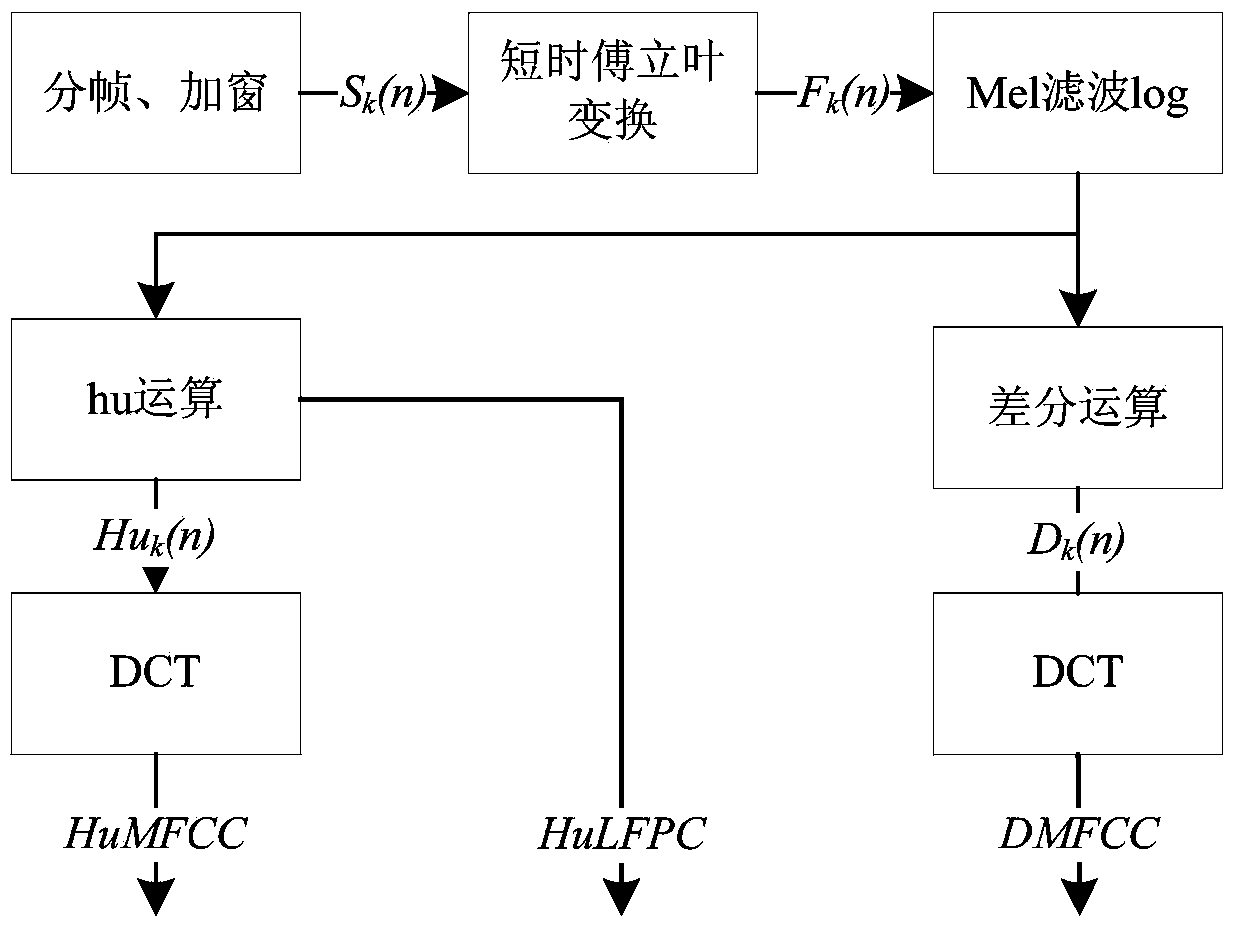

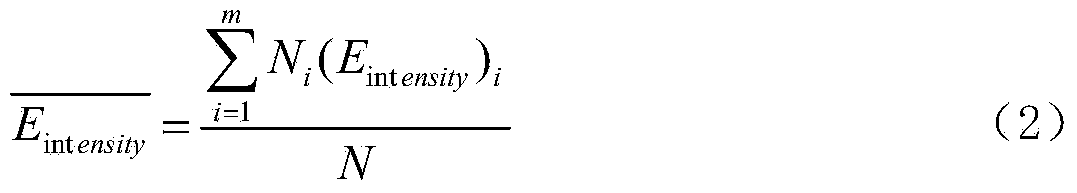

Voice affective characteristic extraction method capable of combining local information and global information

ActiveCN103531206ASimple methodSimple Feature Extraction FrameworkSpeech analysisAffective computingSpeech identification

The invention discloses a voice affective characteristic extraction method capable of combining local information and global information, which can extract three characteristics and belongs to the technical fields of voice signal processing and mode recognition. The voice affective characteristic extraction method comprises the following steps of (1) framing voice signals; (2) carrying out Fourier transform on each frame; (3) filtering a Fourier transform result by utilizing a Mel filter, solving energy from the filtering result, and taking the logarithm to the energy; (4) carrying out local Hu operation on the taken logarithm result to obtain a first characteristic; (5) carrying out discrete cosine transform on each frame after being subjected to the local Hu operation to obtain a second characteristic; (6) carrying out difference operation on the obtained logarithm result of the step (3), and carrying out the discrete cosine transform on each frame of the difference operation result to obtain a third characteristic. According to the voice affective characteristic extraction method capable of combining the local information and the global information, which is disclosed by the invention, the voice of each emotion can be quickly and effectively expressed, the application range comprises fields of voice retrieval, voice recognition, emotion computation and the like.

Owner:SOUTH CHINA UNIV OF TECH

Emotion dictionary building and emotion calculation method

ActiveCN104090864AQuality assuranceQuality improvementSpecial data processing applicationsPattern recognitionThe Internet

The invention discloses an emotion dictionary building and emotion calculation method. A high-quality artificial marking Chinese language database Ren-CECps is used as an initial seed emotion word; under the combination of Chinese thesauruses and internet Chinese text languages (non-marked), emotion synonyms are expanded, and a kernel function method is used on the emotion calculation method; therefore, the problems of long training time and low accuracy of an emotion calculation process are solved.

Owner:HEFEI UNIV OF TECH

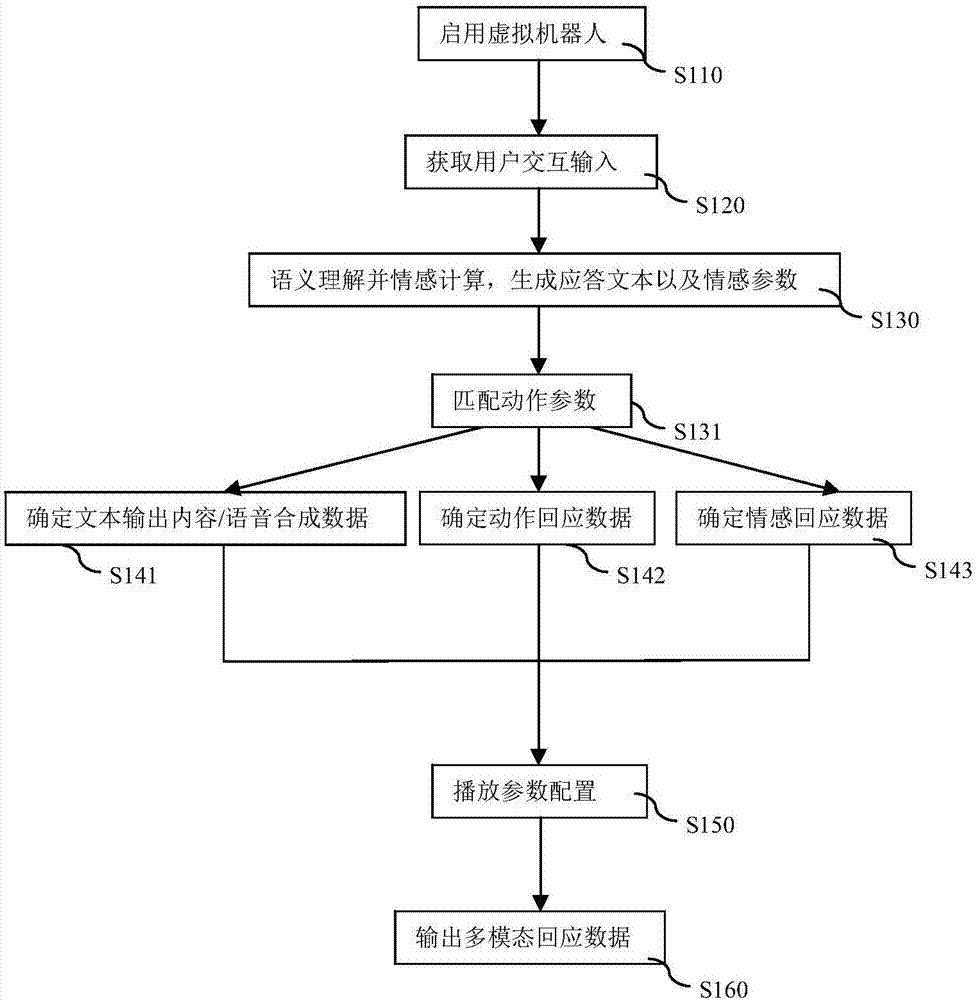

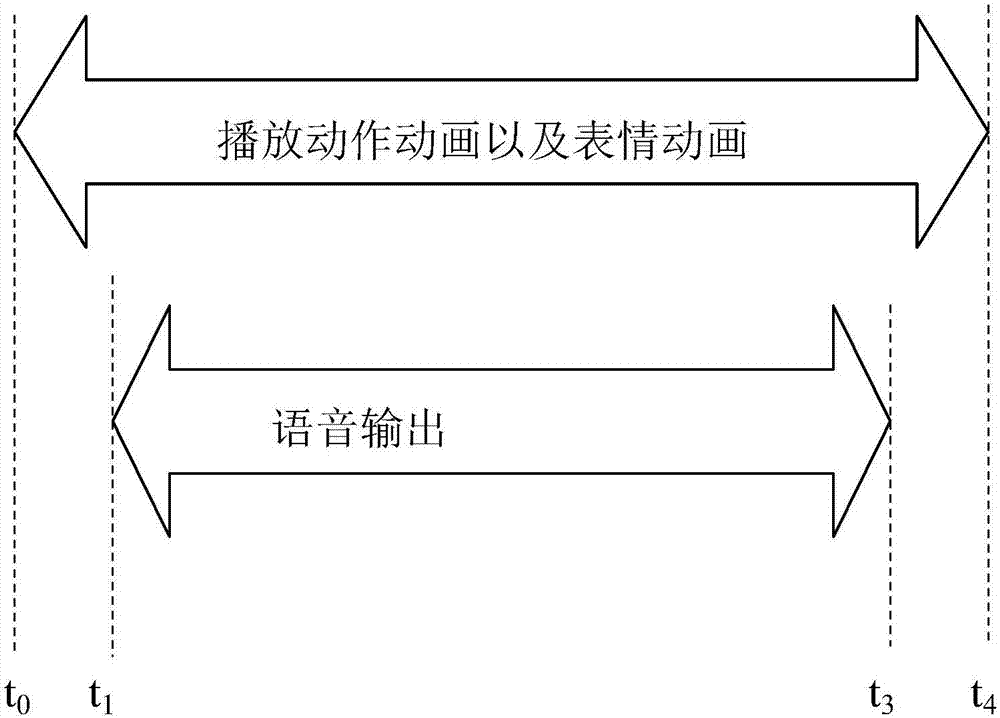

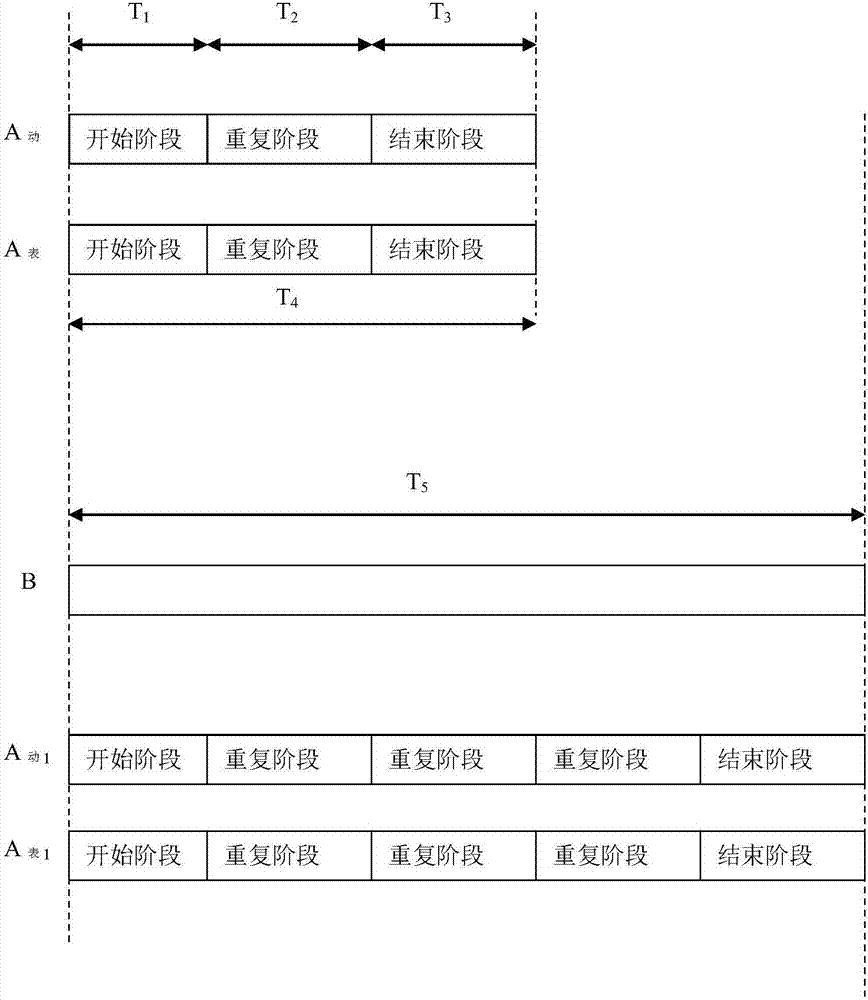

Virtual robot-oriented interaction output method and system

ActiveCN107577661AReduce jerkHigh degree of anthropomorphismSpecial data processing applicationsVirtual robotHuman–computer interaction

The invention discloses a virtual robot-oriented interaction output method and system. The method comprises the following steps of: obtaining user interaction input, carrying out semantic comprehension and emotional computation on the user interaction input so as to generate a response text and a corresponding emotional parameter, and matching an action parameter; determining corresponding text output data and / or voice synthesized data according to the response text; determining action response data and emotional response data of a virtual robot according to the action parameter and the emotional parameter; configuring output parameters for the action response data and the emotional response data; and outputting the text output data and / or voice synthesized data, the action response data and the emotional response data on the basis of configured output parameters. According to the method, virtual robots can coordinate output actions, expressions and voices / texts.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

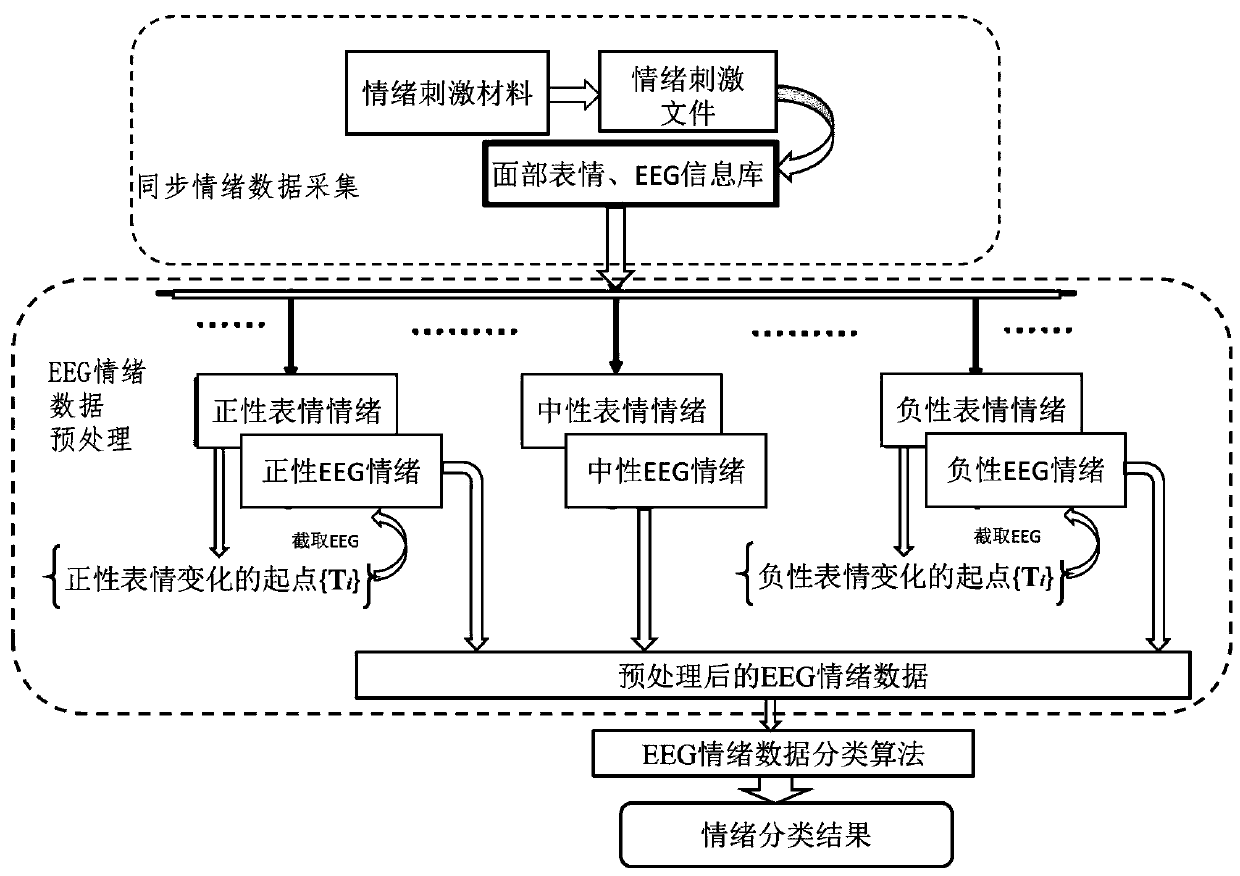

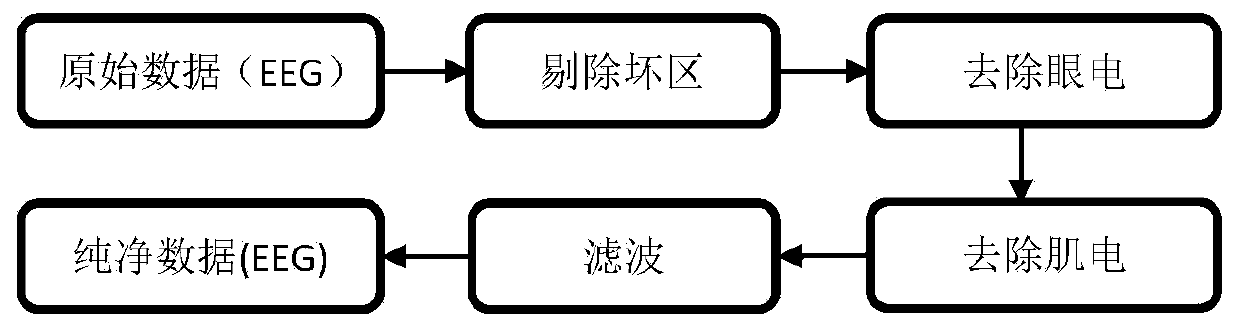

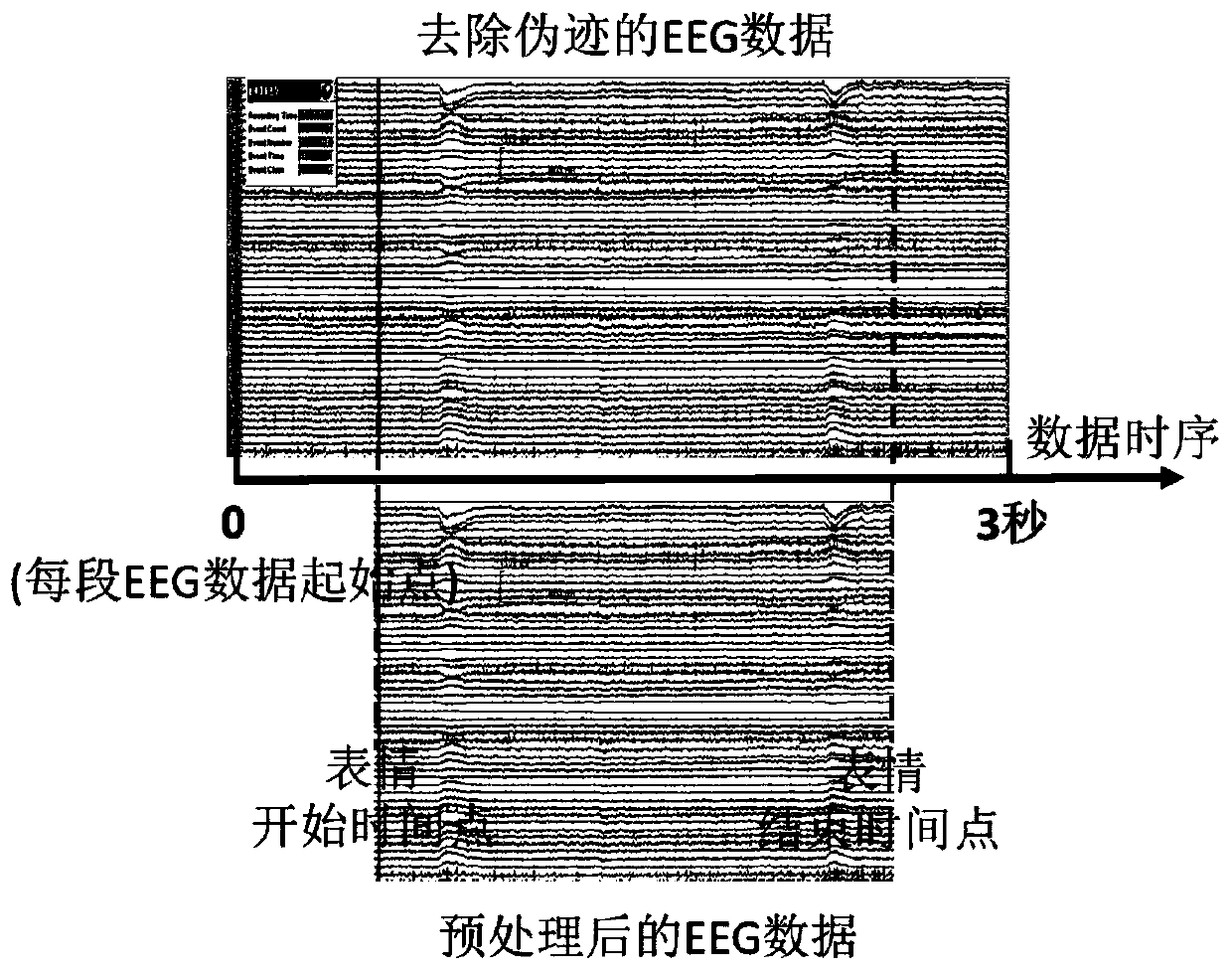

Emotion classifying method based on facial expression and EEG

InactiveCN110037693AMood-enhancing ingredientsImprove accuracyCharacter and pattern recognitionSensorsEeg dataAfter treatment

The invention relates to an emotion classifying method based on facial expression and EEG, and belongs to the technical field of pattern identification. The emotion classifying method comprises the steps of firstly synchronously collecting facial expression and electroencephalogram (EEG) data, then cutting off EEG data implying emotion information through the change information of the facial expression, and finally performing emotion classification through EEG information after treatment with an emotion classifying algorithm. Compared with an emotion calculating method which is frequently usedat present, the emotion classifying method based on facial expression and EEG provided by the invention has the advantages that facial expression identification is additionally performed in the emotion classifying process to pretreat the EEG data, and emotion components in the EEG data are improved, so that the emotion classifying accuracy rate is increased.

Owner:MINZU UNIVERSITY OF CHINA

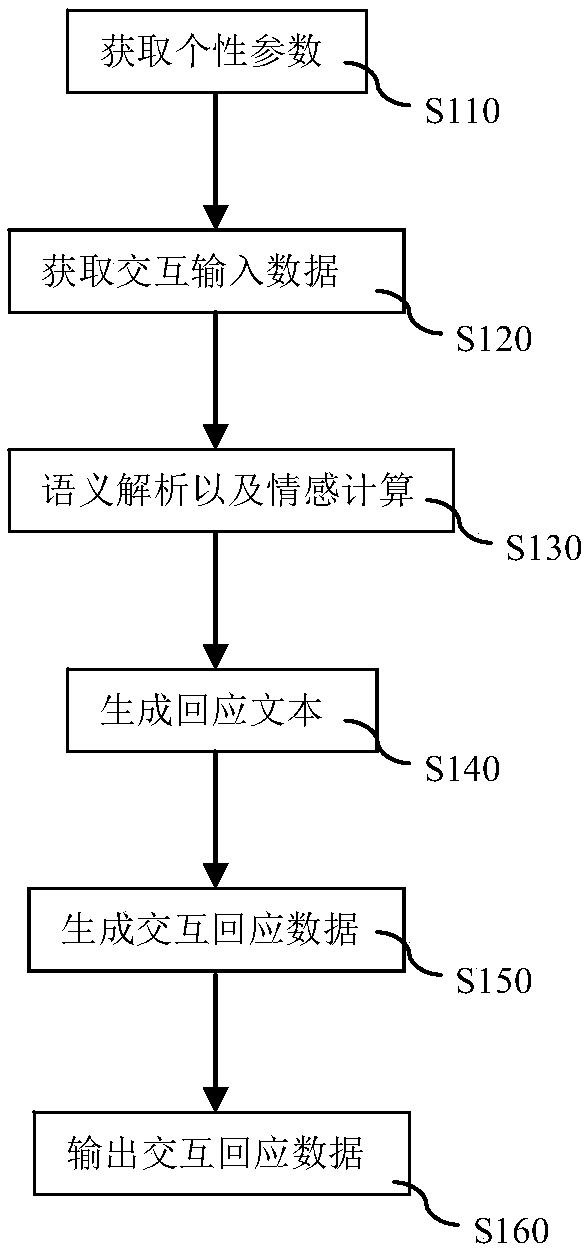

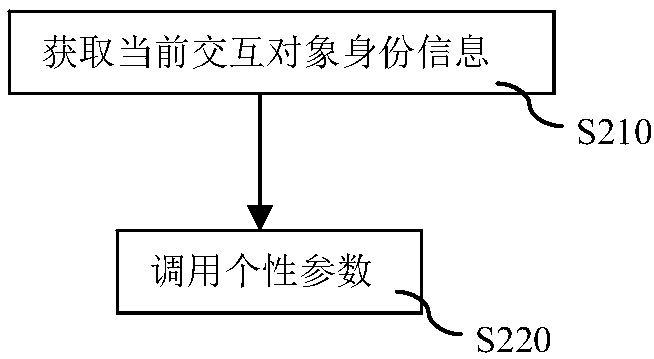

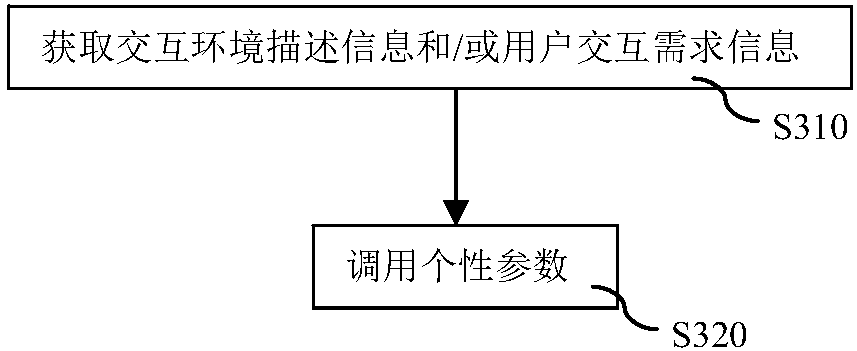

Interactive output method and system for intelligent robots

ActiveCN107807734ARaise the level of anthropomorphismImprove experienceInput/output for user-computer interactionGraph readingInteraction objectComputer science

The invention discloses an interactive output method and system for intelligent robots. The method comprises the following steps of: obtaining a personality parameter of an intelligent robot; obtaining interactive input data of a current interaction object; carrying out semantic analysis and emotional calculation on the interactive input data so as to obtain a semantic analysis result and an emotional analysis result; generating a corresponding response text according to the semantic analysis result and the emotional analysis result; and generating and outputting interactive response data which comprises an expression image and / or the response text, wherein the type of the expression image is matched with the personality parameter and the meaning of the expression image is matched with theresponse text.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

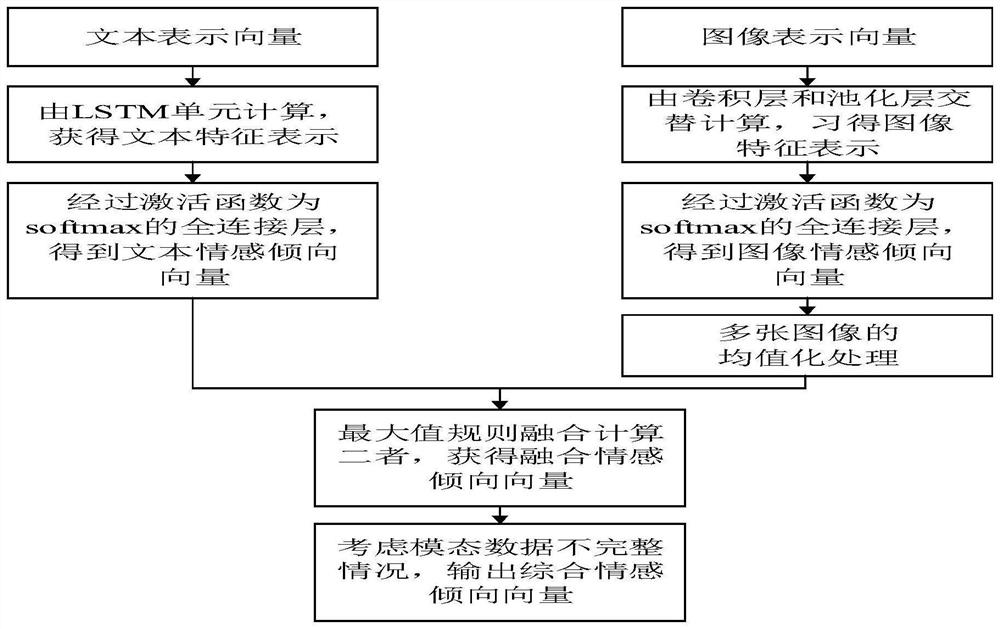

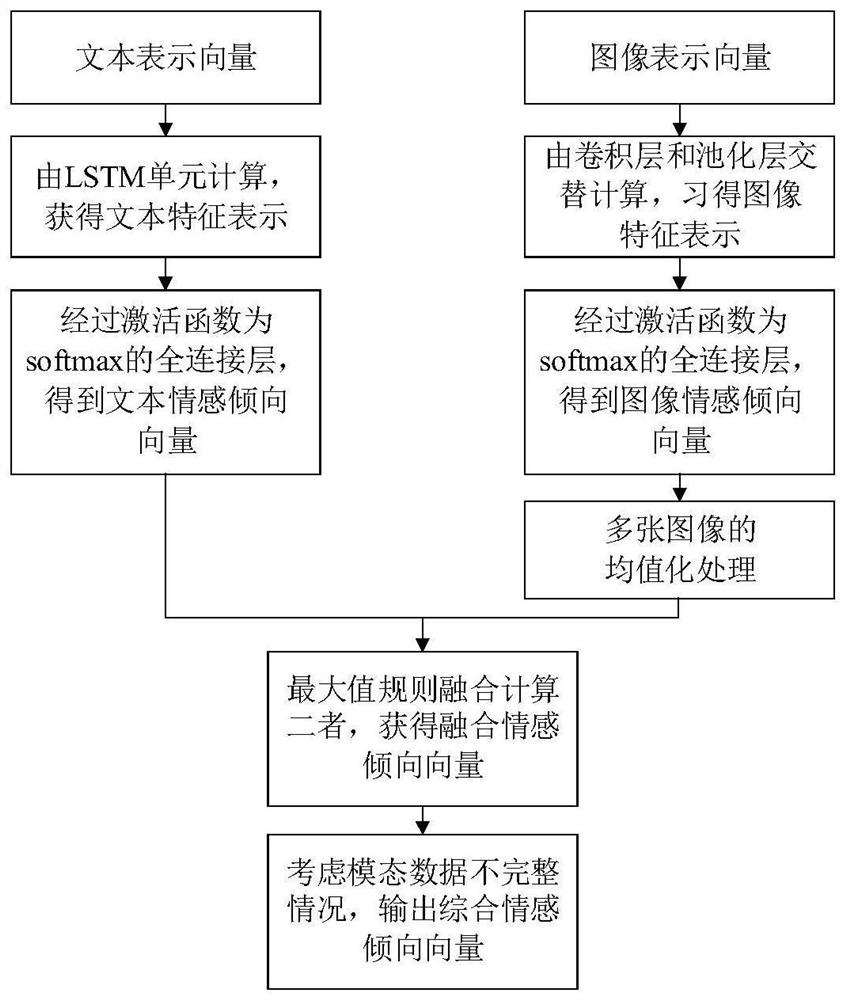

Psychological crisis early warning method based on text and image information joint calculation

ActiveCN112687374AResolve ambiguityAccurate predictionMedical simulationMental therapiesPsychological healthMachine learning

The invention discloses a psychological crisis early warning method based on text and image information joint calculation, which comprises the steps: S1, establishing and training a psychological health automatic evaluation model, S2, selecting network content data of a certain tested student from the step S1, sequentially preprocessing each text and a corresponding image, respectively obtaining a text representation matrix and an image representation matrix; S3, sequentially inputting the text representation matrix and the image representation matrix into a text sentiment calculation model and an image sentiment calculation model according to a row sequence to respectively obtain a text sentiment tendency matrix and an image sentiment tendency matrix, sequentially calculating the two matrixes according to a row sequence by adopting a maximum decision rule to obtain a comprehensive emotional tendency vector sequence of the tested student; and S4, inputting the comprehensive emotional tendency vector sequence into an automatic psychological health assessment model, and judging the psychological health grade of the tested student according to an output result to complete automatic psychological health assessment. The psychological health level of students can be quickly and accurately recognized.

Owner:HUNAN NORMAL UNIVERSITY

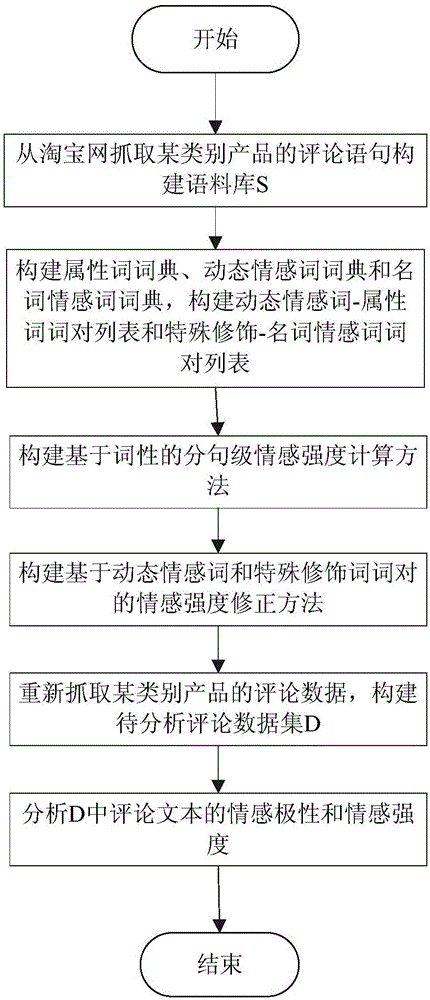

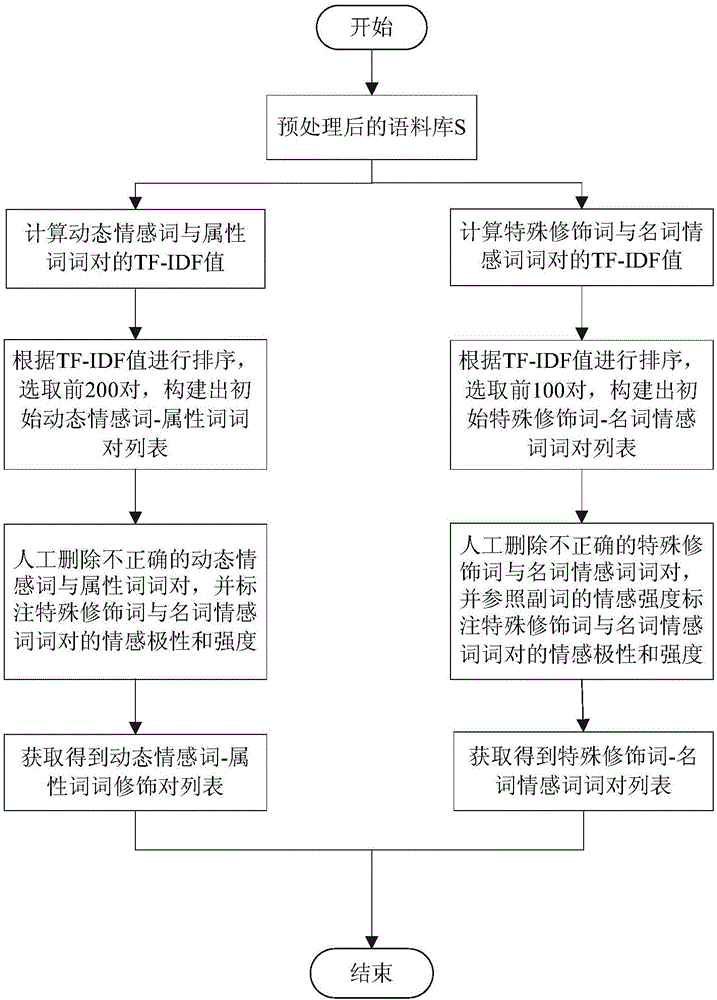

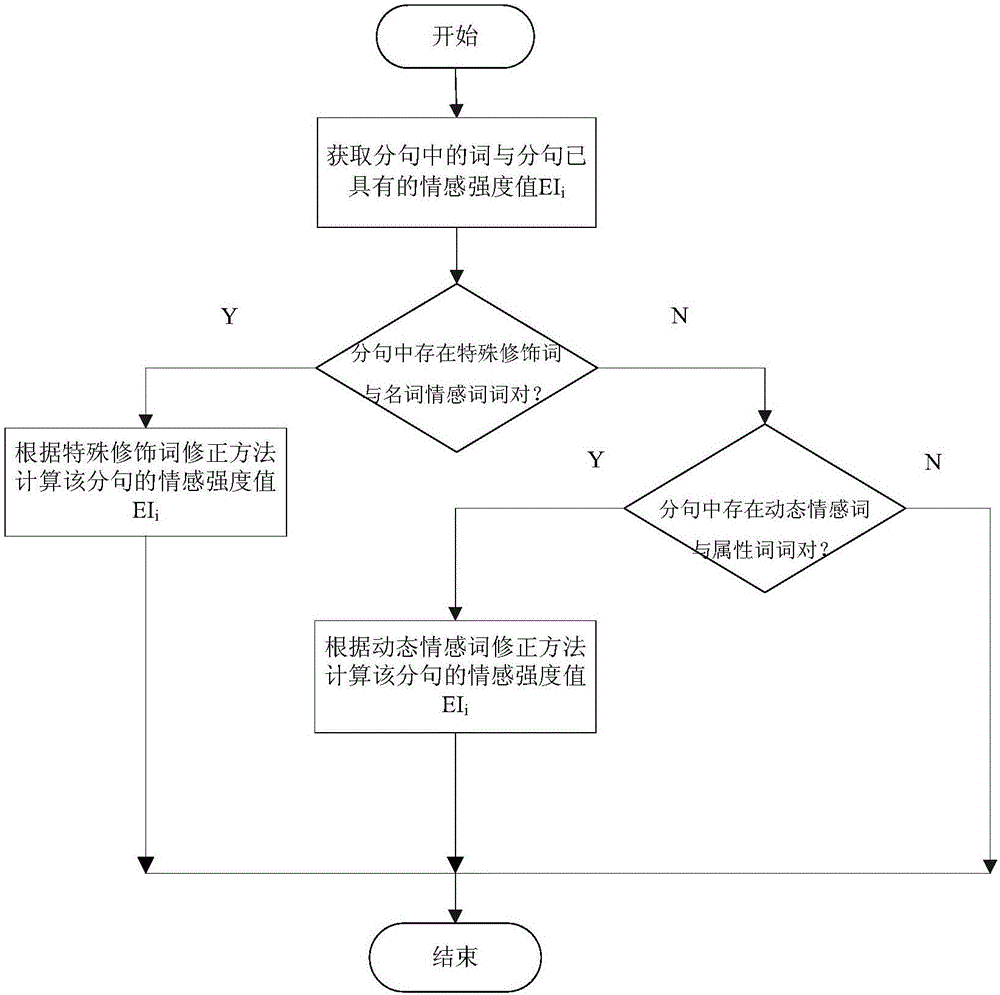

Dynamic sentiment word and special adjunct word-based text sentiment analysis method

InactiveCN106528533ASentiment analysis results in line withSemantic analysisSpecial data processing applicationsPart of speechCorrection method

The invention discloses a dynamic sentiment word and special adjunct word-based text sentiment analysis method. The method comprises the following steps of: (1) constructing a dynamic sentiment word-attribute word pair list and artificially labelling sentiment polarities and sentiment intensities of word pairs in the list, and constructing a special adjunct word-noun sentiment word pair list and artificially labelling sentiment polarities and sentiment intensities of word pairs in the list; (2) constructing a part of speech-based clause sentiment intensity calculation method; and (3) constructing a dynamic sentiment word and special adjunct word-based sentiment intensity correction method, and during sentiment analysis, correcting a sentiment calculation result according to a modification relationship between dynamic sentiment words and attribute words and a modification relationship between special adjunct words and noun sentiment words. The method disclosed by the invention has the advantages of sufficiently considering the functions of the dynamic sentiment words and of the special adjunct words in the sentiment analysis, so that the sentiment analysis result more accords with the practical conditions.

Owner:ZHEJIANG SCI-TECH UNIV

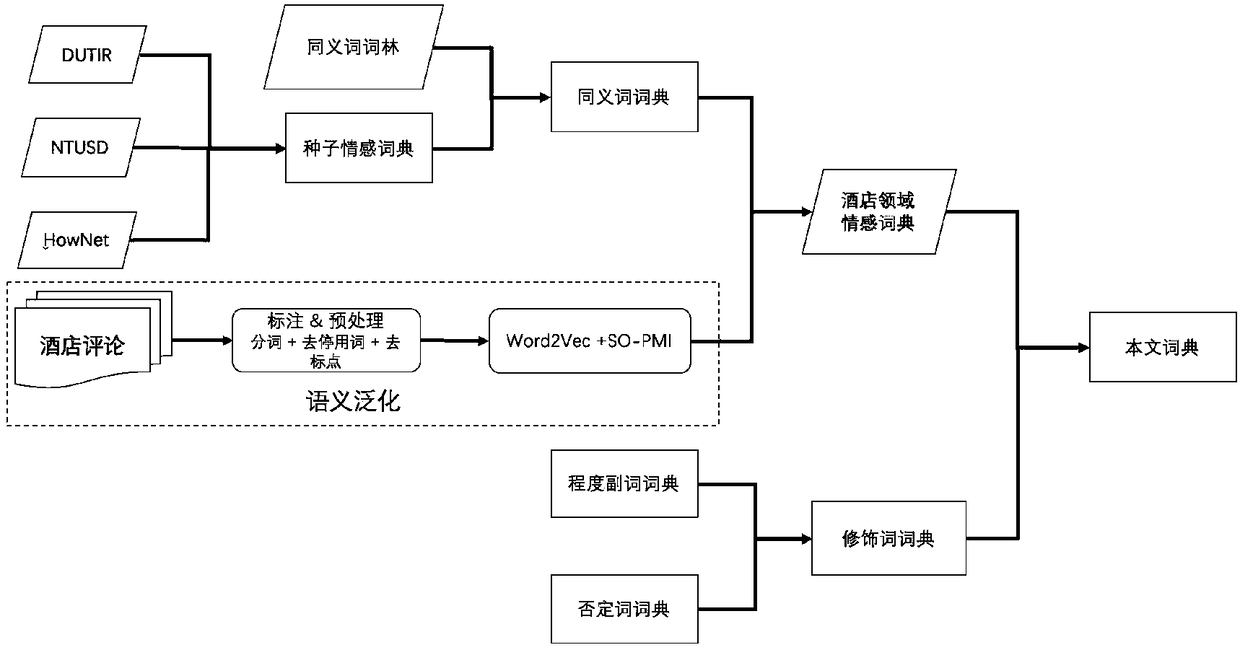

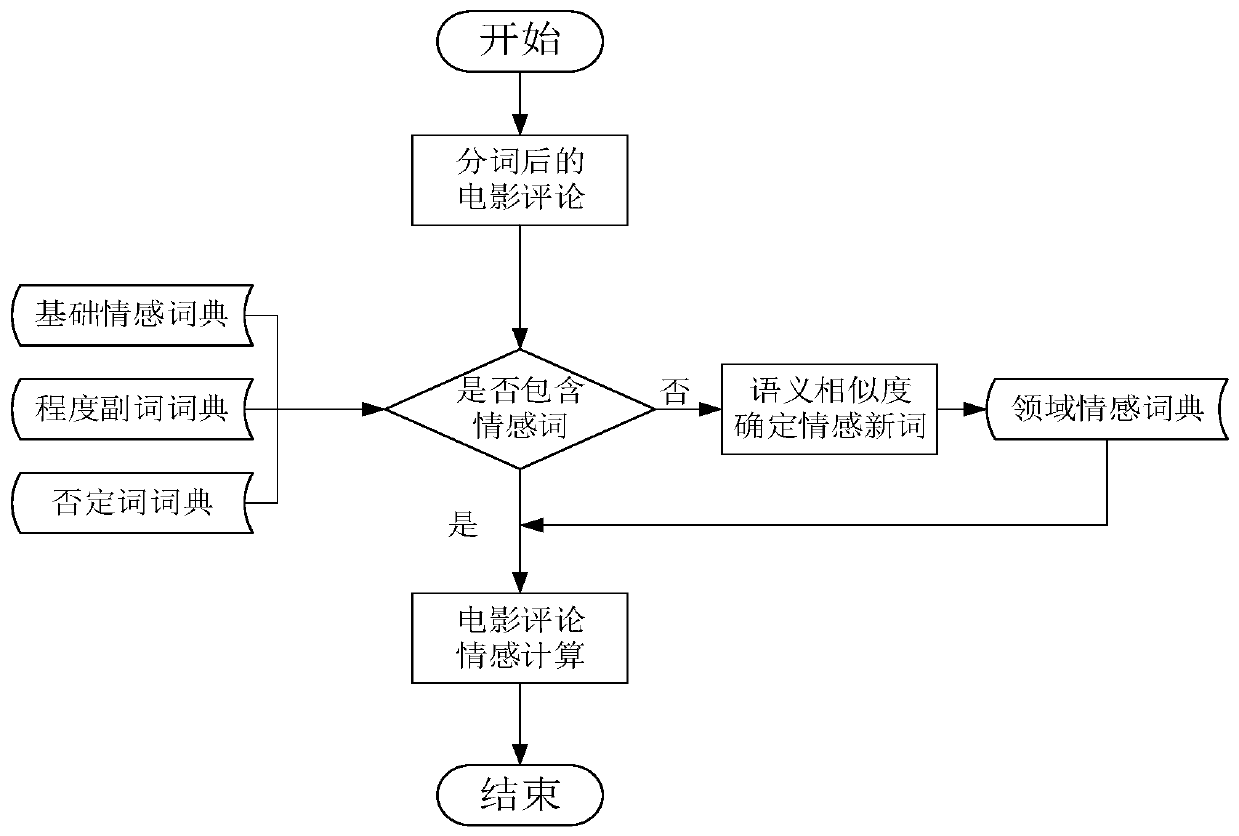

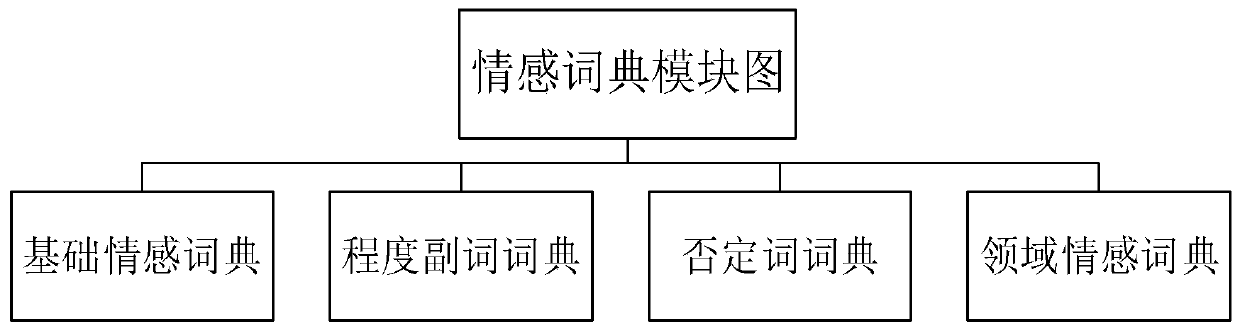

Emotion analysis method for Douban network movie comments

PendingCN110598219AOvercome limitationsImprove accuracyMetadata text retrievalData processing applicationsPattern recognitionPart of speech

The invention relates to an emotion analysis method for Douban network movie comments, which is mainly used for carrying out emotion analysis on Chinese movie comments on the Douban network, and comprises the following steps of: firstly, carrying out data crawling operation on the movie comments on the Douban network, and then carrying out preprocessing operation on the data, including deleting stop words, segmenting words and tagging part of speech; secondly, constructing four types of dictionaries required for movie comment sentiment analysis, wherein the four types of dictionaries are respectively a basic sentiment dictionary, a negative word dictionary, a degree adverb dictionary and a sentiment dictionary in the movie comment field; carrying out emotion calculation on the movie comments by utilizing a designed emotion calculation method to judge emotion polarity; then performing emotion polarity judgment on the comments by utilizing the weak annotation information of the user scores; wherein if the comment emotion polarity obtained through emotion calculation is consistent with the comment emotion polarity judged by the weak annotation information, the emotion polarity of themovie comment can be obtained, and if the comment emotion polarity obtained through emotion calculation is not consistent with the comment emotion polarity judged by the weak annotation information, the emotion polarity of the movie comment is judged according to emotion calculation.

Owner:ANHUI UNIV OF SCI & TECH

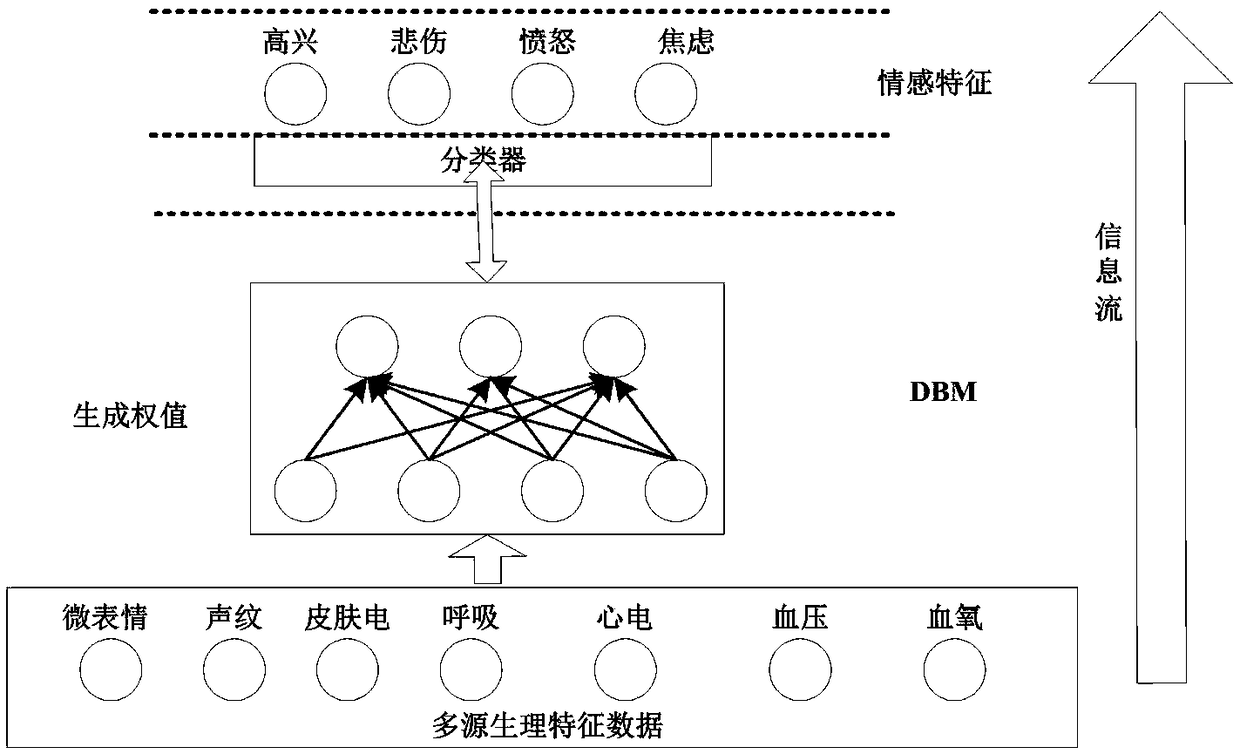

Depth neural network multi-source data fusion method using micro-expression multi-input information

InactiveCN109190550AImprove fault toleranceStable and reliable outputBiometric pattern recognitionNeural architecturesMulti inputAlgorithm

The invention discloses a depth neural network multi-source data fusion method using micro-expression multi-input information. A deep neural network learning architecture combining micro-expression and multi-input information is used to reconstruct the characteristic weights of a specific affective analysis model through unsupervised greedy layer-by-layer training method for multi-source data in front of affective computation, and then the values are transferred to the hidden layer to reconstruct the original input data. In order to obtain the true expression of emotional characteristics, thebidirectional data layer and the hidden layer are iterated many times. Through the top-level data eigenvector fusion, high-precision heterogeneous multi-dimensional data fusion target information is formed. The invention provides a sparse coding based on the SAE structure, which reduces the dimension of the high-dimensional physiological feature vector through the bottom-up sparse coding theory, so that fewer over-complete physiological feature data base vectors can accurately represent the original high-dimensional feature.

Owner:SHENYANG CONTAIN ELECTRONICS SCI & TECH CO LTD

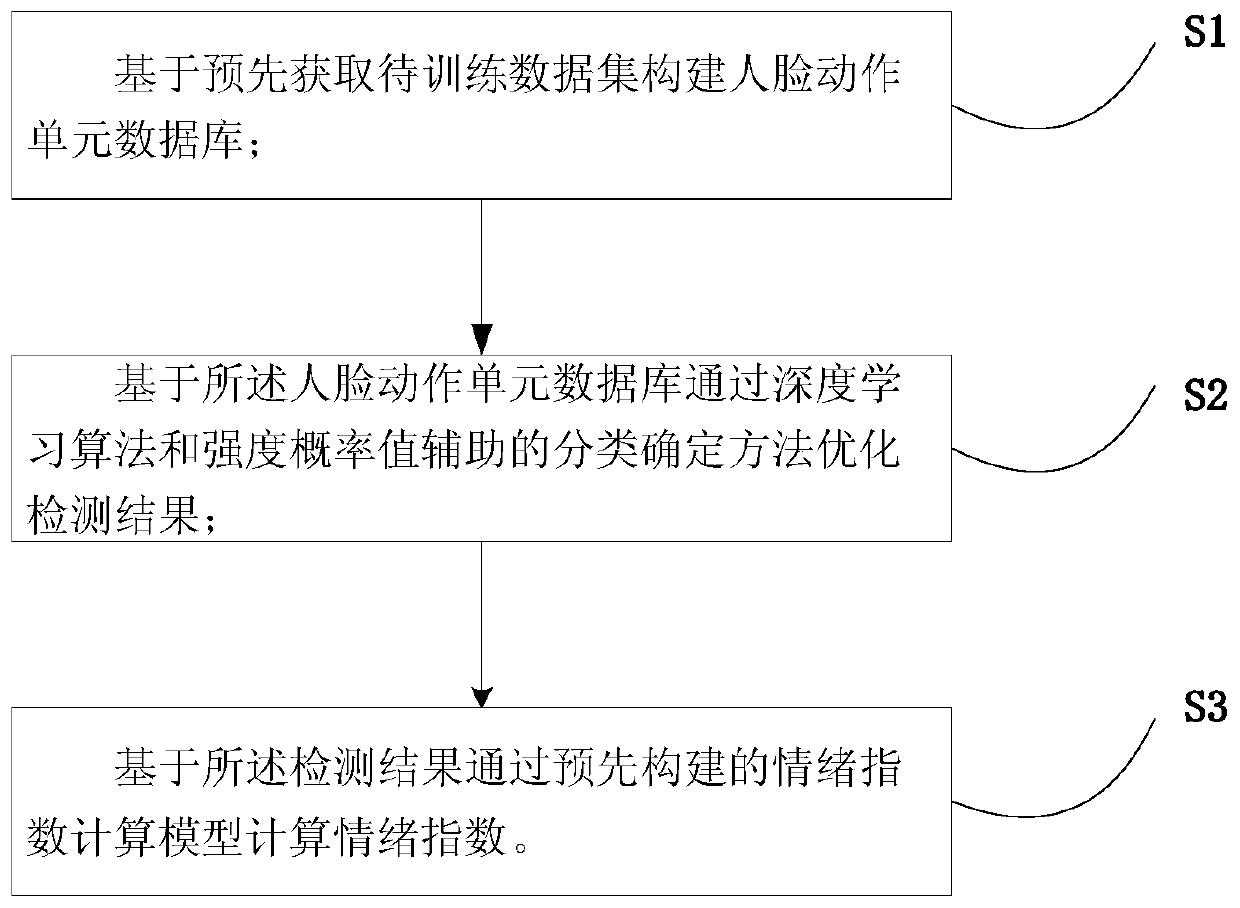

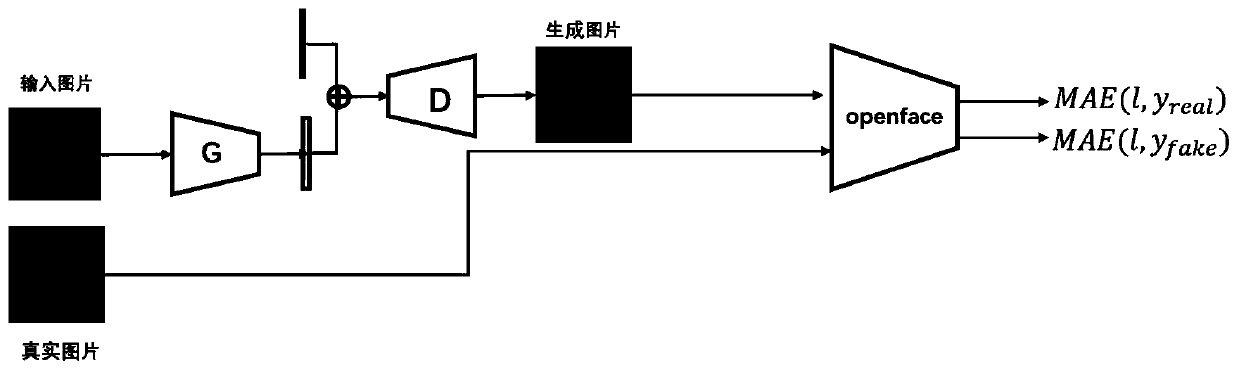

Emotion index calculation method based on face action unit detection

InactiveCN110147822AAccurate and objective communicationThe calculation principle is simpleCharacter and pattern recognitionPattern recognitionData set

The invention belongs to the technical field of face recognition and emotion calculation, and particularly relates to an emotion index calculation method based on face action unit detection. The emotion index calculation method comprises the following specific steps: establishing a to-be-trained data set to form a face action unit database; detecting the action intensity of the face action unit; and calculating an emotion index. According to the emotion index calculation method, the emotional process expressed through facial expressions is achieved; detail information can be more accurately and objectively transmitted; deep information which cannot be described and transmitted by languages can be made up for; and a very feasible scheme is provided for scene applications where language expression obstacles are caused and language information cannot be normally obtained.

Owner:BEIJING NORMAL UNIVERSITY

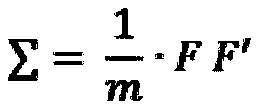

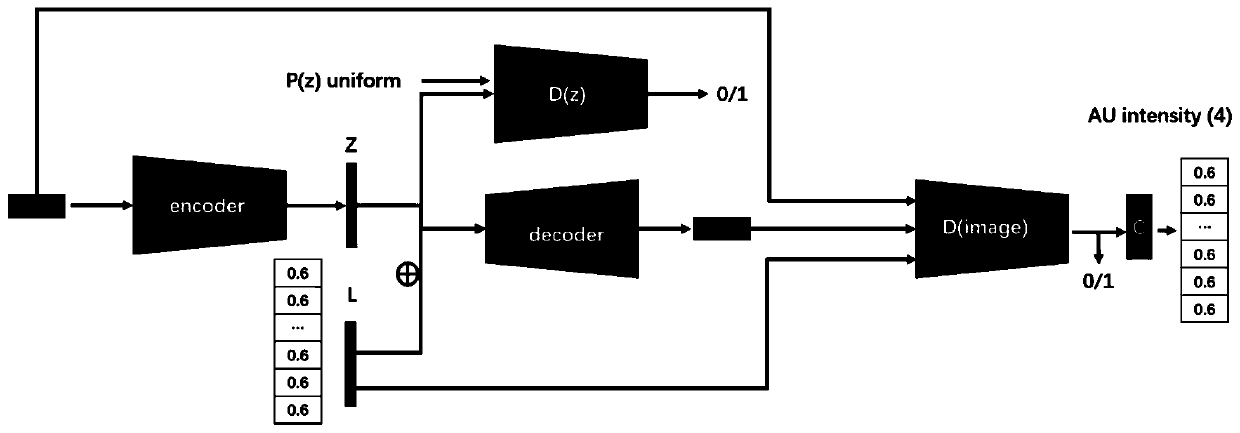

Facial expression action unit adversarial synthesis method based on local attention model

PendingCN110458003AEffective mappingAU strength changesImage enhancementImage analysisAttention modelData set

The invention relates to the fields of computer vision, emotion calculation and the like. In order to improve the diversity and the universality of an AU expression data set are improved, a technicalscheme adopted by the invnetion includes a facial expression action unit adversarial synthesis method based on a local attention model, and the method comprises the steps: carrying out the feature extraction of a facial motion unit AU region through the local attention model, carrying out the adversarial generation of a local region, and finally carrying out the AU intensity evaluation of enhanceddata, so as to detect the quality of a generated image. The method is mainly applied to occasions such as image processing and face recognition.

Owner:TIANJIN UNIV

Expression recognition method based on brain-computer collaborative intelligence

ActiveCN110135244AAvoid fit problemsAvoid the modeling processMultiple biometrics useAcquiring/recognising facial featuresData acquisitionCollaborative intelligence

The invention relates to an expression recognition method based on brain-computer collaborative intelligence. The method mainly adopts a two-layer convolutional neural network to extract image visualfeatures of human face expressions, adopts a plurality of gating circulation units to extract electroencephalogram emotion features induced when the expressions are watched, establishes a mapping relation between the two features through a random forest regression model, and finally adopts K-neighbor classifier to carry out expression classification on the predicted electroencephalogram emotion features obtained by the regression model. The method comprises the steps of data acquisition, data preprocessing, image visual feature extraction, electroencephalogram emotion feature extraction, feature mapping and expression classification. Expression classification results show that a good classification result is obtained by adopting the predicted electroencephalogram emotion features. Comparedwith a traditional image vision method, the expression recognition method based on brain-computer collaborative intelligence is a promising emotion calculation method.

Owner:HANGZHOU DIANZI UNIV

Industry text emotion obtaining method, device and storage medium

PendingCN109284499AExpanding the Emotional Knowledge BaseIncrease reflectionNatural language data processingSpecial data processing applicationsTarget textLexicon

The invention discloses an industry text emotion obtaining method, device and a storage medium, which relate to the technical field of natural language processing big data analysis. The method includes carrying out emotion sentence recognition on clauses in combination with an emotion knowledge base of a target field so as to form an emotion sentence set; according to the constructed affective dependency tree, determining a method of obtaining the scores of affective dependency; the long sentences are segmented into clauses, and the emotional scores of clauses, long sentences and paragraphs are obtained according to the method of obtaining the emotional dependency scores of the target text. Through the practical application test, the industry text emotion calculation method provided by theinvention can better reflect the industry text emotion, and the accuracy rate reaches 85% in the calculation of the long text chapter level emotion. Aiming at the texts of different fields, the invention can quickly construct the domain emotion thesaurus, and realize the fast self-adaptation of the emotion calculation of different industries.

Owner:数地工场(南京)科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com