Voice affective characteristic extraction method capable of combining local information and global information

A technology of global information and emotional features, applied in speech analysis, instruments, etc., can solve problems such as noise sensitivity, and achieve the effect of simple method, easy implementation, and simple feature extraction framework

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

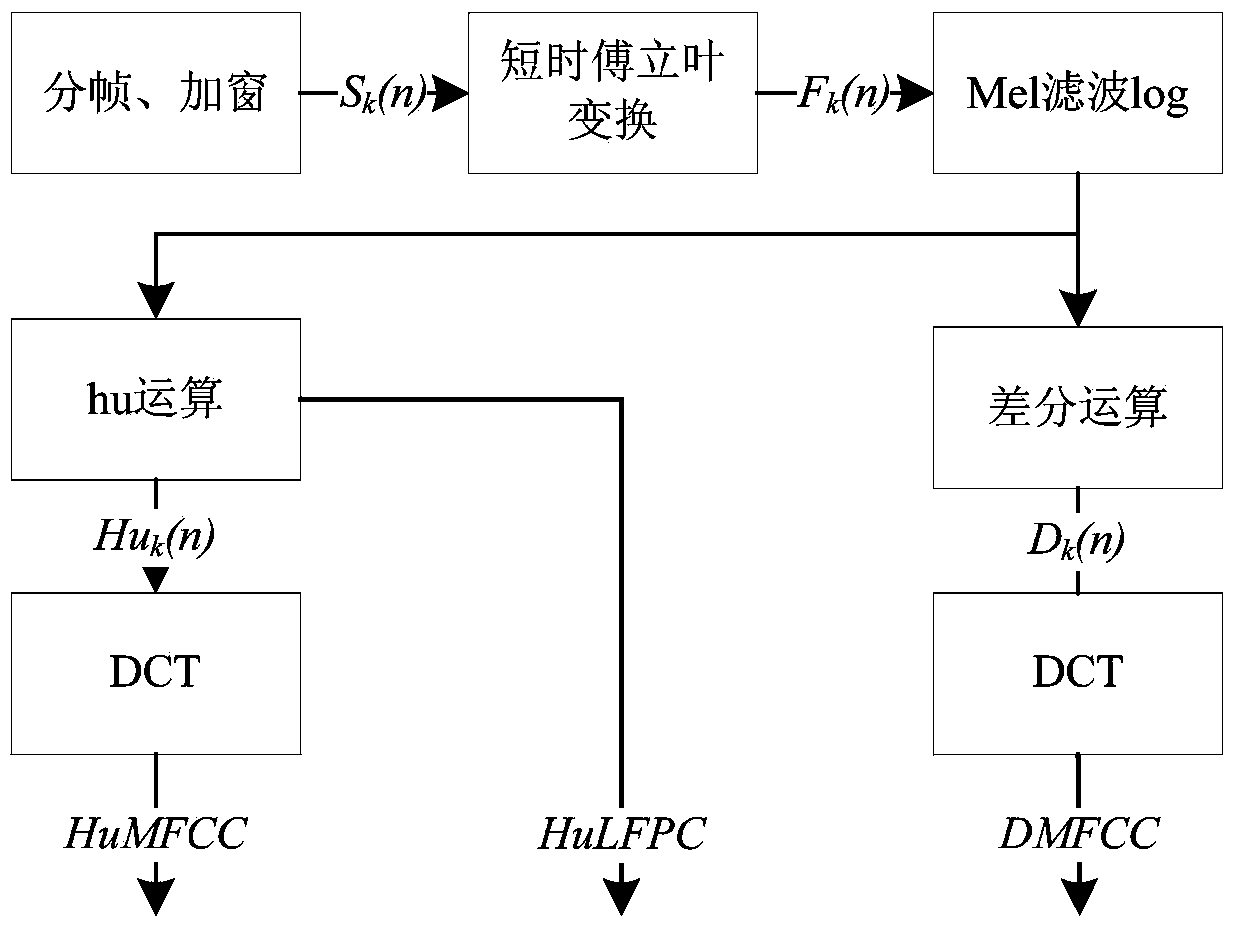

[0041] Such as figure 1 As shown, a speech emotion feature extraction method combining local and global information, including the following steps:

[0042] The first step: Framing and windowing the speech signal to obtain S k (N). Use the following two formulas for framing, where N represents the frame length, inc represents the number of sampling points that deviate from the next frame, fix(x) finds the nearest integer to x, fs represents the sampling rate of the voice signal, from the voice data, bw is the frequency resolution in the spectrogram, k represents the kth frame, and the present invention takes 60HZ. The windowing function is the Hamming window.

[0043] N=fix(1.81*fs / bw), (1)

[0044] inc=1.81 / (4*bw), (2);

[0045] The second step: to S k (N) Perform a short-time Fourier transform F k (N), and to F k (N) Use formula (3) to obtain the Mel frequency G k (N).

[0046] Mel(f)=2595*lg(1+f / 700), (3);

[0047] Step 3: First use formula (4) to define a filte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com