Domain-self-adaptive facial expression analysis method

A technology of facial expression and analysis method, applied in the field of computer vision and emotional computing research, can solve problems such as hindering prediction accuracy, differences in training and testing data fields, and achieve the effect of expanding the scope of practical application and good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

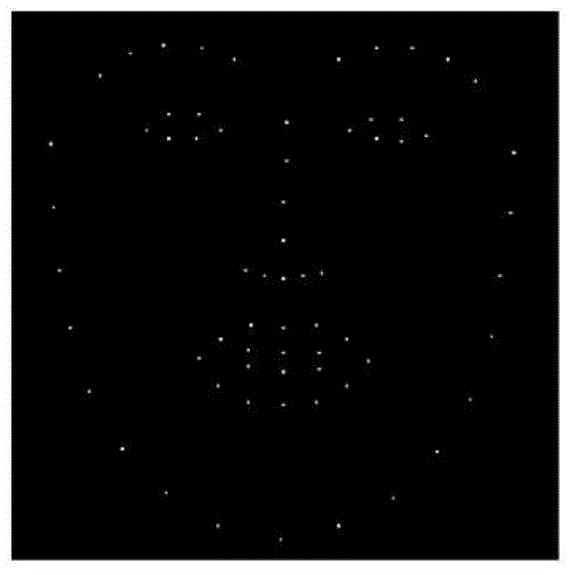

[0012] The invention is an automatic human facial expression analysis method with domain self-adaptive capability. The present invention takes the facial action unit (Action Unit, AU) defined in FACS as the target of expression analysis. AU is a set of action units defined on facial muscle movements. For example, AU 12 means that the corners of the mouth are raised, which is basically equivalent to the action of "laughing" semantically. On the basis of making full use of the correlation and complementarity between the two types of face image features, the method proposed by the present invention can automatically analyze the video of the test object, and give a label of whether a specific AU appears in each frame.

[0013] Using existing techniques, we can detect face landmarks. We use SDM (Supervised Descent Machine) technology to detect face feature points in each frame of face video. The detection results of facial feature points show opinions figure 1 .

[0014] In ad...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com