Virtual learning environment natural interaction method based on multimode emotion recognition

A technology for learning environment and emotion recognition, applied in the field of natural interaction in virtual learning environment, can solve problems such as difficulty in accurately conveying students' true emotions and lack of effective research, and achieve high practicability and fun, naturalness, and good motion recognition Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

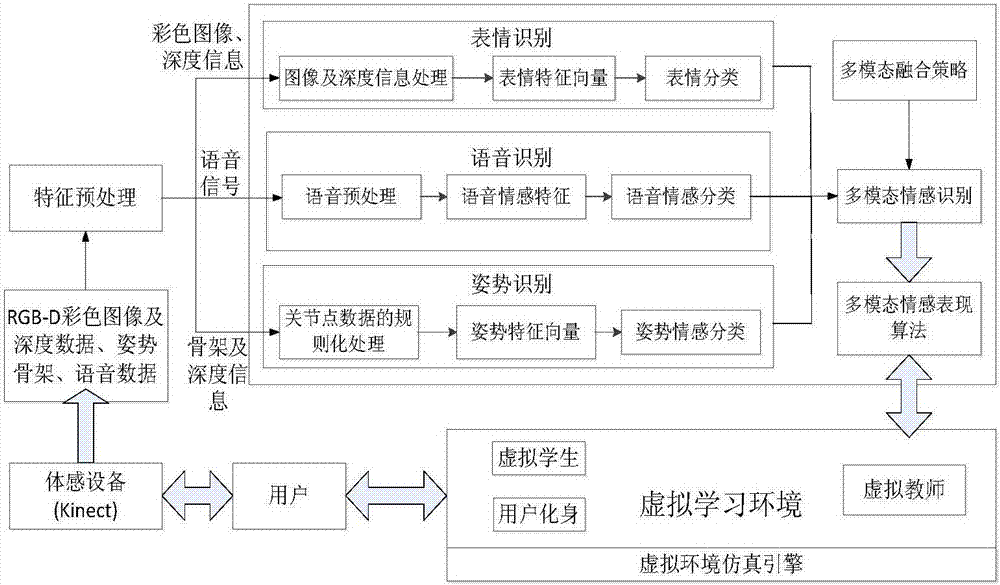

[0038] figure 1 It is a flow chart of natural interaction in a virtual learning environment based on multi-modal emotion recognition proposed by the present invention. It is a natural interaction in a virtual learning environment based on multi-modal emotion recognition. Emotions are extracted, classified and recognized, and then the three kinds of emotion recognition results are fused through the quadrature rule algorithm, and the fusion results are used to drive the decision-making module of the virtual teacher in the virtual learning environment, select the corresponding teaching strategy and behavior action, and generate a virtual agent Emotions such as facial expressions, voice and gestures are displayed in the virtual learning environment. The specific implementation is as follows:

[0039] Step 1: Obtain color image information, depth information, voice signal and skeleton information representing the student's expression, voice and posture.

[0040] Step 101: The pre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com