Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

8663results about "Physical realisation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

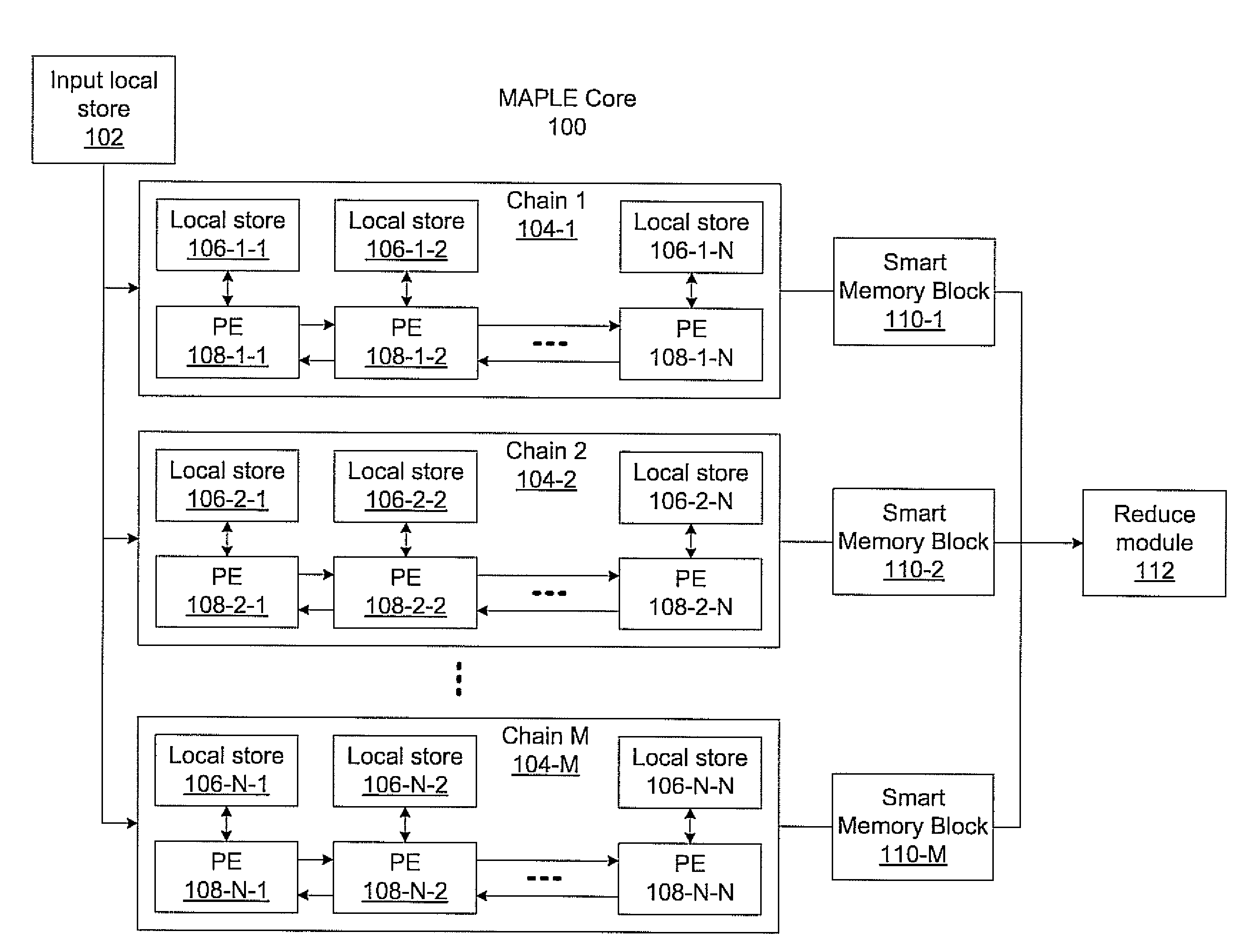

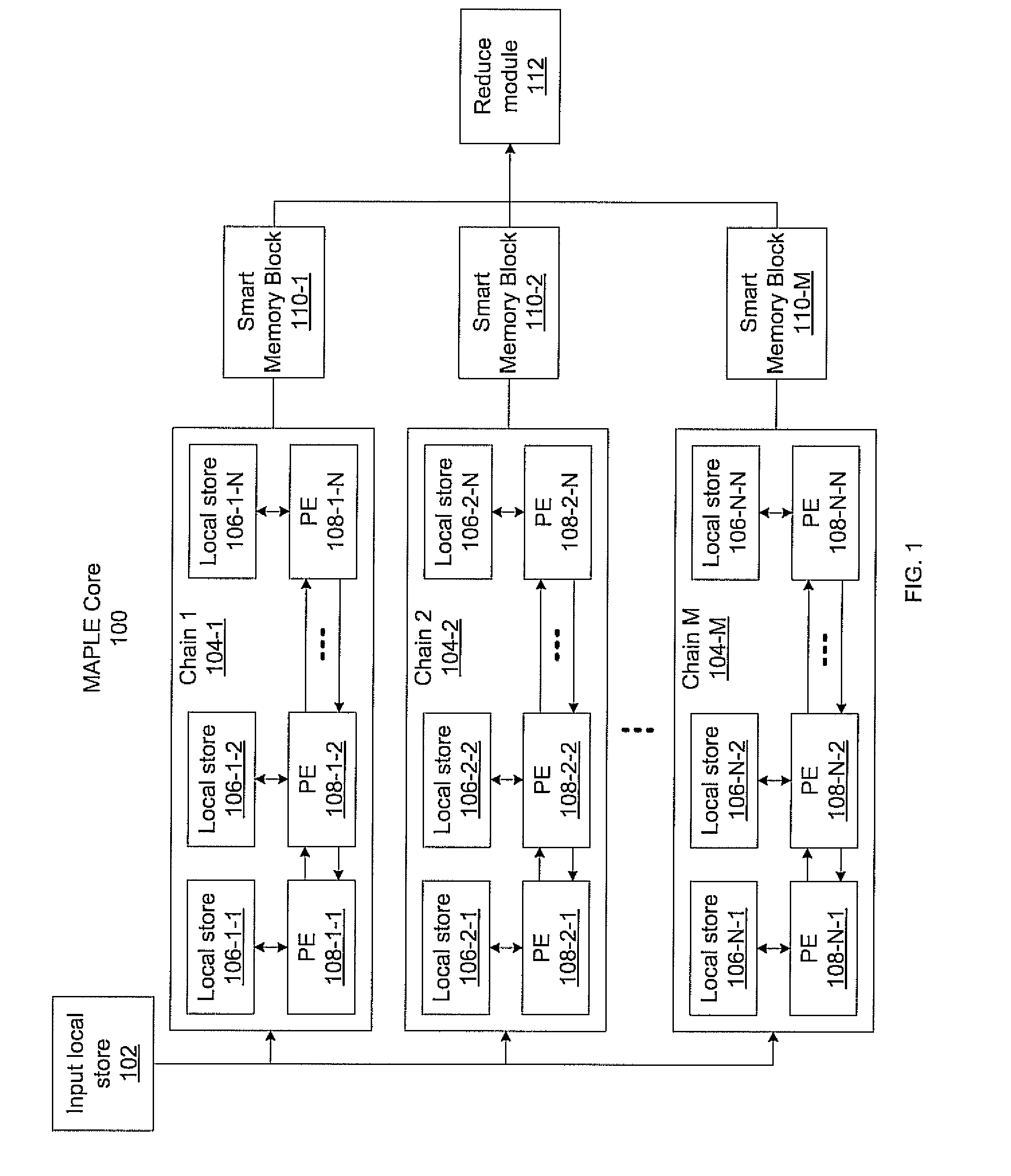

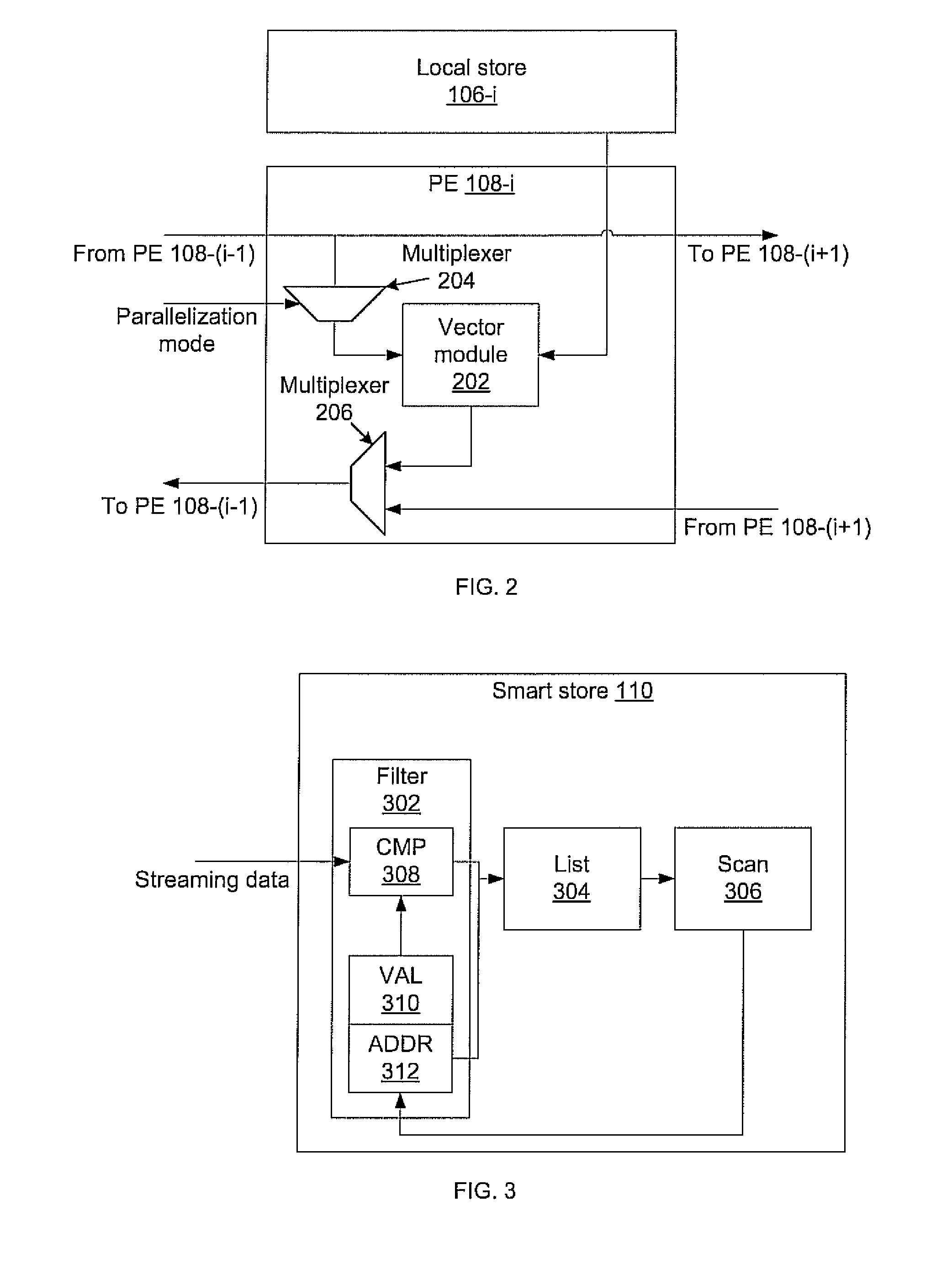

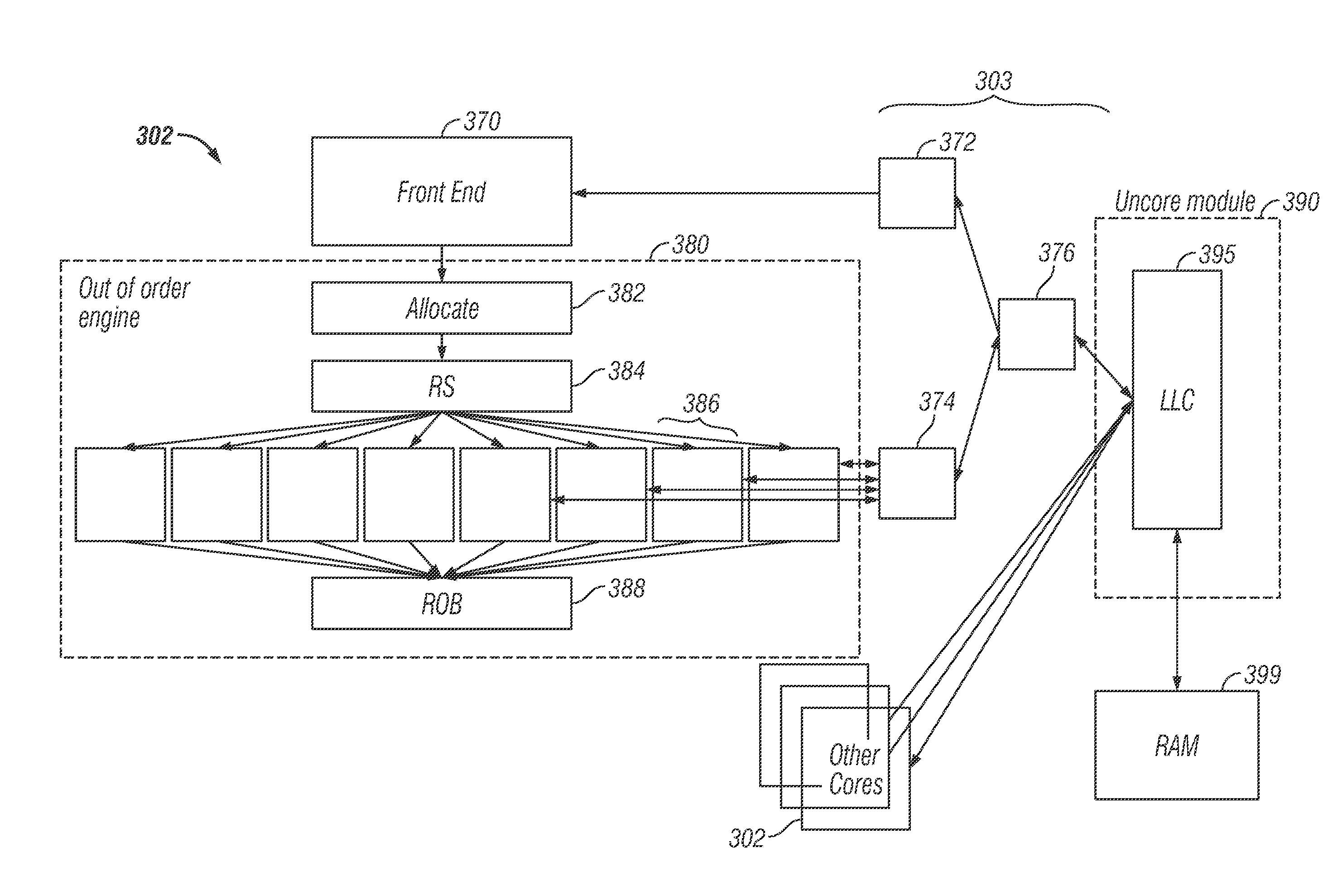

Massively parallel, smart memory based accelerator

Systems and methods for massively parallel processing on an accelerator that includes a plurality of processing cores. Each processing core includes multiple processing chains configured to perform parallel computations, each of which includes a plurality of interconnected processing elements. The cores further include multiple of smart memory blocks configured to store and process data, each memory block accepting the output of one of the plurality of processing chains. The cores communicate with at least one off-chip memory bank.

Owner:NEC CORP

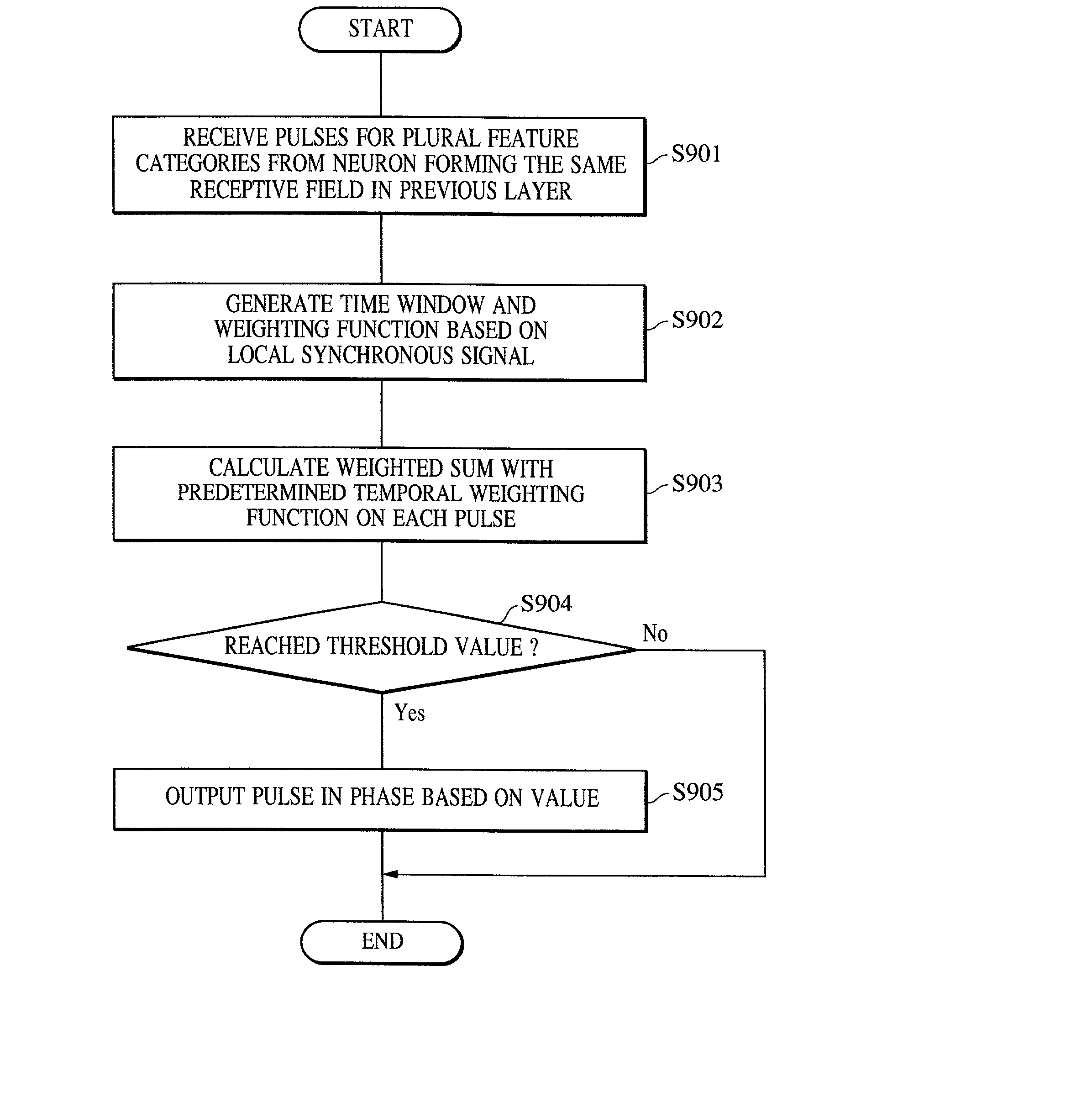

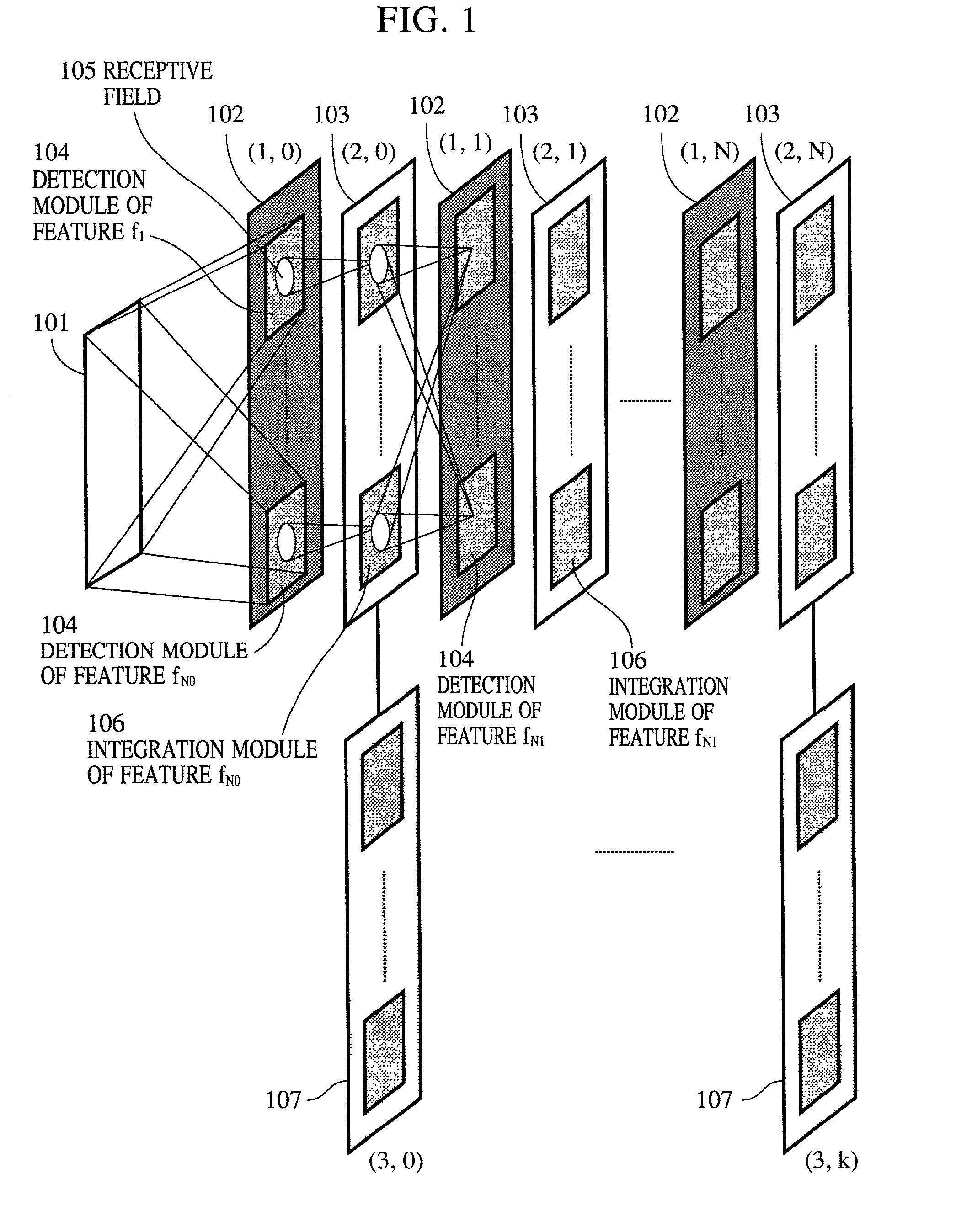

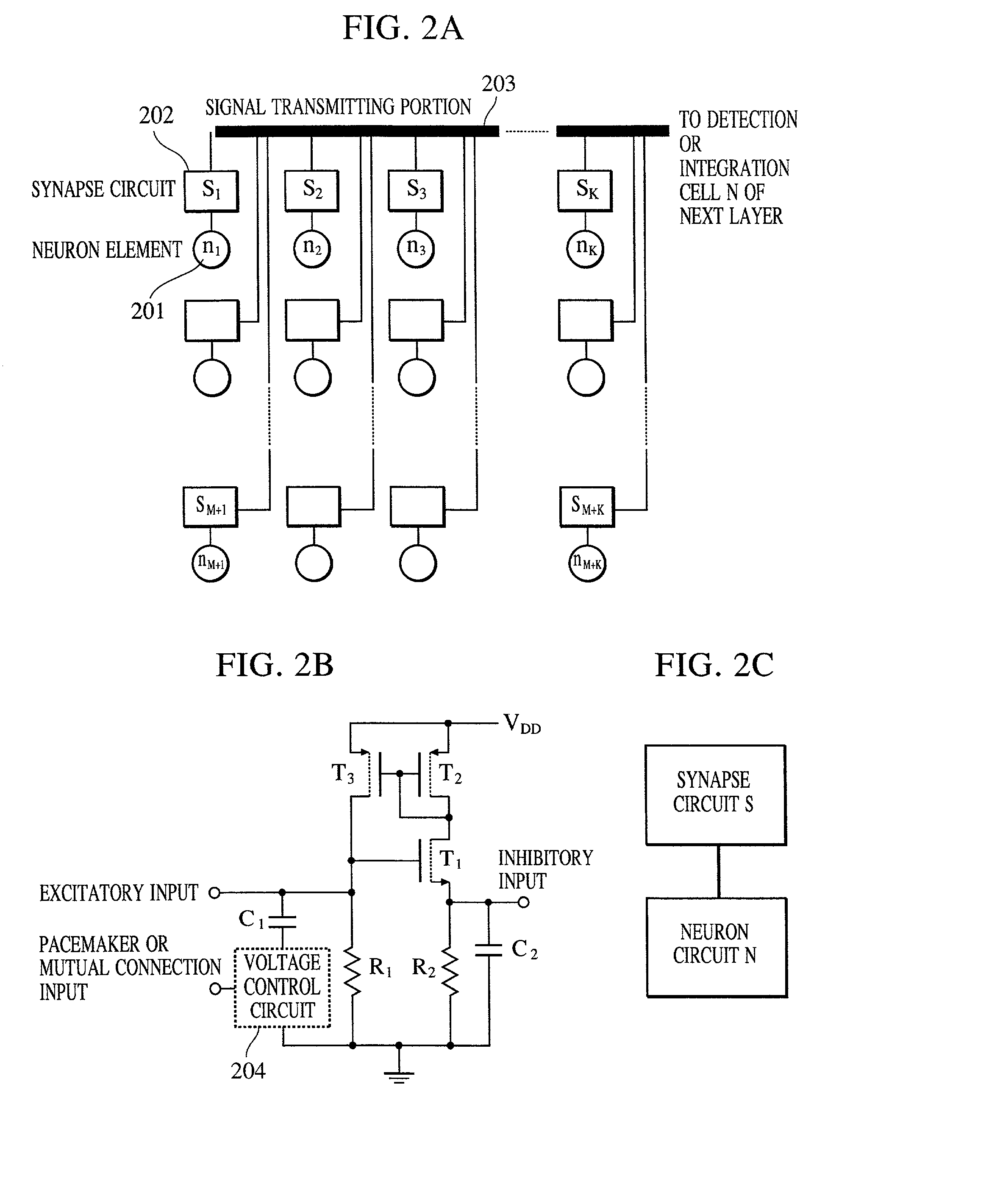

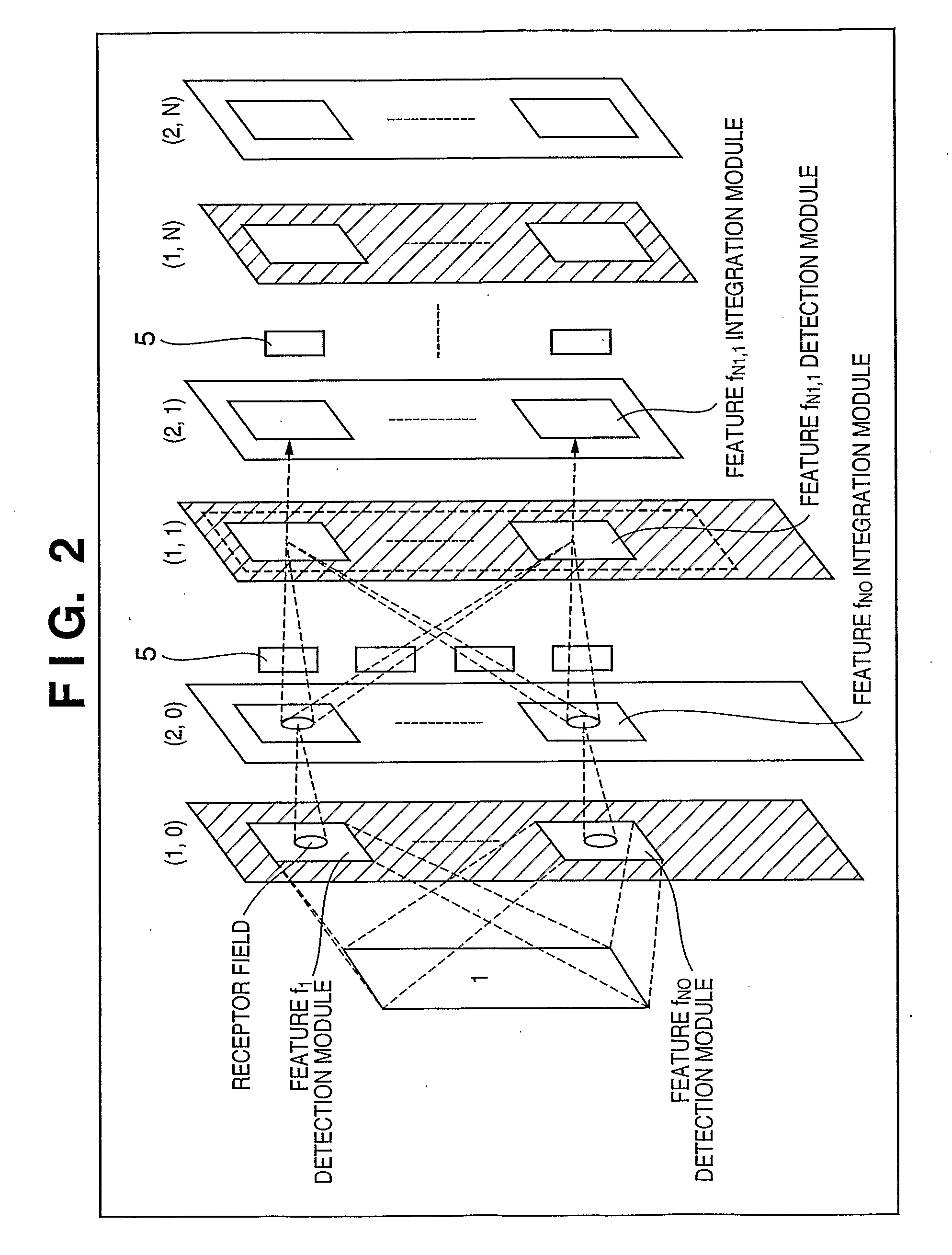

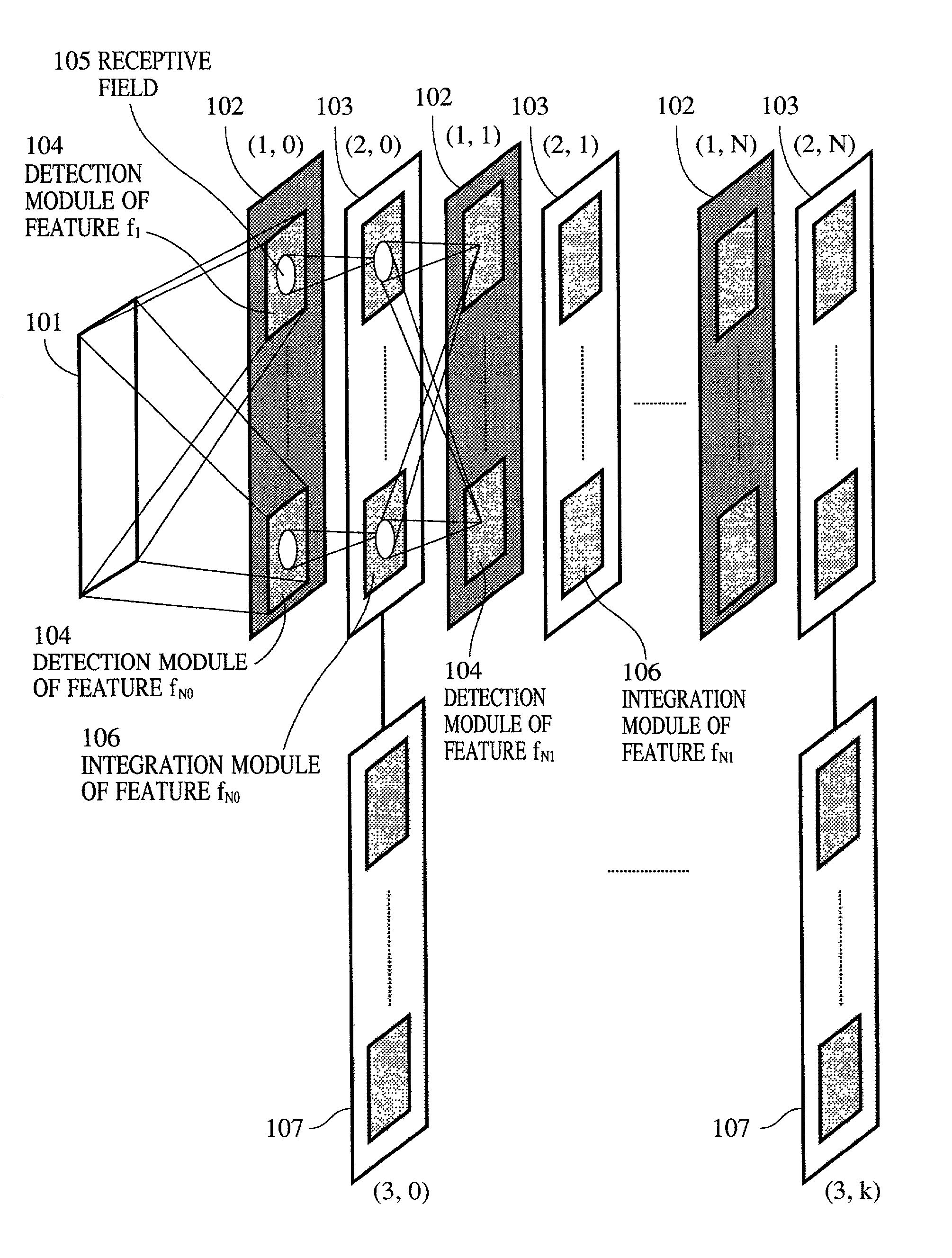

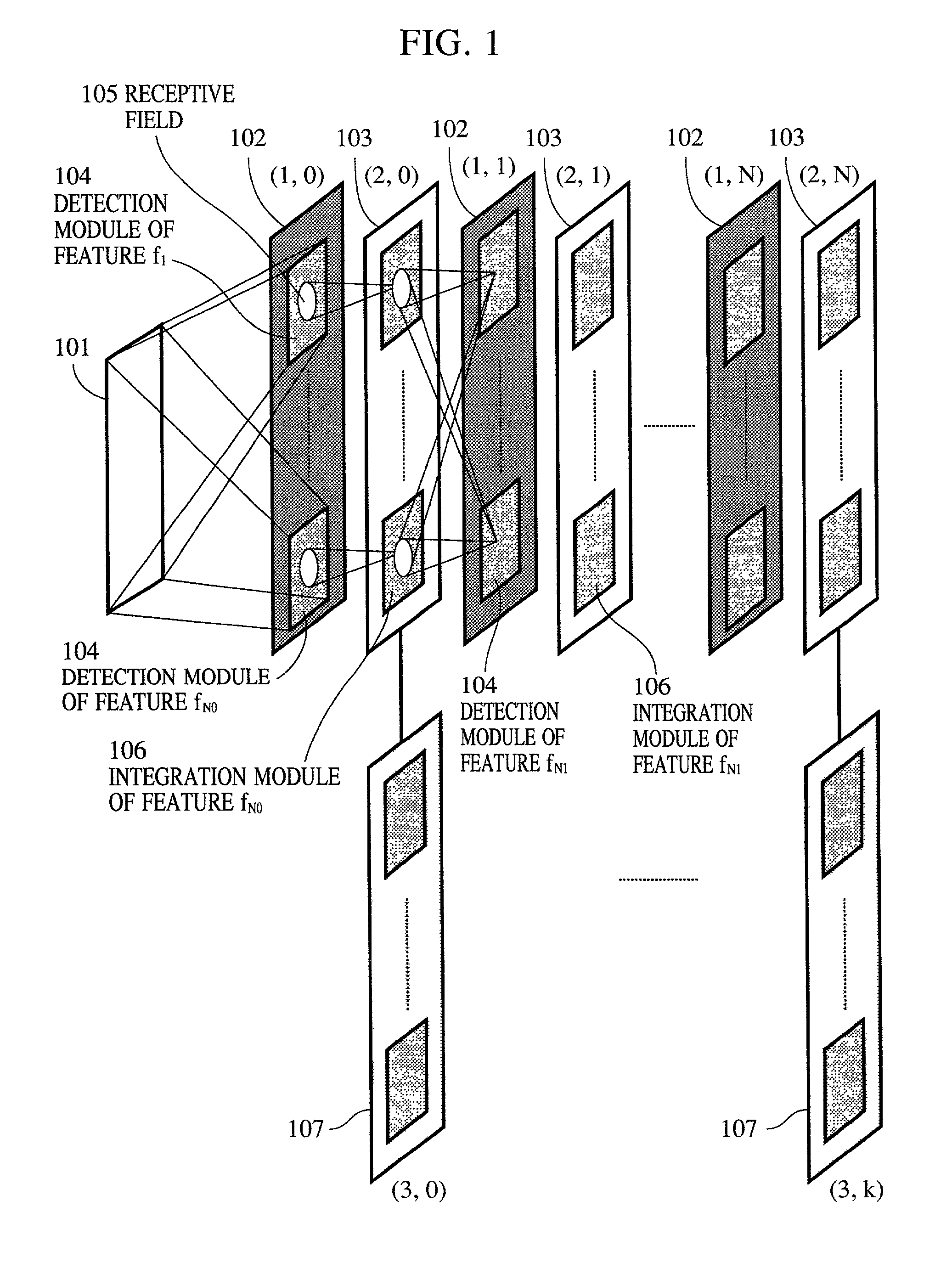

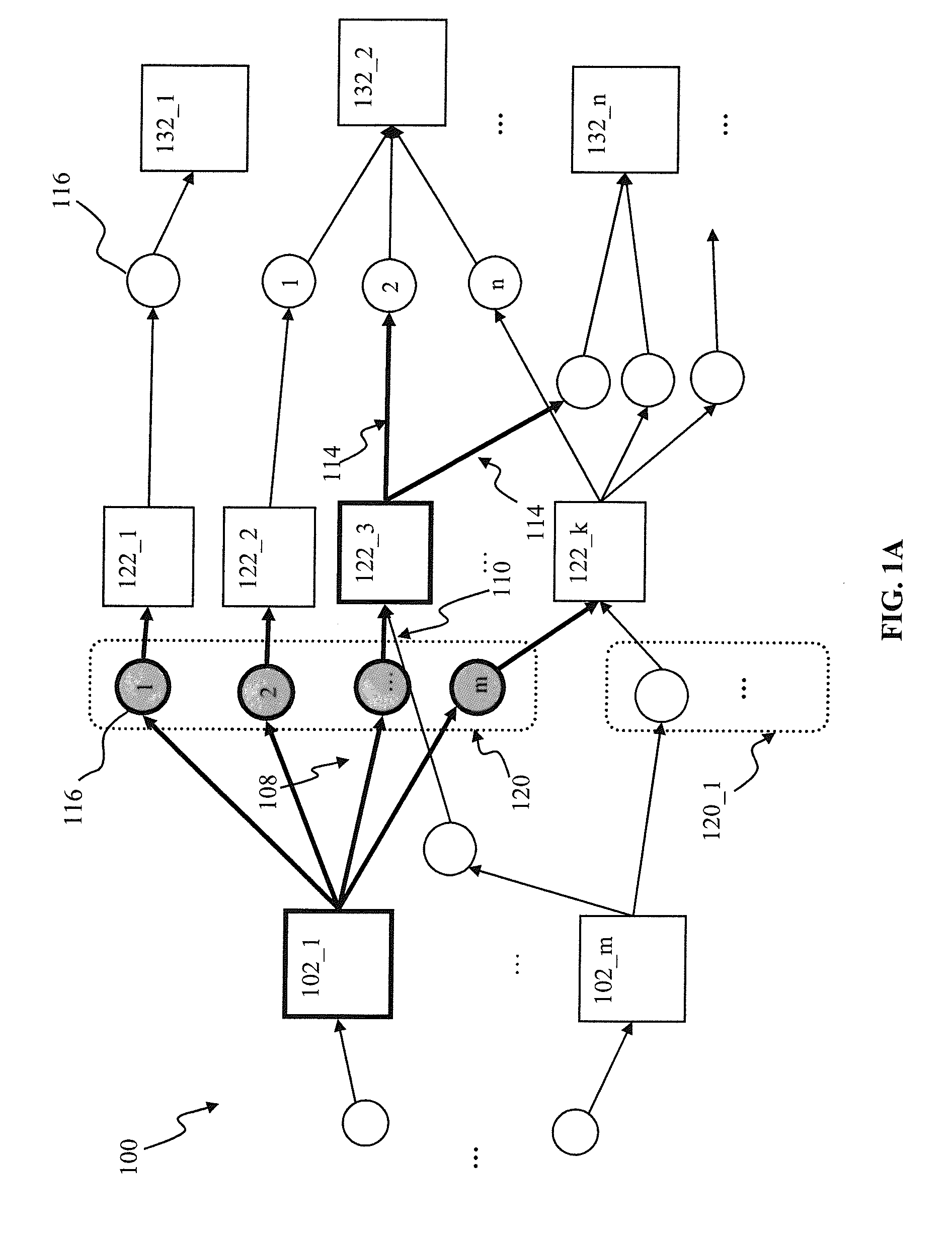

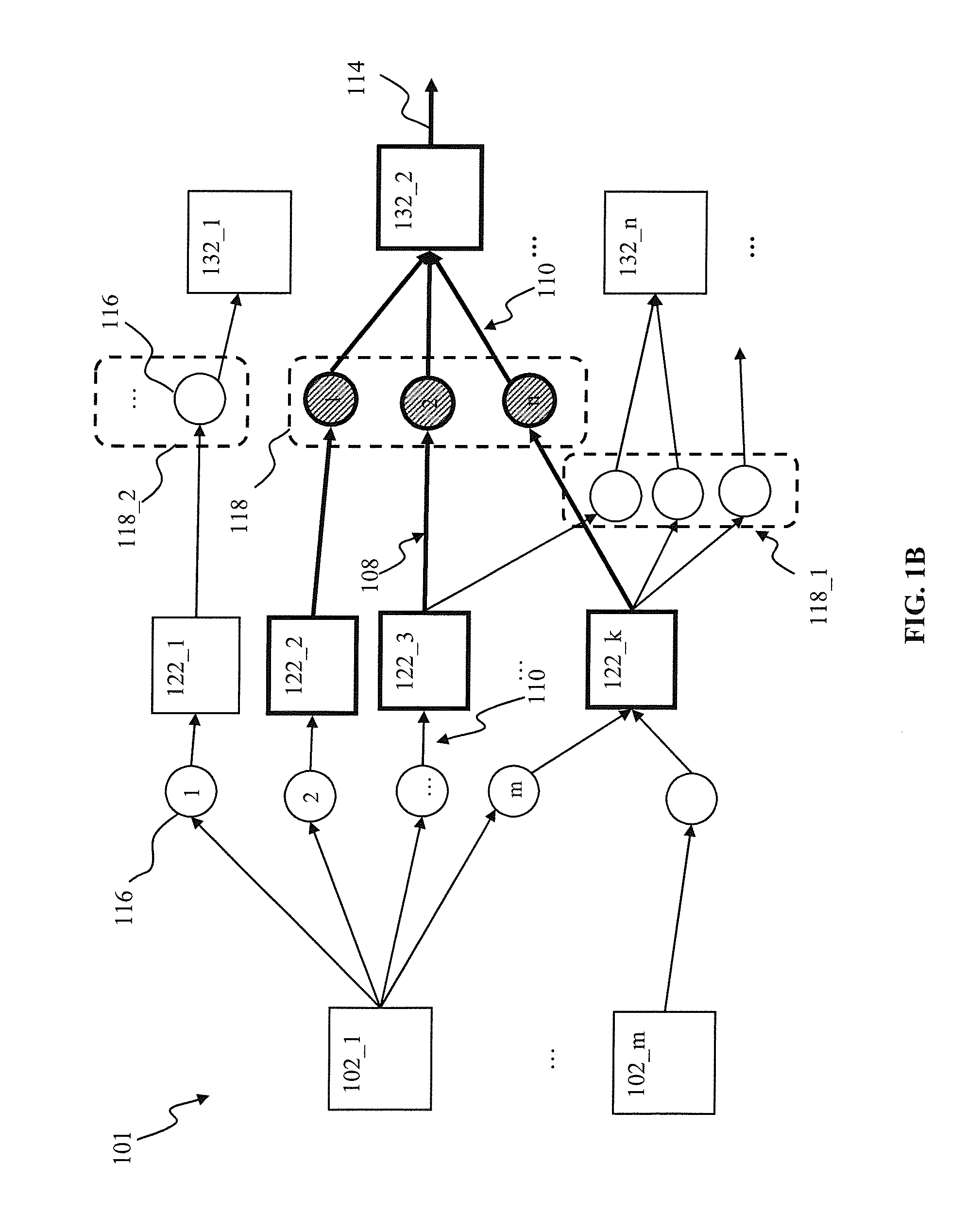

Apparatus and method for detecting or recognizing pattern by employing a plurality of feature detecting elements

InactiveUS20020038294A1Easy constructionReduce in quantityDigital computer detailsCharacter and pattern recognitionSynapsePattern detection

A pattern detecting apparatus has a plurality of hierarchized neuron elements to detect a predetermined pattern included in input patterns. Pulse signals output from the plurality of neuron elements are given specific delays by synapse circuits associated with the individual elements. This makes it possible to transmit the pulse signals to the neuron elements of the succeeding layer through a common bus line so that they can be identified on a time base. The neuron elements of the succeeding layer output the pulse signals at output levels based on a arrival time pattern of the plurality of pulse signals received from the plurality of neuron elements of the preceding layer within a predetermined time window. Thus, the reliability of pattern detection can be improved, and the number of wires interconnecting the elements can be reduced by the use of the common bus line, leading to a small scale of circuit and reduced power consumption.

Owner:CANON KK

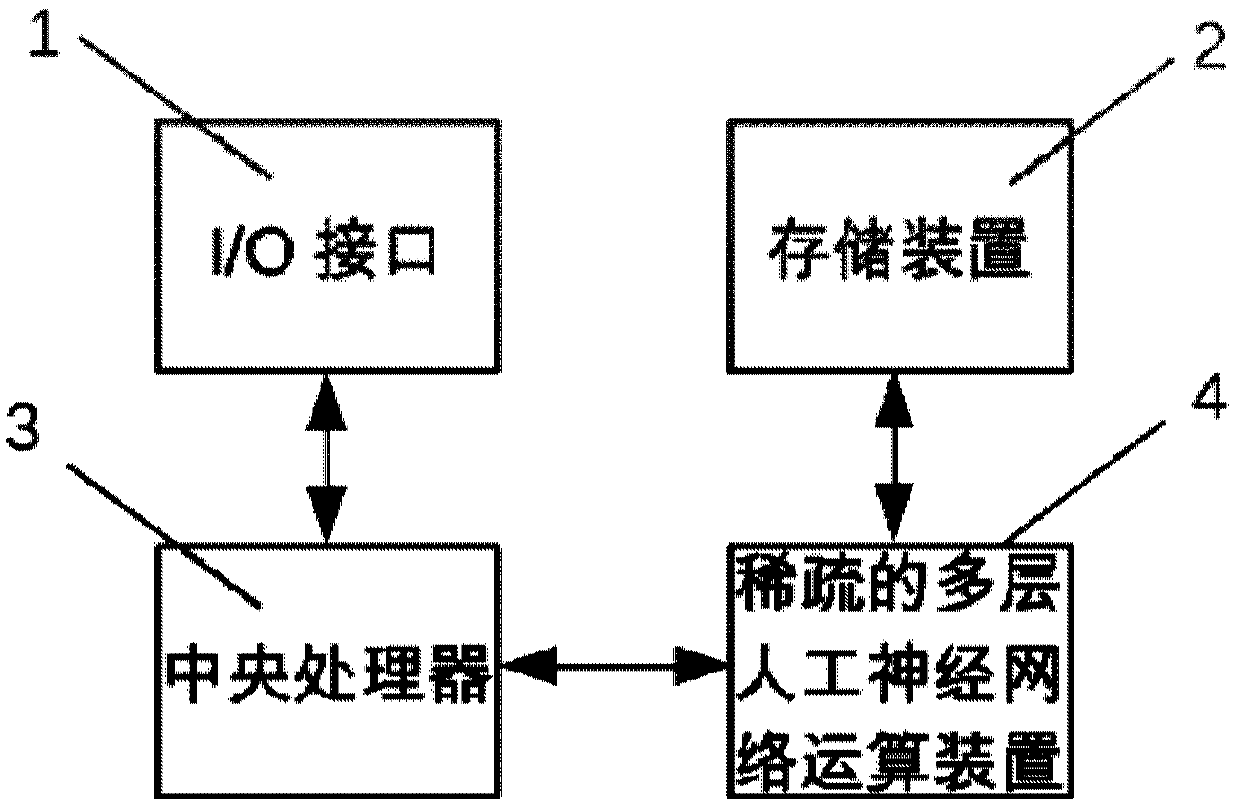

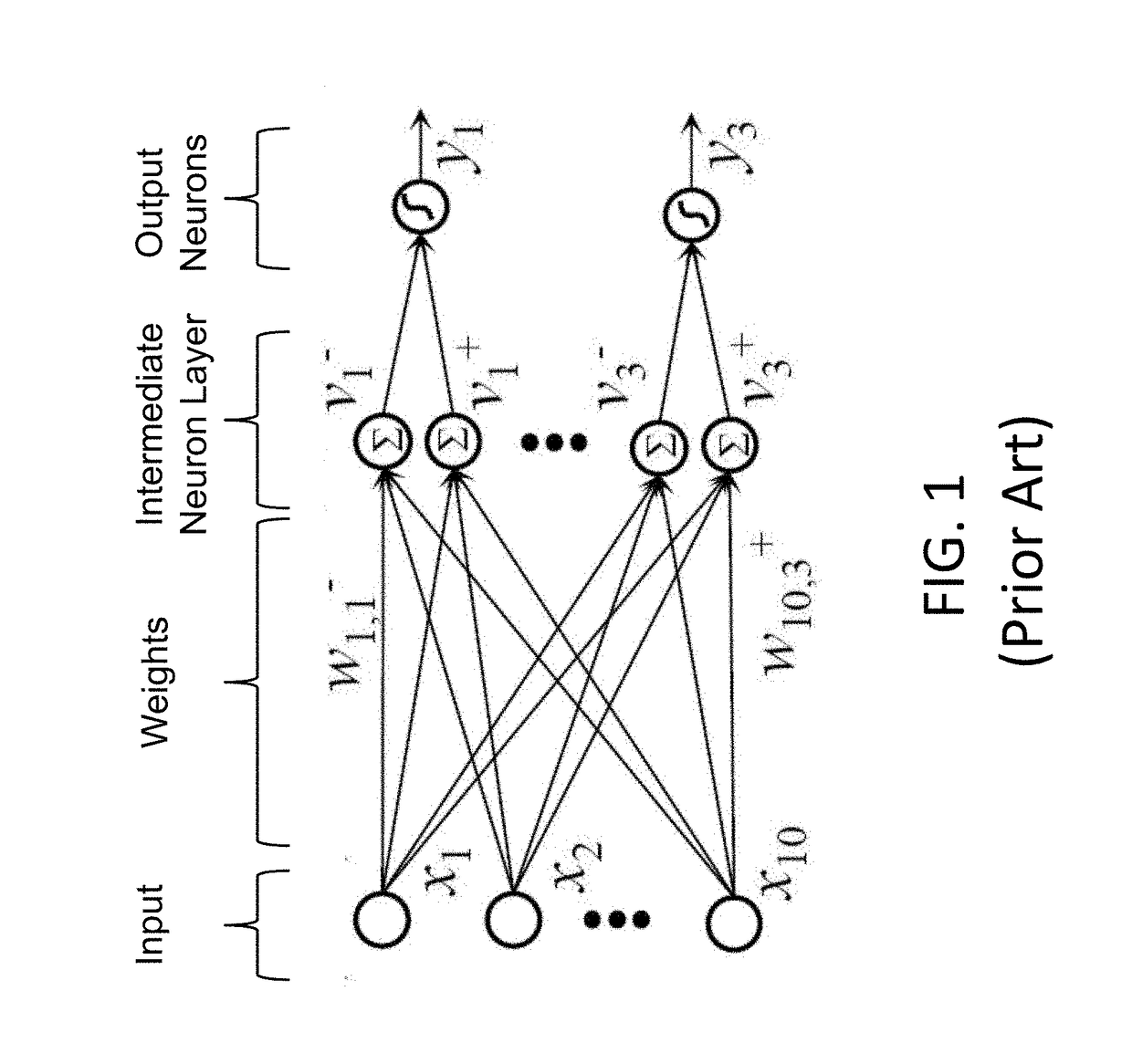

Artificial neural network calculating device and method for sparse connection

ActiveCN105512723ASolve the problem of insufficient computing performance and high front-end decoding overheadAdd supportMemory architecture accessing/allocationDigital data processing detailsActivation functionMemory bandwidth

An artificial neural network calculating device for sparse connection comprises a mapping unit used for converting input data into the storage mode that input nerve cells and weight values correspond one by one, a storage unit used for storing data and instructions, and an operation unit used for executing corresponding operation on the data according to the instructions. The operation unit mainly executes three steps of operation, wherein in the first step, the input nerve cells and weight value data are multiplied; in the second step, addition tree operation is executed, the weighted output nerve cells processed in the first step are added level by level through an addition tree, or the output nerve cells are added with offset to obtain offset-added output nerve cells; in the third step, activation function operation is executed, and the final output nerve cells are obtained. By means of the device, the problems that the operation performance of a CPU and a GPU is insufficient, and the expenditure of front end coding is large are solved, support to a multi-layer artificial neural network operation algorithm is effectively improved, and the problem that memory bandwidth becomes a bottleneck of multi-layer artificial neural network operation and the performance of a training algorithm of the multi-layer artificial neural network operation is solved.

Owner:CAMBRICON TECH CO LTD

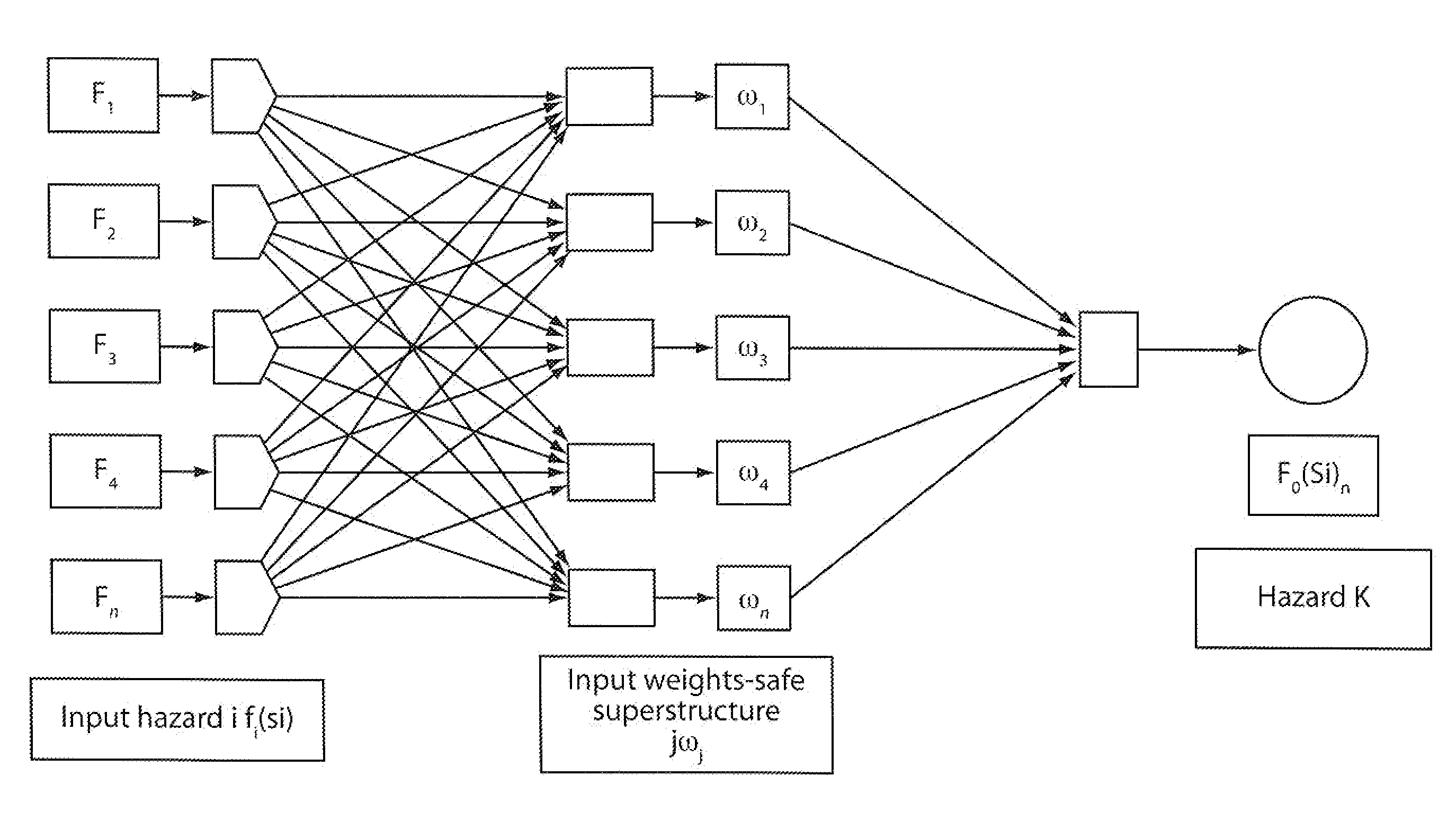

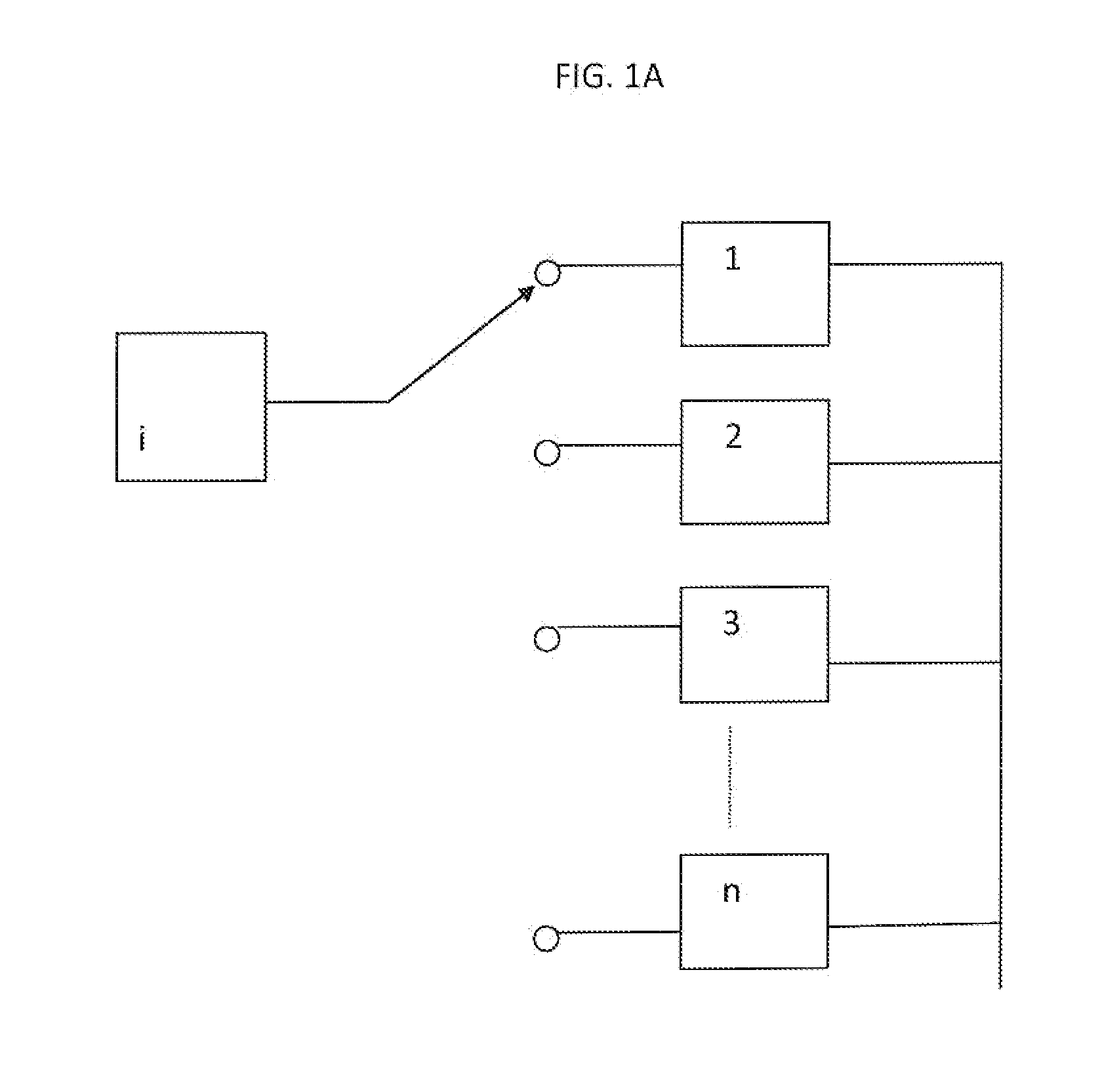

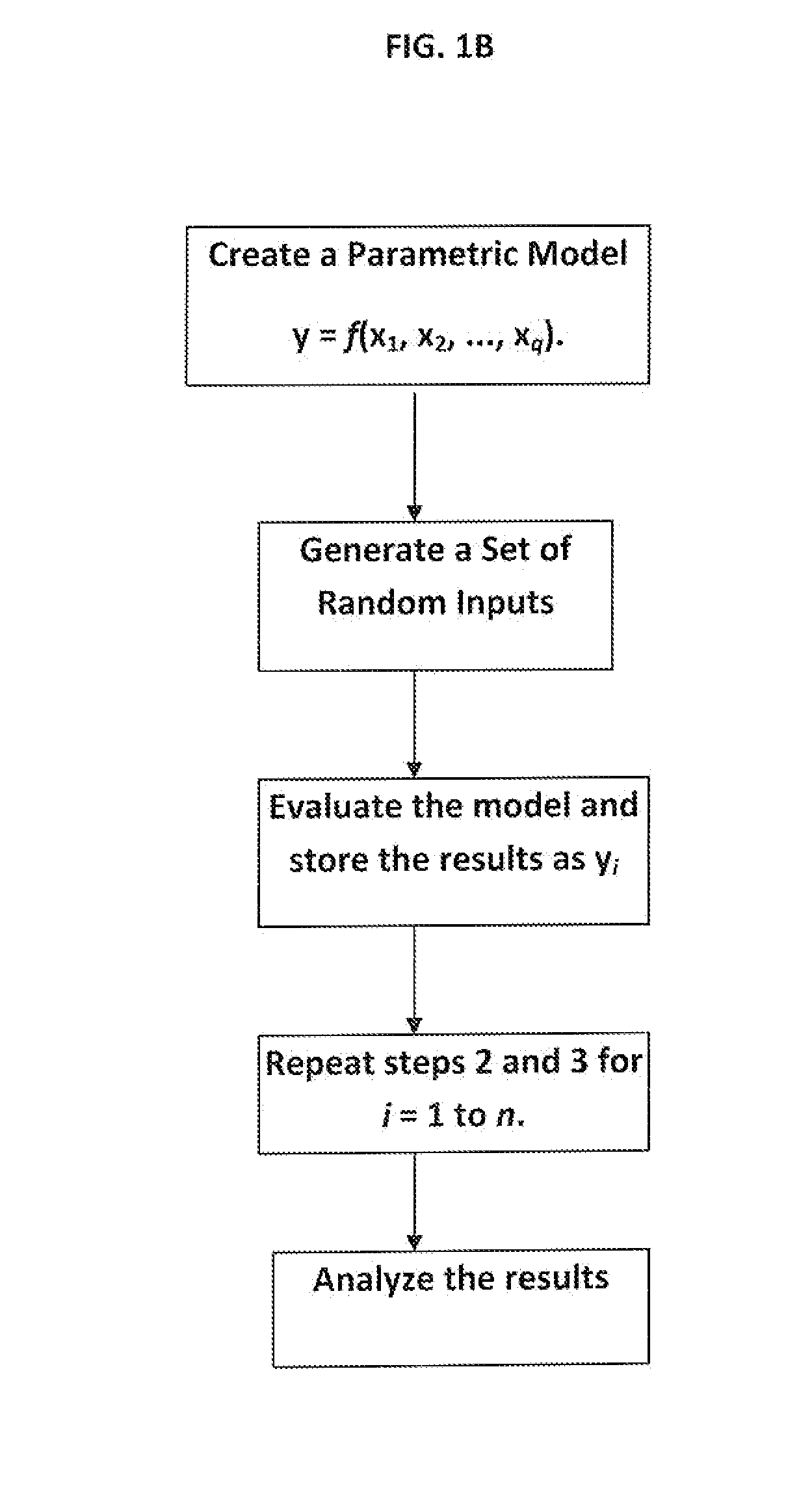

Design of computer based risk and safety management system of complex production and multifunctional process facilities-application to fpso's

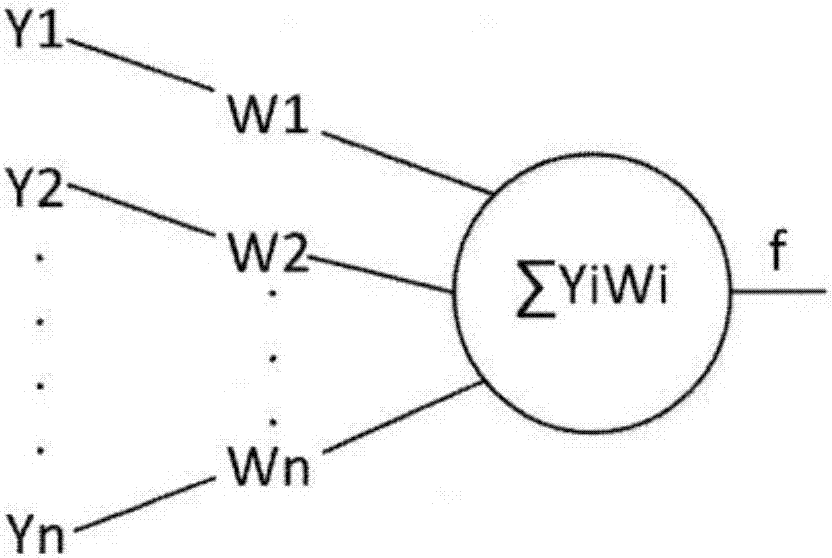

InactiveUS20120317058A1Strong robust attributeStrong robust attributesDigital computer detailsFuzzy logic based systemsProcess systemsNerve network

A method for predicting risk and designing safety management systems of complex production and process systems which has been applied to an FPSO System operating in deep waters. The methods for the design were derived from the inclusion of a weight index in a fuzzy class belief variable in the risk model to assign the relative numerical value or importance a safety device or system has contain a risk hazards within the barrier. The weights index distributes the relative importance of risk events in series or parallel in several interactive risk and safety device systems. The fault tree, the FMECA and the Bow Tie now contains weights in fizzy belief class for implementing safety management programs critical to the process systems. The techniques uses the results of neural networks derived from fuzzy belief systems of weight index to implement the safety design systems thereby limiting use of experienced procedures and benchmarks. The weight index incorporate Safety Factors sets SFri {0, 0.1, 0.2 . . . 1}, and Markov Chain Network to allow the possibility of evaluating the impact of different risks or reliability of multifunctional systems in transient state process. The application of this technique and results of simulation to typical FPSO / Riser systems has been discussed in this invention.

Owner:ABHULIMEN KINGSLEY E

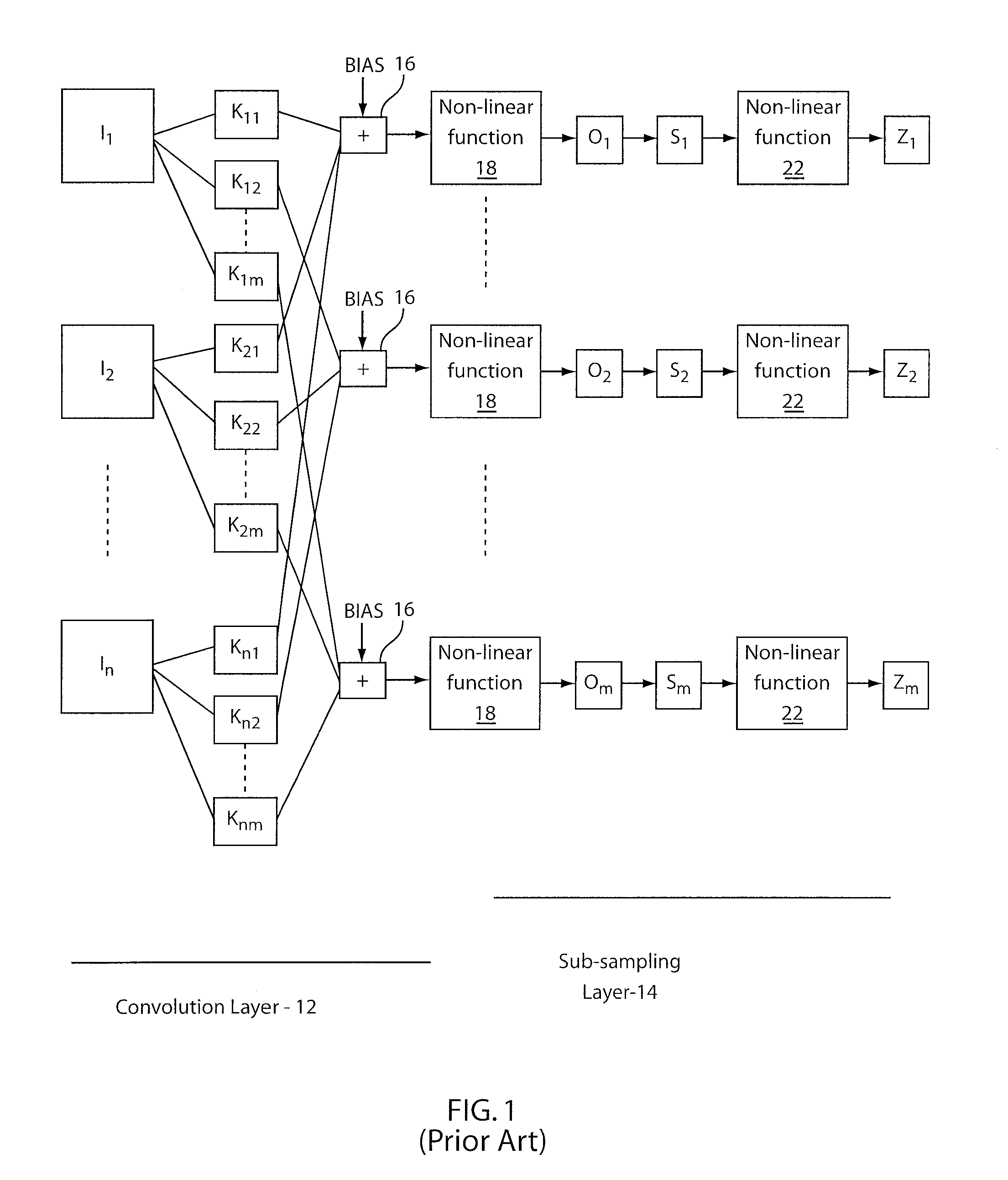

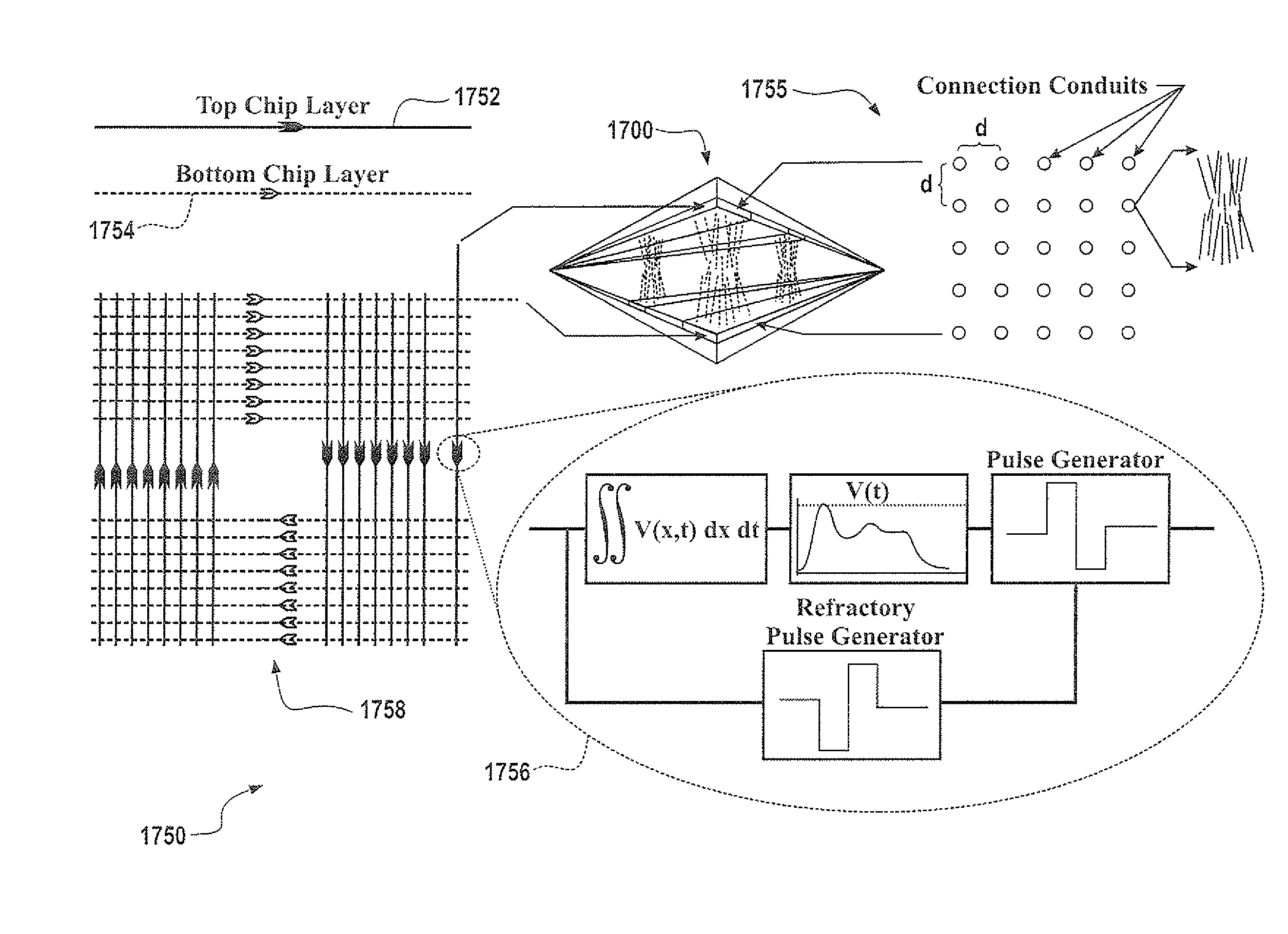

Apparatus comprising artificial neuronal assembly

ActiveUS20100241601A1Augment sensory awarenessDigital computer detailsDigital dataVisual cortexRetina

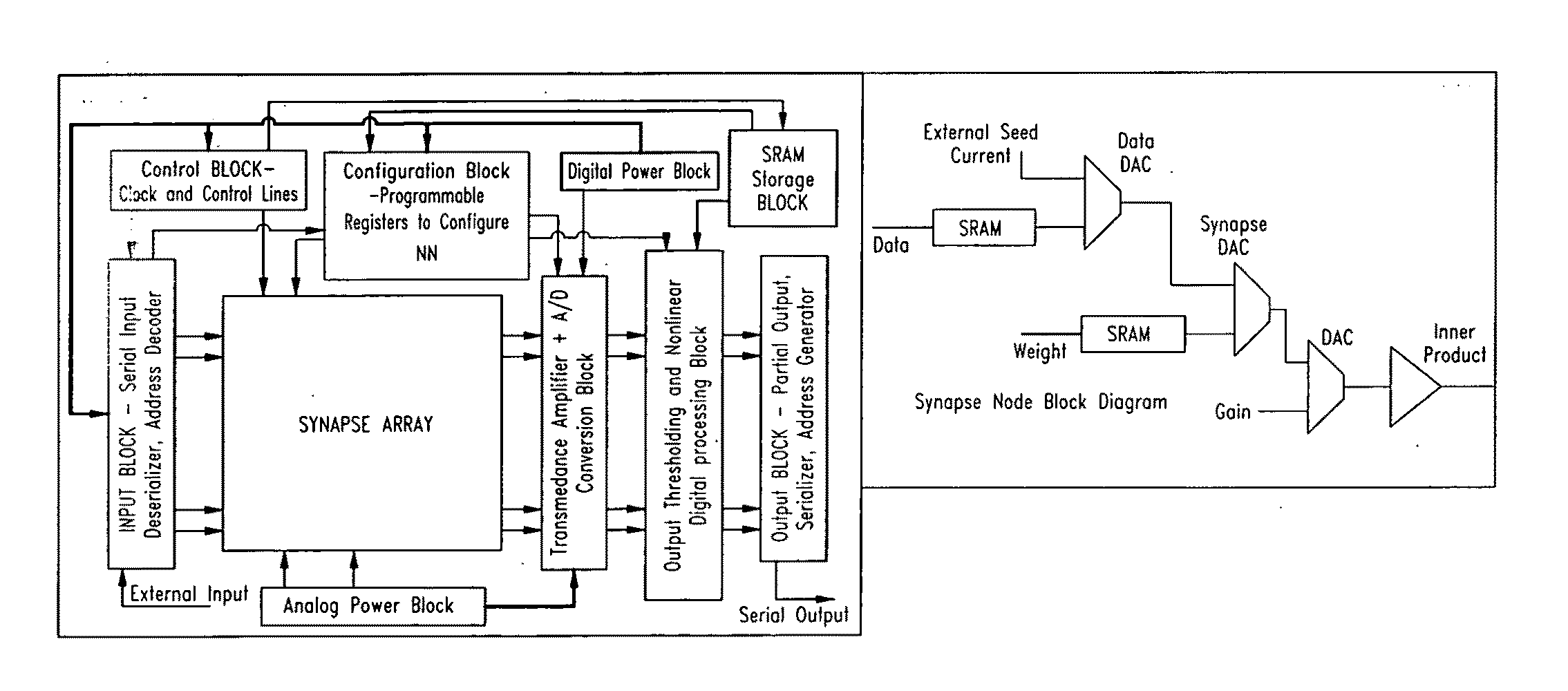

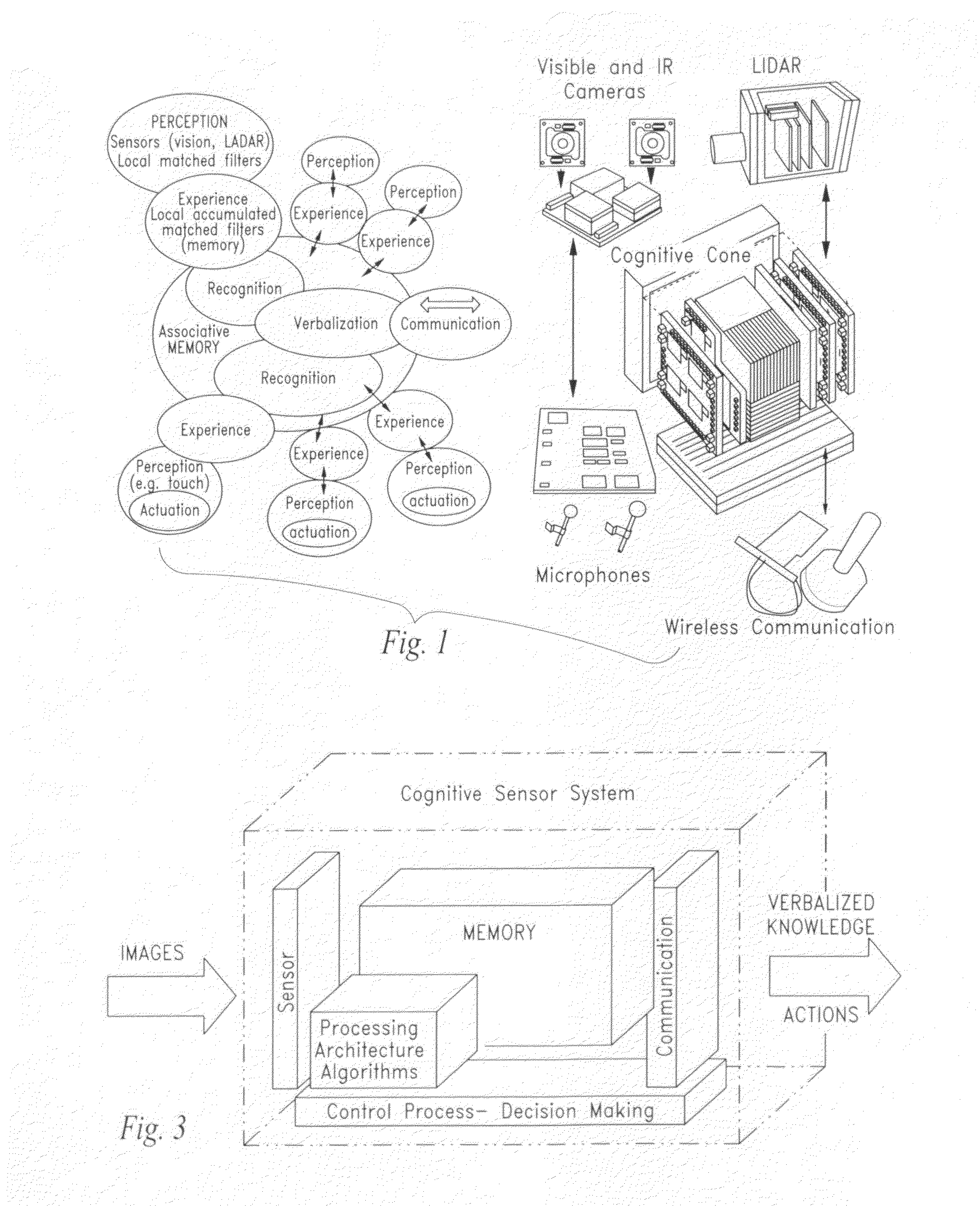

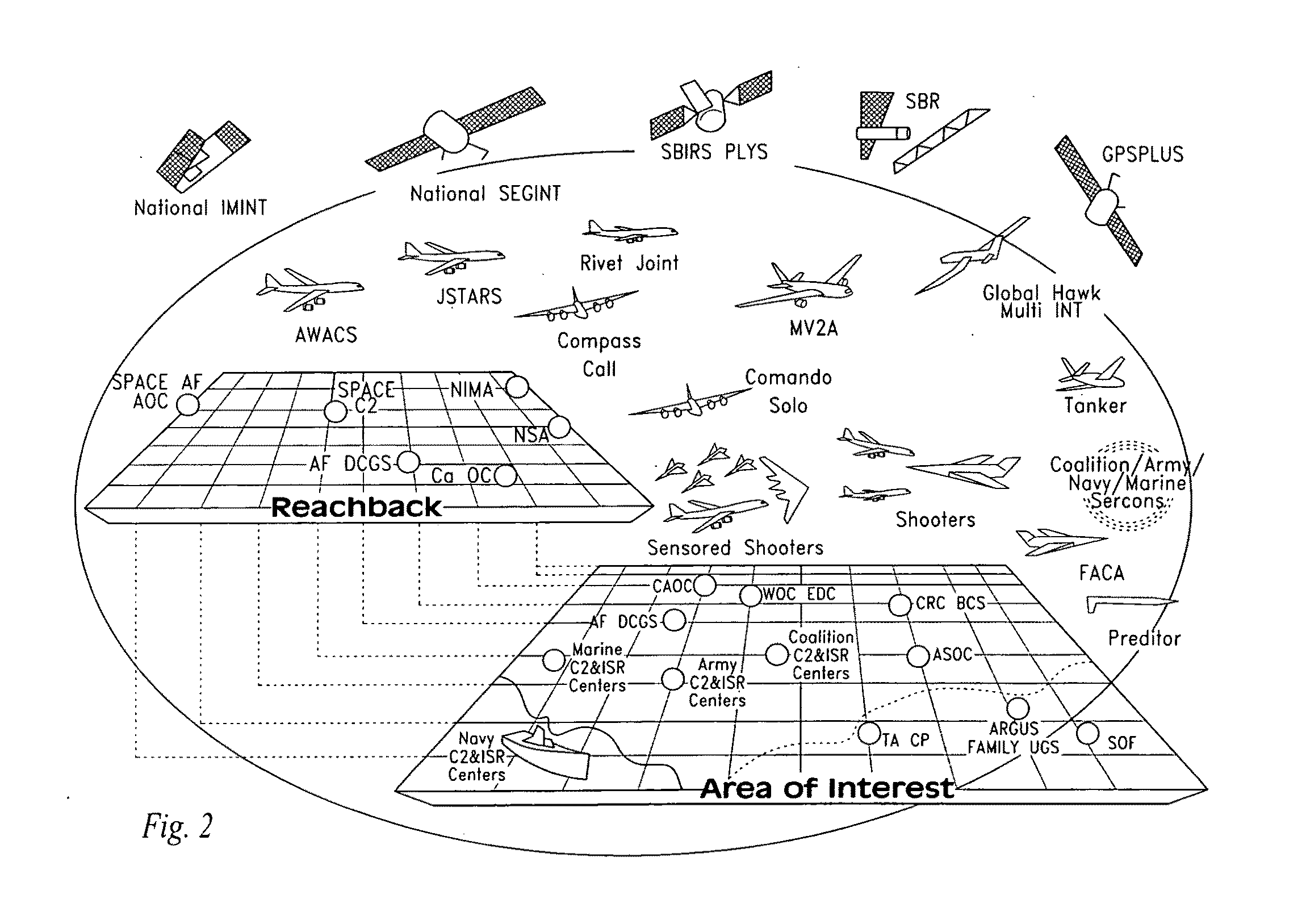

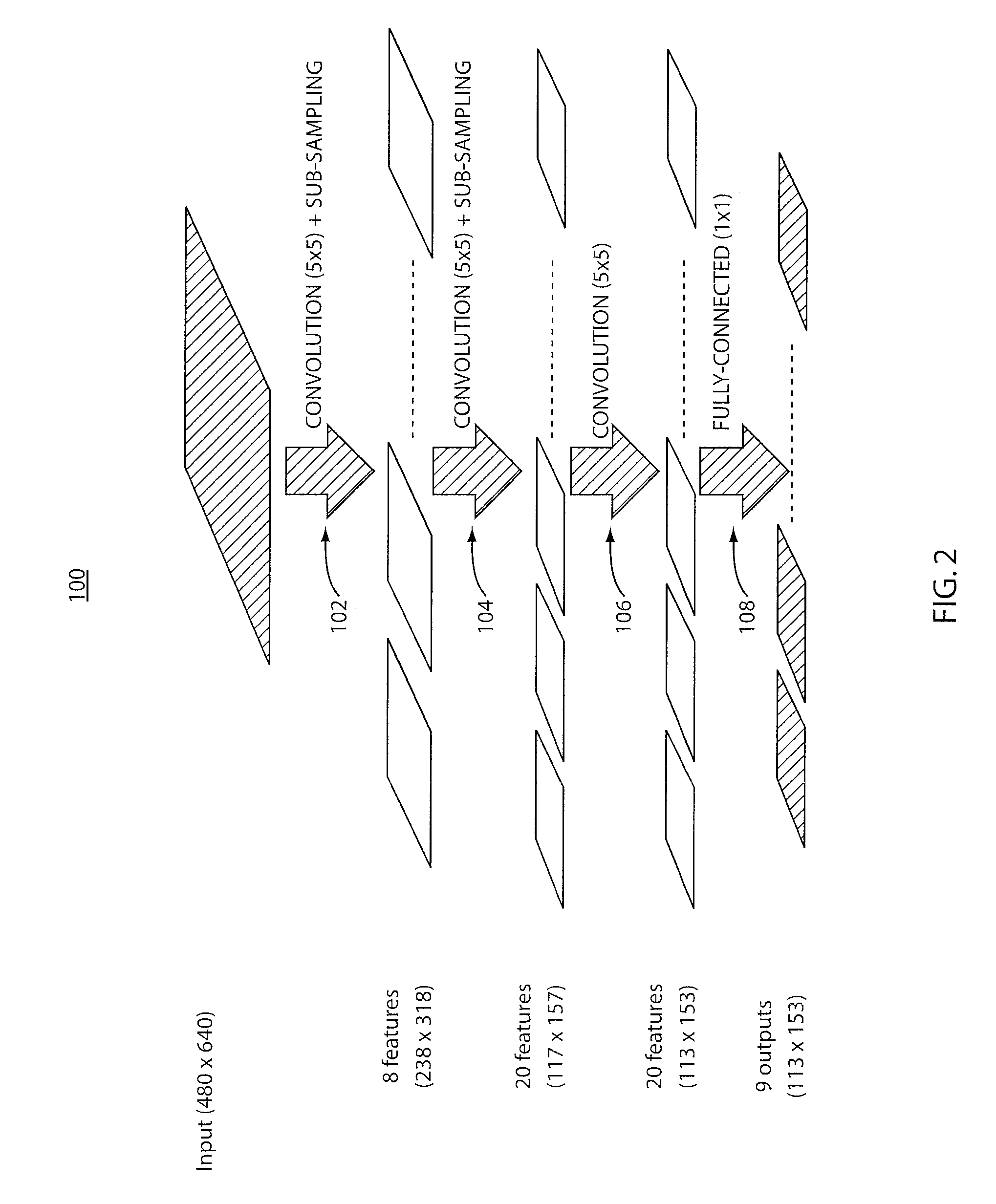

An artificial synapse array and virtual neural space are disclosed.More specifically, a cognitive sensor system and method are disclosed comprising a massively parallel convolution processor capable of, for instance, situationally dependent identification of salient features in a scene of interest by emulating the cortical hierarchy found in the human retina and visual cortex.

Owner:PFG IP +1

Deep Learning Neural Network Classifier Using Non-volatile Memory Array

ActiveUS20170337466A1Input/output to record carriersRead-only memoriesSynapseNeural network classifier

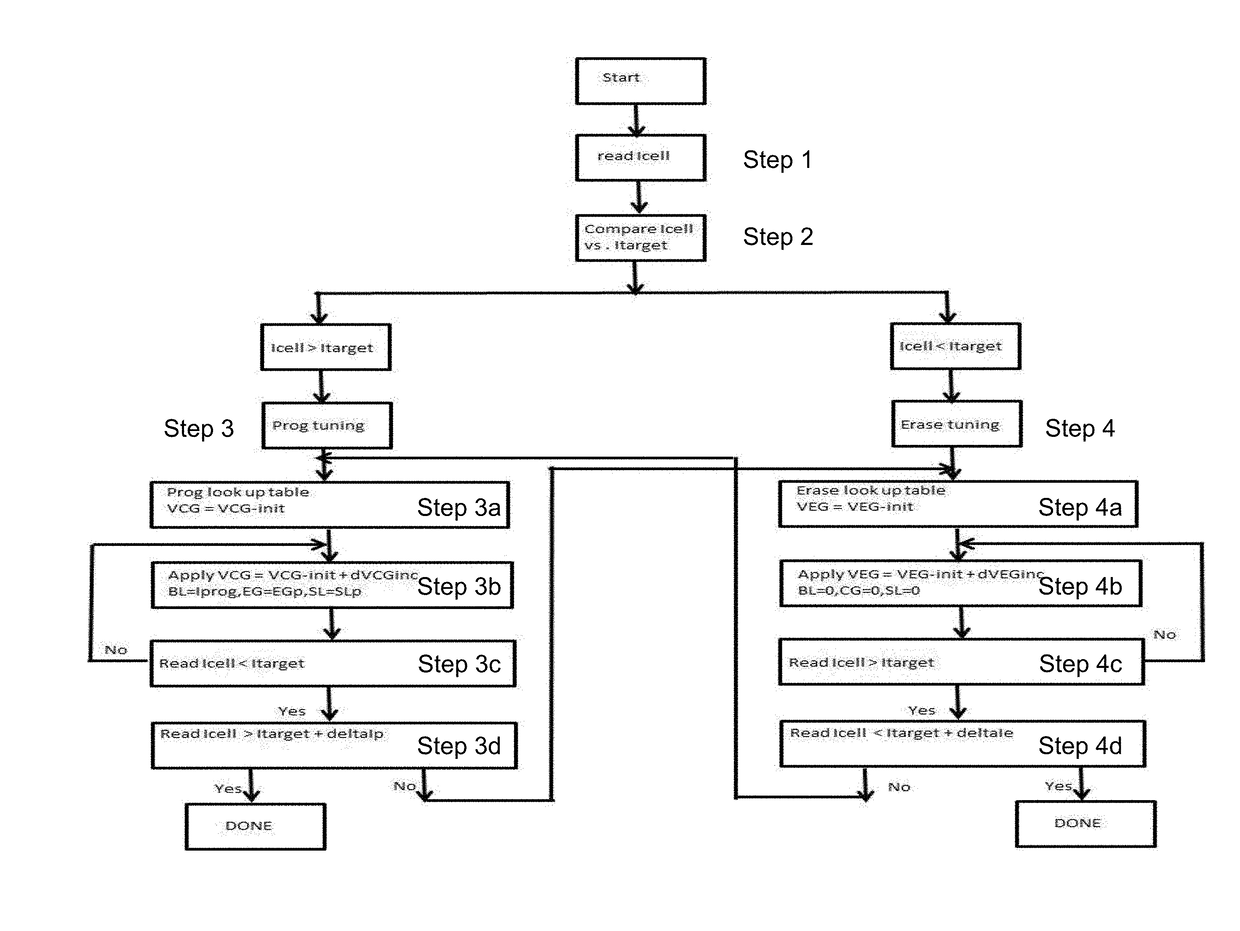

An artificial neural network device that utilizes one or more non-volatile memory arrays as the synapses. The synapses are configured to receive inputs and to generate therefrom outputs. Neurons are configured to receive the outputs. The synapses include a plurality of memory cells, wherein each of the memory cells includes spaced apart source and drain regions formed in a semiconductor substrate with a channel region extending there between, a floating gate disposed over and insulated from a first portion of the channel region and a non-floating gate disposed over and insulated from a second portion of the channel region. Each of the plurality of memory cells is configured to store a weight value corresponding to a number of electrons on the floating gate. The plurality of memory cells are configured to multiply the inputs by the stored weight values to generate the outputs.

Owner:RGT UNIV OF CALIFORNIA +1

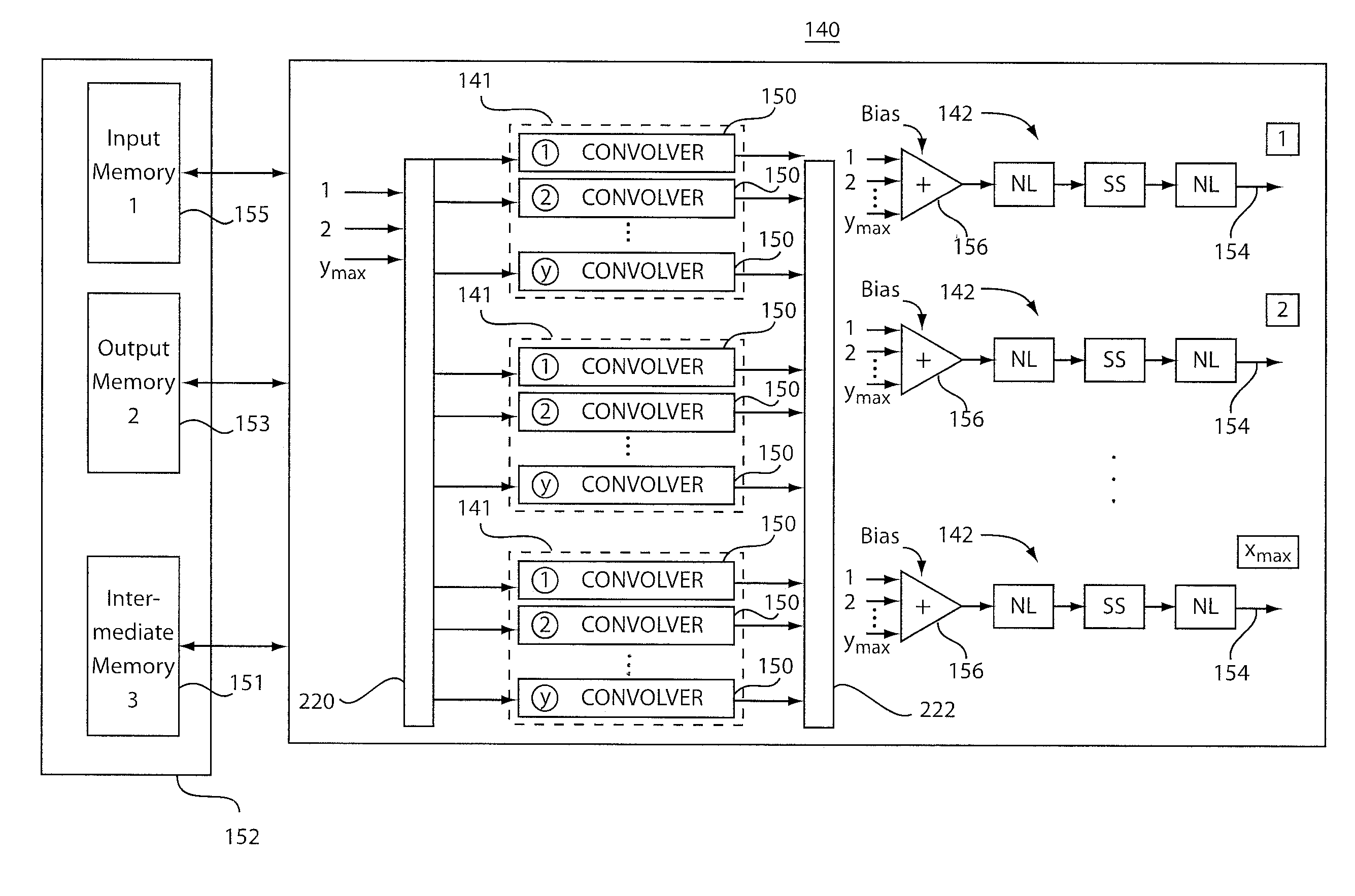

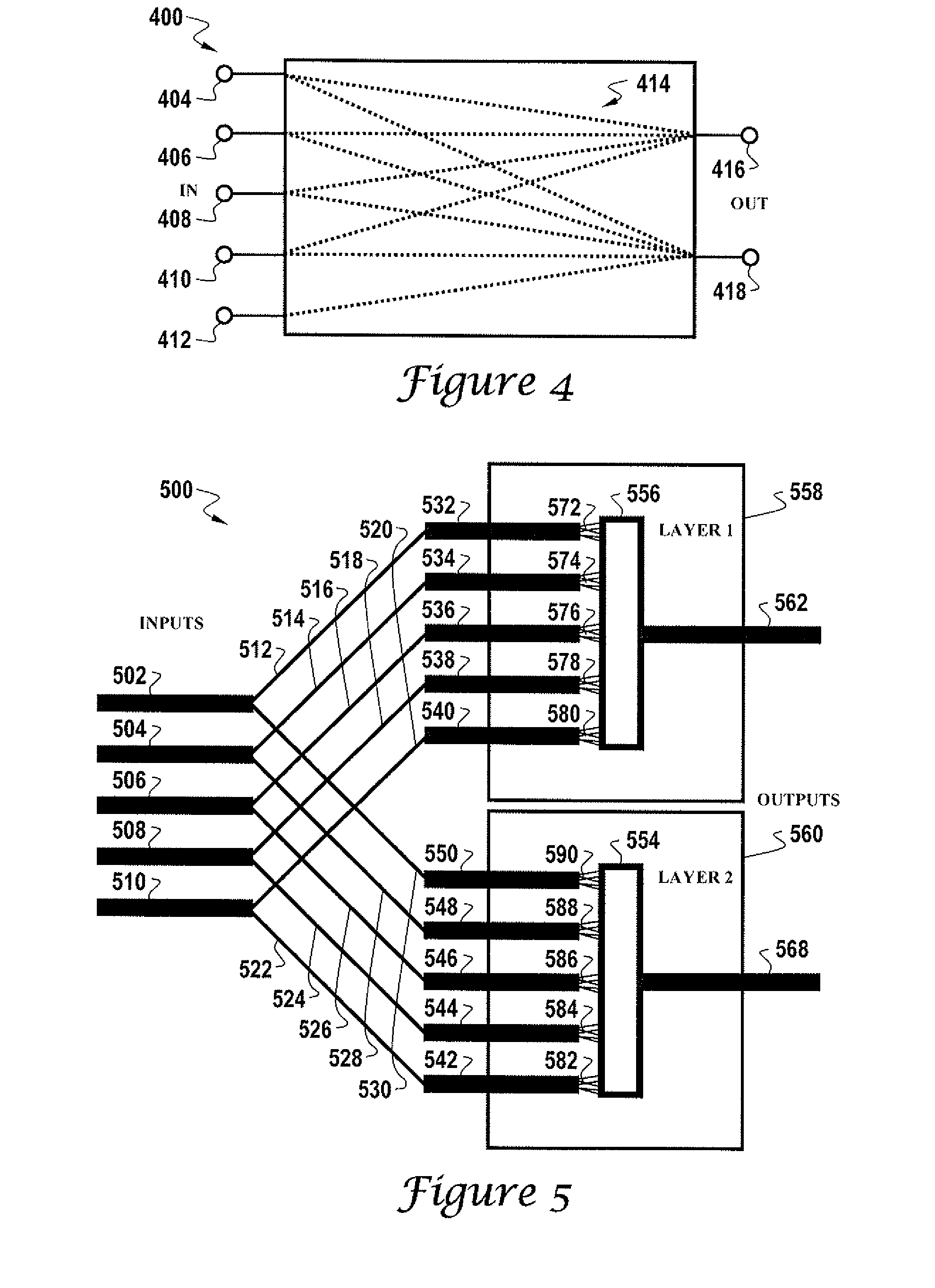

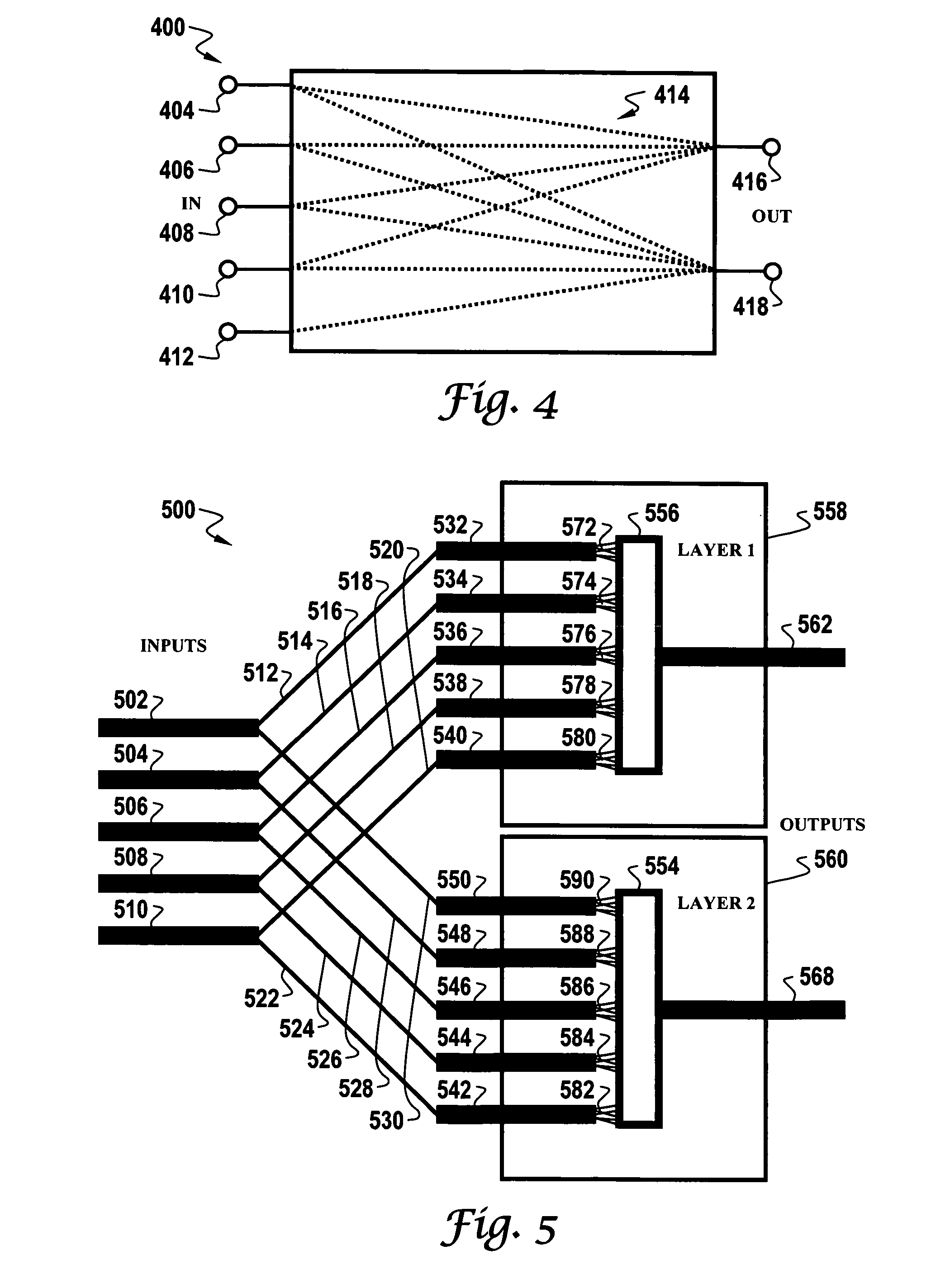

Dynamically configurable, multi-ported co-processor for convolutional neural networks

ActiveUS20110029471A1Improve feed-forward processing speedGeneral purpose stored program computerDigital dataCoprocessorControl signal

A coprocessor and method for processing convolutional neural networks includes a configurable input switch coupled to an input. A plurality of convolver elements are enabled in accordance with the input switch. An output switch is configured to receive outputs from the set of convolver elements to provide data to output branches. A controller is configured to provide control signals to the input switch and the output switch such that the set of convolver elements are rendered active and a number of output branches are selected for a given cycle in accordance with the control signals.

Owner:NEC CORP

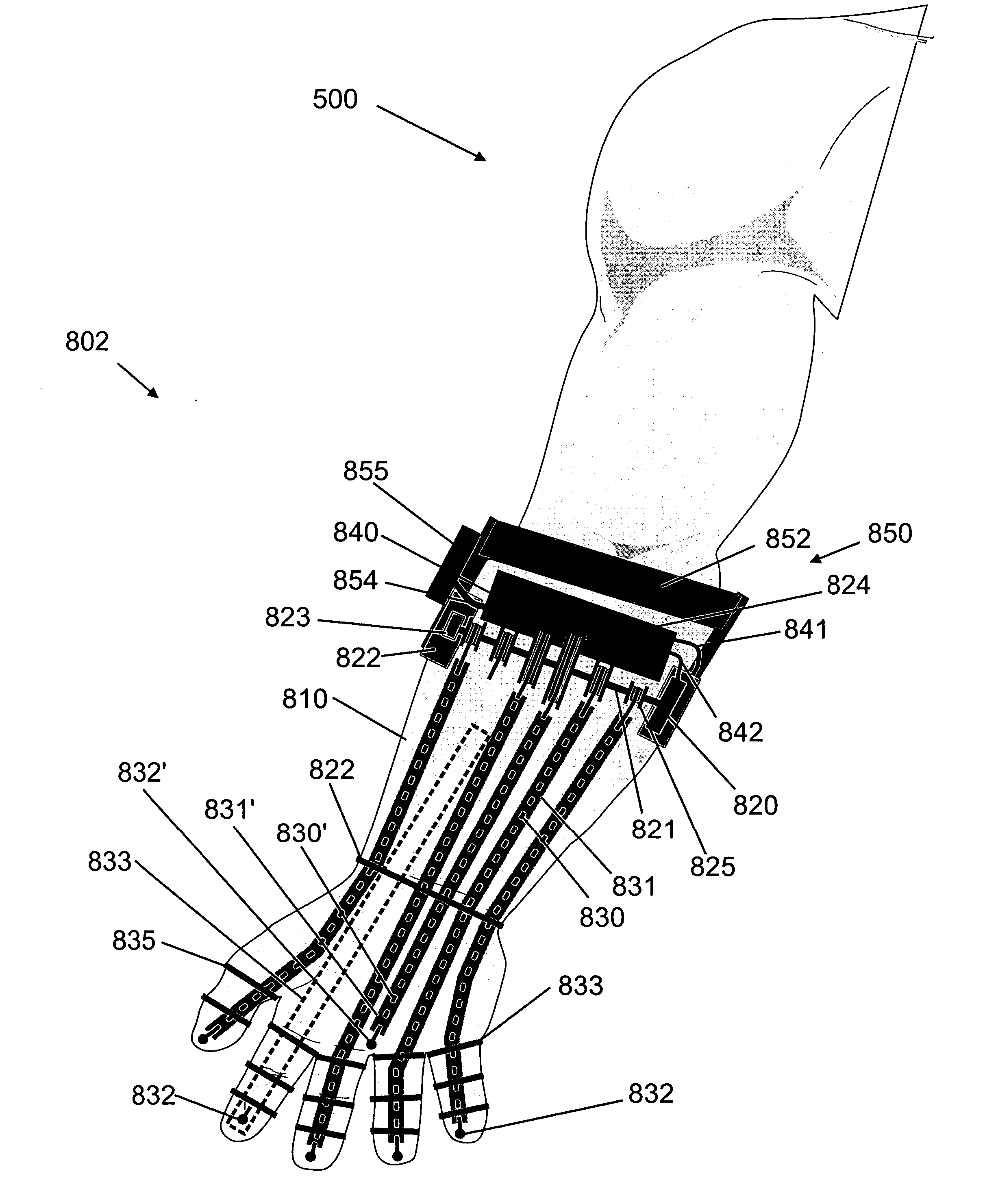

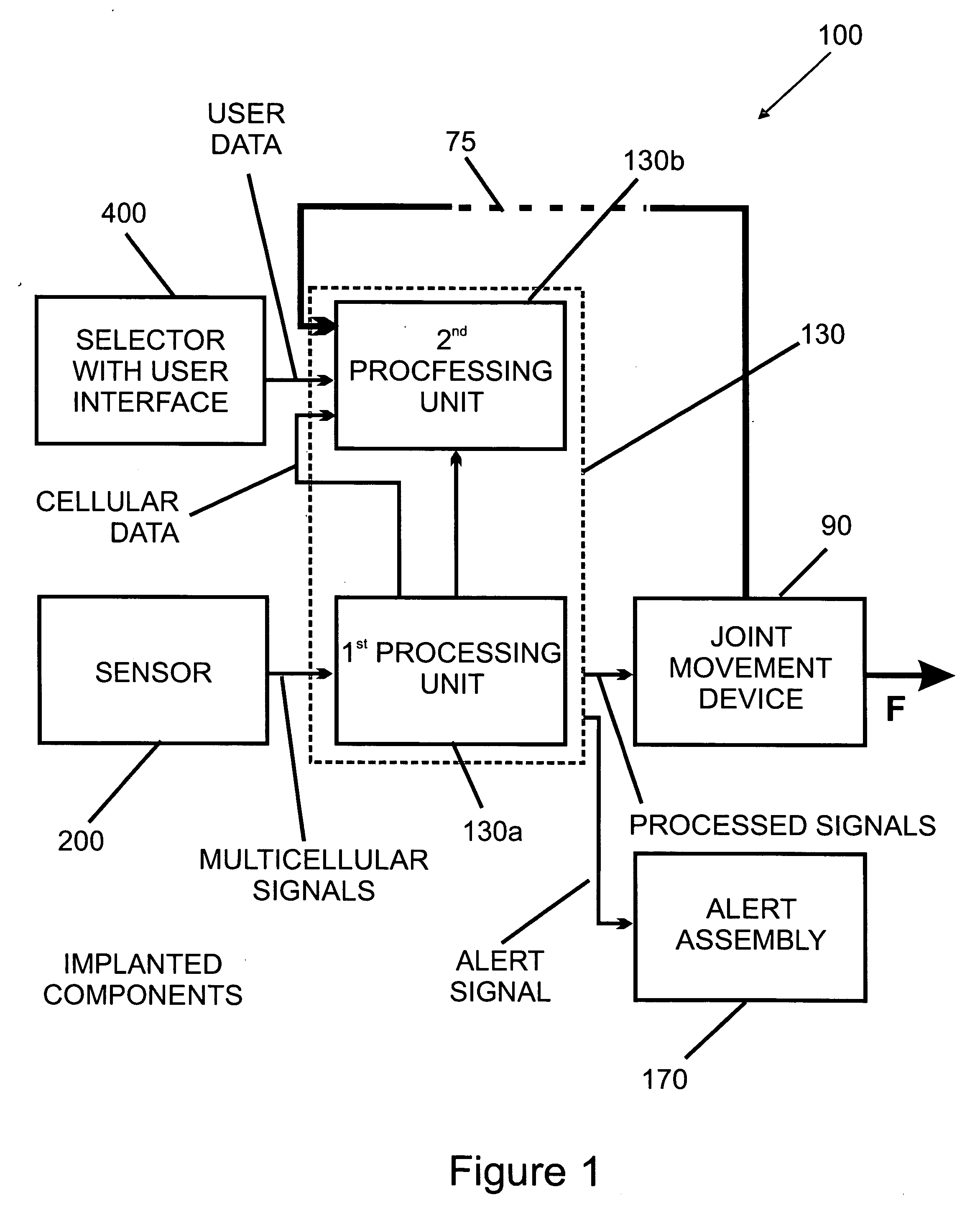

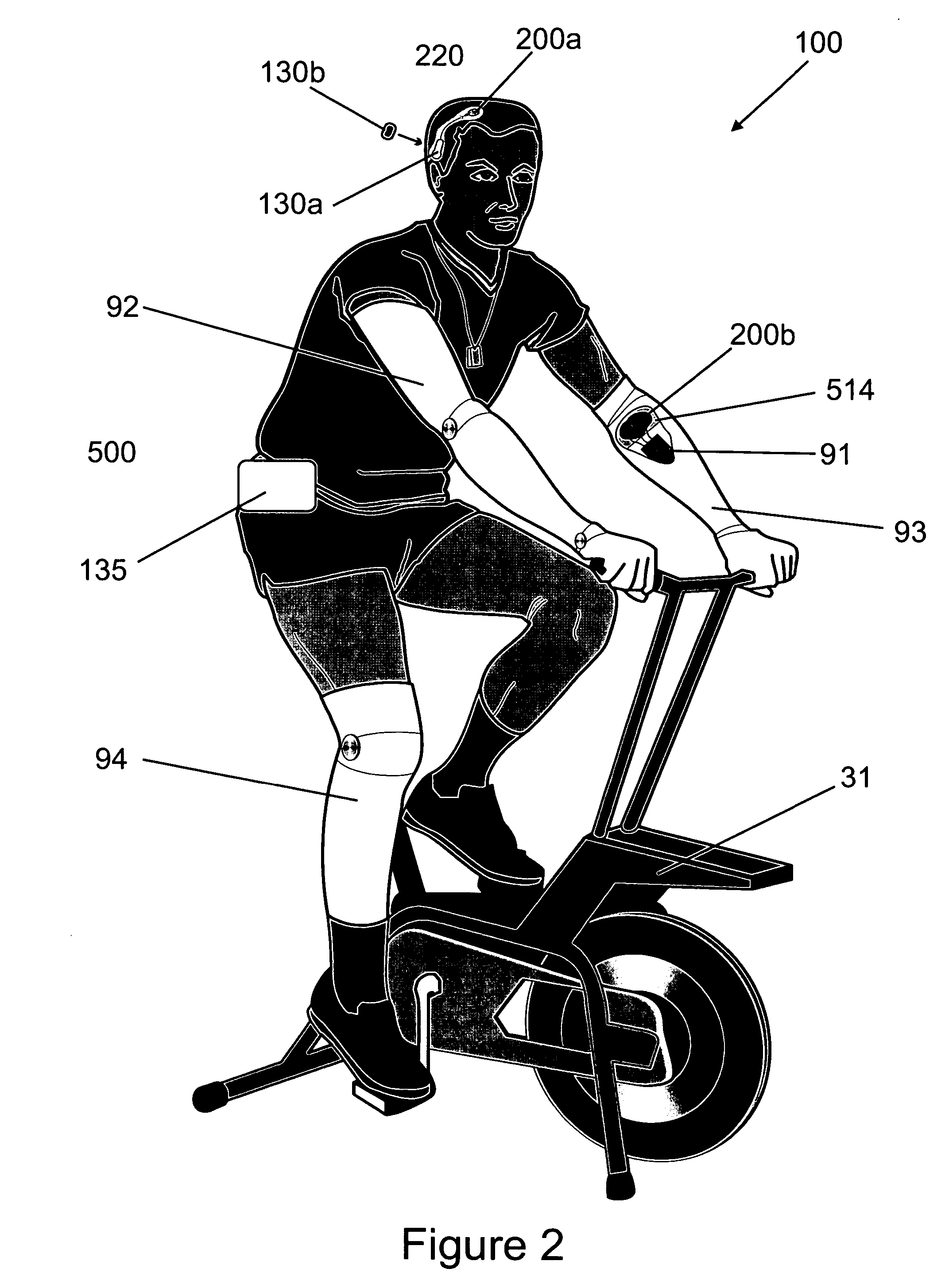

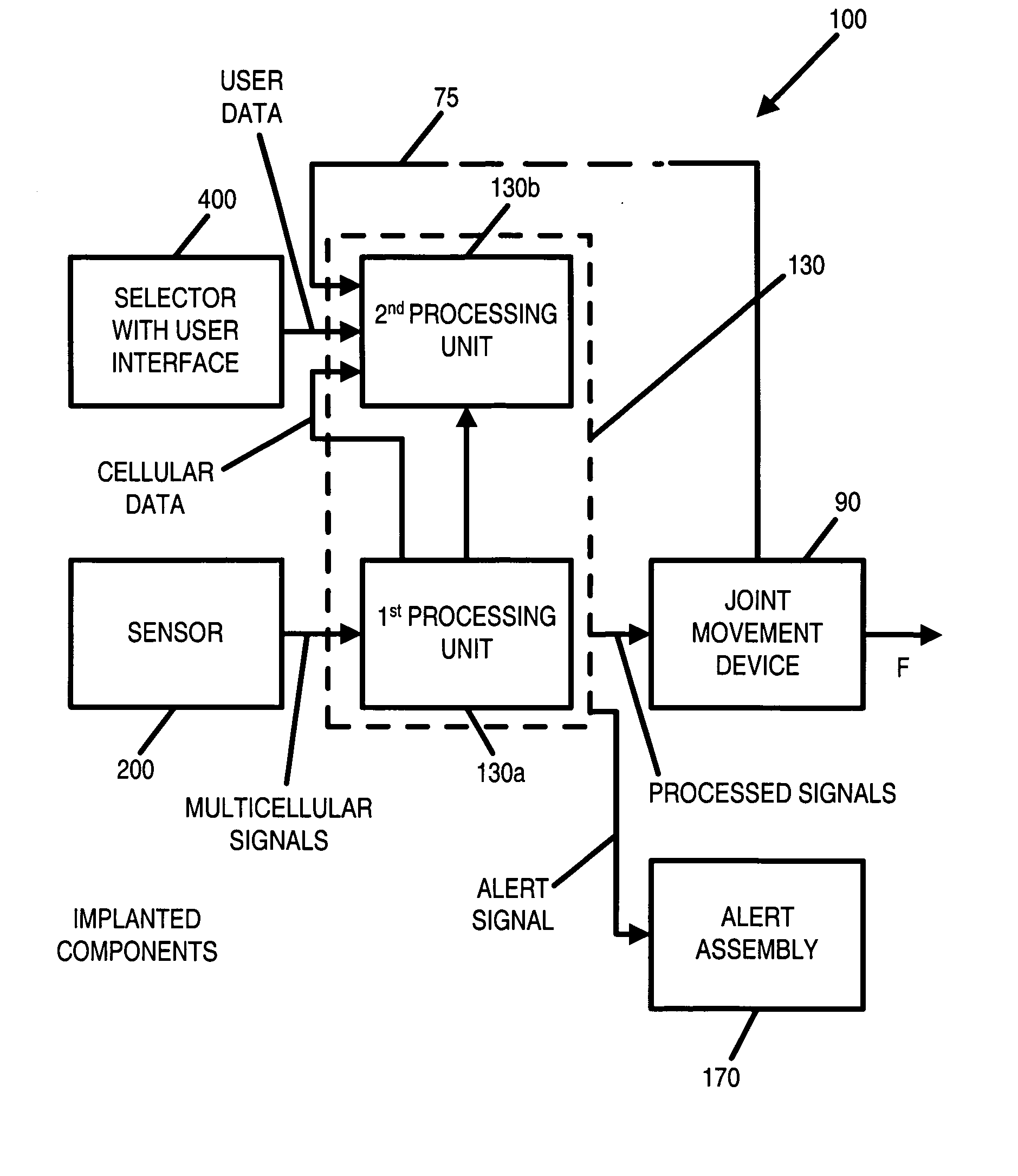

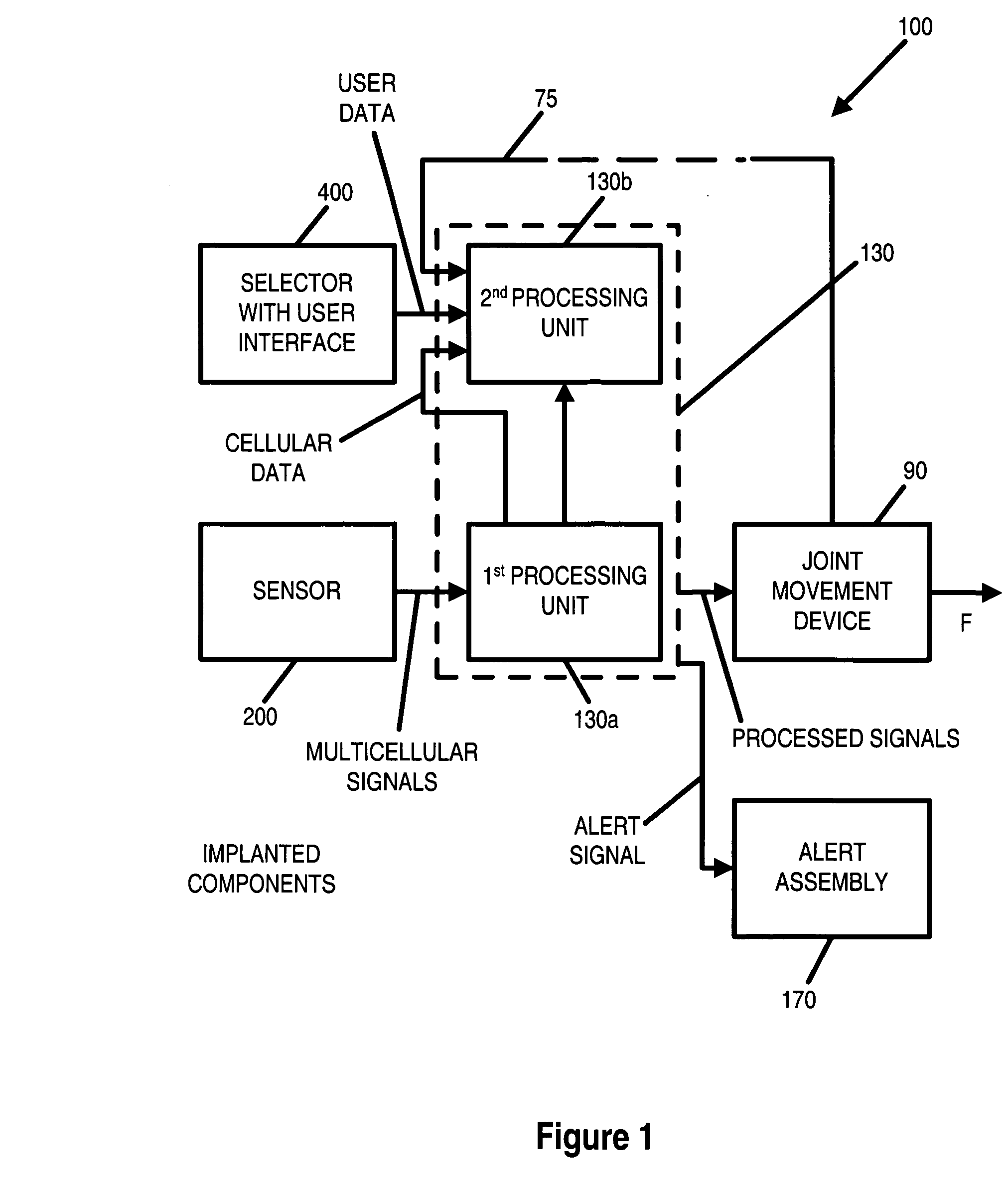

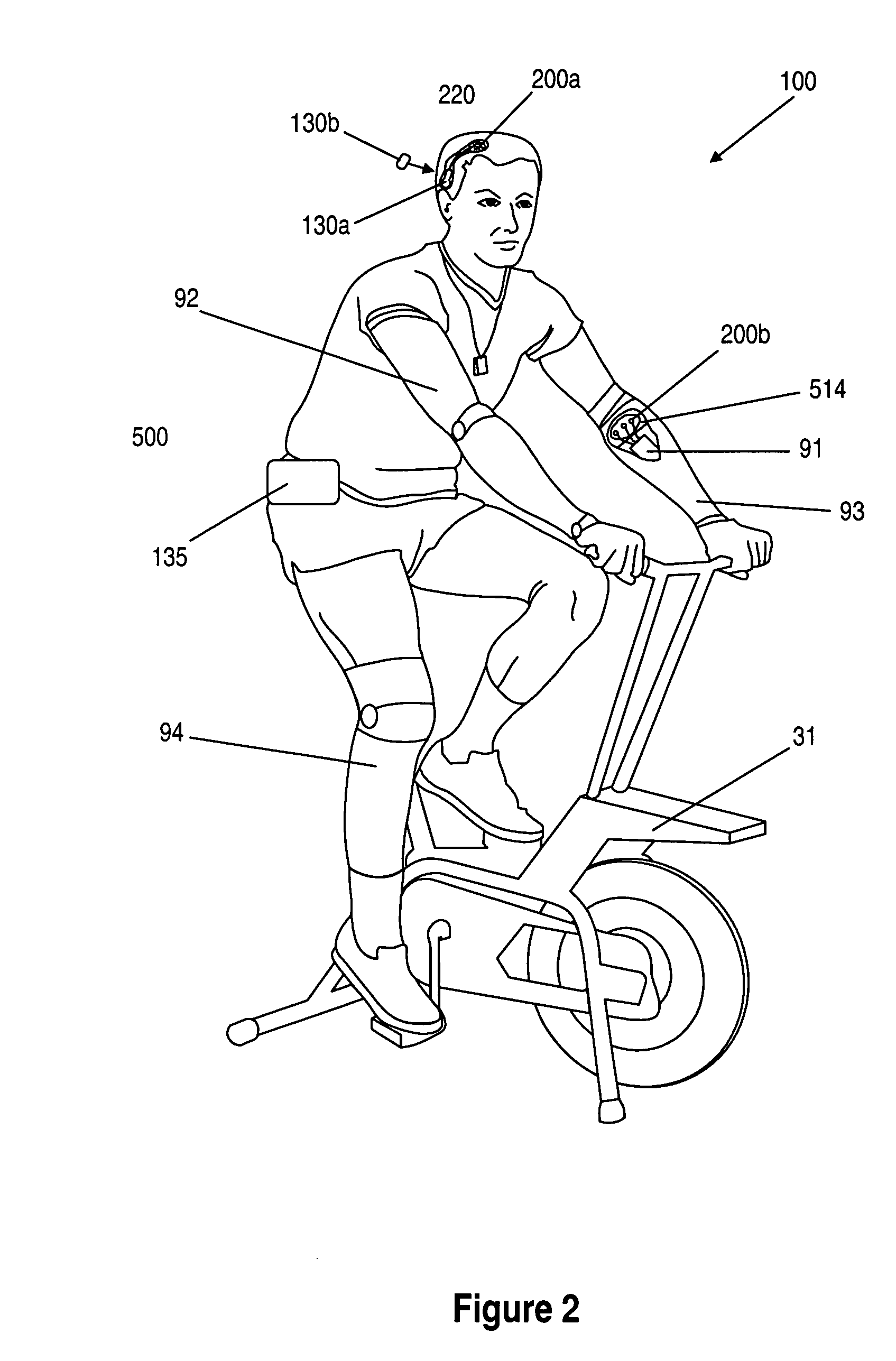

Limb and digit movement system

Systems, methods and devices for restoring or enhancing one or more motor functions of a patient are disclosed. The system comprises a biological interface apparatus and a joint movement device such as an exoskeleton device or FES device. The biological interface apparatus includes a sensor that detects the multicellular signals and a processing unit for producing a control signal based on the multicellular signals. Data from the joint movement device is transmitted to the processing unit for determining a value of a configuration parameter of the system. Also disclosed is a joint movement device including a flexible structure for applying force to one or more patient joints, and controlled cables that produce the forces required.

Owner:CYBERKINETICS NEUROTECH SYST

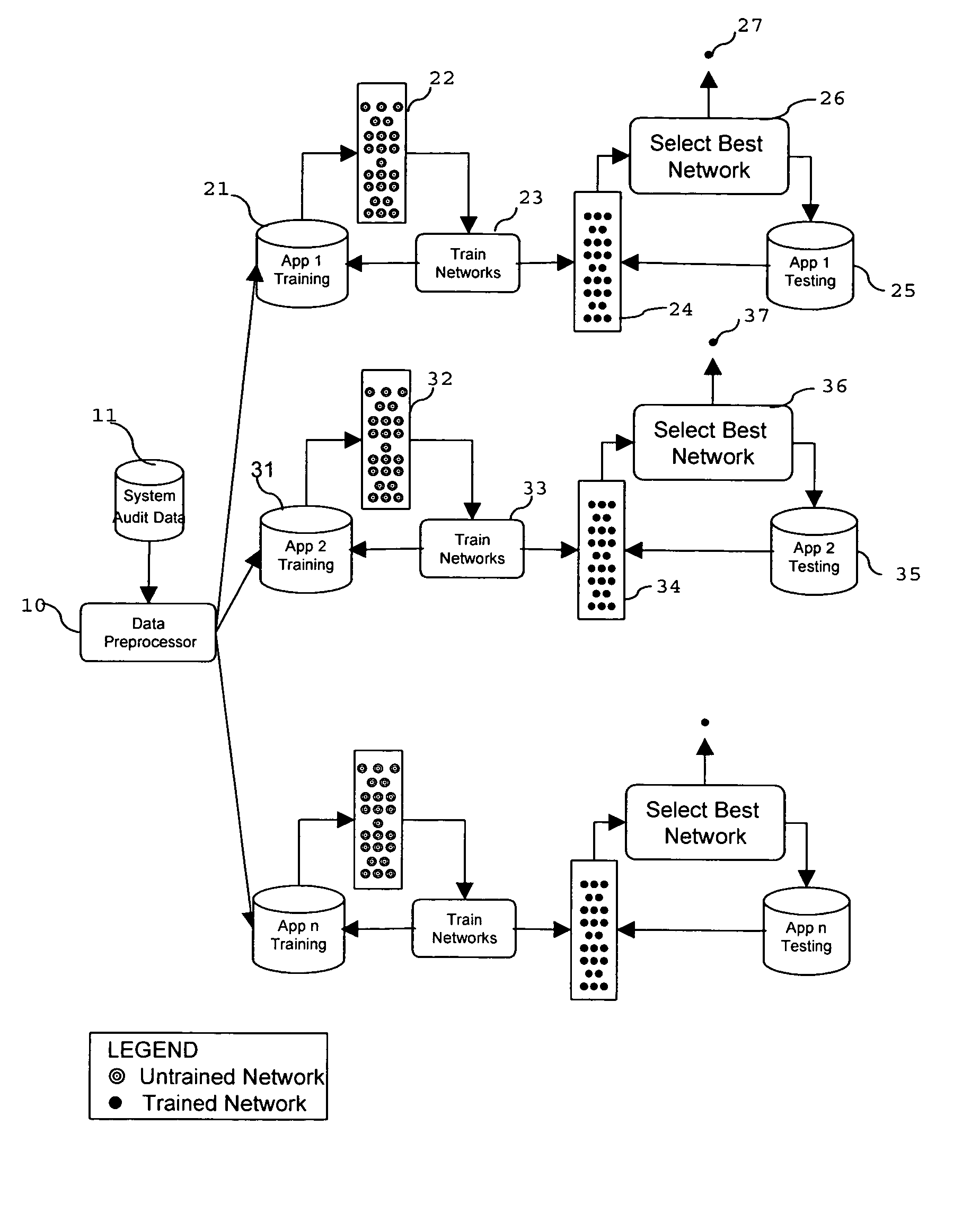

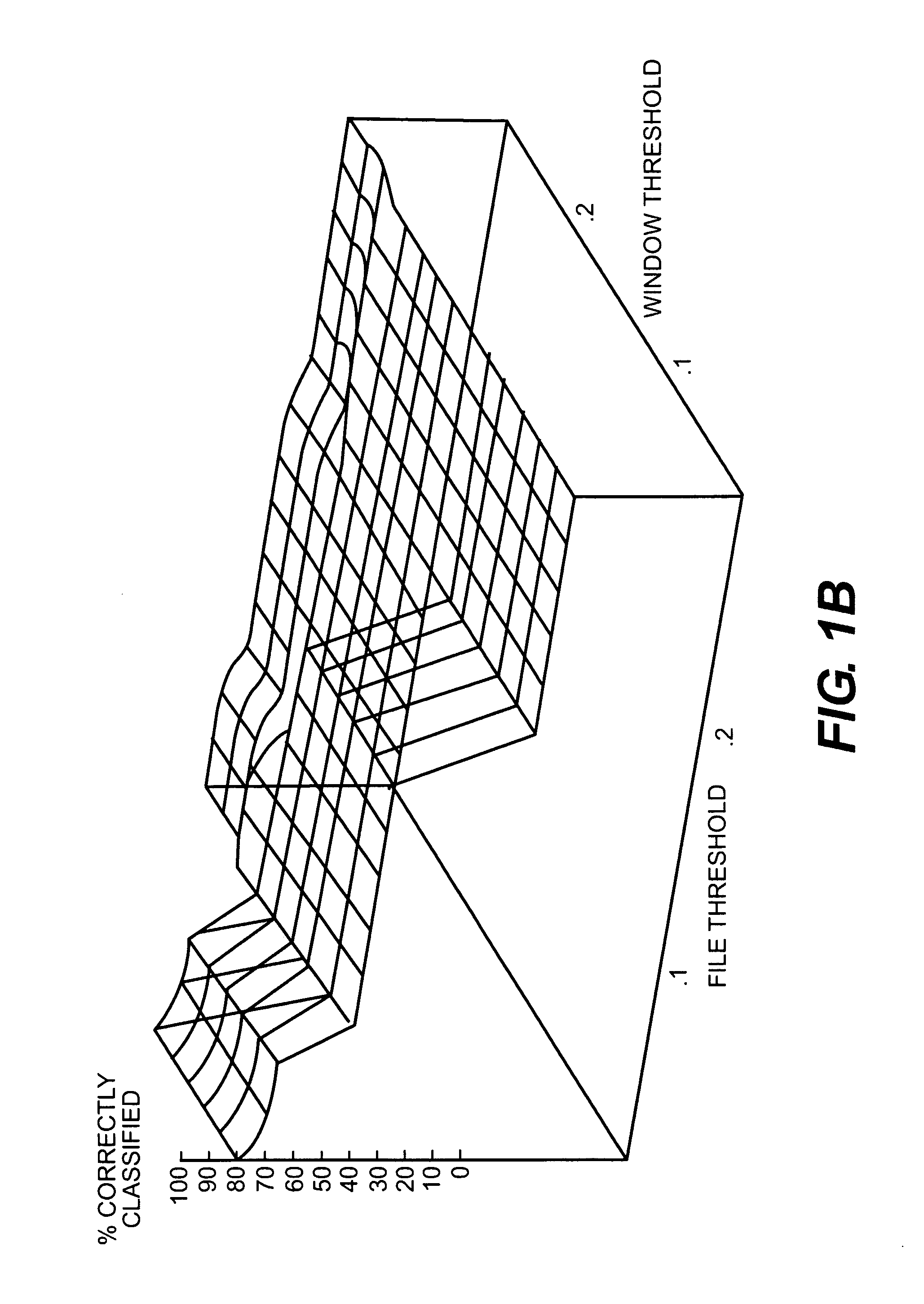

Computer intrusion detection system and method based on application monitoring

InactiveUS7181768B1Reduced false positive falseReduce false negative rateMemory loss protectionError detection/correctionNerve networkPrediction algorithms

An intrusion detection system (IDS) that uses application monitors for detecting application-based attacks against computer systems. The IDS implements application monitors in the form of a software program to learn and monitor the behavior of system programs in order to detect attacks against computer hosts. The application monitors implement machine learning algorithms to provide a mechanism for learning from previously observed behavior in order to recognize future attacks that it has not seen before. The application monitors include temporal locality algorithms to increased the accuracy of the IDS. The IDS of the present invention may comprise a string-matching program, a neural network, or a time series prediction algorithm for learning normal application behavior and for detecting anomalies.

Owner:SYNOPSYS INC

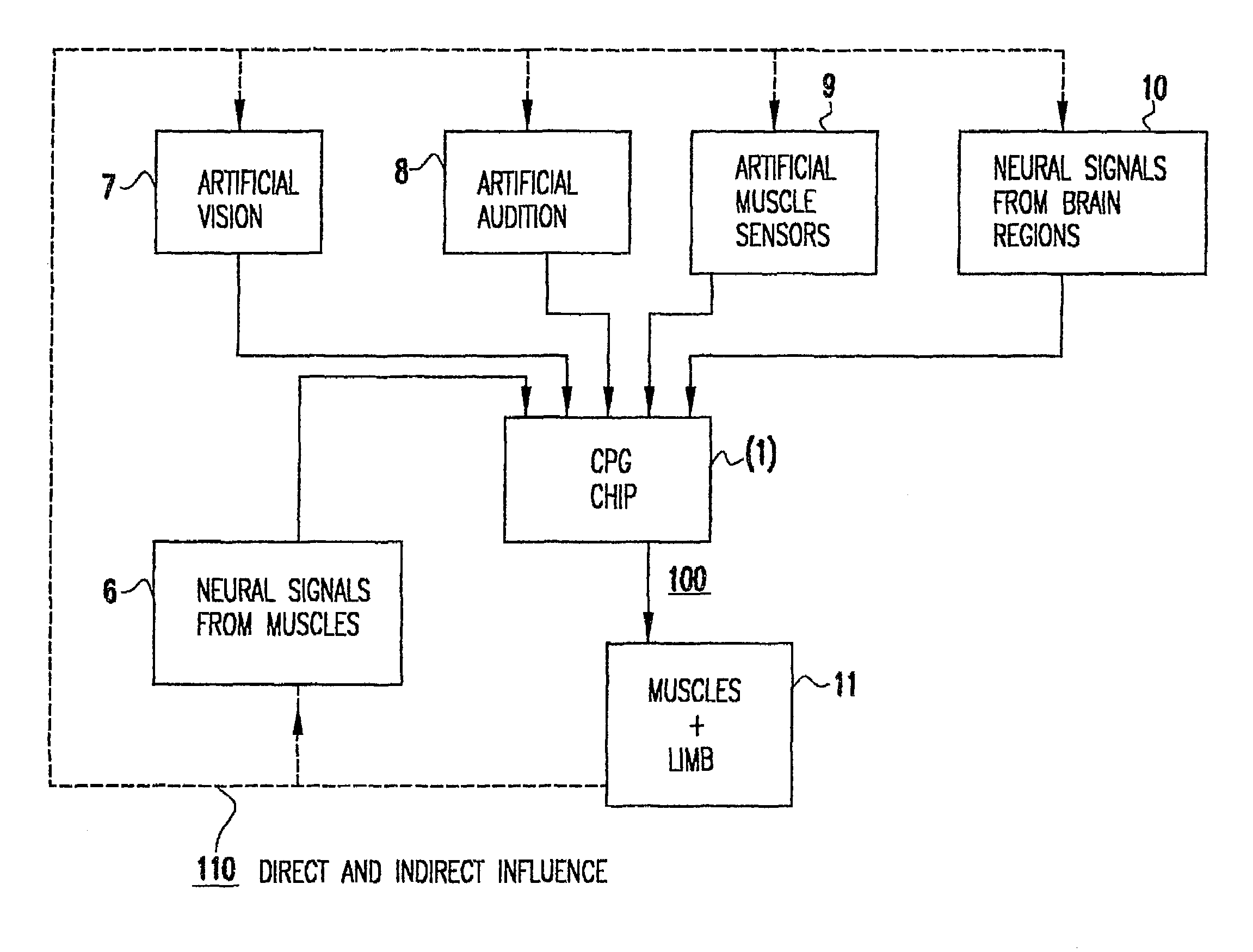

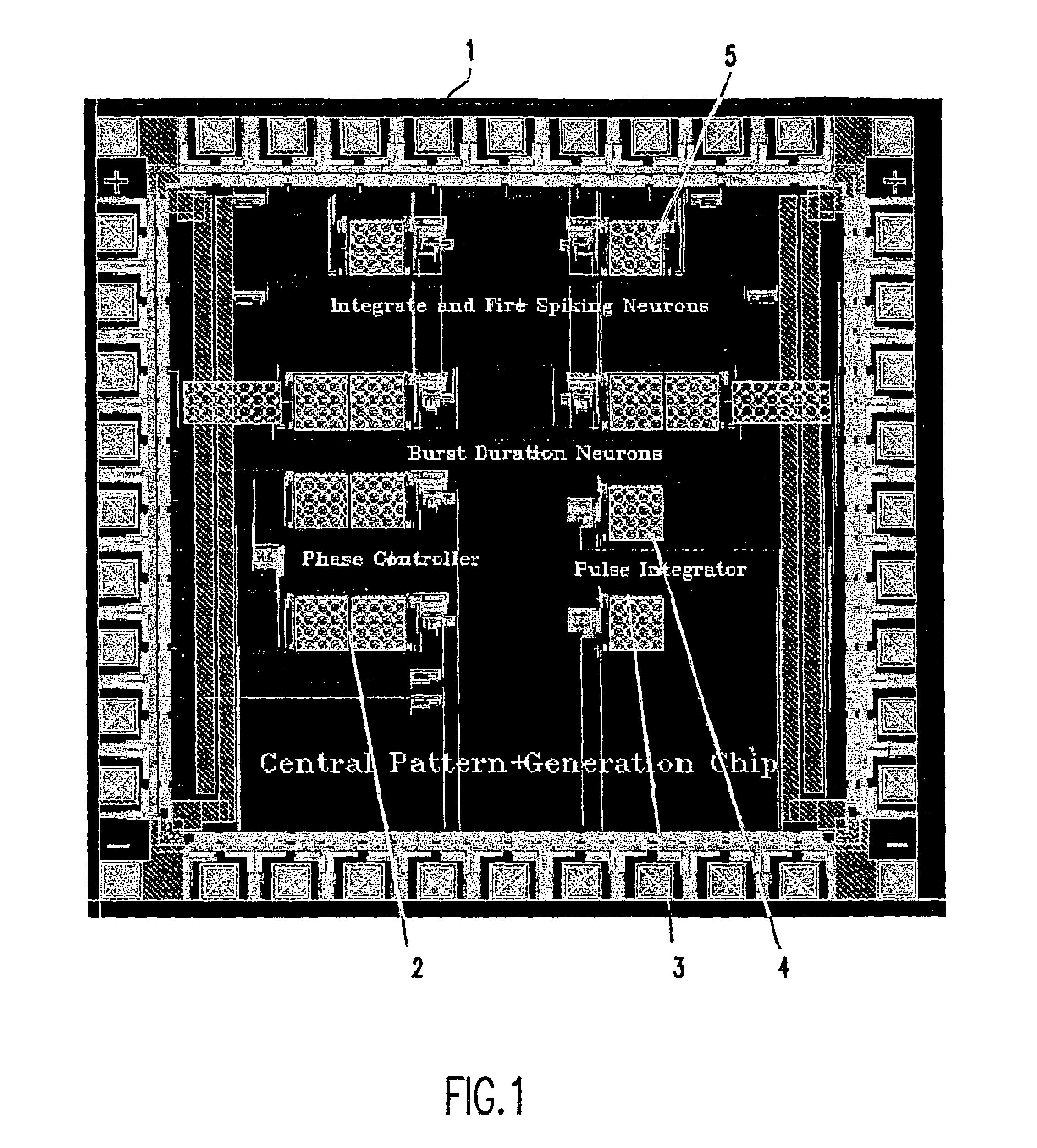

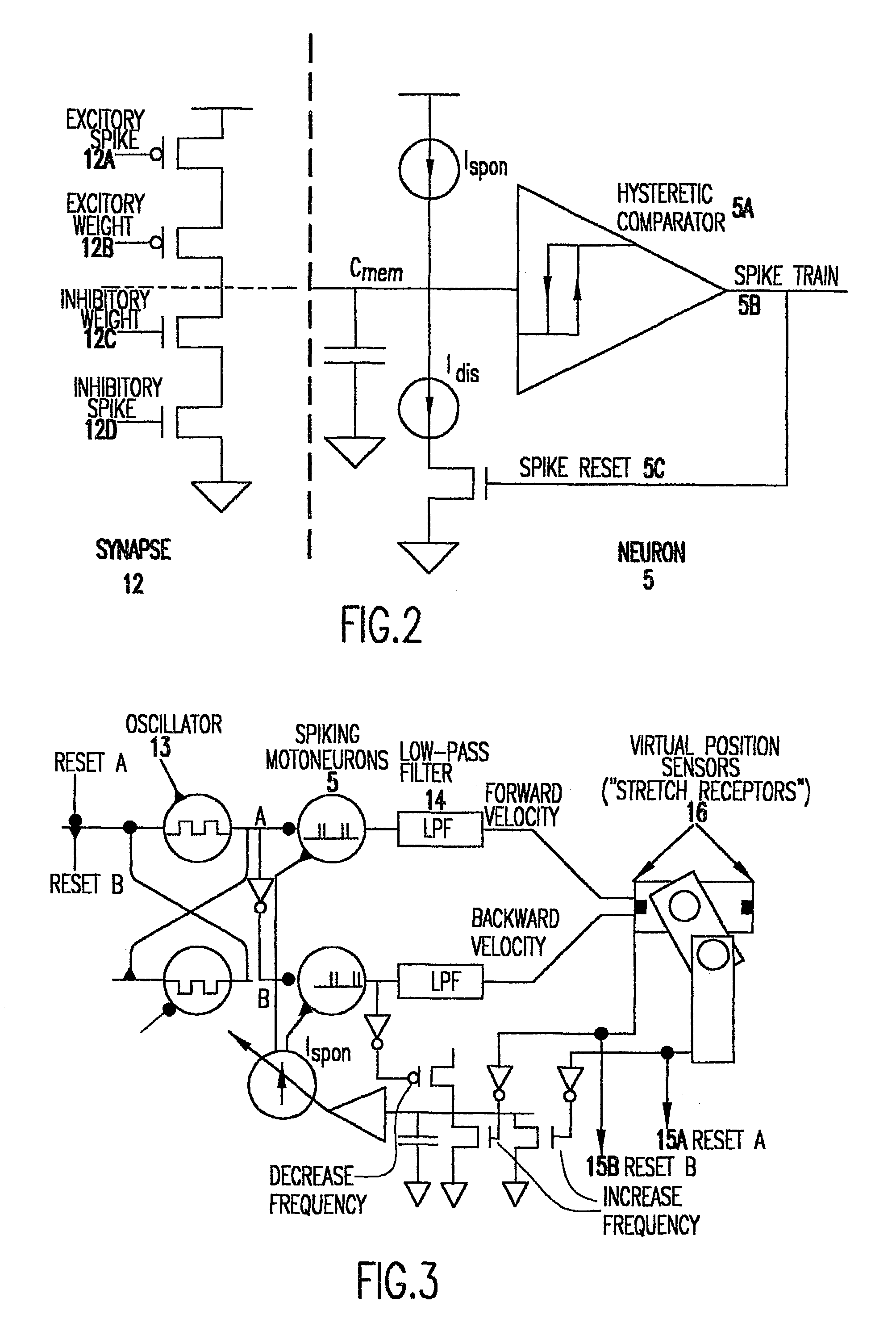

Biomorphic rhythmic movement controller

InactiveUS7164967B2Sophisticated adaptabilitySophisticated responsivenessProgramme-controlled manipulatorElectrotherapyEngineeringSelf adaptive

An artificial Central Pattern Generator (CPG) based on the naturally-occuring central pattern generator locomotor controller for walking, running, swimming, and flying animals may be constructed to be self-adaptive, by providing for the artificial CPG, which may be a chip, to tune its behavior based on sensory feedback. It is believed that this is the first instance of an adaptive CPG chip. Such a sensory feedback-using system with an artificial CPG may be used in mechanical applications such as a running robotic leg, in walking, flying and swimming machines, and in miniature and larger robots, and also in biological systems, such as a surrogate neural system for patients with spinal damage.

Owner:IGUANA ROBOTICS

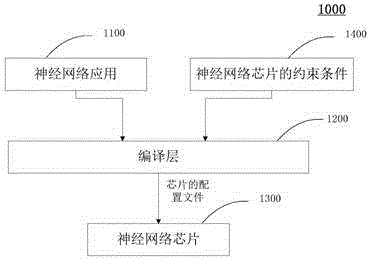

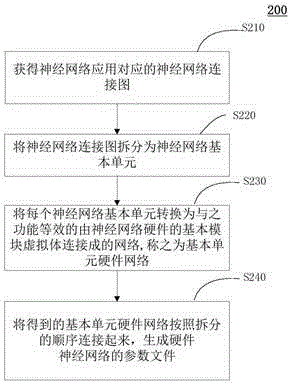

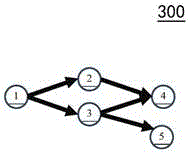

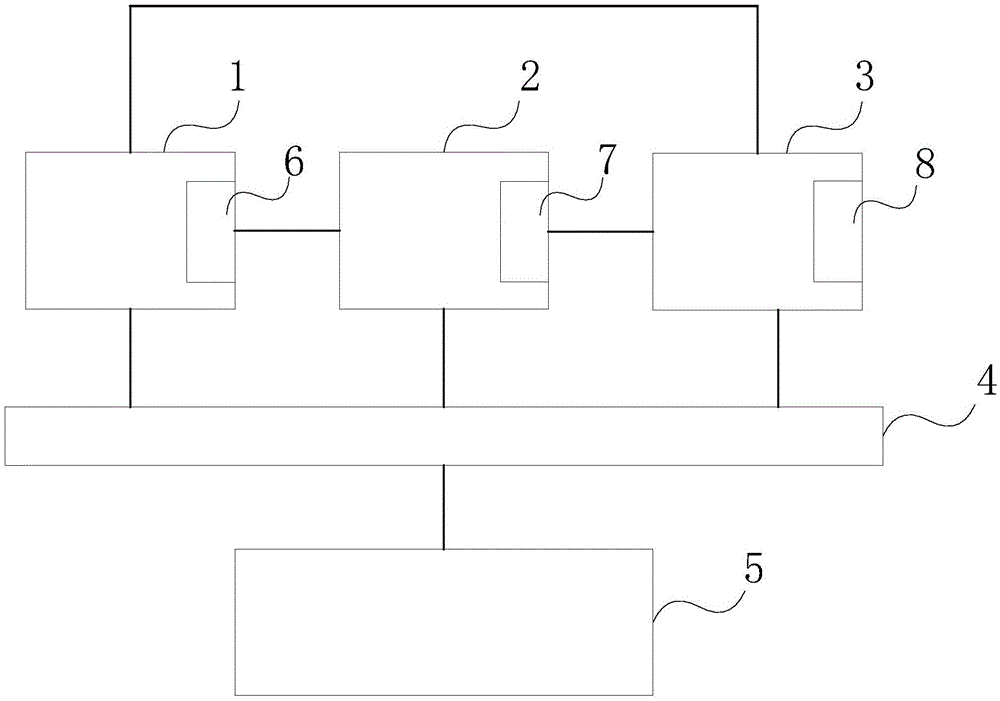

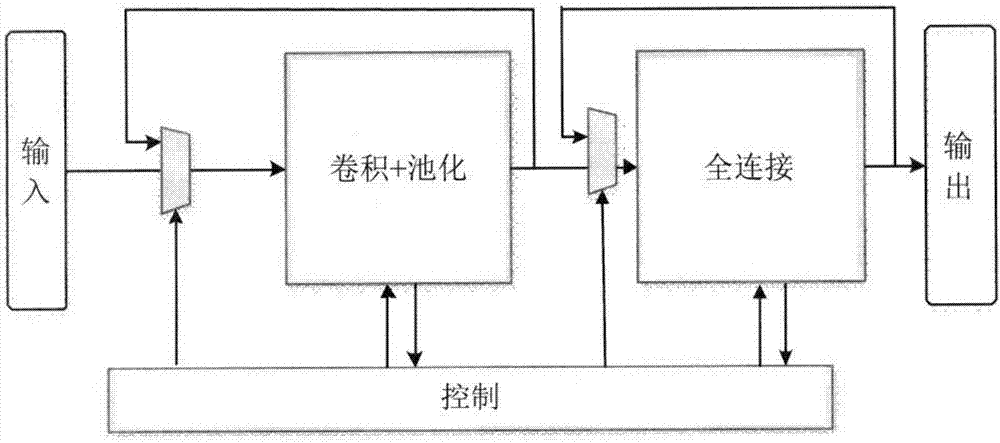

Hardware neural network conversion method, computing device, compiling method and neural network software and hardware collaboration system

ActiveCN106650922ASolve adaptation problemsEasy to operatePhysical realisationNetwork connectionNeural network hardware

The invention provides a hardware neural network conversion method which converts a neural network application into a hardware neural network meeting the hardware constraint condition, a computing device, a compiling method and a neural network software and hardware collaboration system. The method comprises the steps that a neural network connection diagram corresponding to the neural network application is acquired; the neural network connection diagram is split into neural network basic units; each neural network basic unit is converted into a network which has the equivalent function with the neural network basic unit and is formed by connection of basic module virtual bodies of neural network hardware; and the obtained basic unit hardware networks are connected according to the splitting sequence so as to generate the parameter file of the hardware neural network. A brand-new neural network and quasi-brain computation software and hardware system is provided, and an intermediate compiling layer is additionally arranged between the neural network application and a neural network chip so that the problem of adaptation between the neural network application and the neural network application chip can be solved, and development of the application and the chip can also be decoupled.

Owner:TSINGHUA UNIV

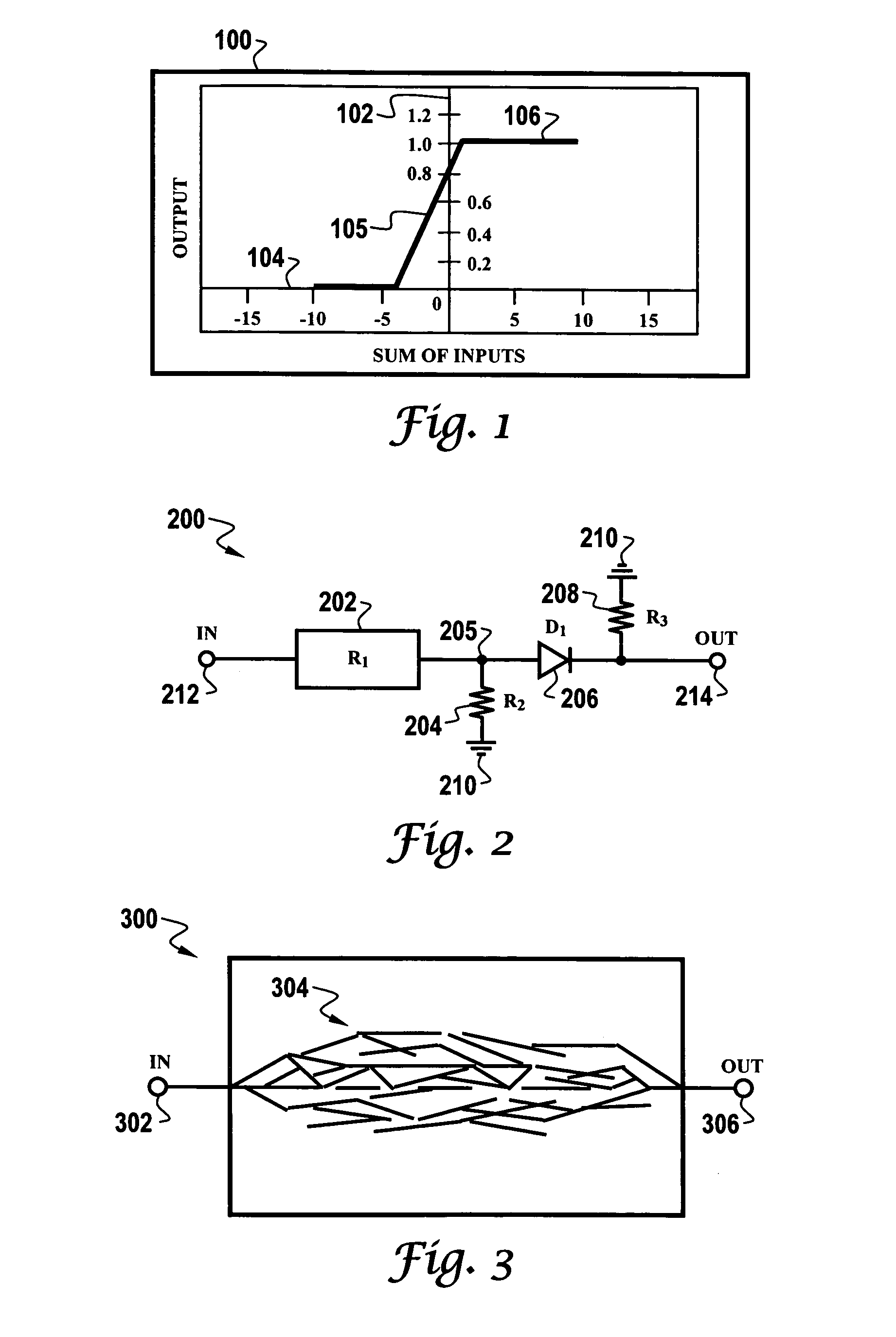

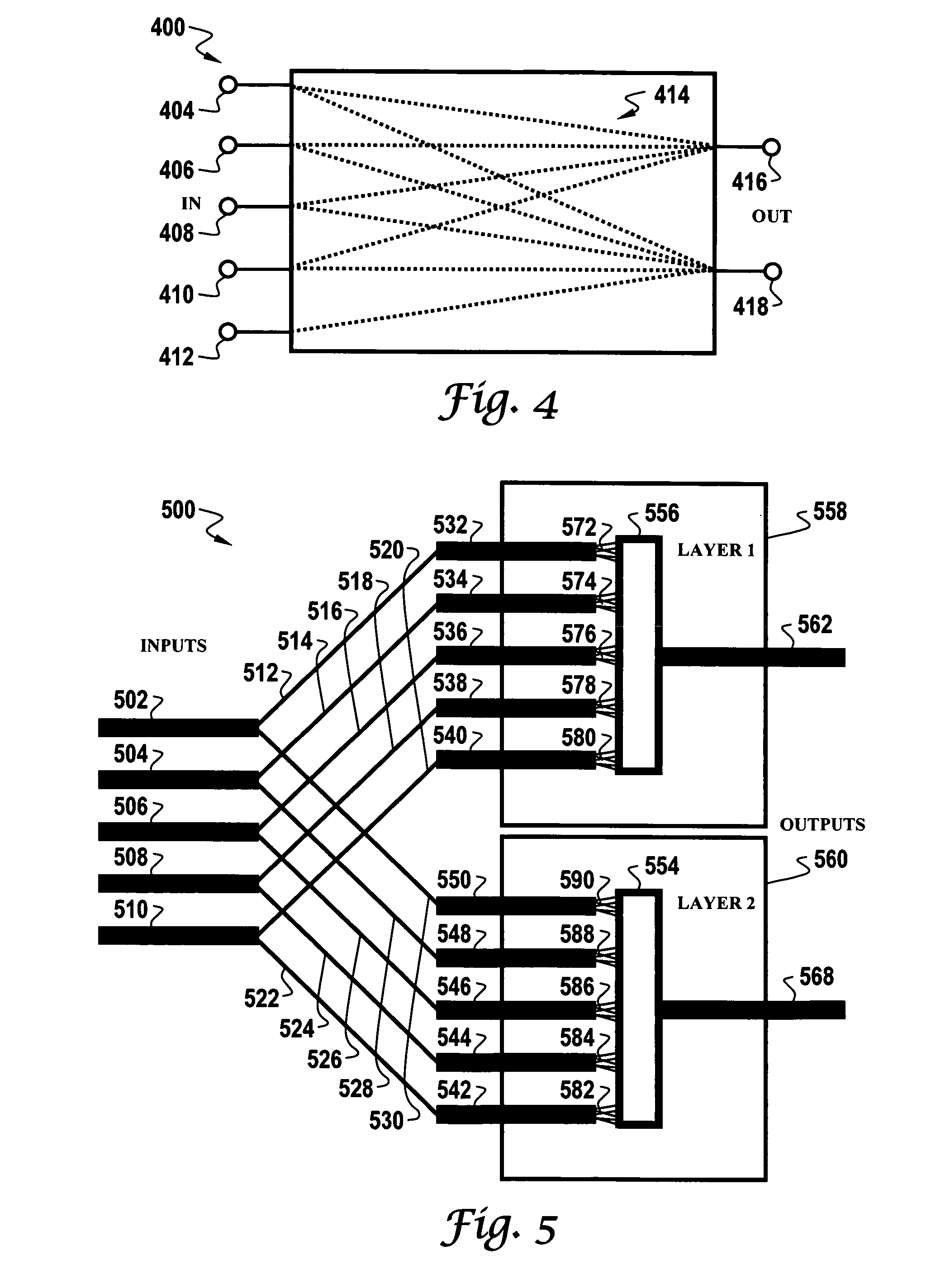

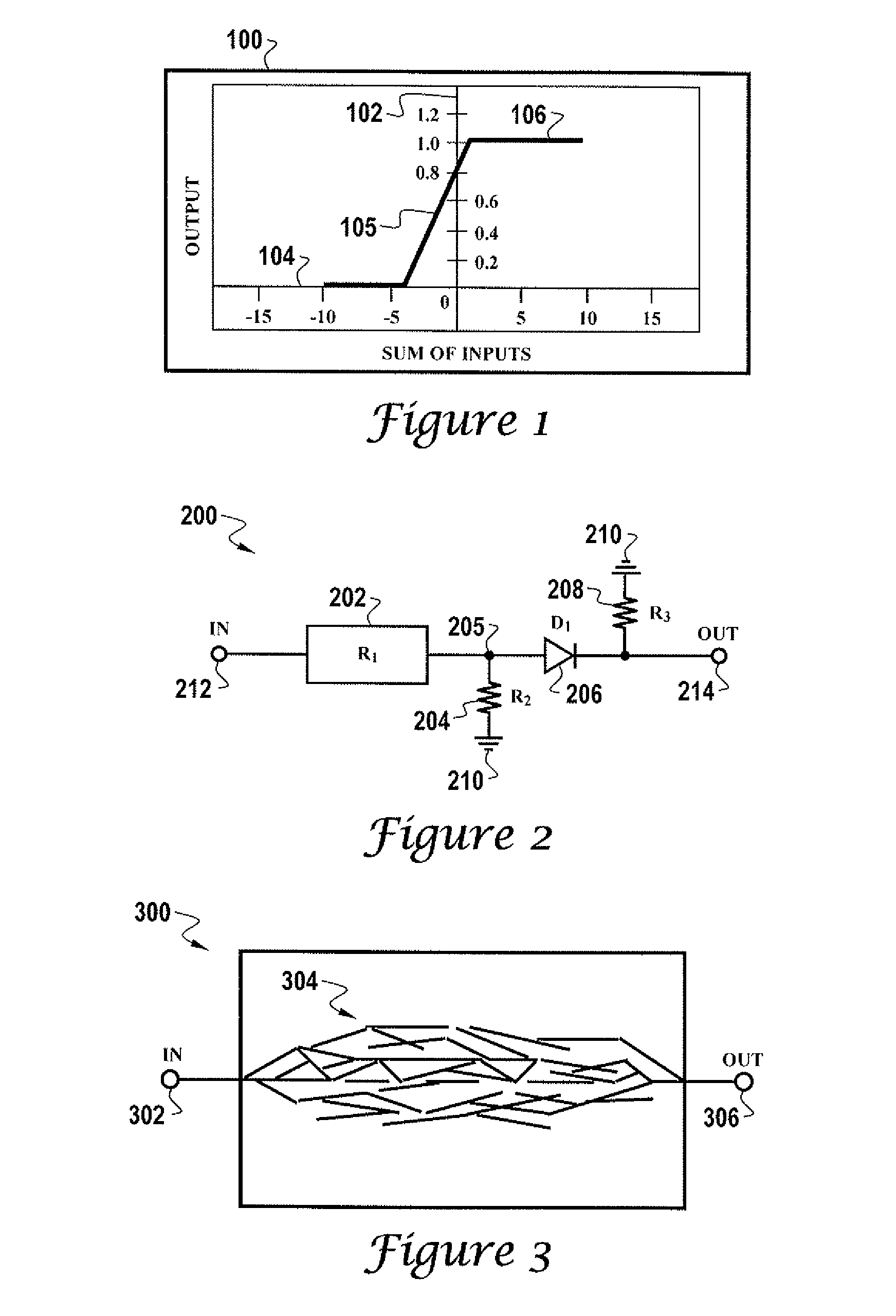

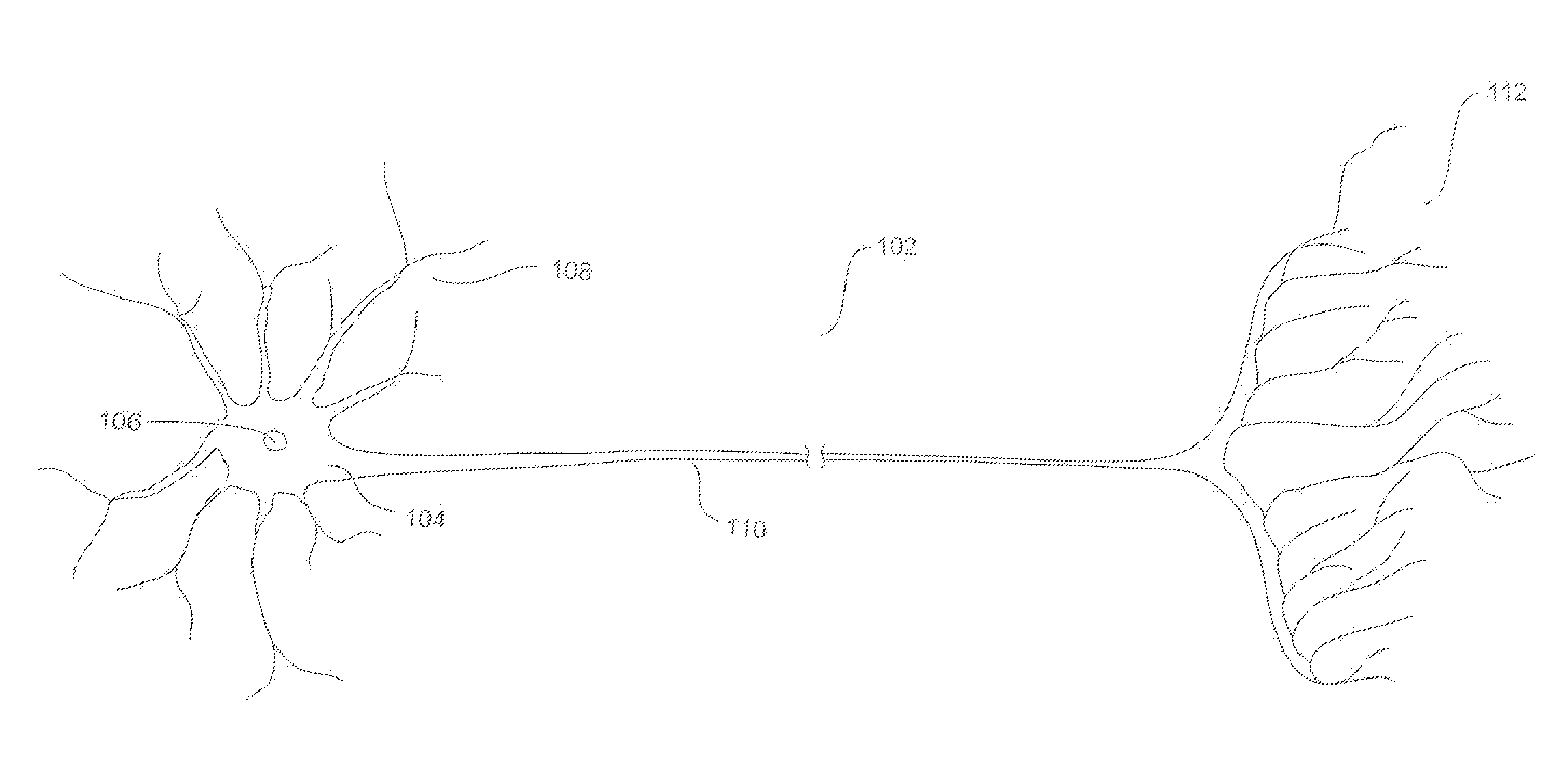

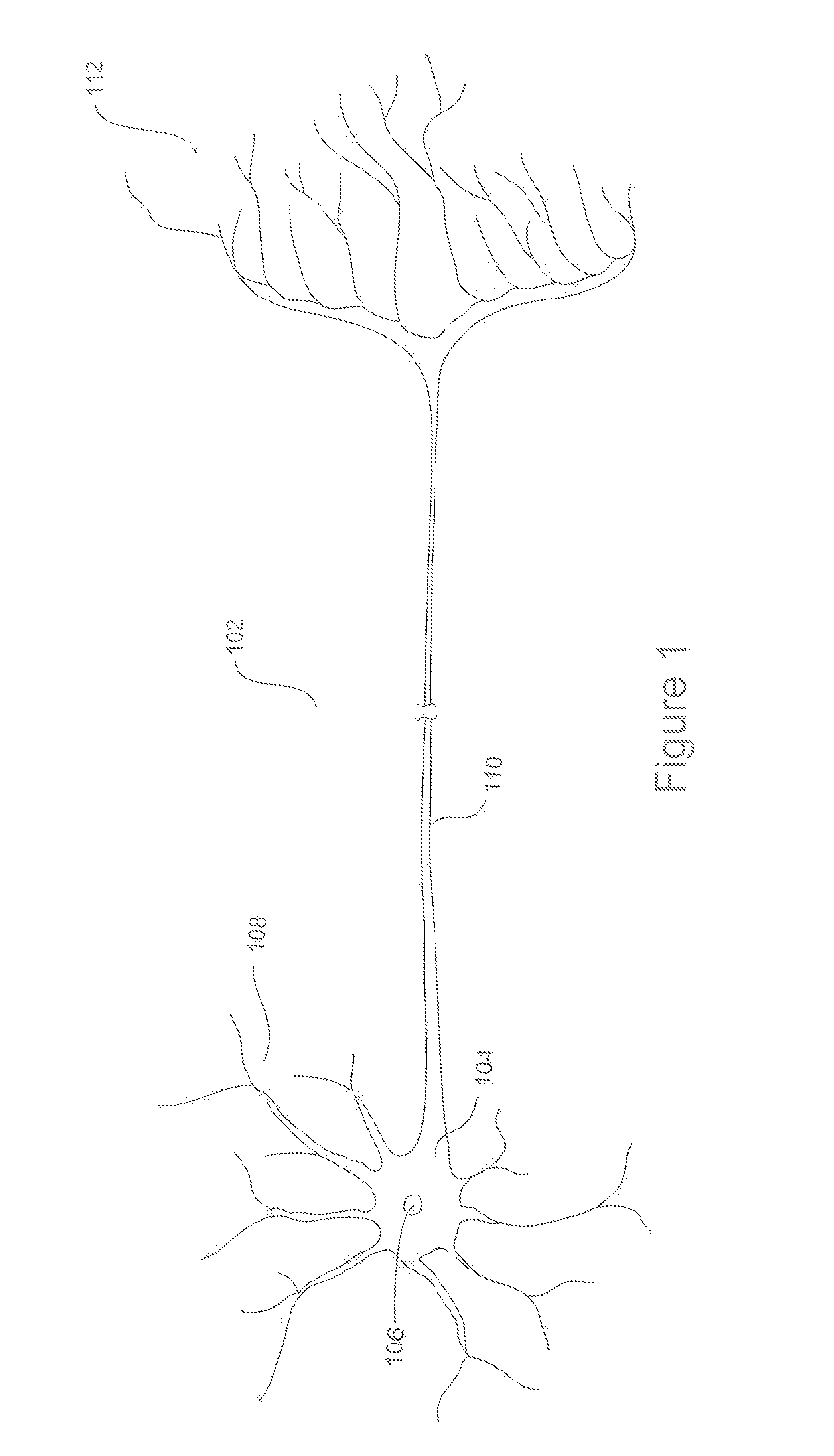

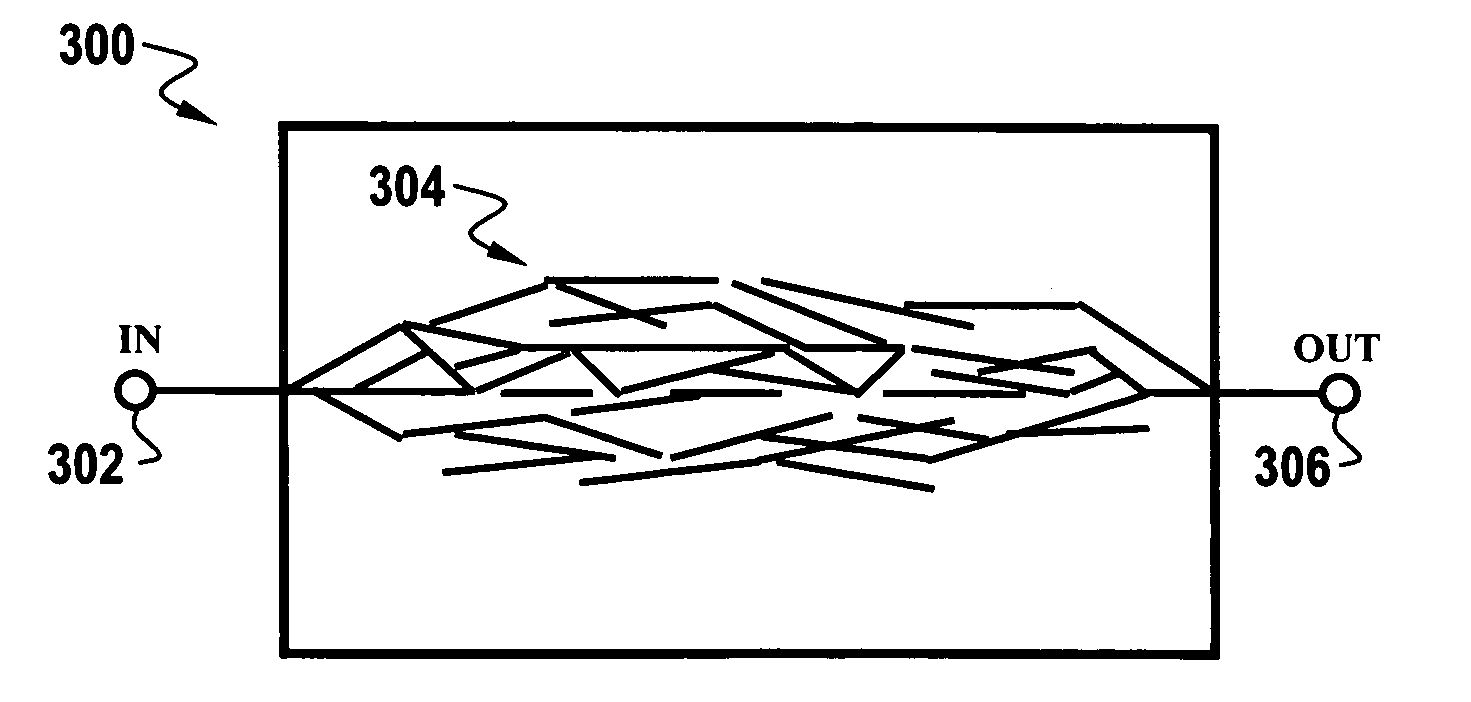

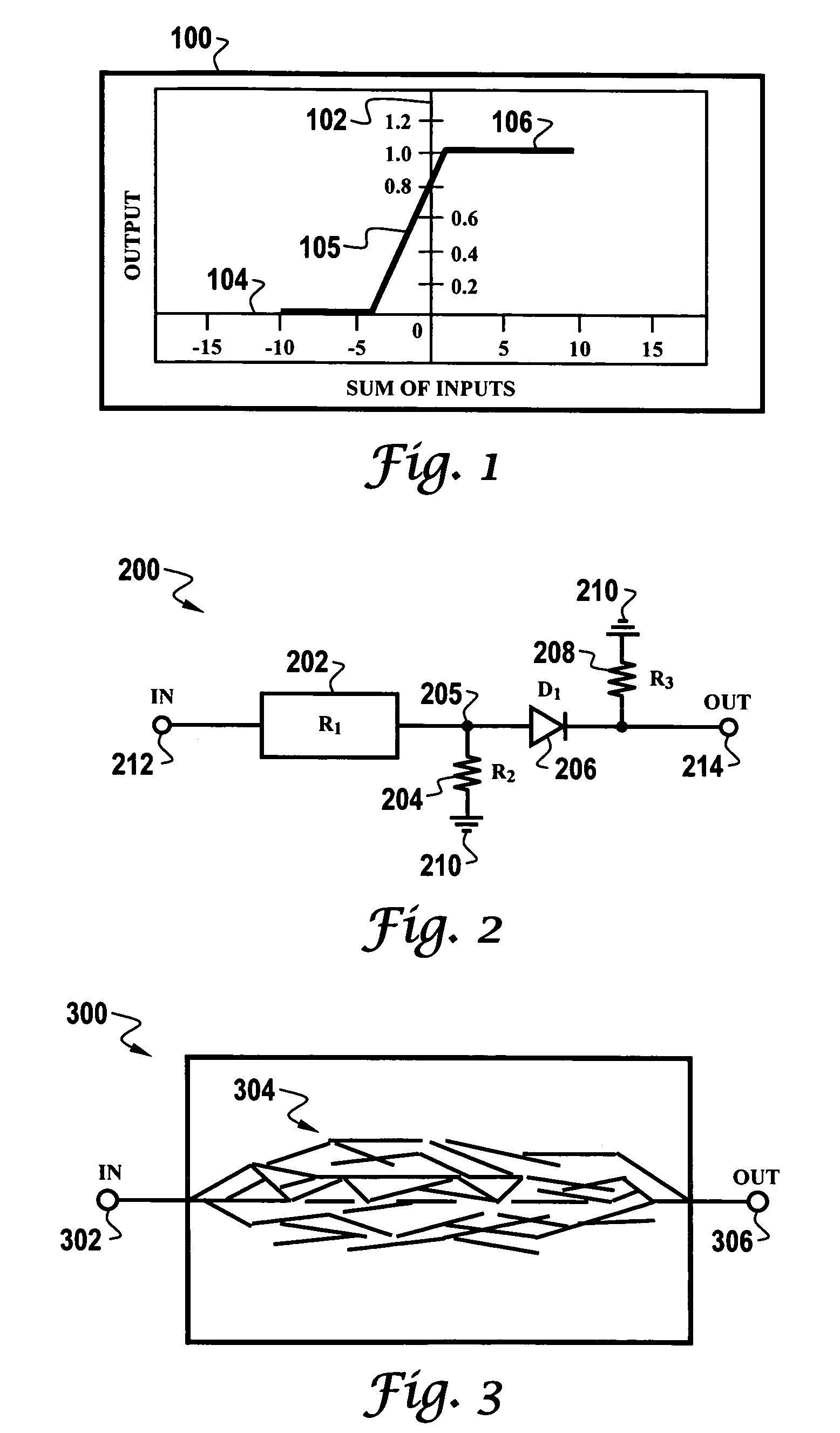

Nanotechnology neural network methods and systems

A physical neural network is disclosed, which includes a connection network comprising a plurality of molecular conducting connections suspended within a connection gap formed between one or more input electrodes and one or more output electrodes. One or more molecular connections of the molecular conducting connections can be strengthened or weakened according to an application of an electric field across said connection gap. Thus, a plurality of physical neurons can be formed from said molecular conducting connections of said connection network. Additionally, a gate can be located adjacent said connection gap and which comes into contact with said connection network. The gate can be connected to logic circuitry which can activate or deactivate individual physical neurons among said plurality of physical neurons.

Owner:KNOWM TECH

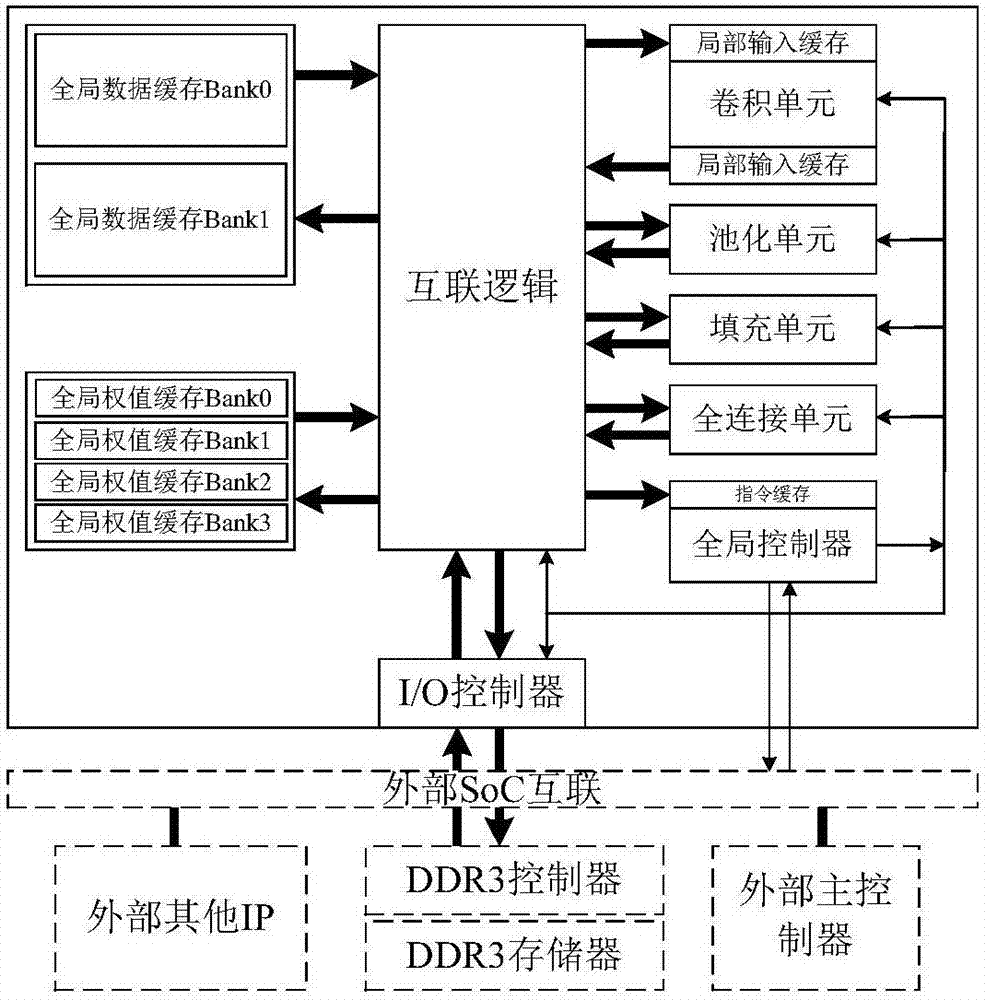

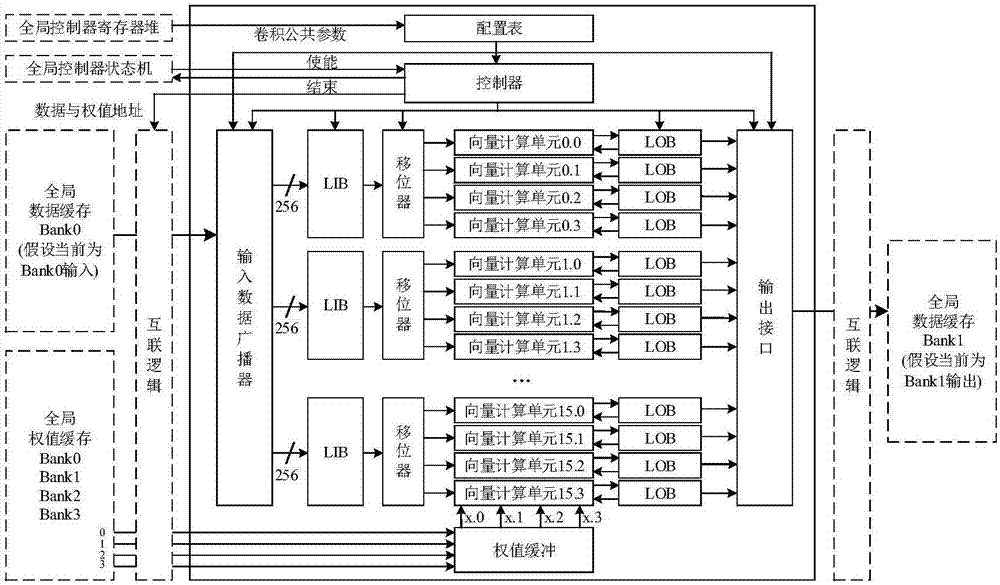

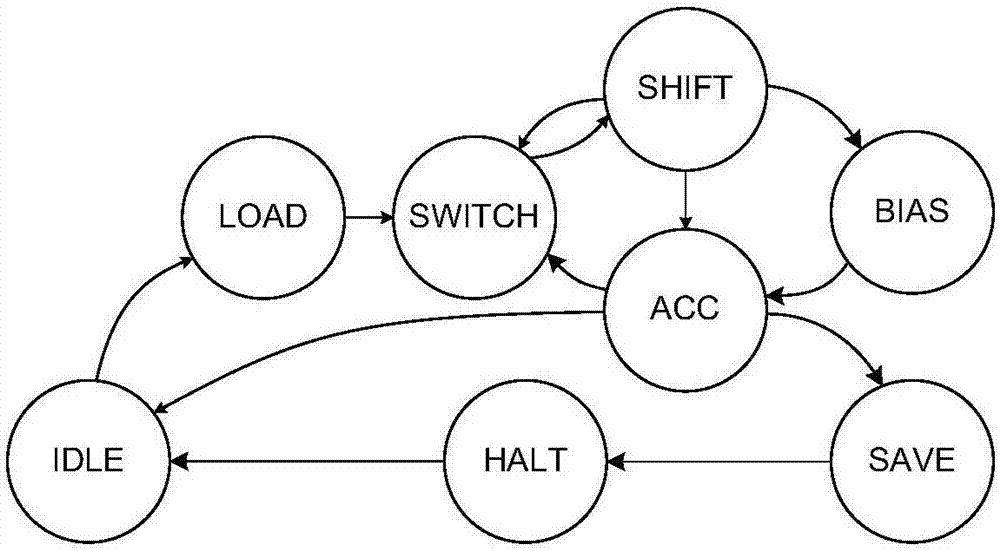

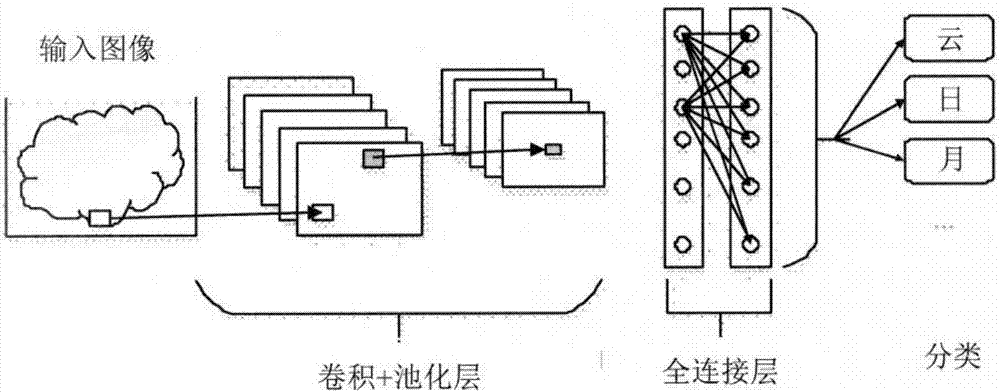

Co-processor IP core of programmable convolutional neural network

ActiveCN106940815AReduce frequencyReduce bandwidth pressureNeural architecturesPhysical realisationHardware structureInstruction set design

The present invention discloses a co-processor IP core of a programmable convolutional neural network. The invention aims to realize the arithmetic acceleration of the convolutional neural network on a digital chip (FPGA or ASIC). The co-processor IP core specifically comprises a global controller, an I / O controller, a multi-level cache system, a convolution unit, a pooling unit, a filling unit, a full-connection unit, an internal interconnection logical unit, and an instruction set designed for the co-processor IP. The proposed hardware structure supports the complete flows of convolutional neural networks diversified in scale. The hardware-level parallelism is fully utilized and the multi-level cache system is designed. As a result, the characteristics of high performance, low power consumption and the like are realized. The operation flow is controlled through instructions, so that the programmability and the configurability are realized. The co-processor IP core can be easily applied to different application scenes.

Owner:XI AN JIAOTONG UNIV

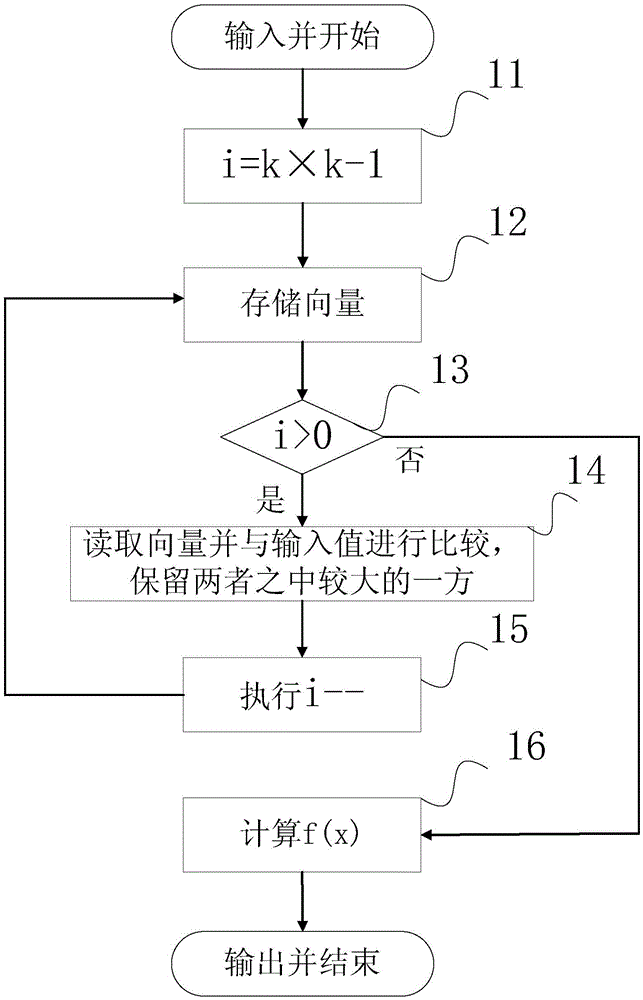

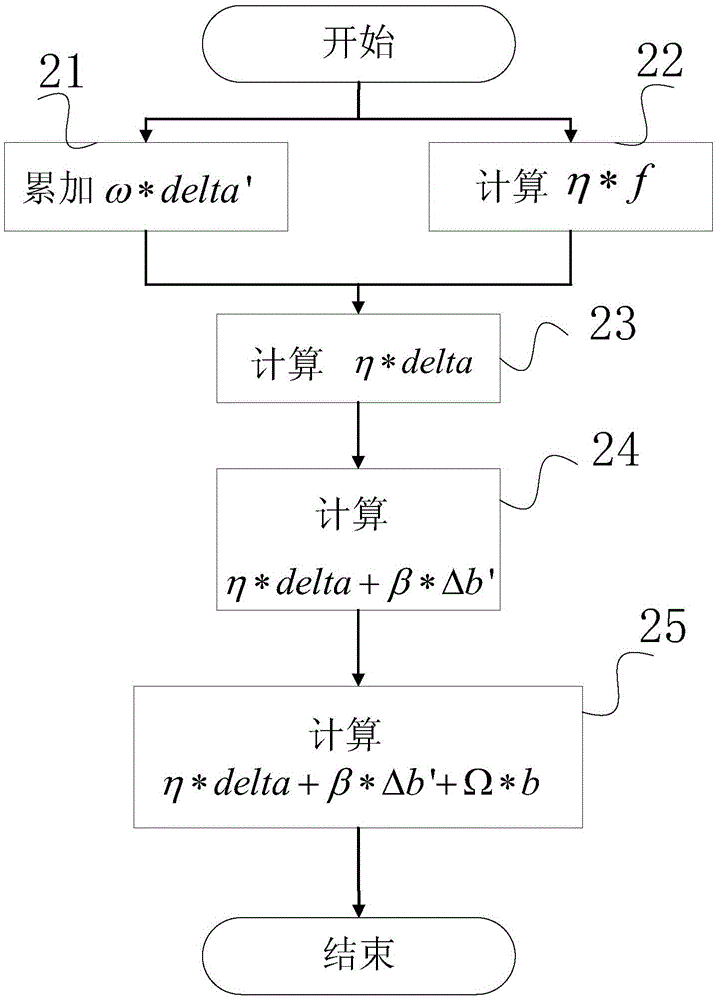

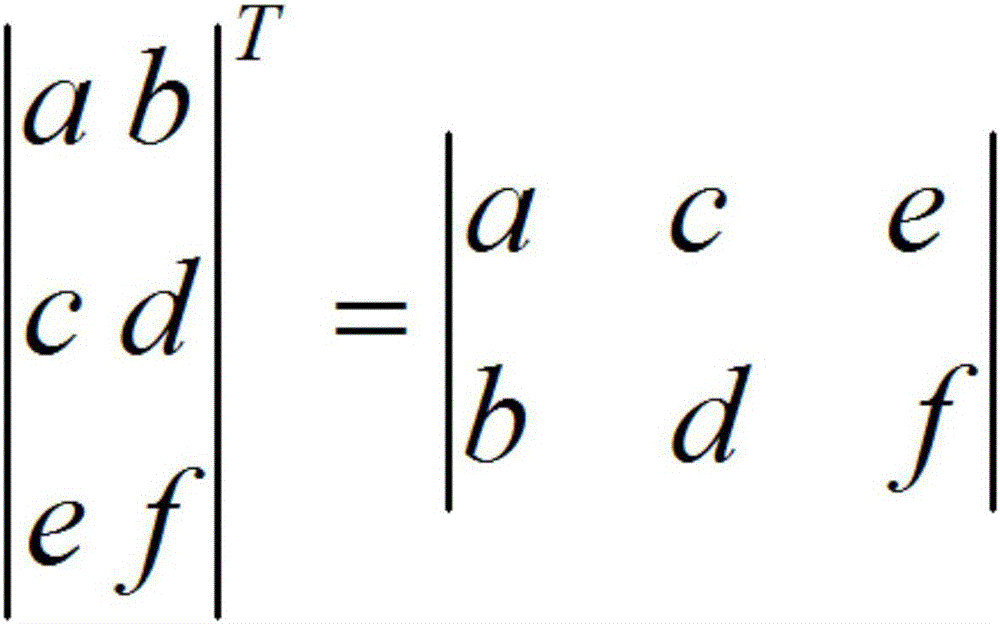

Calculation apparatus and method for accelerator chip accelerating deep neural network algorithm

InactiveCN105488565AExtended waiting timeConsume morePhysical realisationNeural learning methodsSynaptic weightNerve network

The invention provides a calculation apparatus and method for an accelerator chip accelerating a deep neural network algorithm. The apparatus comprises a vector addition processor module, a vector function value calculator module and a vector multiplier-adder module, wherein the vector addition processor module performs vector addition or subtraction and / or vectorized operation of a pooling layer algorithm in the deep neural network algorithm; the vector function value calculator module performs vectorized operation of a nonlinear value in the deep neural network algorithm; the vector multiplier-adder module performs vector multiplication and addition operations; the three modules execute programmable instructions and interact to calculate a neuron value and a network output result of a neural network and a synaptic weight variation representing the effect intensity of input layer neurons to output layer neurons; and an intermediate value storage region is arranged in each of the three modules and a main memory is subjected to reading and writing operations. Therefore, the intermediate value reading and writing frequencies of the main memory can be reduced, the energy consumption of the accelerator chip can be reduced, and the problems of data missing and replacement in a data processing process can be avoided.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

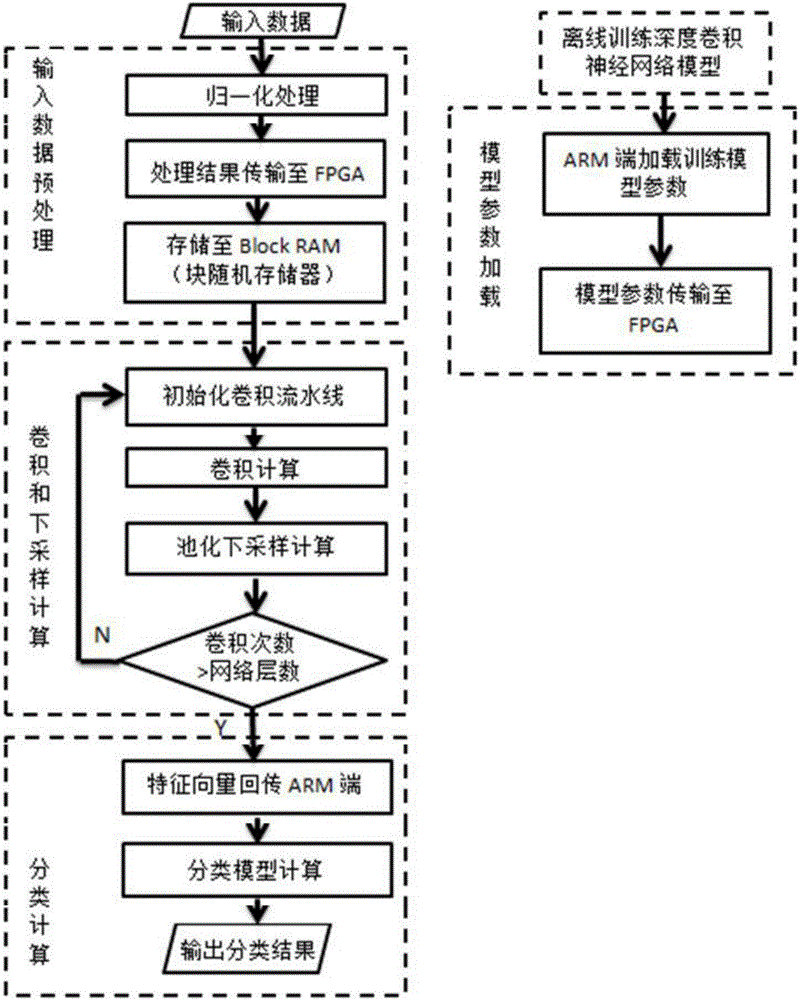

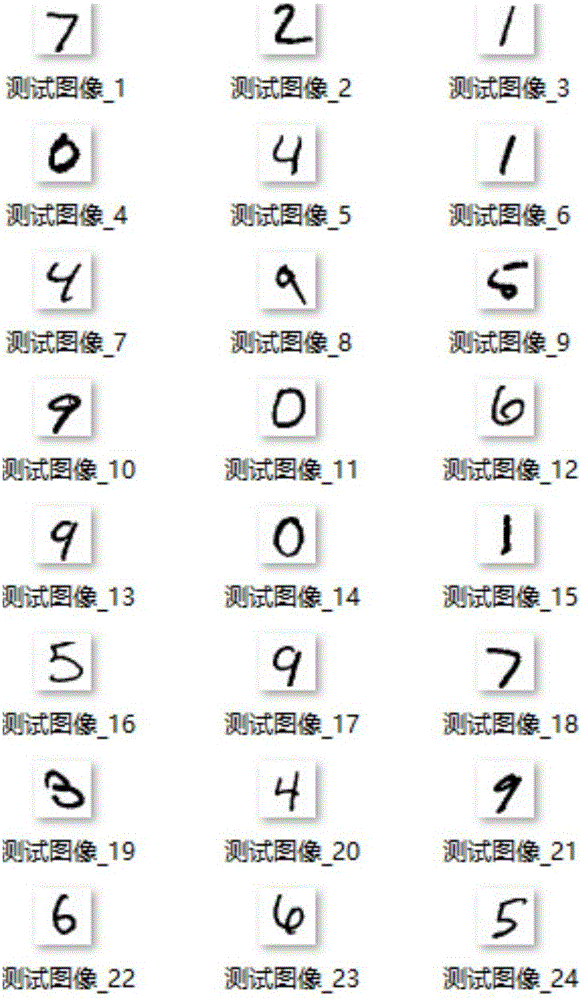

FPGA-based deep convolution neural network realizing method

ActiveCN106228240ASimple designReduce resource consumptionCharacter and pattern recognitionSpeech recognitionFeature vectorAlgorithm

The invention belongs to the technical field of digital image processing and mode identification, and specifically relates to an FPGA-based deep convolution neural network realizing method. The hardware platform for realizing the method is XilinxZYNQ-7030 programmable sheet SoC, and an FPGA and an ARM Cortex A9 processor are built in the hardware platform. Trained network model parameters are loaded to an FPGA end, pretreatment for input data is conducted at an ARM end, and the result is transmitted to the FPGA end. Convolution calculation and down-sampling of a deep convolution neural network are realized at the FPGA end to form data characteristic vectors and transmit the data characteristic vectors to the ARM end, thus completing characteristic classification calculation. Rapid parallel processing and extremely low-power high-performance calculation characteristics of FPGA are utilized to realize convolution calculation which has the highest complexity in a deep convolution neural network model. The algorithm efficiency is greatly improved, and the power consumption is reduced while ensuring algorithm correct rate.

Owner:FUDAN UNIV

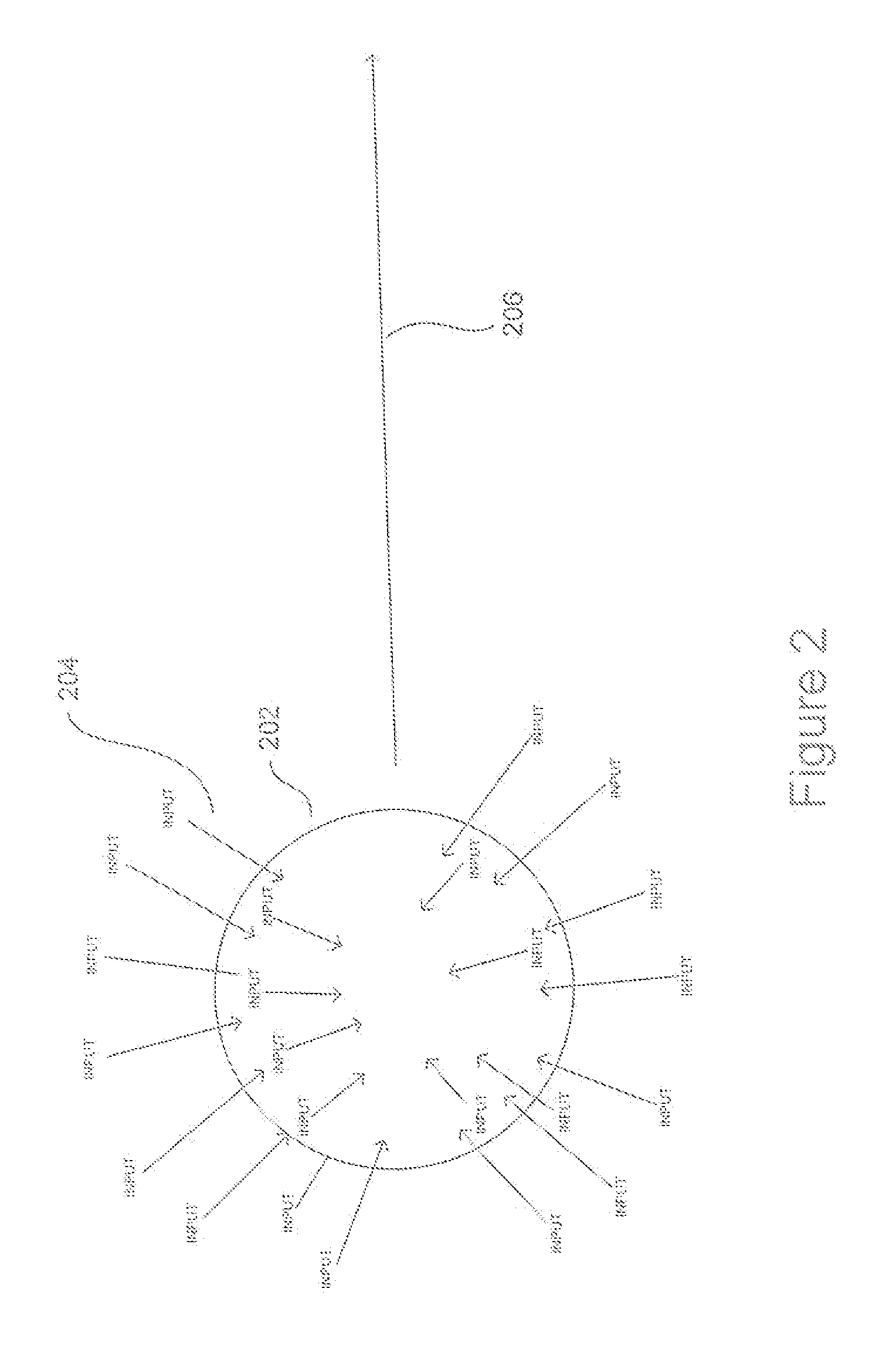

Adaptive neural network utilizing nanotechnology-based components

Methods and systems for modifying at least one synapse of a physicallelectromechanical neural network. A physical / electromechanical neural network implemented as an adaptive neural network can be provided, which includes one or more neurons and one or more synapses thereof, wherein the neurons and synapses are formed from a plurality of nanoparticles disposed within a dielectric solution in association with one or more pre-synaptic electrodes and one or more post-synaptic electrodes and an applied electric field. At least one pulse can be generated from one or more of the neurons to one or more of the pre-synaptic electrodes of a succeeding neuron and one or more post-synaptic electrodes of one or more of the neurons of the physical / electromechanical neural network, thereby strengthening at least one nanoparticle of a plurality of nanoparticles disposed within the dielectric solution and at least one synapse thereof.

Owner:KNOWM TECH

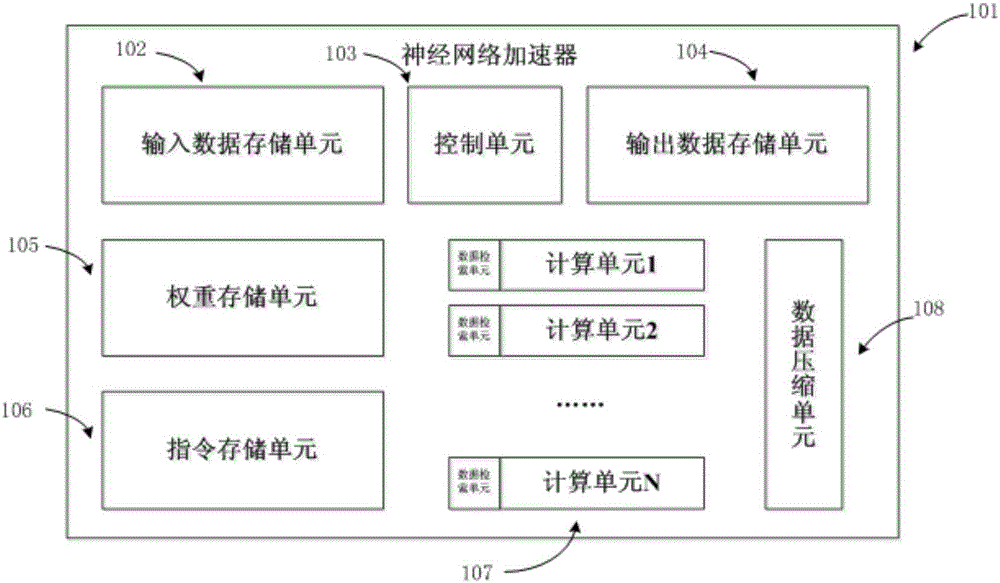

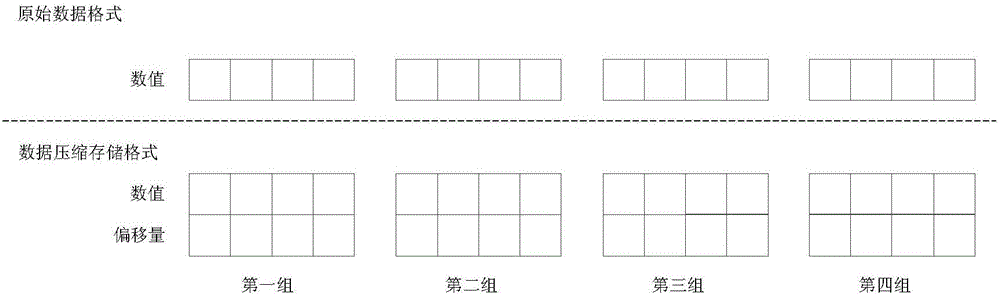

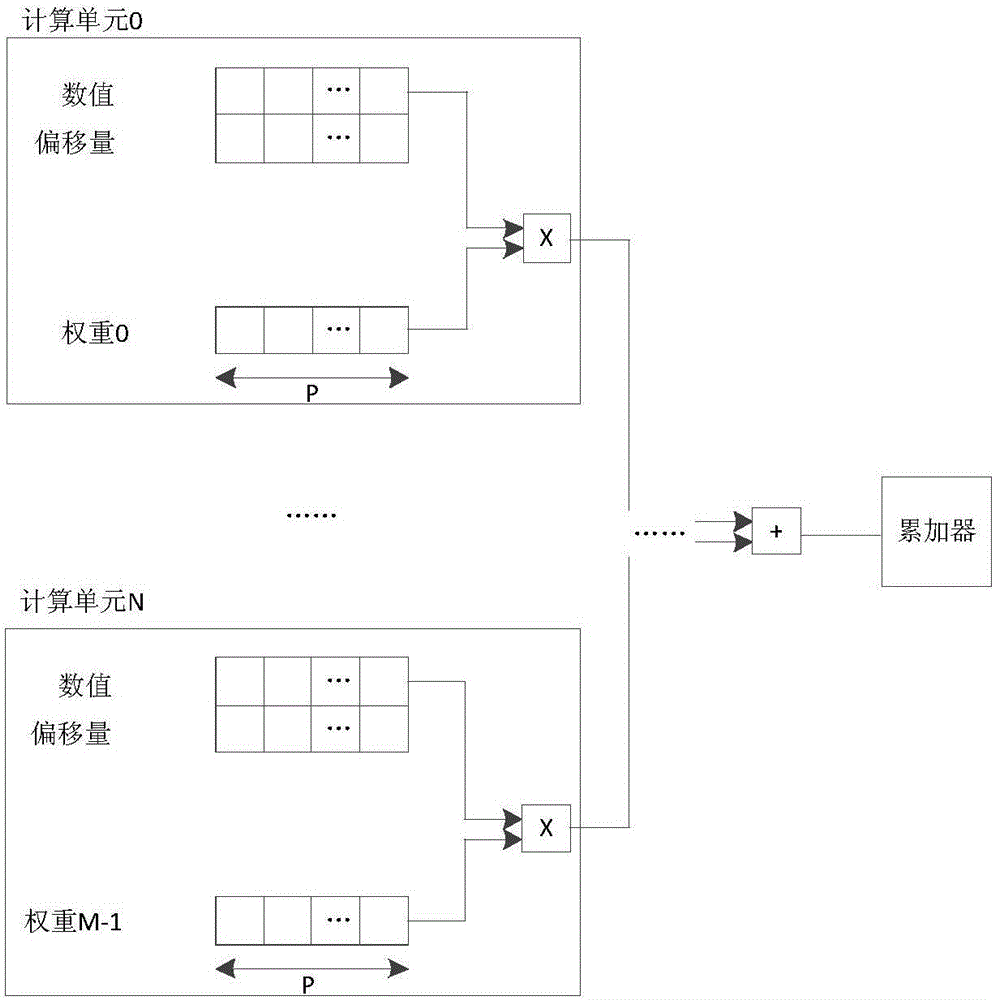

Neutral network processor based on data compression, design method and chip

ActiveCN106447034AIncrease computing speedImprove computing efficiencyPhysical realisationEnergy efficient computingOperating instructionData compression

The invention provides a neutral network processor based on data compression, a design method and a chip. The processor comprises at least one storage unit used for storing operating instructions and data participating in calculation, at least one storage unit controller used for controlling the storage unit, at least one calculation unit used for executing calculation of a neutral network, a control unit connected with the storage unit controllers and the calculation units and used for acquiring instructions stored by the storage unit through the storage unit controllers and analyzing the instructions to control the calculation units, and at least one data compression unit used for compressing data participating in calculation according to a data compression storage format. Each data compression unit is connected with the corresponding calculation unit. Occupancy of data resources in the neutral network processor is reduced, the operating rate is increased, and energy efficiency is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

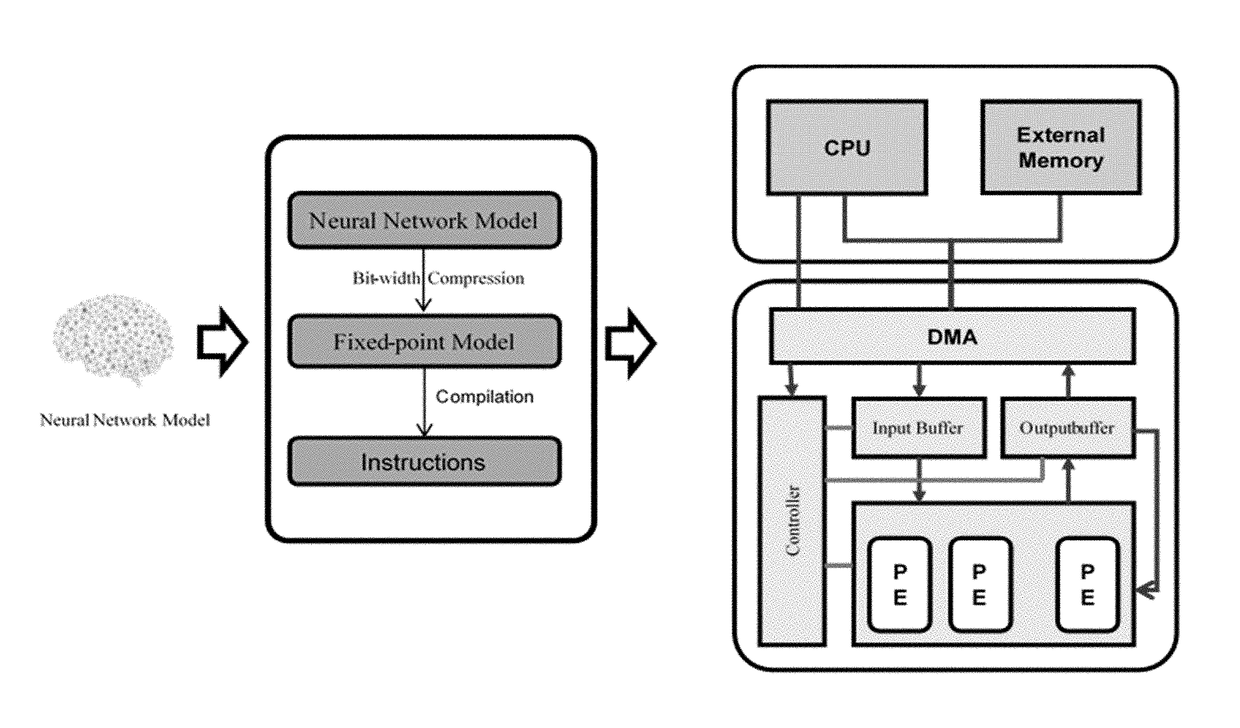

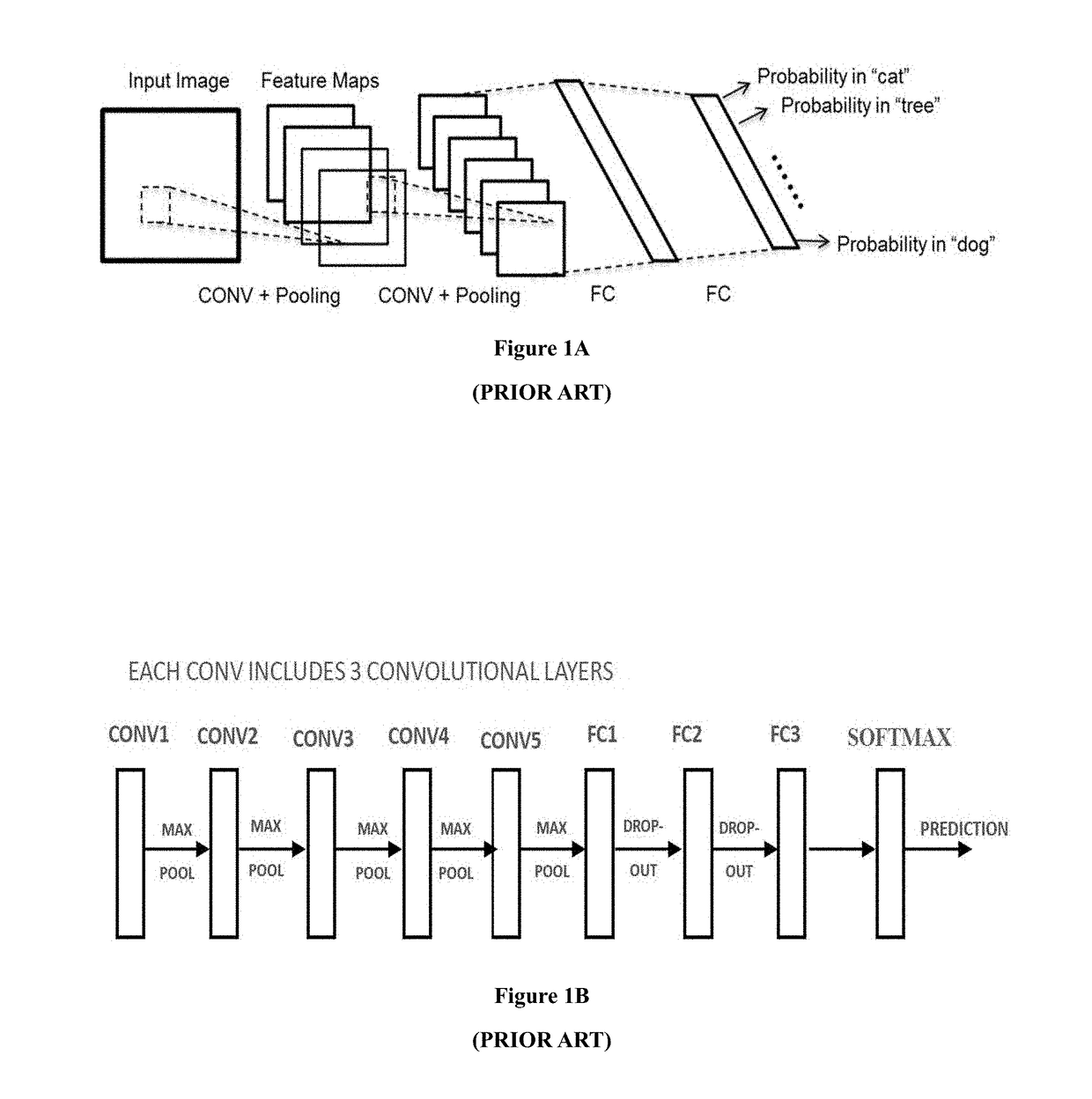

Method for optimizing an artificial neural network (ANN)

ActiveUS20180046894A1Digital data processing detailsSpeech analysisNetwork modelArtificial neural network

The present invention relates to artificial neural network, for example, convolutional neural network. In particular, the present invention relates to how to implement and optimize a convolutional neural network based on an embedded FPGA. Specifically, it proposes an overall design process of compressing, fix-point quantization and compiling the neural network model.

Owner:XILINX TECH BEIJING LTD

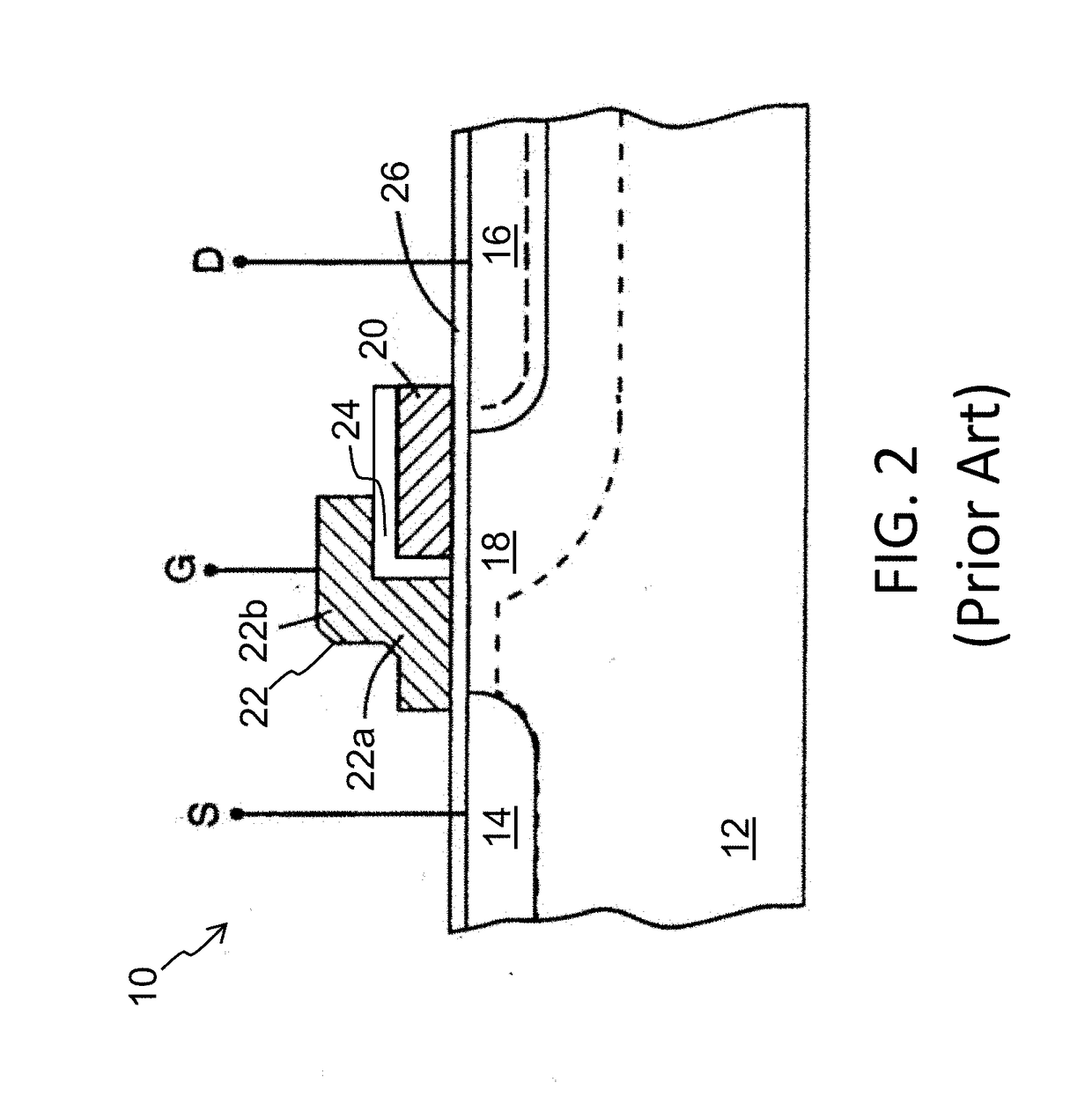

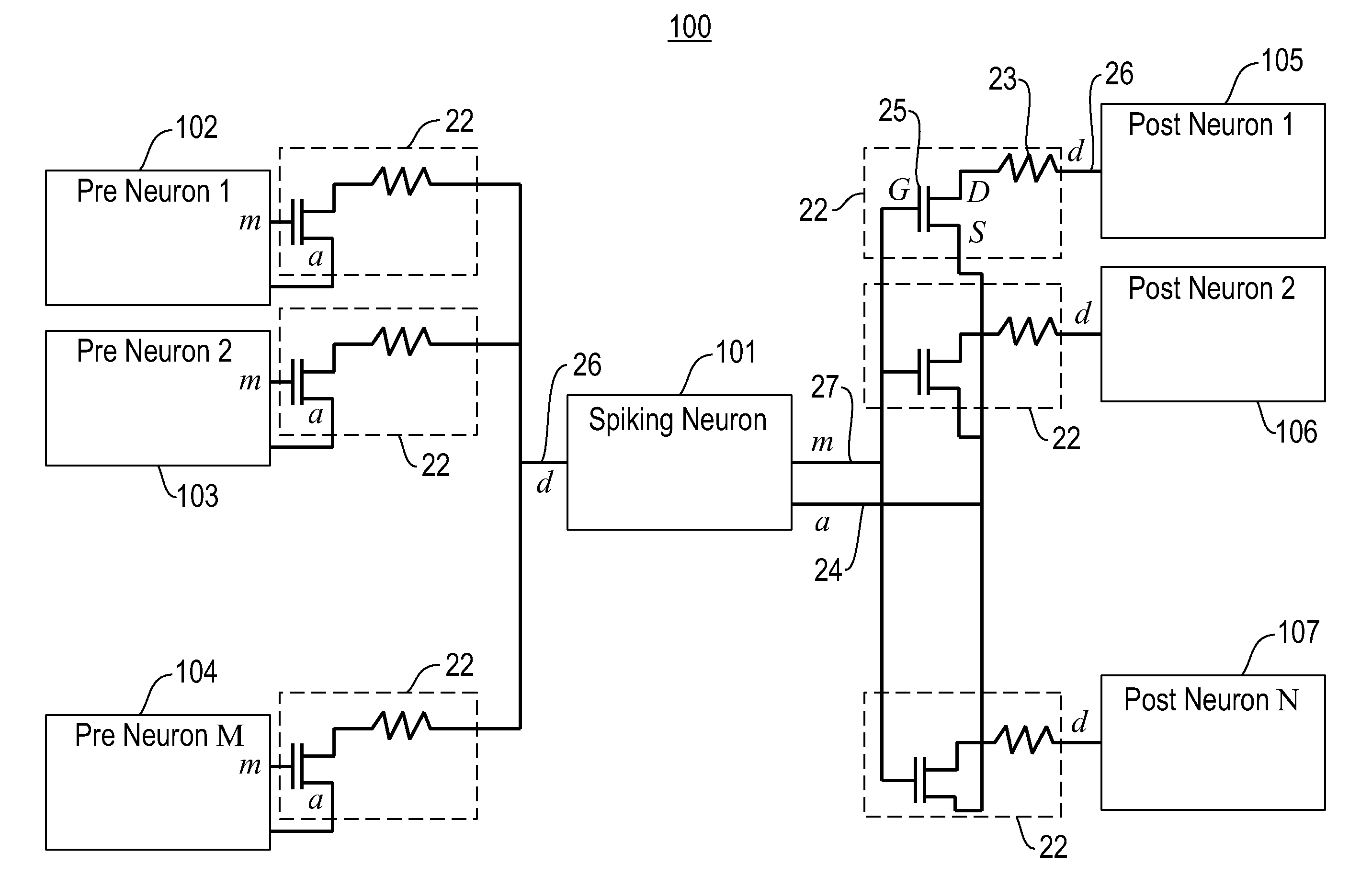

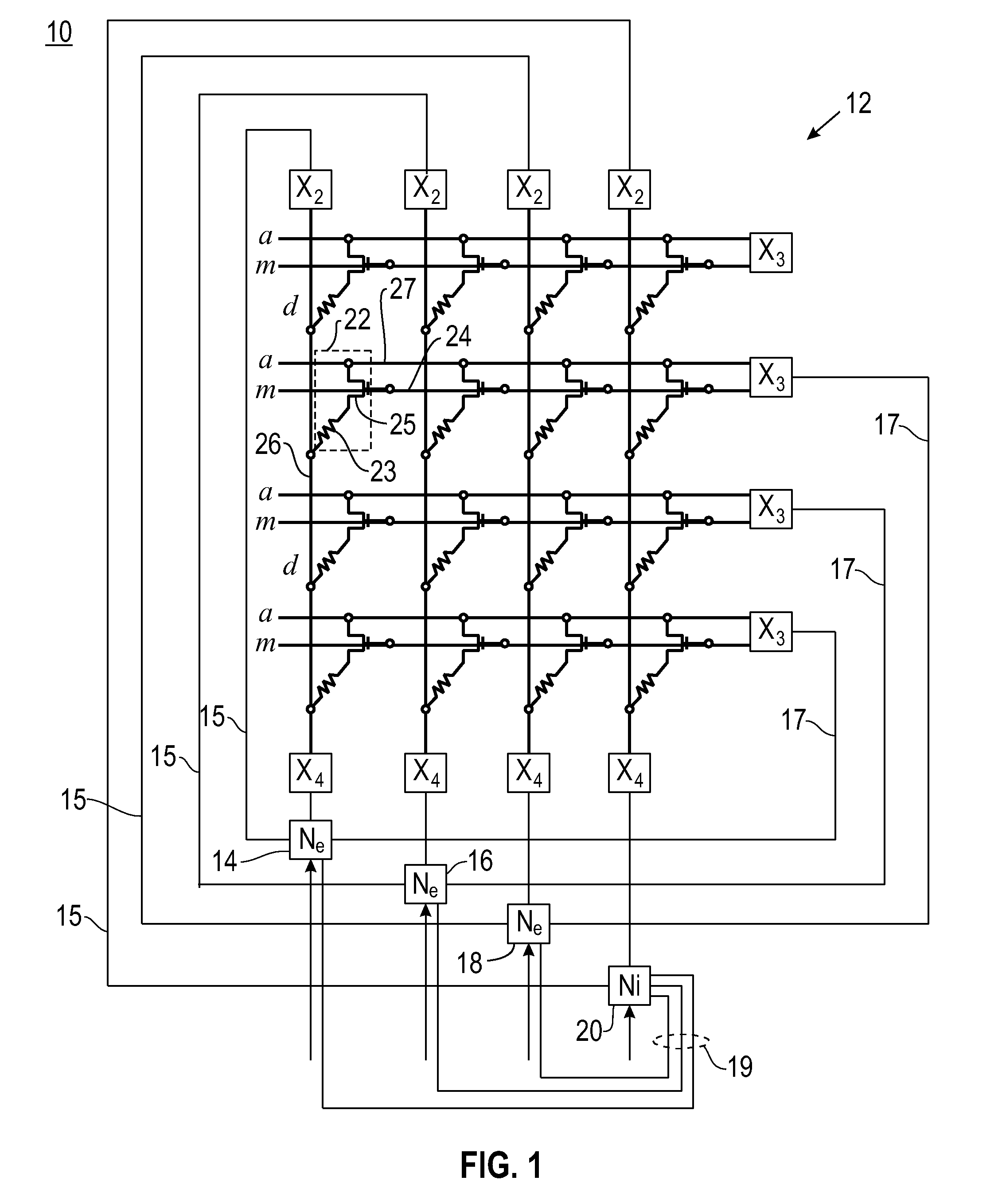

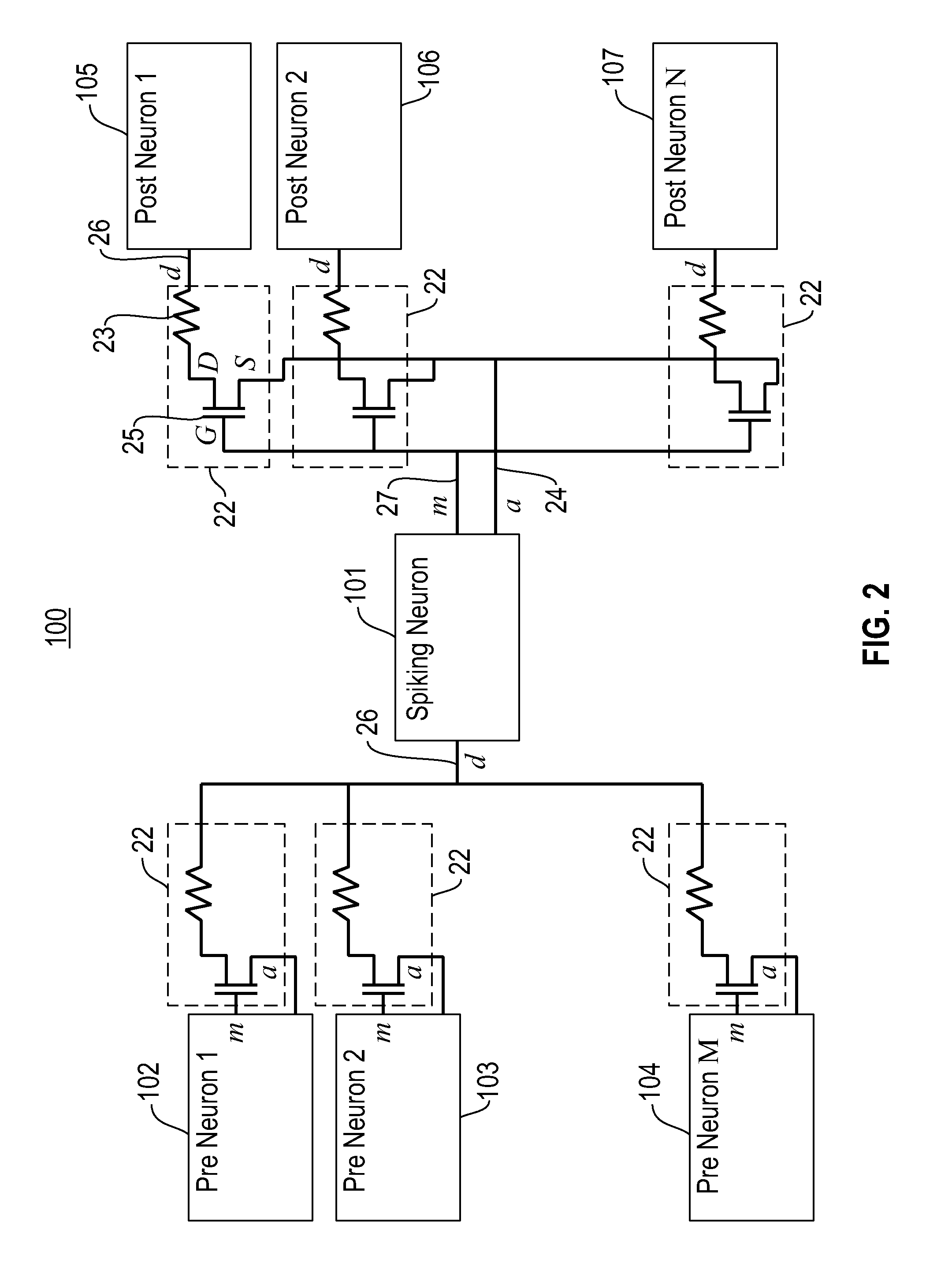

Producing spike-timing dependent plasticity in a neuromorphic network utilizing phase change synaptic devices

ActiveUS20120084241A1Digital computer detailsDigital storageSynaptic deviceSpike-timing-dependent plasticity

Embodiments of the invention relate to a neuromorphic network for producing spike-timing dependent plasticity. The neuromorphic network includes a plurality of electronic neurons and an interconnect circuit coupled for interconnecting the plurality of electronic neurons. The interconnect circuit includes plural synaptic devices for interconnecting the electronic neurons via axon paths, dendrite paths and membrane paths. Each synaptic device includes a variable state resistor and a transistor device with a gate terminal, a source terminal and a drain terminal, wherein the drain terminal is connected in series with a first terminal of the variable state resistor. The source terminal of the transistor device is connected to an axon path, the gate terminal of the transistor device is connected to a membrane path and a second terminal of the variable state resistor is connected to a dendrite path, such that each synaptic device is coupled between a first axon path and a first dendrite path, and between a first membrane path and said first dendrite path.

Owner:IBM CORP

Apparatus and method for realizing accelerator of sparse convolutional neural network

InactiveCN107239824AImprove computing powerReduce response latencyDigital data processing detailsNeural architecturesAlgorithmBroadband

The invention provides an apparatus and method for realizing an accelerator of a sparse convolutional neural network. According to the invention, the apparatus herein includes a convolutional and pooling unit, a full connection unit and a control unit. The method includes the following steps: on the basis of control information, reading convolutional parameter information, and input data and intermediate computing data, and reading full connected layer weight matrix position information, in accordance with the convolutional parameter information, conducting convolution and pooling on the input data with first iteration times, then on the basis of the full connected layer weight matrix position information, conducting full connection computing with second iteration times. Each input data is divided into a plurality of sub-blocks, and the convolutional and pooling unit and the full connection unit separately operate on the plurality of sub-blocks in parallel. According to the invention, the apparatus herein uses a specific circuit, supports a full connected layer sparse convolutional neural network, uses parallel ping-pang buffer design and assembly line design, effectively balances I / O broadband and computing efficiency, and acquires better performance power consumption ratio.

Owner:XILINX INC

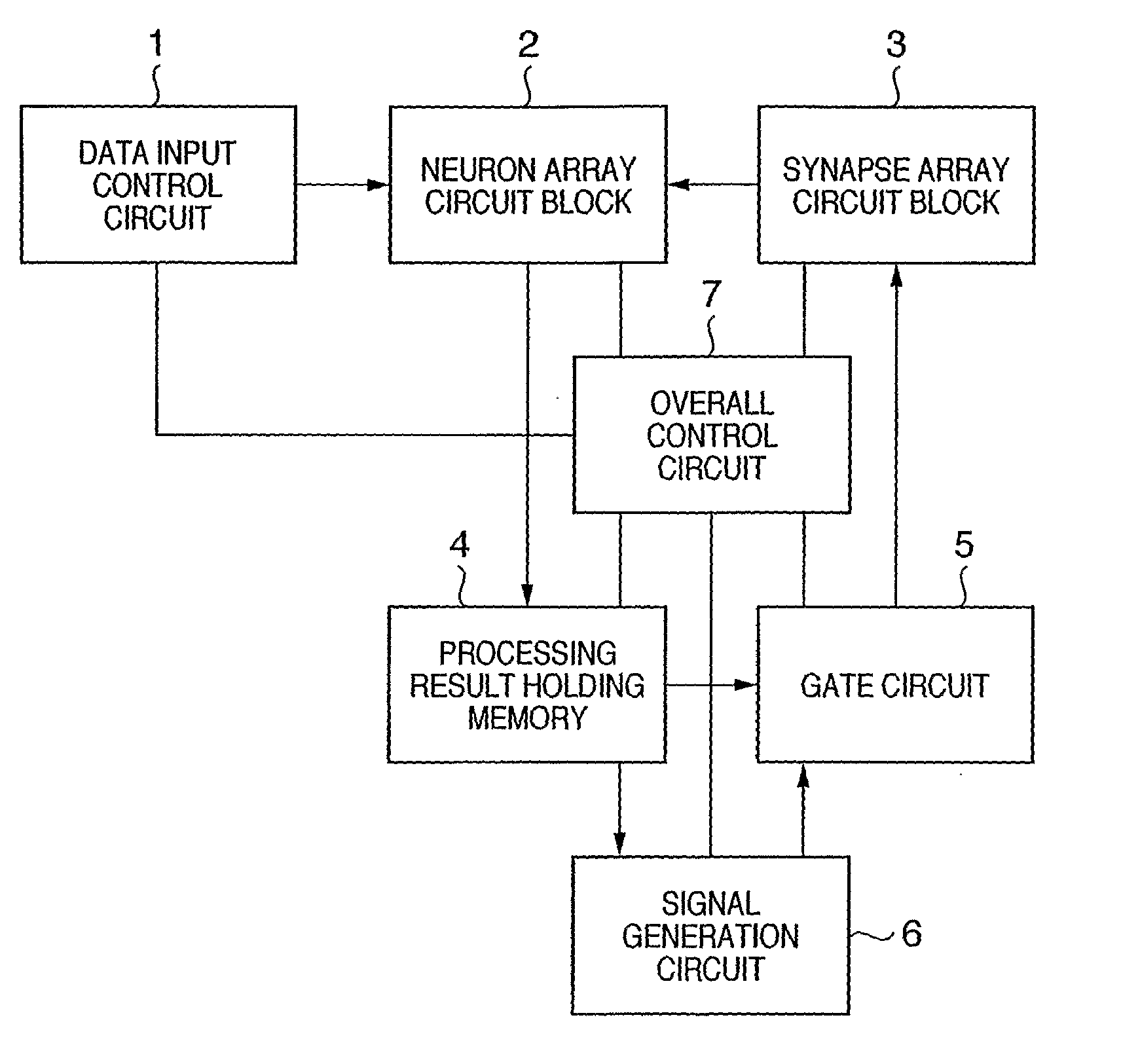

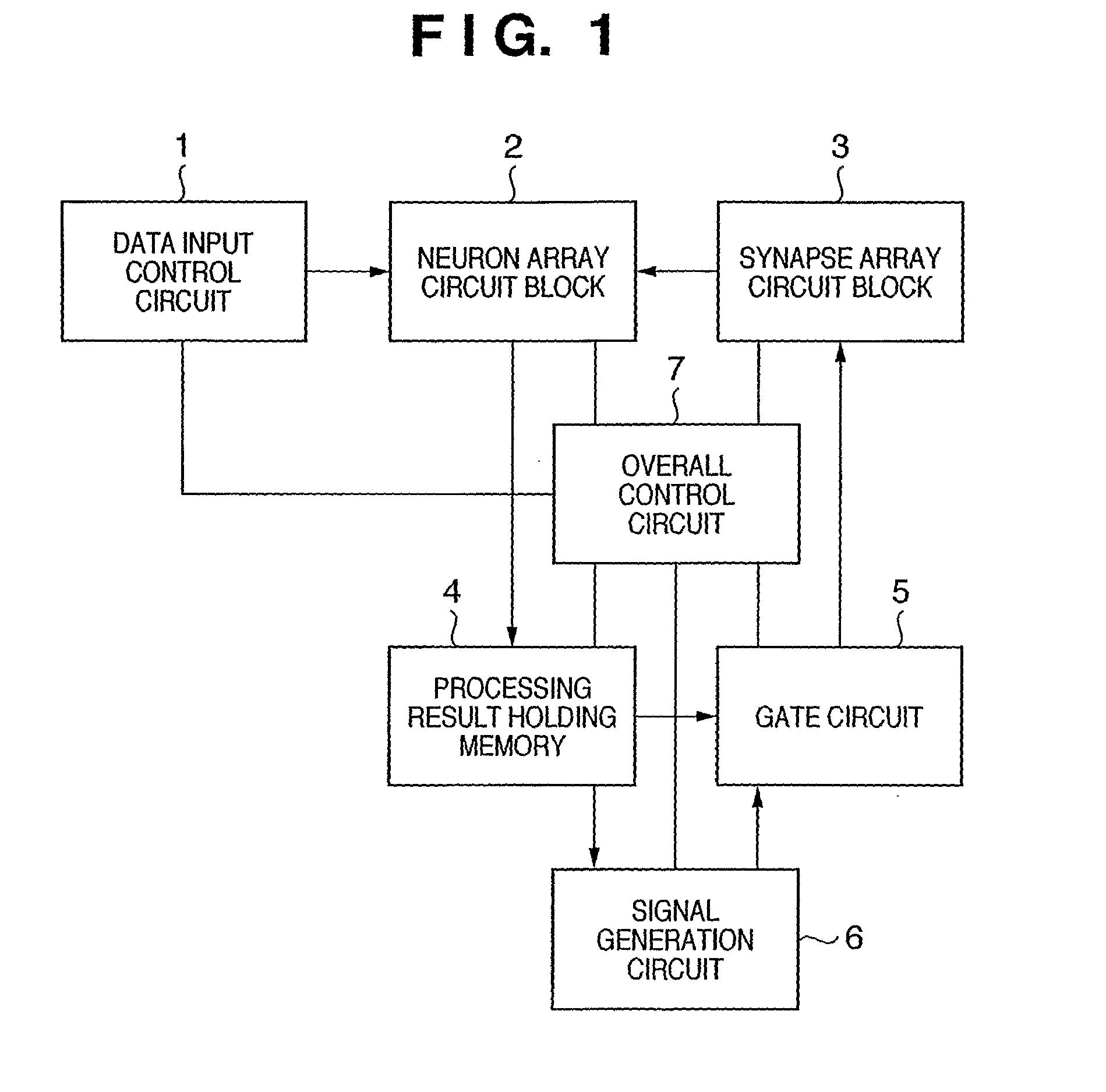

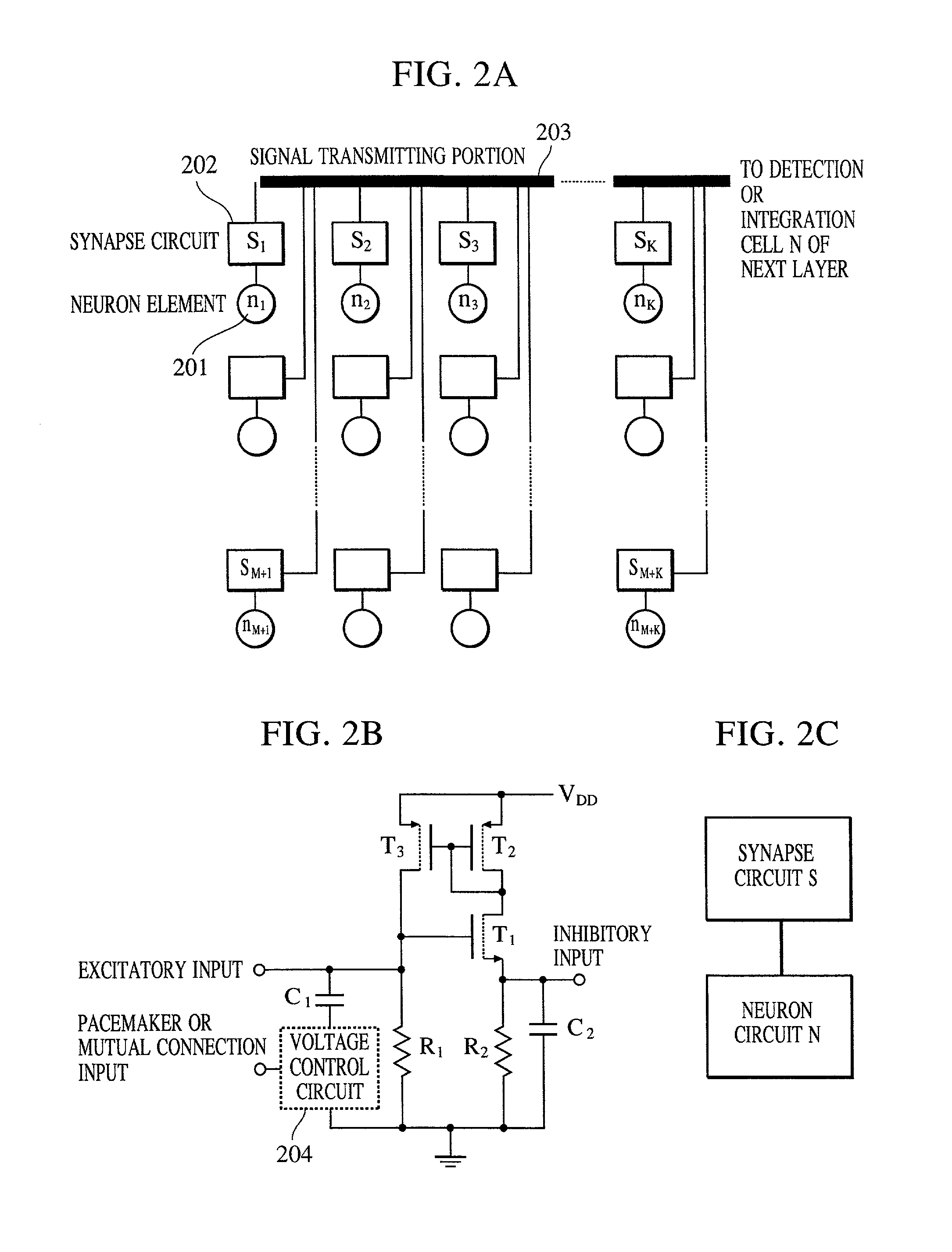

Parallel Pulse Signal Processing Apparatus, Pattern Recognition Apparatus, And Image Input Apparatus

ActiveUS20070208678A1Reduce power consumptionReduce scaleDigital computer detailsCharacter and pattern recognitionSignal onSignal processing

In a parallel pulse signal processing apparatus including a plurality of pulse output arithmetic elements (2), a plurality of connection elements (3) which parallelly connect predetermined arithmetic elements, and a gate circuit (5) which selectively passes pulse signals from the plurality of connection elements, the arithmetic element inputs a plurality of time series pulse signals, executes predetermined modulation processing on the basis of the plurality of time series pulse signals which are input, and outputs a pulse signal on the basis of a result of modulation processing, wherein the gate circuit selectively passes, of the signals from the plurality of connection elements, a finite number of pulse signals corresponding to predetermined upper output levels.

Owner:CANON KK

Neuromorphic Circuit

Embodiments of the present invention are directed to neuromorphic circuits containing two or more internal neuron computational units. Each internal neuron computational unit includes a synchronization-signal input for receiving a synchronizing signal, at least one input for receiving input signals, and at least one output for transmitting an output signal. A memristive synapse connects an output signal line carrying output signals from a first set of one or more internal neurons to an input signal line that carries signals to a second set of one or more internal neurons.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Nanotechnology neural network methods and systems

A physical neural network is disclosed, which includes a connection network comprising a plurality of molecular conducting connections suspended within a connection gap formed between one or more input electrodes and one or more output electrodes. One or more molecular connections of the molecular conducting connections can be strengthened or weakened according to an application of an electric field across said connection gap. Thus, a plurality of physical neurons can be formed from said molecular conducting connections of said connection network. Additionally, a gate can be located adjacent said connection gap and which comes into contact with said connection network. The gate can be connected to logic circuitry which can activate or deactivate individual physical neurons among said plurality of physical neurons.

Owner:KNOWM TECH

Joint movement apparatus

Systems, methods and devices for restoring or enhancing one or more motor functions of a patient are disclosed. The system comprises a biological interface apparatus and a joint movement device such as an exoskeleton device or FES device. The biological interface apparatus includes a sensor that detects the multicellular signals and a processing unit for producing a control signal based on the multicellular signals. Data from the joint movement device is transmitted to the processing unit for determining a value of a configuration parameter of the system. Also disclosed is a joint movement device including a flexible structure for applying force to one or more patient joints, and controlled cables that produce the forces required.

Owner:CYBERKINETICS NEUROTECH SYST

Weight-shifting mechanism for convolutional neural networks

A processor includes a processor core and a calculation circuit. The processor core includes logic determine a set of weights for use in a convolutional neural network (CNN) calculation and scale up the weights using a scale value. The calculation circuit includes logic to receive the scale value, the set of weights, and a set of input values, wherein each input value and associated weight of a same fixed size. The calculation circuit also includes logic to determine results from convolutional neural network (CNN) calculations based upon the set of weights applied to the set of input values, scale down the results using the scale value, truncate the scaled down results to the fixed size, and communicatively couple the truncated results to an output for a layer of the CNN.

Owner:INTEL CORP

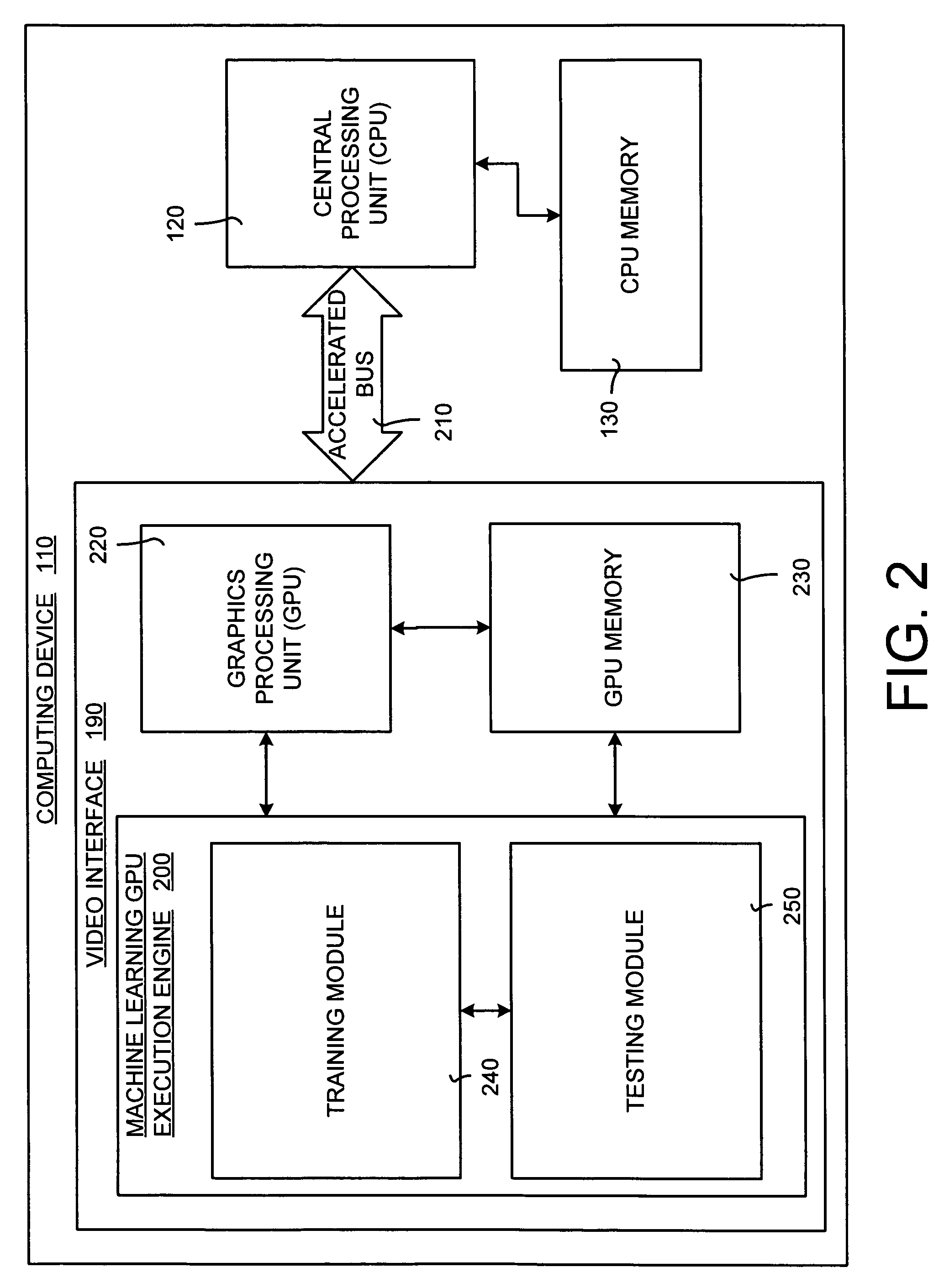

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS7219085B2Alleviates computational limitationMore computationCharacter and pattern recognitionKnowledge representationGraphicsTheoretical computer science

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

Apparatus and method for detecting or recognizing pattern by employing a plurality of feature detecting elements

InactiveUS7054850B2High-precision detectionImprove process capabilityDigital computer detailsCharacter and pattern recognitionSynapsePattern detection

Owner:CANON KK

Training of a physical neural network

Owner:KNOWM TECH

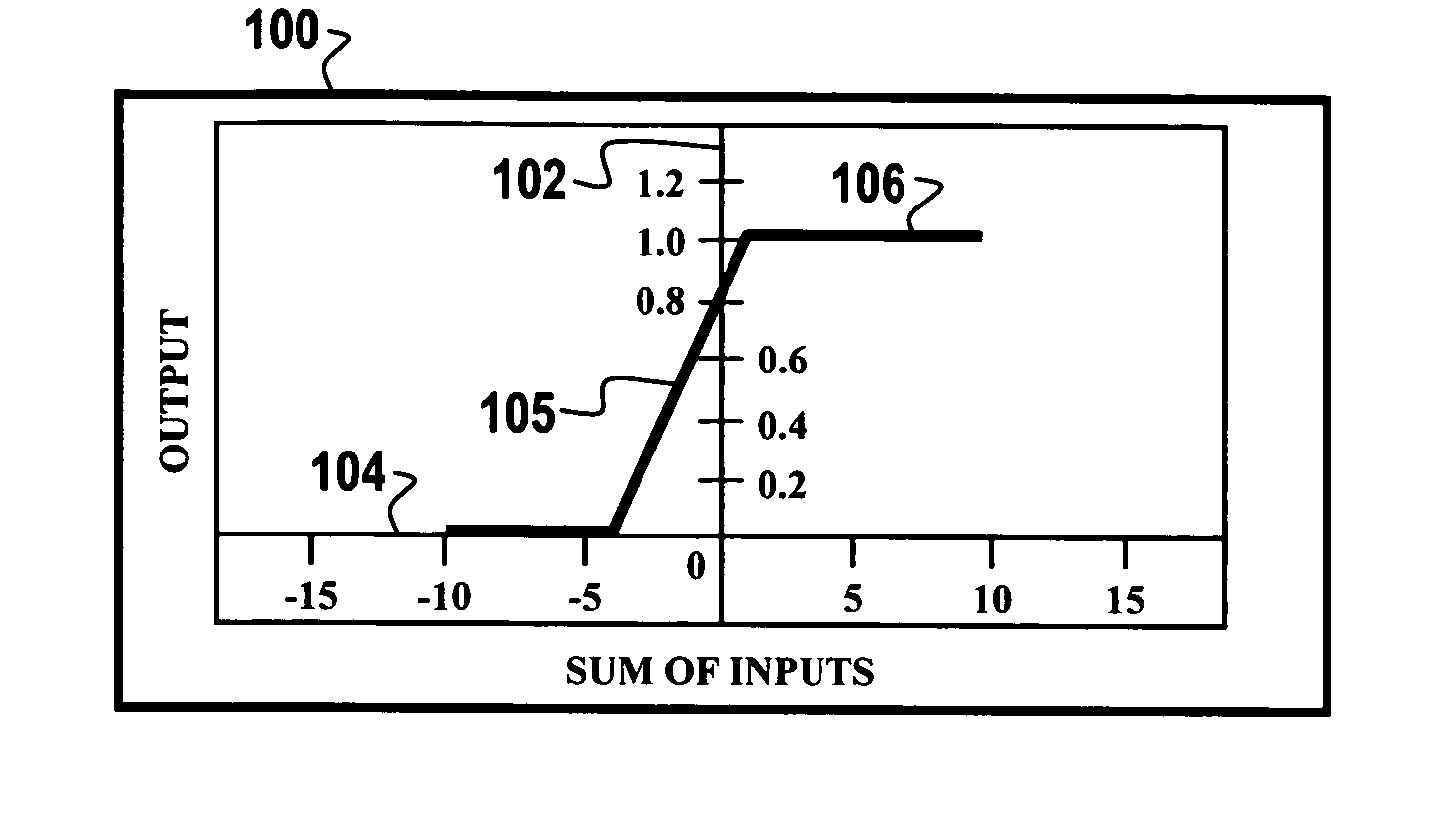

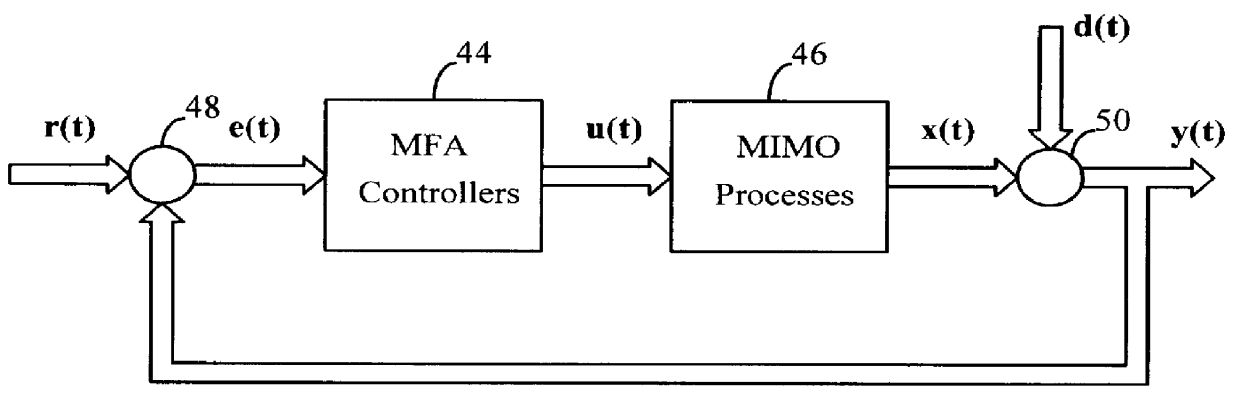

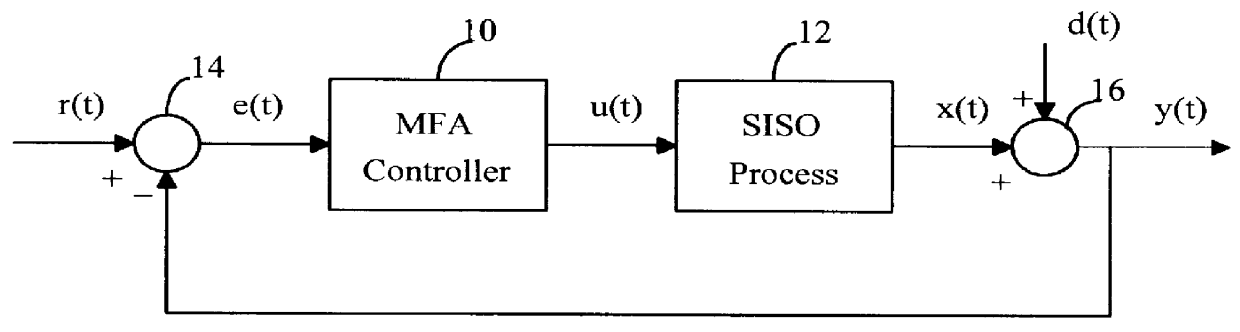

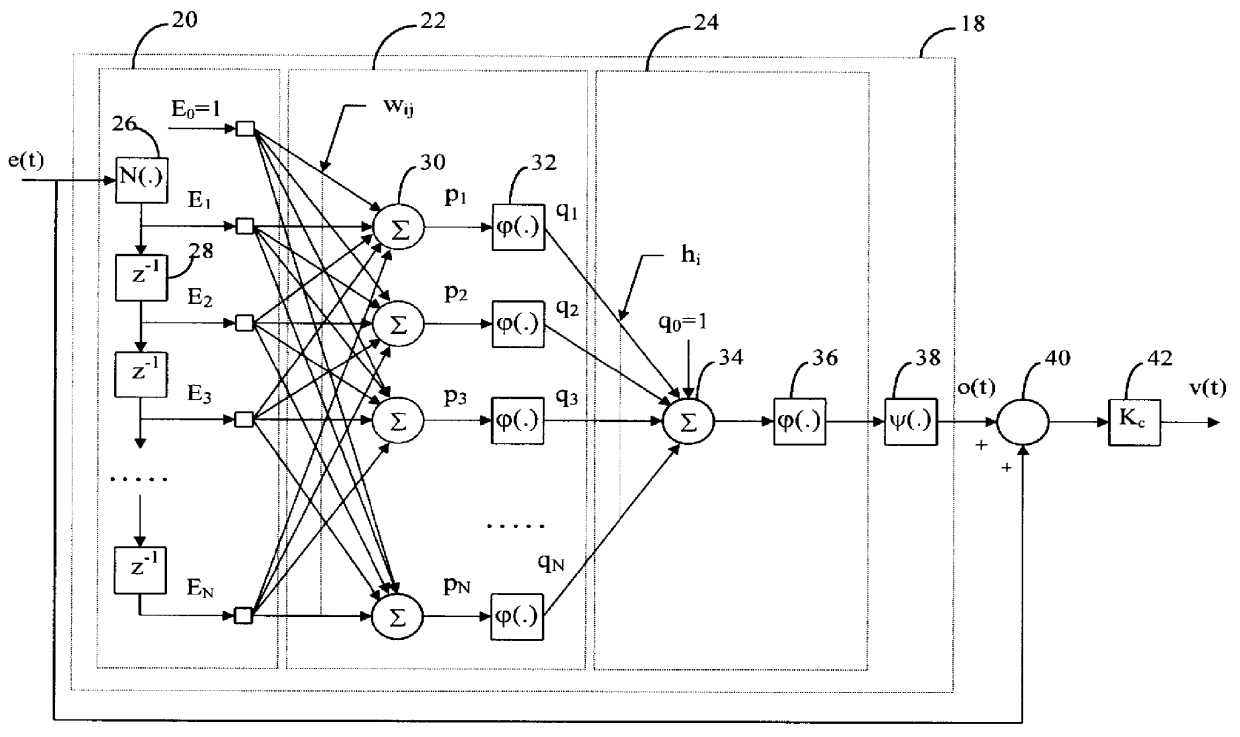

Model-free adaptive process control

InactiveUS6055524ALong response delayOvercome limitationsDigital computer detailsDigital dataData miningSelf adaptive

A model-free adaptive controller is disclosed, which uses a dynamic artificial neural network with a learning algorithm to control any single-variable or multivariable open-loop stable, controllable, and consistently direct-acting or reverse-acting industrial process without requiring any manual tuning, quantitative knowledge of the process, or process identifiers. The need for process knowledge is avoided by substituting 1 for the actual sensitivity function .differential.y(t) / .differential.u(t) of the process.

Owner:GEN CYBERNATION GROUP

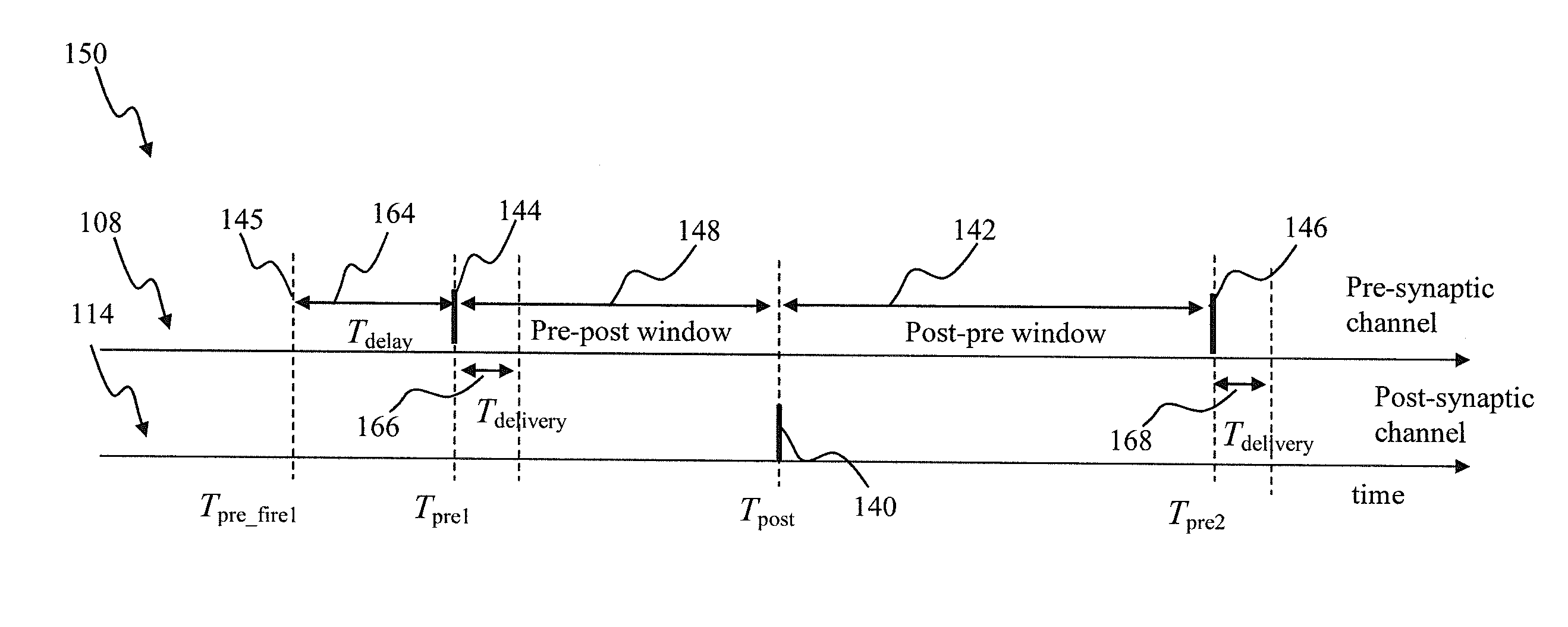

Apparatus and method for partial evaluation of synaptic updates based on system events

Apparatus and methods for partial evaluation of synaptic updates in neural networks. In one embodiment, a pre-synaptic unit is connected to a several post synaptic units via communication channels. Information related to a plurality of post-synaptic pulses generated by the post-synaptic units is stored by the network in response to a system event. Synaptic channel updates are performed by the network using the time intervals between a pre-synaptic pulse, which is being generated prior to the system event, and at least a portion of the plurality of the post synaptic pulses. The system event enables removal of the information related to the portion of the post-synaptic pulses from the storage device. A shared memory block within the storage device is used to store data related to post-synaptic pulses generated by different post-synaptic nodes. This configuration enables memory use optimization of post-synaptic units with different firing rates.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com