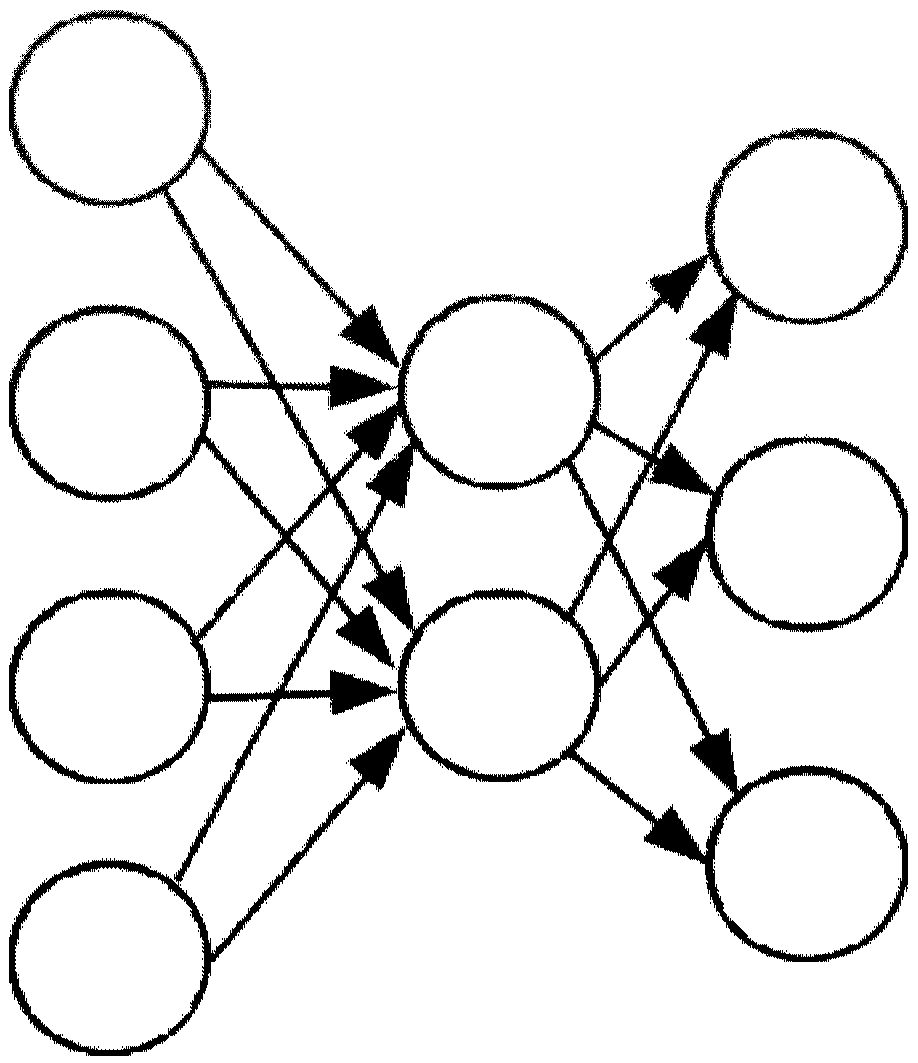

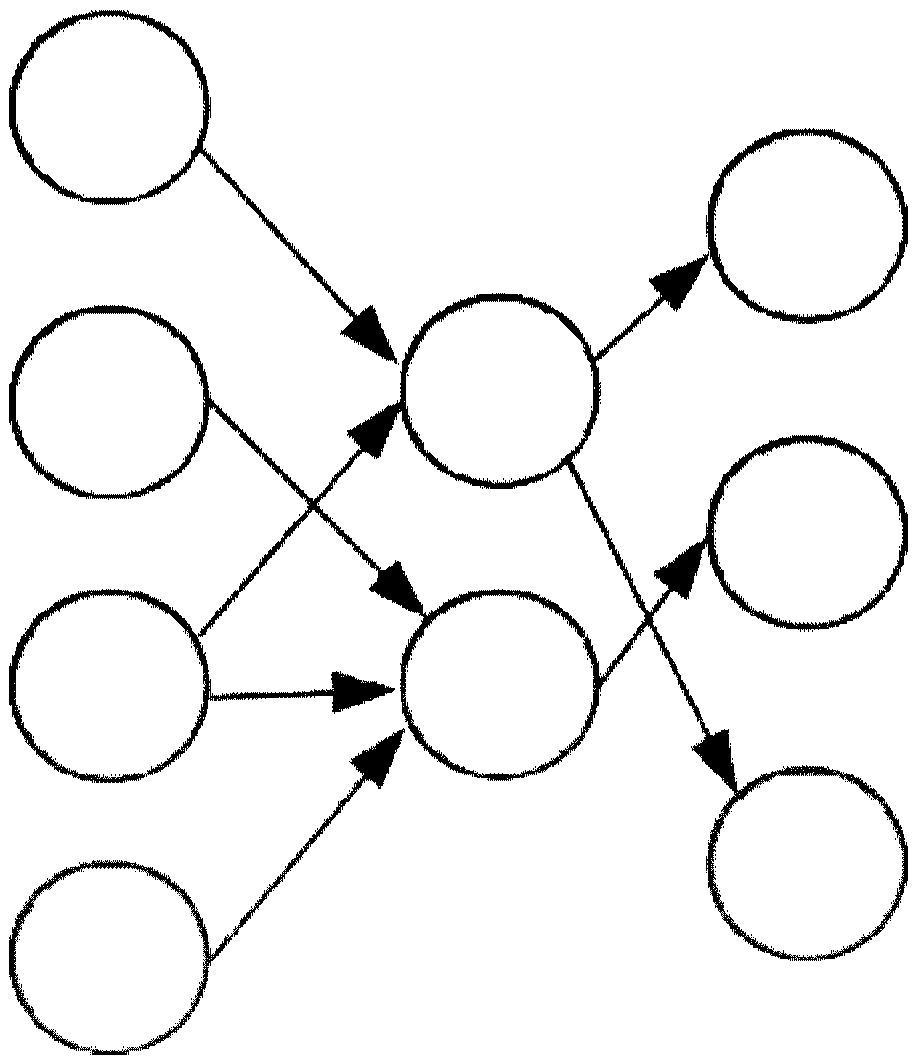

Artificial neural network calculating device and method for sparse connection

An artificial neural network and computing device technology, applied in neural learning methods, biological neural network models, calculations, etc., can solve problems such as performance bottlenecks, high power consumption overhead, etc., to improve support, avoid performance bottlenecks, and reduce memory access bandwidth Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

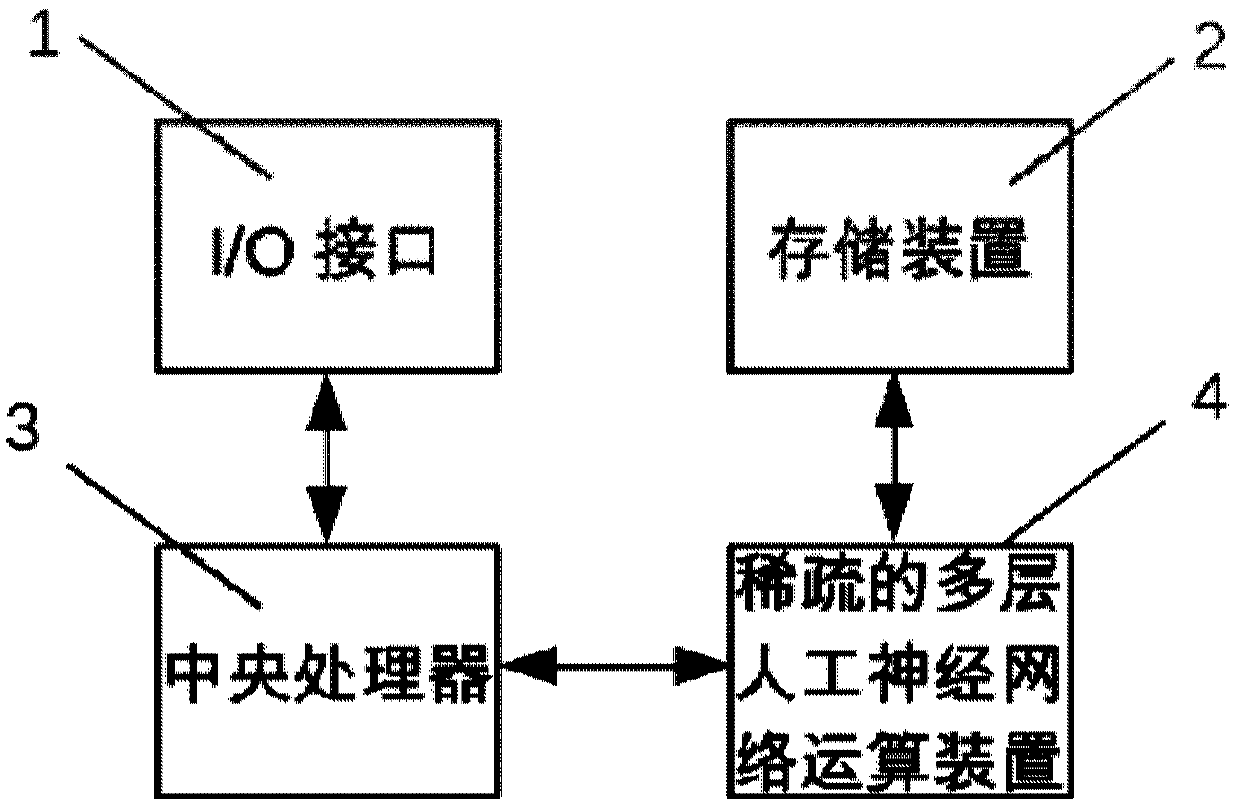

[0051] The invention discloses an artificial neural network computing device for sparse connections, comprising:

[0052] The mapping unit is used to convert the input data into a one-to-one storage method of input neurons and weights, and store them in a storage device or a cache;

[0053] storage device for storing data and instructions;

[0054] An operation unit, configured to perform corresponding operations on the data according to instructions stored in the storage device; the operation unit mainly performs three-step operations, the first step is to multiply the input neurons and weight data; the second step The second step is to perform the addition tree operation, which is used to add the weighted output neuron...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com