Device and method for executing batch normalization operation

A technology of computing modules and computing units, applied in the field of artificial neural networks, can solve problems such as off-chip bandwidth performance bottlenecks, and achieve the effects of avoiding performance bottlenecks, reducing memory access bandwidth, and normalizing serial operations and parallel operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

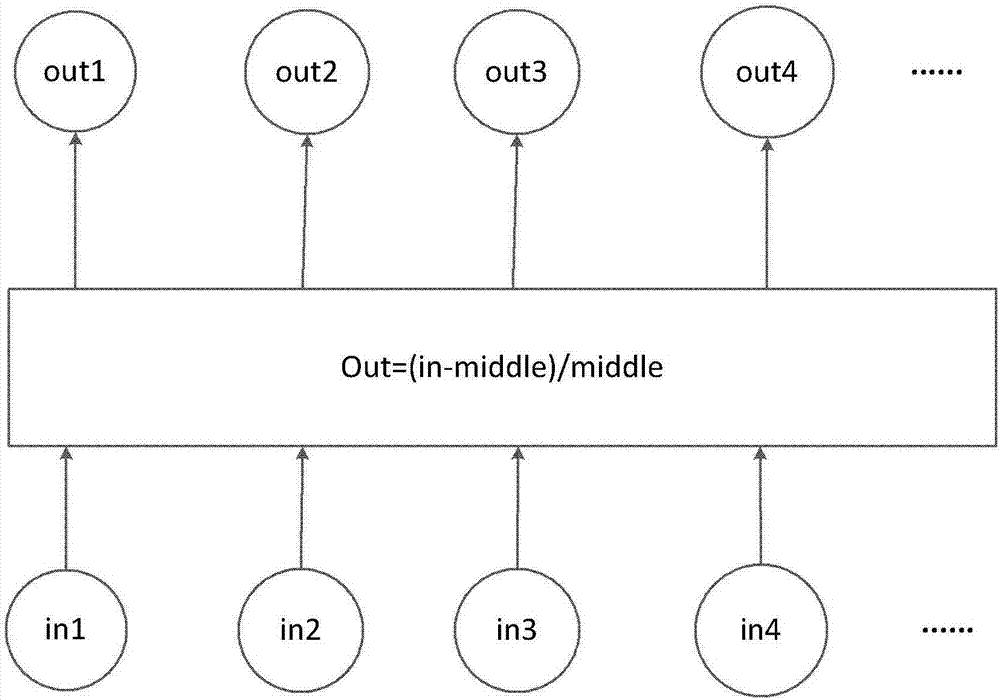

[0018] The batch normalization operation includes forward and reverse parts. Both the forward and reverse of the batch normalization operation need to be used during the training process of the artificial neural network, while only the forward process of the batch normalization operation is performed during the use of the artificial neural network. In the process of using the artificial neural network, the parameters obtained in the training process are used, such as the mean value, variance and other data in the batch normalization operation do not need to be repeatedly calculated.

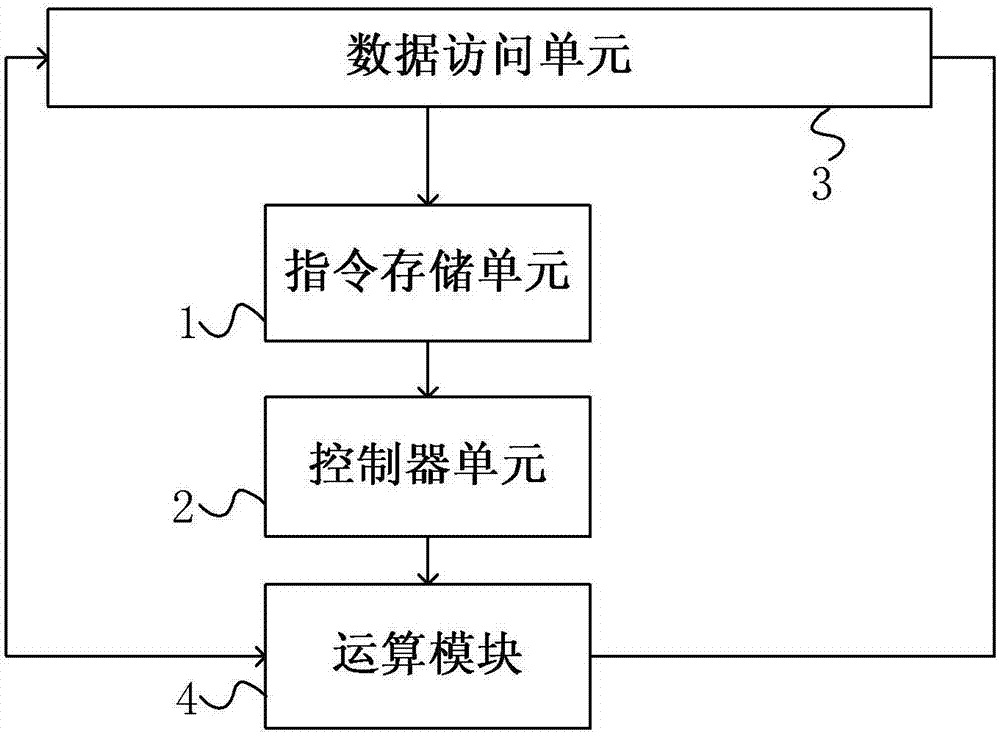

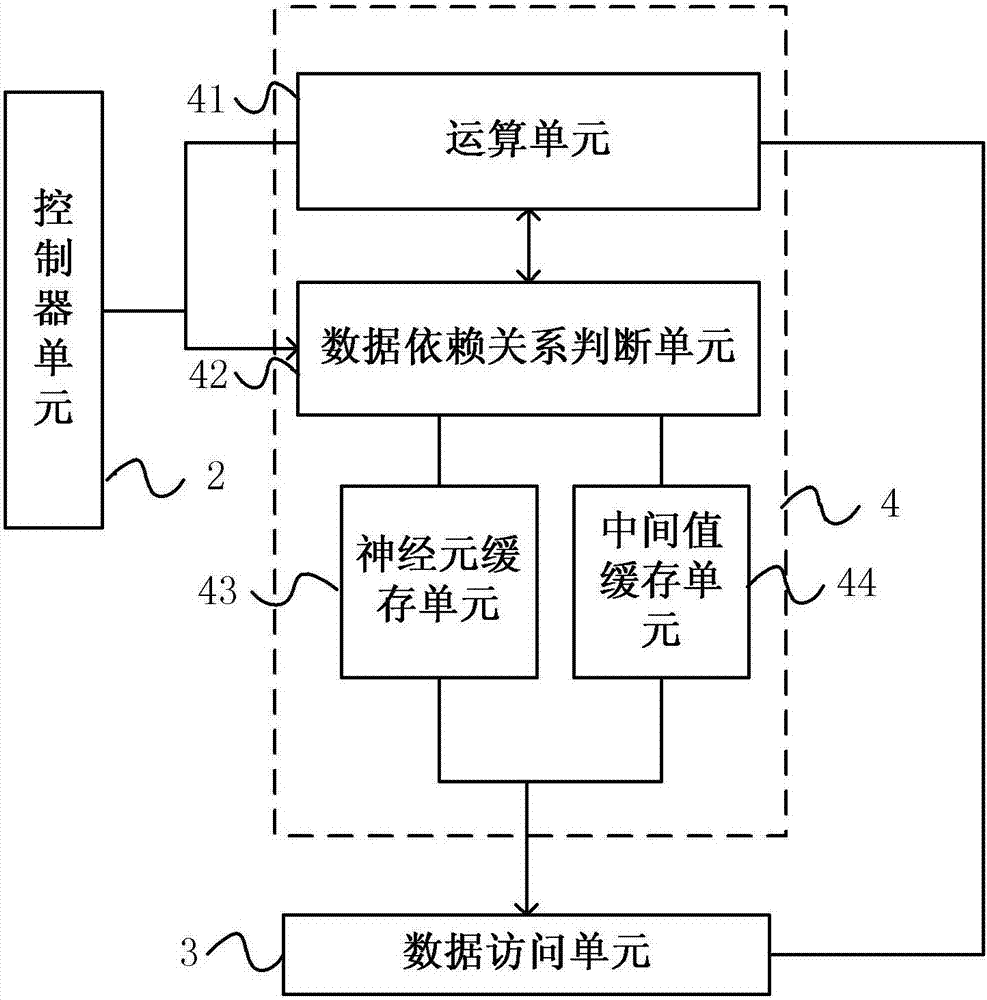

[0019] figure 1 It shows the overall structure diagram of the device for performing artificial neural network batch normalization operatio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com