Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

7705 results about "Parallel processing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

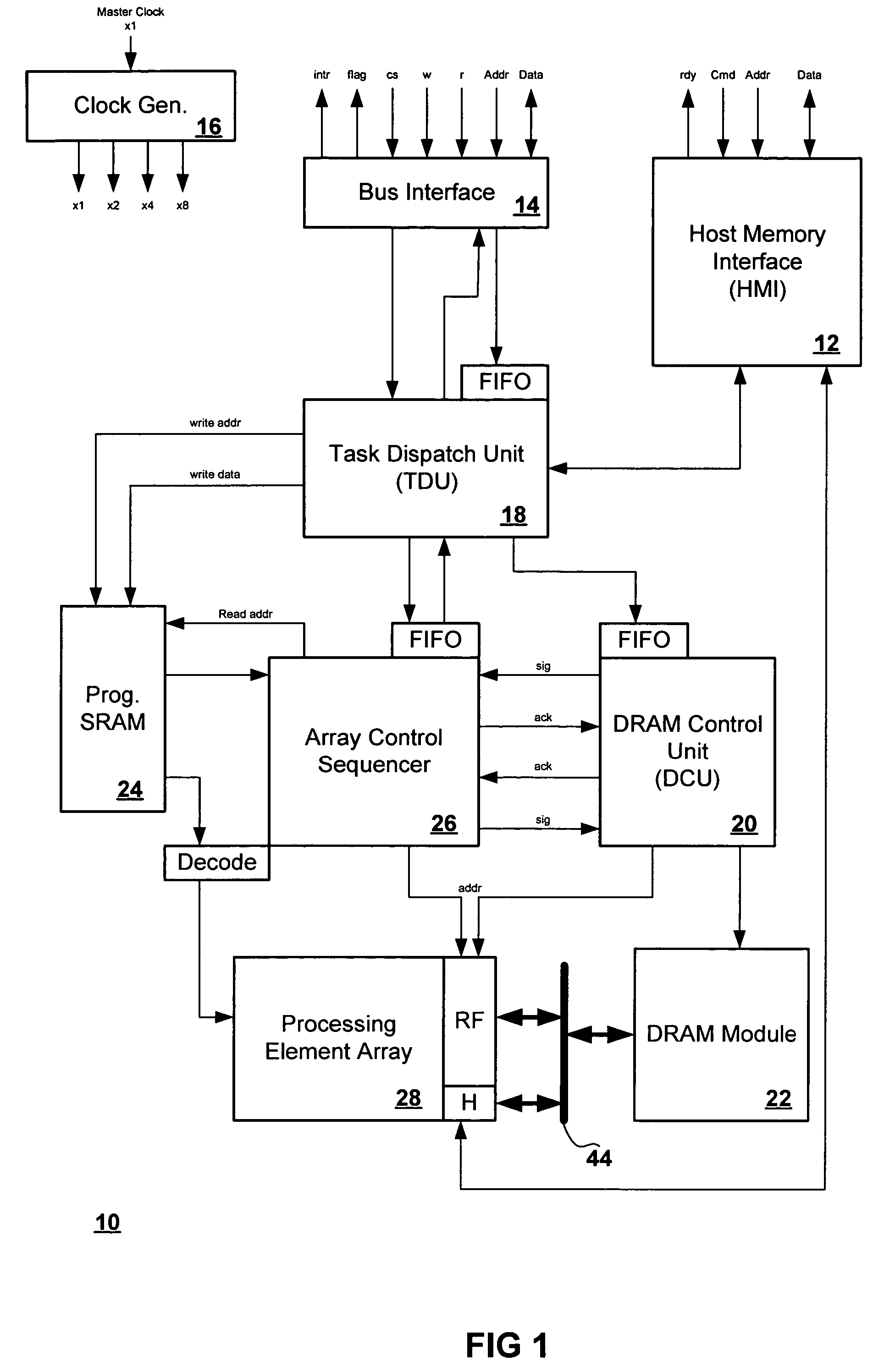

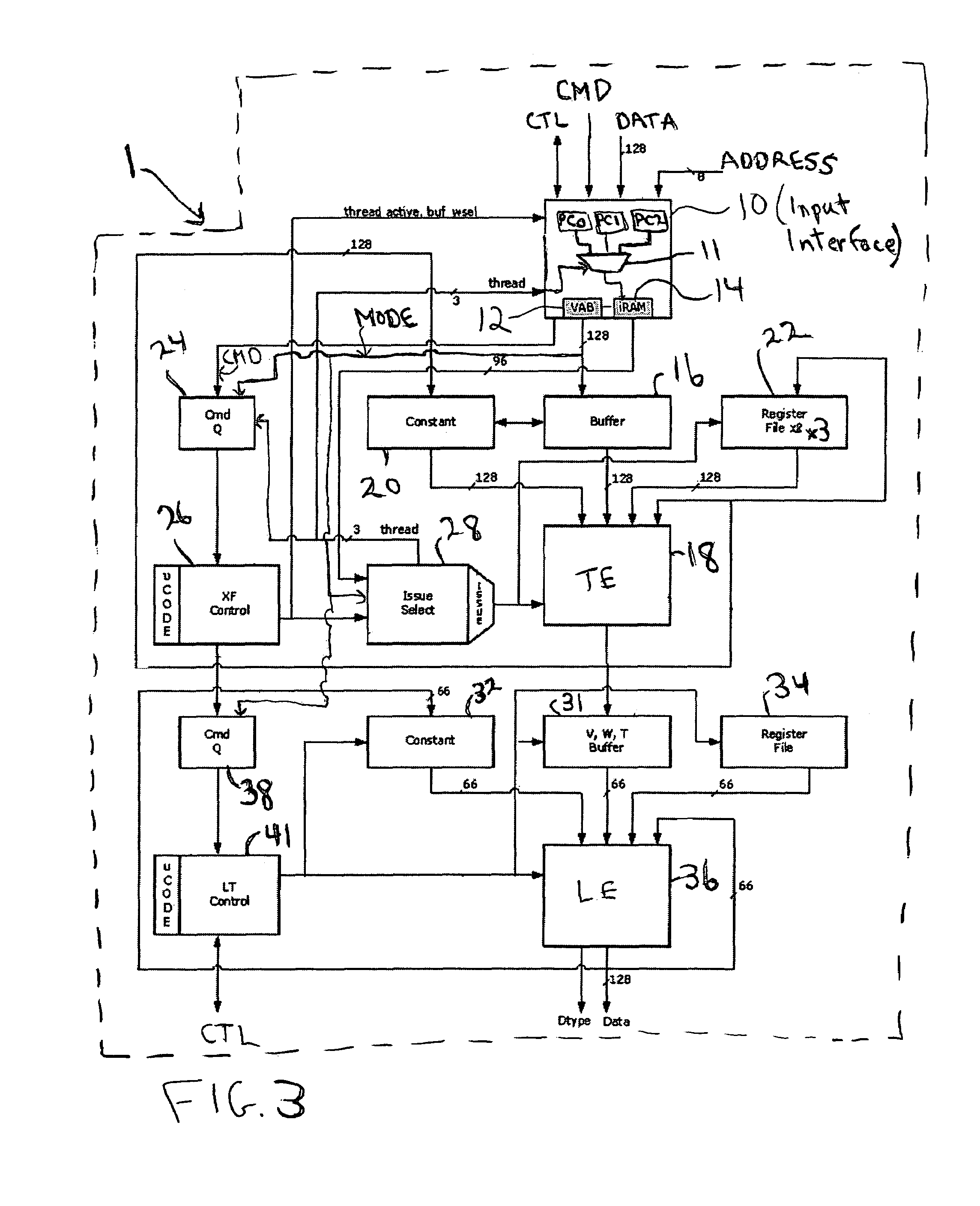

Runtime adaptable search processor

ActiveUS20060136570A1Reduce stacking processImproving host CPU performanceWeb data indexingMultiple digital computer combinationsData packInternal memory

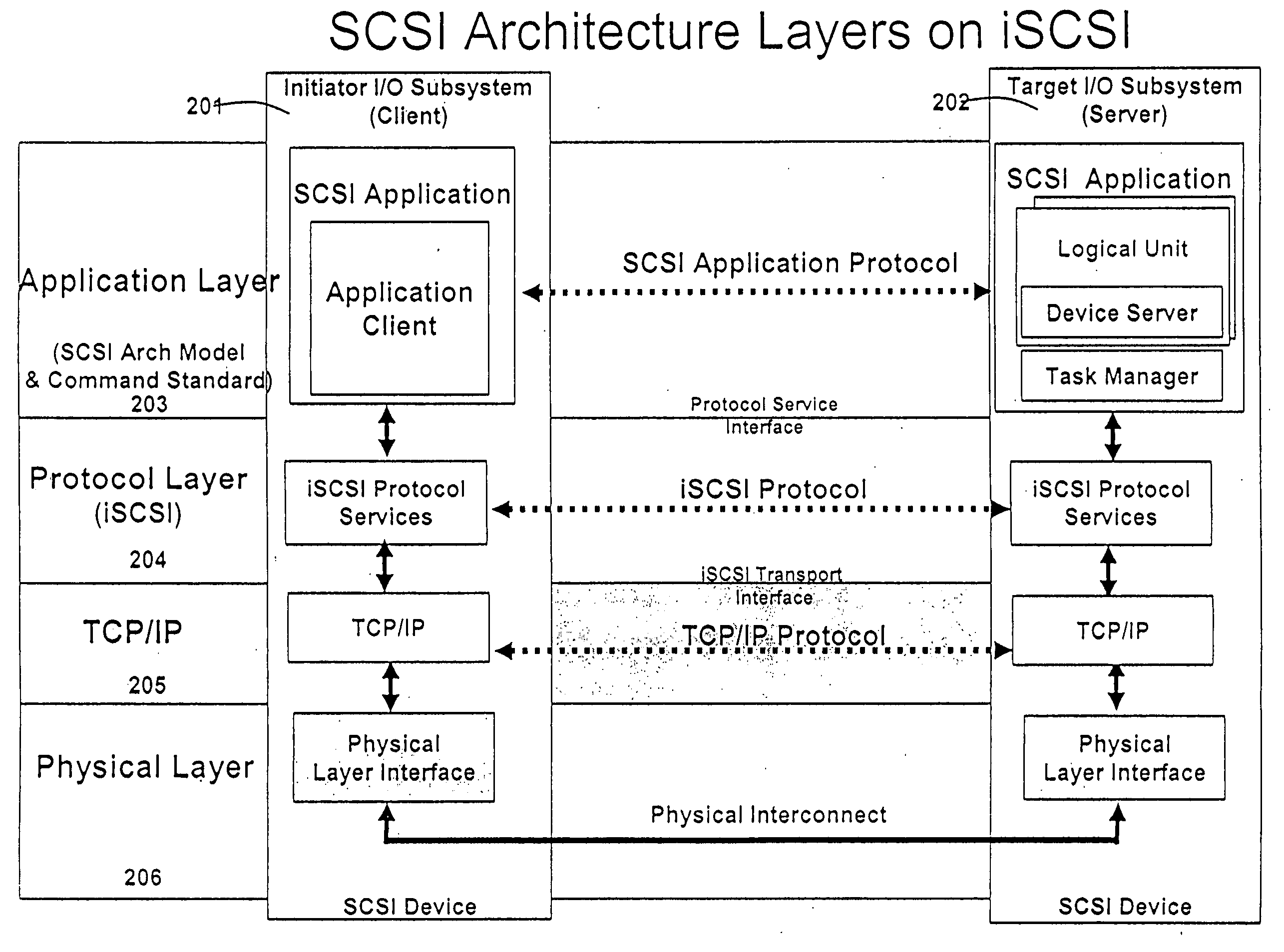

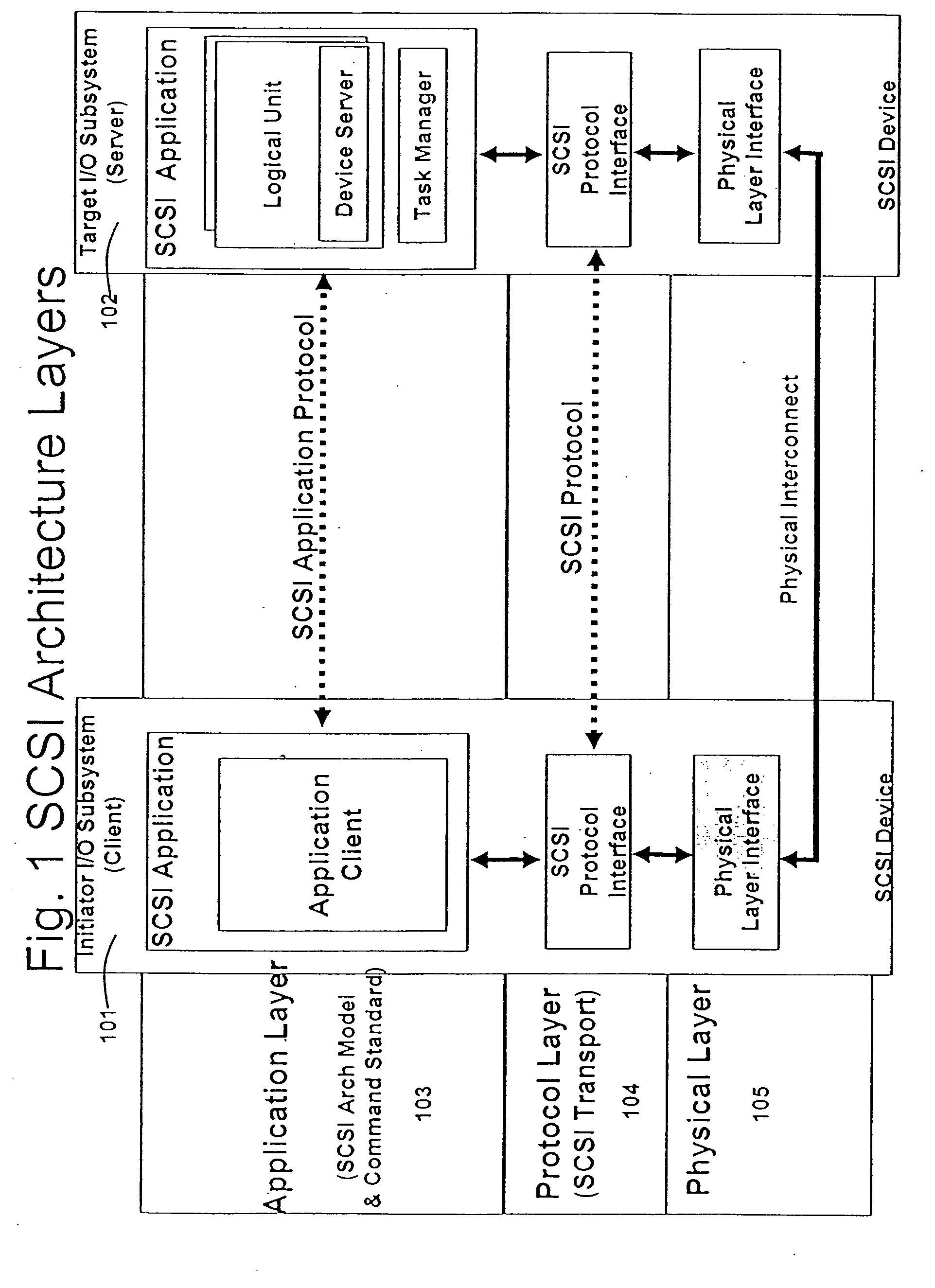

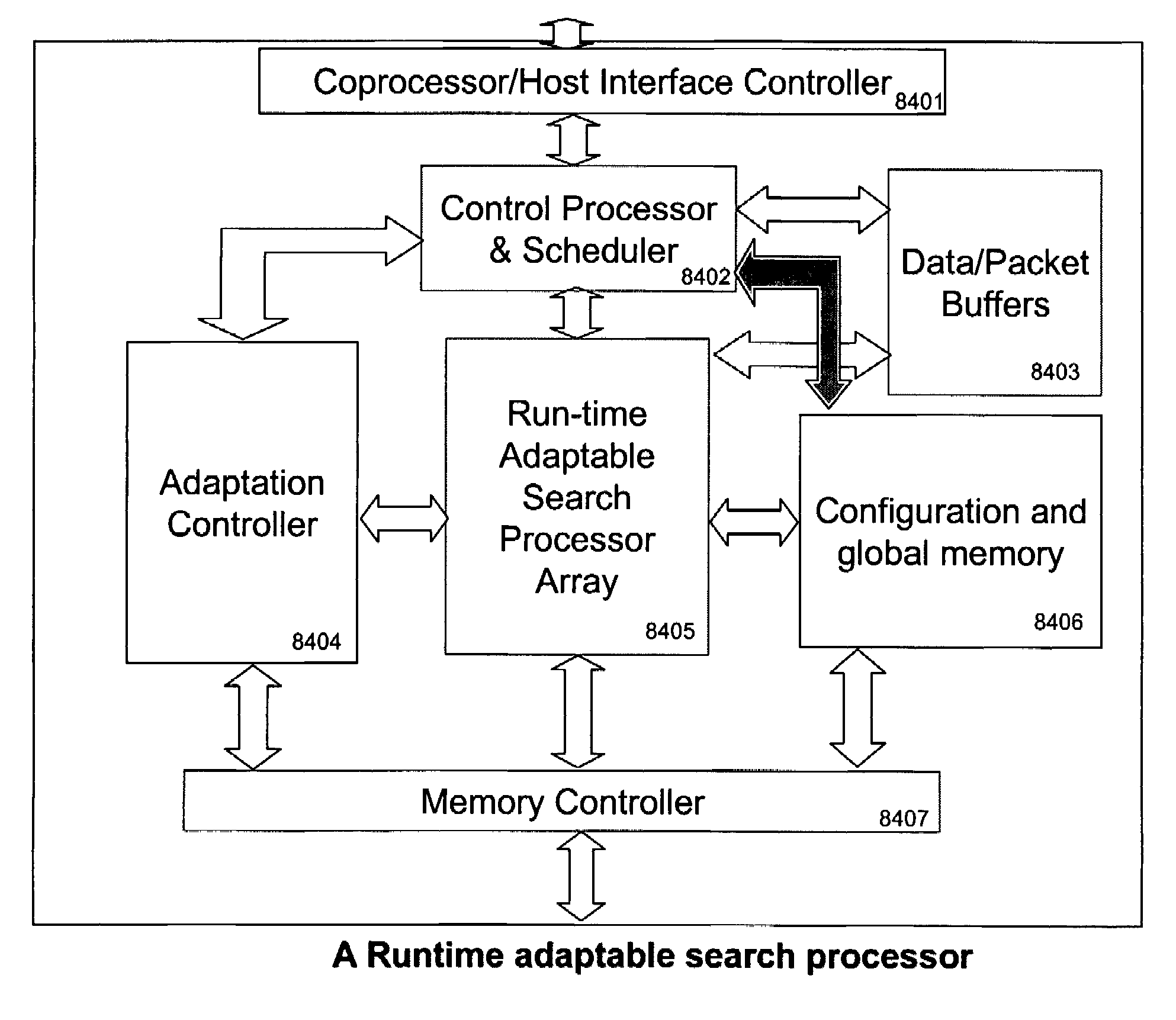

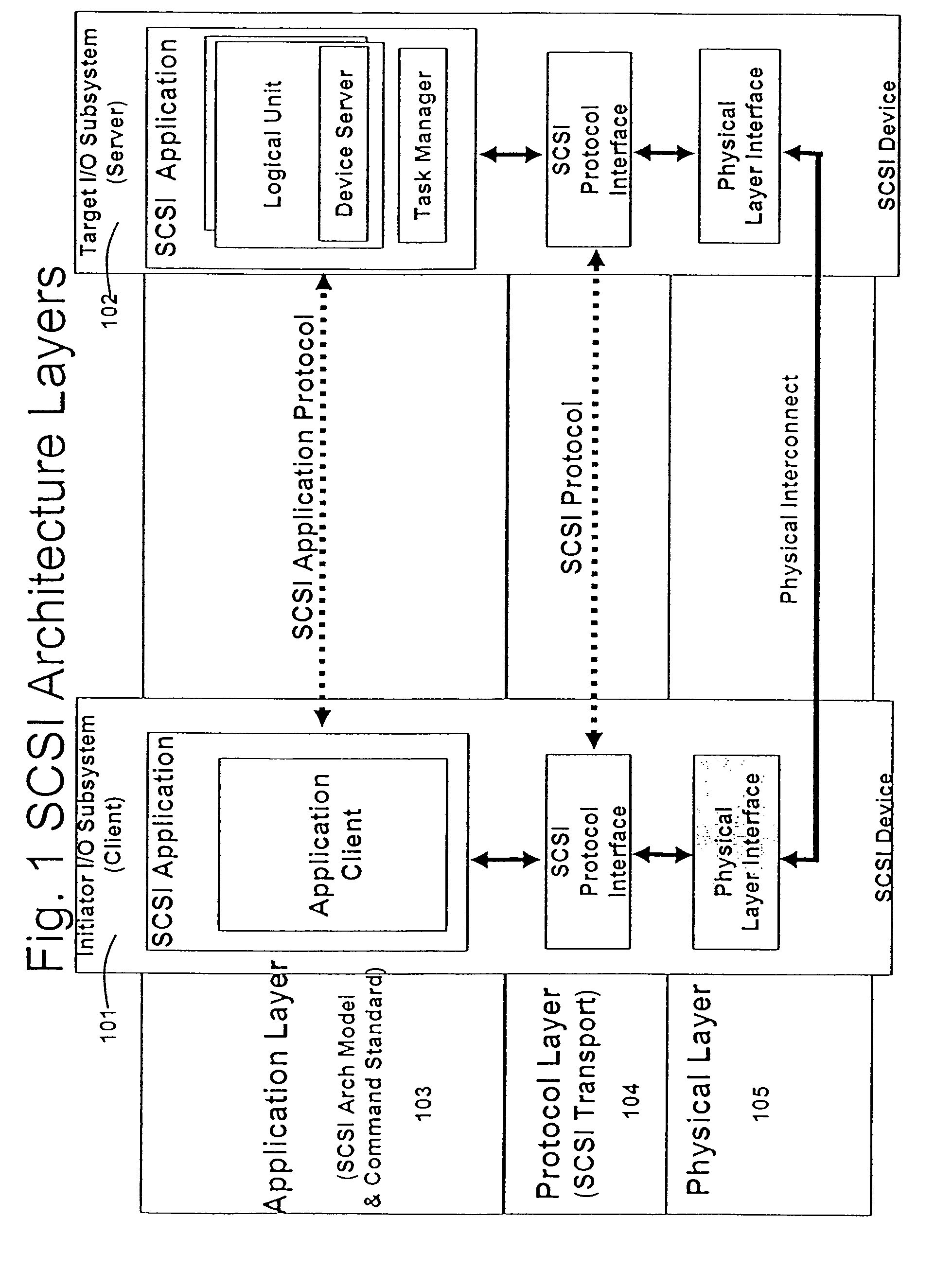

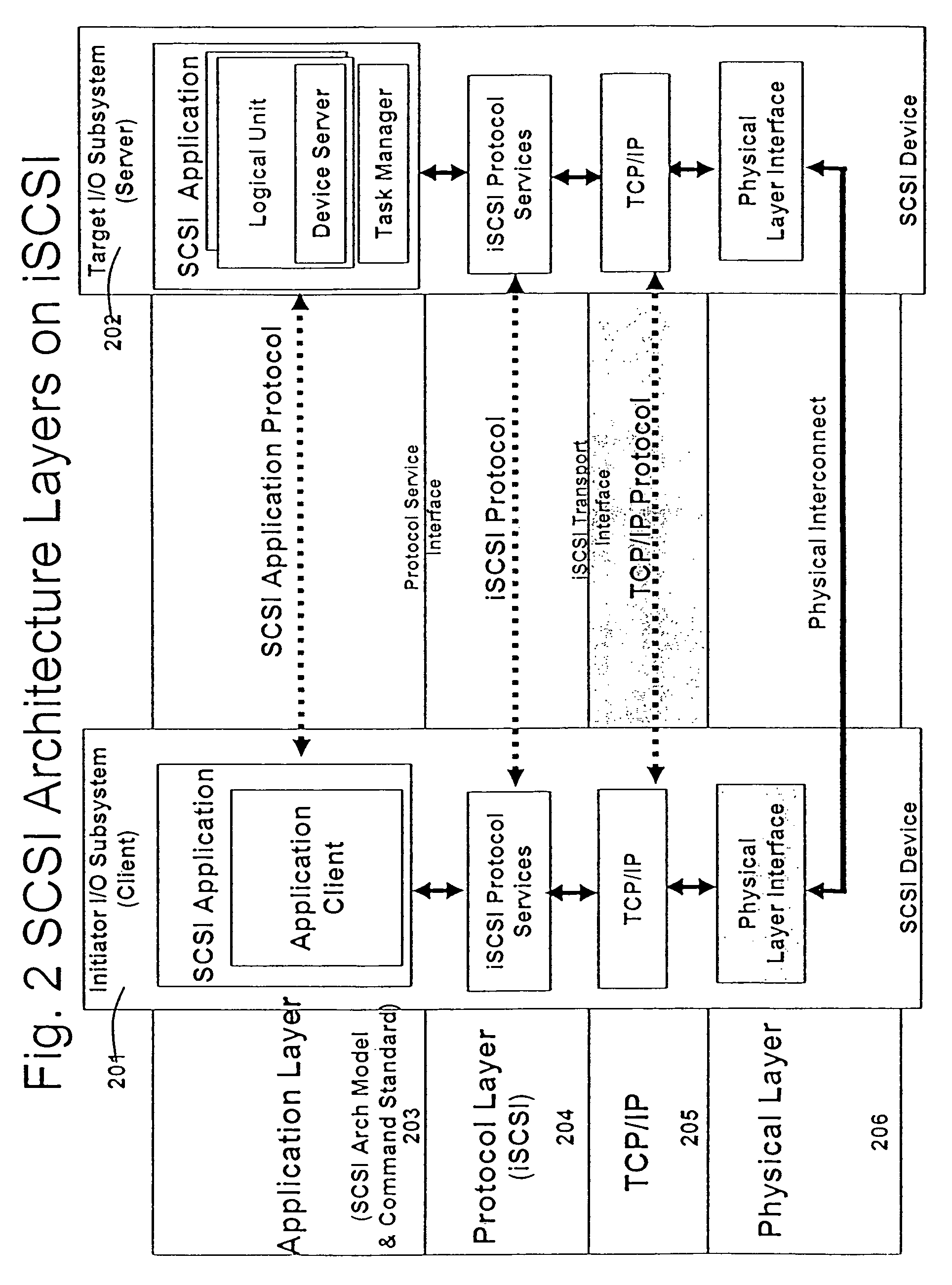

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. he search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

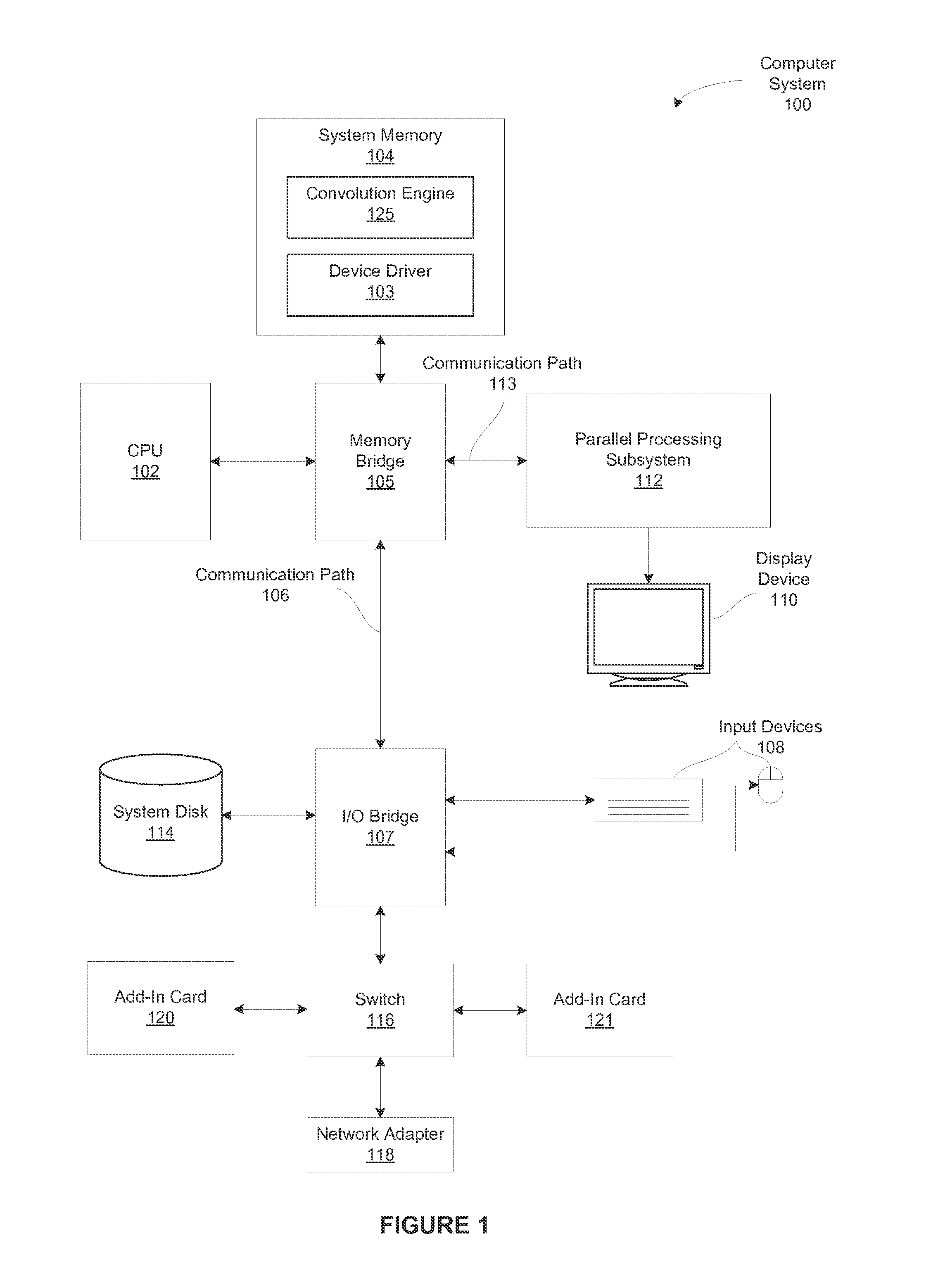

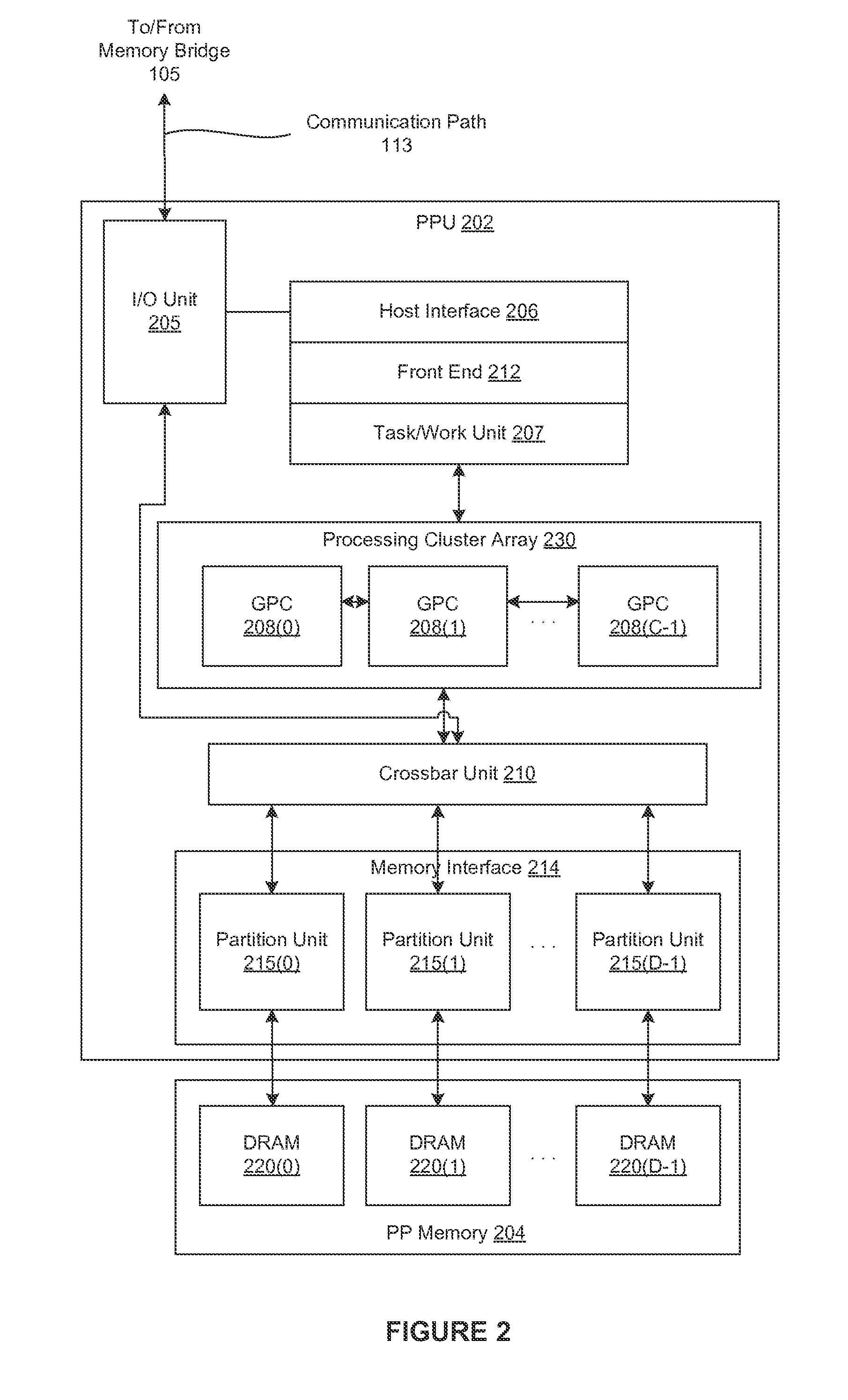

Performing multi-convolution operations in a parallel processing system

ActiveUS20160062947A1Easy to operateOptimizing on-chip memory usageBiological modelsComplex mathematical operationsLine tubingParallel processing

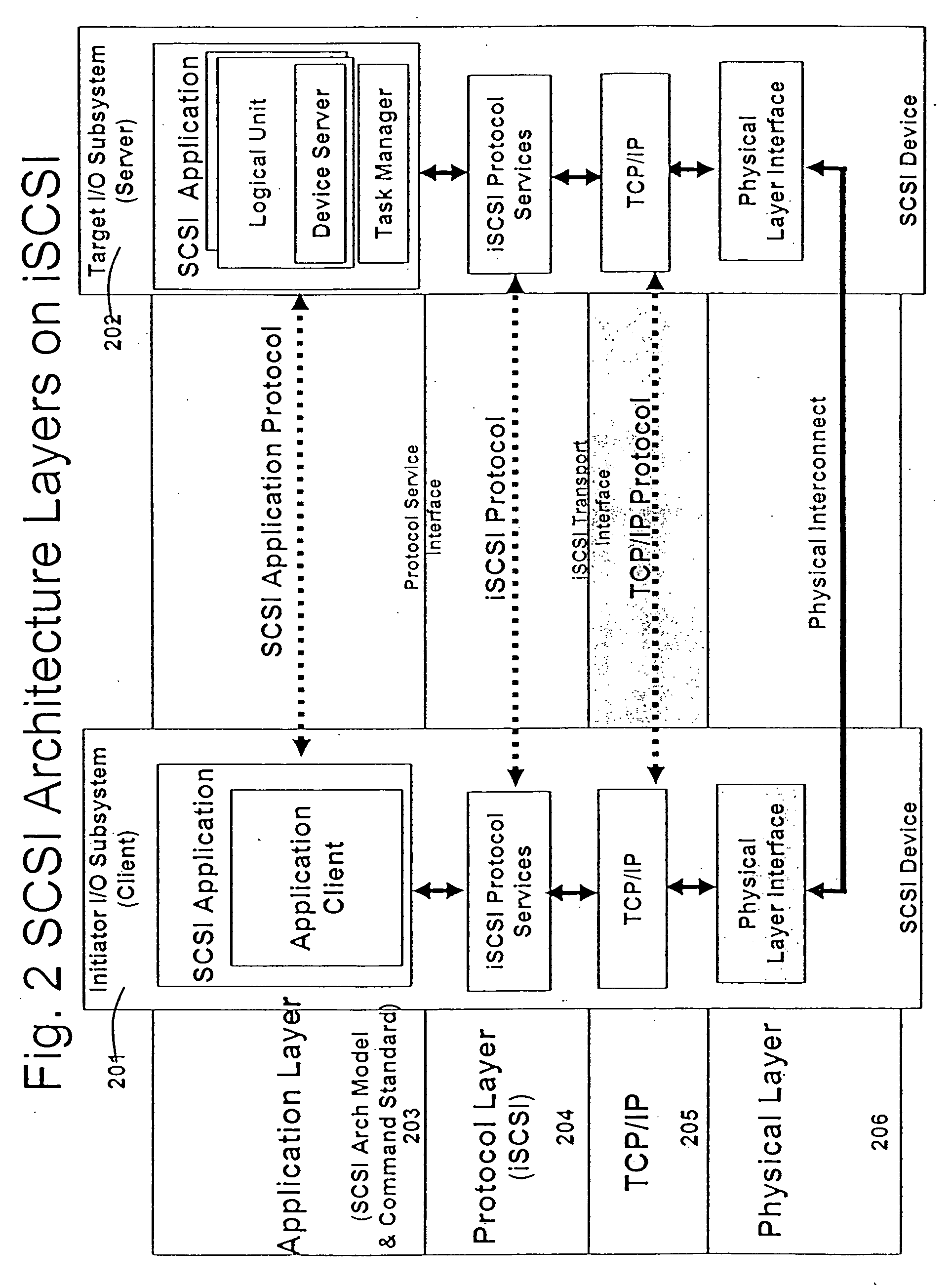

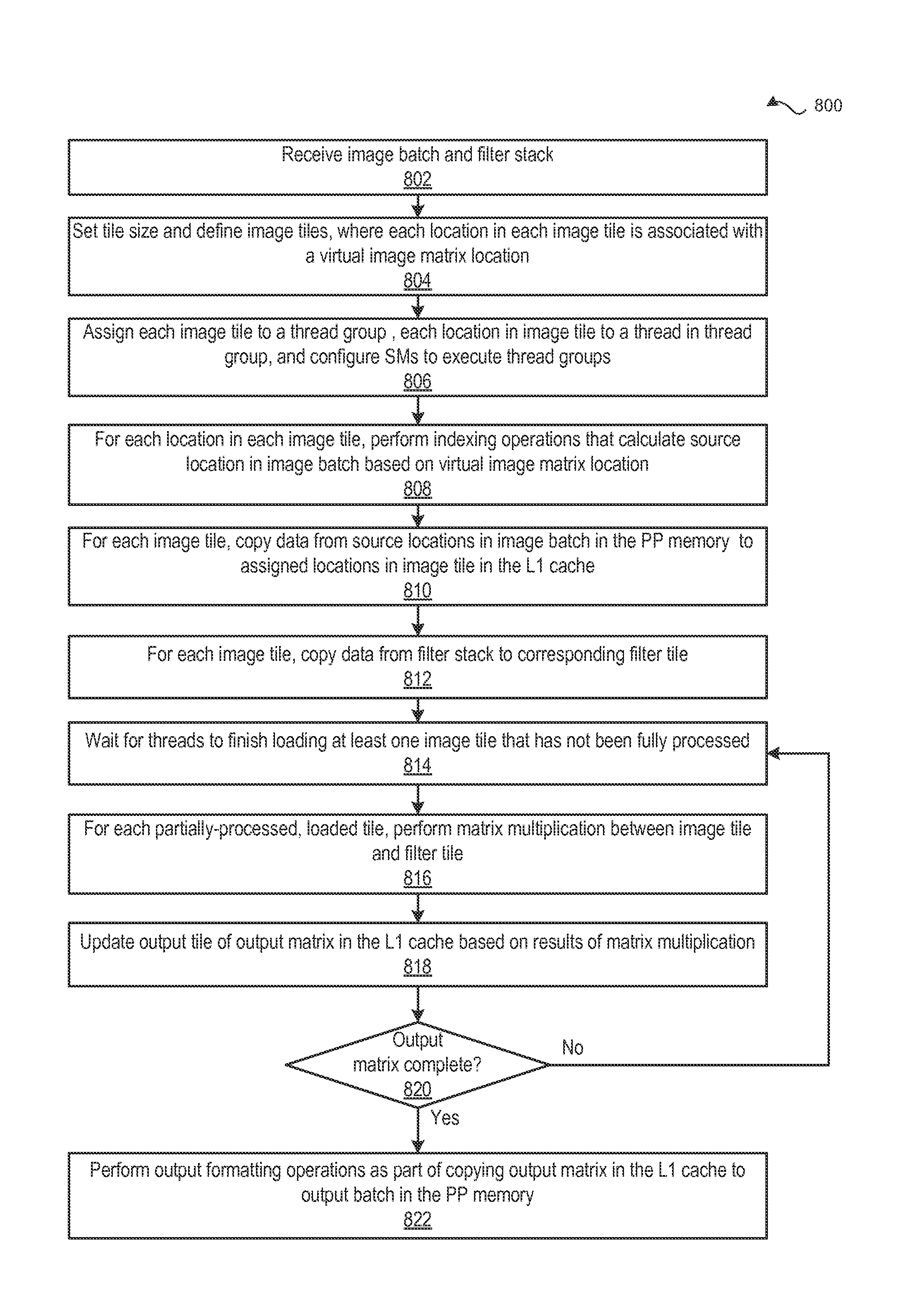

In one embodiment of the present invention a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch. Notably, the source locations reflect the contribution of the image tile to an output tile of an output matrix—the result of the multi-convolution operation. Subsequently, the pipeline copies data from the source locations to the image tile. Similarly, the pipeline copies data from a filter stack to a filter tile. The pipeline then performs matrix multiplication operations between the image tile and the filter tile to generate data included in the corresponding output tile. To optimize both on-chip memory usage and execution time, the pipeline creates each image tile in on-chip memory as-needed.

Owner:NVIDIA CORP

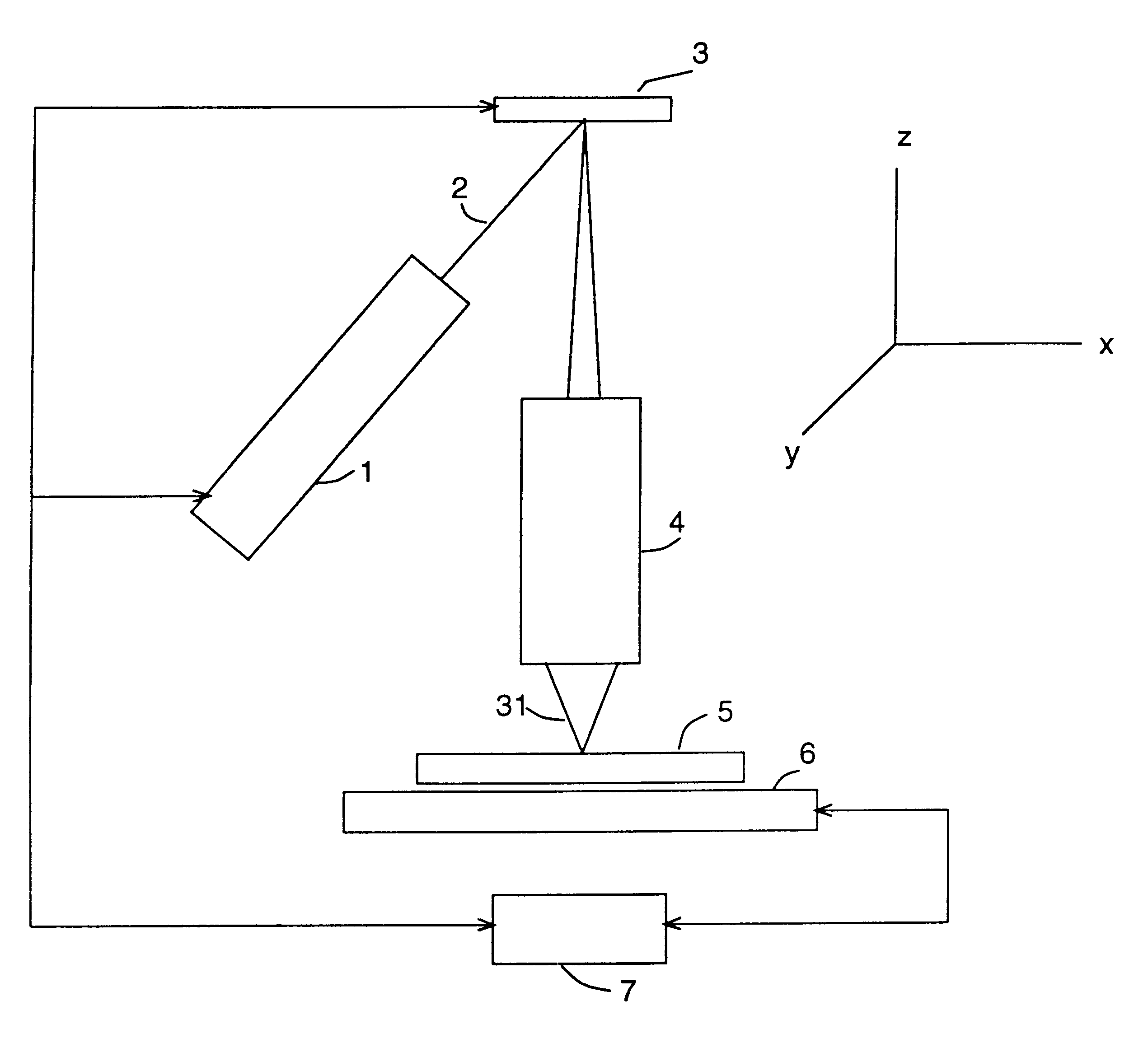

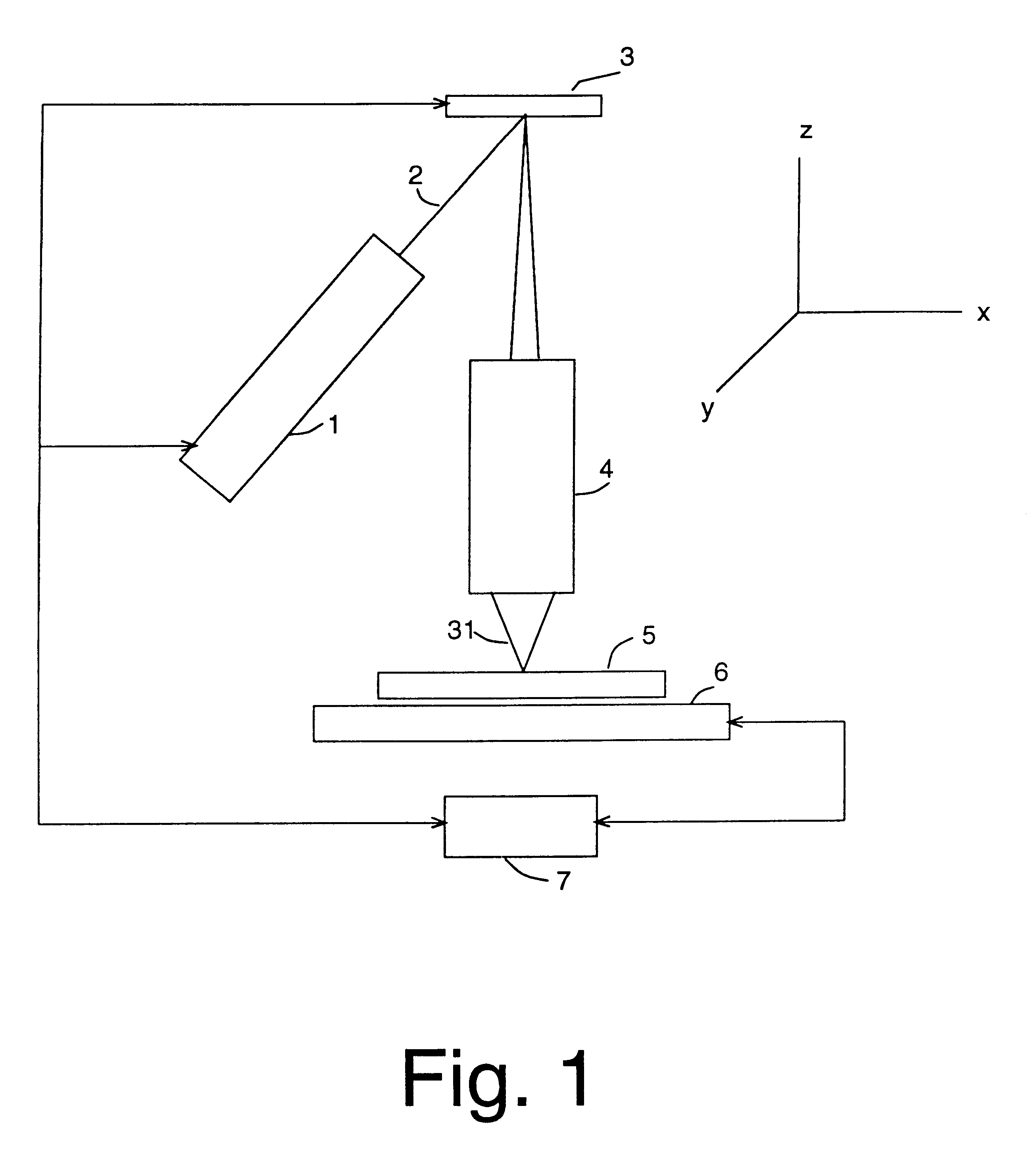

Seamless, maskless lithography system using spatial light modulator

InactiveUS6312134B1Eliminate needImprove processing throughputMirrorsPhotomechanical exposure apparatusRadiation DosagesSpatial light modulator

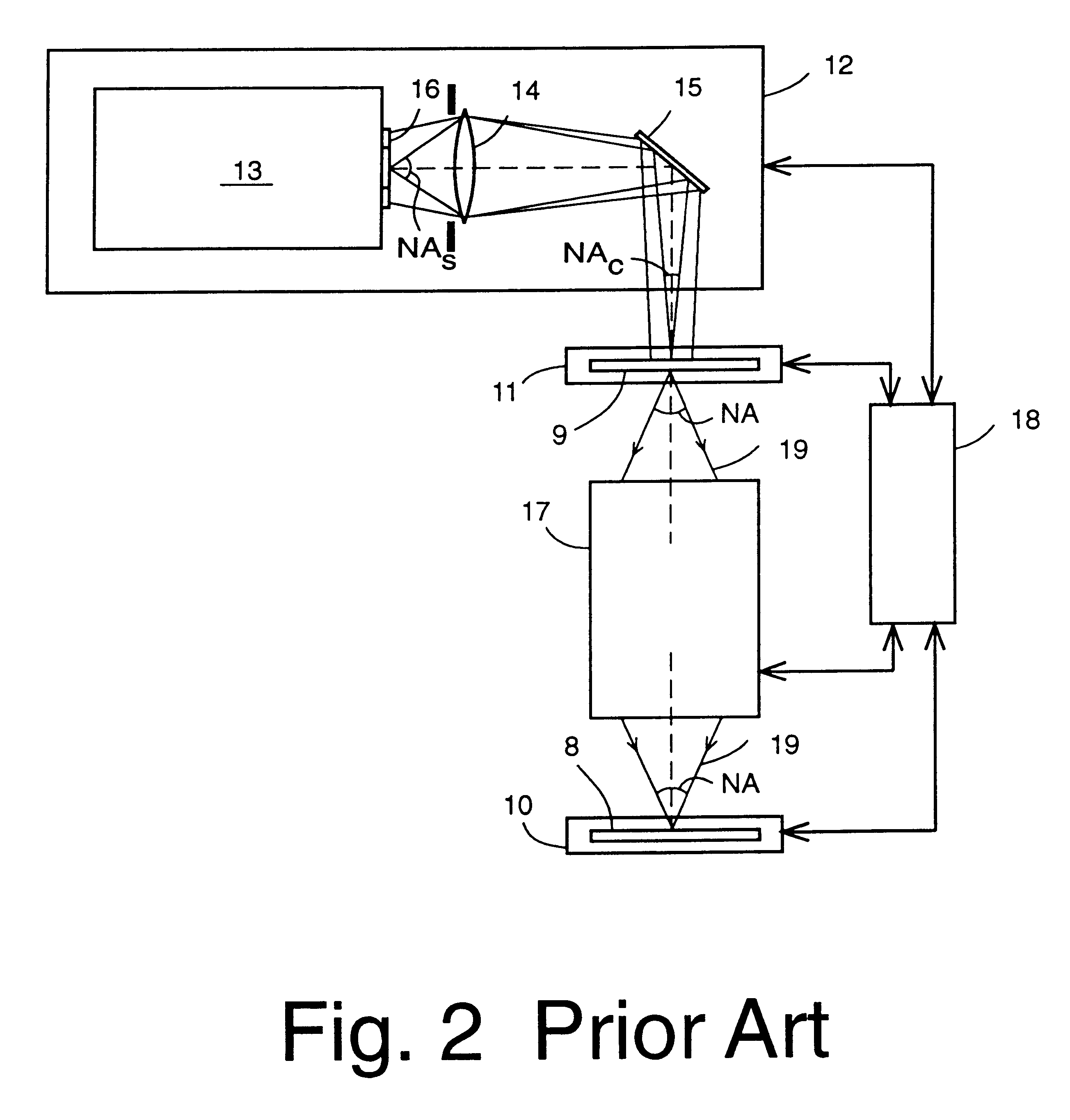

The invention is a seamless projection lithography system that eliminates the need for masks through the use of a programmable Spatial Light Modulator (SLM) with high parallel processing power. Illuminating the SLM with a radiation source (1), which while preferably a pulsed laser may be a shuttered lamp or multiple lasers with alternating synchronization, provides a patterning image of many pixels via a projection system (4) onto a substrate (5). The preferred SLM is a Deformable Micromirror Device (3) for reflective pixel selection using a synchronized pulse laser. An alternative SLM is a Liquid Crystal Light Valve (LCLV) (45) for pass-through pixel selection. Electronic programming enables pixel selection control for error correction of faulty pixel elements. Pixel selection control also provides for negative and positive imaging and for complementary overlapping polygon development for seamless uniform dosage. The invention provides seamless scanning by complementary overlapping scans to equalize radiation dosage, to expose a pattern on a large area substrate (5). The invention is suitable for rapid prototyping, flexible manufacturing, and even mask making.

Owner:ANVIK CORP

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

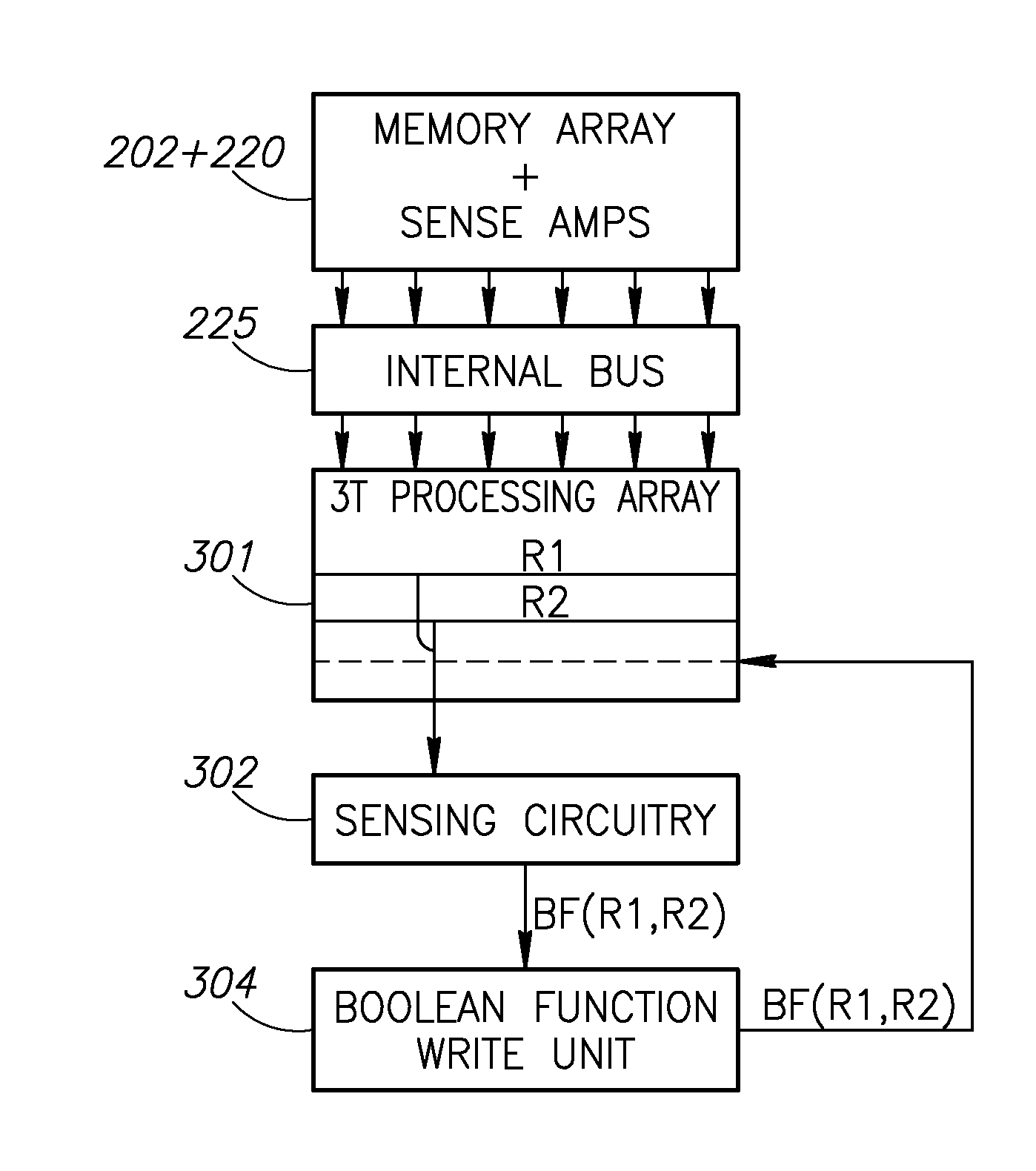

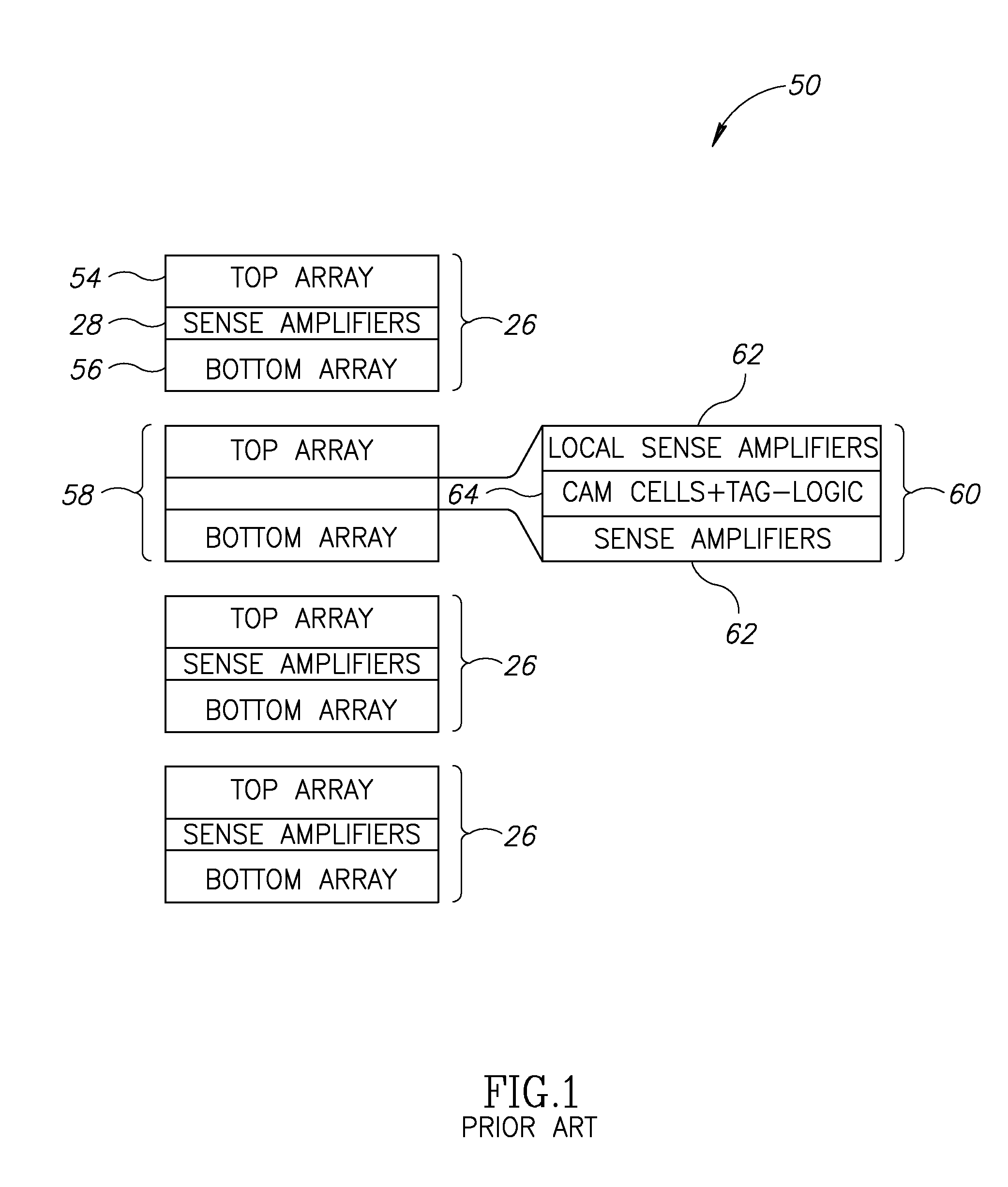

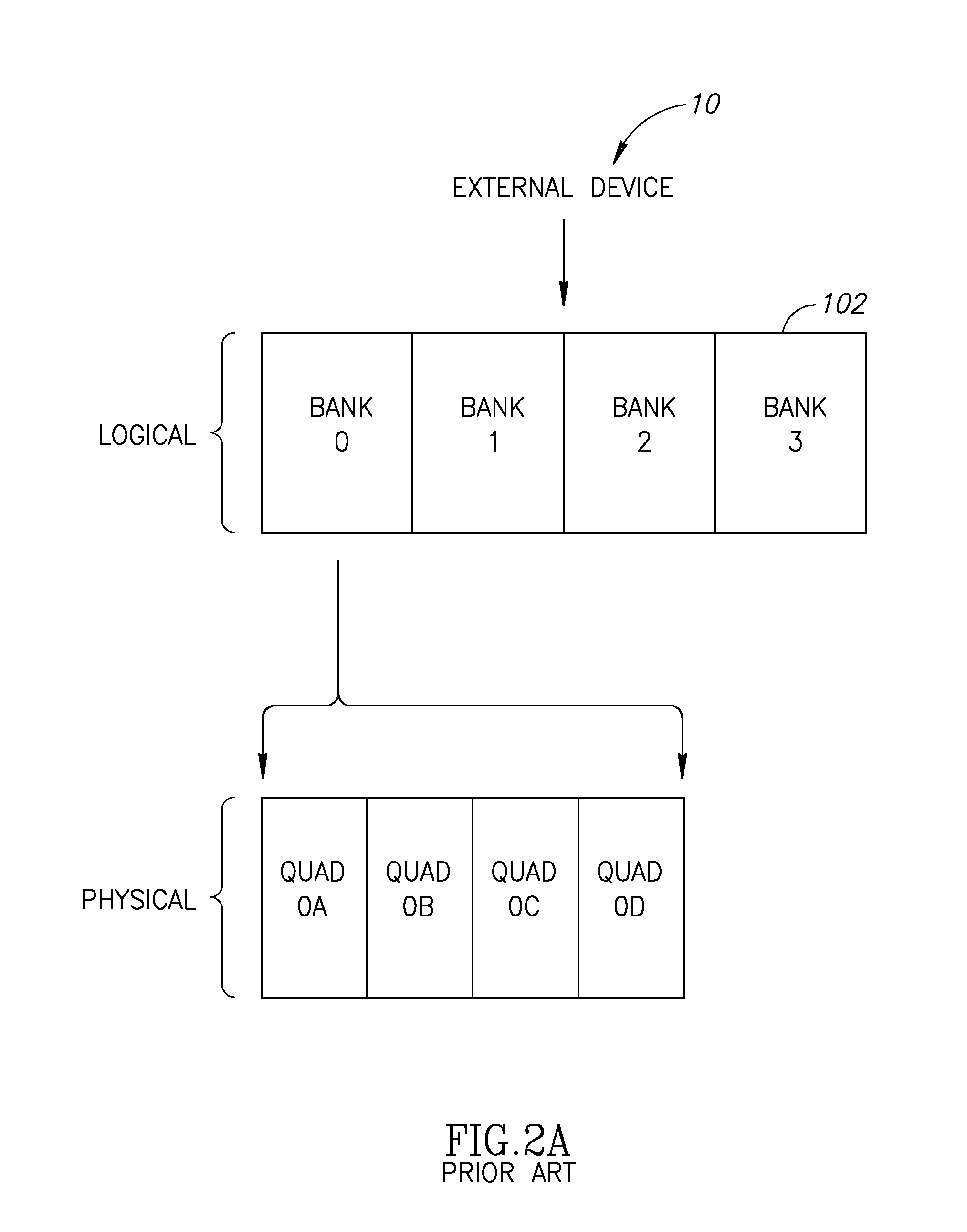

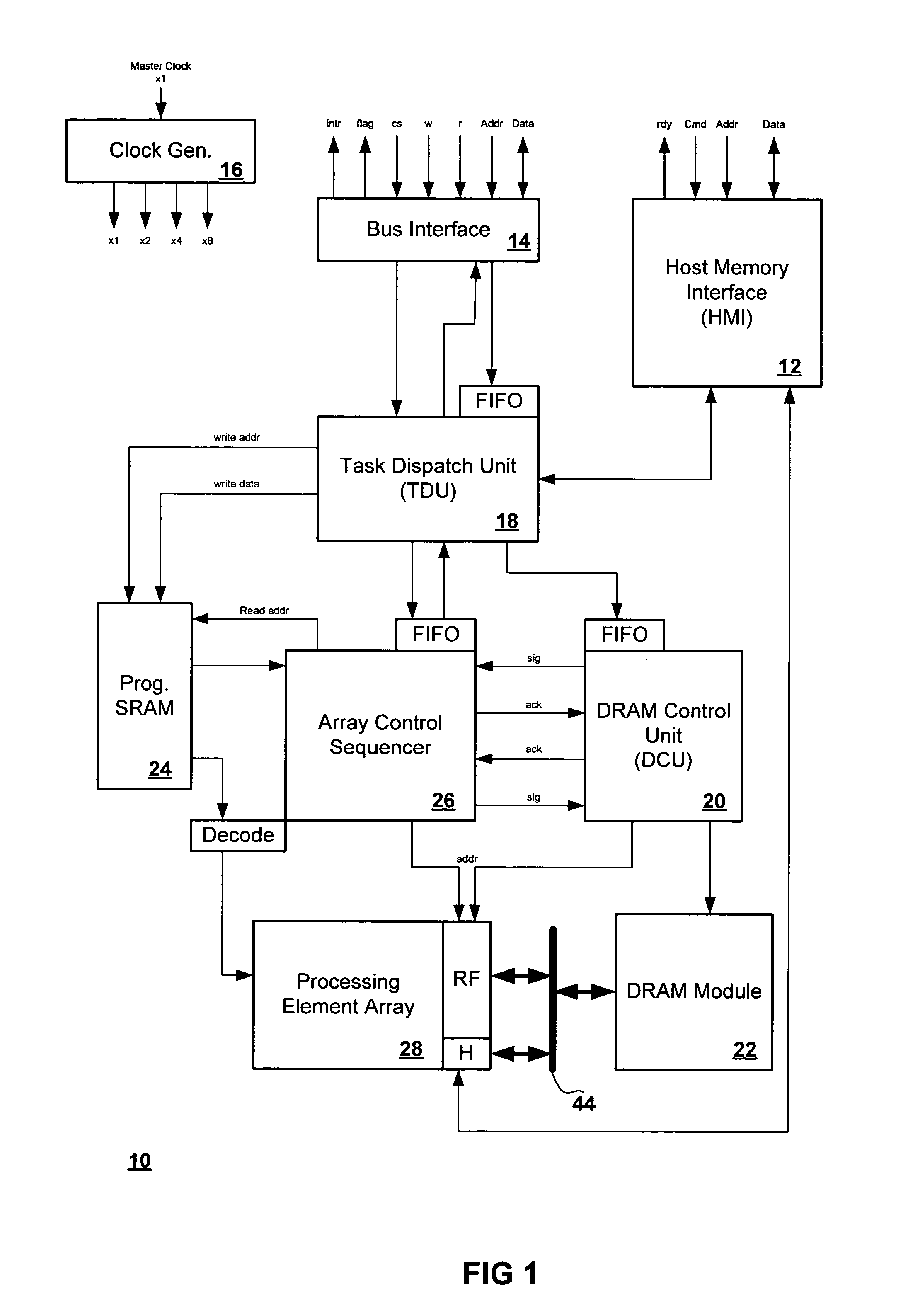

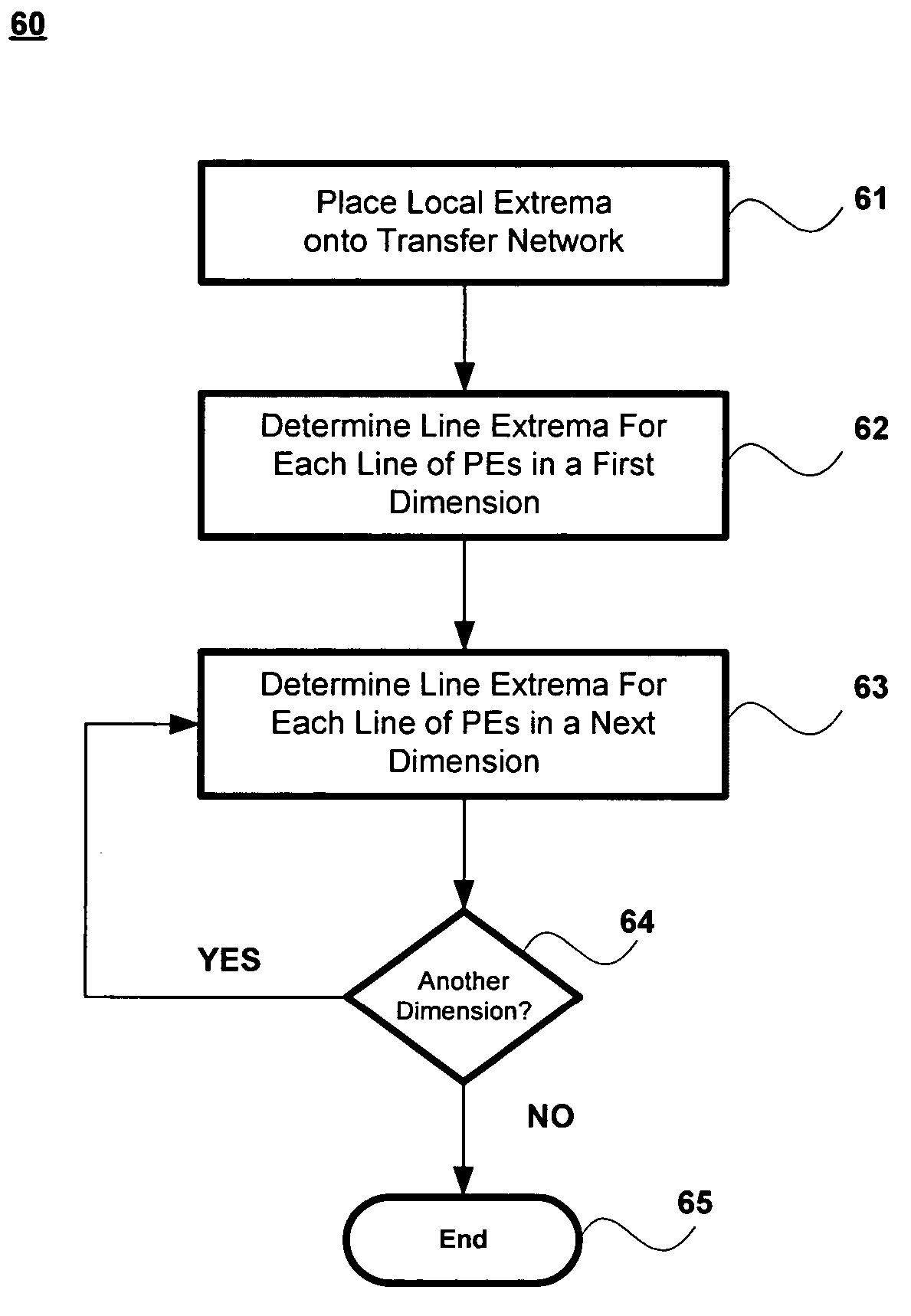

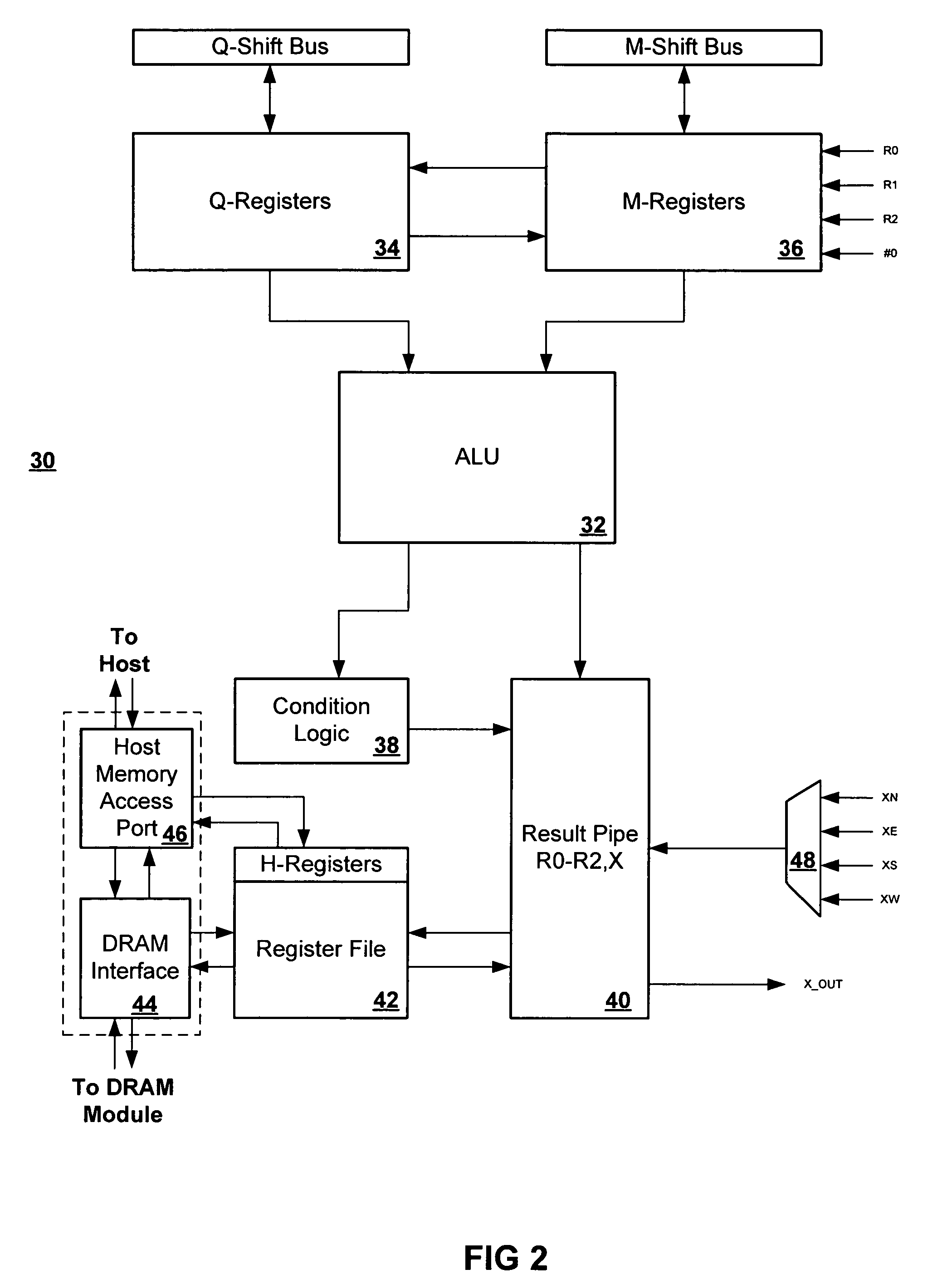

Neighborhood operations for parallel processing

InactiveUS20120246380A1Memory adressing/allocation/relocationDigital storageProcessing elementParallel processing

A memory device includes a plurality of storage units in which to store data of a bank, wherein the data has a logical order prior to storage and a physical order different than the logical order within the plurality of storage units and a within-device reordering unit to reorder the data of a bank into the logical order prior to performing on-chip processing. In another embodiment, the memory device includes an external device interface connectable to an external device communicating with the memory device, an internal processing element to process data stored on the device and multiple banks of storage. Each bank includes a plurality of storage units and each storage unit has two ports, an external port connectable to the external device interface and an internal port connected to the internal processing element.

Owner:GSI TECH

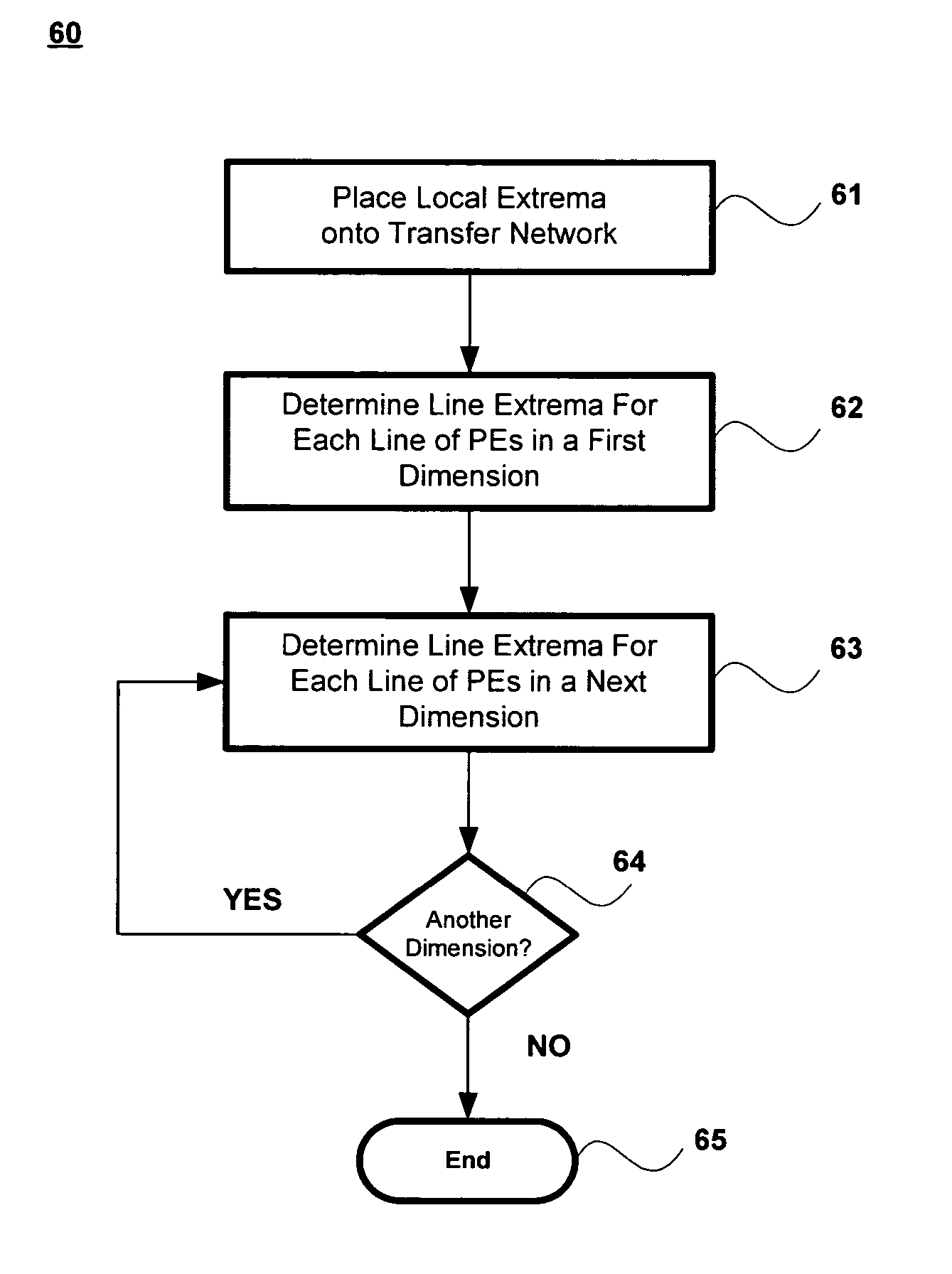

Method for finding global extrema of a set of shorts distributed across an array of parallel processing elements

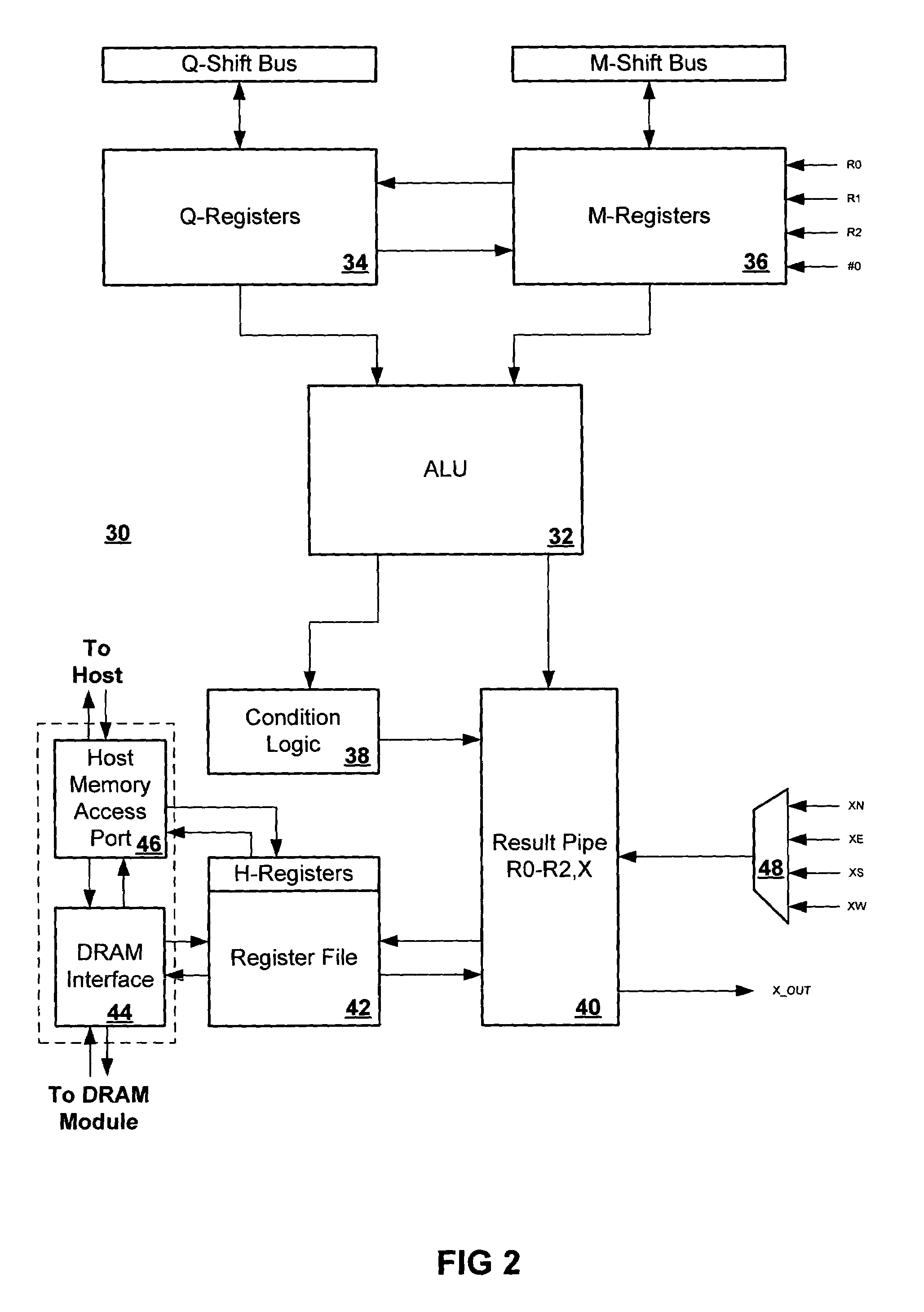

ActiveUS7574466B2Single instruction multiple data multiprocessorsDigital data processing detailsProcessing elementParallel processing

A method for finding an extrema for an n-dimensional array having a plurality of processing elements, the method includes determining within each processing element a first dimensional extrema for a first dimension, wherein the first dimensional extrema is related to the local extrema of the processing elements in the first dimension and wherein the first dimensional extrema has a most significant byte and a least significant byte, determining within each processing element a next dimensional extrema for a next dimension of the n-dimensional array, wherein the next dimensional extrema is related to the first dimensional extrema and wherein the next dimensional extrema has a most significant byte and a least significant byte; and repeating the determining within each processing element a next dimensional extrema for each of the n-dimensions, wherein each of the next dimensional extrema is related to a dimensional extrema from a previously selected dimension.

Owner:MICRON TECH INC

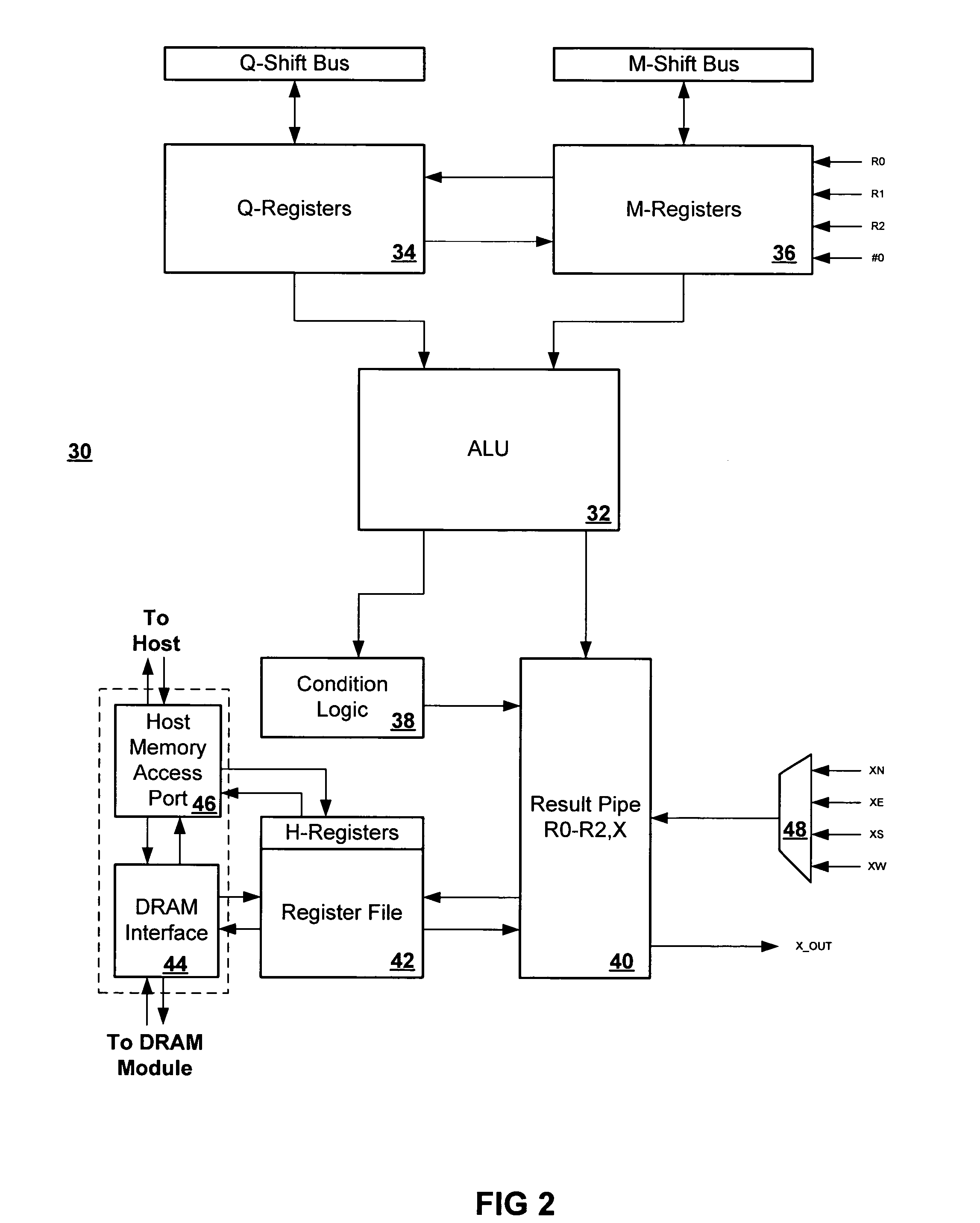

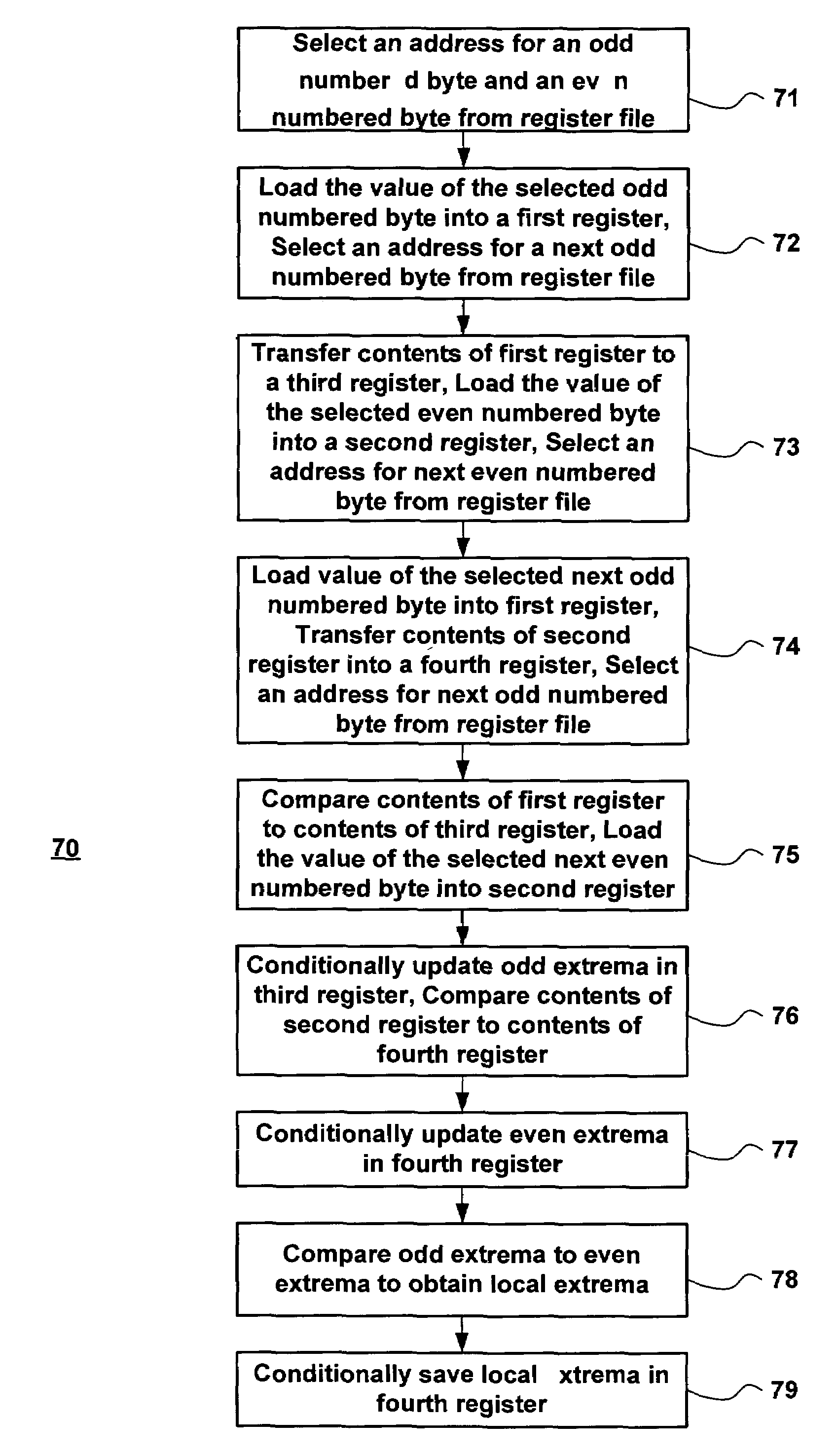

Method for finding local extrema of a set of values for a parallel processing element

ActiveUS7454451B2Digital data processing detailsDigital computer detailsTheoretical computer scienceProcessing element

A method for finding a local extrema for a single processing element having a set of values associated therewith includes separating the set of values into an odd set of values and an even set of values, determining a first extrema from the odd set of values, determining a second extrema from the even set of values, and determining the local extrema from the first extrema and the second extrema. The first extrema is found by comparing each odd-numbered value in the set to each other odd-numbered value in the set and the second extrema is found by comparing each even-numbered value in the set to each other even-numbered value in the set.

Owner:MICRON TECH INC

Method for finding global extrema of a set of bytes distributed across an array of parallel processing elements

ActiveUS7447720B2Digital data processing detailsDigital computer detailsProcessing elementParallel processing

A method for finding an extrema for an n-dimensional array having a plurality of processing elements, the method includes determining within each of the processing elements a dimensional extrema for a first dimension of the n-dimensional array, wherein the dimensional extrema is related to one or more local extrema of the processing elements in the first dimension, determining within each of the processing elements a next dimensional extrema for a next dimension of the n-dimensional array, wherein the next dimensional extrema is related to one or more of the first dimensional extrema, and repeating the determining within each of the processing elements a next dimensional extrema for each of the n-dimensions, wherein each of the next dimensional extrema is related to a dimensional extrema from a previously selected dimension.

Owner:MICRON TECH INC

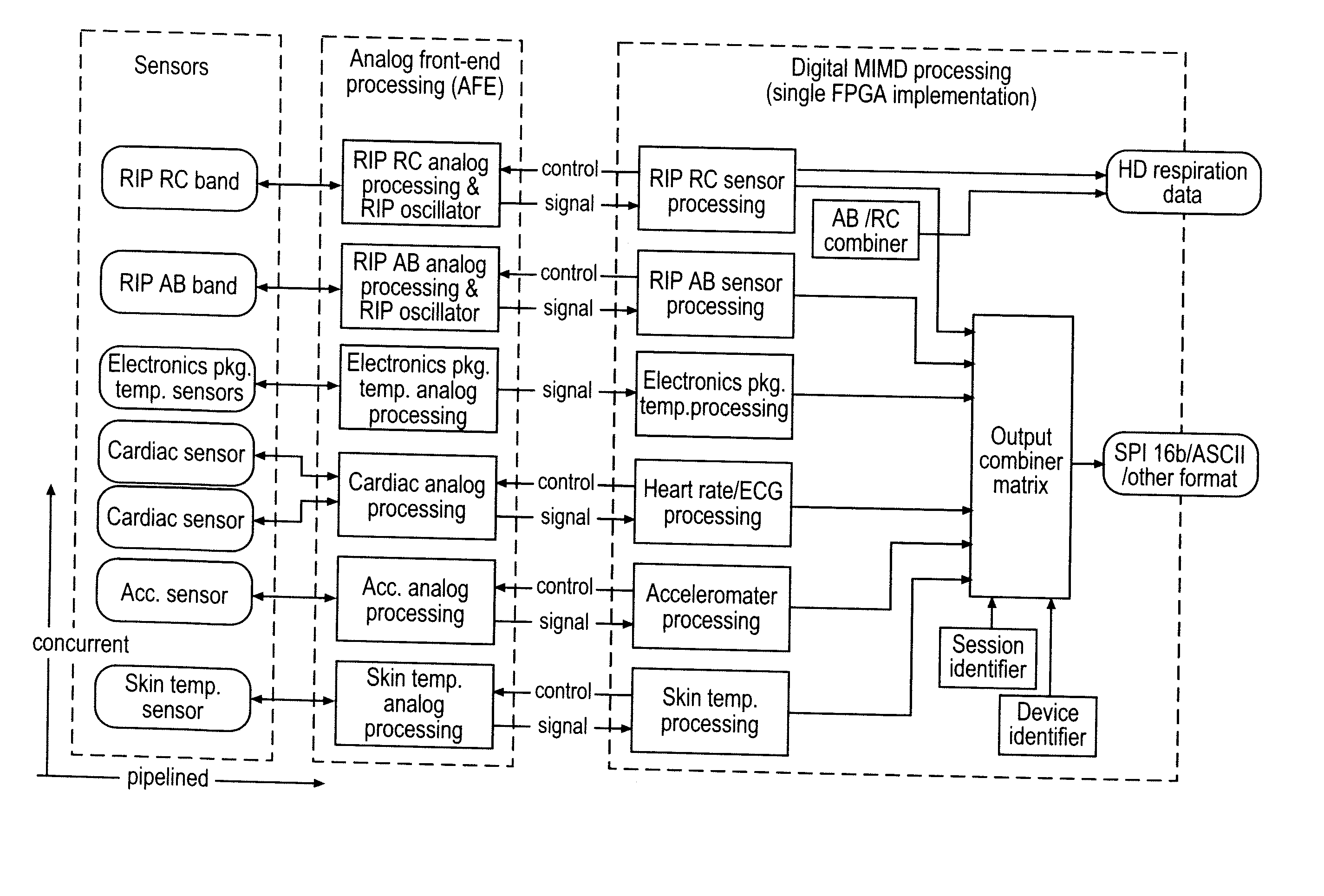

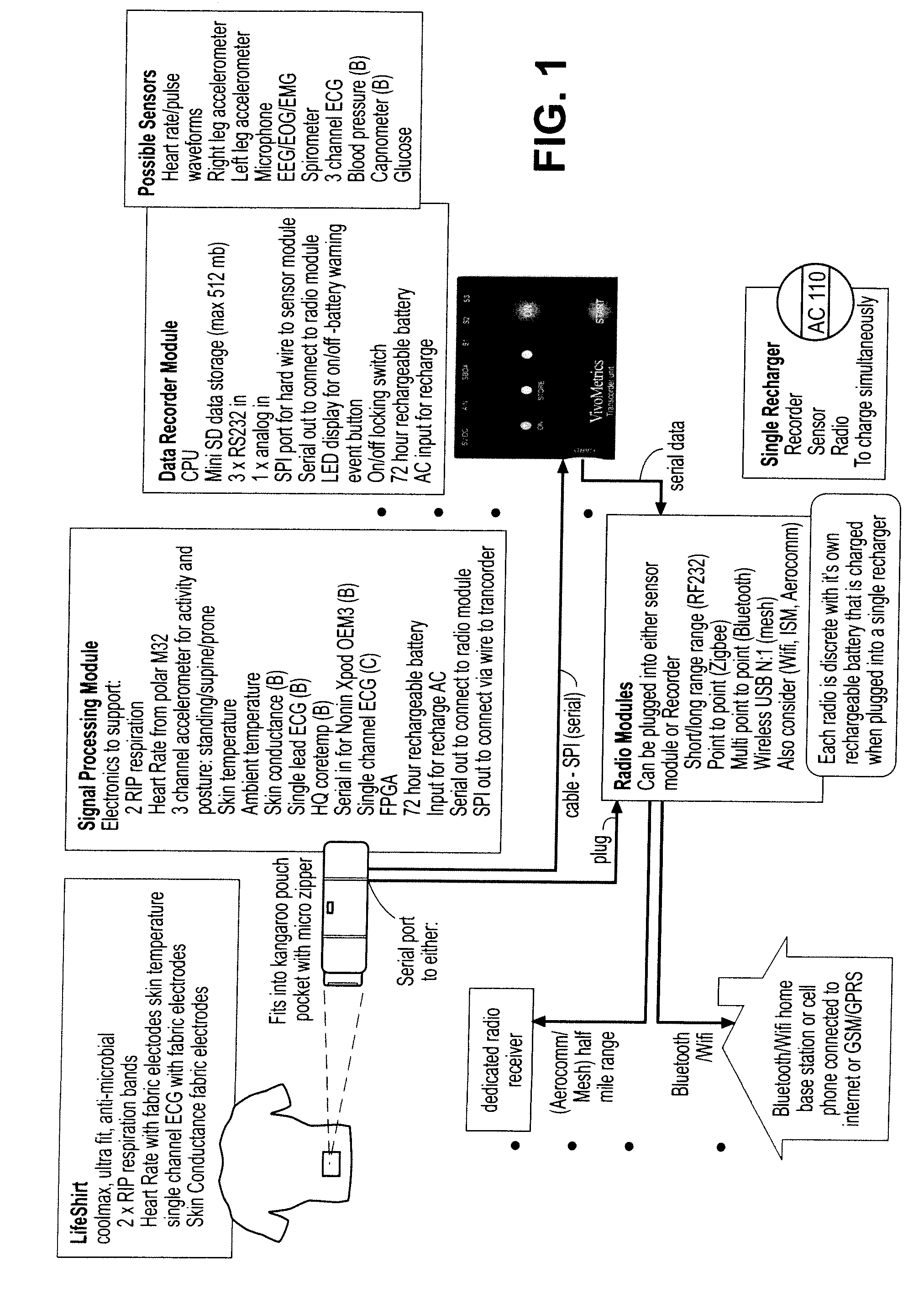

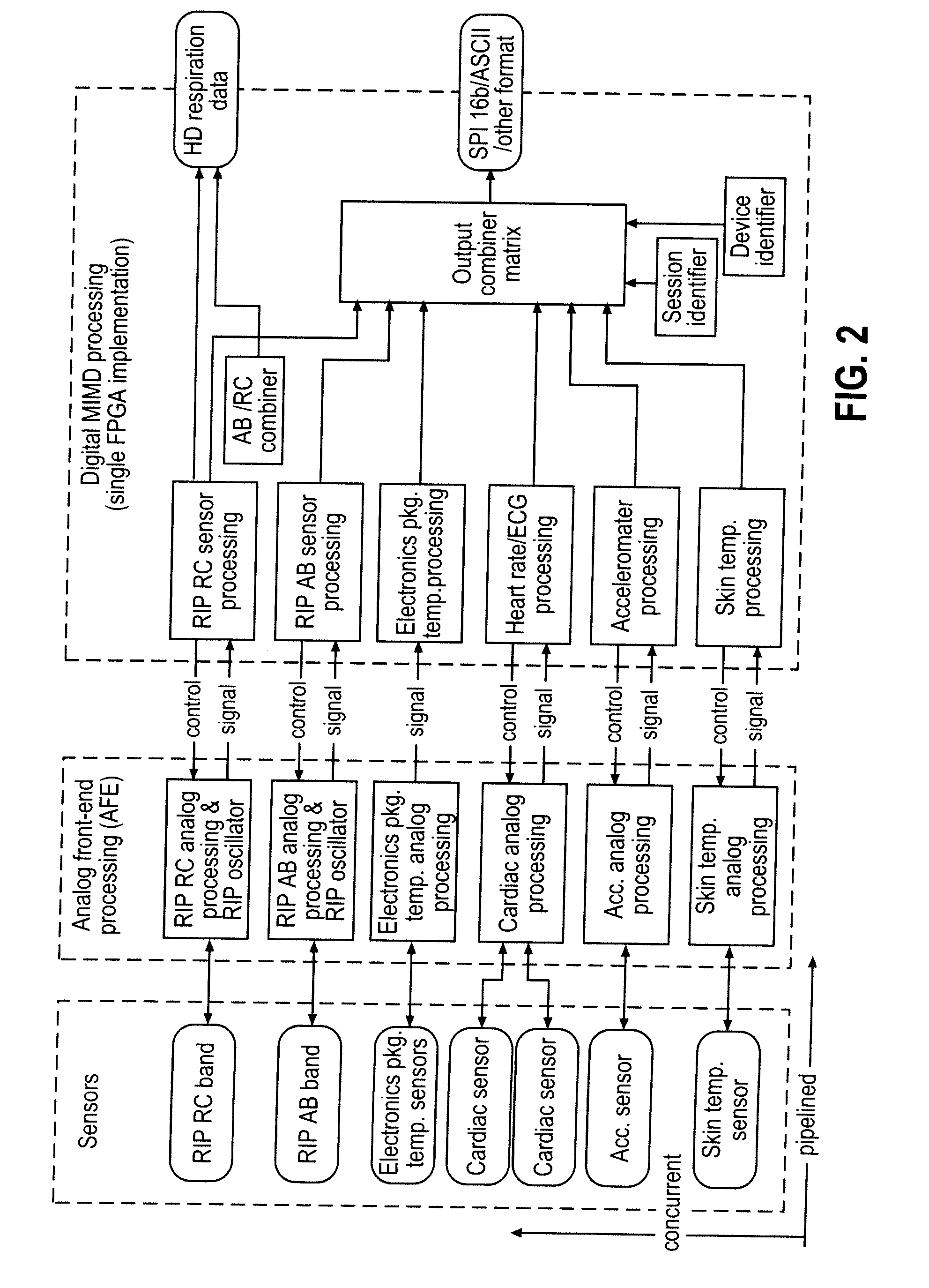

Physiological signal processing devices and associated processing methods

InactiveUS20070270671A1Speed up the processEasy to integrateSensorsTelemetric patient monitoringPhysiological monitoringParallel processing

The invention provides improved devices for processing data from one or more physiological sensors based on parallel processing. The provided devices are small, low power, and readily configurable for use in most physiological monitoring applications. In a preferred embodiment, the provided devices are used for ambulatory monitoring of a subject's cardio-respiratory systems, and in particular, process data from one or more respiratory inductive plethysmographic sensors.

Owner:ADIDAS

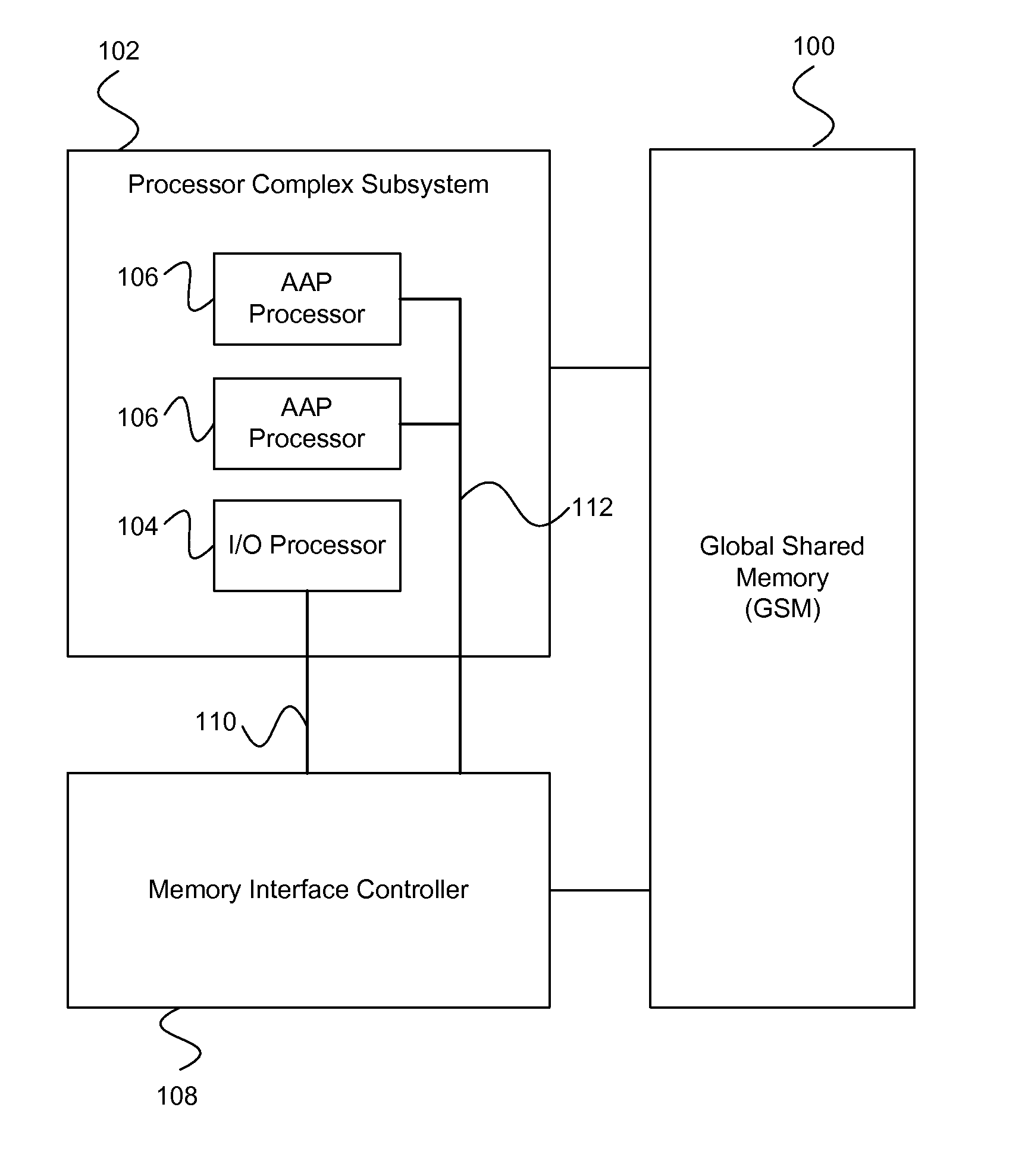

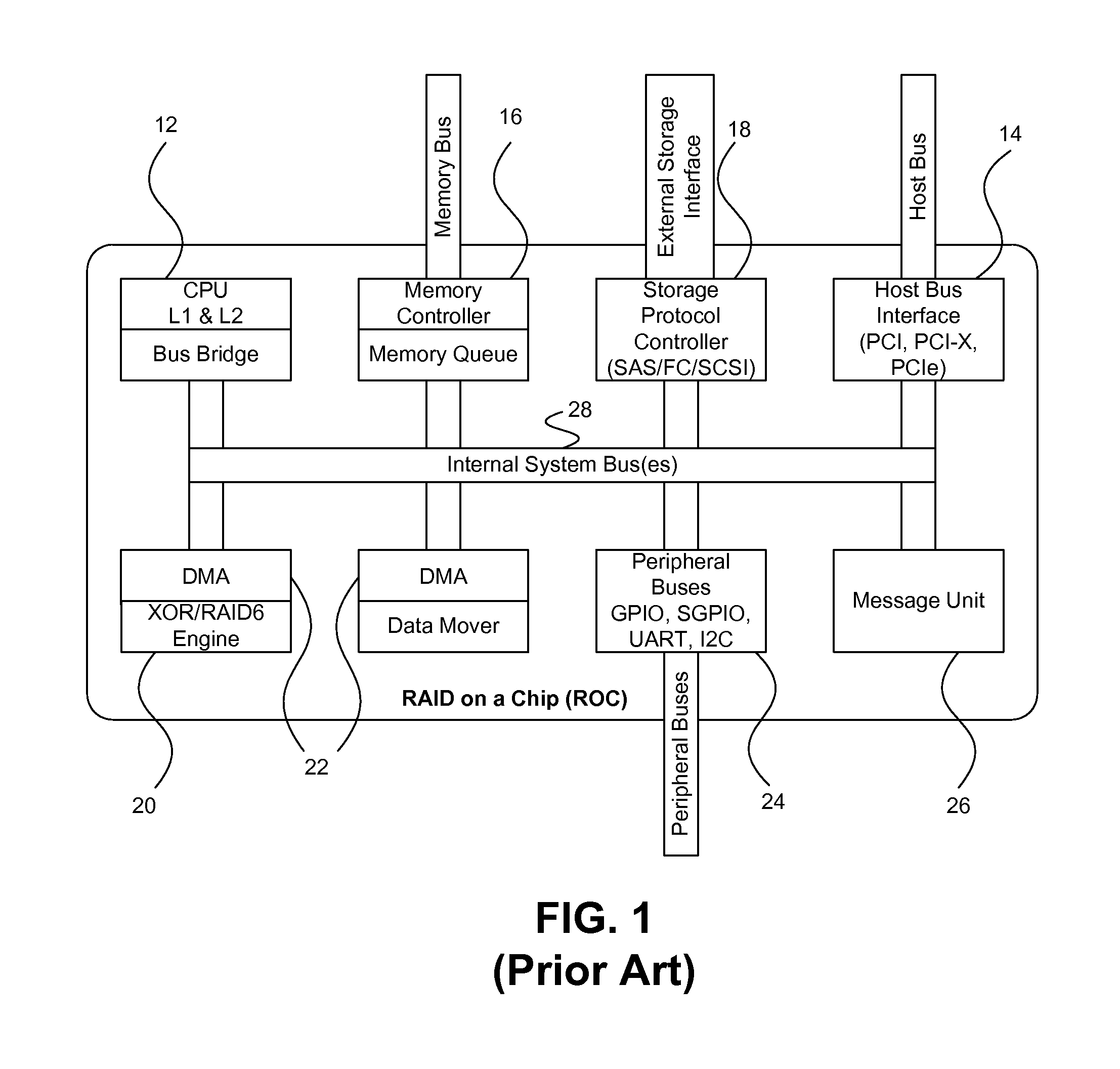

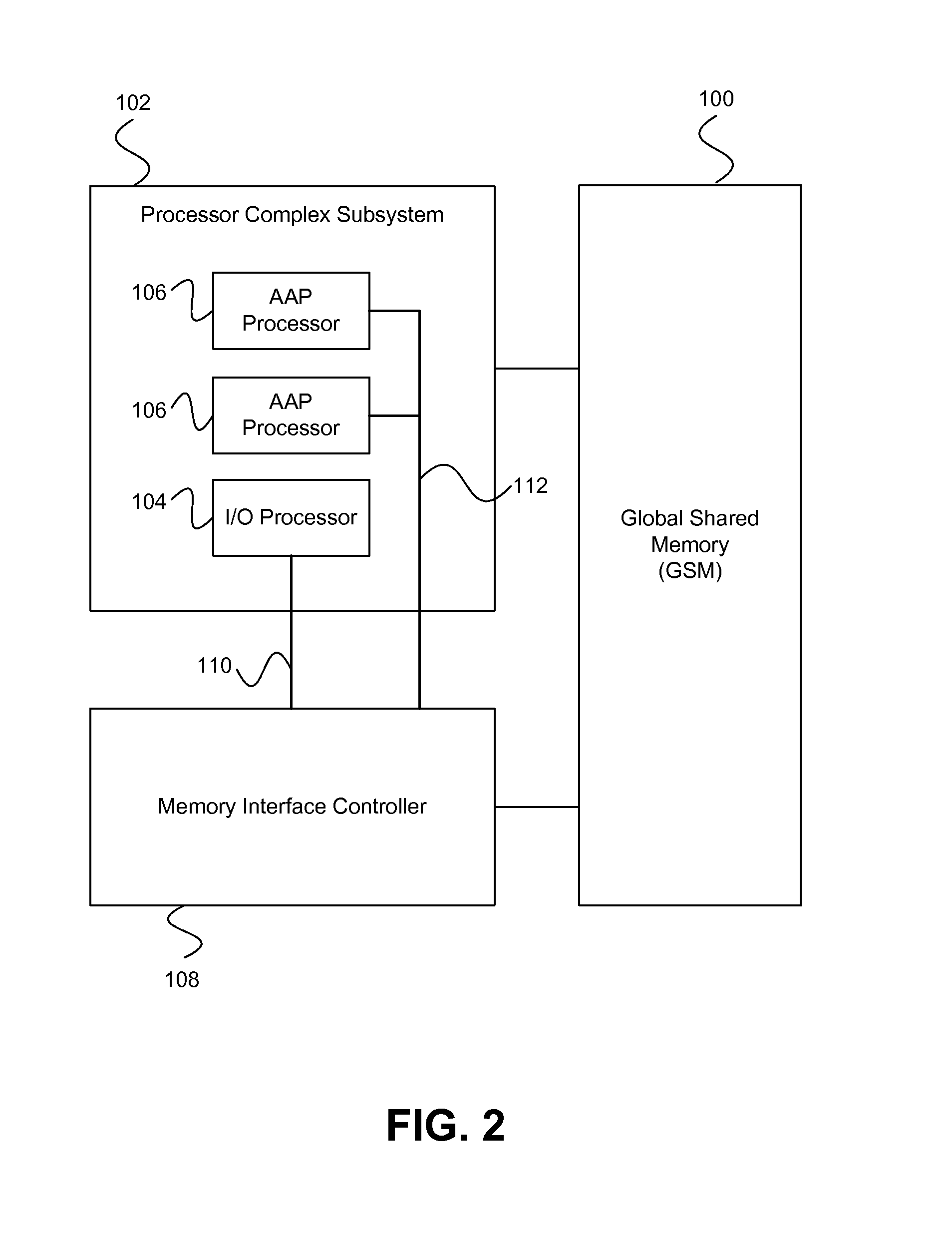

On-chip shared memory based device architecture

ActiveUS7743191B1Reduce disadvantagesLow costRedundant array of inexpensive disk systemsRecord information storageExtensibilityRAID

A method and architecture are provided for SOC (System on a Chip) devices for RAID processing, which is commonly referred as RAID-on-a-Chip (ROC). The architecture utilizes a shared memory structure as interconnect mechanism among hardware components, CPUs and software entities. The shared memory structure provides a common scratchpad buffer space for holding data that is processed by the various entities, provides interconnection for process / engine communications, and provides a queue for message passing using a common communication method that is agnostic to whether the engines are implemented in hardware or software. A plurality of hardware engines are supported as masters of the shared memory. The architectures provide superior throughput performance, flexibility in software / hardware co-design, scalability of both functionality and performance, and support a very simple abstracted parallel programming model for parallel processing.

Owner:MICROSEMI STORAGE SOLUTIONS

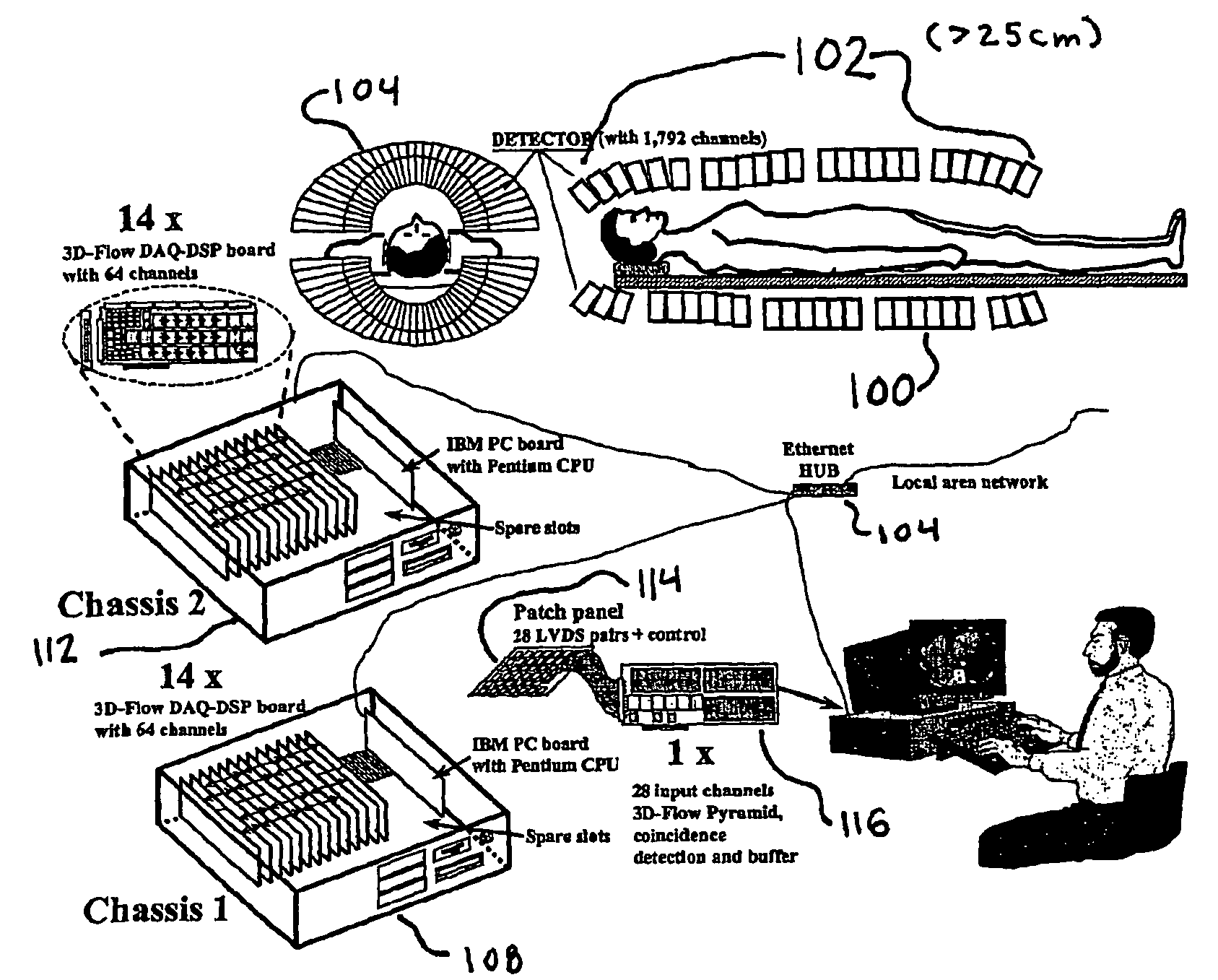

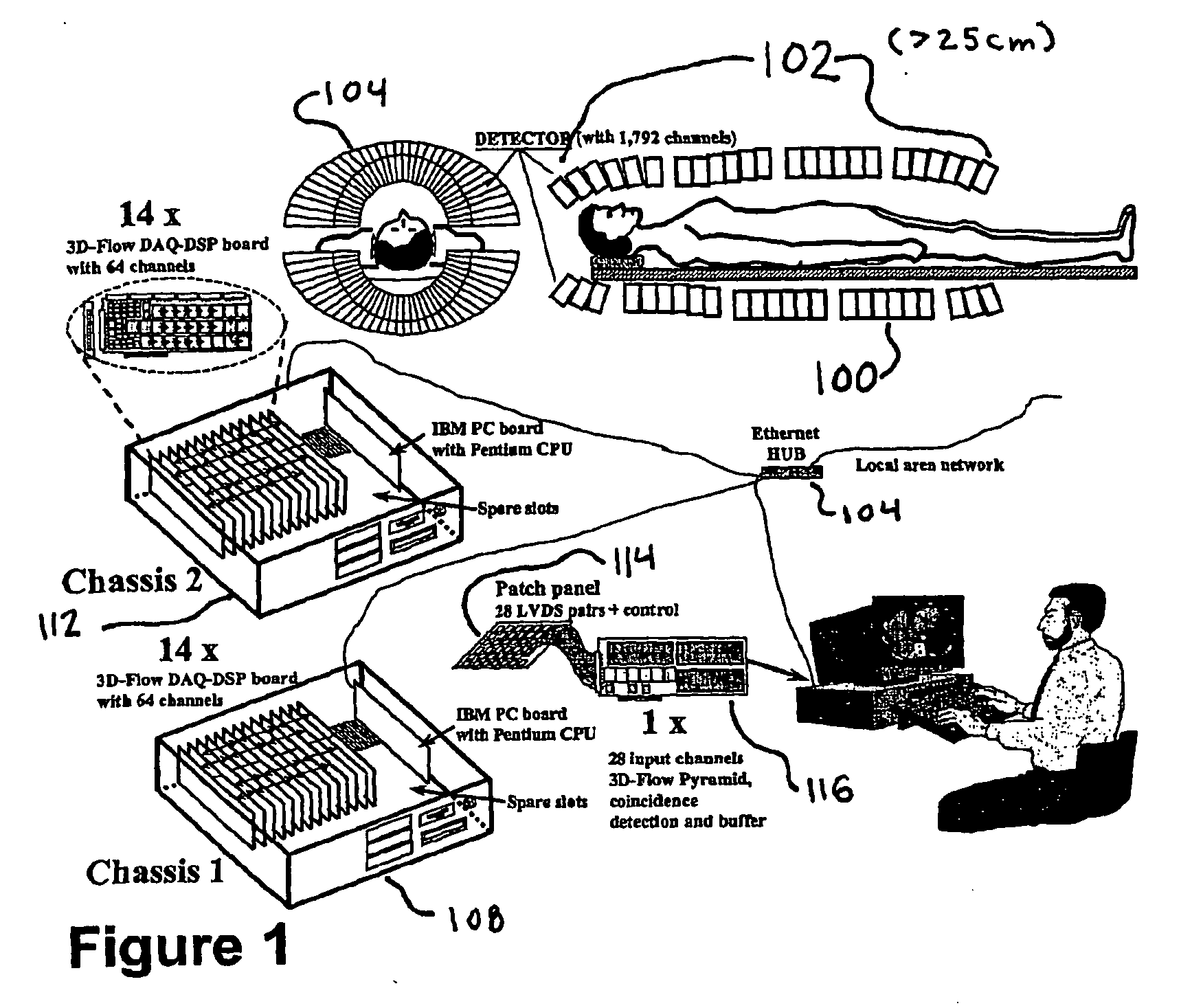

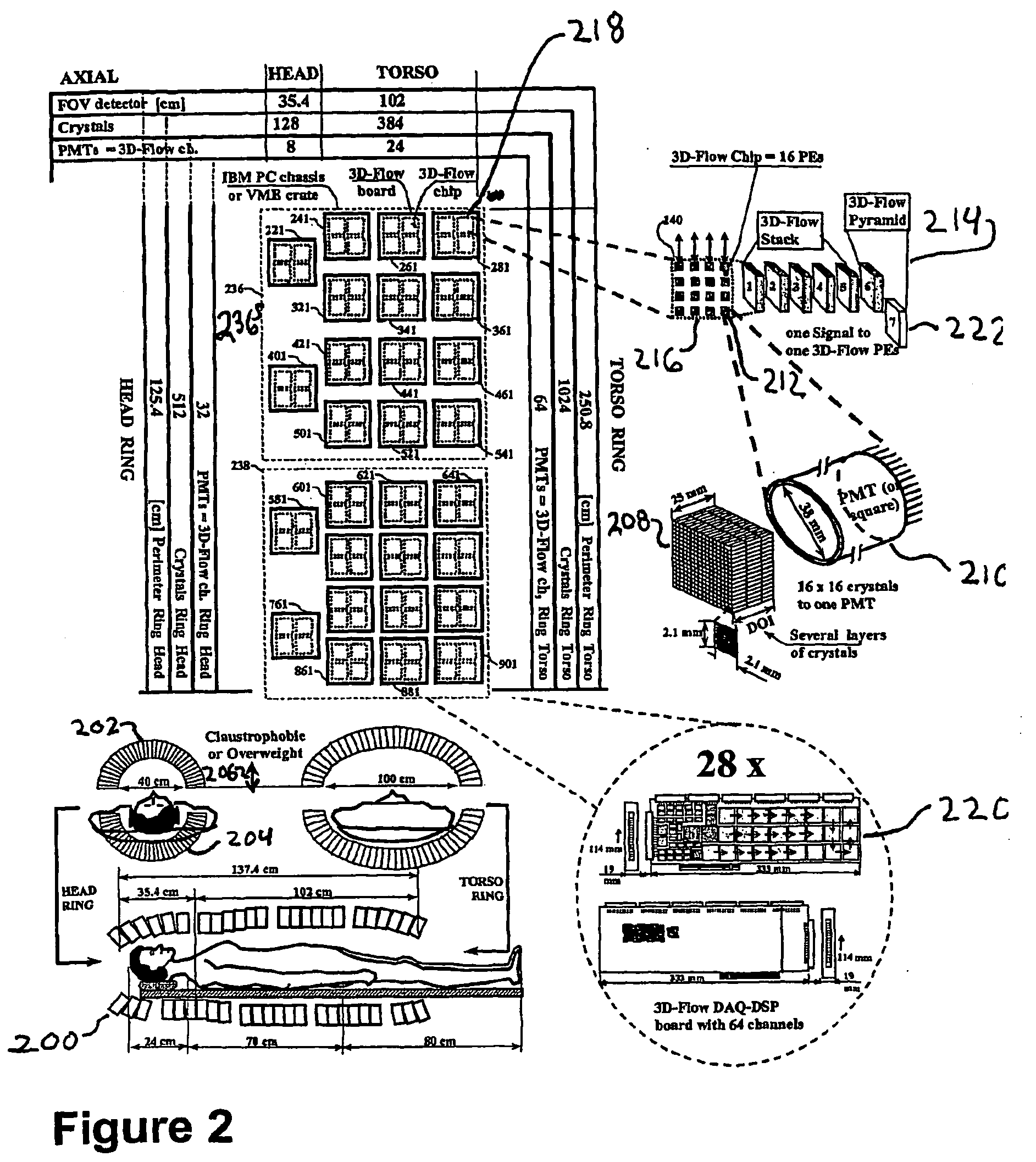

Method and apparatus for anatomical and functional medical imaging

InactiveUS20040195512A1Increase the lengthDistance minimizationMaterial analysis using wave/particle radiationRadiation/particle handlingMedical imagingFunctional imaging

A body scanning system includes a CT transmitter and a PET configured to radiate along a significant portion of the body and a plurality of sensors (202, 204) configured to detect photons along the same portion of the body. In order to facilitate the efficient collection of photons and to process the data on a real time basis, the body scanning system includes a new data processing pipeline that includes a sequentially implemented parallel processor (212) that is operable to create images in real time not withstanding the significant amounts of data generated by the CT and PET radiating devices.

Owner:CROSETTO DARIO B

Parallel processing of continuous queries on data streams

InactiveUS20110314019A1Improve throughputIncrease the number ofDigital data information retrievalDigital data processing detailsTimestampComplex event processing

A continuous query parallel engine on data streams provides scalability and increases the throughput by the addition of new nodes. The parallel processing can be applied to data stream processing and complex events processing. The continuous query parallel engine receives the query to be deployed and splits the original query into subqueries, obtaining at least one subquery; each subquery is executed in at least in one node. Tuples produced by each operator of each subquery are labeled with timestamps. A load balancer is interposed at the output of each node that executes each one of the instances of the source subquery and an input merger is interposed in each node that executes each one of the instances of a destination subquery. After checks are performed, further load balancers or input managers may be added.

Owner:UNIV MADRID POLITECNICA

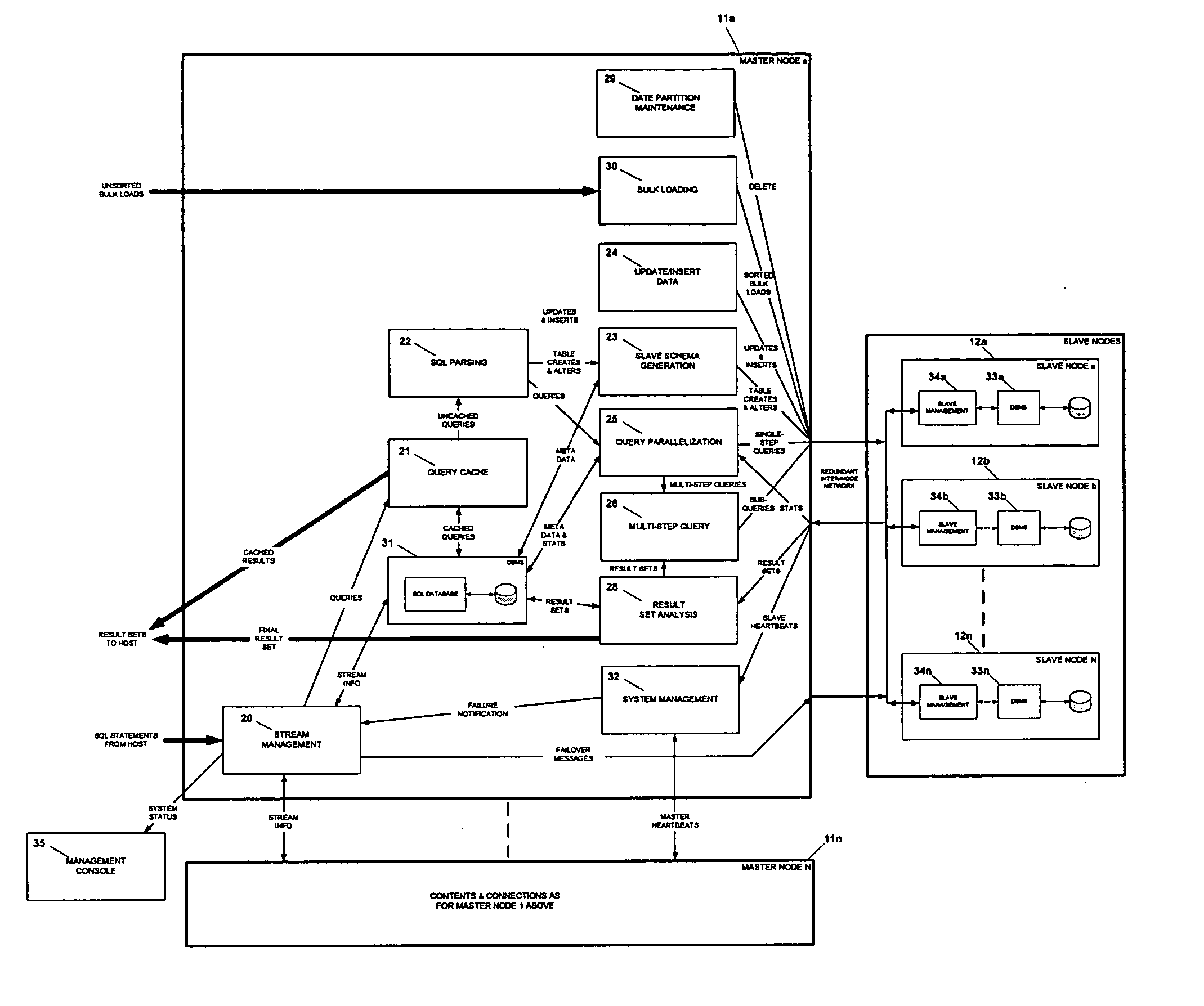

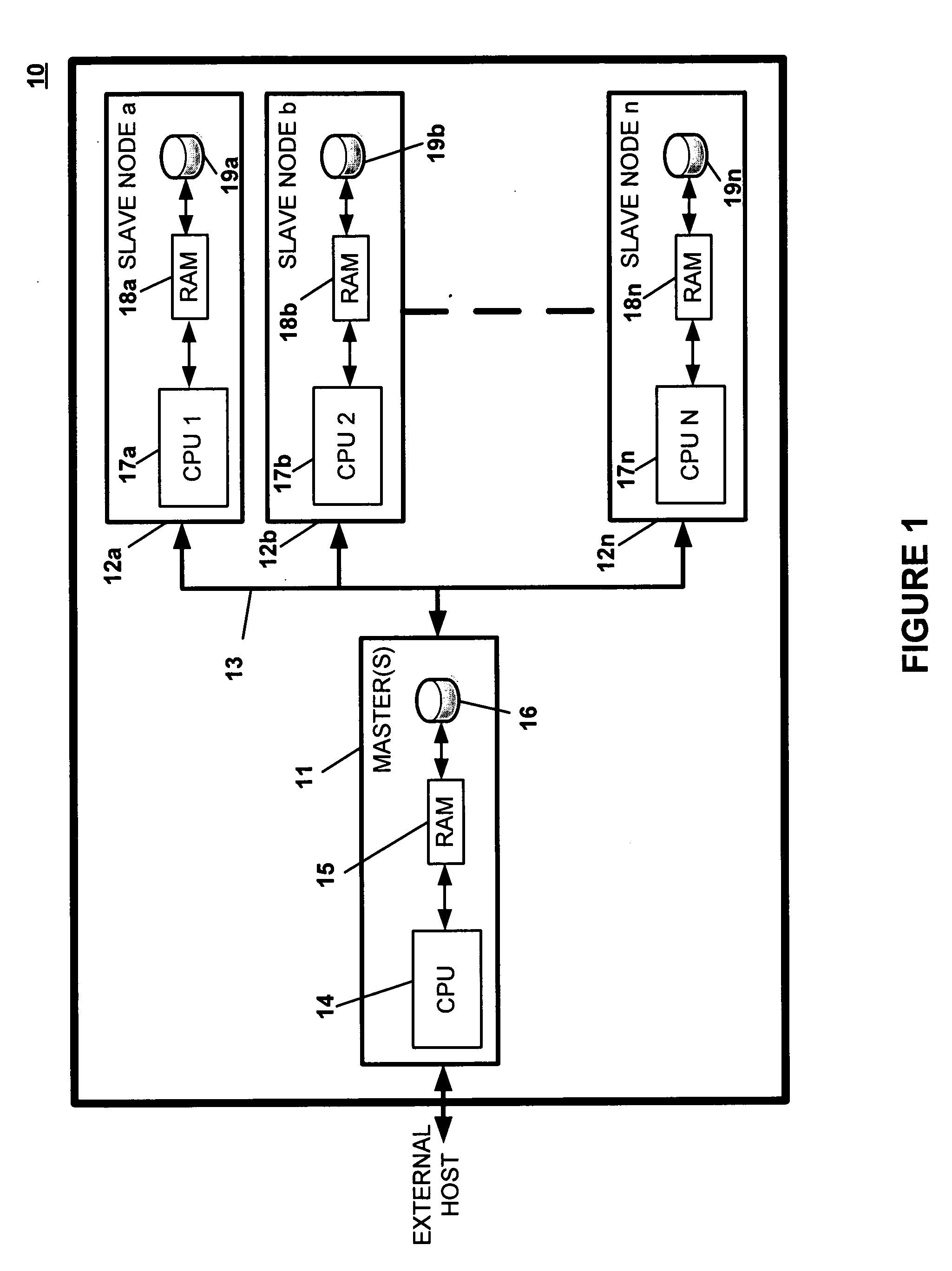

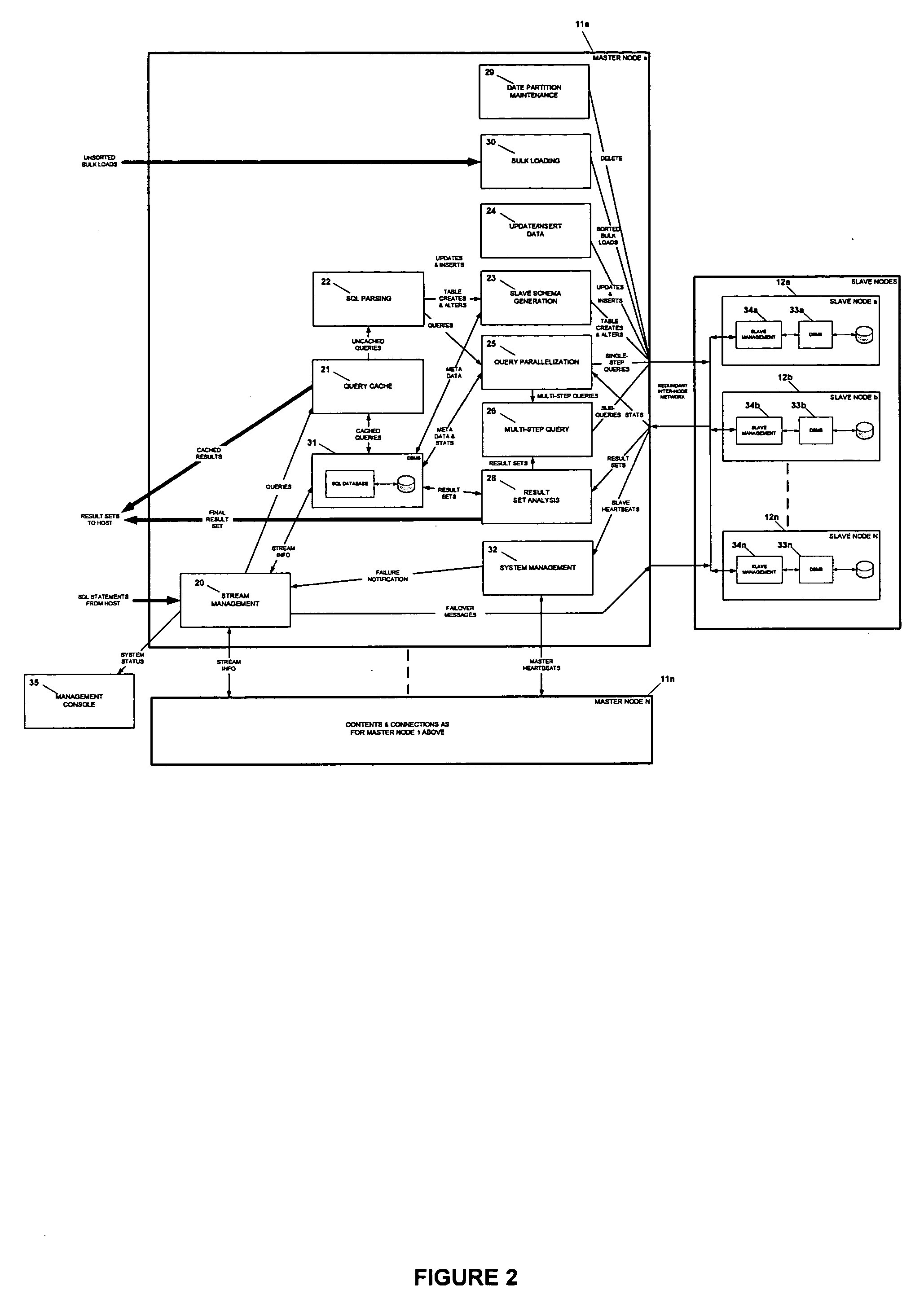

Ultra-shared-nothing parallel database

ActiveUS20050187977A1Reduce processEfficient and reliableDigital data information retrievalDigital data processing detailsData miningParallel processing

An ultra-shared-nothing parallel database system includes at least one master node and multiple slave nodes. A database consisting of at least one fact table and multiple dimension tables is partitioned and distributed across the slave nodes of the database system so that queries are processed in parallel without requiring the transfer of data between the slave nodes. The fact table and a first dimension table of the database are partitioned across the slave nodes. The other dimension tables of the database are duplicated on each of the slave nodes and at least one of these other dimension tables is partitioned across the slave nodes.

Owner:MICROSOFT TECH LICENSING LLC

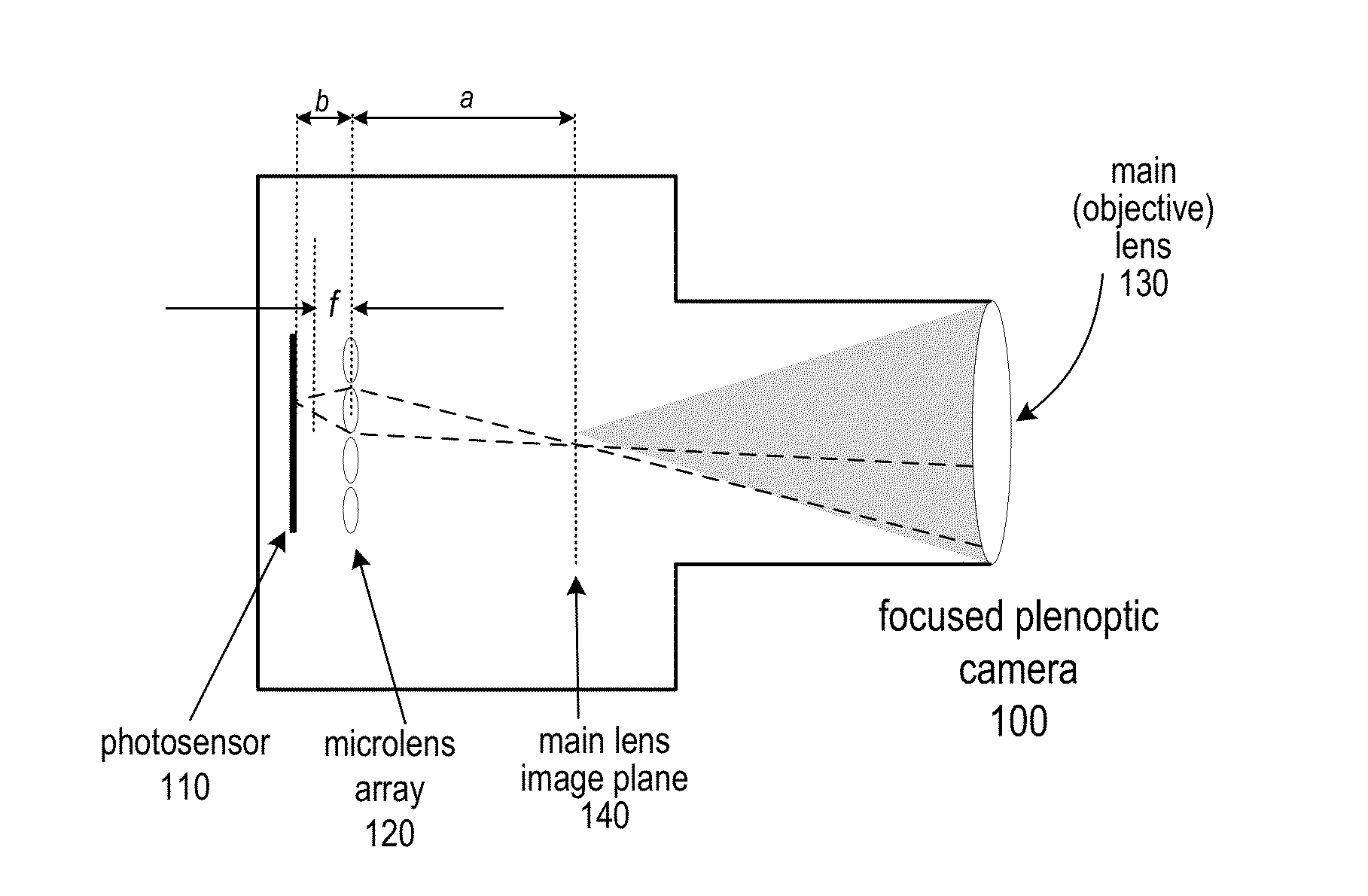

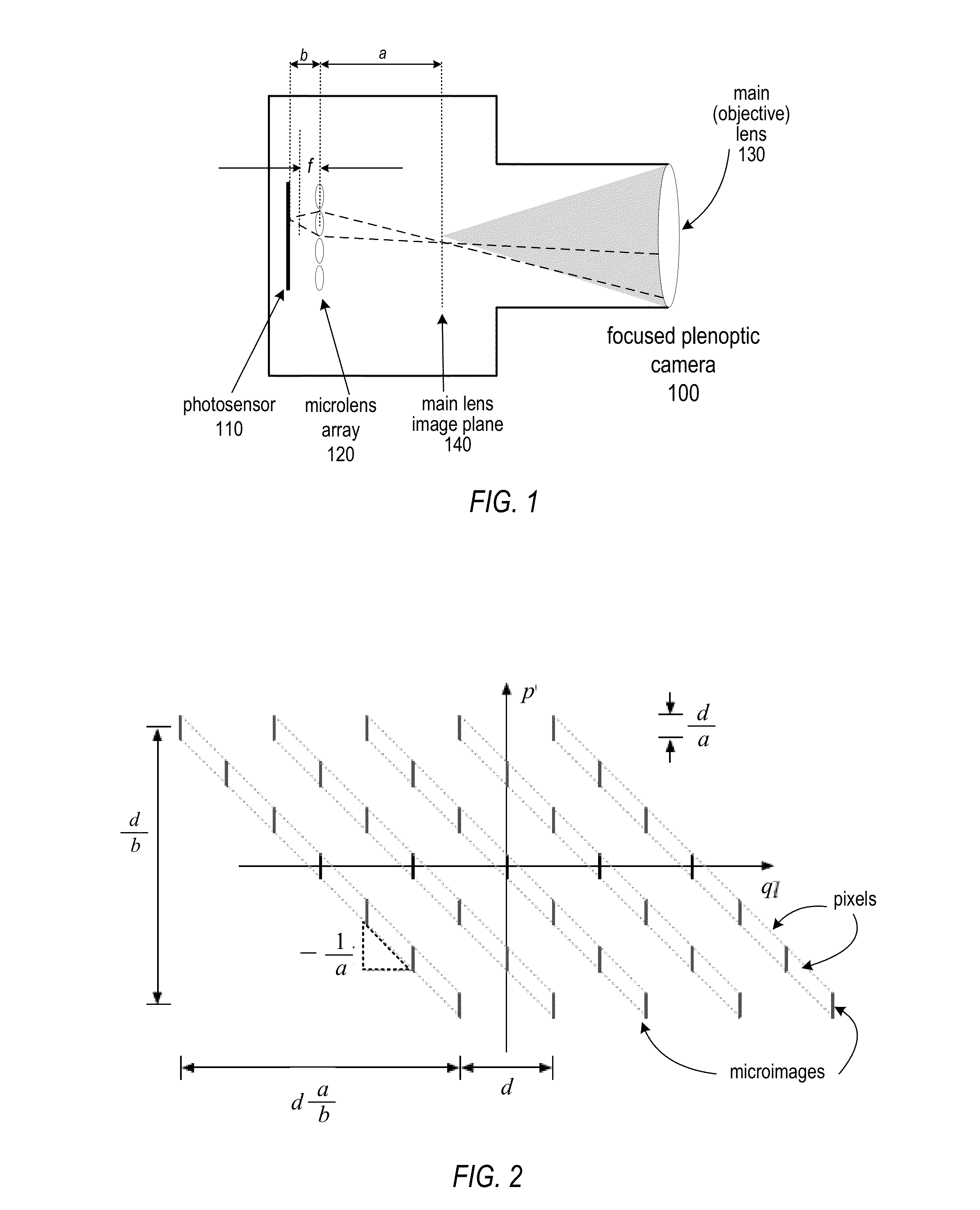

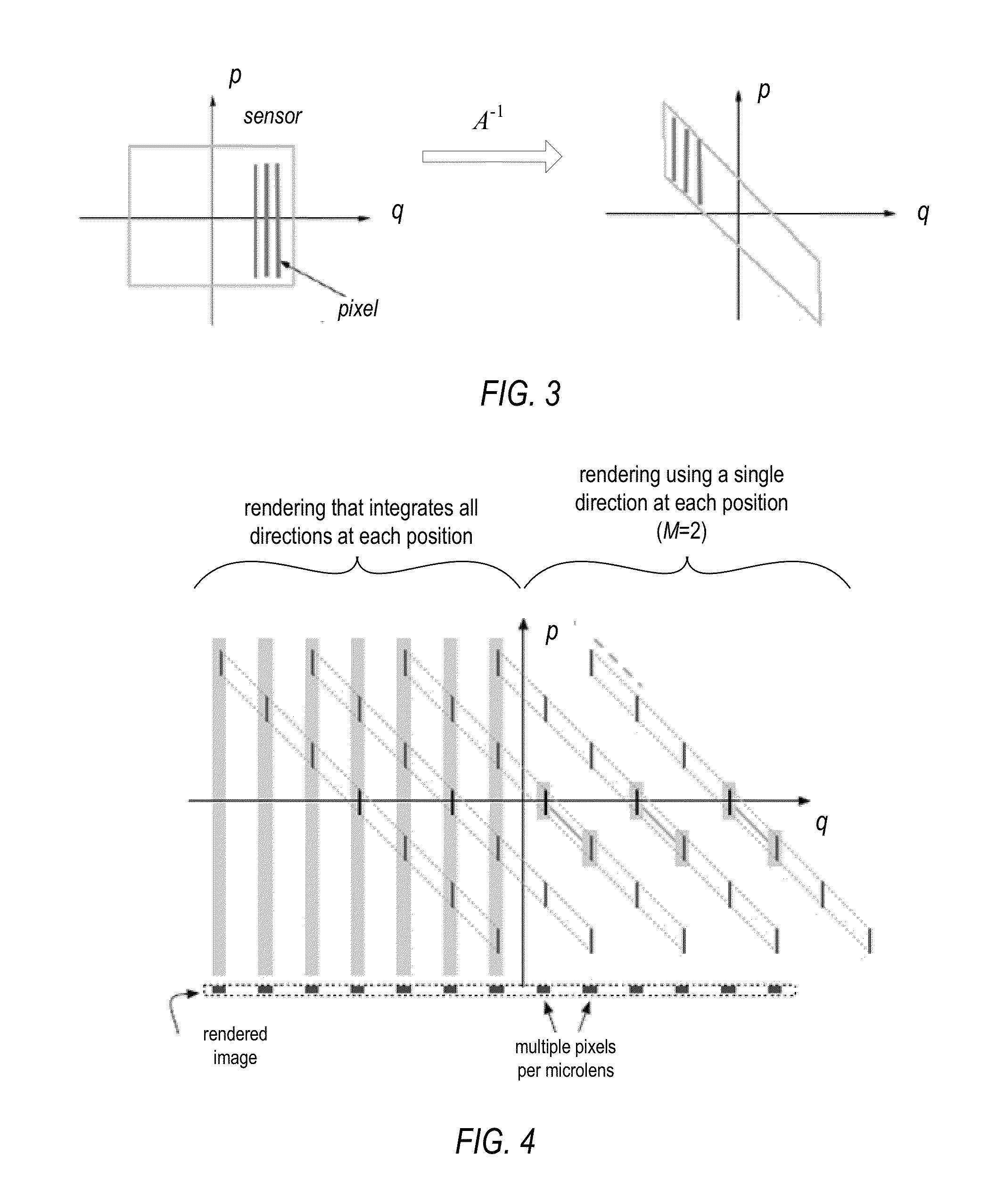

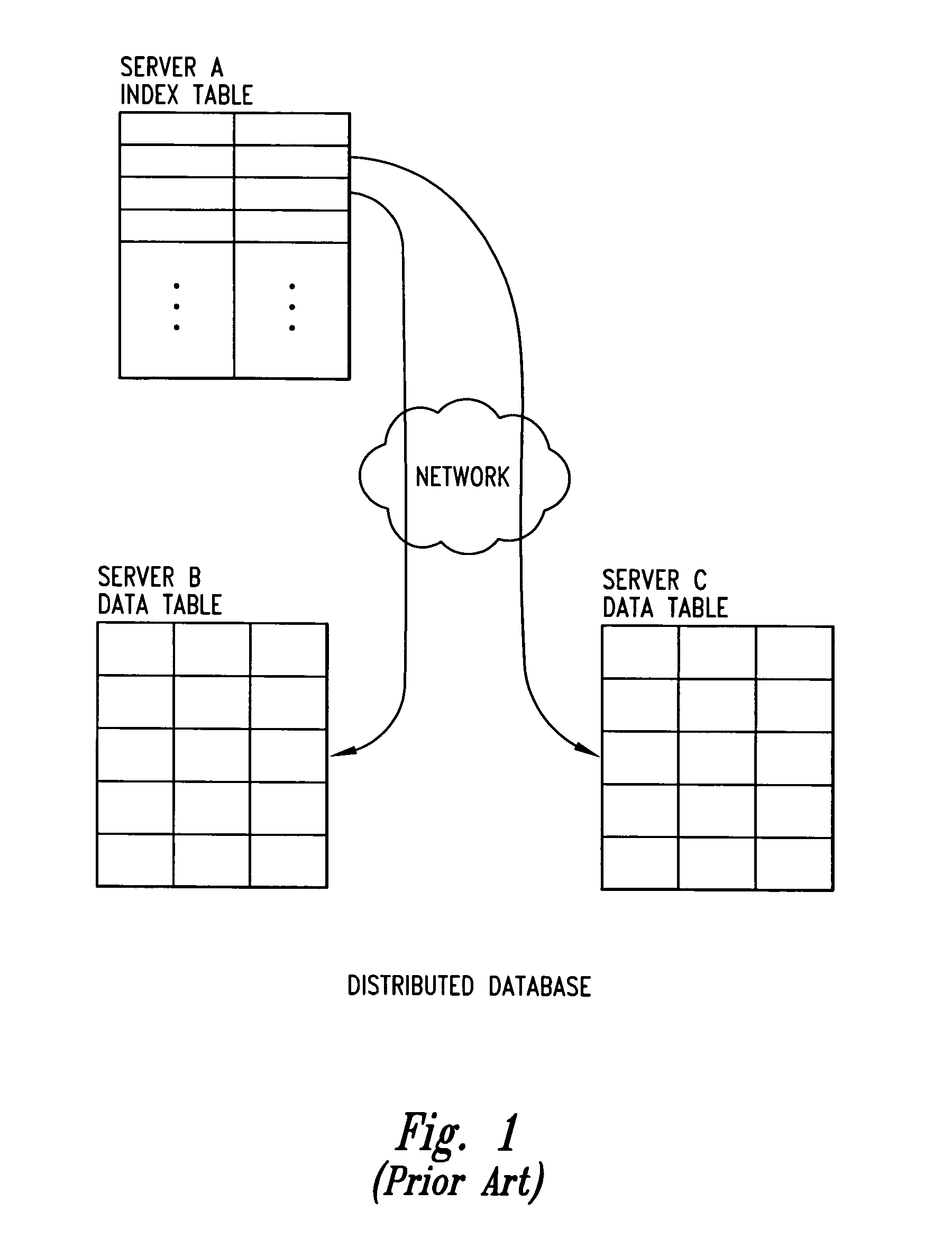

Methods, Apparatus, and Computer-Readable Storage Media for Blended Rendering of Focused Plenoptic Camera Data

ActiveUS20130120605A1High resolutionTelevision system detailsColor television detailsGraphicsMultiple point

Methods, apparatus, and computer-readable storage media for rendering focused plenoptic camera data. A rendering with blending technique is described that blends values from positions in multiple microimages and assigns the blended value to a given point in the output image. A rendering technique that combines depth-based rendering and rendering with blending is also described. Depth-based rendering estimates depth at each microimage and then applies that depth to determine a position in the input flat from which to read a value to be assigned to a given point in the output image. The techniques may be implemented according to parallel processing technology that renders multiple points of the output image in parallel. In at least some embodiments, the parallel processing technology is graphical processing unit (GPU) technology.

Owner:ADOBE SYST INC

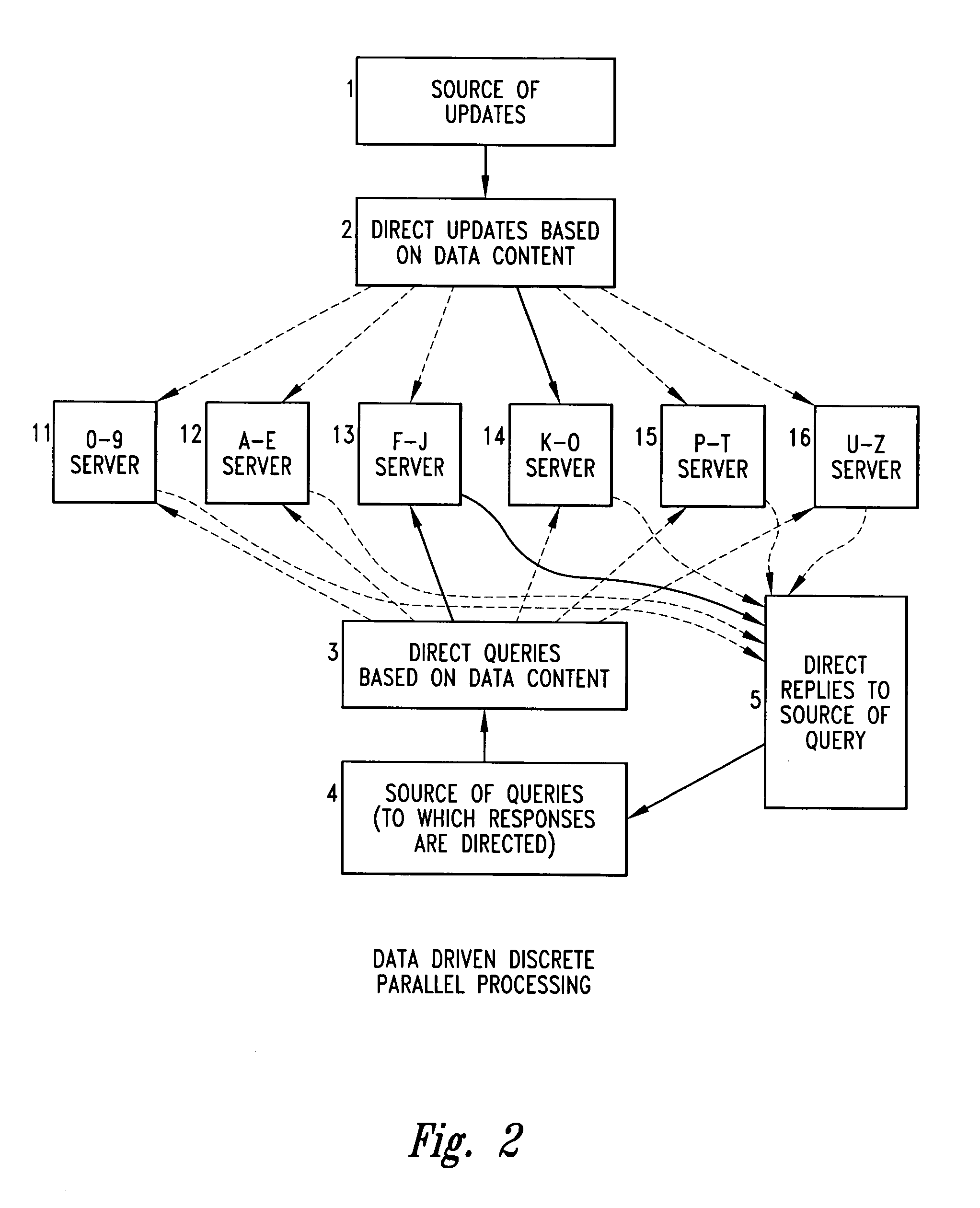

System for discrete parallel processing of queries and updates

InactiveUS6983322B1Reduce system overheadAvoid the needData processing applicationsWeb data indexingData-drivenParallel processing

A data driven discrete parallel processing computing system for searches with a key-ordered list of data objects distributed over a plurality of servers. The invention is a data-driven architecture for distributed segmented databases consisting of lists of objects. The database is divided into segments based on content and distributed over a multiplicity of servers. Updates and queries are data driven and determine the segment and server to which they must be directed avoiding broadcasting. This is effective for systems such as search engines. Each object in the list of data objects must have a key on which the objects can be sorted relative to each other. Each segment is self-contained and doesn't rely on a schema. Multiple simultaneous queries and simultaneous updates and queries on different segments on different servers result in parallel processing on the database taken as a whole.

Owner:MEC MANAGEMENT LLC

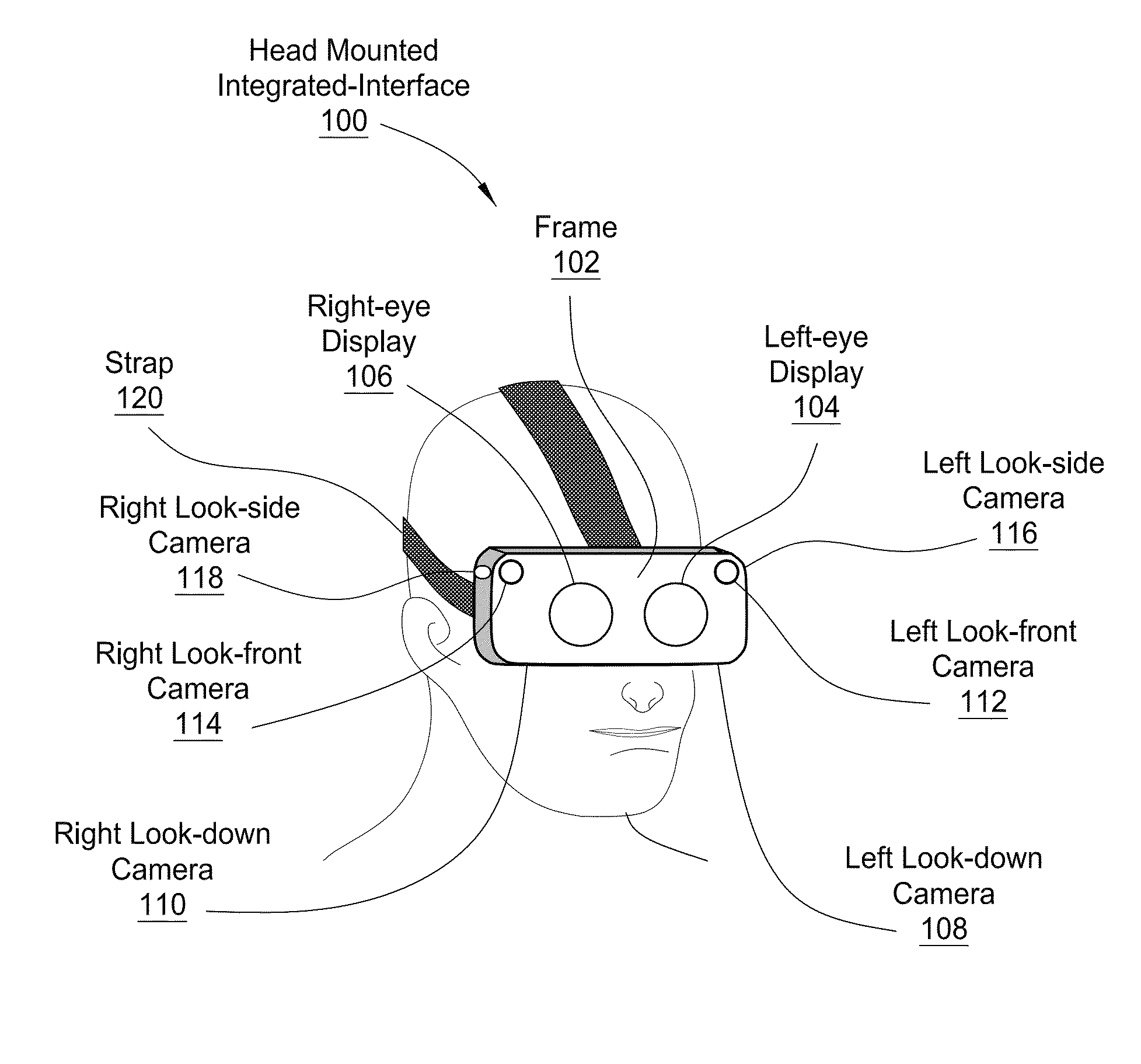

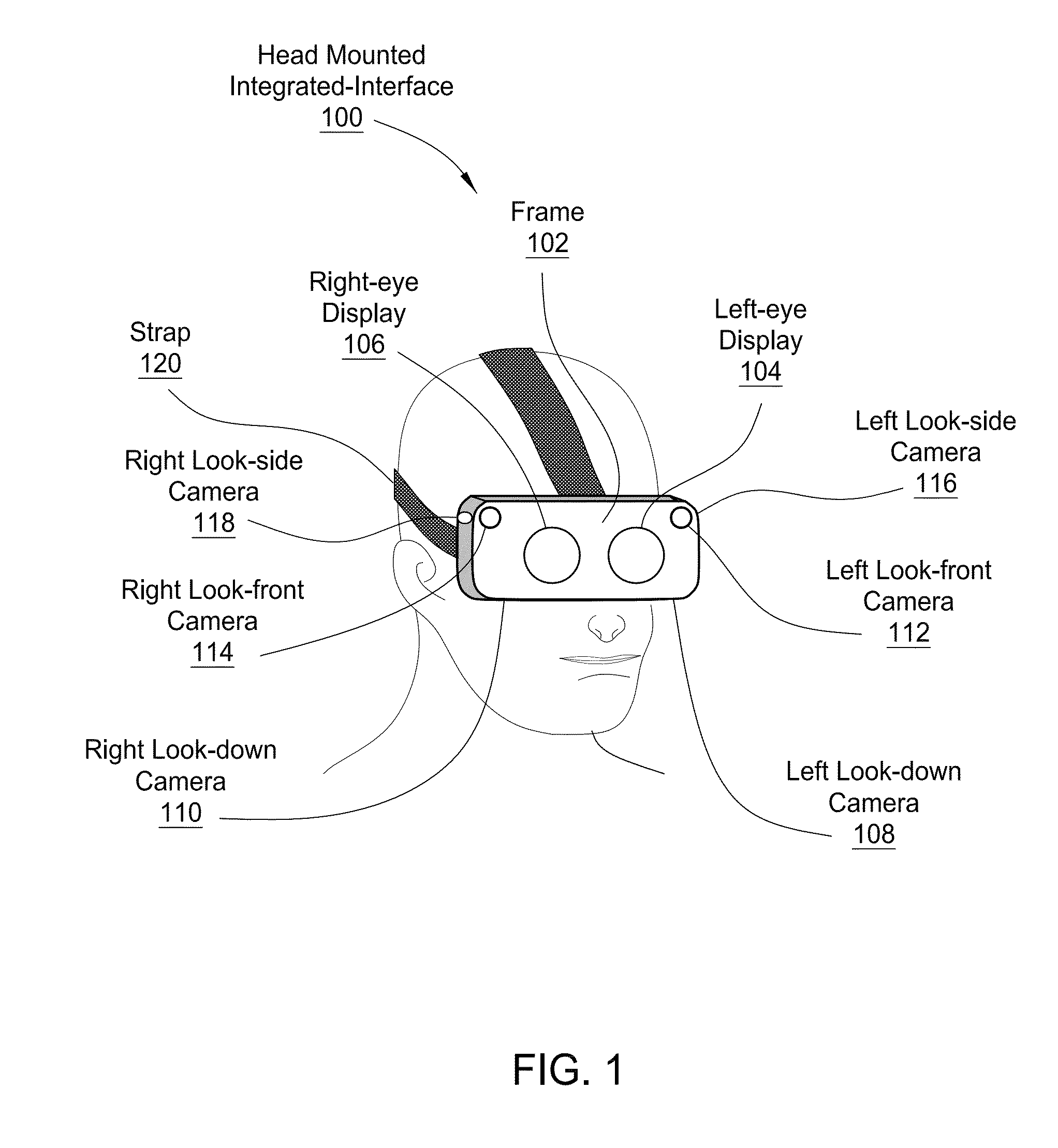

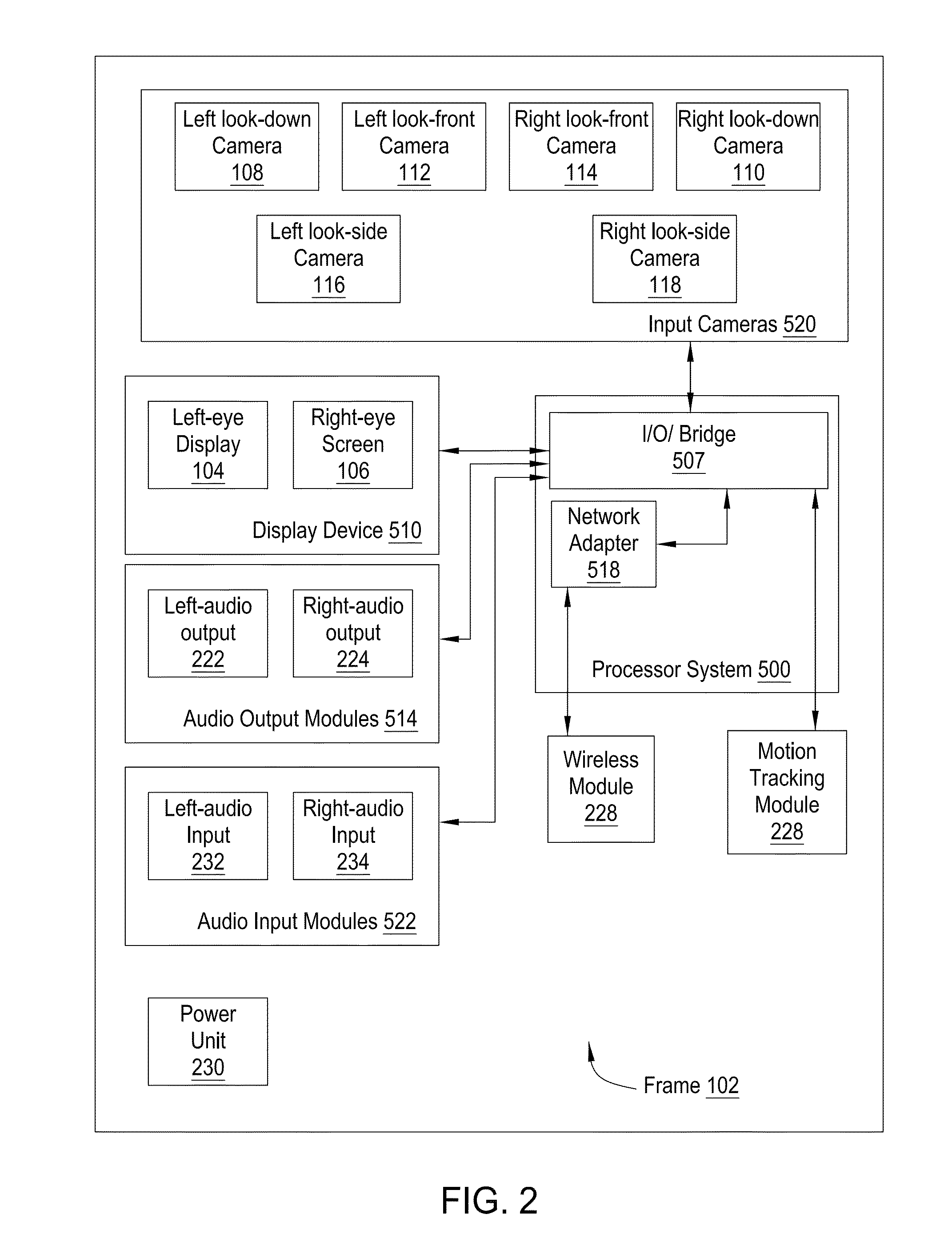

Head-mounted integrated interface

InactiveUS20150138065A1Improve versatilityImprove portabilityInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsLarge screen

A head mounted integrated interface (HMII) is presented that may include a wearable head-mounted display unit supporting two compact high resolution screens for outputting a right eye and left eye image in support of the stereoscopic viewing, wireless communication circuits, three-dimensional positioning and motion sensors, and a processing system which is capable of independent software processing and / or processing streamed output from a remote server. The HMII may also include a graphics processing unit capable of also functioning as a general parallel processing system and cameras positioned to track hand gestures. The HMII may function as an independent computing system or as an interface to remote computer systems, external GPU clusters, or subscription computational services, The HMII is also capable linking and streaming to a remote display such as a large screen monitor.

Owner:NVIDIA CORP

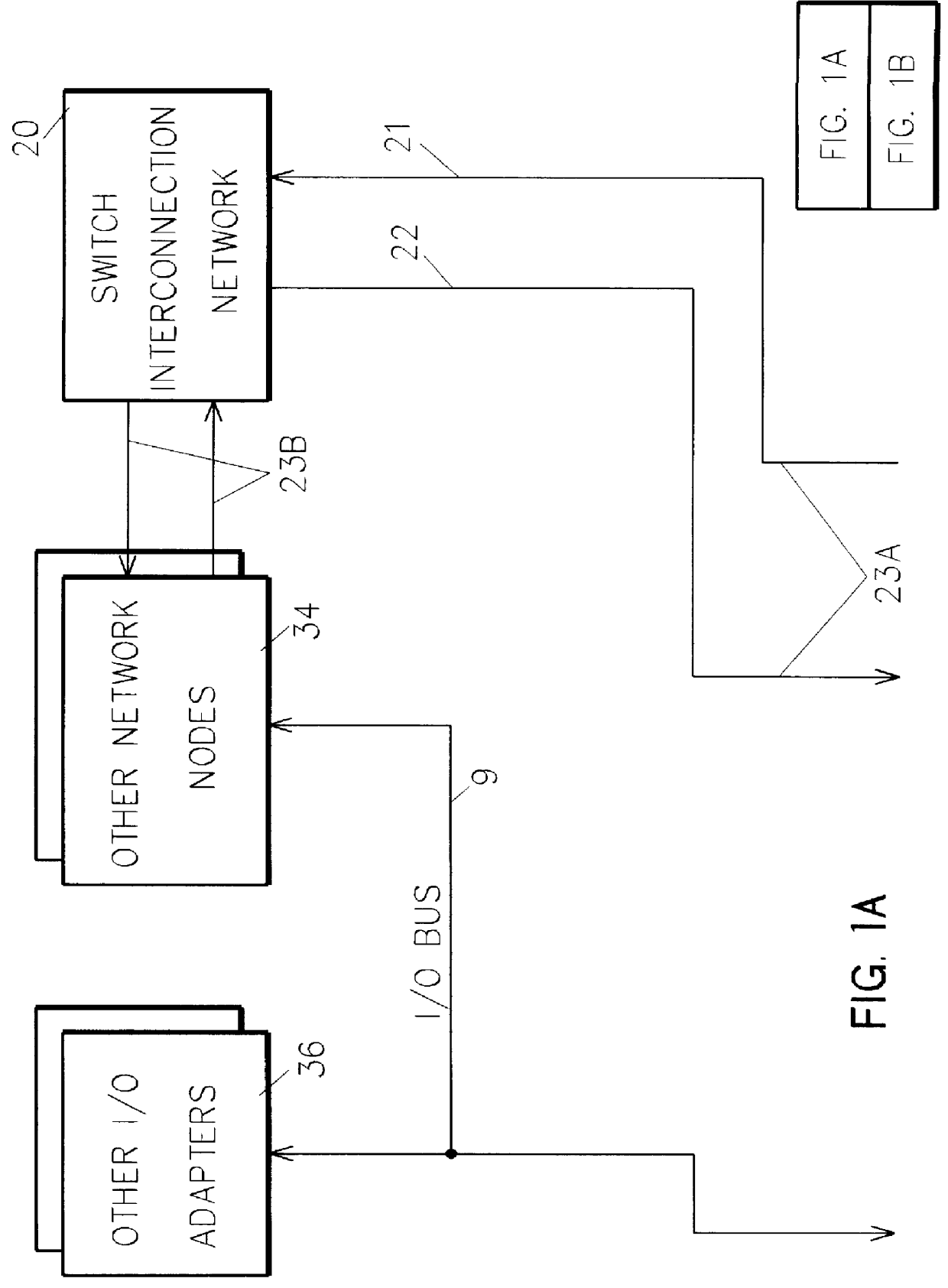

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

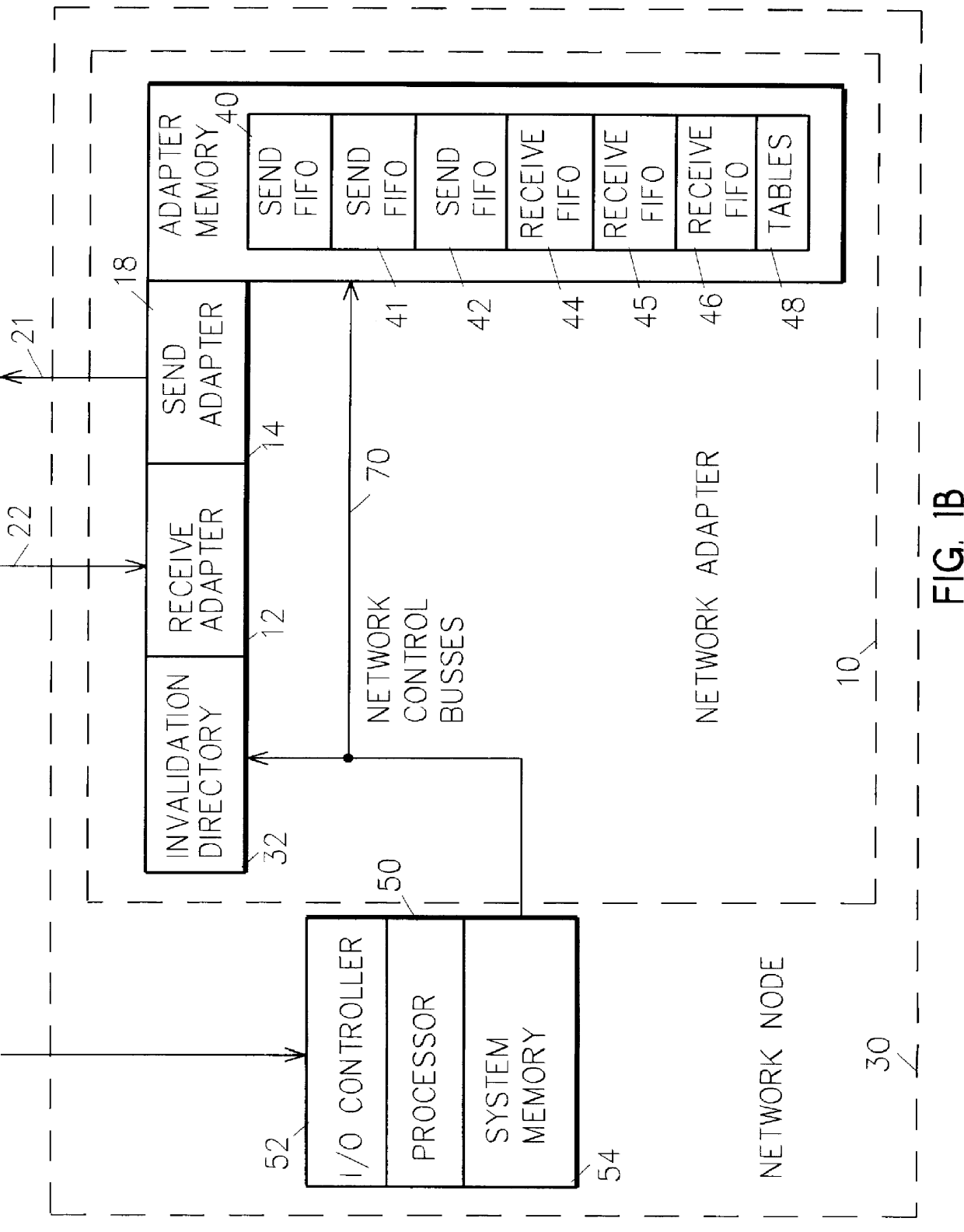

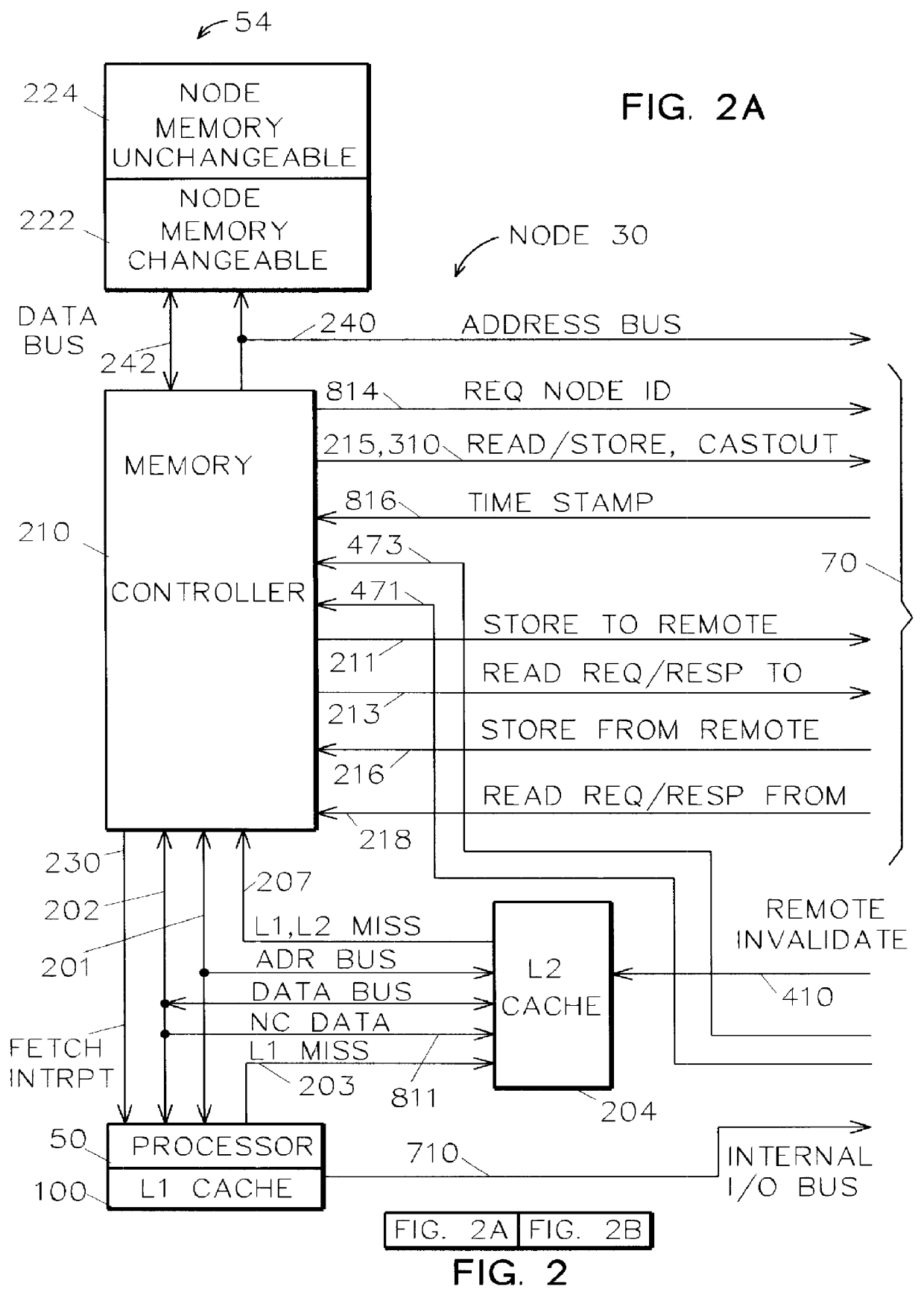

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

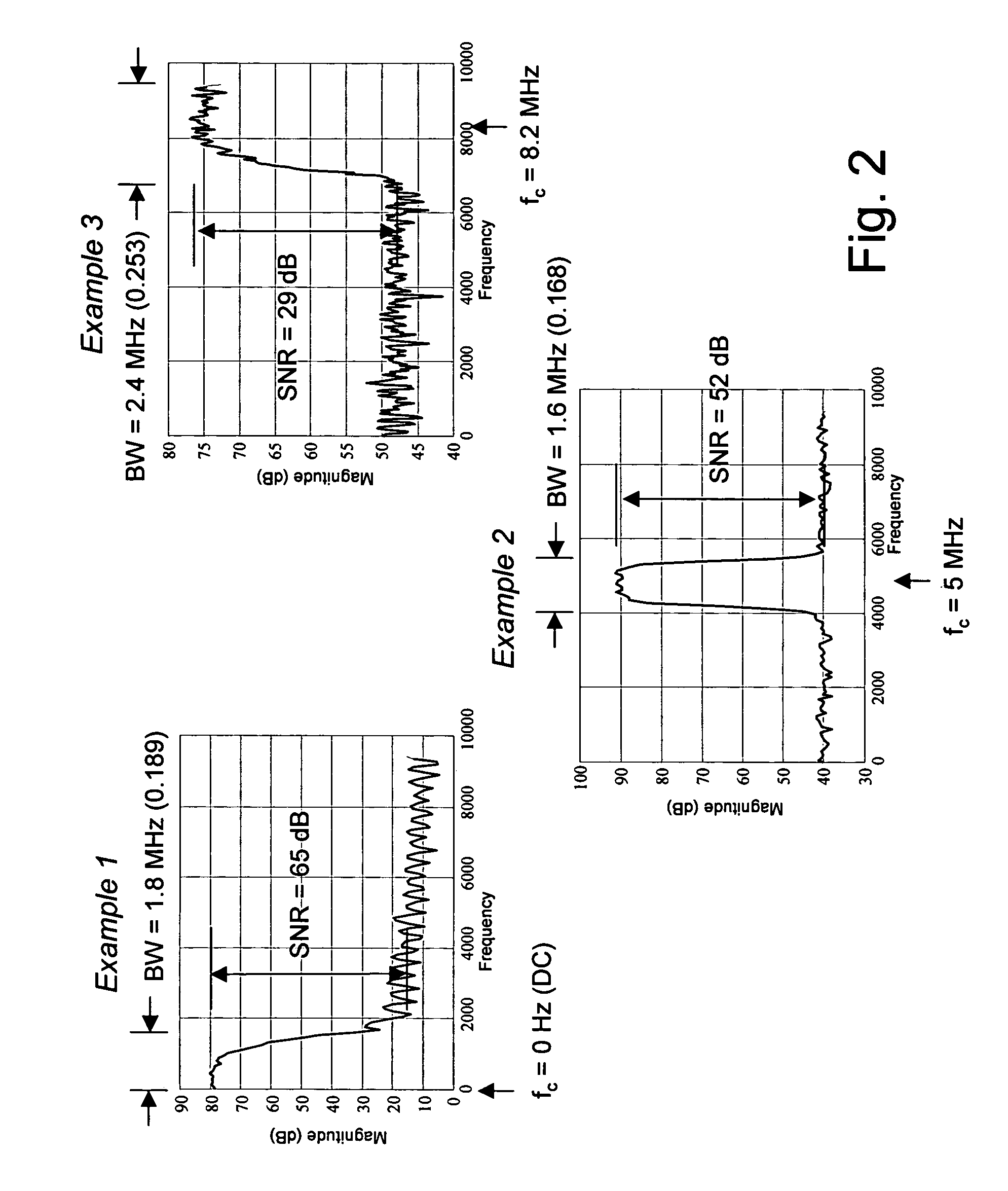

Adaptive compression and decompression of bandlimited signals

InactiveUS7009533B1Less bandwidthLess storageCode conversionPictoral communicationAdaptive compressionSpectrum analyzer

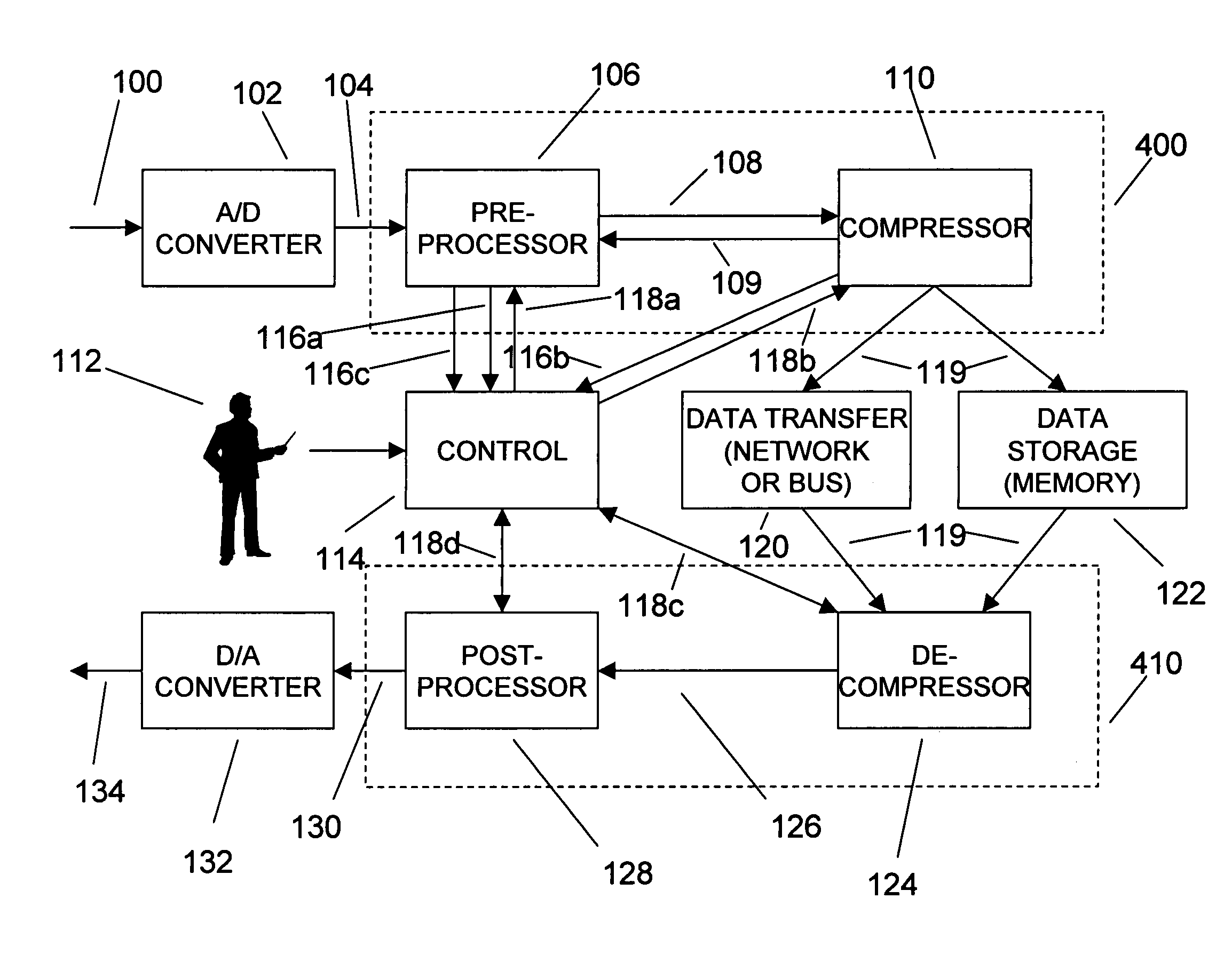

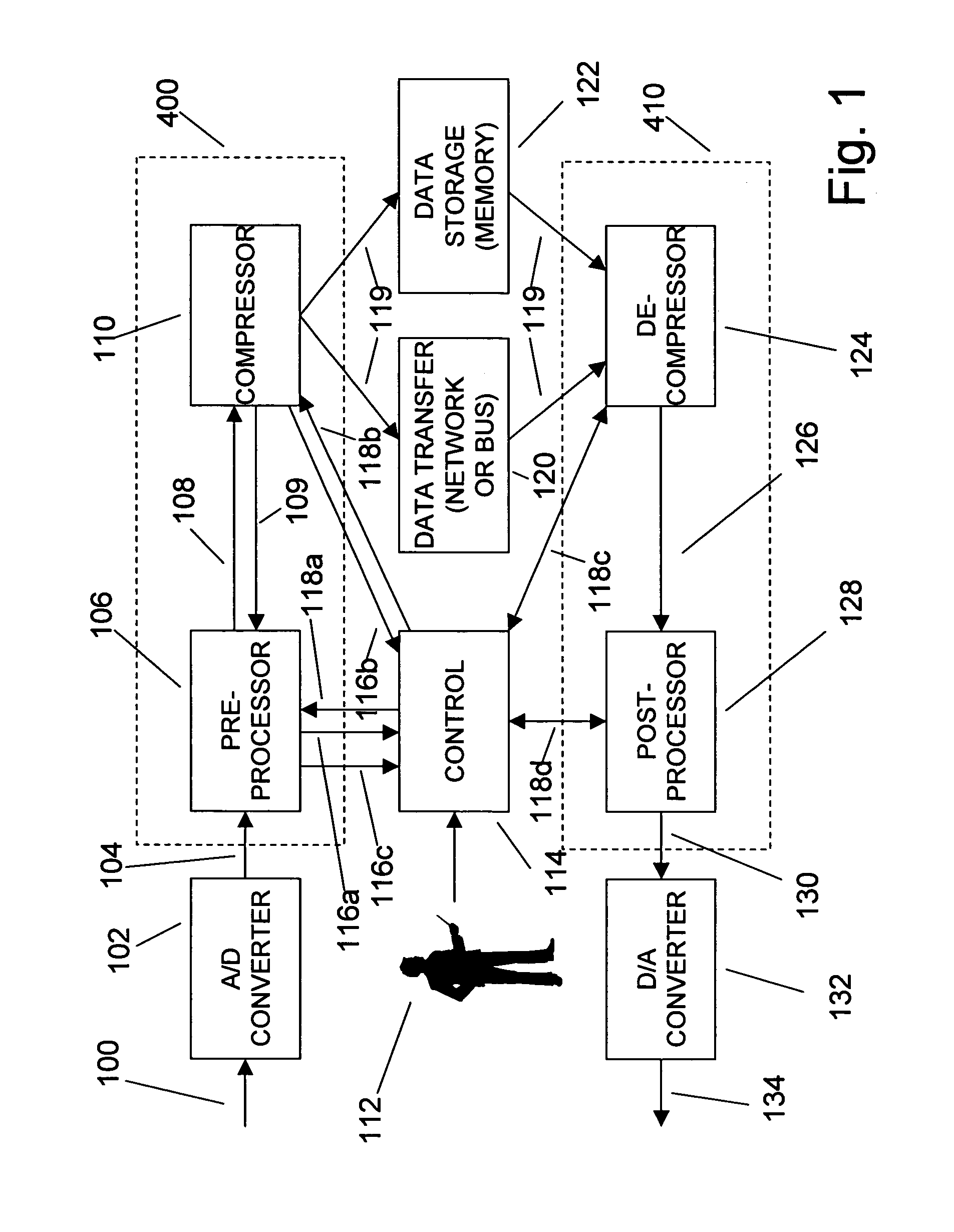

An efficient method for compressing sampled analog signals in real time, without loss, or at a user-specified rate or distortion level, is described. The present invention is particularly effective for compressing and decompressing high-speed, bandlimited analog signals that are not appropriately or effectively compressed by prior art speech, audio, image, and video compression algorithms due to various limitations of such prior art compression solutions. The present invention's preprocessor apparatus measures one or more signal parameters and, under program control, appropriately modifies the preprocessor input signal to create one or more preprocessor output signals that are more effectively compressed by a follow-on compressor. In many instances, the follow-on compressor operates most effectively when its input signal is at baseband. The compressor creates a stream of compressed data tokens and compression control parameters that represent the original sampled input signal using fewer bits. The decompression subsystem uses a decompressor to decompress the stream of compressed data tokens and compression control parameters. After decompression, the decompressor output signal is processed by a post-processor, which reverses the operations of the preprocessor during compression, generating a postprocessed signal that exactly matches (during lossless compression) or approximates (during lossy compression) the original sampled input signal. Parallel processing implementations of both the compression and decompression subsystems are described that can operate at higher sampling rates when compared to the sampling rates of a single compression or decompression subsystem. In addition to providing the benefits of real-time compression and decompression to a new, general class of sampled data users who previously could not obtain benefits from compression, the present invention also enhances the performance of test and measurement equipment (oscilloscopes, signal generators, spectrum analyzers, logic analyzers, etc.), busses and networks carrying sampled data, and data converters (A / D and D / A converters).

Owner:TAHOE RES LTD

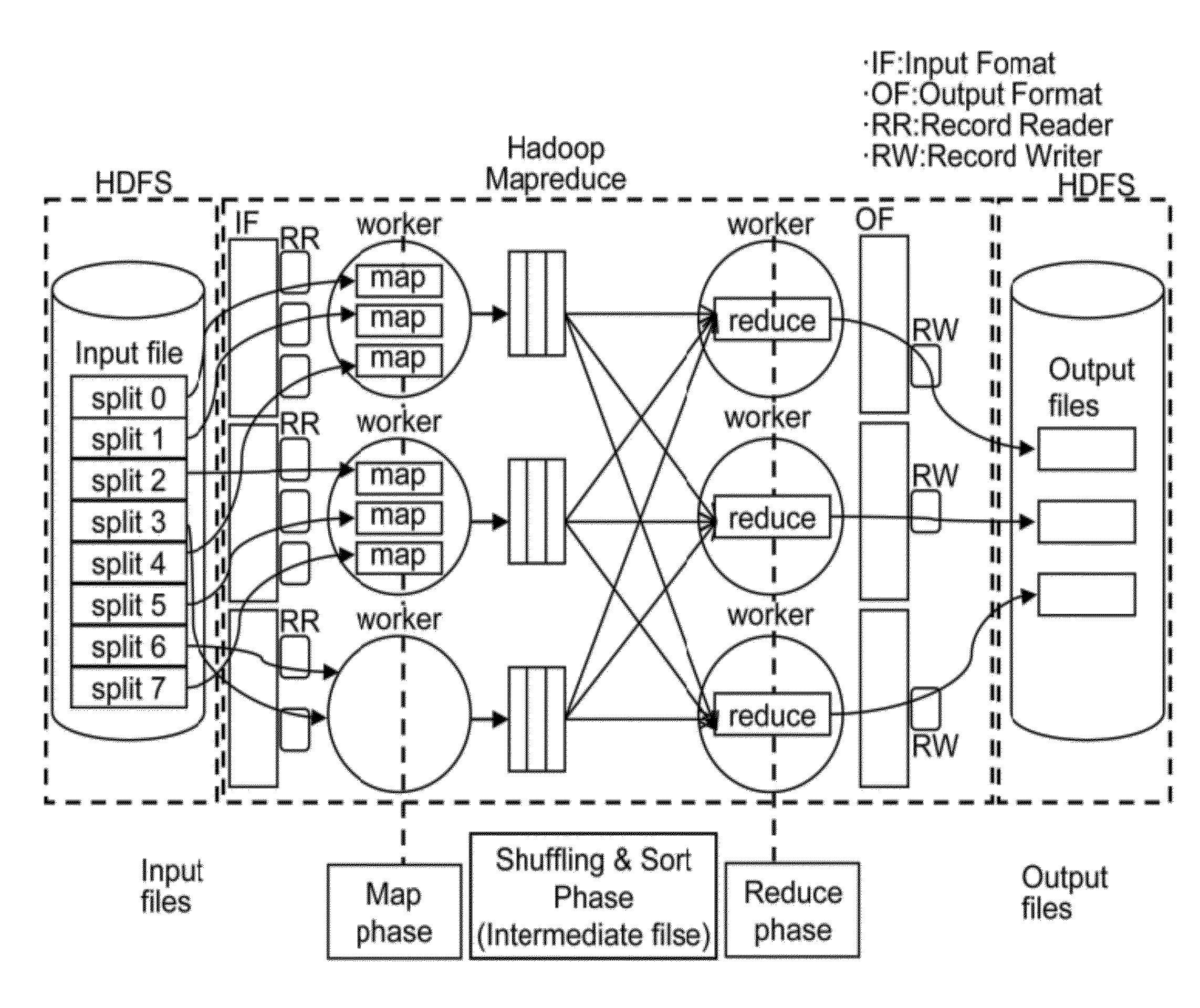

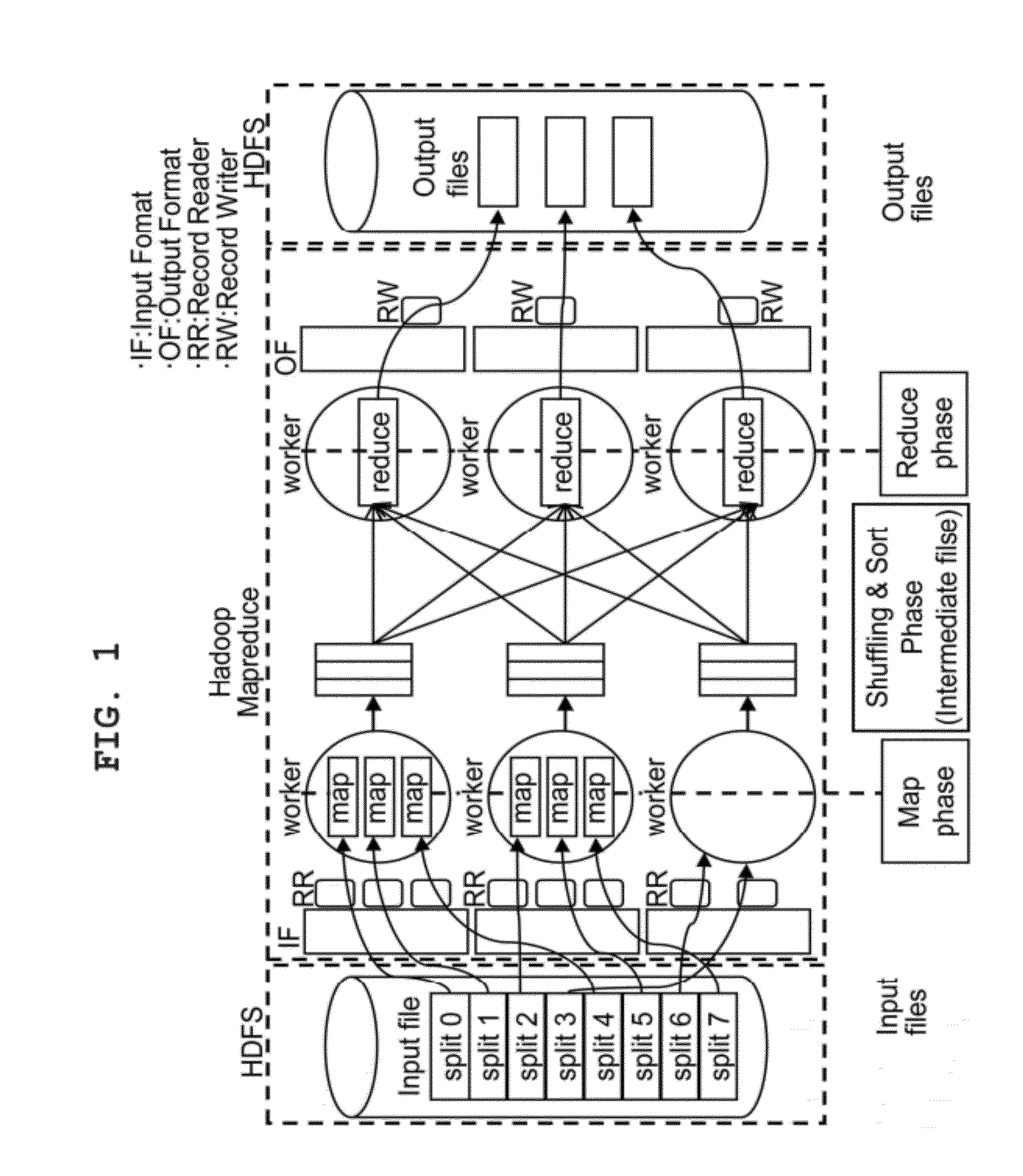

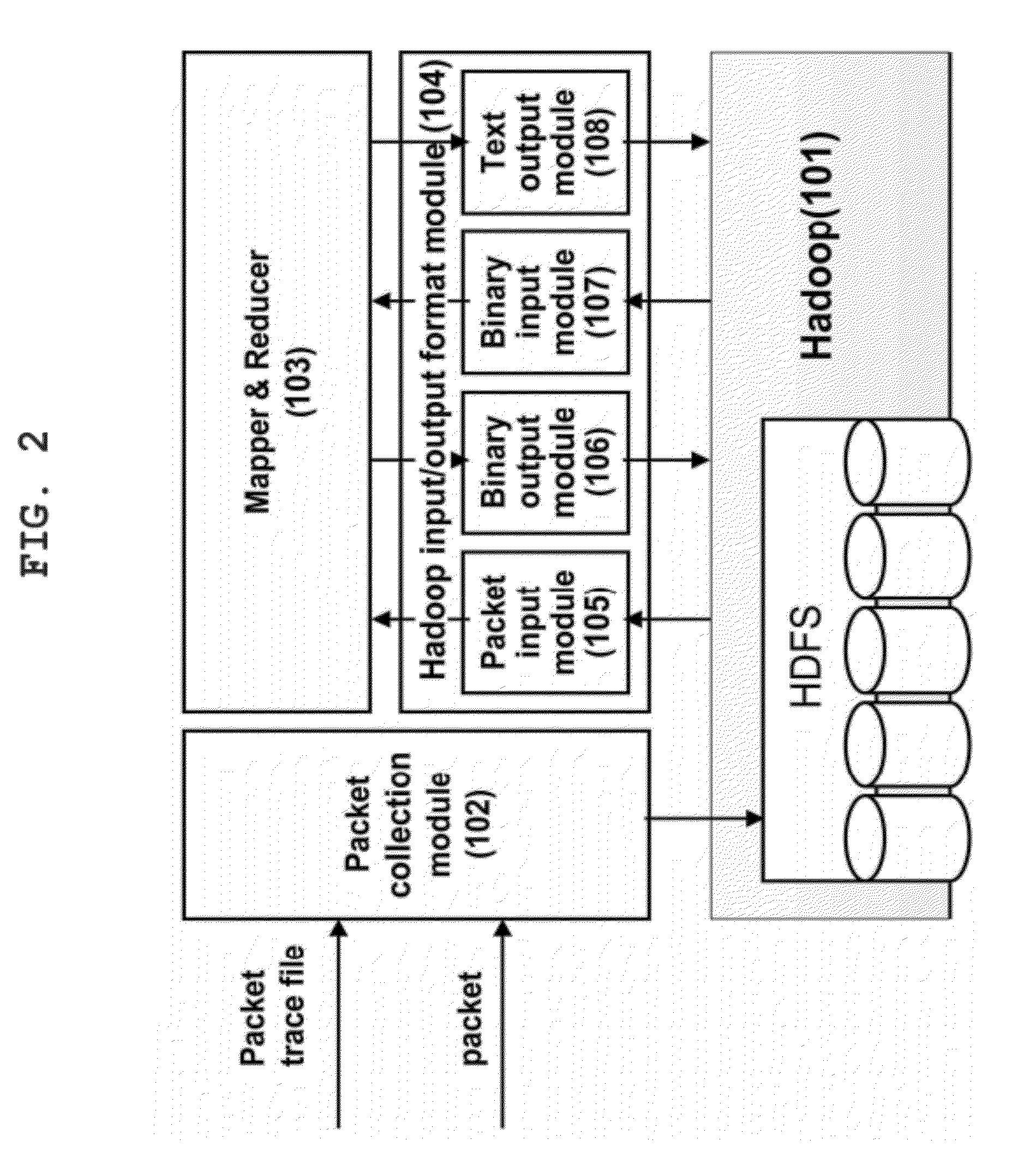

Packet analysis system and method using hadoop based parallel computation

InactiveUS20120182891A1Optimize data processingError preventionTransmission systemsDistributed File SystemOpen source

The present invention relates to a packet analysis system and method, which enables cluster nodes to process in parallel a large quantity of packets collected in a network in an open source distribution system called Hadoop. The packet analysis system based on a Hadoop framework includes a first module for distributing and storing packet traces in a distributed file system, a second module for distributing and processing the packet traces stored in the distributed file system in a cluster of nodes executing Hadoop using a MapReduce method, and a third module for transferring the packet traces, stored in the distributed file system, to the second module so that the packet traces can be processed using the MapReduce method and outputting a result of analysis, calculated by the second module using the MapReduce method, to the distributed file system.

Owner:THE IND & ACADEMIC COOP IN CHUNGNAM NAT UNIV (IAC)

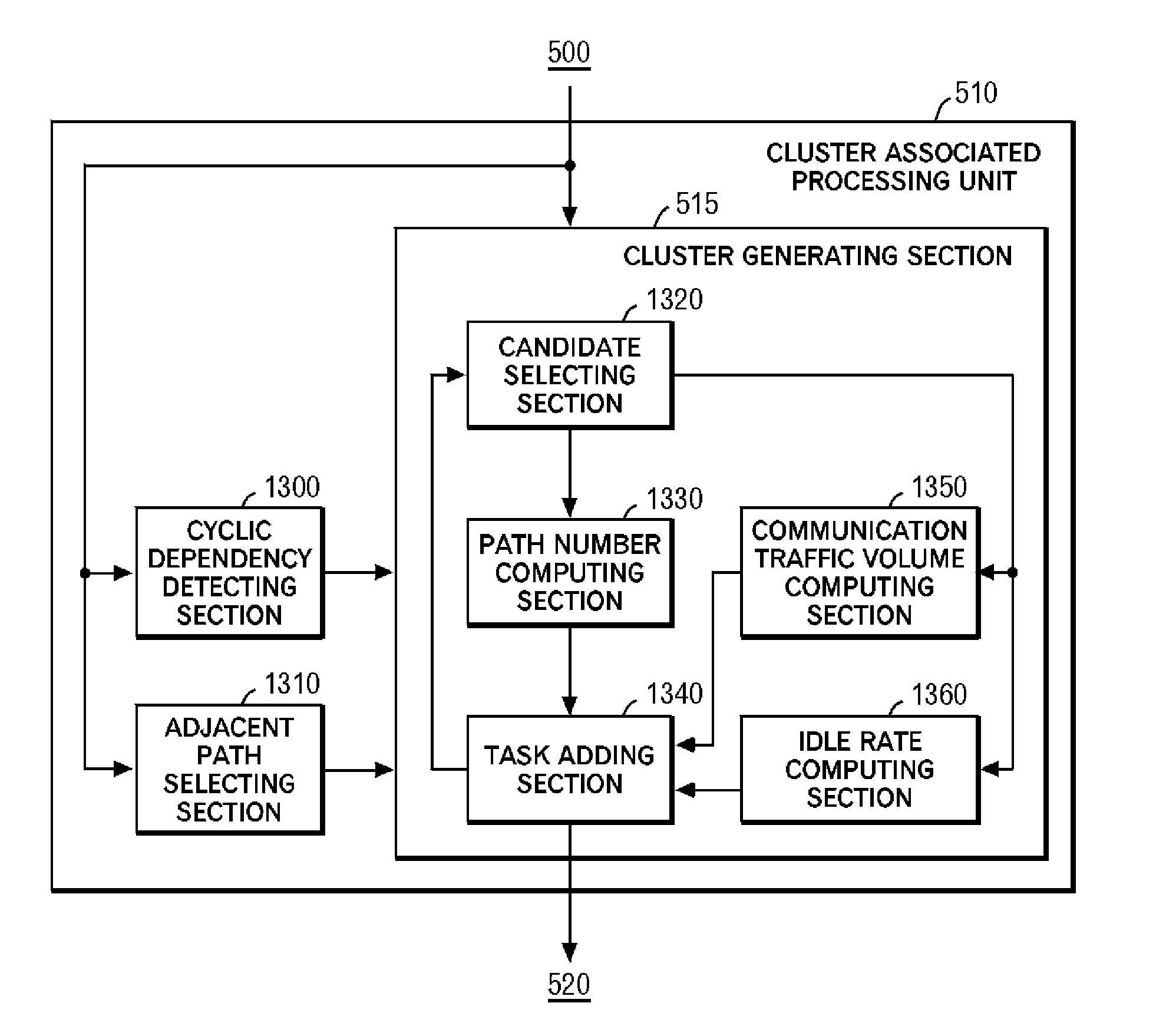

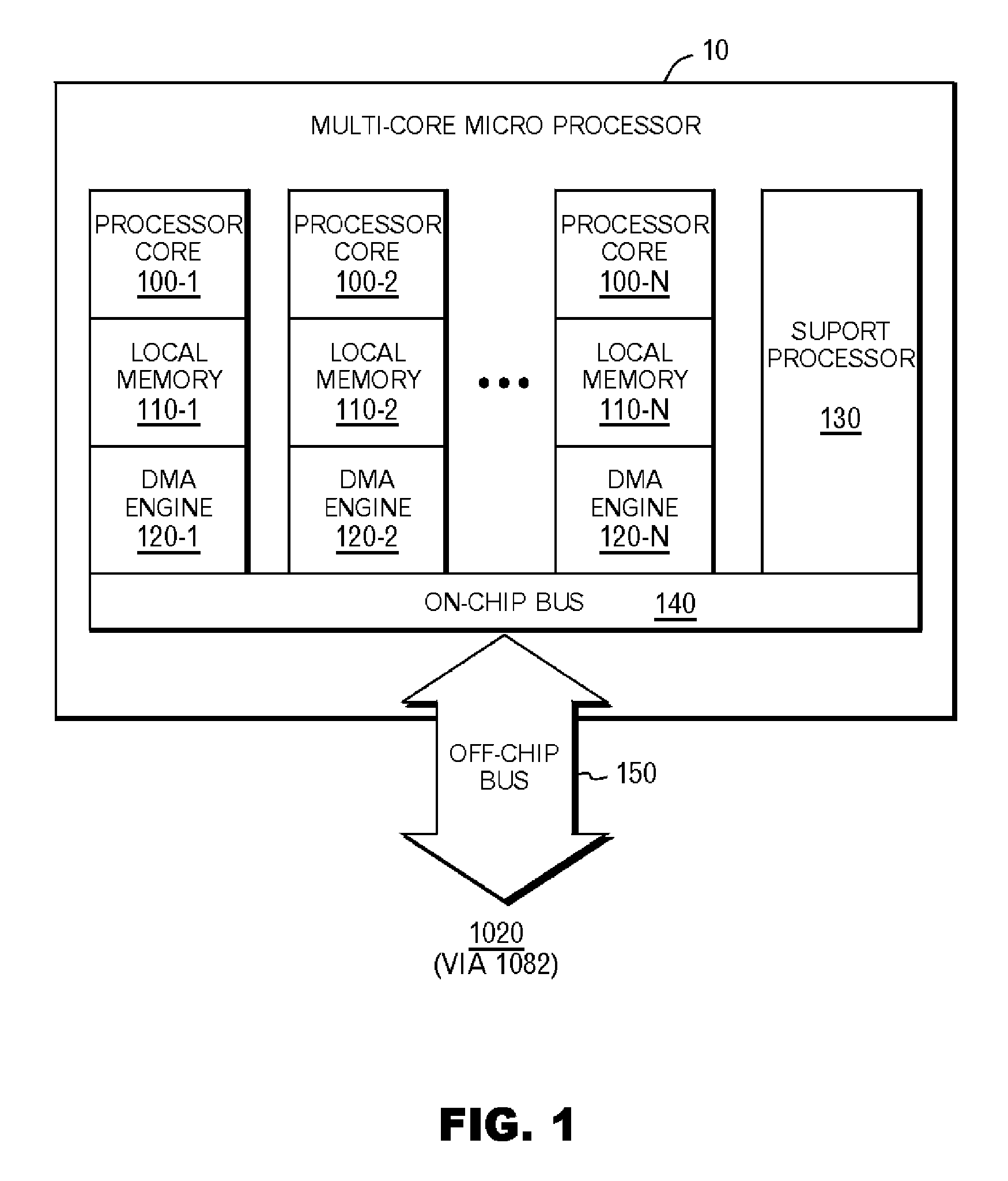

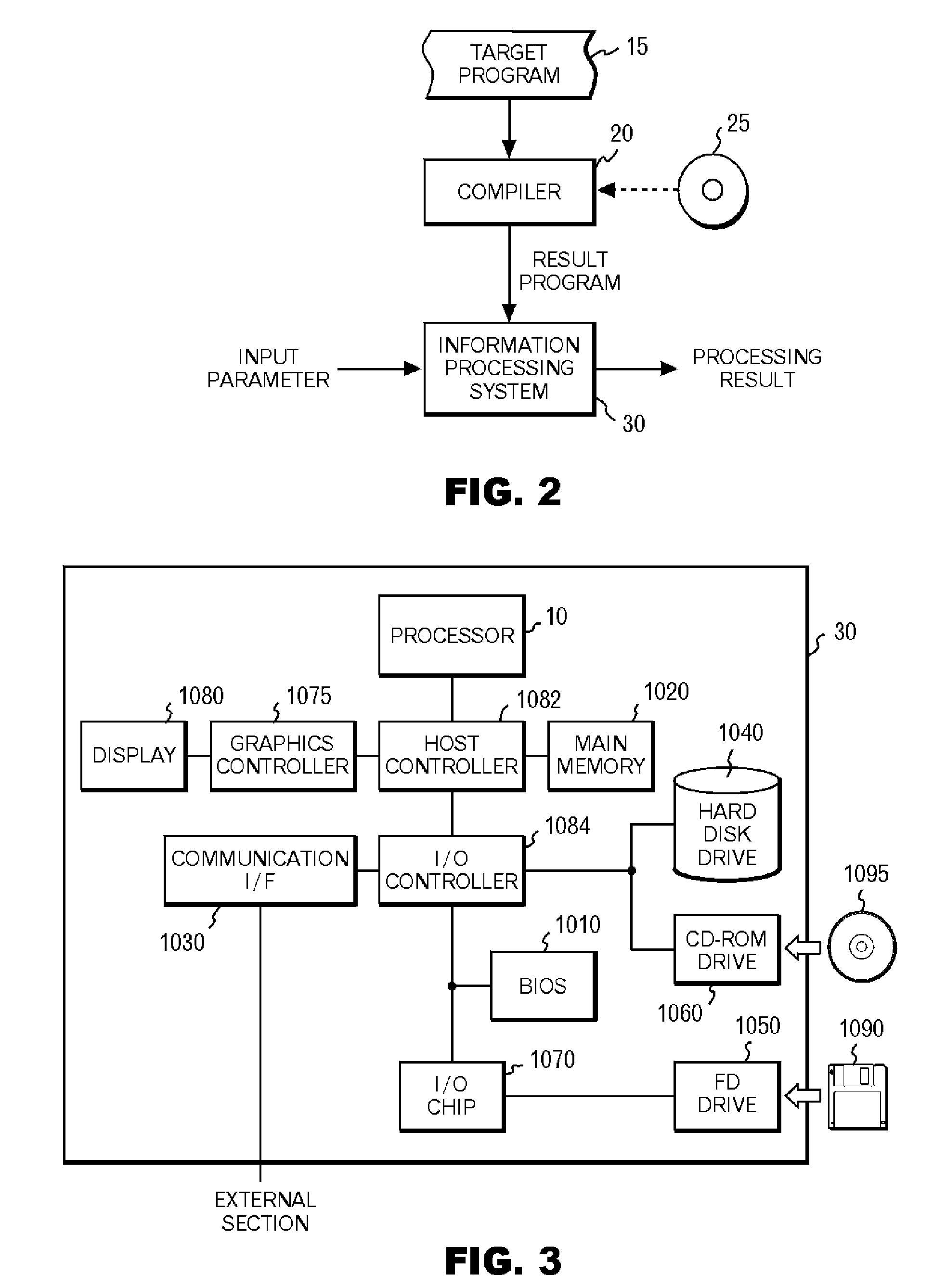

Preprocessor to improve the performance of message-passing-based parallel programs on virtualized multi-core processors

InactiveUS20070038987A1Reduced execution timeEasy to implementMultiprogramming arrangementsMemory systemsCluster basedParallel processing

Provided is a complier which optimizes parallel processing. The complier records the number of execution cores, which is the number of processor cores that execute a target program. First, the compiler detects a dominant path, which is a candidate of an execution path to be consecutively executed by a single processor core, from a target program. Subsequently, the compiler selects dominant paths with the number not larger than the number of execution cores, and generates clusters of tasks to be executed by a multi-core processor in parallel or consecutively. After that, the compiler computes an execution time for which each of the generated clusters is executed by the processor cores with the number equal to one or each of a plurality natural numbers selected from the natural numbers not larger than the number of execution cores. Then, the compiler selects the number of processor cores to be assigned for execution of each of the clusters based on the computed execution time.

Owner:IBM CORP

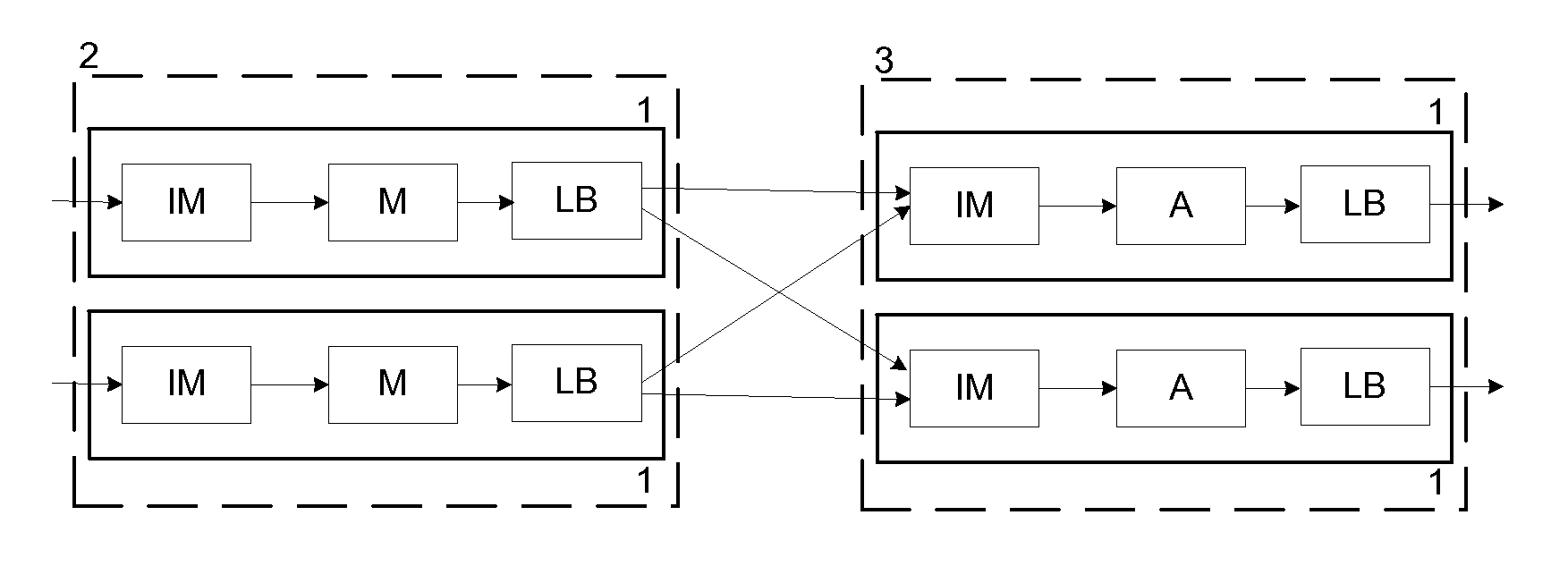

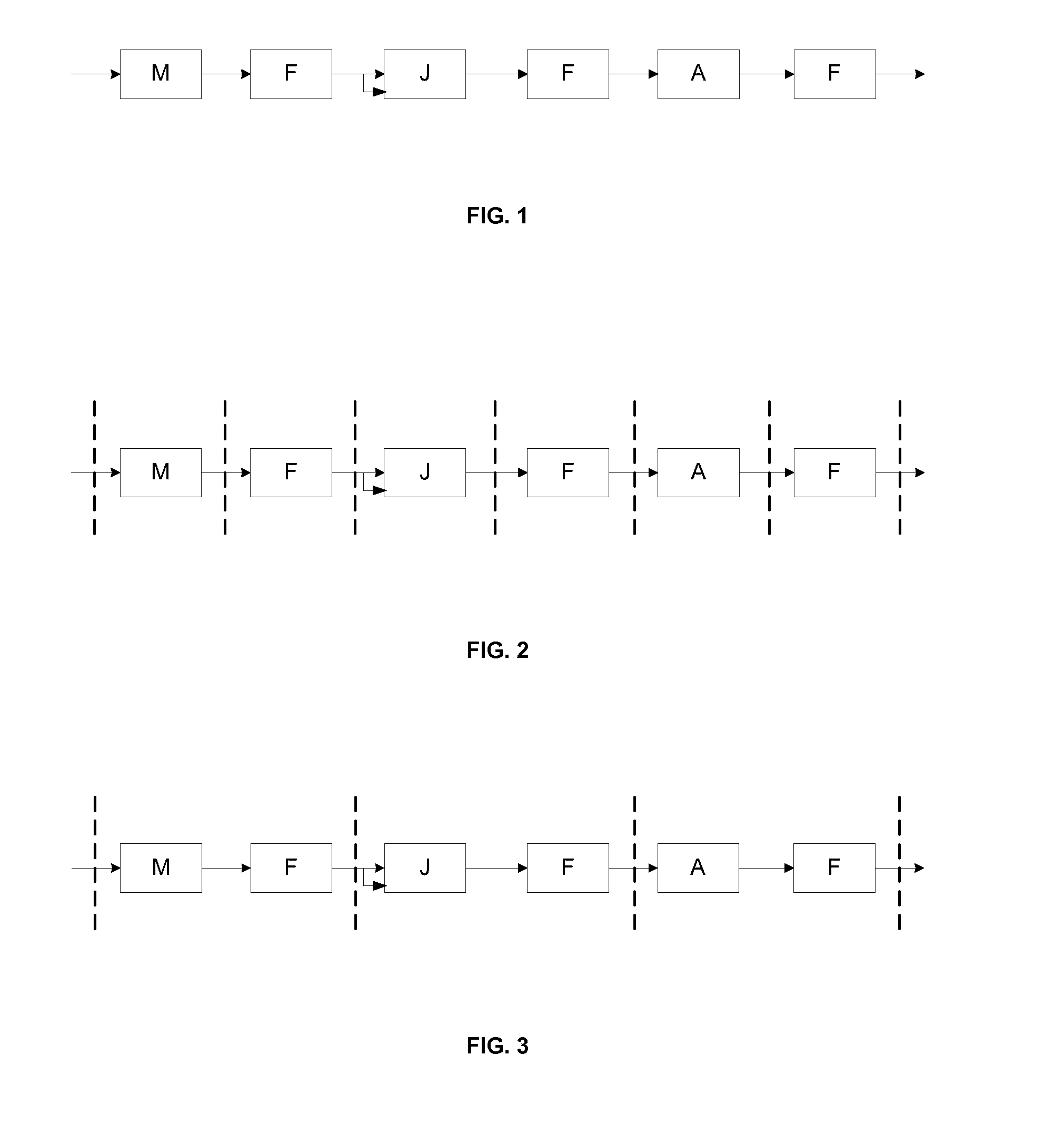

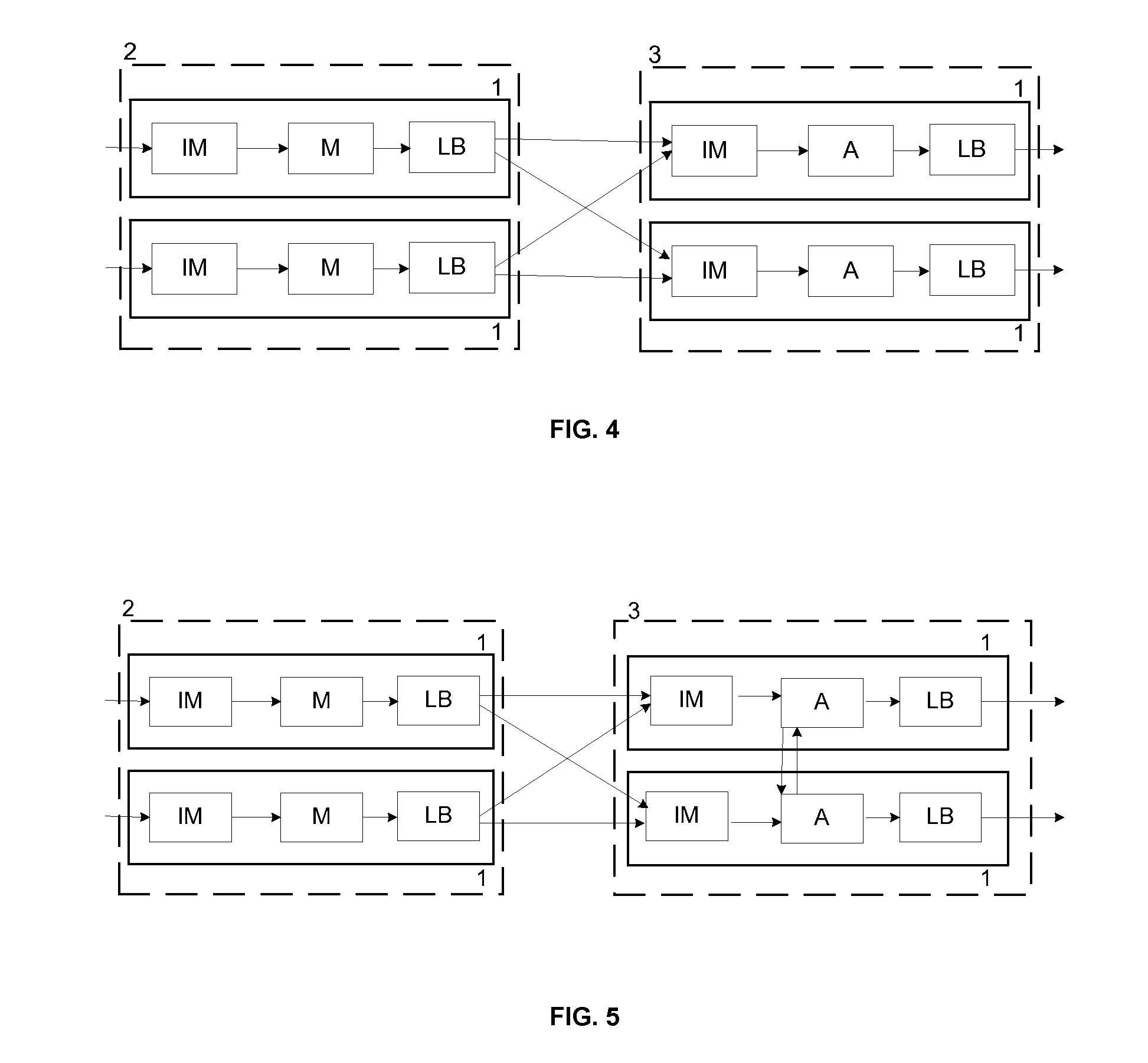

System and Methodology for Parallel Stream Processing

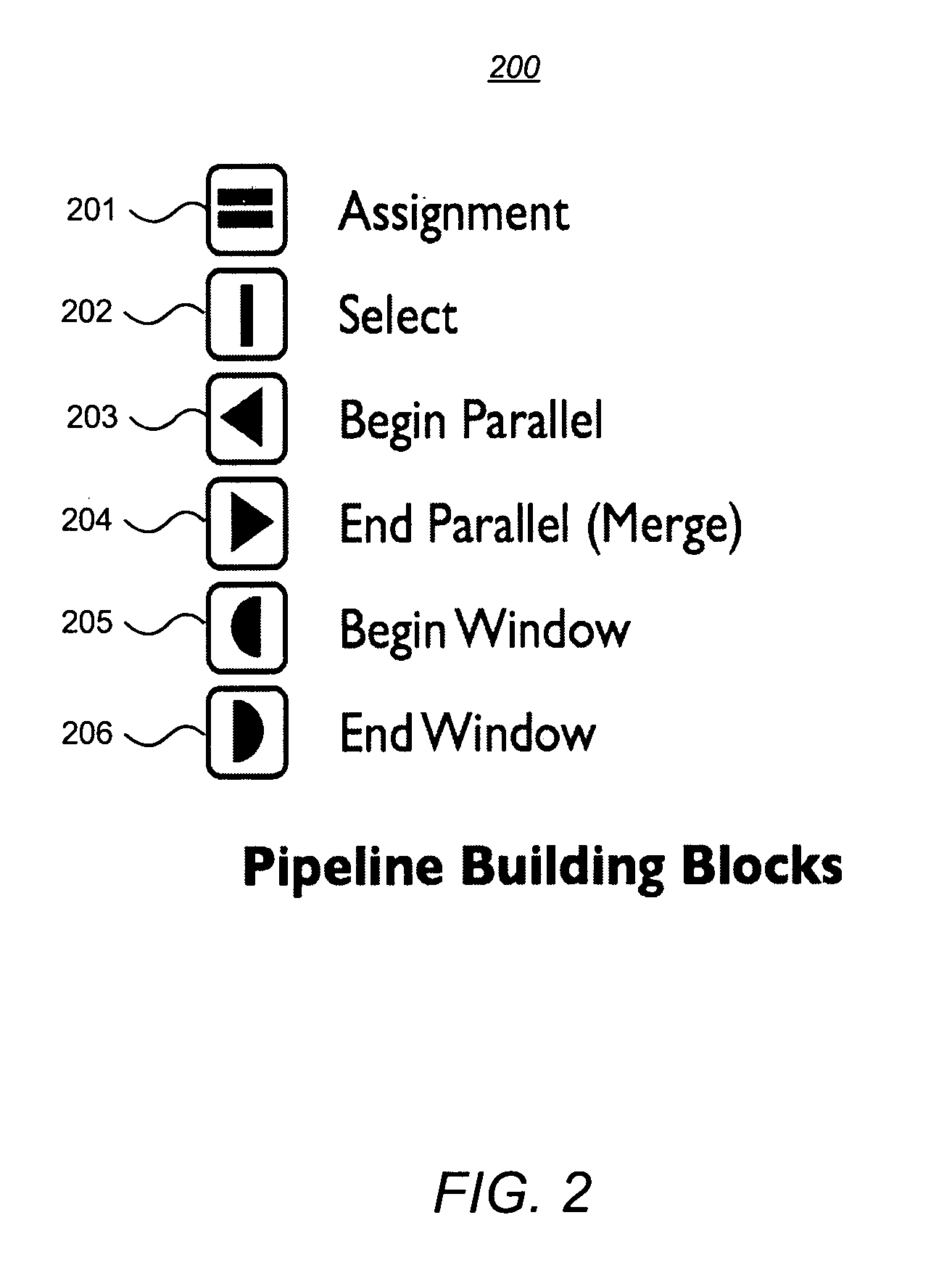

InactiveUS20090171999A1More formHigh levelDigital data information retrievalDigital data processing detailsData streamLinear sequence

A system and methodology for parallel processing of continuous data streams. In one embodiment, a system for parallel processing of data streams comprises: a converter receiving input streams of data in a plurality of formats and transforming the streams into a standardized data stream format comprising rows and columns in which values in a given column are of a homogeneous type; a storage system that continuously maintains a finite interval of each stream subject to specified space limits for the stream; an interface enabling a user to construct parallel stream programs for processing streams in the standardized data stream format, wherein a parallel stream program comprises a linear sequence of program building blocks for performing operations on a data stream; and a runtime computing system running multiple parallel stream programs continuously on the streams as they flow through the storage system.

Owner:CLOUSLE

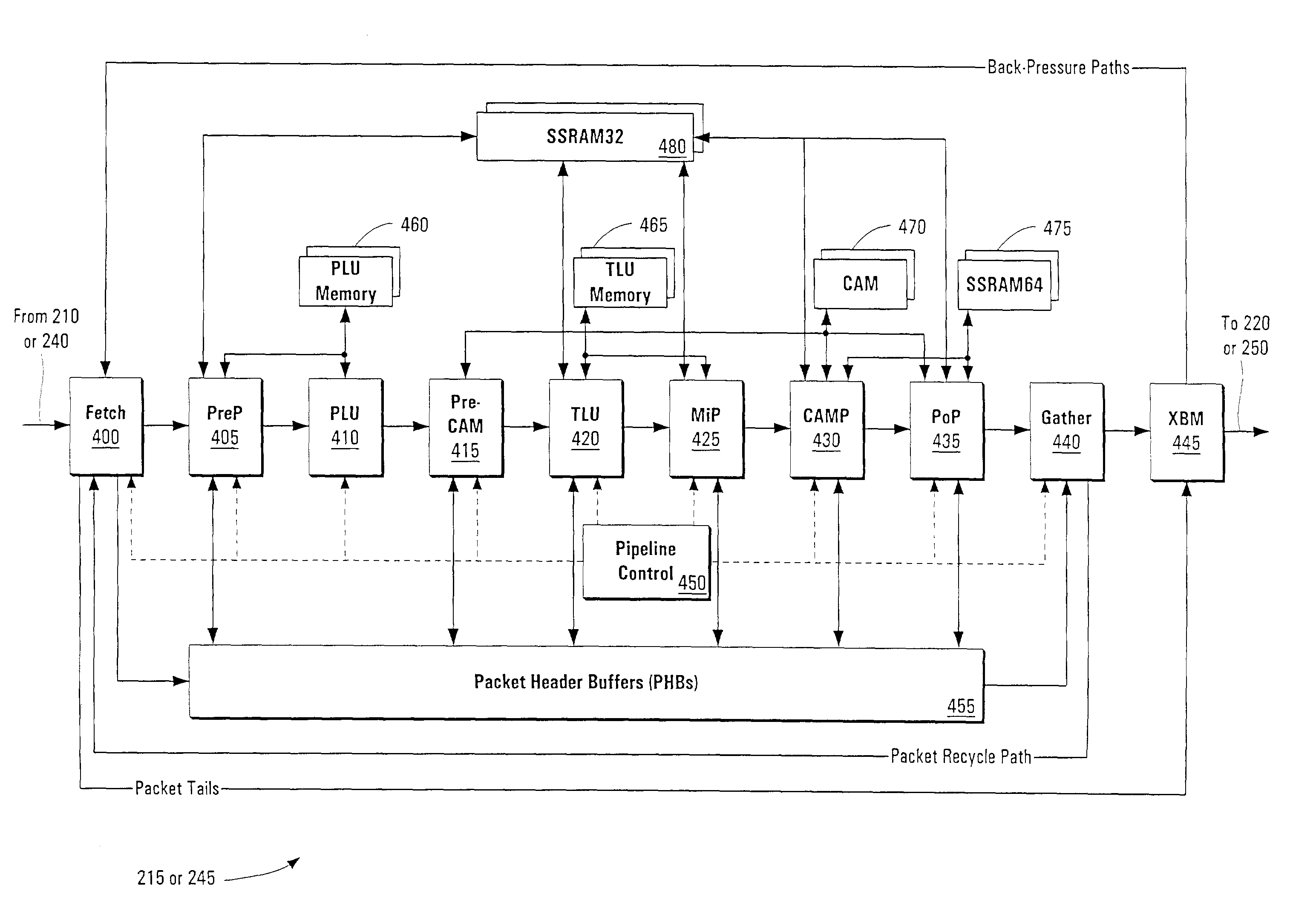

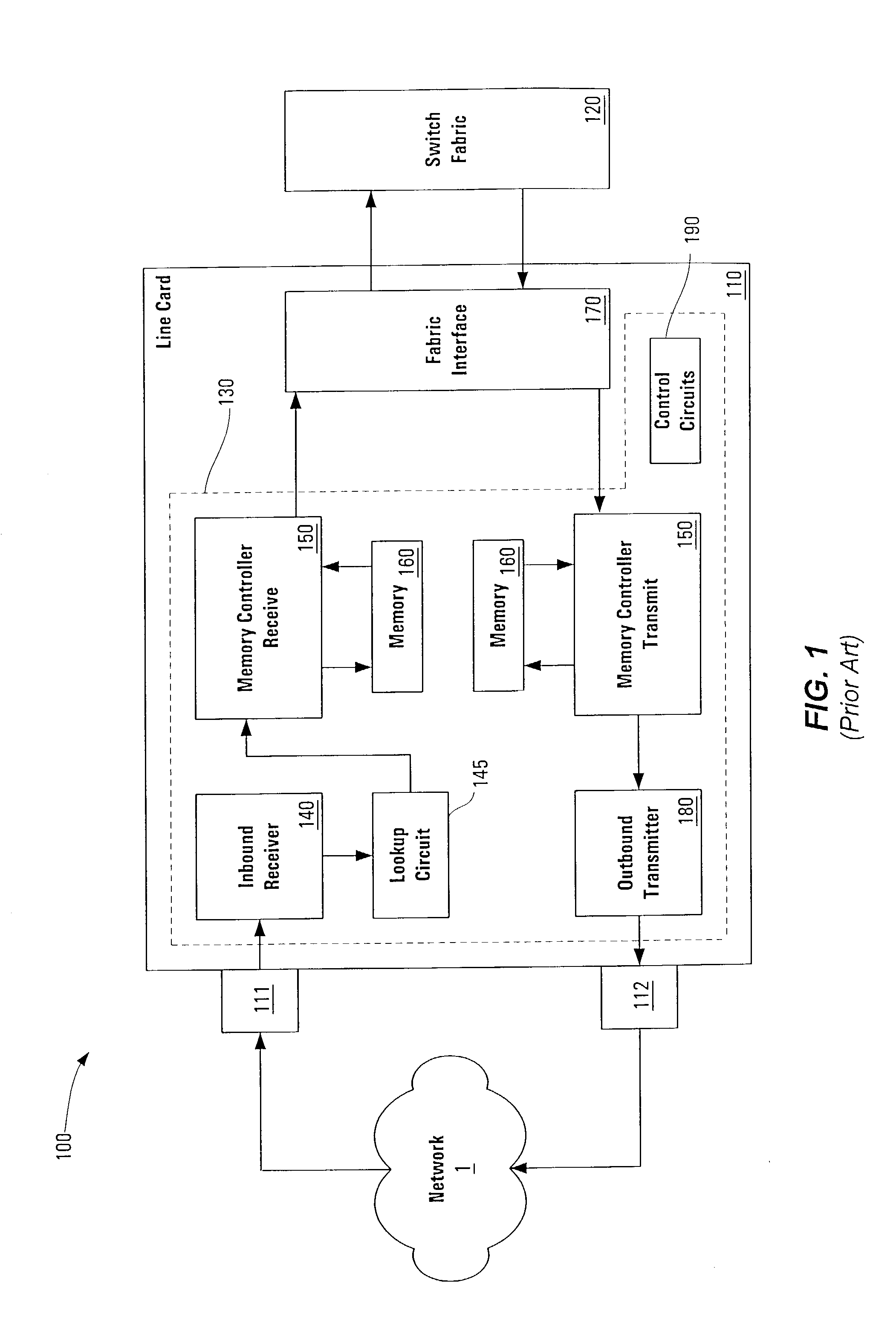

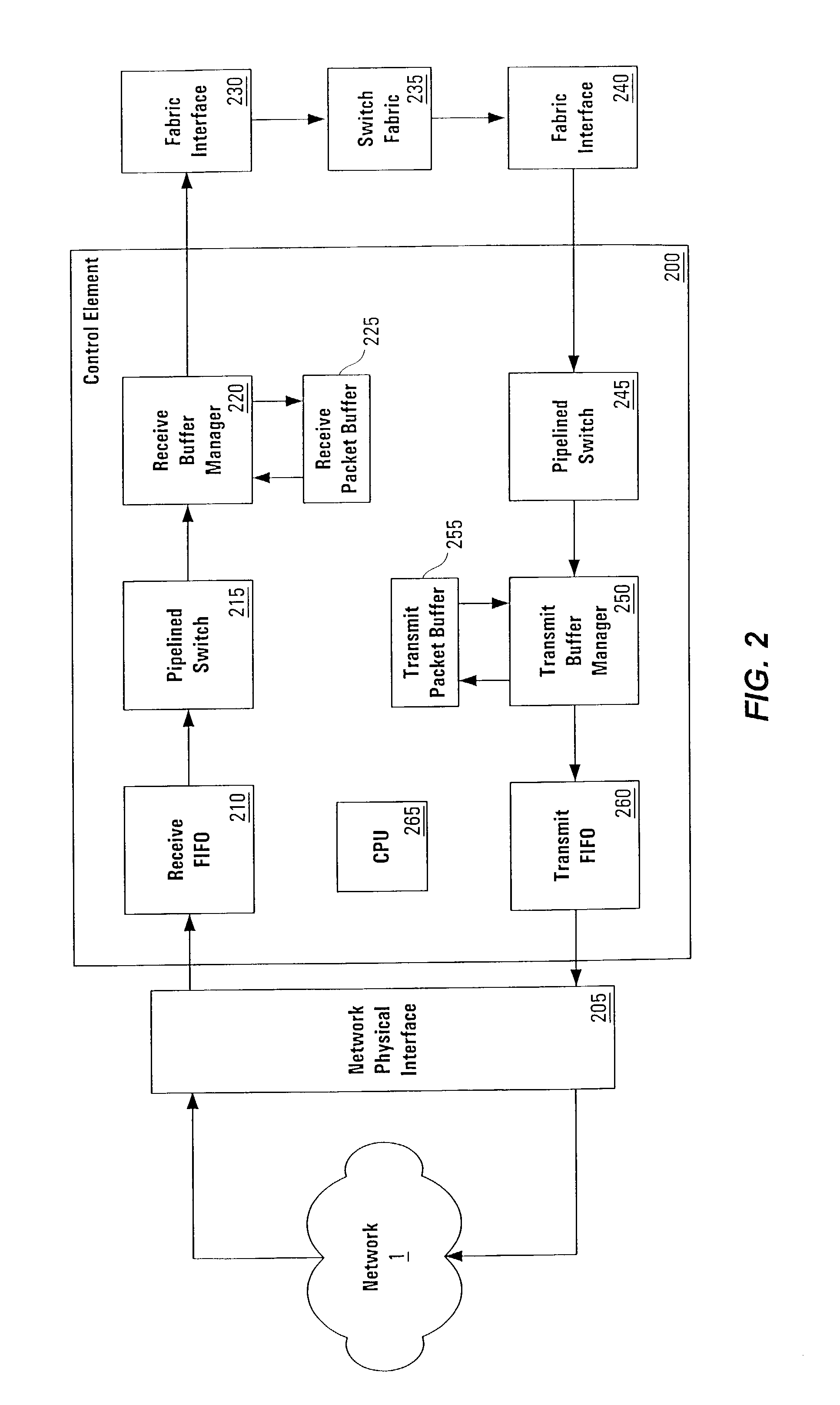

Pipelined packet switching and queuing architecture

InactiveUS6980552B1Quick implementationImprove throughputData switching by path configurationParallel processingBandwidth management

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in a multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Using bandwidth management techniques, each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path includes a buffer / queuing circuit similar to that used in the receive path and can include another pipelined switch. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus, congestion avoidance, and bandwidth management hardware.

Owner:CISCO TECH INC

Atomic memory operators in a parallel processor

ActiveUS7627723B1Direct performanceLower performance requirementsCathode-ray tube indicatorsProcessor architectures/configurationMachine instructionParallel processing

Owner:NVIDIA CORP

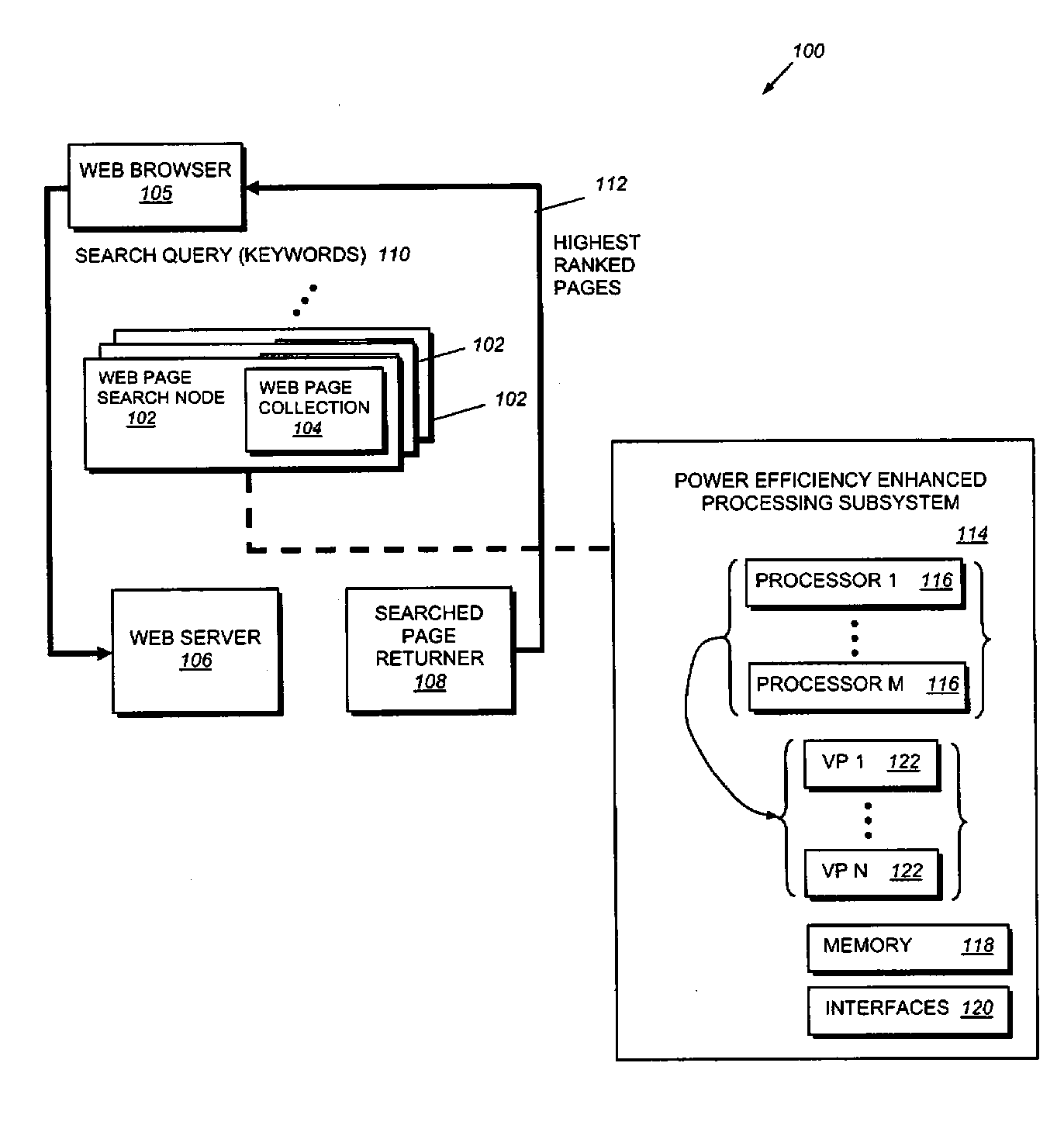

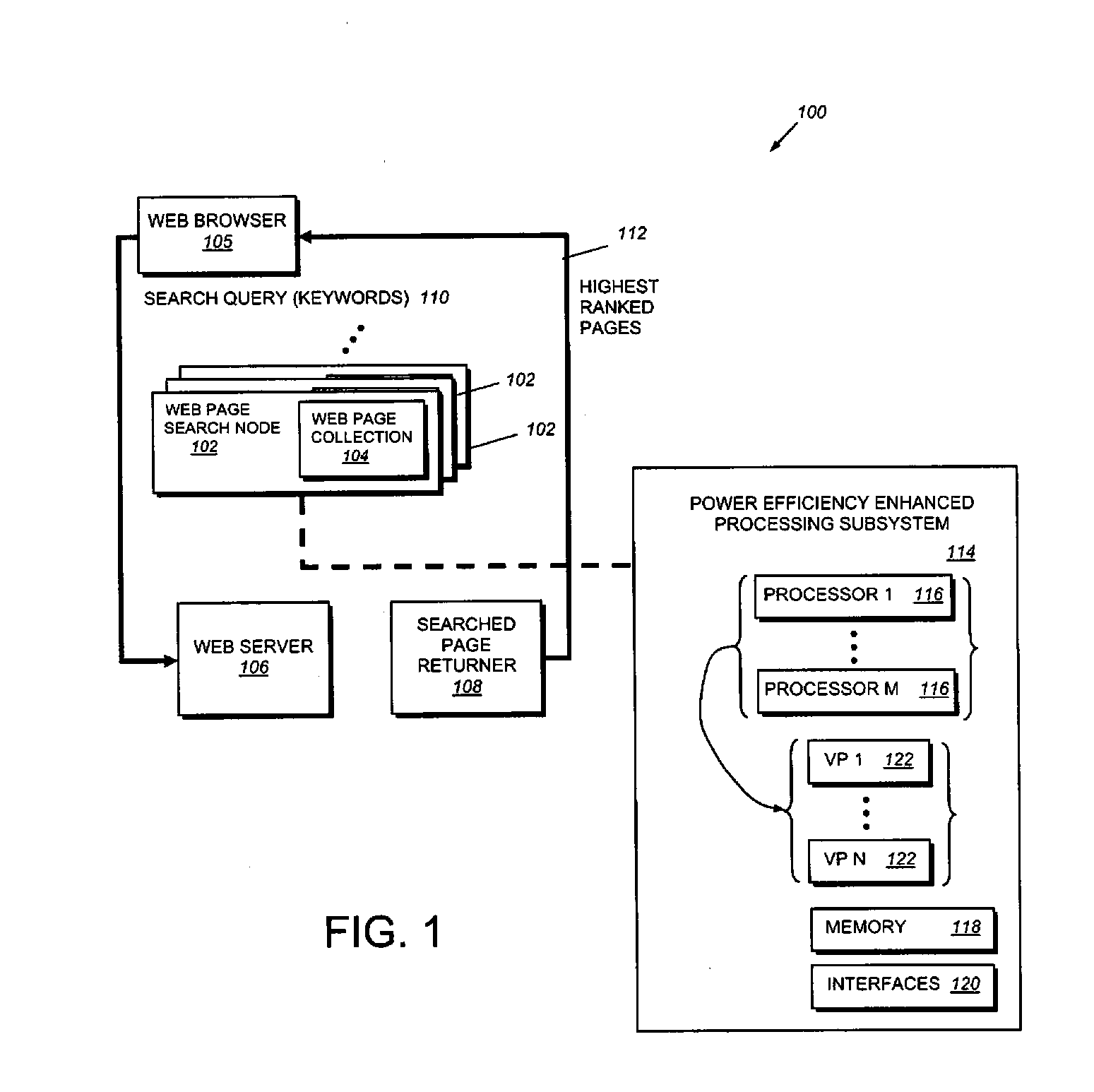

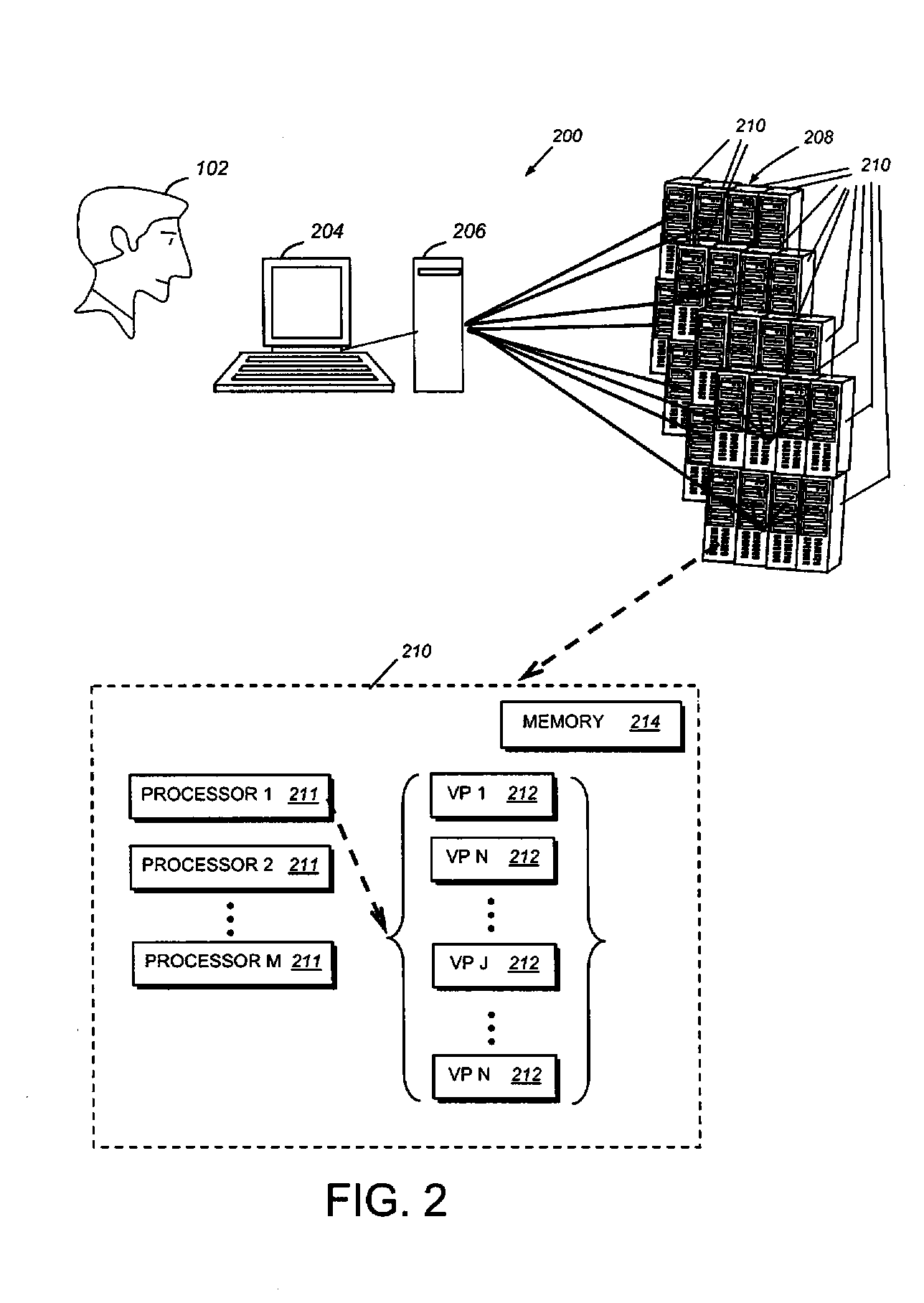

Parallel processing computer systems with reduced power consumption and methods for providing the same

ActiveUS20090083263A1Reduce power consumptionEnergy efficient ICTWeb data indexingWeb browserWeb service

This invention provides a computer system architecture and method for providing the same which can include a web page search node including a web page collection. The system and method can also include a web server configured to receive, from a given user via a web browser, a search query including keywords. The node is caused to search pages in its own collection that best match the search query. A search page returner may be provided which is configured to return, to the user, high ranked pages. The node may include a power-efficiency-enhanced processing subsystem, which includes M processors. The M processors are configured to emulate N virtual processors, and they are configured to limit a virtual processor memory access rate at which each of the N virtual processors accesses memory. The memory accessed by each of the N virtual processors may be RAM. In select embodiments, the memory accessed by each of the N virtual processors includes DRAM having a high capacity yet lower power consumption then SRAM.

Owner:GRANGER RICHARD

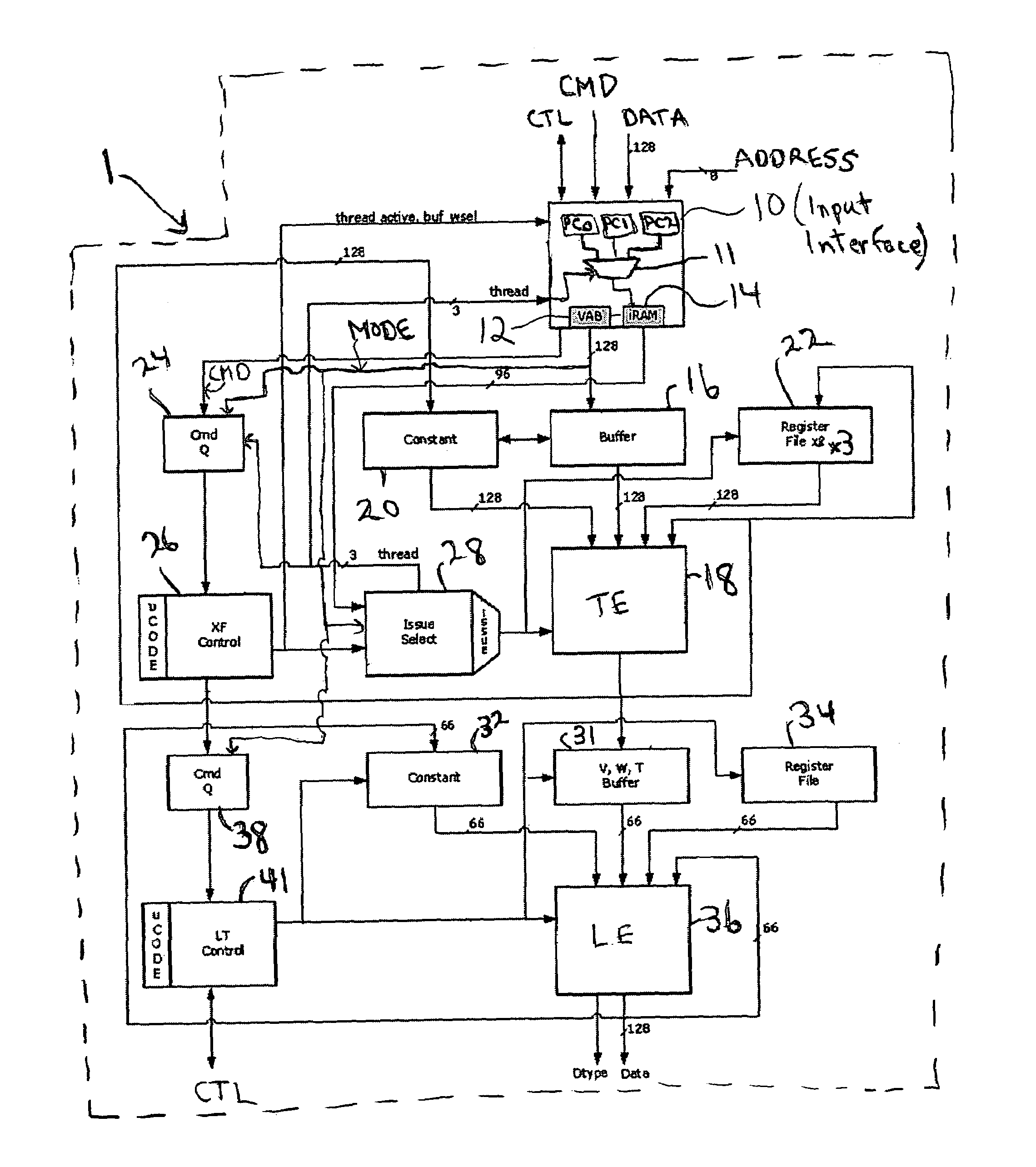

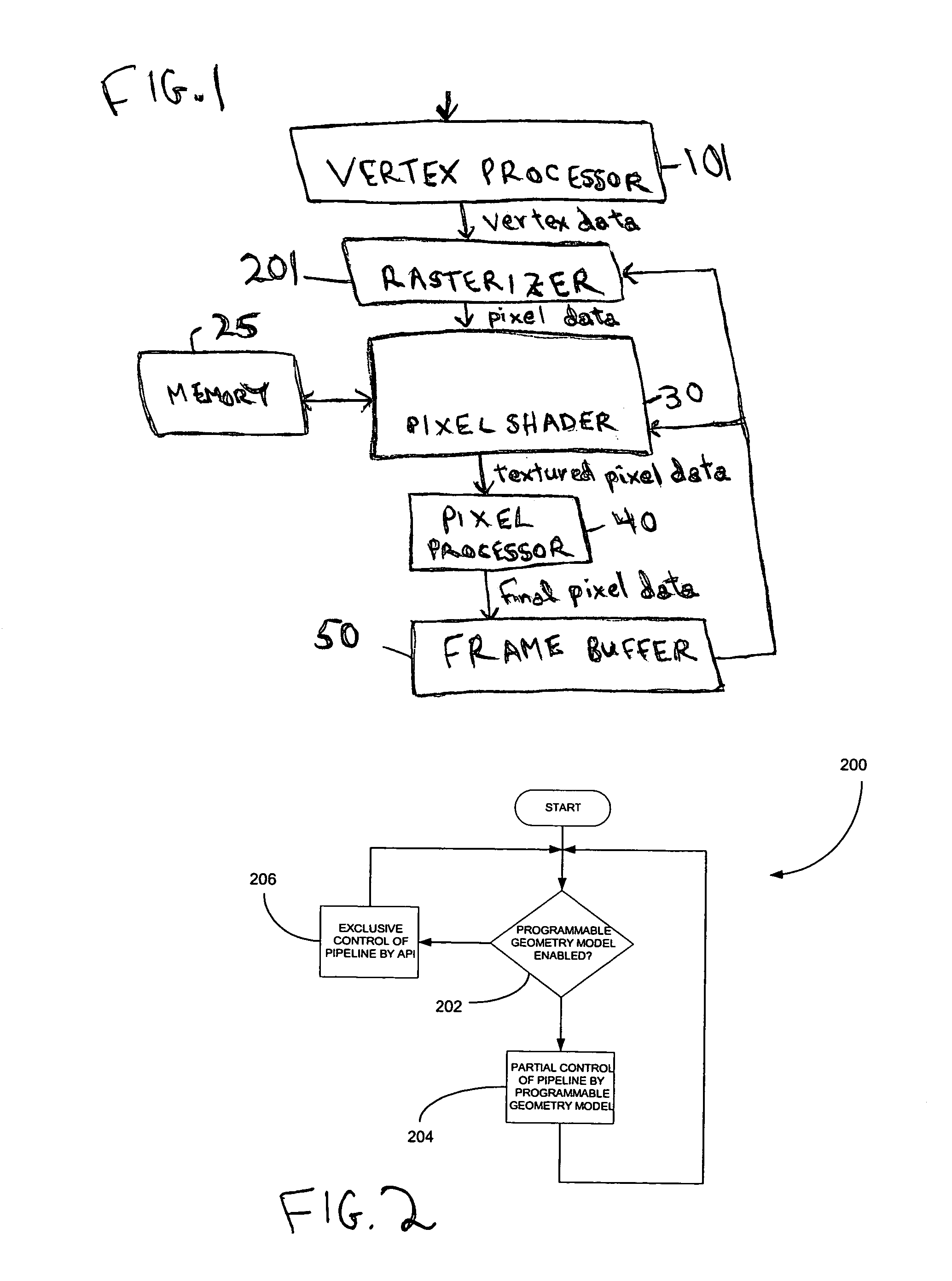

Method and system for programmable pipelined graphics processing with branching instructions

InactiveUS6947047B1Avoid confictMultiple digital computer combinationsProcessor architectures/configurationGraphicsMode control

A programmable, pipelined graphics processor (e.g., a vertex processor) having at least two processing pipelines, a graphics processing system including such a processor, and a pipelined graphics data processing method allowing parallel processing and also handling branching instructions and preventing conflicts among pipelines. Preferably, each pipeline processes data in accordance with a program including by executing branch instructions, and the processor is operable in any one of a parallel processing mode in which at least two data values to be processed in parallel in accordance with the same program are launched simultaneously into multiple pipelines, and a serialized mode in which only one pipeline at a time receives input data values to be processed in accordance with the program (and operation of each other pipeline is frozen). During parallel processing mode operation, mode control circuitry recognizes and resolves branch instructions to be executed (before processing of data in accordance with each branch instruction starts) and causes the processor to operate in the serialized mode when (and preferably only for as long as) necessary to prevent any conflict between the pipelines due to branching. In other embodiments, the processor is operable in any one of a parallel processing mode and a limited serialized mode in which operation of each of a sequence of pipelines (or pipeline sets) pauses for a limited number of clock cycles. The processor enters the limited serialized mode in response to detecting a conflict-causing instruction that could cause a conflict between resources shared by the pipelines during parallel processing mode operation.

Owner:NVIDIA CORP

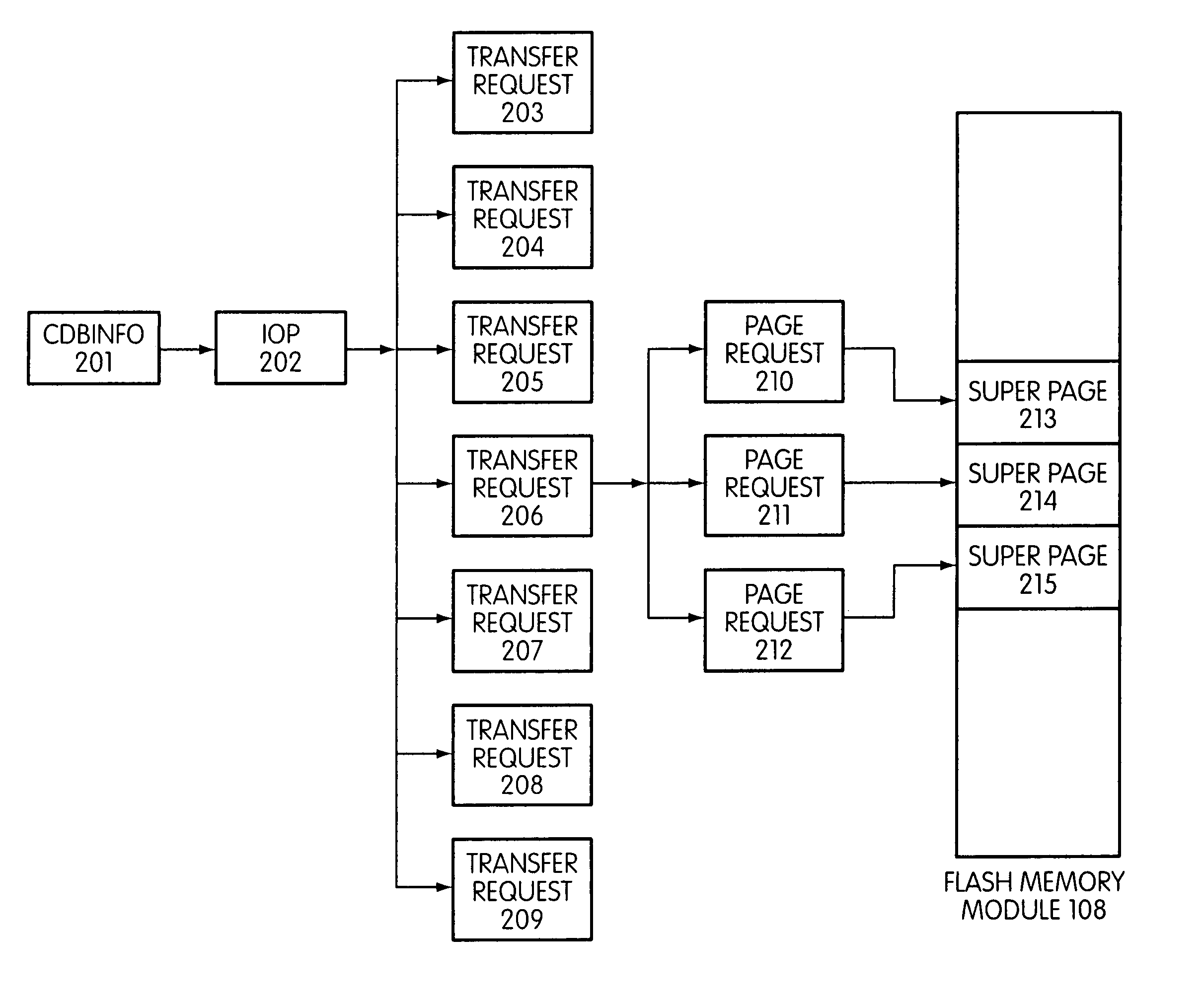

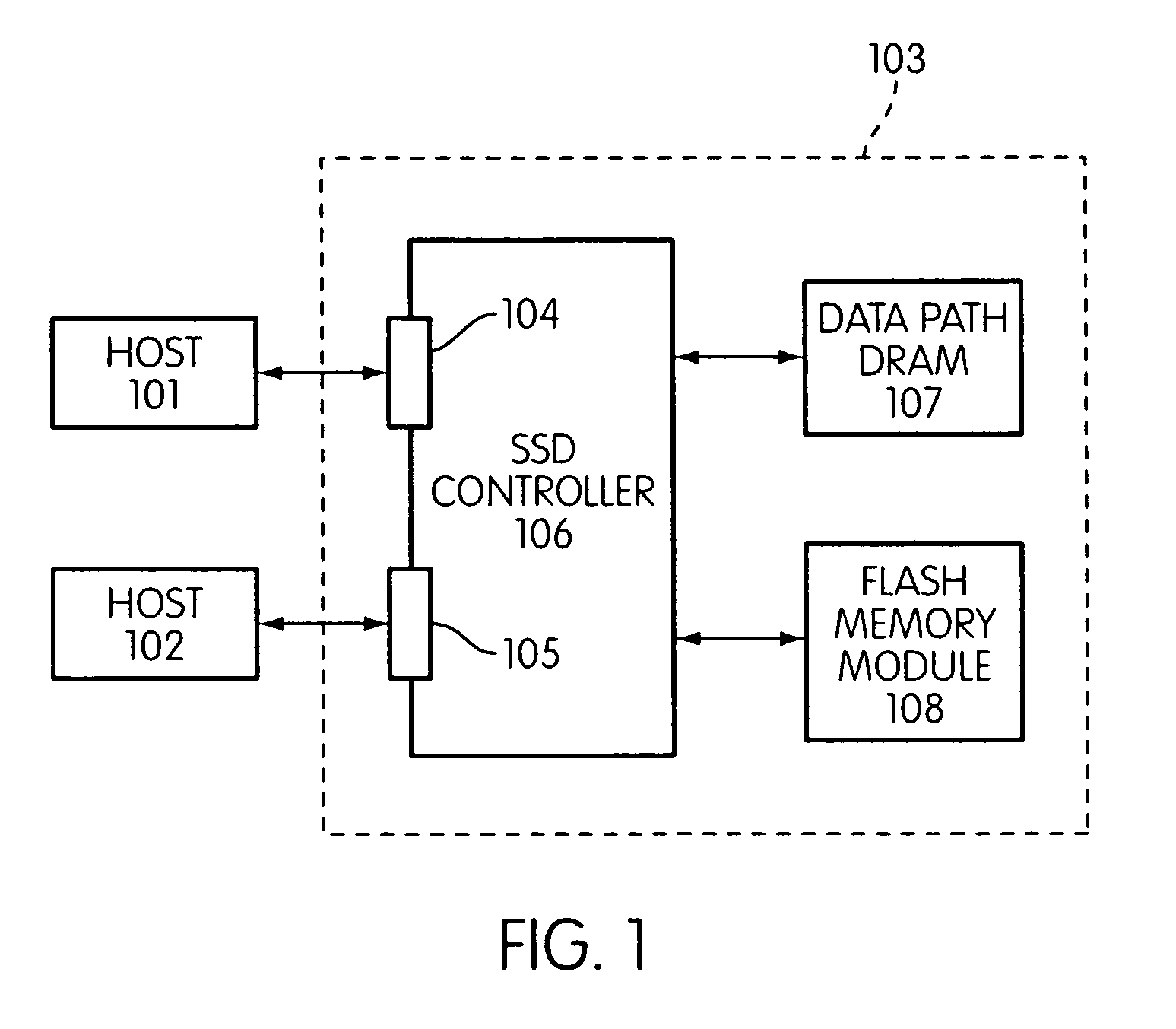

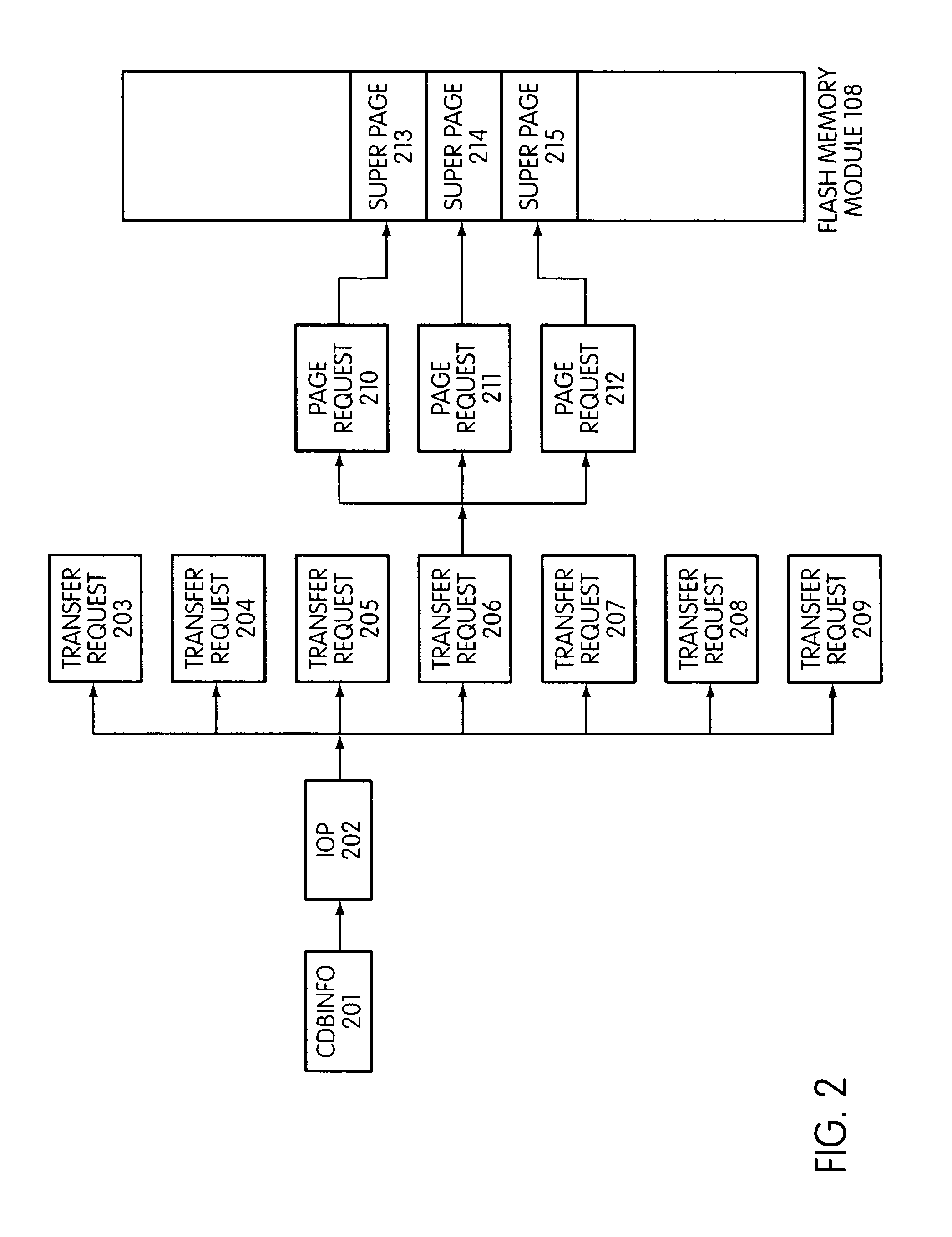

System and method for performing host initiated mass storage commands using a hierarchy of data structures

ActiveUS7934052B2Maximize useImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationFlash memory controllerParallel processing

Disclosed is a mass storage system and method for breaking a host command into a hierarchy of data structures. Different types of data structures are designed to handle different phases of tasks required by the host command, and multiple data structures may be used to handle portions of the host command in parallel, thereby allowing increased performance. The disclosed embodiments include a flash memory controller designed to allow a high degree of pipelining and parallelism.

Owner:SANDISK TECH LLC

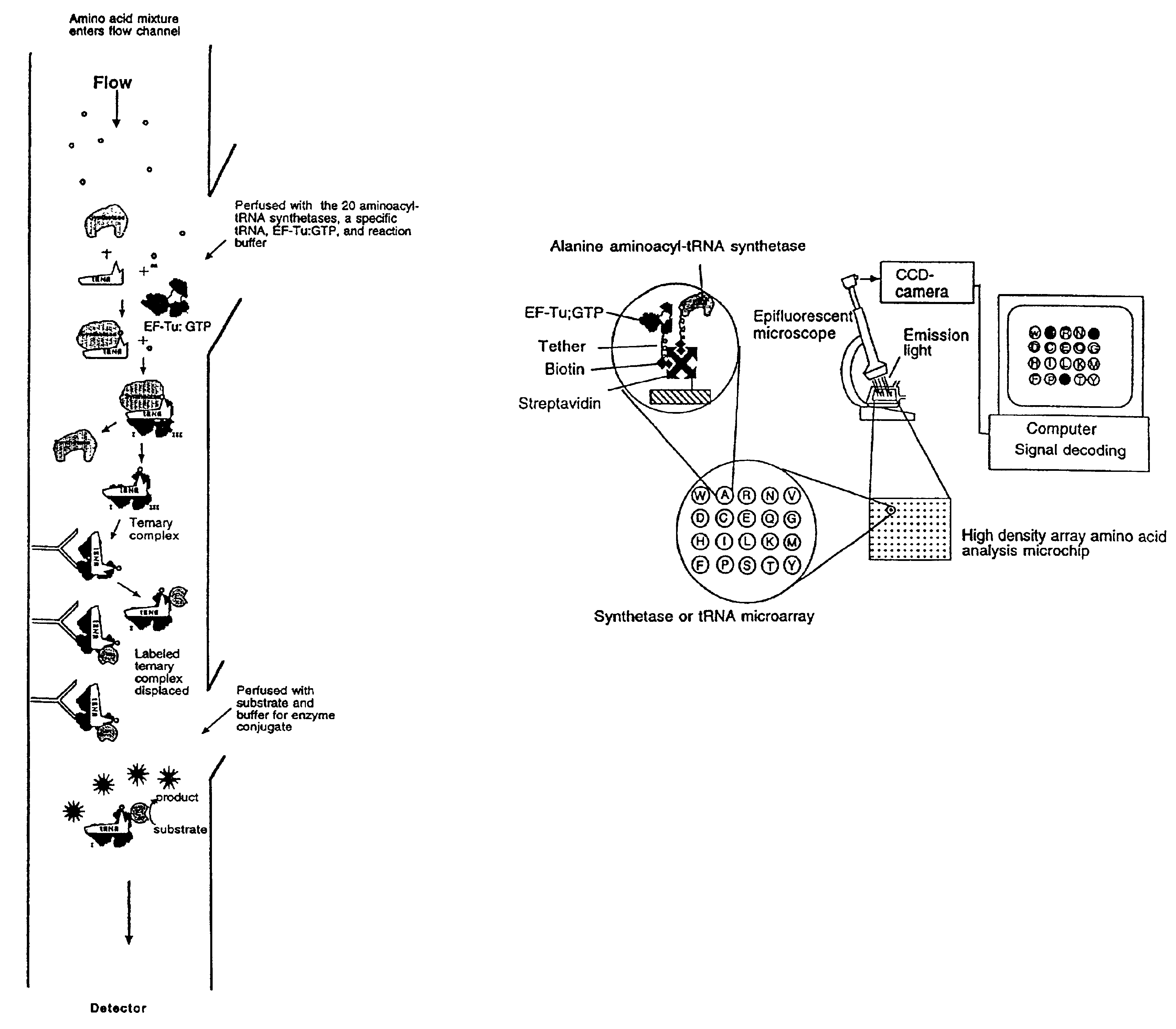

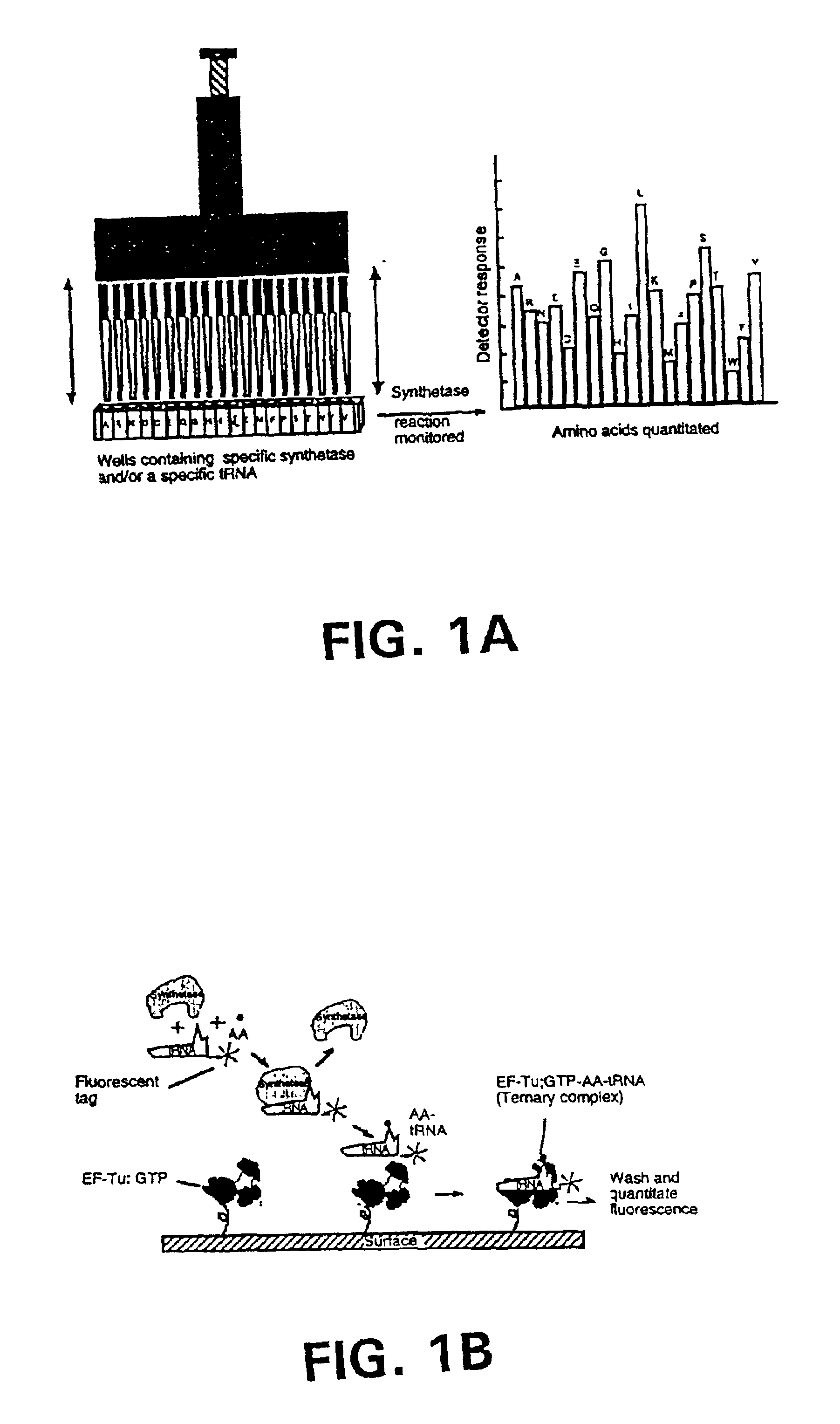

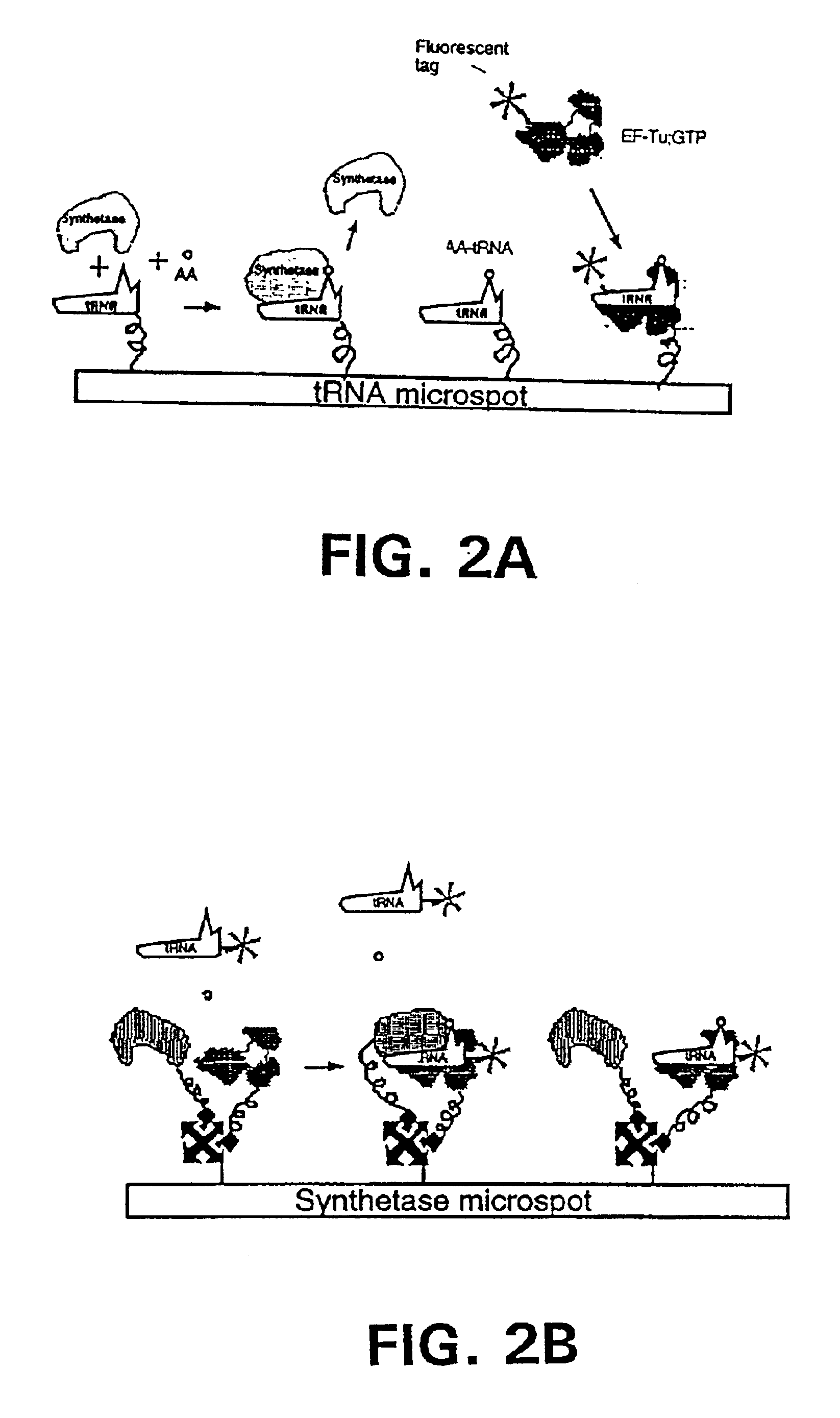

Method and system for rapid biomolecular recognition of amino acids and protein sequencing

InactiveUS6846638B2Low costHigh sensitivityBioreactor/fermenter combinationsBiological substance pretreatmentsProtein Sequence DeterminationDigestion

Methods, compositions, kits, and apparatus are provided wherein the aminoacyl-tRNA synthetase system is used to analyze amino acids. The method allows very small devices for quantitative or semi-quantitative analysis of the amino acids in samples or in sequential or complete proteolytic digestions. The methods can be readily applied to the detection and / or quantitation of one or more primary amino acids by using cognate aminoacyl-tRNA synthetase and cognate tRNA. The basis of the method is that each of the 20 synthetases and / or a tRNA specific for a different amino acid is separated spatially or differentially labeled. The reactions catalyzed by all 20 synthetases may be monitored simultaneously, or nearly simultaneously, or in parallel. Each separately positioned synthetase or tRNA will signal its cognate amino acid. The synthetase reactions can be monitored using continuous spectroscopic assays. Alternatively, since elongation factor Tu:GTP (EF-Tu:GTP) specifically binds all AA-tRNAs, the aminoacylation reactions catalyzed by the synthetases can be monitored using ligand assays. Microarrays and microsensors for amino acid analysis are provided. Additionally, amino acid analysis devices are integrated with protease digestions to produce miniaturized enzymatic sequenators capable of generating either N- or C-terminal sequence and composition data for a protein or peptide. The possibility of parallel processing of many samples in an automated manner is discussed.

Owner:NANOBIODYNAMICS

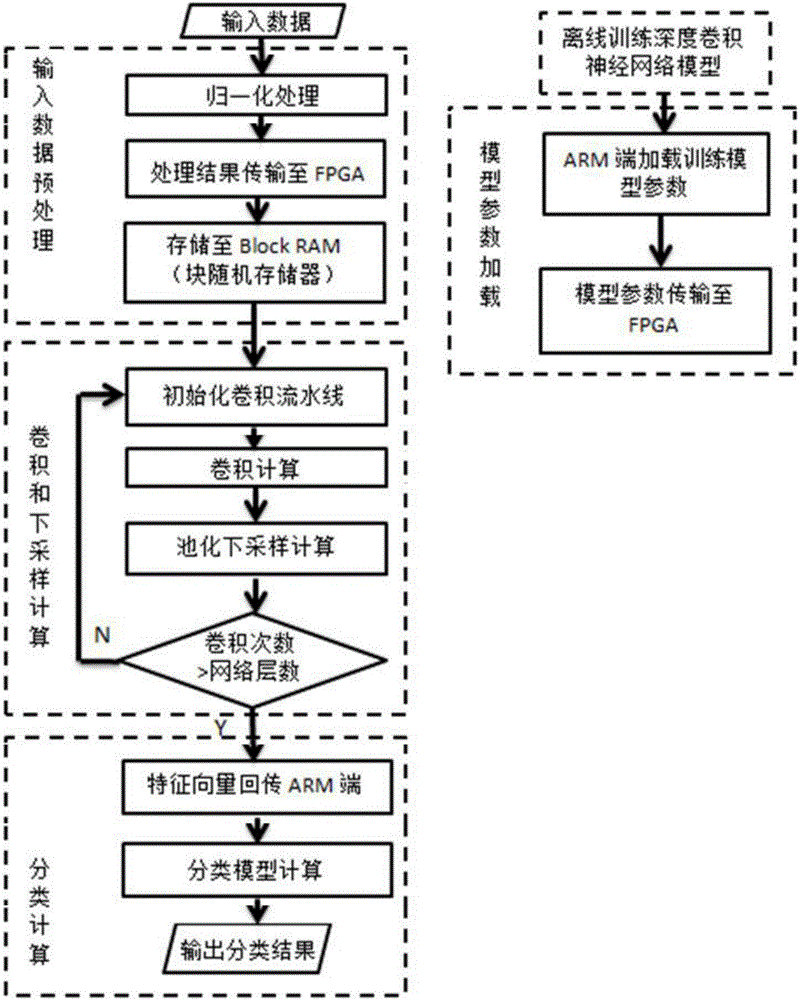

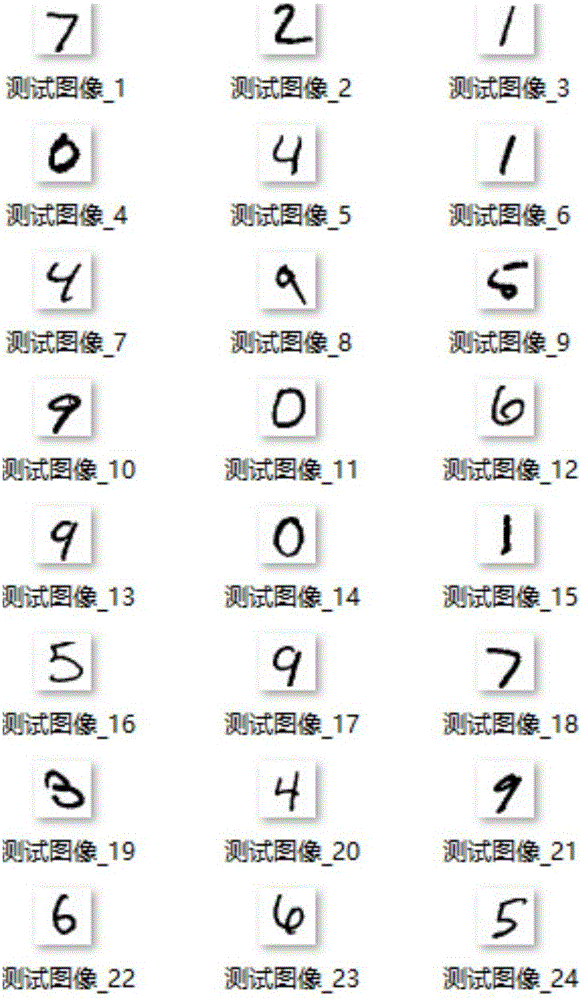

FPGA-based deep convolution neural network realizing method

ActiveCN106228240ASimple designReduce resource consumptionCharacter and pattern recognitionSpeech recognitionFeature vectorAlgorithm

The invention belongs to the technical field of digital image processing and mode identification, and specifically relates to an FPGA-based deep convolution neural network realizing method. The hardware platform for realizing the method is XilinxZYNQ-7030 programmable sheet SoC, and an FPGA and an ARM Cortex A9 processor are built in the hardware platform. Trained network model parameters are loaded to an FPGA end, pretreatment for input data is conducted at an ARM end, and the result is transmitted to the FPGA end. Convolution calculation and down-sampling of a deep convolution neural network are realized at the FPGA end to form data characteristic vectors and transmit the data characteristic vectors to the ARM end, thus completing characteristic classification calculation. Rapid parallel processing and extremely low-power high-performance calculation characteristics of FPGA are utilized to realize convolution calculation which has the highest complexity in a deep convolution neural network model. The algorithm efficiency is greatly improved, and the power consumption is reduced while ensuring algorithm correct rate.

Owner:FUDAN UNIV

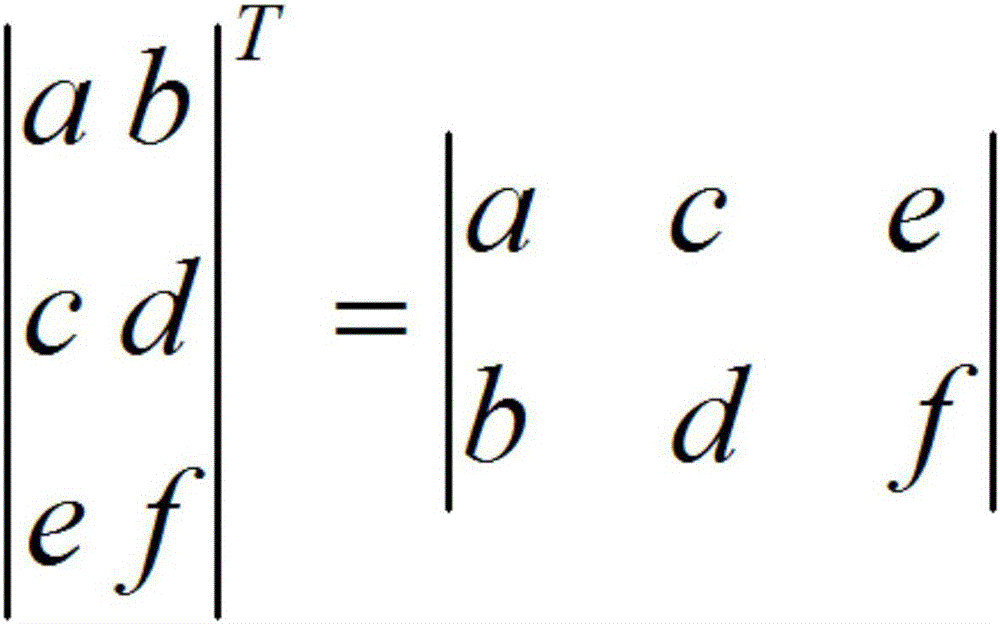

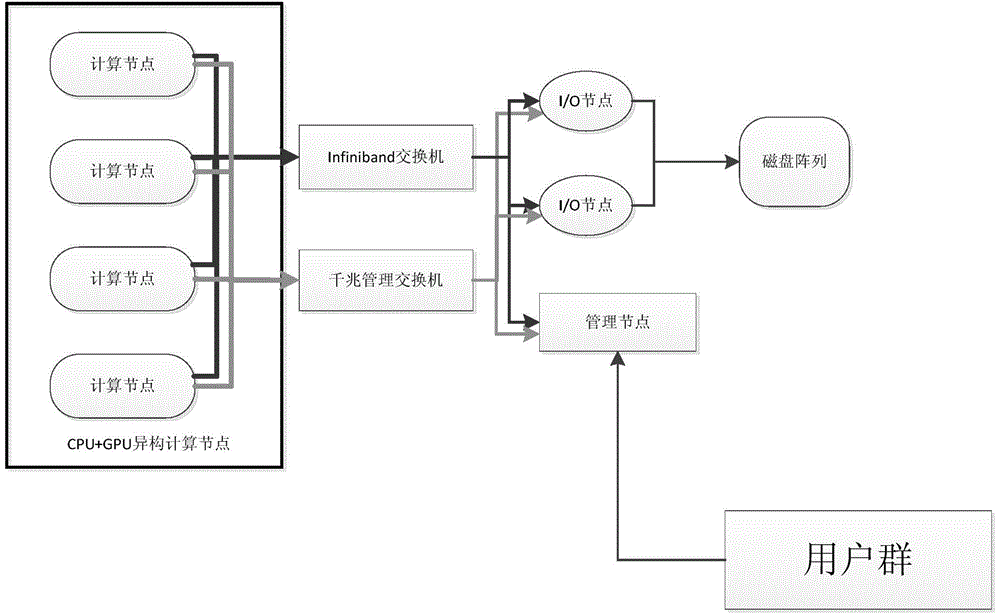

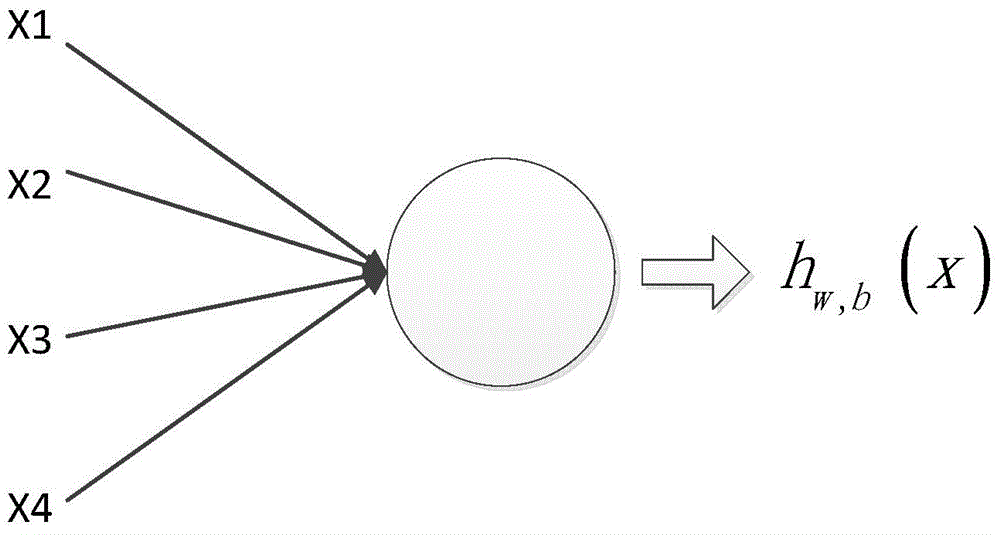

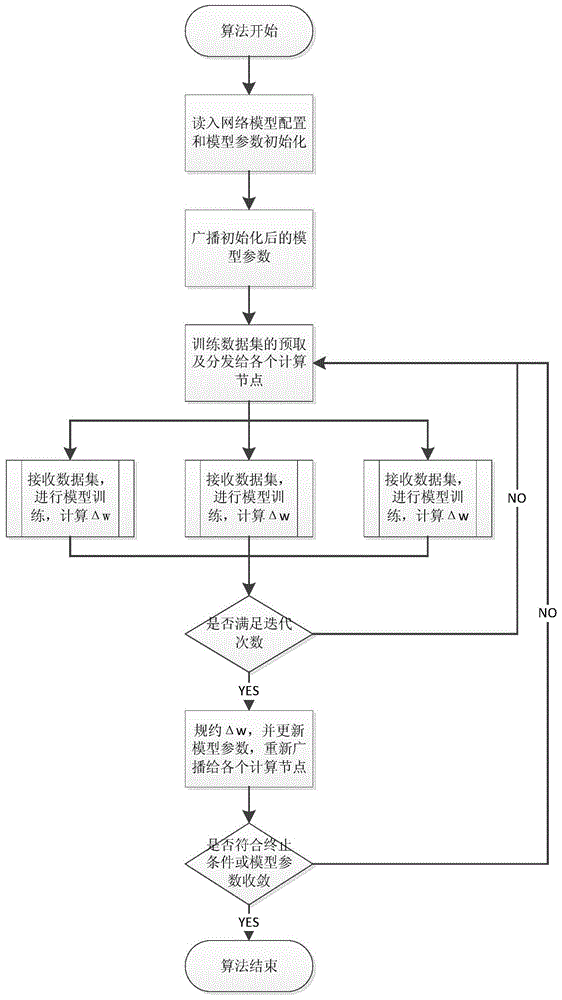

Convolution neural network parallel processing method based on large-scale high-performance cluster

InactiveCN104463324AReduce training timeImprove computing efficiencyBiological neural network modelsConcurrent instruction executionNODALAlgorithm

The invention discloses a convolution neural network parallel processing method based on a large-scale high-performance cluster. The method comprises the steps that (1) a plurality of copies are constructed for a network model to be trained, model parameters of all the copies are identical, the number of the copies is identical with the number of nodes of the high-performance cluster, each node is provided with one model copy, one node is selected to serve as a main node, and the main node is responsible for broadcasting and collecting the model parameters; (2) a training set is divided into a plurality of subsets, the training subsets are issued to the rest of sub nodes except the main mode each time to conduct parameter gradient calculation together, gradient values are accumulated, the accumulated value is used for updating the model parameters of the main node, and the updated model parameters are broadcast to all the sub nodes until model training is ended. The convolution neural network parallel processing method has the advantages of being capable of achieving parallelization, improving the efficiency of model training, shortening the training time and the like.

Owner:CHANGSHA MASHA ELECTRONICS TECH

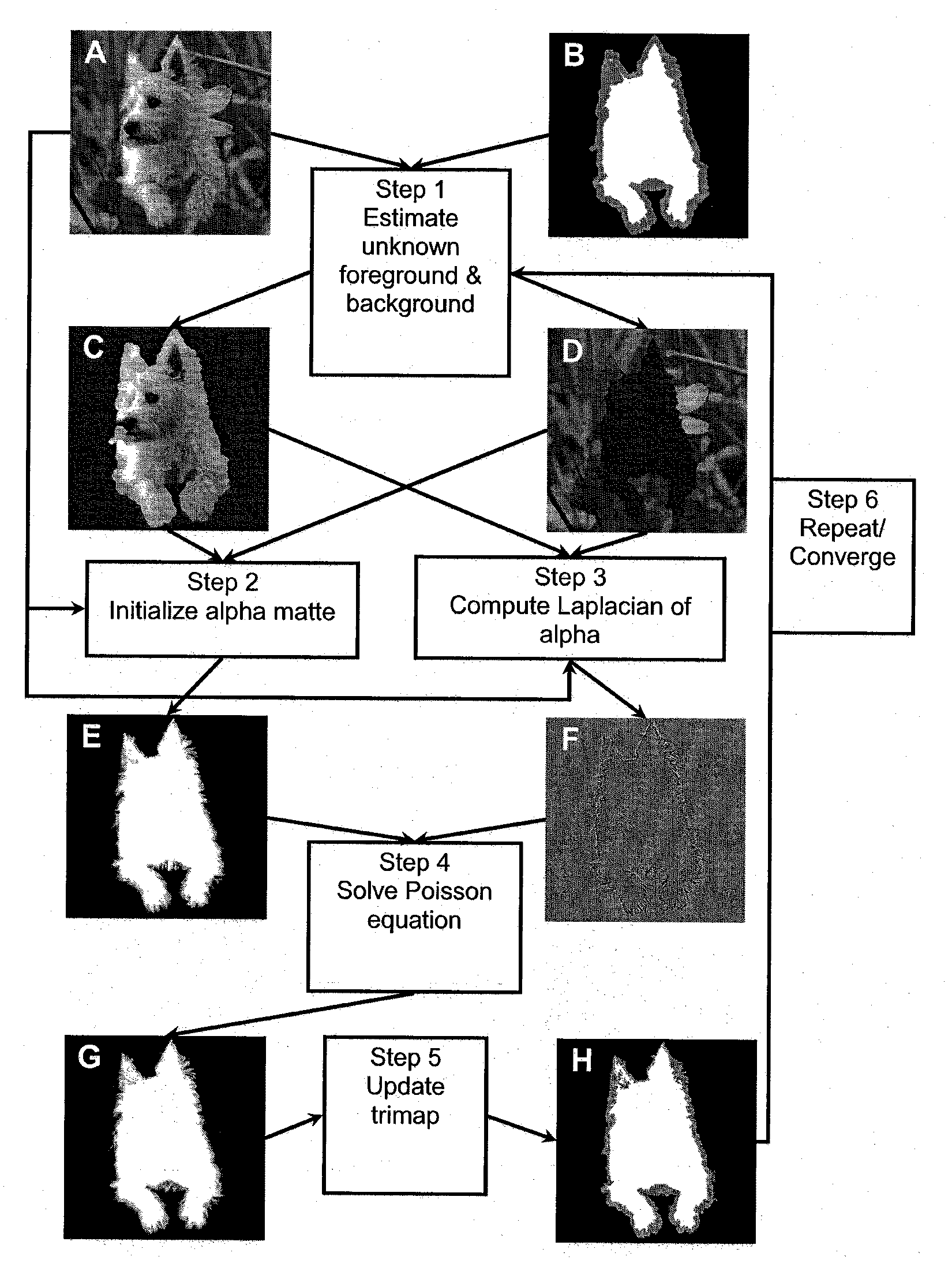

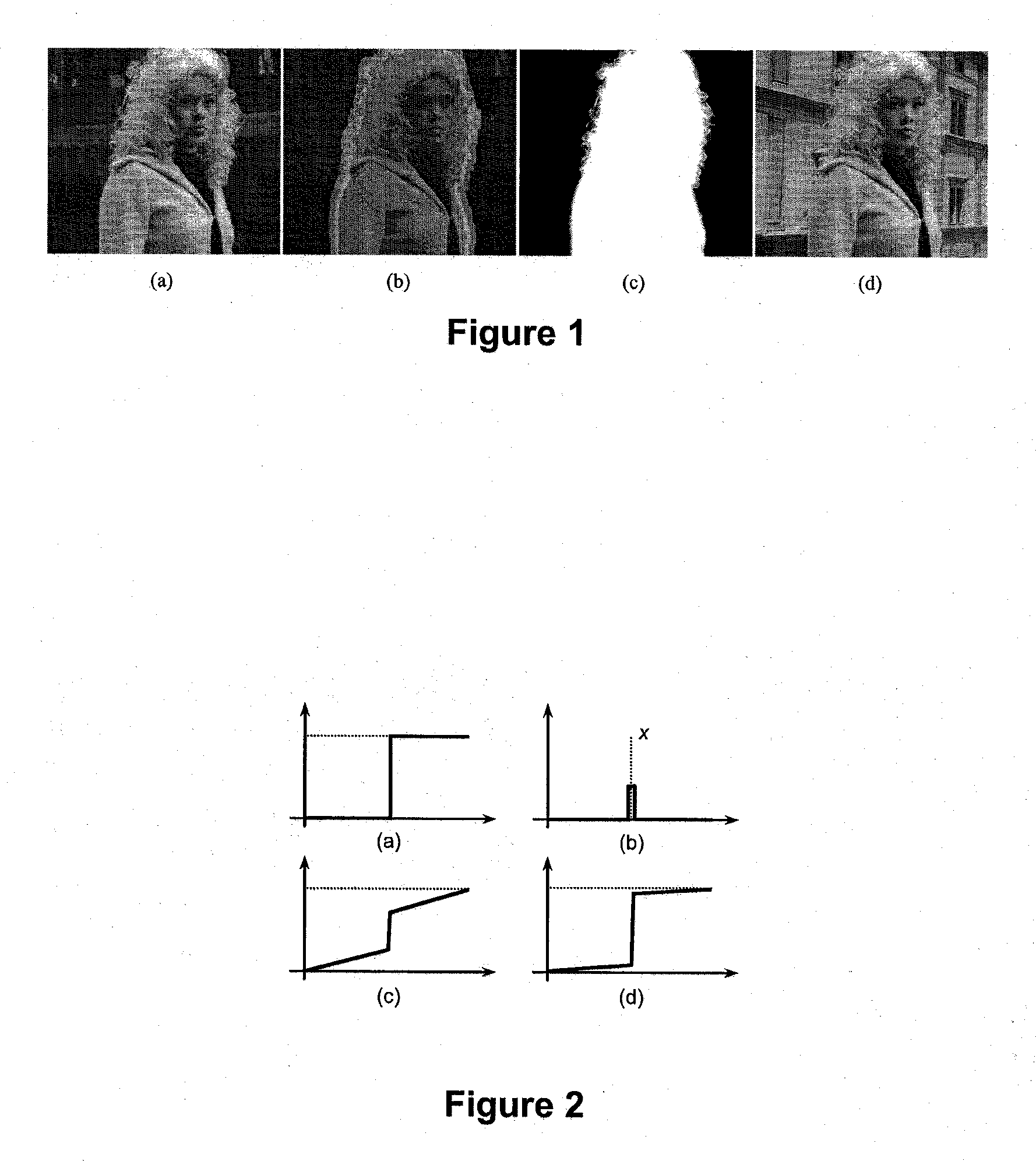

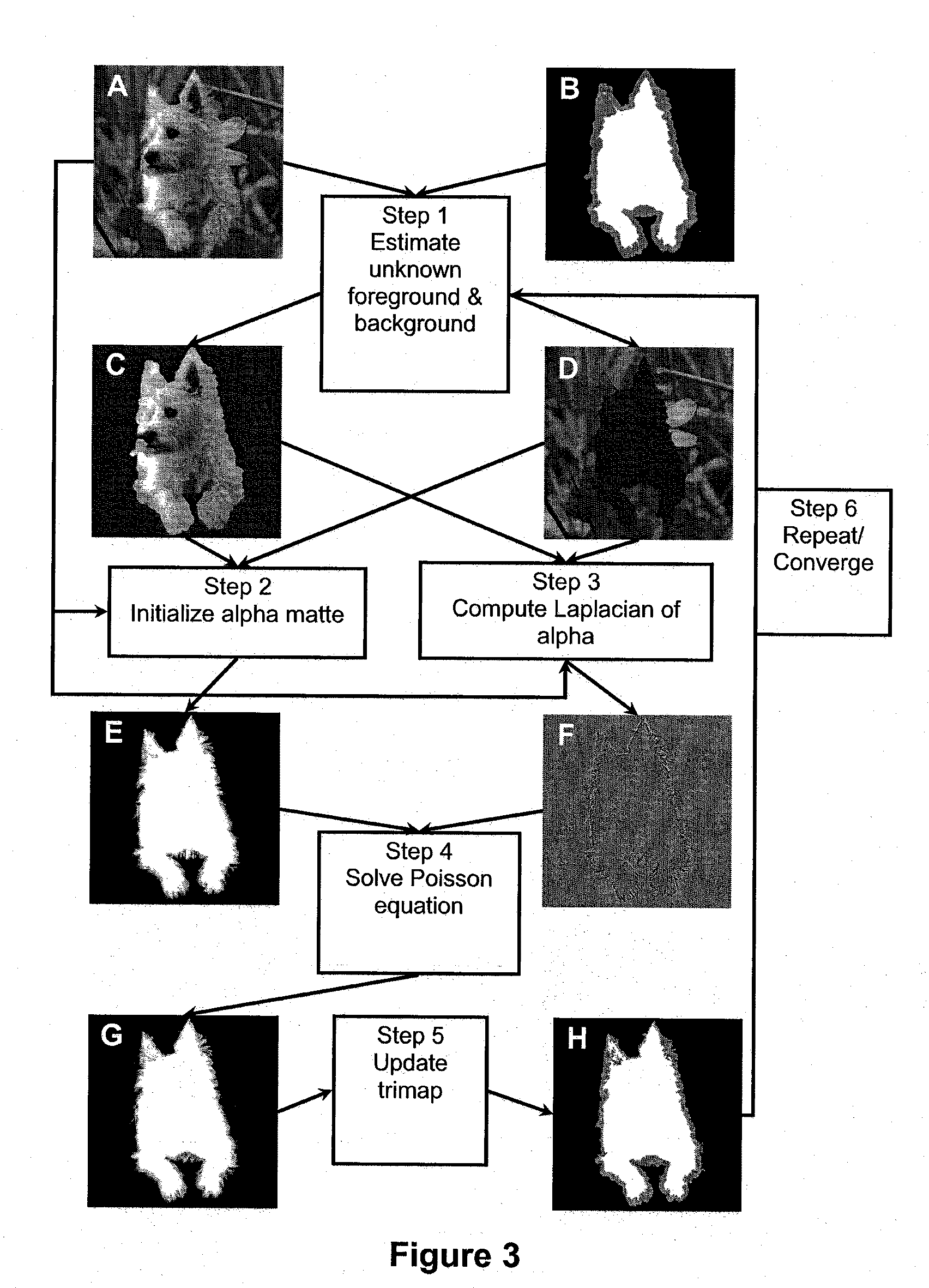

Real-time image and video matting

InactiveUS20110038536A1Reduce errorsImprove robustnessImage enhancementImage analysisPattern recognitionColor vector

A system and method implemented as a software tool for generating alpha matte sequences in real-time for the purposes of background or foreground substitution in digital images and video. The system and method is based on a set of modified Poisson equations that are derived for handling multichannel color vectors. Greater robustness is achieved by computing an initial alpha matte in color space. Real-time processing speed is achieved through optimizing the algorithm for parallel processing on the GPUs. For online video matting, a modified background cut algorithm is implemented to separate foreground and background, which guides the automatic trimap generation. Quantitative evaluation on still images shows that the alpha mattes extracted using the present invention has improved accuracy over existing state-of-the-art offline image matting techniques.

Owner:GENESIS GROUP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com