Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

174 results about "Database caching" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

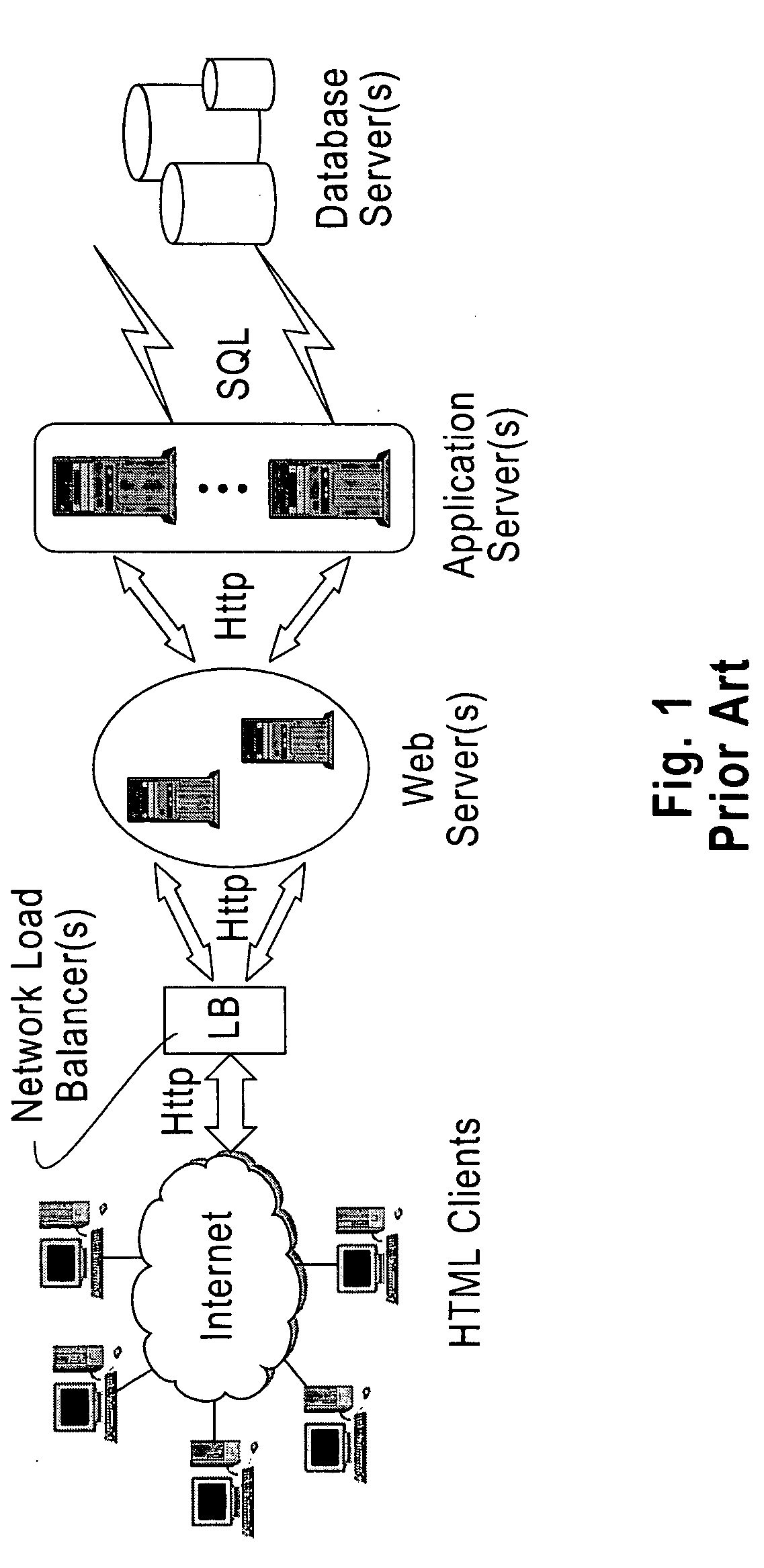

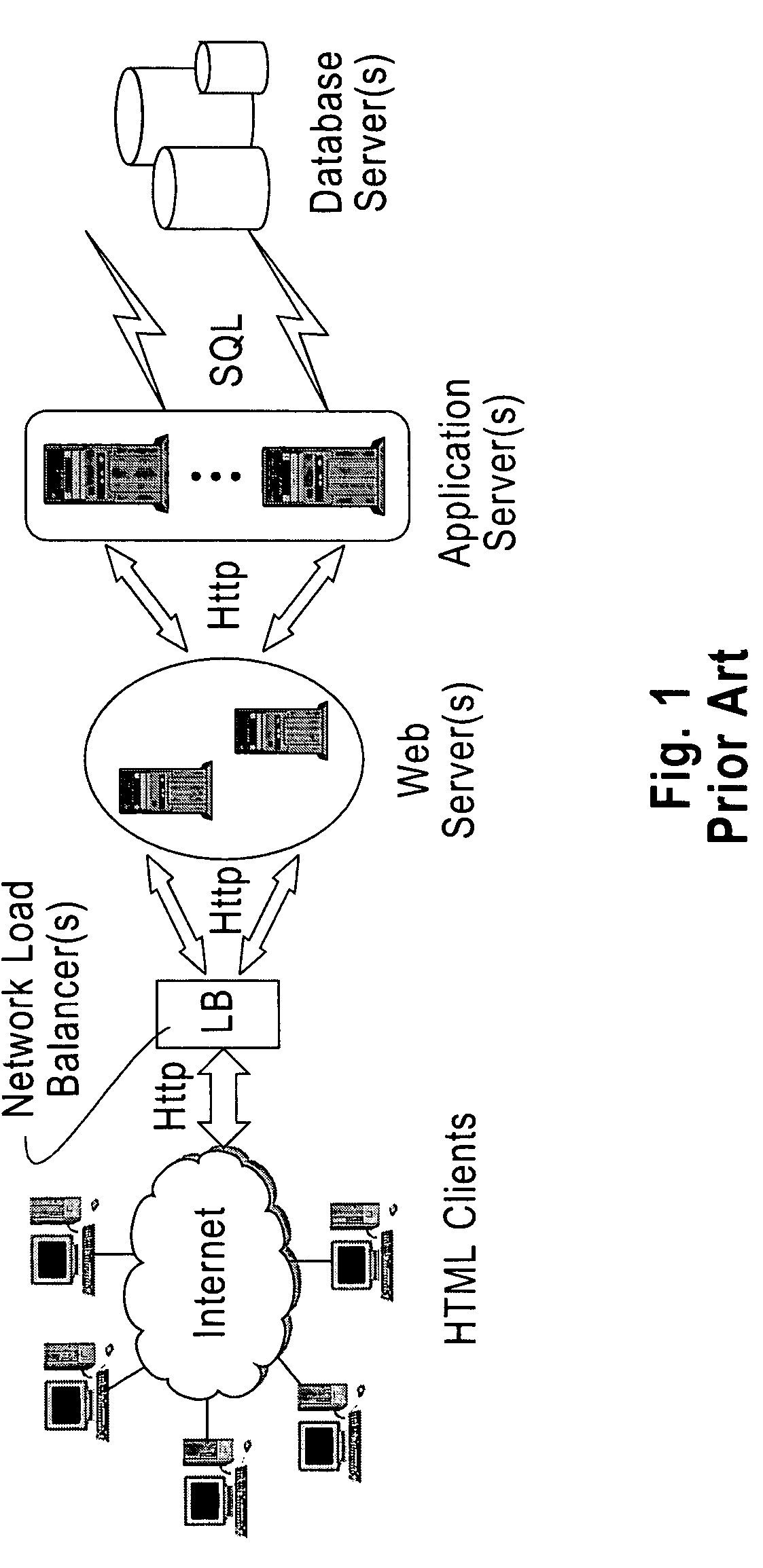

Inventor

Database caching is a process included in the design of computer applications which generate web pages on-demand (dynamically) by accessing backend databases. When these applications are deployed on multi-tier environments that involve browser-based clients, web application servers and backend databases, middle-tier database caching is used to achieve high scalability and performance.

Runtime adaptable search processor

ActiveUS20060136570A1Reduce stacking processImproving host CPU performanceWeb data indexingMultiple digital computer combinationsData packInternal memory

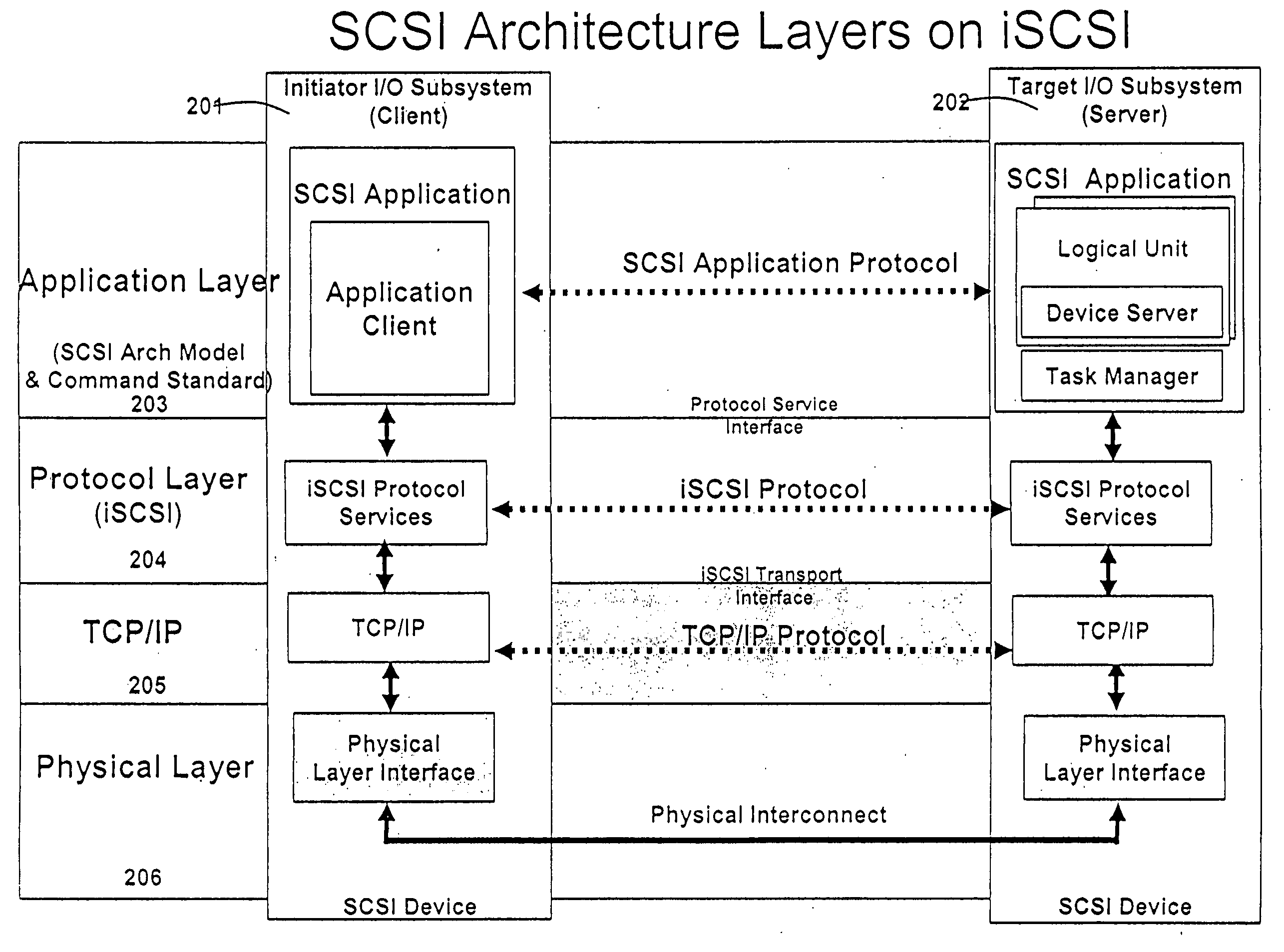

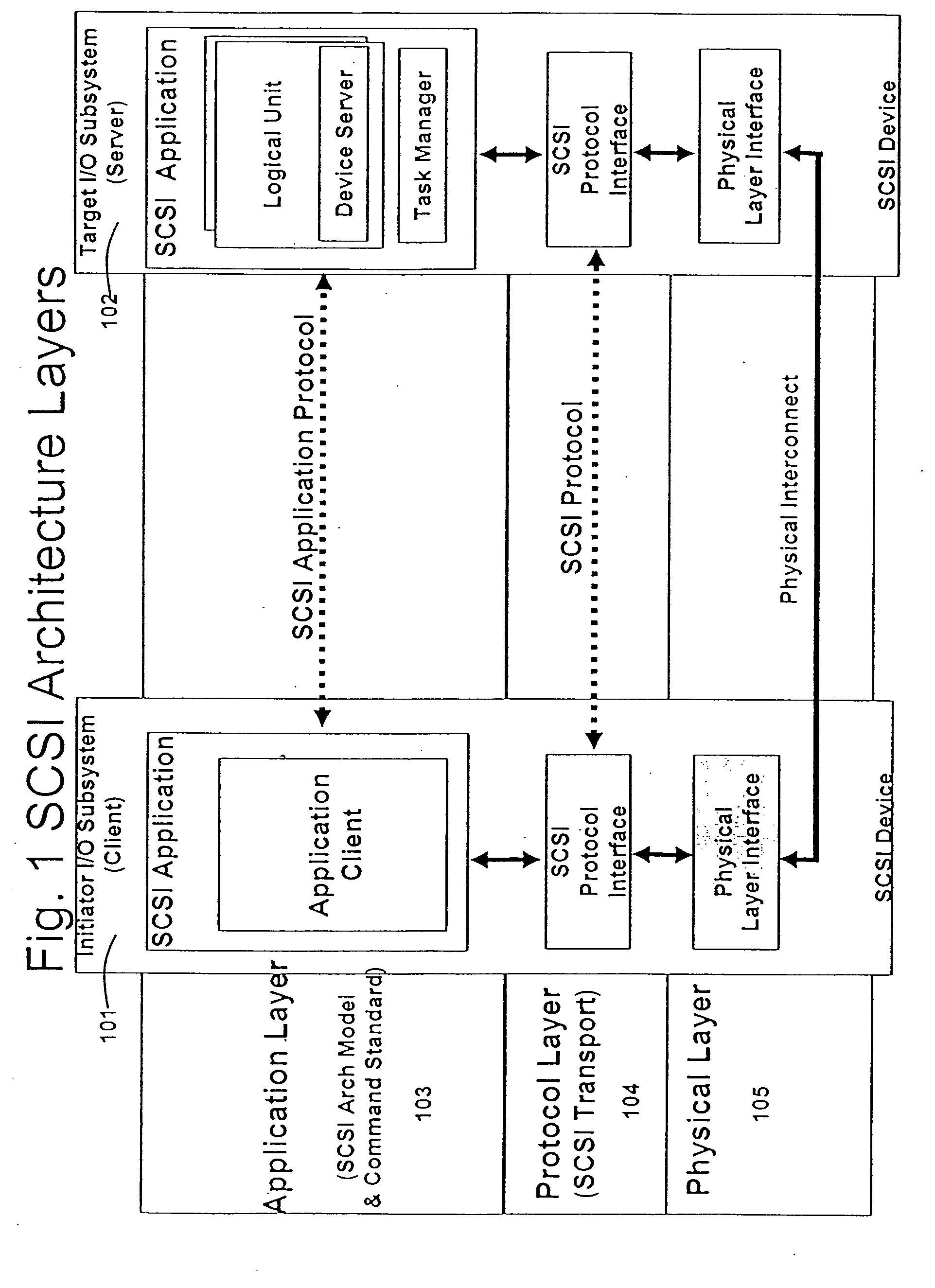

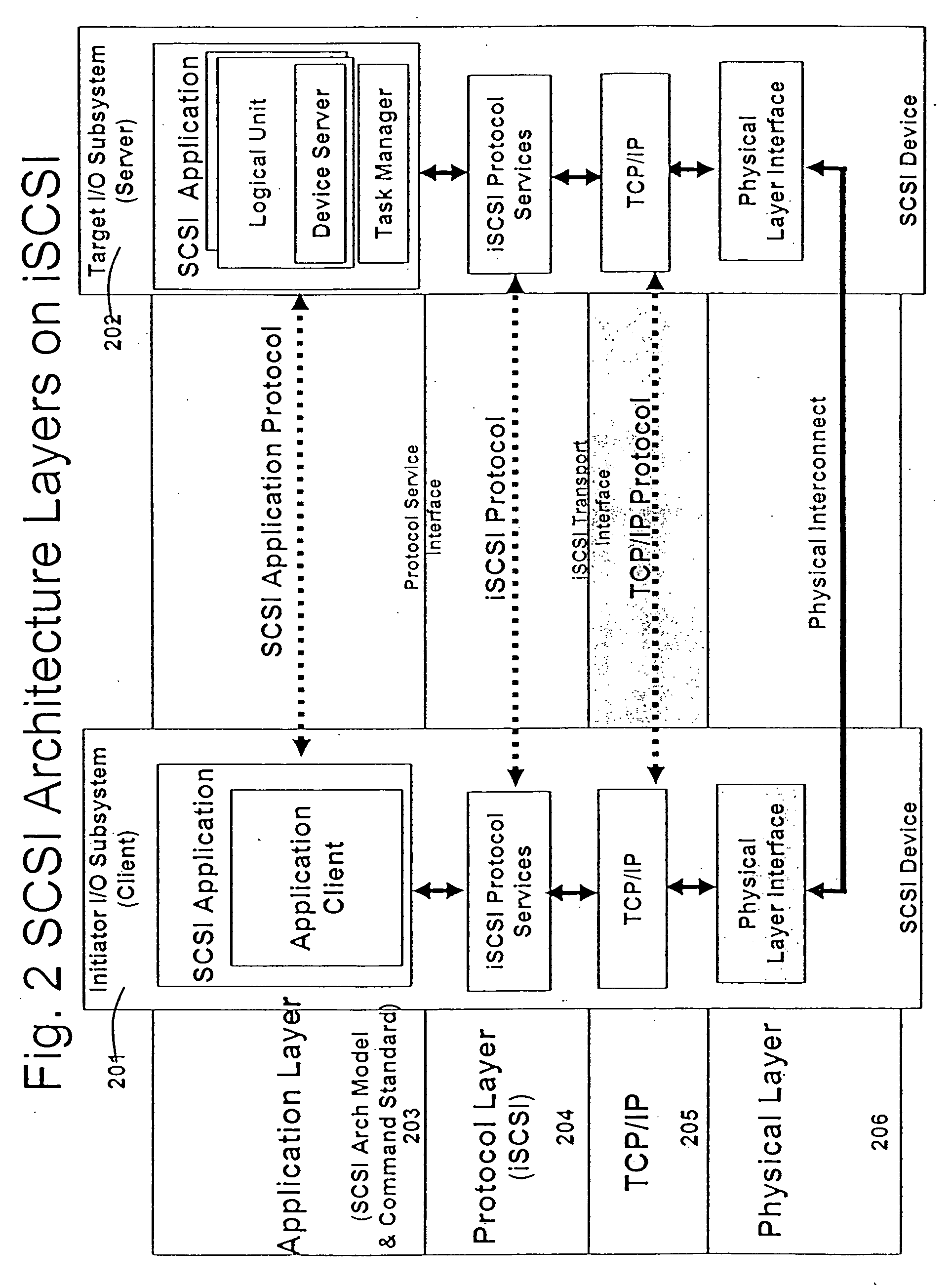

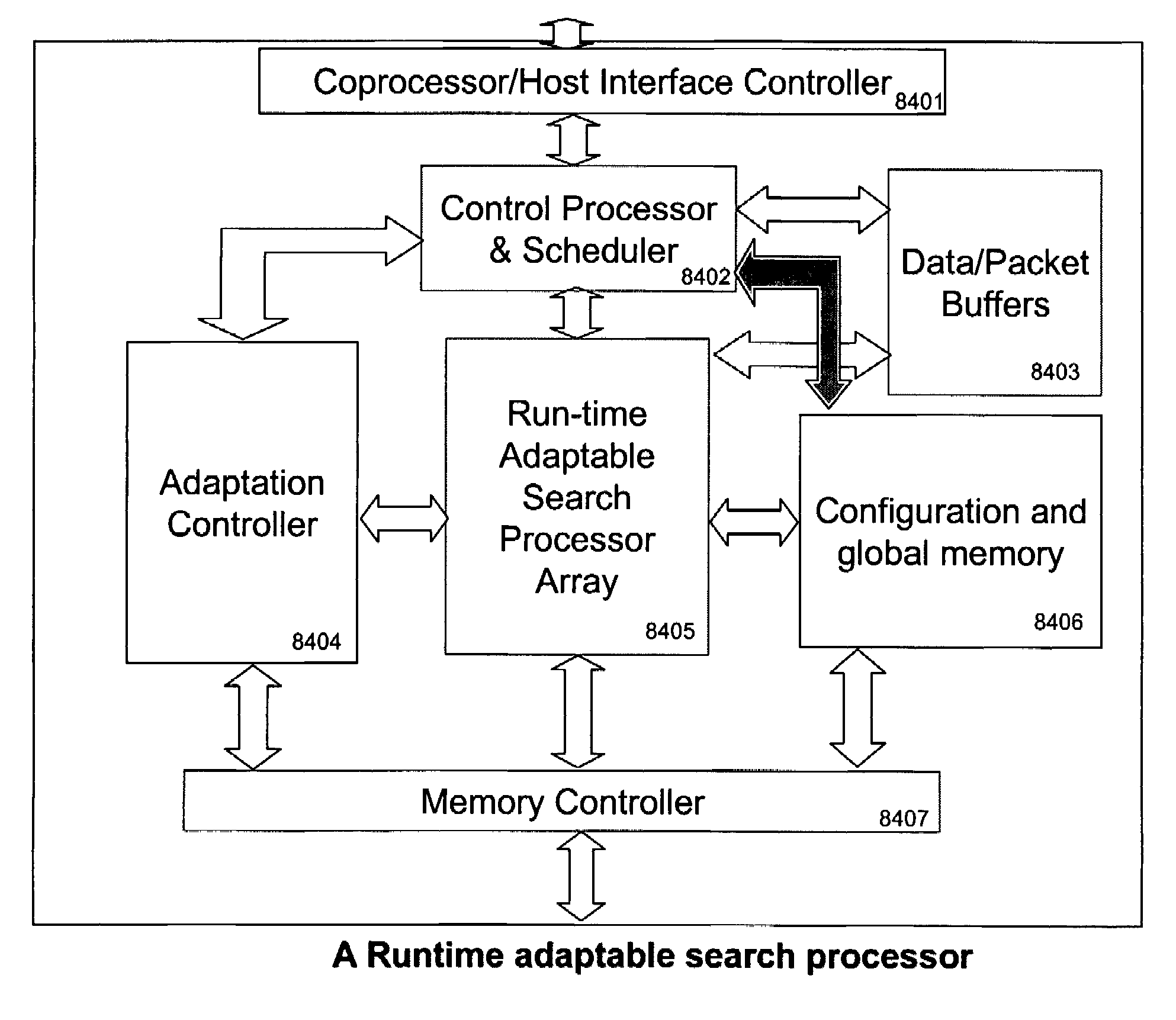

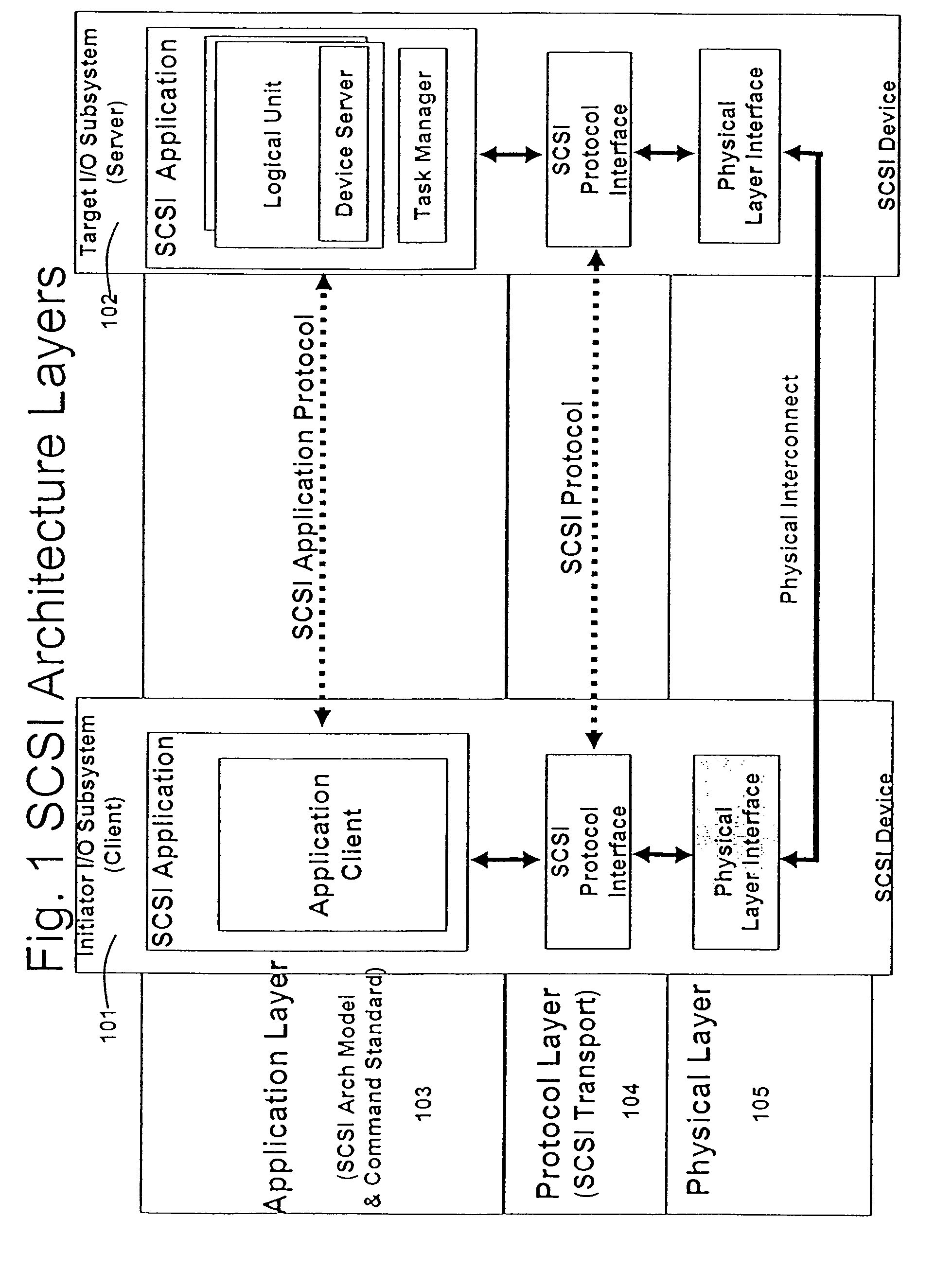

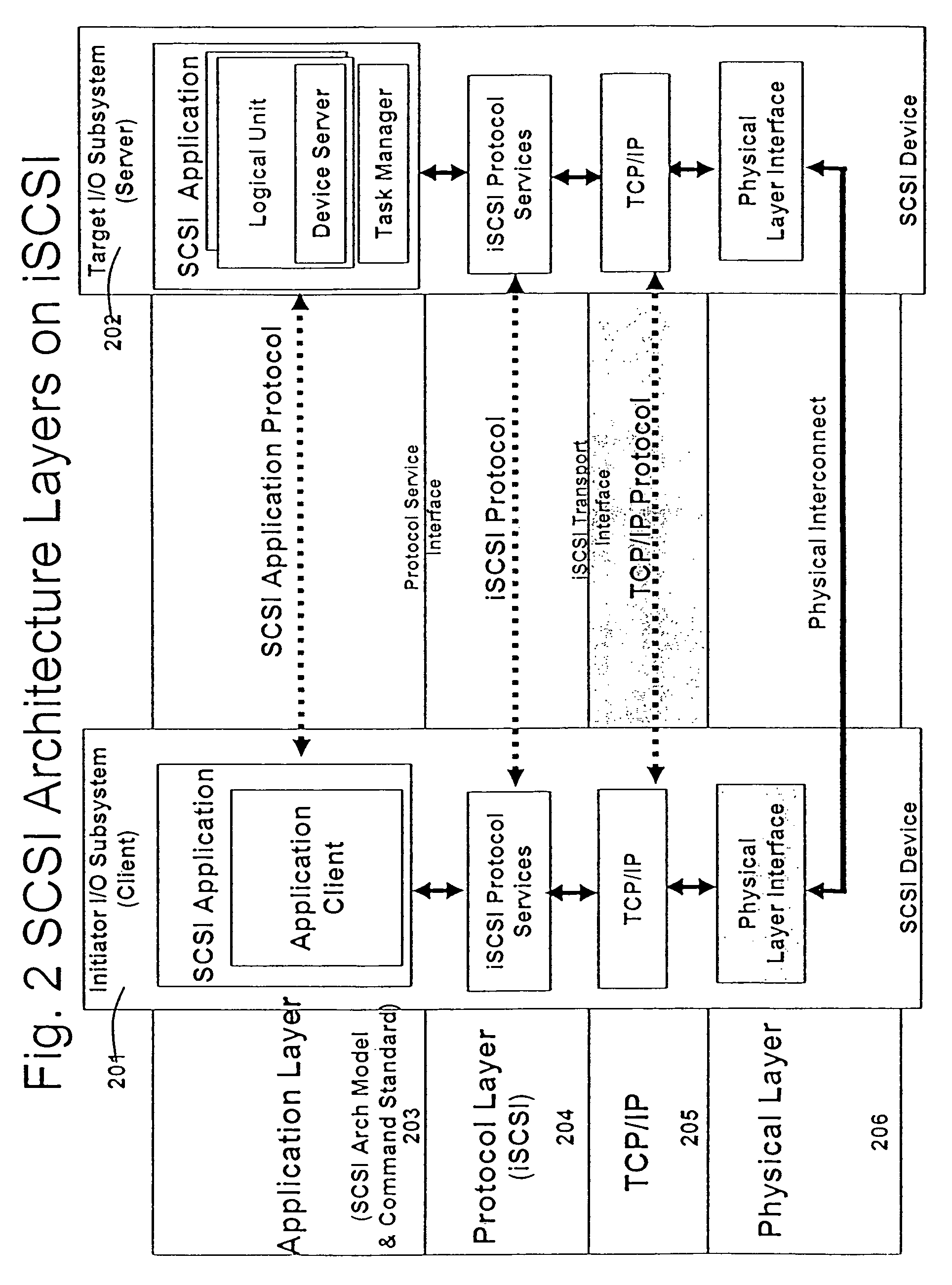

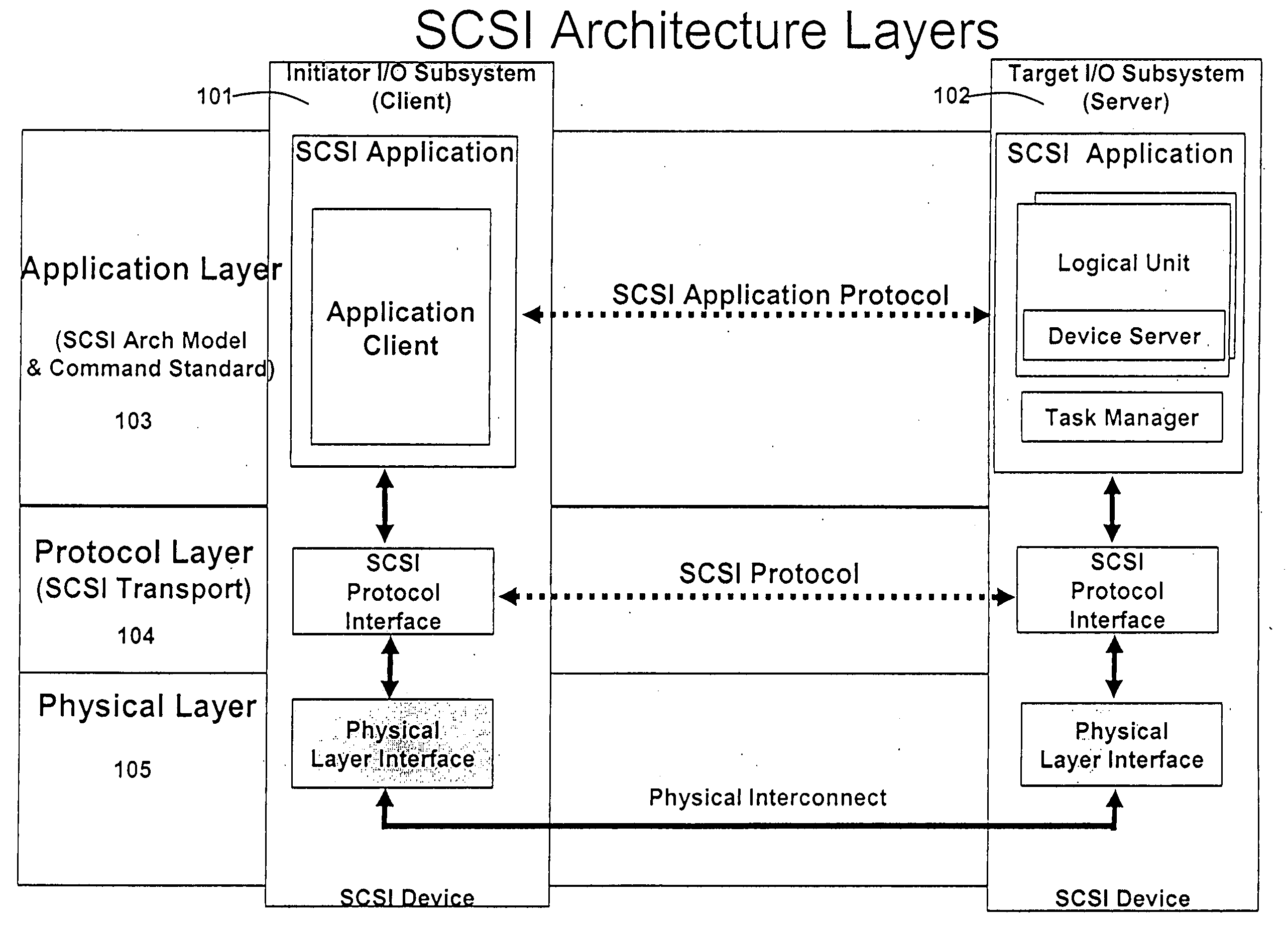

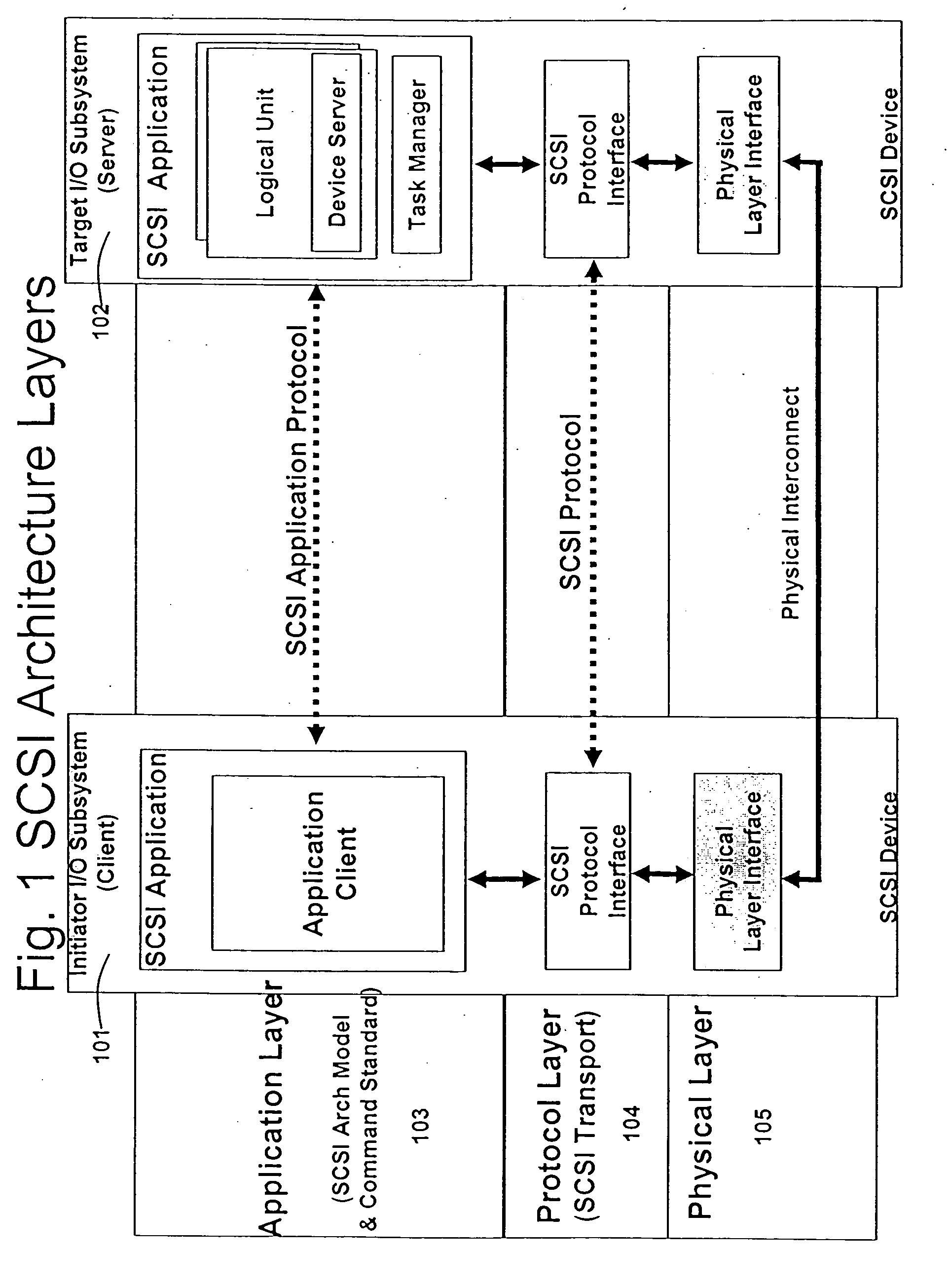

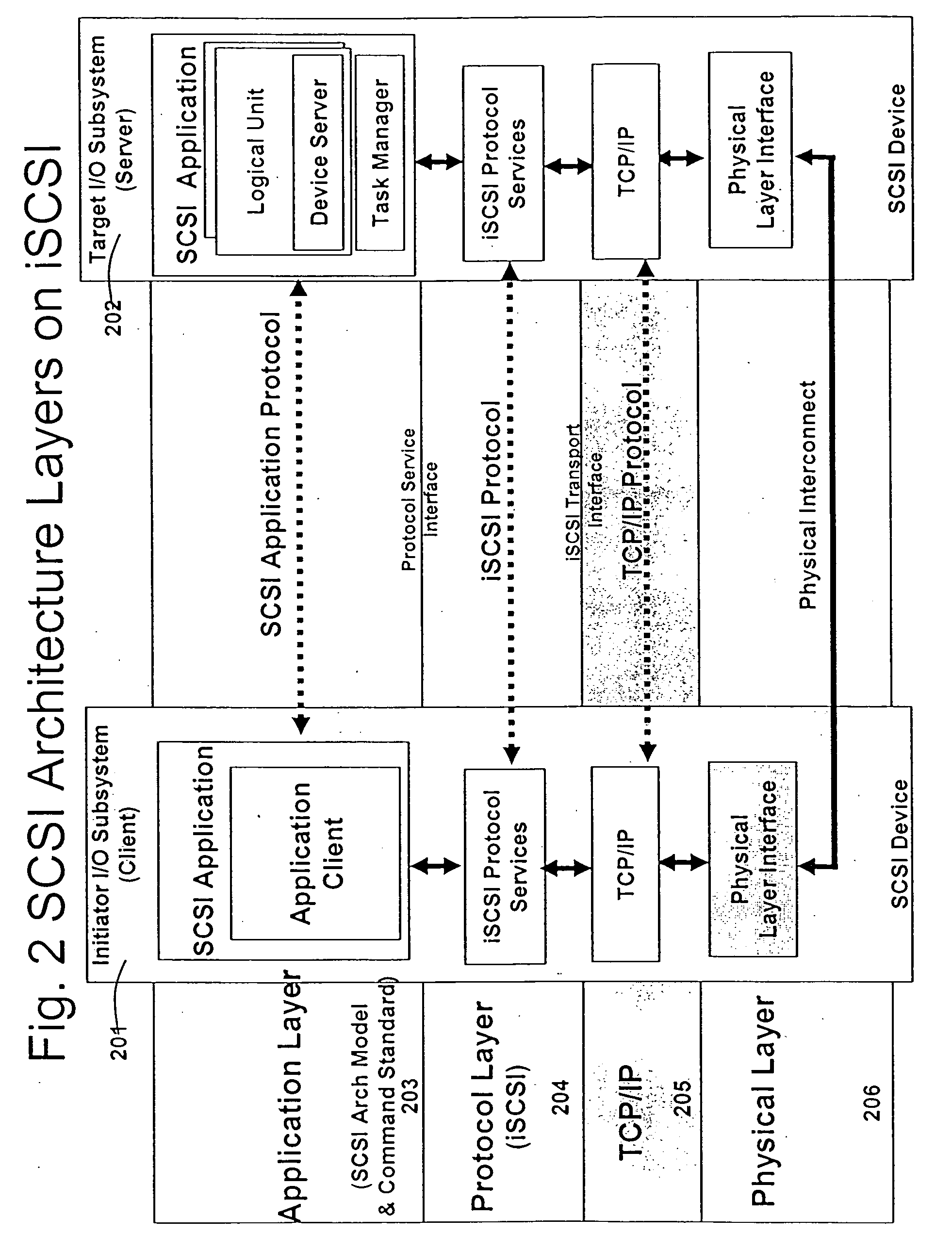

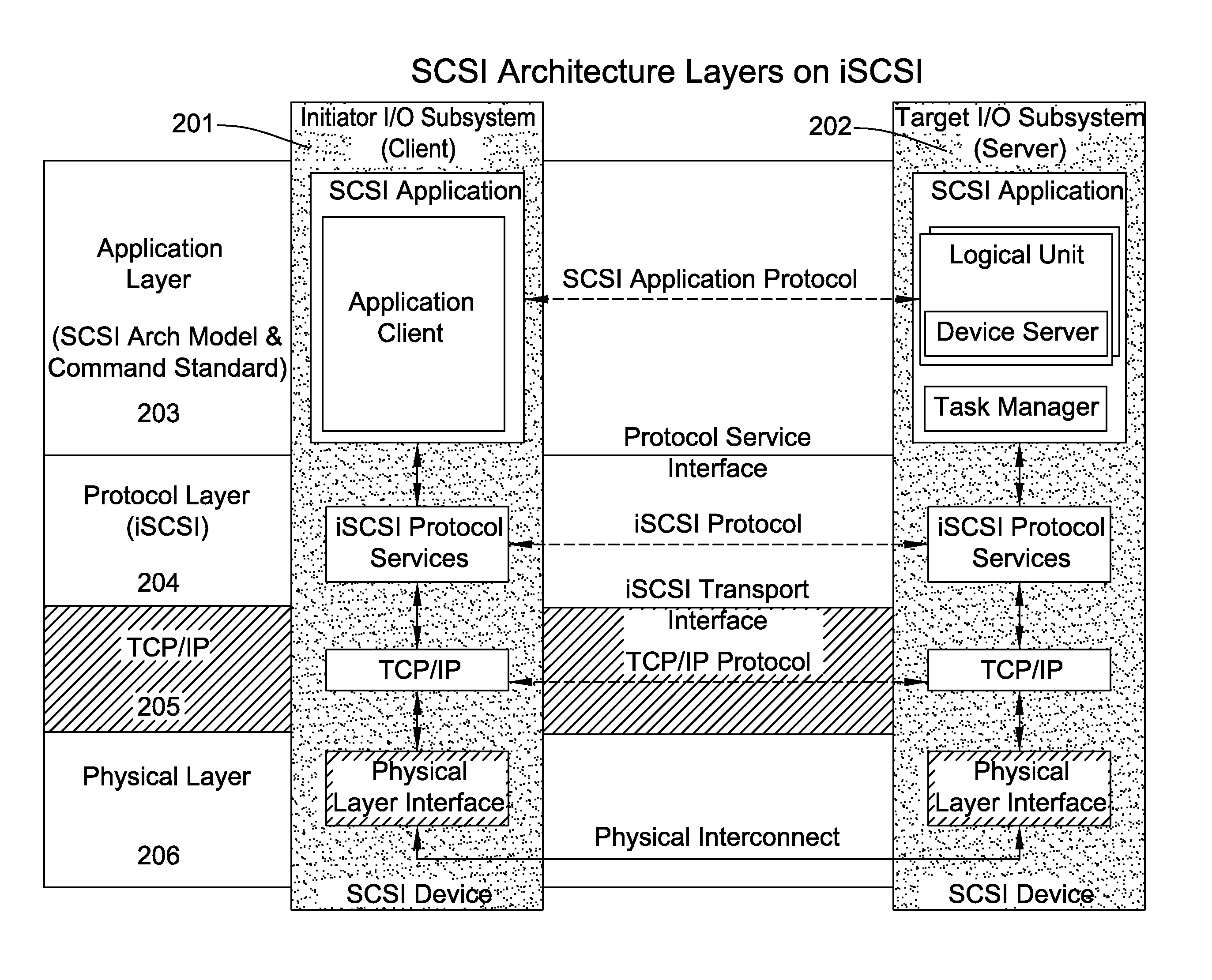

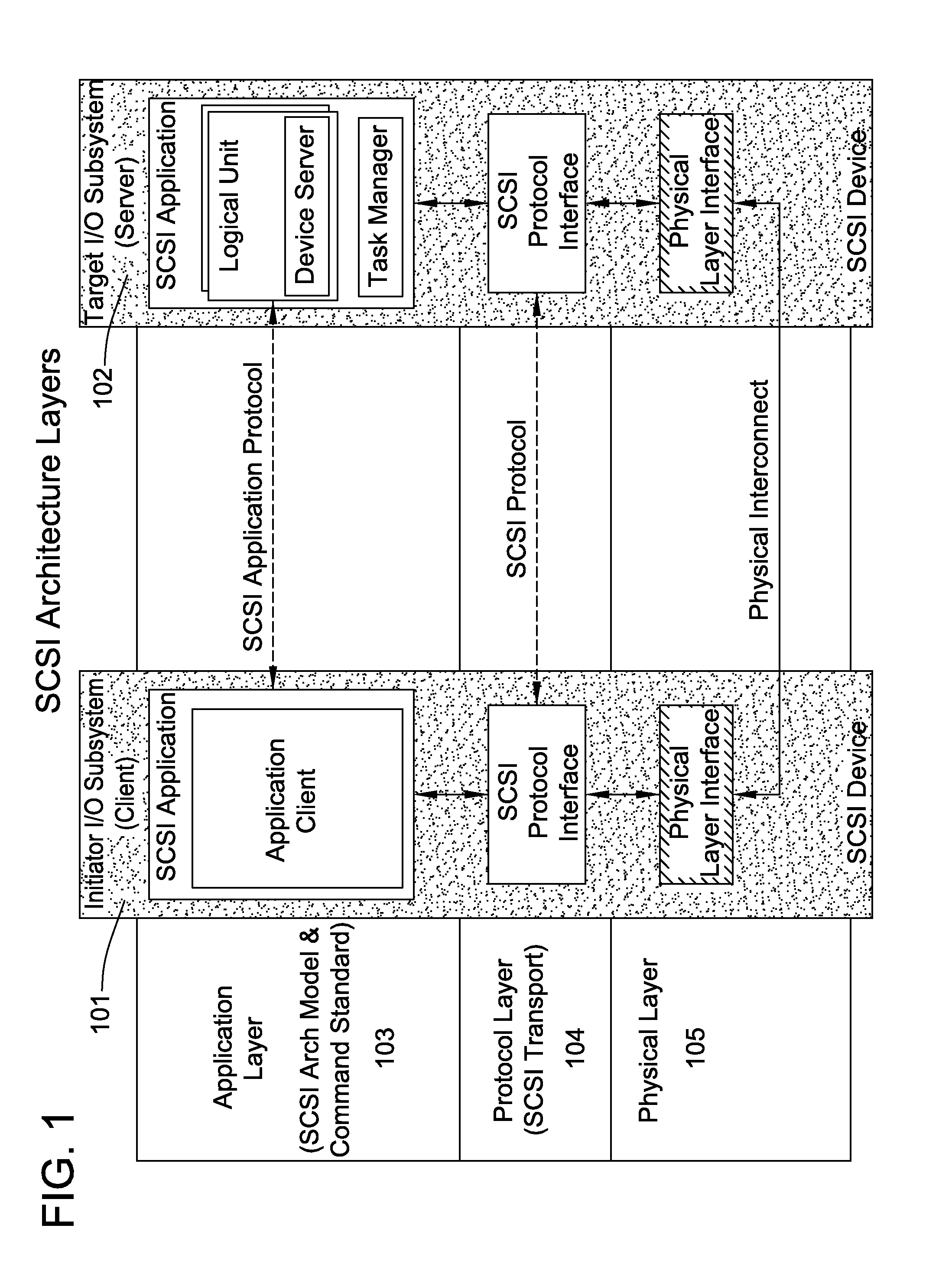

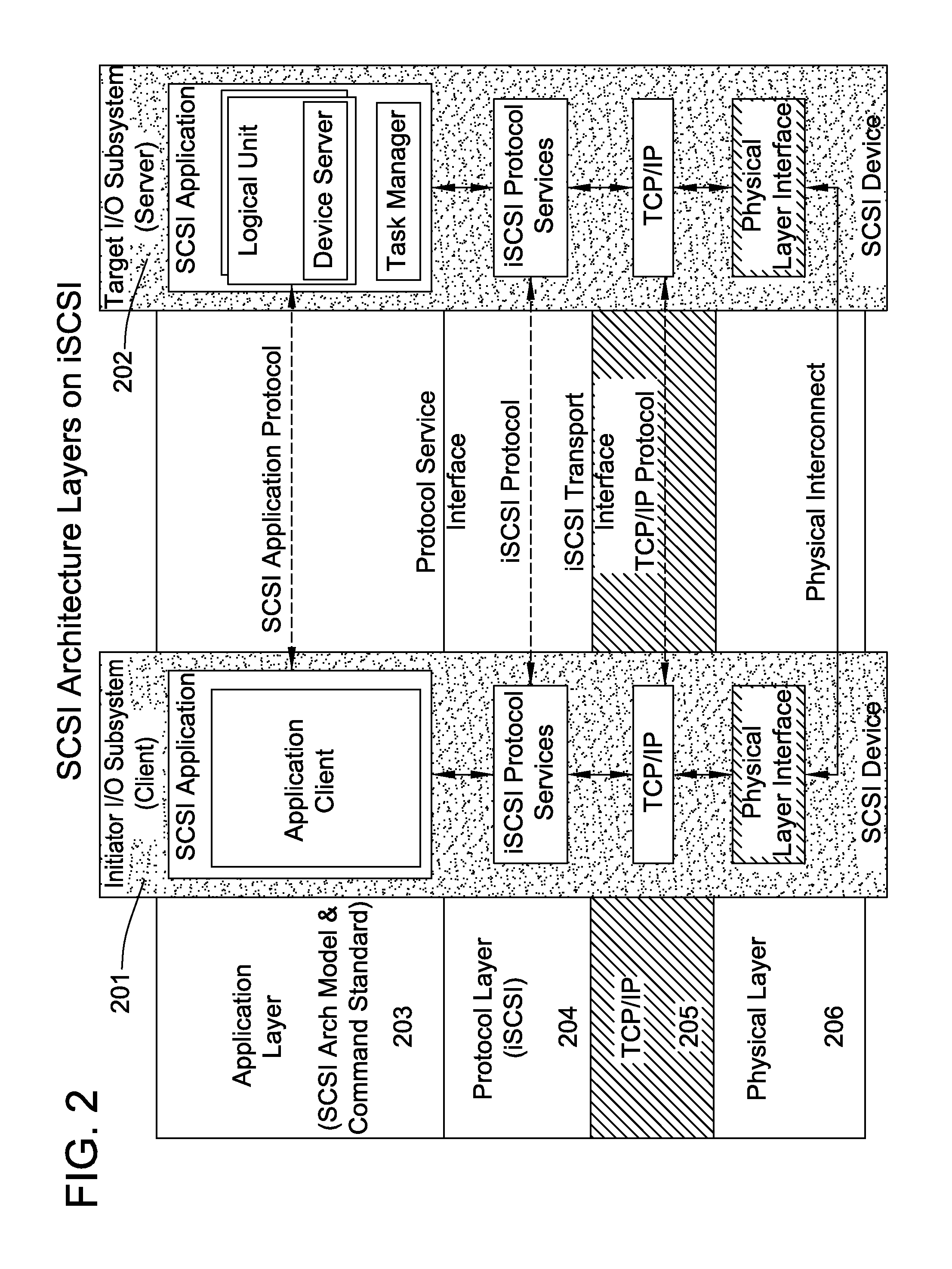

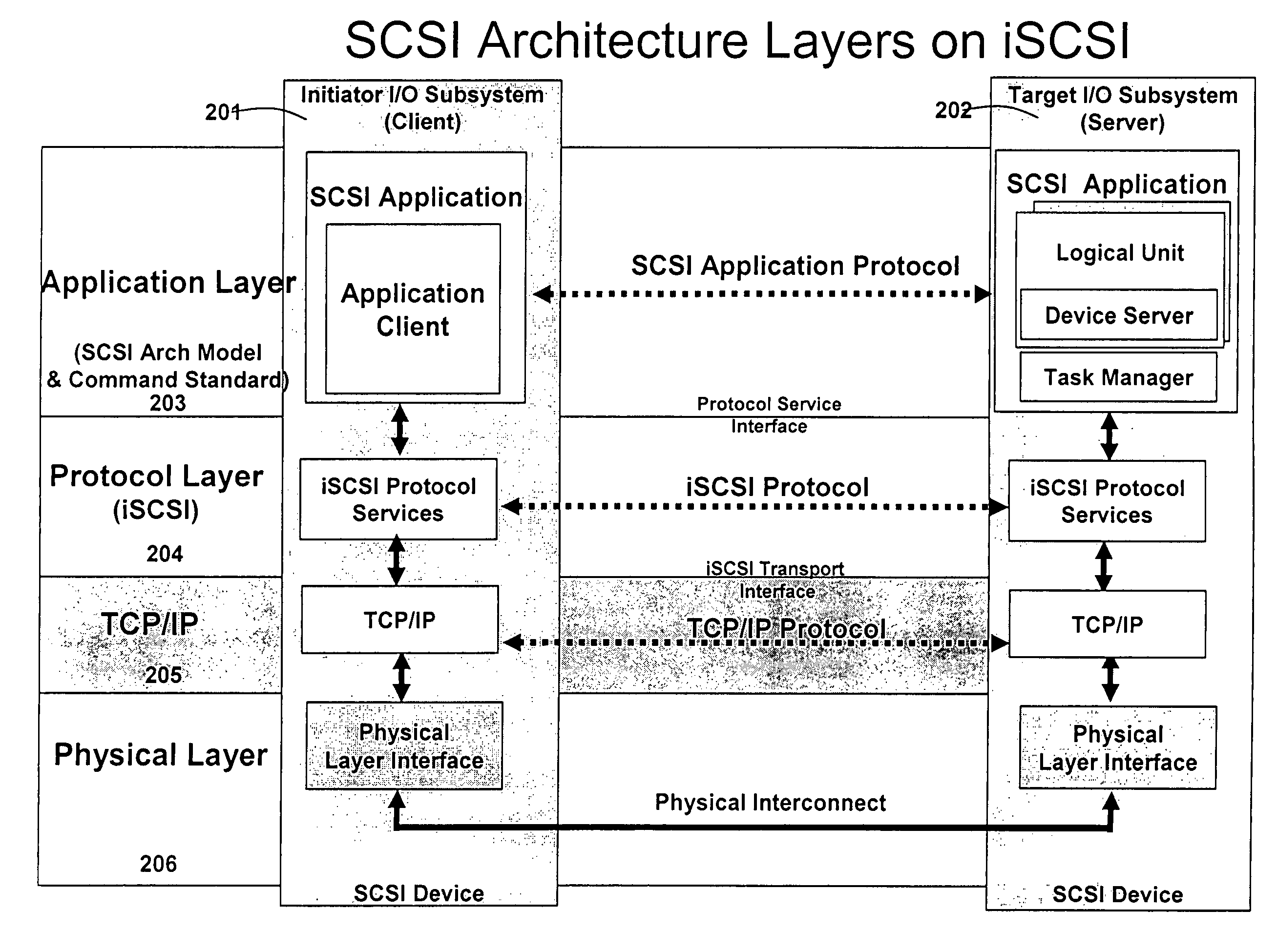

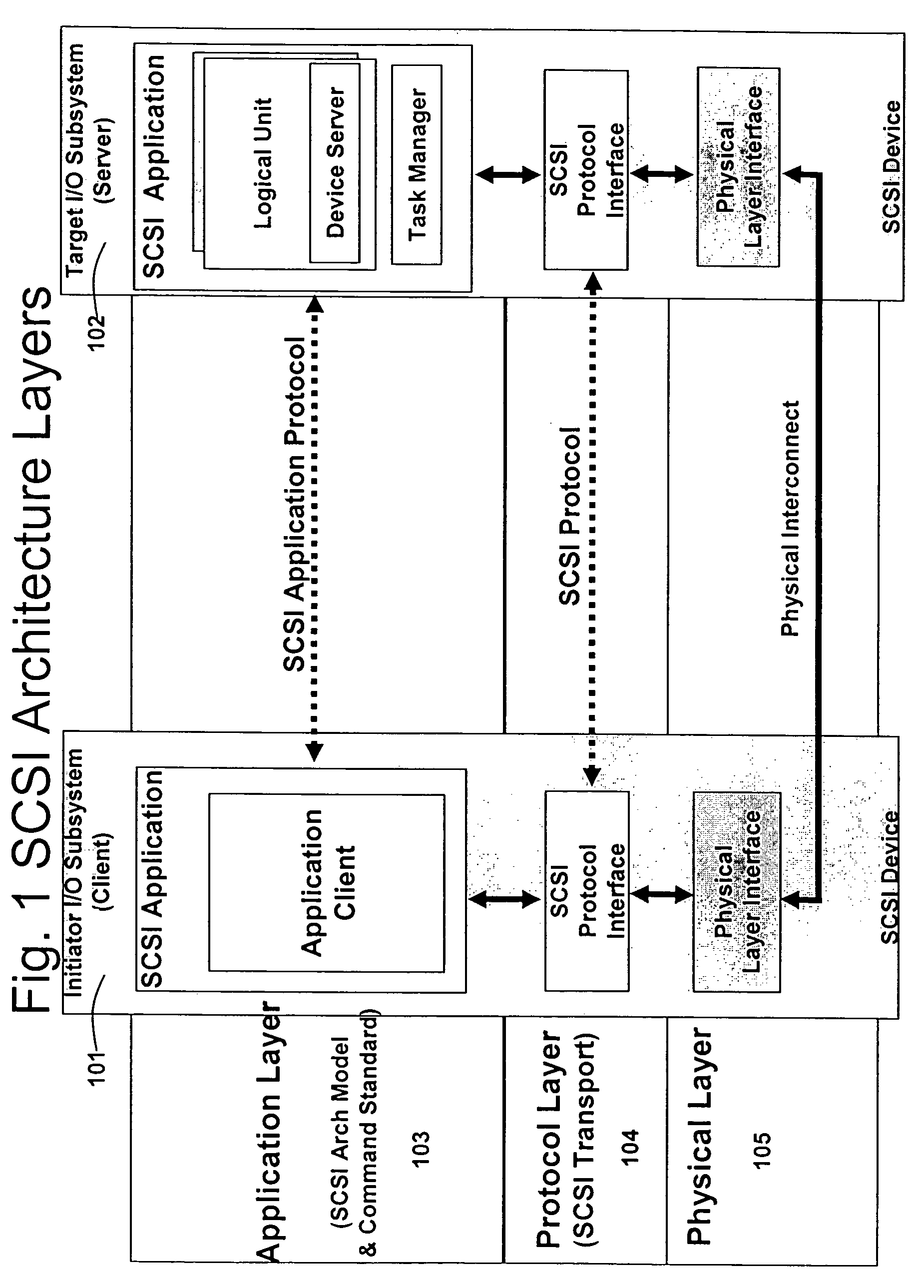

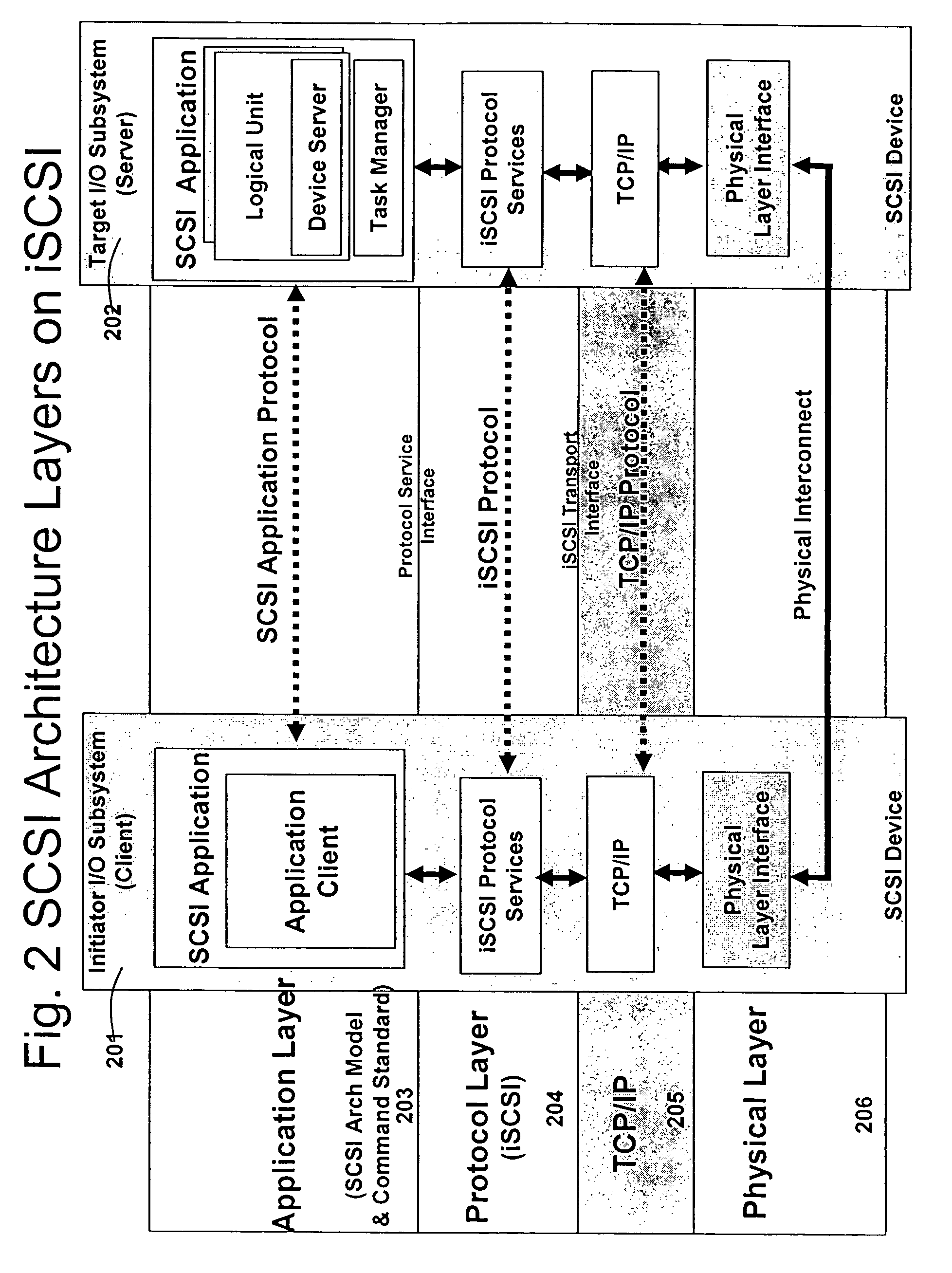

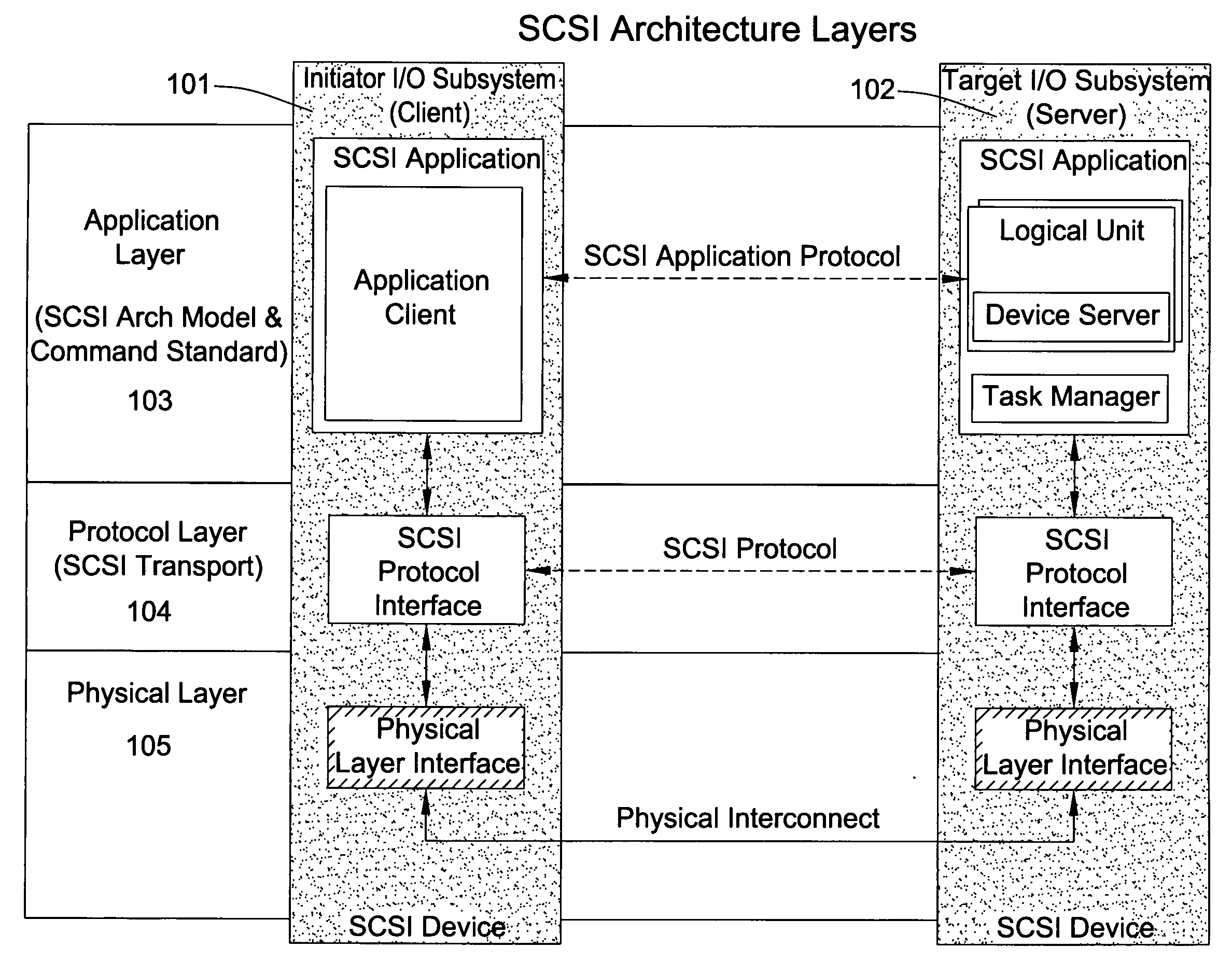

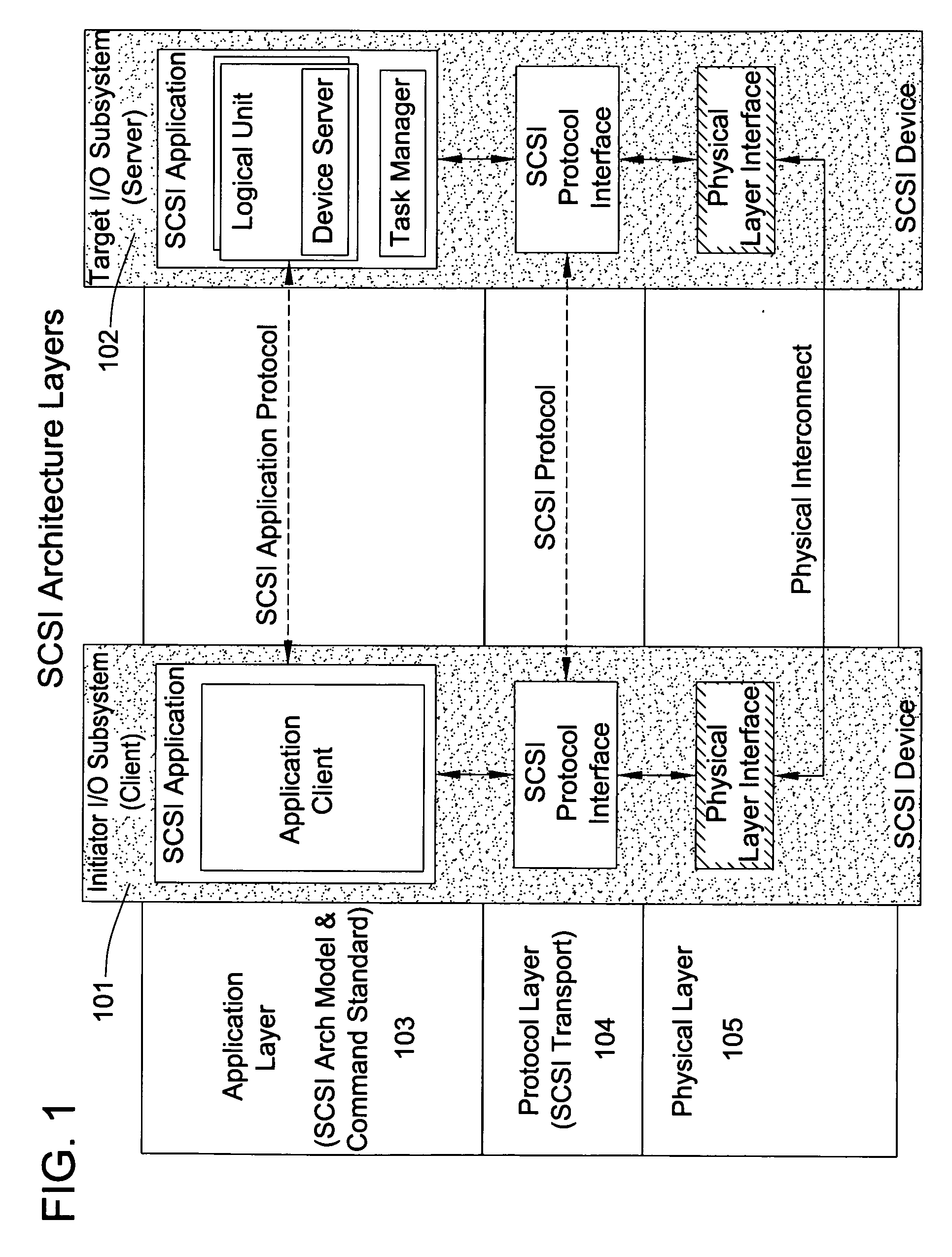

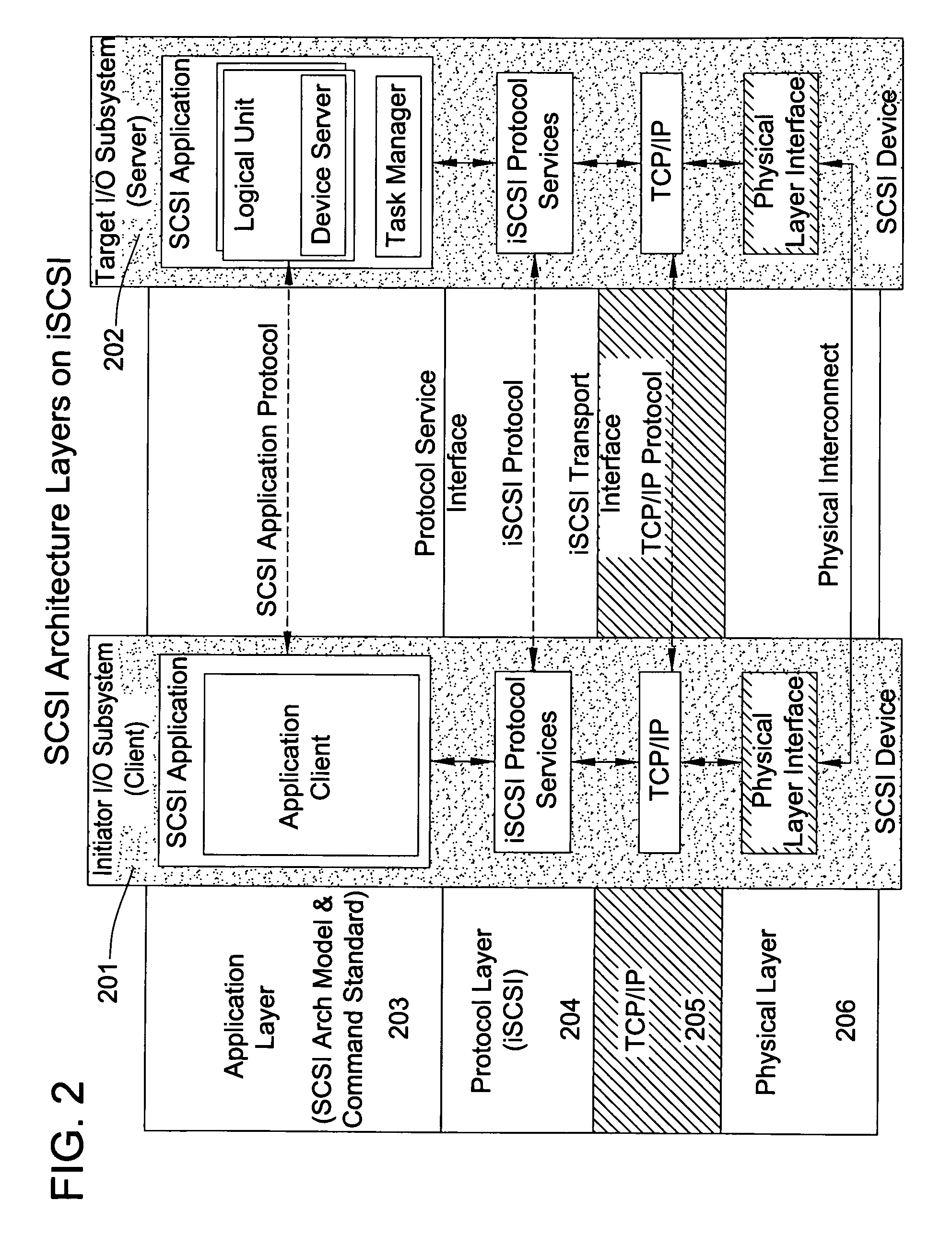

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. he search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

Runtime adaptable security processor

InactiveUS20050108518A1Improve performanceReduce overheadSecuring communicationInternal memoryPacket scheduling

A runtime adaptable security processor is disclosed. The processor architecture provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

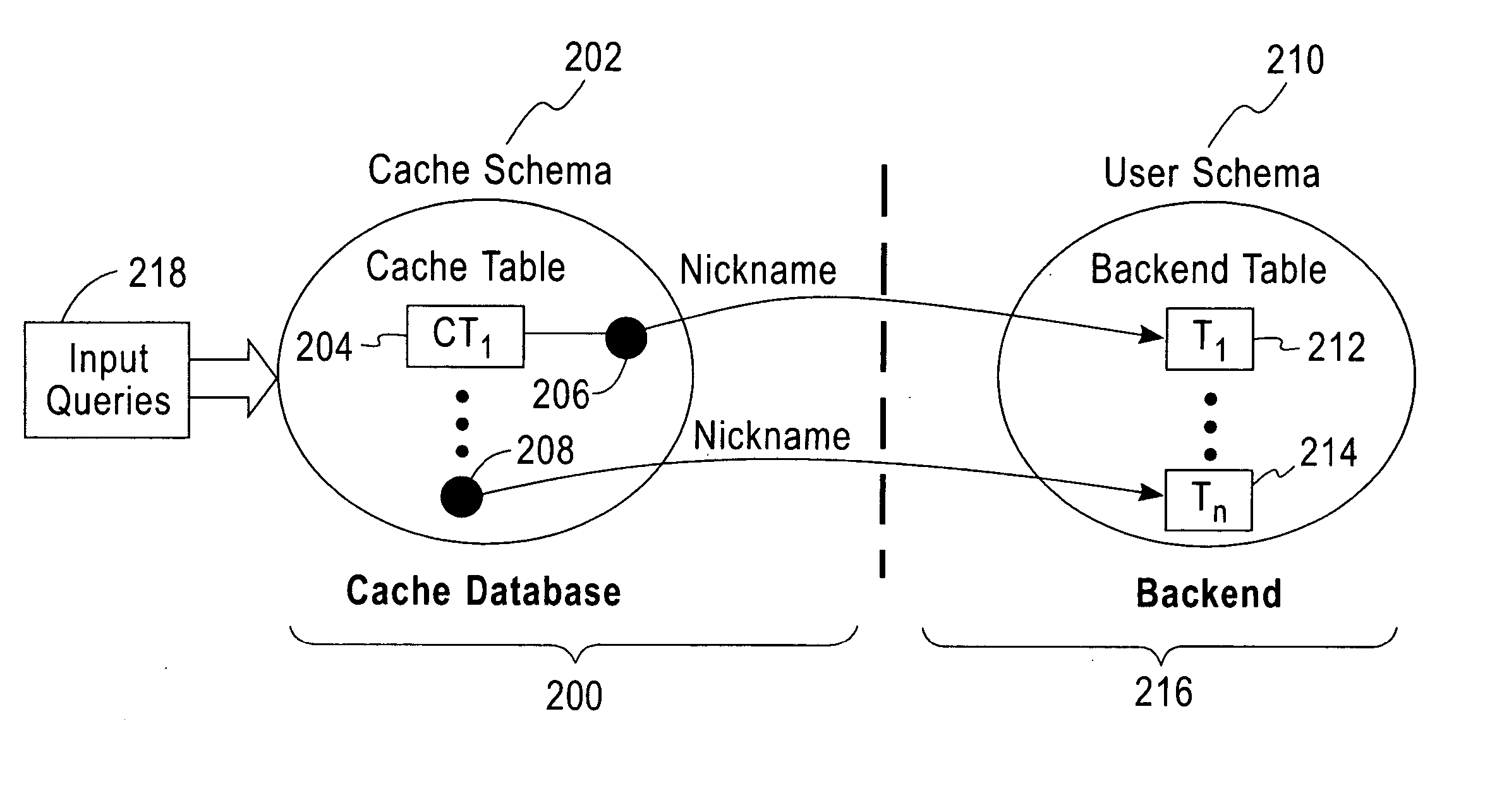

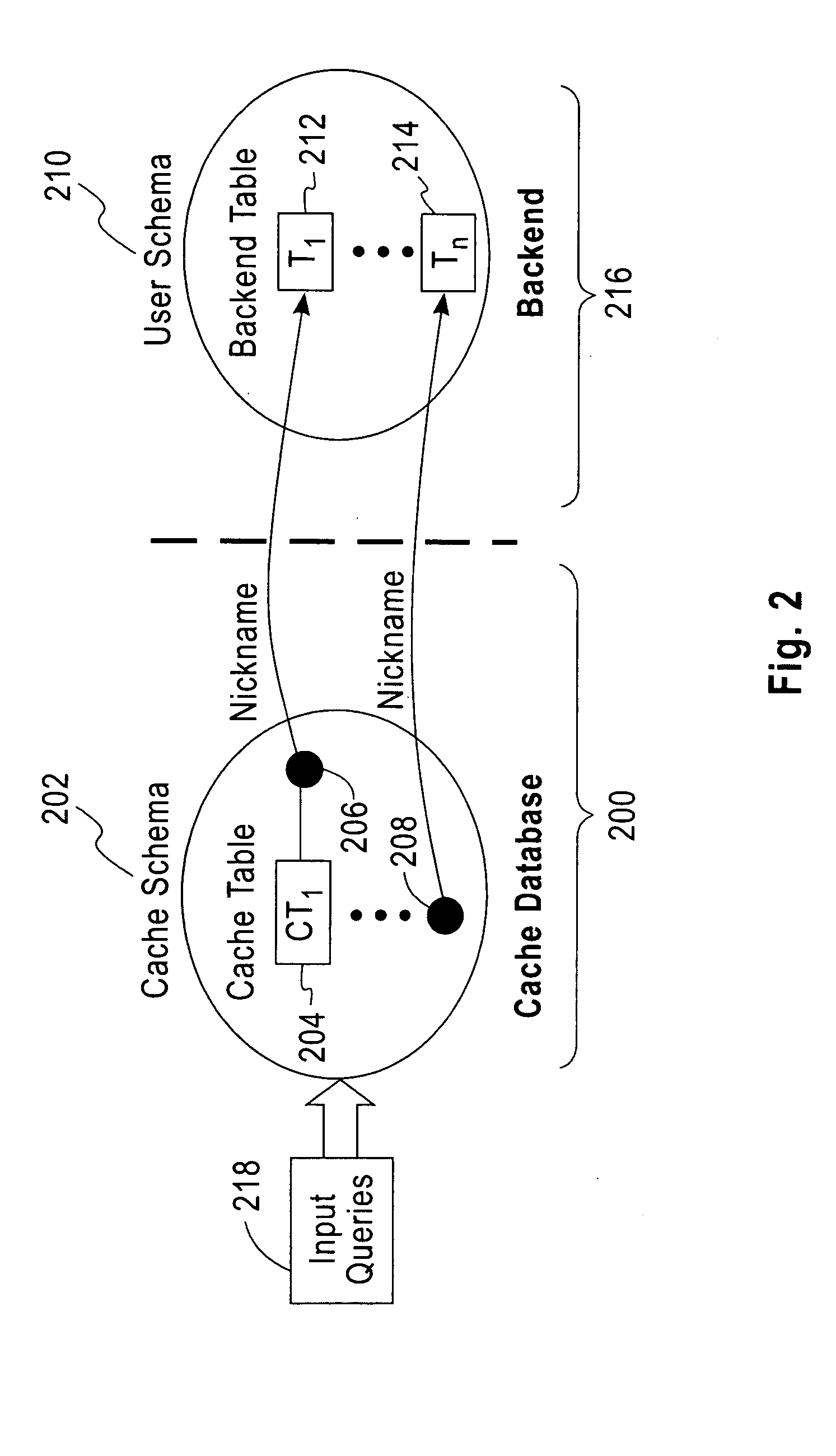

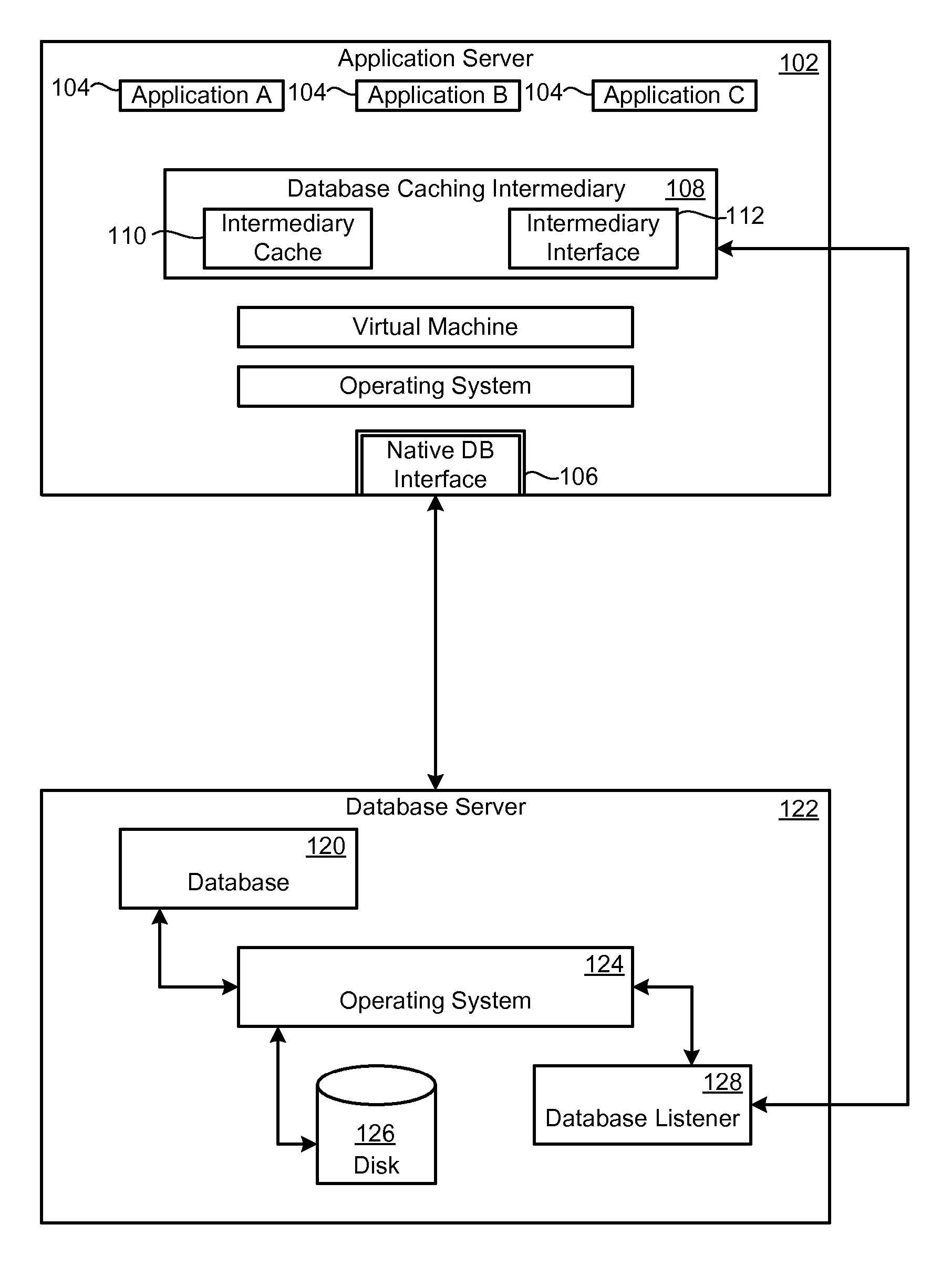

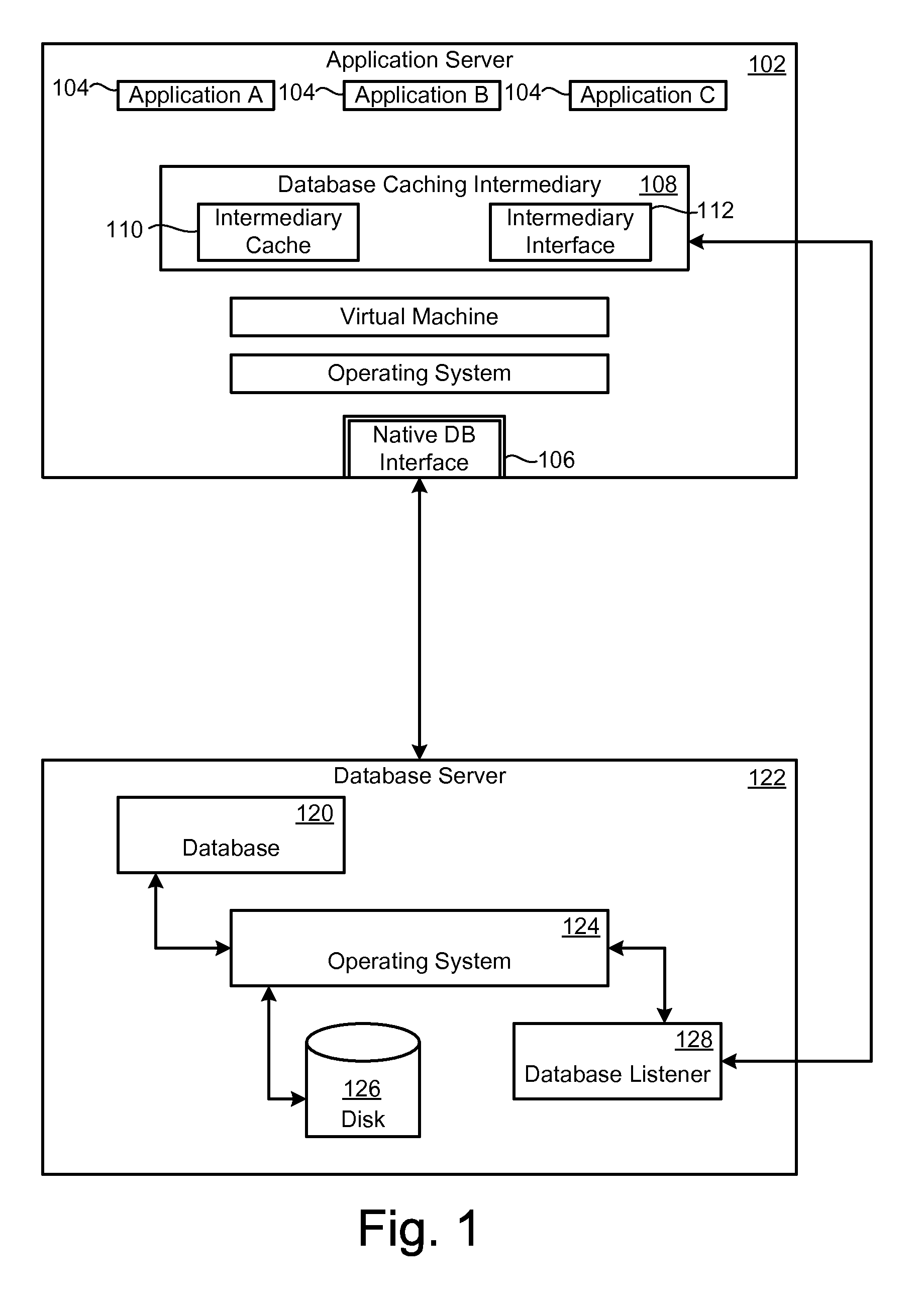

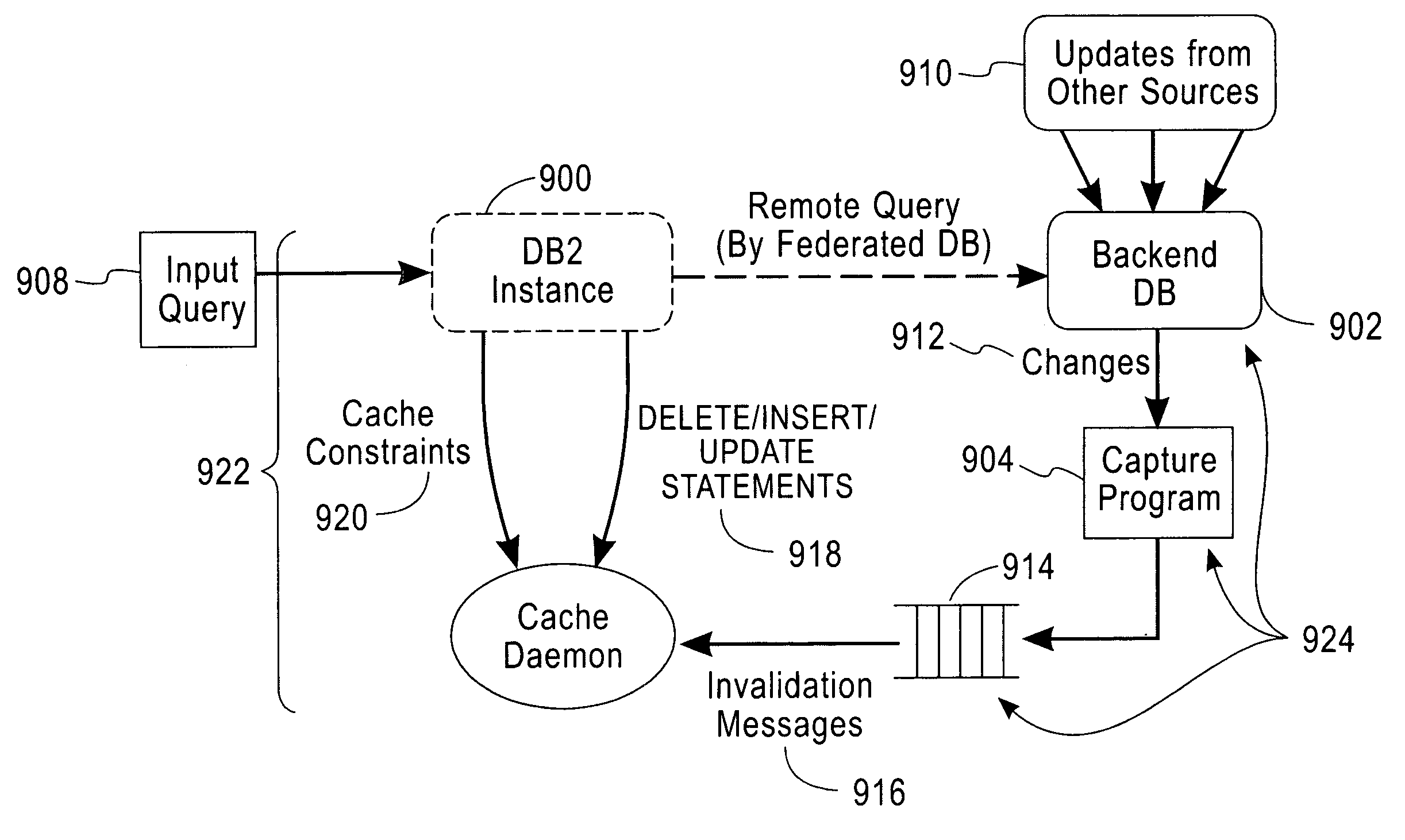

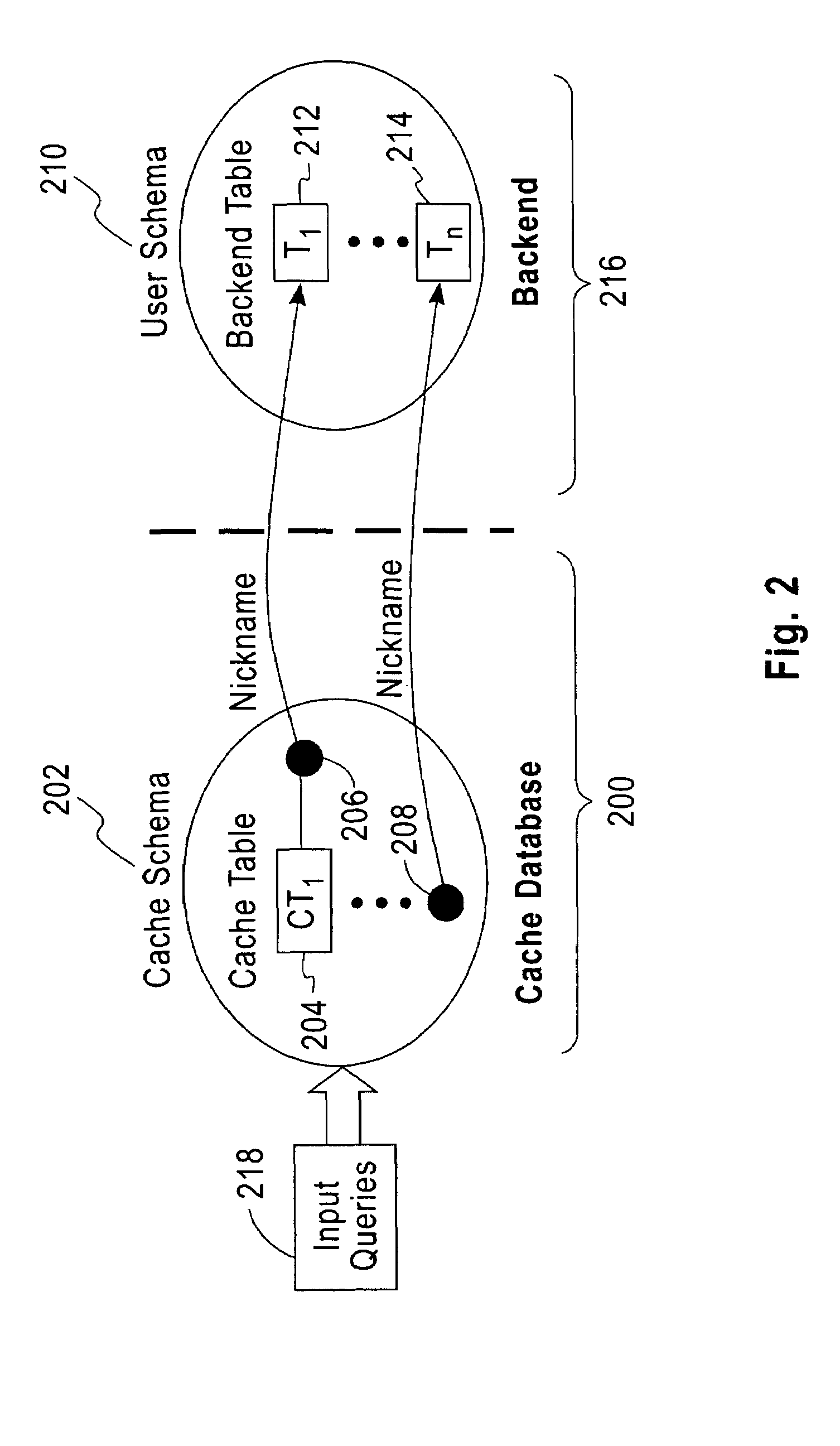

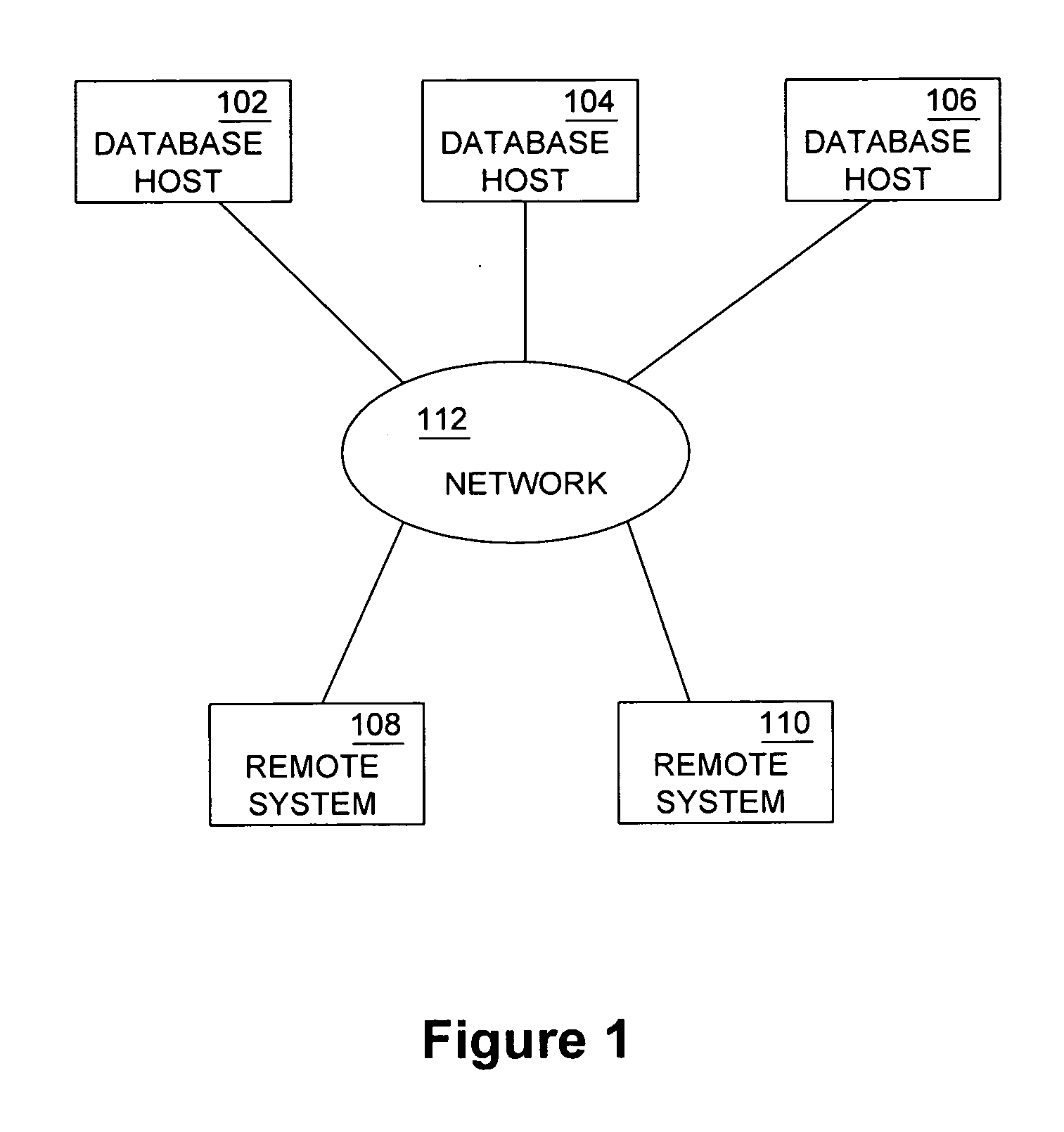

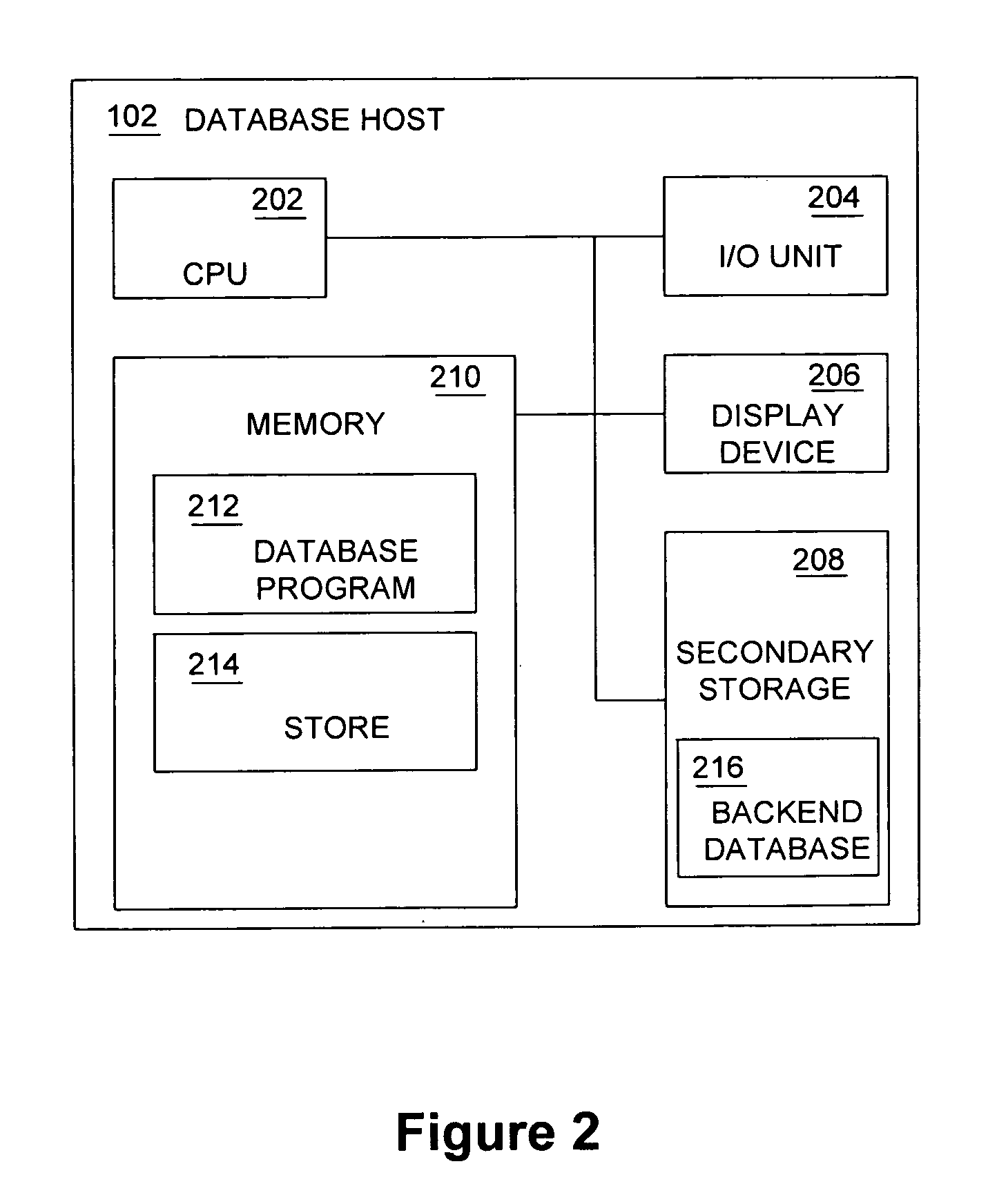

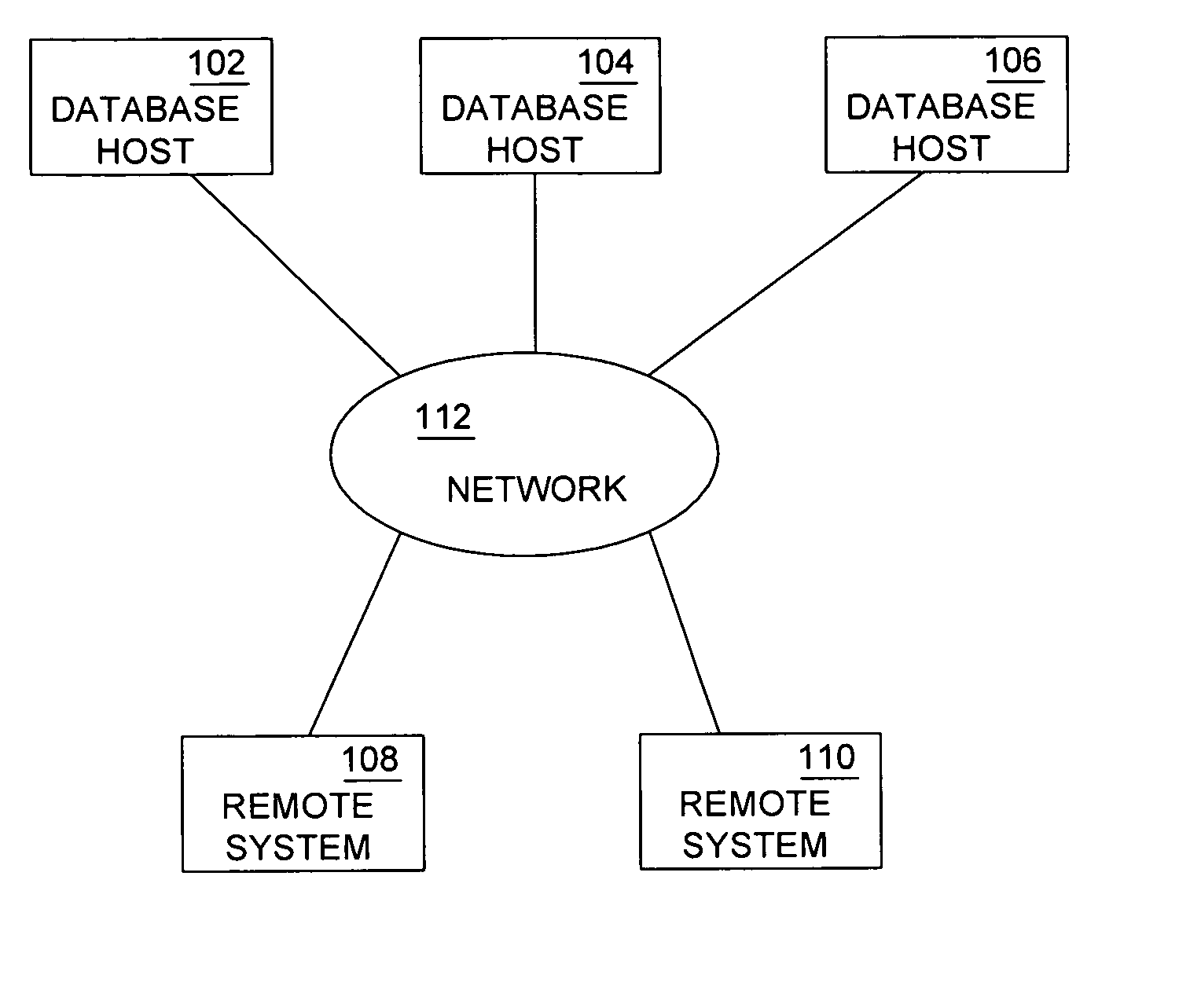

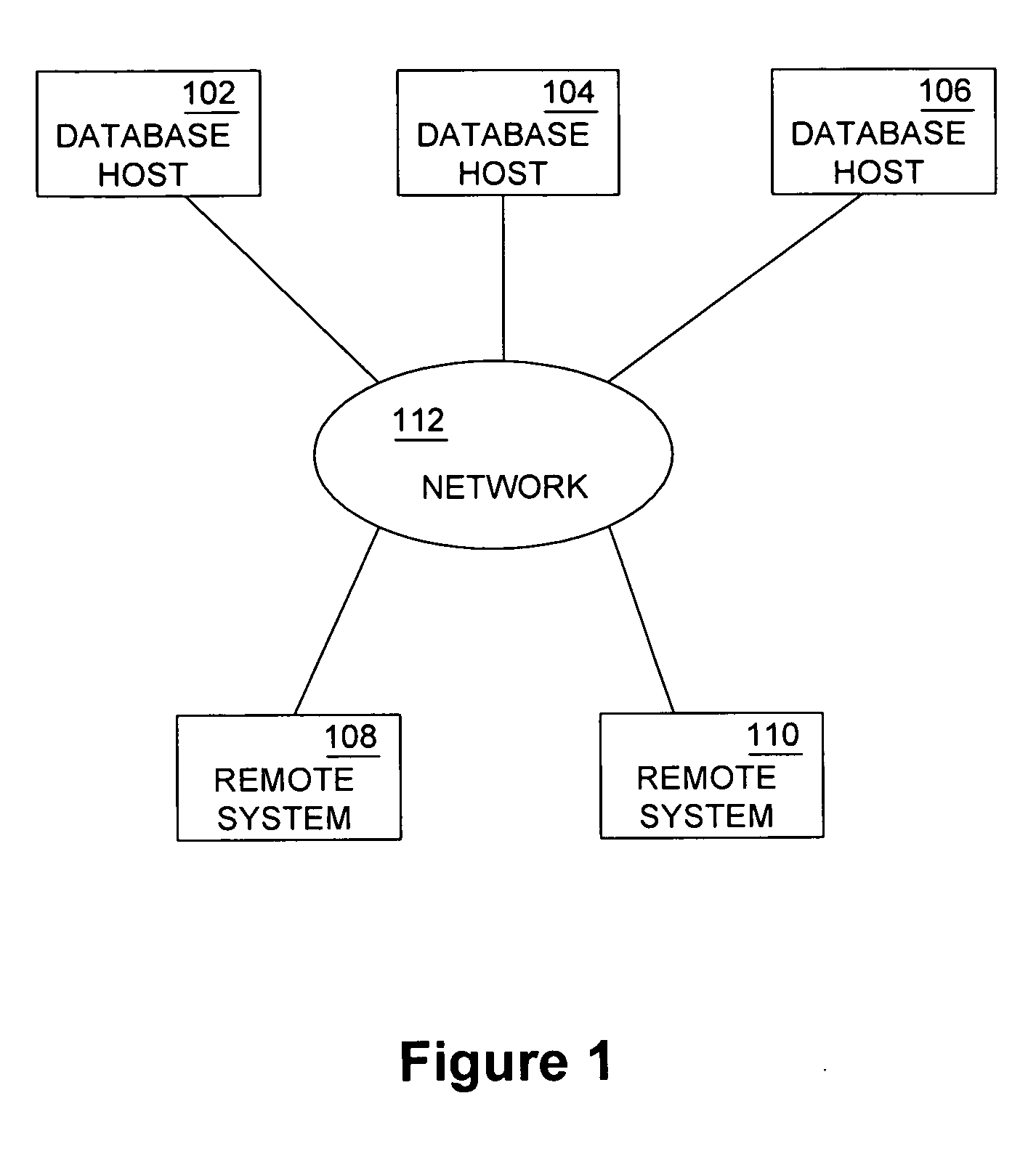

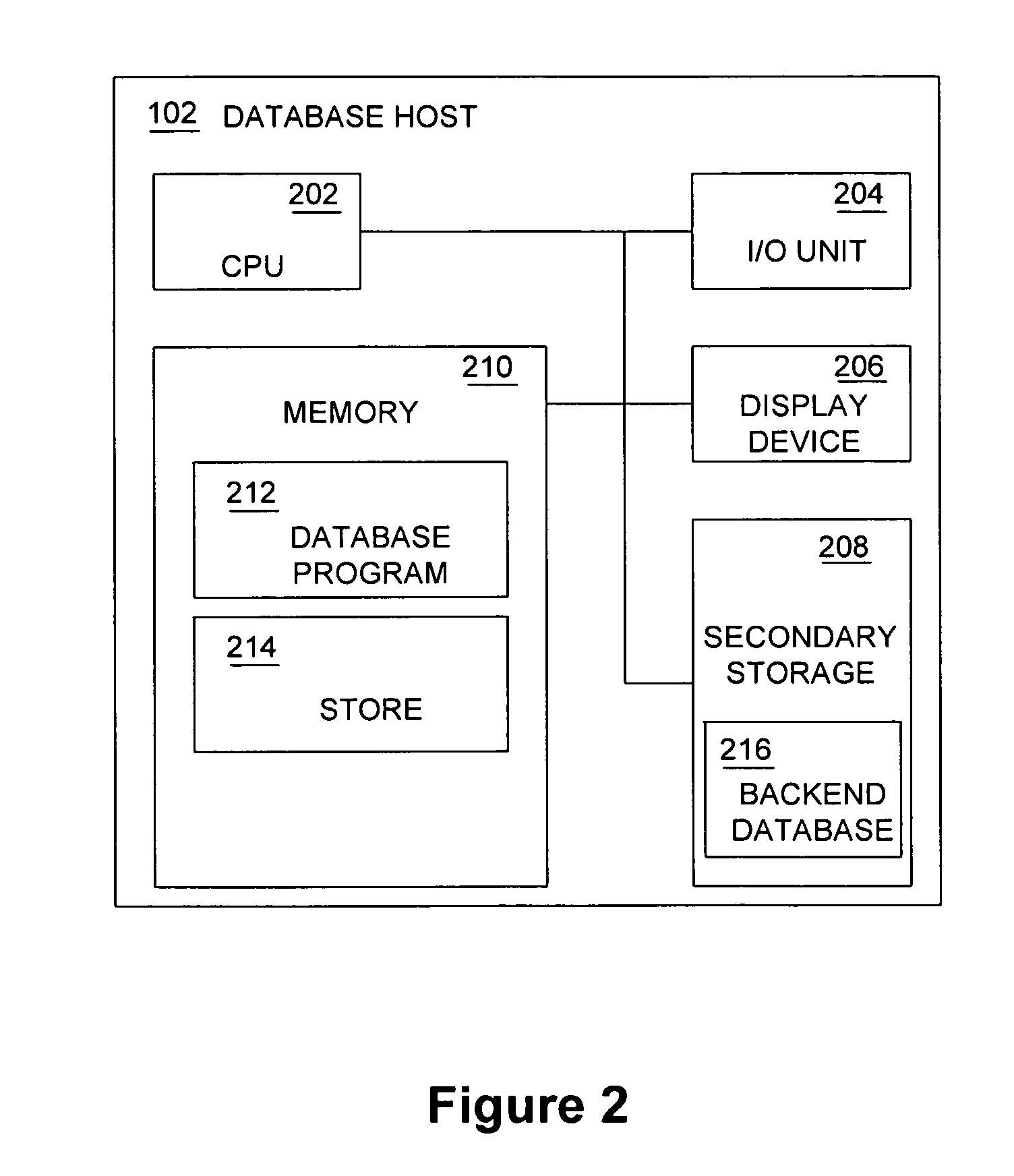

System and method for adaptive database caching

ActiveUS20060026154A1Data processing applicationsDigital data information retrievalQuery planUser input

A local database cache enabling persistent, adaptive caching of either full or partial content of a remote database is provided. Content of tables comprising a local cache database is defined on per-table basis. A table is either: defined declaratively and populated in advance of query execution, or is determined dynamically and asynchronously populated on-demand during query execution. Based on a user input query originally issued against a remote DBMS and referential cache constraints between tables in a local database cache, a Janus query plan, comprising local, remote, and probe query portions is determined. A probe query portion of a Janus query plan is executed to determine whether up-to-date results can be delivered by the execution of a local query portion against a local database cache, or whether it is necessary to retrieve results from a remote database by executing a remote query portion of Janus query plan.

Owner:IBM CORP

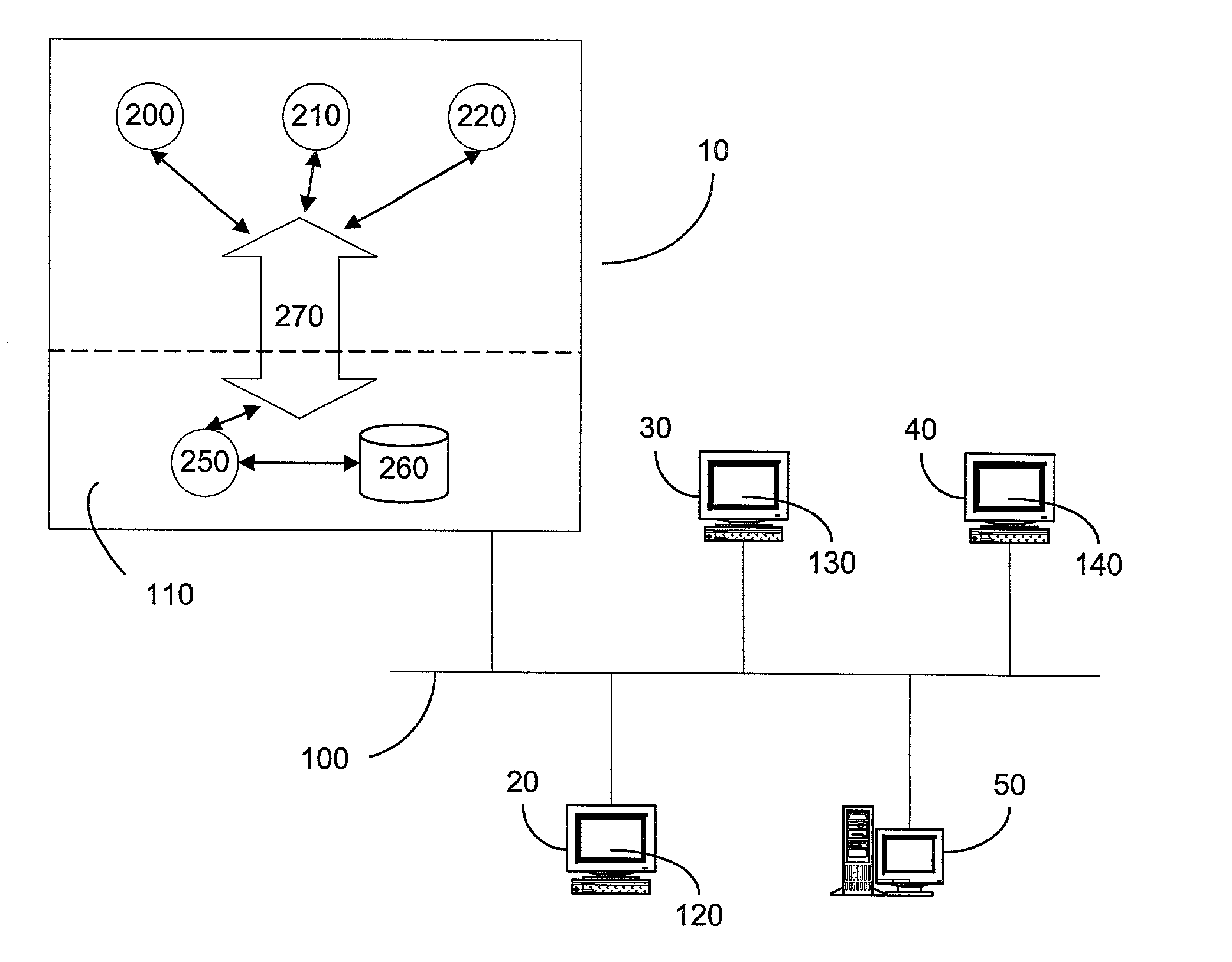

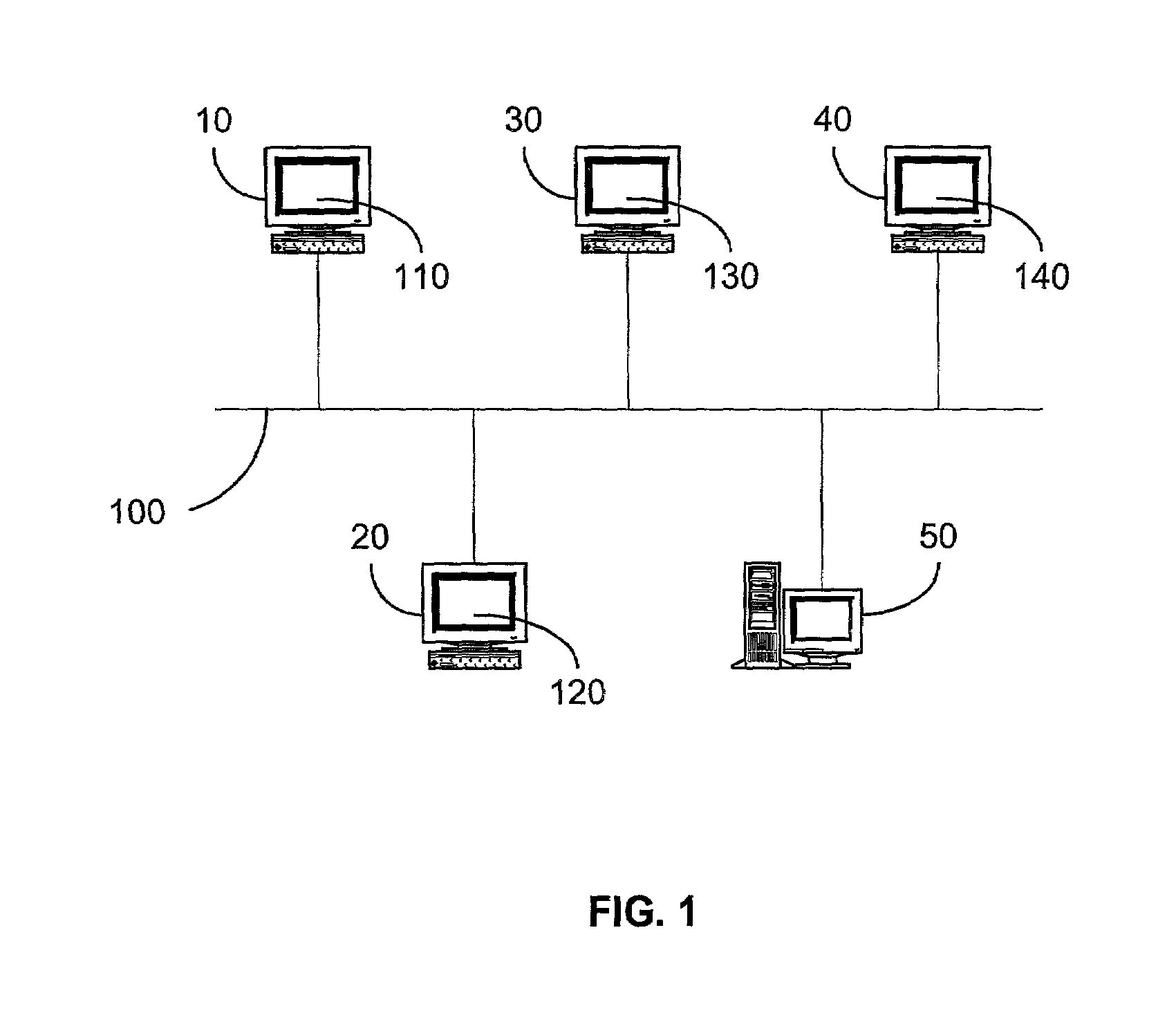

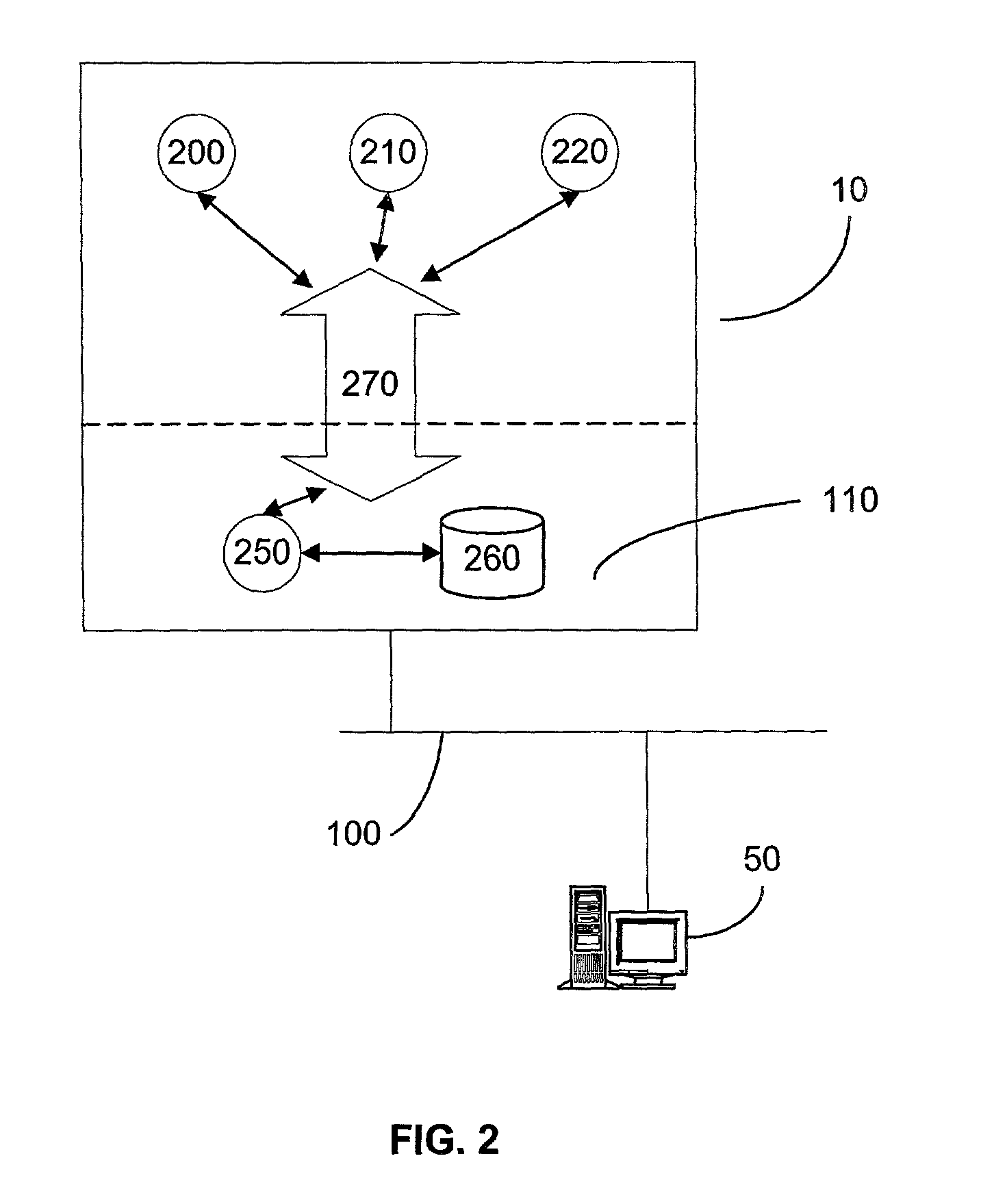

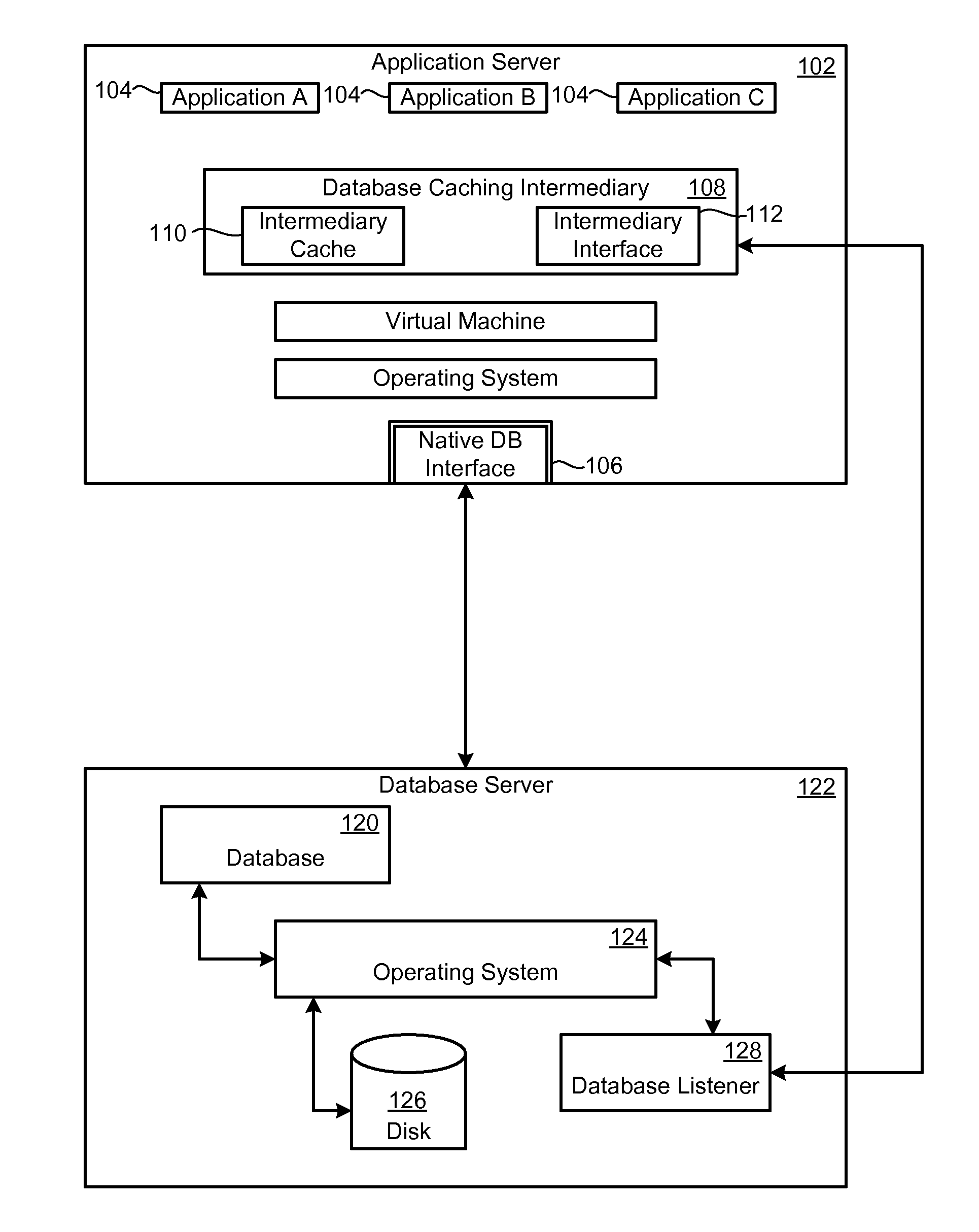

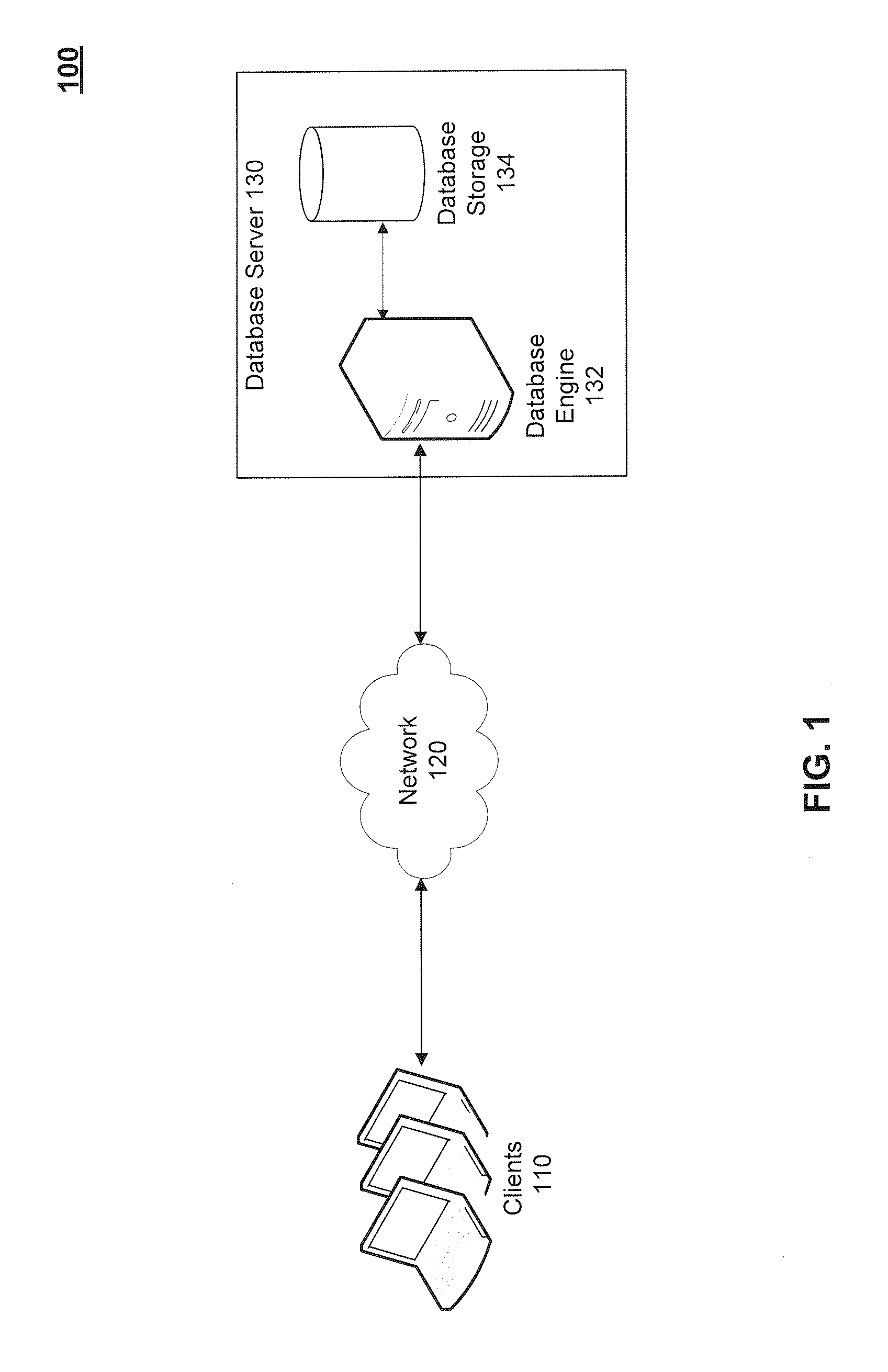

Method and apparatus for efficient SQL processing in an n-tier architecture

ActiveUS7580971B1Efficient processingFree of ChargeDigital data information retrievalMultiple digital computer combinationsData setCache server

A method and apparatus for efficiently processing data requests in a network oriented n-tier database environment is presented. According to one embodiment of the invention, certain or all data from the tables of a database server device can be maintained in tables on the client device in a client side database cache server system. This local cache allows the network oriented n-tier database system to eliminate the expense of repetitive network transmissions to respond to duplicate queries for the same information. Additionally, the local client device may also keep track of what data is cached on peer network nodes. This allows the client to request that data from a peer database cache server and off load that burden from the database server device. Moreover, the local client may also keep statistics regarding the frequency of requested data in order to optimize the data set maintained in the local database cache server.

Owner:ORACLE INT CORP

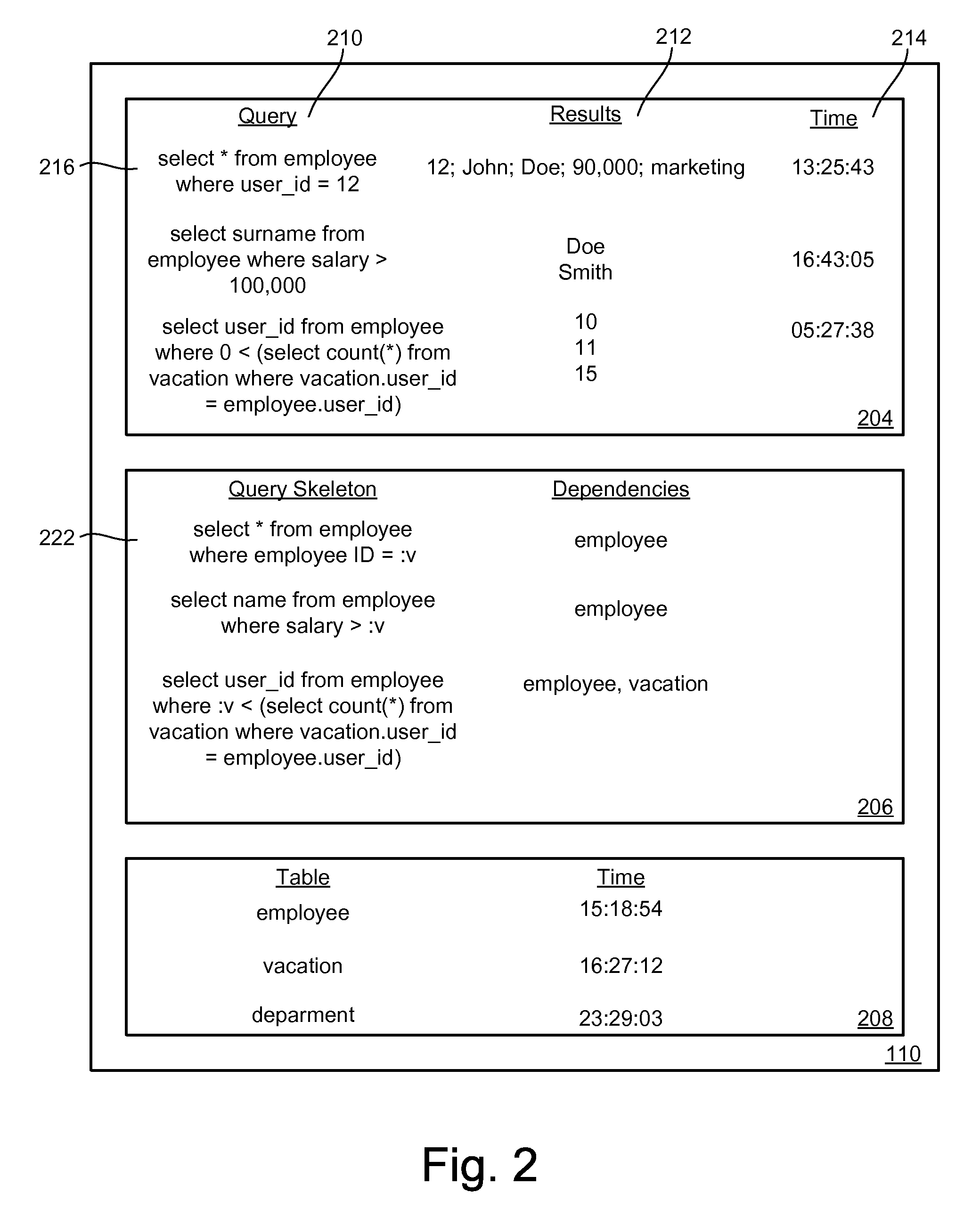

Database Caching and Invalidation using Database Provided Facilities for Query Dependency Analysis

InactiveUS20060271510A1Sure easyDigital data information retrievalProgram synchronisationDatabase cachingDatabase

Database data is maintained reliably and invalidated based on actual changes to data in the database. Updates or changes to data are detected without parsing queries submitted to the database. The dependencies of a query can be determined by submitting a version of the received query to the database through a native facility provided by the database to analyze how query structures are processed. The caching system can access the results of the facility to determine the tables, rows, or other partitions of data a received query is dependent upon or modifies. An abstracted form of the query can be cached with an indication of the tables, rows, etc. that queries of that structure access or modify. The tables a write or update query modifies can be cached with a time of last modification. When a query is received for which the results are cached, the system can readily determine dependency information for the query, the last time the dependencies were modified, and compare this time with the time indicated for when the cached results were retrieved. By passing versions of write queries to the database, updates to the database can be detected.

Owner:TERRACOTTA

TCP/IP processor and engine using RDMA

ActiveUS7376755B2Sharply reduces TCP/IP protocol stack overheadImprove performanceMultiplex system selection arrangementsMemory adressing/allocation/relocationTransmission protocolInternal memory

A TCP / IP processor and data processing engines for use in the TCP / IP processor is disclosed. The TCP / IP processor can transport data payloads of Internet Protocol (IP) data packets using an architecture that provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. The engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a TCP / IP session information database and may also store a storage information session database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer.

Owner:MEMORY ACCESS TECH LLC

Runtime adaptable security processor

InactiveUS20120117610A1Sharply reduces TCP/IP protocol stack overheadImprove performanceComputer security arrangementsSpecial data processing applicationsInternal memoryApplication software

A runtime adaptable security processor is disclosed. The processor architecture provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer.

Owner:MEMORY ACCESS TECH LLC

Runtime adaptable protocol processor

ActiveUS7631107B2Sharply reduces TCP/IP protocol stack overheadImprove performanceComputer controlTime-division multiplexInternal memoryData pack

A runtime adaptable protocol processor is disclosed. The processor architecture provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

Database Caching and Invalidation Based on Detected Database Updates

InactiveUS20060271557A1Sure easyDigital data information retrievalProgram synchronisationDatabase cachingDatabase

Database data is reliably maintained and invalidated based on actual changes to data in the database. The dependencies of a received query can be determined by submitting a version of the received query to the database through a native facility provided by the database to analyze how query structures are processed. The caching system can access the results of the facility to determine the tables, rows, or other partitions of data a received query is dependent upon or modifies. An abstracted form of the query can be cached with an indication of the tables, rows, etc. that queries of that structure access or modify. The tables a write or update query modifies can be cached with a time of last modification. A component can be implemented at or on the system of the database to directly detect changes to the database data. This component can monitor transactional information maintained by the database itself to determine when changes to the database occur. This component can communicate with the cache to provide notification of changes to the database.

Owner:TERRACOTTA

Tcp/ip processor and engine using rdma

InactiveUS20080253395A1Sharply reduces TCP/IP protocol stack overheadImprove performanceDigital computer detailsTime-division multiplexInternal memoryTransmission protocol

A TCP / IP processor and data processing engines for use in the TCP / IP processor is disclosed. The TCP / IP processor can transport data payloads of Internet Protocol (IP) data packets using an architecture that provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. The engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a TCP / IP session information database and may also store a storage information session database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer.

Owner:MEMORY ACCESS TECH LLC

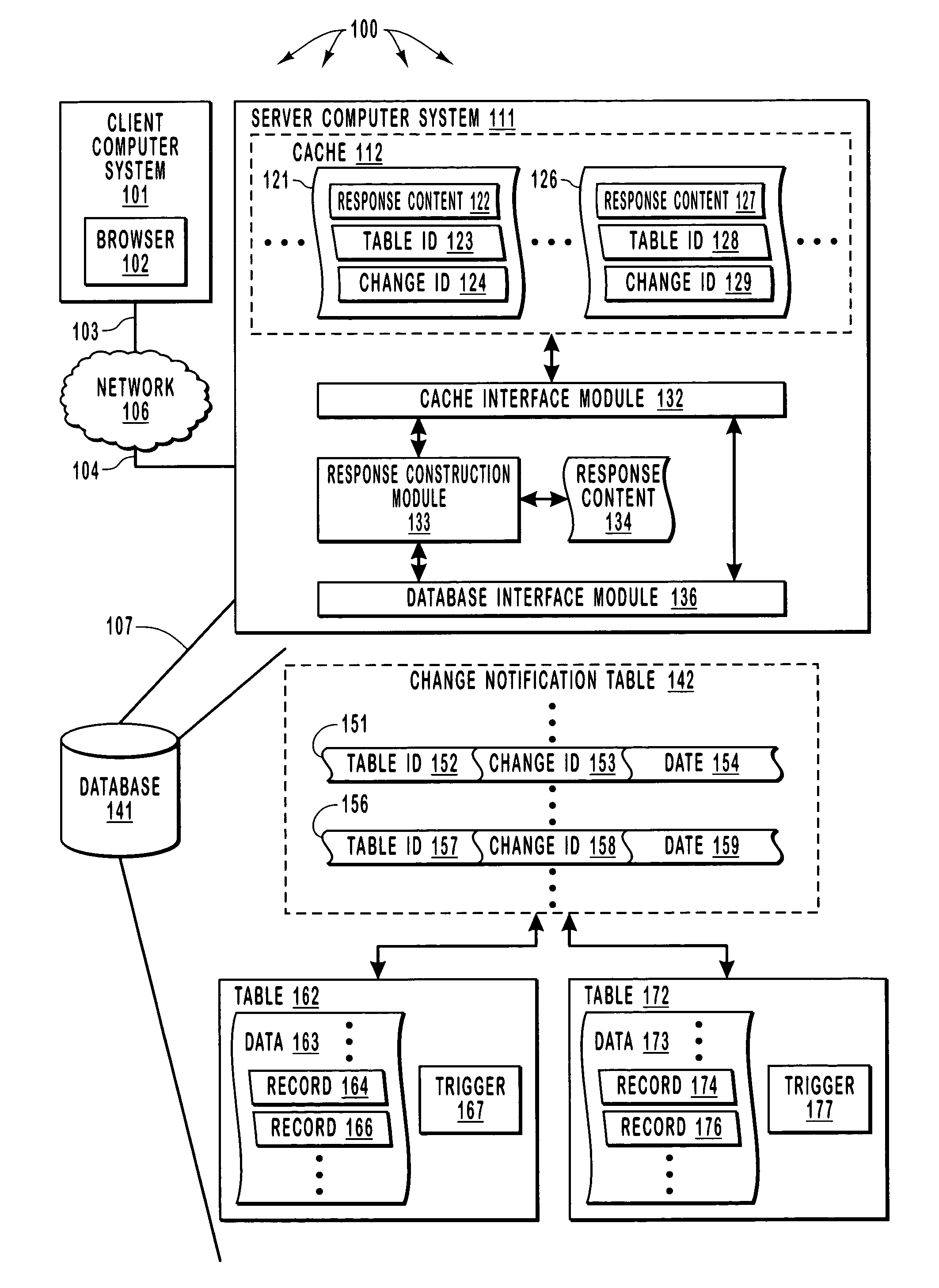

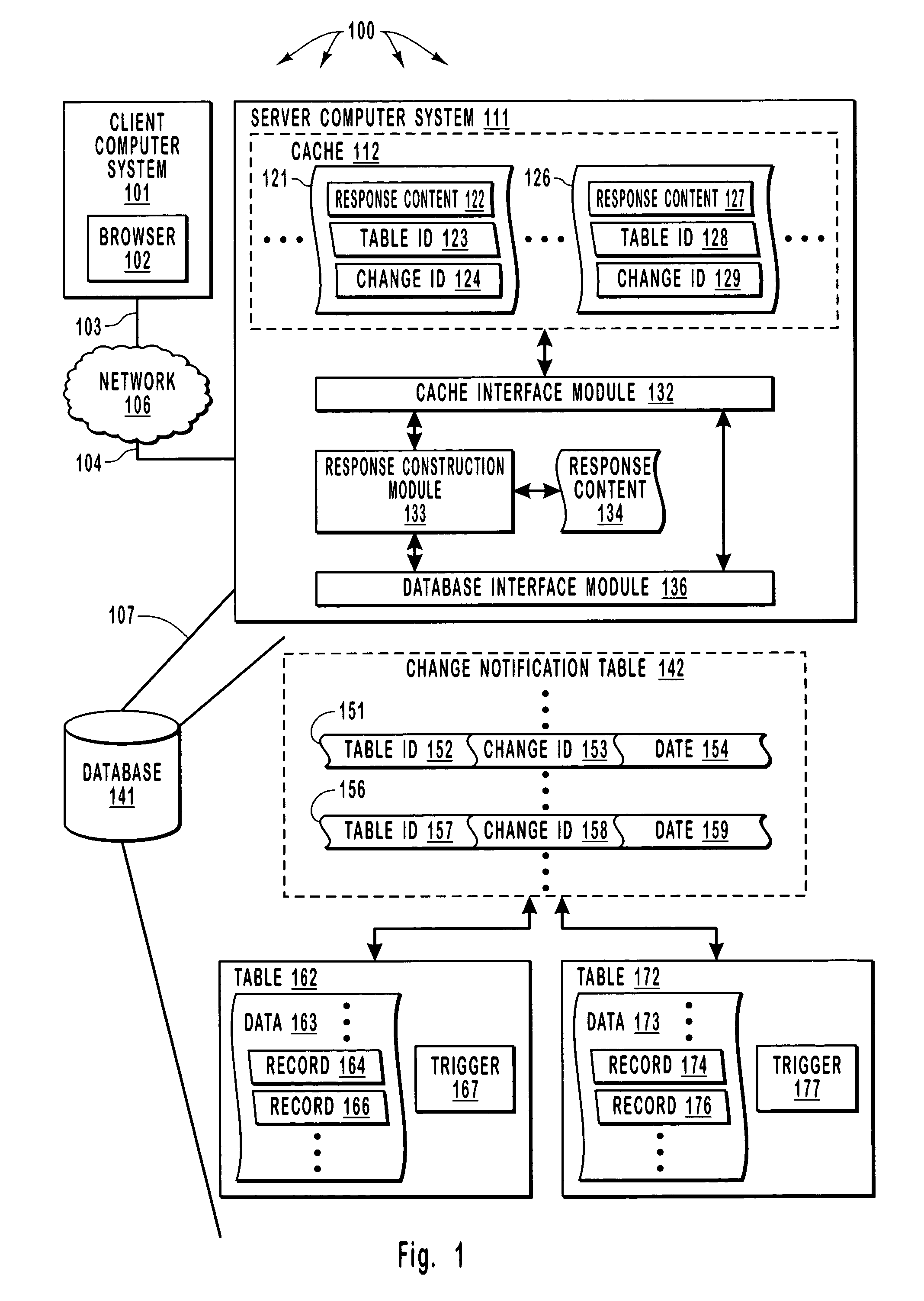

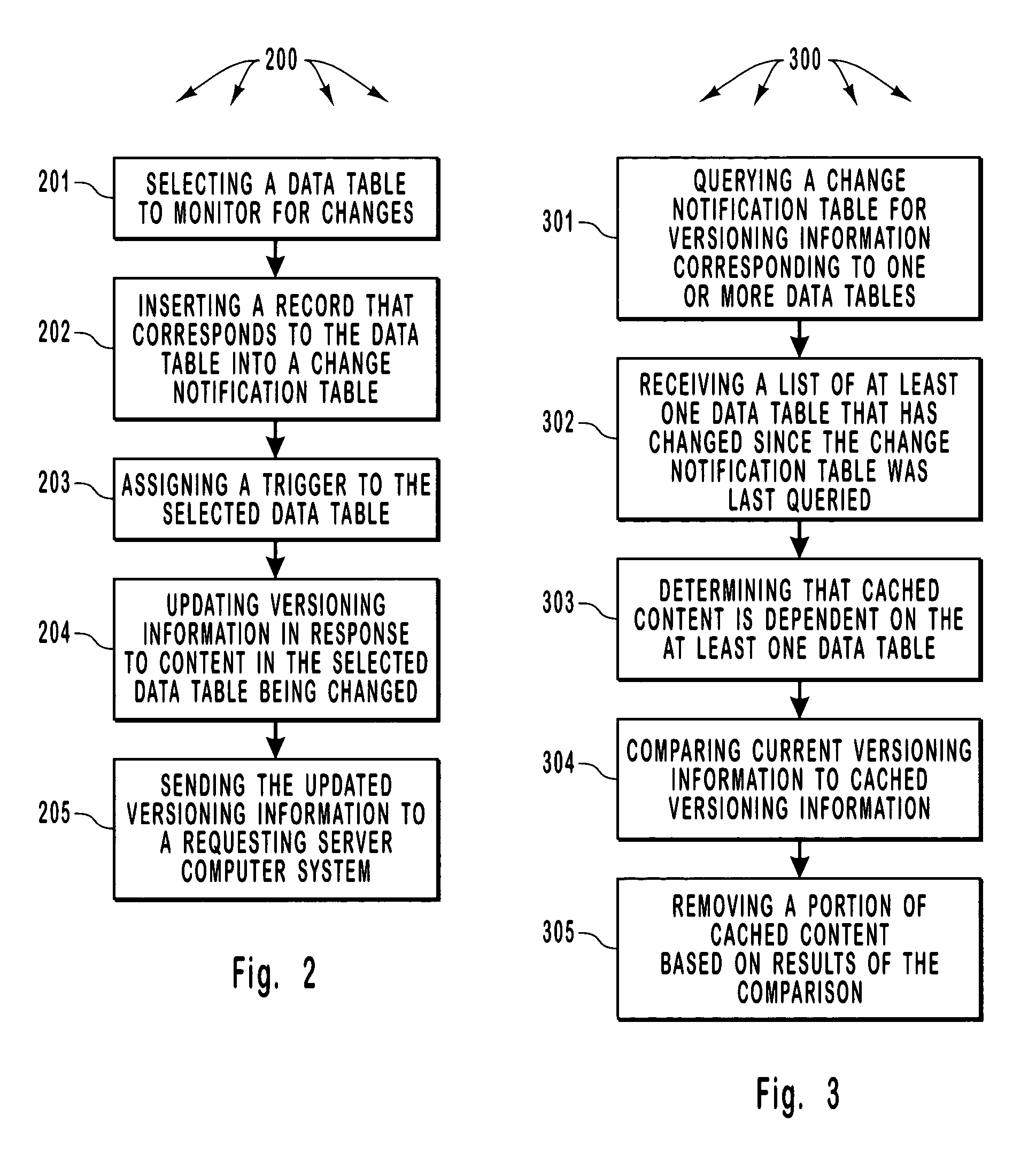

Registering for and retrieving database table change information that can be used to invalidate cache entries

InactiveUS7624126B2Improve efficiencyReduce the possibilityDigital data information retrievalData processing applicationsClient-sideDatabase caching

A server provides Web responses that can include content from data tables in a database. The server maintains a cache (e.g., in system memory) that can store content (including content from data tables) so as to increase the efficiency of subsequently providing the same content to satisfy client Web requests. The server monitors data tables for changes and, when a change in a particular data table occurs, invalidates cached entries that depend on a particular data table. Further, in response to a client Web request for a Web response, the server assigns a database cache dependency to at least a portion of a constructed Web response (e.g., to content retrieved from a data table) based on commands executed during construction of the Web response. The at least a portion of the constructed Web response is subsequently cached in a cache location at the server.

Owner:MICROSOFT TECH LICENSING LLC

System and method for adaptive database caching

A local database cache enabling persistent, adaptive caching of either full or partial content of a remote database is provided. Content of tables comprising a local cache database is defined on per-table basis. A table is either: defined declaratively and populated in advance of query execution, or is determined dynamically and asynchronously populated on-demand during query execution. Based on a user input query originally issued against a remote DBMS and referential cache constraints between tables in a local database cache, a Janus query plan, comprising local, remote, and probe query portions is determined. A probe query portion of a Janus query plan is executed to determine whether up-to-date results can be delivered by the execution of a local query portion against a local database cache, or whether it is necessary to retrieve results from a remote database by executing a remote query portion of Janus query plan.

Owner:INT BUSINESS MASCH CORP

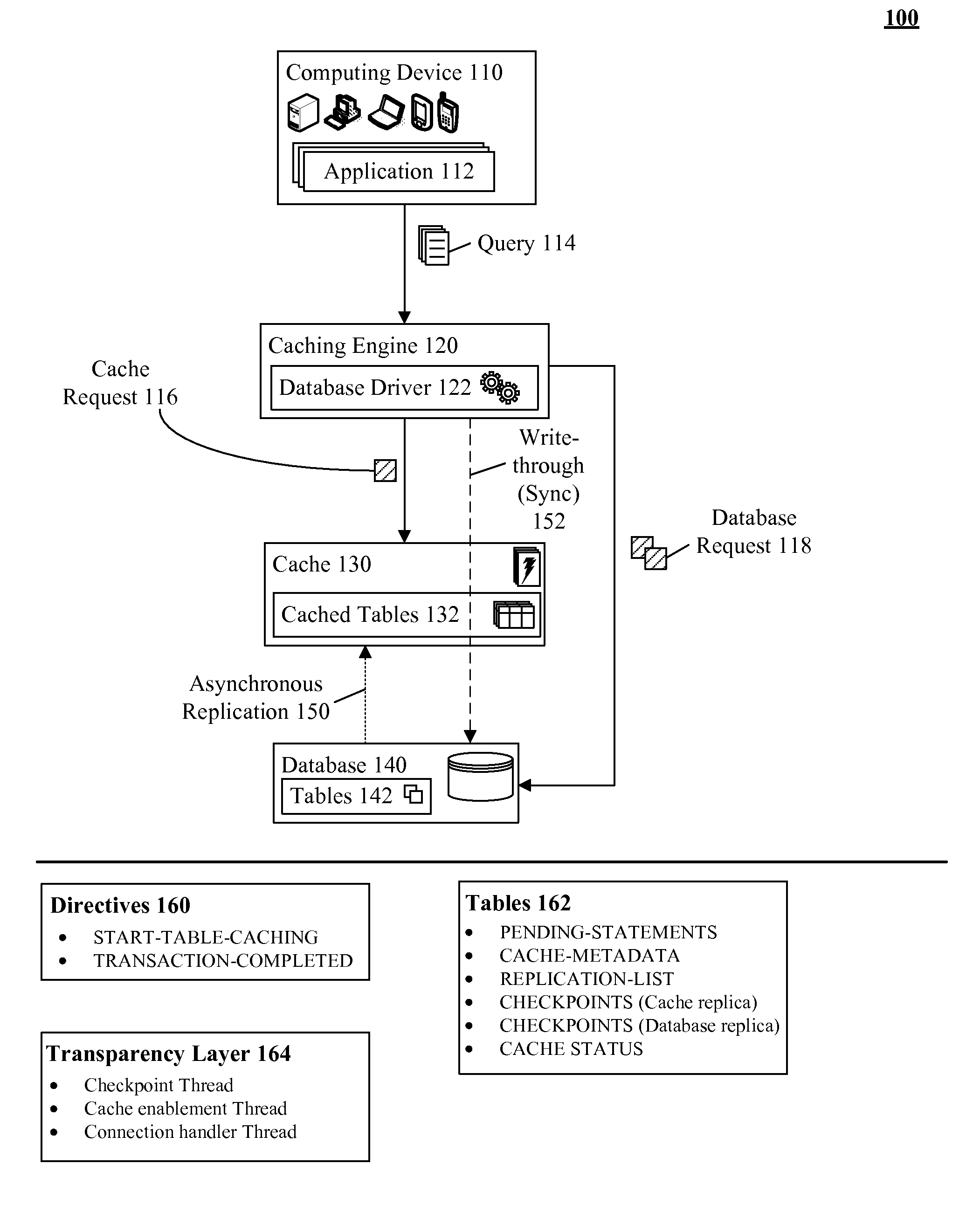

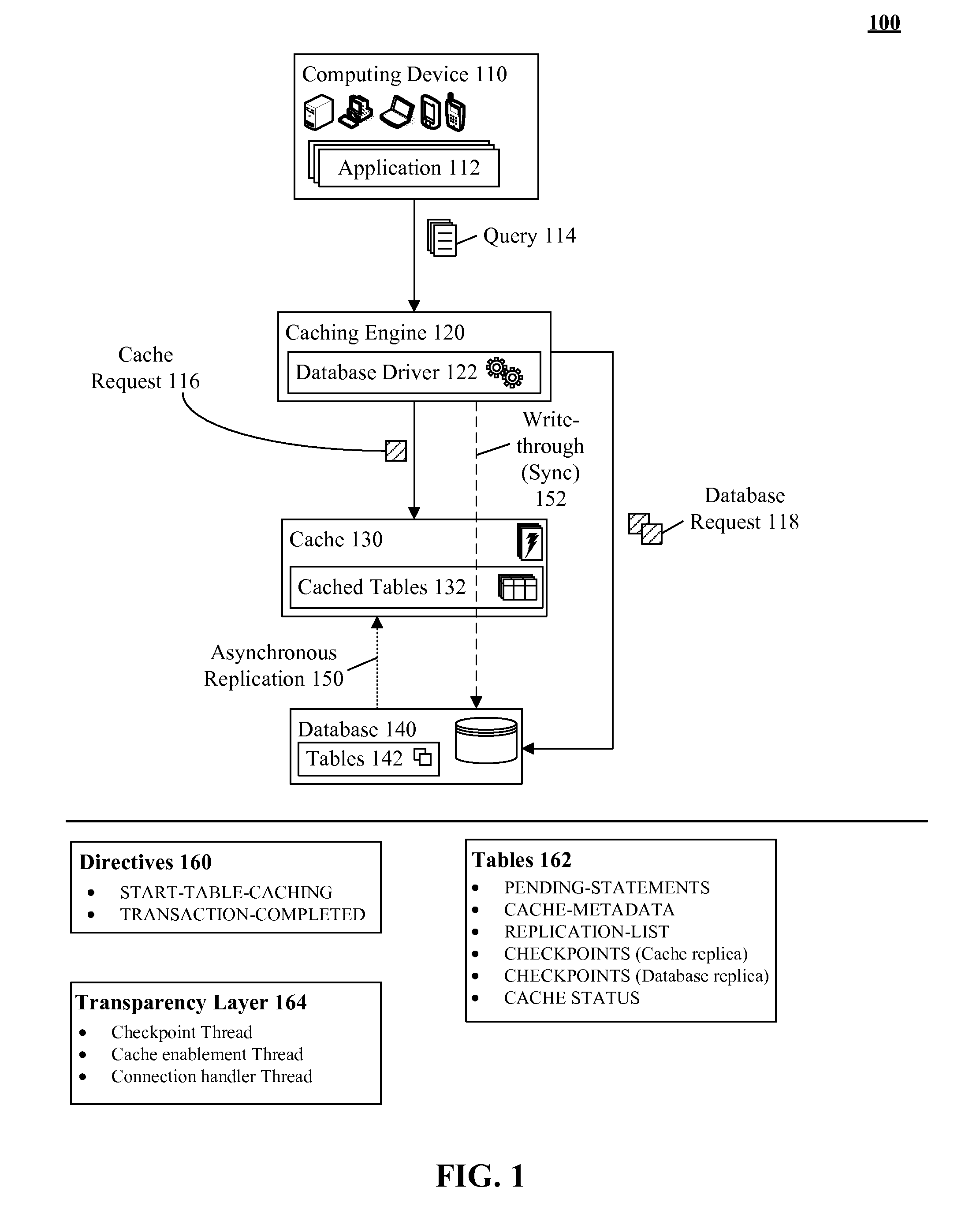

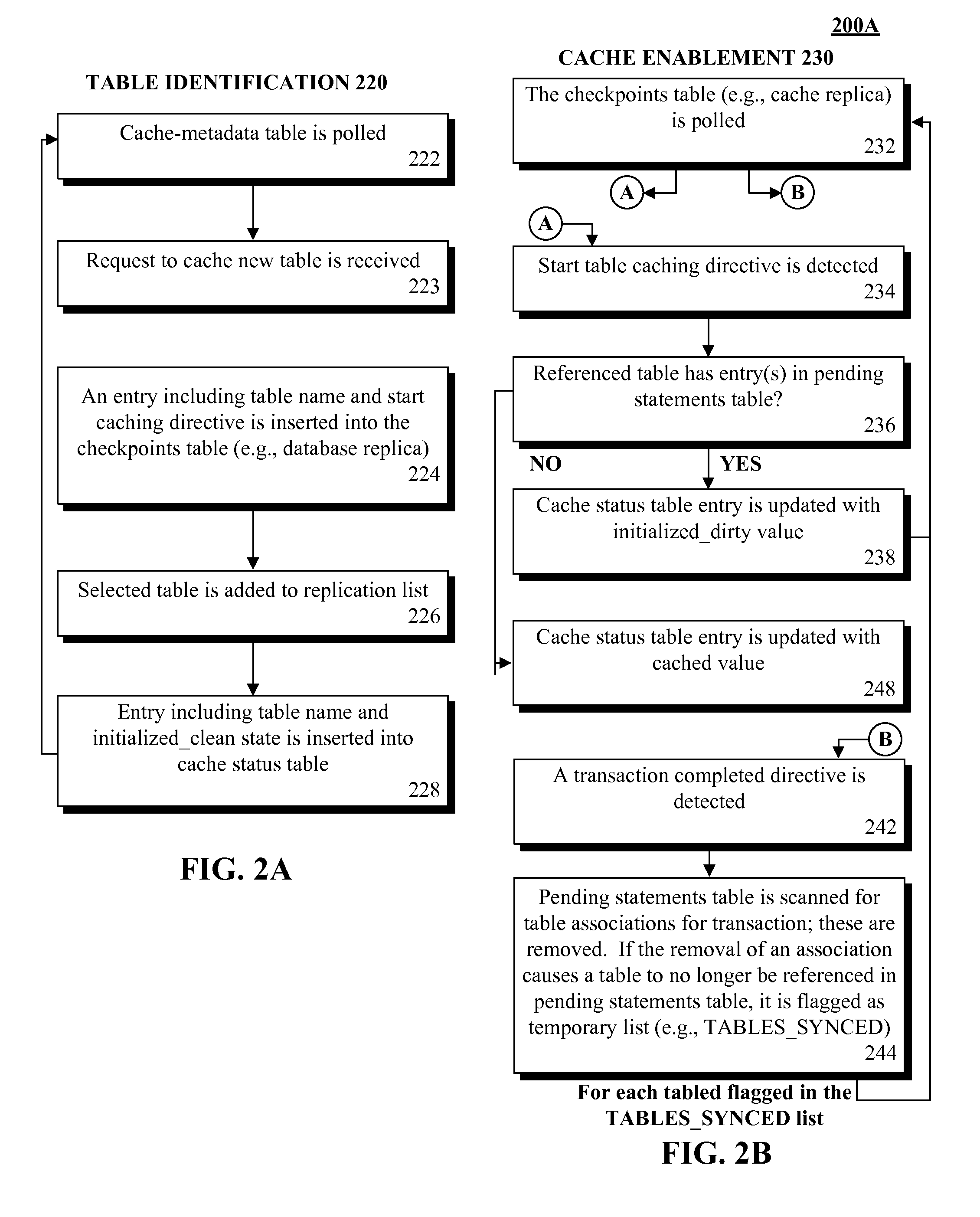

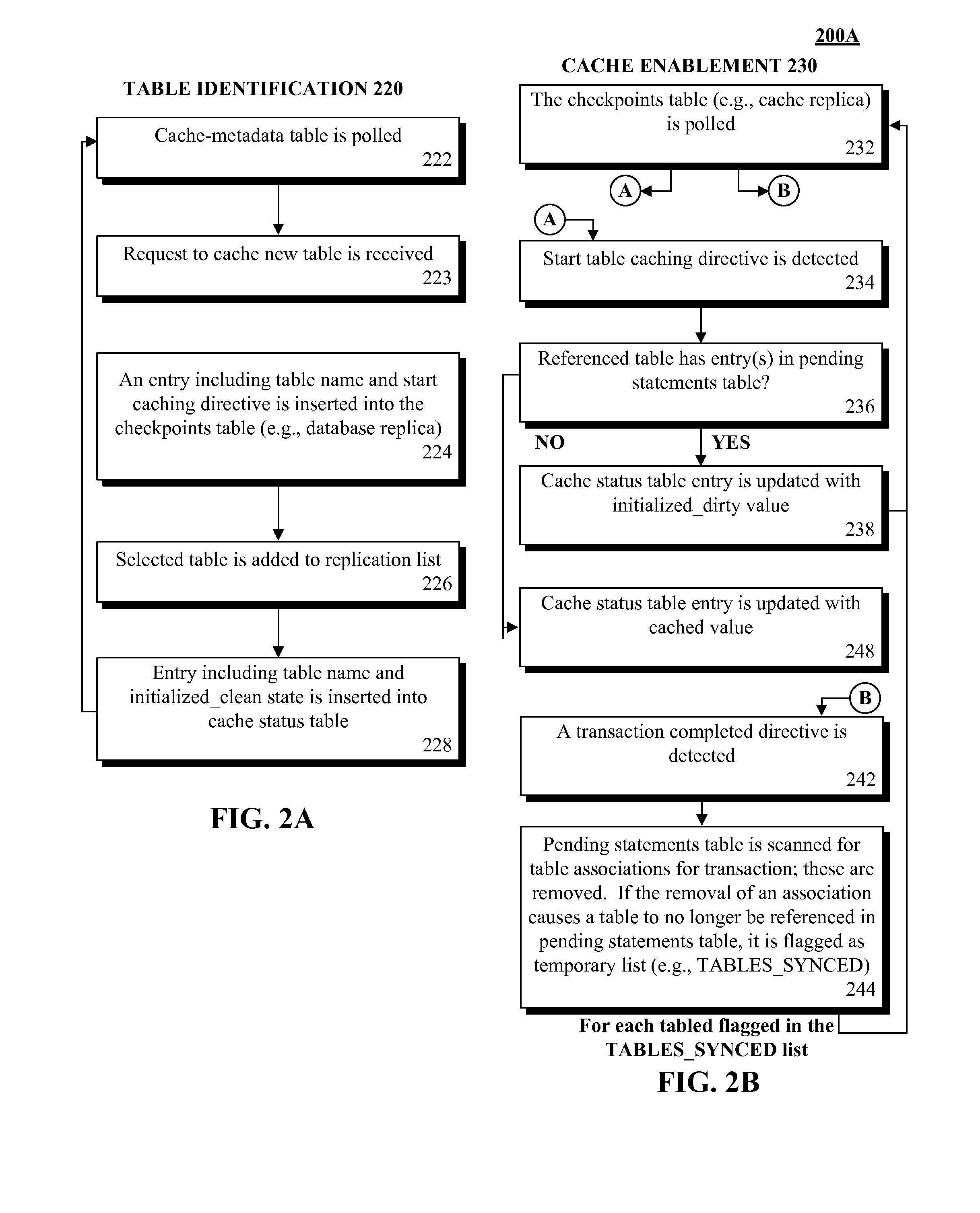

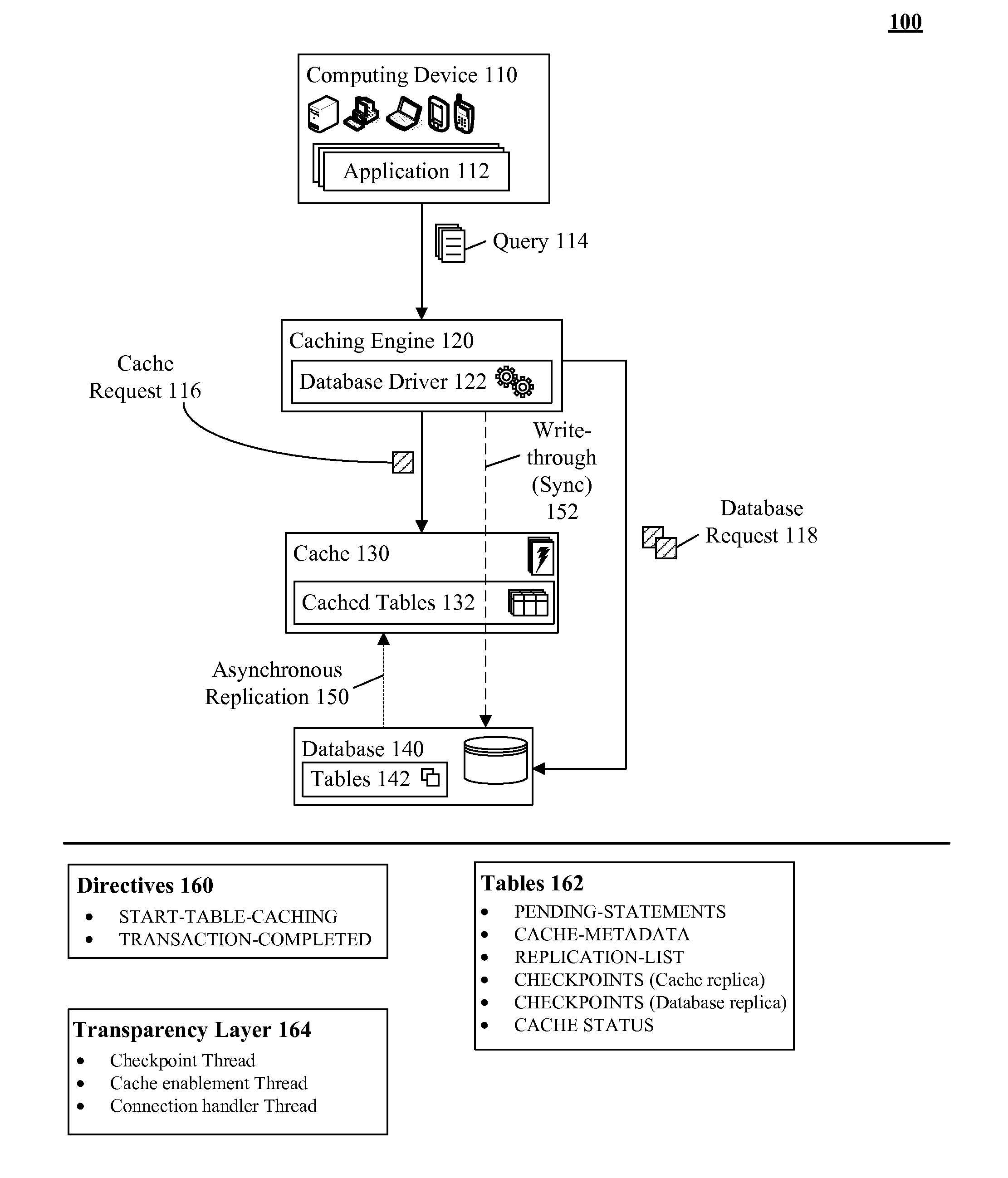

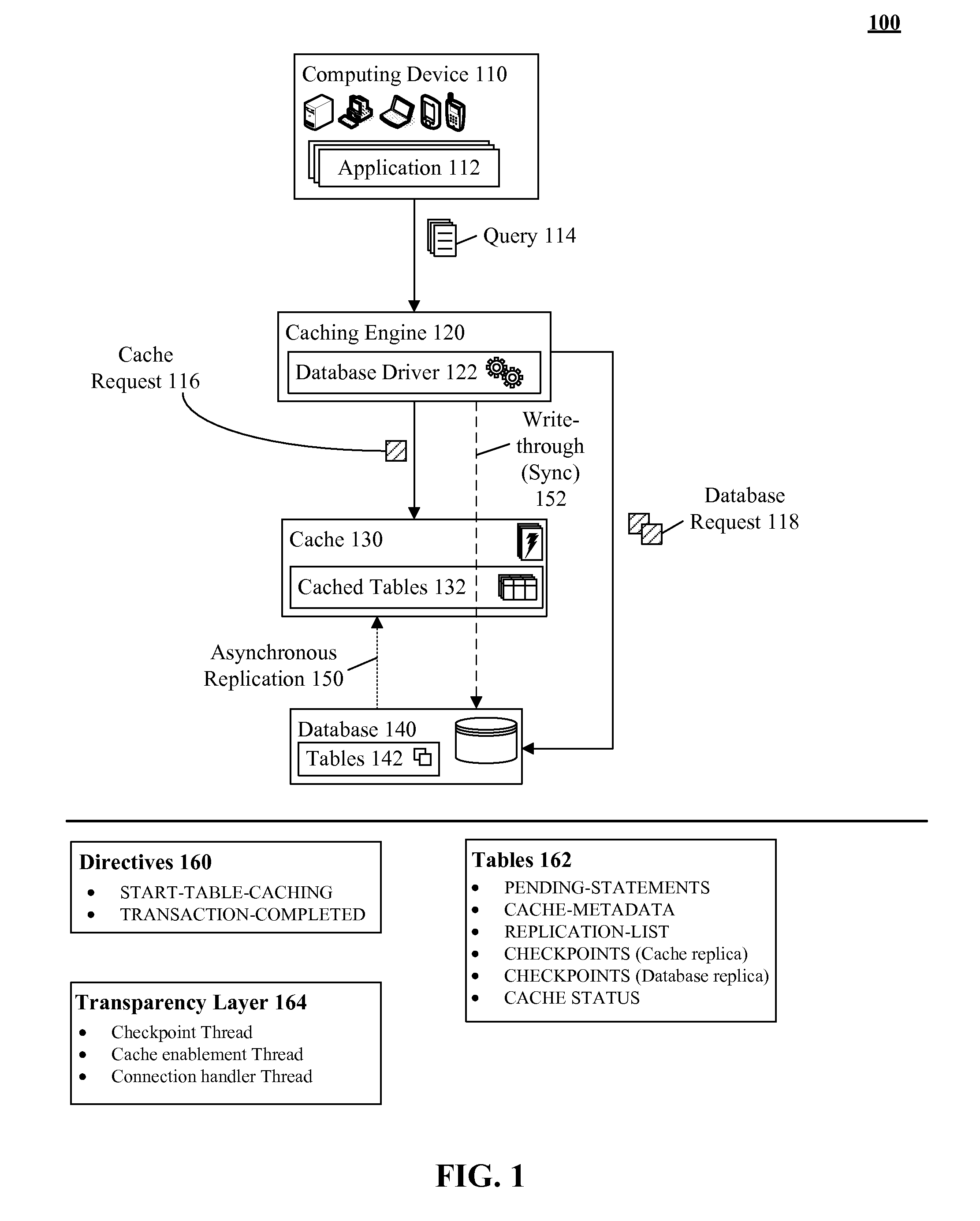

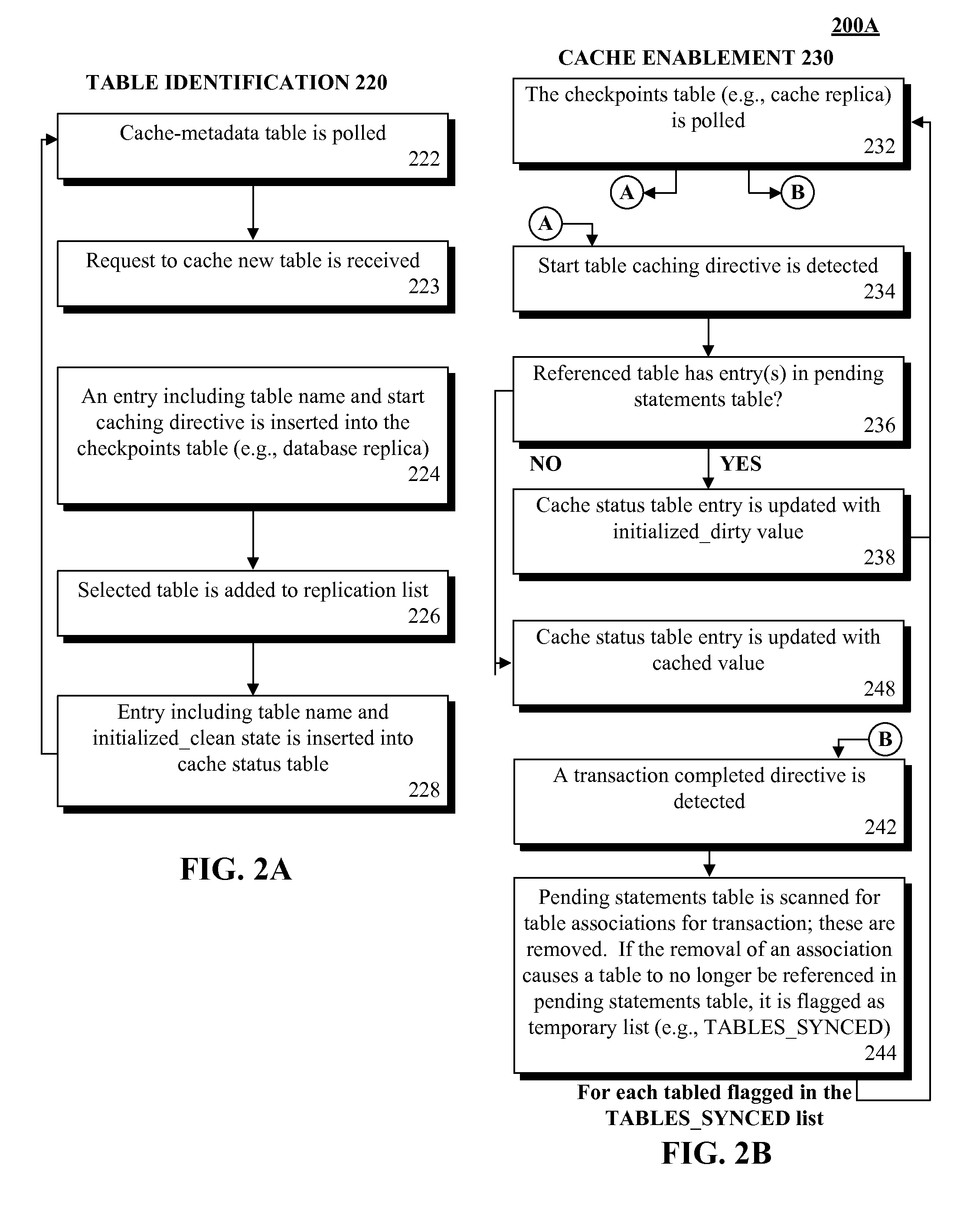

Database caching utilizing asynchronous log-based replication

ActiveUS8548945B2Digital data information retrievalDigital data processing detailsRelational database management systemDatabase caching

A database table within a database to persist within a cache as a cached table can be identified. The database can be a relational database management system (RDBMS) or an object oriented database management system (OODBMS). The cache can be a database cache. Database transactions within can be logged within a log table and the cached table within the cache can be flagged as not cached during runtime. An asynchronous replication of the database table to the cached table can be performed. The replication can execute the database transactions within the log table upon the cached table. The cached table can be flagged as cached when the replication is completed.

Owner:INT BUSINESS MASCH CORP

Database caching utilizing asynchronous log-based replication

ActiveUS20130080388A1Digital data information retrievalDigital data processing detailsRelational database management systemDatabase caching

A database table within a database to persist within a cache as a cached table can be identified. The database can be a relational database management system (RDBMS) or an object oriented database management system (OODBMS). The cache can be a database cache. Database transactions within can be logged within a log table and the cached table within the cache can be flagged as not cached during runtime. An asynchronous replication of the database table to the cached table can be performed. The replication can execute the database transactions within the log table upon the cached table. The cached table can be flagged as cached when the replication is completed.

Owner:IBM CORP

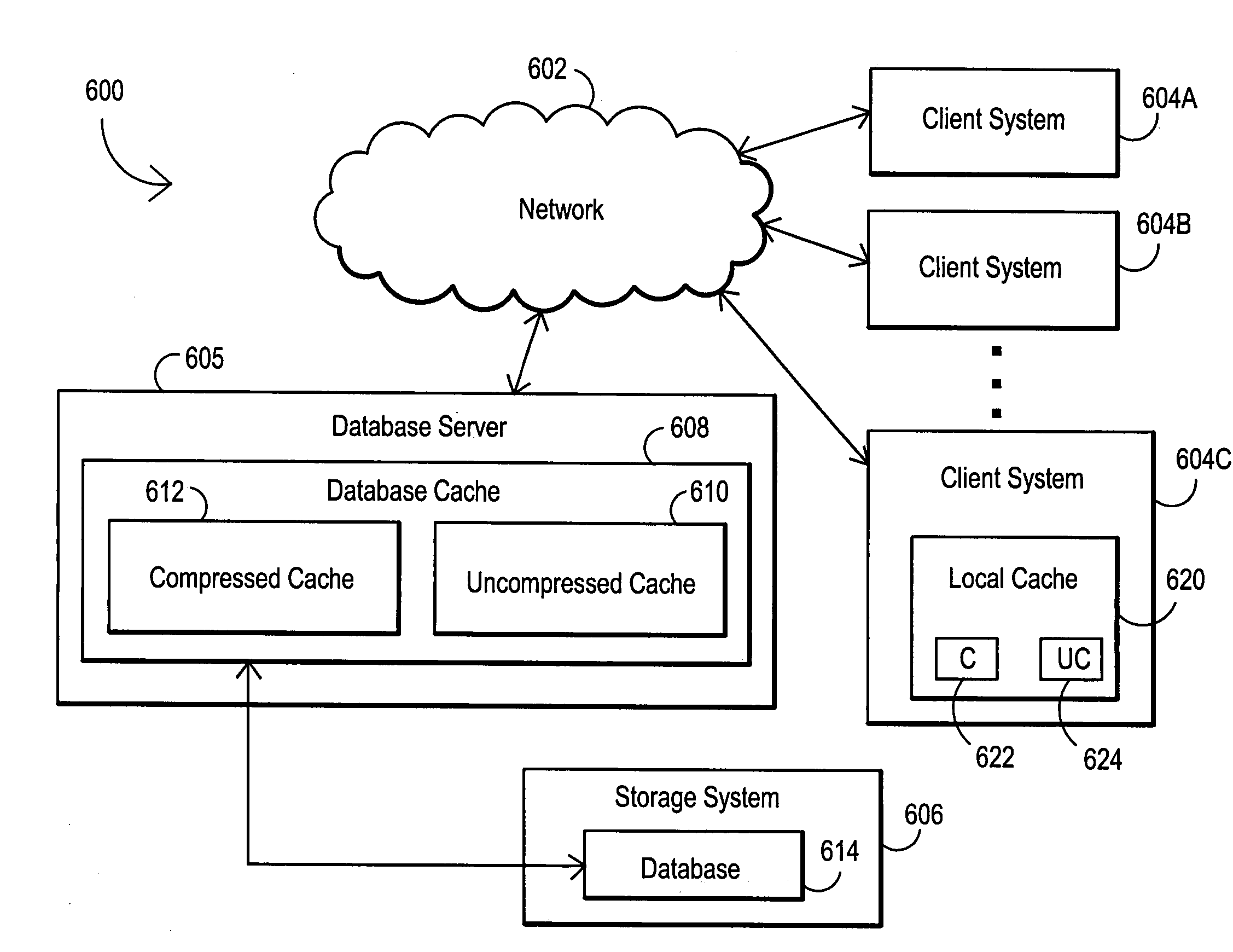

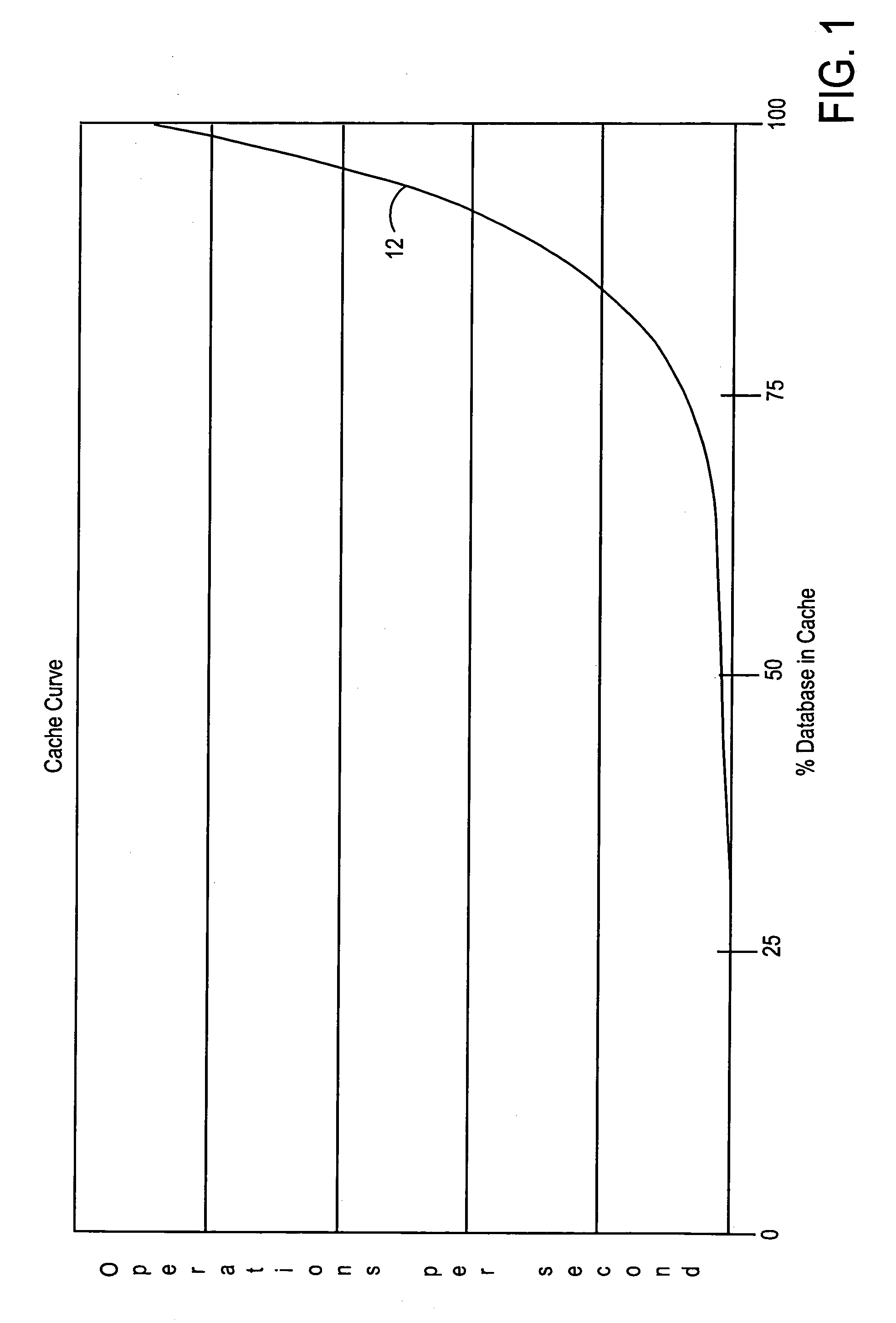

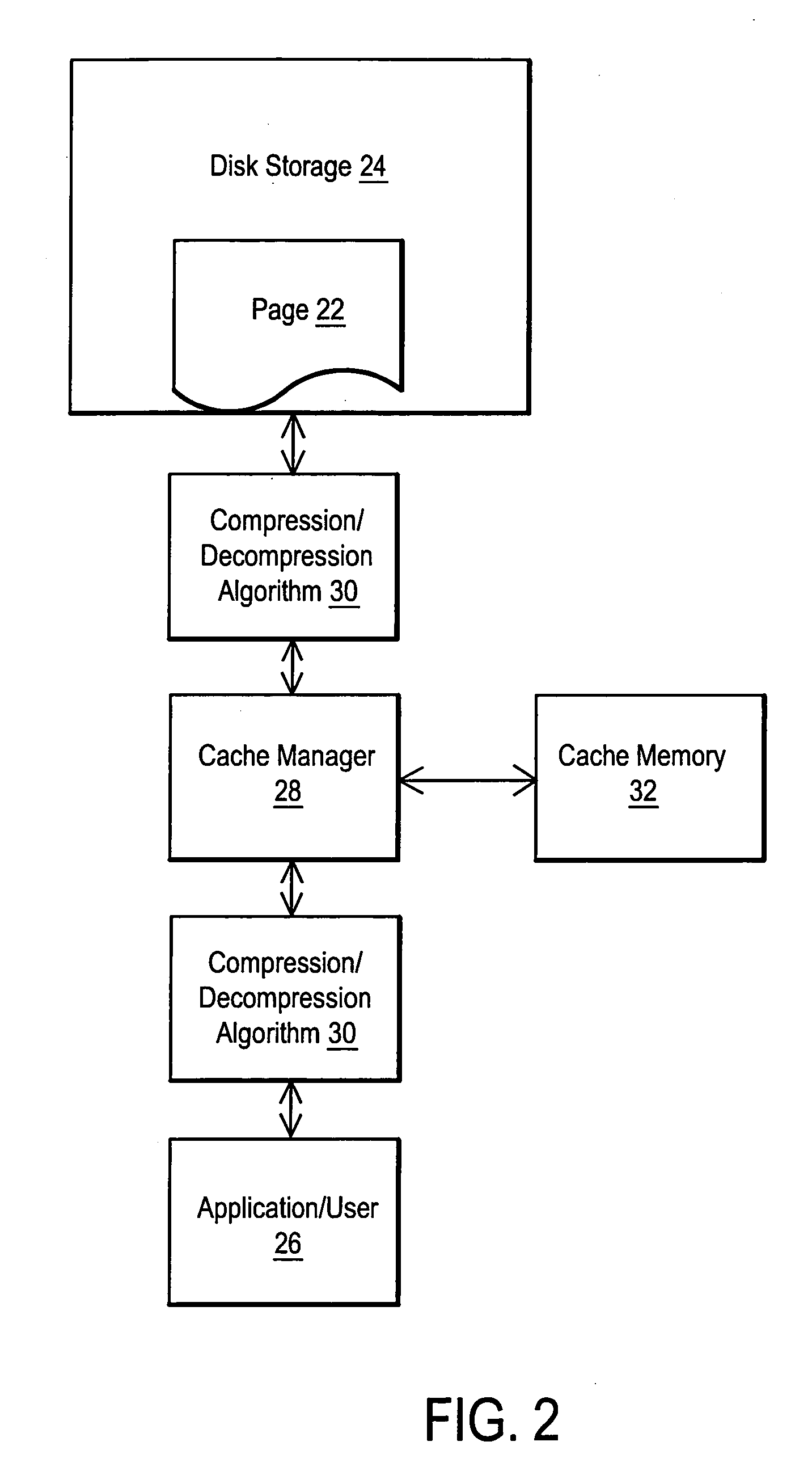

System and method for utilizing compression in database caches to facilitate access to database information

ActiveUS7181457B2Easy accessEnhance operations-per-second capabilityMemory architecture accessing/allocationData processing applicationsAccess timeData access

A system and method are disclosed for utilizing compression in database caches to facilitate access to database information. In contrast with applying compression to the database that is stored on disk, the present invention achieves performance advantages by using compression within the main memory database cache used by a database management system to manage data transfers to and from a physical database file stored on a storage system or stored on a networked attached device or node. The disclosed system and method thereby provide a significant technical advantage by increasing the effective database cache size. And this effective increase in database cache size can greatly enhance the operations-per-second capability of a database management system by reducing unnecessary disk or network accesses thereby reducing data access times.

Owner:ACTIAN CORP

Database caching utilizing asynchronous log-based replication

ActiveUS20130080386A1Digital data information retrievalDigital data processing detailsRelational database management systemDatabase caching

A database table within a database to persist within a cache as a cached table can be identified. The database can be a relational database management system (RDBMS) or an object oriented database management system (OODBMS). The cache can be a database cache. Database transactions within can be logged within a log table and the cached table within the cache can be flagged as not cached during runtime. An asynchronous replication of the database table to the cached table can be performed. The replication can execute the database transactions within the log table upon the cached table. The cached table can be flagged as cached when the replication is completed.

Owner:IBM CORP

Systems and methods for a distributed in-memory database and distributed cache

InactiveUS20070239661A1Fast readQuick updateDigital data information retrievalSpecial data processing applicationsData processing systemIn-memory database

Methods, systems, and articles of manufacture consistent with the present invention provide for managing a distributed in-memory database and a database cache. A database cache is provided. An in-memory database is provided. The in-memory database is distributed over at least two sub data processing systems in memory.

Owner:SUN MICROSYSTEMS INC

Systems and methods for a distributed cache

ActiveUS20070239791A1Fast readAvoid overheadDigital data processing detailsDatabase distribution/replicationData processing systemParallel computing

Methods, systems, and articles of manufacture consistent with the present invention provide managing a distributed database cache. A database cache is provided. The database cache is distributed over at least two data processing systems.

Owner:ORACLE INT CORP

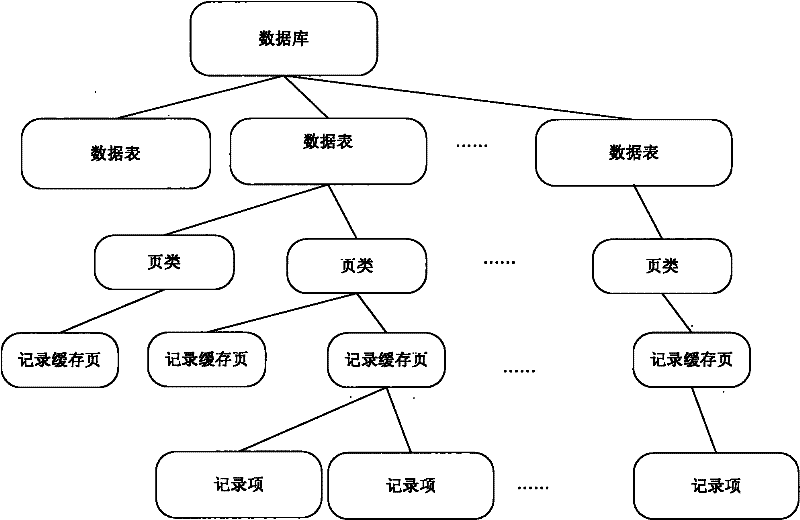

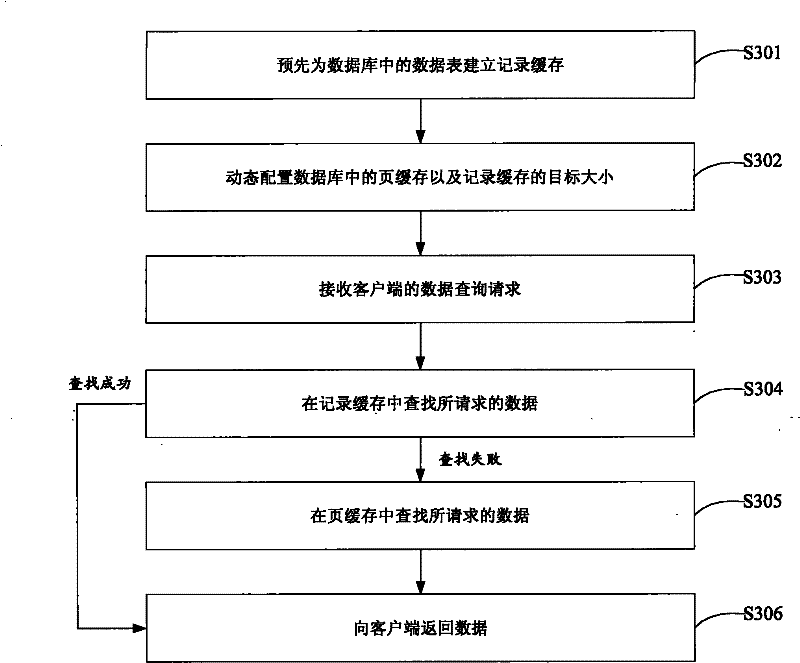

Database cache management method and database server

ActiveCN102331986AIncrease profitReduce update frequencySpecial data processing applicationsDatabase serverDatabase caching

The embodiment of the invention discloses a database cache management method and a database server. The method comprises the following steps of: pre-establishing a record cache for a data sheet in a database, and reading and writing data by the record cache by taking a data row as a unit; in the event of receiving a data inquiry request of a client, searching requested data in the record cache; if the searching process is failed, searching the requested data from a page cache of the database; and returning the data searched from the record cache or the page cache to the client. By utilizing the scheme, the two caches are included in the same database server, wherein the record cache is used for reading and writing data by taking the data row as the unit; the record cache can be only updated when only a small amount of hotspot data is changed, therefore, the cache utilization rate of the database server is increased; and the cache updating frequency is reduced.

Owner:TAOBAO CHINA SOFTWARE

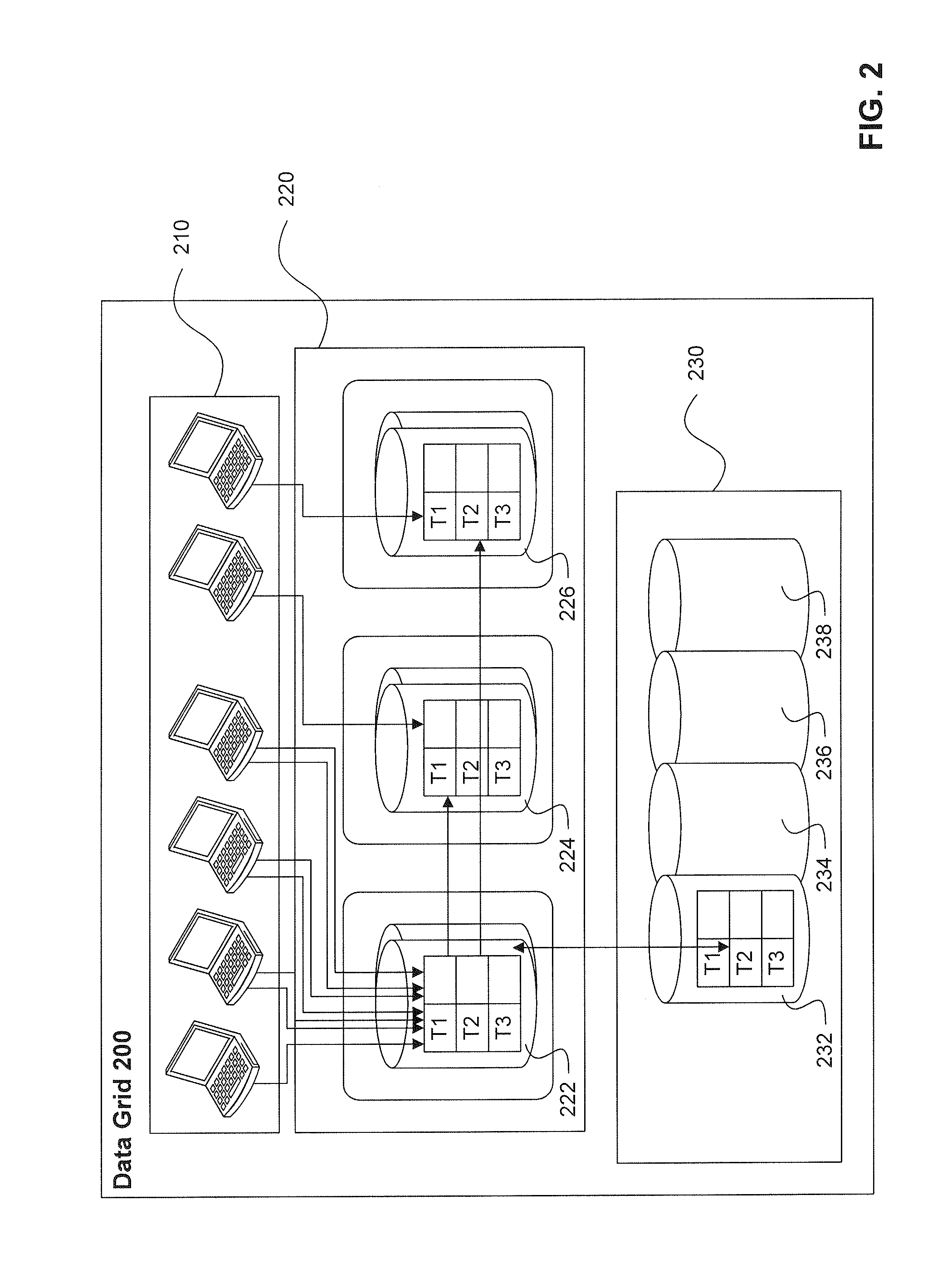

Data Grid Advisor

ActiveUS20120158723A1Improve performanceDigital data information retrievalDigital data processing detailsIn-memory databaseDatabase application

A system and method to generate an improved layout of a data grid in a database environment is provided. The data grid is a clustered in-memory database cache comprising one or more data fabrics, where each data fabric includes multiple in-memory database cache nodes. A data grid advisor capability can be used by application developers and database administrators to evaluate and design the data grid layout so as to optimize performance based on resource constraints and the needs of particular database applications.

Owner:SYBASE INC

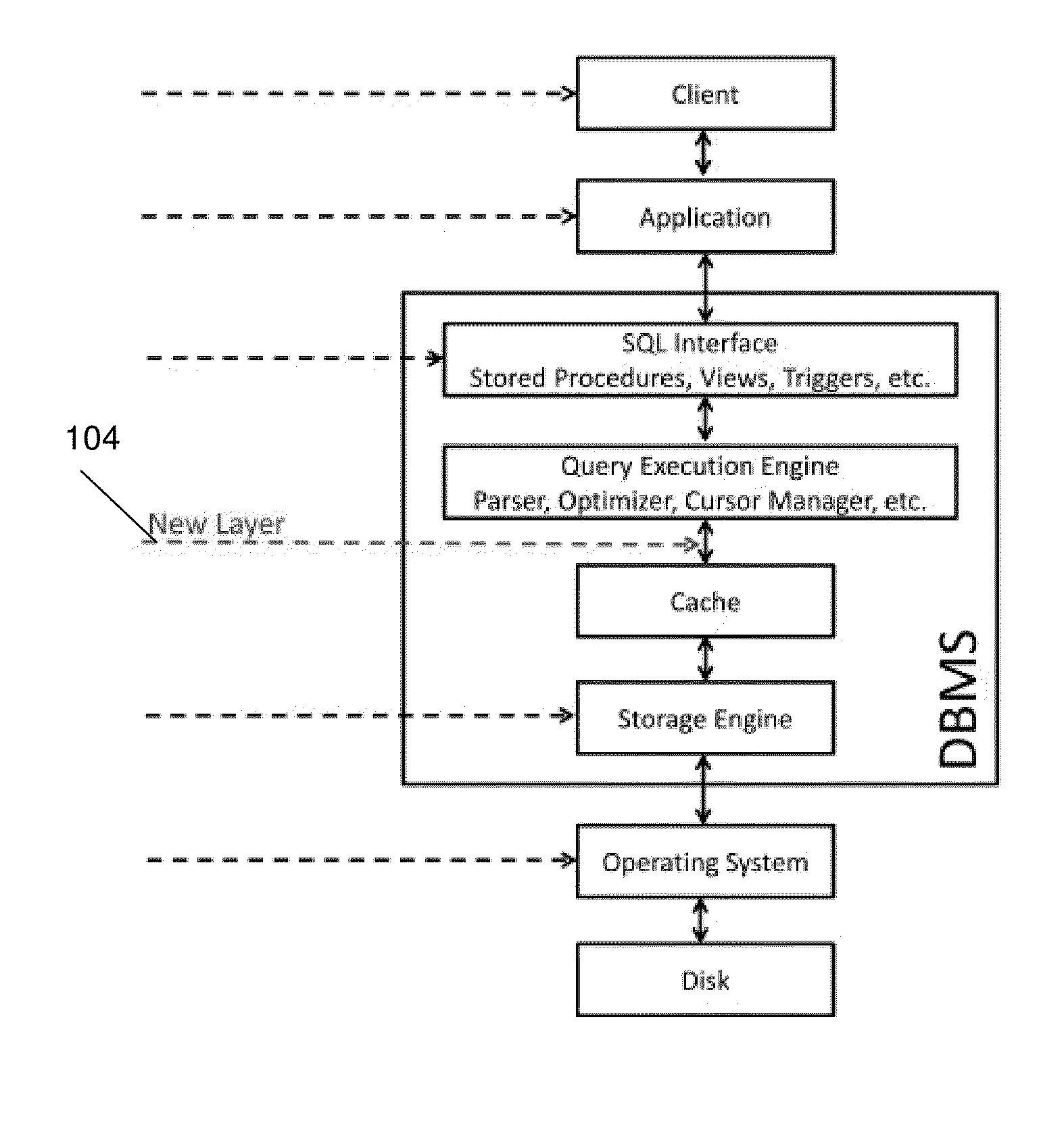

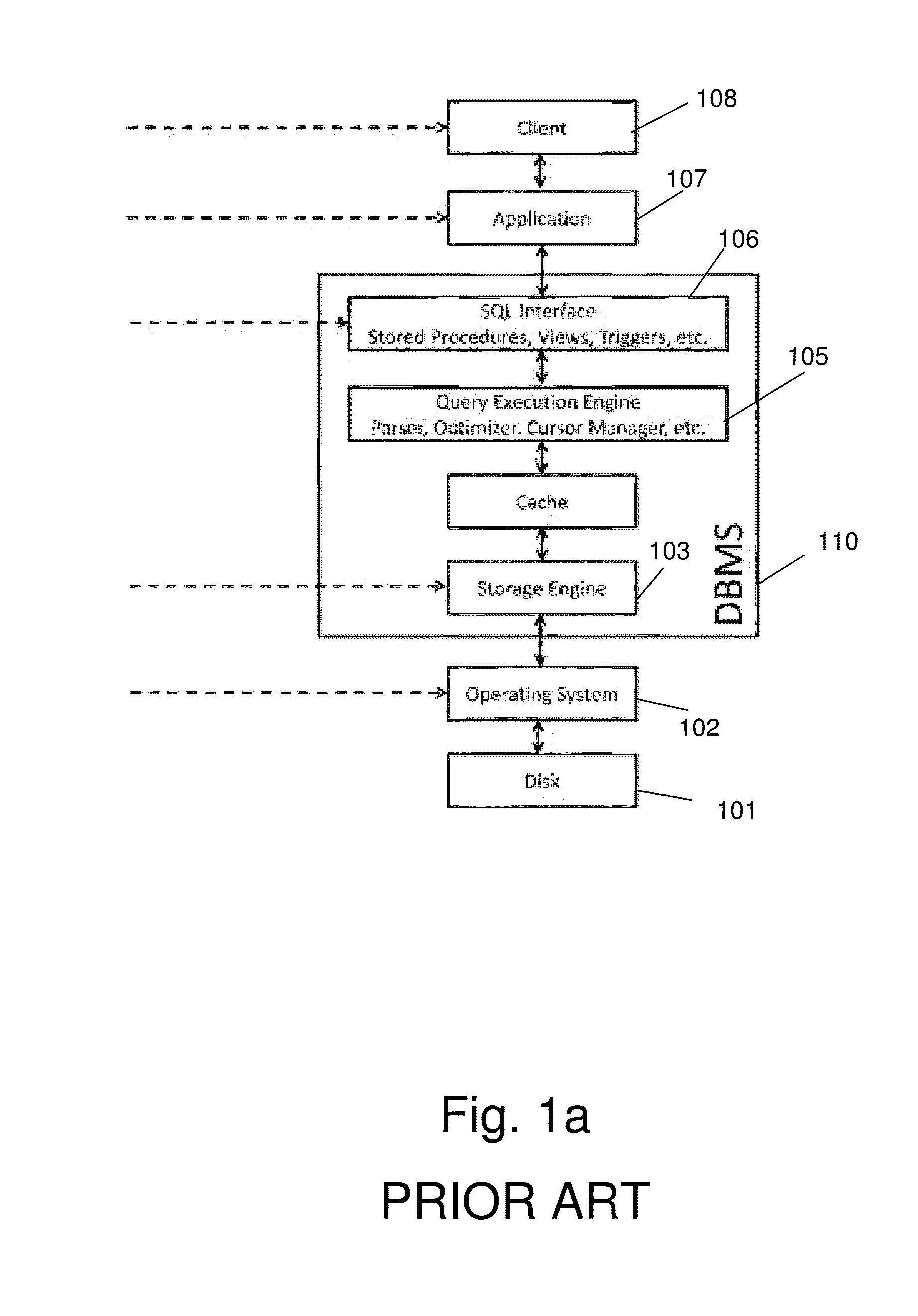

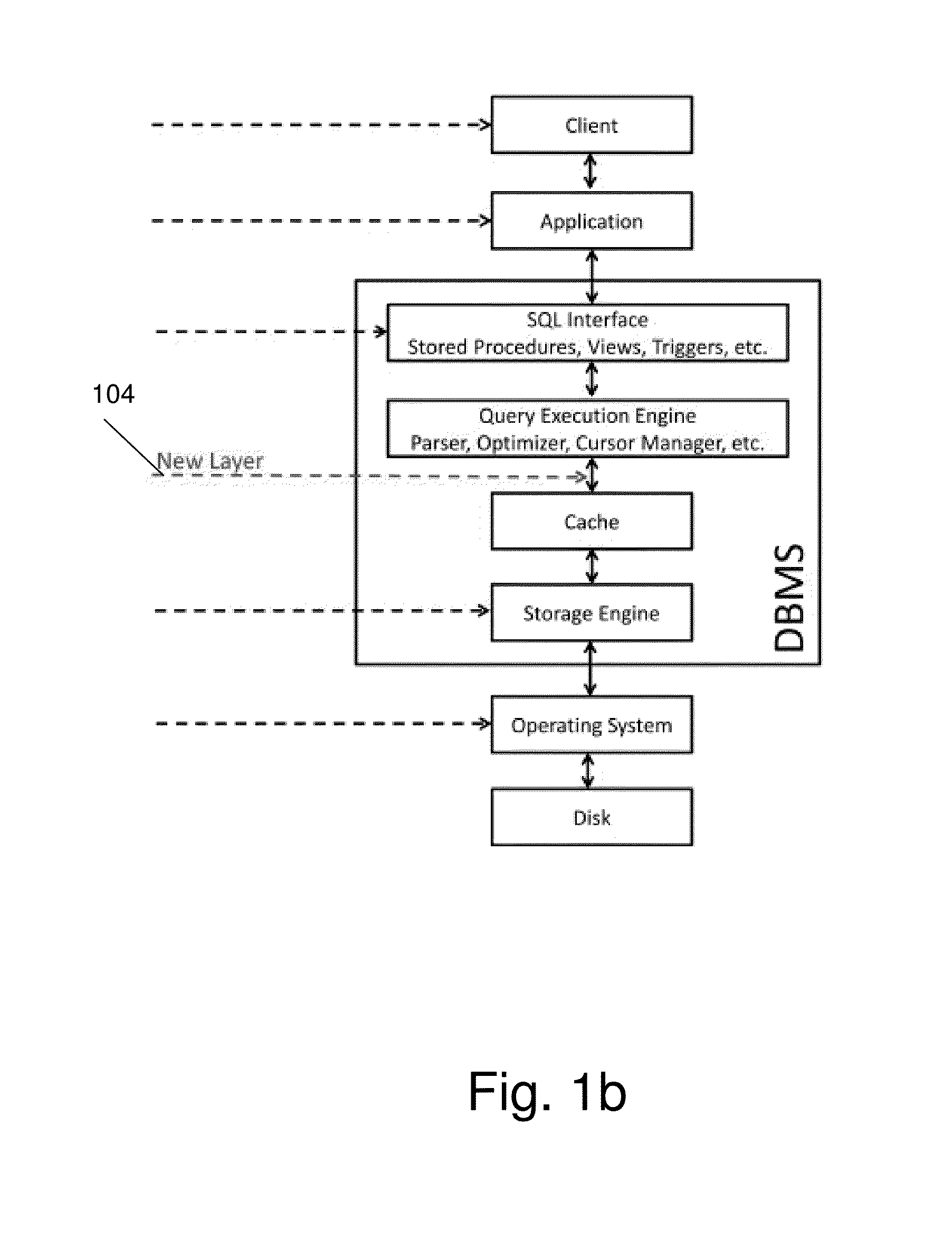

Method and system for database encryption

ActiveUS20150178506A1Unauthorized memory use protectionHardware monitoringDatabase encryptionDatabase caching

A method for encrypting a database, according to which a dedicated encryption module is placed inside a Database Management Software above a database cache. Each value of the database is encrypted together with the coordinates of said value using a dedicated encryption method, which assumes the existence of an internal cell identifier that can be queried but not tampered.

Owner:BEN GURION UNIVERSITY OF THE NEGEV

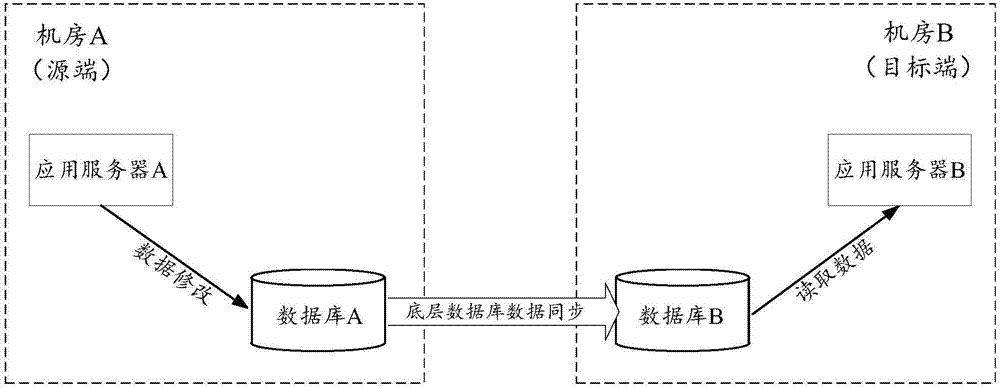

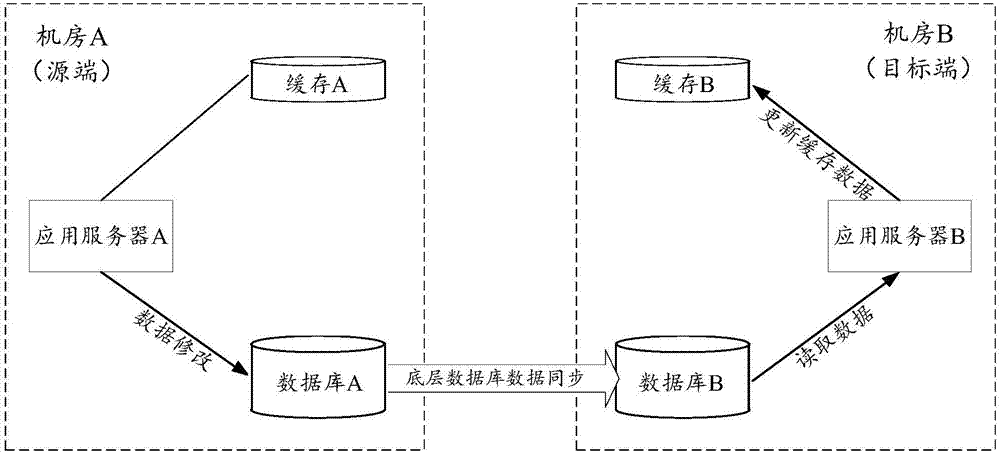

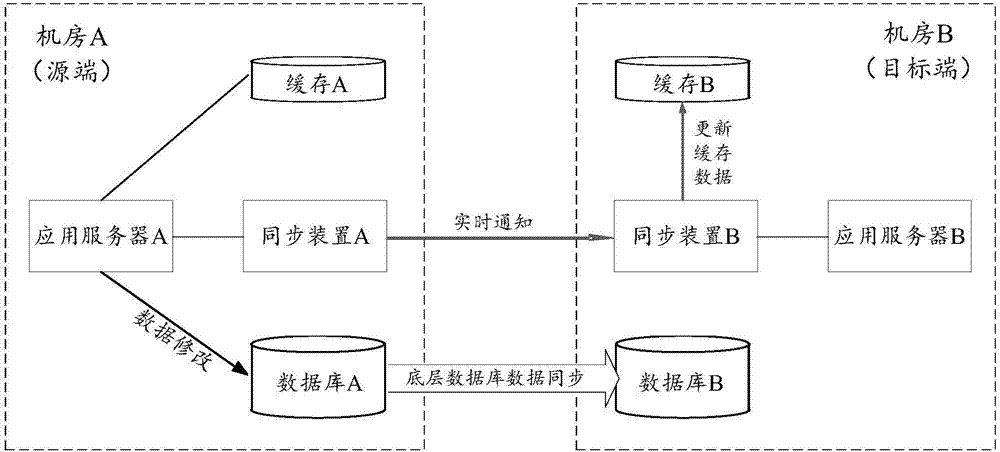

Data synchronization method, apparatus and system

ActiveCN106980625AReduce latencyDatabase distribution/replicationSpecial data processing applicationsData synchronizationTime delays

The invention discloses a data synchronization method, apparatus and system. The data synchronization method comprises the steps that a data synchronization source end generates a real-time notification for current data modification after determining that data of a source end database is modified, and sends the real-time notification to a data synchronization target end; and the data synchronization target end analyzes the real-time notification to obtain data modification related information after receiving the real-time notification, and updates cache of a target end database according to an analysis result. By applying the scheme, the target end can update the cache of the local database directly according to the information carried in the real-time notification after receiving the real-time notification, or initiate monitoring of the local database to update cache data in first time after local data is synchronized, thereby achieving the effect of shortening a synchronous updating time delay of the cache.

Owner:ADVANCED NEW TECH CO LTD

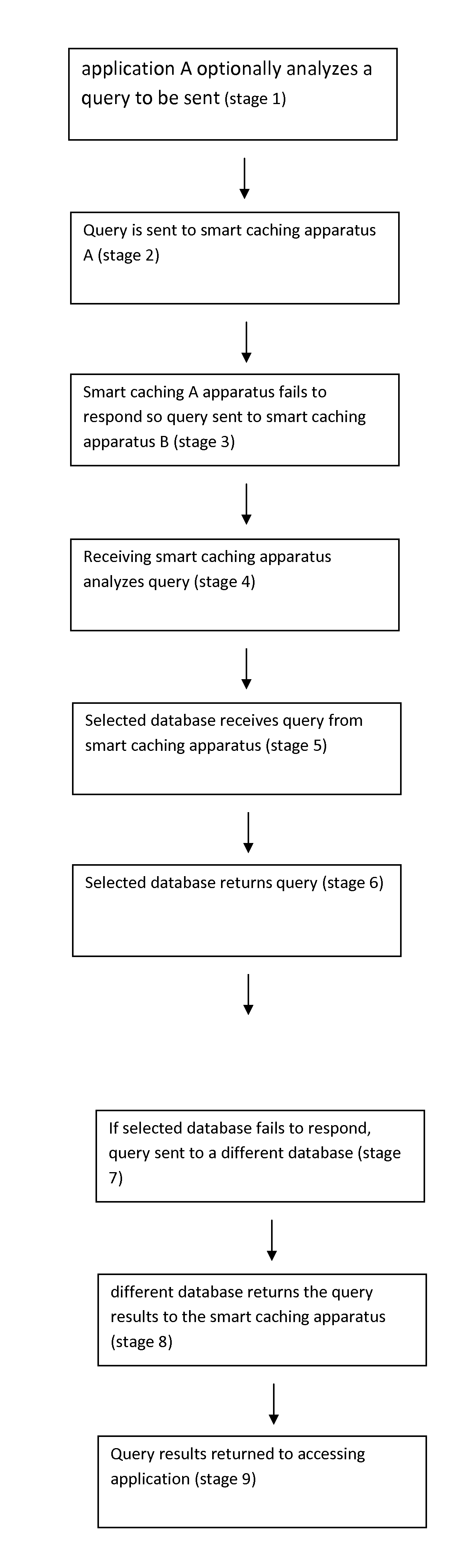

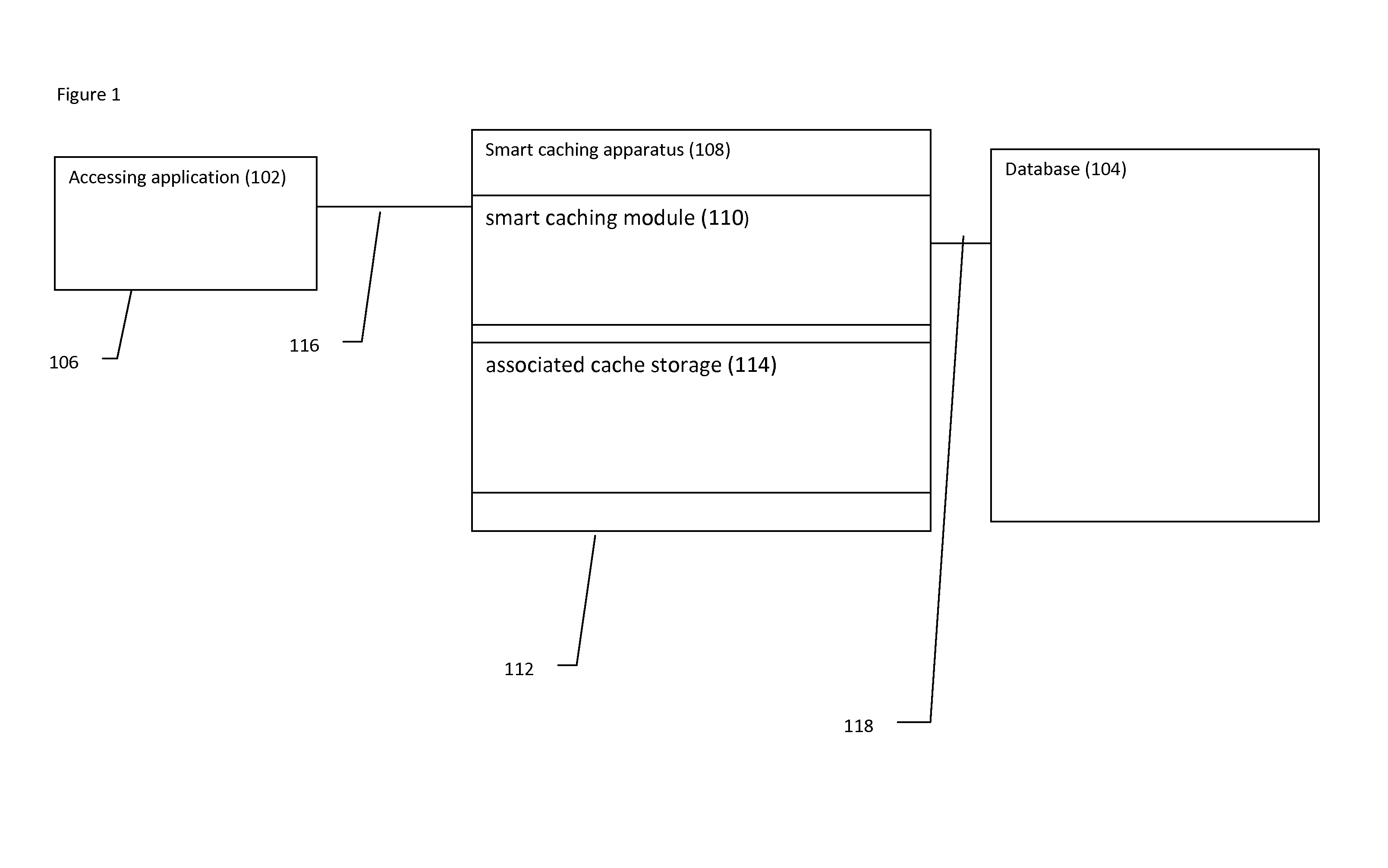

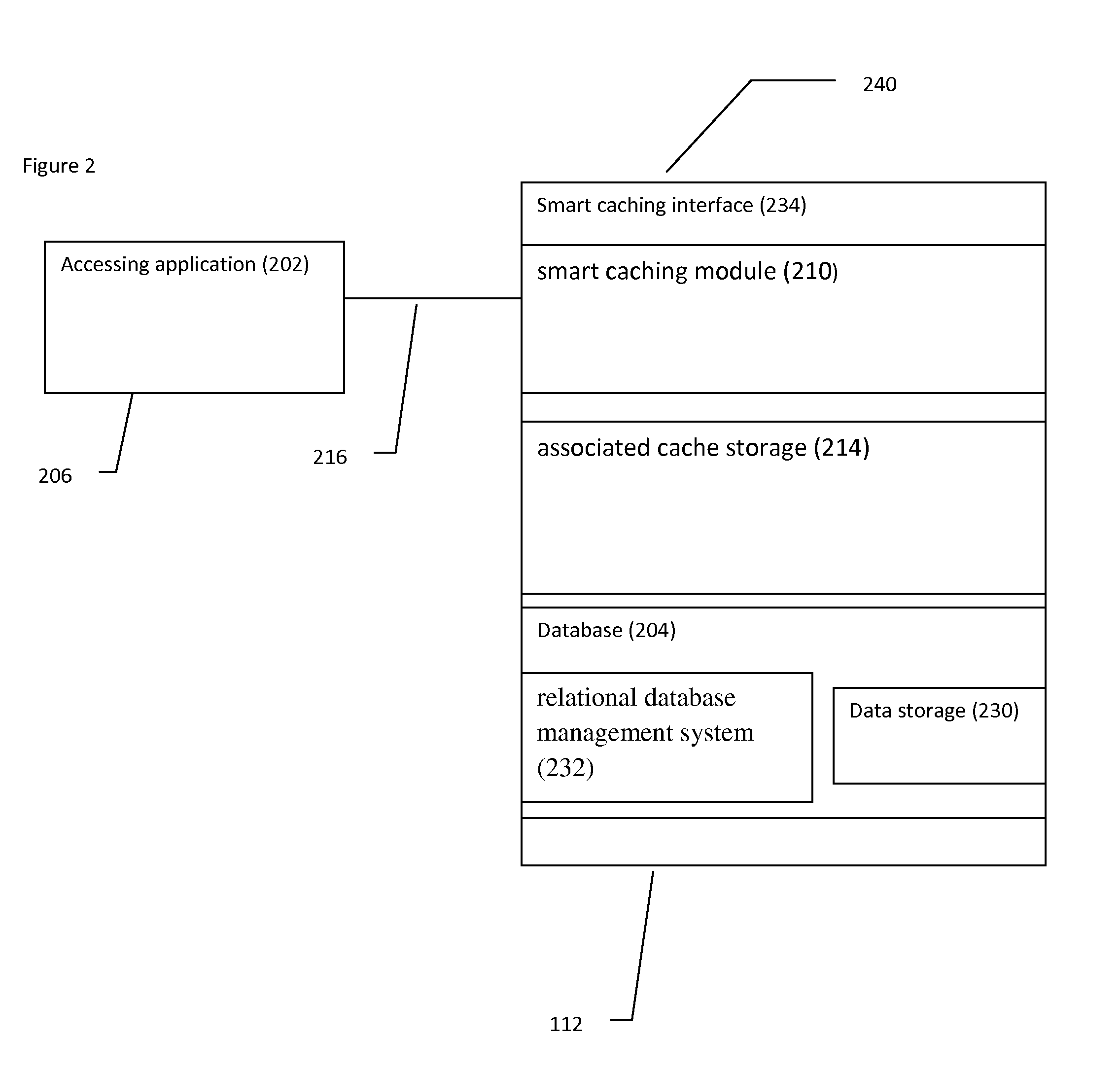

Smart database caching

InactiveUS20130060810A1Improve efficiencyIncrease speedDigital data information retrievalDigital data processing detailsEngineeringDatabase caching

A system and method for smart caching, in which caching is performed according to one or more functional criteria, in which the functional criteria includes at least time elapsed since a query was received for the data. Preferably at least data is cached.

Owner:HEXATIER LTD

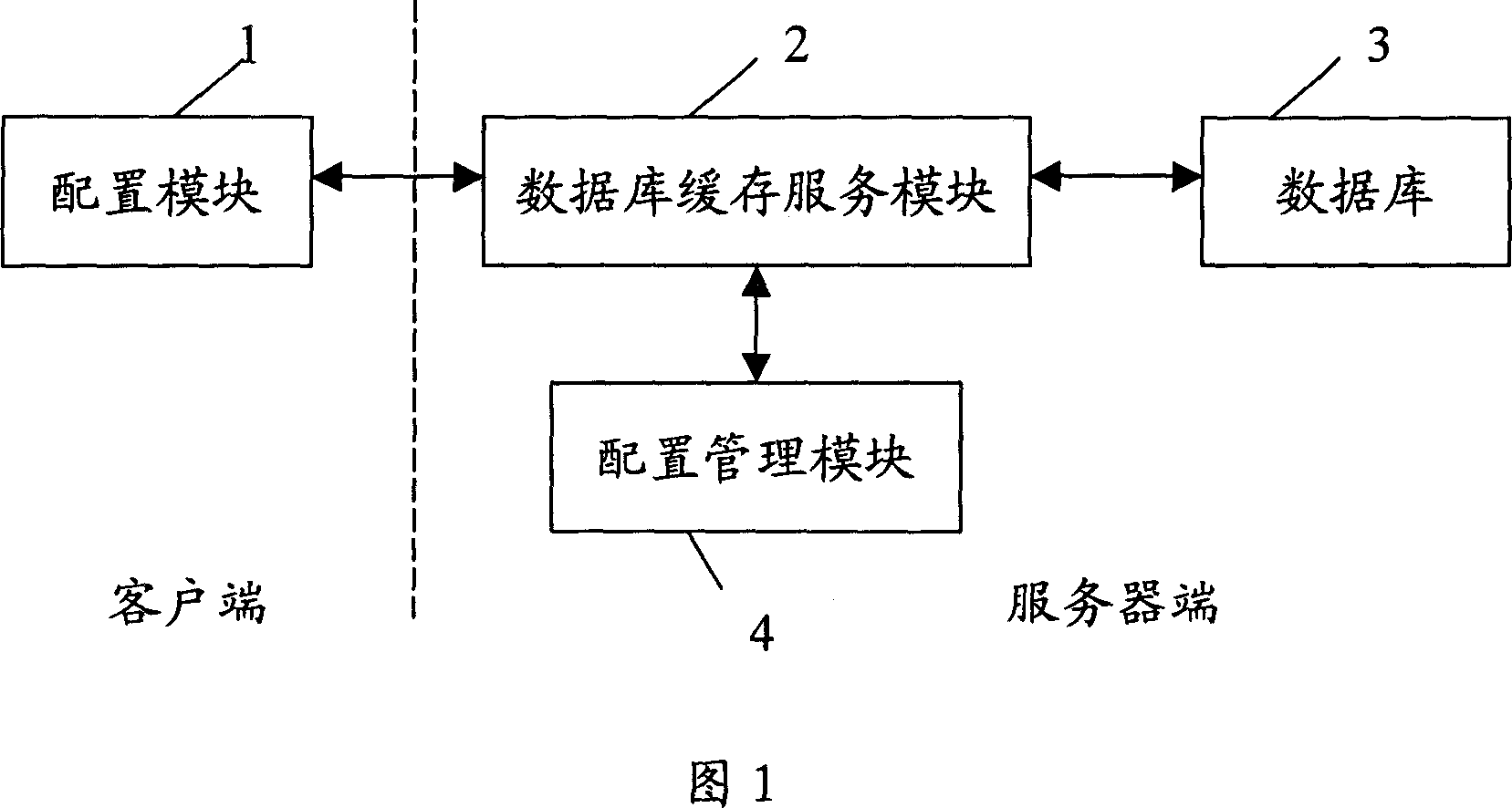

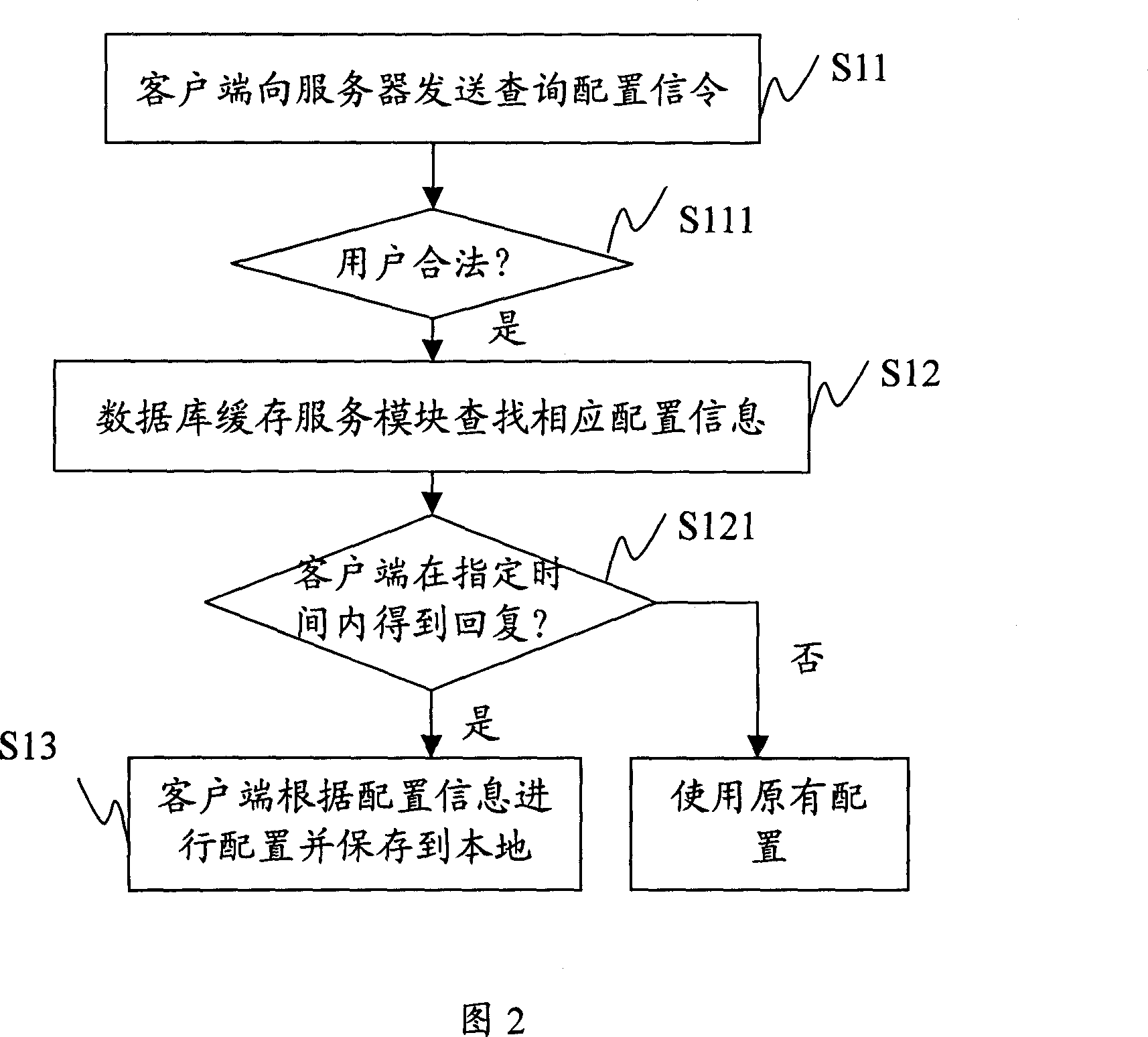

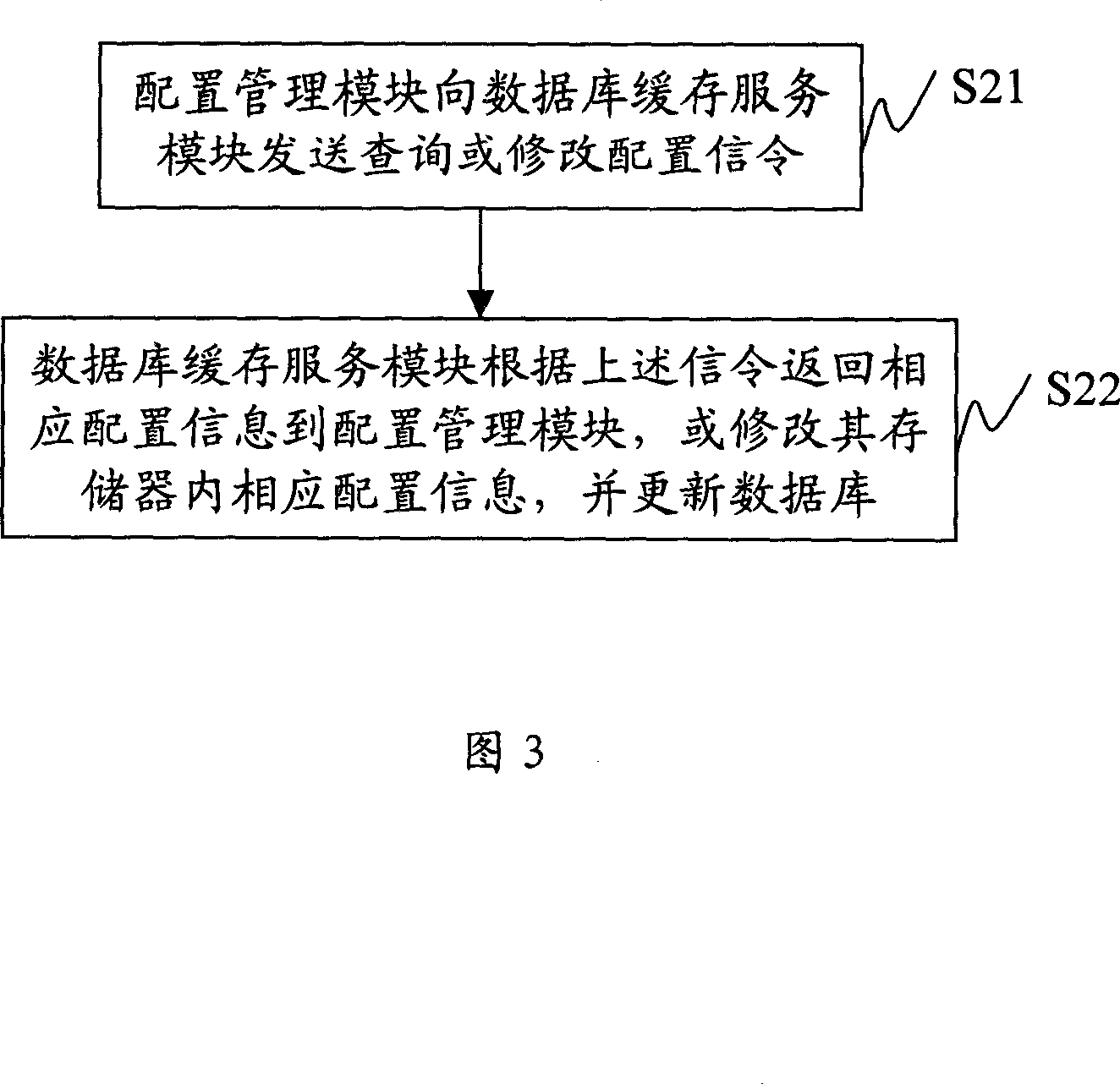

A remote configuration and management system and method of servers

InactiveCN101079763AReduce maintenance costsReduce development costsData switching by path configurationSystem maintenanceService mode

The invention discloses a managing method and system of remote allocation of server, which consists of server and customer end with allocation mode, wherein the customer end connects the server through network; the server contains database buffer service mode and database; the allocating mode is used to transmit checking allocating order to the server, which receives the returned allocation information from server; the database buffer service mode receives the checking allocating order to acquire corresponding allocation information from memory or from database and return to the allocation mode according to the allocation order. The invention provides a uniform and transparent remote allocation management, which reduces the maintain cost greatly with high reliability and rapid operating speed.

Owner:TENCENT TECH (SHENZHEN) CO LTD

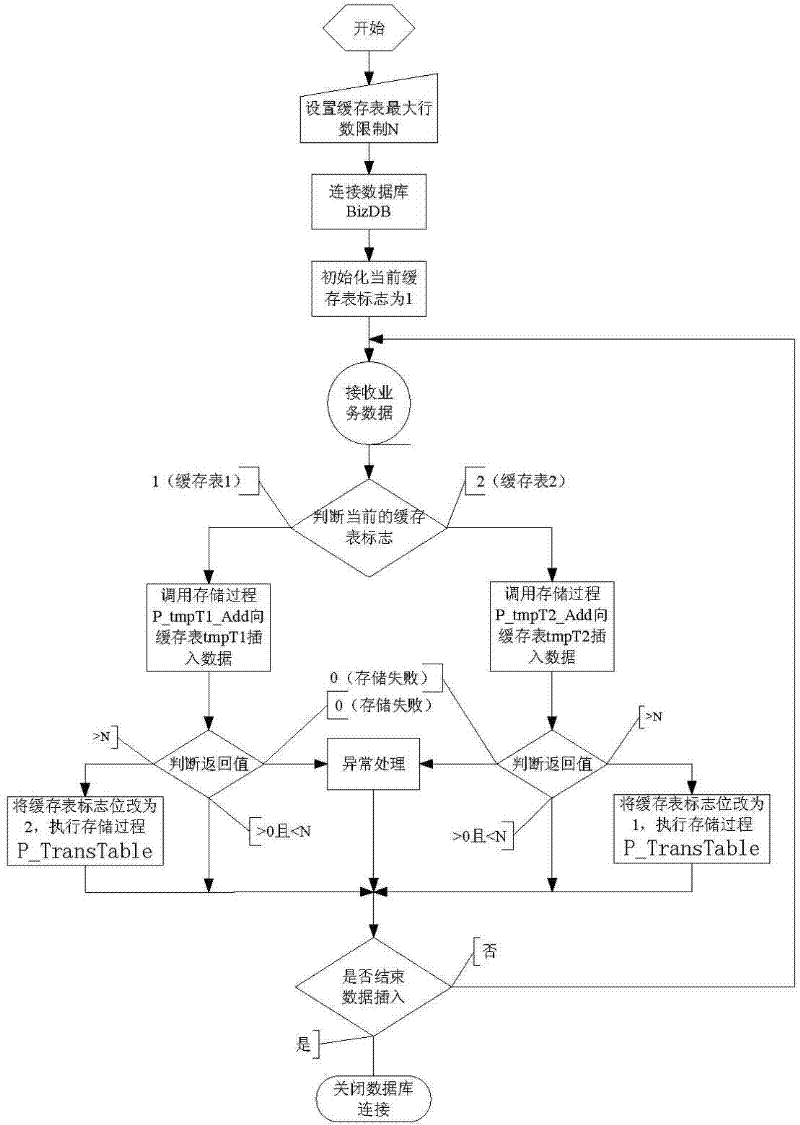

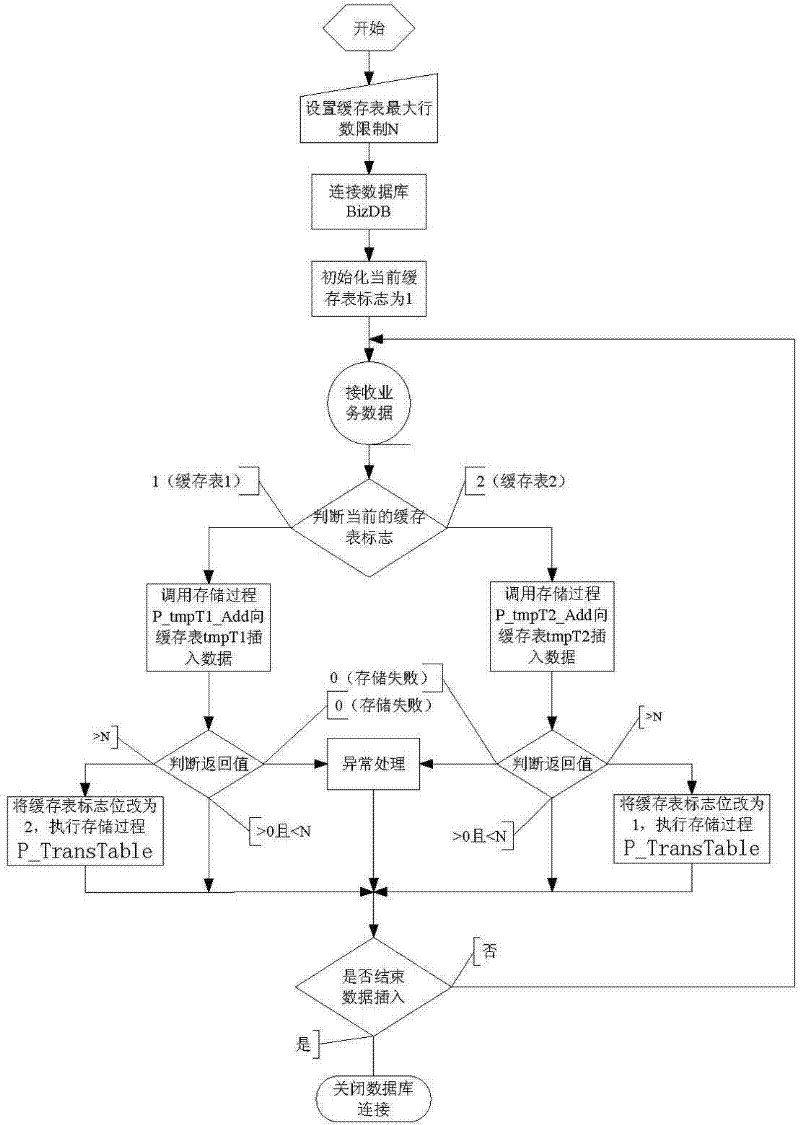

Method utilizing cache tables to improve insertion performance of data in database

ActiveCN102542054ALarge capacitySpecial data processing applicationsInternal memoryParallel computing

The invention relates to a method utilizing cache tables to improve insertion performance of data in a database, which includes firstly, building two database cache tables which are based on internal memory and have the same structure with a target data table to be inserted finally; secondly, inserting data into a first cache table, importing the data of the first cache table to the target data table after the data of the first cache table are higher than the maximum number threshold, inserting new data to a second cache table if the new data are required to be inserted to the database during the import process, and then importing data of the second cache table to the target data table after the data of the second cache table are higher than the maximum number threshold. By the method, the insertion performance of the data in the database can be improved greatly without updating of hardware or dead embracing of the database.

Owner:XIAMEN YAXON NETWORKS CO LTD

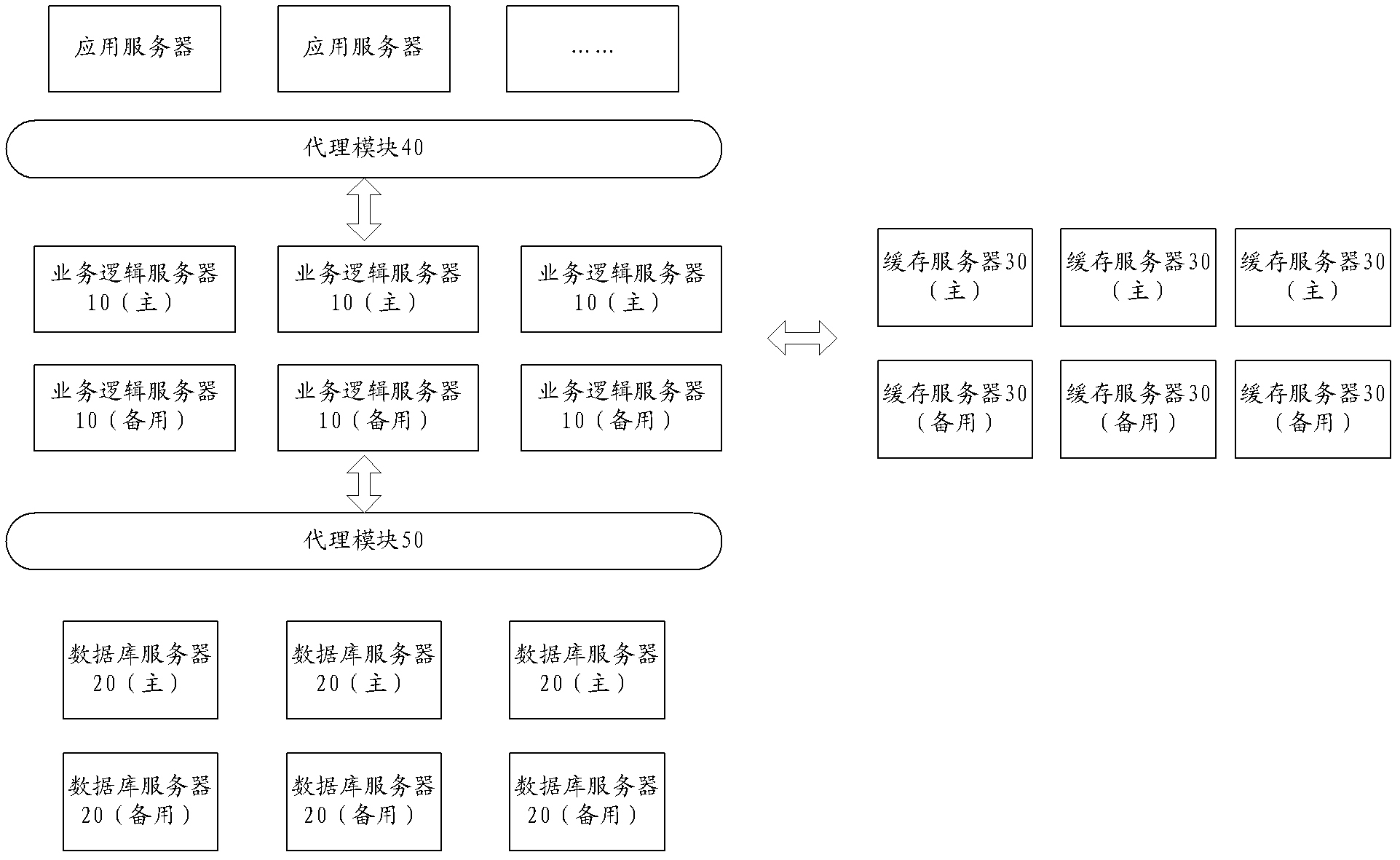

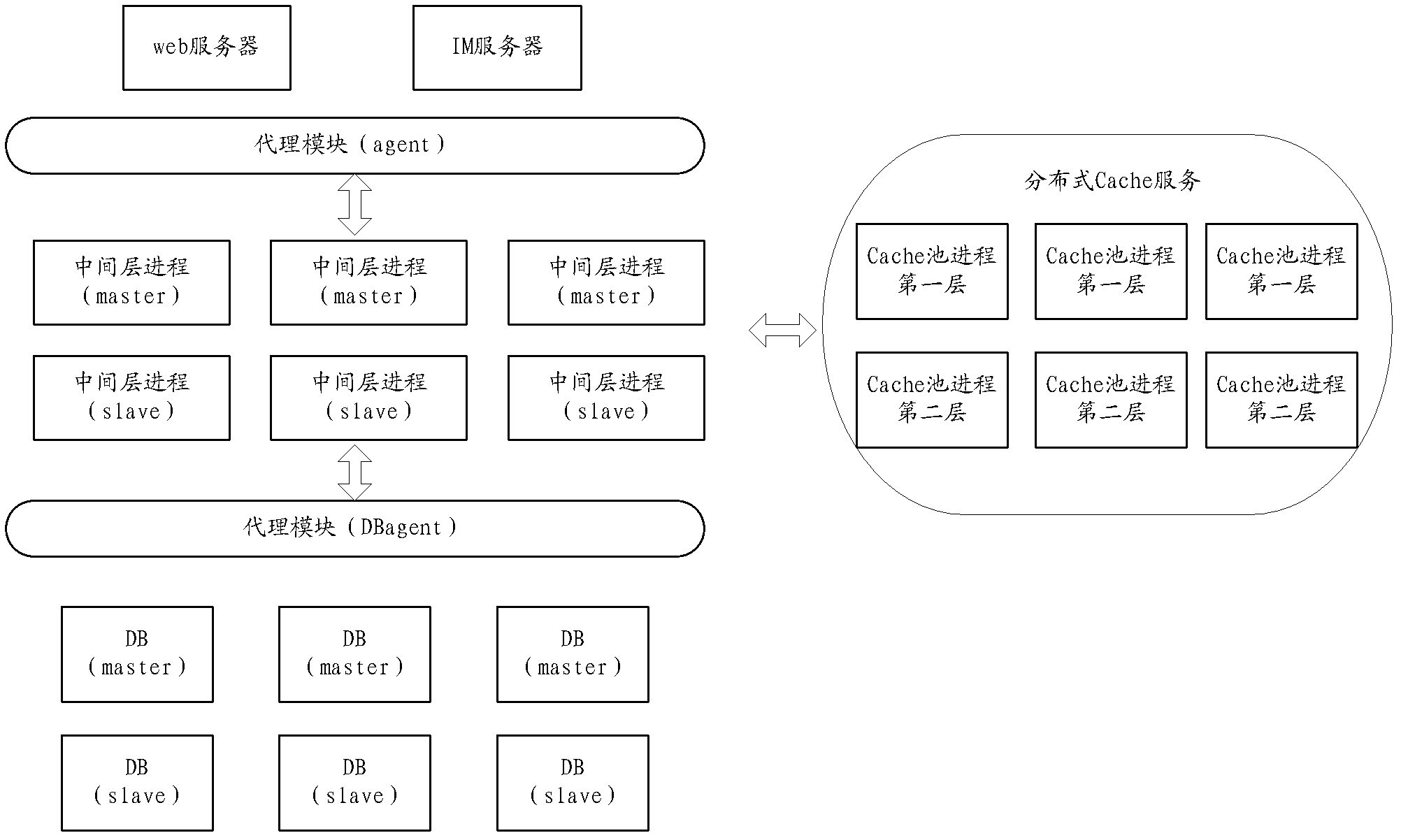

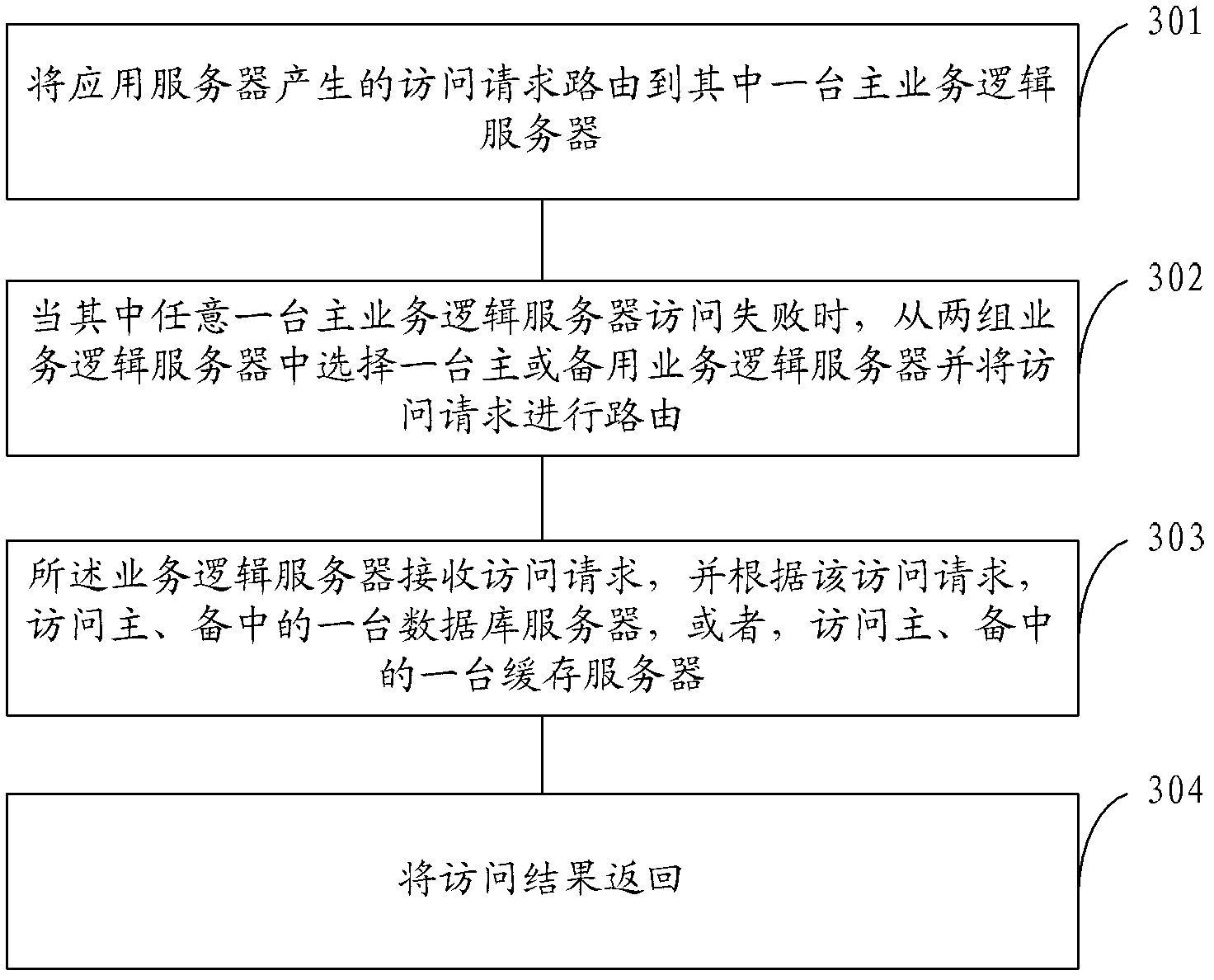

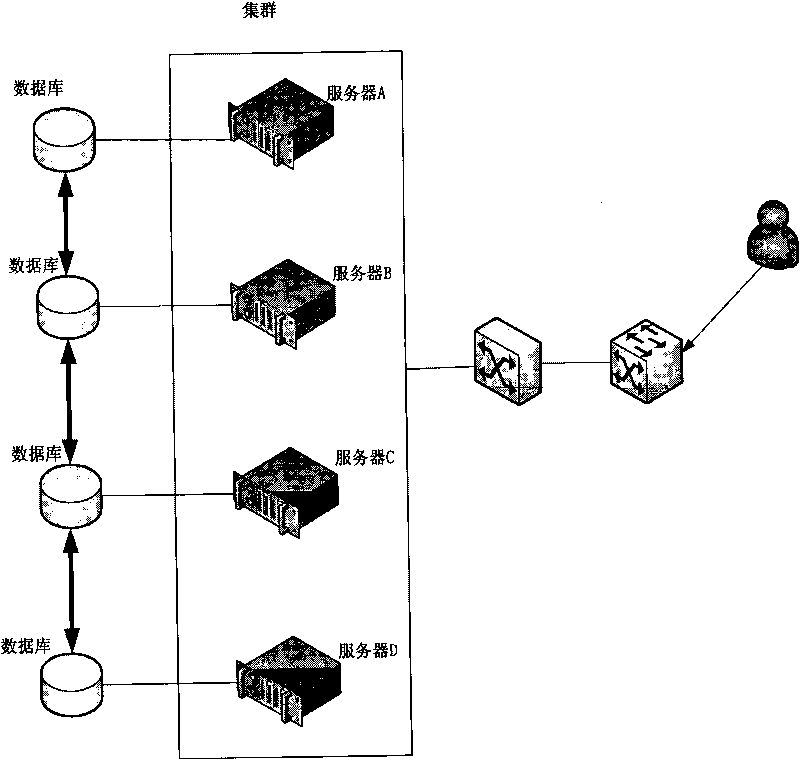

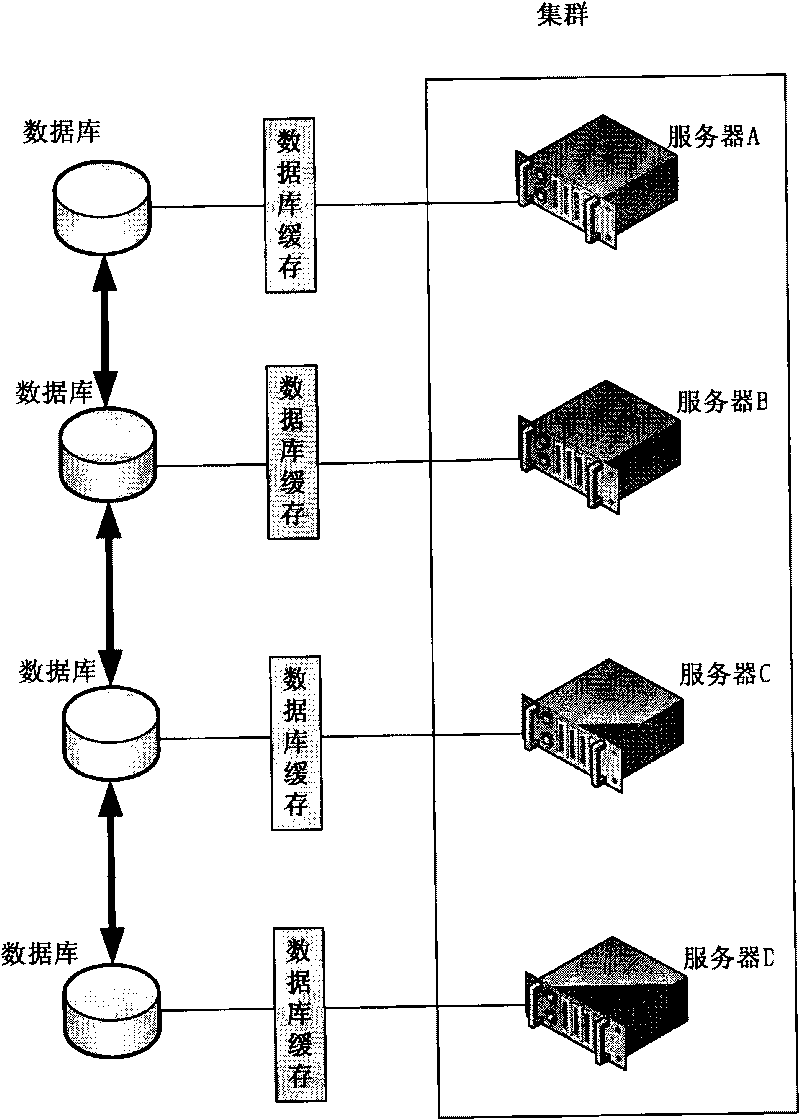

System and method for disaster recovery in internet application

InactiveCN102629903AGuaranteed service availabilityEnsure safetyData switching networksRedundant operation error correctionData accessThe Internet

The application provides a system and a method for disaster recovery in internet application, so that a problem of data access in large-scale internet application can be solved. The disaster recovery system, which is a complete set of solution schemes, carries out leveled disaster recovery on an internet application system; and real-time active-standby synchronization is carried out respectively at various levels including a database, caching, and service access. Therefore, it can be ensured a standby computer is immediately switched for usage when there is a problem at the database; and when there are invalidated cache data, data can be loaded from a standby cache file; and when a service logic server fails to carry out access, the access can be carried out form the standby computer. Because the whole set of the disaster recovery system does not carry out disaster recovery for a single point, a data access situation in large-scale application can be well adapted to, thereby maximizing service availability for users and further ensuring security and an access success rate for large internet data access.

Owner:QIZHI SOFTWARE (BEIJING) CO LTD

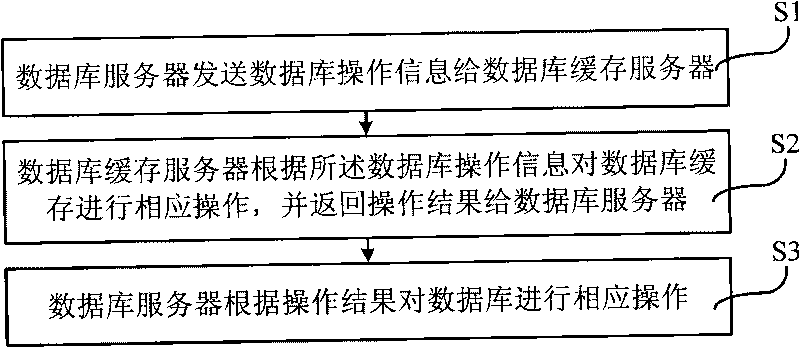

Method and system for centralized management of database caches

ActiveCN101706781AAvoid synchronizationSave resourcesTransmissionSpecial data processing applicationsDatabase serverCentralized management

The invention provides a method and a system for the centralized management of database caches. The method comprises the following steps that: step S1, a database server sends database operating information to a database cache server; step S2, the database cache server performs corresponding operations on the database caches according to the database operating information, and returns an operating result to a database server; and step S3, the database server performs corresponding operations on a database according to the operating result. Through the centralized management of the database caches, the method and the system deploy a unified database cache server for a plurality of database servers, have no need to maintain one database cache on each database server, and perform corresponding operations on the database caches before operating the database, so the problem that a plurality of the database caches are synchronized while the database is synchronized is avoided, and the system resource is saved.

Owner:广东北斗南方科技有限公司

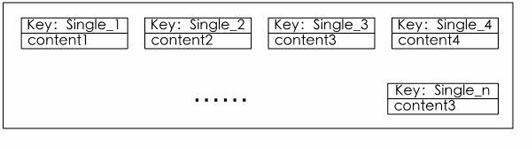

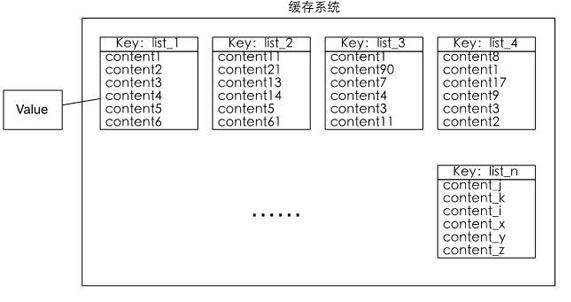

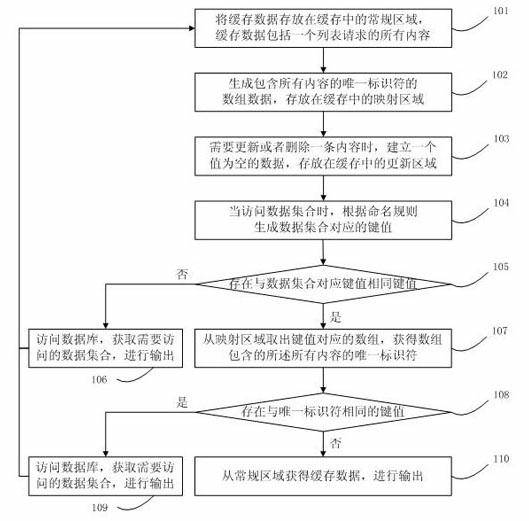

Data base caching method

InactiveCN102117338ASolve difficult technical problemsSpecial data processing applicationsLarge scale dataDatabase caching

The invention discloses a data base caching method. Besides an original caching data, two new caching areas are constructed, and the data mapping relationship based on caching is constructed through three caching areas; and in a large-scale data system, on condition that a caching system is ensured to be used, the content needing to be updated timely can be located fast in the huge caching systemfor the aggregate caching data, and all the caching data related to the content can be updated.

Owner:TVMINING BEIJING MEDIA TECH

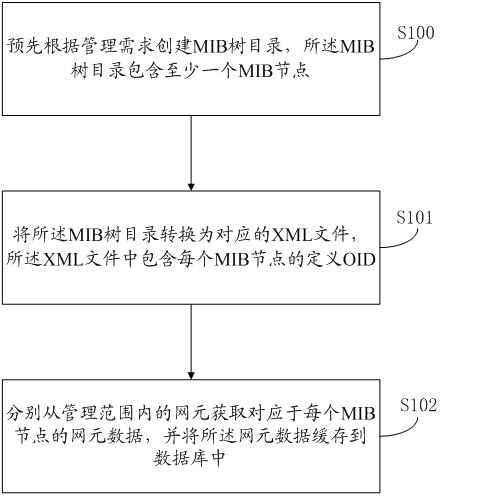

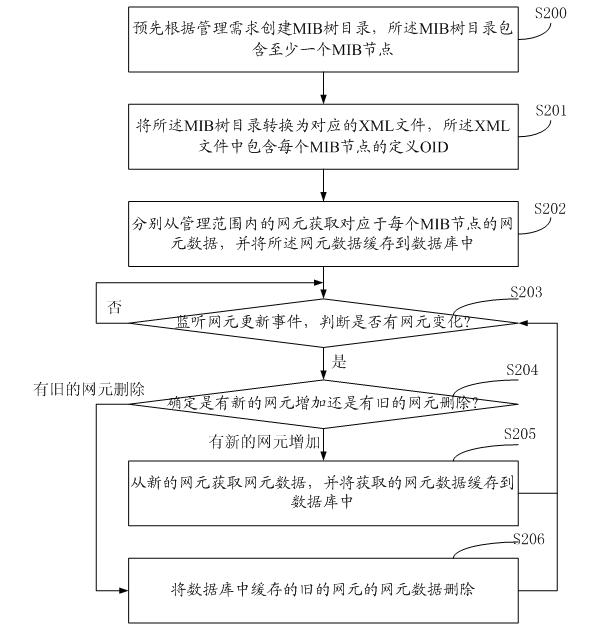

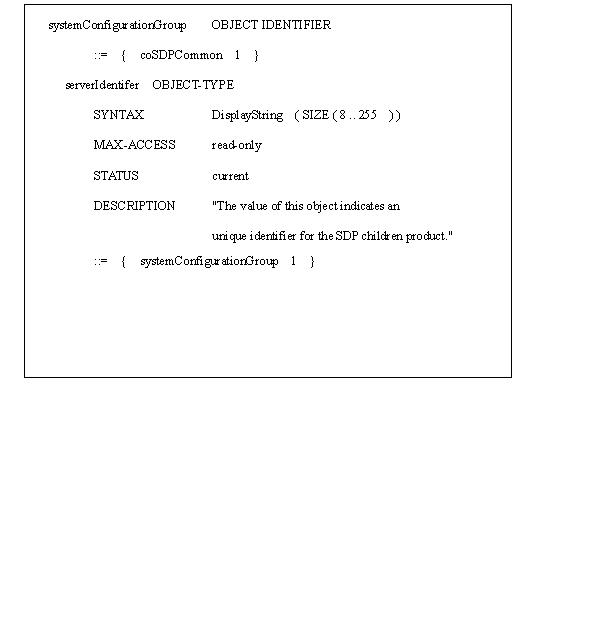

Method and system for network element data management

InactiveCN102571420AAvoid overheadImprove performanceData switching networksSpecial data processing applicationsManagement information baseMetadata management

An embodiment of the invention discloses a method for network element data management, which includes the following steps: a management information base (MIB) tree directory is established in advance according to management requirements, and the MIB tree directory comprises at least one MIB node; the MIB tree directory is converted to a corresponding extensive makeup language (XML) file, and the XML file comprises a defined object identifier (OID) of each MIB node; network element data corresponding to each MIB node are obtained respectively from each network element within the range of management and saved in a data base through a cache. The embodiment of the invention further discloses a system for network element data management. By means of the system, once an MIB node is changed, the changes of the MIB node can be updated to the XML file only by generating the XML file again so that unnecessary object expenses can be avoided. In addition, due to the fact that most network element data maintain unchanged, network element data can be directly queried on the network management side through a data base cache mechanism, querying speed is greatly quickened, and network management performance is improved.

Owner:SHENZHEN COSHIP ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com