Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

975 results about "In-memory database" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

An in-memory database (IMDB, also main memory database system or MMDB or memory resident database) is a database management system that primarily relies on main memory for computer data storage. It is contrasted with database management systems that employ a disk storage mechanism. In-memory databases are faster than disk-optimized databases because disk access is slower than memory access, the internal optimization algorithms are simpler and execute fewer CPU instructions. Accessing data in memory eliminates seek time when querying the data, which provides faster and more predictable performance than disk.

Virtual data center that allocates and manages system resources across multiple nodes

ActiveUS20070067435A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemData center

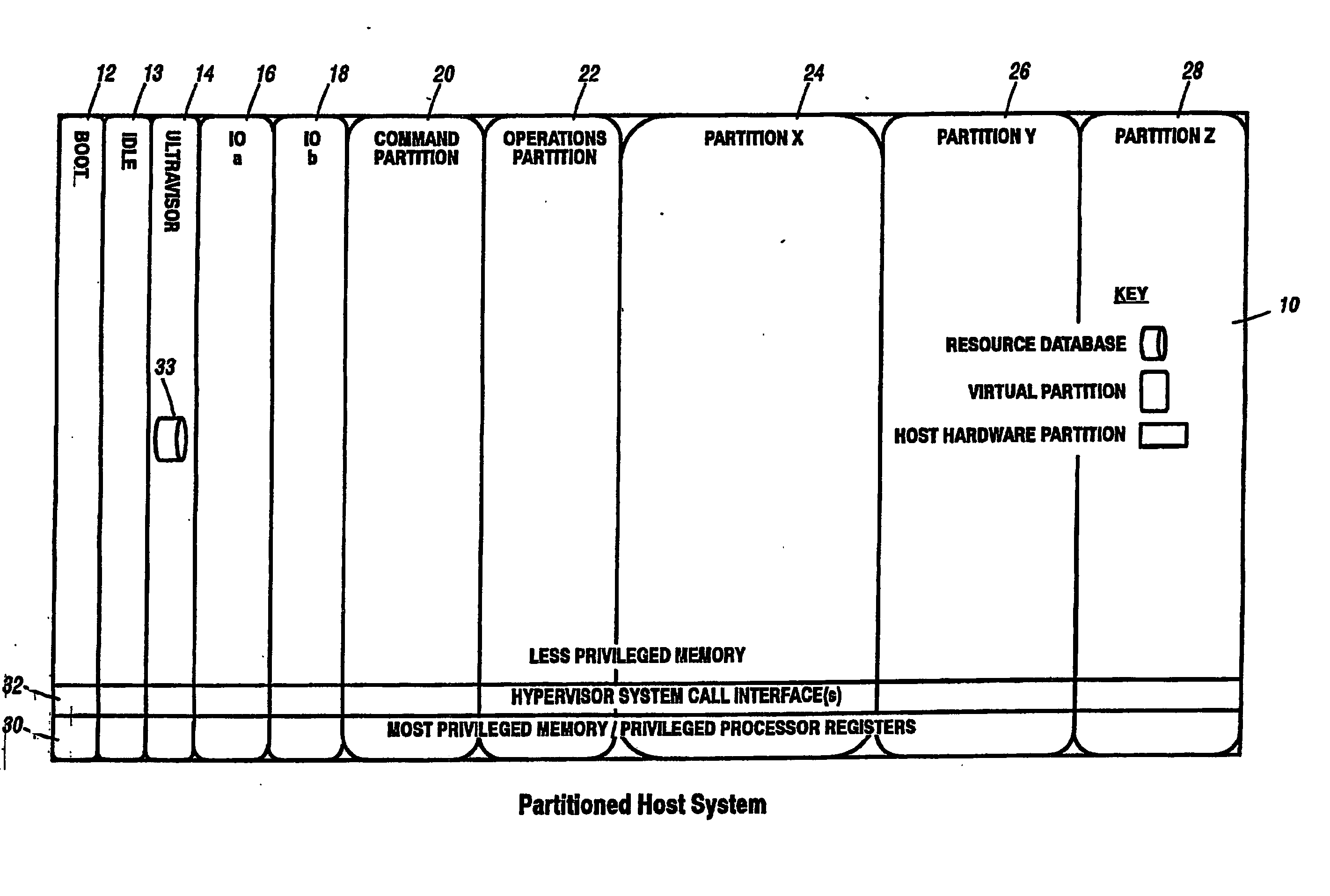

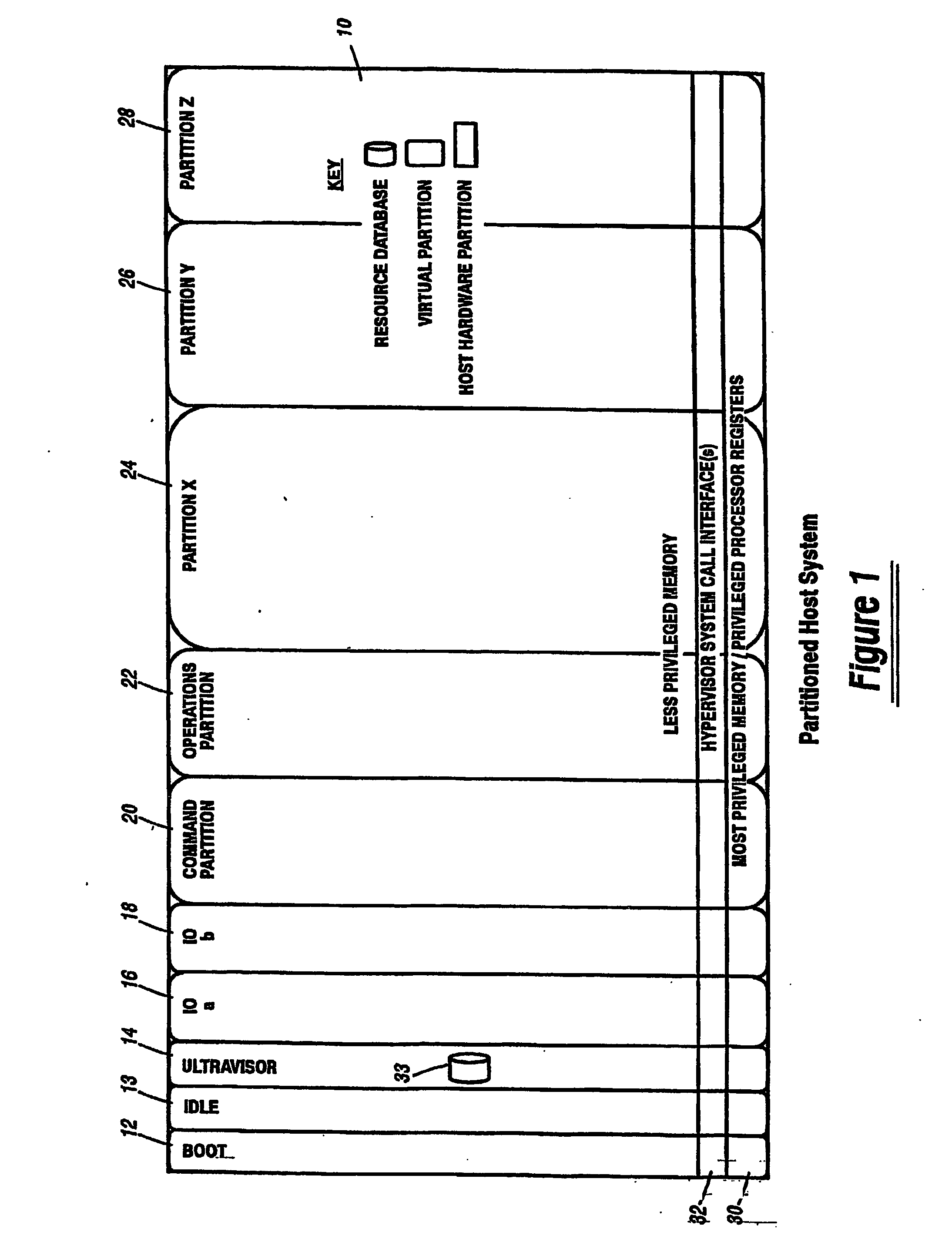

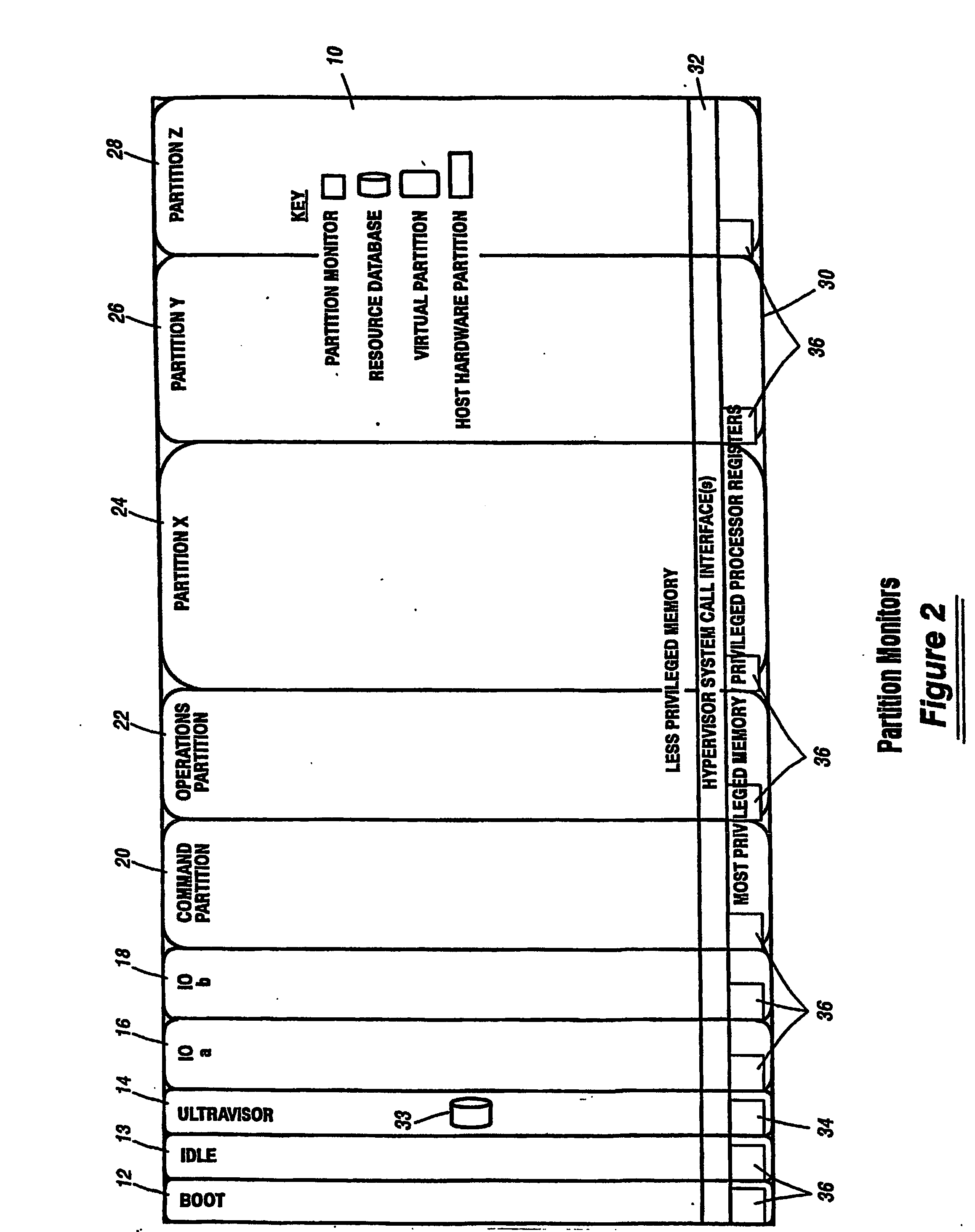

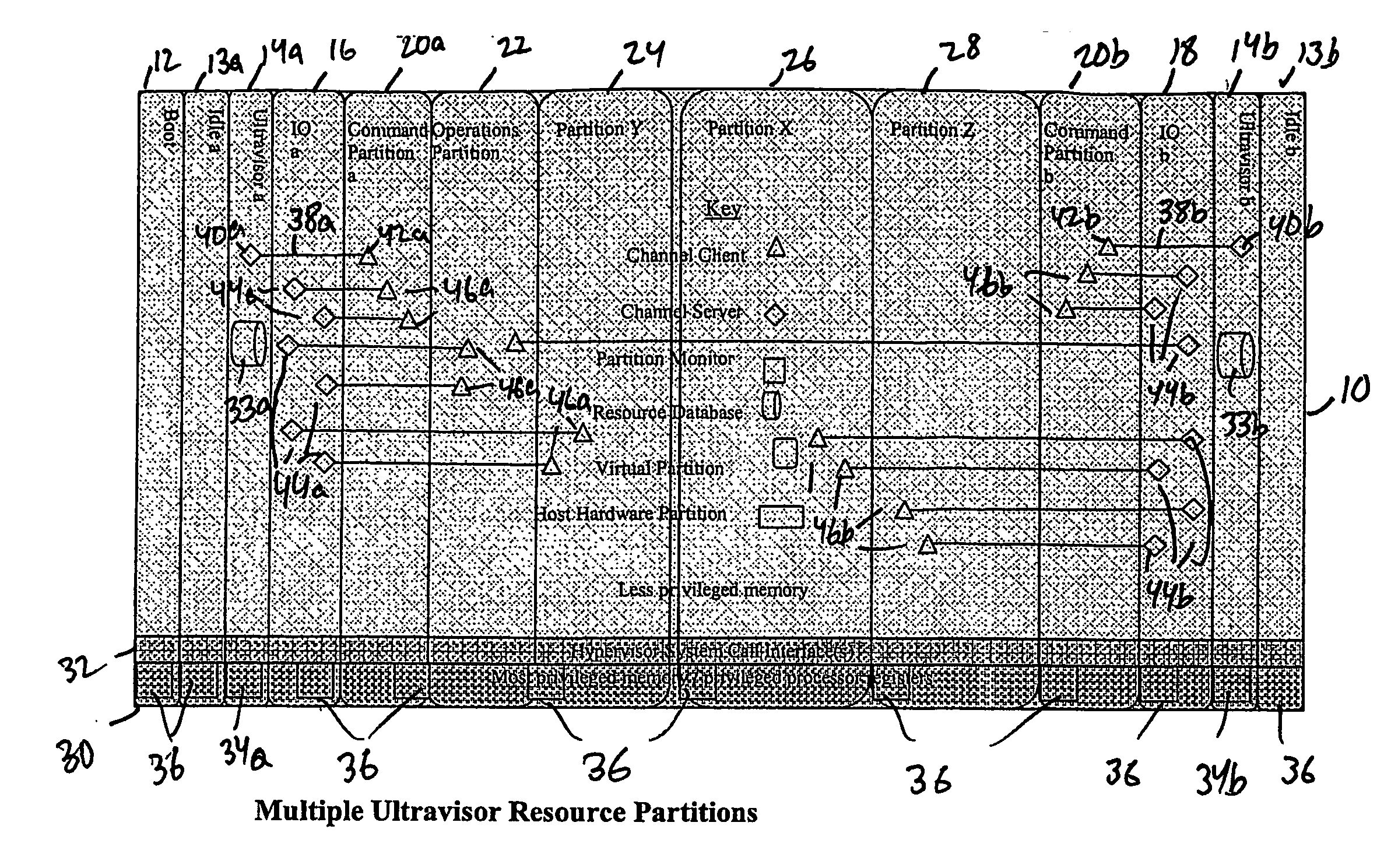

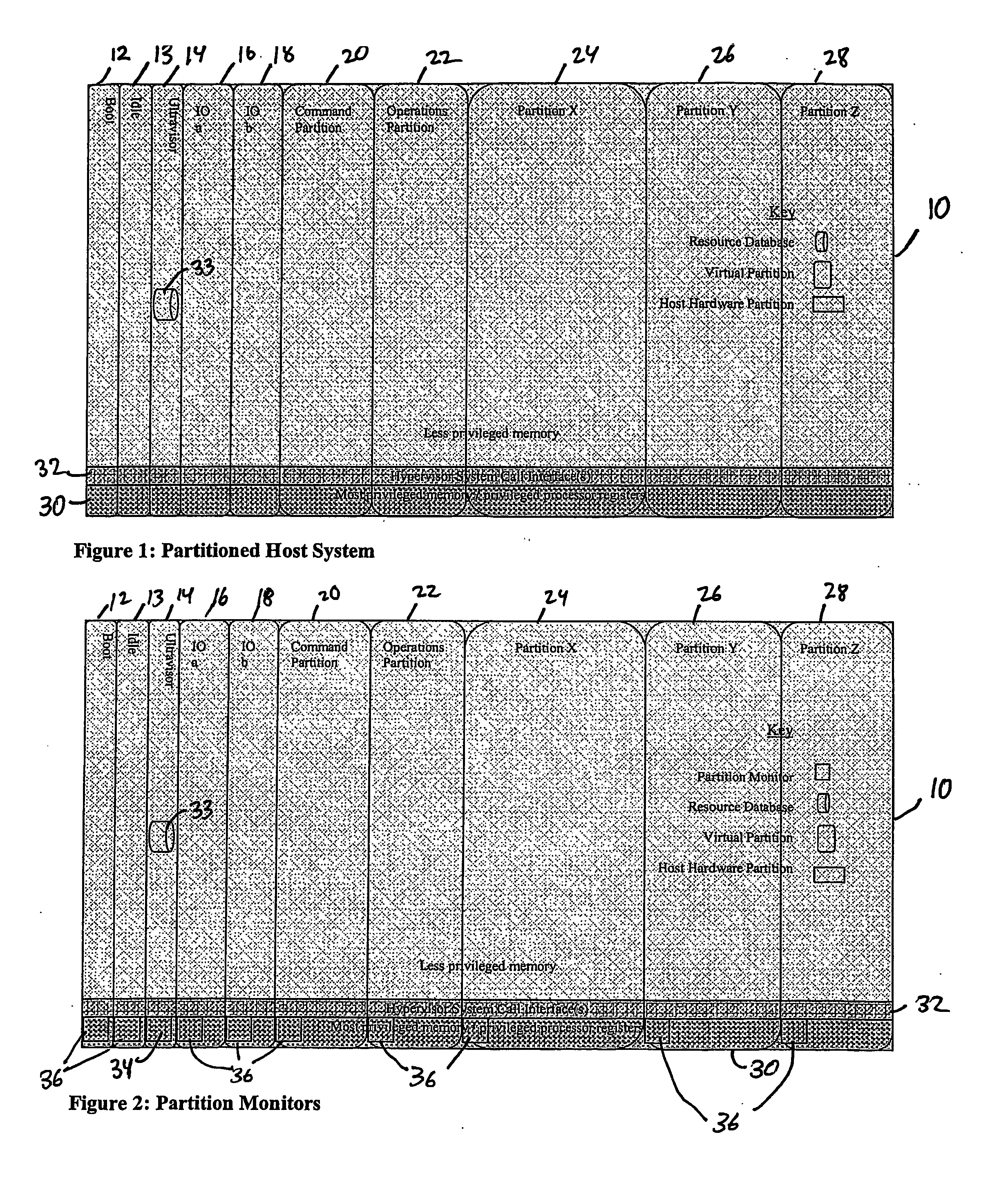

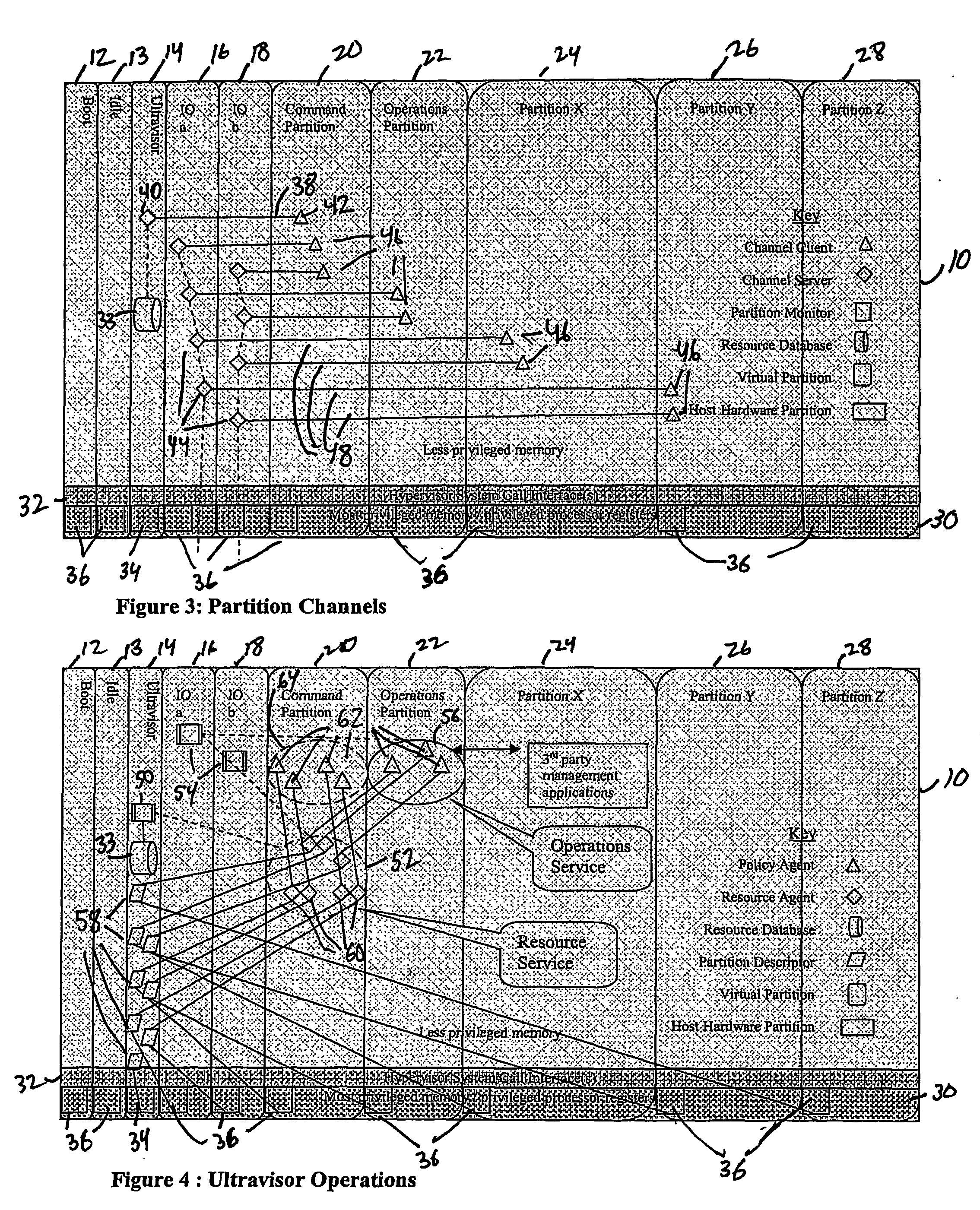

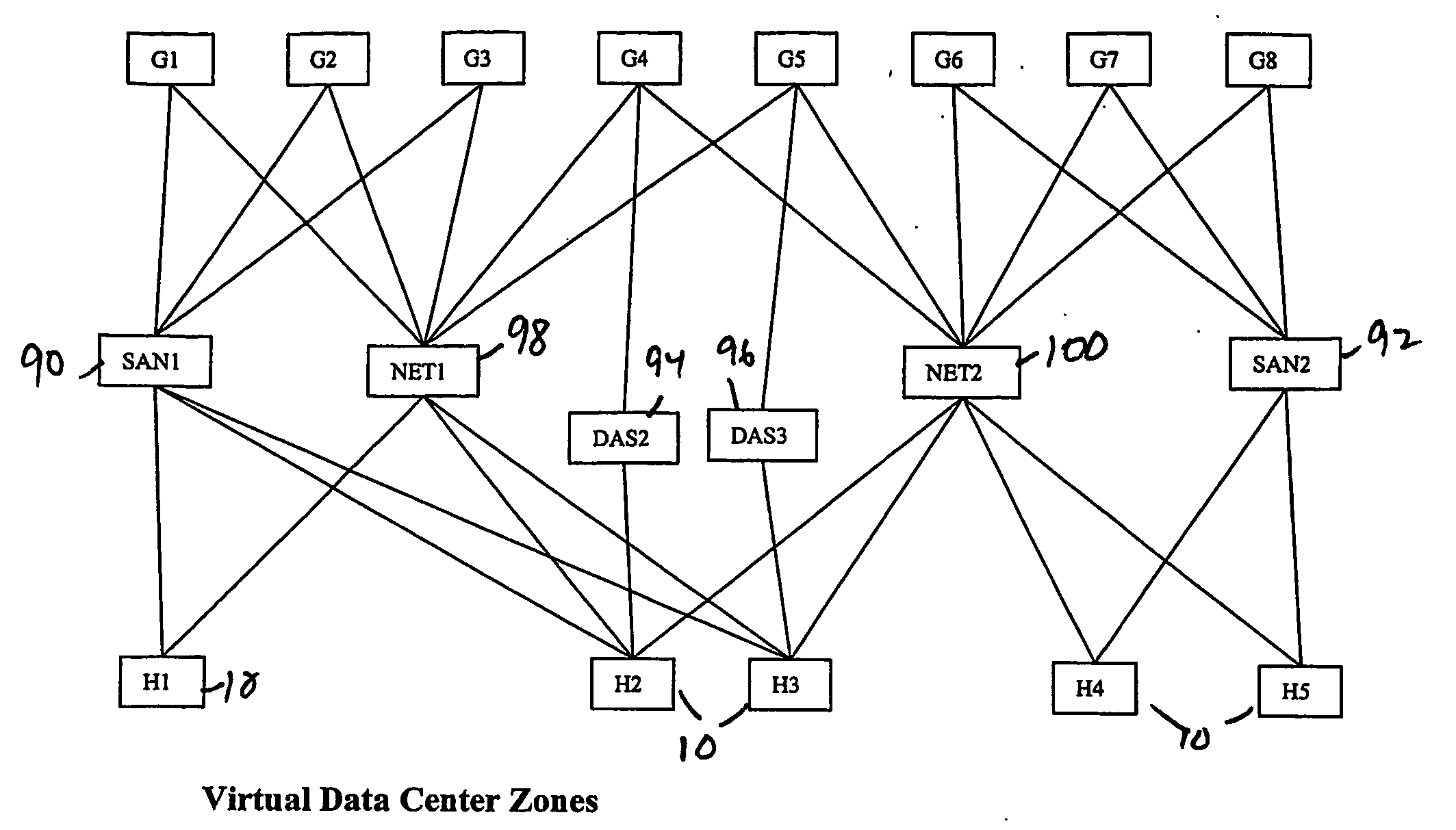

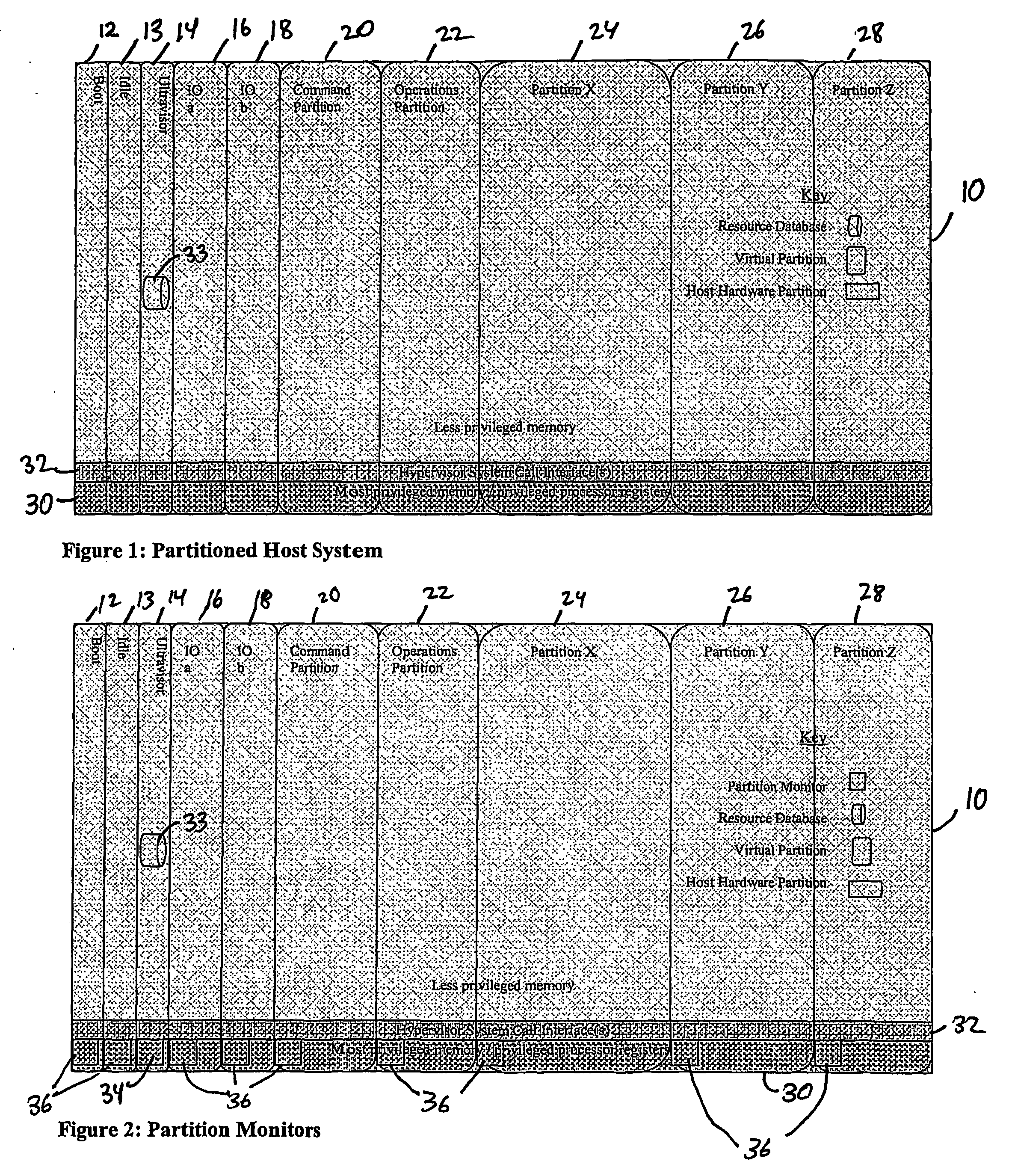

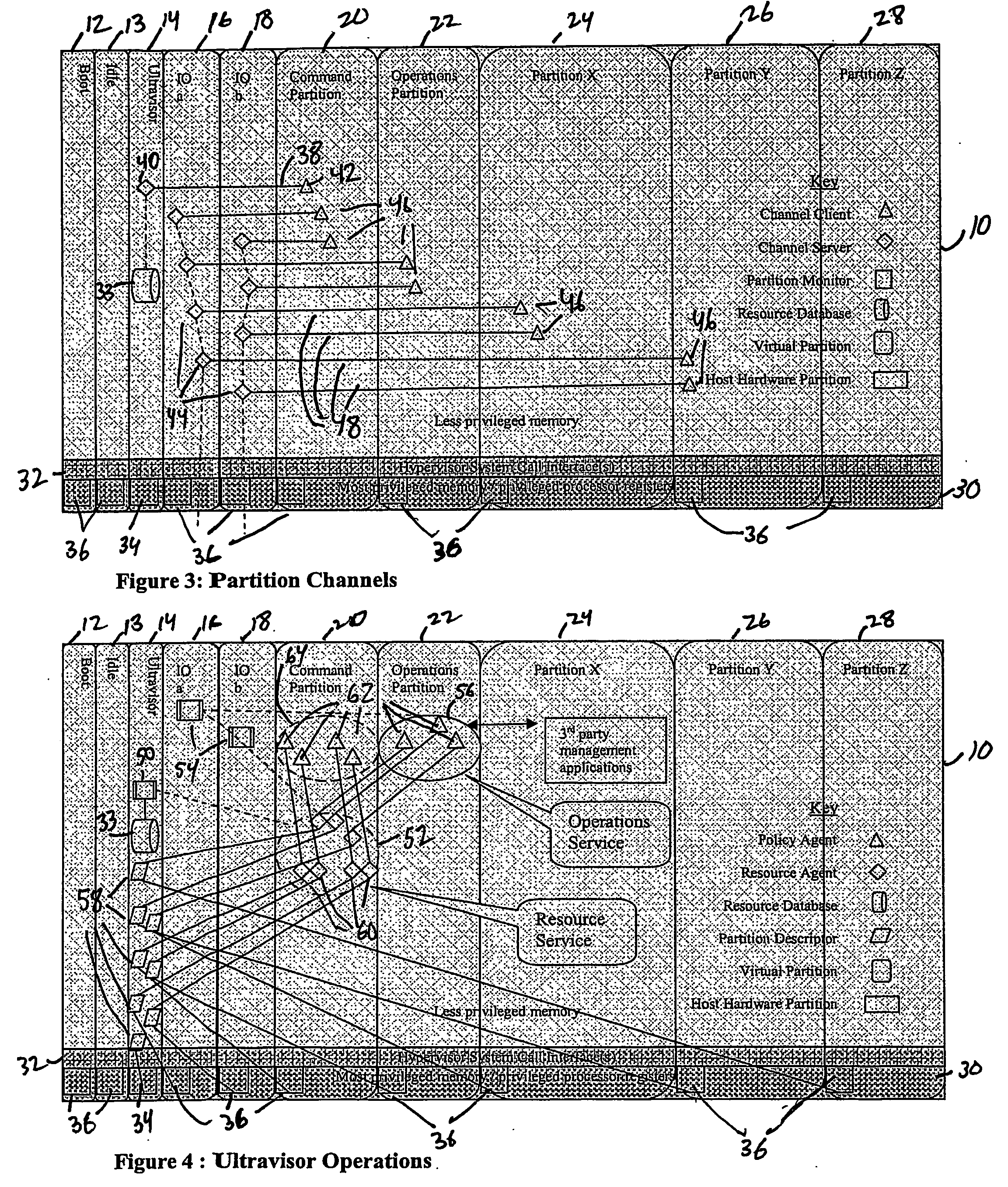

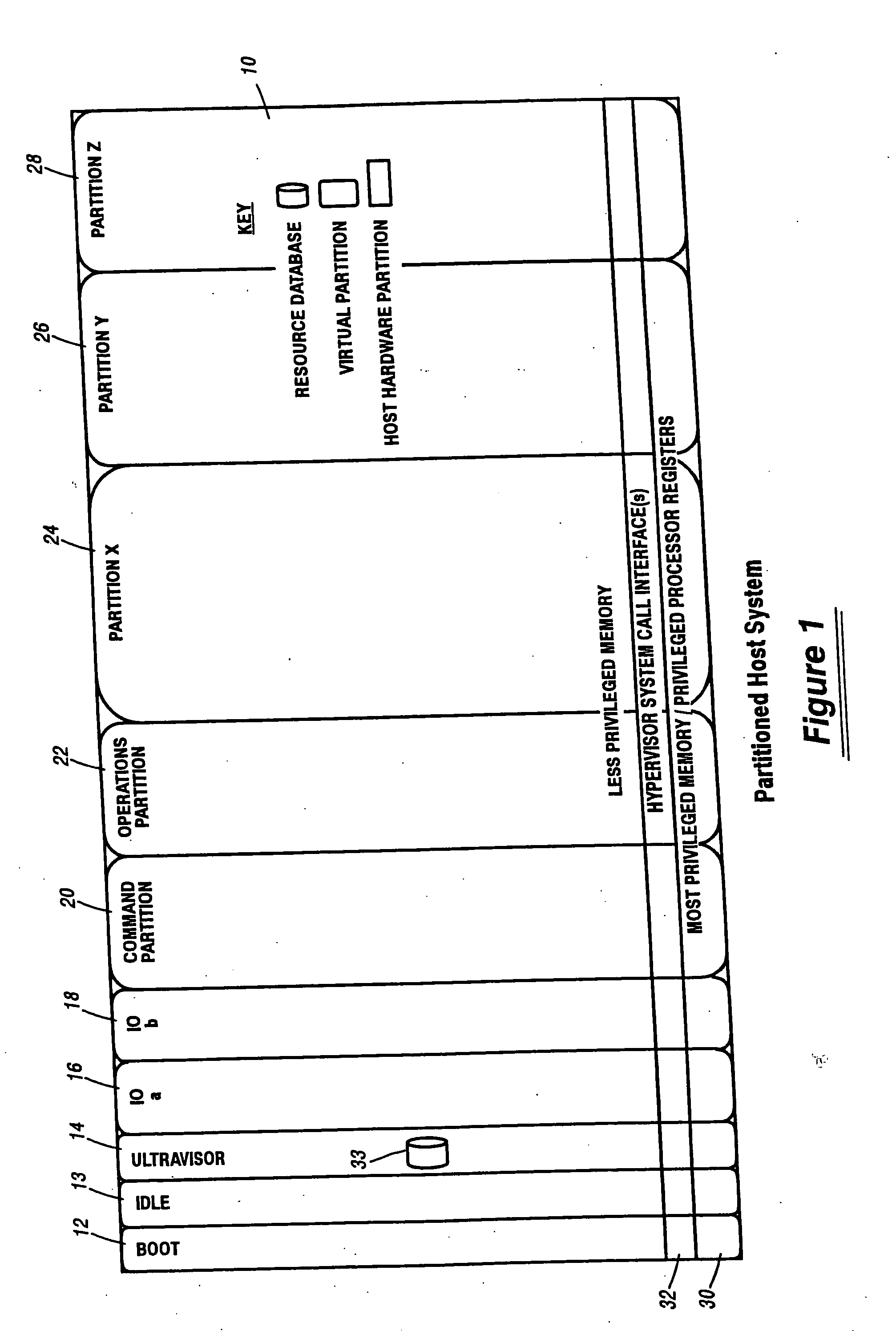

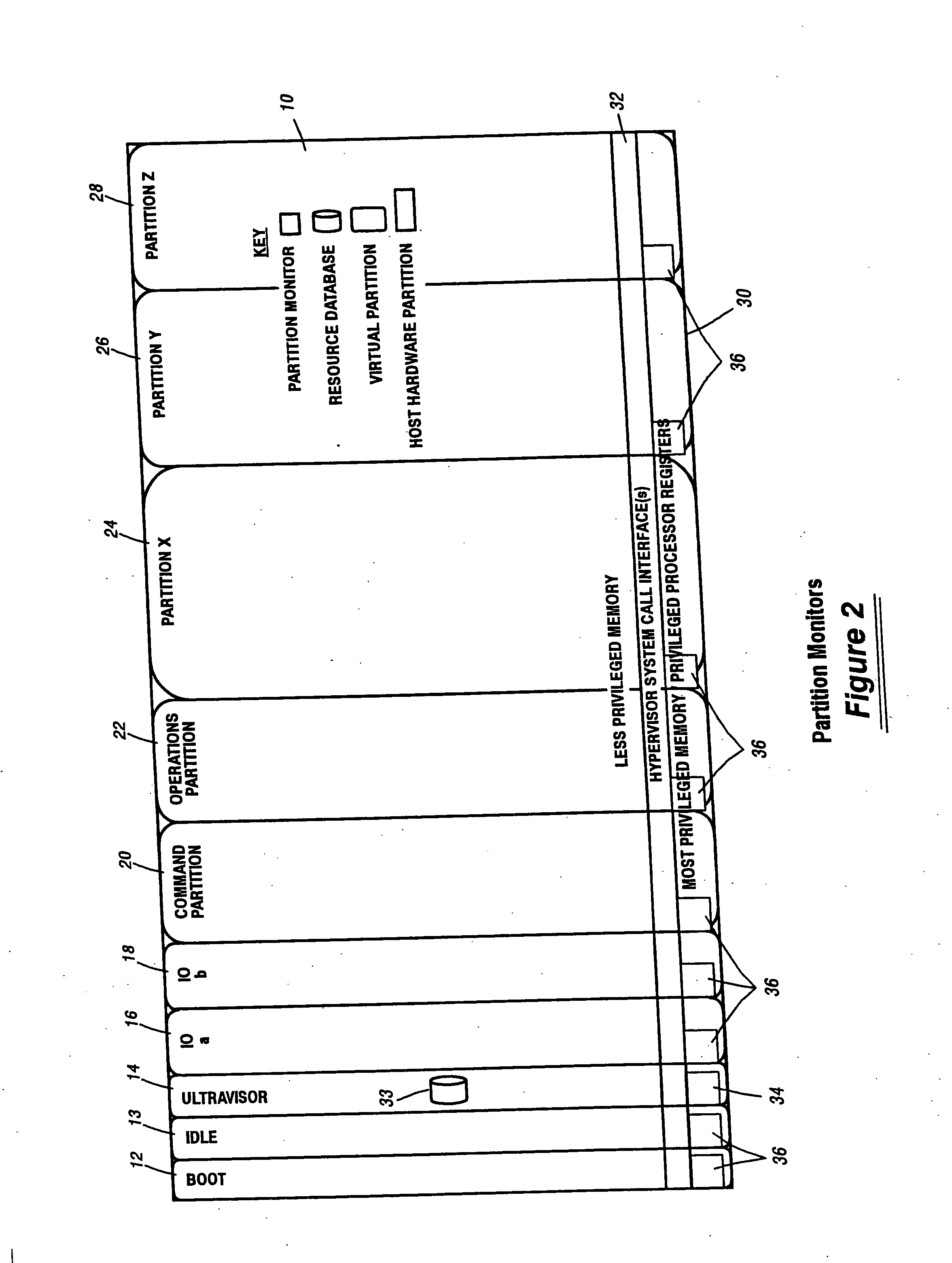

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps. System resources are separated into zones that are managed by a separate partition containing resource management policies that may be implemented across nodes to implement a virtual data center.

Owner:UNISYS CORP

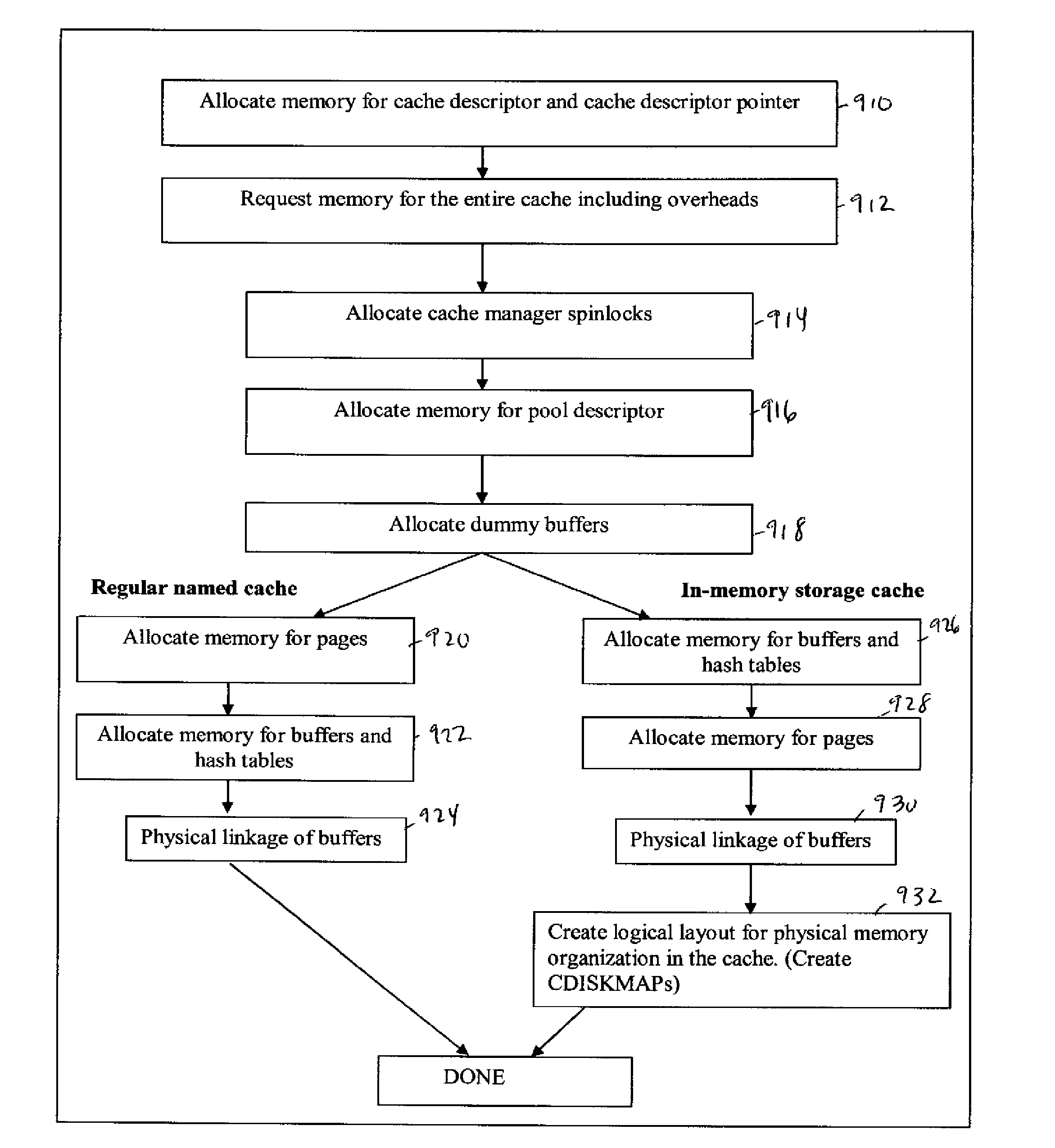

Computer system para-virtualization using a hypervisor that is implemented in a partition of the host system

ActiveUS20070028244A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemSystem call

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps.

Owner:UNISYS CORP

Para-virtualized computer system with I/0 server partitions that map physical host hardware for access by guest partitions

InactiveUS20070061441A1Improve efficiencyImprove securityError detection/correctionDigital computer detailsOperational systemSystem call

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps.

Owner:UNISYS CORP

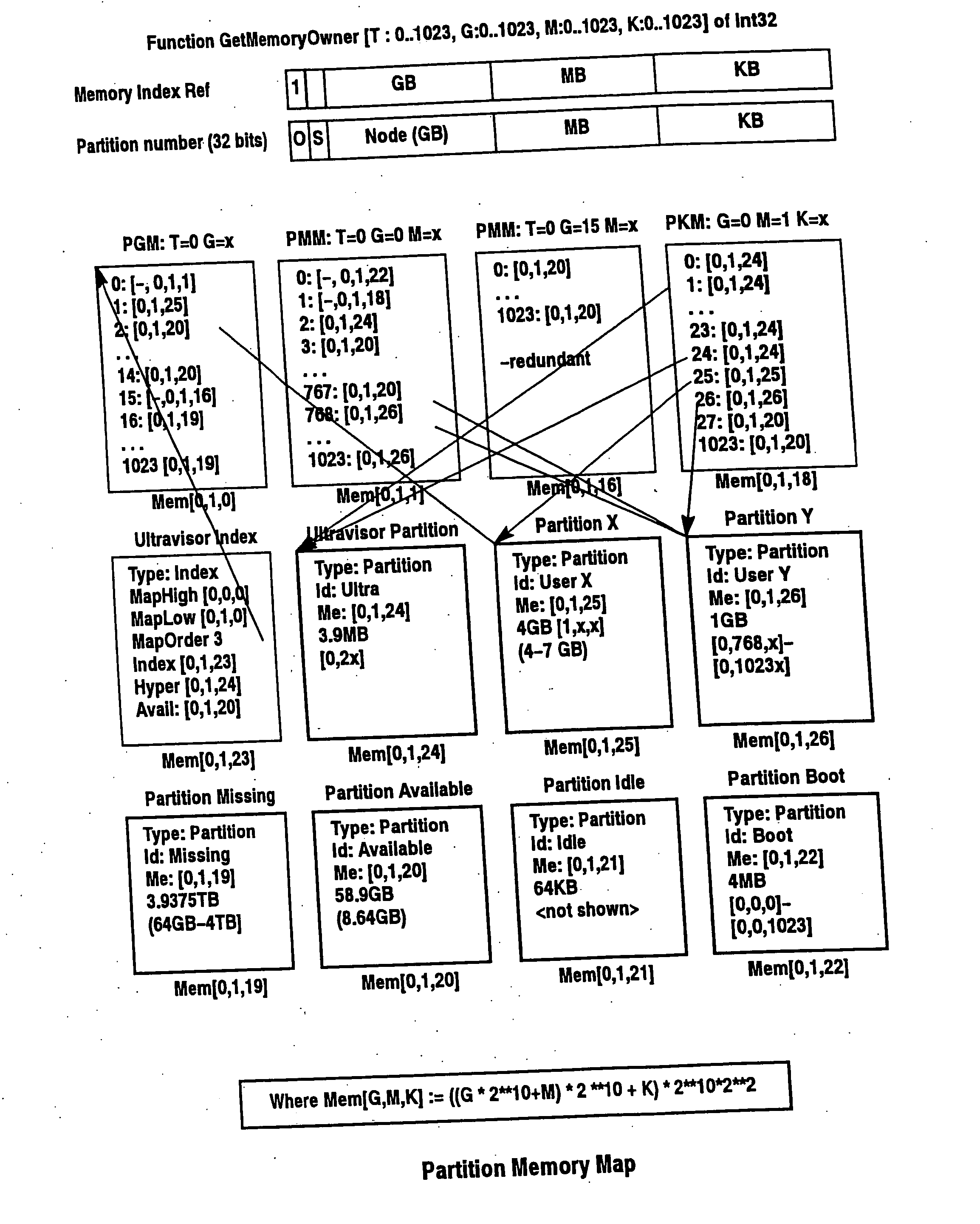

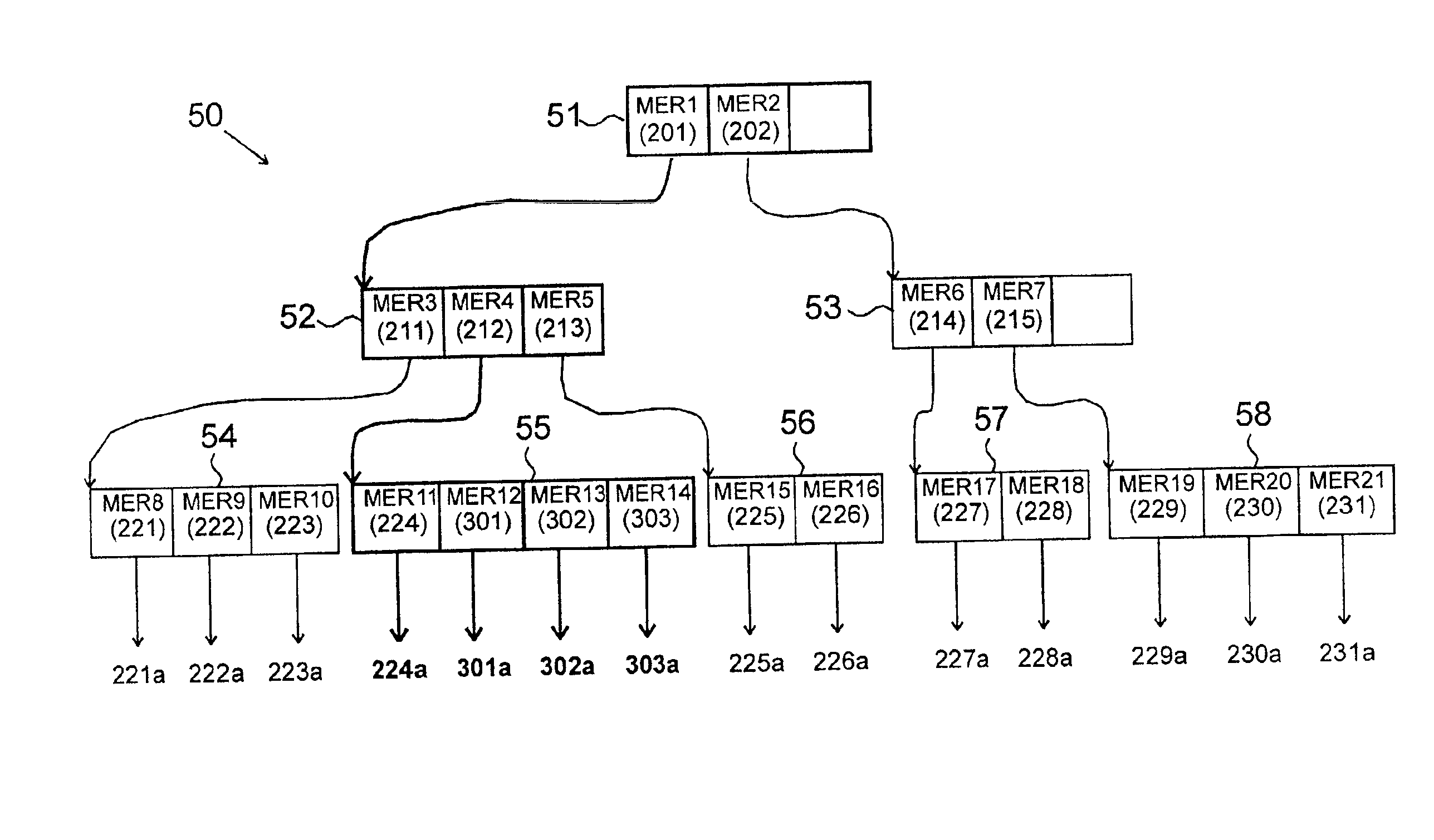

Scalable partition memory mapping system

InactiveUS20070067366A1Improve efficiencyImprove securityMemory architecture accessing/allocationSoftware simulation/interpretation/emulationIn-memory databaseOperational system

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. A scalable partition memory mapping system is implemented in the ultravisor partition so that the virtualized system is scalable to a virtually unlimited number of pages. A log (210) based allocation allows the virtual partition memory sizes to grow over multiple generations without increasing the overhead of managing the memory allocations. Each page of memory is assigned to one partition descriptor in the page hierarchy and is managed by the ultravisor partition.

Owner:UNISYS CORP

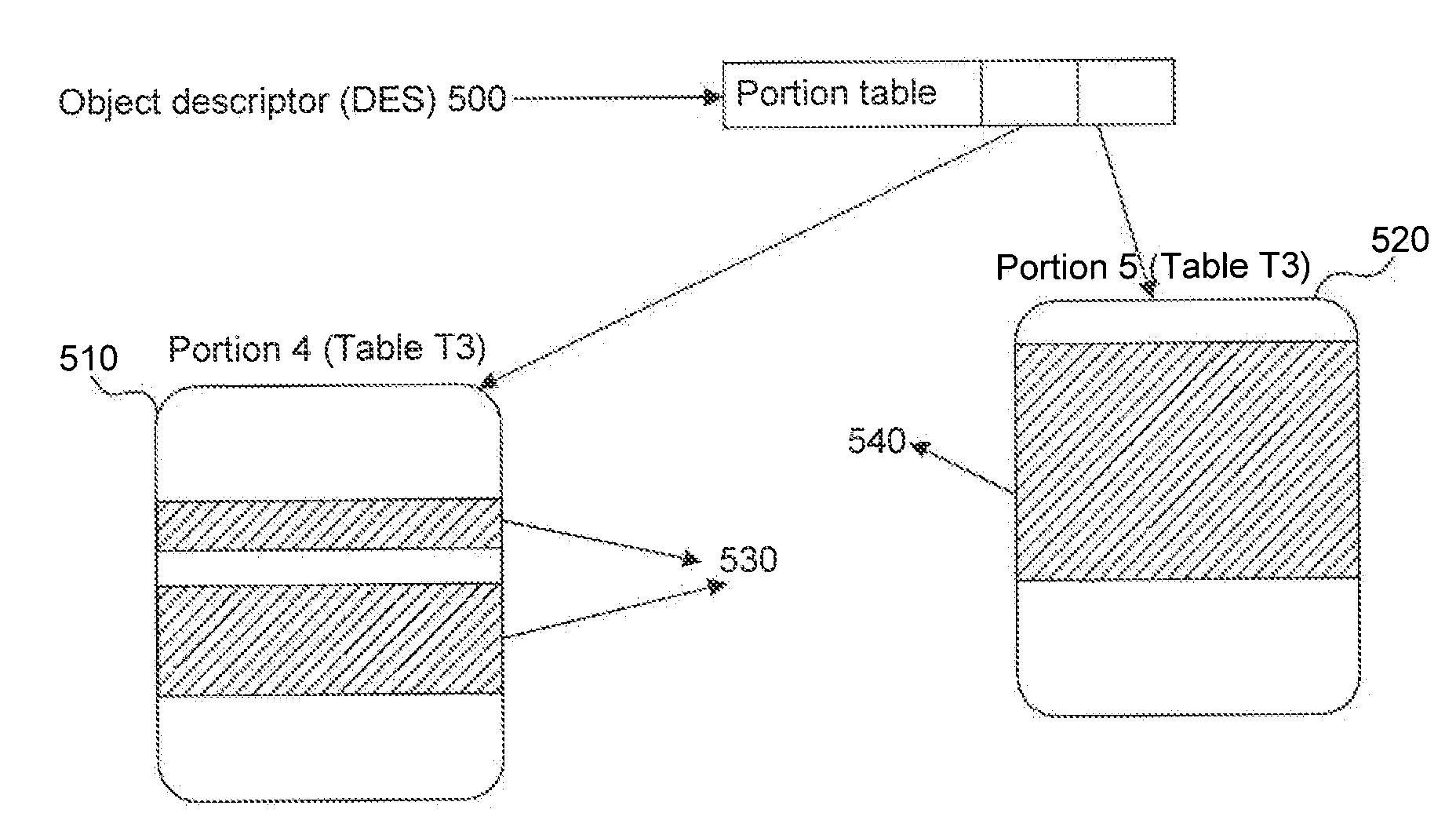

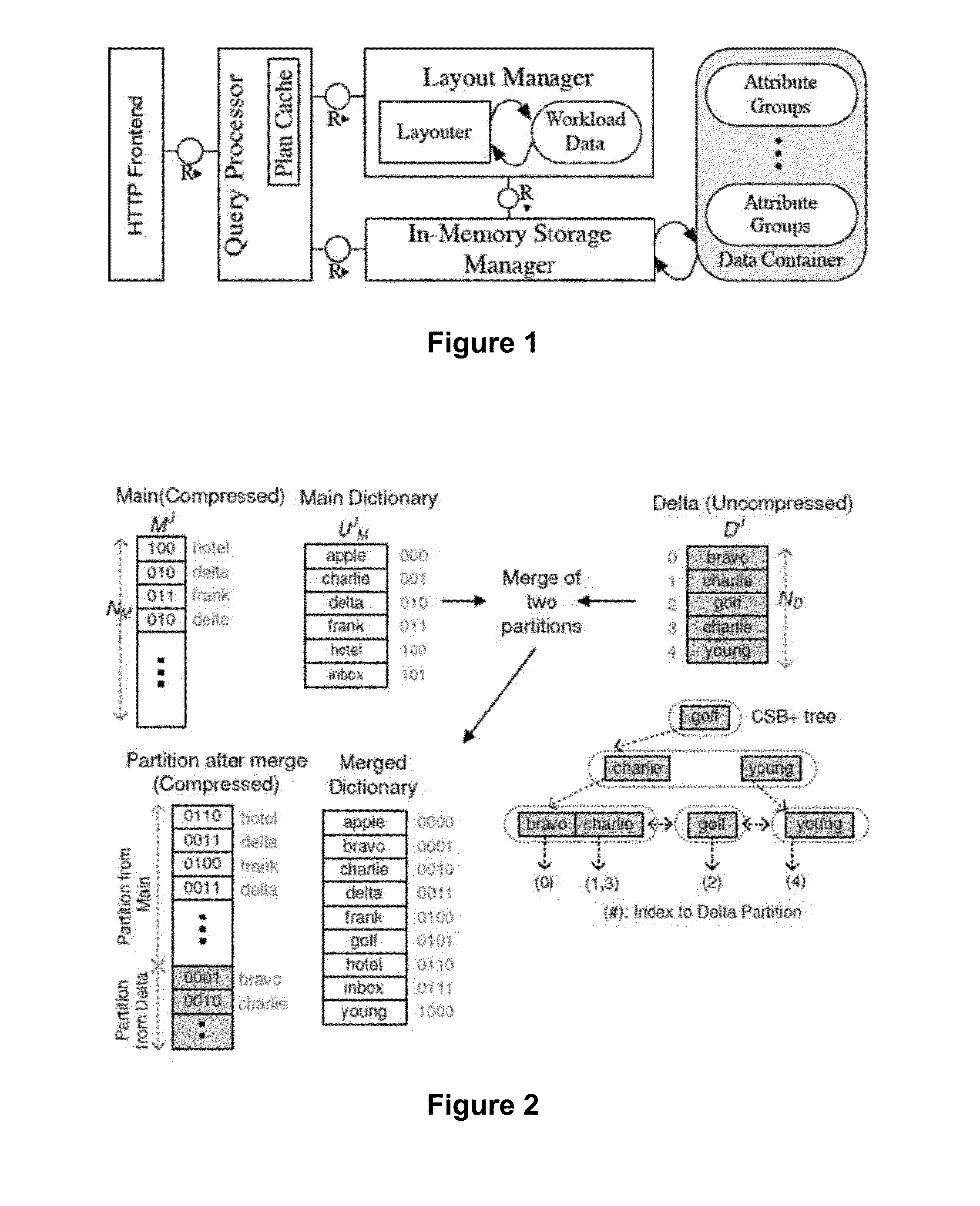

Optimizing data storage and access of an in-memory database

InactiveUS20120323971A1Digital data processing detailsSpecial data processing applicationsIn-memory databasePortion size

System, method, computer program product embodiments and combinations and sub-combinations thereof are provided for optimizing data storage and access of an in-memory database in a database management system. Embodiments include utilizing in-memory storage for hosting an entire database, and storing objects of the database individually in separate portions of the in-memory storage, wherein a portion size is based upon an object element size.

Owner:SYBASE INC

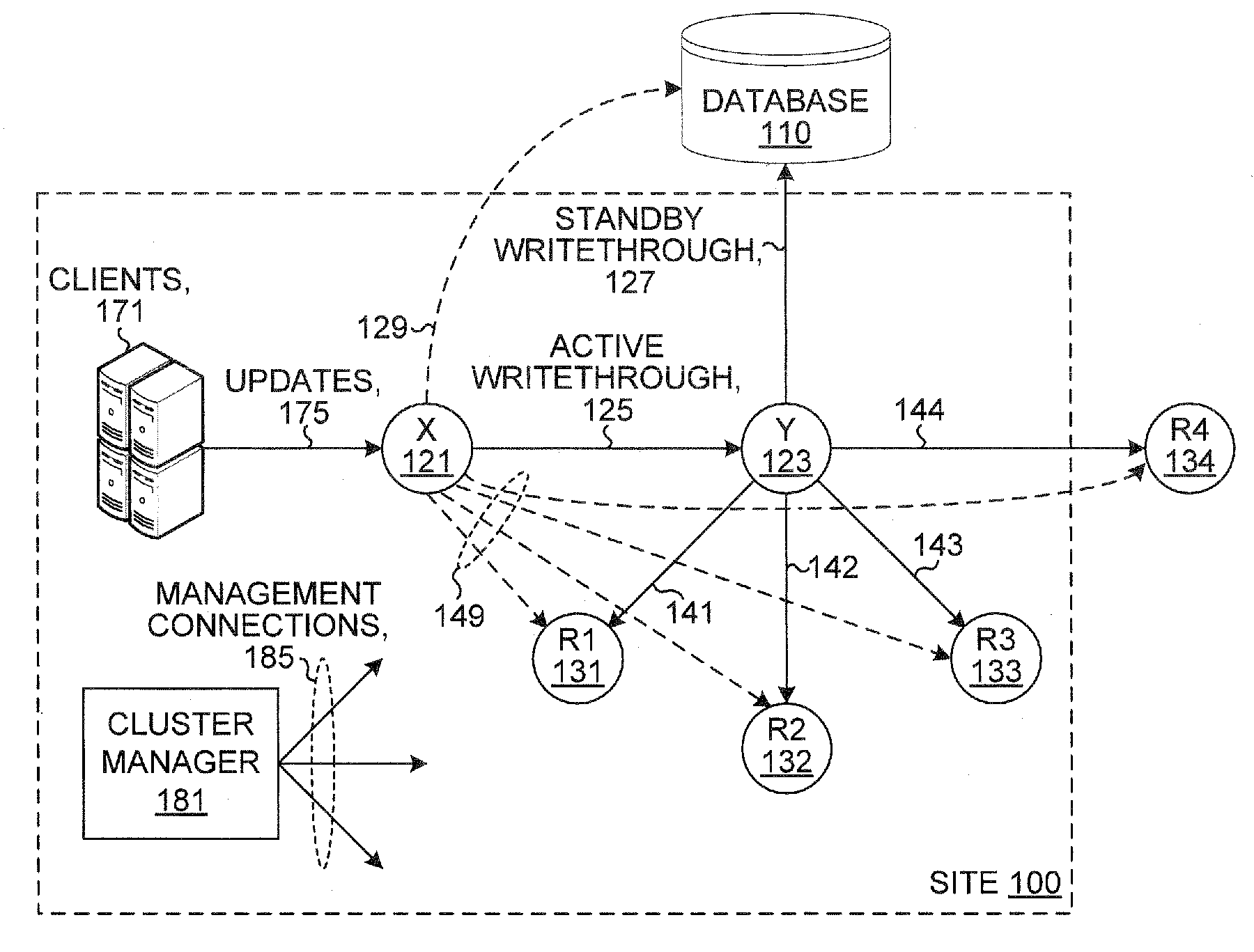

Database system with active standby and nodes

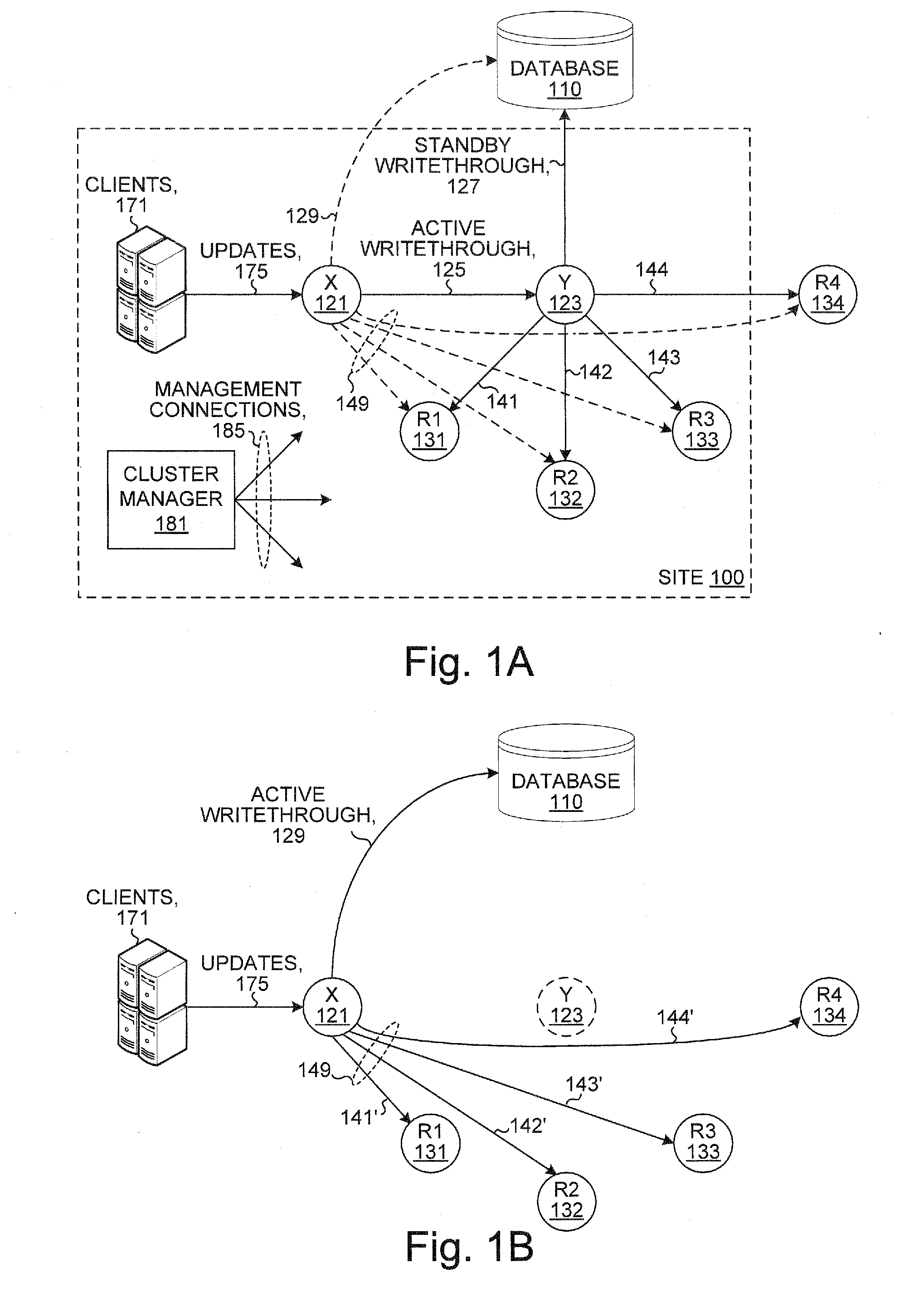

ActiveUS20080222159A1Special data processing applicationsDatabase design/maintainanceIn-memory databaseTransaction log

A system includes an active node and a standby node and zero or more replica nodes. Each of the nodes includes a database system, such as an in-memory database system. Client updates applied to the active node are written through to the standby node, and the standby node writes the updates through to a primary database and updates the replica nodes. Commit ticket numbers tag entries in transaction logs and are used to facilitate recovery if either of the active node or the standby node fails. Updates applied to the primary database are autorefreshed to the active node and written through by the active node to the standby node which propagates the updates to the replica nodes. Bookmarks are used to track updated records of the primary database and are used to facilitate recovery if either of the active node or the standby node fails.

Owner:ORACLE INT CORP

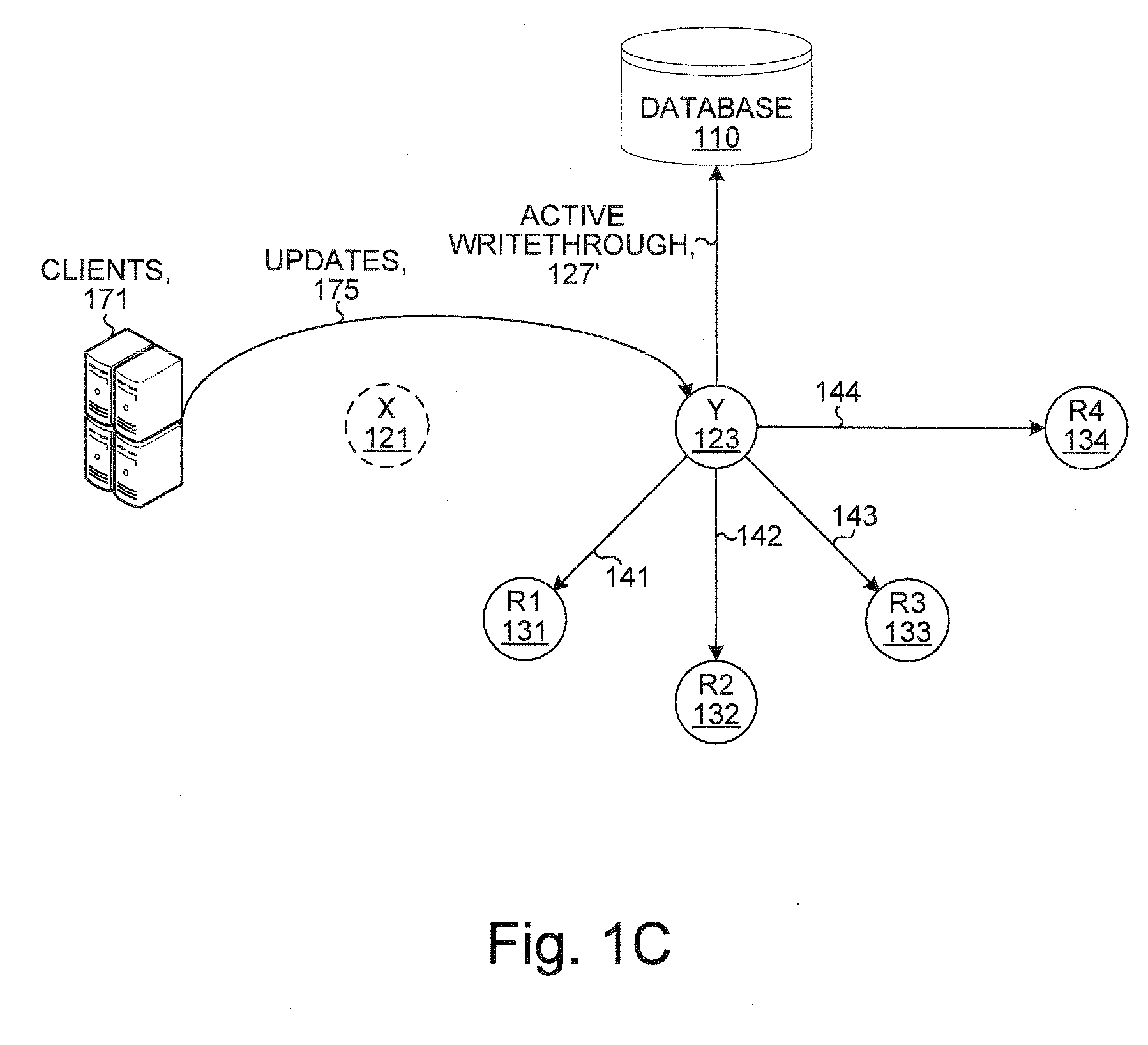

Managing Data Storage as an In-Memory Database in a Database Management System

ActiveUS20110138123A1Digital data processing detailsMemory adressing/allocation/relocationIn-memory databaseRelational database management system

System, method, computer program product embodiments and combinations and sub-combinations thereof for managing data storage as an in-memory database in a database management system (DBMS) are provided. In an embodiment, a specialized database type is provided as a parameter of a native DBMS command. A database hosted entirely in-memory of the DBMS is formed when the specialized database type is specified.

Owner:SYBASE INC

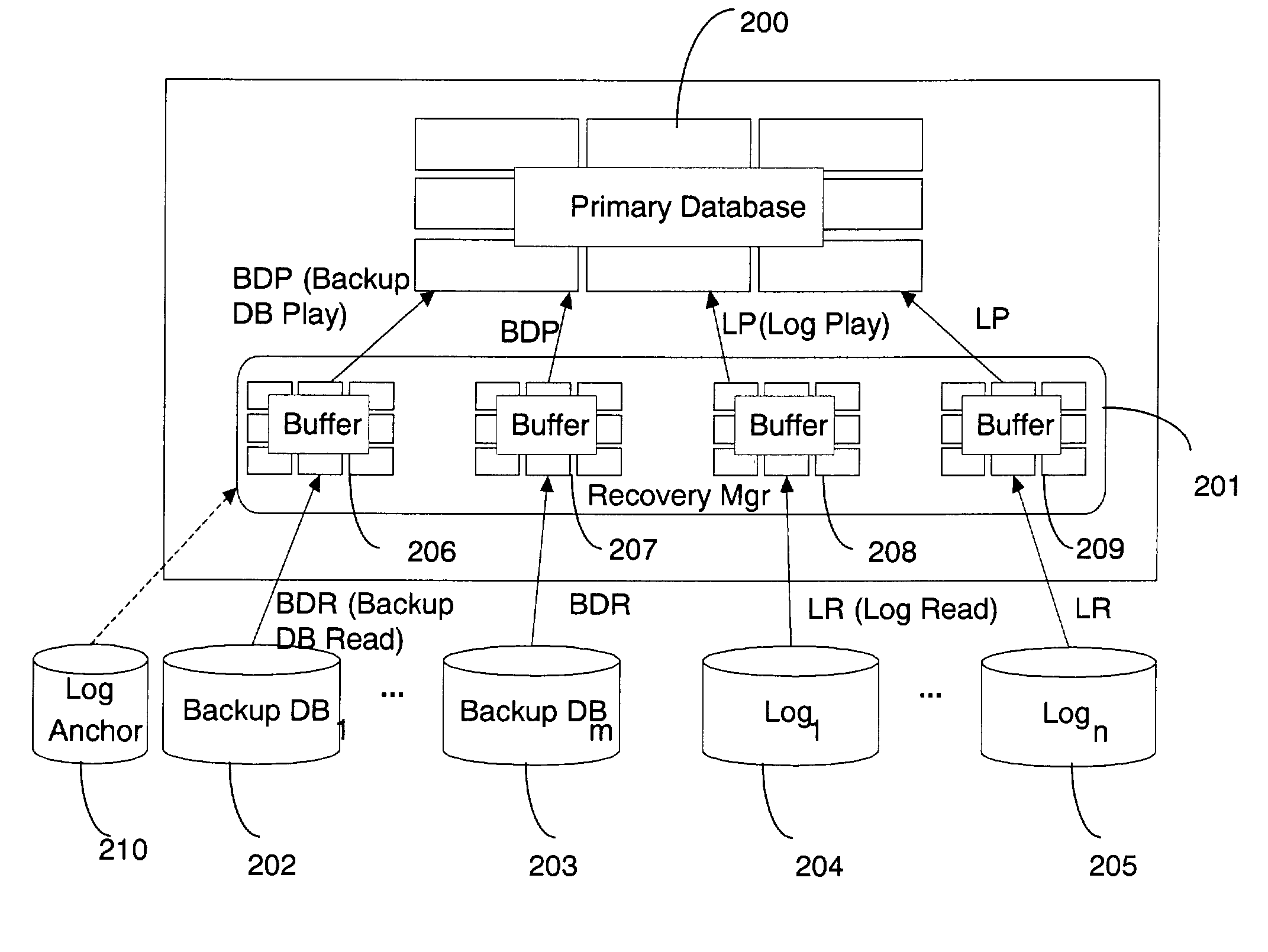

Parallelized redo-only logging and recovery for highly available main memory database systems

InactiveUS7305421B2Easy constructionData processing applicationsLight protection screensIn-memory databaseHigh availability

A parallel logging and recovery scheme for highly available main-memory database systems is presented. A preferred embodiment called parallel redo-only logging (“PROL”) combines physical logging and selective replay of redo-only log records. During physical logging, log records are generated with an update sequence number representing the sequence of database update. The log records are replayed selectively during recovery based on the update sequence number. Since the order of replaying log records doesn't matter in physical logging, PROL makes parallel operations possible. Since the physical logging does not depend on the state of the object to which the log records are applied, the present invention also makes it easy to construct a log-based hot standby system.

Owner:TRANSACT & MEMORY

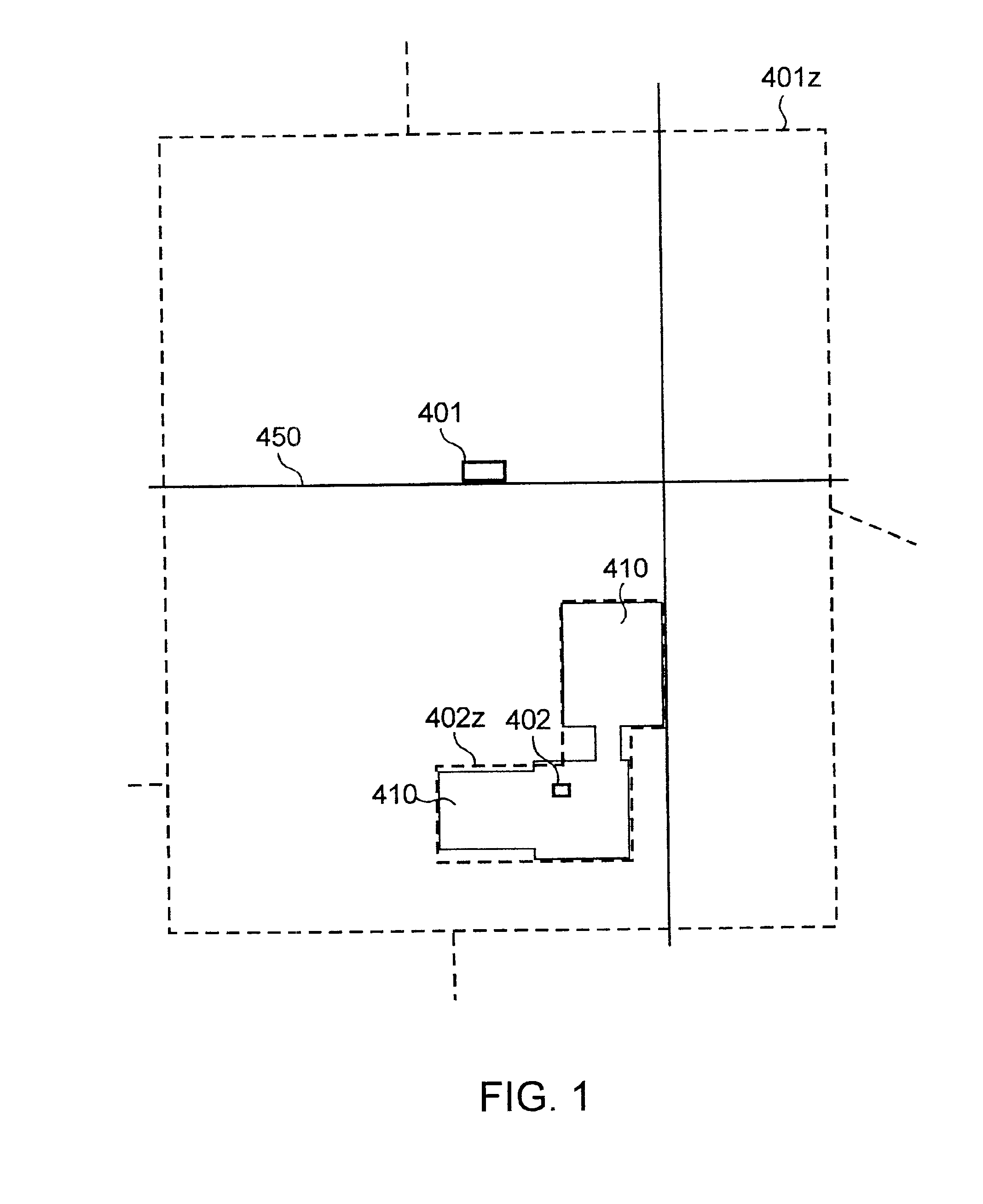

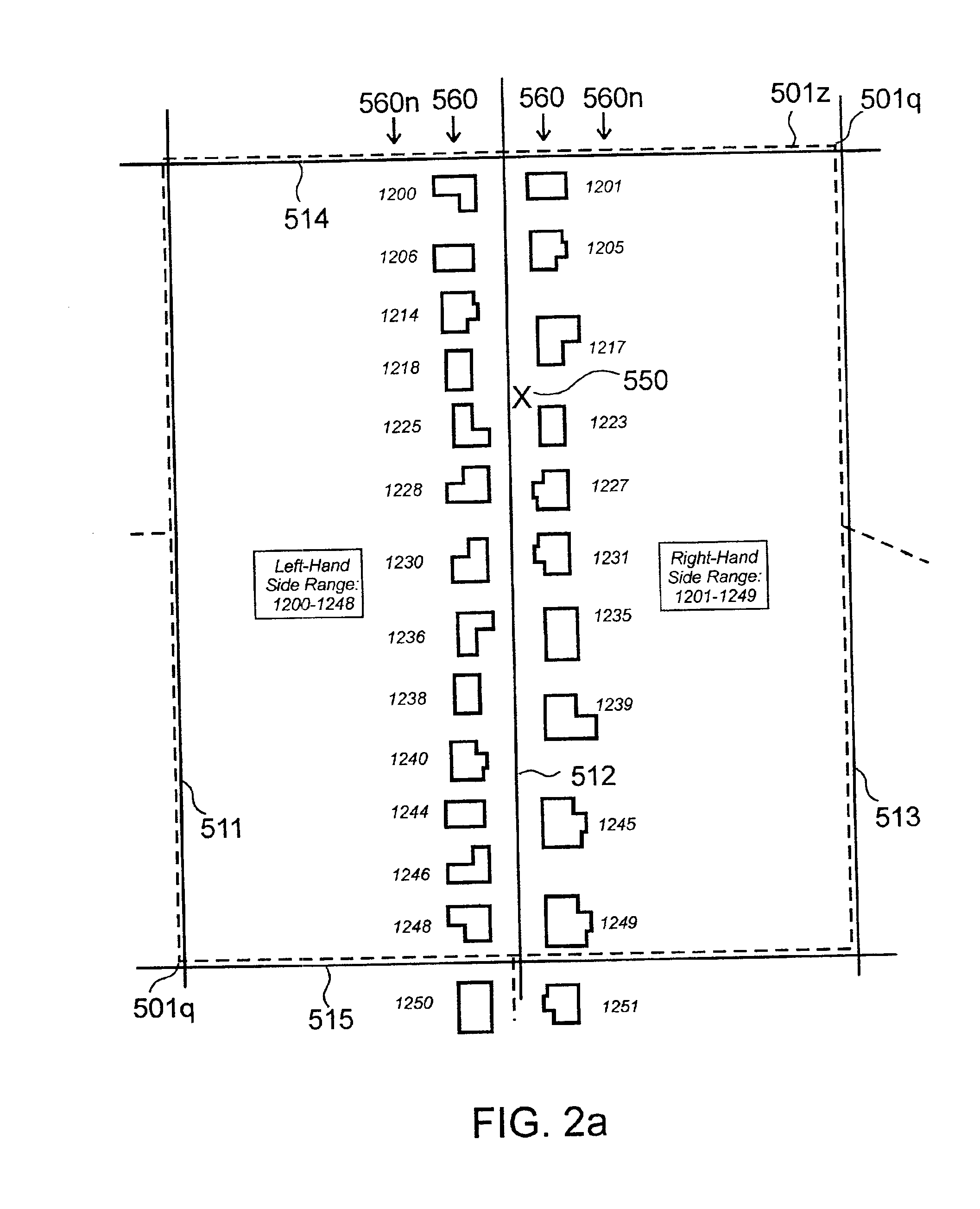

High-performance location management platform

An apparatus and method for rapid translation of geographic latitude and longitude into any of a number of application-specific location designations or location classifications, including street address, nearest intersection, PSAP (Public Safety Answering Point) zone, telephone rate zone, franchise zone, or other geographic, administrative, governmental or commercial division of territory. The speed of translation meets call-setup requirements for call-processing applications such as PSAP determination, and meets caller response expectations for caller queries such as the location of the nearest commercial establishment of a given type. To complete its translation process in a timely manner, a memory-stored spatial database is used to eliminate mass-storage accesses during operation, a spatial indexing scheme such as an R-tree over the spatial database is used to locate a caller within a specific rectangular area, and an optimized set of point-in-polygon algorithms is used to narrow the caller's location to a specific zone identified in the database. Additional validation processing is supplied to verify intersections or street addresses returned for a given latitude and longitude. Automatic conversion of latitude-longitude into coordinates in different map projection systems is provided. The memory-stored database is built in a compact and optimized form from a relational spatial database as required. The R-tree spatial indexing of the memory-stored database allows for substantially unlimited scalability of database size without degradation of response time. Maximum performance for database retrievals is assured by isolating the retrieval process from all updating and maintenance processes. Hot update of the in-memory database is provided without degradation of response time.

Owner:PRECISELY SOFTWARE INC +1

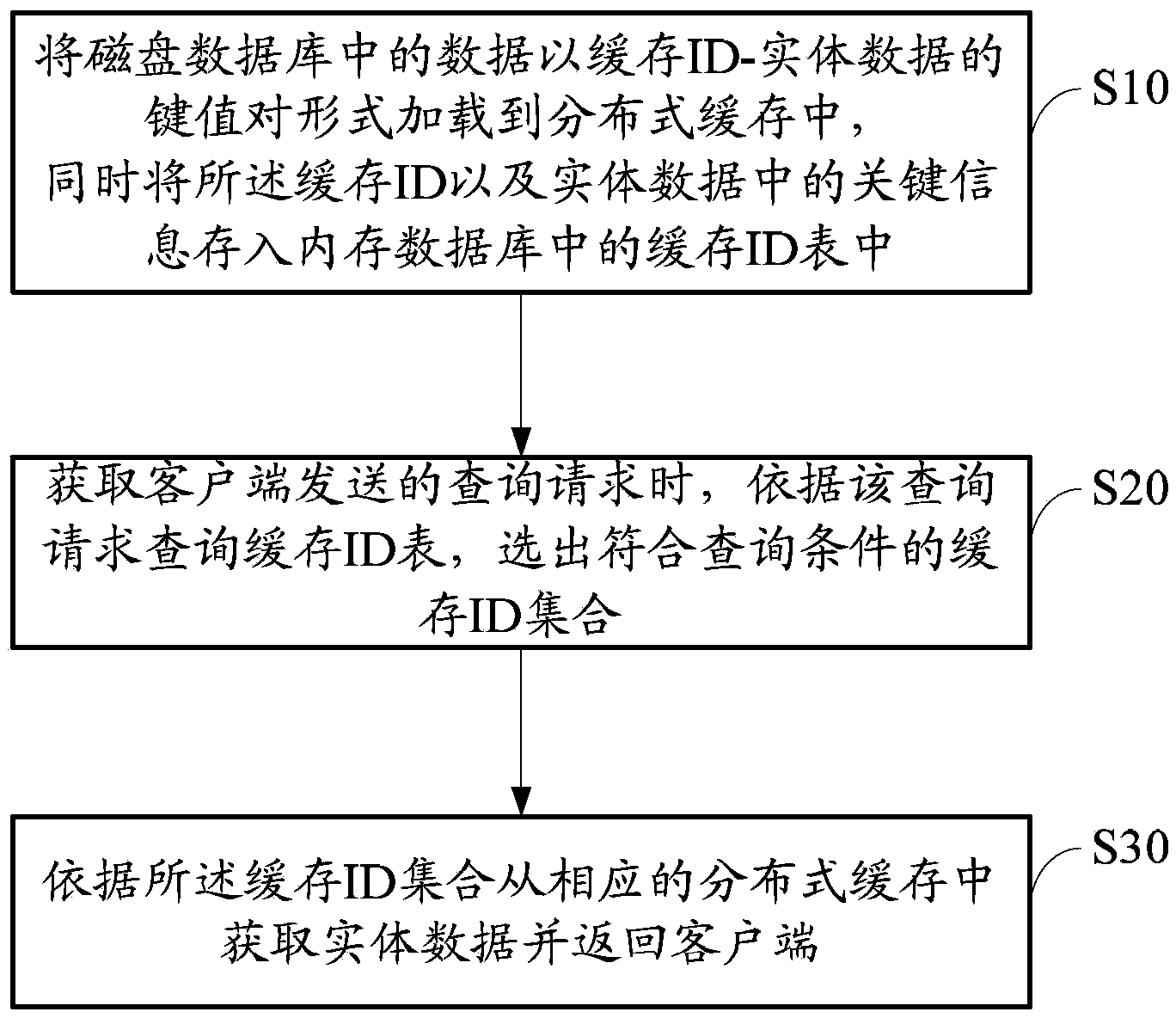

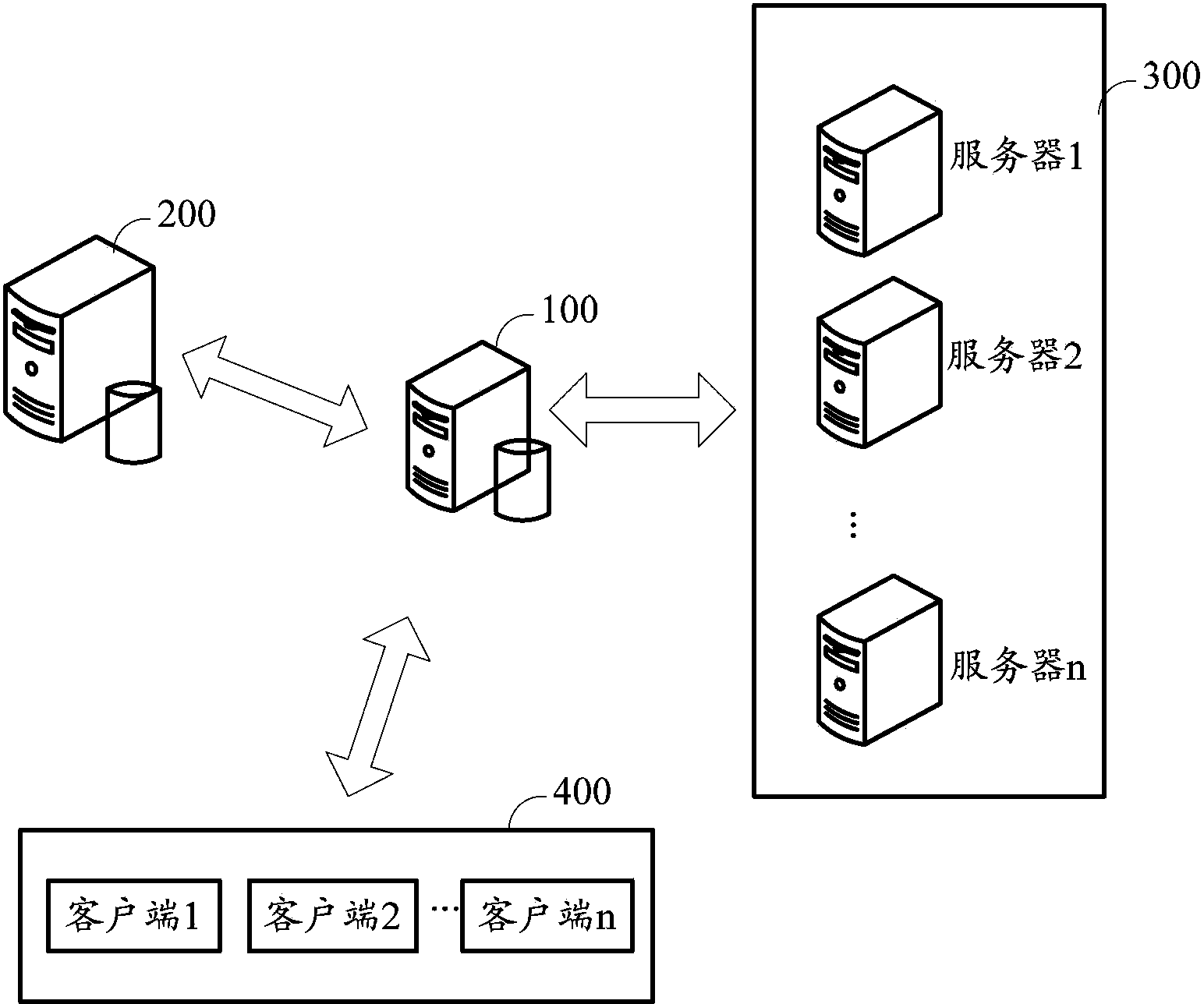

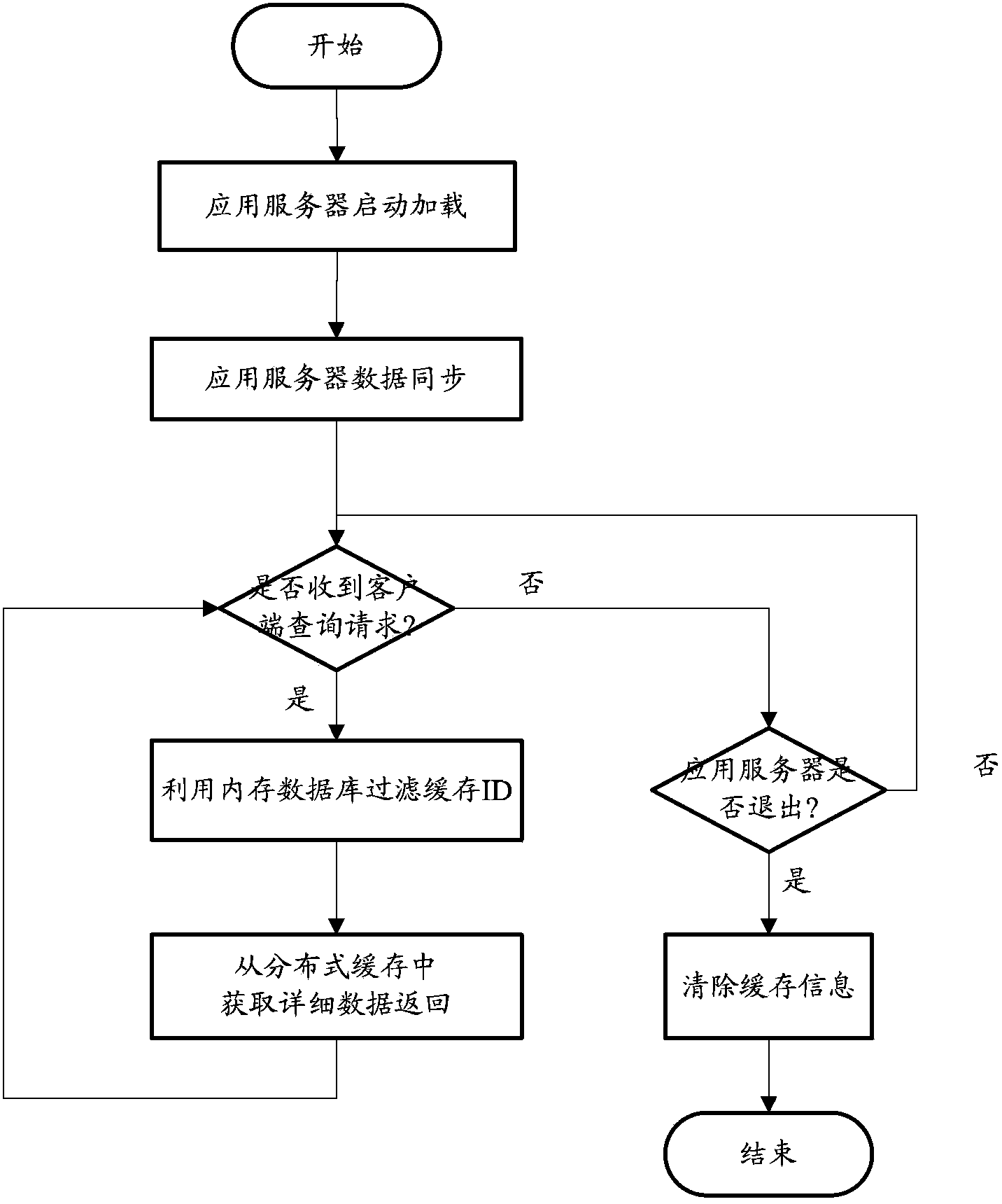

Method and system for improving large data volume query performance

ActiveCN103853727AReduce loadEasy to handleMemory adressing/allocation/relocationDatabase distribution/replicationIn-memory databaseDistributed cache

The invention discloses a method and a system for improving the large data volume query performance and belongs to the technical field of large data volume query. The method comprises A, loading data in a disk database into distributed caches in a cache ID-entity data key value pair mode, and storing the cache ID and key information of the entity data in a cache ID table of a memory database simultaneously; B, querying the cache ID table according to a query request when the query request sent by a client is obtained to selecting an ID set meeting the query request; C, obtaining the entity data from corresponding distributed caches according to the cache ID set and returning the entity data to the client. By means o the system and the method, loads of the disk database can be effectively reduced, and the big data query performance is improved.

Owner:SHENZHEN ZTE NETVIEW TECH

High-performance location management platform

InactiveUS6868410B2Quick translationEliminate mass-storage accessesData processing applicationsDigital computer detailsMass storageLongitude

An apparatus and method for rapid translation of geographic latitude and longitude into any of a number of application-specific location designations or location classifications, including street address, nearest intersection, PSAP (Public Safety Answering Point) zone, telephone rate zone, franchise zone, or other geographic, administrative, governmental or commercial division of territory. The speed of translation meets call-setup requirements for call-processing applications such as PSAP determination, and meets caller response expectations for caller queries such as the location of the nearest commercial establishment of a given type. To complete its translation process in a timely manner, a memory-stored spatial database is used to eliminate mass-storage accesses during operation, a spatial indexing scheme such as an R-tree over the spatial database is used to locate a caller within a specific rectangular area, and an optimized set of point-in-polygon algorithms is used to narrow the caller's location to a specific zone identified in the database. Additional validation processing is supplied to verify intersections or street addresses returned for a given latitude and longitude. Automatic conversion of latitude-longitude into coordinates in different map projection systems is provided.The memory-stored database is built in a compact and optimized form from a relational spatial database as required. The R-tree spatial indexing of the memory-stored database allows for substantially unlimited scalability of database size without degradation of response time. Maximum performance for database retrievals is assured by isolating the retrieval process from all updating and maintenance processes. Hot update of the in-memory database is provided without degradation of response time.

Owner:PRECISELY SOFTWARE INC +1

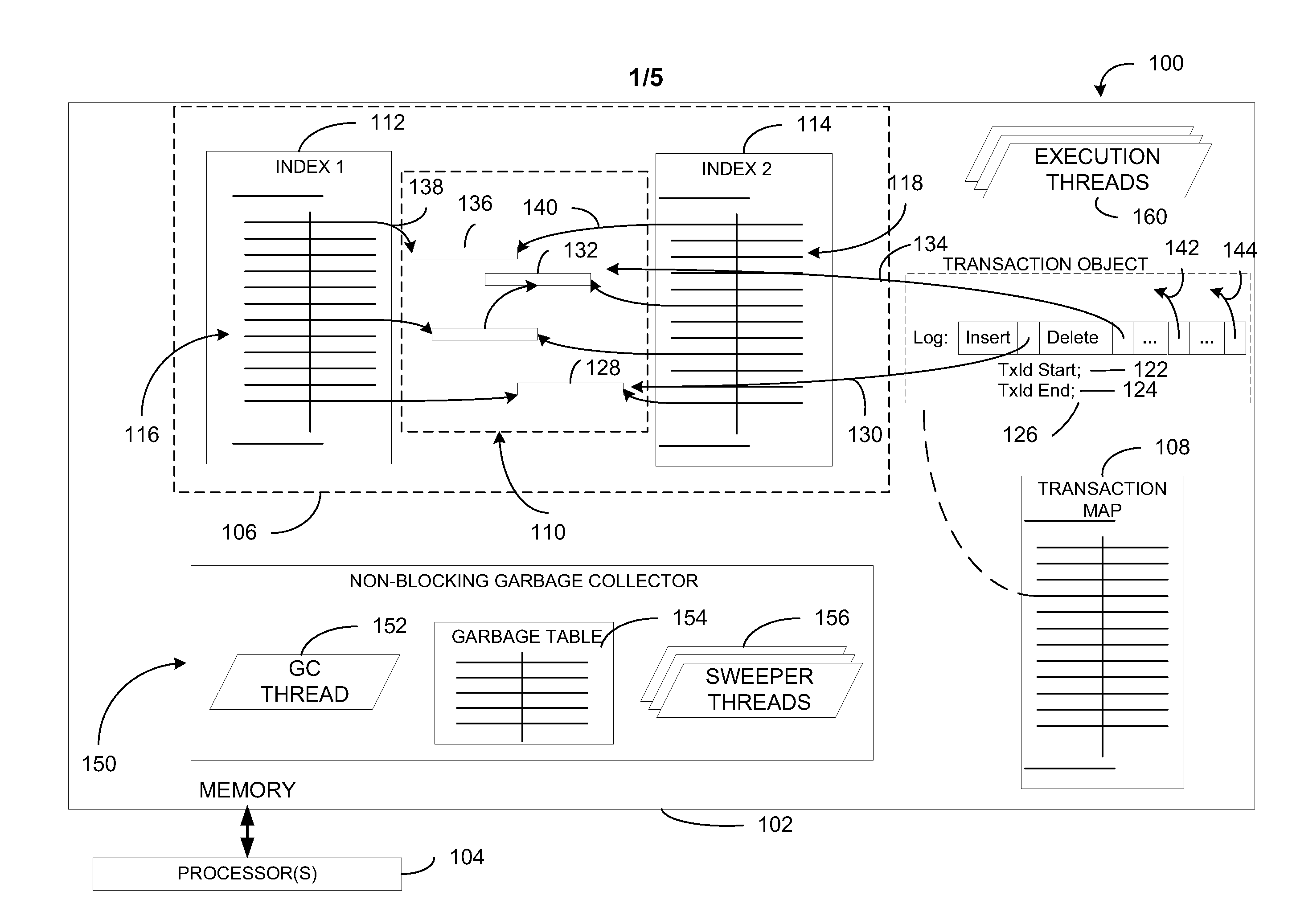

In-memory database system

ActiveUS20110252000A1Effectively scaledMeet growth needsMemory architecture accessing/allocationDigital data processing detailsIn-memory databaseComputerized system

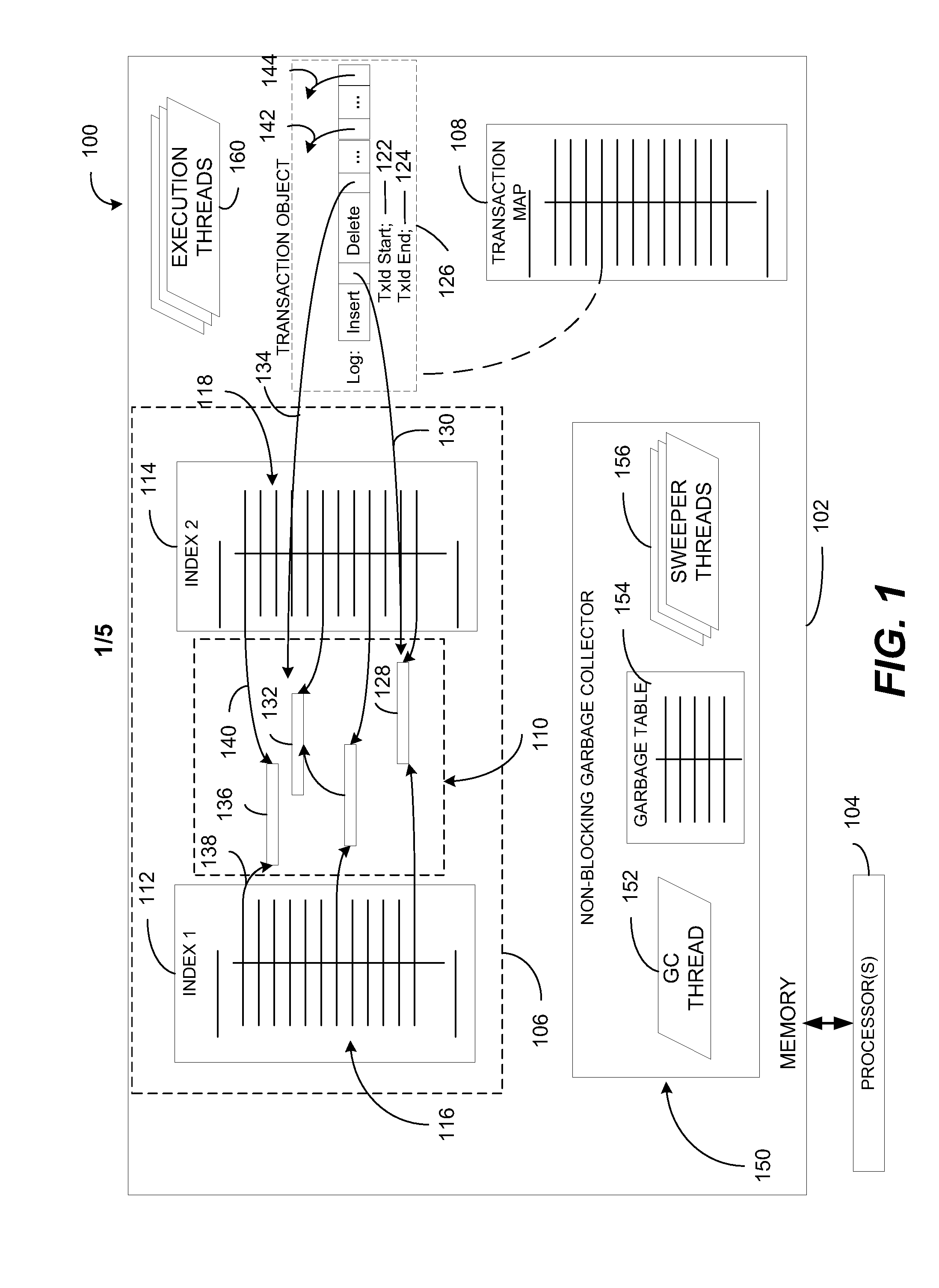

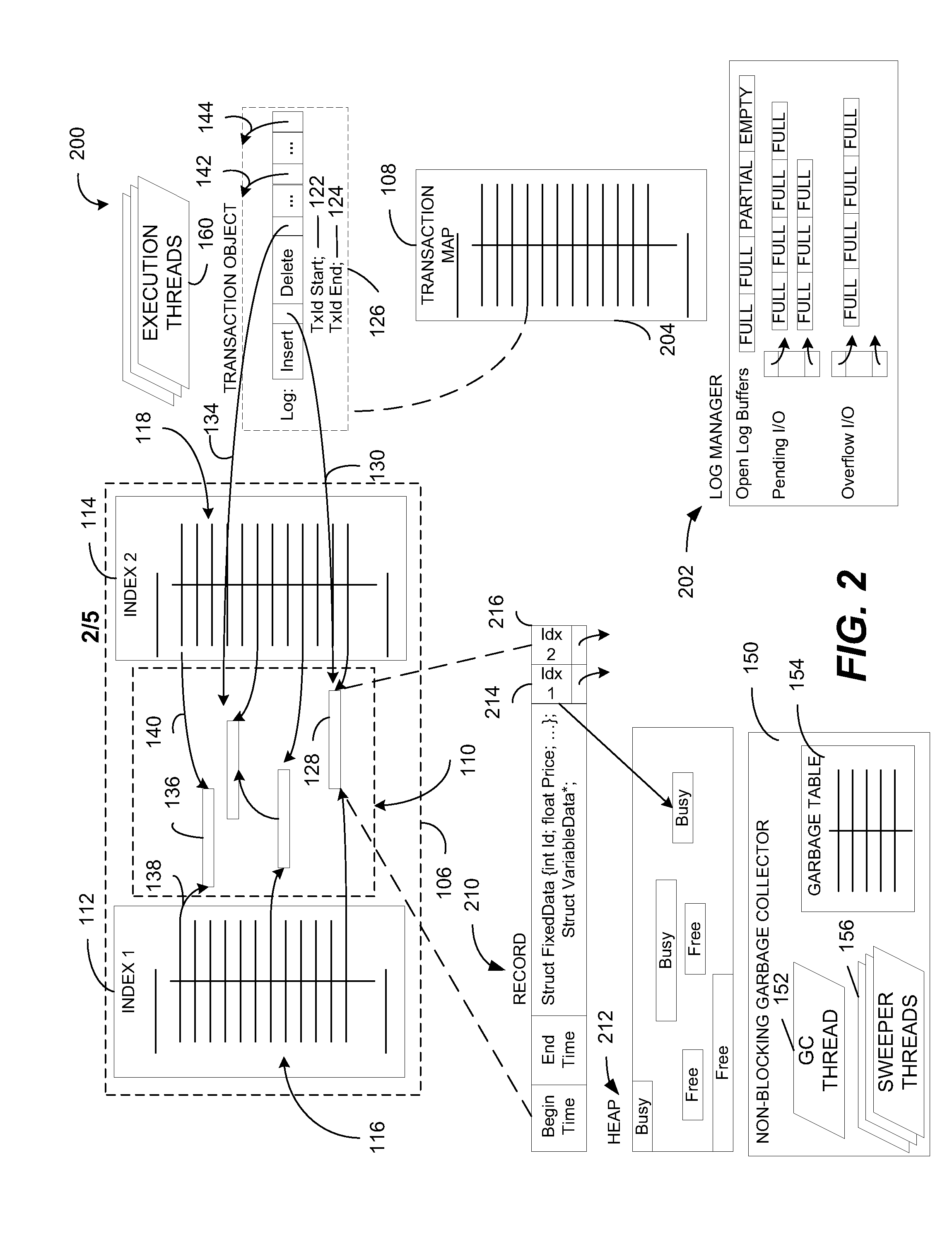

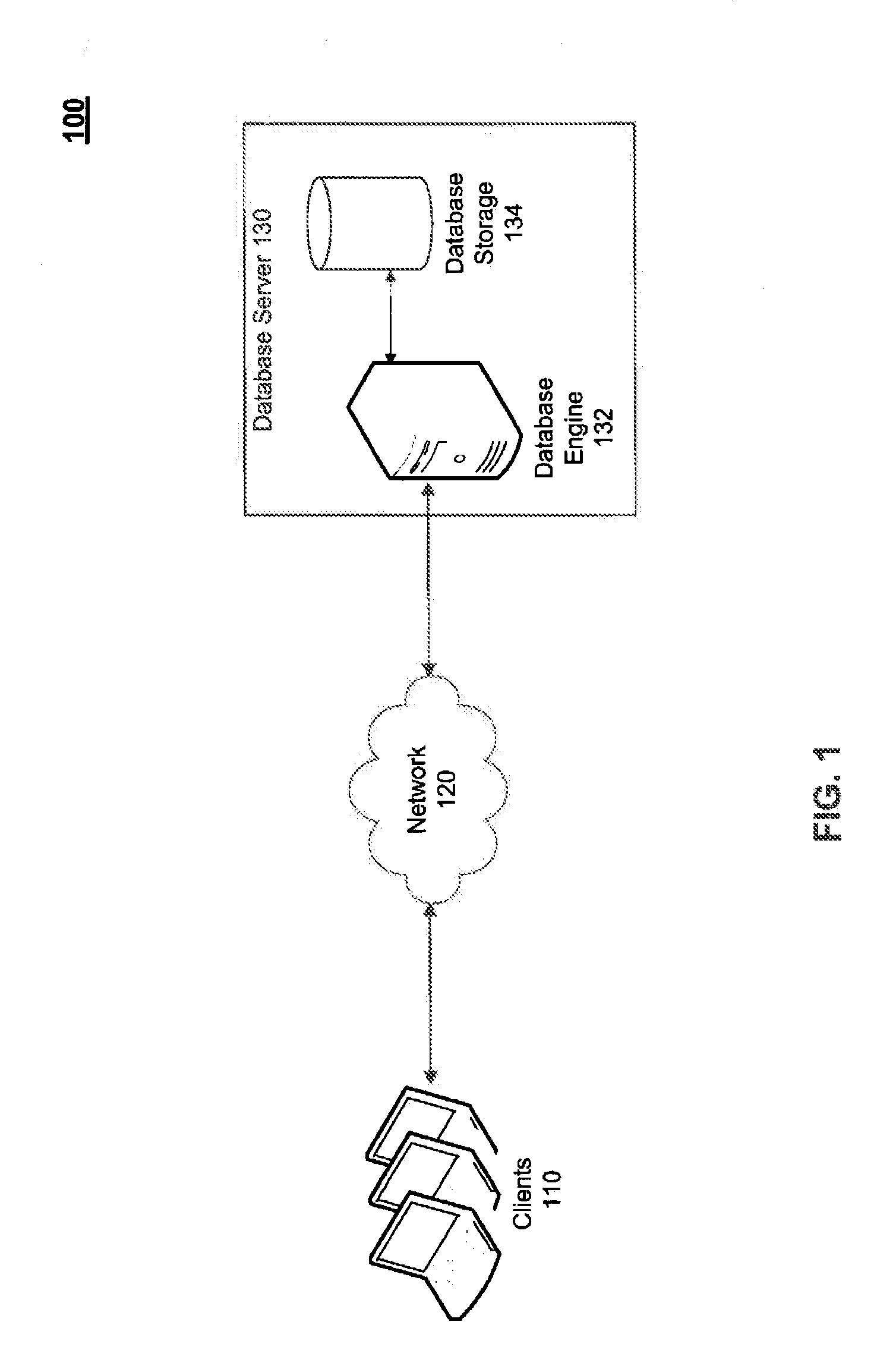

A computer system includes a memory and a processor coupled to the memory. The processor is configured to execute instructions that cause execution of an in-memory database system that includes one or more database tables. Each database table includes a plurality of rows, where data representing each row is stored in the memory. The in-memory database system also includes a plurality of indexes associated with the one or more database tables, where each index is implemented by a lock-free data structure. Update logic at the in-memory database system is configured to update a first version of a particular row to create a second version of the particular row. The in-memory database system includes a non-blocking garbage collector configured to identify data representing outdated versions of rows.

Owner:MICROSOFT TECH LICENSING LLC

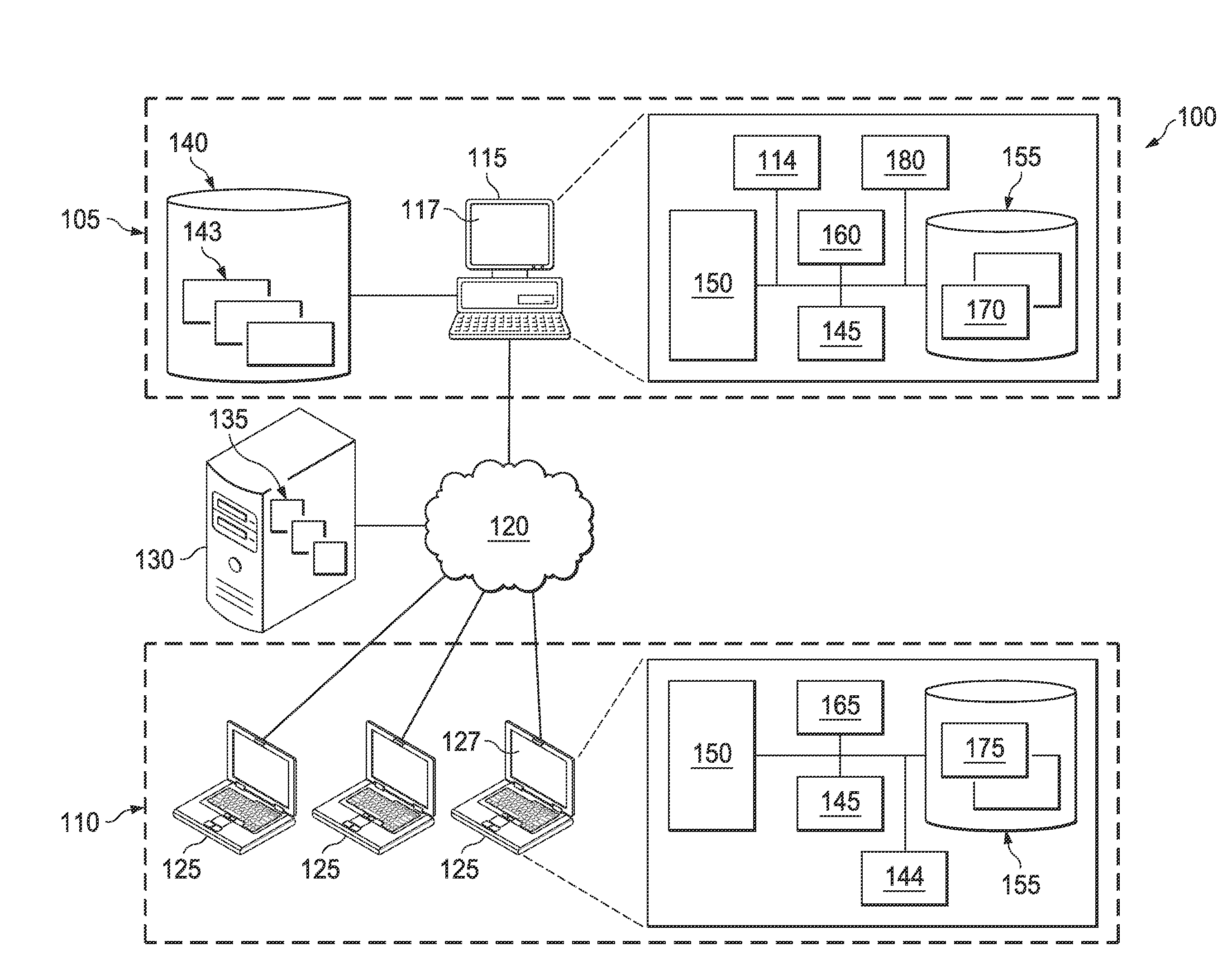

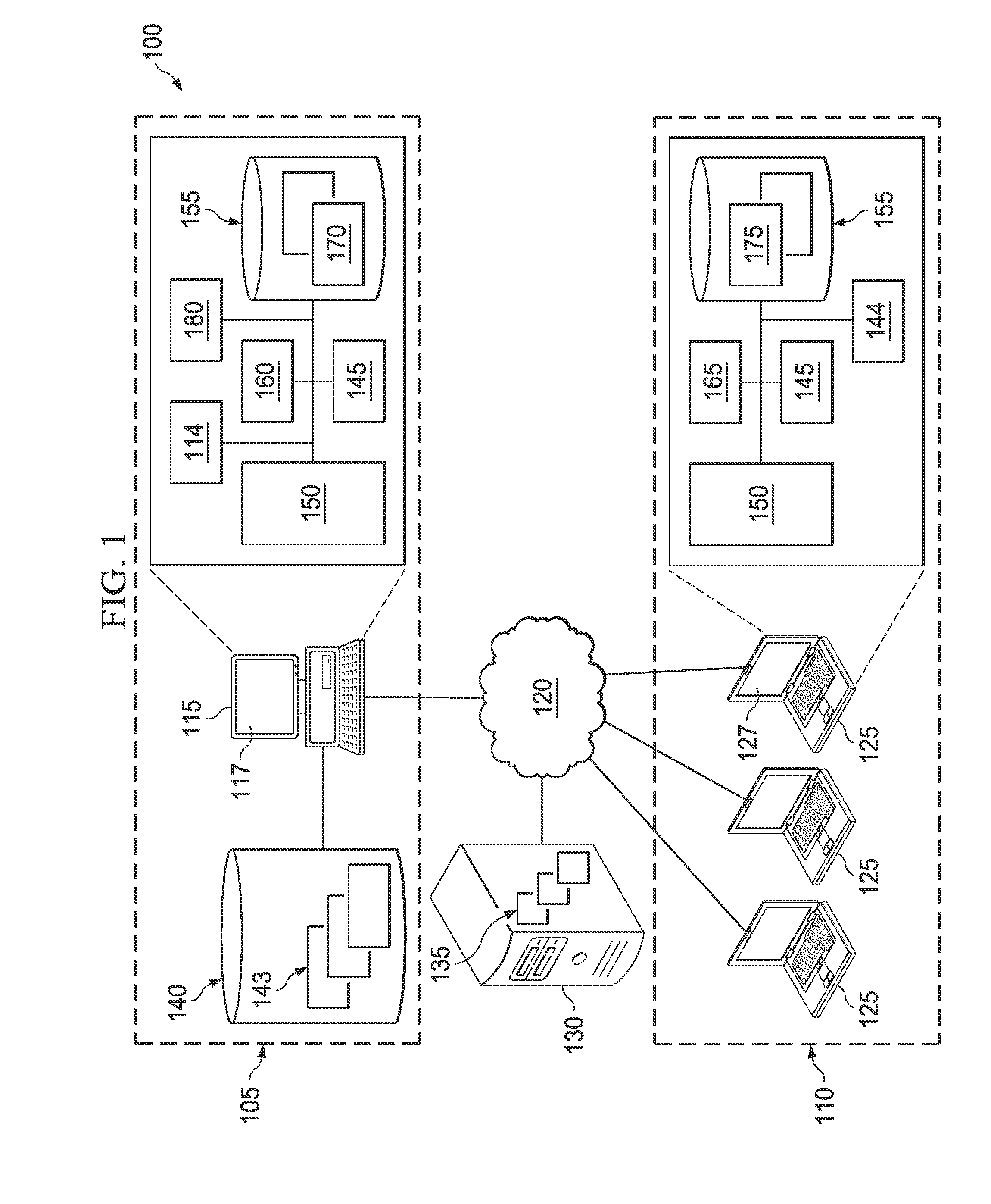

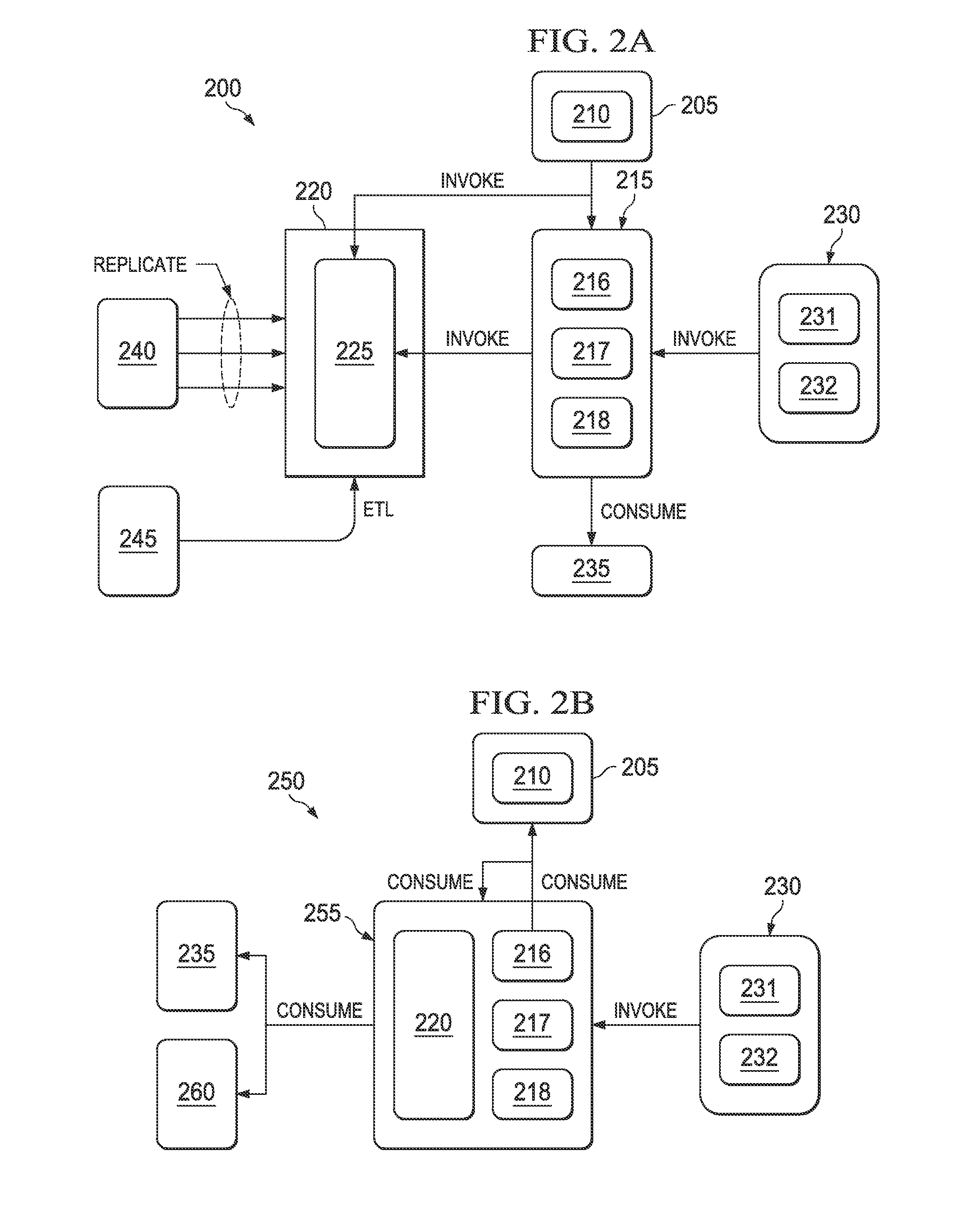

Distributed data cache database architecture

InactiveUS20120158650A1Digital data processing detailsDatabase distribution/replicationIn-memory databaseGranularity

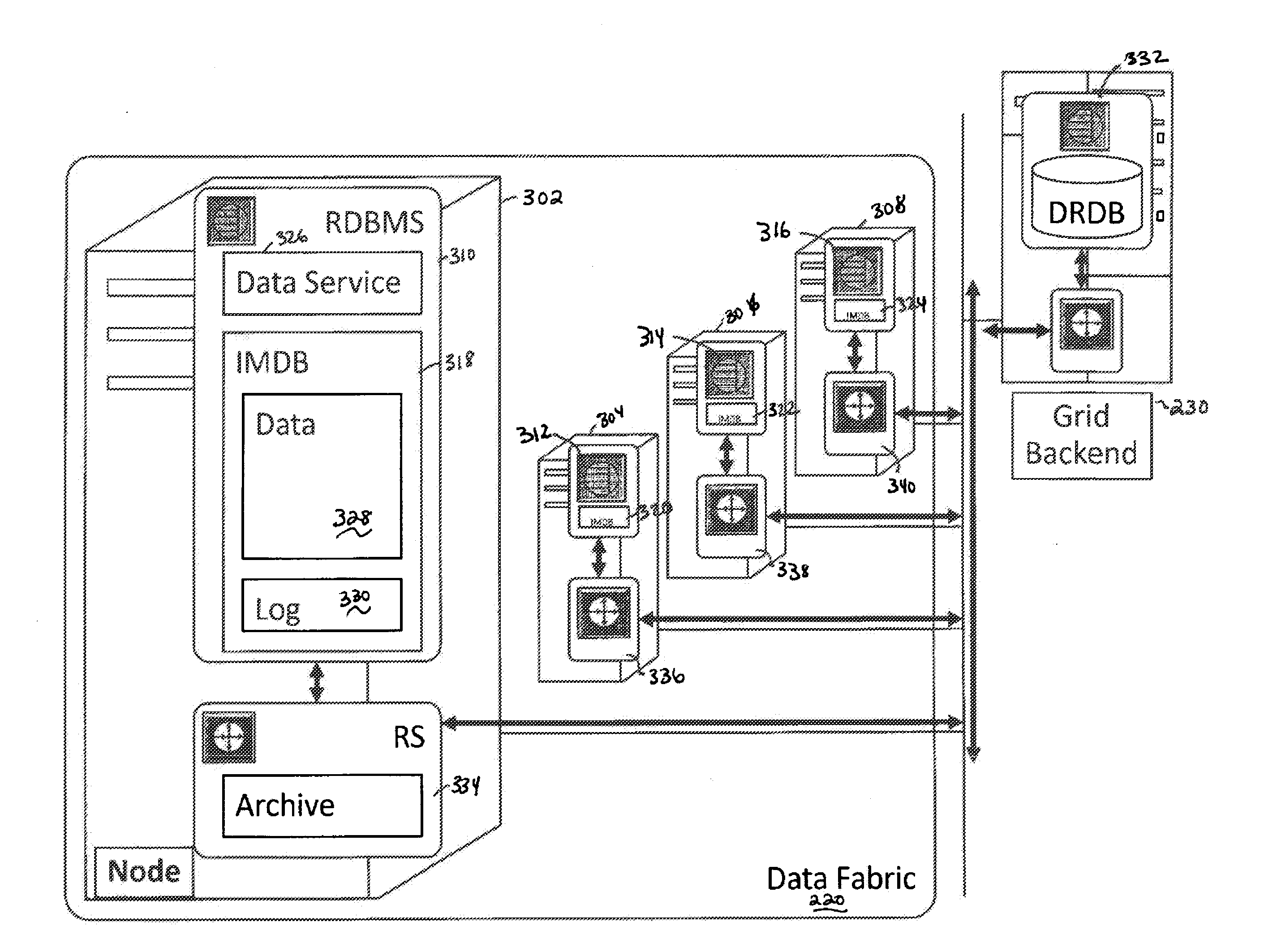

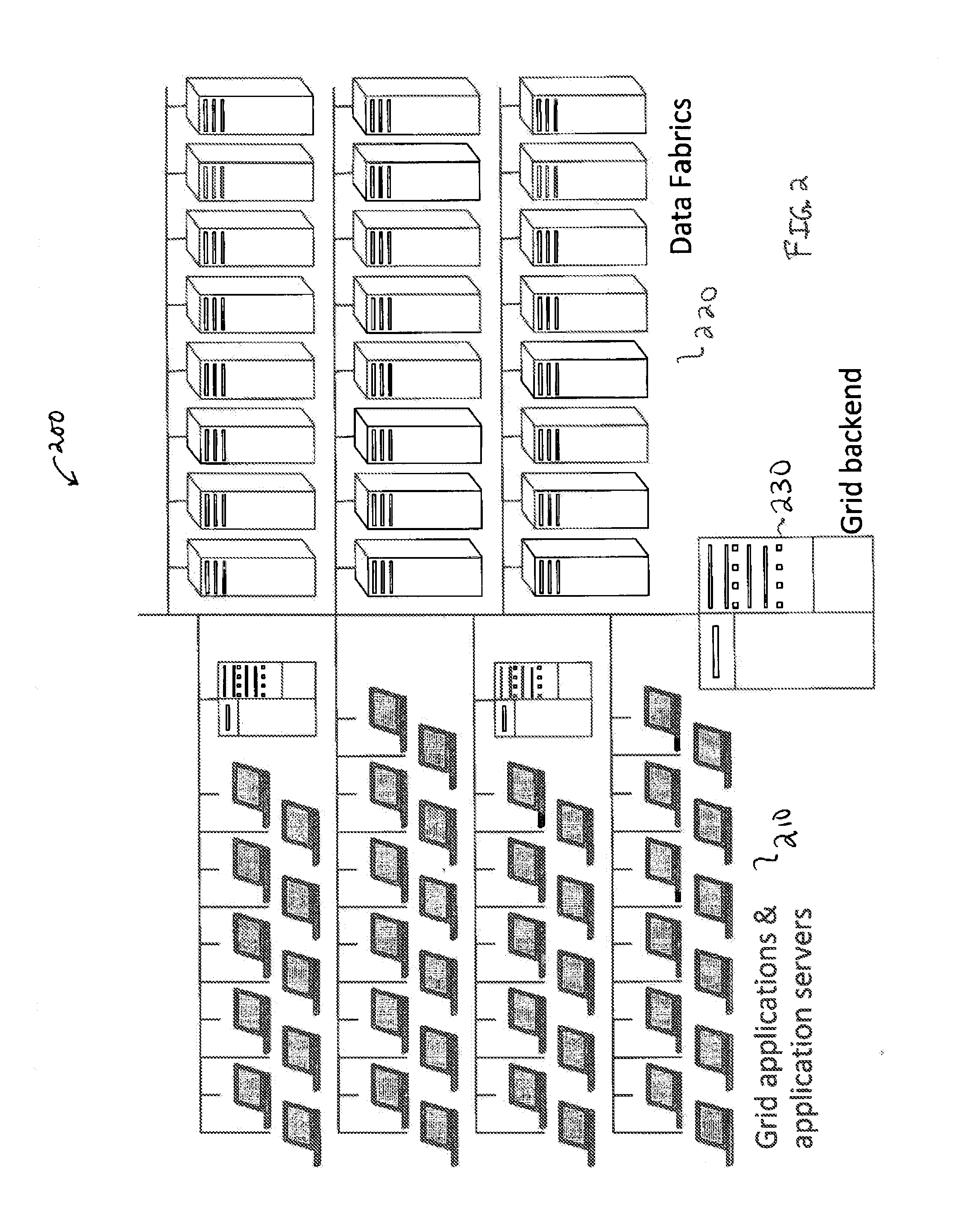

System, method, computer program product embodiments and combinations and sub-combinations thereof for a distributed data cache database architecture are provided. An embodiment includes providing a scalable distribution of in-memory database (IMDB) system nodes organized as one or more data fabrics. Further included is providing a plurality of data granularity types for storing data within the one or more data fabrics. Database executions are managed via the one or more data fabrics for a plurality of applications compatible with at least one data granularity type.

Owner:SYBASE INC

WEB-Based Task Management System and Method

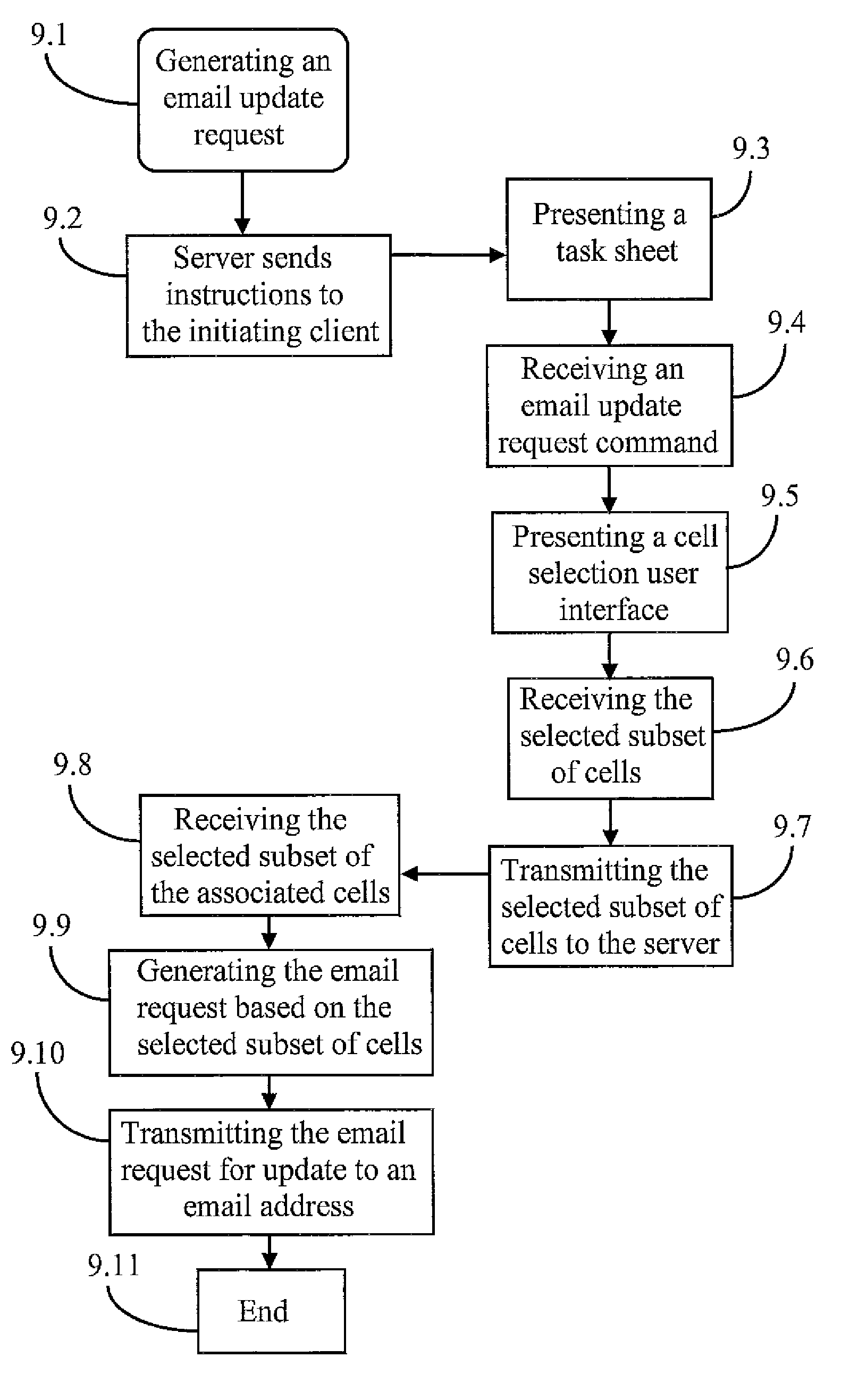

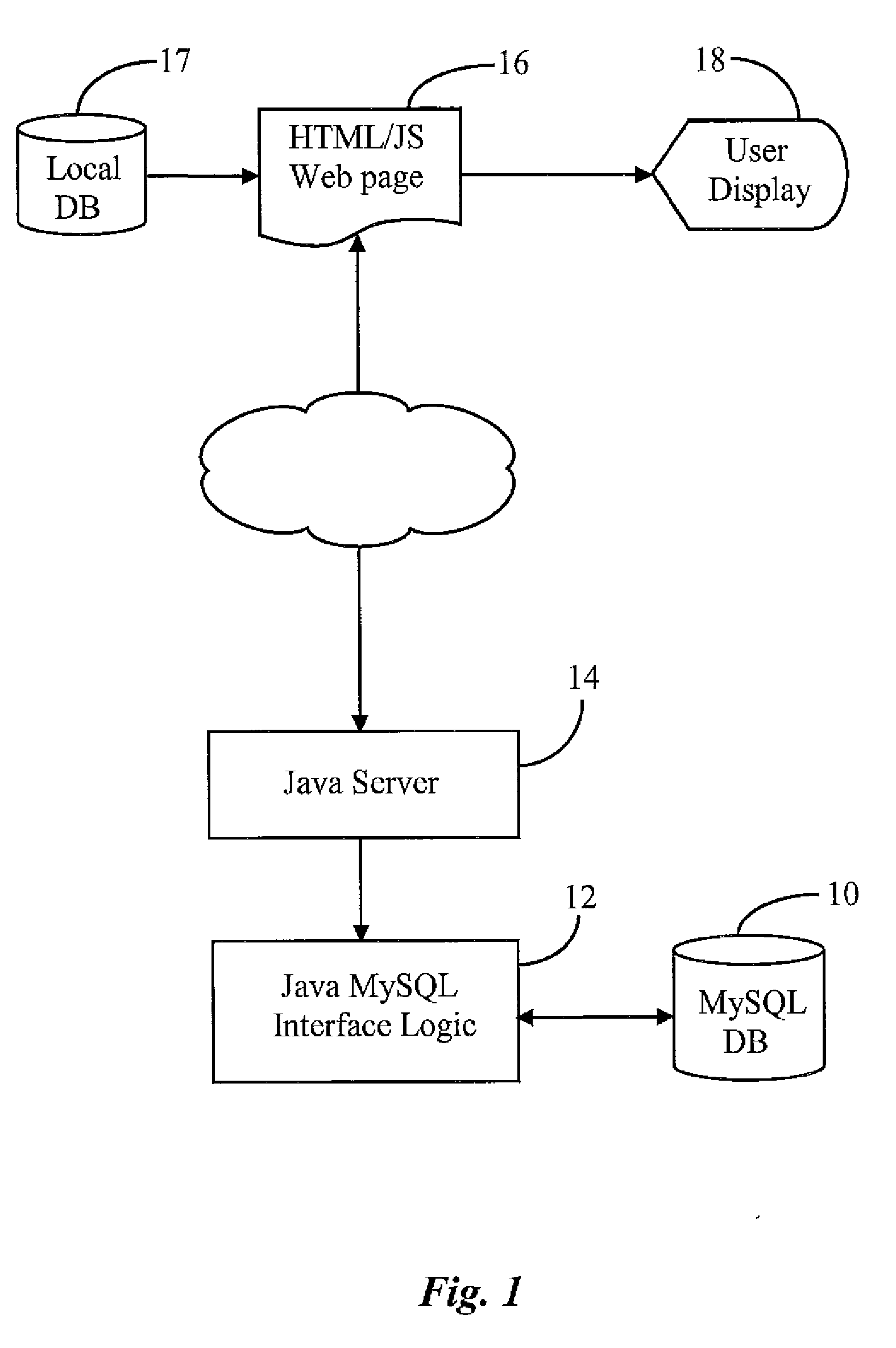

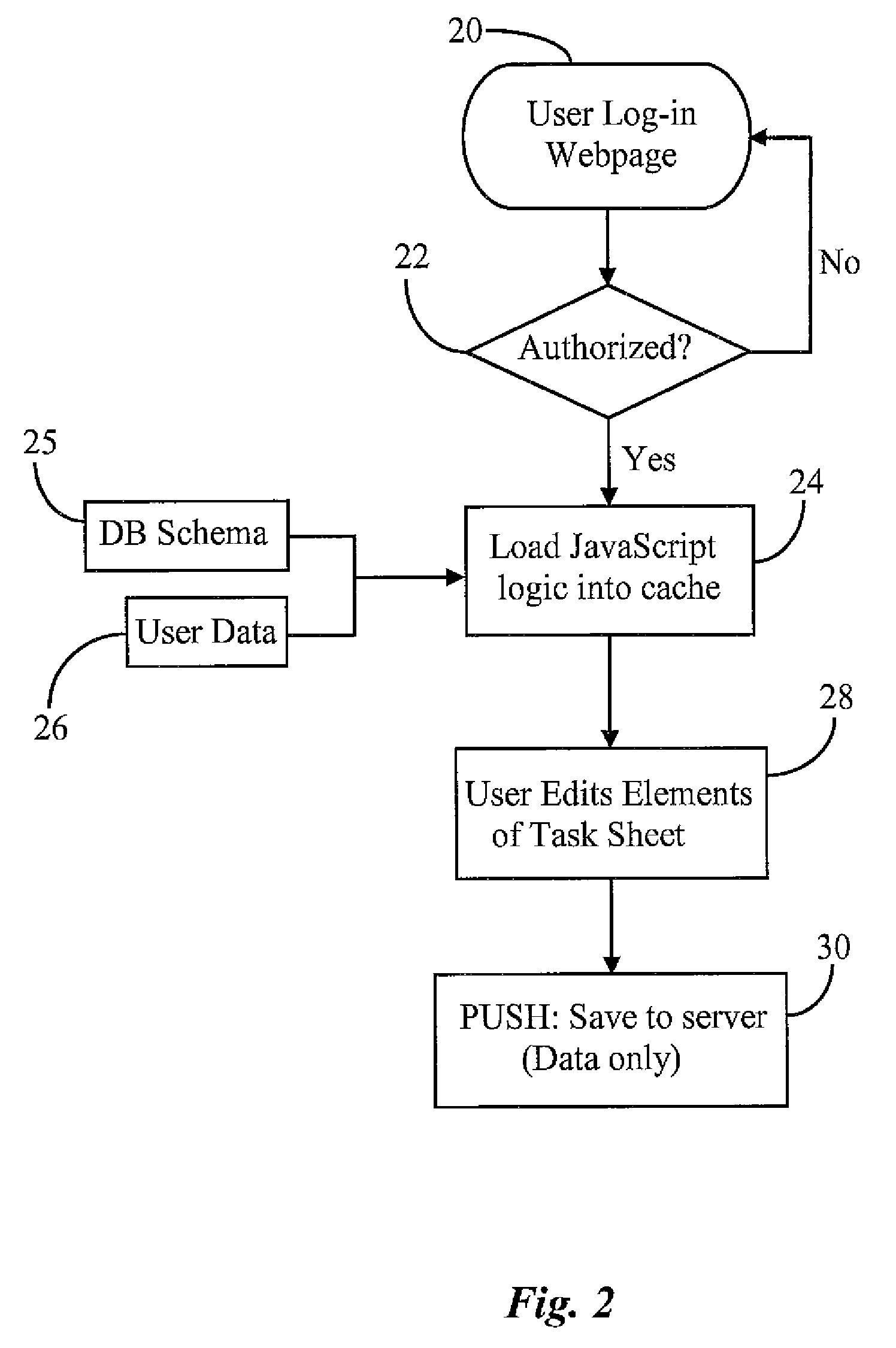

InactiveUS20080244582A1Interprogram communicationData switching networksIn-memory databaseWeb browser

A task management system and method integrates rich functionality into a web-browser based application. An efficient request for an update enables a user to quickly generate a completely customizable email message to intended recipient(s). By introducing a client side, in-memory database, the client component becomes less susceptible to network connectivity glitches and enables user interfaces to be redrawn without server interaction. Additionally, the task management system and method provides flexibility by enabling tasks to be grouped and organized. Specifically, a task may be associated with multiple task sheets and a task sheet may include multiple tasks in a many-to-many manner. Also, templates may be created that enable a user to start with a base template and to add (or remove) one or more columns. Further, the task management systems allows for multiple users to access and manipulate task data concurrently. In addition, the task management system provides a means for viewing the change history of task data within a task sheet by highlighting task data within a task sheet that has been changed by another user of the task management system.

Owner:SMARTSHEET INC

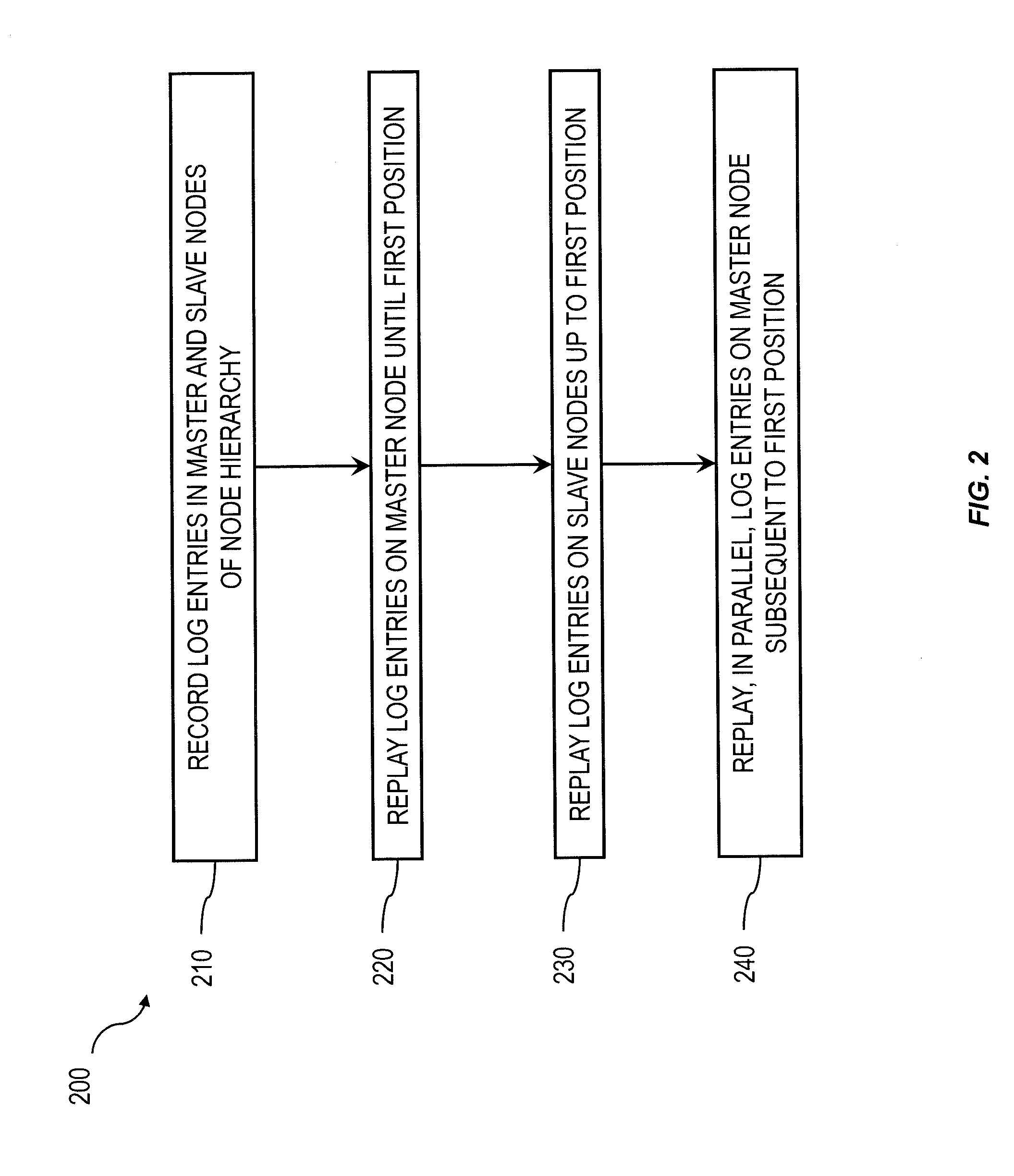

Distributed Database Log Recovery

ActiveUS20130117237A1Digital data processing detailsError detection/correctionIn-memory databaseApplication software

Log entries are recorded in a data storage application (such as an in-memory database, etc.) for a plurality of transactions among nodes in a node hierarchy. The node hierarchy comprises master node having a plurality of slave nodes. Thereafter, at least a portion of the master node log entries are replayed until a first replay position is reached. Next, for each slave node, at least a portion of its respective log entries are replayed until the first replay position is reached (or an error occurs). Subsequently, replay of at least a portion of the log entries of the master node that are subsequent to the first replay position is initiated by the master node in parallel to at least a portion of the replaying by the slave nodes. Related apparatus, systems, techniques and articles are also described.

Owner:SAP AG

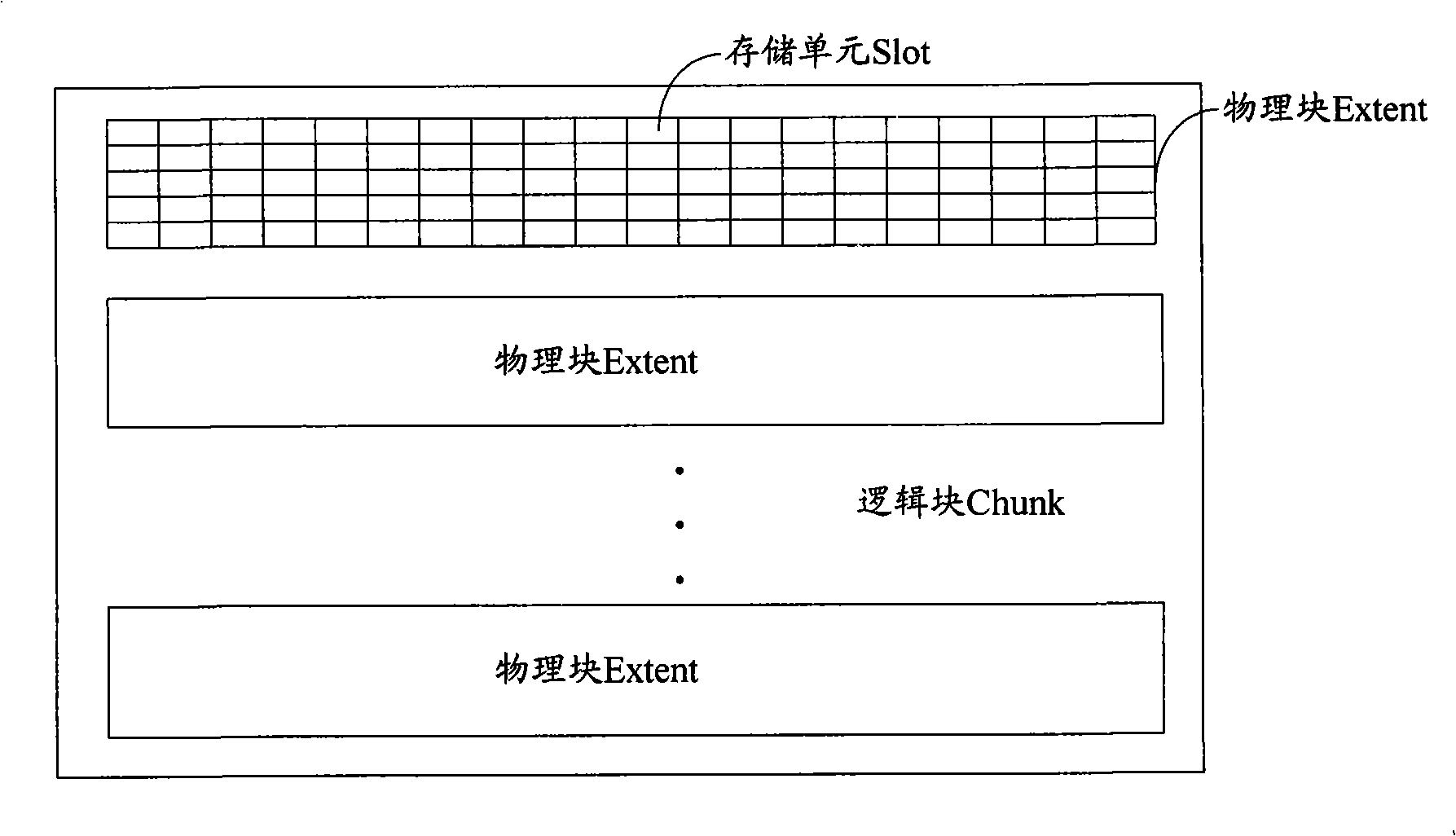

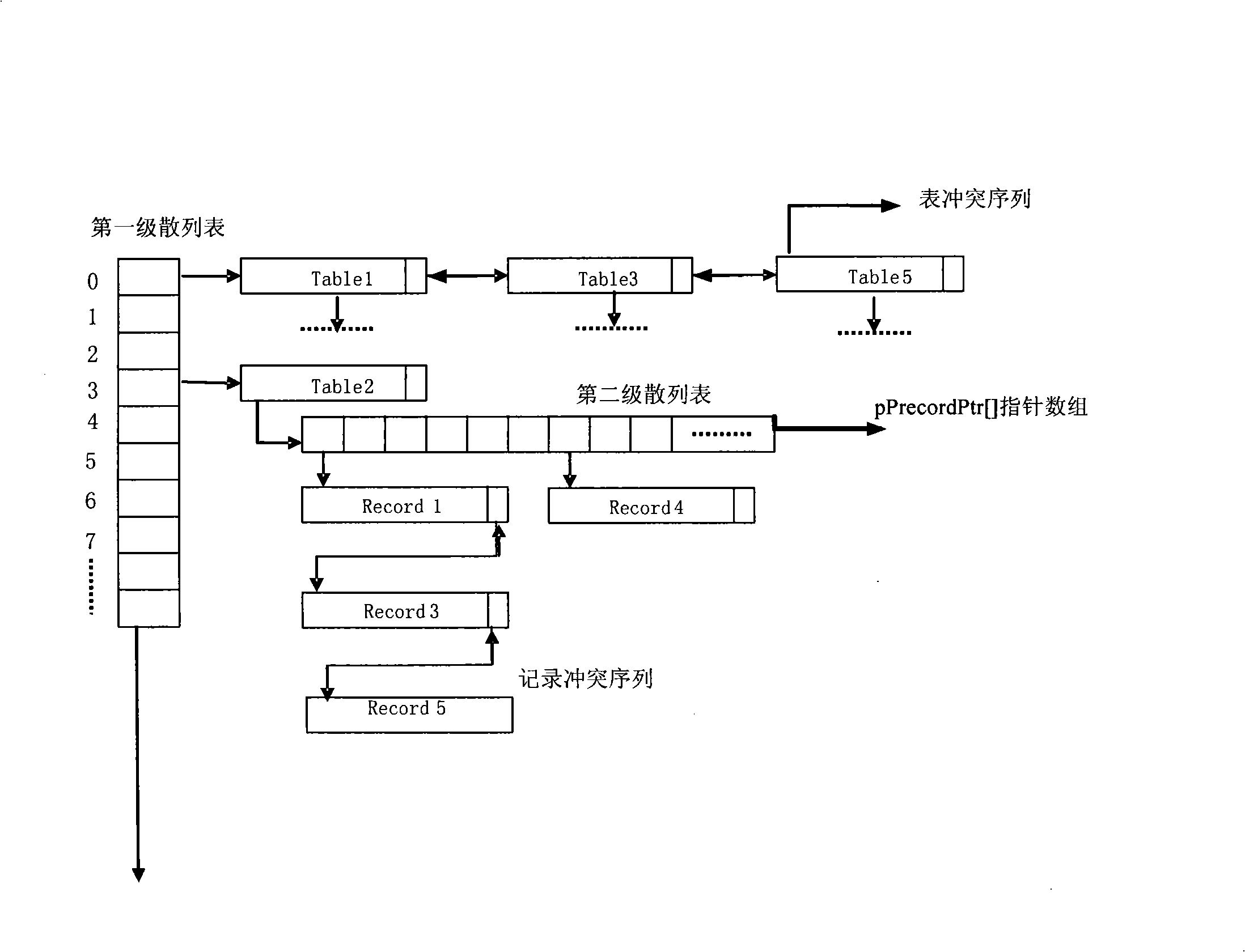

Internal memory database system and method and device for implementing internal memory data base

InactiveCN101315628AReduce resource usageAchieve loose couplingSpecial data processing applicationsInternal memoryCommunication interface

The invention discloses a memory database system which comprises a communication interface, a establishing device, a write operation device, a query operation device and a relief operation device; the establishing device is used for establishing a first storage area of the description information of a memory database in a shared memory, storing a second storage area used for locating the index information of table records and a third storage area used for storing the table records, and storing the description information of the memory database into the first storage area; the third storage area comprises storage units which are matched with the table records in size, and each storage unit stores one table record of the database, a physical block is formed by the storage units which have the same size and are continuous in physical space, and a logical block is linked by one physical block or a plurality of the same physical blocks. The invention also discloses a method for establishing the memory database, a method and the device for establishing multiple indexes. The invention realizes the loose coupling of the memory database table structure and the data storage structure, and can flexibly establish and manage the memory database.

Owner:HUAWEI TECH CO LTD

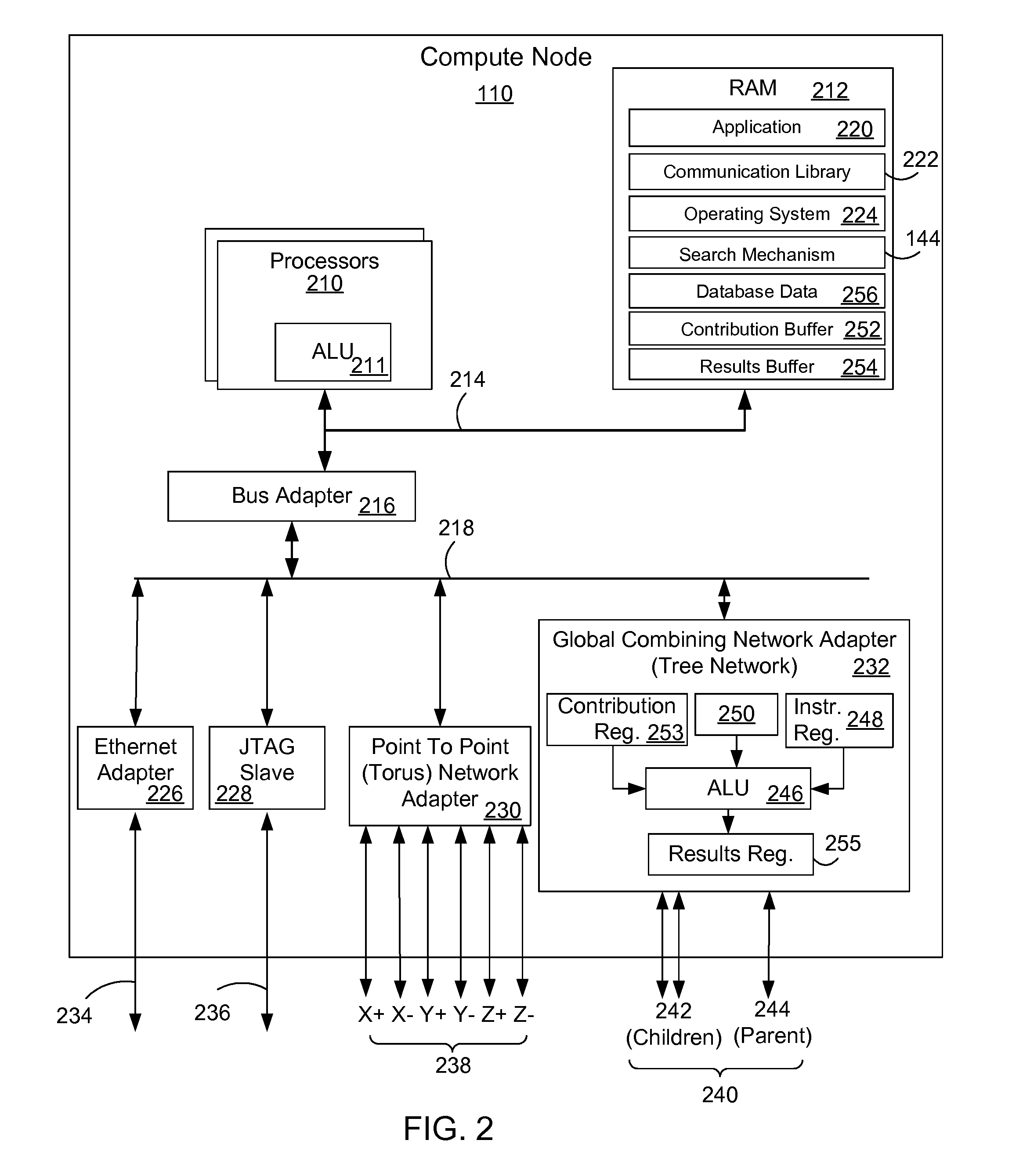

Database retrieval with a non-unique key on a parallel computer system

InactiveUS20090037377A1Efficiently and quickly searchDigital data information retrievalDigital data processing detailsIn-memory databaseComputerized system

An apparatus and method retrieves a database record from an in-memory database of a parallel computer system using a non-unique key. The parallel computer system performs a simultaneous search on each node of the computer system using the non-unique key and then utilizes a global combining network to combine the local results from the searches of each node to efficiently and quickly search the entire database.

Owner:IBM CORP

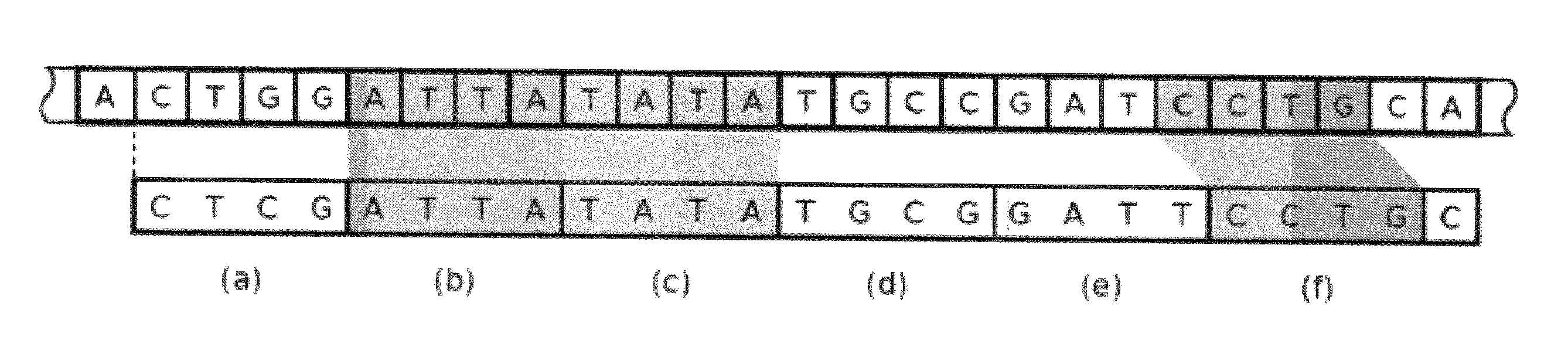

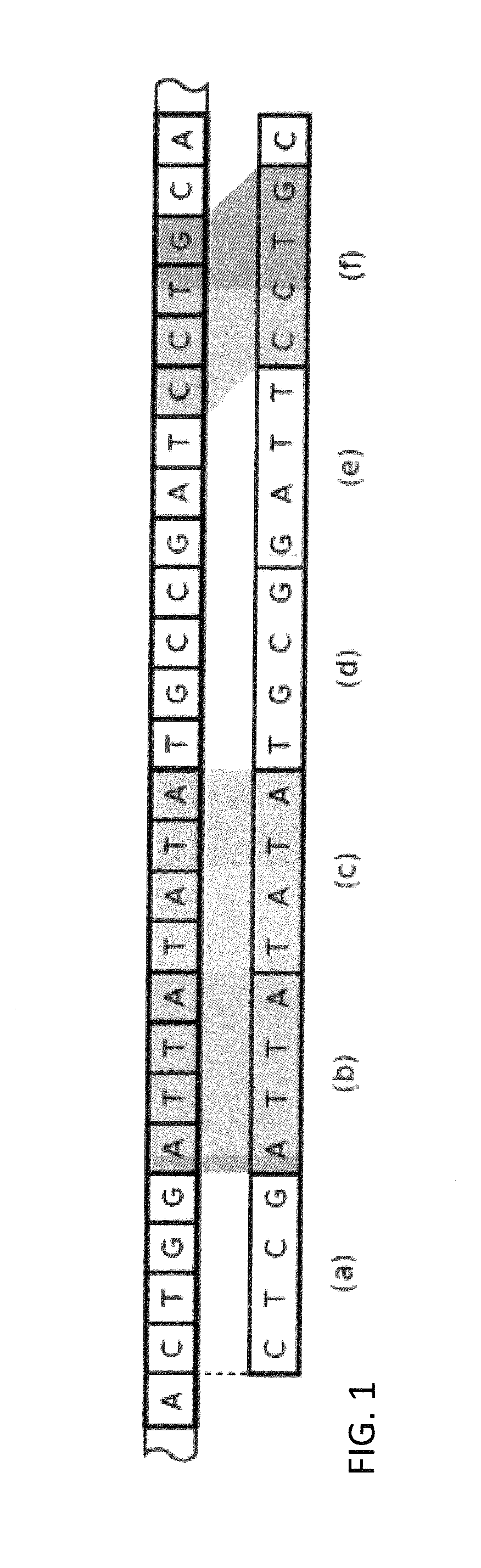

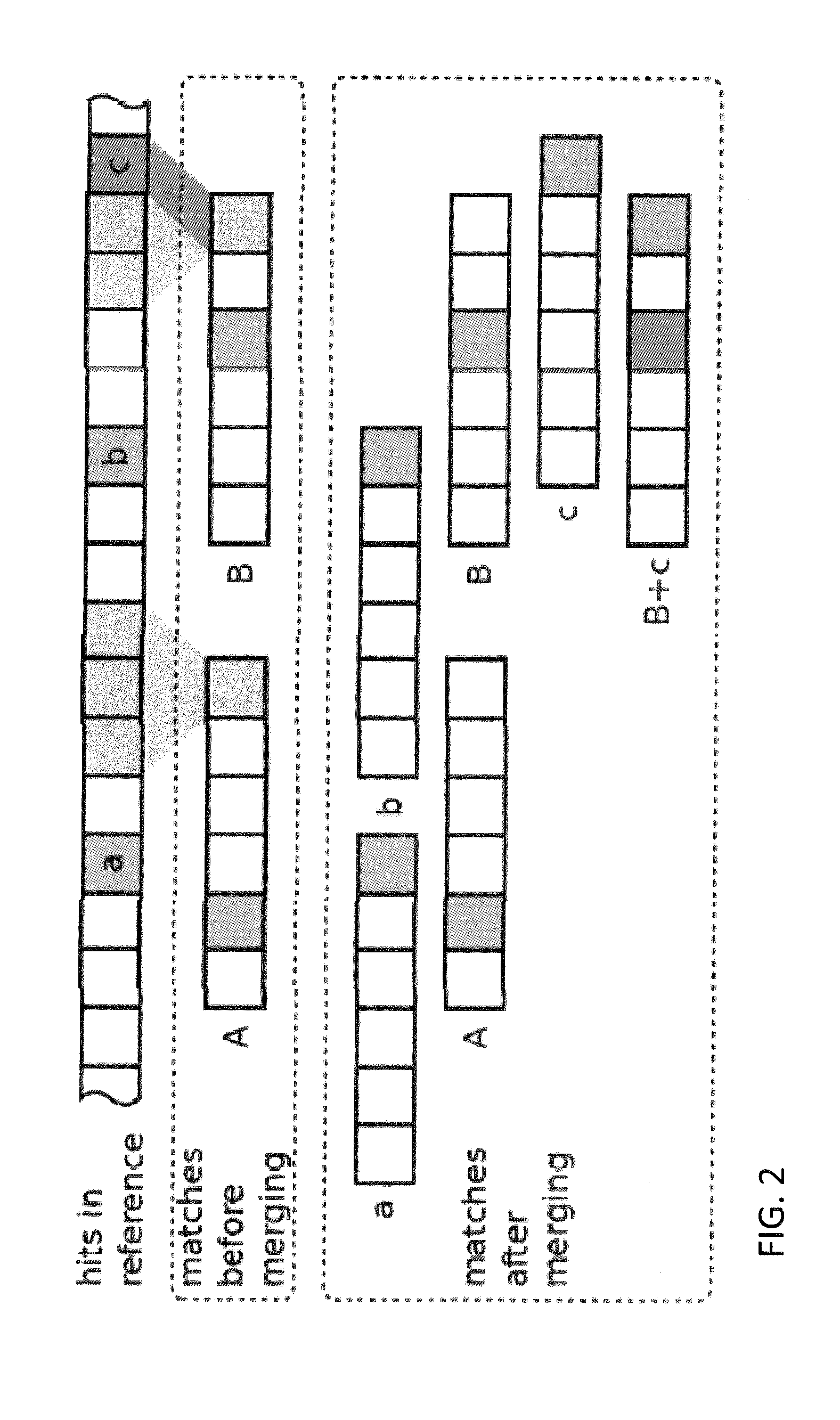

Efficient genomic read alignment in an in-memory database

ActiveUS20140214334A1Great processing timeConvenient timeData visualisationBiological testingIn-memory databaseAlgorithm

A high performance, low-cost, gapped read alignment algorithm is disclosed that produces high quality alignments of a complete human genome in a few minutes. Additionally, the algorithm is more than an order of magnitude faster than previous approaches using a low-cost workstation. The results are obtained via careful algorithm engineering of the seeding based approach. The use of non-hashed seeds in combination with techniques from search engine ranking achieves fast cache-efficient processing. The algorithm can also be efficiently parallelized. Integration into an in-memory database infrastructure (IMDB) leads to low overhead for data management and further analysis.

Owner:HASSO PLATTNER INSTITUT FUR SOFTWARESYSTTECHN

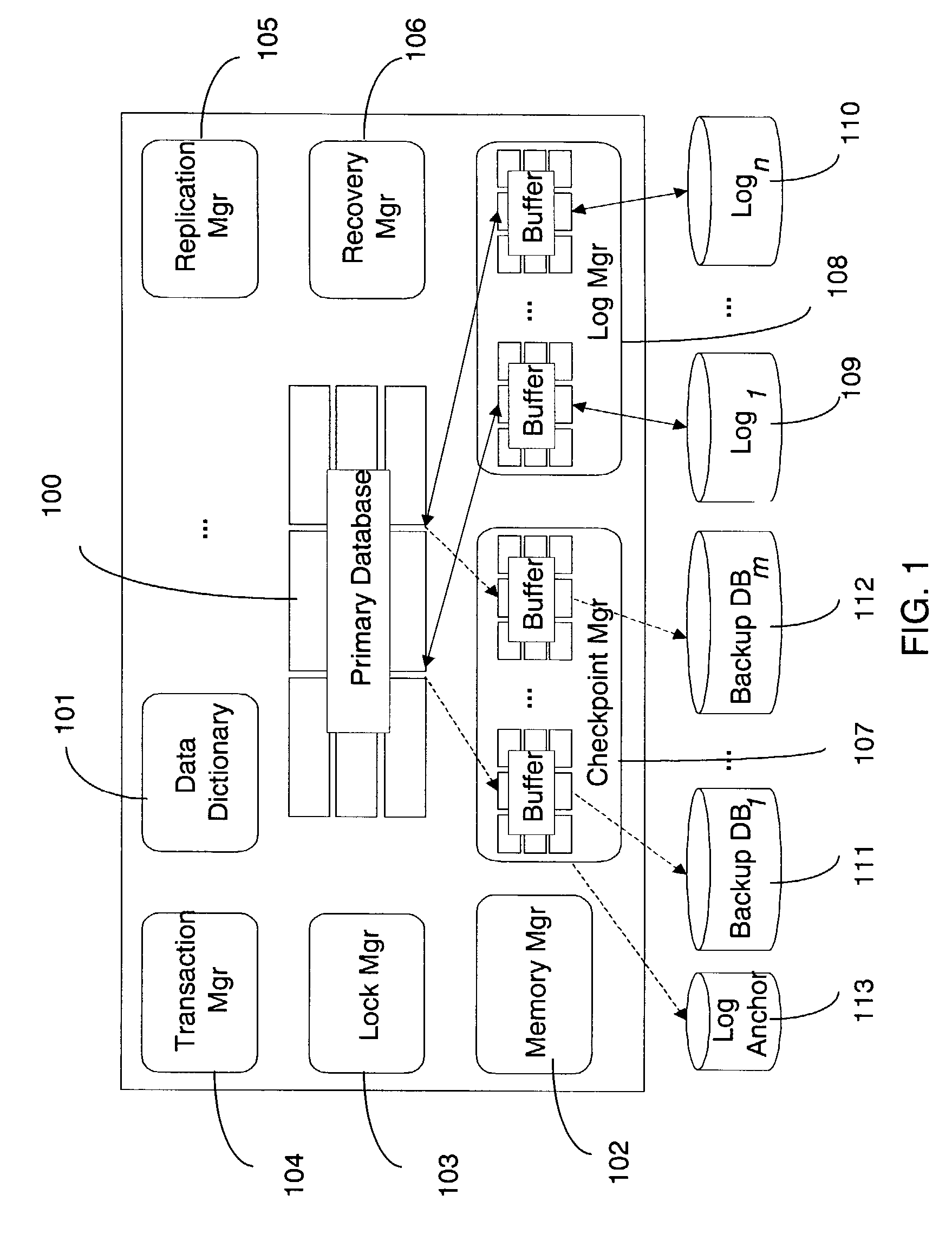

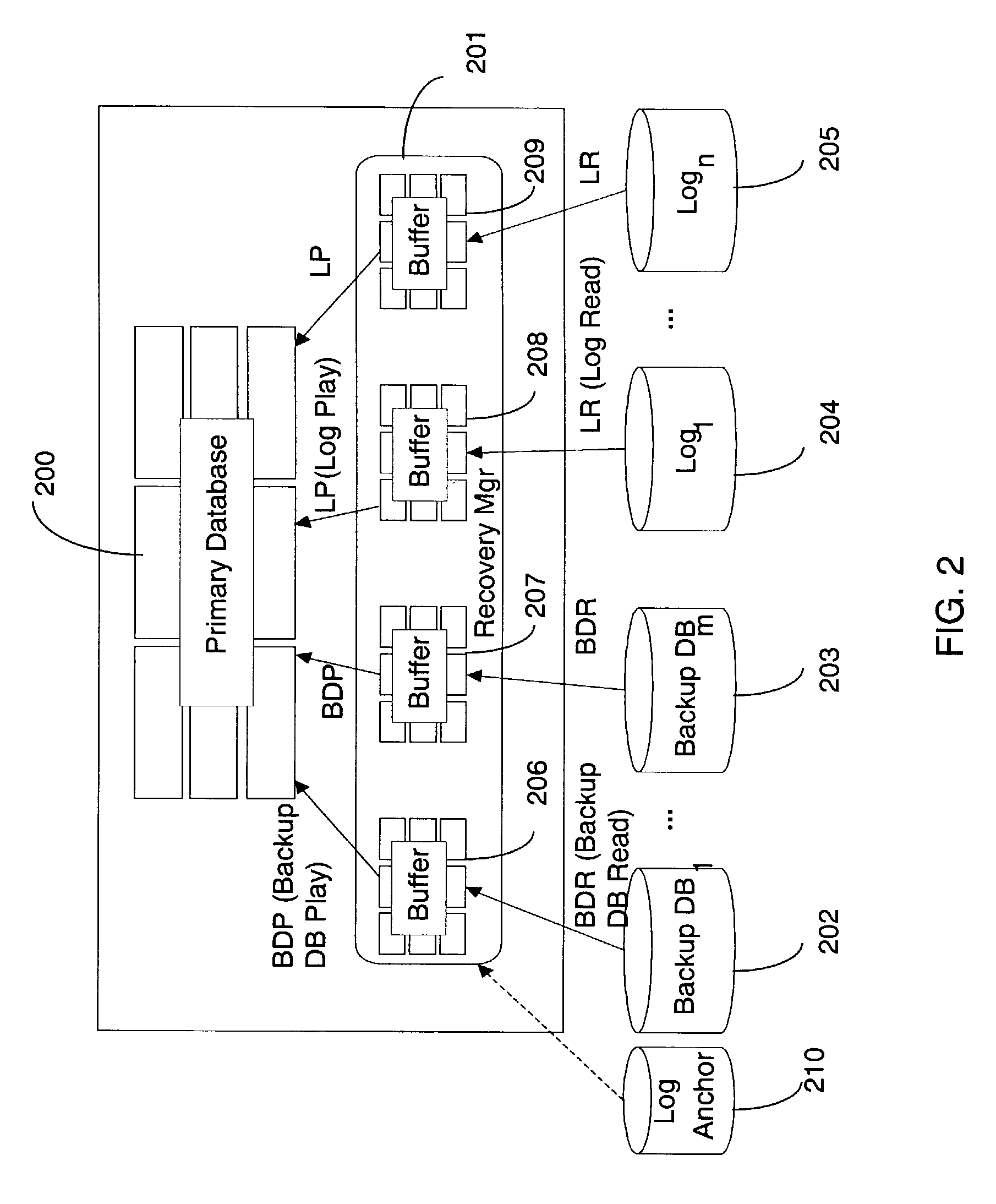

Managing data backup of an in-memory database in a database management system

ActiveUS8433684B2Digital data processing detailsError detection/correctionIn-memory databaseDatabase server

System, method, computer program product embodiments and combinations and sub-combinations thereof for backing up an in-memory database. In an embodiment, a backup server is provided to perform backup operations of a database on behalf of a database server. A determination is made as to whether the database is an in-memory database. Database server connections are utilized during data accesses for the backup operations when the database is an in-memory database.

Owner:SYBASE INC

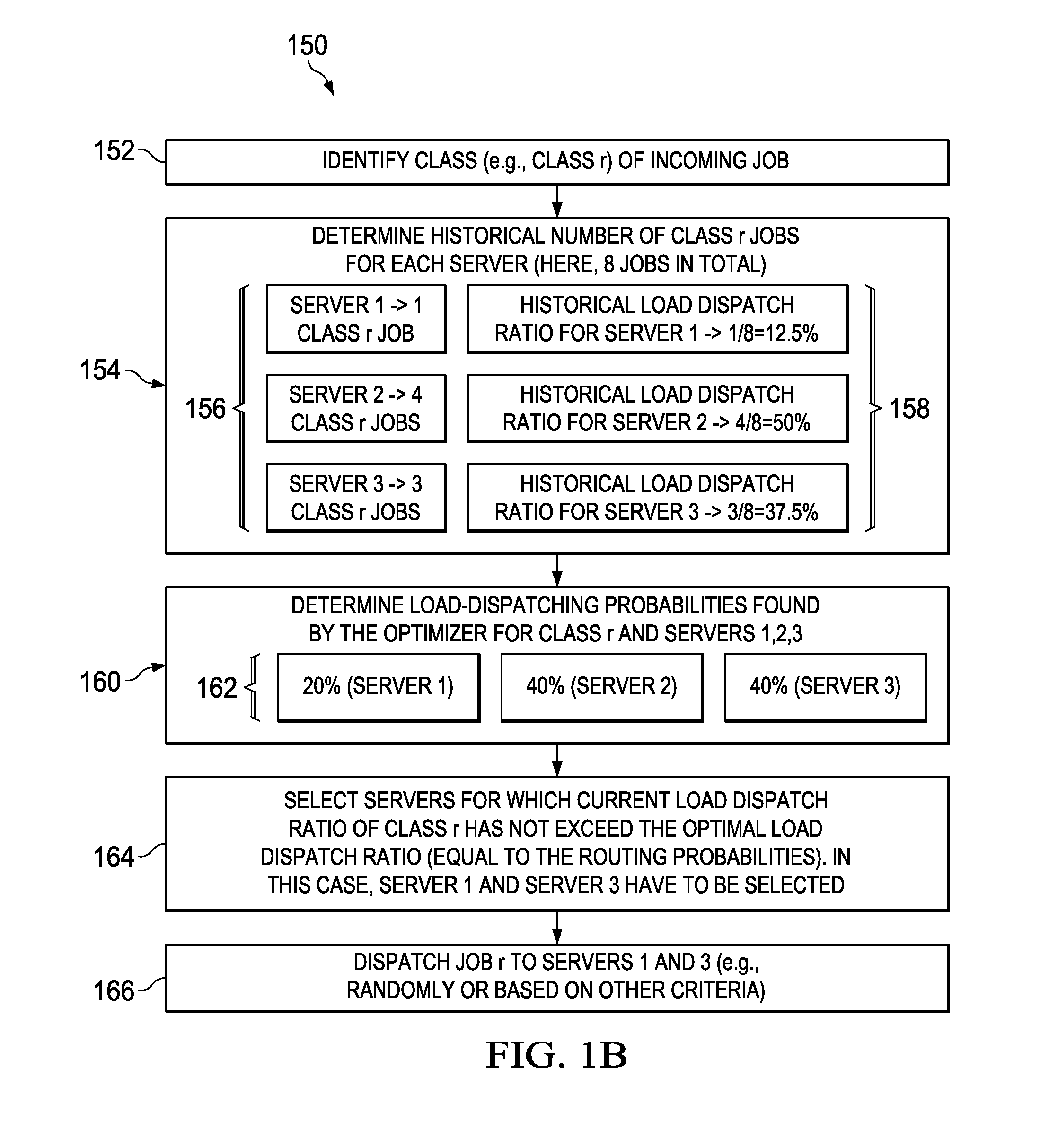

Optimizing workloads in a workload placement system

InactiveUS20160328273A1Reduce in quantityReducing total cost of ownership (TCO)Ultrasonic/sonic/infrasonic diagnosticsProgram initiation/switchingIn-memory databaseParallel computing

The disclosure generally describes computer-implemented methods, software, and systems, including a method for creating and incorporating an optimization solution into a workload placement system. An optimization model is defined for a workload placement system. The optimization model includes information for optimizing workflows and resource usage for in-memory database clusters. Parameters are identified for the optimization model. Using the identified parameters, an optimization solution is created for optimizing the placement of workloads in the workload placement system. The creating uses a multi-start approach including plural initial conditions for creating the optimization solution. The created optimization solution is refined using at least the multi-start approach. The optimization solution is incorporated into workload placement system.

Owner:SAP AG

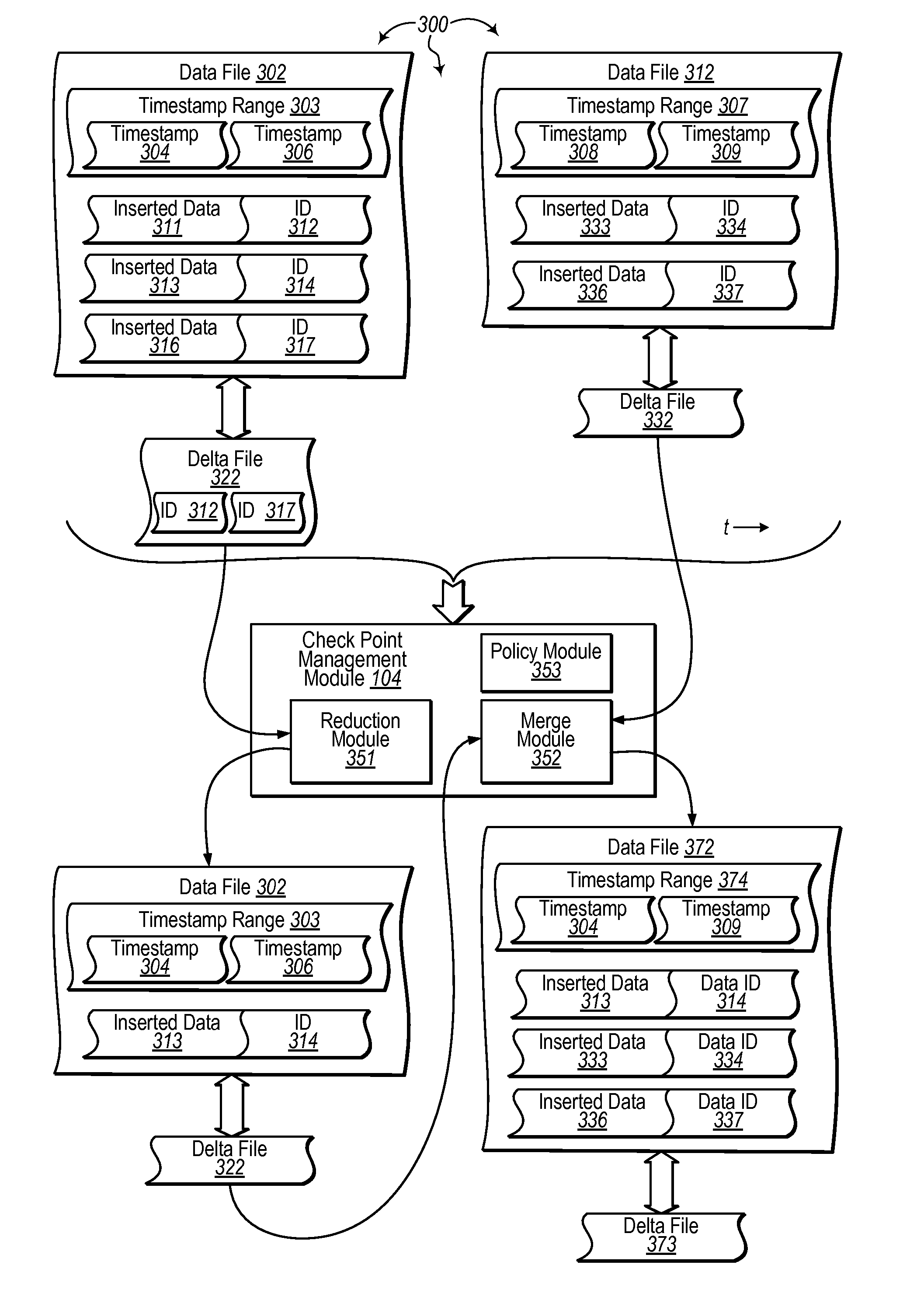

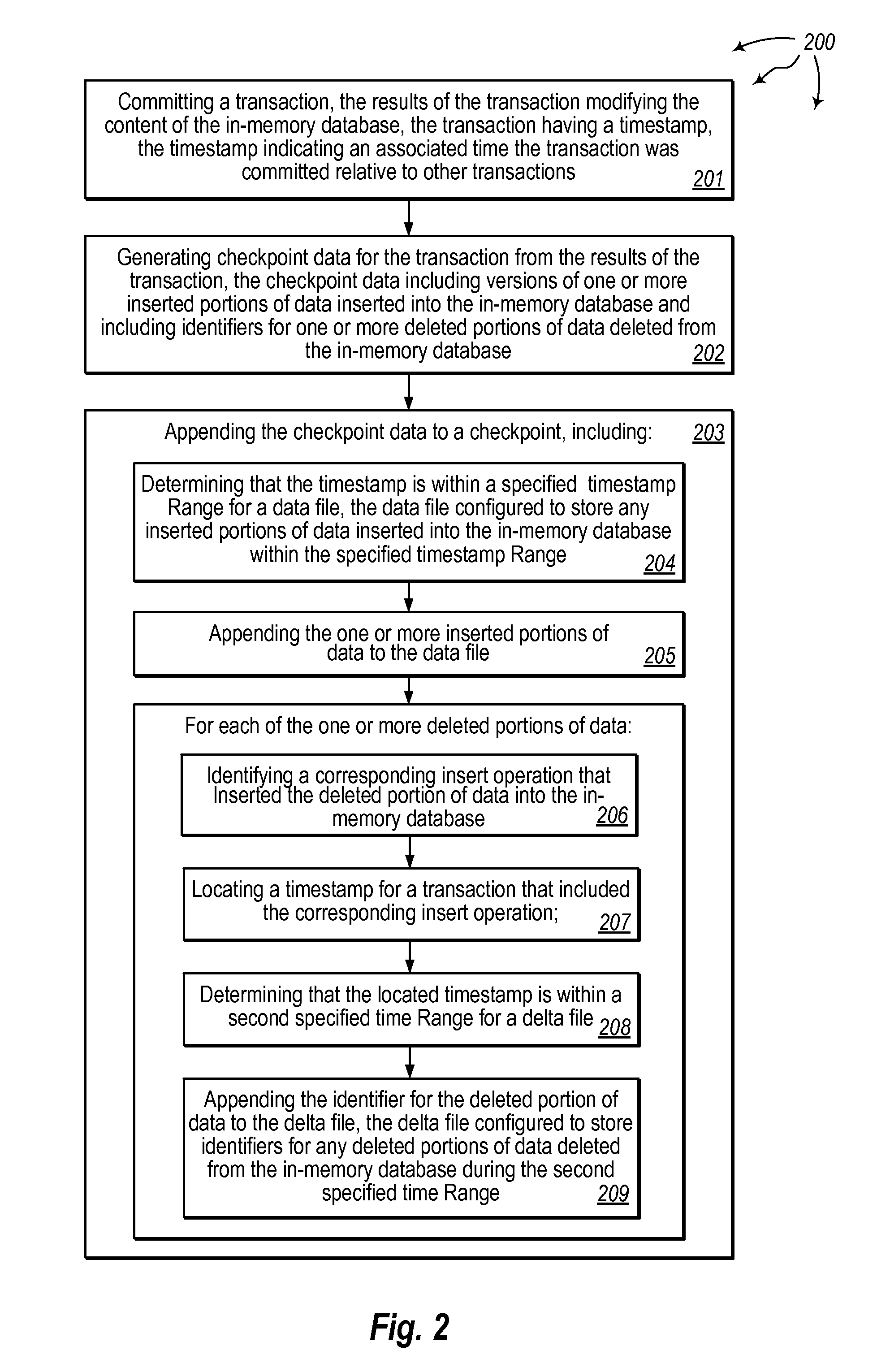

Main-memory database checkpointing

ActiveUS20140172803A1Well formedDigital data information retrievalDigital data processing detailsIn-memory databaseTransaction log

The present invention extends to methods, systems, and computer program products for main-memory database checkpointing. Embodiments of the invention use a transaction log as an interface between online threads and a checkpoint subsystem. Using the transaction log as an interface reduces synchronization overhead between threads and the checkpoint subsystem. Transactions can be assigned to files and storage space can be reserved in a lock free manner to reduce overhead of checkpointing online transactions. Meta-data independent data files and delta files can be collapsed and merged to reduce storage overhead. Checkpoints can be updated incrementally such that changes made since the last checkpoint (and not all data) are flushed to disk. Checkpoint I / O is sequential, helping ensure higher performance of physical I / O layers. During recovery checkpoint files can be loaded into memory in parallel for multiple devices.

Owner:MICROSOFT TECH LICENSING LLC

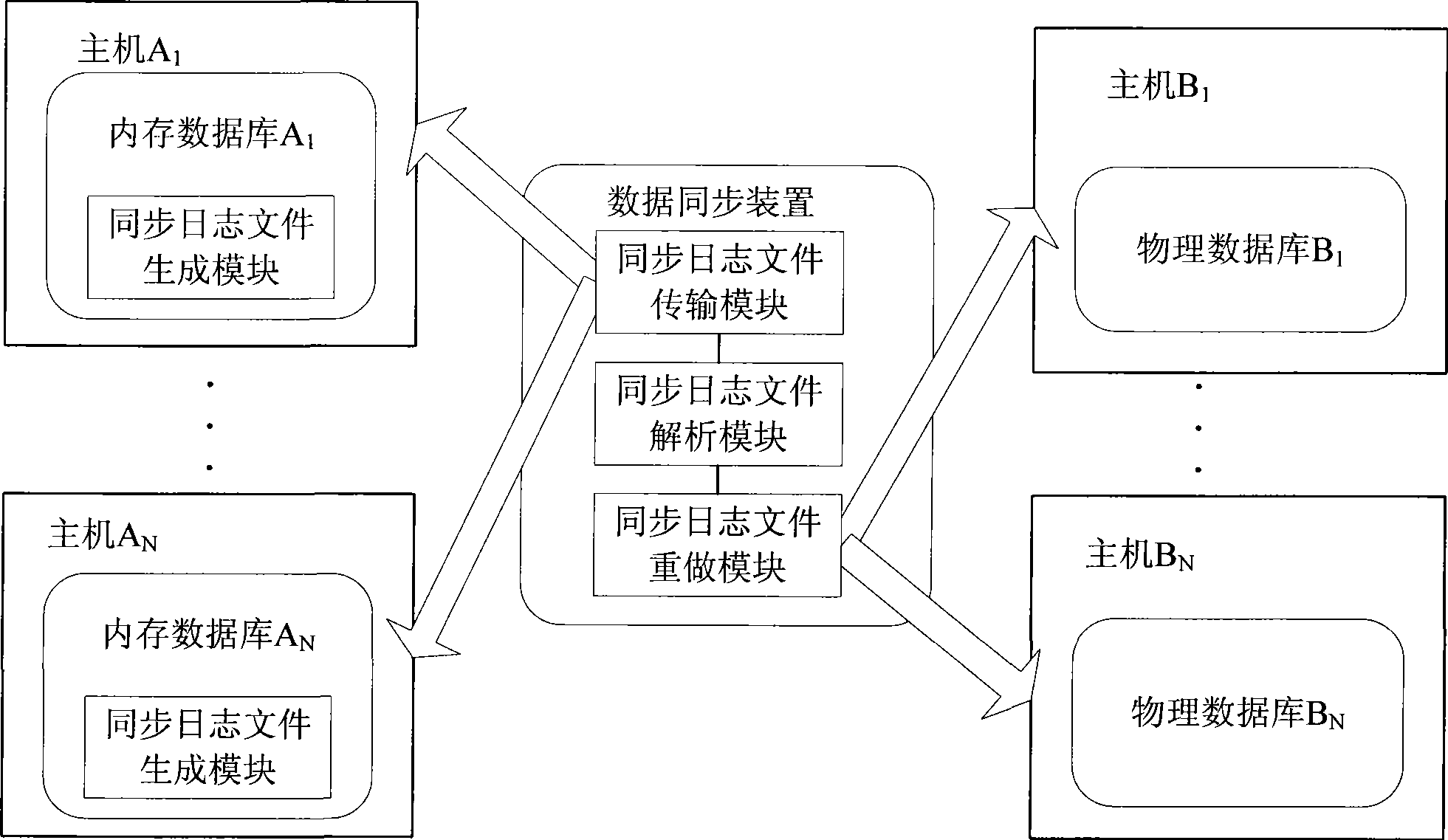

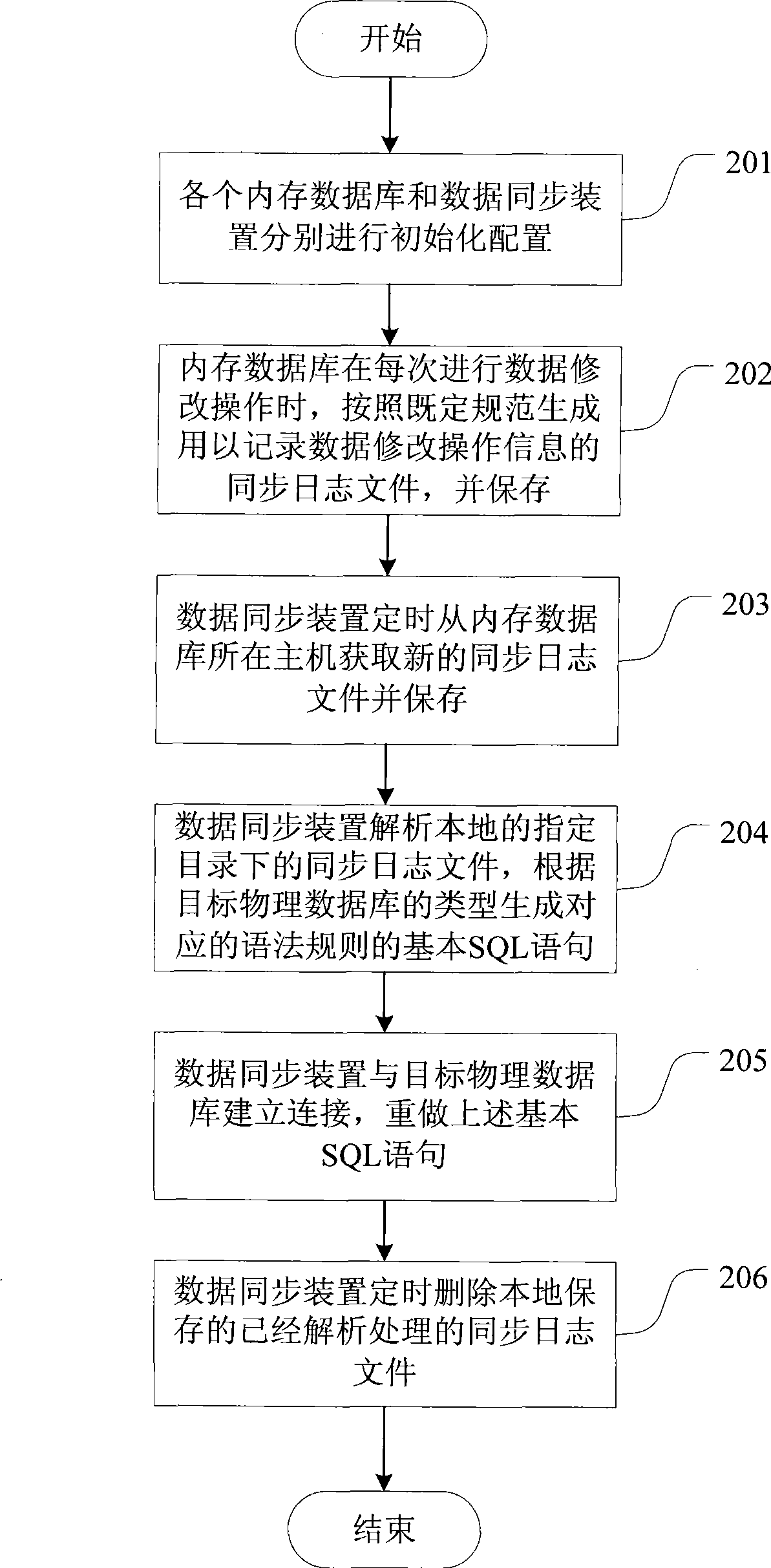

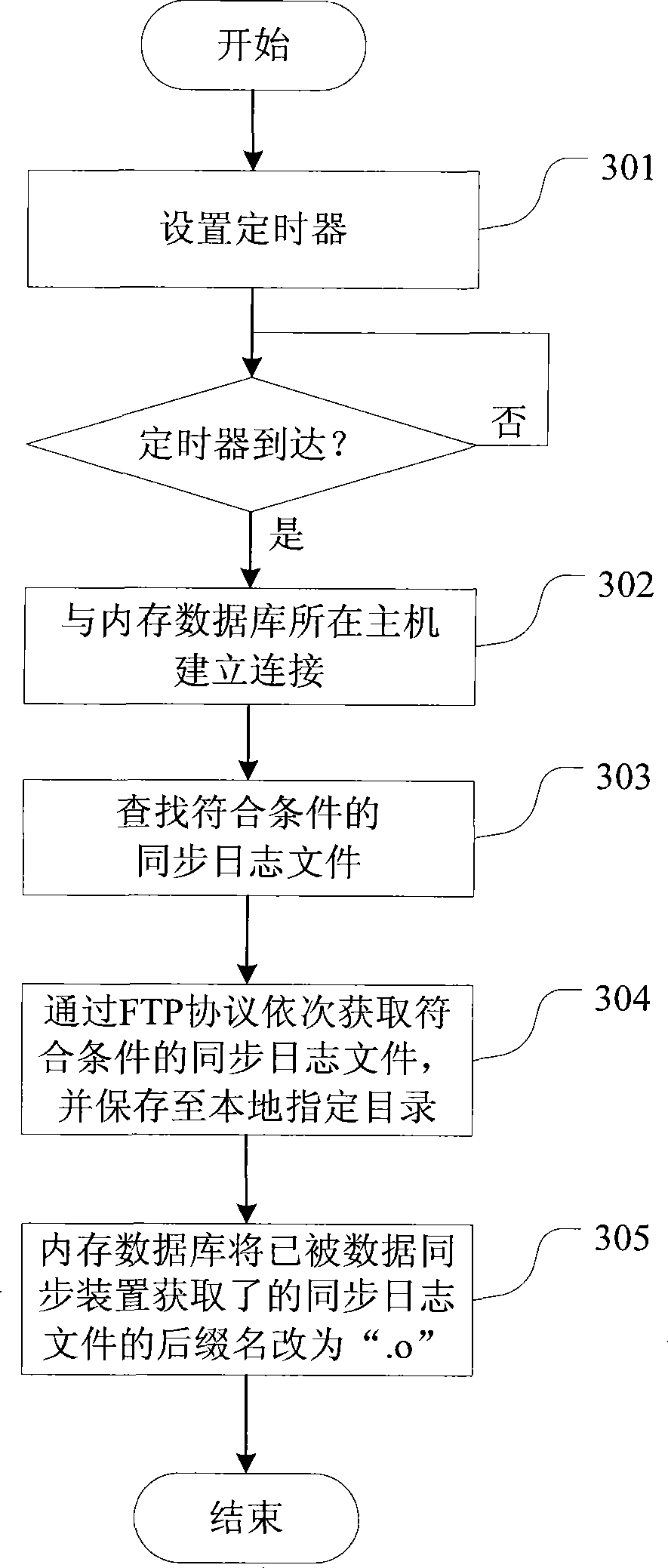

Data synchronization method and system for internal memory database physical data base

InactiveCN101369283ASolve data synchronizationFix security issuesSpecial data processing applicationsData synchronizationIn-memory database

The invention relates to a data synchronization method between a storage database and a physical database and a system, comprising: a storage database, a physical database and a data synchronization unit. The method comprises that: the storage database generates a synchronization log file for recording the data modification operation information according to the established criterion when performing data modification, and stores the file in a host computer of the storage database; the data synchronization unit timely obtains the synchronization log file which is generated novelly from the host computer of the storage database, and stores in an established list locally, then resolves the file, transforms into basic SQL sentences which match with syntactic rules of an object physical database, then builds connection with the object physical database, executes the basic SQL sentences. The invention not only ensures synchronization real-time, but also effectively controls data size of synchronization, accelerates synchronization speed, which is used in a plurality of operating system platforms.

Owner:ZTE CORP

System and method for processing data records in a mediation system

InactiveUS20090030943A1Fast executionReliable executionTransmissionSpecial data processing applicationsIn-memory databaseDatabase interface

A mediation method and system utilizes a subsystem (300) for processing event records that at least potentially have a mutual relation. The subsystem comprises an in-memory database (410) capable of storing data, database interface layer (310) providing an interface to the database, a basic functionality layer (320) comprising at least one module capable of performing basic functions, and a mediation functionality layer (330, 340) comprising at least two modules each capable of performing at least one mediation function on the data in the in-memory database via the modules at the database interface layer and / or basic functionality layer.

Owner:COMPTEL CORP

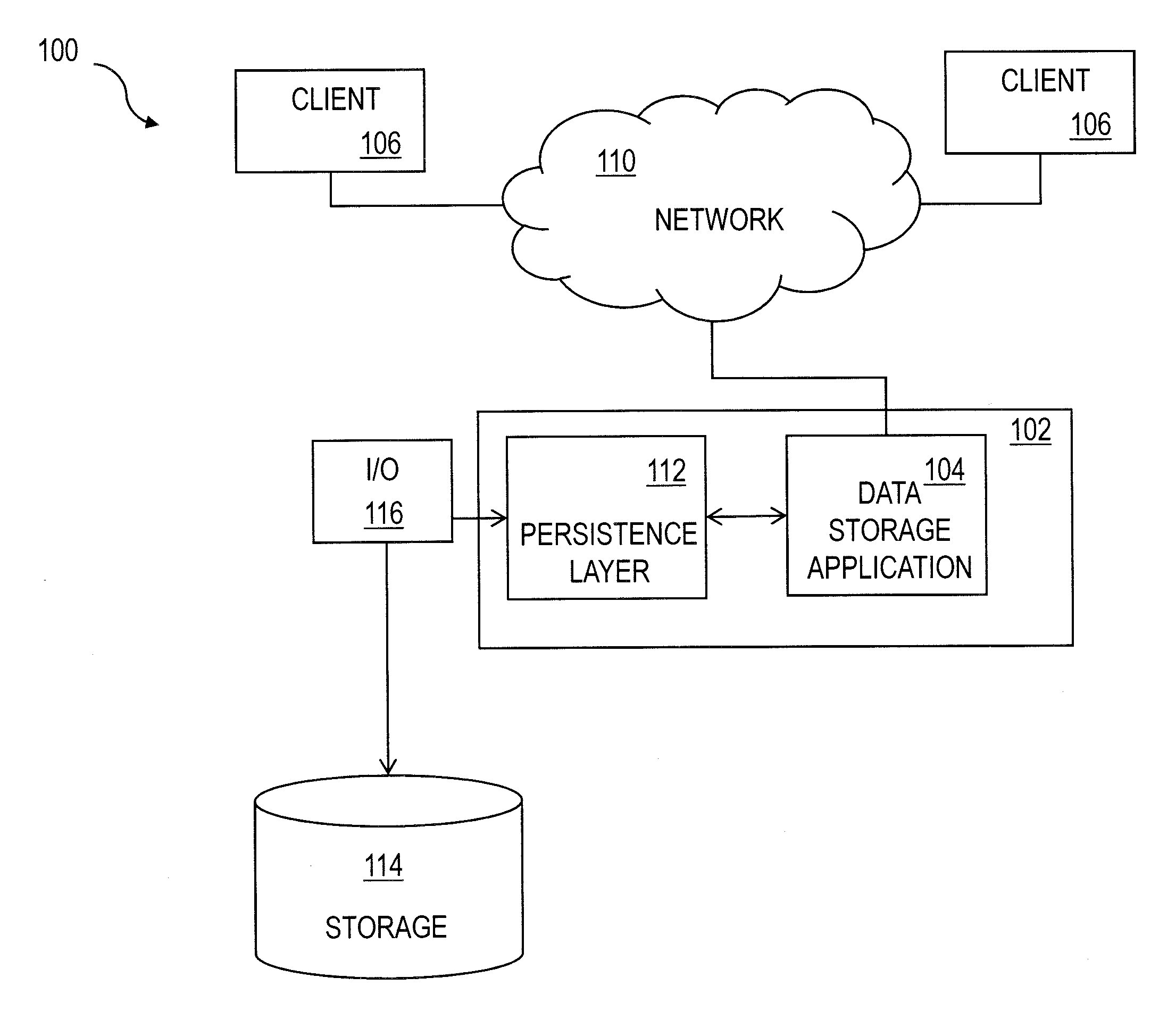

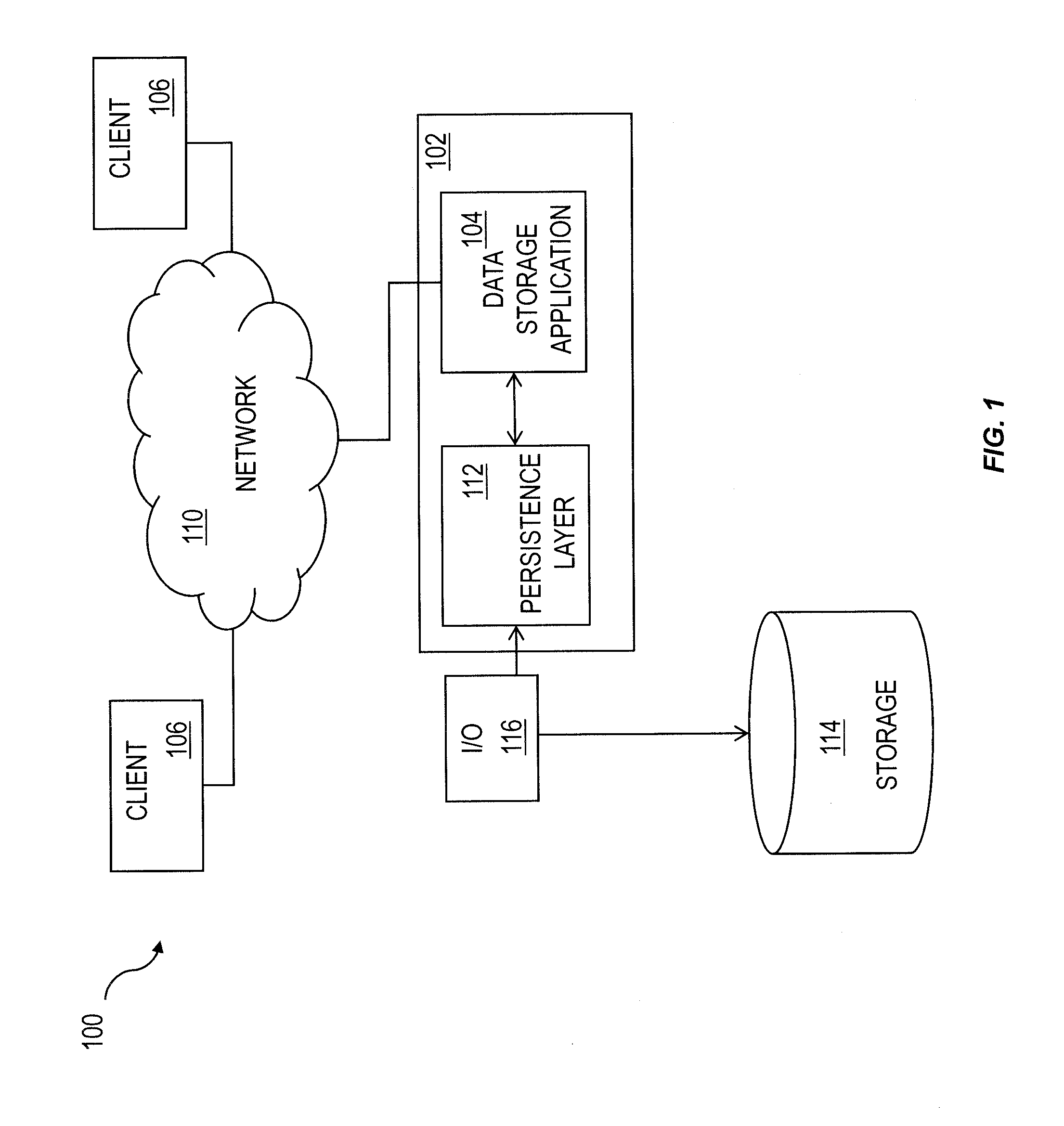

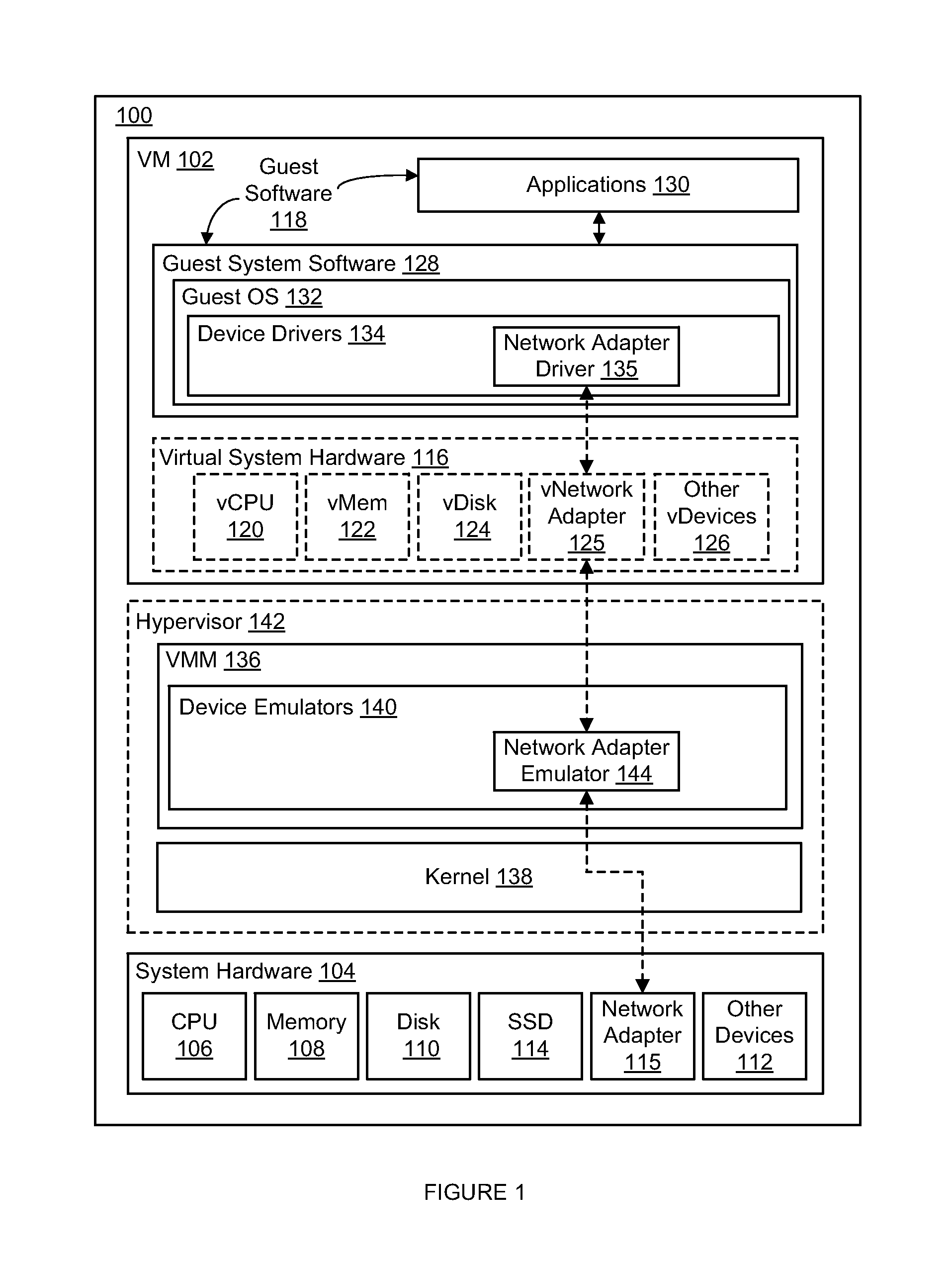

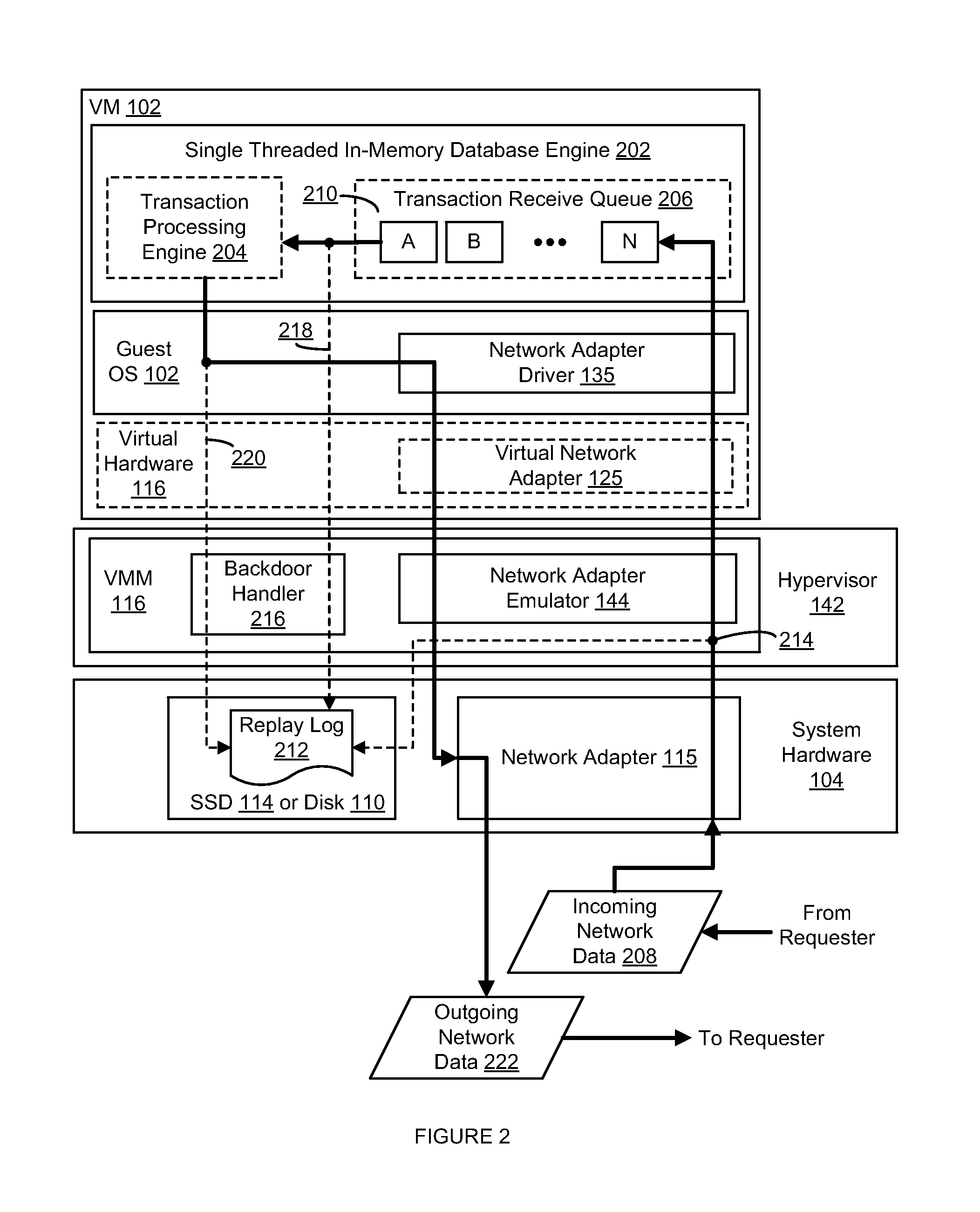

Synchronously logging to disk for main-memory database systems through record and replay

ActiveUS8826273B1Improve performanceSlow performanceError detection/correctionSoftware simulation/interpretation/emulationIn-memory databaseManagement system

An in-memory database management system (DBMS) in a virtual machine (VM) preserves the durability property of the ACID model for database management without significantly slowing performance due to accesses to disk. Input data relating to a database transaction is recorded into a replay log and forwarded to the VM for processing by the DBMS. An indication of a start of processing by the DBMS of the database transaction is received after receipt of the input data by the VM and an indication of completion of processing of the database transaction by the DBMS is subsequently received, upon which outgoing output data received from the VM subsequent to the receipt of the completion indication is delayed. The delayed outgoing output data is ultimately released upon a confirmation that all input data received prior to the receipt of the start indication has been successfully stored into the replay log, thereby preserving durability for the database transaction.

Owner:VMWARE INC

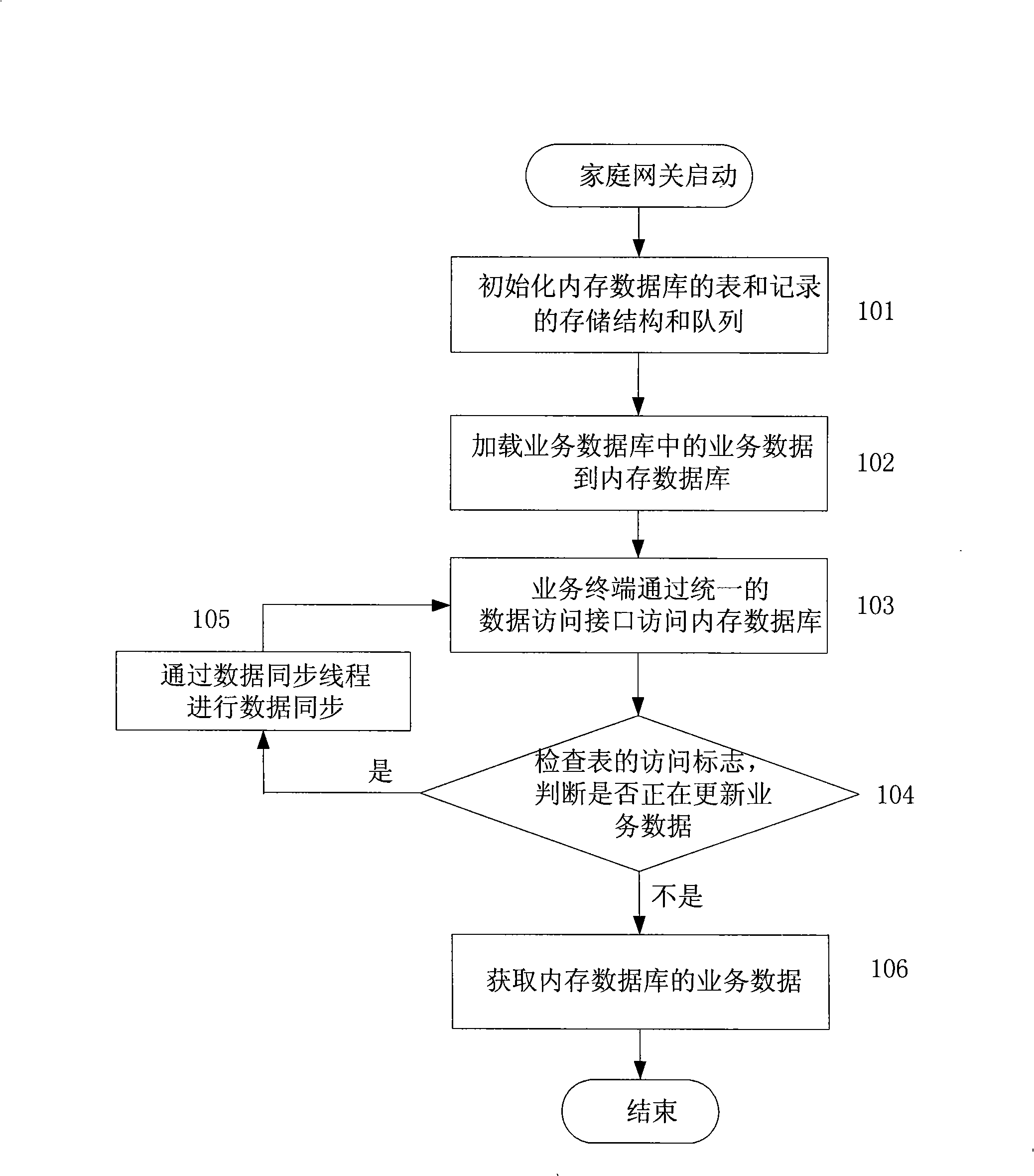

Implementing method of memory database on household gateway

InactiveCN101329685AFast accessEnsure data securitySpecial data processing applicationsData synchronizationIn-memory database

The invention discloses a realization method of a memory database on a home gateway. The method of the invention comprises the steps: 1. the storage structure and queue of data sheets and records in the memory database are initialized; 2. on the basis of the establishment of the storage structure of the memory database, the business data in a business database are uploaded to the memory database on the home gateway, while before the business data are uploaded, the business data are filtered; 3. data synchronization: when the data in business database are changed, the given data in the memory database are backed up; data synchronization is carried out by adopting a synchronous module, and if the synchronization succeeds, the backup data are cleared; 4. data access: a unified access interface of the memory database is provided for inquiring, adding, deleting and modifying, etc. Compared with the realization method of the existing memory database, the realization method of the invention requires few resources, has high data storage efficiency and is especially suitable for the business application that is based on a home gateway and has high requirement for data real time.

Owner:FENGHUO COMM SCI & TECH CO LTD

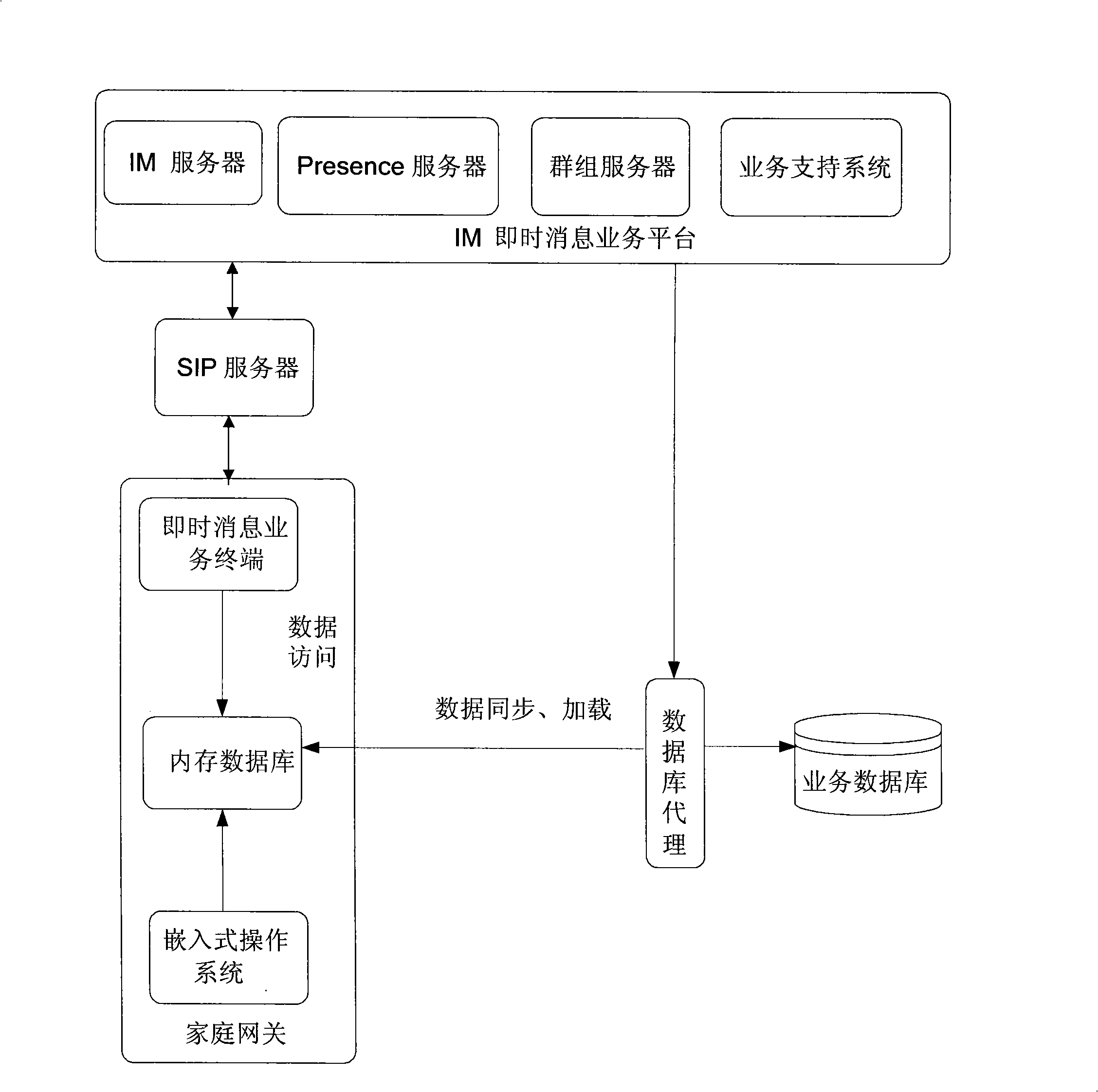

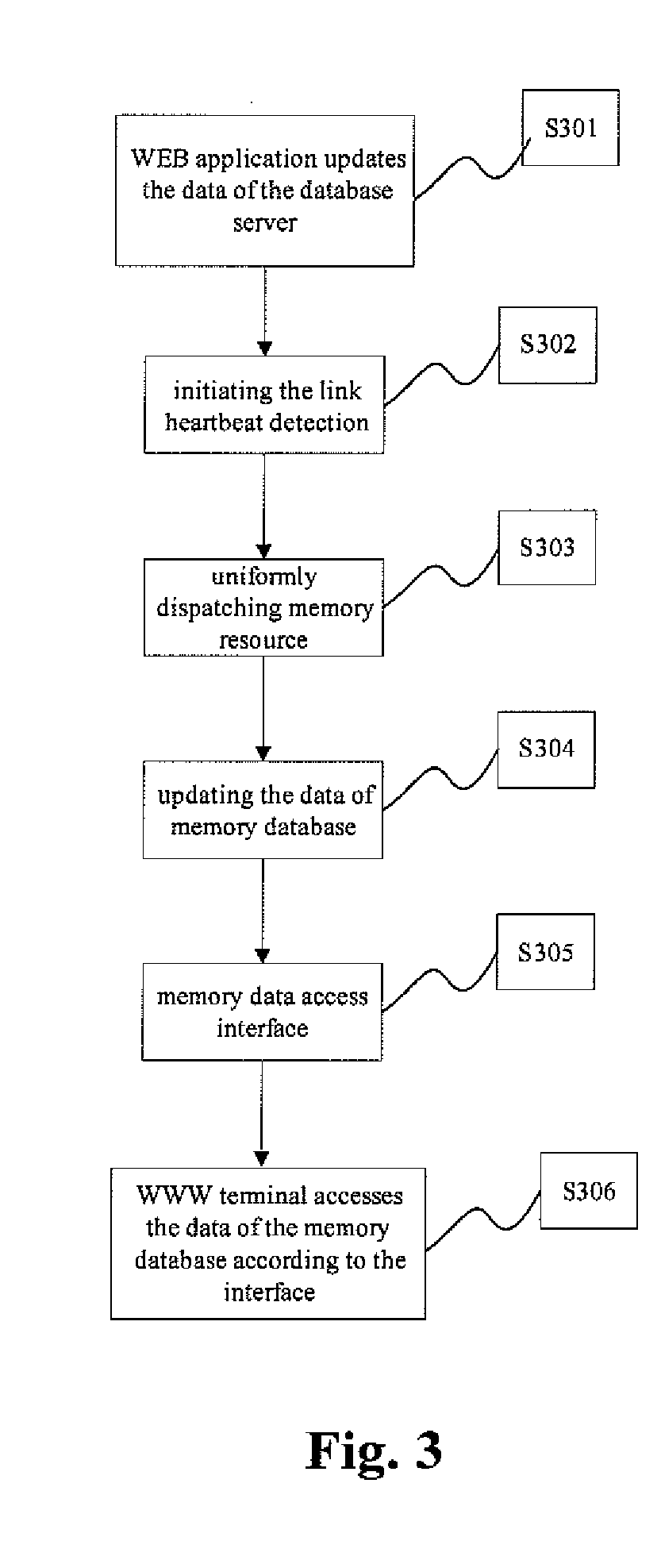

Database system based on web application and data management method thereof

InactiveUS20110246420A1Lower capability requirementsLower performance requirementsDigital data processing detailsWebsite content managementData synchronizationIn-memory database

A database system based on a WEB application includes a database server (103) accessed by means of reading and writing a disk and a distributed memory database server (11), wherein the distributed memory database serve performs data synchronized with data read from the database server by a data synchronizing module (111); the distributed memory database server further includes a memory database Manager server (104) and more than one memory database Agent servers (105), wherein the memory database manager server performs uniform dispatch of memory resources, realizes the data synchronization between the database server and the distributed memory database, and provides the WEB application server (102) with a data access interface; and the memory database agent servers store specific data.

Owner:ZTE CORP

Real-Time Social Networking

ActiveUS20130144957A1Less overall consumptionLow costDigital data processing detailsMultiple digital computer combinationsIn-memory databaseData element

Techniques for exploring social connections in an in-memory database include identifying an attribute in a user profile associated with a first user; executing a query against a data element stored in an in-memory database, the query including the attribute in the user profile; identifying a second user from results of the query, the second user associated with the data element based on a relationship between the second user and the first user defined by the attribute; and generating displayable information associated with the second user.

Owner:SAP PORTALS ISRAEL

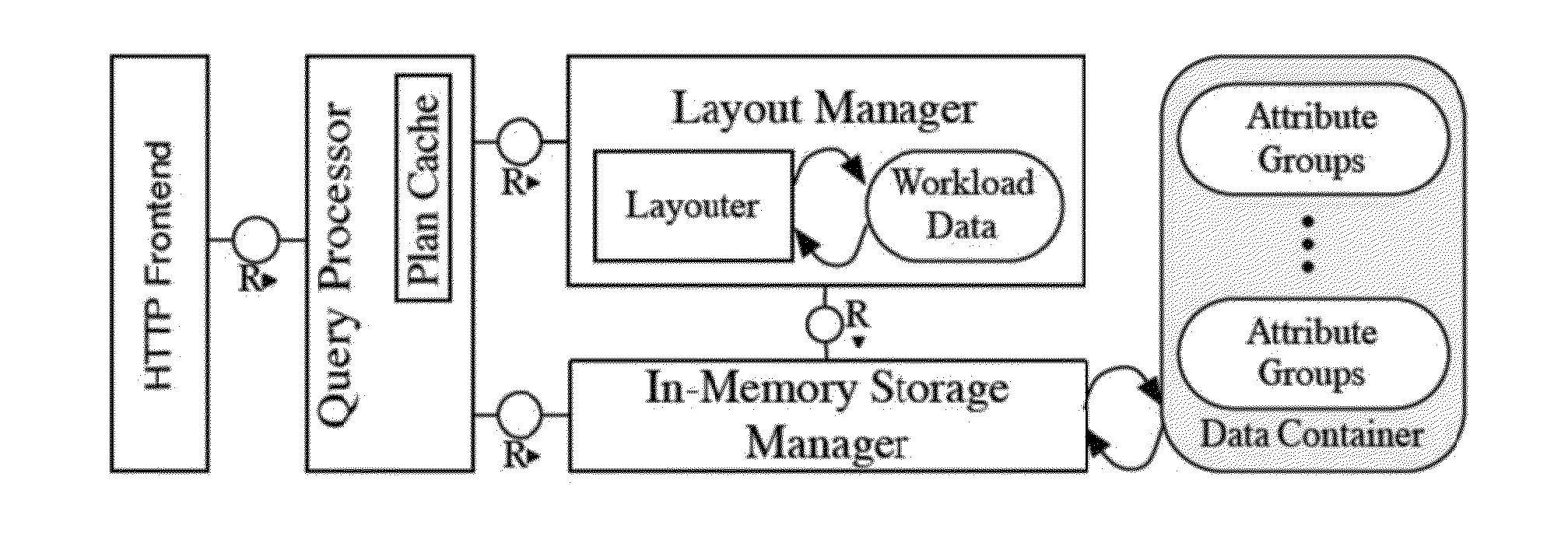

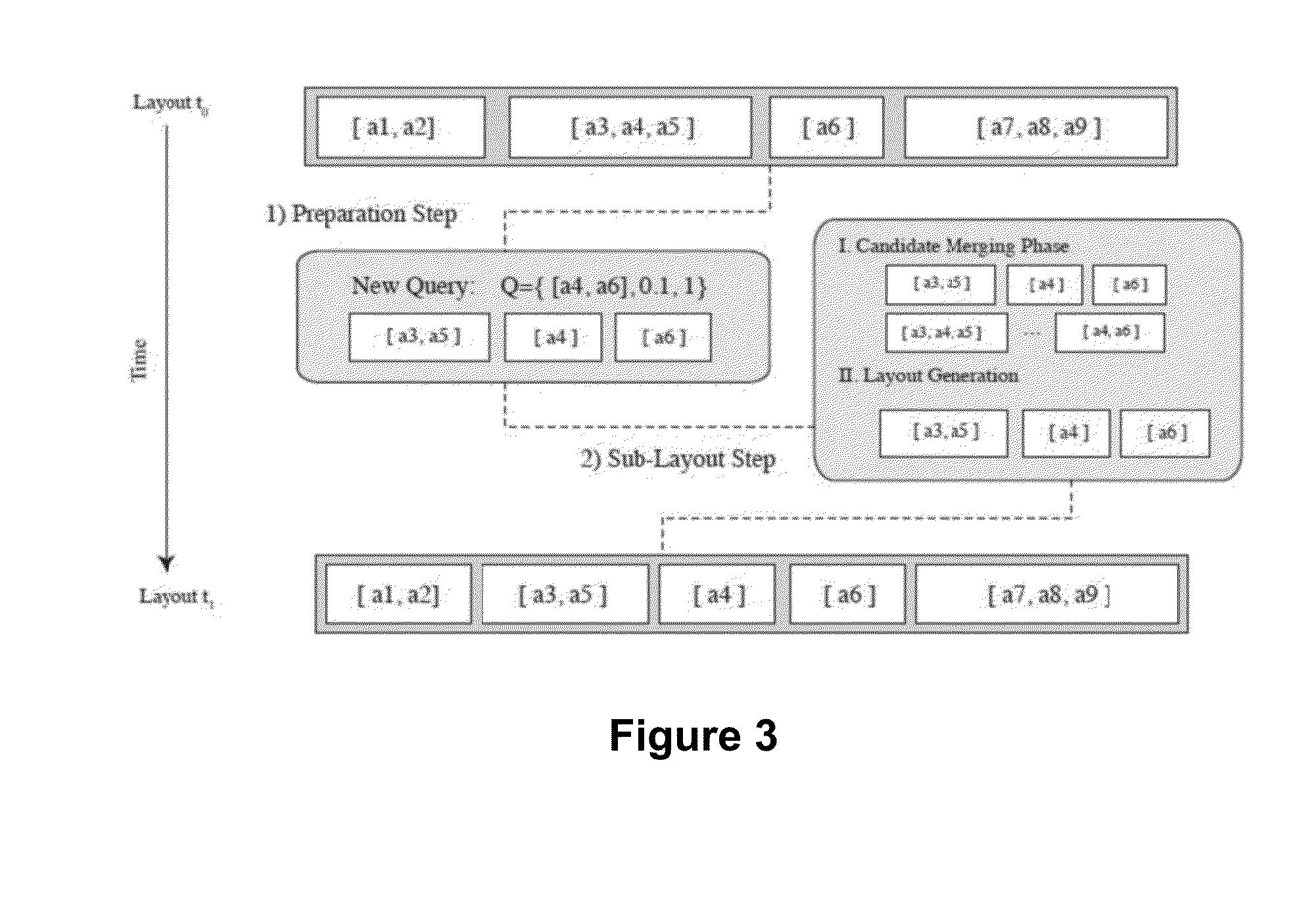

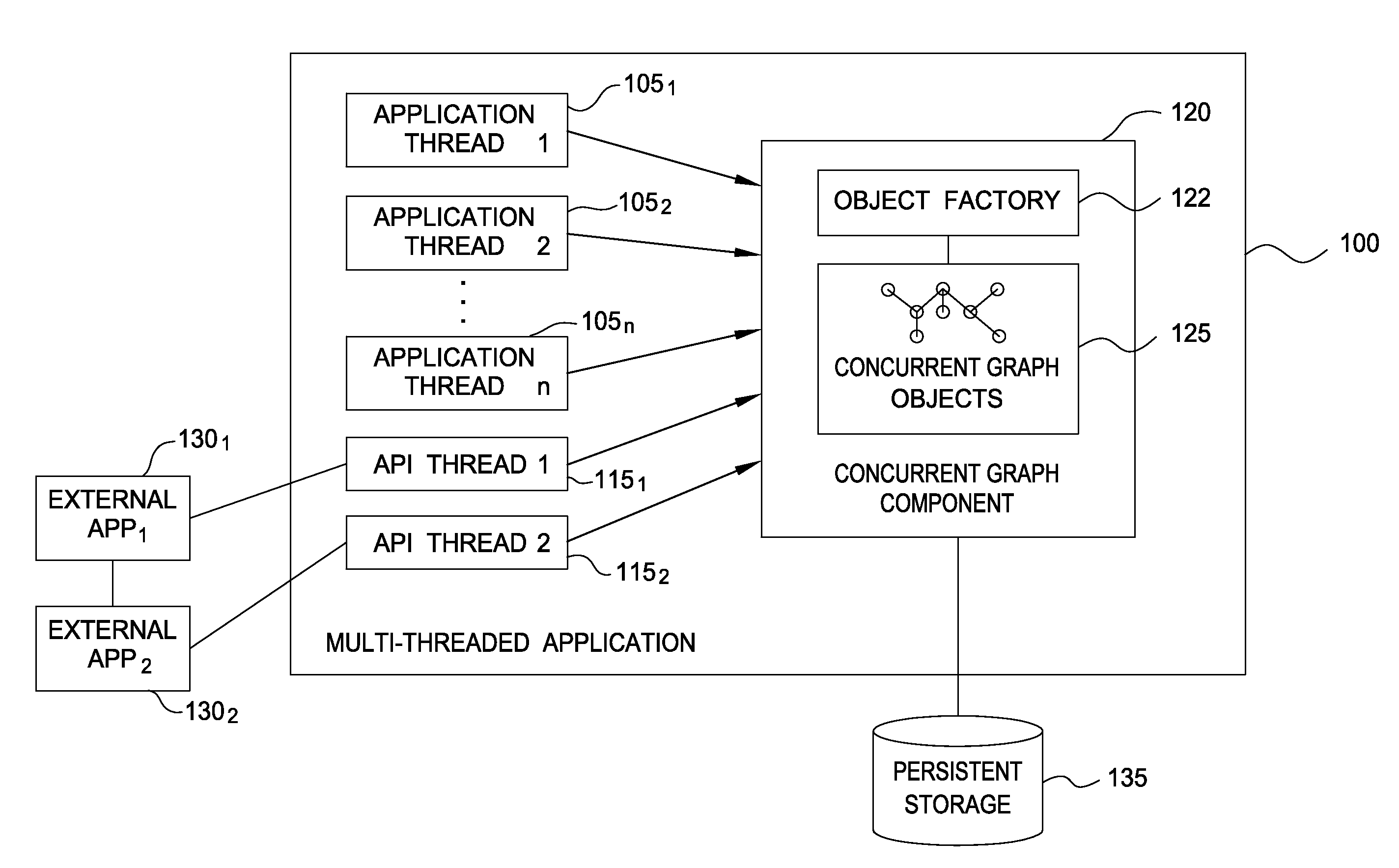

Online Reorganization of Hybrid In-Memory Databases

ActiveUS20130232176A1Improve efficiencyGreat tractionDigital data processing detailsSpecial data processing applicationsIn-memory databaseParallel computing

A system and method that dynamically adapts to workload changes and adopts the best possible physical layout on the fly—while allowing simultaneous updates to the table. A process continuously and incrementally computes the optimal physical layout based on workload changes and determines whether or not switching to this new layout would be beneficial. The system can perform online reorganization of hybrid main memory databases with a negligible overheard, leading up to three orders of magnitude performance gains when determining the optimal layout of dynamic workloads and providing guarantees on the worst case performance of our system.

Owner:HASSO PLATTNER INSTITUT FUR SOFTWARESYSTTECHN

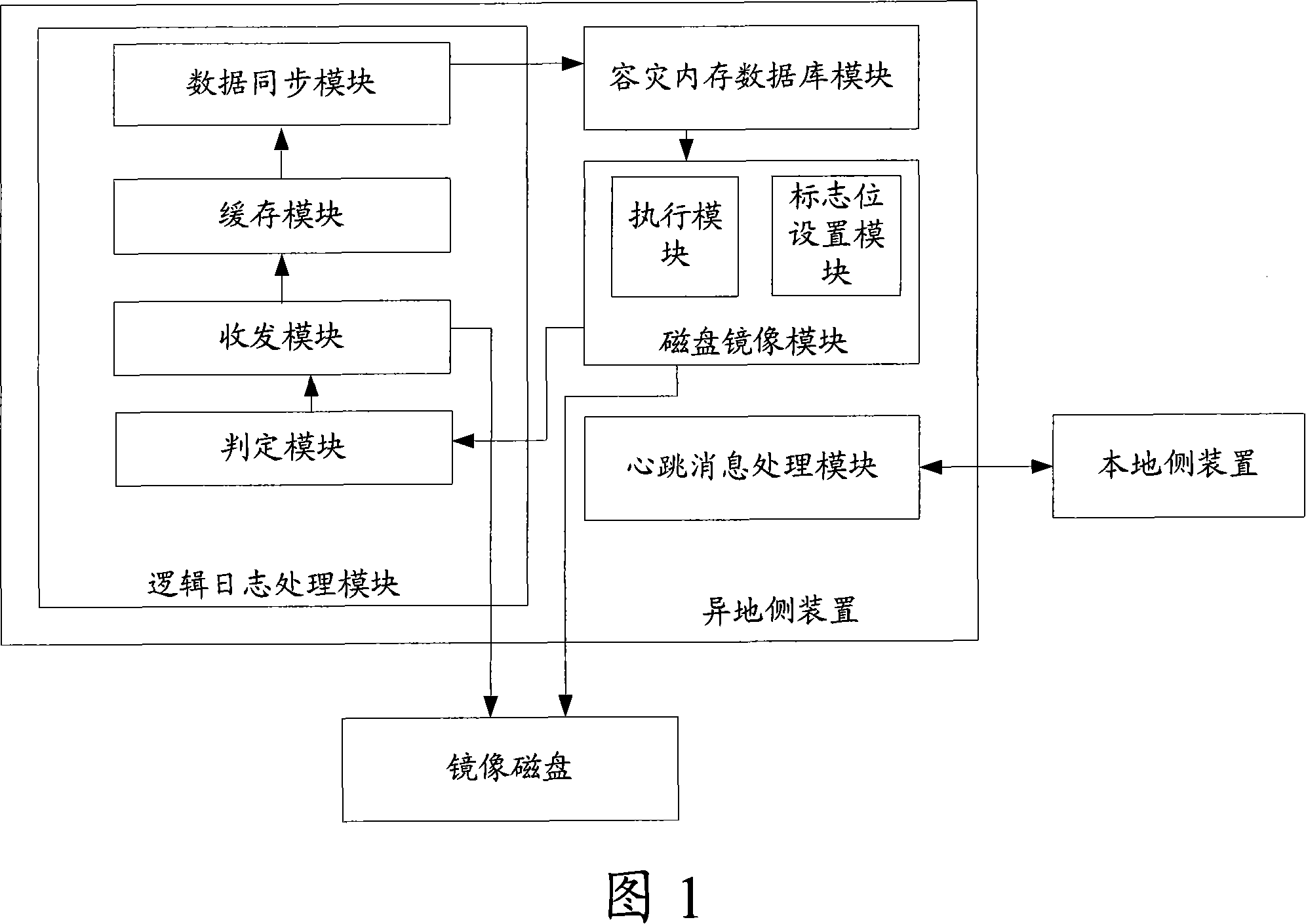

Method and system for acessing domain specific in-memory database management system

InactiveUS20130097136A1Digital data processing detailsSoftware designIn-memory databaseTheoretical computer science

A concurrent graph DBMS allows for representation of graph data structures in memory, using familiar Java object navigation, while at the same time providing atomicity, consistently, and transaction isolation properties of a DBMS, including concurrent access and modification of the data structure from multiple application threads. The concurrent graph DBMS serves as a “traffic cop” between application threads to prevent them from seeing unfinished and inconsistent changes made by other threads, and atomicity of changes. The concurrent graph DBMS provides automatic detection of deadlocks and correct rollback of a thread's incomplete transaction when exceptions or deadlocks occur. The concurrent graph DBMS may be generated from a schema description specifying objects and relationships between objects, for the concurrent graph DBMS.

Owner:PIE DIGITAL

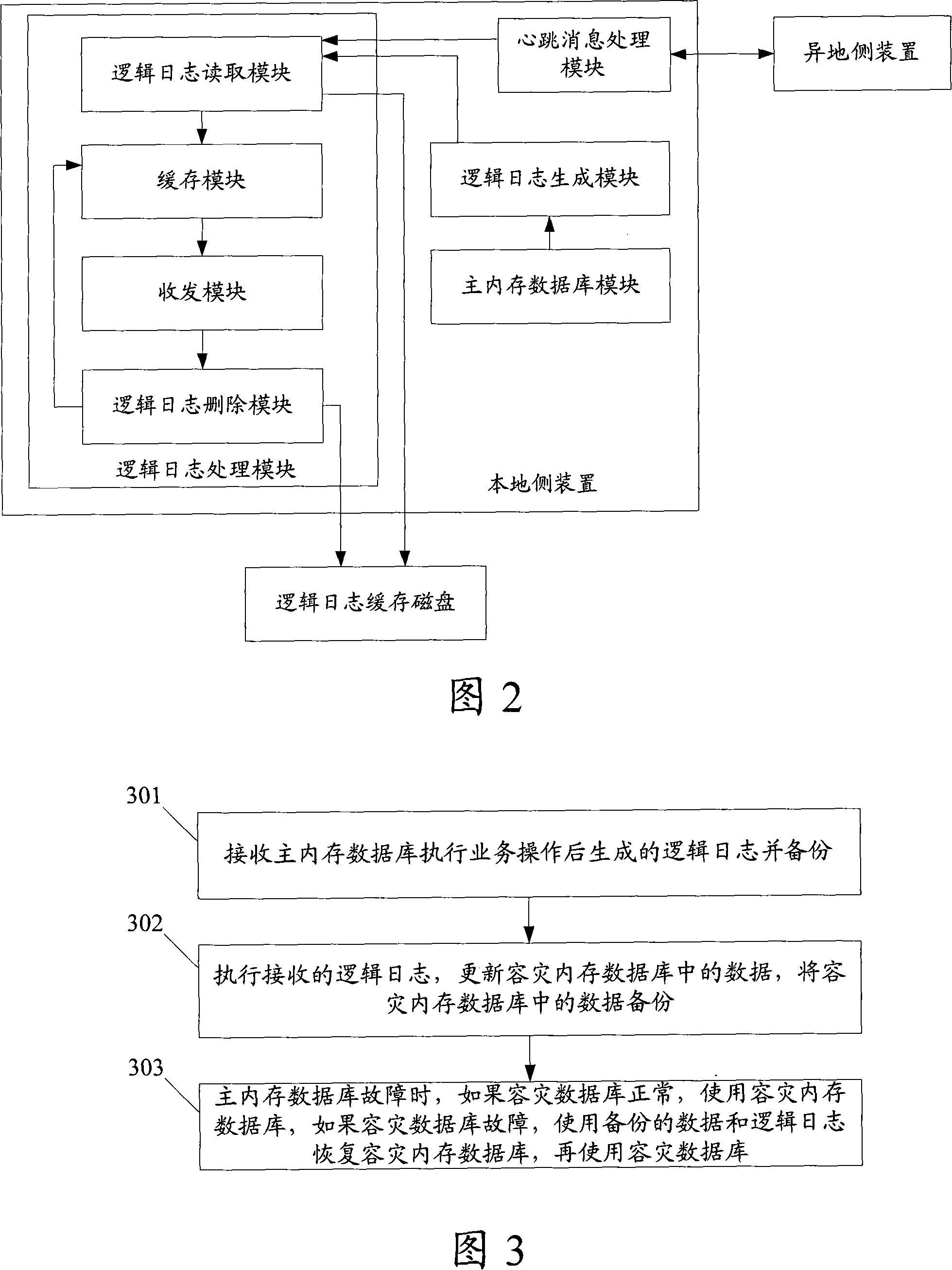

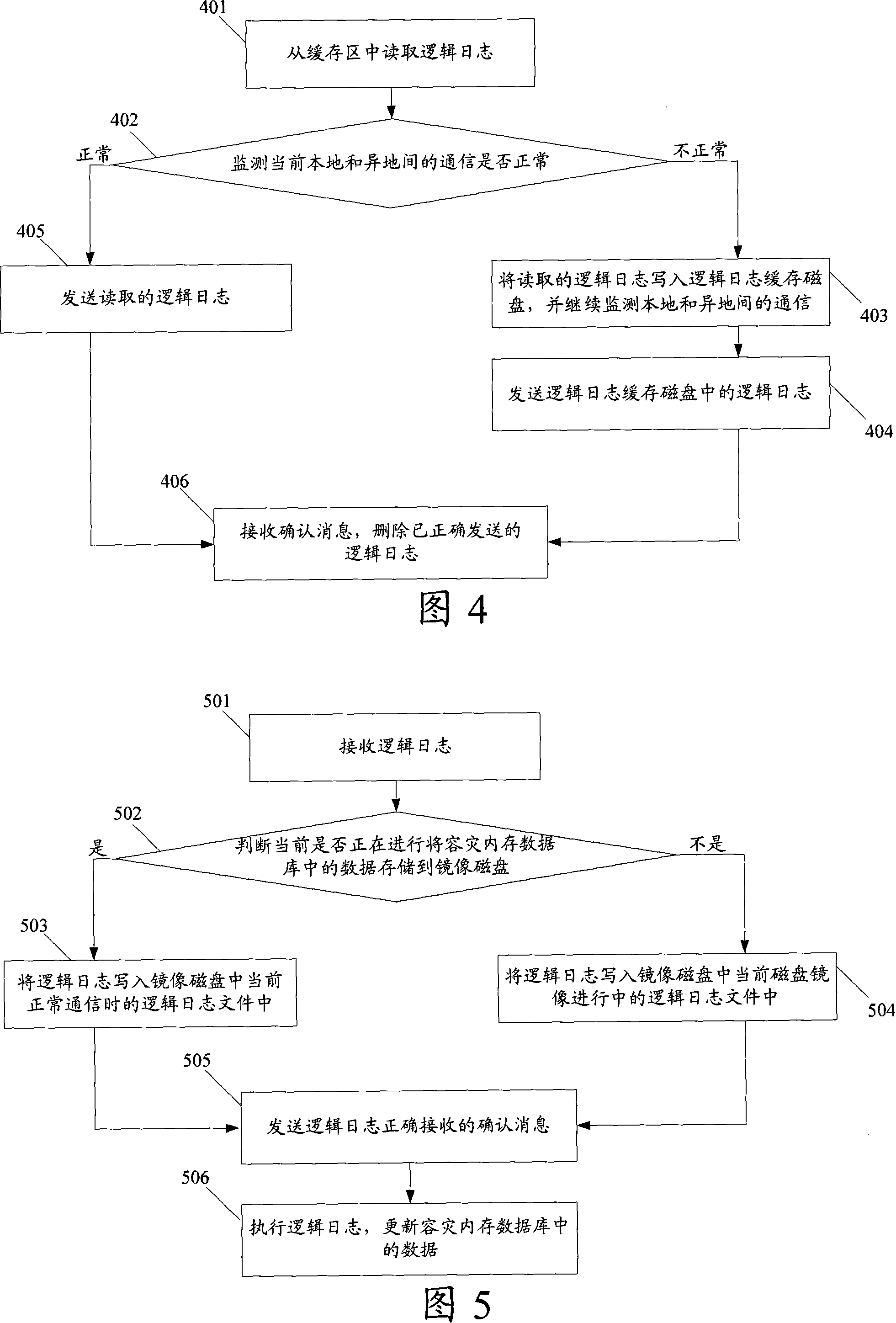

Process, device and system for EMS memory data-base remote disaster tolerance

ActiveCN101118509ASpecial data processing applicationsRedundant operation error correctionIn-memory databaseData storing

The present invention discloses a long-distance disaster tolerating method for an EMS memory database, and the method includes receiving the logic log generated by the main EMS memory database executing the business operation and making a backup; executing the logic log and refreshing the date in the disaster tolerating database, and backup the data in the disaster tolerating database. When the main EMS memory data is wrong but the disaster tolerating database is normal, the disaster tolerating database can be used; when the disaster tolerating database is wrong, the disaster database can be retrieved by using the backup data and the logic log, and then the disaster tolerating database can be used again. The present invention also discloses a long-distance disaster tolerating remote side device of the EMS memory database, a long-distance disaster tolerating local side device of the EMS memory database, and a long-distance disaster tolerating system of the EMS memory database. The present invention can use the EMS memory as the main data storing body, and can realize the long-distance disaster tolerating of the EMS memory database.

Owner:HUAWEI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com