Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

853 results about "Distributed cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a distributed cache is an extension of the traditional concept of cache used in a single locale. A distributed cache may span multiple servers so that it can grow in size and in transactional capacity. It is mainly used to store application data residing in database and web session data. The idea of distributed caching has become feasible now because main memory has become very cheap and network cards have become very fast, with 1 Gbit now standard everywhere and 10 Gbit gaining traction. Also, a distributed cache works well on lower cost machines usually employed for web servers as opposed to database servers which require expensive hardware. An emerging internet architecture known as Information-centric networking (ICN) is one of the best examples of a distributed cache network. The ICN is a network level solution hence the existing distributed network cache management schemes are not well suited for ICN. In the supercomputer environment, distributed cache is typically implemented in the form of burst buffer.

Distributed cache for state transfer operations

ActiveUS20020138551A1Computer security arrangementsMultiple digital computer combinationsDistributed cacheClient-side

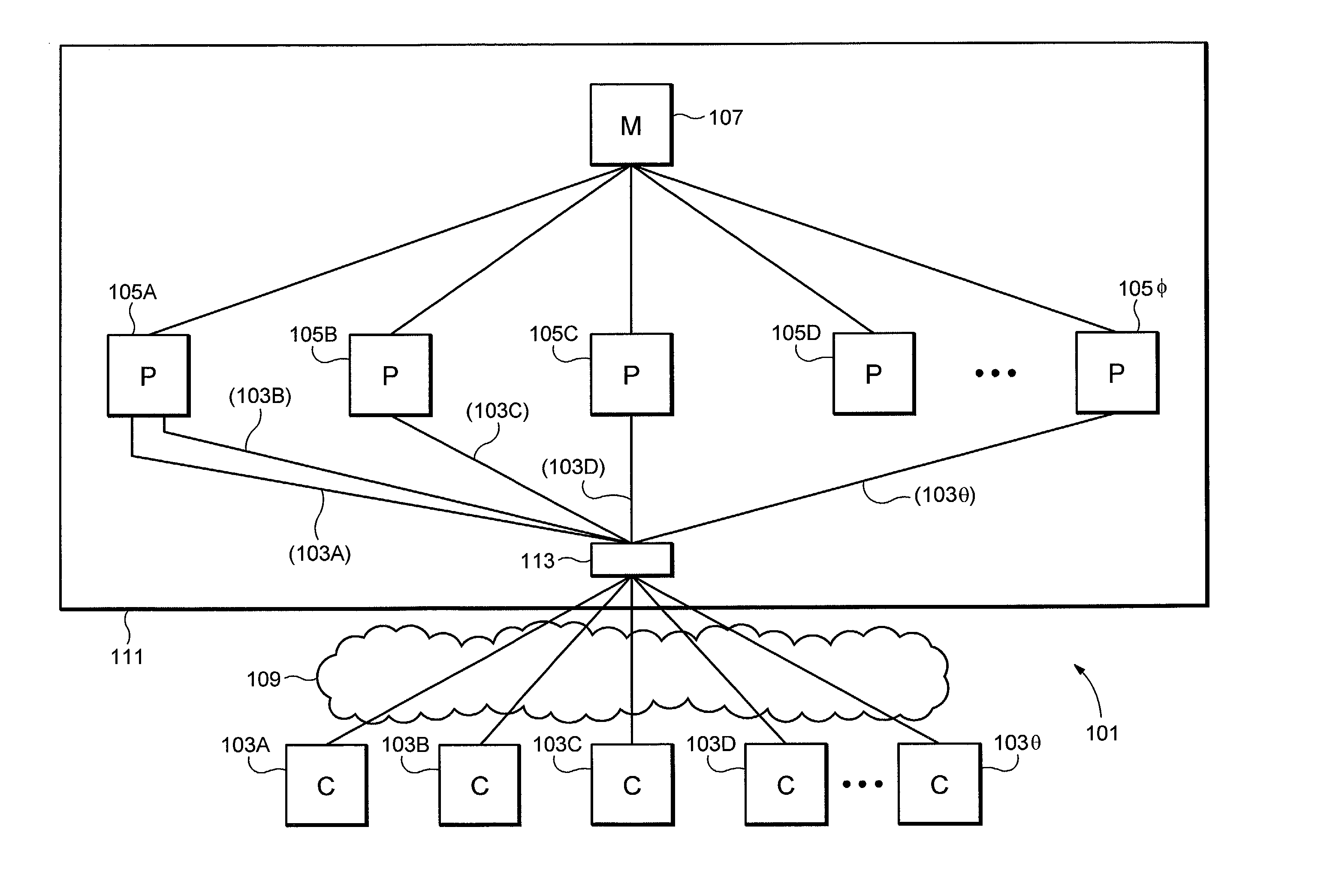

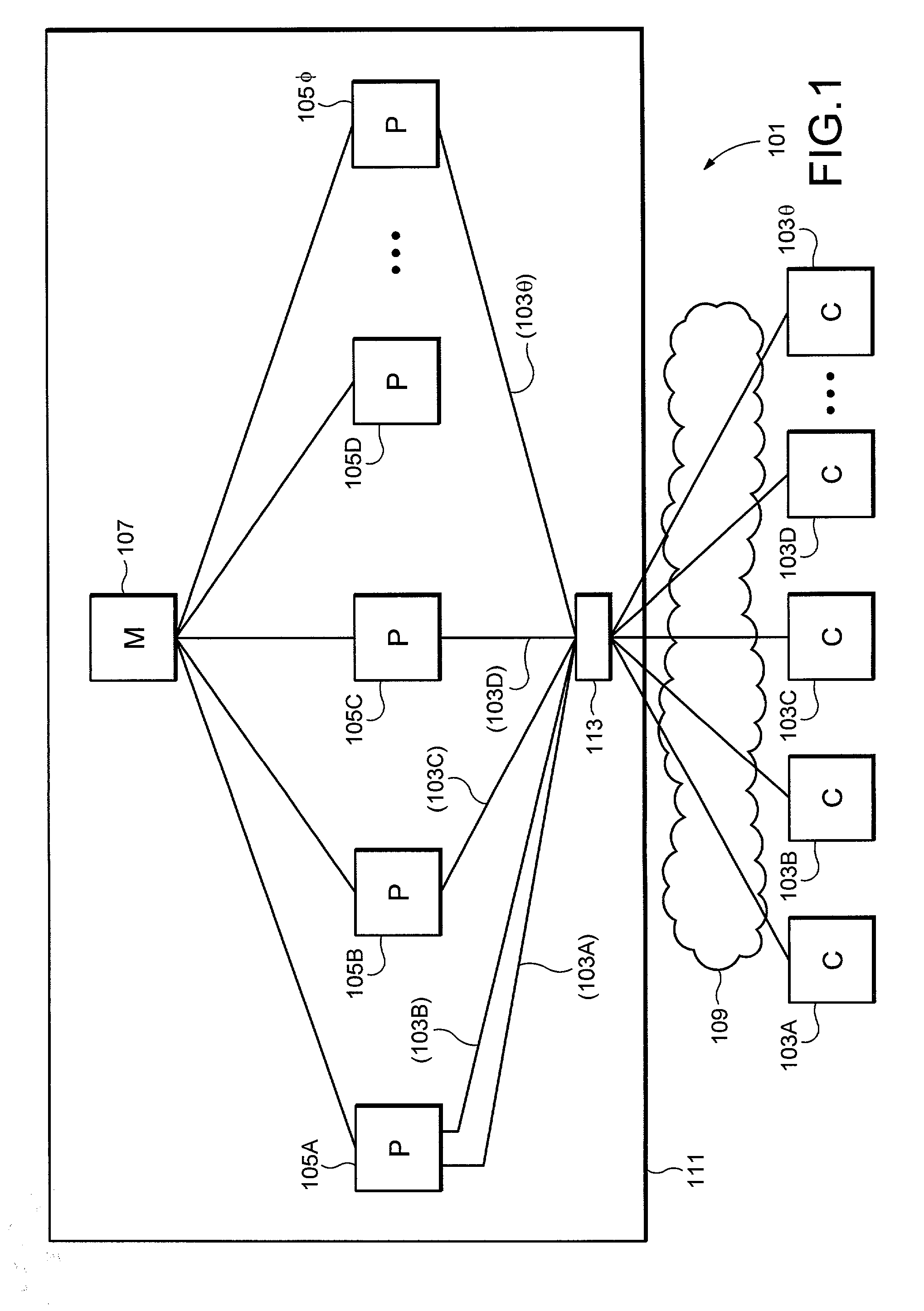

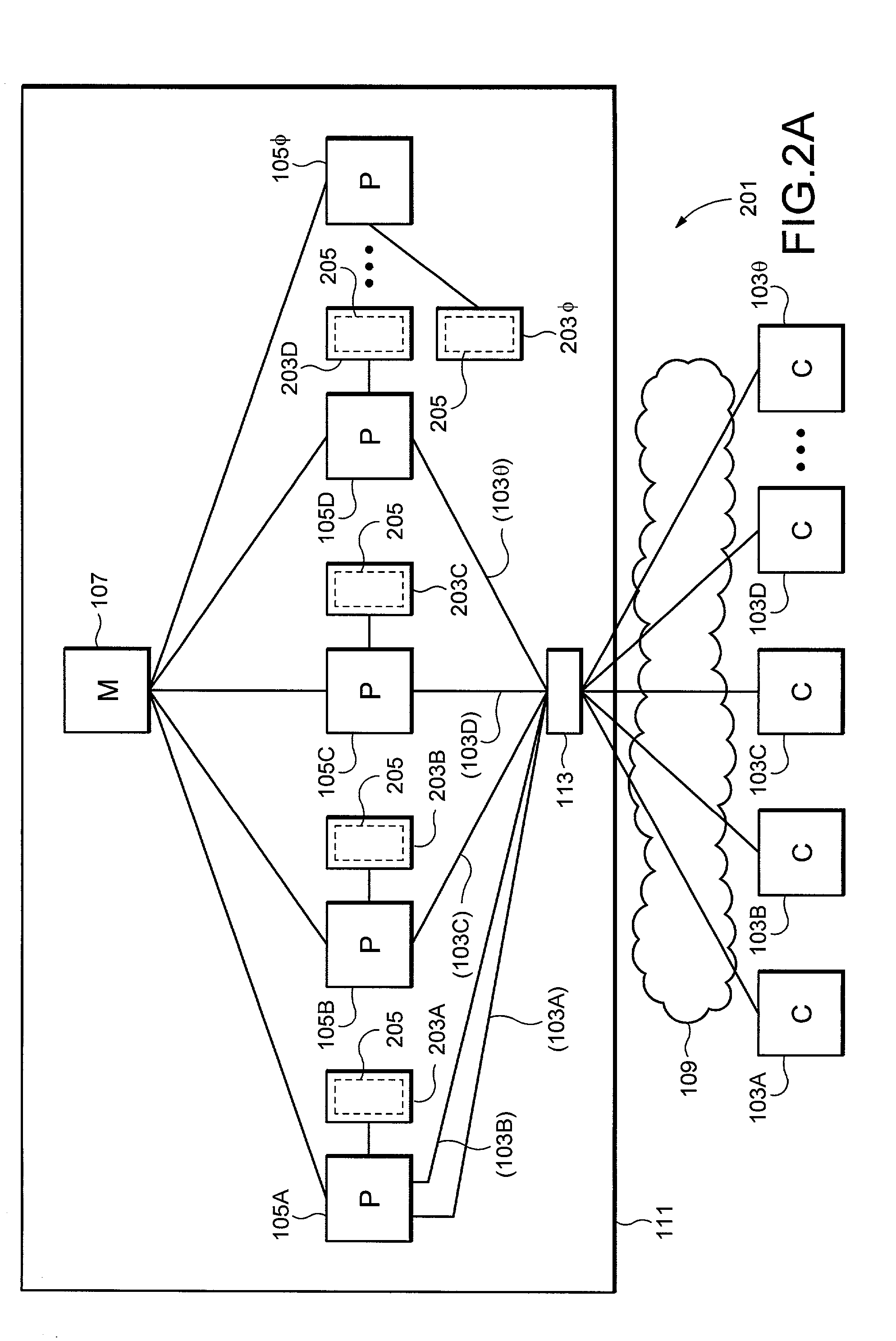

A network arrangement that employs a cache having copies distributed among a plurality of different locations. The cache stores state information for a session with any of the server devices so that it is accessible to at least one other server device. Using this arrangement, when a client device switches from a connection with a first server device to a connection with a second server device, the second server device can retrieve state information from the cache corresponding to the session between the client device and the first server device. The second server device can then use the retrieved state information to accept a session with the client device.

Owner:DELL PROD LP

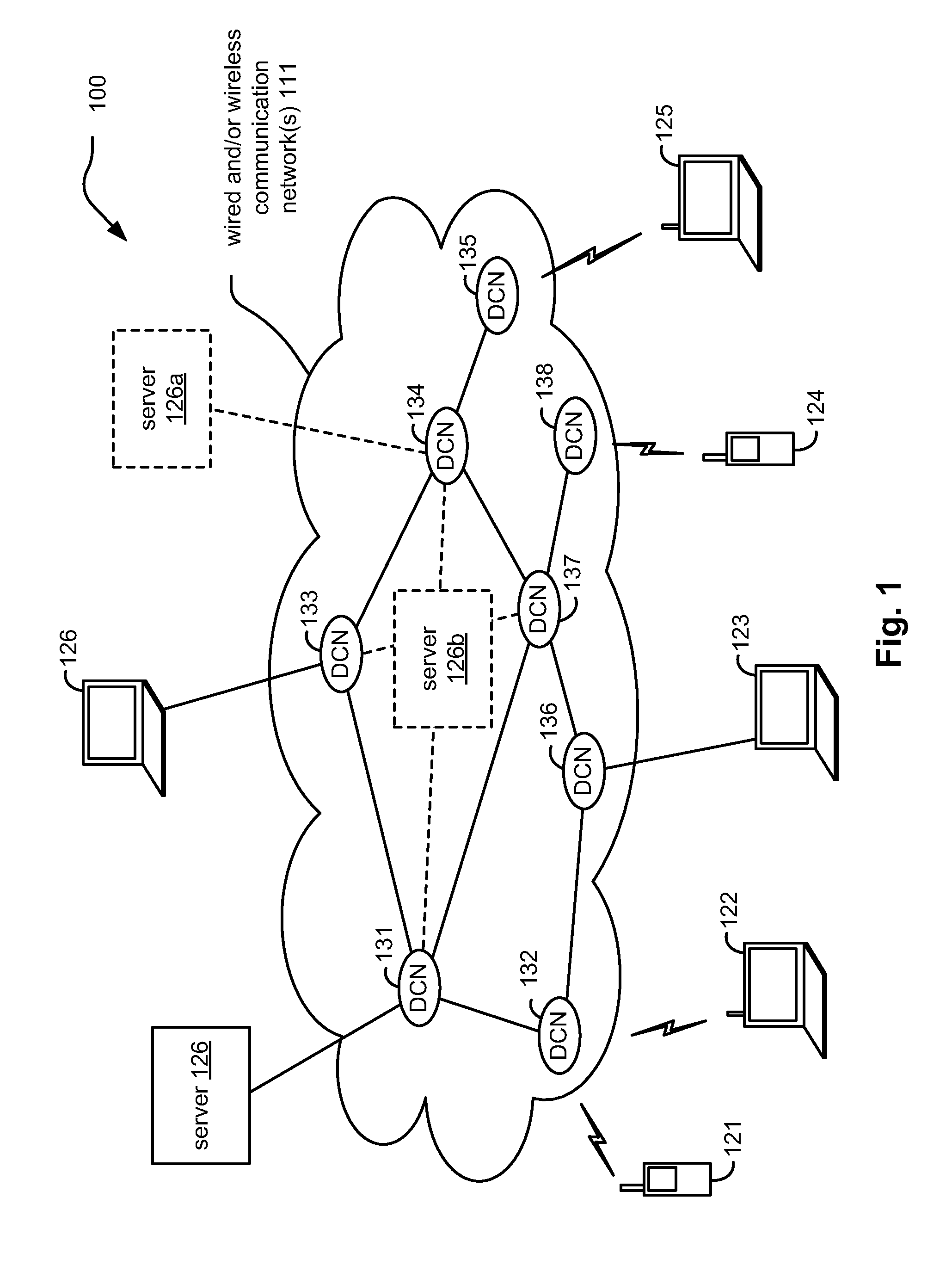

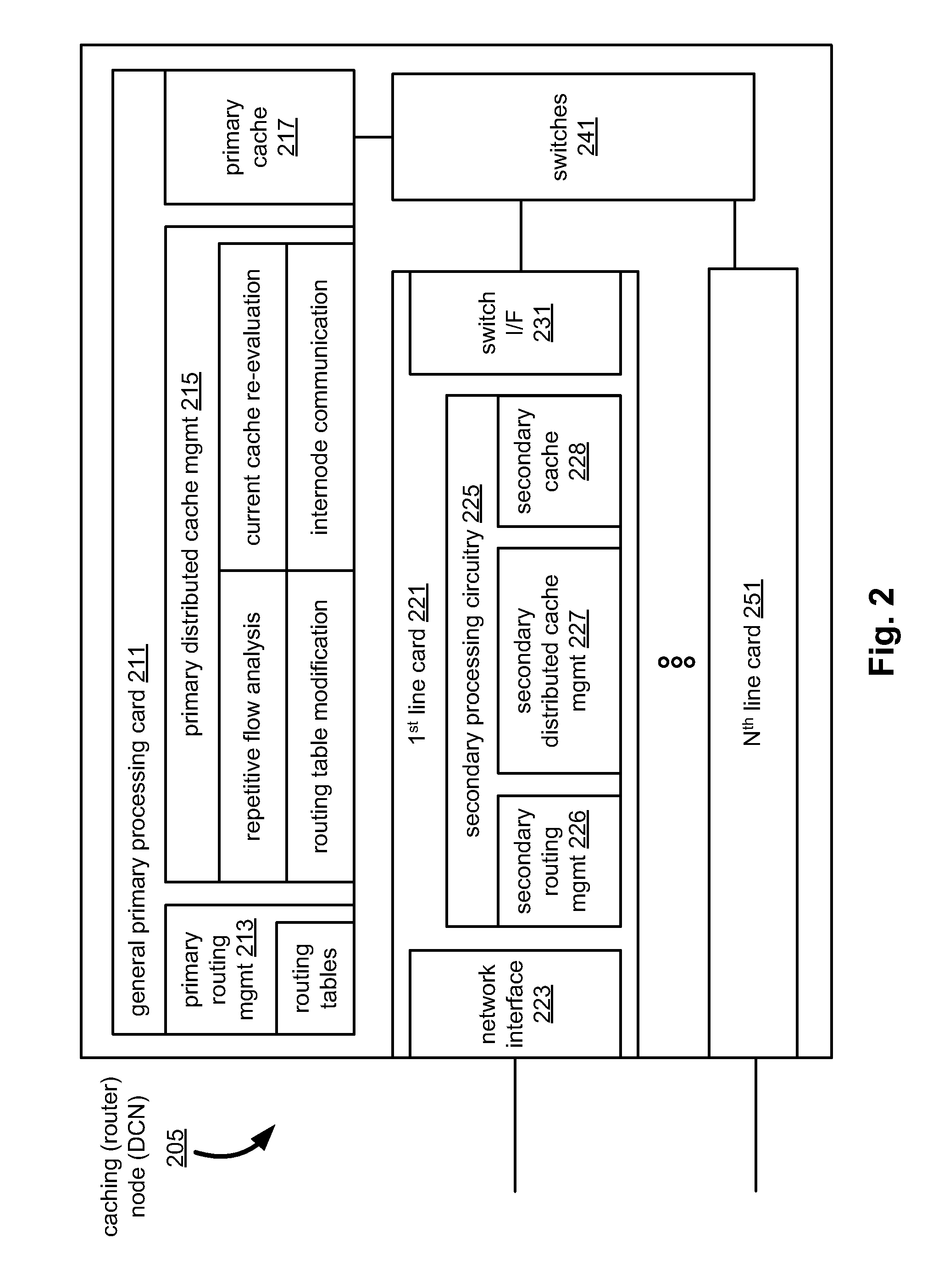

Distributed Internet caching via multiple node caching management

InactiveUS20110040893A1Digital computer detailsTransmissionCommunications systemTelecommunications link

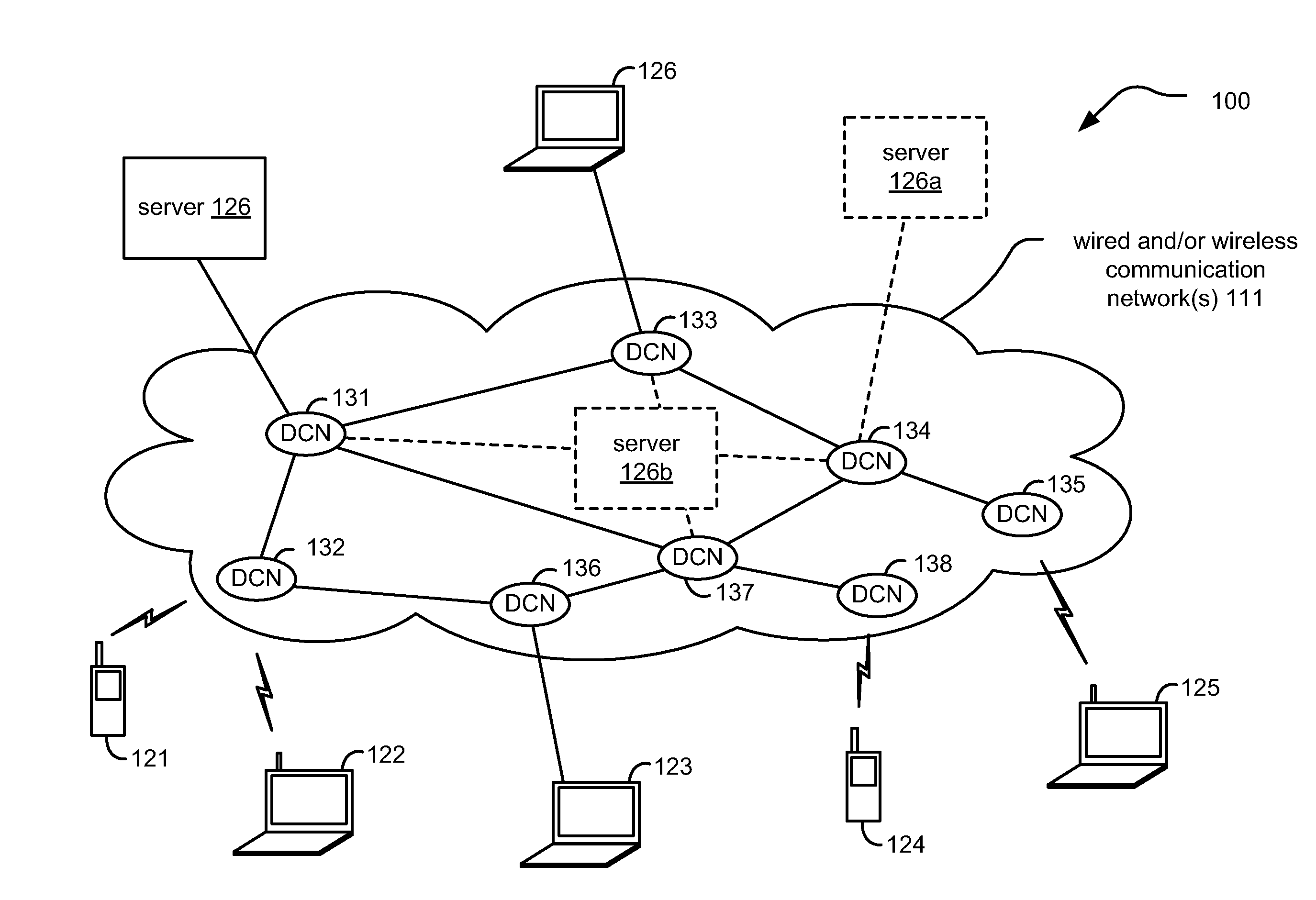

Distributed Internet caching via multiple node caching management. Caching decisions and management are performed based on information corresponding to more than one caching node device (sometimes referred to as a distributed caching node device, distributed Internet caching node device, and / or DCN) within a communication system. The communication system may be composed of one type or multiple types of communication networks that are communicatively coupled to communicate there between, and they may be composed of any one or combination types of communication links therein [wired, wireless, optical, satellite, etc.]). In some instances, more than one of these DCNs operate cooperatively to make caching decisions and direct management of content to be stored among the more than one DCNs. In an alternative embodiment, a managing DCN is operative to make caching decisions and direct management of content within more than one DCNs of a communication system.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

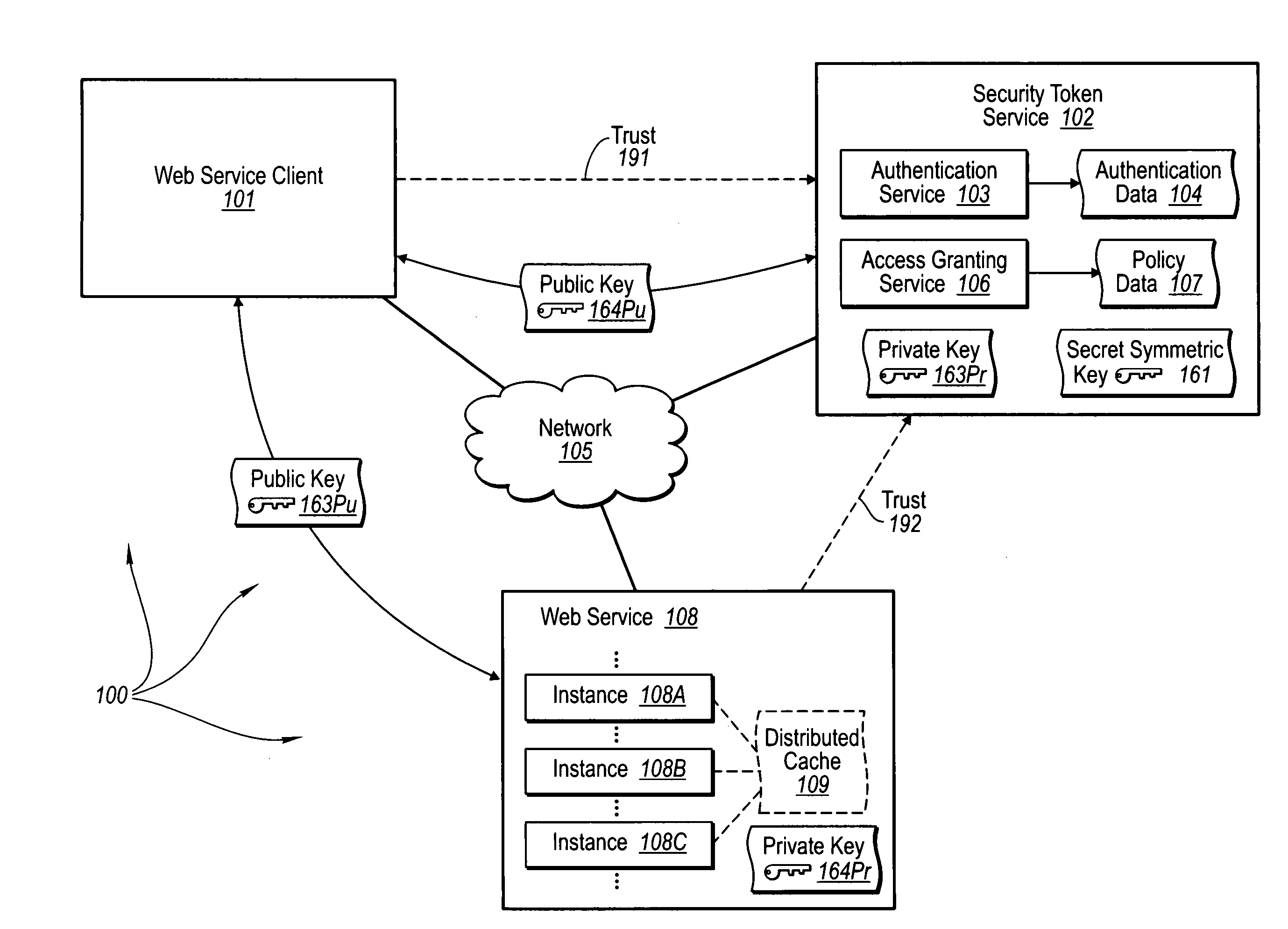

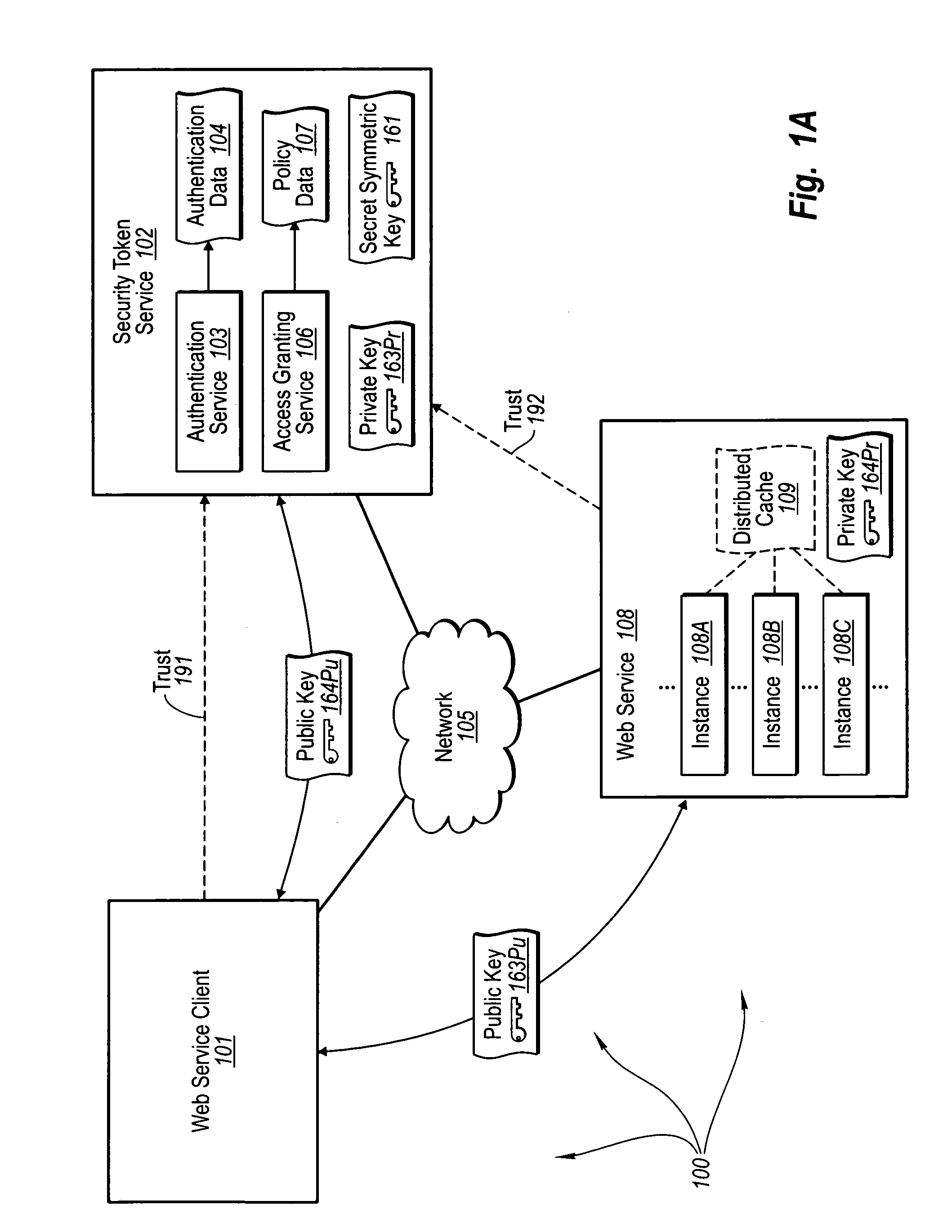

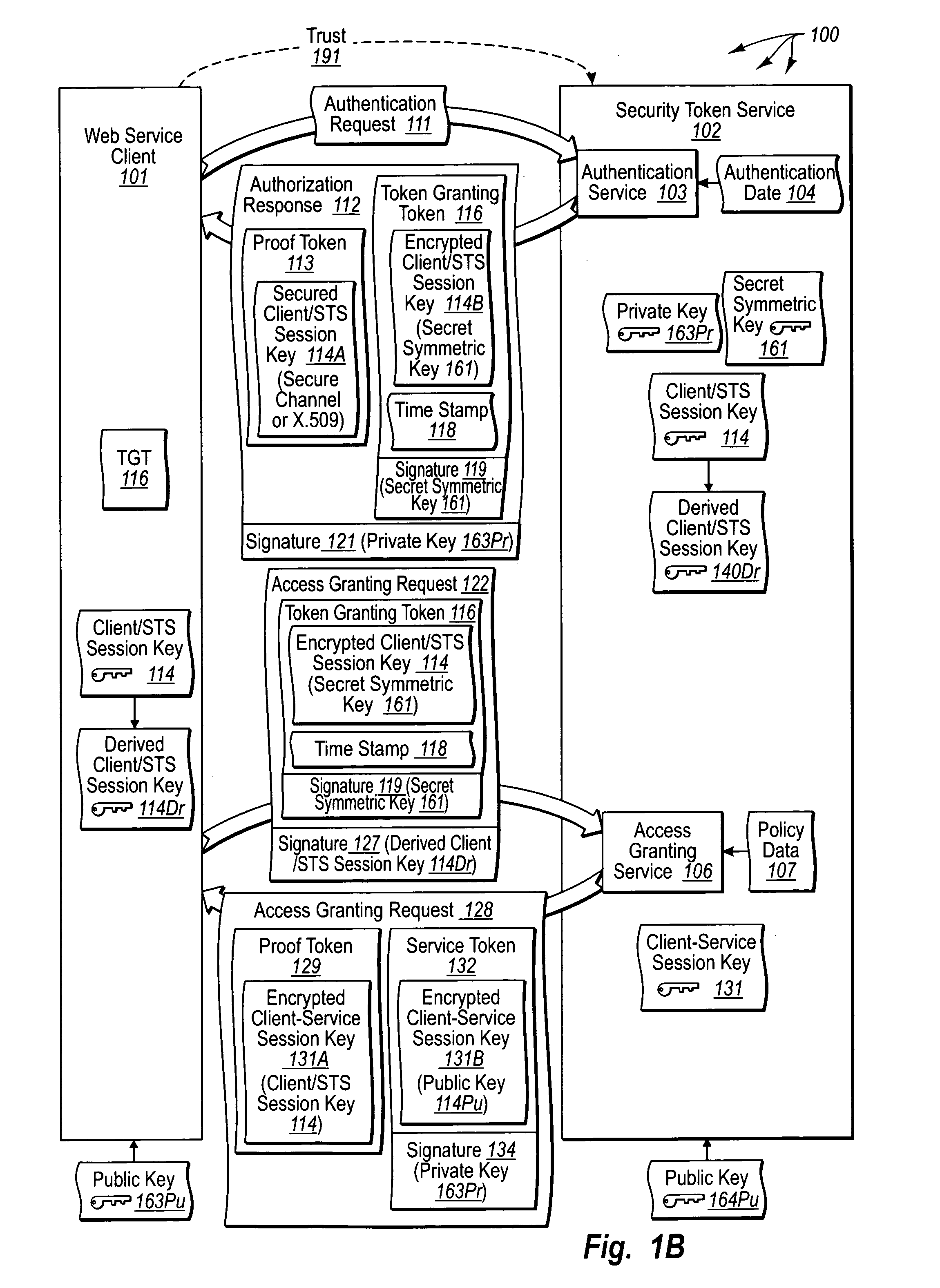

Trusted third party authentication for web services

InactiveUS20060206932A1Communication securityDigital data processing detailsUser identity/authority verificationWeb serviceInternet privacy

The present invention extends to trusted third party authentication for Web services. Web services trust and delegate user authentication responsibility to a trusted third party that acts as an identity provider for the trusting Web services. The trusted third party authenticates users through common authentication mechanisms, such as, for example, username / password and X.509 certificates and uses initial user authentication to bootstrap subsequent secure sessions with Web services. Web services construct user identity context using a service session token issued by the trusted third party and reconstruct security states without having to use a service-side distributed cache.

Owner:MICROSOFT TECH LICENSING LLC

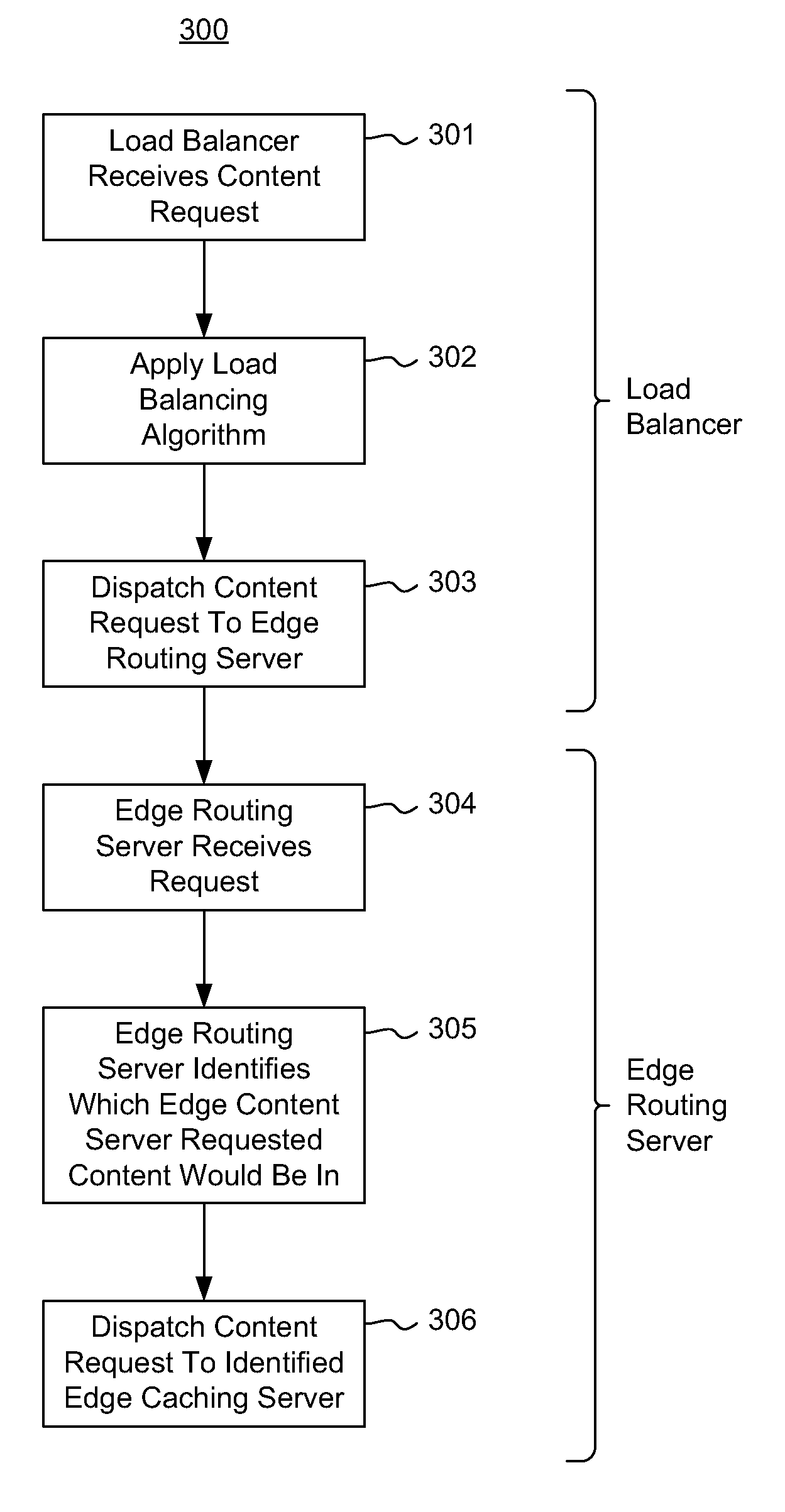

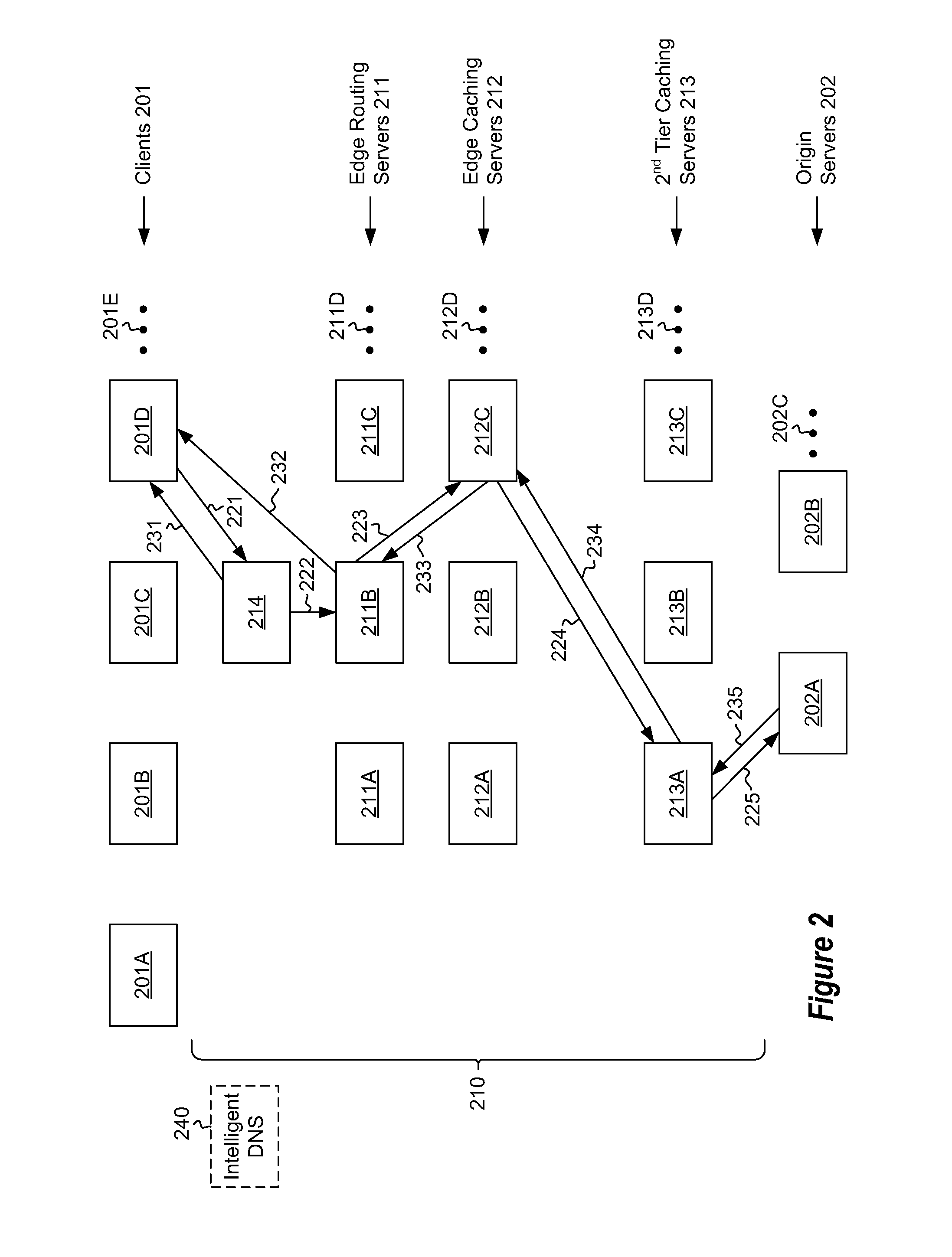

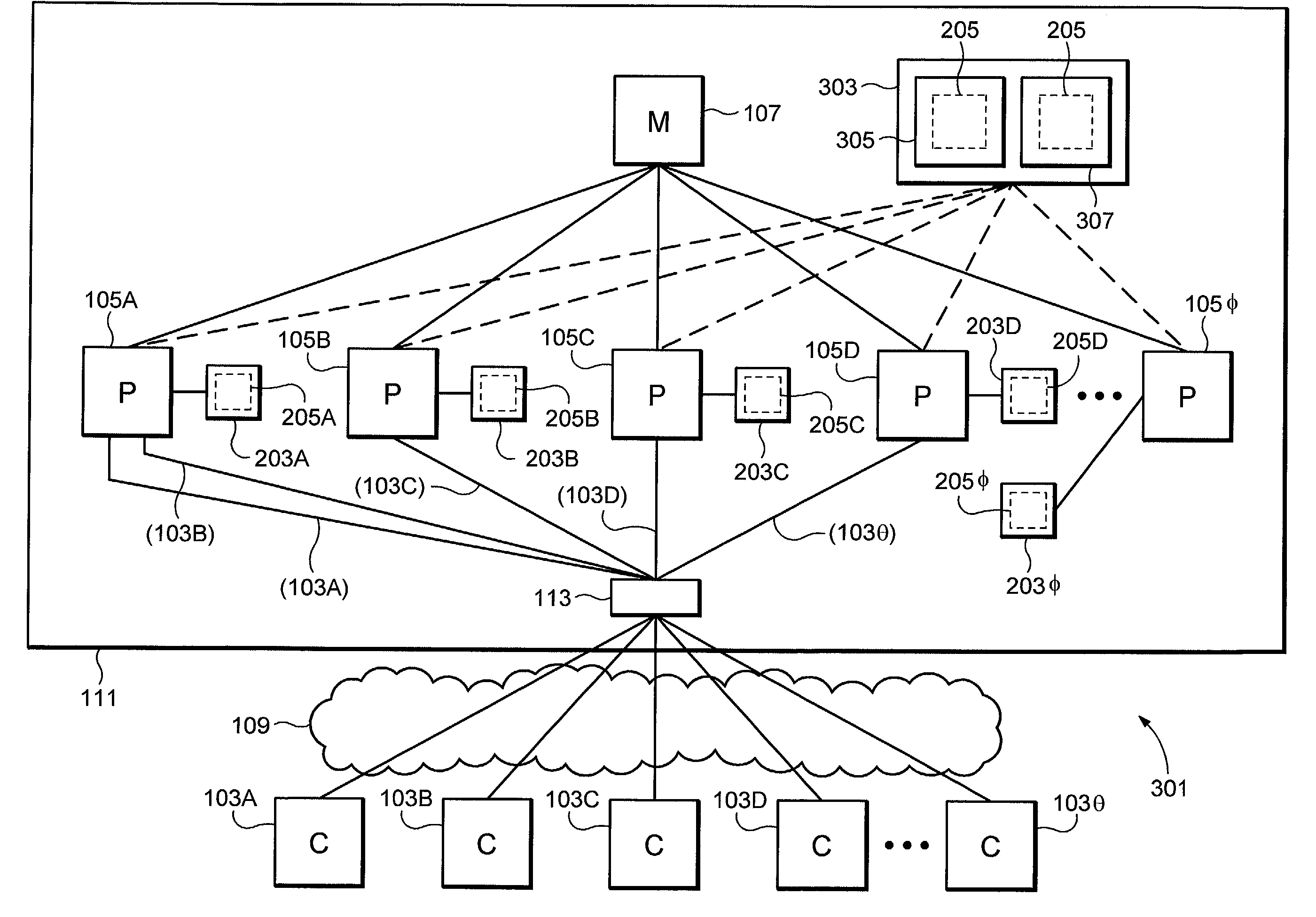

Proxy-based cache content distribution and affinity

ActiveUS8612550B2Maximize likelihoodMultiple digital computer combinationsTransmissionContent distributionMultiple edges

A distributed caching hierarchy that includes multiple edge routing servers, at least some of which receiving content requests from client computing systems via a load balancer. When receiving a content request, an edge routing server identifies which of the edge caching servers the requested content would be in if the requested content were to be cached within the edge caching servers, and distributes the content request to the identified edge caching server in a deterministic and predictable manner to increase the likelihood of increasing a cache-hit ratio.

Owner:MICROSOFT TECH LICENSING LLC

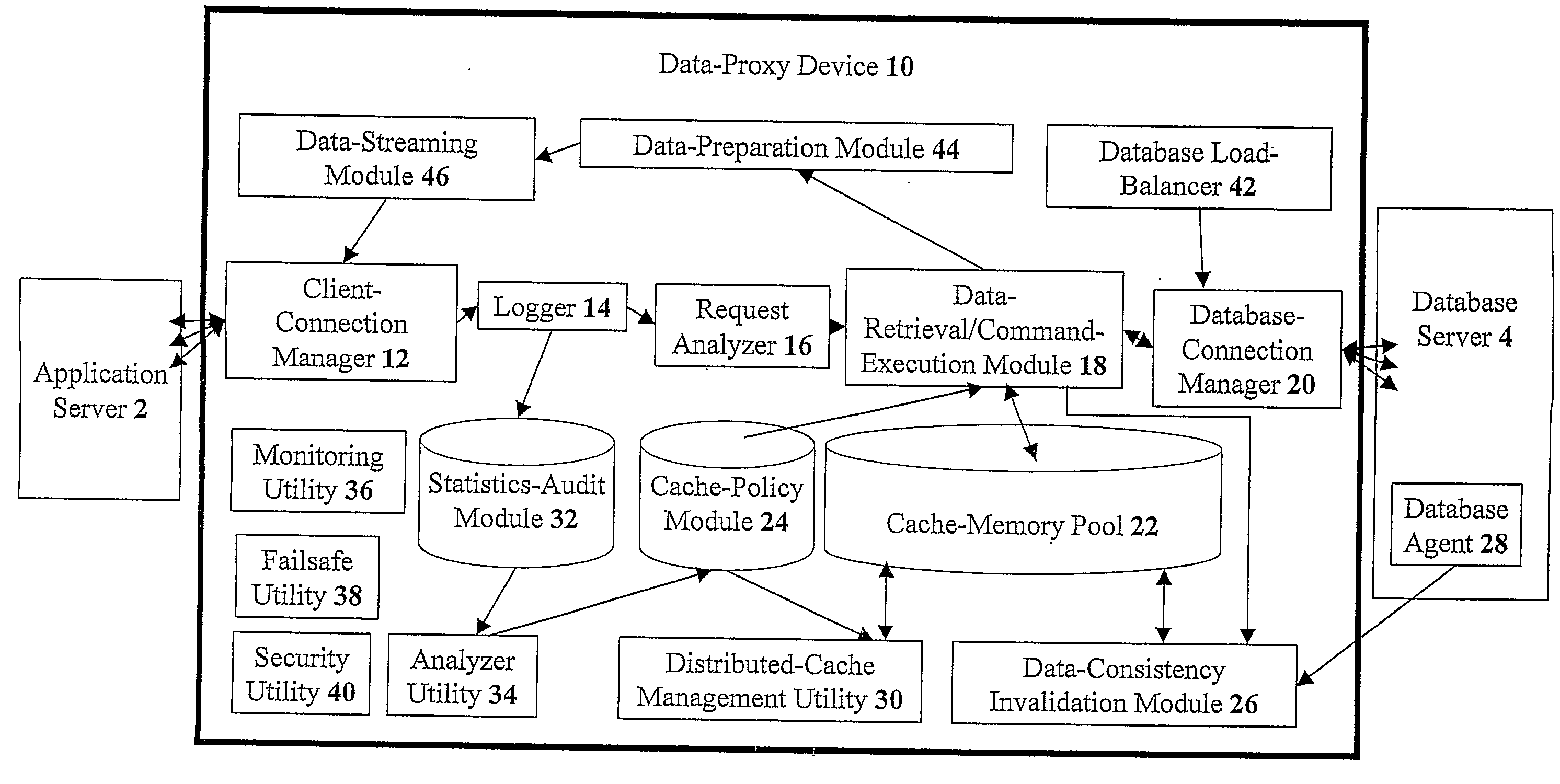

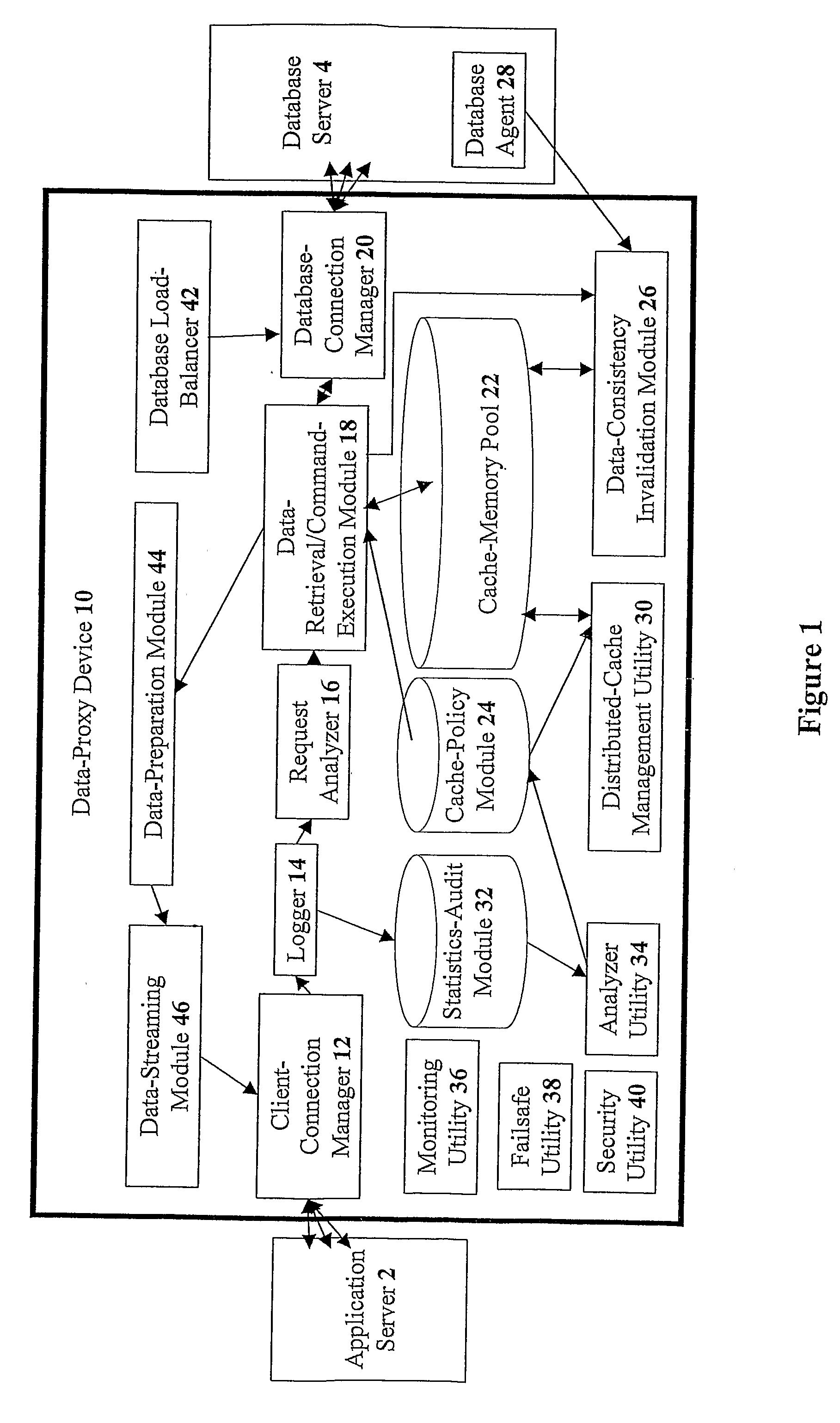

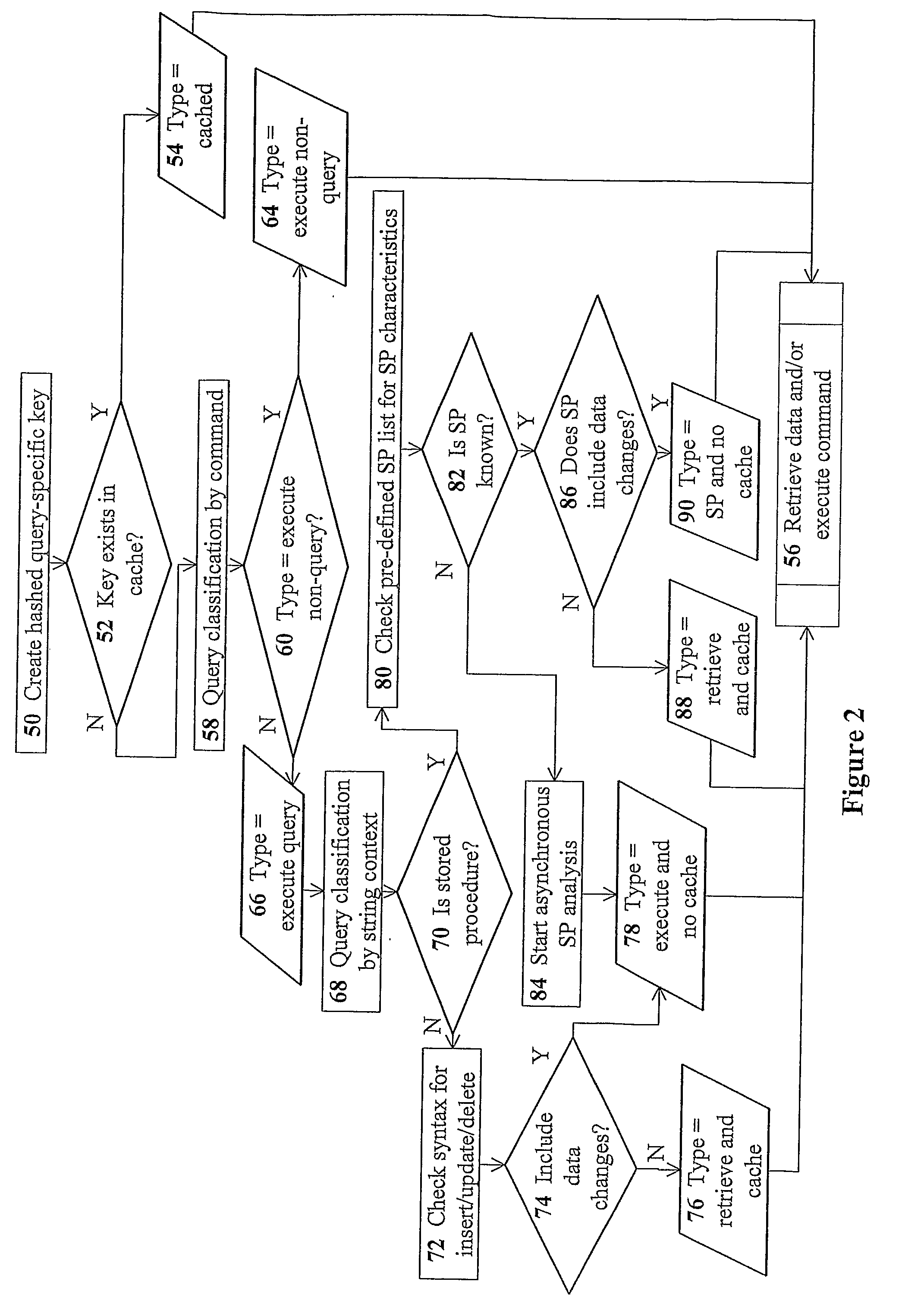

Devices for providing distributable middleware data proxy between application servers and database servers

InactiveUS20100174939A1Shorten the timeSave resourcesDigital data information retrievalResource allocationClient dataTerm memory

The present invention discloses devices Including a transparent client-connection manager for exchanging client data between an application server and the device: a request analyzer for analyzing query requests from at least one application server; a data-retrieval / command-execution module for executing query requests; a database connection manager for exchanging database data between at least one database server and the device: a cache-memory pool for storing data items from at least one database server: a cache-policy module for determining cache criteria for storing the data Items In the cache-memory pool; and a data-consistency invalidation module for determining invalidated data Items based on invalidation criteria for removing from the cache-memory pool. The cache-memory pool Is configured to utilize memory modules residing in data proxy devices and distributed cache management utility, enabling the memory capacity to be used as a cluster to balance workloads.

Owner:DCF TECH

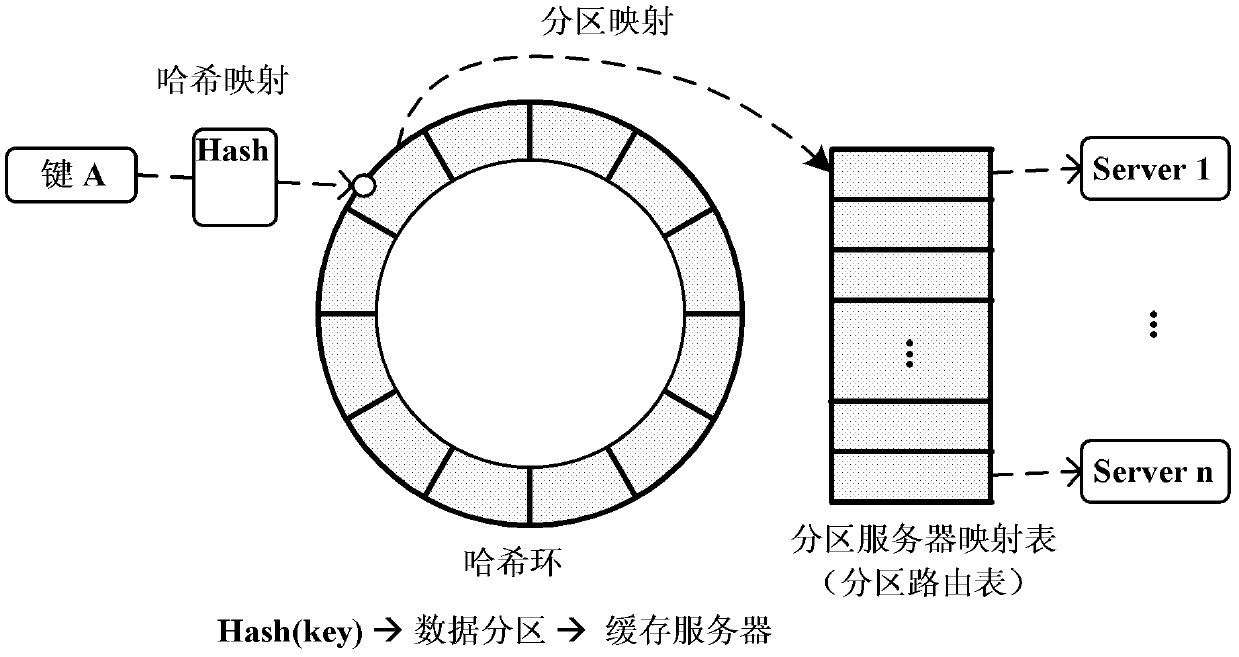

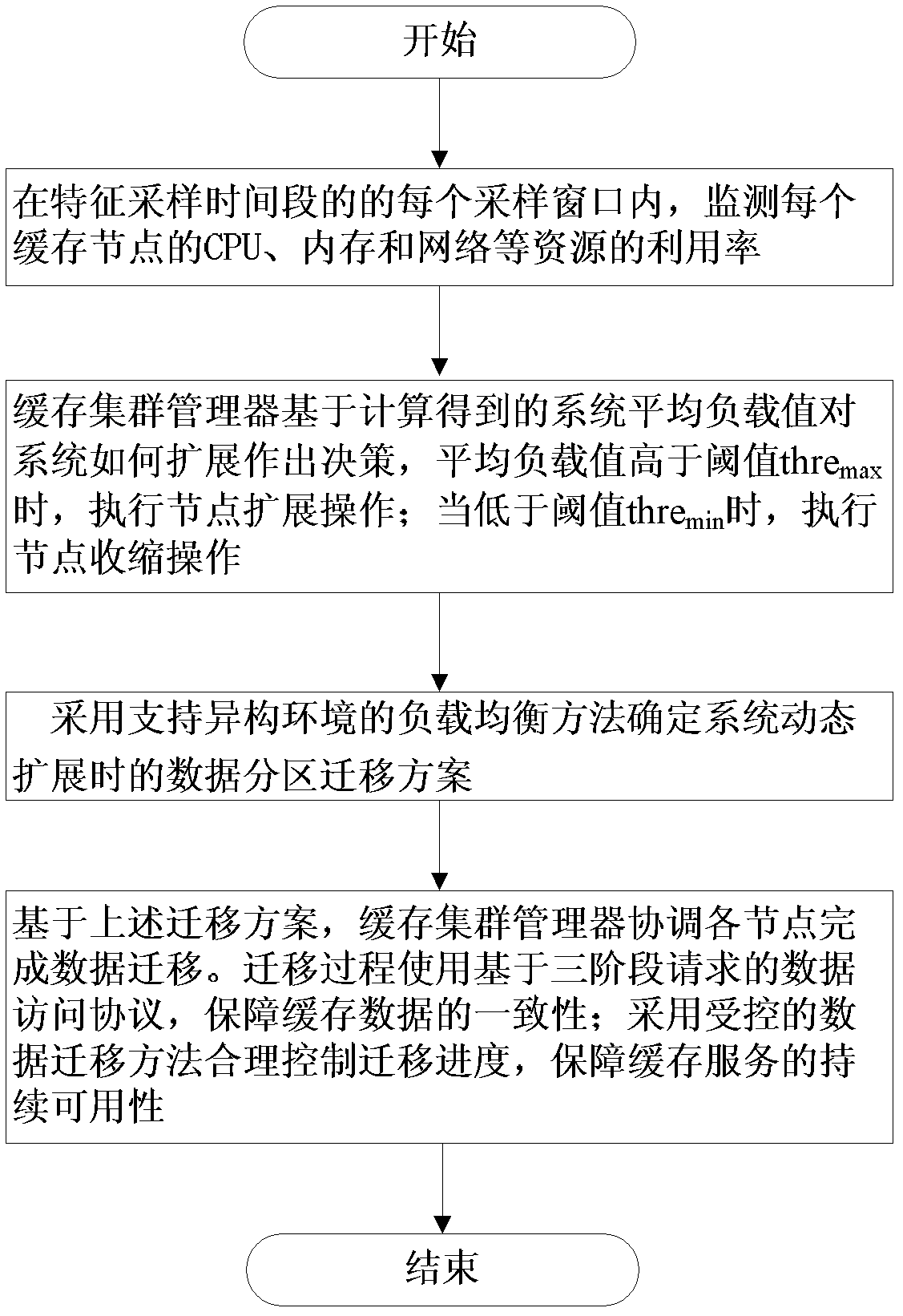

Distributed type dynamic cache expanding method and system supporting load balancing

InactiveCN102244685AReduce overheadImprove performanceData switching networksTraffic capacityCache server

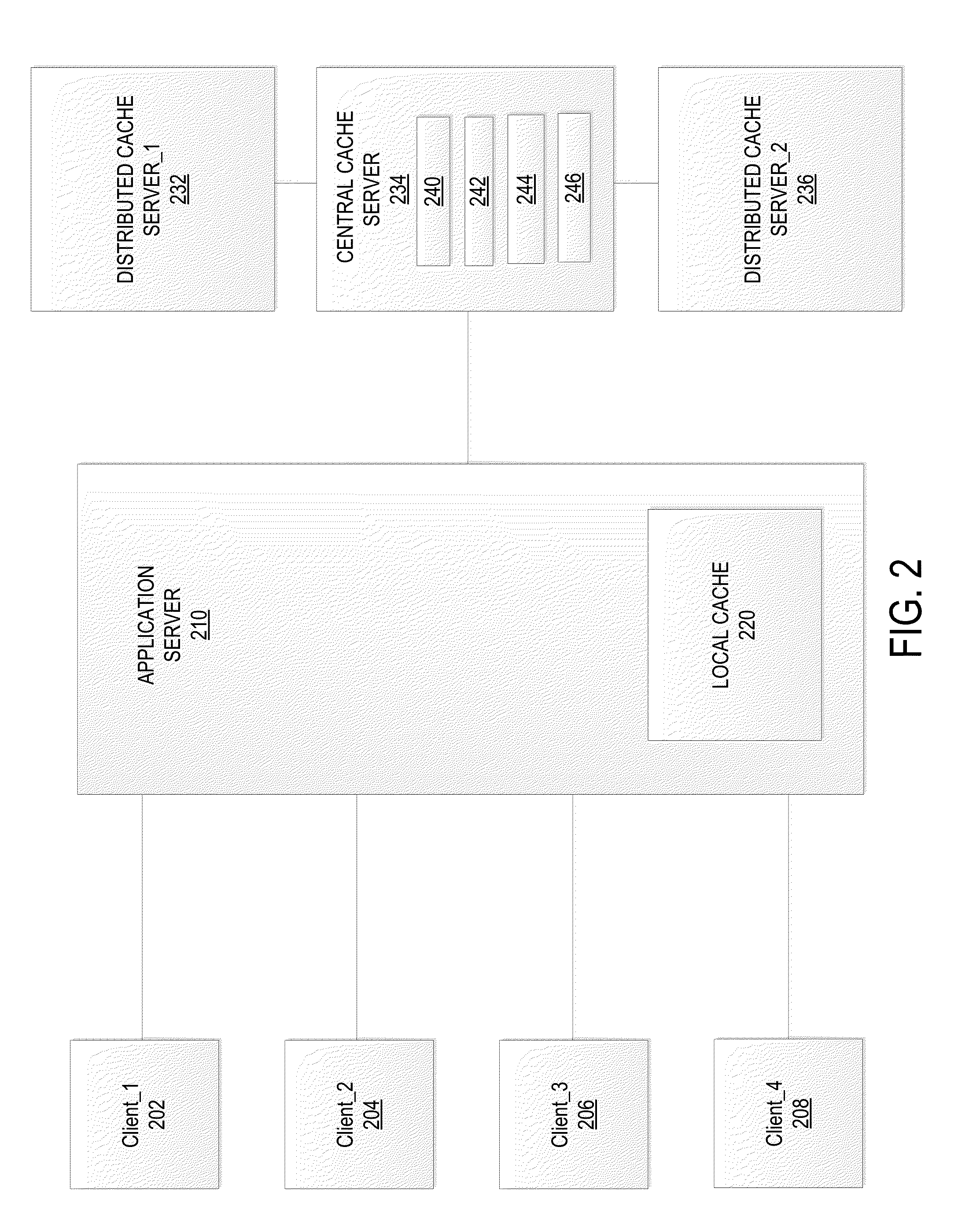

The invention discloses a distributed type dynamic cache expanding method and system supporting load balancing, which belong to the technical field of software. The method comprises steps of: 1) monitoring respective resource utilization rate at regular intervals by each cache server; 2) calculating respective weighing load value Li according to the current monitored resource utilization rate, and sending the weighting load value Li to a cache clustering manager by each cache server; 3) calculating current average load value of a distributed cache system by the cache clustering manager according to the weighting load value Li, and executing expansion operation when the current average load value is higher than a threshold thremax; and executing shrink operation when the current average load value is lower than a set threshold thremin. The system comprises the cache servers, a cache client side and the cache clustering manager, wherein the cache servers are connected with the cache client side and the cache clustering manager through the network. The invention ensures the uniform distribution of the network flow among the cache nodes, optimizes the utilization rate of system resources, and solves the problems of ensuring data consistency and continuous availability of services.

Owner:济南君安泰投资集团有限公司

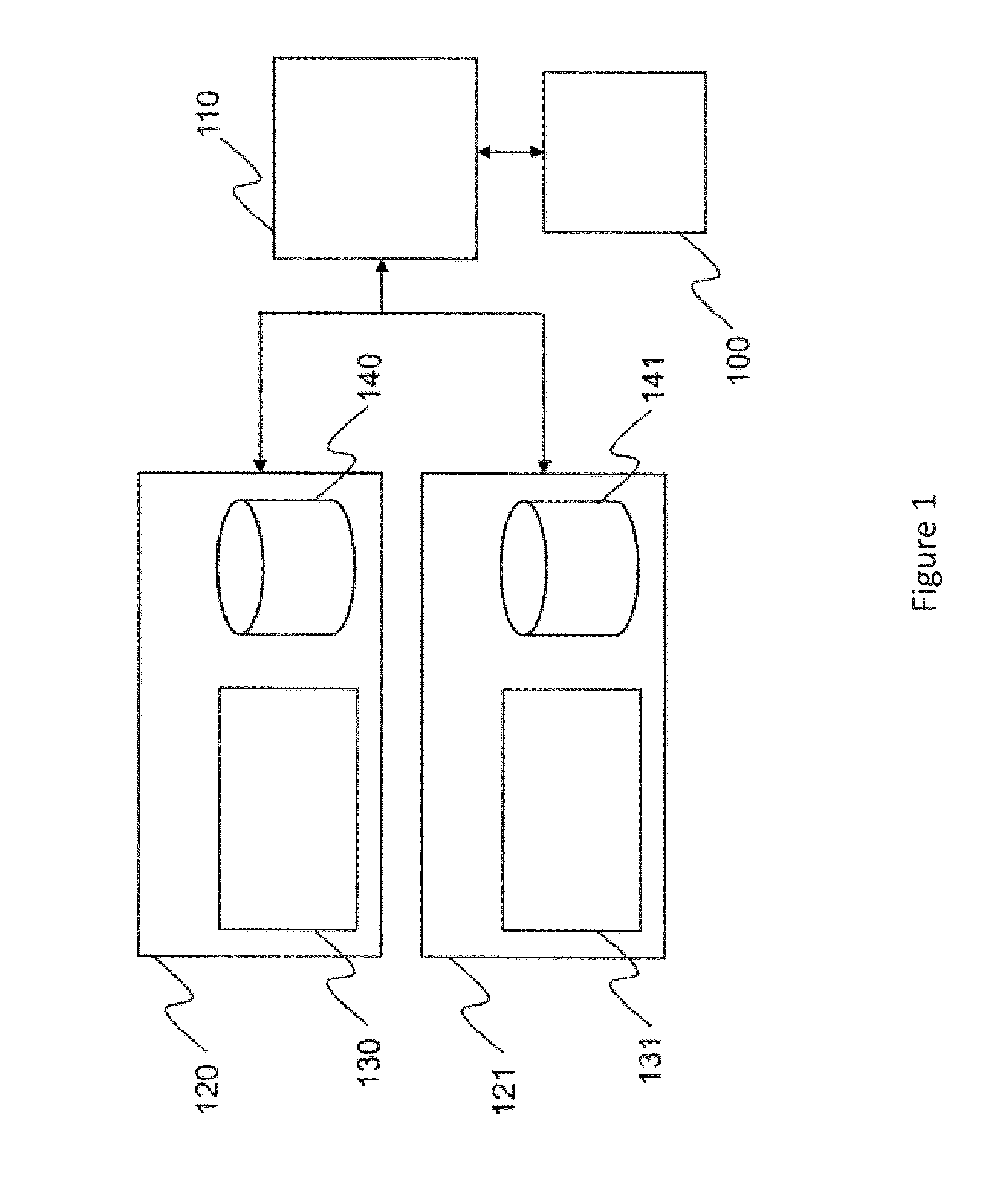

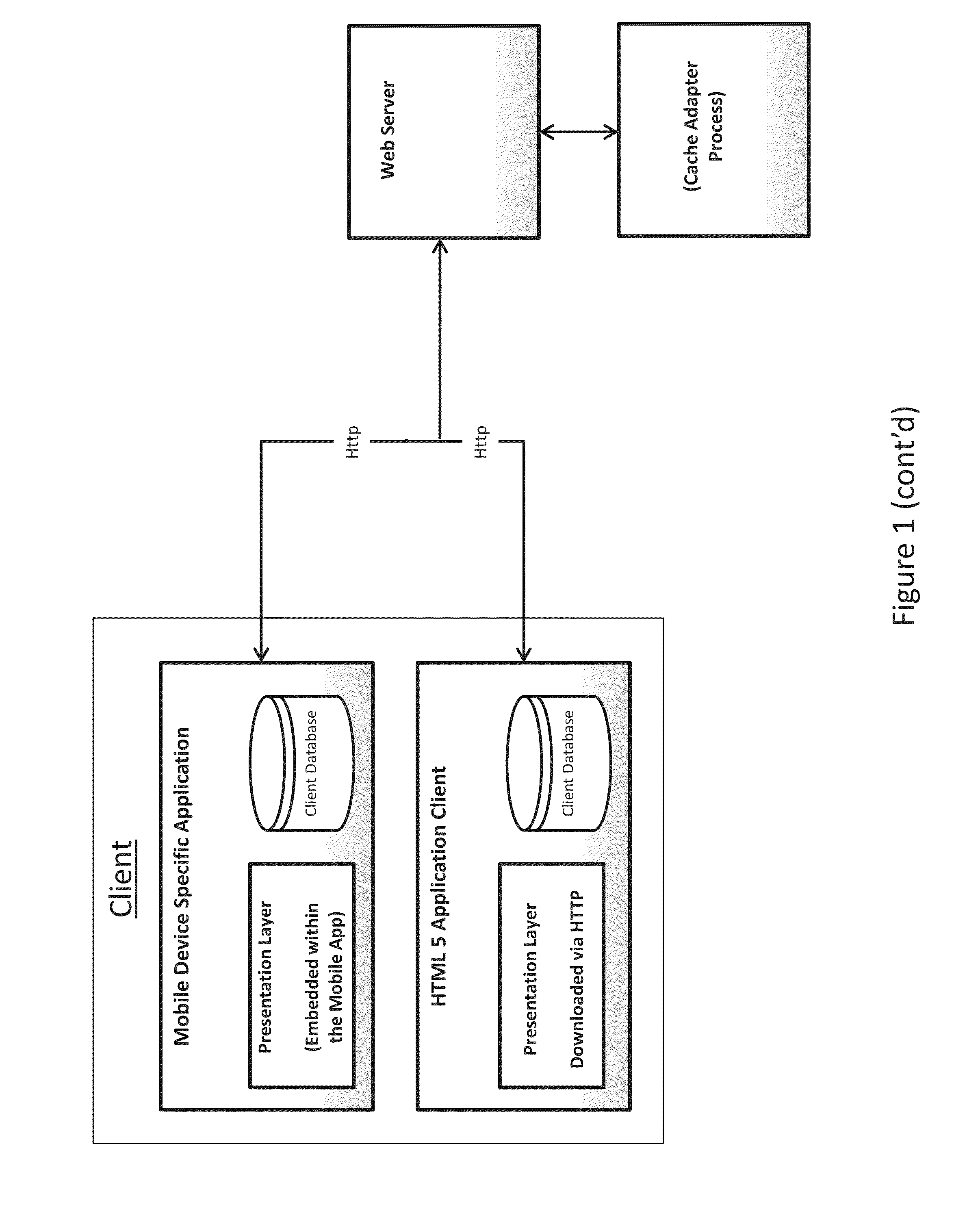

Cloud-based distributed persistence and cache data model

ActiveUS20130110961A1Improve scalabilityLower latencyDigital computer detailsTransmissionDistributed File SystemWeb service

A distributed cache data system includes a cache adapter configured to reserve a designated portion of memory in at least one node in a networked cluster of machines as a contiguous space. The designated portion of memory forms a cache and the designated portion of memory includes data cells configured to store data. The cache adapter is configured to interface with the data and a distributed file system and the cache adapter is further configured to provide an interface for external clients to access the data. The cache adapter is configured to communicate to clients via a web server, and the cache adapter is further configured to direct data access requests to appropriate the data. A related process of distributing cache data is disclosed as well.

Owner:JADHAV AJAY

Distribution-base mass log collection system

InactiveCN104036025AProcessing in near real timeDeal effectively with data collection issuesDatabase management systemsHardware monitoringCollection systemDistributed cache

The invention discloses a distribution-base mass log collection system comprising a data source layer, a distribution-type cache layer, a distribution-type storing and calculating layer, a bushiness processing layer, a visible display layer and a unified dispatching and managing module. The system has the advantages that the problem of log collection and high-speed storage can be solved effectively, the distribution-type storage and search engine technology is adopted, querying and retrieval speed can be increased, and the mass logs can be collected and analyzed accurately and accurately in high speed.

Owner:蓝盾信息安全技术有限公司

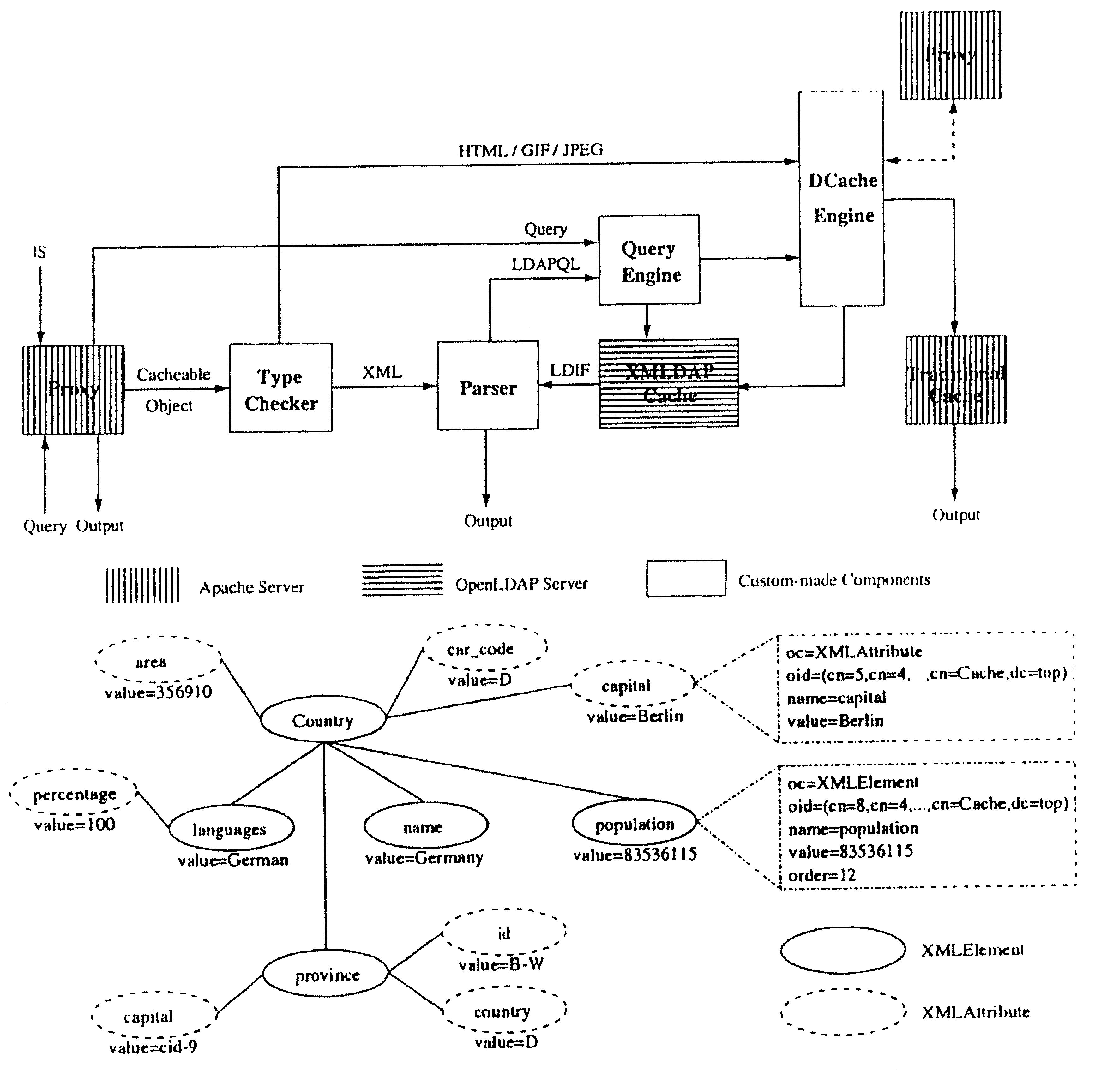

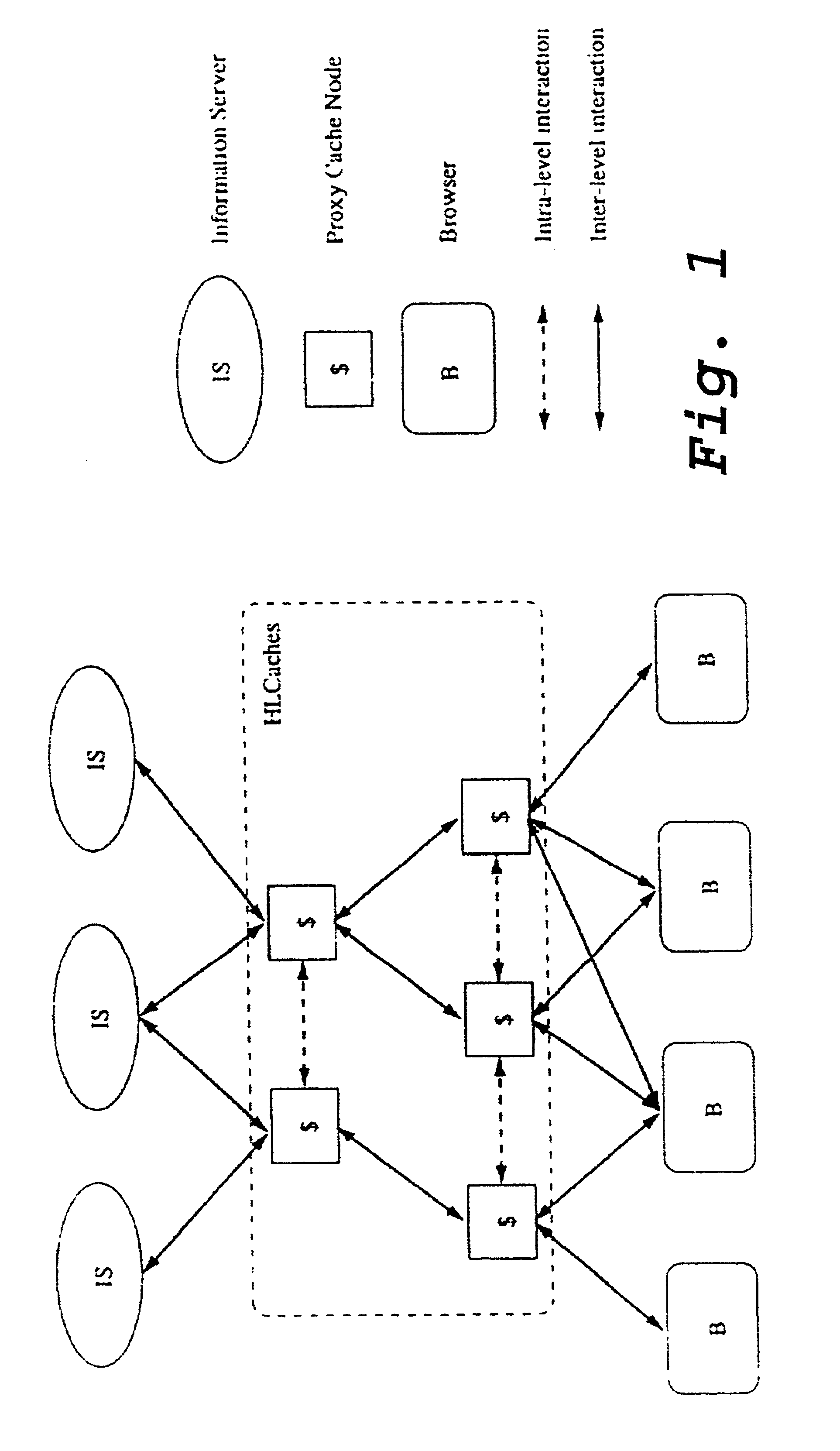

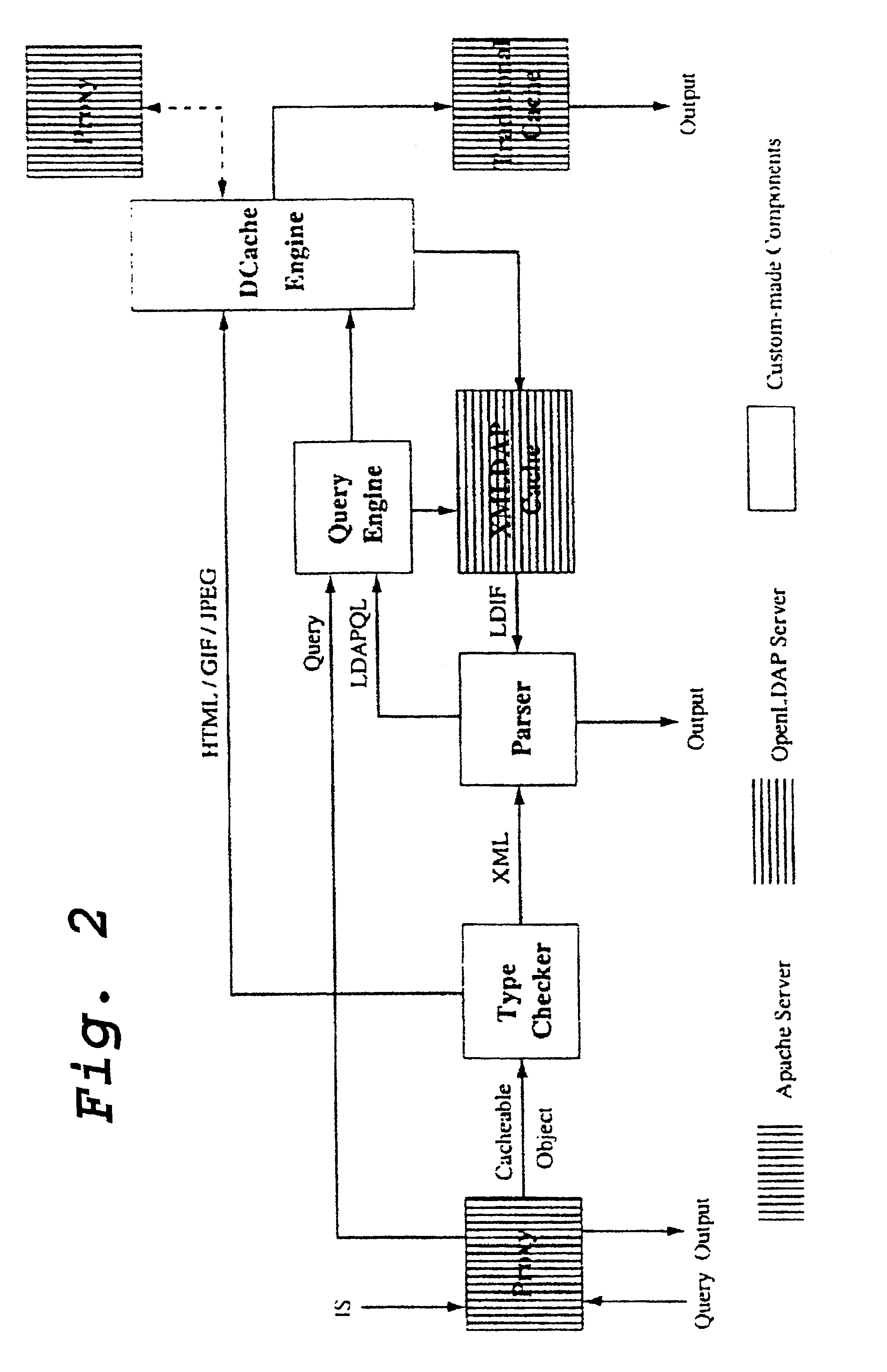

LDAP-based distributed cache technology for XML

InactiveUS6901410B2Simple algorithmFast resultsData processing applicationsSpecial data processing applicationsSemi-structured dataTransformation algorithm

The design, internal data representation and query model of the invention, a hierarchical distributed caching system for semi-structured documents based on LDAP technology is presented that brings both, the semi-structured data model and the LDAP data model together into a system that provides the ideal characteristics for the efficient processing of XPath queries over XML documents. Transformation algorithms and experimental results have also been shown that prove the feasibility of the invention as a distributed caching system especially tailored for semi-structured data.

Owner:MARRON PEDRO JOSE +1

Distributed cache for state transfer operations

ActiveUS7383329B2Computer security arrangementsMultiple digital computer combinationsDistributed cacheClient-side

A network arrangement that employs a cache having copies distributed among a plurality of different locations. The cache stores state information for a session with any of the server devices so that it is accessible to at least one other server device. Using this arrangement, when a client device switches from a connection with a first server device to a connection with a second server device, the second server device can retrieve state information from the cache corresponding to the session between the client device and the first server device. The second server device can then use the retrieved state information to accept a session with the client device.

Owner:DELL PROD LP

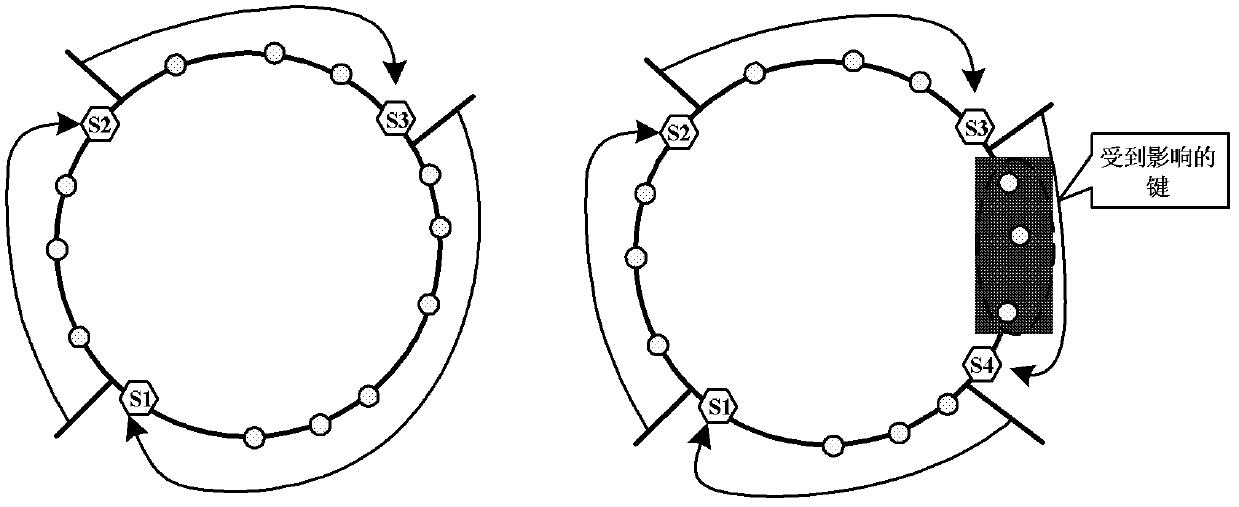

Method, System and Server of Removing a Distributed Caching Object

ActiveUS20130145099A1Waste of resourceImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationCache serverDistributed cache

The present disclosure discloses a method, a system and a server of removing a distributed caching object. In one embodiment, the method receives a removal request, where the removal request includes an identifier of an object. The method may further apply consistent Hashing to the identifier of the object to obtain a Hash result value of the identifier, locates a corresponding cache server based on the Hash result value and renders the corresponding cache server to be a present cache server. In some embodiments, the method determines whether the present cache server is in an active status and has an active period greater than an expiration period associated with the object. Additionally, in response to determining that the present cache server is in an active status and has an active period greater than the expiration period associated with the object, the method removes the object from the present cache server. By comparing an active period of a located cache server with an expiration period associated with an object, the exemplary embodiments precisely locate a cache server that includes the object to be removed and perform a removal operation, thus saving the other cache servers from wasting resources to perform removal operations and hence improving the overall performance of the distributed cache system.

Owner:ALIBABA GRP HLDG LTD

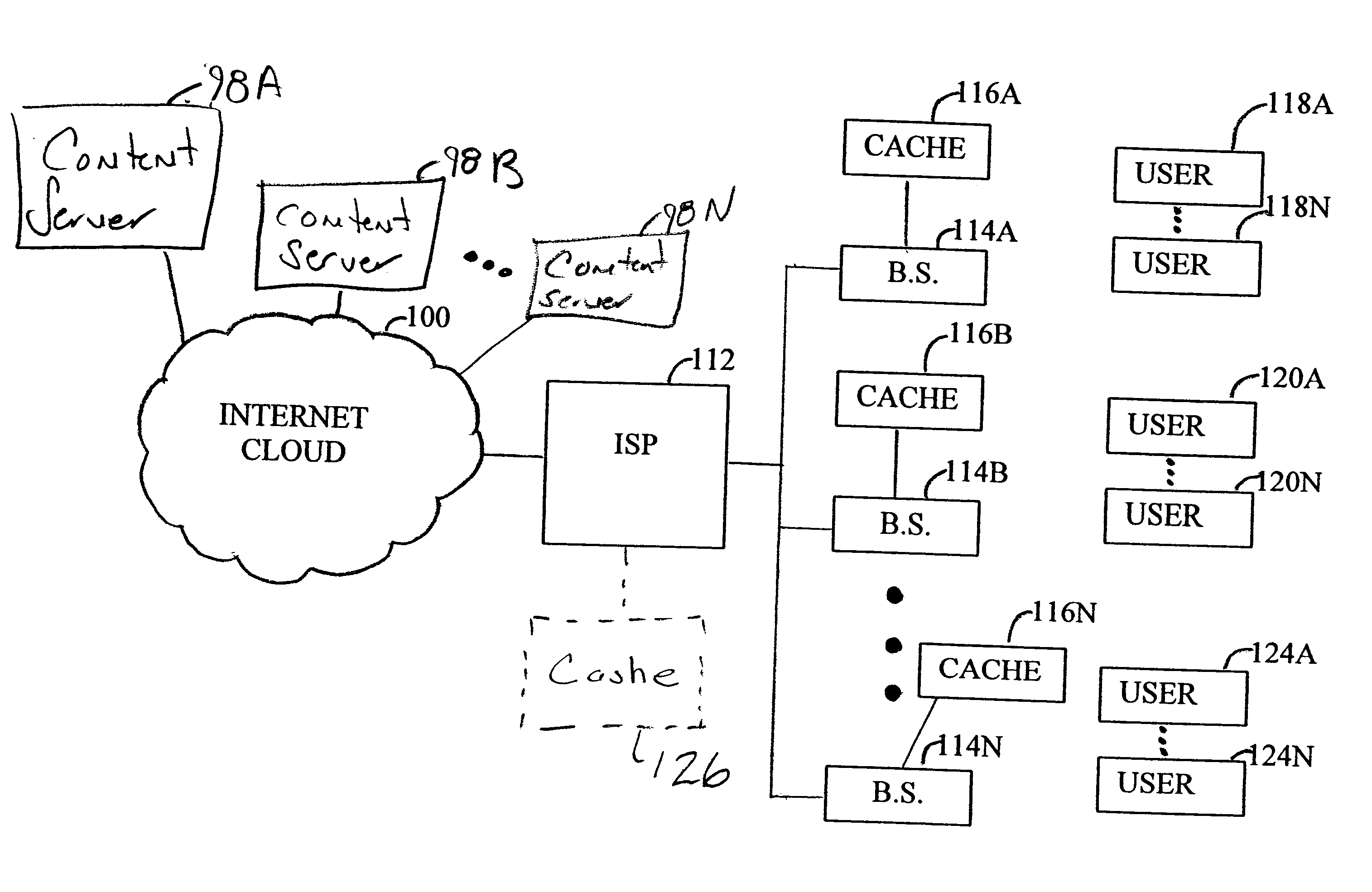

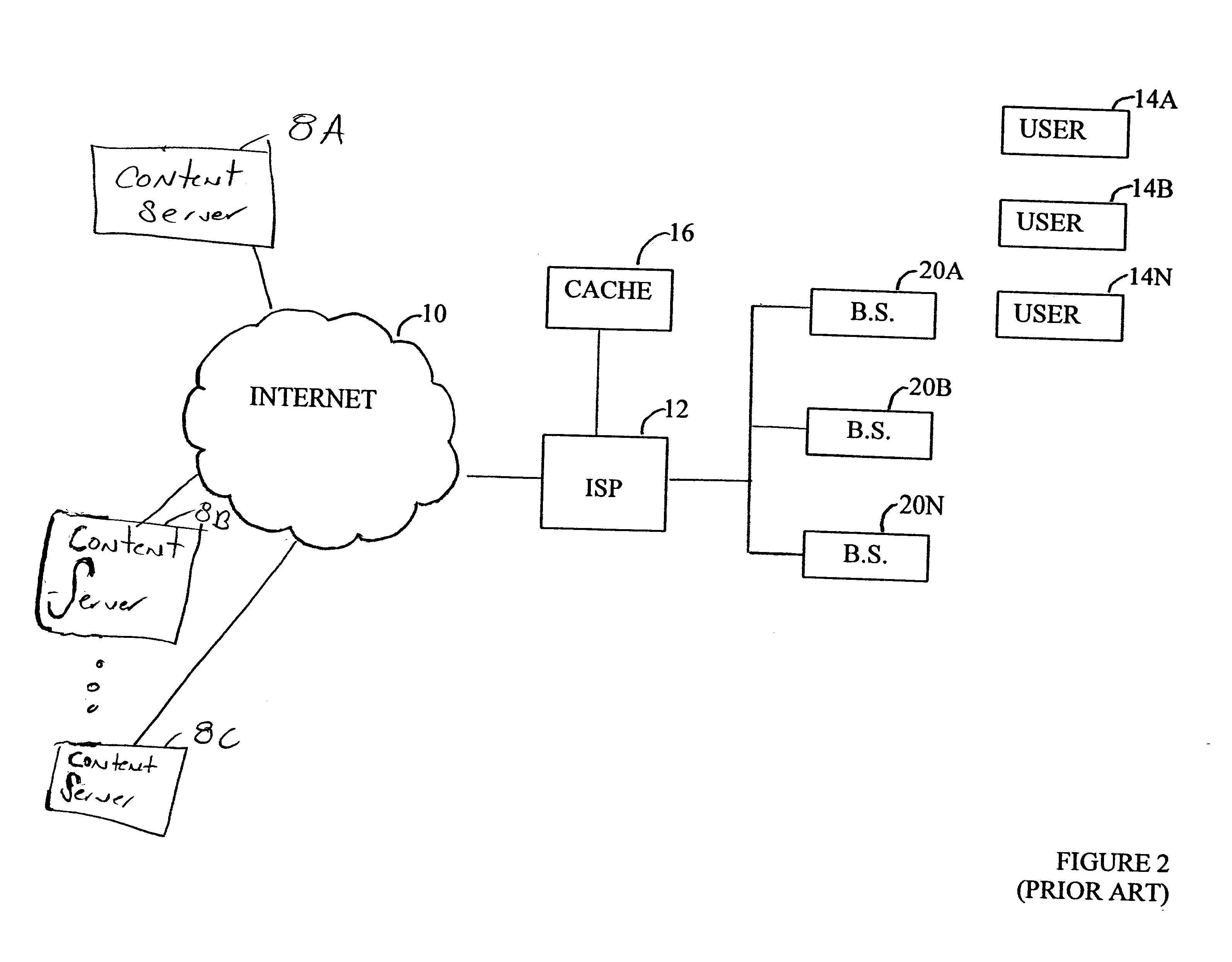

Distributed cache for a wireless communication system

InactiveUS6941338B1Short response timeDigital data information retrievalNetwork traffic/resource managementCommunications systemDistributed cache

In a wireless system comprising a plurality of radio base station, each base station services a portion of the system. A cache is associated with each base station. The cache stores files regularly requested by the remote unit within the coverage area of the corresponding base station. When a base station receives a message from a remote unit, it parses the message to determine if the message comprises a file request. If so, the base station determines whether the file is available from the cache. If available, the base station responds to the request by forwarding the requested file from the cache. If the file is not available, the base station forwards the message to a central controller, which retrieves the file via the Internet from the appropriate content server and provides it to the base station.

Owner:SAMSUNG ELECTRONICS CO LTD

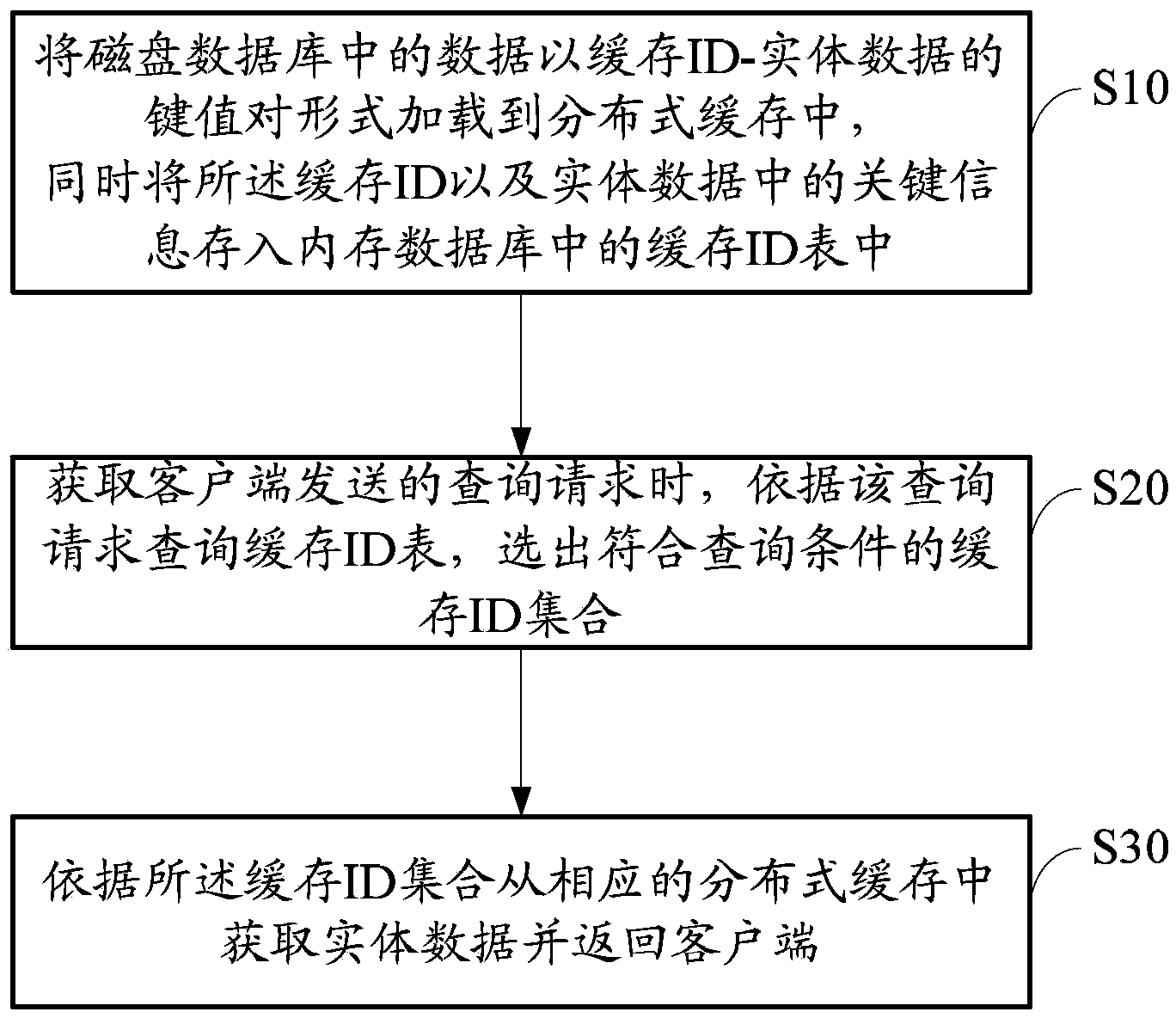

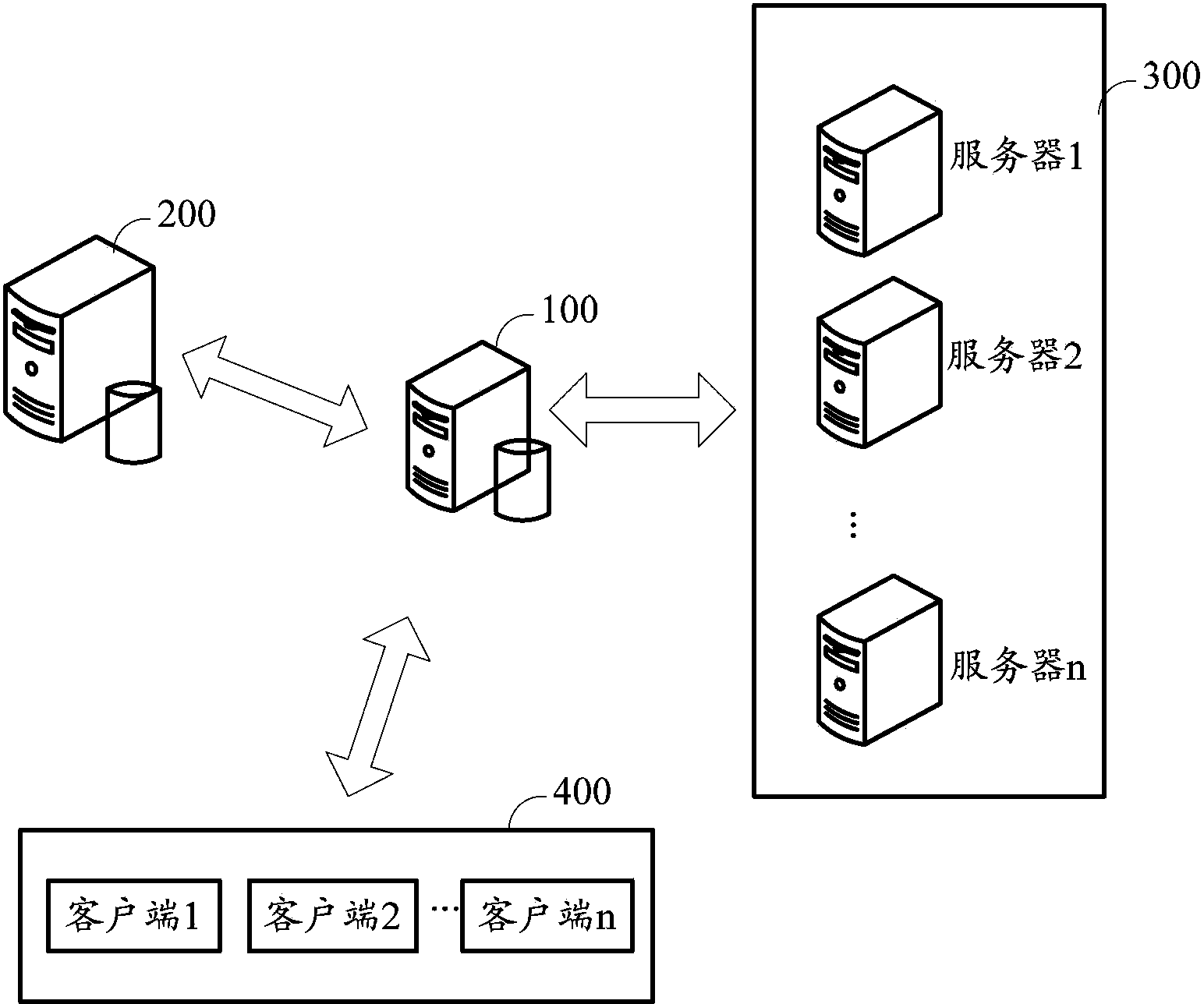

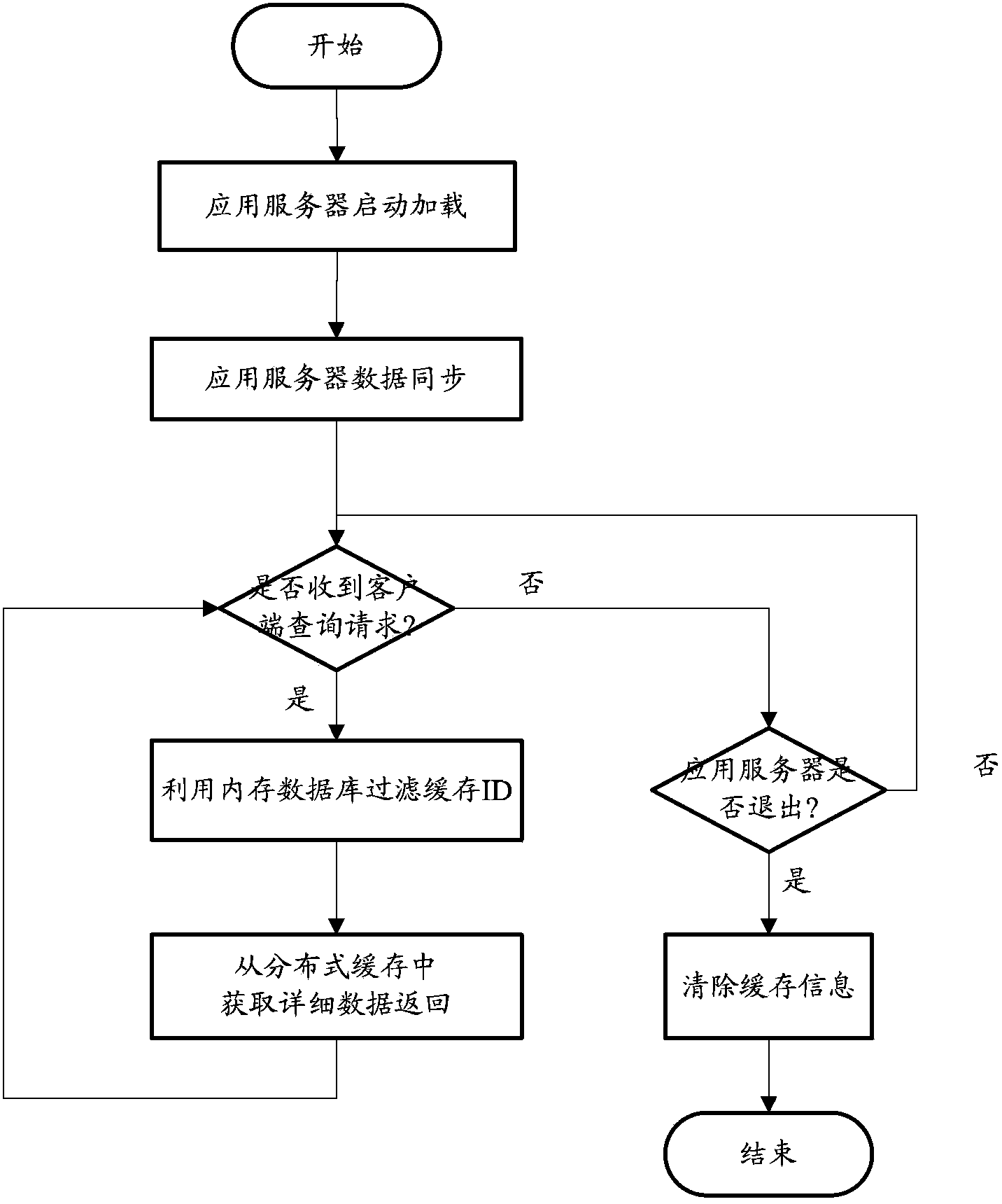

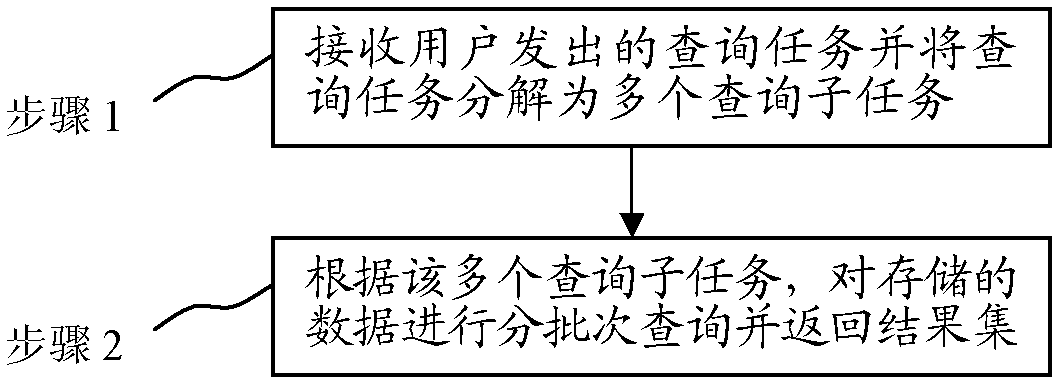

Method and system for improving large data volume query performance

ActiveCN103853727AReduce loadEasy to handleMemory adressing/allocation/relocationDatabase distribution/replicationIn-memory databaseDistributed cache

The invention discloses a method and a system for improving the large data volume query performance and belongs to the technical field of large data volume query. The method comprises A, loading data in a disk database into distributed caches in a cache ID-entity data key value pair mode, and storing the cache ID and key information of the entity data in a cache ID table of a memory database simultaneously; B, querying the cache ID table according to a query request when the query request sent by a client is obtained to selecting an ID set meeting the query request; C, obtaining the entity data from corresponding distributed caches according to the cache ID set and returning the entity data to the client. By means o the system and the method, loads of the disk database can be effectively reduced, and the big data query performance is improved.

Owner:SHENZHEN ZTE NETVIEW TECH

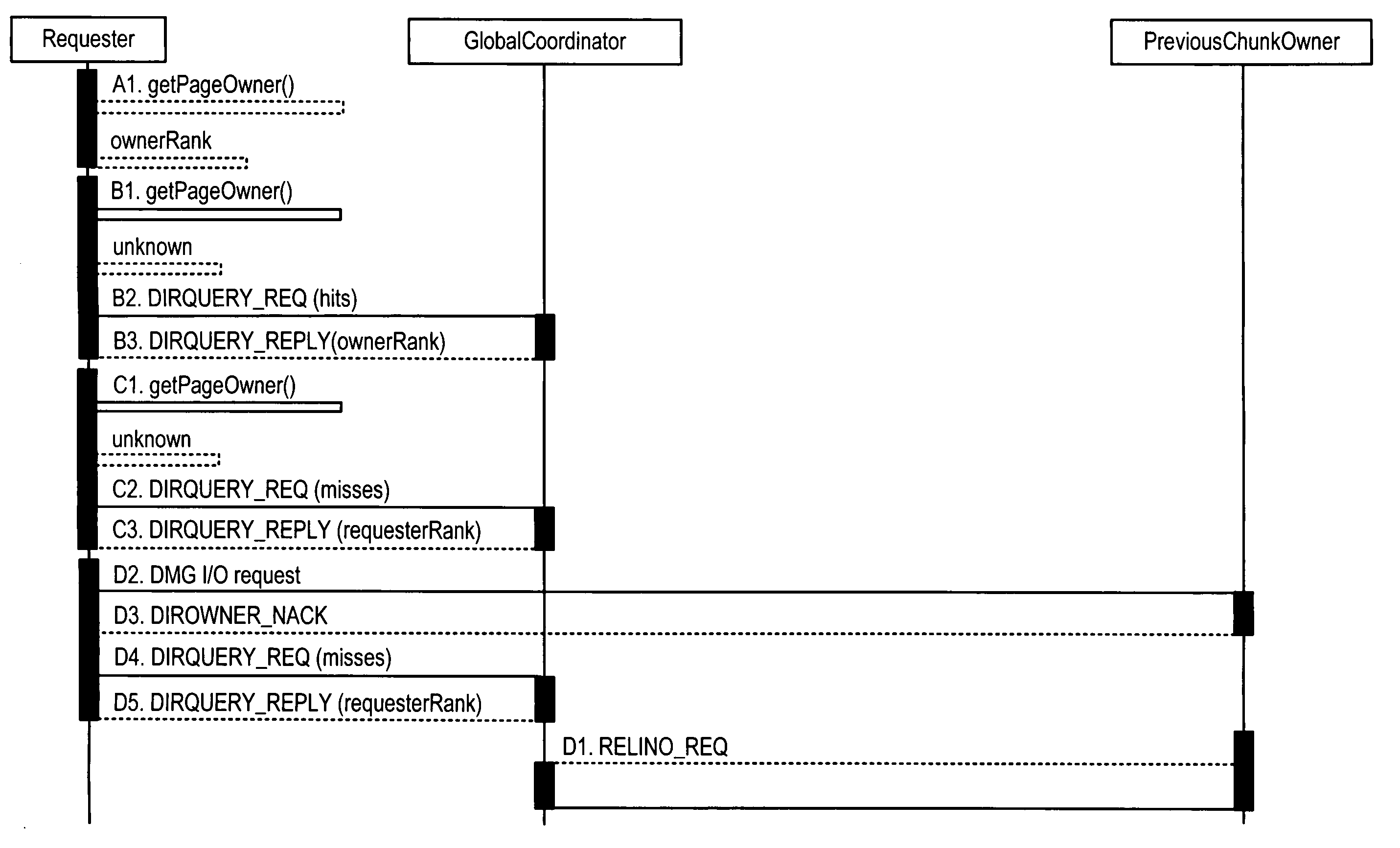

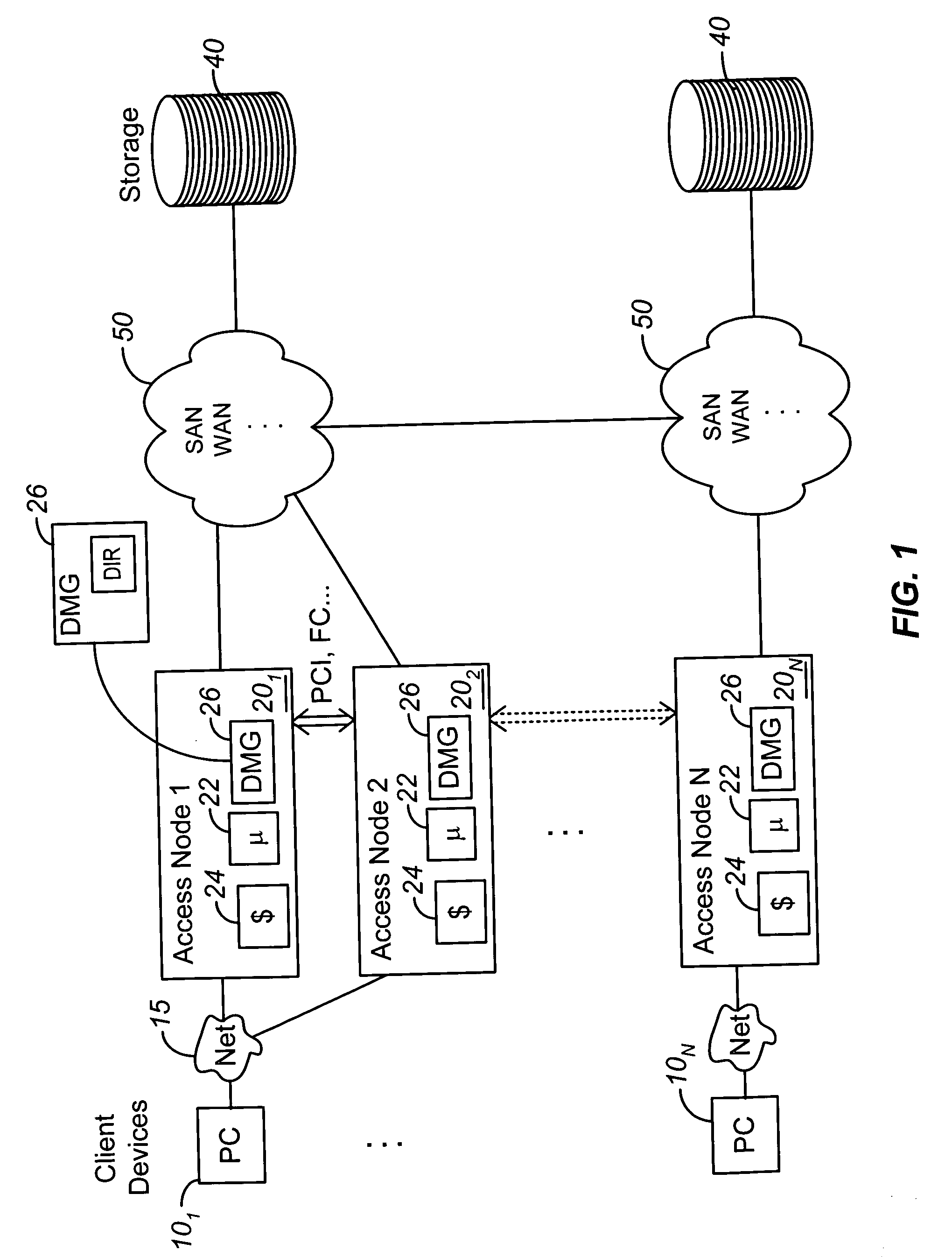

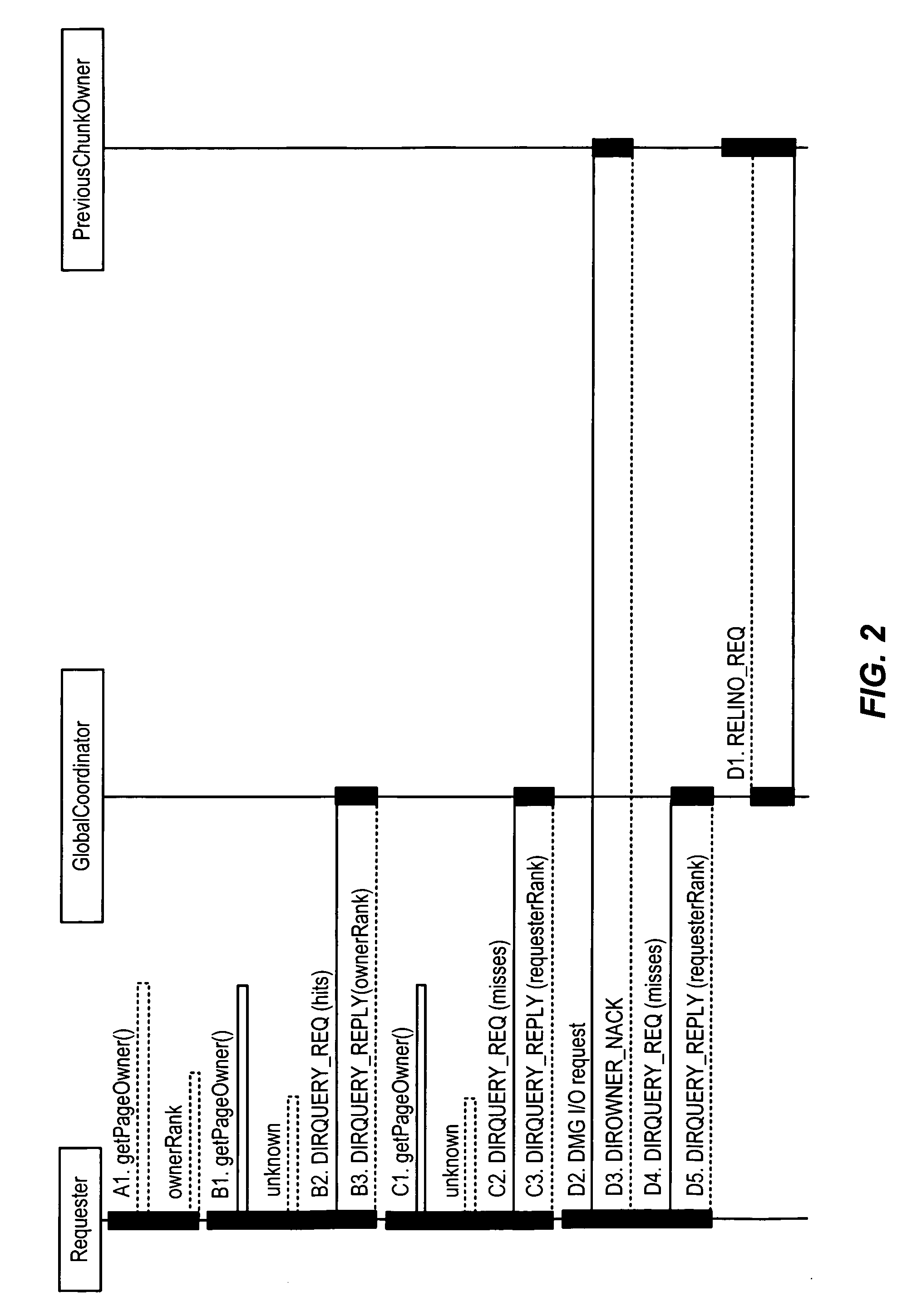

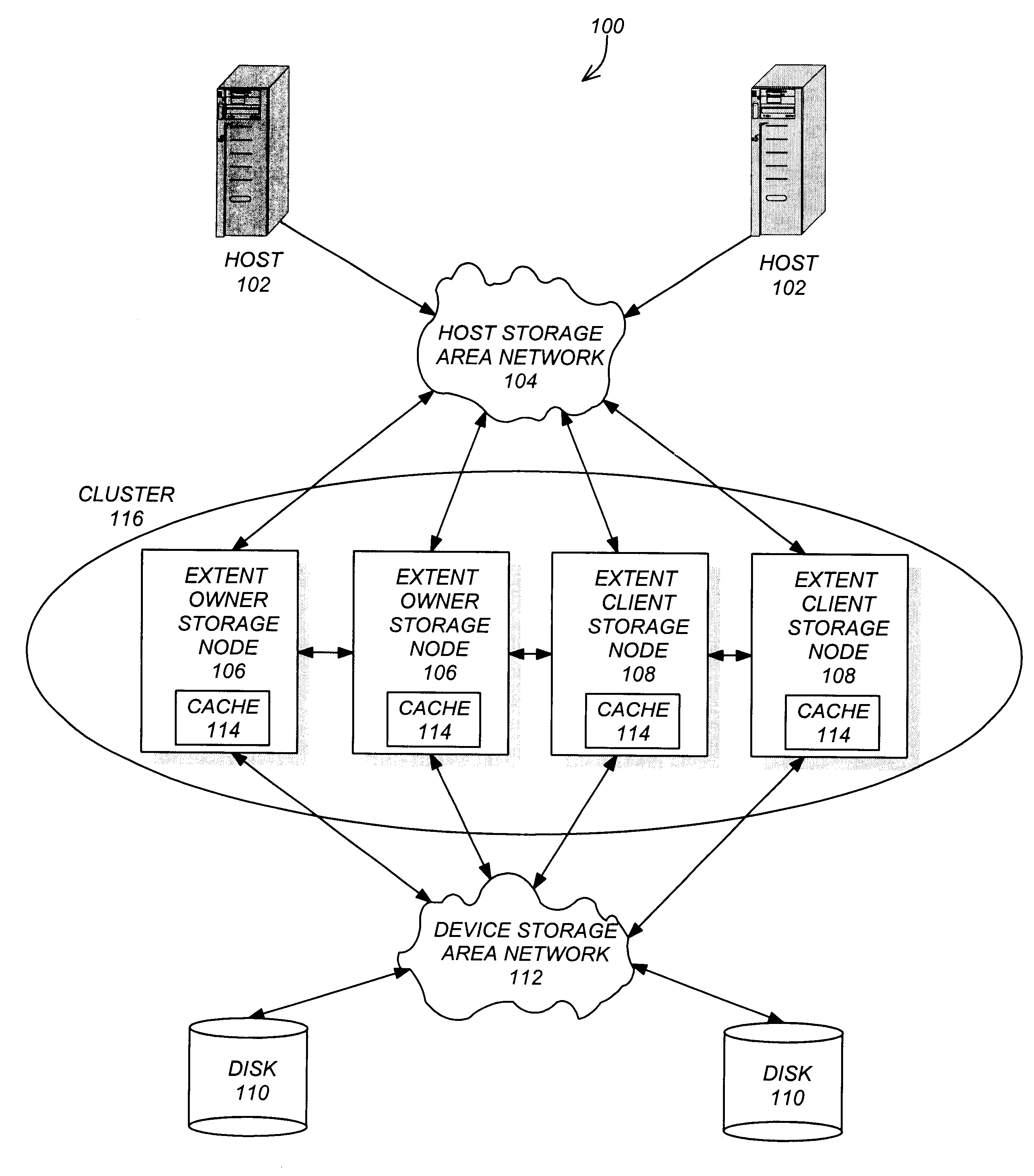

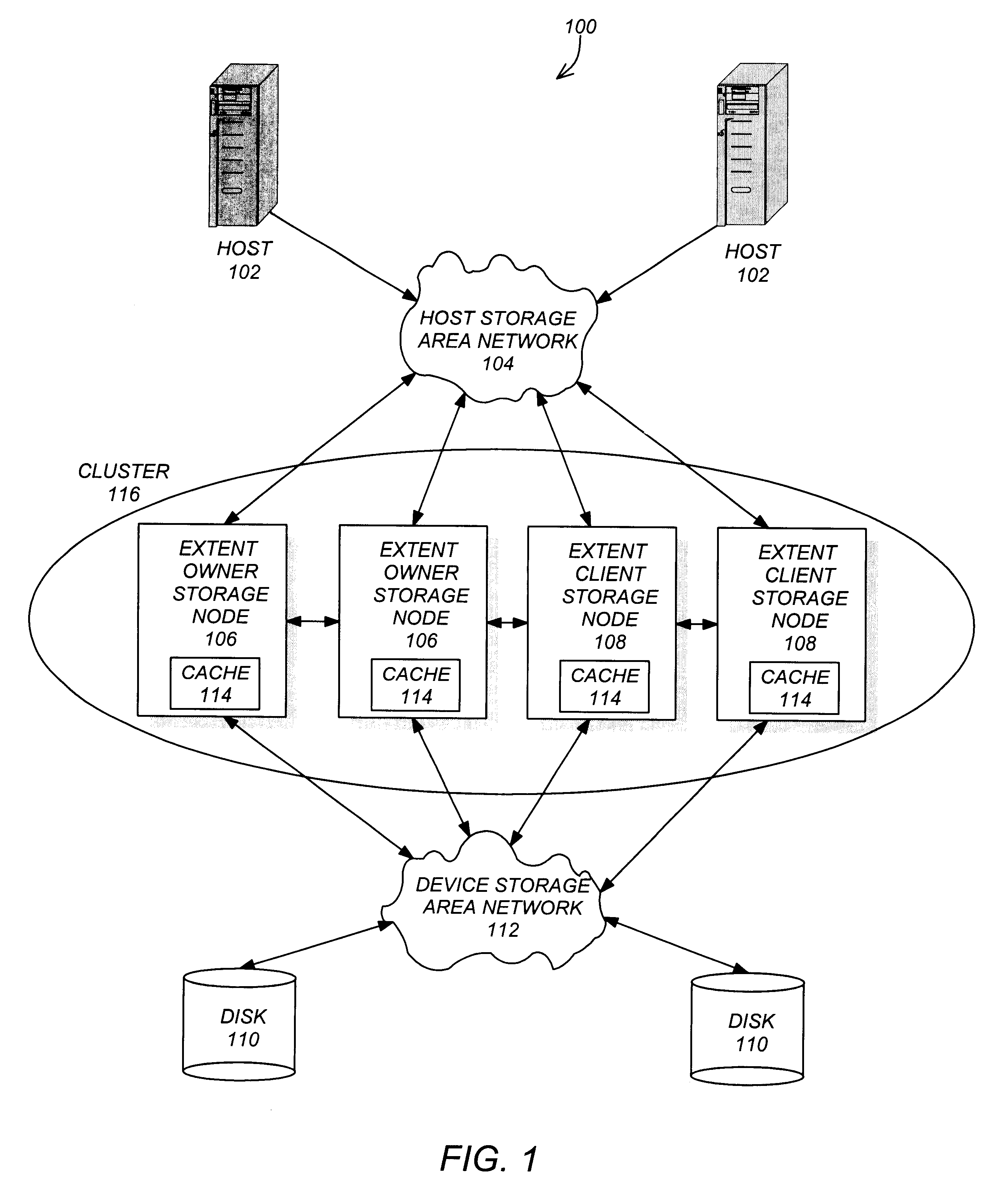

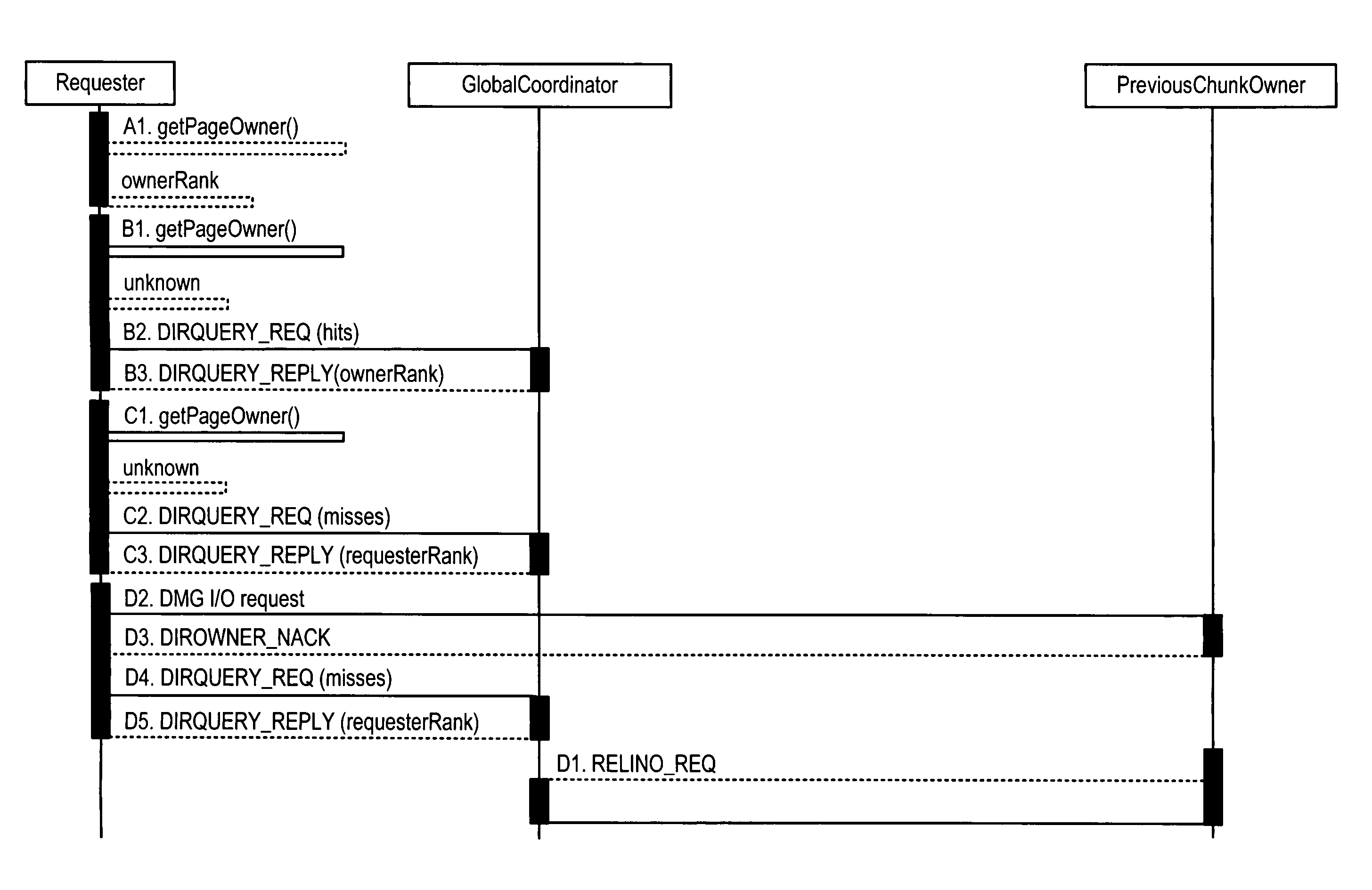

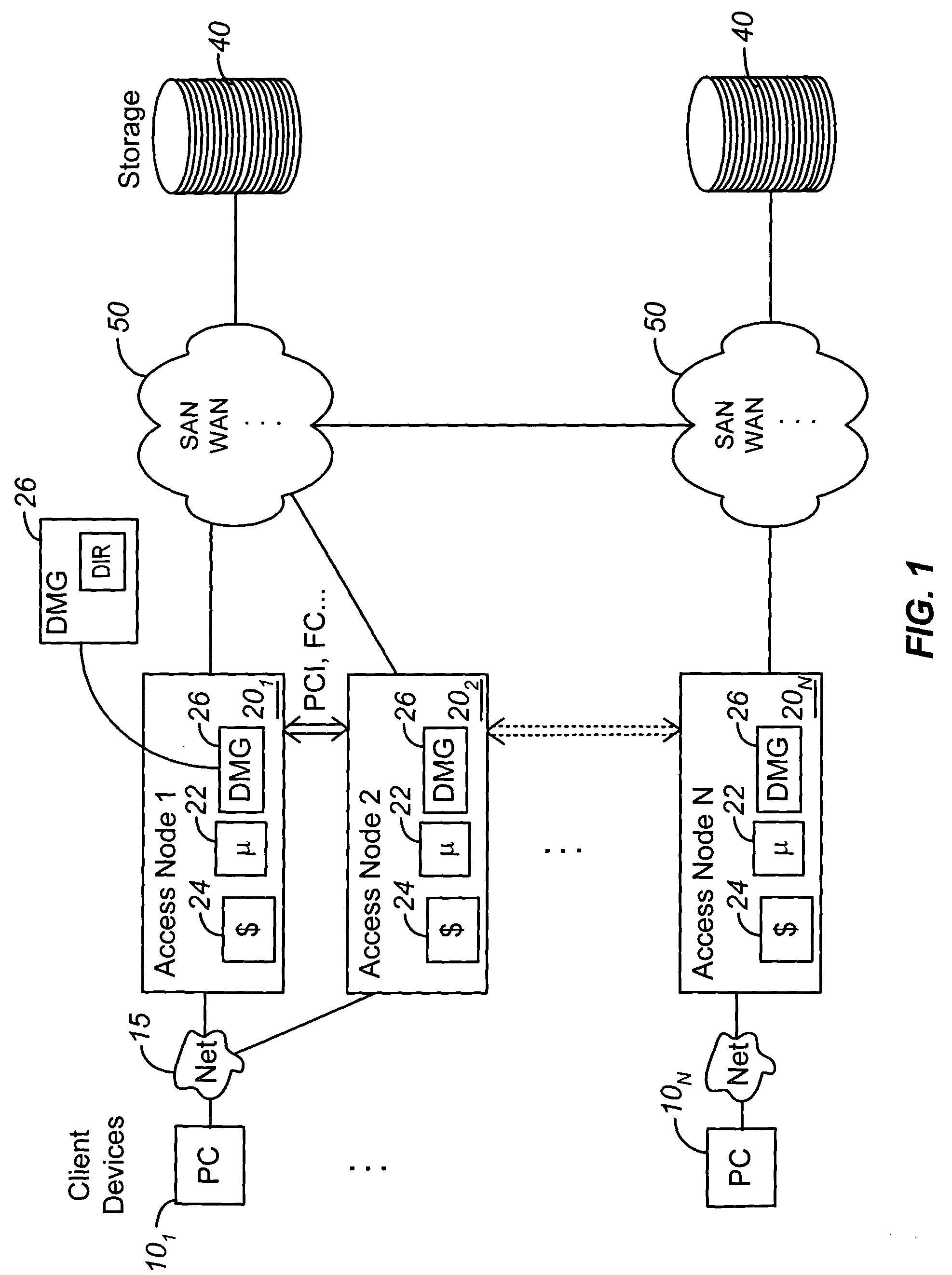

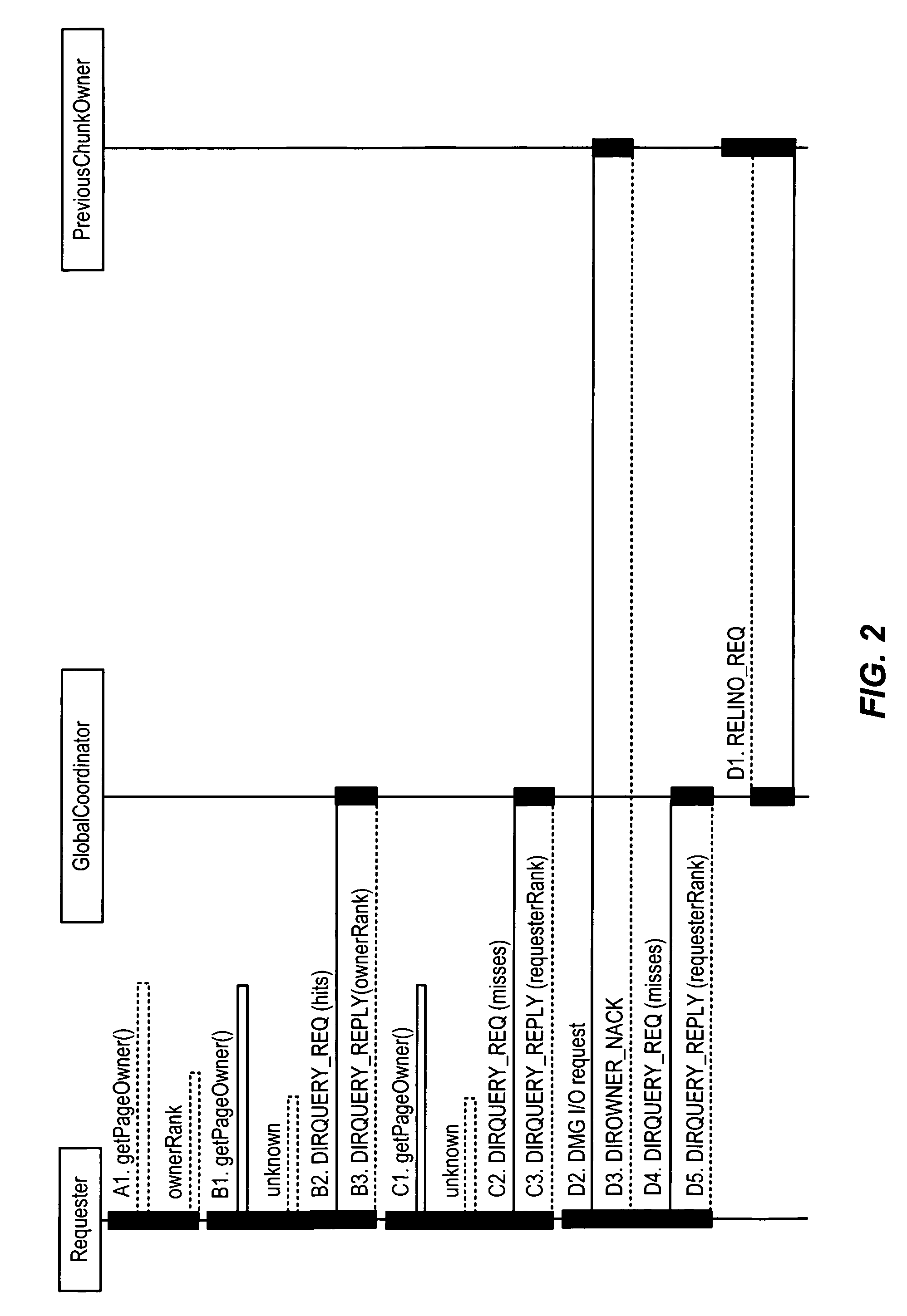

Systems and methods for providing distributed cache coherence

ActiveUS20060031450A1Reduce bandwidth requirementsMaintain traffic scalabilityDigital computer detailsTransmissionDistributed cacheBandwidth requirement

A plurality of access nodes sharing access to data on a storage network implement a directory based cache ownership scheme. One node, designated as a global coordinator, maintains a directory (e.g., table or other data structure) storing information about I / O operations by the access nodes. The other nodes send requests to the global coordinator when an I / O operation is to be performed on identified data. Ownership of that data in the directory is given to the first requesting node. Ownership may transfer to another node if the directory entry is unused or quiescent. The distributed directory-based cache coherency allows for reducing bandwidth requirements between geographically separated access nodes by allowing localized (cached) access to remote data.

Owner:EMC IP HLDG CO LLC

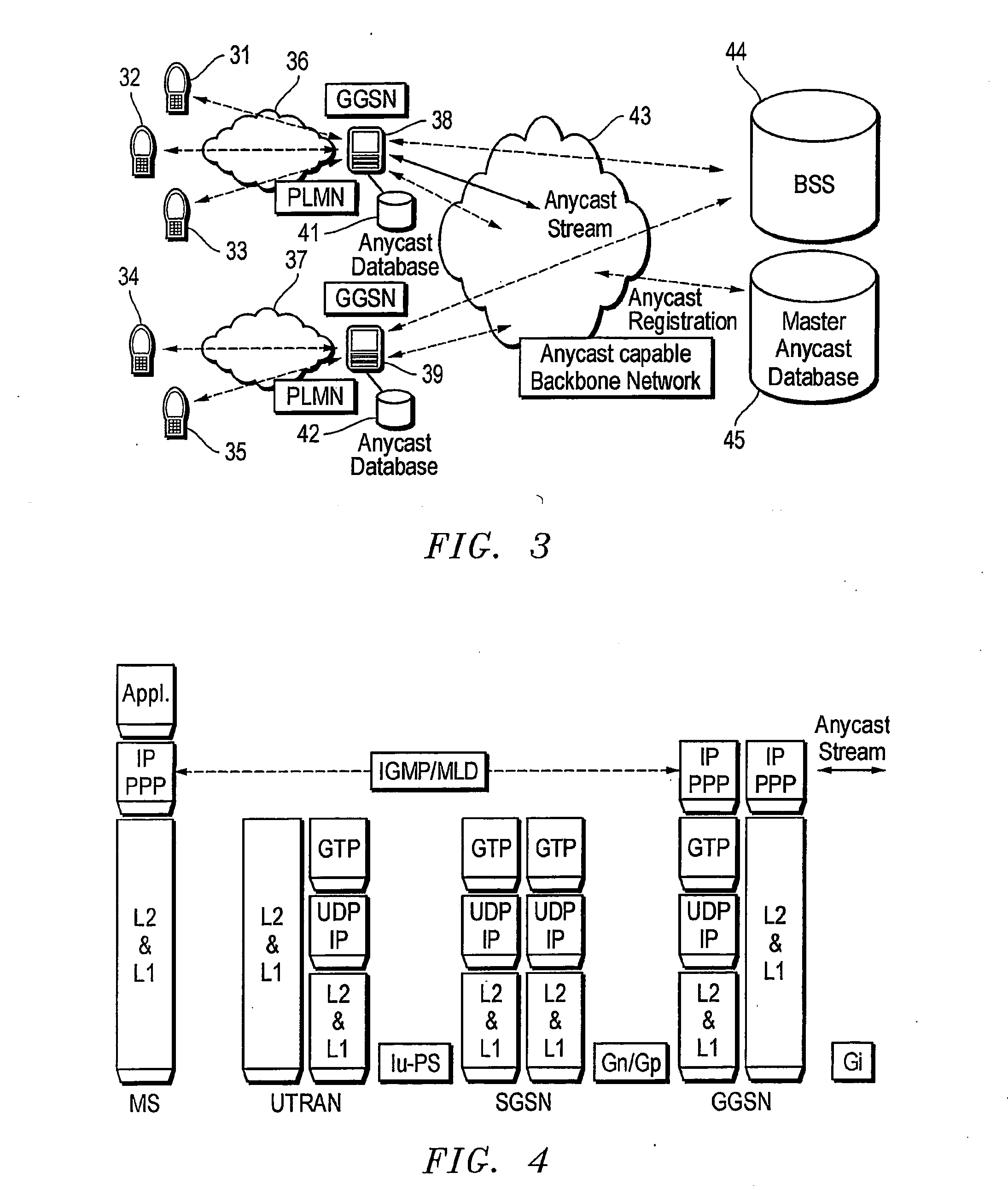

Destributed Caching and Redistribution System and Method in a Wireless Data Network

InactiveUS20070243821A1Broadcast with distributionSpecial service for subscribersQuality of serviceDistributed cache

A distributed caching and redistribution system, method, and mobile client for caching and redistributing data content in a wireless data network. The system enables mobile clients to cache data content that has been distributed by means of broadcast or multicast, and to redistribute the content from the cache to other users. Using anycast techniques applied to the PLMN, a user who wants to retrieve data that was distributed earlier locates the nearest user that has the content available, and asks the nearest user to forward the content. The “nearest” user may be the closest user, but may also be the optimal user from a Quality of Service (QoS) point of view.

Owner:TELEFON AB LM ERICSSON (PUBL)

Method and apparatus for cache synchronization in a clustered environment

InactiveUS6587921B2Memory architecture accessing/allocationData processing applicationsData synchronizationHigh availability

Owner:GOOGLE LLC

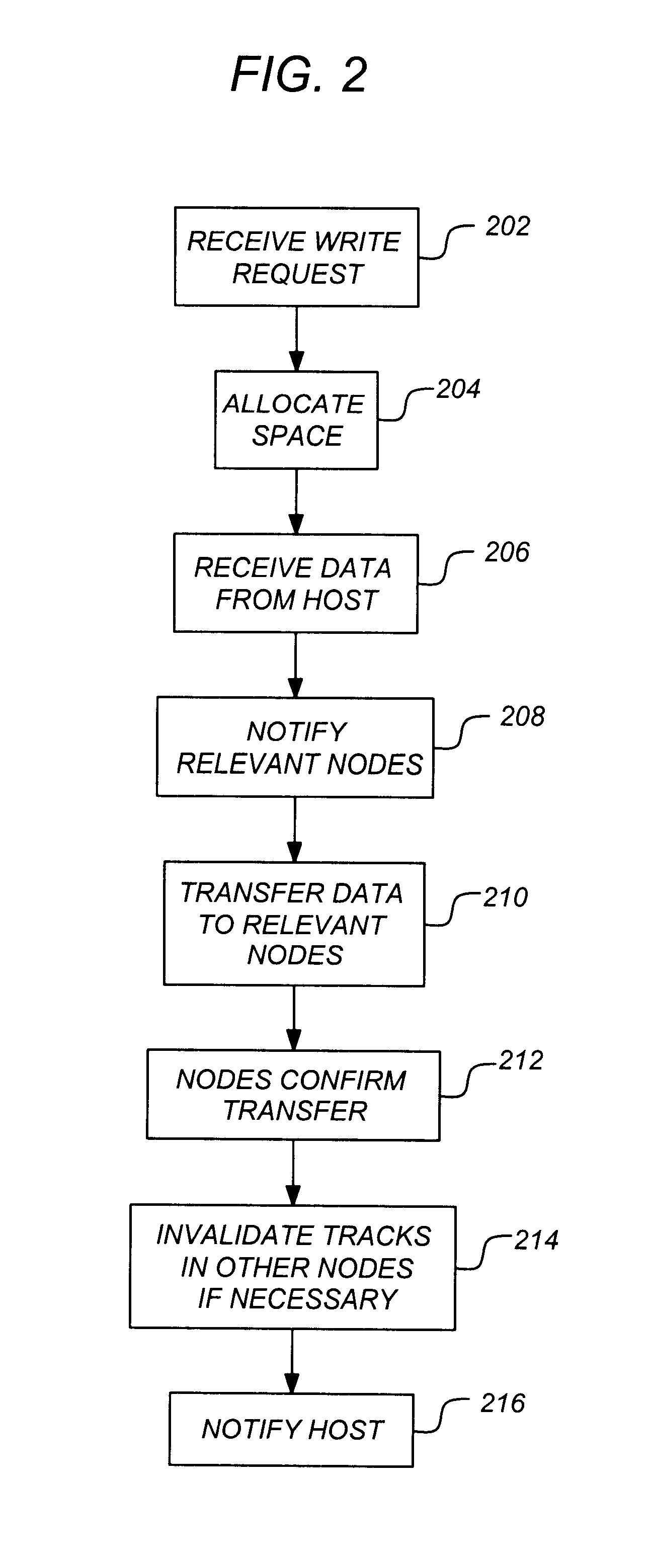

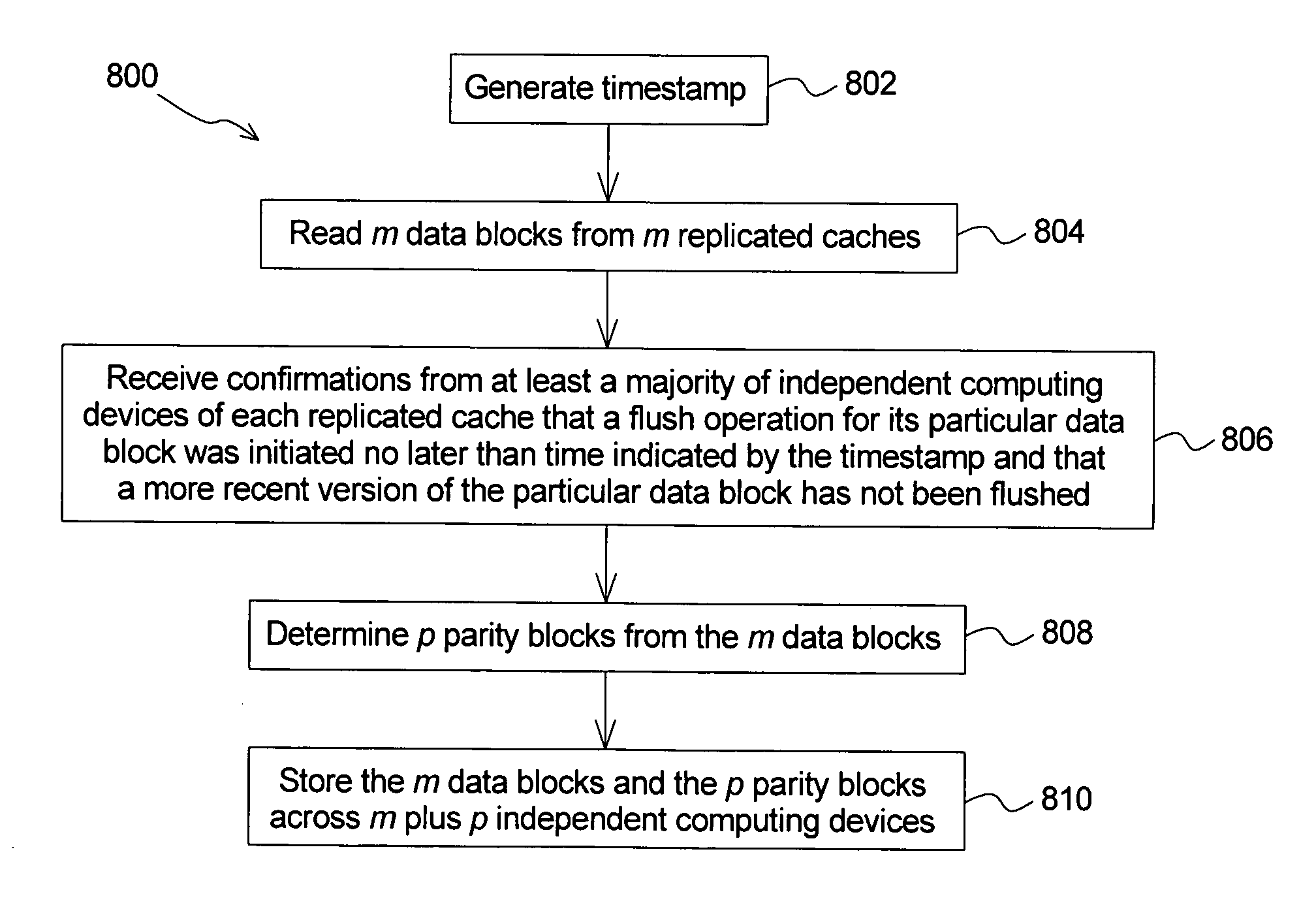

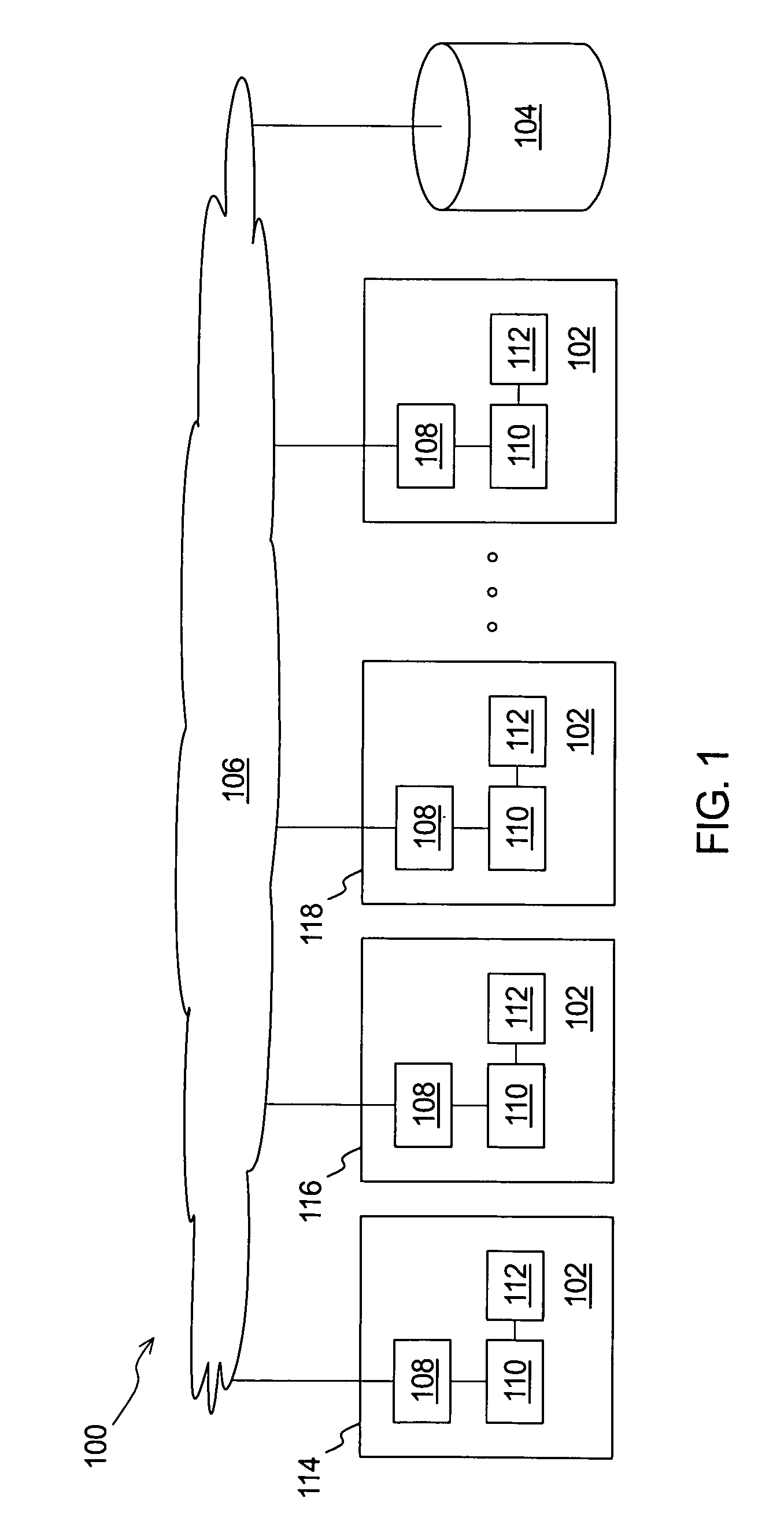

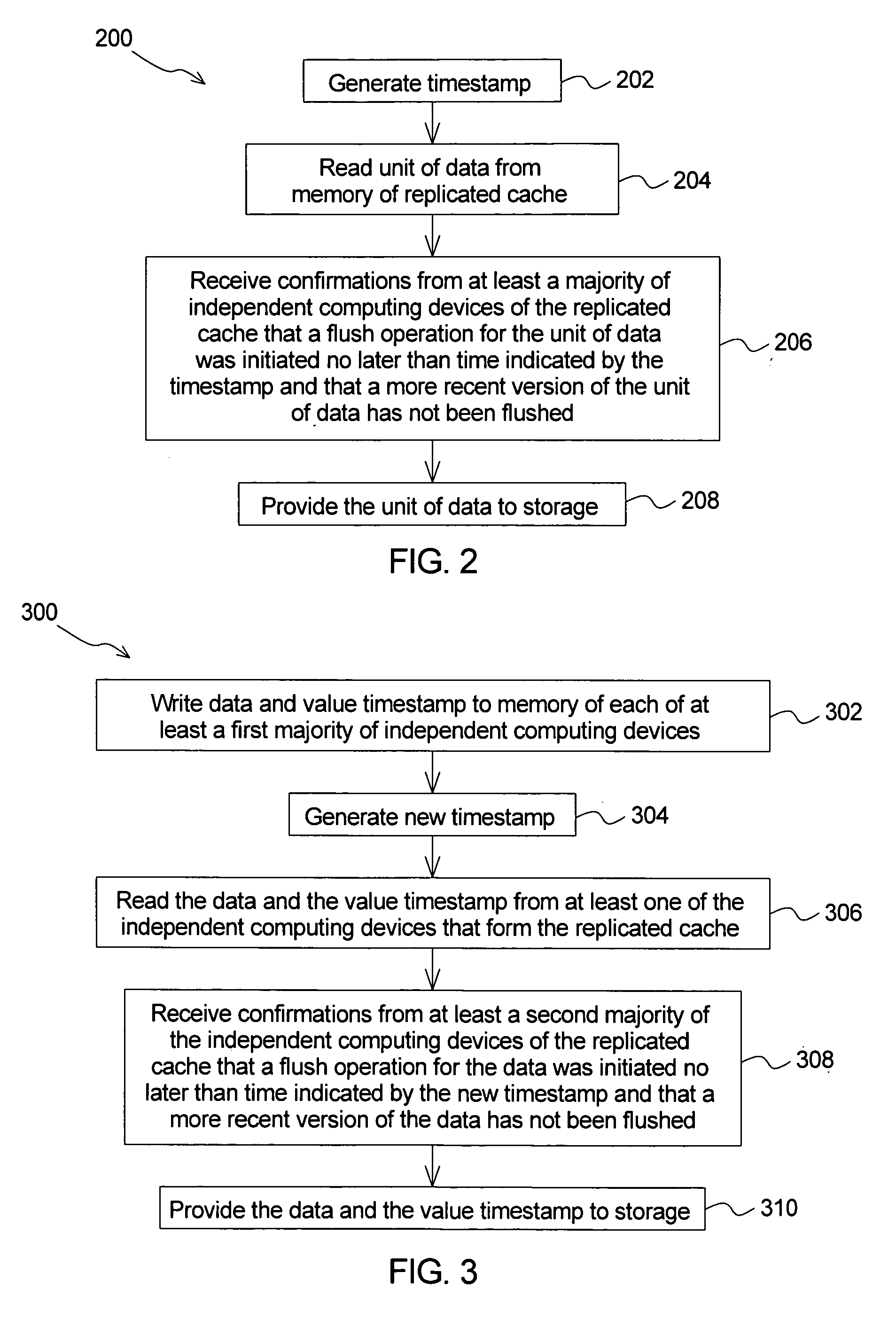

Method of operating distributed storage system

ActiveUS20070192542A1Error detection/correctionDigital data processing detailsComputer hardwareDistributed cache

An embodiment of a method of operating a distributed storage system includes reading m data blocks from a distributed cache. The distributed cache comprises memory of a plurality of independent computing devices that include redundancy for the m data blocks. The m data blocks and p parity blocks are stored across m plus p independent computing devices. Each of the m plus p independent computing devices stores a single block selected from the m data blocks and the p parity blocks.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

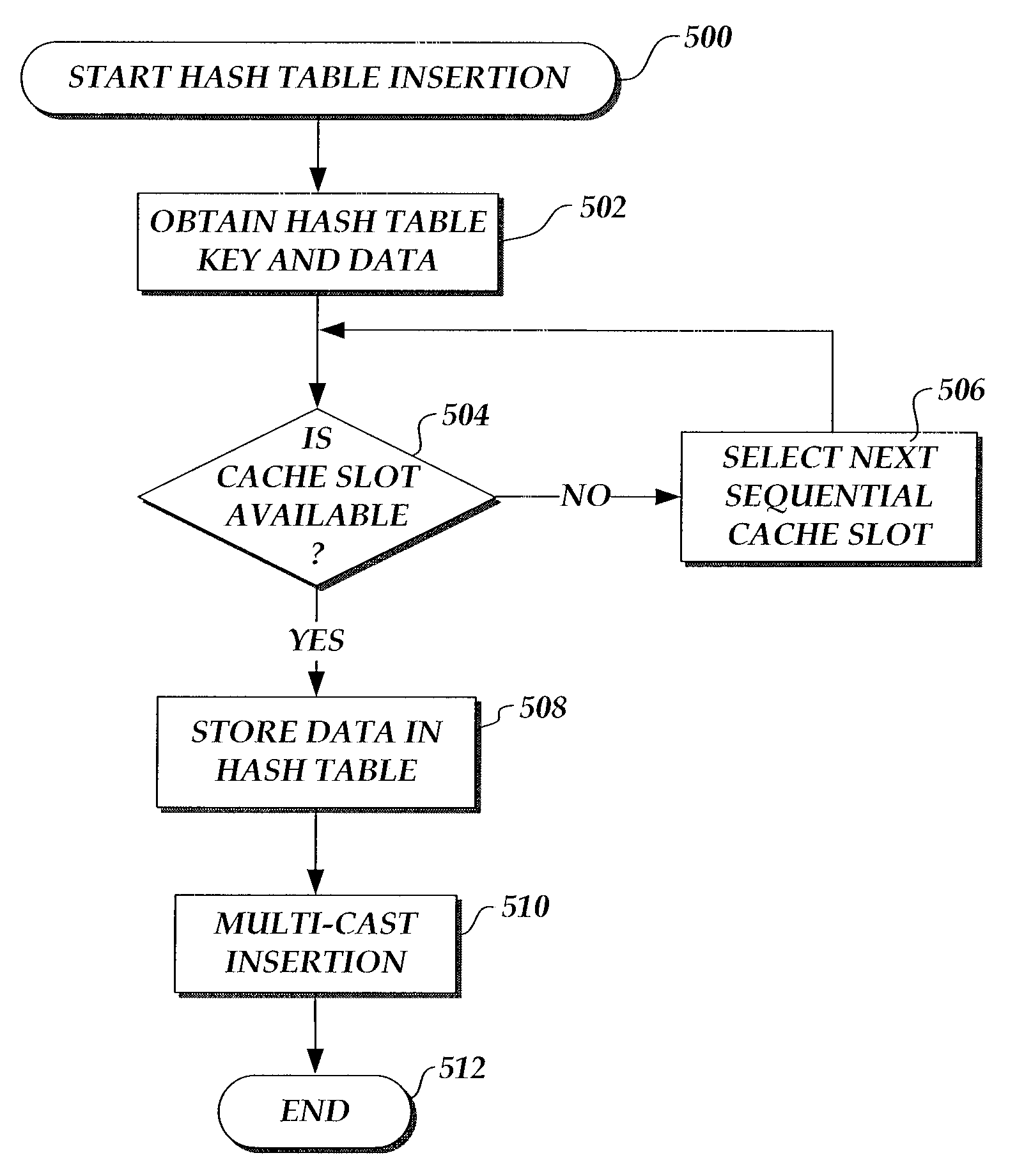

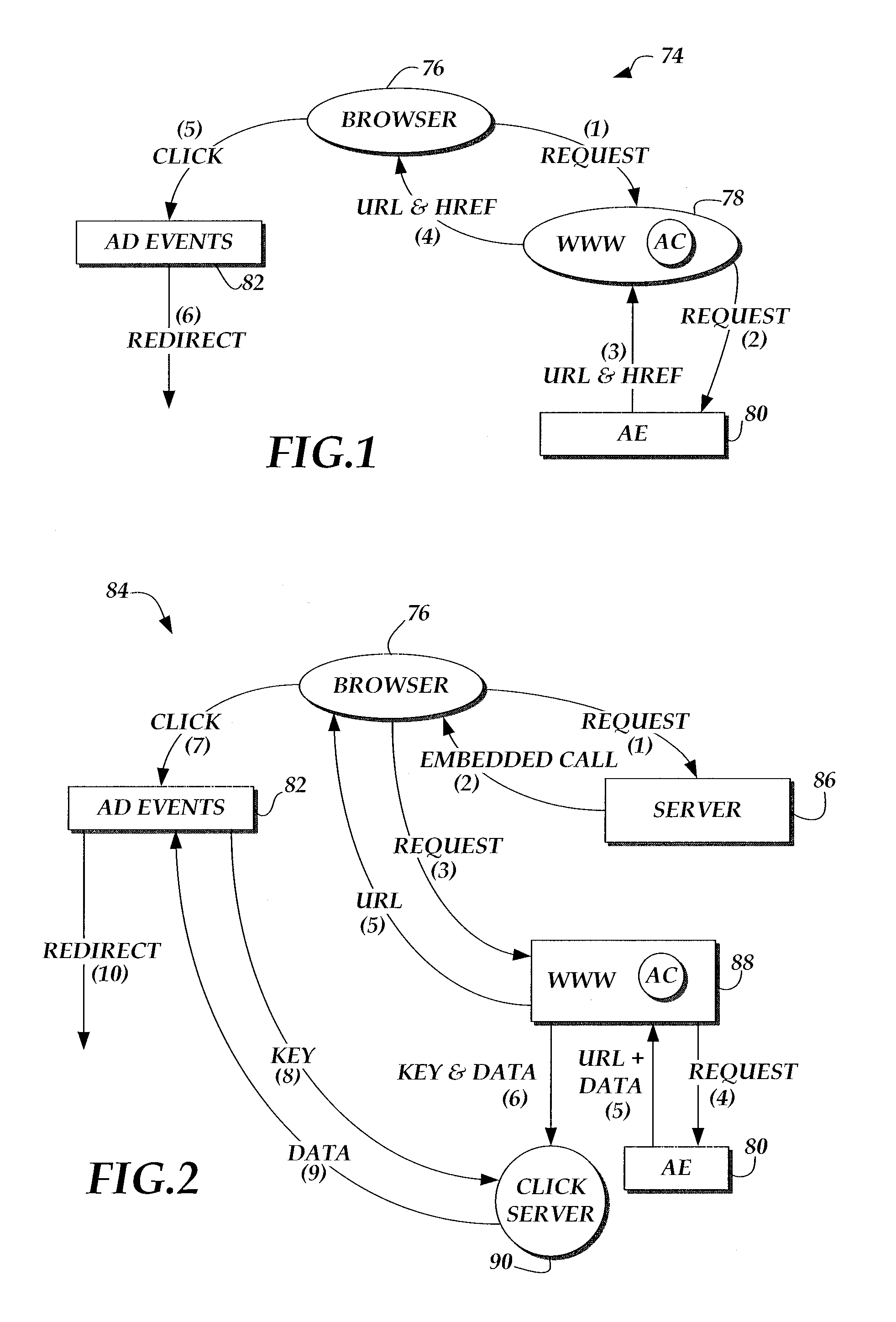

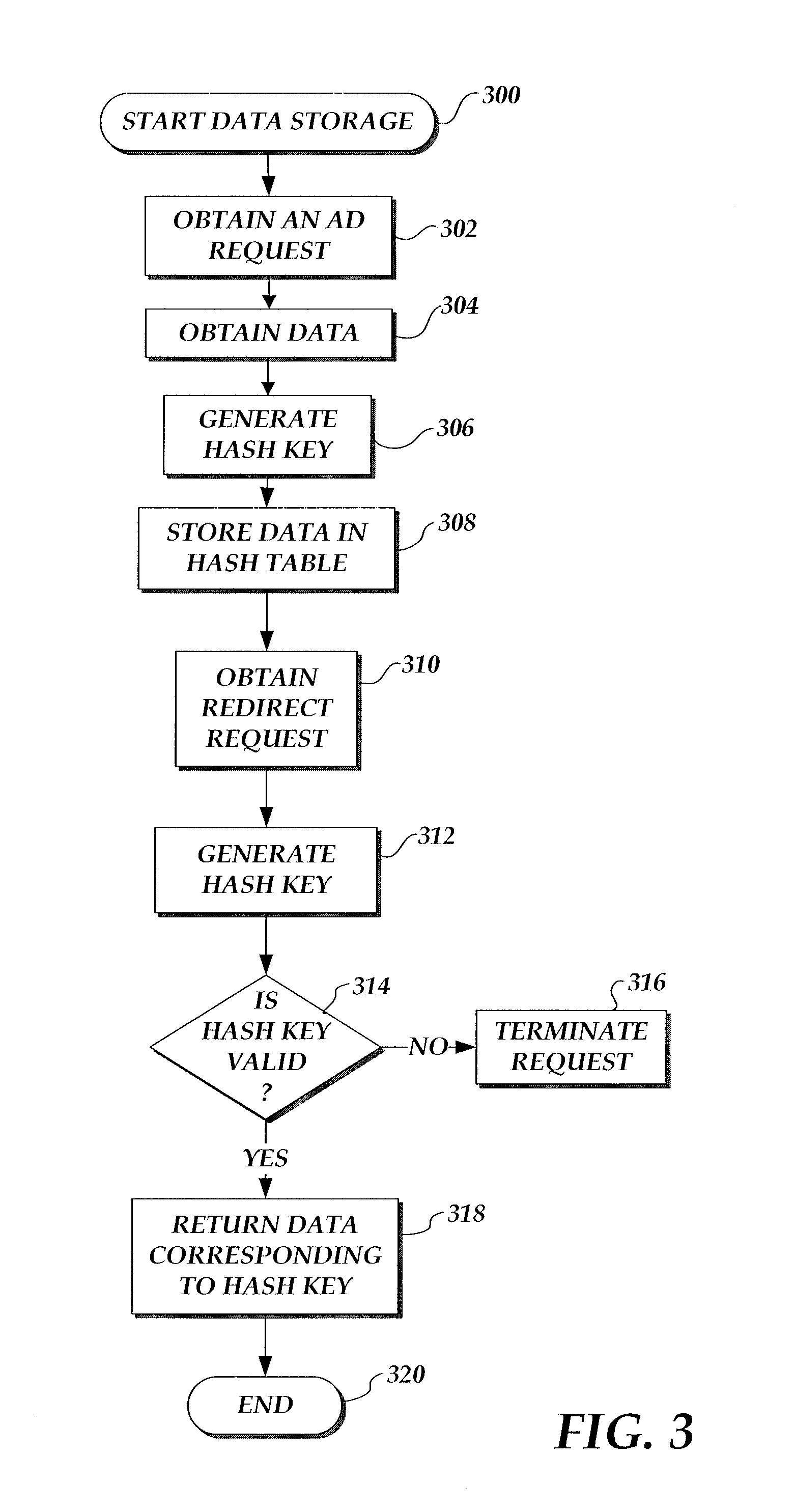

System and method for distributed caching using multicast replication

A system and method for transferring multiple portions of data utilizing a distributed cache are disclosed. A content server obtains a request for content data and associates an identifier with the request. The content server returns a first portion of the data with the request and stores a second portion of the data in a cache according to the first identifier. Thereafter, the content server receives a request for the remaining portion of the provider data and associates a second identifier with the second request. If the second identifier matches the first identifier, the content server returns the data stored according to the first identifier. Additionally, the content server implements and utilizes a click server having multiple cache servers in which multi-cache replication is utilized to store identical contents in each cache server.

Owner:MICROSOFT TECH LICENSING LLC

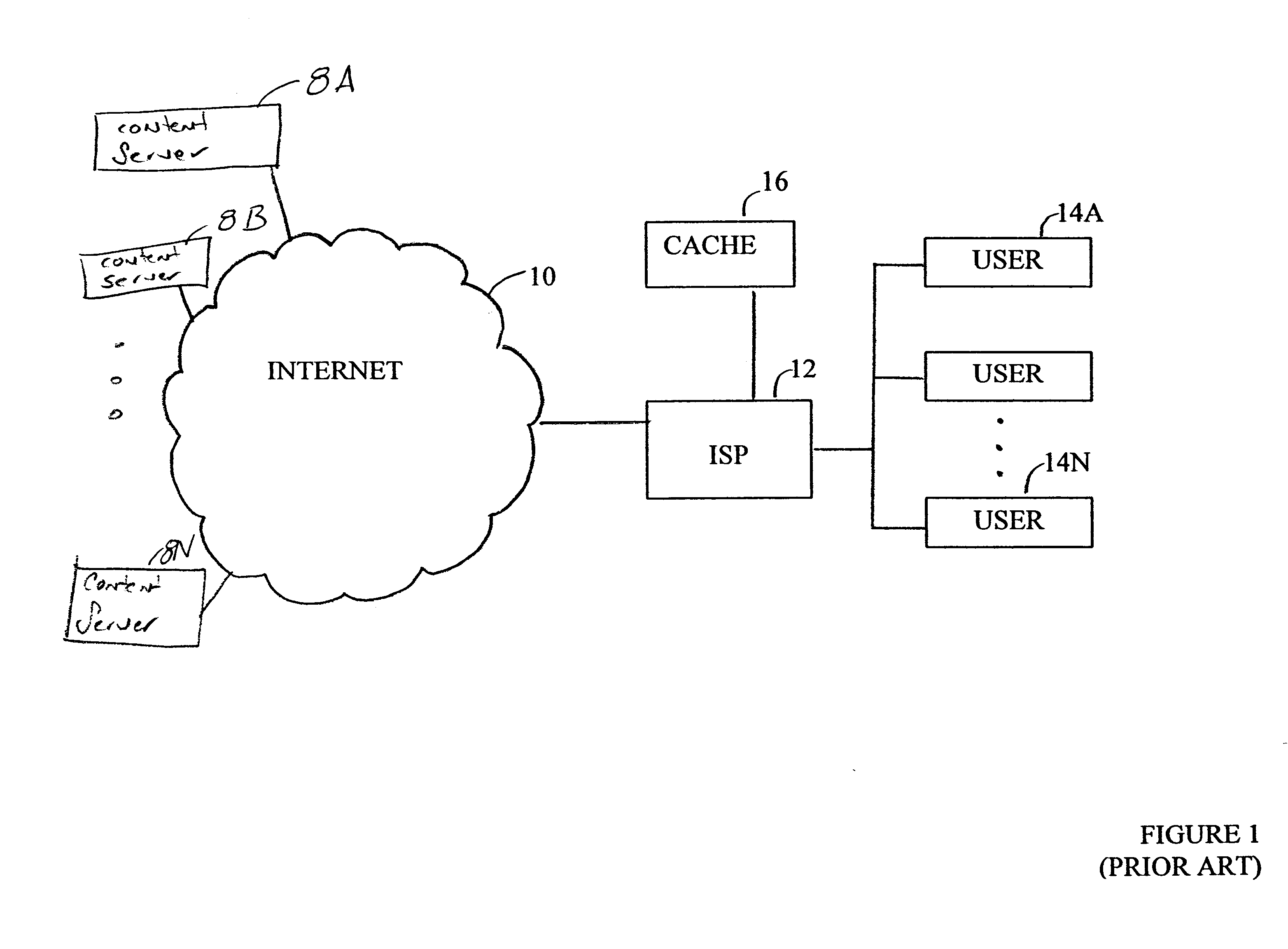

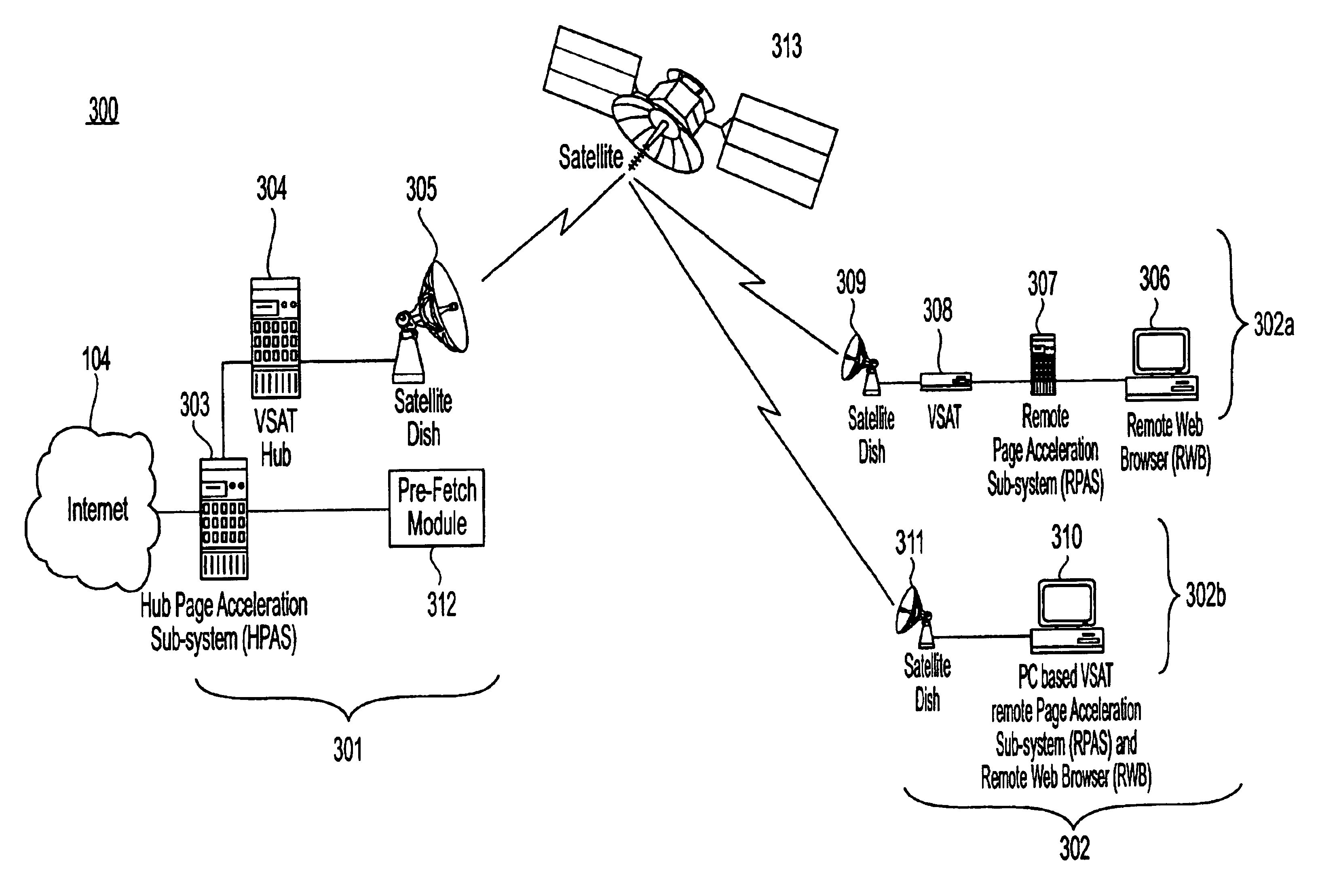

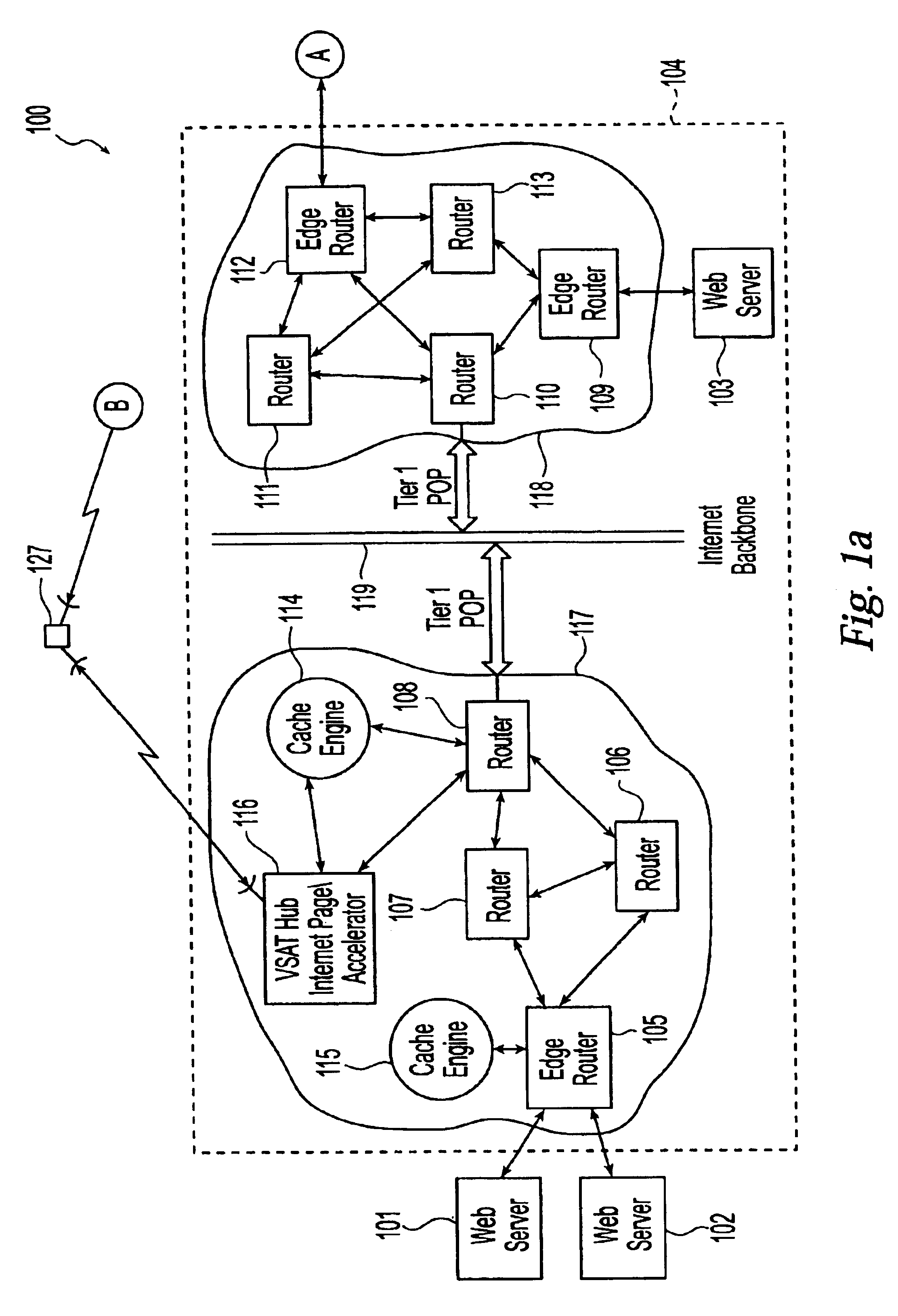

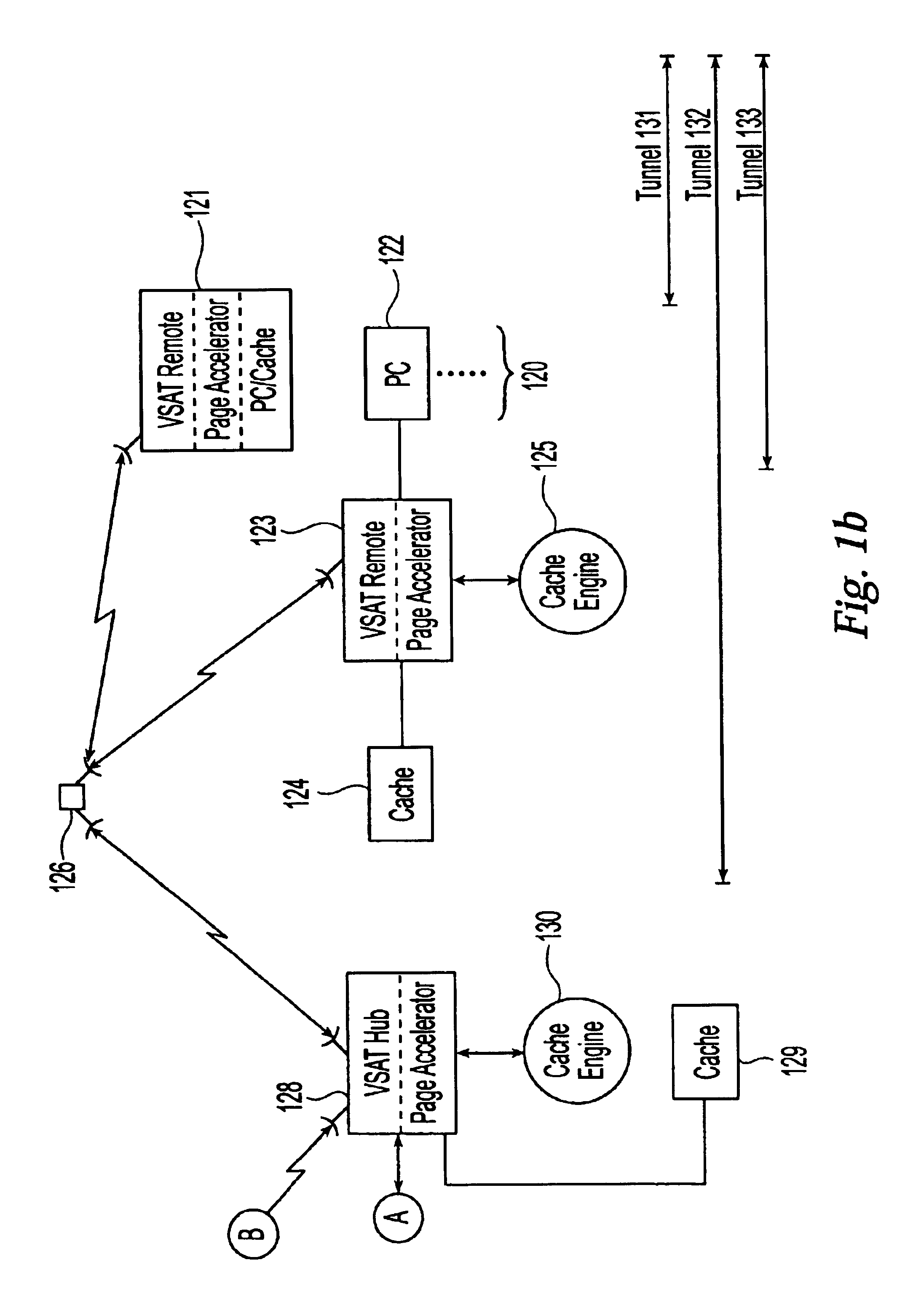

System and method for internet page acceleration including multicast transmissions

InactiveUS6947440B2Reducing latency experiencedLow experience requirementSpecial service provision for substationNetwork topologiesDistributed cacheThe Internet

A broadband communication system with improved latency is disclosed. The system employs distributed caching techniques to assemble data objects at locations proximate to a source to avoid latency over delayed links. The assembled data objects are then multicast to one or more remote terminals in response to a request for the objects, thus reducing unnecessary data flow across the satellite link.

Owner:GILAT SATELLITE NETWORKS

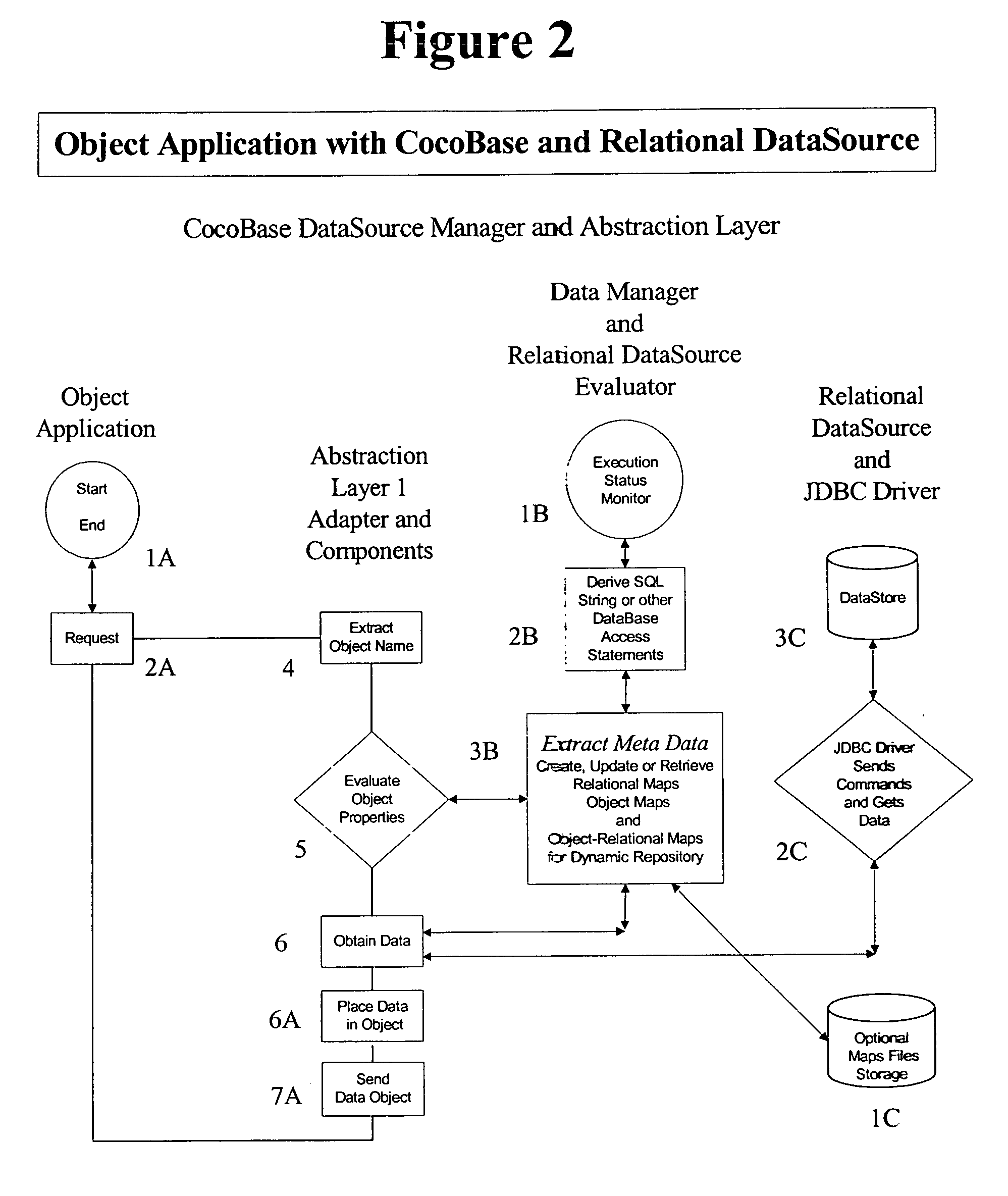

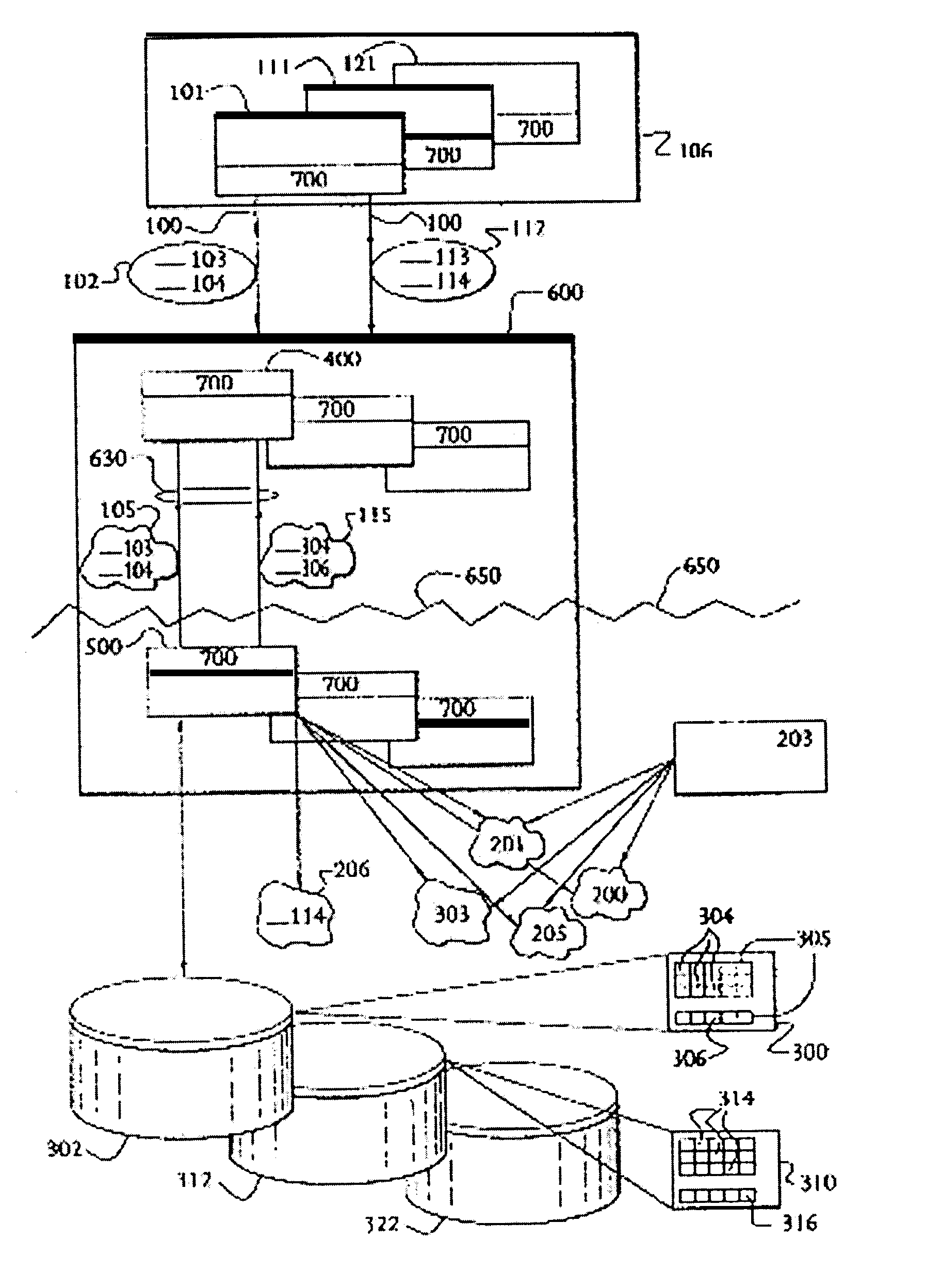

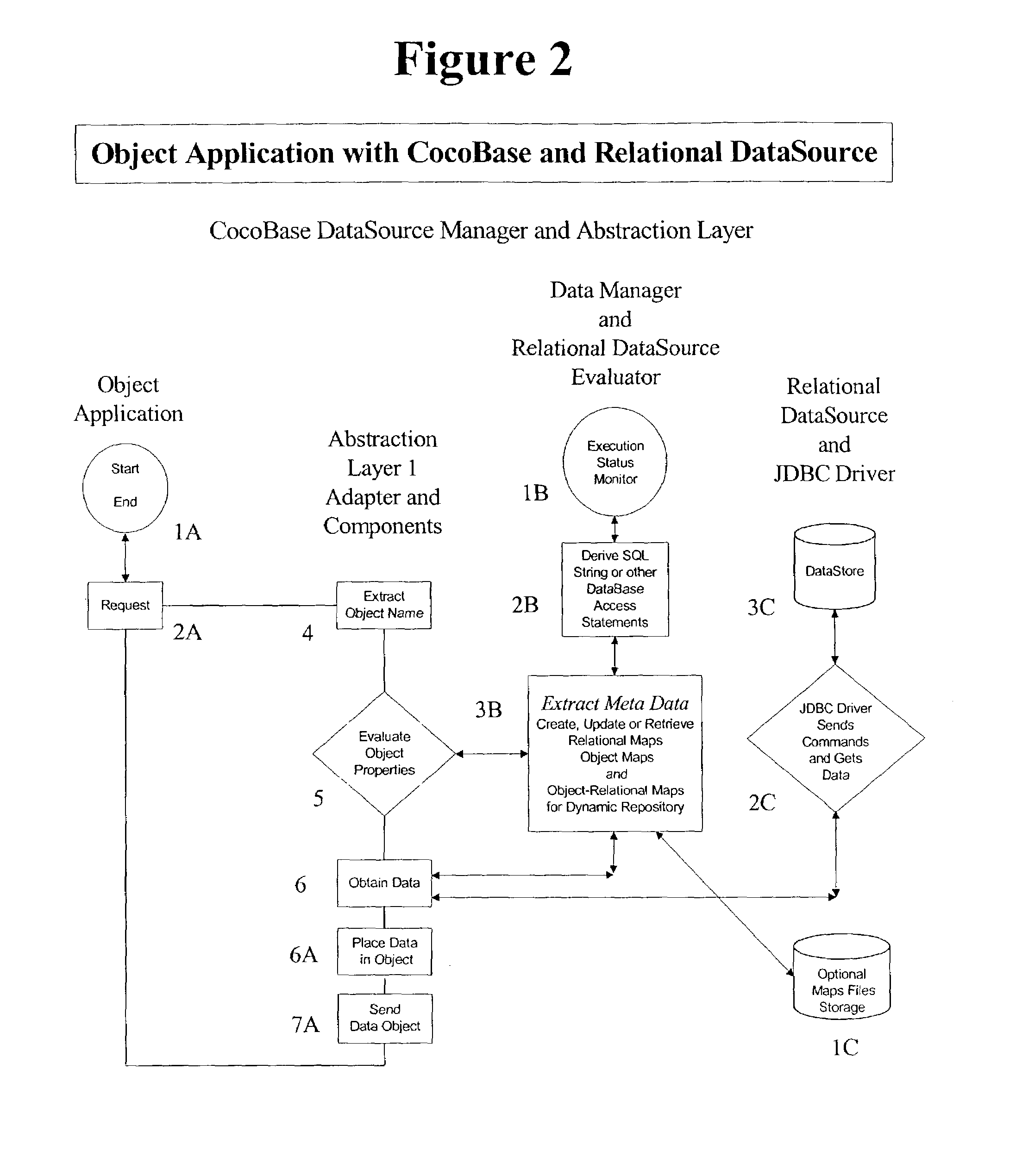

Dynamic class inheritance and distributed caching with object <->relational mapping and Cartesian model support in a database manipulation and mapping system

InactiveUS20070055647A1Flexibility and dynamic operationData processing applicationsObject oriented databasesTheoretical computer scienceData file

The present invention provides a system and method for dynamic object-driven database manipulation and mapping system which relates in general to correlating or translating one type of database to another type of database or to an object programming application. Correlating or translating involves relational to object translation, object to object translation, relational to relational, or a combination of the above. Thus, the present invention is directed to dynamic mapping of databases to selected objects. Also provided are systems and methods that optionally include caching components, security features, data migration facilities, and components for reading, writing, interpreting and manipulating XML and XMI data files.

Owner:MULLINS WARD +1

Dynamic class inheritance and distributed caching with object relational mapping and cartesian model support in a database manipulation and mapping system

InactiveUS7149730B2Flexibility and dynamic operationData processing applicationsObject oriented databasesDistributed cacheData file

The present invention provides a system and method for dynamic object-driven database manipulation and mapping system which relates in general to correlating or translating one type of database to another type of database or to an object programming application. Correlating or translating involves relational to object translation, object to object translation, relational to relational, or a combination of the above. Thus, the present invention is directed to dynamic mapping of databases to selected objects. Also provided are systems and methods that optionally include caching components, security features, data migration facilities, and components for reading, writing, interpreting and manipulating XML and XMI data files.

Owner:THOUGHT

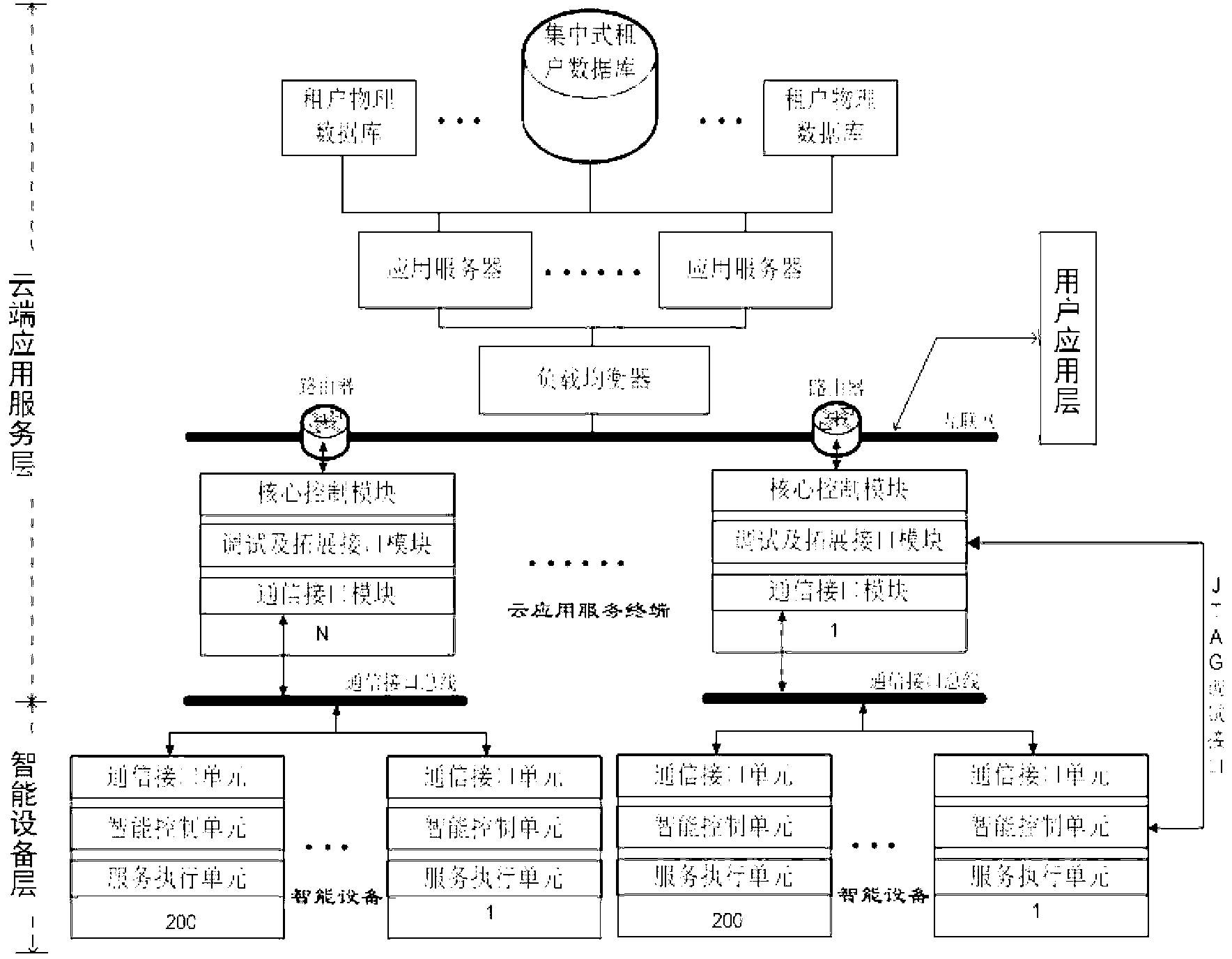

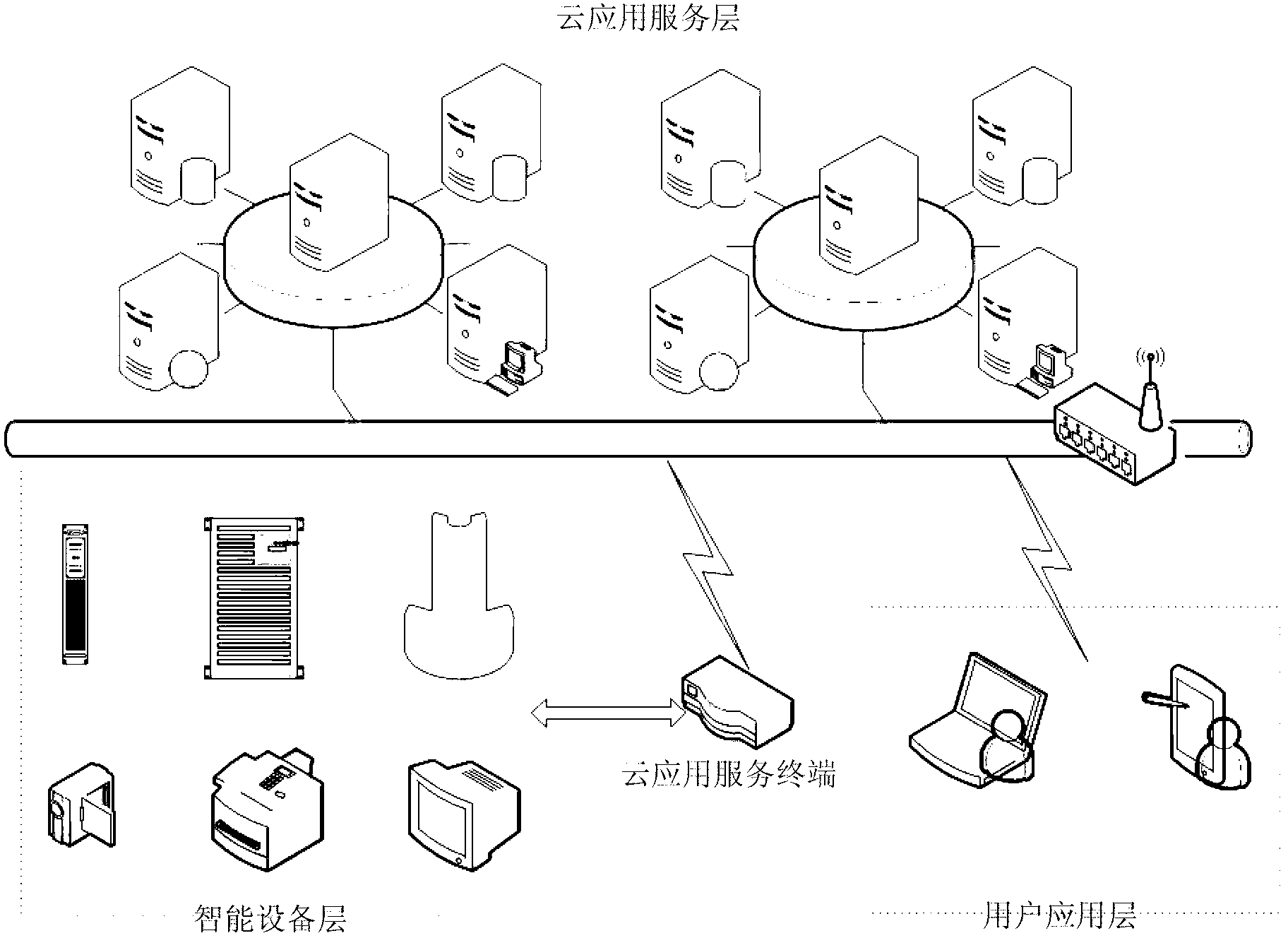

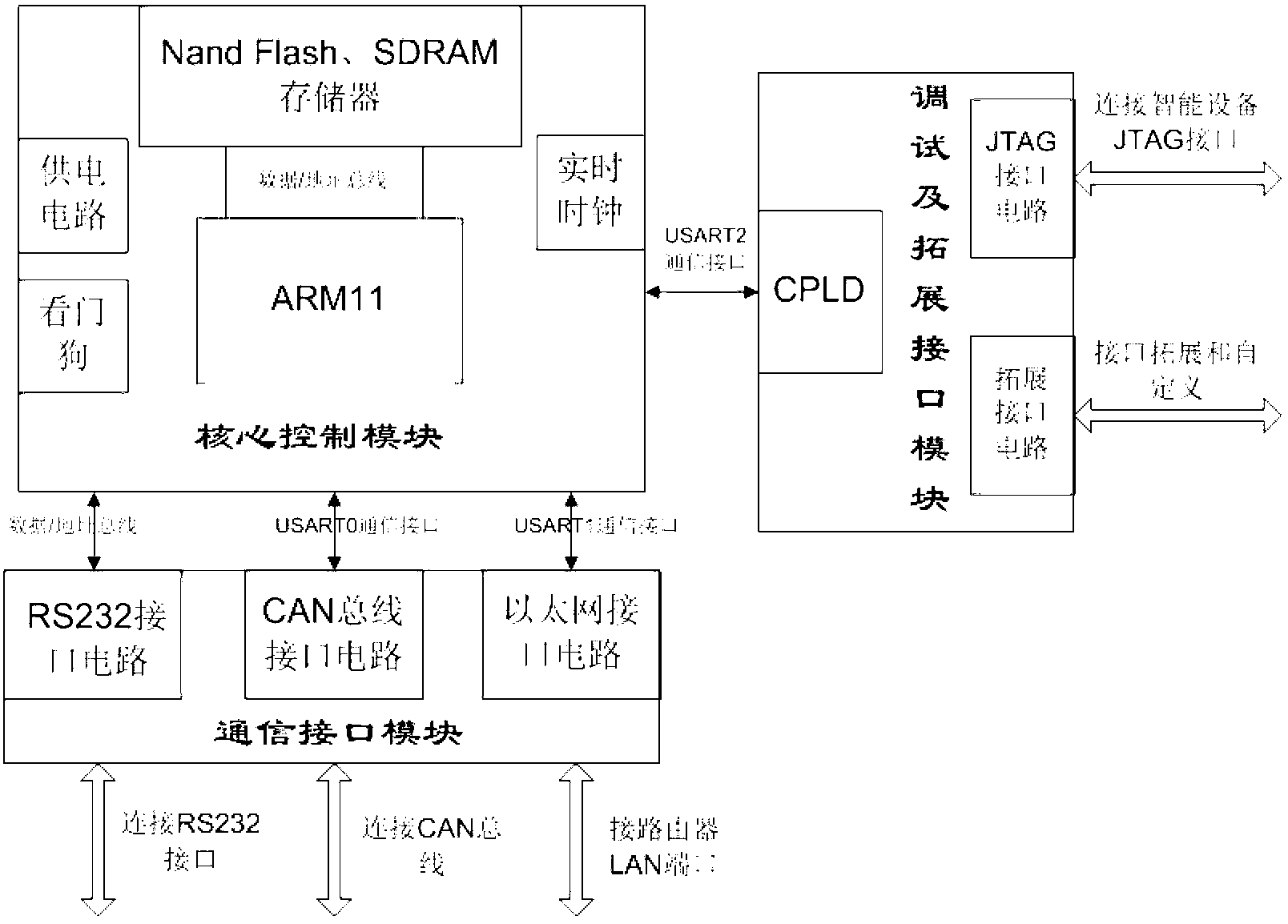

Intelligent device monitoring and managing system based on software as a service (SaaS)

ActiveCN102981440ASolve the problems caused by scaleTake advantage ofProgramme controlComputer controlNetwork serviceInformation integration

The invention relates to an intelligent device monitoring and managing system based on software as a service (SaaS). The system comprises an intelligent device layer, a cloud application and service layer and a user application layer, wherein the intelligent device layer is assemble of monitoring and managing objects of the same category, the cloud application and service layer is a group of network server cluster, and the user application layer comprises any devices and users capable of accessing the internet and carrying a web browser. A user can use the web browser as a monitor terminal to request monitoring service, managing service or other services of intelligent devices to the cloud application and service layer. The cloud application and service layer adopts an Saas multi-tenant and distributed application procedural framework, processes data in a distributed cache according to a monitoring service strategy customized by the user, and logs the data in a data base at regular time. The cloud application and service layer drives a cloud application and service terminal and controls the intelligent devices to execute responding service motions. According to the intelligent device monitoring and managing system based on SaaS, application services such as remote control, management and information integration of the intelligent devices based on a SaaS platform are achieved, problems caused by large-scale monitoring objects are effectively solved, servers and information bearing capacity can be arranged dynamically according to the quantity of users, hardware resources of the servers can be utilized completely, and therefore operating cost is reduced.

Owner:WUHAN UNIV OF TECH

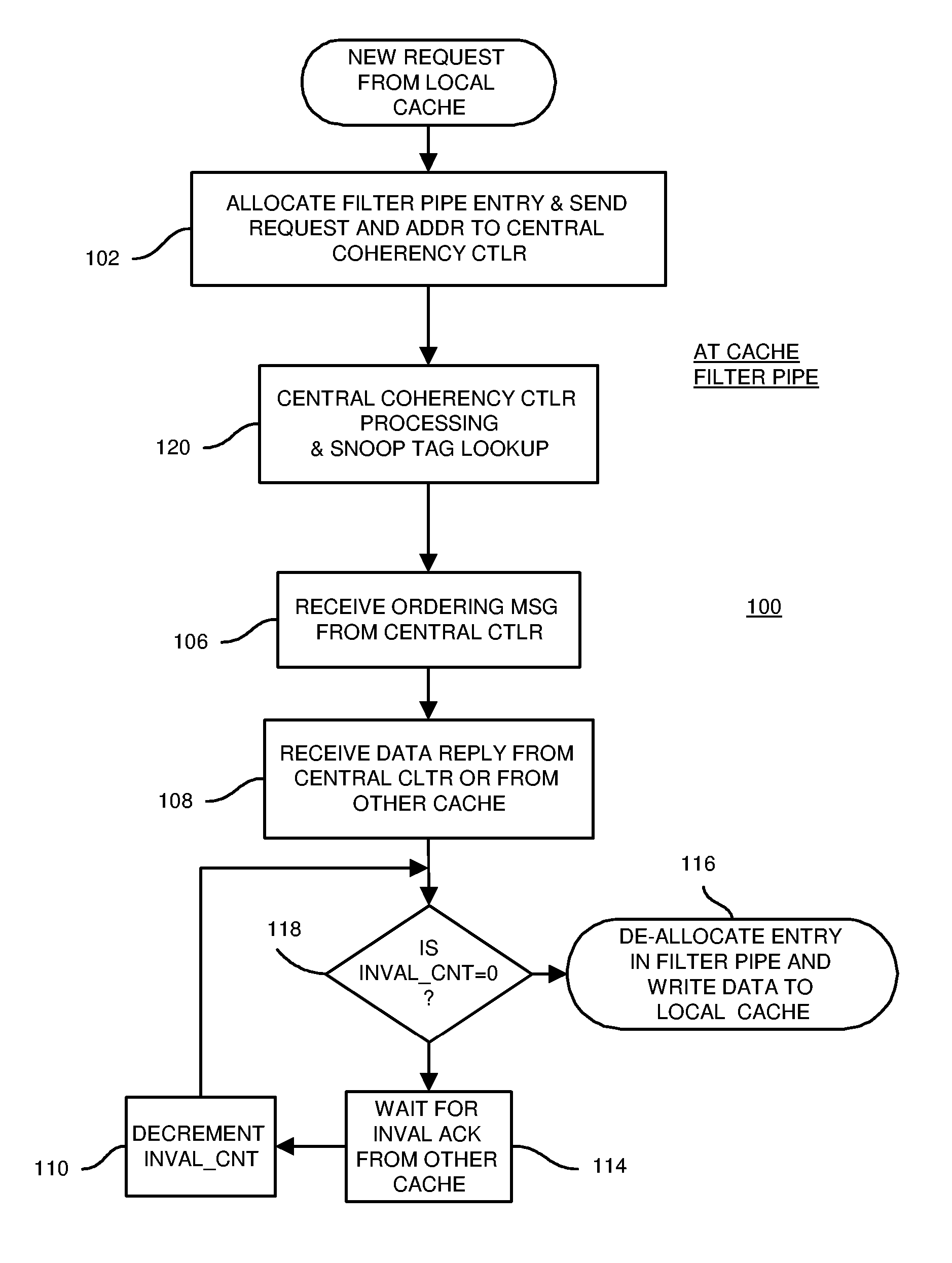

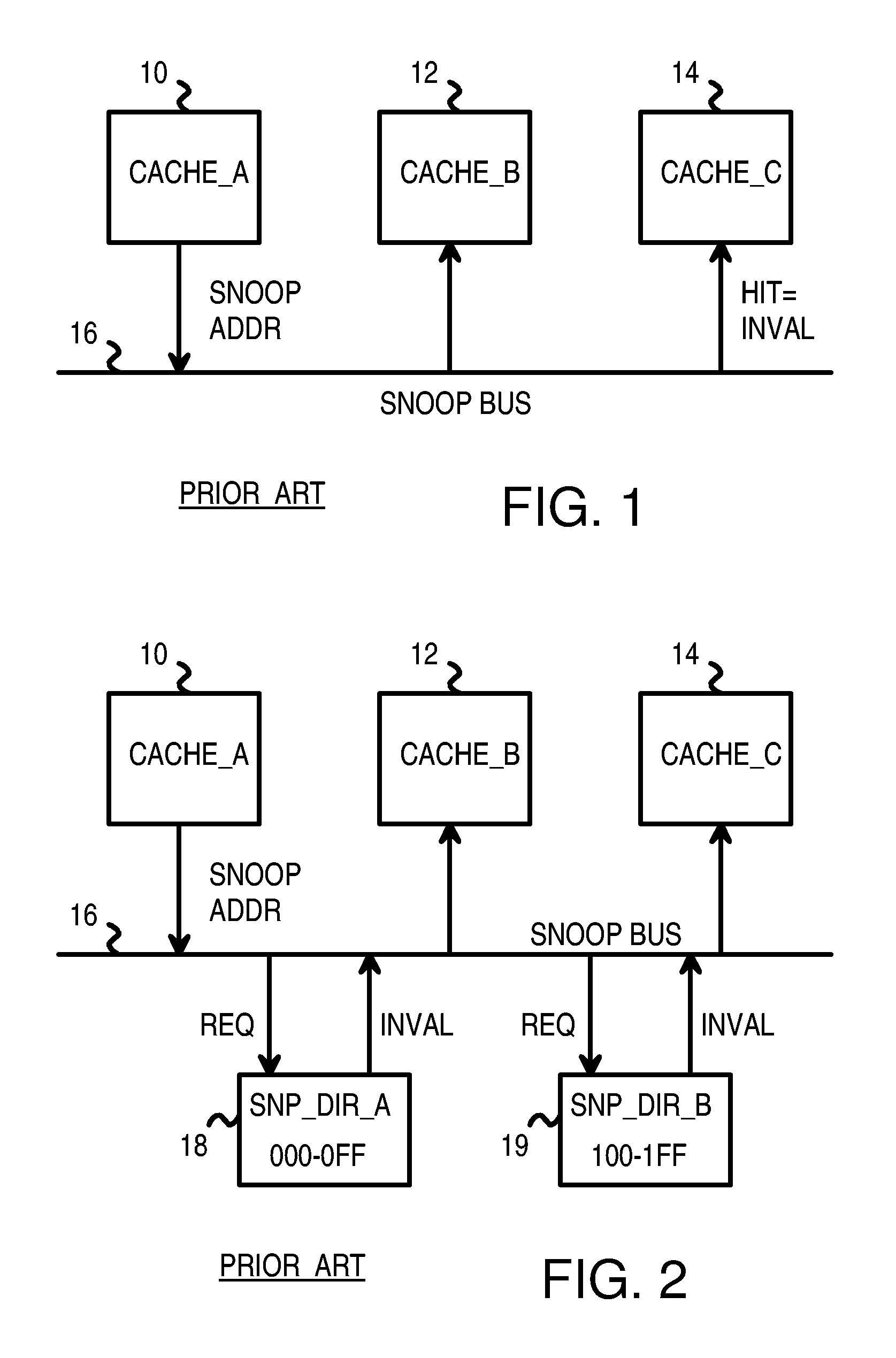

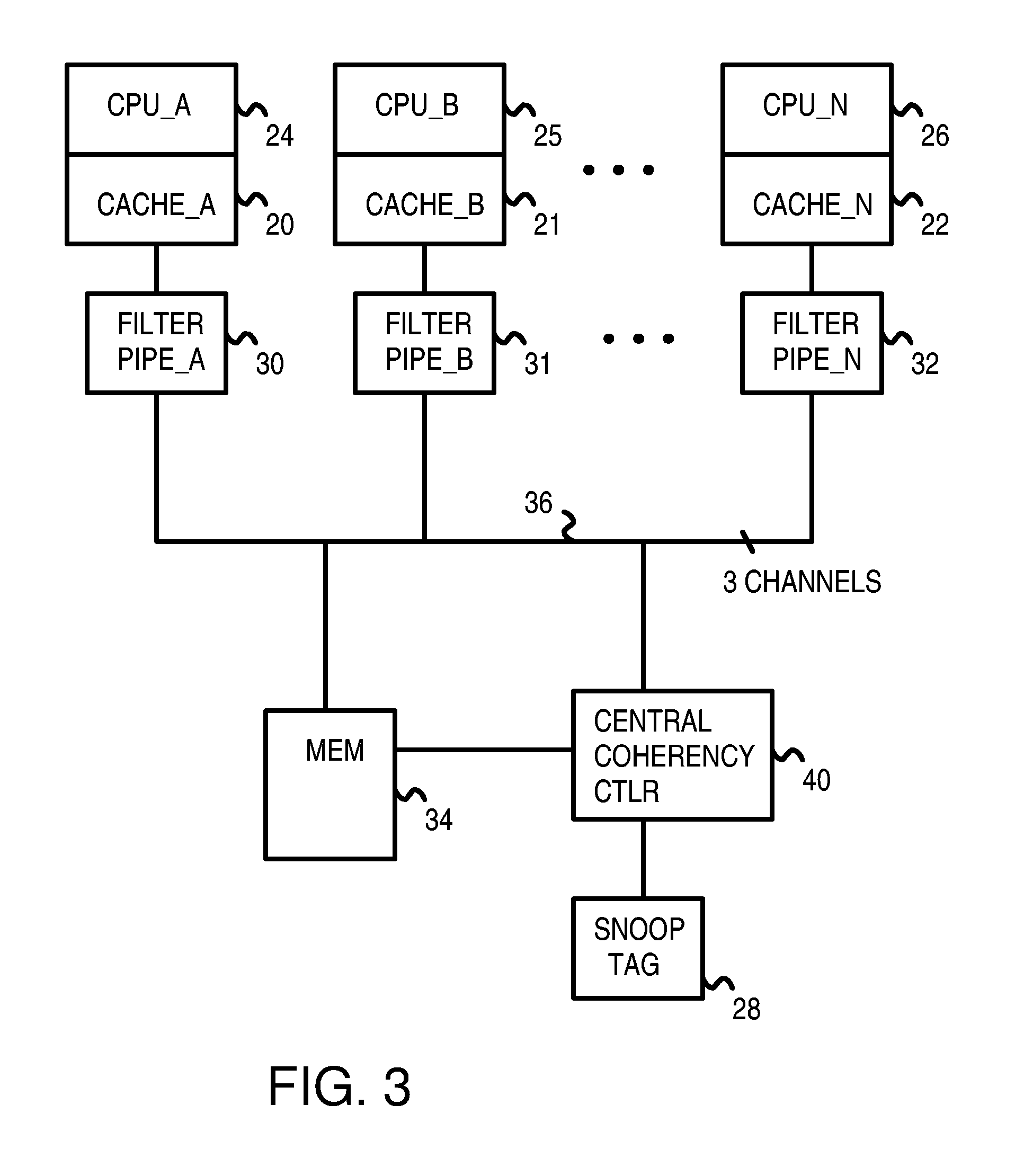

Distributed Cache Coherence at Scalable Requestor Filter Pipes that Accumulate Invalidation Acknowledgements from other Requestor Filter Pipes Using Ordering Messages from Central Snoop Tag

A multi-processor, multi-cache system has filter pipes that store entries for request messages sent to a central coherency controller. The central coherency controller orders requests from filter pipes using coherency rules but does not track completion of invalidations. The central coherency controller reads snoop tags to identify sharing caches having a copy of a requested cache line. The central coherency controller sends an ordering message to the requesting filter pipe. The ordering message has an invalidate count indicating the number of sharing caches. Each sharing cache receives an invalidation message from the central coherency controller, invalidates its copy of the cache line, and sends an invalidation acknowledgement message to the requesting filter pipe. The requesting filter pipe decrements the invalidate count until all sharing caches have acknowledged invalidation. All ordering, data, and invalidation acknowledgement messages must be received by the requesting filter pipe before loading the data into its cache.

Owner:AZUL SYSTEMS

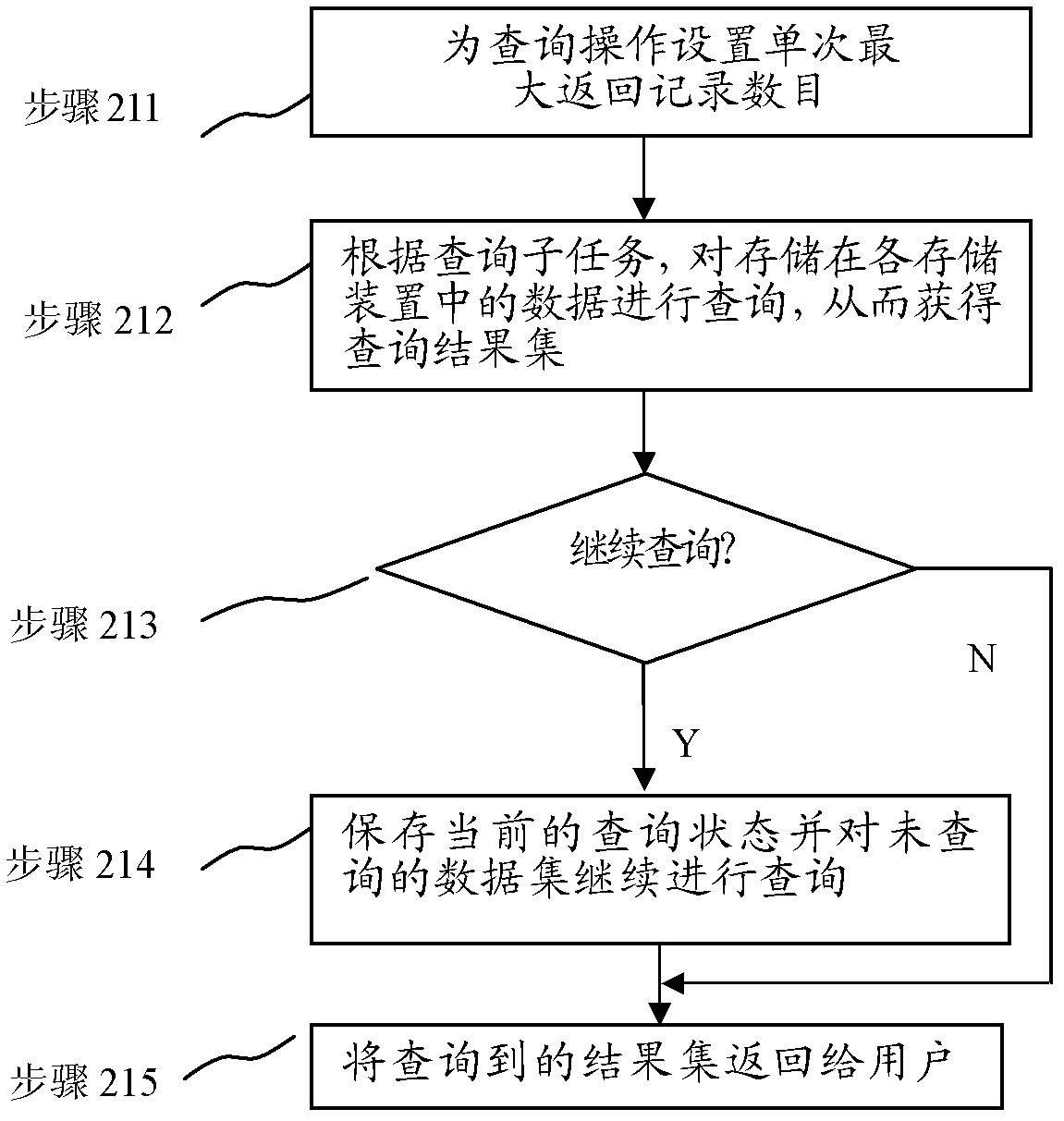

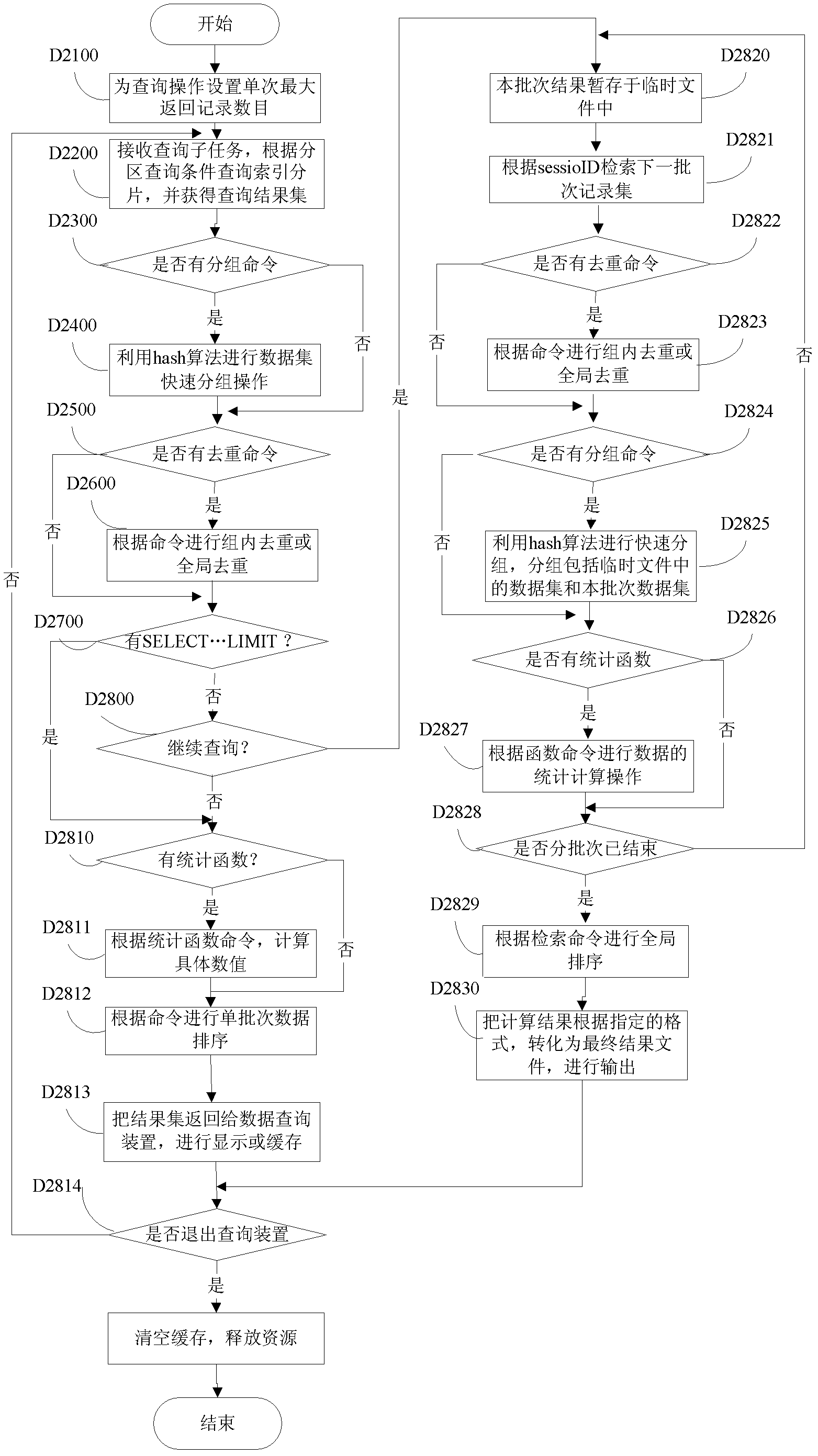

Massive structured data storage and query methods and systems supporting high-speed loading

ActiveCN102521405AImplement cachingTaking into account statistical needsSpecial data processing applicationsStreaming dataSlide window

The invention provides a massive structured data storage method, a massive structured data query method, a massive structured data storage system and a massive structured data query system, which all support high-speed loading. The distributed storage method for massive structured data comprises the following steps of: receiving the data which is loaded at high speed from a user; and caching the loaded data in a distributed way by utilizing a double-sliding window structure, and storing the cached data in the distributed way after a fixed period. By the distributed storage method for the massive structured data, newly-loaded data can be cached, so that the query efficiency of application such as streaming data which frequently uses recently-loaded data in post data query can be improved.

Owner:国信电子票据平台信息服务有限公司

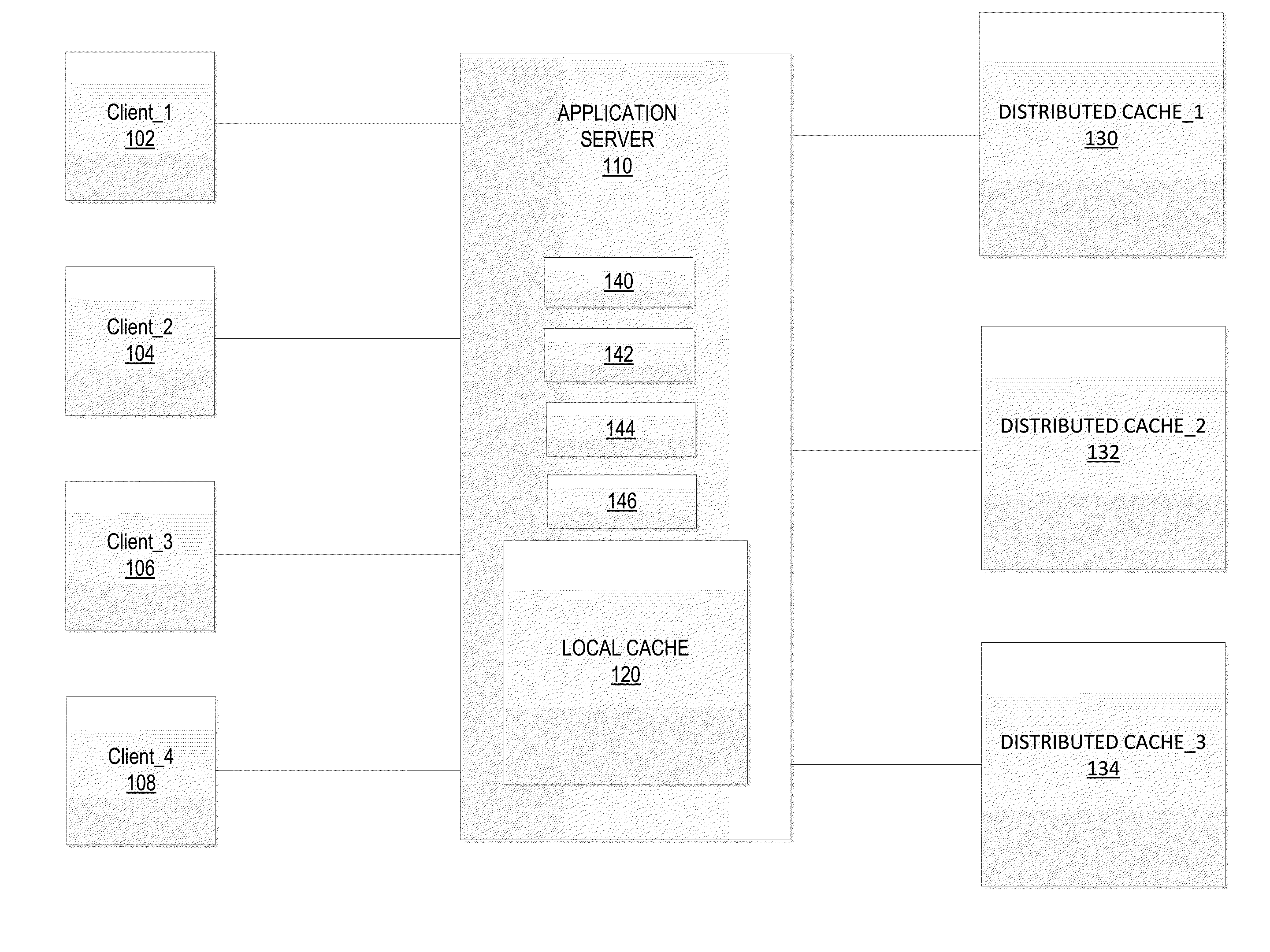

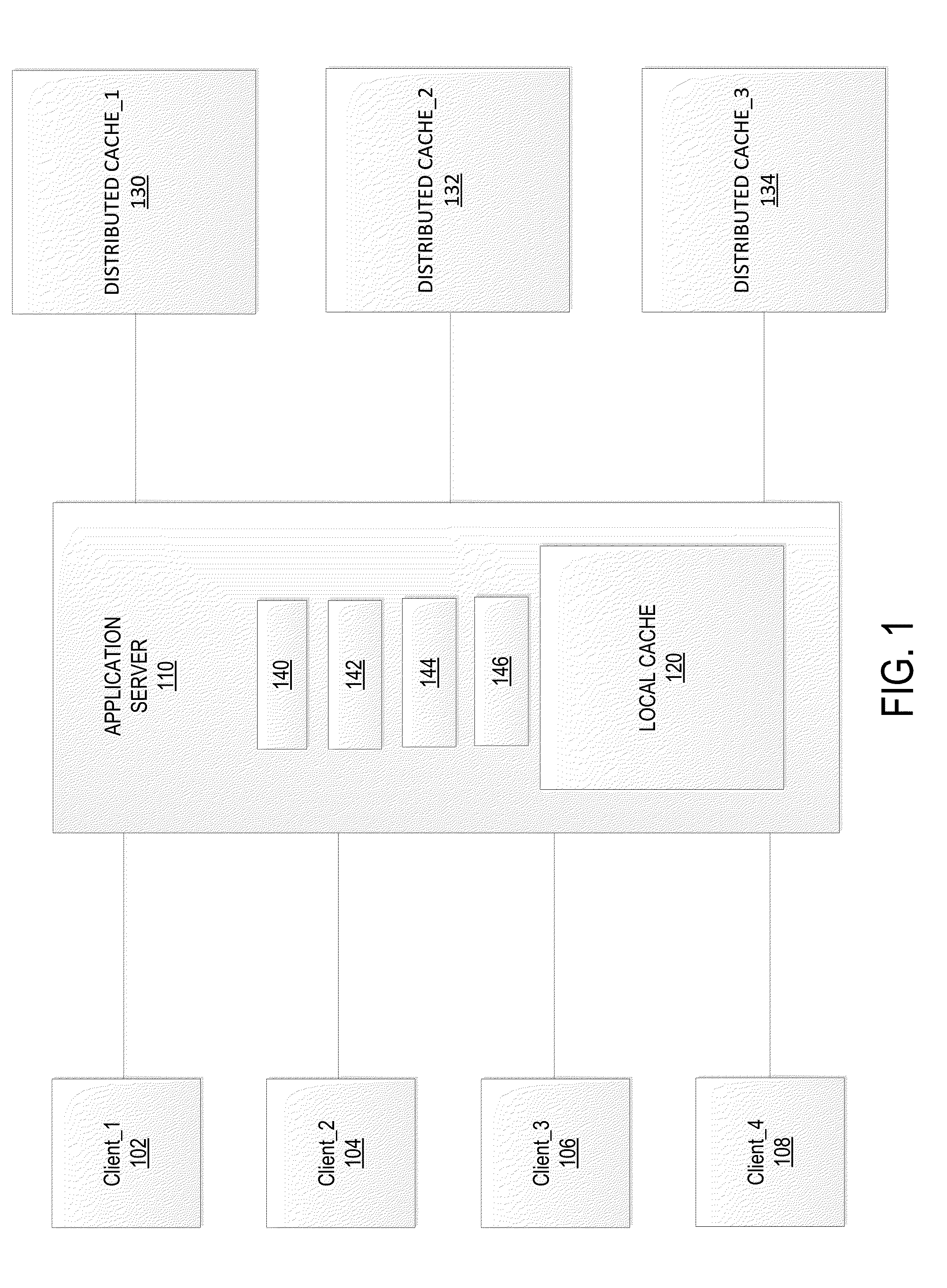

Distributed Cache System

Various embodiments of the present disclosure provide improved systems and techniques for intelligently allocating cache requests to caches based upon the nature of the cache objects associated with the cache requests, and / or the condition of the caches in the distributed cache system, to facilitate cache system utilization and performance. In some embodiments, an allocation of cache requests may be performed in response to detection of a problem at one of a group of cache servers. For example, in some embodiments, a particular cache server may be entered into a safe mode of operations when the cache's ability to service cache requests is impaired. In other embodiments, an allocation of cache requests may be performed based on an cache object data type associated with the cache requests.

Owner:SUCCESSFACTORS

Systems and methods for providing distributed cache coherence

ActiveUS7975018B2Reduce bandwidth requirementsReduce in quantityDigital computer detailsTransmissionComputer networkCache access

A plurality of access nodes sharing access to data on a storage network implement a directory based cache ownership scheme. One node, designated as a global coordinator, maintains a directory (e.g., table or other data structure) storing information about I / O operations by the access nodes. The other nodes send requests to the global coordinator when an I / O operation is to be performed on identified data. Ownership of that data in the directory is given to the first requesting node. Ownership may transfer to another node if the directory entry is unused or quiescent. The distributed directory-based cache coherency allows for reducing bandwidth requirements between geographically separated access nodes by allowing localized (cached) access to remote data.

Owner:EMC IP HLDG CO LLC

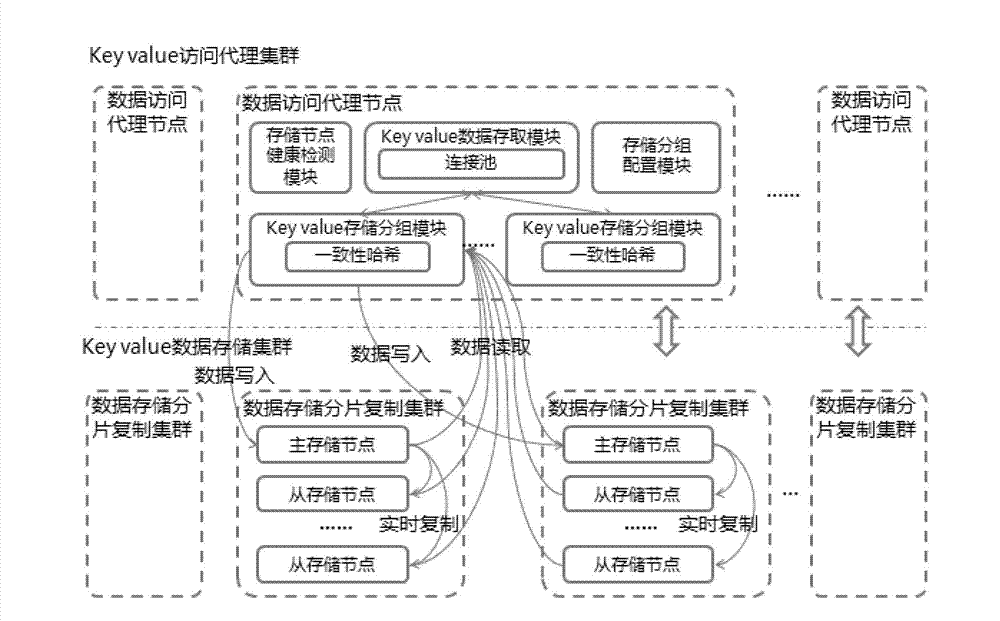

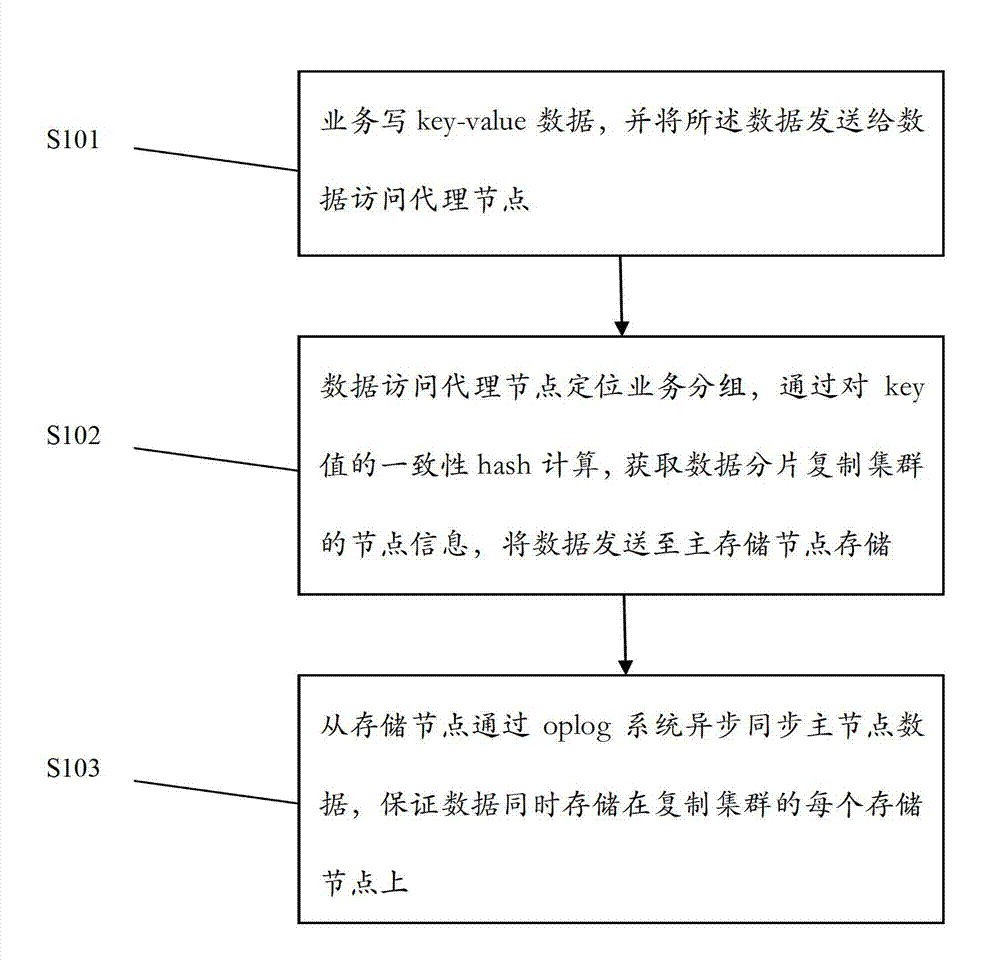

Key-value data distributed caching system and method thereof

ActiveCN103078927AReduce migration costsImprove reliabilityTransmissionSpecial data processing applicationsDistributed cacheData access

The invention discloses a key-value data distributed caching system and a method thereof. The system comprises a key-value access proxy cluster and a key-value data storage cluster, wherein the key-value access proxy cluster is composed of a plurality of data access proxy nodes, and the key-value data storage cluster is composed of a plurality of data fragmentation storage replication clusters; the data access proxy nodes are used for sending a node business storage request, routing a data read and write request to a target data storage node, and storing or reading business data; and in the data fragmentation storage replication clusters, a master-slave data replication mechanism is adopted to determine that a single point of failure does not exist in the data, read data are read from a plurality of slave nodes, and load balance of the read data is determined. By adopting the technical scheme provided by the invention, key-value data automatic fragmentation storage is realized through a consistency hash algorithm, and horizontal expansion of data storage capacity of the system is guaranteed.

Owner:ALIBABA (CHINA) CO LTD

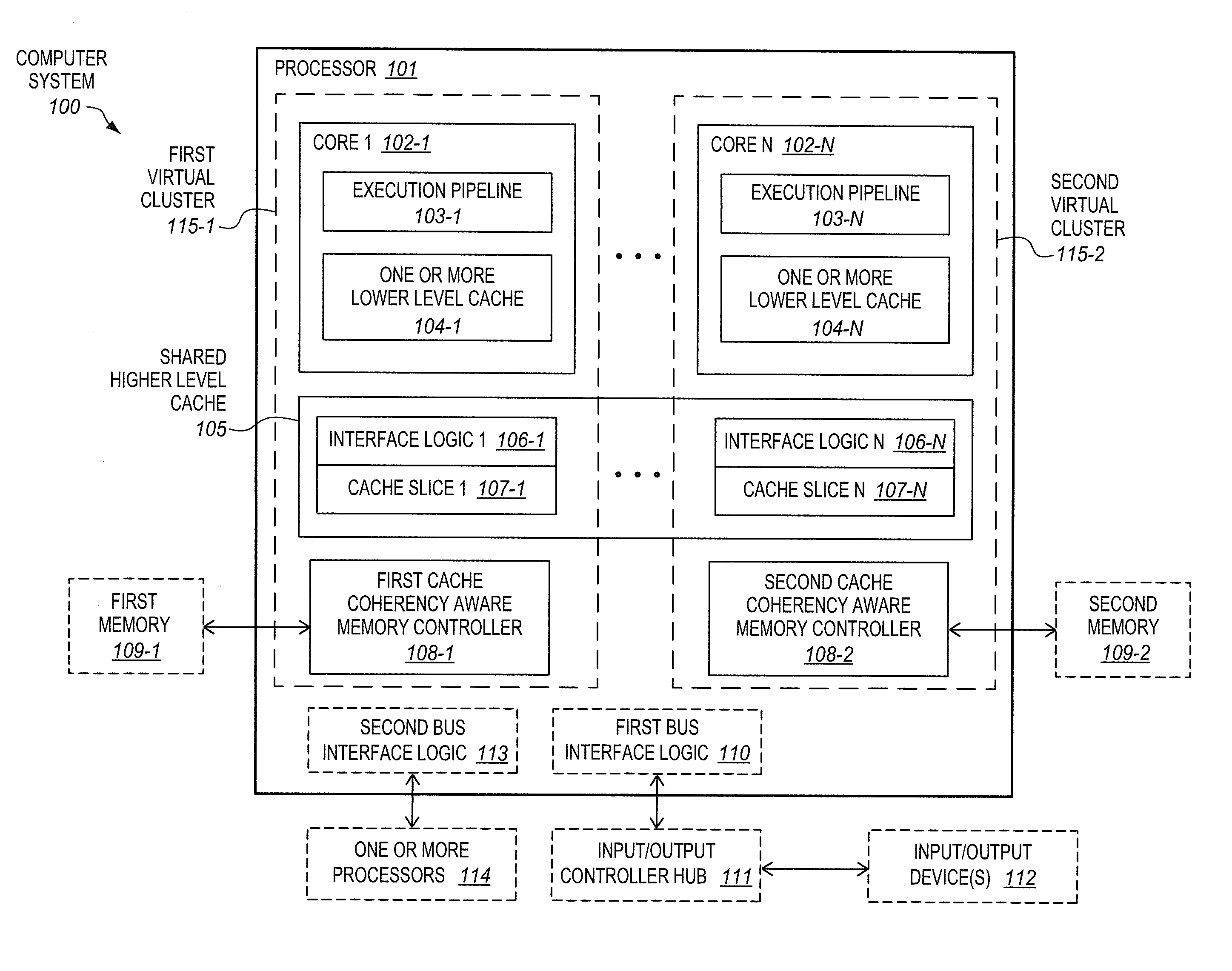

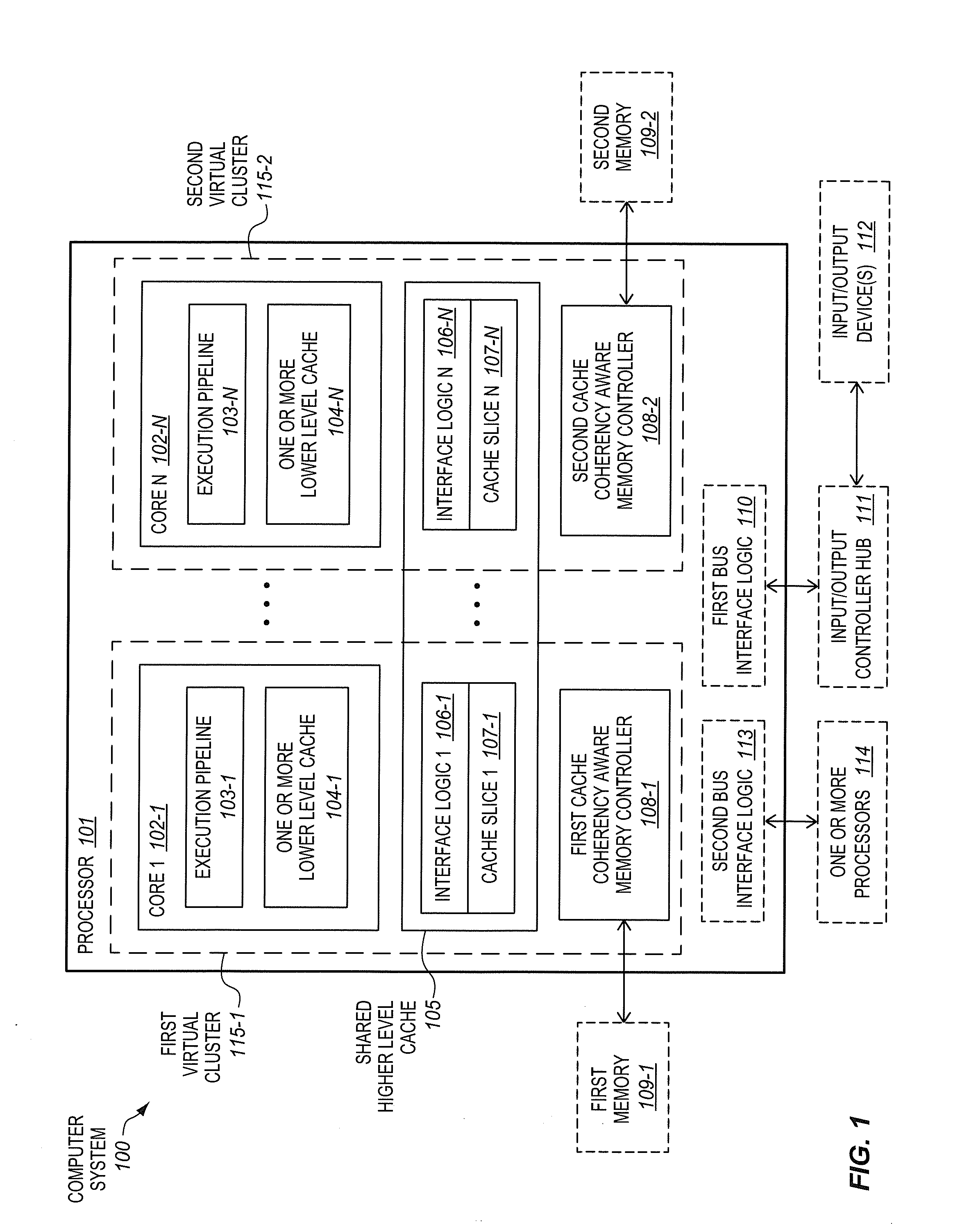

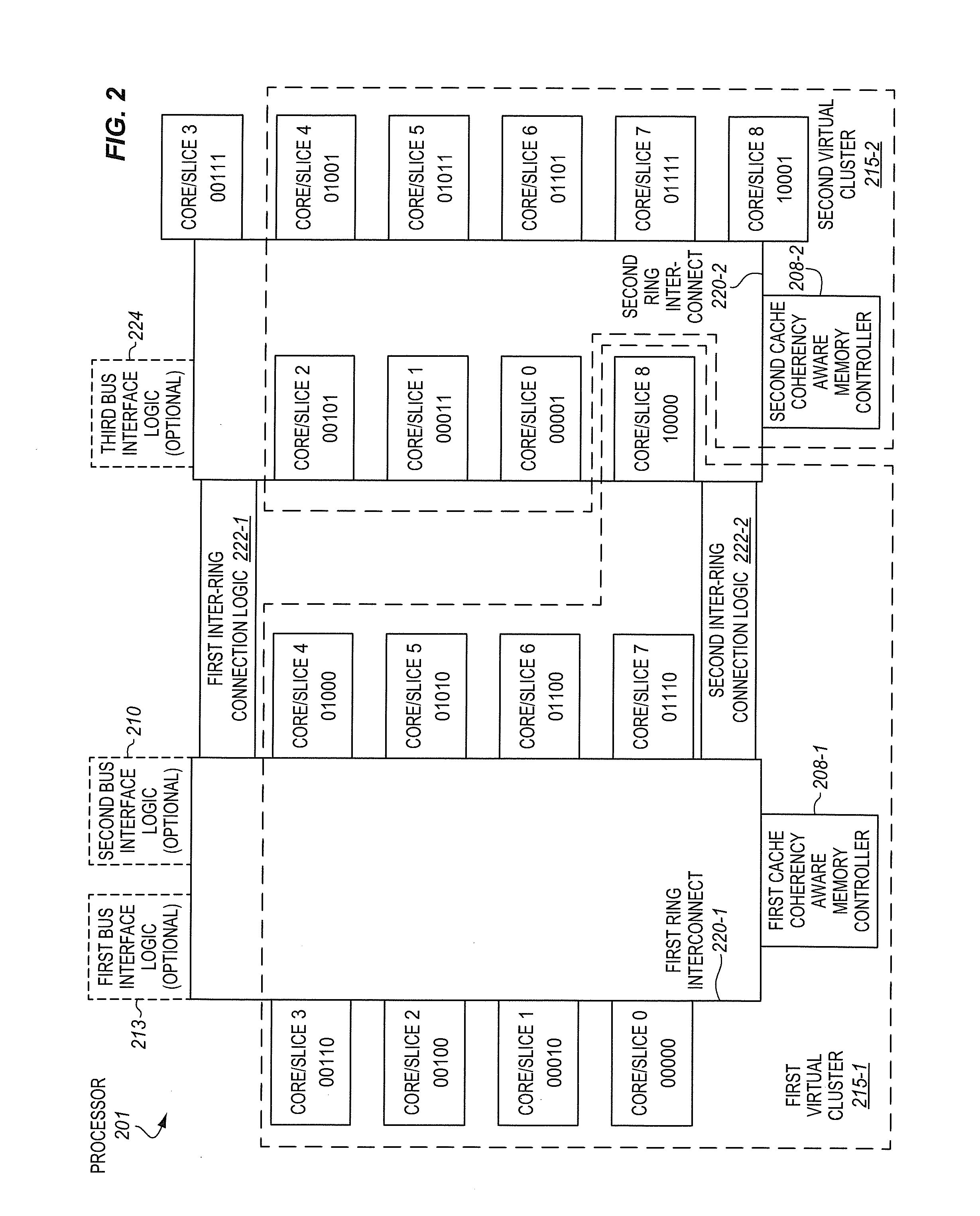

Processors having virtually clustered cores and cache slices

A processor of an aspect includes a plurality of logical processors each having one or more corresponding lower level caches. A shared higher level cache is shared by the plurality of logical processors. The shared higher level cache includes a distributed cache slice for each of the logical processors. The processor includes logic to direct an access that misses in one or more lower level caches of a corresponding logical processor to a subset of the distributed cache slices in a virtual cluster that corresponds to the logical processor. Other processors, methods, and systems are also disclosed.

Owner:DAEDALUS PRIME LLC

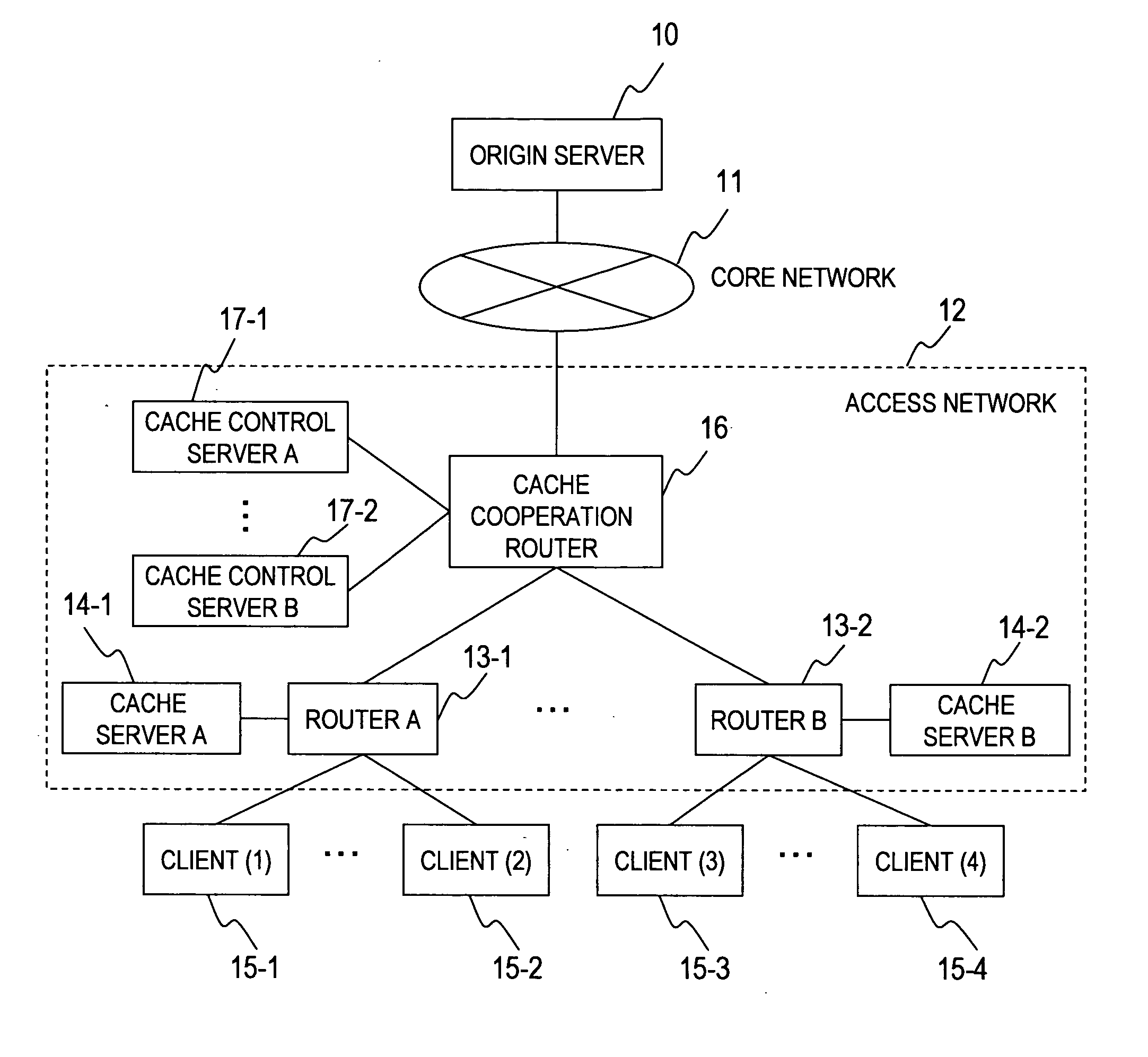

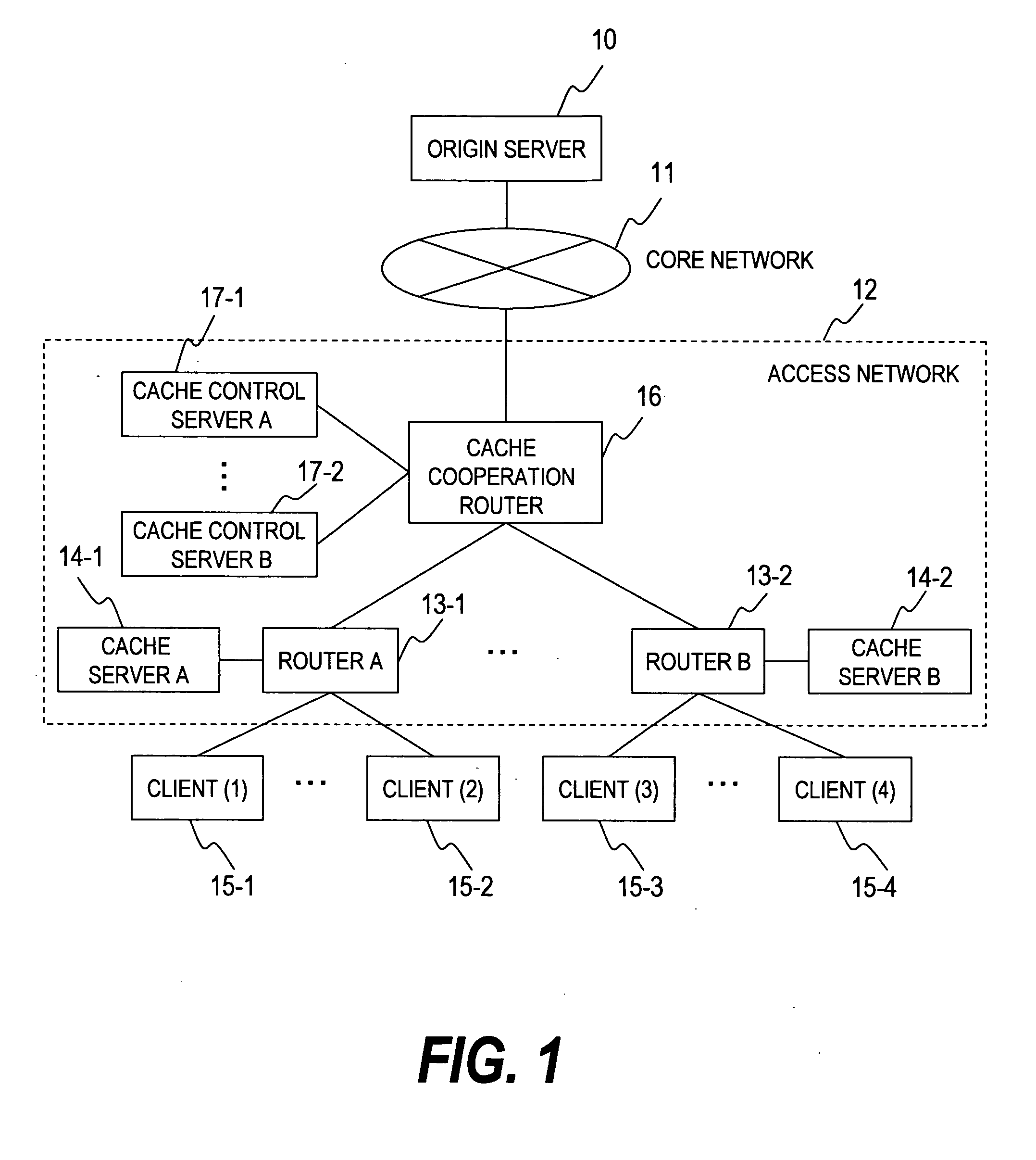

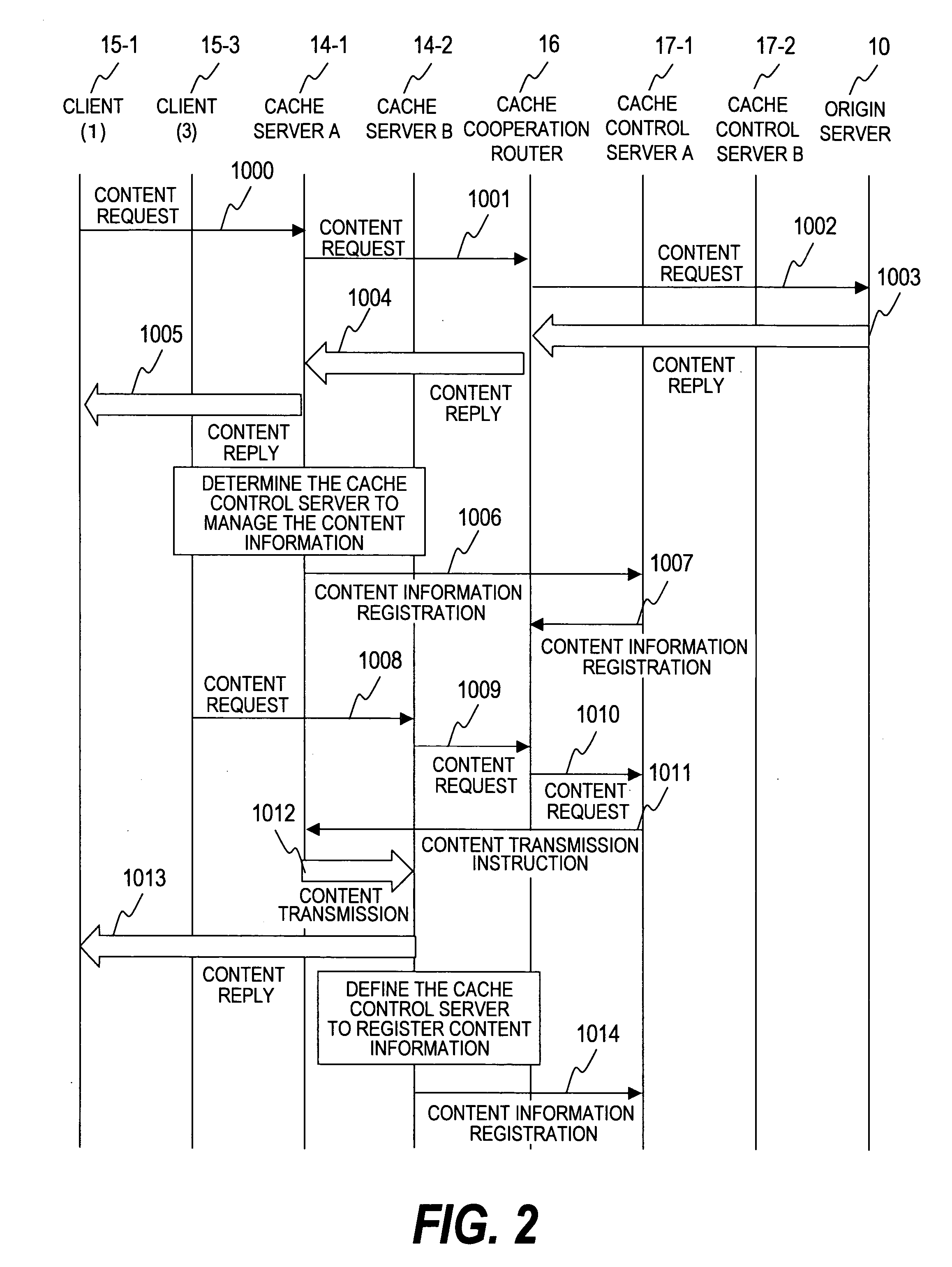

Cache system

InactiveUS20070050491A1Easily adapted to large-scale networkDigital computer detailsTransmissionCache serverParallel computing

The purpose of the invention is to provide a distributed cache system applicable to a large-scale network having multiple cache servers. In a distributed cache system including multiple cache control servers, the content information is divided and managed by each cache control server. When content requested from a client is stored in the distributed cache system, a cache cooperation router forwards the content request to the cache control server which manages the information of the requested content. A cache control server has a function to notify its own address to the distributed cache system when the cache control server is added to the distributed cache system. When a cache control server receives the notification, it sends content information to the new cache control server and synchronizes the content information. Thus, a cache control server can be added to the system with ease.

Owner:HITACHI LTD

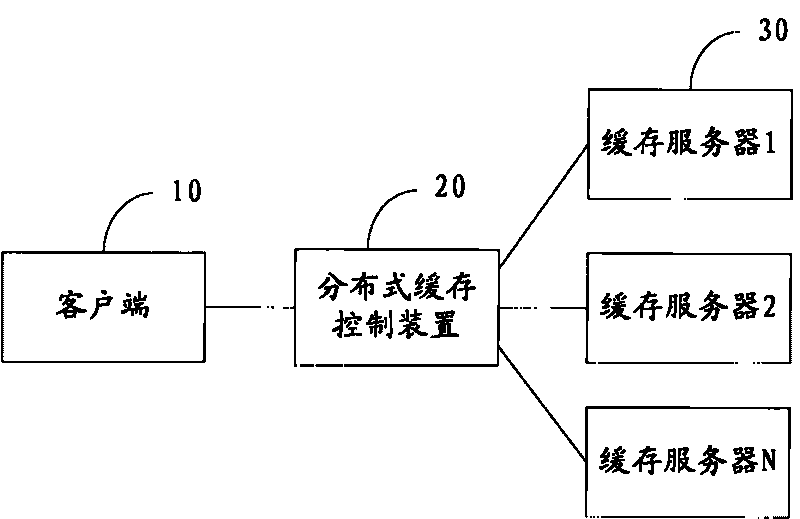

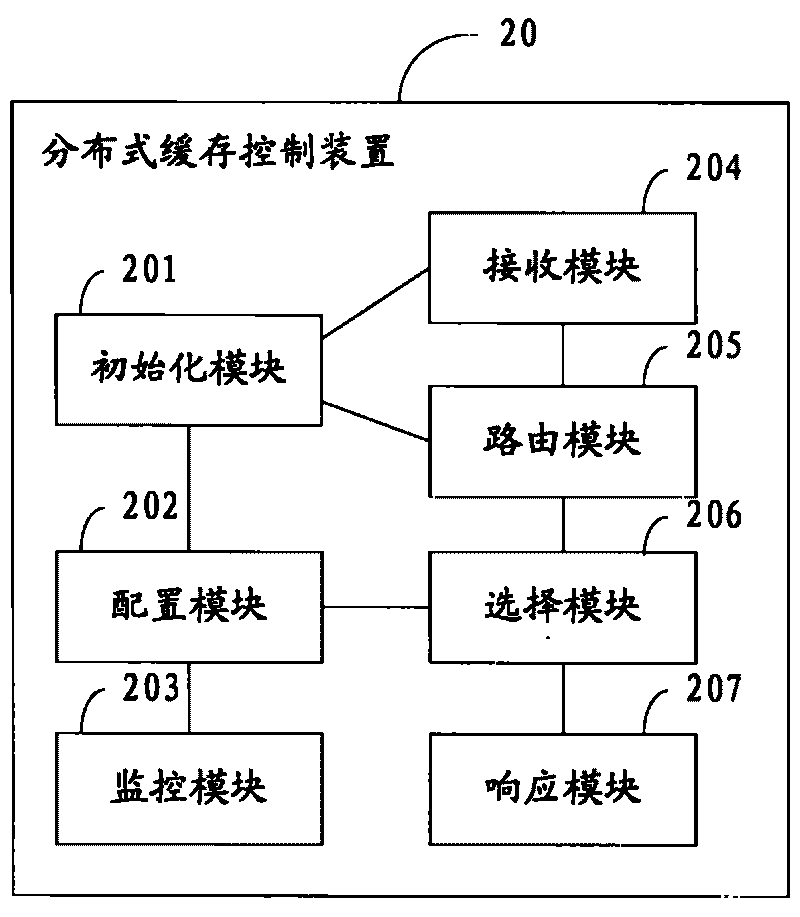

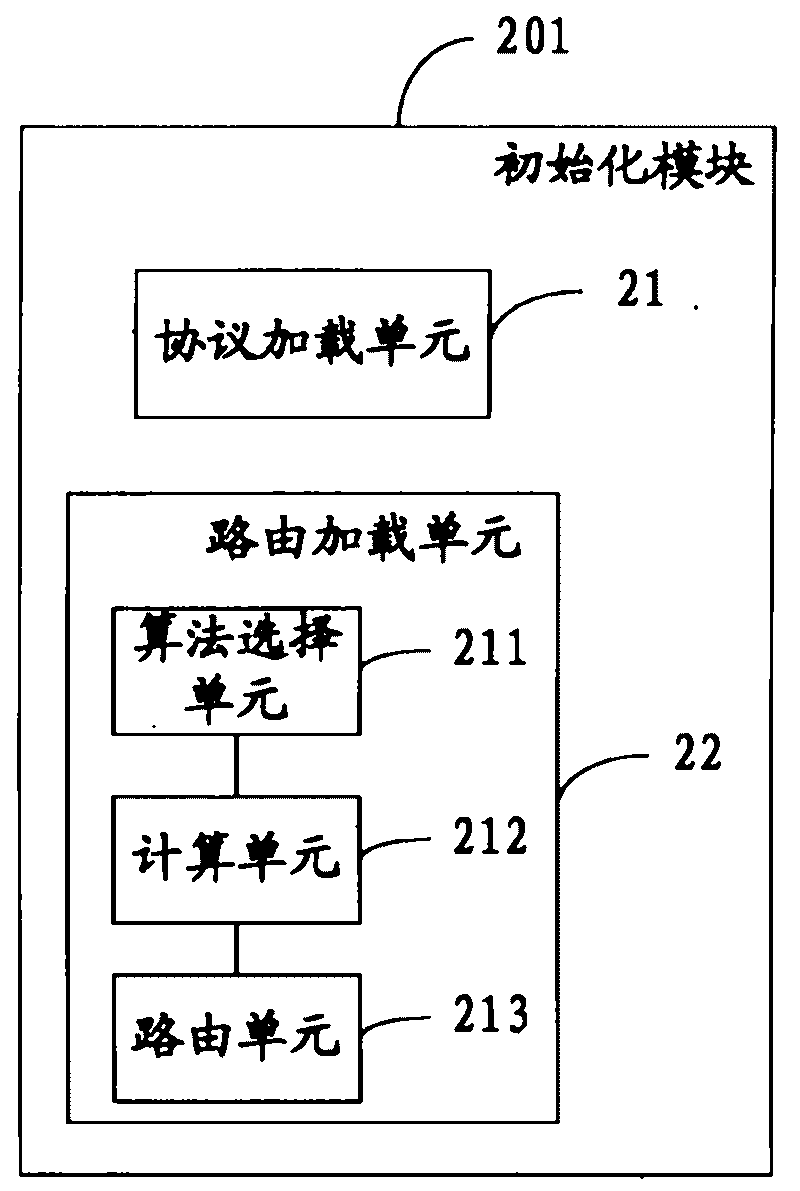

Distributed cache control method, device and system

InactiveCN101764824AFlexible controlFlexible switchingData switching networksCache serverDistributed cache

The invention discloses a distributed cache control method which comprises the following steps: according to the configuration information, a data access protocol and routing information are pre-loaded; according to the pre-loaded data access protocol, the data access request of a client is received; according to the pre-loaded routing information, at least a cache server in which the data requested by the data access request is located in is searched; according to status information of all searched cache servers, one cache server is selected as a target cache server; and the data access request is forwarded to the target cache server according to the pre-loaded data access protocol, to respond to the data access request. Correspondingly, the invention also discloses a distributed cache control device and a system. The invention can support various data access protocols, flexibly switch the used cache servers according to the status information of the cache servers, and realize the flexible control to distribution cache.

Owner:SHENZHEN LONG VISION

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com