Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3185 results about "Multi processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and apparatus for providing virtual computing services

InactiveUS20050044301A1Easy to manageLow costResource allocationMemory adressing/allocation/relocationVirtualizationOperational system

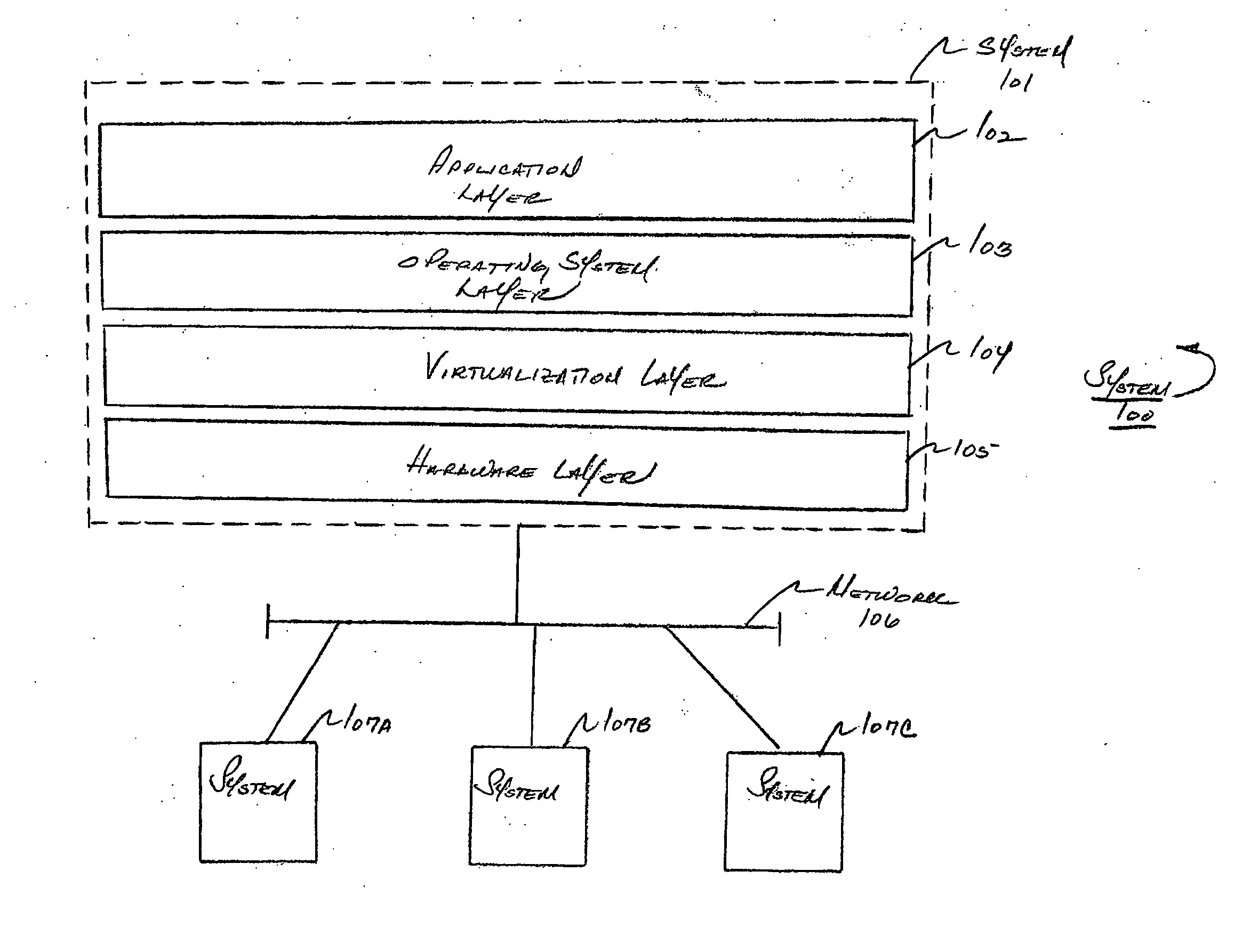

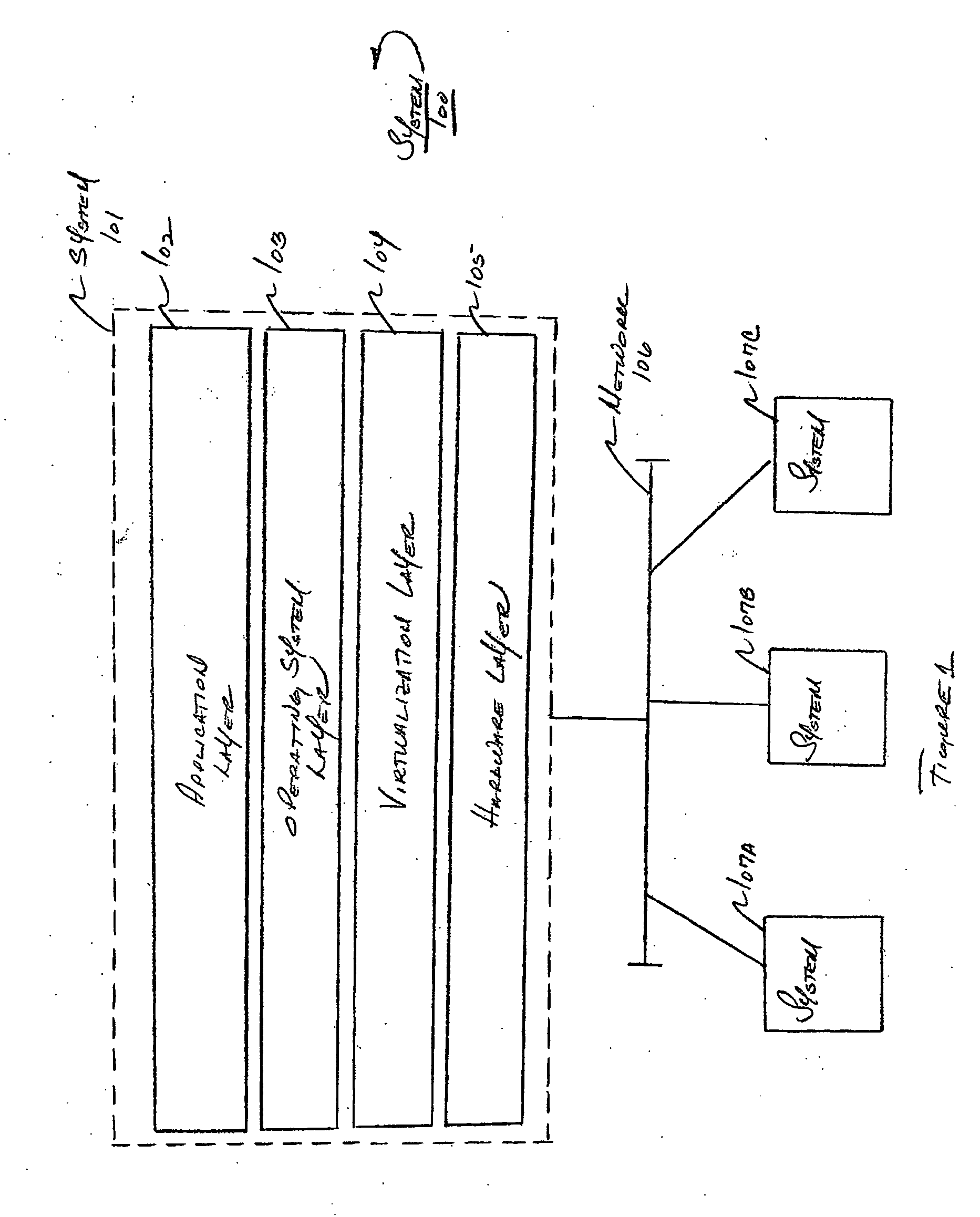

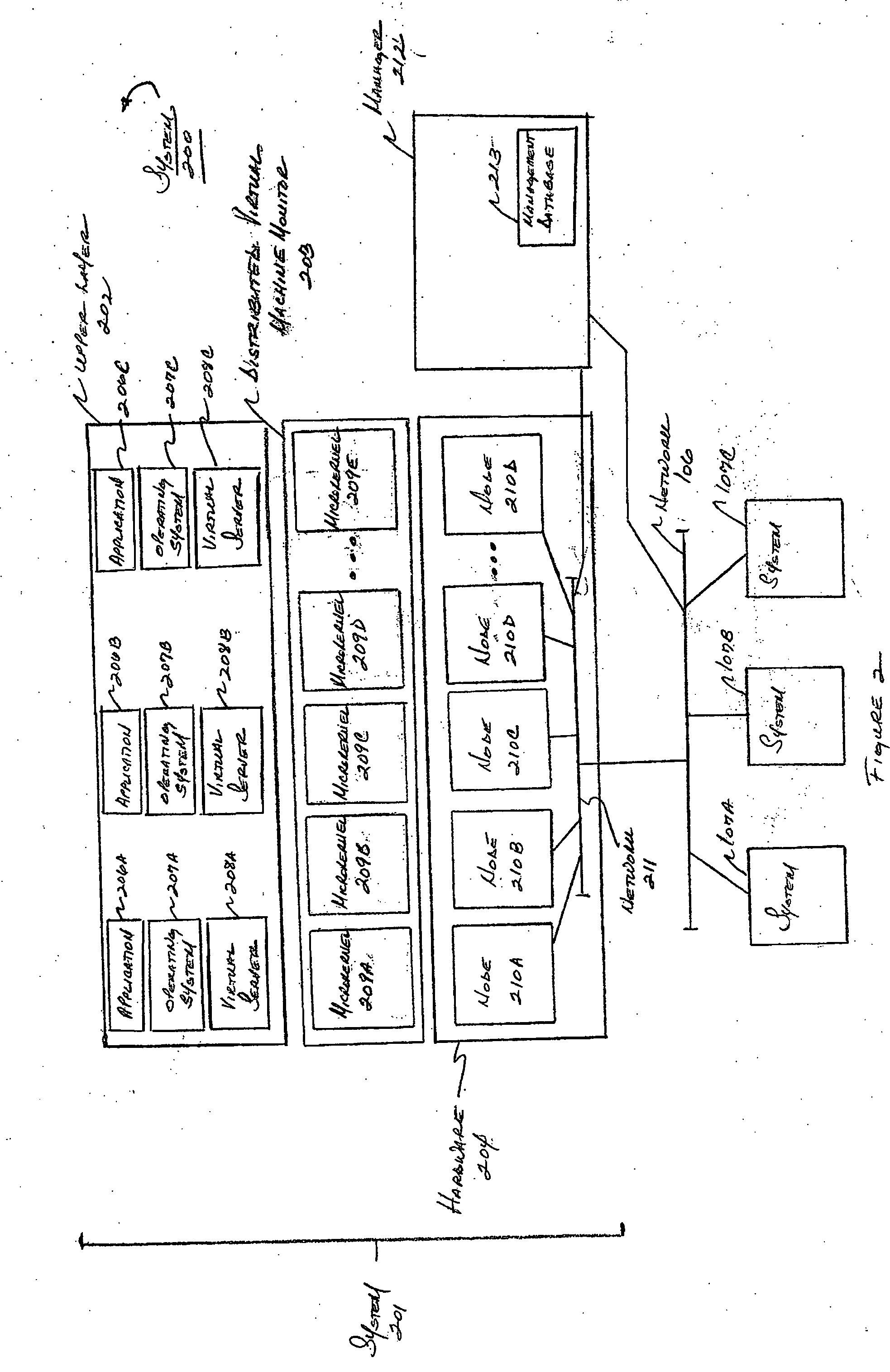

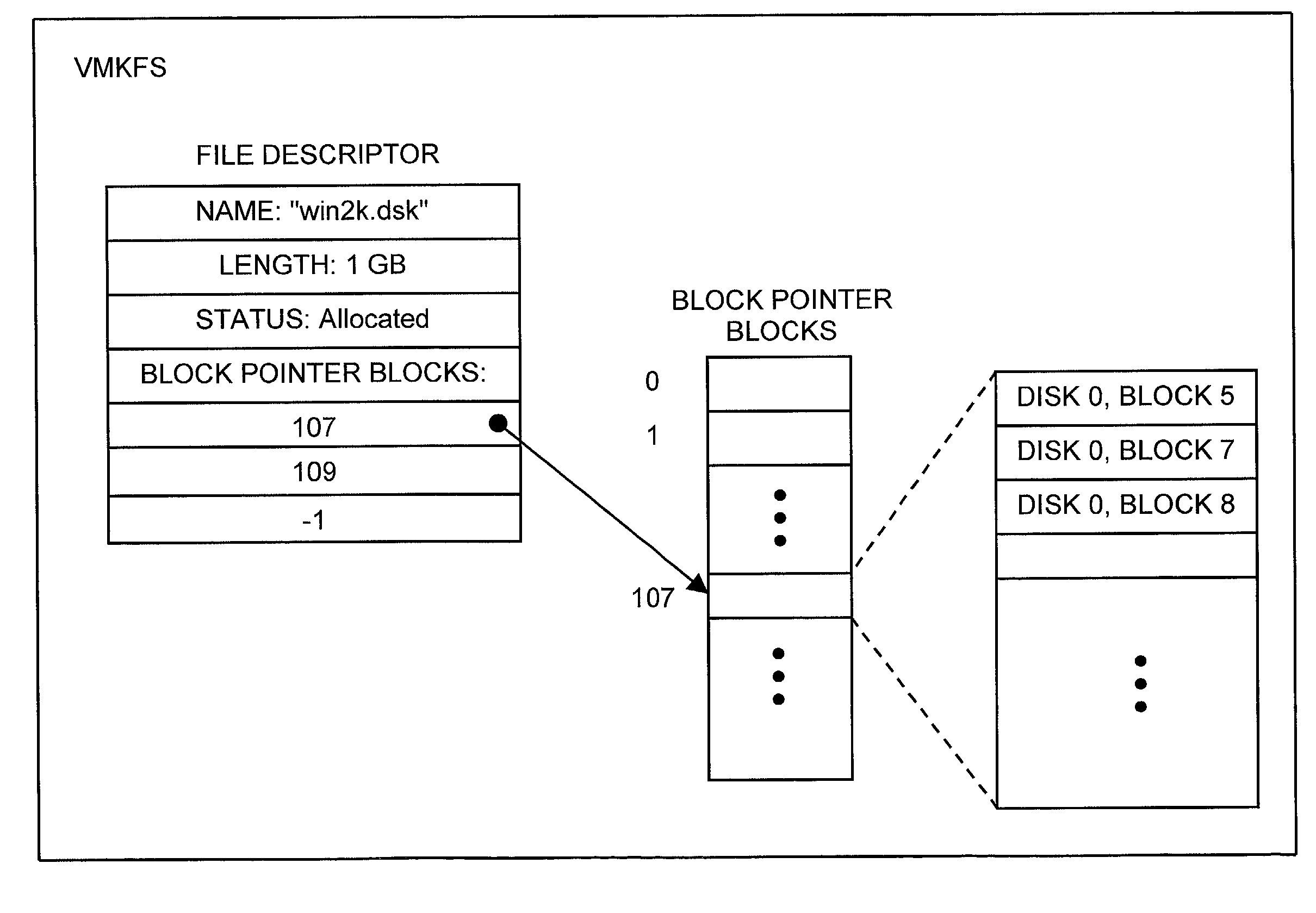

A level of abstraction is created between a set of physical processors and a set of virtual multiprocessors to form a virtualized data center. This virtualized data center comprises a set of virtual, isolated systems separated by a boundary referred as a partition. Each of these systems appears as a unique, independent virtual multiprocessor computer capable of running a traditional operating system and its applications. In one embodiment, the system implements this multi-layered abstraction via a group of microkernels, each of which communicates with one or more peer microkernel over a high-speed, low-latency interconnect and forms a distributed virtual machine monitor. Functionally, a virtual data center is provided, including the ability to take a collection of servers and execute a collection of business applications over a compute fabric comprising commodity processors coupled by an interconnect. Processor, memory and I / O are virtualized across this fabric, providing a single system, scalability and manageability. According to one embodiment, this virtualization is transparent to the application, and therefore, applications may be scaled to increasing resource demands without modifying the application.

Owner:ORACLE INT CORP

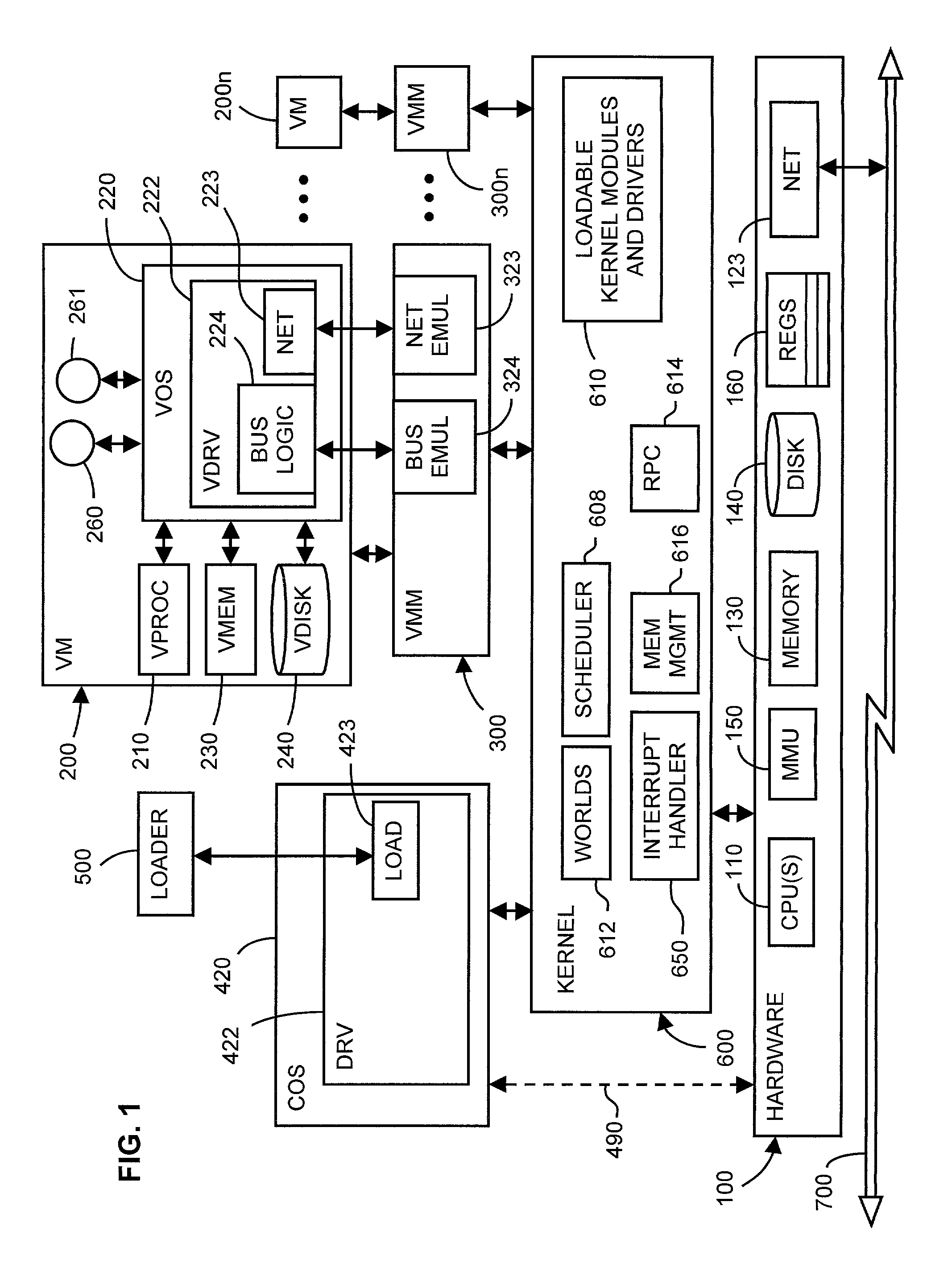

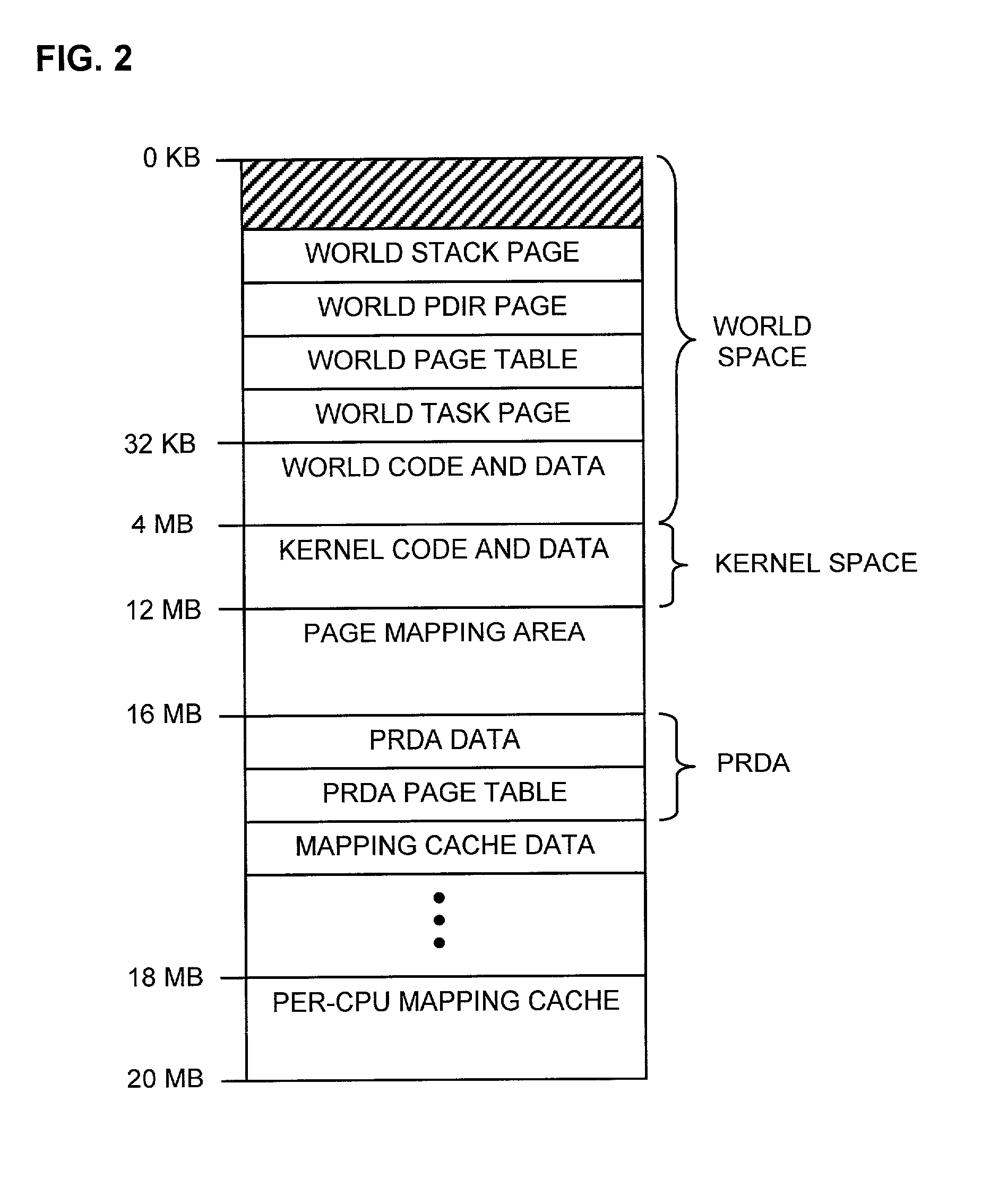

Computer configuration for resource management in systems including a virtual machine

InactiveUS6961941B1Avoiding multiple recurrenceBootstrappingSoftware simulation/interpretation/emulationOperational systemComputer configuration

A computer architecture includes a first operating system (COS), which may be a commodity operating system, and a kernel, which acts as a second operating system. The COS is used to boot the system as a whole. After booting, the kernel is loaded and displaces the COS from the system level, meaning that the kernel itself directly accesses predetermined physical resources of the computer. All requests for use of system resources then pass via the kernel. System resources are divided into those that, in order to maximize speed, are controlled exclusively by the kernel, those that the kernel allows the COS to handle exclusively, and those for which control is shared by the kernel and COS. In the preferred embodiment of the invention, at least one virtual machine (VM) runs via a virtual machine monitor, which is installed to run on the kernel. Each VM, the COS, and even each processor in a multiprocessor embodiment, are treated as separately schedulable entities that are scheduled by the kernel. Mechanisms for high-speed I / O between VM's and I / O devices are also included.

Owner:VMWARE INC

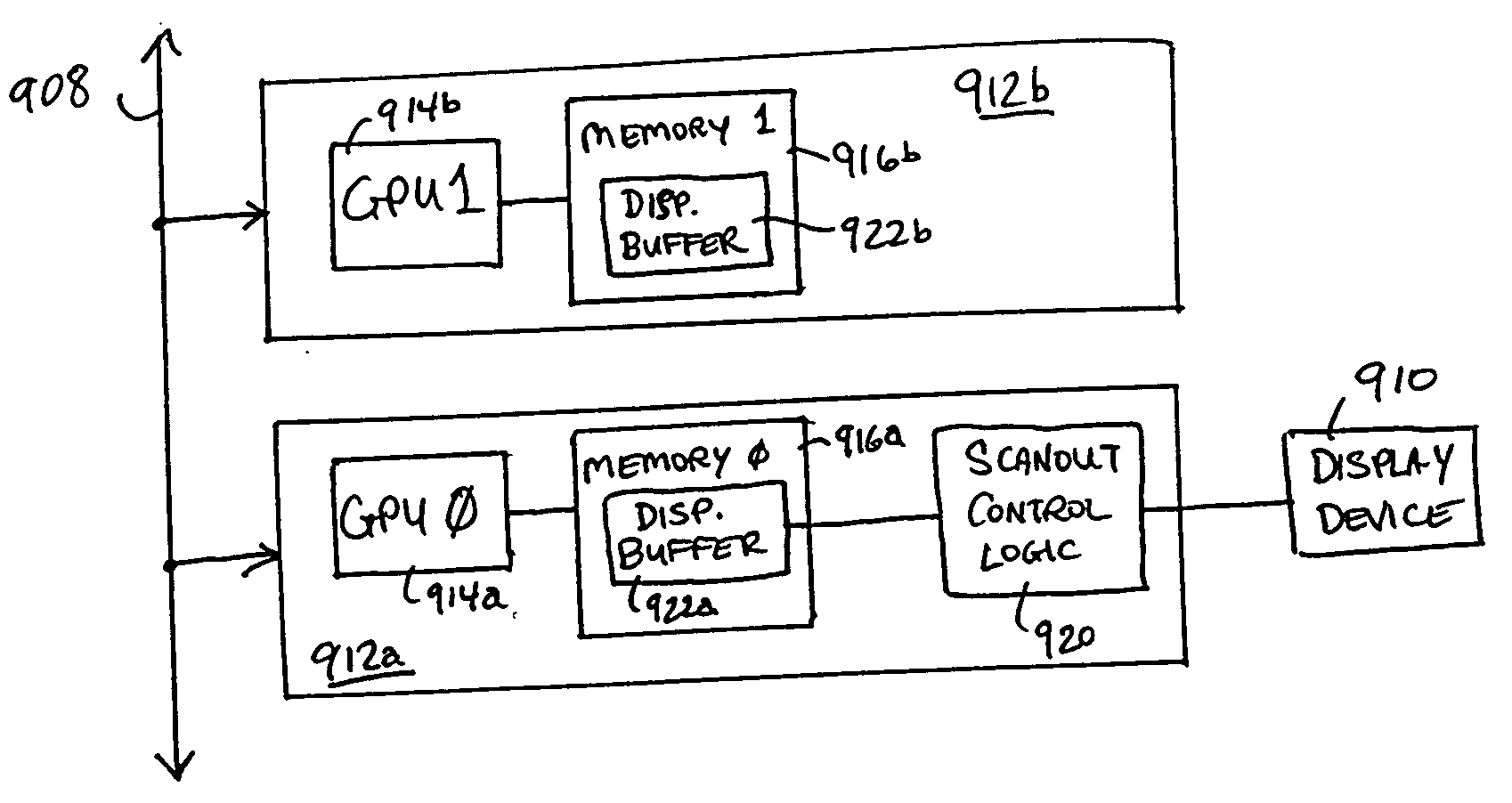

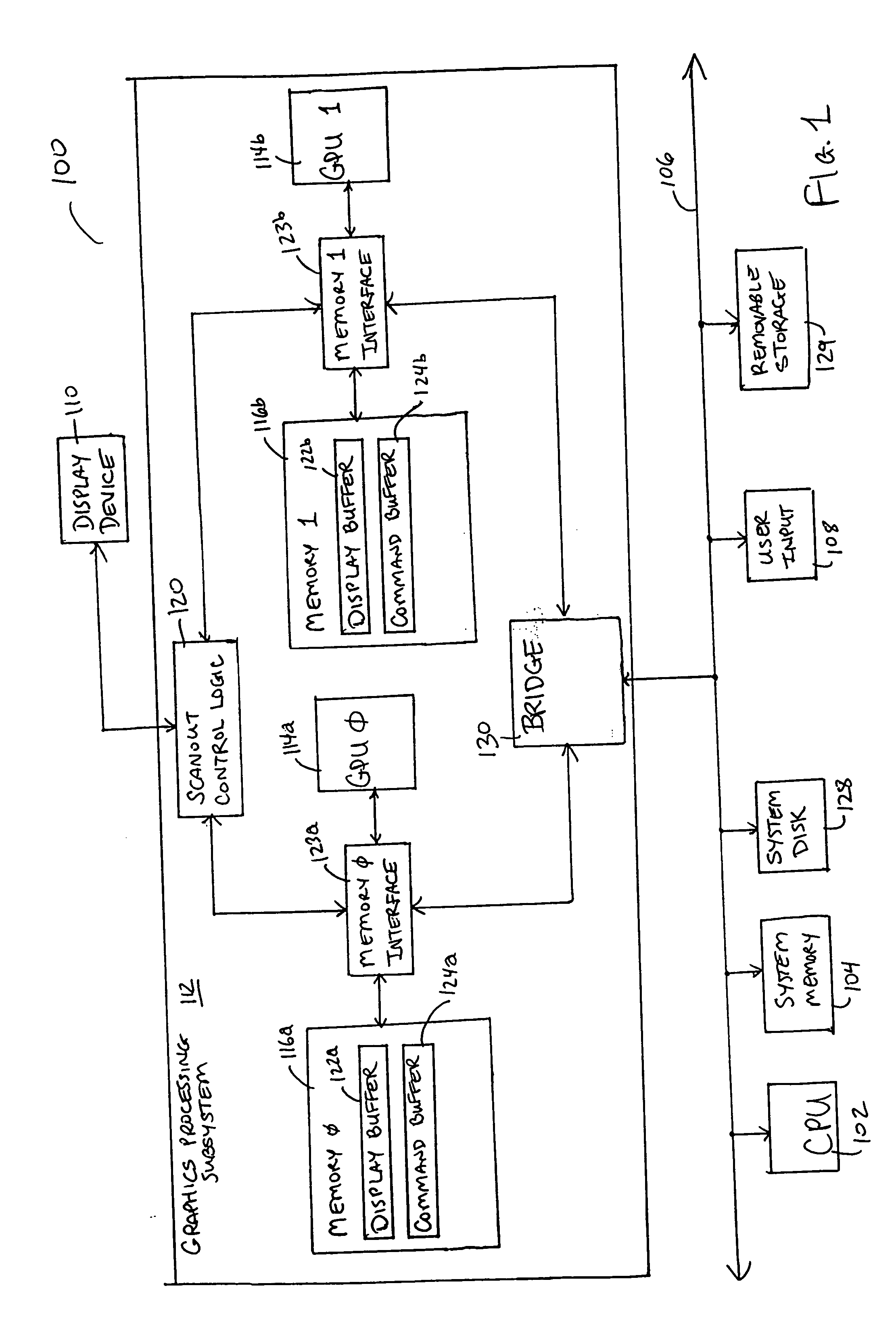

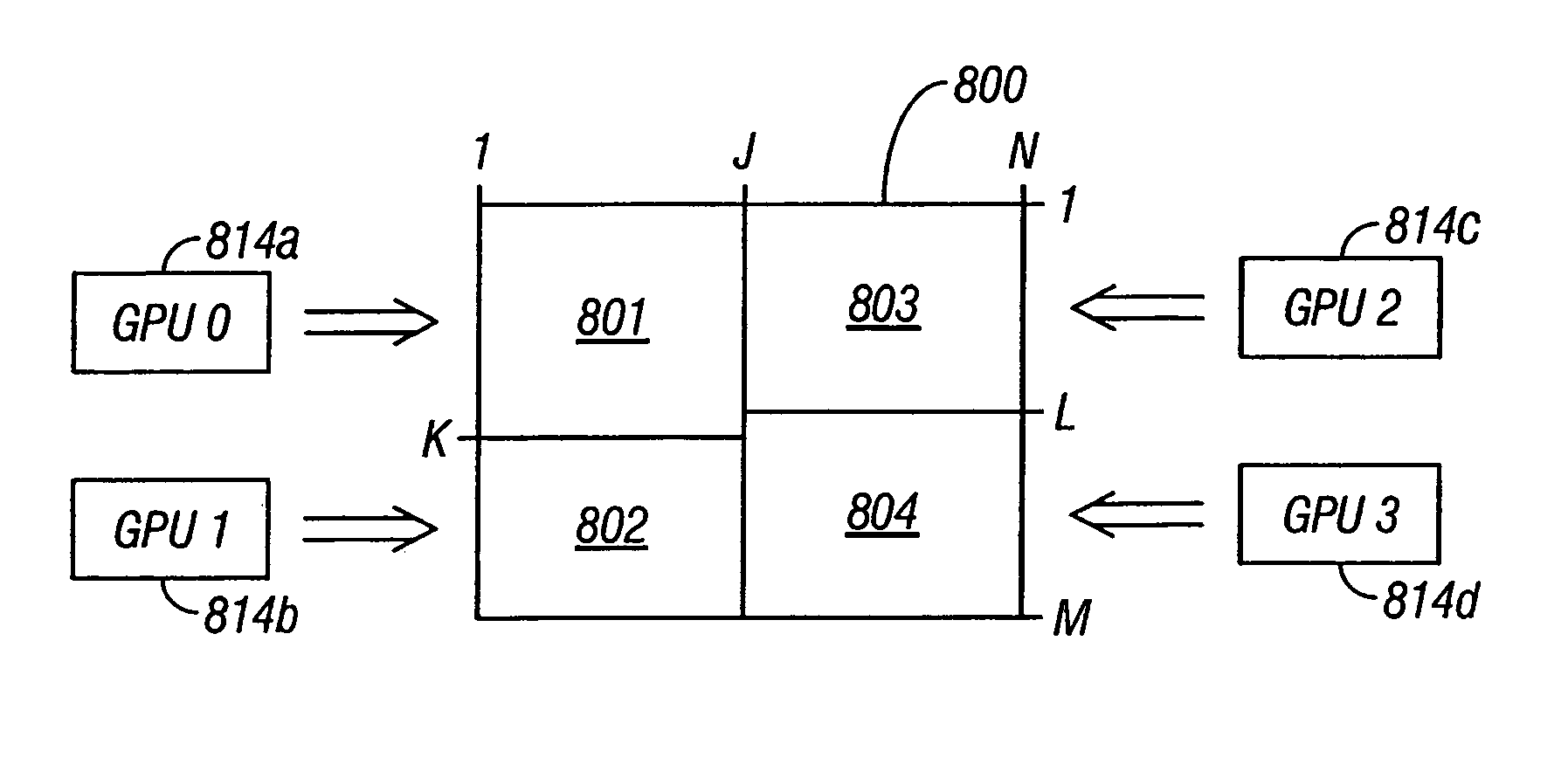

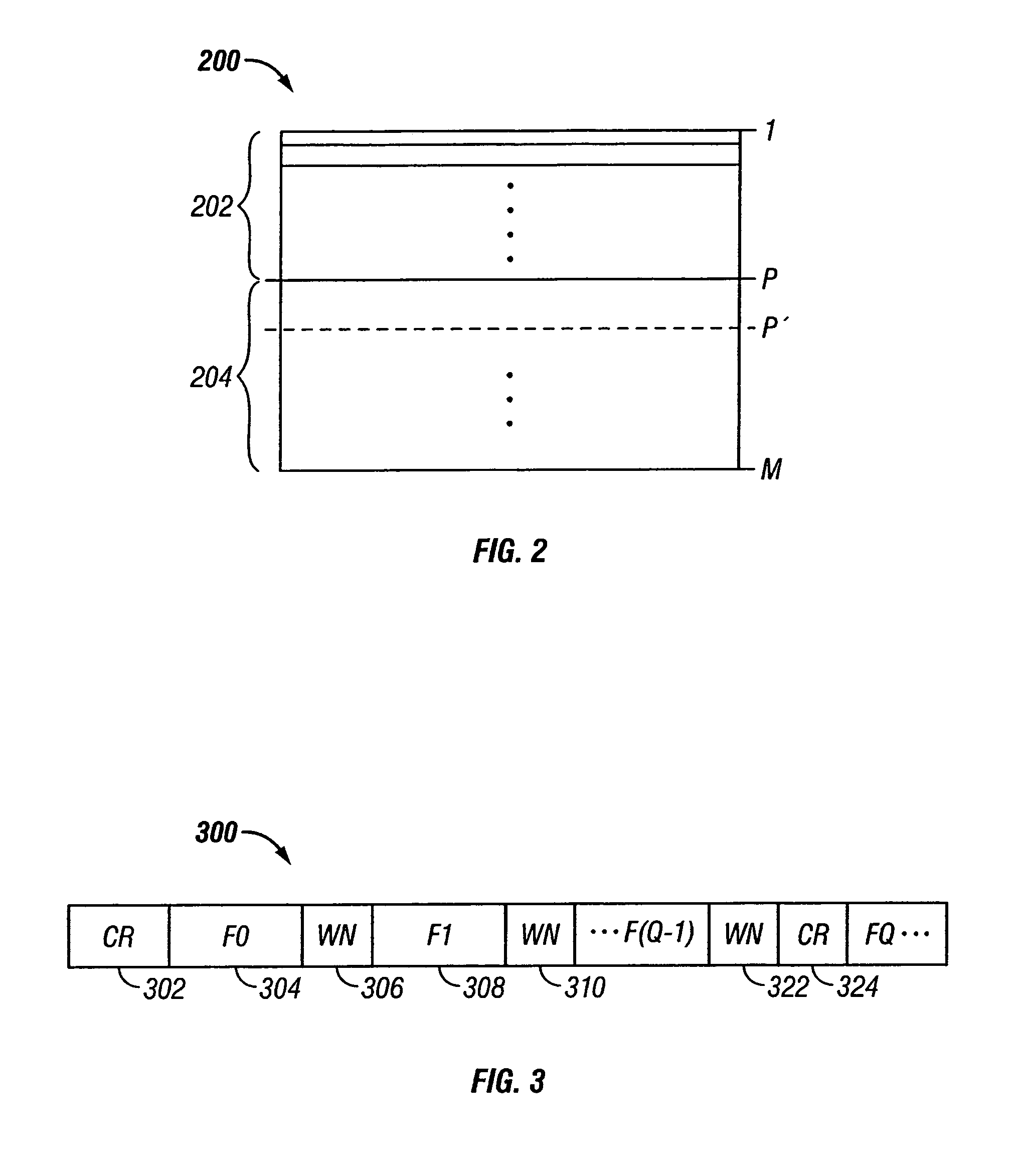

Adaptive load balancing in a multi-processor graphics processing system

ActiveUS20050041031A1Increase in sizeSmall sizeCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

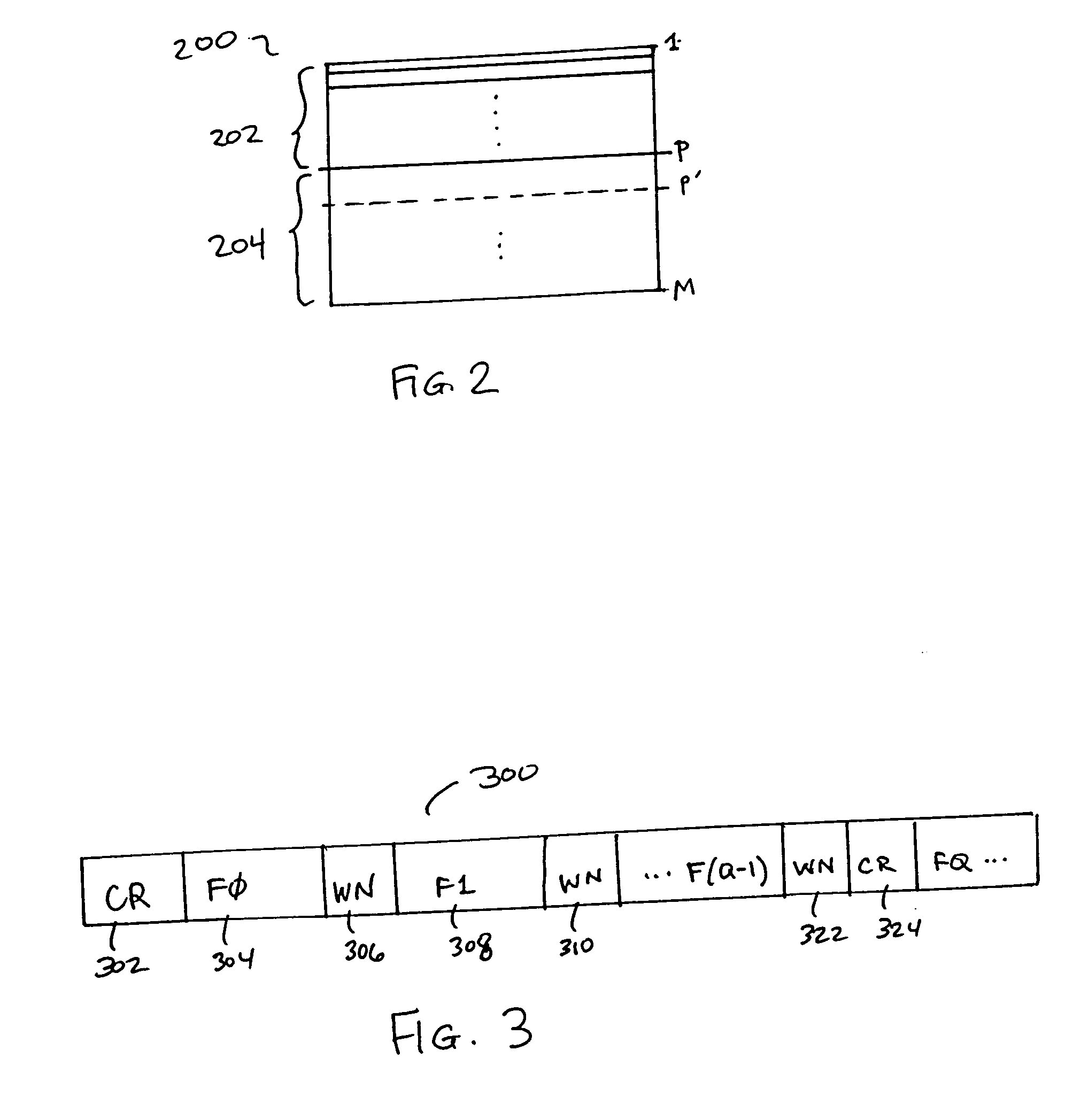

Systems and methods for balancing a load among multiple graphics processors that render different portions of a frame. A display area is partitioned into portions for each of two (or more) graphics processors. The graphics processors render their respective portions of a frame and return feedback data indicating completion of the rendering. Based on the feedback data, an imbalance can be detected between respective loads of two of the graphics processors. In the event that an imbalance exists, the display area is re-partitioned to increase a size of the portion assigned to the less heavily loaded processor and to decrease a size of the portion assigned to the more heavily loaded processor.

Owner:NVIDIA CORP

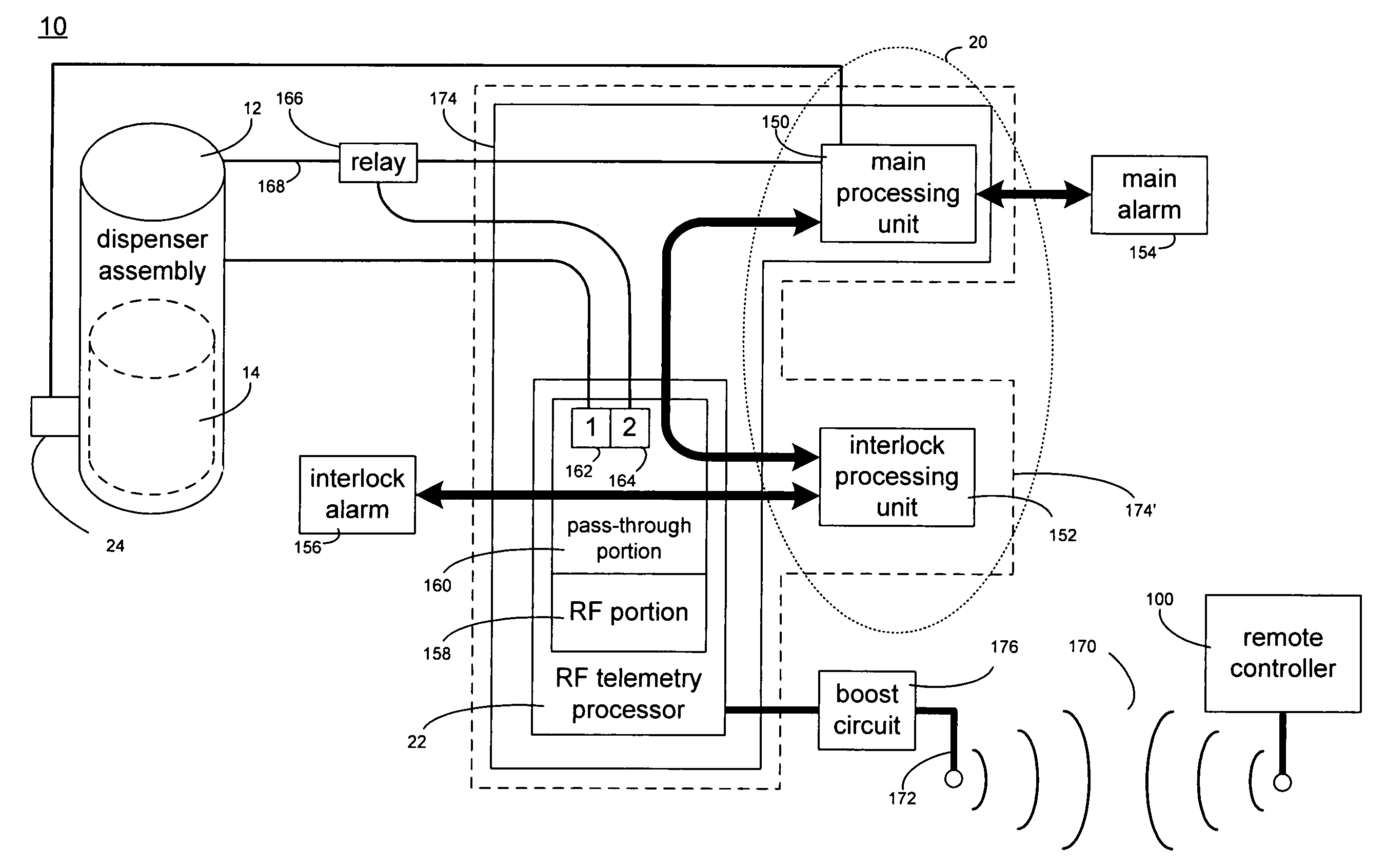

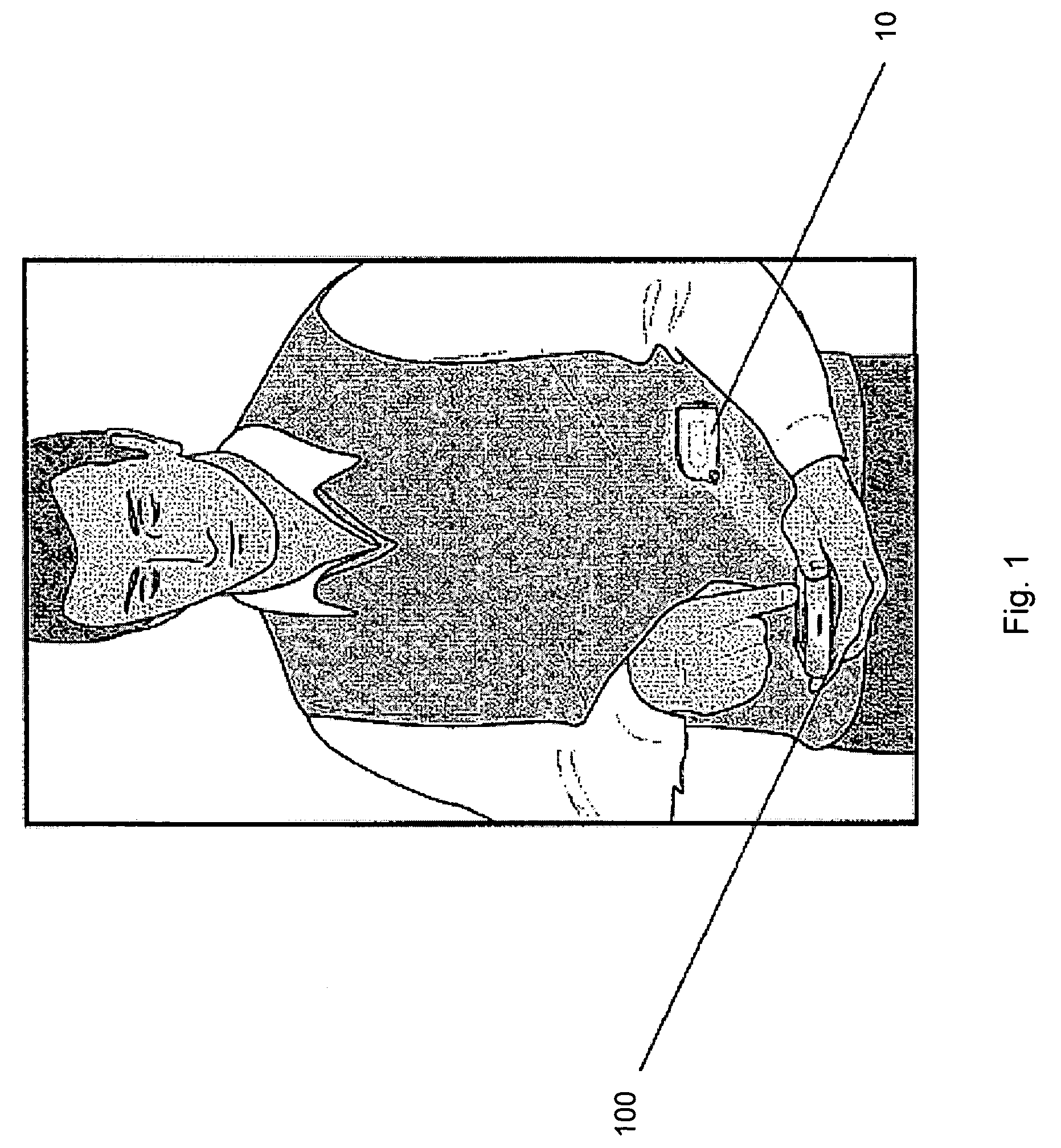

Multi-processor medical device

A system for delivering a fluid to a patient includes a remote controller and an infusion pump. The infusion pump includes a dispenser for dispensing the fluid. An operationally-independent RF telemetry portion is configured to receive an RF data signal from the remote controller. An operationally-independent processing portion is configured to process the RF data signal received by the operationally-independent RF telemetry portion, and control the dispenser in accordance with the RF data signal received by the operationally-independent RF telemetry portion.

Owner:INSULET CORP

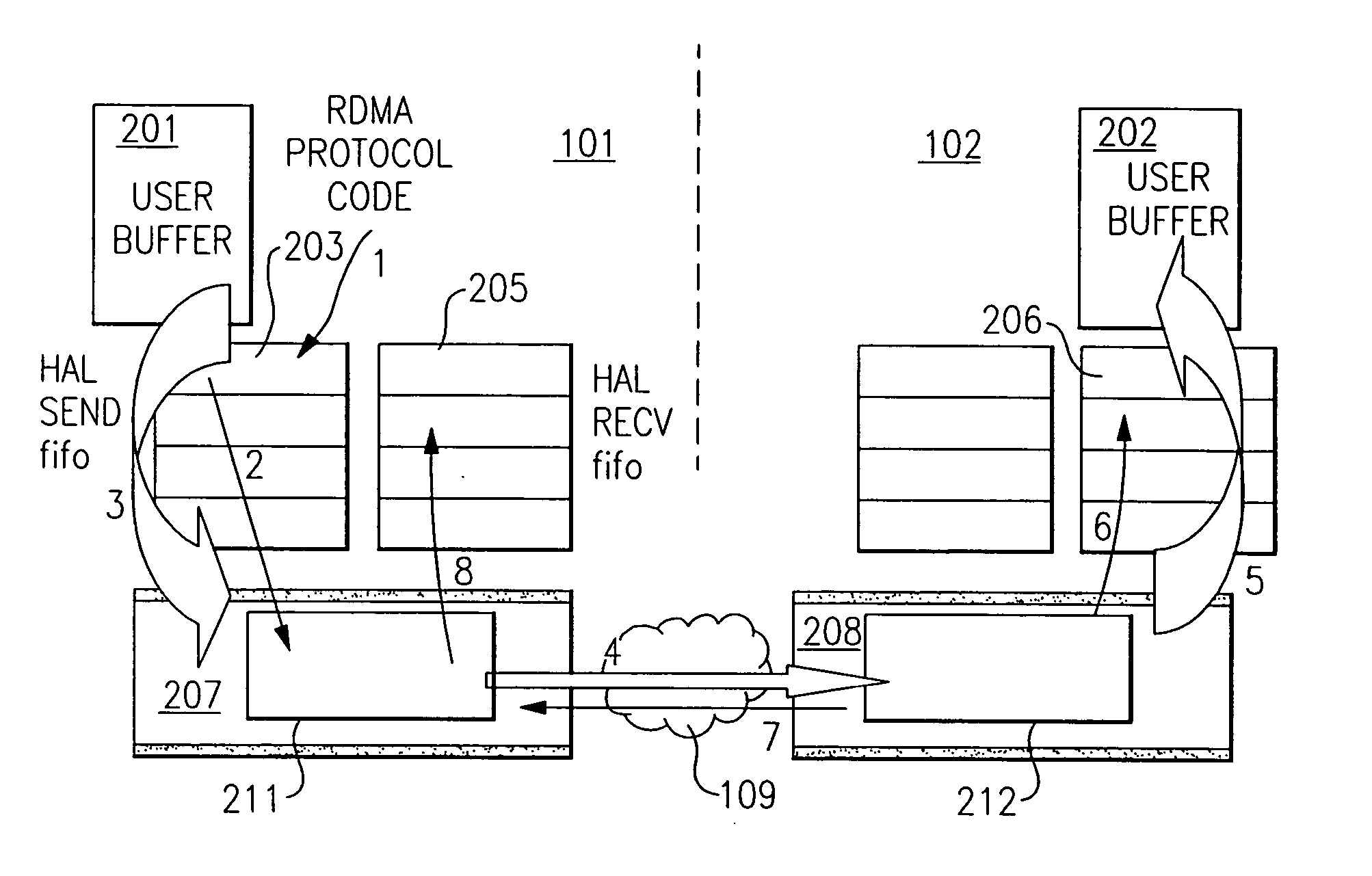

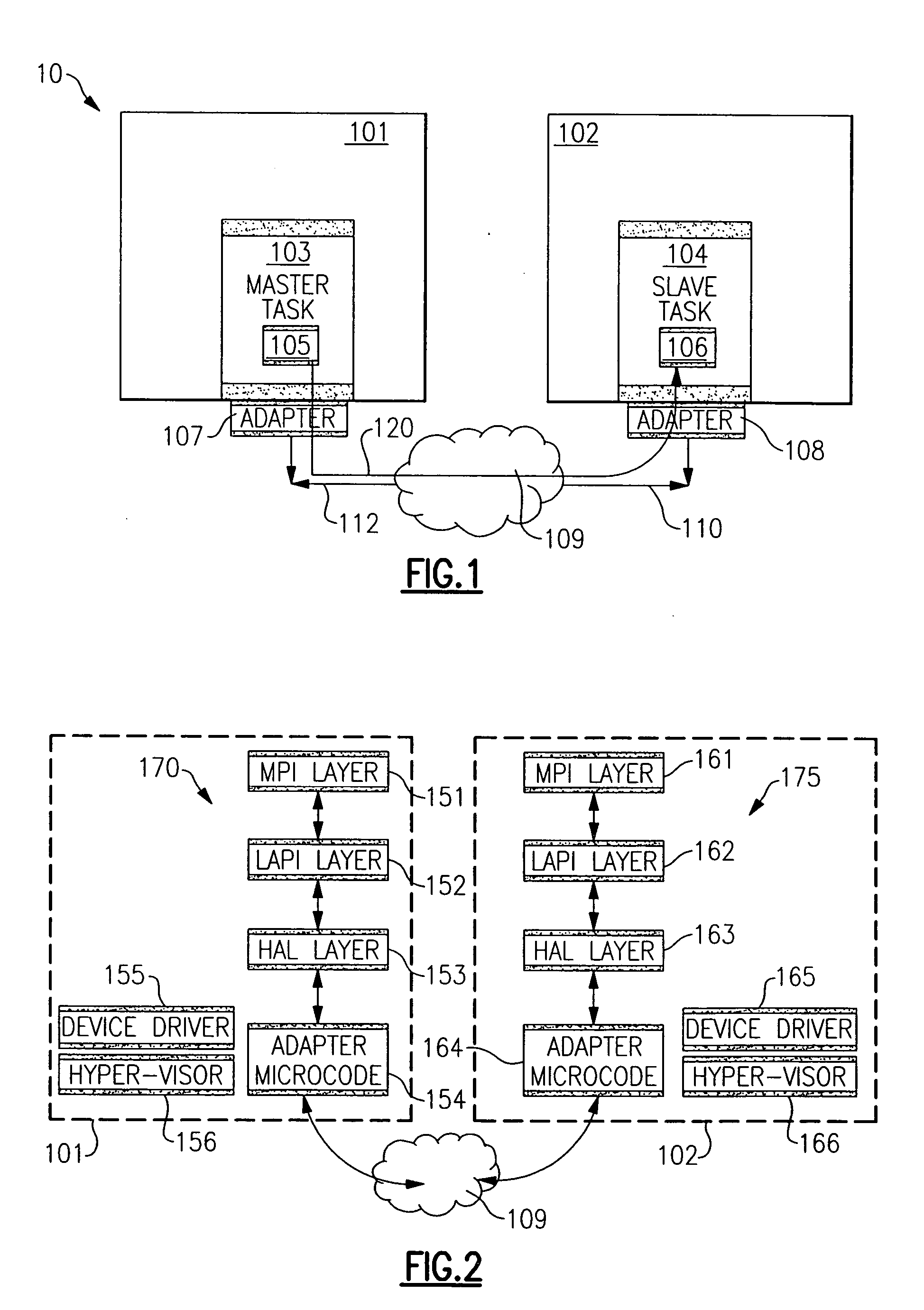

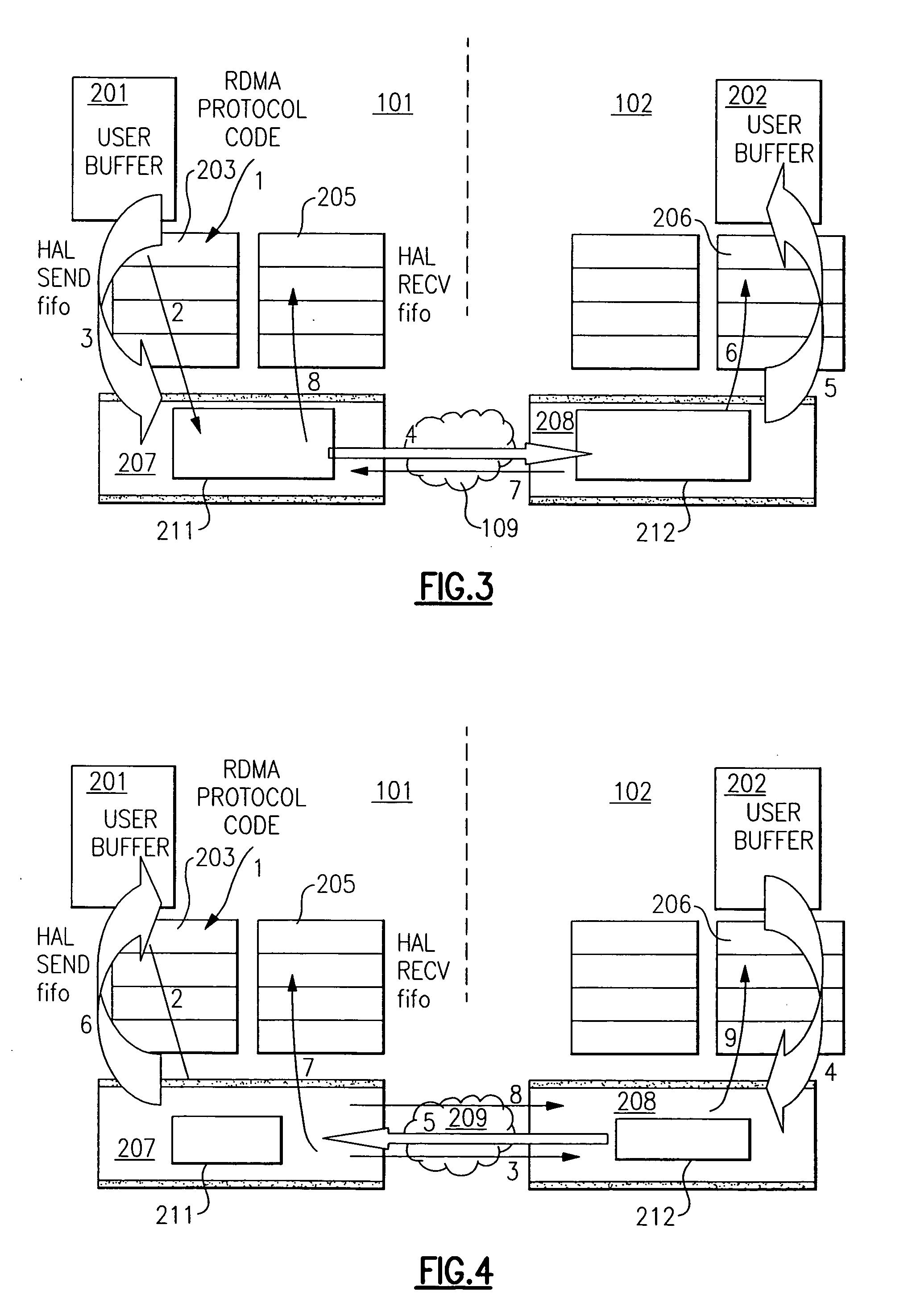

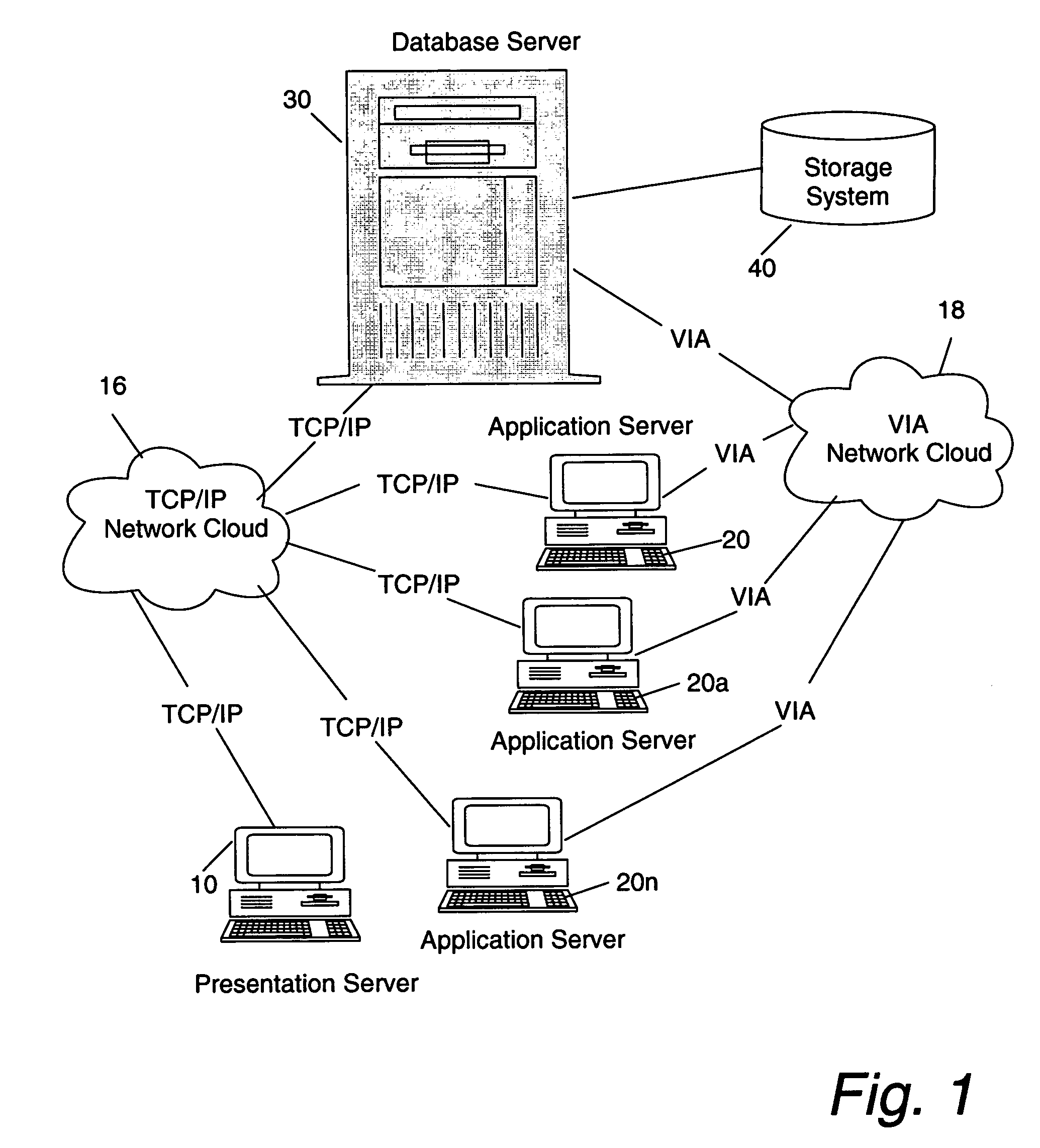

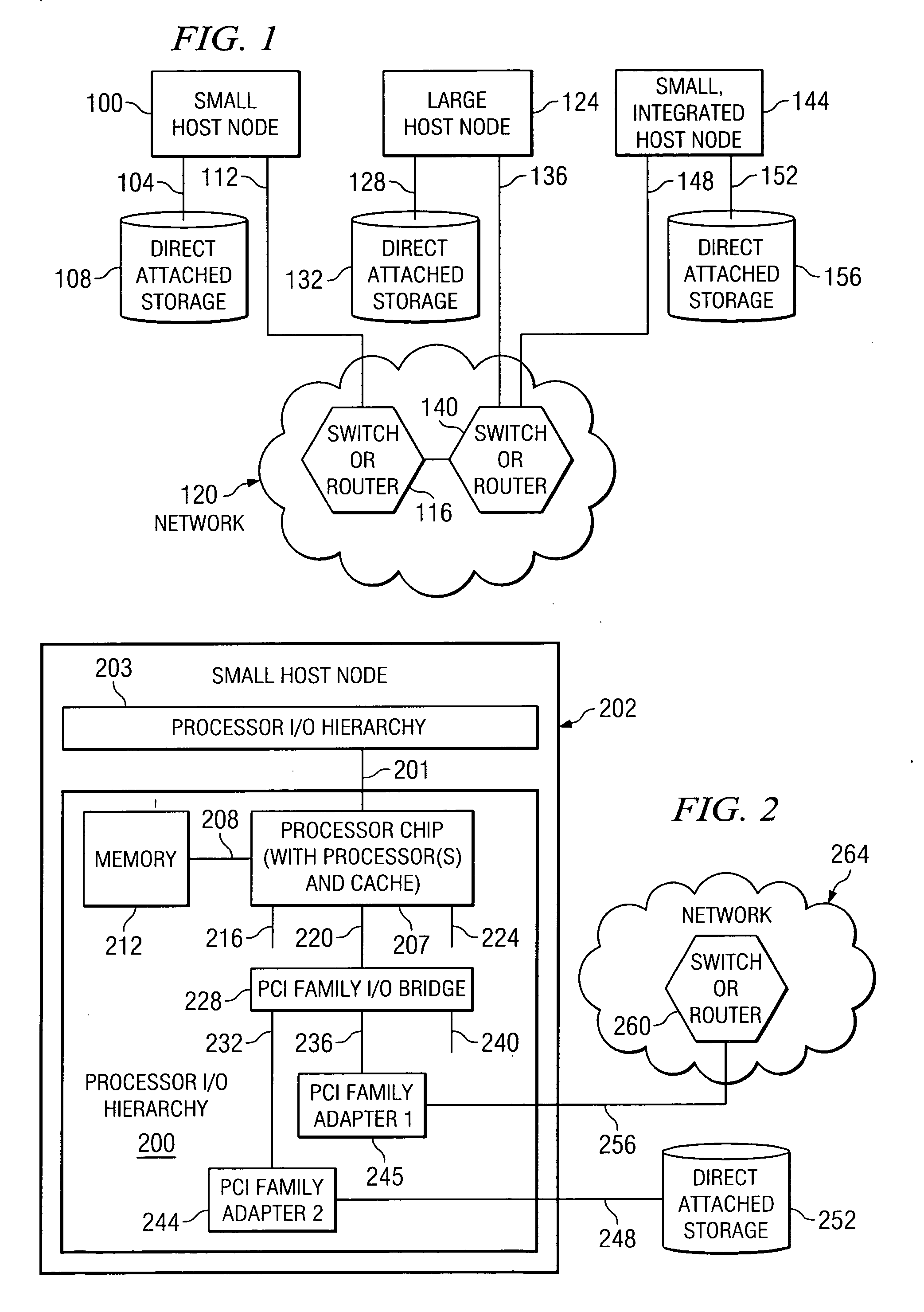

Remote direct memory access system and method

InactiveUS20060075057A1Digital computer detailsTransmissionMulti processorRemote direct memory access

A remote direct memory access (RDMA) system is provided in which data is transferred over a network by DMA between from a memory of a first node of a multi-processor system having a plurality of nodes connected by a network and a memory of a second node of the multi-processor system. The system includes a first network adapter at the first node, operable to transmit data stored in the memory of the first node to a second node in a plurality of portions in fulfillment of a DMA request. The first network adapter is operable to transmit each portion together with identifying information and information identifying a location for storing the transmitted portion in the memory of the second node, such that each portion is capable of being received independently by the second node according to the identifying information. Each portion is further capable of being stored in the memory of the second node at the location identified by the location identifying information.

Owner:IBM CORP

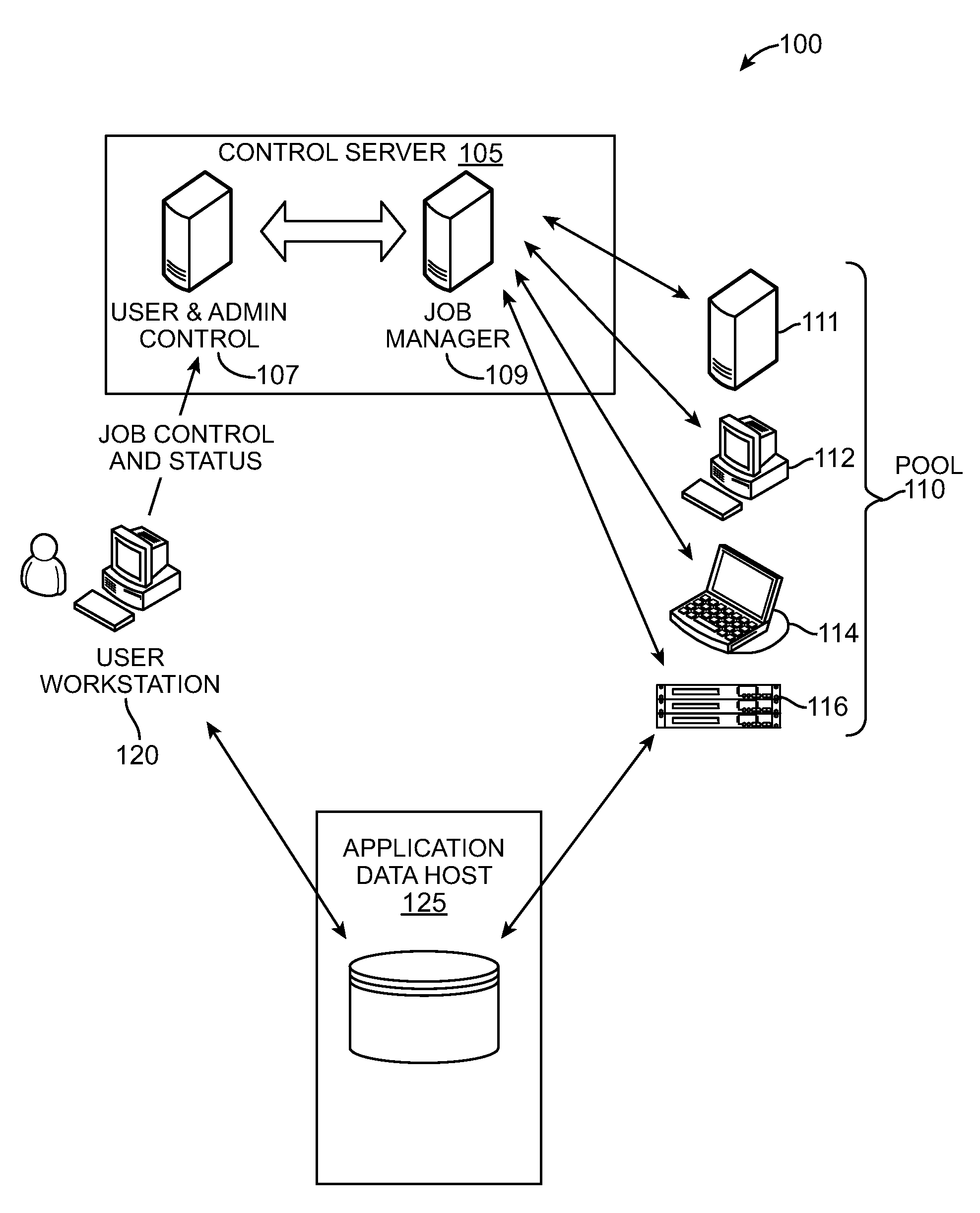

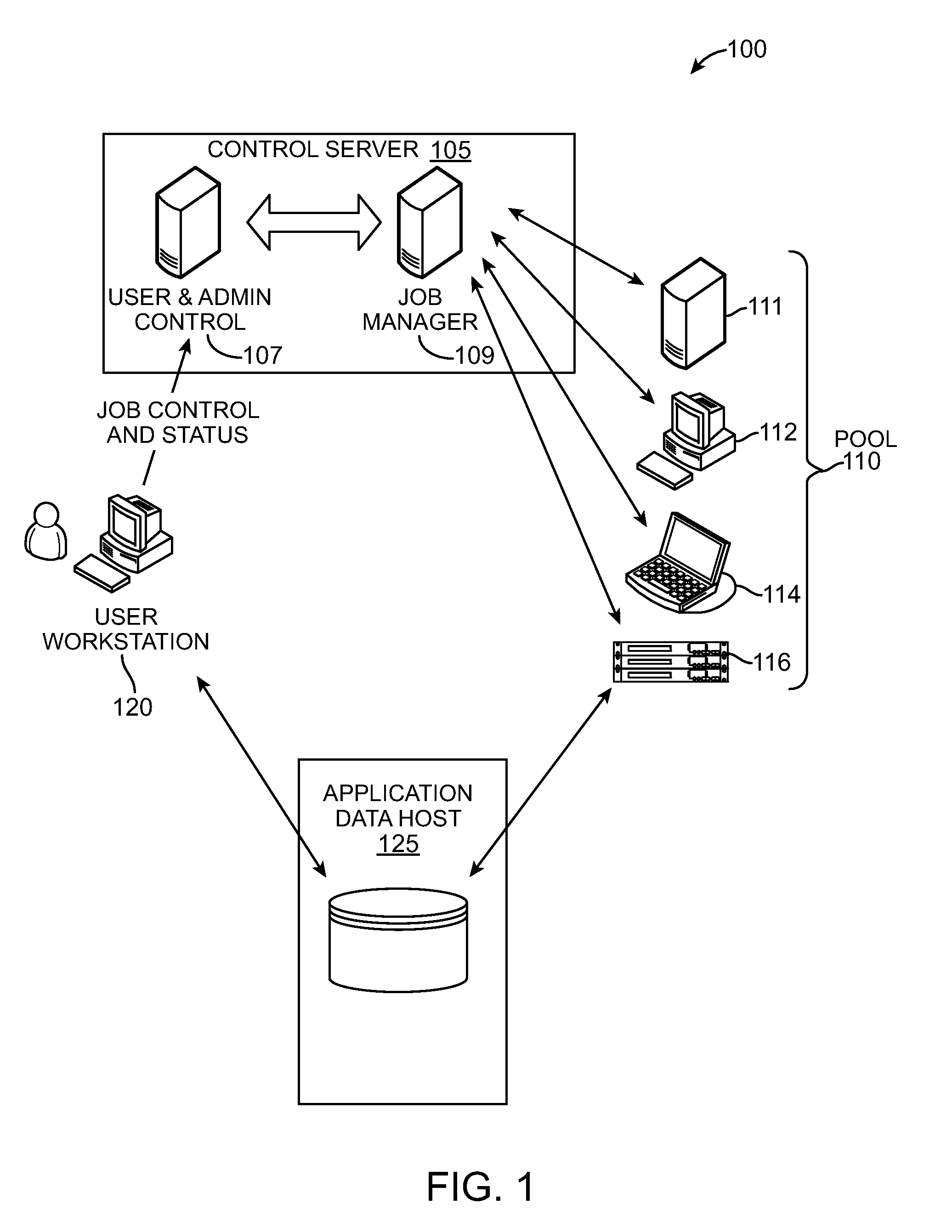

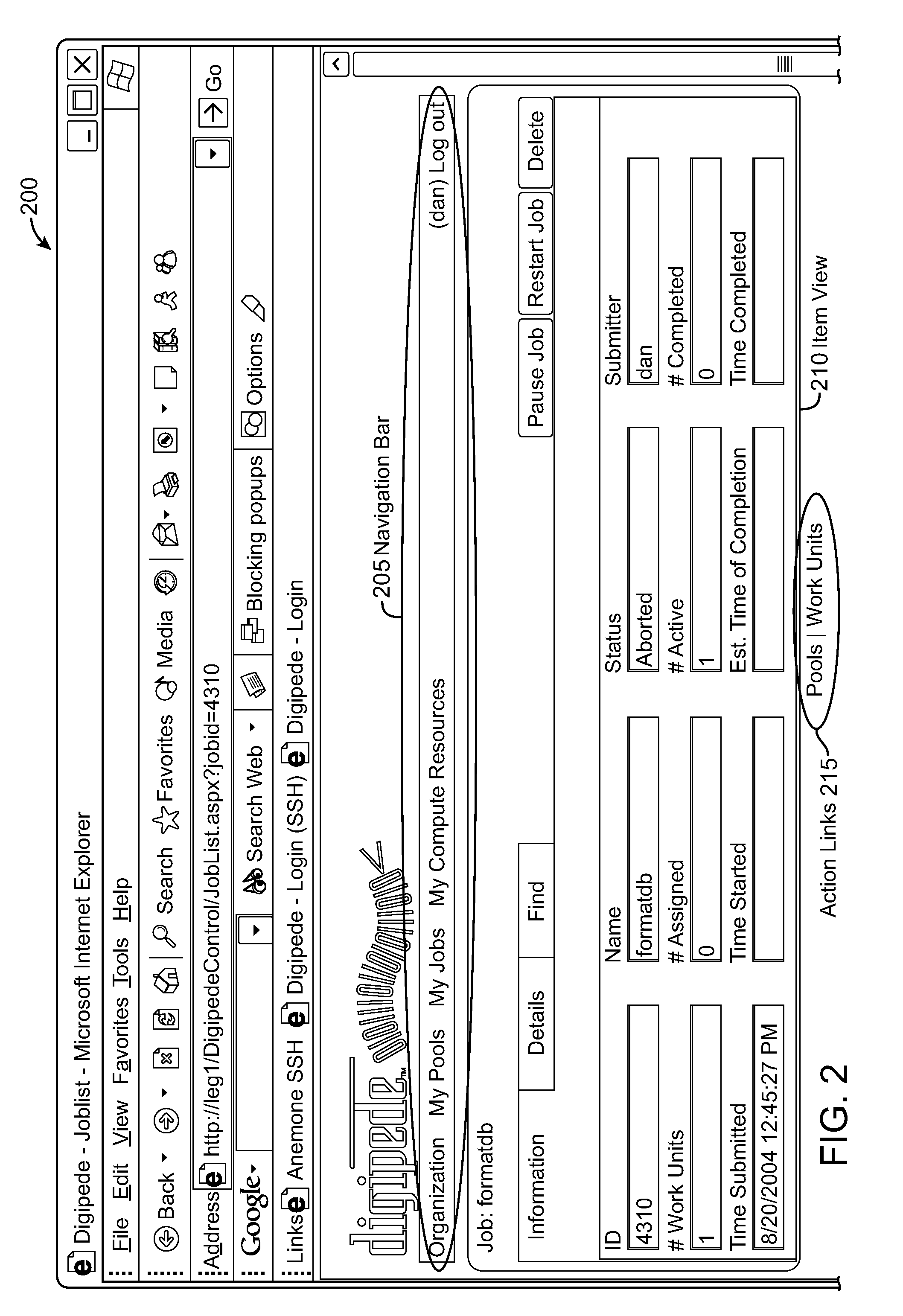

Multicore Distributed Processing System

ActiveUS20090049443A1Improve application performanceFaster and relatively lightweightGeneral purpose stored program computerMultiprogramming arrangementsMulti processorWork unit

A distributed processing system delegates the allocation and control of computing work units to agent applications running on computing resources including multi-processor and multi-core systems. The distributed processing system includes at least one agent associated with at least one computing resource. The distributed processing system creates work units corresponding with execution phases of applications. Work units can be associated with concurrency data that specifies how applications are executed on multiple processors and / or processor cores. The agent collects information about its associated computing resources and requests work units from the server using this information and the concurrency data. An agent can monitor the performance of executing work units to better select subsequent work units. The distributed processing system may also be implemented within a single computing resource to improve processor core utilization of applications. Additional computing resources can augment the single computing resource and execute pending work units at any time.

Owner:DIGIPEDE TECH LLC

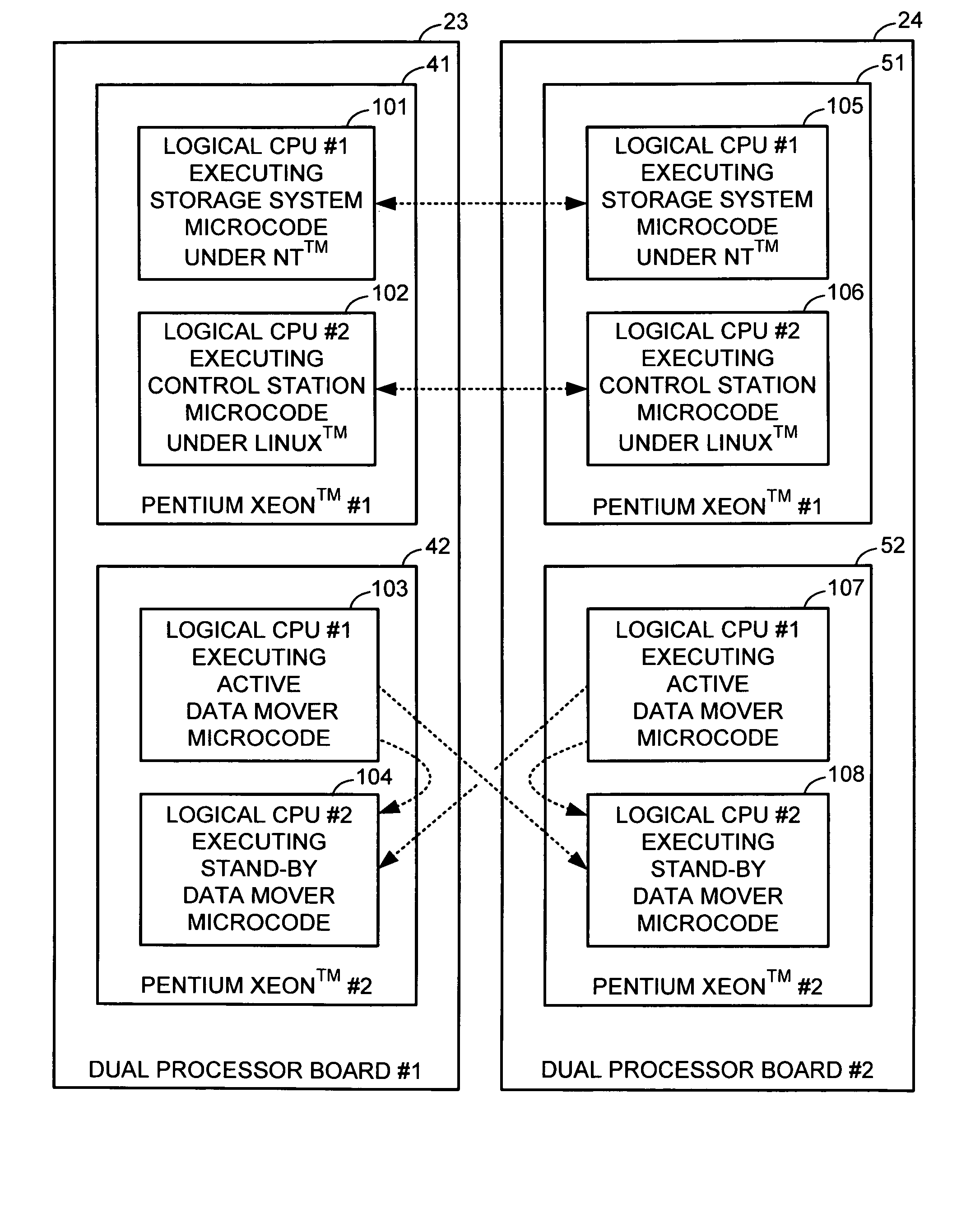

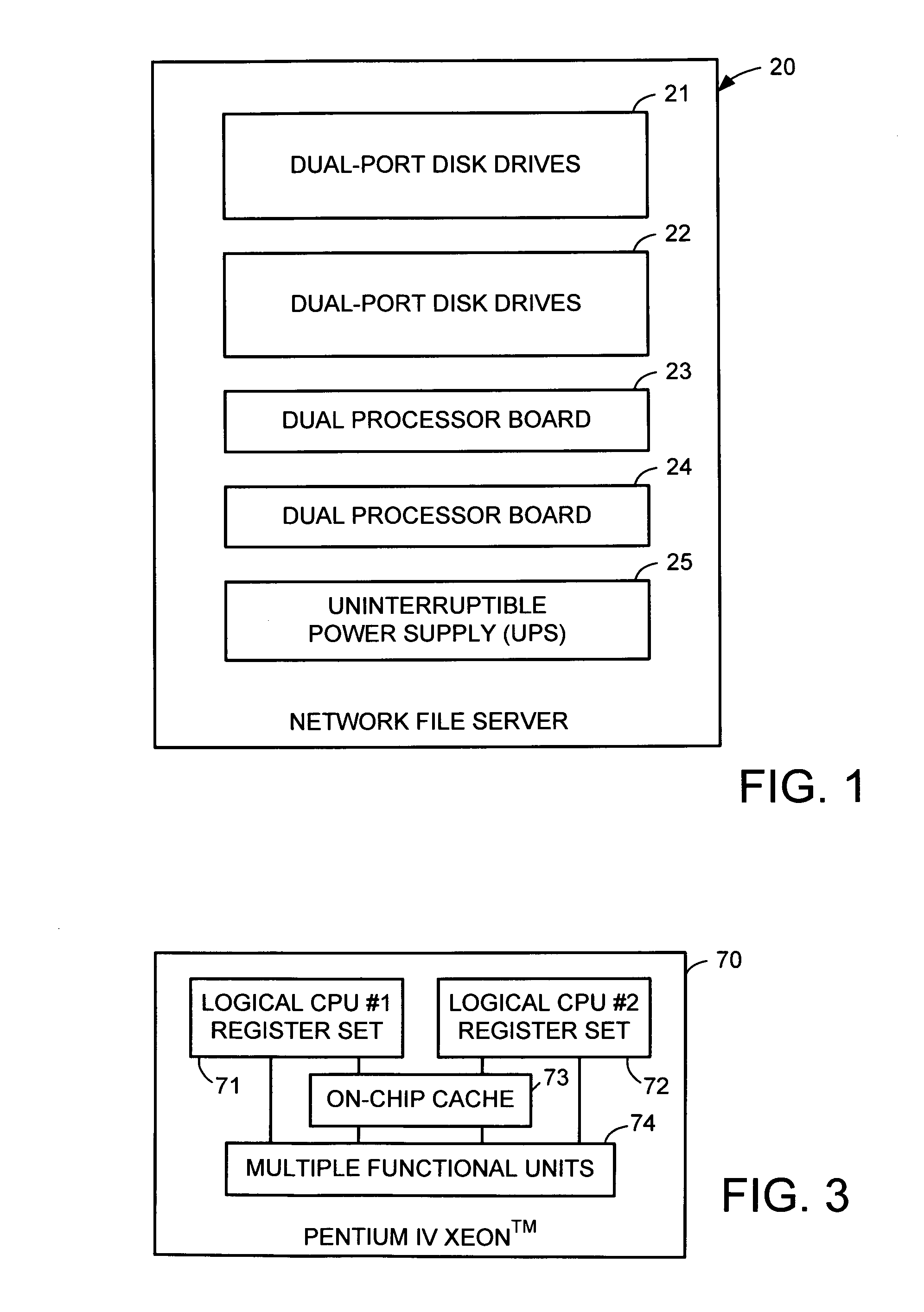

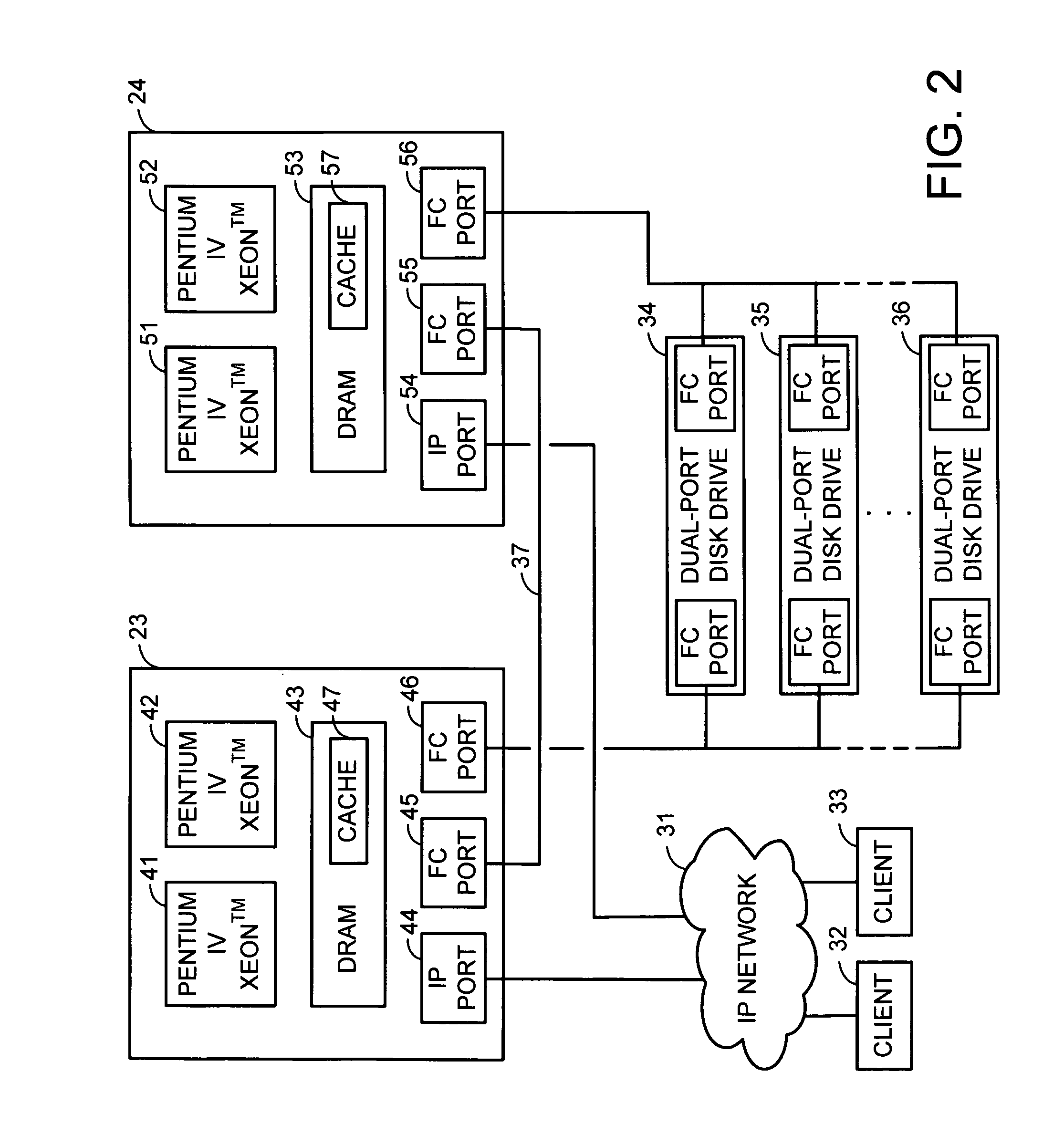

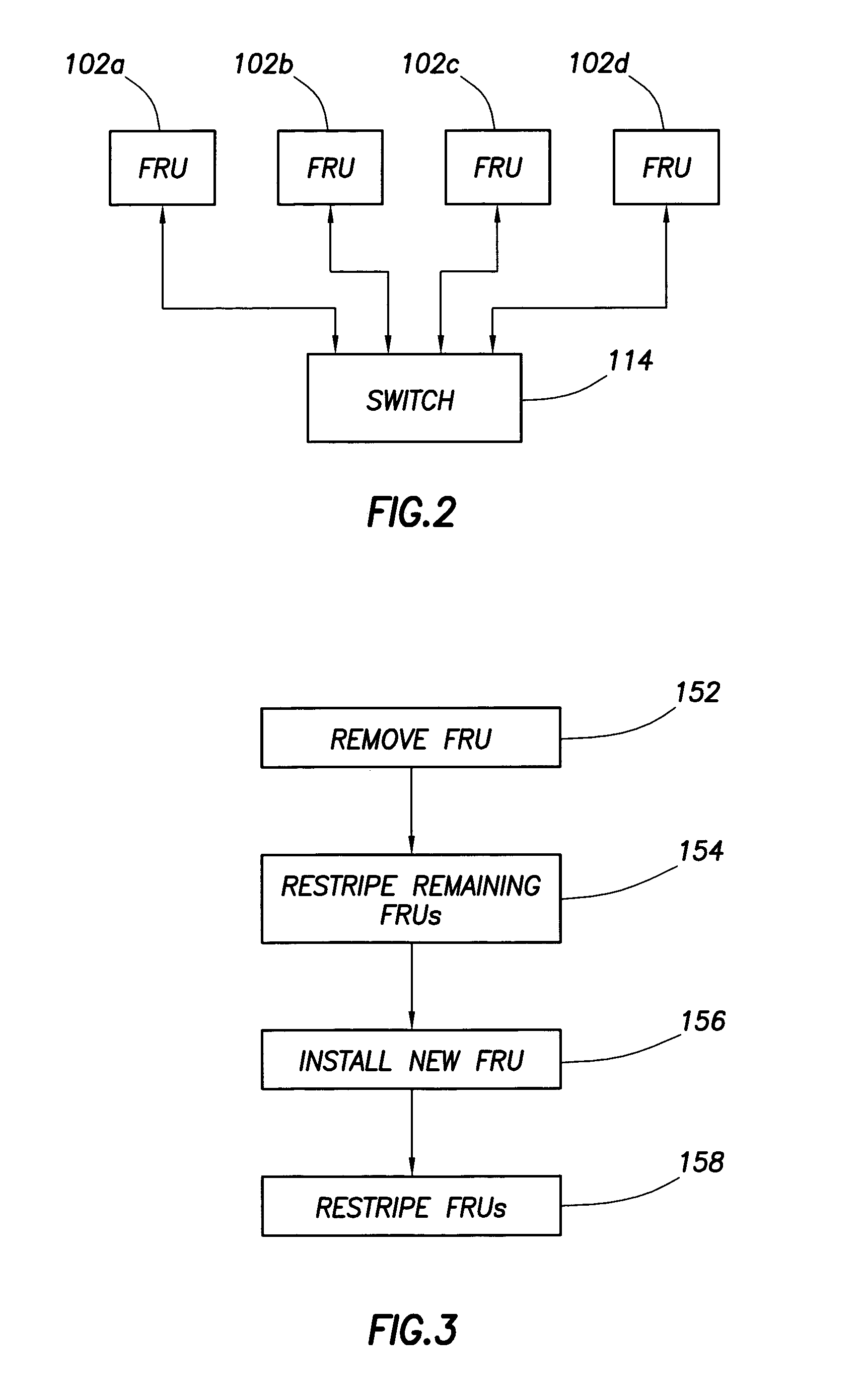

Redundant multi-processor and logical processor configuration for a file server

A redundant file server includes at least two dual processor boards. Each physical processor has two logical processors. The first logical processor of the first physical processor of each board executes storage system code under the Microsoft NT™ operating system. The second logical processor of the first physical processor of each board executes control station code under the Linux operating system. The first logical processor of the second physical processor of each board executes data mover code. The second logical processor of the second physical processor of each board is kept in a stand-by mode for assuming data mover functions upon failure of the first logical processor of the second physical processor on the first or second board.

Owner:EMC IP HLDG CO LLC

Adaptive load balancing in a multi-processor graphics processing system

ActiveUS7075541B2Increase in sizeSmall sizeCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

Systems and methods for balancing a load among multiple graphics processors that render different portions of a frame. A display area is partitioned into portions for each of two (or more) graphics processors. The graphics processors render their respective portions of a frame and return feedback data indicating completion of the rendering. Based on the feedback data, an imbalance can be detected between respective loads of two of the graphics processors. In the event that an imbalance exists, the display area is re-partitioned to increase a size of the portion assigned to the less heavily loaded processor and to decrease a size of the portion assigned to the more heavily loaded processor.

Owner:NVIDIA CORP

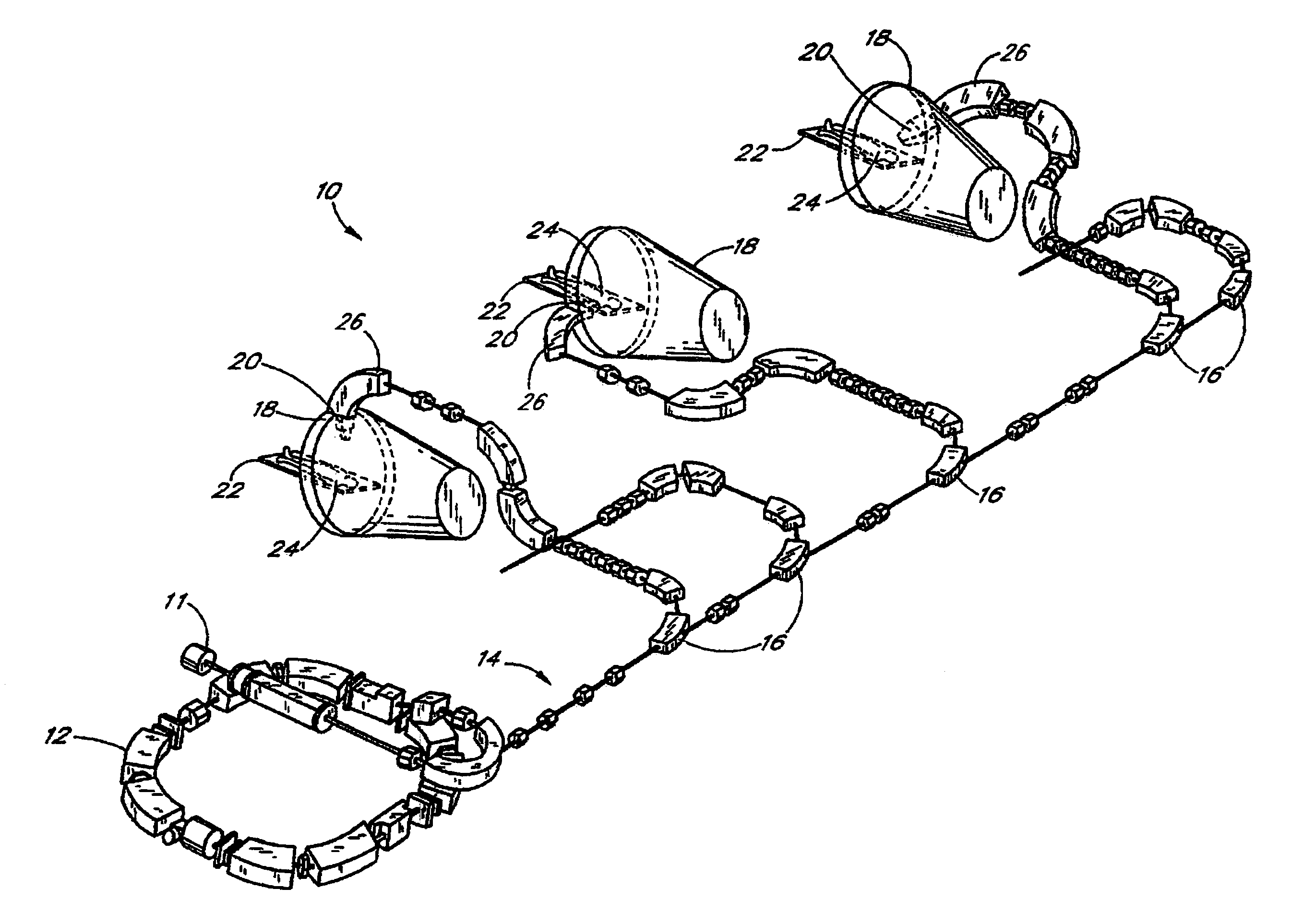

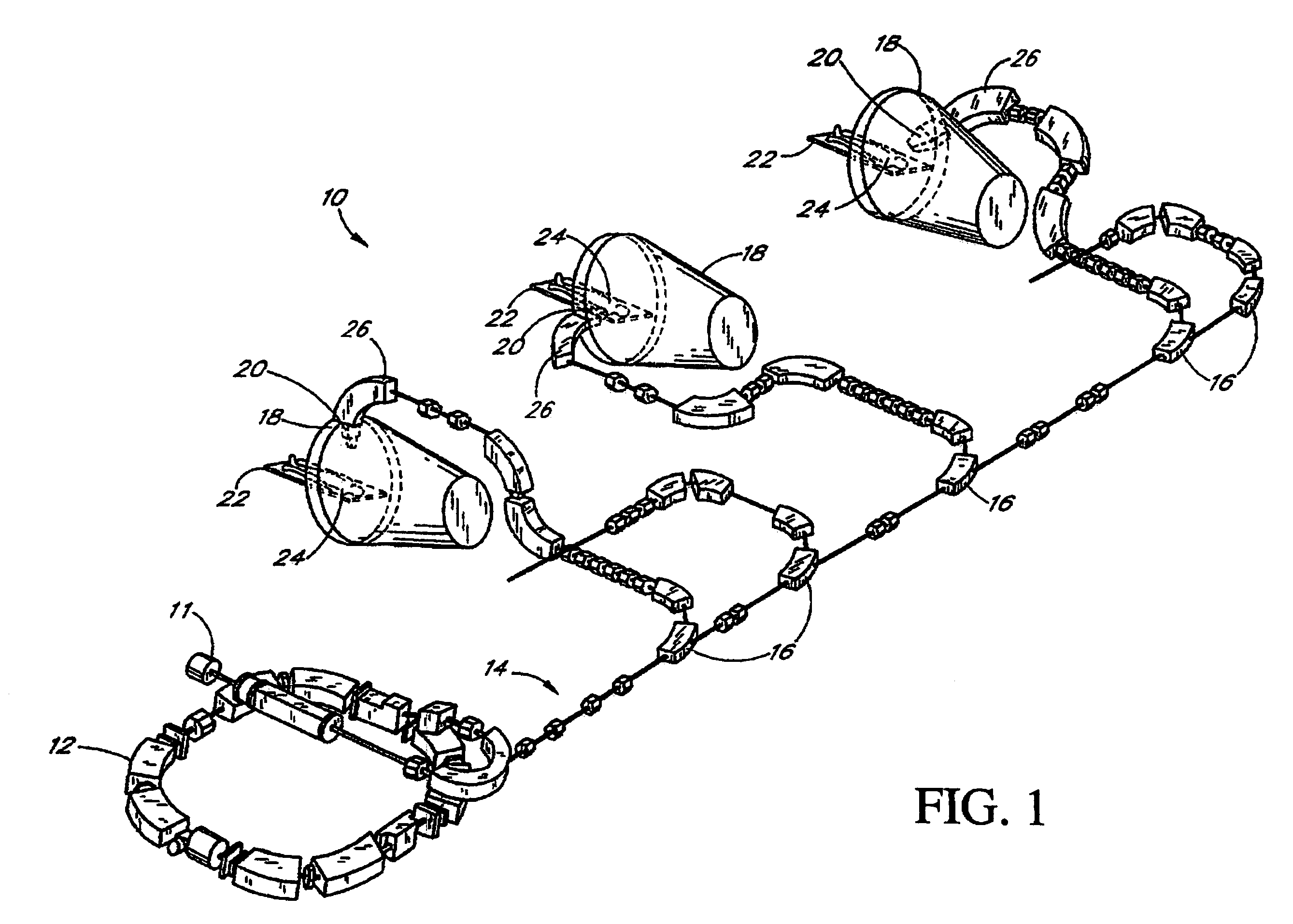

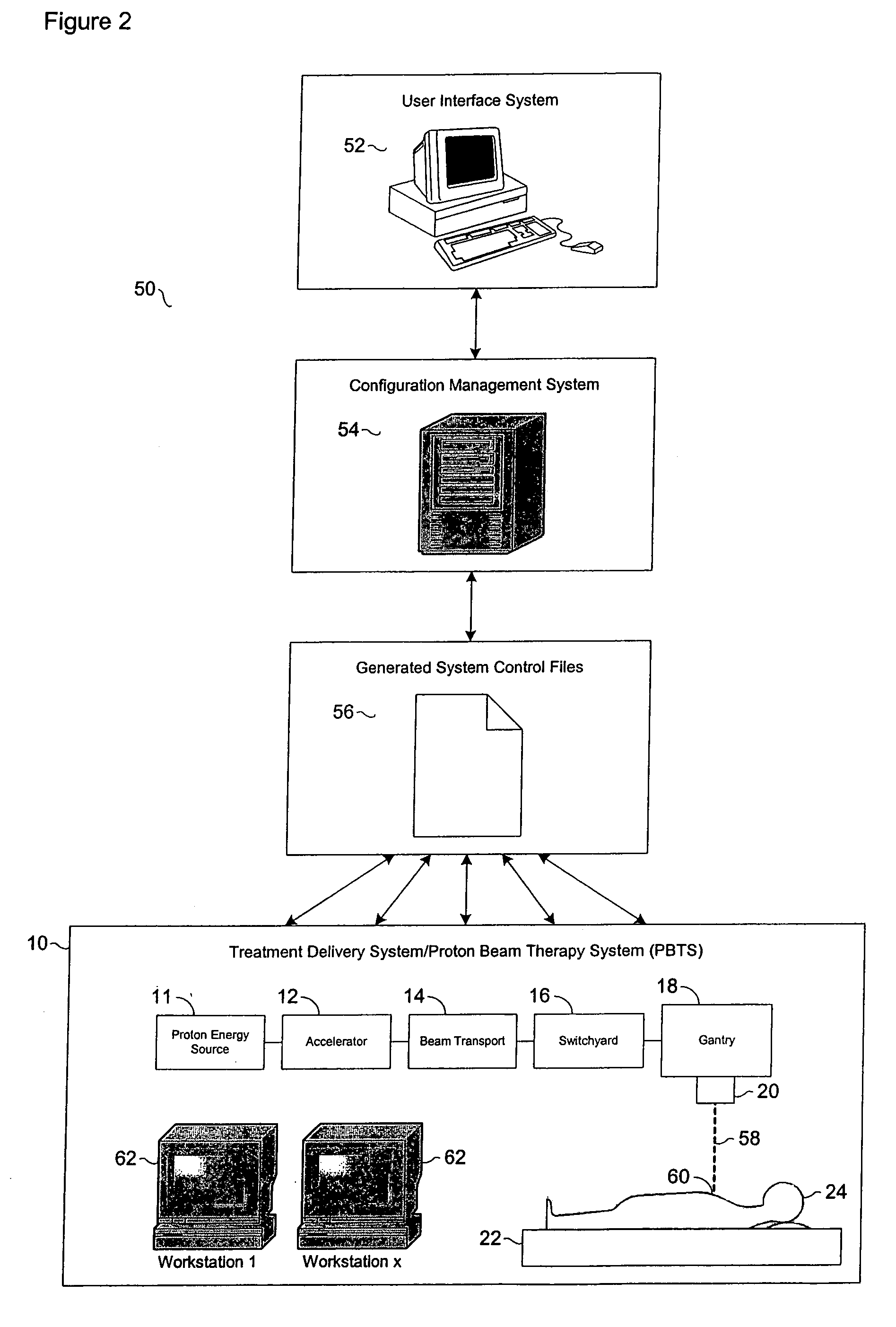

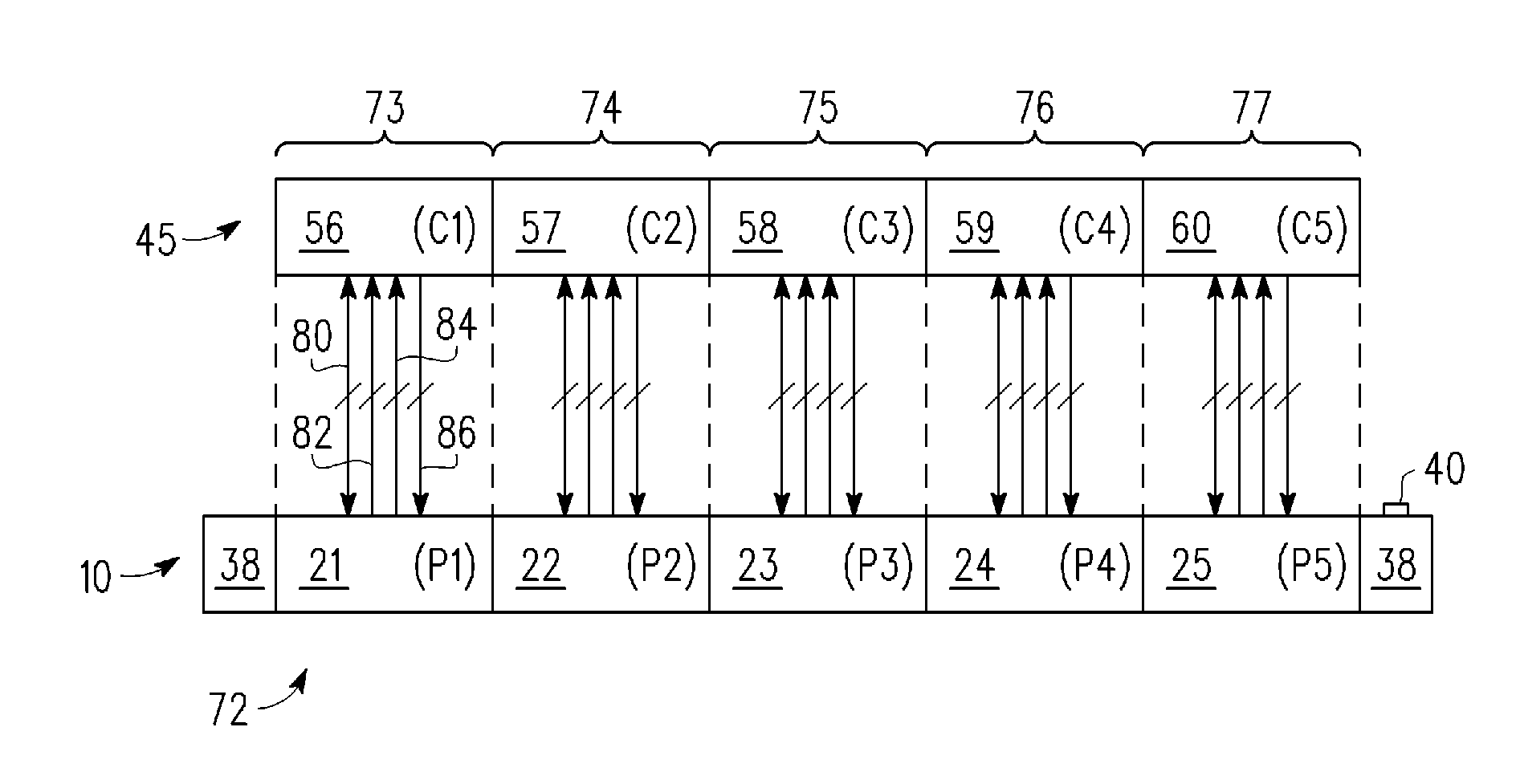

Configuration management and retrieval system for proton beam therapy system

InactiveUS7084410B2Reduce generationEasy accessNuclear monitoringDigital computer detailsOperation modeSingle point of failure

In a complex, multi-processor software controlled system, such as proton beam therapy system (PBTS), it may be important to provide treatment configurable parameters that are easily modified by an authorized user to prepare the software controlled systems for various modes of operation. This particular invention relates to a configuration management system for the PBTS that utilizes a database to maintain data and configuration parameters and also to generate and distribute system control files that can be used by the PBTS for treatment delivery. The use of system control files reduces the adverse effects of single point failures in the database by allowing the PBTS to function independently from the database. The PBTS accesses the data, parameters, and control settings from the database through the system control files, which insures that the data and configuration parameters are accessible when and if single point failures occur with respect to the database.

Owner:LOMA LINDA UNIV MEDICAL CENT

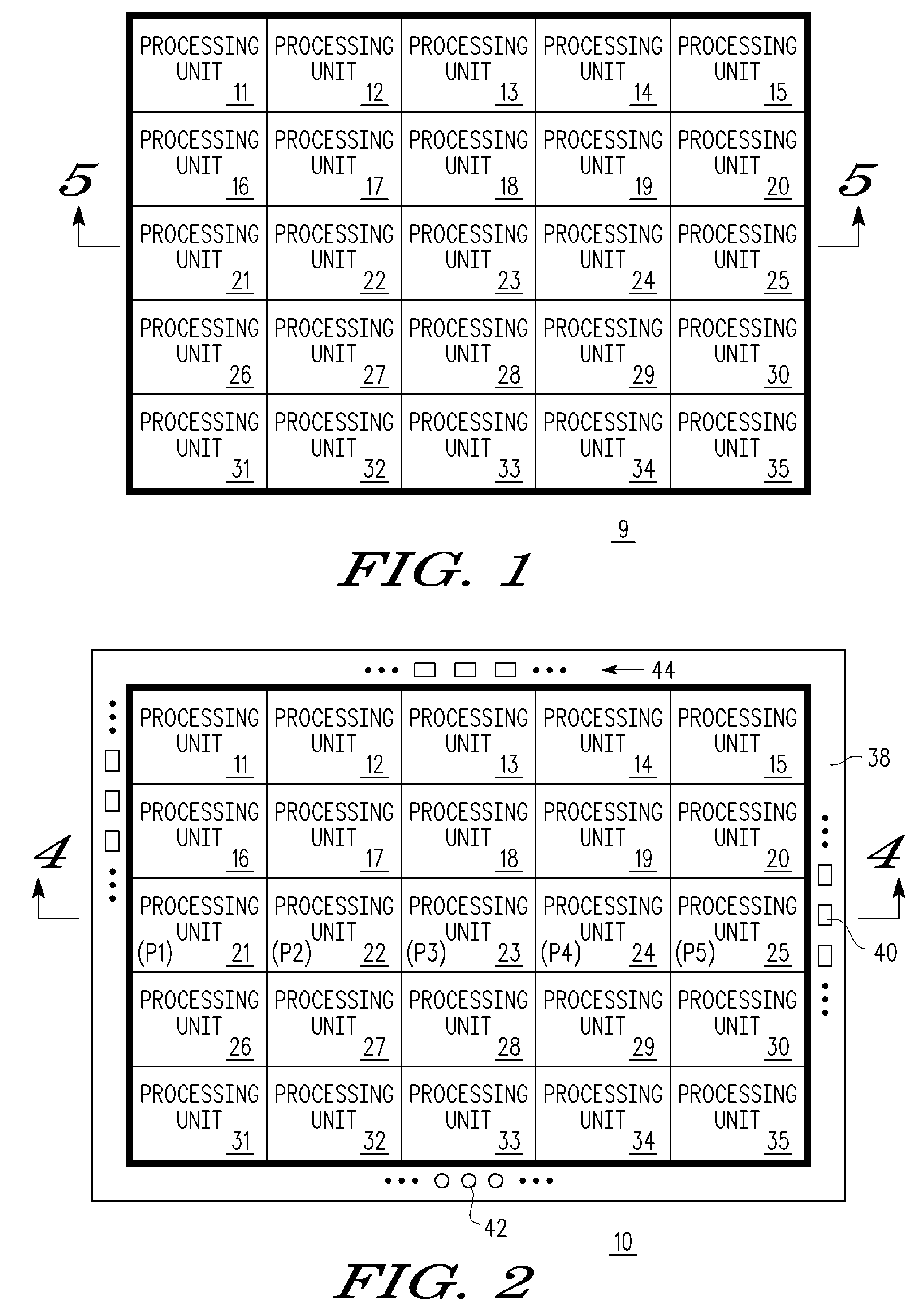

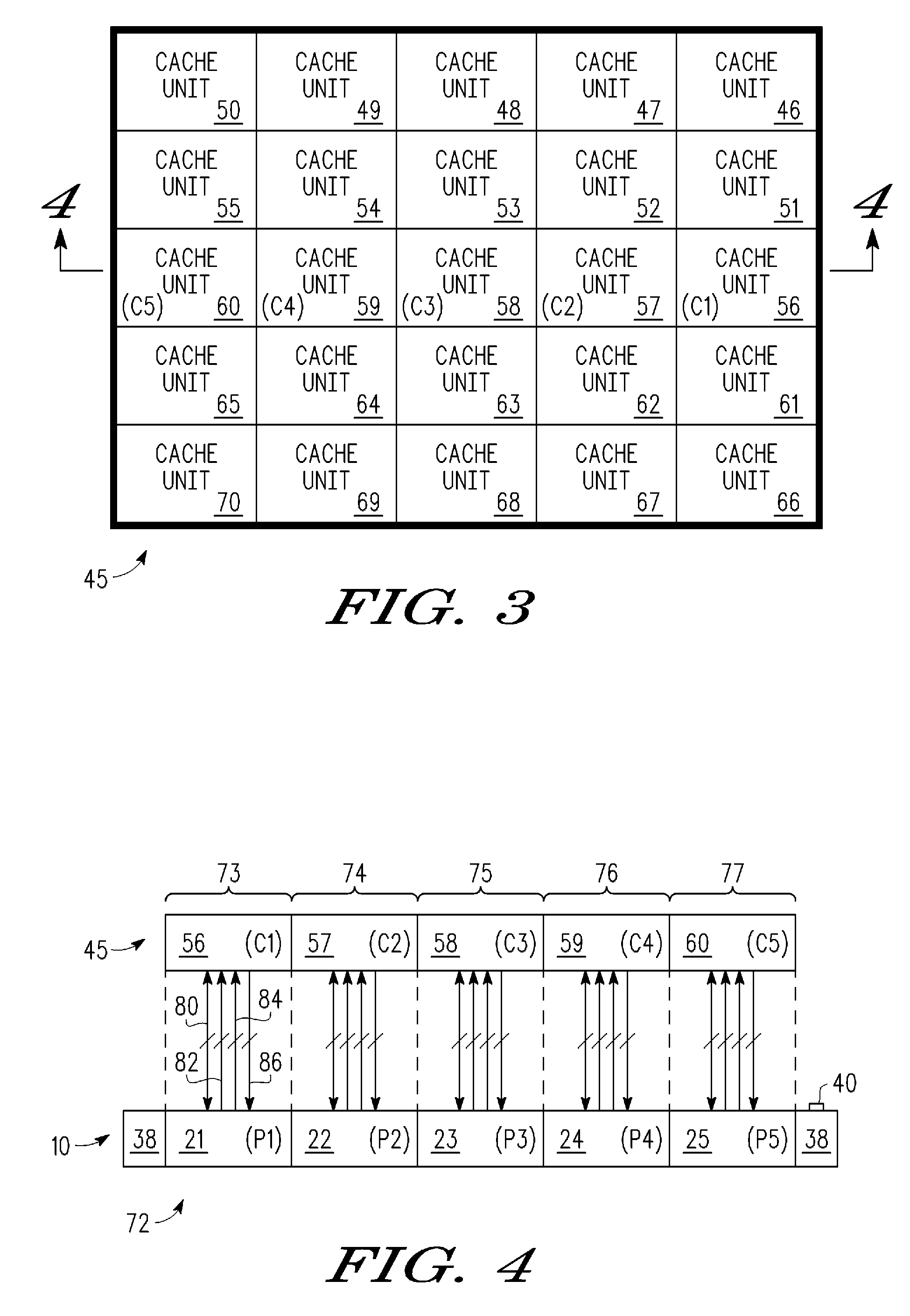

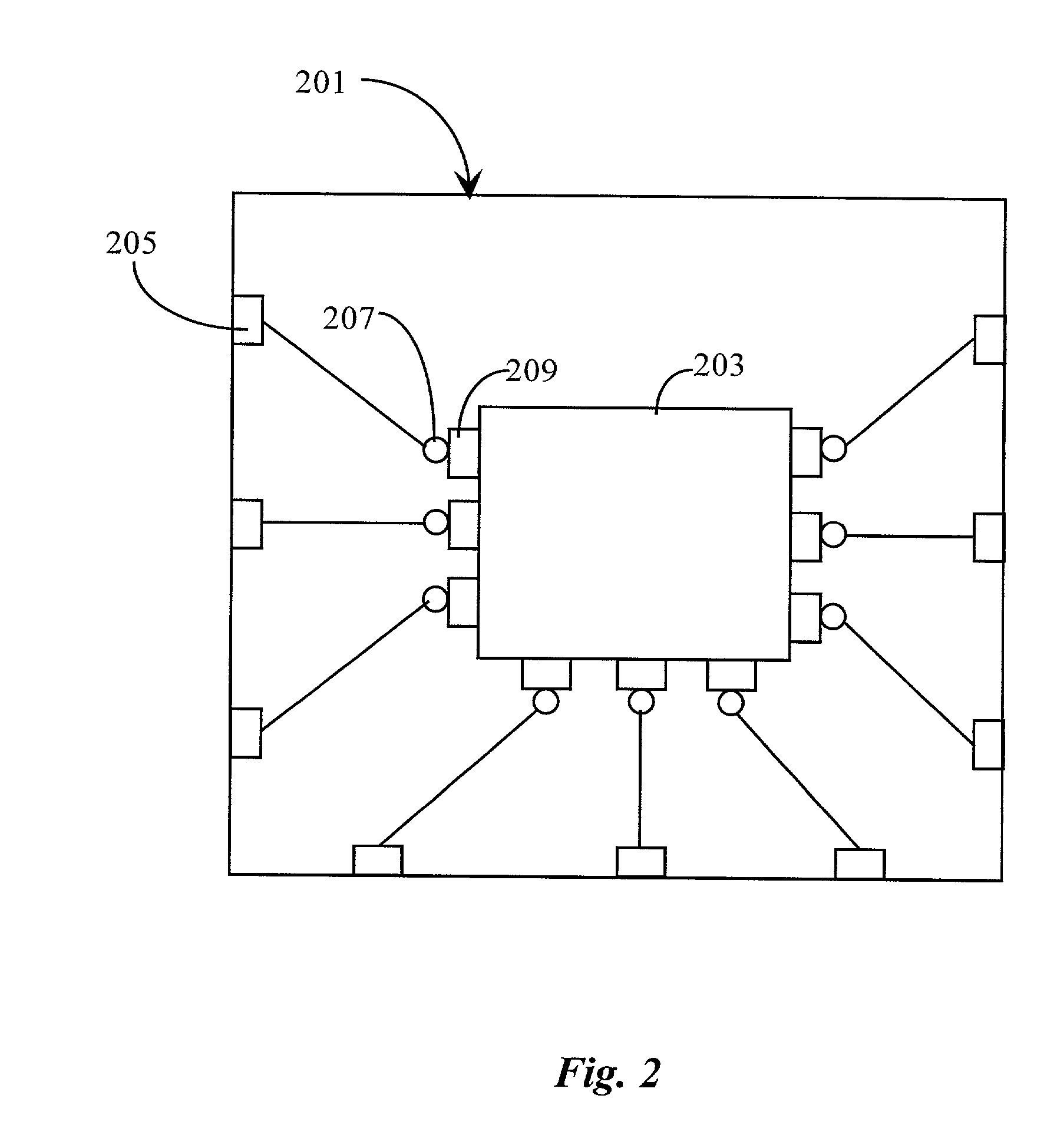

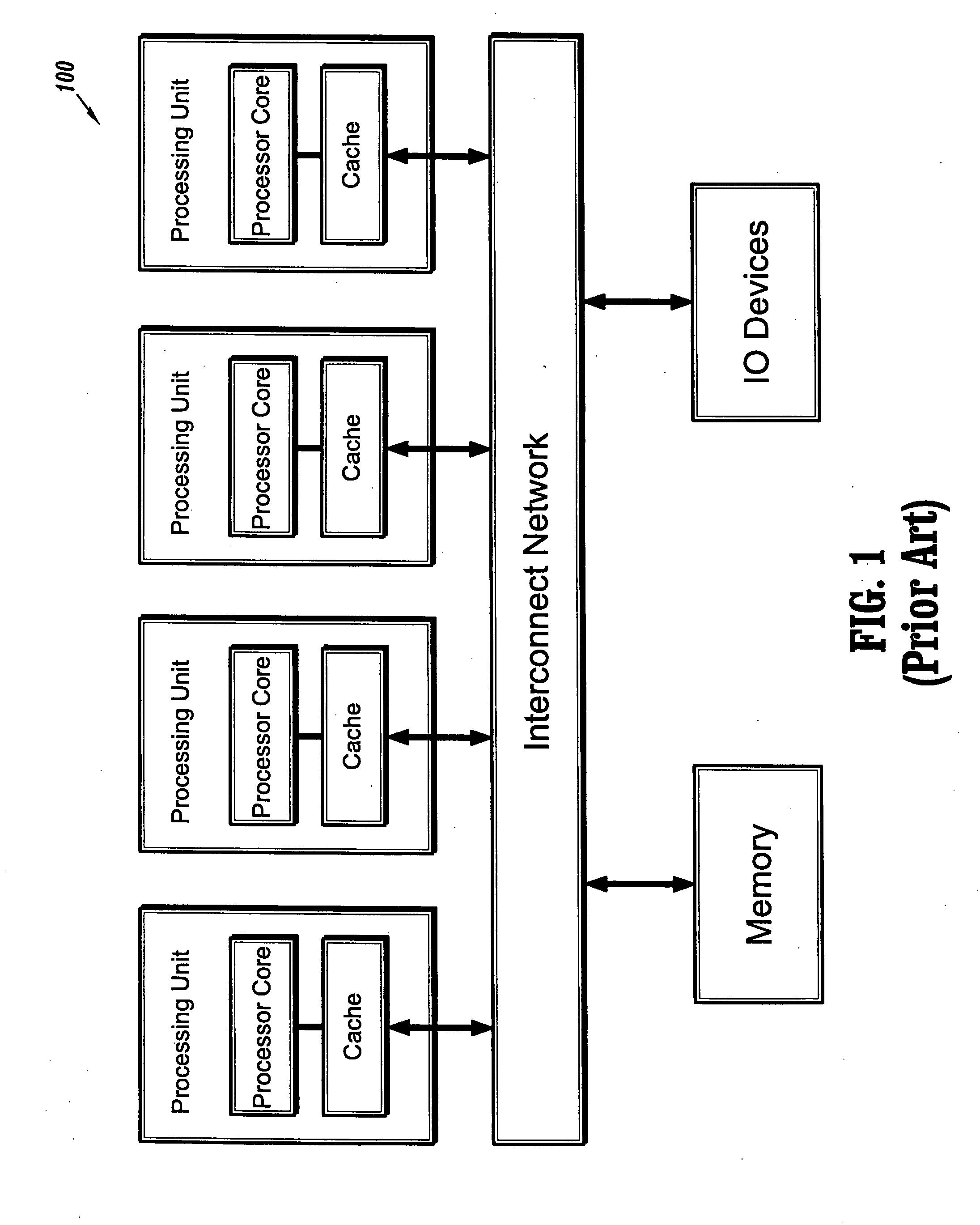

High bandwidth cache-to-processing unit communication in a multiple processor/cache system

InactiveUS7777330B2Semiconductor/solid-state device detailsSolid-state devicesContact padHigh bandwidth

A processor / cache assembly has a processor die coupled to a cache die. The processor die has a plurality of processor units arranged in an array. There is a plurality of processor sets of contact pads on the processor units, one processor set for each processor unit. Similarly, the cache die has a plurality of cache units arranged in an array. There is a plurality of cache sets of contact pads on the cache die, one cache set for each cache unit. Each cache set is in contact with one corresponding processor set.

Owner:NORTH STAR INNOVATIONS

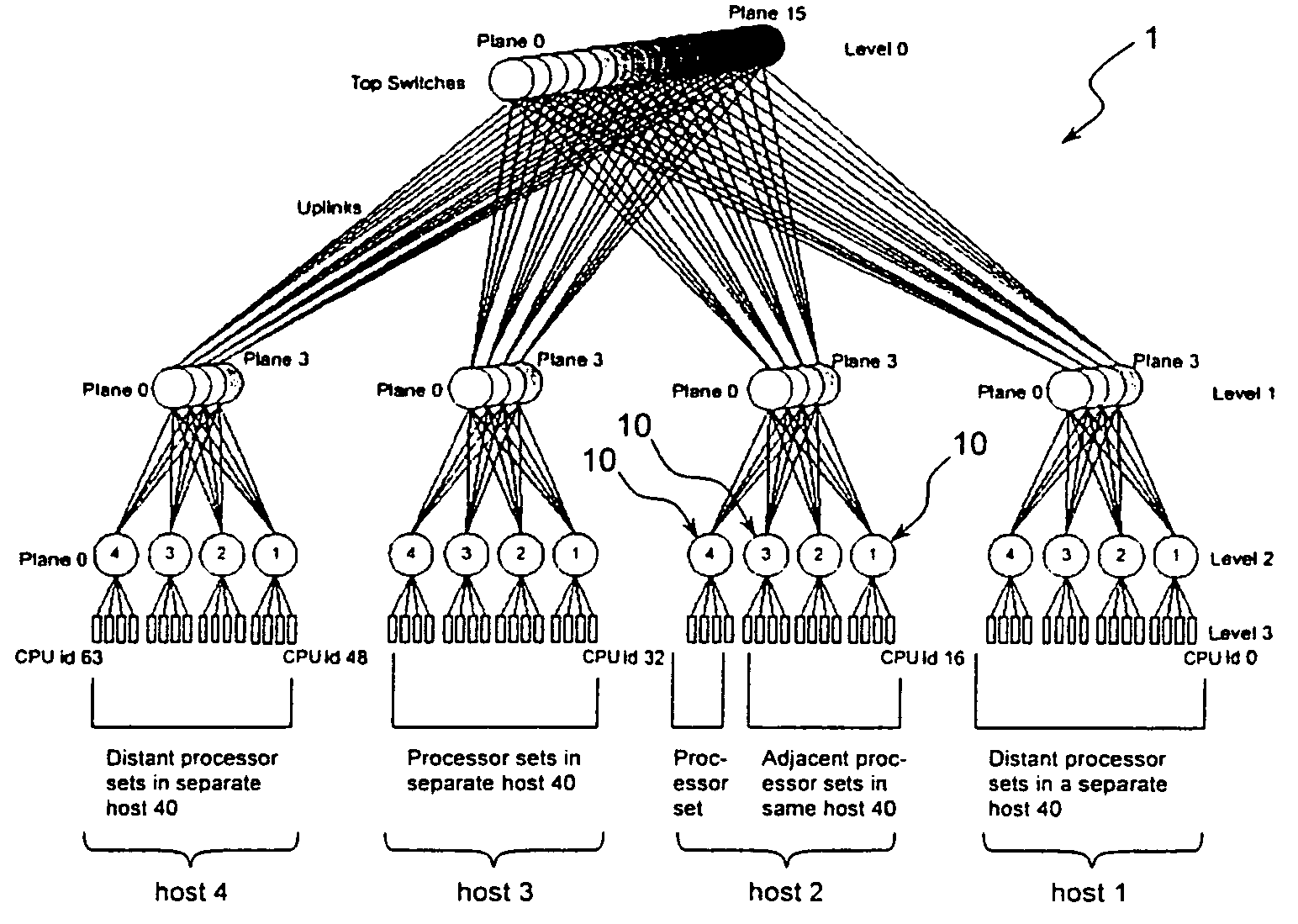

Topology aware scheduling for a multiprocessor system

InactiveUS20050071843A1Maximize efficiencyLoad balancingResource allocationMemory systemsOperation schedulingMulti processor

A system and method for scheduling jobs in a multiprocessor machine is disclosed. The status of CPUs on node boards in the multiprocessor machine is periodically determined. The status can indicate the number of CPUs available, and the maximum radius of free CPUs available to execute jobs. Memory allocation is also monitored. This information is provided to a scheduler that compares the status of the resources available against the resource requirements of jobs. The node boards and CPUS, as well as other resources such as memory, are arranged in hosts. The scheduler then schedules jobs to hosts that indicate they have resources available to execute the jobs. If none of the hosts indicate they have resources available to execute the jobs, the scheduler will wait until the resources become available. A best fit of job to resources is attained by scheduling jobs to hosts that have the maximum number of free CPUs for a radius corresponding to the CPU radius requirement of a job. Once the job is scheduled to a host, it is dispatched to a host and resources required to execute the job are allocated to the job at the host.

Owner:PLATFORM COMPUTING CORP

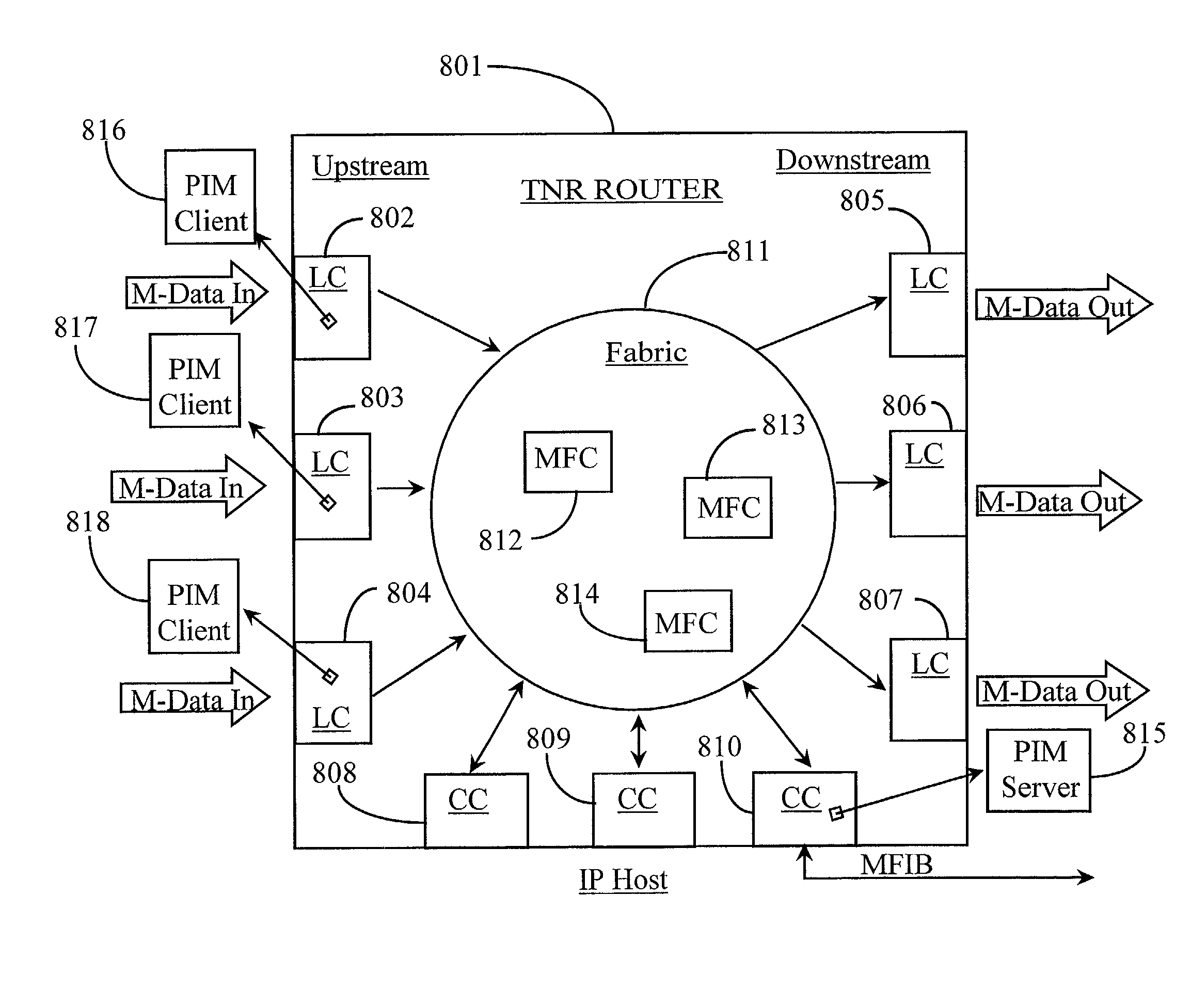

Method and apparatus for distributing routing instructions over multiple interfaces of a data router

InactiveUS20020126671A1Special service provision for substationError preventionCommunication interfaceInformation repository

A software application in a multi-processor data router in which a forwarding information base for the router is maintained is provided with a server module and one or more client modules, each client module associated with one or more communication interfaces of the data router. The application is characterized in that the server module sends to each client module only that portion of the forwarding information base specific to the communication interfaces associated with the client module.

Owner:PLURIS

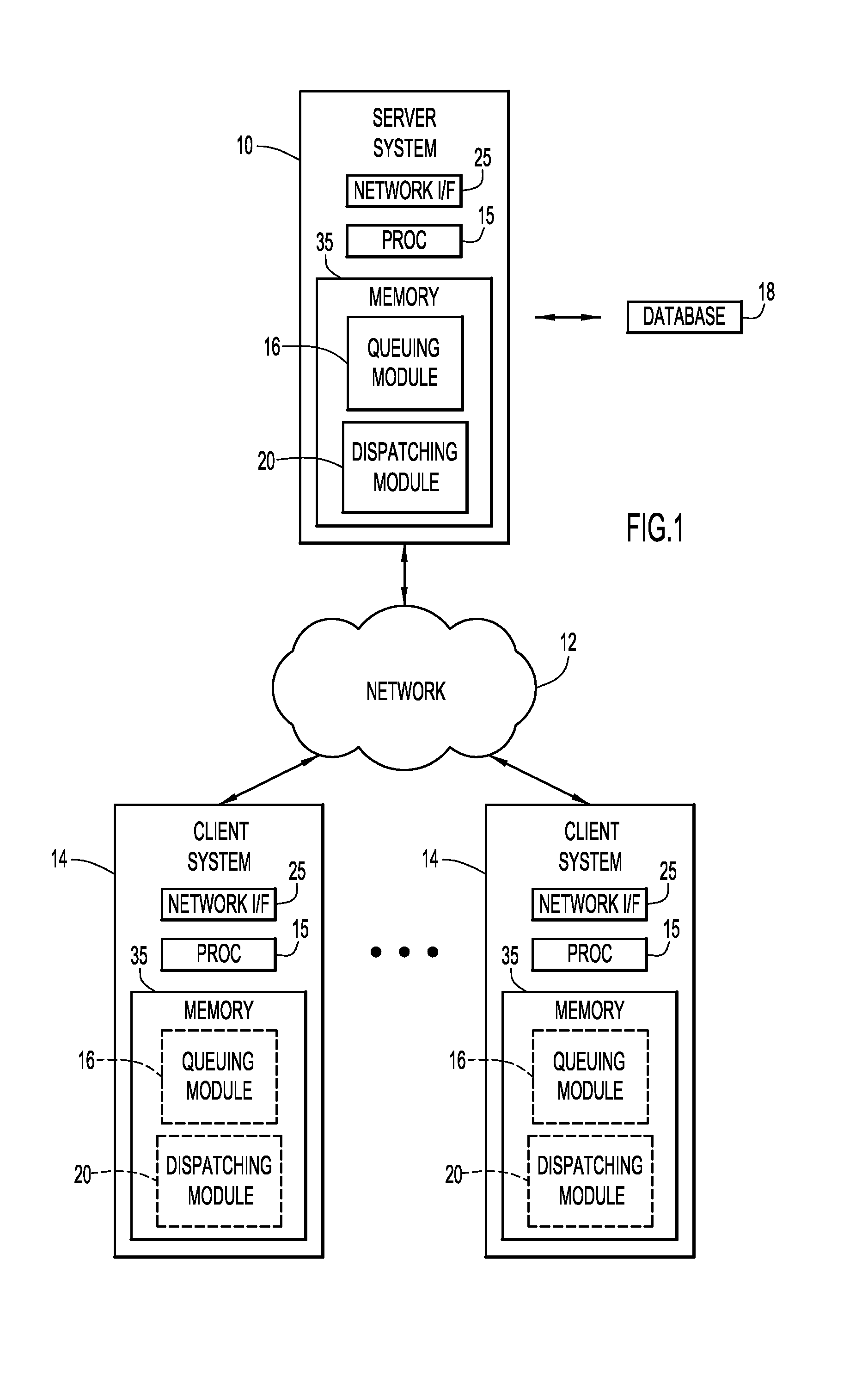

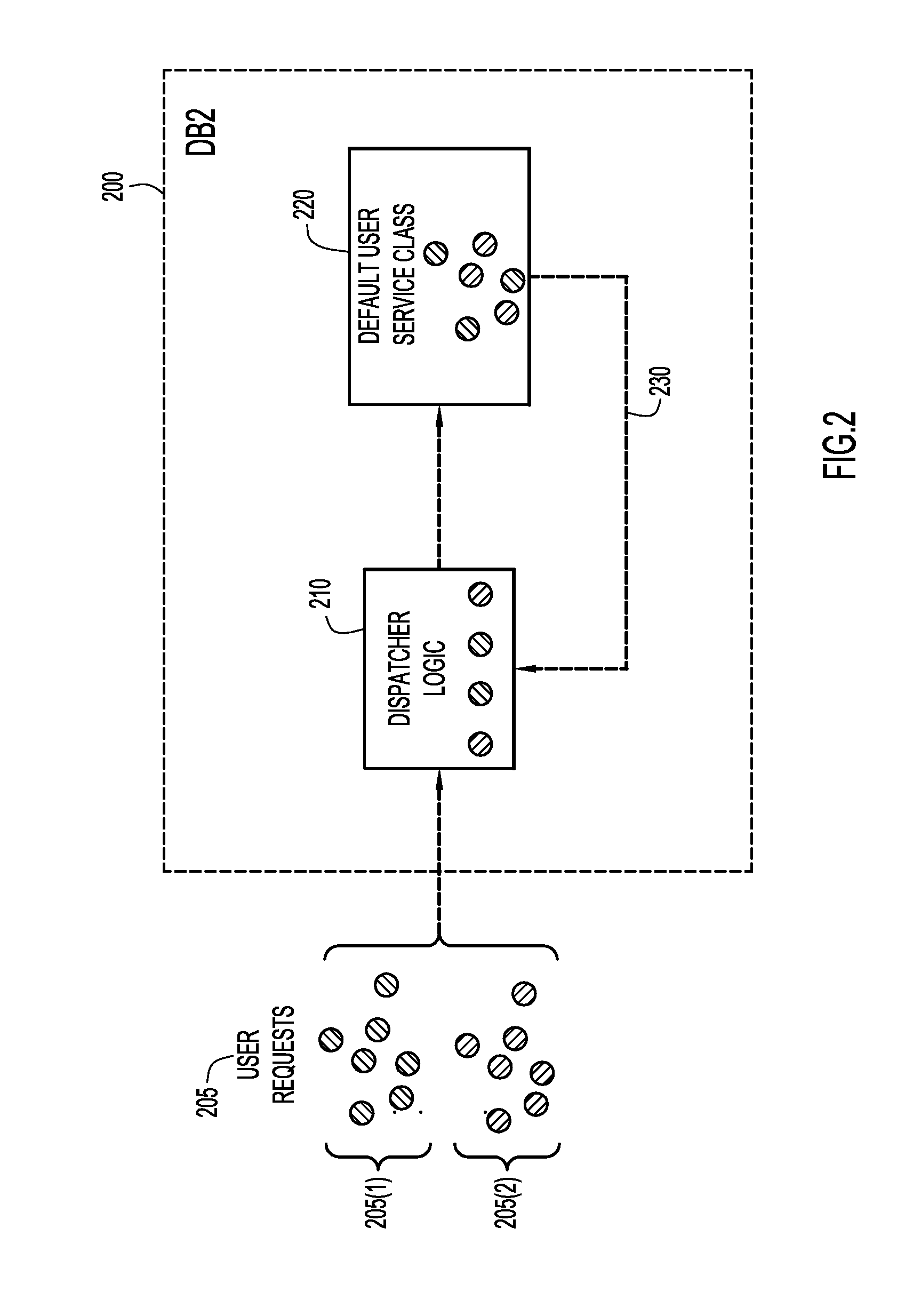

Processor provisioning by a middleware system for a plurality of logical processor partitions

InactiveUS20140181833A1Resource allocationError detection/correctionMulti processorApplication software

A middleware processor provisioning process provisions a plurality of processors in a multi-processor environment. The processing capability of the multiprocessor environment is subdivided and multiple instances of service applications start protected processes to service a plurality of user processing requests, where the number of protected processes may exceed the number of processors. A single processing queue is created for each processor. User processing requests are portioned and dispatched across the plurality of processing queues and are serviced by protected processes from corresponding service applications, thereby efficiently using available processing resources while servicing the user processing requests in a desired manner.

Owner:IBM CORP

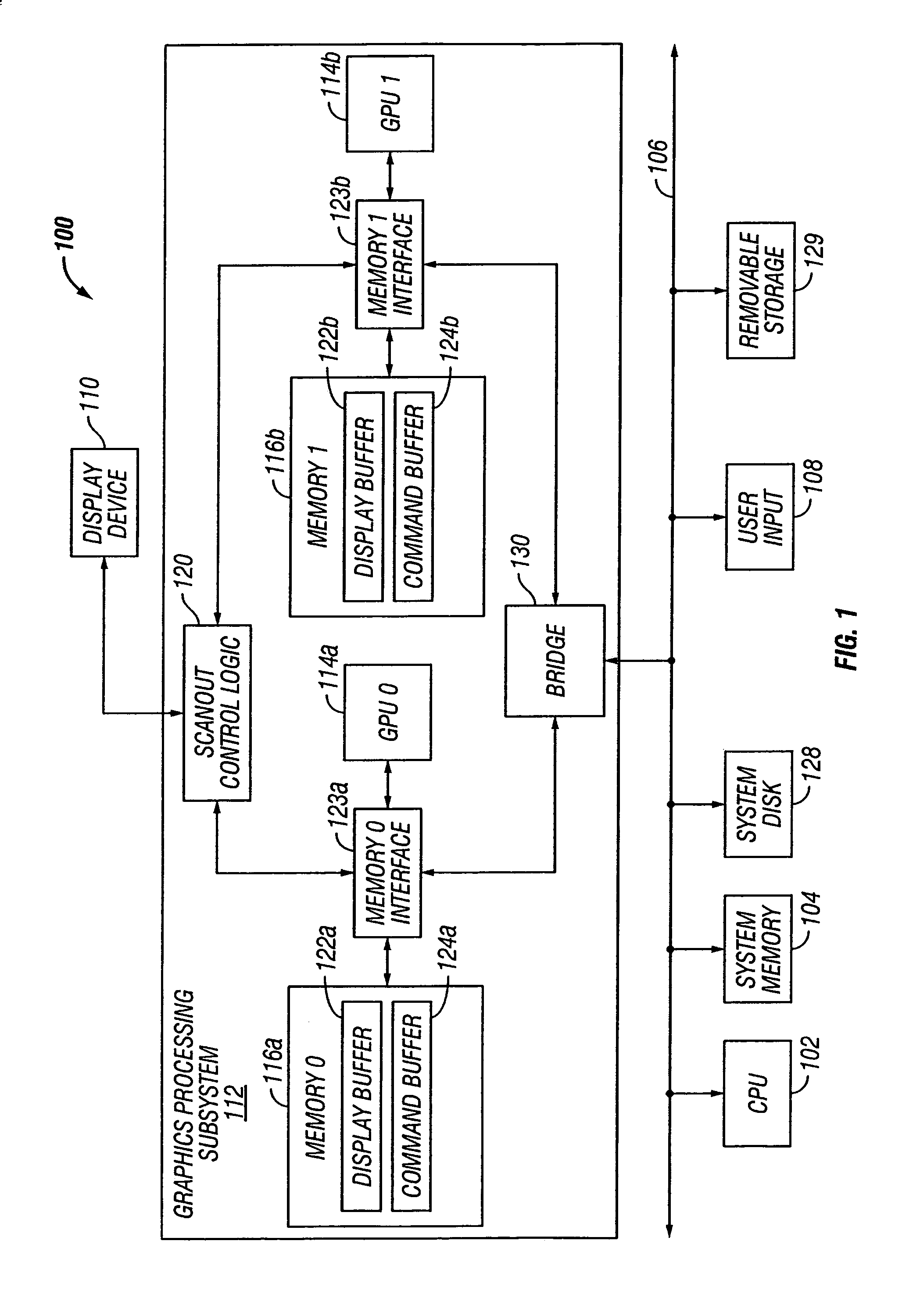

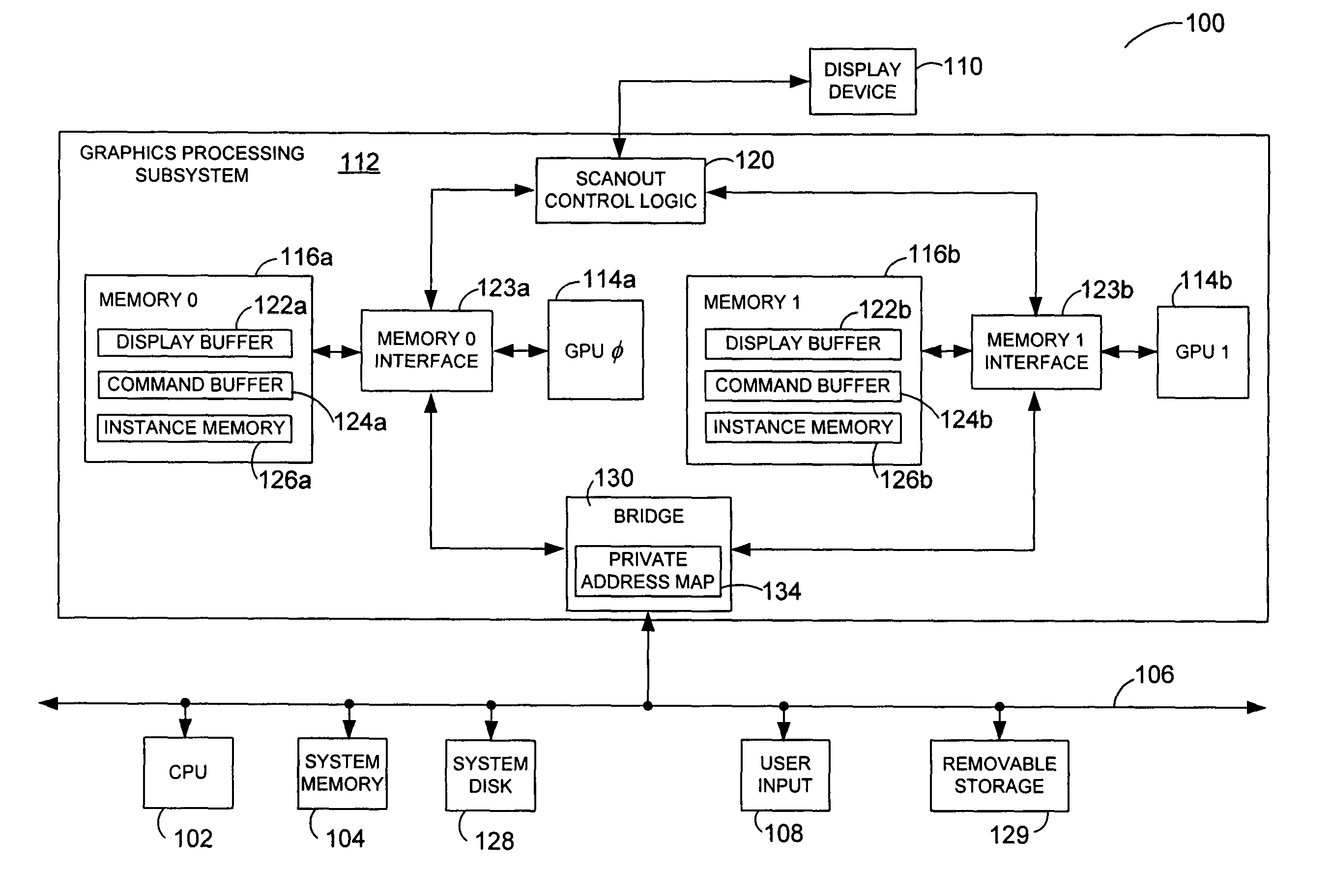

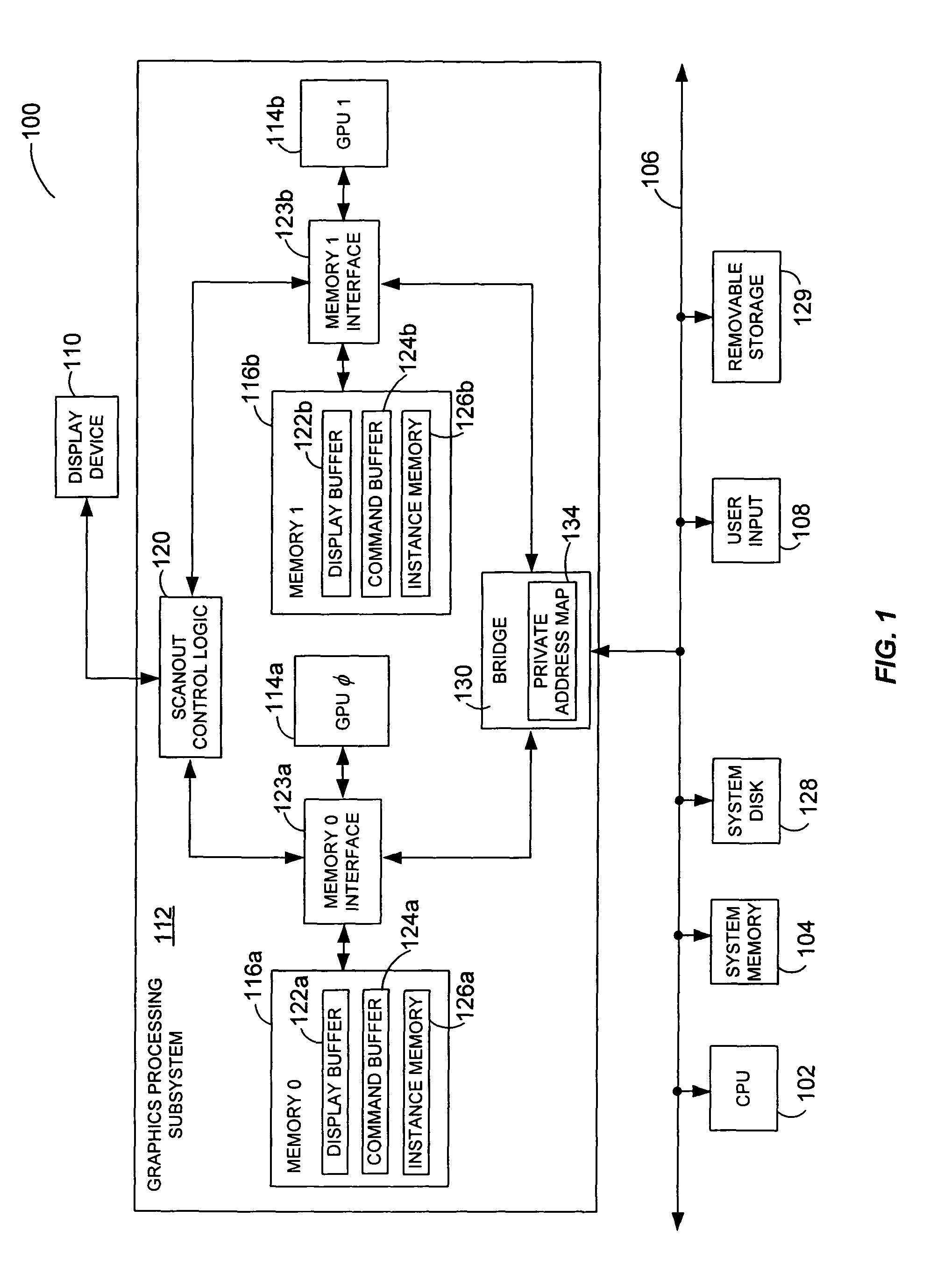

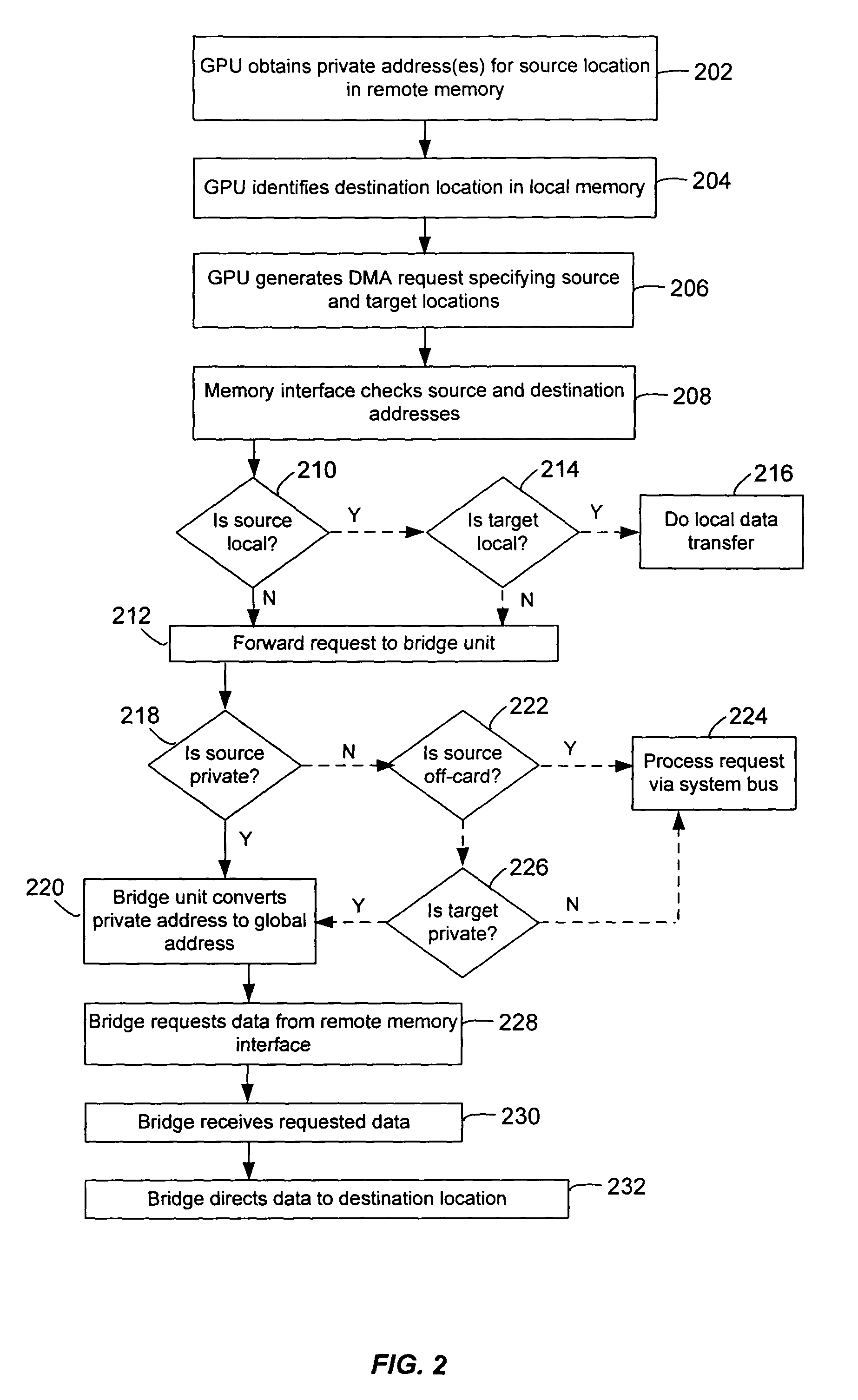

Private addressing in a multi-processor graphics processing system

ActiveUS6956579B1Memory adressing/allocation/relocationCathode-ray tube indicatorsGraphicsMulti processor

Systems and methods for private addressing in a multi-processor graphics processing subsystem having a number of memories and a number of graphics processors. Each of the memories includes a number of addressable storage locations, and storage locations in different memories may share a common global address. Storage locations are uniquely identifiable by private addresses internal to the graphics processing subsystem. One of the graphics processors is able to access a location in a particular memory by referencing its private address.

Owner:NVIDIA CORP

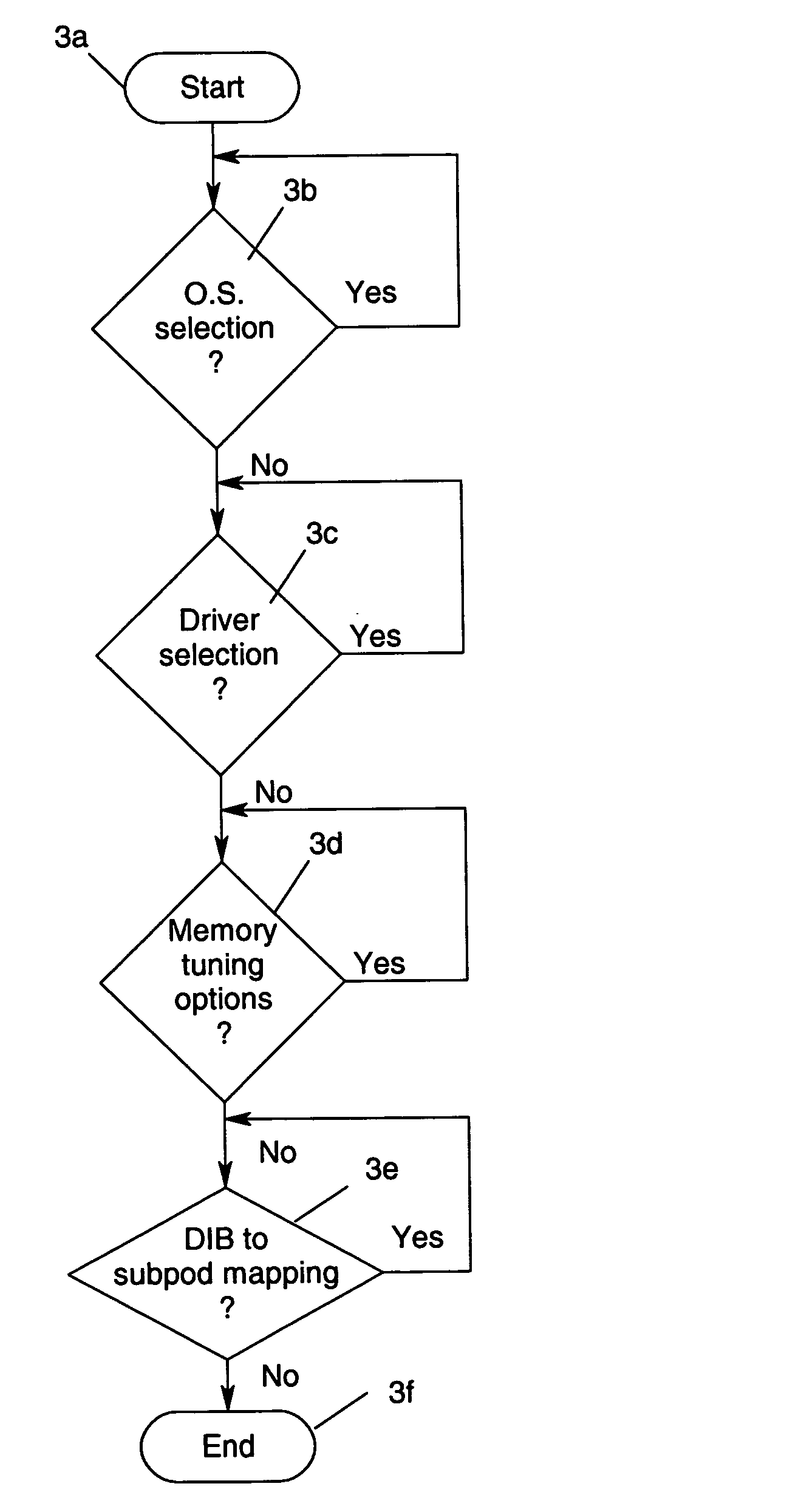

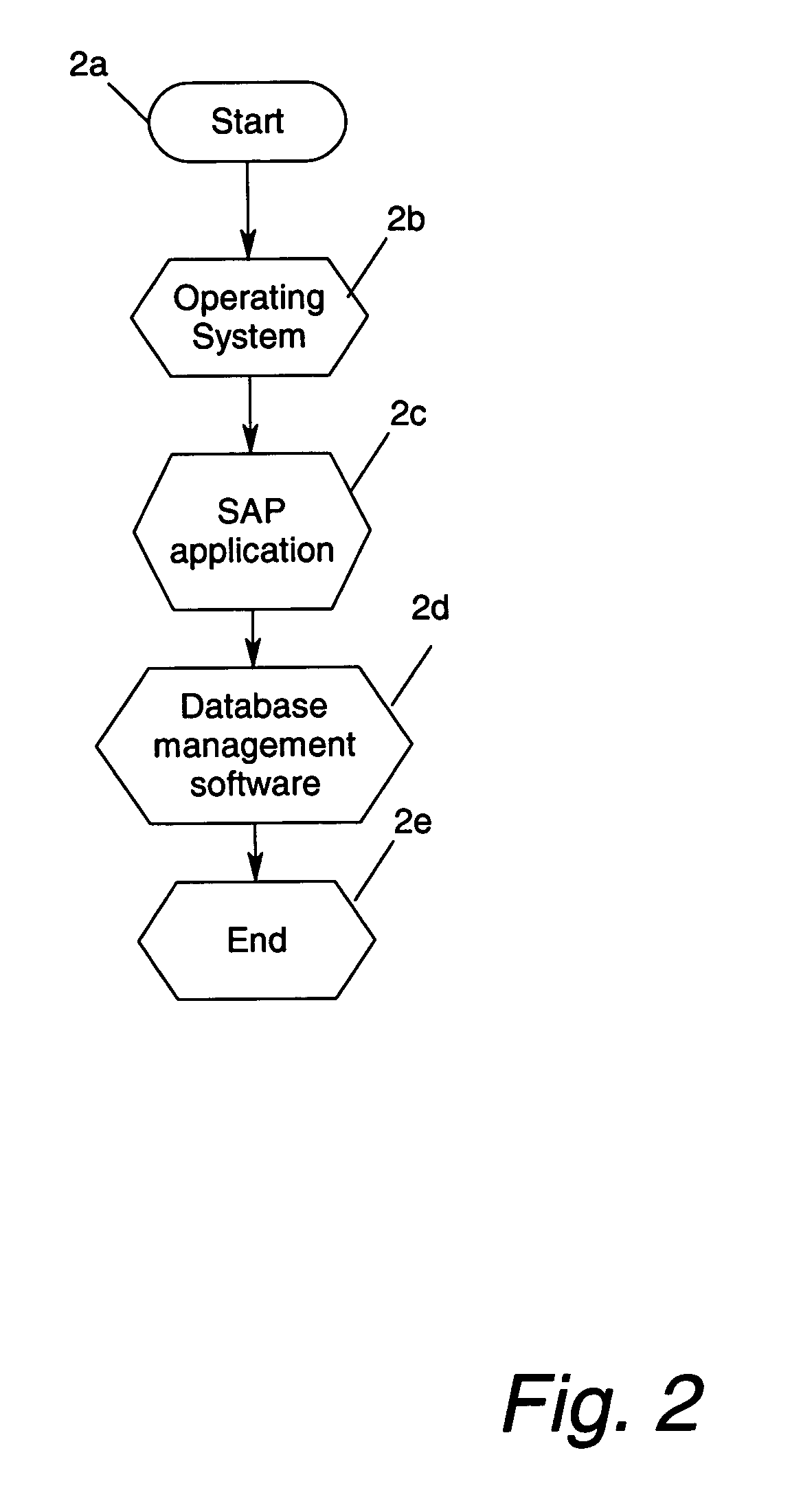

Process for optimizing software components for an enterprise resource planning (ERP) application SAP on multiprocessor servers

ActiveUS7805706B1Improve performanceBottleneck and poor performanceError detection/correctionDigital computer detailsMulti processorEnterprise resource planning

In a three-tier ERP implementation, multiple servers are interconnected through one or more network infrastructure. Users may observe poor performance due to the complexity and the number of interconnected components in the implementation. Herein is devised a process for tuning the software component by applying tuning techniques to the OS, SAP application and Database Management System software. For each component, the process identifies potential tuning opportunities of various subcomponents. The process is iterated numerous times through all software components while applying the tuning techniques to derive the most optimal performance for the ERP implementation.

Owner:UNISYS CORP

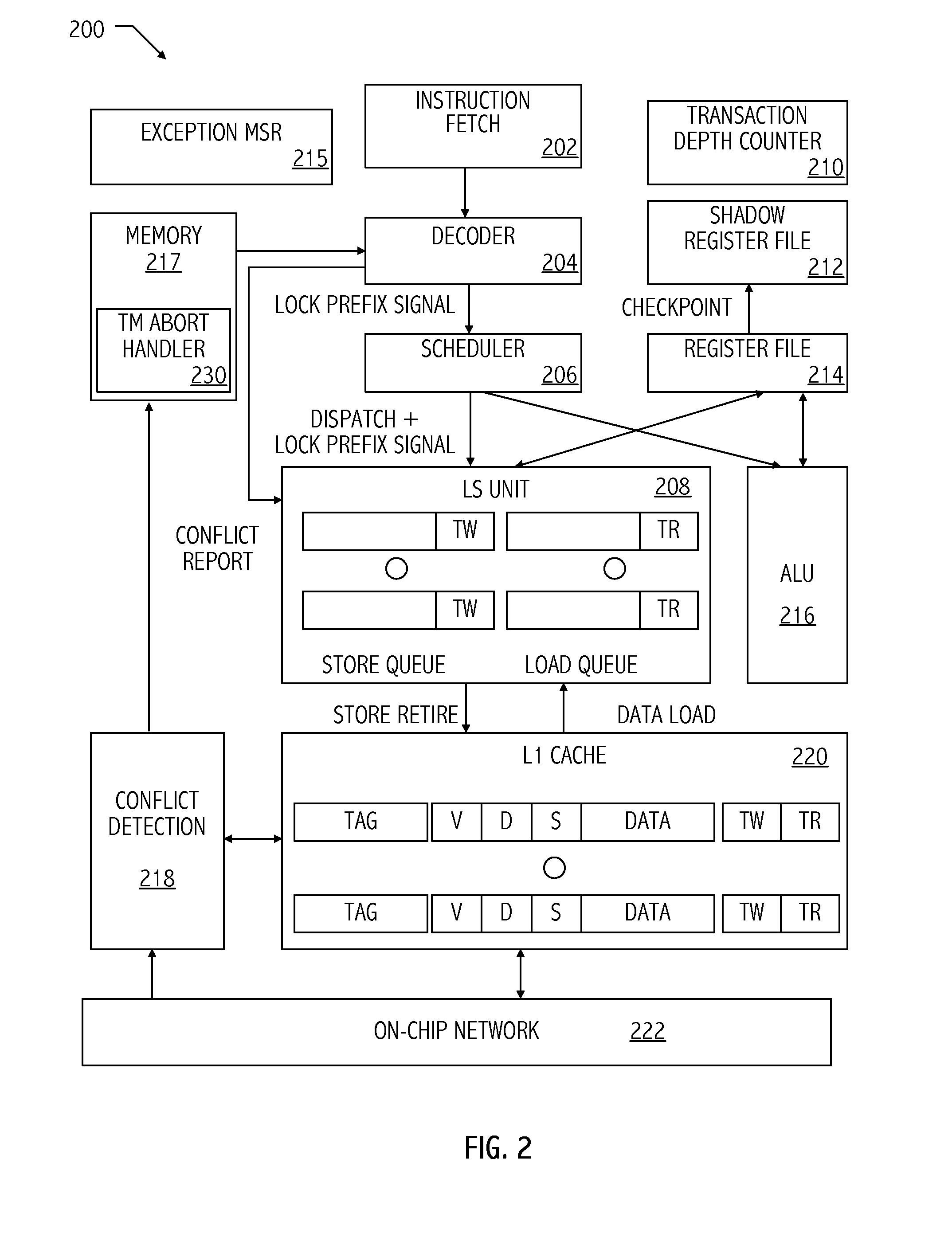

Inverted default semantics for in-speculative-region memory accesses

InactiveUS20110208921A1Unauthorized memory use protectionTransaction processingDirect memory accessMulti processor

A method for accessing memory by a first processor of a plurality of processors in a multi-processor system includes, responsive to a memory access instruction within a speculative region of a program, accessing contents of a memory location using a transactional memory access to the memory access instruction unless the memory access instruction indicates a non-transactional memory access. The method may include accessing contents of the memory location using a non-transactional memory access by the first processor according to the memory access instruction responsive to the instruction not being in the speculative region of the program. The method may include updating contents of the memory location responsive to the speculative region of the program executing successfully and the memory access instruction not being annotated to be a non-transactional memory access.

Owner:ADVANCED MICRO DEVICES INC

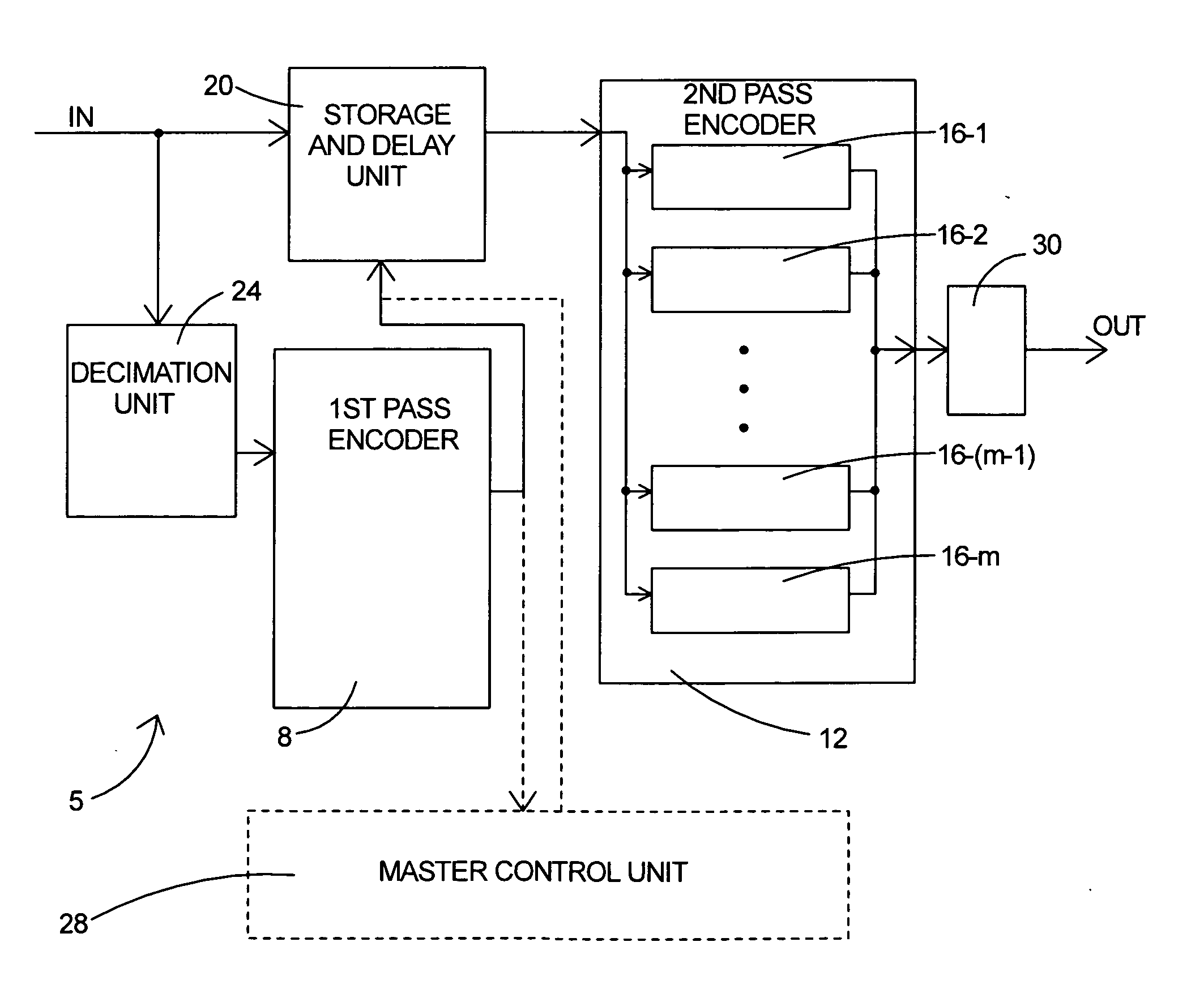

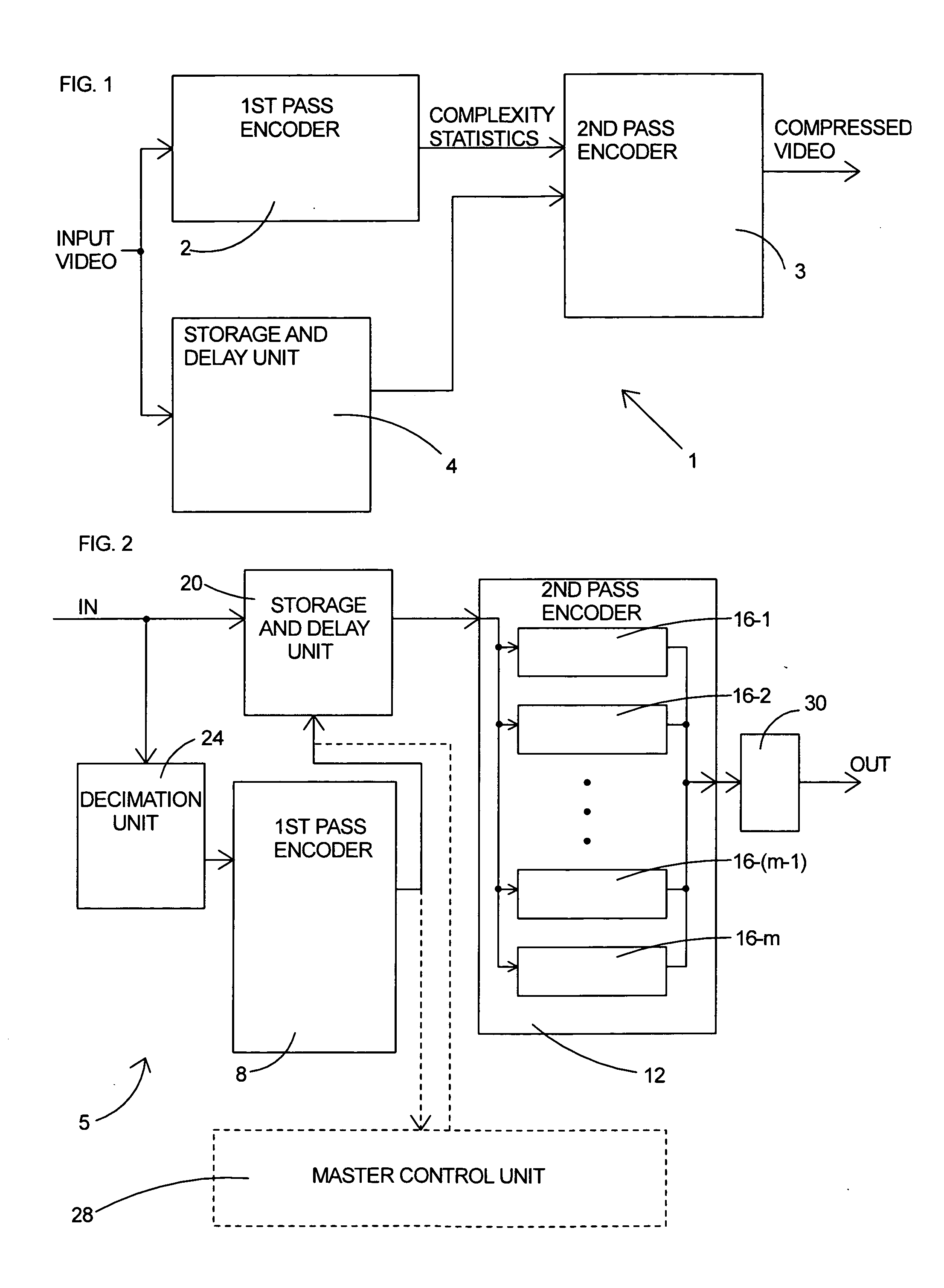

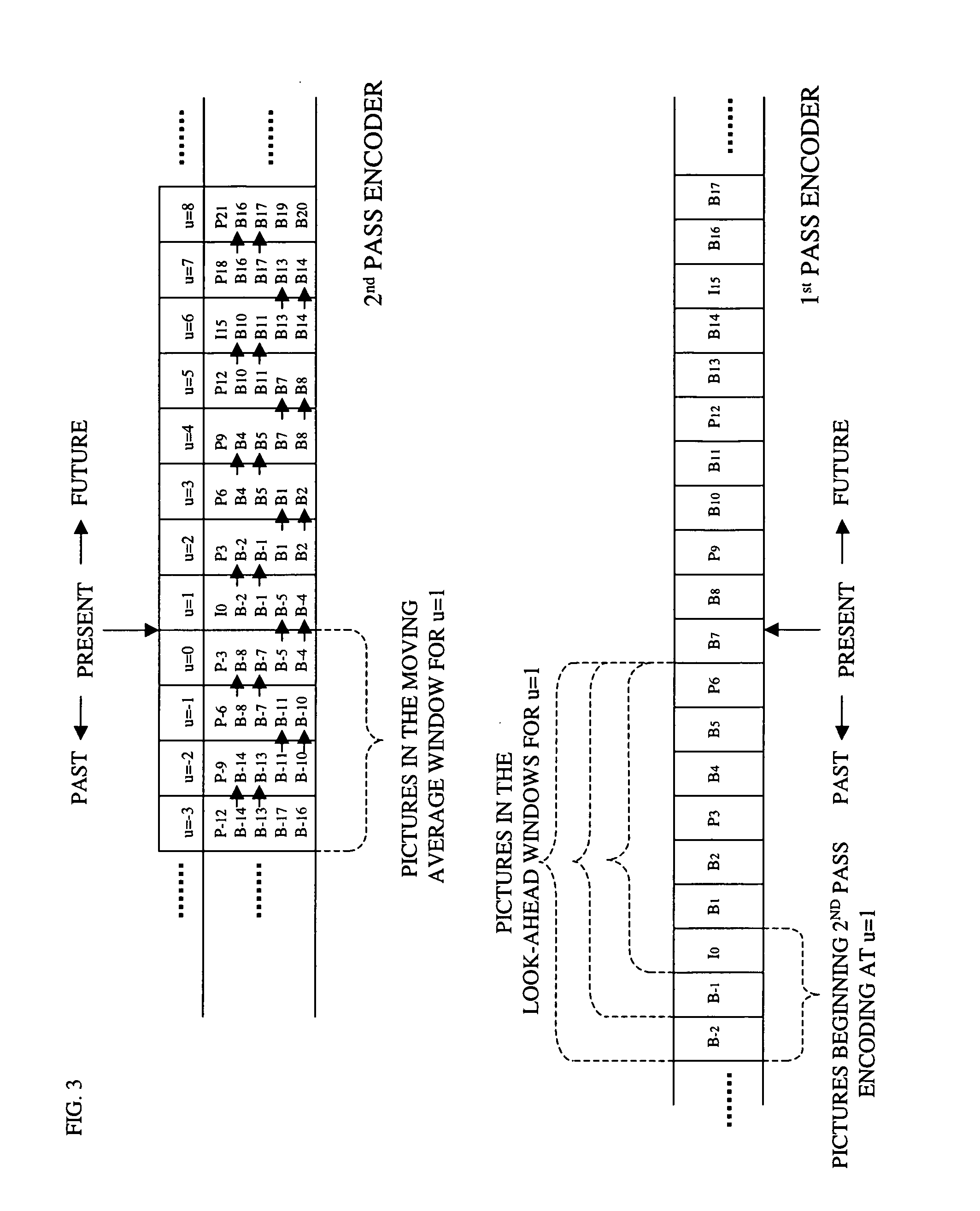

Parallel rate control for digital video encoder with multi-processor architecture and picture-based look-ahead window

ActiveUS20060126728A1Prevent underflowIncrease in sizeColor television with pulse code modulationColor television with bandwidth reductionDigital videoMulti processor

A method of operating a multi-processor video encoder by determining a target size corresponding to a preferred number of bits to be used when creating an encoded version of a picture in a group of sequential pictures making up a video sequence. The method includes the steps of calculating a first degree of fullness of a coded picture buffer at a first time, operating on the first degree of fullness to return an estimated second degree of fullness of the coded picture buffer at a second time, and operating on the second degree of fullness to return an initial target sized for the picture. The first time corresponds to the most recent time an accurate degree of fullness of the coded picture buffer can be calculated and the second time occurs after the first time.

Owner:BISON PATENT LICENSING LLC

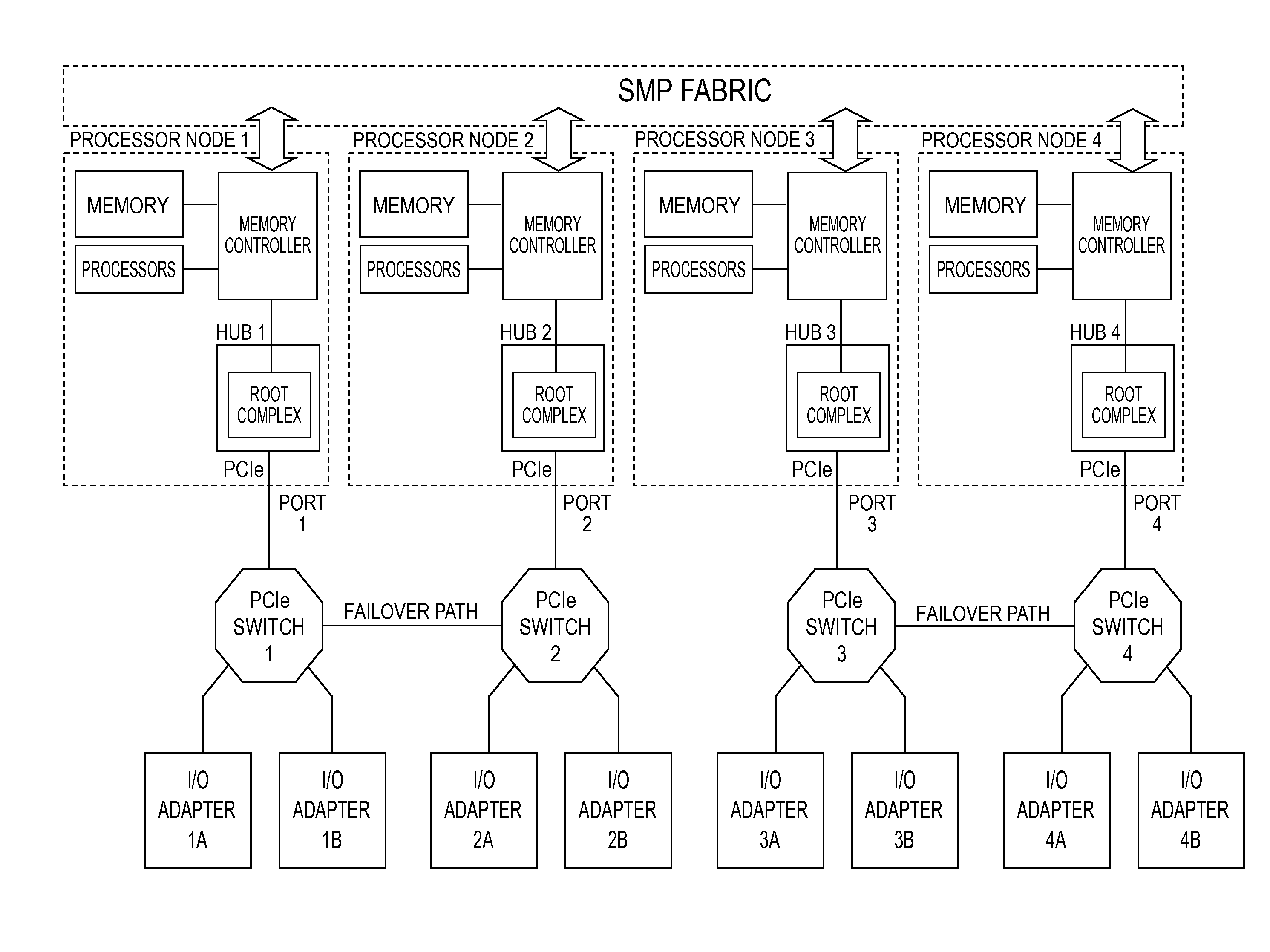

Switch failover control in a multiprocessor computer system

A system and a method for failover control comprising: maintaining a primary device table entry (DTE) in a first table activated for a first adapter in communication with a first processor node having a first root complex via a first switch assembly and maintaining a secondary DTE in standby for a second adapter in communication with a second processor node having a second root complex via a second switch assembly; maintaining a primary DTE in a second table activated for the second adapter and maintaining a secondary DTE in standby for the first adapter; and upon a failover, updating the secondary DTE in the first table as an active entry for the second adapter and forming a path to enable traffic to route from the second adapter through the second switch assembly over to the first switch assembly and up to the first root complex of the first processor node.

Owner:IBM CORP

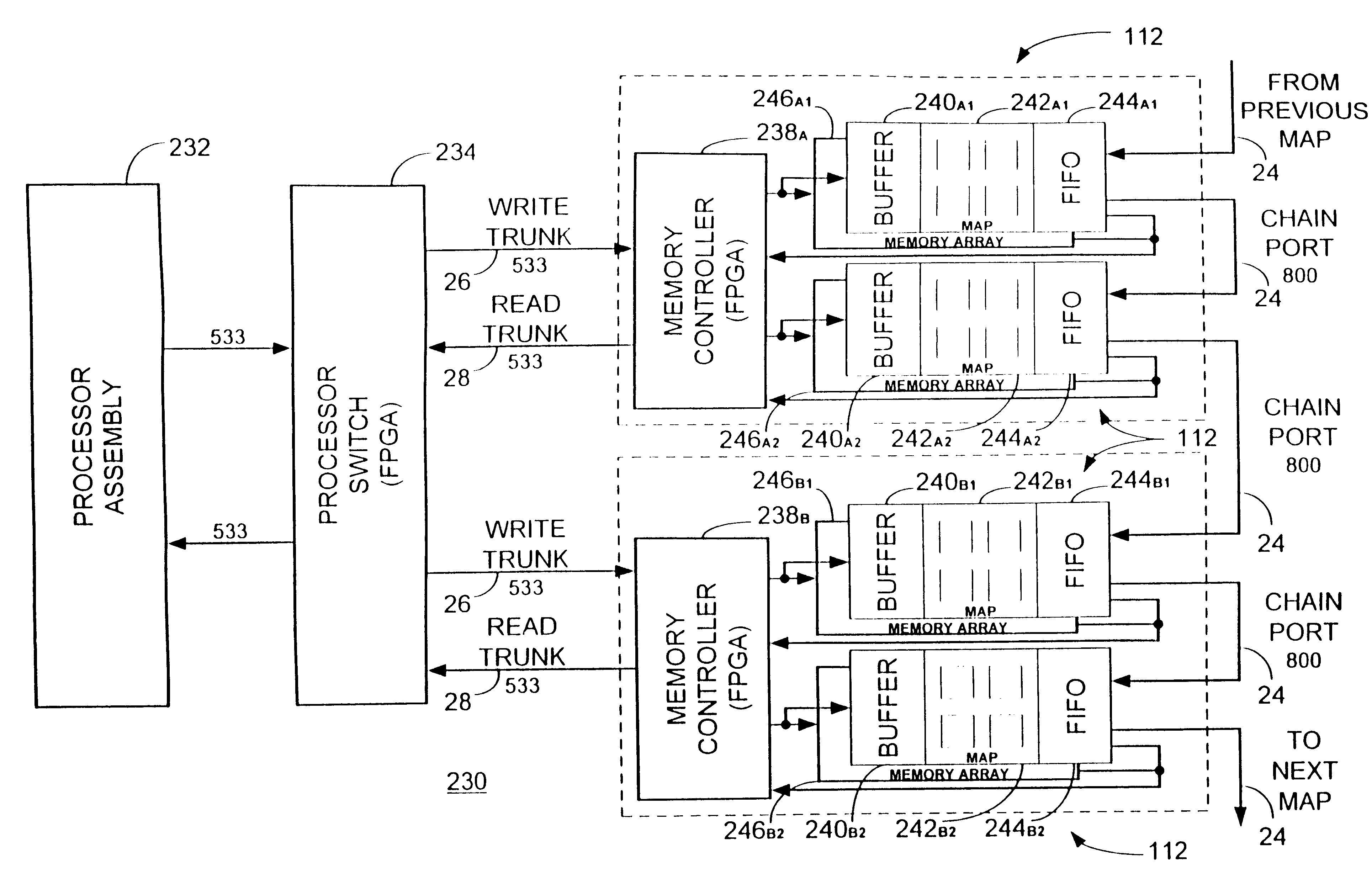

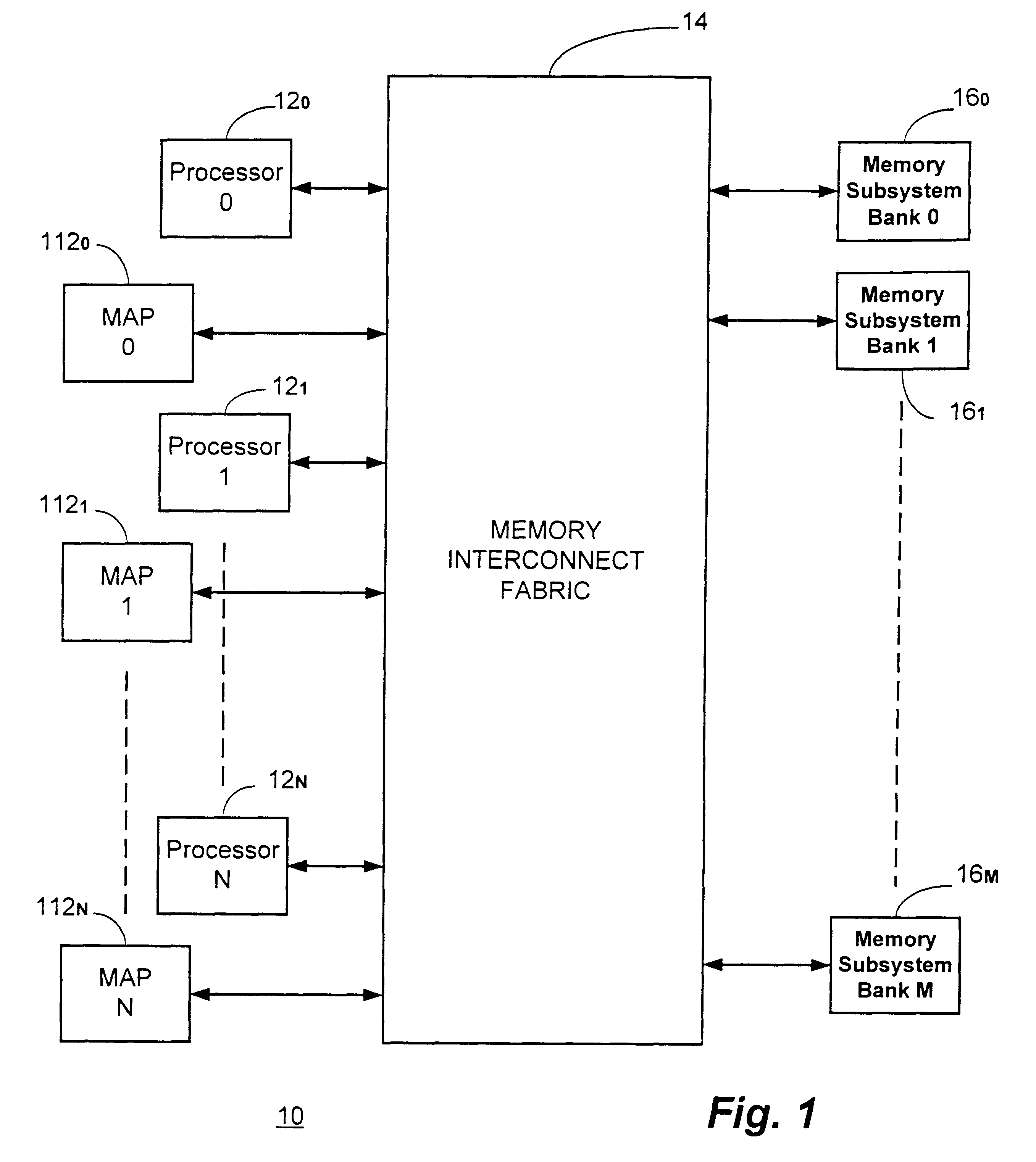

Multiprocessor with each processor element accessing operands in loaded input buffer and forwarding results to FIFO output buffer

InactiveUS6339819B1Perform operationPotential utilitySingle instruction multiple data multiprocessorsMemory adressing/allocation/relocationProcessor elementMulti processor

An enhanced memory algorithmic processor ("MAP") architecture for multiprocessor computer systems comprises an assembly that may comprise, for example, field programmable gate arrays ("FPGAs") functioning as the memory algorithmic processors. The MAP elements may further include an operand storage, intelligent address generation, on board function libraries, result storage and multiple input / output ("I / O") ports. The MAP elements are intended to augment, not necessarily replace, the high performance microprocessors in the system and, in a particular embodiment of the present invention, they may be connected through the memory subsystem of the computer system resulting in it being very tightly coupled to the system as well as being globally accessible from any processor in a multiprocessor computer system.

Owner:SRC COMP

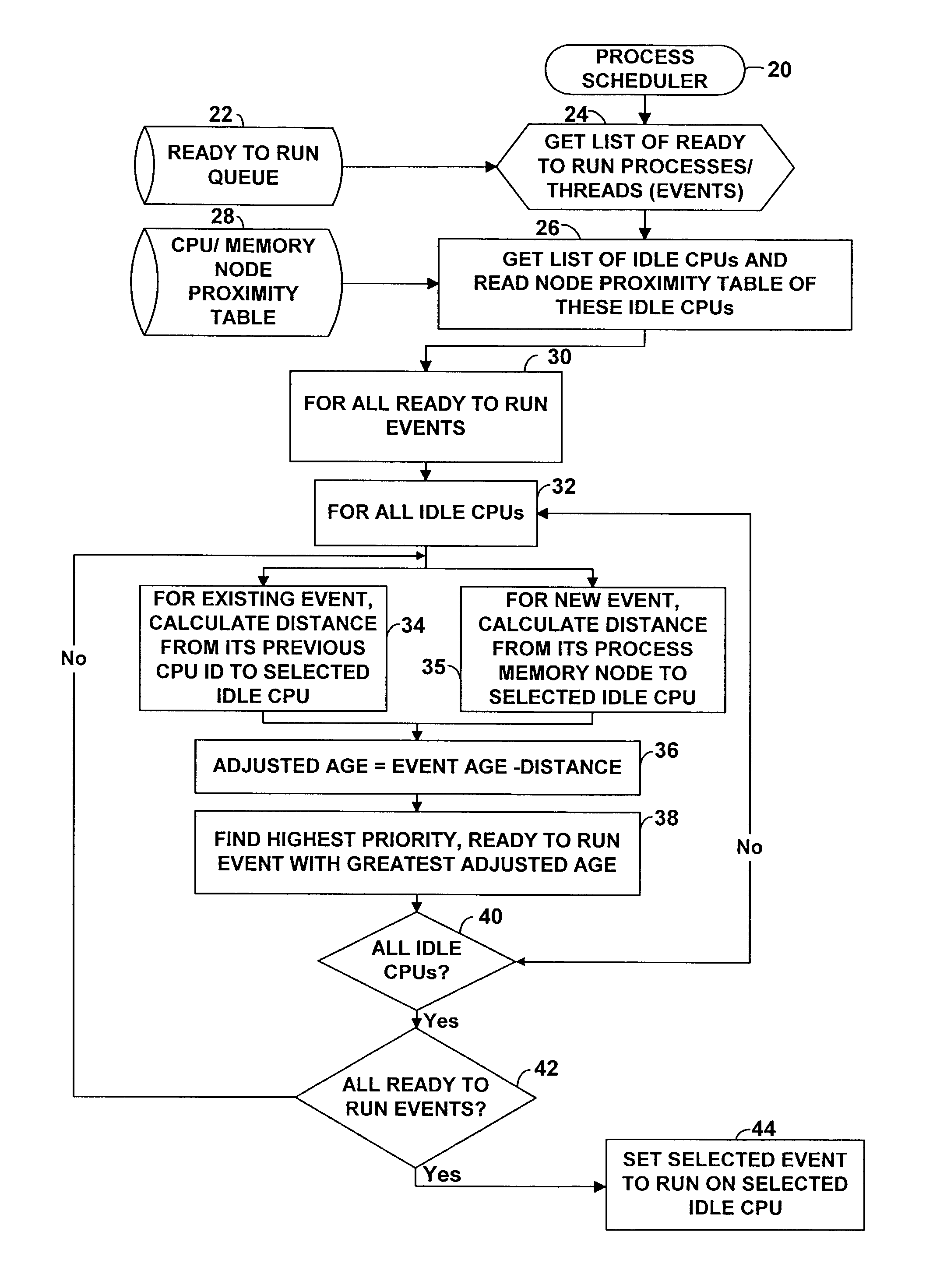

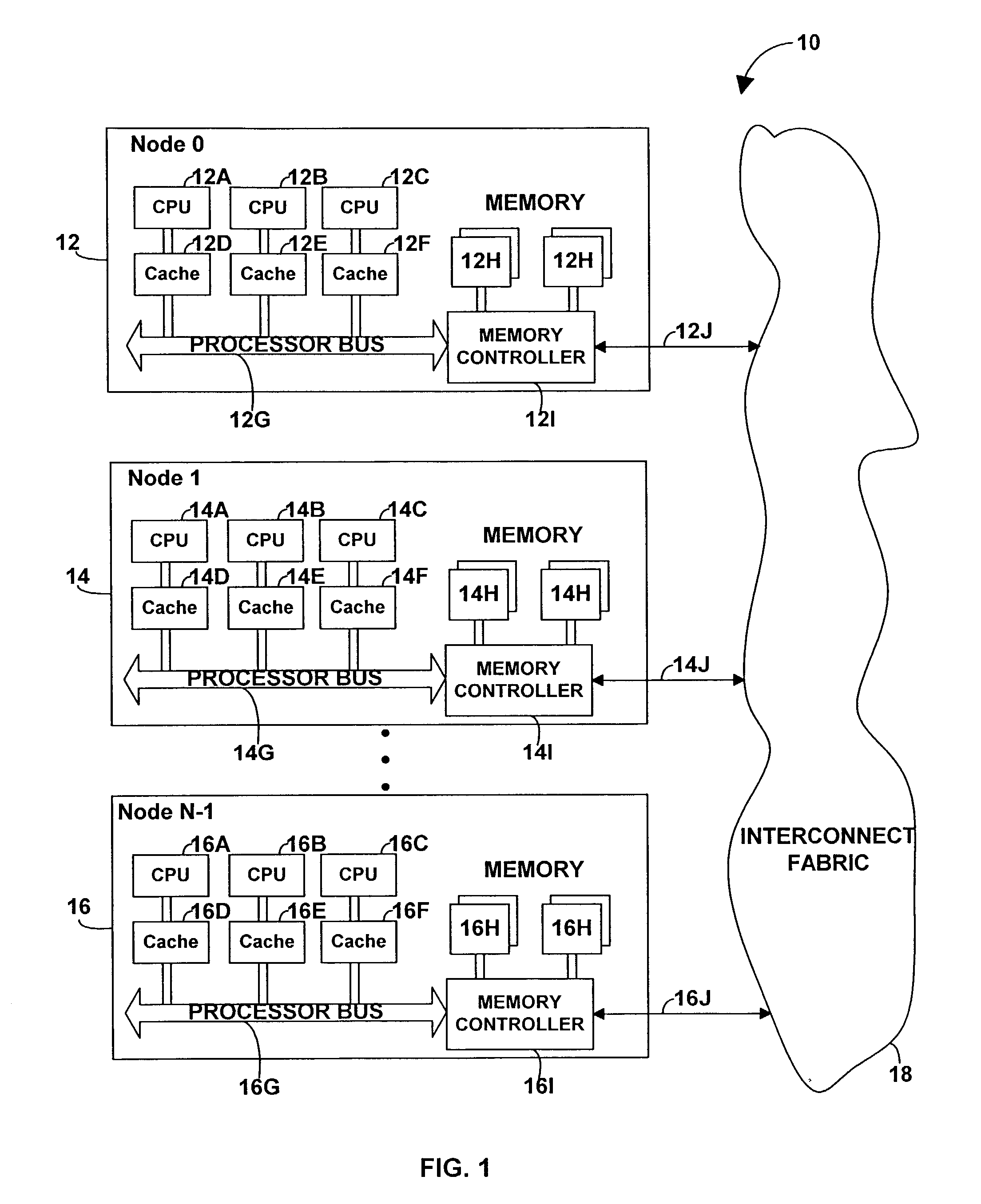

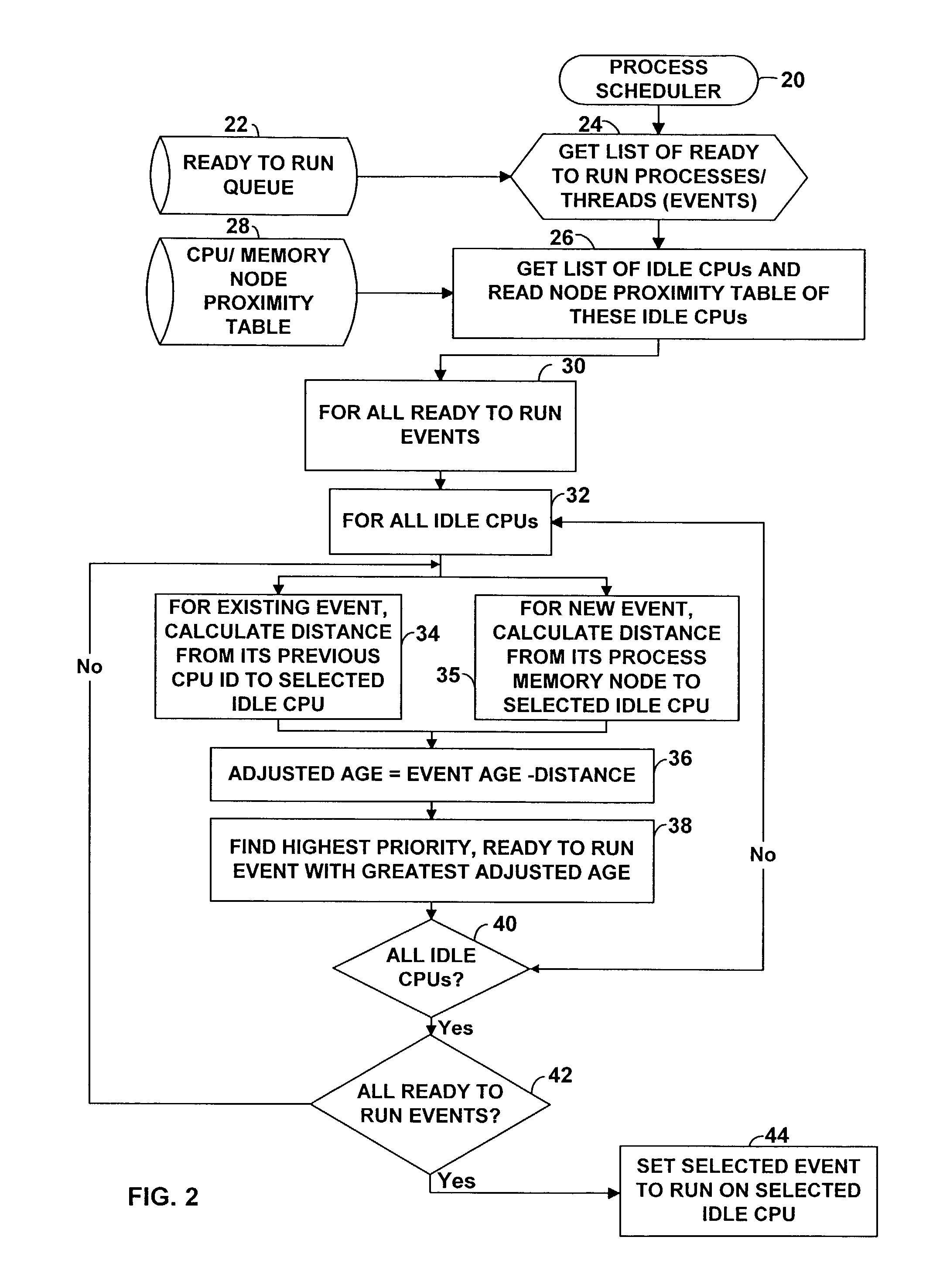

Method and apparatus for optimizing performance in a multi-processing system

InactiveUS7143412B2Interprogram communicationDigital computer detailsError processingThread scheduling

A technique for improving performance in a multi-processor system by reducing access latency by correlating processor, node and memory allocation. Specifically, a Process / Thread Scheduler is modified such that system mapping and node proximity tables may be referenced to help determine processor assignments for ready-to-run processes / threads. Processors are chosen to minimize access latency. Further, the Page Fault Handler is modified such that free memory pages are assigned to a process based partially on the proximity of the memory with respect to the processor requesting memory allocation.

Owner:HEWLETT PACKARD DEV CO LP

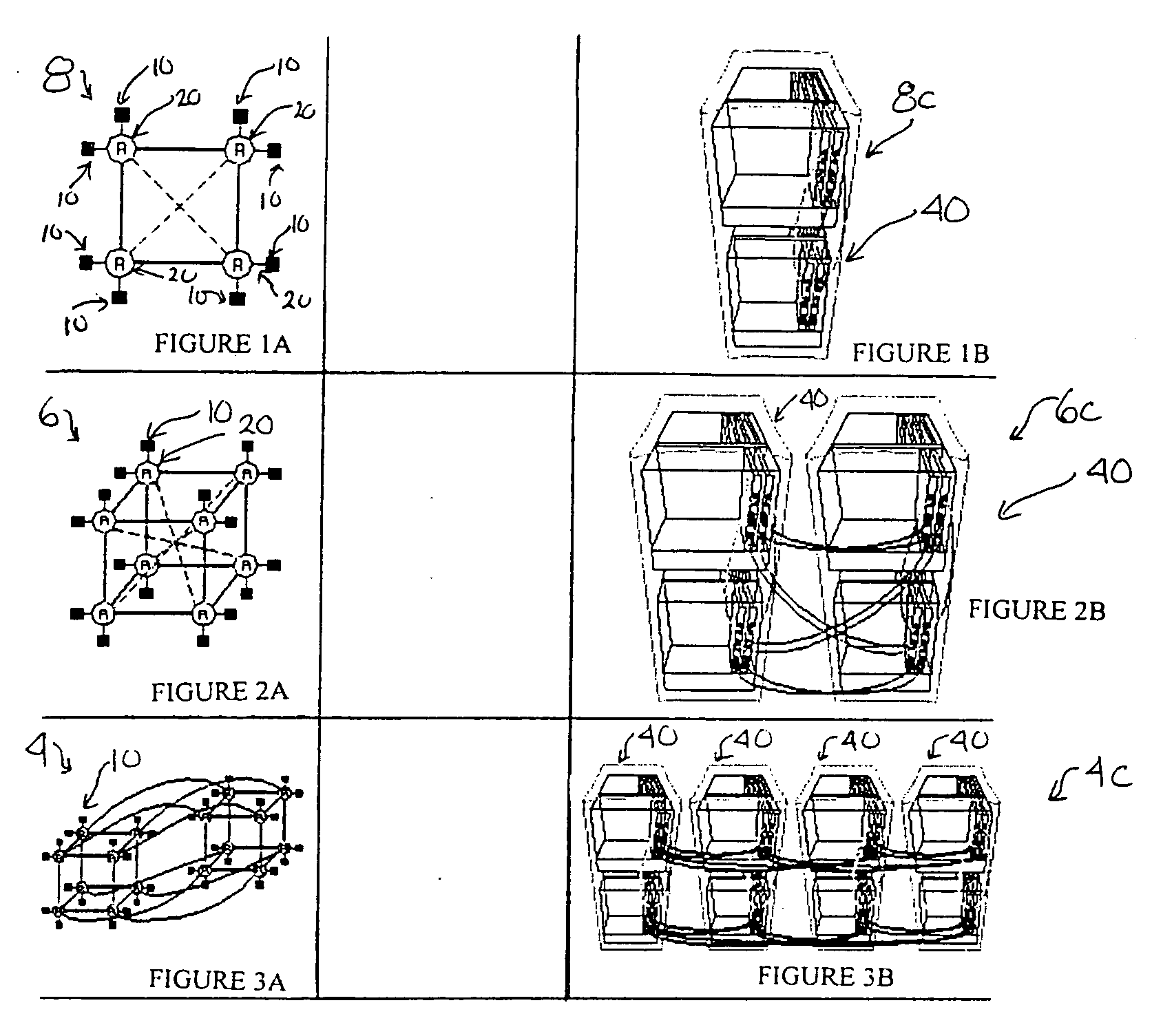

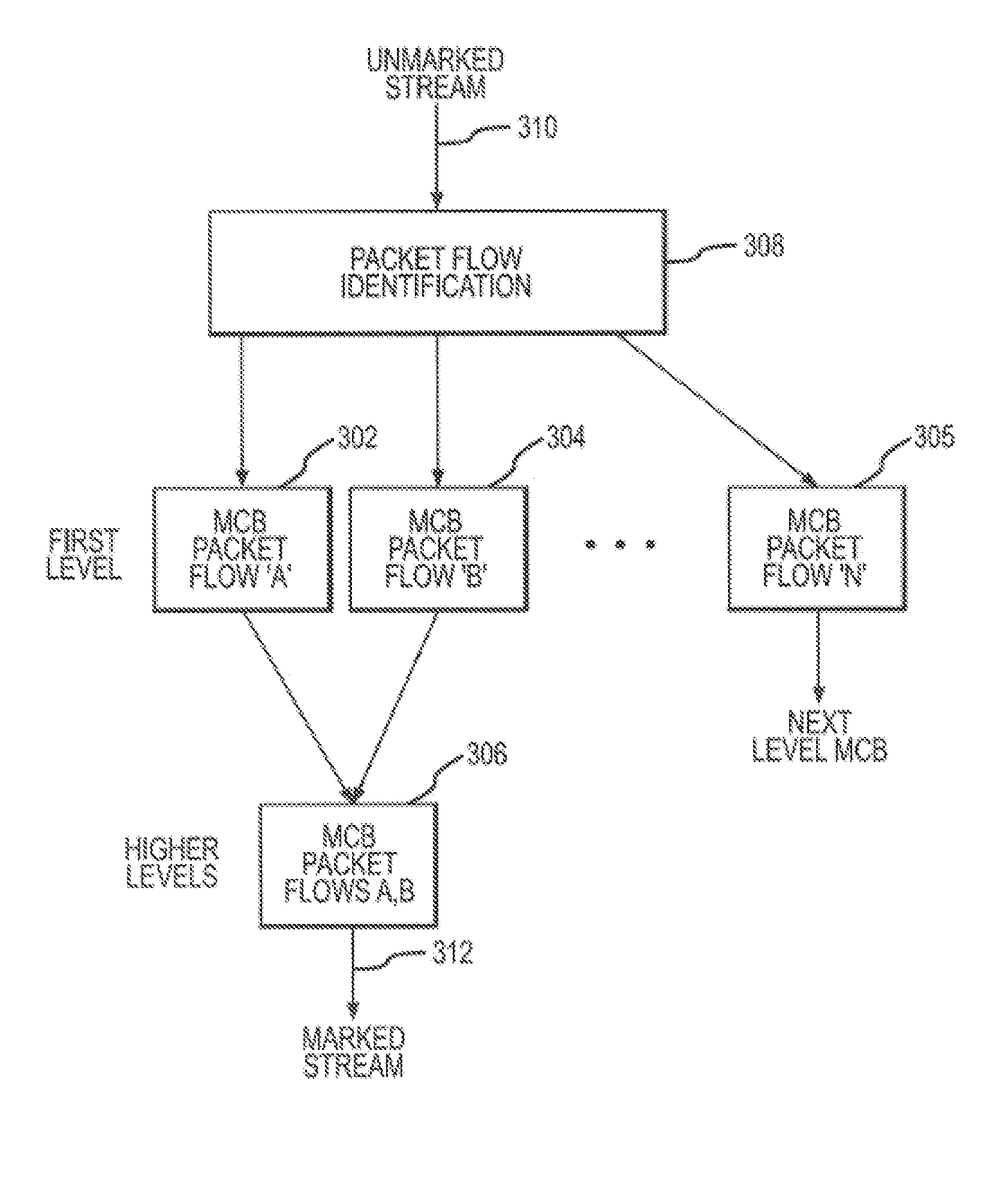

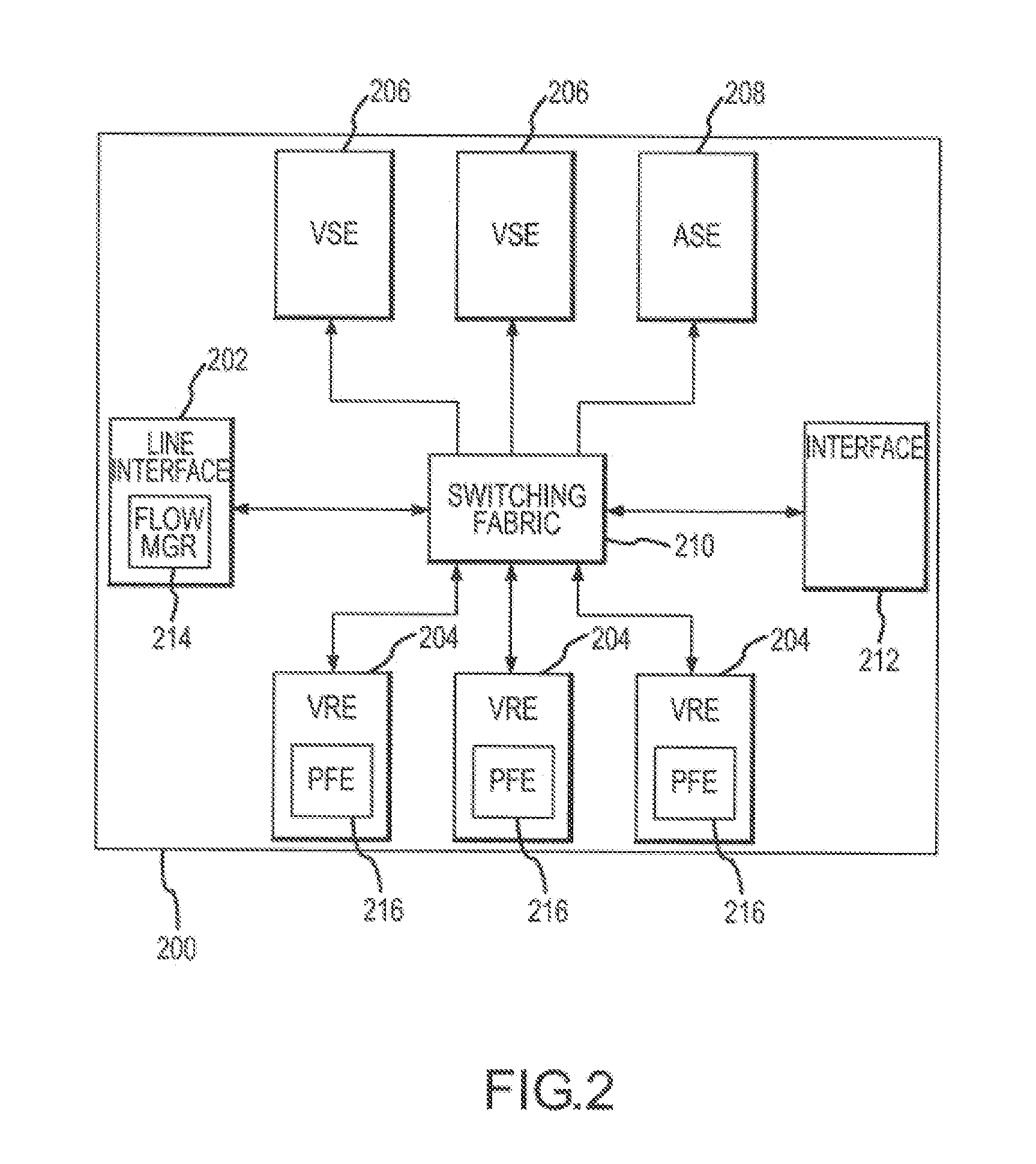

System and method for hierarchical metering in a virtual router based network switch

A virtual routing platform includes a line interface a plurality of virtual routing engines (VREs) to identify packets of different packet flows and perform a hierarchy of metering including at least first and second levels of metering on the packet flows. A first level of metering may be performed on packets of a first packet flow using a first metering control block (MCB). The first level of metering may be one level of metering in a hierarchy of metering levels. A second level of metering on the packets of the first packet flow and packets of a second flow using a second MCB. The second level of metering may be another level of metering in the hierarchy. A cache-lock may be placed on the appropriate MCB prior to performing the level of metering. The first and second MCBs may be data structures stored in a shared memory of the virtual routing platform. The cache-lock may be released after performing the level of metering using the MCB. The cache-lock may comprise setting a lock-bit of a cache line index in a cache tag store, which may identify a MCB in the cache memory. The virtual routing platform may be a multiprocessor system utilizing a shared memory having a first and second processors to perform levels of metering in parallel. In one embodiment, a virtual routing engine may be shared by a plurality of virtual router contexts running in a memory system of a CPU of the virtual routing engine. In this embodiment, the first packet flow may be associated with one virtual router context and the second packet flow is associated with a second virtual router context. The first and second routing contexts may be of a plurality of virtual router contexts resident in the virtual routing engine.

Owner:GOOGLE LLC

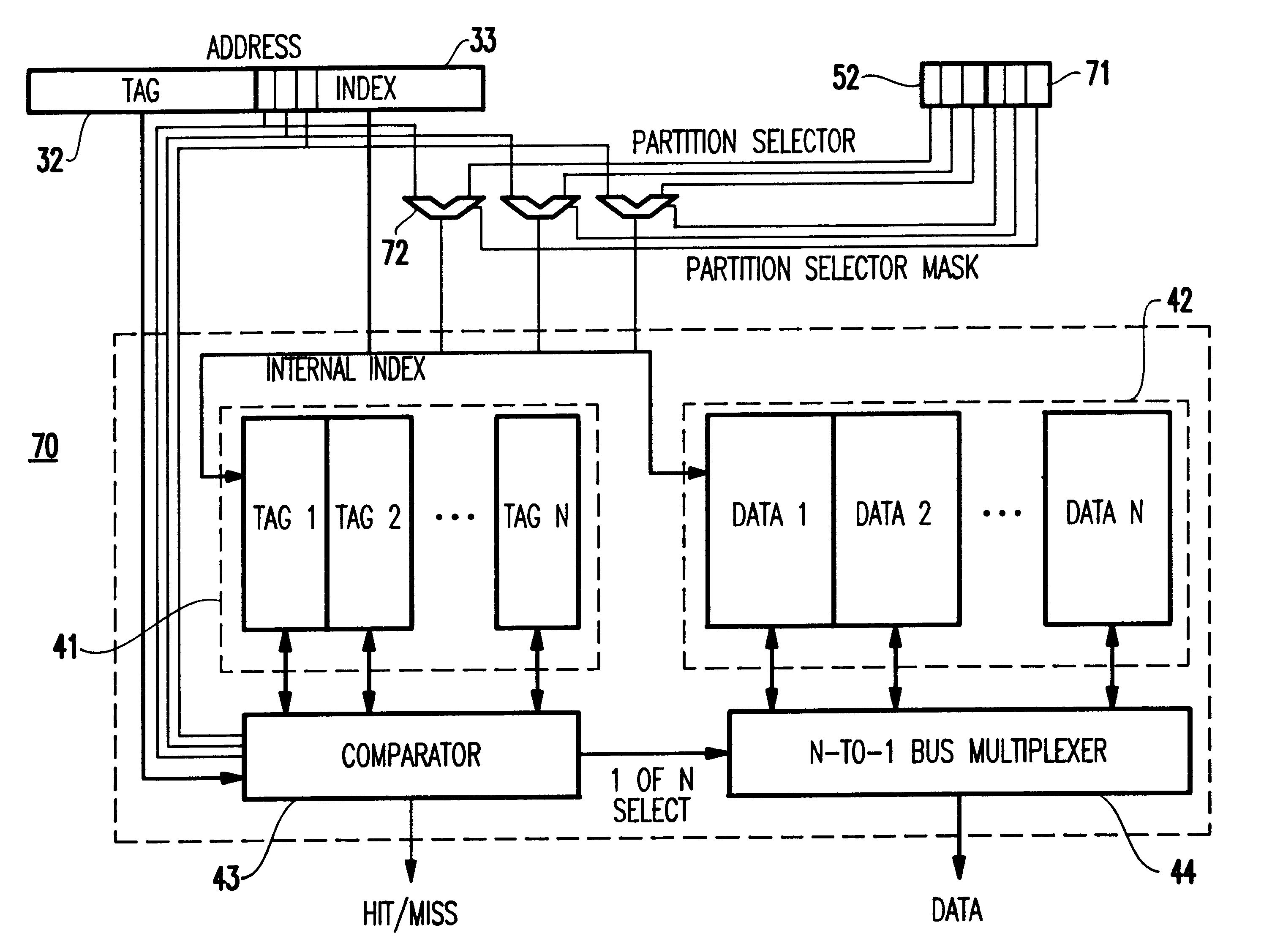

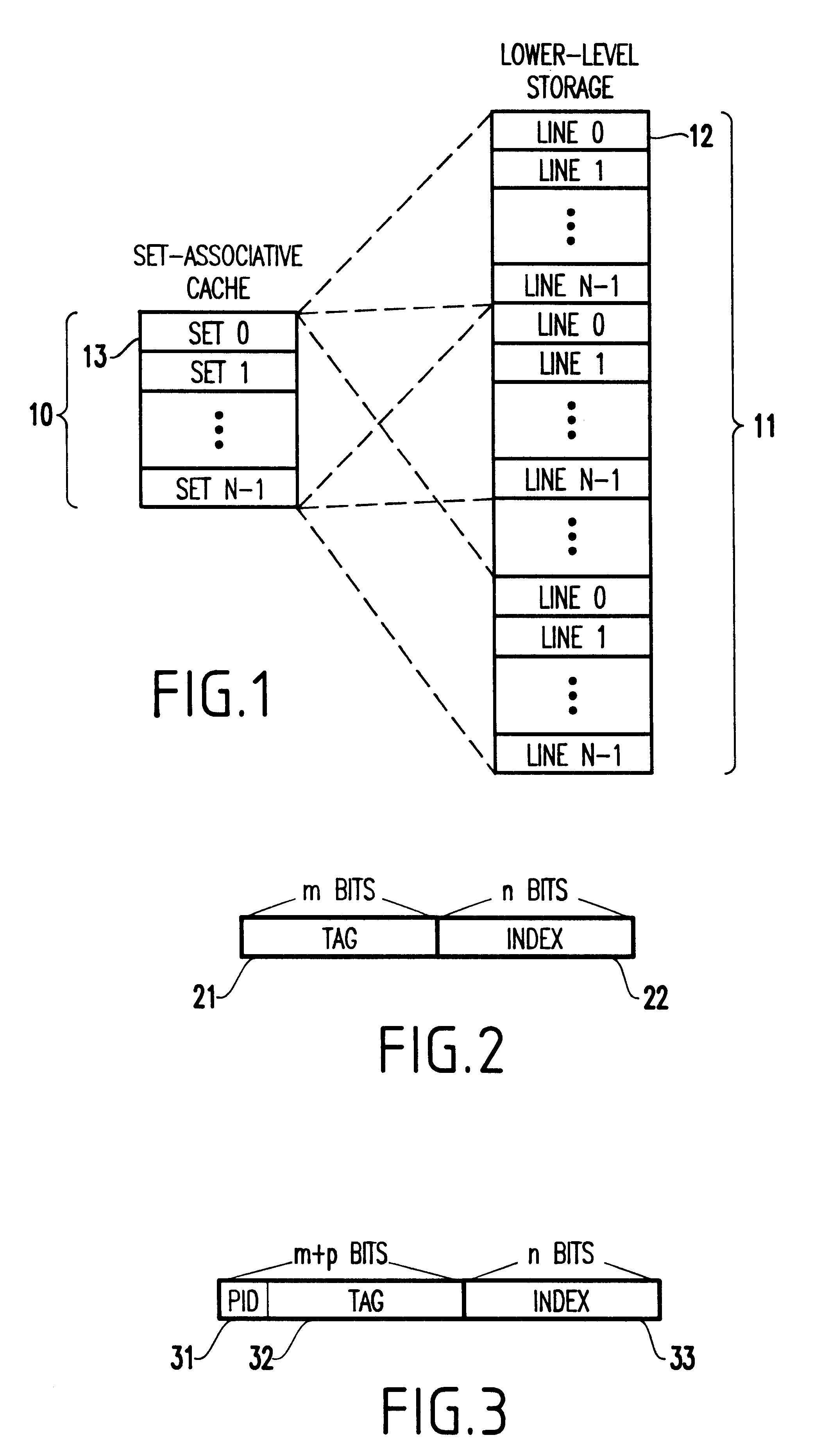

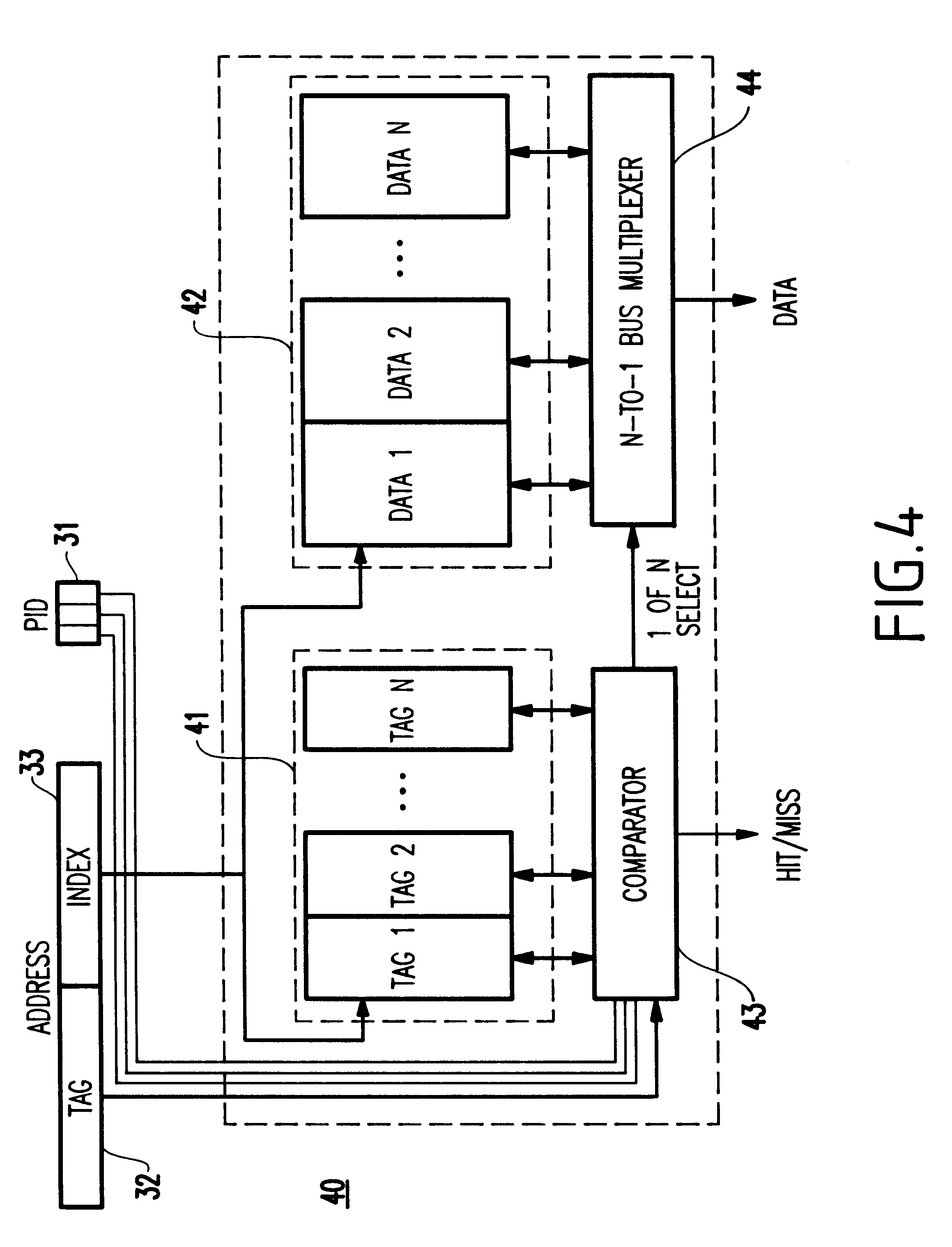

Method and system for dynamically partitioning a shared cache

InactiveUS6493800B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorParallel computing

A cache memory shared among a plurality of separate, disjoint entities each having a disjoint address space, includes a cache segregator for dynamically segregating a storage space allocated to each entity of the entities such that no interference occurs with respective ones of the entities. A multiprocessor system including the cache memory, a method and a signal bearing medium for storing a program embodying the method also are provided.

Owner:IBM CORP

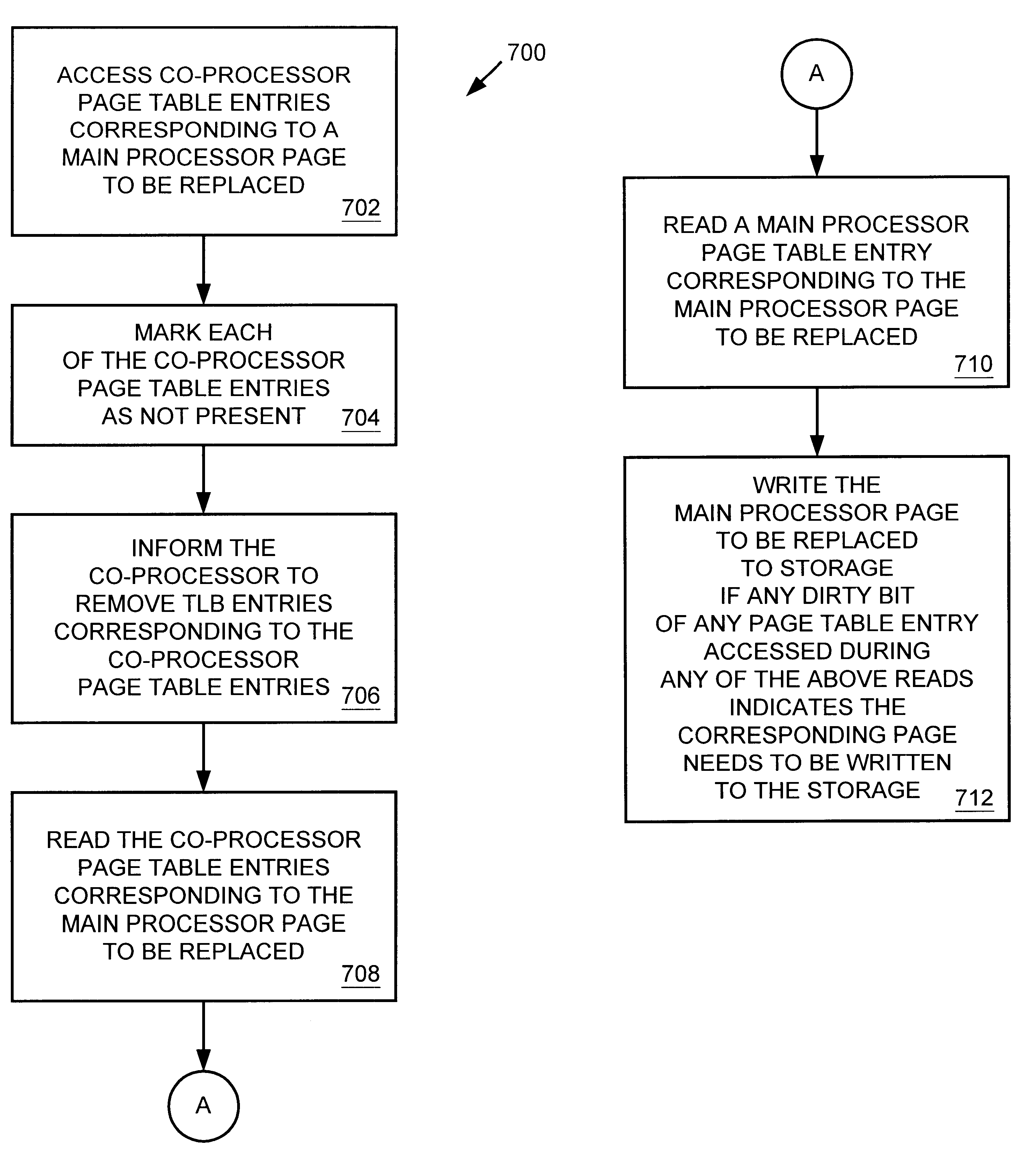

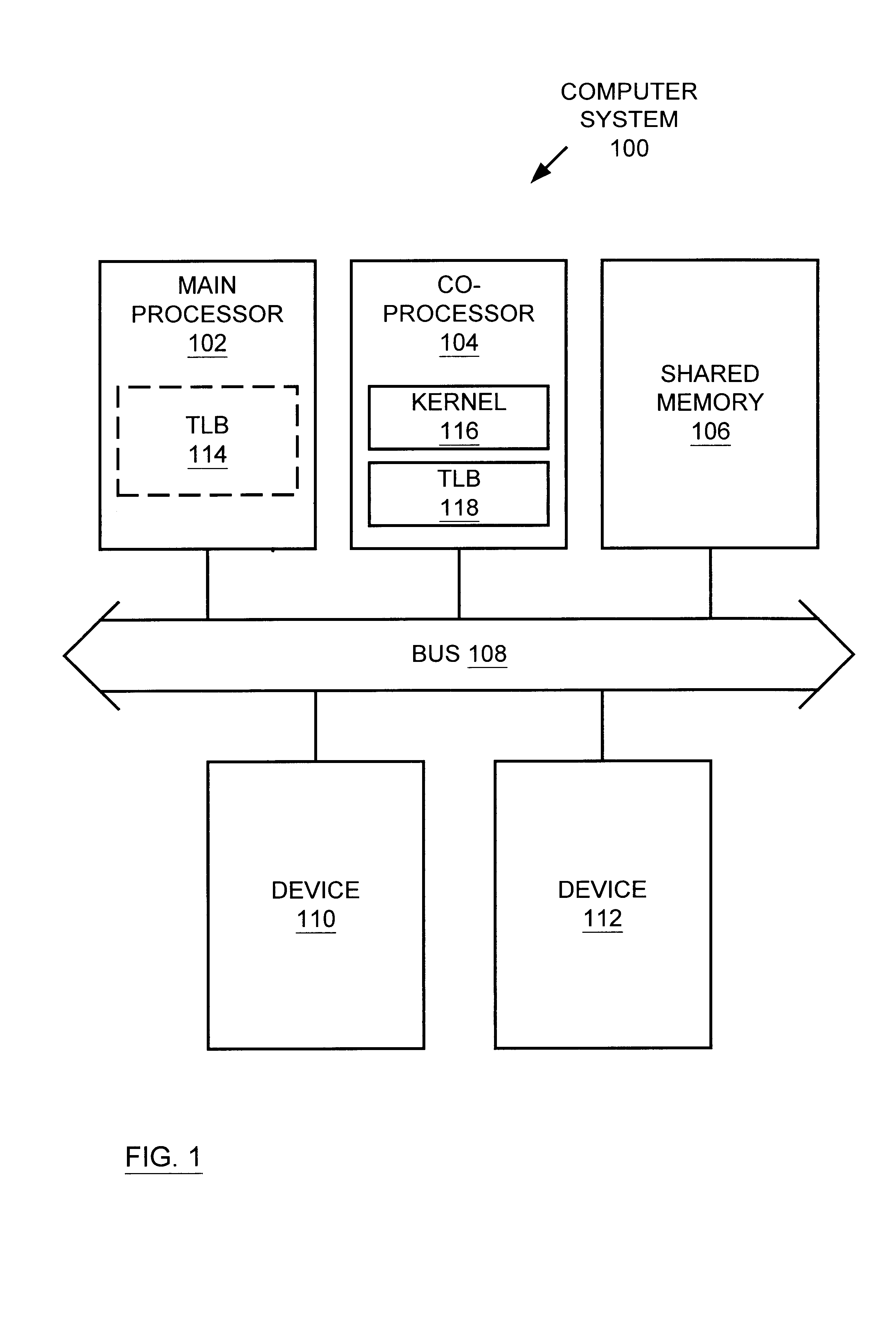

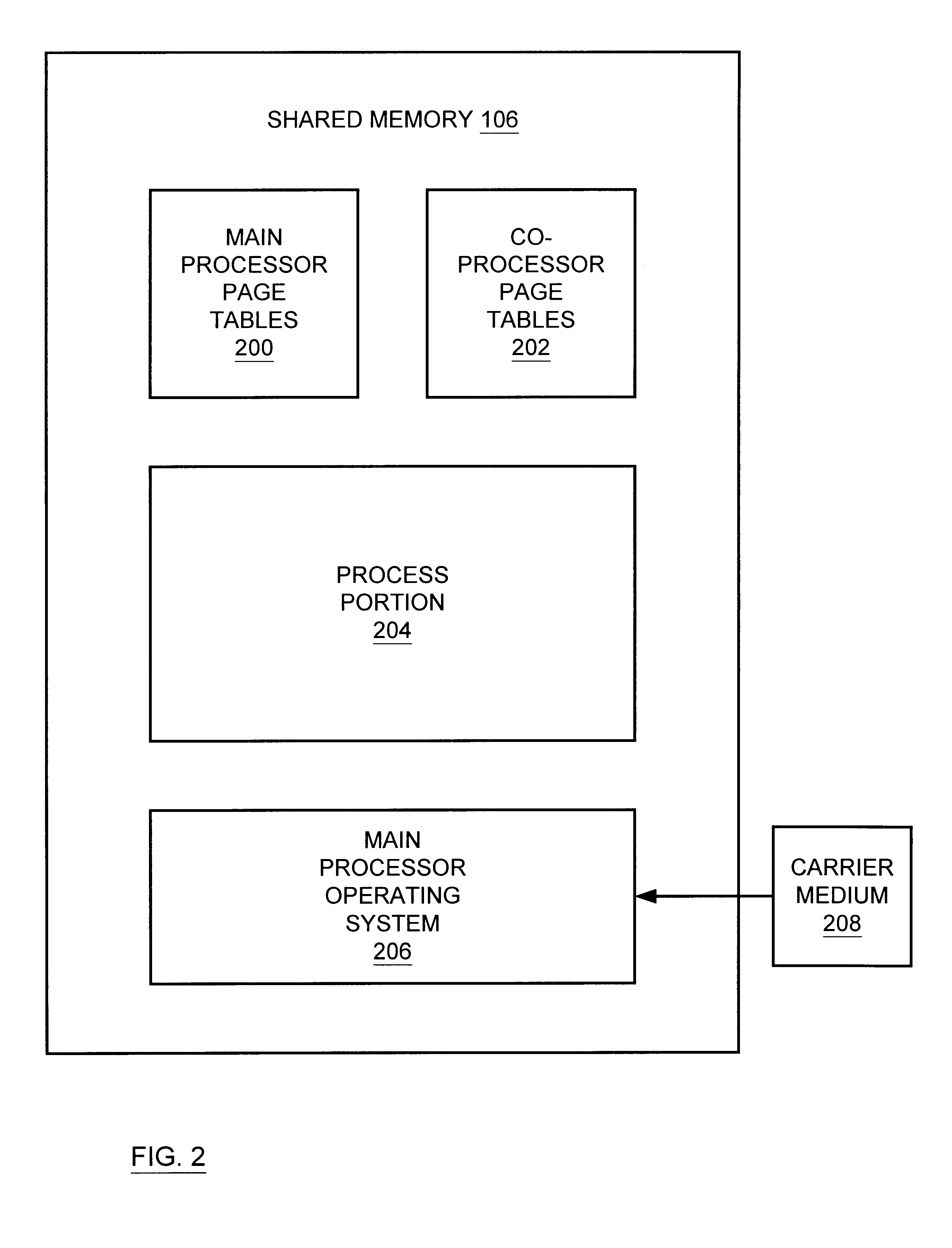

Multiprocessor system implementing virtual memory using a shared memory, and a page replacement method for maintaining paged memory coherence

InactiveUS6684305B1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryComputer architecture

A computer system including a first processor, a second processor in communication with the first processor, a memory coupled to the first and second processors (i.e., a shared memory) and including multiple memory locations, and a storage device coupled to the first processor. The first and second processors implement virtual memory using the memory. The first processor maintains a first set of page tables and a second set of page tables in the memory. The first processor uses the first set of page tables to access the memory locations within the memory. The second processor uses the second set of page tables, maintained by the first processor, to access the memory locations within the memory. A virtual memory page replacement method is described for use in the computer system, wherein the virtual memory page replacement method is designed to help maintain paged memory coherence within the multiprocessor computer system.

Owner:GLOBALFOUNDRIES US INC

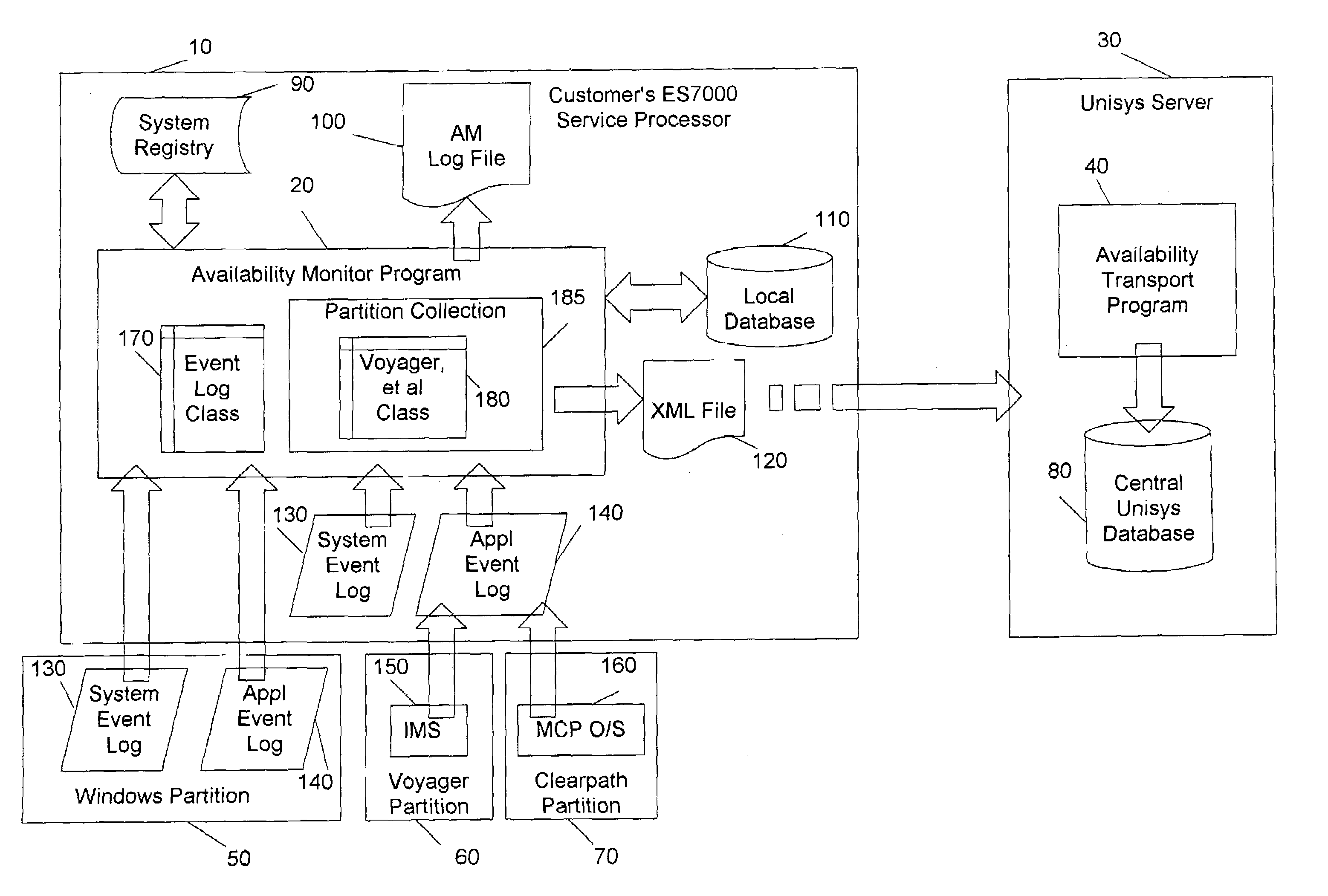

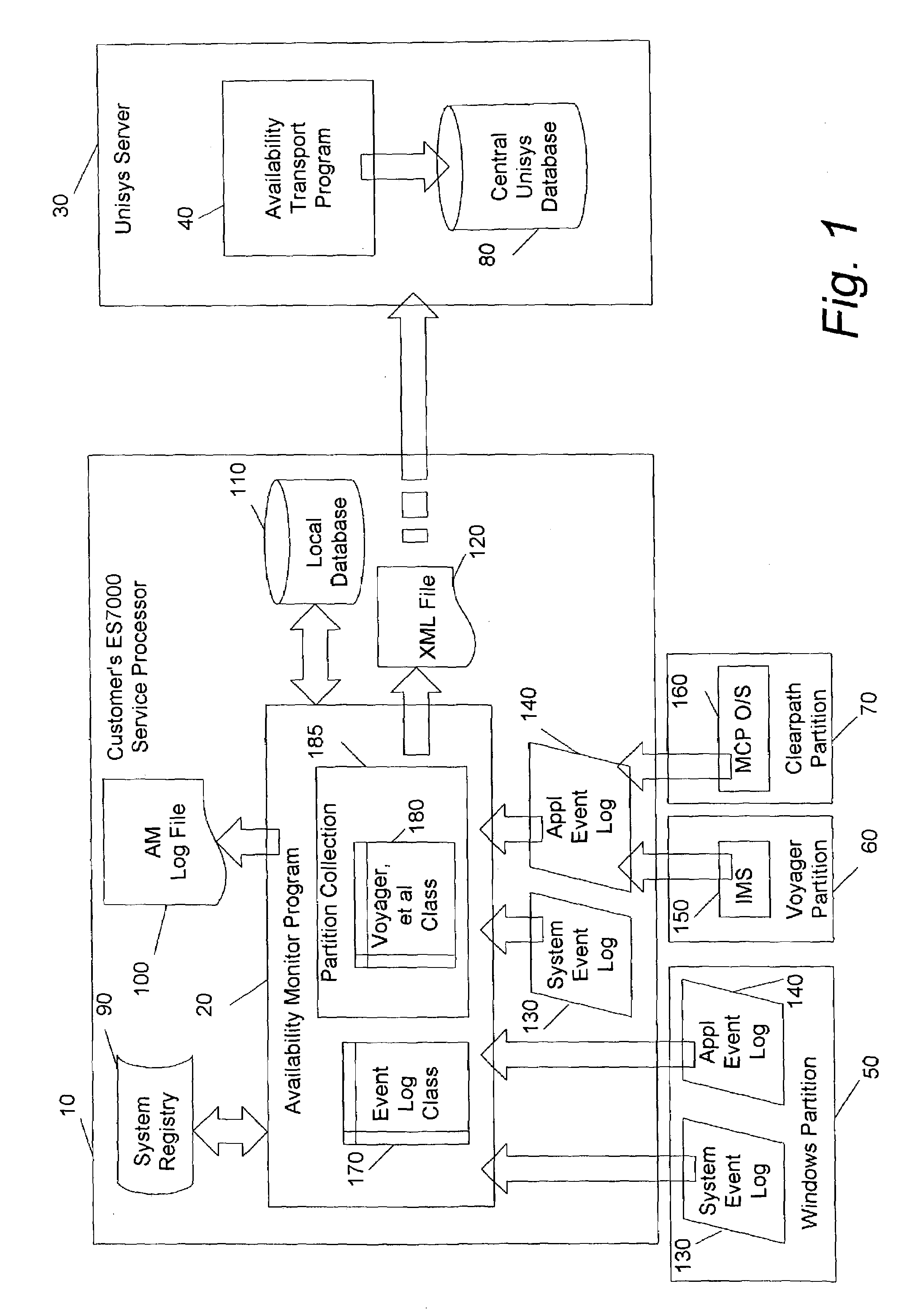

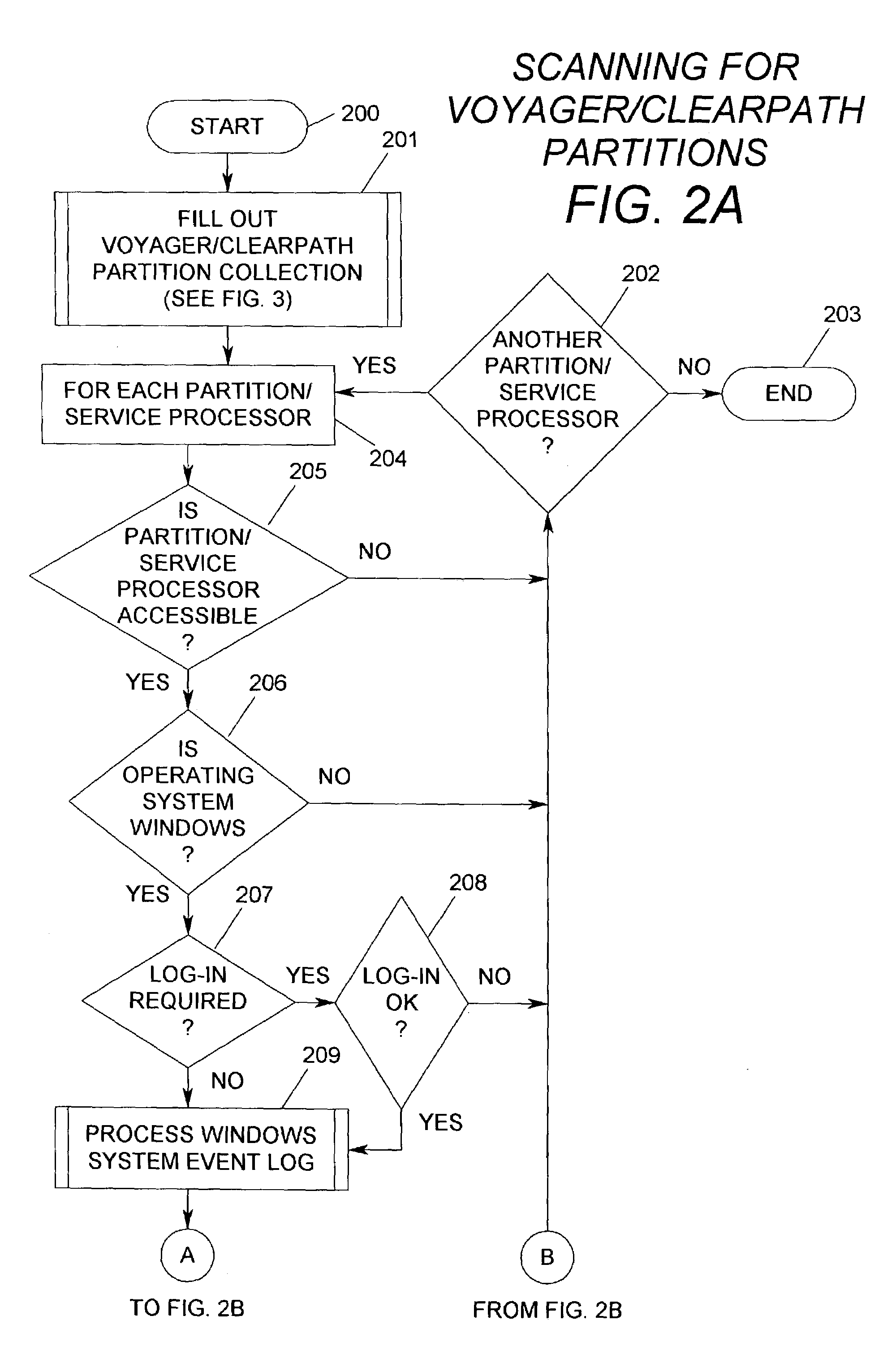

Method variation for collecting stability data from proprietary systems

ActiveUS7043505B1Improve integrityUniform compatibilityData processing applicationsError detection/correctionUnixPresent method

A Cellular Multi-Processor Serve provides partitions having different Operating Systems such as Windows, Unix OS2200 (Unisys), Master Control Program (Unisys) or other Operating Systems, which could be designated as OS-A or OS-B. The present method and system collects and scans availability and reliability information which involve non-windows partitions with respect to planned and unplanned stops, system starts and different categories of error conditions.

Owner:UNISYS CORP

Method and mechanism for speculatively executing threads of instructions

InactiveUS6574725B1Program initiation/switchingGeneral purpose stored program computerSpeculative executionOperational system

A processor architecture containing multiple closely coupled processors in a form of symmetric multiprocessing system is provided. The special coupling mechanism allows it to speculatively execute multiple threads in parallel very efficiently. Generally, the operating system is responsible for scheduling various threads of execution among the available processors in a multiprocessor system. One problem with parallel multithreading is that the overhead involved in scheduling the threads for execution by the operating system is such that shorter segments of code cannot efficiently take advantage of parallel multithreading. Consequently, potential performance gains from parallel multithreading are not attainable. Additional circuitry is included in a form of symmetrical multiprocessing system which enables the scheduling and speculative execution of multiple threads on multiple processors without the involvement and inherent overhead of the operating system. Advantageously, parallel multithreaded execution is more efficient and performance may be improved.

Owner:GLOBALFOUNDRIES INC

Multiprocessor system in which a cache serving as a highest point of coherency is indicated by a snoop response

InactiveUS6405289B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorParallel computing

A method of maintaining cache coherency, by designating one cache that owns a line as a highest point of coherency (HPC) for a particular memory block, and sending a snoop response from the cache indicating that it is currently the HPC for the memory block and can service a request. The designation may be performed in response to a particular coherency state assigned to the cache line, or based on the setting of a coherency token bit for the cache line. The processing units may be grouped into clusters, while the memory is distributed using memory arrays associated with respective clusters. One memory array is designated as the lowest point of coherency (LPC) for the memory block (i.e., a fixed assignment) while the cache designated as the HPC is dynamic (i.e., changes as different caches gain ownership of the line). An acknowledgement snoop response is sent from the LPC memory array, and a combined response is returned to the requesting device which gives priority to the HPC snoop response over the LPC snoop response.

Owner:IBM CORP

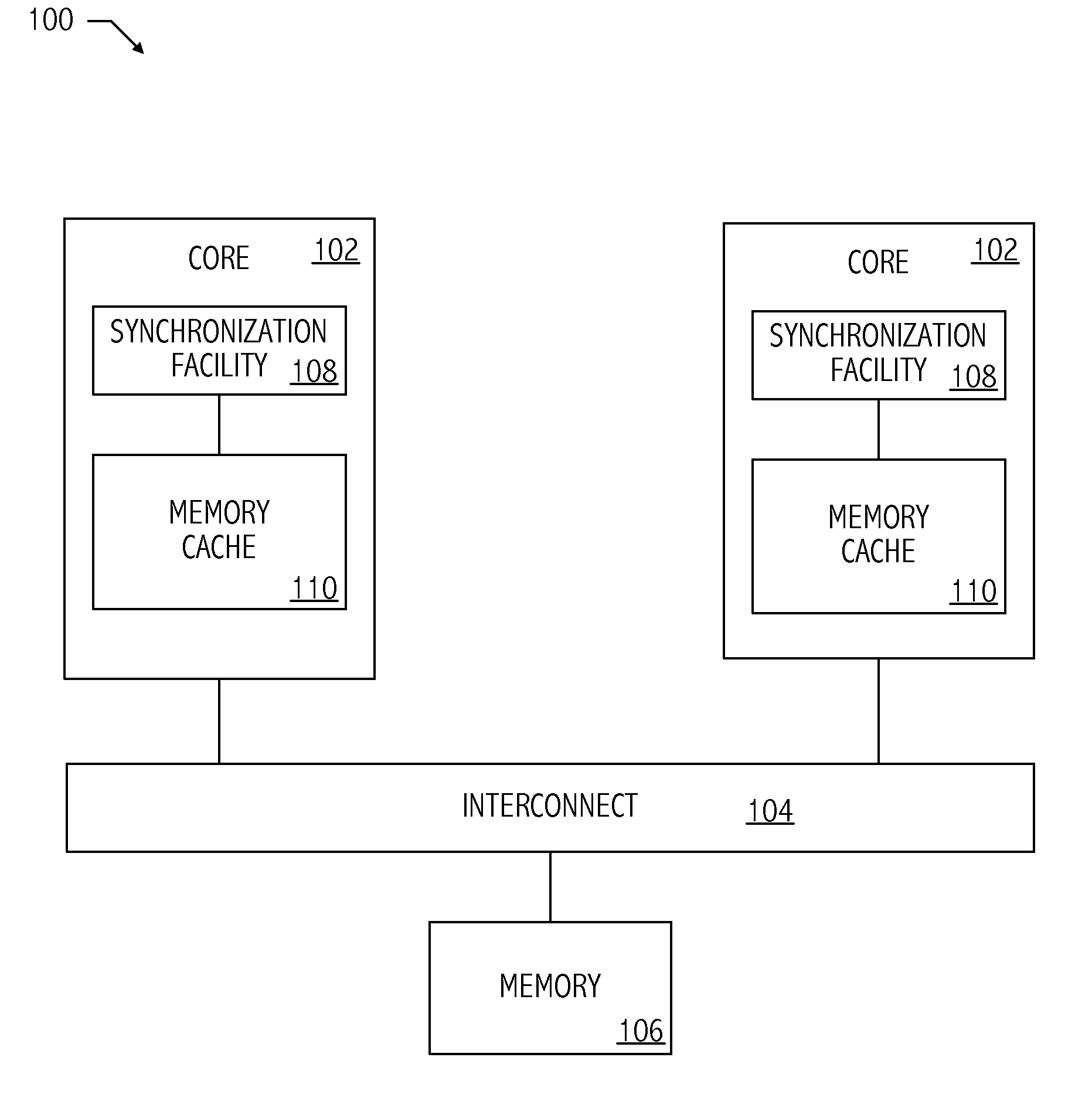

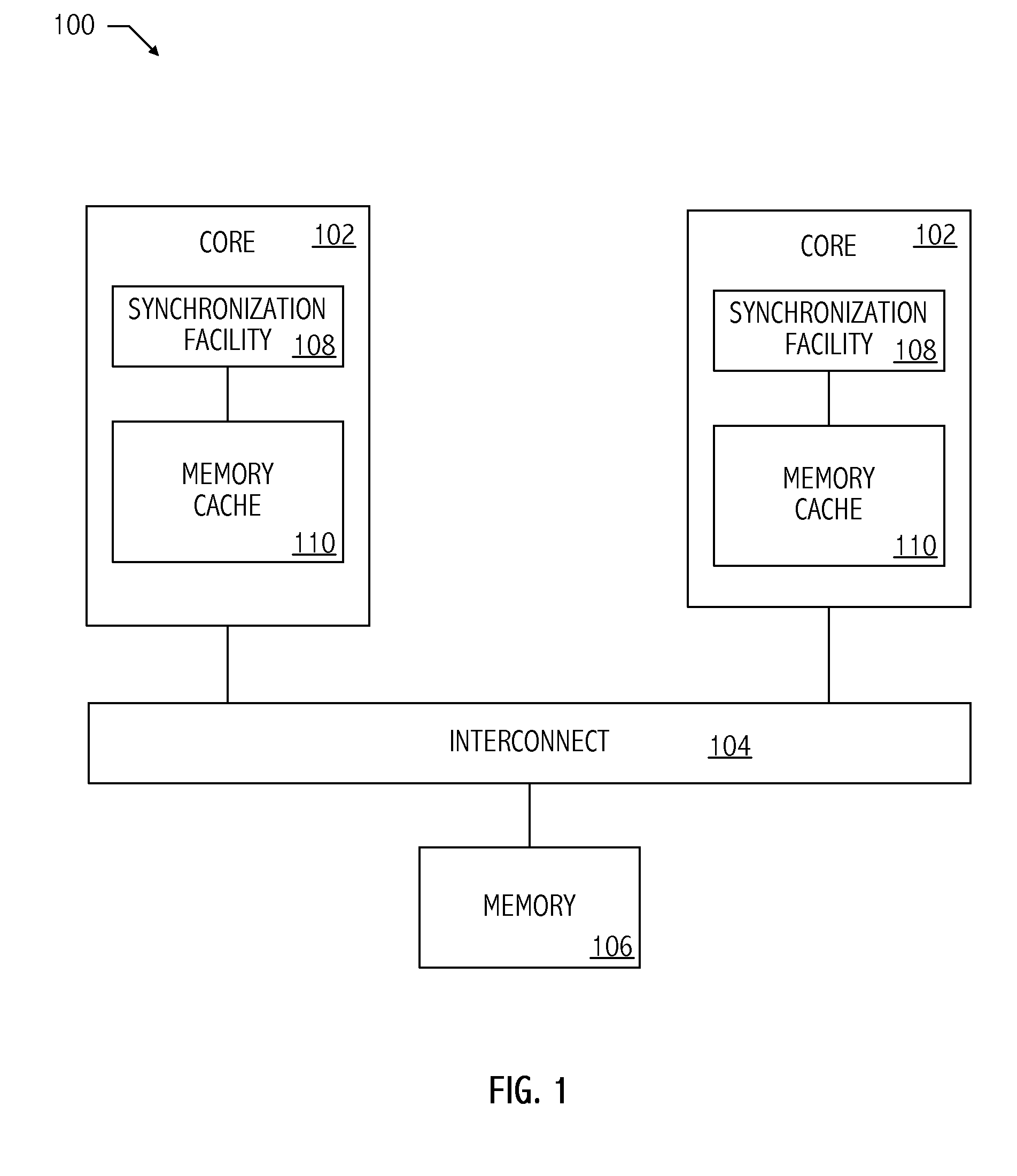

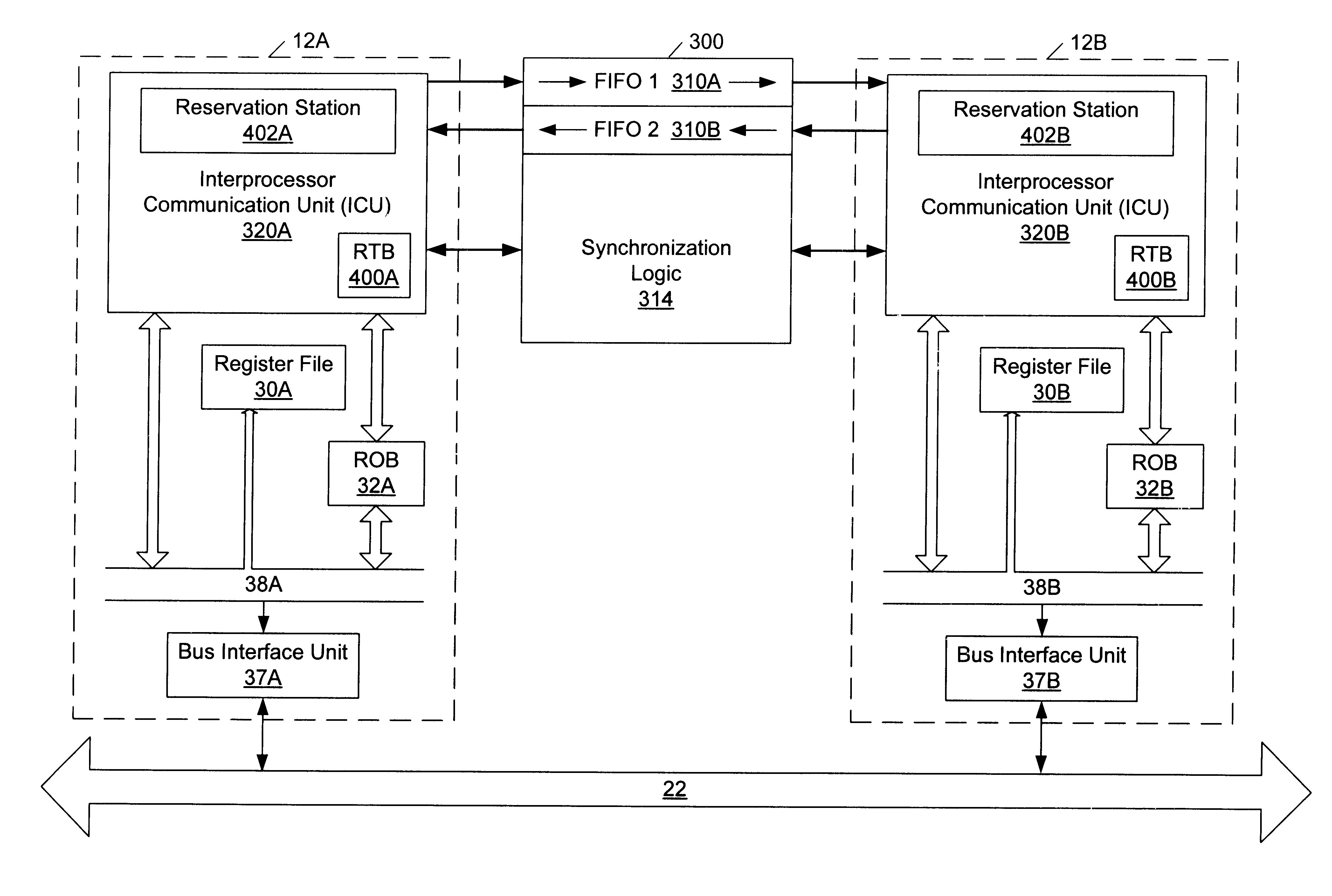

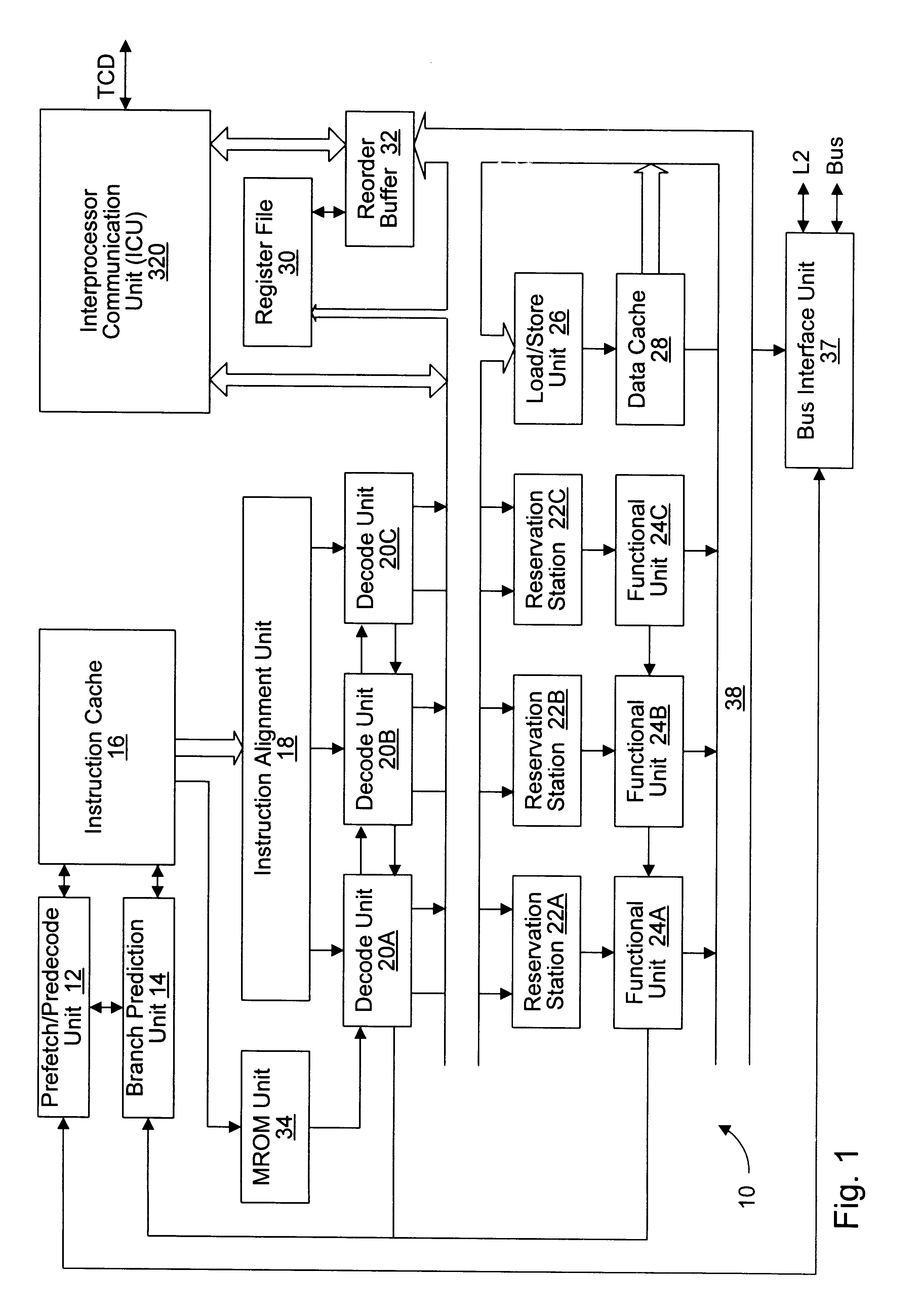

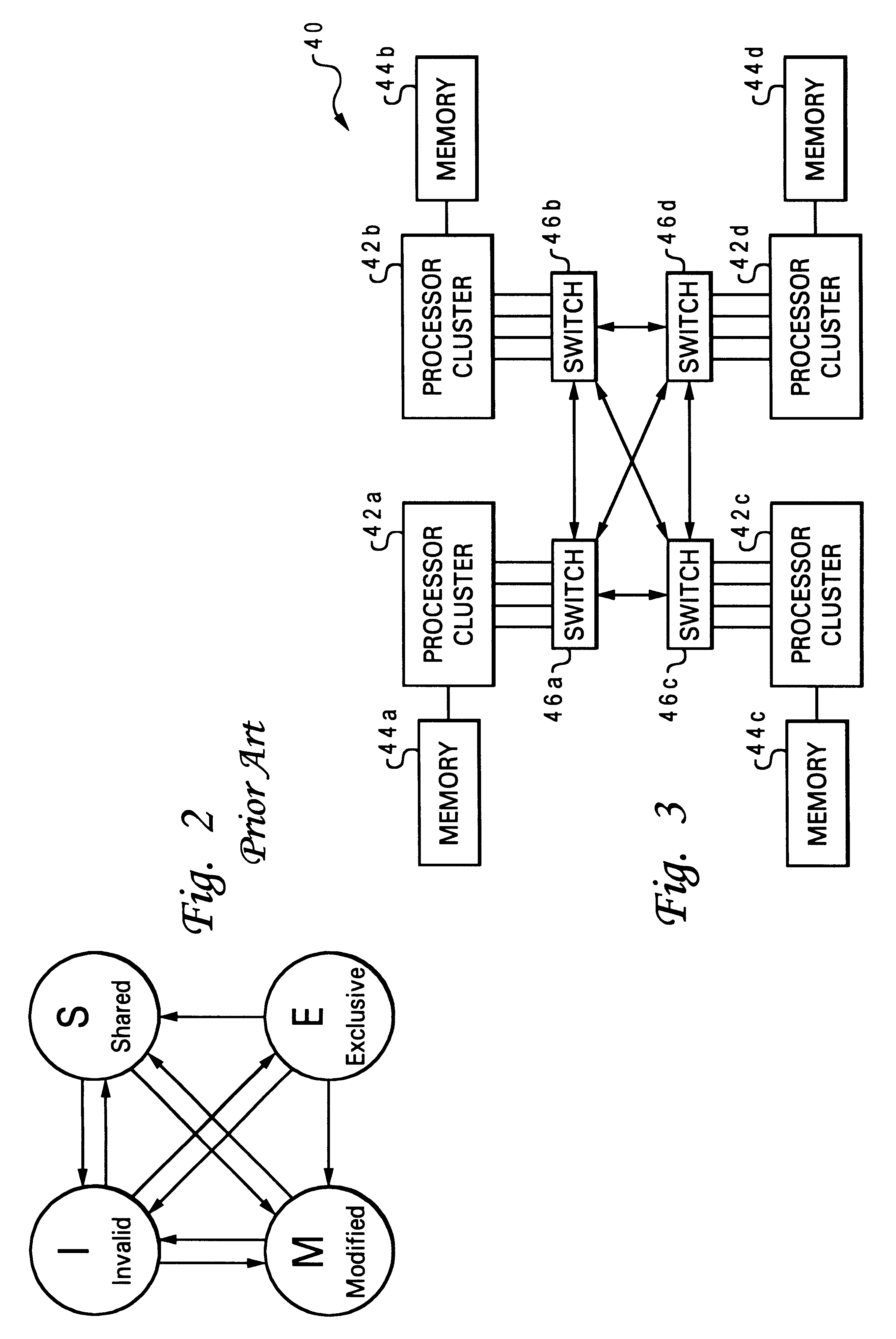

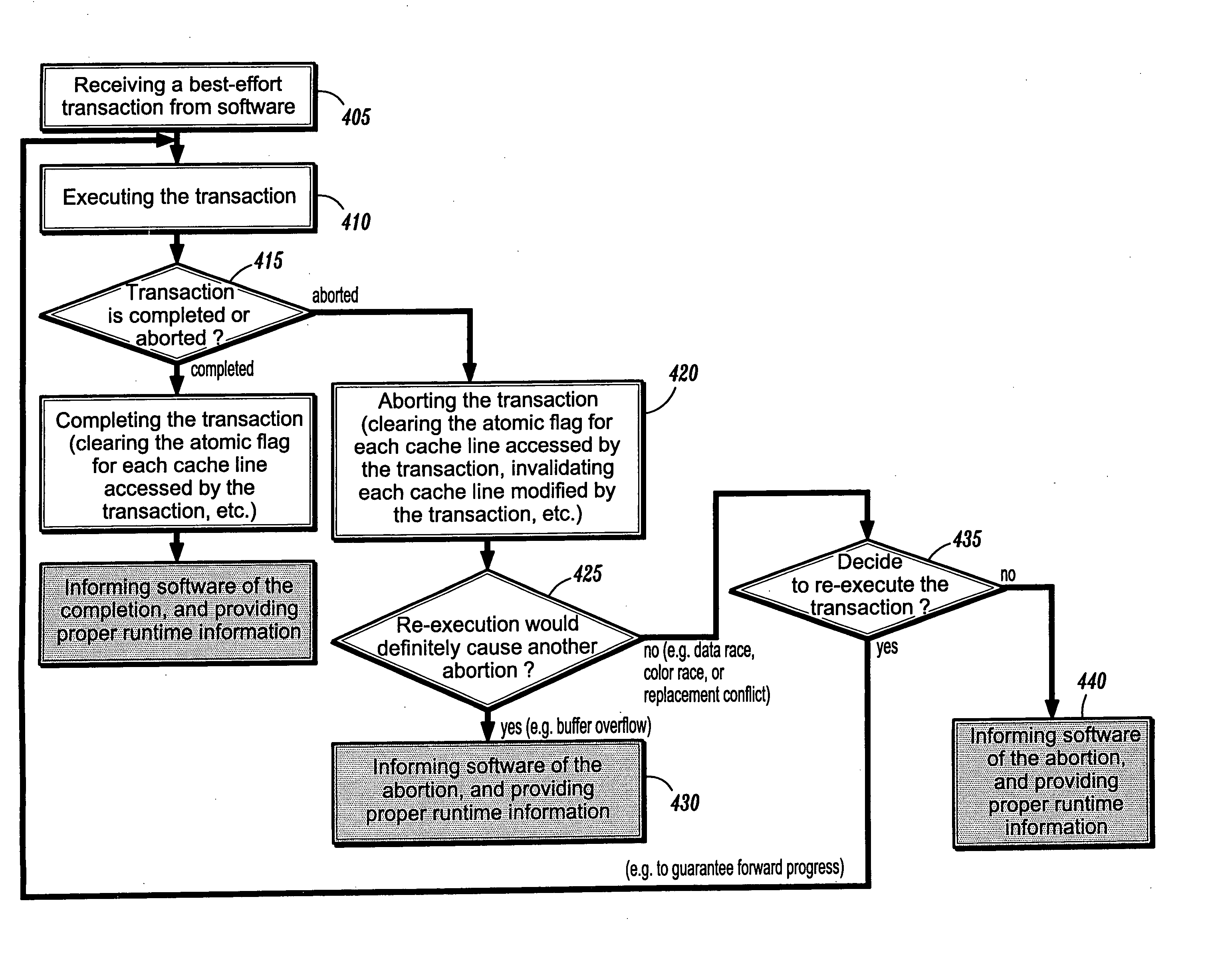

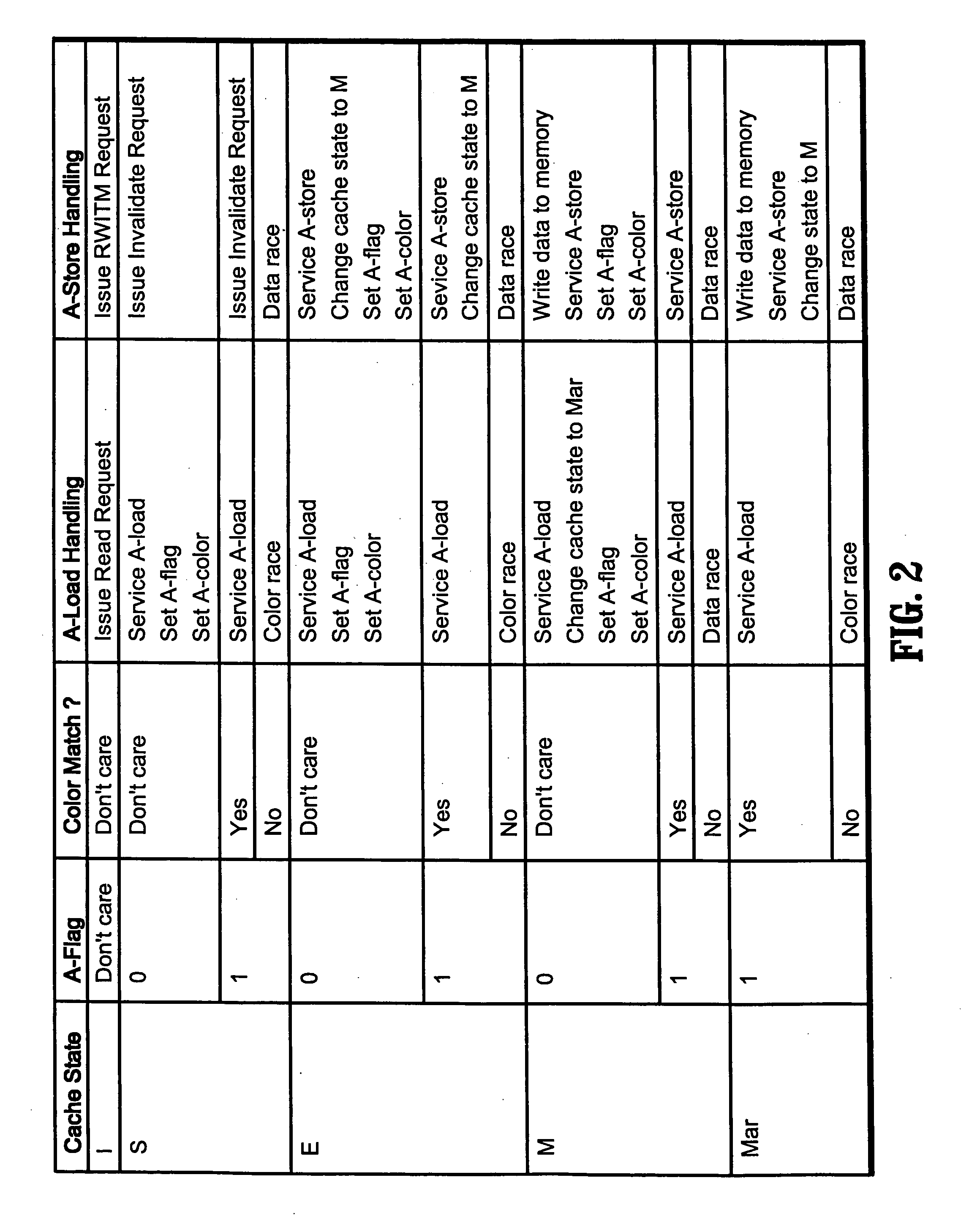

Architecture support of best-effort atomic transactions for multiprocessor systems

An atomic transaction includes one or more memory access operations that are completed atomically. A Best-Effort Transaction (BET) system makes its best effort to complete each atomic transaction without guaranteeing completion of all atomic transactions. When an atomic transaction is aborted, BET may provide software with appropriate runtime information such as cause of the abortion. With proper coherence layer enhancements, BET can be implemented efficiently for multiprocessor systems, using caches as buffers for data accessed by atomic transactions. Furthermore, with appropriate fairness support, forward progress can be guaranteed for atomic transactions that incur no buffer overflow.

Owner:IBM CORP

Virtualized I/O adapter for a multi-processor data processing system

InactiveUS20060195663A1Unauthorized memory use protectionGeneral purpose stored program computerData processing systemSCSI

An enhanced SCSI storage adapter with multiple queues for use by different server processors or partitions. For a non-partitioned server, the operating system (OS) owns the SCSI storage adapter, controls the adapter queues, both creation of and changes to the queues, and updates the queue table(s) in the storage adapter with queue address information, device list, message signaled interrupt (MSI) information and optional queue priorities. An OS operator can specify that one or more SCSI devices can be accessed by a specific processor or group of processors. The processor or group of processors is given an adapter queue to access the SCSI device or devices. For a partitioned server, one partition, which may be a hosting partition, owns the SCSI storage adapter, controls the adapter queues, both creation of and changes to the queues, and updates the queue table(s) in the storage adapter with queue address information, device list, message signaled interrupt (MSI) information and optional queue priorities. A system operator can assign one or more SCSI devices under a storage adapter to a partition. Each partition that has access to a SCSI device(s) under a SCSI adapter is given an adapter queue to access the device(s).

Owner:IBM CORP

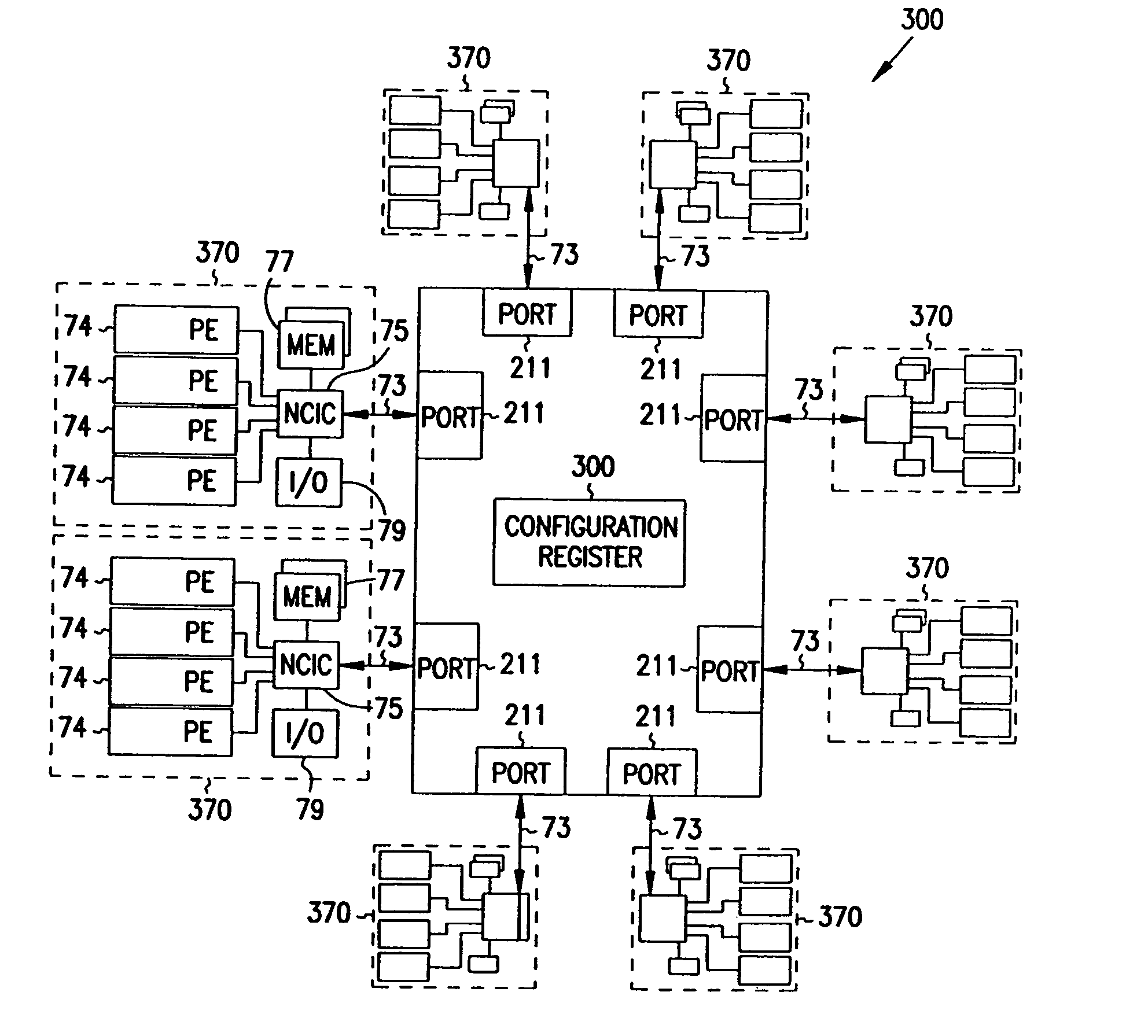

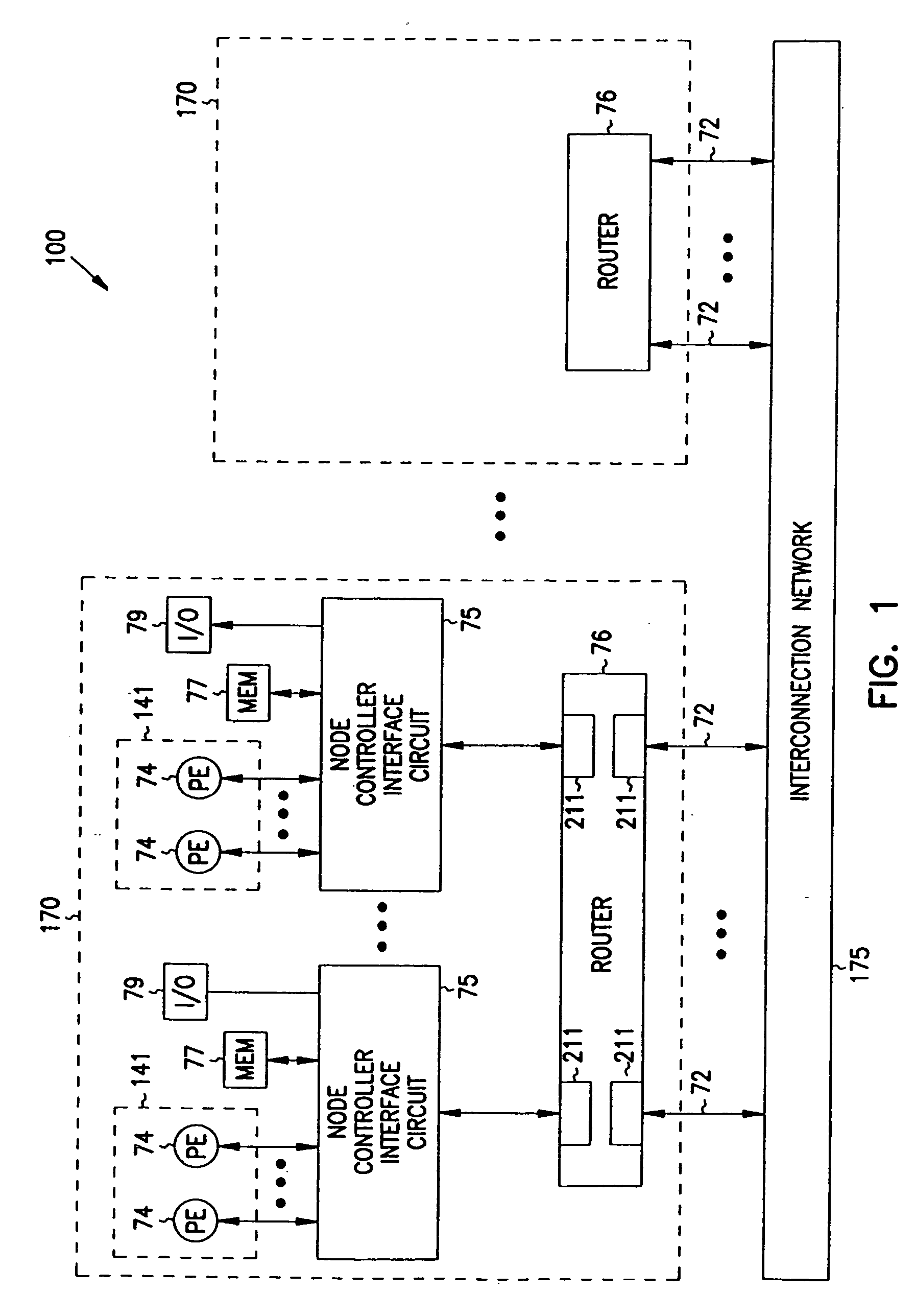

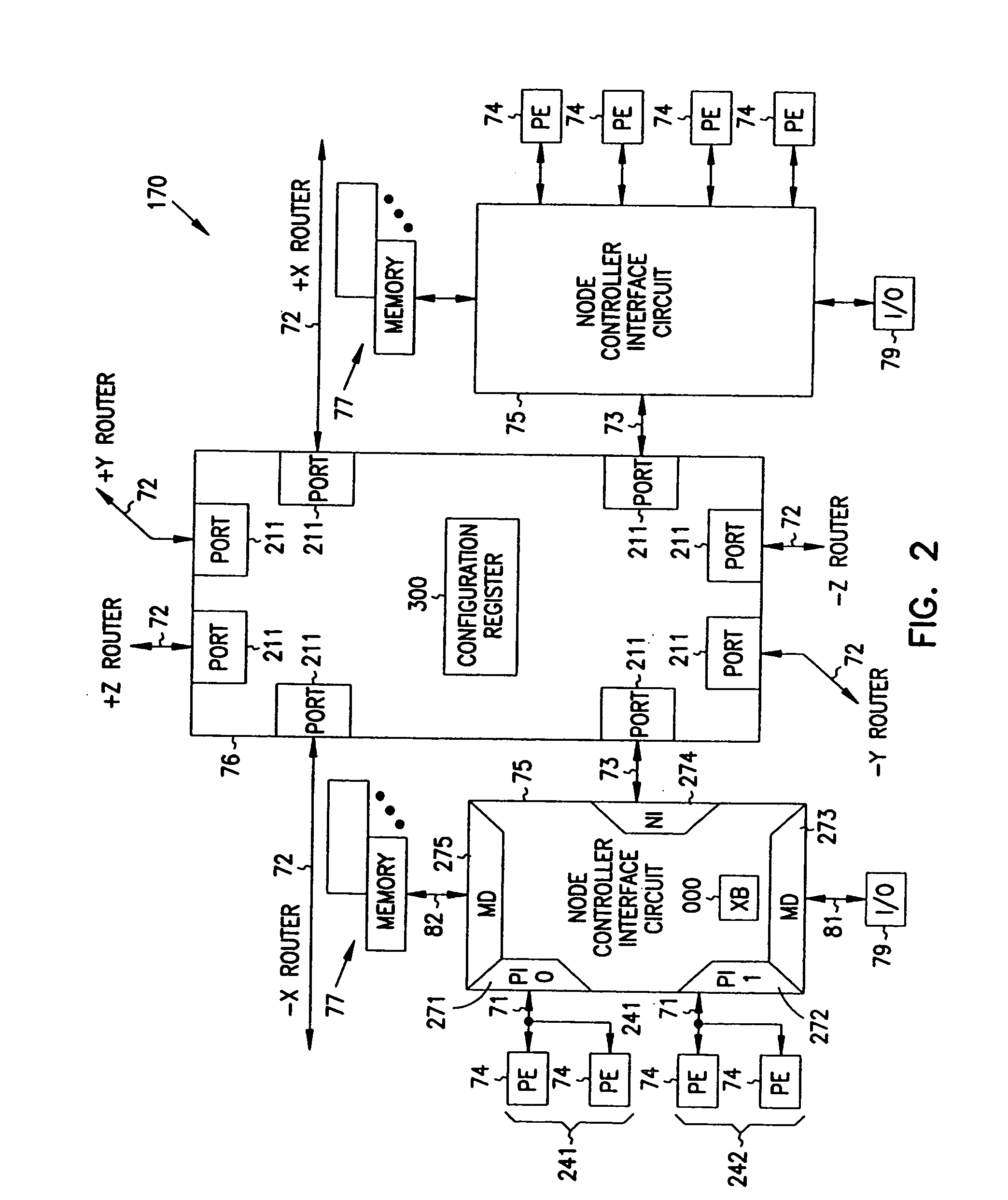

Multiprocessor node controller circuit and method

InactiveUS20050053057A1Ease of parallel processingImprove welfareMultiplex system selection arrangementsMemory adressing/allocation/relocationMemory addressCrossbar switch

Improved method and apparatus for parallel processing. One embodiment provides a multiprocessor computer system that includes a first and second node controller, a number of processors being connected to each node controller, a memory connected to each controller, a first input / output system connected to the first node controller, and a communications network connected between the node controllers. The first node controller includes: a crossbar unit to which are connected a memory port, an input / output port, a network port, and a plurality of independent processor ports. A first and a second processor port connected between the crossbar unit and a first subset and a second subset, respectively, of the processors. In some embodiments of the system, the first node controller is fabricated onto a single integrated-circuit chip. Optionally, the memory is packaged on plugable memory / directory cards wherein each card includes a plurality of memory chips including a first subset dedicated to holding memory data and a second subset dedicated to holding directory data. Further, the memory port includes a memory data port including a memory data bus and a memory address bus coupled to the first subset of memory chips, and a directory data port including a directory data bus and a directory address bus coupled to the second subset of memory chips. In some such embodiments, the ratio of (memory data space) to (directory data space) on each card is set to a value that is based on a size of the multiprocessor computer system.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com