Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

203 results about "Multiprocessing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

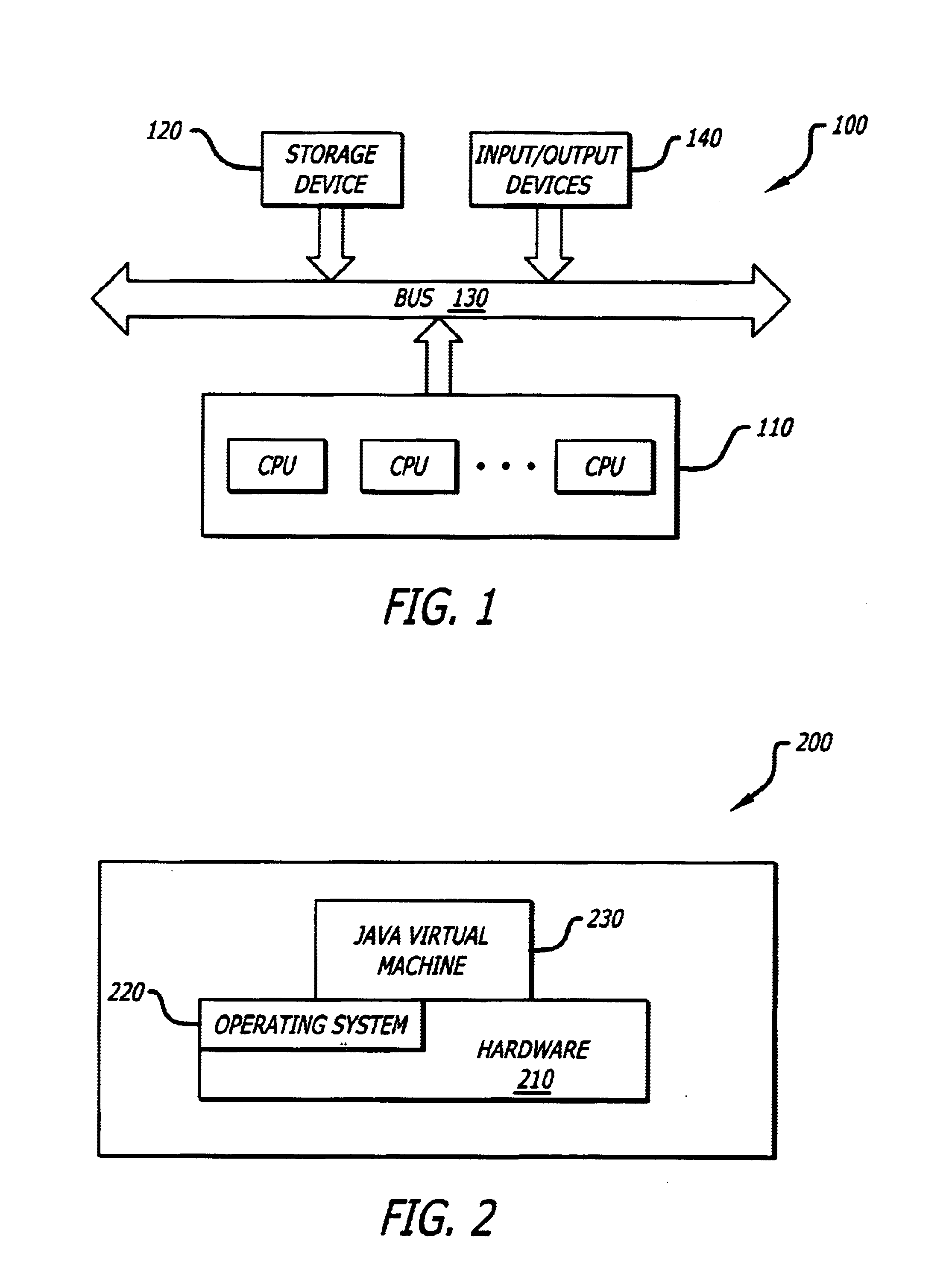

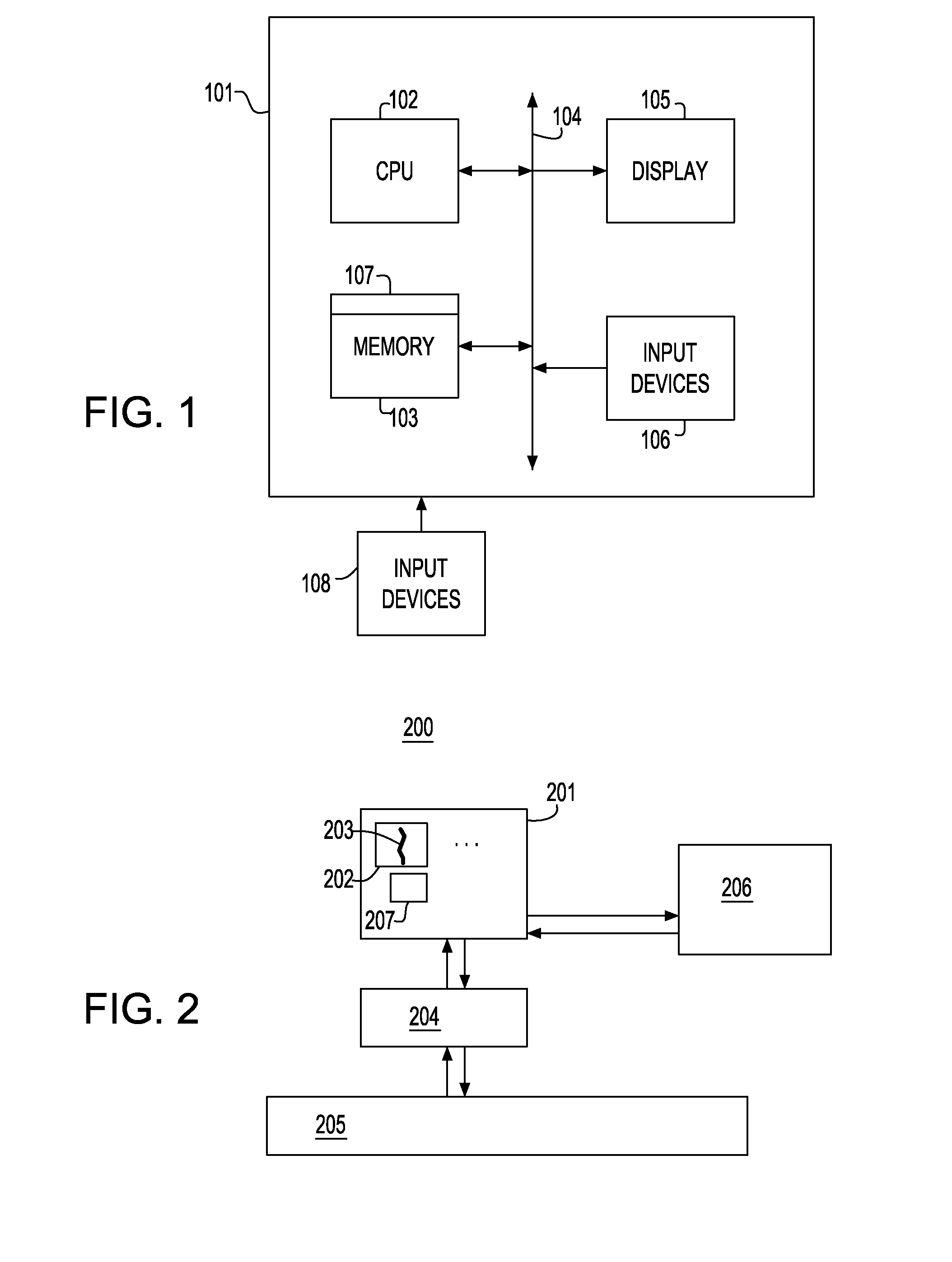

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined (multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.).

Single chip protocol converter

ActiveUS20050021874A1Increase the number ofHigh bandwidthConcurrent instruction executionMultiple digital computer combinationsSingle chipMultiprocessing

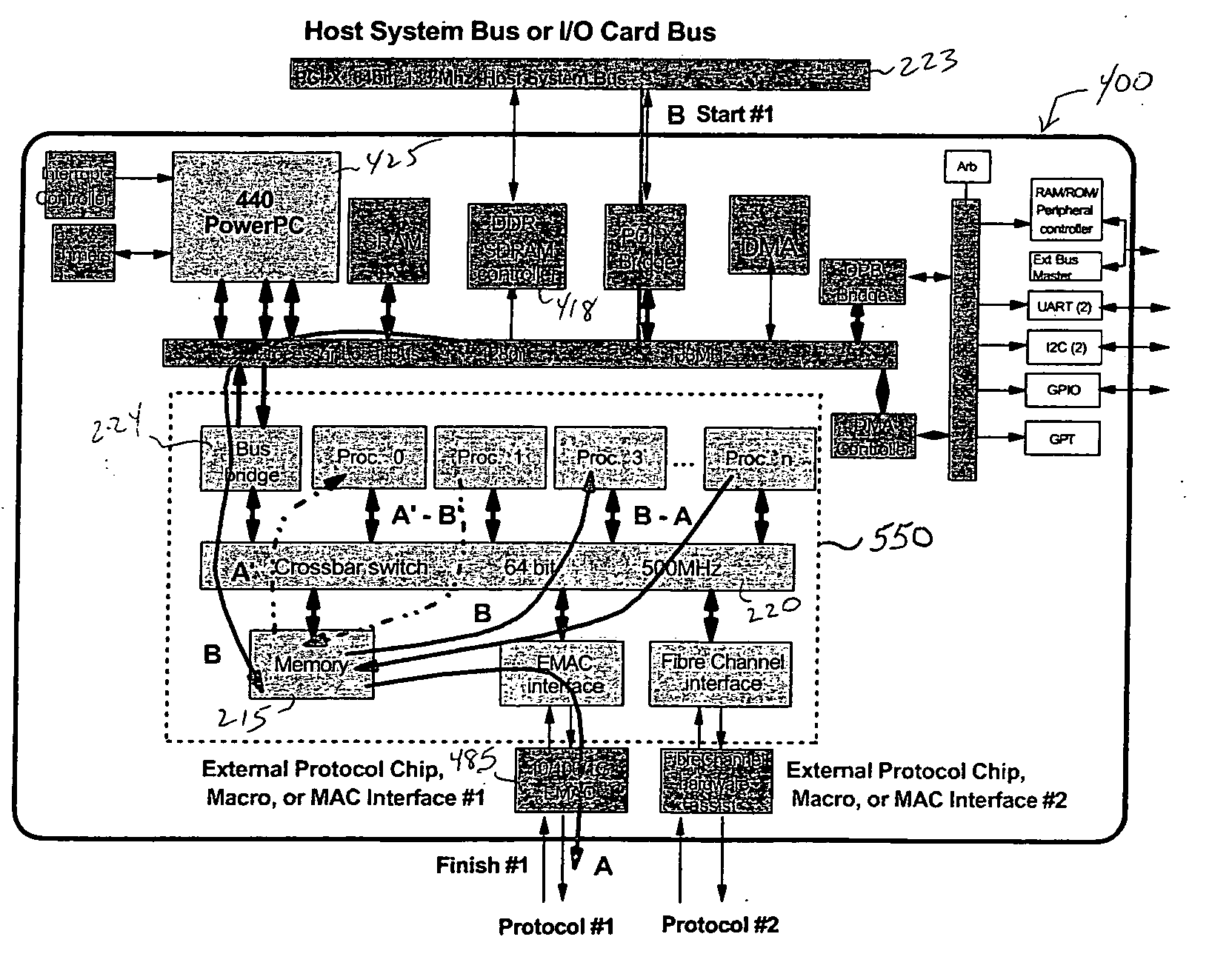

A single chip protocol converter integrated circuit (IC) capable of receiving packets generating according to a first protocol type and processing said packets to implement protocol conversion and generating converted packets of a second protocol type for output thereof, the process of protocol conversion being performed entirely within the single integrated circuit chip. The single chip protocol converter can be further implemented as a macro core in a system-on-chip (SoC) implementation, wherein the process of protocol conversion is contained within a SoC protocol conversion macro core without requiring the processing resources of a host system. Packet conversion may additionally entail converting packets generated according to a first protocol version level and processing the said packets to implement protocol conversion for generating converted packets according to a second protocol version level, but within the same protocol family type. The single chip protocol converter integrated circuit and SoC protocol conversion macro implementation include multiprocessing capability including processor devices that are configurable to adapt and modify the operating functionality of the chip.

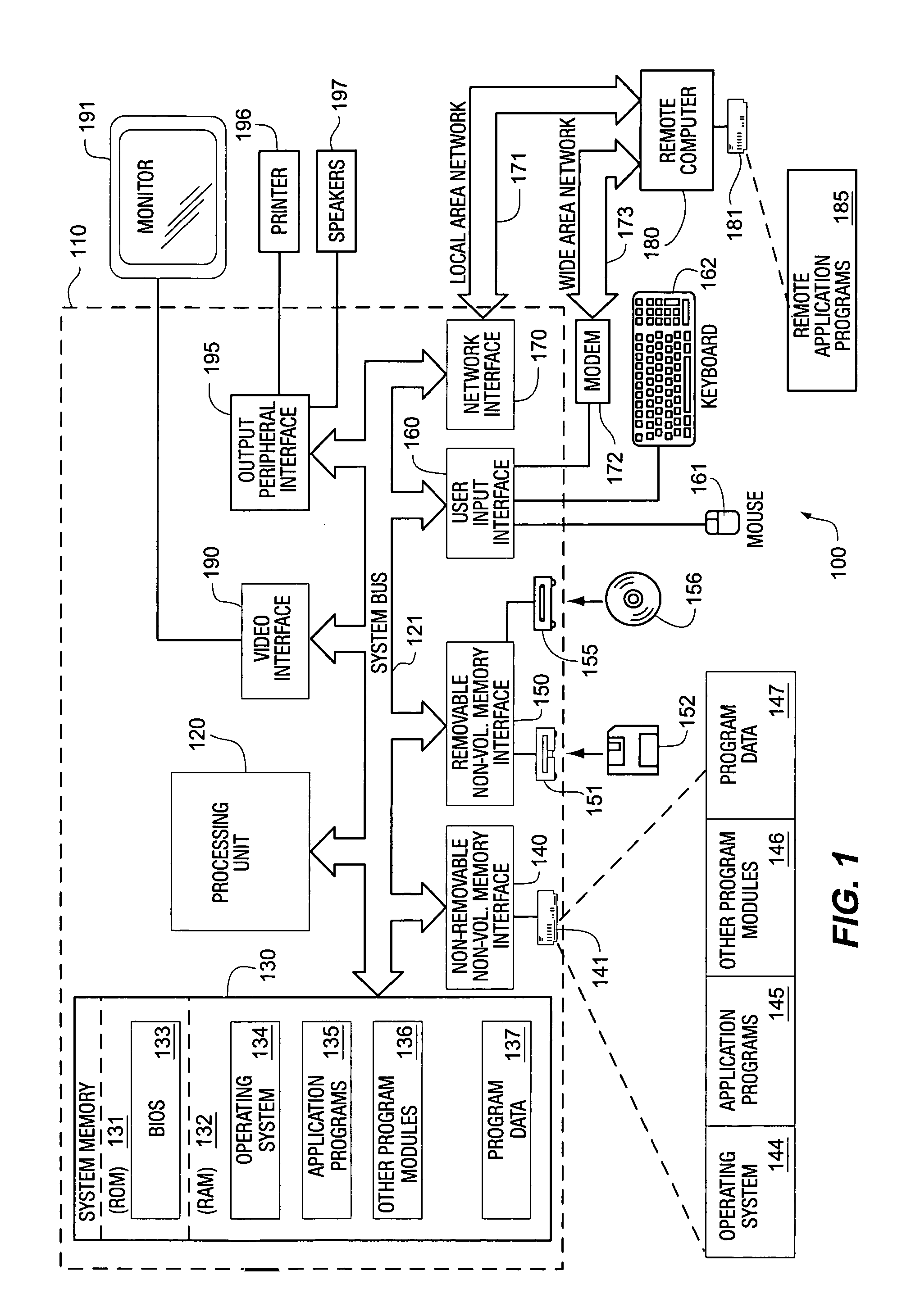

Owner:MICROSOFT TECH LICENSING LLC

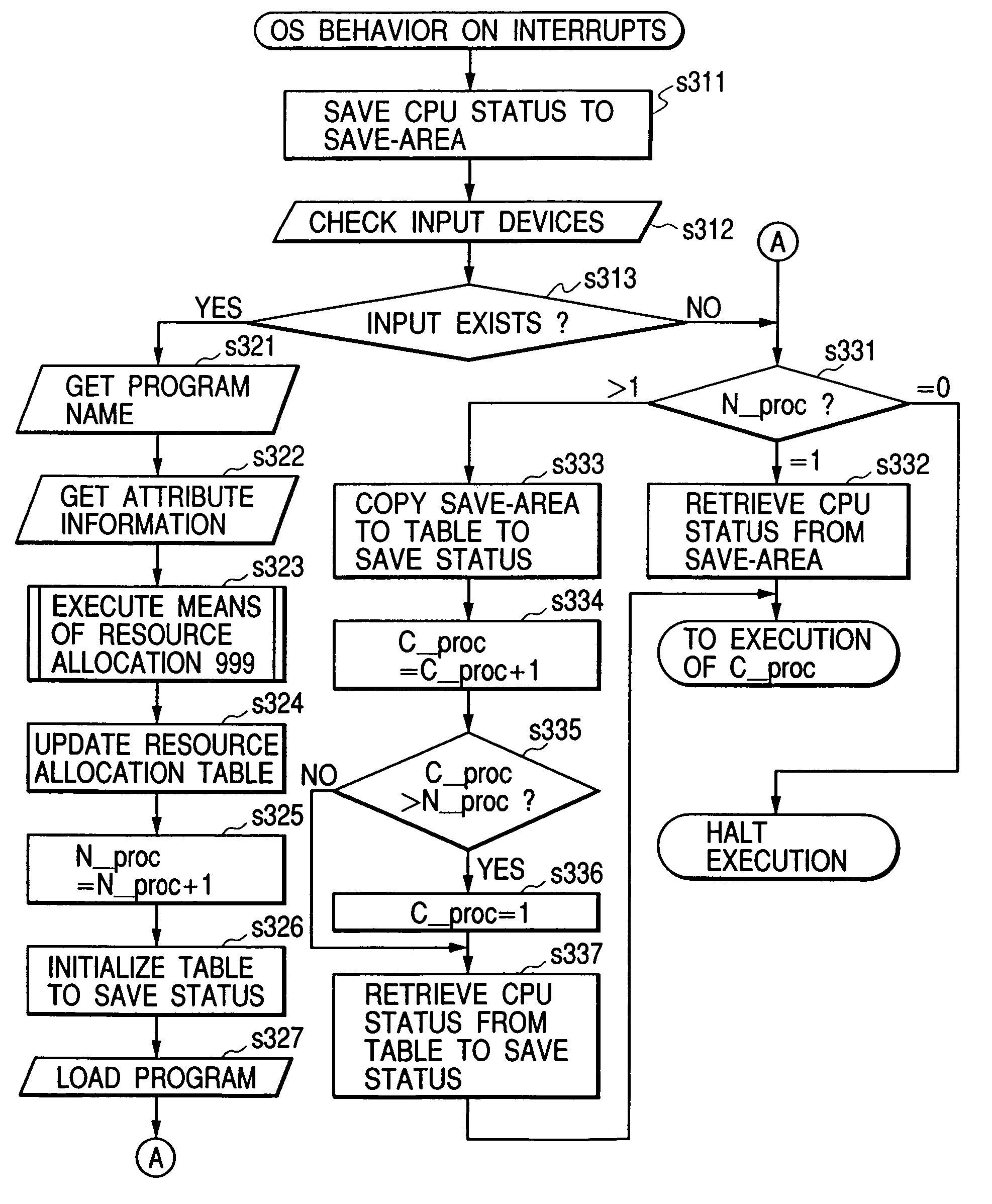

Exception handling with reduced overhead in a multithreaded multiprocessing system

InactiveUS6651163B1Digital computer detailsConcurrent instruction executionMultiprocessingHandling system

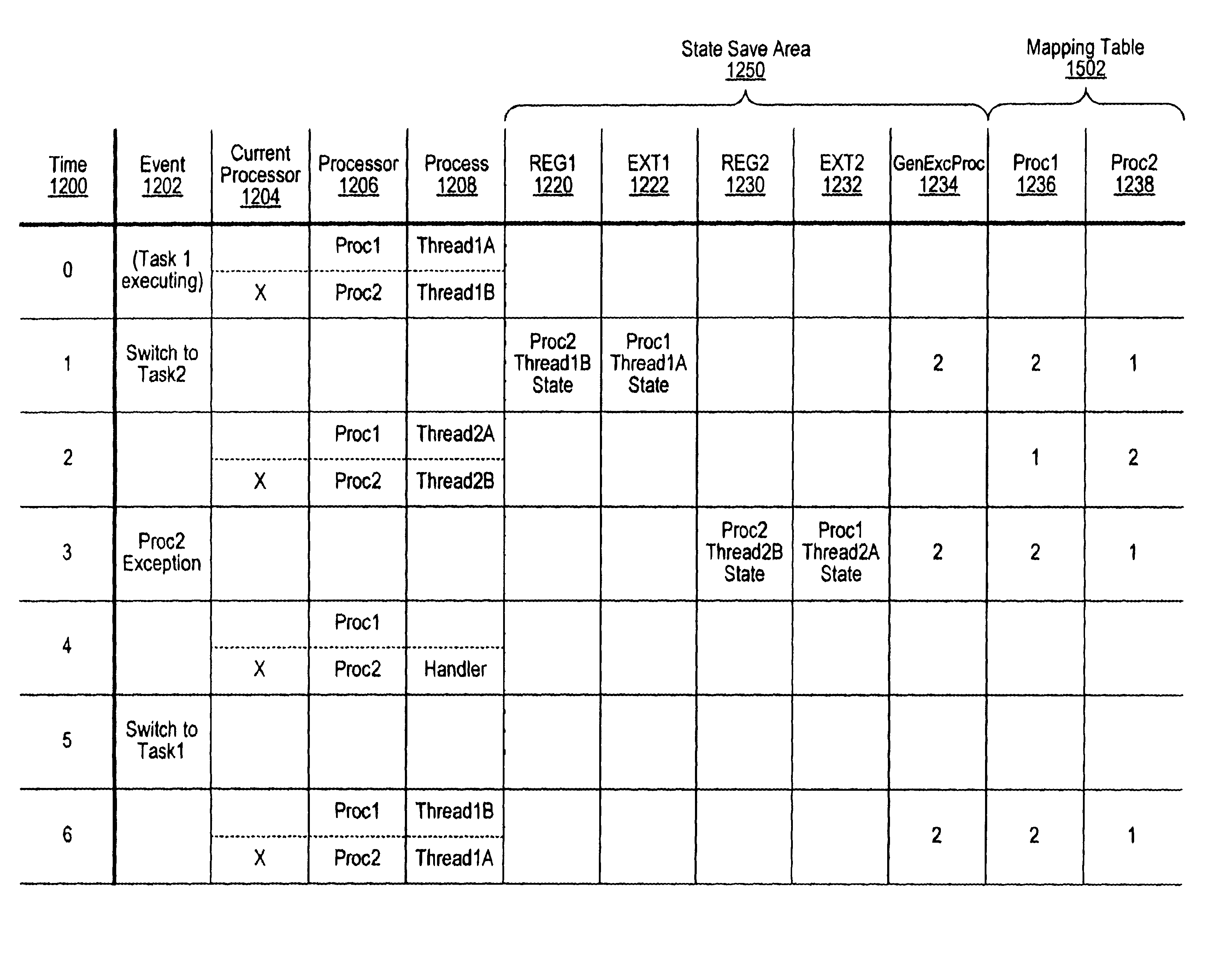

A mechanism for exception and interrupt handling in multithreaded multiprocessors is provided. The mechanism allows the handling of exceptions and interruptions in a multithreaded multiprocessor computer, while hiding the multiprocessor nature of the computer from the operating system. Generally, when an operating system is cognizant of the multiprocessor nature of a computer, additional overhead may be required when handling exceptions and interruptions. Due to the overhead involved in saving and restoring processing states, the performance of a processor may be significantly impacted. Additional circuitry is provided which allows the multiprocessor nature of the computer to be hidden from the operating system, while minimizing the overhead necessary for proper handling.

Owner:GLOBALFOUNDRIES INC

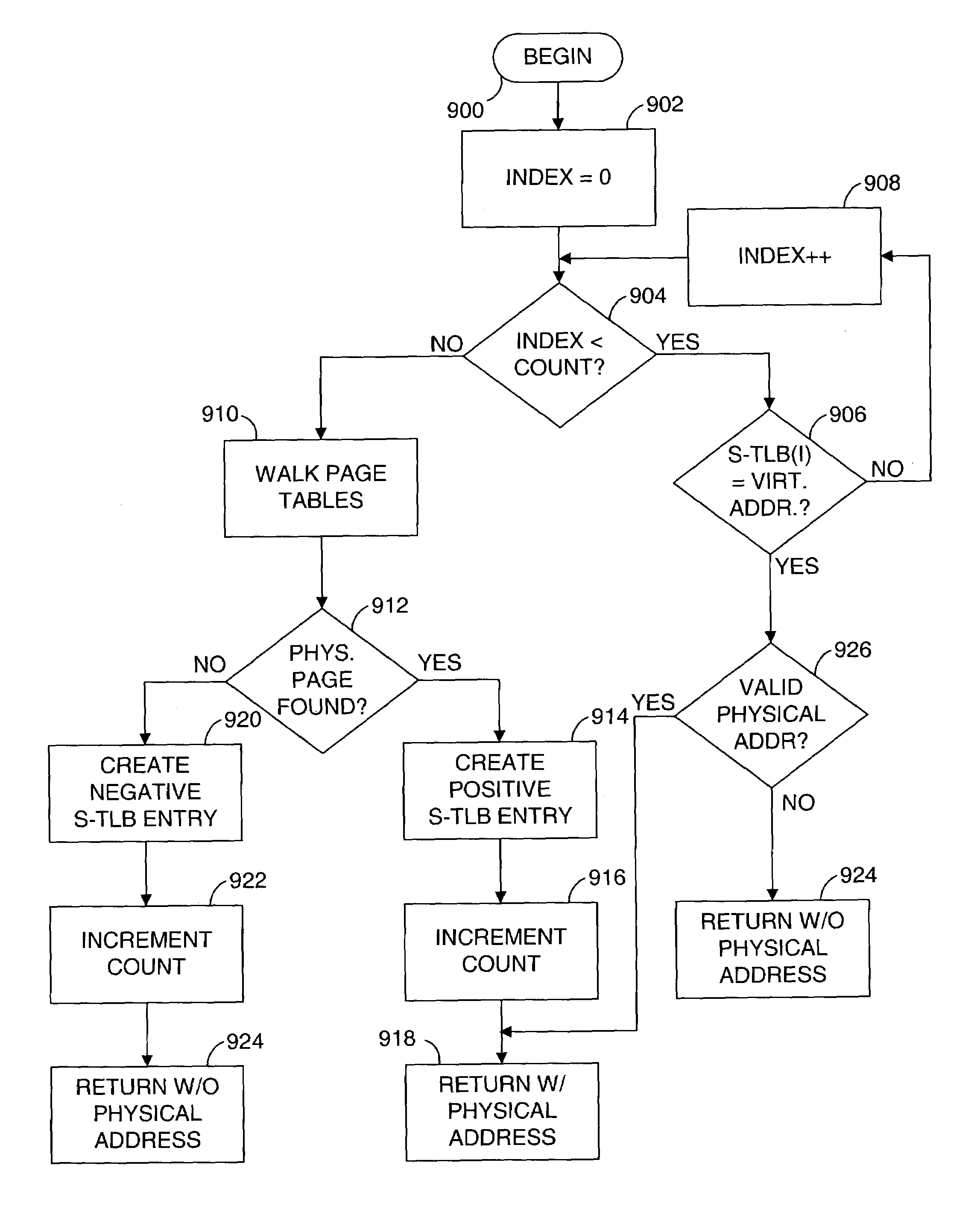

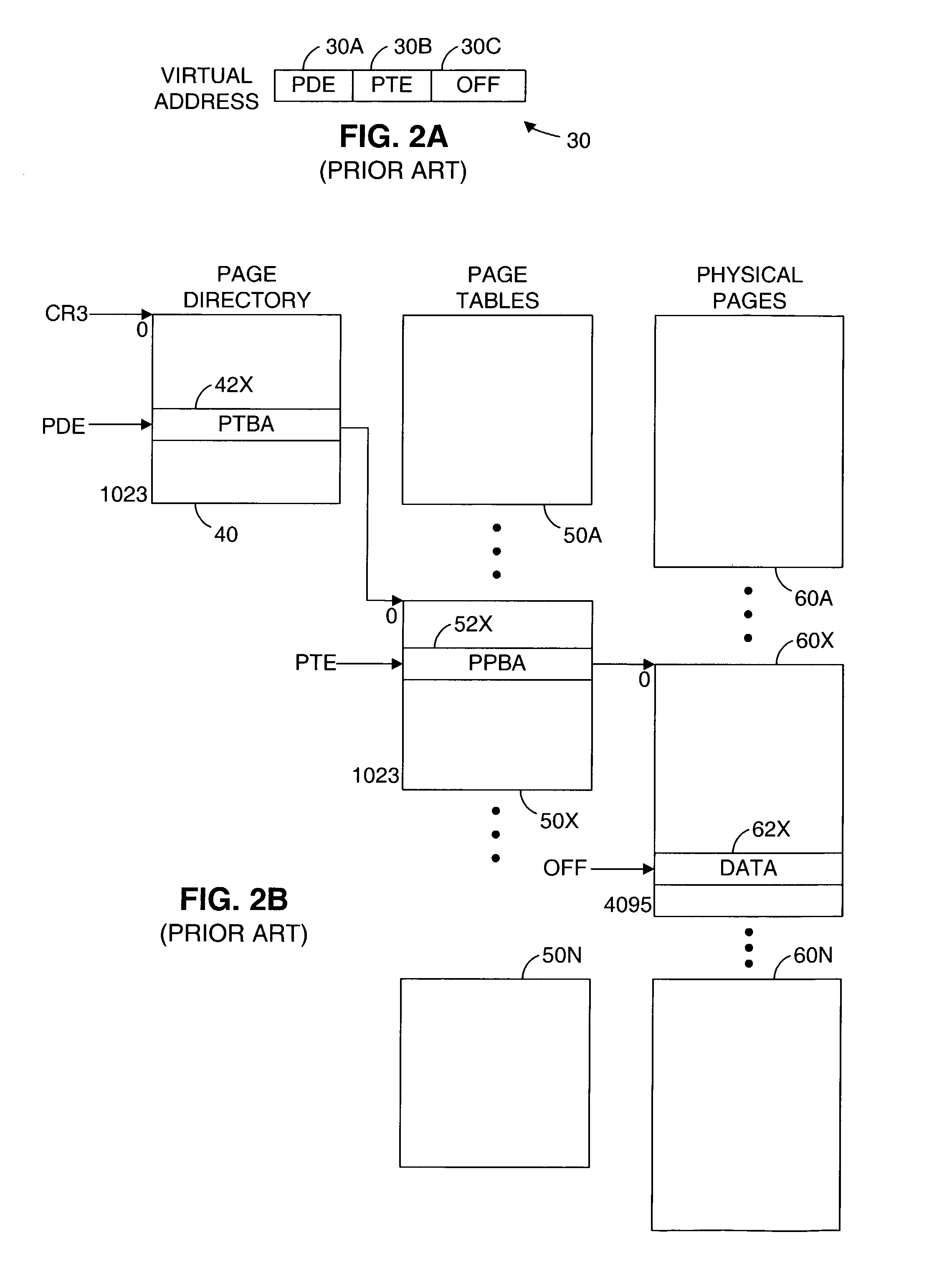

Method and system for performing virtual to physical address translations in a virtual machine monitor

InactiveUS7069413B1Memory architecture accessing/allocationComputer security arrangementsPhysical addressEngineering

The invention is used in a virtual machine monitor for a multiprocessing system that includes a virtual memory system. During a software-based processing of a guest instruction, including translating or interpreting a guest instruction, mappings between virtual addresses and physical addresses are retained in memory until processing of the guest instruction is completed. The retained mappings may be cleared after each guest instruction has been processed, or after multiple guest instructions have been processed. Information may also be stored to indicate that an attempt to map a virtual address to a physical address was not successful. The invention may be extended beyond virtual machine monitors to other systems involving the software-based processing of instructions, and beyond multiprocessing systems to other systems involving concurrent access to virtual memory management data.

Owner:VMWARE INC

System and method for reducing power consumption in multiprocessor system

InactiveUS6901522B2Volume/mass flow measurementMultiprogramming arrangementsComputer architectureMulti processor

A method and apparatus for power management is disclosed. The invention reduces power consumption in multiprocessing systems by dynamically adjusting processor power based on system workload. Particularly, the method and apparatus determines the number of required processors based on the number or active threads and sets a processor affinity to run the active threads on the determined number of required processors, thereby allowing the free processors to enter a low-power state.

Owner:INTEL CORP

Conservative shadow cache support in a point-to-point connected multiprocessing node

A point-to-point connected multiprocessing node uses a snooping-based cache-coherence filter to selectively direct relays of data request broadcasts. The filter includes shadow cache lines that are maintained to hold copies of the local cache lines of integrated circuits connected to the filter. The shadow cache lines are provided with additional entries so that if newly referenced data is added to a particular local cache line by “silently” removing an entry in the local cache line, the newly referenced data may be added to the shadow cache line without forcing the “blind” removal of an entry in the shadow cache line.

Owner:ORACLE INT CORP

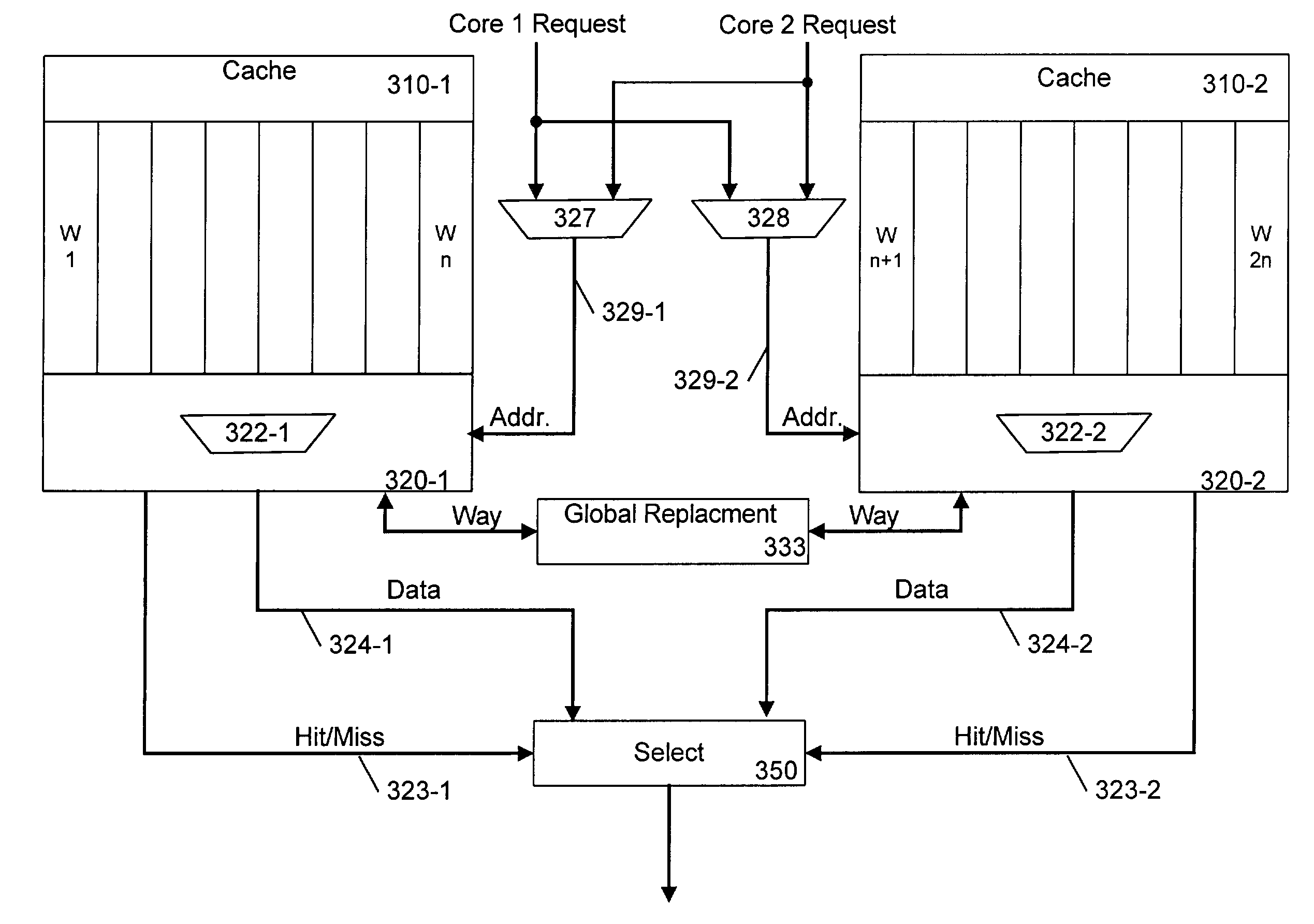

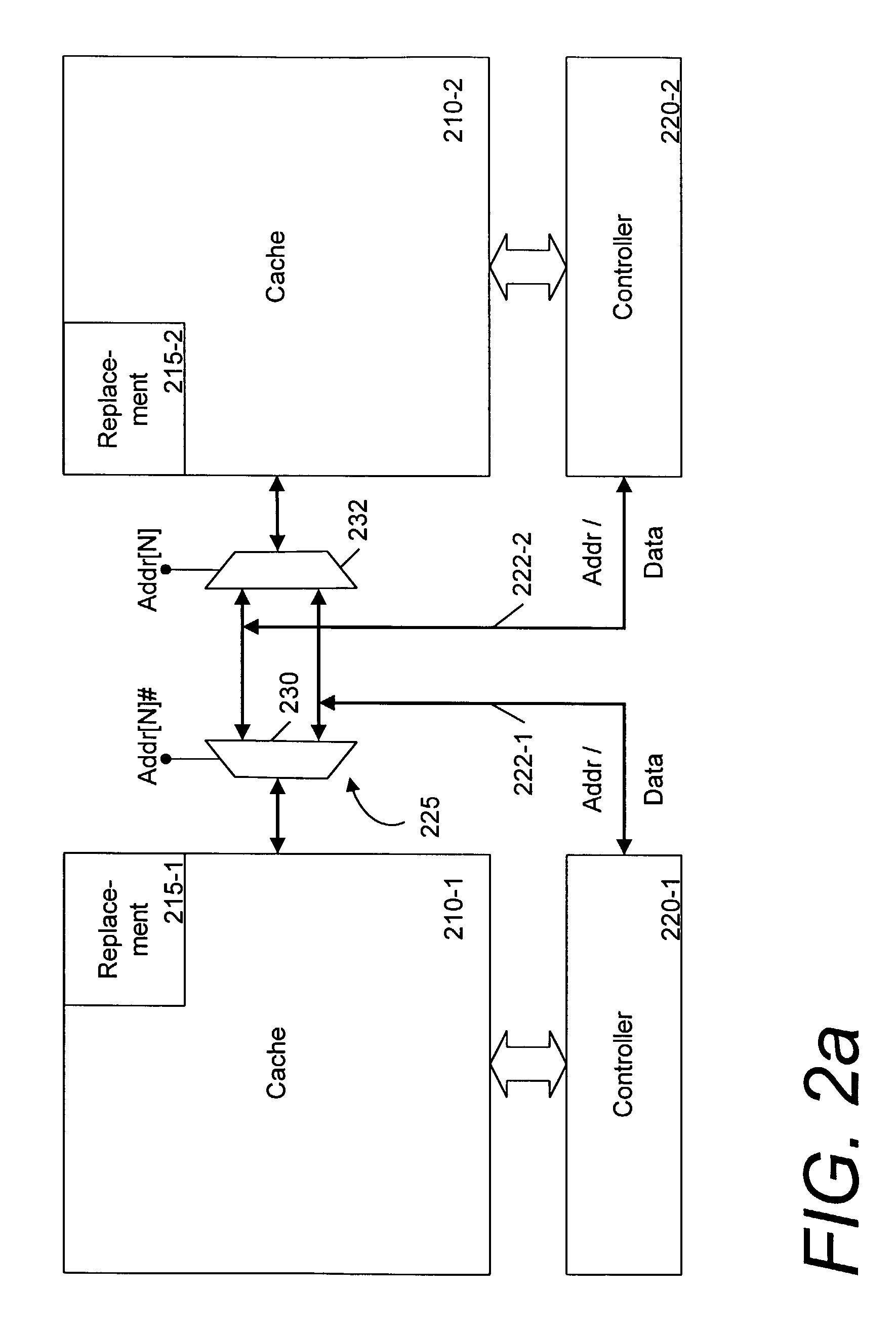

Cache sharing for a chip multiprocessor or multiprocessing system

InactiveUS20040059875A1Energy efficient ICTMemory adressing/allocation/relocationMultiprocessingChip multi processor

Cache sharing for a chip multiprocessor. In one embodiment, a disclosed apparatus includes multiple processor cores, each having an associated cache. A control mechanism is provided to allow sharing between caches that are associated with individual processor cores.

Owner:INTEL CORP

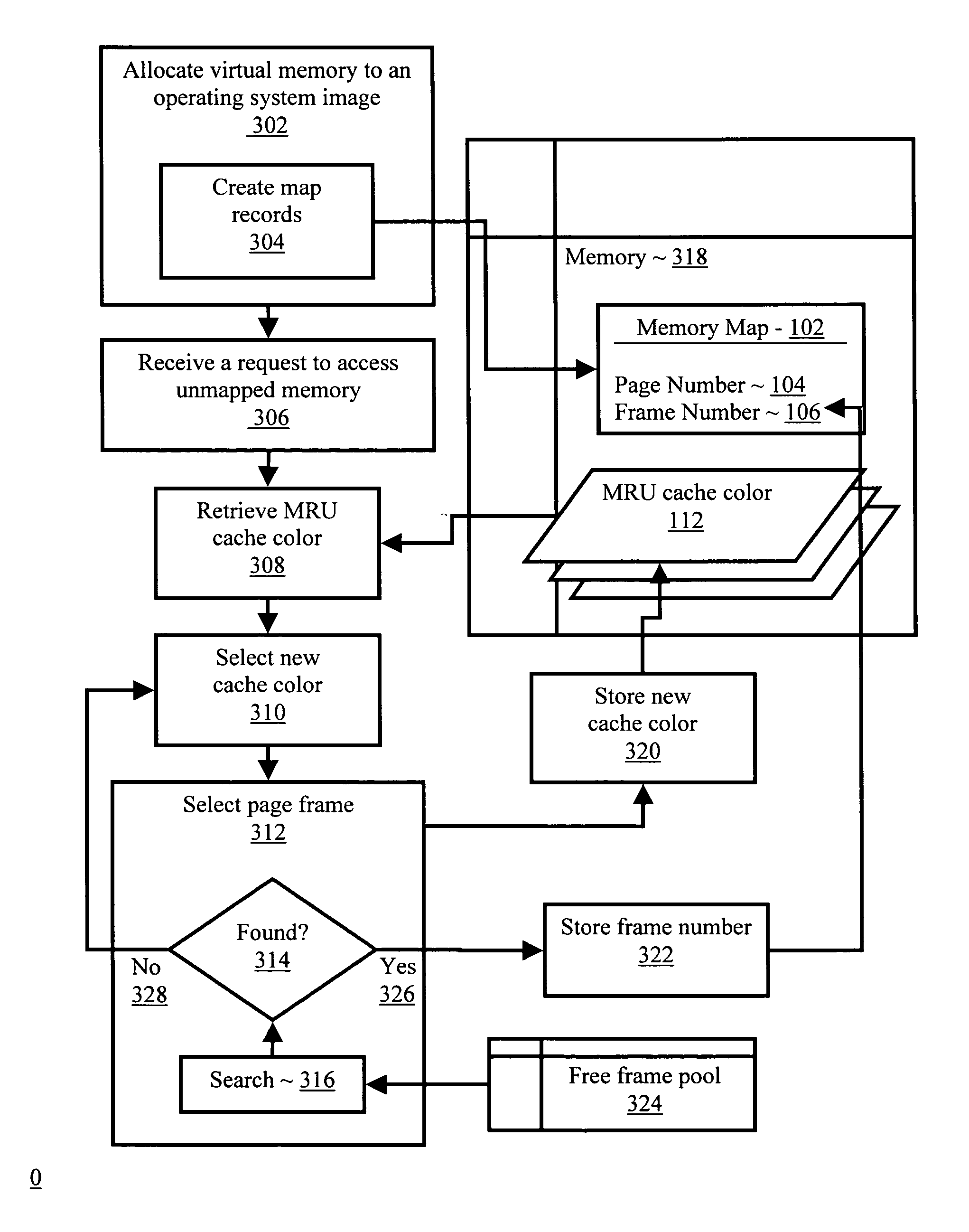

Memory mapping to reduce cache conflicts in multiprocessor systems

InactiveUS7085890B2Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryOperational system

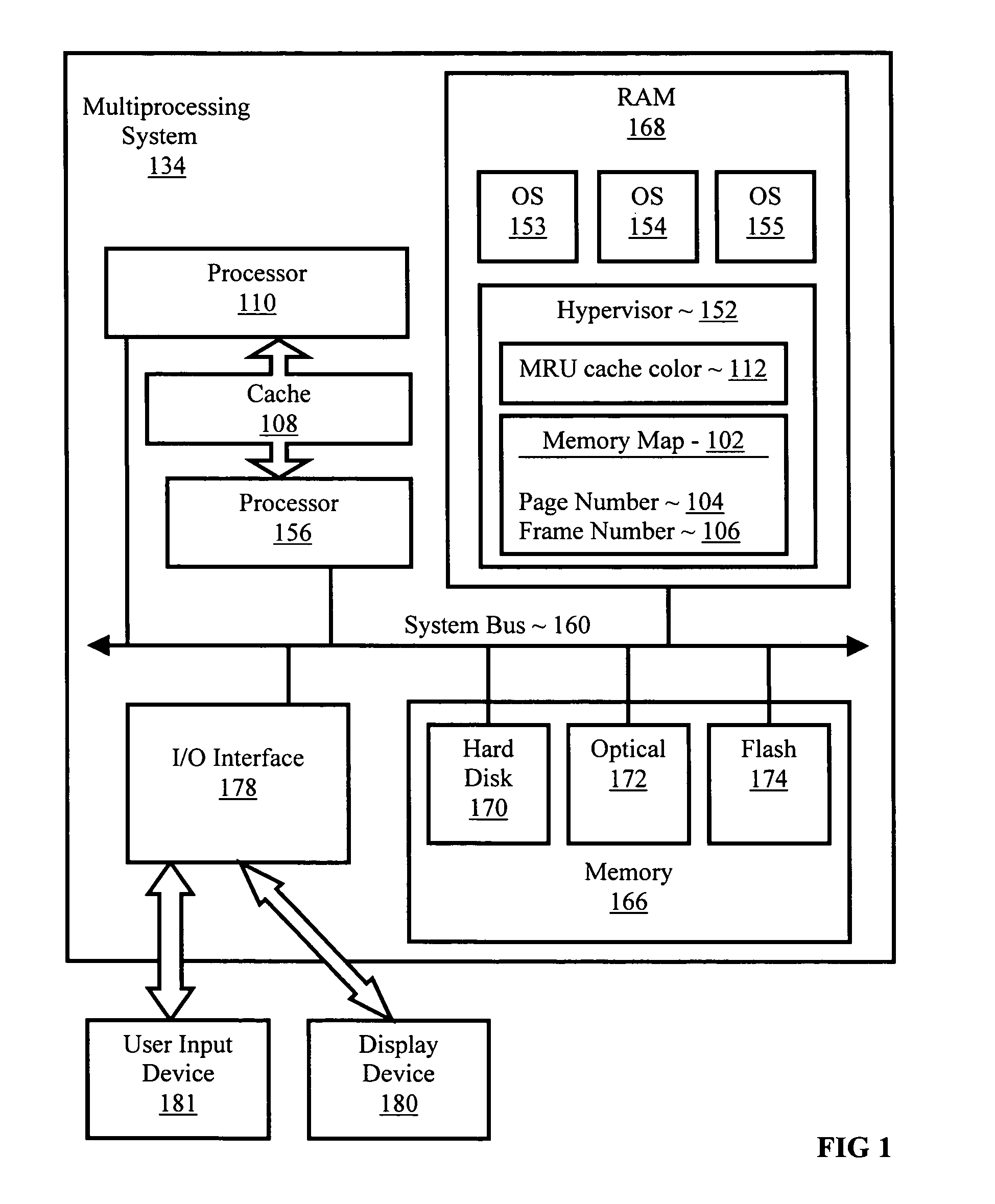

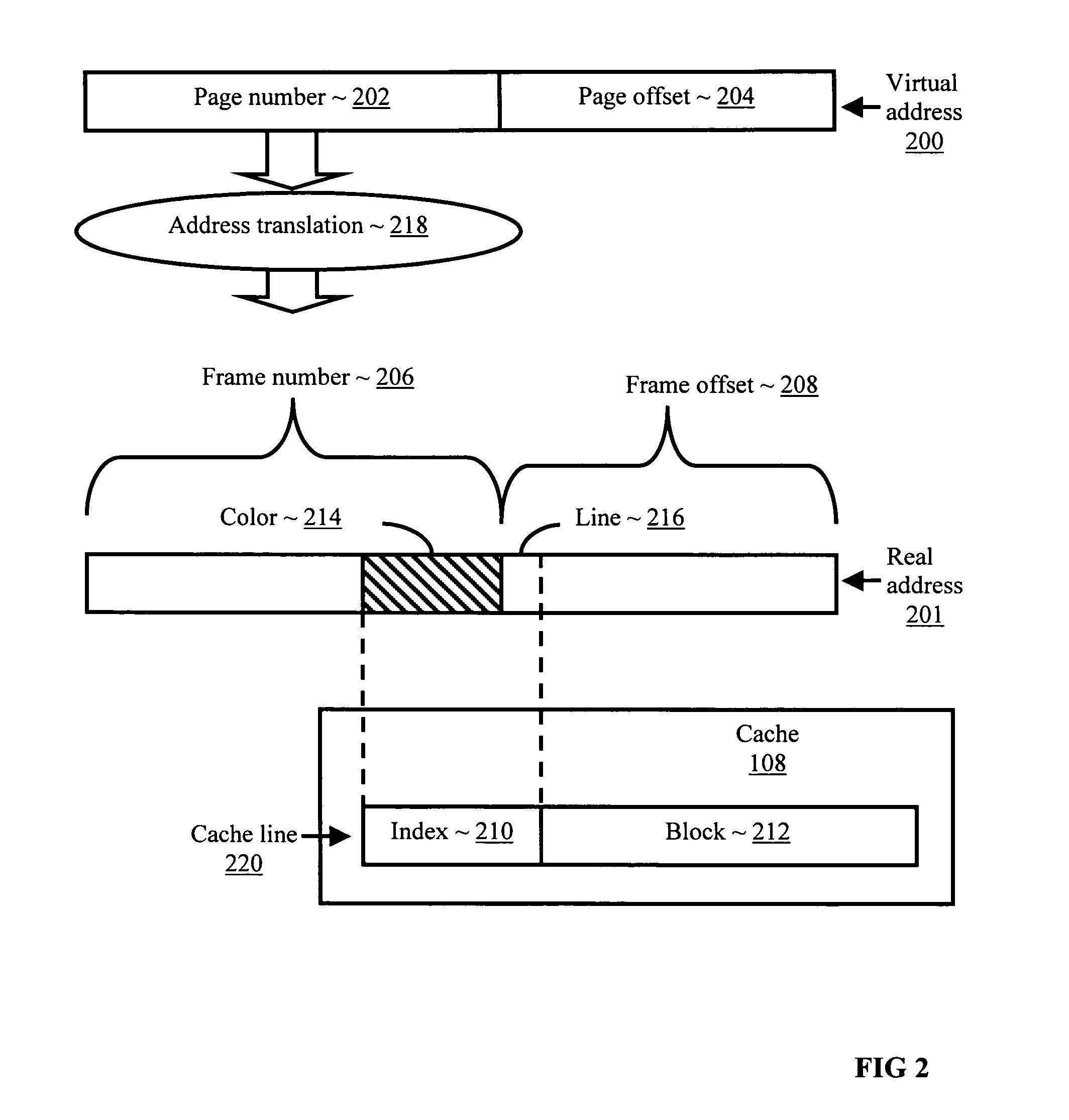

Methods, systems, and computer program products are disclosed for mapping a virtual memory page to a real memory page frame in a multiprocessing environment that supports a multiplicity of operating system images. Typical embodiments include retrieving into an operating system image, from memory accessible to a multiplicity of operating system images, a most recently used cache color for a cache, where the cache is shared by the operating system image with at least one other operating system image; selecting a new cache color in dependence upon the most recently used cache color; selecting in the operating system image a page frame in dependence upon the new cache color; and storing in the memory the new cache color as the most recently used cache color for the cache.

Owner:INT BUSINESS MASCH CORP

Software implementation of synchronous memory barriers

InactiveUS6996812B2Program synchronisationDigital computer detailsProcessor registerInter-processor interrupt

Selectively emulating sequential consistency in software improves efficiency in a multiprocessing computing environment. A writing CPU uses a high priority inter-processor interrupt to force each CPU in the system to execute a memory barrier. This step invalidates old data in the system. Each CPU that has executed a memory barrier instruction registers completion and sends an indicator to a memory location to indicate completion of the memory barrier instruction. Prior to updating the data, the writing CPU must check the register to ensure completion of the memory barrier execution by each CPU. The register may be in the form of an array, a bitmask, or a combining tree, or a comparable structure. This step ensures that all invalidates are removed from the system and that deadlock between two competing CPUs is avoided. Following validation that each CPU has executed the memory barrier instruction, the writing CPU may update the pointer to the data structure.

Owner:IBM CORP

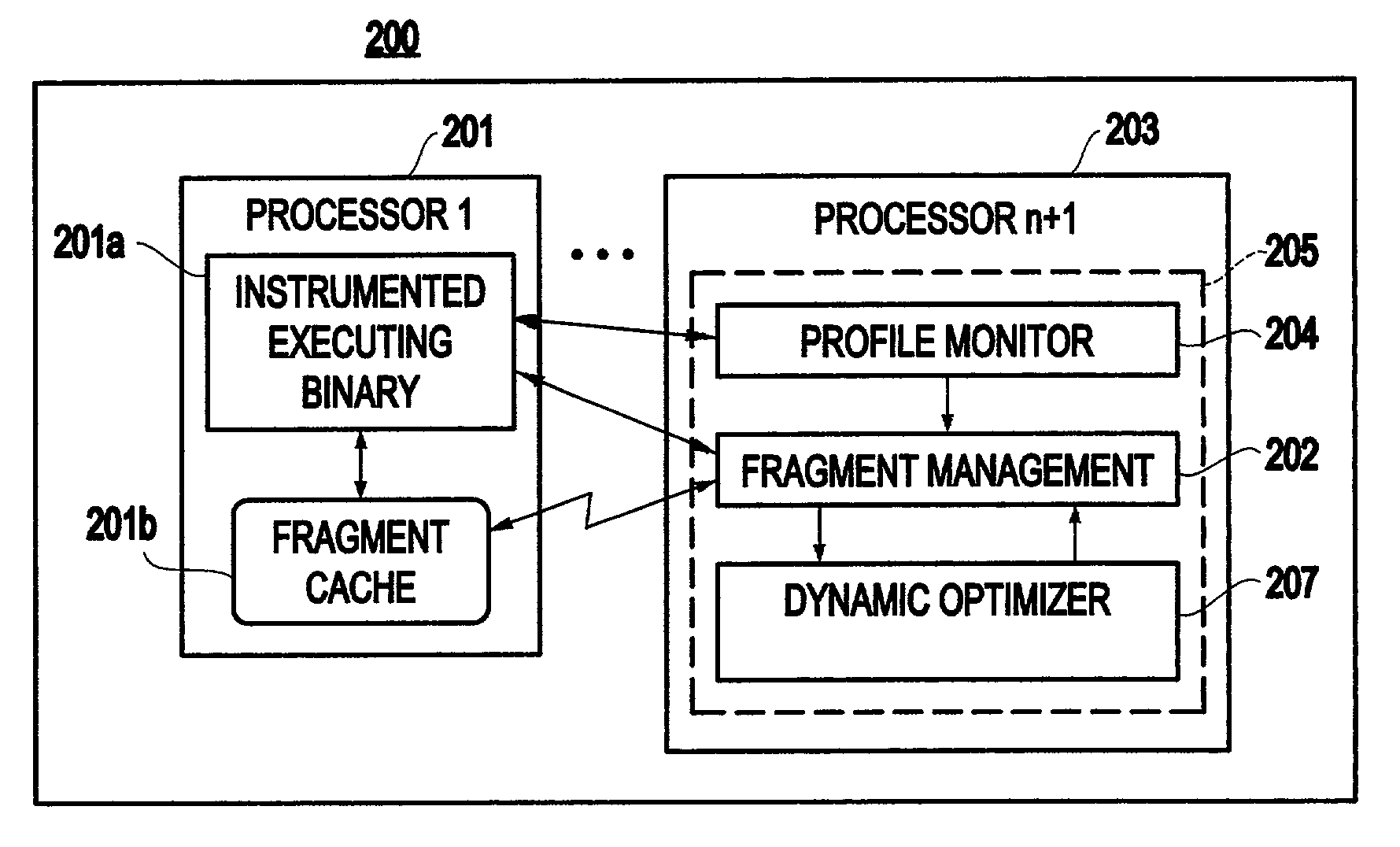

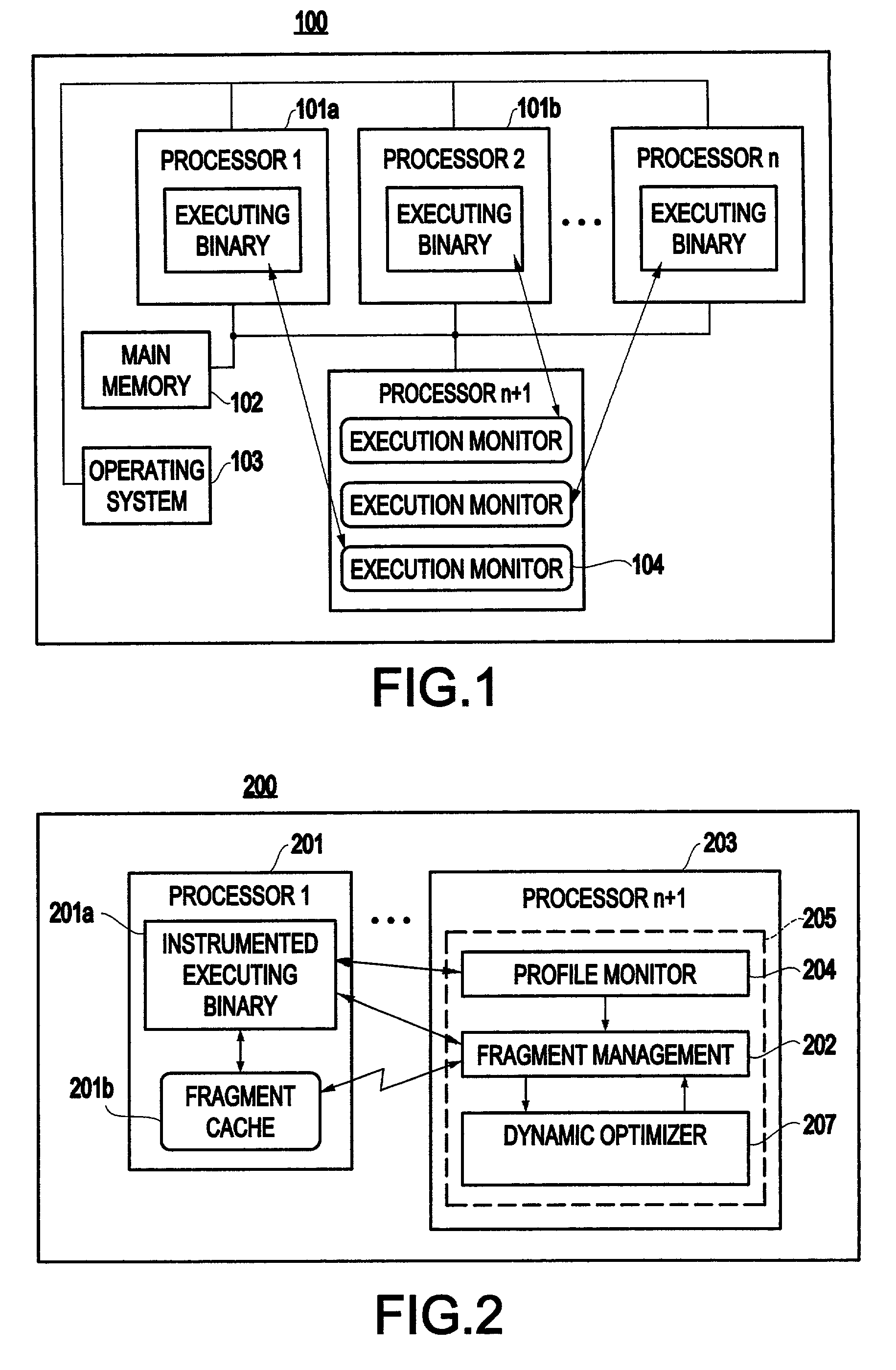

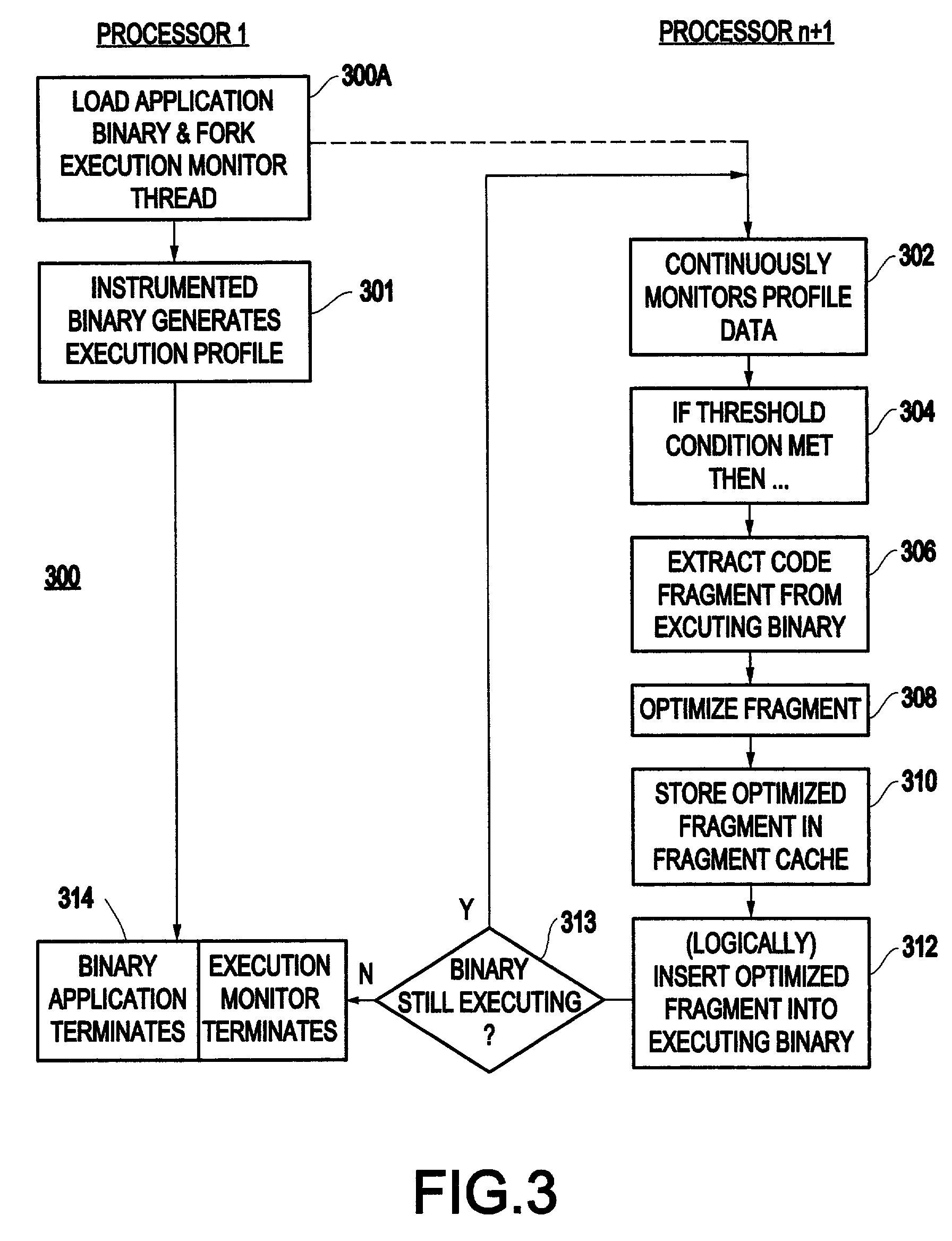

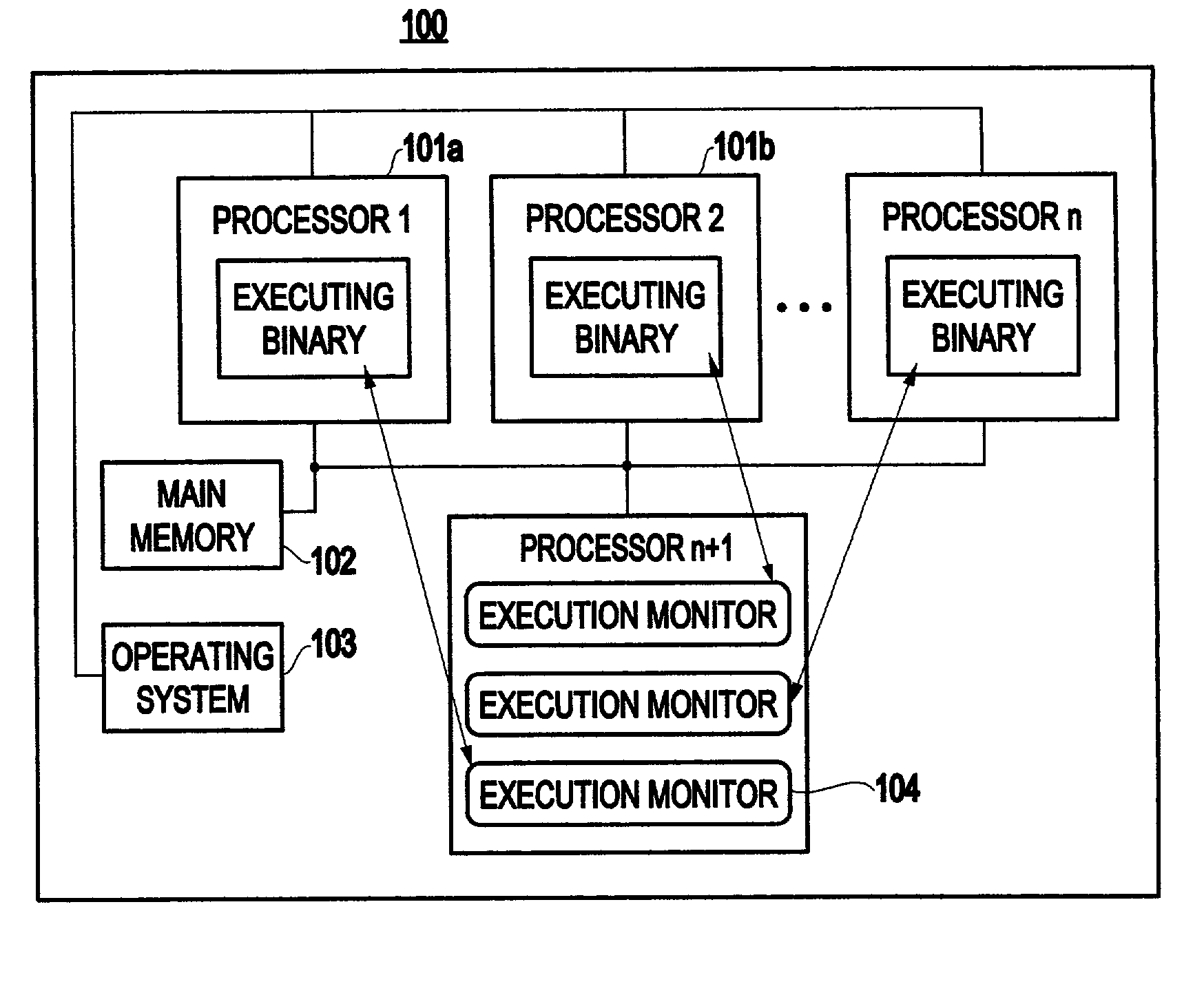

Method and system for transparent dynamic optimization in a multiprocessing environment

InactiveUS7146607B2Improved program execution efficiencyImprove performanceSoftware engineeringDigital computer detailsApplication softwareMultiprocessing

A method (and system) of transparent dynamic optimization in a multiprocessing environment, includes monitoring execution of an application on a first processor with an execution monitor running on another processor of the system, and transparently optimizing one or more segments of the original application with a runtime optimizer executing on the another processor of the system.

Owner:INT BUSINESS MASCH CORP

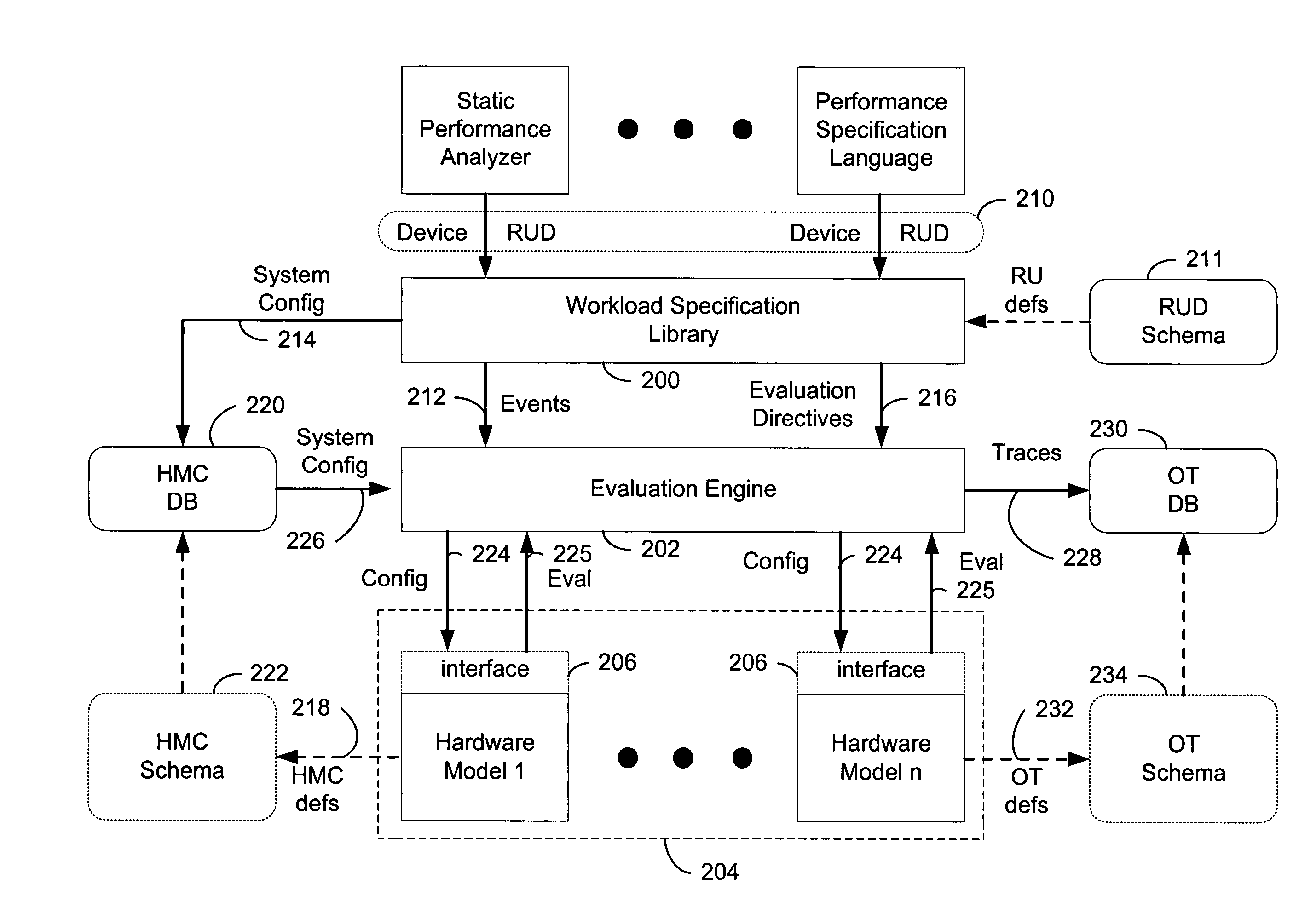

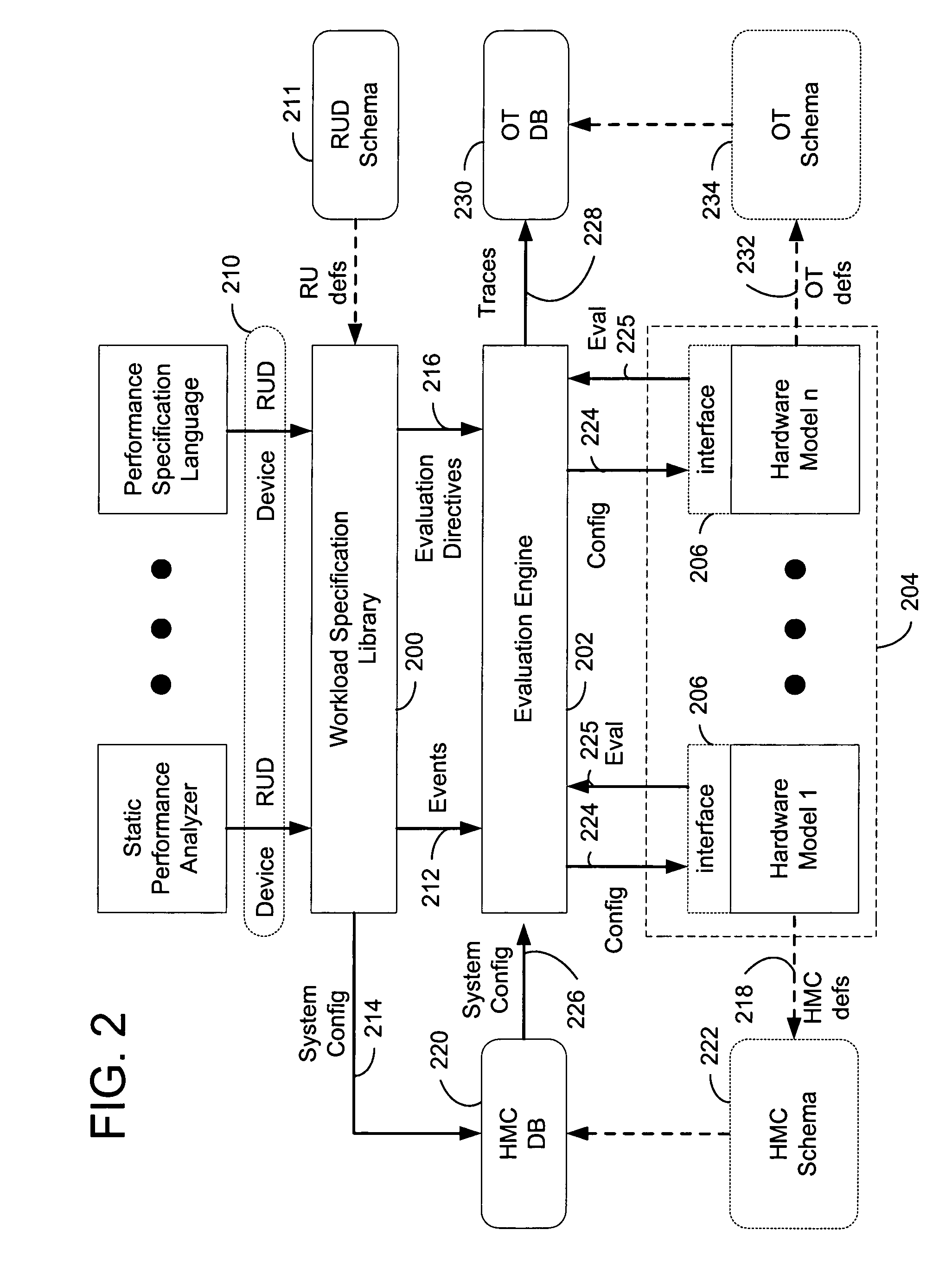

Performance technology infrastructure for modeling the performance of computer systems

InactiveUS6996517B1Facilitates flexible/dynamic integrationSimplify the development processDigital computer detailsNuclear monitoringResource elementComputerized system

An infrastructure and a set of steps are disclosed for evaluating performance of computer systems. The infrastructure and method provide a flexible platform for carrying out analysis of various computer systems under various workload conditions. The flexible platform is achieved by allowing / supporting independent designation / incorporation of a workload specification and a system upon which the workload is executed. The analytical framework disclosed and claimed herein facilitates flexible / dynamic integration of various hardware models and workload specifications into a system performance analysis, and potentially streamlines development of customized computer software / system specific analyses.The disclosed performance technology infrastructure includes a workload specification interface facilitating designation of a particular computing instruction workload. The workload comprises a list of resource usage requests. The performance technology infrastructure also includes a hardware model interface facilitating designation of a particular computing environment (e.g., hardware configuration and / or network / multiprocessing load). A disclosed hardware model comprises a specification of delays associated with particular resource uses. A disclosed hardware specification further specifies a hardware configuration describing actual resource elements (e.g., hardware devices) and their interconnections in the system of interest. The performance technology infrastructure further comprises an evaluation engine for performing a system performance analysis in accordance with a specified workload and hardware model incorporated via the workload specification and hardware model interfaces.

Owner:MICROSOFT TECH LICENSING LLC

Method and system for transparent dynamic optimization in a multiprocessing environment

InactiveUS20040054992A1Improve performanceImprove executionSoftware engineeringDigital computer detailsApplication softwareMultiprocessing

A method (and system) of transparent dynamic optimization in a multiprocessing environment, includes monitoring execution of an application on a first processor with an execution monitor running on another processor of the system, and transparently optimizing one or more segments of the original application with a runtime optimizer executing on the another processor of the system.

Owner:IBM CORP

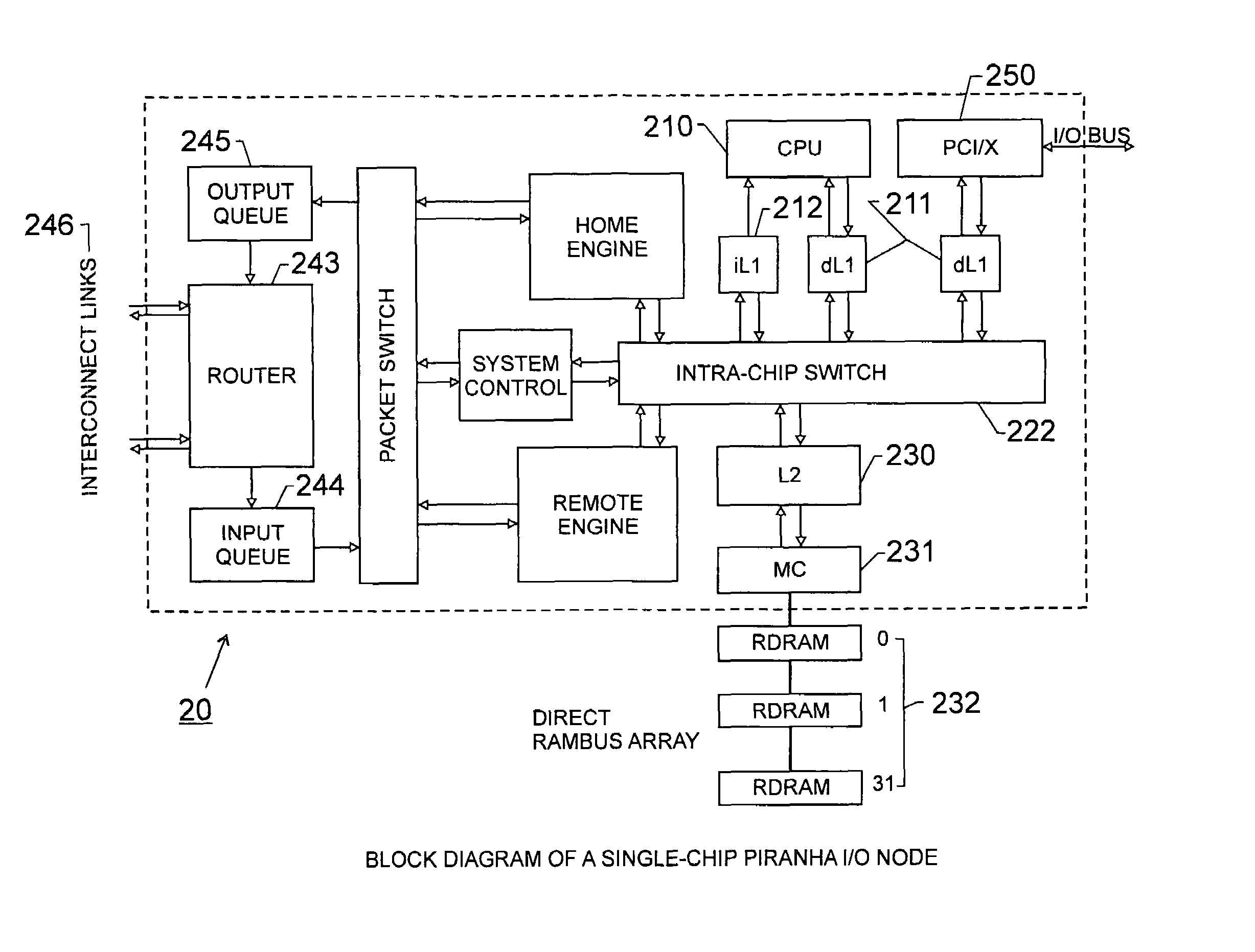

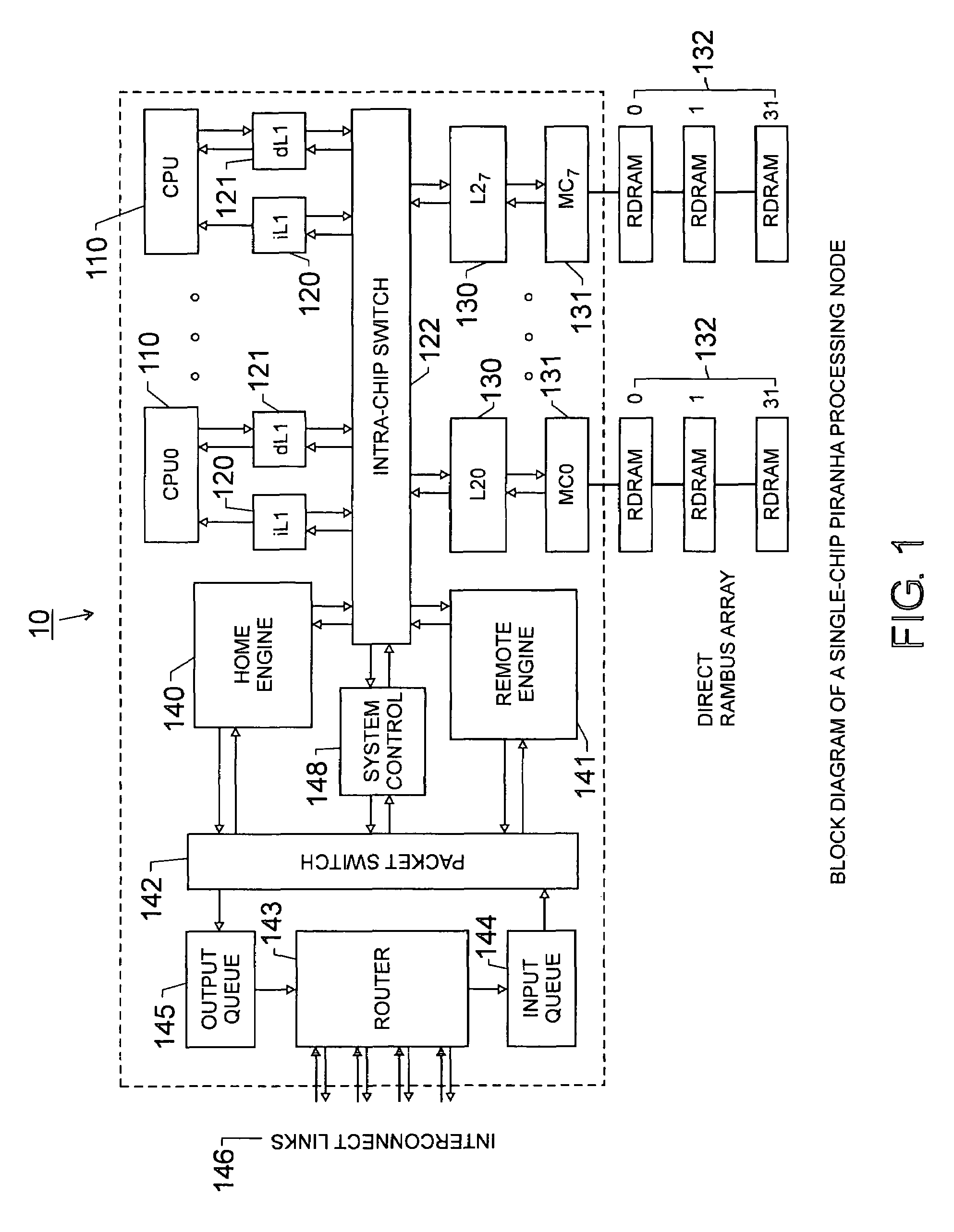

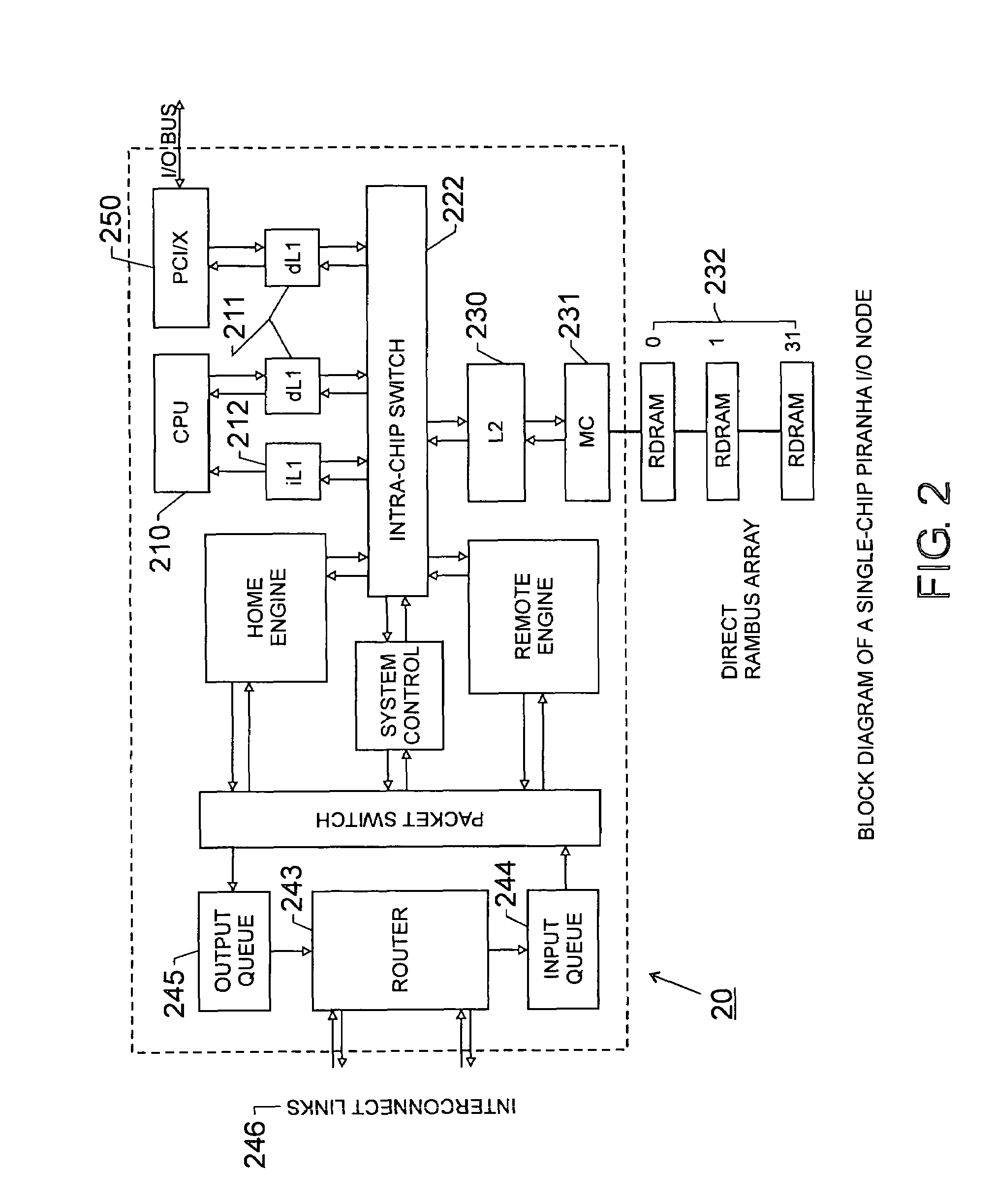

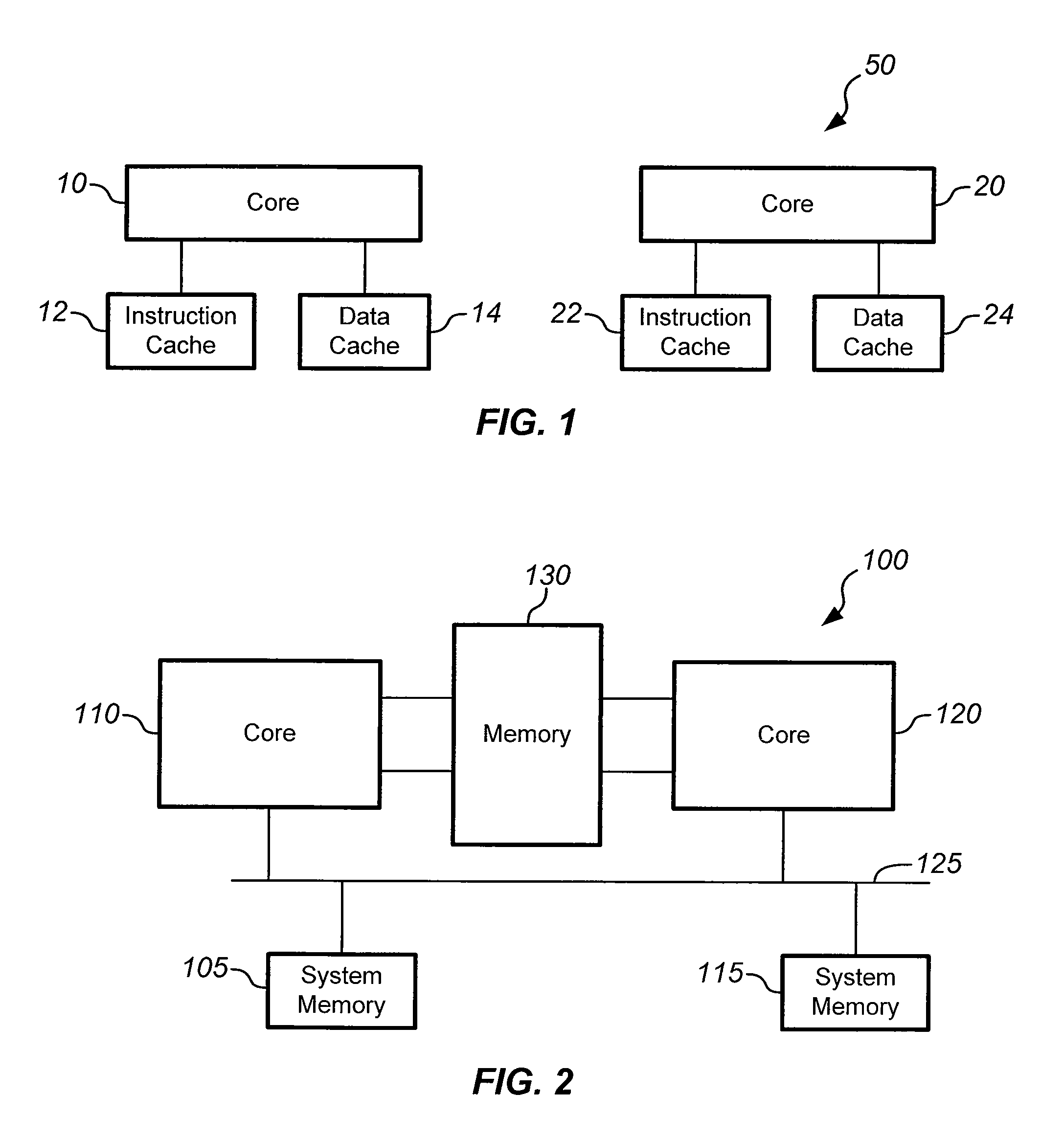

Scalable architecture based on single-chip multiprocessing

InactiveUS6988170B2Short timeSmall investmentMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyProcessing core

A chip-multiprocessing system with scalable architecture, including on a single chip: a plurality of processor cores; a two-level cache hierarchy; an intra-chip switch; one or more memory controllers; a cache coherence protocol; one or more coherence protocol engines; and an interconnect subsystem. The two-level cache hierarchy includes first level and second level caches. In particular, the first level caches include a pair of instruction and data caches for, and private to, each processor core. The second level cache has a relaxed inclusion property, the second-level cache being logically shared by the plurality of processor cores. Each of the plurality of processor cores is capable of executing an instruction set of the ALPHA™ processing core. The scalable architecture of the chip-multiprocessing system is targeted at parallel commercial workloads. A showcase example of the chip-multiprocessing system, called the PIRAHNA™ system, is a highly integrated processing node with eight simpler ALPHA™ processor cores. A method for scalable chip-multiprocessing is also provided.

Owner:SK HYNIX INC

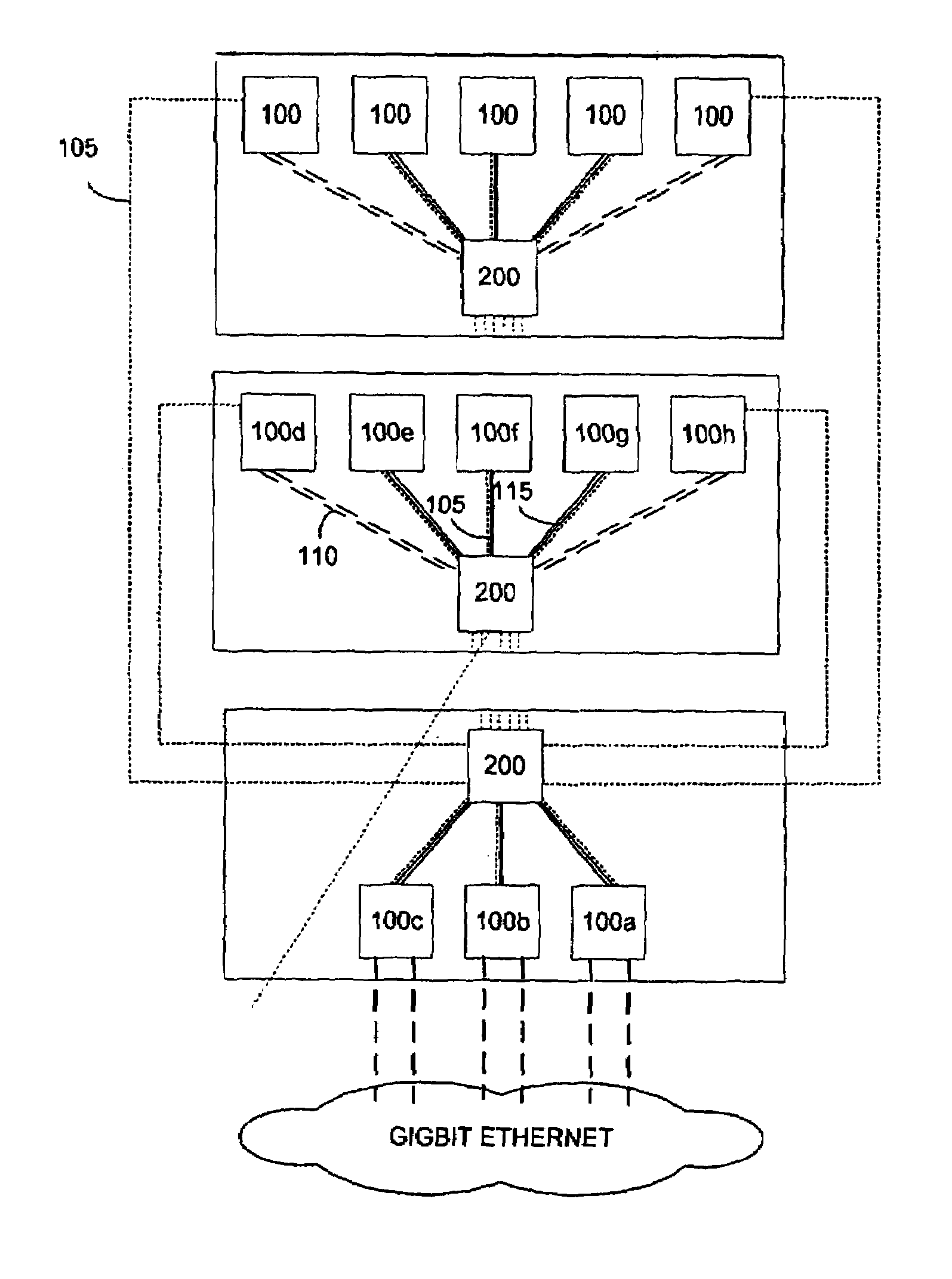

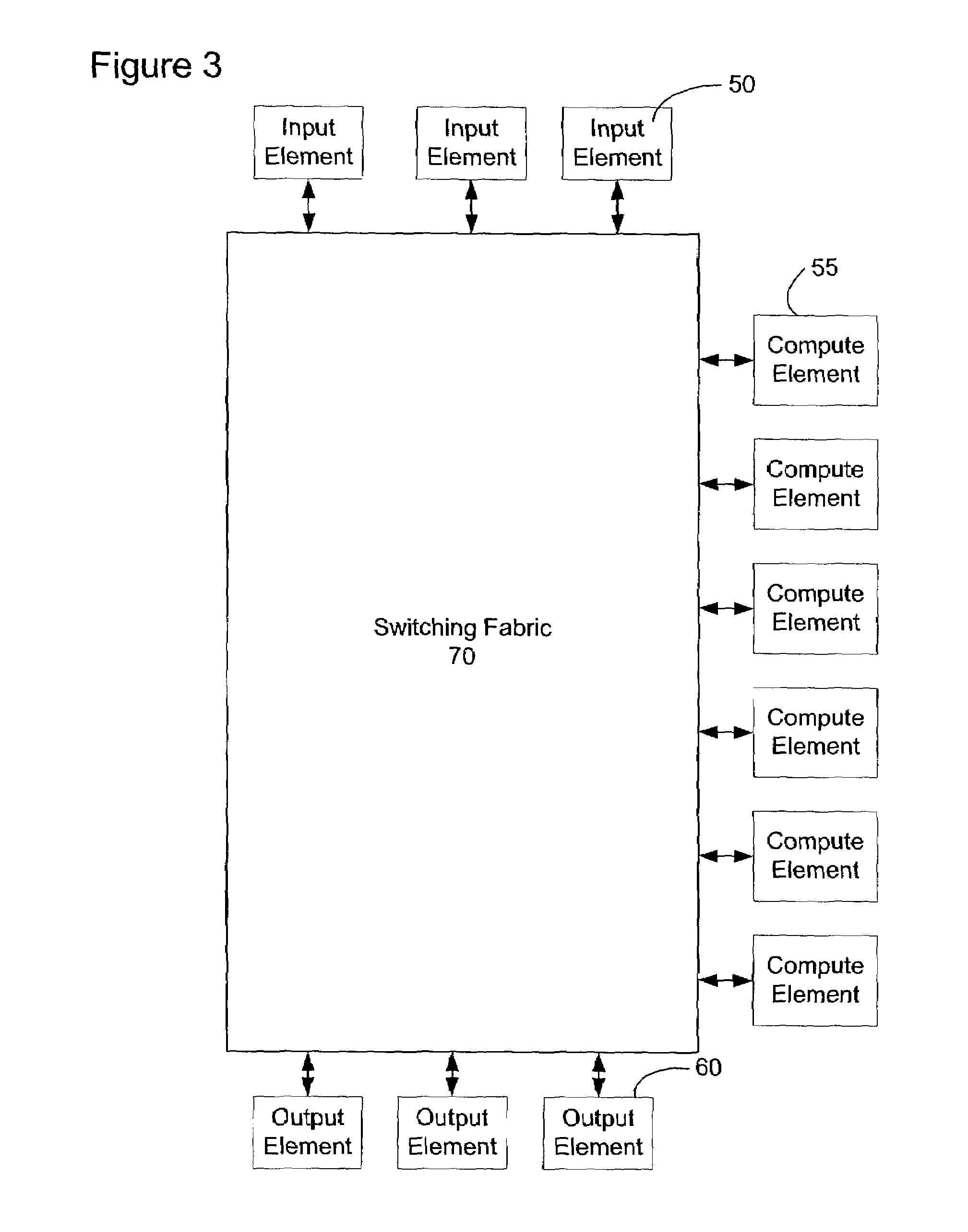

Content service aggregation device for a data center

An architecture for controlling a multiprocessing system to provide at least one network service to subscriber data packets transmitted in the system using a plurality of compute elements, comprising a management compute element including service set-up information for at least one service and at least one processing compute element applying said at least one network service to said data packets and communicating service set-up information with the management compute element in order to perform service specific operations on data packets. In a further embodiment, a method of controlling a processing system including a plurality of processors is disclosed. The method comprises the steps of operating at least one of said processors as a control authority providing service provisioning information for a subscriber; and operating a set of processors as a service specific compute element responsive to the control authority, receiving provisioning information from the subscriber and performing service specific instructions on data packets to provide IP content services.

Owner:JUMIPER NETWORKS INC +1

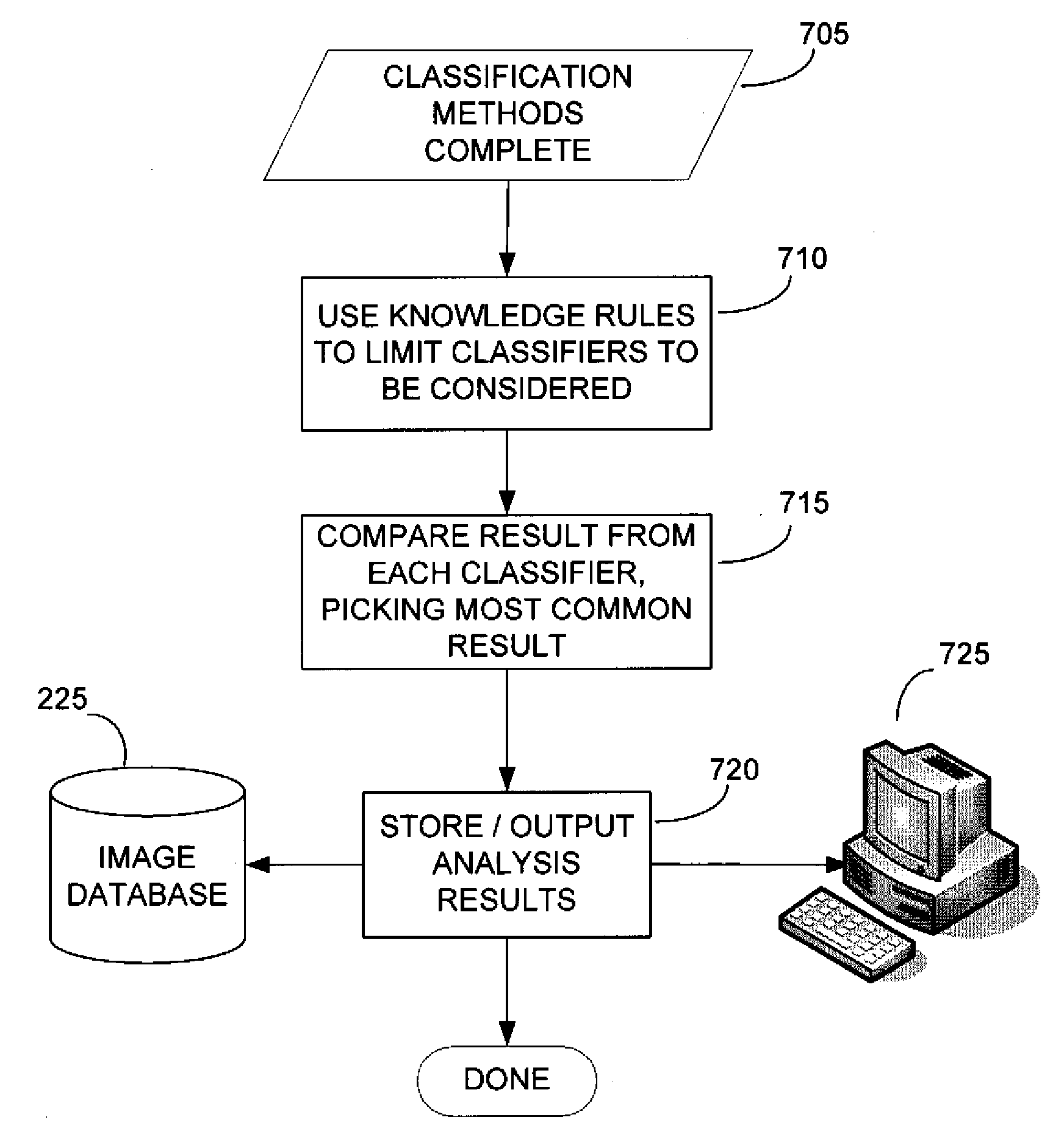

High Volume Earth Observation Image Processing

ActiveUS20090232349A1Simple methodImprove throughputImage enhancementImage analysisEarth observationImaging processing

The present invention is related to the processing of data, and more particularly to a method of and system for processing large volumes of Earth observation imagery data. A system for processing a large volume of Earth observation imaging data is described, comprising a computer including a visual display and a user interface, a plurality of servers, an image database storing said Earth observation imaging data as a plurality of separate image data files, and a network for interconnecting the computer, plurality of servers and image database. The plurality of servers is operable to process the separate data files in a distributed manner, at least one of the plurality of servers is operable to process the separate data files in a multiprocessing environment and at least one of the plurality of servers is operable to collate the processed separate data files into a single imaging result.

Owner:PCI GEOMATICS ENTERPRISES

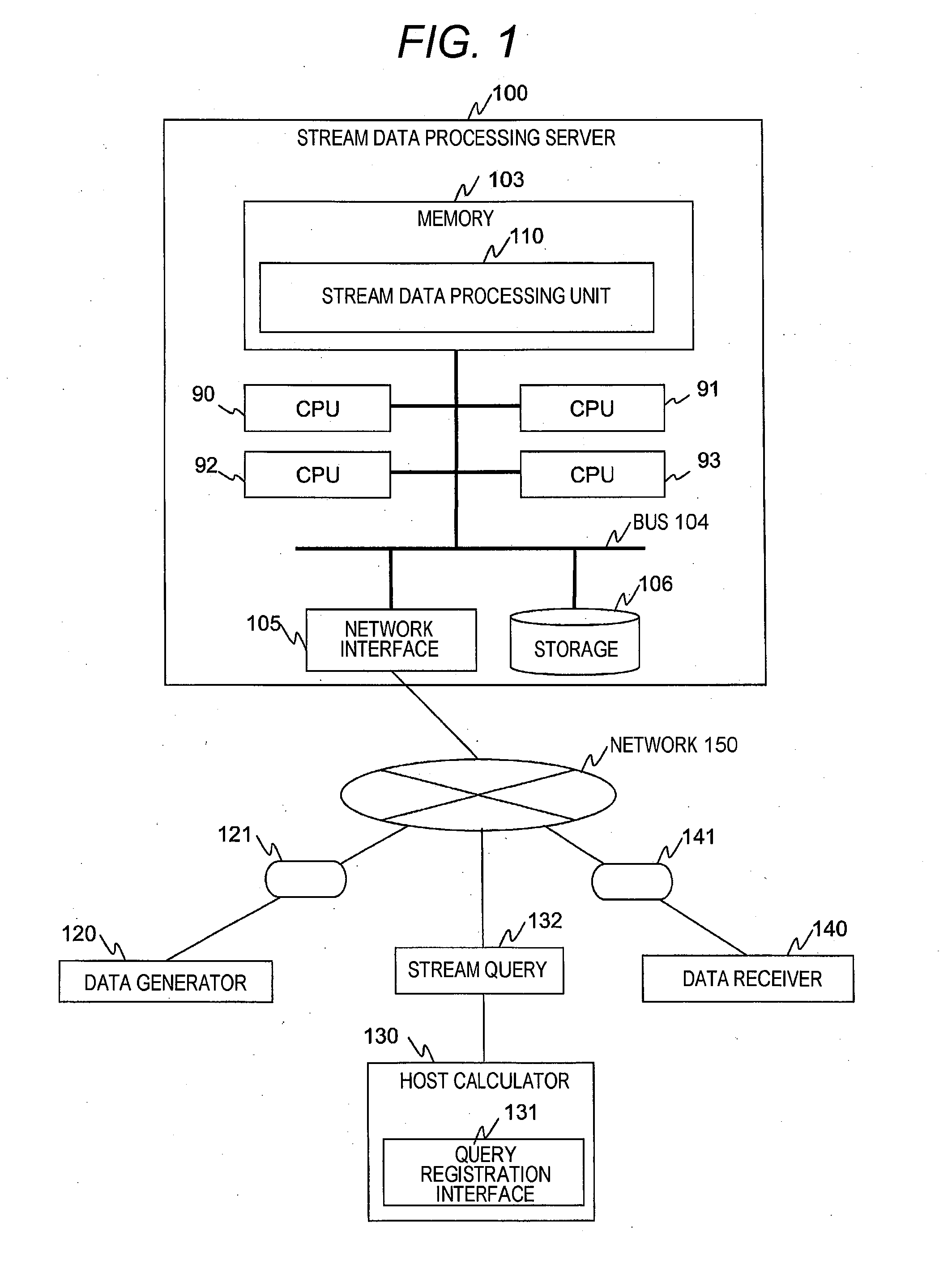

Stream data multiprocessing method

ActiveUS20150149507A1Lower latencyImprove throughputDigital data information retrievalDigital data processing detailsStreaming dataTheoretical computer science

A query parser that converts query definition into a query graph and decides the execution order of operators is installed, a set of consecutive operators in the execution order is called a stage, the total of calculation costs of operators configuring each stage is called a calculation cost of the stage, the query graph is divided into multiple stages such that the calculation cost of each stage becomes a value less than a value dividing the total cost of all operators by the number of calculation cores, and each calculation core extracts tuples one by one from an input stream, and, when taking charge of and executing processing of the tuples from the entrance to exit of the query graph, before the execution of each stage, confirms whether processing of the stage is completed for a tuple previous to a charge tuple.

Owner:HITACHI LTD

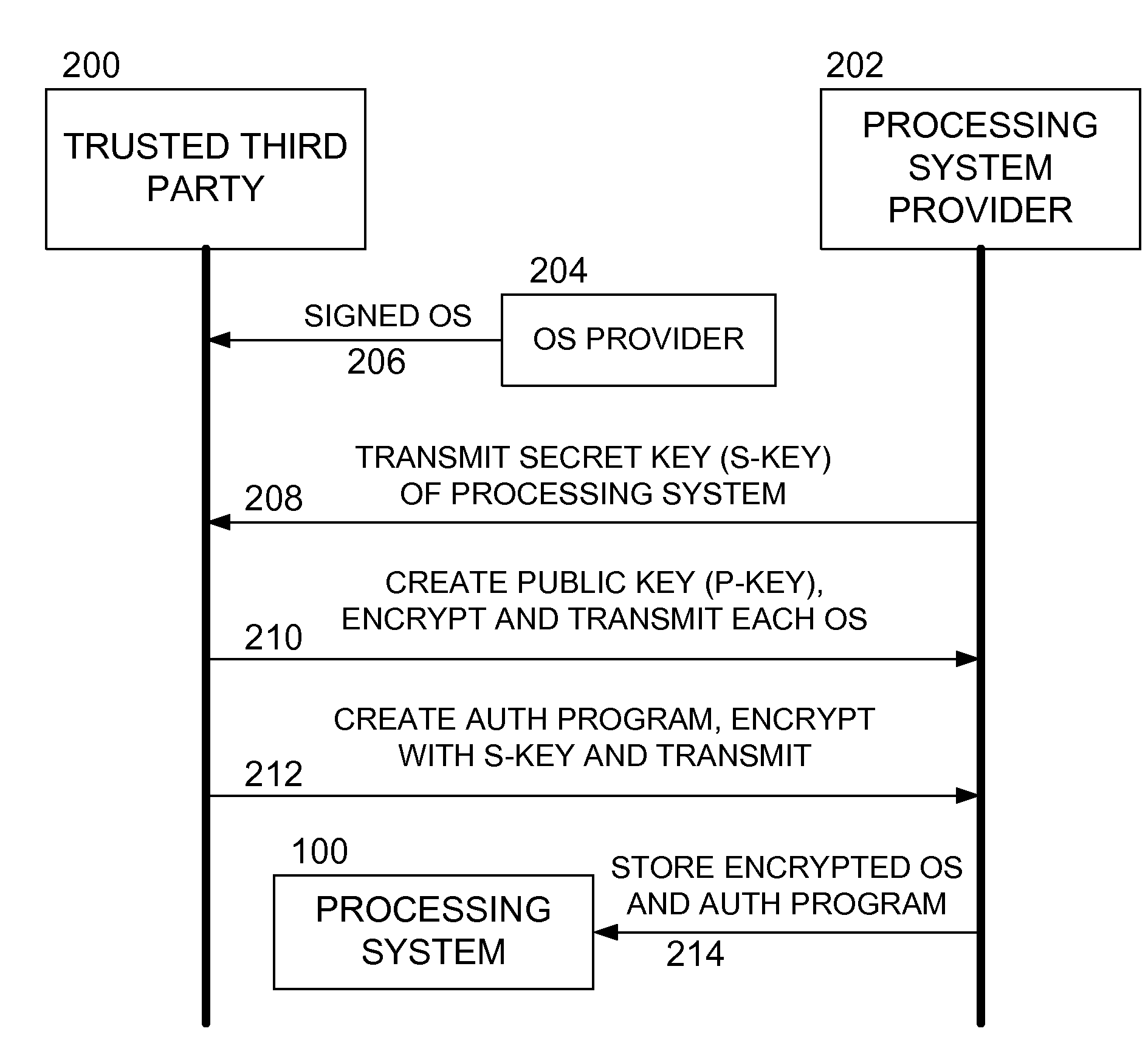

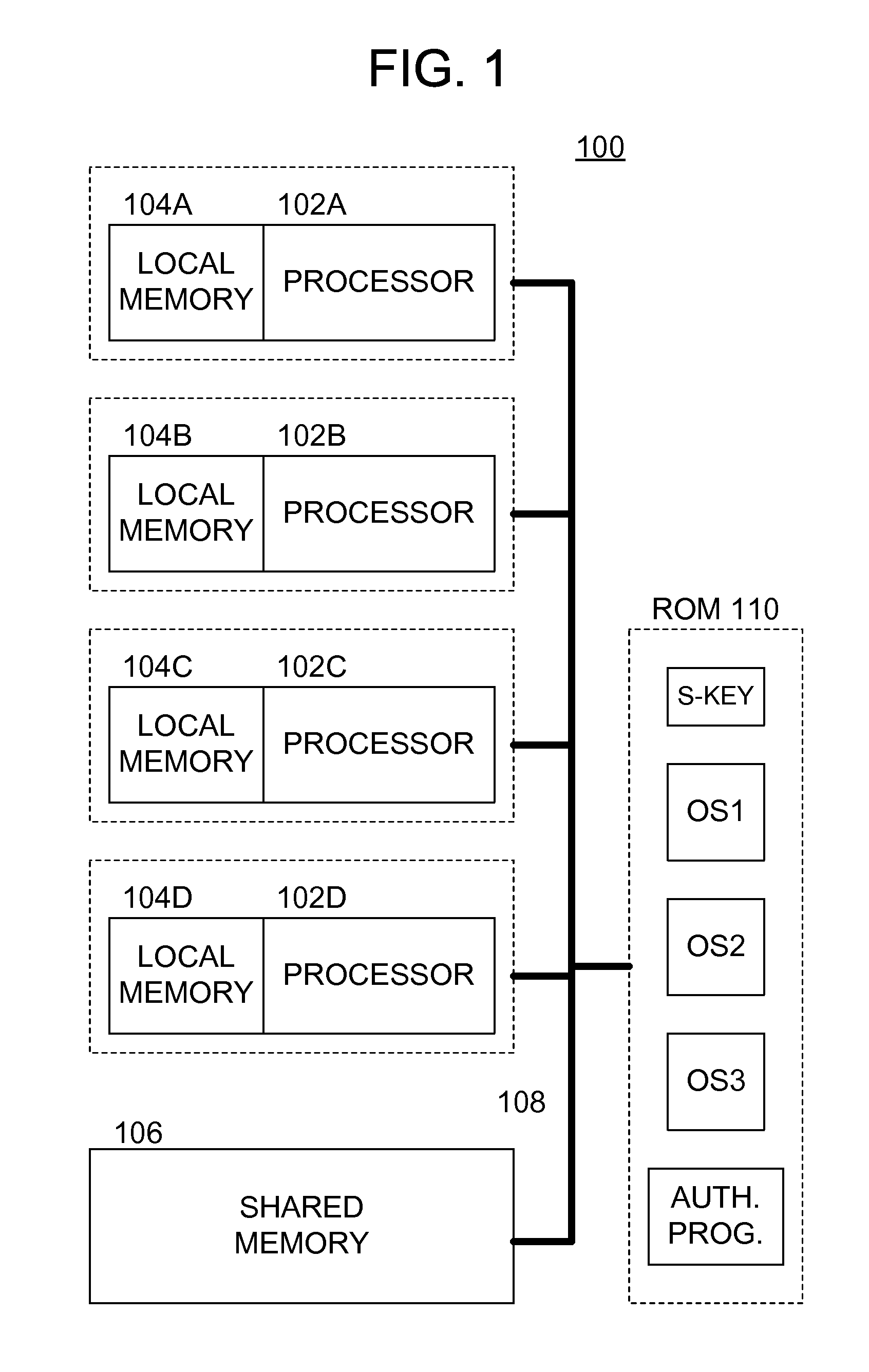

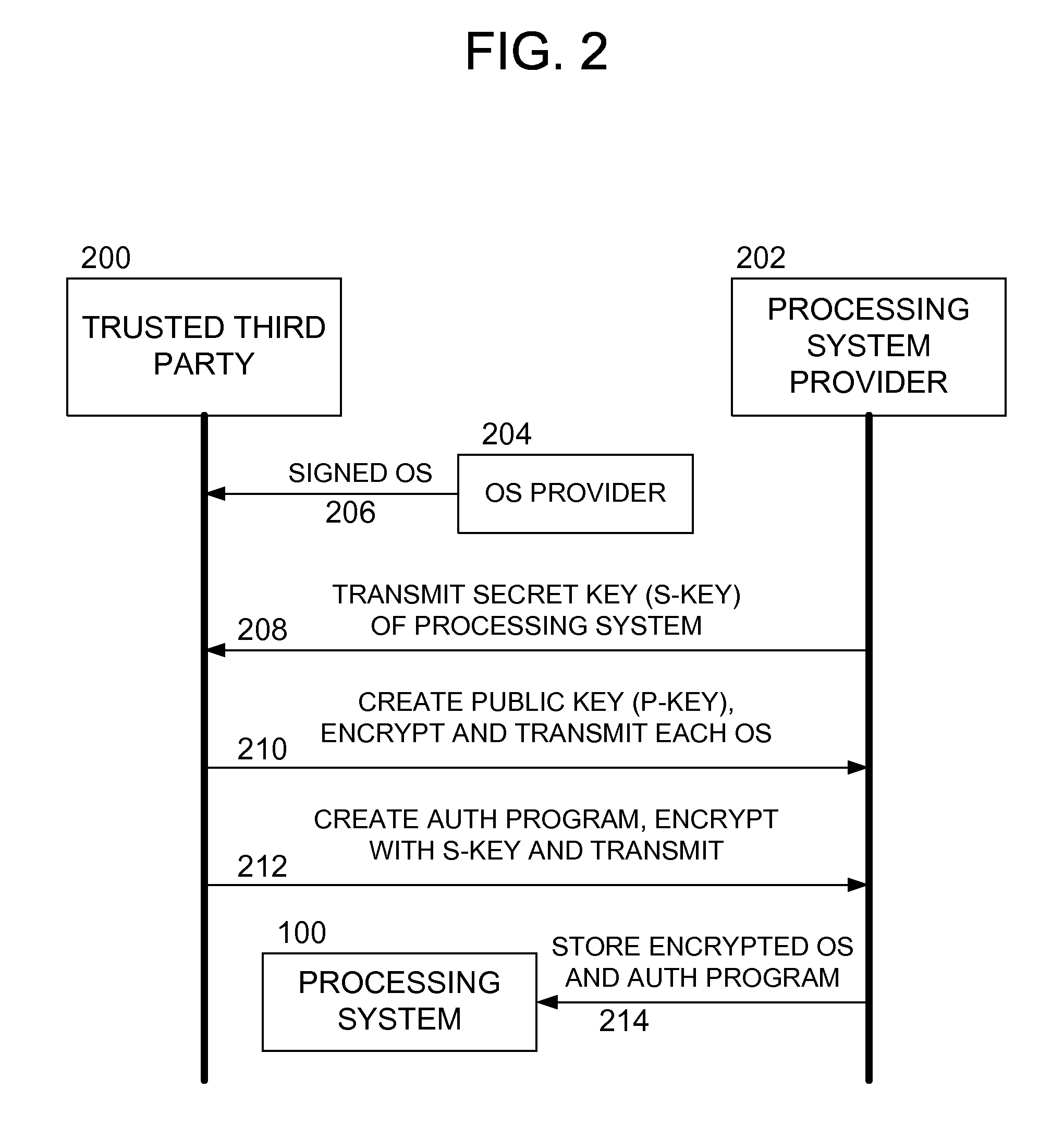

Methods and apparatus for secure operating system distribution in a multiprocessor system

ActiveUS20080282084A1Easy accessEasy to modifyKey distribution for secure communicationPublic key for secure communicationOperational systemMulti processor

Methods and apparatus provide for: decrypting a first of a plurality of operating systems (OSs) within a first processor of a multiprocessing system using a private key thereof, the plurality of OSs having been encrypted by a trusted third party, other than a manufacturer of the multiprocessing system, using respective public keys, each paired with the private key; executing an authentication program using the first processor to verify that the first OS is valid; and executing the first OS on the first processor.

Owner:SONY COMPUTER ENTERTAINMENT INC

Comparative updates tracking to synchronize local operating parameters with centrally maintained reference parameters in a multiprocessing system

In a multiprocessing system, a configuration manager maintains various reference parameters that are selectively copied by subordinate managed units to form local operating parameters, which subsequently govern operation of these managed units. A comparative technique is employed to track reference parameter updates, and synchronize each local operating parameter counterpart accordingly. At the configuration manager, reference parameters include reference profiles and reference characteristics. Each reference profile specifies one or more of the reference characteristics. At each managed unit, the operating parameters include subcribed-to profiles and operating characteristics; both are initially copied from the configuration manager's reference profiles / characteristics. Each local operating profile specifies one or more of the operating characteristics. Each managed unit operates according to its locally maintained operating characteristics. When certain update criteria are satisfied, a managed unit and the configuration manager cooperatively synchronize the manage unit's local operating profiles and characteristics with the configuration manager's reference profiles and characteristics. This involves comparing the reference and operating profiles to identify new, updated, or deleted operating characteristics. Also, the local operating profiles and operating characteristics may be cross-referenced to identify any "orphan" characteristics for deletion.

Owner:IBM CORP

Multiprocessing system with automated propagation of changes to centrally maintained configuration settings

InactiveUS6334178B1Data processing applicationsGeneral purpose stored program computerManagement unitMultiprocessing

In a multiprocessing system, hierarchically superior configuration managers maintain profiles of operating characteristics to which subordinate managed units selectively subscribe. If the profiles or operating characteristics change, the configuration managers propagate the changes to all managed units. Each configuration manager stores a record of operating characteristics and multiple server profiles, each profile specifying one or more operating characteristics. A subscription list identifies one or more managed units, each associated with one or more server profiles. Each managed unit acts according to its current operating characteristics, stored locally at the managed unit. If the managed unit receives a profile subscription request from a system administrator, the managed unit sends a subscription message to the configuration manager to subscribe to that input profile. Receiving the subscription, the configuration manager enters the subscribing managed unit and the associated profile into the subscription list, and returns the profiled operating characteristics to the subscribing managed unit. The subscribing managed unit stores these operating characteristics in its record of current operating characteristics. If there is a change to the operating characteristics (or to the profiles), the configuration manager transmits the changed matter to all managed units with affected subscriptions. Upon receipt of this data, each subscribing managed units stores the changed operating characteristics in its record of current operating characteristics.

Owner:IBM CORP

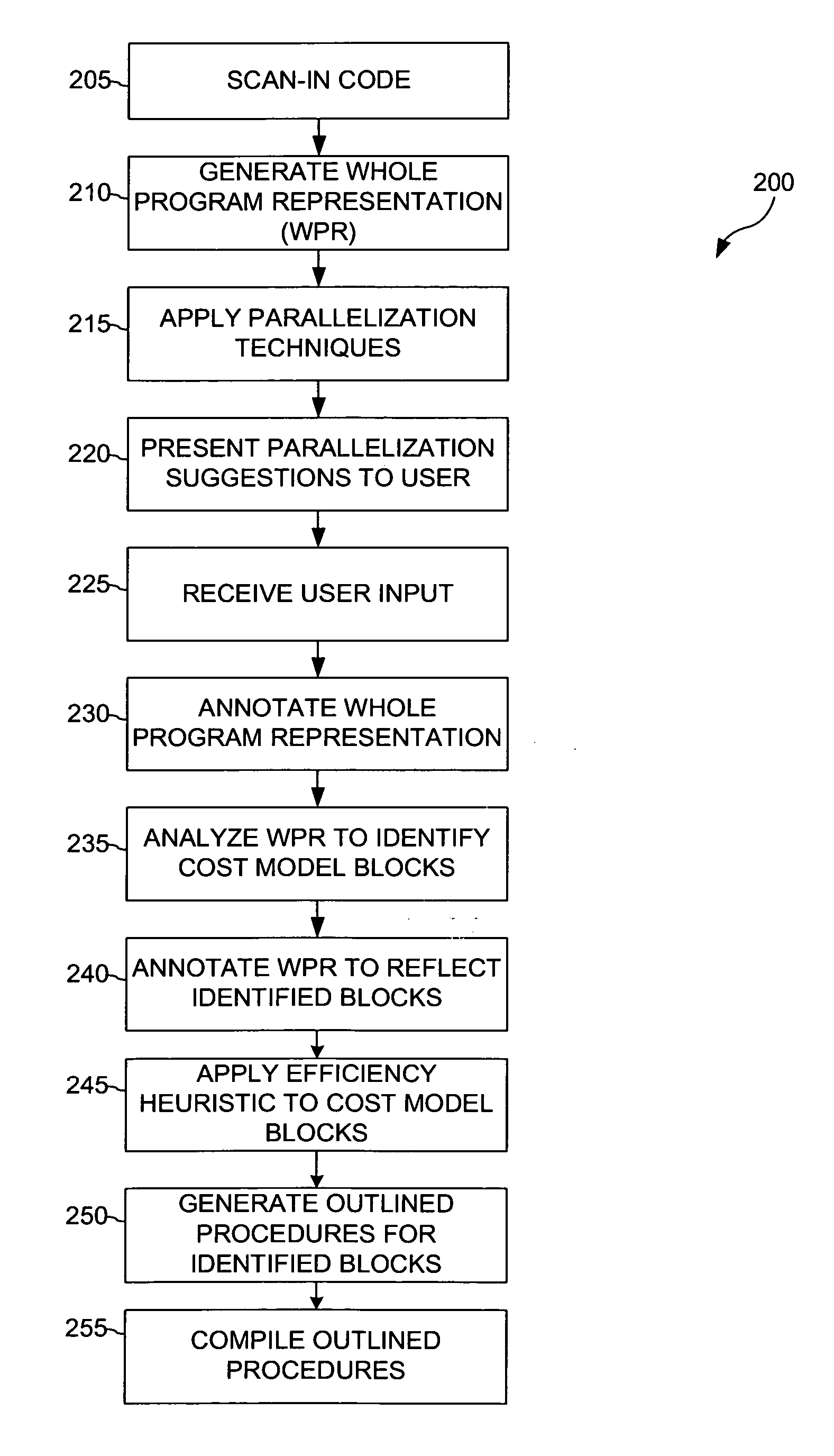

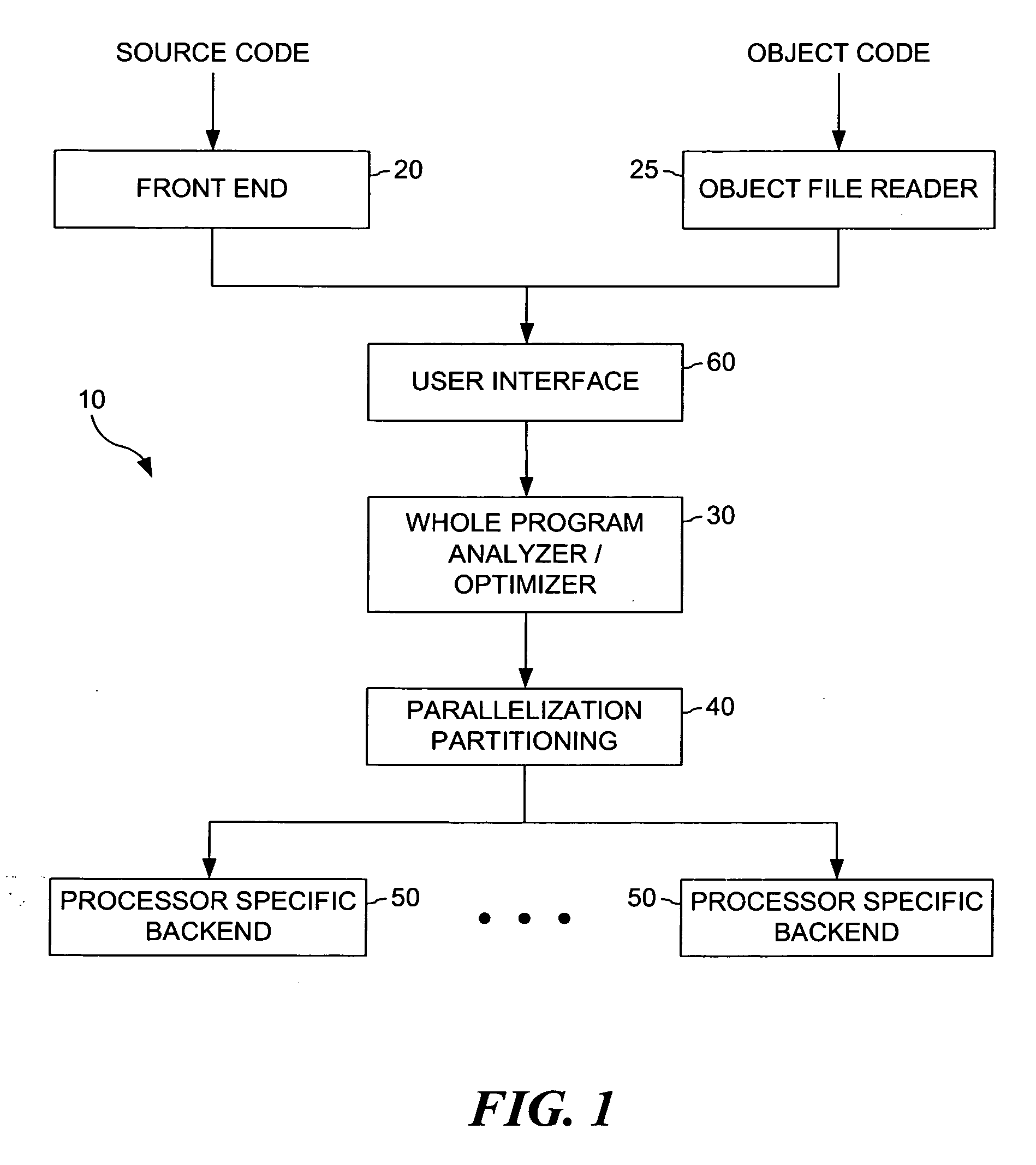

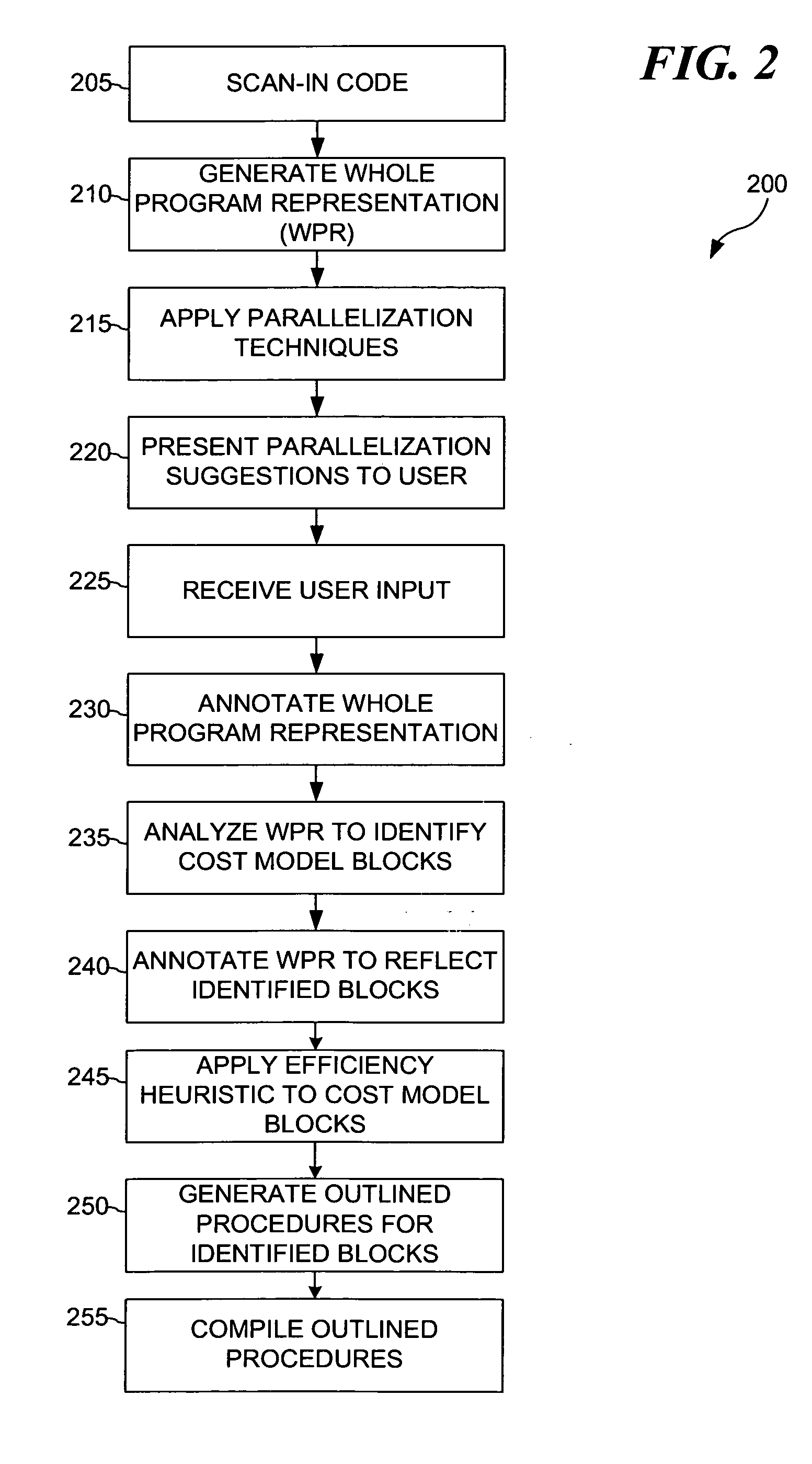

Method and system for exploiting parallelism on a heterogeneous multiprocessor computer system

InactiveUS20060123401A1Frees the application programmerSpecific program execution arrangementsMemory systemsProgramming complexityMulti processor

In a multiprocessor system it is generally assumed that peak or near peak performance will be achieved by splitting computation across all the nodes of the system. There exists a broad spectrum of techniques for performing this splitting or parallelization, ranging from careful handcrafting by an expert programmer at the one end, to automatic parallelization by a sophisticated compiler at the other. This latter approach is becoming more prevalent as the automatic parallelization techniques mature. In a multiprocessor system comprising multiple heterogeneous processing elements these techniques are not readily applicable, and the programming complexity again becomes a very significant factor. The present invention provides for a method for computer program code parallelization and partitioning for such a heterogeneous multi-processor system. A Single Source file, targeting a generic multiprocessing environment is received. Parallelization analysis techniques are applied to the received single source file. Parallelizable regions of the single source file are identified based on applied parallelization analysis techniques. The data reference patterns, code characteristics and memory transfer requirements are analyzed to generate an optimum partition of the program. The partitioned regions are compiled to the appropriate instruction set architecture and a single bound executable is produced.

Owner:IBM CORP

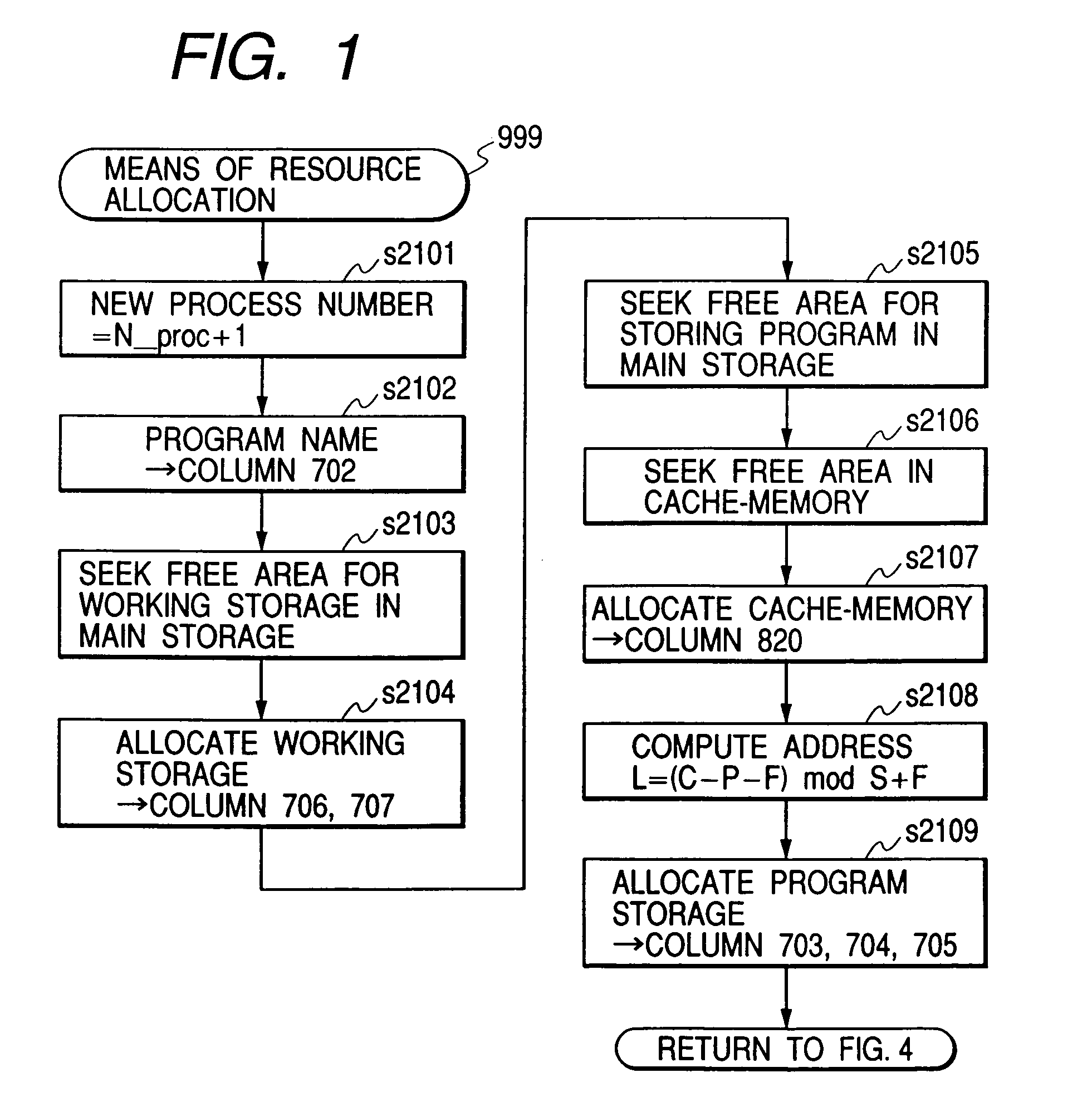

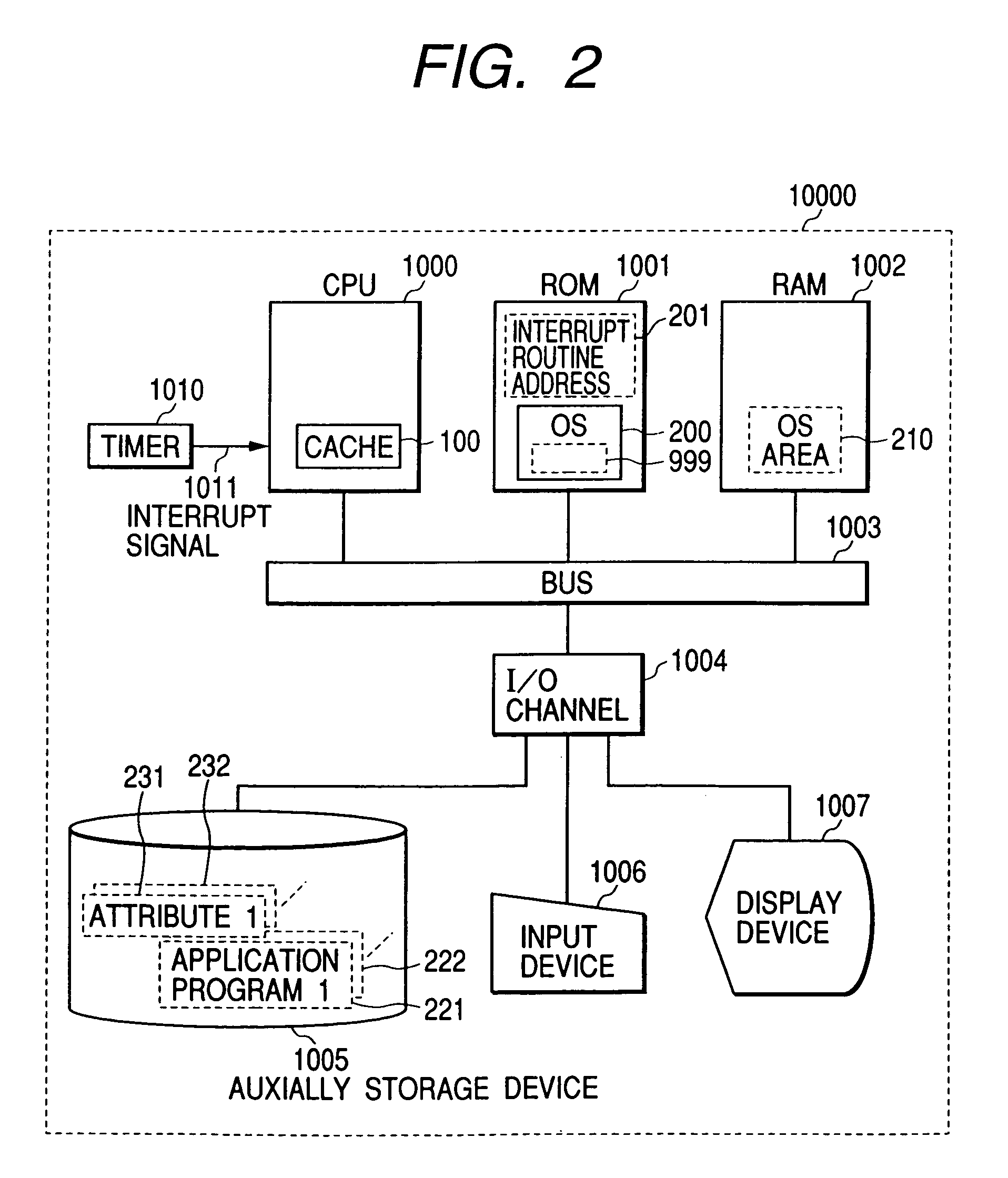

Cache memory allocation method

InactiveUS7000072B1Effective preventive measureAvoid bumpingMemory architecture accessing/allocationData processing applicationsDistribution methodComputerized system

To assure the multiprocessing performance of CPU on a microprocessor, the invention provides a method of memory mapping for multiple concurrent processes, thus minimizing cache thrashing. An OS maintains a management (mapping) table for controlling the cache occupancy status. When a process is activated, the OS receives from the process the positional information for a specific part (principal part) to be executed most frequently in the process and coordinates addressing of a storage area where the process is loaded by referring to the management table, ensuring that the cache address assigned for the principal part of the process differs from that for any other existing process. Taking cache memory capacity, configuration scheme, and process execution priority into account when executing the above coordination, a computer system is designed such that a highest priority process can have a first priority in using the cache.

Owner:HITACHI LTD

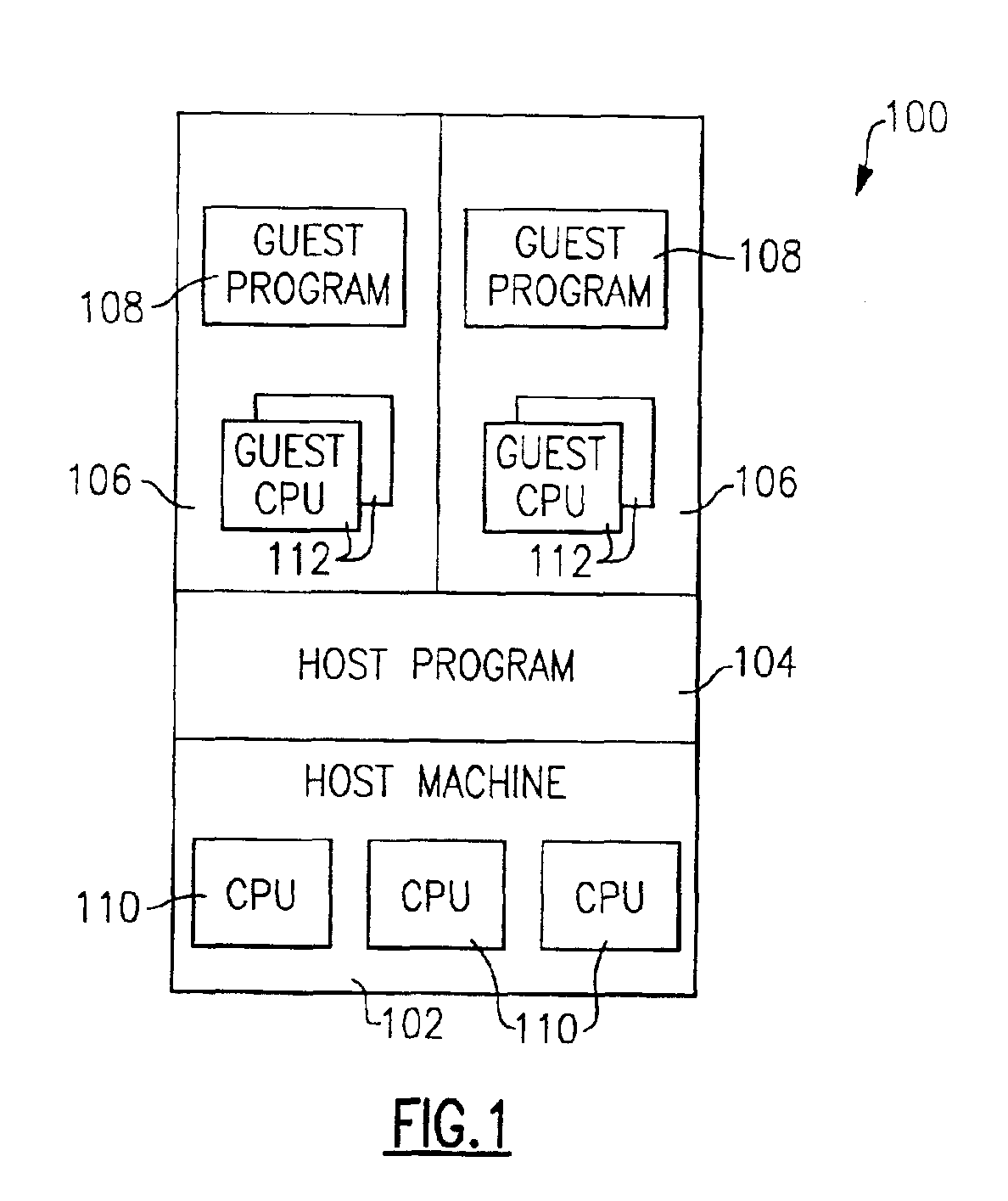

Method and apparatus for managing the execution of a broadcast instruction on a guest processor

InactiveUS7197585B2Program synchronisationMemory adressing/allocation/relocationHandling systemClient-side

A method and apparatus for managing the execution on guest processors of a broadcast instruction requiring a corresponding operation on other processors of a guest machine. Each of a plurality of processors on an information handling system is operable either as a host processor under the control of a host program executing on a host machine or as a guest processor under the control of a guest program executing on a guest machine. The guest machine is defined by the host program executing on the host machine and contains a plurality of such guest processors forming a guest multiprocessing configuration. A lock is defined for the guest machine containing an indication of whether it is being held by a host lock holder from the host program and a count of the number of processors holding the lock as guest lock holders. Upon decoding a broadcast instruction executing on a processor operating as a guest processor, the lock is tested to determine whether it is being held by a host lock holder. If the lock is being held by a host lock holder, an instruction interception is recognized and execution of the instruction is terminated. If the lock is not being held by a host lock holder, the lock is updated to indicate that it is being held by the guest processor as a shared lock holder, the instruction is executed, and then the lock is updated a second time to indicate that it is no longer being held by the guest processor as a shared lock holder.

Owner:IBM CORP

System and method for handling overflow in hardware transactional memory with locks

InactiveUS20090177847A1Good conditionEasy to implementMemory systemsTransaction processingGranularityTransactional memory

A system, method and computer program product for processing overflow transactions in a transactional memory system. The transactional memory system is provided in a multiprocessing system having one or more processor devices and a shared memory storage system, and implements a best effort hardware transactional memory system. The method includes acquiring, by a requesting processor, lockbits associated with a memory structure of the shared memory storage system to be reserved for an overflowing transaction. The lockbits determine the granularity at which memory reservations for an overflow transaction are recorded. The method includes implementation of control mechanism for controlling concurrency between overflowing and non-overflowing transactions requested by processor devices in the multiprocessing system, the method enabling only one overflowing transaction to execute at a time in the multiprocessing system.

Owner:IBM CORP

Method and apparatus for profiling execution of code using multiple processors

InactiveUS20070220495A1Minimal impactError detection/correctionSpecific program execution arrangementsData shippingApplication software

A computer implemented method, apparatus, and computer usable medium for gathering performance related data in a multiprocessing environment. Instrumentation code is executed on a processor that minimizes the distortion to the processor resources used to execute the program to be profiled. Data is written by the instrumentation code to a shared memory in response to an event occurring during execution of the program. The data is generated during execution of the program on the processor and the instrumentation code uses shared memory to convey the data to a profiling application running on a set of profiling processors. The data is collected by the set of profiling processors in the shared memory written by the instrumentation code.

Owner:LINKEDIN

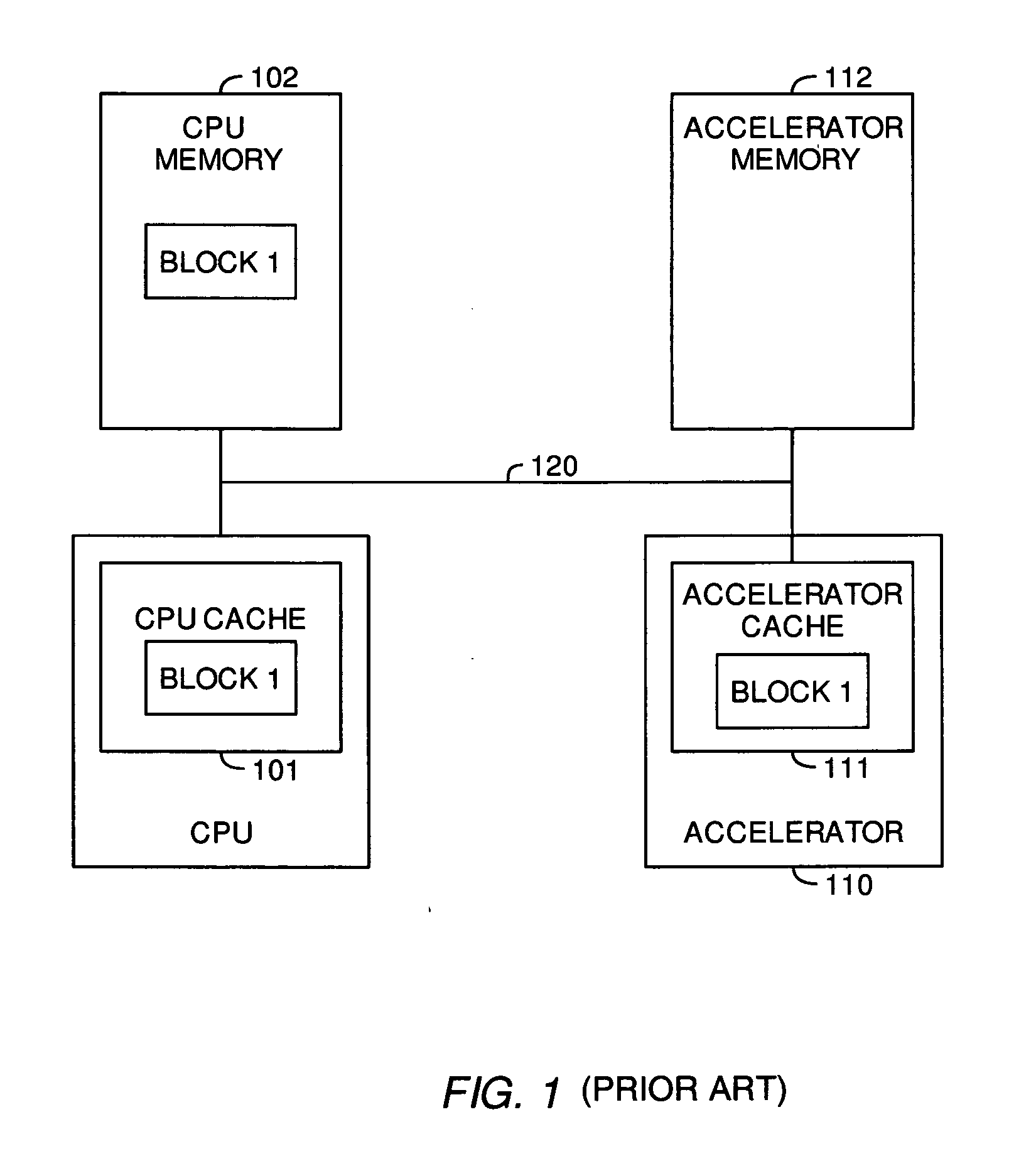

Low-cost cache coherency for accelerators

ActiveUS20070226424A1Reduce consumptionMemory architecture accessing/allocationMemory systemsTerm memoryMultiprocessing

Embodiments of the invention provide methods and systems for reducing the consumption of inter-node bandwidth by communications maintaining coherence between accelerators and CPUs. The CPUs and the accelerators may be clustered on separate nodes in a multiprocessing environment. Each node that contains a shared memory device may maintain a directory to track blocks of shared memory that may have been cached at other nodes. Therefore, commands and addresses may be transmitted to processors and accelerators at other nodes only if a memory location has been cached outside of a node. Additionally, because accelerators generally do not access the same data as CPUs, only initial read, write, and synchronization operations may be transmitted to other nodes. Intermediate accesses to data may be performed non-coherently. As a result, the inter-chip bandwidth consumed for maintaining coherence may be reduced.

Owner:IBM CORP

System and method for command routing and execution in a multiprocessing system

InactiveUS6389543B1Digital data processing detailsMultiprogramming arrangementsHandling systemComputer science

Any node in a multi-node processing system may be employed to route commands to a selected group of one or more nodes, and initiate local command execution if permitted by local security provisions. The system includes multiple application nodes interconnected by a network, and one or more administrator nodes each coupled to at least one application node. Each administrator node has assigned security credentials. The process starts when the administrator node transmits input to one of the application nodes (an "entry" node). The input includes a command and routing information specifying a list of desired application nodes ("destination" nodes) to execute the command. In response to this input, the entry node transmits messages to all destination nodes to (1) log-in to the destination nodes as the originating administrator node, and (2) request the destination nodes to execute the command. Consulting locally stored security information, each destination node determines whether the entry node's log-in should succeed. If so, the destination node consults locally stored authority information to determine whether the initiating administrator node has authority to execute the requested command. If so, the destination node executes the command. The destination node sends the entry node a response representing the outcome of command execution. The entry node organizes such responses and provides a representative output.

Owner:IBM CORP

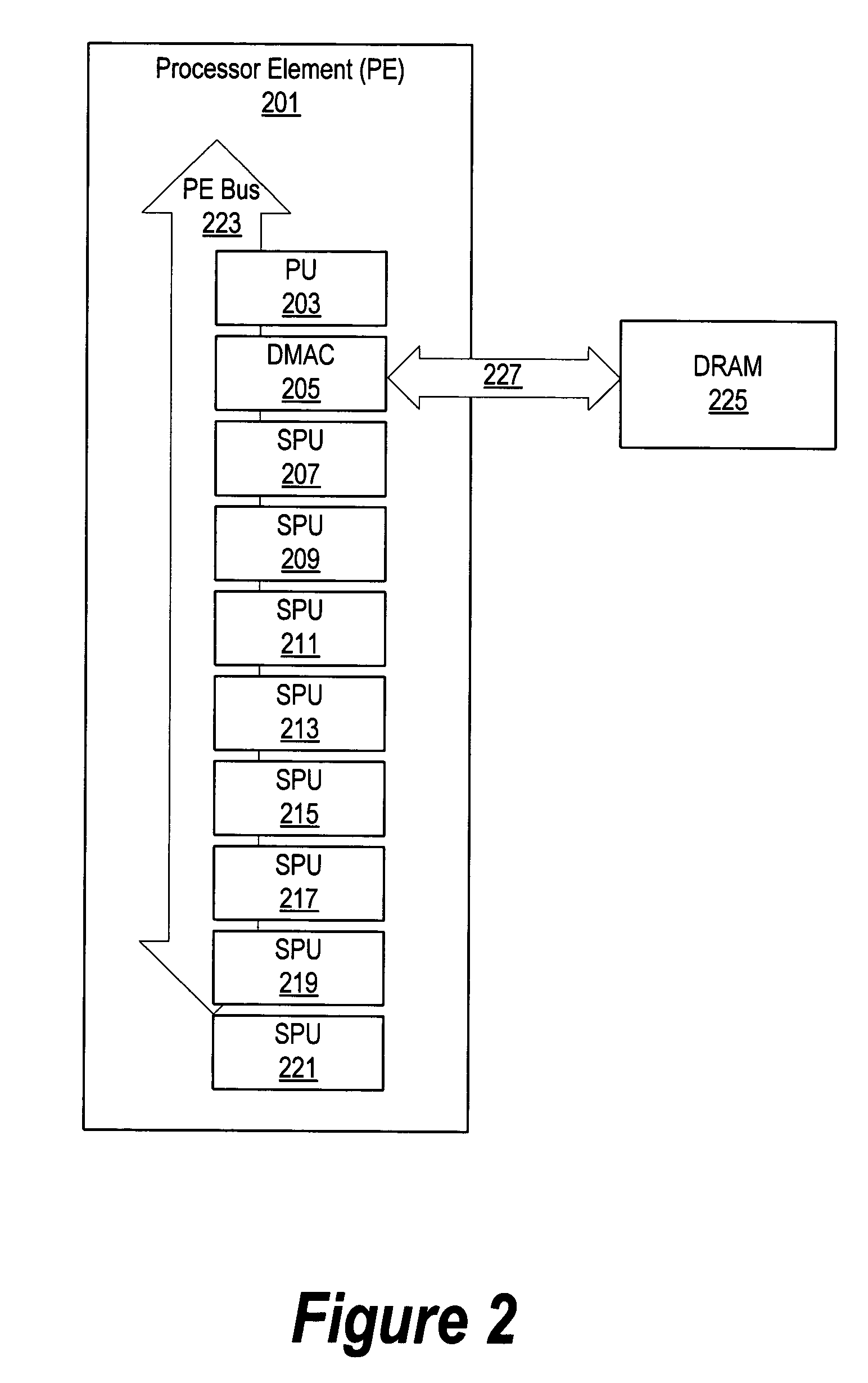

System and method for encrypting data using a plurality of processors

InactiveUS20050071651A1Key distribution for secure communicationDigital data processing detailsEncrypted functionMemory map

A system and method are provided to dedicate one or more processors in a multiprocessing system to performing encryption functions. When the system initializes, one of the synergistic processing unit (SPU) processors is configured to run in a secure mode wherein the local memory included with the dedicated SPU is not shared with the other processors. One or more encryption keys are stored in the local memory during initialization. During initialization, the SPUs receive nonvolatile data, such as the encryption keys, from nonvolatile register space. This information is made available to the SPU during initialization before the SPUs local storage might be mapped to a common memory map. In one embodiment, the mapping is performed by another processing unit (PU) that maps the shared SPUs' local storage to a common memory map.

Owner:IBM CORP +1

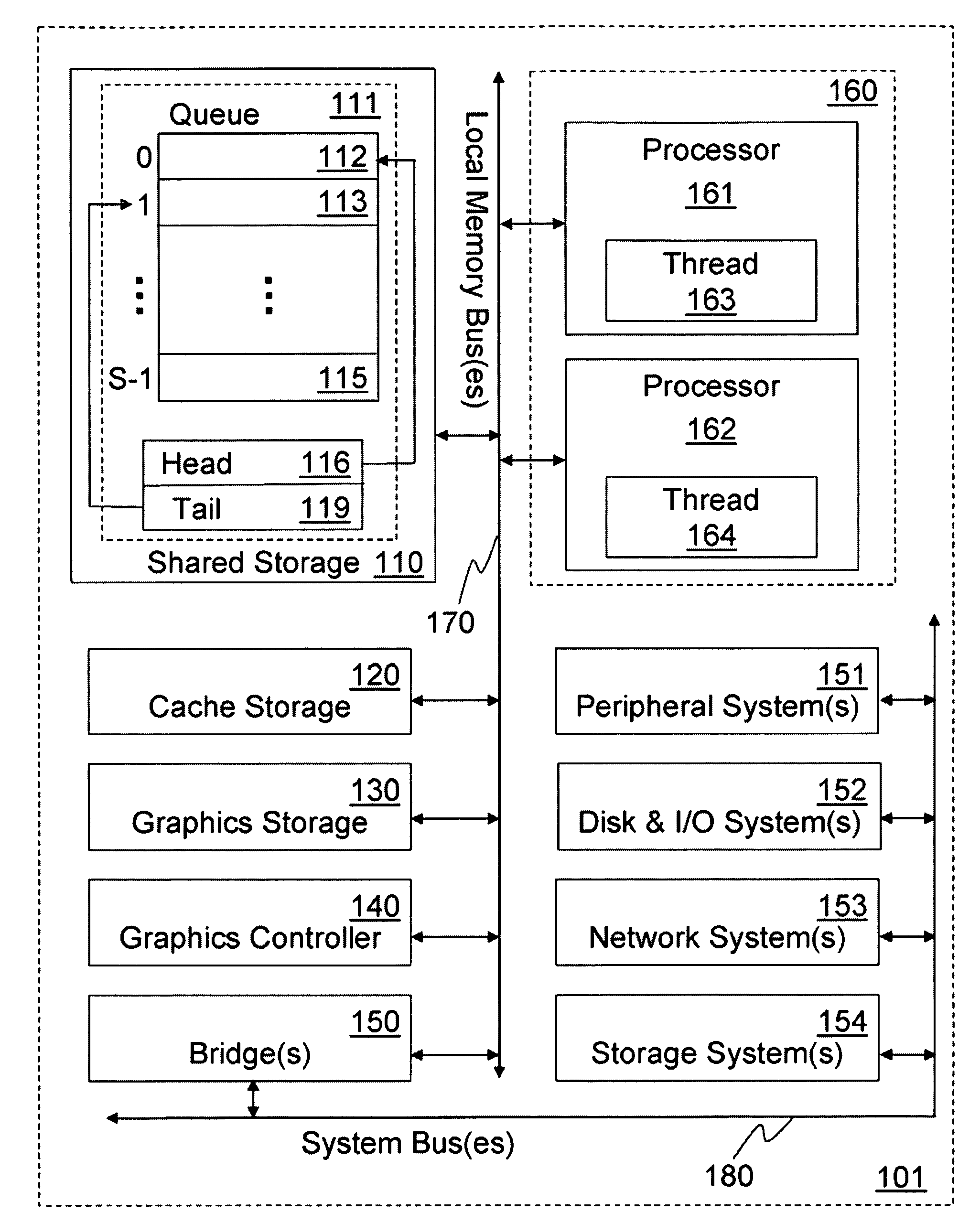

Lock-free circular queue in a multiprocessing system

Lock-free circular queues relying only on atomic aligned read / write accesses in multiprocessing systems are disclosed. In one embodiment, when comparison between a queue tail index and each queue head index indicates that there is sufficient room available in a circular queue for at least one more queue entry, a single producer thread is permitted to perform an atomic aligned write operation to the circular queue and then to update the queue tail index. Otherwise an enqueue access for the single producer thread would be denied. When a comparison between the queue tail index and a particular queue head index indicates that the circular queue contains at least one valid queue entry, a corresponding consumer thread may be permitted to perform an atomic aligned read operation from the circular queue and then to update that particular queue head index. Otherwise a dequeue access for the corresponding consumer thread would be denied.

Owner:INTEL CORP

Cache sharing for a chip multiprocessor or multiprocessing system

InactiveUS7076609B2Energy efficient ICTMemory adressing/allocation/relocationMultiprocessingChip multi processor

Owner:INTEL CORP

Multicore memory management system

ActiveUS7984246B1Memory architecture accessing/allocationMemory systemsDirect communicationMultiprocessing

A multiprocessing system includes, in part, a multitude of processing units each in direct communication with a bus, a multitude of memory units in direct communication with the bus, and at least one shared memory not in direct communication with the bus but directly accessible to the plurality of processing units. The shared memory may be a cache memory that stores instructions and / or data. The shared memory includes a multitude of banks, a first subset of which may store data and a second subset of which may store instructions. A conflict detection block resolves access conflicts to each of the of the banks in accordance with a number of address bits and a predefined arbitration scheme. The conflict detection block provides each of the processing units with sequential access to the banks during consecutive cycles of a clock signal.

Owner:MARVELL ASIA PTE LTD

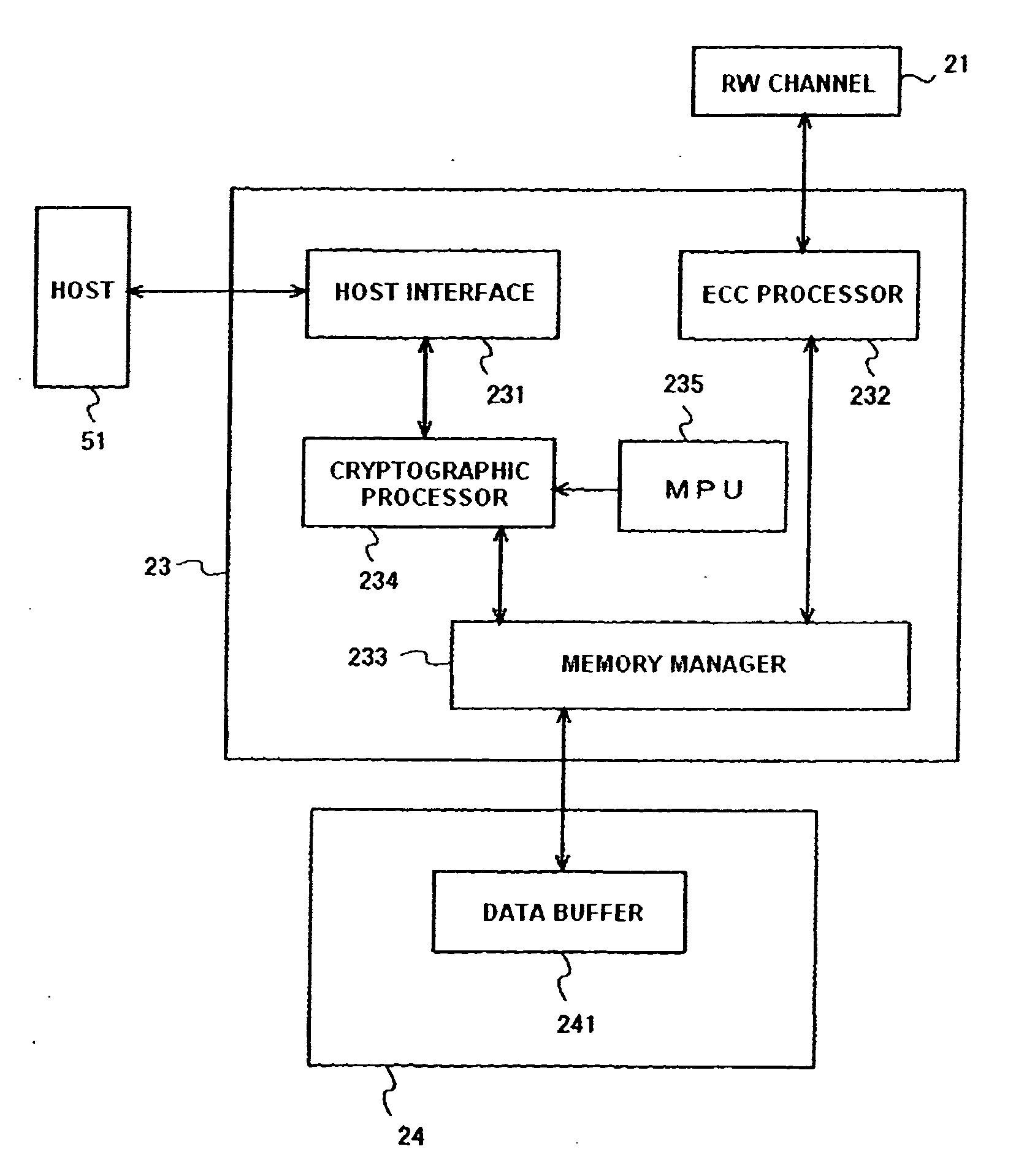

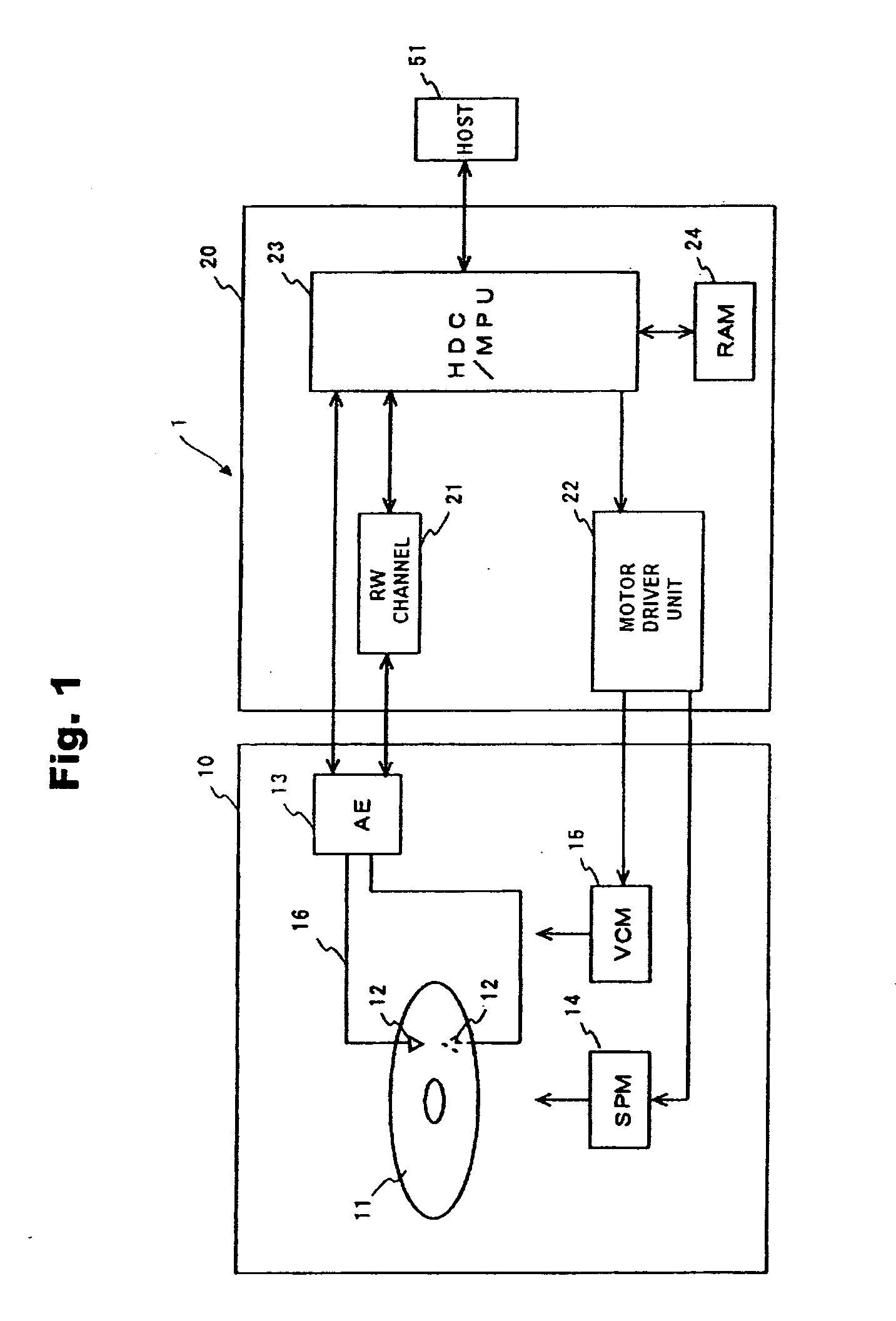

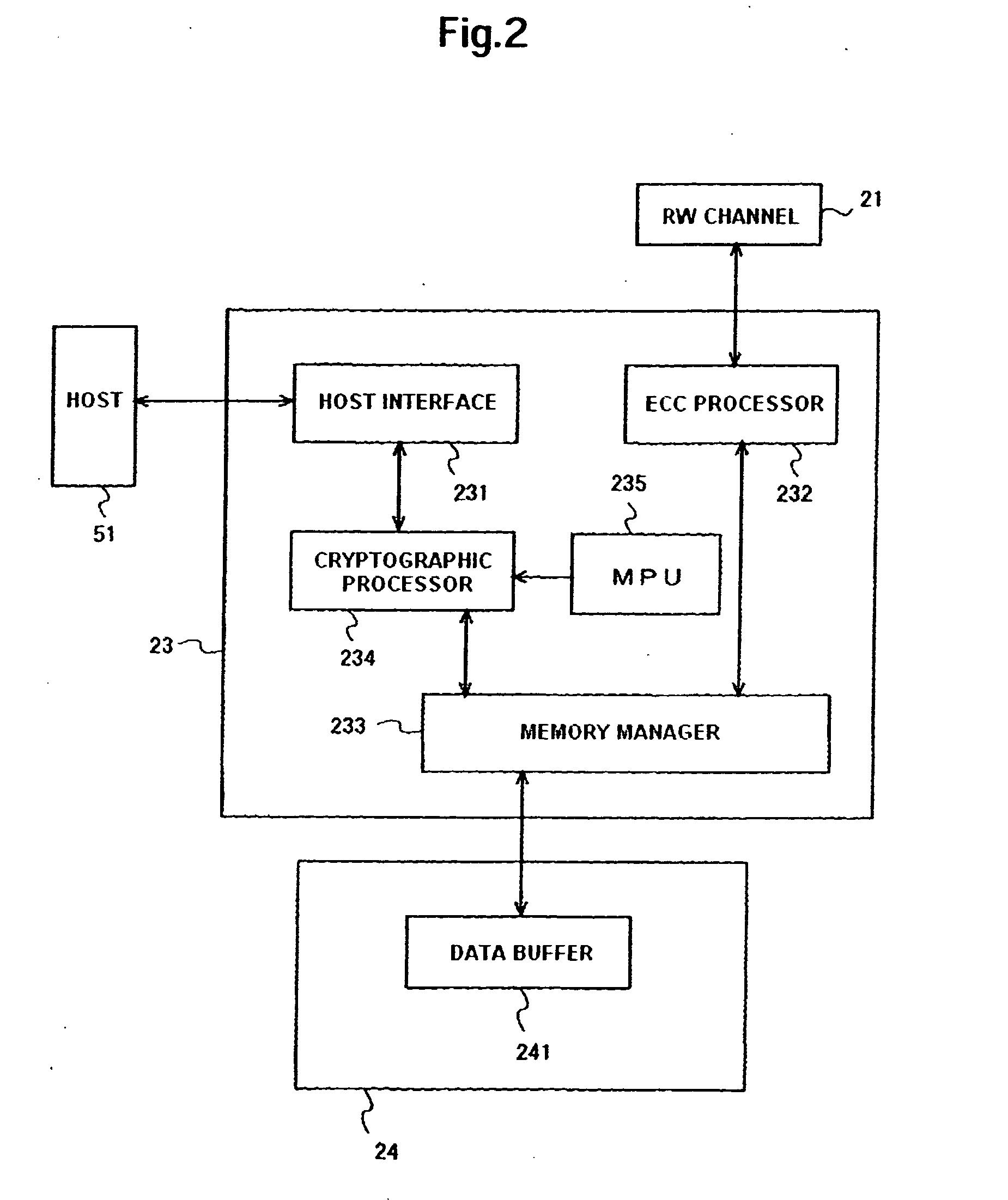

Data storage device and management method of cryptographic key thereof

ActiveUS20100031061A1More securityUnauthorized memory use protectionRecord information storageHard disc drivePassword

Embodiments of the present invention help to securely manage a data cryptographic key in a data storage device. In an embodiment of the present invention, a cryptographic processor for encrypting and decrypting data is located between a host interface and a memory manager. In parts of the hard disk drive (HDD), except for the host interface, the HDD handles user data in an encrypted state. A data cryptographic key which the cryptographic processor uses to encrypt and decrypt the user data is encrypted and stored in a magnetic disk. A multiprocessing unit (MPU) decrypts the data cryptographic key using a password and a random number to supply it to the cryptographic processor. Using the password and the random number, the HDD can manage the data cryptographic key with more security.

Owner:WESTERN DIGITAL TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com