Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

186 results about "Cache hierarchy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

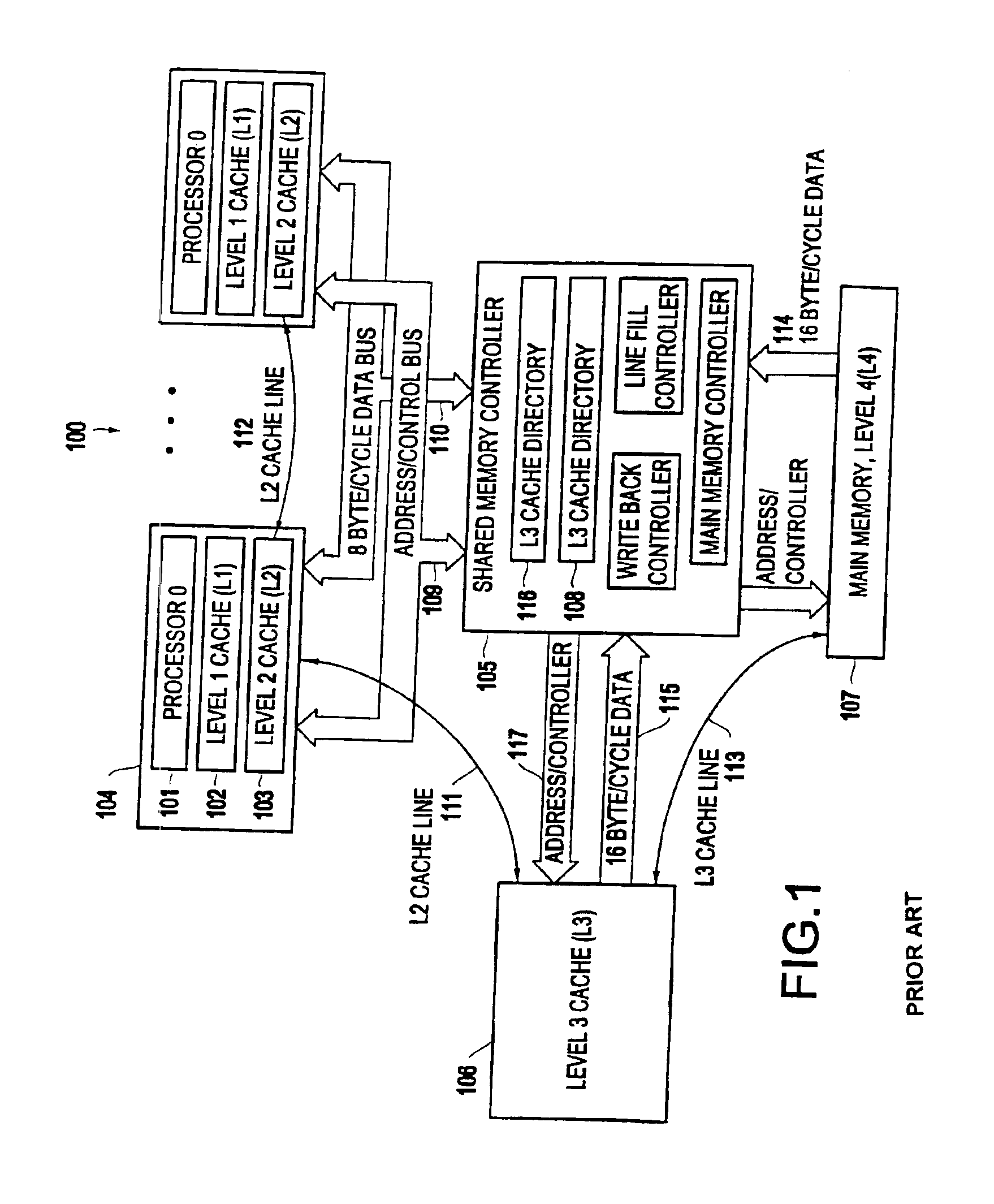

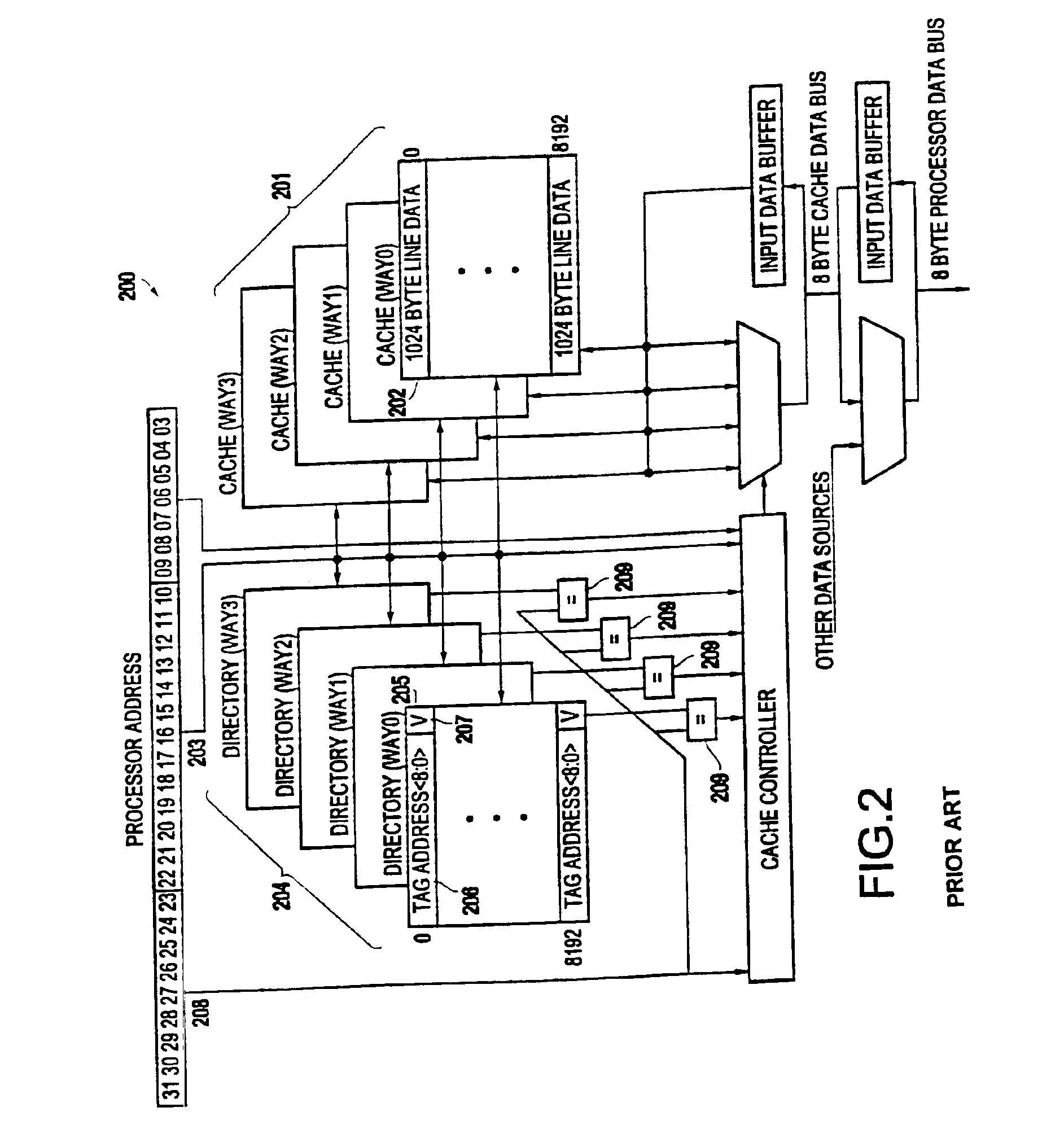

Inventor

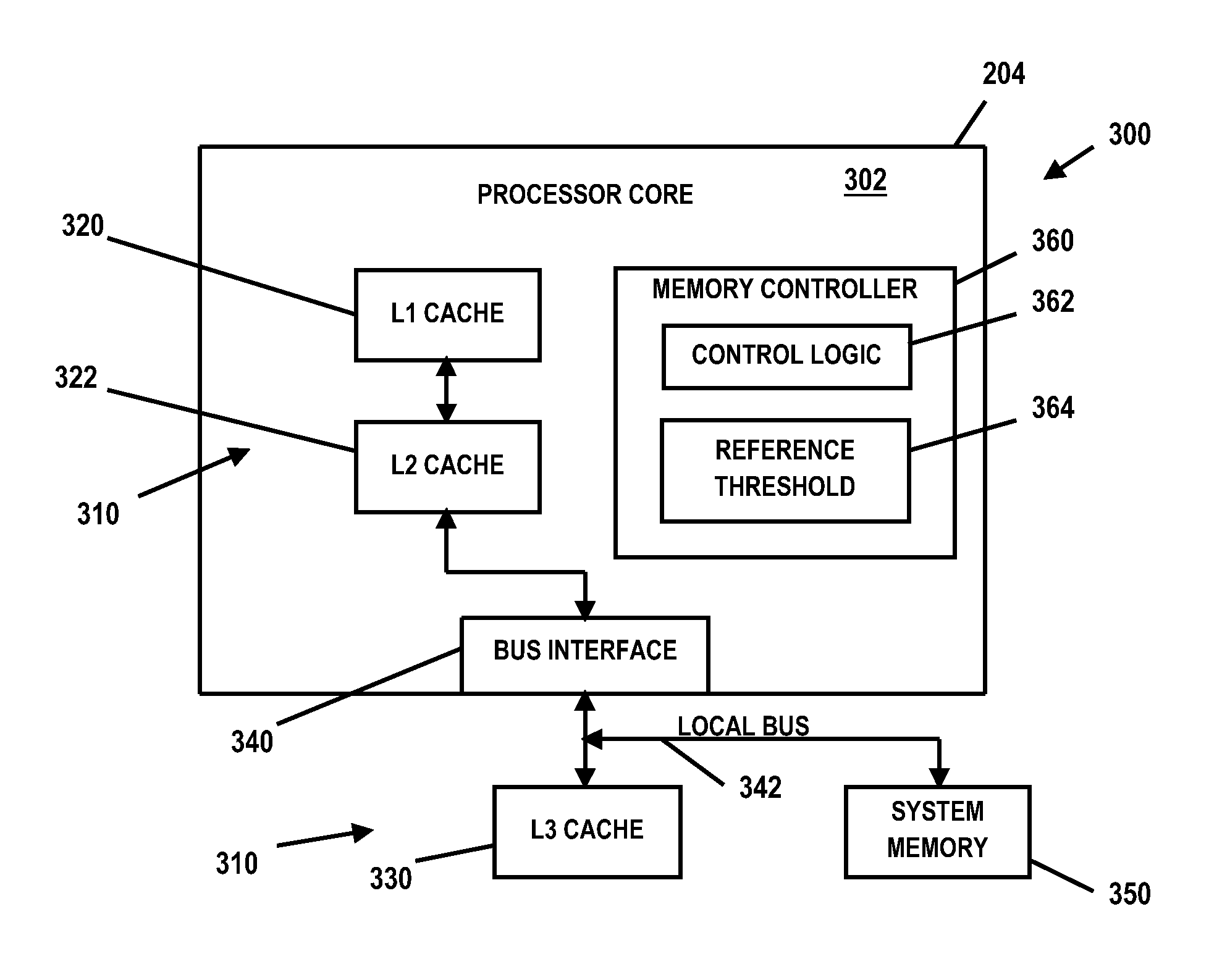

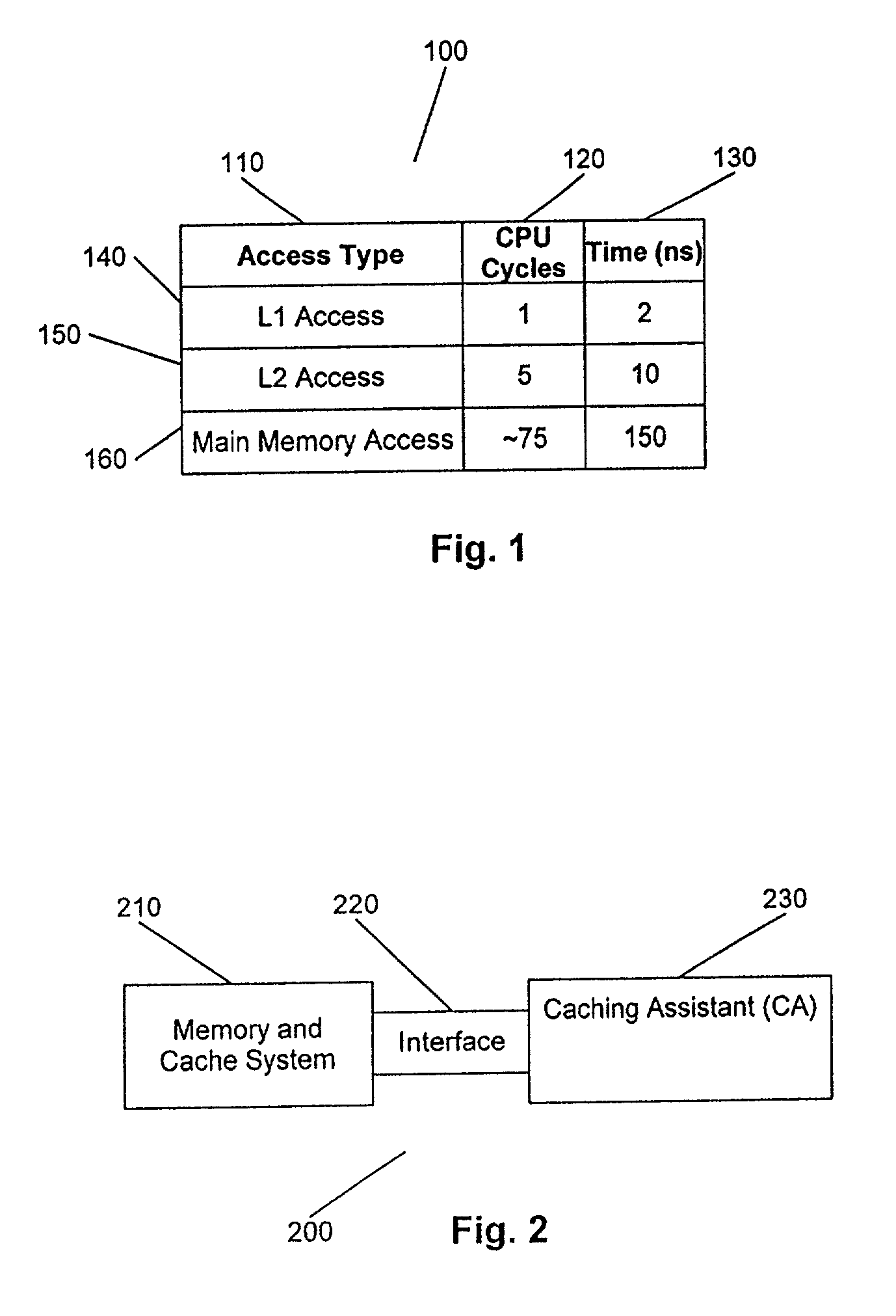

Cache hierarchy, or multi-level caches, refers to a memory architecture which uses a hierarchy of memory stores based on varying access speeds to cache data.Highly-requested data is cached in high-speed access memory stores, allowing swifter access by central processing unit (CPU) cores.. Cache hierarchy is a form and part of memory hierarchy, and can be considered a form of tiered storage.

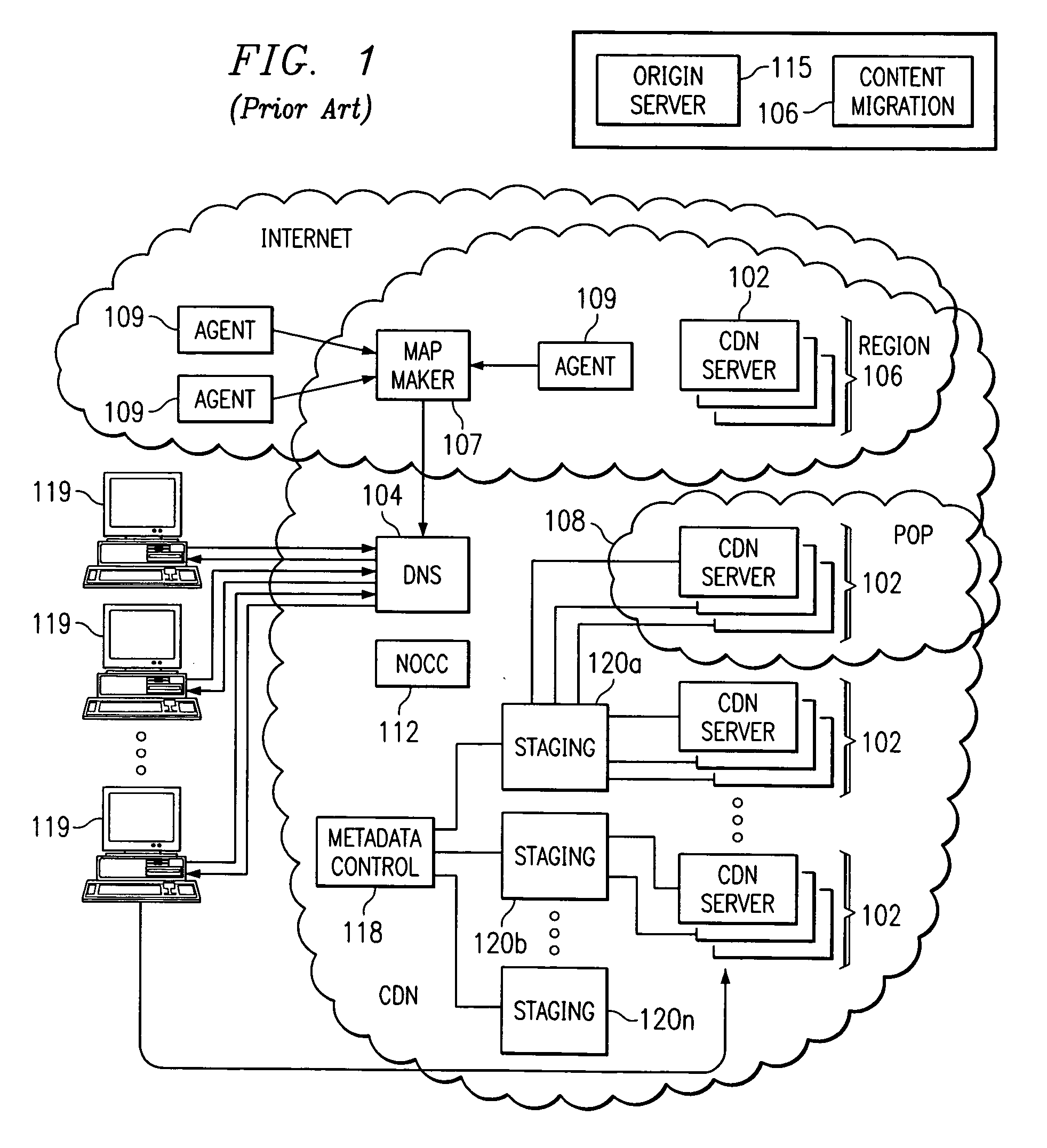

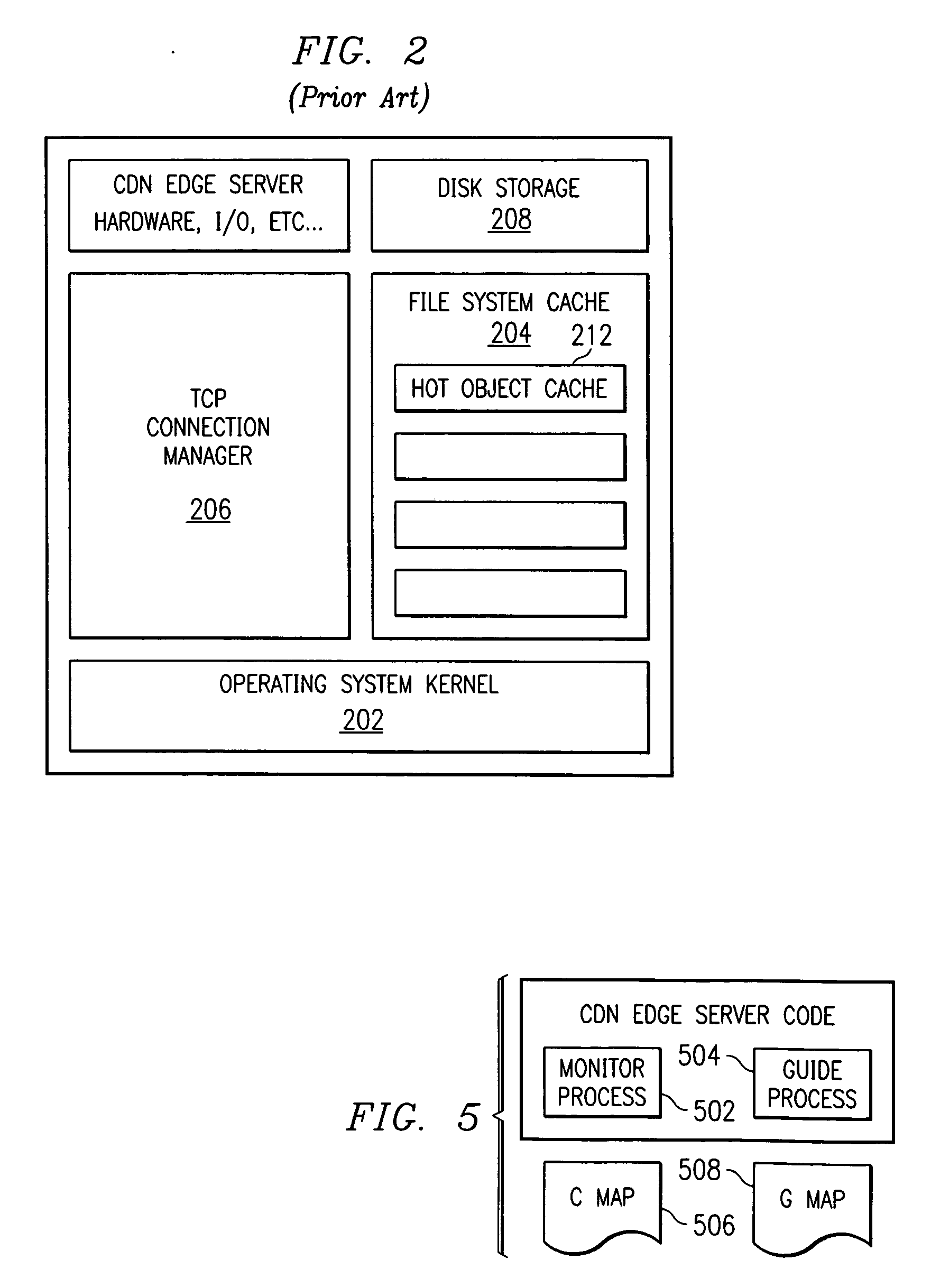

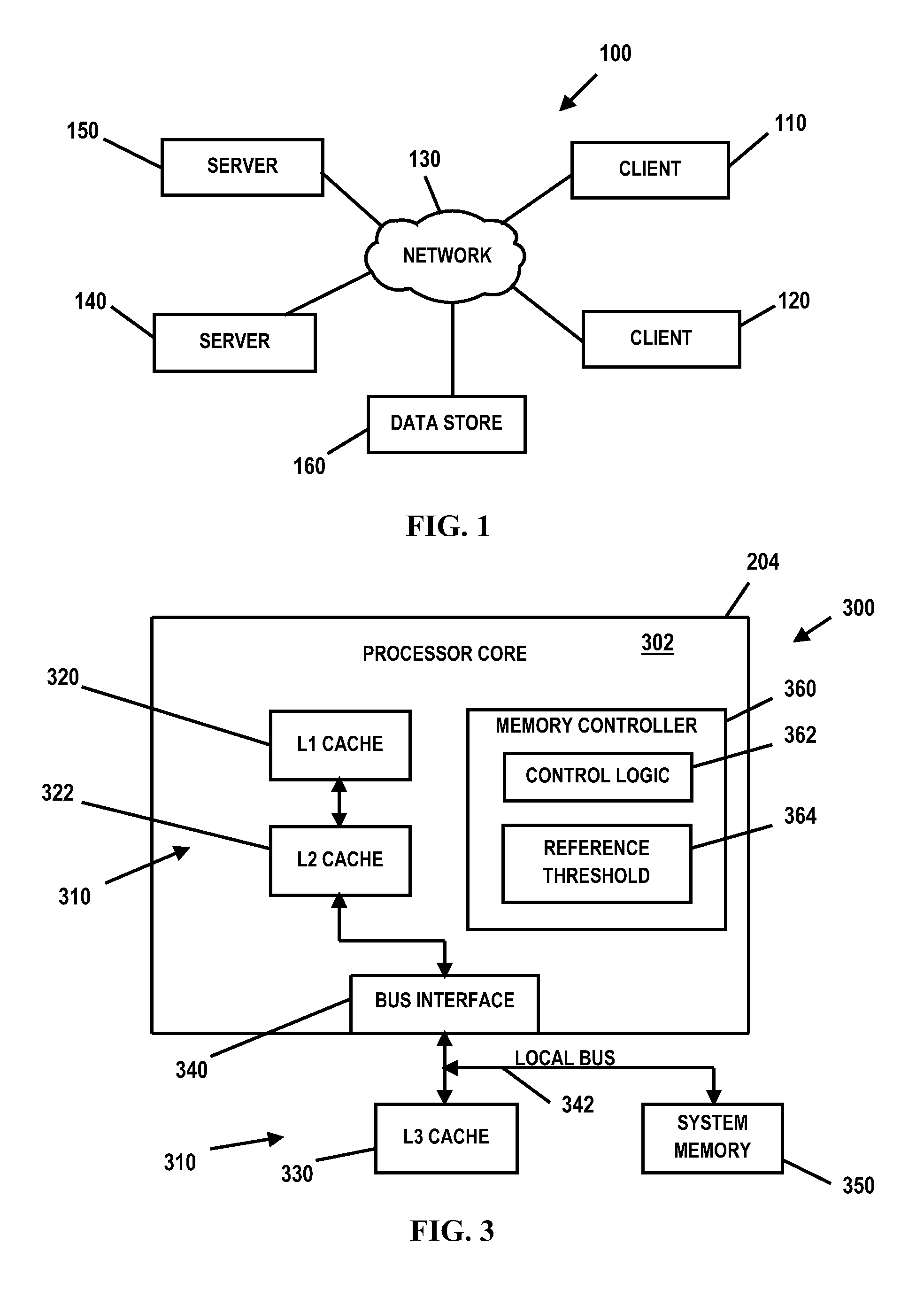

Method and system for tiered distribution in a content delivery network

InactiveUS7133905B2Effective bufferExcessive trafficMultiple digital computer combinationsLocation information based serviceCache hierarchyEdge server

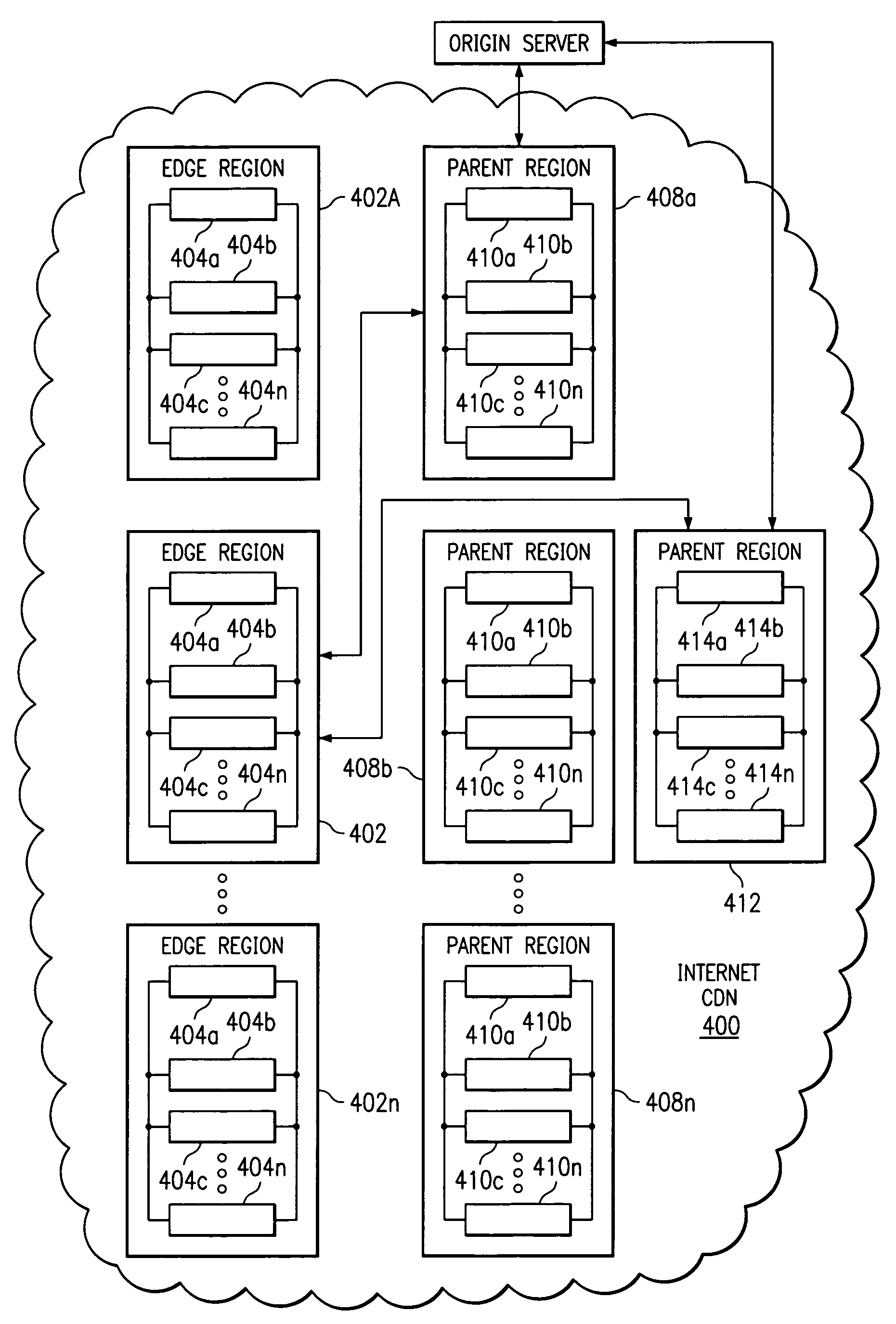

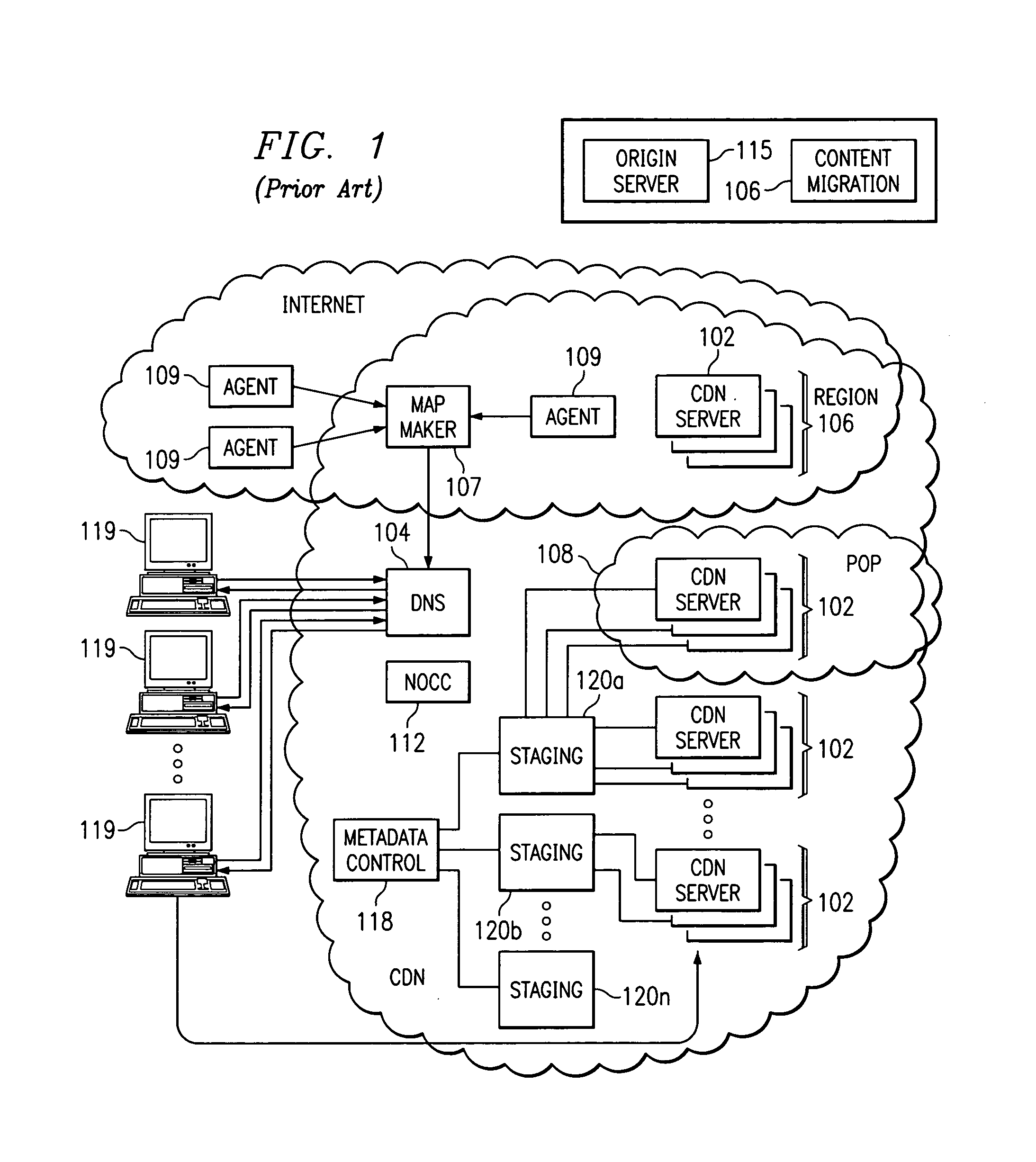

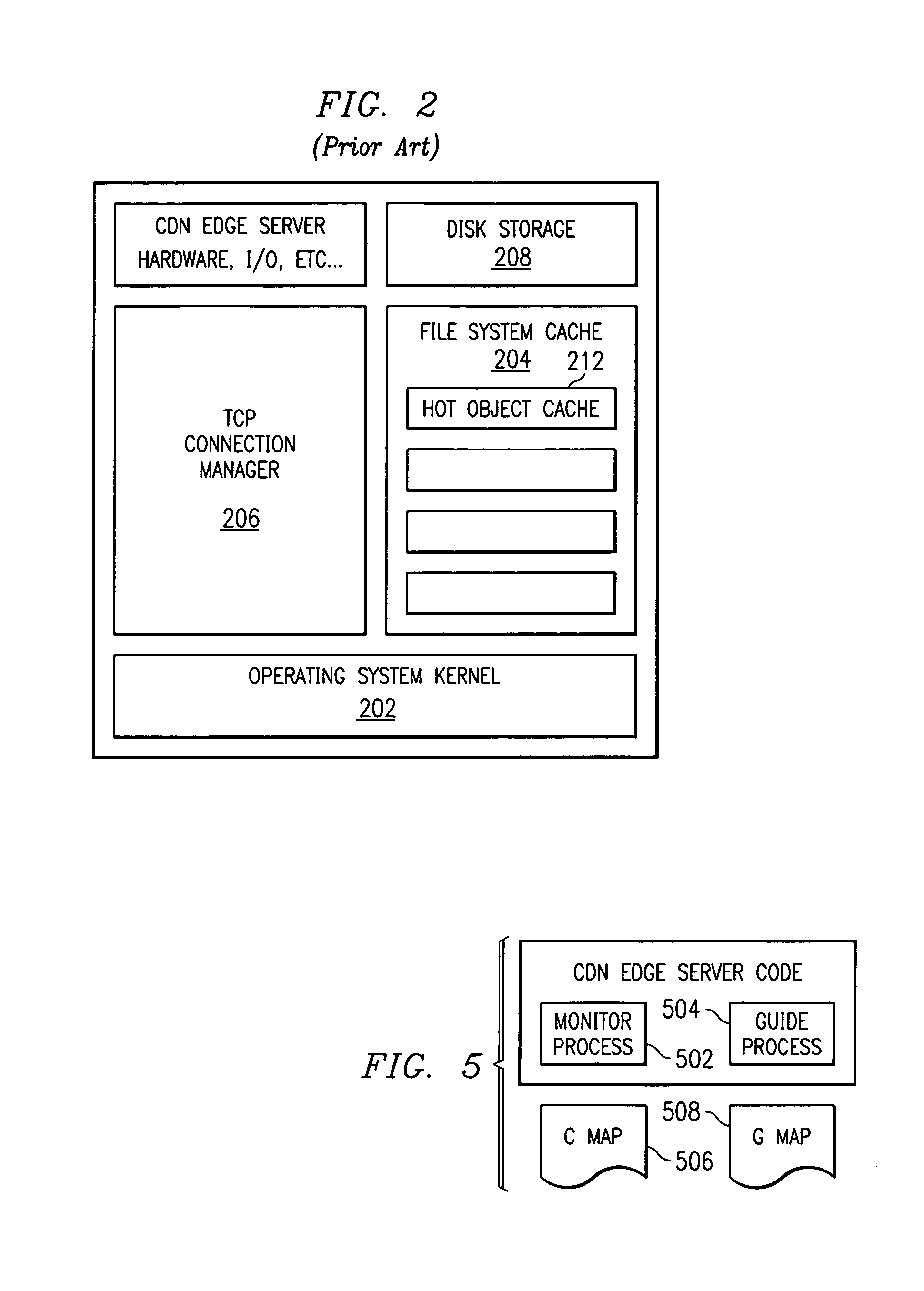

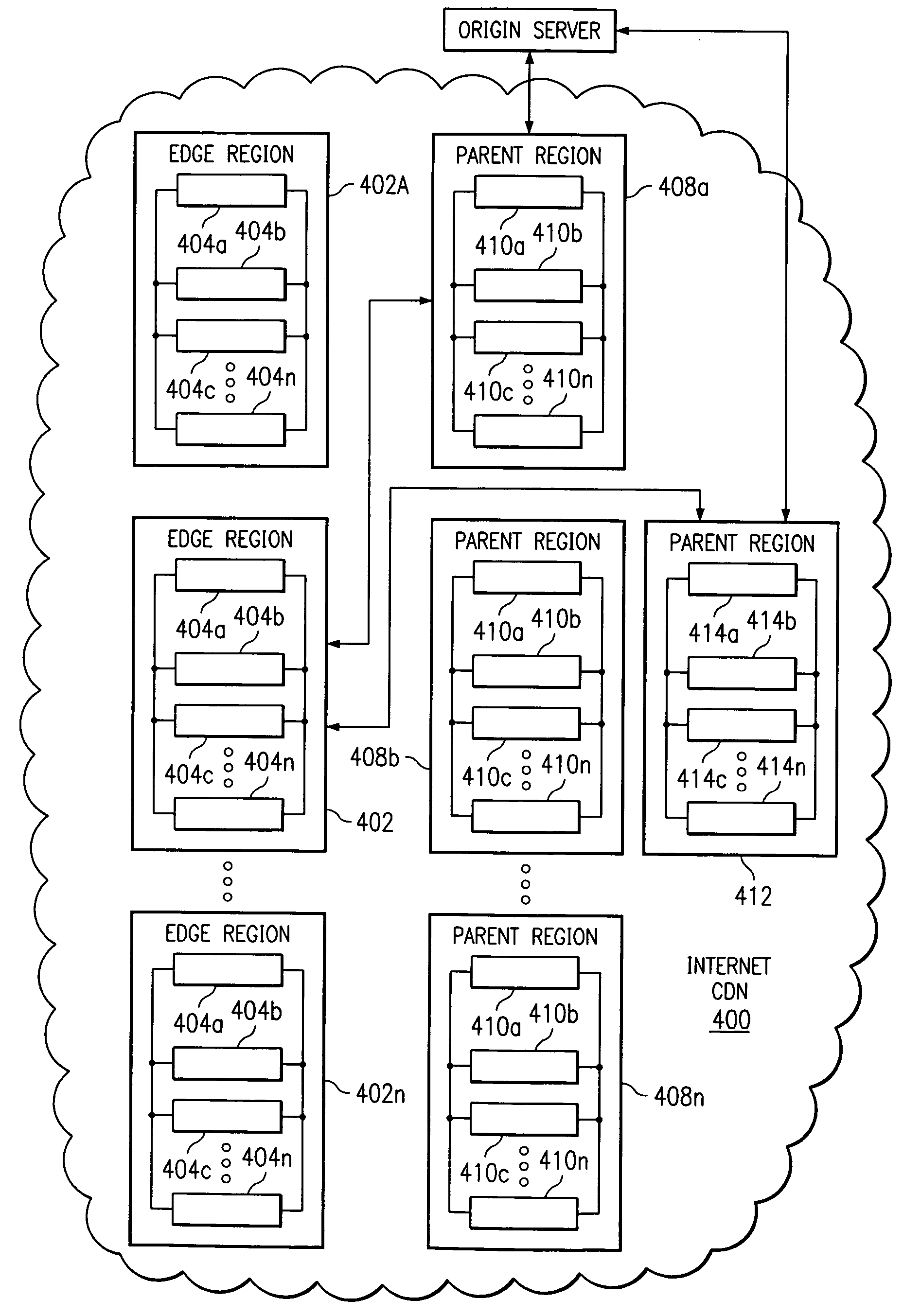

A tiered distribution service is provided in a content delivery network (CDN) having a set of surrogate origin (namely, “edge”) servers organized into regions and that provide content delivery on behalf of participating content providers, wherein a given content provider operates an origin server. According to the invention, a cache hierarchy is established in the CDN comprising a given edge server region and either (a) a single parent region, or (b) a subset of the edge server regions. In response to a determination that a given object request cannot be serviced in the given edge region, instead of contacting the origin server, the request is provided to either the single parent region or to a given one of the subset of edge server regions for handling, preferably as a function of metadata associated with the given object request. The given object request is then serviced, if possible, by a given CDN server in either the single parent region or the given subset region. The original request is only forwarded on to the origin server if the request cannot be serviced by an intermediate node.

Owner:AKAMAI TECH INC

Method and system for tiered distribution in a content delivery network

InactiveUS20070055764A1Excessive trafficEffectively buffering web site infrastructureDigital computer detailsLocation information based serviceCache hierarchyEdge server

Owner:AKAMAI TECH INC

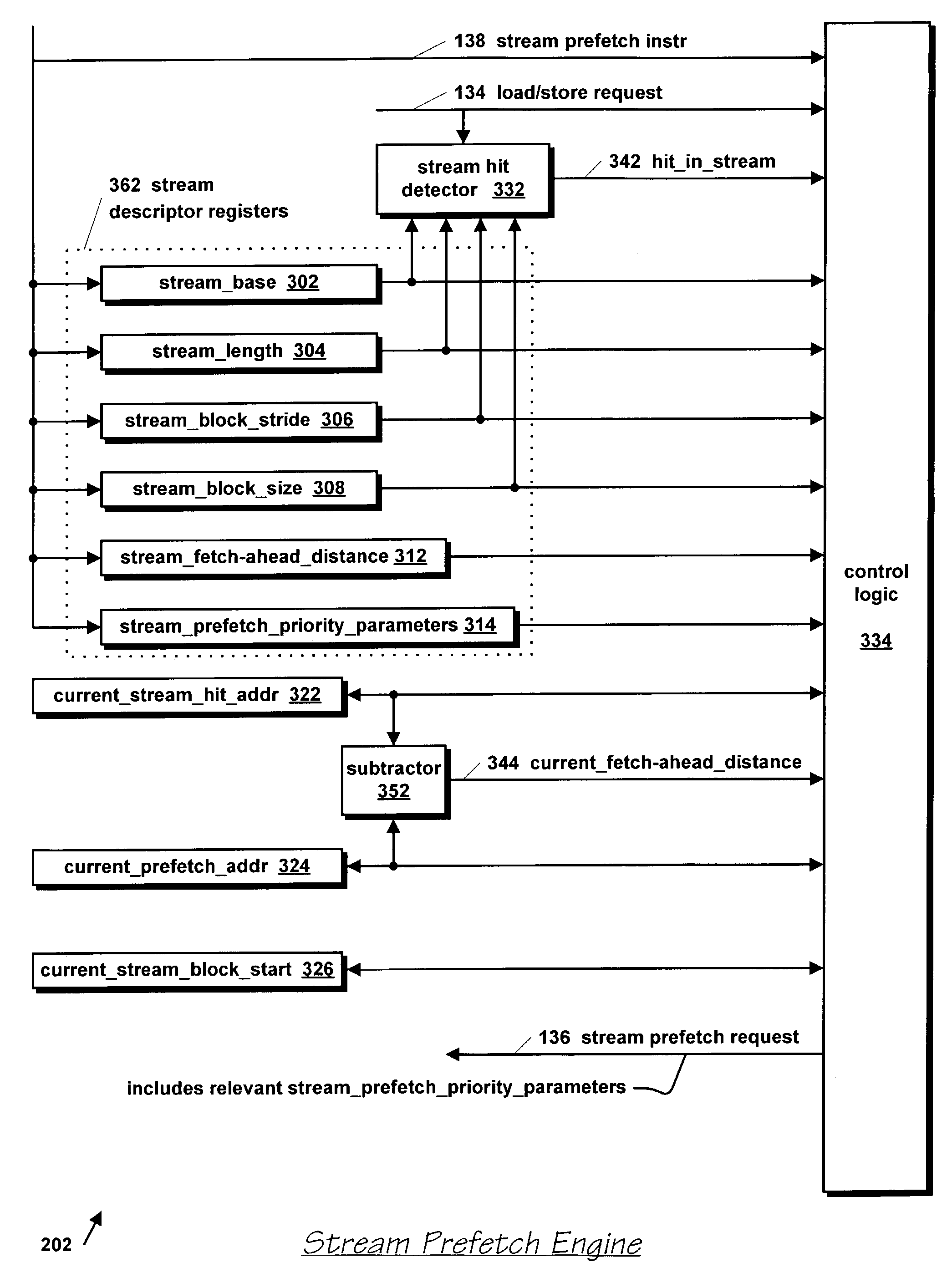

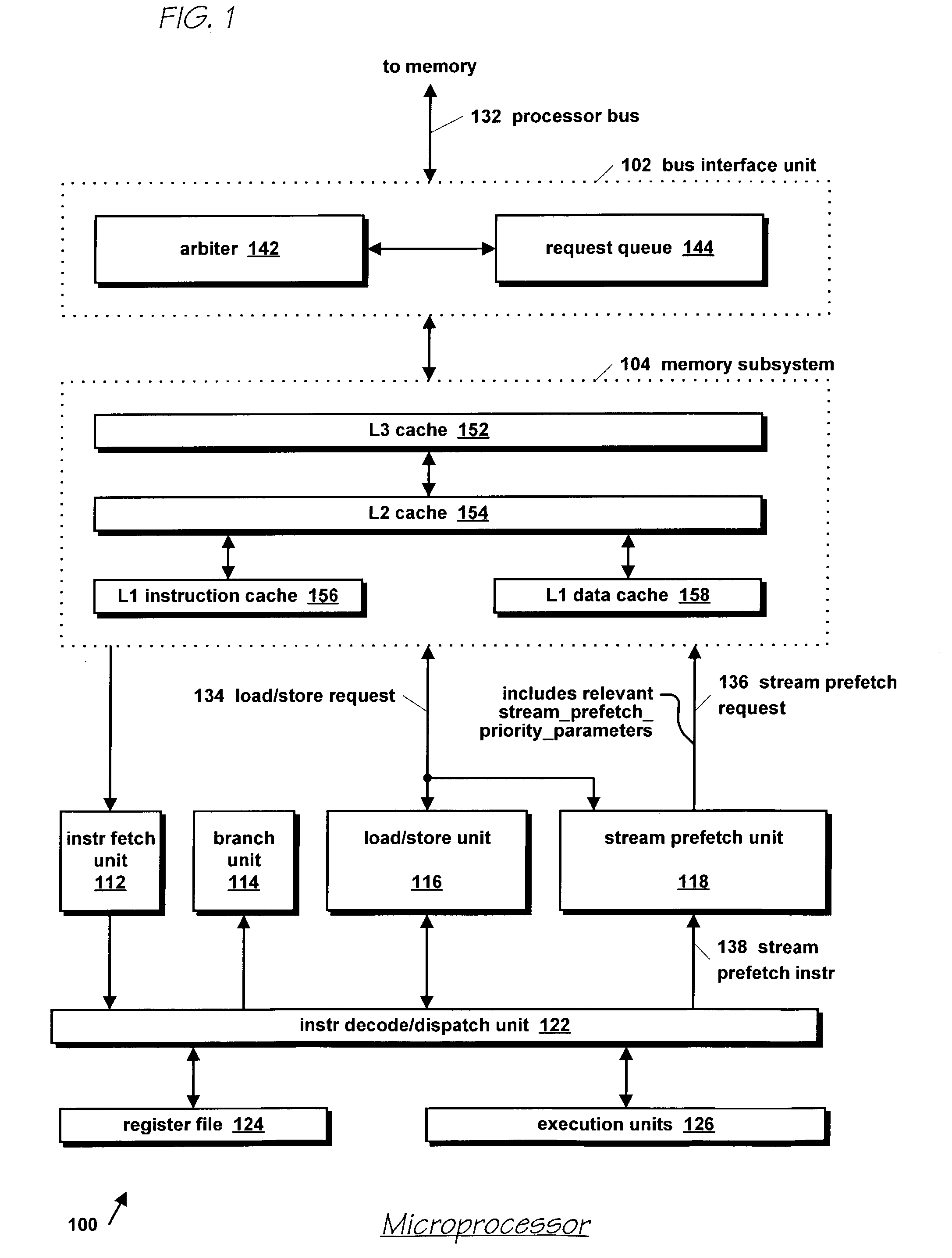

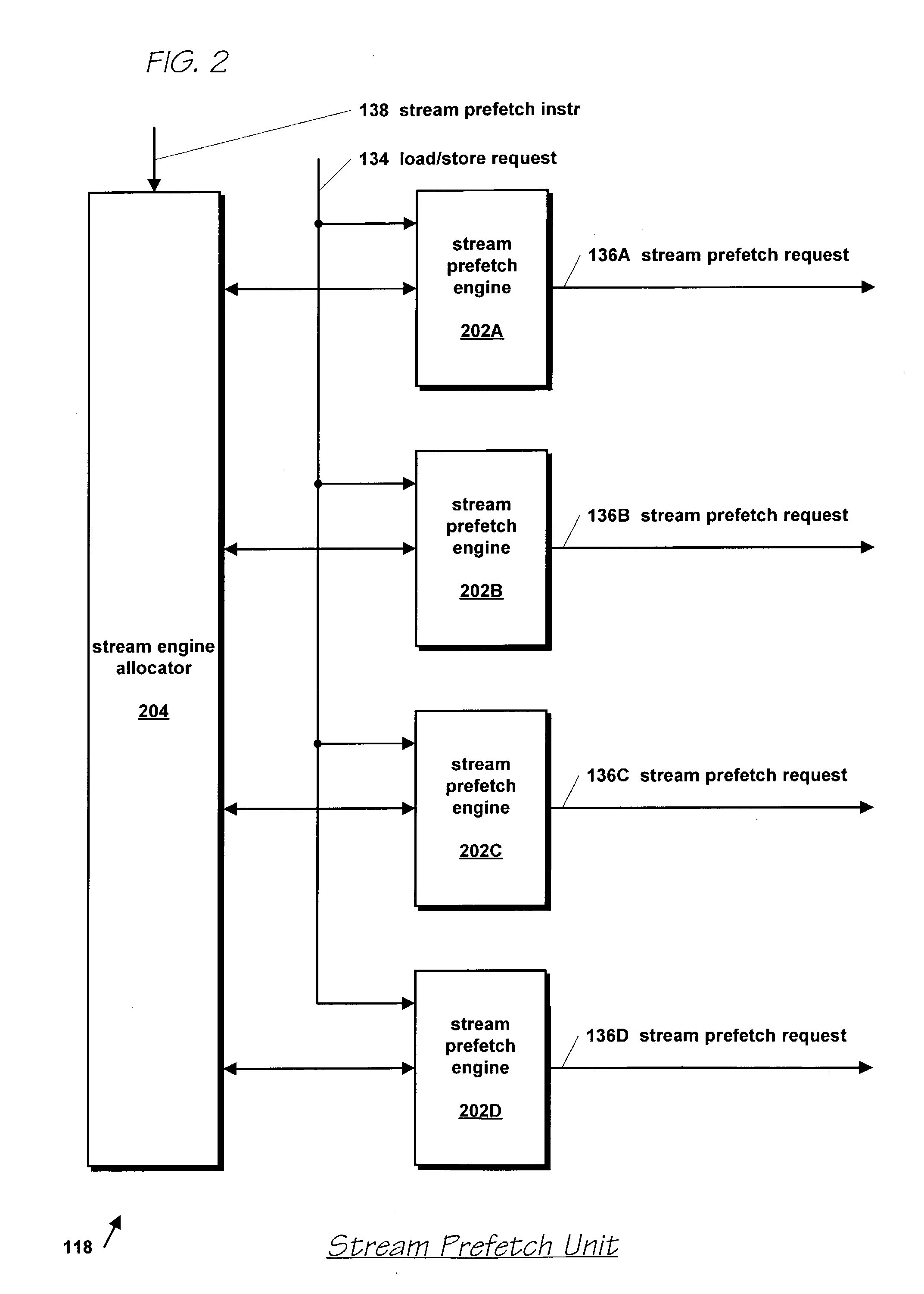

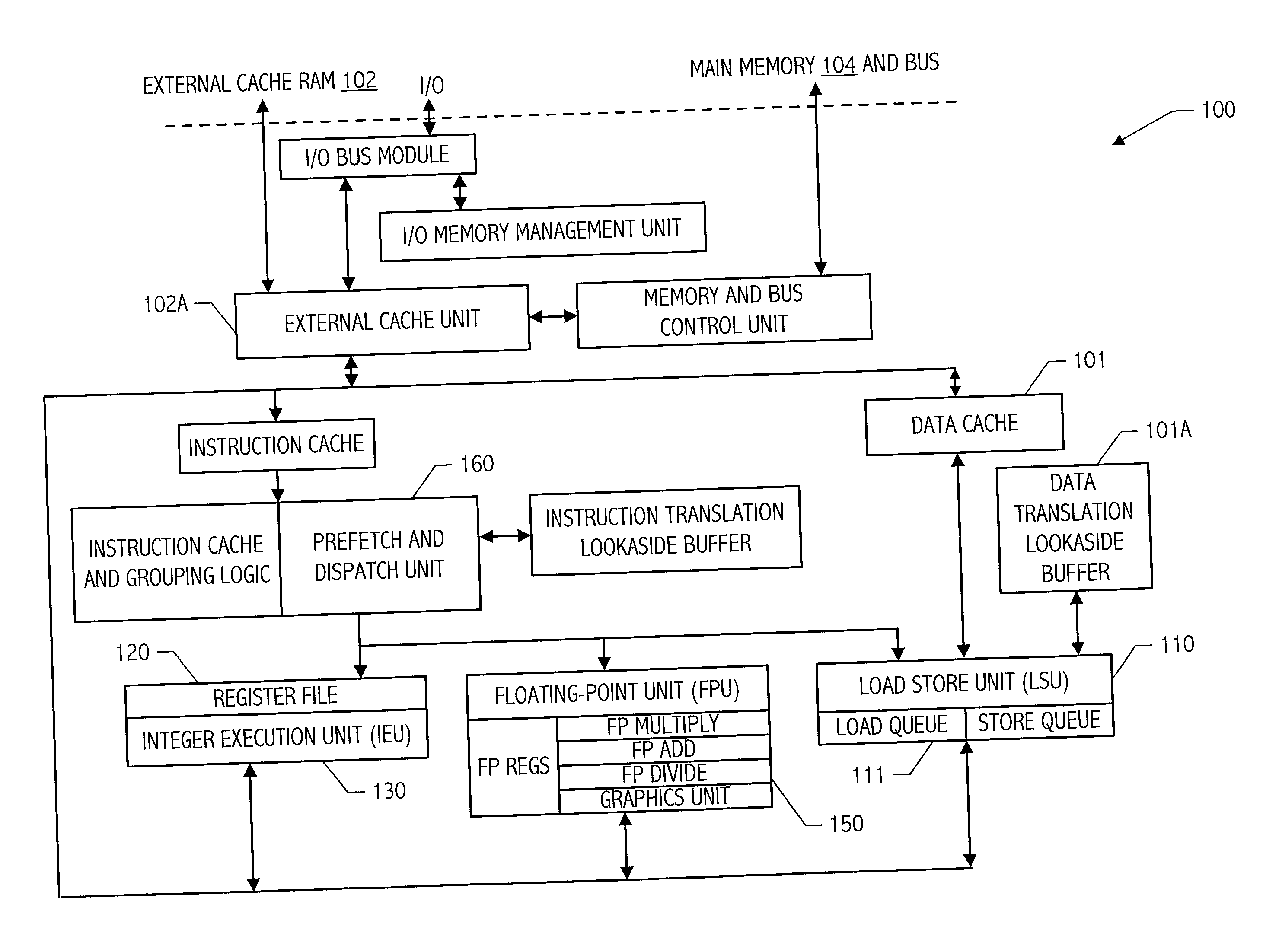

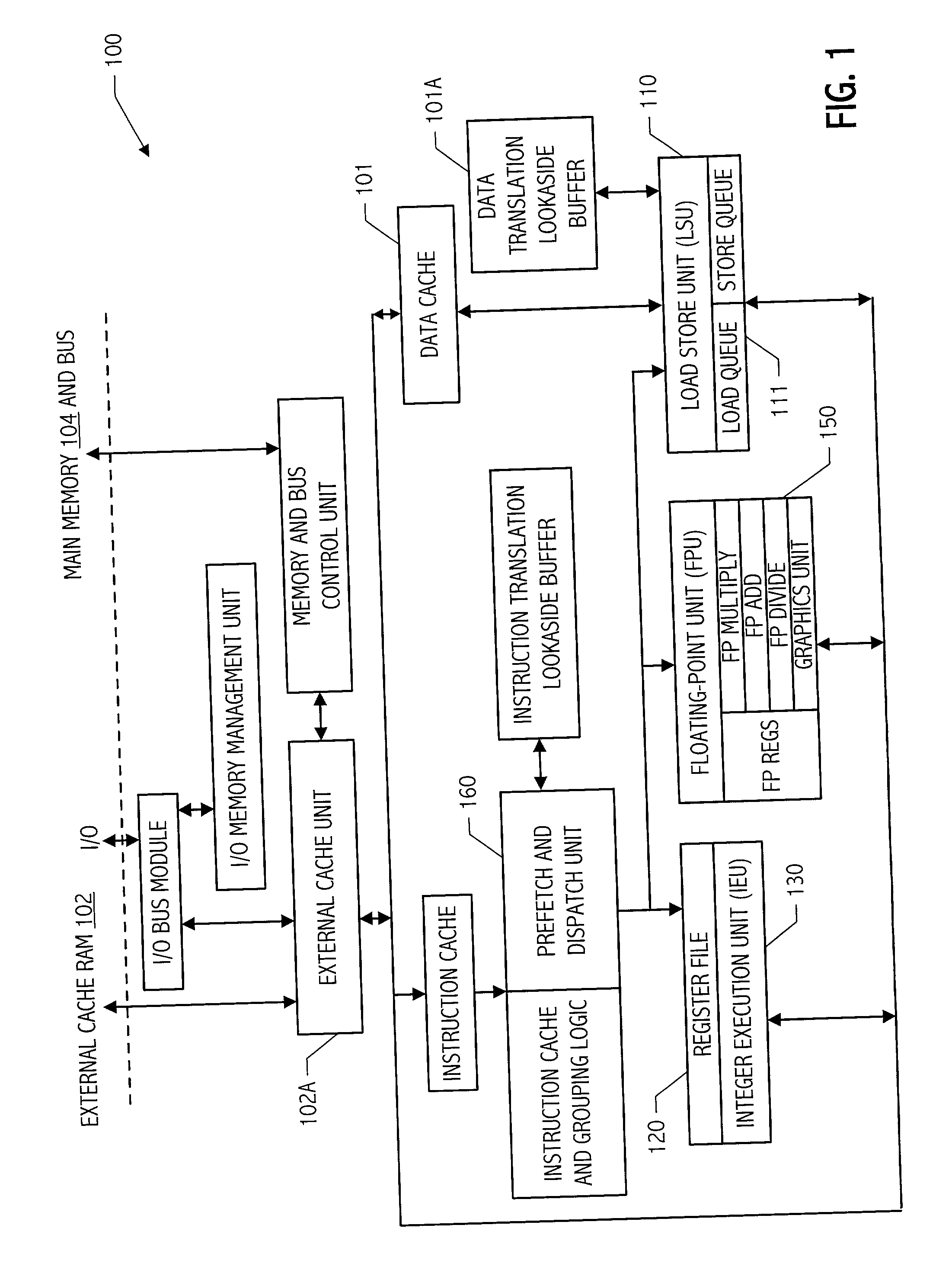

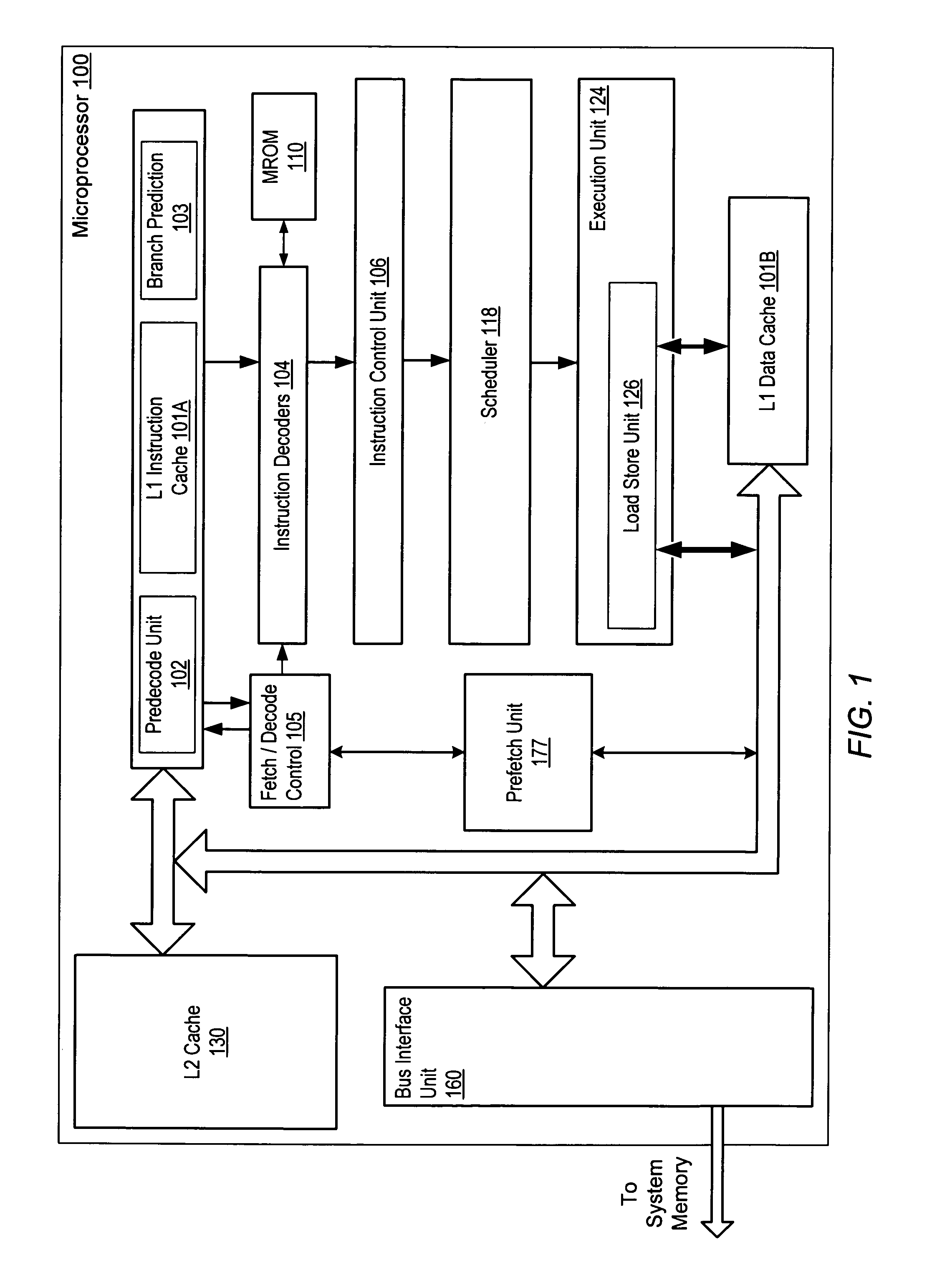

Microprocessor with improved data stream prefetching

ActiveUS7177985B1Improving data stream prefetchingMemory architecture accessing/allocationDigital computer detailsCache hierarchyPage fault

A microprocessor with multiple stream prefetch engines each executing a stream prefetch instruction to prefetch a complex data stream specified by the instruction in a manner synchronized with program execution of loads from the stream is provided. The stream prefetch engine stays at least a fetch-ahead distance (specified in the instruction) ahead of the program loads, which may randomly access the stream. The instruction specifies a level in the cache hierarchy to prefetch into, a locality indicator to specify the urgency and ephemerality of the stream, a stream prefetch priority, a TLB miss policy, a page fault miss policy, a protection violation policy, and a hysteresis value, specifying a minimum number of bytes to prefetch when the stream prefetch engine resumes prefetching. The memory subsystem includes a separate TLB for stream prefetches; or a joint TLB backing the stream prefetch TLB and load / store TLB; or a separate TLB for each prefetch engine.

Owner:ARM FINANCE OVERSEAS LTD

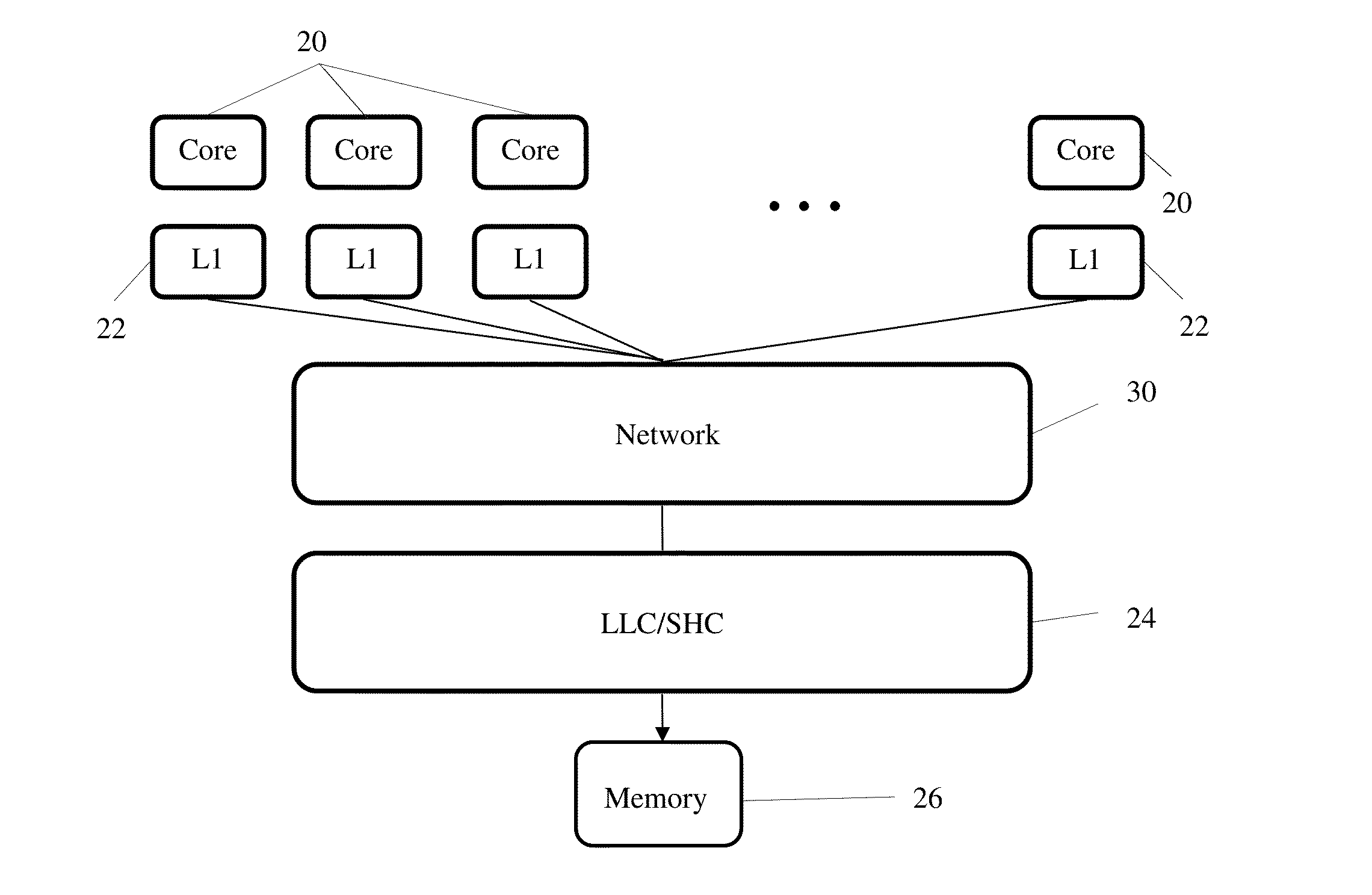

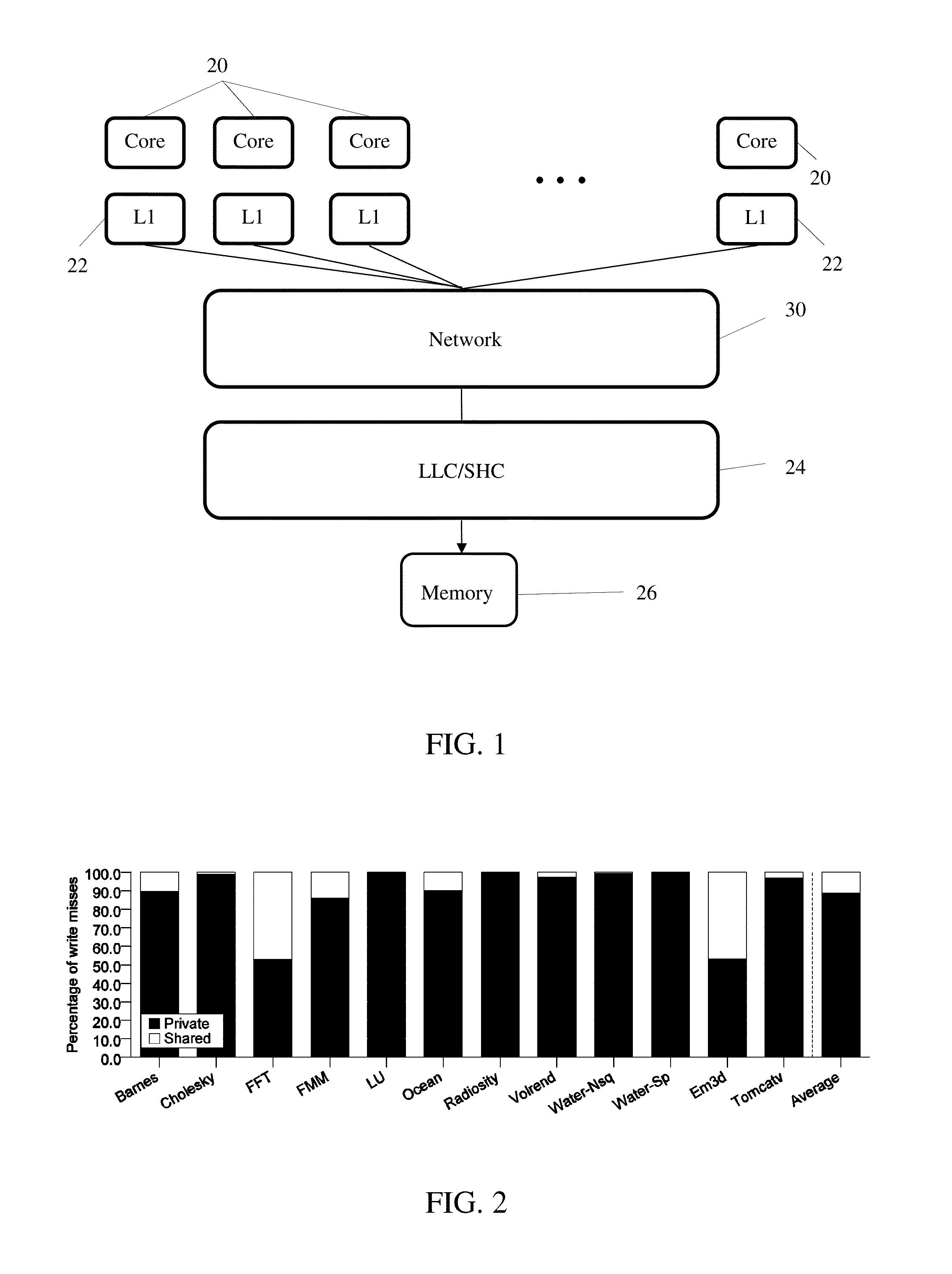

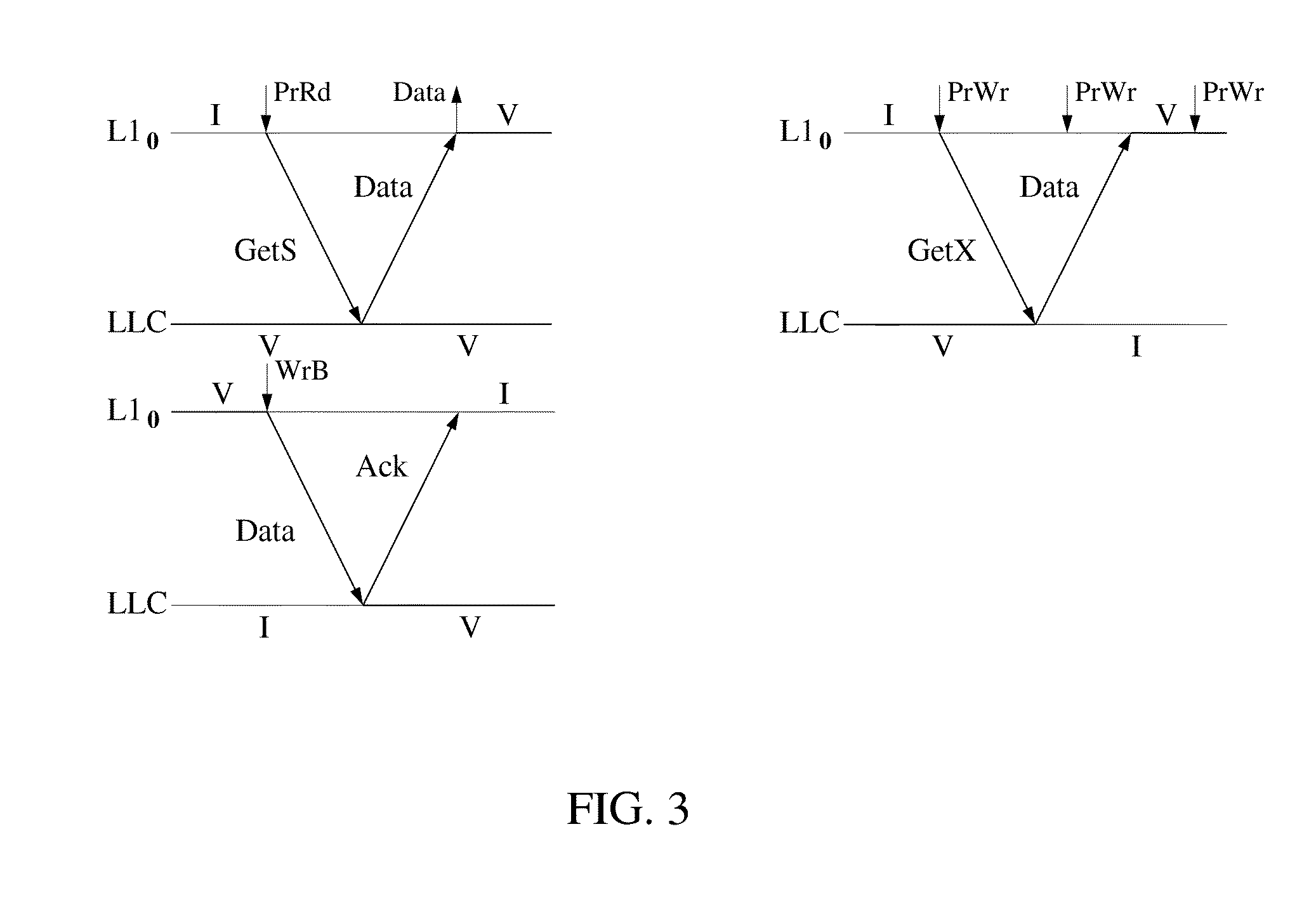

System and method for simplifying cache coherence using multiple write policies

ActiveUS20130254488A1Improve power efficiencyReduce hardware costsEnergy efficient ICTMemory adressing/allocation/relocationCache hierarchyCache coherence

System and methods for cache coherence in a multi-core processing environment having a local / shared cache hierarchy. The system includes multiple processor cores, a main memory, and a local cache memory associated with each core for storing cache lines accessible only by the associated core. Cache lines are classified as either private or shared. A shared cache memory is coupled to the local cache memories and main memory for storing cache lines. The cores follow a write-back to the local memory for private cache lines, and a write-through to the shared memory for shared cache lines. Shared cache lines in local cache memory enter a transient dirty state when written by the core. Shared cache lines transition from a transient dirty to a valid state with a self-initiated write-through to the shared memory. The write-through to shared memory can include only data that was modified in the transient dirty state.

Owner:ETA SCALE AB

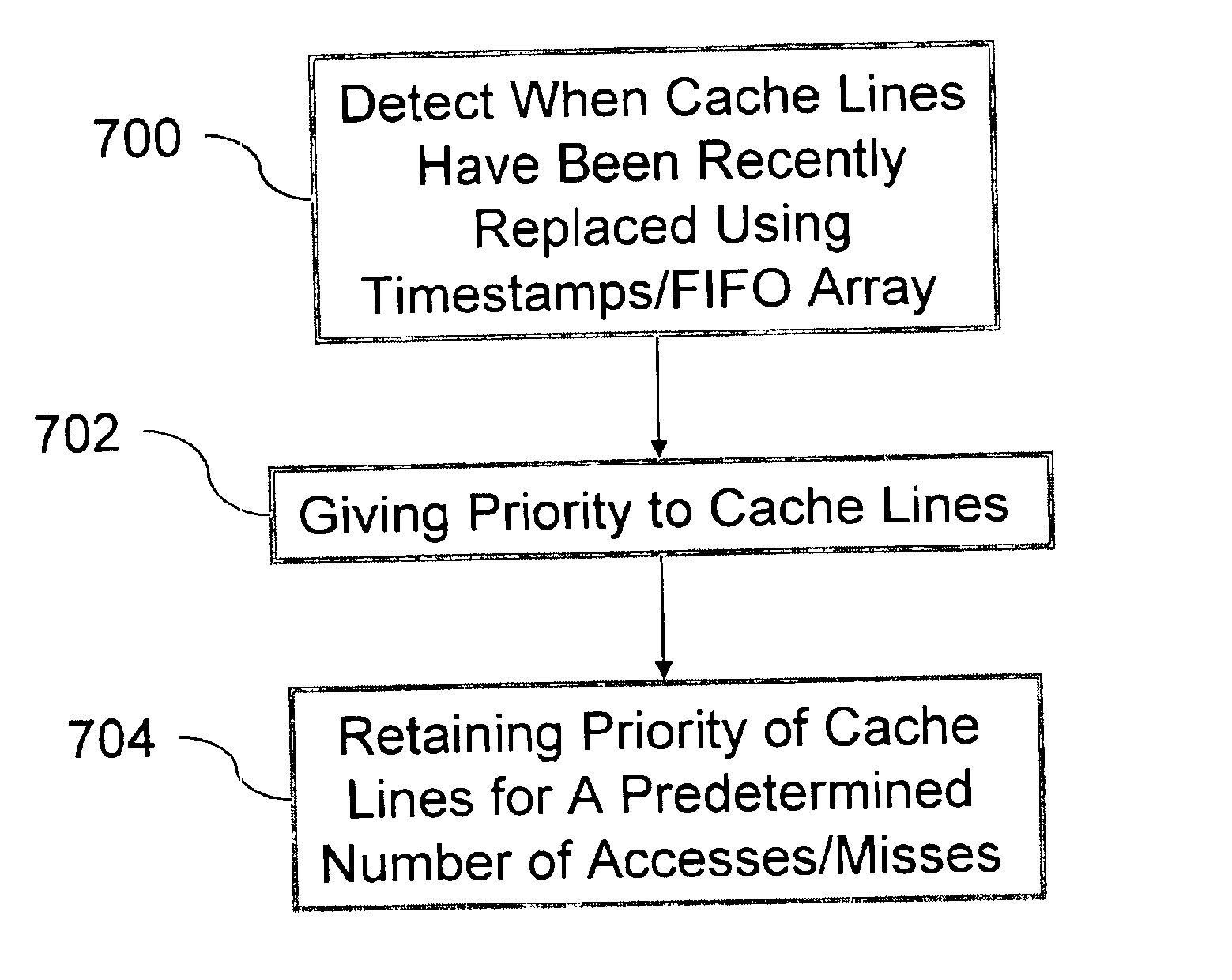

Weighted cache line replacement

InactiveUS20040083341A1Avoid replacementMemory adressing/allocation/relocationCache hierarchyParallel computing

A method for selecting a line to replace in an inclusive set-associative cache memory system which is based on a least recently used replacement policy but is enhanced to detect and give special treatment to the reloading of a line that has been recently cast out. A line which has been reloaded after having been recently cast out is assigned a special encoding which temporarily gives priority to the line in the cache so that it will not be selected for replacement in the usual least recently used replacement process. This method of line selection for replacement improves system performance by providing better hit rates in the cache hierarchy levels above, by ensuring that heavily used lines in the levels above are not aged out of the levels below due to lack of use.

Owner:IBM CORP

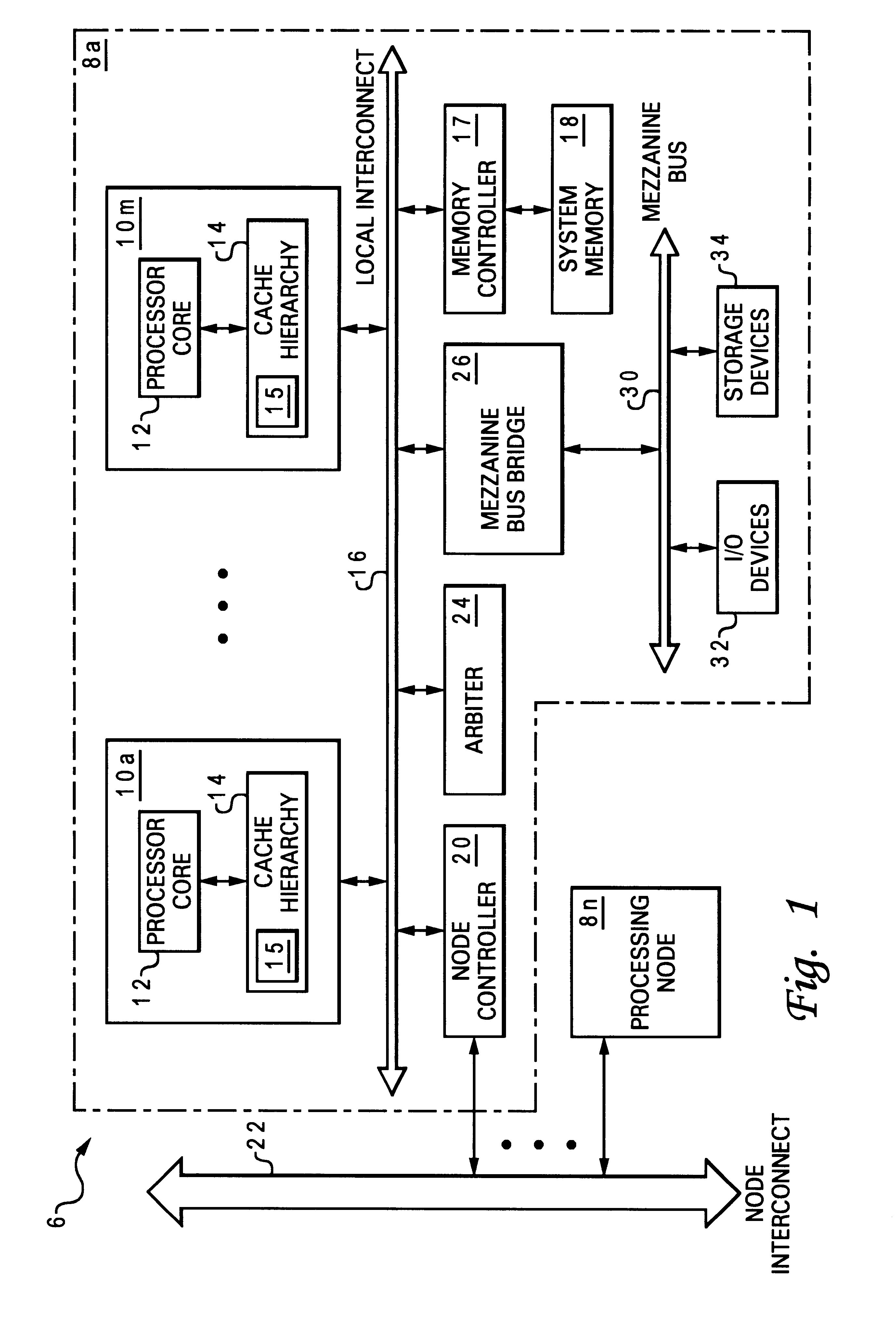

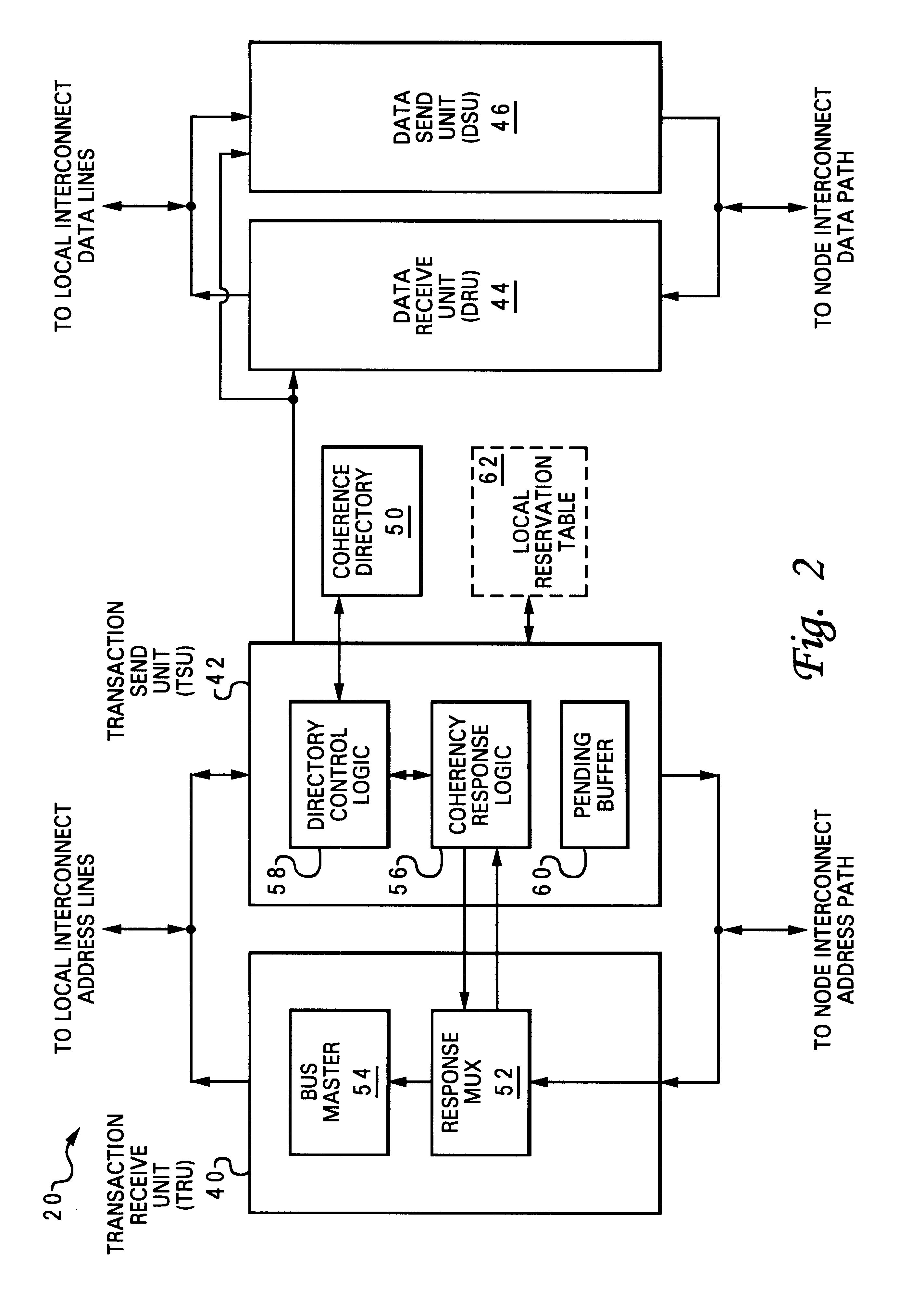

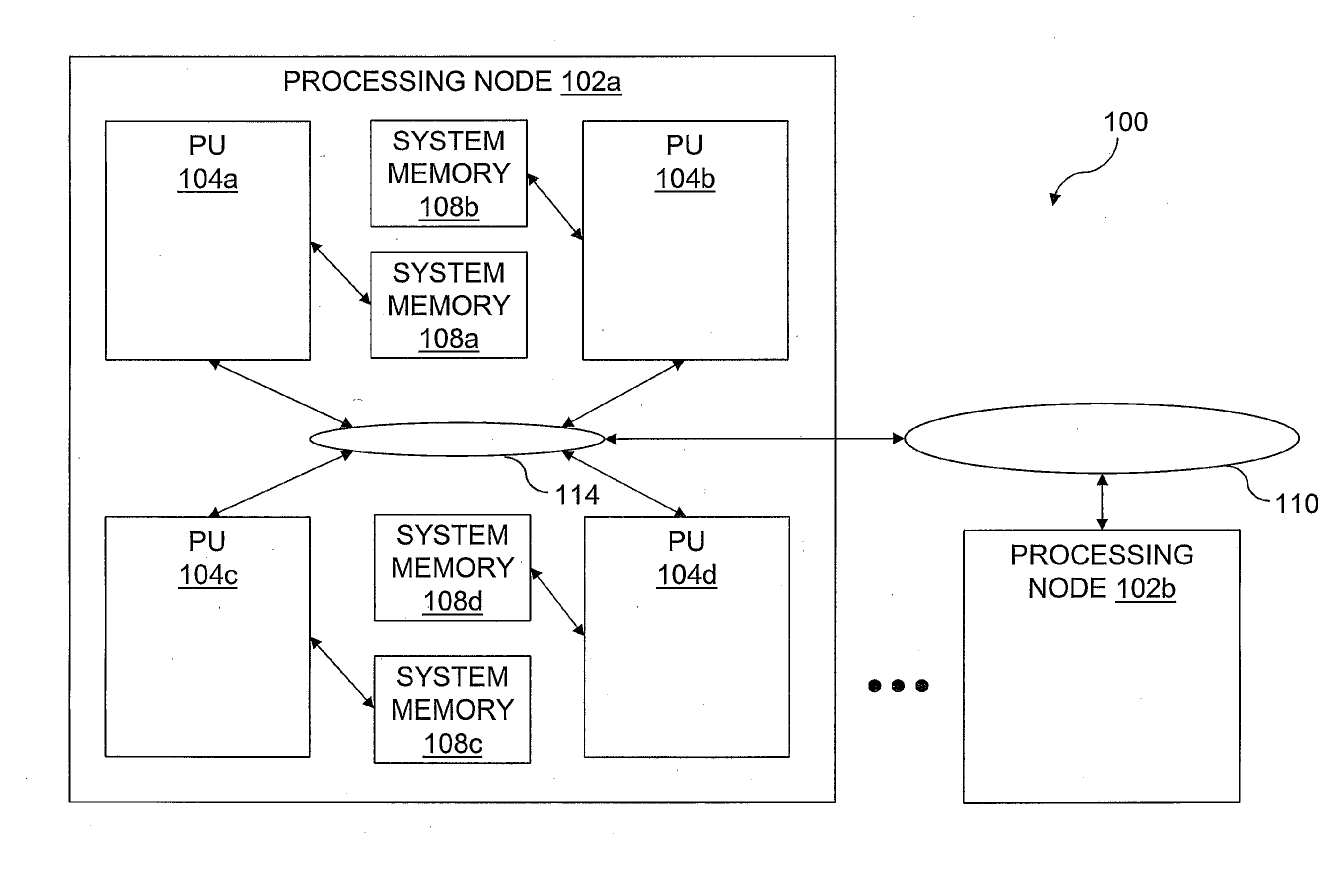

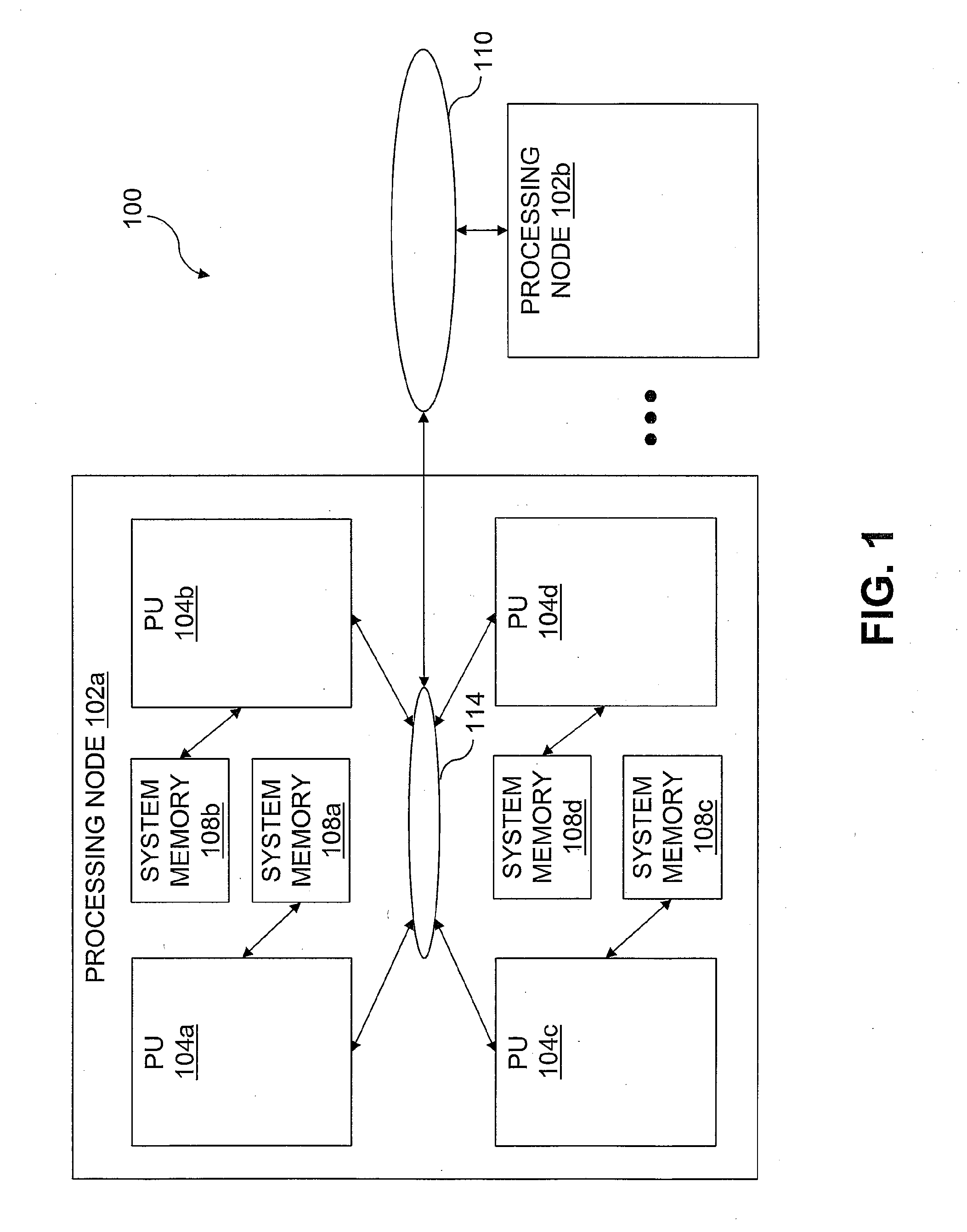

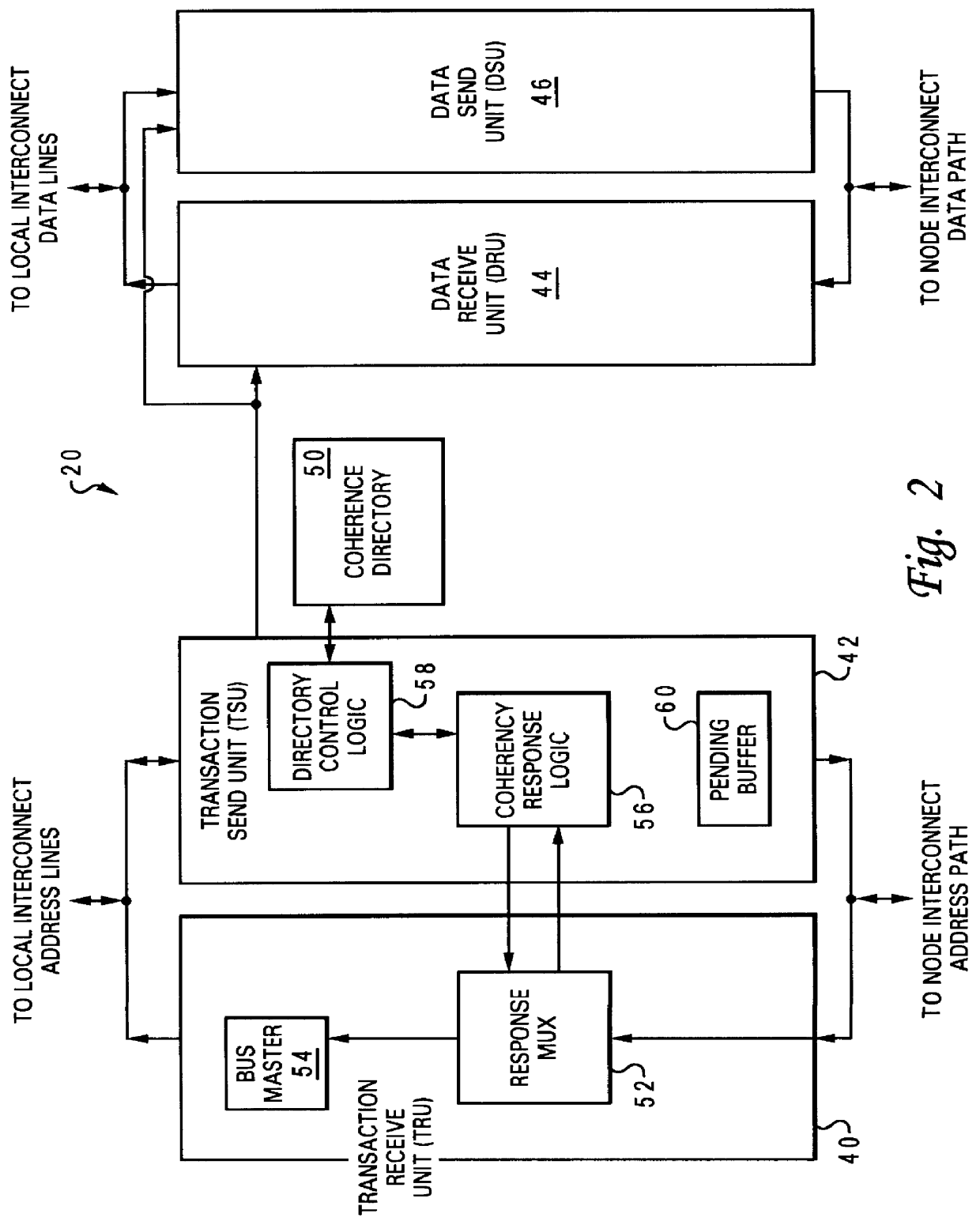

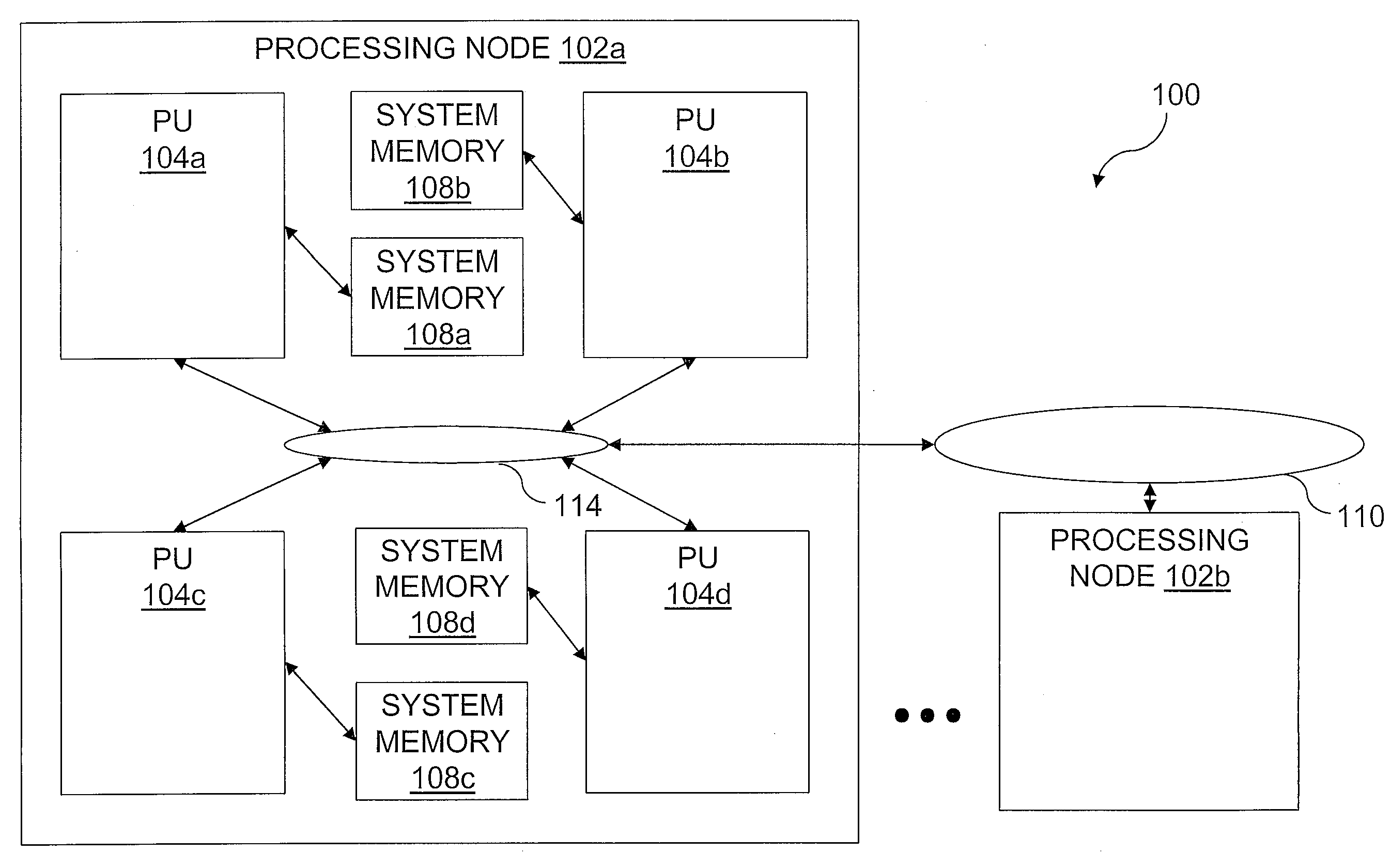

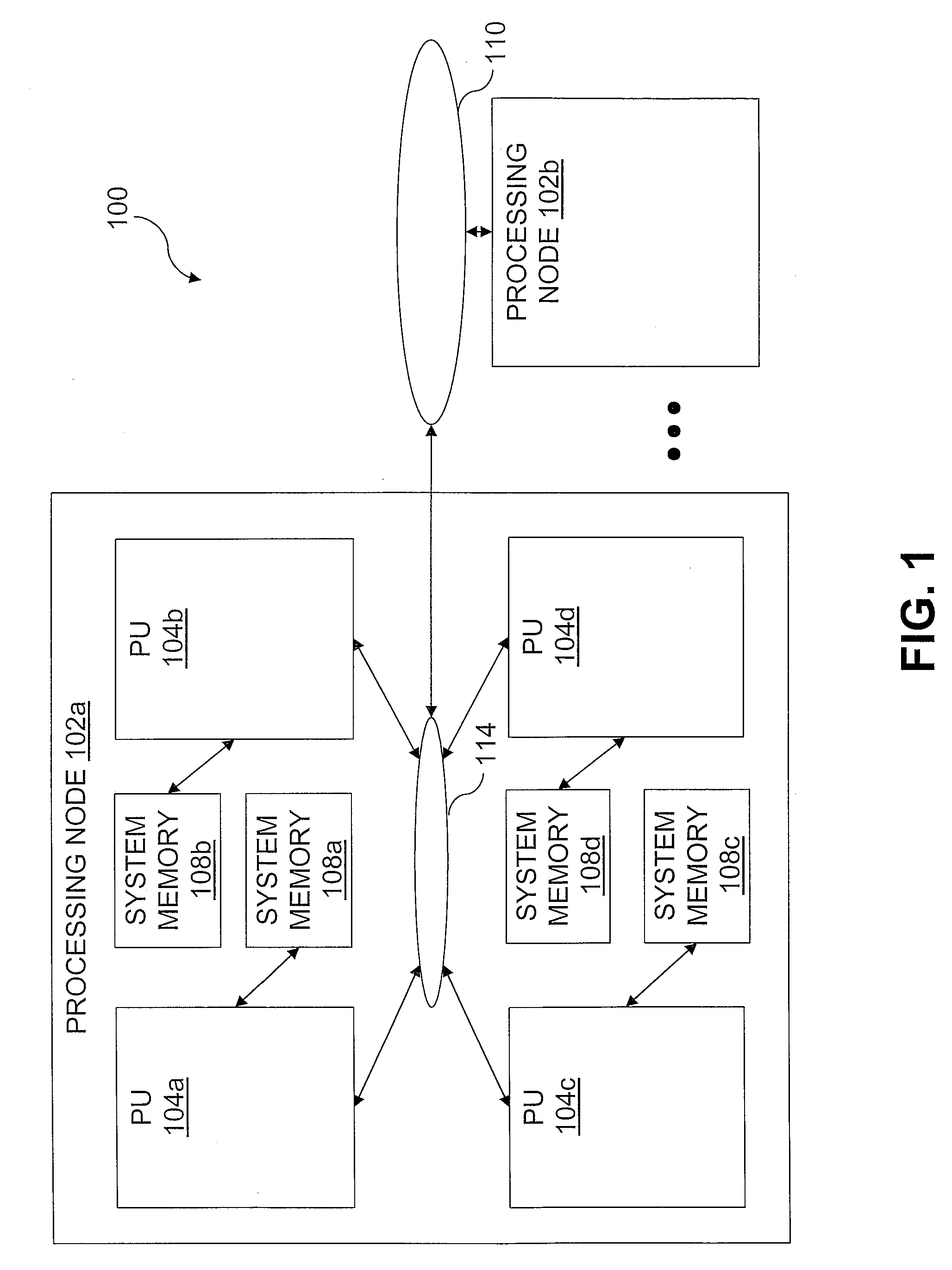

Reservation management in a non-uniform memory access (NUMA) data processing system

InactiveUS6275907B1Memory adressing/allocation/relocationProgram controlData processing systemCache hierarchy

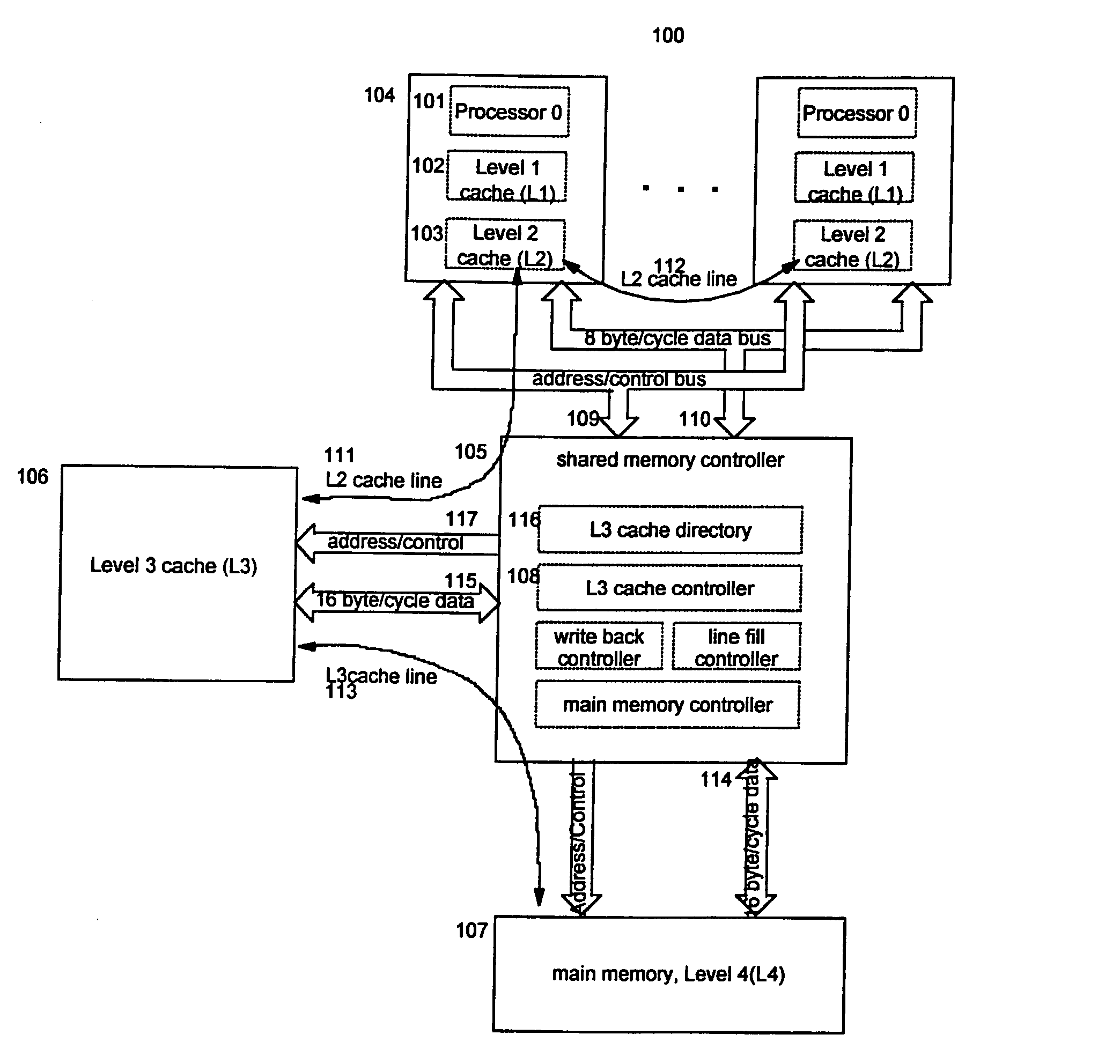

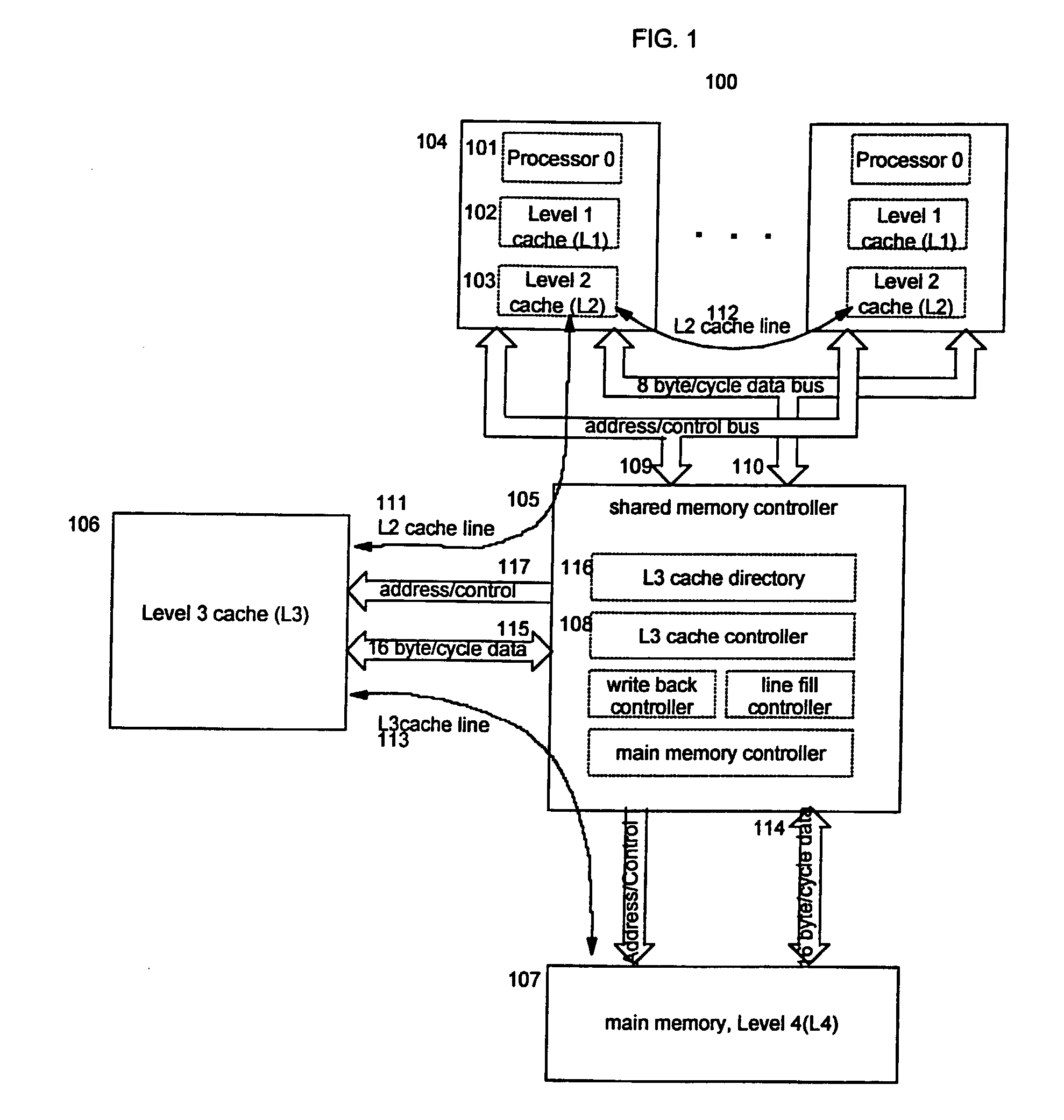

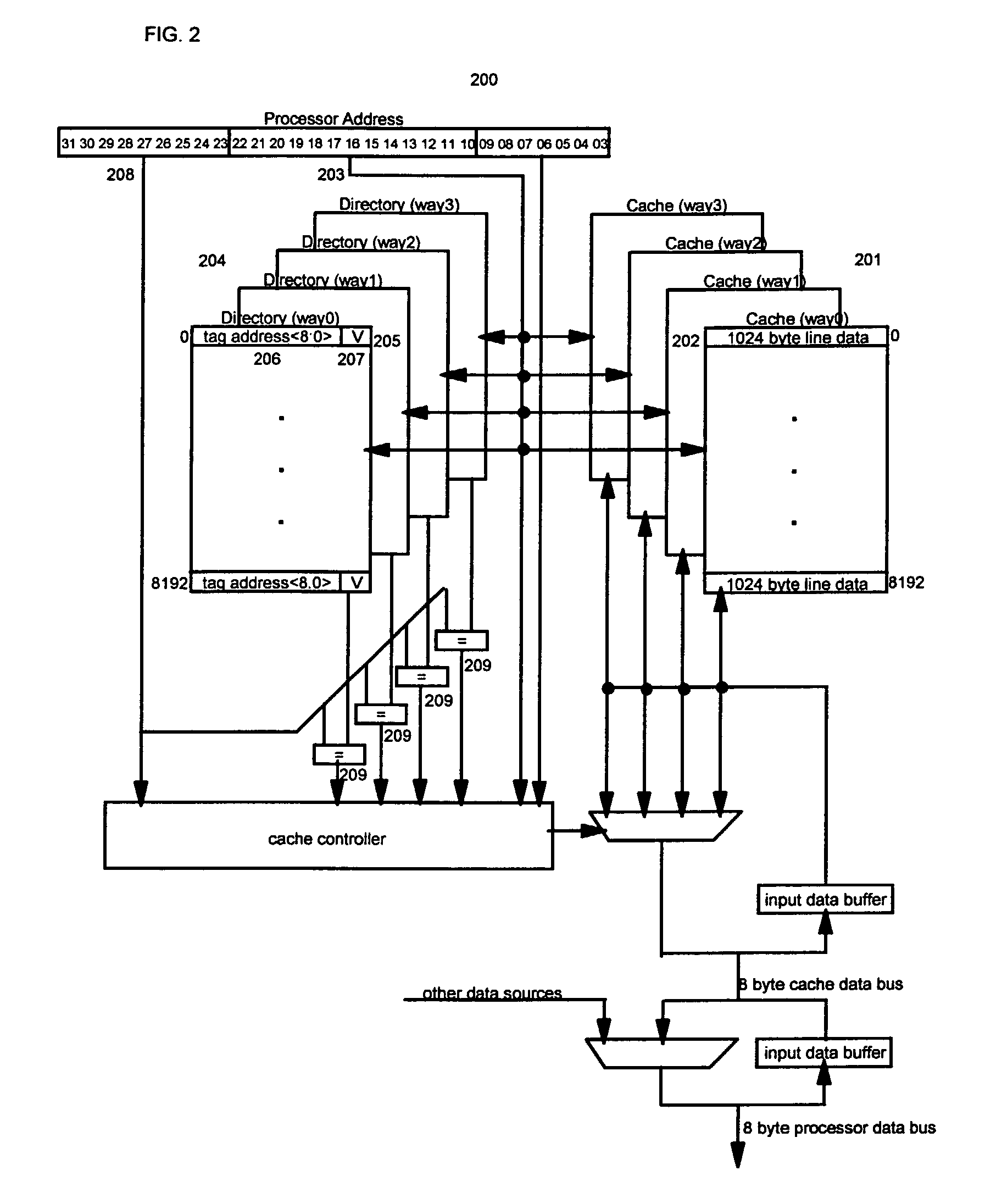

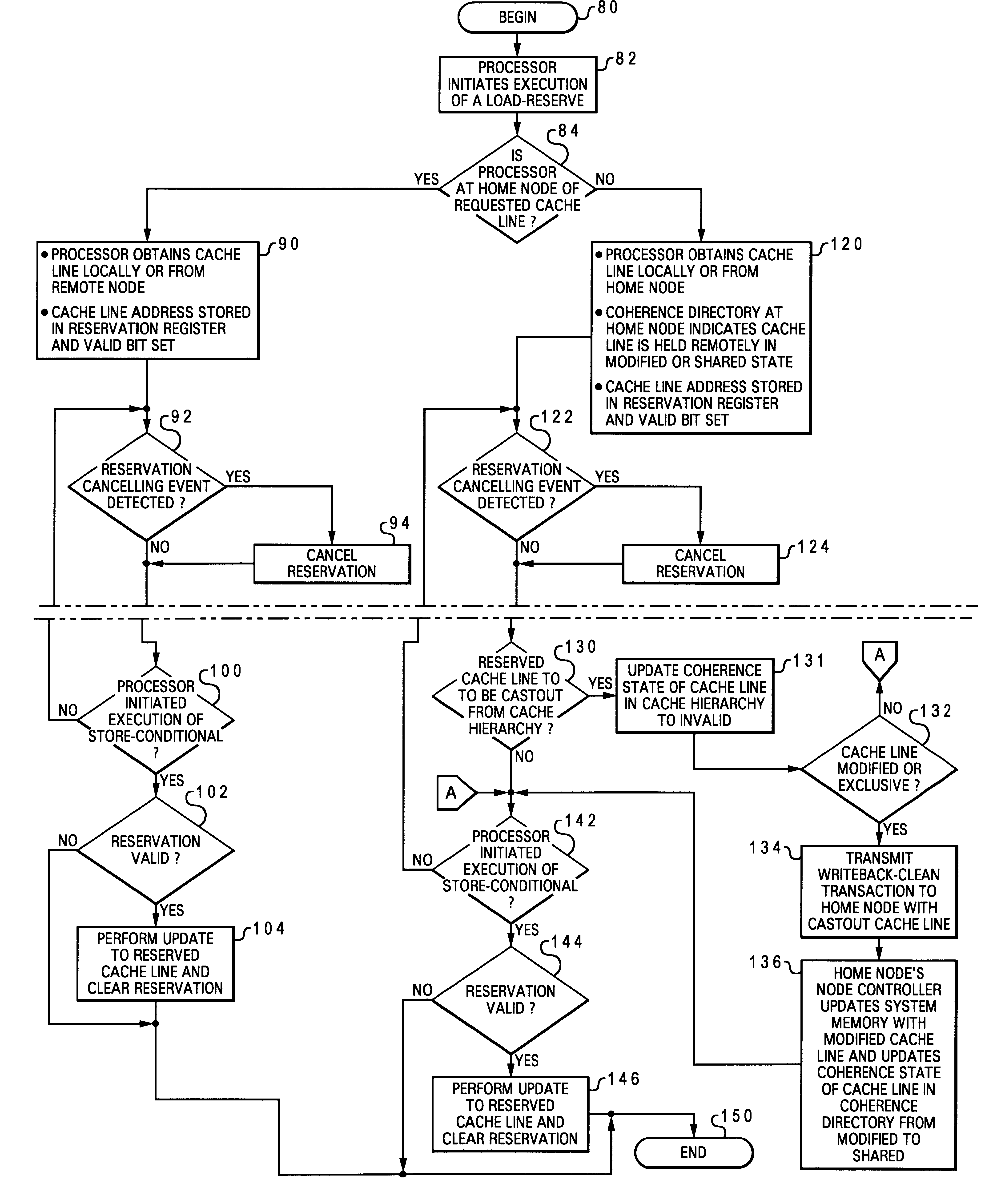

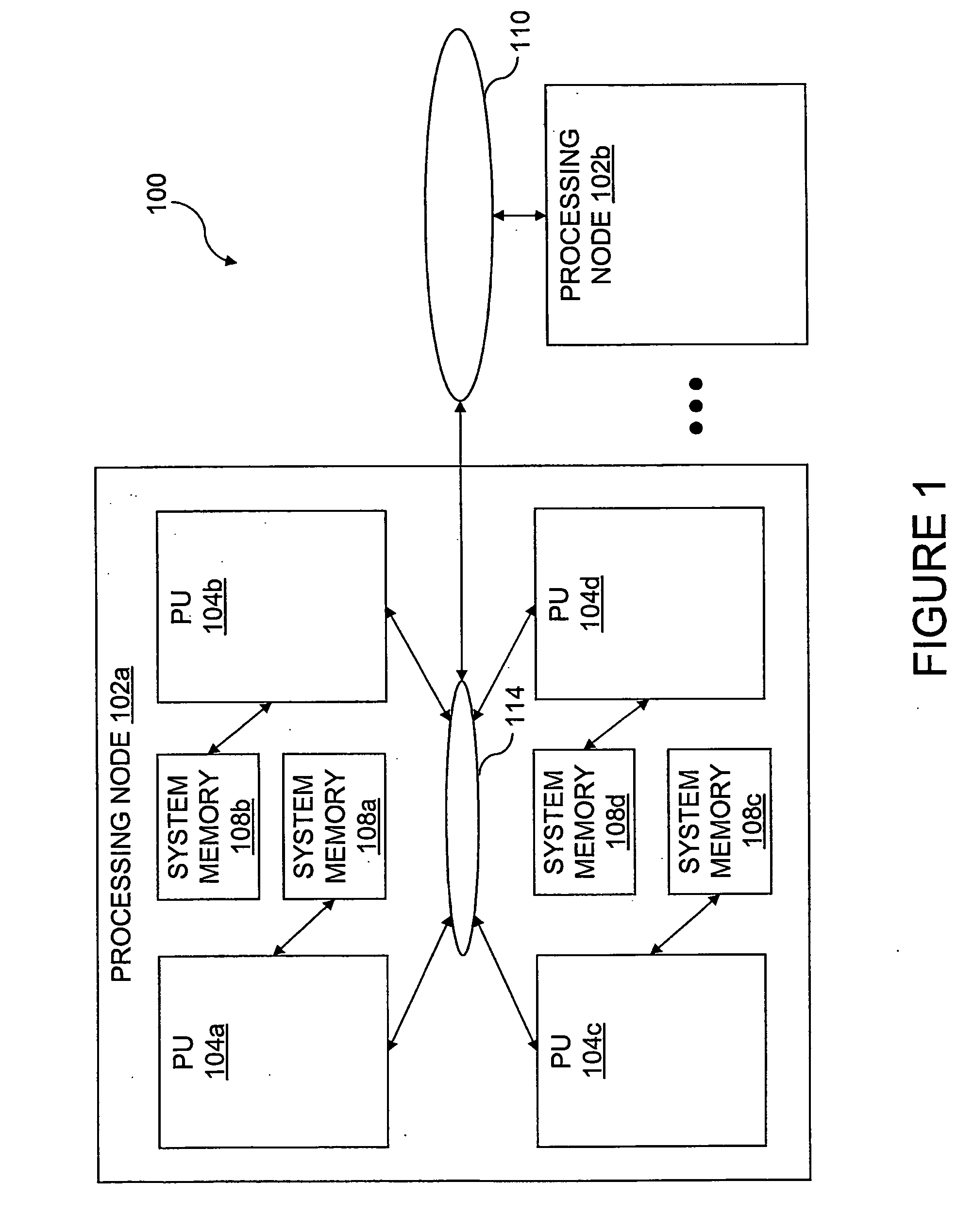

A non-uniform memory access (NUMA) computer system includes a plurality of processing nodes coupled to a node interconnect. The plurality of processing nodes include at least a remote processing node, which contains a processor having an associated cache hierarchy, and a home processing node. The home processing node includes a shared system memory containing a plurality of memory granules and a coherence directory that indicates possible coherence states of copies of memory granules among the plurality of memory granules that are stored within at least one processing node other than the home processing node. If the processor within the remote processing node has a reservation for a memory granule among the plurality of memory granules that is not resident within the associated cache hierarchy, the coherence directory associates the memory granule with a coherence state indicating that the reserved memory granule may possibly be held non-exclusively at the remote processing node. In this manner, the coherence mechanism can be utilized to manage processor reservations even in cases in which a reserving processor's cache hierarchy does not hold a copy of the reserved memory granule.

Owner:IBM CORP

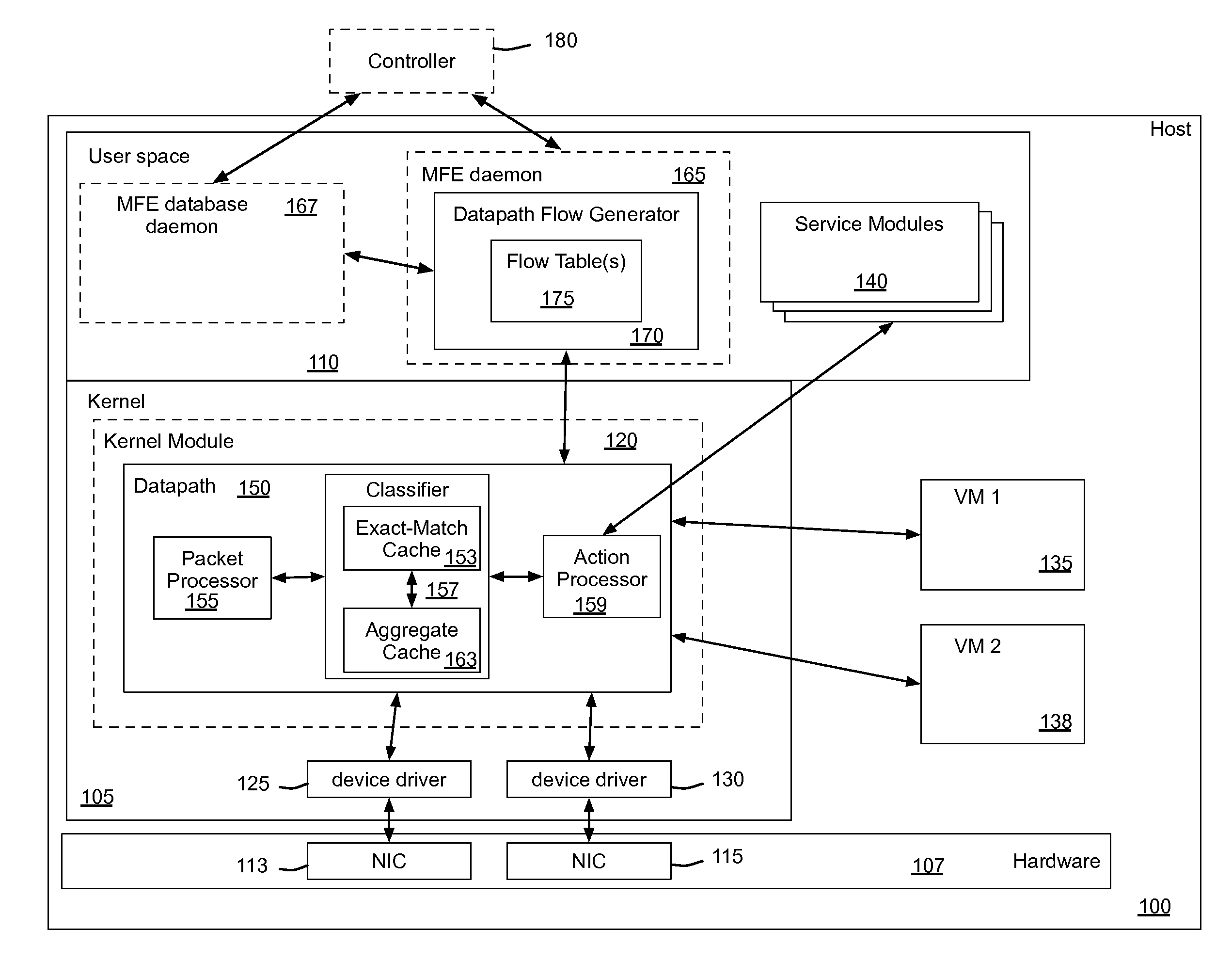

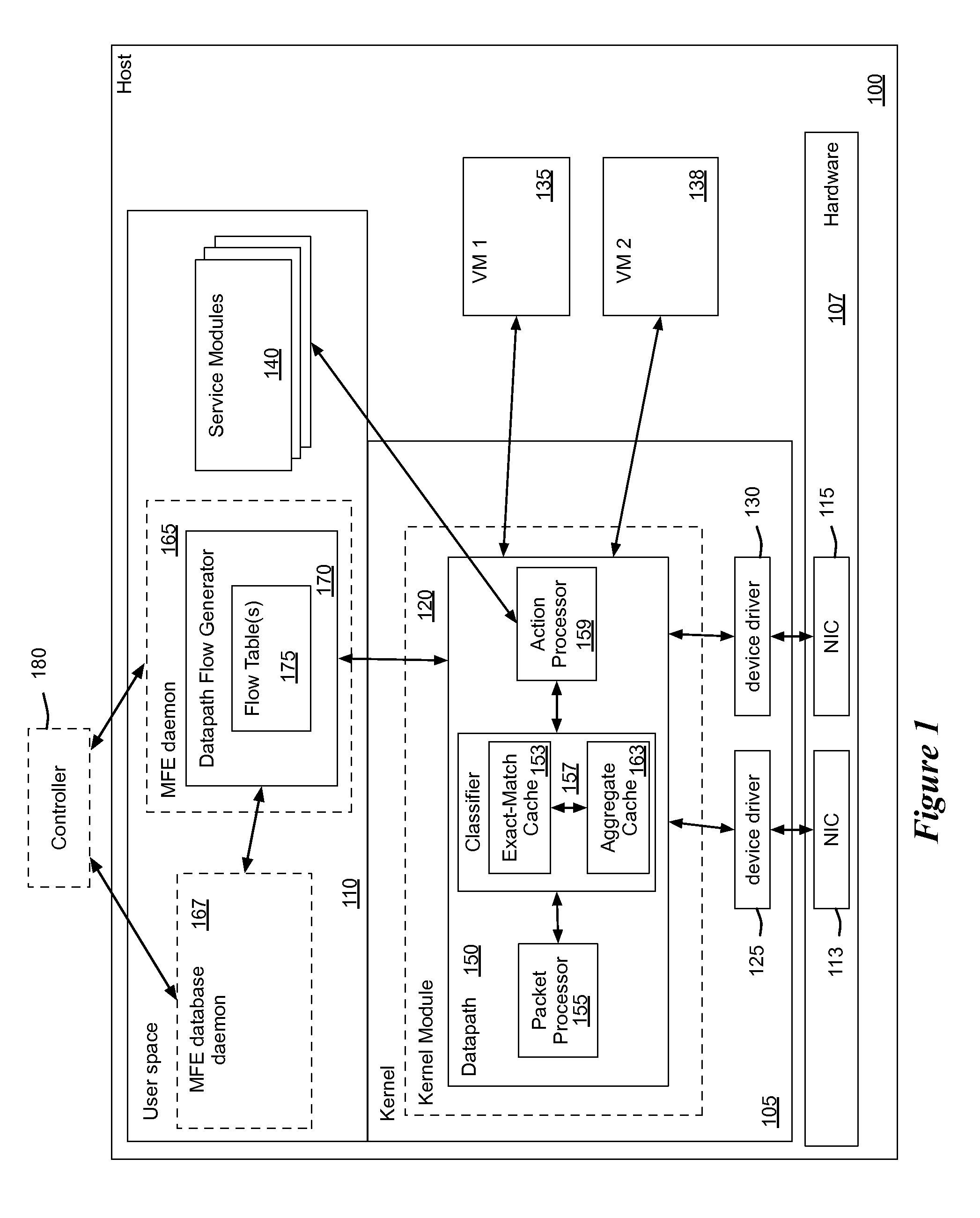

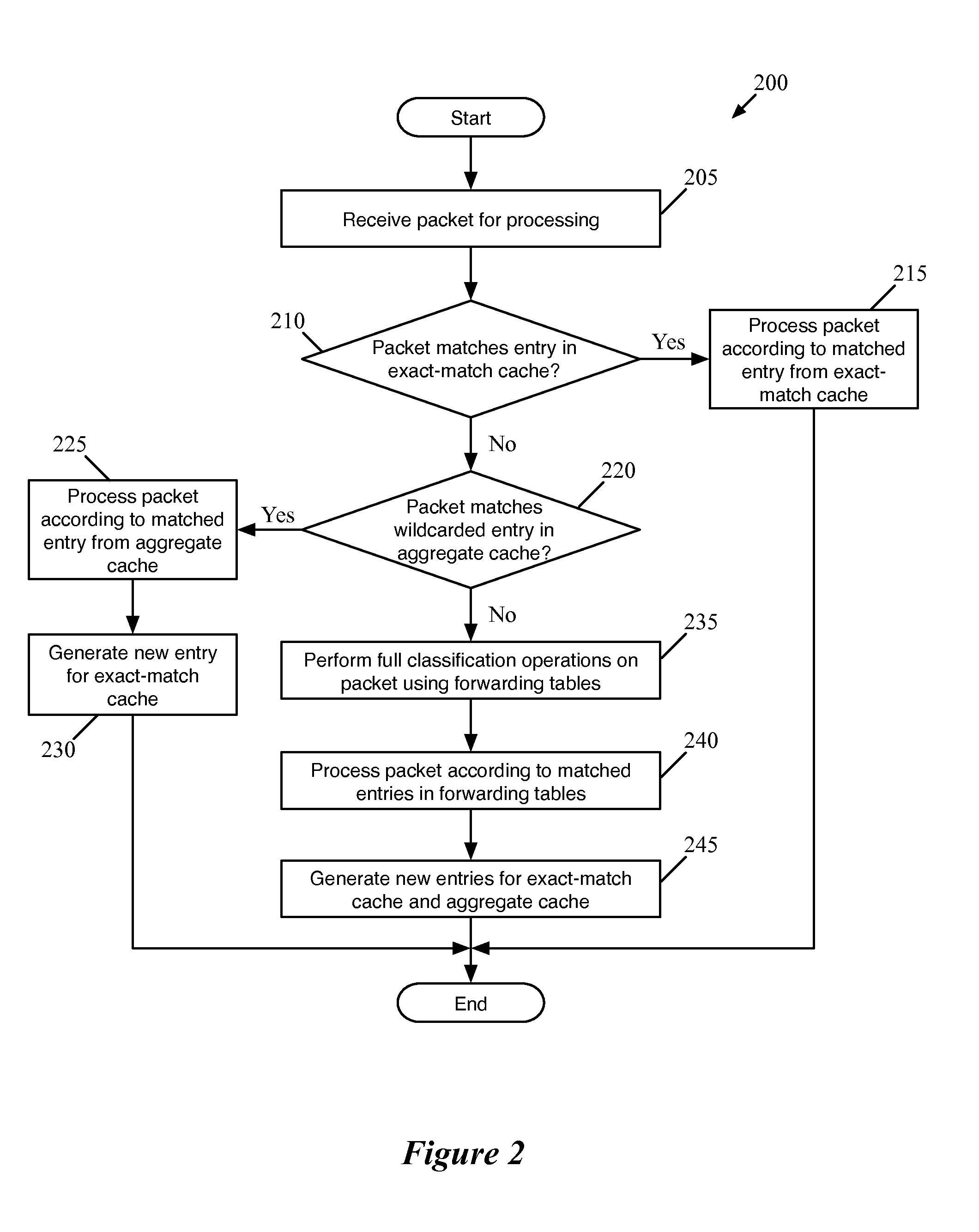

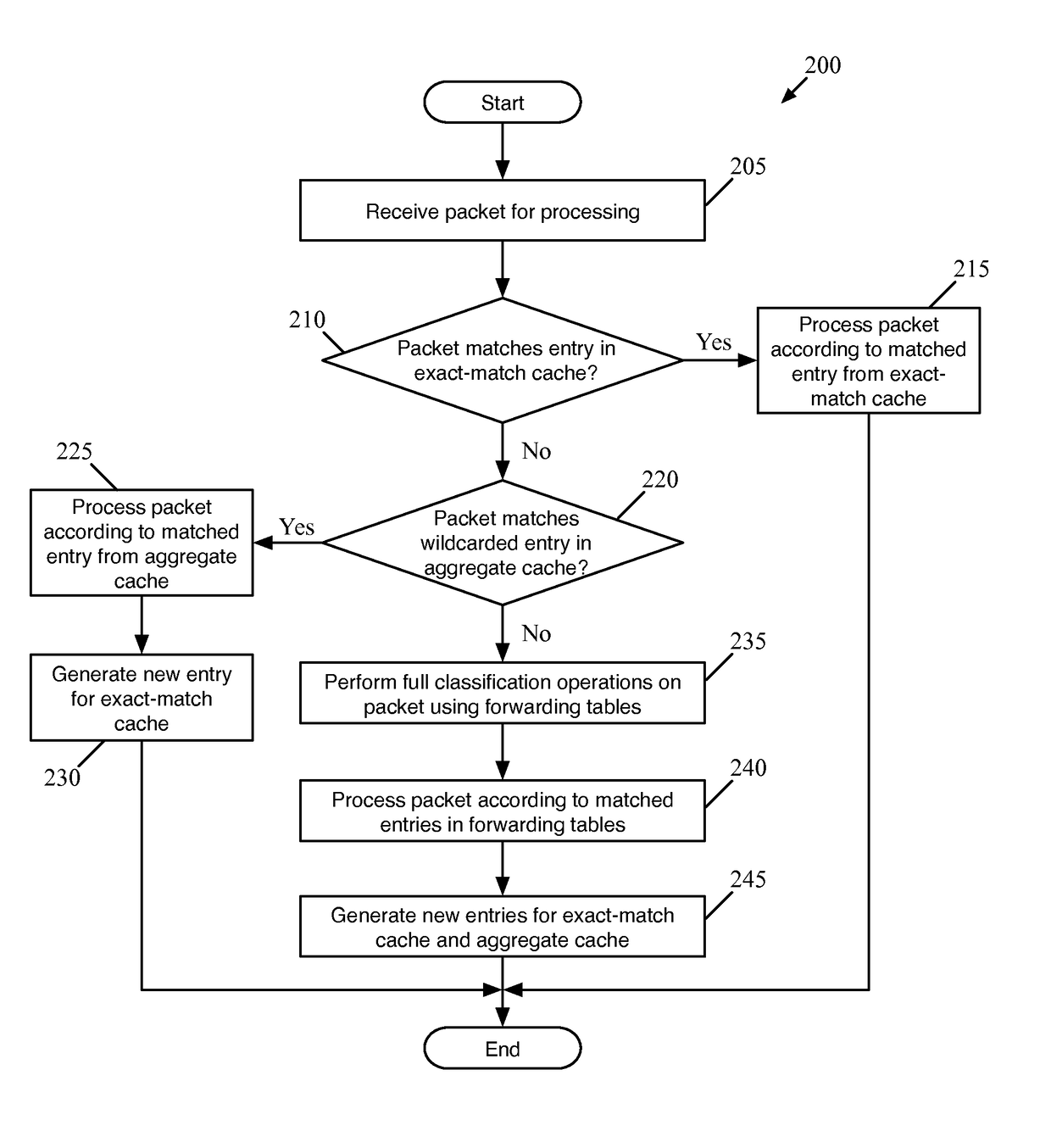

Flow Cache Hierarchy

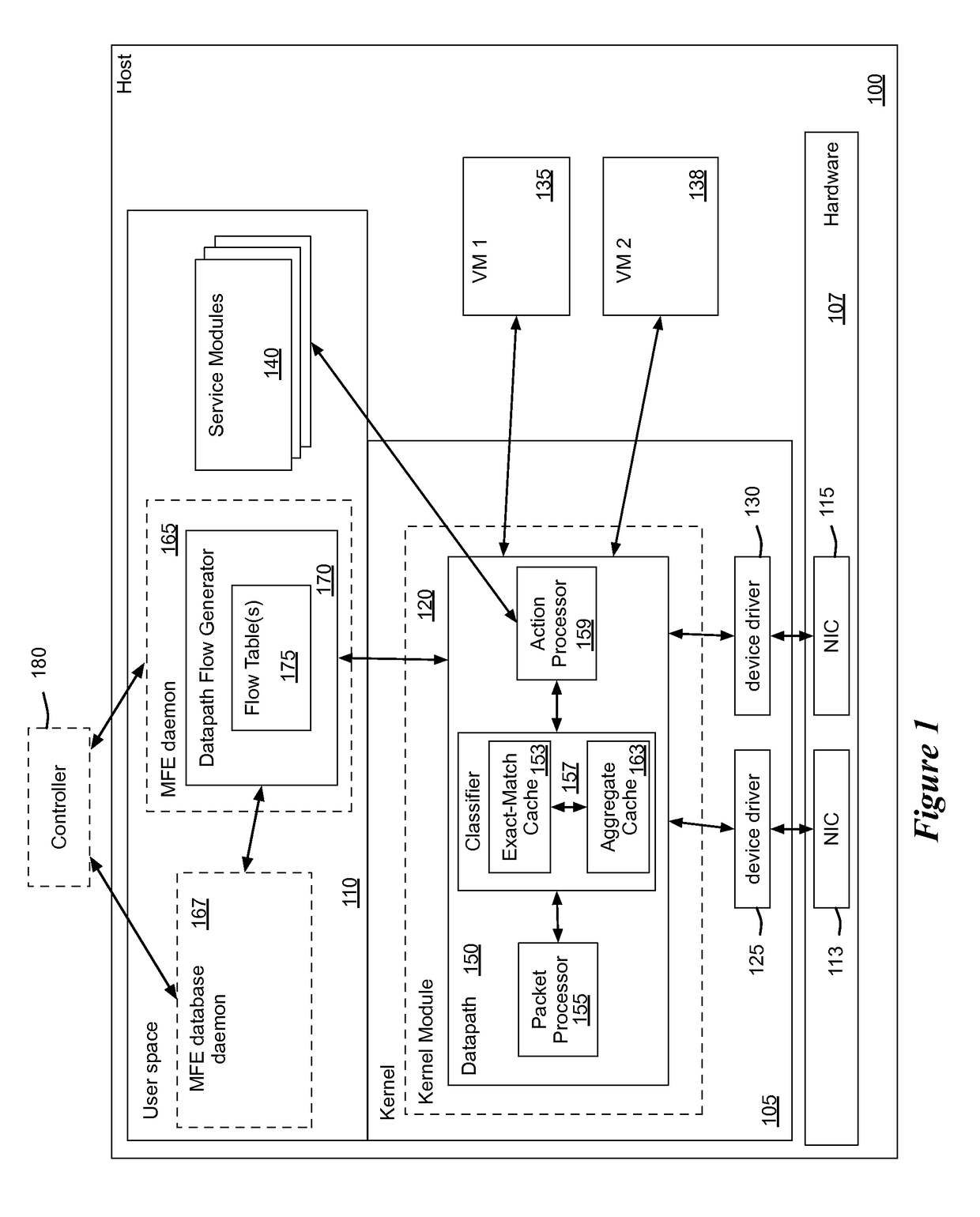

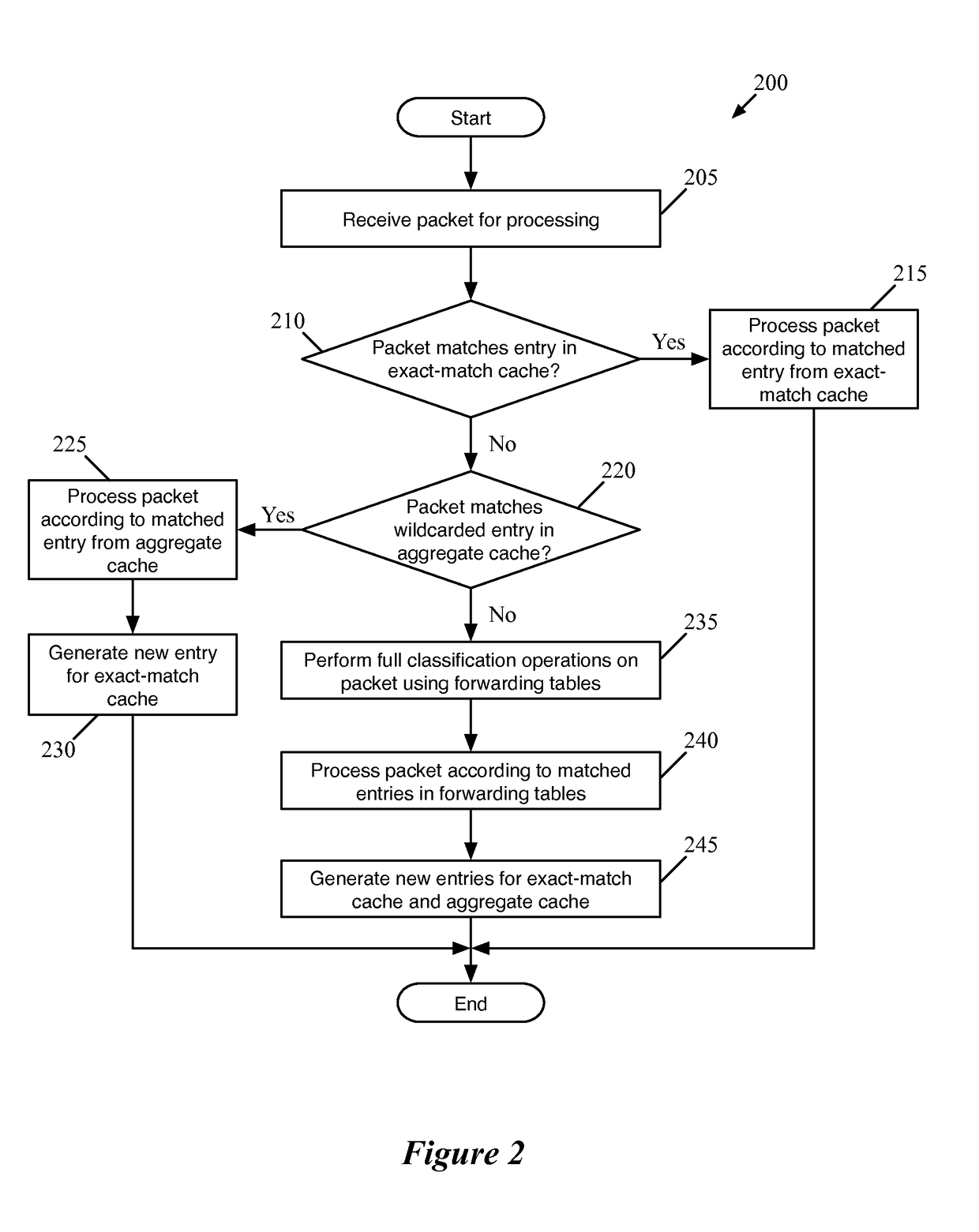

ActiveUS20150281098A1Save computing resourcesSave a lot of timeMemory architecture accessing/allocationError preventionExact matchCache hierarchy

Some embodiments provide a managed forwarding element (MFE that includes a set of flow tables including a first set of flow entries for processing packets received by the MFE. The MFE includes an aggregate cache including a second set of flow entries for processing packets received by the MFE. Each of the flow entries of the second set is for processing packets of multiple data flows. At least a subset of packet header fields of the packets of the multiple data flows have a same set of packet header field values, and a same set of operations is applied to said packets. The MFE includes an exact-match cache including a third set of flow entries for processing packets received by the MFE. Each of the flow entries of the third set is for processing packets for a single data flow having a unique set of packet header field values.

Owner:NICIRA

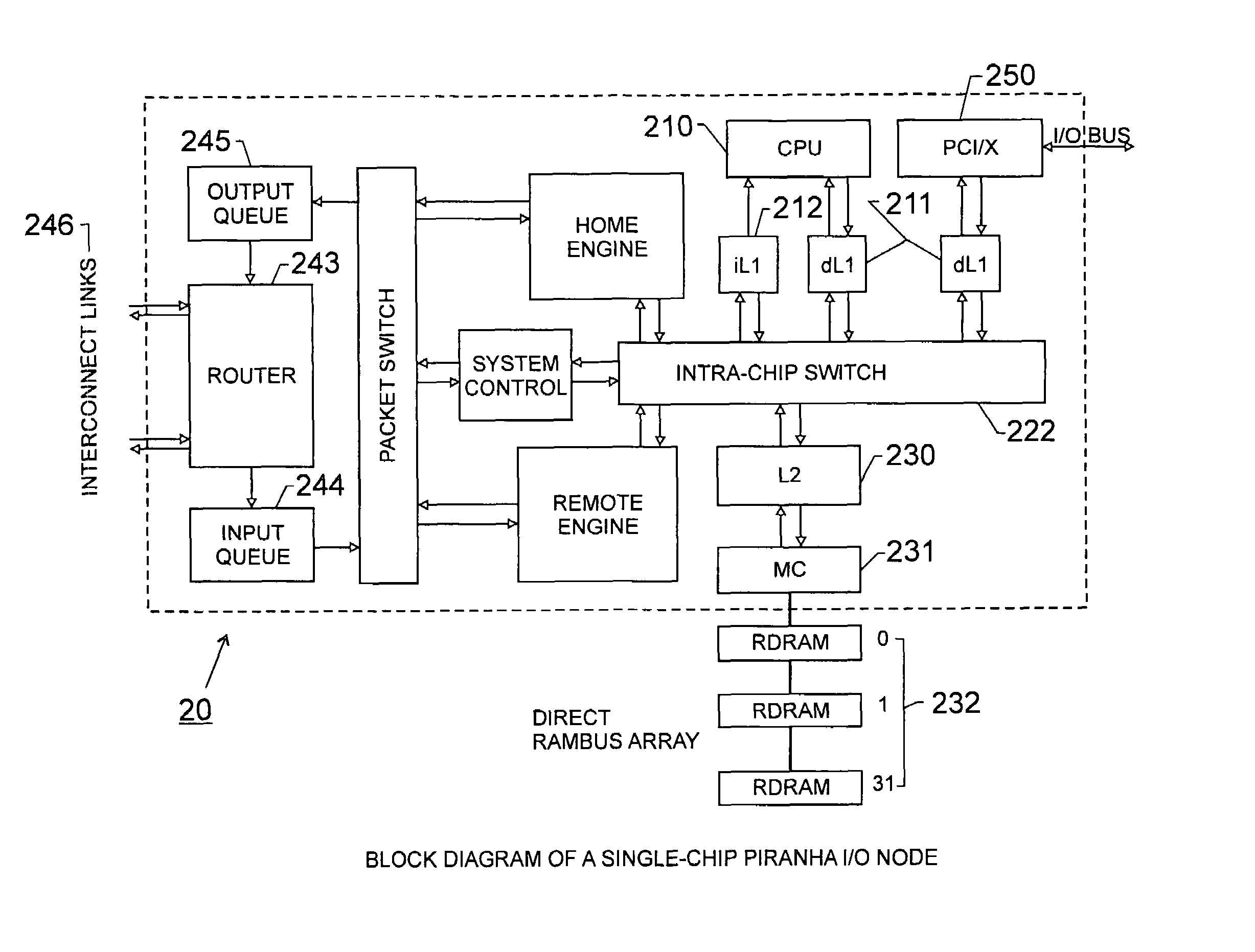

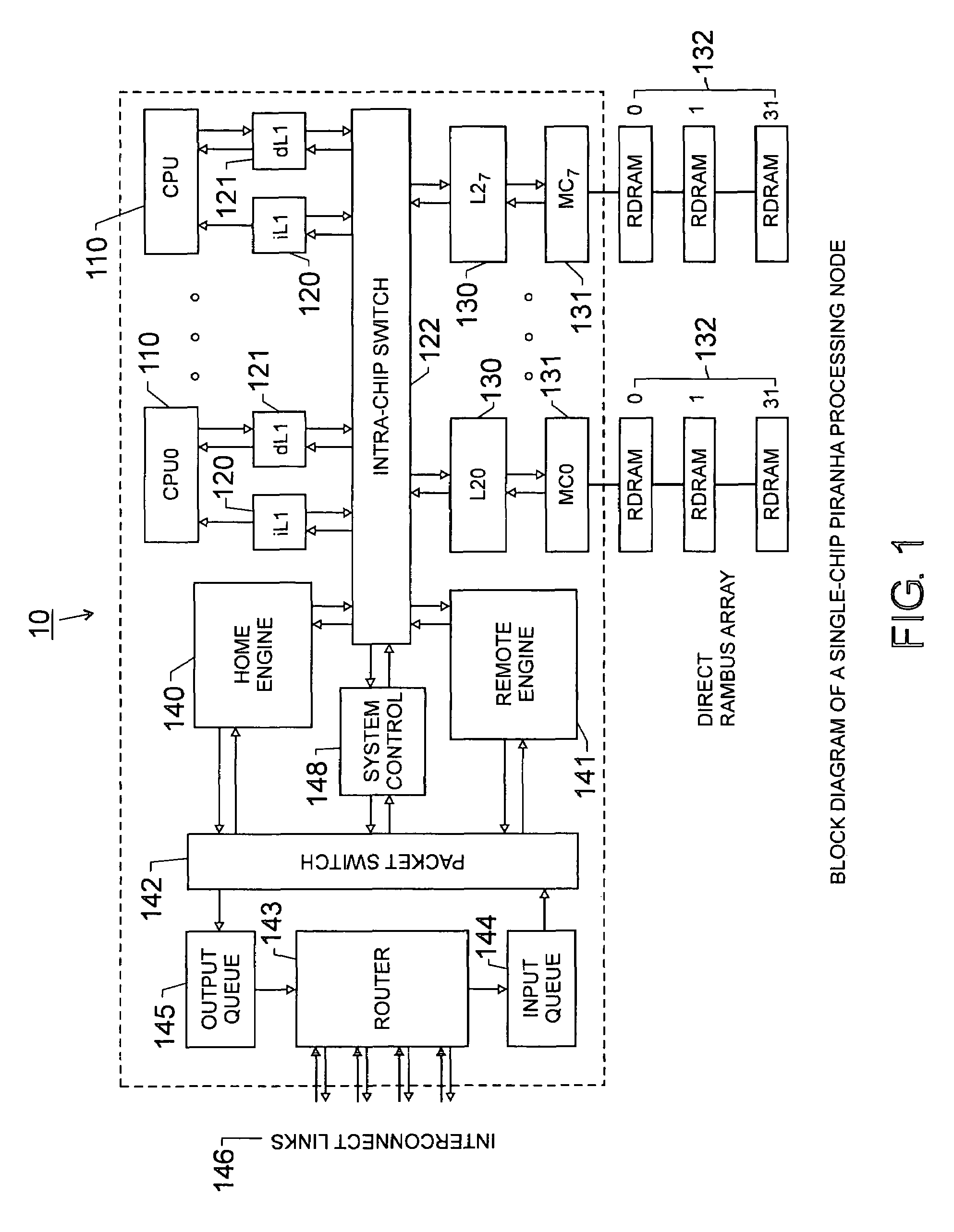

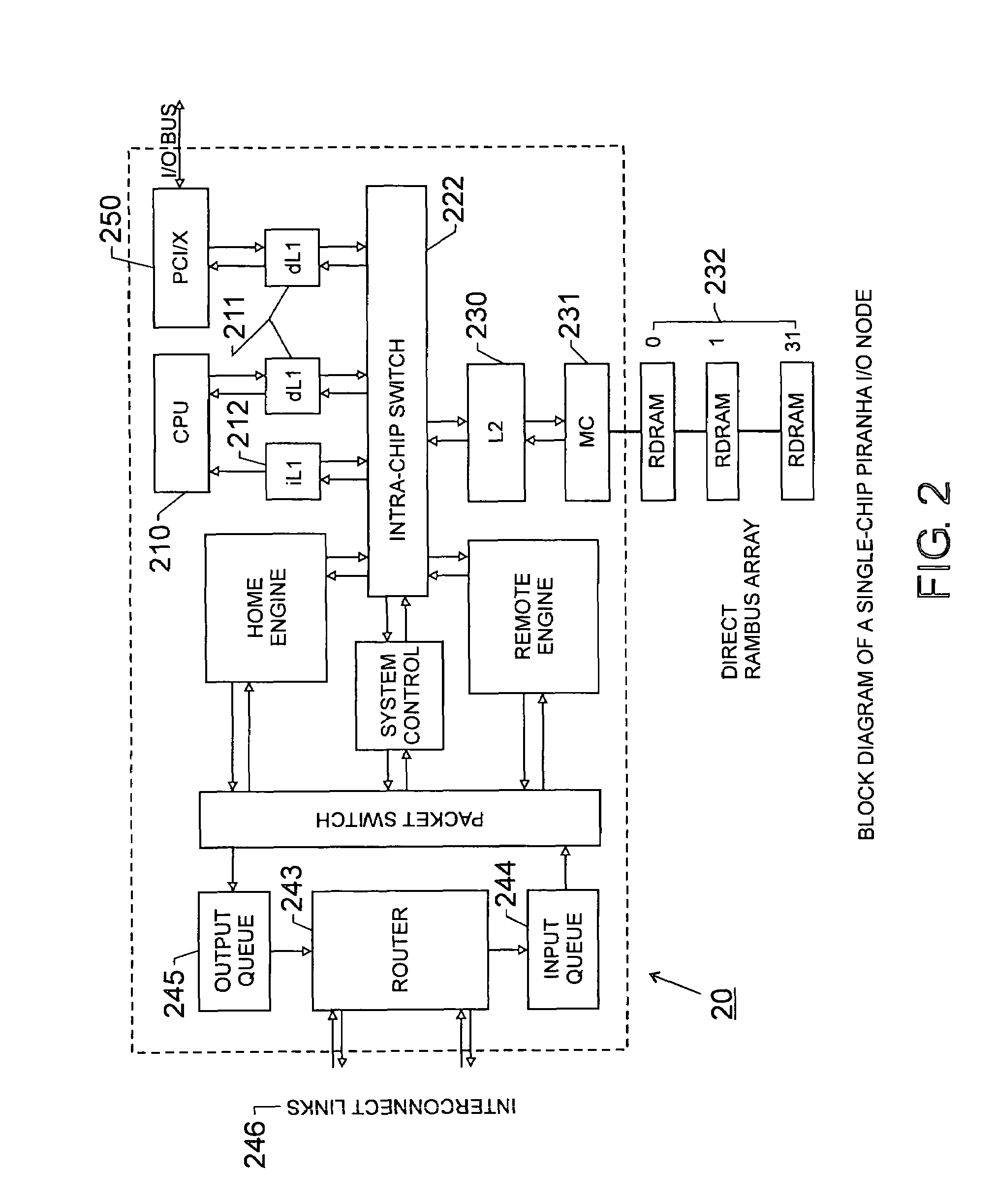

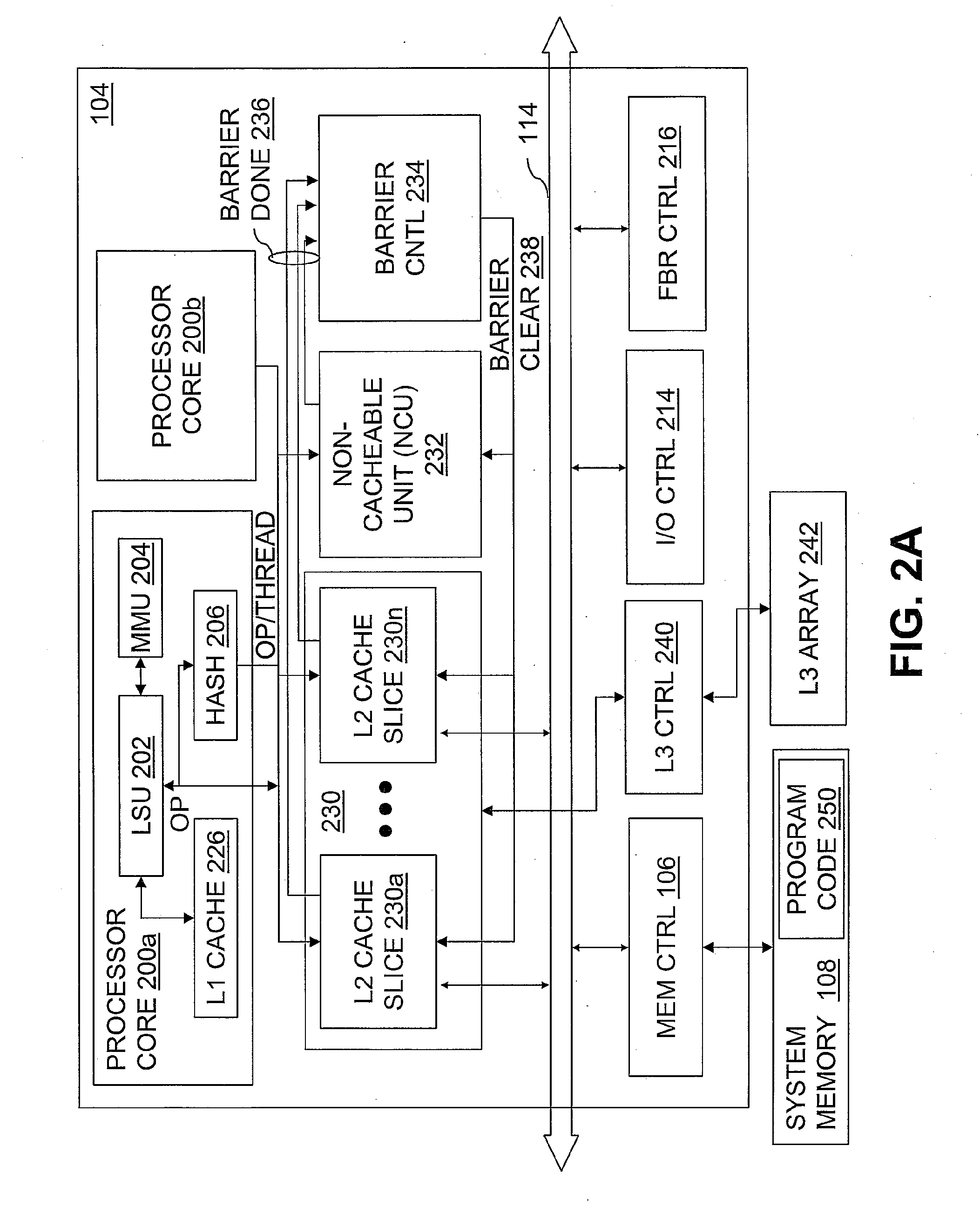

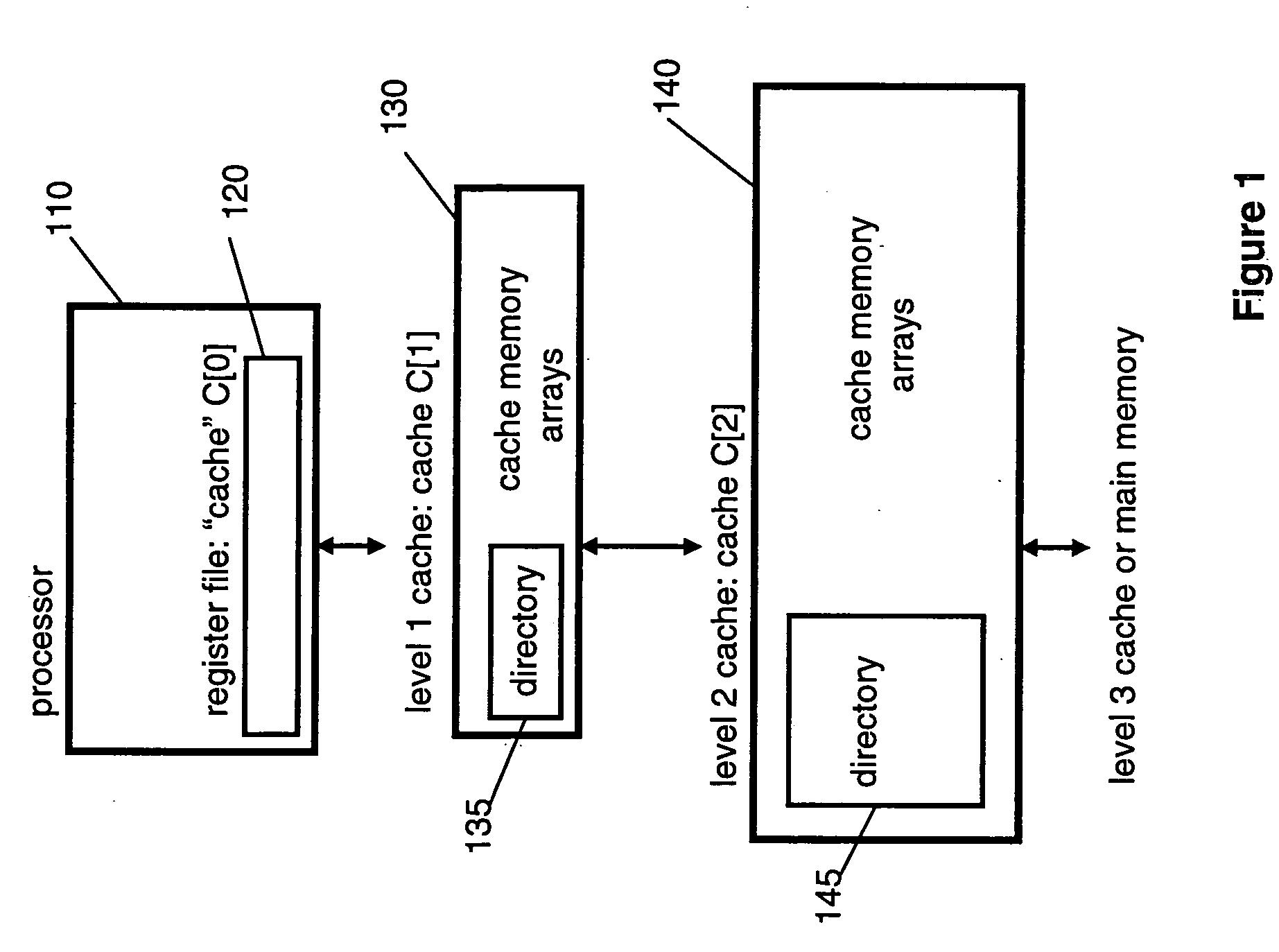

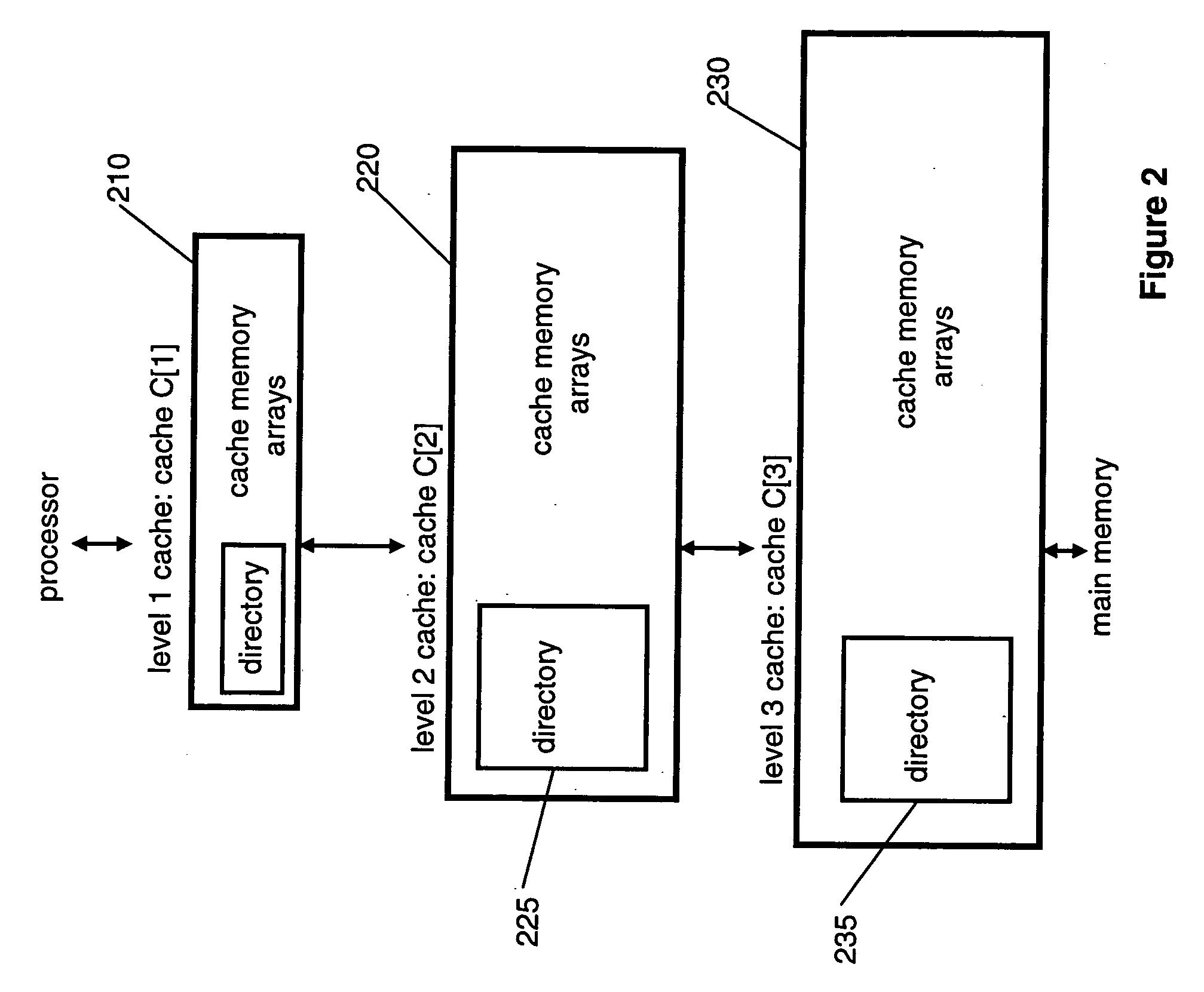

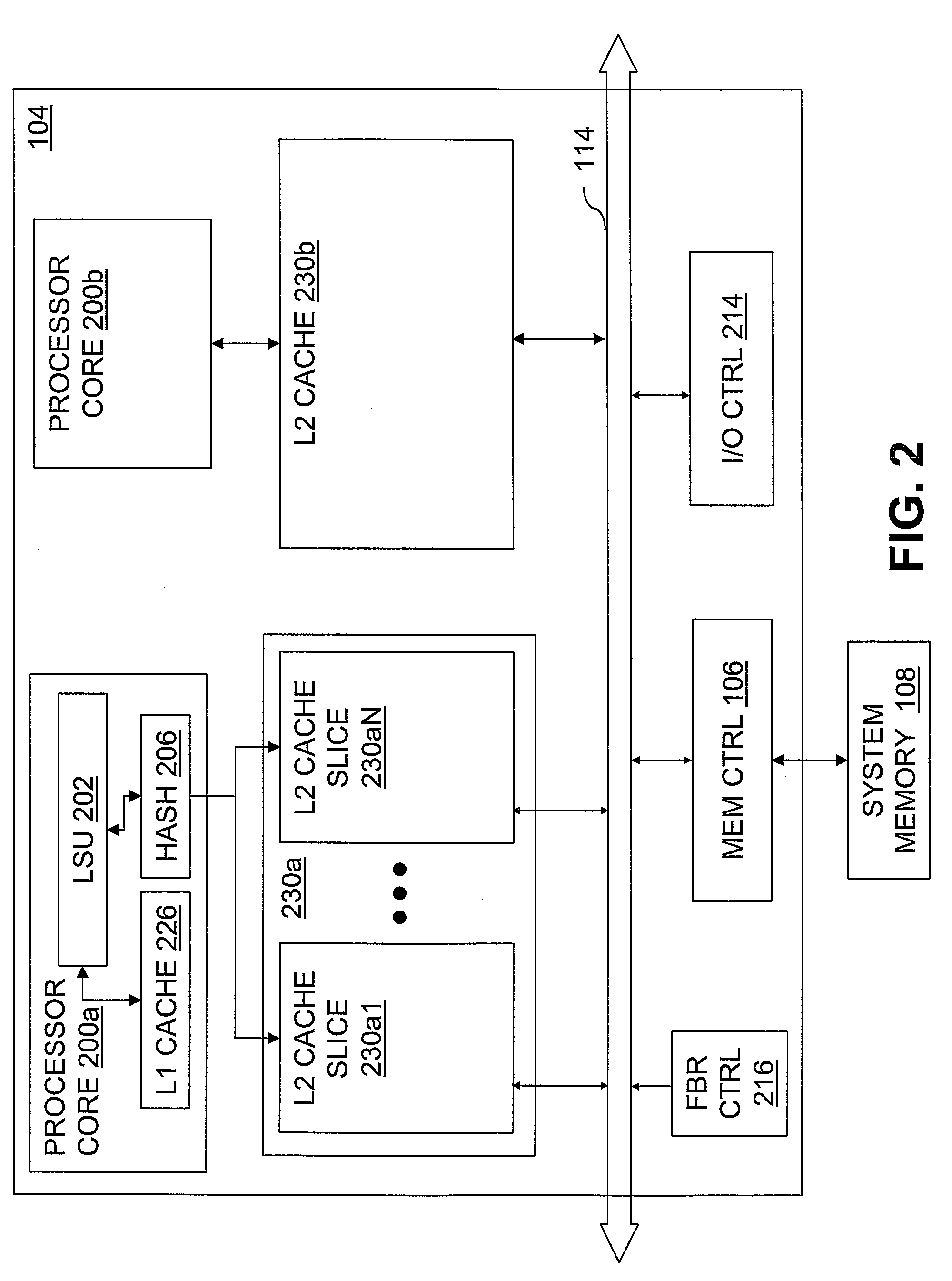

Scalable architecture based on single-chip multiprocessing

InactiveUS6988170B2Short timeSmall investmentMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyProcessing core

A chip-multiprocessing system with scalable architecture, including on a single chip: a plurality of processor cores; a two-level cache hierarchy; an intra-chip switch; one or more memory controllers; a cache coherence protocol; one or more coherence protocol engines; and an interconnect subsystem. The two-level cache hierarchy includes first level and second level caches. In particular, the first level caches include a pair of instruction and data caches for, and private to, each processor core. The second level cache has a relaxed inclusion property, the second-level cache being logically shared by the plurality of processor cores. Each of the plurality of processor cores is capable of executing an instruction set of the ALPHA™ processing core. The scalable architecture of the chip-multiprocessing system is targeted at parallel commercial workloads. A showcase example of the chip-multiprocessing system, called the PIRAHNA™ system, is a highly integrated processing node with eight simpler ALPHA™ processor cores. A method for scalable chip-multiprocessing is also provided.

Owner:SK HYNIX INC

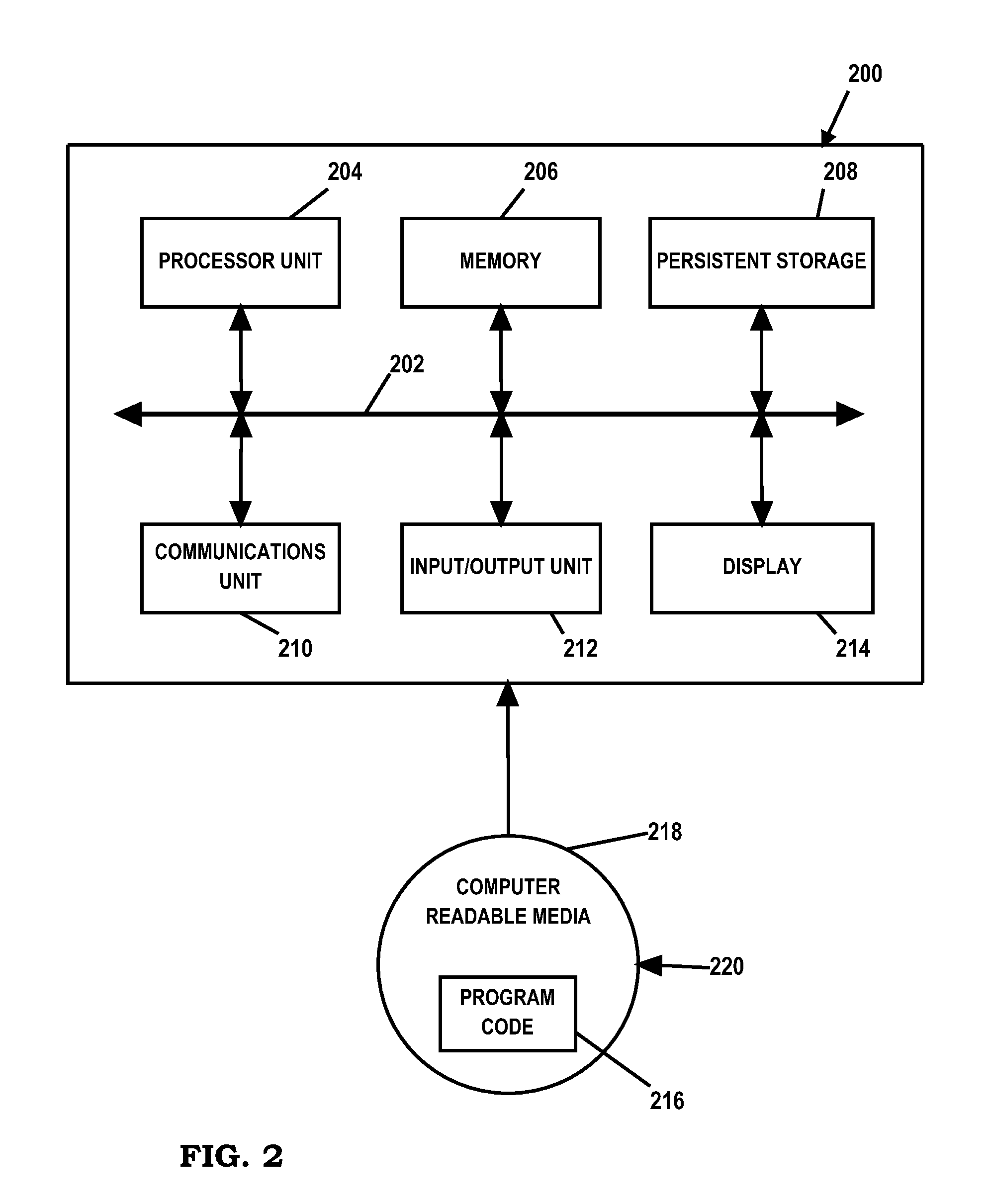

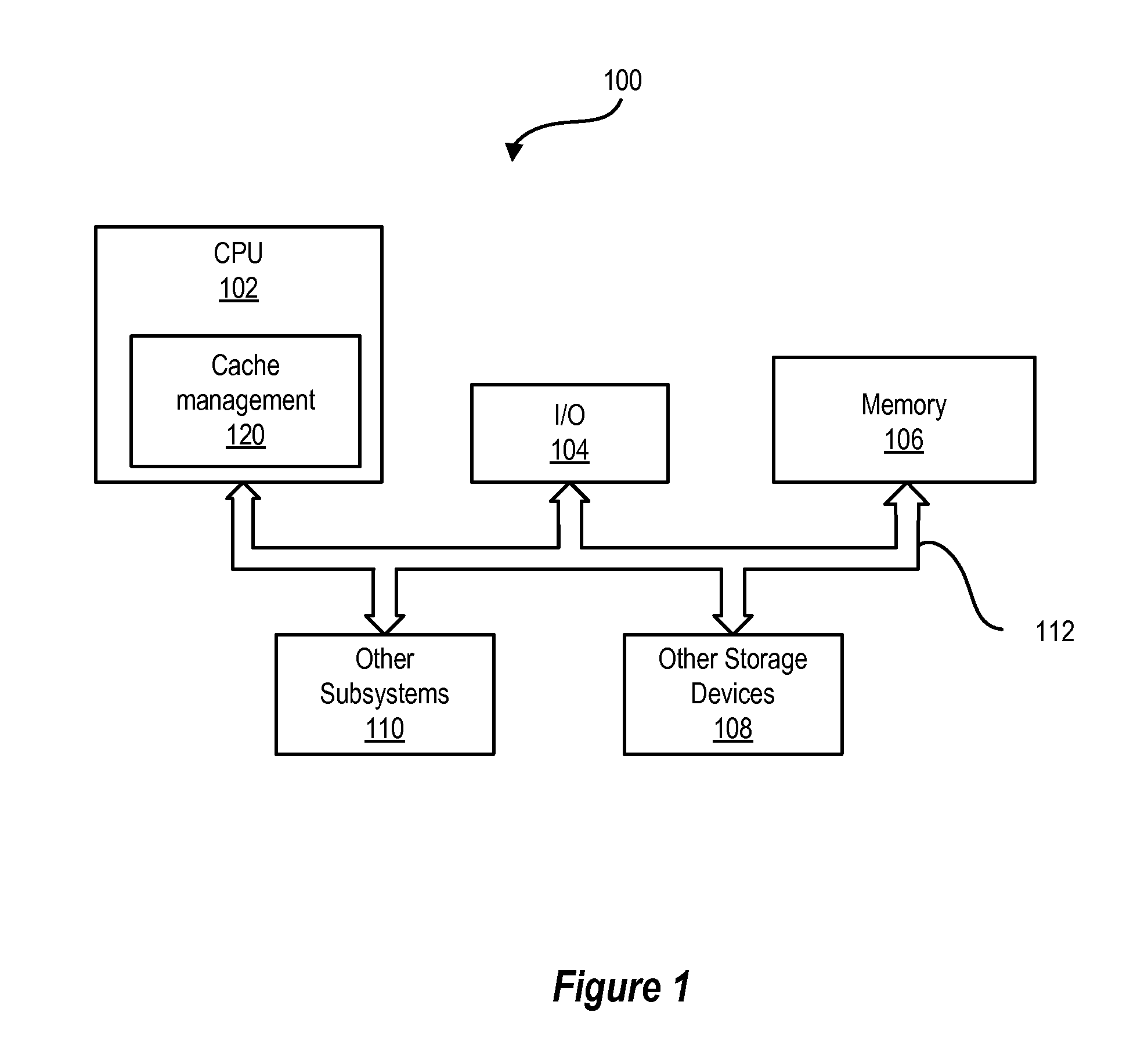

Data Processing System and Method for Reducing Cache Pollution by Write Stream Memory Access Patterns

InactiveUS20080046736A1User identity/authority verificationMemory systemsData processing systemCache hierarchy

A data processing system includes a system memory and a cache hierarchy that caches contents of the system memory. According to one method of data processing, a storage modifying operation having a cacheable target real memory address is received. A determination is made whether or not the storage modifying operation has an associated bypass indication. In response to determining that the storage modifying operation has an associated bypass indication, the cache hierarchy is bypassed, and an update indicated by the storage modifying operation is performed in the system memory. In response to determining that the storage modifying operation does not have an associated bypass indication, the update indicated by the storage modifying operation is performed in the cache hierarchy.

Owner:IBM CORP

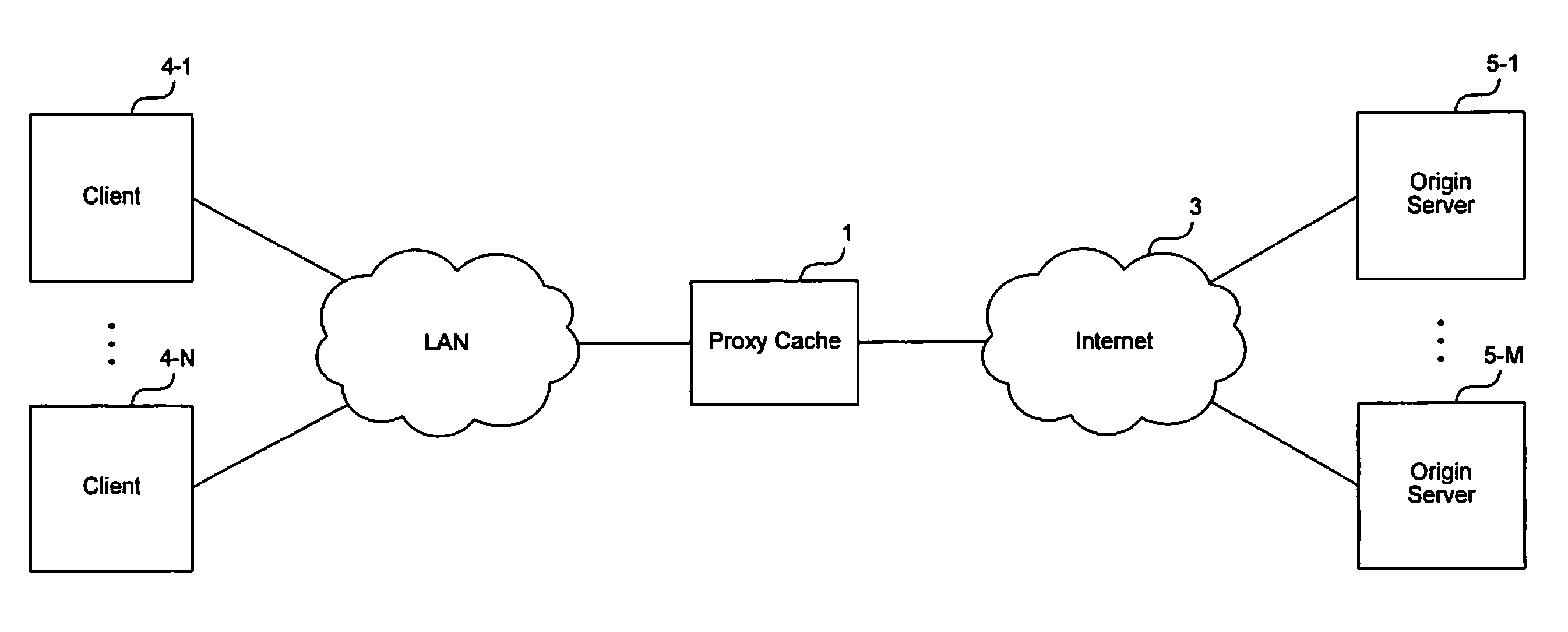

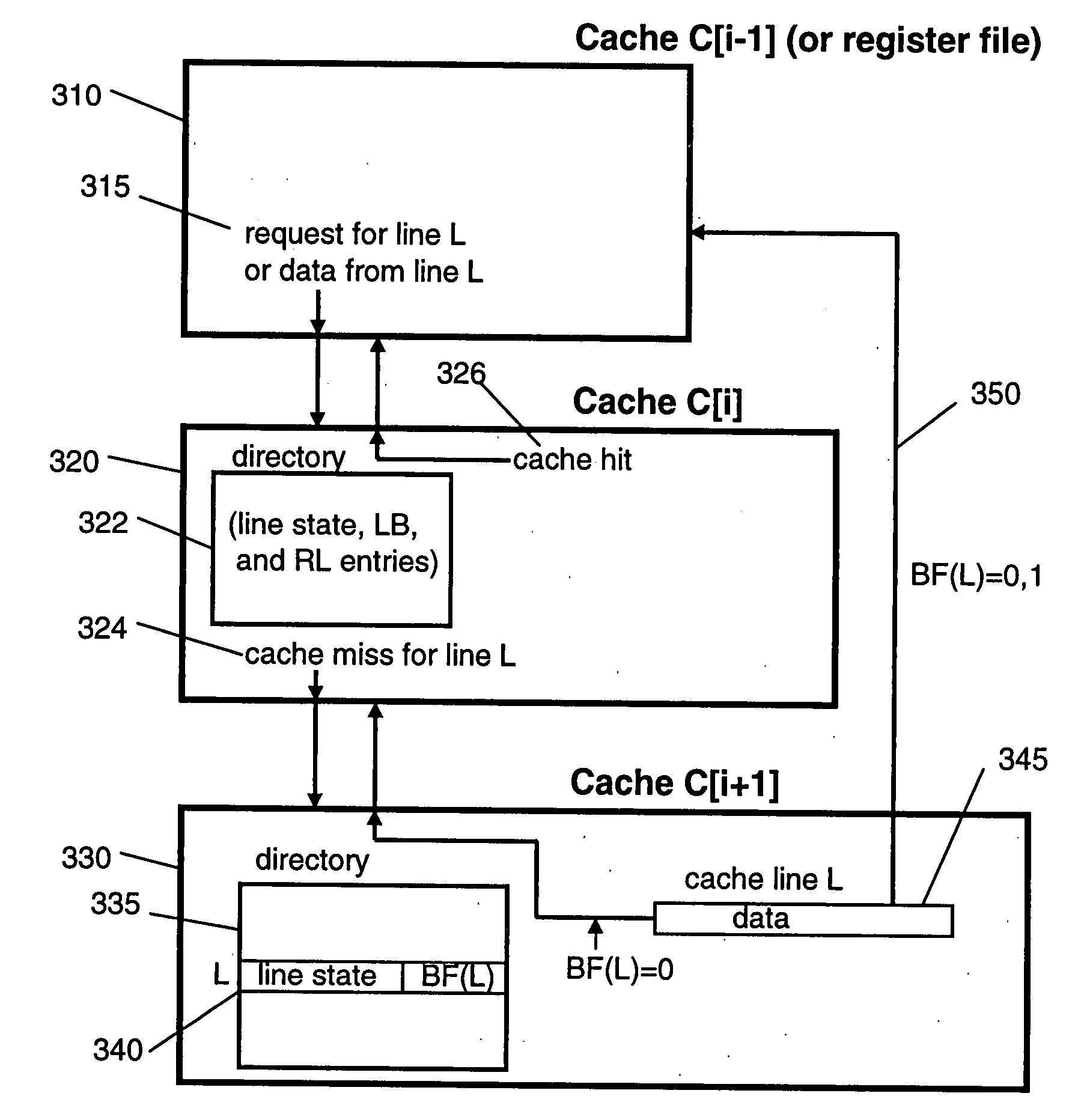

Method and apparatus for forwarding requests in a cache hierarchy based on user-defined forwarding rules

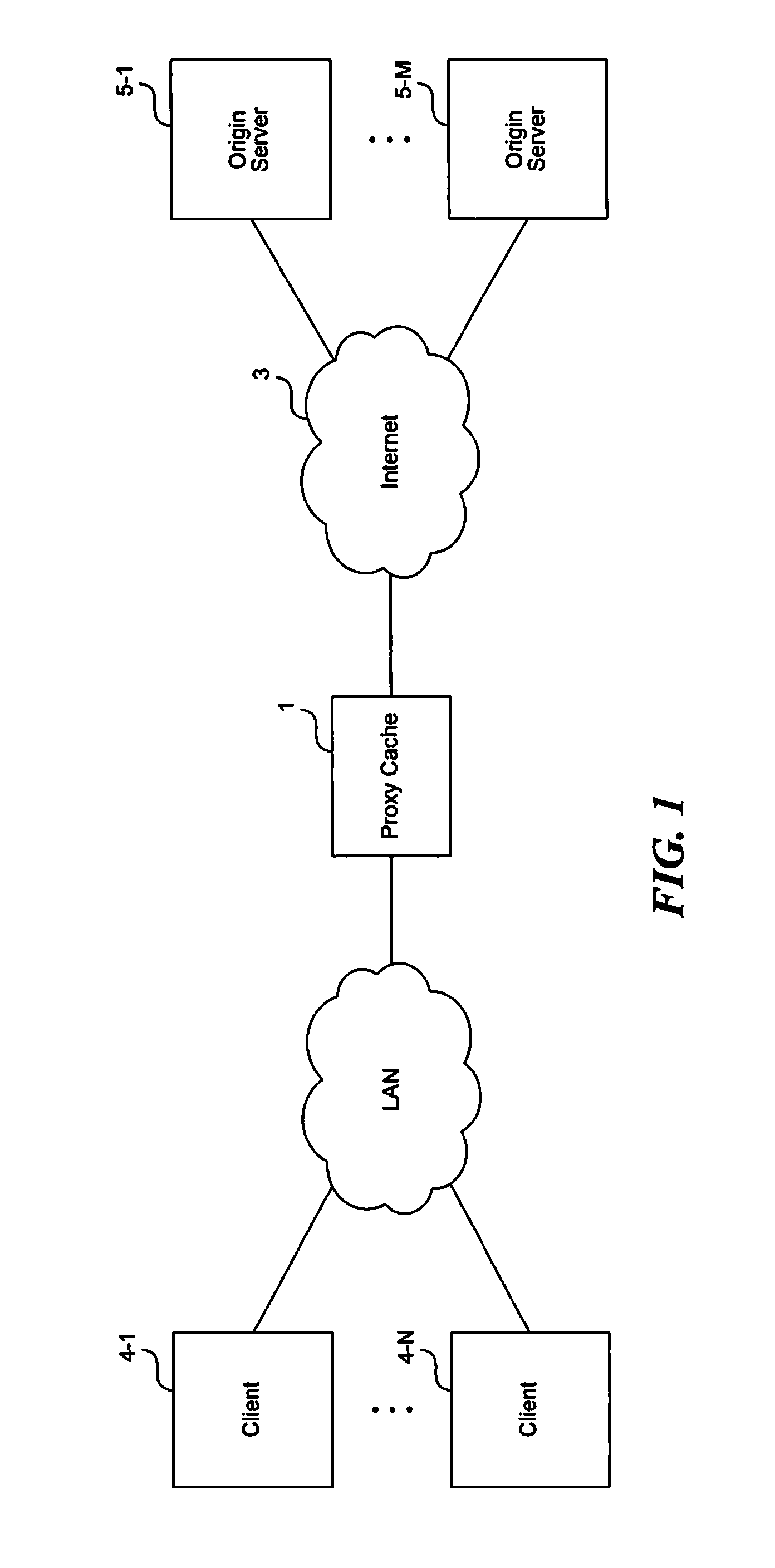

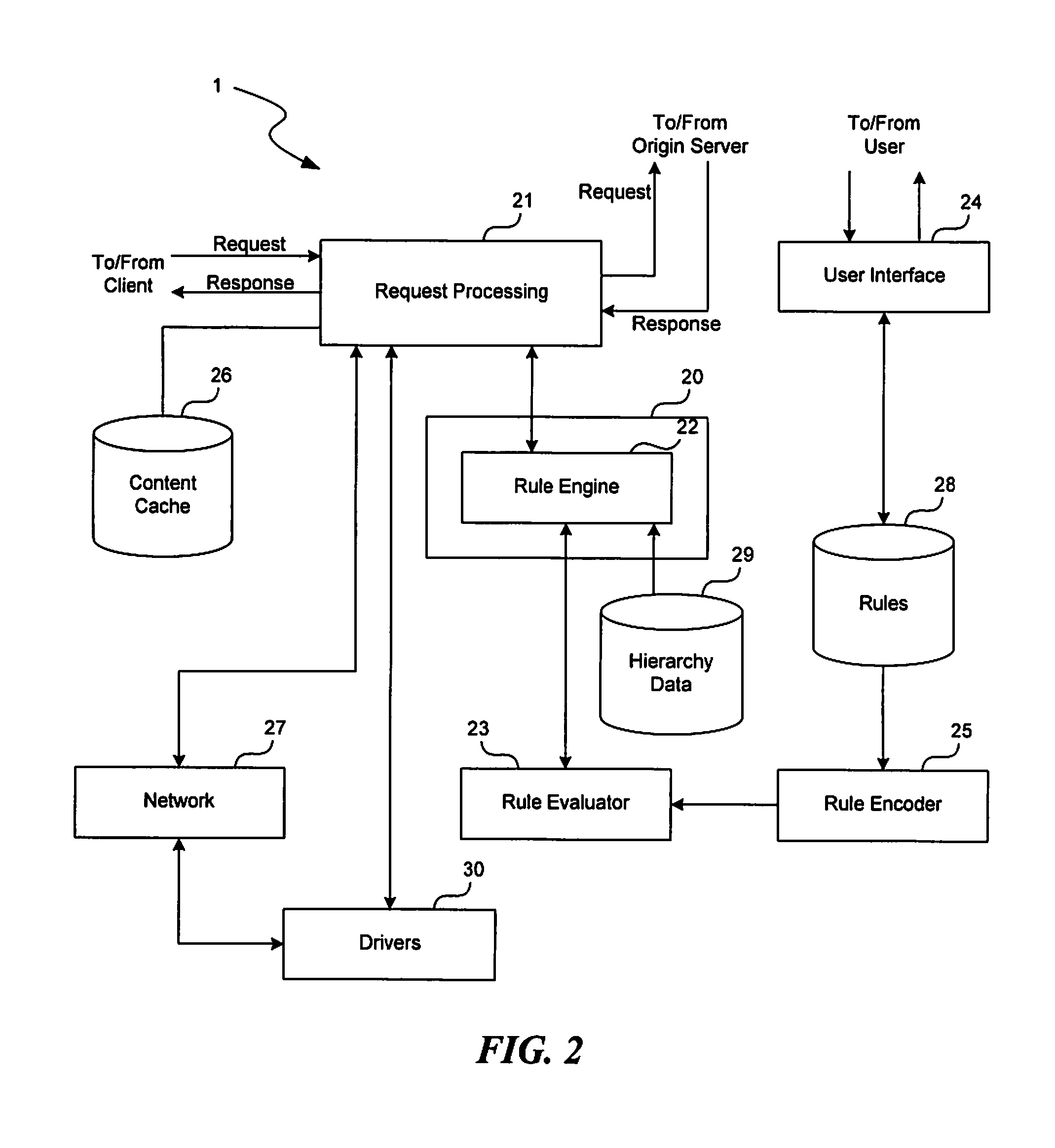

A method and apparatus for forwarding requests in a cache hierarchy based on user-defined forwarding rules are described. A proxy cache on a network provides a user interface that enables a user to define a set of forwarding rules for controlling the forwarding of content requests within a cache hierarchy. When the proxy cache receives a content request from a client and the request produces a cache miss, the proxy cache examines the rules sequentially two determine whether any of the user-defined rules applies to the request. If a rule is found to apply, the proxy cache identifies one or more forwarding destinations from the rule and determines the availability of the destinations. The proxy cache then forwards the request to an available destination according to the applicable rule.

Owner:NETWORK APPLIANCE INC

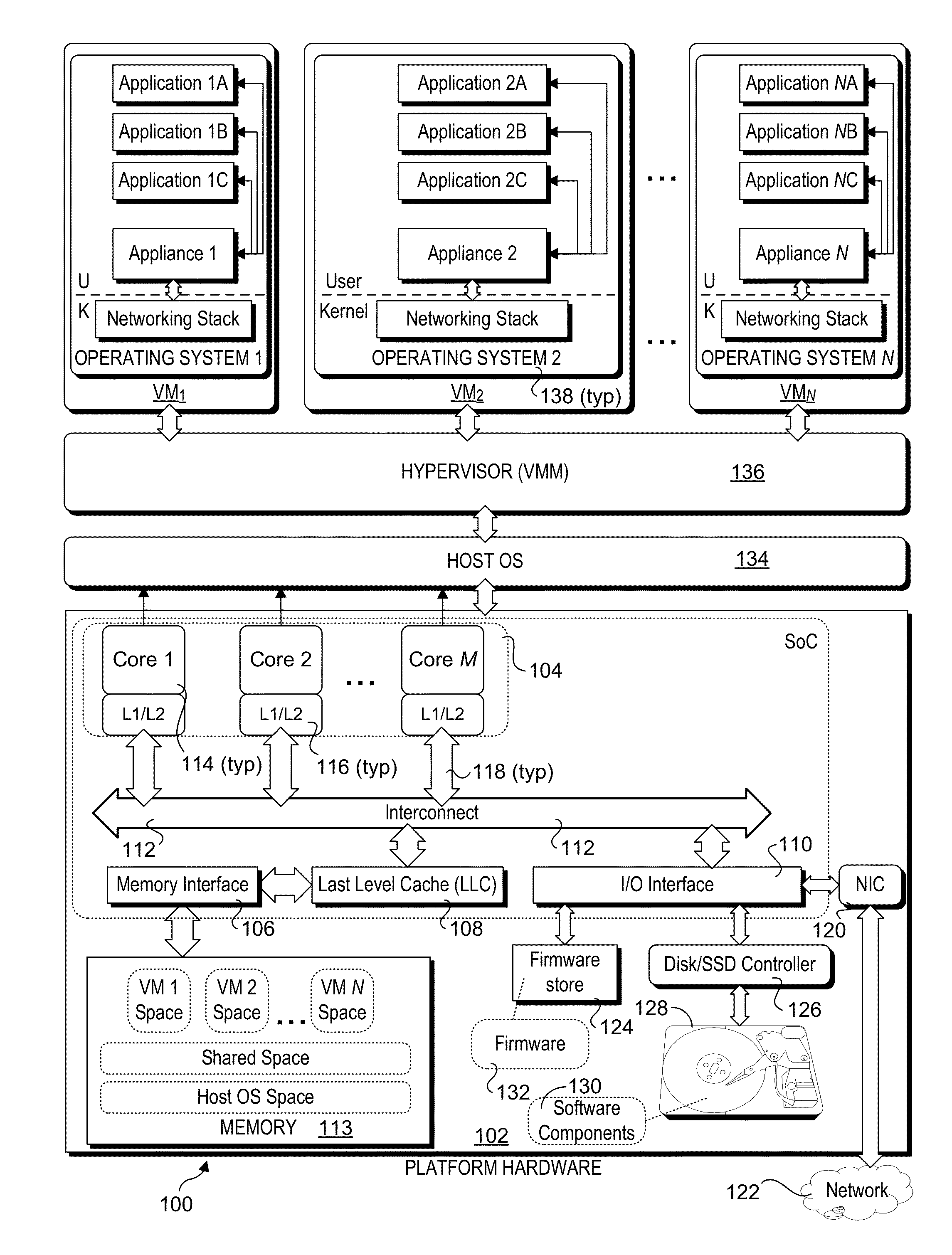

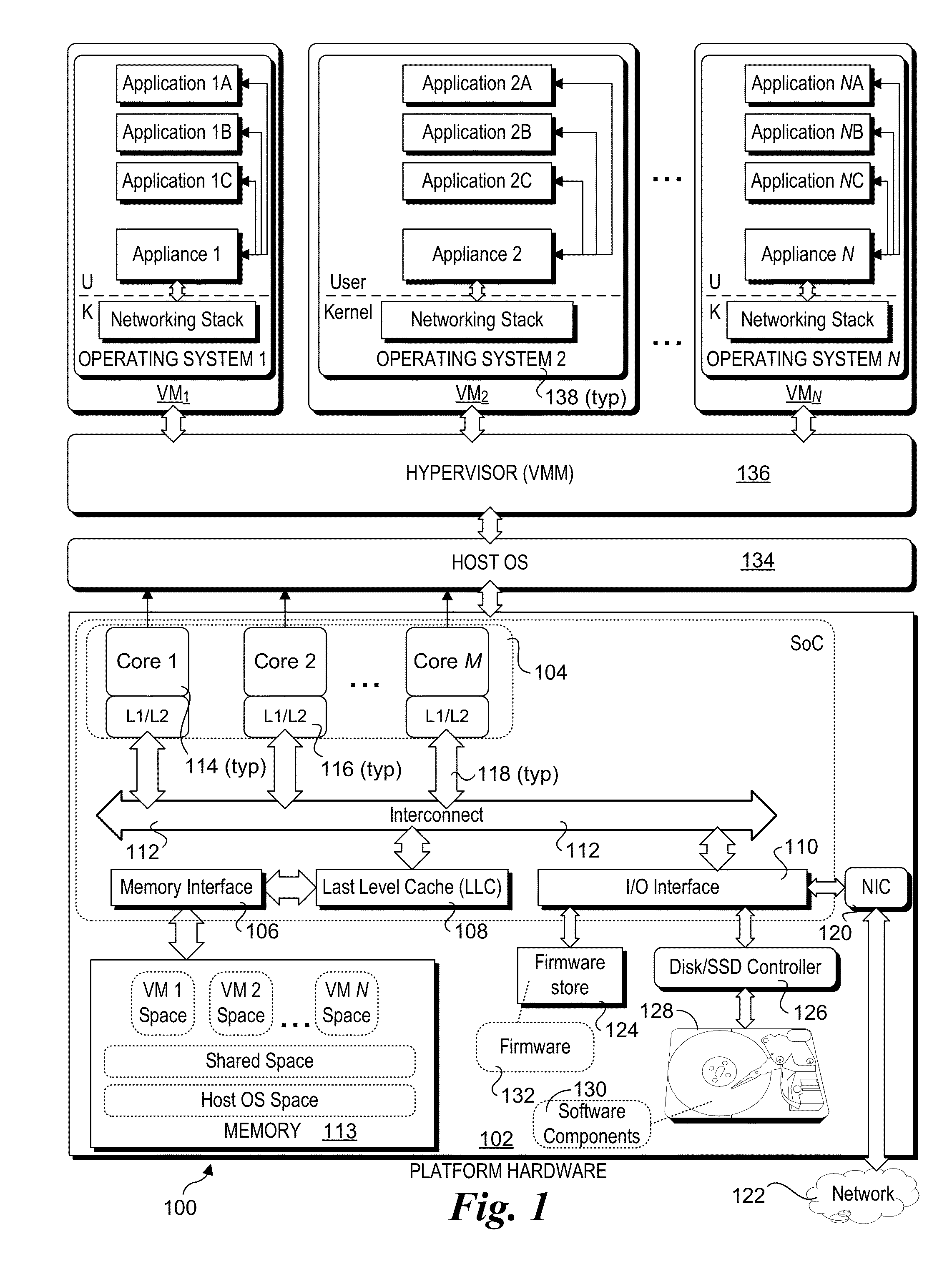

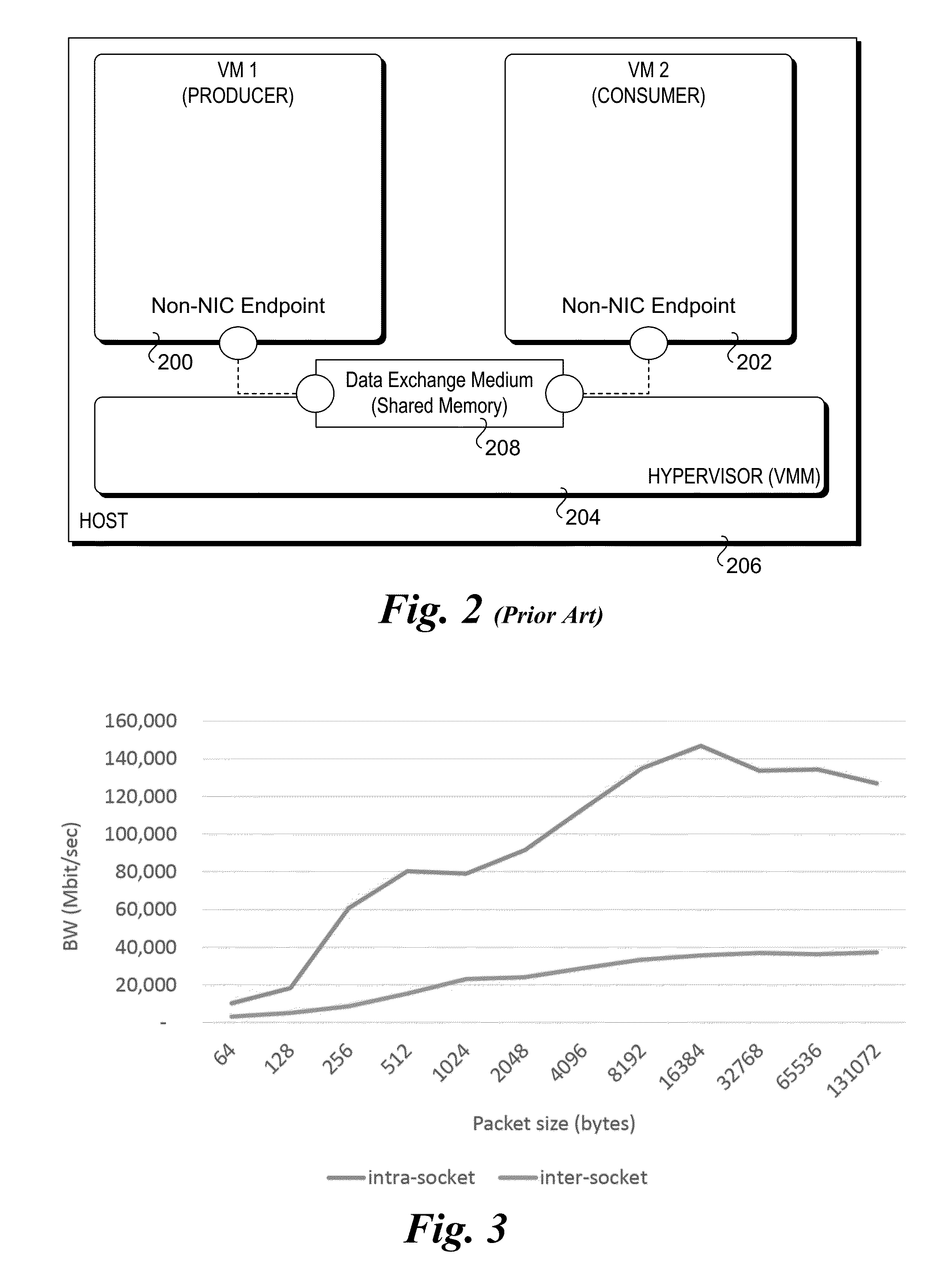

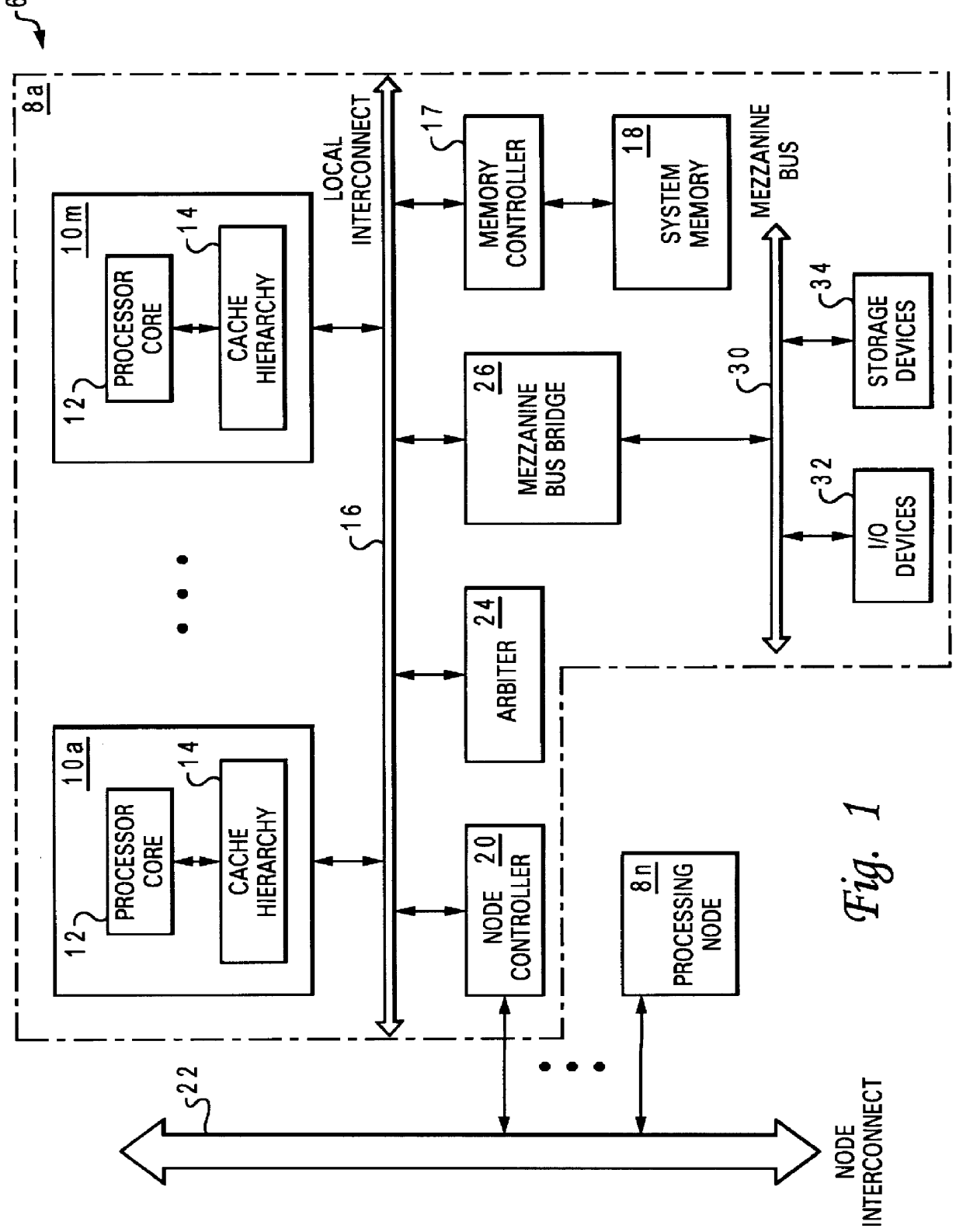

Hardware/software co-optimization to improve performance and energy for inter-vm communication for nfvs and other producer-consumer workloads

ActiveUS20160188474A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyStructure of Management Information

Methods and apparatus implementing Hardware / Software co-optimization to improve performance and energy for inter-VM communication for NFVs and other producer-consumer workloads. The apparatus include multi-core processors with multi-level cache hierarchies including and L1 and L2 cache for each core and a shared last-level cache (LLC). One or more machine-level instructions are provided for proactively demoting cachelines from lower cache levels to higher cache levels, including demoting cachelines from L1 / L2 caches to an LLC. Techniques are also provided for implementing hardware / software co-optimization in multi-socket NUMA architecture system, wherein cachelines may be selectively demoted and pushed to an LLC in a remote socket. In addition, techniques are disclosure for implementing early snooping in multi-socket systems to reduce latency when accessing cachelines on remote sockets.

Owner:INTEL CORP

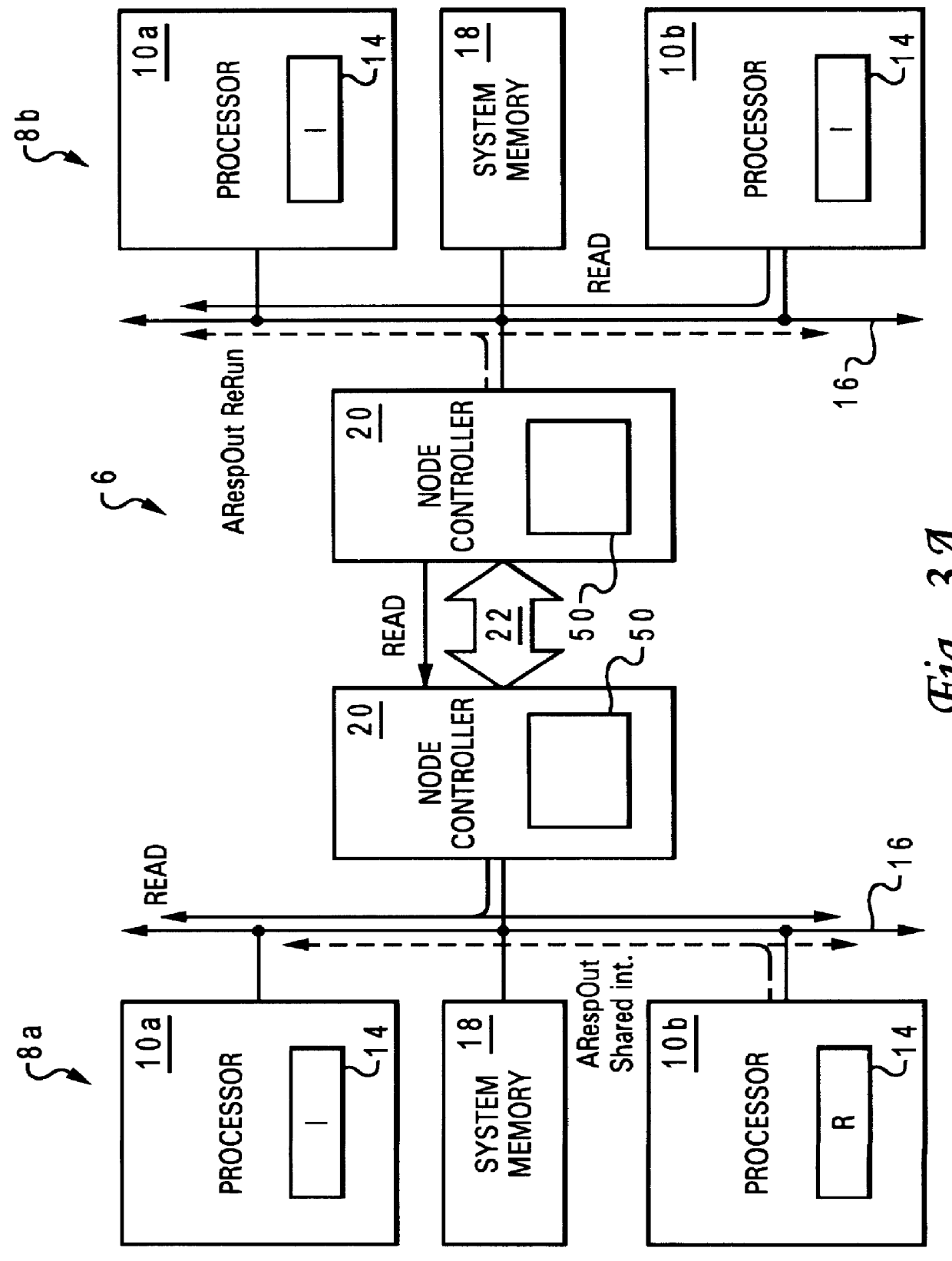

Non-uniform memory access (NUMA) data processing system with multiple caches concurrently holding data in a recent state from which data can be sourced by shared intervention

InactiveUS6108764AProgram synchronisationMemory adressing/allocation/relocationData processing systemCache hierarchy

A non-uniform memory access (NUMA) computer system includes first and second processing nodes that are coupled together. The first processing node includes a system memory and first and second processors that each have a respective associated cache hierarchy. The second processing node includes at least a third processor and a system memory. If the cache hierarchy of the first processor holds an unmodified copy of a cache line and receives a request for the cache line from the third processor, the cache hierarchy of the first processor sources the requested cache line to the third processor and retains a copy of the cache line in a Recent coherency state from which the cache hierarchy of the first processor can source the cache line in response to subsequent requests.

Owner:IBM CORP

Enabling and disabling cache bypass using predicted cache line usage

InactiveUS20060112233A1Improve system performanceImprove hit rateMemory systemsCache hierarchyCache access

Arrangements and method for enabling and disabling cache bypass in a computer system with a cache hierarchy. Cache bypass status is identified with respect to at least one cache line. A cache line identified as cache bypass enabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is bypassed, while a cache line identified as cache bypass disabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is not bypassed. Included is an arrangement for selectively enabling or disabling cache bypass with respect to at least one cache line based on historical cache access information.

Owner:IBM CORP

Flow cache hierarchy

ActiveUS9686200B2Save a lot of timeOperation is necessaryMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyExact match

Some embodiments provide a managed forwarding element (MFE that includes a set of flow tables including a first set of flow entries for processing packets received by the MFE. The MFE includes an aggregate cache including a second set of flow entries for processing packets received by the MFE. Each of the flow entries of the second set is for processing packets of multiple data flows. At least a subset of packet header fields of the packets of the multiple data flows have a same set of packet header field values, and a same set of operations is applied to said packets. The MFE includes an exact-match cache including a third set of flow entries for processing packets received by the MFE. Each of the flow entries of the third set is for processing packets for a single data flow having a unique set of packet header field values.

Owner:NICIRA

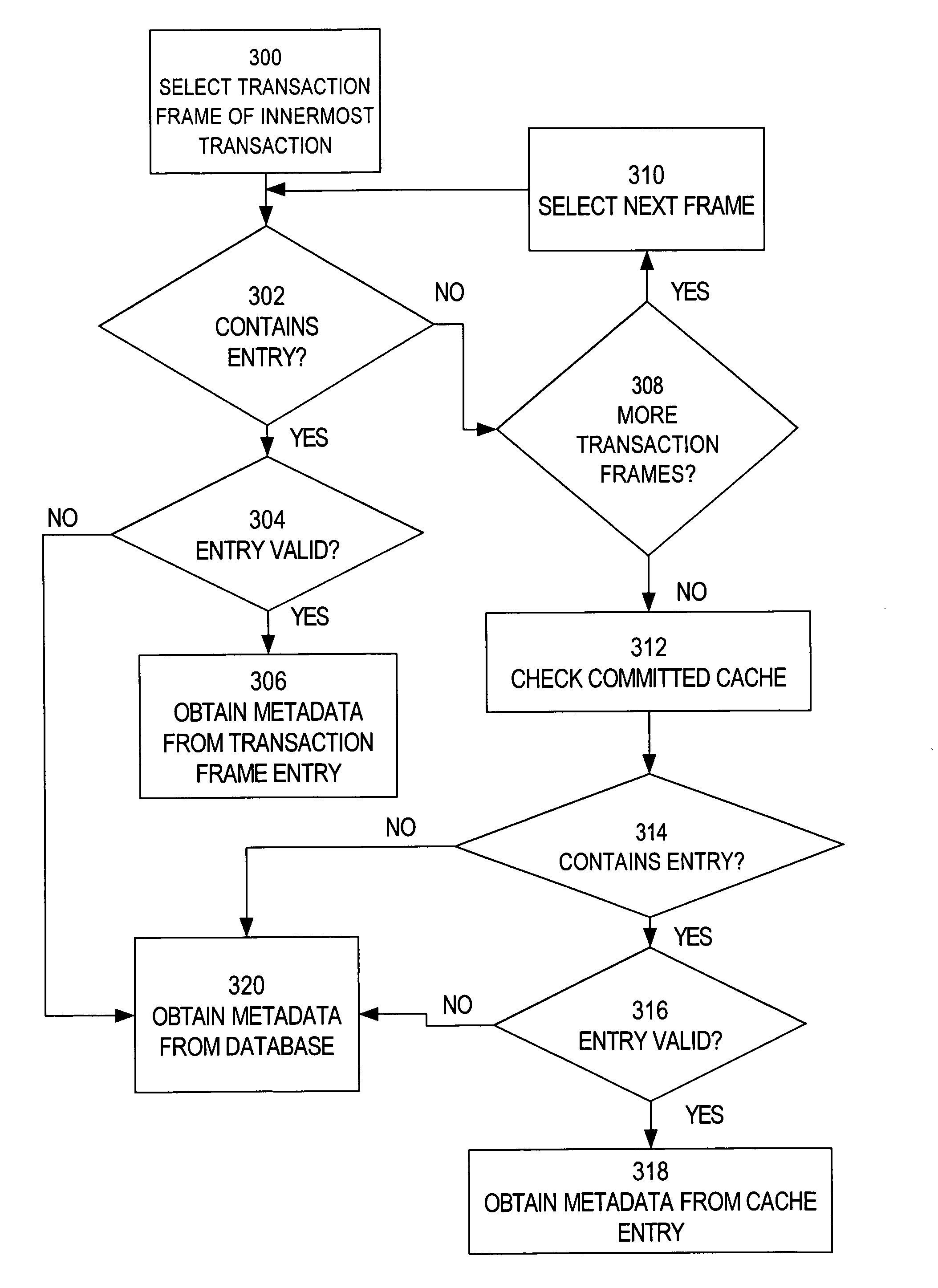

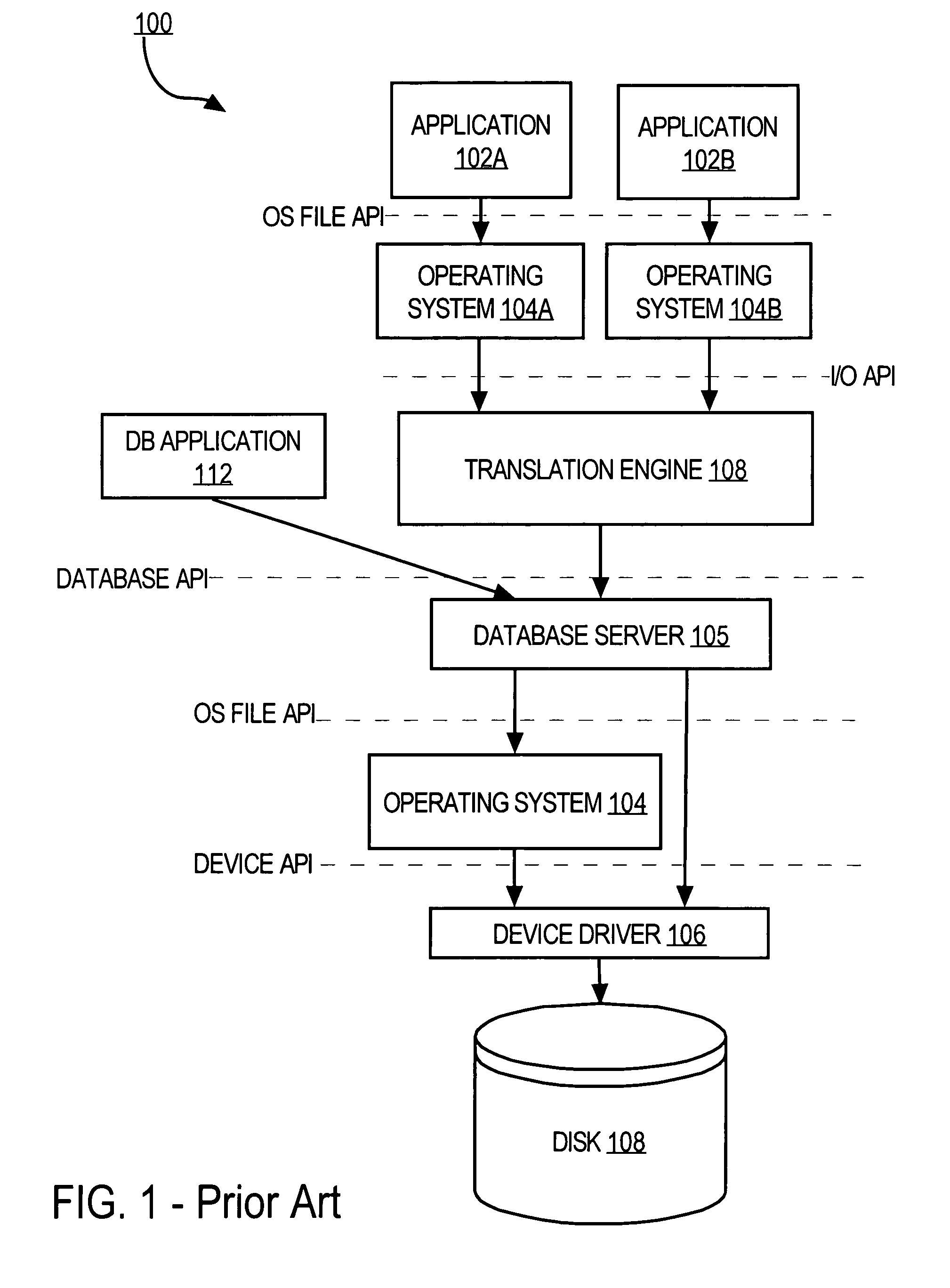

Transaction-aware caching for document metadata

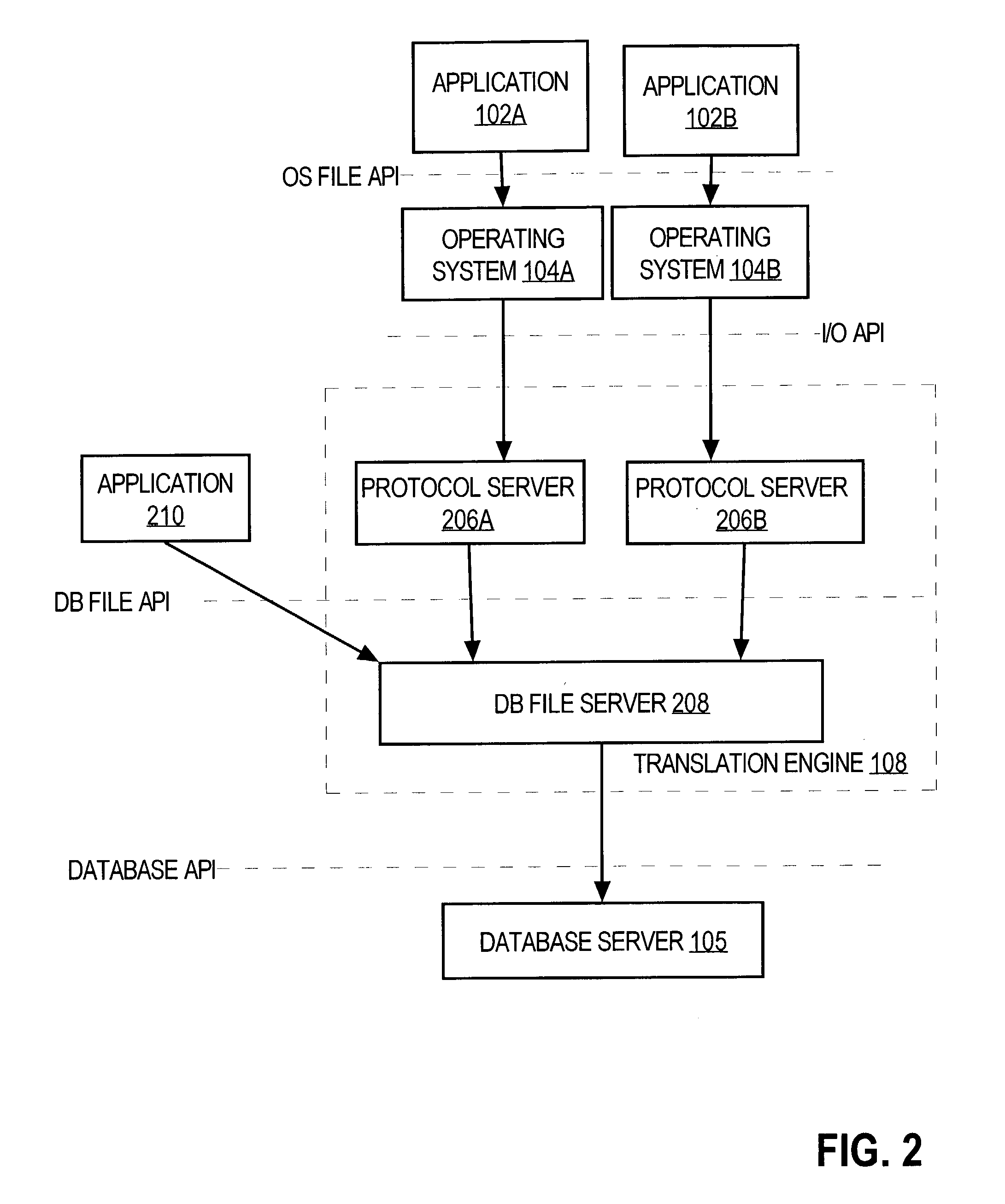

InactiveUS7987217B2Improve system performanceReduce overheadDigital data processing detailsMultiple digital computer combinationsNegative cacheCache hierarchy

Techniques are provided for performing transaction-aware caching of metadata in an electronic file system. A mechanism is described for providing transaction-aware caching that uses a cache hierarchy, where the cache hierarchy includes uncommitted caches associated with sessions in an application and a committed cache that is shared among the sessions in that application. Techniques are described for caching document metadata, access control metadata and folder path metadata. Also described is a technique for using negative cache entries to avoid unnecessary communications with a server when applications repeatedly request non-existent data.

Owner:ORACLE INT CORP

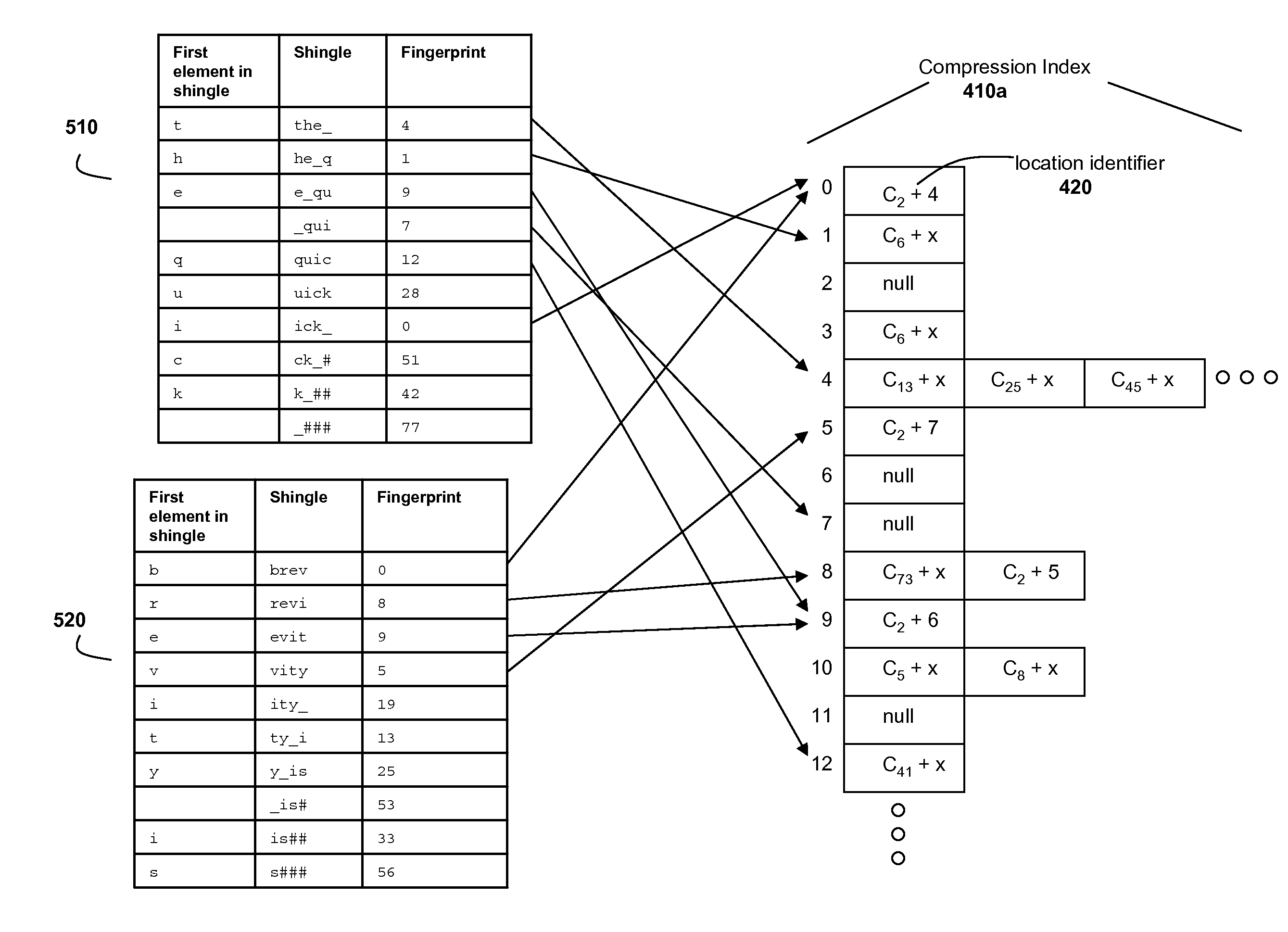

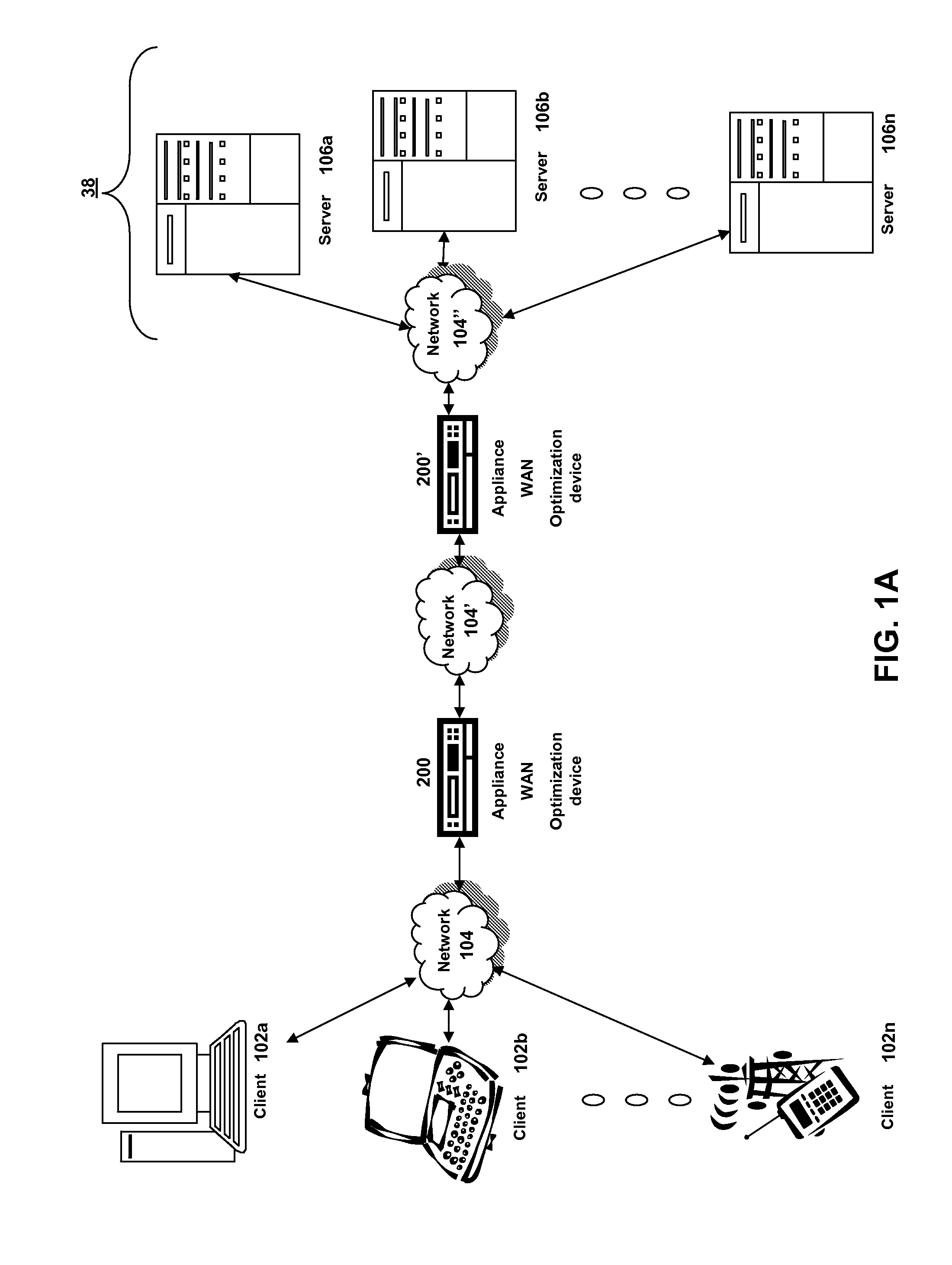

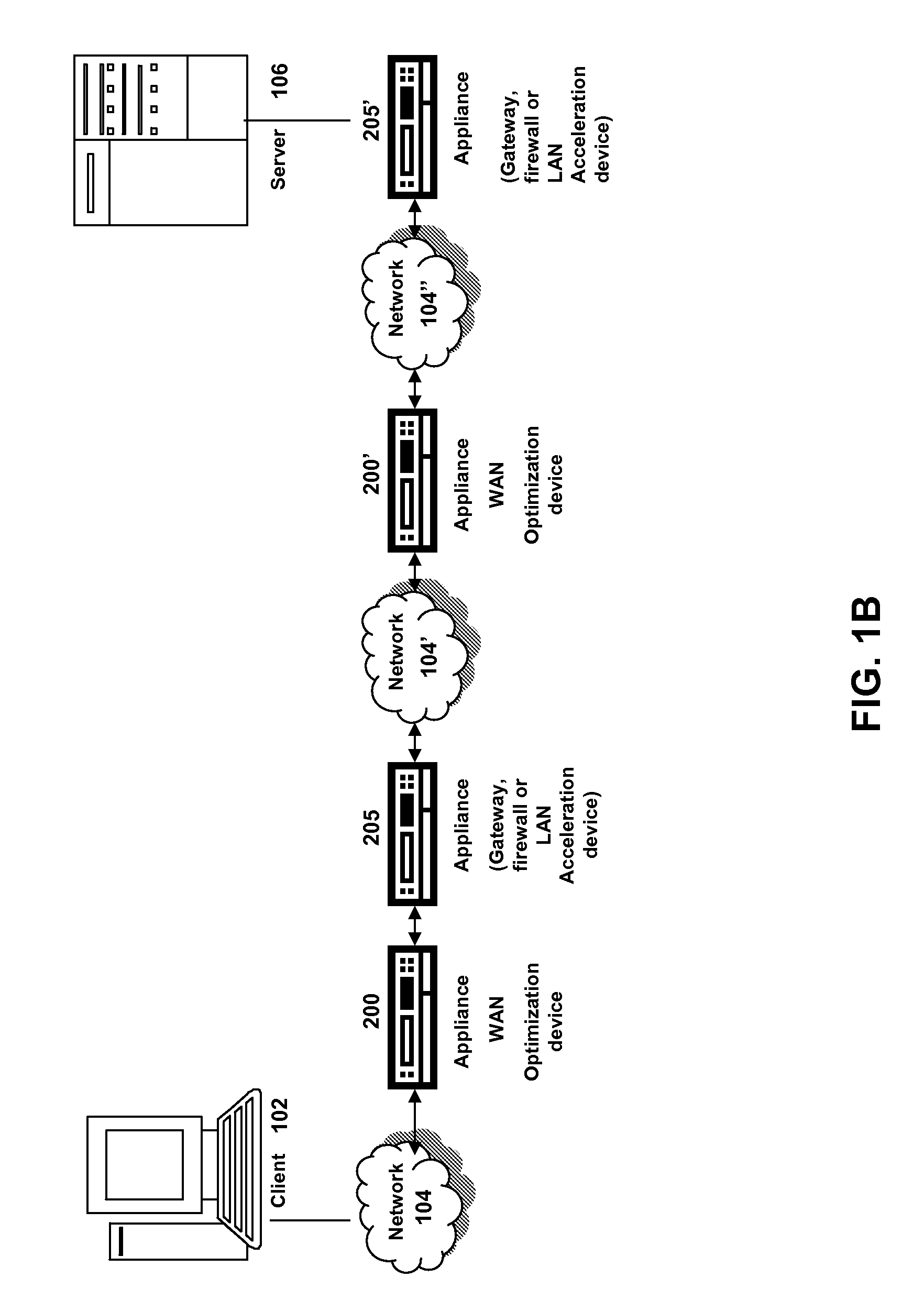

Systems and methods for providing dynamic ad hoc proxy-cache hierarchies

ActiveUS20080228939A1Reduce bandwidth usageAccelerate future communicationMultiple digital computer combinationsTransmissionTraffic capacityCache hierarchy

Systems and methods of storing previously transmitted data and using it to reduce bandwidth usage and accelerate future communications are described. By using algorithms to identify long compression history matches, a network device may improve compression efficiently and speed. A network device may also use application specific parsing to improve the length and number of compression history matches. Further, by sharing compression histories, compression history indexes and caches across multiple devices, devices can utilize data previously transmitted to other devices to compress network traffic. Any combination of the systems and methods may be used to efficiently find long matches to stored data, synchronize the storage of previously sent data, and share previously sent data among one or more other devices.

Owner:CITRIX SYST INC

Derivation method for cached keys in wireless communication system

InactiveUS20060083377A1Improve securityImproved roaming timeNetwork topologiesSecret communicationCache hierarchyCommunications system

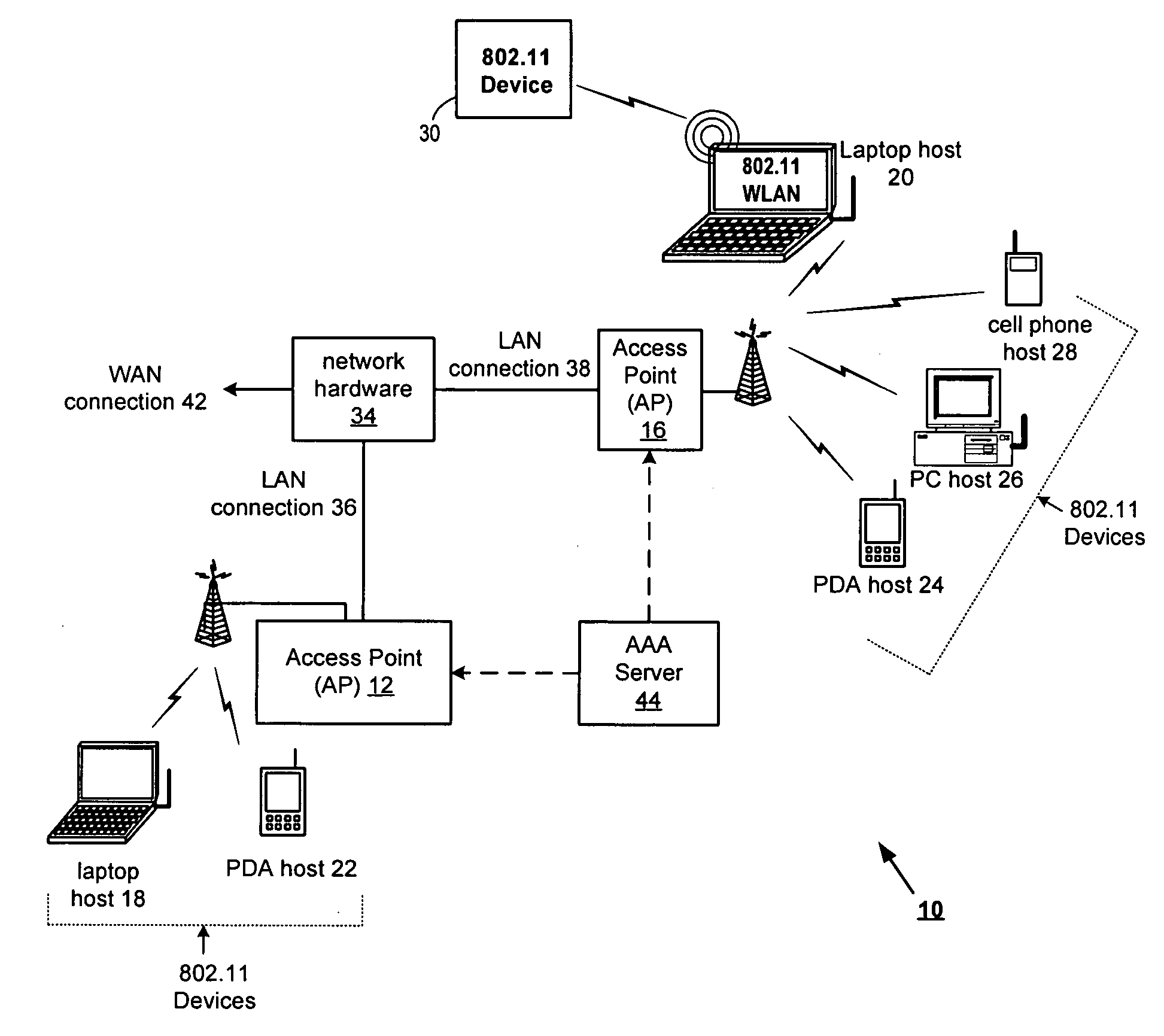

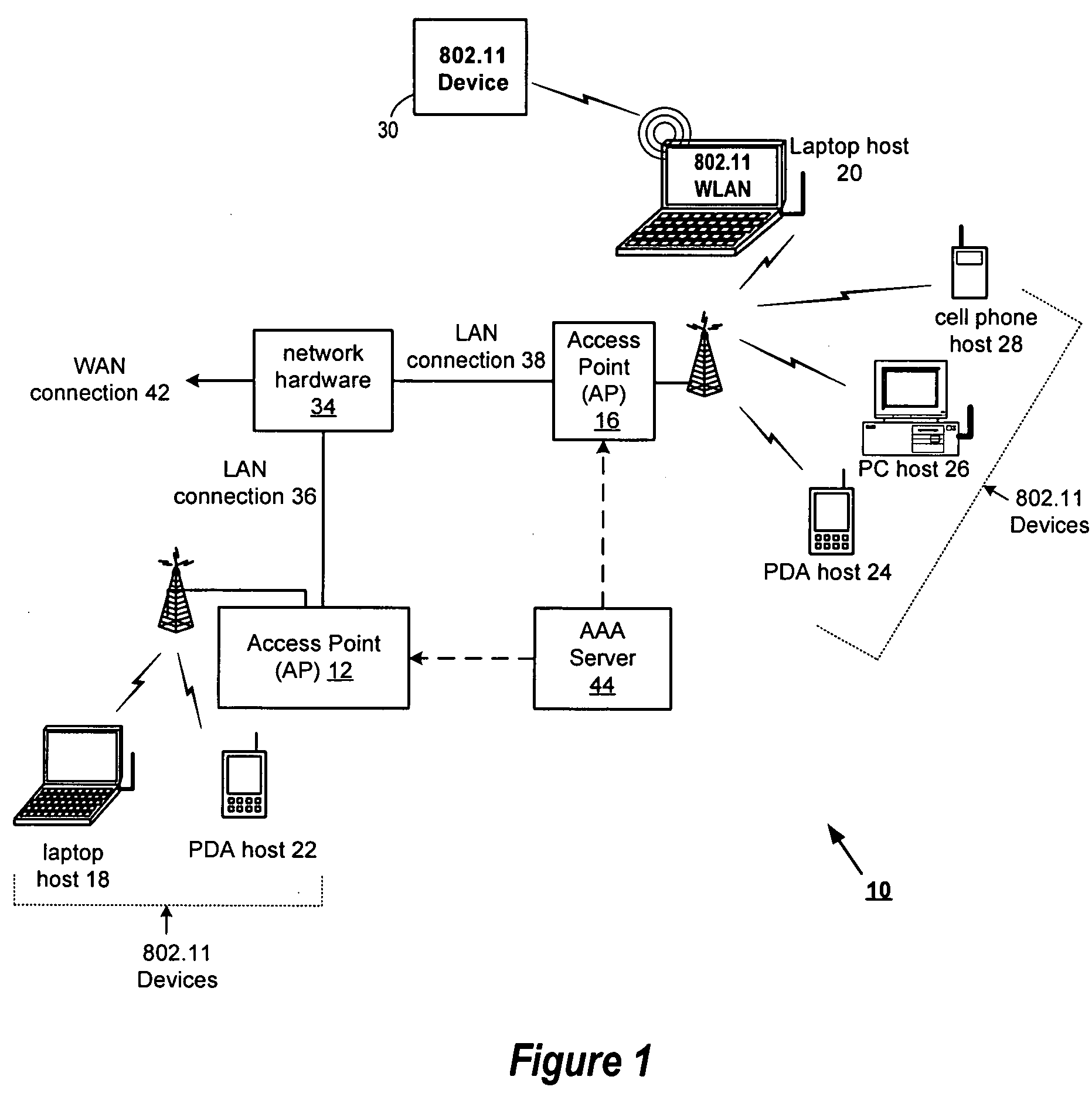

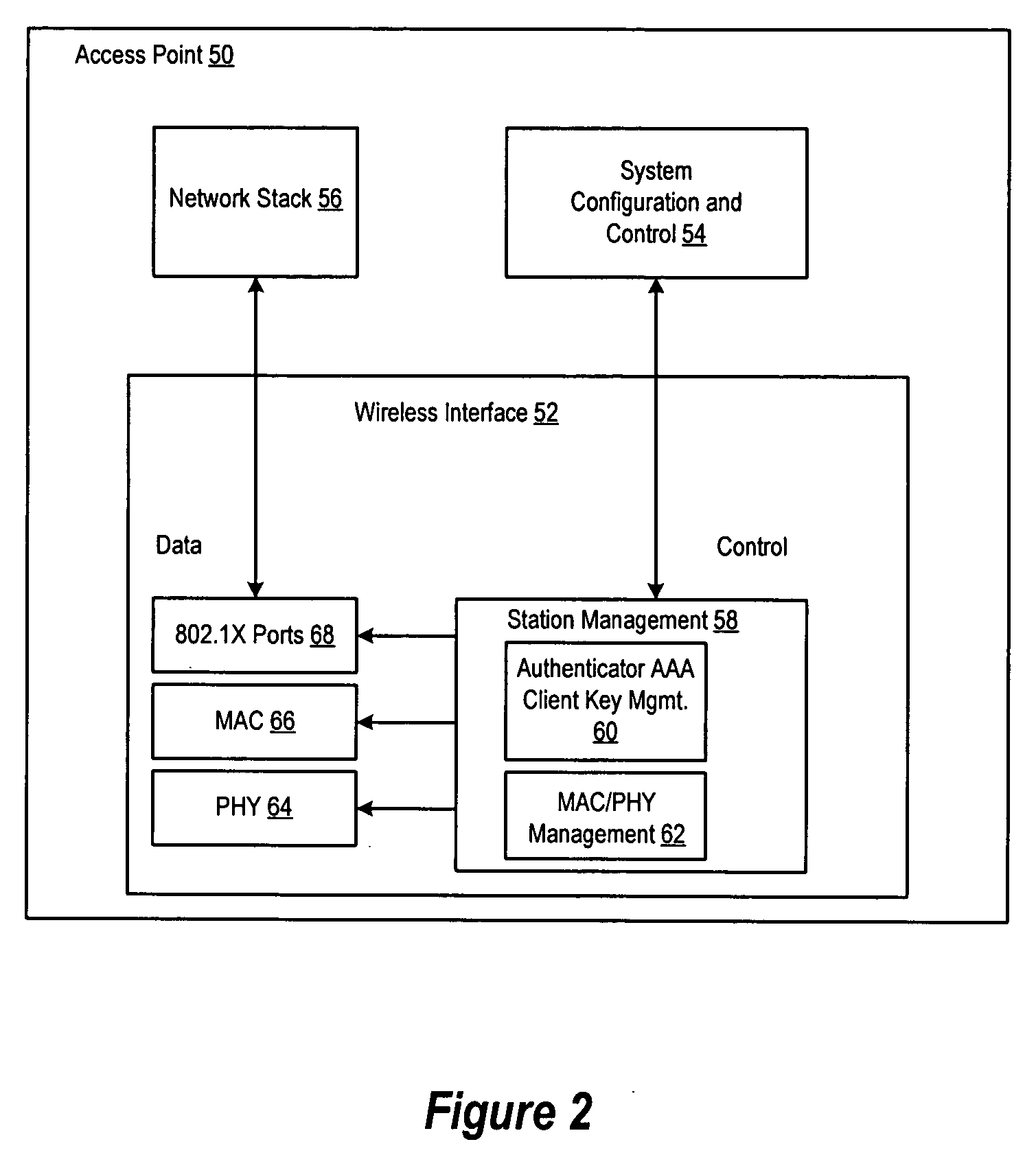

A method and apparatus for providing improved security and improved roaming transition times in wireless networks. In the present invention, the same pairwise master key (PMK) from an authentication server can be used across multiple access points and a new pairwise transition key (PTK) is derived for each association of a station to any of the access points. A plurality of access points are organized in functional hierarchical levels and are operable to advertise an indicator of the PMK cache depth supported by a group of access points (N) and an ordered list of the identifiers for the derivation path. Access points in each level in the cache hierarchy compute the derived pairwise master keys (DPMKs) for devices in the next lower level in the hierarchy and then deliver the DPMKs to those devices. An access point calculates the PTK as part of the security exchange process when the station wishes to associate to the access point. The station also computes the PTK as part of the security exchange process. The station calculates all the DMPKs in the hierarchy as part of computing the PTK. The method and apparatus of the present invention allows the cache depth to vary per station, but it remains constant for a given station within a key circle.

Owner:AVAGO TECH INT SALES PTE LTD

Variable cache line size management

According to one aspect of the present disclosure, a system and technique for variable cache line size management is disclosed. The system includes a processor and a cache hierarchy, where the cache hierarchy includes a sectored upper level cache and an unsectored lower level cache, and wherein the upper level cache includes a plurality of sub-sectors, each sub-sector having a cache line size corresponding to a cache line size of the lower level cache. The system also includes logic executable to, responsive to determining that a cache line from the upper level cache is to be evicted to the lower level cache: identify referenced sub-sectors of the cache line to be evicted; invalidate unreferenced sub-sectors of the cache line to be evicted; and store the referenced sub-sectors in the lower level cache.

Owner:IBM CORP

Load request scheduling in a cache hierarchy

InactiveUS20100268882A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyLeast recently frequently used

A system and method for tracking core load requests and providing arbitration and ordering of requests. When a core interface unit (CIU) receives a load operation from the processor core, a new entry in allocated in a queue of the CIU. In response to allocating the new entry in the queue, the CIU detects contention between the load request and another memory access request. In response to detecting contention, the load request may be suspended until the contention is resolved. Received load requests may be stored in the queue and tracked using a least recently used (LRU) mechanism. The load request may then be processed when the load request resides in a least recently used entry in the load request queue. CIU may also suspend issuing an instruction unless a read claim (RC) machine is available. In another embodiment, CIU may issue stored load requests in a specific priority order.

Owner:IBM CORP

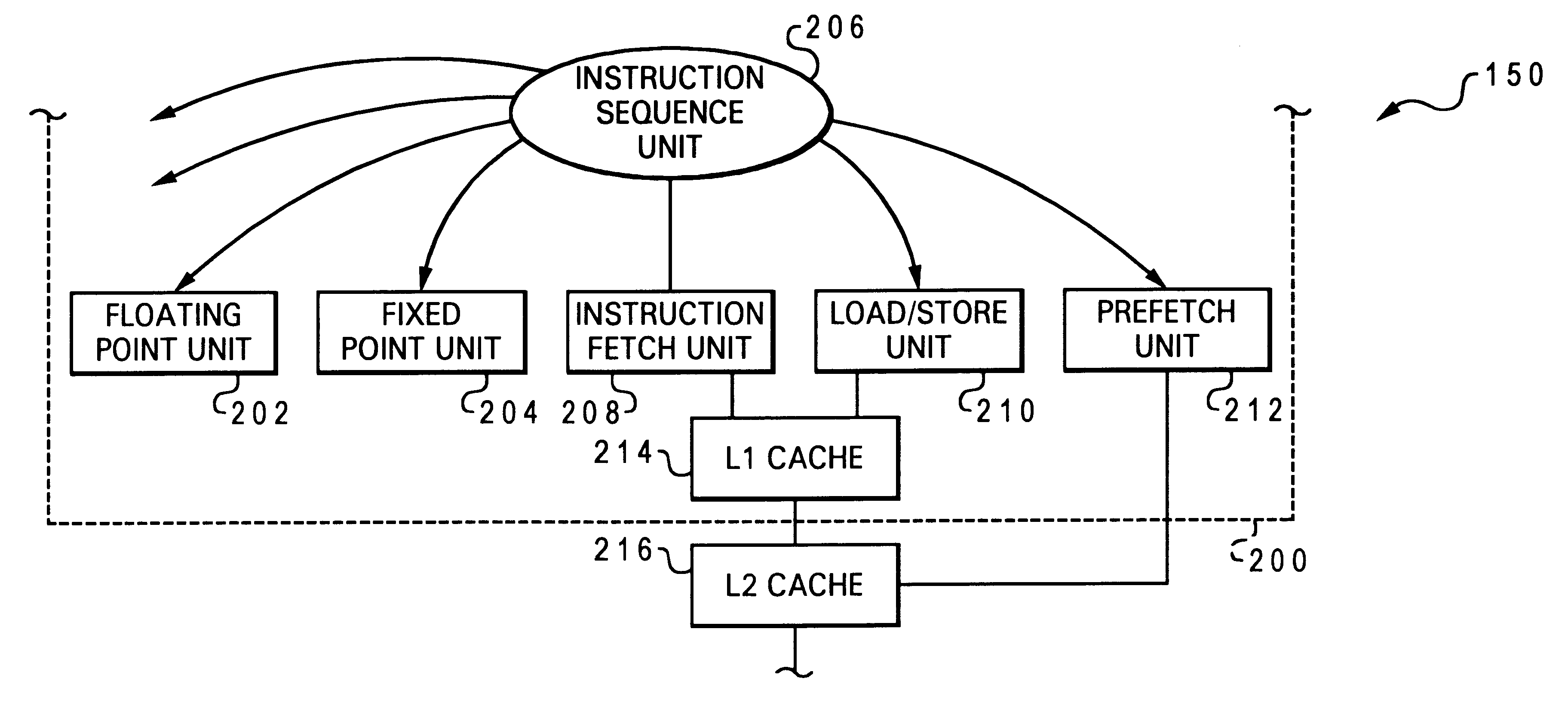

Mechanism for high performance transfer of speculative request data between levels of cache hierarchy

InactiveUS6532521B1Memory adressing/allocation/relocationConcurrent instruction executionCache hierarchyMemory hierarchy

A method of operating a processing unit of a computer system, by issuing an instruction having an explicit prefetch request directly from an instruction sequence unit to a prefetch unit of the processing unit. The invention applies to values that are either operand data or instructions. In a preferred embodiment, two prefetch units are used, the first prefetch unit being hardware independent and dynamically monitoring one or more active streams associated with operations carried out by a core of the processing unit, and the second prefetch unit being aware of the lower level storage subsystem and sending with the prefetch request an indication that a prefetch value is to be loaded into a lower level cache of the processing unit. The invention may advantageously associate each prefetch request with a stream ID of an associated processor stream, or a processor ID of the requesting processing unit (the latter feature is particularly useful for caches which are shared by a processing unit cluster). If another prefetch value is requested from the memory hierarchy, and it is determined that a prefetch limit of cache usage has been met by the cache, then a cache line in the cache containing one of the earlier prefetch values is allocated for receiving the other prefetch value. The prefetch limit of cache usage may be established with a maximum number of sets in a congruence class usable by the requesting processing unit. A flag in a directory of the cache may be set to indicate that the prefetch value was retrieved as the result of a prefetch operation. In the implementation wherein the cache is a multi-level cache, a second flag in the cache directory may be set to indicate that prefetch value has been sourced to an upstream cache. A cache line containing prefetch data can be automatically invalidated after a preset amount of time has passed since the prefetch value was requested.

Owner:IBM CORP

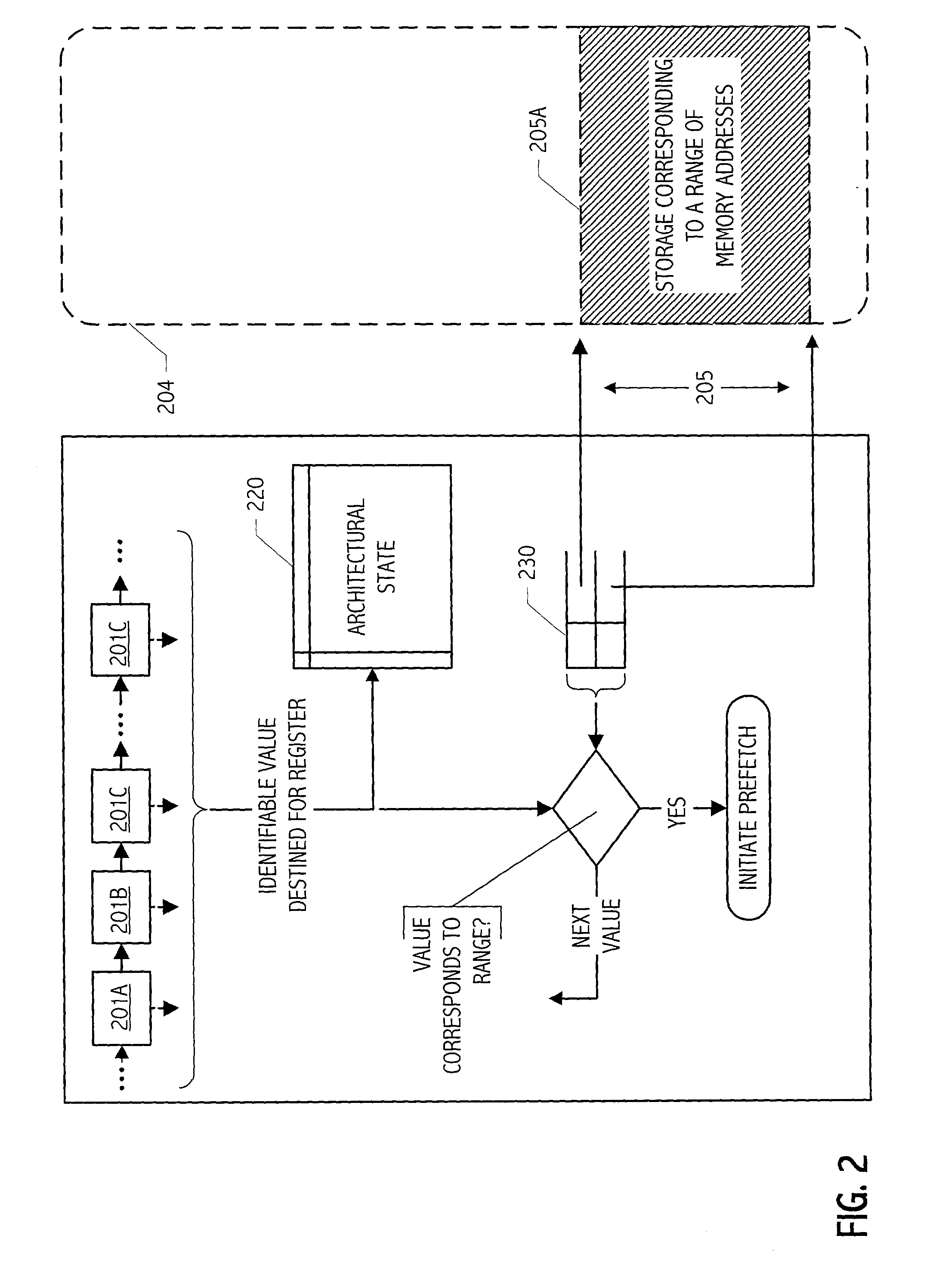

Automatic prefetch of pointers

InactiveUS20030163645A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyMemory address

Techniques have been developed whereby likely pointer values are identified at runtime and contents of corresponding storage location can be prefetched into a cache hierarchy to reduce effective memory access latencies. In some realizations, one or more writable stores are defined in a processor architecture to delimit a portion or portions of a memory address space.

Owner:ORACLE INT CORP

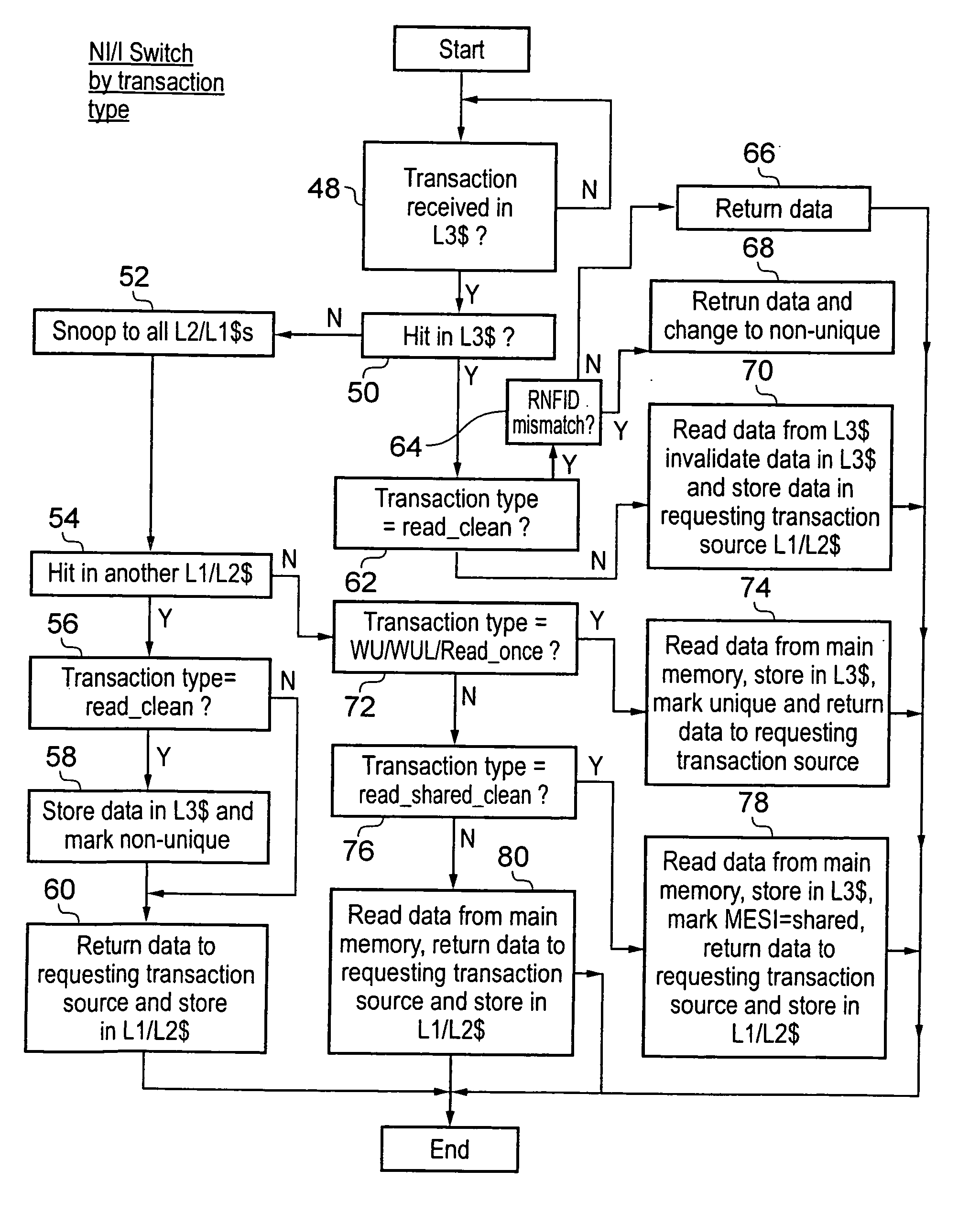

Shared cache memory control

ActiveUS20130042070A1Readily available for sharingReduce in quantityMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemCache hierarchy

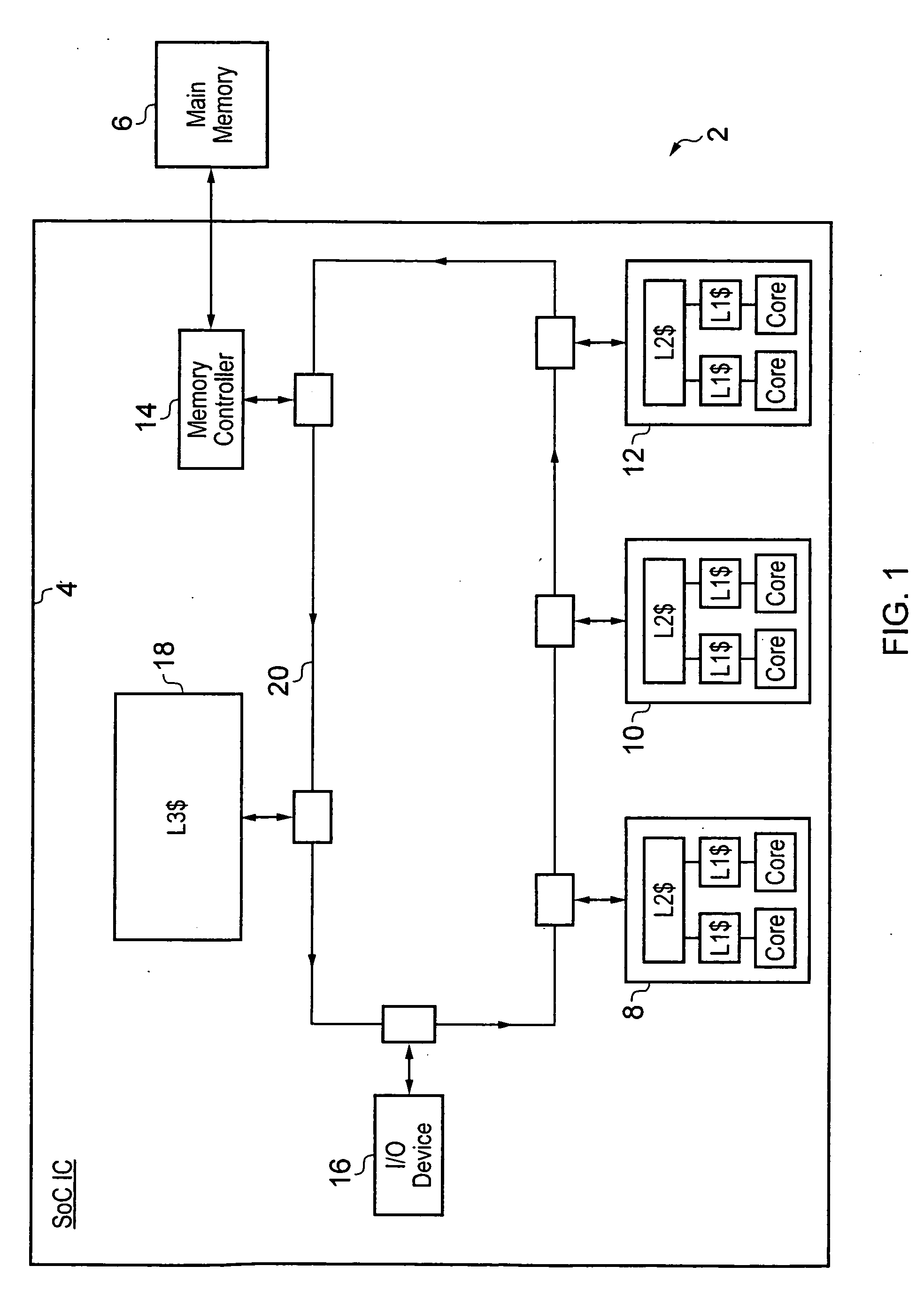

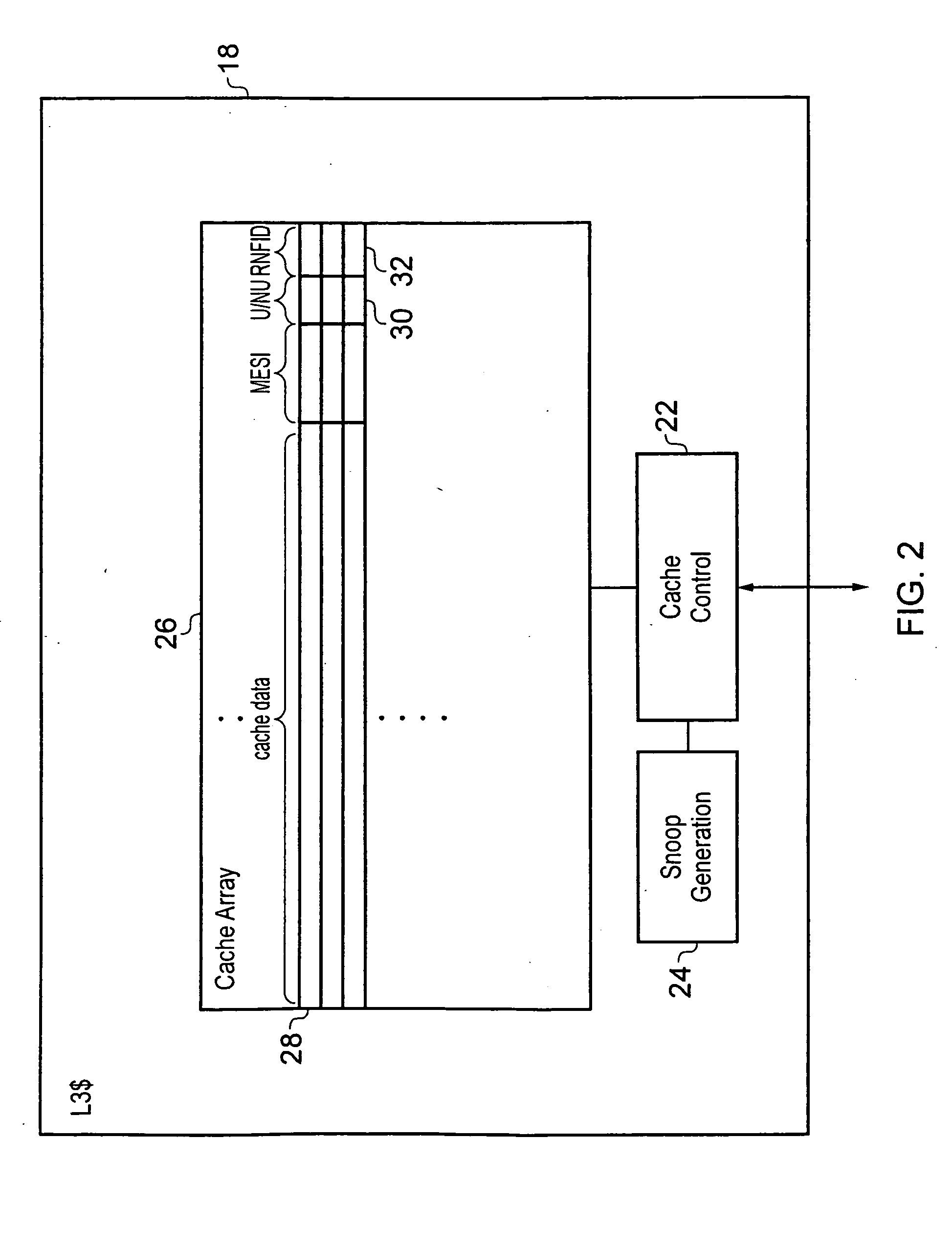

A data processing system 2 includes a cache hierarchy having a plurality of local cache memories and a shared cache memory 18. State data 30, 32 stored within the shared cache memory 18 on a per cache line basis is used to control whether or not that cache line of data is stored and managed in accordance with non-inclusive operation or inclusive operation of the cache memory system. Snoop transactions are filtered on the basis of data indicating whether or not a cache line of data is unique or non-unique. A switch from non-inclusive operation to inclusive operation may be performed in dependence upon the transaction type of a received transaction requesting a cache line of data.

Owner:ARM LTD

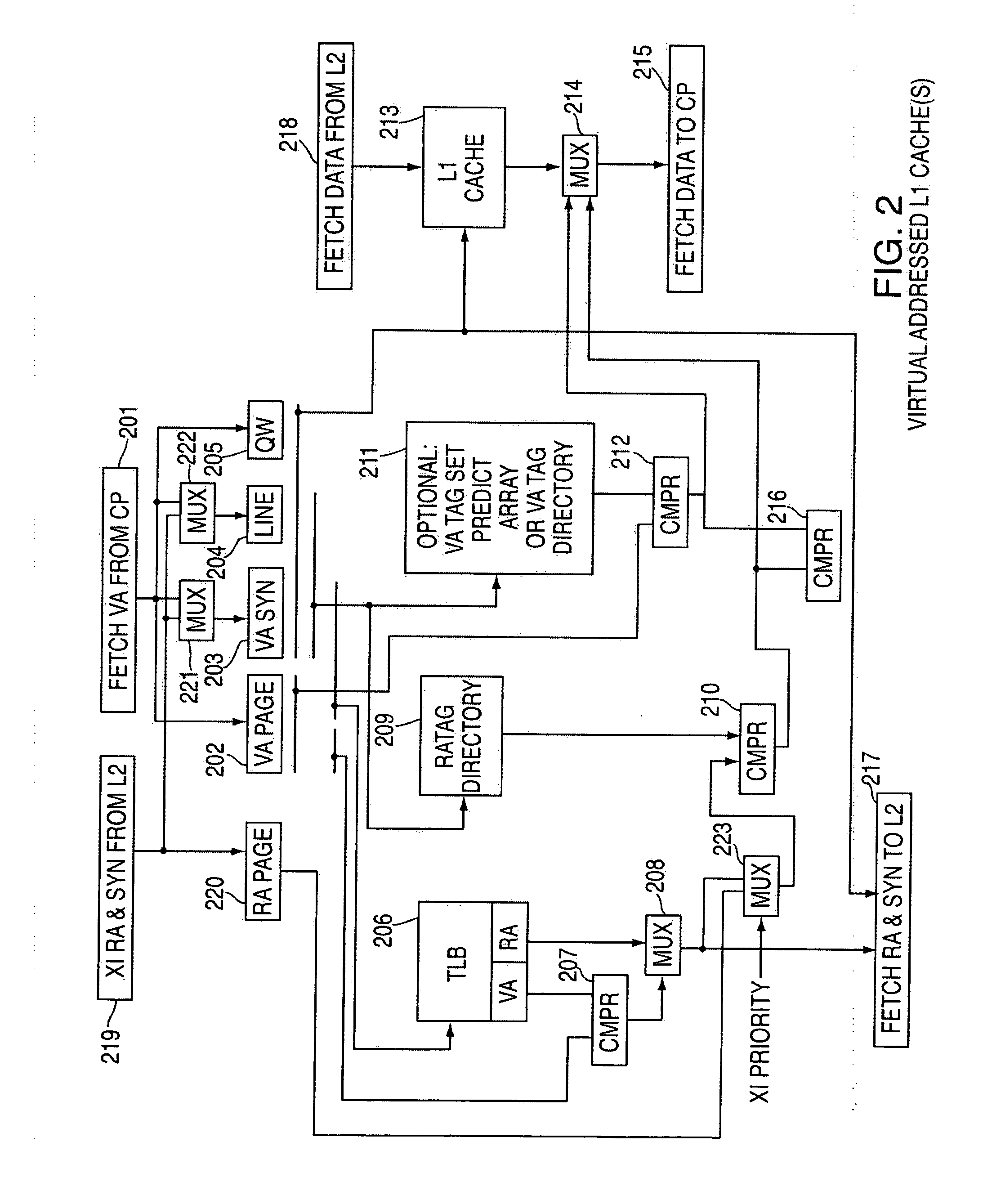

Method and system for a multi-level virtual/real cache system with synonym resolution

ActiveUS20090216949A1Memory adressing/allocation/relocationMicro-instruction address formationCache hierarchyParallel computing

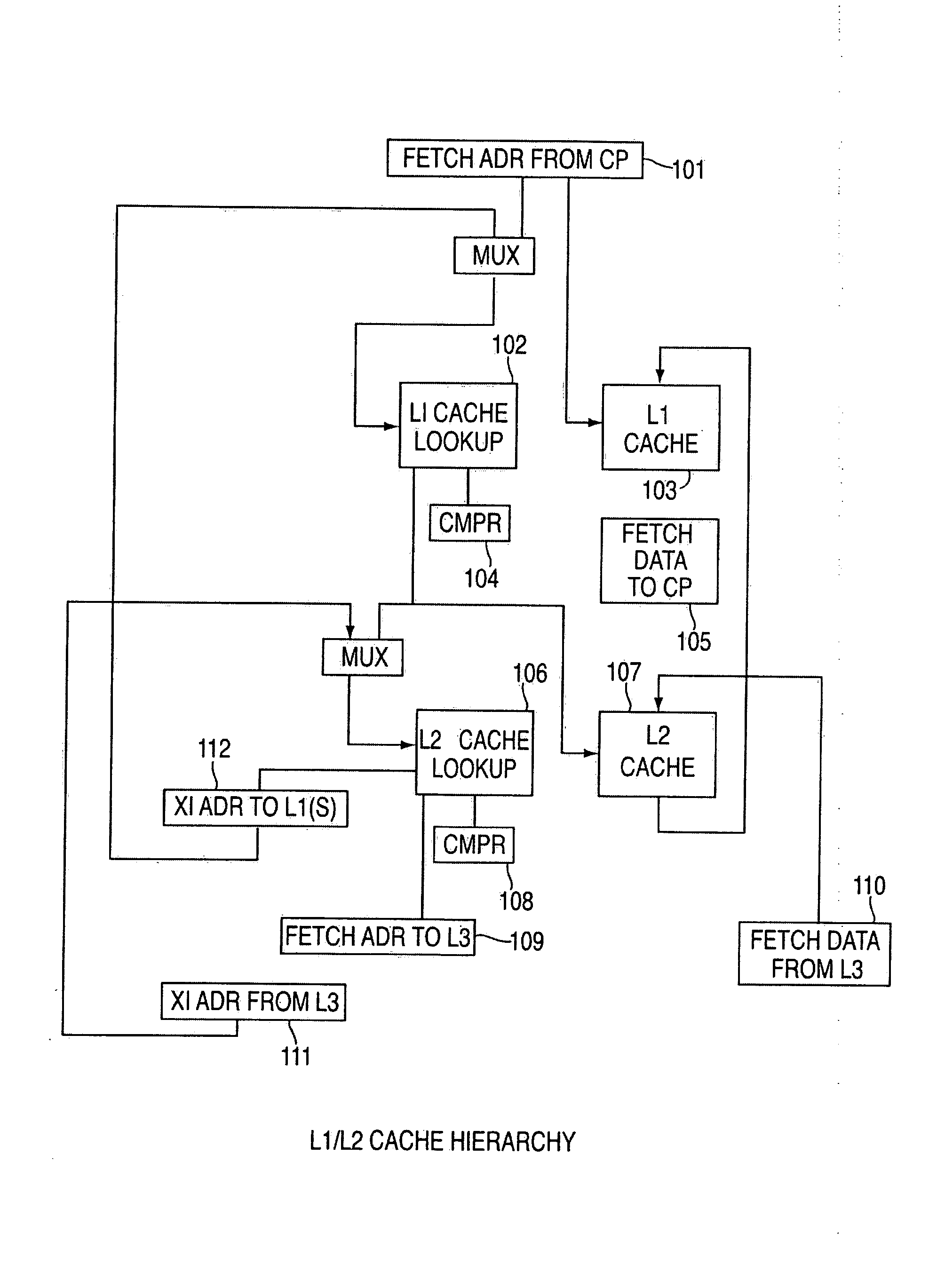

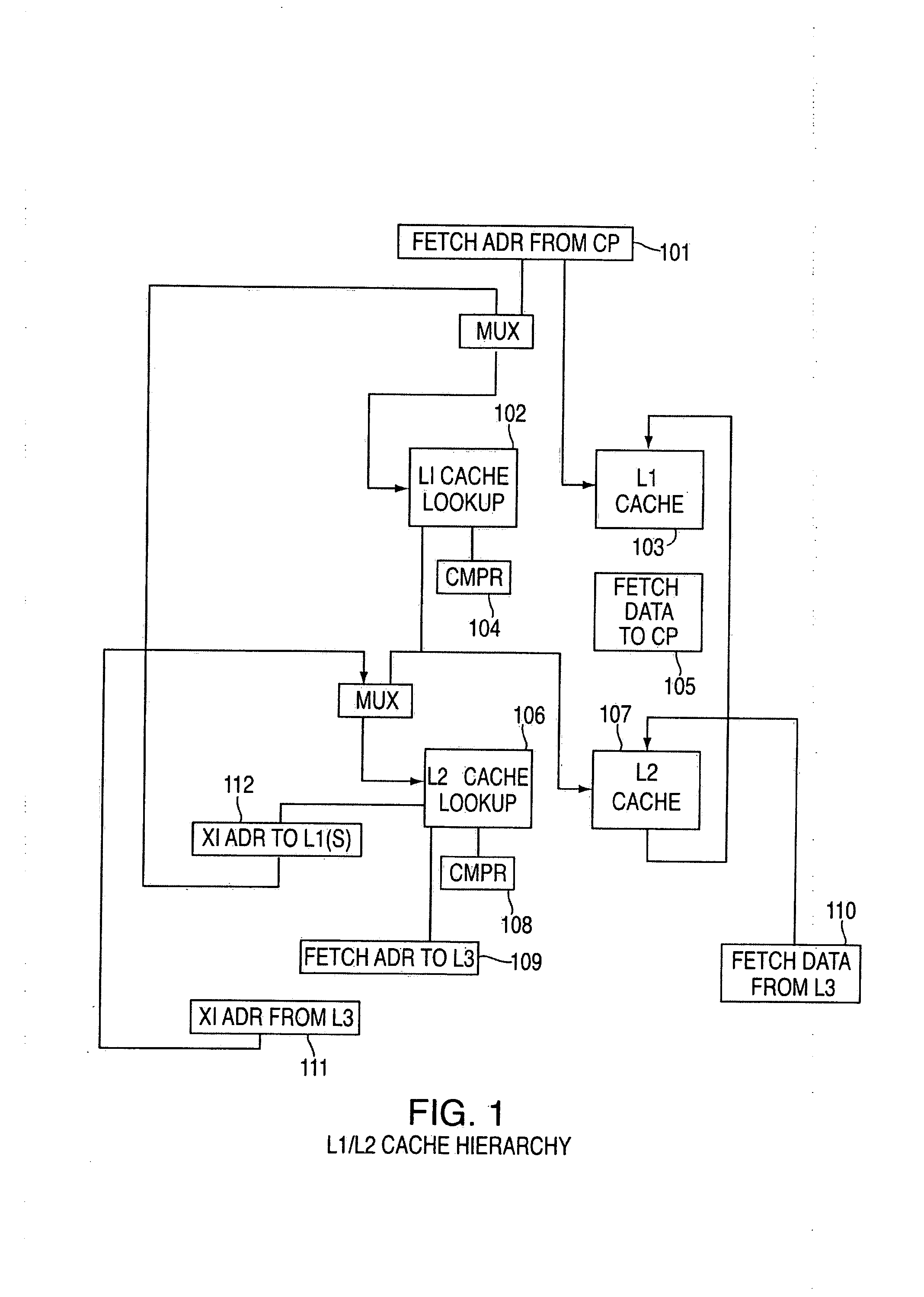

Method and system for a multi-level virtual / real cache system with synonym resolution. An exemplary embodiment includes a multi-level cache hierarchy, including a set of L1 caches associated with one or more processor cores and a set of L2 caches, wherein the set of L1 caches are a subset of the set of L2 caches, wherein the set of L1 caches underneath a given L2 cache are associated with one or more of the processor cores.

Owner:DAEDALUS BLUE LLC

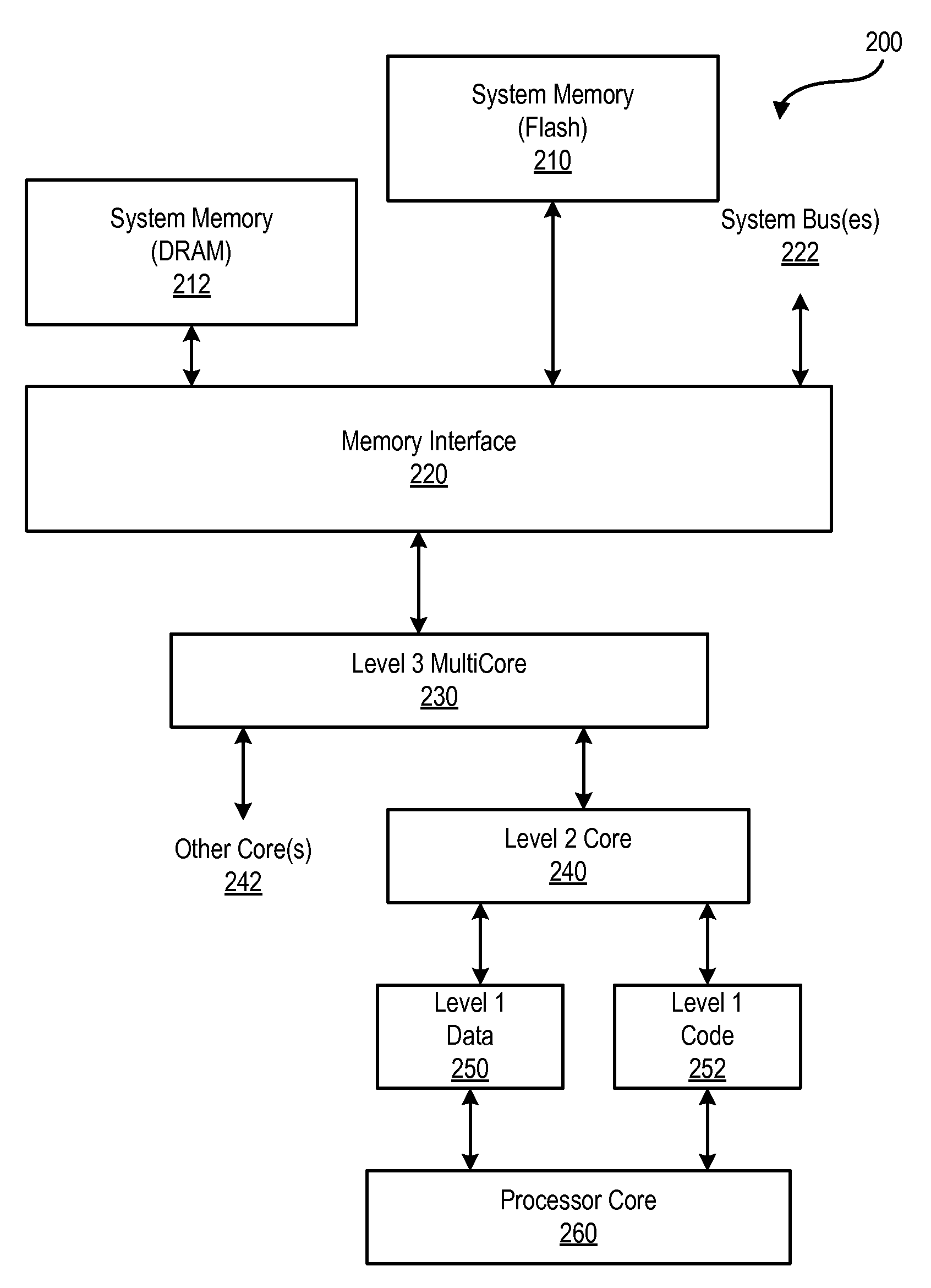

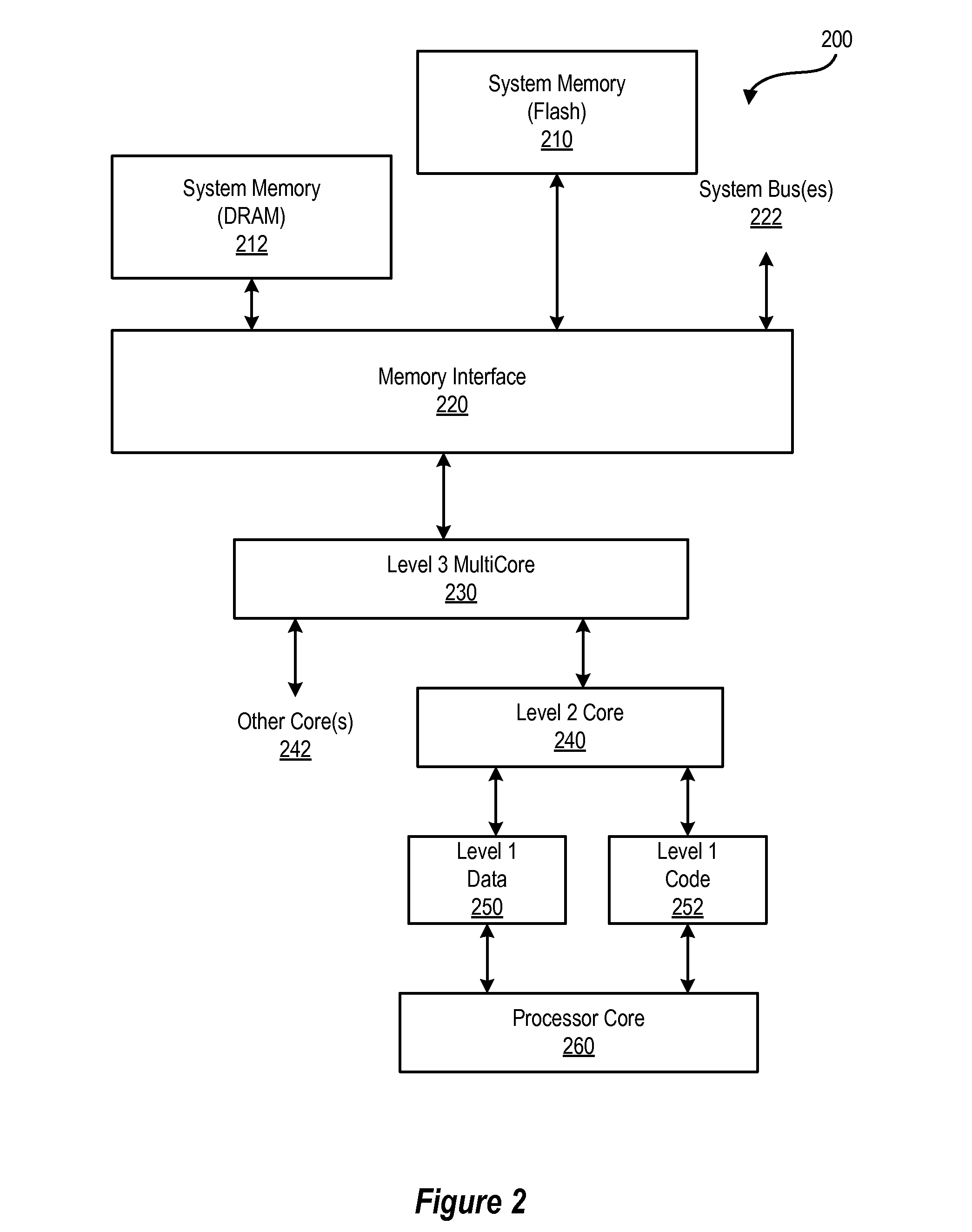

Multiple Cache Line Size

InactiveUS20100185816A1Improve performanceLow costMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyParallel computing

A mechanism which allows pages of flash memory to be read directly into cache. The mechanism enables different cache line sizes for different cache levels in a cache hierarchy, and optionally, multiple line size support, simultaneously or as an initialization option, in the highest level (largest / slowest) cache. Such a mechanism improves performance and reduces cost for some applications.

Owner:DELL PROD LP

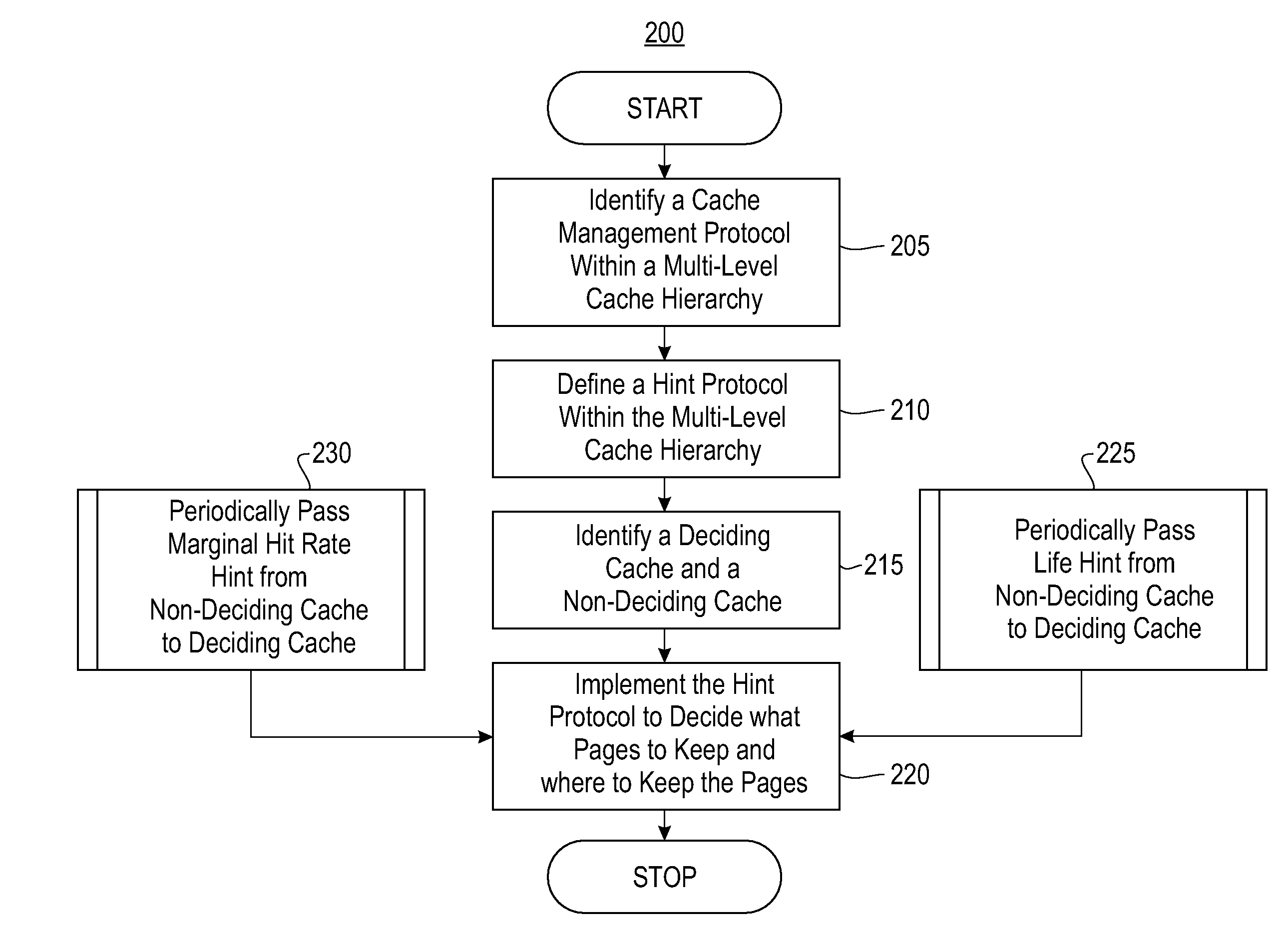

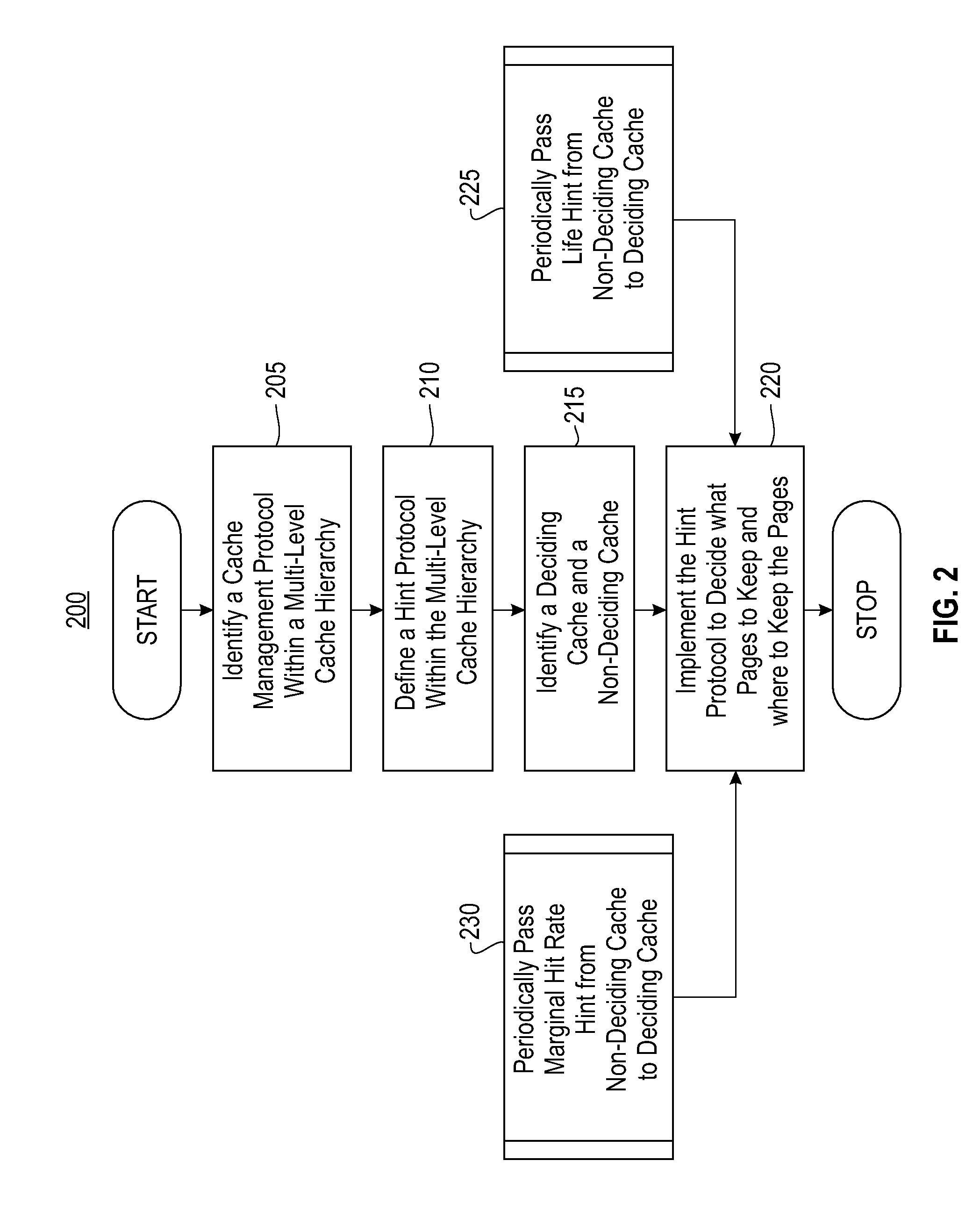

Systems and methods for multi-level exclusive caching using hints

InactiveUS20080256294A1Memory architecture accessing/allocationMemory systemsCache hierarchyParallel computing

Systems and methods for multi-level exclusive caching using hints. Exemplary embodiments include a method for multi-level exclusive caching, the method including identifying a cache management protocol within a multi-level cache hierarchy having a plurality of caches, defining a hint protocol within the multi-level cache hierarchy, identifying deciding caches and non-deciding caches within the multi-level cache hierarchy and implementing the hint protocol in conjunction with the cache management protocol to decide which pages within the multi-level cache to retain and where to store the pages.

Owner:LINKEDIN

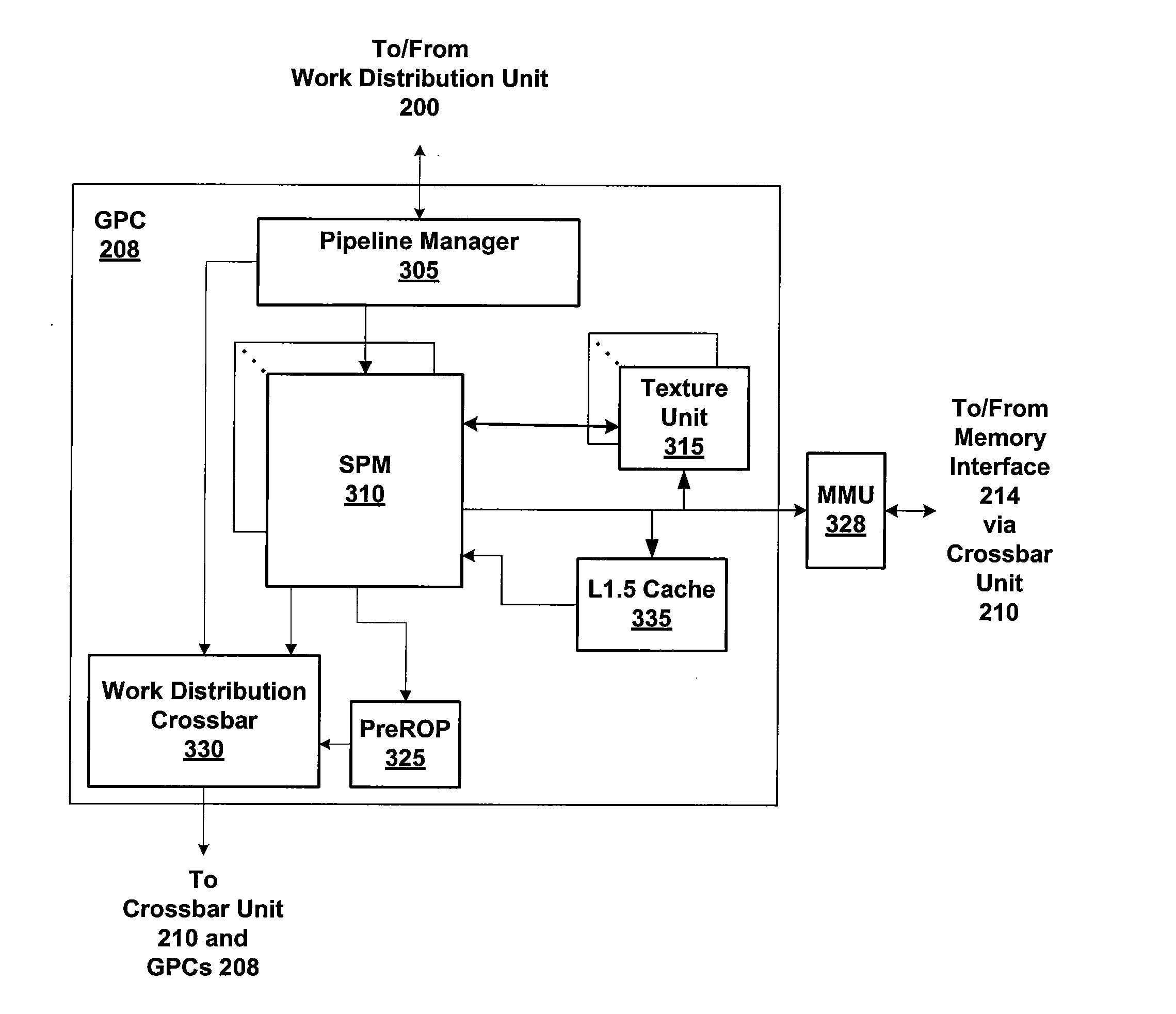

Cache Operations and Policies For A Multi-Threaded Client

A method for managing a parallel cache hierarchy in a processing unit. The method including receiving an instruction that includes a cache operations modifier that identifies a level of the parallel cache hierarchy in which to cache data associated with the instruction; and implementing a cache replacement policy based on the cache operations modifier.

Owner:NVIDIA CORP

Method and apparatus for optimizing cache hit ratio in non L1 caches

ActiveUS7035979B2Improve processor performanceMemory architecture accessing/allocationEnergy efficient ICTCache hierarchyMonitoring data

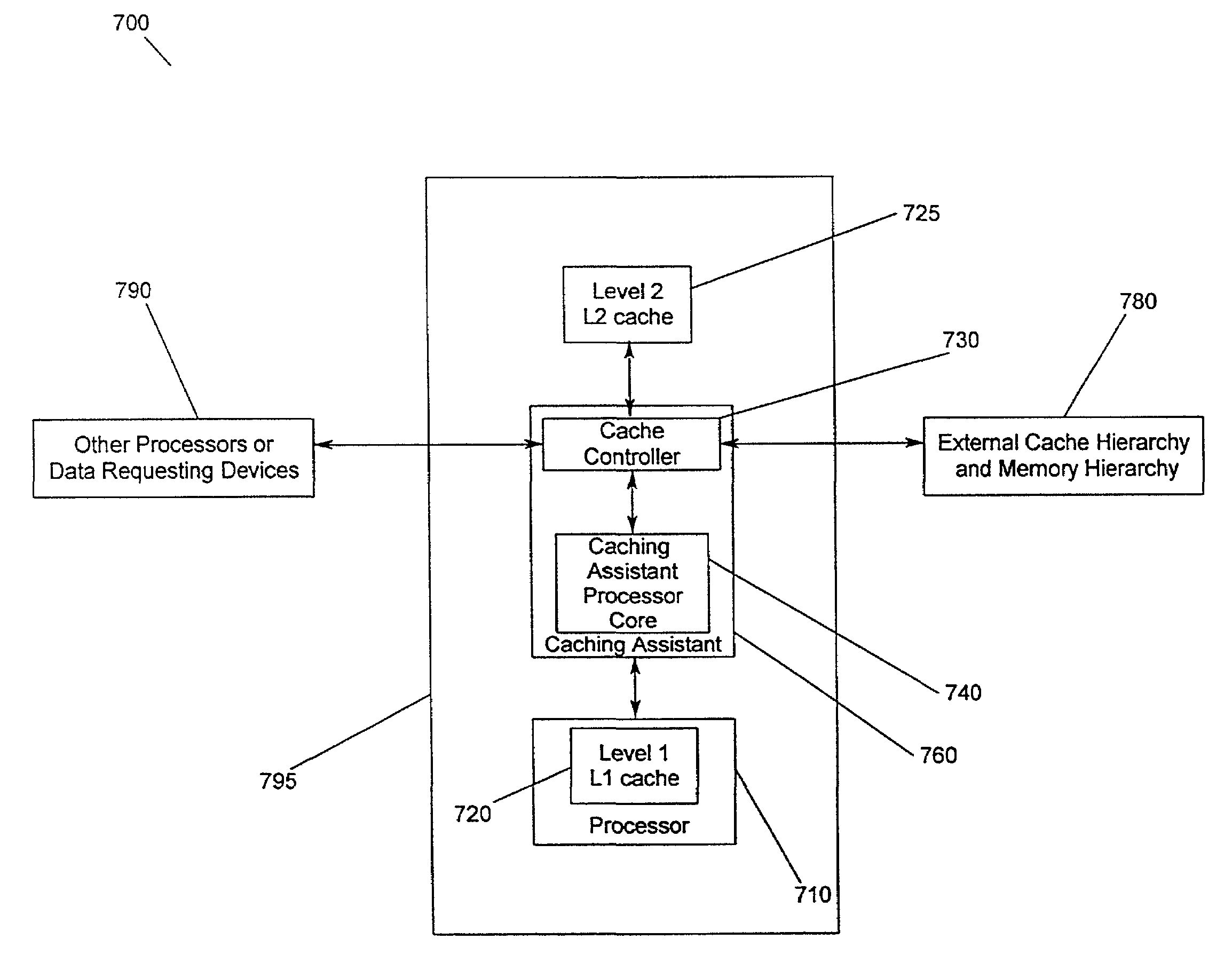

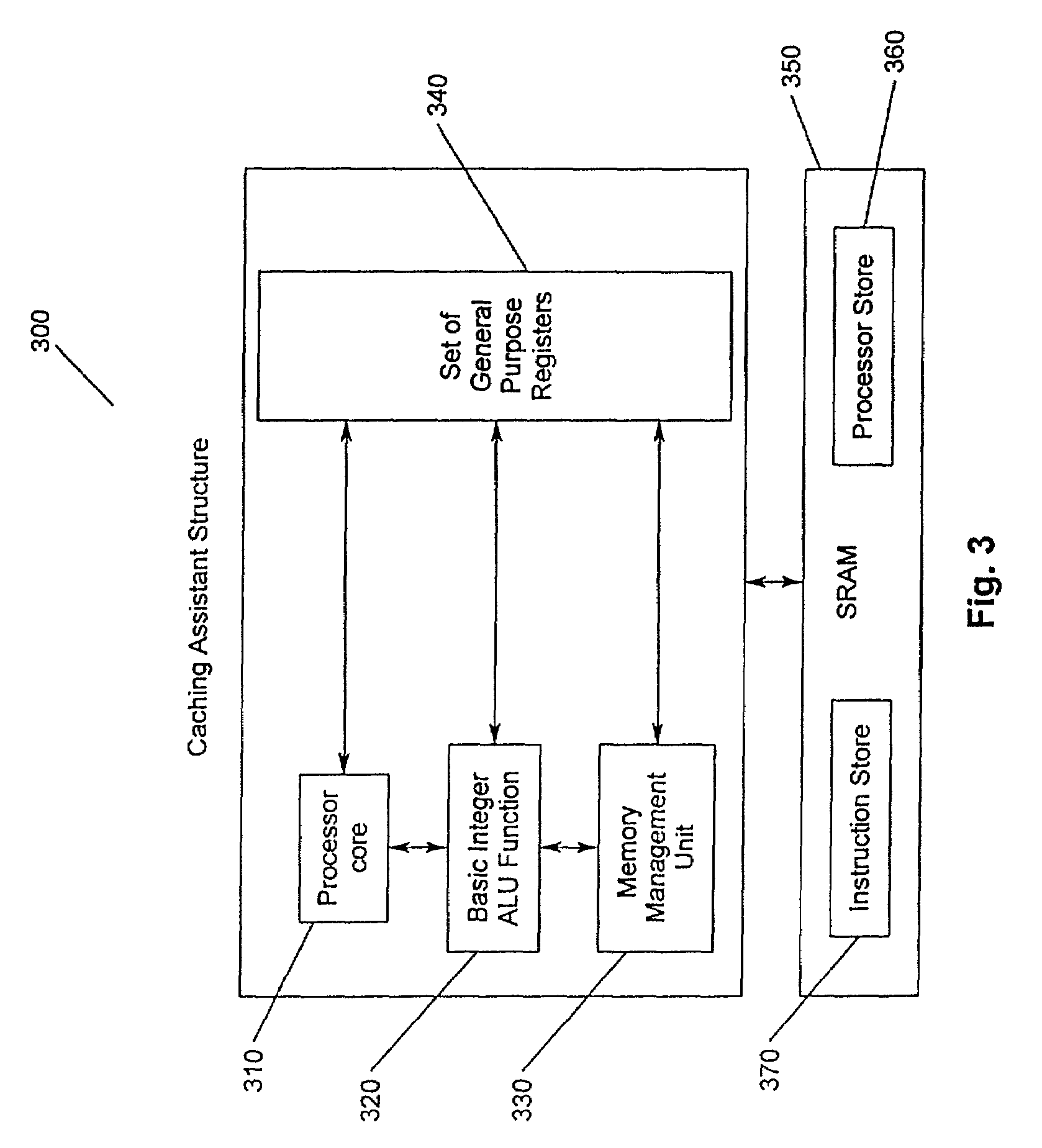

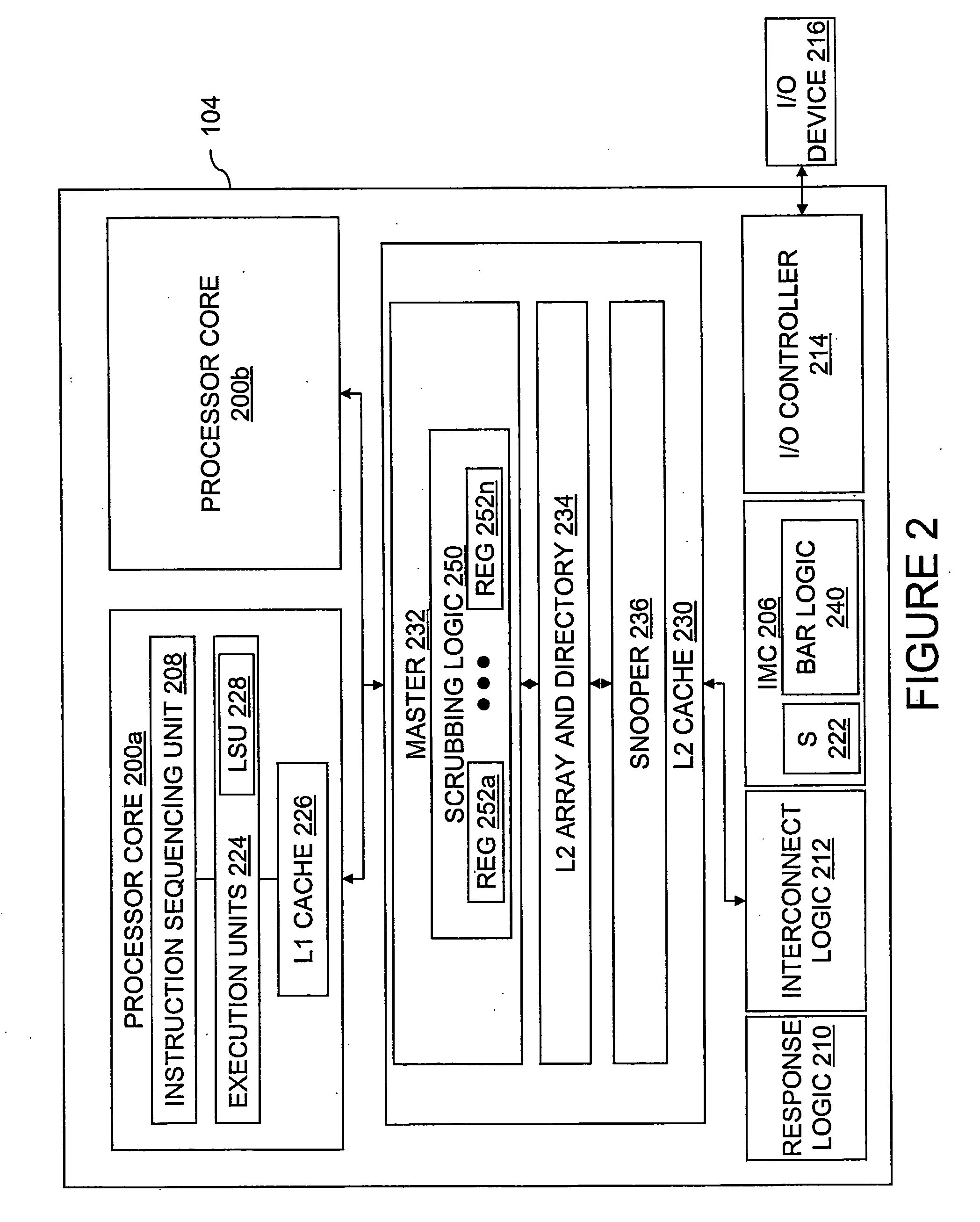

A method and apparatus for increasing the performance of a computing system and increasing the hit ratio in at least one non-L1 cache. A caching assistant and a processor are embedded in a processing system. The caching assistant analyzes system activity, monitors and coordinates data requests from the processor, processors and other data accessing devices, and monitors and analyzes data accesses throughout the cache hierarchy. The caching assistant is provided with a dedicated cache for storing fetched and prefetched data. The caching assistant improves the performance of the computing system by anticipating which data is likely to be requested for processing next, accessing and storing that data in an appropriate non-L1 cache prior to the data being requested by processors or data accessing devices. A method for increasing the processor performance includes analyzing system activity and optimizing a hit ratio in at least one non-L1 cache. The caching assistant performs processor data requests by accessing caches and monitoring the data requests to determine knowledge of the program code currently being processed and to determine if patterns of data accession exist. Based upon the knowledge gained through monitoring data accession, the caching assistant anticipates future data requests.

Owner:IBM CORP

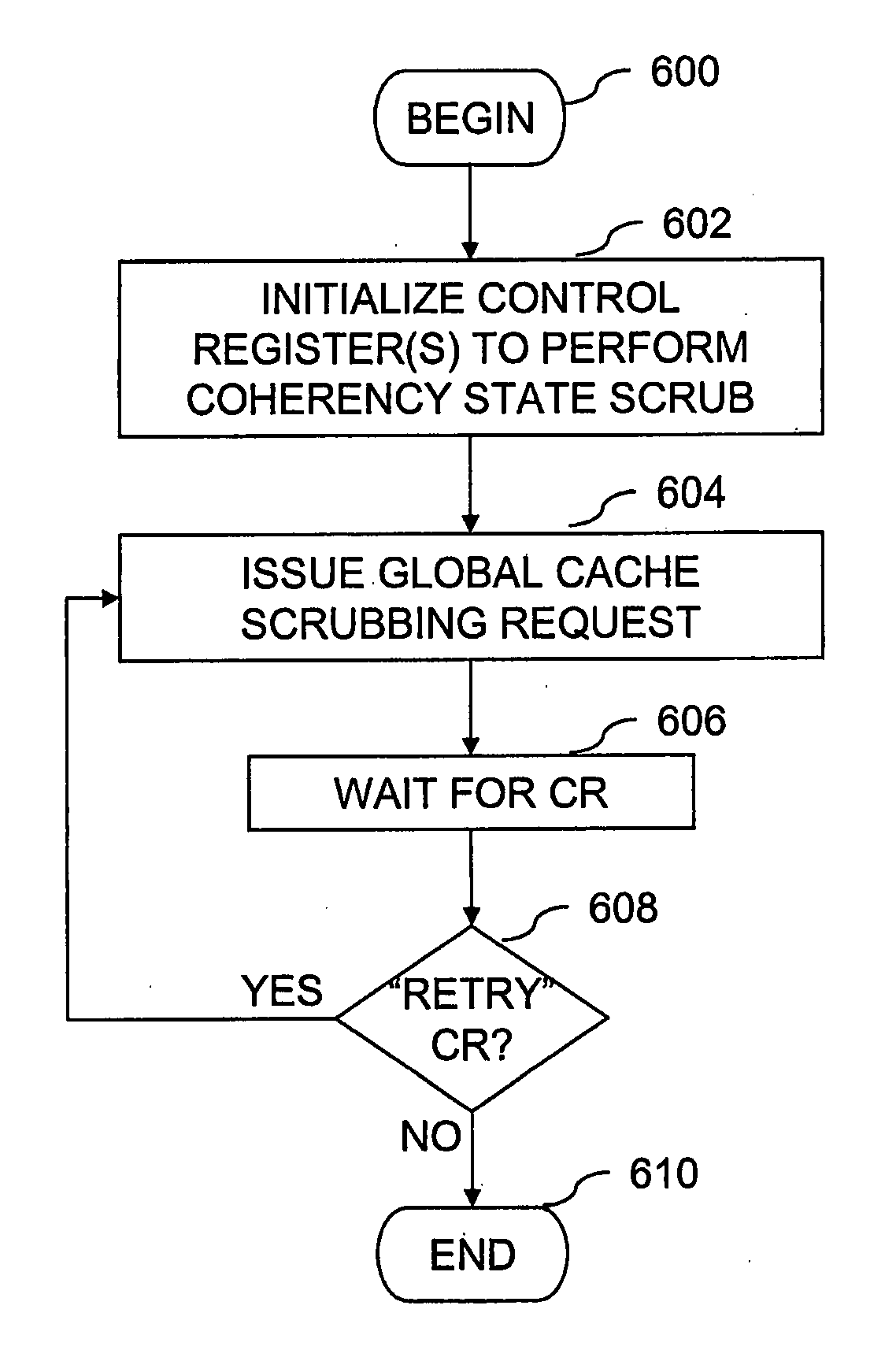

Data processing system, cache system and method for passively scrubbing a domain indication

Scrubbing logic in a local coherency domain issues a domain query request to at least one cache hierarchy in a remote coherency domain. The domain query request is a non-destructive probe of a coherency state associated with a target memory block by the at least one cache hierarchy. A coherency response to the domain query request is received. In response to the coherency response indicating that the target memory block is not cached in the remote coherency domain, a domain indication in the local coherency domain is updated to indicate that the target memory block is cached, if at all, only within the local coherency domain.

Owner:IBM CORP

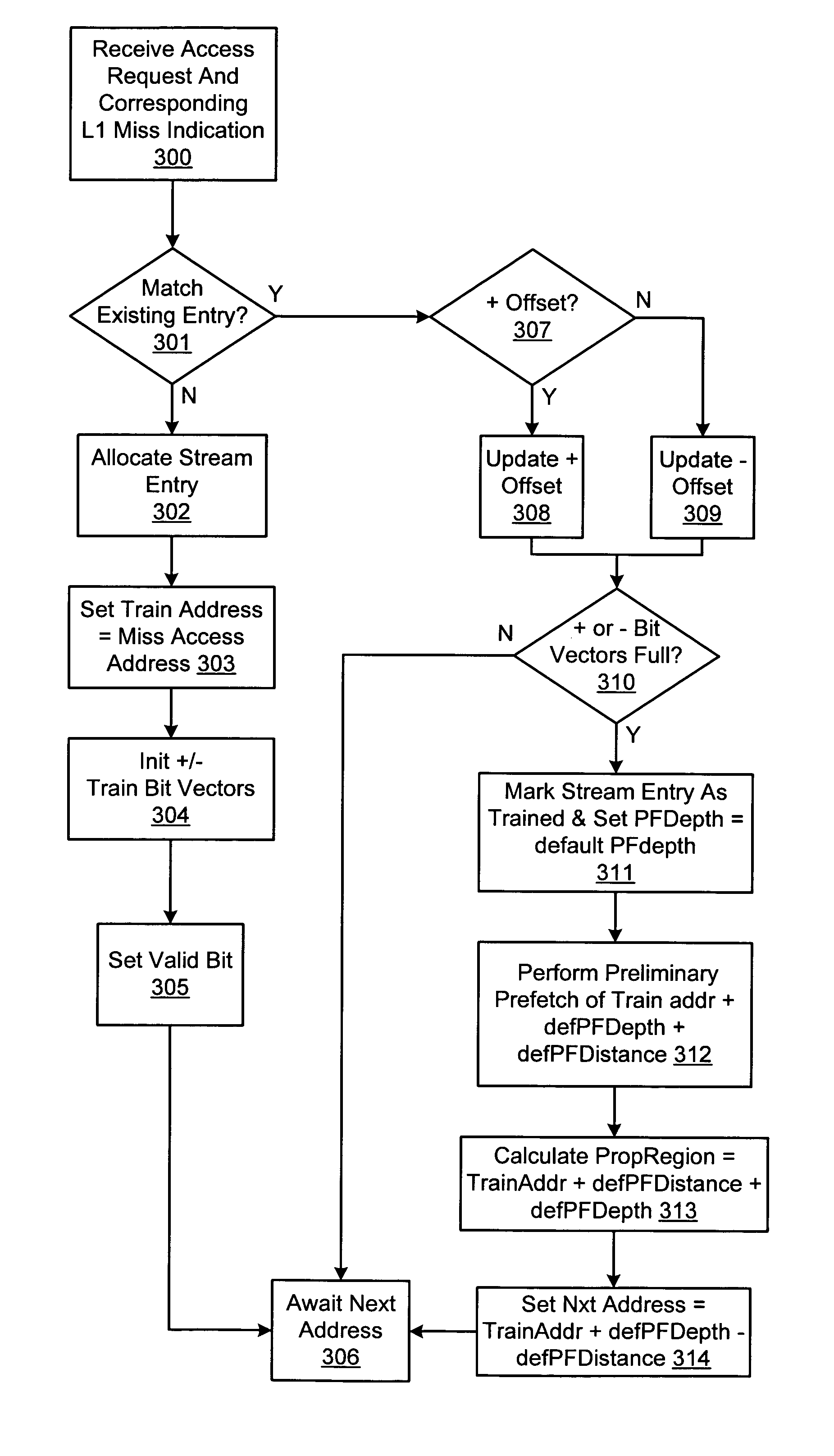

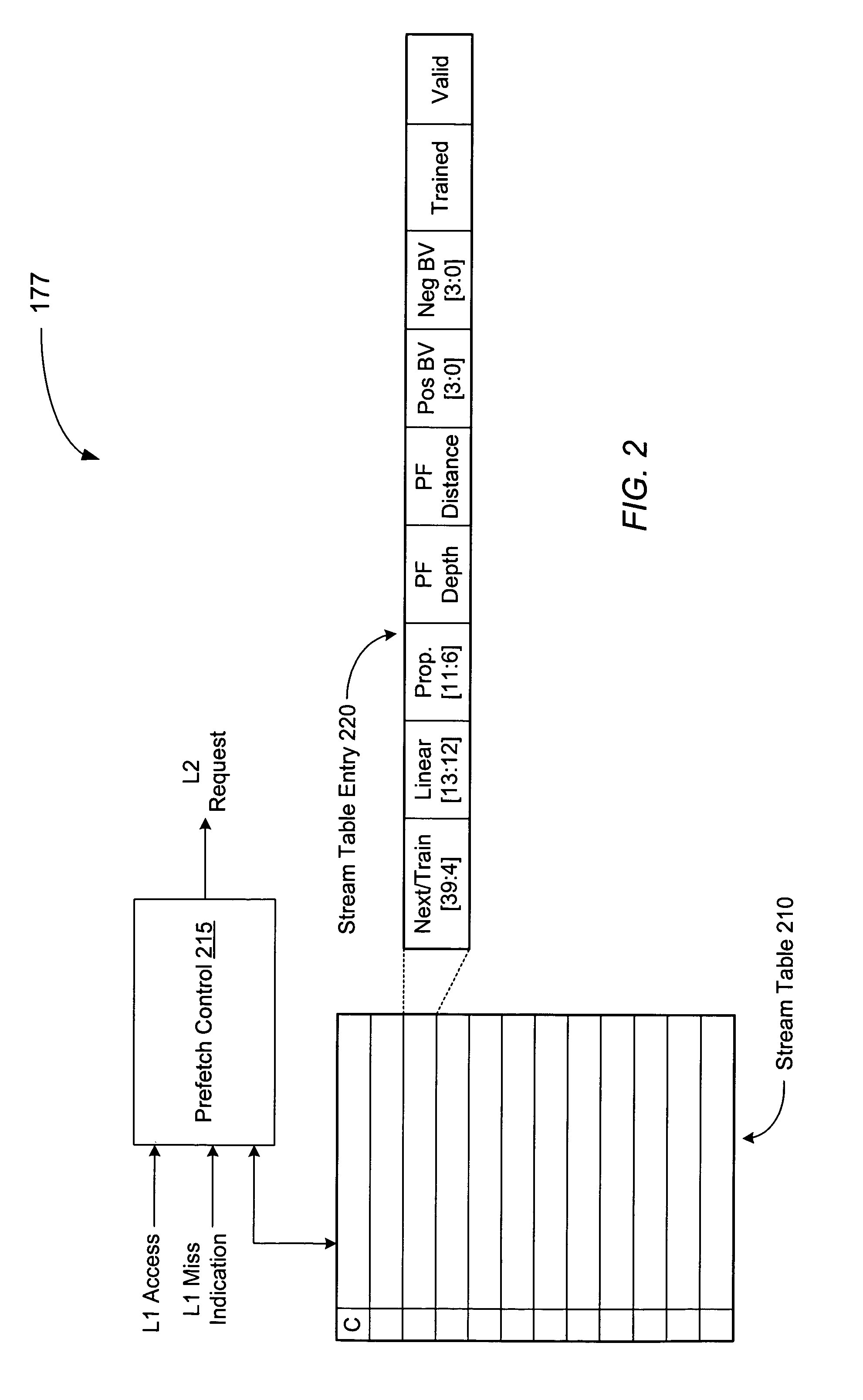

Prefetch unit for use with a cache memory subsystem of a cache memory hierarchy

ActiveUS7836259B1Memory architecture accessing/allocationMemory systemsMemory hierarchyCache hierarchy

A prefetch unit for use with a cache subsystem. The prefetch unit includes a stream storage coupled to a prefetch unit. The stream storage may include a plurality of locations configured to store a plurality of entries each corresponding to a respective range of prefetch addresses. The prefetch control may be configured to prefetch an address in response to receiving a cache access request including an address that is within the respective range of prefetch addresses of any of the plurality of entries.

Owner:ADVANCED MICRO DEVICES INC

Prioritizing and locking removed and subsequently reloaded cache lines

InactiveUS6901483B2Low cache hit rateInefficiency described above will be avoidedMemory adressing/allocation/relocationCache hierarchyParallel computing

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com