Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1762results about How to "Improve hit rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

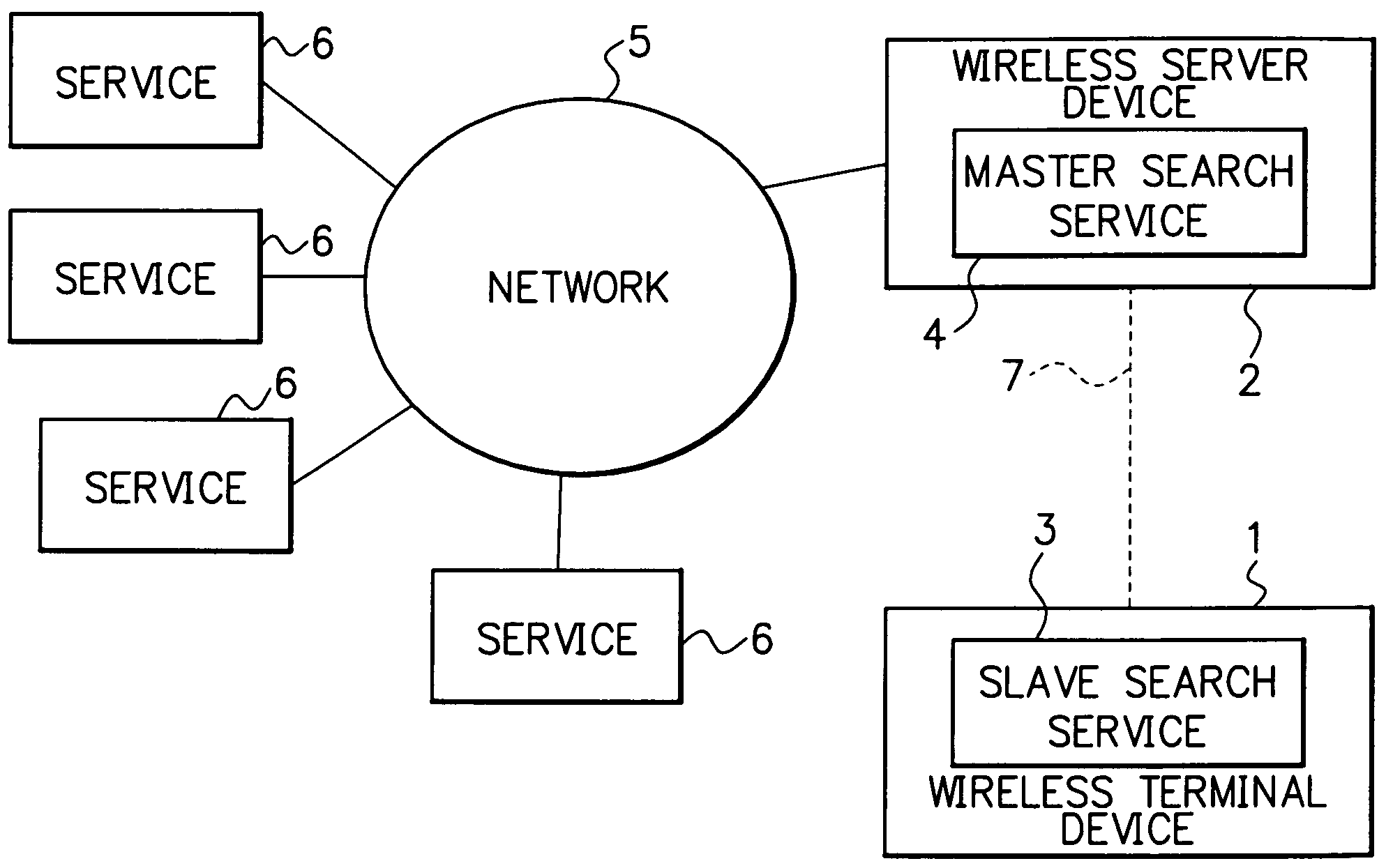

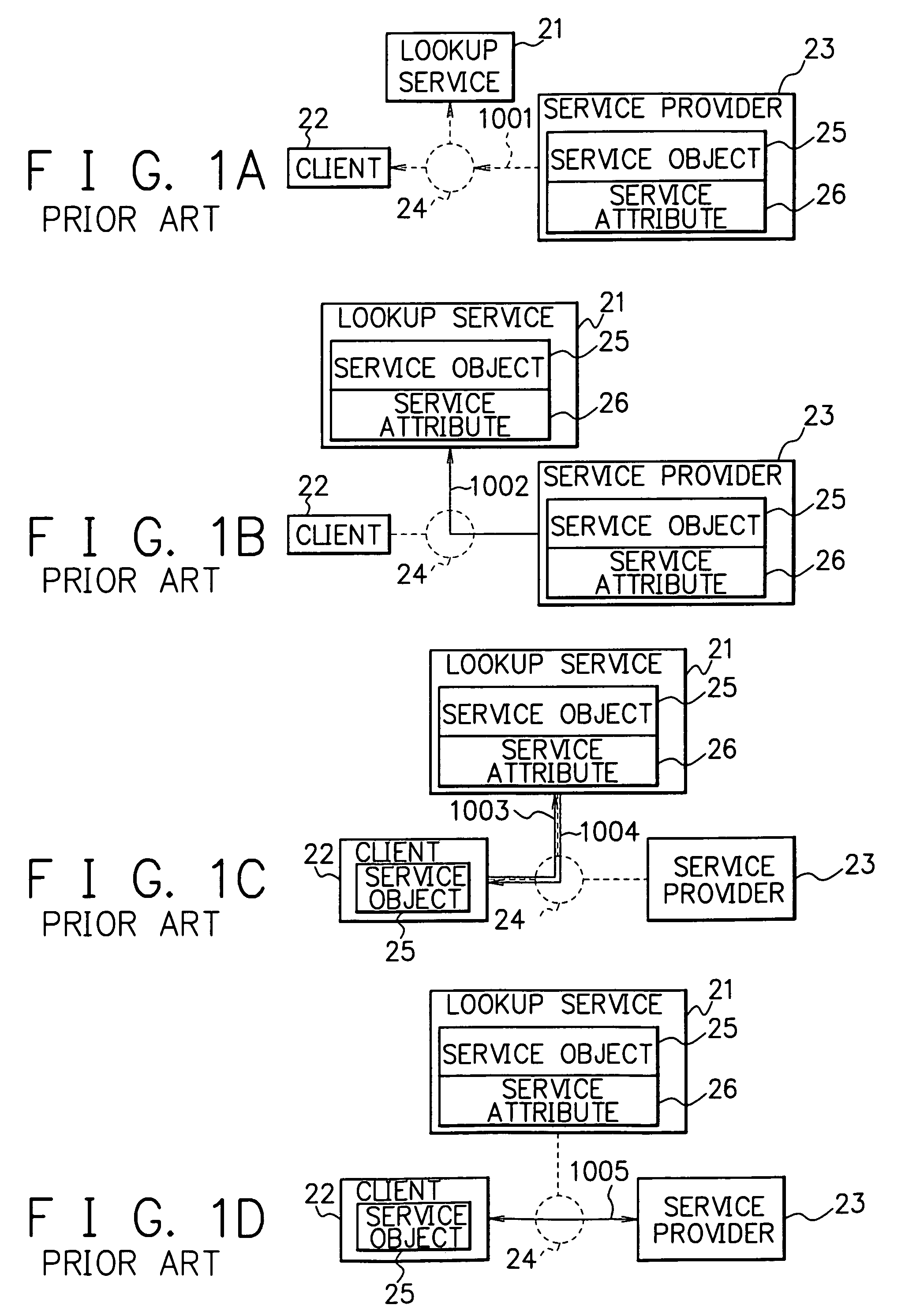

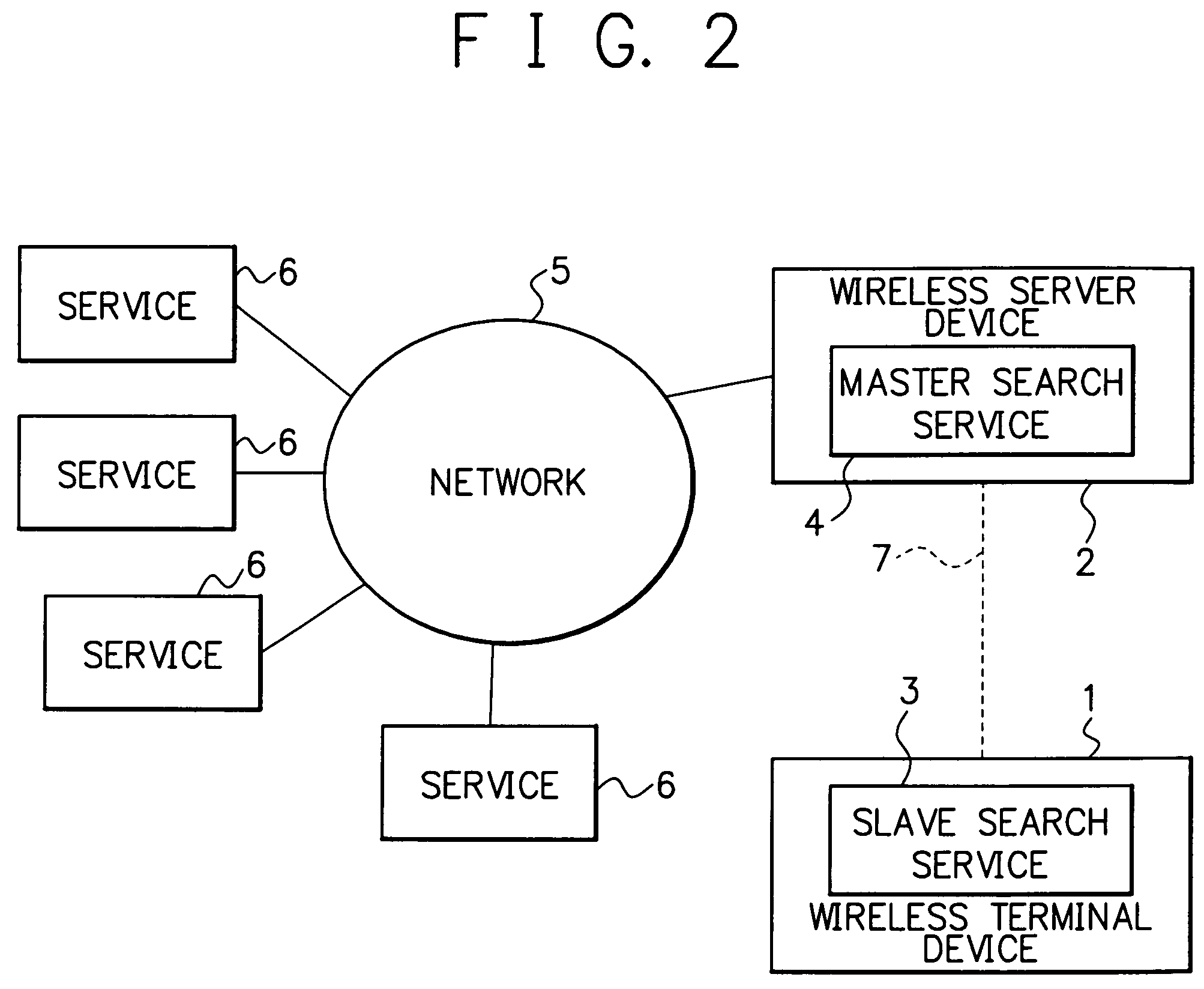

Service searching system

InactiveUS7139569B2Improve service rateReduce communication data volumeSpecial service for subscribersDigital computer detailsTerminal equipmentServer appliance

A service searching system for searching a service in a distributed system comprises a wireless server device and a wireless terminal device. The wireless server device is connected to a network and implements a master search service. On the other hand, the wireless terminal device implements a slave search service, and is capable of communicating with the server by wireless and utilizing the master search service. Besides, the wireless terminal device comprises a storage means that caches service objects obtained through the master search service. In addition, in searching the slave search service for a service, the wireless terminal device begins by searching the service objects cached in the storage means. In the case where the service is not detected, the wireless terminal device searches the master search service. The service objects are cached by being related to priority data. Thereby, there is provided a service searching system comprising a wireless terminal device, which is intended to search for a service in a distributed system in which a range of services are distributed in a network, wherein it is possible to cut down a service-searching time and costs, and further, it is possible to cut down communication interruptions by noise etc. in the wireless communication section compared to the conventional one.

Owner:NEC CORP

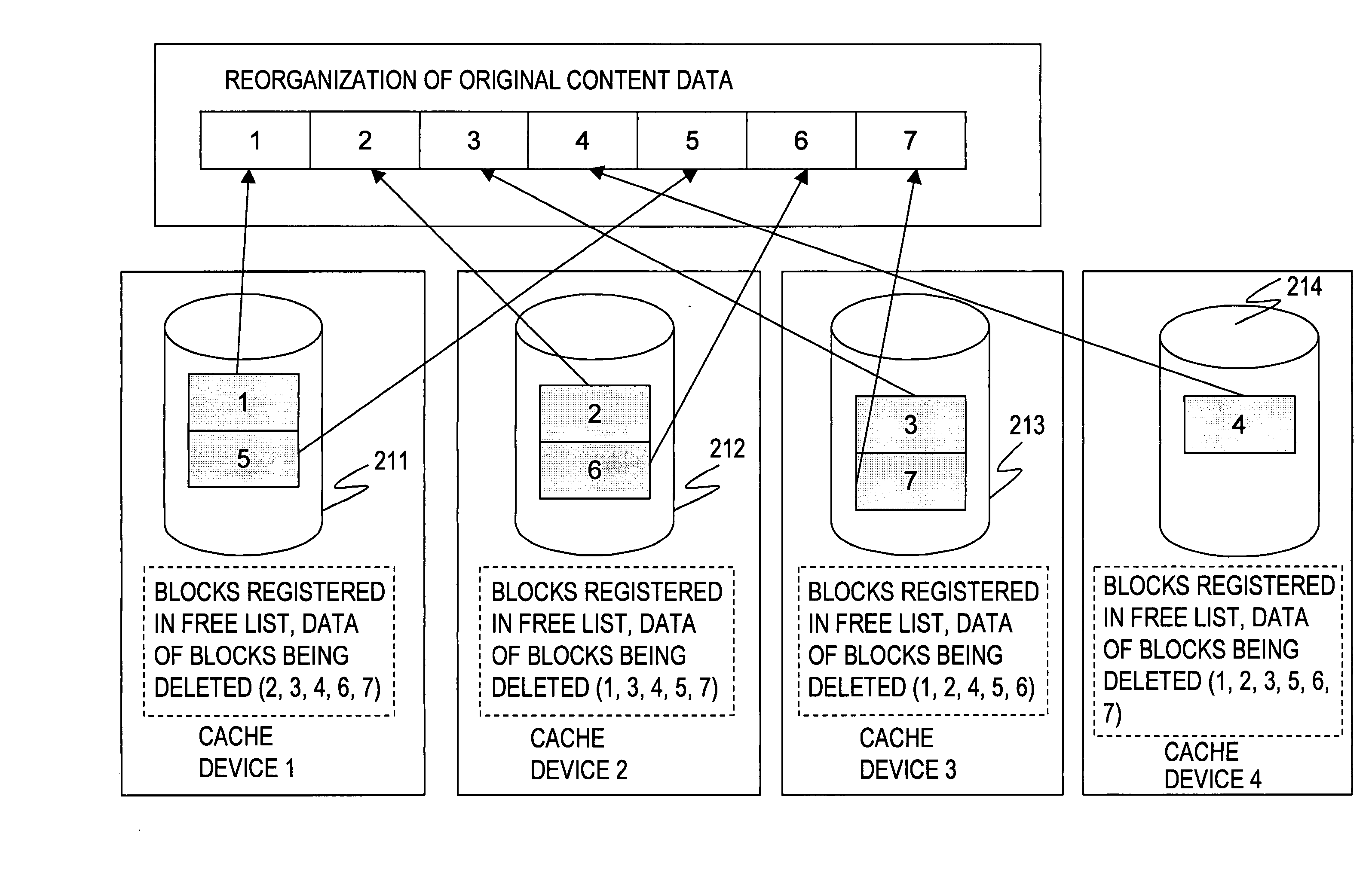

Cache device, cache data management method, and computer program

InactiveUS20050172080A1Easy to useFast dataMemory adressing/allocation/relocationData controlPending - status

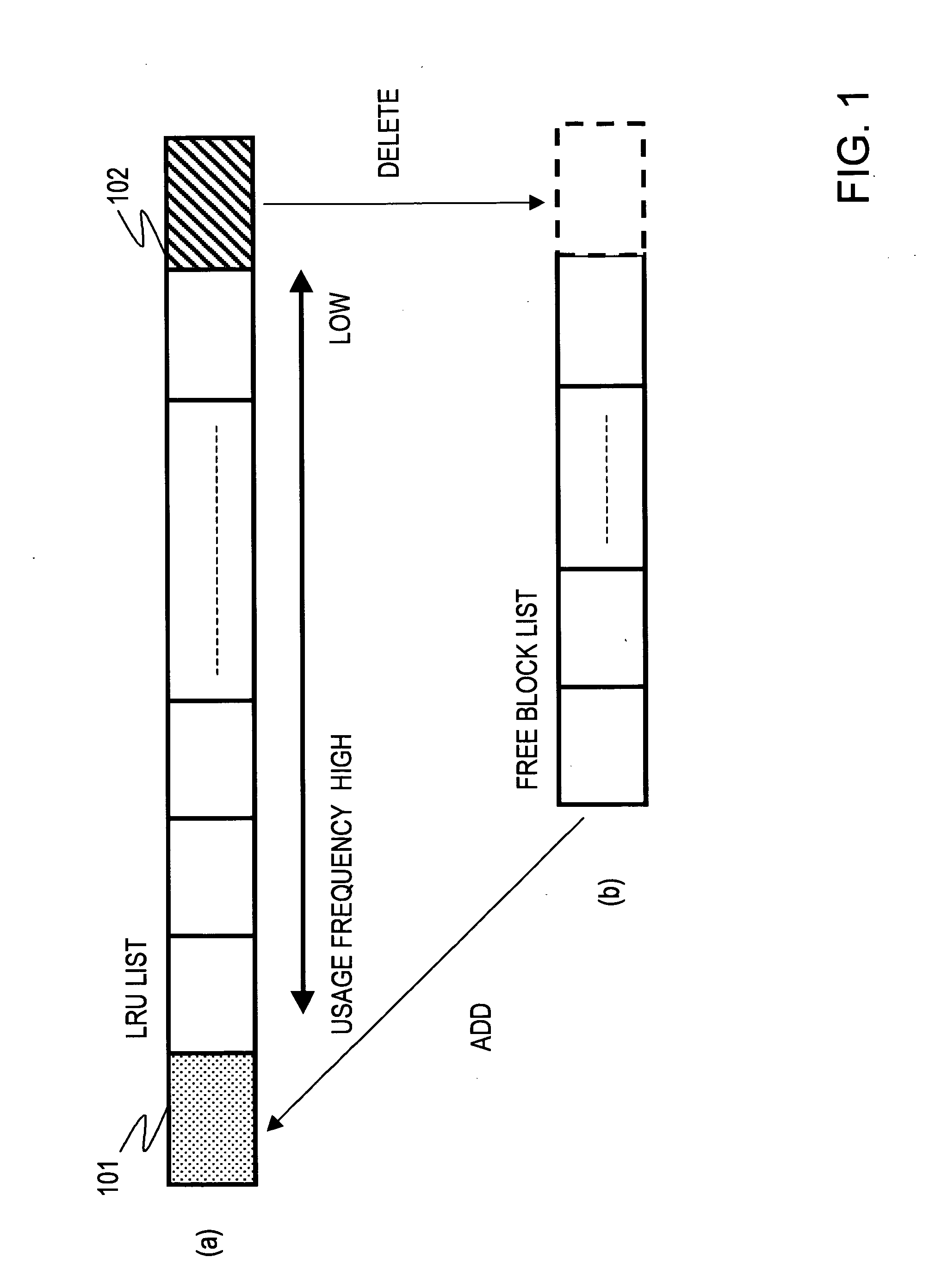

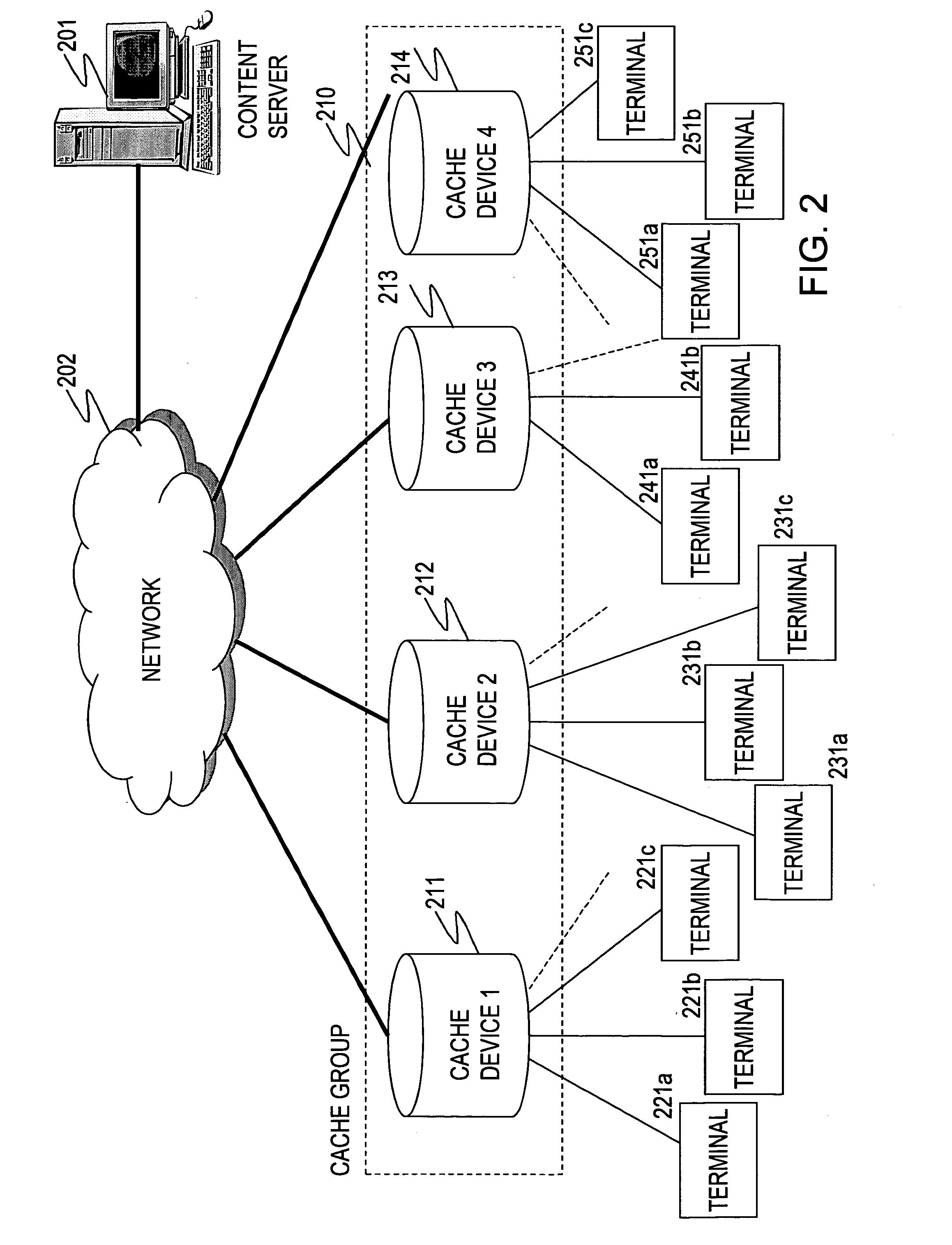

A cache device and a method for controlling cached data that enable efficient use of a storage area and improve the hit ratio are provided. When cache replacement is carried out in cache devices connected to each other through networks, data control is carried out so that data blocks set to a deletion pending status in each cache device, which includes lists regarding the data blocks set to a deletion pending status, in a cache group are different from those in other cache devices in the cache group. In this way, data control using deletion pending lists is carried out. According to the system of the present invention, a storage area can be used efficiently as compared with a case where each cache device independently controls cache replacement, and data blocks stored in a number of cache devices are collected to be sent to terminals in response to data acquisition requests from the terminals, thereby facilitating network traffic and improving the hit rate of the cache devices.

Owner:SONY CORP

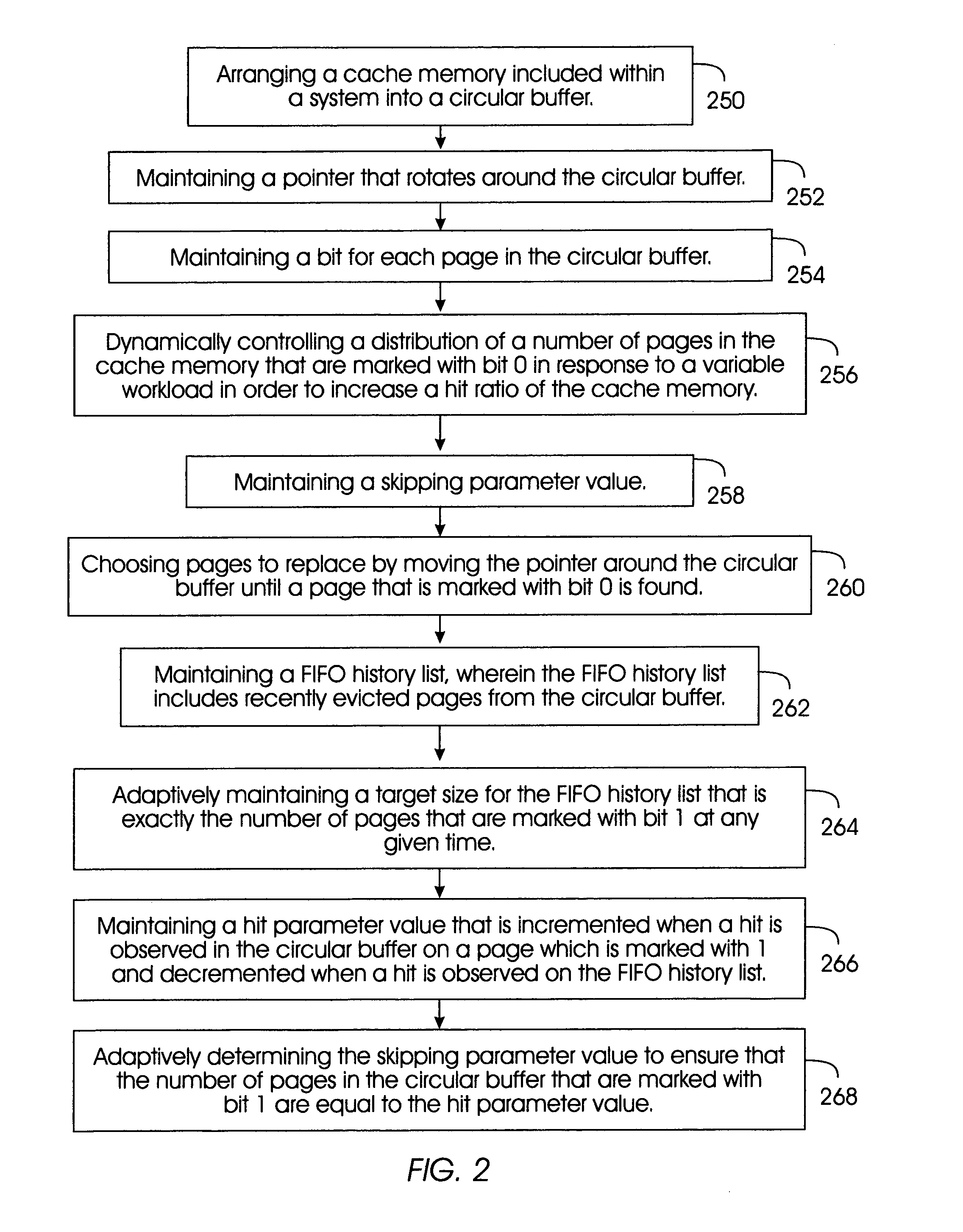

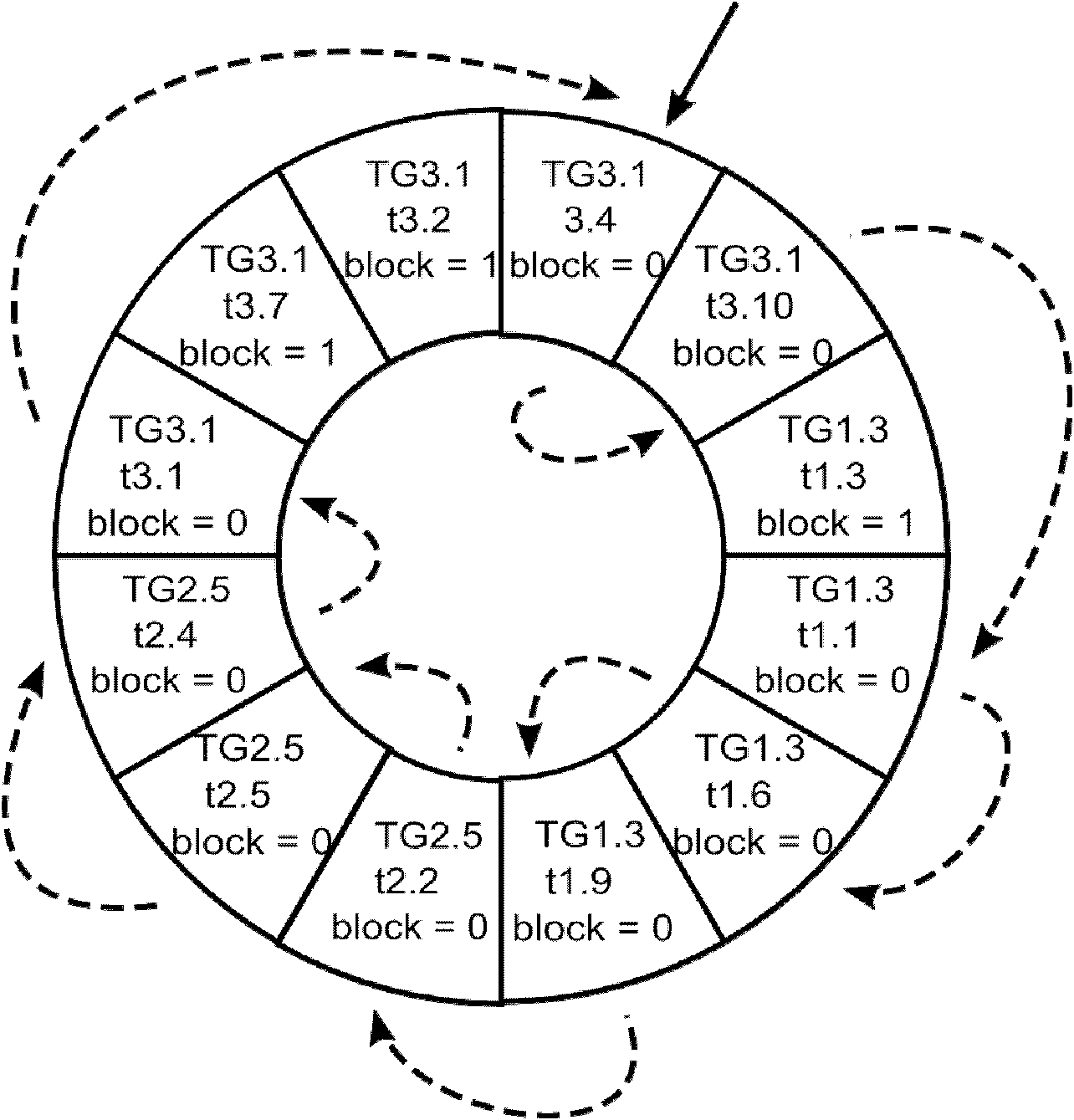

Method and system for a cache replacement technique with adaptive skipping

InactiveUS7096321B2Improve hit rateMemory architecture accessing/allocationMemory adressing/allocation/relocationCircular bufferWorkload

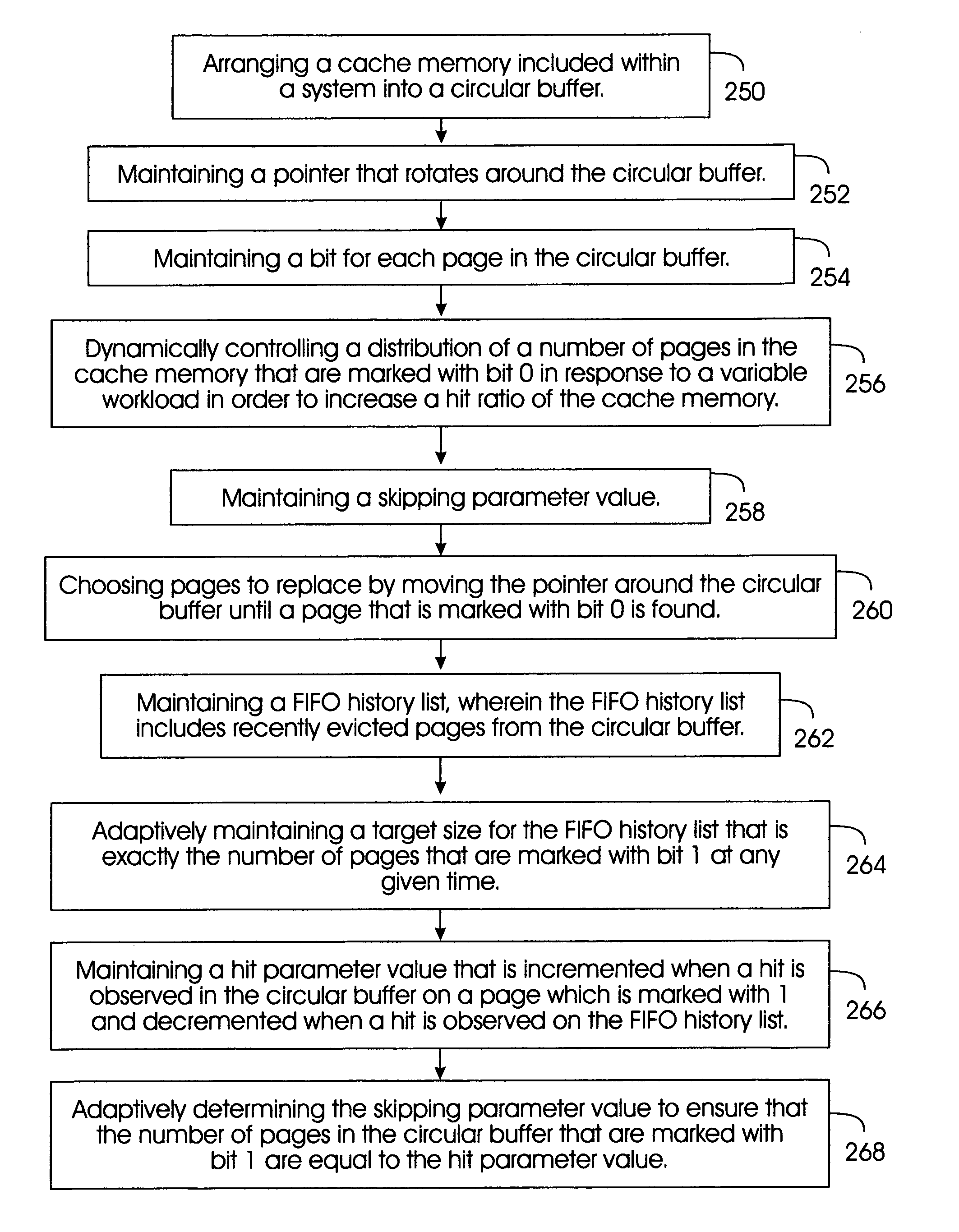

A method, system, and program storage medium for adaptively managing pages in a cache memory included within a system having a variable workload, comprising arranging a cache memory included within a system into a circular buffer; maintaining a pointer that rotates around the circular buffer; maintaining a bit for each page in the circular buffer, wherein a bit value 0 indicates that the page was not accessed by the system since a last time that the pointer traversed over the page, and a hit value 1 indicates that the page has been accessed since the last time the pointer traversed over the page; and dynamically controlling a distribution of a number of pages in the cache memory that are marked with bit 0 in response to a variable workload in order to increase a hit ratio of the cache memory.

Owner:INT BUSINESS MASCH CORP

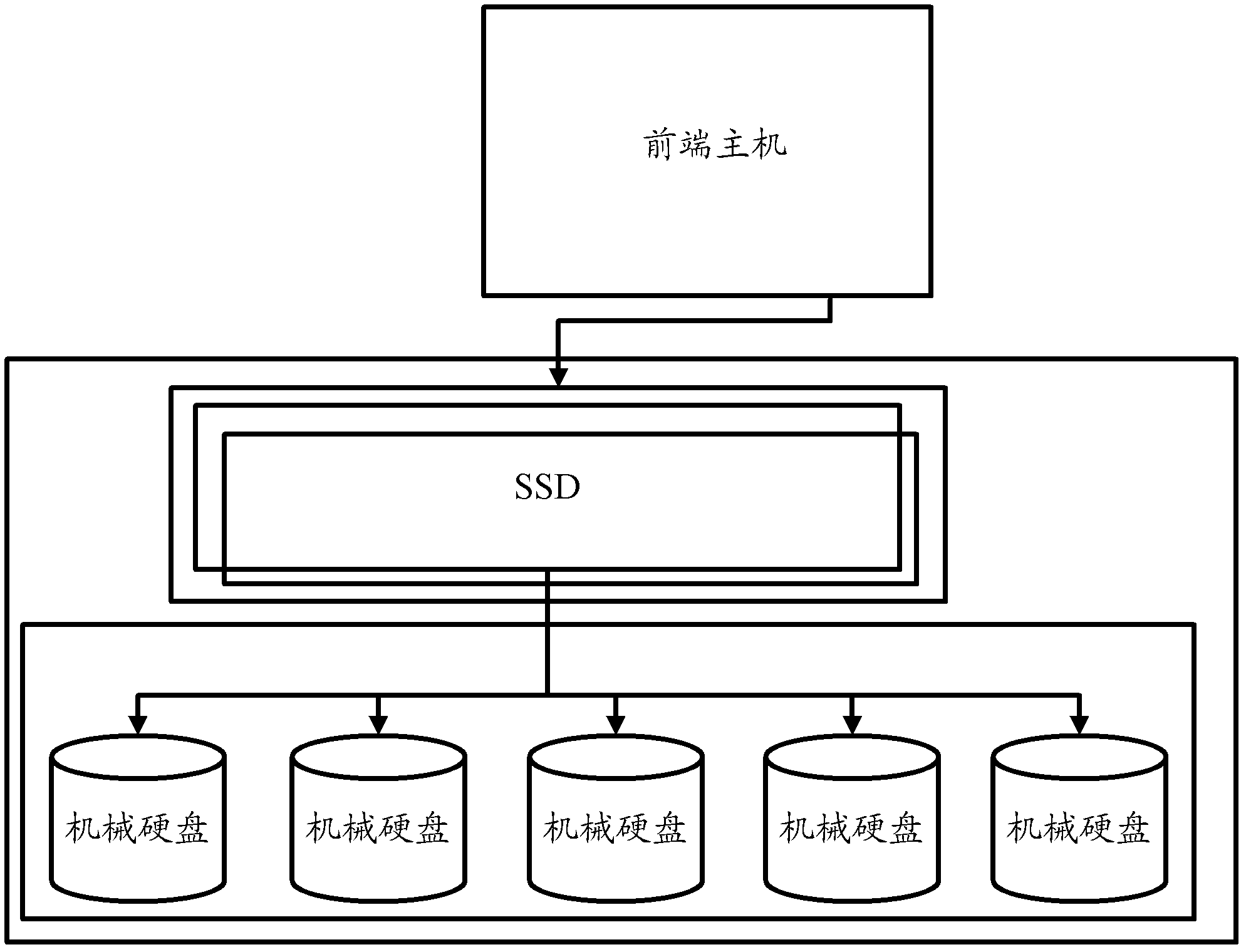

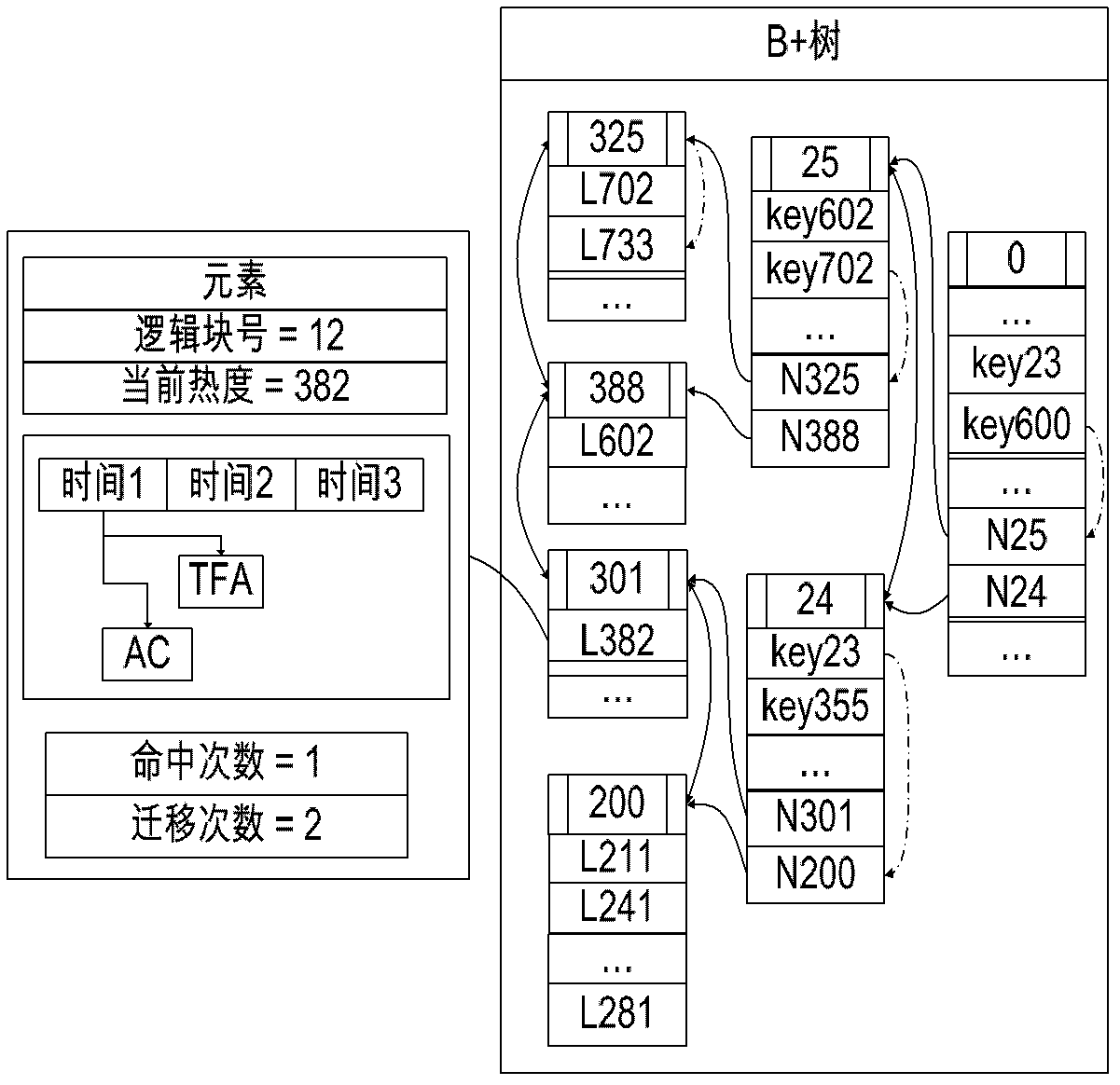

Hybrid storage system and hot spot data block migration method

InactiveCN103186350AGood value for moneyLow costInput/output to record carriersMemory adressing/allocation/relocationSolid-state driveHybrid storage system

The invention provides a hybrid storage system which comprises at least one solid-state hard disc SSD (solid state drive), at least one mechanical hard disc and a control module, wherein the control module is used for migrating hot spot data blocks in the mechanical hard disc into the SSD, and migrating the non-hot spot data blocks in the SSD into the mechanical hard disc, the hot spot data blocks are data blocks of which the visited frequency is higher than that of other data blocks, and the non hot spot data blocks are data blocks of which the visited frequency is lower than that of others. According to the hybrid storage system and the hot spot data block migration method, provided by the invention, the utilization ratio of the SSD and the performance of the hybrid storage system are effectively enhanced, and meanwhile, the cost of the hybrid storage system is lowered.

Owner:BEIJING FASTWEB TECH

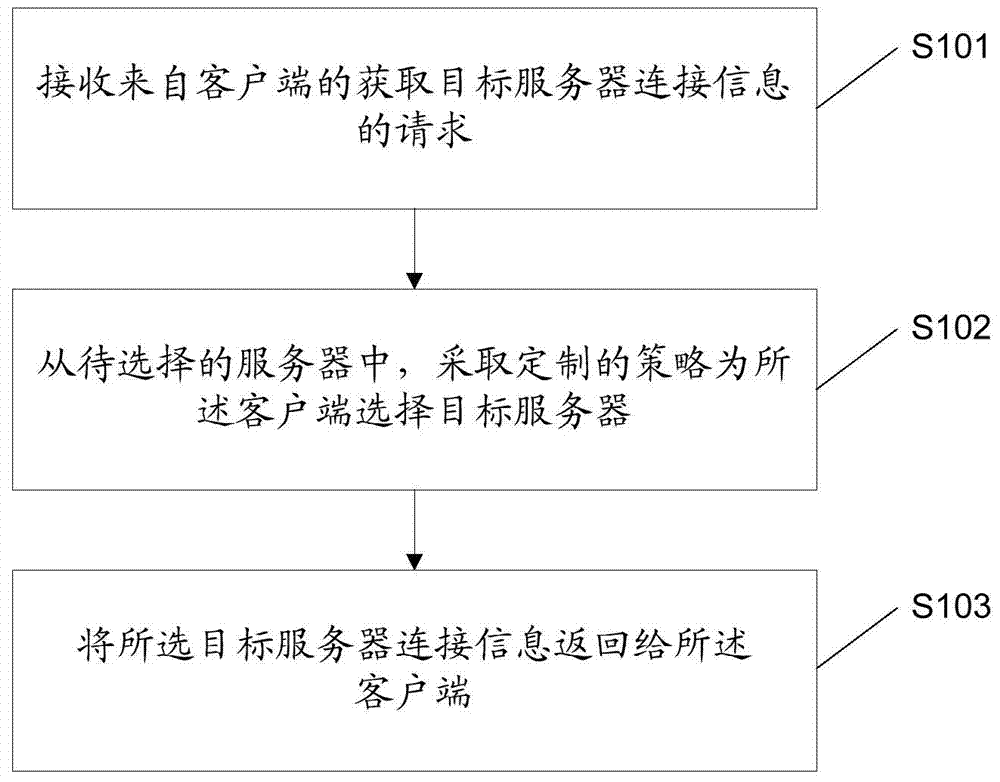

Method for realizing flow distribution based on front-end scheduling, device and system thereof

InactiveCN104852934AAvoid interferenceFlexible allocationData switching networksTraffic capacityFault tolerance

The invention discloses a method for realizing flow distribution based on front-end scheduling, a device thereof, an accessing method based on front-end scheduling, and a system for realizing flow distribution based on front-end scheduling. The method for realizing flow distribution based on front-end scheduling comprises the steps of receiving a request which comes from a client for acquiring target server connecting information; selecting a target server for the client according to a customized strategy from a server to be selected; and returning the target server connecting information to the client. The method of the invention can realize flexible configuration for a client accessing flow, load equalization of a server cluster, fault tolerance and disaster recovery.

Owner:ALIBABA GRP HLDG LTD

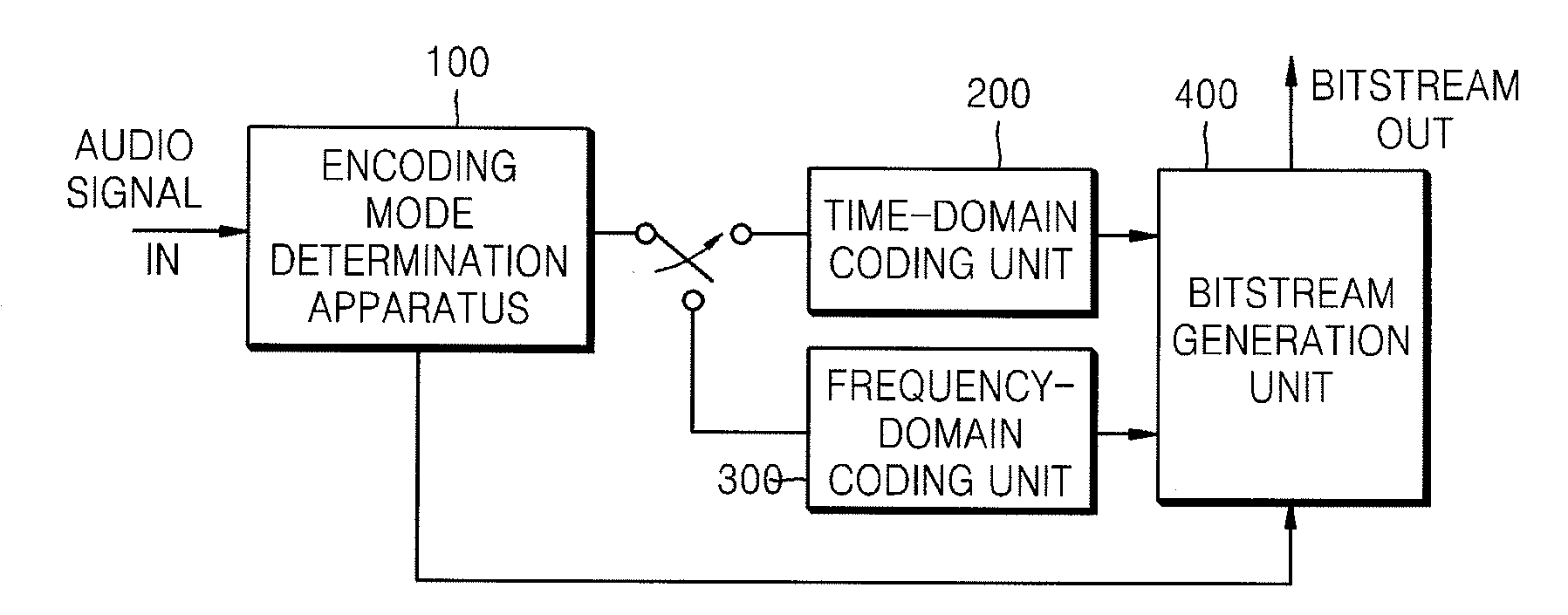

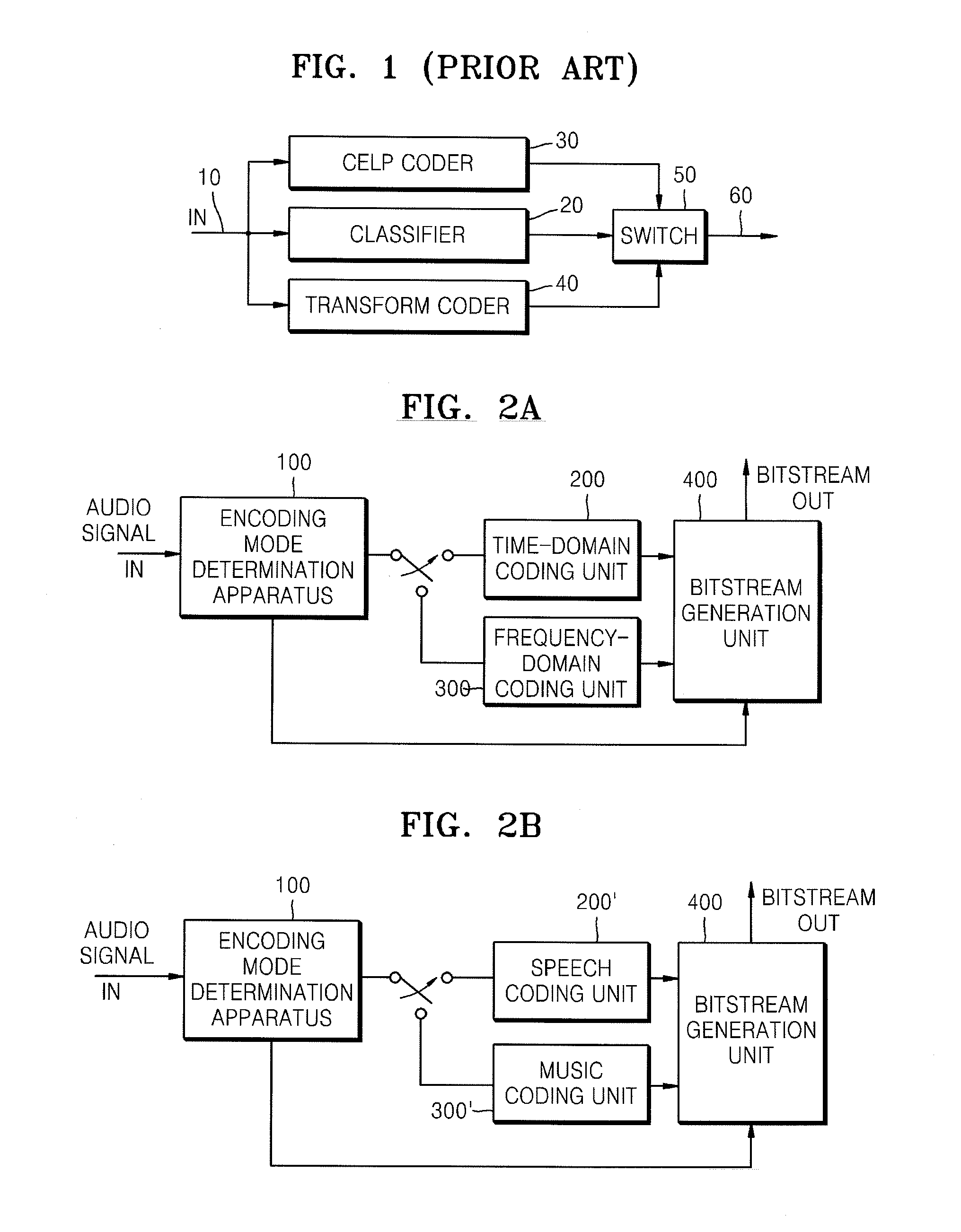

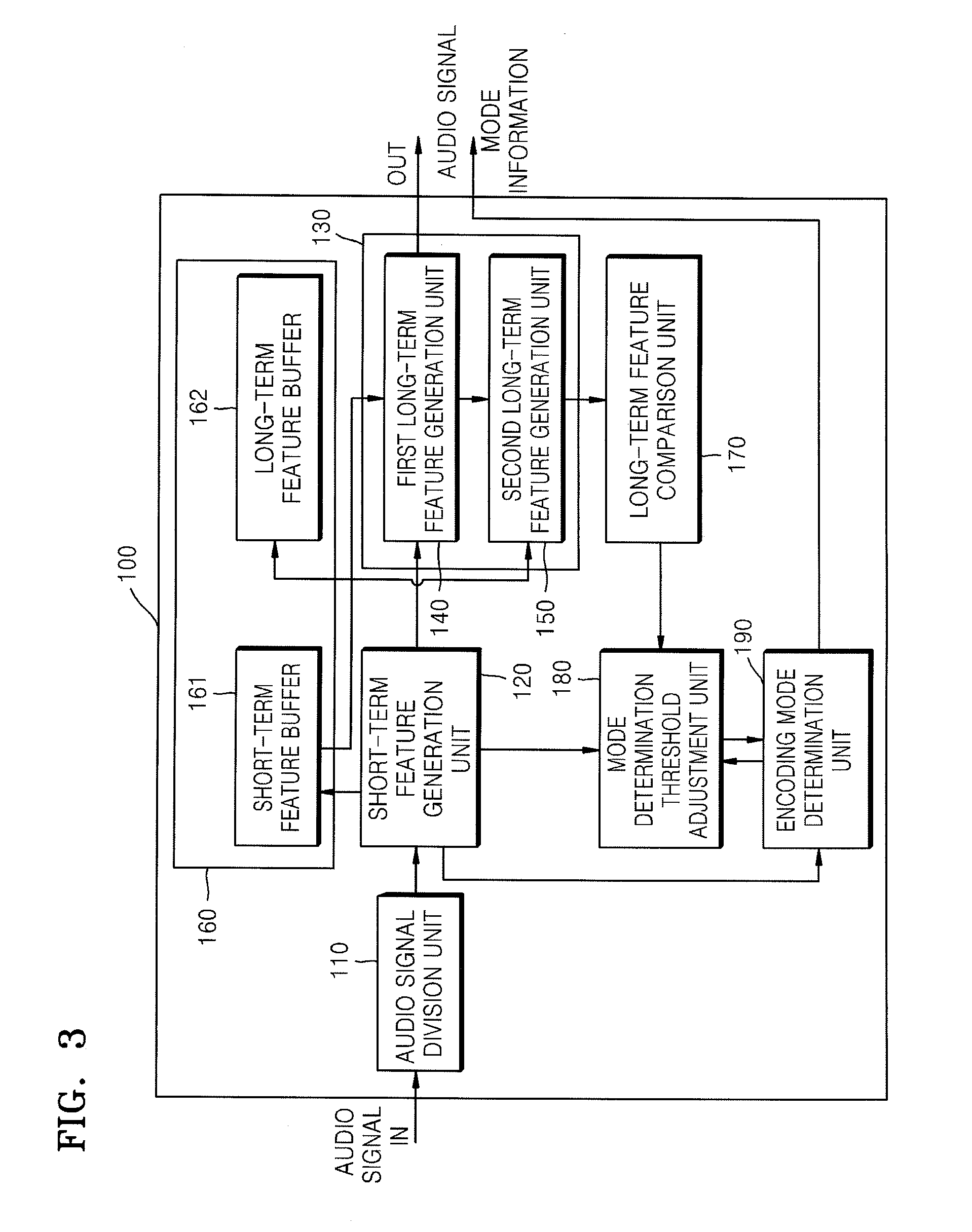

Method and apparatus to determine encoding mode of audio signal and method and apparatus to encode and/or decode audio signal using the encoding mode determination method and apparatus

InactiveUS20080147414A1Improve hit rateImprove determinationSpeech analysisCode conversionSignal classificationENCODE

A method and apparatus to determine an encoding mode of an audio signal, and a method and apparatus to encode an audio signal according to the encoding mode. In the encoding mode determination method, a mode determination threshold for the current frame that is subject to encoding mode determination is adaptively adjusted according to a long-term feature of the audio signal for a frame (the current frame) that is subject to encoding mode determination, thereby improving the hit rate of encoding mode determination and signal classification, suppressing frequent oscillation of an encoding mode in frame units, improving noise tolerance, and improving smoothness of a reconstructed audio signal.

Owner:SAMSUNG ELECTRONICS CO LTD

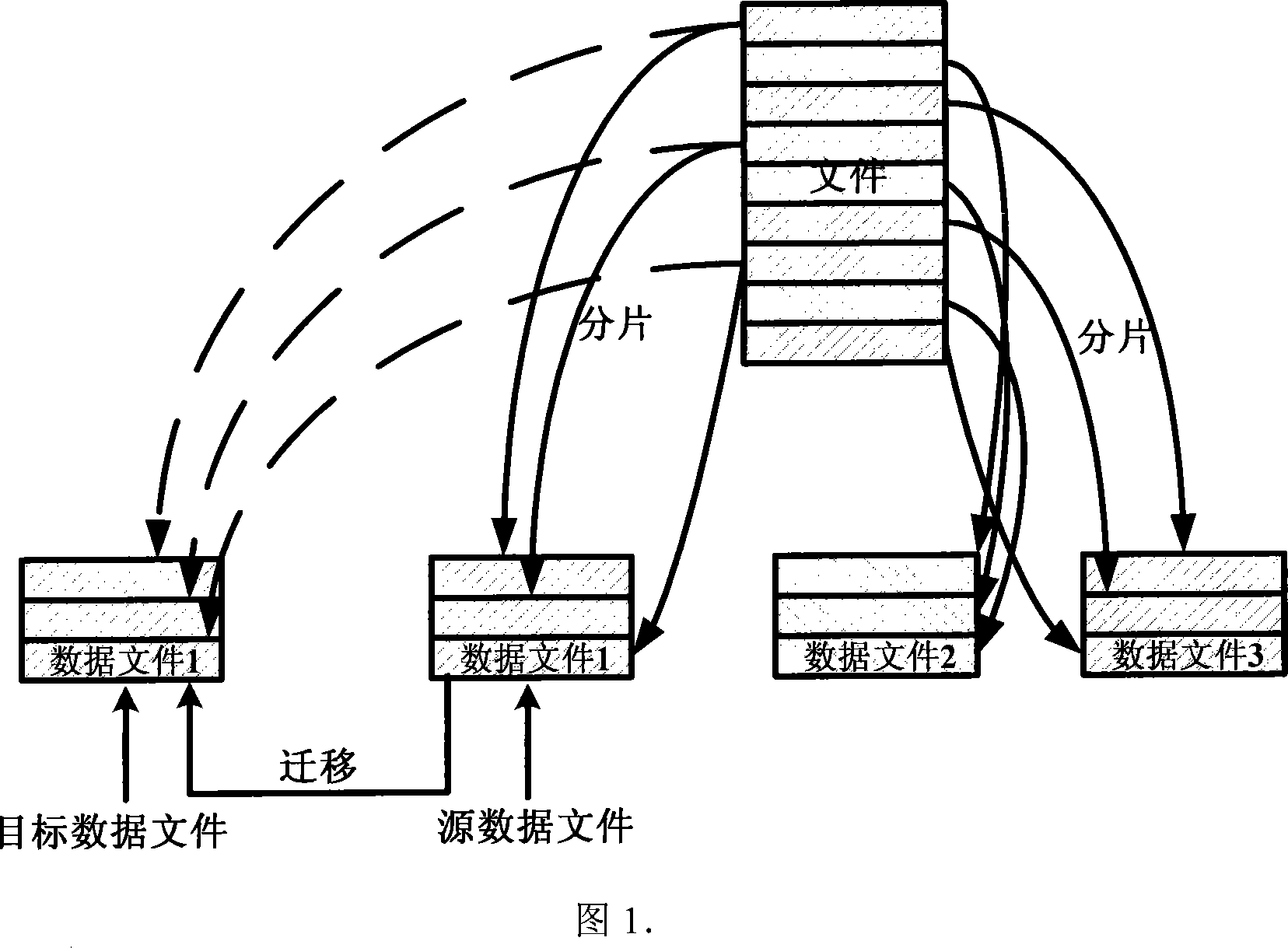

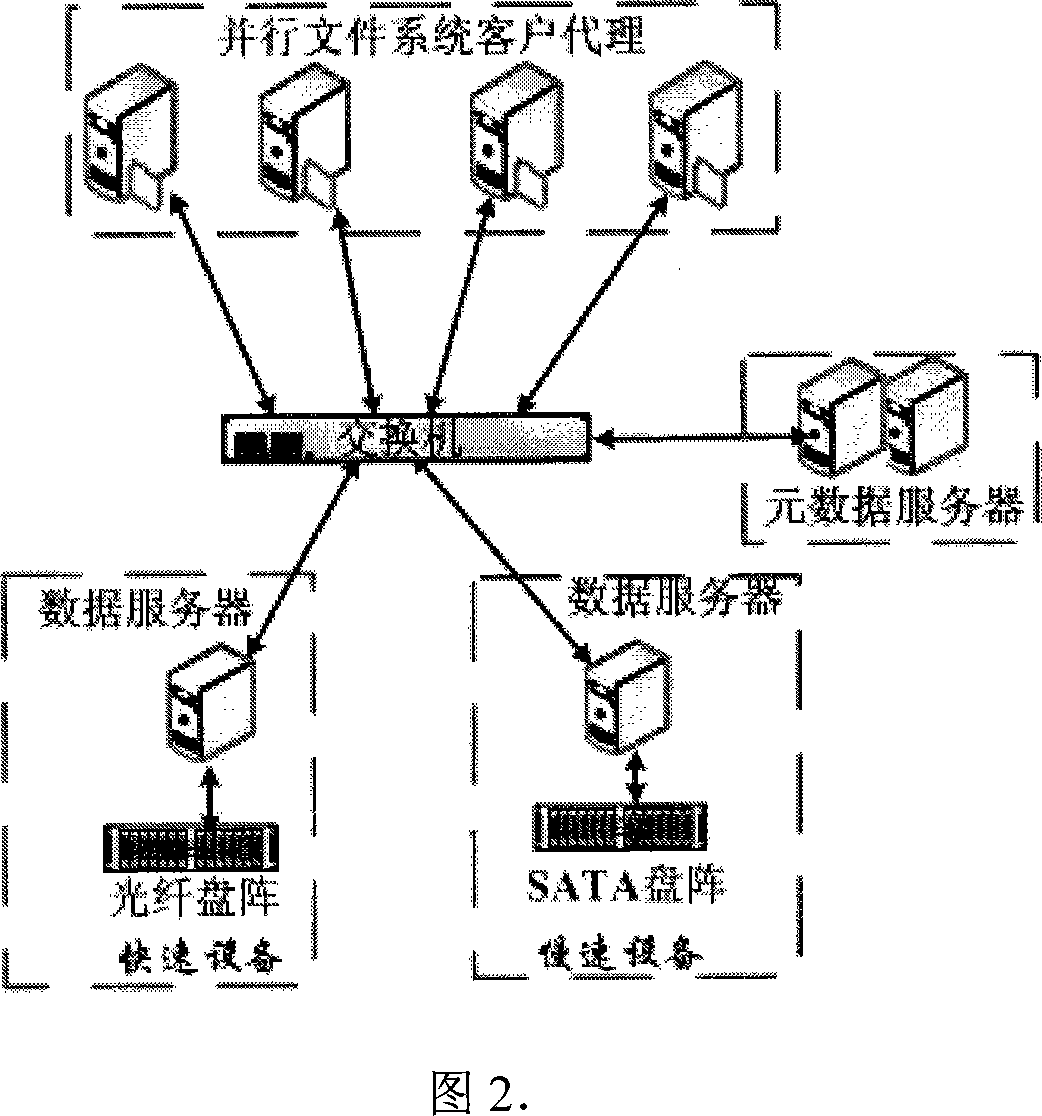

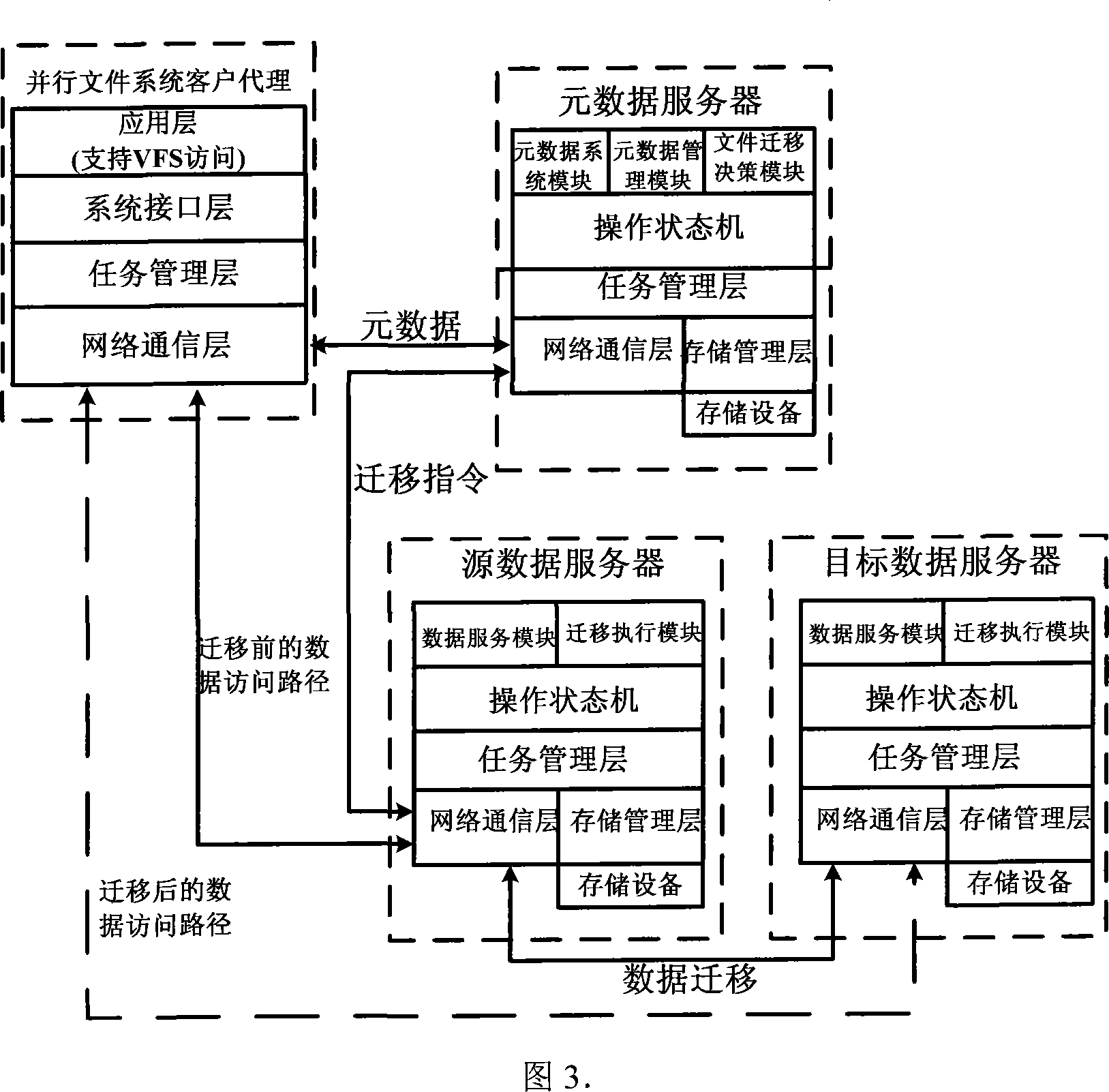

A great magnitude of data hierarchical storage method

InactiveCN101079902ANo need to scanAvoid front-end application impactTransmissionSpecial data processing applicationsMetadata managementFile system

This invention relates to a graded storing method for mass data characterizing that the customer proxy software of parallel file systems on front hosts realizes support to VFS access by system interface sub-modules and sub-modules of the VFS layer, a metadata server is responsible for organizing data files on different data servers to a unified parallel file system view, and a metadata management module provides operations of accessing metadata, a file migration deciding module gets file access information from the data server periodically and makes decision to file migration according to file system load and grade situation of equipment and an executing module of the data server executes migration.

Owner:TSINGHUA UNIV

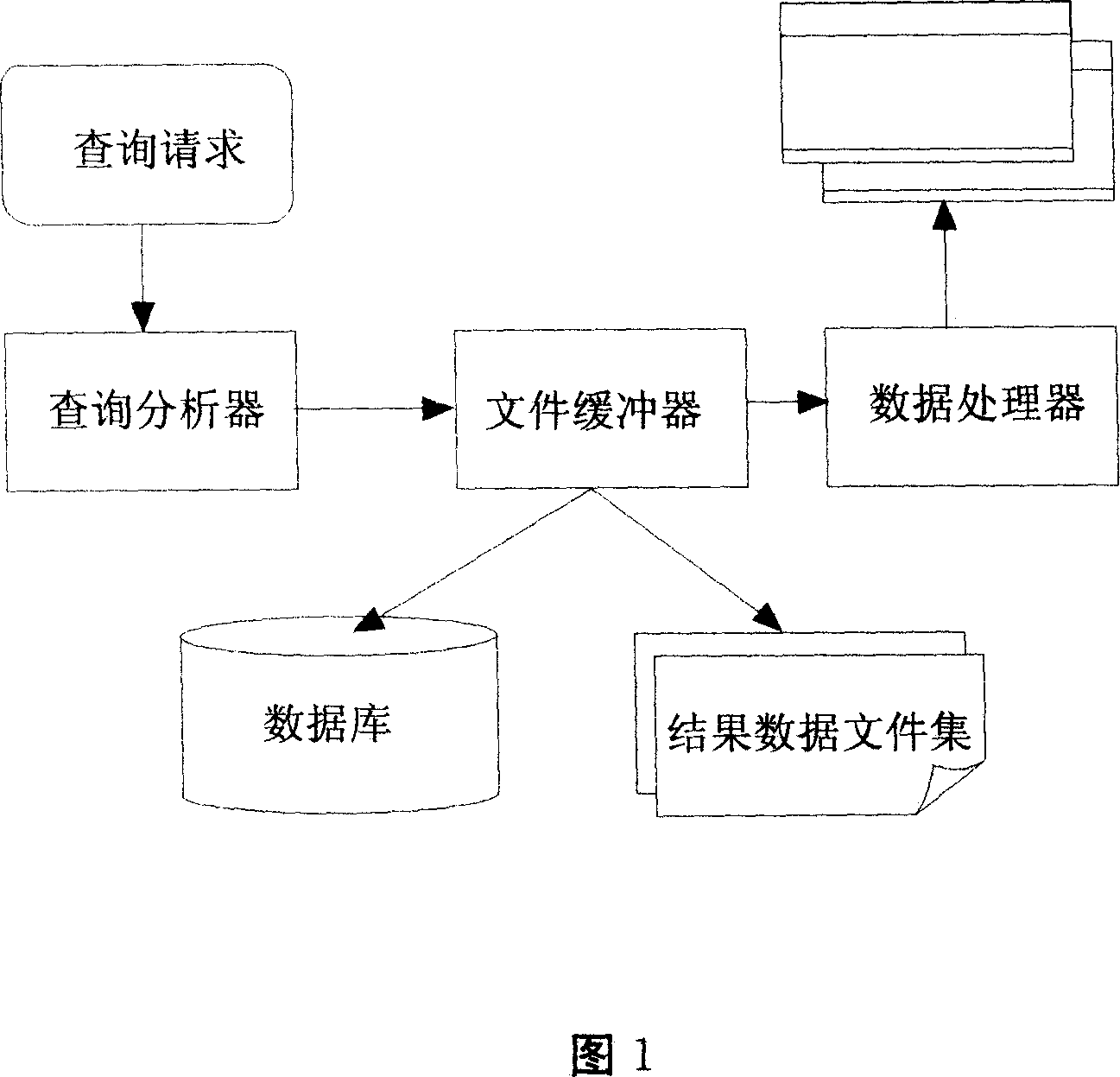

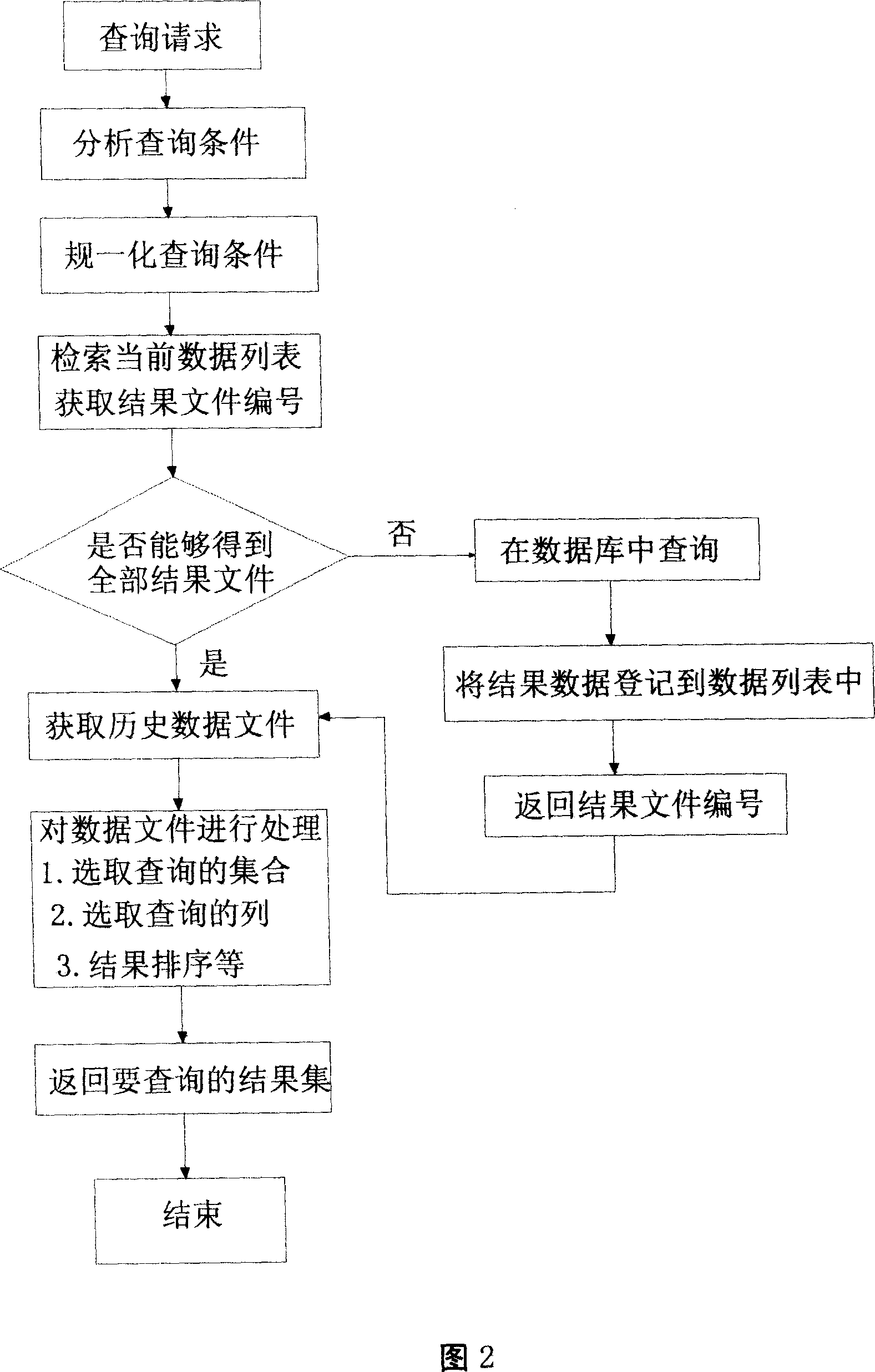

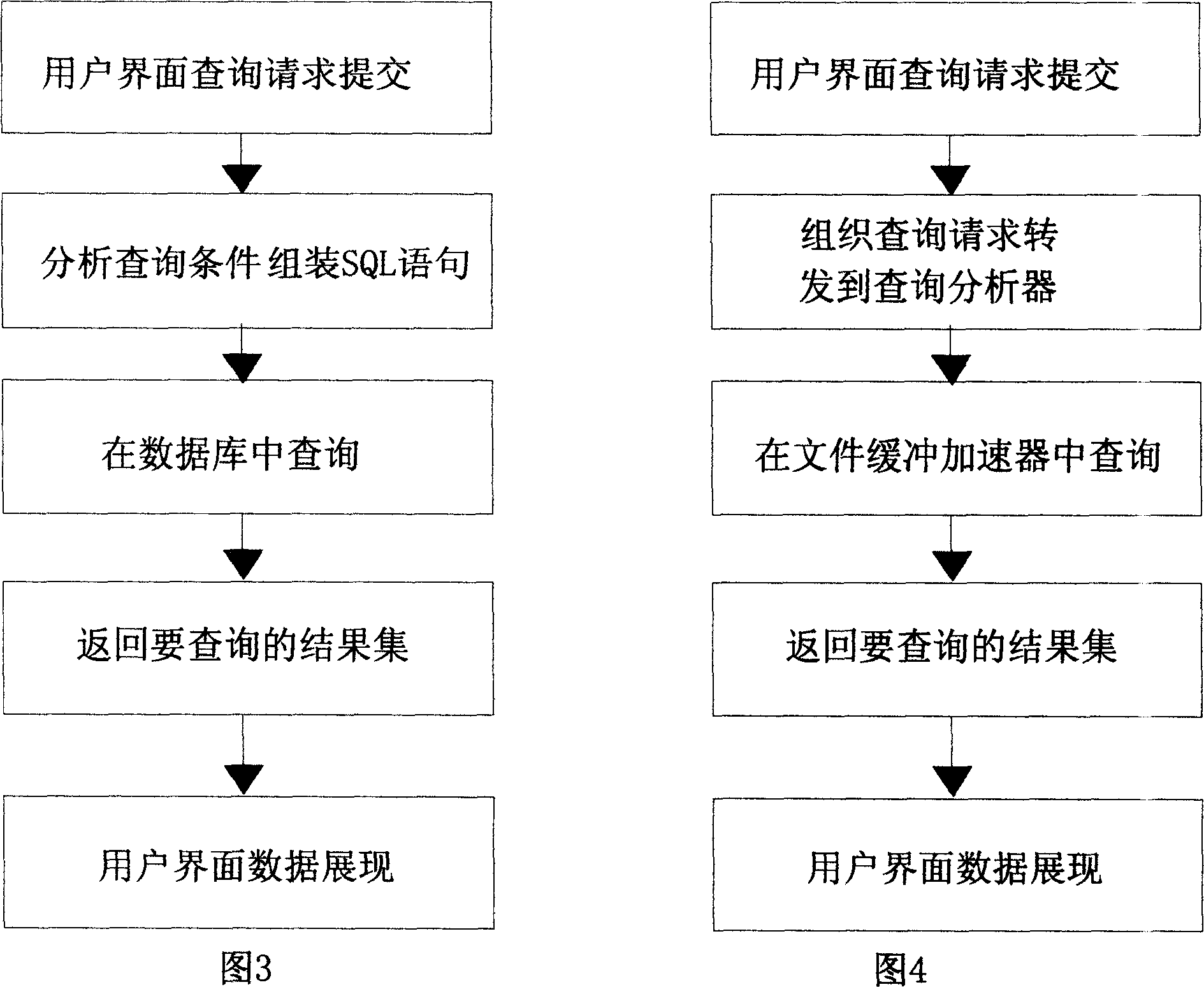

Data speedup query method based on file system caching

InactiveCN101110074AImprove hit rateIncrease in inquiriesSpecial data processing applicationsQuery analysisFile system

The invention relates to an accelerated data query method in a query system, which provides an accelerated data query method based on a cache of document system according to the features of unchanged saved historical data and frequent query of same data. The invention saves the historical query data and takes it as the cache data for query, so as to accelerate the query. Upon receiving the query quest from user, the system will first of all turn to the query analyzer; the principal function of the query analyzer is to analyze and plan the query condition; then, after passing through a document buffer, the system analyzes whether the data document in the result satisfies the present query need; if not, the query will be carried out in the database and the final result will be fed back to a data processor; then, the data processor will filter and sort the data according to the final query demand and finally feed back the result document to a super stratum application system. Therefore, without increasing the investment on hardware, this query method is able to greatly reduce the occupation of database and system resource, so as to improve the query speed.

Owner:INSPUR TIANYUAN COMM INFORMATION SYST CO LTD

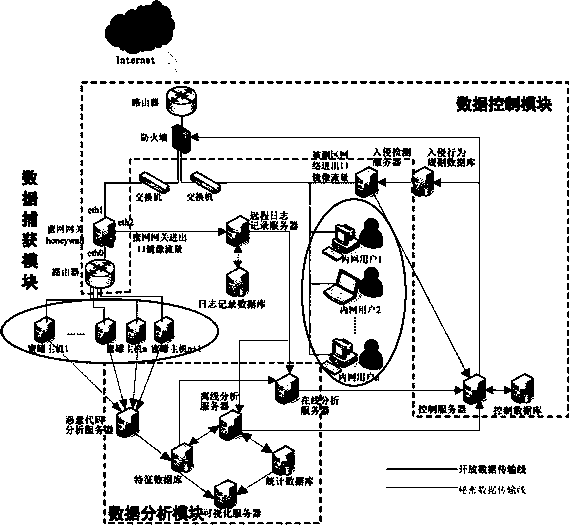

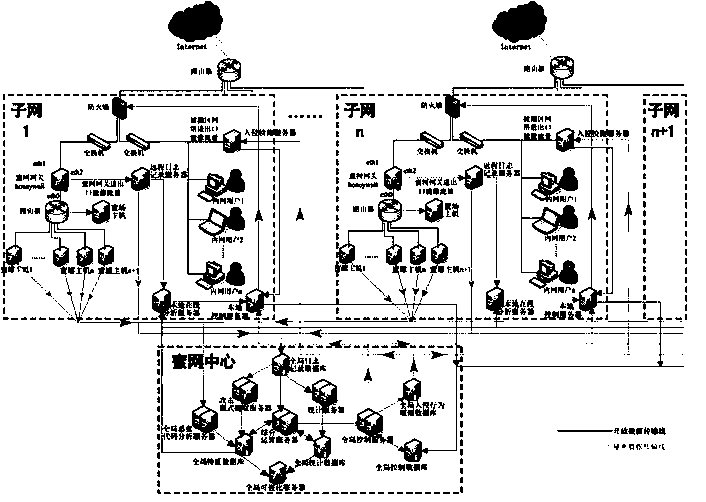

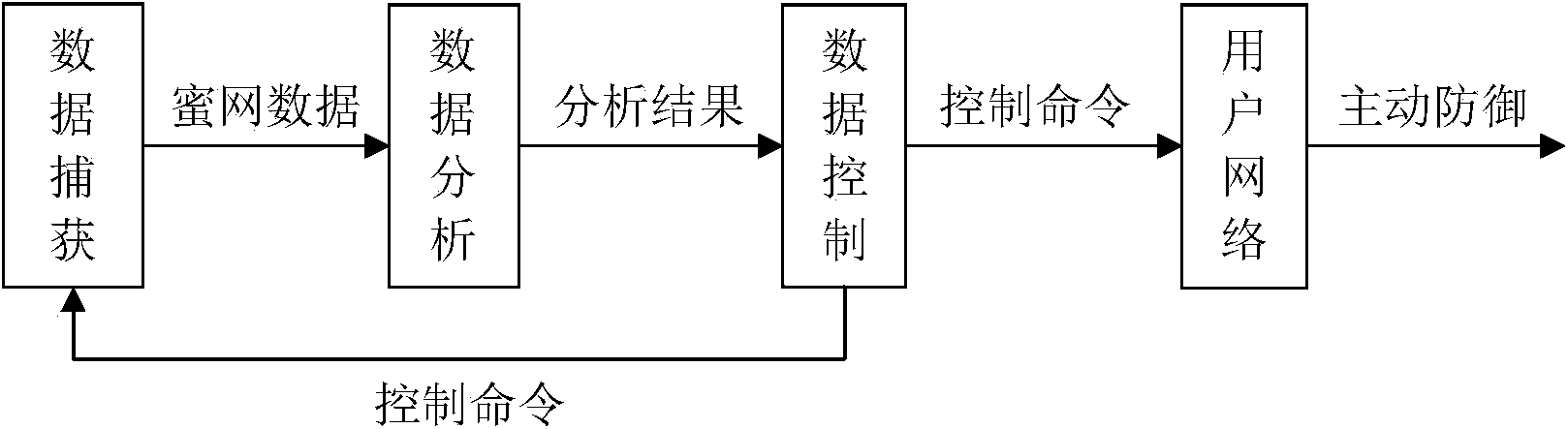

Cooperative type active defense system based on honey nets

The invention provides a cooperative type active defense system based on honey nets. The cooperative type active defense system comprises a data capture module, a data analysis module and a data control module and is characterized in that the data capture module, the data analysis module and the data control module are arranged at the center of one honey net and a plurality of sub nets in a distributed mode. The cooperative type active defense system depends on a honey net technology, a cooperative type active defense thought is adopted, attacker information captured by the different honey nets is shared in real time, active defensiveness of a network layer is achieved, defensive initiative and real-time performance are improved, and the cooperative type active defense system is suitable for large-scale enterprise networks. The cooperative type active defense system built through the method is high in defense rate, hit rate and robustness, and time delay from the time that attackers are firstly found to the time that all network deployment and control is achieved is greatly reduced.

Owner:XI AN JIAOTONG UNIV

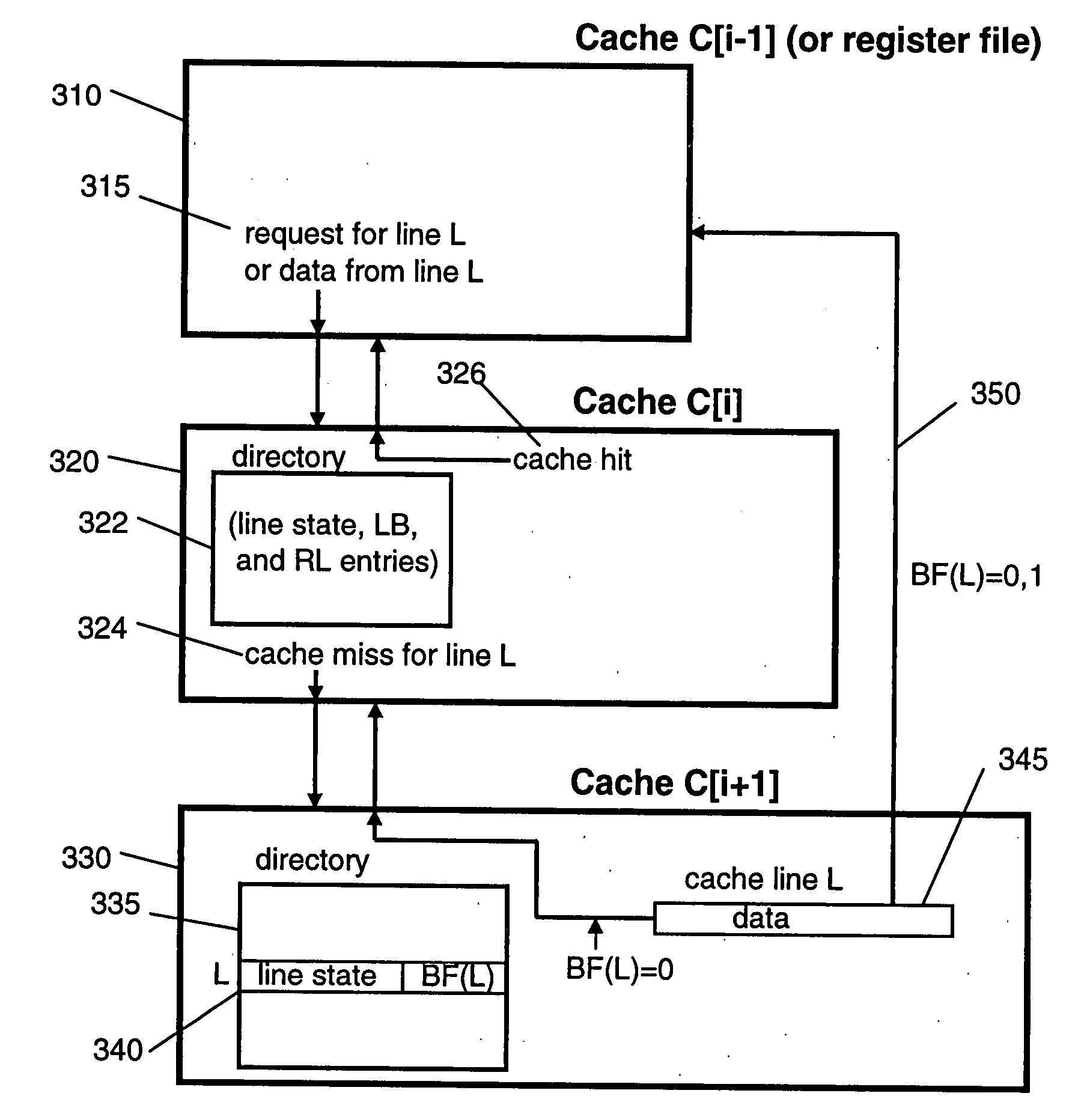

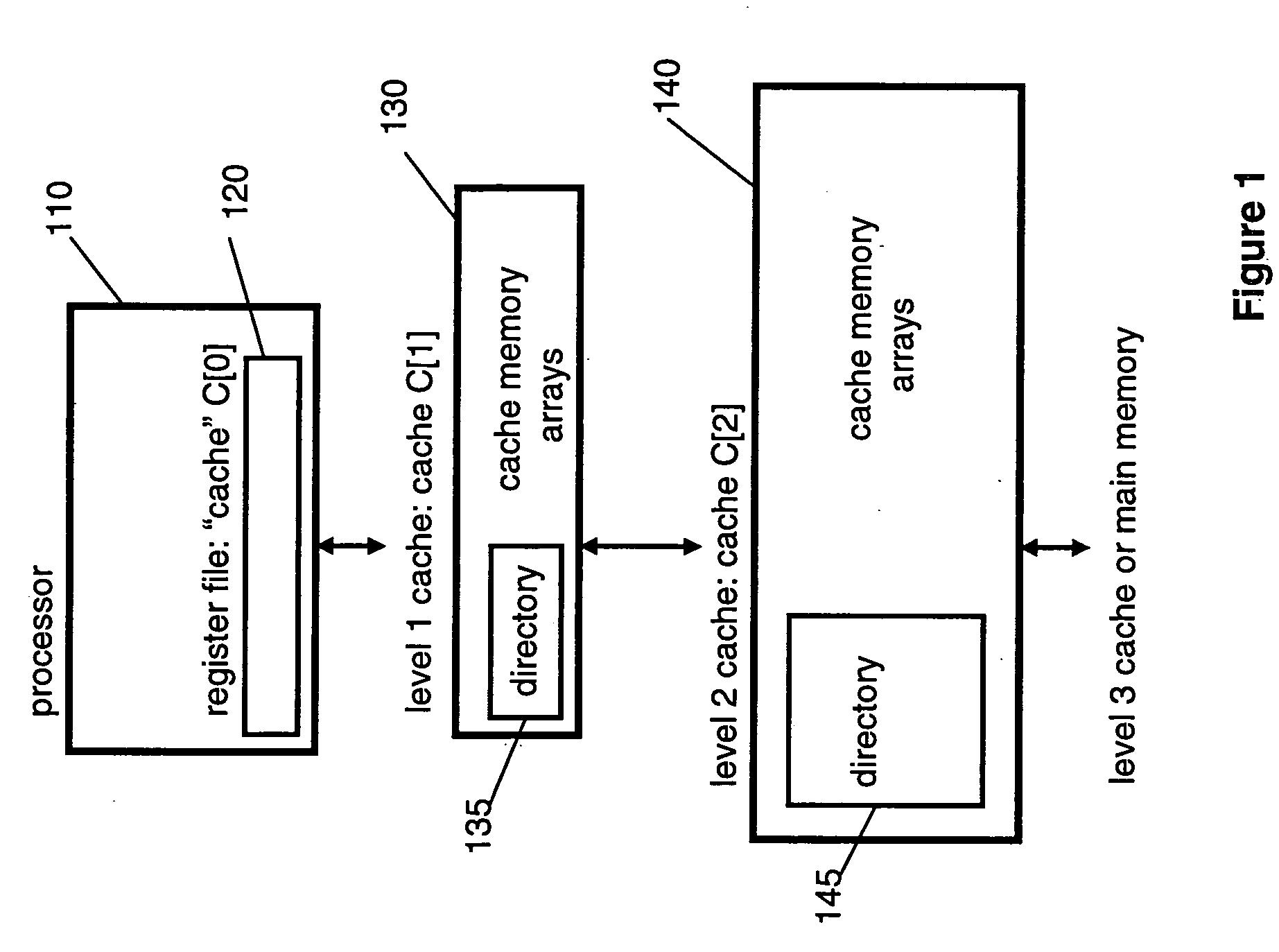

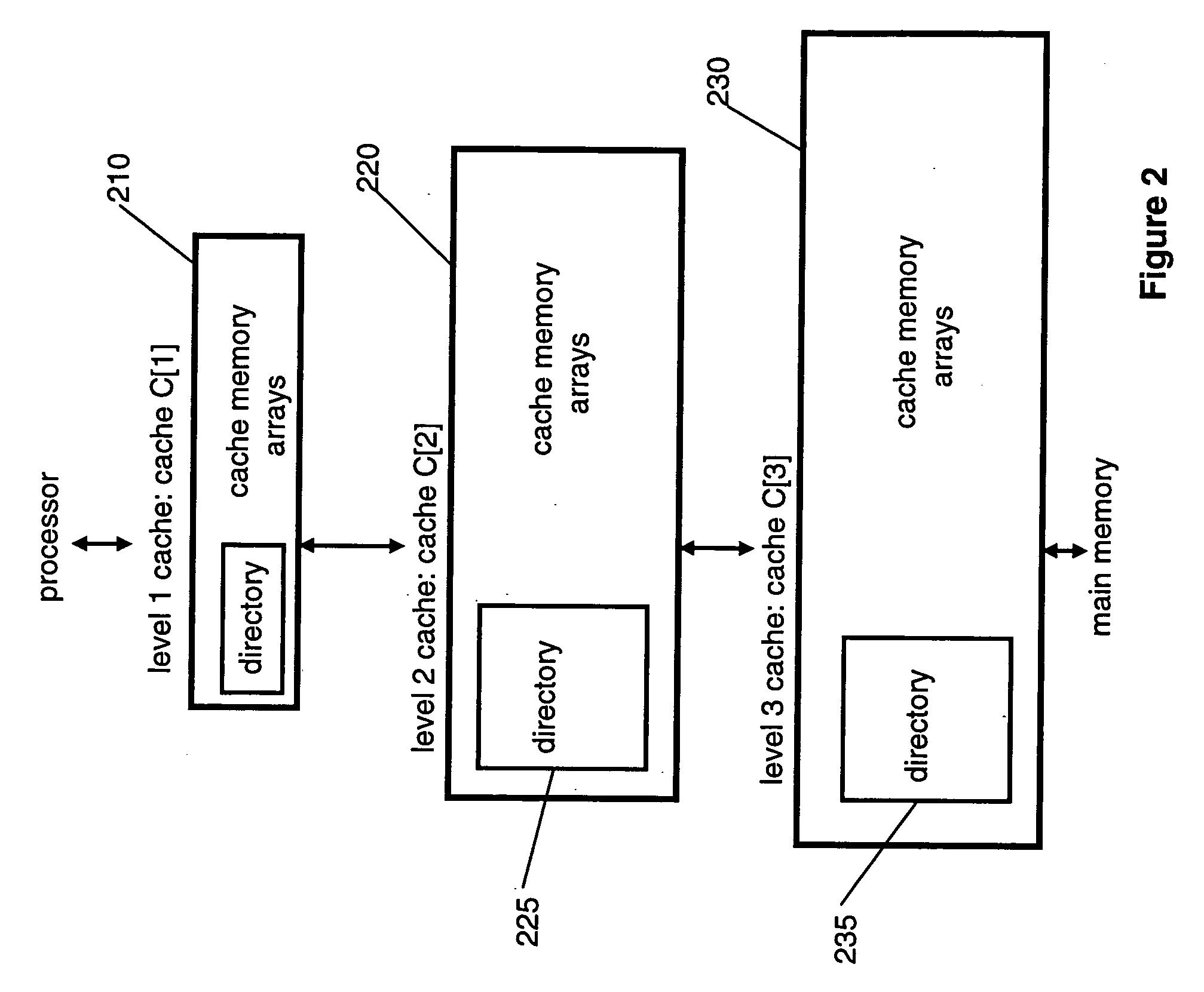

Enabling and disabling cache bypass using predicted cache line usage

InactiveUS20060112233A1Improve system performanceImprove hit rateMemory systemsCache hierarchyCache access

Arrangements and method for enabling and disabling cache bypass in a computer system with a cache hierarchy. Cache bypass status is identified with respect to at least one cache line. A cache line identified as cache bypass enabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is bypassed, while a cache line identified as cache bypass disabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is not bypassed. Included is an arrangement for selectively enabling or disabling cache bypass with respect to at least one cache line based on historical cache access information.

Owner:IBM CORP

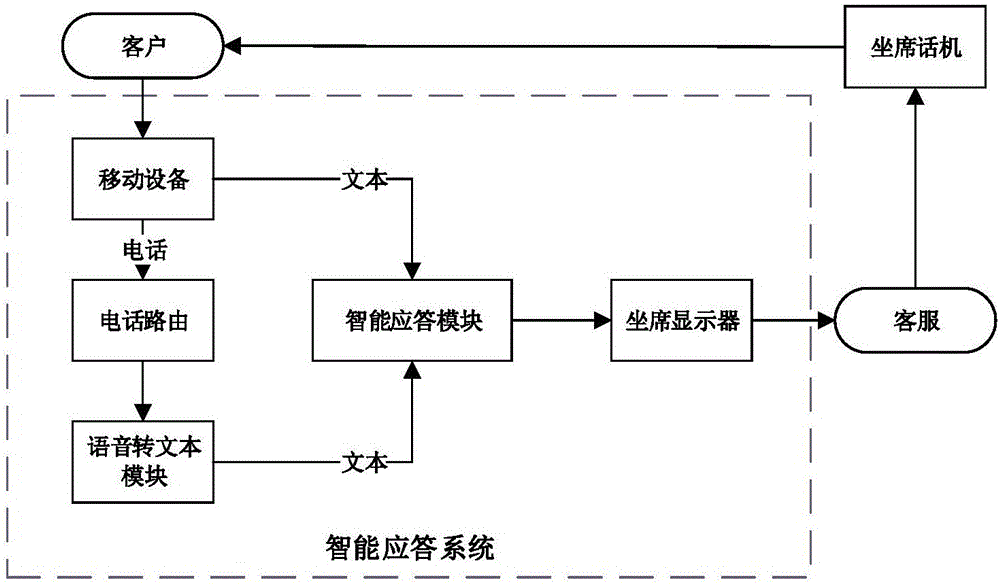

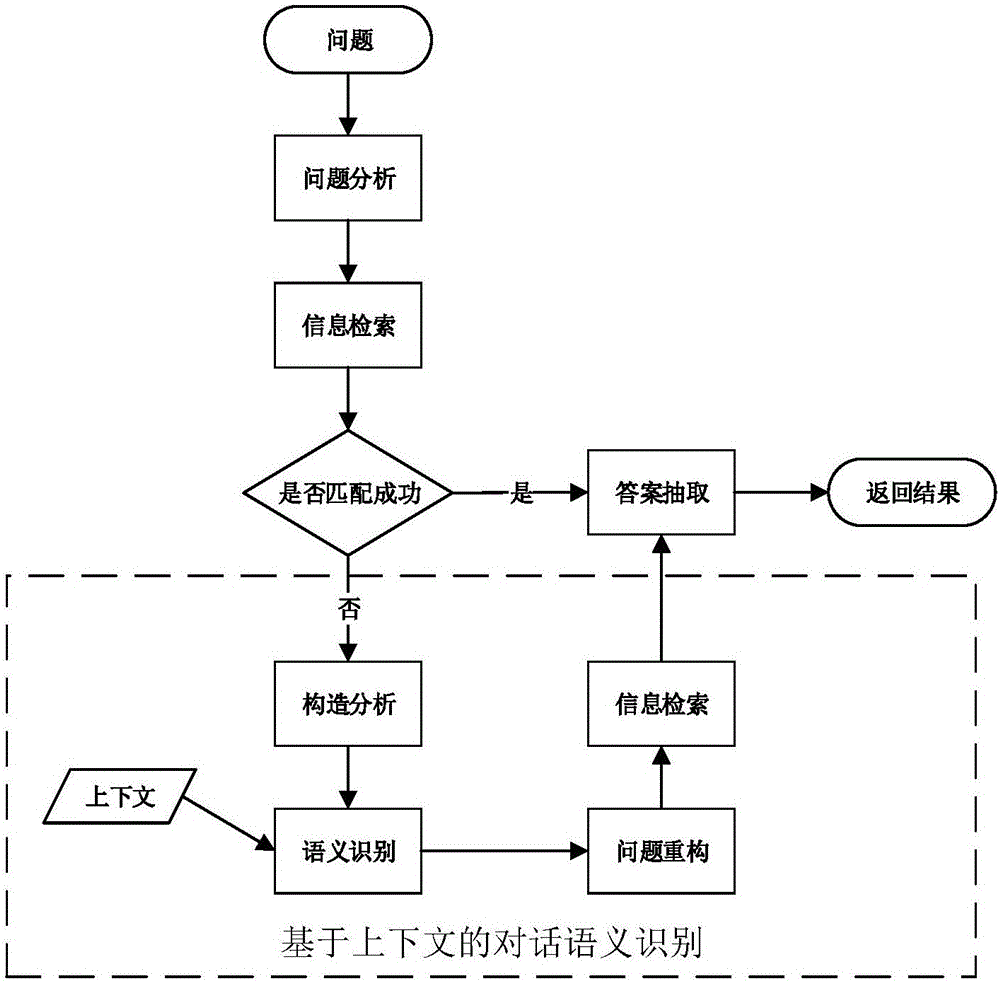

Intelligent response method and system based on context dialogue semantic recognition

InactiveCN106357942AImprove recommendation hit rateReduce workloadSemantic analysisSpecial service for subscribersContext basedNatural language

The invention discloses an intelligent response method and system based on context dialogue semantic recognition. The method comprises the following steps of: performing formatting and natural language processing on a consulting question to obtain a semantic type of the question, determine a question intention of a user, and extract out keywords; performing answer matching by using a recommend matching algorithm, and outputting hit knowledge points in the form of retrieved results; if no knowledge point with a corresponding matching degree exists, performing structural analysis on the consulting question, and performing semantic recognition on the consulting question in combination with context contents to recompose the consulting question; performing retrieving on the recomposed consulting question; and extracting a suitable answer from retrieval results. According to the method disclosed by the invention, a traditional intelligent response system is improved by virtue of a natural language processing technology, dialogue semantic recognition based on context is added, a user colloquial consulting mode can be effectively processed, the response matching degree of the intelligent response system is promoted, the user experience is greatly improved, and the customer service cost is effectively reduced.

Owner:GUANGZHOU BAILING DATA CO LTD

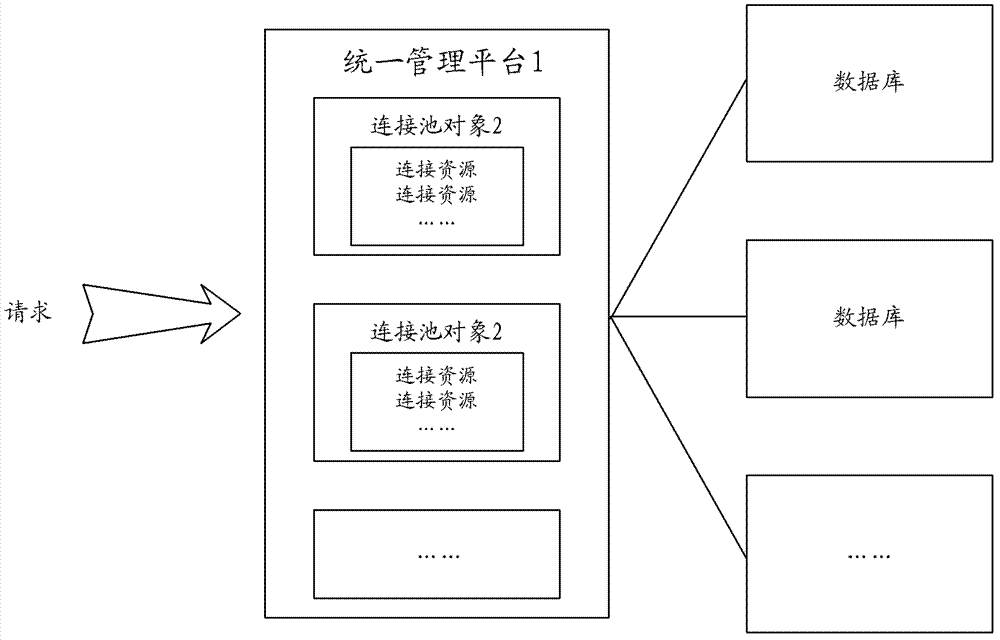

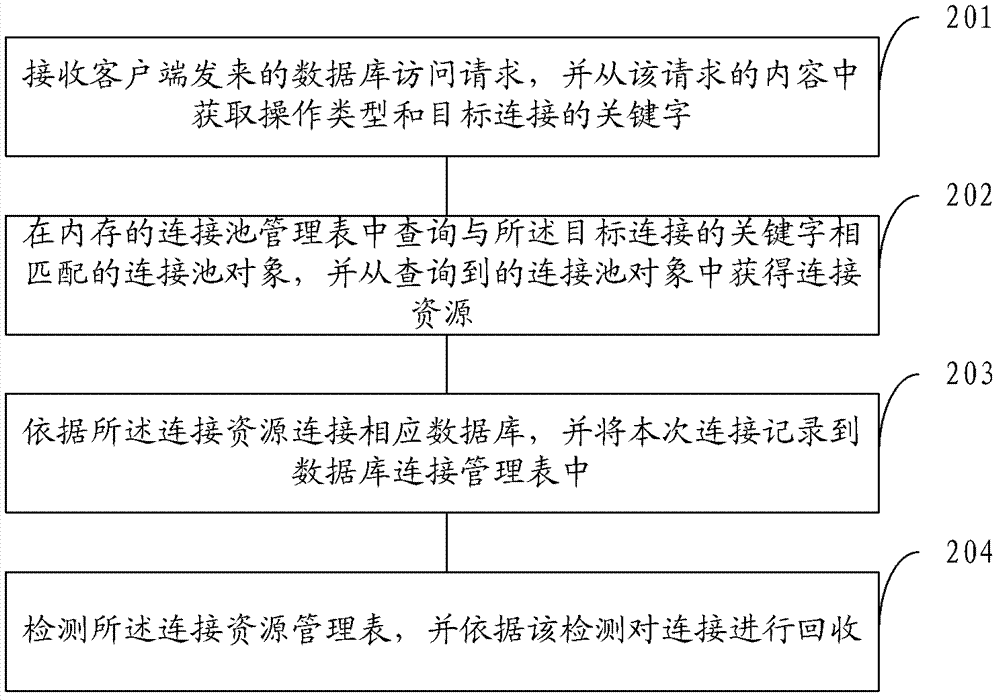

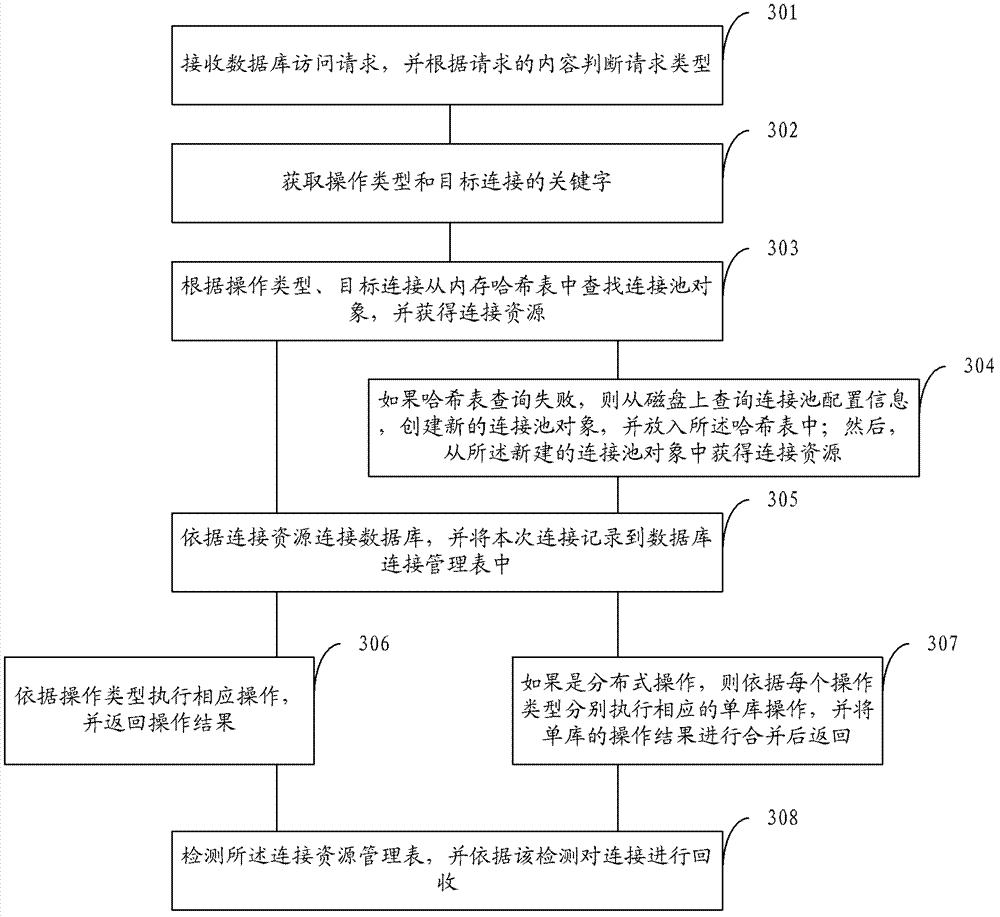

Management method and management system of database connection

ActiveCN103365929AImprove access efficiencyImprove hit rateSpecial data processing applicationsConnection poolResource management

The invention provides a management method and a management system of database connection. The method and the system aim at solving the problem of management of multiple connection tank resources. The method comprises the steps that a database access request sent by a client side is received; an operation type and a keyword connected with a target are obtained from content of the request; a connection tank object matched with the keyword connected with the target is queried from a connection tank management table of a memory; the connection resource is obtained from the queried connection tank object; a corresponding database is connected according to the connection resource; connection is recorded to a database connection management table; corresponding database operation is executed according to the operation type; an operation result is returned; a connection resource management table is detected; and the connection is recovered according to detection. According to the method and the system, thousands of connection tank resources can be managed uniformly, and corresponding database connection is uniformly dispatched and controlled by a platform, so that the access efficiency is improved significantly compared with mutually independent management of connection tanks in the prior art.

Owner:ALIBABA GRP HLDG LTD

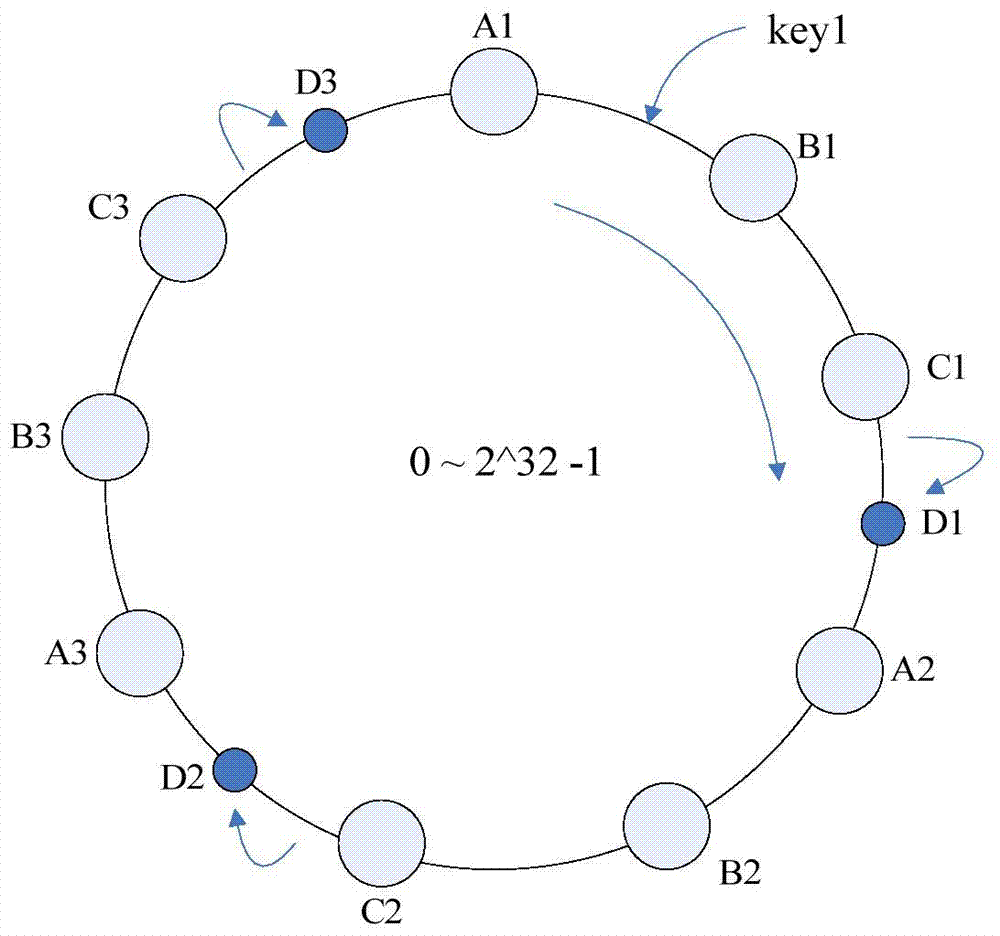

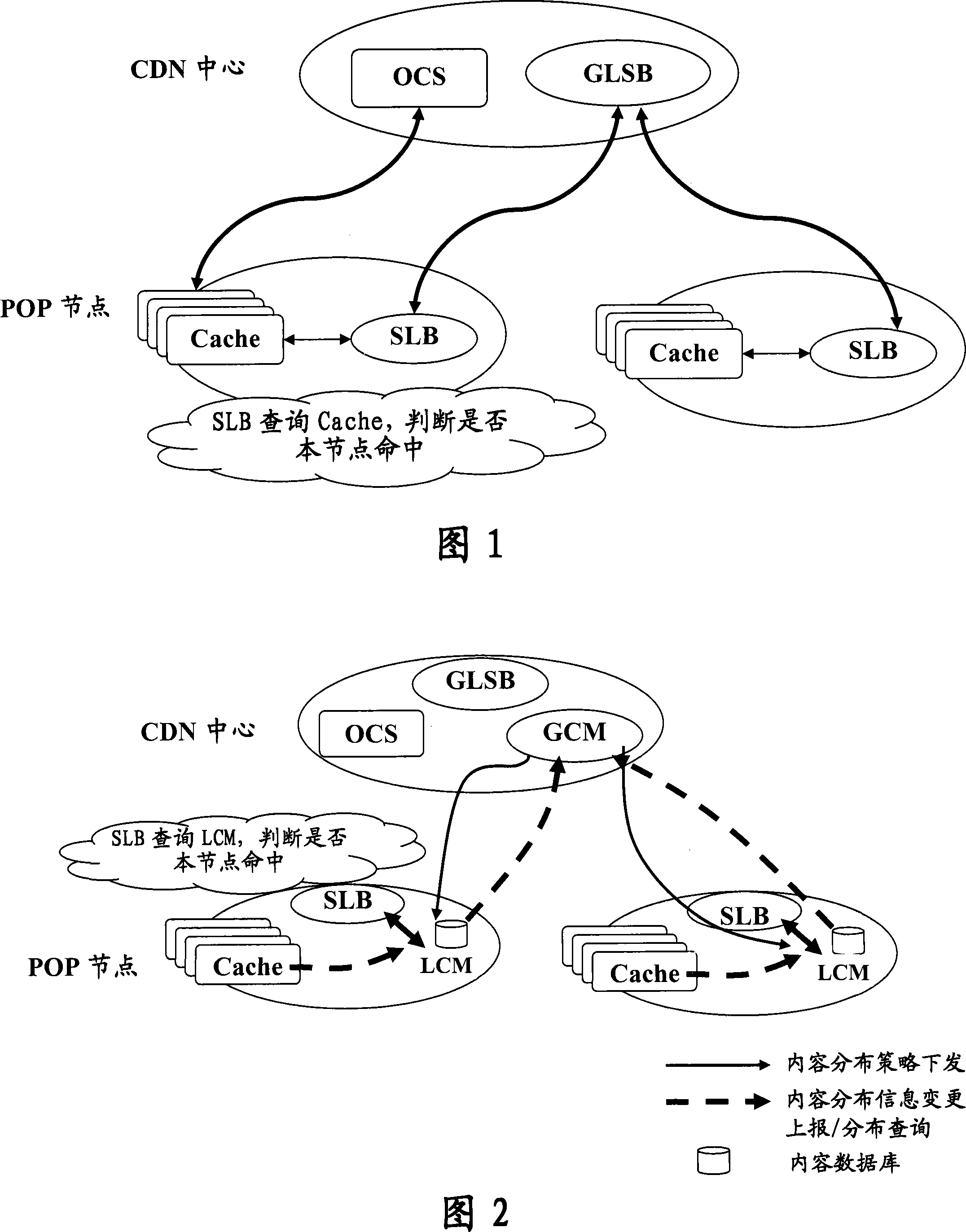

Content distribution network and scheduling method based on content in the network

ActiveCN101222424AReduce redirectsEfficient integrationData switching networksContent distributionContent management system

The invention relates to a CDN and a scheduling method based on the content in the network. In the CDN, a GCM and an LCM are arranged to take charge of the uniform maintenance and management of the content distribution information and the content distribution strategy in the whole network. When the content required to access by a user is not hit on a POP node, the LCM of the POP node searches other stored POP nodes or OCS with the content and the proxy service is provided for the user.

Owner:CHINA TELECOM CORP LTD

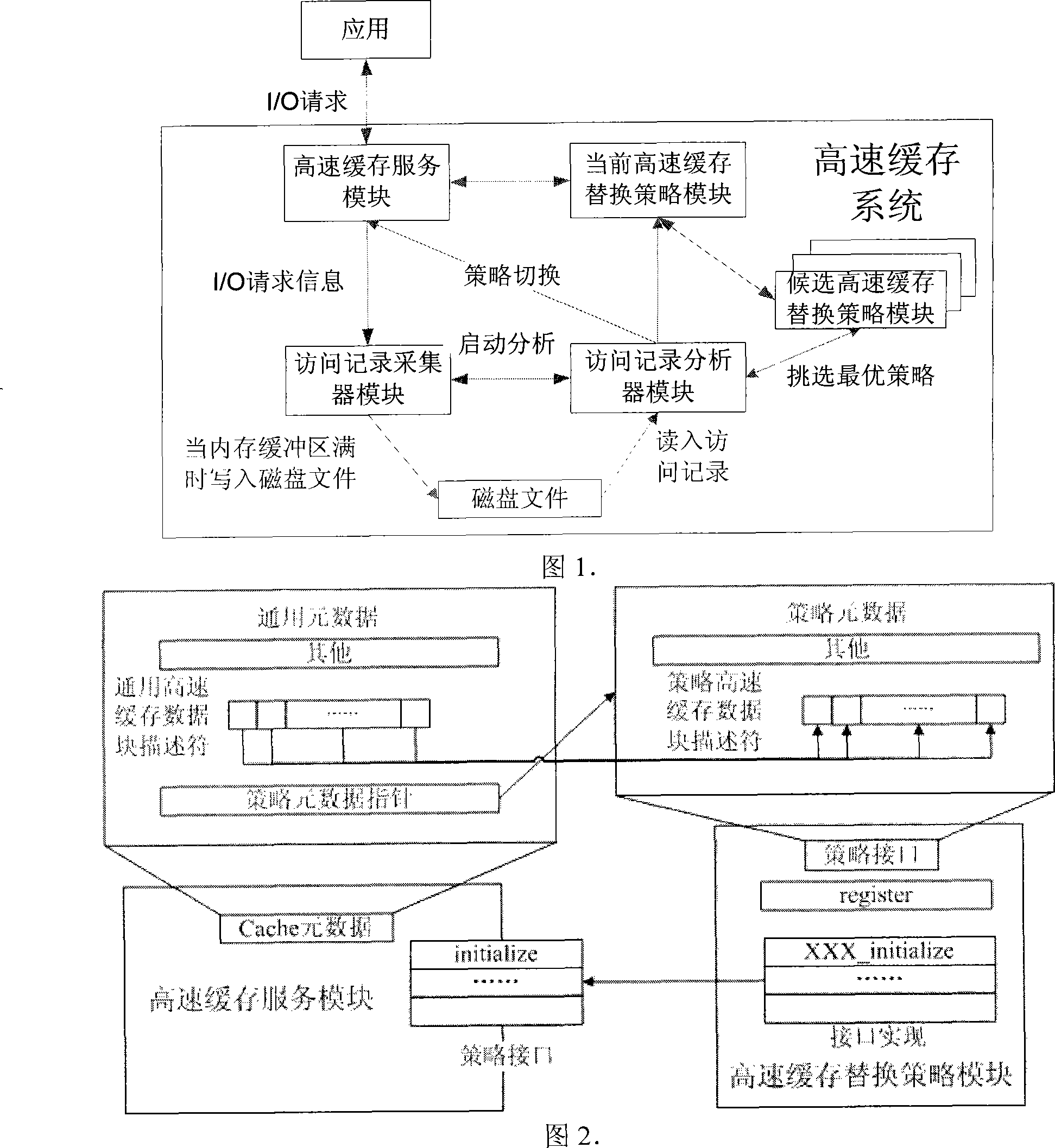

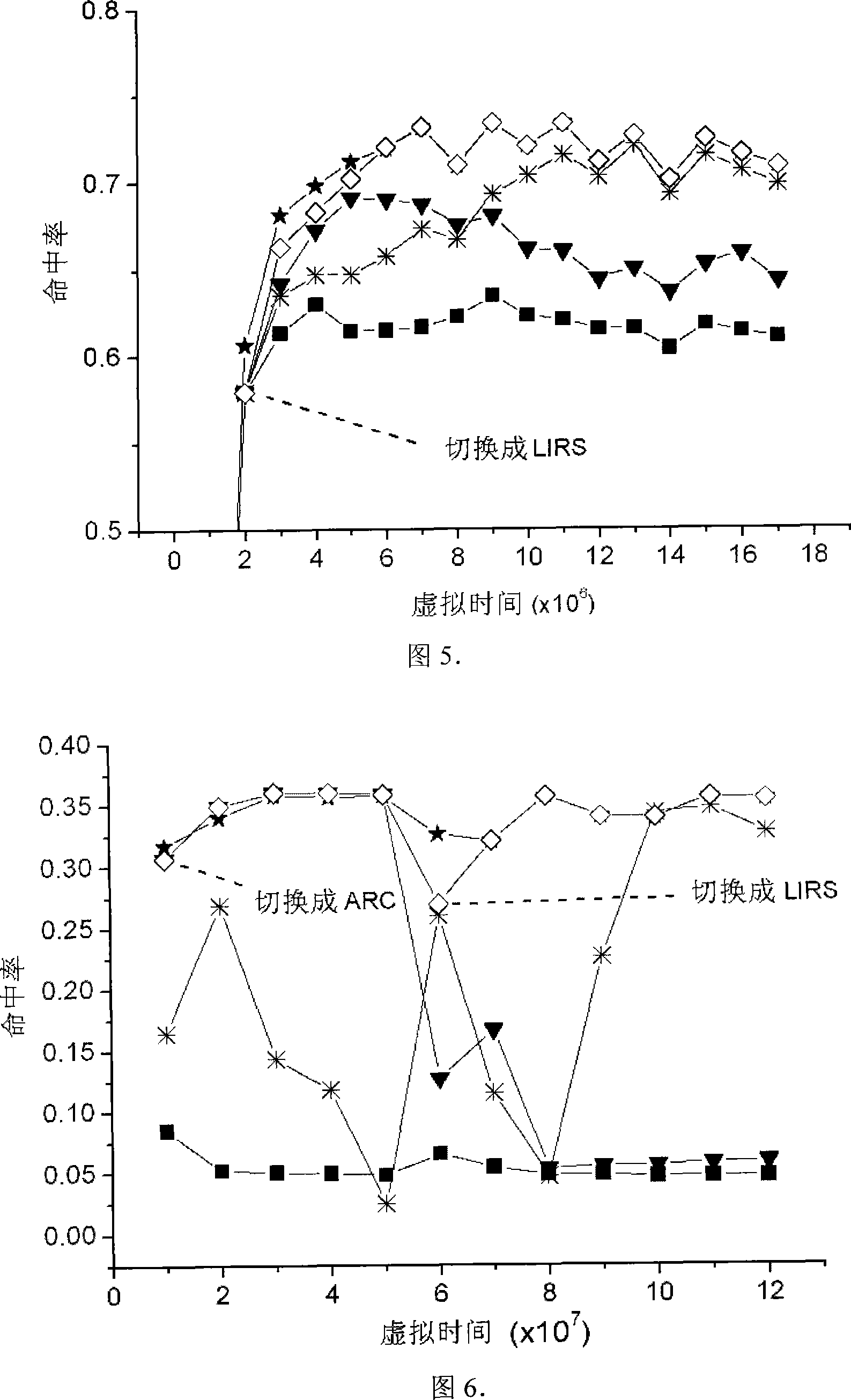

High speed cache replacement policy dynamic selection method

InactiveCN101236530AReduce CPU and memory overheadLower average response timeMemory adressing/allocation/relocationModularityDecision-making

The invention relates to a dynamic selection method for cache replacement policies, belonging to the memory system cache filed. The invention is characterized in that: the cache replacement policies are modularized through uniform interfaces, thereby online switching can be performed on any two cache replacement policies and novel cache replacement policies can be disposed; assess, record, acquisition and analysis are performed asynchronously, thereby CPU and memory overheads are small and affection on application is small; accurate decision-making results can be obtained as soon as possible through multi-run of policy selection, thereby not only the results are guaranteed to be brought into use quickly but also method overheads are reduced as much as possible.

Owner:TSINGHUA UNIV

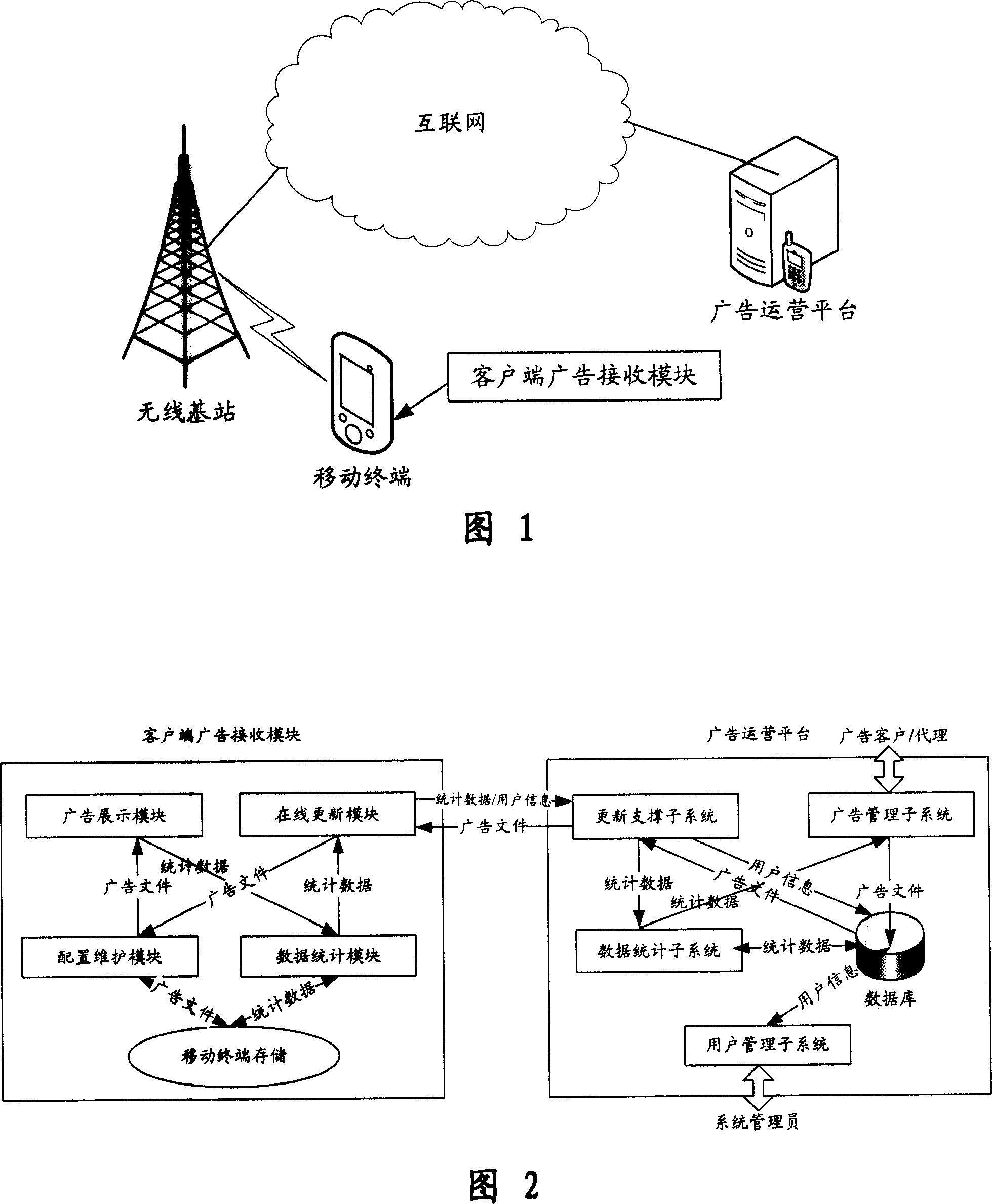

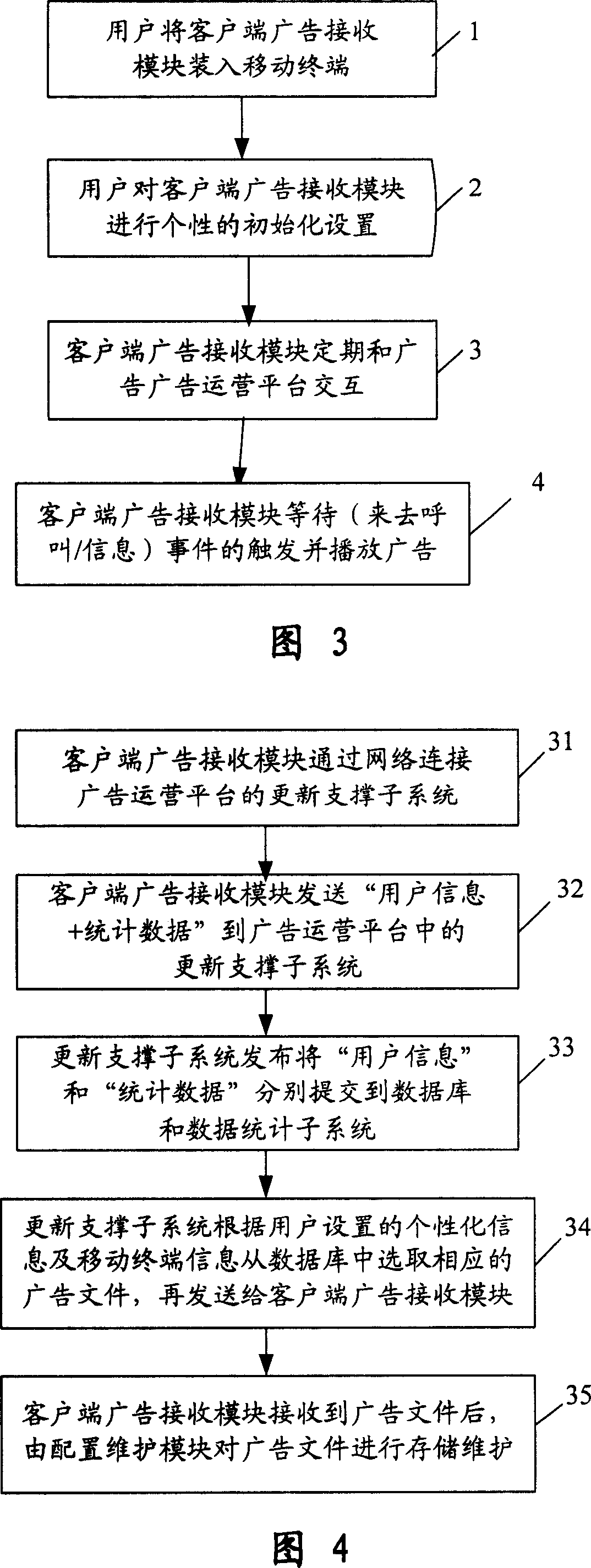

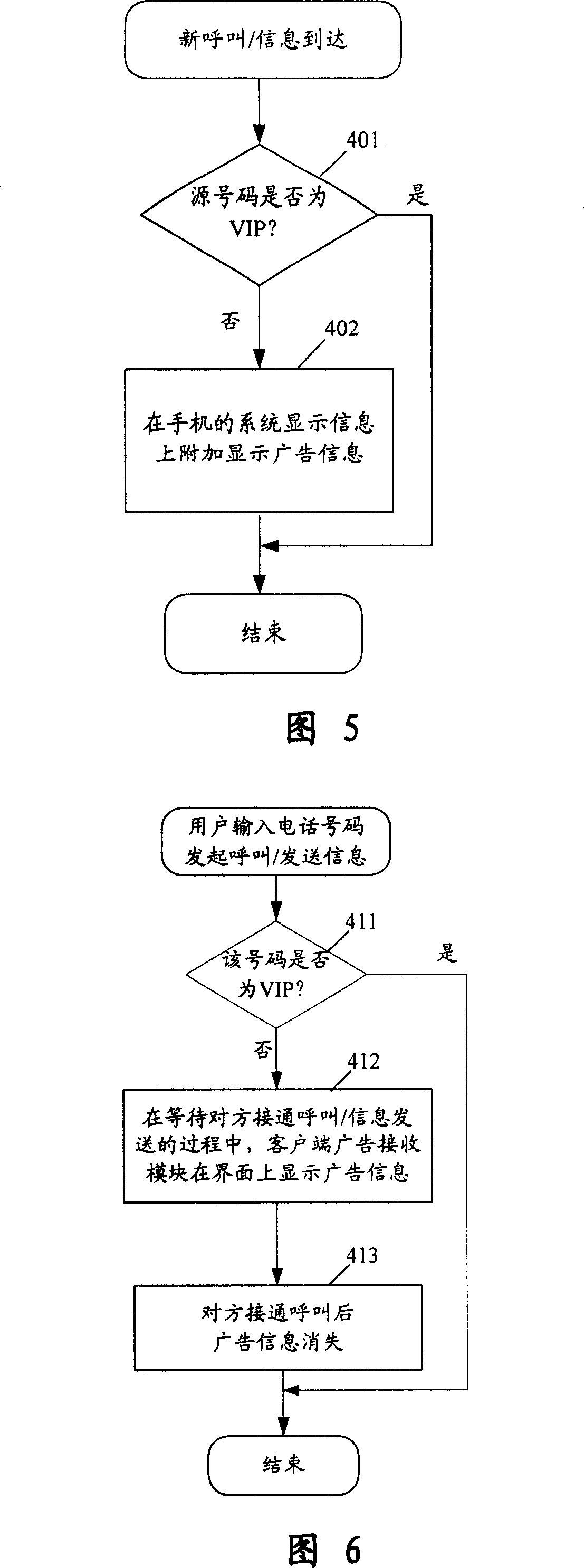

A method and system for publishing the advertisement at the mobile terminal

InactiveCN101018364AImprove hit rateGood effectSpecial service provision for substationSubstation equipmentPersonalizationThe Internet

The ad-releasing method on mobile terminal comprises: (1) user installs a client ad receiving module in mobile terminal; (2) user initiates the module; (3) the receiving module interacts with the provider's platform periodically to receive ad; and (4) once calling or called, the client module displays ad on terminal. This invention is simple by just adding client ad receiving module on the terminal and connecting platform in Internet, and can satisfy user individual demand, while rewording scores for displaying ad.

Owner:BEIJING NETQIN TECH

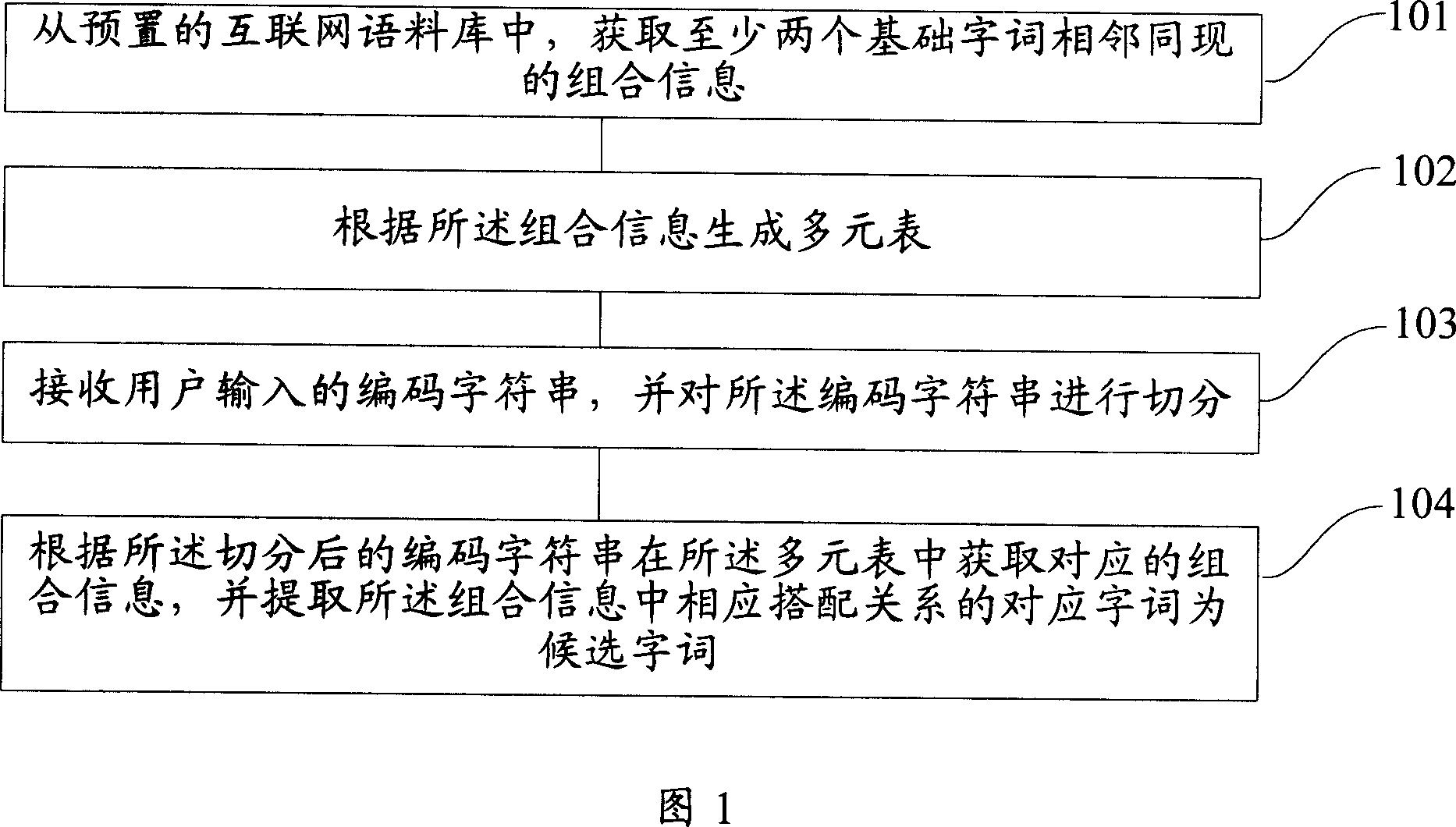

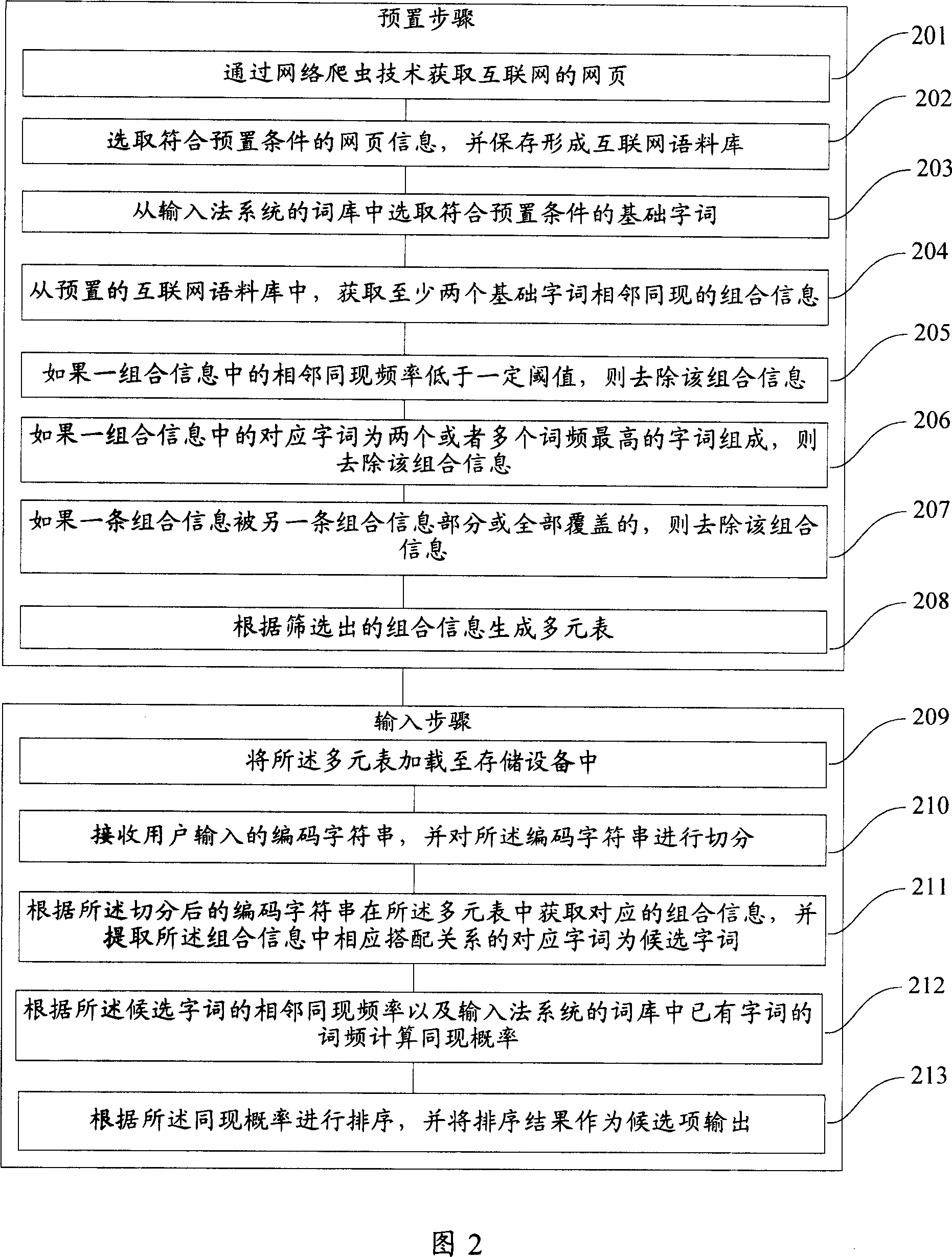

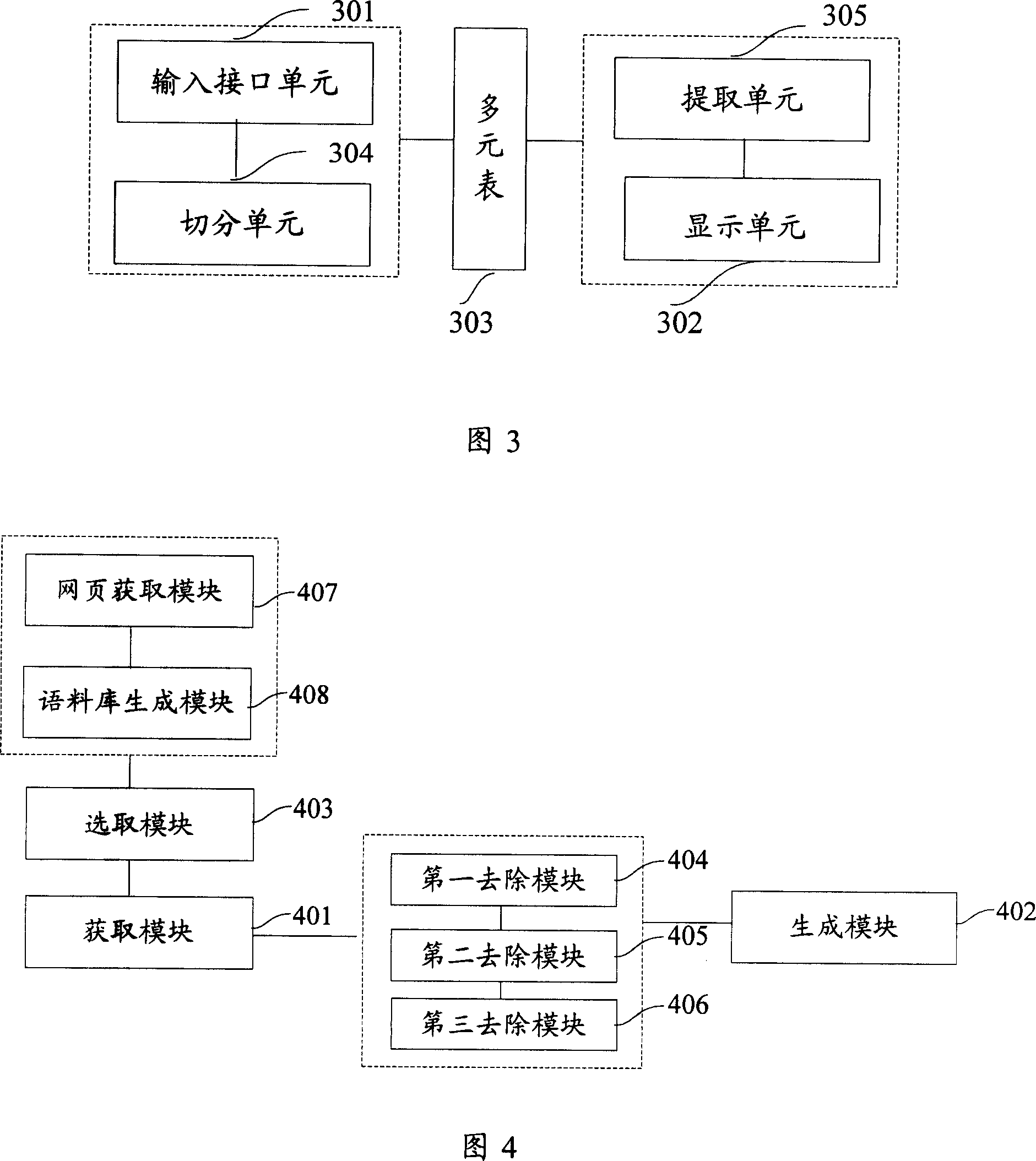

Intelligent word input method and input method system and updating method thereof

ActiveCN101013443AGuaranteed accuracyGuaranteed representationSpecial data processing applicationsInput/output processes for data processingUser inputThe Internet

The invention discloses an intelligent word-group input method in input system, including get the portfolio information between at least two basic words from the pre-set internet corpus, which including the combination relationship and adjacent same frequency of (the least two) words; generate multi-table according to the portfolio information; receive the user input encoding string, and segment the encoding string; get the combination information in the multi-table according to segmentation string, and extract the words with the corresponding relations in combination information as the candidate words. The invention can improve the first selected words hit rate of user input words, phrases, short sentences or long sentences to avoid ineffective repeat calculation process to improve the efficiency of the user input.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

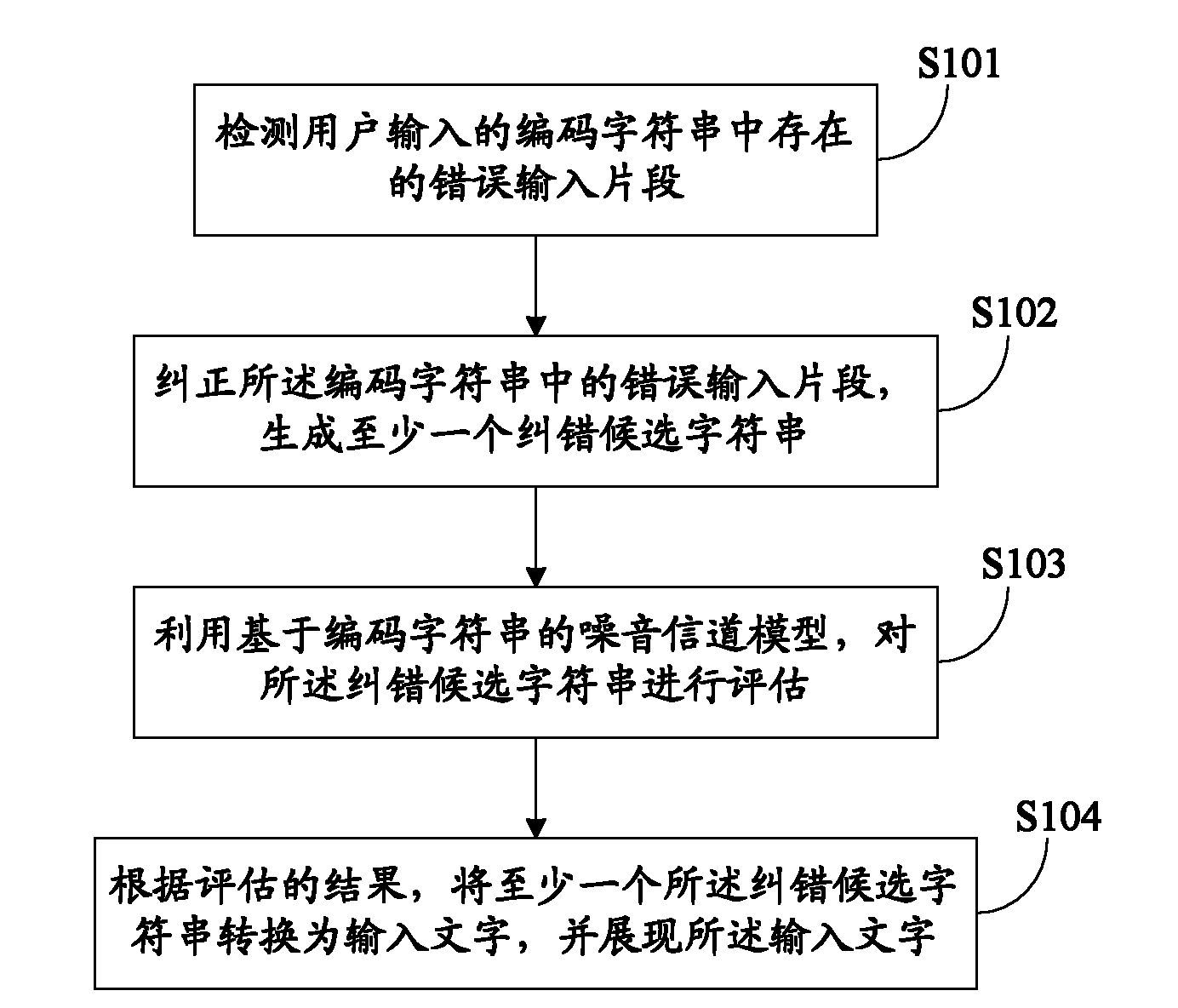

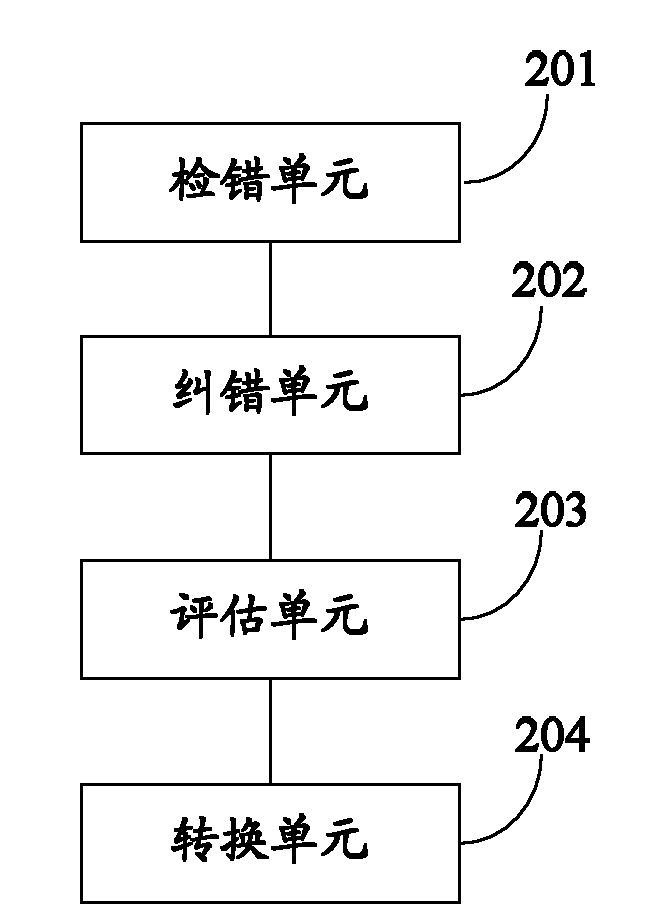

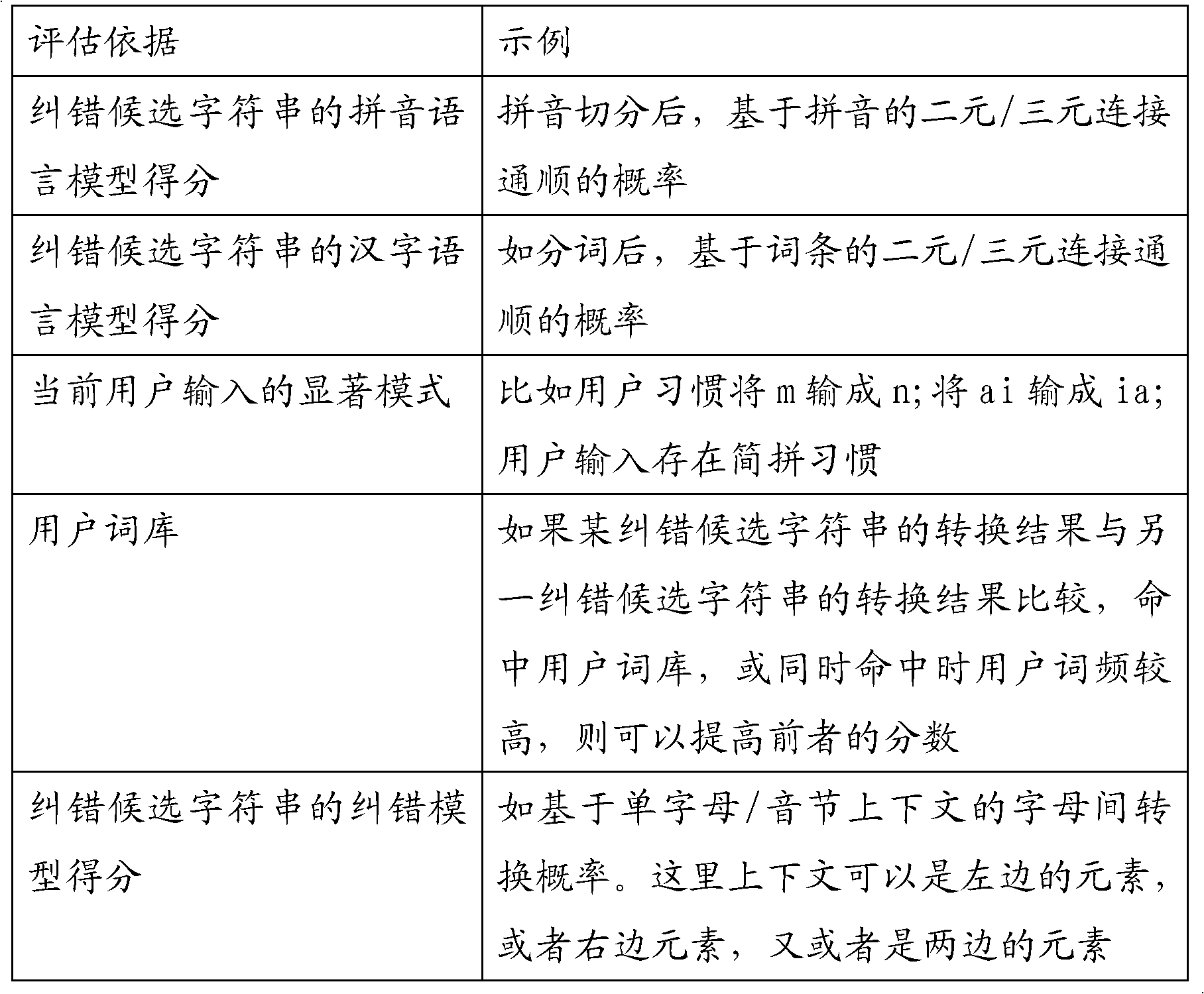

Method and system for correcting error of word input

ActiveCN102156551AImprove effectiveness or hit rateImprove implementation efficiencyInput/output processes for data processingSpeech recognitionAlgorithm

The invention discloses a method and a system for correcting an error of word input. The method comprises the following steps of: detecting an error input section in an encoded characteristic string input by a user; correcting the error input section in the encoded characteristic string and generating at least one error-corrected candidate character string; estimating the error-corrected candidate character string by using a noise channel model based on the encoded character string; and converting the at least one error-corrected candidate character string into an input word according to an estimation result, and displaying the input word. By the invention, the effectiveness or hit rate of error correction can be improved.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

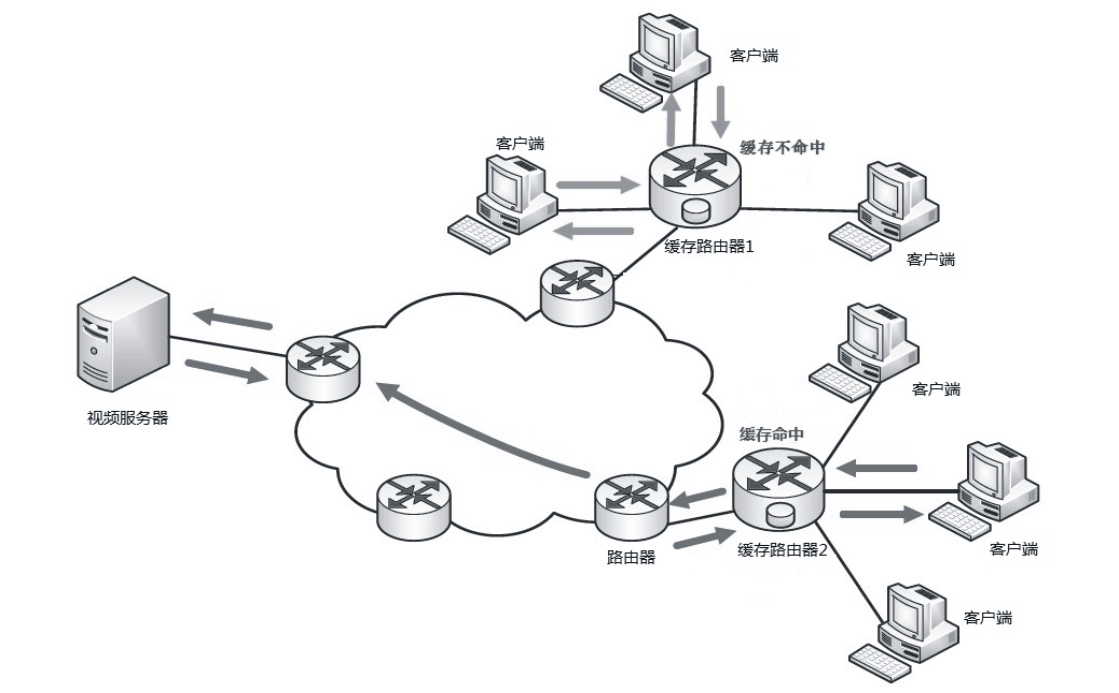

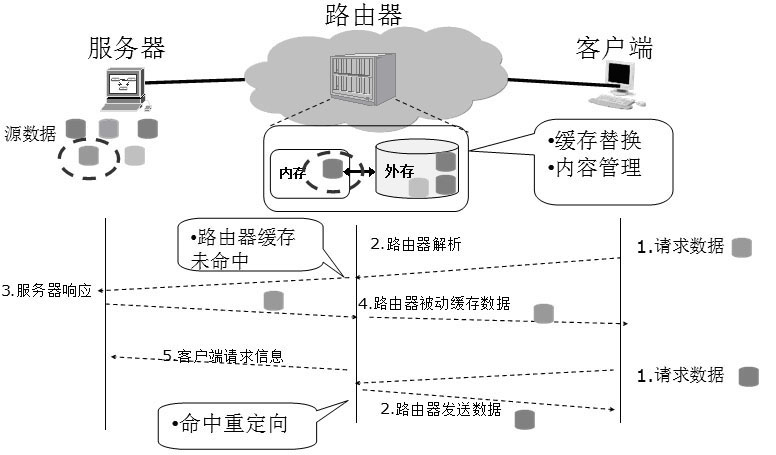

Streaming media system accelerating method based on router cache

InactiveCN101860550AImprove experienceSmall playback initial delayPulse modulation television signal transmissionTransmissionEnd systemBase function

The invention aims to provide a streaming media system accelerating method based on the router cache, which not only adopts the basic function of a router for storage and transfer, but also adopts the functions of the router to buffer the memory of streaming media data and to respond to requests of a user. Therefore, the streaming media data are closer to the user, thereby reducing the initial delay of the low-end system in video playing and easing the burden of the server. The invention can improve the performance of the streaming media system, reduce the total bandwidth consumption on the network and the access delay of the client, and not only reduce the load of the video server but also increase the total number of the users the system can provide service for.

Owner:FUDAN UNIV

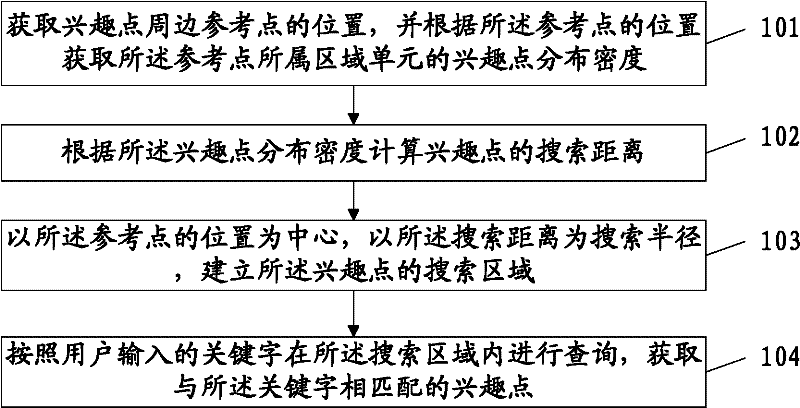

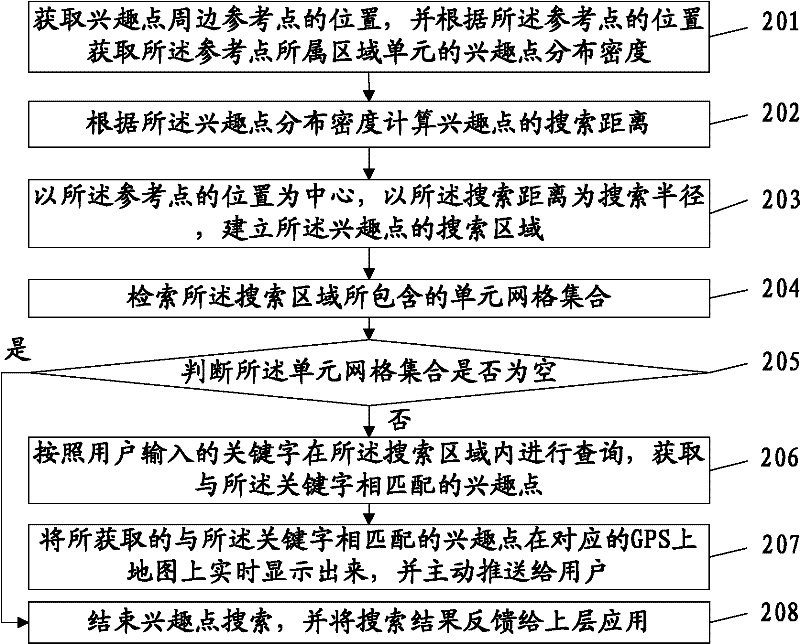

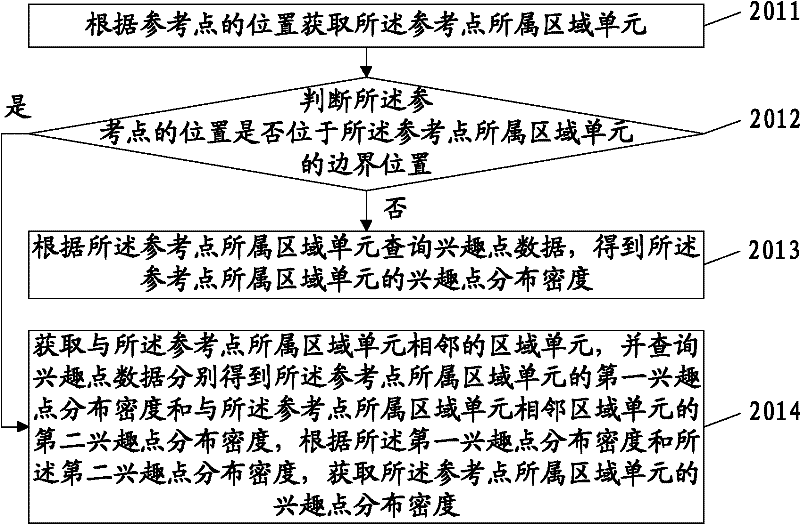

Periphery searching method and device of points of interest

ActiveCN102176206AGuaranteed hit rateImprove hit rateInstruments for road network navigationSpecial data processing applicationsData miningAlgorithm

The embodiment of the invention discloses a periphery searching method and device of points of interest, relating to the field of navigation. The periphery searching method and device of the points of interest can reduce the searching range and POI (point of interest) searching time in an area with higher POI density on the premise of ensuring a certain hit ratio, and enlarge the searching area and improve the hit ratio of POI searching in an area with low POI density. The periphery searching method of the points of interest comprises the steps of: obtaining the position of a reference point around the point of interest, and obtaining the distribution density of points of interest in the area unit to which the reference point belongs according to the position of the reference point; calculating the searching distance of the points of interest according to the distribution density of the points of interest; creating a searching area of the points of interest by taking the position of the reference point as the center and the searching distance as the searching radius; and searching the points of interest in the searching area according to keywords input by a user, and obtaining thepoint of interest matched with the key words. The periphery searching method and device of the points of interest, disclosed by the embodiment of the invention, are mainly used in the periphery searching process of the points of interest.

Owner:SHENZHEN TRANSSION HLDG CO LTD

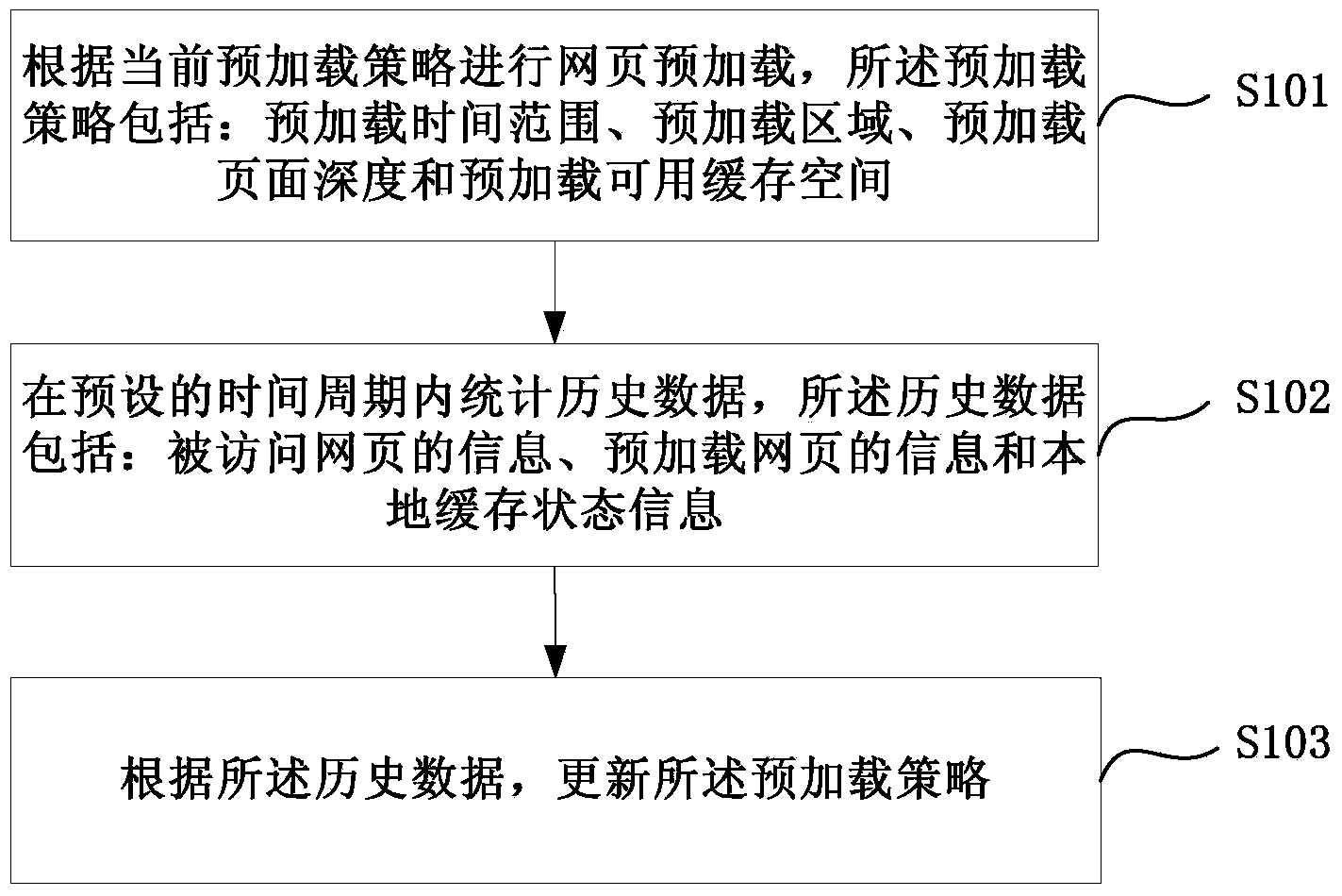

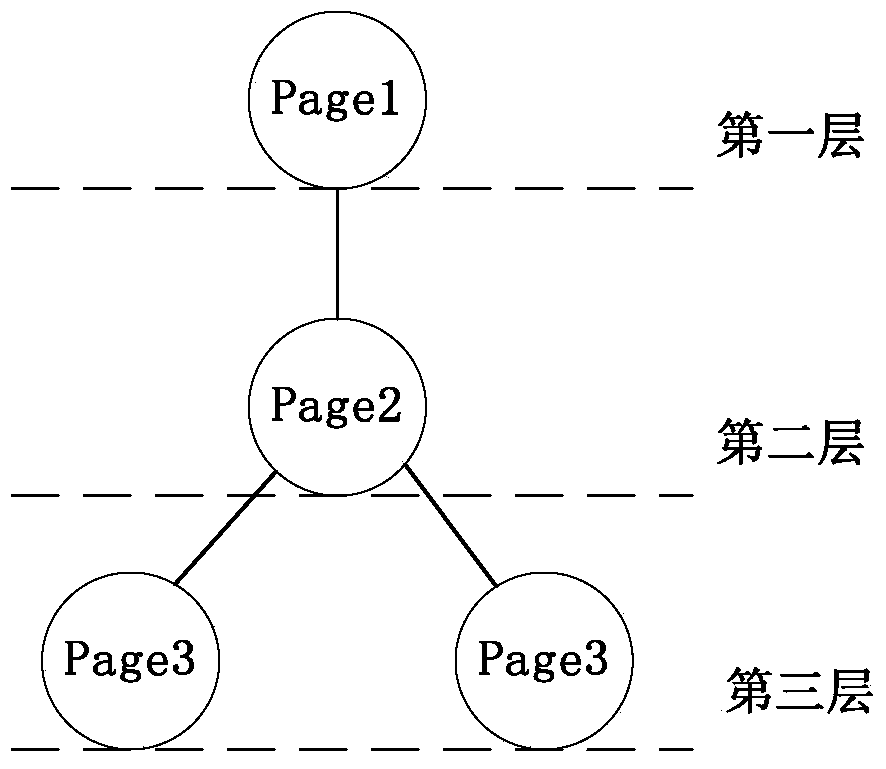

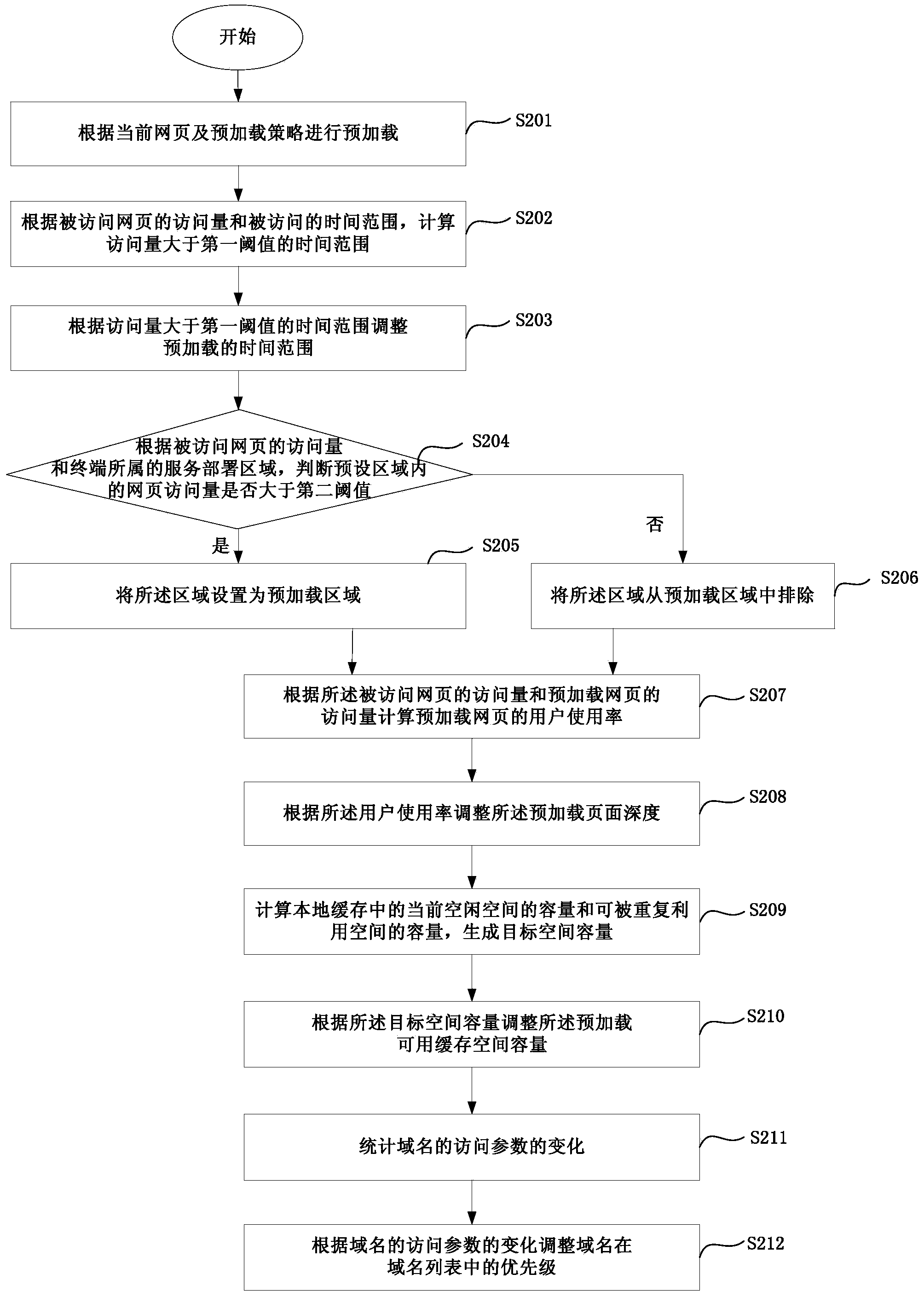

Webpage preloading method and device

ActiveCN103729438AAdjust priorityAdapt to access conditions in real timeSpecial data processing applicationsTime rangeLoad time

The embodiment of the invention discloses a webpage preloading method and device. The webpage preloading method includes the steps that webpage preloading is carried out according to a current preloading strategy, wherein the preloading strategy relates to the aspects of a preloading time range, preloading areas, preloading webpage depths and available spaces for preloading; statistics of historical data is carried out within a preset time period, wherein the historical data comprise information of an accessed webpage, information of a preloaded webpage and information of local cache states; according to the historical data, the preloading strategy is updated. According to the webpage preloading method, the preloading strategy can be automatically updated through the statistics of the preloaded historical data within the preset time period according to changes of the historical data, so the preloading strategy can adapt to web and user access conditions in real time, and hit accuracy rate of preloading is improved.

Owner:ALIBABA (CHINA) CO LTD

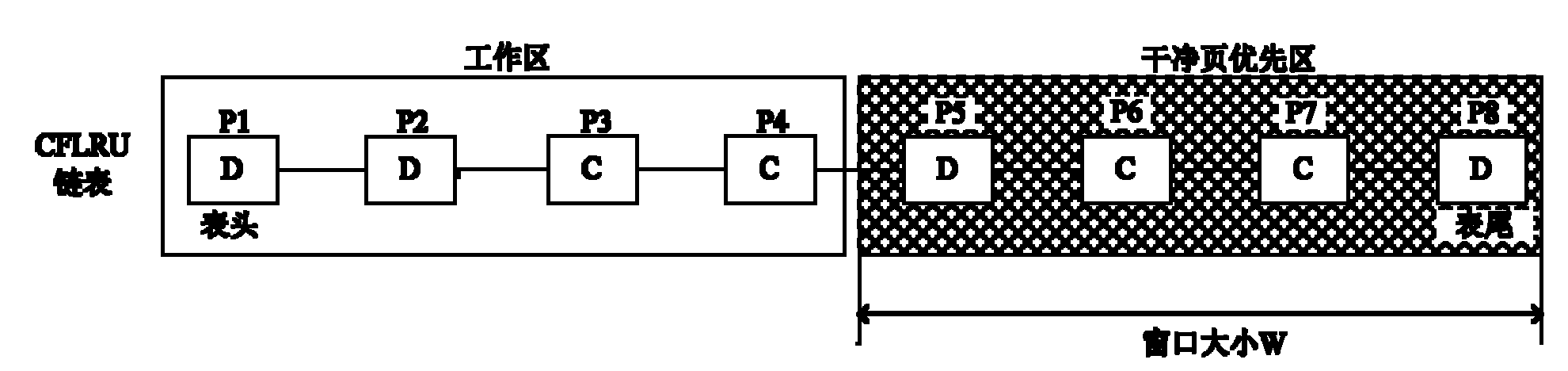

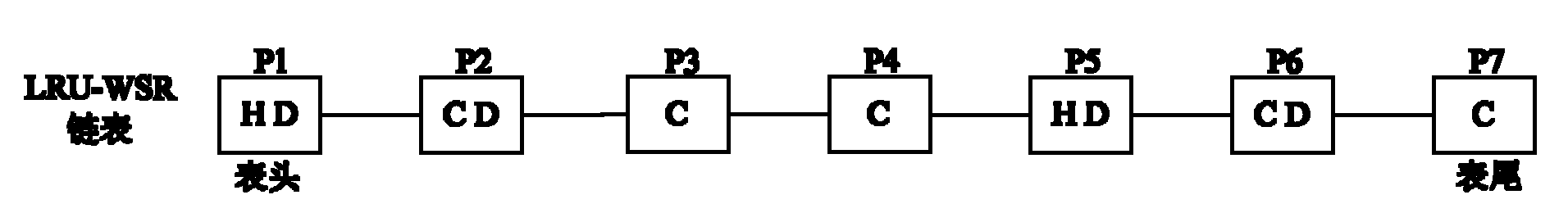

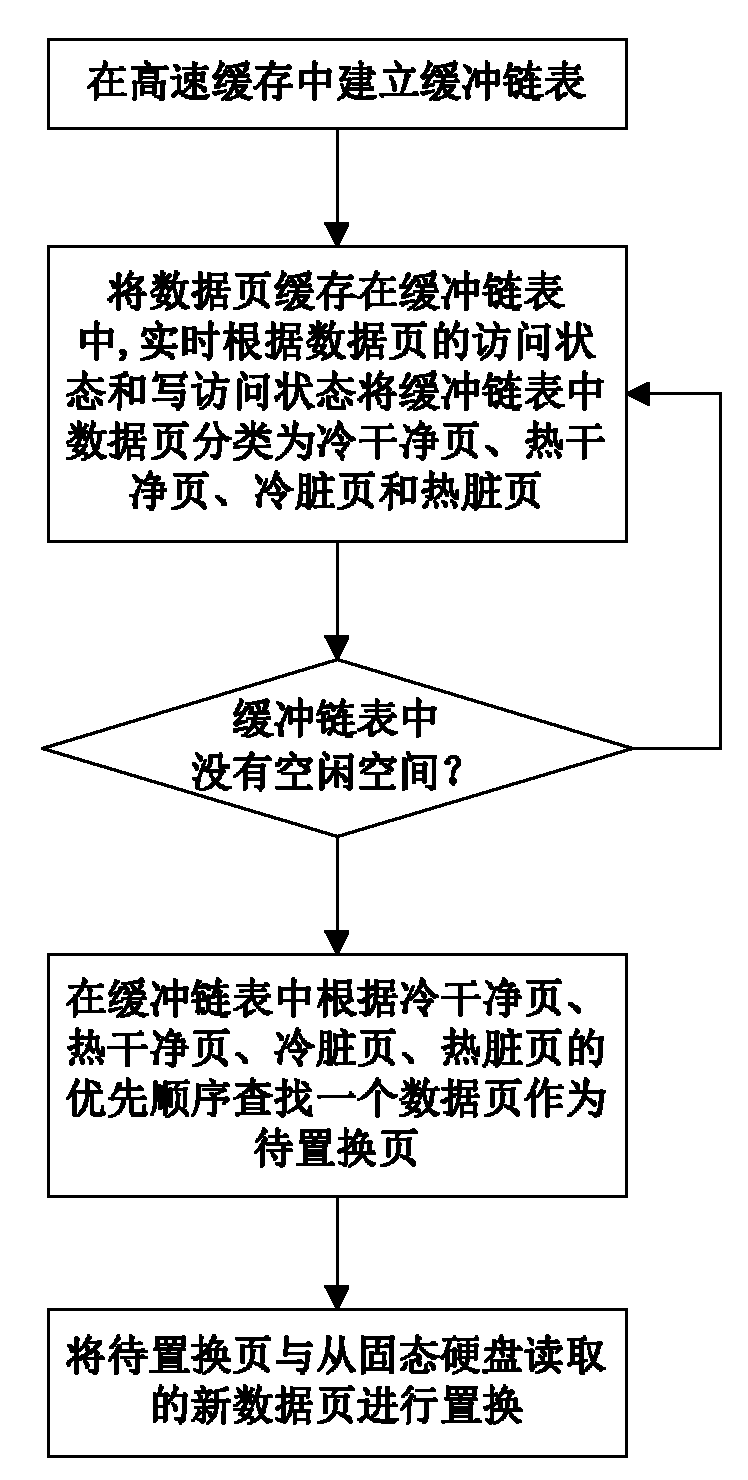

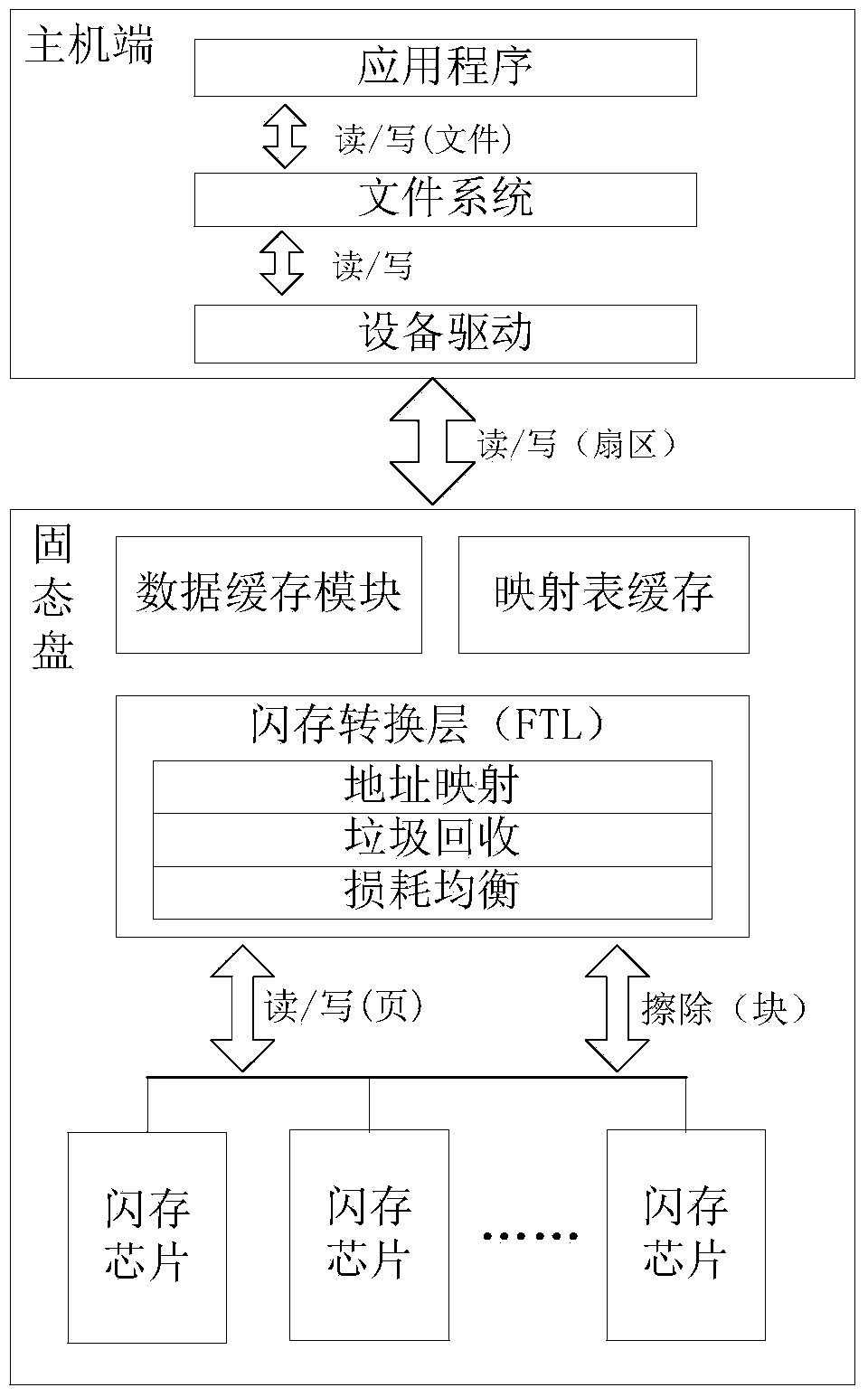

Data page caching method for file system of solid-state hard disc

ActiveCN102156753AReduce overheadImprove hit rateSpecial data processing applicationsDirty pageExternal storage

The invention discloses a data page caching method for a file system of a solid-state hard disc, which comprises the following implementation steps of: (1) establishing a buffer link list used for caching data pages in a high-speed cache; (2) caching the data pages read in the solid-state hard disc in the buffer link list for access, classifying the data pages in the buffer link list into cold clean pages, hot clean pages, cold dirty pages and hot dirty pages in real time according to the access states and write access states of the data pages; (3) firstly searching a data page as a page to be replaced in the buffer link list according to the priority of the cold clean pages, the hot clean pages, the cold dirty pages and the hot dirty pages, and replacing the page to be replaced with a new data page read from the solid-state hard disc when a free space does not exist in the buffer link list. In the invention, the characteristics of the solid-state hard disc can be sufficiently utilized, the performance bottlenecks of the external storage can be effectively relieved, and the storage processing performance of the system can be improved; moreover, the data page caching method has the advantages of good I / O (Input / Output) performance, low replacement cost for cached pages, low expense and high hit rate.

Owner:NAT UNIV OF DEFENSE TECH

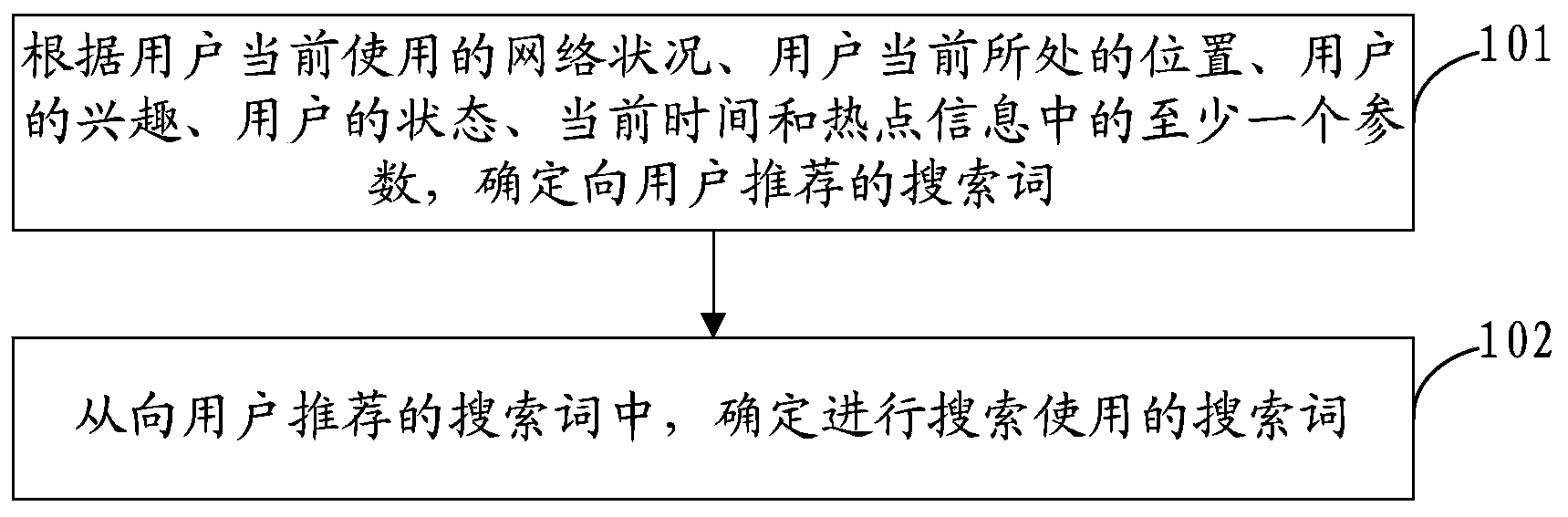

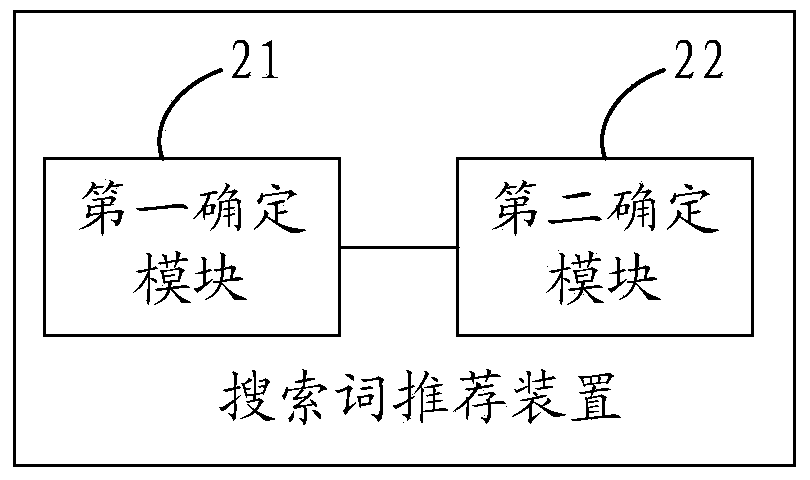

Search term recommendation method and device

InactiveCN104166700AImprove hit rateImprove efficiency in identifying search termsSpecial data processing applicationsData miningSearch terms

The invention provides a search term recommendation method and device. The search term recommendation method comprises the steps that search terms recommended to a user are determined according to at least one parameter of the condition of the network currently used by the user, the current position of the user, the interest of the user, the condition of the user, the current time and the hot spot information; the search term used for search is determined from the search terms recommended to the user. By means of the technical scheme, the efficiency of determining the search term for search is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

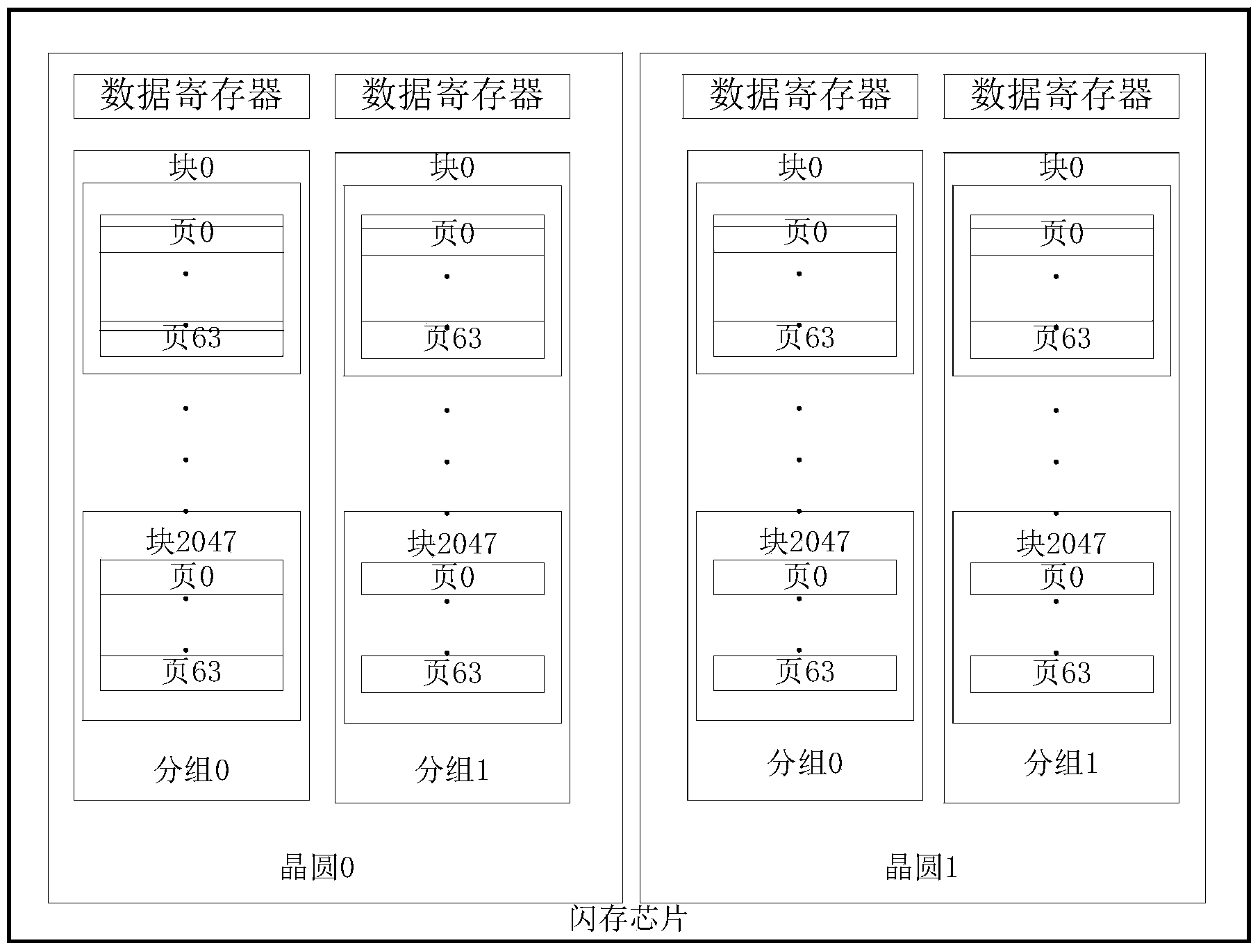

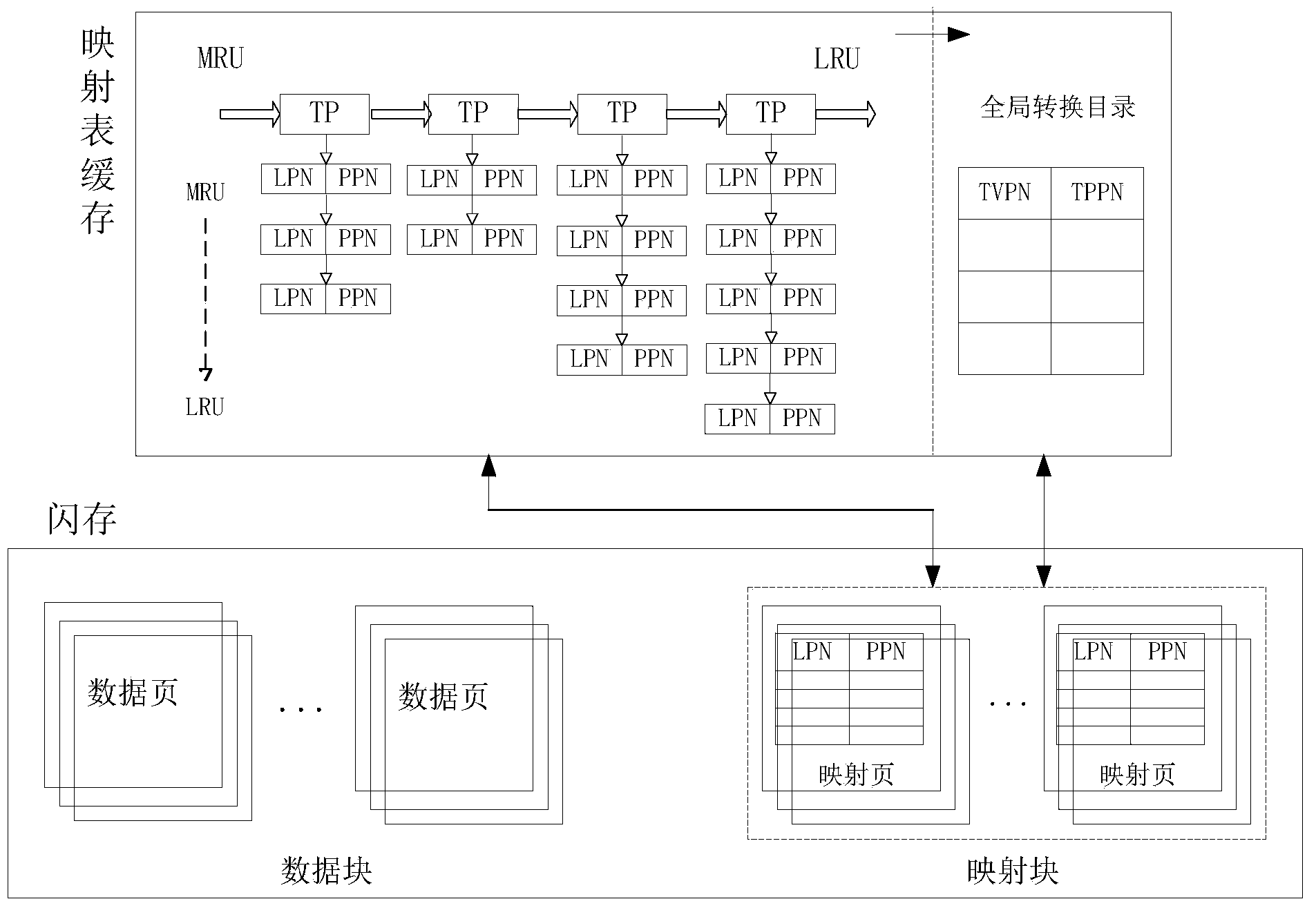

Management method of mapping table caches in solid-state disk system

InactiveCN104166634AImprove space utilizationSave storage spaceMemory architecture accessing/allocationUnauthorized memory use protectionChain typeCache management

The invention discloses a management method of mapping table caches in a solid-state disk system. For a solid-state disk with page mapping tables stored on a flash memory, a two-stage chain type organizational structure is adopted in the method, and mapping entries belonging to the same mapping page in the caches are organized together. When dirty entries in the caches need to be replaced, replaced dirty entries and other dirty entries belonging to the same mapping page with the replaced dirty entries are updated and returned to the page mapping tables of the flash memory through a batch updating method, and therefore extra overhead caused by cache replacement is greatly reduced; when the caches are not hit, a dynamic pre-fetching technology is used, the pre-fetching length is determined according to loading overhead, replacement cost and the continuous precursor number of historical access, the hit rate of the caches is increased, and extra overhead caused by cache loading is reduced. The performance of the solid-state disk is improved through batch updating and the dynamic pre-fetching technology, and the service life of the solid-state disk is prolonged.

Owner:HUAZHONG UNIV OF SCI & TECH

Method for improving page layout display performance of embedded browser

InactiveCN101221580AImprove the display effectImprove hit rateMemory adressing/allocation/relocationSpecial data processing applicationsUniform resource locatorComputer science

The invention discloses a method for improving page display performance of an embedded browser. The method comprises the following steps that: when a picture needs to be displayed in the page, whether a uniform resource locator (URL), which the picture corresponds to, exists in a memory buffer area is inquired; if the uniform resource locator exists, the data of the picture is called to display to picture; or the data of the picture is downloaded and decoded, then the URL that the picture corresponds to and the decoded data of the picture are stored in the memory buffer area, finally the picture is displayed. The method of the invention can remarkably improve the page display performance of the embedded browser.

Owner:ZTE CORP

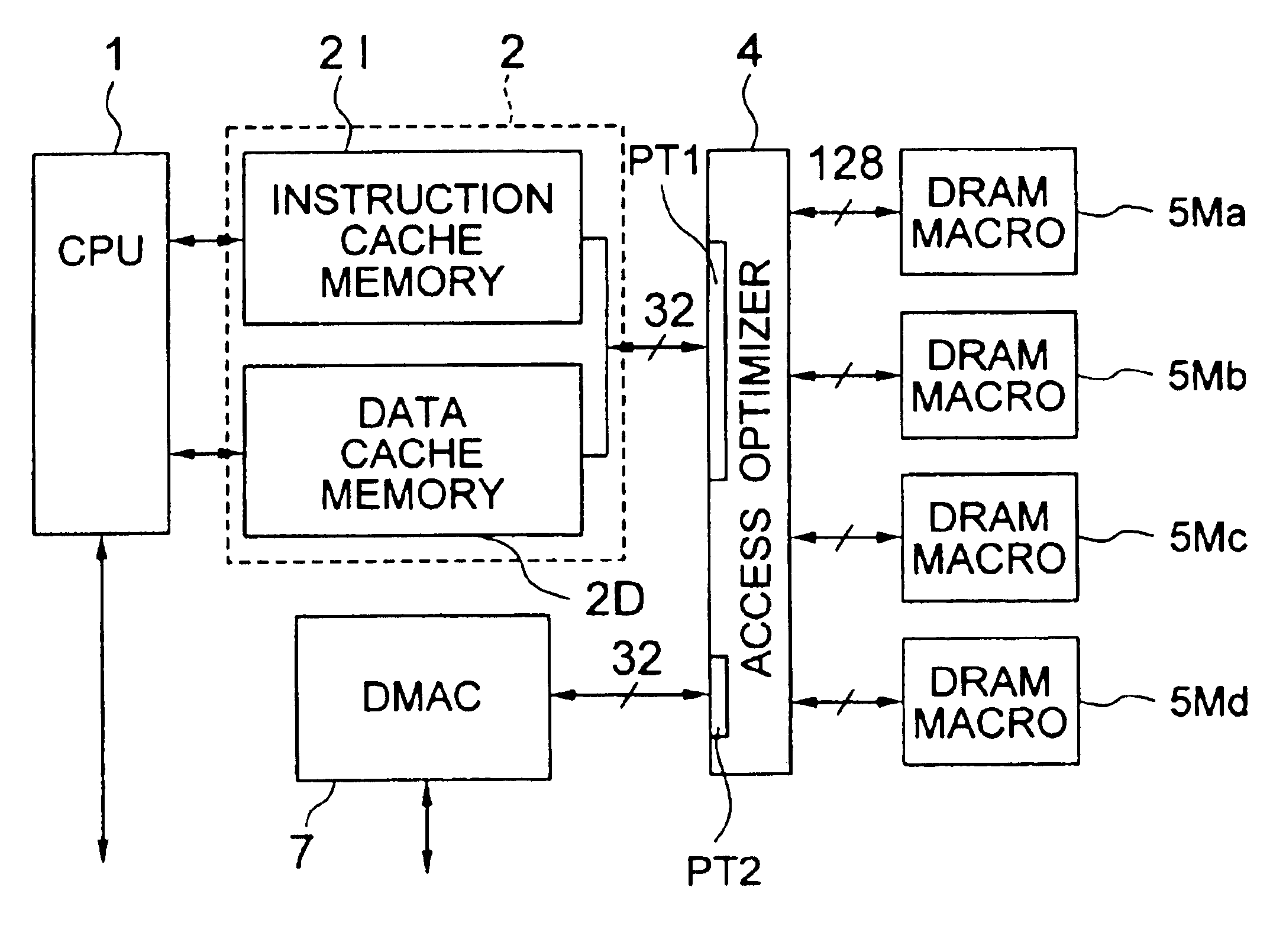

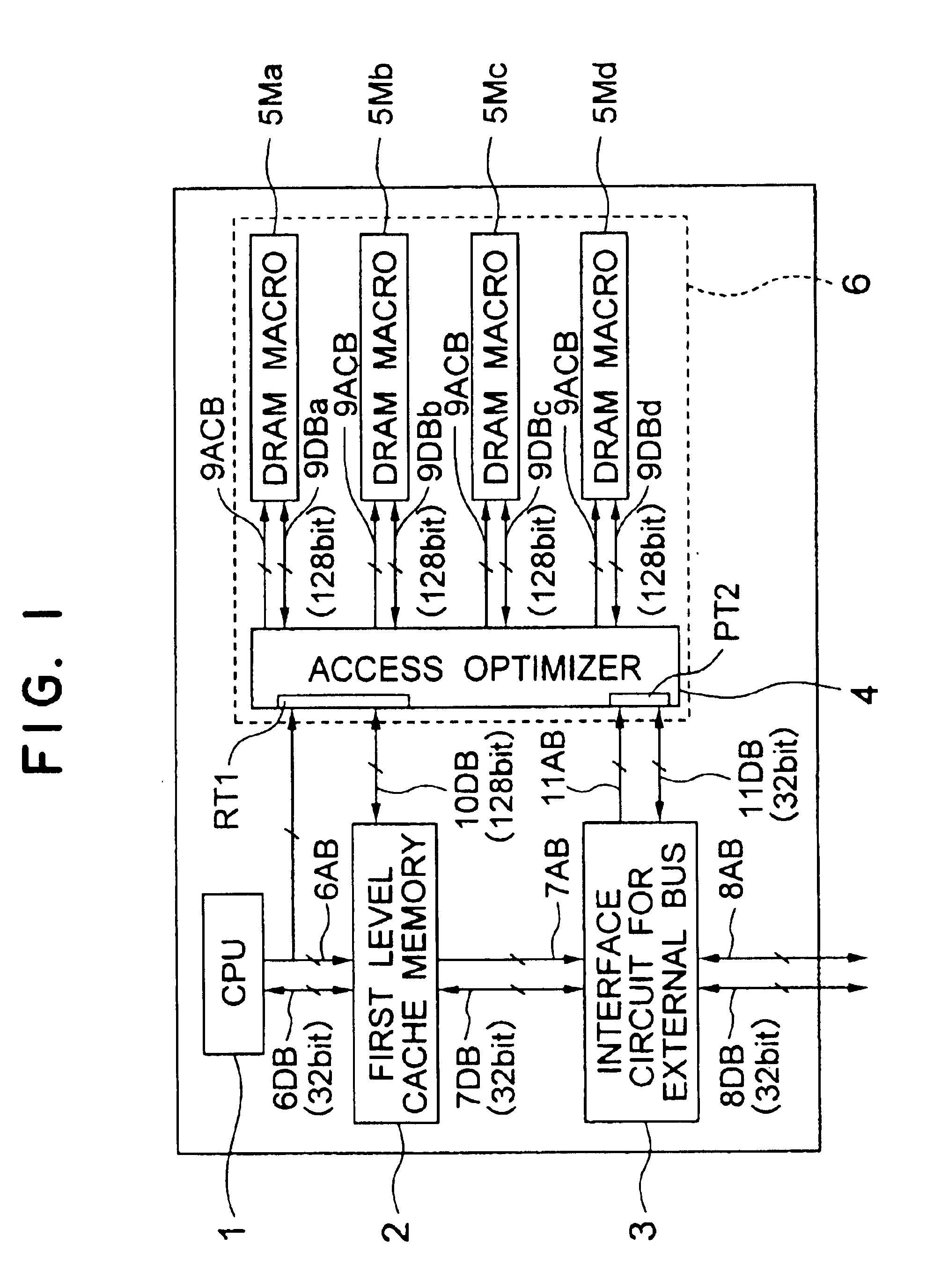

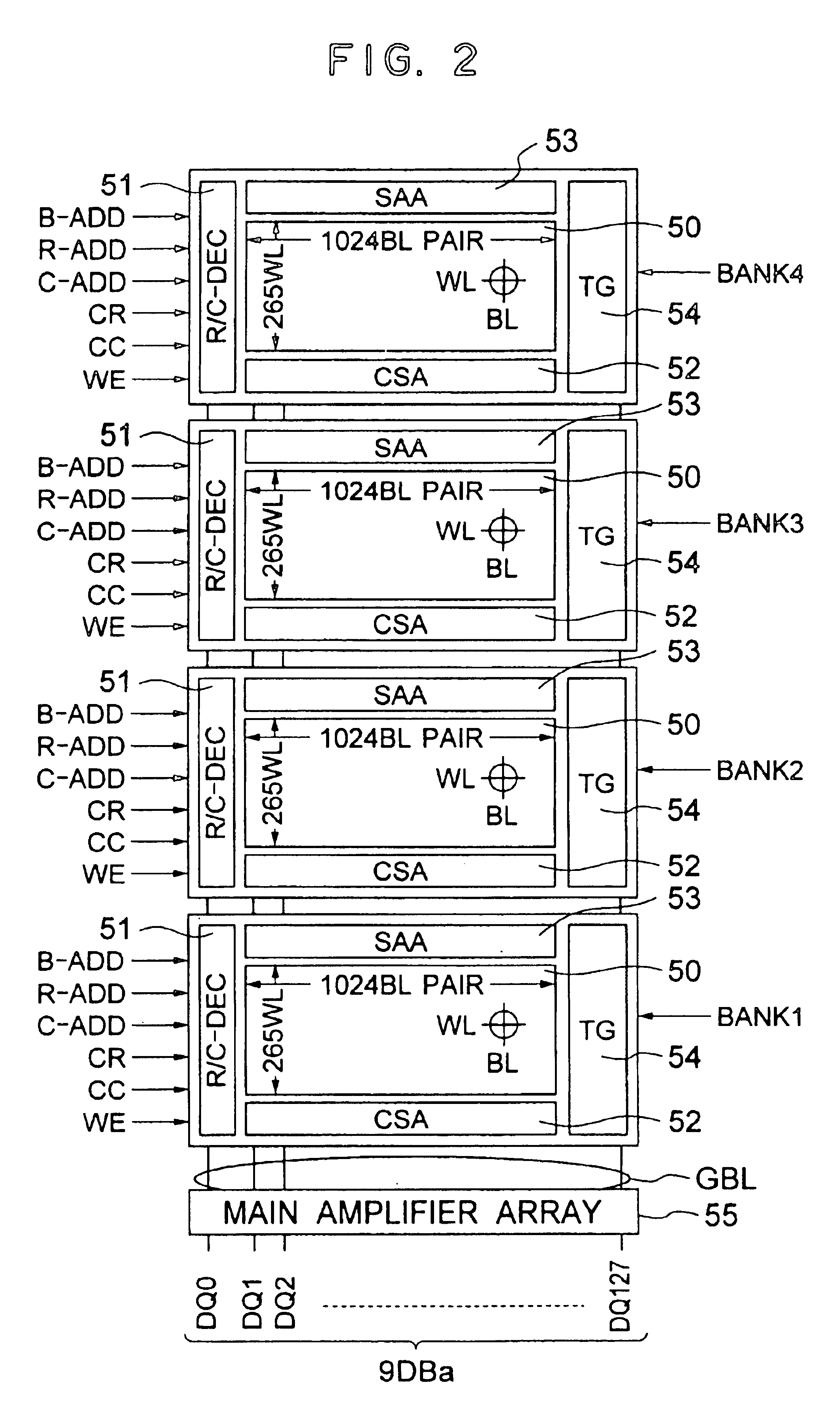

Semiconductor integrated circuit and data processing system

InactiveUS6847578B2Increase speedHigh data efficiencyMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemAudio power amplifier

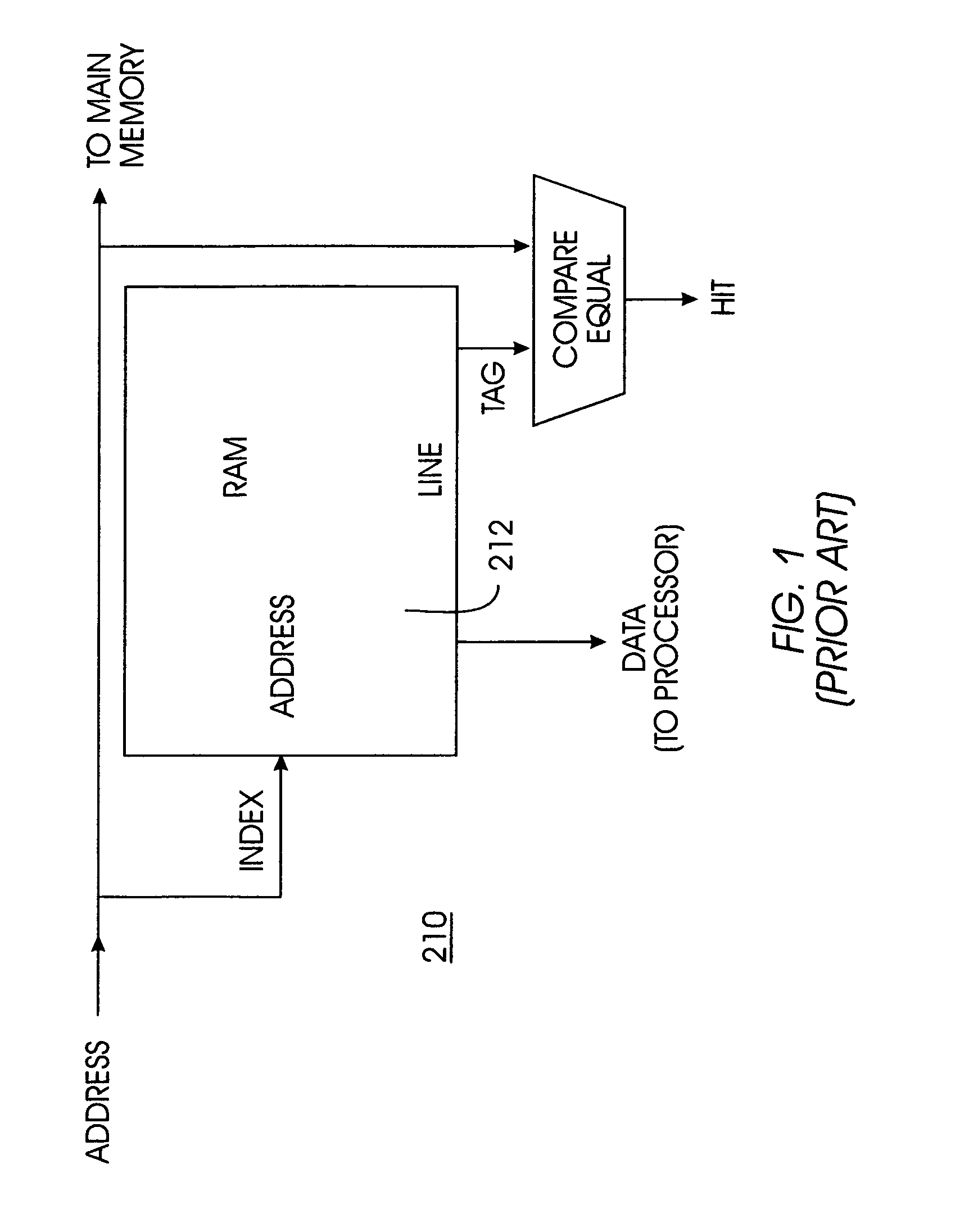

To enhance the speed of first access (read access different in word line from the previous access) to a multi-bank memory, multi-bank memory macro structures are used. Data are held in a sense amplifier for every memory bank. When access is hit to the held data, data latched by the sense amplifier are output to thereby enhance the speed of first access to the memory macro structures. Namely, each memory bank is made to function as a sense amplifier cache. To enhance the hit ratio of such a sense amplifier cache more greatly, an access controller self-prefetches the next address (an address to which a predetermined offset has been added) after access to a memory macro structure so that data in the self-prefetched address are preread by a sense amplifier in another memory bank.

Owner:RENESAS ELECTRONICS CORP

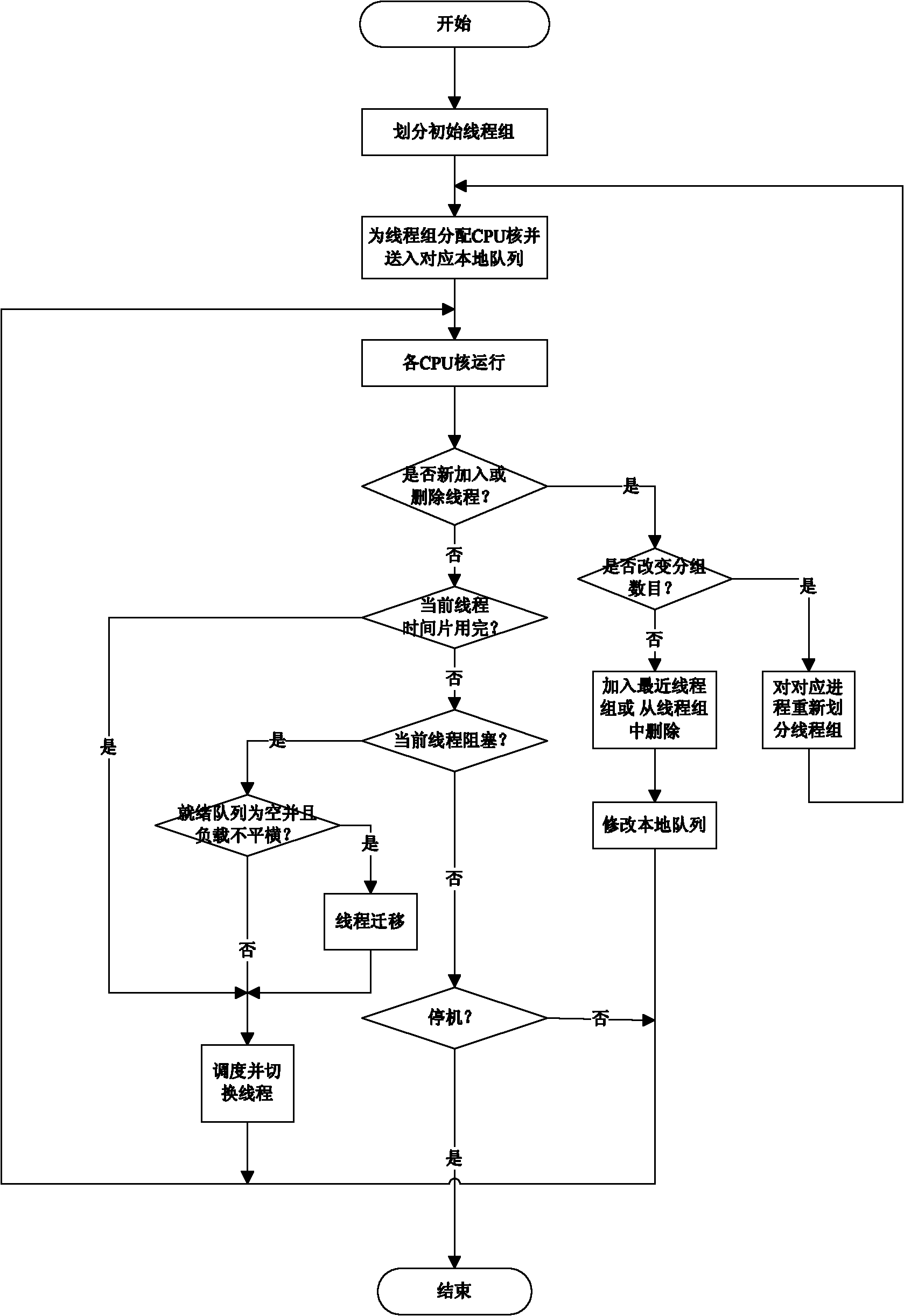

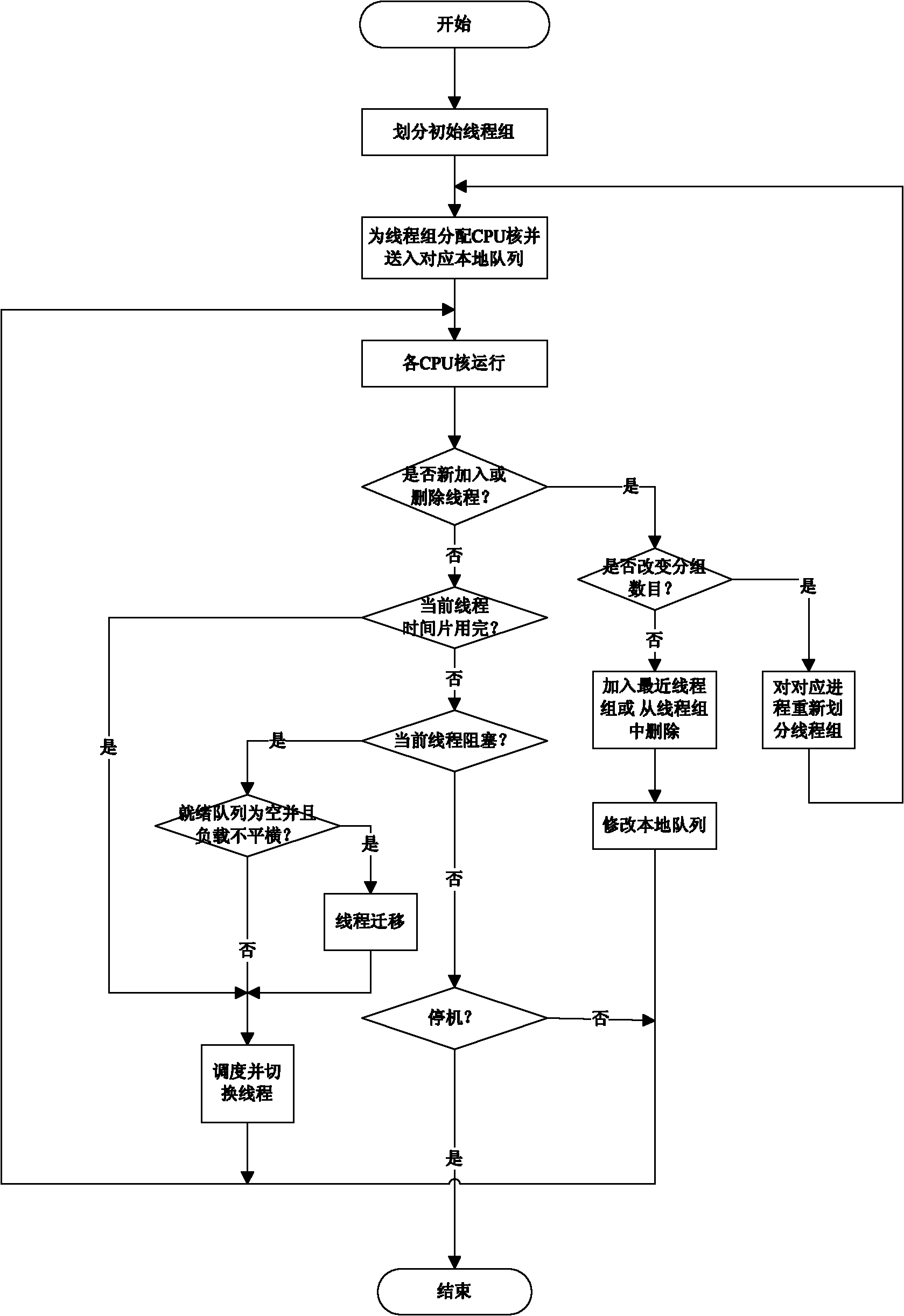

Thread group address space scheduling and thread switching method under multi-core environment

InactiveCN101923491AImprove hit rateReduce switching timesProgram initiation/switchingResource allocationParallel computingCombined use

The invention relates to a thread group address space scheduling and thread switching method under a multi-core environment in the technical field of computers, wherein a thread grouping strategy is introduced to aggregate potential threads which can be benefited through CPU core distribution and scheduling order arrangement, the address space switching frequency in the scheduling process are reduced, and the Cache hit rate is improved, thereby improving the throughput rate of a system and enhancing the overall performance of the system; adjustment can be flexibly carried out by adopting a thread grouping method according to the characteristics and the application characteristics of a hardware platform, so that thread group division adapted to a specific situation can be created; and the method also can be combined with other scheduling methods for use. In the invention, a task queue is equipped for each core of a processor by grouping the threads, the threads with scheduling benefits are sequentially scheduled, and the invention has the advantages of less scheduling spending, large task throughput and high scheduling flexibility.

Owner:SHANGHAI JIAO TONG UNIV

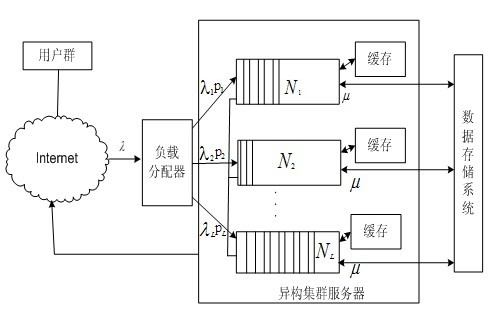

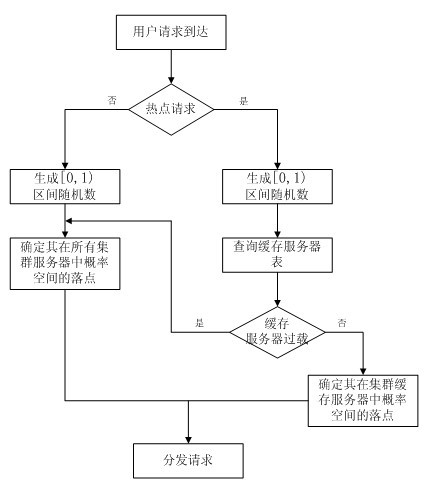

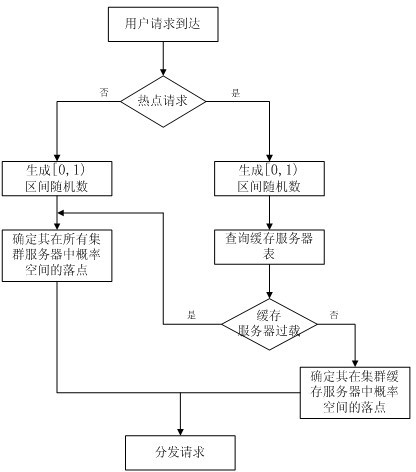

Method for balancing load of network GIS heterogeneous cluster server

The invention discloses a method for balancing a load of a network GIS (Geographic Information System) heterogeneous cluster server. In the method, built-in attributes which accord with the Zipf distribution rule and a server heterogeneous processing capacity are accessed on the basis of GIS data; the method adapts to dense access of a user in the aspect of the cluster caching distribution; when the cache hit rate is improved, the access load of hot spot data is balanced; the minimum processing cost of a cluster system, which is required by a data request service, is solved from the integral performance of a heterogeneous cluster service system, and user access response time is optimized when the load of the heterogeneous cluster server is balanced; and the distribution processing is carried out on the basis of data request contents and the access load of the hot spot data is prevented from being excessively centralized. The method disclosed by the invention highly accords with the large-scale user highly-clustered access characteristic in a network GIS, well coordinates and balances the relation between the load distribution and the access local control, ensures the service efficiency and the optimization of the load, effectively promotes the service performance of the actual network GIS and the utilization efficiency of the heterogeneous cluster service system.

Owner:WUHAN UNIV

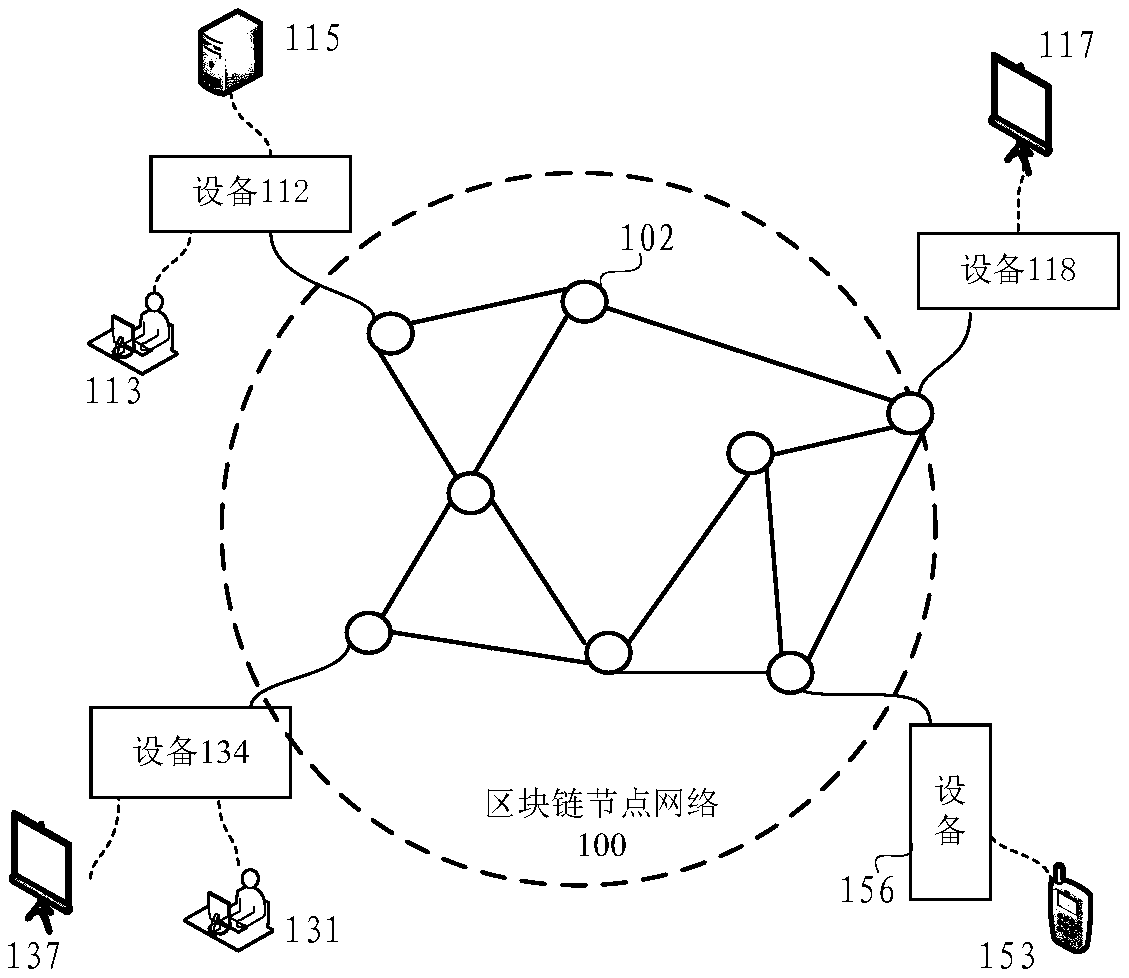

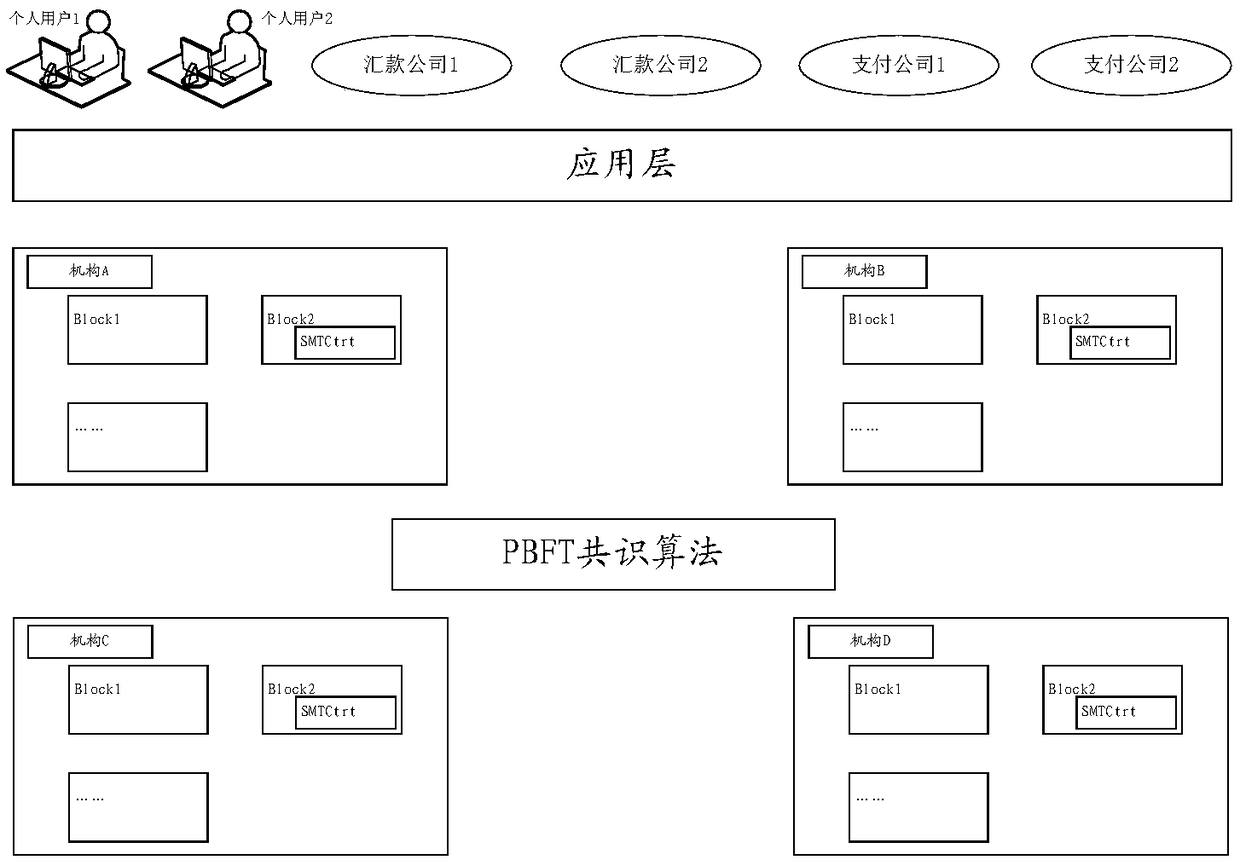

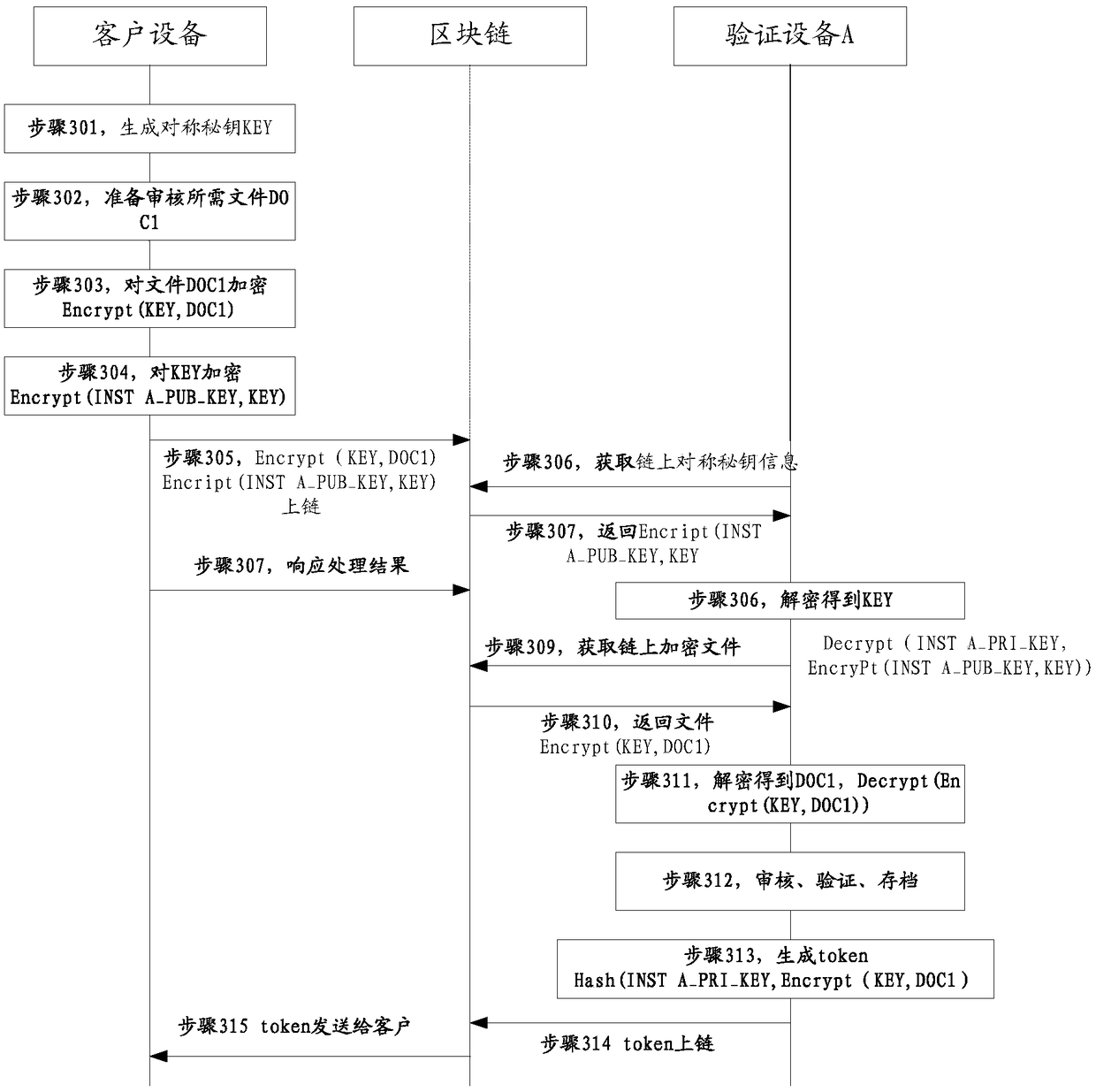

Inter-agency client verification method based on block chain, transaction supervision method and device

ActiveCN108765240AAuthentication is convenientSimplify the calling processData processing applicationsUser identity/authority verificationInter agencyEngineering

The embodiment of the invention provides an inter-agency client verification method based on a block chain, a transaction supervision method and device. In one embodiment of the invention, the equipment located by the client encrypts a file by utilizing a symmetric key, and the encrypted file and the symmetric key encrypted by utilizing the mechanism A public key are uploaded to the block chain; the equipment of the client acquires a token issued by the mechanism A based on a checking result on the file; the equipment located by the client sends the token and the address of the encrypted fileon the block chain to the equipment of the mechanism B, thereby accelerating the checking process on the file by the mechanism B. Through the method provided by the embodiment of the invention, the file calling process between agencies at present is simplified, and the hit rate of the anti-money laundering rule is increased.

Owner:ADVANCED NEW TECH CO LTD

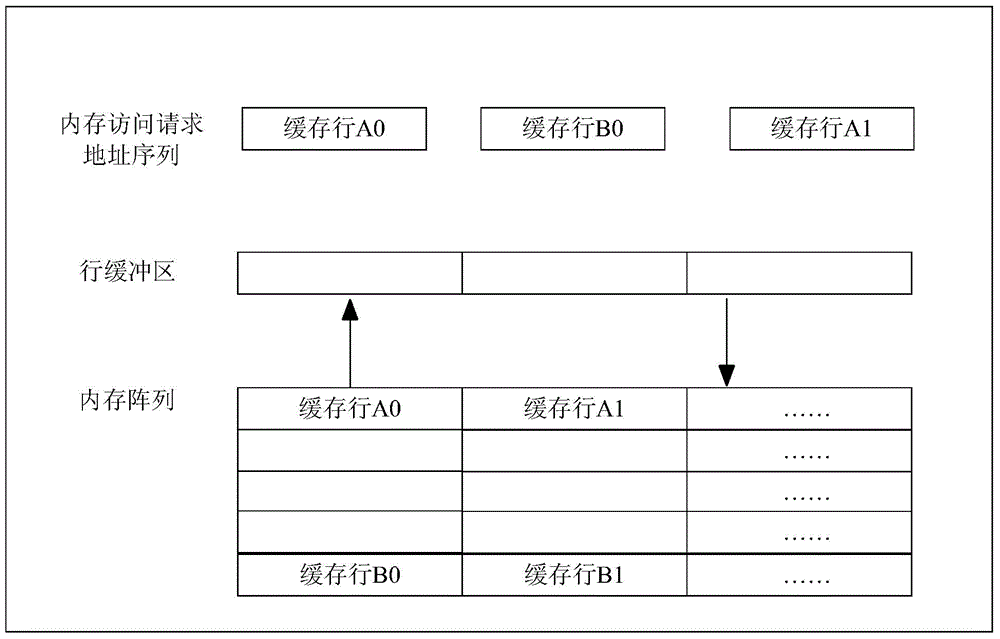

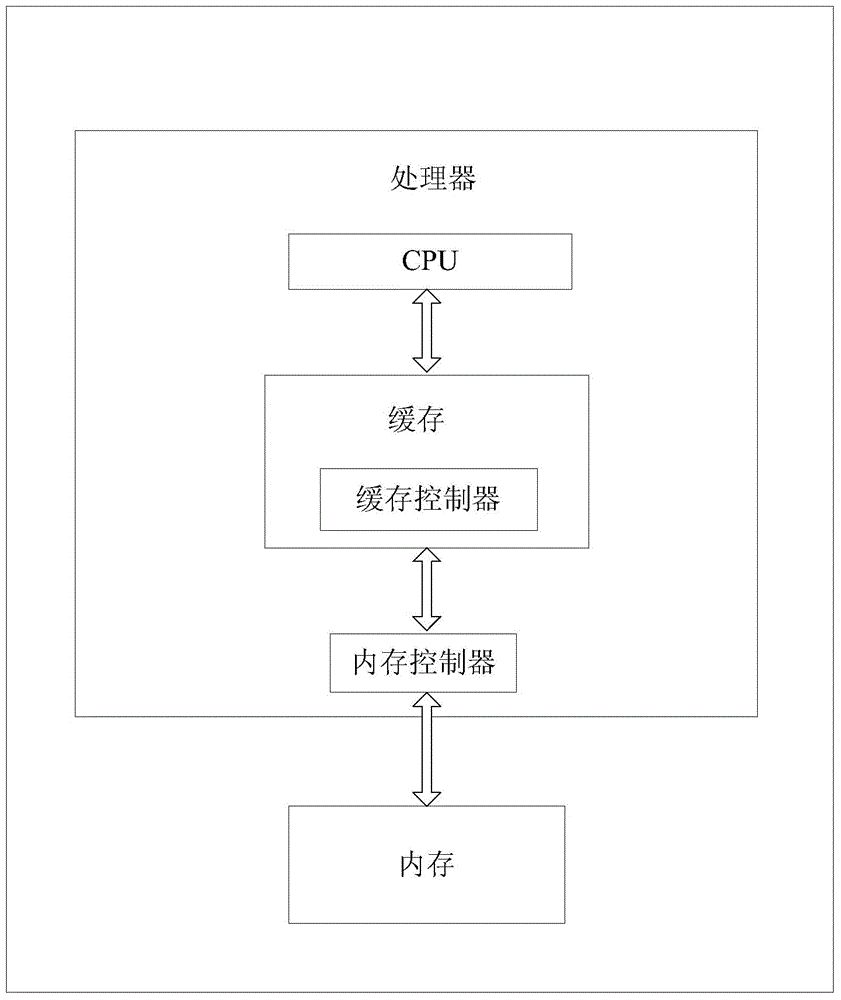

Cache replacing method, cache controller and processor

ActiveCN105095116AImprove access performanceReduce the number of writesMemory adressing/allocation/relocationParallel computingHit ratio

The embodiment of the invention provides a cache replacing method, a cache controller and a processor. The method comprises the following steps that: the cache controller determines an associated cache pool of a cache line to be replaced, wherein each associated cache row in the associated cache pool and the cache row to be replaced belong to the same memory row; a cache row to be written back is further determined from the associated cache pool according to the access information of the associated cache row; and data in the cache row to be replaced and the cache row to be written back are simultaneously written into a memory. The cache row to be replaced and the cache row to be written back belong to the same memory row, so that the hit rate of the cache region can be improved; and the memory access performance is improved. The cache controller further determines the cache row to be written back from the associated cache pool according to the access information of the associated cache row, and only the cache row to be written back in the associated cache pool is written into the memory, so that the number of the memory writing times can be reduced; and the service life of the memory is prolonged.

Owner:HUAWEI TECH CO LTD +1

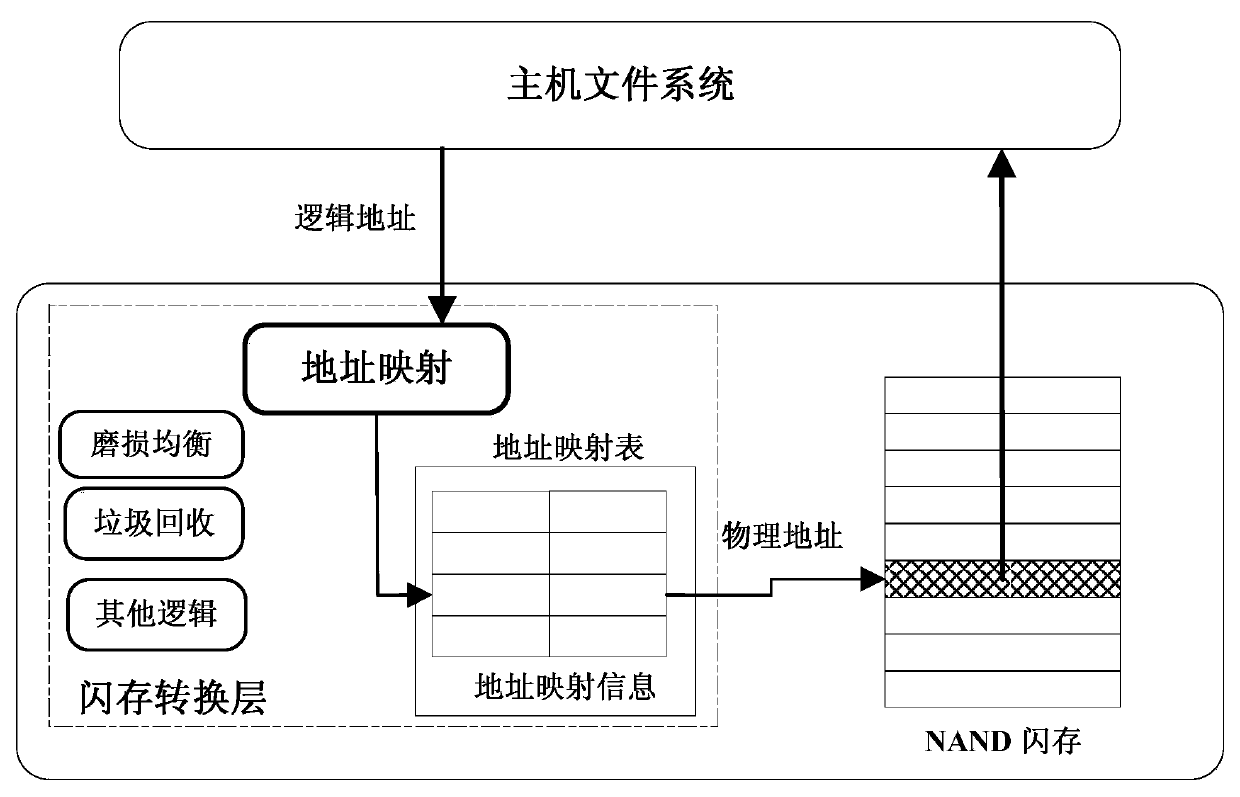

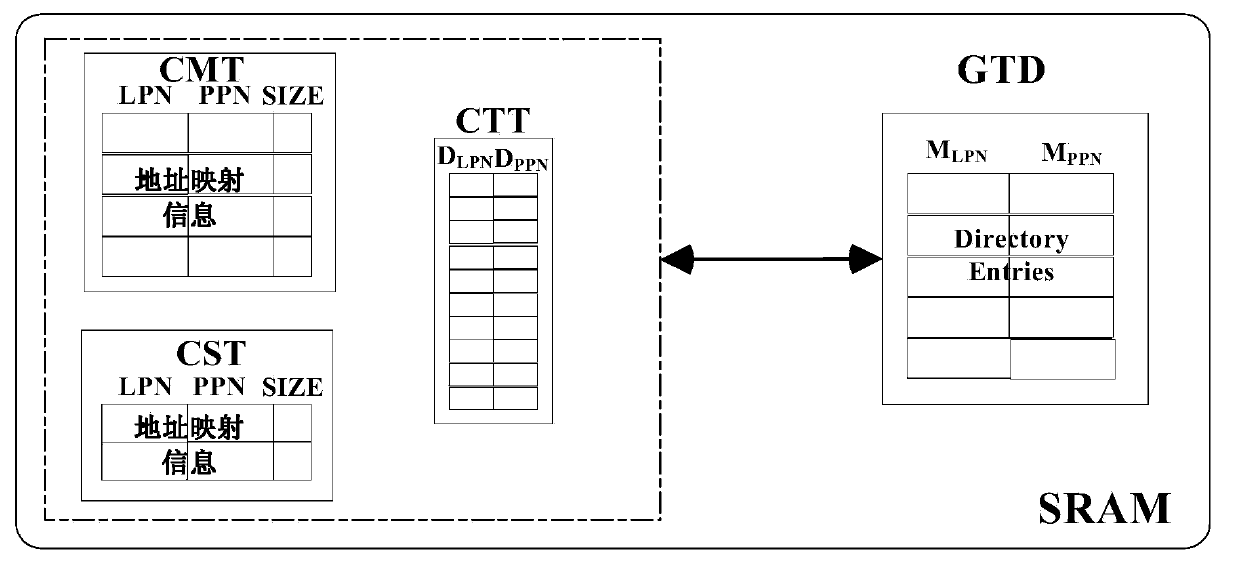

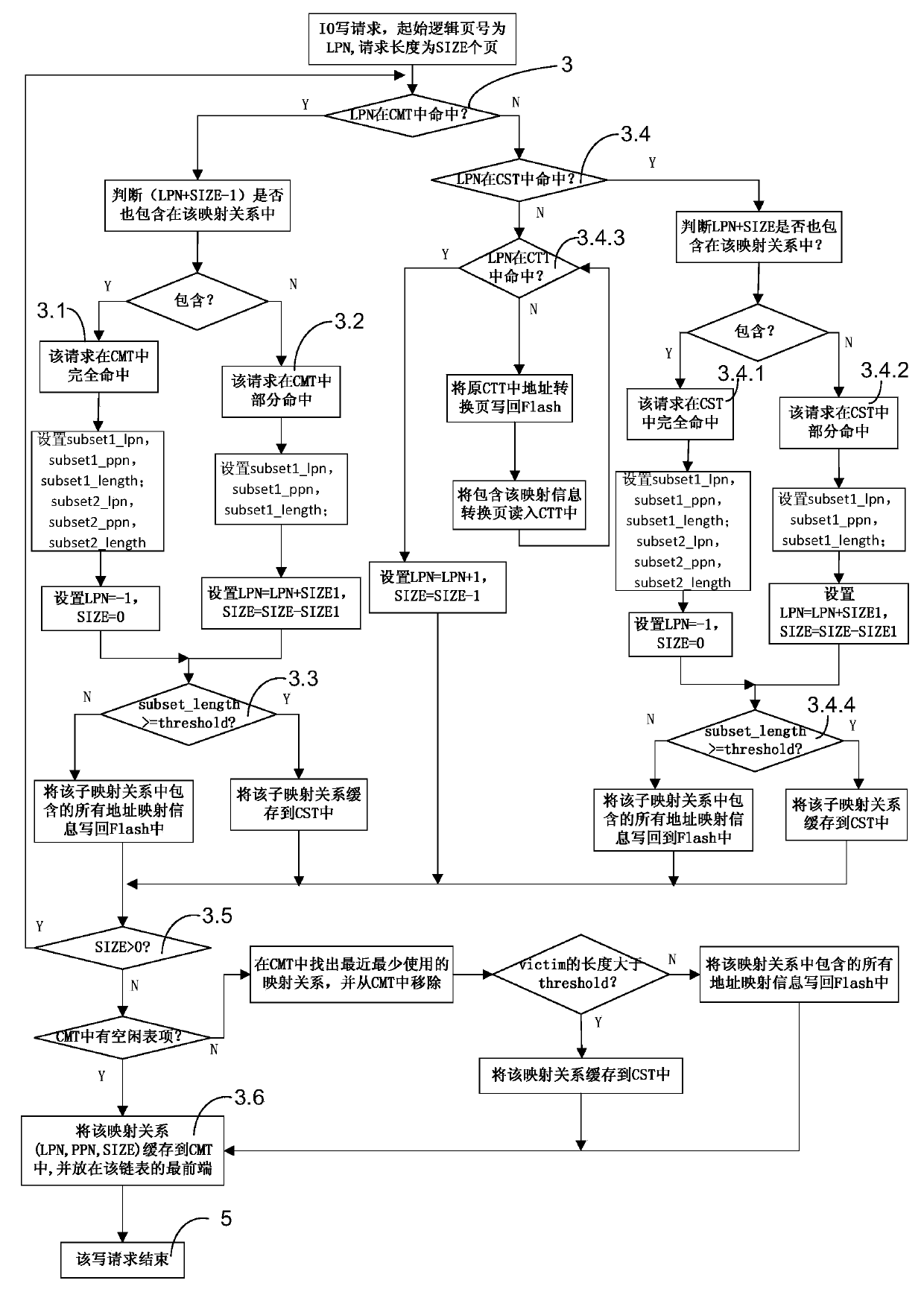

Address mapping method for flash translation layer of solid state drive

ActiveCN103425600AImprove random write performanceImprove hit rateMemory architecture accessing/allocationMemory adressing/allocation/relocationStatic random-access memorySolid-state drive

The invention discloses an address mapping method for a flash translation layer of a solid state drive. The method comprises the following steps of (1) establishing a cached mapping table, a cached split table, a cached translation table and a global translation directory in an SRAM (static random access memory) in advance; (2) receiving an IO (input / output) request, turning to a step (3) if the IO request is a write request, otherwise turning to a step (4); (3) preferentially and sequentially searching the tables in the SRAM for the hit condition of the current IO request, finishing write operation according to hit mapping information, and caching the mapping information according to a hit type and a threshold value; (4) preferentially searching the tables in the SRAM for the hit condition of the current IO request, and finishing read operation according to the hit mapping information in the SRAM. The method has the advantages that the random write performance of the solid state drive can be improved, the service life of the solid state drive can be prolonged, the efficiency of the flash translation layer is high, the hit ratio of address mapping information in the SRAM is high, and less additional read-write operation between the SRAM and the solid state drive Flash is realized.

Owner:NAT UNIV OF DEFENSE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com