Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

401 results about "Hit rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Hit rate is a metric or measure of business performance traditionally associated with sales. It is defined as the number of sales of a product divided by the number of customers who go online, Planned call, or visit a company to find out about the product.

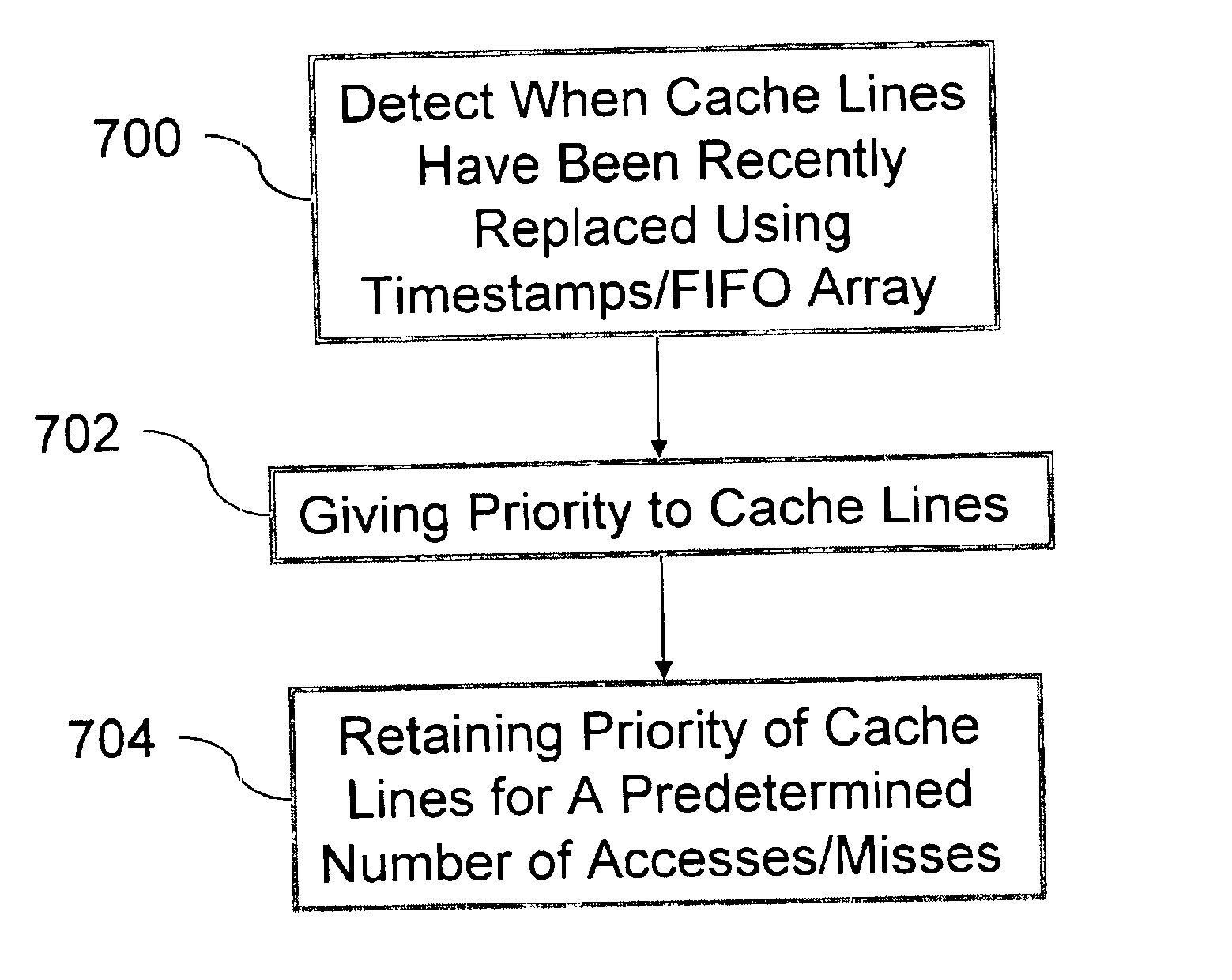

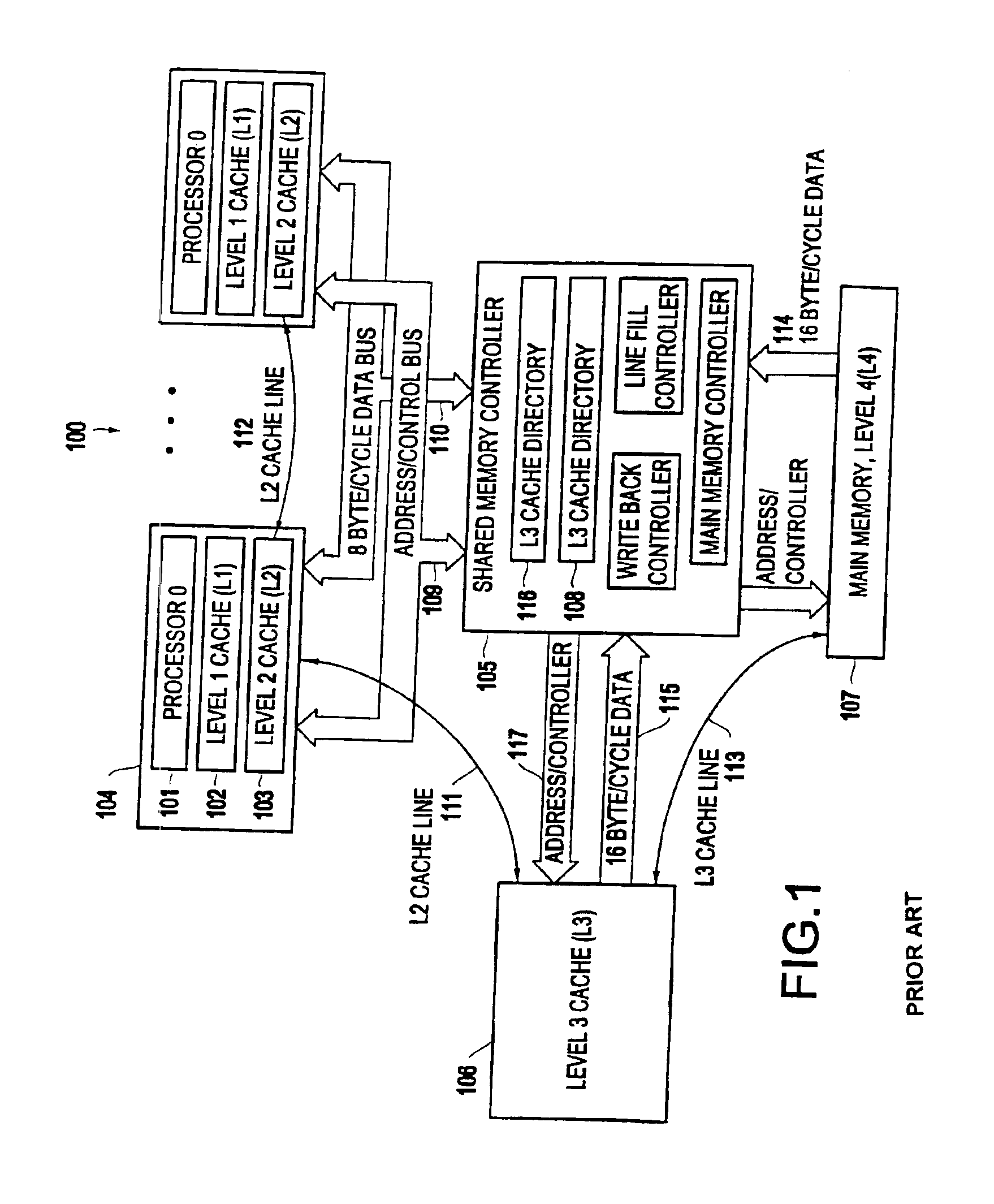

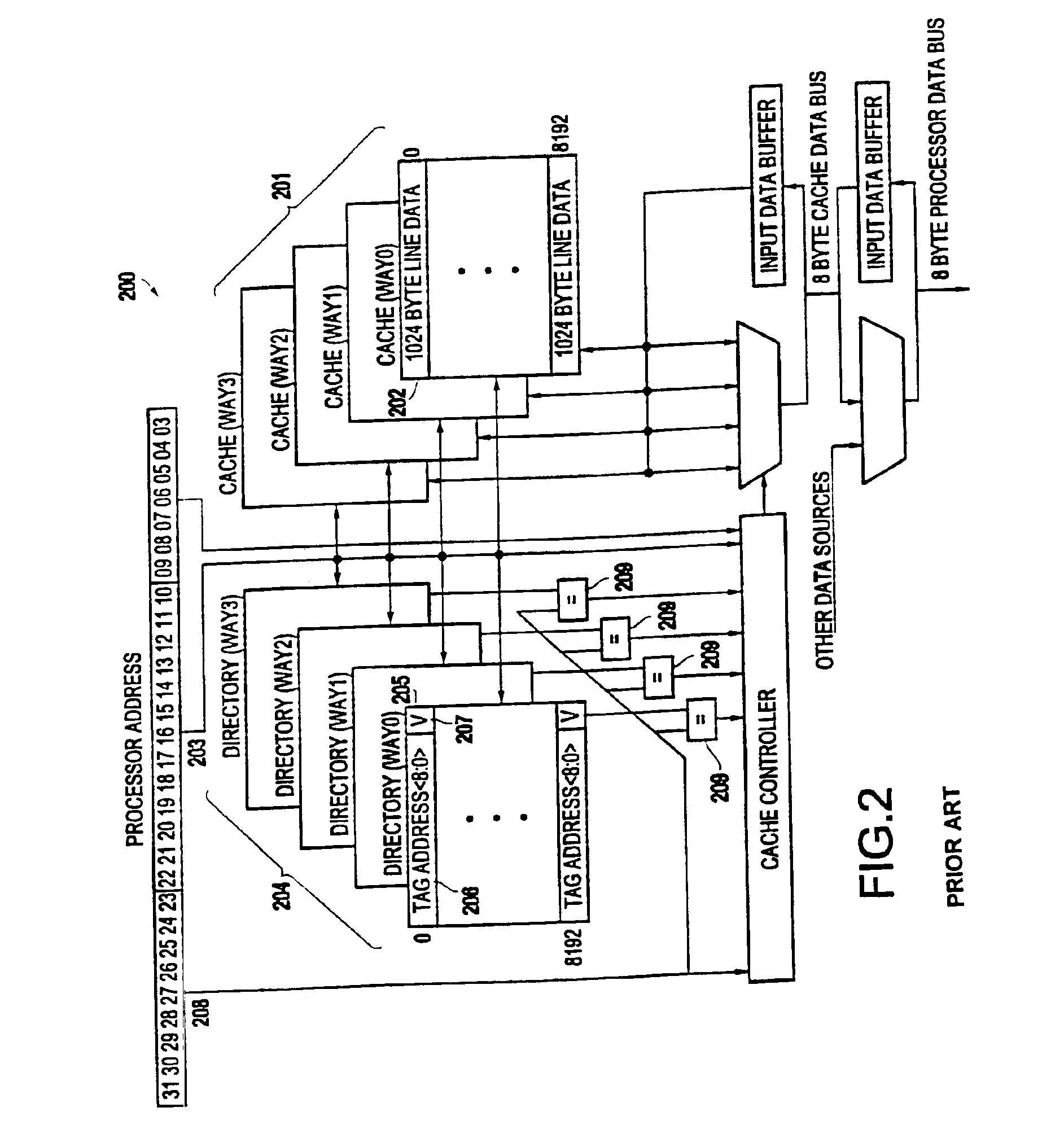

Weighted cache line replacement

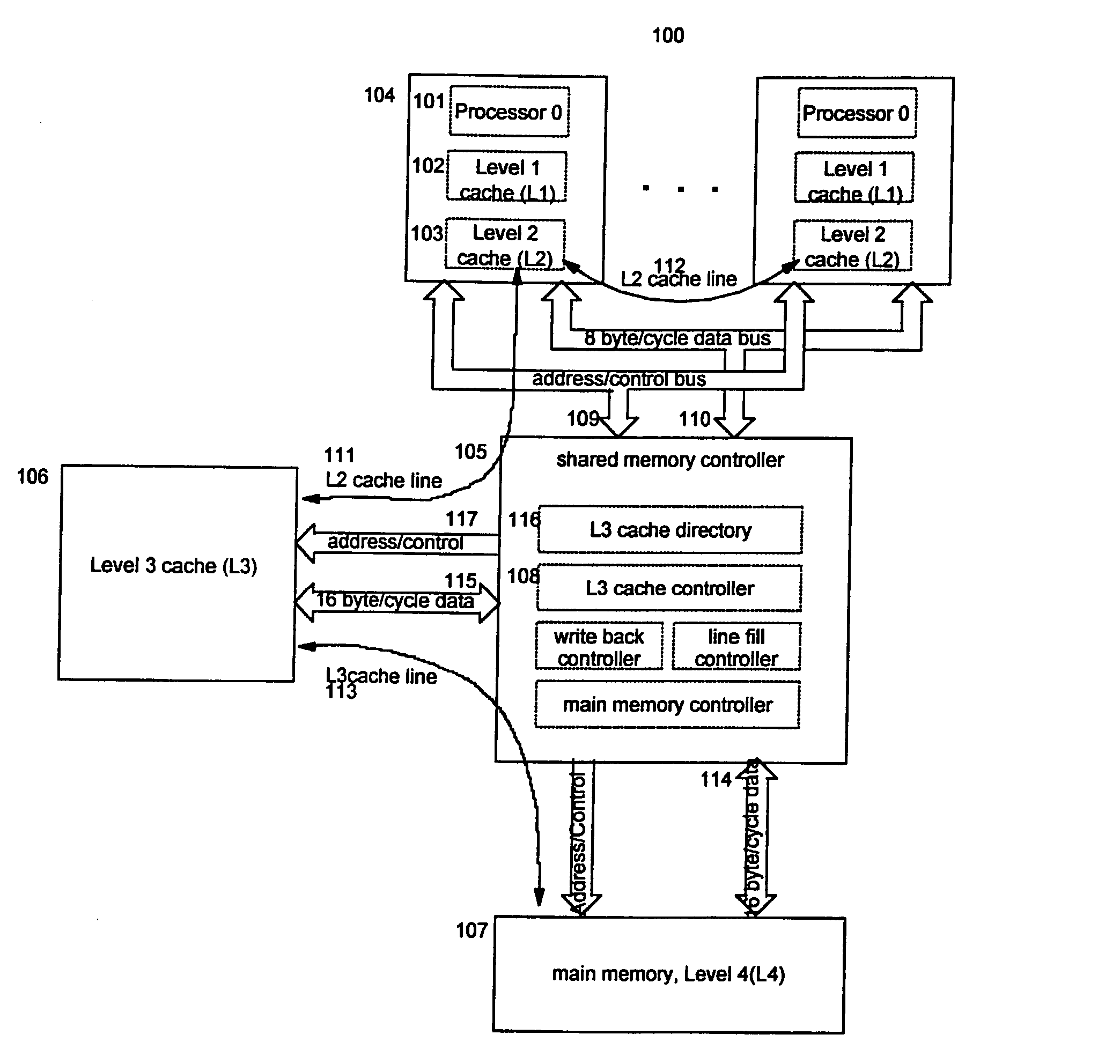

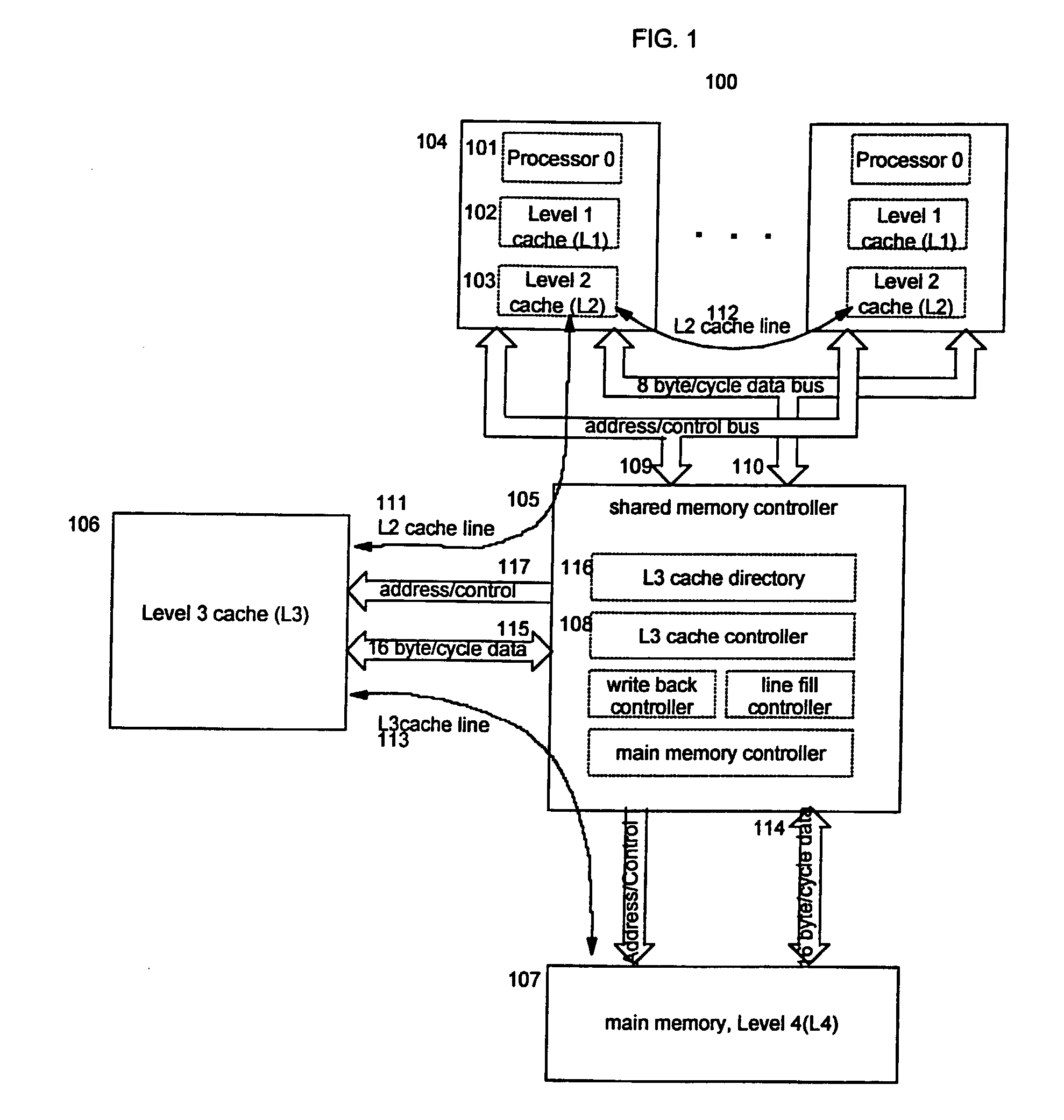

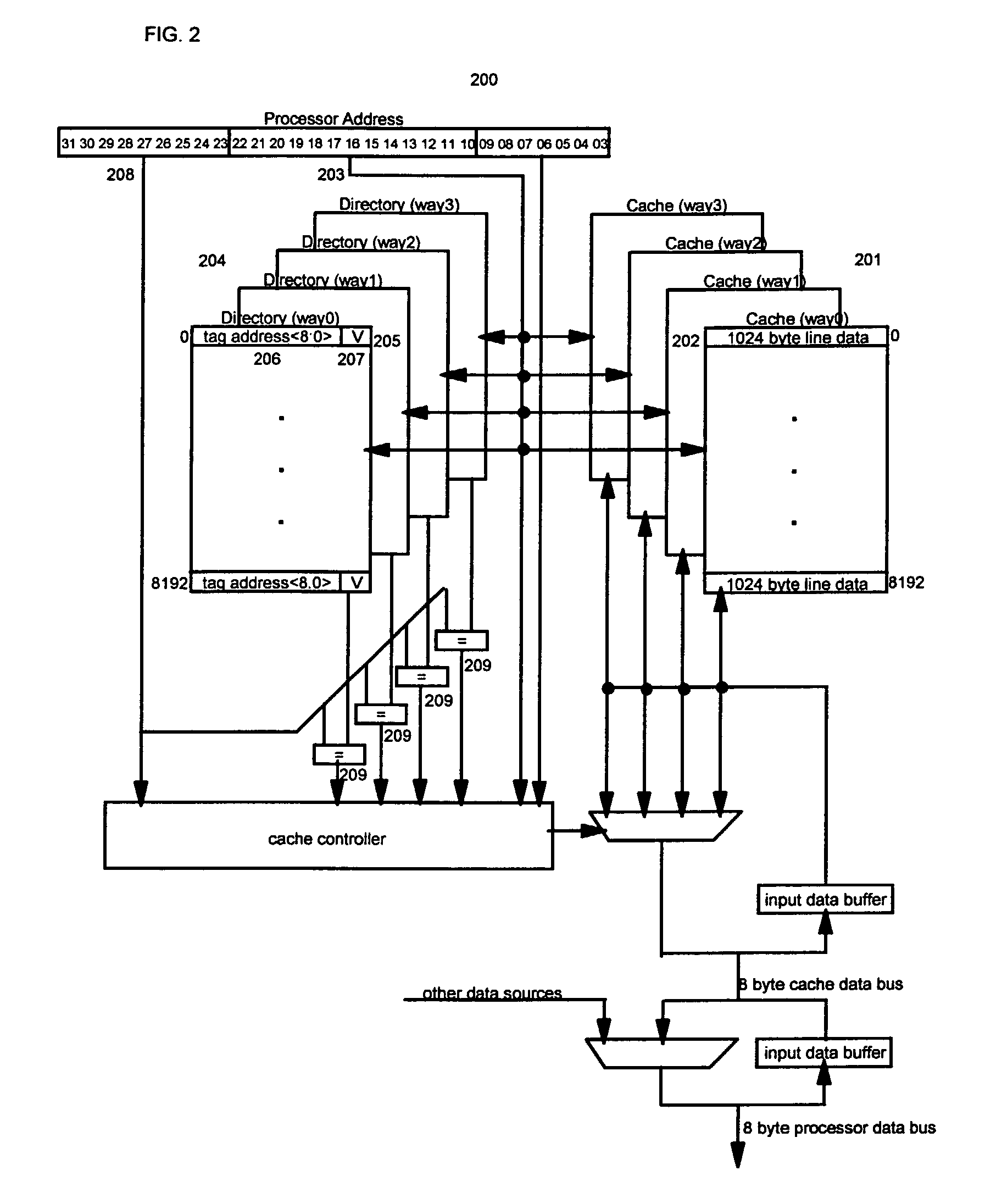

InactiveUS20040083341A1Avoid replacementMemory adressing/allocation/relocationCache hierarchyParallel computing

A method for selecting a line to replace in an inclusive set-associative cache memory system which is based on a least recently used replacement policy but is enhanced to detect and give special treatment to the reloading of a line that has been recently cast out. A line which has been reloaded after having been recently cast out is assigned a special encoding which temporarily gives priority to the line in the cache so that it will not be selected for replacement in the usual least recently used replacement process. This method of line selection for replacement improves system performance by providing better hit rates in the cache hierarchy levels above, by ensuring that heavily used lines in the levels above are not aged out of the levels below due to lack of use.

Owner:IBM CORP

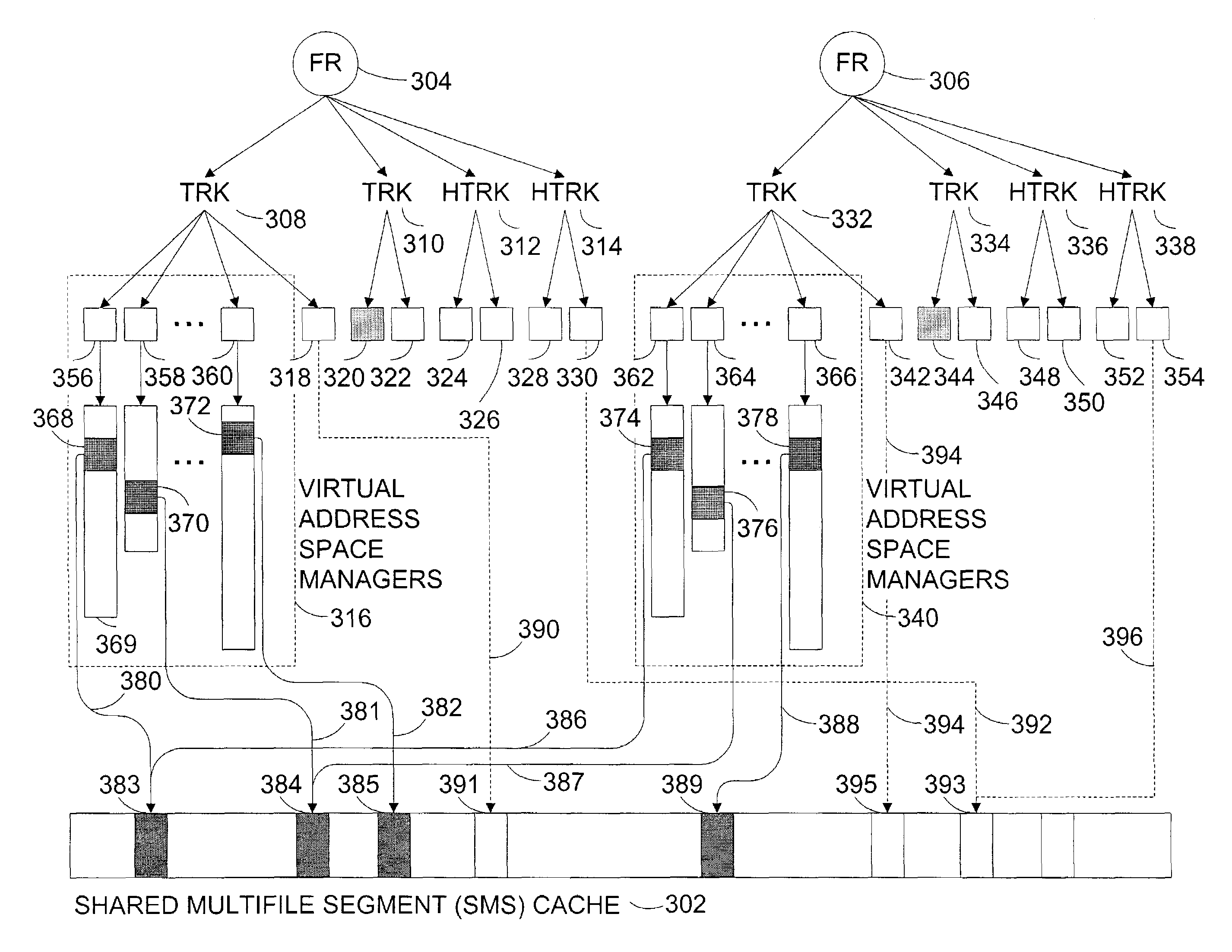

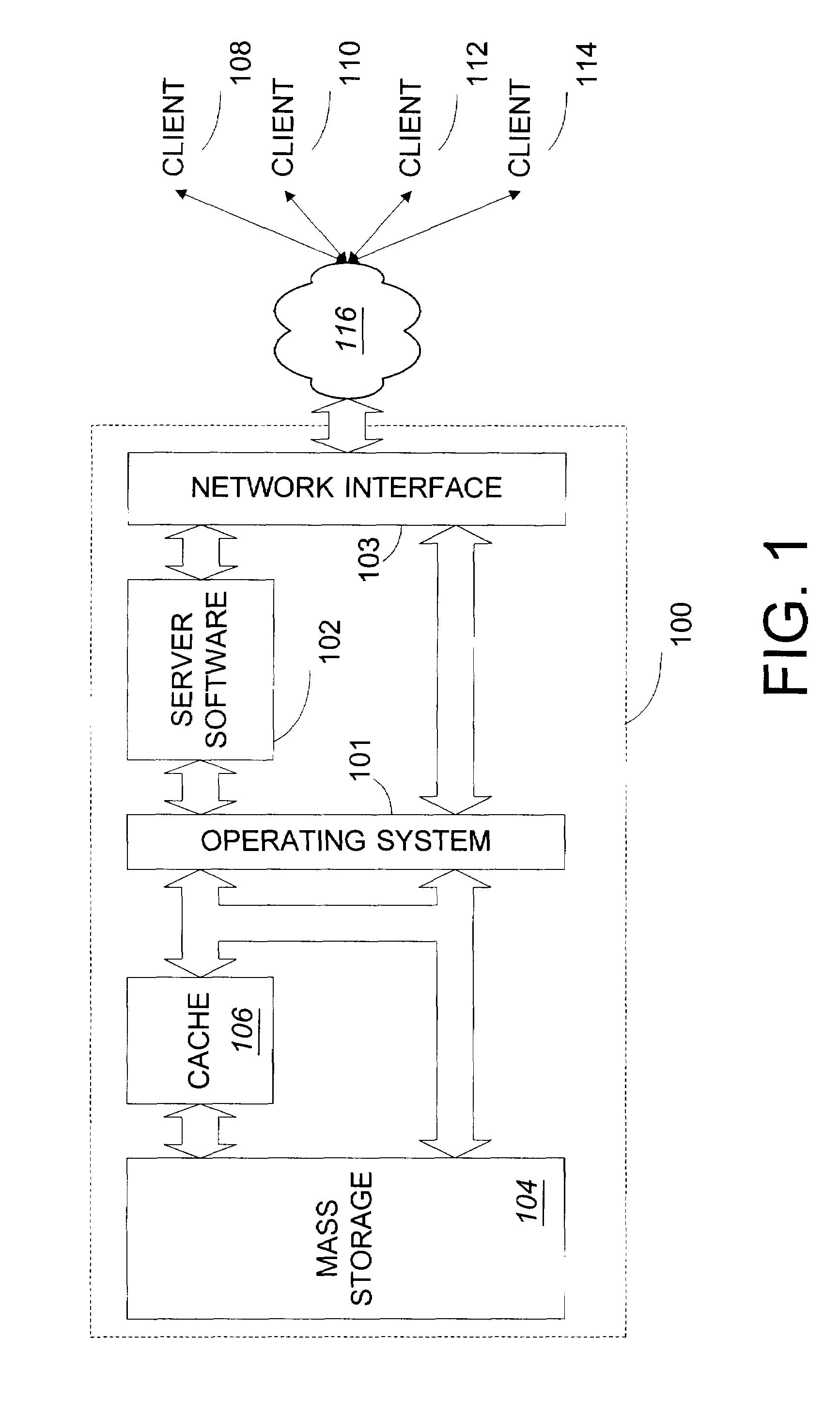

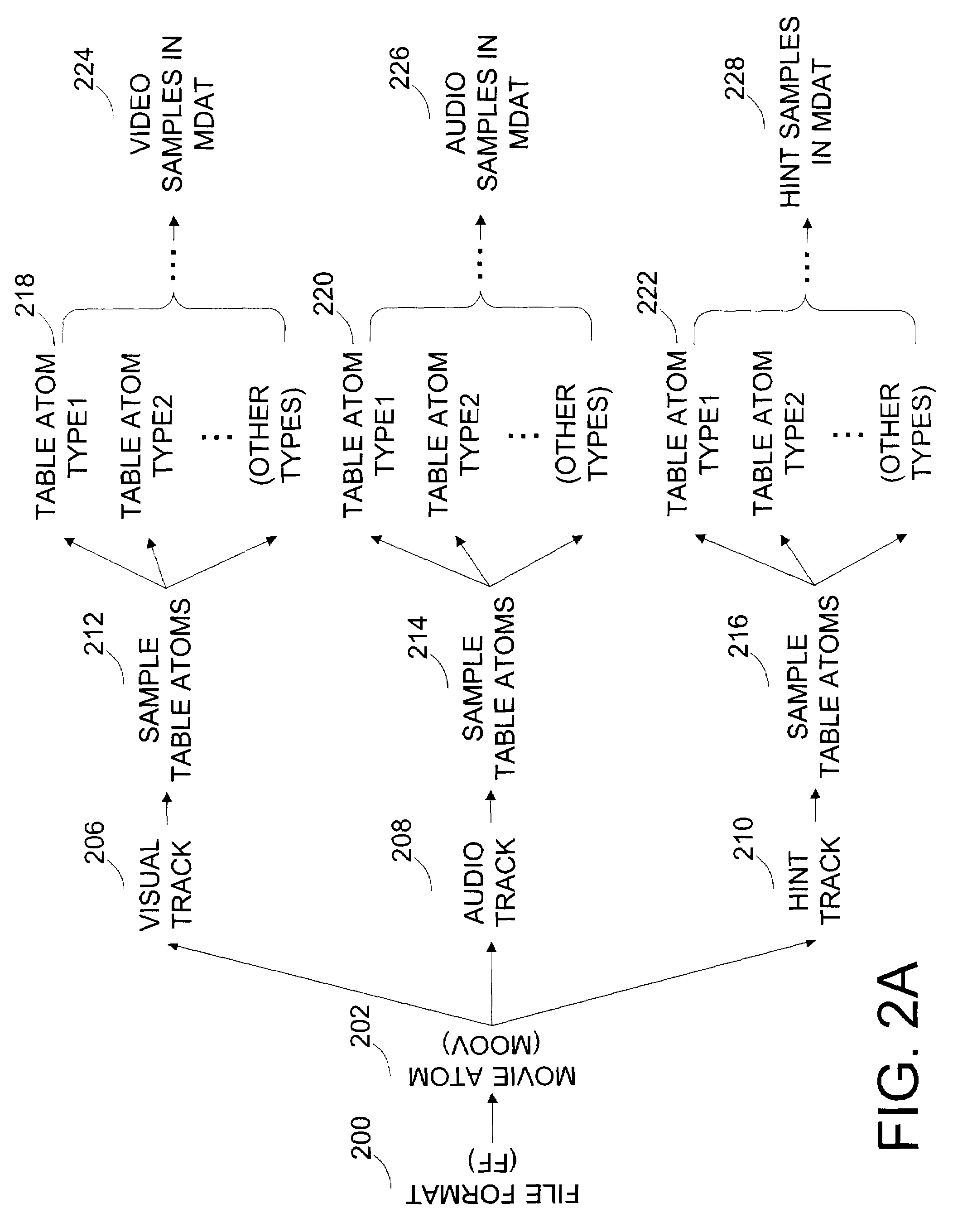

Systems and methods for the efficient reading of data in a server system

The invention is related to methods and apparatus that efficiently store and read data in a cache for a server system, such as a media server. The cache stores data for file formats of a streamable file format, such as an MPEG-4 file format. Relatively frequently accessed portions of a streamable file format are maintained with long-life links. For example, selected child atoms of sample table atoms of MPEG-4 file formats can be relatively frequently accessed during the streaming of a media presentation. A virtual address space manager for selected child atoms maintains relatively long-life links to pages or segments of data in a cache that hold data corresponding to these child atoms and can advantageously inhibit the release of these segments to enhance a hit rate for the cache and decrease an amount of thrashing, i.e., repeated paging of data to the cache.

Owner:INTEL CORP

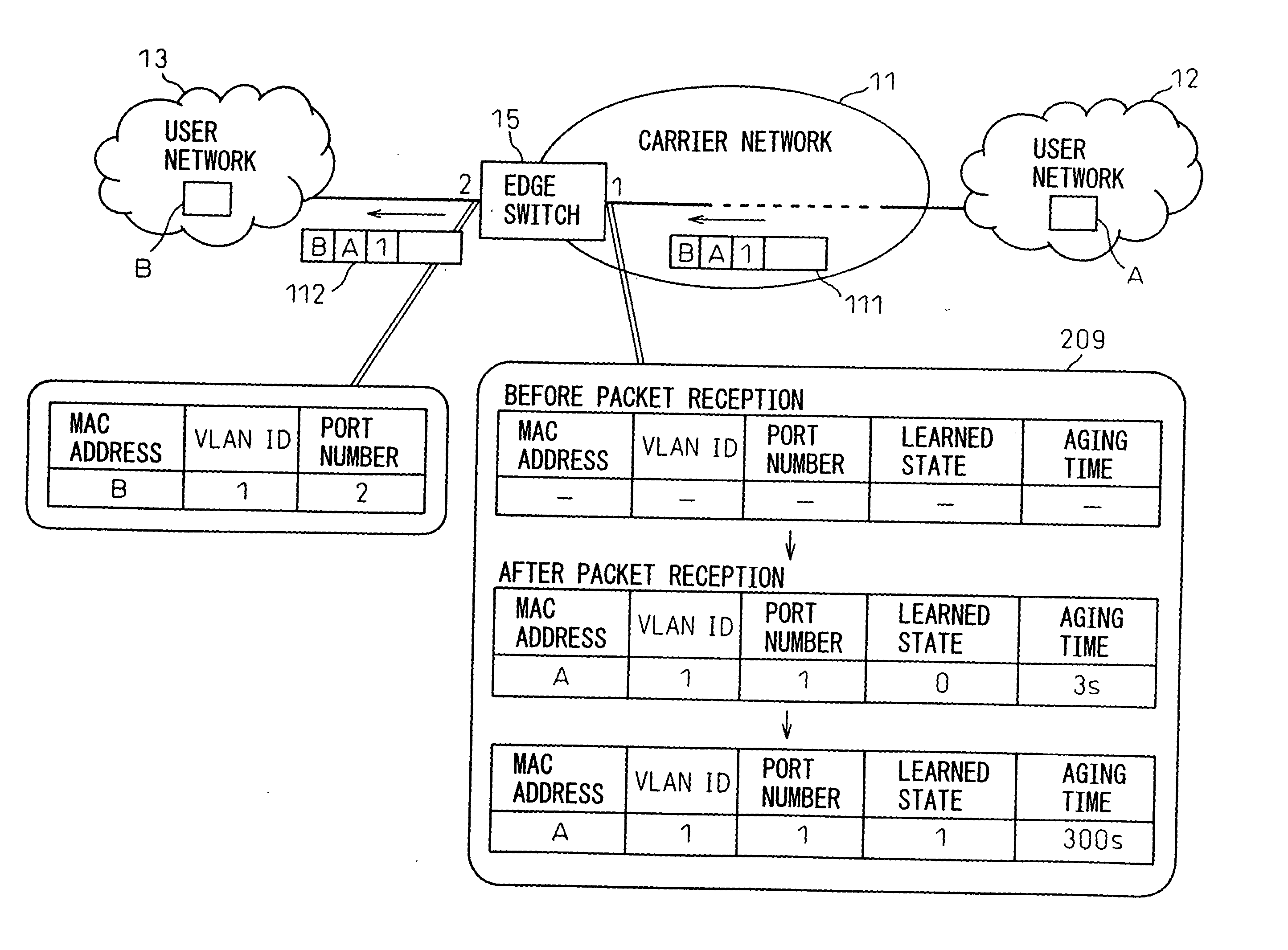

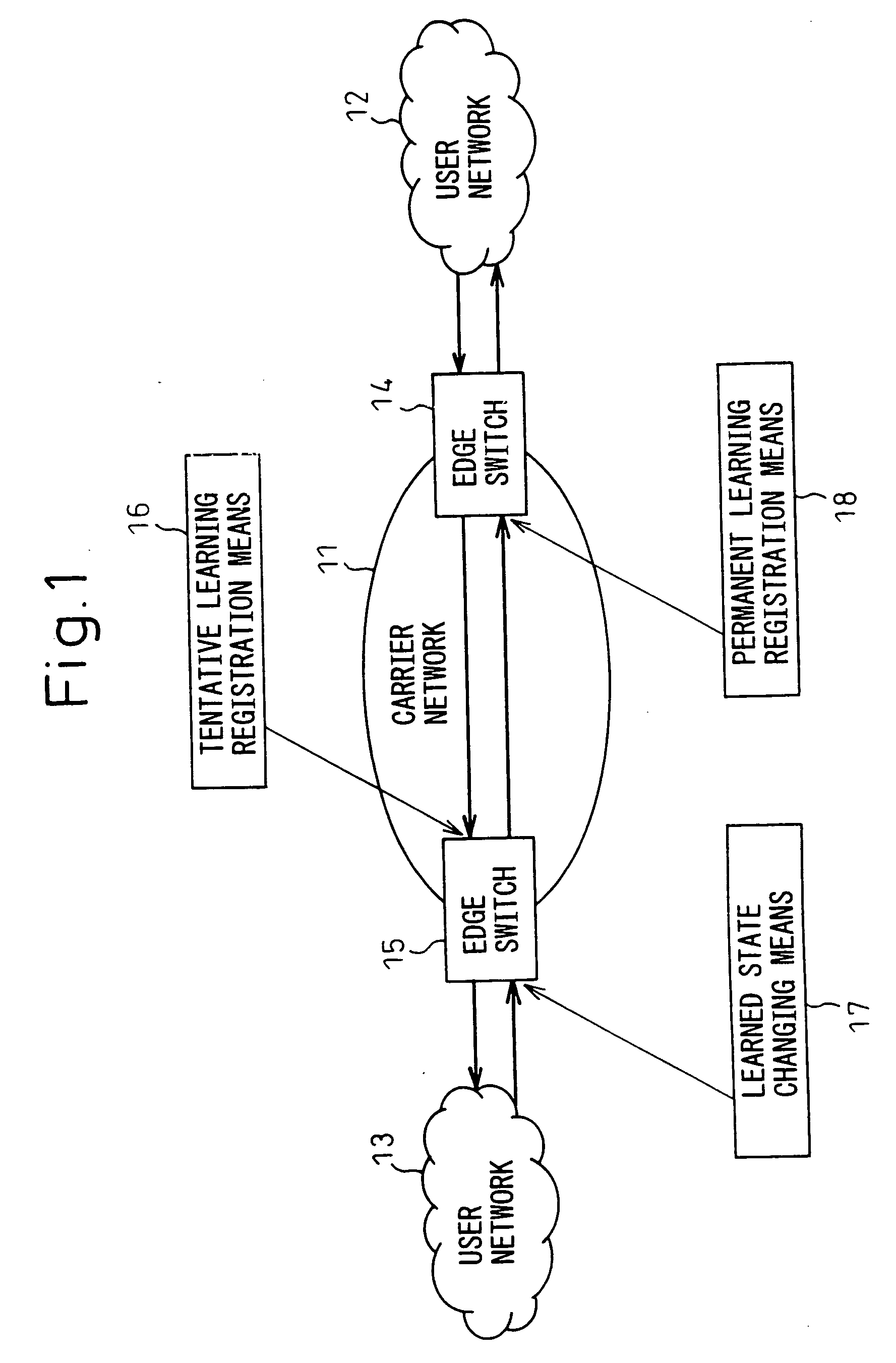

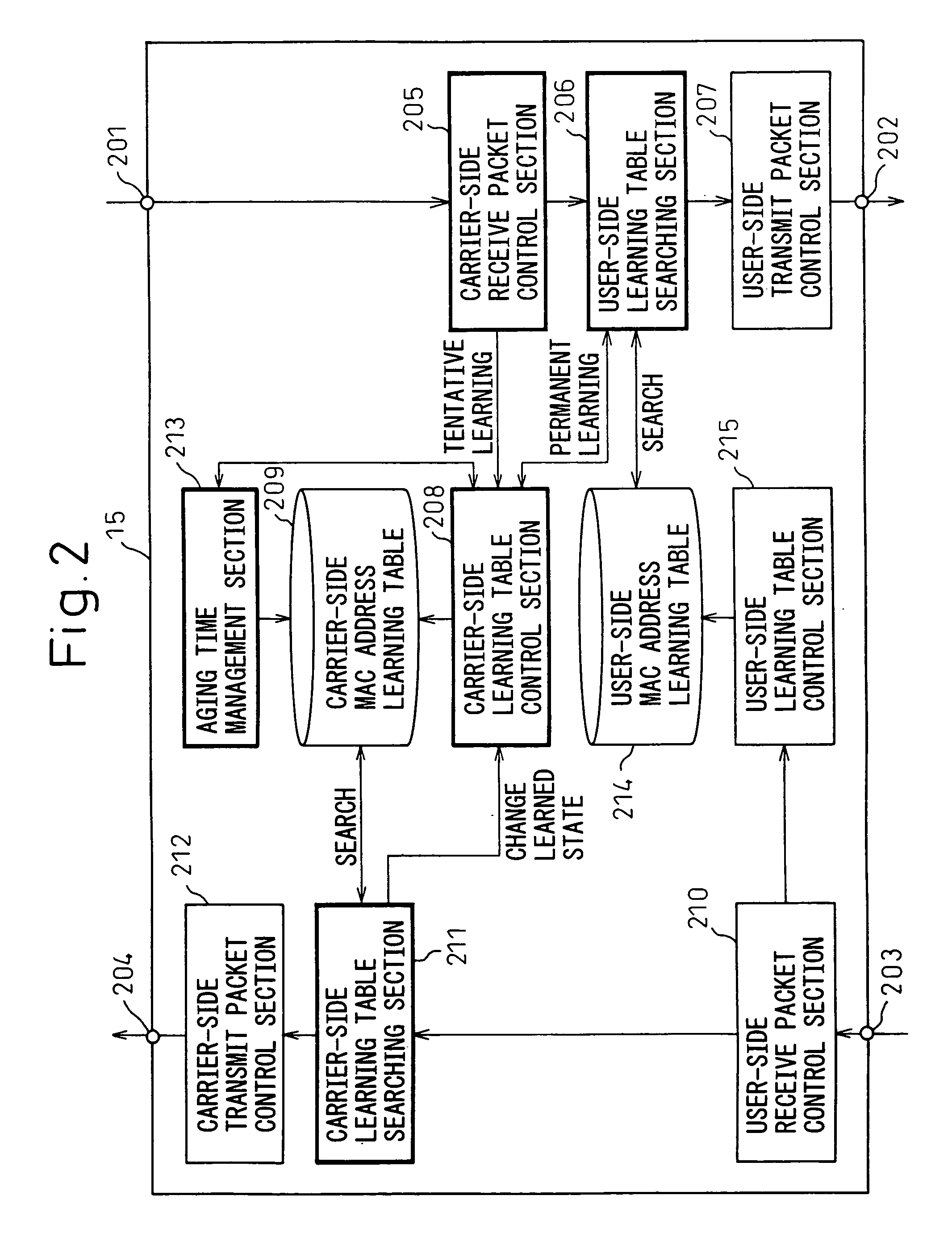

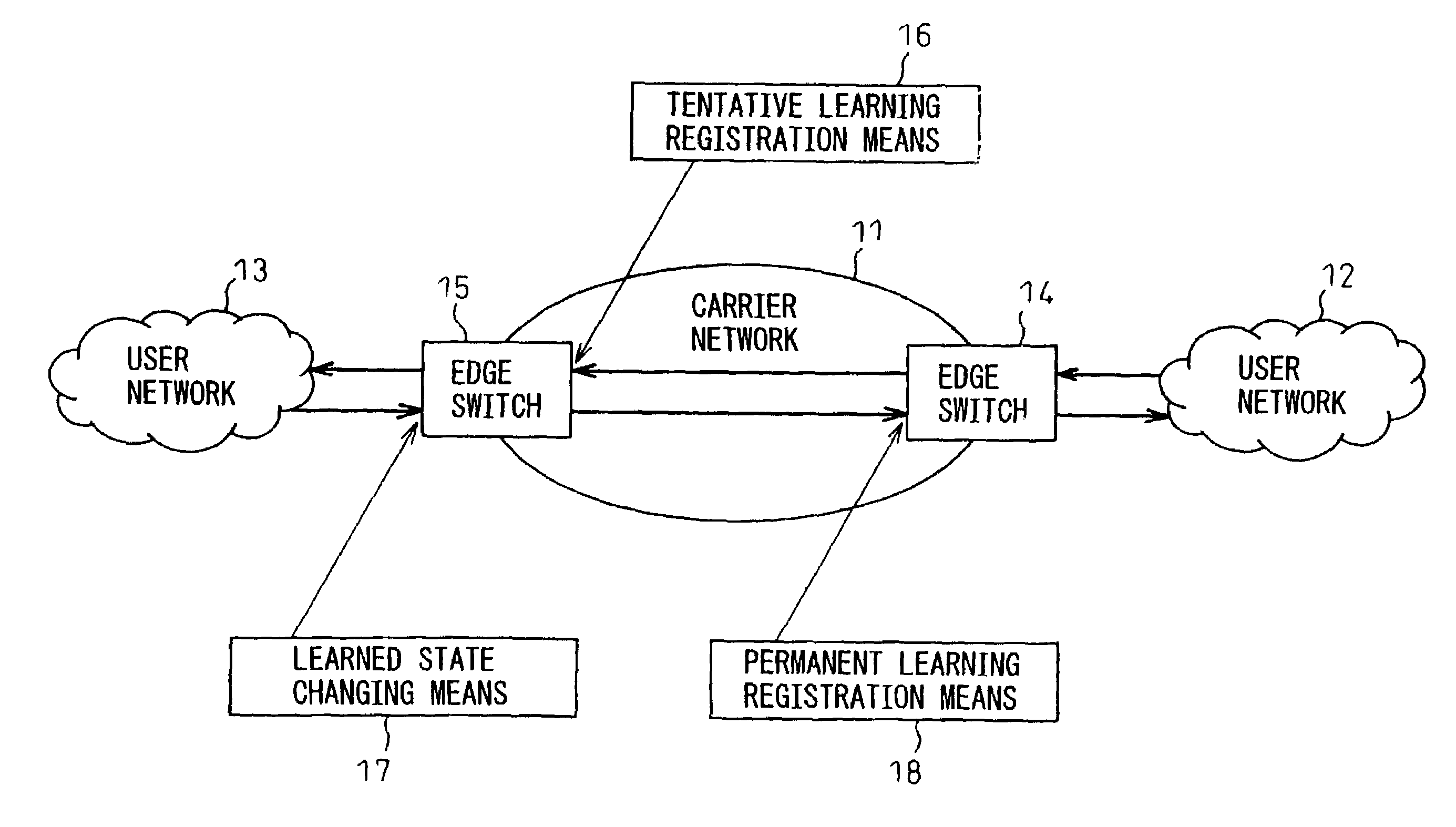

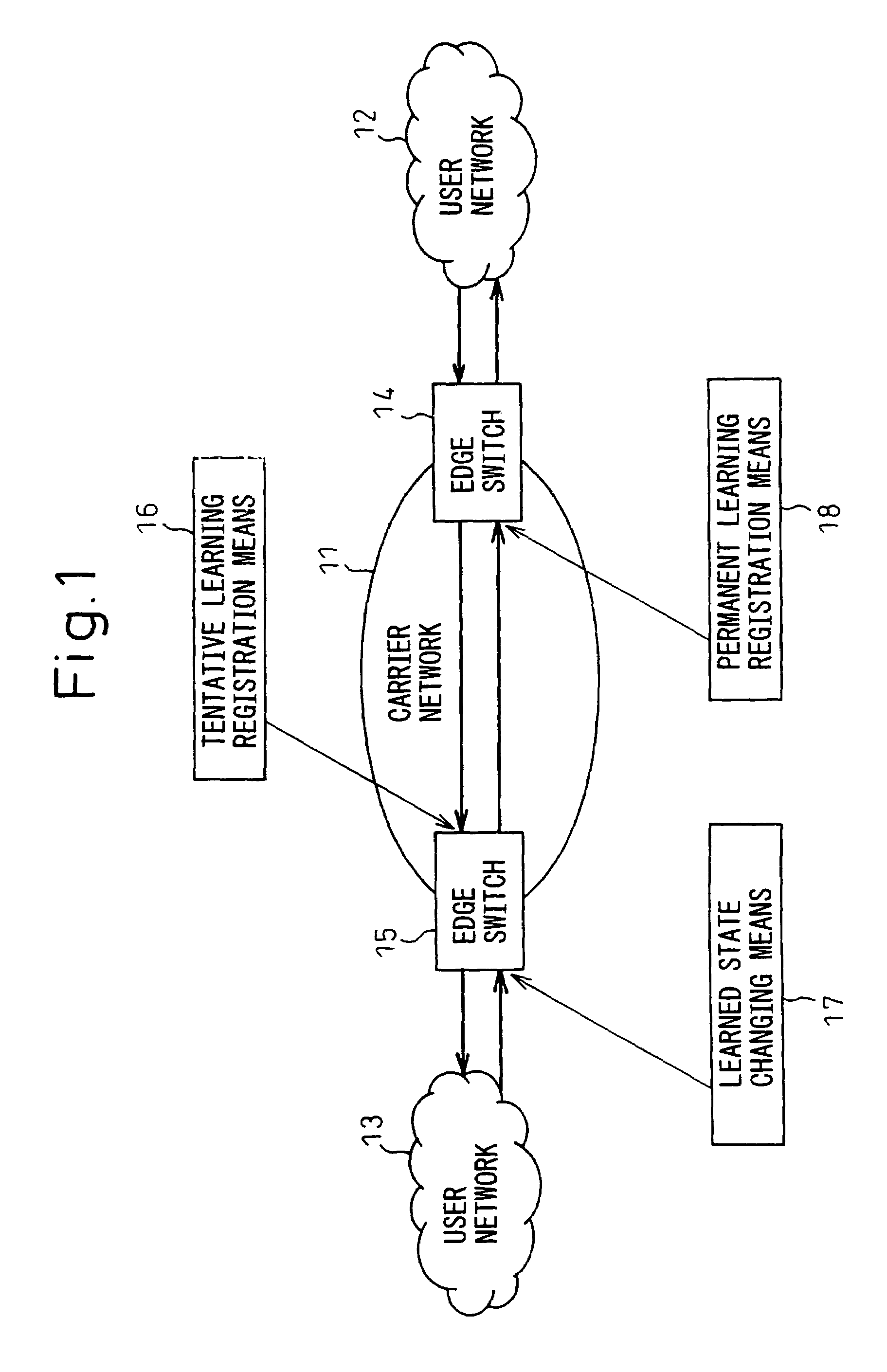

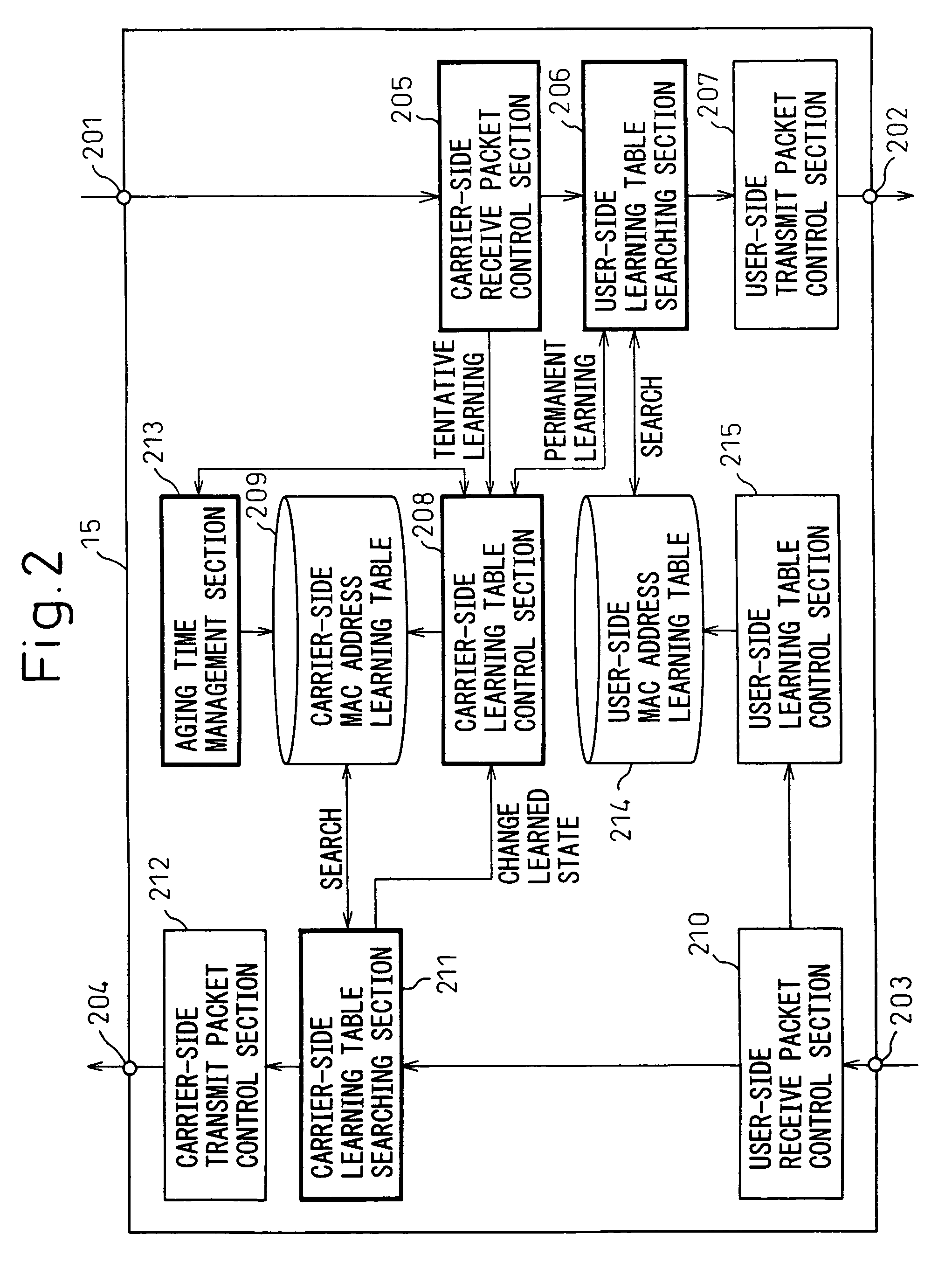

MAC address learning apparatus

InactiveUS20060092860A1Avoid saturationBlocking in networkNetworks interconnectionCarrier signalHit rate

In a network providing a wide-area LAN service which allows communications to be performed between user networks (12, 13) via a carrier network (11), edge switches (14, 15) each comprise: a tentative learning registration means (16) which, when a packet whose source MAC address and receiving port are not yet learned is received from the carrier network, registers the source MAC address and the receiving port in a tentatively learned state; a learned state changing means (17) which changes the learned state of the destination MAC address of a packet received from an associated one of the user networks to a permanently learned state; and a permanent learning registration means which, when a packet whose destination MAC address is registered in a learned state is received from the carrier network, registers the source MAC address and receiving port of the packet received from the carrier network in a permanently learned state. The invention thus provides a MAC address learning apparatus that improves the hit rate in a MAC address learning table maintained in each of the edge switches (14, 15) provided in a wide-area VLAN carrier network.

Owner:FUJITSU LTD

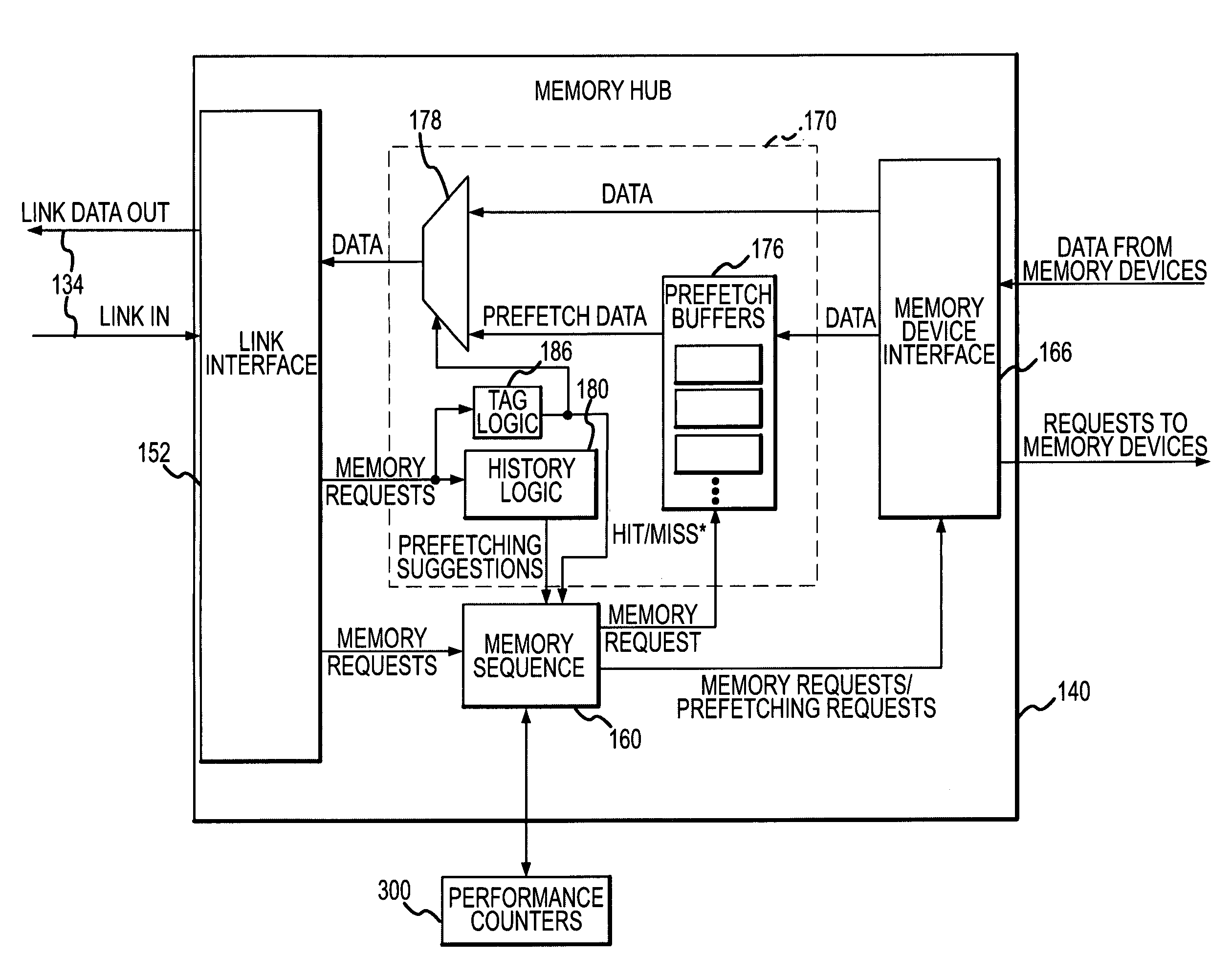

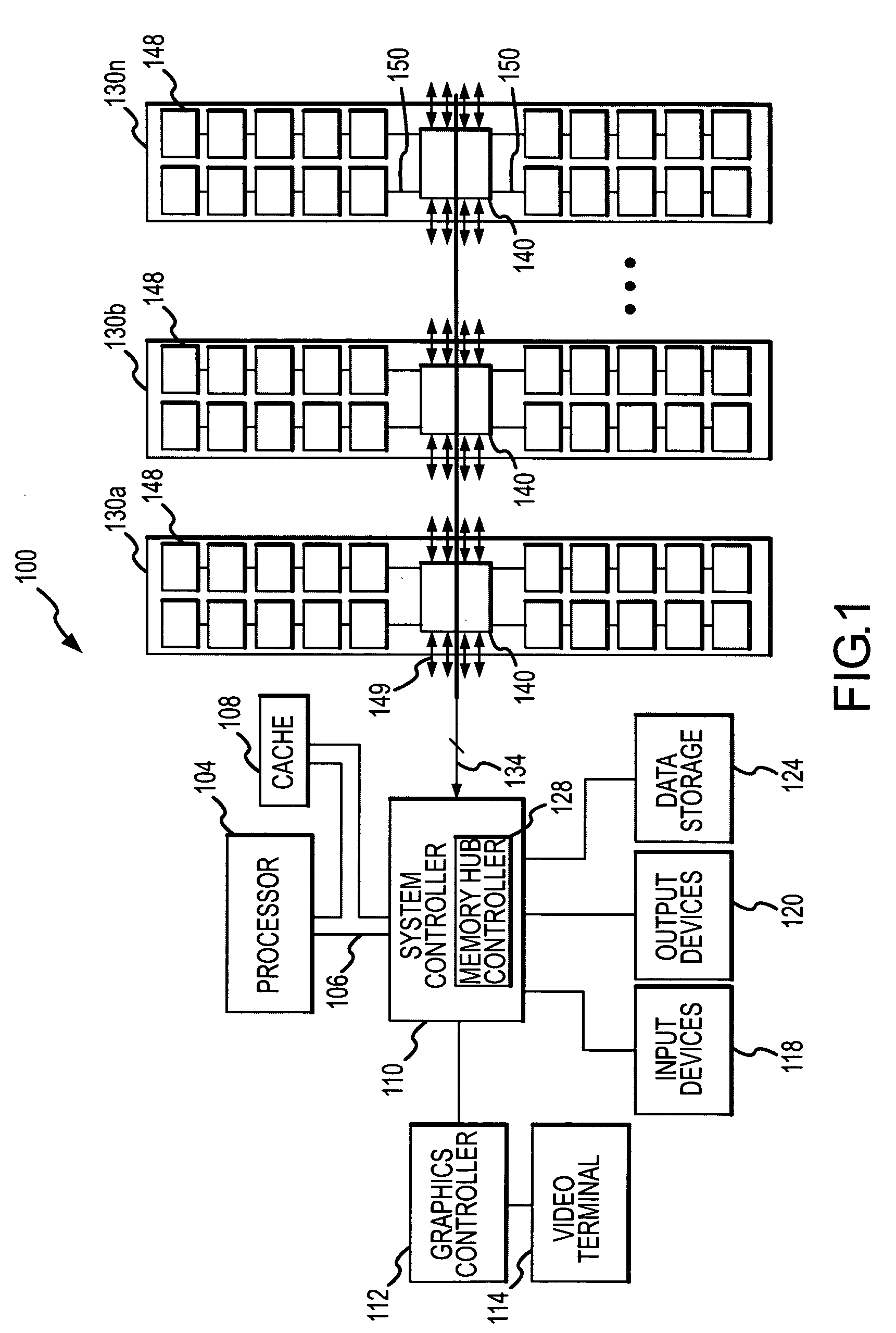

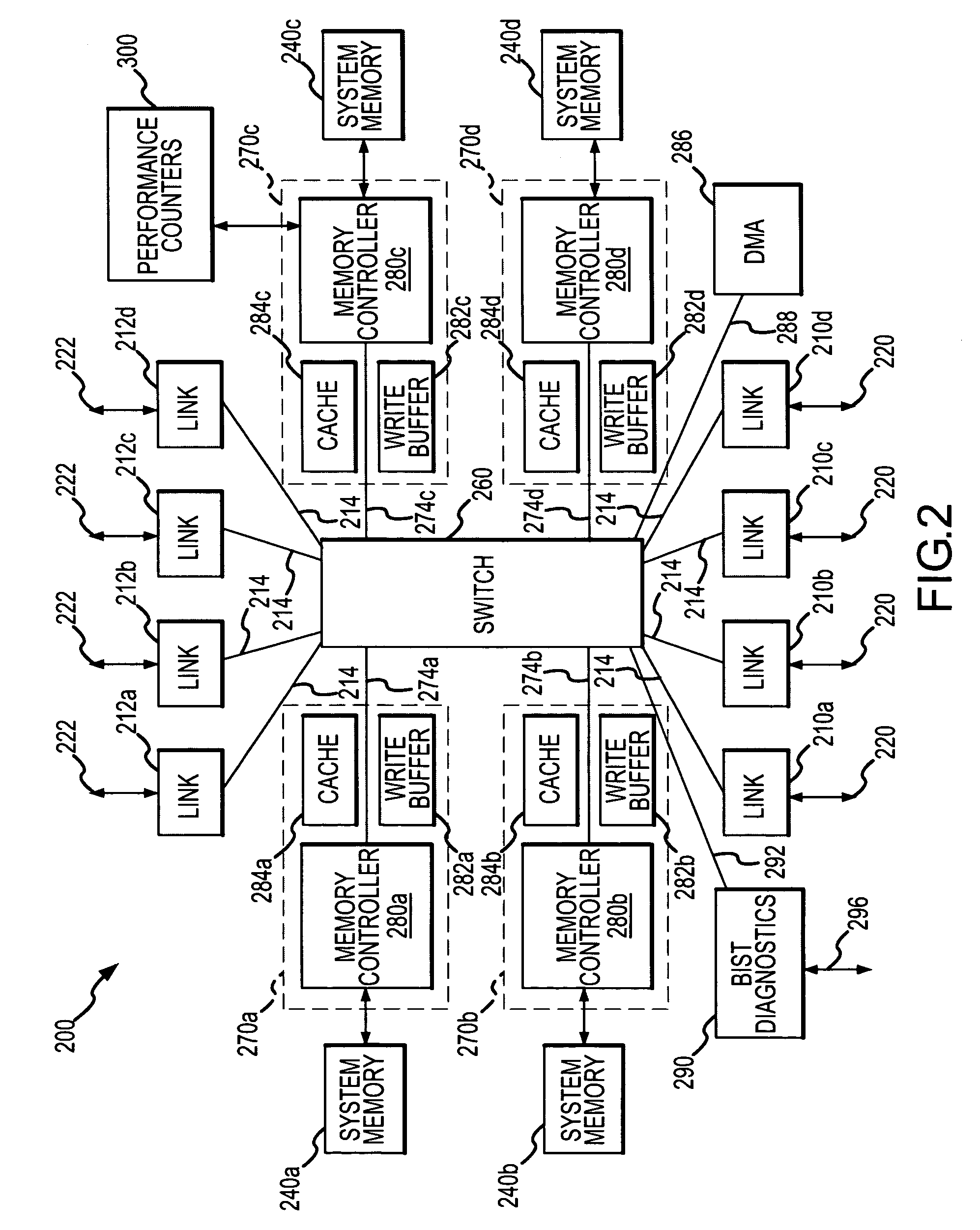

Memory hub and method for memory sequencing

InactiveUS7162567B2Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

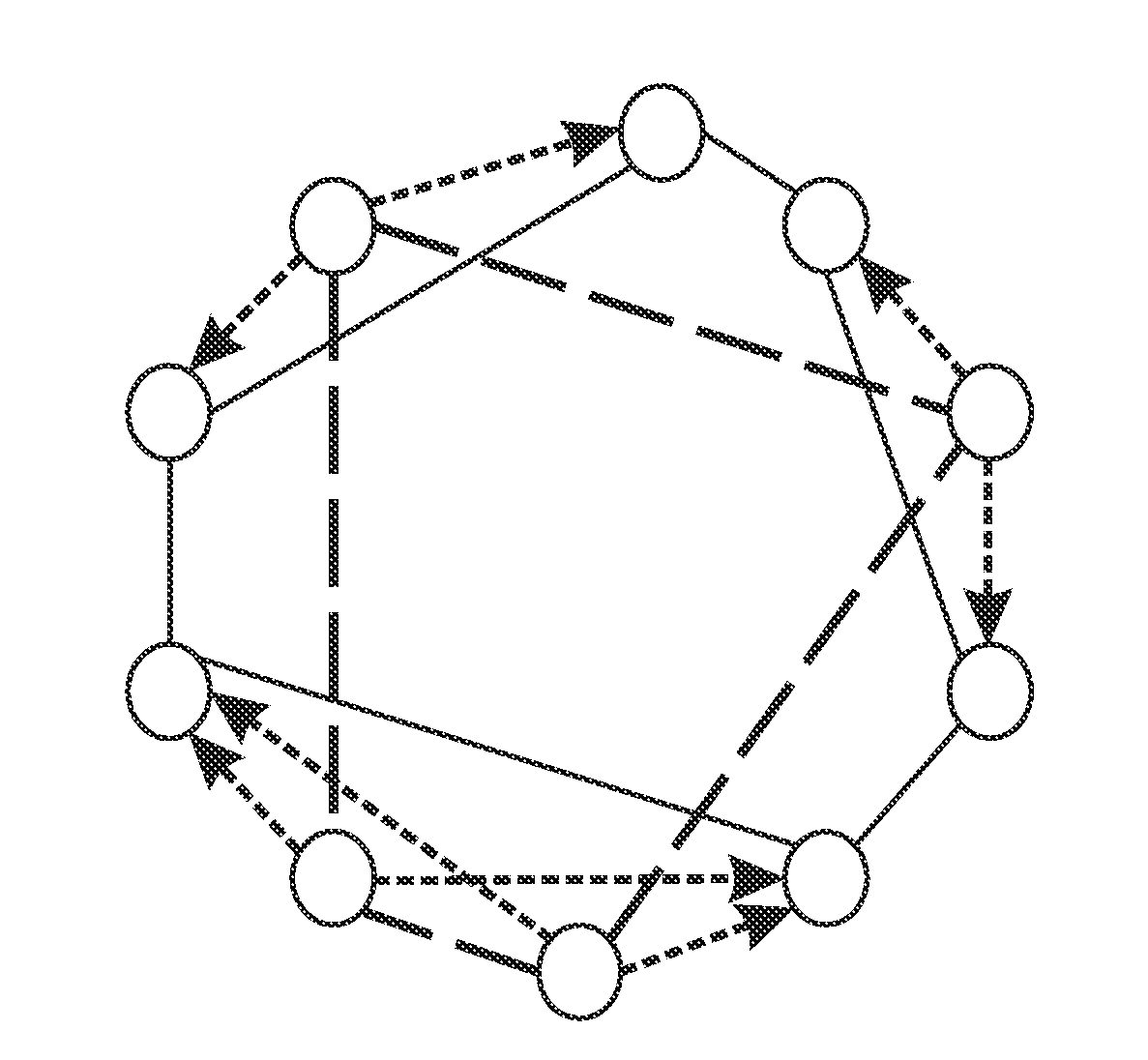

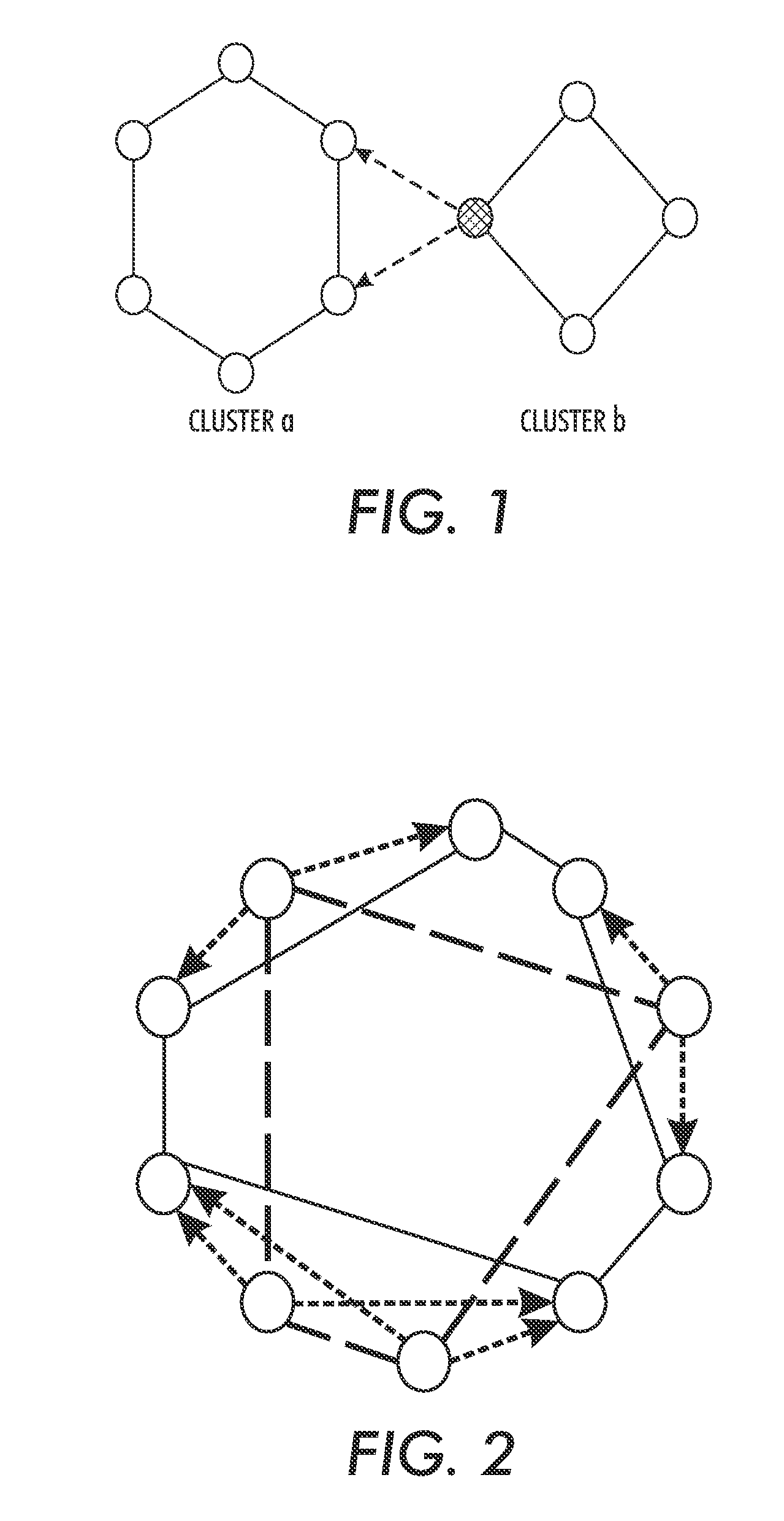

Two-level structured overlay design for cluster management in a peer-to-peer network

InactiveUS20080177767A1Multiple digital computer combinationsData switching networksFile replicationLevel structure

A method and system for designing file replication schemes in file sharing systems consider node storage constraints and node up / down statistics, file storage costs, and file transfer costs among the nodes, user request rates for the files, and user specified file availability requirements. Based on these considerations, a systematic method for designing file replication schemes can be implemented. The method first determines the number of copies of the files to be stored in the system to achieve the desired goal (e.g., to satisfy file availability requirements, or to maximize the system hit rate), and then selects the nodes at which to store the file copies to minimize the total expected cost. The file replication scheme for a peer-to-peer file sharing system in a distributed and adaptive manner can scale to a large number of nodes and files and can handle changes in the user request pattern over time.

Owner:XEROX CORP

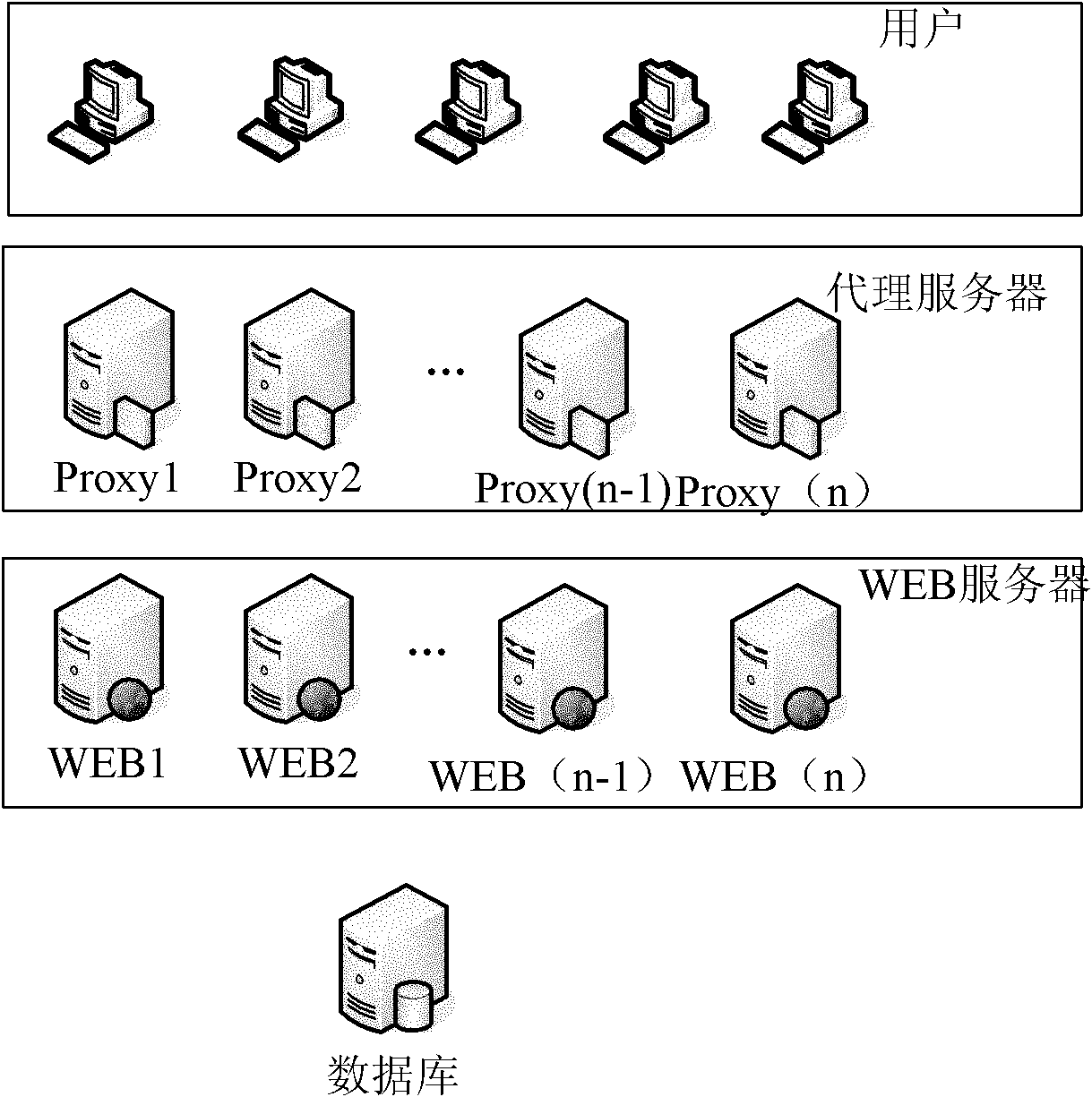

Load balancing system based on content

InactiveCN101605092ASolve the problem of inconsistencySave resourcesData switching networksData synchronizationThe Internet

The invention relates to a load balancing system based on content, comprising load balancing based on content, inspection of content and health status of a backend server, self high availability of load balancing and load balancing algorithm thereof, wherein, the load balancing based on content refers to that a system works in the seventh layer of a seven-layer model of an OSI Internet, and belongs to the load balancing of an application layer; the inspection of content and health status of a backend server is provided with an inspection module of system resources to inspect the content and the health status of the backend server in real time; in order to avoid single-point failures of the load balancing system, the load balancing system uses dual-system configuration to realize the high availability; a main system and a standby system keep the consistency of connection information at client by data synchronization. When a system is in the failure status, a standby load balancing system automatically takes over services. The system of the invention changes the goofy load balancing mode of the traditional IP layer load balancing, and thus, the load hit rate can be effectively increased. The load balancing system does not require that the backend server stores the same content, which largely saves server resources.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

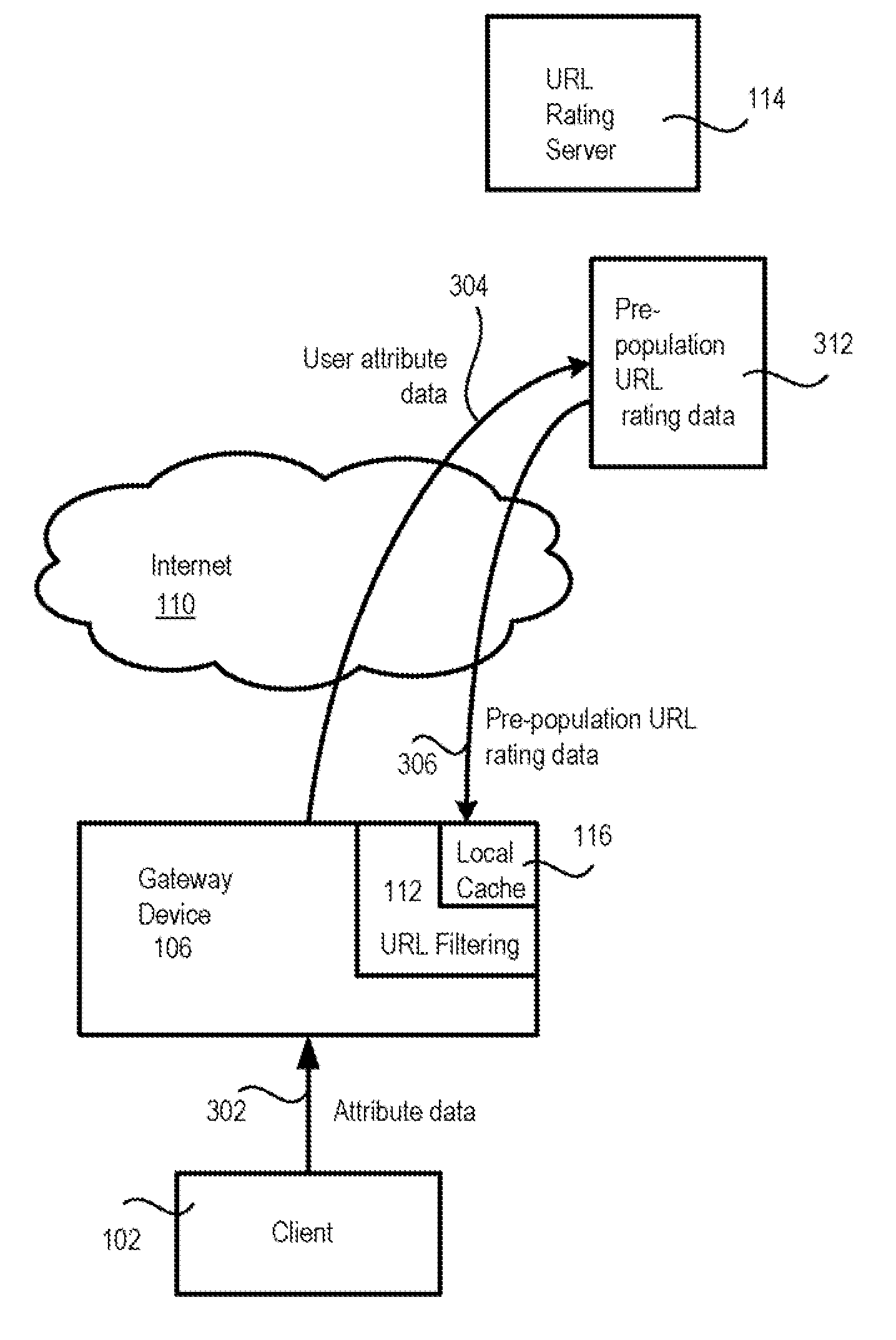

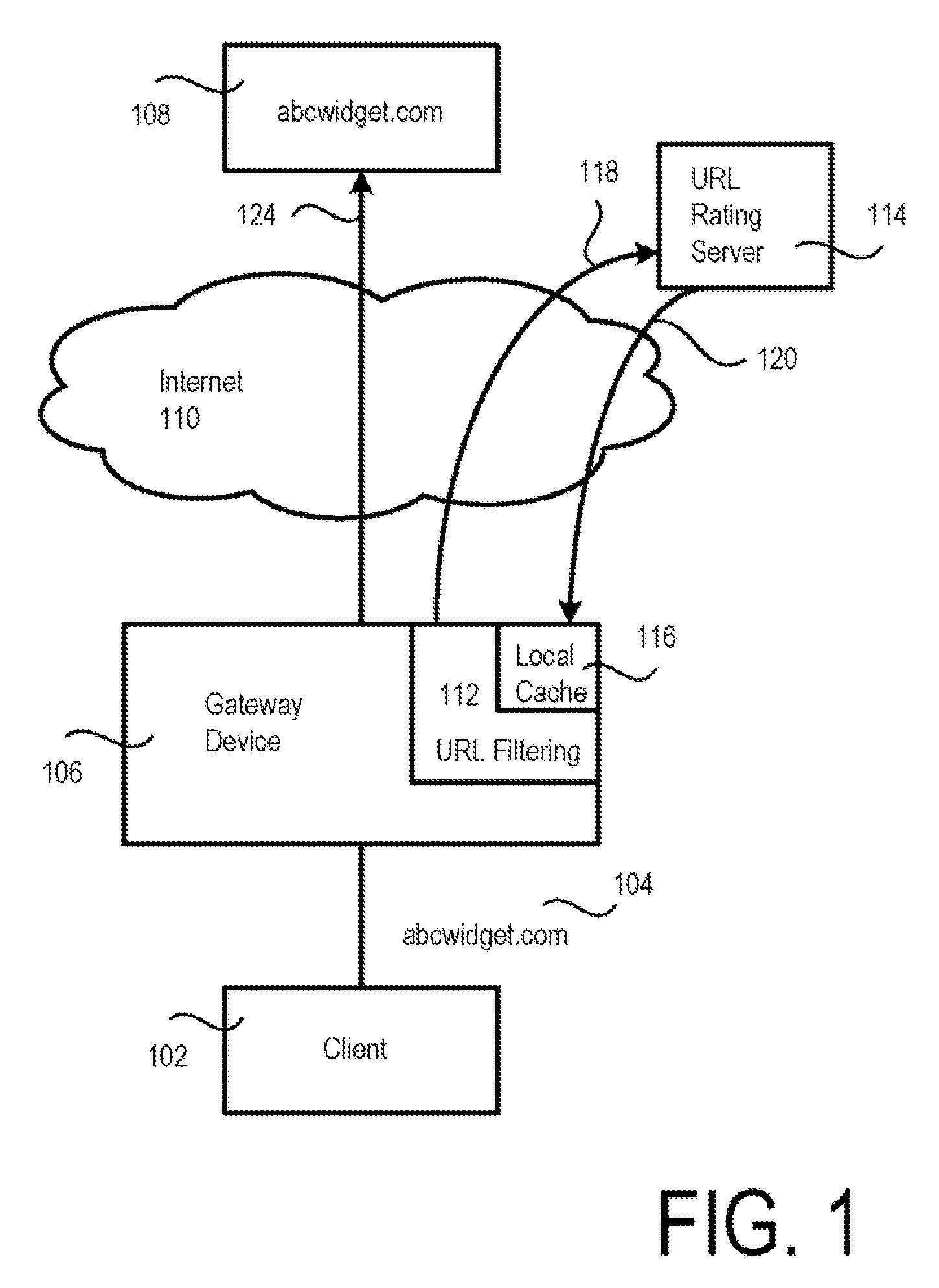

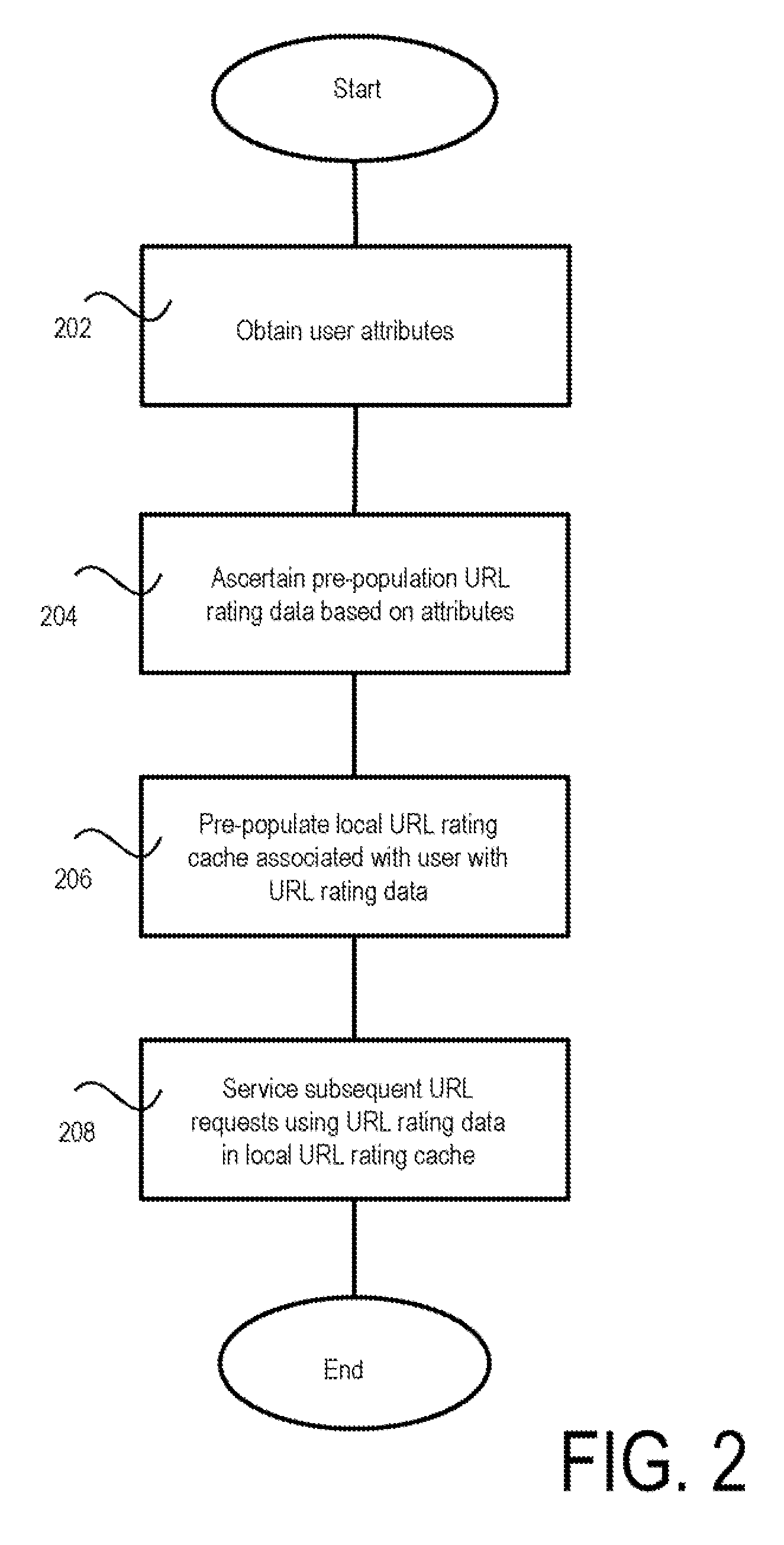

Pre-populating local URL rating cache

ActiveUS20080163380A1Digital data information retrievalDigital data processing detailsUniform resource locatorCache hit rate

A method and apparatus for improving the system response time when URL filtering is employed to provide security for web access. The method involves gathering the attributes of the user, and pre-populating a local URL-rating cache with URLs and corresponding ratings associated with analogous attributes from a URL cache database. Thus, the cache hit rate is higher with a pre-populated local URL rating cache, and the system response time is also improved.

Owner:TREND MICRO INC

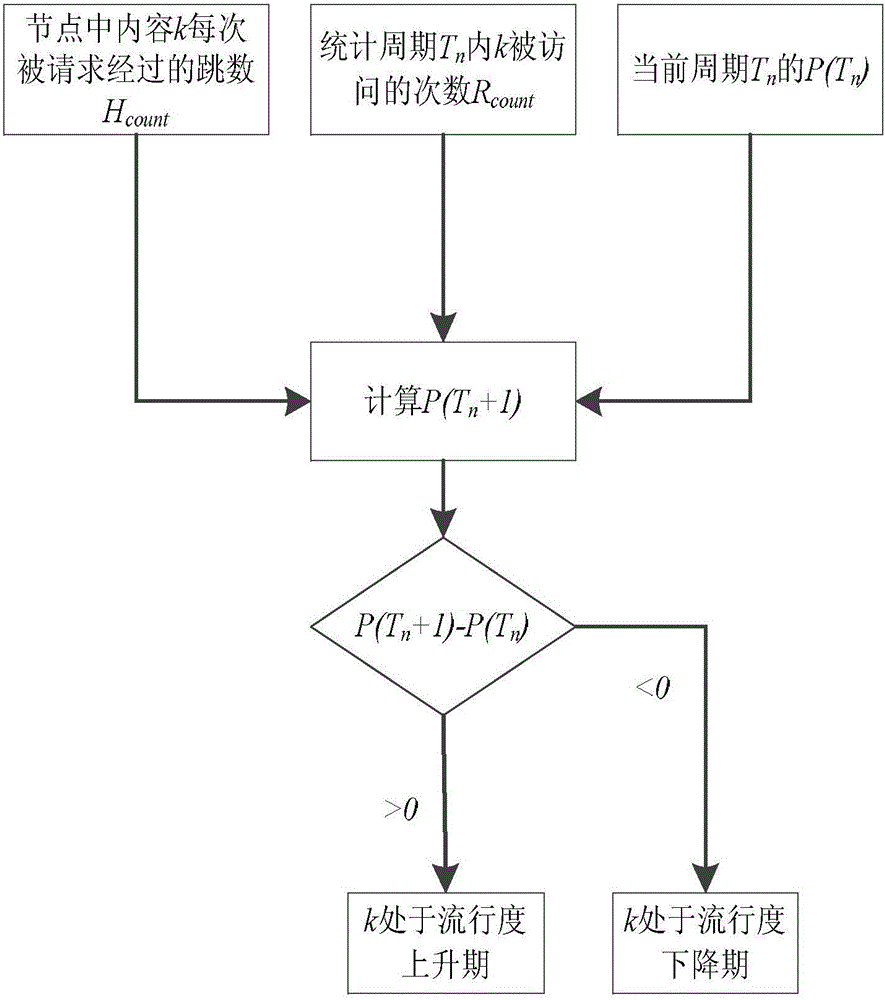

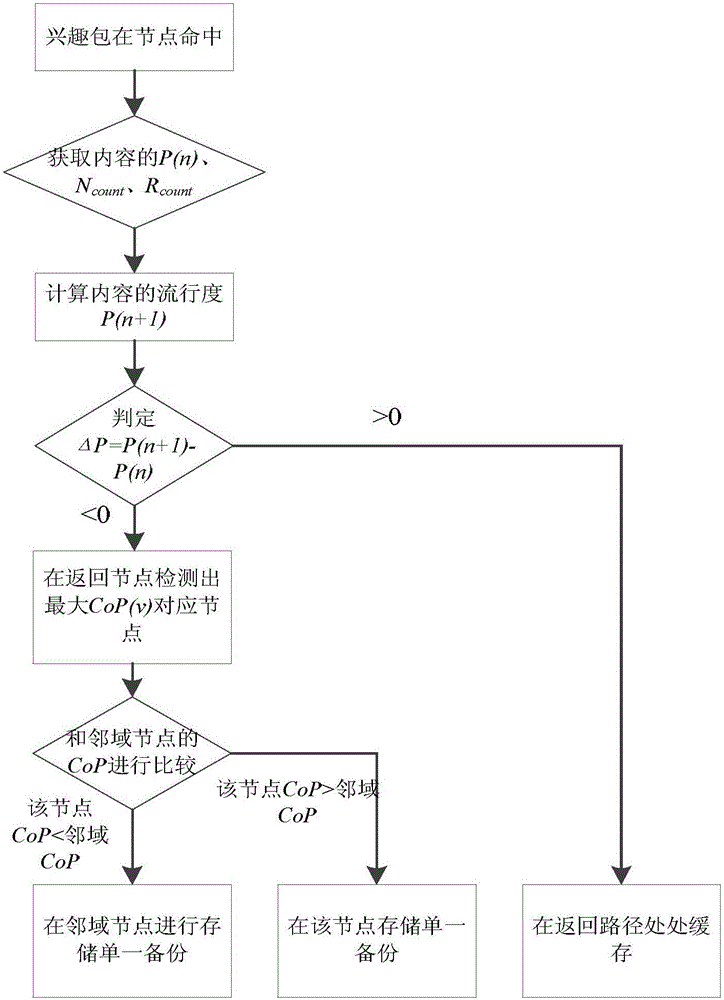

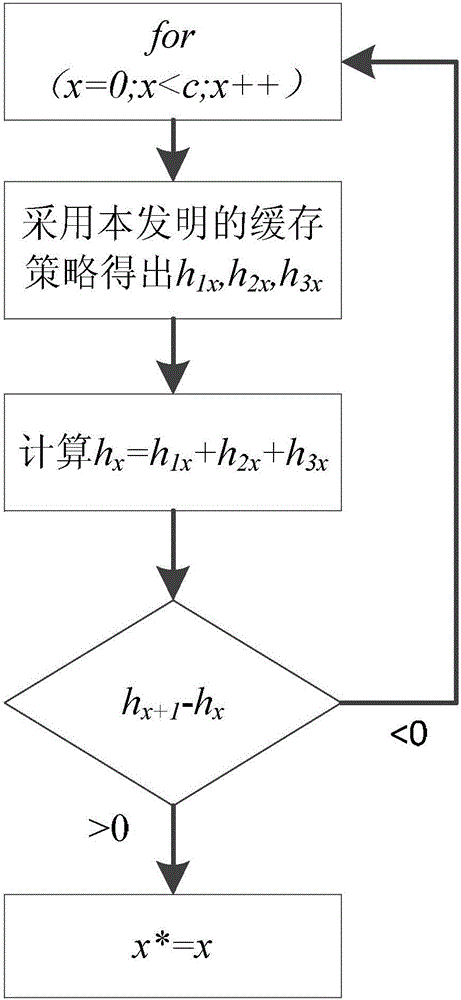

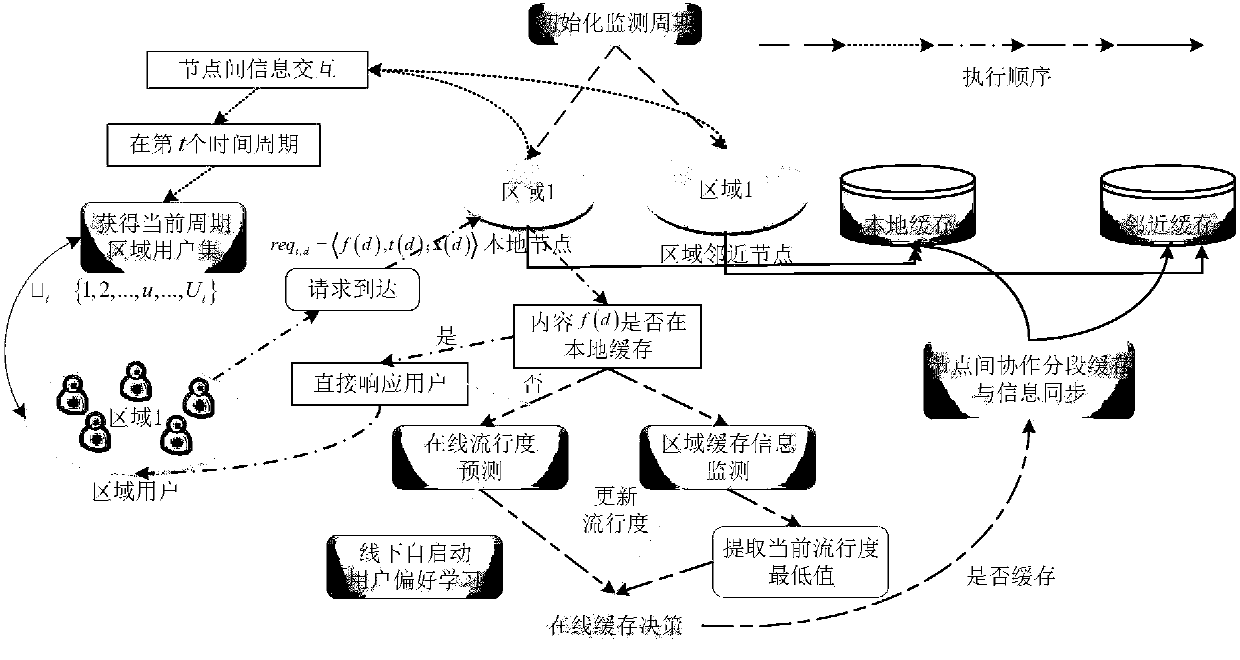

Cooperative caching method based on popularity prediction in named data networking

ActiveCN106131182AFast pushSatisfy low frequency requestsTransmissionCache hit rateDistributed computing

The invention discloses a cooperative caching method based on popularity prediction in a named data networking. When storing a content in the named data networking (NDN), the application of a strategy of caching at multiple parts along a path or a strategy of caching at important nodes causes high redundancy of node data and low utilization rate of a caching space in the networking. The method provided by the invention uses a 'partial cooperative caching mode', firstly, the content is subjected to future popularity prediction, then, the caching space of an optimal proportion is segmented from each node to be used as a local caching space for storing the content of high popularity. The rest part of each node stores the content of relatively low popularity through a neighborhood cooperation mode. An optimal space division proportion is calculated through considering hop count of an interest packet in node kit and server side request hit in the networking. Compared with the conventional caching strategy, the method provided by the invention increases the utilization rate of the caching space in the networking, reduces caching redundancy in the networking, improves a caching hit rate of the nodes in the networking, and promotes performance of the whole networking.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

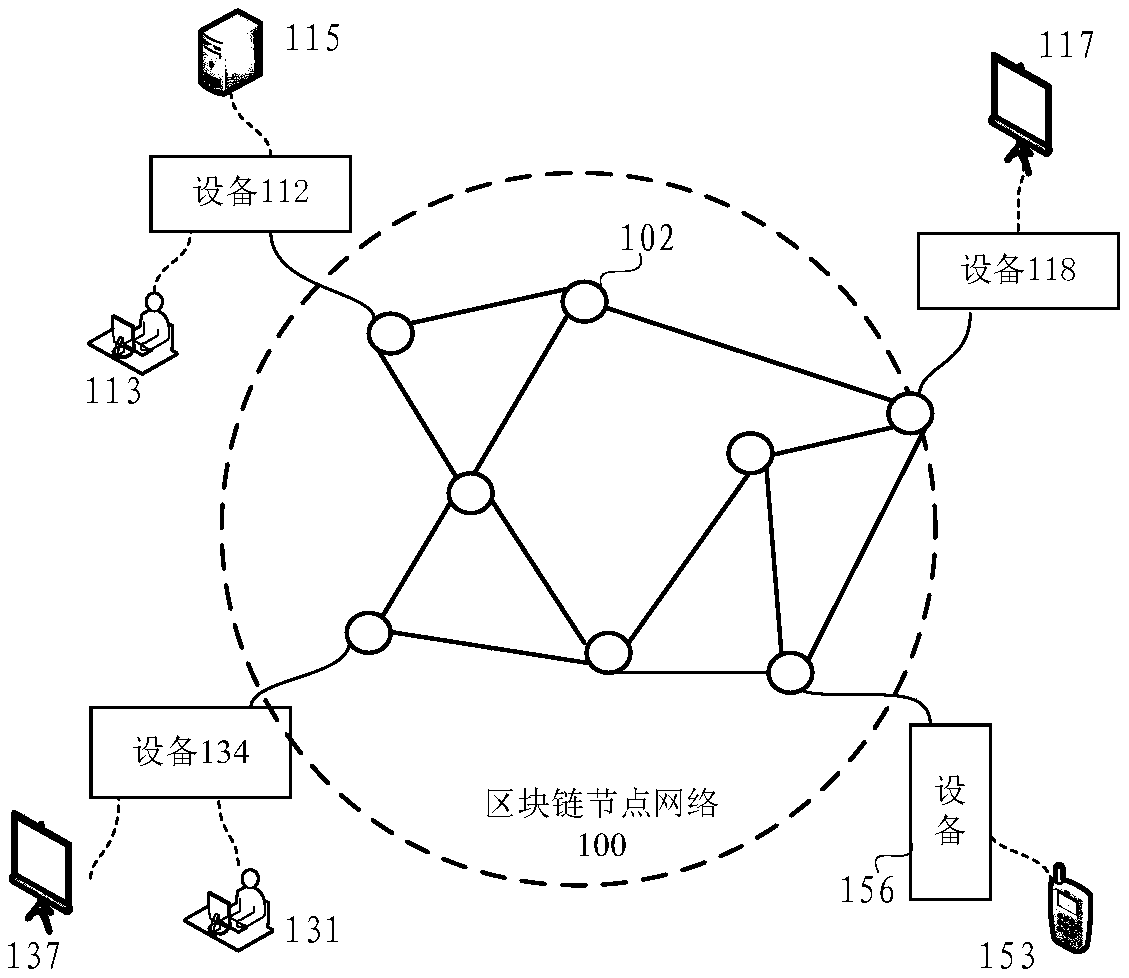

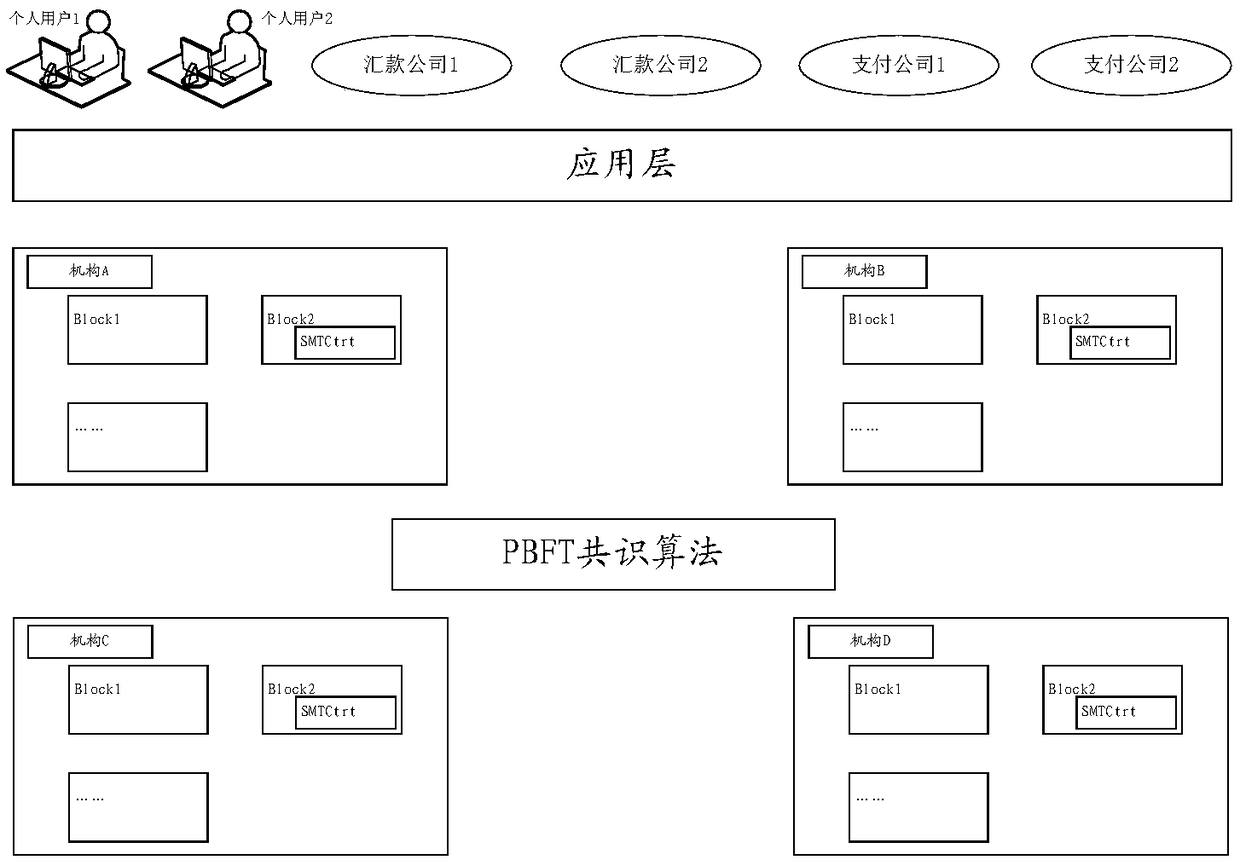

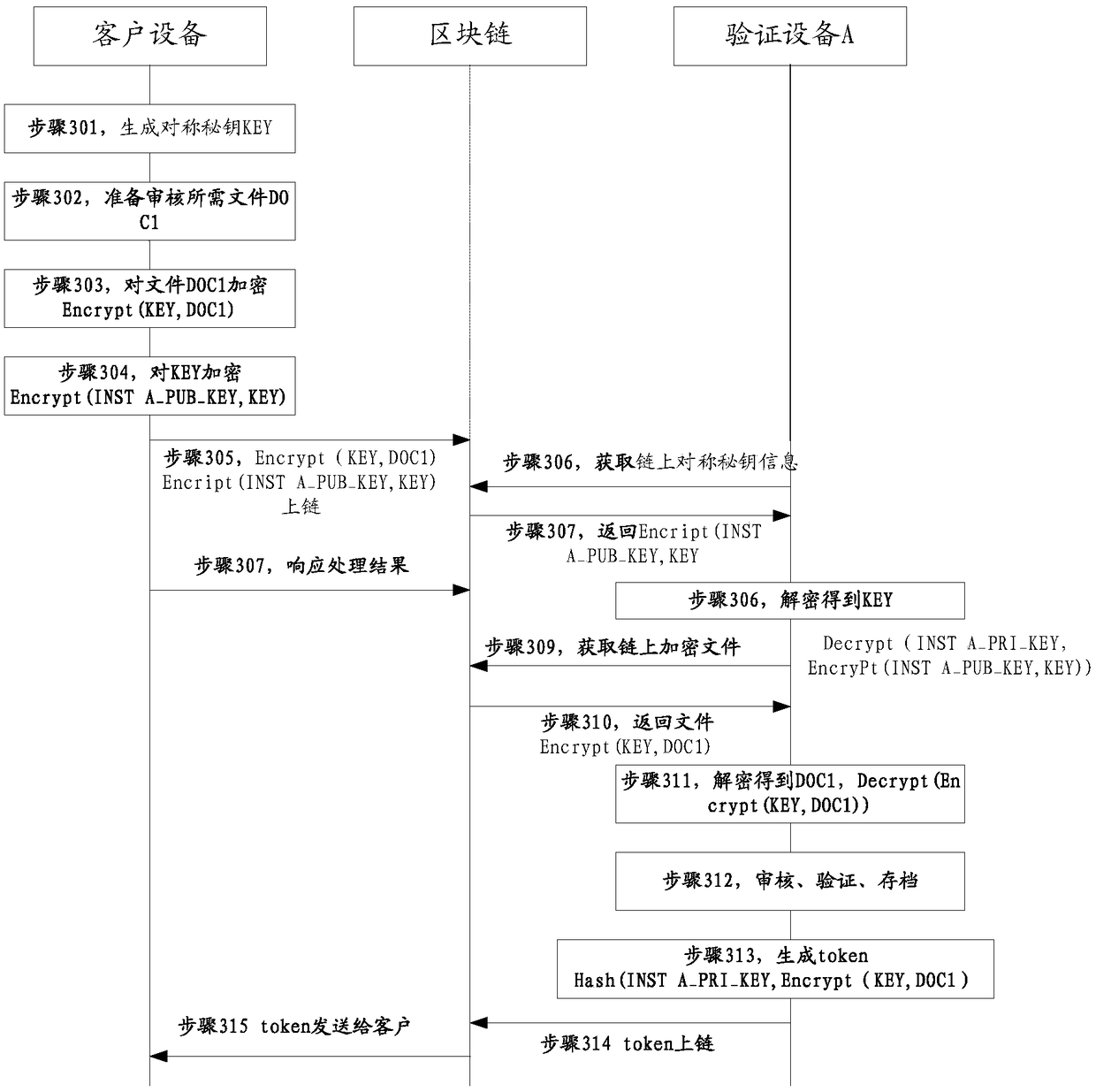

Inter-agency client verification method based on block chain, transaction supervision method and device

ActiveCN108765240AAuthentication is convenientSimplify the calling processData processing applicationsUser identity/authority verificationInter agencyEngineering

The embodiment of the invention provides an inter-agency client verification method based on a block chain, a transaction supervision method and device. In one embodiment of the invention, the equipment located by the client encrypts a file by utilizing a symmetric key, and the encrypted file and the symmetric key encrypted by utilizing the mechanism A public key are uploaded to the block chain; the equipment of the client acquires a token issued by the mechanism A based on a checking result on the file; the equipment located by the client sends the token and the address of the encrypted fileon the block chain to the equipment of the mechanism B, thereby accelerating the checking process on the file by the mechanism B. Through the method provided by the embodiment of the invention, the file calling process between agencies at present is simplified, and the hit rate of the anti-money laundering rule is increased.

Owner:ADVANCED NEW TECH CO LTD

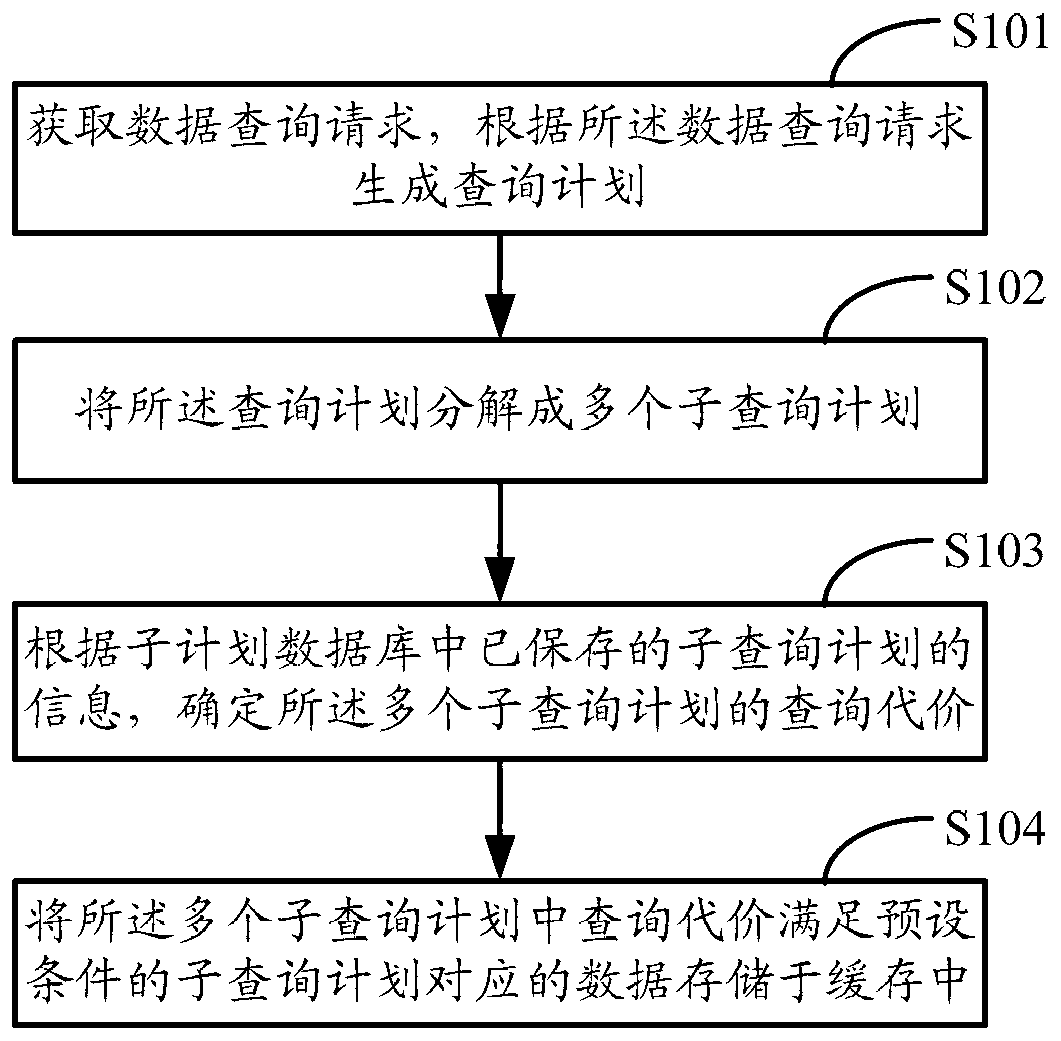

Method and device for processing data

ActiveCN103324724AIncrease valueImprove effectivenessSpecial data processing applicationsQuery planData treatment

The invention relates to a method and a device for processing data. The method includes acquiring a data query request and generating a query plan according to the data query request; decomposing the query plan into a plurality of sub-query plans; determining query costs of the multiple sub-query plans according to information of sub-query plans stored in a sub-query database; storing data corresponding to certain sub-query plans among the multiple sub-query plans in a cache. The query costs of the certain sub-query plans meet preset conditions. The method and the device for processing the data have the advantages that the cache can be sufficiently utilized for storing the data, the value realized by the cache is maximized, the validity of the data of the cache is improved, the hit rate of the data of the cache is increased, accordingly, the query response speed of OLAP (on-line analytical processing) is increased, and the query performance of the OLAP is improved.

Owner:HUAWEI TECH CO LTD

Prioritizing and locking removed and subsequently reloaded cache lines

InactiveUS6901483B2Low cache hit rateInefficiency described above will be avoidedMemory adressing/allocation/relocationCache hierarchyParallel computing

Owner:INT BUSINESS MASCH CORP

MAC address learning apparatus

InactiveUS7400634B2Saturation of the MAC address learning table at the side of the first networkAvoid saturationMultiple digital computer combinationsNetworks interconnectionCarrier signalHit rate

In a network providing a wide-area LAN service which allows communications to be performed between user networks (12, 13) via a carrier network (11), edge switches (14, 15) each comprise: a tentative learning registration means (16) which, when a packet whose source MAC address and receiving port are not yet learned is received from the carrier network, registers the source MAC address and the receiving port in a tentatively learned state; a learned state changing means (17) which changes the learned state of the destination MAC address of a packet received from an associated one of the user networks to a permanently learned state; and a permanent learning registration means which, when a packet whose destination MAC address is registered in a learned state is received from the carrier network, registers the source MAC address and receiving port of the packet received from the carrier network in a permanently learned state. The invention thus provides a MAC address learning apparatus that improves the hit rate in a MAC address learning table maintained in each of the edge switches (14, 15) provided in a wide-area VLAN carrier network.

Owner:FUJITSU LTD

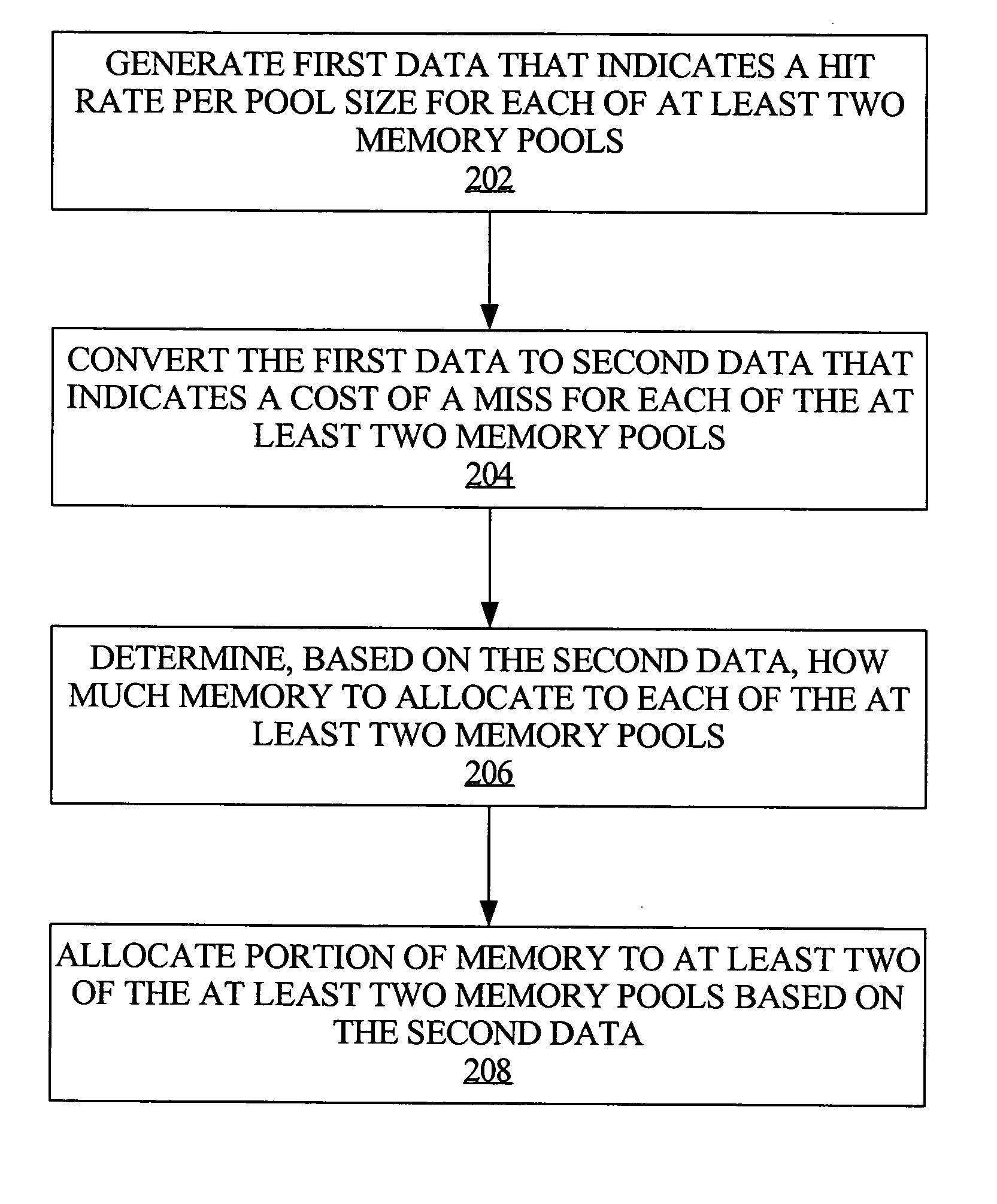

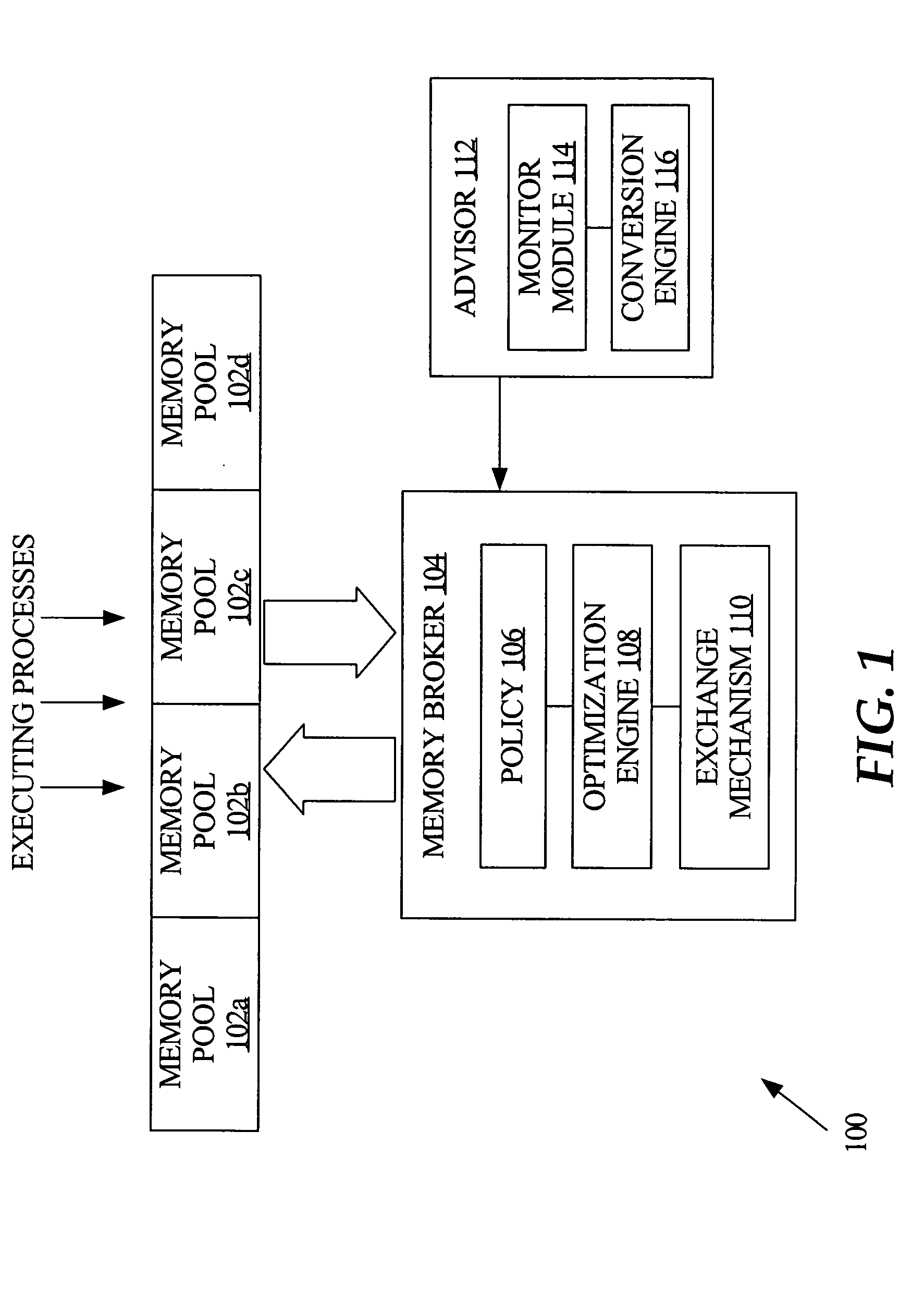

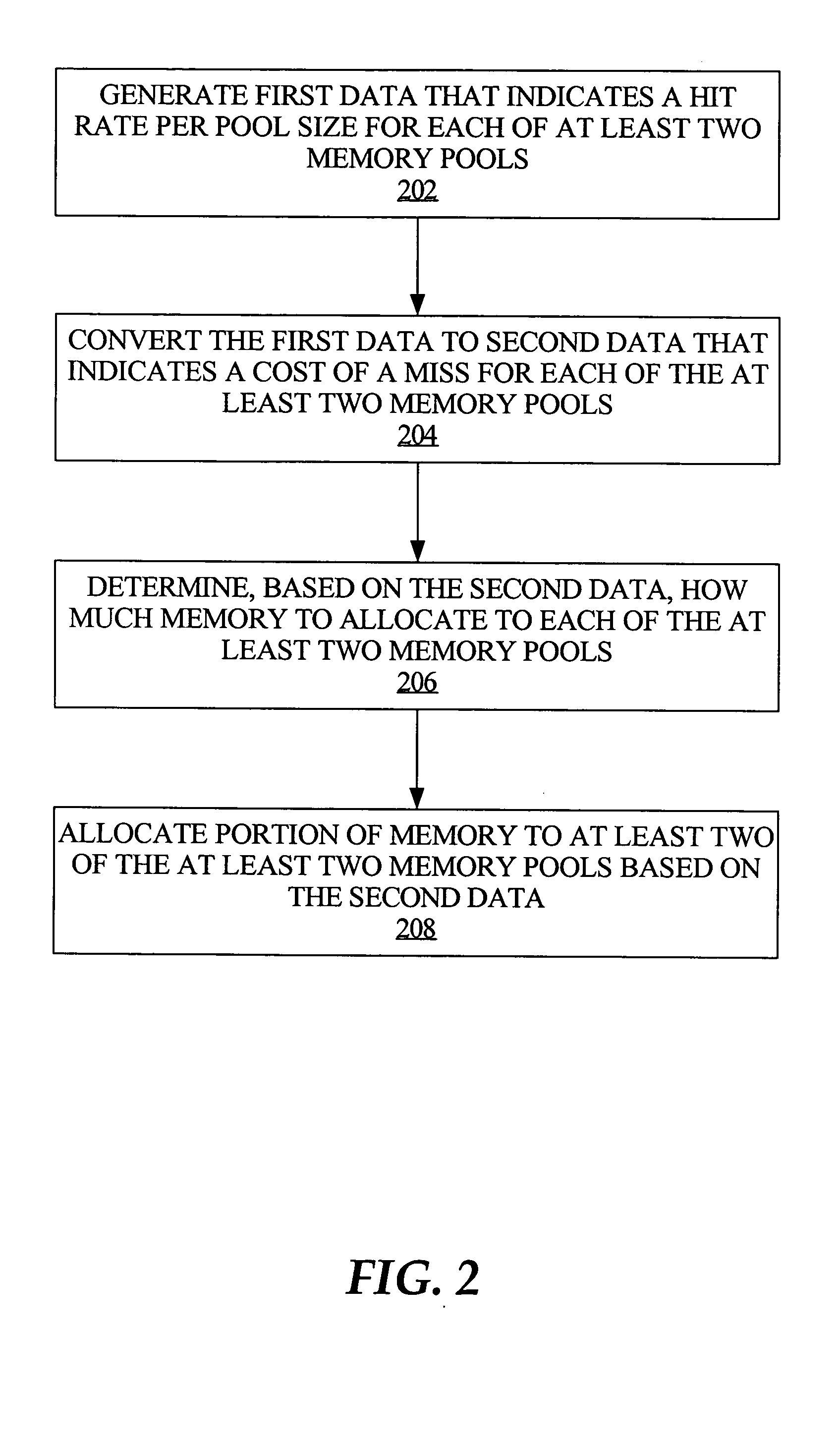

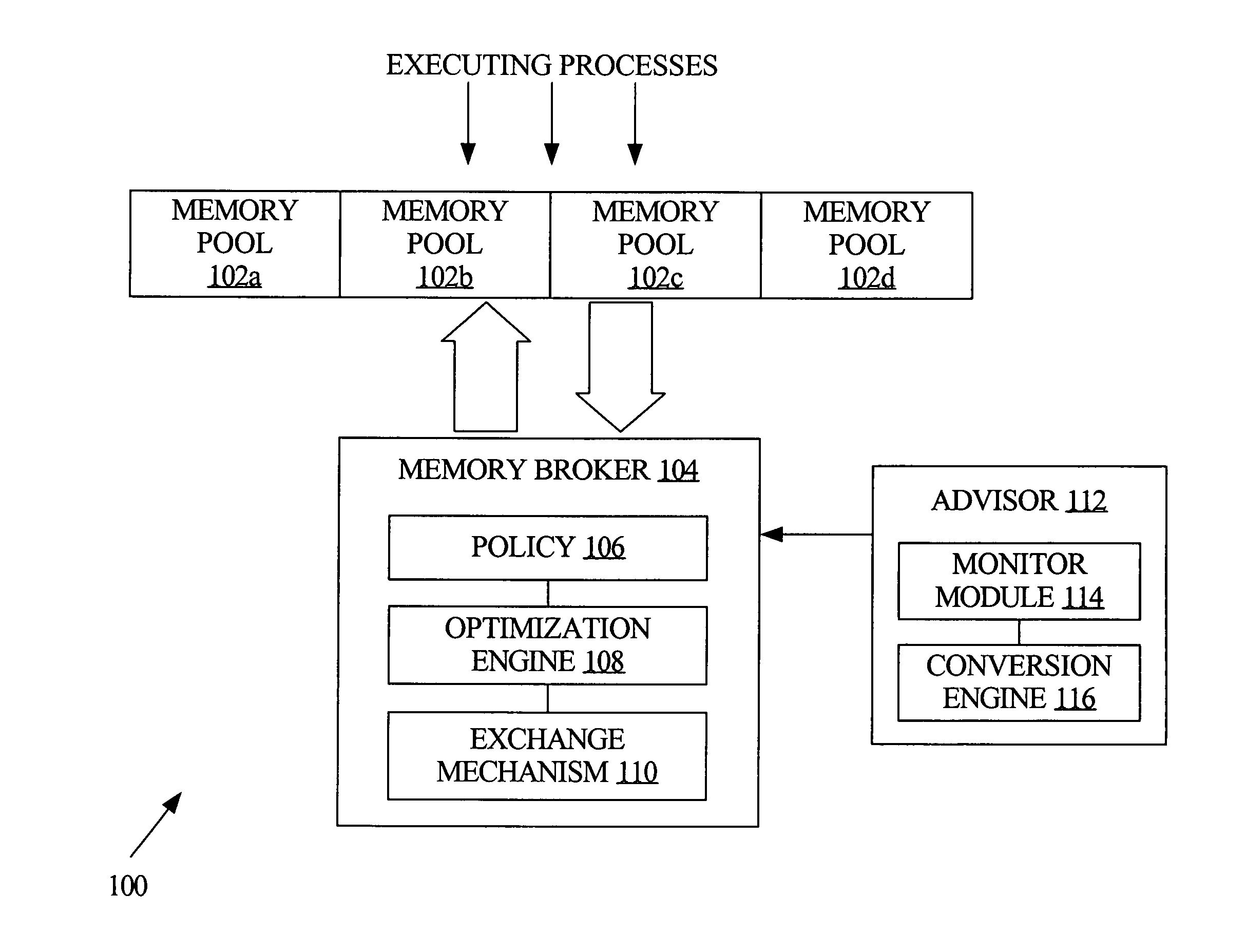

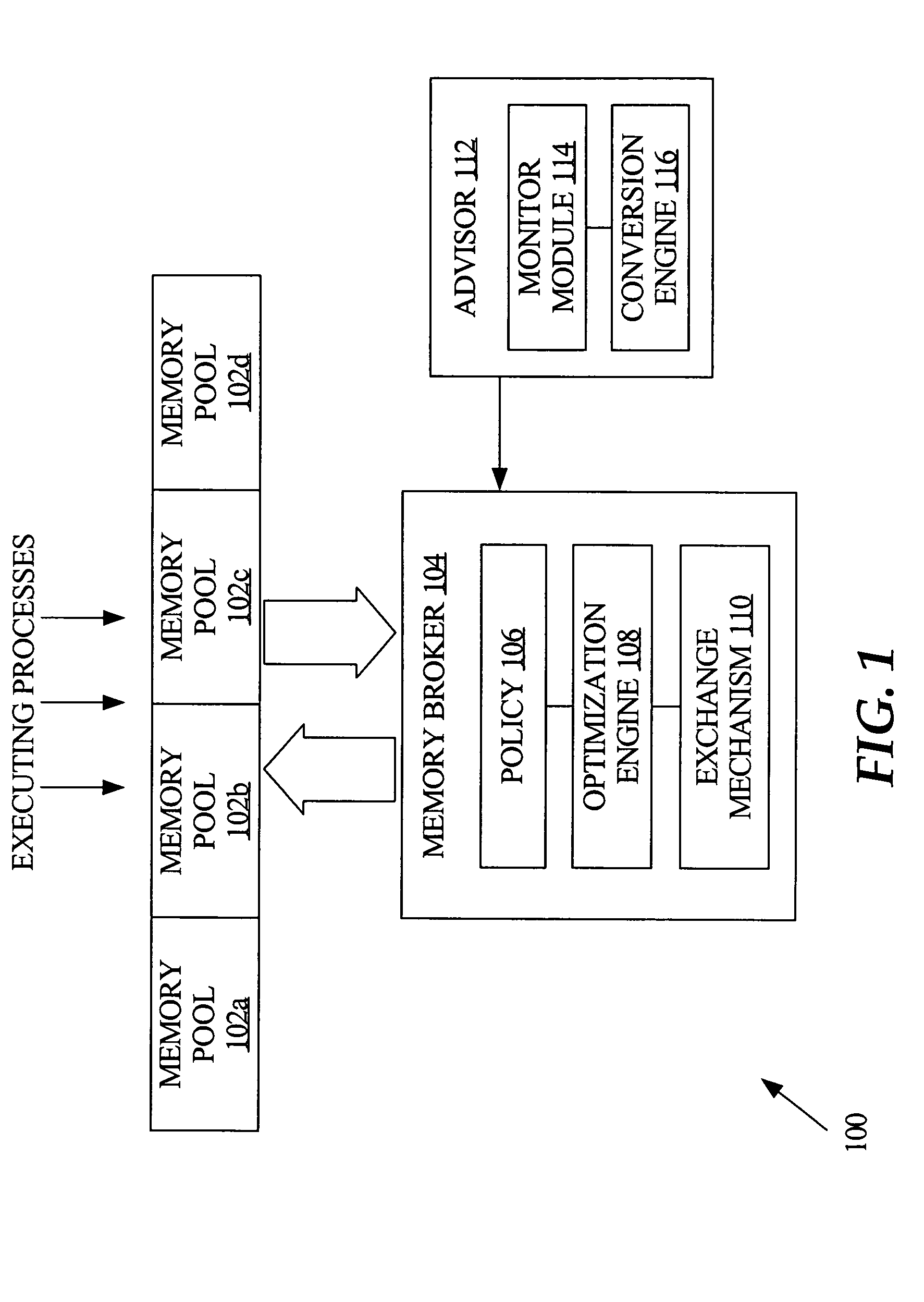

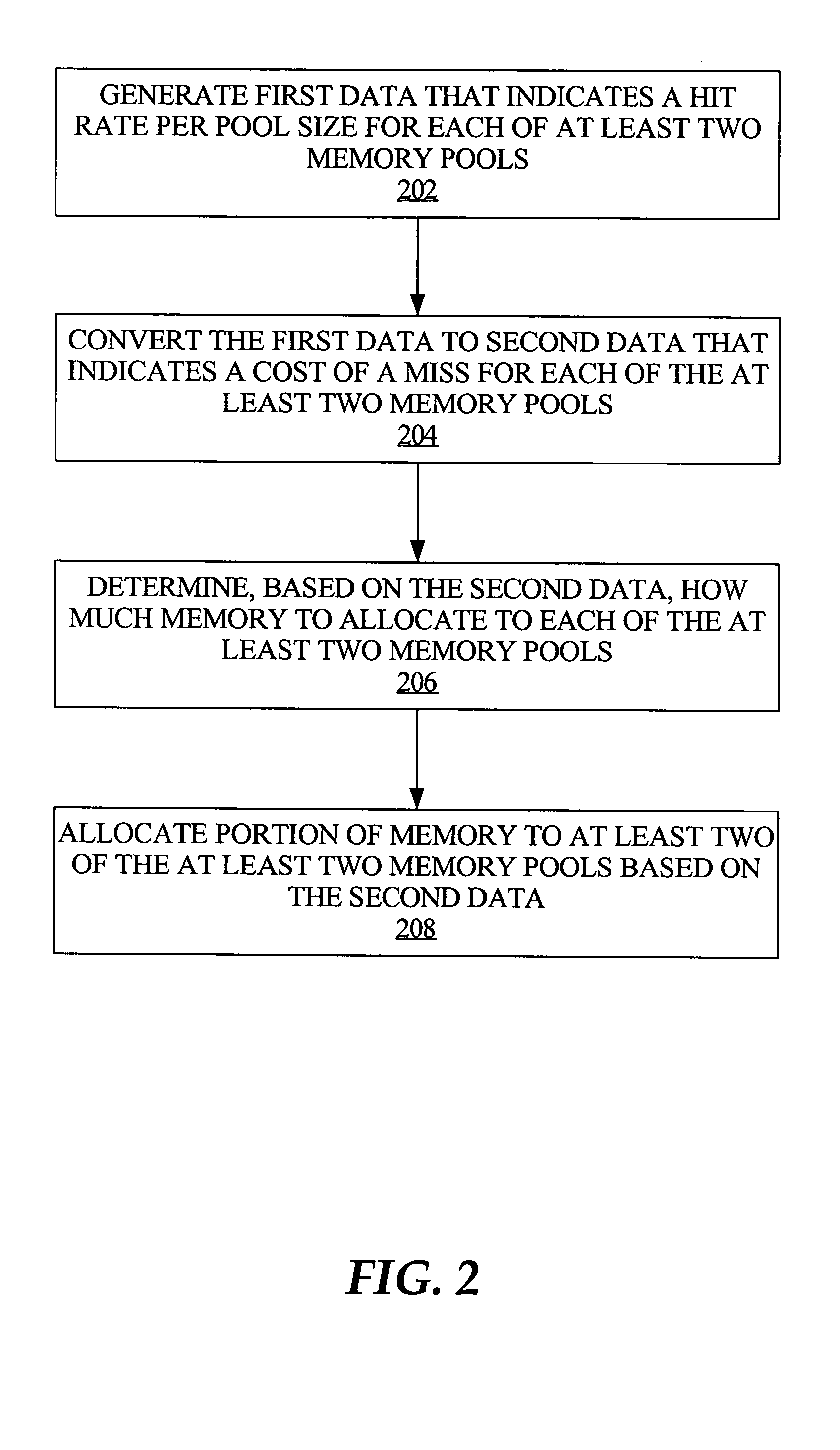

Techniques for automated allocation of memory among a plurality of pools

ActiveUS20050114621A1Resource allocationDigital data processing detailsParallel computingTerm memory

Allocation of memory is optimized across multiple pools of memory, based on minimizing the time it takes to successfully retrieve a given data item from each of the multiple pools. First data is generated that indicates a hit rate per pool size for each of multiple memory pools. In an embodiment, the generating step includes continuously monitoring attempts to access, or retrieve a data item from, each of the memory pools. The first data is converted to second data that accounts for a cost of a miss with respect to each of the memory pools. In an embodiment, the second data accounts for the cost of a miss in terms of time. How much of the memory to allocate to each of the memory pools is determined, based on the second data. In an embodiment, the steps of converting and determining are automatically performed, on a periodic basis.

Owner:ORACLE INT CORP

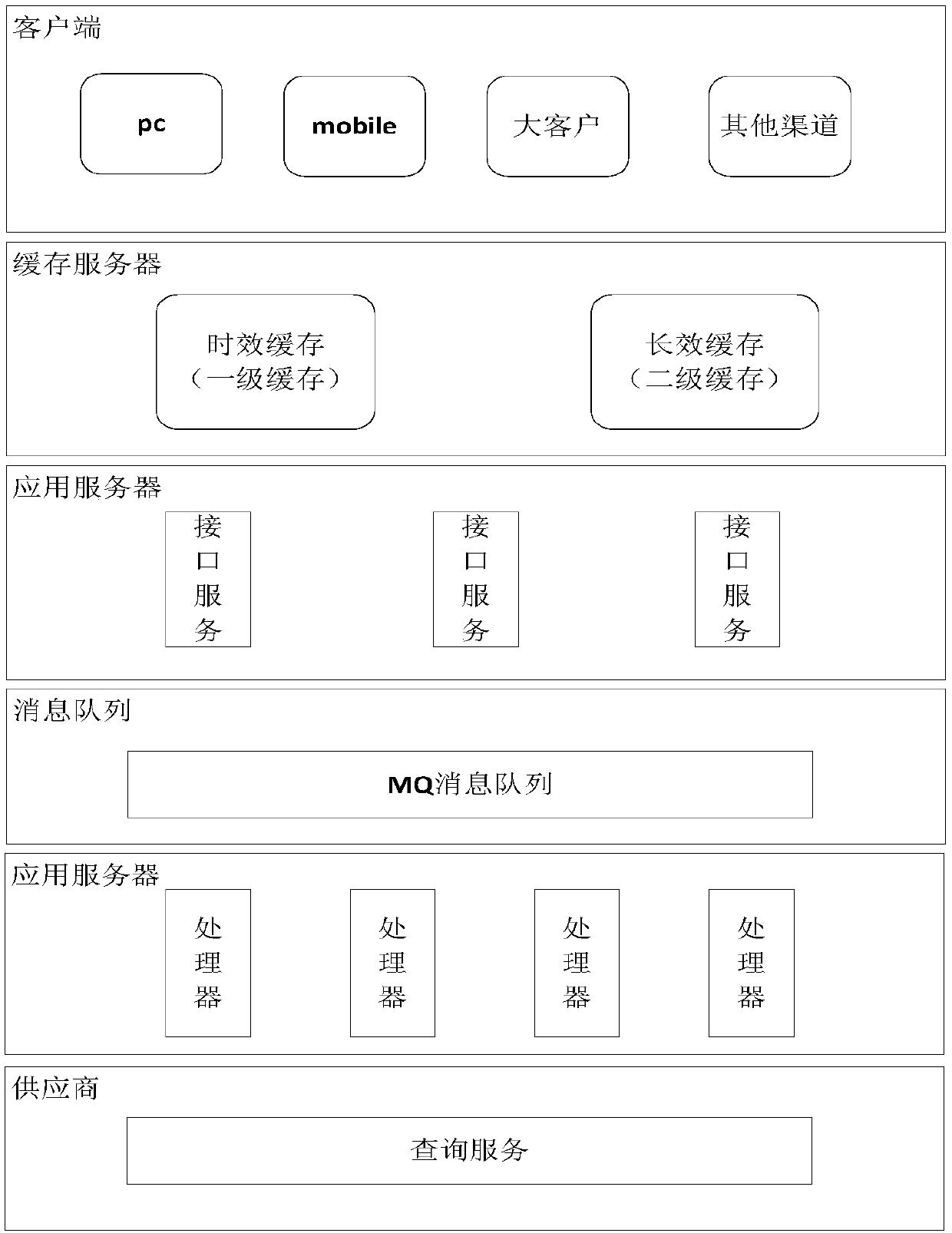

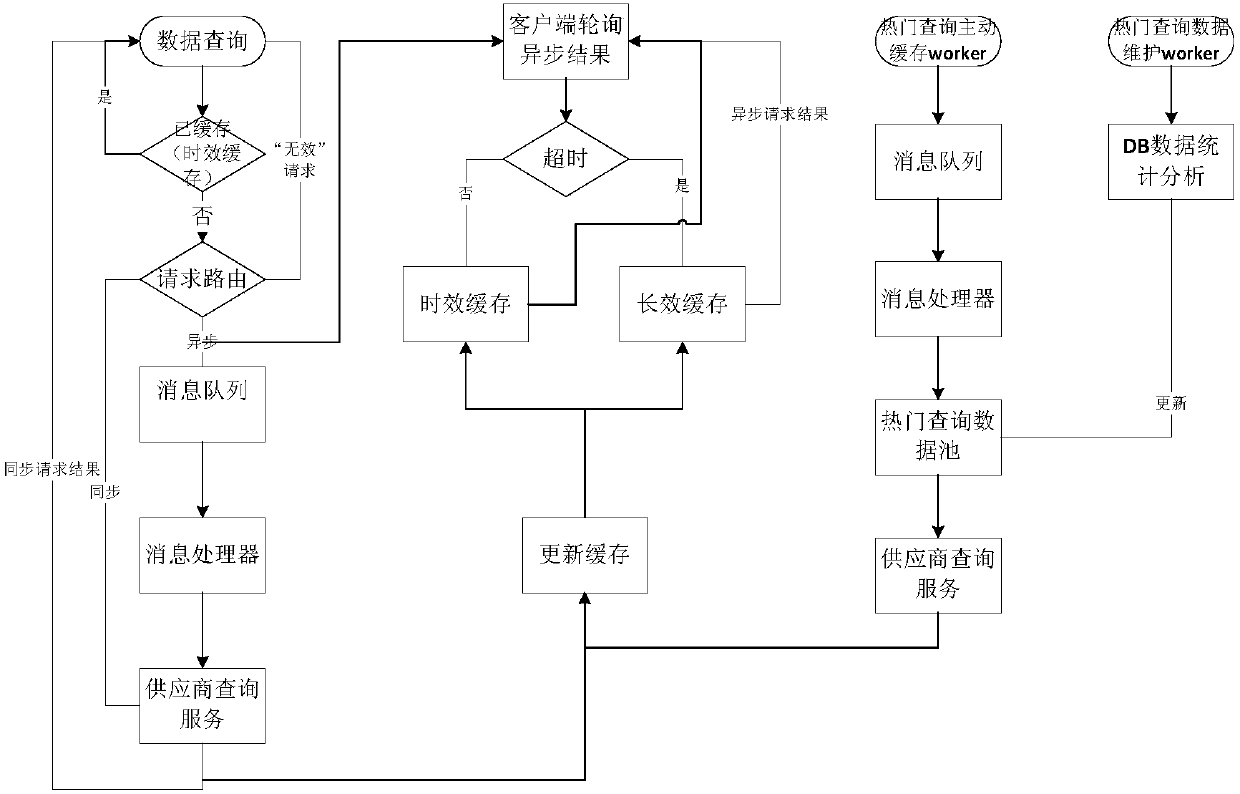

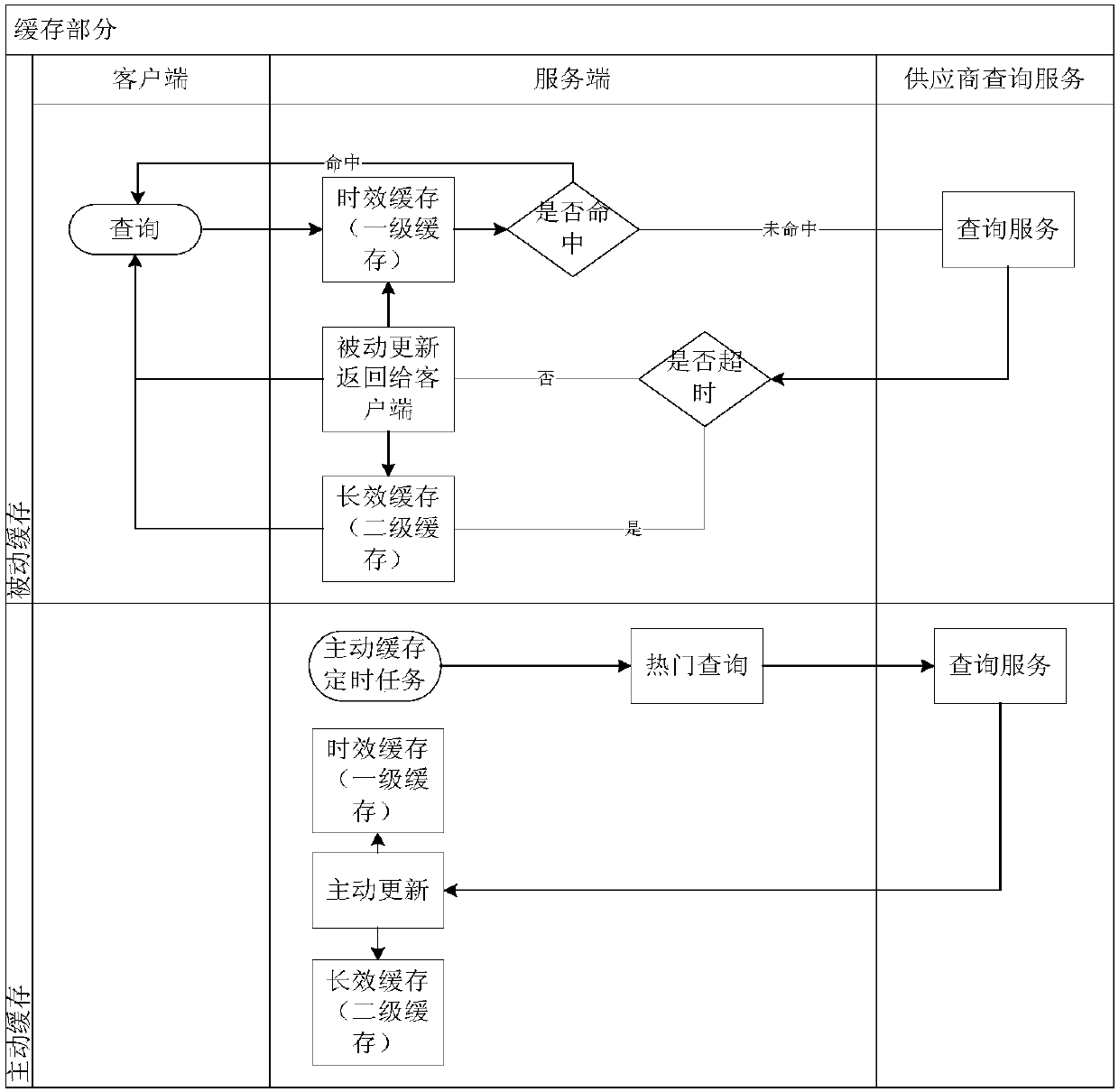

Data query method and device

ActiveCN109684358AImprove usabilityIncrease risk resistanceDigital data information retrievalSpecial data processing applicationsCache hit rateData query

The invention provides a data query method and a data query device, which can dynamically analyze hot queries, improve the cache hit rate and reduce the delay of query response by adopting a mode of coexistence of a first-level cache and a second-level cache, and can improve the accuracy of cached data by utilizing a data updating form of combination of an active cache and a passive cache. The method comprises the following steps: querying data from a first-level cache according to a received query request, and returning the data if the data is queried; Otherwise, continuing to call the external query service interface to perform data query; if the query is not overtime, returning the queried data; updating the inquired data into a first-level cache and a second-level cache; and if the query is overtime, querying data from a second-level cache and returning the data, the first-level cache having a first preset data expiration deletion time, the second-level cache having a second presetdata expiration deletion time, and the first preset data expiration deletion time being less than the second preset data expiration deletion time.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

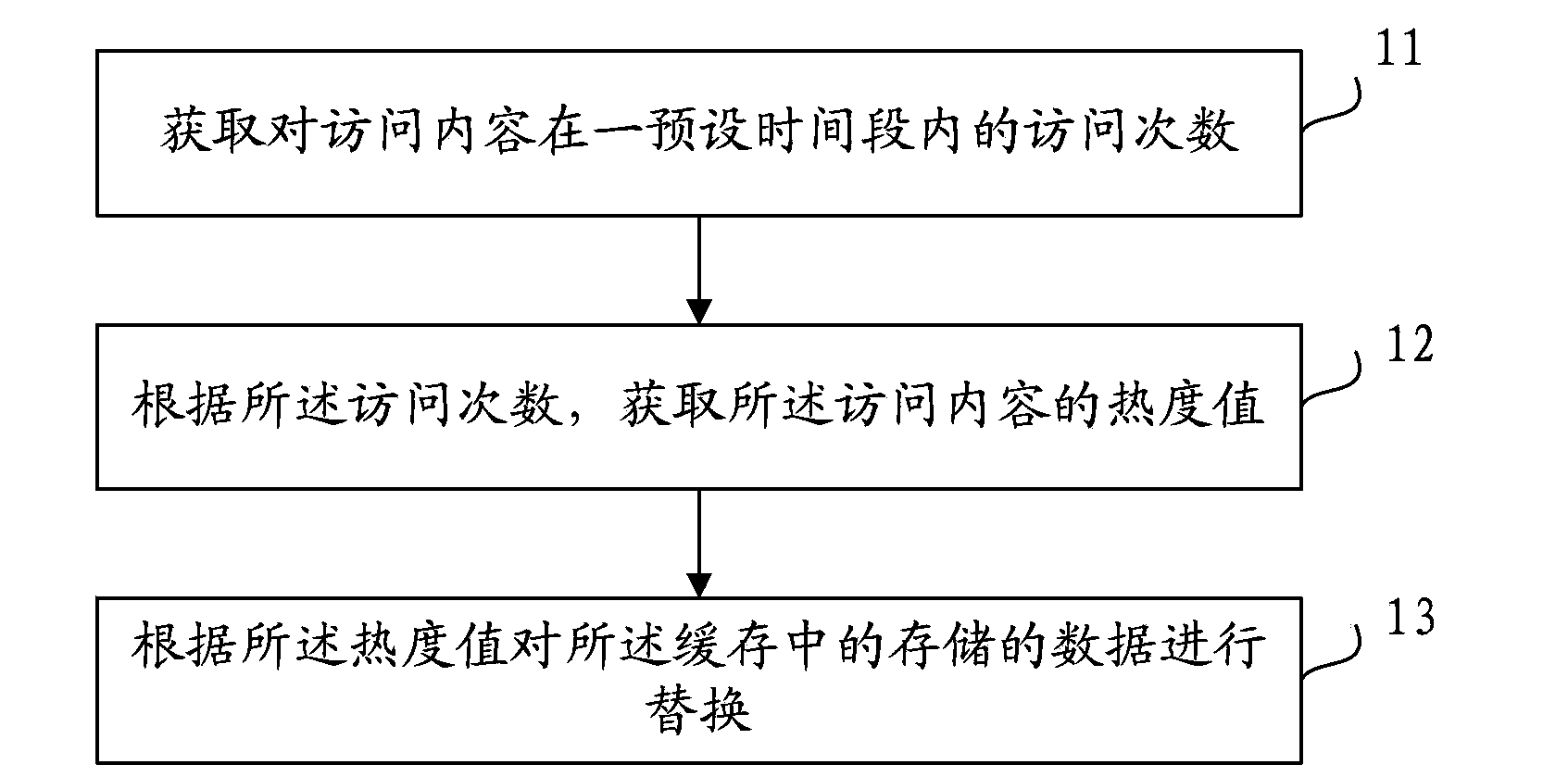

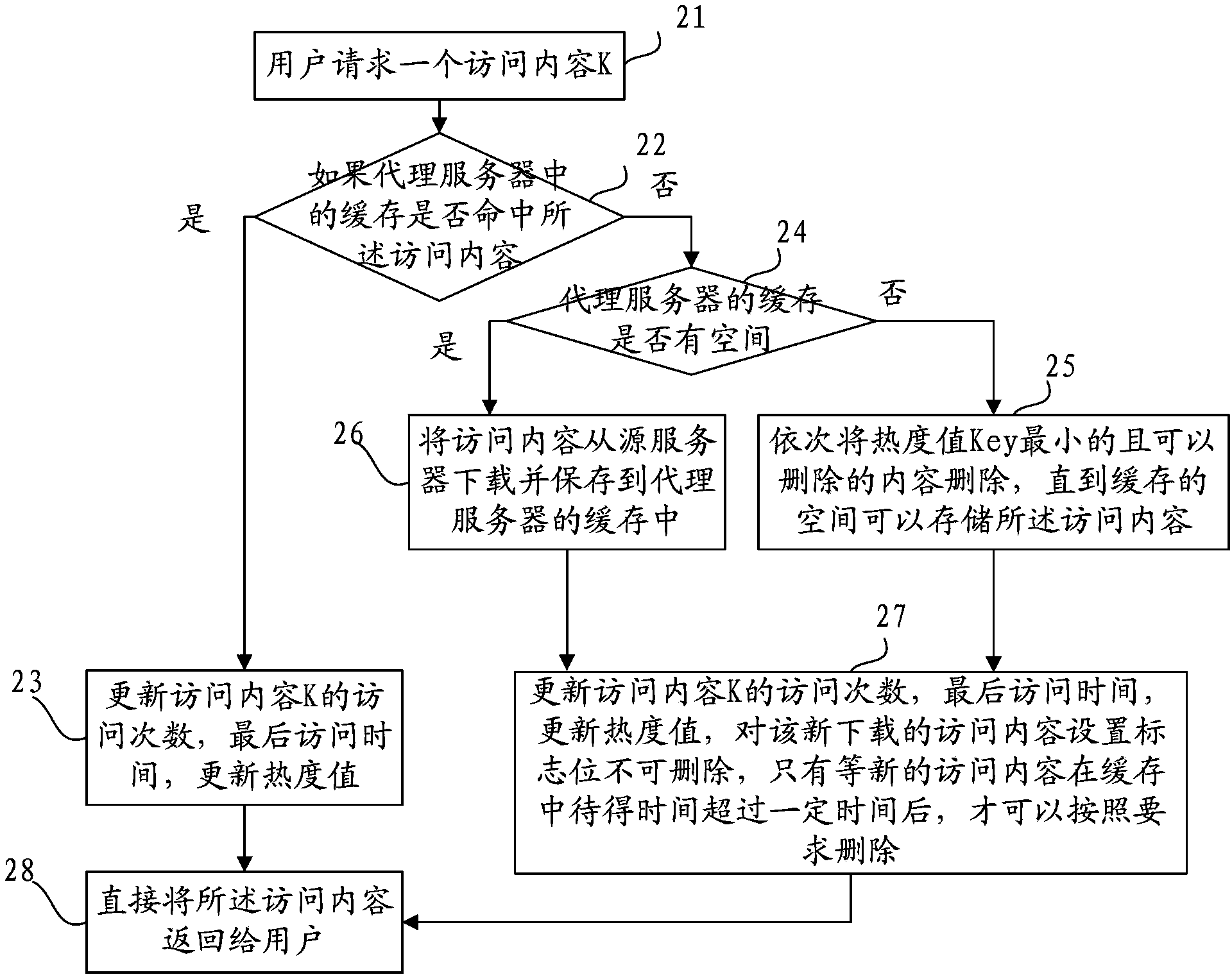

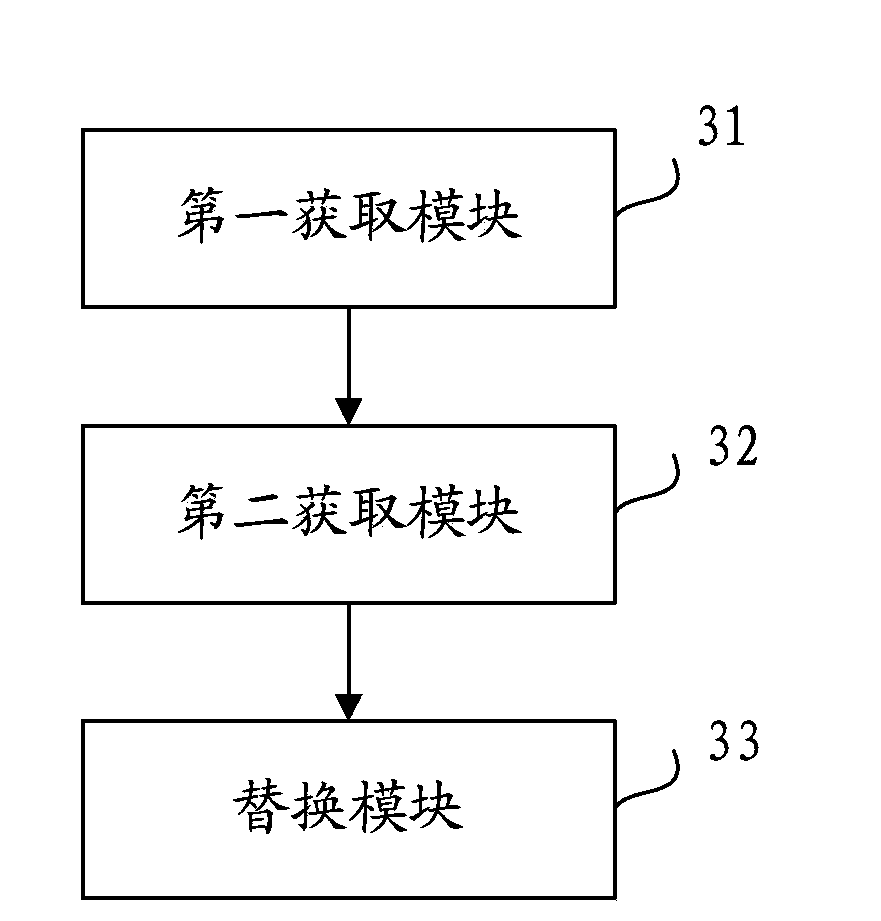

Method and device for replacing data in cache

ActiveCN104111900AHeat ForecastImprove hit rateMemory adressing/allocation/relocationVisit timeData storing

The invention provides a method and a device for replacing data in a cache. The method comprises the following steps of acquiring visit times of visited contents within a preset time period; acquiring heat value of the visited contents according to the visit times; and replacing the data stored in the cache according to the heat value. By the scheme, heat of the visited contents can be accurately predicted, and the hit rate of the cache is increased.

Owner:CHINA MOBILE COMM GRP CO LTD

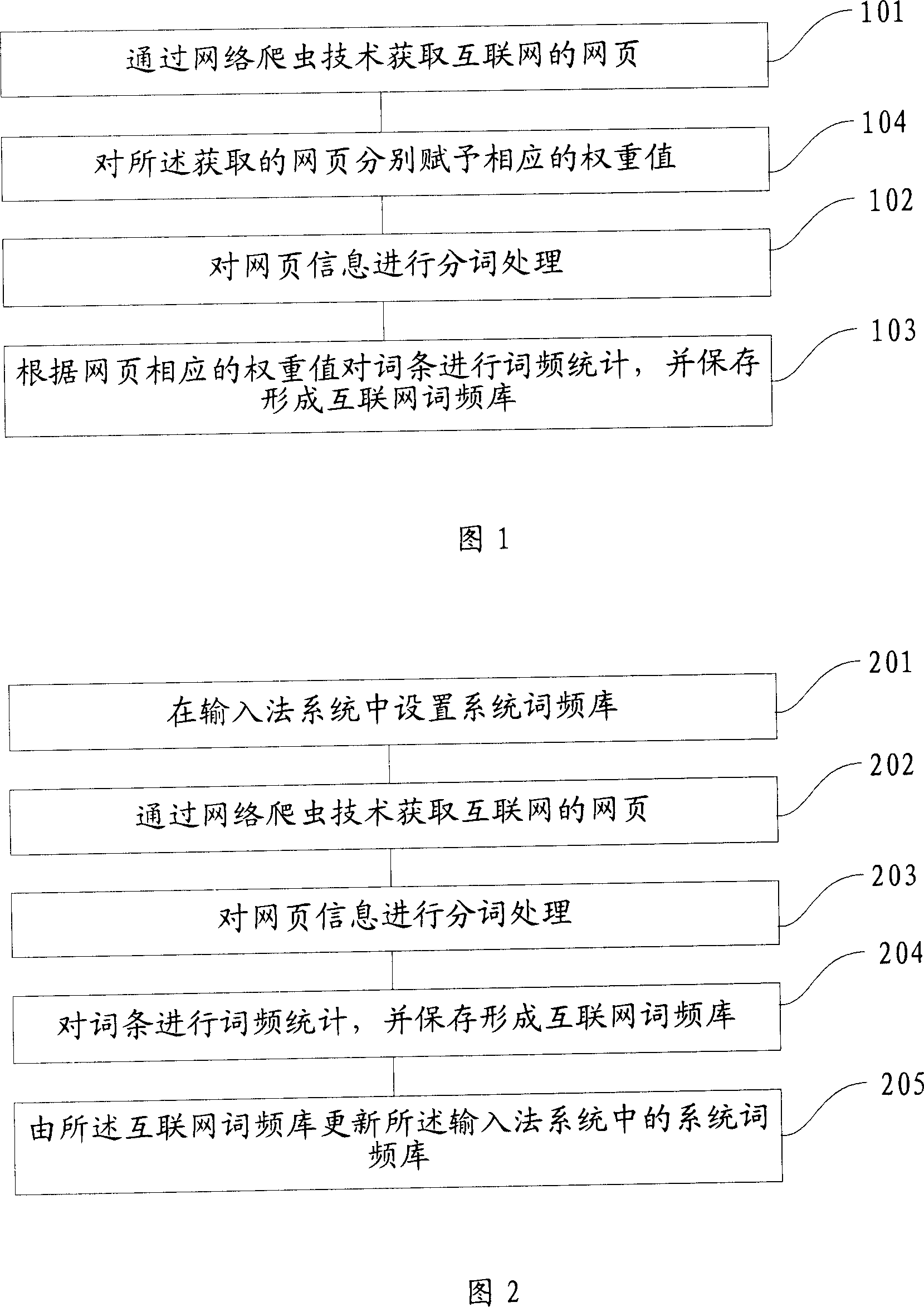

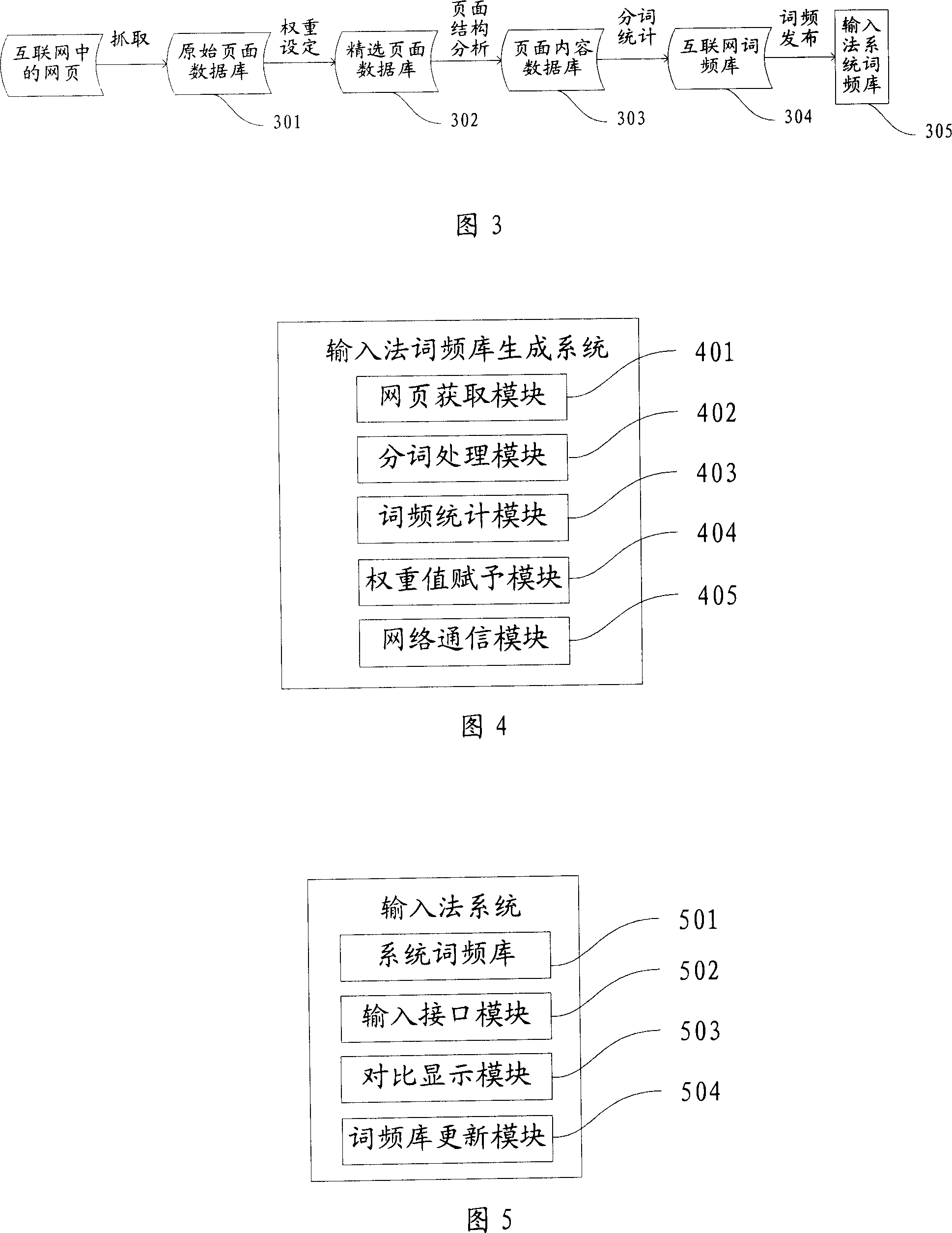

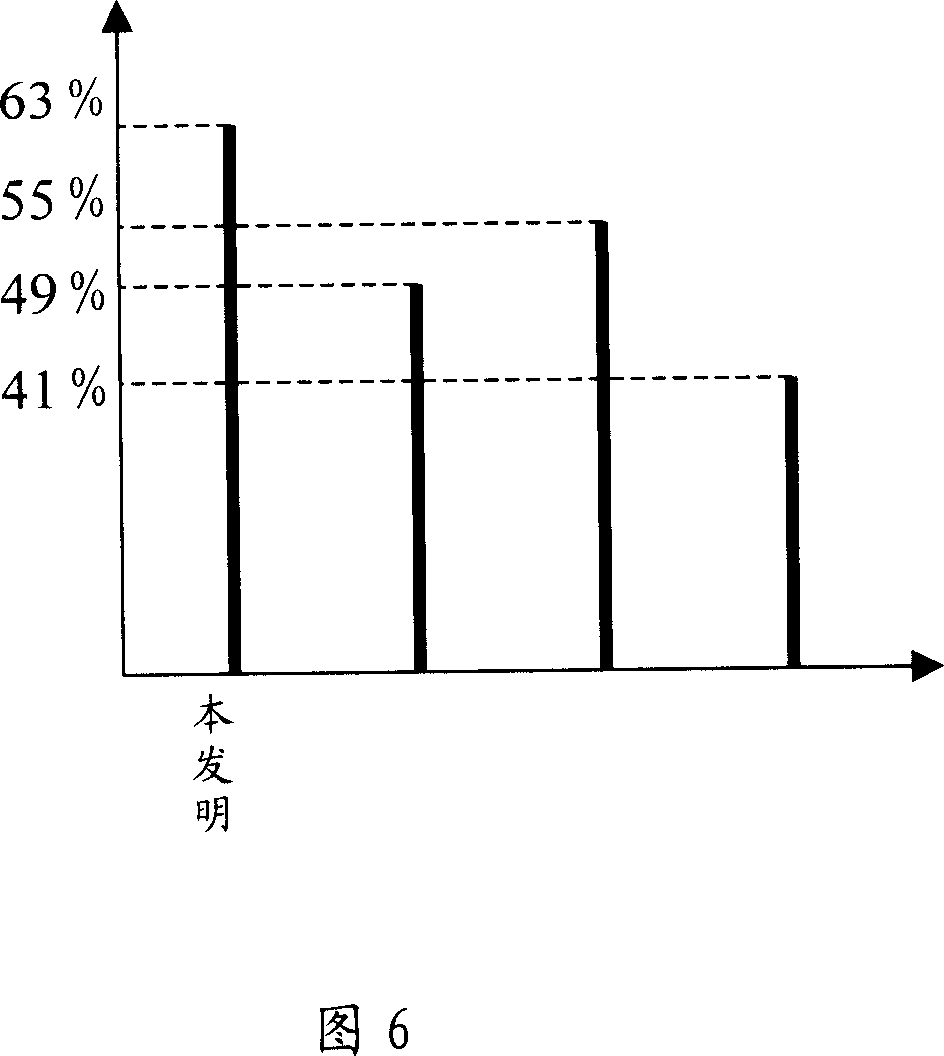

Method and system for generating input-method word frequency base based on internet information

ActiveCN1936893AImprove hit rateIncrease typing speedWeb data indexingSpecial data processing applicationsAlgorithmThe Internet

The method includes following procedures: using technique of network crawler to obtain web pages of Internet; carrying out procedure of dividing words for information of web pages; carrying out statistics of word frequency for vocabulary entry, and saving statistical result so as to form Internet word frequency base. Using public real-time changeable information from Internet being as source of statistics of word frequency, the invention can create up to date, optimal information of word frequency. Through each convenient way, the method updates the word frequency base of system in input method system from the said optimal information of word frequency. Thus, information of word frequency base of system can be kept consistent to information in Internet. The invention raises hit rate of first selected word from user so as to raise input speed and efficiency.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

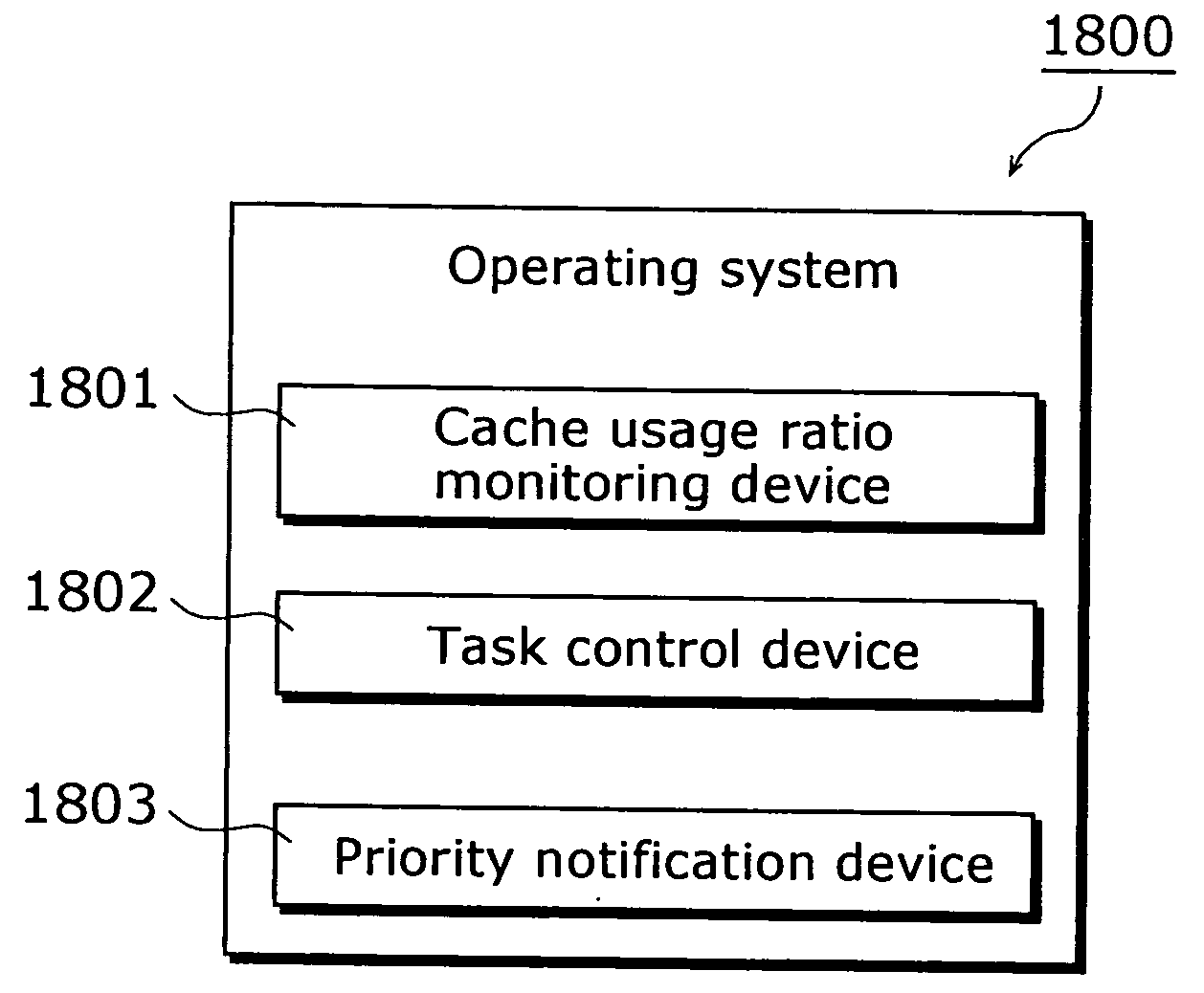

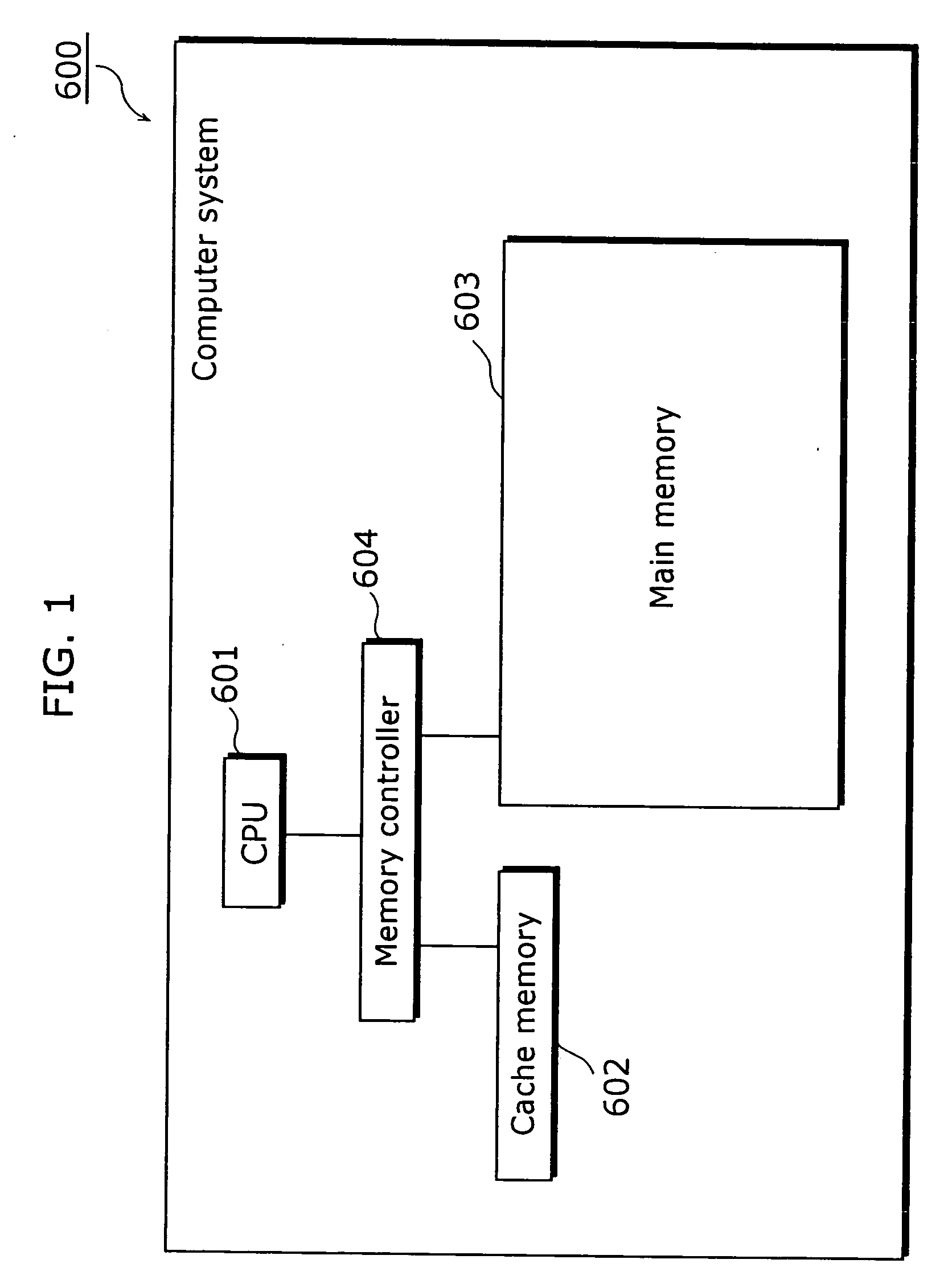

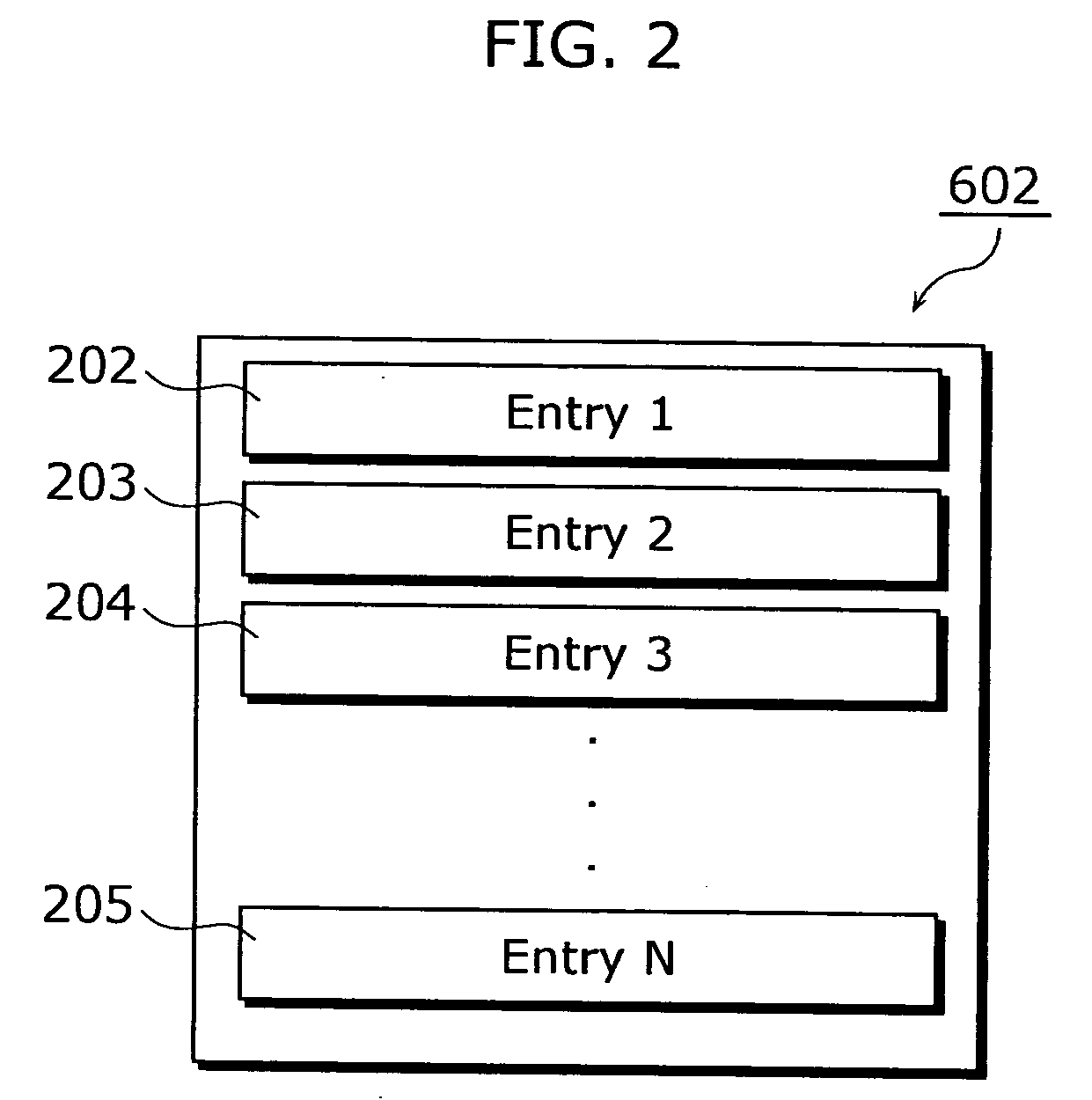

Computer system, compiler apparatus, and operating system

ActiveUS20050071572A1Improve hit rateMemory architecture accessing/allocationSoftware engineeringOperational systemParallel computing

A complier apparatus for a computer system that is capable of improving the hit rate of a cache memory is comprised of a prefetch target extraction device, a thread activation process insertion device, and a thread process creation device, and creates threads for performing prefetch and prepurge. Prefetch and prepurge threads created by this compiler apparatus perform prefetch and prepurge in parallel with the operation of the main program, by taking into consideration program priorities and the usage ratio of the cache memory.

Owner:SOCIONEXT INC

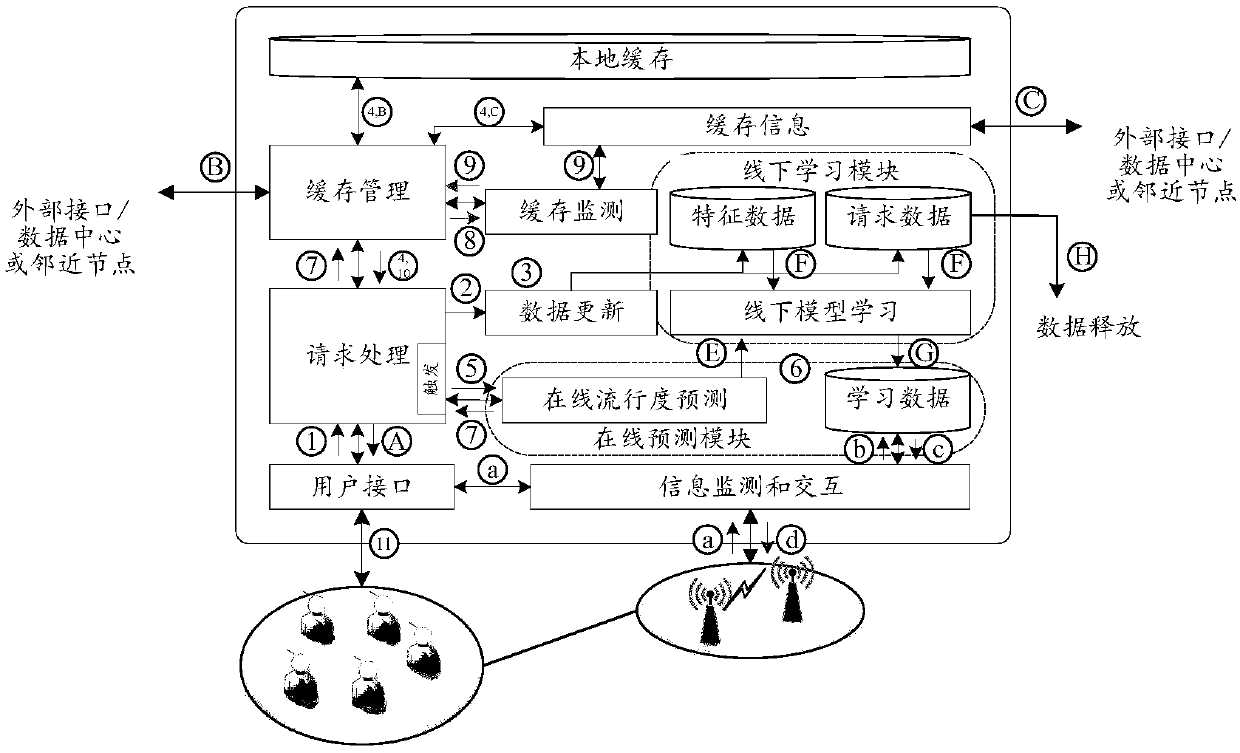

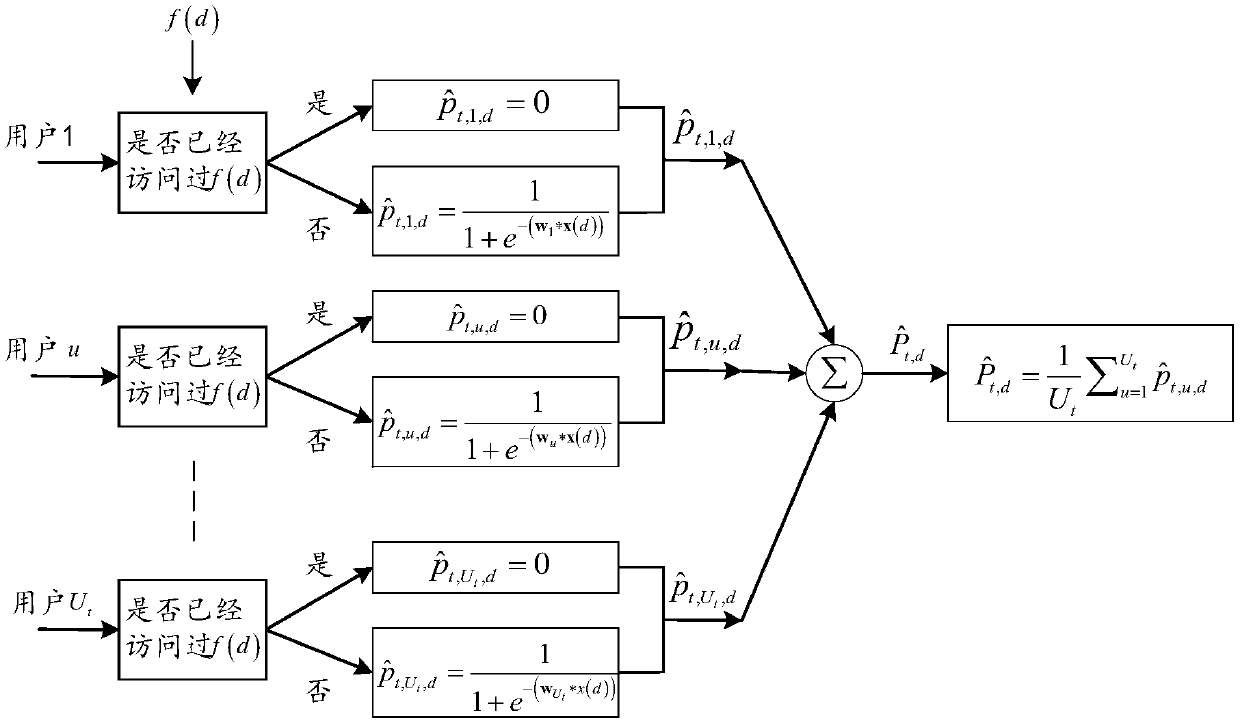

Edge caching system and method based on content popularity prediction

ActiveCN107909108AImprove accuracyImprove real-time performanceDatabase updatingCharacter and pattern recognitionEdge nodeCache hit rate

The invention discloses an edge caching system and method based on content popularity prediction. The method comprises the following steps that: (1) according to the historical request information ofa user, training the preference model of each user in a node coverage region offline; (2) when a request arrives, if requested contents are not in the presence in a cache region, on the basis of the preference model of the user, carrying out the on-line prediction of content popularity; (3) comparing a content popularity prediction value with the minimum value of the content popularity of the cache region, and making a corresponding caching decision; and (4) updating a content popularity value at a current moment, evaluating the preference model of the user, and determining whether the offlinelearning of the preference model of the user is started or not. By use of the method, an edge node can predict the content popularity online and track the change of the content popularity in real time, the corresponding caching decision is made on the basis of the predicted content popularity, so that the edge node is guaranteed to continuously cache hot contents, and a caching hit rate which approaches to an ideal caching method is obtained.

Owner:SOUTHEAST UNIV

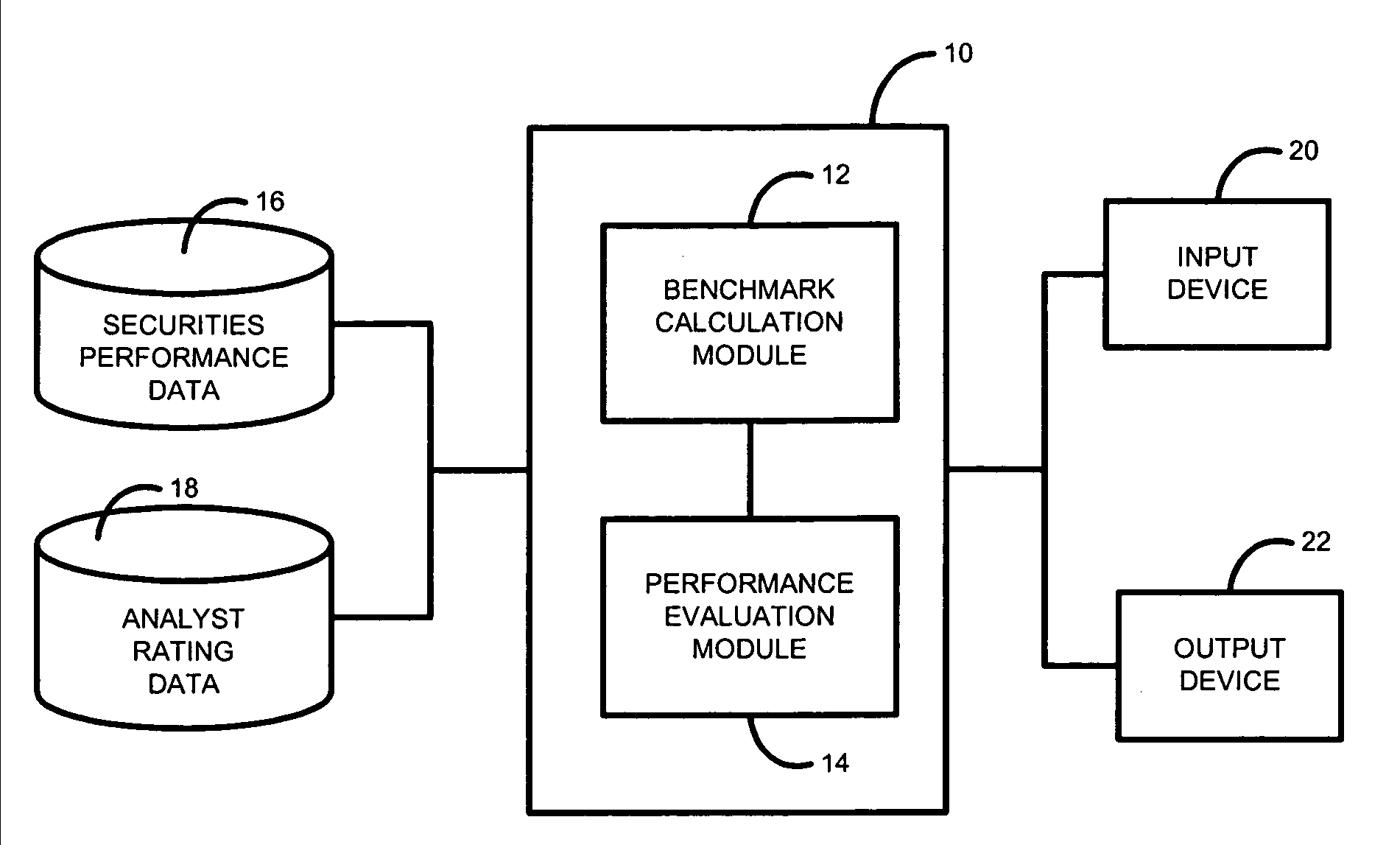

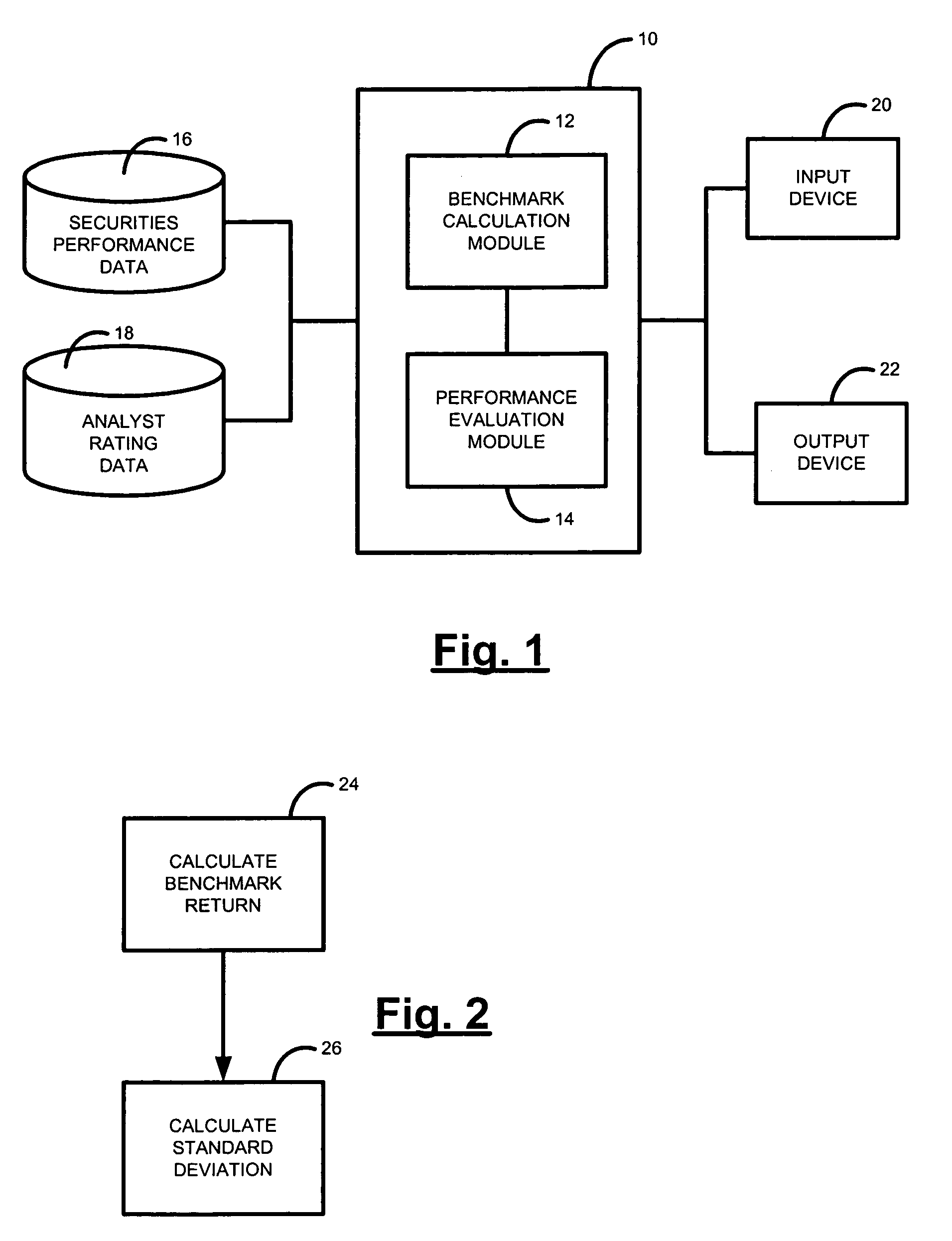

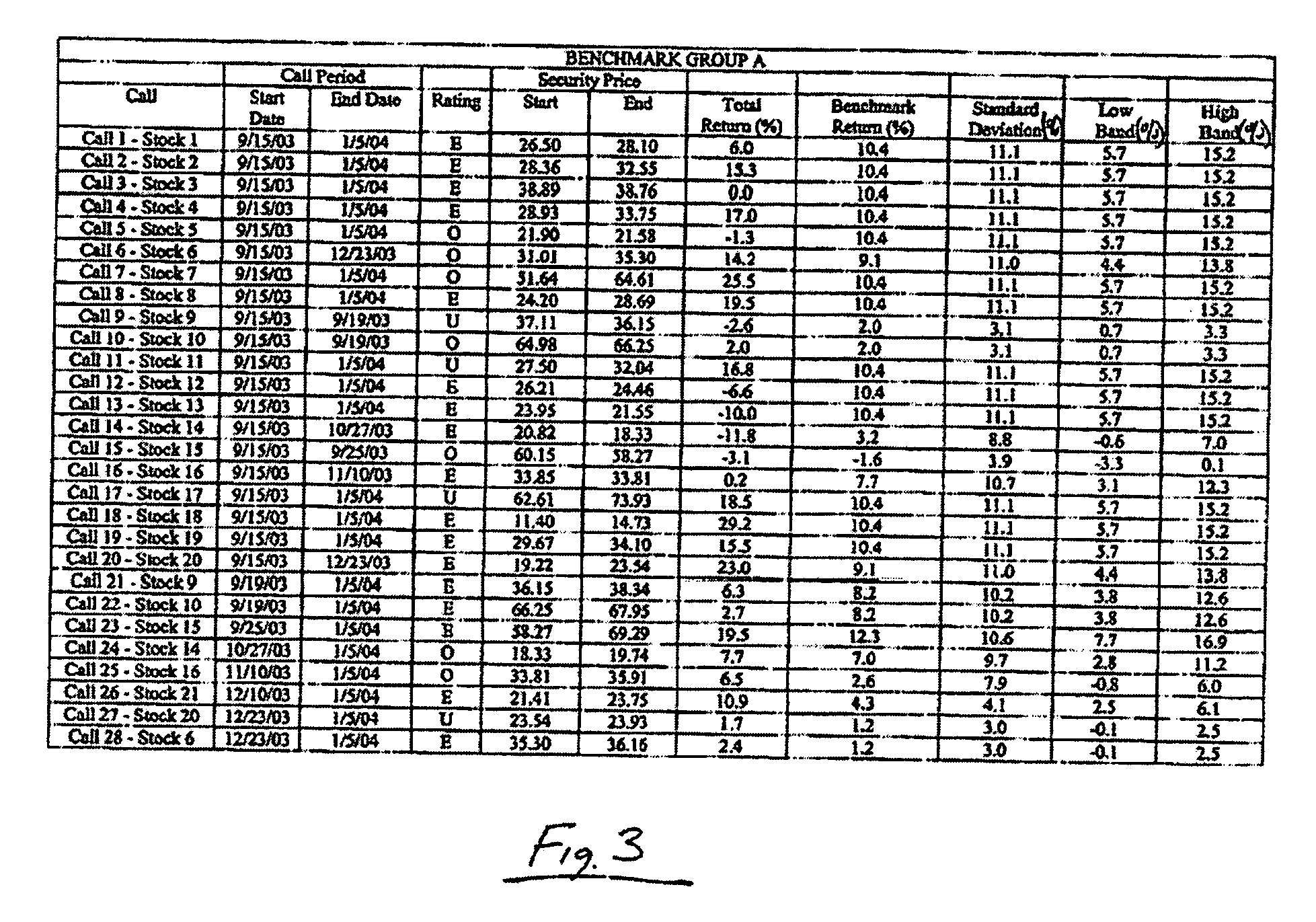

Method and system for evaluating the investment ratings of a securities analyst

Systems and methods for measuring the performance of calls on securities by a securities analyst during an evaluation period are disclosed. According to various embodiments, the system may include a performance evaluation module. The performance evaluation module is for determining a value (called the “hit ratio”) indicative of the success of the calls by the analyst for securities within a benchmark group of securities within an industry covered by the analyst relative to a benchmark return for the benchmark group for the corresponding call periods of the calls. The hit ratio may be computed as the ratio of the sum of the actual excess returns to the sum of the total available excess returns for each call by the analyst over the call evaluation period. The contributions to the hit ratio by each call may be equally weighted or weighted according to market capitalization of the rated security.

Owner:MORGAN STANLEY

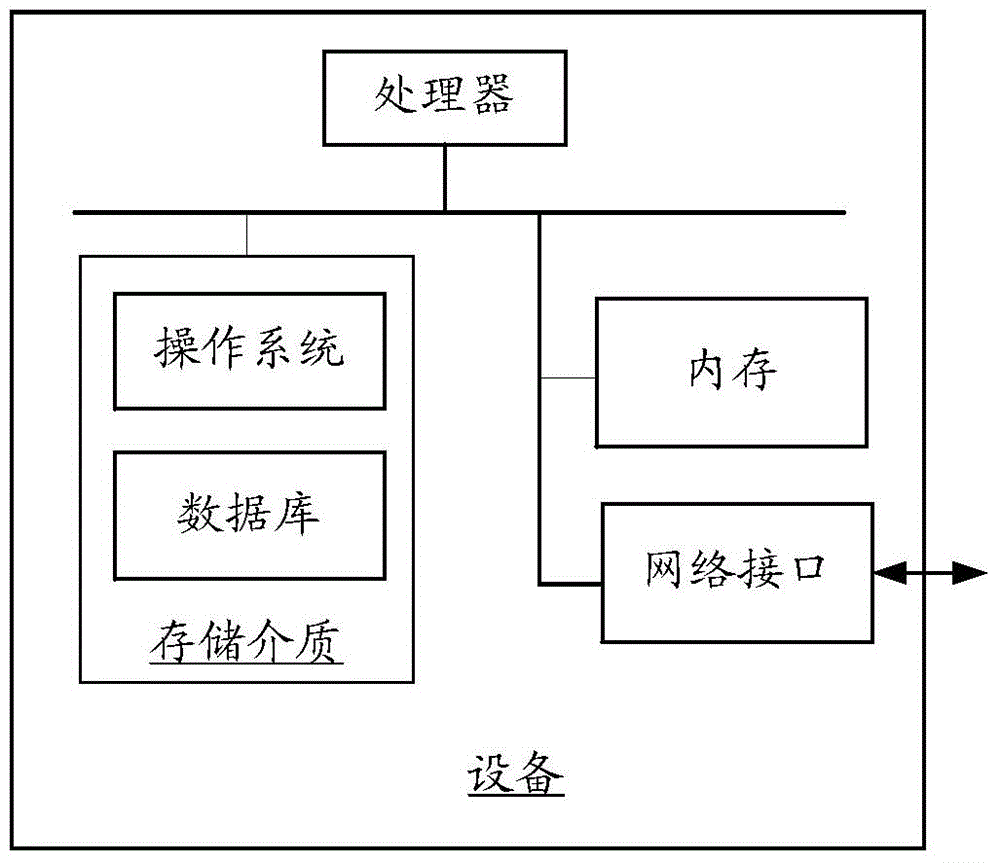

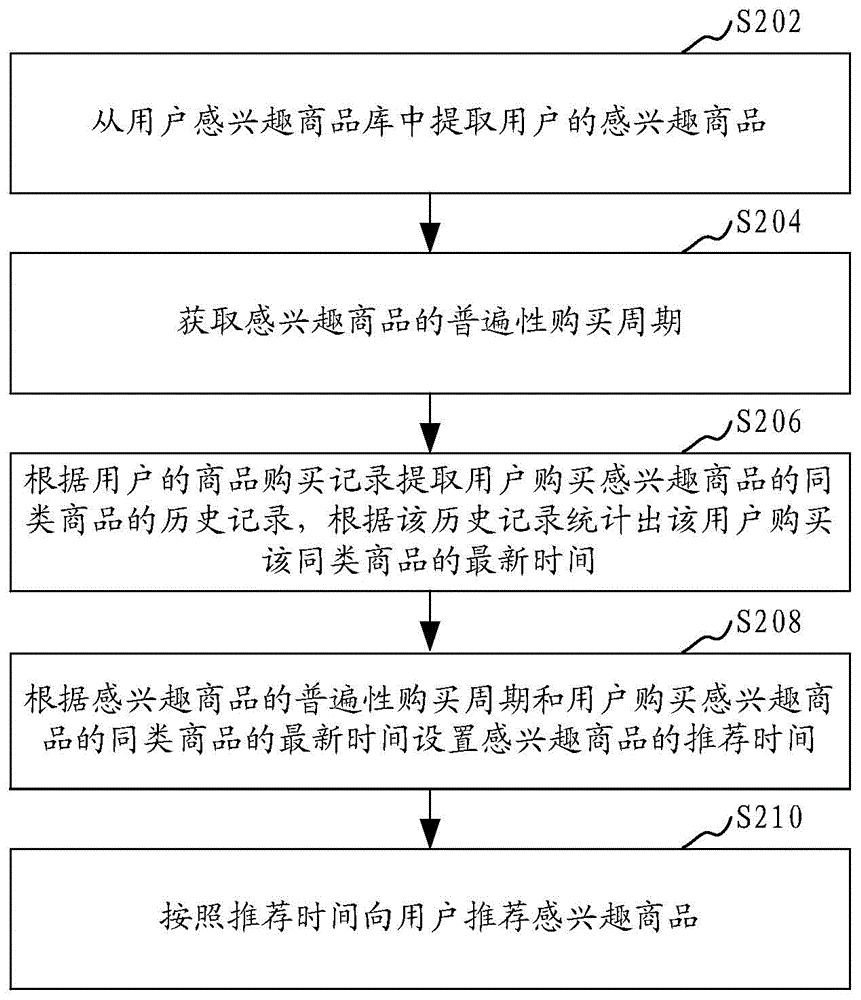

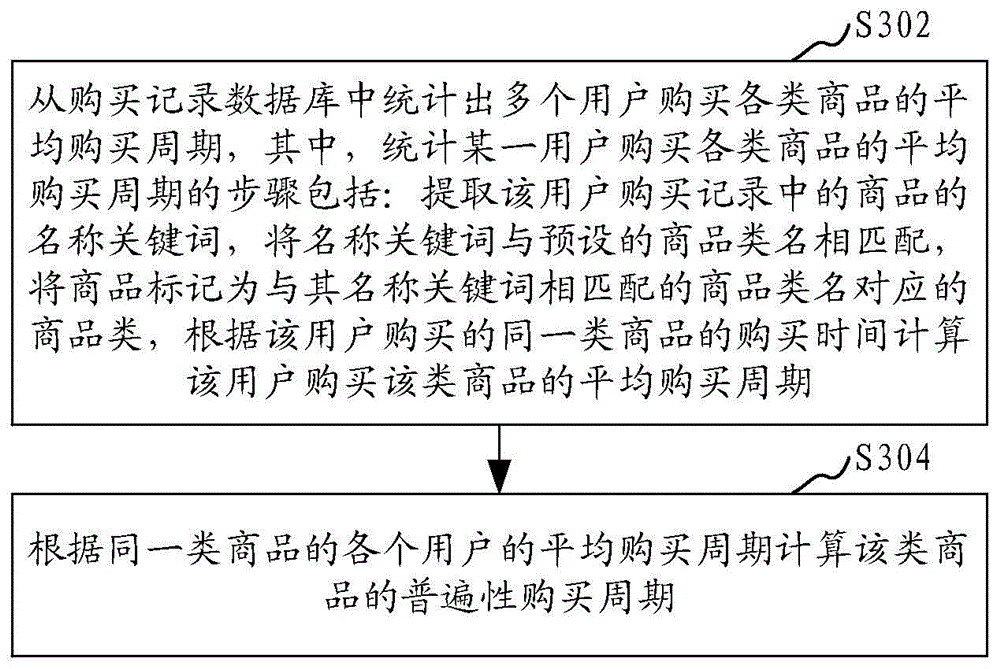

Commodity recommendation method and commodity recommendation device

A commodity recommendation method includes the steps of extracting a user's interested commodity from a commodity database which a user is interested in; obtaining a universality purchase cycle of the interested commodity; extracting historical records of similar commodities, purchased by the user, of the interested commodity according to user's commodity purchase records, and computing latest time when the user purchases the similar commodities according to the historical records; setting recommendation time for the interested commodity according to the universality purchase cycle and the latest time; recommending the interested commodity to the user according to the recommendation time. By the method, hit rate of commodity push information can be increased, and network and computer resource utilization rate can be increased effectively. Additionally, the invention further provides a commodity recommendation device corresponding to the commodity recommendation method, and a method and a device for recommending another commodity in an interested commodity bundle.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

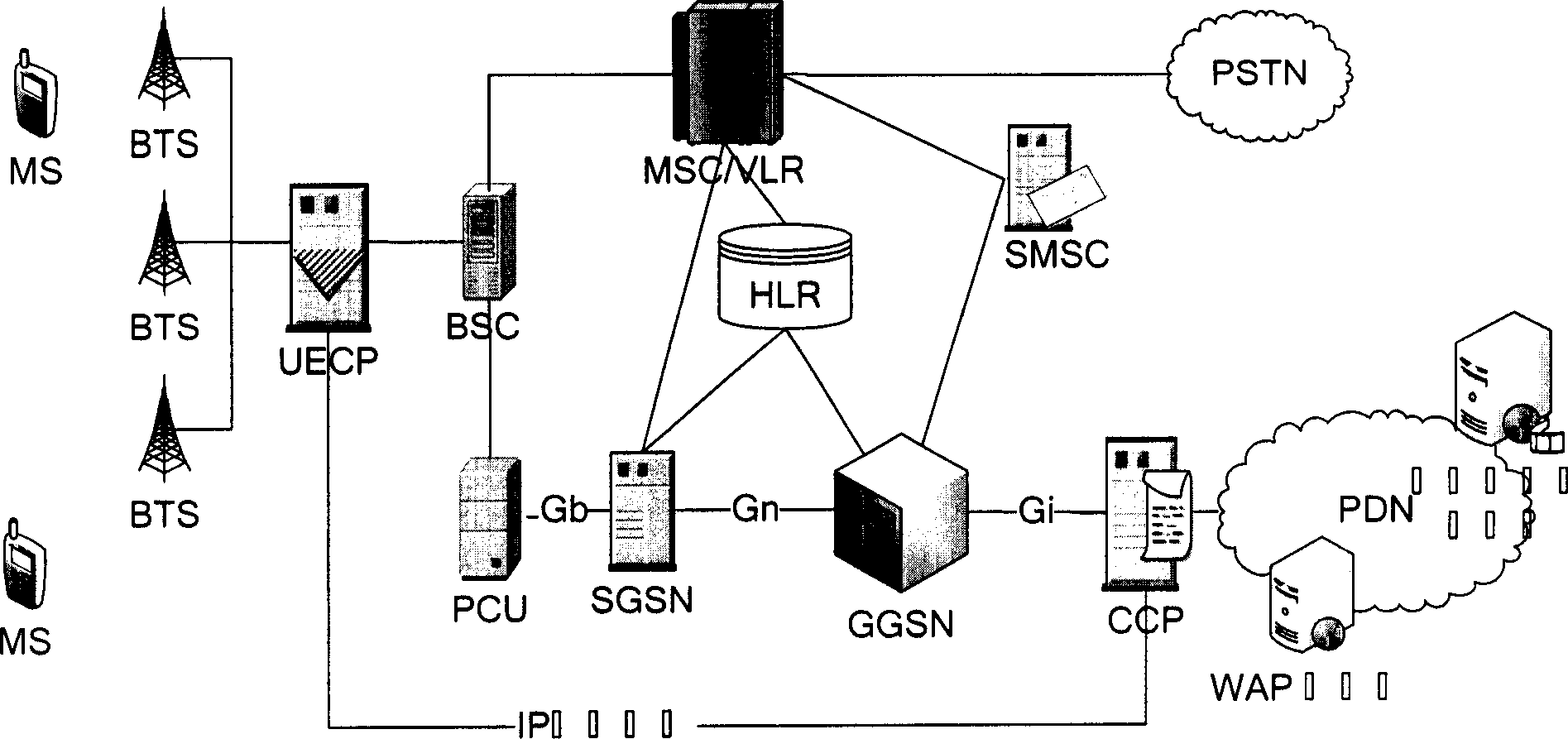

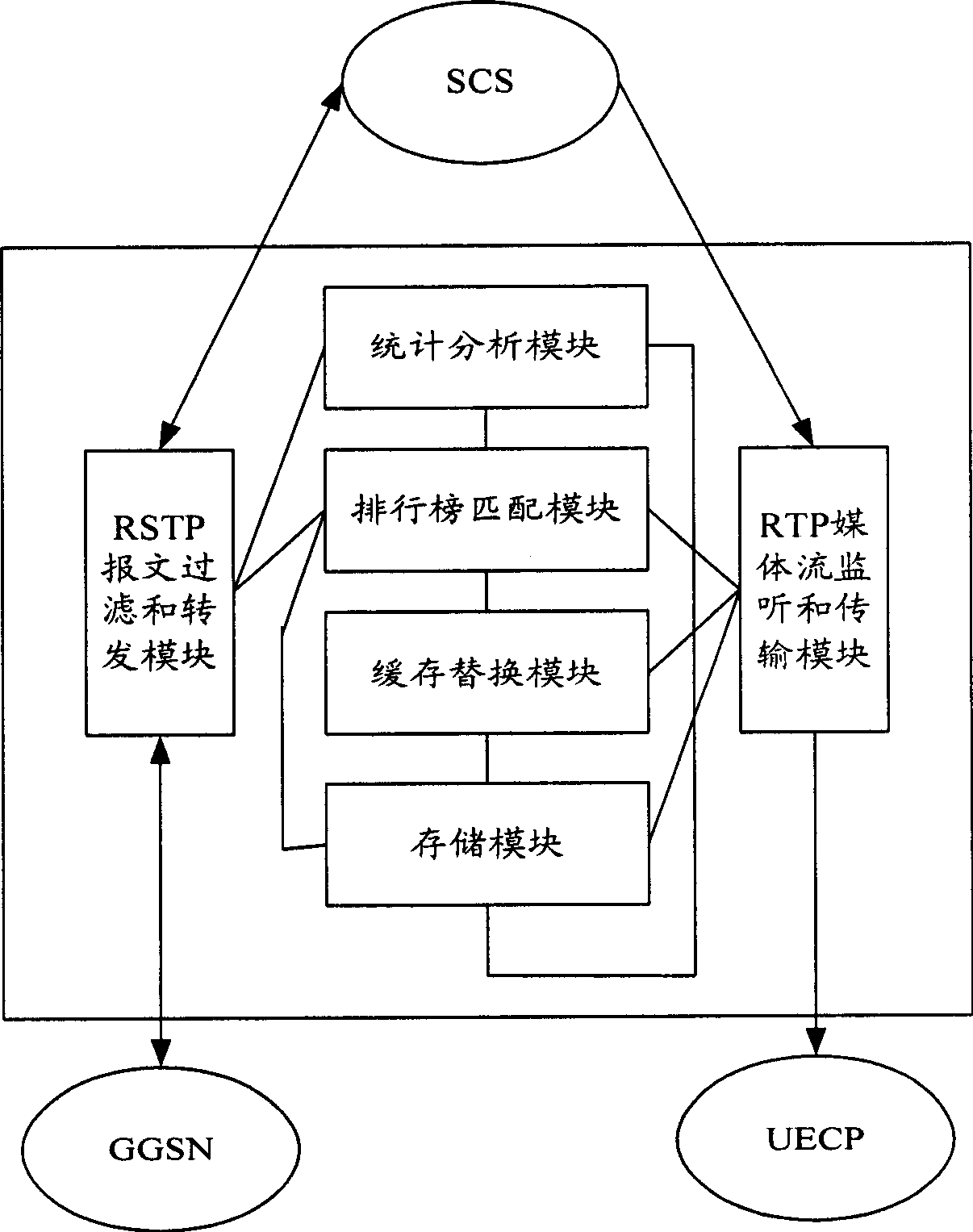

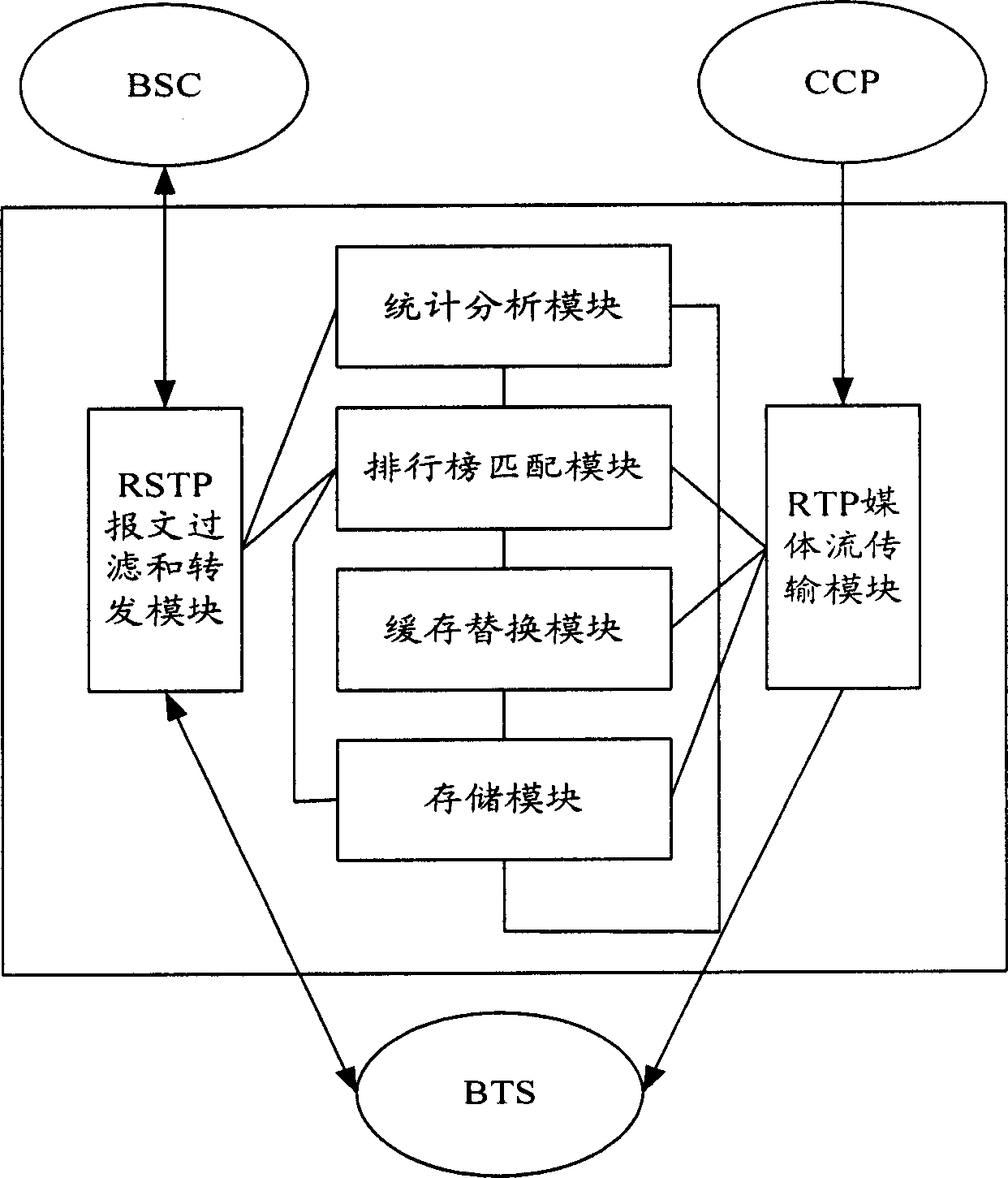

Mobile video order service system with optimized performance and realizing method

InactiveCN1791213AReduce data trafficReduce processing loadTransmissionSelective content distributionTraffic capacityAlternative strategy

The mobile VOD business system with super performance based on GPRS mobile network comprises: besides traditional net element, a CCCP server on boundary of GGSN and PDN to release load for SCS and reduce bandwidth consumption between GGSN and SGS, a UECP server arranged in BSS that is between BSC and BTS to self-adaptive improve buffer hit rate based on buffer alternative strategy and ensure fast response to most user request, and a high-speed IP DL between CCP and UECP to transmit flow media content and construct two-stage buffer device with two proxy servers to bypass great media and reduce data flux and load for other link and eliminate the effect of VOD to network performance.

Owner:BEIJING UNIV OF POSTS & TELECOMM

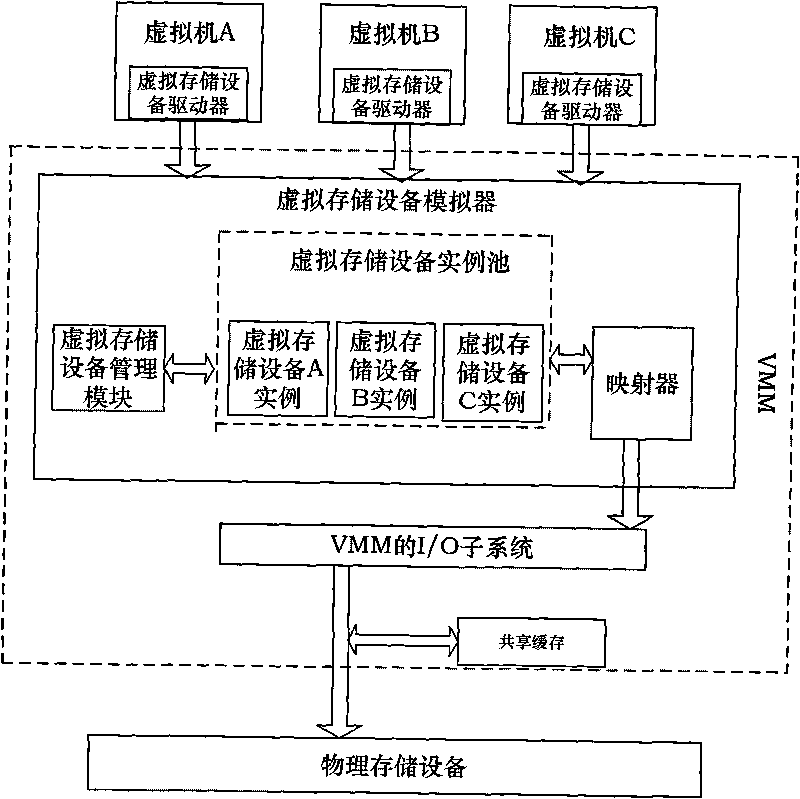

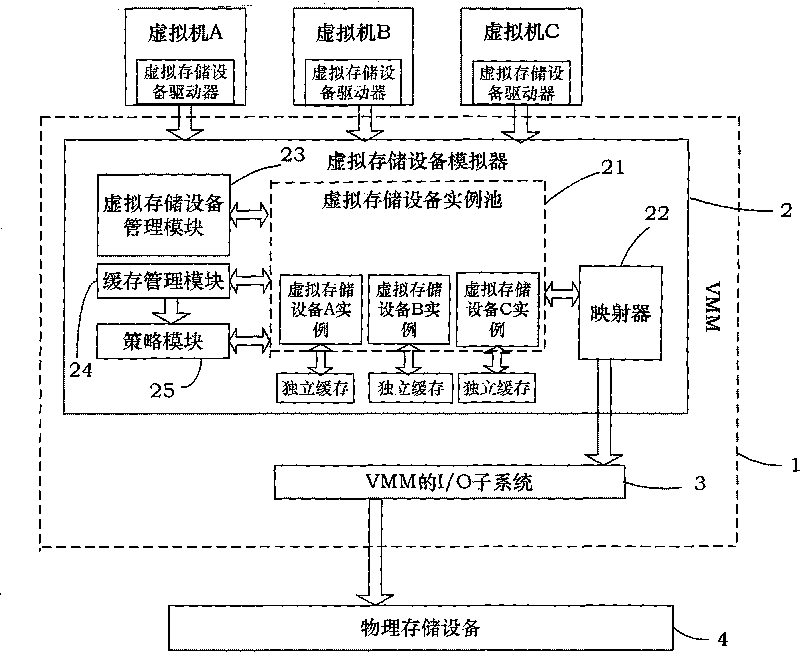

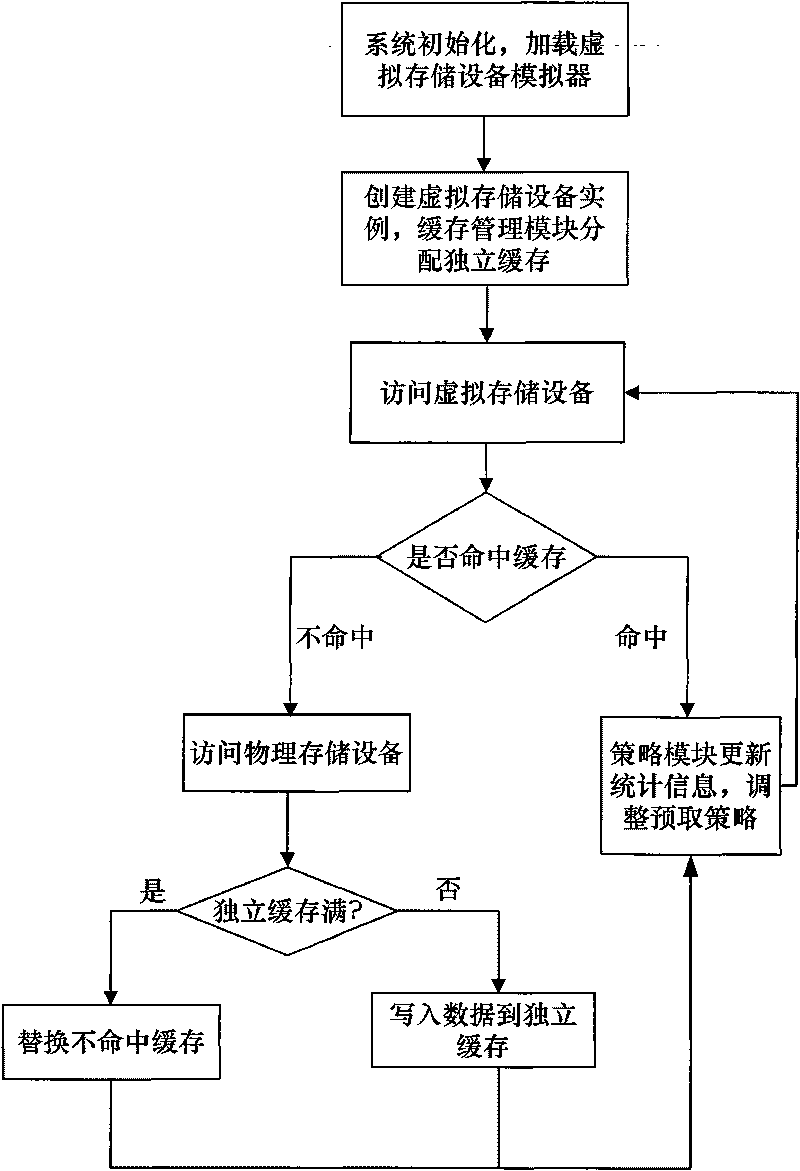

Cache method for virtual storage devices

InactiveCN101763226AImprove access performanceImprove hit rateInput/output to record carriersMemory adressing/allocation/relocationCache managementVirtual storage

The invention provides a cache method for virtual storage devices. A cache management module and a strategy module are added in the virtual storage device simulator of the traditional virtual machine monitor VMM. Firstly, when the virtual storage devices are created, the cache management module distributes respective independent cache for each virtual storage device; secondly, when the virtual storage devices are accessed, the strategy module appoints a prefetch strategy and a replacement strategy for the example of each virtual storage device and dynamically regulates the prefetch strategy. The cache method enables the hit rate of prefetched data to be increased, thereby further improving the access performance of the virtual storage devices.

Owner:BEIHANG UNIV

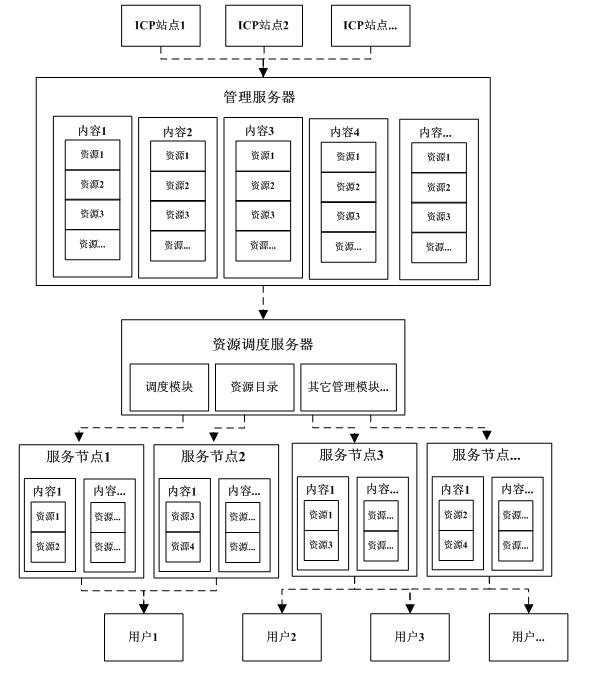

Grid-based Internet content delivery method and system

InactiveCN102143237AReduce content delivery bandwidthSave spaceData switching networksInternet contentComputer network

The invention discloses a grid-based Internet content delivery method and a grid-based Internet content delivery system. In the method, a content resource leveling and slicing processing method is provided. The system performs slicing processing on a complete content resource according to preset rules to generate a plurality of small resource files with the same size or different sizes, and then intelligently delivers the plurality of small resource files into each serving node of a network. The problems of content resource redundancy in each serving node are remarkably reduced, system spaces of each serving node are effectively utilized, the hit rate of Internet content accessing of a user is also increased, the user can access nearby networks and intelligently divide network traffic, and the response of the network is greatly quickened.

Owner:宋健 +1

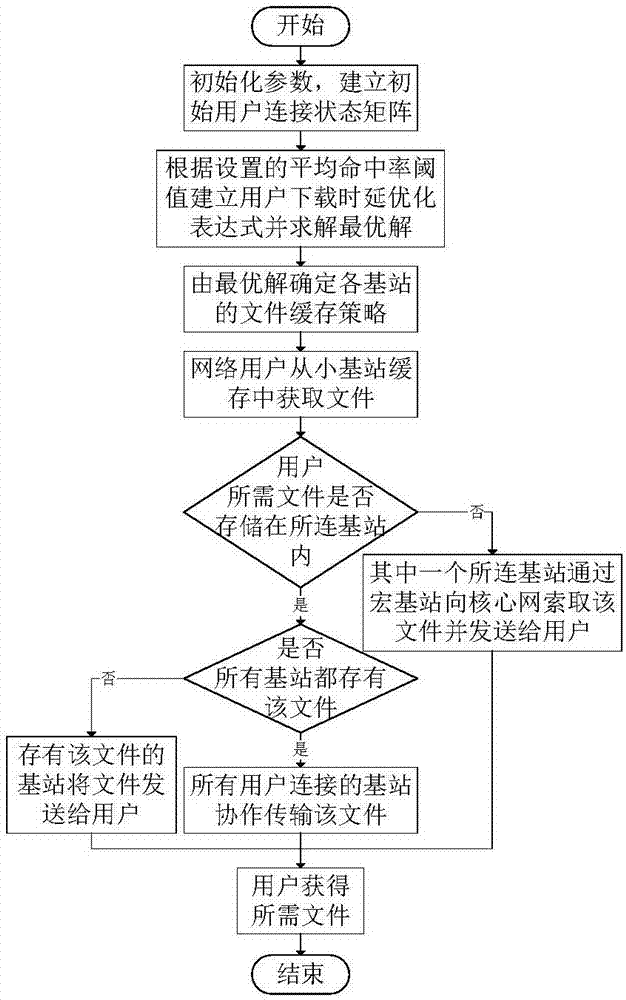

Base station caching method based on minimized user delay in edge caching network

ActiveCN107548102AImplementation LatencyHigh average hit rateNetwork traffic/resource managementBalance problemsHit rate

The invention discloses a base station caching method based on the minimized user delay in an edge caching network, and belongs to the field of wireless mobile communication. In an edge caching network scene, a connection matrix between a user and a base station is established at first; simultaneously, a strategy that the base station caches a file is generated; a relationship matrix between the base station and the file is established; then, the average hit rate that all users in the whole network obtain the file from the base station is counted; a constraint condition is set to achieve the optimal minimum network user average delay when satisfying a reasonable user average hit rate threshold value; a traversing method is further used for solving and finding an optimal base station content storage strategy; a small base station is deployed according to the strategy; and finally, a user is connected to the small base station to obtain the file. According to the base station caching method based on the minimized user delay in edge caching network disclosed by the invention, the balance problem of the average delay and the average hit rate in a process of designing the small base station file caching strategy of the edge caching network is sufficiently considered; and thus, the purpose of minimizing the user average downloading delay when a certain user average hit rate is satisfied is realized.

Owner:BEIJING UNIV OF POSTS & TELECOMM

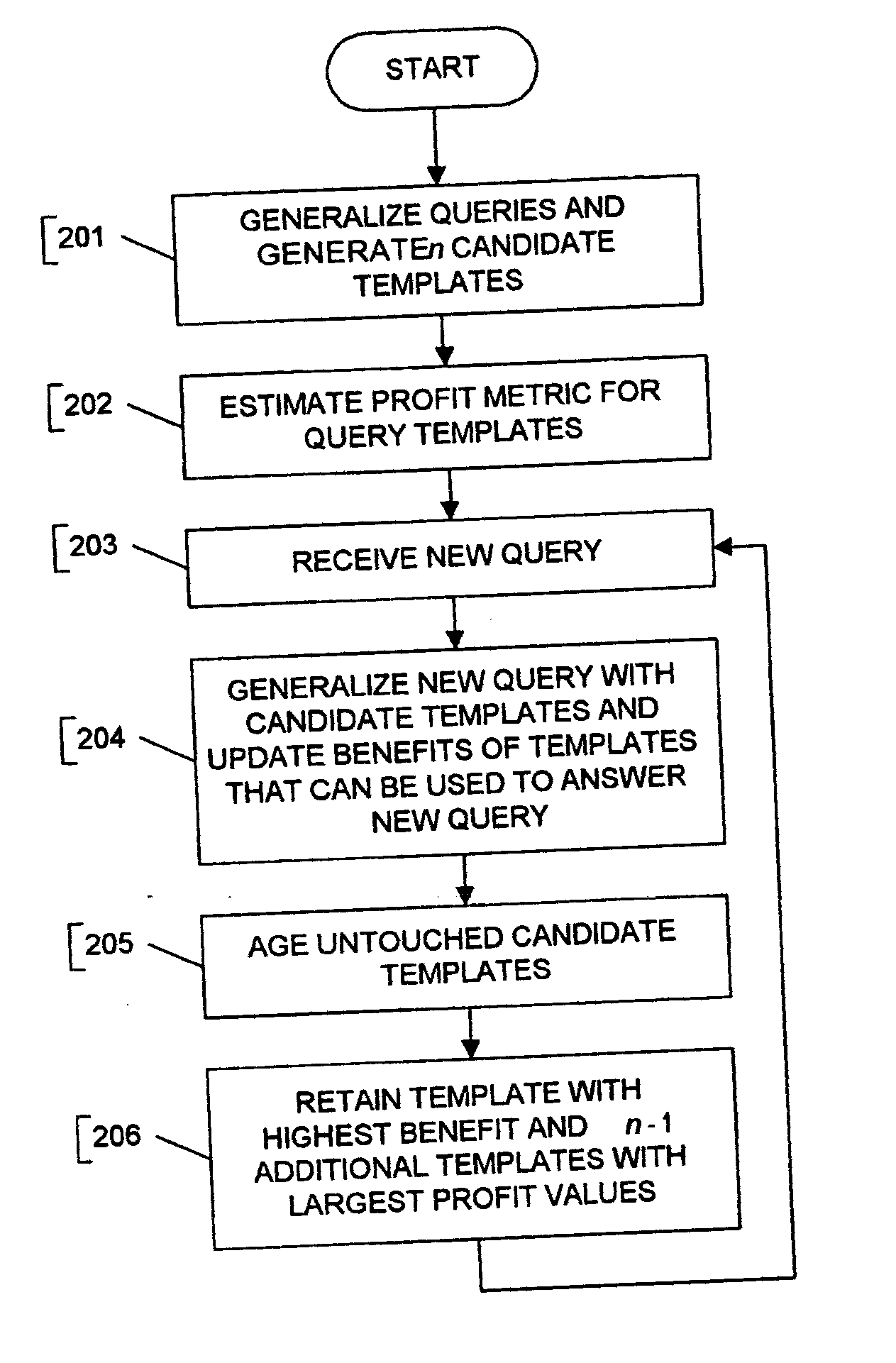

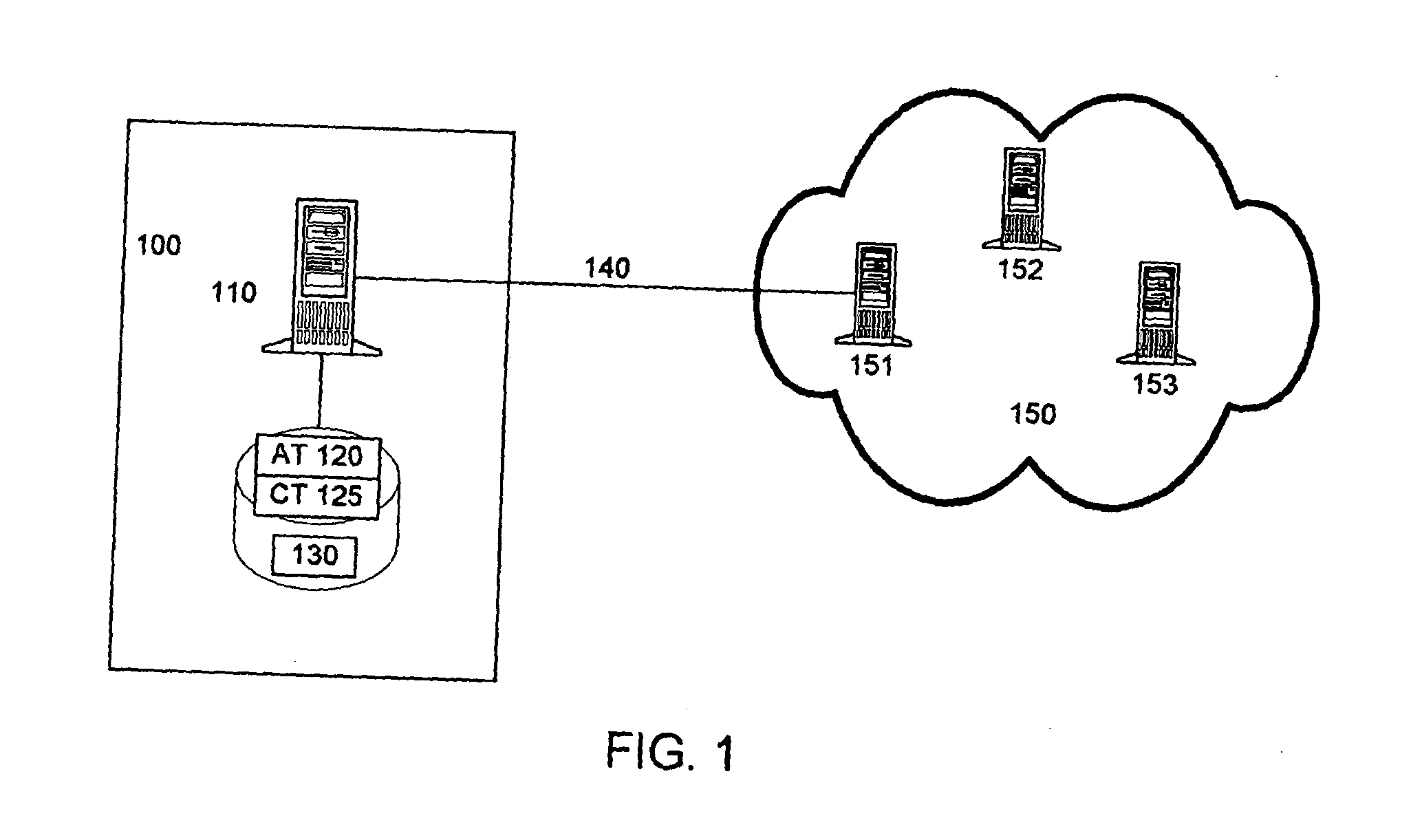

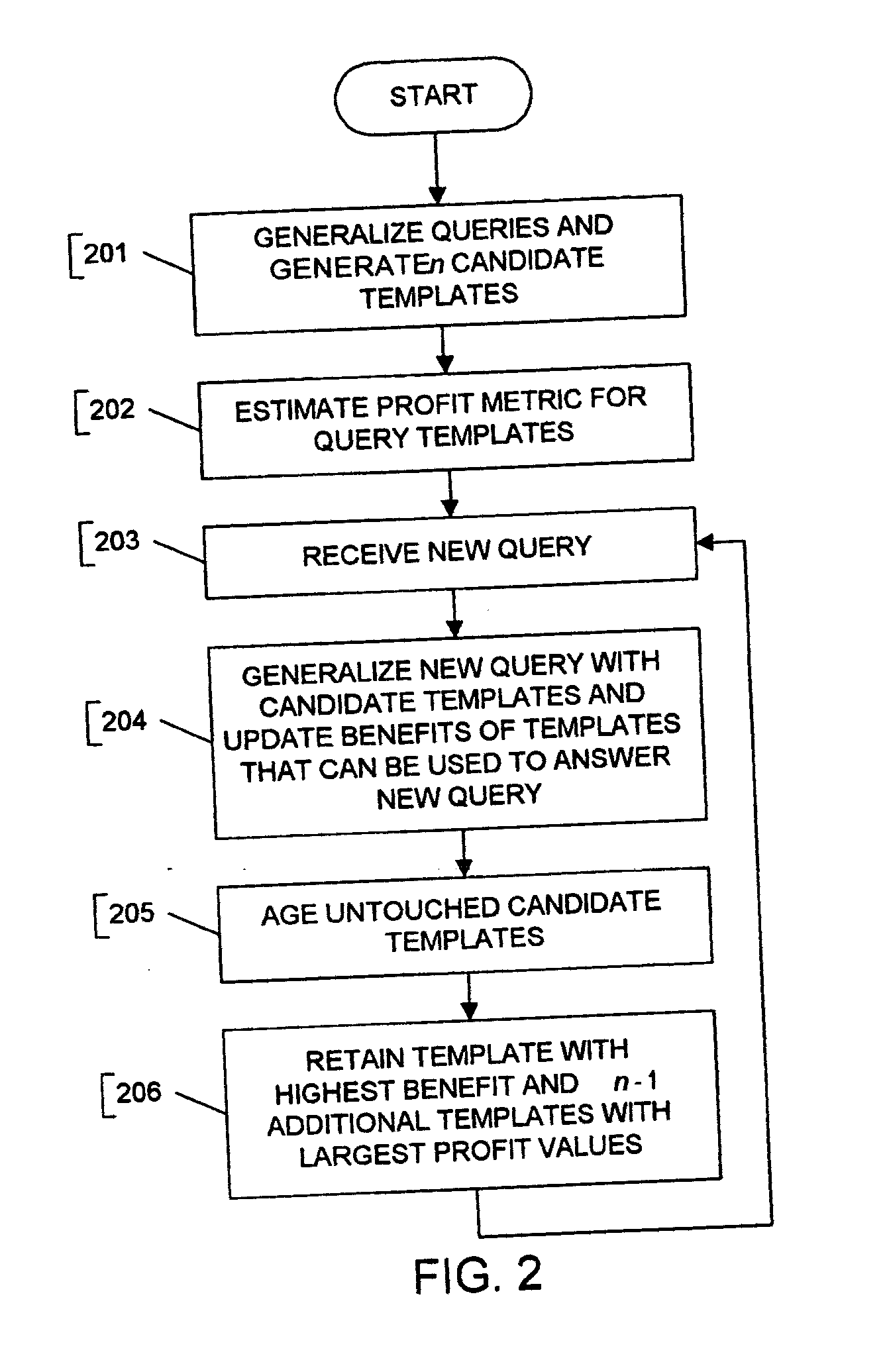

Method for using query templates in directory caches

InactiveUS20050203897A1Improve efficiencyReduce overheadDigital data information retrievalData processing applicationsParallel computingClient-side

The present invention discloses the use of generalized queries, referred to as query templates, obtained by generalizing individual user queries, as the semantic basis for low overhead, high benefit directory caches for handling declarative queries. Caching effectiveness can be improved by maintaining a set of generalizations of queries and admitting such generalizations into the cache when their estimated benefits are sufficiently high. In a preferred embodiment of the invention, the admission of query templates into the cache can be done in what is referred to by the inventors as a “revolutionary” fashion—followed by stable periods where cache admission and replacement can be done incrementally in an evolutionary fashion. The present invention can lead to considerably higher hit rates and lower server-side execution and communication costs than conventional caching of directory queries—while keeping the clientside computational overheads comparable to query caching.

Owner:AT & T INTPROP II LP

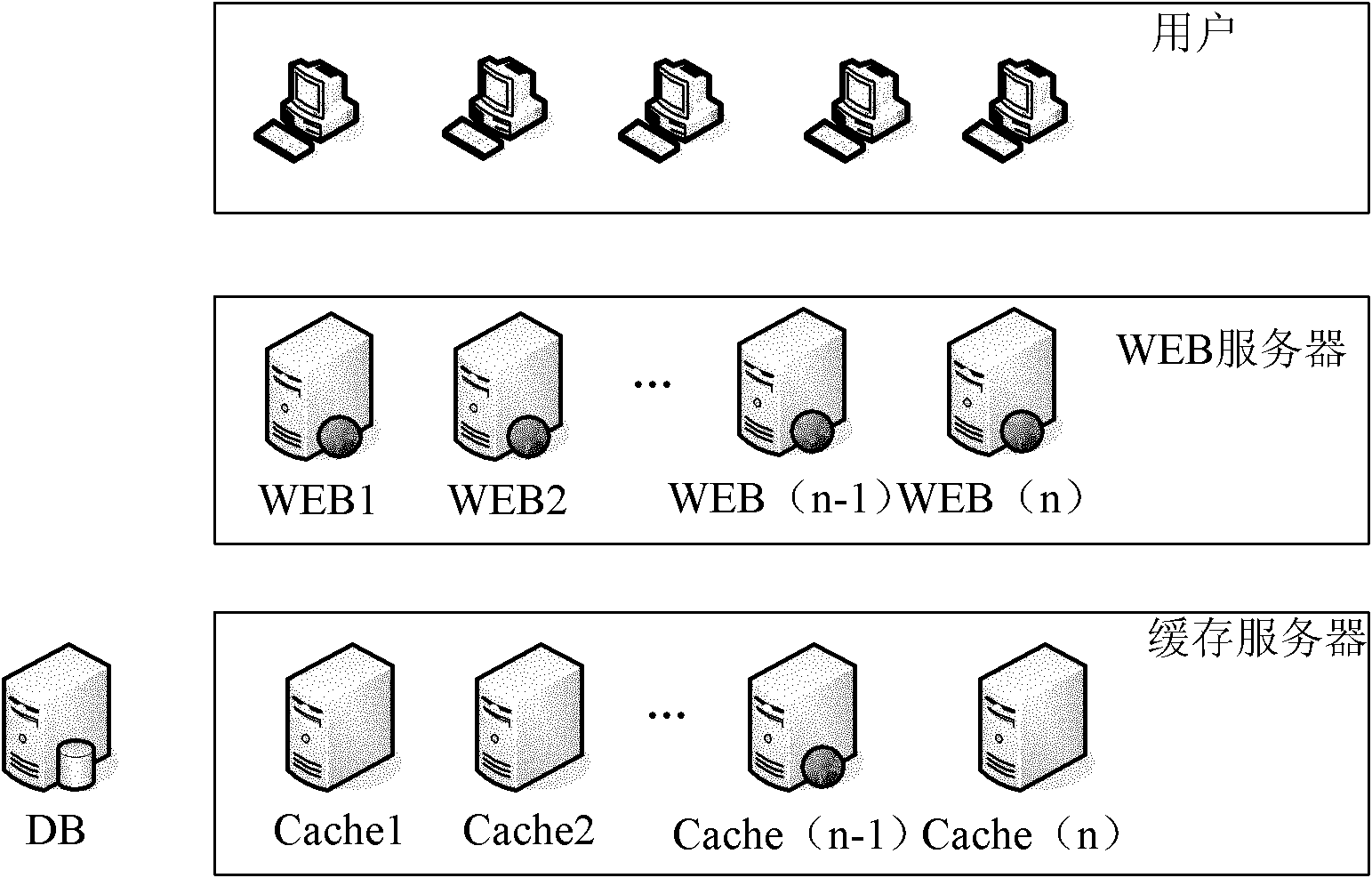

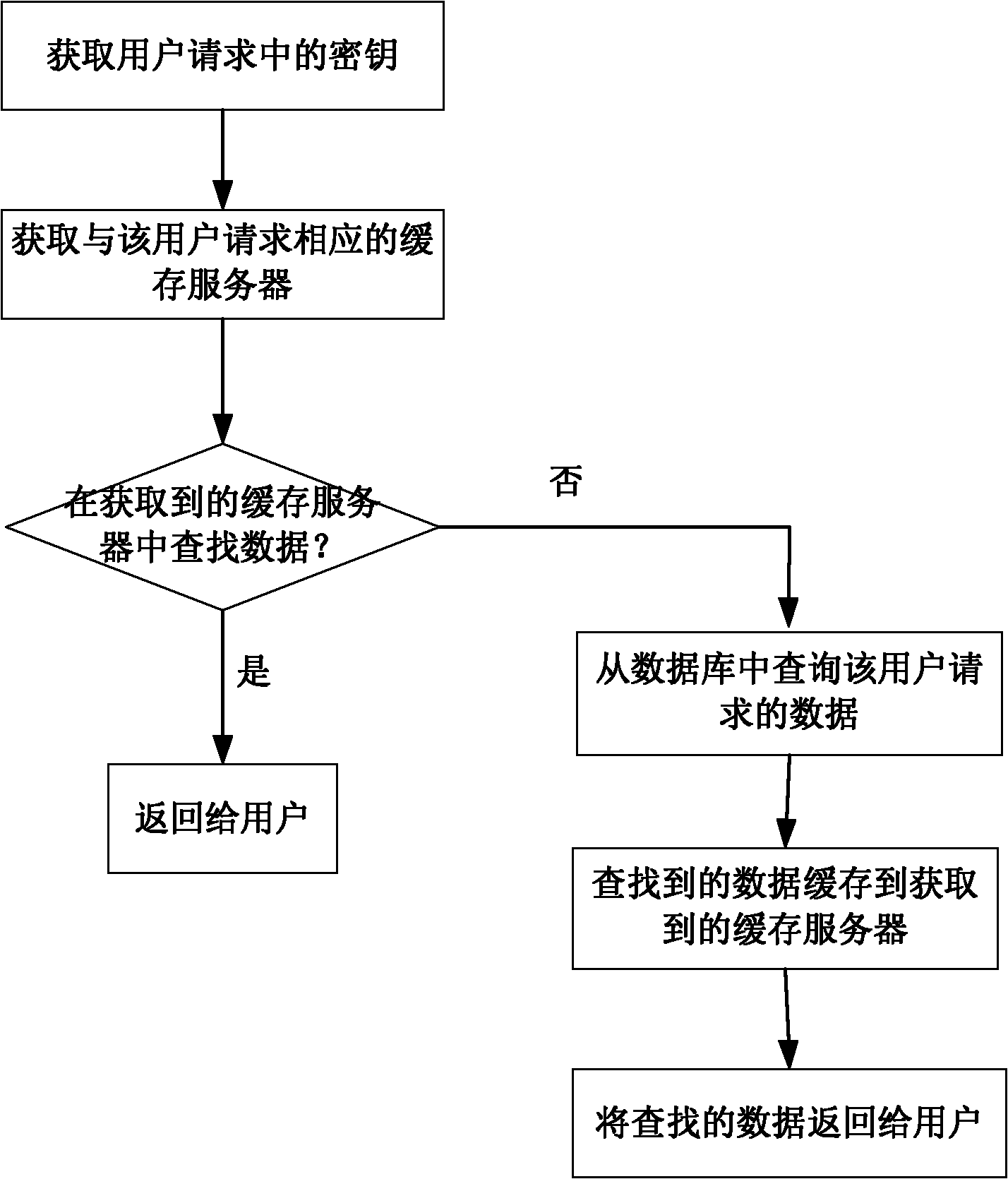

Filter cache method and device, and cache system

InactiveCN102012931AImprove scalabilityImprove cache hit ratioSpecial data processing applicationsCache serverFilter cache

The invention discloses a filter cache method and a filter cache device, and a cache system. The filter cache method comprises the following steps of: 1, acquiring a key in a user request according to the user request; 2, selecting a cache server corresponding to the user request according to the key; and 3, querying data requested by a user from the selected cache server, and directly returning the searched data to the user. The filter cache method can improve the cache data hit rate and the cache expansibility.

Owner:BEIJING RUIXIN ONLINE SYST TECH

Techniques for automated allocation of memory among a plurality of pools

Allocation of memory is optimized across multiple pools of memory, based on minimizing the time it takes to successfully retrieve a given data item from each of the multiple pools. First data is generated that indicates a hit rate per pool size for each of multiple memory pools. In an embodiment, the generating step includes continuously monitoring attempts to access, or retrieve a data item from, each of the memory pools. The first data is converted to second data that accounts for a cost of a miss with respect to each of the memory pools. In an embodiment, the second data accounts for the cost of a miss in terms of time. How much of the memory to allocate to each of the memory pools is determined, based on the second data. In an embodiment, the steps of converting and determining are automatically performed, on a periodic basis.

Owner:ORACLE INT CORP

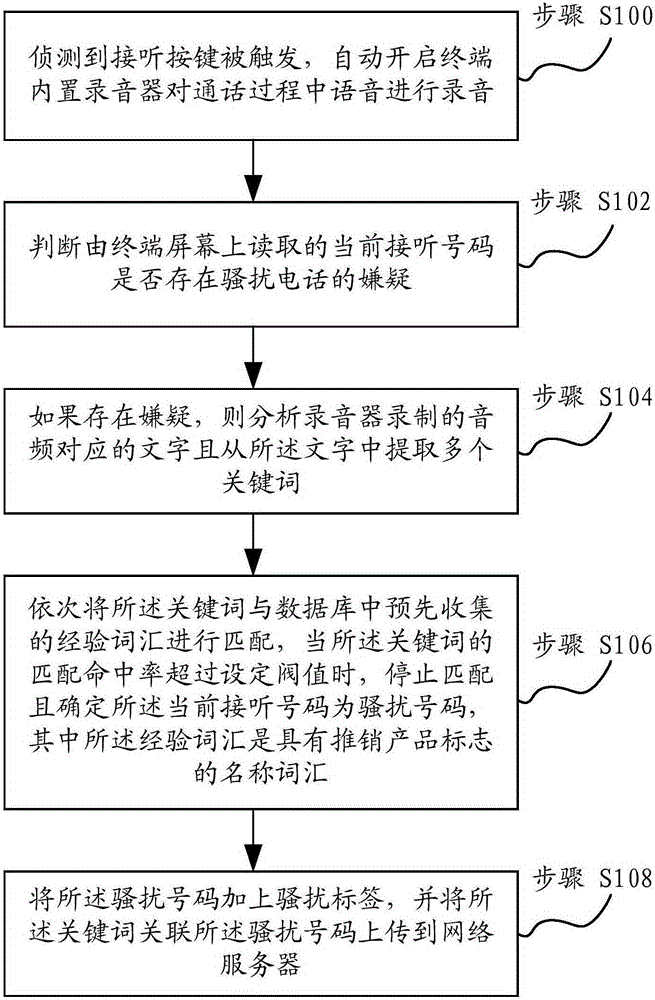

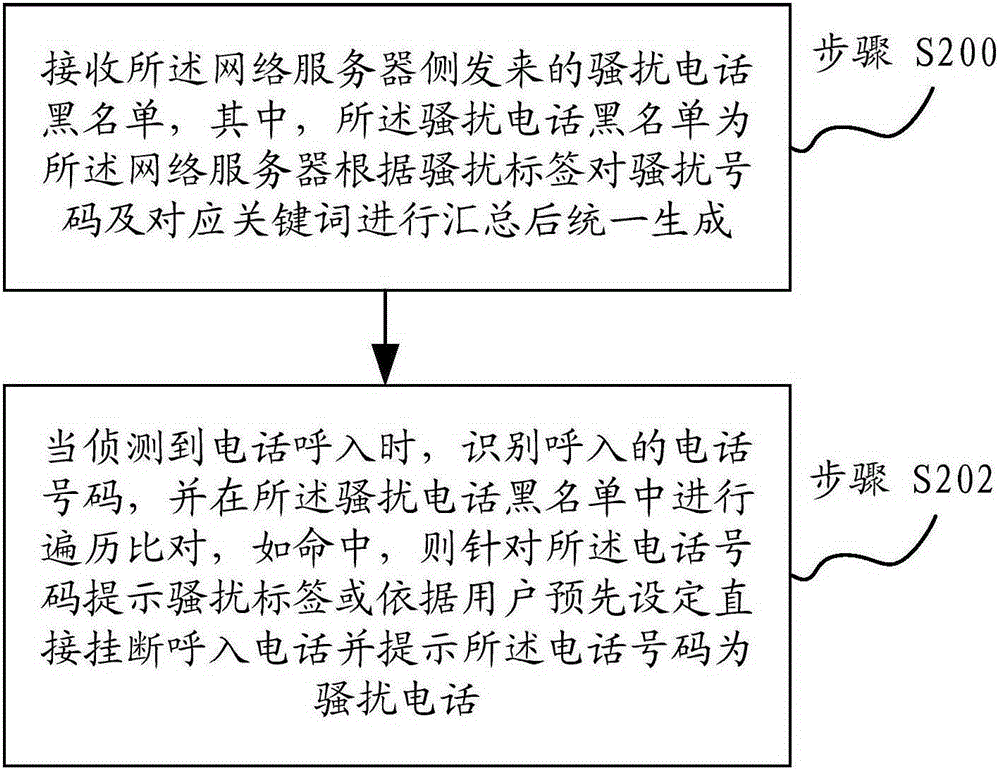

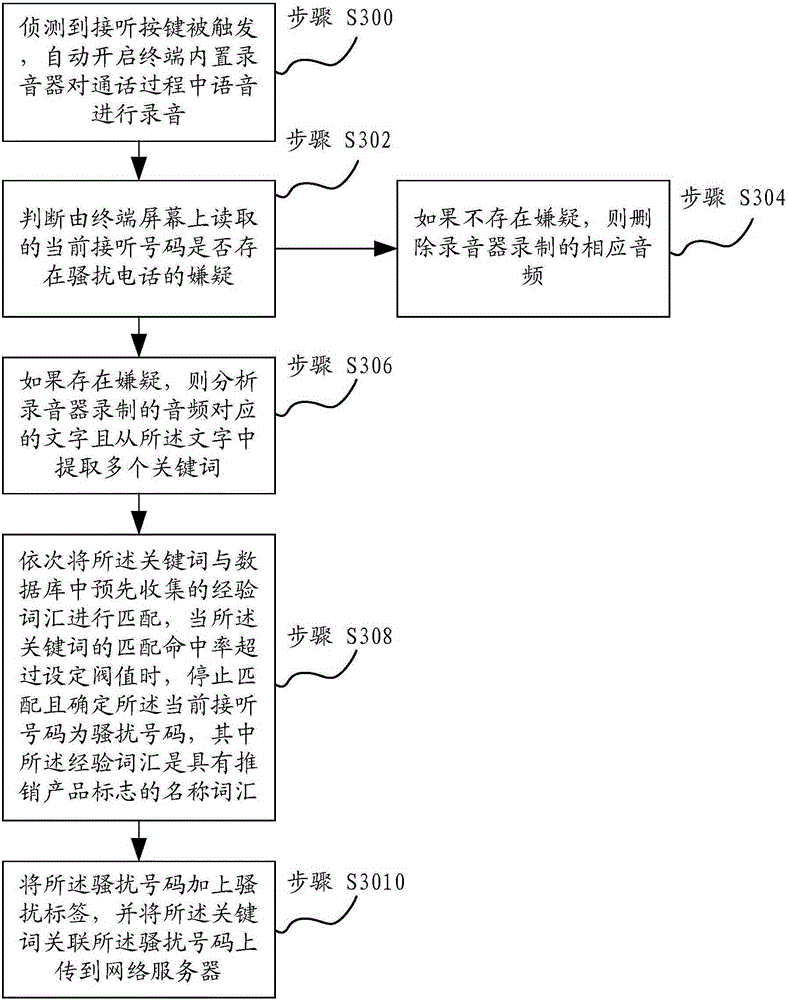

Processing method for adaptively identifying harassing call and processing system thereof

InactiveCN106686191AReduce misjudgment and harassment callsReduce the probability of misjudging harassing callsSubstation equipmentTransmissionData miningSelf adaptive

The invention discloses a processing method for adaptively identifying a harassing call and a processing system thereof. The processing method comprises the steps that trigging of an answer key is detected, and a terminal built-in recorder is automatically started to recording voice in the conversation process; the suspicion of the harassing call of the current answer number read from the screen of the terminal is judged, and the characters corresponding to the audios recorded by the recorder are analyzed and multiple keywords are extracted from the characters if the suspicion exists; and the keywords are matched with experience words collected in a database in advance in turn; and when the matching hit rate of the keywords exceeds the preset threshold, matching is stopped and the current answer number is determined to be the harassing number. The conversation process is recorded and the keywords in the record are extracted for matching so as to judge and set whether the unknown number is the harassing call.

Owner:BEIJING QIHOO TECH CO LTD +1

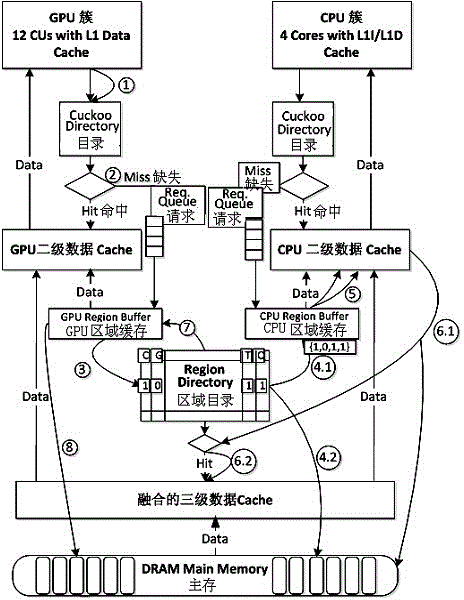

Method for establishing access by fusing multiple levels of cache directories

InactiveCN103955435AReduce failure rateReduce bus bandwidthMemory adressing/allocation/relocationEnergy efficient computingFailure rateThree level

The invention relates to a method for establishing an access by fusing multiple levels of cache directories, and a graded fused hierarchical cache directory mechanism is established. The method comprising the steps that multiple CPU and GPU processors form a Quart computing element, a Cuckoo directory is established in a graded way in caches built in the CPU or GPU processors, an area directory and an area directory controller are established outside the Quart computing element, thus the bus communication bandwidth is effectively reduced, the arbitration conflict frequency is lowered, a data block directory of a three-level fusion Cache can be cached, and thus the access hit rate of the three-level fusion Cache is improved. Therefore a graded fused hierarchical Cache directory mechanism inside and outside the Quart is constructed, the Cache failure rate is lowered, the on-chip bus bandwidth is reduced, the power consumption of the system is lowered, the new status of the Cache block does not need to be added, the very good compatibility with the Cache consistency protocol is realized, and a new train of thought is provided for constructing a heterogeneous monolithic multi-core processor system with extensibility and high performance.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

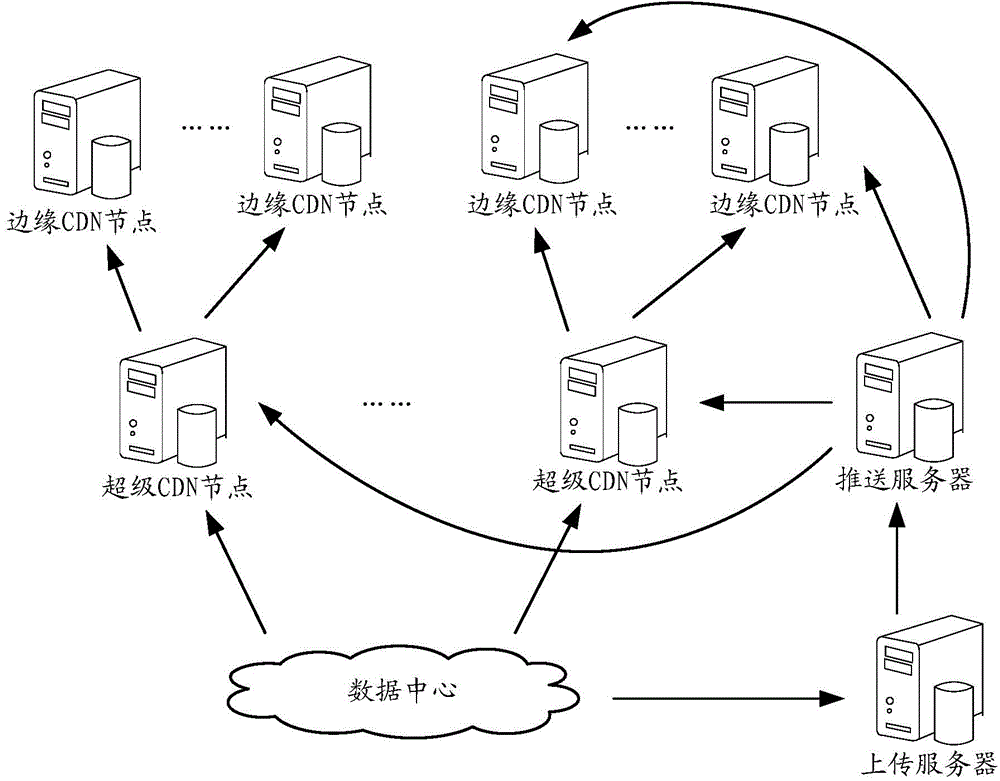

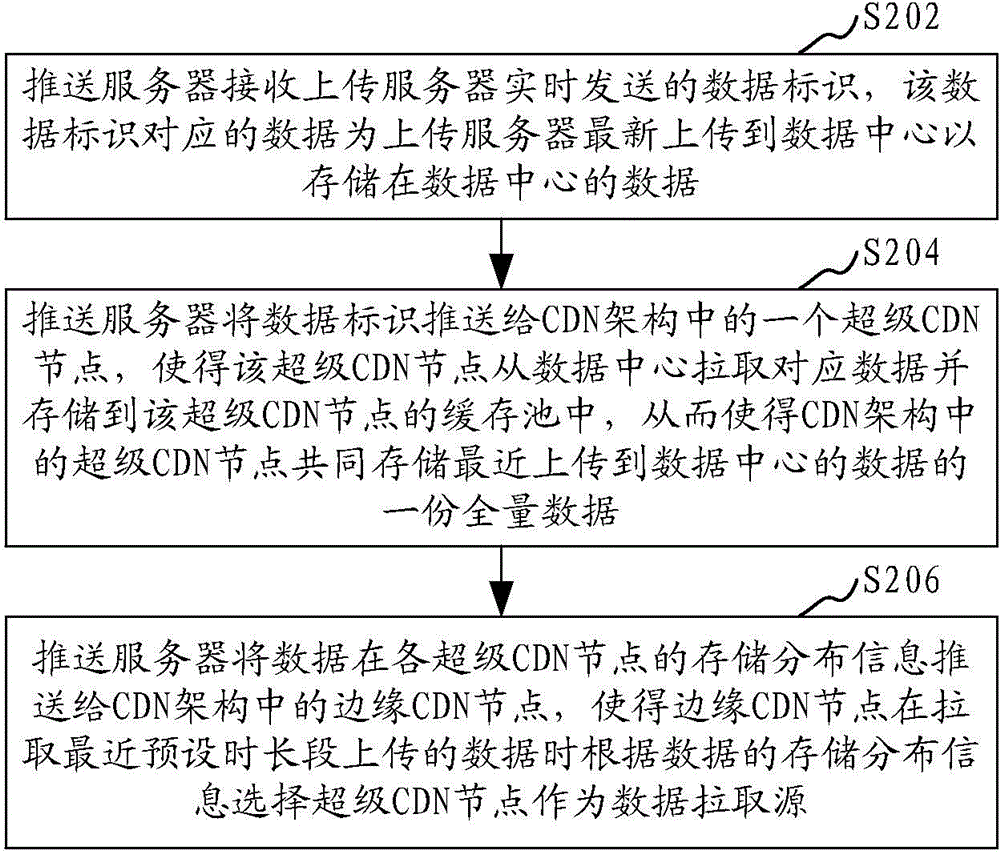

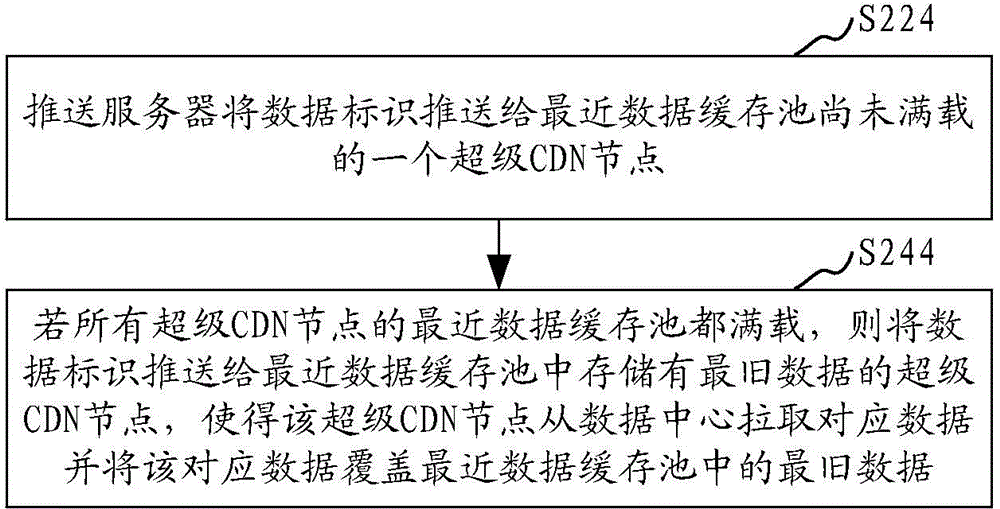

Data push, storage and downloading methods and devices based on CDN architecture

Disclosed is a data push method based on a CDN architecture. The method comprises the following steps: a push server pushing data identifications of latest data uploaded to a data center to super CDN nodes in the CDN architecture to enable the super CDN nodes to draw the corresponding data from the data center and store the corresponding data in the buffer pool of the super CDN nodes so as to enable the super CDN nodes in the CDN architecture to commonly store a copy of total-amount data of the data uploaded to the data center lately; and the push server pushing storage distribution information of the data in each super CDN node to edge CDN nodes in the CDN architecture to enable the edge CDN nodes to select the super CDN nodes as data drawing sources according to the storage distribution information when drawing the data uploaded in a recent preset time period. The method provided by the invention can improve the data access hit rate of the CDN nodes. Besides, the invention further provides a data push device based on a CDN architecture, and data storage and downloading methods and devices based on a CDN architecture.

Owner:SHENZHEN TENCENT COMP SYST CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com