Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

154 results about "Cache consistency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

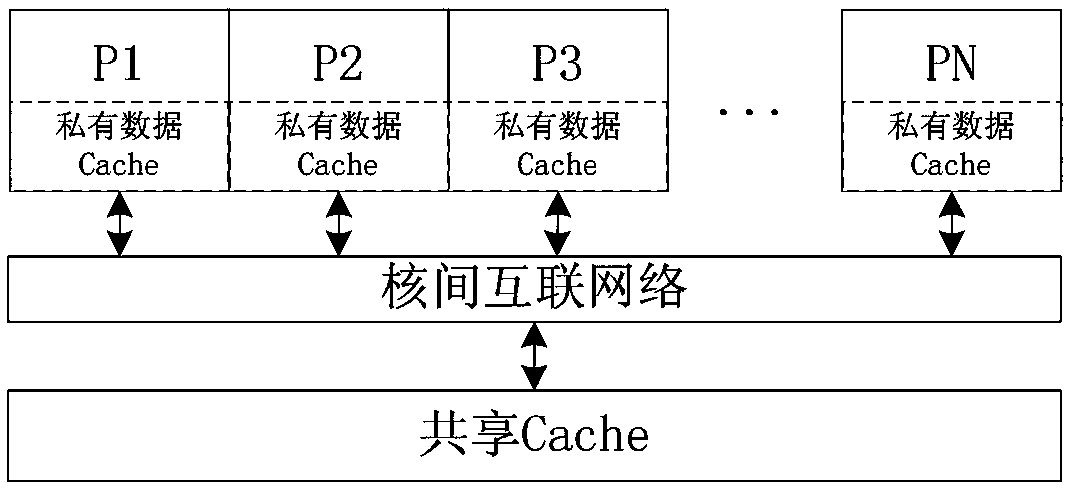

Multi-core processor supporting cache consistency, reading and writing methods and apparatuses as well as device

ActiveCN105740164AAchieve consistencyImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationDistribution methodCache consistency

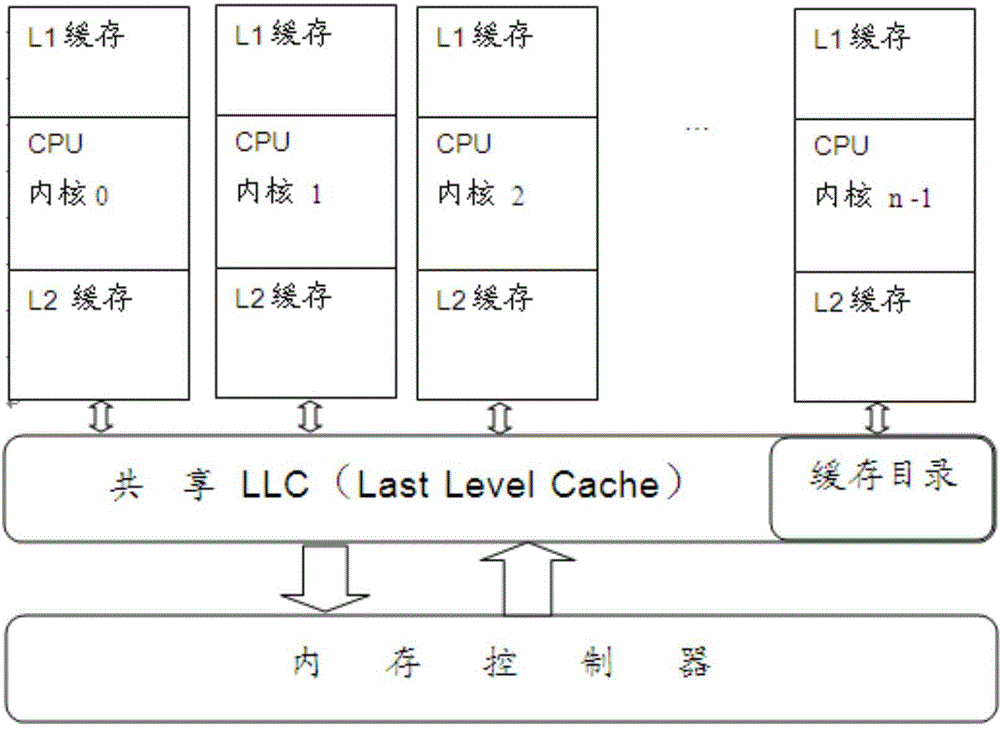

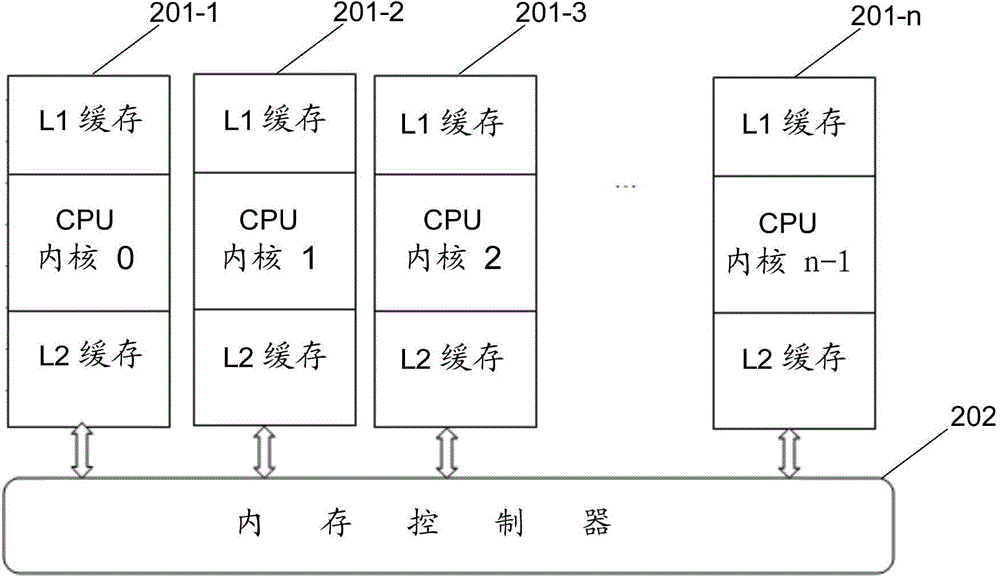

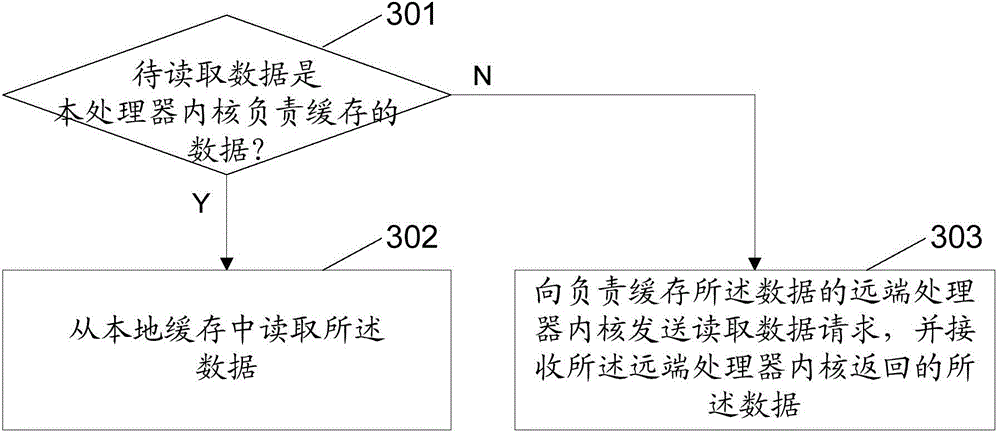

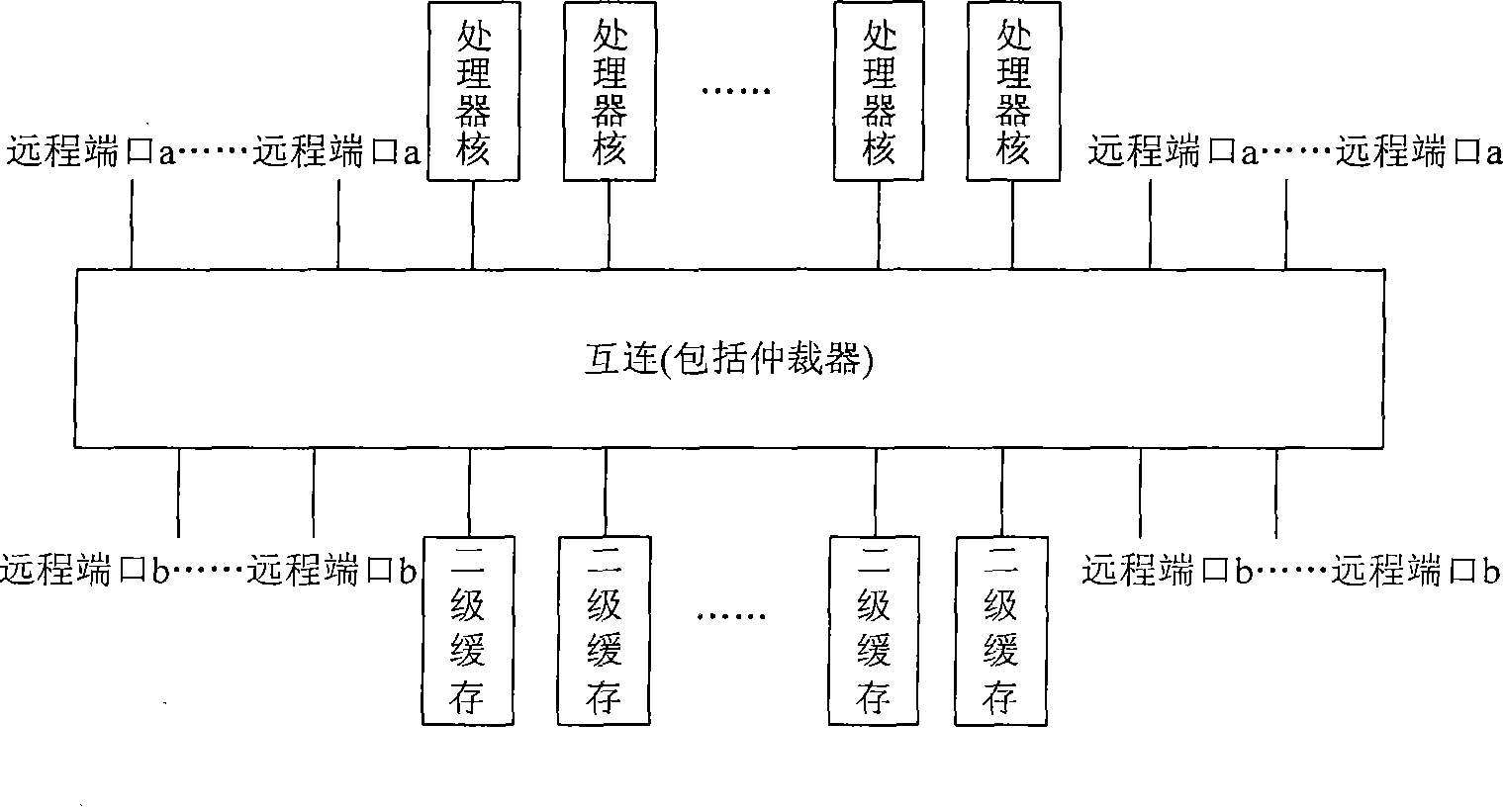

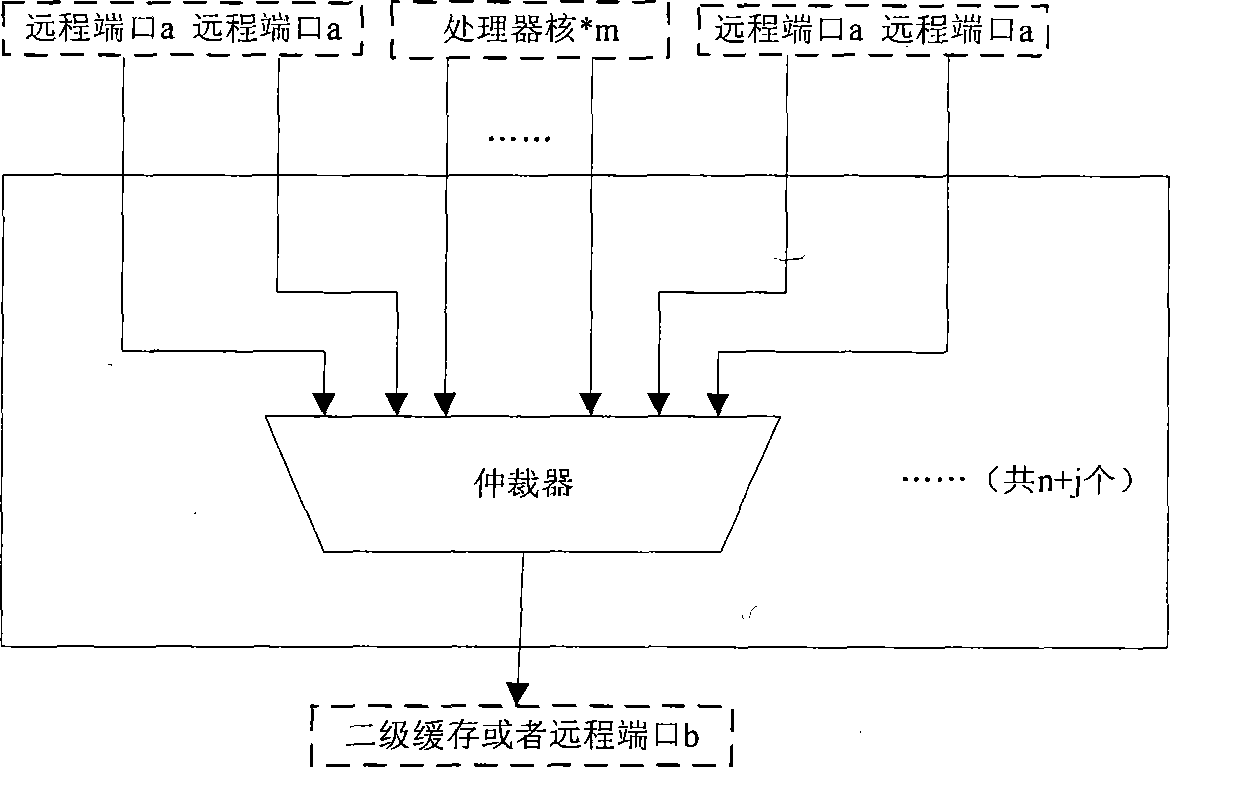

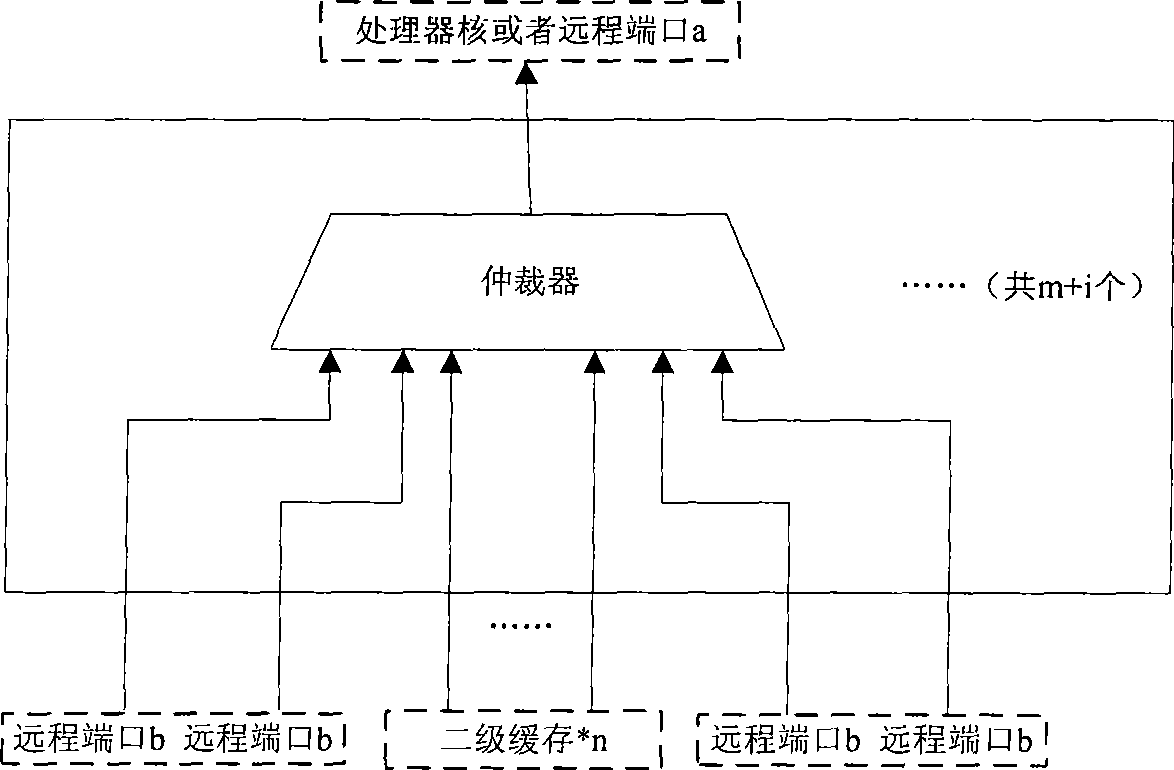

The invention discloses a multi-core processor supporting cache consistency, a data reading method and apparatus based on the multi-core processor, a data writing method and apparatus based on the multi-core processor, a memory allocation method and apparatus based on the multi-core processor, and a device. The multi-core processor supporting cache consistency comprises a plurality of processor cores and local caches corresponding to the processor cores respectively, wherein the local caches of different processor cores are used for caching data in memory spaces of different address ranges respectively; and each processor core accesses the data in the local caches of other processor cores through an interconnection bus. According to the technical scheme provided by the invention, a new thought of removing a cache directory and shared caches from a conventional multi-core processor framework is provided, so that the cache consistency is realized, private caches are shared, and the overall performance of the processor is improved while the conflicts between the private caches and the shared caches are eliminated.

Owner:ALIBABA GRP HLDG LTD

Mechanism for reordering transactions in computer systems with snoop-based cache consistency protocols

InactiveUS6484240B1Memory adressing/allocation/relocationMultiple digital computer combinationsMulti processorCache consistency

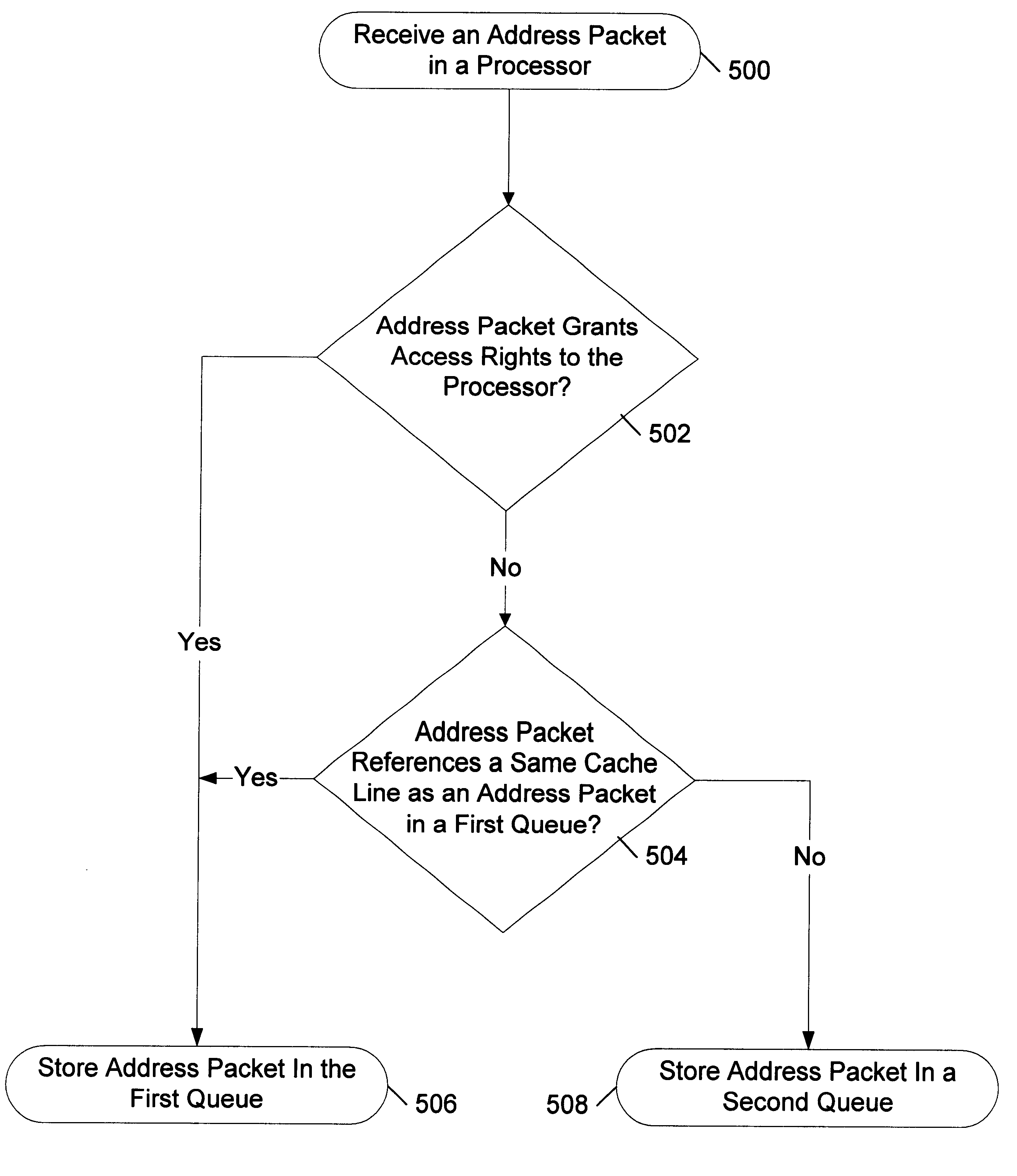

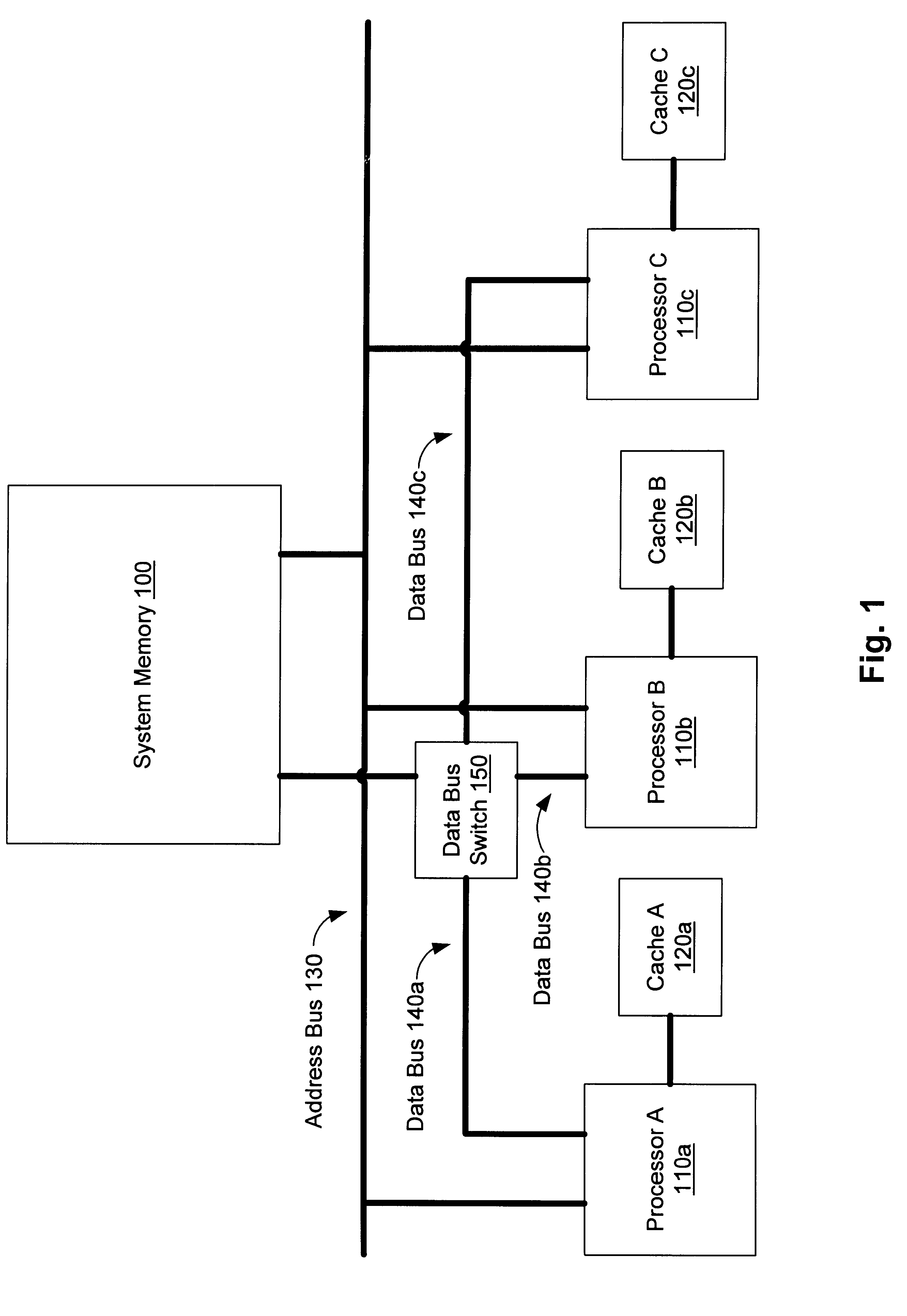

An apparatus and method for expediting the processing of requests in a multiprocessor shared memory system. In a multiprocessor shared memory system, requests can be processed in any order provided two rules are followed. First, no request that grants access rights to a processor can be processed before an older request that revokes access rights from the processor. Second, all requests that reference the same cache line are processed in the order in which they arrive. In this manner, requests can be processed out-of-order to allow cache-to-cache transfers to be accelerated. In particular, foreign requests that require a processor to provide data can be processed by that processor before older local requests that are awaiting data. In addition, newer local requests can be processed before older local requests. As a result, the apparatus and method described herein may advantageously increase performance in multiprocessor shared memory systems by reducing latencies associated with a cache consistency protocol.

Owner:ORACLE INT CORP

Consistency maintenance device for multi-kernel processor and consistency interaction method

InactiveCN102346714AReduce storage overheadImprove memory access efficiencyMemory adressing/allocation/relocationLinear growthData access

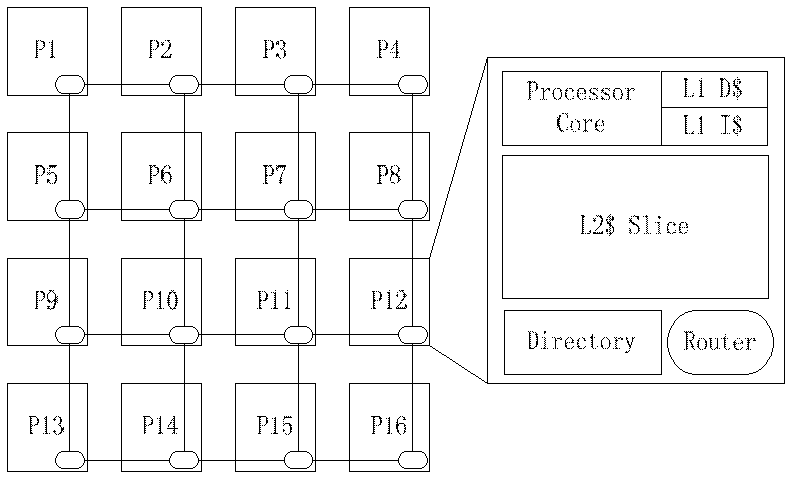

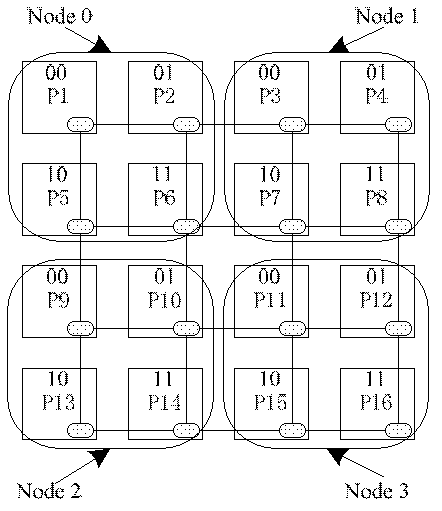

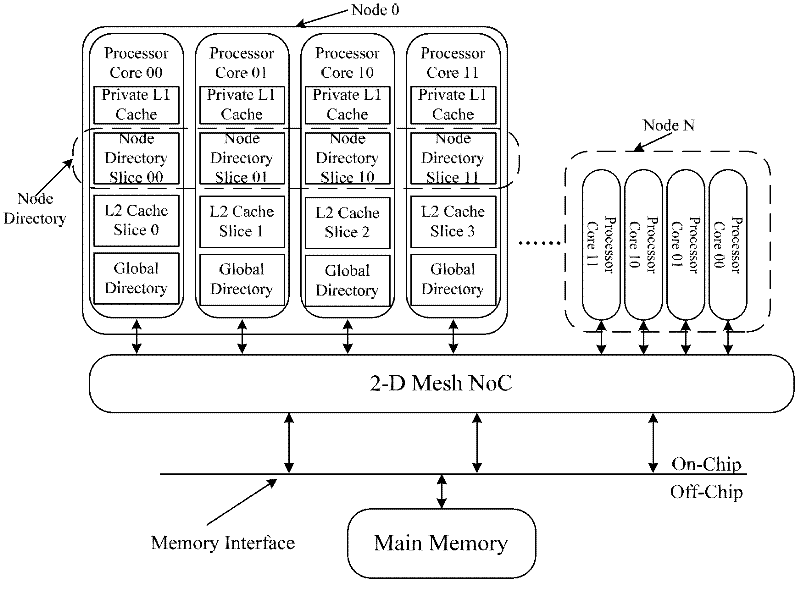

The invention discloses a consistency maintenance device for a multi-kernel processor and a consistency interaction method, mainly solving the technical problem of large directory access delay in a consistency interaction process for processing read-miss and write-miss by a Cache consistency protocol of the traditional multi-kernel processor. According to the invention, all kernels of the multi-kernel processor are divided into a plurality nodes in parallel relation, wherein each node comprises a plurality of kernels. When the read-miss and the write-miss occur, effective data transcription nodes closest to the kernels undergoing the read-miss and the write-miss are directly predicted and accessed according to node predication Cache, and a directory updating step is put off and is not performed until data access is finished, so that directory access delay is completely concealed and the access efficiency is increased; a double-layer directory structure is beneficial to conversion of directory storage expense from exponential increase into linear increase, so that better expandability is achieved; and because the node is taken as a unit for performing coarse-grained predication, the storage expense for information predication is saved compared with that for fine-grained prediction in which the kernel is taken as a unit.

Owner:XI AN JIAOTONG UNIV

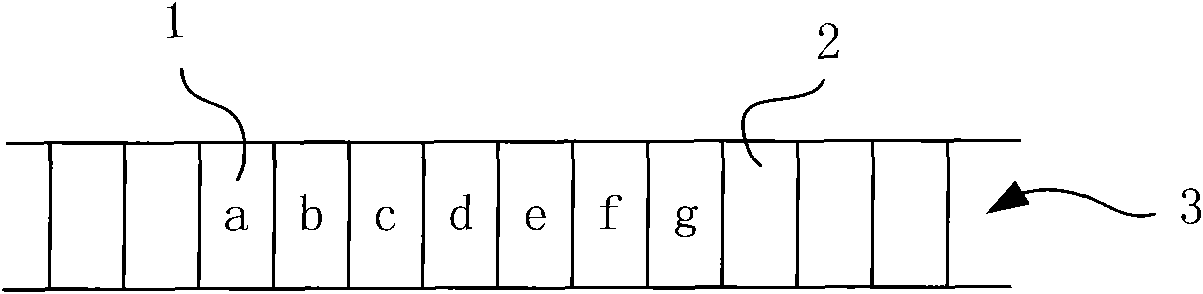

Multiprocessor system and Cache consistency message transmission method

ActiveCN101430664ARealize transmissionReduce complexityMemory adressing/allocation/relocationMulti processorCache consistency

The invention provides a multiprocessor system which accords with an AXI protocol. The system comprises at least two processor cores including a first-level cache, and at least two second-level caches, the processor cores are connected with the second-level caches by a bus; the bus comprises a read address channel, a read data channel, a write address channel, a write data channel and a write response channel; the lines in the channels are divided into regions according to the transmitted contents, and the channels comprise the regions which are defined according to the AXI protocol; wherein, the write address channel further comprises an AWDID region for identifying a target ID of the write address request, and an AWSTATE region for transmitting state information of a cache block in the first-level cache in write operation; the write data channel further comprises a WDID region for identifying the target ID of the write data request; the read address channel further comprises an ARDID region for identifying the target ID of the write address request and an ARCMD region for representing a read command; and the read data channel further comprises an RSTATE region for representing a read data response.

Owner:LOONGSON TECH CORP

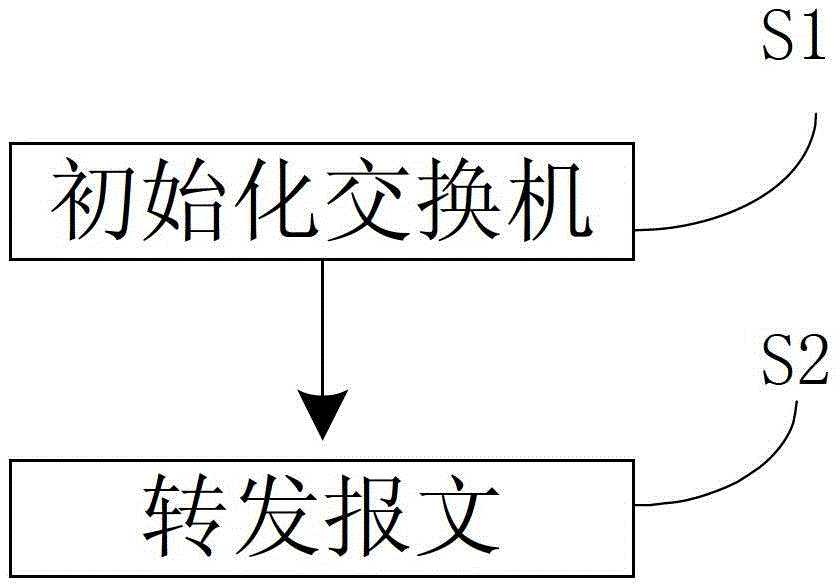

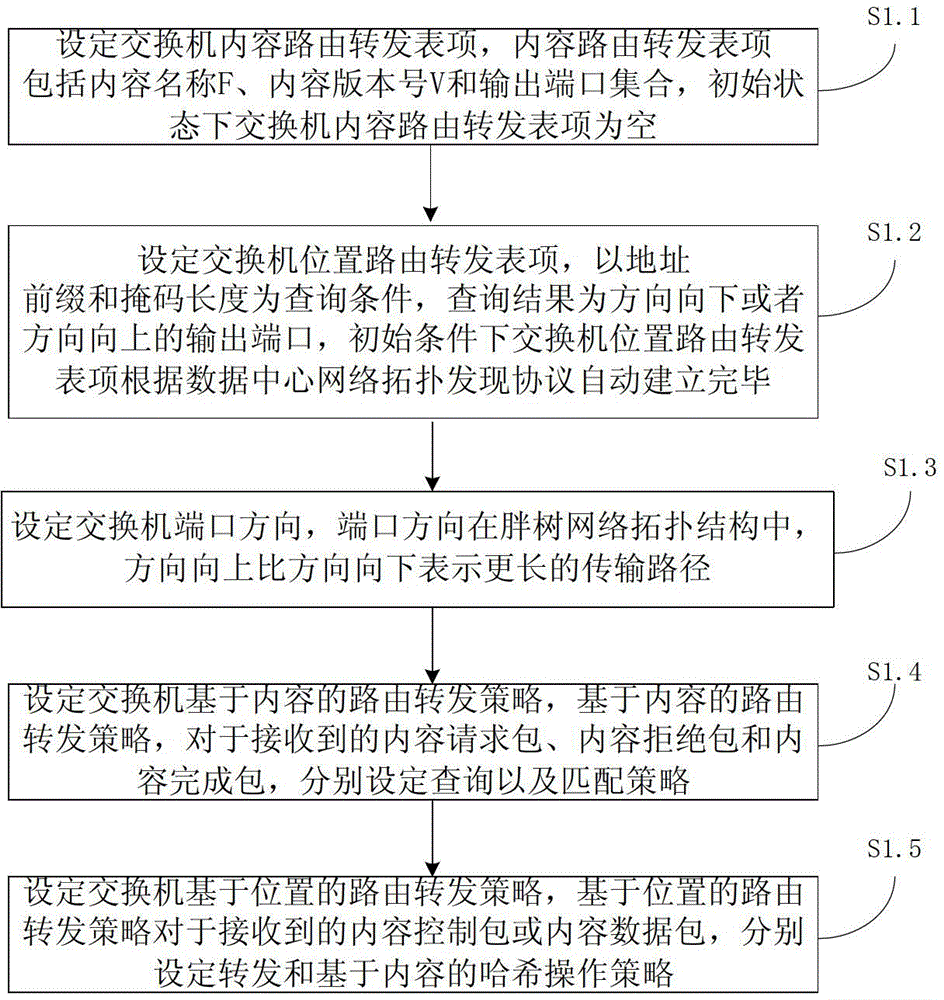

Routing forwarding method for content-based data center network

The invention provides a routing forwarding method of a content-based data center network, wherein the method comprises the following steps of: initializing a switchboard; and forwarding a message. On the basis of the content-based data center network, the switchboard selects a cached host with relatively short distance preferentially to carry out routing forwarding without the participation of an integrated controller through content and position mixed type routing forwarding strategy. A routing strategy utilizes the characteristics of a data center topological structure, so that the switchboard can judge the length of a route just through the direction of a port. The cache consistency is finished through simple content version number matching query during the routing forwarding of the switchboard. The load balancing is finished during the content-based forwarding of the switchboard. The content-based hash operation is carried out on an upward port during the position-based forwarding of the switchboard, so that redundant storage content forwarding tables are reduced.

Owner:TSINGHUA UNIV

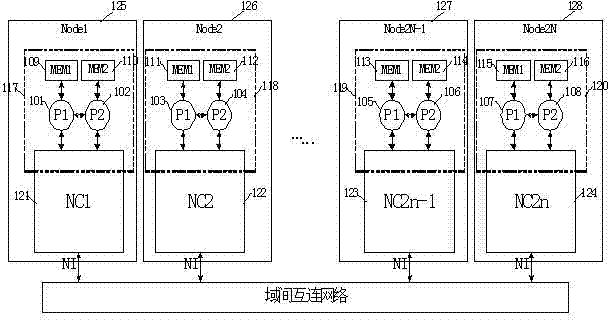

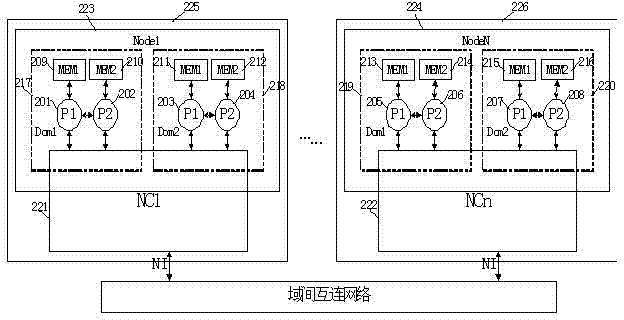

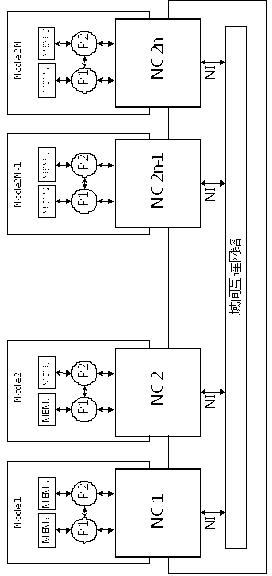

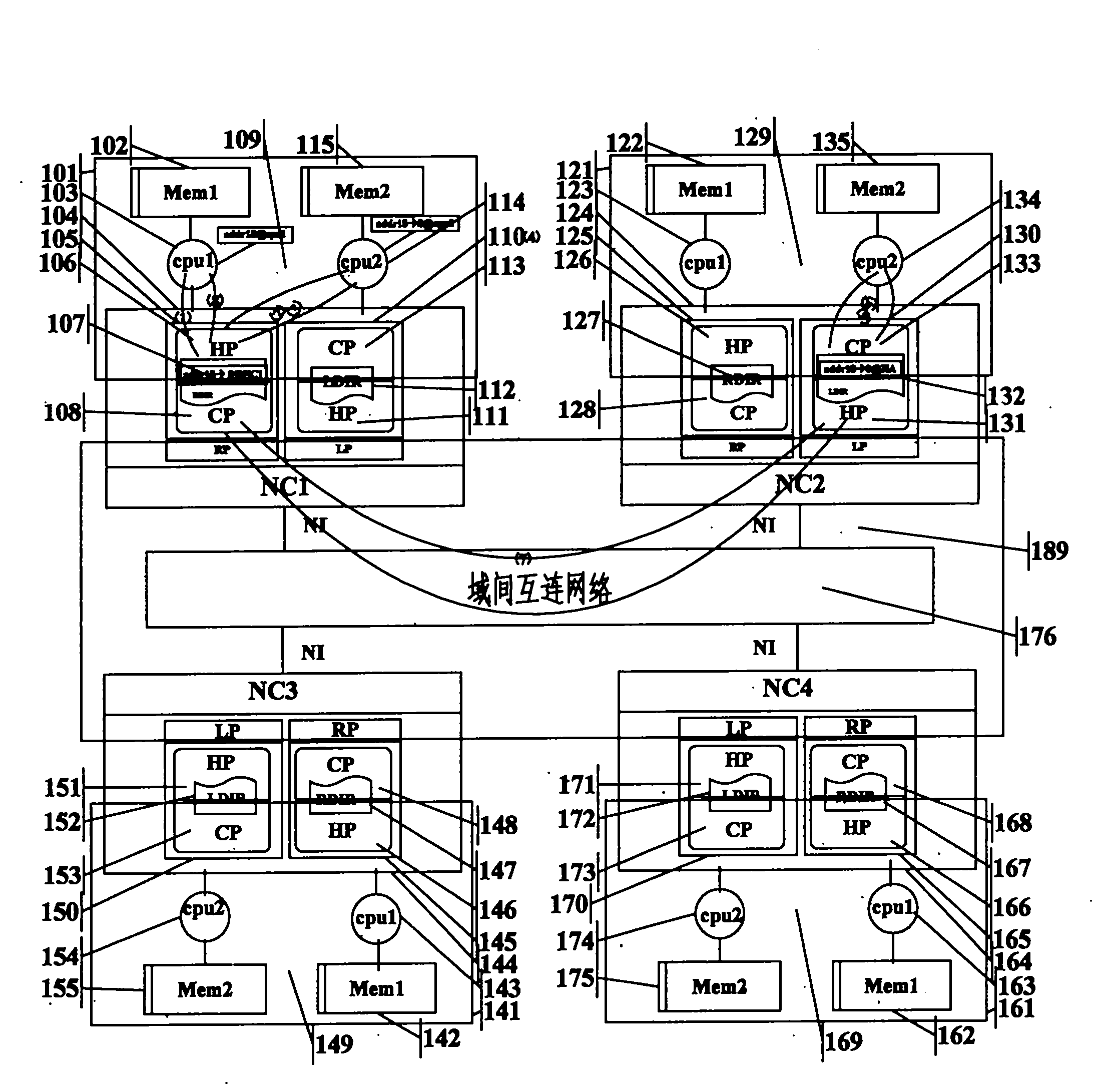

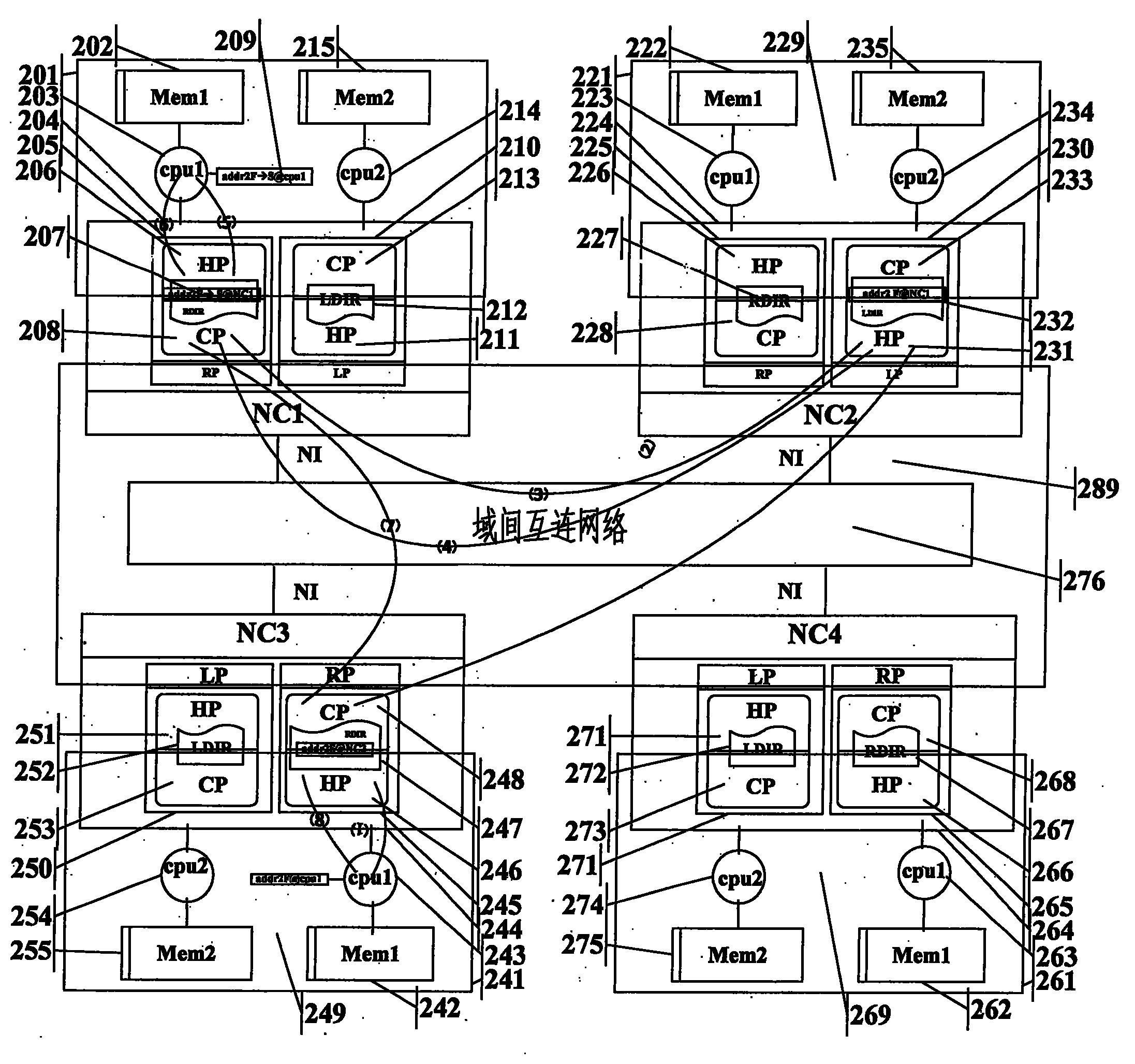

Method for building multi-processor node system with multiple cache consistency domains

ActiveCN103049422ASolve the problem of limited node sizeReduce the numberMemory architecture accessing/allocationDigital computer detailsMulti processorComputerized system

The invention provides a method for building a multi-processor node system with multiple cache consistency domains. In the system, a directory built in a node controller needs to include processor domain attribute information, and the information can be acquired by configuring cache consistency domain attributes of ports for connecting the node controller with processors. The node controller can support the multiple physical cache consistency domains in nodes. The method aims to decrease the number of node controllers in the multi-processor computer system, decrease the scale of interconnection of the nodes, reduce topological complexity of the nodes and improve system efficiency. Besides, the method can solve the problems that the number of processor interconnection ports and the number of supportable intra-domain processor IDs (identities) are extremely limited, and performance bottleneck is caused in building of a large-scale CC-NUMA (cache coherent non-uniform memory access) system.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

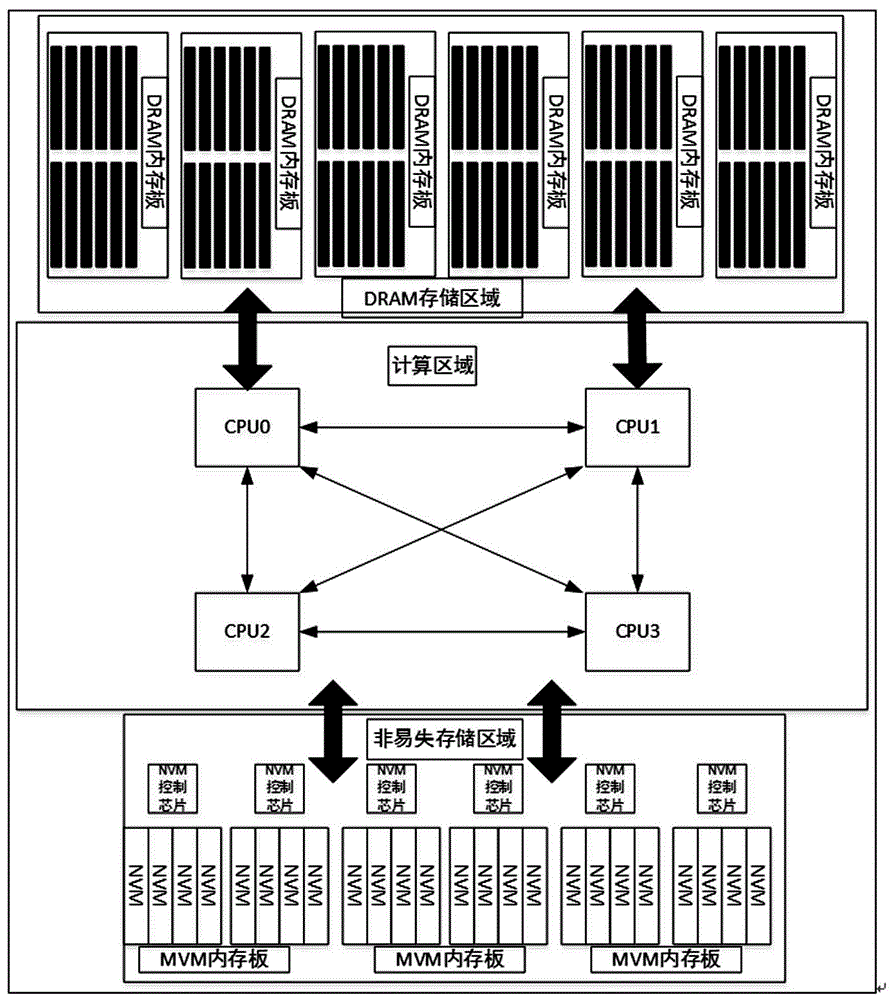

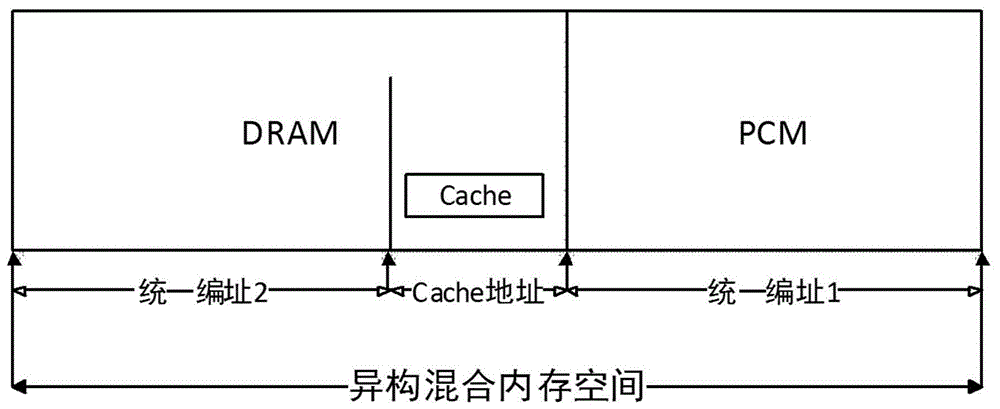

System and method for extending nonvolatile memory based on consistency buses

InactiveCN106843772AImprove access performanceSolve technical bottlenecksInput/output to record carriersMemory systemsDram memoryParallel computing

The invention discloses a system and method for extending a nonvolatile memory based on consistent buses. The system structurally comprises a plurality of nodes mutually connected through cache consistency high-speed mutually-connected buses, wherein each node is configured with a hybrid memory consisting of a processor, a DRAM memory and a NVM memory, a NVM memory controller is configured between the processor and the NVM memory, a DRAM memory controller is configured between the processor and the DRAM memory, the processor is mutually connected with the NVM memory and the DRAM memory through the cache consistency high-speed mutually-connected buses, and the DRAM memory and the NVM memory are addressed in a unified mode to achieve overall cache consistency of a heterogeneous hybrid memory system. The method is achieved based on the system. Compared with the prior art, existing technical bottlenecks of the DRAM memory are solved by adopting the system and method for extending the nonvolatile memory based on the consistent buses, the NVM memory access performance is improved, data processing capability of the whole system is improved, and the system and method is high in practicability, wide in application range and easy to popularize.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

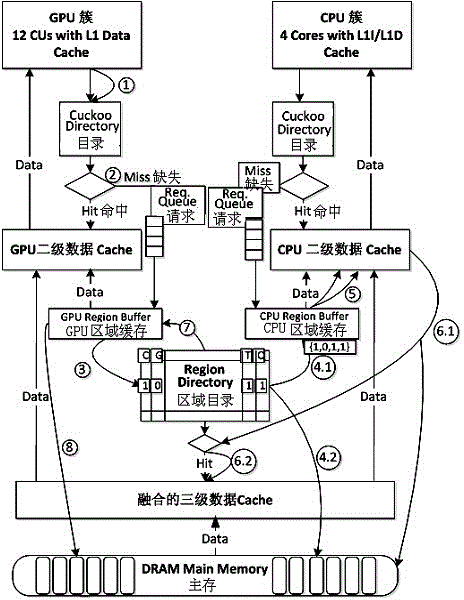

Method for establishing access by fusing multiple levels of cache directories

InactiveCN103955435AReduce failure rateReduce bus bandwidthMemory adressing/allocation/relocationEnergy efficient computingFailure rateThree level

The invention relates to a method for establishing an access by fusing multiple levels of cache directories, and a graded fused hierarchical cache directory mechanism is established. The method comprising the steps that multiple CPU and GPU processors form a Quart computing element, a Cuckoo directory is established in a graded way in caches built in the CPU or GPU processors, an area directory and an area directory controller are established outside the Quart computing element, thus the bus communication bandwidth is effectively reduced, the arbitration conflict frequency is lowered, a data block directory of a three-level fusion Cache can be cached, and thus the access hit rate of the three-level fusion Cache is improved. Therefore a graded fused hierarchical Cache directory mechanism inside and outside the Quart is constructed, the Cache failure rate is lowered, the on-chip bus bandwidth is reduced, the power consumption of the system is lowered, the new status of the Cache block does not need to be added, the very good compatibility with the Cache consistency protocol is realized, and a new train of thought is provided for constructing a heterogeneous monolithic multi-core processor system with extensibility and high performance.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

Method and device for processing single-producer/single-consumer queue in multi-core system

InactiveCN101853149AImprove efficiencyReduce overheadMemory adressing/allocation/relocationConcurrent instruction executionCache consistencyMulticore systems

The invention relates to a method and a device for processing a single-producer / single-consumer queue in a multi-core system. A private buffer area and a timer are arranged at one end of a producer at least; when the producer produces new data, the new data are written in the private buffer area; and when and only when the private buffer area is full or the timer expires, the producer writes all the data in the private buffer area in a queue at a single time. The invention also comprises an enqueuing device, a dequeuing device and a timing device, one end of the queue is accessed by a processing entity to write the data in the queue; and the other end is accessed by another processing entity to read data from the queue, thereby eliminating the false sharing cache miss and sufficiently decreasing the expense spent on the cache consistency protocol in a multi-core processor system.

Owner:张力

Method for synchronously caching by multiple clients in distributed file system

ActiveCN102541983AEnsure consistencyReduce development costsTransmissionSpecial data processing applicationsDistributed File SystemCache consistency

The invention provides a method for synchronously caching by multiple clients in a distributed file system. The method comprises the following steps of: taking a metadata server as a control node, in which a client caches information, and recording a storage state of each client on the metadata server according to a maintained index node on the metadata server; and dividing the metadata information into read only cache and writable cache according to the client cache attribute, wherein for the read only cache for metadata, when the client reads the metadata for the first time, the metadata server authorizes a read only authority or a writable cache authority to the client, and the client always has the authority after finishing operation; and for the writable cache for the metadata, the modification of the client is temporarily stored locally, and when a write-back trigger condition is met, writing-back is performed. According to the method, the cache consistency among different clients is strictly guaranteed; and furthermore, compared with a distributed phase locked method for Lustre and a general parallel file system (GPFS), the method is relatively low in development cost.

Owner:WUXI CITY CLOUD COMPUTING CENT

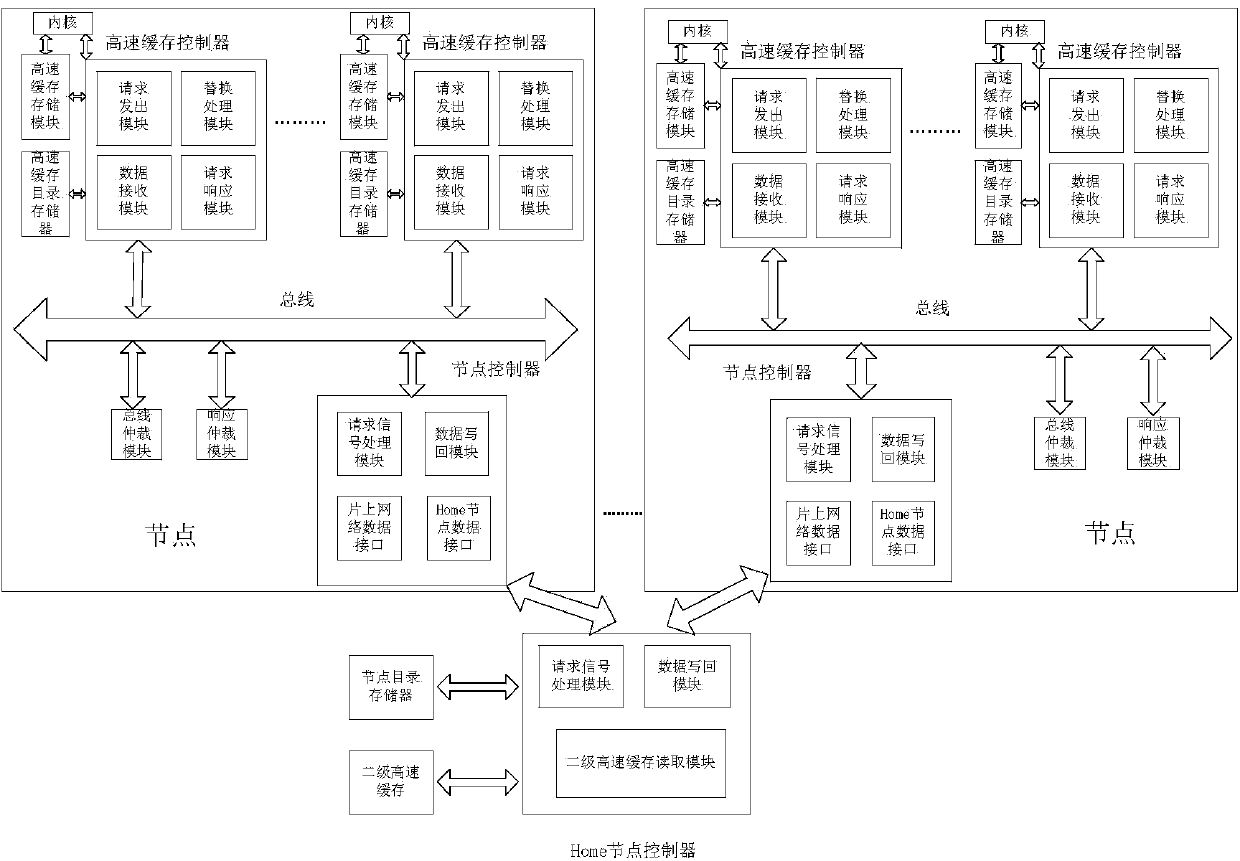

Layering system for achieving caching consistency protocol and method thereof

InactiveCN103440223AEasy to adaptSolve the problem of taking up too much storage spaceMemory adressing/allocation/relocationDigital computer detailsExtensibilityThe Internet

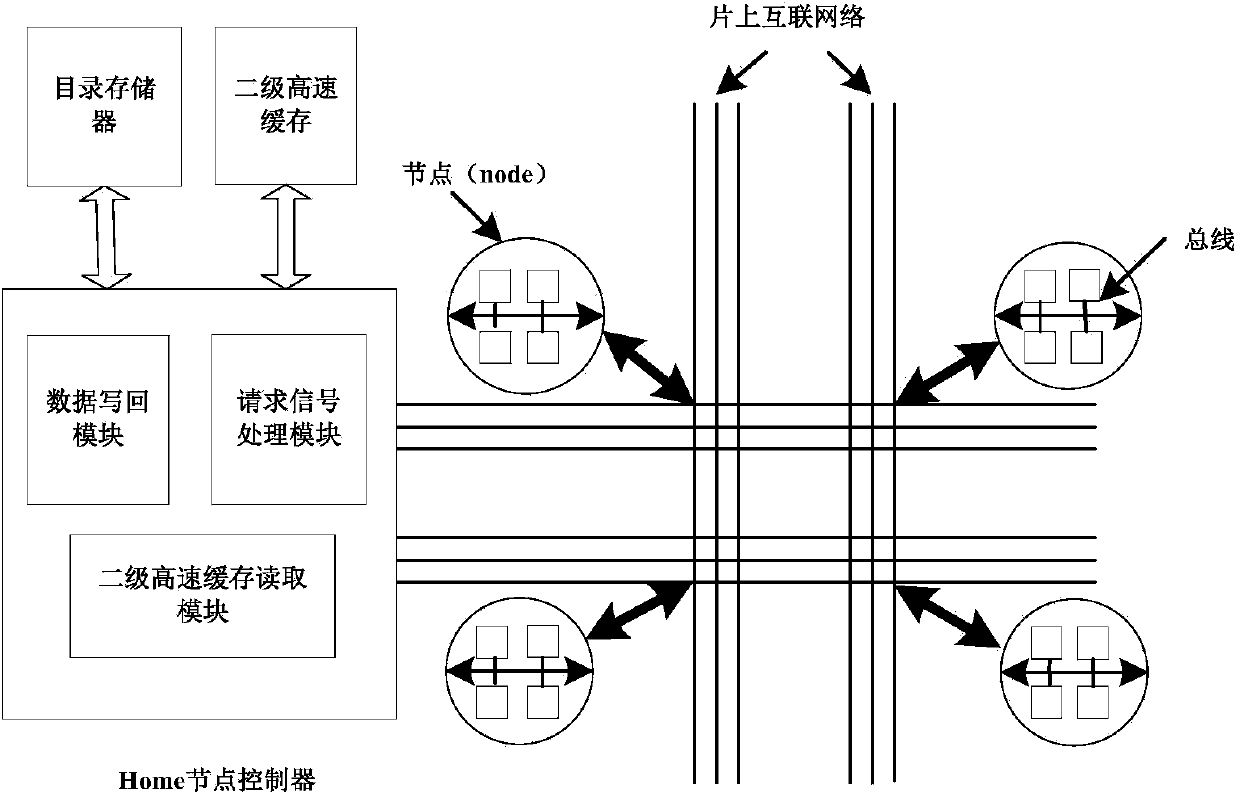

The invention discloses a layering system for achieving the caching consistency protocol and a method of the layering system. According to the scheme, the bus monitoring protocol is adopted in the first layer of the layering system to enable the first layer of the layering system to adapt to a sharing bus framework of the first layer of the layering system, the caching consistency protocol based on a catalog is adopted in the second layer of the layering system to enable the second layer of the layering system to adapt to an internet framework on an NoC of the second layer of the layering system, the two frameworks transmit consistency maintenance signals sent by the two protocols through a node controller of each node, the two protocols can be mutually communicated, and then the mixed consistency protocol can maintain the caching consistency of the whole system. The method has the advantages of being high in performance, good in real-time performance, high in expandability and low in design complexity, solving the bus bandwidth problem of the sharing bus framework, solving the problem that the catalog in the consistency protocol based on the catalog is excessively large in occupied storage space, and enabling the consistency protocol to better adapt to lager-scale multi-core processors.

Owner:XIDIAN UNIV

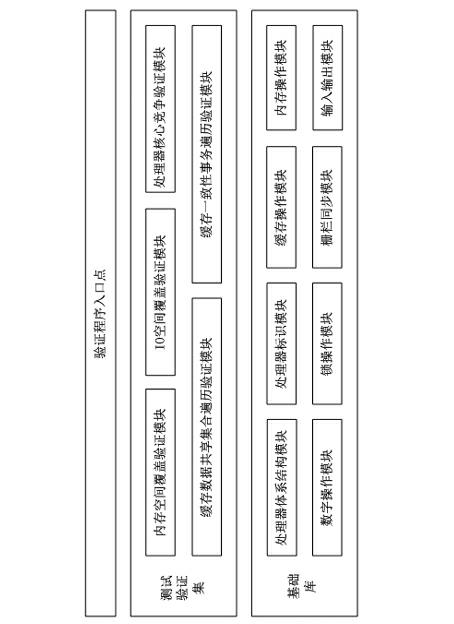

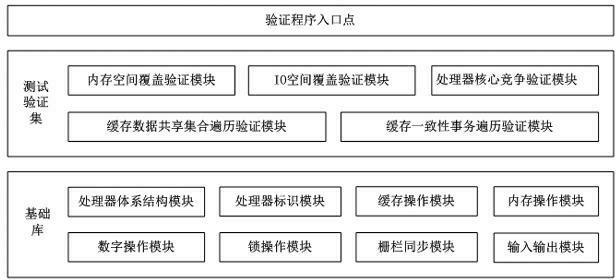

Correctness verifying method of cache consistency protocol

ActiveCN102681937AMake up for efficiencyMake up for poor verification coverageDigital computer detailsMultiprogramming arrangementsOperational systemTerm memory

The invention provides a correctness verifying method of a cache consistency protocol. After a computer enters an operating system, the complexity of a core and the application of the operating system is higher; the action of a processor is not easy to control accurately; therefore, in order to keep verification correctness, a verifying program for the cache consistency protocol is necessary to embed in a systematic procedure; the program is embedded in a BIOS (basic input / output system) code; after the initialization of a memory subsystem is completed at the initialization initial stage of the system, the verifying program is started to be executed; the verifying program needs to be capable of accurately controlling actions of each processor of the system, supports a user to select a verification item to be particularly executed, and feeds back a verification result to the user; by using the method, the verification of the correctness of the cache consistency protocol is realized at a system level; all application scenes of a real system can be completely covered; the disadvantages that a conventional verifying method based on an analog way is low in efficiency and poor in verification coverage rate are made up; the design period and the verifying period of an inter-domain cache consistency chip of the processor can be shortened; the one-time taping-out mission success rate of the chip can be guaranteed effectively; and therefore, the correctness verifying method has an extremely wide development prospect and an extremely high technical value.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

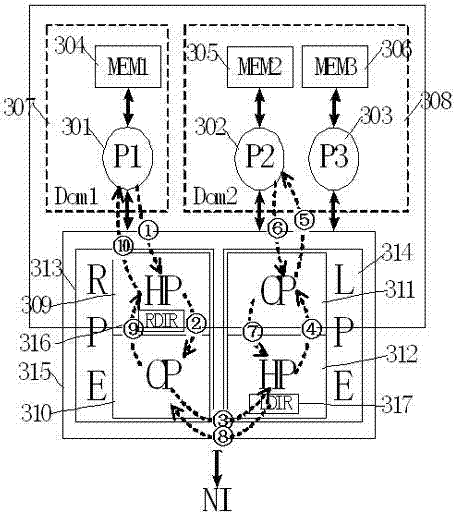

Method for constructing Share-F state in local domain of multi-level cache consistency domain system

ActiveCN103294612AReduce frequencyReduce overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache consistency

The invention discloses a method for constructing a Share-F (Forwarding) state in a local domain of a multi-level cache consistency domain system. The method comprises the following steps of: 1), when requesting to visit remote data of an S (Shared) state with the same address, determining a visiting data copy by enquiring a remote agent directory RDIR, and judging whether the data copy is in an internodal S state and an intranodal F state; 2), directly forwarding the data copy to a requester, and setting a data copy record of the current requester as an internodal Cache consistency domain S state and an intranodal Cache consistency domain F state, and 3), after forwarding the data, setting an intranodal processor record losing an F right state as an internodal Cache consistency domain S state and an intranodal Cache consistency domain F state in the remote data directory RDIR. The method can reduce the frequency and overhead of trans-node visiting, and therefore, greatly improves the performances of the two-level or multilevel Cache consistency domain CC-NUMA system.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Explicit multi-core Cache consistency active management method facing flow application

ActiveCN103279428AShorten the timeAccurate calculationMemory adressing/allocation/relocationDigital computer detailsData operationsParallel computing

Disclosed is an explicit multi-core Cache consistency active management method facing flow application. The method comprises the steps that private data Cache set an optional total state descriptor and a shared data function digit of an identification Cache to the read-write state of shared data; the total state descriptor is used for identifying the operational state of the whole private data Cache to the shared data currently, Y groups are configured according to the number requirement for simultaneous locking of the Cache, the feature information of each locking area is stored in each group, and a shared address section or locking sign information can be achieved; the shared data function digit is a two-dimensional array register, the width is N, and the depth is M; the N is used for distinguishing N different locking shared data areas corresponding to lines or blocks of the Cache, and the M is the same as the number of the lines or the blocks of the private data Cache to identify the corresponding lines or the blocks of the Cache to determine whether the shared data need to be read and written or not. The explicit multi-core Cache consistency active management method facing the flow application has the advantages of being simple in principle, convenient to operate, small in price for hardware implementation, good in expandability, strong in configurability, capable of improving system efficiency and the like.

Owner:NAT UNIV OF DEFENSE TECH

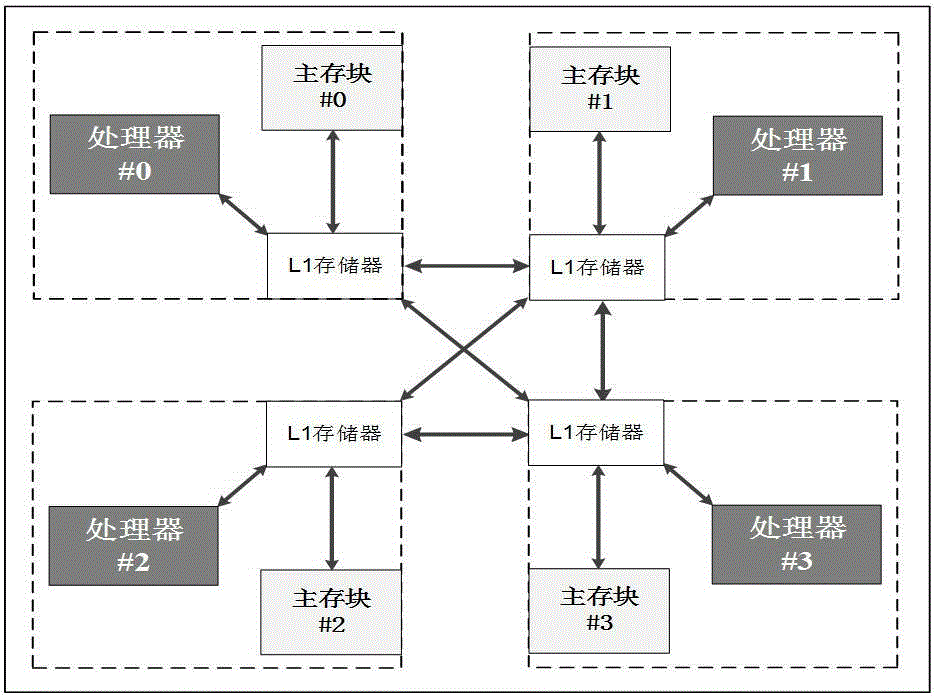

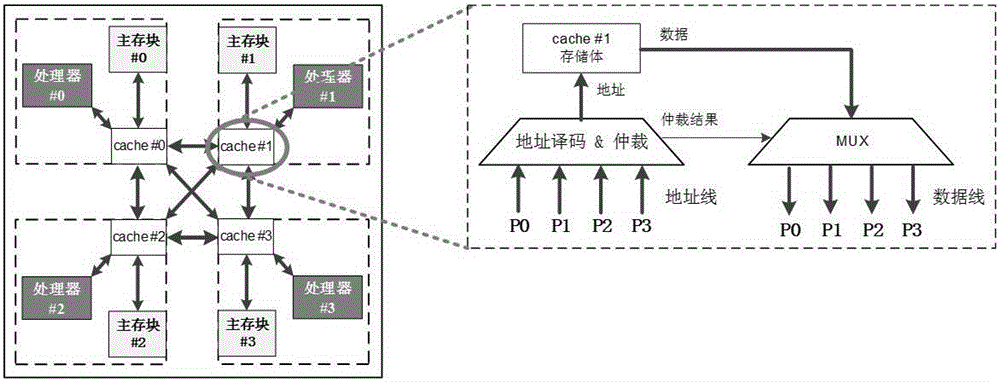

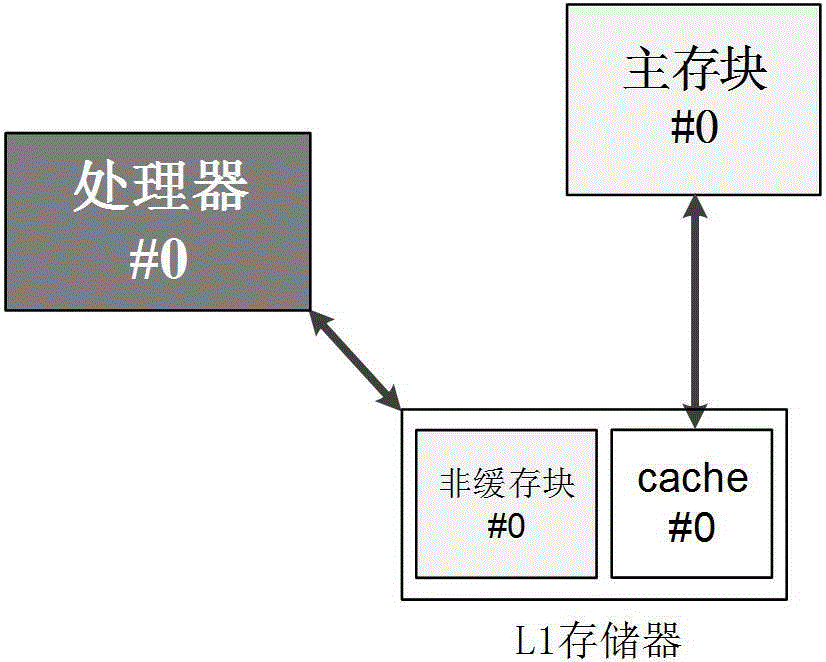

Cache consistency protocol-free distributed sharing on-chip storage framework

ActiveCN105183662AReduce miss rateApplicable scalabilityMemory adressing/allocation/relocationEnergy efficient computingMemory addressDirect memory access

The invention belongs to the technical field of processors and particularly relates to a cache consistency protocol-free distributed sharing on-chip storage framework. According to the framework, a cache is only mapped to a local primary memory space based on a cluster structure, and a processor finishes reading and writing of other shared primary memory blocks in a cluster by accessing to caches of other cores, so that overlapped primary memory address spaces are no longer mapped among the caches of different cores, and the cache consistency problem of a multi-core processor is solved; and an L1 storage device of a local core is divided into two parts, i.e., a non cache storage device and the cache, so that the cache miss rate is reduced and corresponding complicated logic circuits and power consumption are avoided. Moreover, a direct memory access (DMA) operation is supported, i.e., DMA is supported in a stream application to directly perform inter-cluster large data transit on a primary memory, so that the framework is suitable for large-scale expansion.

Owner:FUDAN UNIV

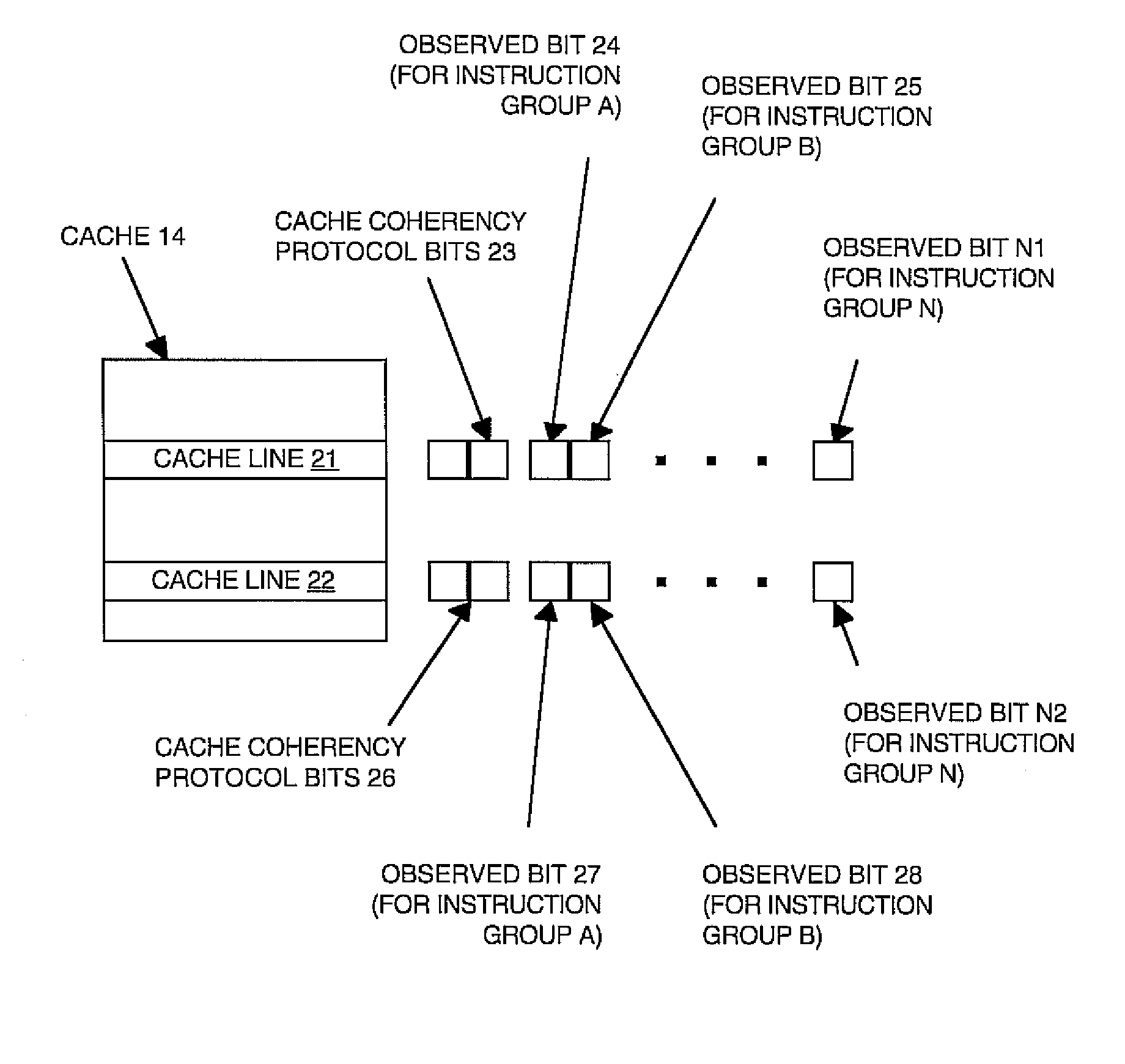

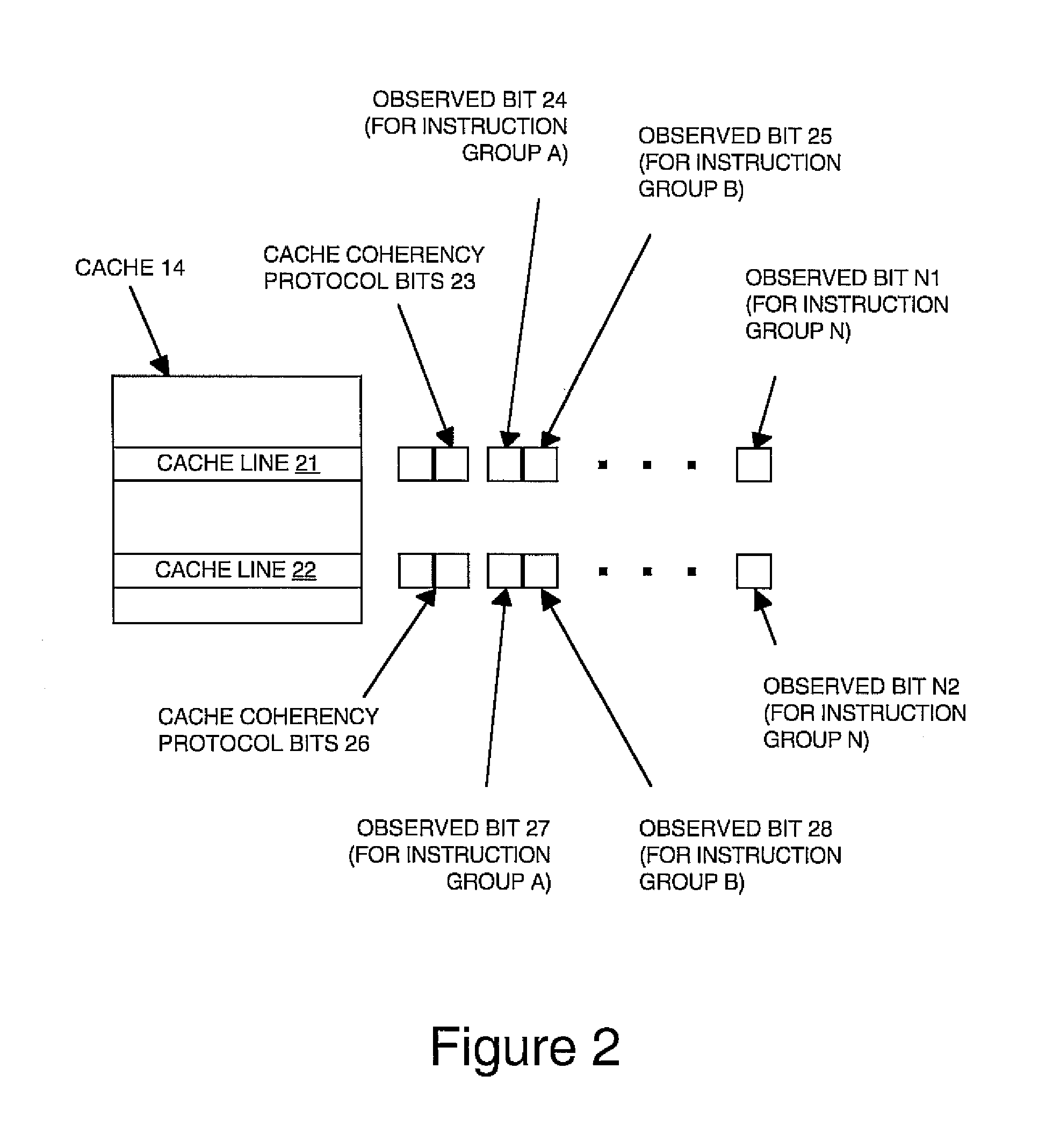

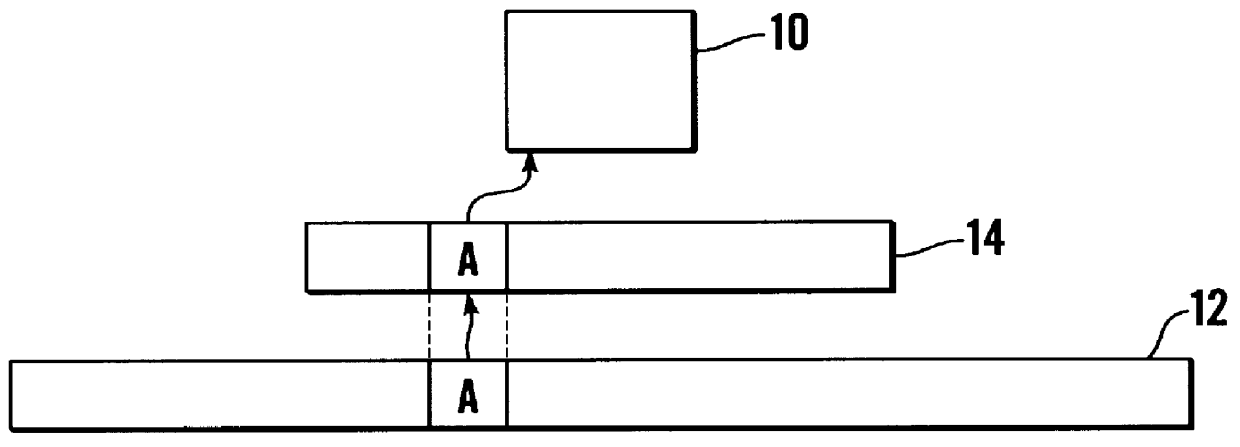

Memory management methods and systems that support cache consistency

Methods and systems for maintaining cache consistency are described. A group of instructions is executed. The group of instructions can include multiple memory operations, and also includes an instruction that when executed causes a cache line to be accessed. In response to execution of that instruction, an indicator associated with the group of instructions is updated to indicate that the cache line has been accessed. The cache line is indicated as having been accessed until execution of the group of instructions is ended.

Owner:INTELLECTUAL VENTURES HOLDING 81 LLC

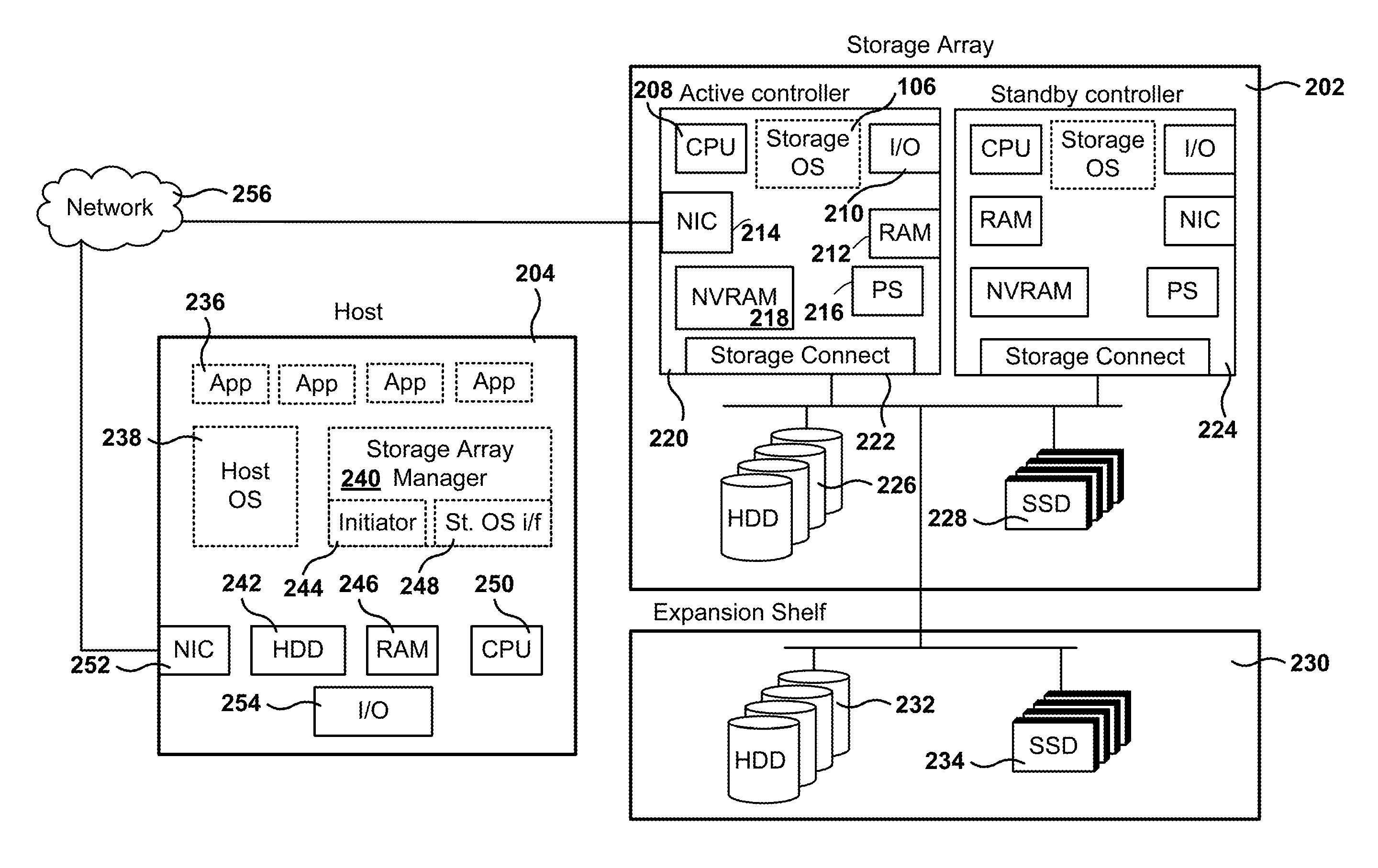

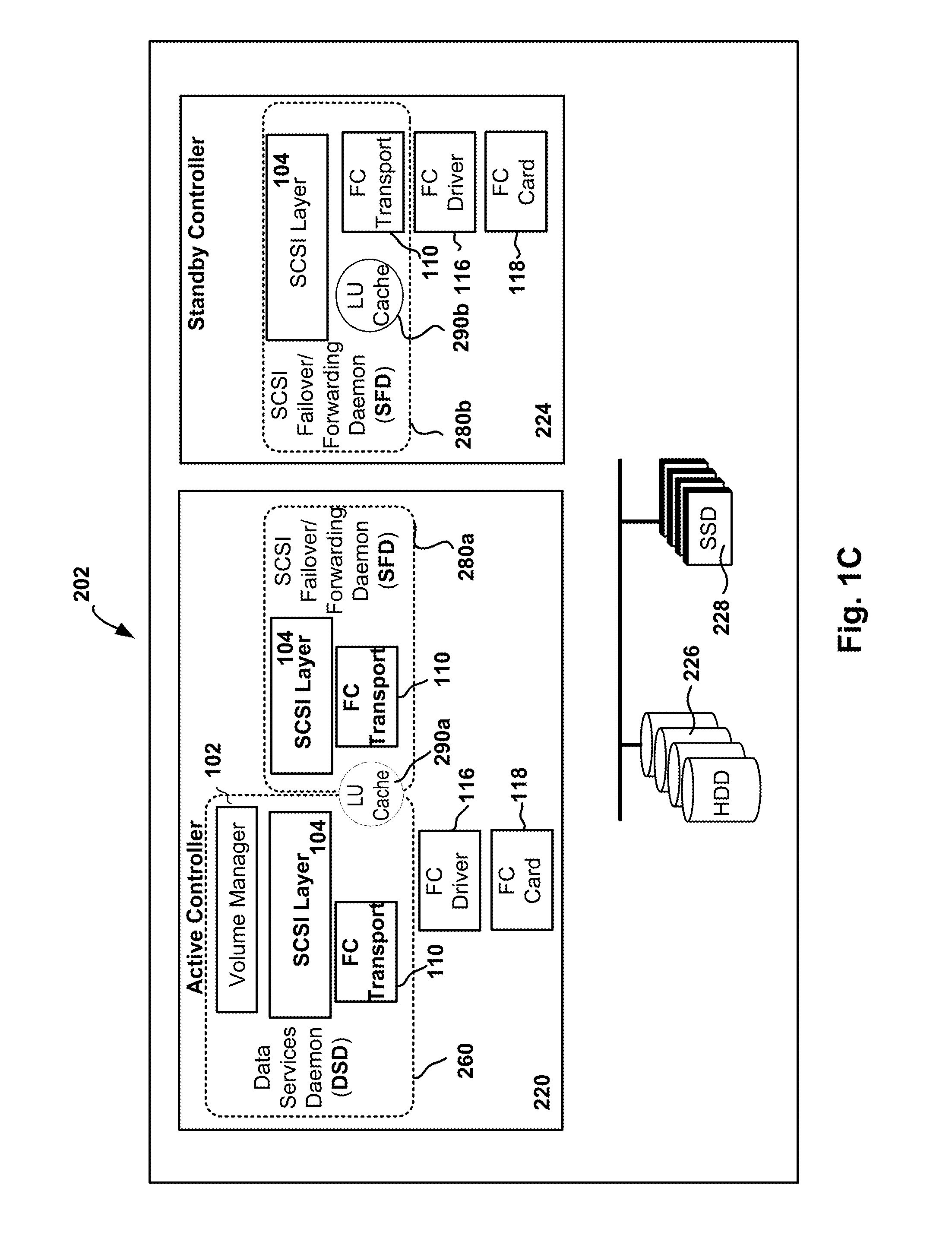

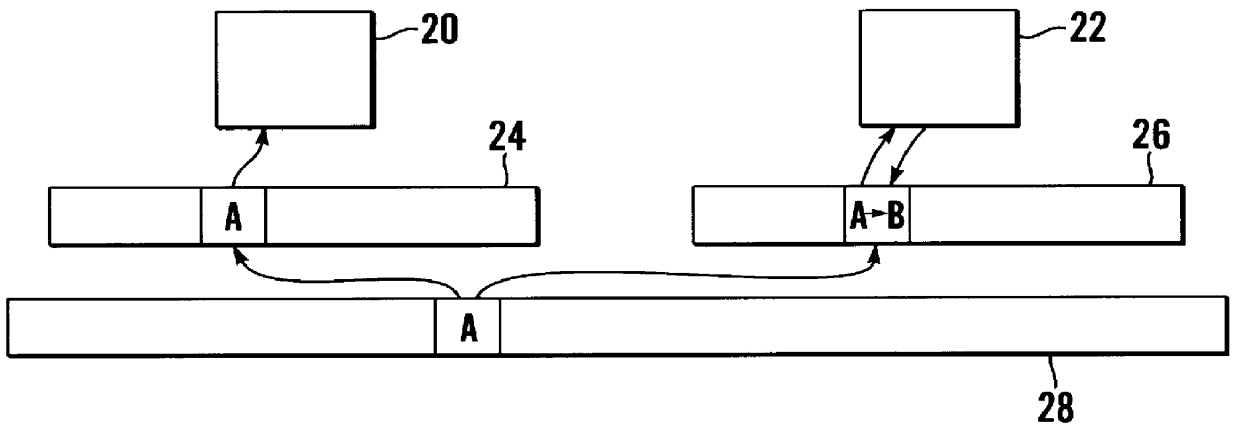

Fibre Channel Storage Array Methods for Handling Cache-Consistency Among Controllers of an Array and Consistency Among Arrays of a Pool

InactiveUS20160077752A1Maintain consistencyInput/output to record carriersRedundant operation error correctionParallel computingCache consistency

Storage arrays, systems and methods for operating storage arrays for maintaining consistency in configuration data between processes running on an active controller and a standby controller of the storage array are provided. One example method includes executing a primary process in user space of the active controller. The primary process is configured to process request commands from one or more initiators, and the primary process has access to a volume manager for serving data input / output (I / O) requests and non-I / O requests. The primary process has primary access to the configuration data and includes a first logical unit (LU) cache for storing the configuration data. The method also includes executing a secondary process in user space of the standby controller. The secondary process is configured to process request commands from one or more of the initiators, wherein the secondary process does not have access to the volume manger. The secondary process has a second LU cache for storing the configuration data, and the second LU cache is used by the secondary process for responding to non-I / O requests. The method includes receiving, at the primary process, an update to the configuration data and sending, by the primary process, the update to the configuration data to the secondary process for updating the second LU cache. When the primary process receives an acknowledgement from the secondary process that the update to the configuration data was received, then the updates to the configuration data are committed to the first LU cache of the active controller.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

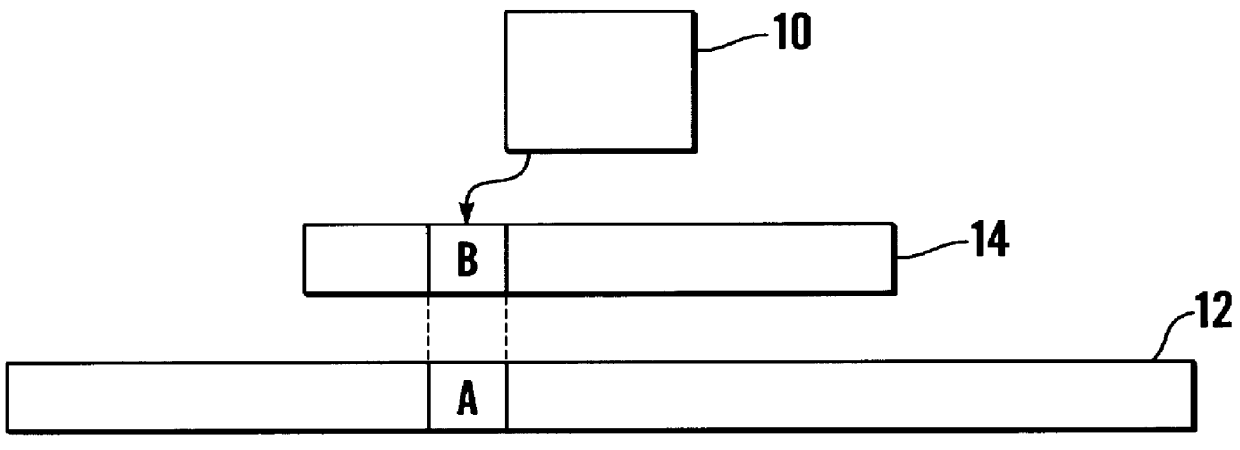

Microprocessor cache consistency

InactiveUS6138216AImprove performanceMemory adressing/allocation/relocationDigital computer detailsParallel computingCache consistency

A method is described of managing memory in a microprocessor system comprising two or more processors (40, 42). Each processor (40, 42) has a cache memory (44, 46) and the system has a system memory (48) divided into pages subdivided into blocks. The method is concerned with managing the system memory (48) identifying areas thereof as being "cacheable", "non-cacheable" or "free". Safeguards are provided to ensure that blocks of system memory (48) cannot be cached by two different processors (40, 42) simultaneously.

Owner:THALES HLDG UK

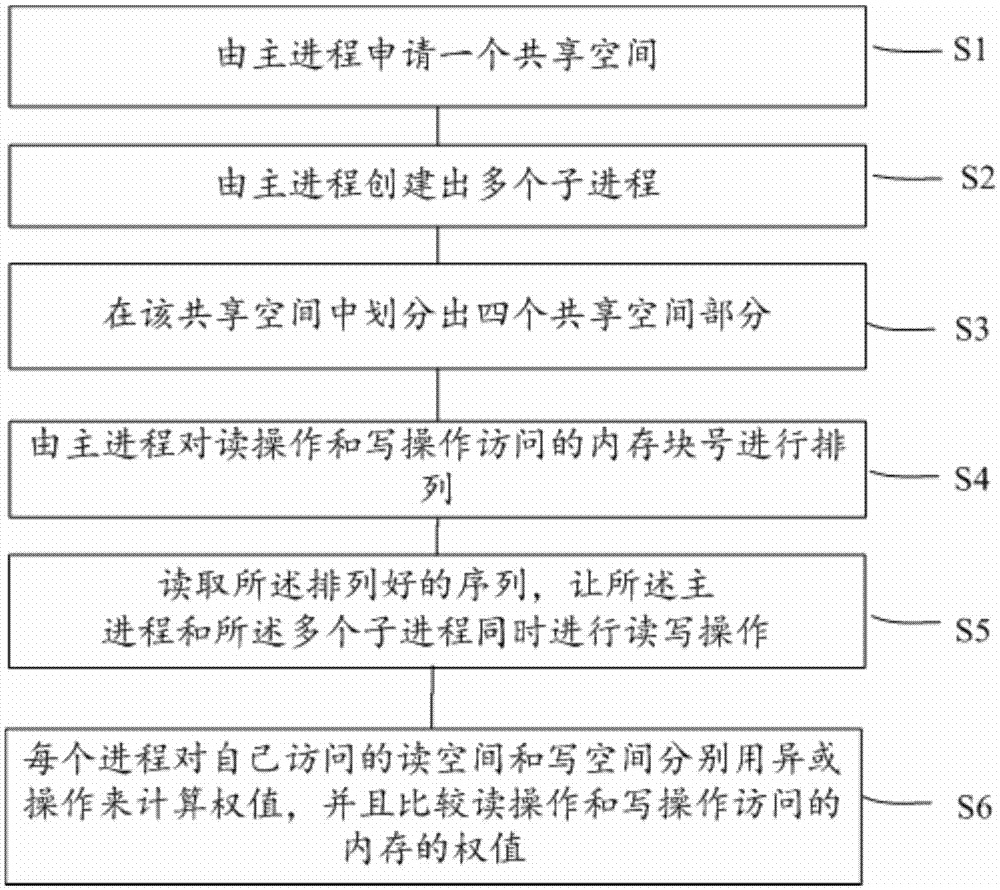

Cache consistency test method

ActiveCN105446841AReduce design costGuarantee the success rate of one shotDetecting faulty hardware by remote testMemory addressExclusive or

The present invention provides a cache consistency test method. The method comprises the steps of: a master process applying for a shared space; the master process creating a plurality of sub processes; dividing four shared space parts in the shared space; the master process arranging numbers of memory blocks accessed by a read operation and a write operation, so as to allow memory addresses accessed by the read operation and write operation not to overlap, and allow the plurality of sub processes to enter synchronous interfaces; after the plurality of sub processes are synchronized successfully, reading an arranged sequence, so as to allow the master process and the plurality of sub processes to perform read and write operations simultaneously; and after the master process and the plurality of sub processes finish read and write operations, each process using an exclusive OR operation to calculate the weight of a read space and a write space accessed by the process, and comparing the weight of the memory accessed by the read operation with the weight of the memory accessed by the write operation.

Owner:JIANGNAN INST OF COMPUTING TECH

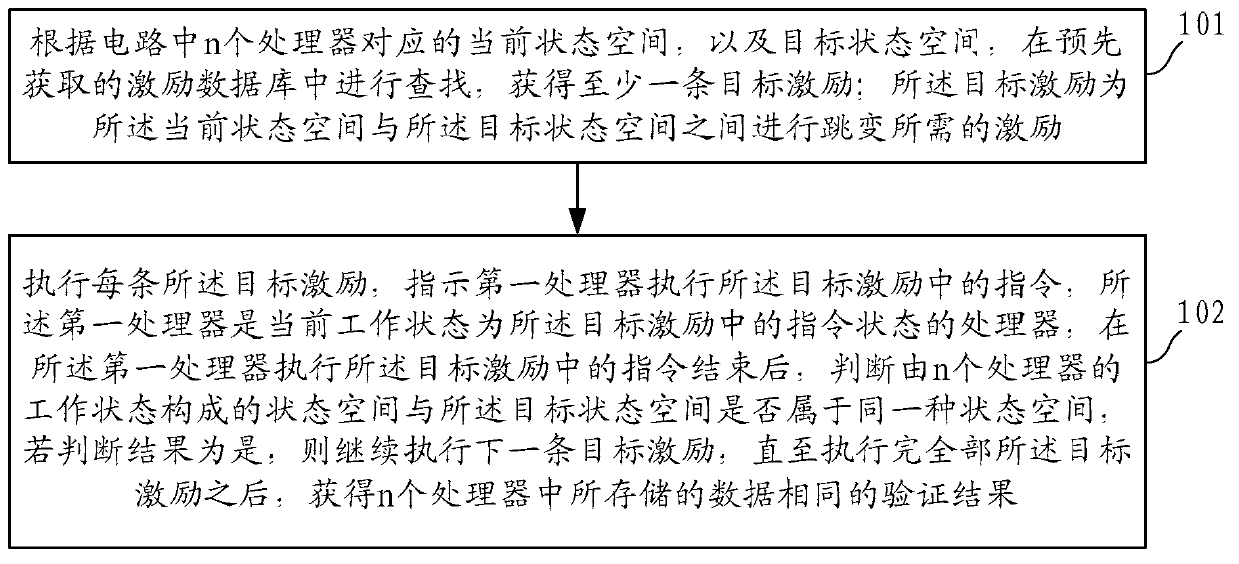

Data verification method, device and system

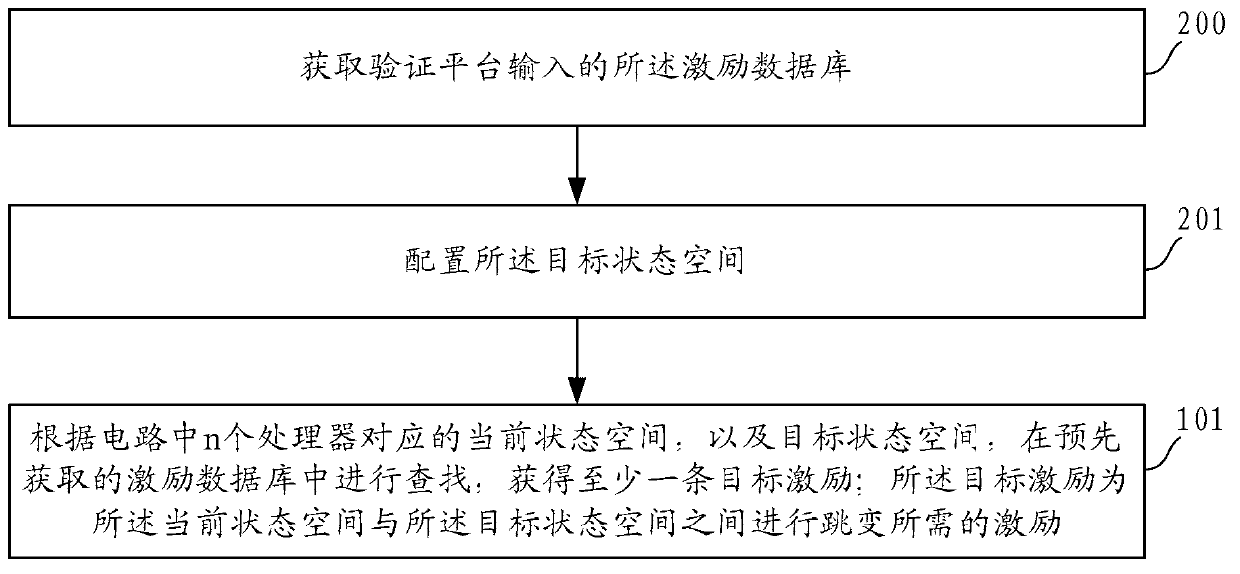

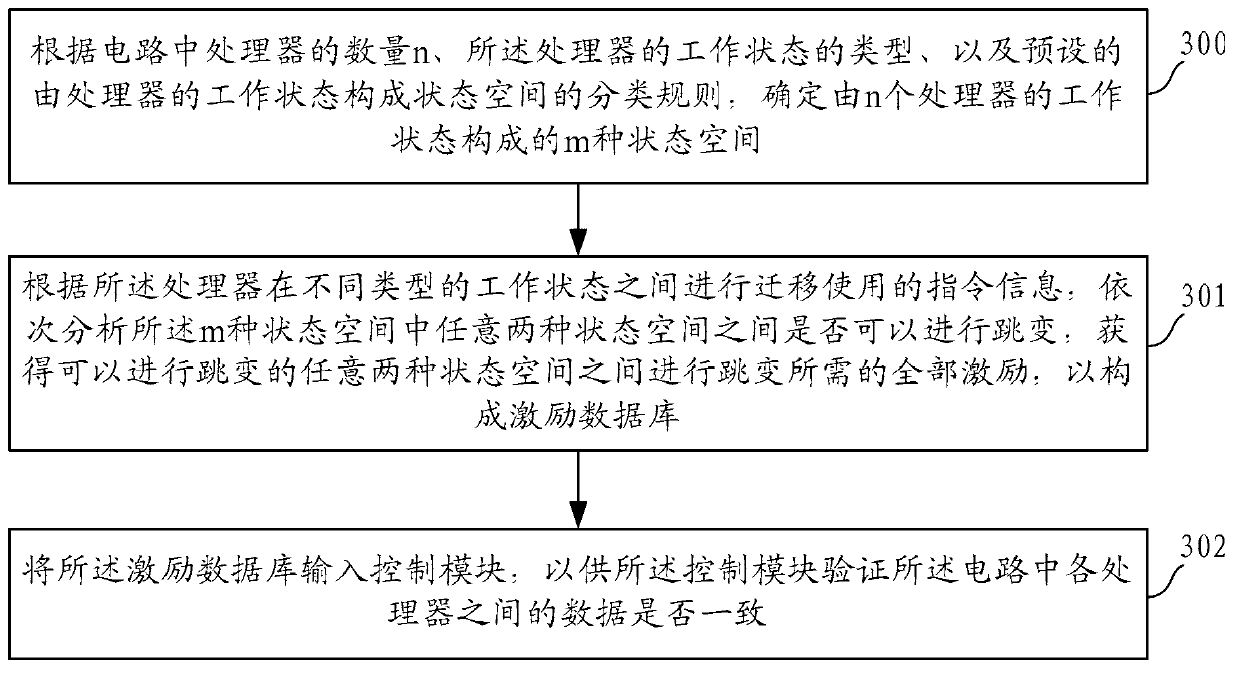

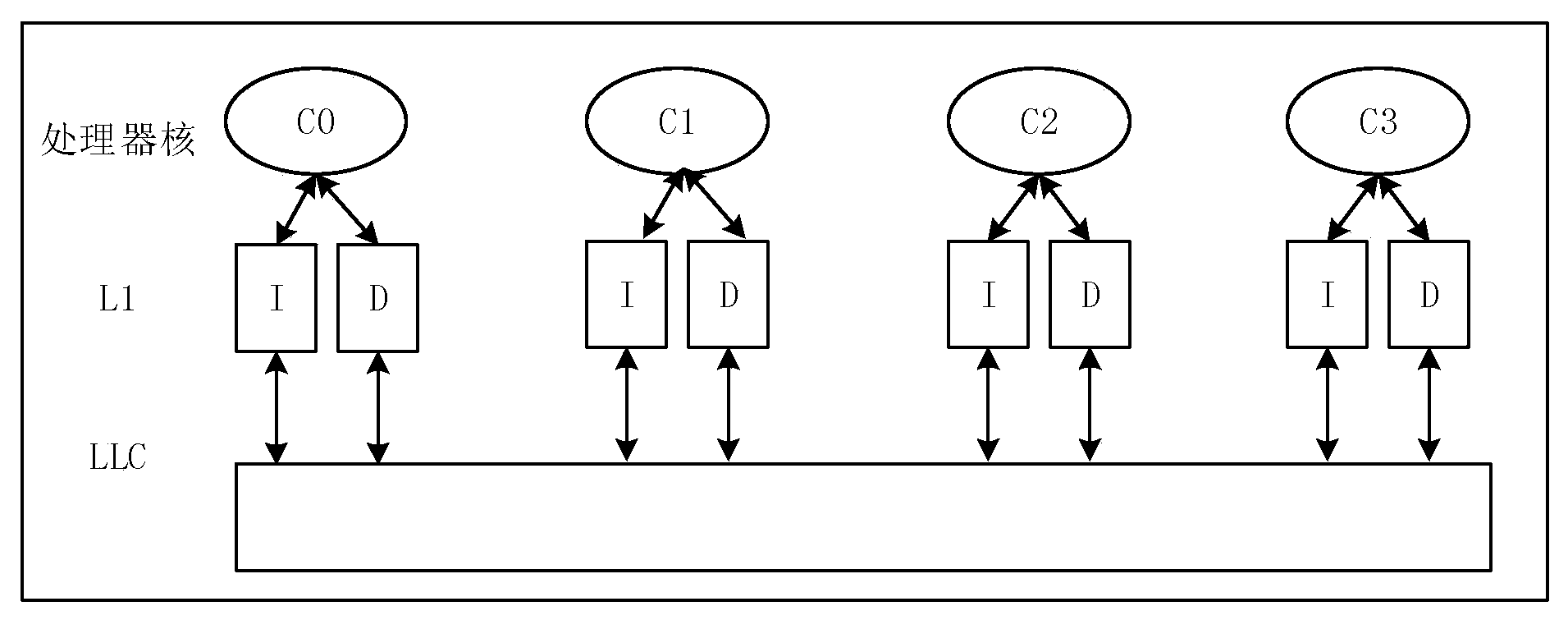

ActiveCN102789483AReduce data processingImprove the efficiency of verifying Cache consistencySpecial data processing applicationsSource Data VerificationParallel computing

The invention discloses a data verification method, a data verification device and a data verification system. The data verification method comprises the following steps of: according to a current state space corresponding to n processors in a circuit and a target state space, carrying out searching in a pre-acquired excitation database to obtain at least one target excitation, wherein each target excitation is an excitation required by jumping between the current state space and the target state space; executing each target excitation, indicating a first processor to execute a command in the target excitation, and after the first processor finishes executing the command in the target excitation, judging whether the state space formed by working states of the n processors and the target state space belong to the same type of state space; and if a judgment result is Yes, continuously executing the next target excitation and obtaining a verification result that data stored in the N processor is same until all the target excitations are executed, so that the efficiency of verifying the Cache consistency of each CPU (Central Processing Unit) in the circuit is effectively improved.

Owner:泰州市海通资产管理有限公司

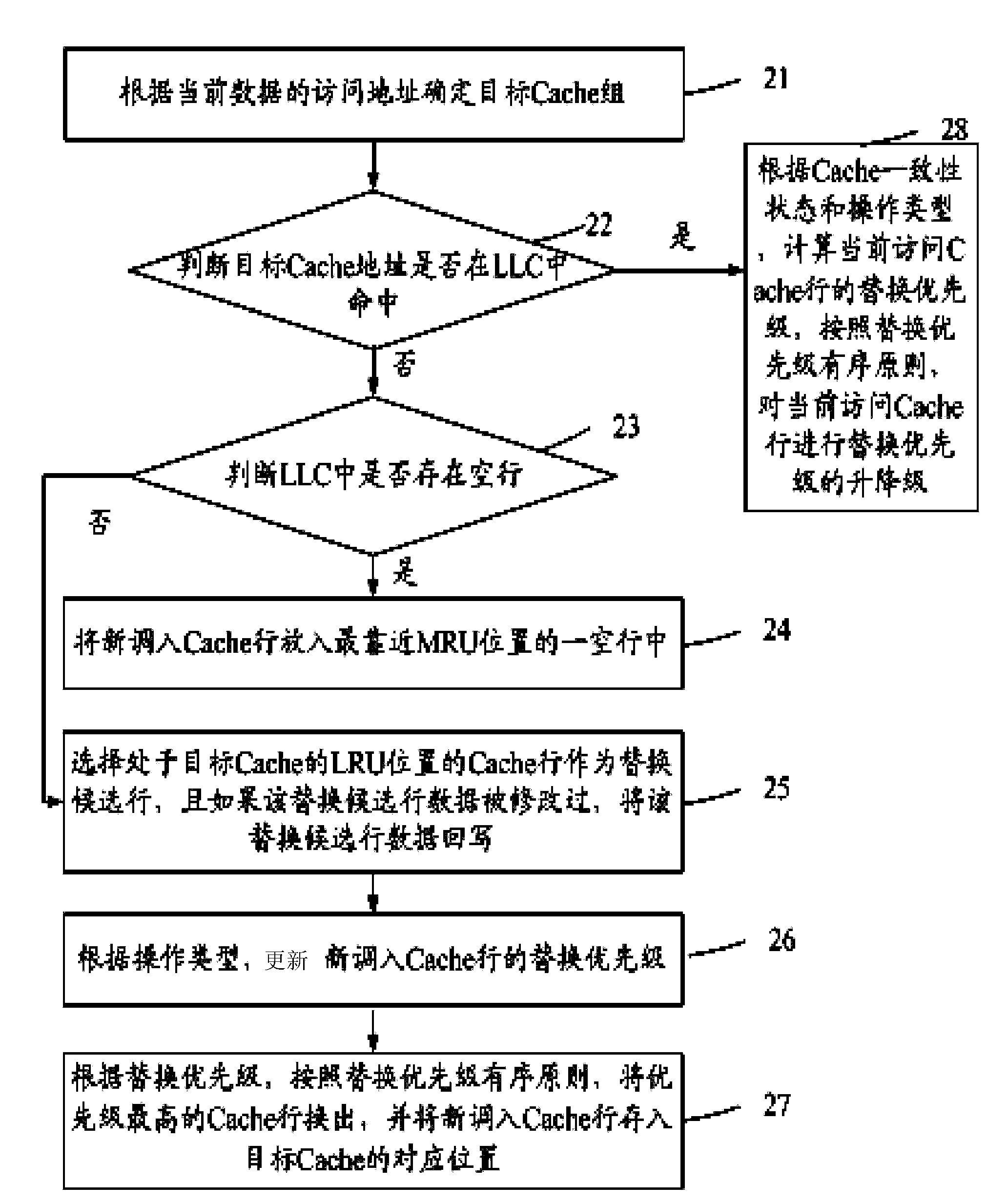

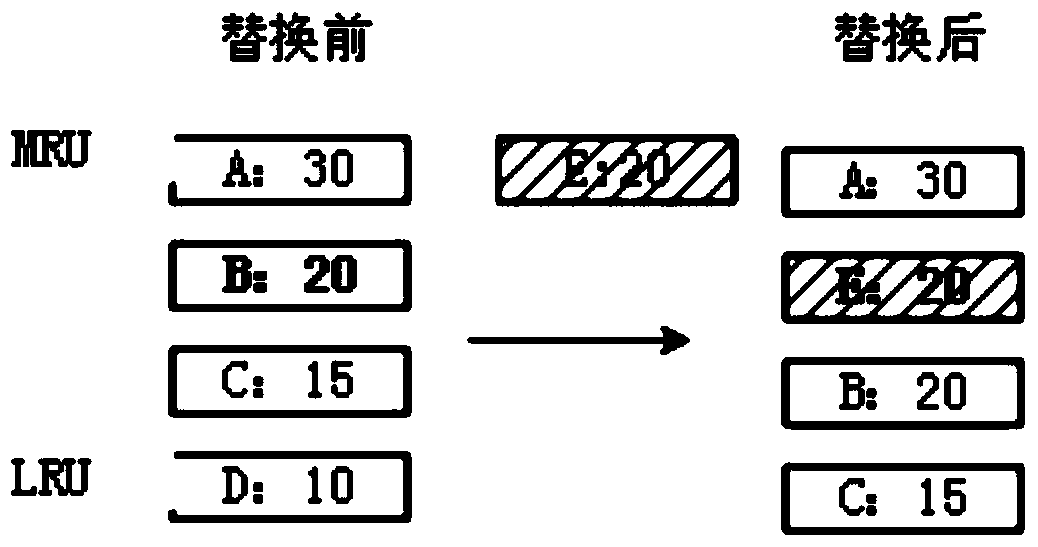

Replacement method for Cache row in LLC

ActiveCN104166631AImprove access performanceReduce invalidationMemory adressing/allocation/relocationParallel computingCache consistency

The invention provides a replacement method for a Cache row in a last level cache (LLC). If a target Cache address is lost in the LLC, the replacement priority of a Cache row which is recently called in is calculated according to the operation type, the original Cache row with the highest replacement priority is replaced according to the replacement priority orderly principle, and the Cache row which is recently called in is stored to the corresponding position of the target Cache; if the target Cache address is in the LLC, the replacement priority of the current access Cache row is updated according to the Cache consistency state and operation type, and the priority of the current access Cache row is upgraded or degraded according to the replacement priority orderly principle. Performance losses caused by inclusive victims led into the inclusive Cache in a replacement mode can be effectively reduced.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

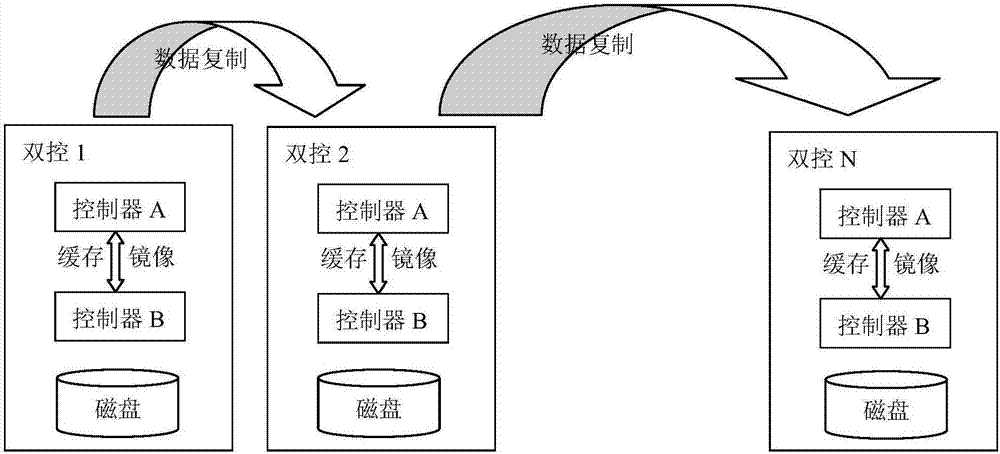

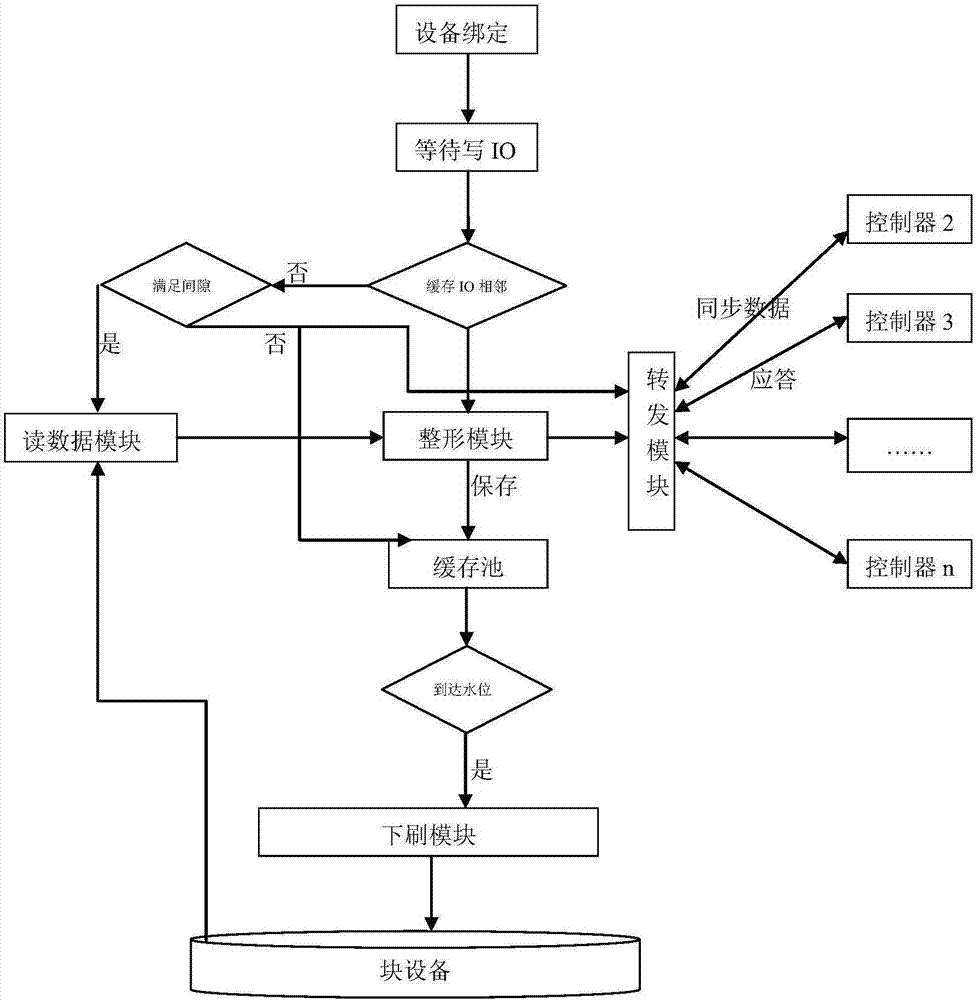

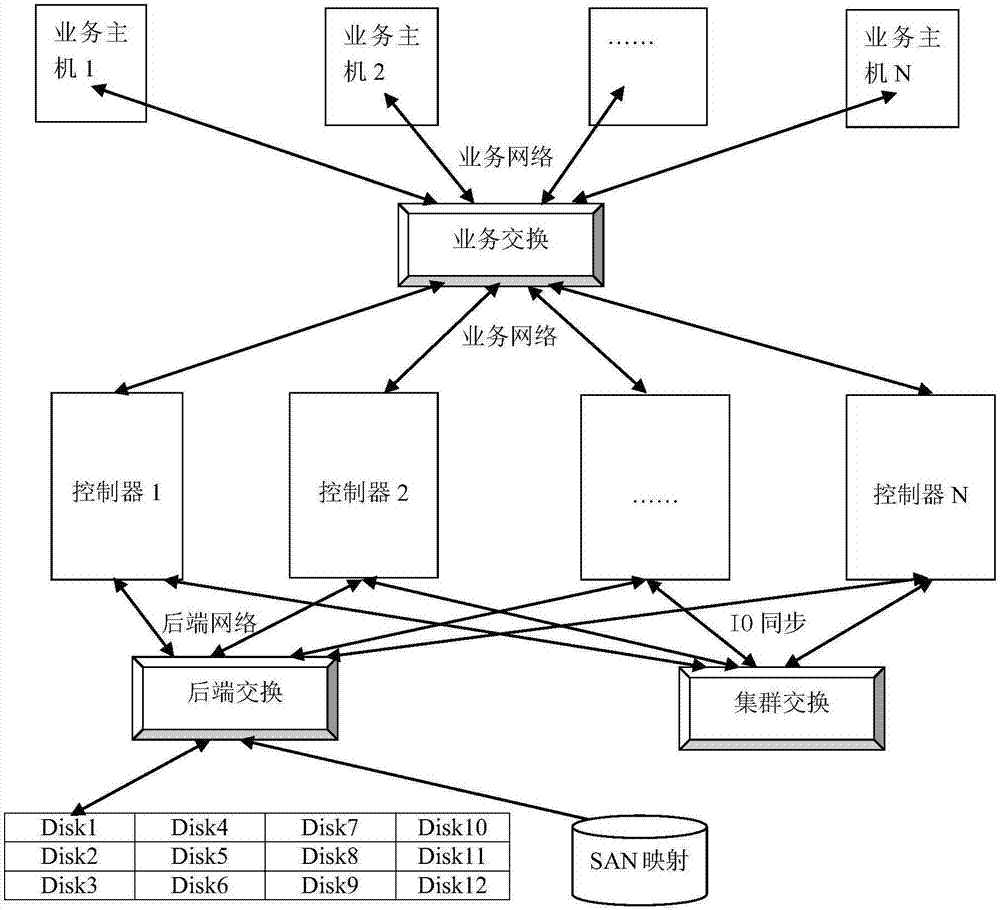

Block equipment write IO shaping and multi-controller synchronization system and method

ActiveCN106886368ASolve write IO performance optimizationSolving Consistency IssuesInput/output to record carriersData synchronizationResponse delay

The invention relates to a block equipment write IO shaping and multi-controller synchronization system and method, and belongs to the technical field of mass data storage. According to the system and method, virtual block equipment driving, binding with bottom block equipment, write IO request shaping and synchronization among multi-controller clusters are realized at a controller. The system comprises a block equipment filter drive, an IP shaping module, a read gap module, a cache pool, a forwarding module and an IP downward brushing module. Rear-end physical block equipment is share type equipment and comprises FC equal-mapping shared disks or disks connected through SAS or other manners. Compared with the prior art, the system and method have the advantages that multi-controller data synchronization is solved without occupying double space; the write IO performance optimization and cache consistency problems in multi-controller cluster storage are solved, the bandwidth performance of block equipment is brought into full play, the time waste of repeated track seeking is avoided, and the write jitter and response delay of the bottom disk are reduced; and write cache is returned, so that the write jitter of the disk and the response delay problem of IO are solved.

Owner:TOYOU FEIJI ELECTRONICS

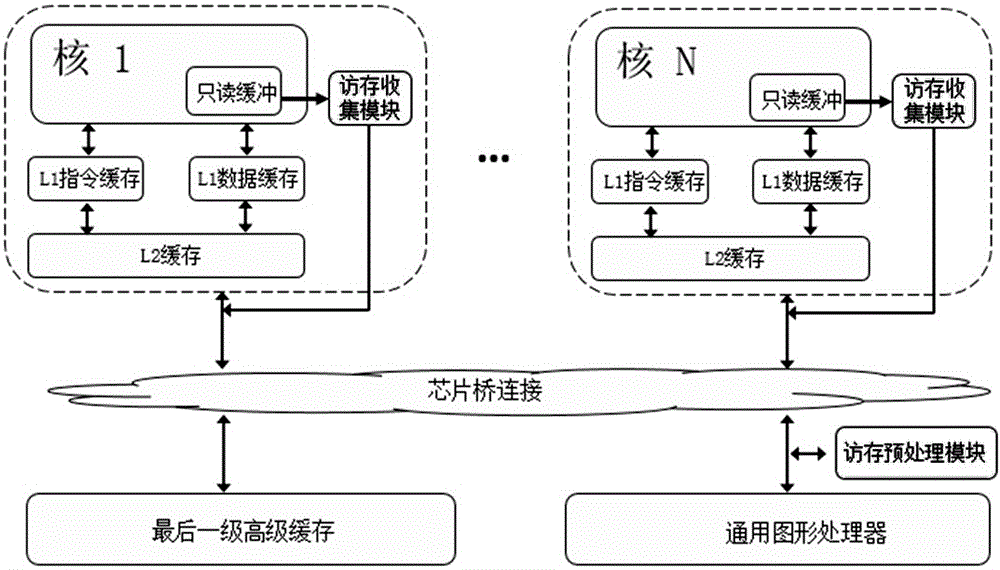

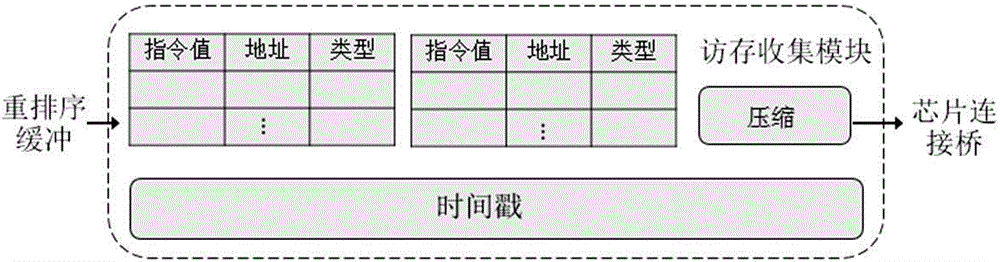

Heterogeneous platform based multi-parallel error detection system framework

InactiveCN105117369AReduce operating overheadDigital computer detailsElectric digital data processingGraphicsComputer architecture

The invention belongs to the technical field of parallel processors and particularly relates to a heterogeneous platform based multi-parallel error detection system framework. According to the framework, powerful parallel computing capability and programmability of a universal graphic processor in a heterogeneous platform are mainly utilized to simultaneously detect mainstream multiple parallel errors, including data competition, atomic contravention and sequential contravention. In the aspect of design complexity, only relatively smooth hardware complexity is required, the logic of an on-chip key path (such as high-speed cache or cache consistency) does not need to be changed, only a memory access collection module and a memory access preprocessing module are added for collecting memory access instructions possibly causing the parallel errors and providing related information of error detection separately, and an error detection algorithm realizes high parallelism by utilizing the universal graphic processor. The hardware framework provided by the invention can discover the parallel errors in the program running process and is very low in runtime overhead.

Owner:FUDAN UNIV

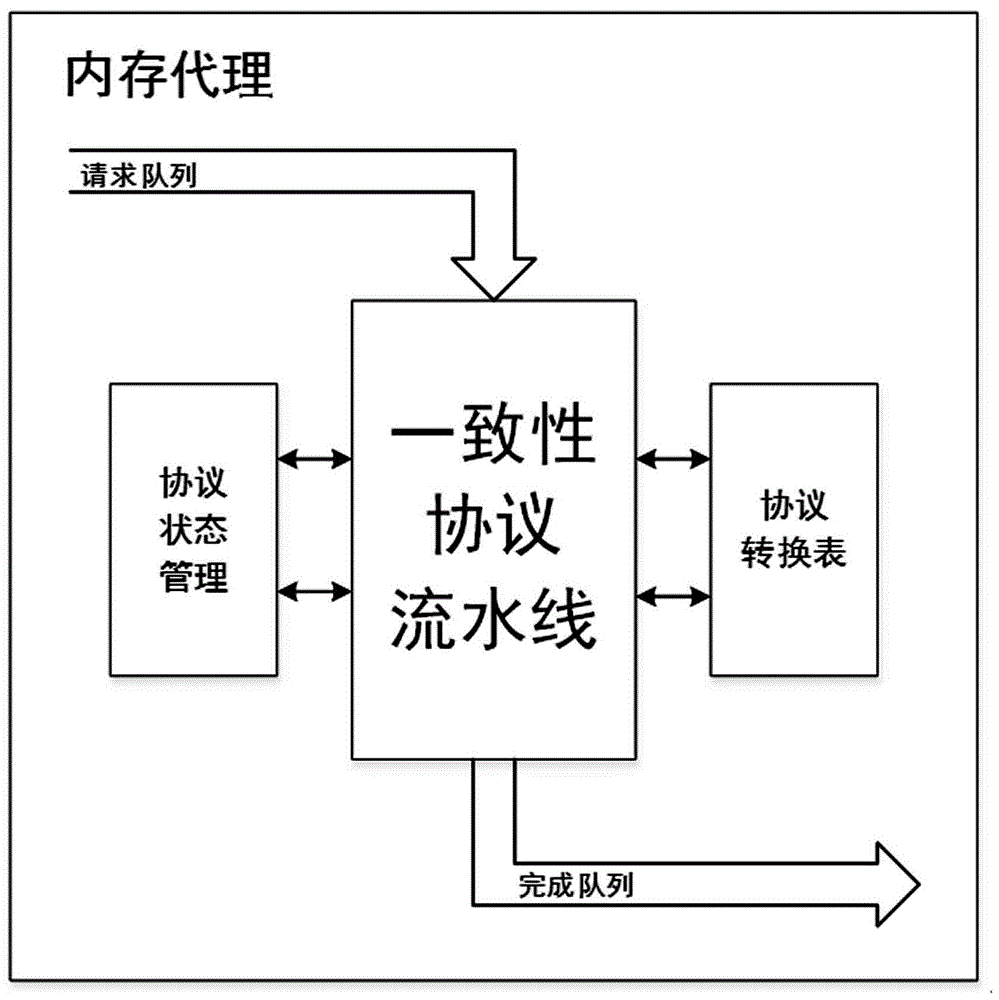

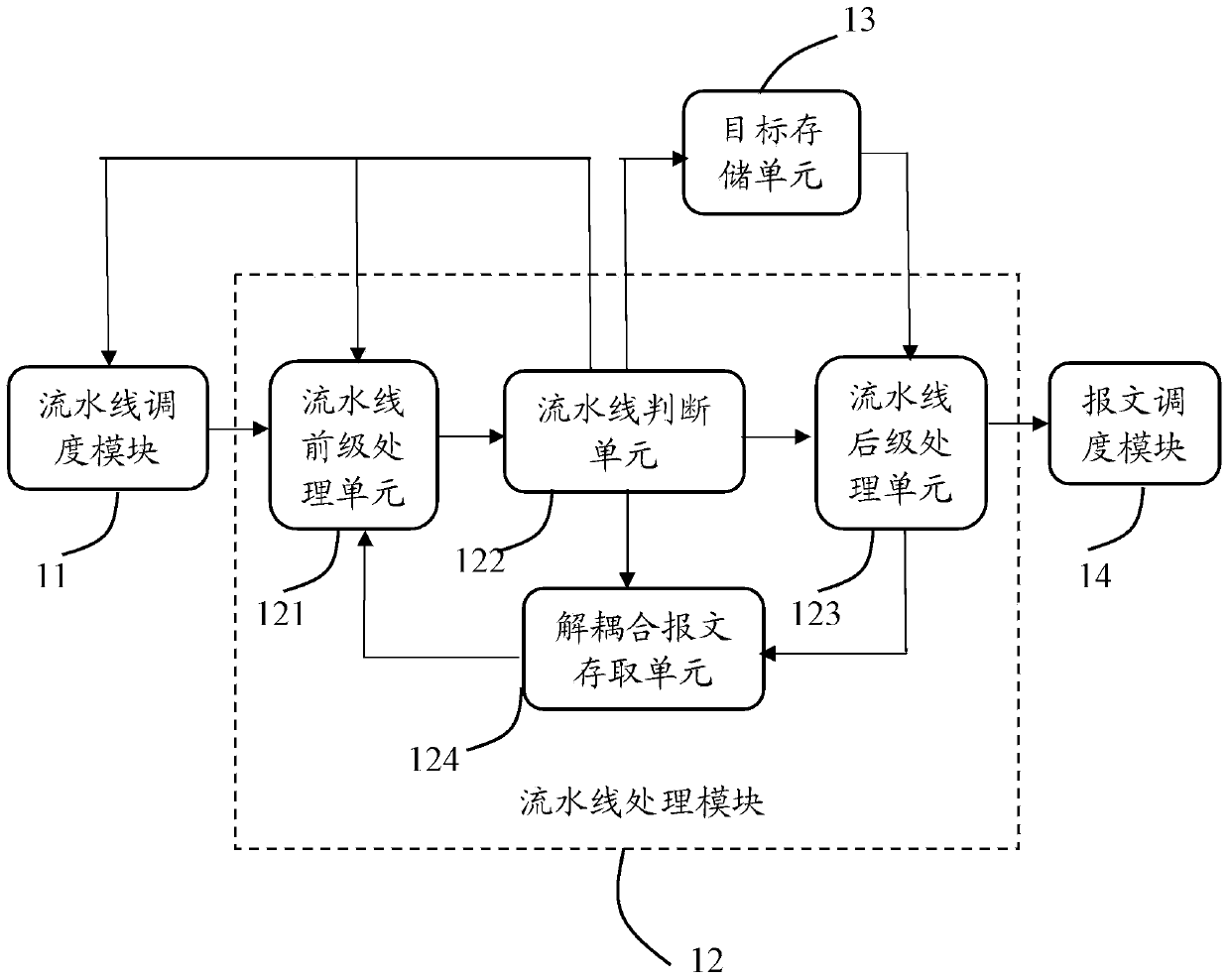

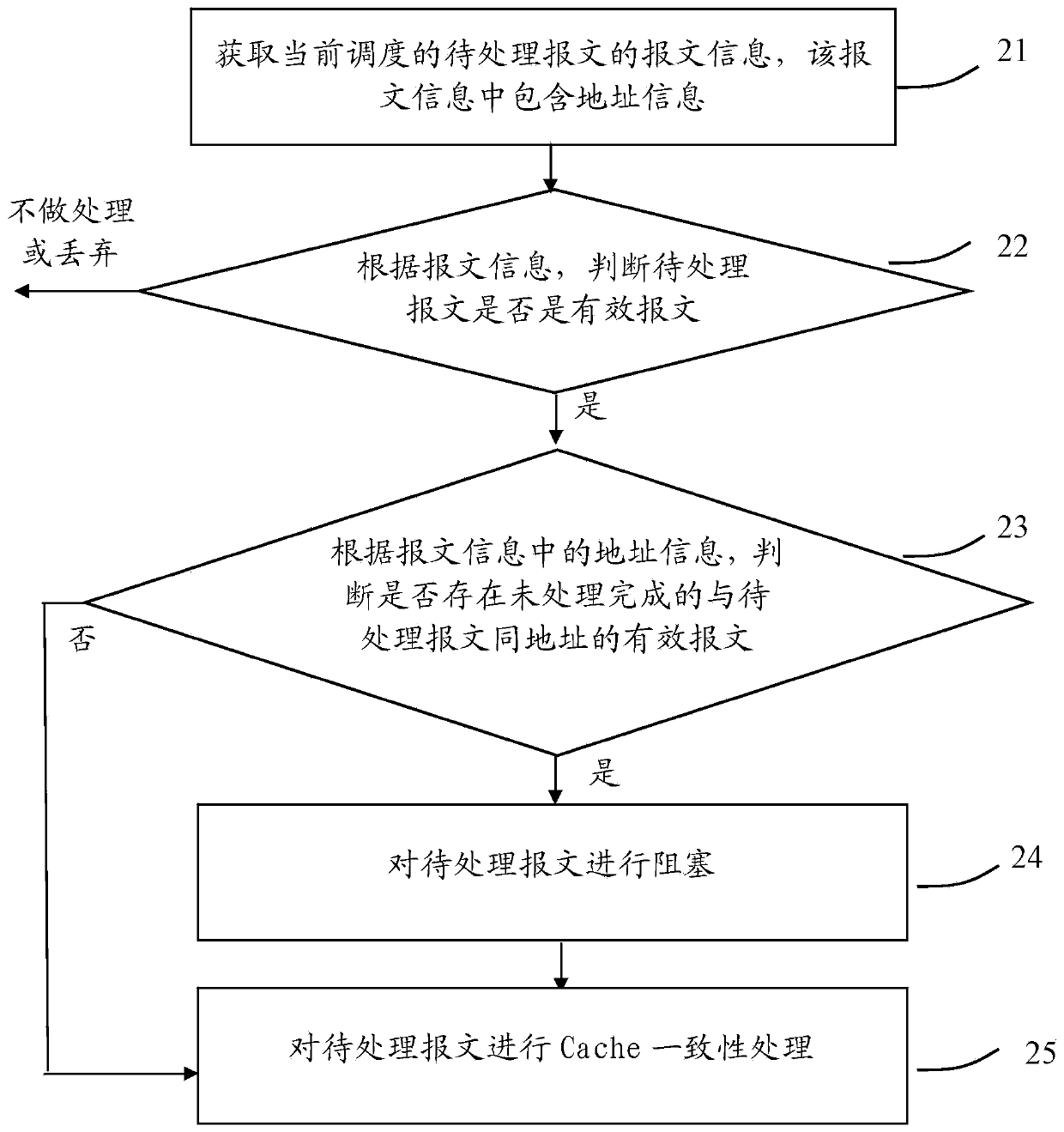

Multistage cache consistency pipeline processing method and device

InactiveCN104049955AImprove processing efficiencyBoost timingConcurrent instruction executionCache consistencyReal-time computing

The invention provides a multistage cache consistency pipeline processing method and device. The method comprises the steps that message information of currently-scheduled messages to be processed is obtained, wherein the message information comprises address information; whether the messages to be processed are effective messages is judged according to the message information, and if the messages to be processed are judged to be the effective messages, according to the address information in the message information, whether the effective messages which are unprocessed and have the same addresses as the messages to be processed exist is judged; if it is judged that the effective messages which are unprocessed and have the same addresses as the messages to be processed exist, the messages to be processed are blocked; if it is judged that the effective messages which are unprocessed and have the same addresses as the messages to be processed do not exist, cache consistency processing is carried out on the messages to be processed; by blocking the messages, to be processed, of the same addresses, when the cache consistency processing is carried out, resources are saved; moreover, timing sequence is good, and therefore the performance of a system is promoted.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

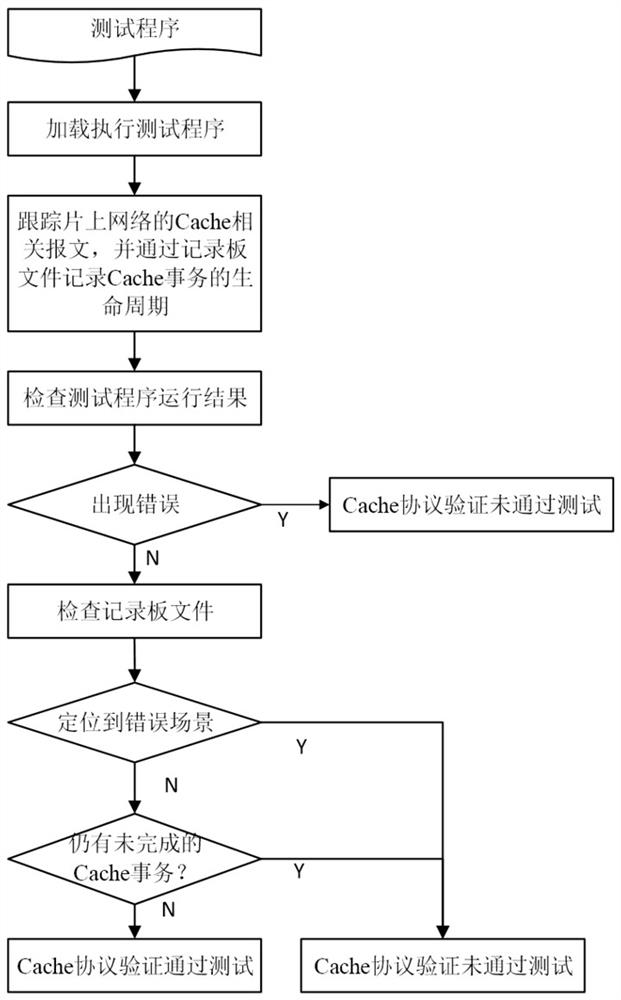

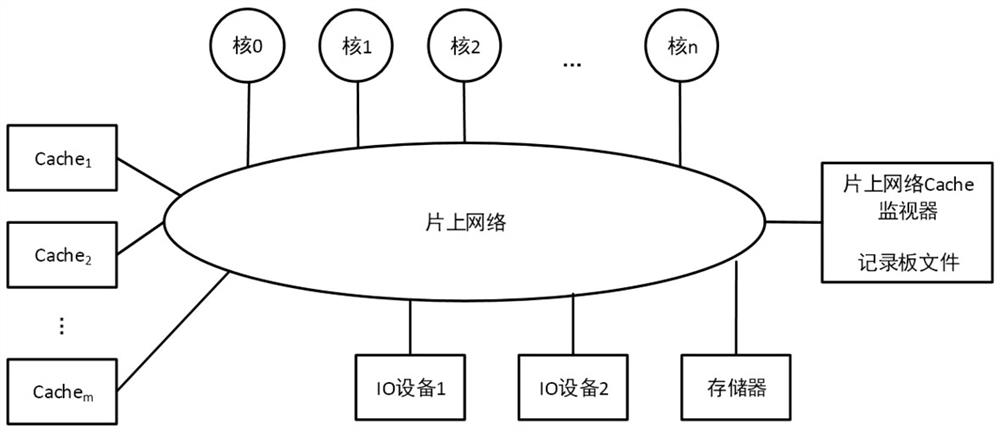

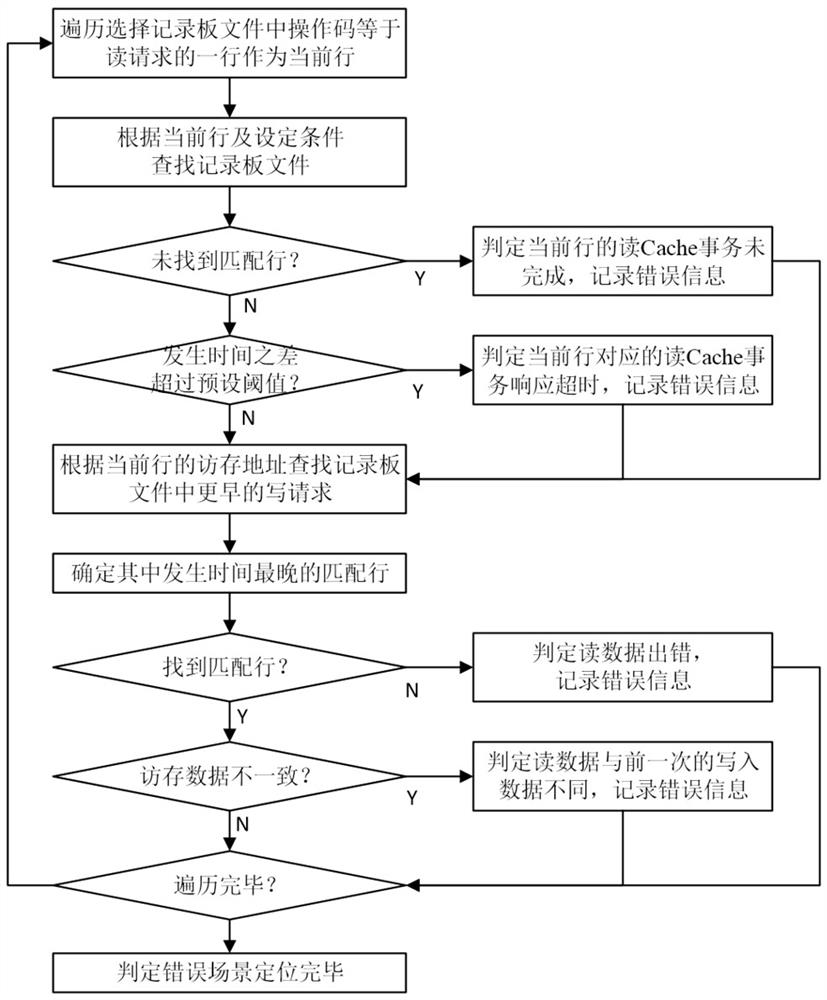

Cache consistency protocol verification method and system for on-chip multi-core processor, and medium

ActiveCN111611120APrecise positioningEasy to spot design errorsFaulty hardware testing methodsEnergy efficient computingCache consistencyNetworks on chip

The invention discloses a Cache consistency protocol verification method and system for an on-chip multi-core processor, and a medium. The method comprises the steps of loading and executing a test program for the on-chip multi-core processor executing a Cache protocol to be verified, tracking a Cache related message of an on-chip network, and recording the life cycle of a Cache transaction through a recording board file; checking whether the running result of the test program has an error or not, if so, judging that the Cache protocol verification does not pass the test, and exiting; otherwise, checking an error scene in the execution process of the record board file positioning test program, and if the error scene is found or the record board file still has an unfinished Cache transaction, judging that the Cache protocol verification does not pass the test; otherwise, judging that the Cache protocol verification passes the test. According to the invention, an error scene can be accurately positioned in a verification process, and design vulnerabilities and errors are easy to discover.

Owner:NAT UNIV OF DEFENSE TECH

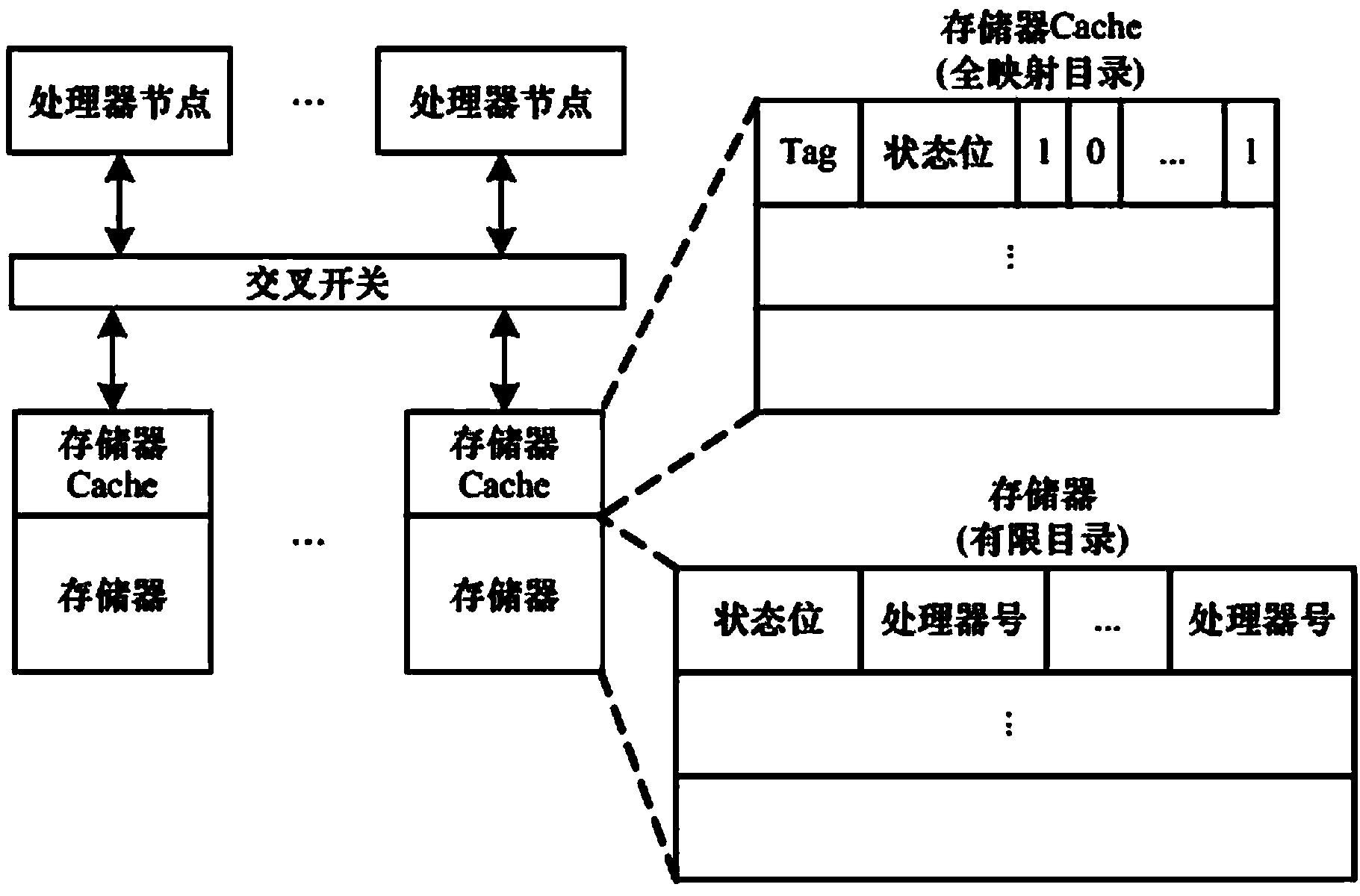

Method for cataloging Cache consistency

ActiveCN103729309ASolve problems such as low time efficiencyResolve overflowMemory adressing/allocation/relocationCache consistencyLeast recently frequently used

The invention provides a method for cataloging Cache consistency. The process of achieving the method comprises the steps that a two-stage catalog storage structure of two protocols is arranged with the combination of a limited catalog and a full mapping catalog on the basis that a storage Cache is used; a replacement algorithm between a storage layer and a storage Cache layer is guaranteed through a fake-least-recently-used algorithm sharing numerical weighing. Compared with the prior art, the method for cataloging Cache consistency solves the problems that that the full mapping catalog takes too many storage expenses, the limited catalog can be limited by overflowing of a catalog entry, and the effectiveness of time for a chained catalog is low, the practicability is high, and popularization is easy.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

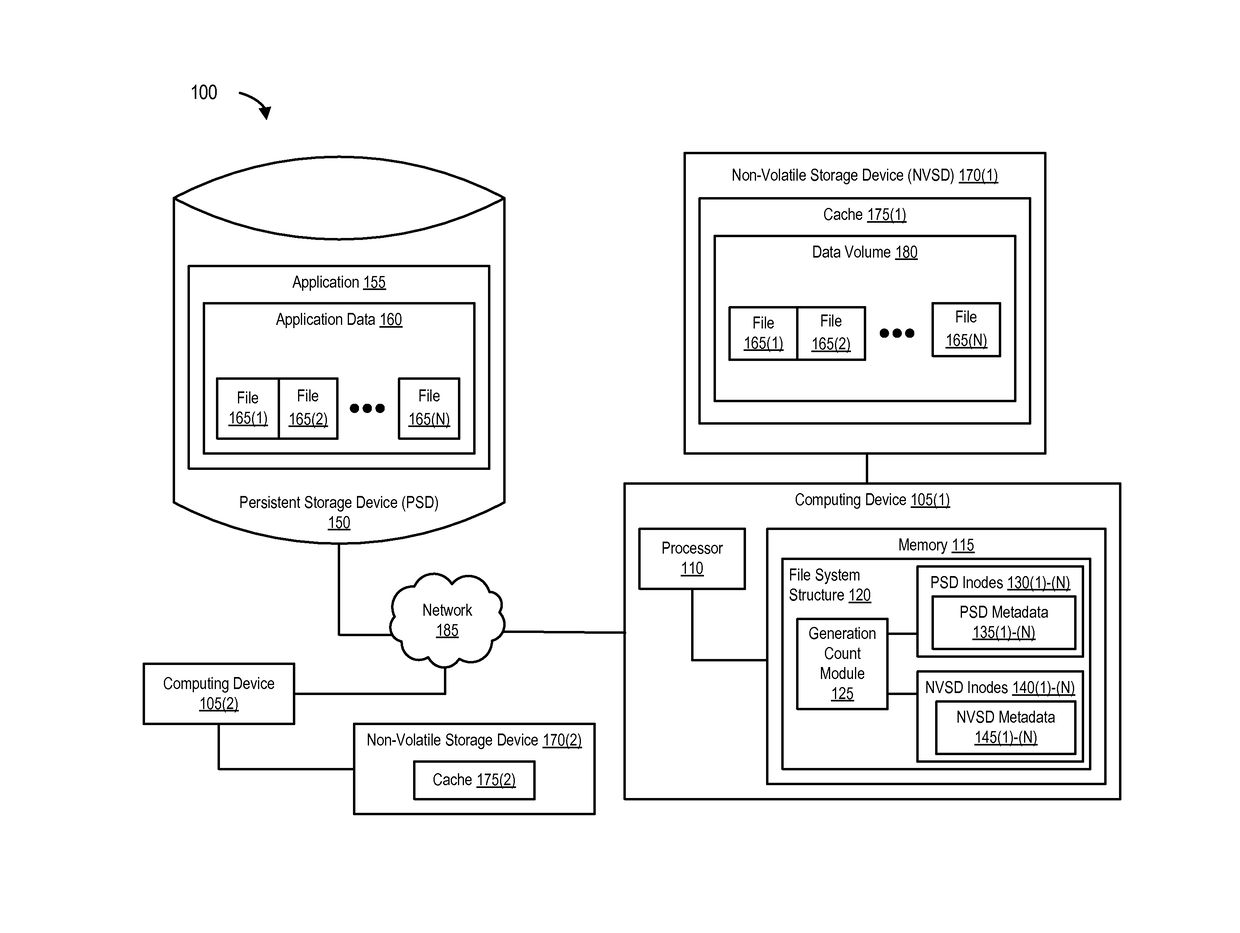

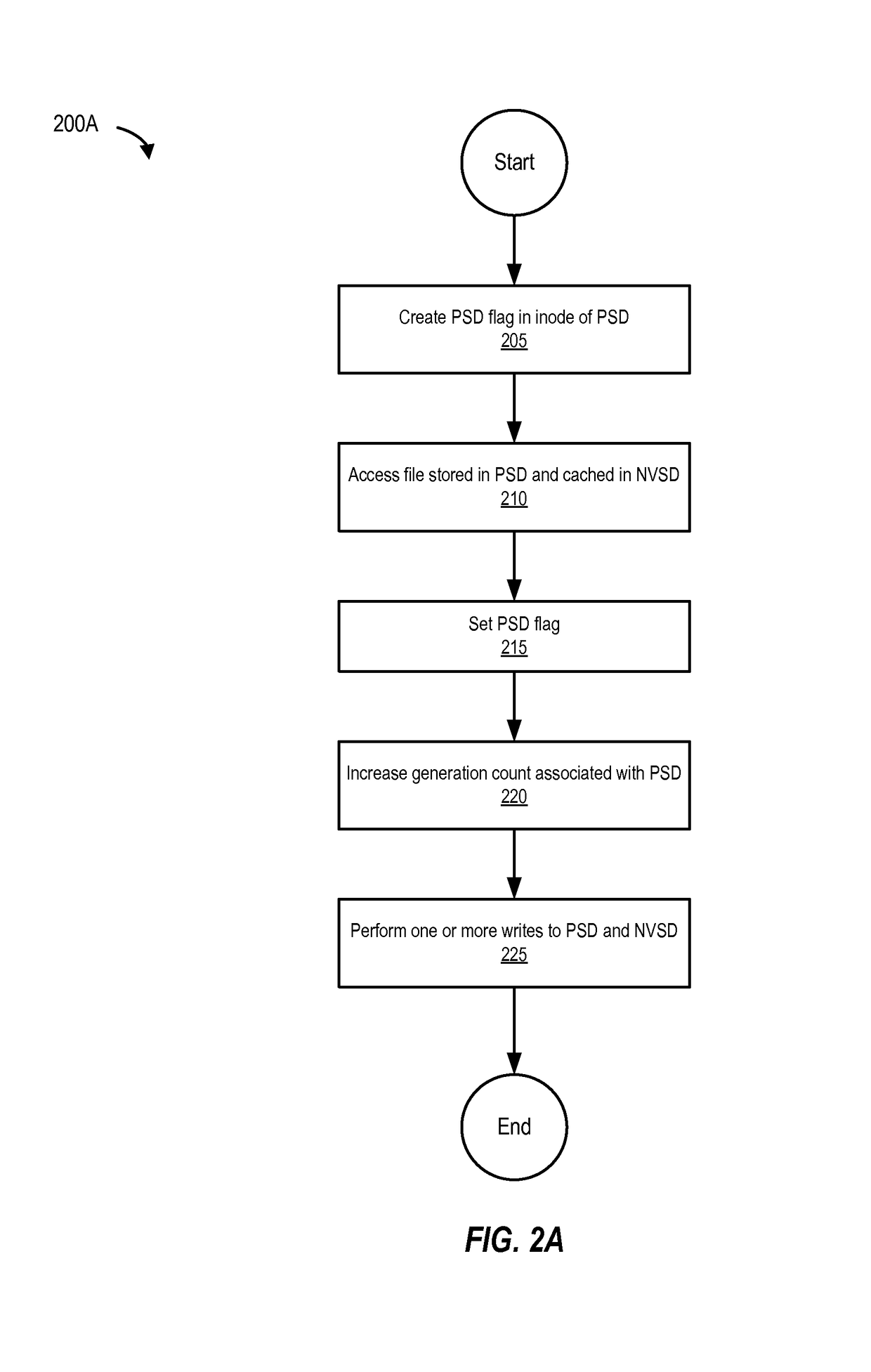

Cache consistency optimization

ActiveUS9892041B1Memory architecture accessing/allocationDigital data information retrievalCache consistencyNon-volatile memory

Various methods and systems for optimizing cache consistency are disclosed. For example, one method involves writing data to a file during a write transaction. The file is stored in a persistent storage device and cached in a non-volatile storage device. The method determines if an in-memory flag associated with the persistent storage device set. If the in-memory flag is not set, the method increases a generation count associated with the persistent storage device before a write transaction is performed on the file. The method then sets the in-memory flag before performing the write transaction on the file. In other examples, the method involves using a persistent flag associated with the non-volatile storage device to maintain cache consistency during a data freeze related to the taking of a snapshot by synchronizing generation counts associated with the persistent storage device and the non-volatile storage device.

Owner:VERITAS TECH

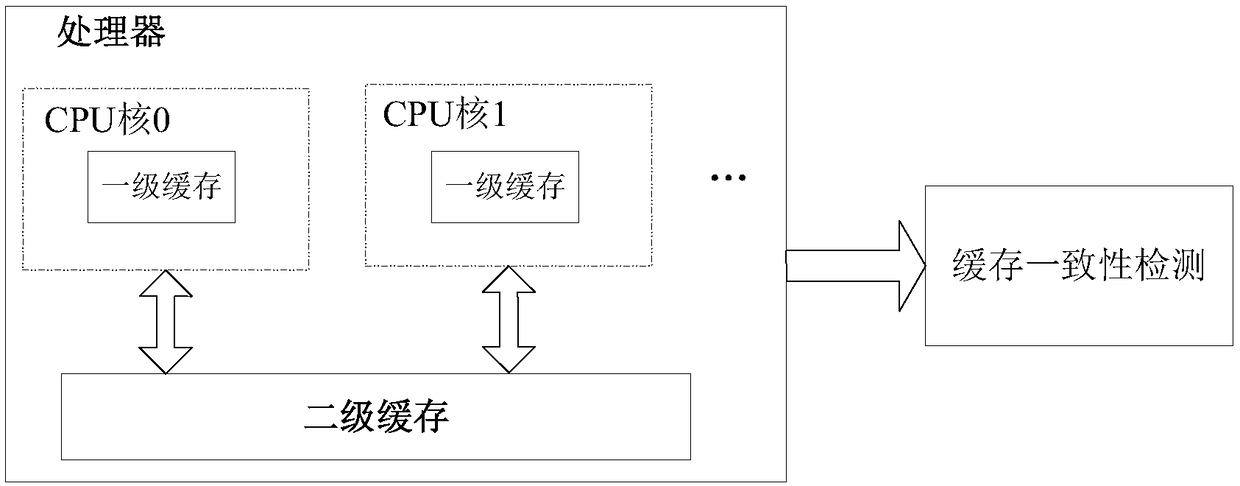

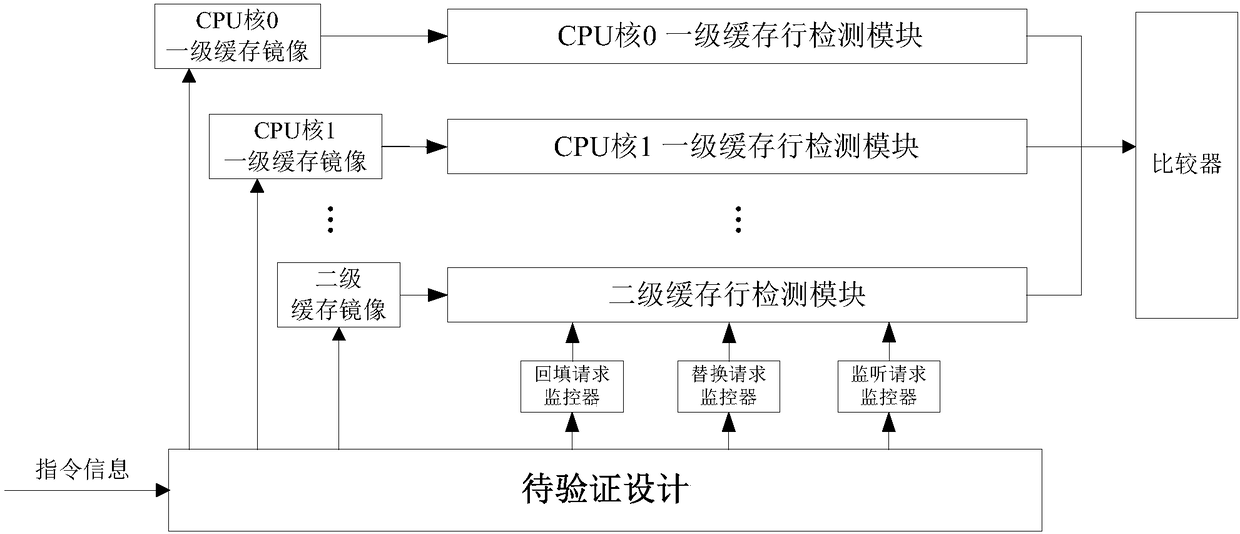

Cache consistency detection system and method

ActiveCN109213641AShorten the verification cycleGuarantee the success rate of one shotDetecting faulty computer hardwareHardware monitoringReal systemsParallel computing

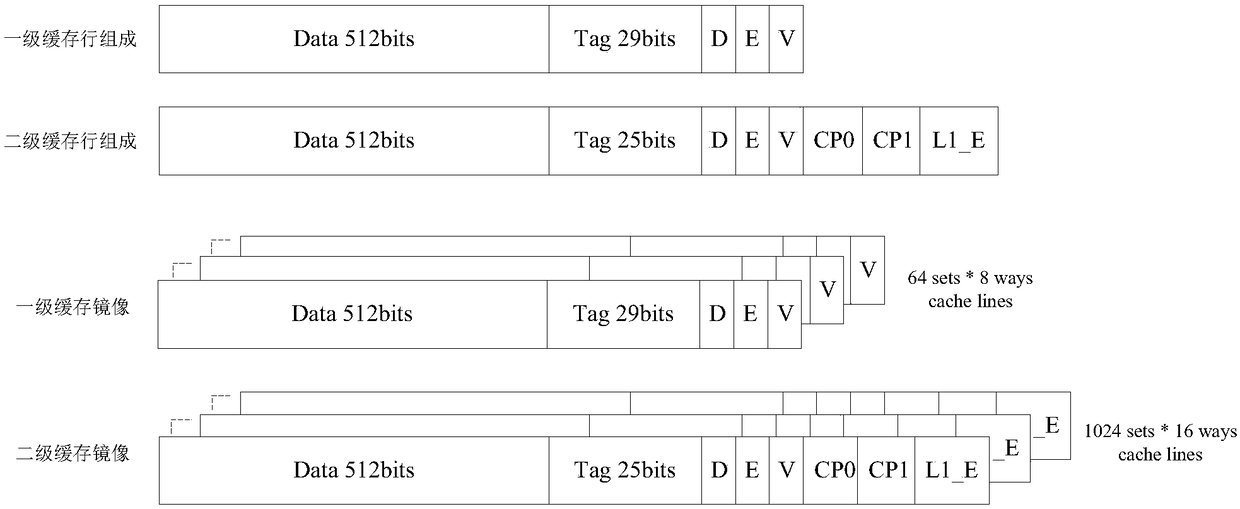

The invention provides a cache consistency detection system and method. The system comprises a plurality of CPU core first-level cache mirror images, first-level cache row detection modules in one-to-one corresponding to the CPU core first-level cache mirror images, a second-level cache mirror image, a second-level cache row detection module corresponding to the second-level cache mirror image, abackfill request monitor, a replacement request monitor, a snoop request monitor and a comparator. The invention can dynamically cover the detection of all application scenes of the real system in real time, and improves the efficiency of cache consistency detection.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

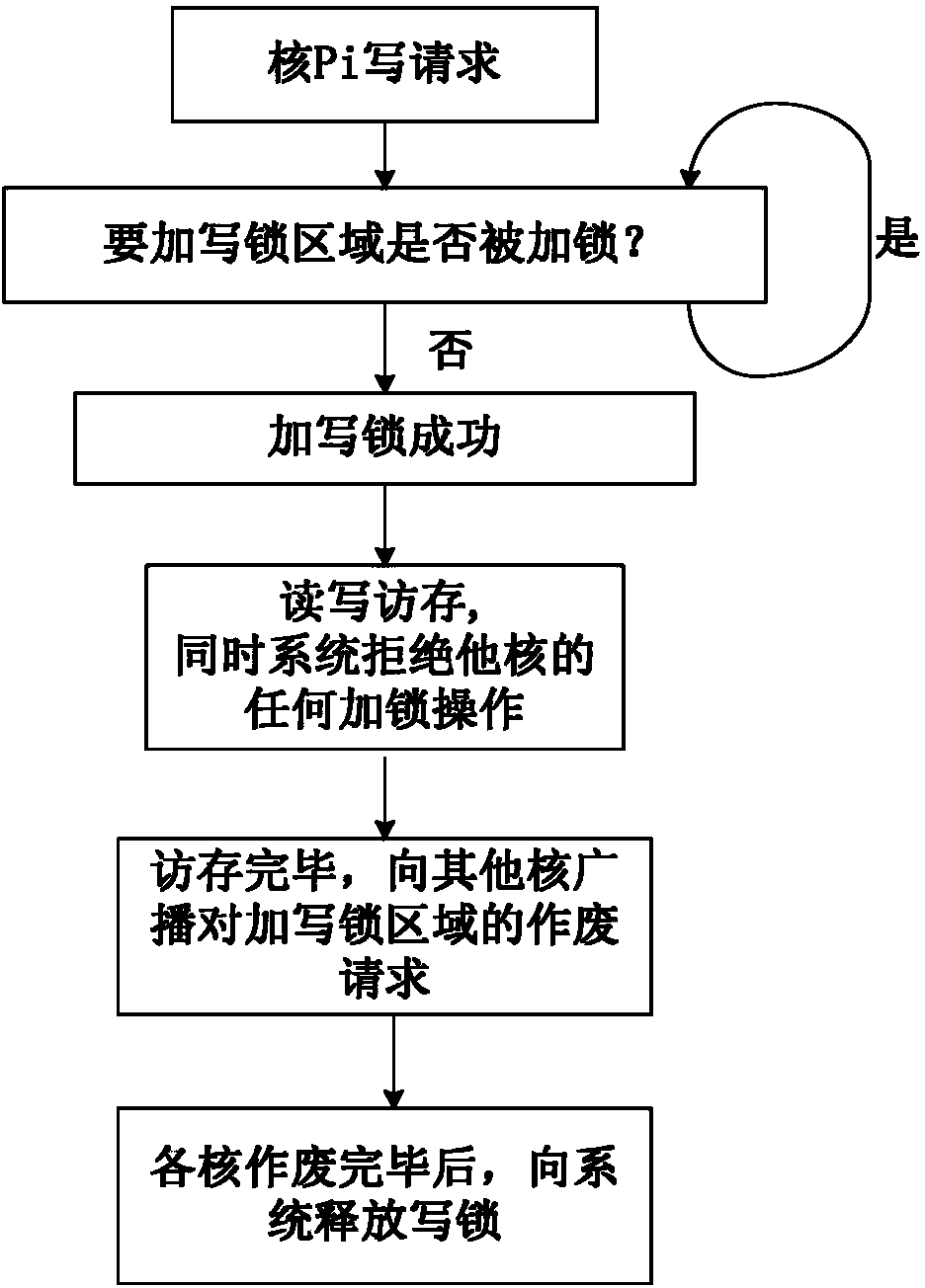

Atomic operation implementation method and device based on cache consistency principle

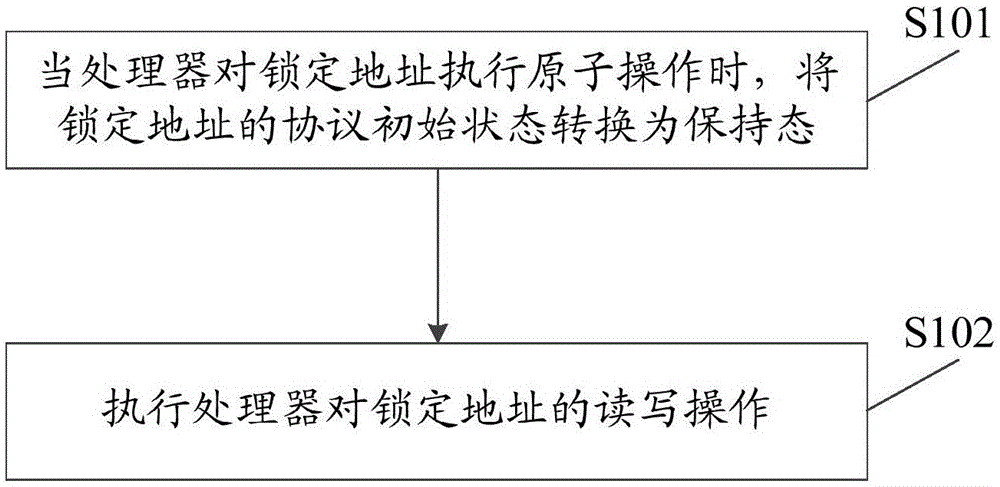

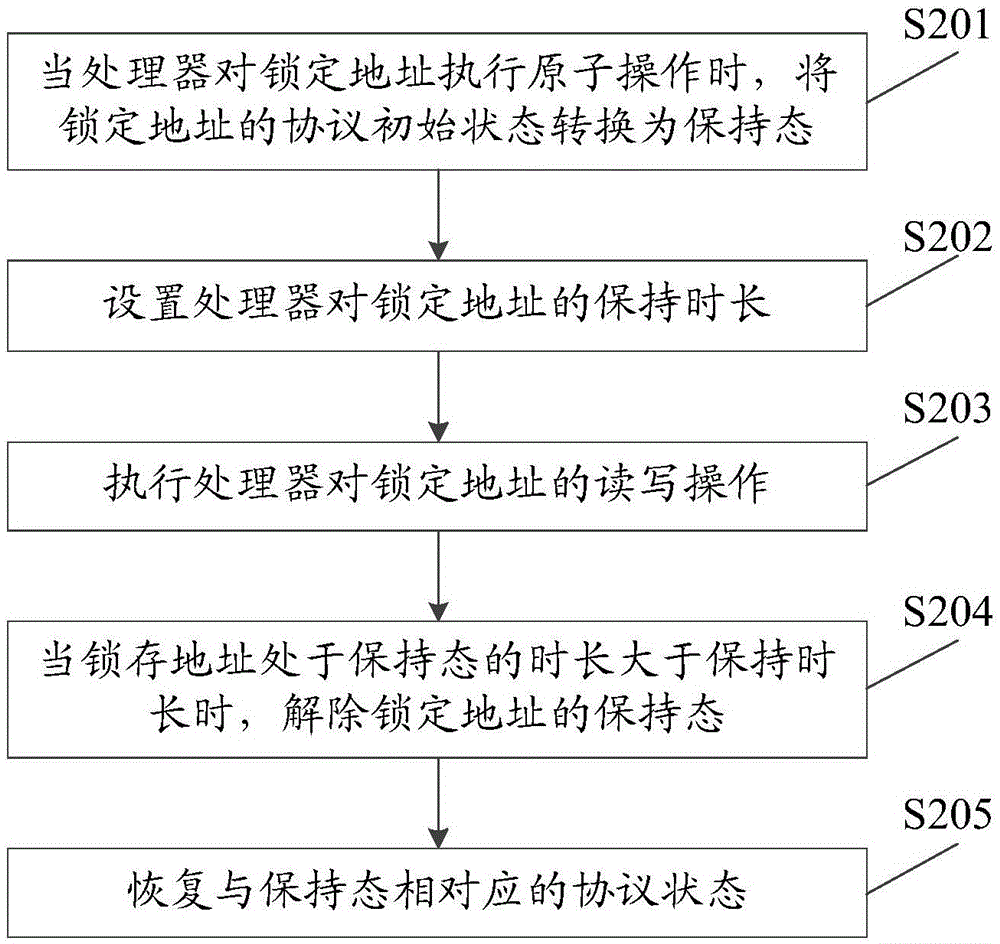

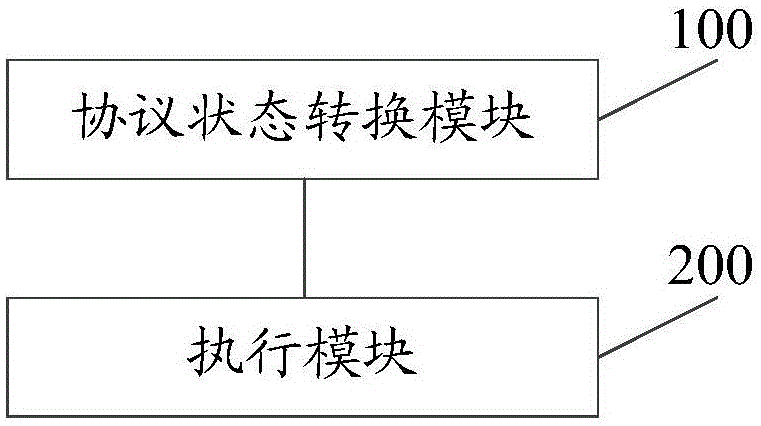

ActiveCN105094840AAtomic operation guaranteeMemory adressing/allocation/relocationSpecific program execution arrangementsCache consistencyOperating system

The embodiment of the invention discloses an atomic operation implementation method and device based on the cache consistency principle. The method comprises the steps that when a processor executes an atomic operation for a locked address, an original protocol state of the locked address is converted into a maintaining state, wherein when the locked address is in the maintaining state, other processors are prevented from executing read-write operations on the locked address; the read-write operation of the processor for the locked address is executed, and when the locked address is in the maintaining state, a cache consistency maintaining mechanism of the processor ensures that the other processors need to pause and wait for executing the operation of the locked address only when the current processor can execute the read-write operation on the locked address, and therefore the atomic operation of the processor for the locked address is ensured.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

Method for maintaining cache consistency during reordering

PendingUS20200112525A1Memory architecture accessing/allocationData switching networksData packParallel computing

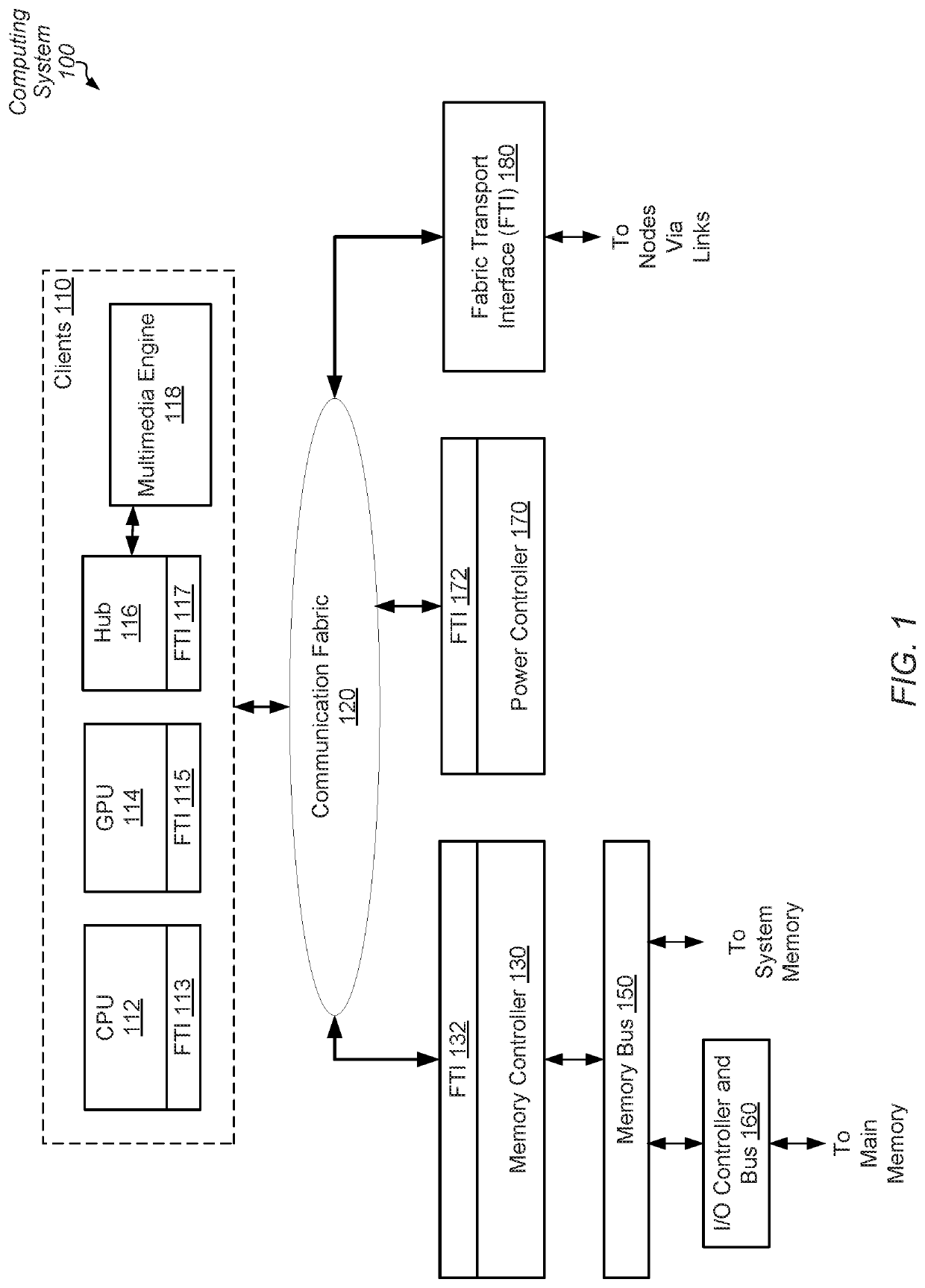

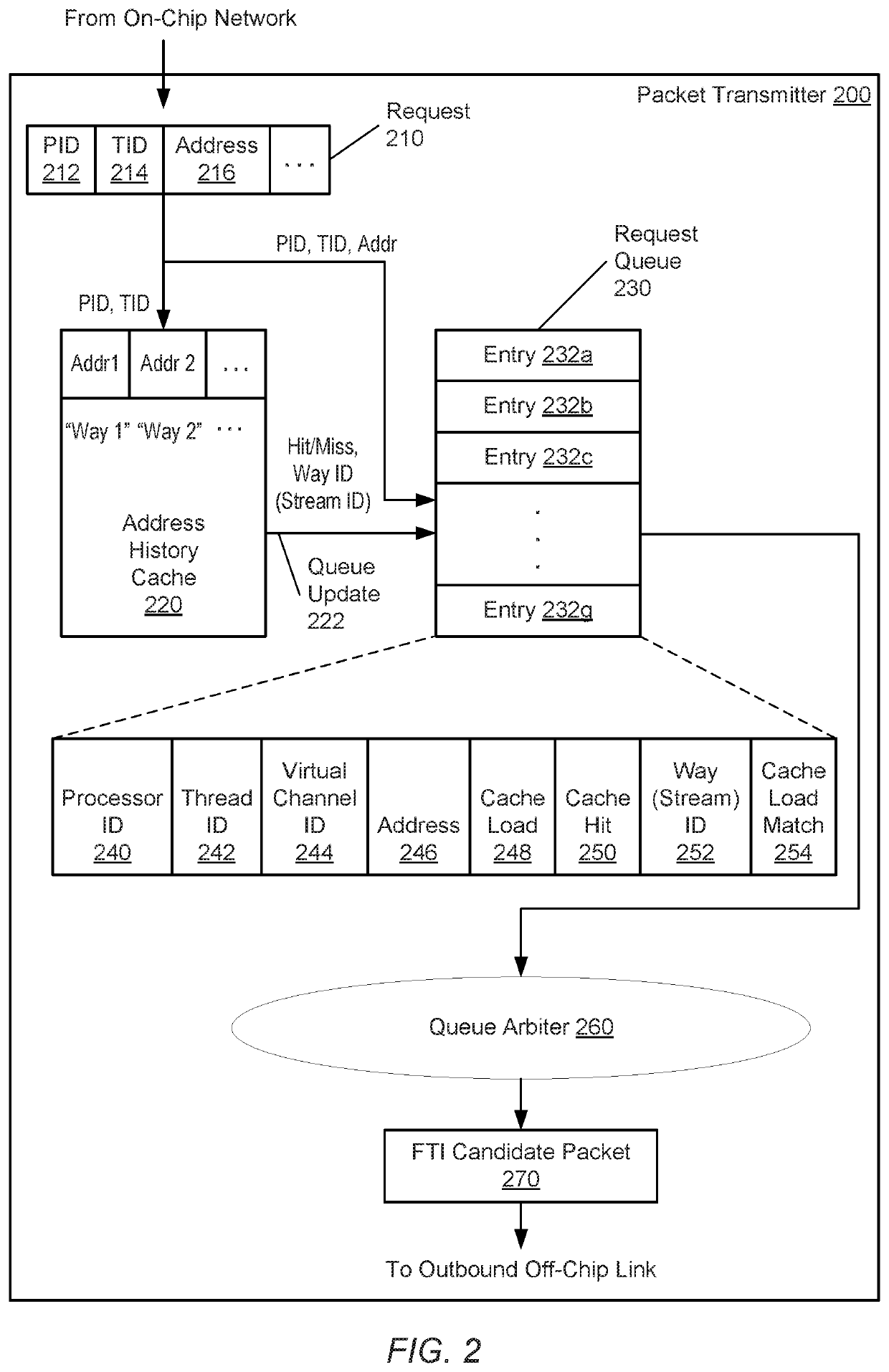

Systems, apparatuses, and methods for performing efficient data transfer in a computing system are disclosed. A computing system includes multiple fabric interfaces in clients and a fabric. A packet transmitter in the fabric interface includes multiple queues, each for storing packets of a respective type, and a corresponding address history cache for each queue. Queue arbiters in the packet transmitter select candidate packets for issue and determine when address history caches on both sides of the link store the upper portion of the address. The packet transmitter sends a source identifier and a pointer for the request in the packet on the link, rather than the entire request address, which reduces the size of the packet. The queue arbiters support out-of-order issue from the queues. The queue arbiters detect conflicts with out-of-order issue and adjust the outbound packets and fields stored in the queue entries to avoid data corruption.

Owner:ADVANCED MICRO DEVICES INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com