Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

153 results about "Processor sharing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Processor sharing or egalitarian processor sharing is a service policy where the customers, clients or jobs are all served simultaneously, each receiving an equal fraction of the service capacity available. In such a system all jobs start service immediately (there is no queueing).

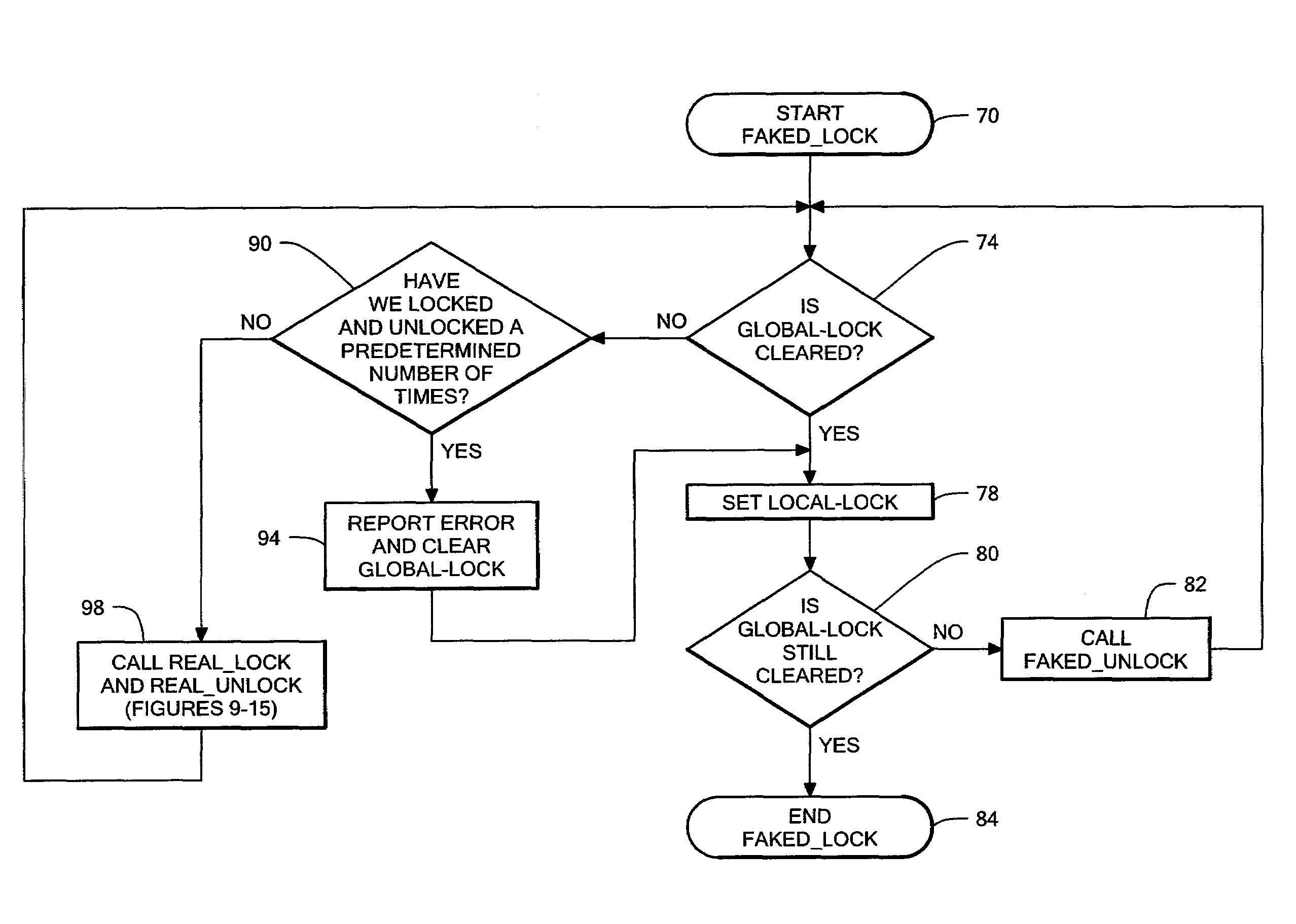

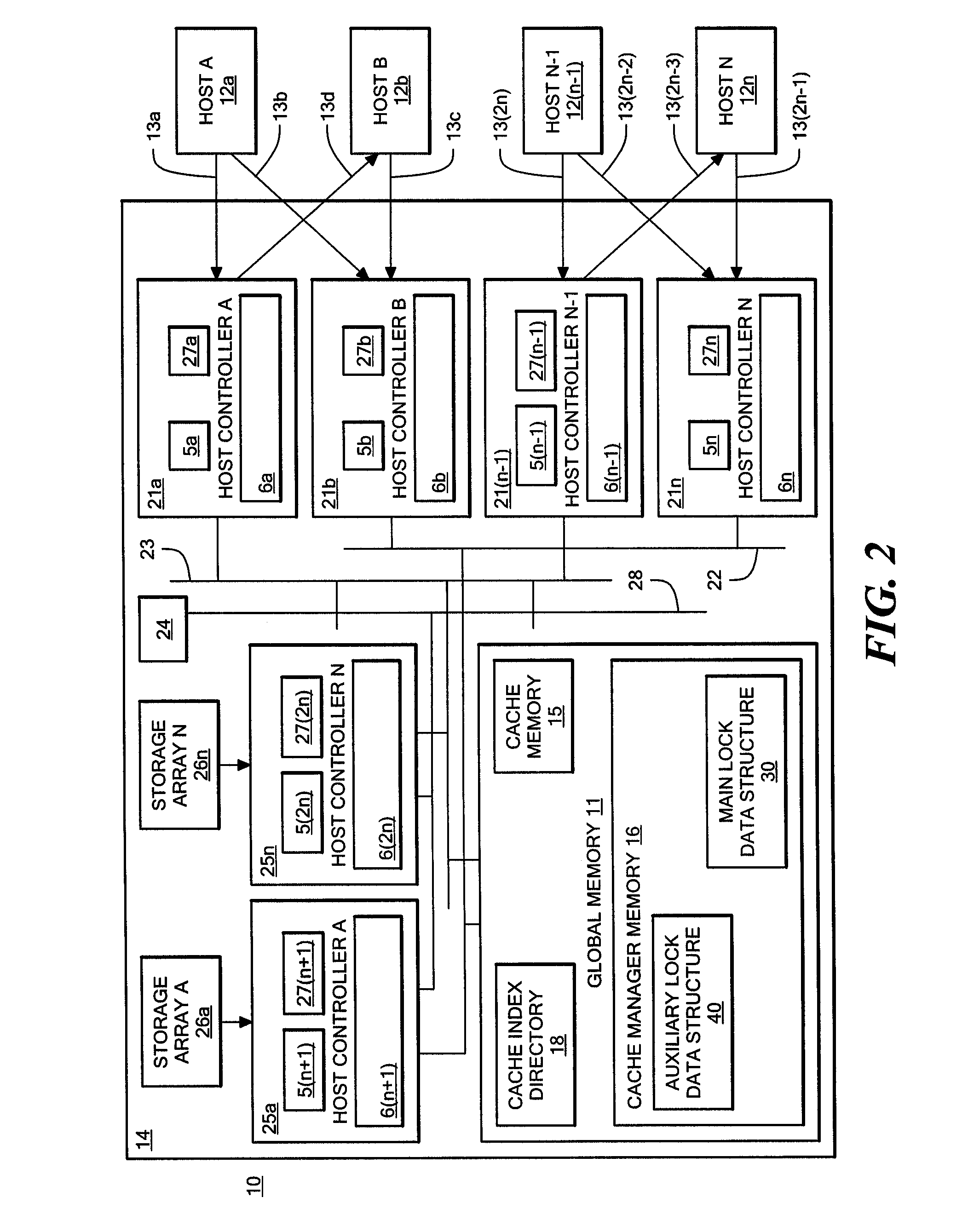

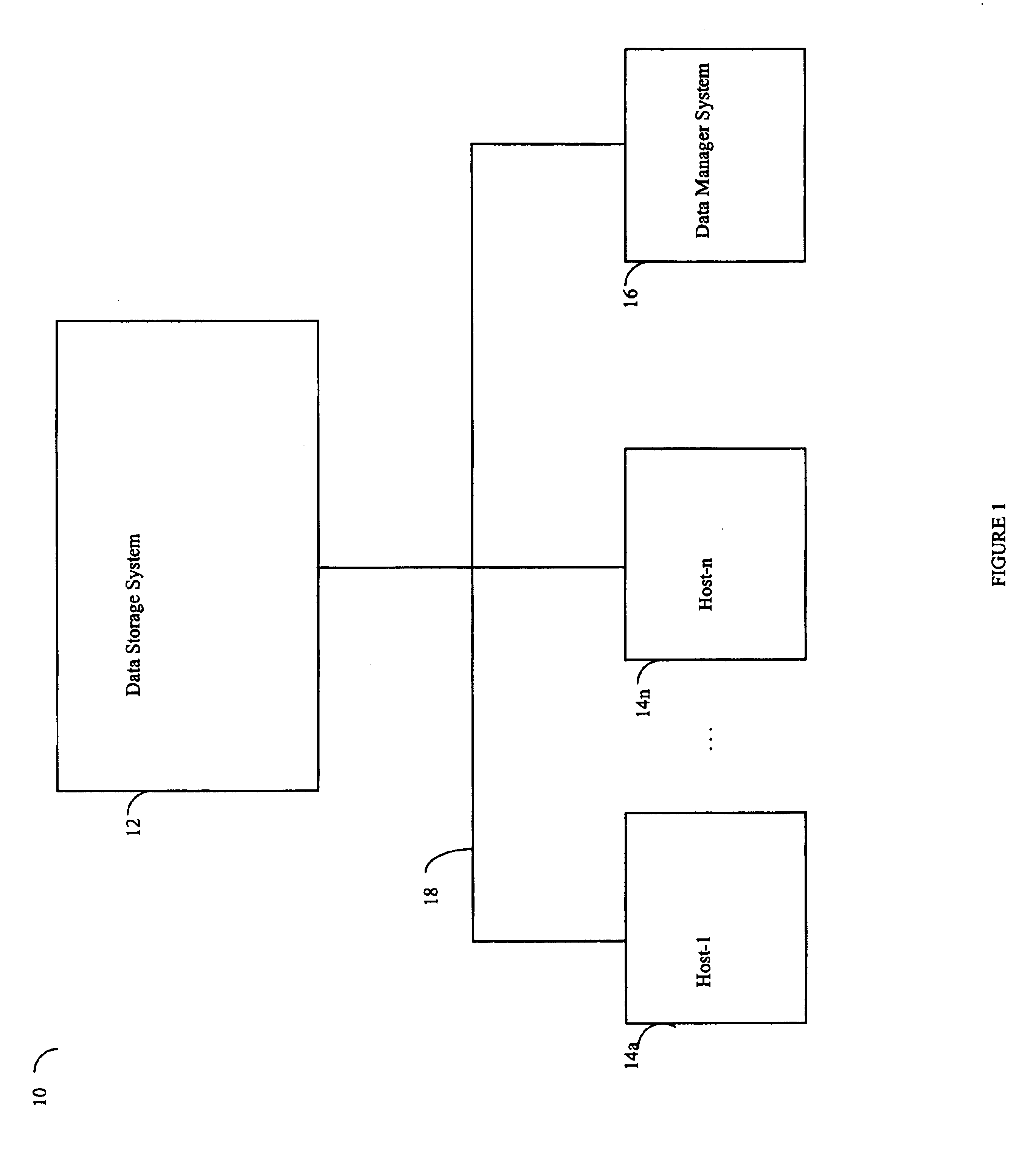

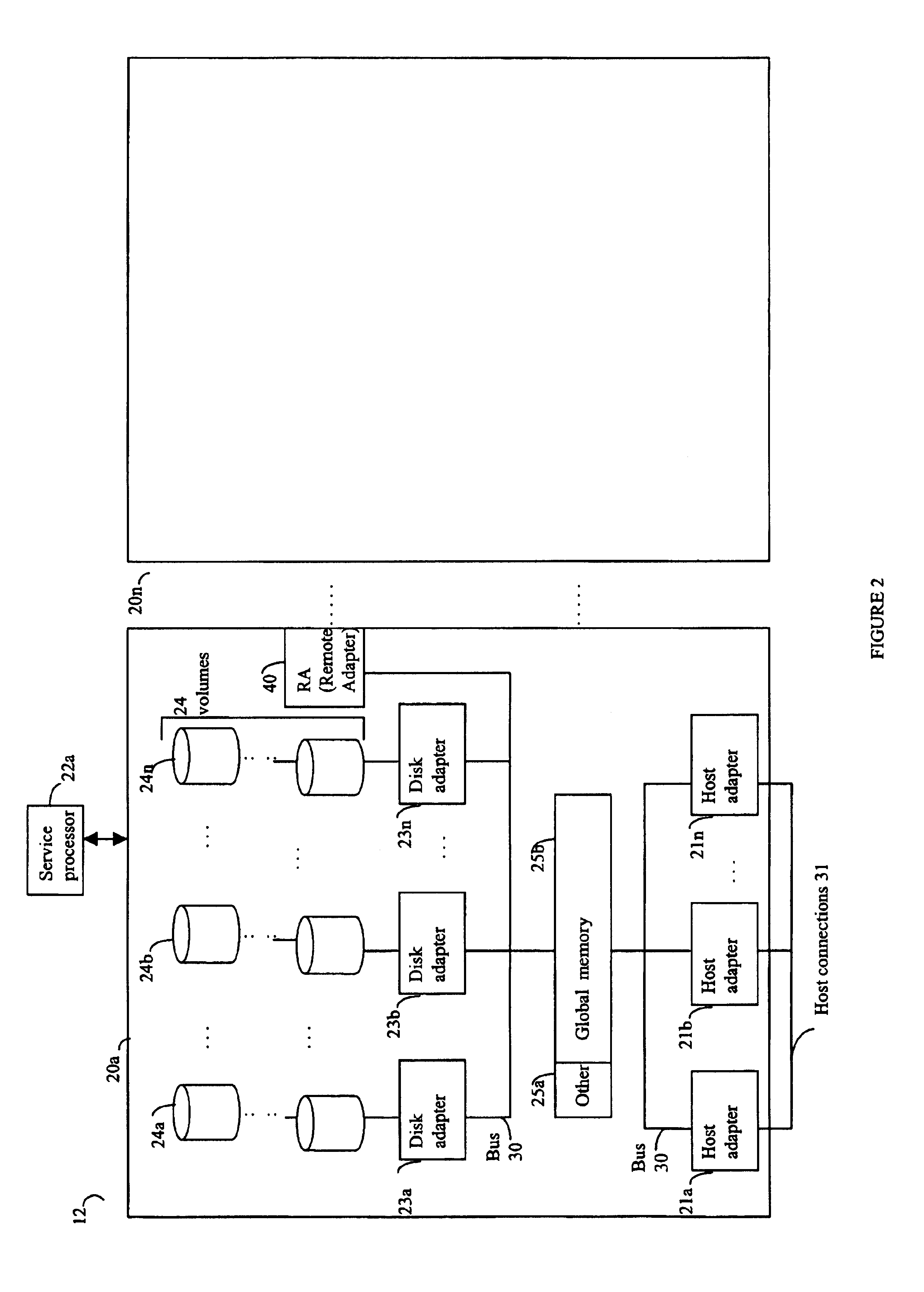

Method and apparatus for controlling exclusive access to a shared resource in a data storage system

InactiveUS7246187B1Unauthorized memory use protectionProgram controlParallel computingShared resource

A method for controlling exclusive access to a resource shared by multiple processors in a data storage system includes providing a system lock procedure to permit a processor to obtain a lock on the shared resource preventing other processors from accessing the shared resource and providing a faked lock procedure to indicate to the system lock procedure that a processor has a lock on the shared resource where such a lock does not exist, and wherein the faked lock procedure prevents another processor from obtaining the lock on the shared resource, but does not prevent other processors from accessing the shared resource. A data storage system according to the invention includes a shared resource, a plurality of processors coupled to the shared resource through a communication channel, and a lock services procedure providing the system lock procedure and the faked lock procedure. In one embodiment, the shared resource is a cache and the system lock procedure permits a processor to lock the entire cache whereas the faked lock procedure is implemented by a processor seeking exclusive access of a cache slot.

Owner:EMC IP HLDG CO LLC

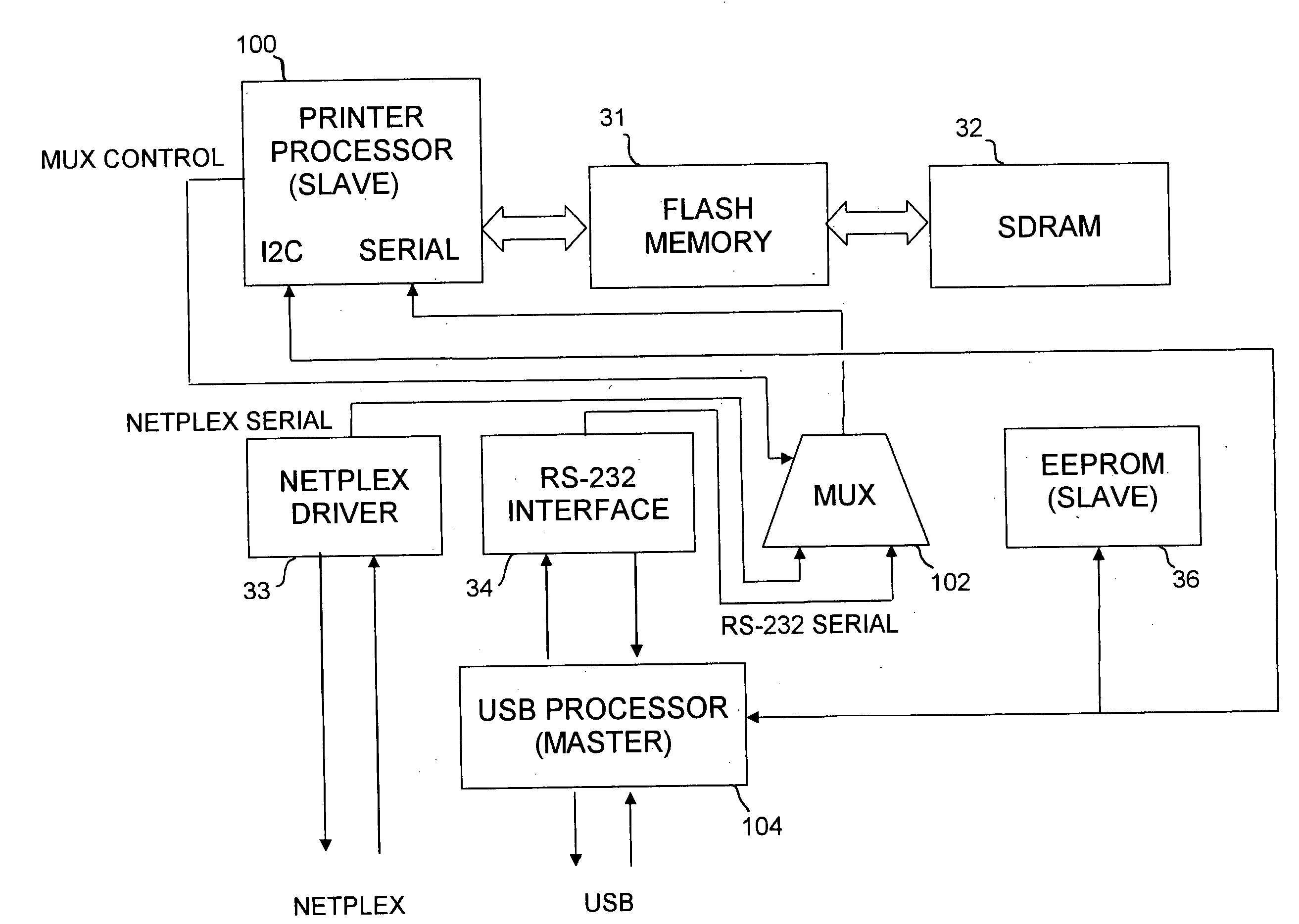

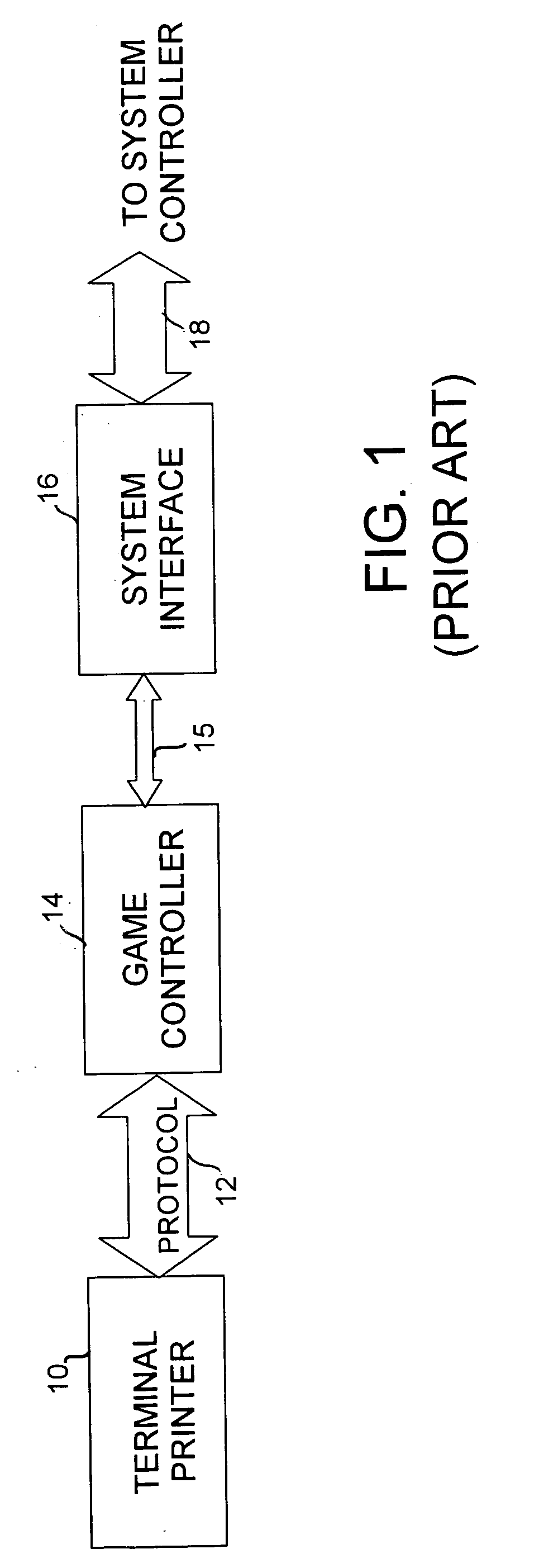

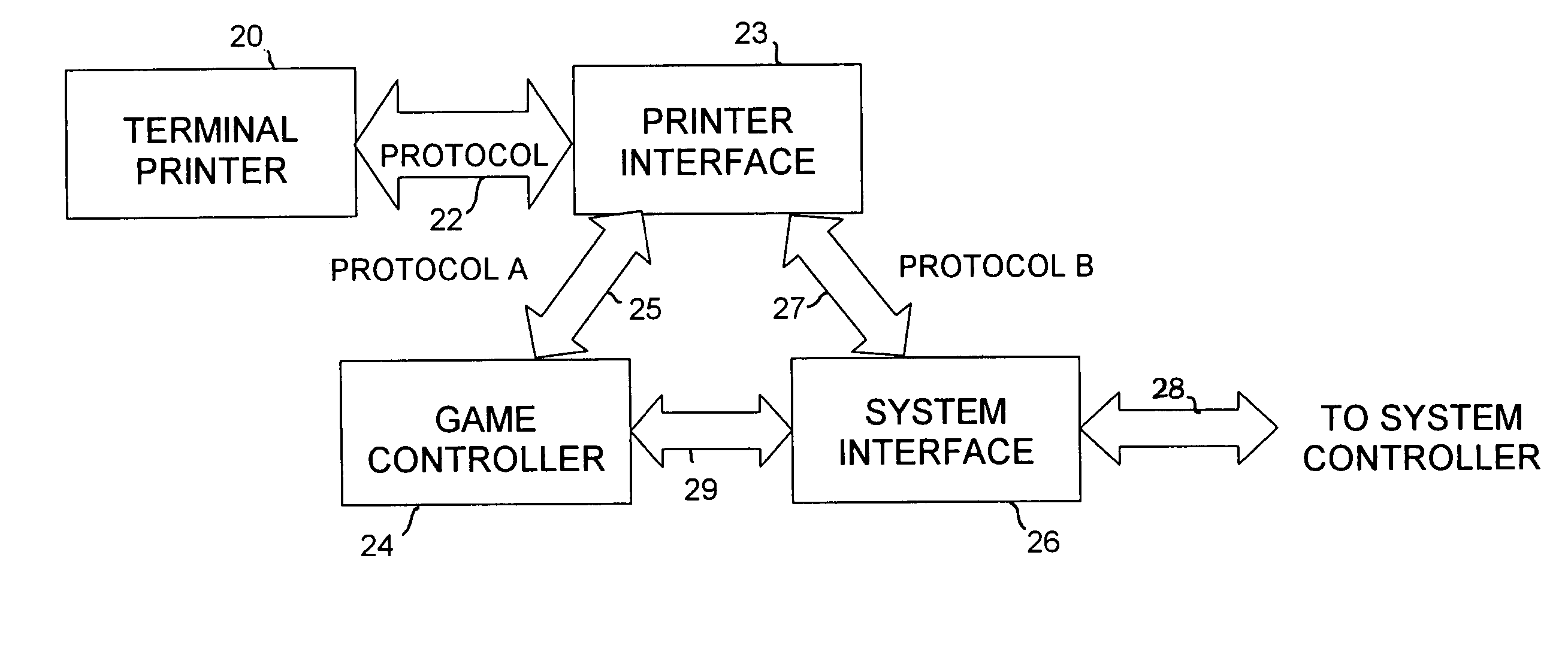

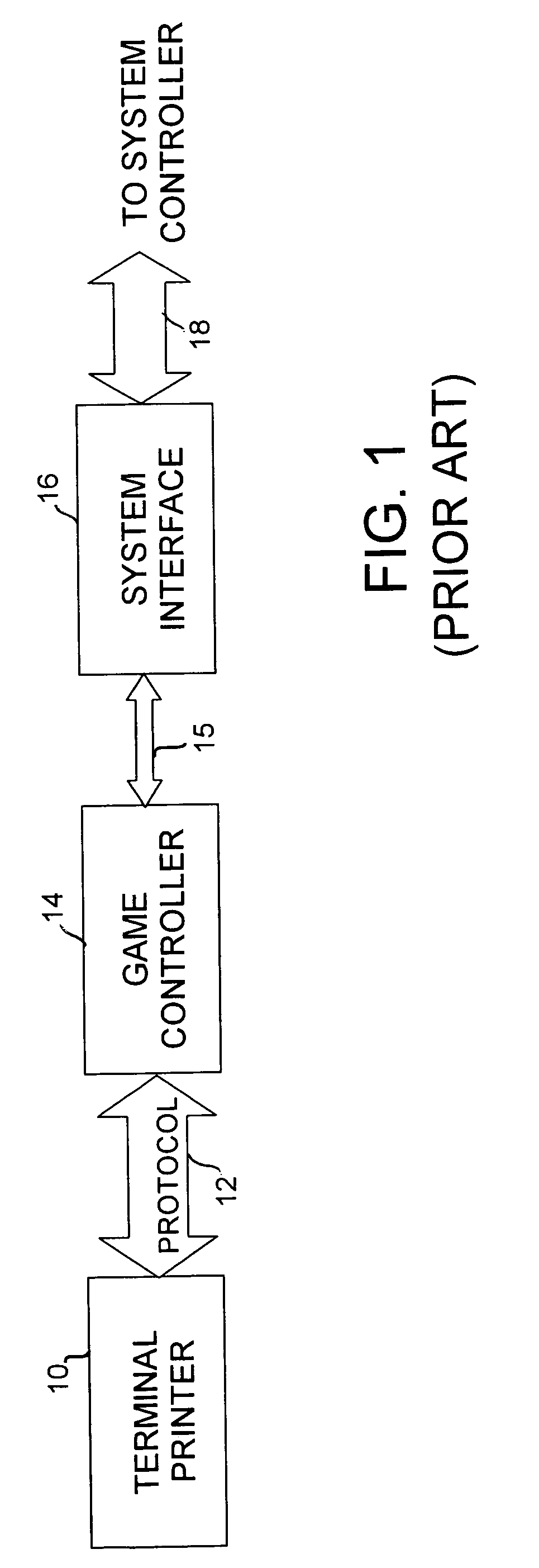

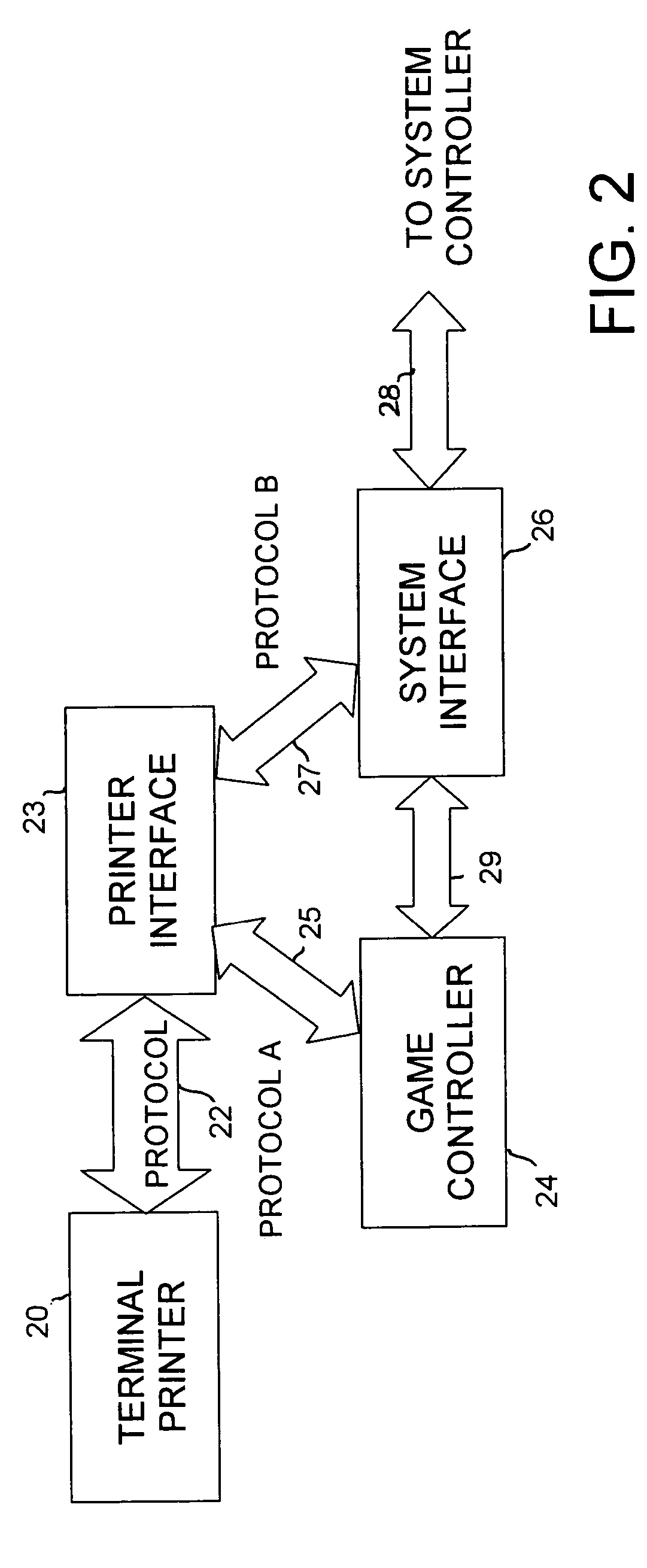

Method and apparatus for controlling a peripheral via different data ports

InactiveUS20060221386A1Digitally marking record carriersDigital computer detailsData portData format

A peripheral such as a printer is controlled to receive data from different data ports. A first port receives data formatted according to a first protocol. A second port receives data formatted according to a second protocol. A first processor is associated with the peripheral. A second processor is associated with the second port. A switch is adapted to receive (i) first data from the first port and (ii) second data from the second port after processing by the second processor. The switch is controlled in response to a command received via the second port to couple either the first data or second data to the first processor for use in controlling the peripheral. New firmware can be downloaded to the peripheral via the second port in response to the command. The command can be communicated to the first processor via a separate port at the first processor and a memory shared with the second processor.

Owner:TRANSACT TECH

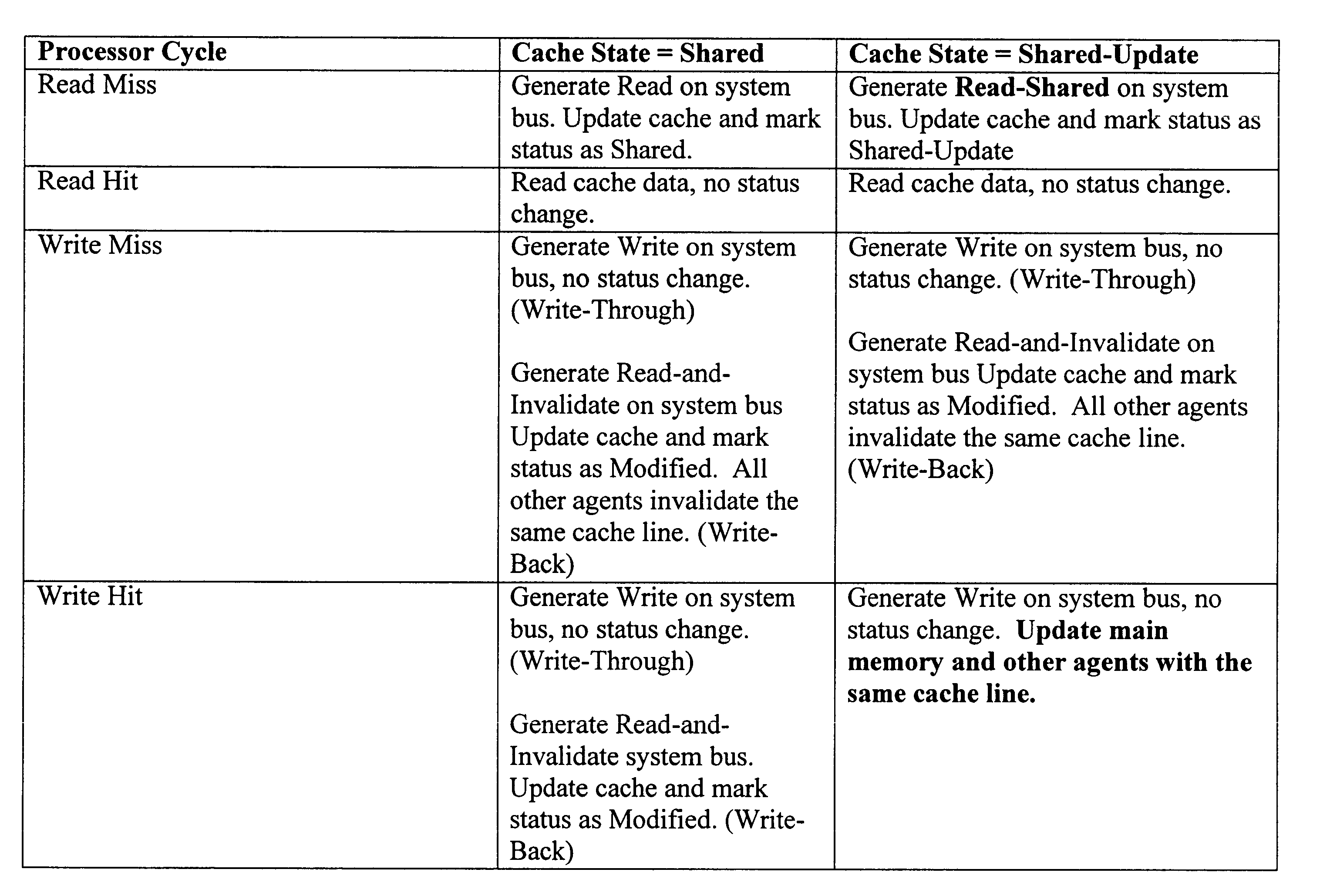

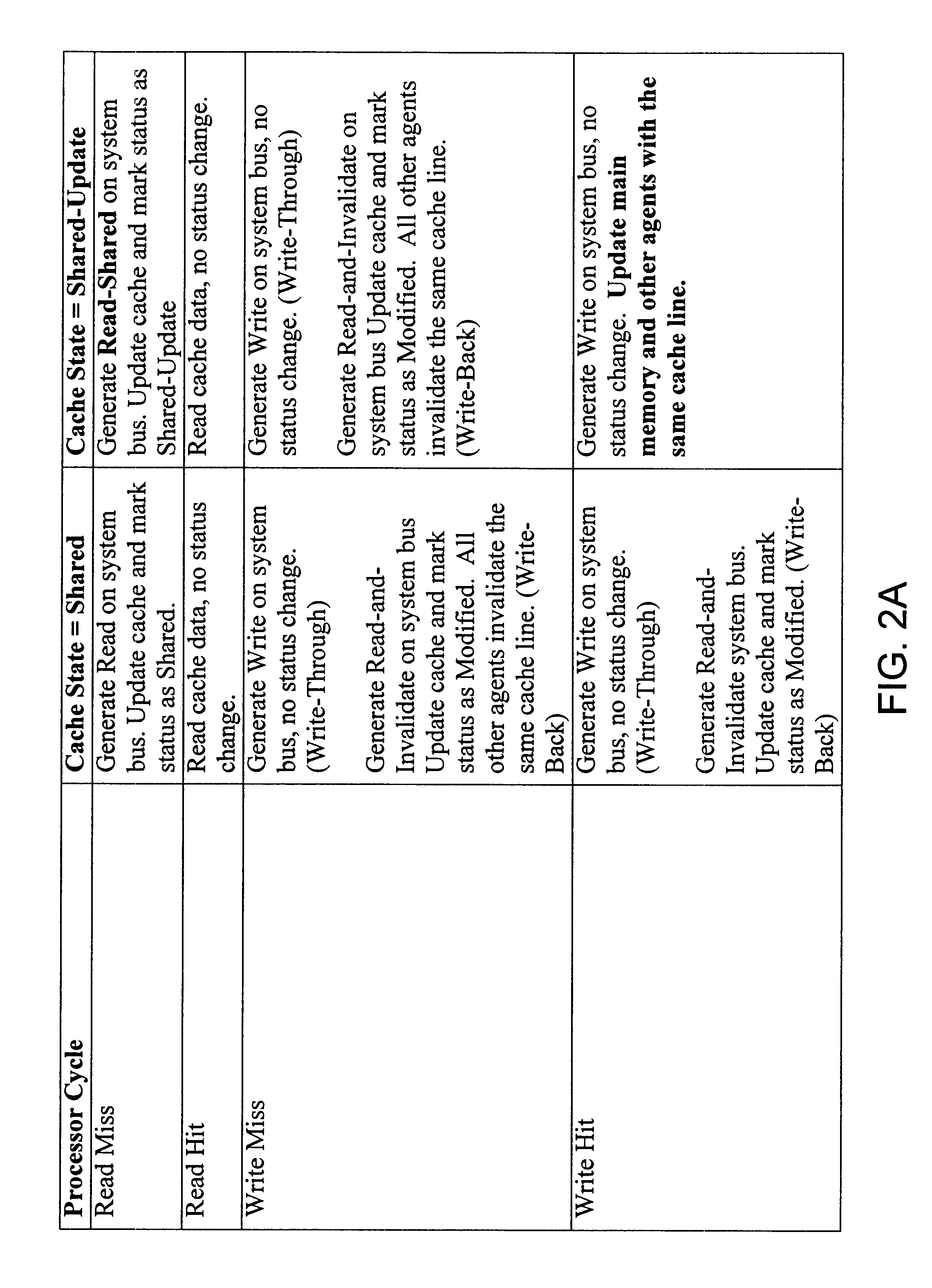

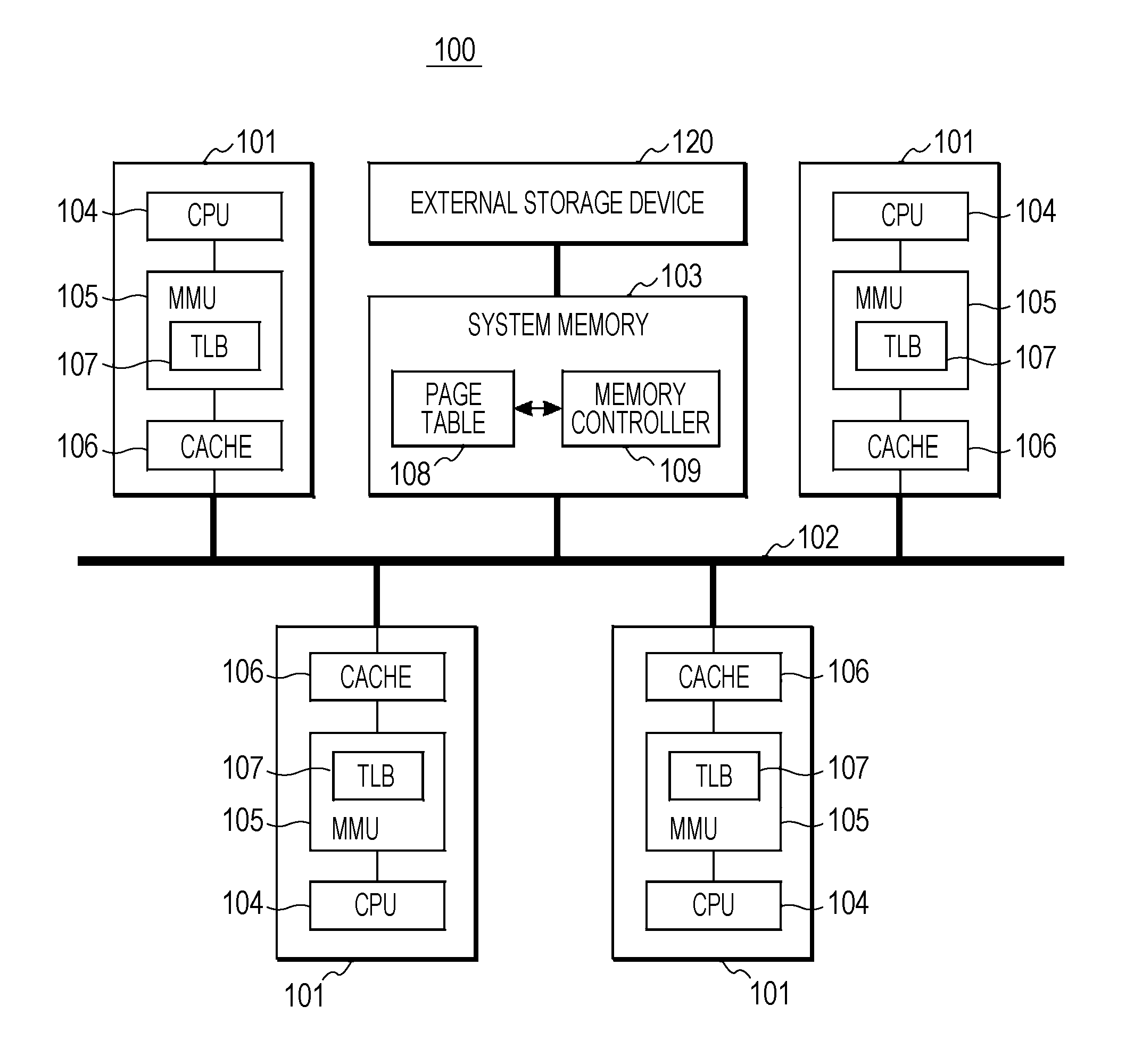

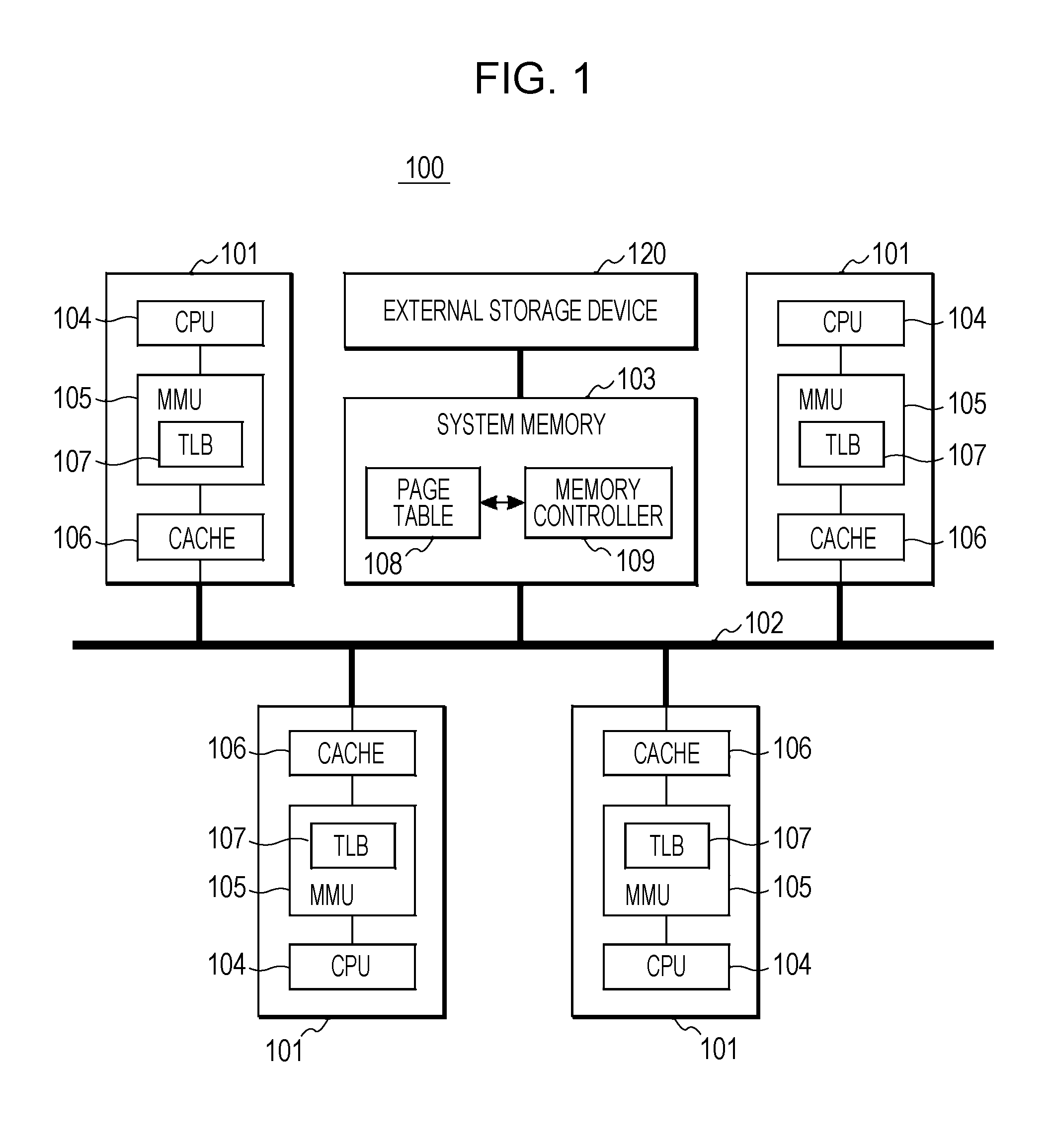

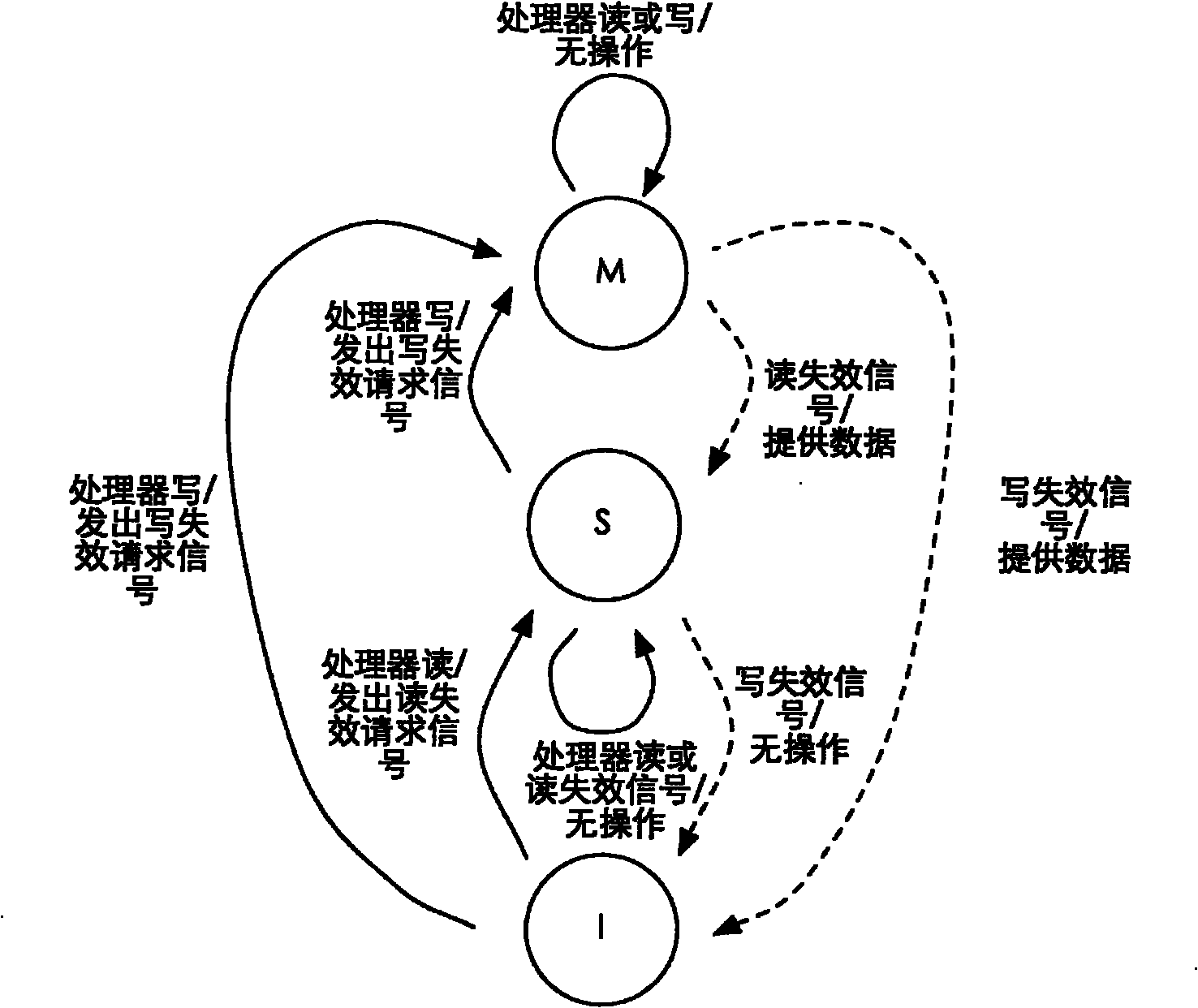

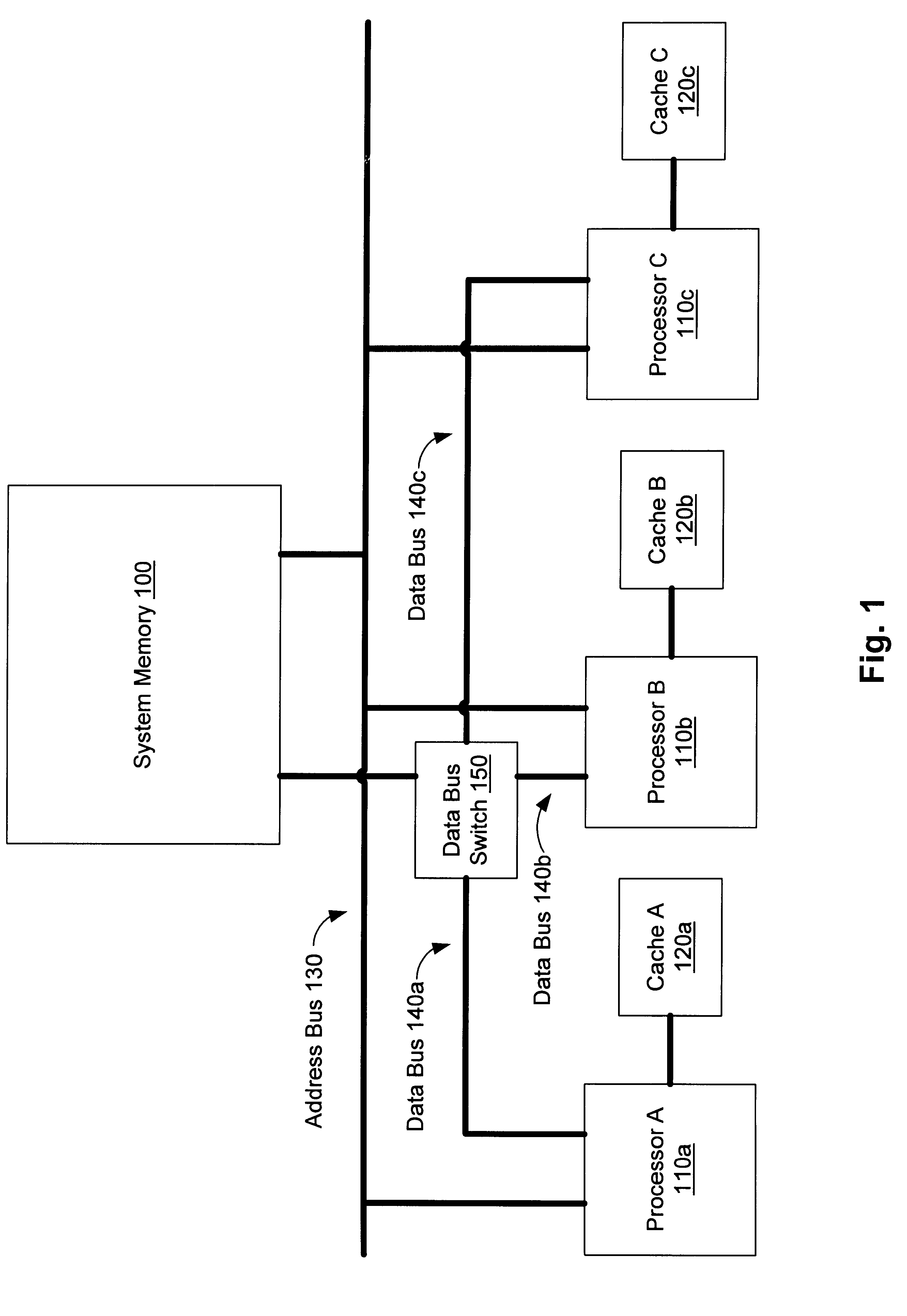

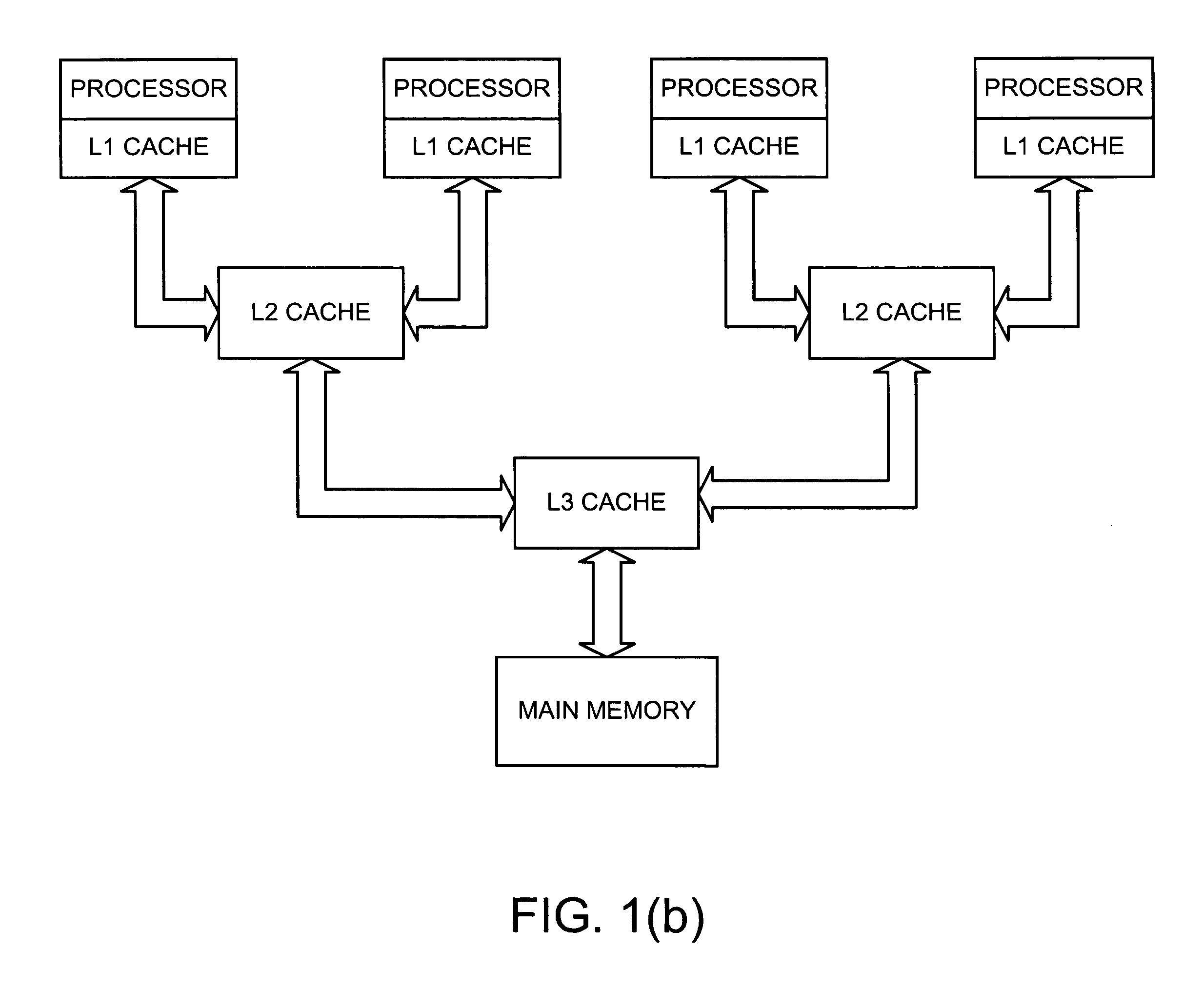

Cache states for multiprocessor cache coherency protocols

Cache states for cache coherency protocols for a multiprocessor system are described. Some embodiments described include a multiprocessor computer system comprising a plurality of cache memories to store a plurality of cache lines and state information for each one of the cache lines. The state information comprises data representing a first state selected from the group consisting of a Shared-Update state, a Shared-Respond state and an Exclusive-Respond state. The multiprocessor computer system further comprises a plurality of processors with at least one cache memory associated with each one of the plurality of processors. The multiprocessor computer system further comprises a system memory shared by the plurality of processors, and at least one bus interconnecting the system memory with the plurality of cache memories and the multiple processors. In some embodiments, one or more of the states (a Shared-Update state, a Shared-Respond state or an Exclusive-Respond state) are implemented in conjunction with the states of the MESI protocol.

Owner:SONY CORP OF AMERICA

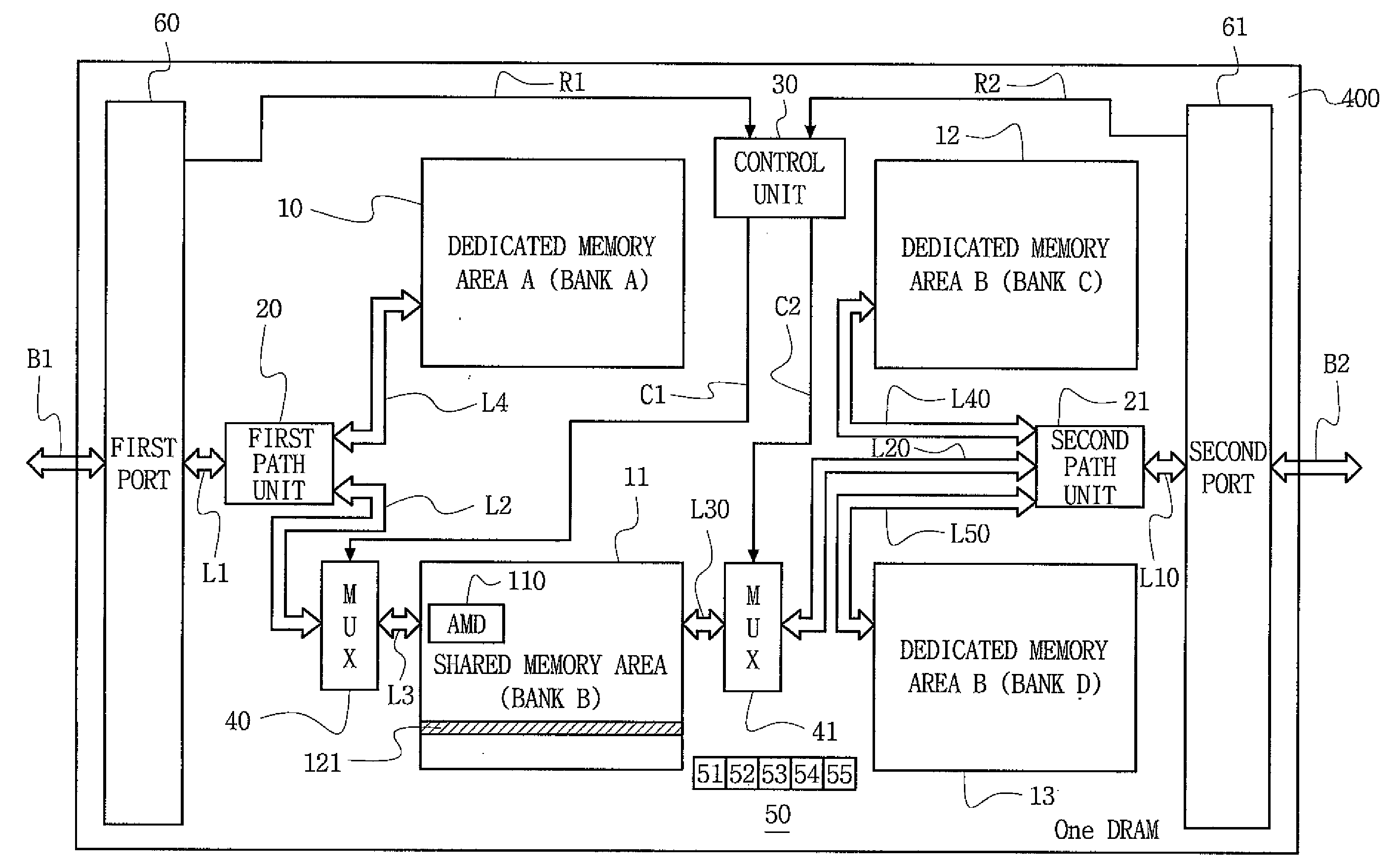

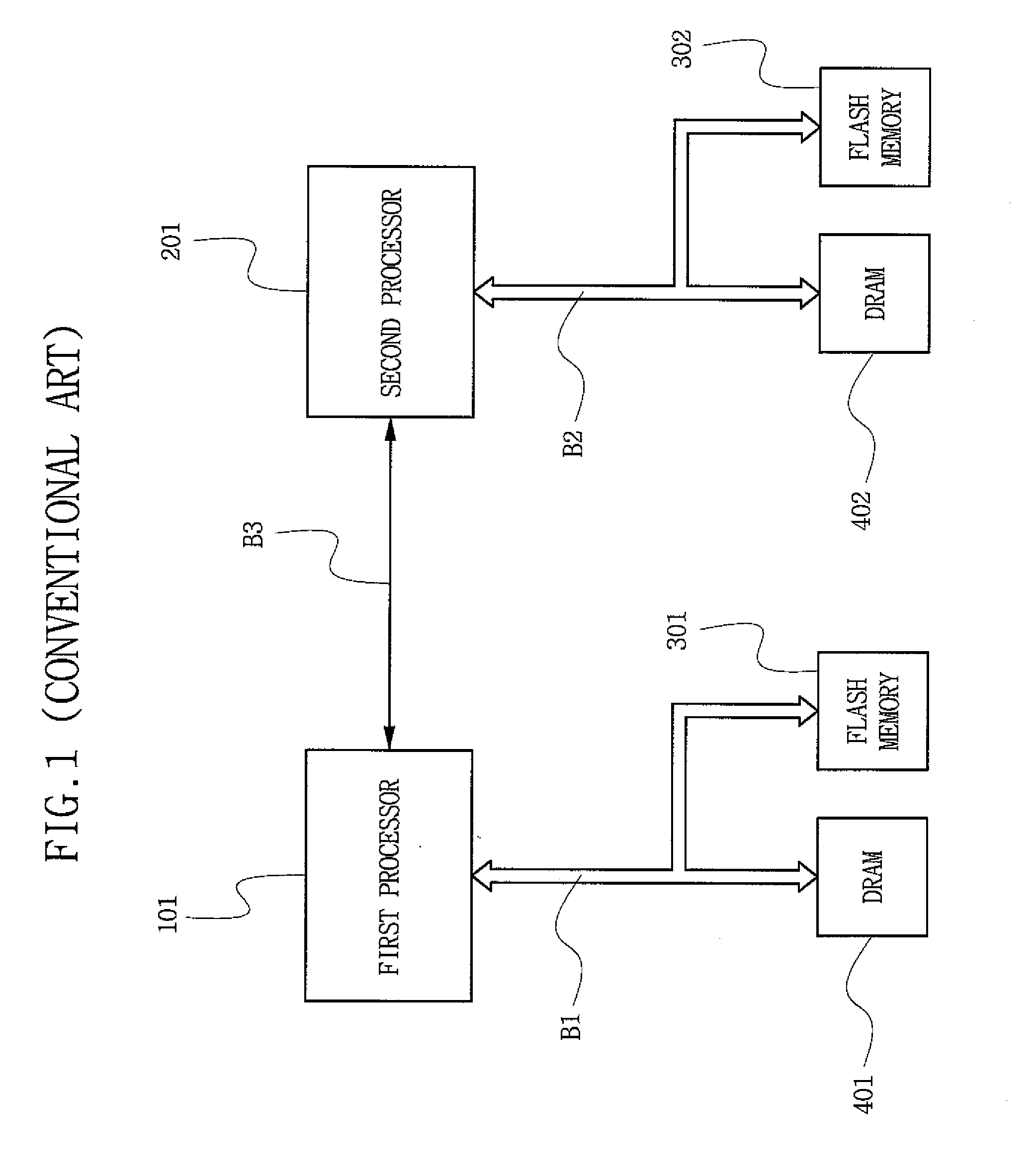

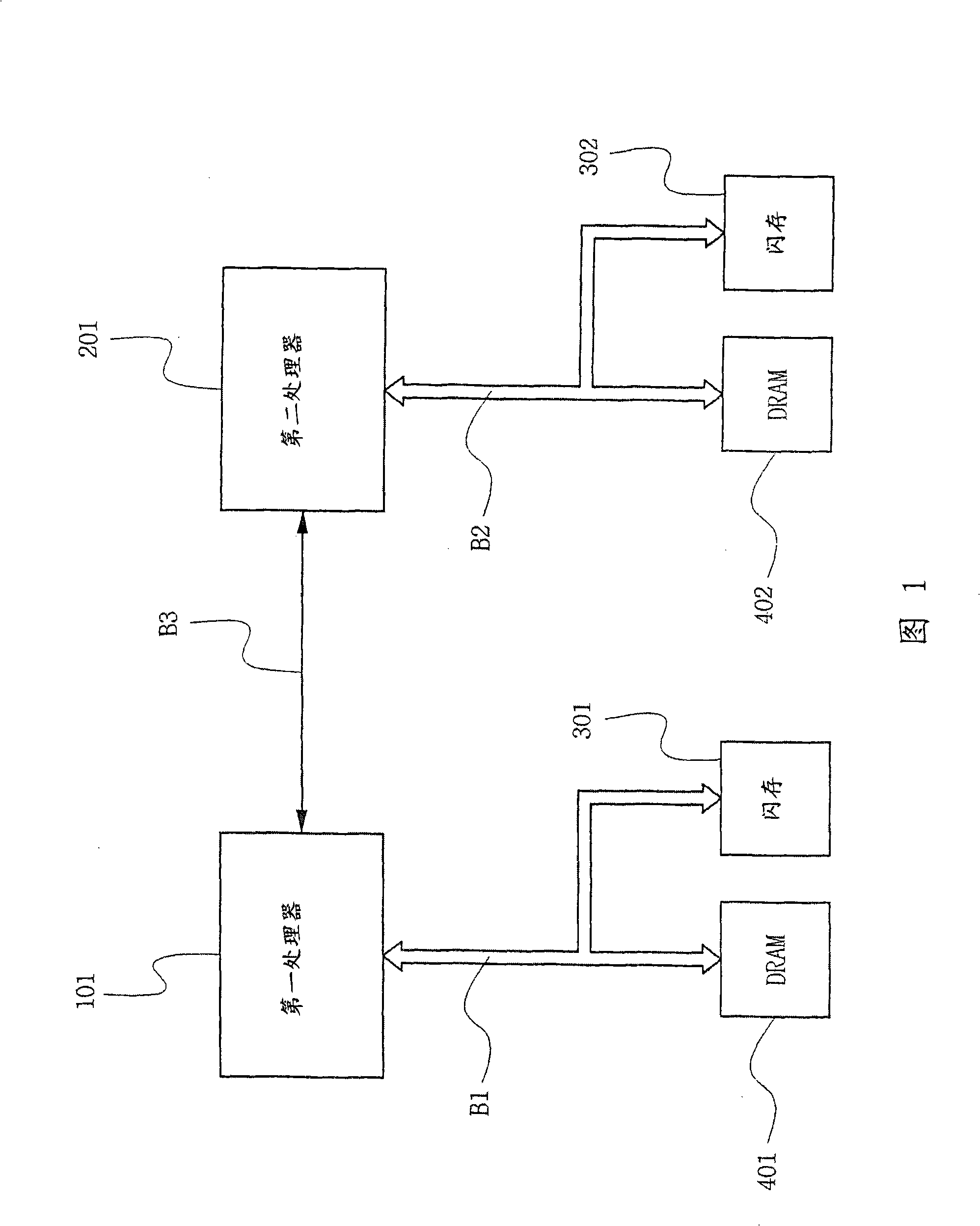

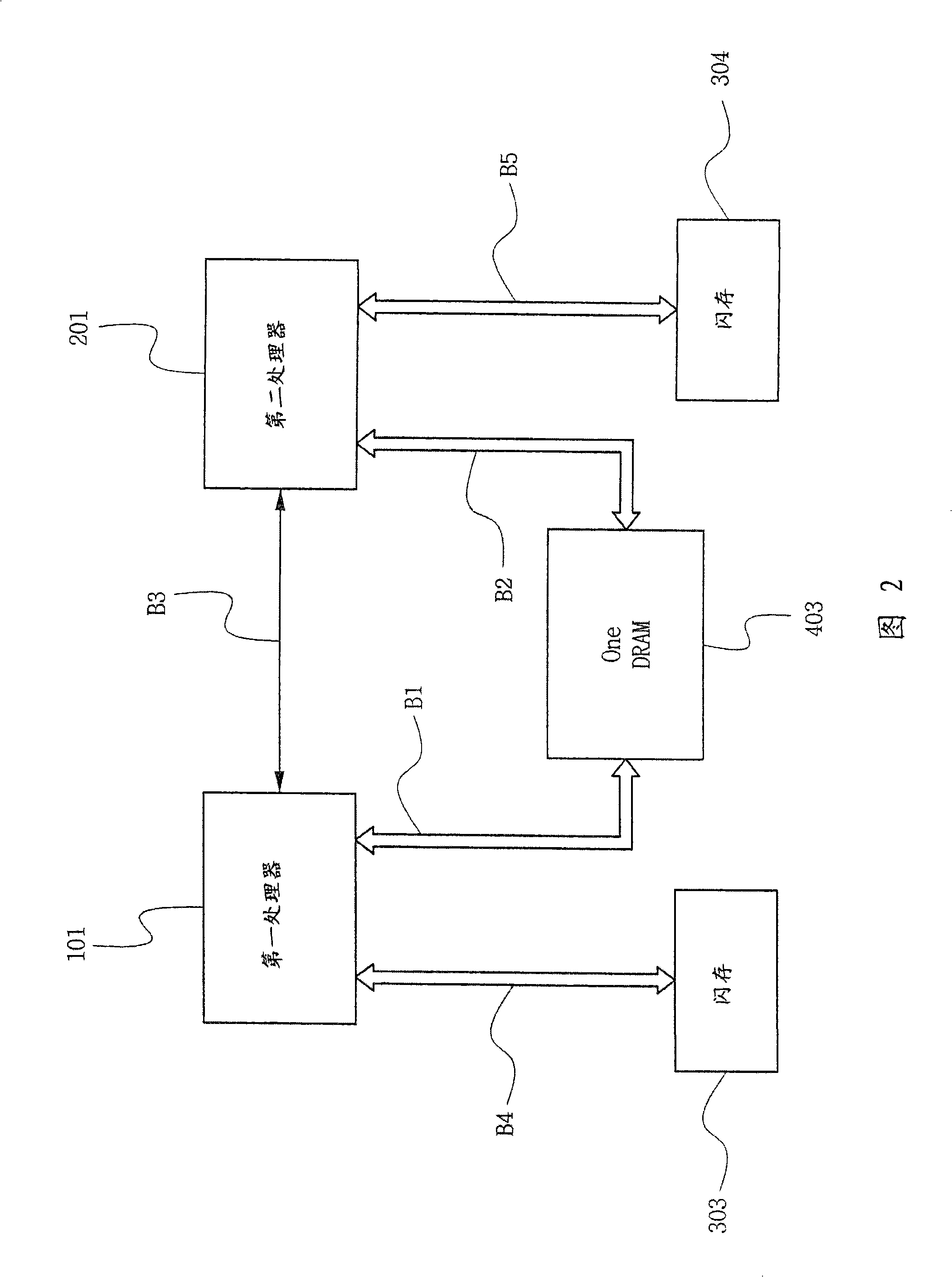

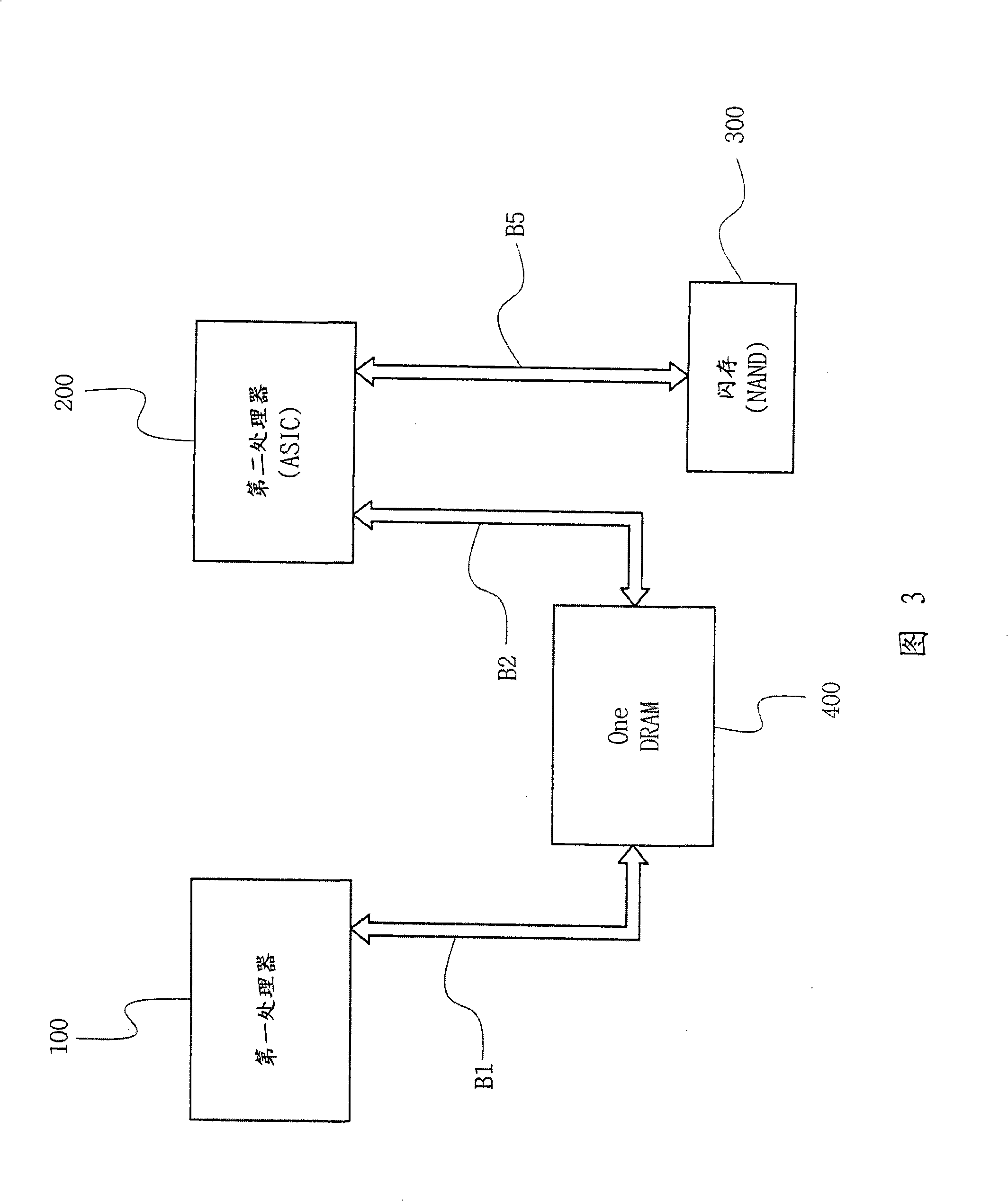

Multipath accessible semiconductor memory device

InactiveUS20080256305A1Interface functionMemory architecture accessing/allocationMemory adressing/allocation/relocationProcessor registerMulti processor

A multipath accessible semiconductor memory device provides an interfacing function between multiple processors which indirectly controls a flash memory. The multipath accessible semiconductor memory device comprises a shared memory area, an internal register and a control unit. The shared memory area is accessed by first and second processors through different ports and is allocated to a portion of a memory cell array. The internal register is located outside the memory cell array and is accessed by the first and second processors. The control unit provides storage of address map data associated with the flash memory outside the shared memory area so that the first processor indirectly accesses the flash memory by using the shared memory area and the internal register even when only the second processor is coupled to the flash memory. The control unit also controls a connection path between the shared memory area and one of the first and second processors. The processors share the flash memory and a multiprocessor system is provided that has a compact size, thereby substantially reducing the cost of memory utilized within the multiprocessor system.

Owner:SAMSUNG ELECTRONICS CO LTD

CPU and graphics unit with shared cache

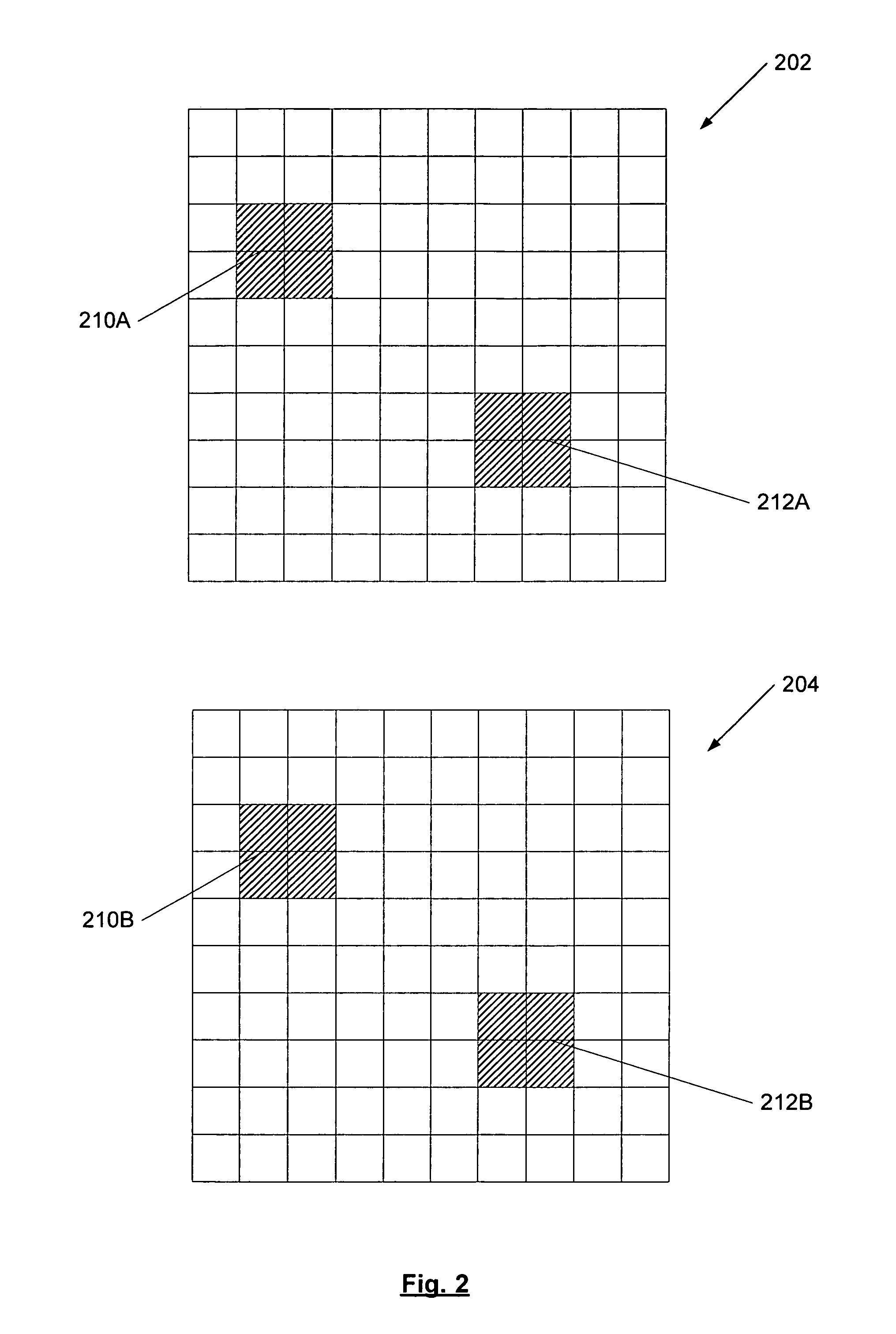

ActiveUS7023445B1Memory adressing/allocation/relocationCathode-ray tube indicatorsGraphicsProcessor sharing

A method and mechanism for managing graphics data. A graphics unit is coupled to share a cache and a memory with a processor. The graphics unit is configured to partition rendered images into a plurality of subset areas. During the rendering of an image, data corresponding to subset areas of an image which require a relatively high number of accesses is deemed cacheable for a subsequent rendering. During a subsequent image rendering, if the graphics unit is required to evict data from a local buffer, the evicted data is only stored in the shared cache if a prior rendering indicated that the corresponding data is cacheable.

Owner:ADVANCED MICRO DEVICES INC

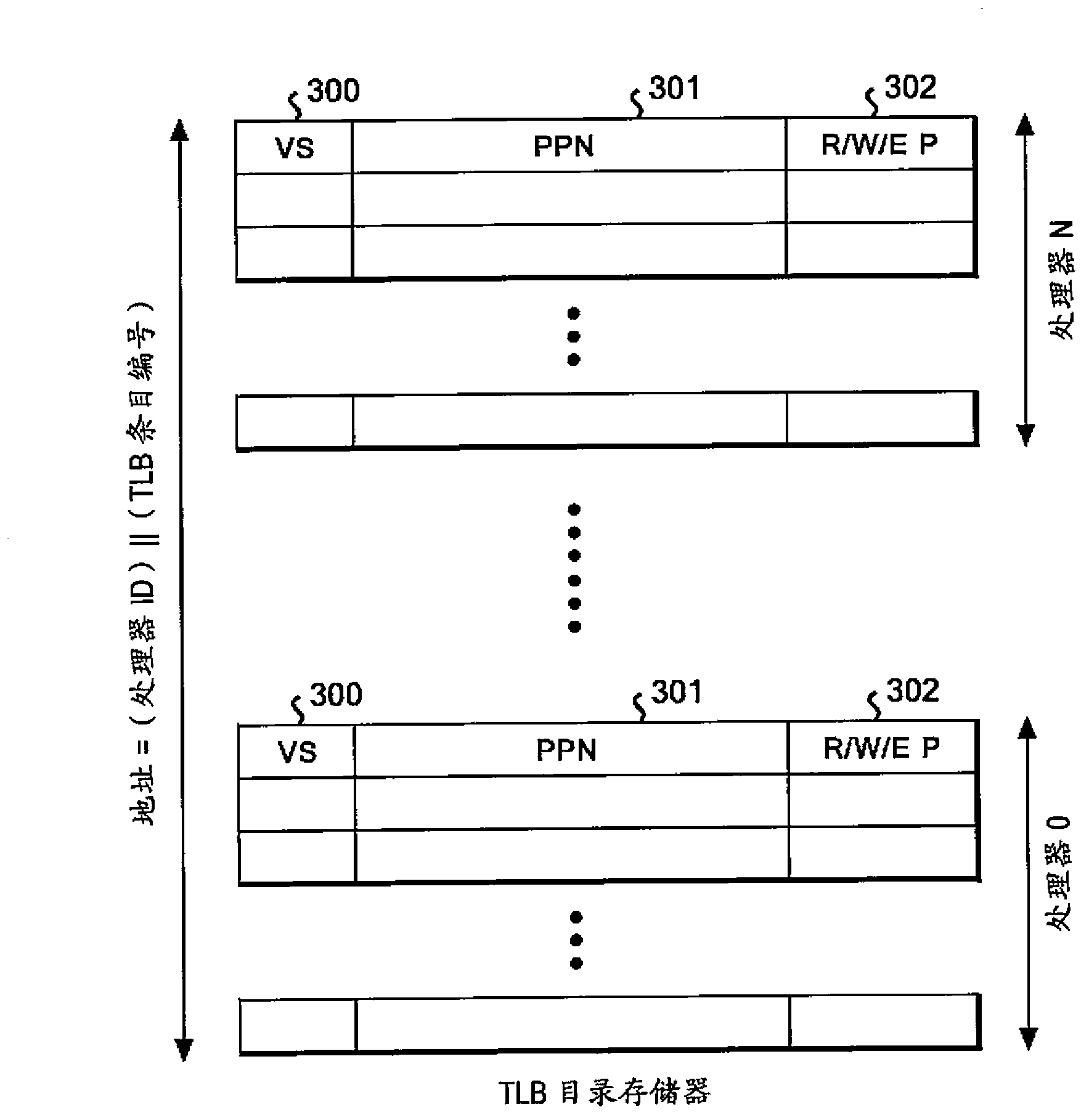

Reducing broadcasts in multiprocessors

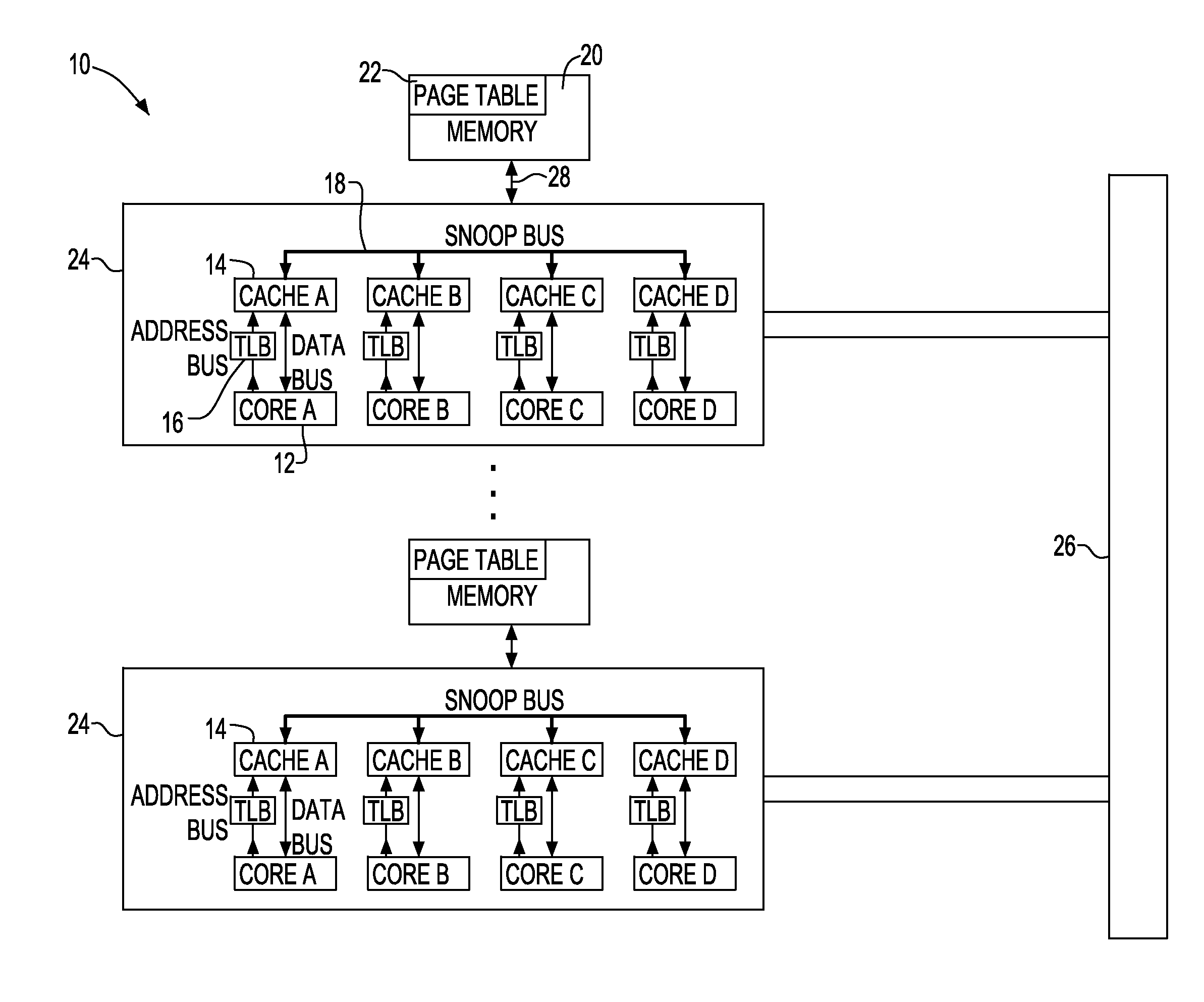

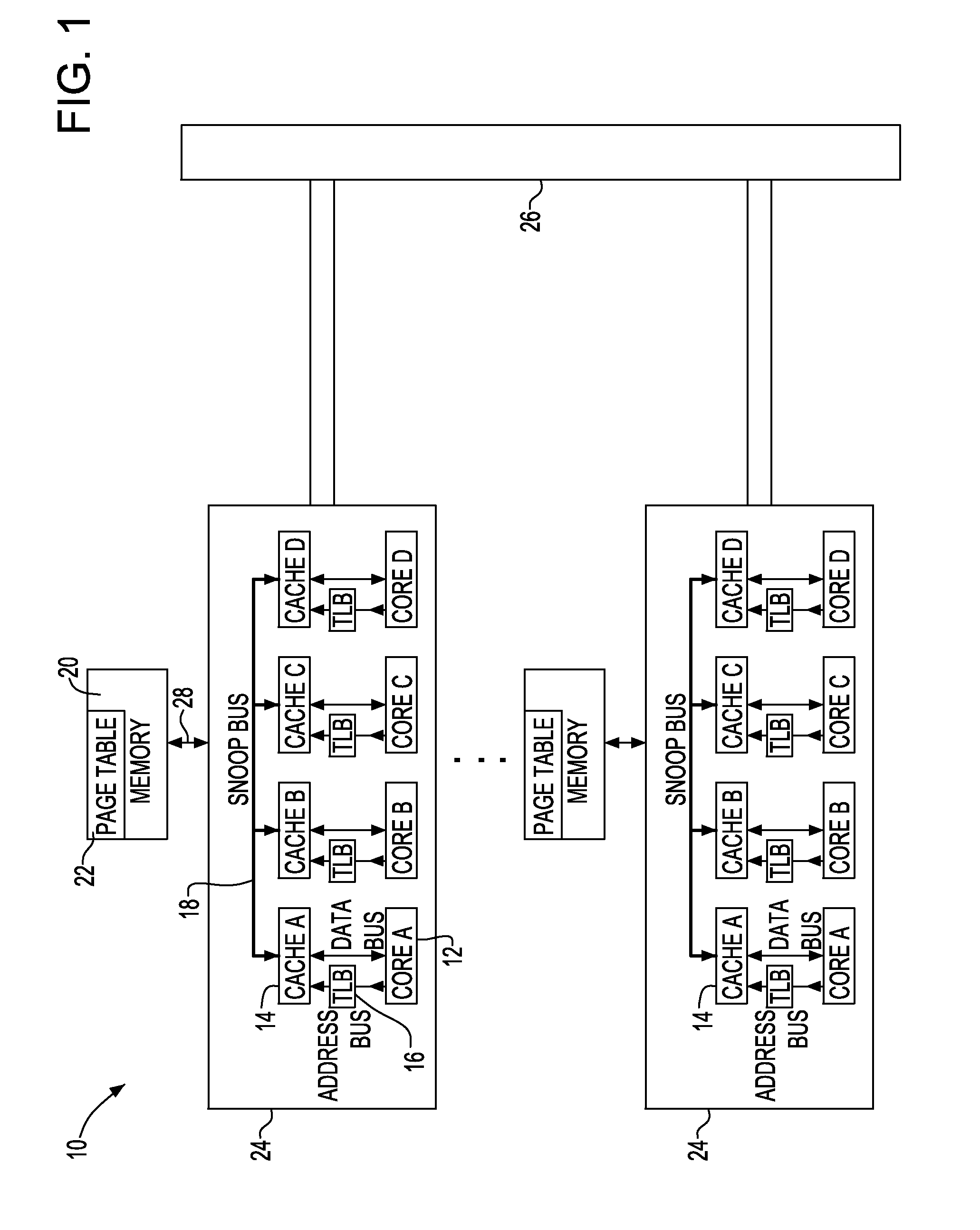

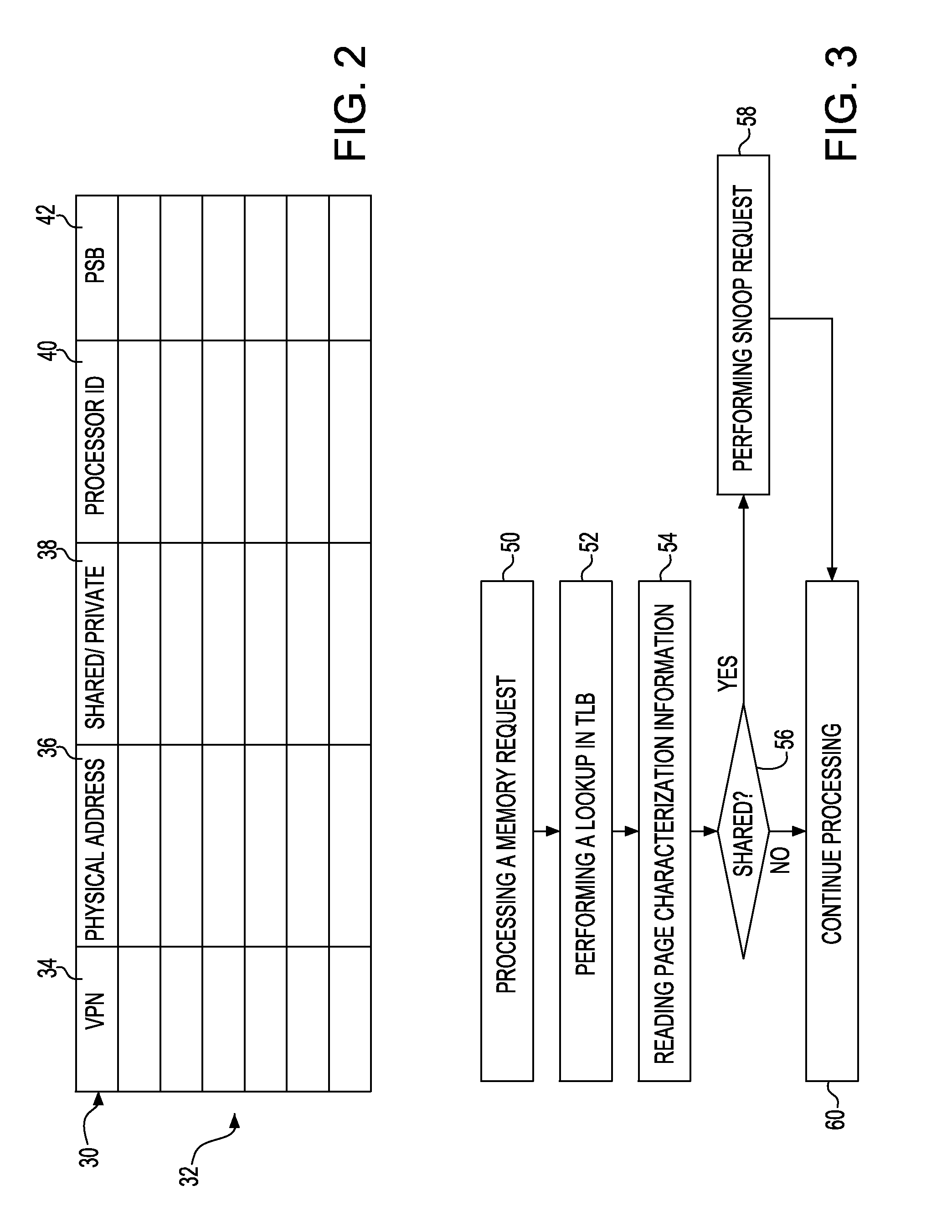

ActiveUS20110055515A1Reduce broadcastRelatively large bandwidthEnergy efficient ICTMemory adressing/allocation/relocationVirtual memoryMulti processor

Disclosed is an apparatus to reduce broadcasts in multiprocessors including a plurality of processors; a plurality of memory caches associated with the processors; a plurality of translation lookaside buffers (TLBs) associated with the processors; and a physical memory shared with the processors memory caches and TLBs; wherein each TLB includes a plurality of entries for translation of a page of addresses from virtual memory to physical memory, each TLB entry having page characterization information indicating whether the page is private to one processor or shared with more than one processor. Also disclosed is a computer program product and method to reduce broadcasts in multiprocessors.

Owner:IBM CORP

System and method for encrypting data using a plurality of processors

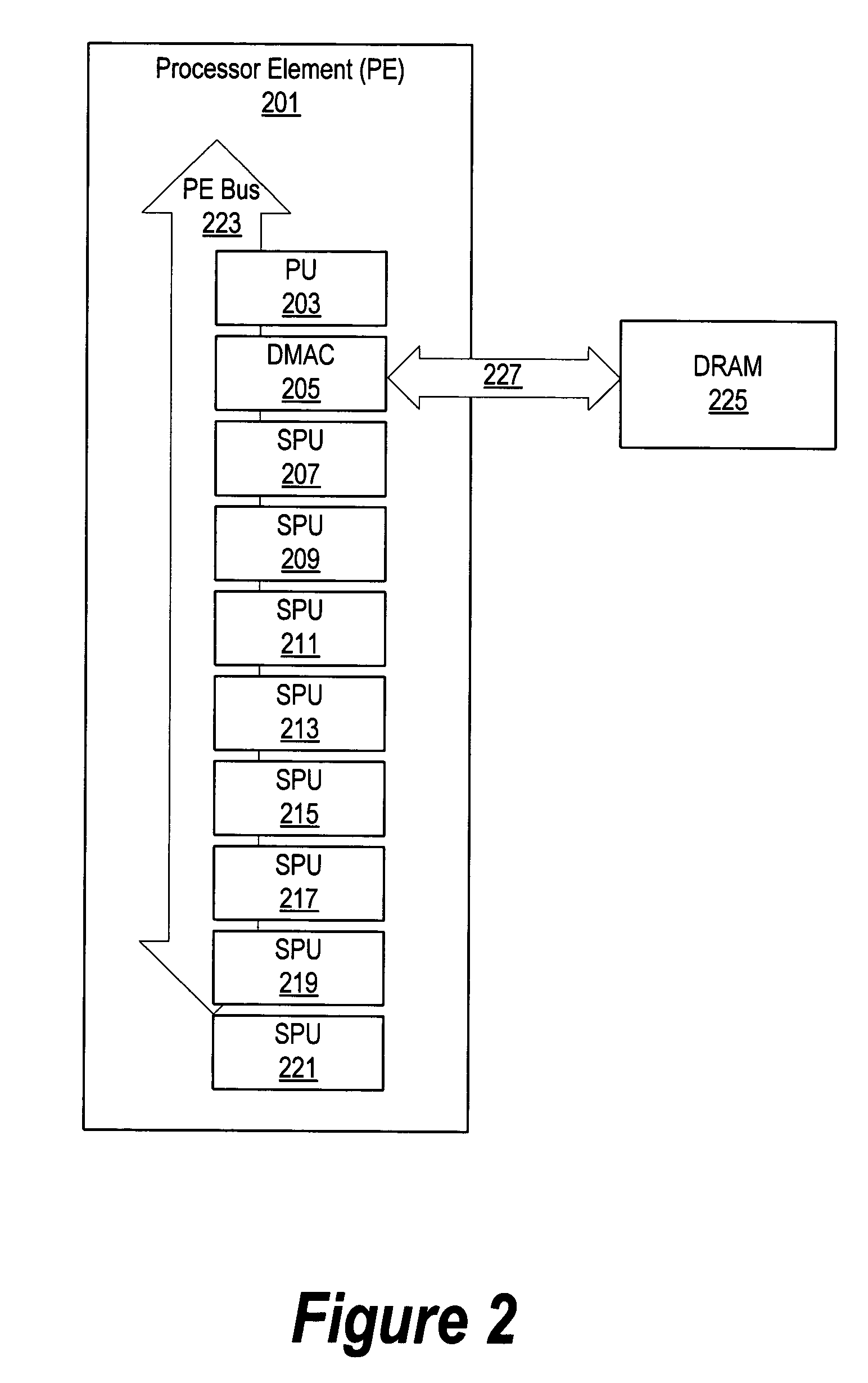

InactiveUS20050071651A1Key distribution for secure communicationDigital data processing detailsEncrypted functionMemory map

A system and method are provided to dedicate one or more processors in a multiprocessing system to performing encryption functions. When the system initializes, one of the synergistic processing unit (SPU) processors is configured to run in a secure mode wherein the local memory included with the dedicated SPU is not shared with the other processors. One or more encryption keys are stored in the local memory during initialization. During initialization, the SPUs receive nonvolatile data, such as the encryption keys, from nonvolatile register space. This information is made available to the SPU during initialization before the SPUs local storage might be mapped to a common memory map. In one embodiment, the mapping is performed by another processing unit (PU) that maps the shared SPUs' local storage to a common memory map.

Owner:IBM CORP +1

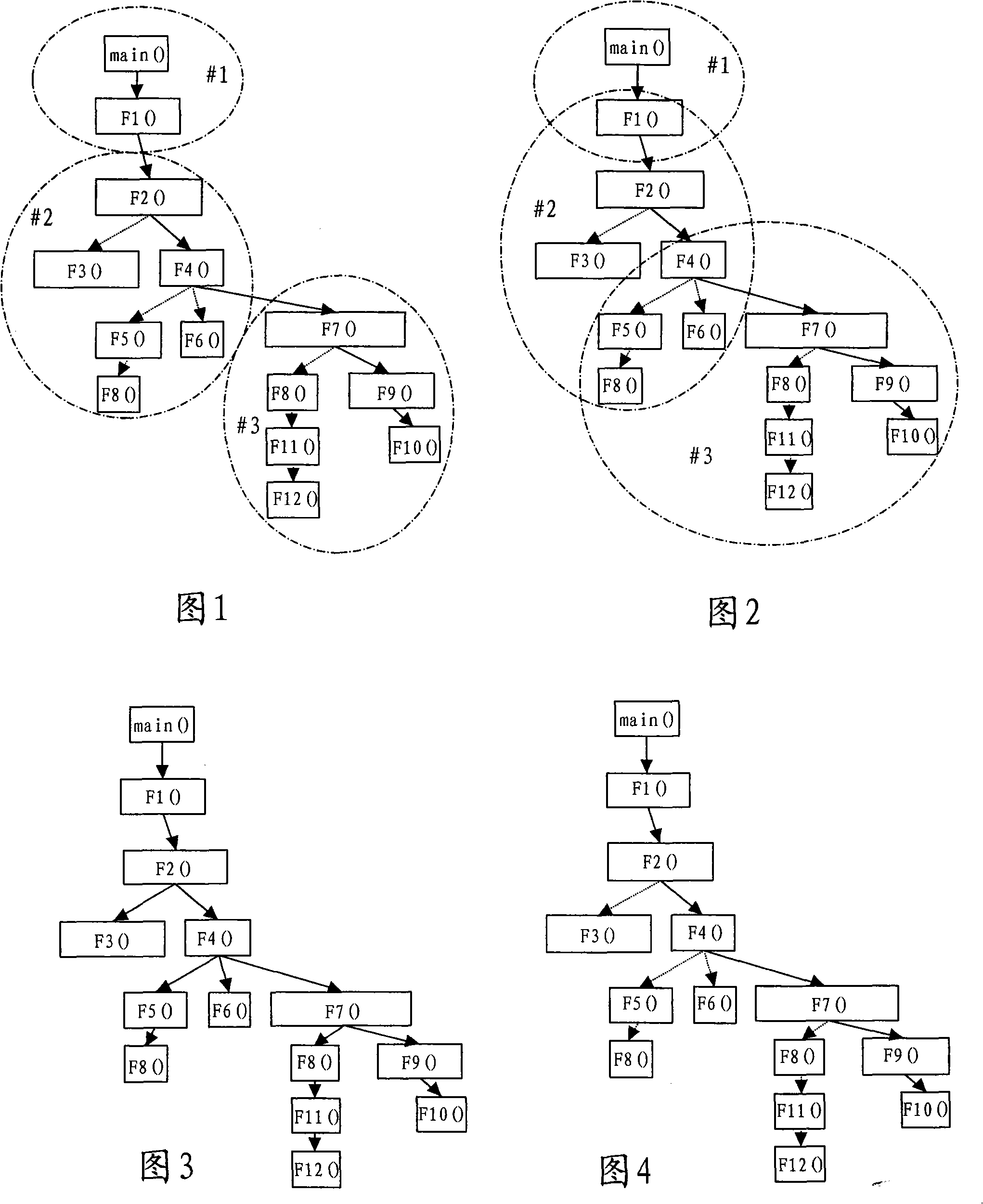

Multi-processor environment assembly line processing method and equipment

InactiveCN101339523ARealize dynamic balanceHigh gainResource allocationDynamic balanceMulti processor

The present invention relates to a method and an equipment for processing pipeline in a multiprocessor environment. The method comprises the steps of dividing a task into overlapping subtasks and distributing the subtasks to a plurality of processors, the overlapping part of each subtask is shared by the processor which disposes the corresponding subtask, determining the state of each processor during the process of implementing the subtask by each processor, and dynamically determining the overlapping part of each subtask to be implemented by the processor which disposes the corresponding subtask according to the state of the processor. According to the present invention, the dynamic balance of the subtask between multiple processors is implemented beautifully, so as to make the most of the calculation resource to implement optimum gain of a multicore processor.

Owner:IBM CN

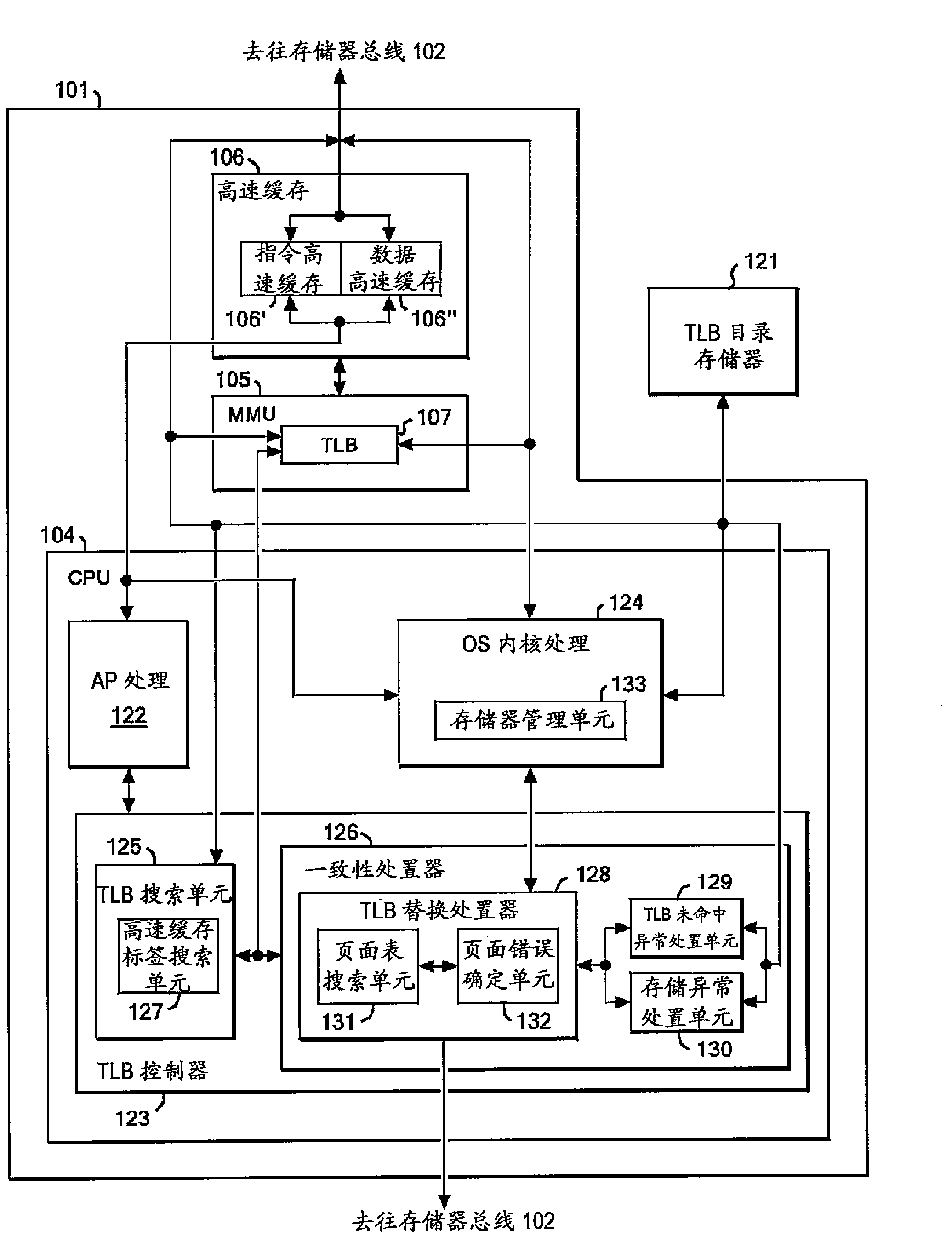

Cache coherency control method, system, and program

InactiveUS20120137079A1Improve scalabilityMeet cost performance requirementsMemory adressing/allocation/relocationInformation processingMulti processor

In a system for controlling cache coherency of a multiprocessor system in which a plurality of processors share a system memory, each of the plurality of processors including a cache and a TLB, the processor includes a TLB controller including a TLB search unit that performs a TLB search and a coherency handler that performs TLB registration information processing when no hit occurs in the TLB search and a TLB interrupt occurs. The coherency handler includes a TLB replacement handler that searches a page table in the system memory and that replaces the TLB registration information, a TLB miss exception handling unit, and a storage exception handling unit.

Owner:IBM CORP

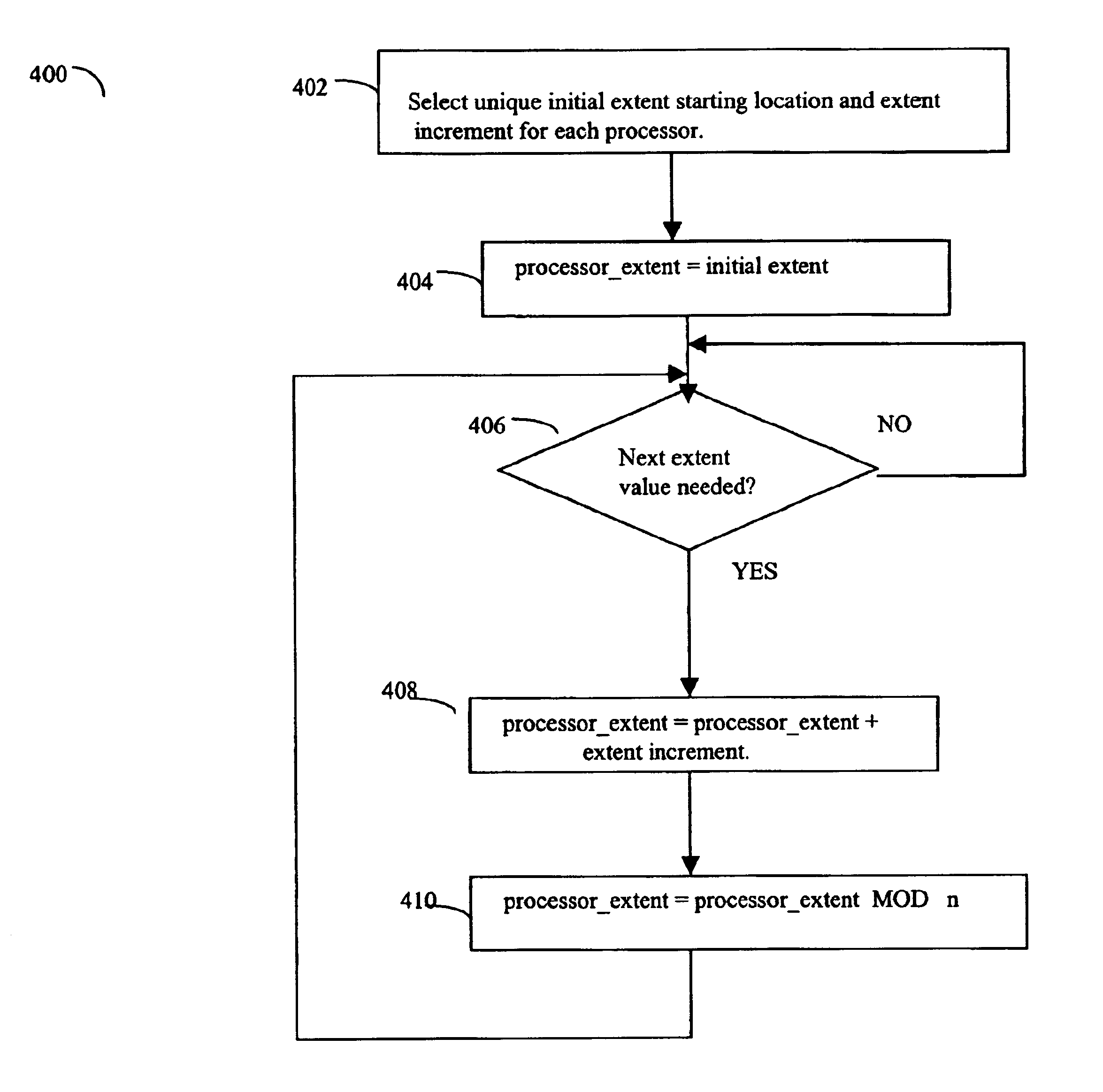

Advancing bank pointer in prime numbers unit

InactiveUS6807619B1Data processing applicationsMemory adressing/allocation/relocationMemory bankParallel computing

The cache arrangement includes a cache that may be organized as a plurality of memory banks in which each memory bank includes a plurality of slots. Each memory bank has an associated control slot that includes groups of extents of tags. Each cache slot has a corresponding tag that includes a bit value indicating the availability of the associated cache slot, and a time stamp indicating the last time the data in the slot was used. The cache may be shared by multiple processors. Exclusive access of the cache slots is implemented using an atomic compare and swap instruction. The time stamp of slots in the cache may be adjusted to indicate ages of slots affecting the amount of time a particular portion of data remains in the cache. Associated with each processor is a unique extent increment used to determine a next location for that particular processor when attempting to locate an available slot.

Owner:EMC IP HLDG CO LLC

Method for realizing cache coherence protocol of chip multiprocessor (CMP) system

ActiveCN102103568AImprove performanceImprove stabilityDigital computer detailsElectric digital data processingData informationData provider

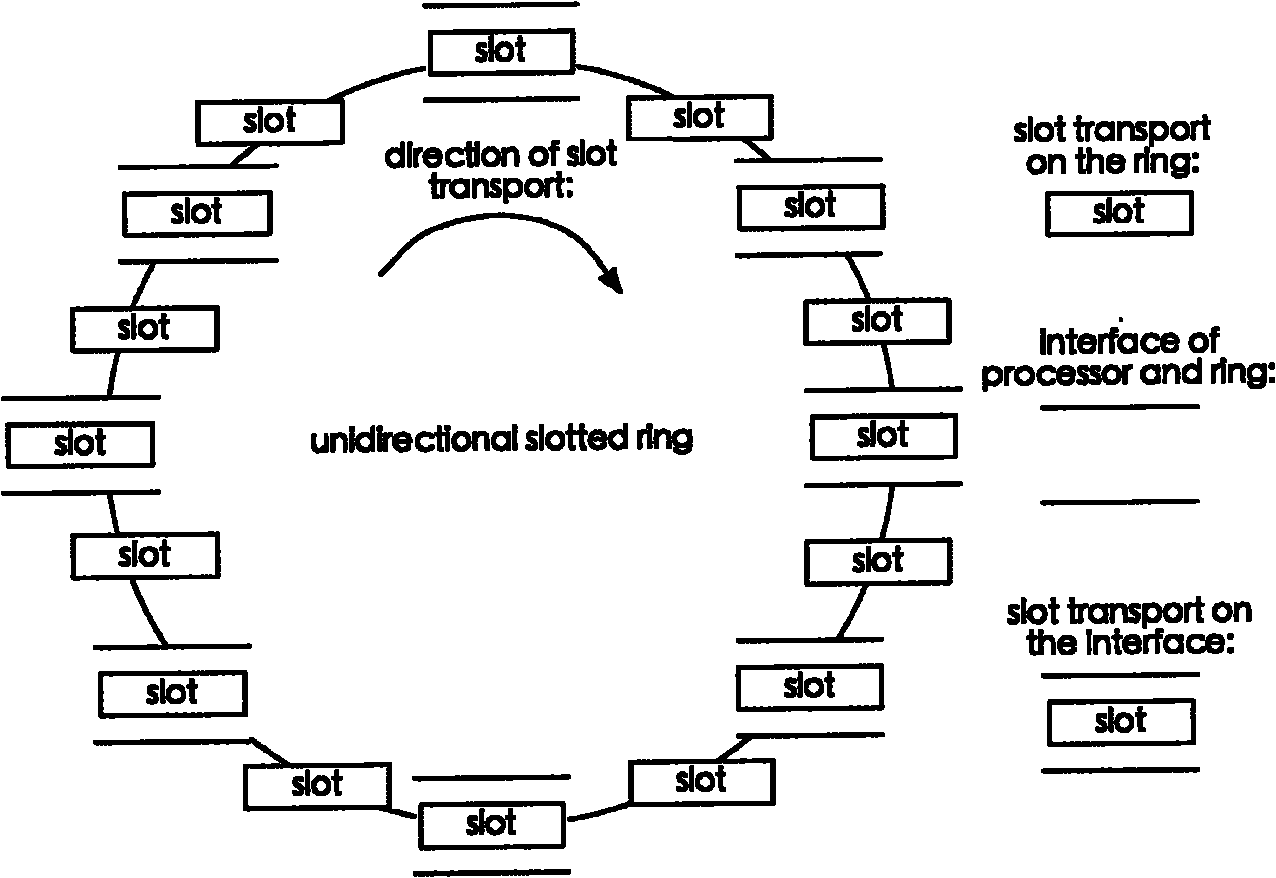

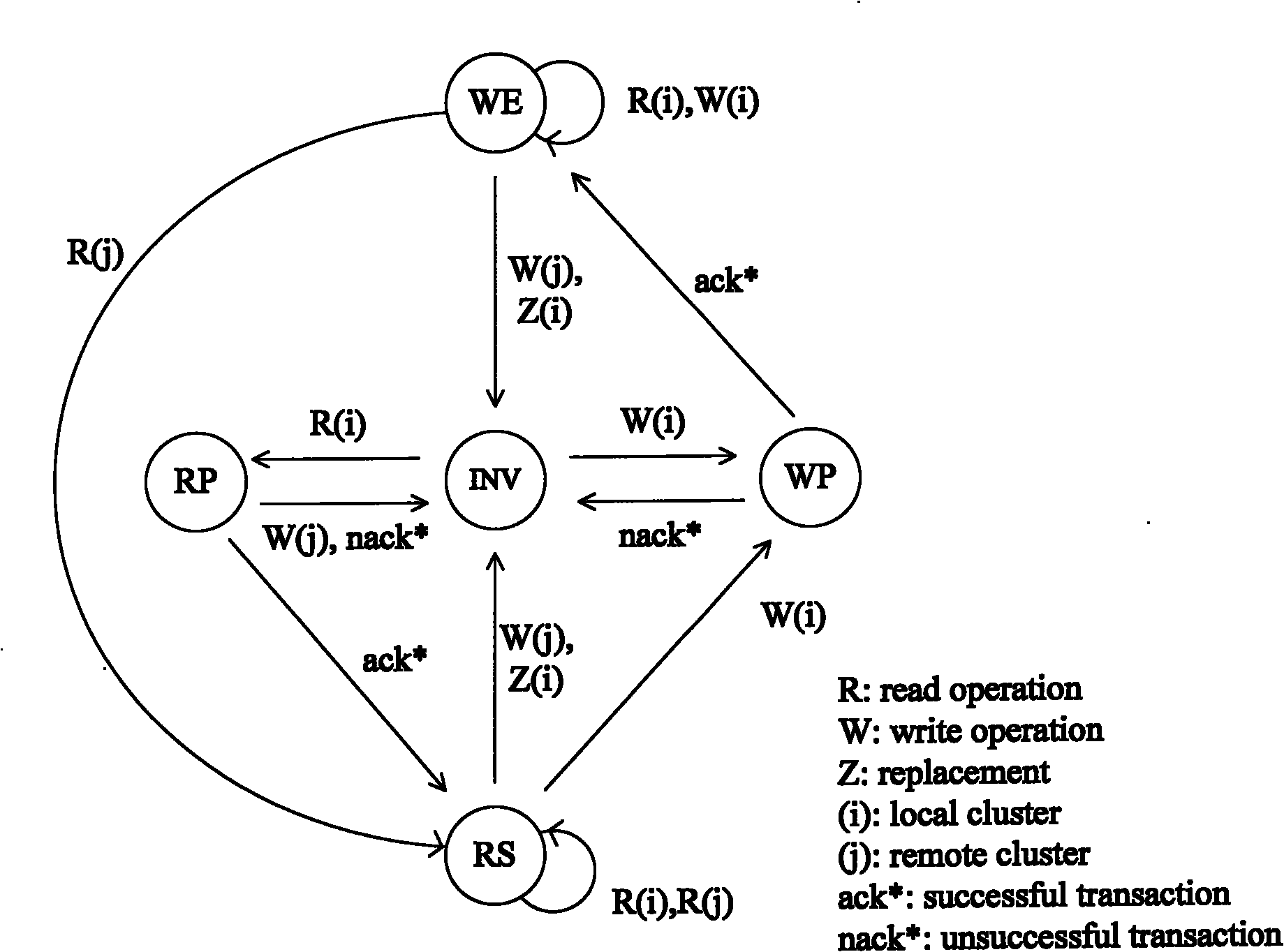

The invention discloses a method for realizing a cache coherence protocol of a chip multiprocessor (CMP) system, and the method comprises the following steps: 1, cache is divided into a primary Cache and a secondary Cache, wherein the primary Cache is a private Cache of each processor in the processor system, and the secondary Cache is shared by the processors in the processor system; 2, each processor accesses the private primary Cache, and when the access fails, a failure request information slot is generated, sent to a request information ring, then transmitted to other processors by the request information ring to carry out intercepting; and 3, after a data provider intercepts the failure request, a data information slot is generated and sent to a data information ring, then transmitted to a requestor by the data information ring, finally, the requestor receives data blocks and then completes corresponding access operations. The method disclosed by the invention has the advantages of effectively improving the performance of the system, reducing the power consumption and bandwidth utilization, avoiding the occurrence of starvation, deadlock and livelock, and improving the stability of the system.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

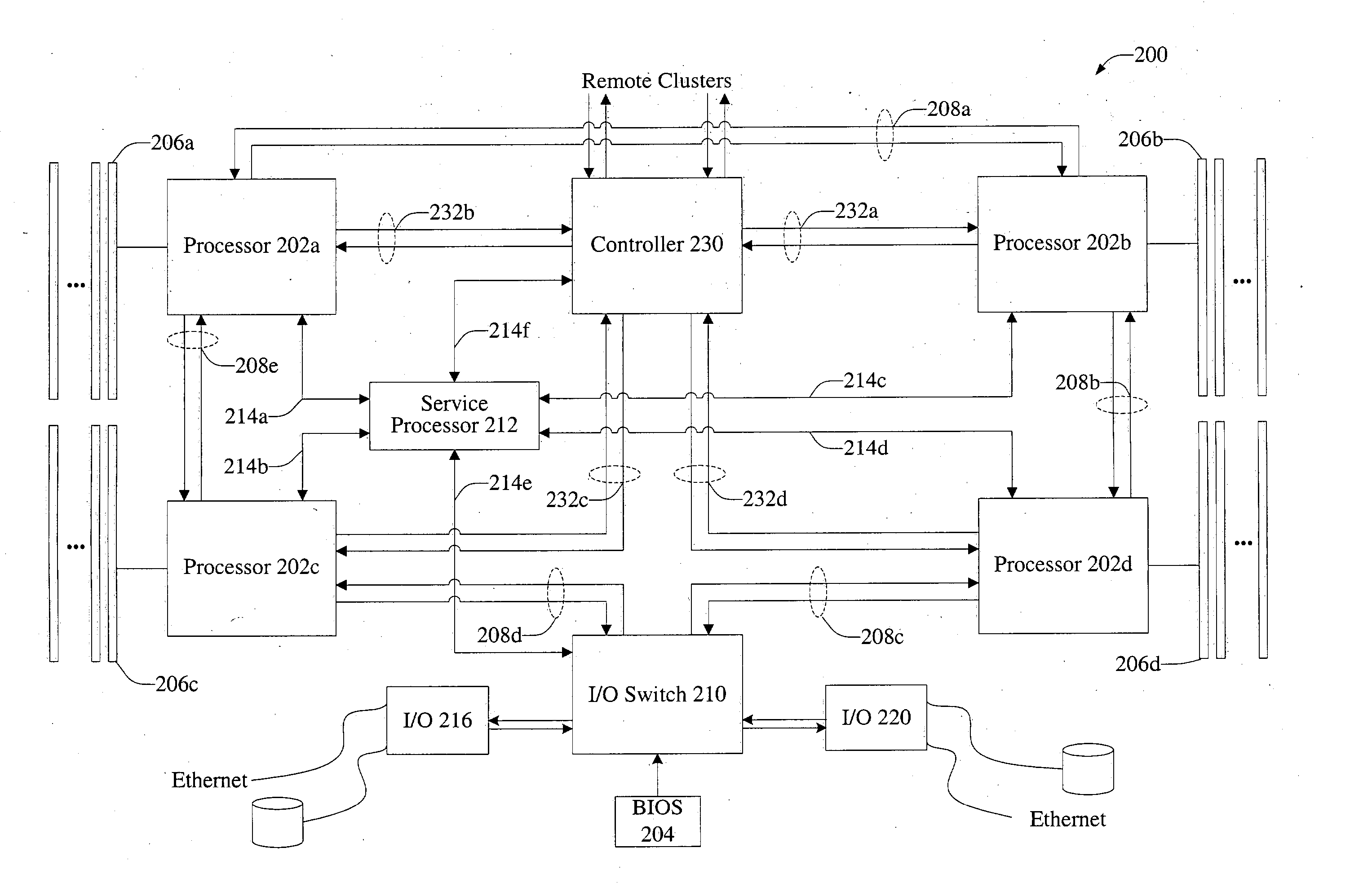

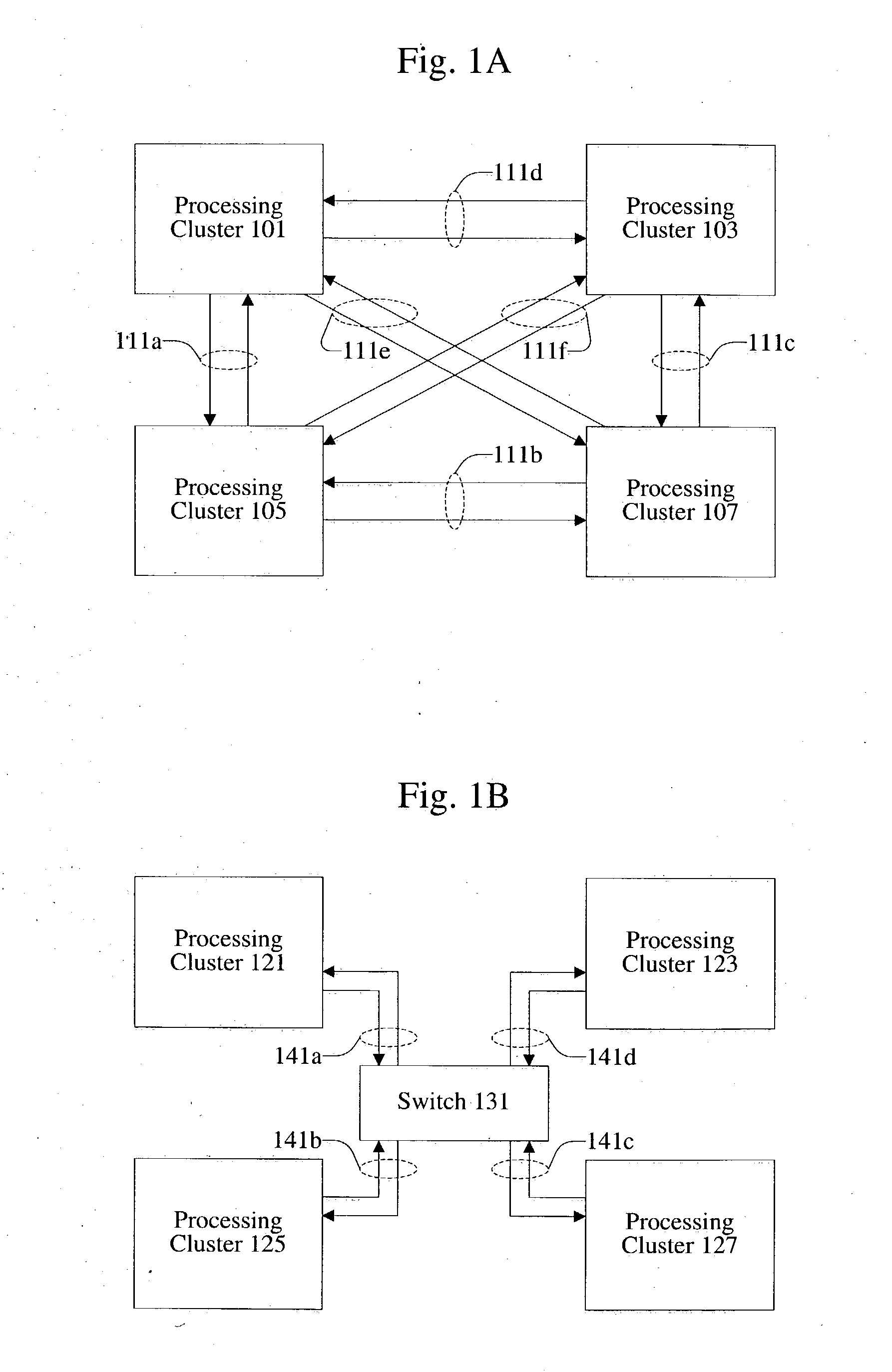

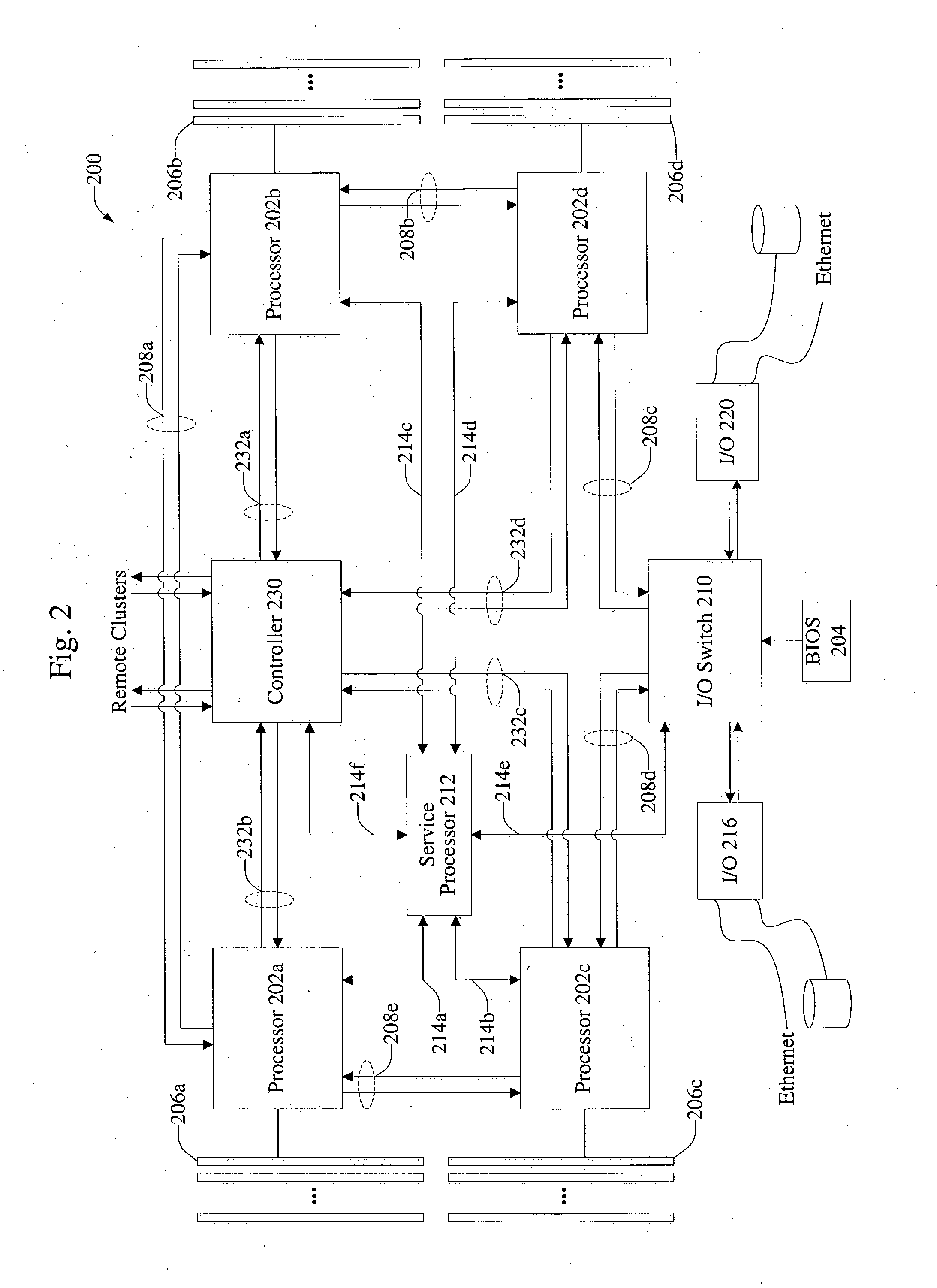

Dynamic multiple cluster system reconfiguration

InactiveUS20050021699A1General purpose stored program computerMultiple digital computer combinationsCluster systemsDistributed computing

According to the present invention, methods and apparatus are provided to allow dynamic multiple cluster system configuration changes. In one example, processors in the multiple cluster system share a virtual address space. Mechanisms for dynamically introducing and removing processors, I / O resources, and clusters are provided. The mechanisms can be implemented during reset or while a system is operating. Links can be dynamically enabled or disabled.

Owner:SANMINA-SCI CORPORATION

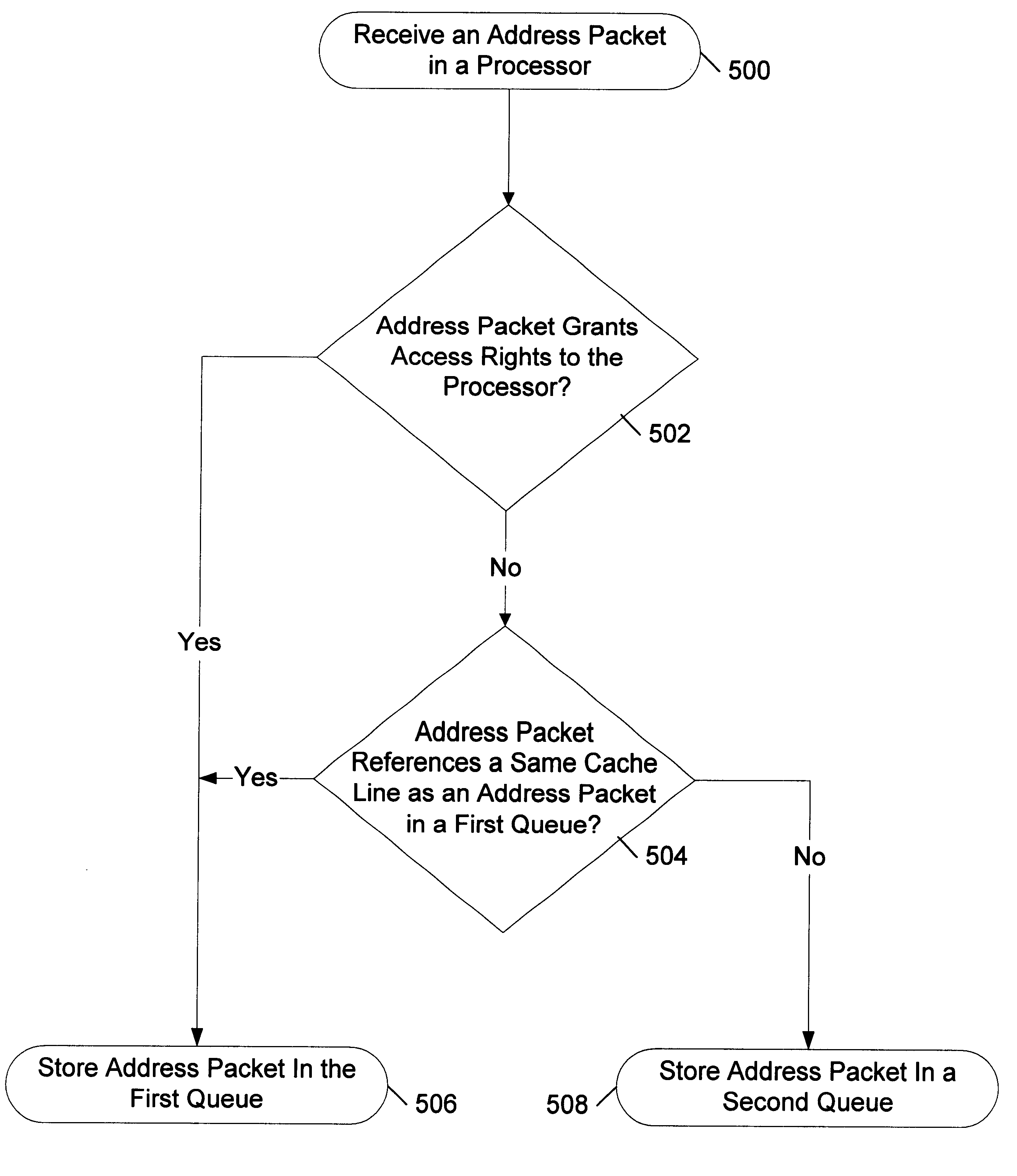

Mechanism for reordering transactions in computer systems with snoop-based cache consistency protocols

InactiveUS6484240B1Memory adressing/allocation/relocationMultiple digital computer combinationsMulti processorCache consistency

An apparatus and method for expediting the processing of requests in a multiprocessor shared memory system. In a multiprocessor shared memory system, requests can be processed in any order provided two rules are followed. First, no request that grants access rights to a processor can be processed before an older request that revokes access rights from the processor. Second, all requests that reference the same cache line are processed in the order in which they arrive. In this manner, requests can be processed out-of-order to allow cache-to-cache transfers to be accelerated. In particular, foreign requests that require a processor to provide data can be processed by that processor before older local requests that are awaiting data. In addition, newer local requests can be processed before older local requests. As a result, the apparatus and method described herein may advantageously increase performance in multiprocessor shared memory systems by reducing latencies associated with a cache consistency protocol.

Owner:ORACLE INT CORP

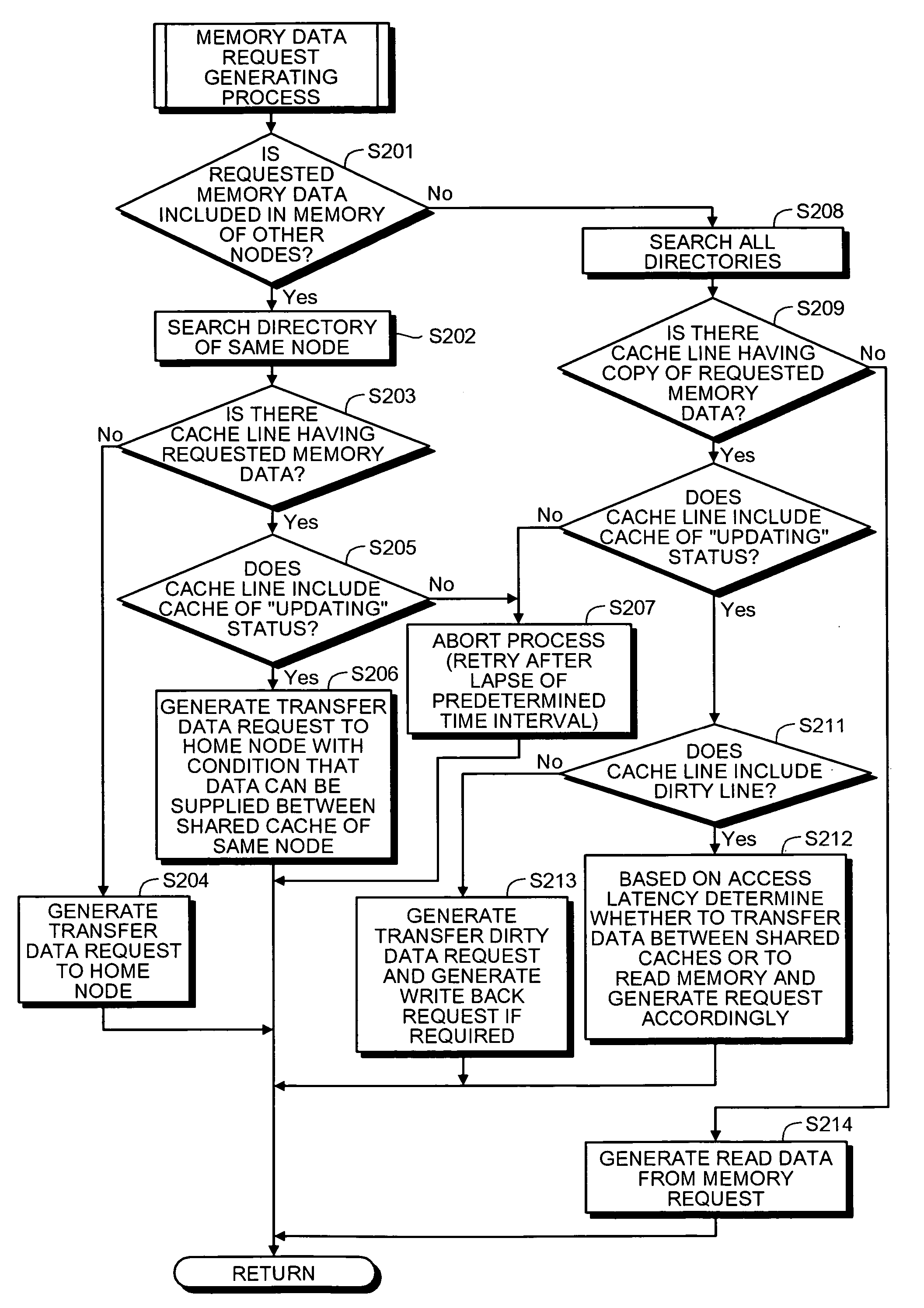

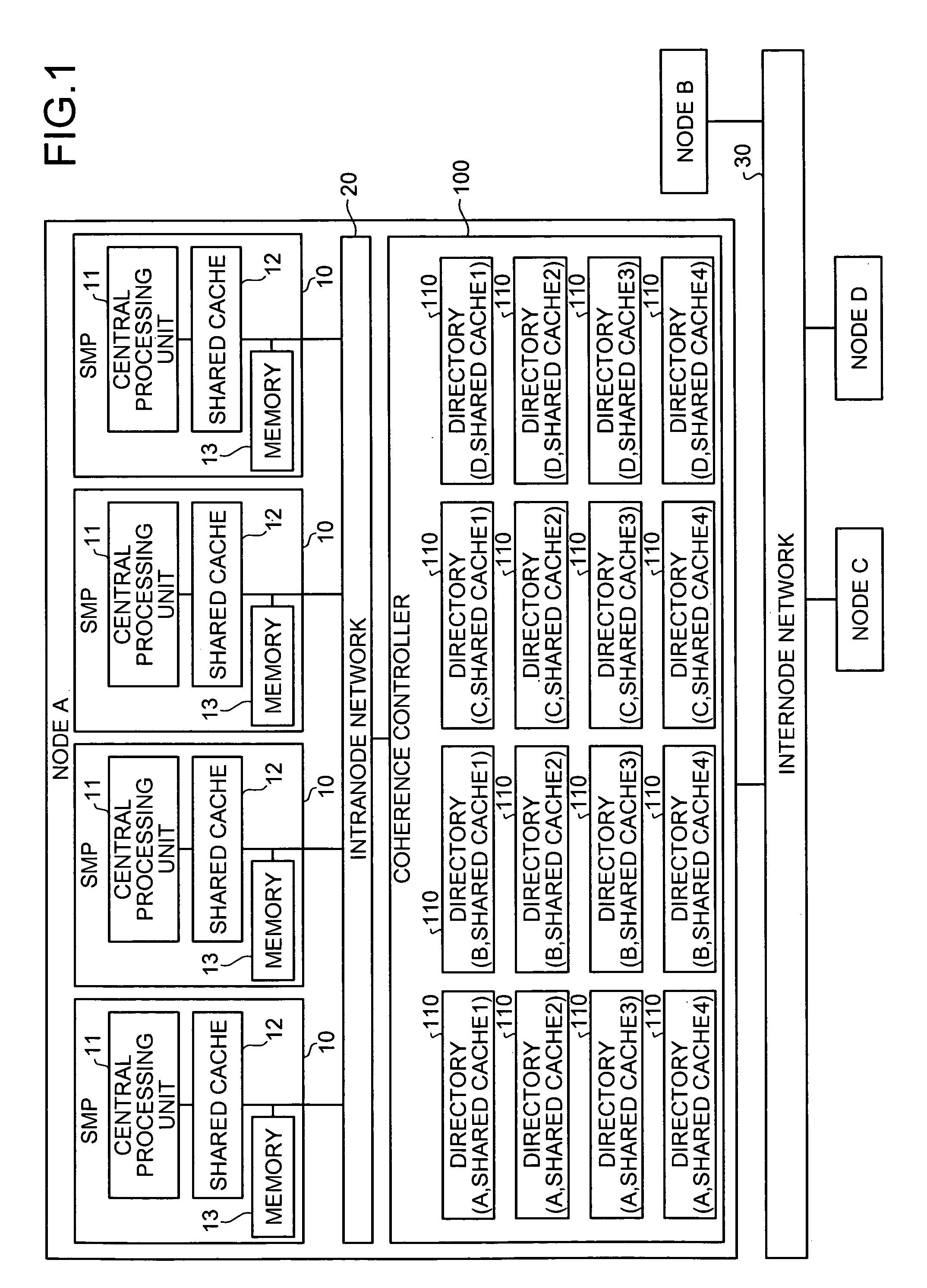

Method and system for maintaining cache coherence of distributed shared memory system

A distributed shared memory system includes a plurality of nodes. Each of the nodes includes a plurality of shared multiprocessors. Each of the shared multiprocessors includes a processor, a shared cache, and a memory. Each of the nodes includes a coherence maintaining unit that maintains cache coherence based on a plurality of directories each of which corresponding to each of the shared caches included in the distributed shared memory system.

Owner:FUJITSU LTD

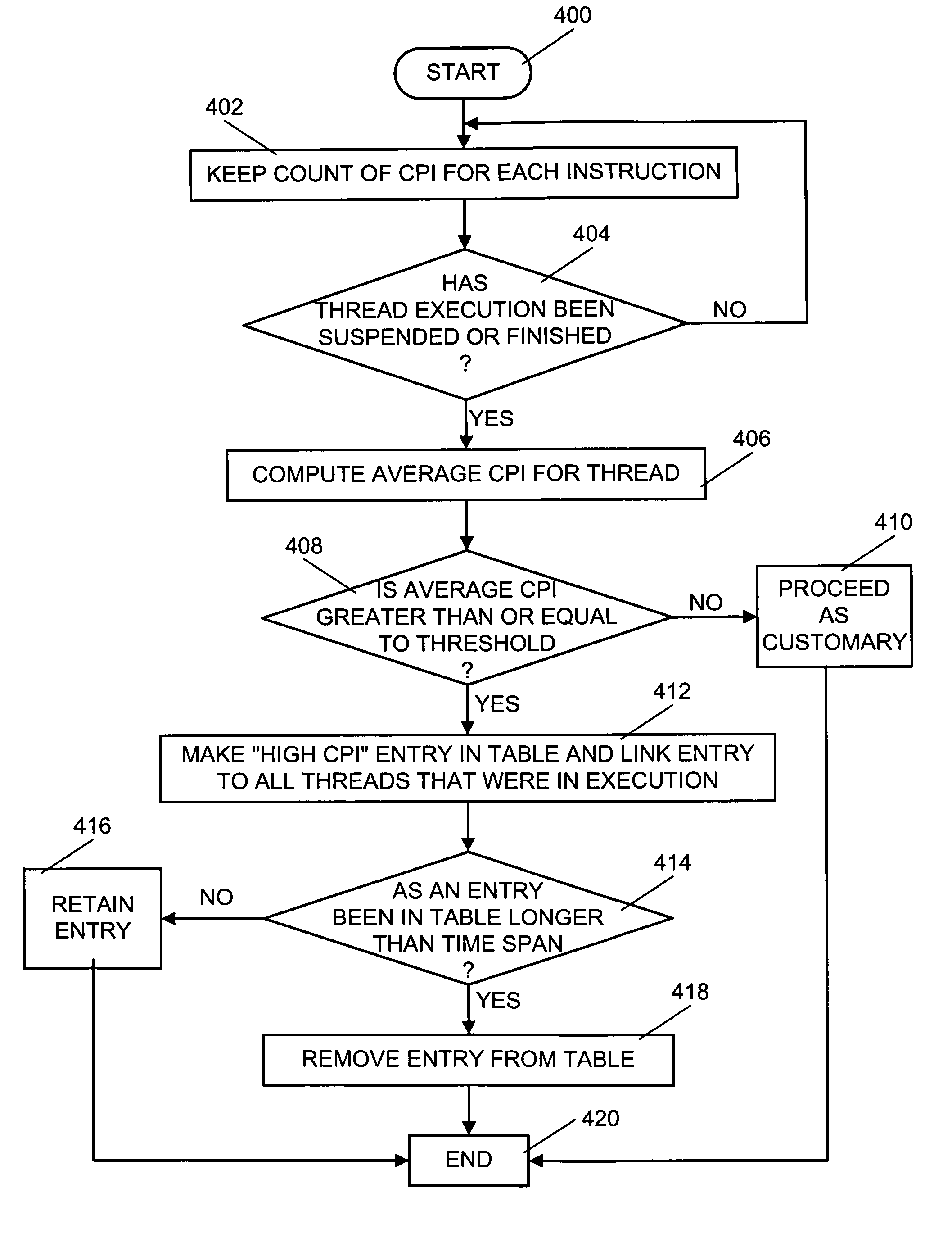

System, application and method of reducing cache thrashing in a multi-processor with a shared cache on which a disruptive process is executing

InactiveUS20060036810A1Reducing cache thrashingLow affinityUnauthorized memory use protectionProgram controlMulti processorParallel computing

A system, apparatus and method of reducing cache thrashing in a multi-processor with a shared cache executing a disruptive process (i.e., a thread that has a poor cache affinity or a large cache footprint) are provided. When a thread is dispatched for execution, a table is consulted to determine whether the dispatched thread is a disruptive thread. If so, a system idle process is dispatched to the processor sharing a cache with the processor executing the disruptive thread. Since the system idle process may not use data intensively, cache thrashing may be avoided.

Owner:IBM CORP

Automatic group formation and group detection through media recognition

ActiveUS20150227609A1Digital data processing detailsRelational databasesMessage passingGroup detection

Disclosed is a method and system for automatically forming a group to share a media file or adding the media file to an existing group. The method and system includes receiving, by a processor, a media file comprising an image, identifying, by the processor, the face of a first person in the image and the face of a second person in the image, identifying, by the processor, a first profile of the first person from the face of the first person and a second profile of the second person from the face of the second person, automatically forming, by the processor, a group of recipients in a messaging application, the forming of the group of recipients based on the first profile and the second profile, the group of recipients comprising the first person and the second person; and sharing, by the processor, the media file with the group.

Owner:VERIZON PATENT & LICENSING INC

Method and apparatus for controlling a peripheral via different data ports

A peripheral such as a printer is controlled to receive data from different data ports. A first port receives data formatted according to a first protocol. A second port receives data formatted according to a second protocol. A first processor is associated with the peripheral. A second processor is associated with the second port. A switch is adapted to receive (i) first data from the first port and (ii) second data from the second port after processing by the second processor. The switch is controlled in response to a command received via the second port to couple either the first data or second data to the first processor for use in controlling the peripheral. New firmware can be downloaded to the peripheral via the second port in response to the command. The command can be communicated to the first processor via a separate port at the first processor and a memory shared with the second processor.

Owner:TRANSACT TECH

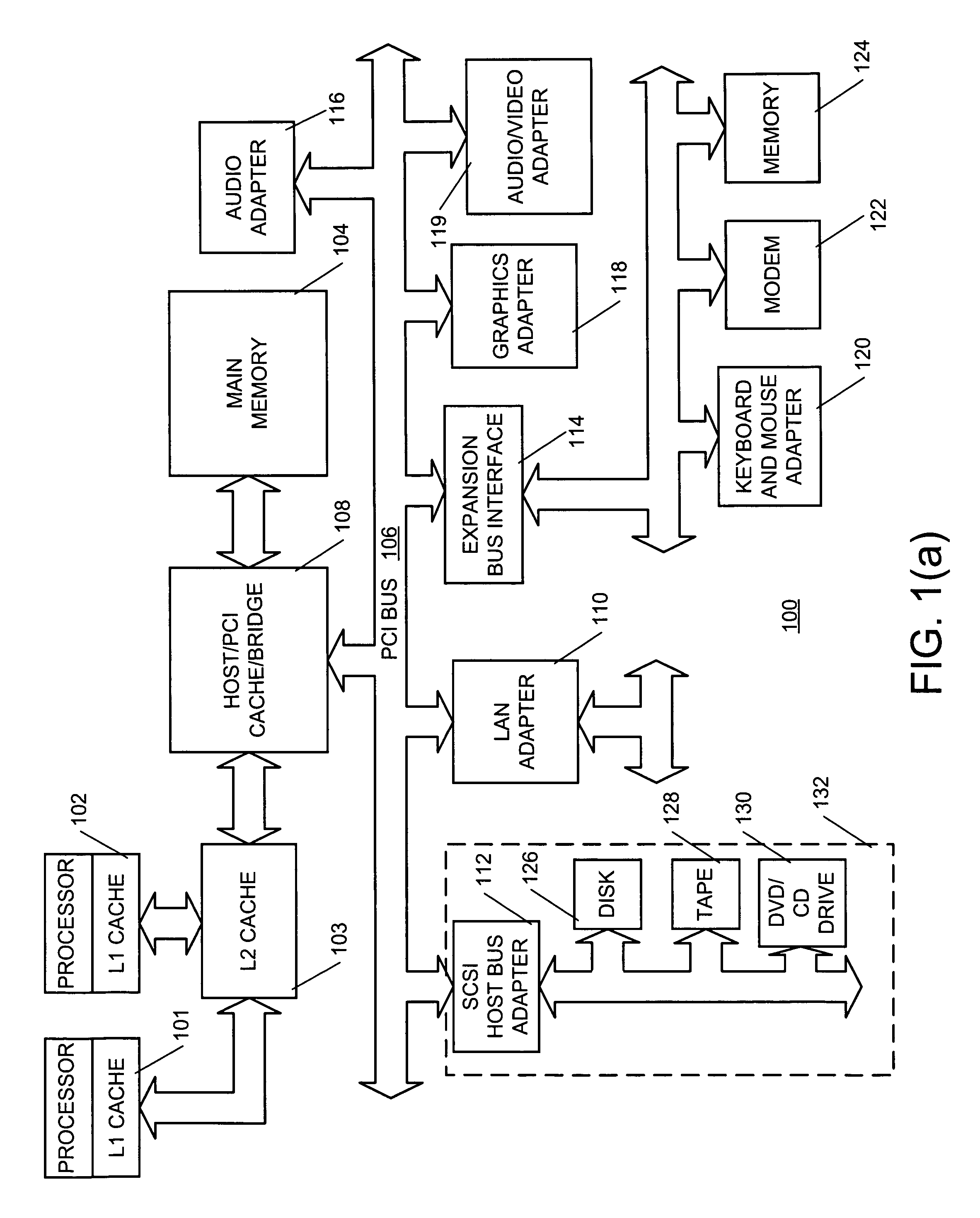

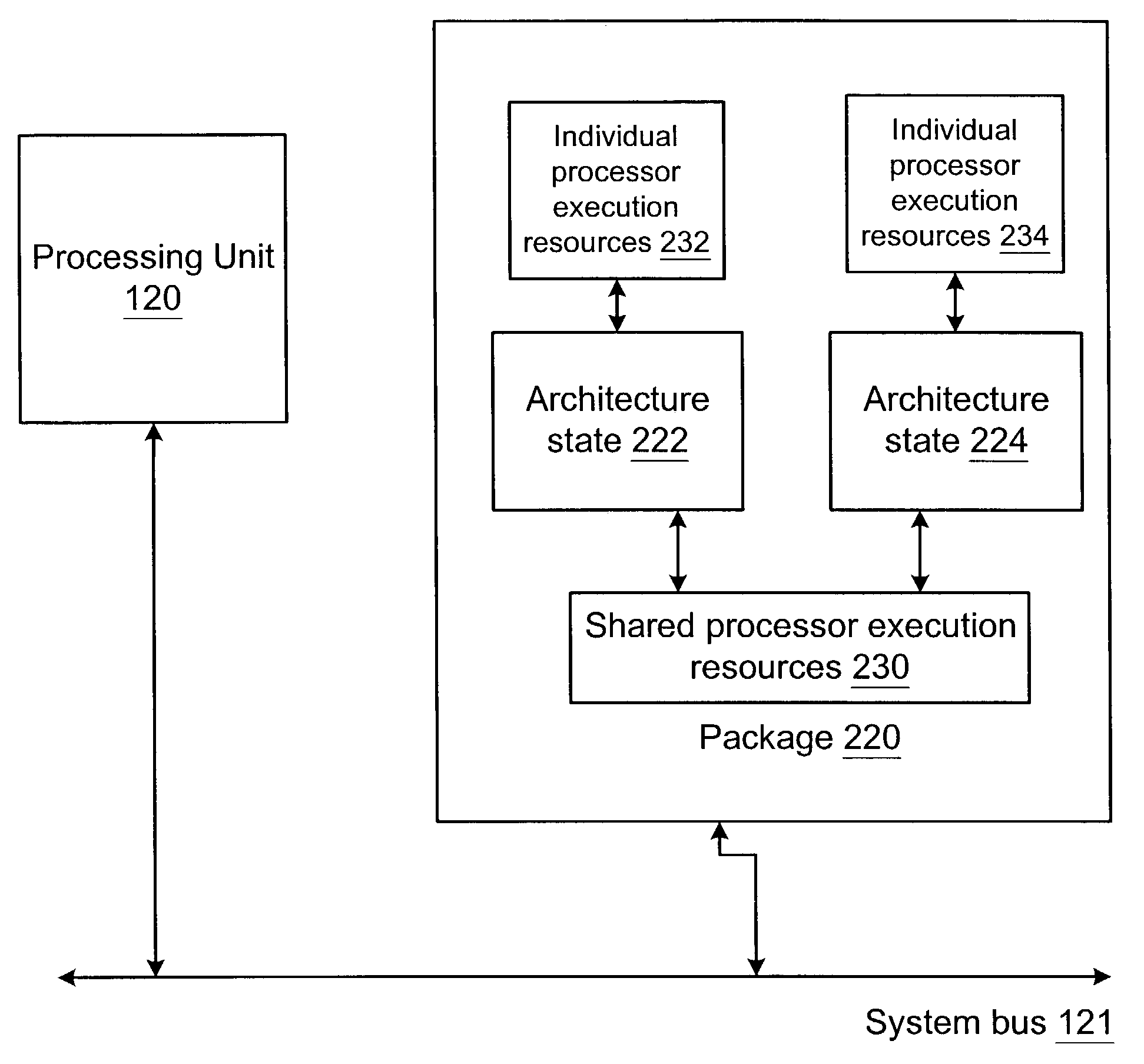

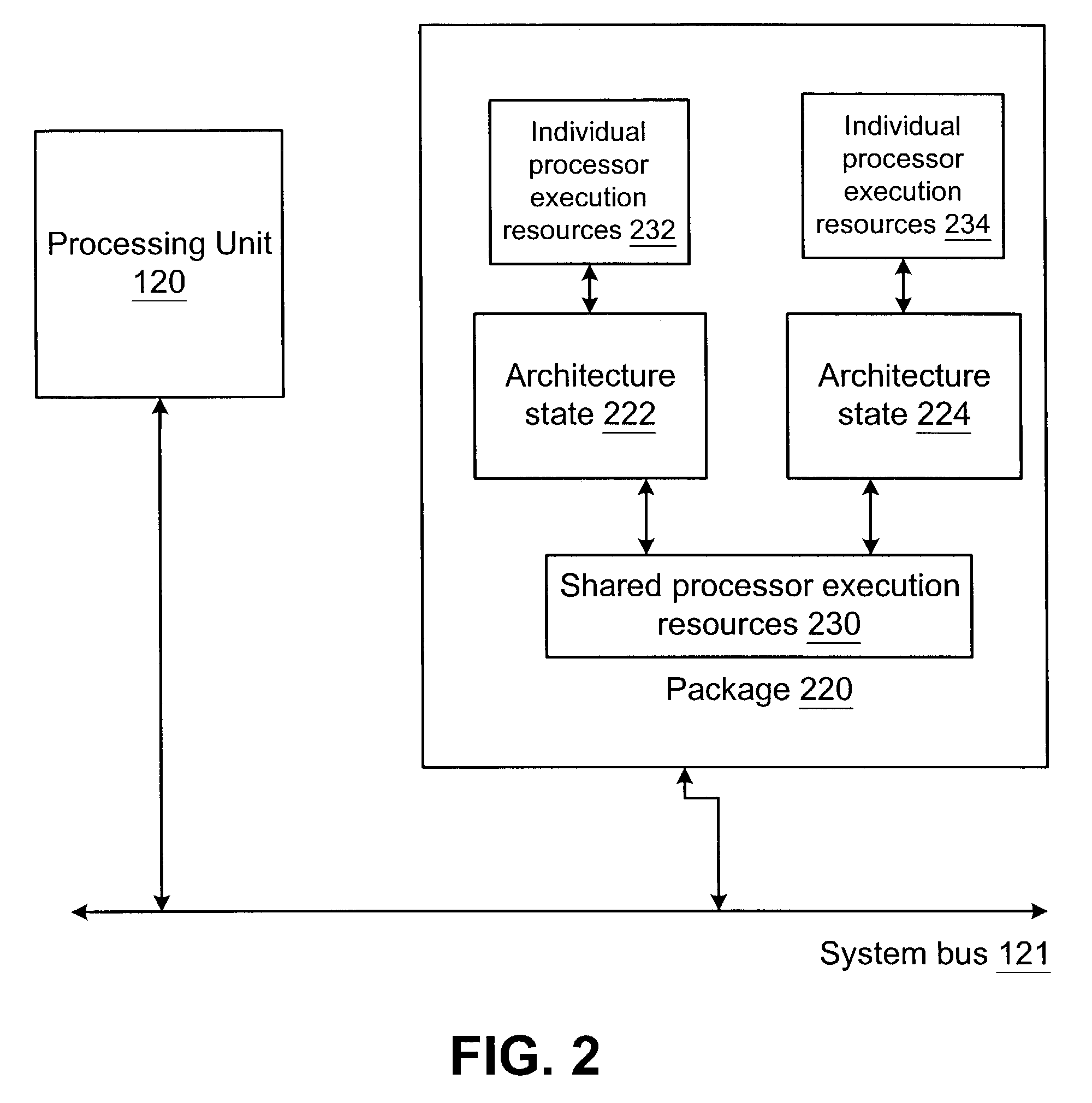

Methods and systems for cooperative scheduling of hardware resource elements

Cooperatively scheduling hardware resources by providing information on shared resources within processor packages to the operating system. Logical processors may be included in packages in which some or all processor execution resources are shared among logical processors. In order to better schedule thread execution, information regarding which logical processors are sharing processor execution resources and information regarding which system resources are shared among processor packages is provided to the operating system. Extensions to the SRAT (static resource affinity table) can be used to provide this information.

Owner:MICROSOFT TECH LICENSING LLC

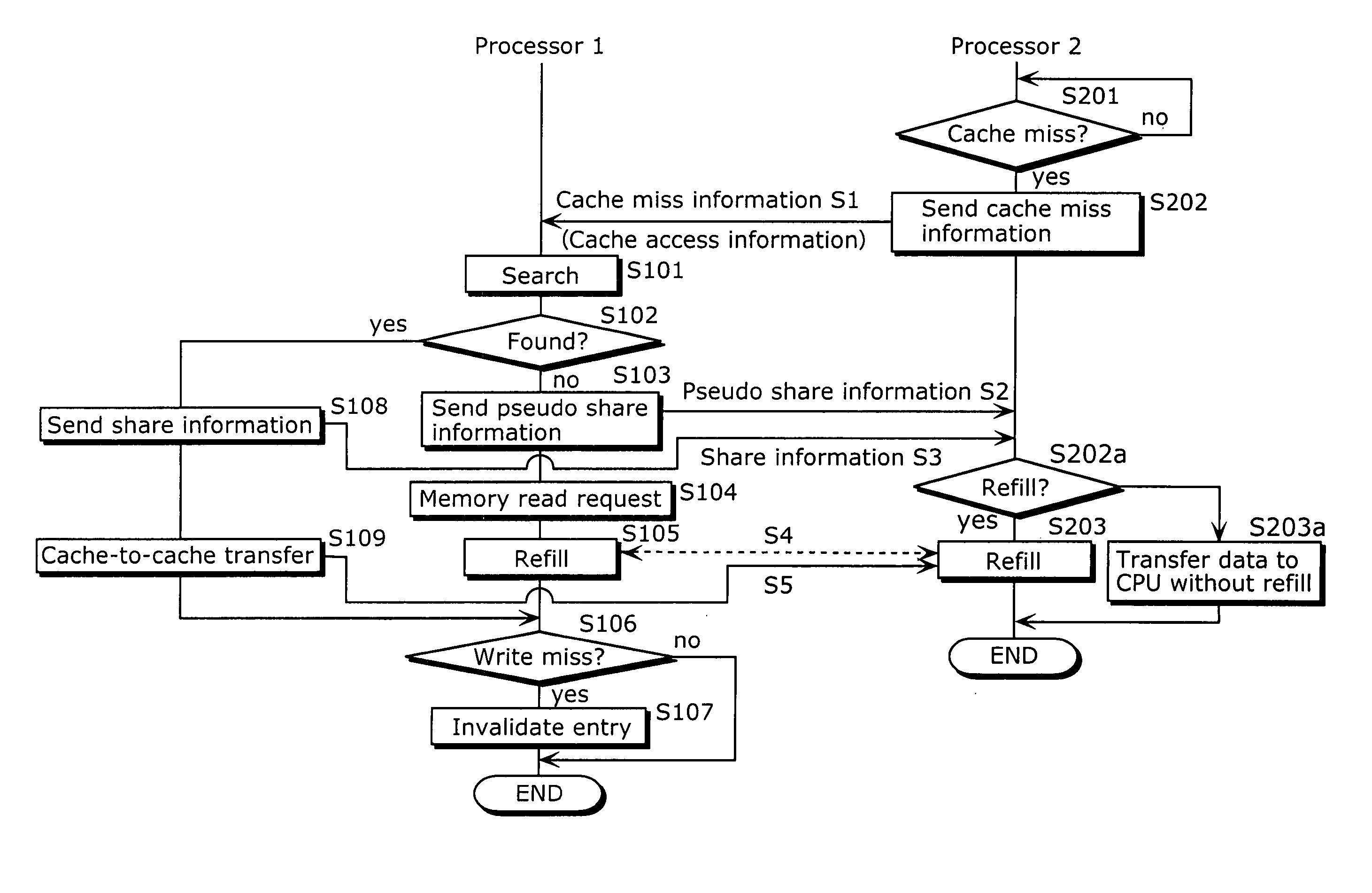

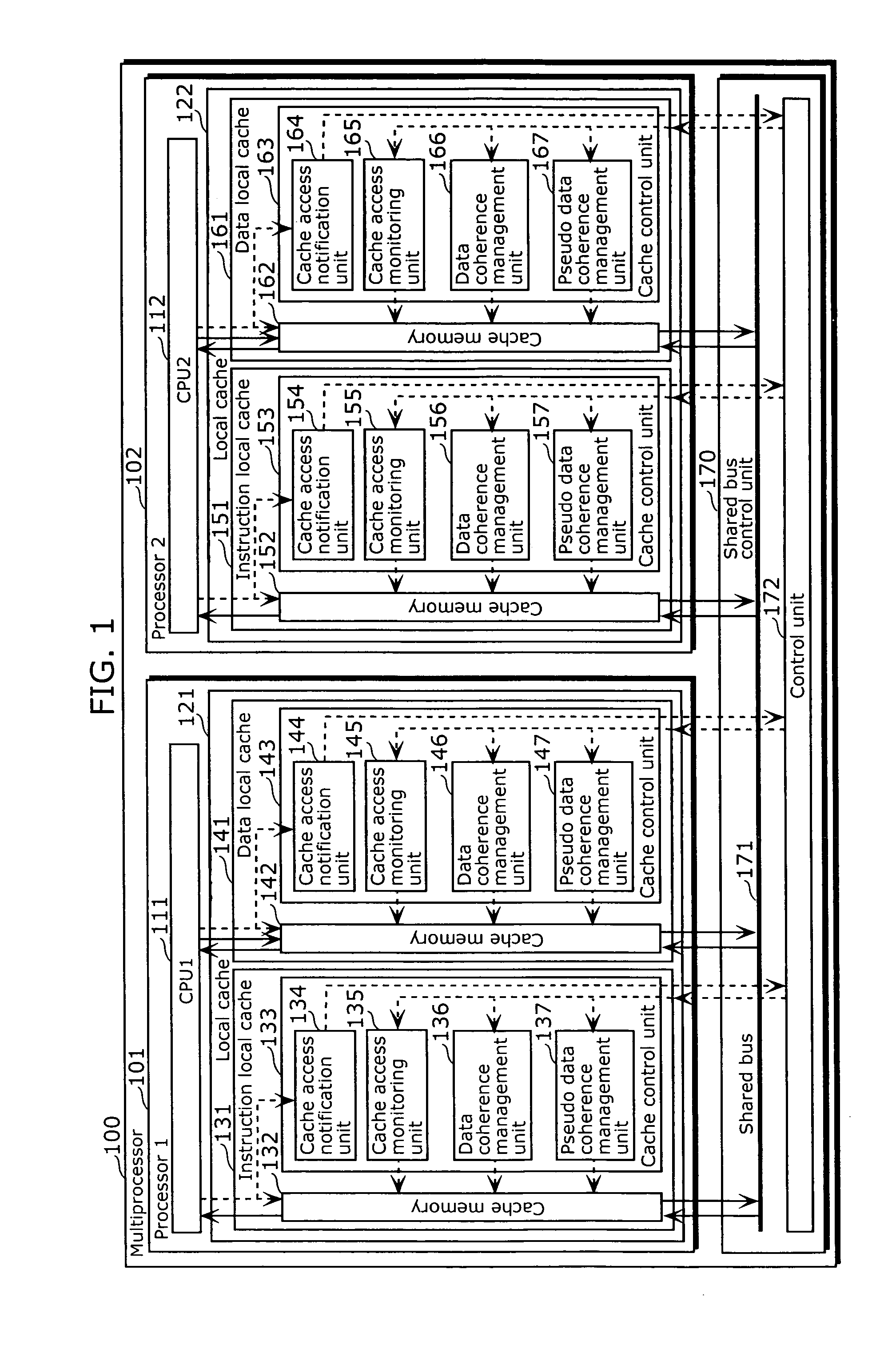

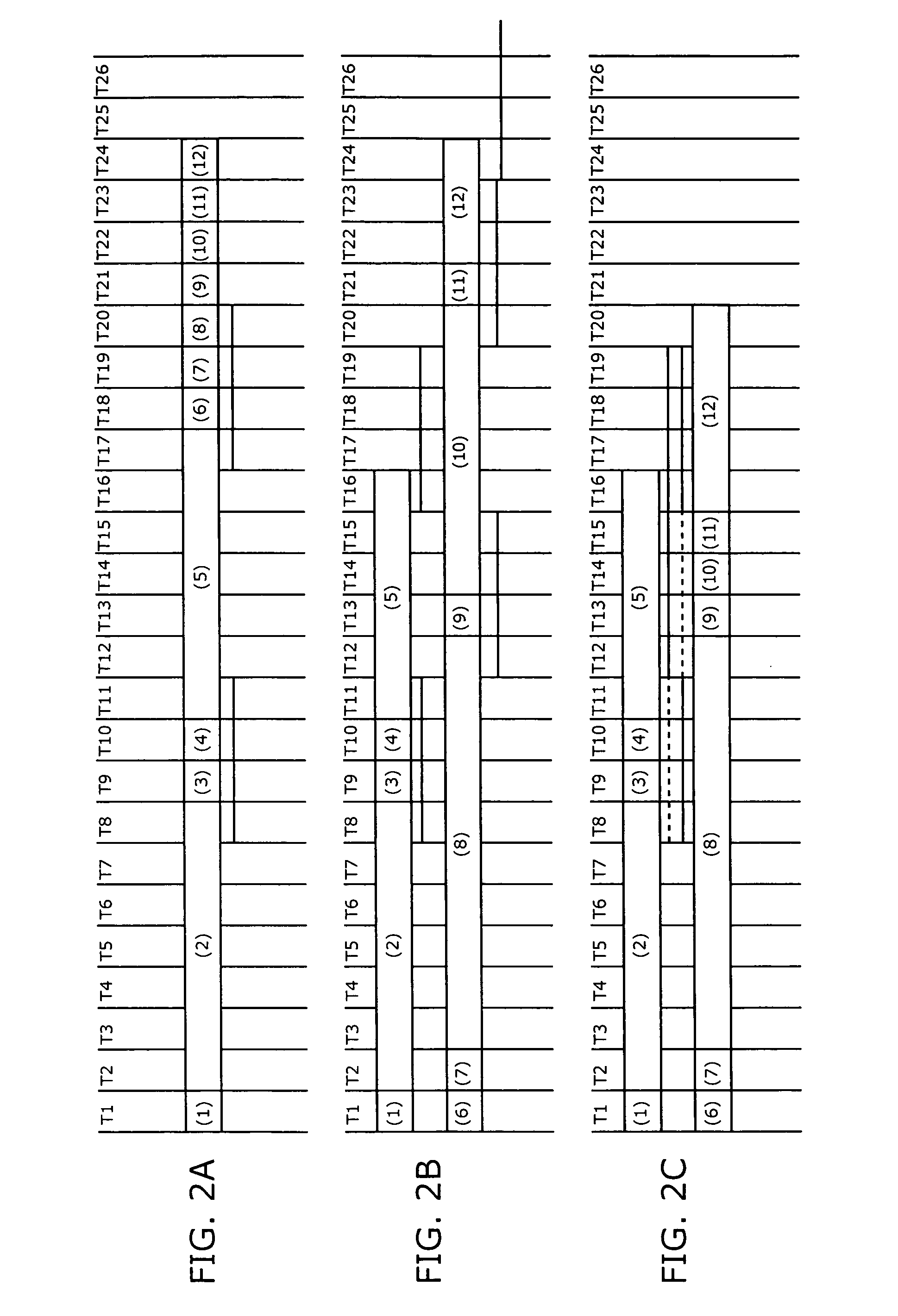

Multiprocessing apparatus

InactiveUS20060059317A1Miss occurrence ratioReducing bus contentionMemory systemsComputer architectureControl cell

The multiprocessing apparatus of the present invention is a multiprocessing apparatus including a plurality of processors, a shared bus, and a shared bus controller, wherein each of the processors includes a central processing unit (CPU) and a local cache, each of the local caches includes a cache memory, and a cache control unit that controls the cache memory, each of the cache control units includes a data coherence management unit that manages data coherence between the local caches by controlling data transfer carried out, via the shared bus, between the local caches, wherein at least one of the cache control units (a) monitors a local cache access signal, outputted from another one of the processors, for notifying an occurrence of a cache miss, and (b) notifies pseudo information to the another one of the processors via the shared bus controller, the pseudo information indicating that data corresponding to the local cache access signal is stored in the cache memory of the local cache that includes the at least one of the cache control units, even in the case where the data corresponding to the local cache access signal is not actually stored.

Owner:PANASONIC CORP

Method for prefetching non-contiguous data structures

InactiveUS7529895B2Efficient accessMemory architecture accessing/allocationProgram synchronisationMulti processorLatency (engineering)

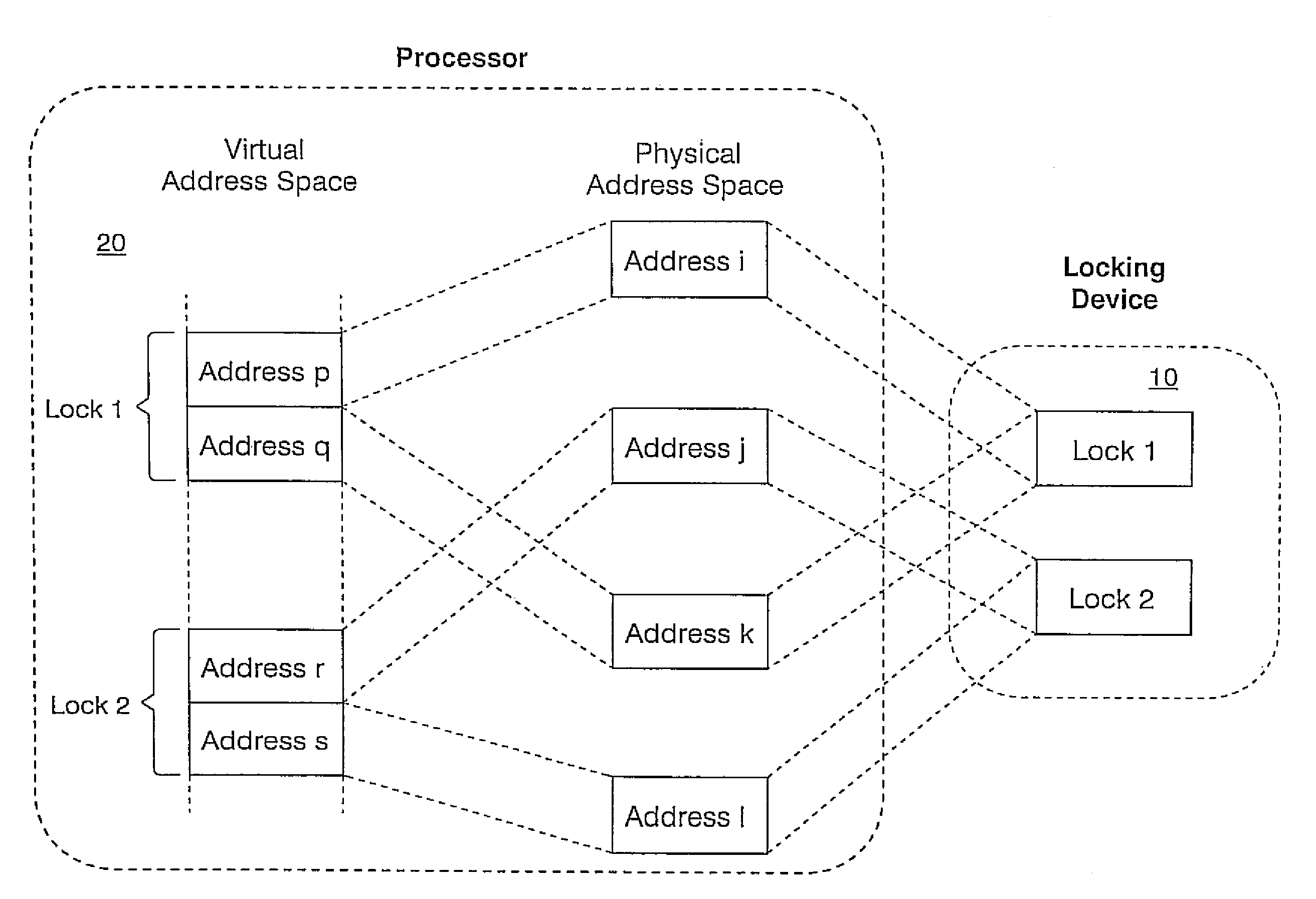

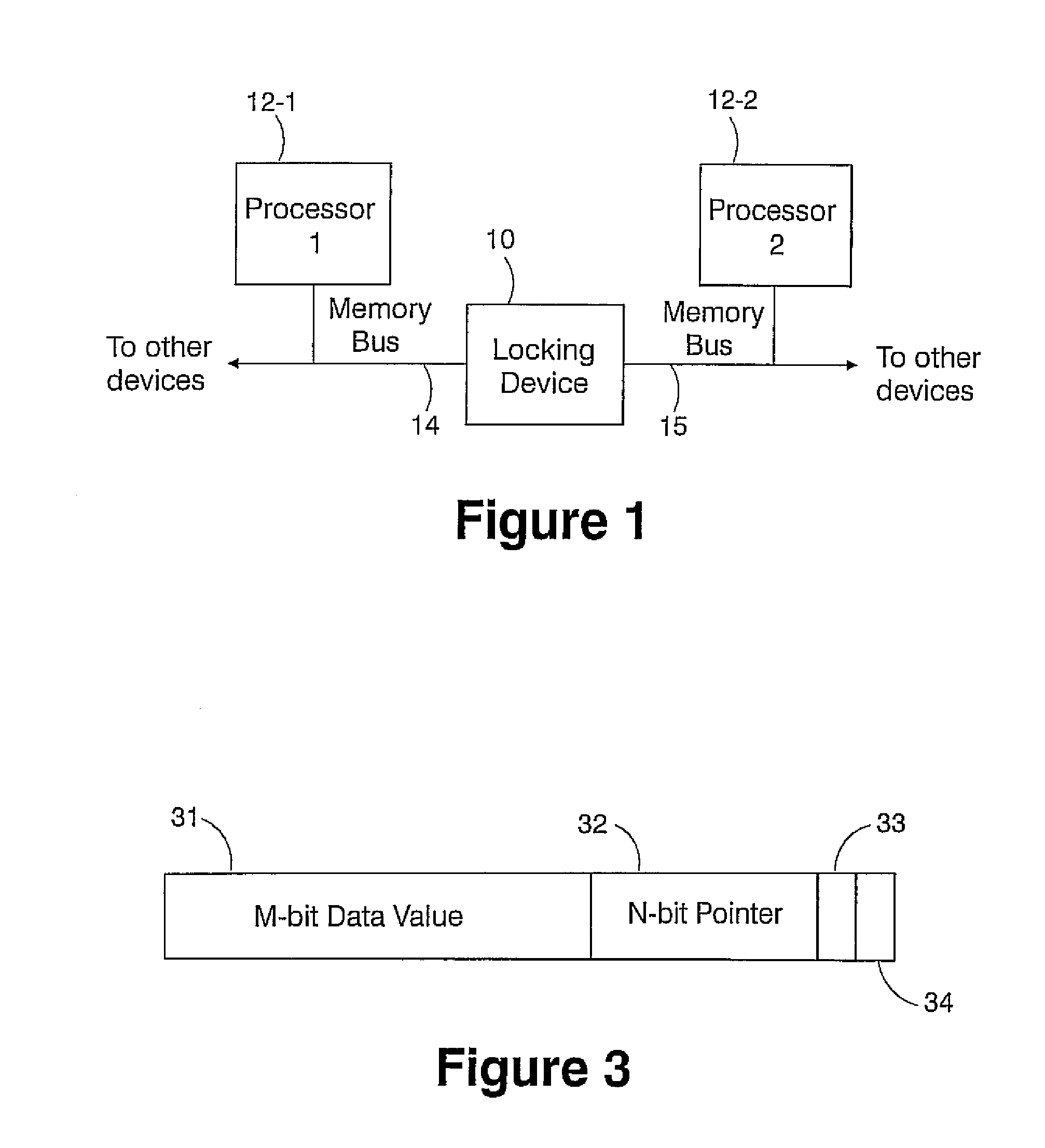

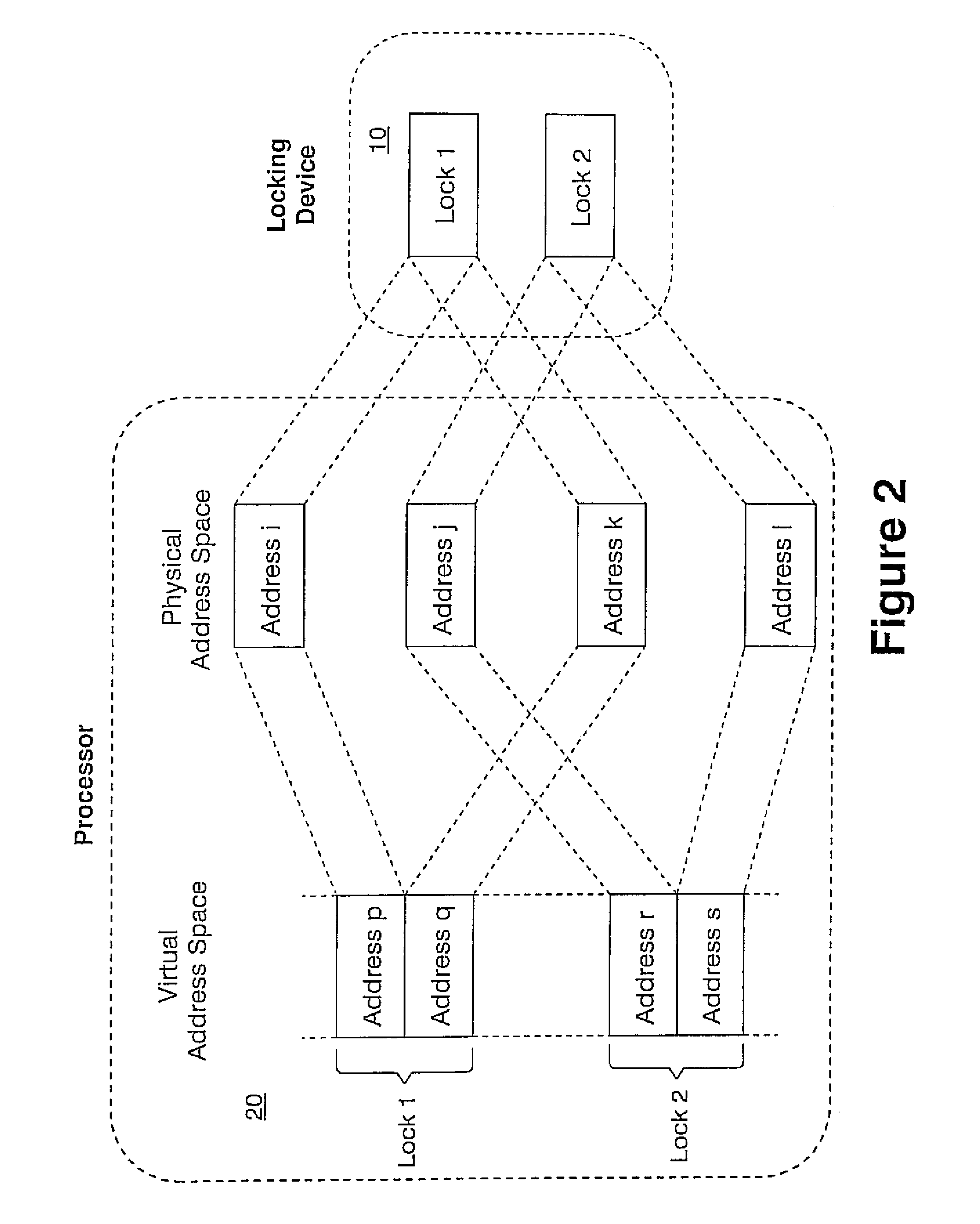

A low latency memory system access is provided in association with a weakly-ordered multiprocessor system. Each processor in the multiprocessor shares resources, and each shared resource has an associated lock within a locking device that provides support for synchronization between the multiple processors in the multiprocessor and the orderly sharing of the resources. A processor only has permission to access a resource when it owns the lock associated with that resource, and an attempt by a processor to own a lock requires only a single load operation, rather than a traditional atomic load followed by store, such that the processor only performs a read operation and the hardware locking device performs a subsequent write operation rather than the processor. A simple perfecting for non-contiguous data structures is also disclosed. A memory line is redefined so that in addition to the normal physical memory data, every line includes a pointer that is large enough to point to any other line in the memory, wherein the pointers to determine which memory line to prefect rather than some other predictive algorithm. This enables hardware to effectively prefect memory access patterns that are non-contiguous, but repetitive.

Owner:INT BUSINESS MASCH CORP

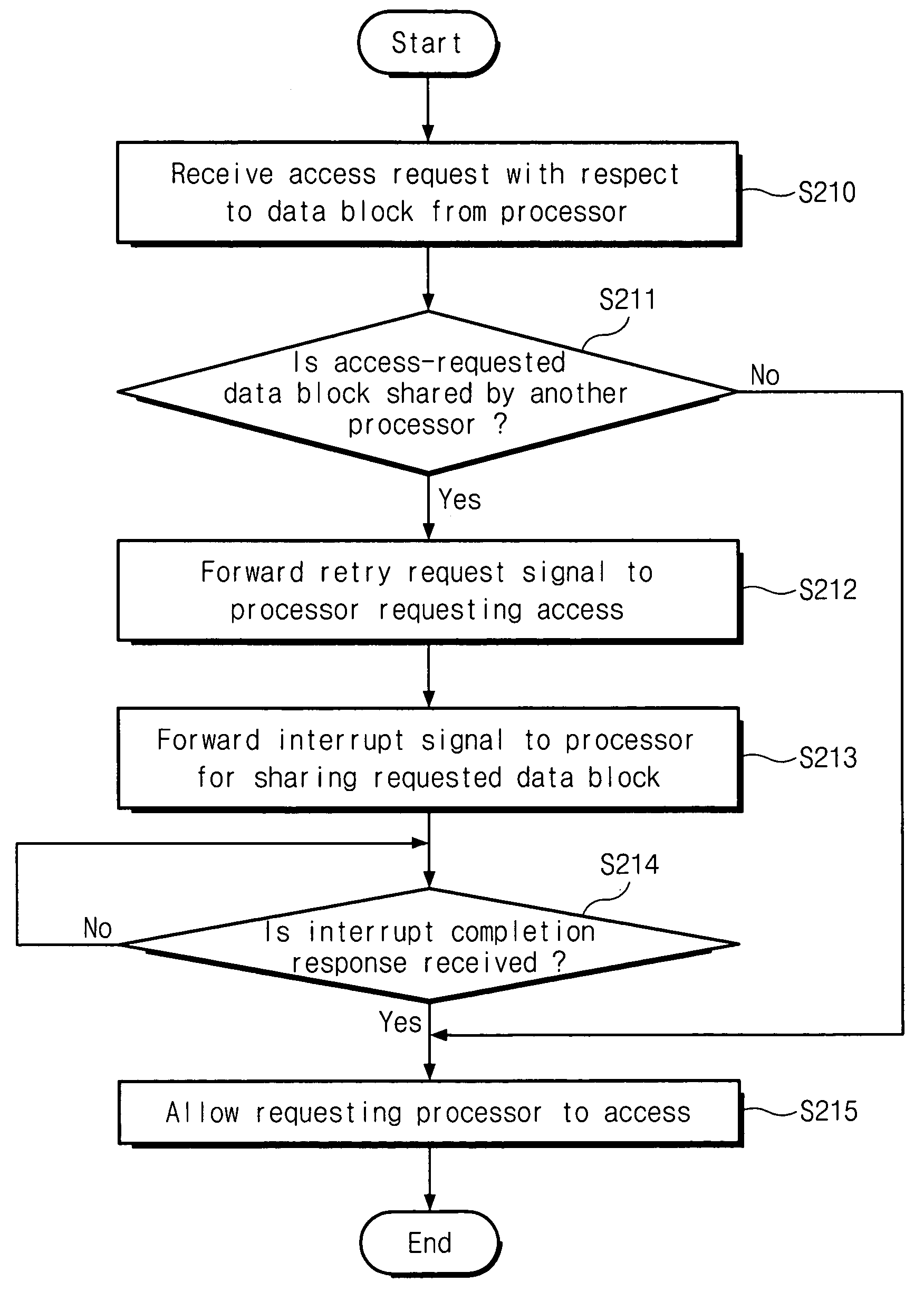

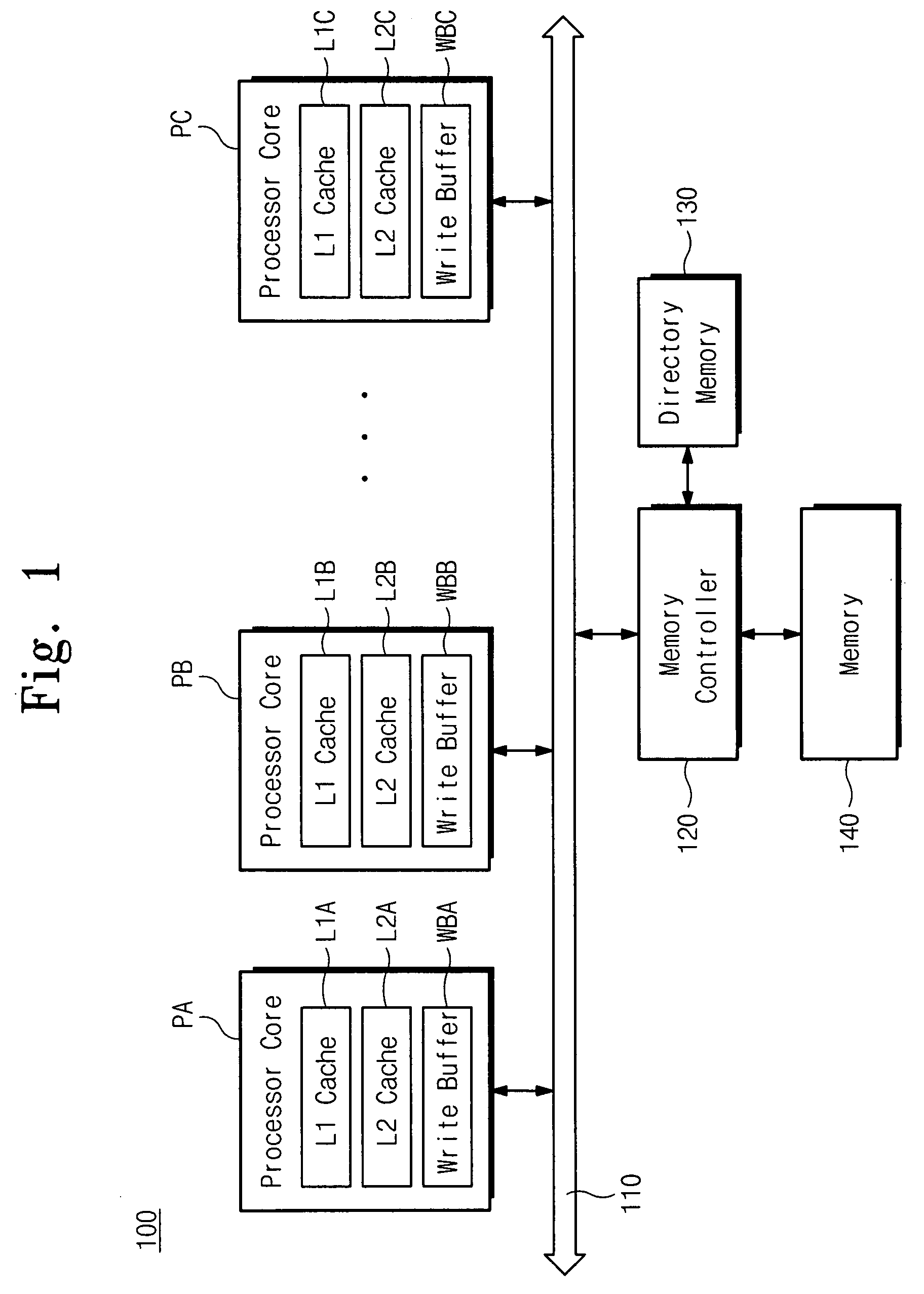

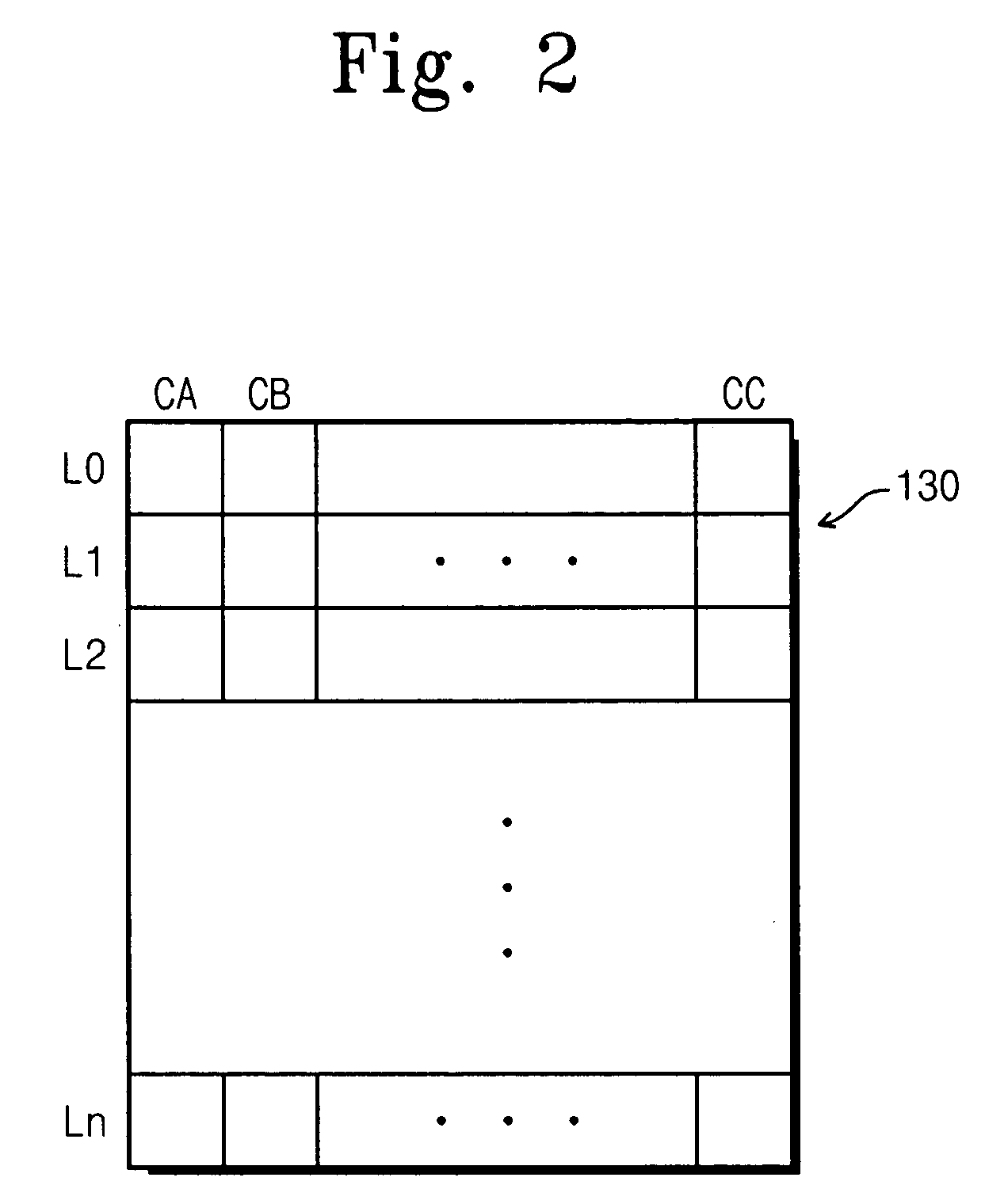

Multiprocessor system and method to maintain cache coherence

ActiveUS20050021914A1Energy efficient ICTMemory adressing/allocation/relocationWrite bufferMulti processor

A multiprocessor system may have a plurality of processors and a memory unit. Each of the processors may include at least one cache memory. The memory unit may be shared by two of the processors. The multiprocessor system may further include a control unit. If the multiprocessor system receives an access request for a data block of the memory unit from one processor, the control unit may forward an interrupt signal to another processor that shares the requested data block. The directory memory may store information indicating the processors that share data blocks of the memory unit. A memory controller unit may include the memory unit, the directory memory, and a control unit connected to memory unit and the directory memory. Each of the processors may include a processor core including a write buffer and a cache memory. The processors may also include a processing unit. When the processor shares a data block, the processing unit may invalidate the shared data block in the cache memory, write the shared data block from the write buffer to a memory unit, and forward an interrupt completion response to a control unit. When the processor attempts to obtain data, the processing unit may forward an access request for a data block of a memory unit, receive a retry request signal from a control unit, and insert the data block into the cache memory of the processor upon receiving an authorization signal from the control unit.

Owner:SAMSUNG ELECTRONICS CO LTD

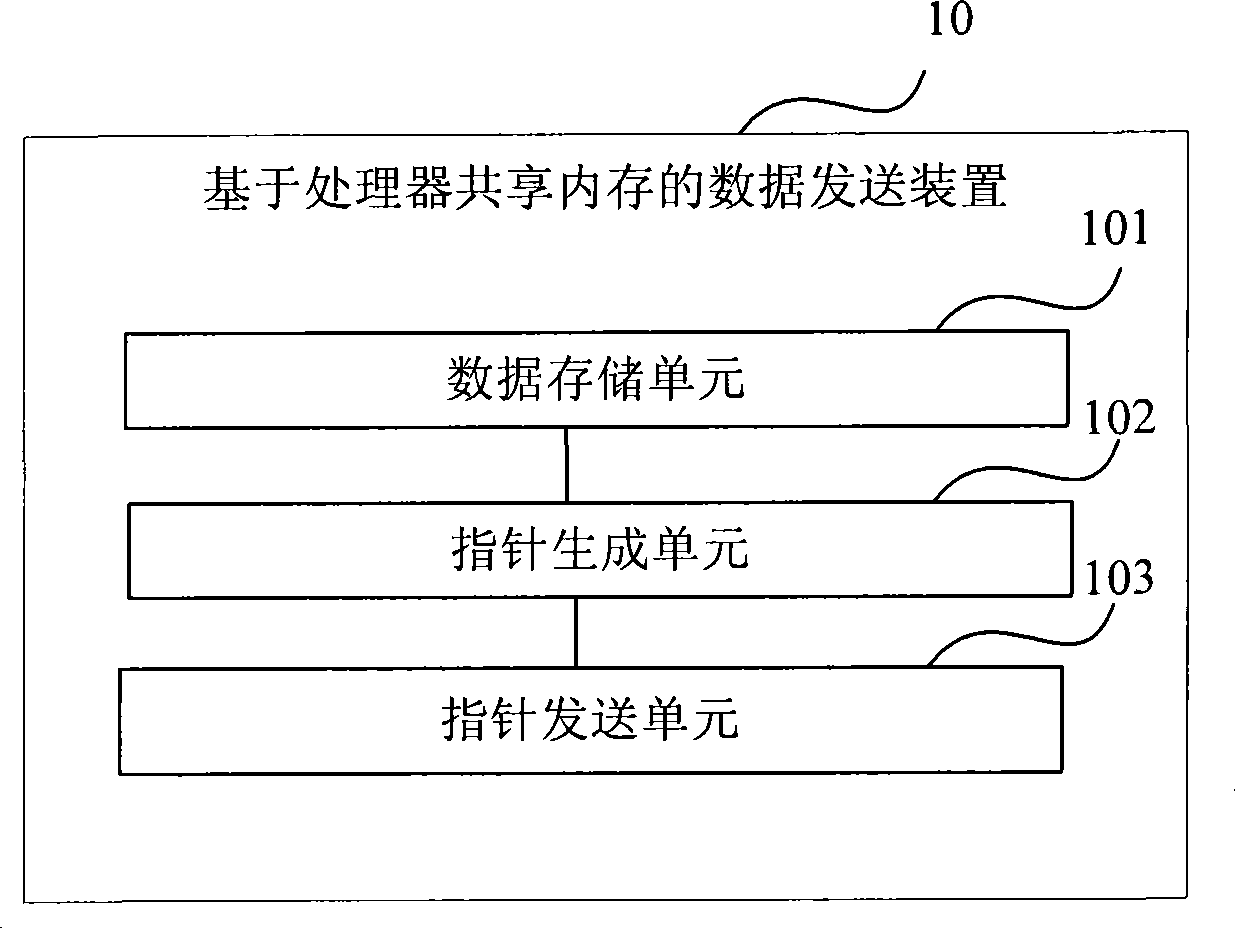

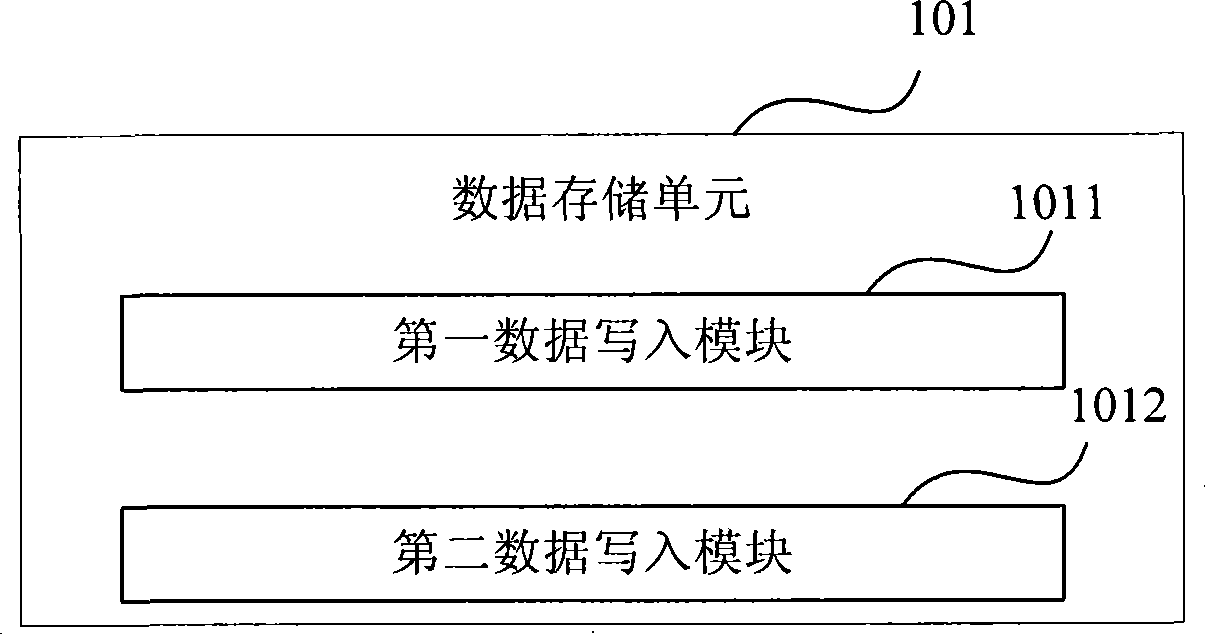

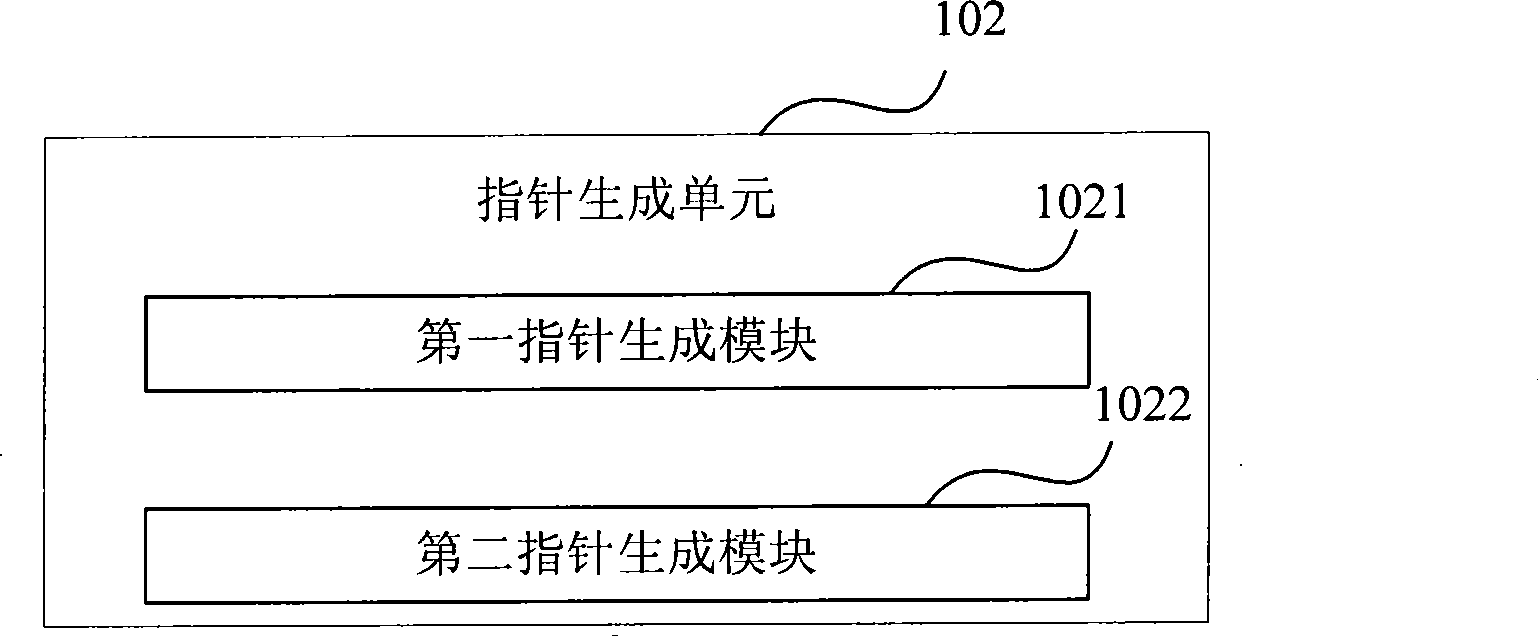

Data transmitting and receiving method and device based on processor sharing internal memory

ActiveCN101504617AOvercome the problem of multiple replicationReduce overheadDigital computer detailsMultiprogramming arrangementsInternal memoryShared memory

The invention provides a method and a device for sending / receiving data on the basis of processor shared memory, wherein the data-sending method based on processor shared memory comprises the following steps of writing data into at least one memory block in a shared memory pool of a processor, generating a memory block pointer according to the address information of the memory block and sending the memory block pointer to a receiver. The invention also provides a data-sending device based on processor shared memory, as well as a method and a device for receiving data on the basis of processor shared memory. As the invention adopts a technical means of transmitting the memory block pointer instead of message data, the invention has the advantages of solving the problem that the message data is repeatedly copied during message transmission and achieving the technical effect of reducing overhead for both memory and time.

Owner:HUAWEI TECH CO LTD

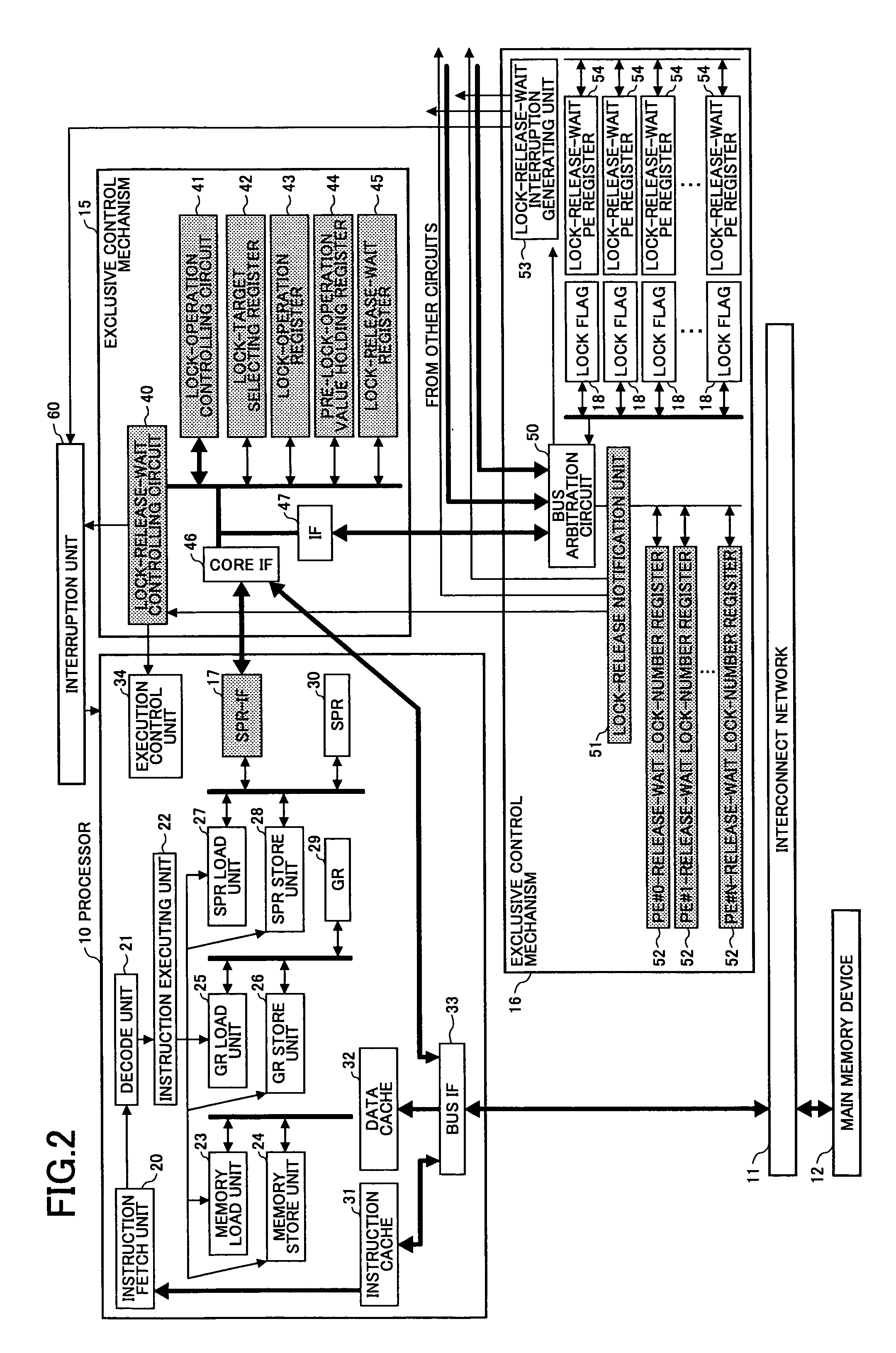

Multiprocessor system with high-speed exclusive control

A multiprocessor system includes a plurality of processors, a shared bus coupled to the plurality of processors, a resource coupled to the shared bus and shared by the plurality of processors, and an exclusive control unit coupled to the plurality of processors and configured to include a lock flag indicative of a locked / unlocked state regarding exclusive use of the resource, wherein the processors include a special purpose register interface coupled to the exclusive control unit, and are configured to access the lock flag by special purpose register access through the special purpose register interface.

Owner:FUJITSU LTD

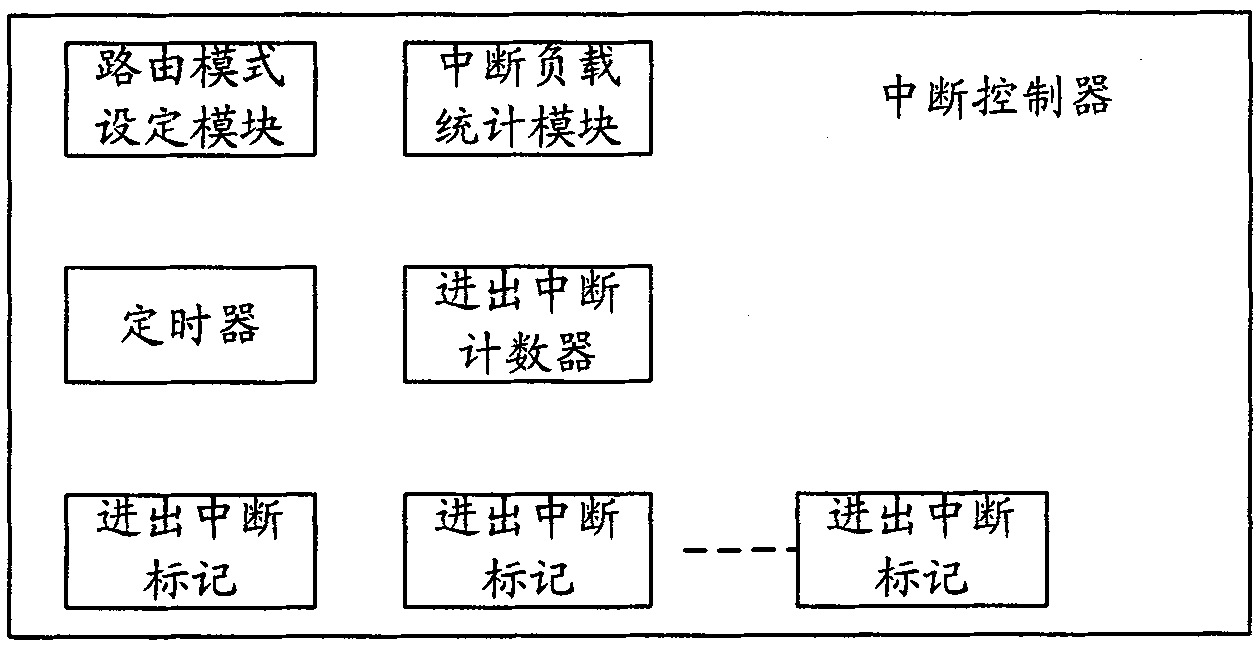

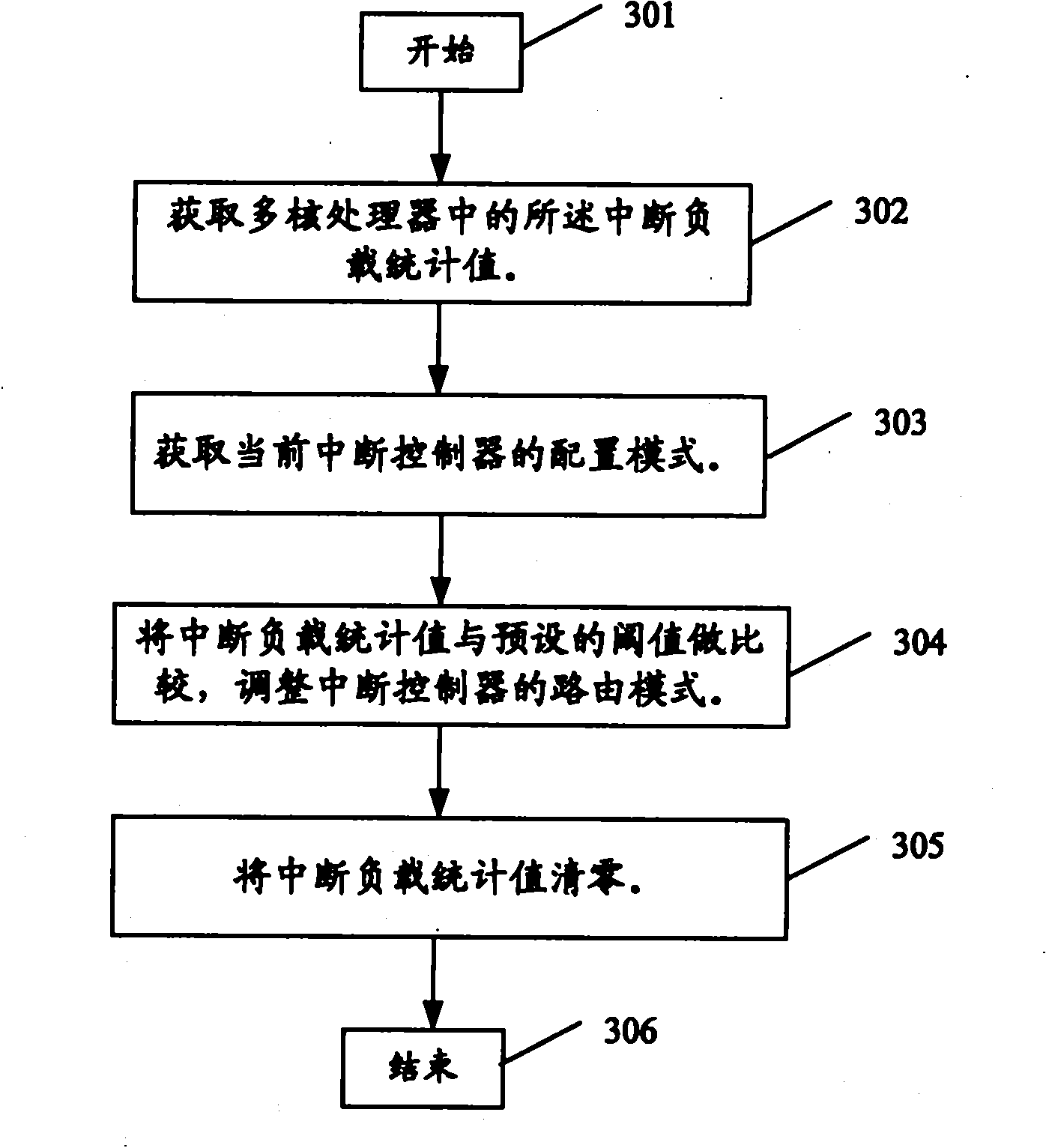

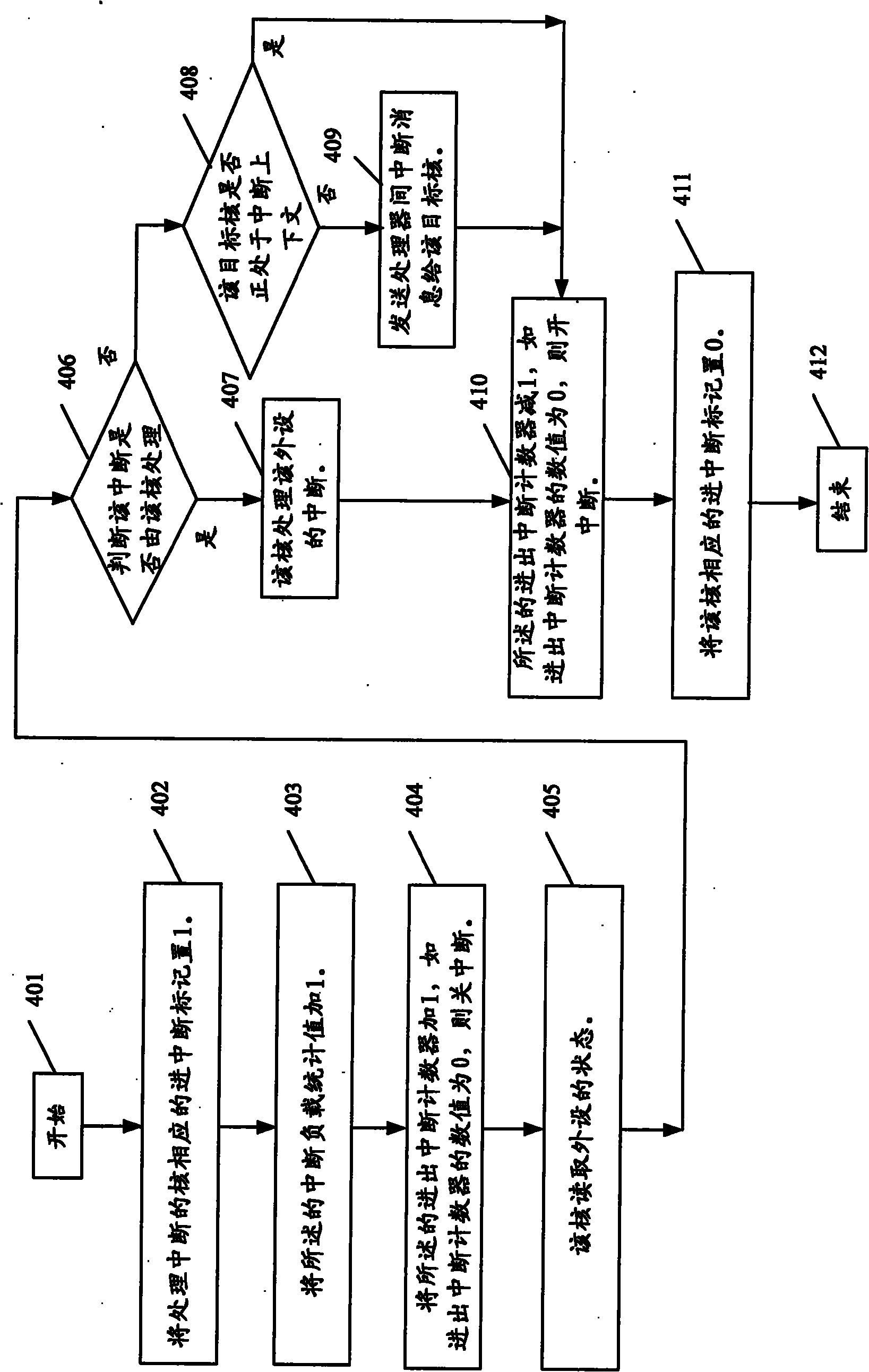

Interrupt controller and method for processing interrupt of multi-core processor shared device

ActiveCN102063335AInterrupt load balancingImprove execution efficiencyProgram initiation/switchingTimerMulti-core processor

The invention discloses an interrupt controller and a method for processing interrupt of a multi-core processor shared device. The interrupt controller monitors interrupt load on each core of a multi-core processor through a cycle timer, the routing policy of the interrupt controller is timely adjusted dynamically, the balance of the interrupt load among a plurality of cores is guaranteed, and the potential of a multi-core structure is fully exerted. The method comprises the following steps that: a, a threshold value of an interrupt load statistic is preset; b, the interrupt controller receives a request from a shared interrupt source and adjusts a routing mode of the interrupt controller according to the current interrupt load statistic; c, the interrupt controller routes the interrupt request to the core according to the routing mode; and d, the core is subjected to an interrupt processing routine.

Owner:DATANG MOBILE COMM EQUIP CO LTD

Multipath accessible semiconductor memory device

InactiveCN101286144AMemory architecture accessing/allocationRead-only memoriesMulti processorProcessor register

A multipath accessible semiconductor memory device provides an interfacing function between multiple processors which indirectly controls a flash memory. The multipath accessible semiconductor memory device comprises a shared memory area, an internal register and a control unit. The shared memory area is accessed by first and second processors through different ports and is allocated to a portion of a memory cell array. The internal register is located outside the memory cell array and is accessed by the first and second processors. The control unit provides storage of address map data associated with the flash memory outside the shared memory area so that the first processor indirectly accesses the flash memory by using the shared memory area and the internal register even when only the second processor is coupled to the flash memory.; The control unit also controls a connection path between the shared memory area and one of the first and second processors. The processors share the flash memory and a multiprocessor system is provided that has a compact size, thereby substantially reducing the cost of memory utilized within the multiprocessor system.

Owner:SAMSUNG ELECTRONICS CO LTD

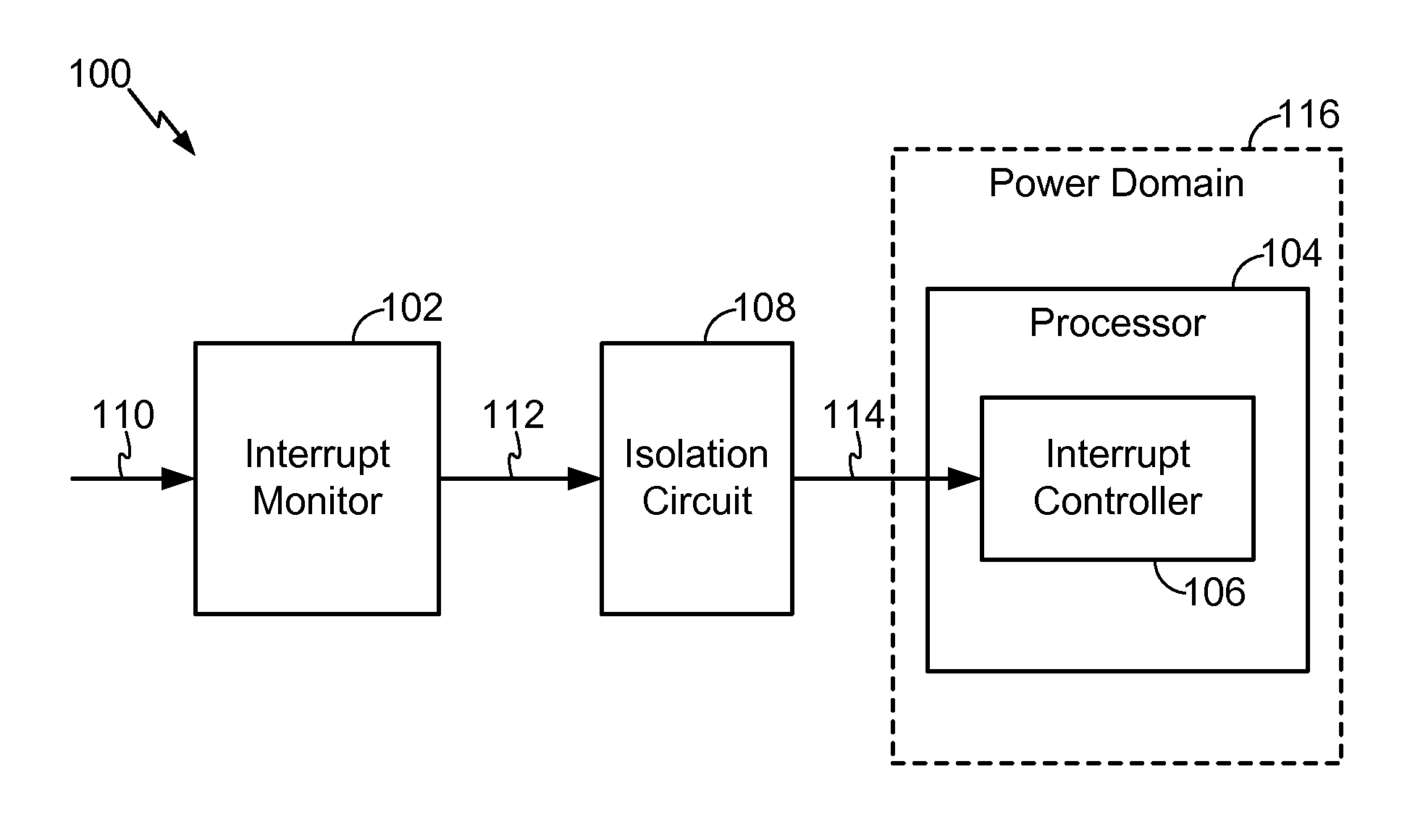

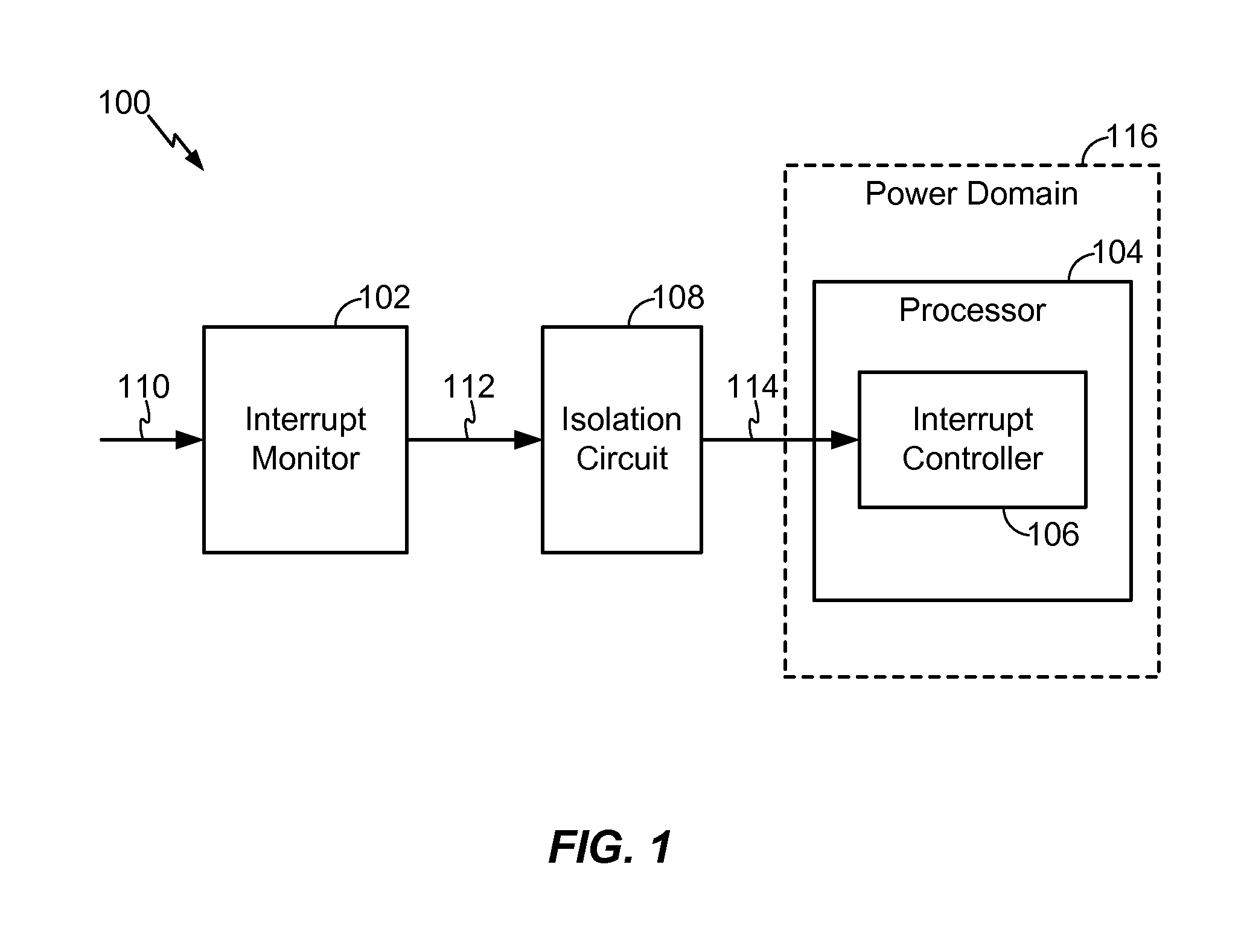

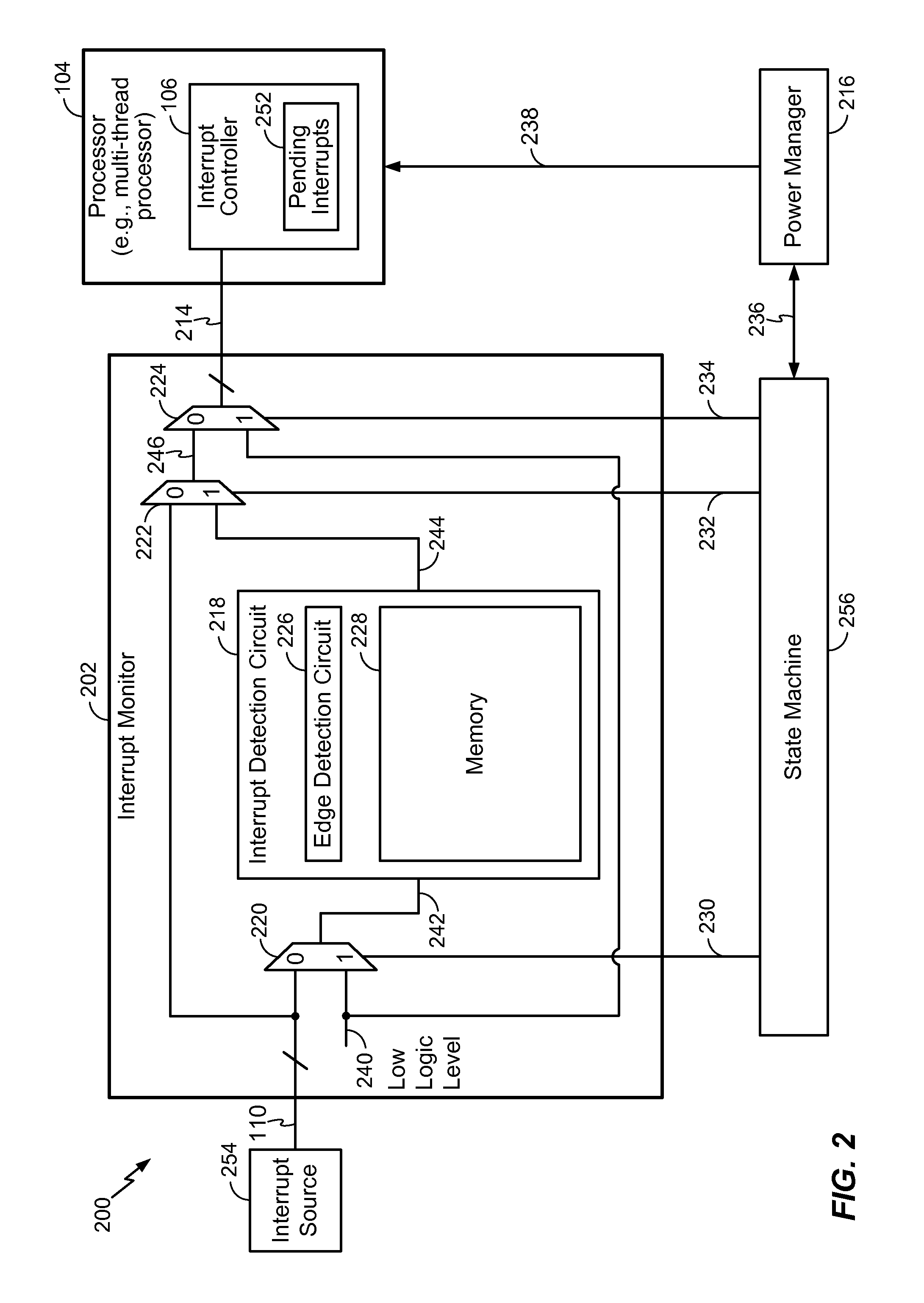

Method and Apparatus for Monitoring Interrupts During a Power Down Event at a Processor

Owner:QUALCOMM INC

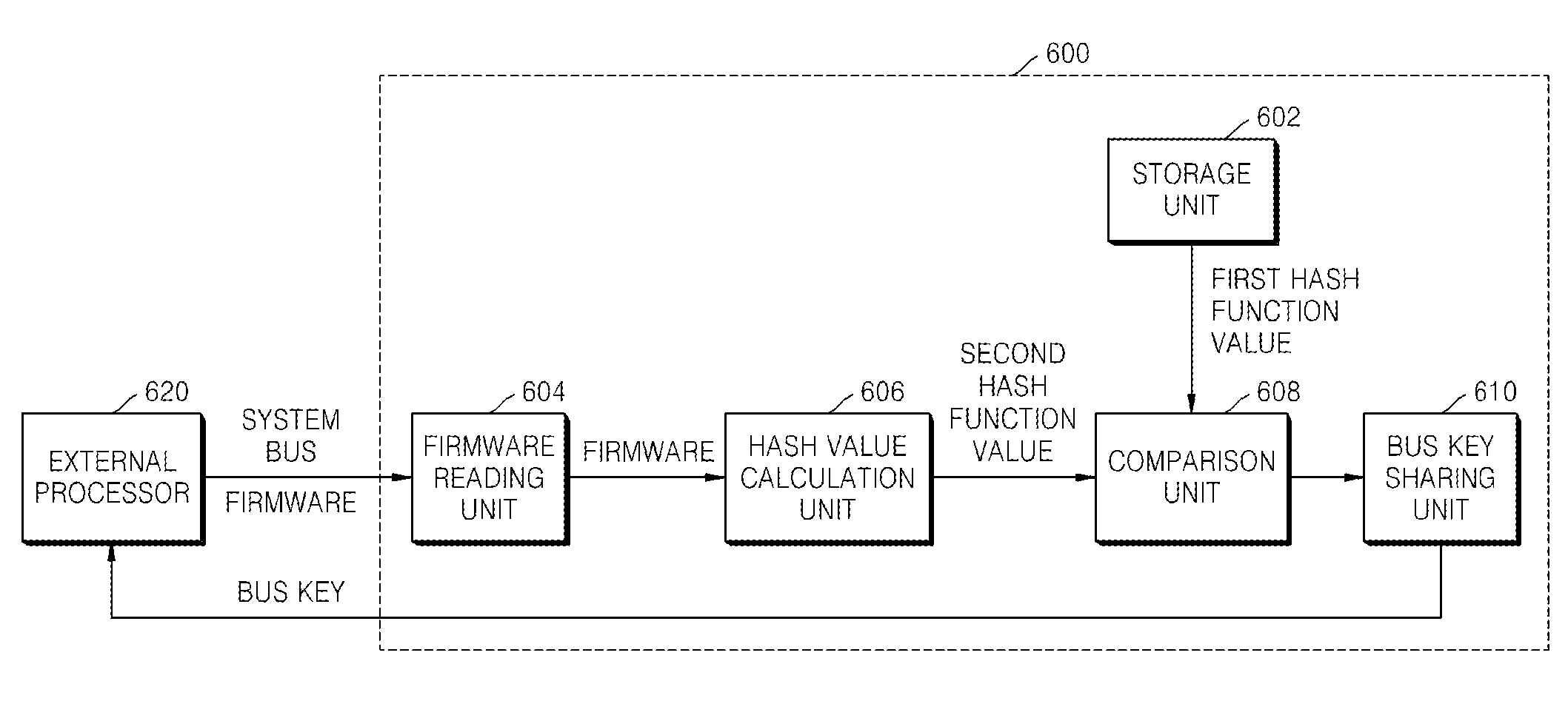

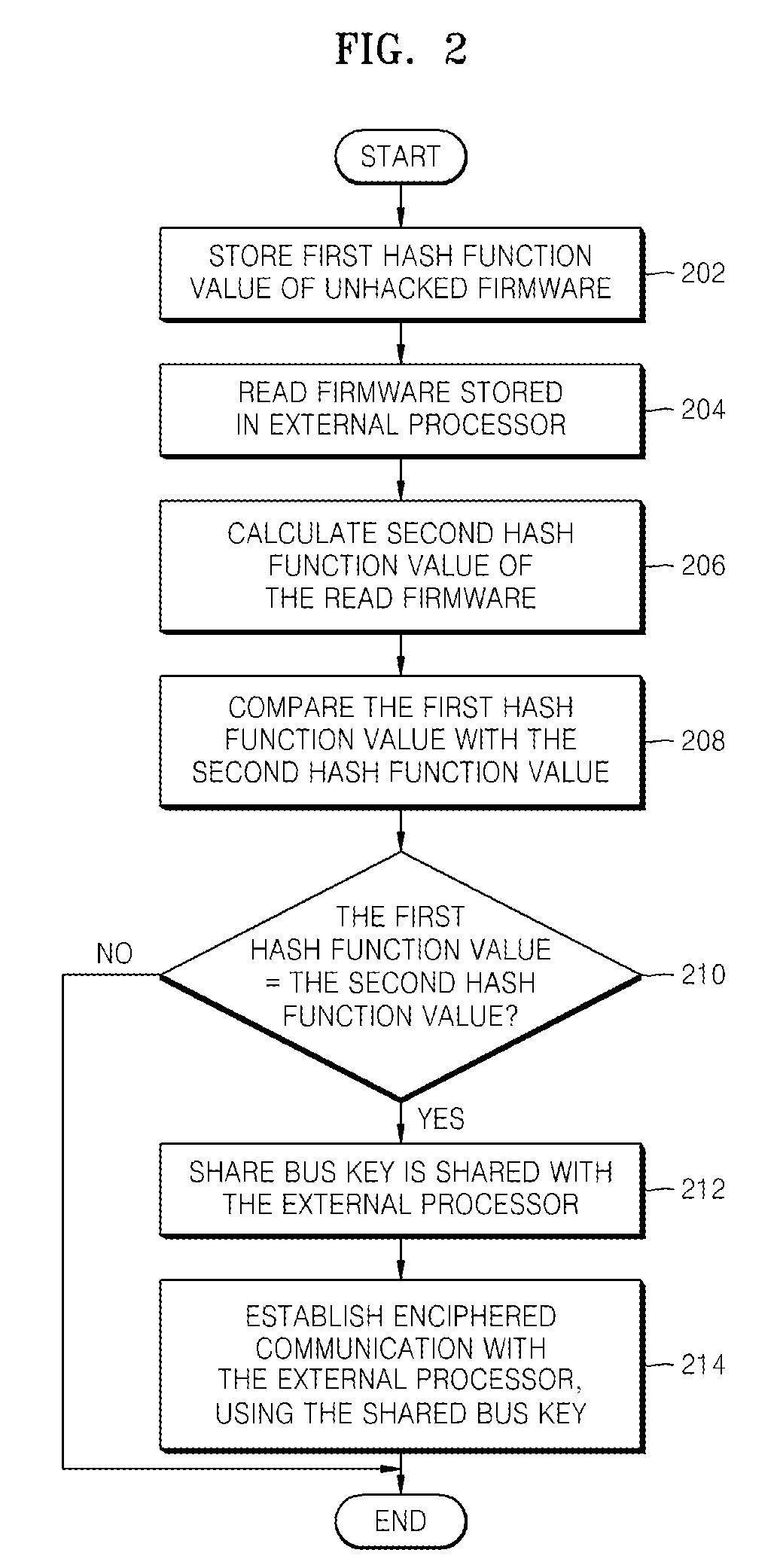

Method and apparatus for checking integrity of firmware

InactiveUS20080289038A1Reduce the possibilityKey distribution for secure communicationProgram control using stored programsHash functionProcessor sharing

Provided are a method and apparatus for checking the integrity of firmware. The method includes storing a first hash function value of unhacked firmware for determining whether actual firmware of an external processor has been hacked; reading the actual firmware via a bus; calculating a second hash function value of the actual firmware; comparing the first hash function value with the second hash function value; and sharing a bus key with the external processor, based on the comparison result.

Owner:SAMSUNG ELECTRONICS CO LTD

Method, system, and program for cache coherency control

InactiveCN103229152AImplement Consistency ControlImprove scalabilityMemory adressing/allocation/relocationInformation processingPage table

To be achieved in the present invention is a cache coherency control, wherein scalability of a shared-memory type multiprocessor system is improved, and cost-performance is improved by restraining the cost of hardware and software. In a system for controlling cache coherency of a multi-processor system wherein a plurality of processors comprising caches and TLBs share system memory, each of the processors comprises a TLB control unit further comprising: a TLB searching unit for executing TLB searching; and a coherency handler for executing registration information processing of the TLB, when no hit is obtained in the TLB searching and a TLB interruption is generated. The coherency handler comprises: a TLB replacement handler for executing a search of a page table of the system memory, and registration information replacement of the TLB; a TLB-mistake exception handling unit for handling a TLB-mistake interruption, which occurs when the TLB interruption is not caused by a page fault, but registration information that matches the address does not exist in the TLB; and a storage exception handling unit for handling a storage interruption, which occurs when registration information that matches the address exists in the TLB, but accessing authority is infringed.

Owner:IBM CORP

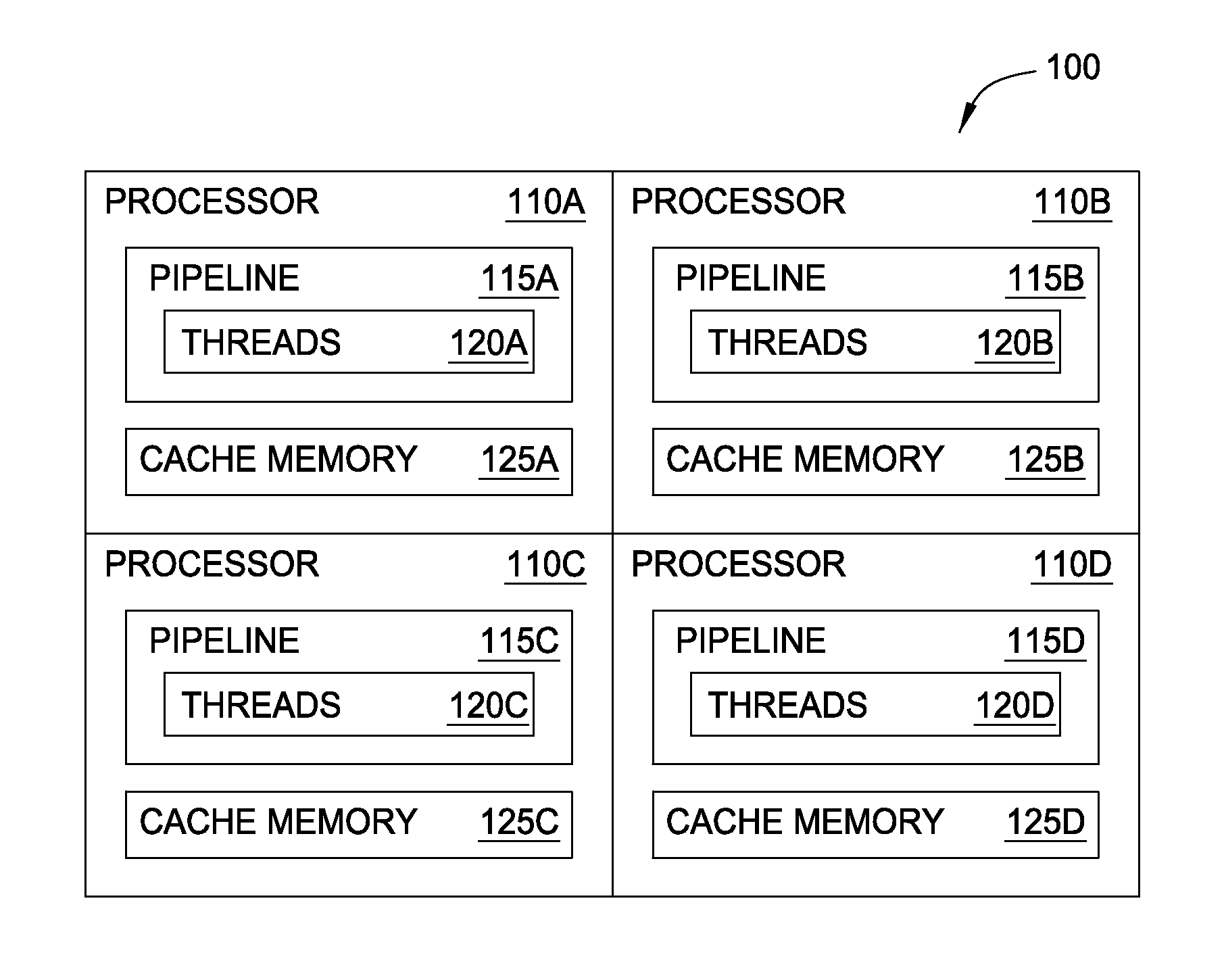

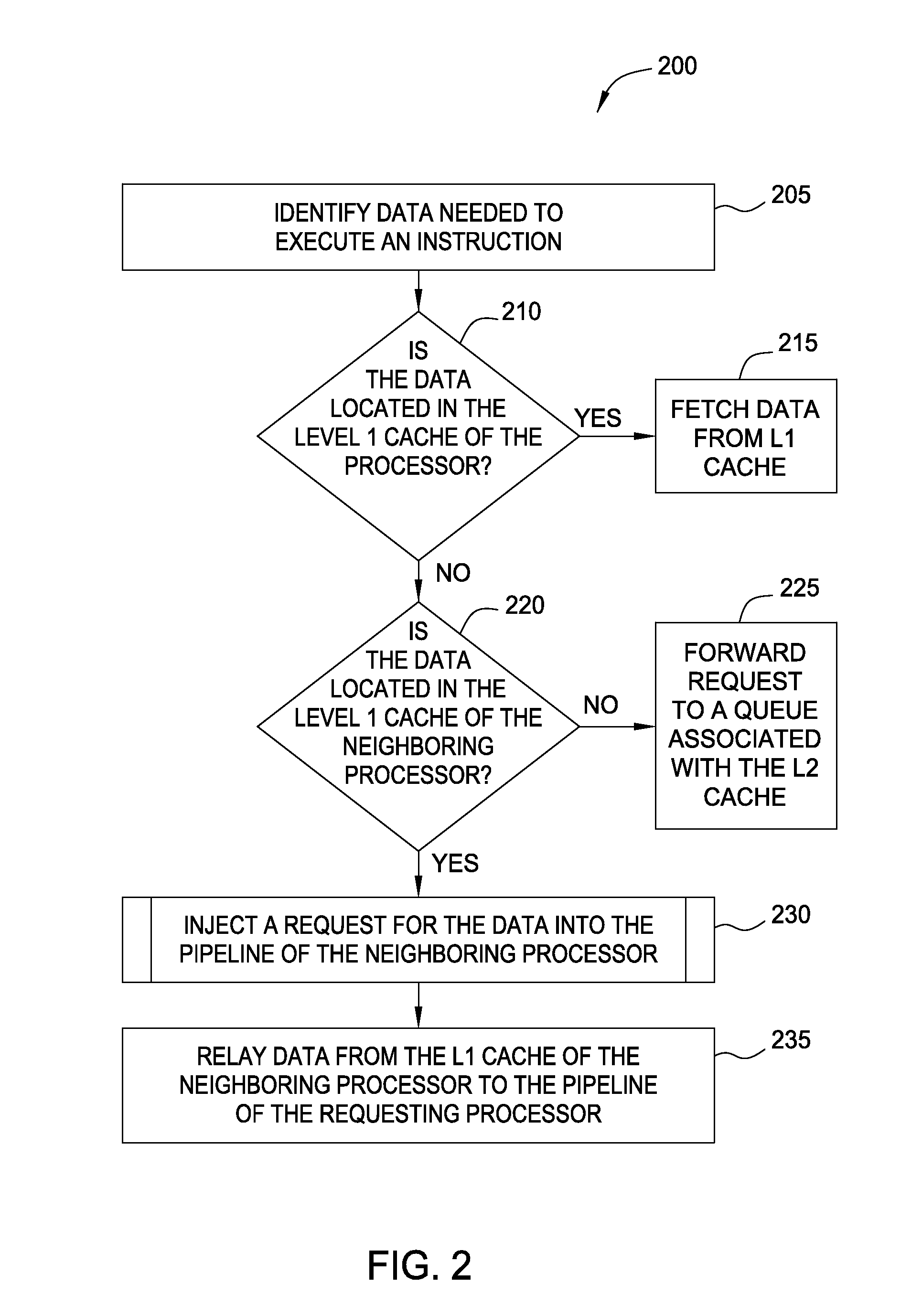

Near neighbor data cache sharing

Parallel computing environments, where threads executing in neighboring processors may access the same set of data, may be designed and configured to share one or more levels of cache memory. Before a processor forwards a request for data to a higher level of cache memory following a cache miss, the processor may determine whether a neighboring processor has the data stored in a local cache memory. If so, the processor may forward the request to the neighboring processor to retrieve the data. Because access to the cache memories for the two processors is shared, the effective size of the memory is increased. This may advantageously decrease cache misses for each level of shared cache memory without increasing the individual size of the caches on the processor chip.

Owner:IBM CORP

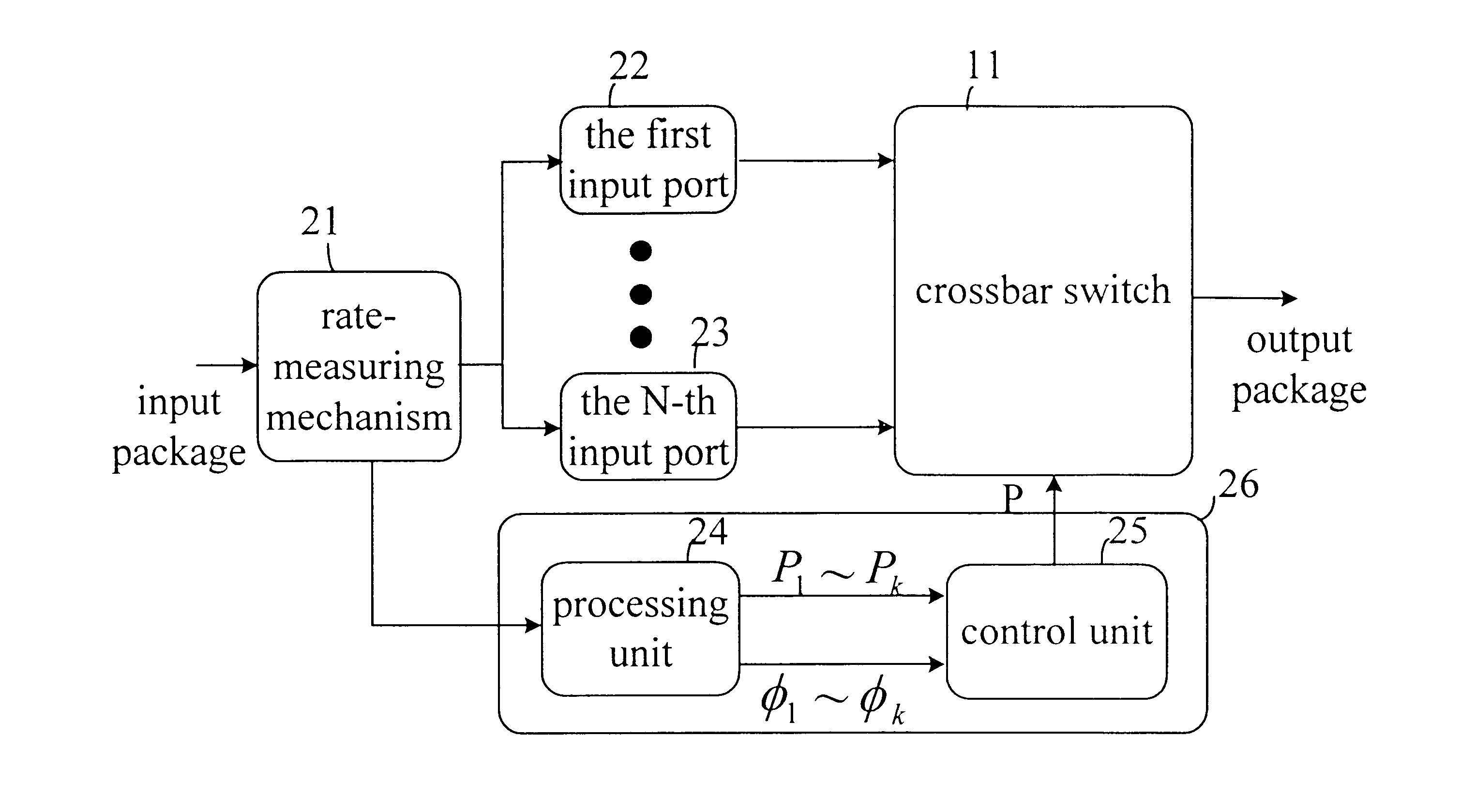

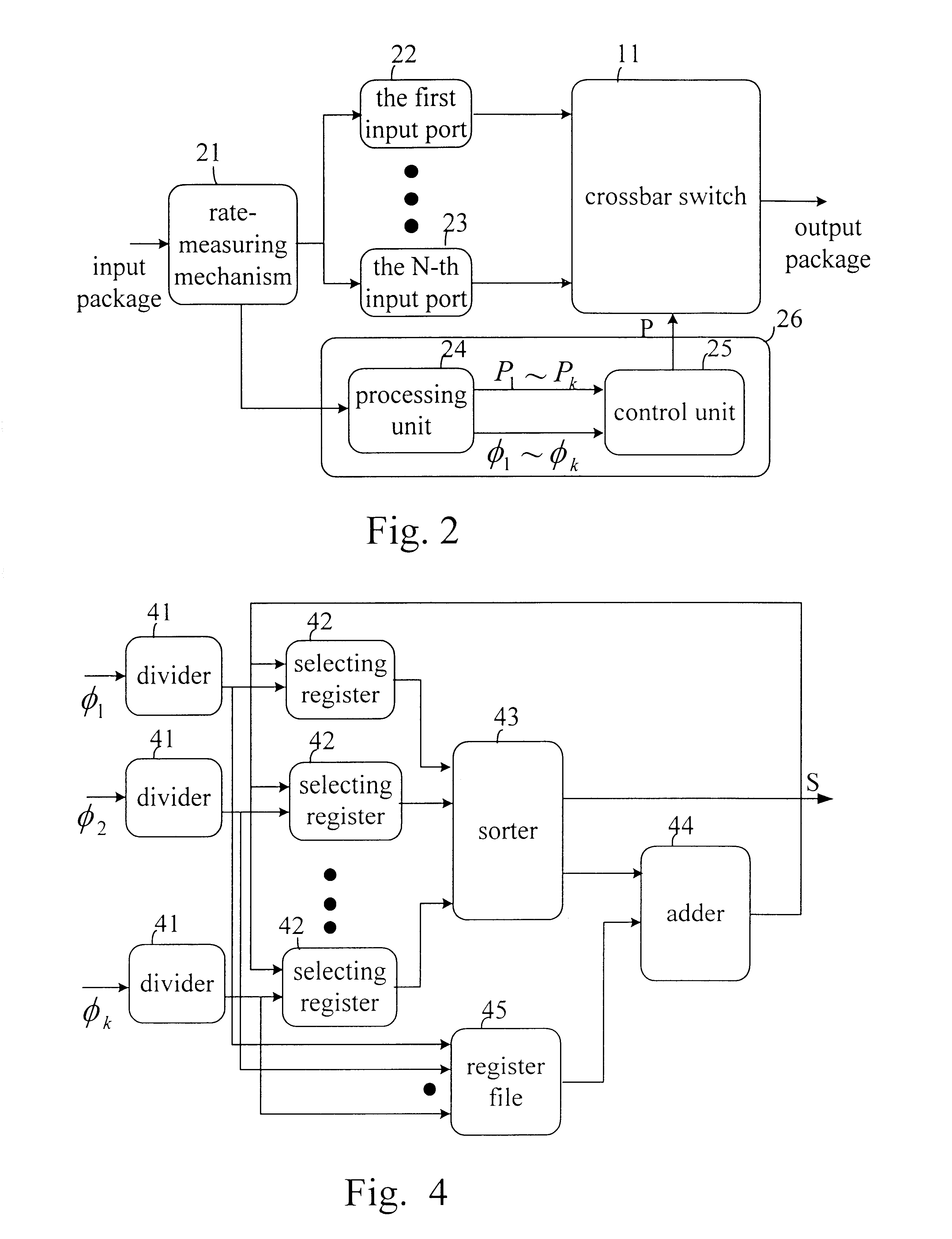

Switching apparatus and method using bandwidth decomposition

InactiveUS6704312B1% utilization of output rateData switching by path configurationComputer architectureDecomposition

The present invention discloses a switching apparatus and method using bandwidth decomposition, appling a von Neumann algorithm, a Birkhoff theorem, a Packetized Generalized Processor Sharing algorithm, a water filling algorithm and a dynamnically calculating rate algorithm in packet switching of a high speed network. It is not necessary to speed up internally and determine a maximal matching between input ports and output ports for the switching apparatus and method using bandwidth decomposition according to the present invention, so the executing speed of a network using the present appatatus and method will be increased, and the manufacturing of the present invention can be easily implemented by current VLSI technology.

Owner:CHANG CHENG SHANG

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com