Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1577 results about "Latency (engineering)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Low latency allows human-unnoticeable delays between an input being processed and the corresponding output providing real time characteristics. This can be especially important for internet connections utilizing services such as Trading, online gaming and VOIP. VOIP is tolerant to some degree of latency since a minor delay between input from conversation participants is generally attributable to non-technical issues, but substantial delays may impair communication. On the other hand, online games are more sensitive to latency since fast response times to new events occurring during a game session are rewarded while slow response times may carry penalties. A player with a high latency internet connection may show slow responses in spite of superior tactics or the appropriate reaction time due to a delay in transmission of game events between said player and other participants in the game session. This gives players with low latency connections a technical advantage and biases game outcomes, so game servers favor players with lower latency connections, sometimes referred to as low "ping" times.

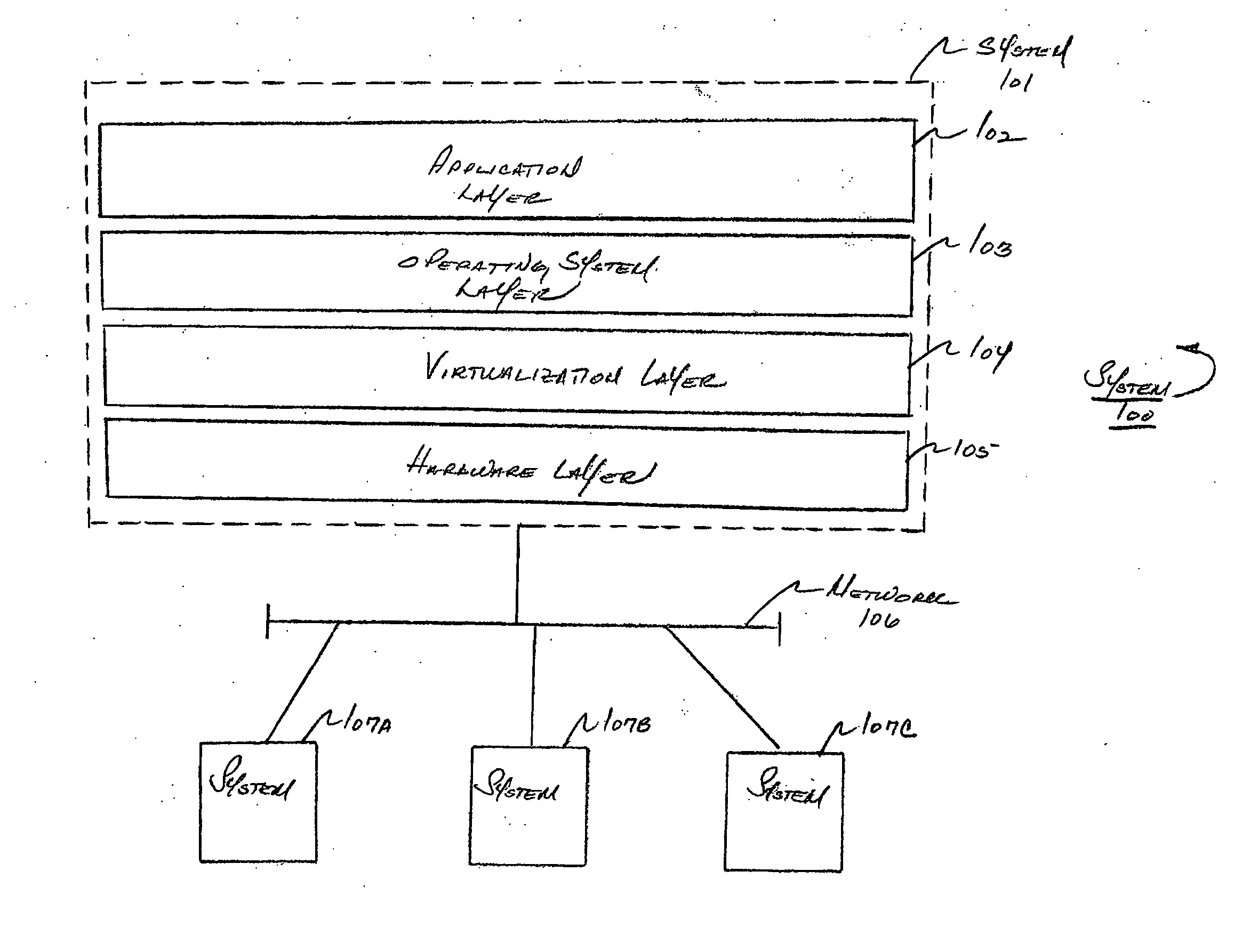

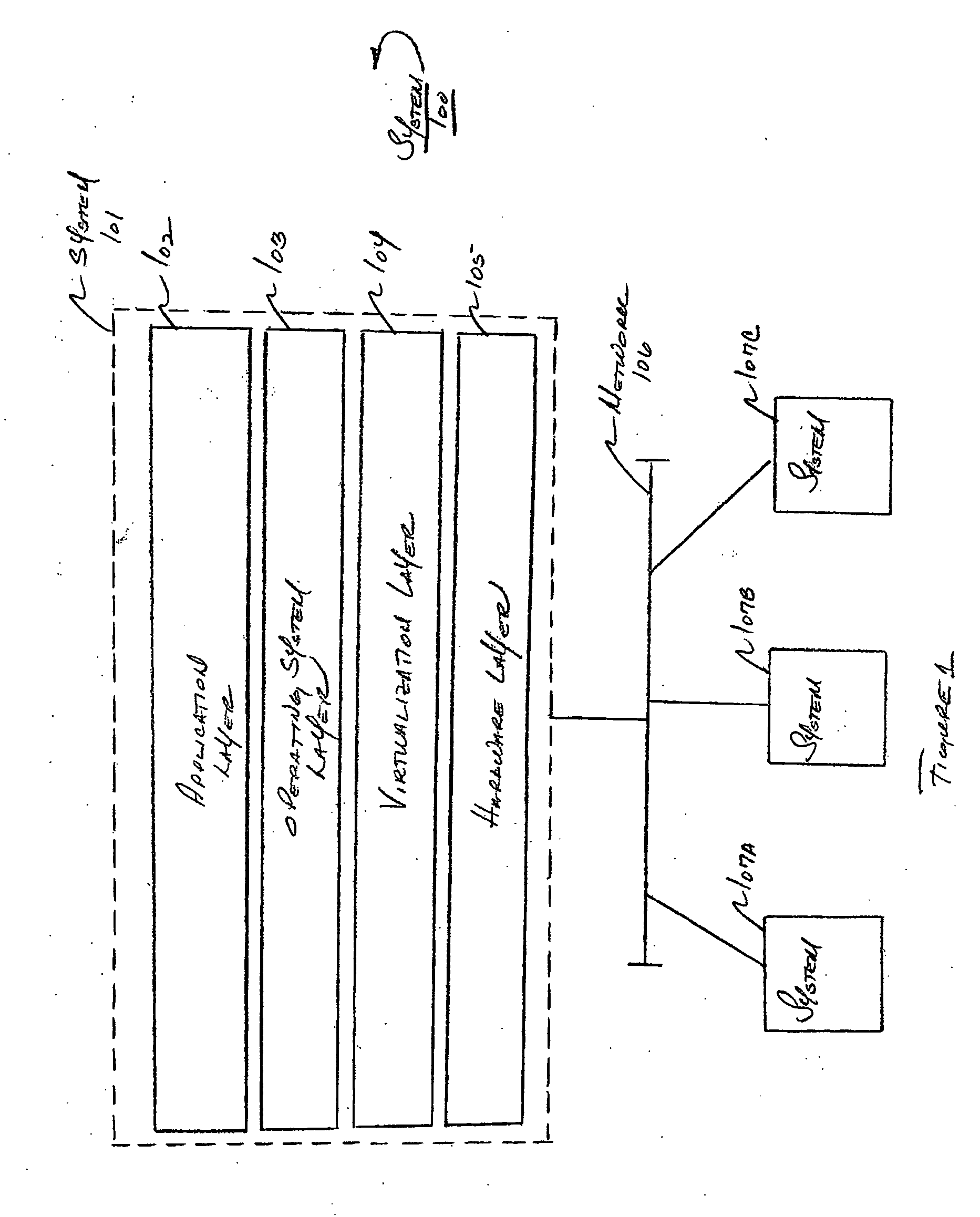

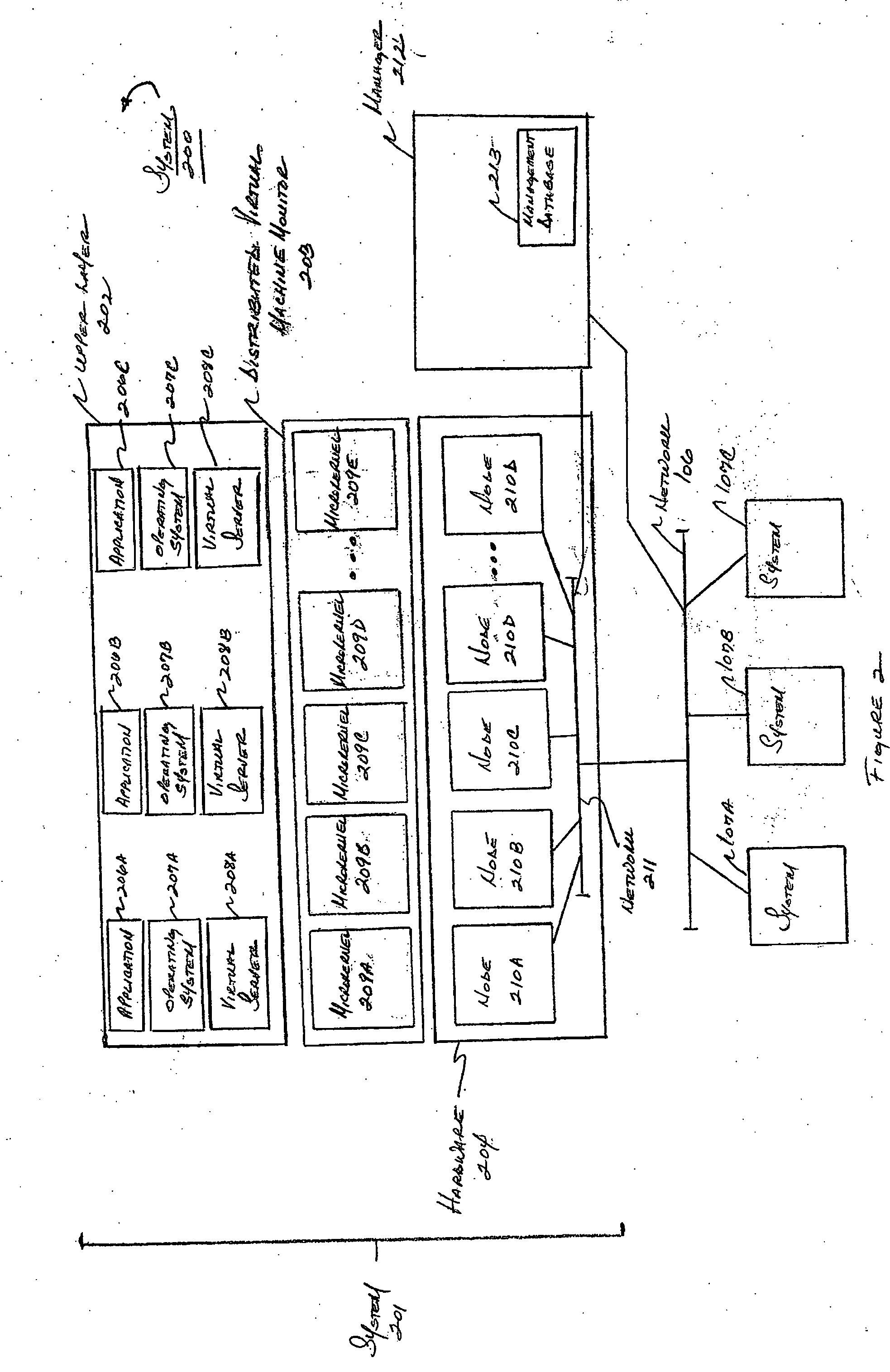

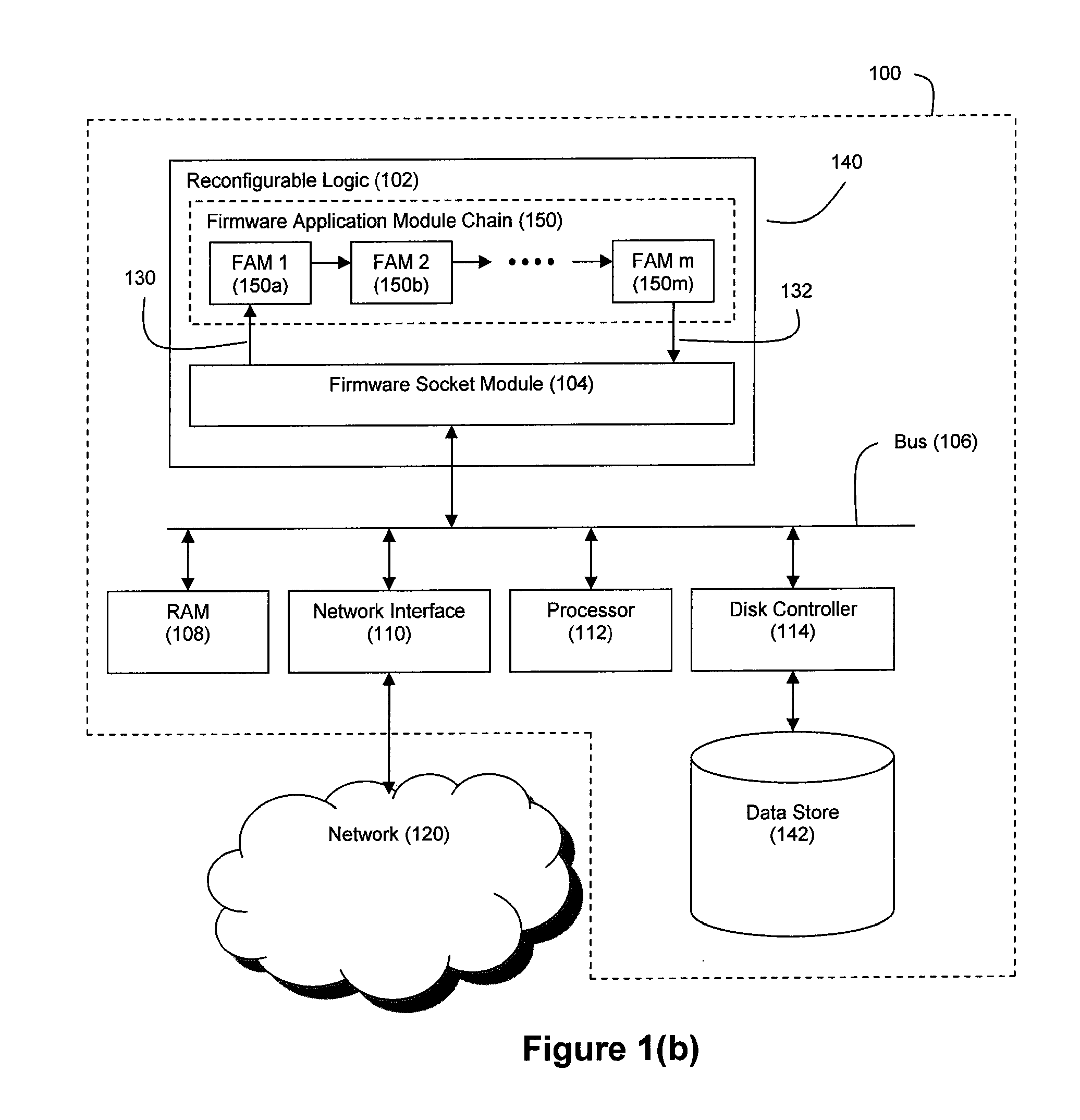

Method and apparatus for providing virtual computing services

InactiveUS20050044301A1Easy to manageLow costResource allocationMemory adressing/allocation/relocationVirtualizationOperational system

A level of abstraction is created between a set of physical processors and a set of virtual multiprocessors to form a virtualized data center. This virtualized data center comprises a set of virtual, isolated systems separated by a boundary referred as a partition. Each of these systems appears as a unique, independent virtual multiprocessor computer capable of running a traditional operating system and its applications. In one embodiment, the system implements this multi-layered abstraction via a group of microkernels, each of which communicates with one or more peer microkernel over a high-speed, low-latency interconnect and forms a distributed virtual machine monitor. Functionally, a virtual data center is provided, including the ability to take a collection of servers and execute a collection of business applications over a compute fabric comprising commodity processors coupled by an interconnect. Processor, memory and I / O are virtualized across this fabric, providing a single system, scalability and manageability. According to one embodiment, this virtualization is transparent to the application, and therefore, applications may be scaled to increasing resource demands without modifying the application.

Owner:ORACLE INT CORP

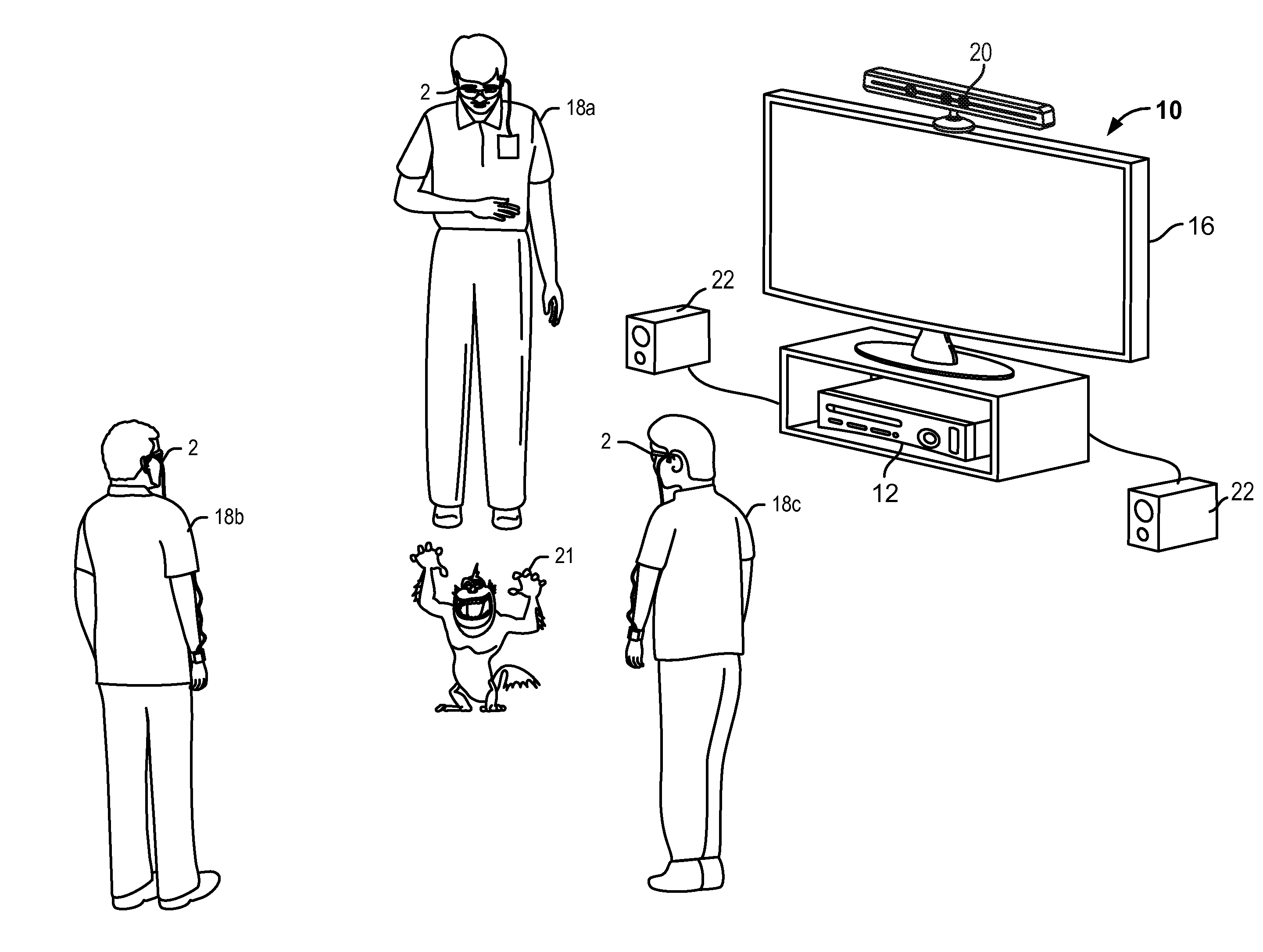

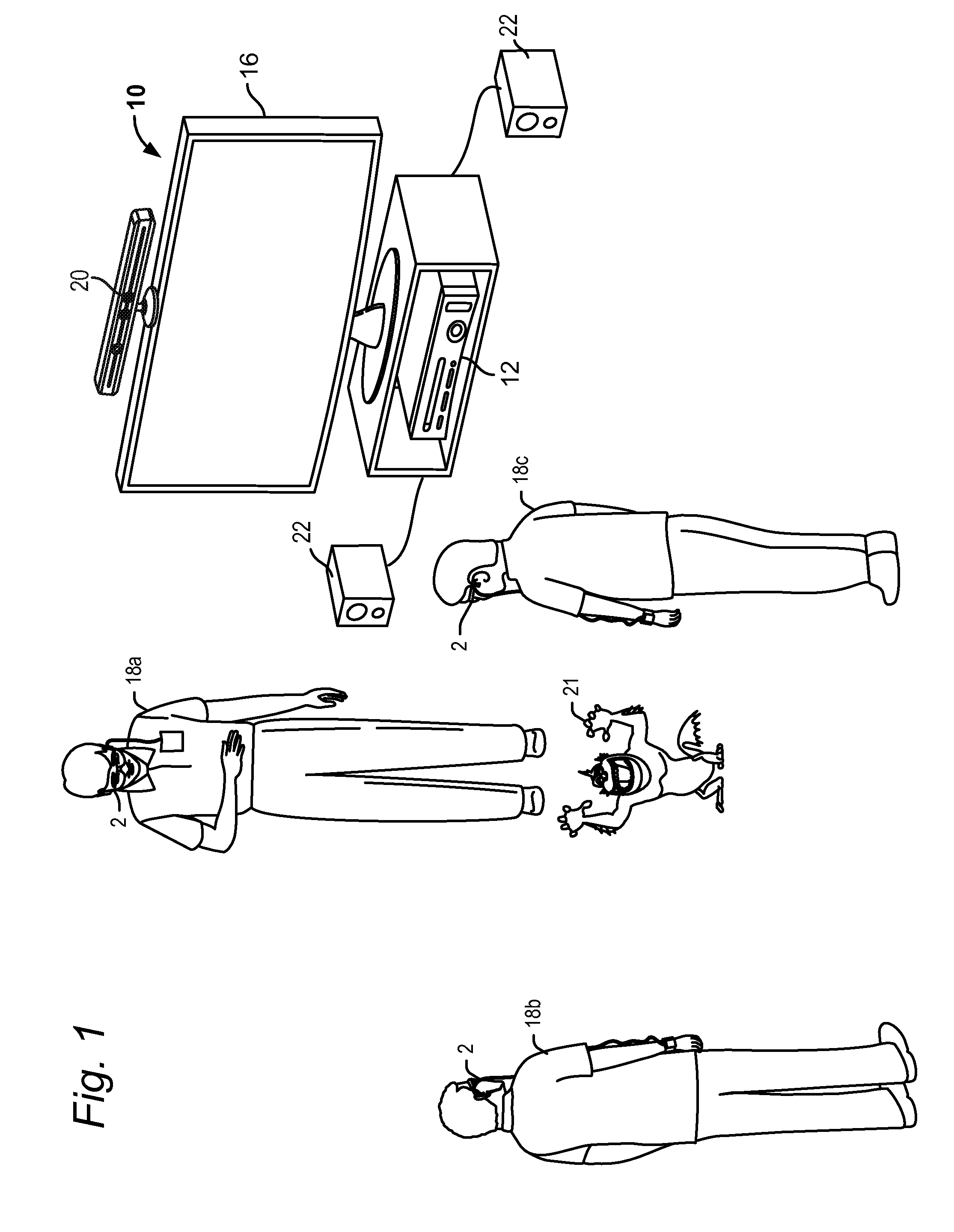

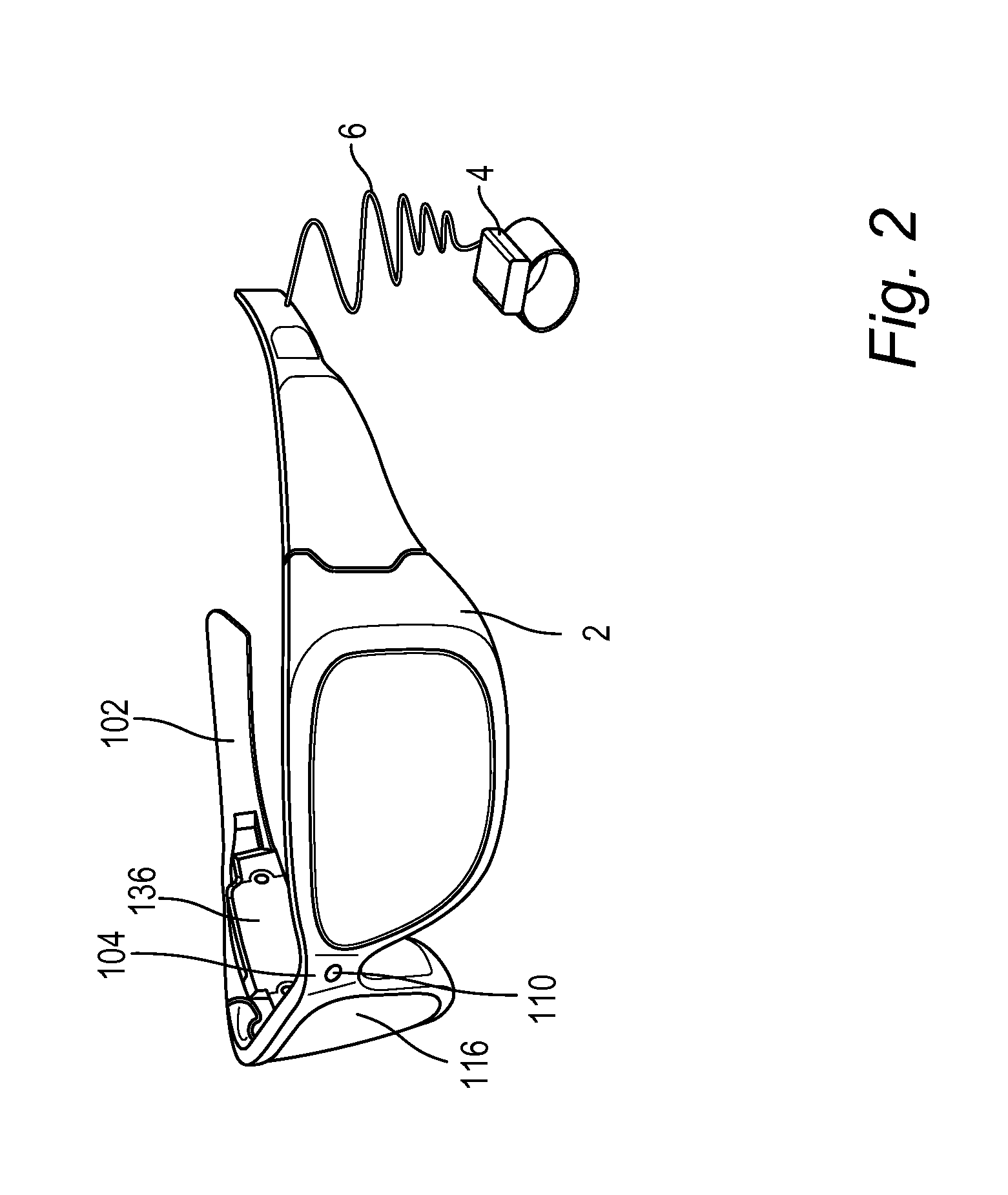

Low-latency fusing of virtual and real content

ActiveUS20120105473A1Introduce inherent latencyImage analysisCathode-ray tube indicatorsMixed realityLatency (engineering)

A system that includes a head mounted display device and a processing unit connected to the head mounted display device is used to fuse virtual content into real content. In one embodiment, the processing unit is in communication with a hub computing device. The processing unit and hub may collaboratively determine a map of the mixed reality environment. Further, state data may be extrapolated to predict a field of view for a user in the future at a time when the mixed reality is to be displayed to the user. This extrapolation can remove latency from the system.

Owner:MICROSOFT TECH LICENSING LLC

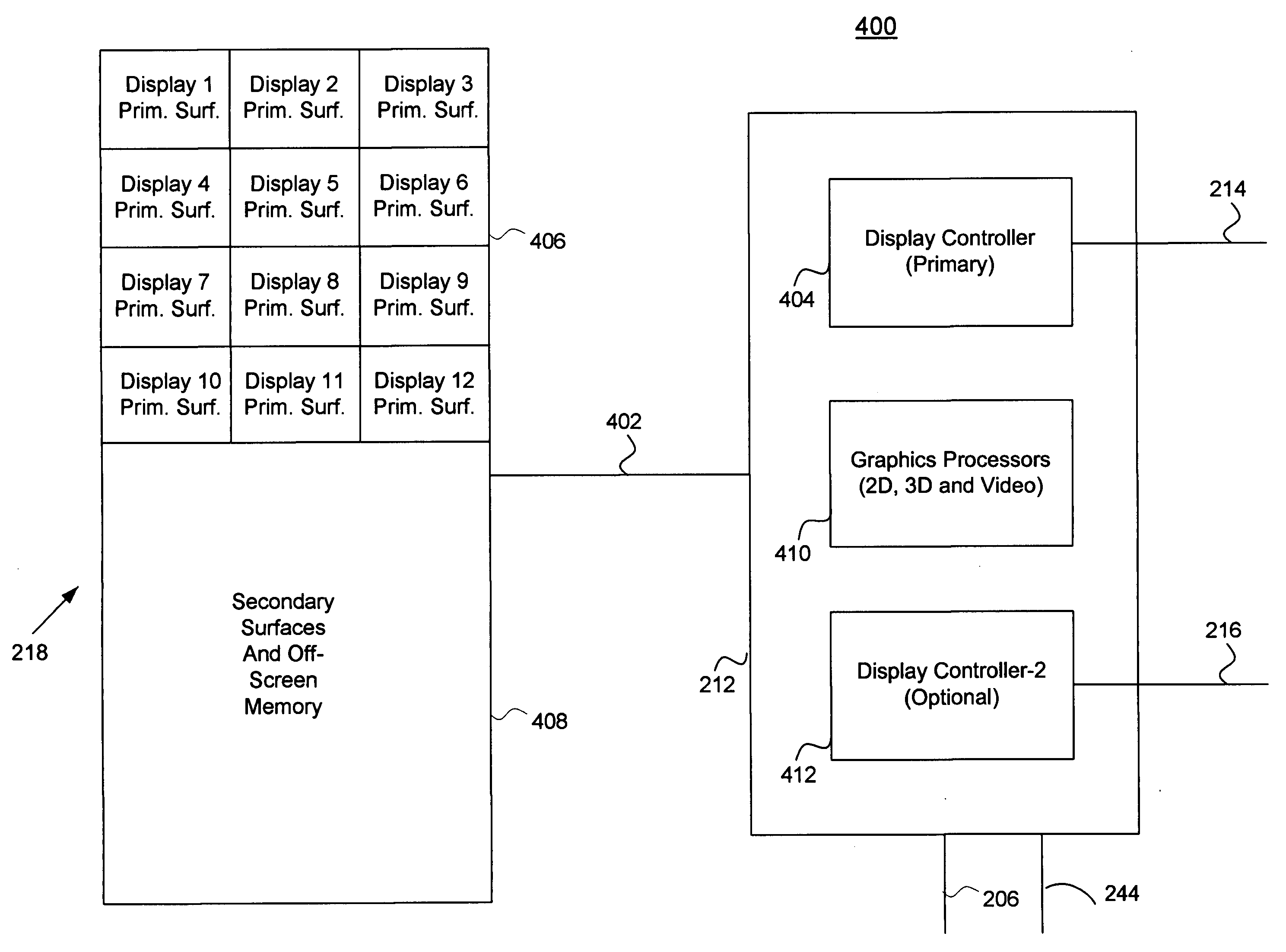

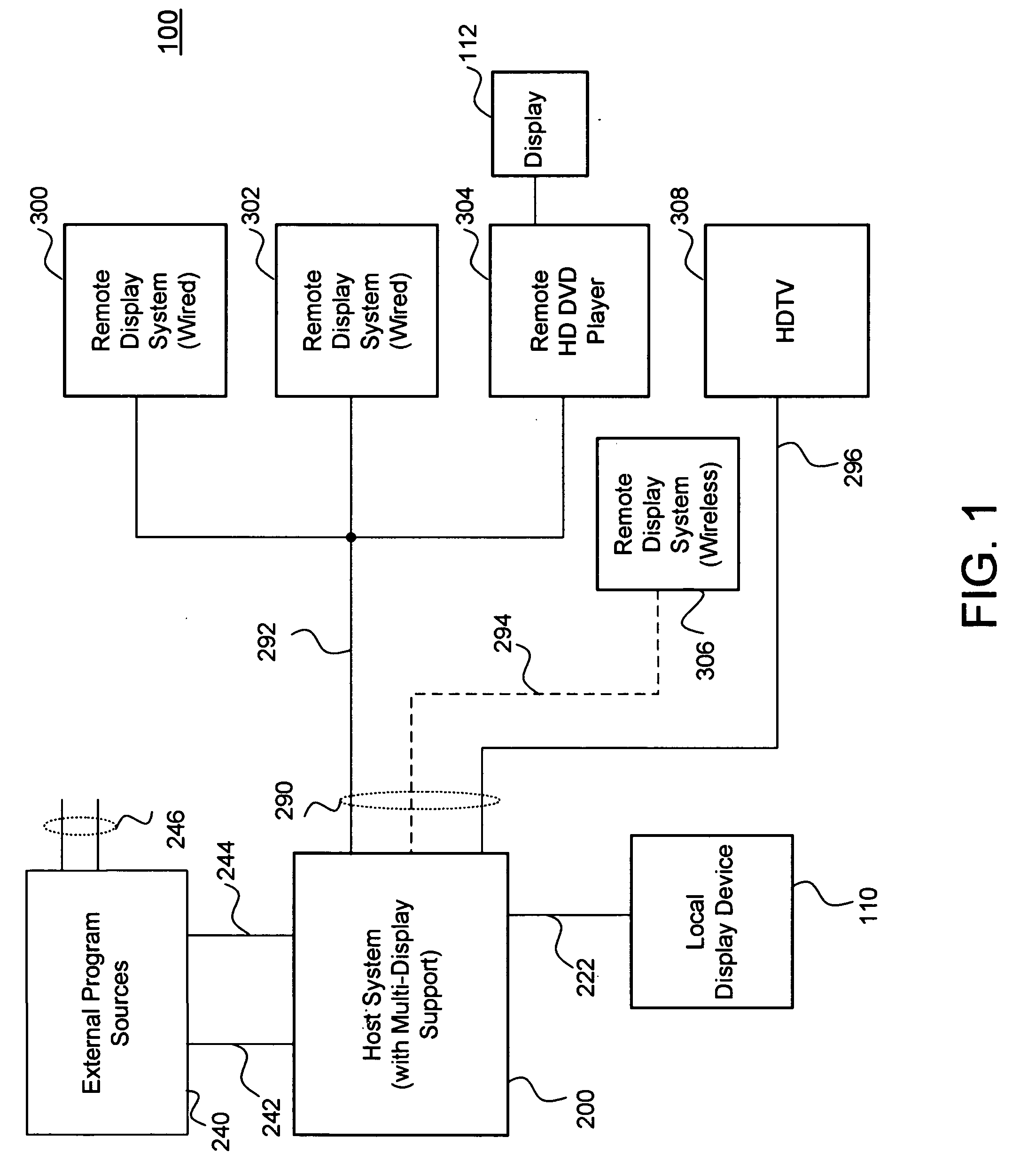

Multiple remote display system

InactiveUS20060282855A1Lower latencyGood frame rateTelevision system detailsColor television detailsGraphicsInternet traffic

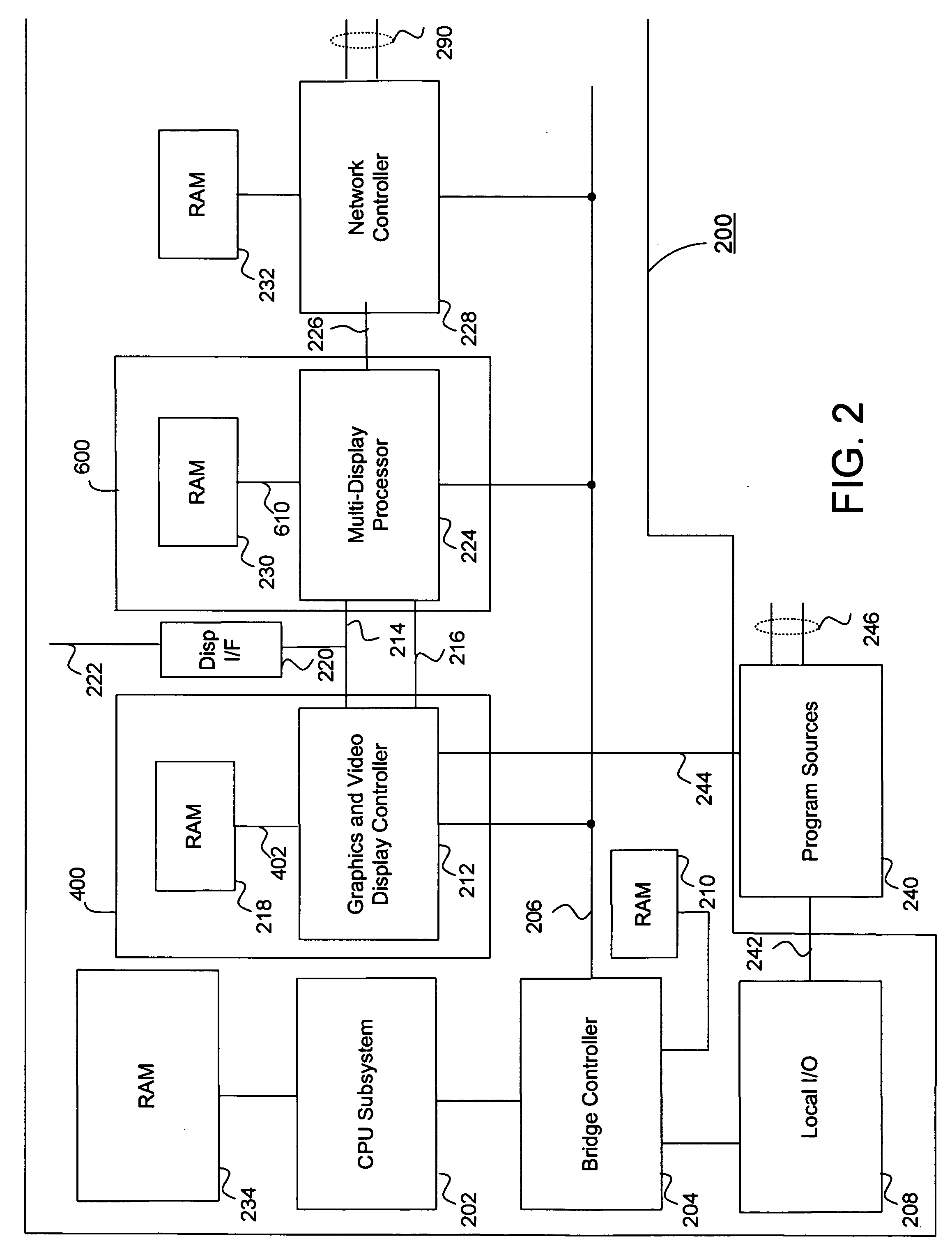

A multi-display system includes a host system that supports both graphics and video based frames for multiple remote displays, multiple users or a combination of the two. For each display and for each frame, a multi-display processor responsively manages each necessary aspect of the remote display frame. The necessary portions of the remote display frame are further processed, encoded and where necessary, transmitted over a network to the remote display for each user. In some embodiments, the host system manages a remote desktop protocol and can still transmit encoded video or encoded frame information where the encoded video may be generated within the host system or provided from an external program source. Embodiments integrate the multi-display processor with either the video decoder unit, graphics processing unit, network controller, main memory controller, or any combination thereof The encoding process is optimized for network traffic and attention is paid to assure that all users have low latency interactive capabilities.

Owner:III HLDG 1

Method for effectively implementing a multi-room television system

InactiveUS20060117371A1Efficient and effective implementationImprove functionalityTelevision system detailsPulse modulation television signal transmissionTelevision systemVideo encoding

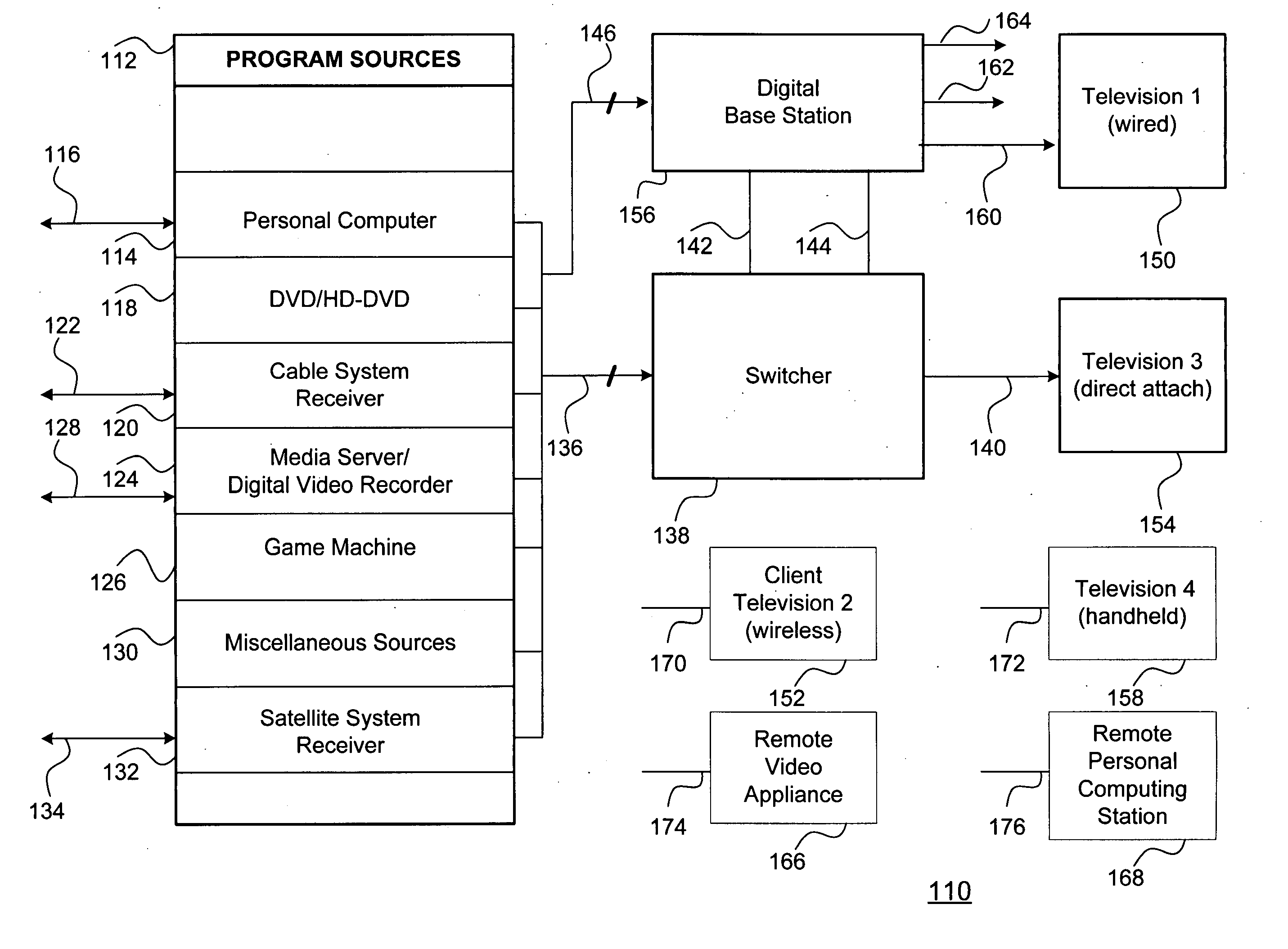

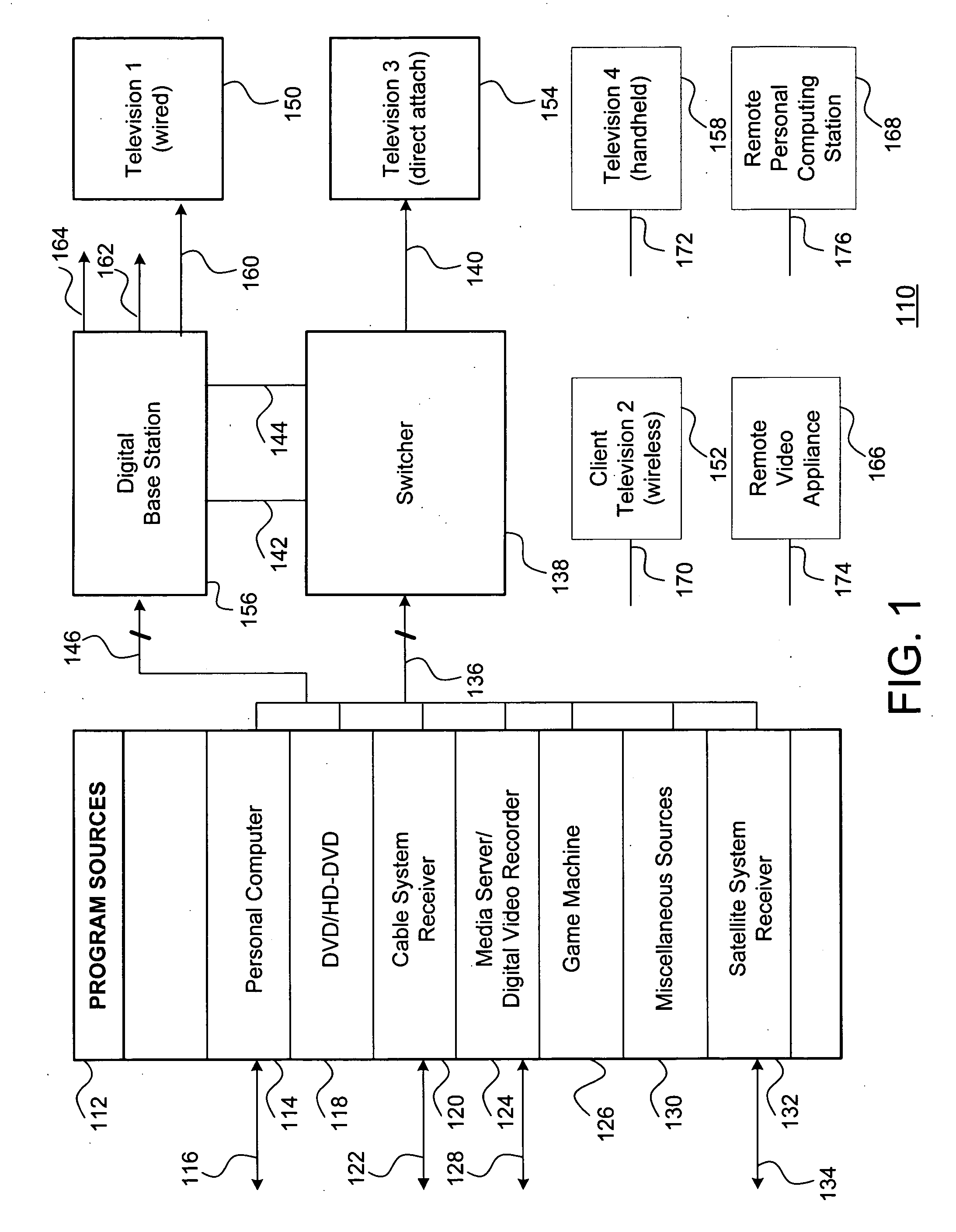

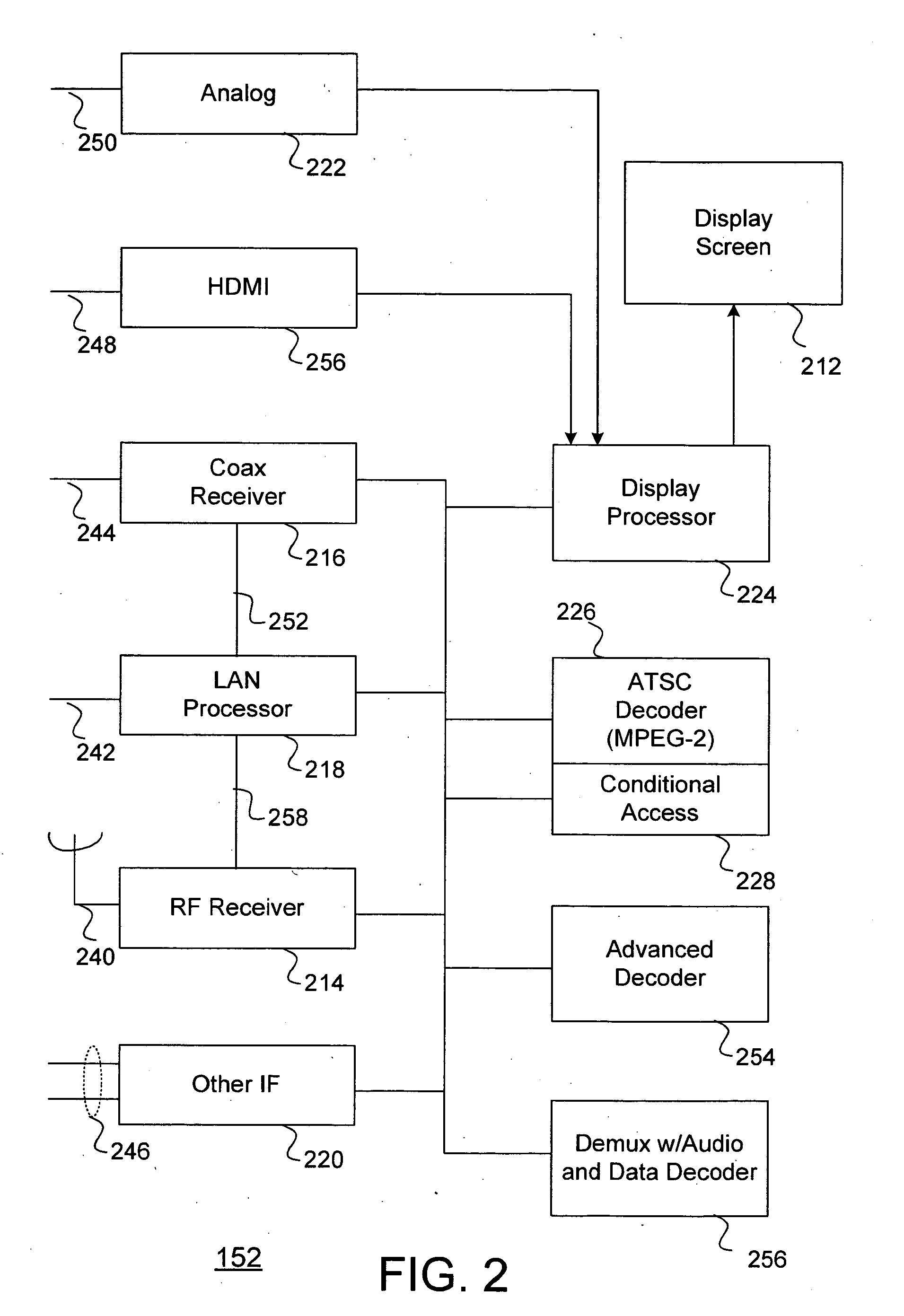

A method for effectively implementing a multi-room television system includes a digital base station that processes and combines various program sources to produce a processed stream. A communications processor then responsively transmits the processed stream as a local composite output stream to various wired and wireless display devices for flexible viewing at variable remote locations. The transmission path performance is used to determine the video encoding process, and special attention is taken to assure that all users have low-latency interactive capabilities.

Owner:SLING MEDIA LLC

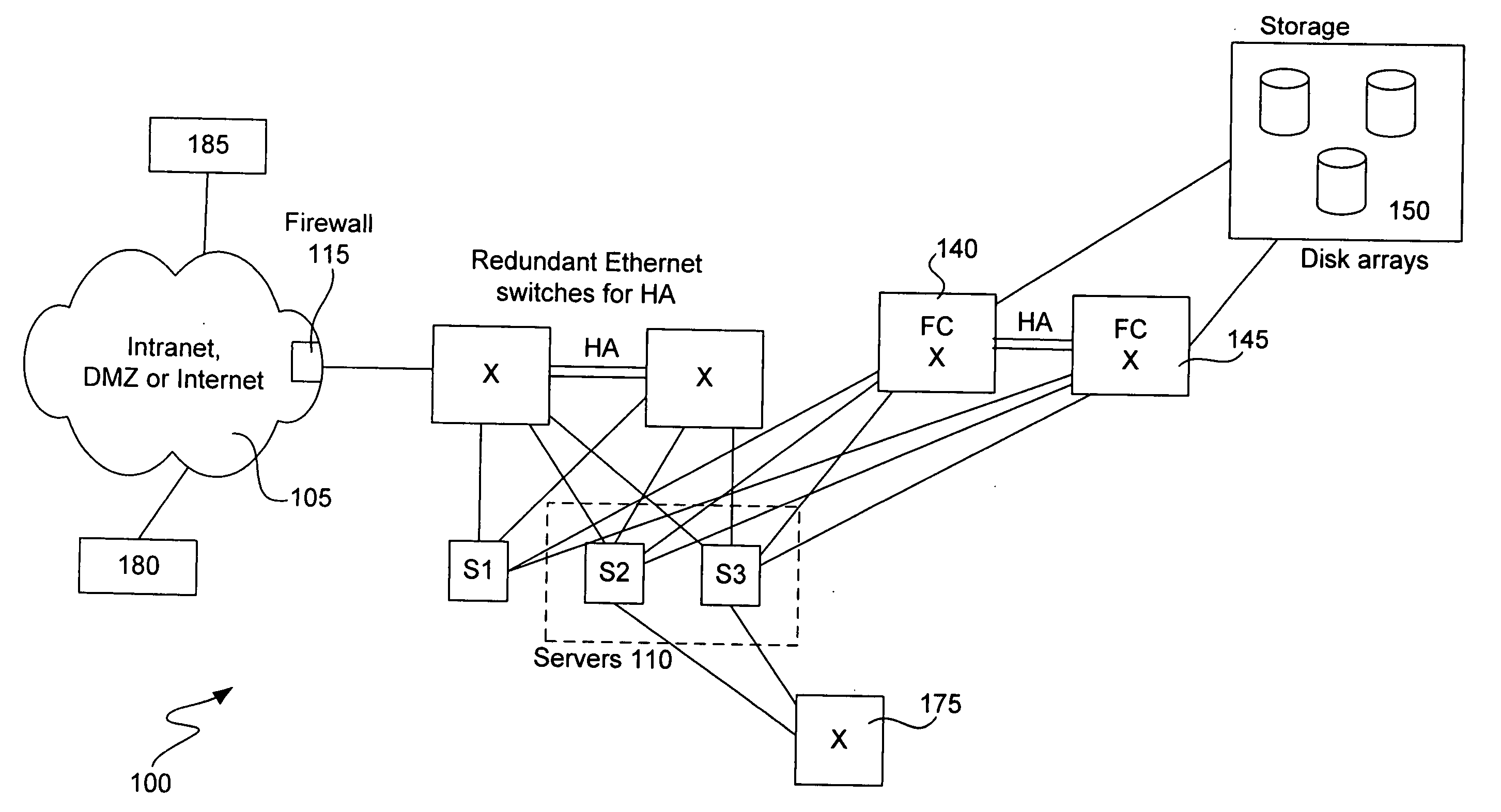

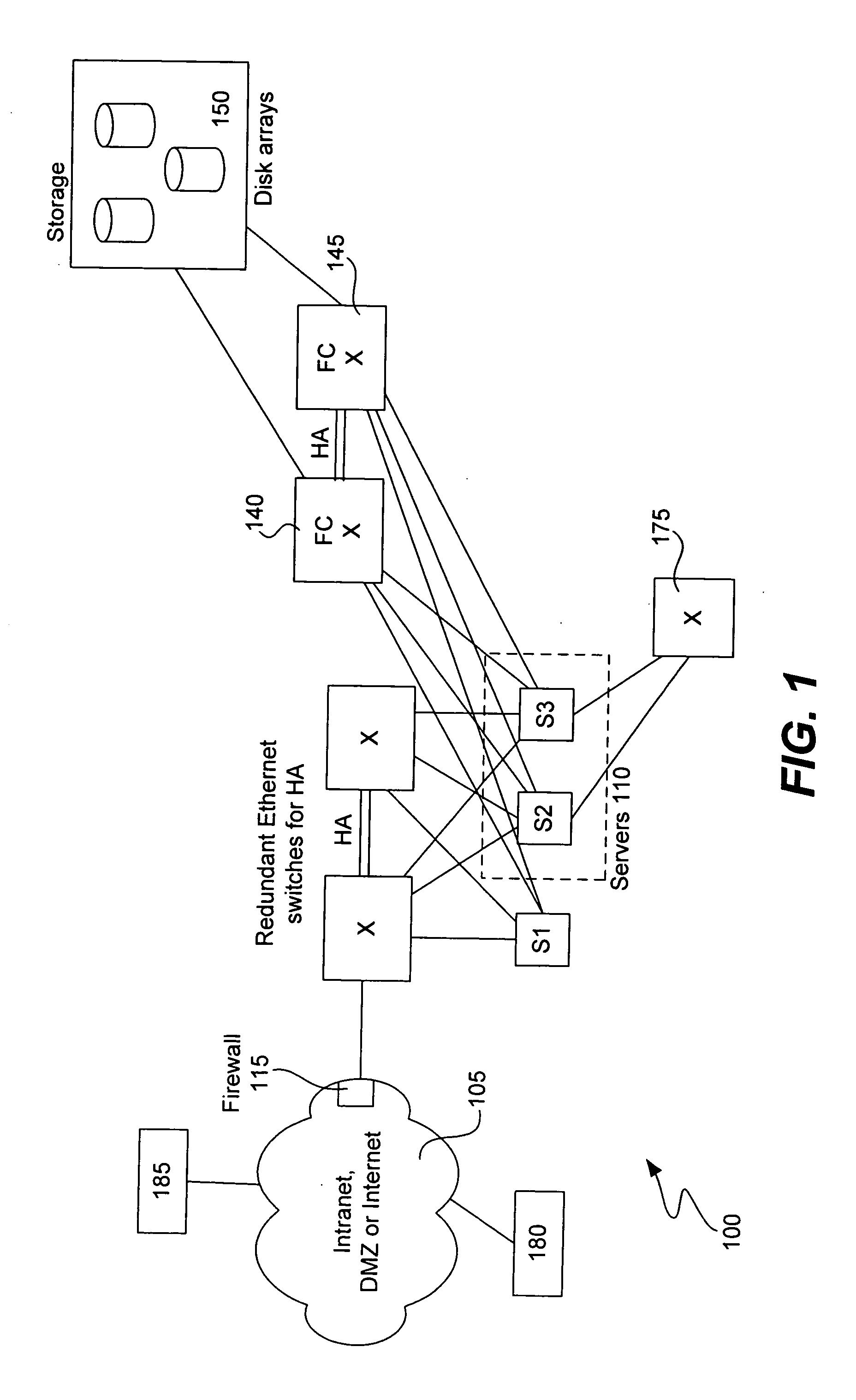

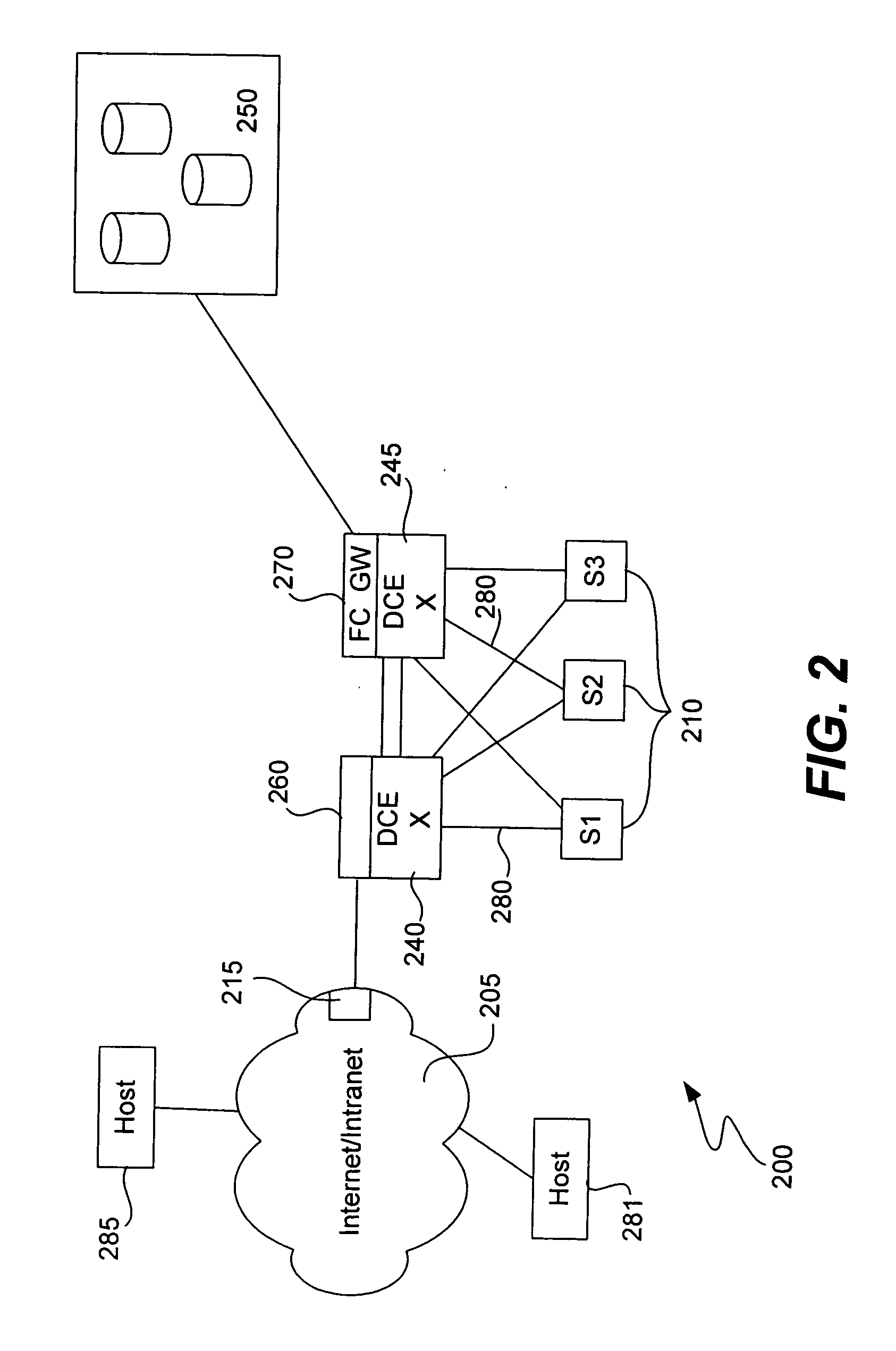

Fibre channel over ethernet

ActiveUS20060251067A1Improve reliabilityLower latencyTime-division multiplexData switching by path configurationHigh bandwidthData center

A Data Center Ethernet (“DCE”) network and related methods and device are provided. A DCE network simplifies the connectivity of data centers and provides a high bandwidth, low latency network for carrying Ethernet, storage and other traffic. A Fibre Channel (“FC”) frame, including FC addressing information, is encapsulated in an Ethernet frame for transmission on a Data Center Ethernet (“DCE”) network. The Ethernet address fields may indicate that the frame includes an FC frame, e.g., by the use of a predetermined Organization Unique Identifier (“OUI”) code in the D_MAC field, but also include Ethernet addressing information. Accordingly, the encapsulated frames can be forwarded properly by switches in the DCE network whether or not these switches are configured for operation according to the FC protocol. Accordingly, only a subset of Ethernet switches in the DCE network needs to be FC-enabled. Only switches so configured will require an FC Domain_ID.

Owner:CISCO TECH INC

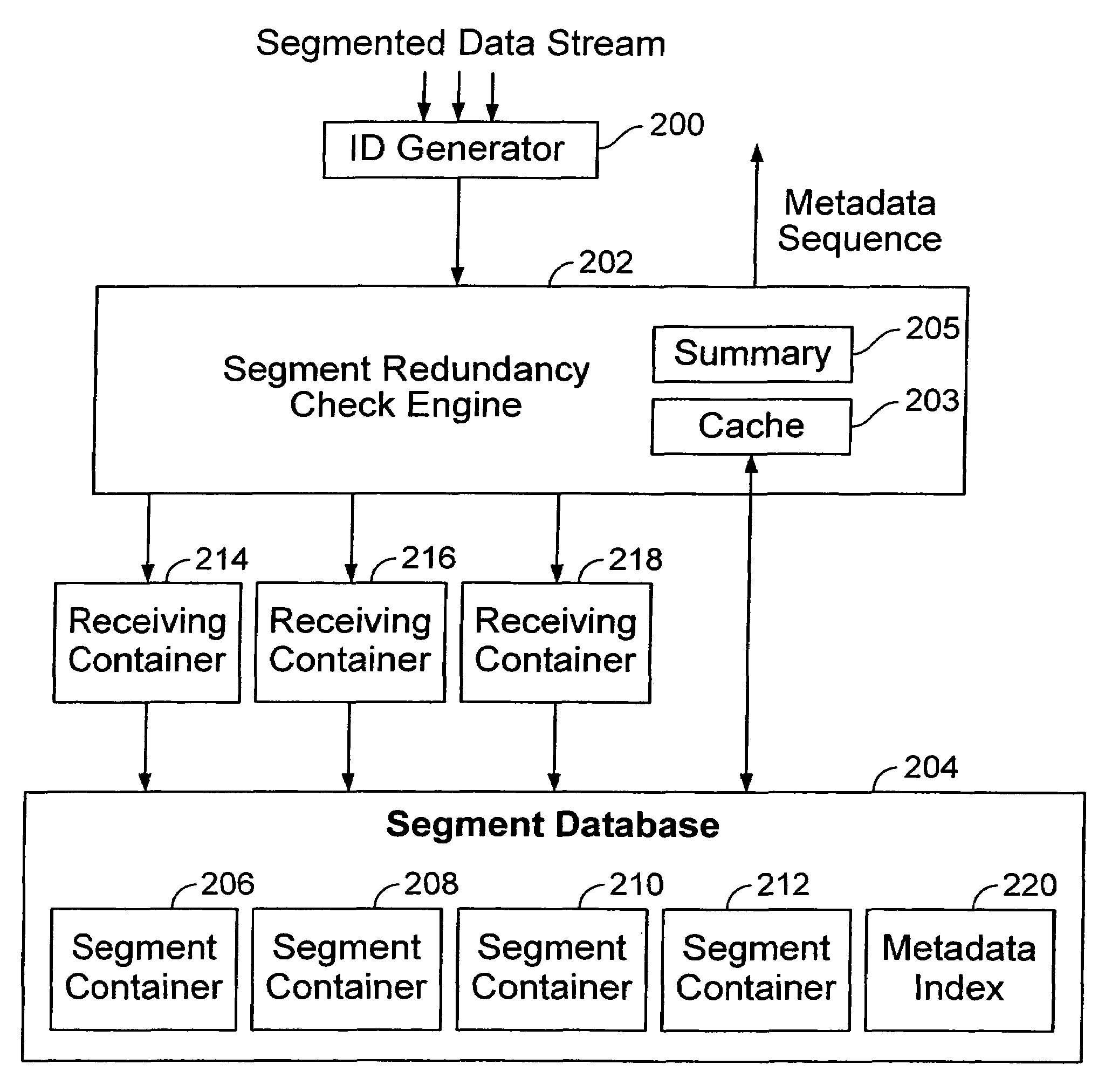

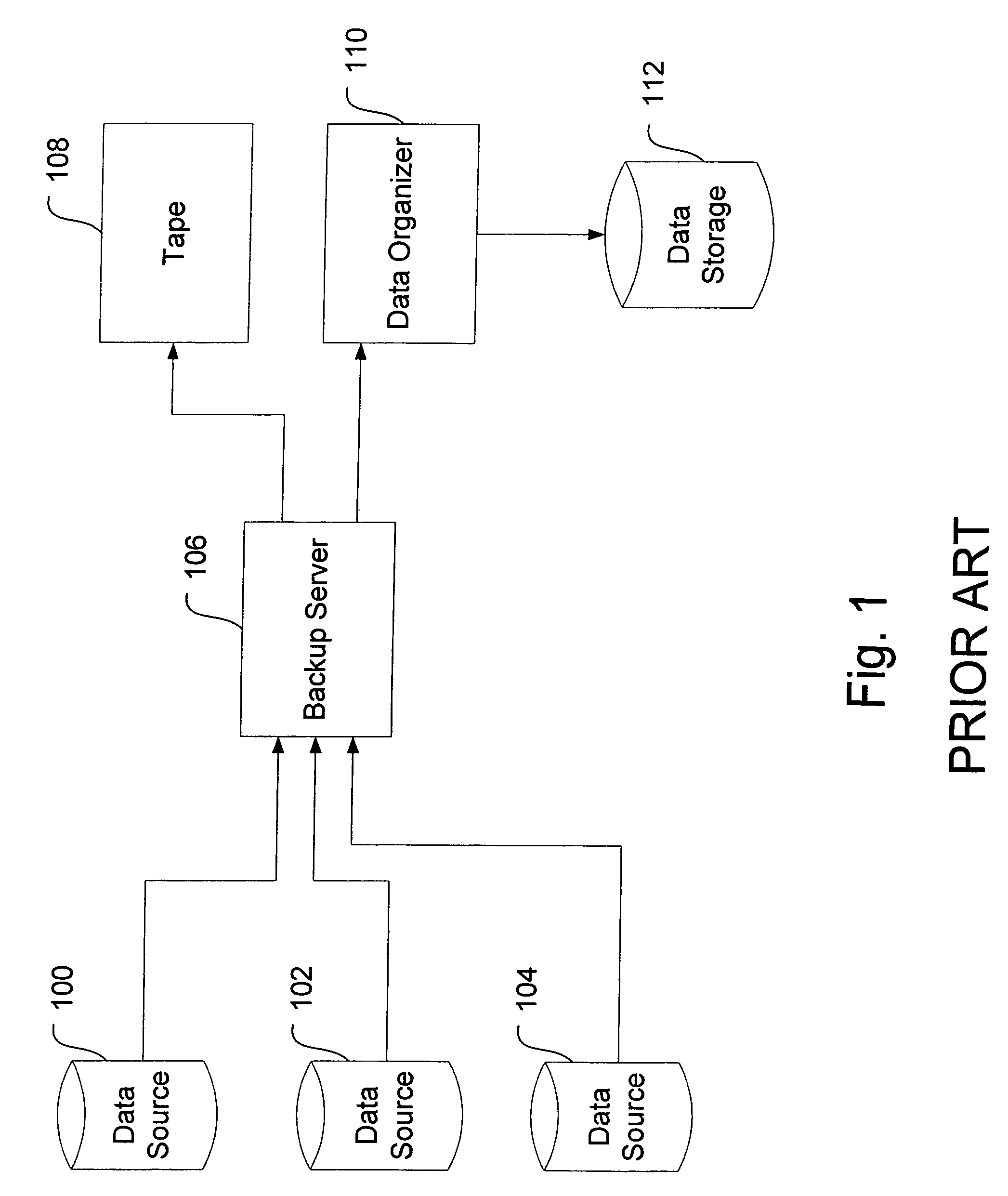

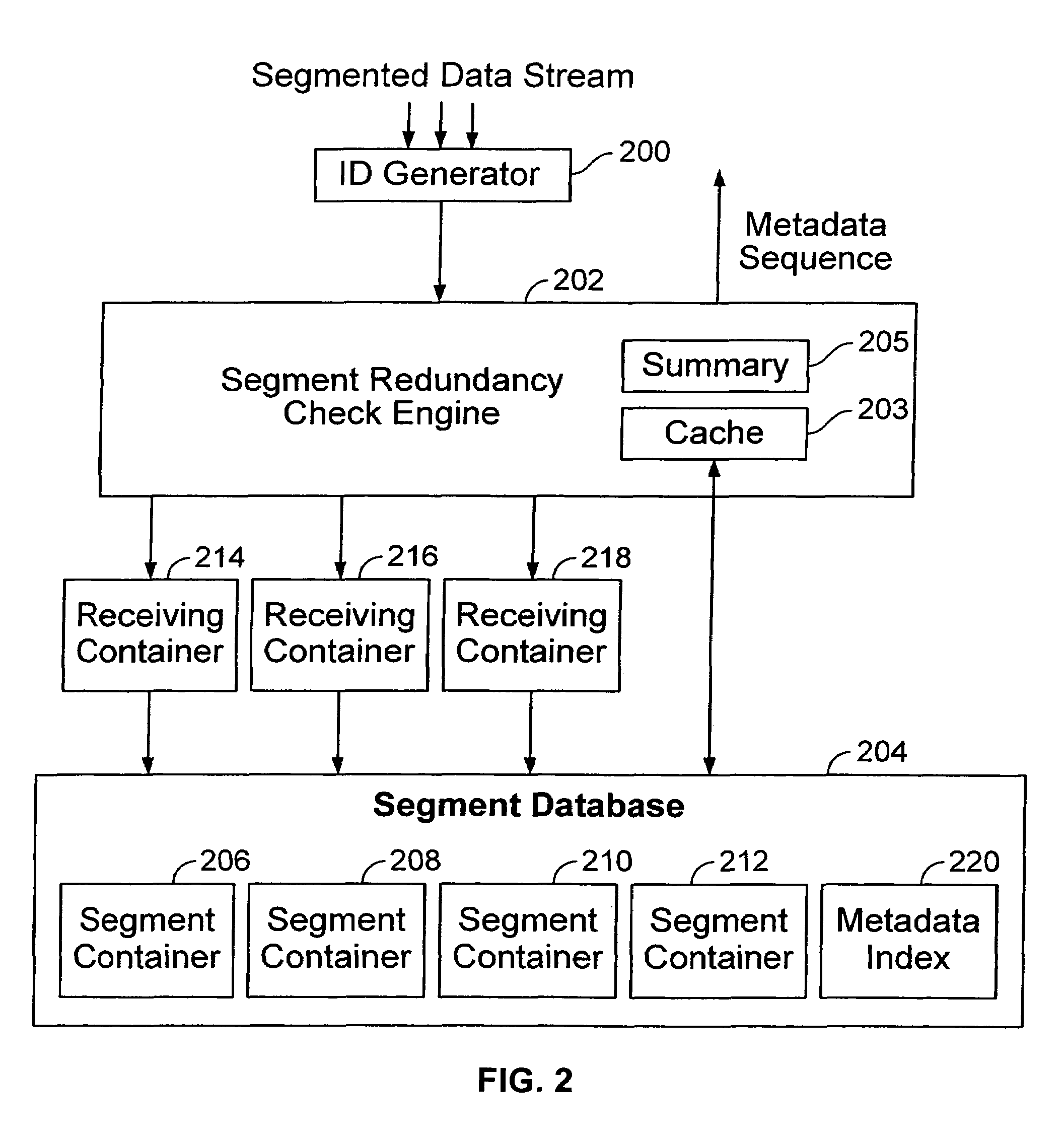

Efficient data storage system

A system and method are disclosed for providing efficient data storage. A plurality of data segments is received in a data stream. The system determines whether a data segment has been stored previously in a low latency memory. In the event that the data segment is determined to have been stored previously, an identifier for the previously stored data segment is returned.

Owner:EMC CORP

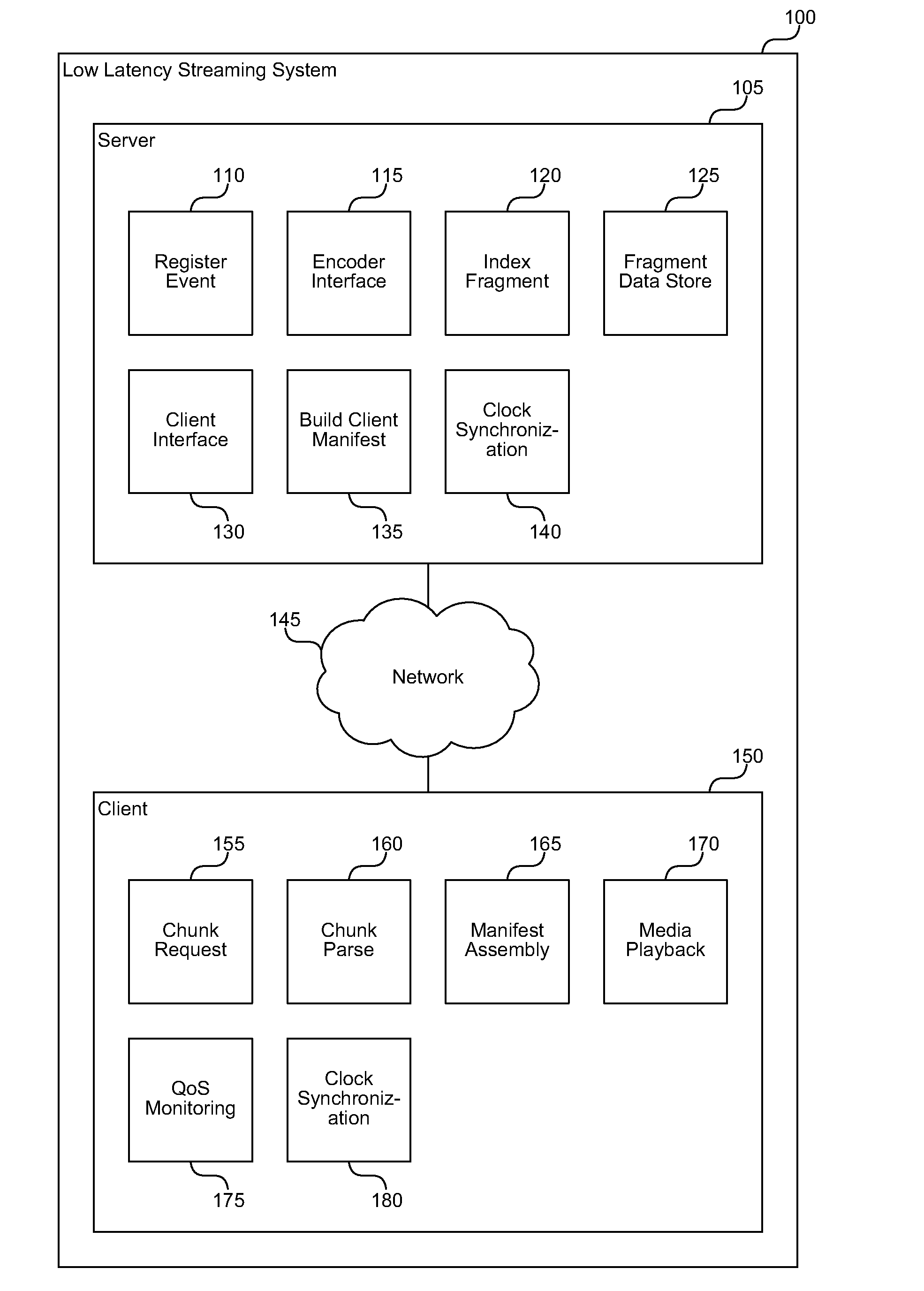

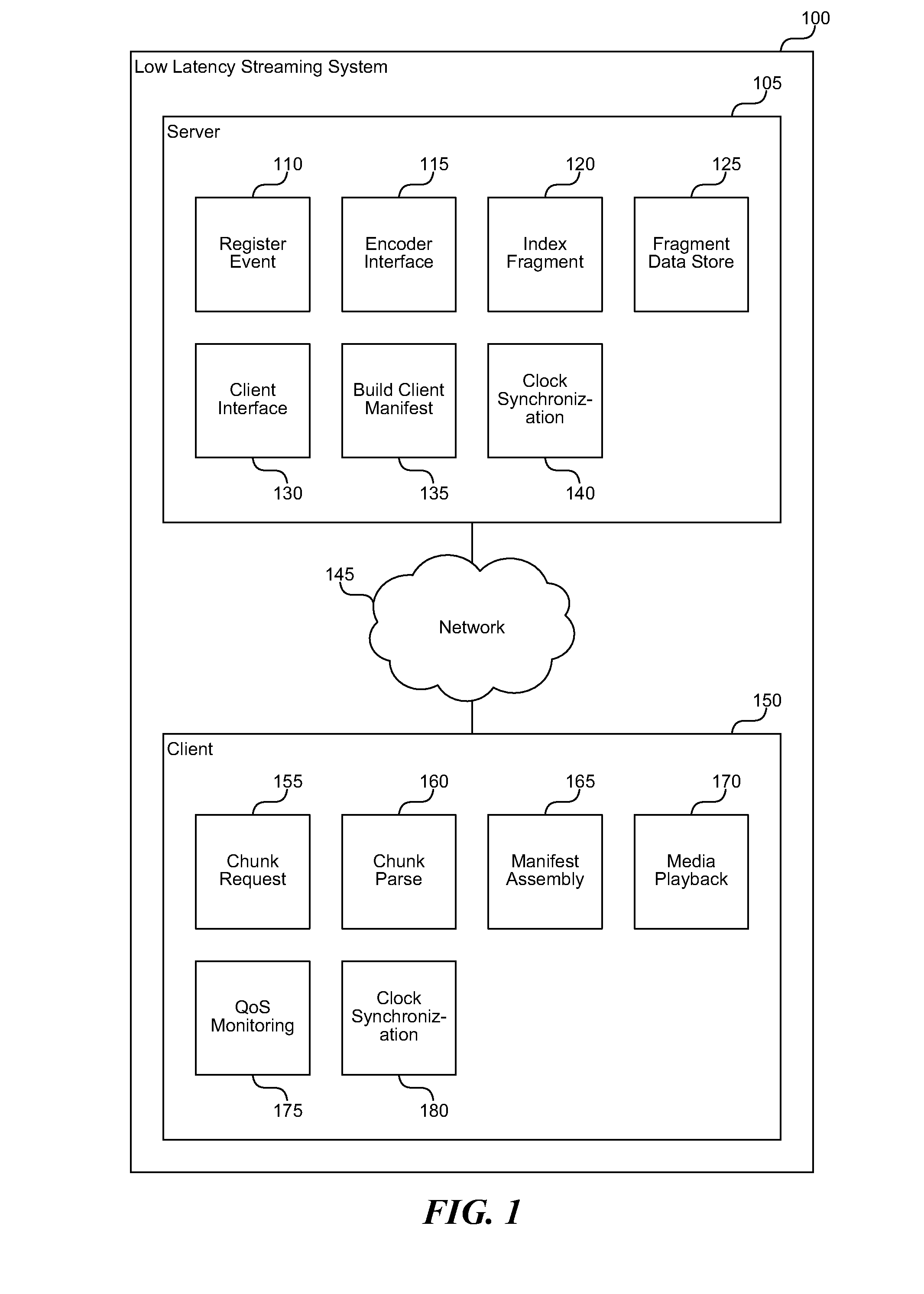

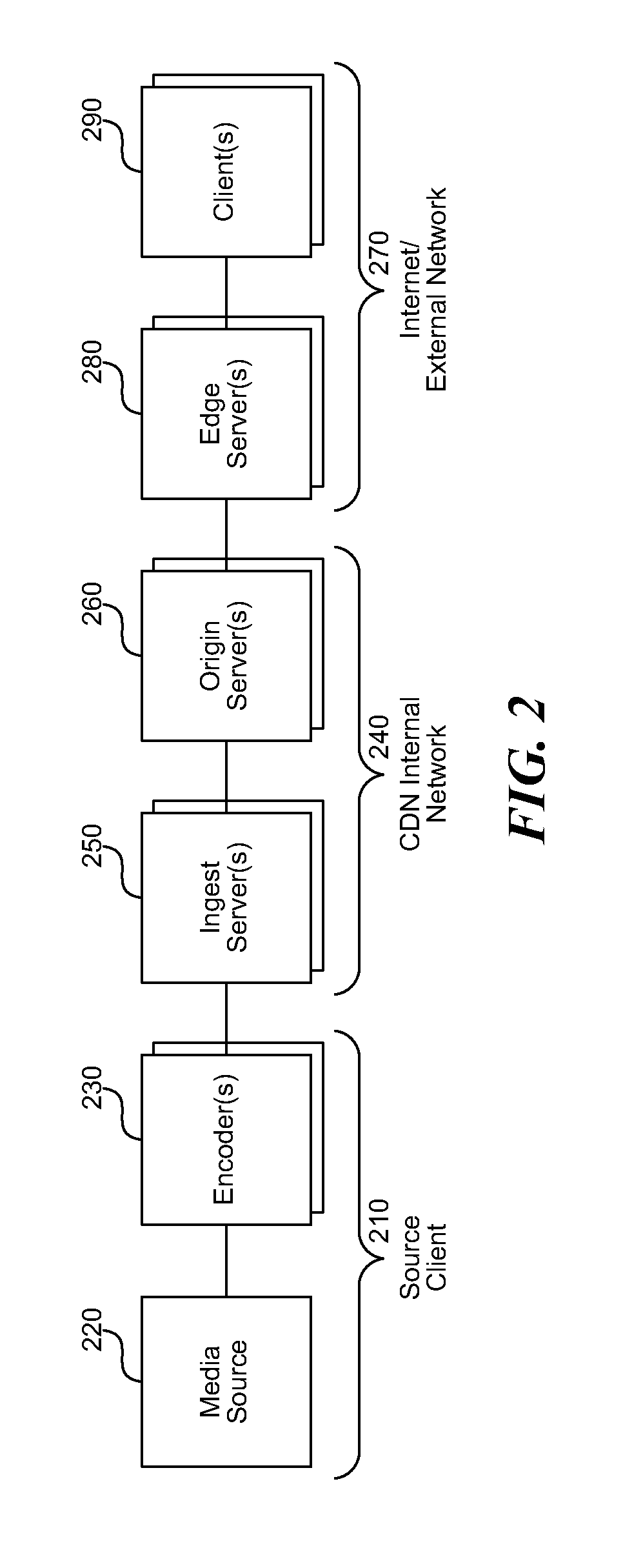

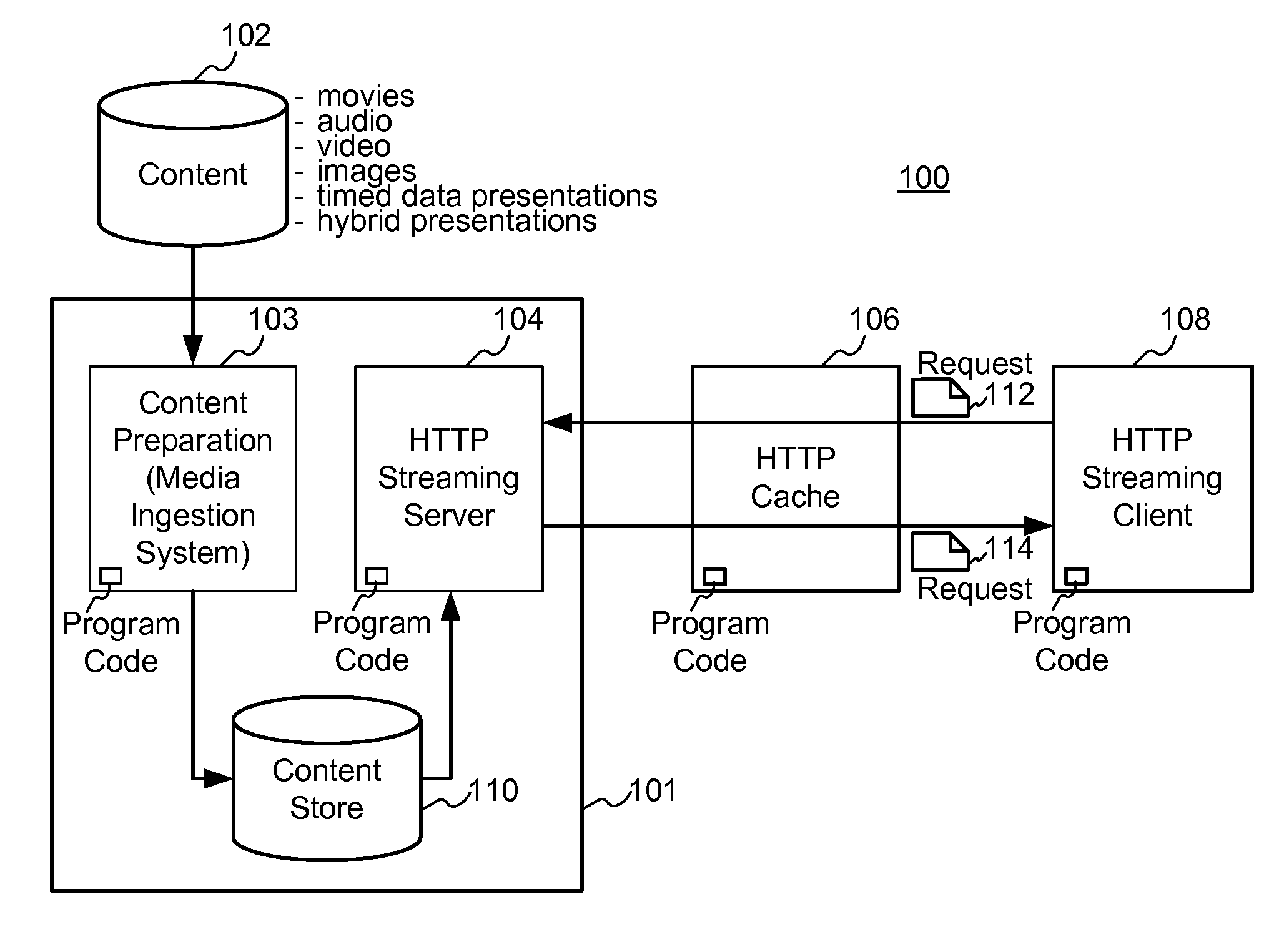

Low latency cacheable media streaming

ActiveUS20110080940A1Lower latencyRaise the possibilityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningGroup of picturesCache server

A low latency streaming system provides a stateless protocol between a client and server with reduced latency. The server embeds incremental information in media fragments that eliminates the usage of a typical control channel. In addition, the server provides uniform media fragment responses to media fragment requests, thereby allowing existing Internet cache infrastructure to cache streaming media data. Each fragment has a distinguished Uniform Resource Locator (URL) that allows the fragment to be identified and cached by both Internet cache servers and the client's browser cache. The system reduces latency using various techniques, such as sending fragments that contain less than a full group of pictures (GOP), encoding media without dependencies on subsequent frames, and by allowing clients to request subsequent frames with only information about previous frames.

Owner:MICROSOFT TECH LICENSING LLC

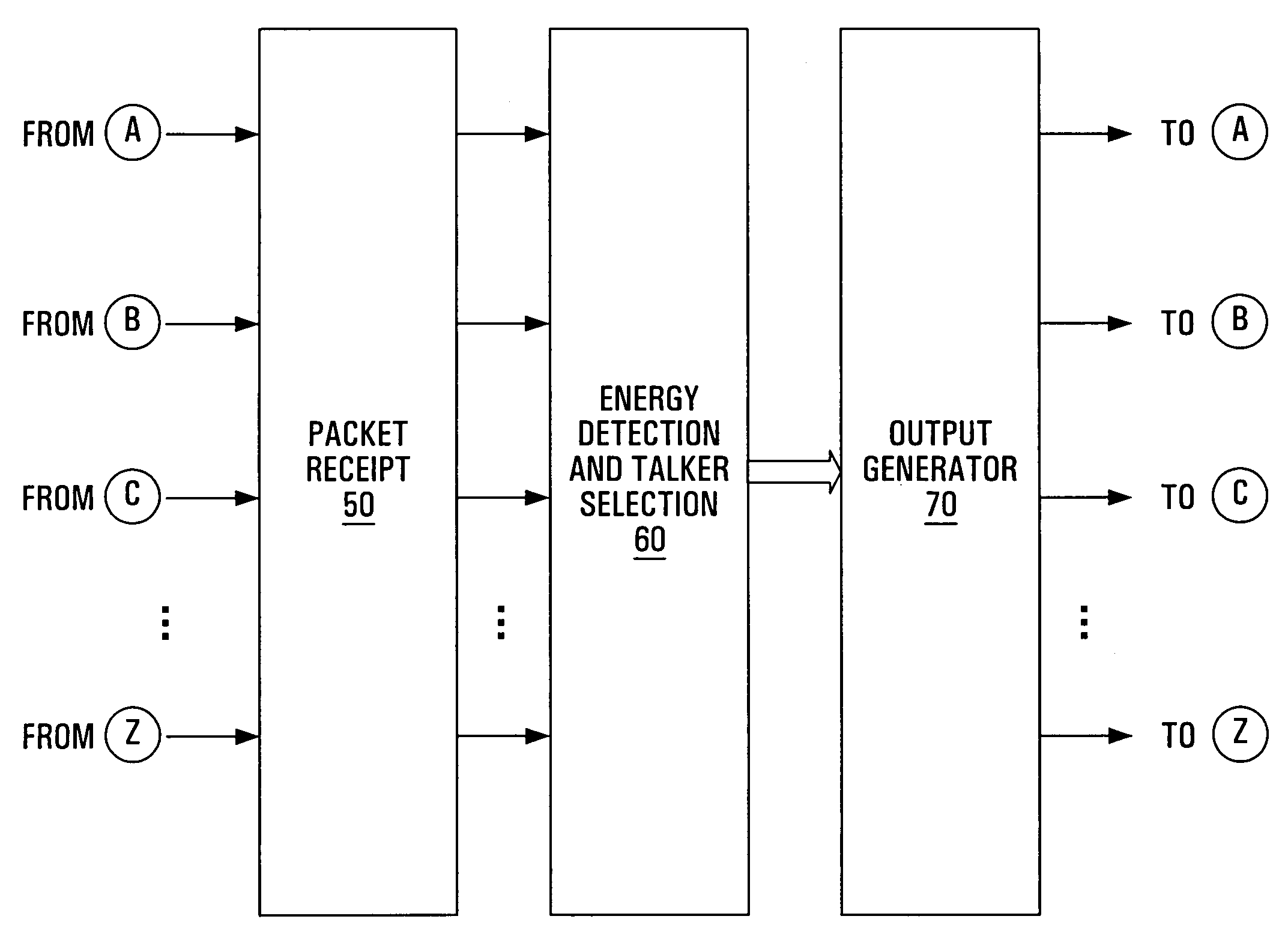

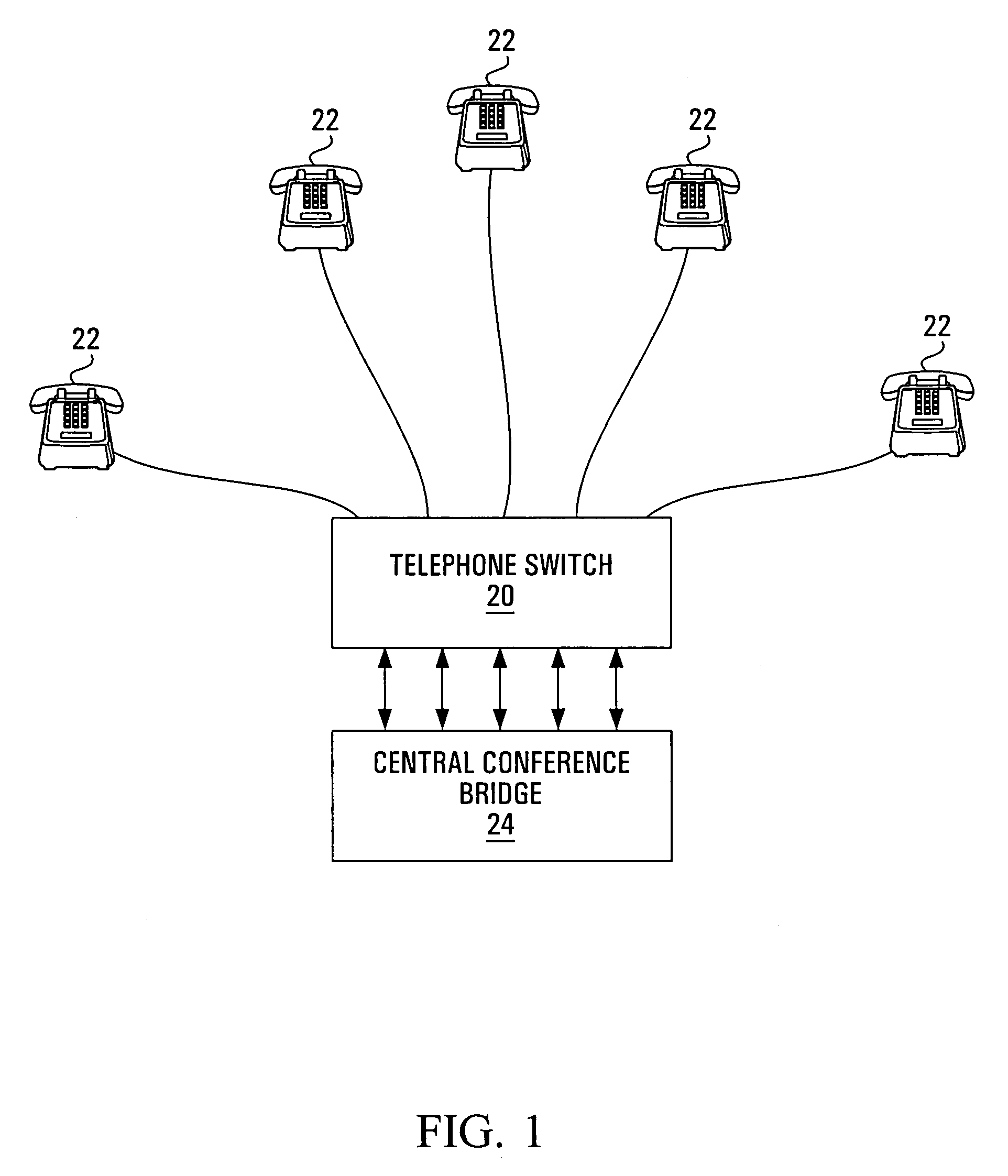

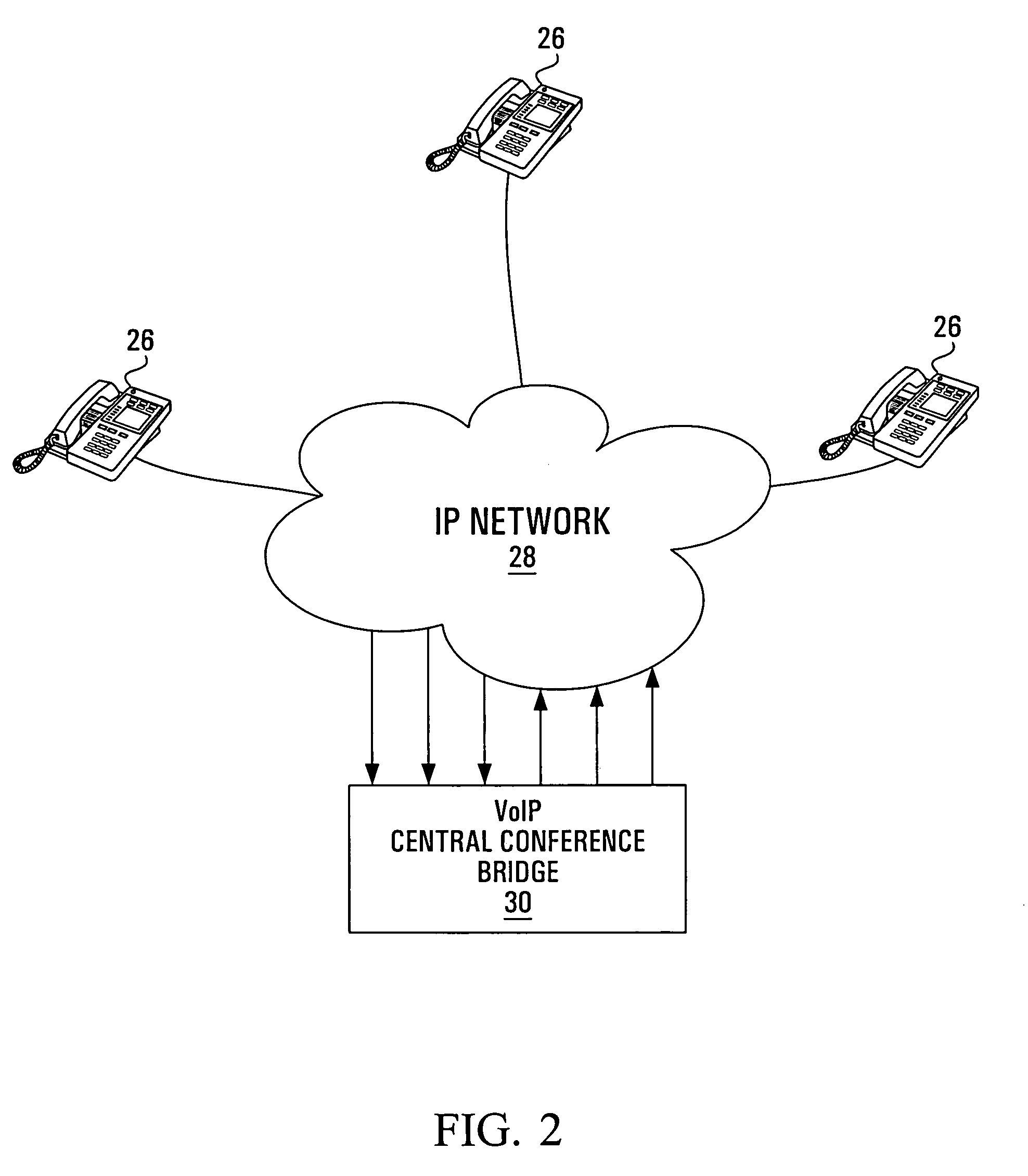

Apparatus and method for packet-based media communications

InactiveUS6940826B1Reduction in transcodingReduce latencySpecial service provision for substationMultiplex system selection arrangementsSignal qualityVoice communication

Packet-based central conference bridges, packet-based network interfaces and packet-based terminals are used for voice communications over a packet-based network. Modifications to these apparatuses can reduce the latency and the signal processing requirements while increasing the signal quality within a voice conference as well as point-to-point communications. For instance, by selecting the talkers prior to the decompression of the voice signals, decreases in the latency and increases in signal quality within the voice conference can result due to a possible removal of the decompression and subsequent compression operations in a conference bridge unnecessary in some circumstances. Further, the removal of the jitter buffers within the conference bridges and the moving of the mixing operation to the individual terminals and / or network interfaces are modifications that can cause lower latency and transcoding within the voice conference.

Owner:RPX CLEARINGHOUSE

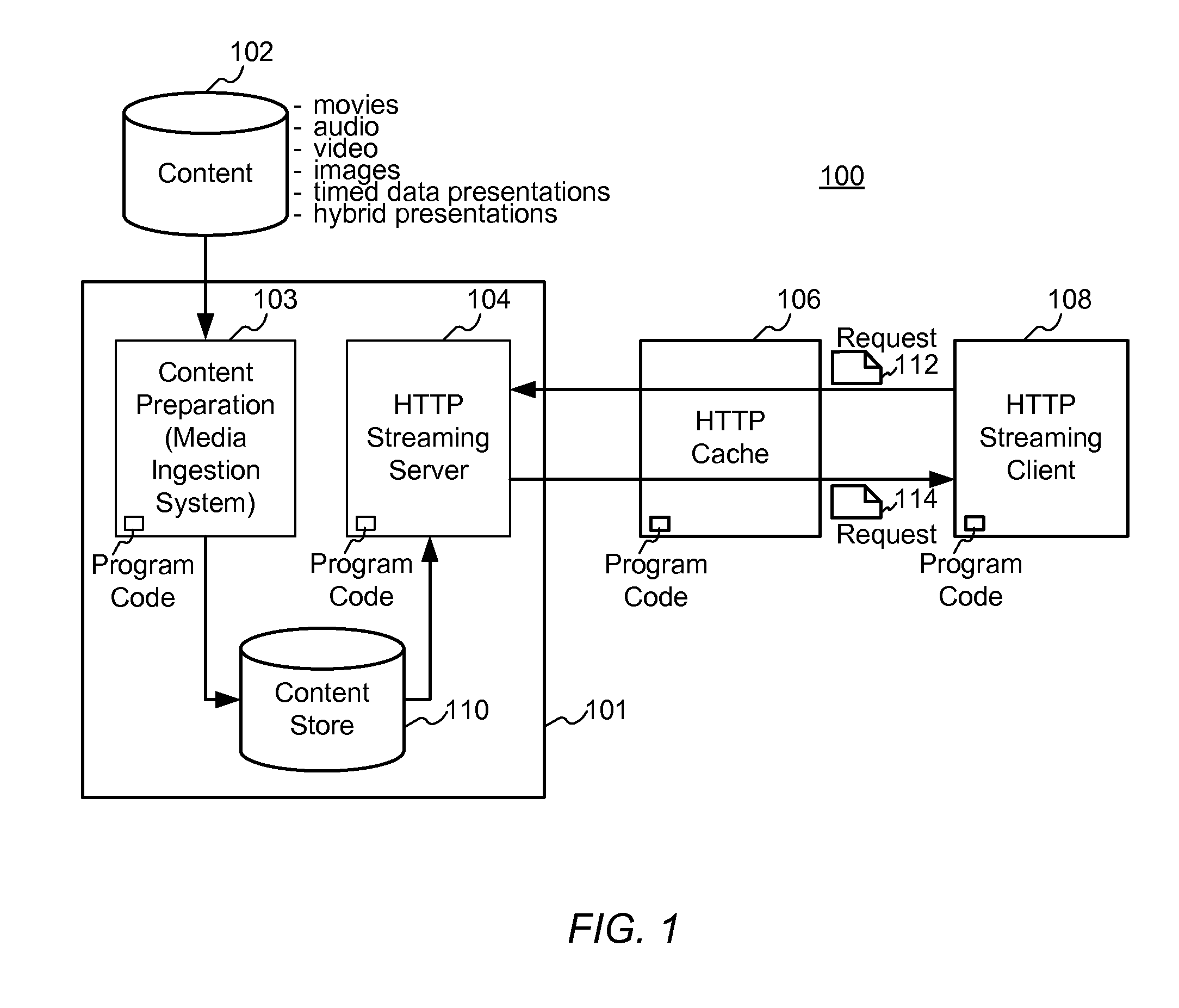

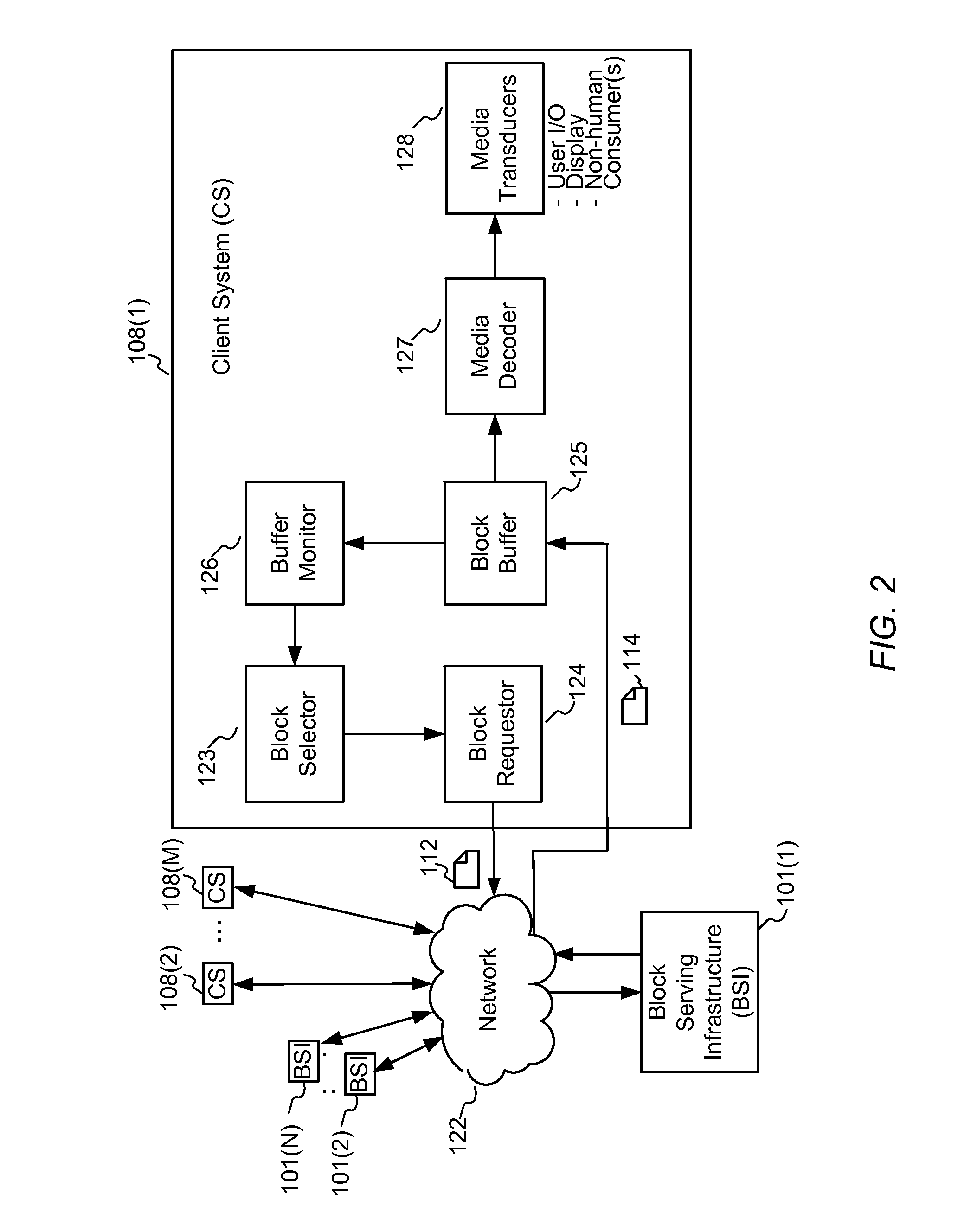

Enhanced block-request streaming system for handling low-latency streaming

ActiveUS20130007223A1Improve bandwidth efficiencyImprove user experienceMultiple digital computer combinationsTransmissionTransport systemLatency (engineering)

A block-request streaming system provides for low-latency streaming of a media presentation. A plurality of media segments are generated according to an encoding protocol. Each media segment includes a random access point. A plurality of media fragments are encoded according to the same protocol. The media segments are aggregated from a plurality of media fragments.

Owner:QUALCOMM INC

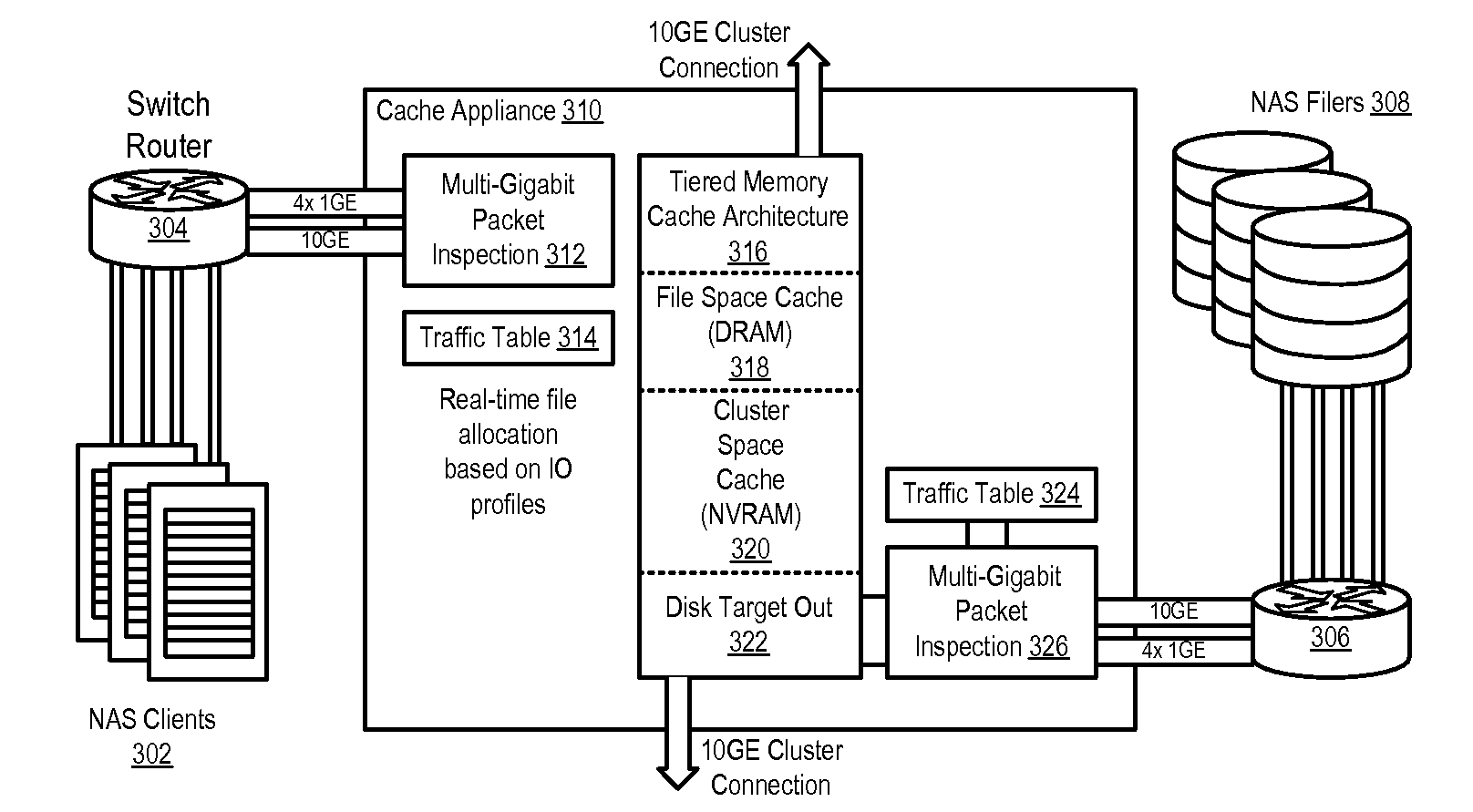

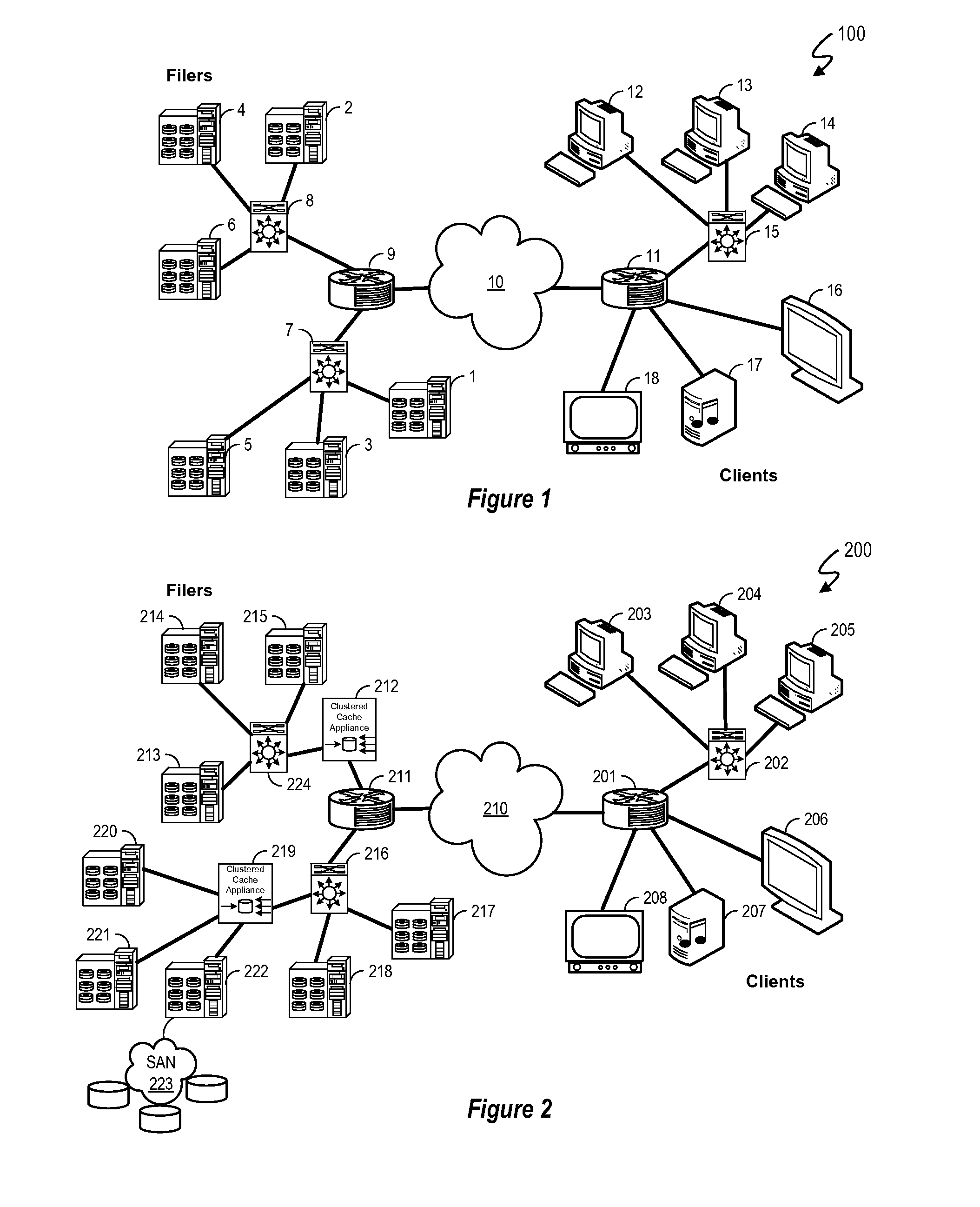

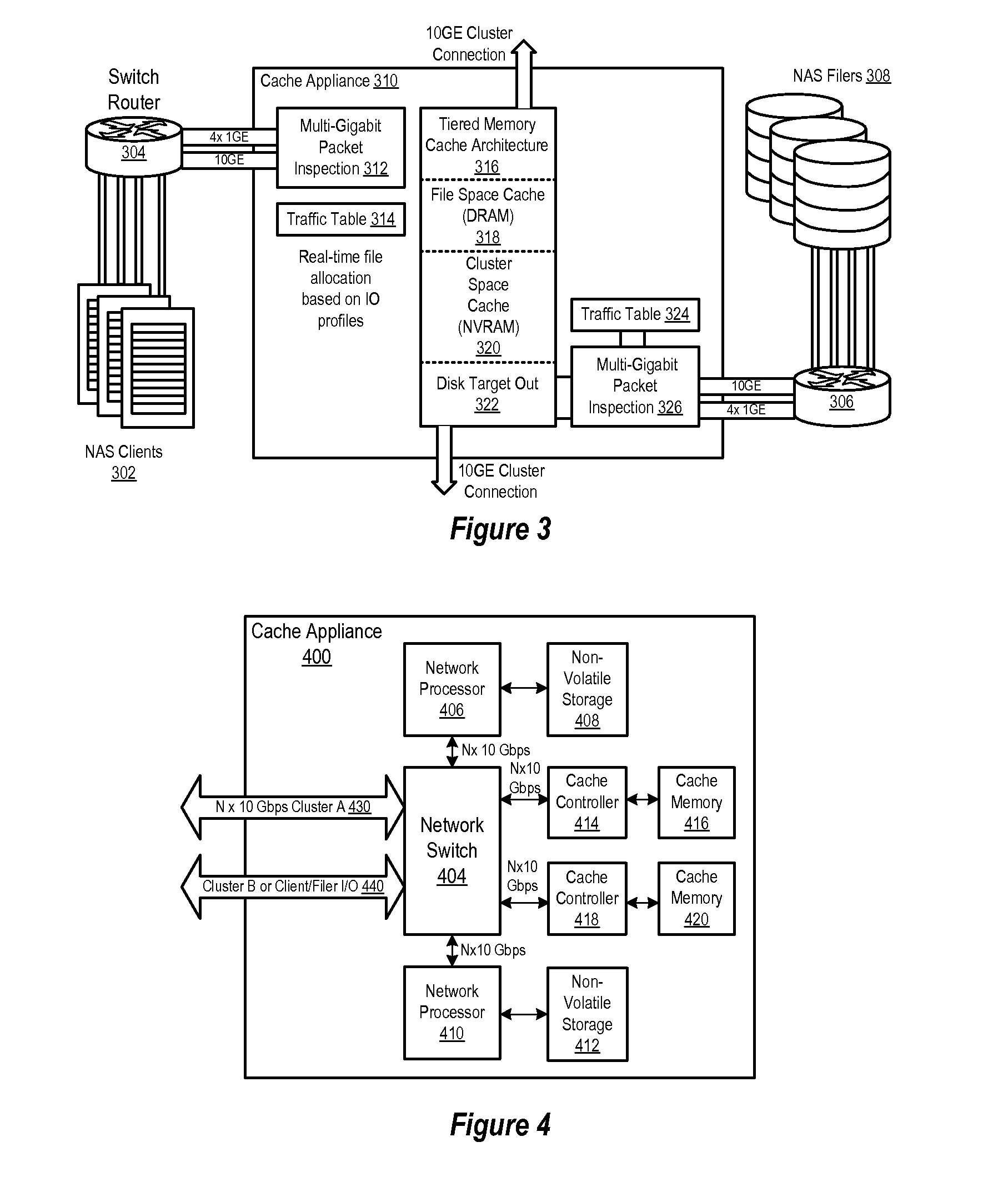

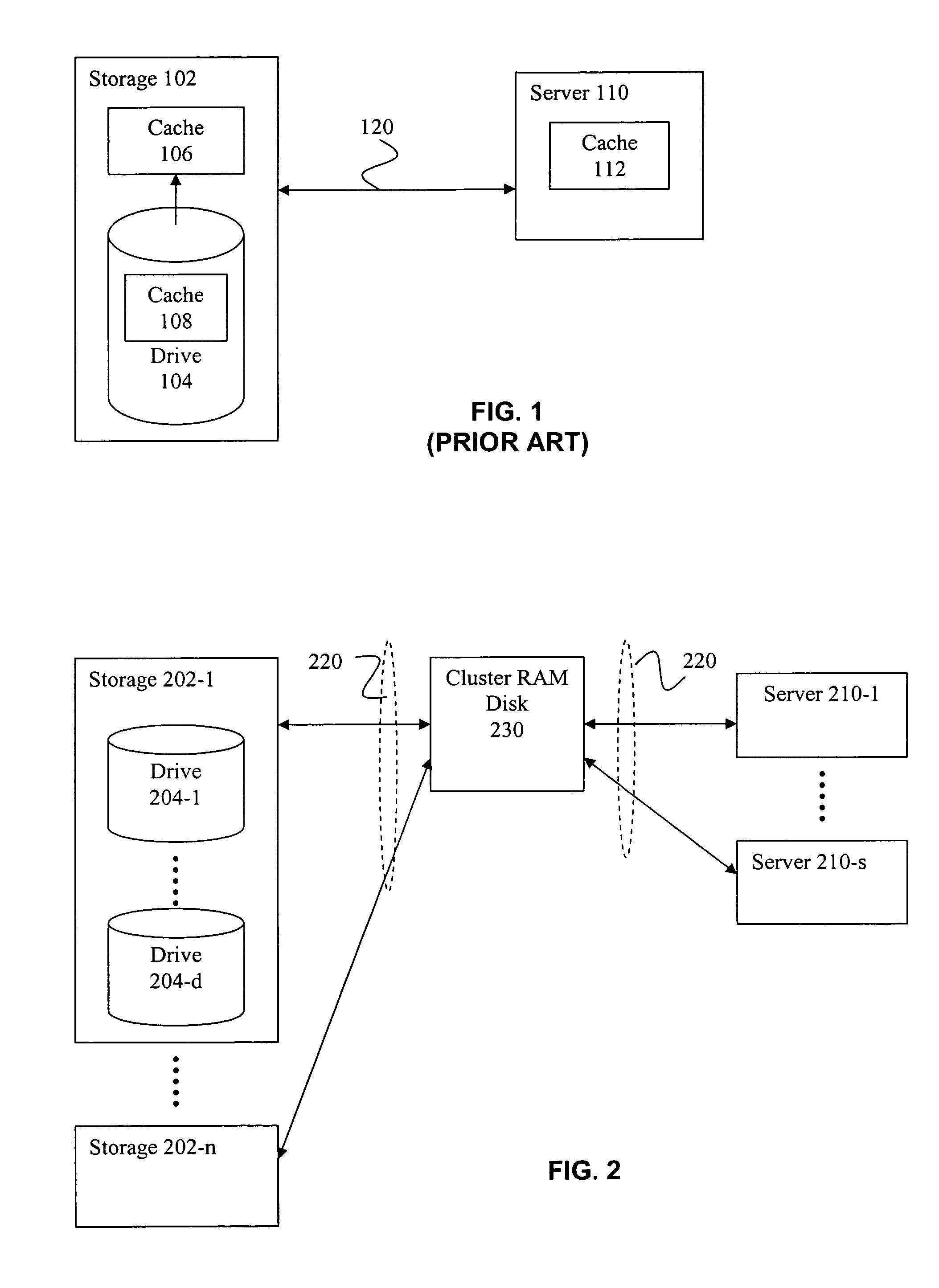

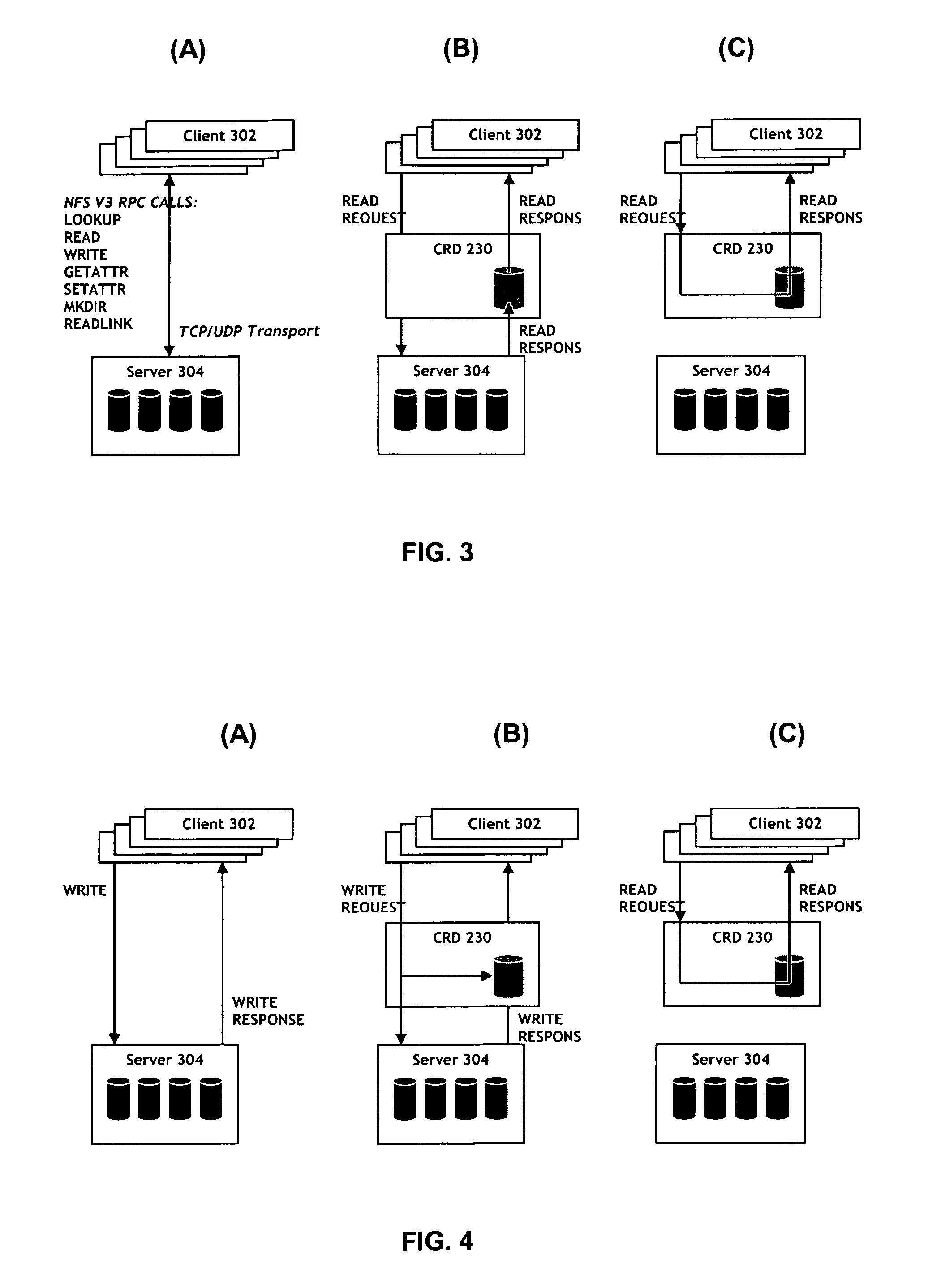

Clustered cache appliance system and methodology

ActiveUS20090182945A1Memory adressing/allocation/relocationTransmissionAccess timeLatency (engineering)

A method, system and program are disclosed for accelerating data storage by providing non-disruptive storage caching using clustered cache appliances with packet inspection intelligence. A cache appliance cluster that transparently monitors NFS and CIFS traffic between clients and NAS subsystems and caches files using dynamically adjustable cache policies provides low-latency access and redundancy in responding to both read and write requests for cached files, thereby improving access time to the data stored on the disk-based NAS filer (group).

Owner:NETWORK APPLIANCE INC

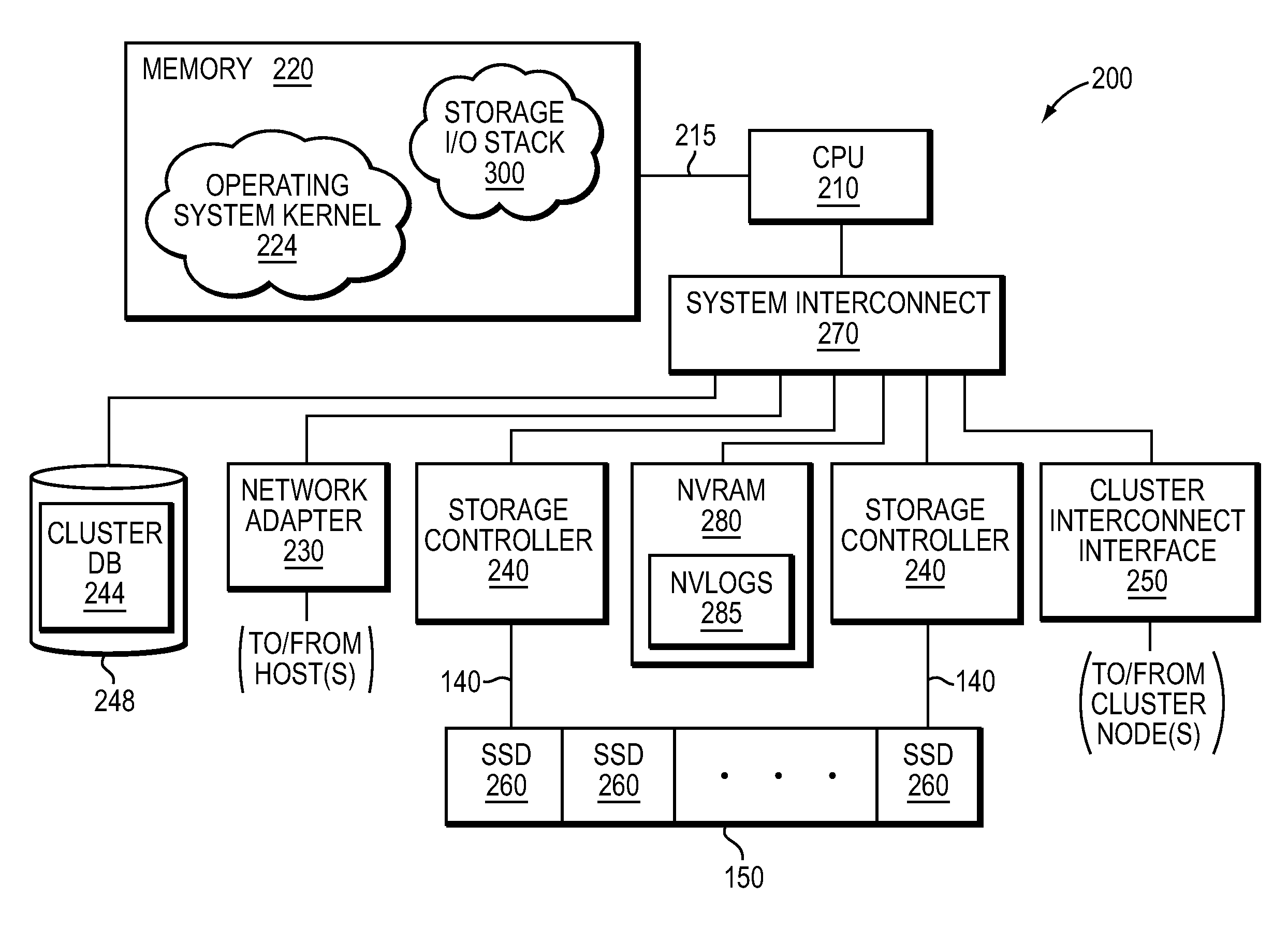

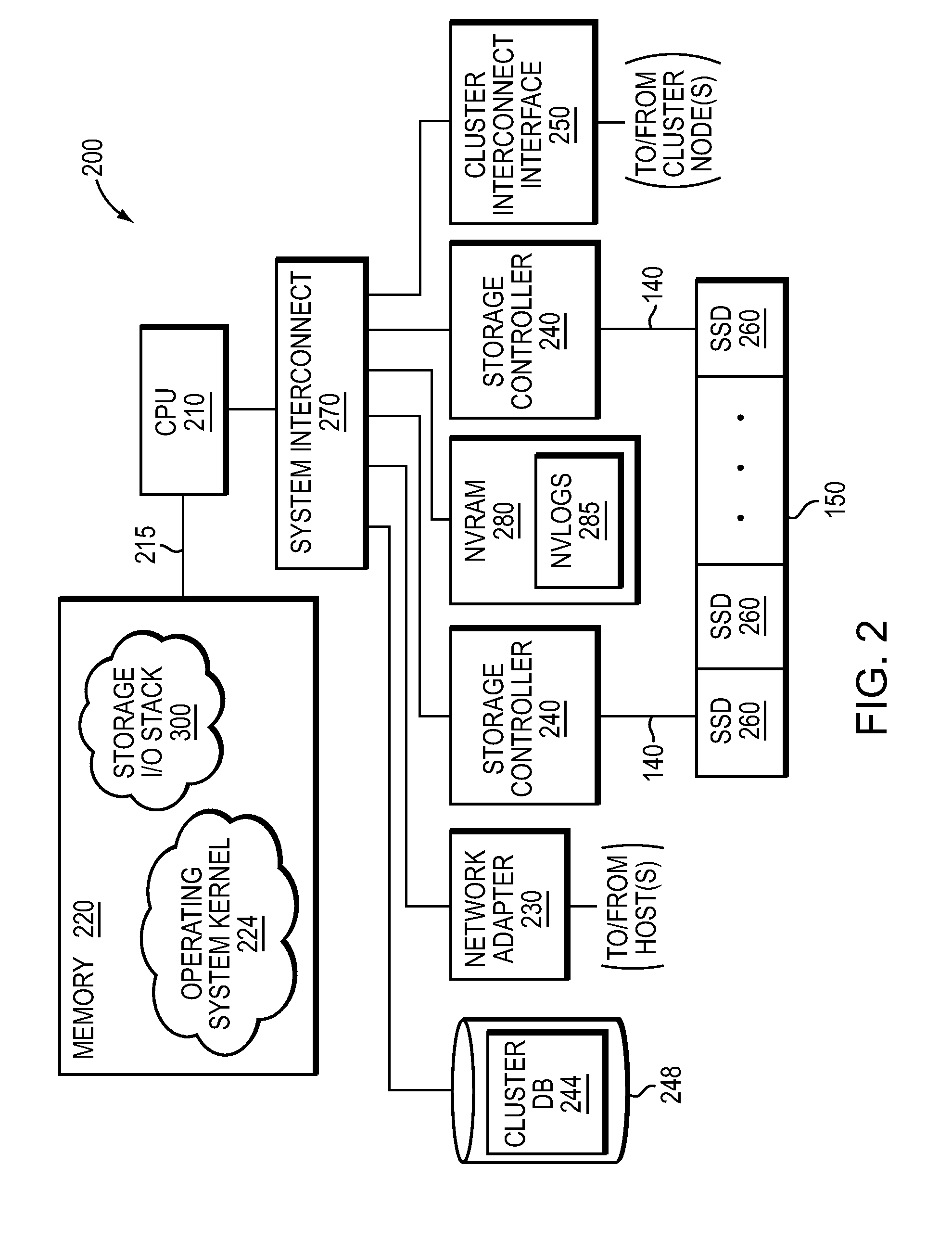

NVRAM caching and logging in a storage system

ActiveUS8898388B1Memory architecture accessing/allocationInput/output to record carriersLatency (engineering)Solid-state drive

In one embodiment, non-volatile random access memory (NVRAM) caching and logging delivers low latency acknowledgements of input / output (I / O) requests, such as write requests, while avoiding loss of data. Write data may be stored in a portion of an NVRAM configured as, e.g., a persistent write-back cache, while parameters of the request may be stored in another portion of the NVRAM configured as one or more logs, e.g., NVLogs. The write data may be organized into separate variable length blocks or extents and “written back” out-of-order from the write back cache to storage devices, such as solid state drives (SSDs). The write data may be preserved in the write-back cache until each extent is safely and successfully stored on SSD (i.e., in the event of power loss), or operations associated with the write request are sufficiently logged on NVLog.

Owner:NETWORK APPLIANCE INC

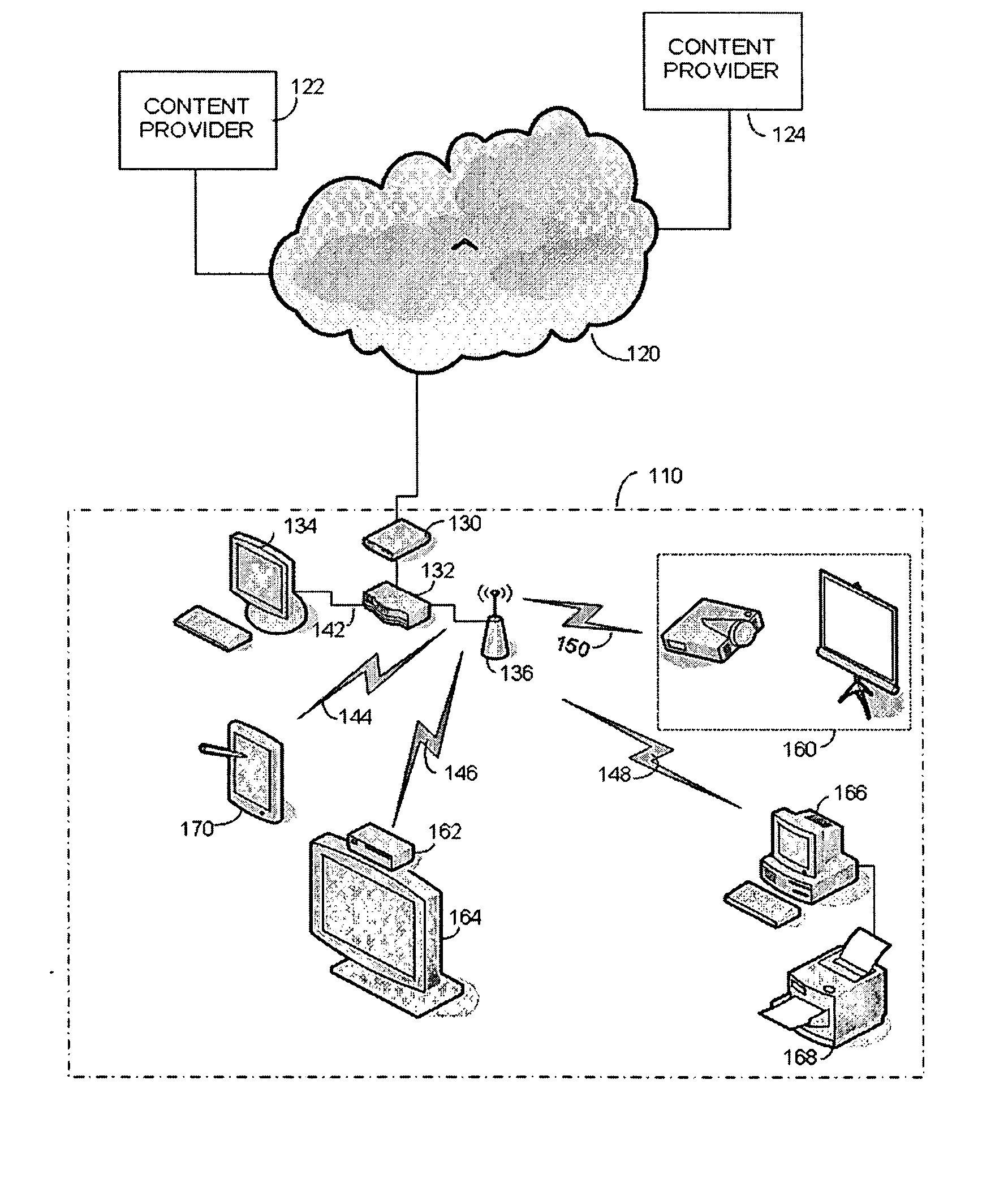

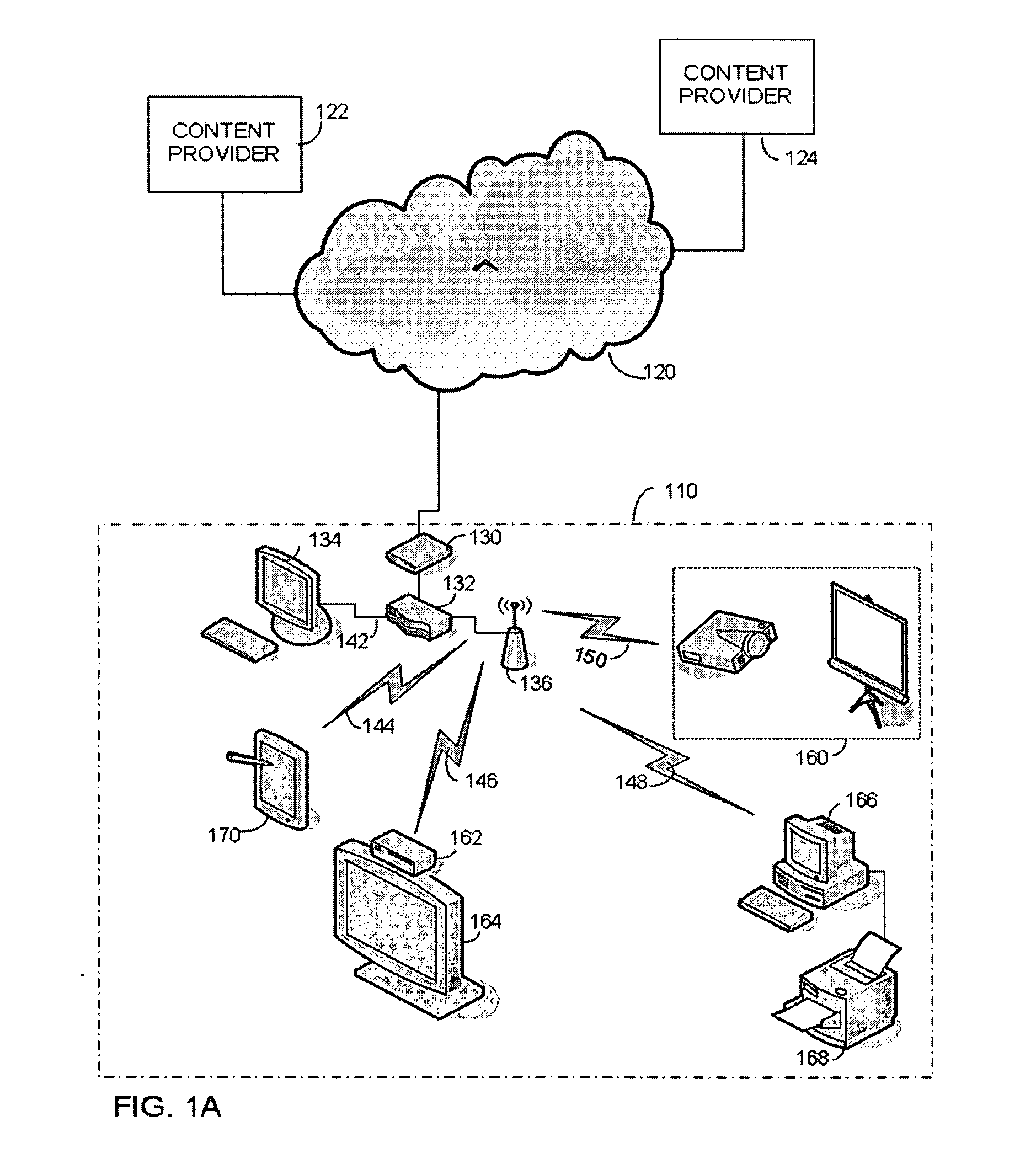

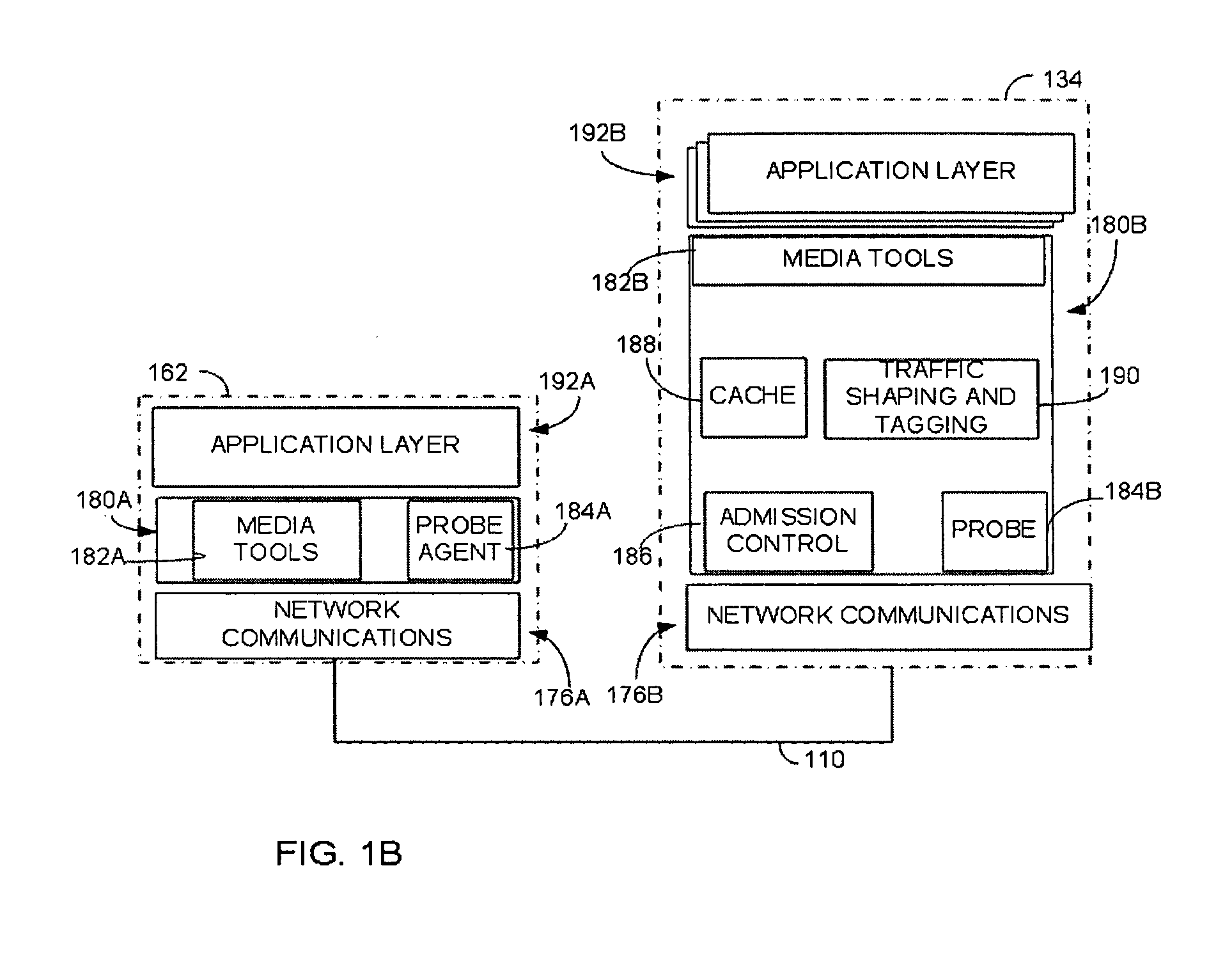

Quality of service support for A/V streams

ActiveUS20070248100A1Speed up the processLower latencyData switching by path configurationWireless commuication servicesQuality of serviceData stream

An access control mechanism in a network connecting one or more sink devices to a server providing audio / visual data (A / V) in streams. As a sink device requests access, the server measures available bandwidth to the sink device. If the measurement of available bandwidth is completed before the sink device requests a stream of audio / visual data, the measured available bandwidth is used to set transmission parameters of the data stream in accordance with a Quality of Service (QoS) policy. If the measurement is not completed when the data stream is requested, the data stream is nonetheless transmitted. In this scenario, the data stream may be transmitted using parameters computed using a cached measurement of the available bandwidth to the sink device. If no cached measurement is available, the data stream is transmitted with a low priority until a measurement can be made. Once the measurement is available, the transmission parameters of the data stream are re-set. With this access control mechanism, A / V streams may be provided with low latency but with transmission parameters accurately set in accordance with the QoS policy.

Owner:MICROSOFT TECH LICENSING LLC

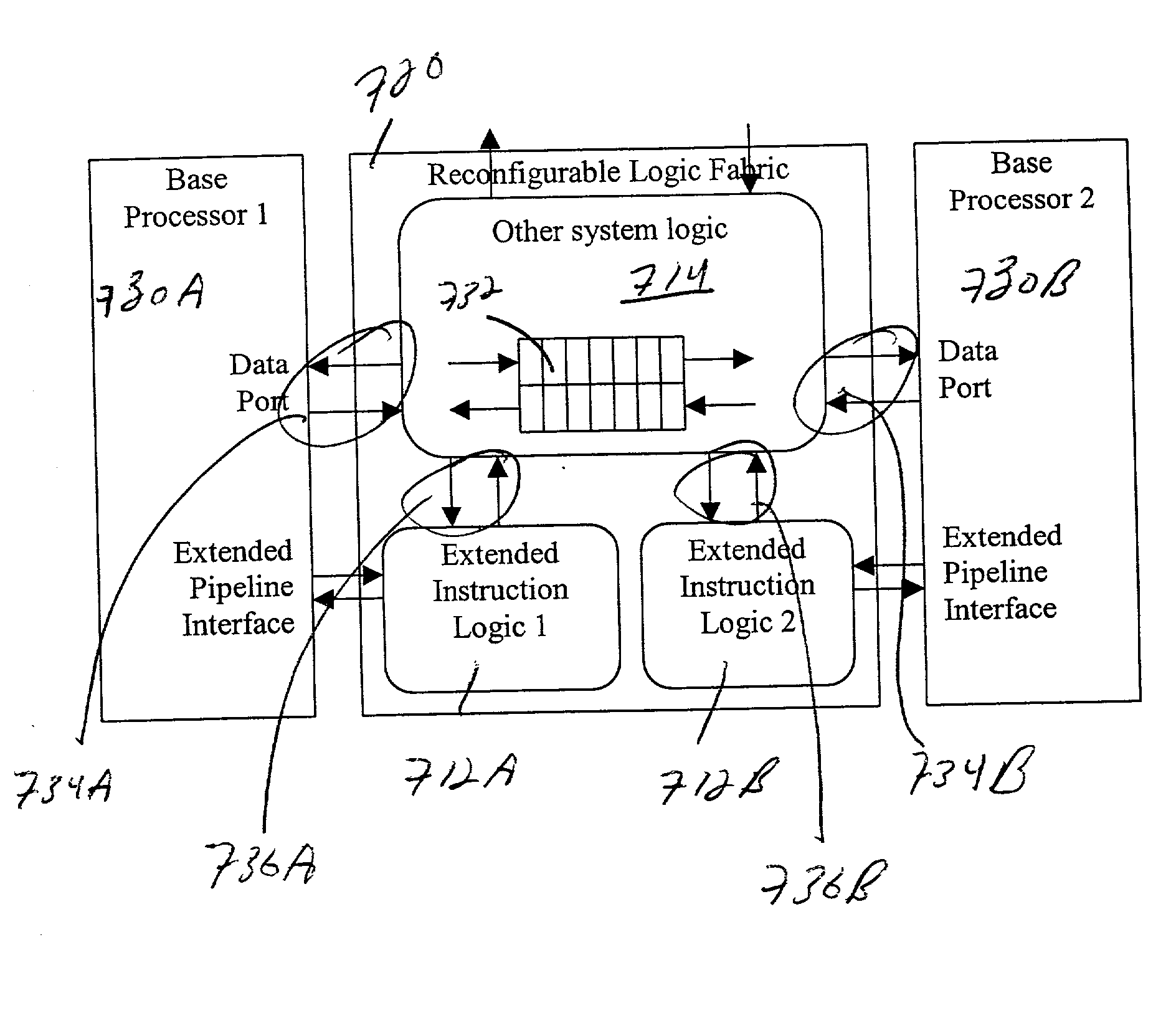

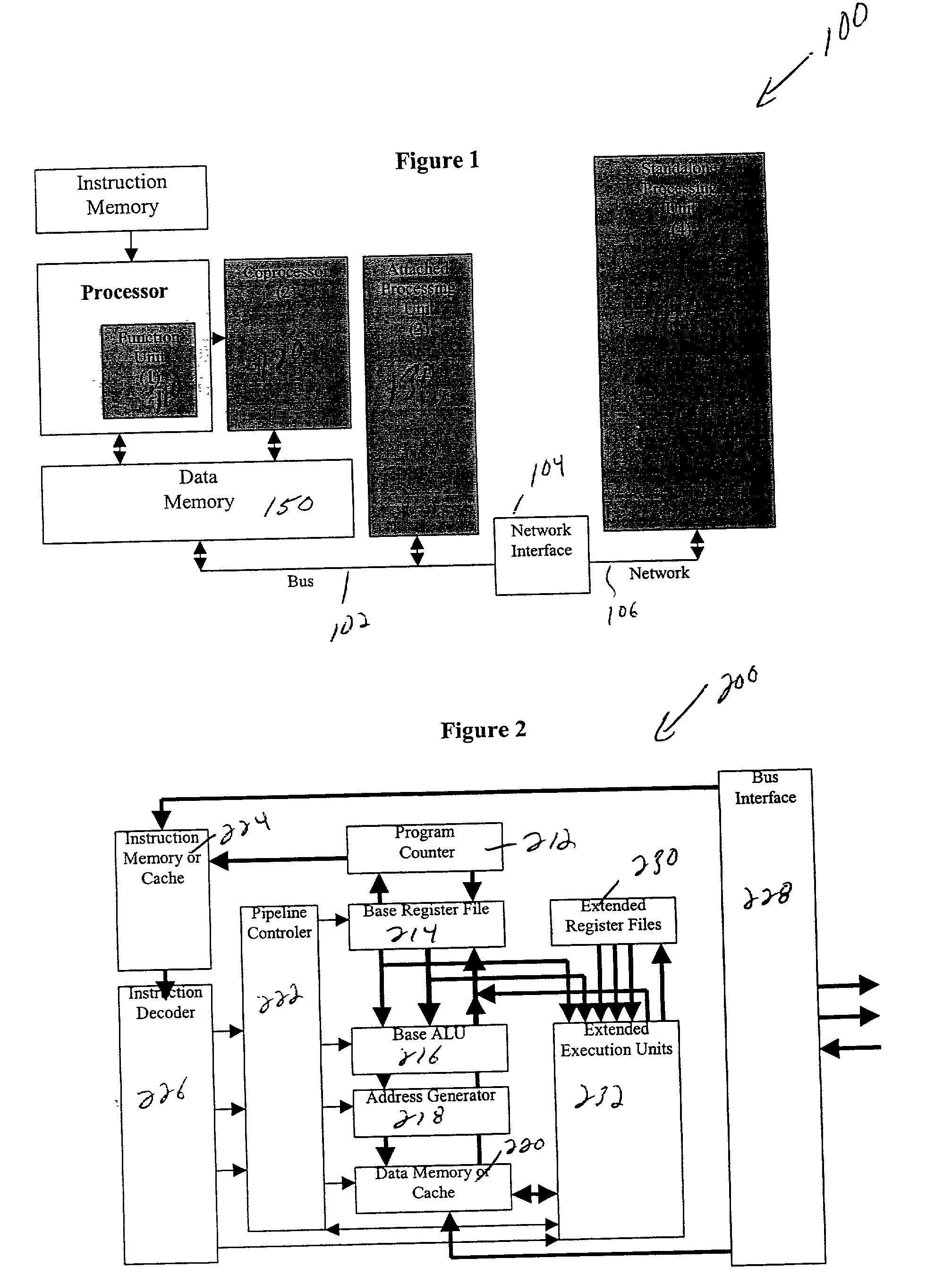

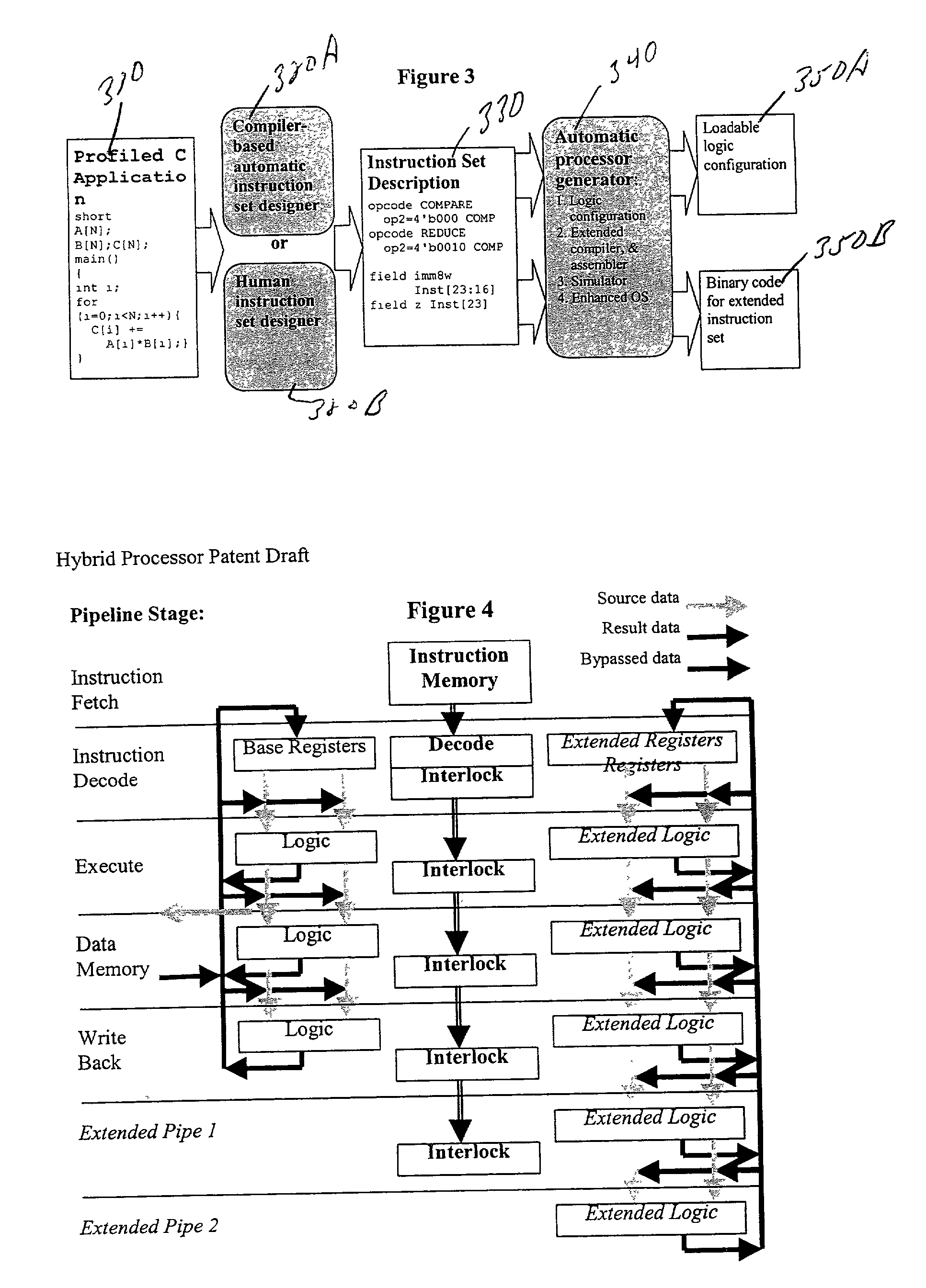

High-performance hybrid processor with configurable execution units

InactiveUS20050166038A1High bandwidthFlexibilityInstruction analysisConcurrent instruction executionHigh bandwidthLatency (engineering)

A new general method for building hybrid processors achieves higher performance in applications by allowing more powerful, tightly-coupled instruction set extensions to be implemented in reconfigurable logic. New instructions set configurations can be discovered and designed by automatic and semi-automatic methods. Improved reconfigurable execution units support deep pipelining, addition of additional registers and register files, compound instructions with many source and destination registers and wide data paths. New interface methods allow lower latency, higher bandwidth connections between hybrid processors and other logic.

Owner:TENSILICA

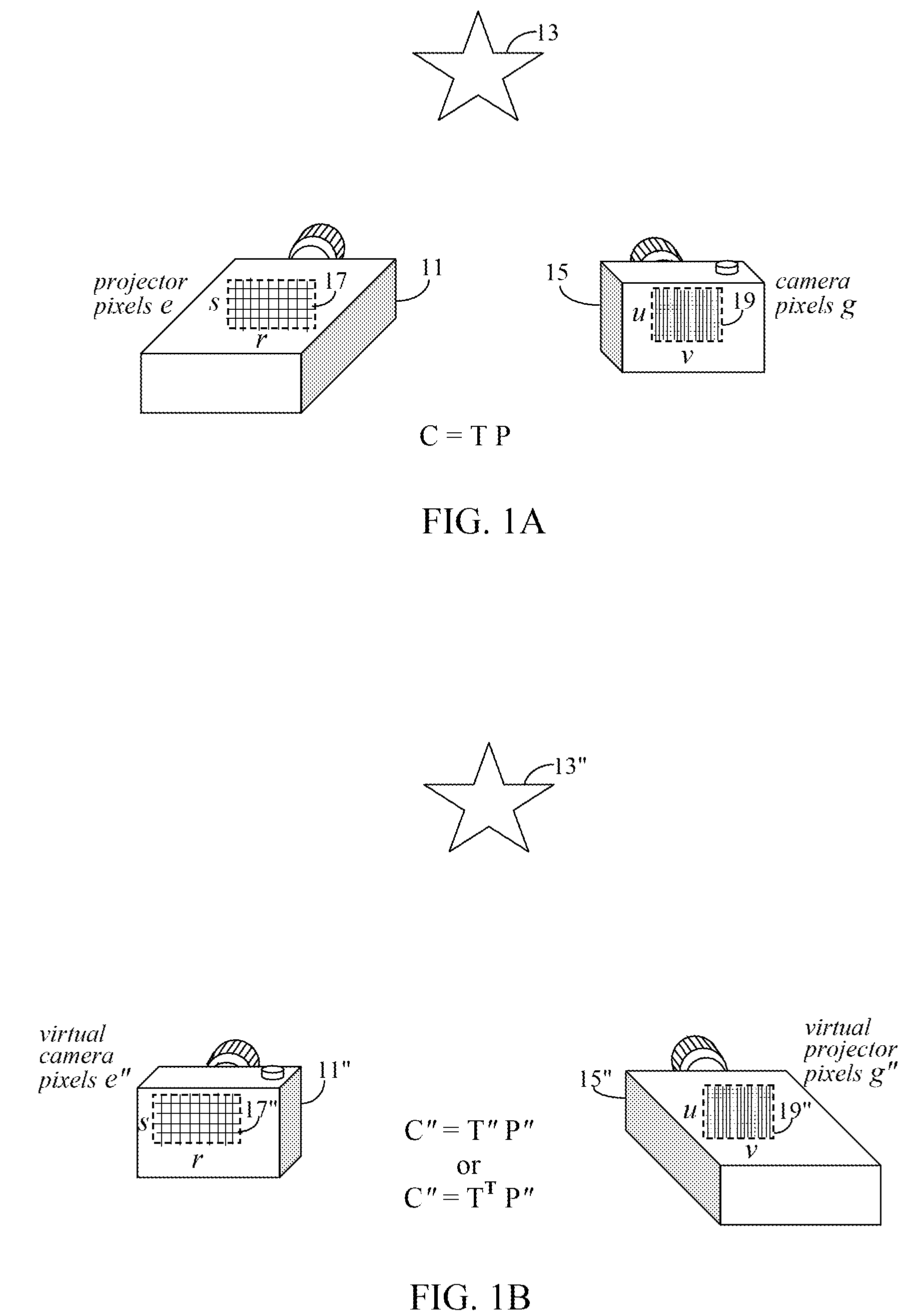

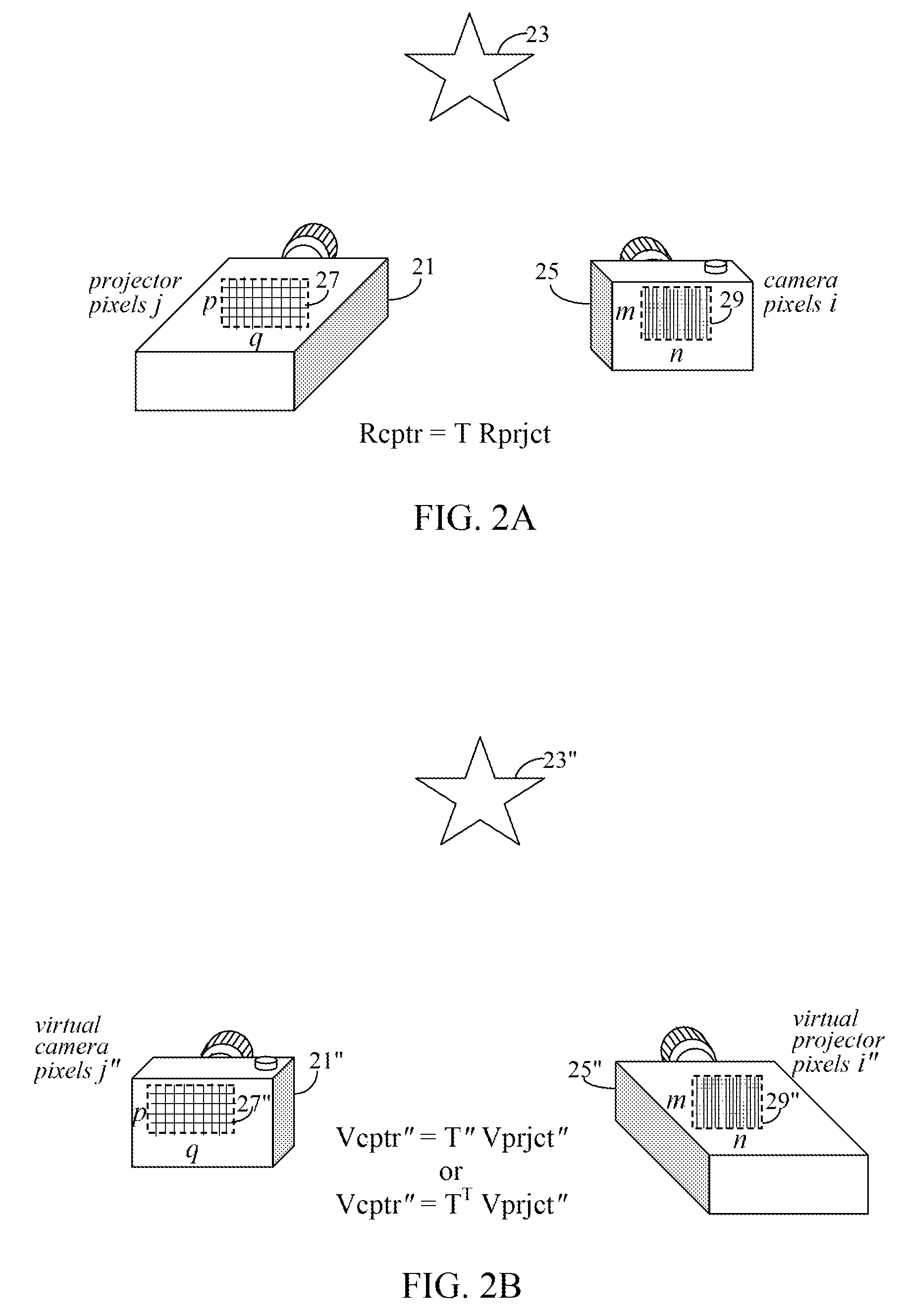

View projection matrix based high performance low latency display pipeline

InactiveUS8013904B2Easy to shipEasy to implementTelevision system detailsPicture reproducers using projection devicesHat matrixImaging processing

A projection system uses a transformation matrix to transform a projection image p in such a manner so as to compensate for surface irregularities on a projection surface. The transformation matrix makes use of properties of light transport relating a projector to a camera. A display pipeline of user-supplied image modification processing modules are reduced by first representing the processing modules as multiple, individual matrix operations. All the matrix operations are then combined with, i.e., multiplied to, the transformation matrix to create a modified transformation matrix. The created transformation matrix is then used in place of the original transformation matrix to simultaneously achieve both image transformation and any pre and post image processing defined by the image modification processing modules.

Owner:SEIKO EPSON CORP

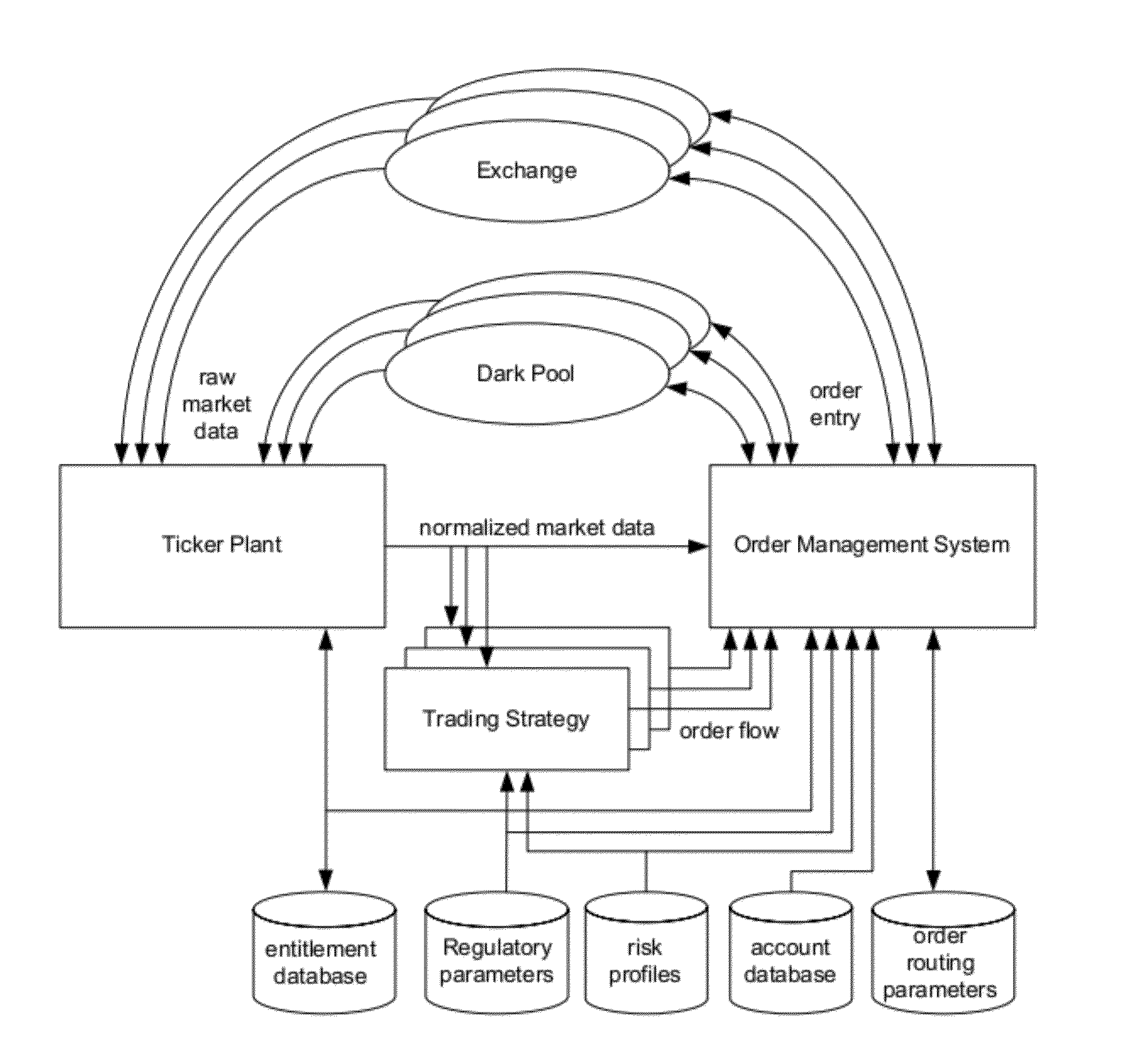

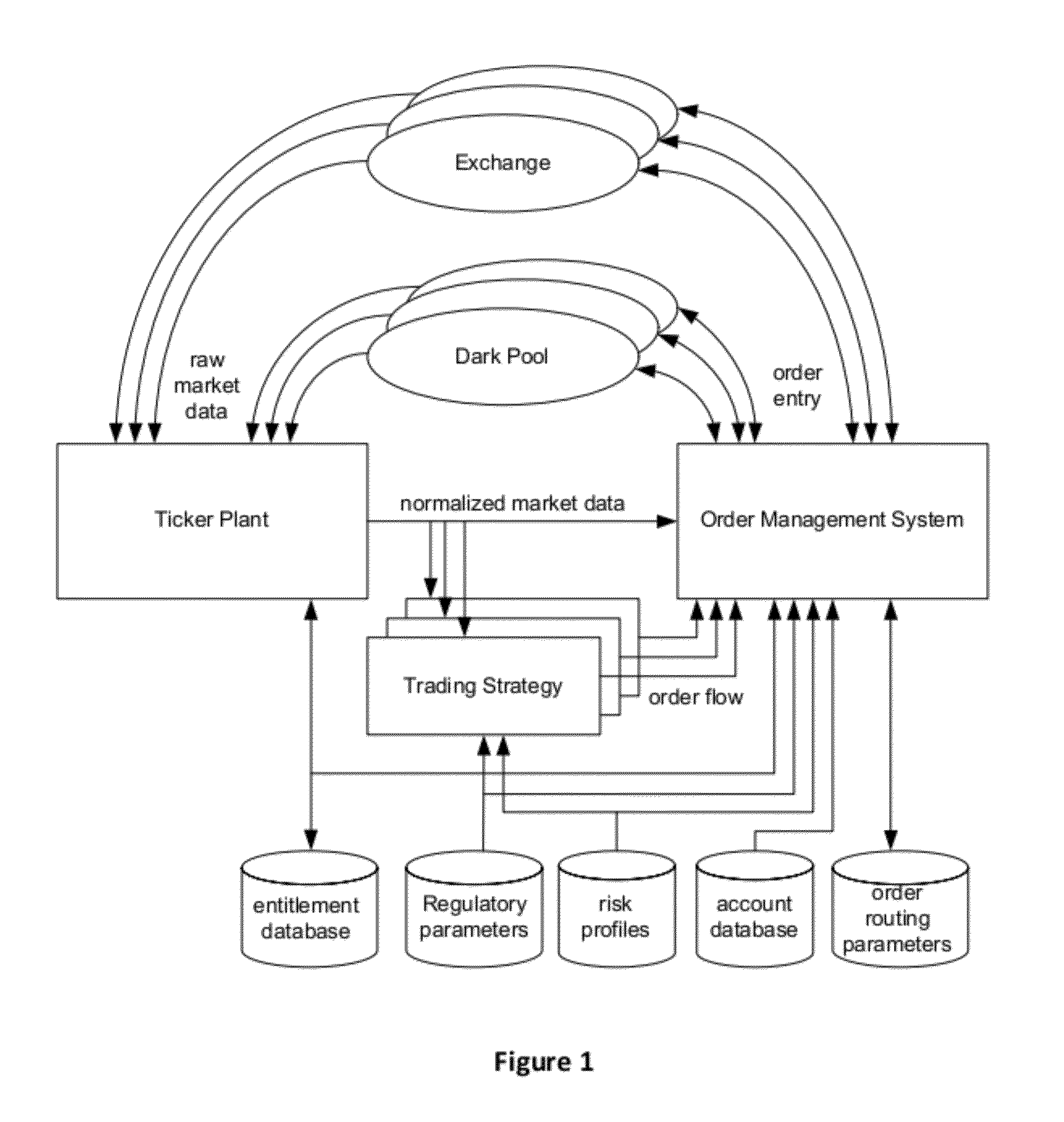

Method and Apparatus for Managing Orders in Financial Markets

ActiveUS20120246052A1Reduce and eliminate opportunityLimited opportunityFinanceLatency (engineering)Engineering

An integrated order management engine is disclosed that reduces the latency associated with managing multiple orders to buy or sell a plurality of financial instruments. Also disclosed is an integrated trading platform that provides low latency communications between various platform components. Such an integrated trading platform may include a trading strategy offload engine.

Owner:EXEGY INC

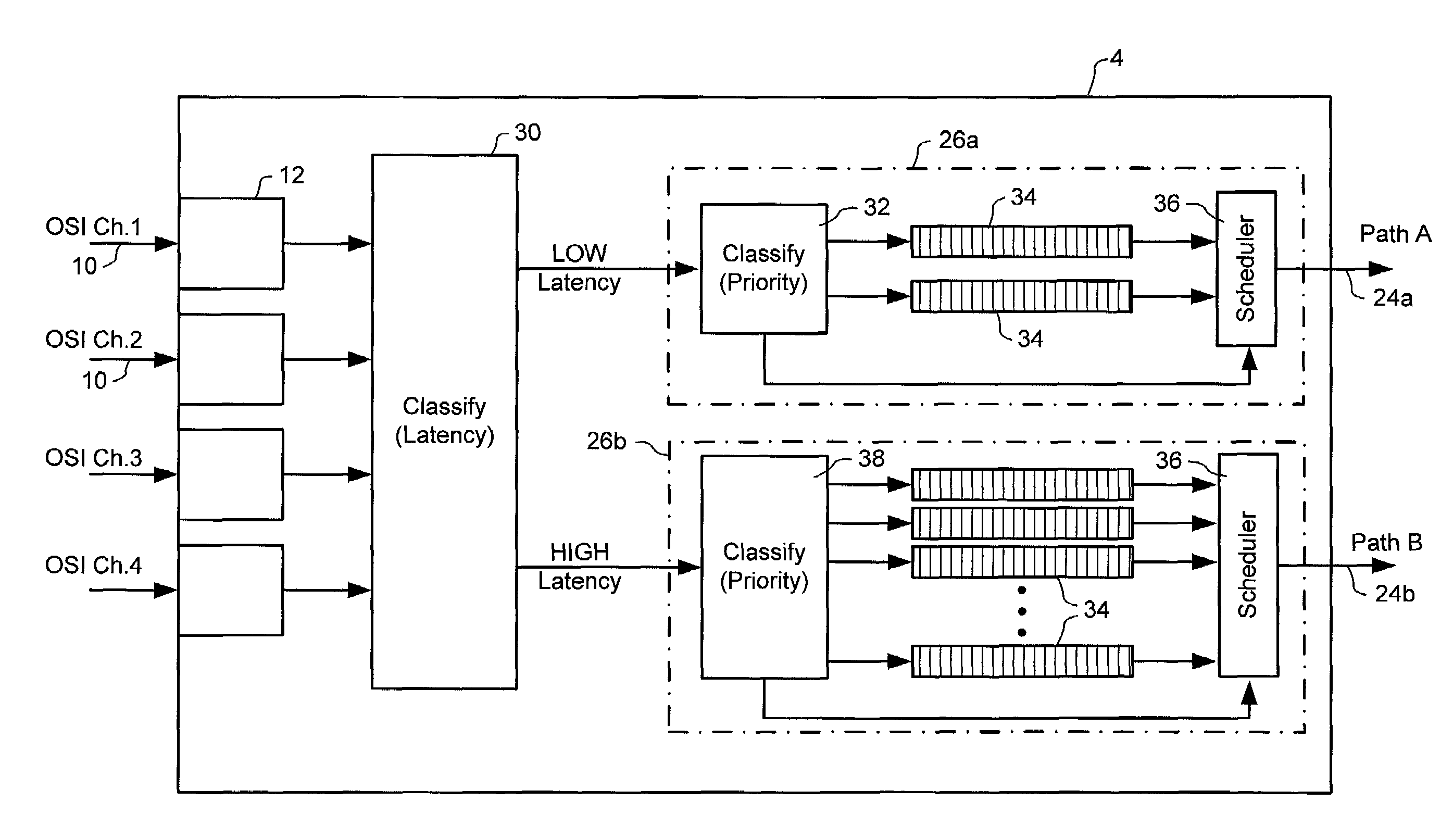

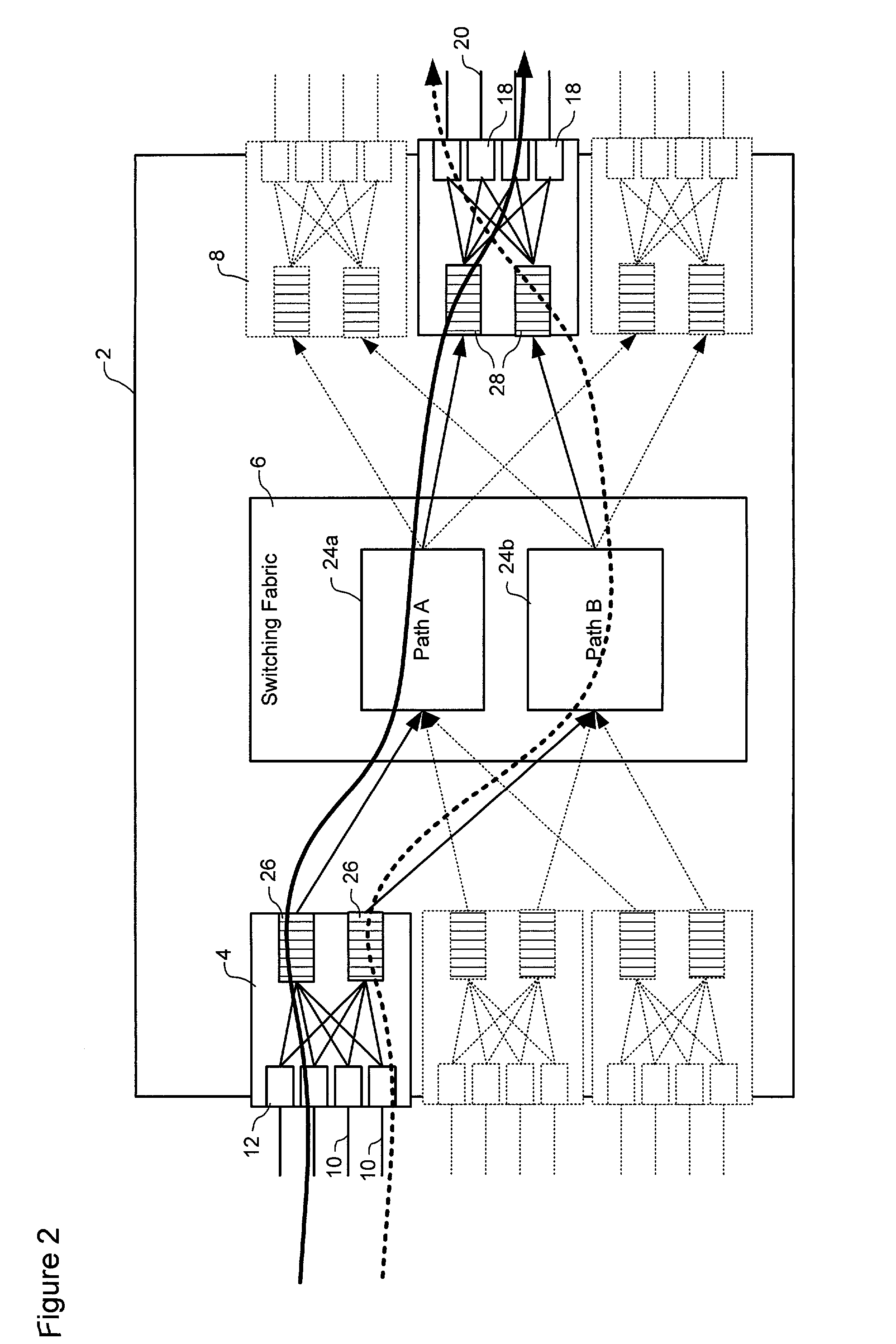

Traffic switching using multi-dimensional packet classification

ActiveUS7260102B2Efficient transportMultiplex system selection arrangementsError preventionTraffic capacityLatency (engineering)

A method and system for conveying an arbitrary mixture of high and low latency traffic streams across a common switch fabric implements a multi-dimensional traffic classification scheme, in which multiple orthogonal traffic classification methods are successively implemented for each traffic stream traversing the system. At least two diverse paths are mapped through the switch fabric, each path being optimized to satisfy respective different latency requirements. A latency classifier is adapted to route each traffic stream to a selected path optimized to satisfy latency requirements most closely matching a respective latency requirement of the traffic stream. A prioritization classifier independently prioritizes traffic streams in each path. A fairness classifier at an egress of each path can be used to enforce fairness between responsive and non-responsive traffic streams in each path. This arrangement enables traffic streams having similar latency requirements to traverse the system through a path optimized for those latency requirements.

Owner:CIENA

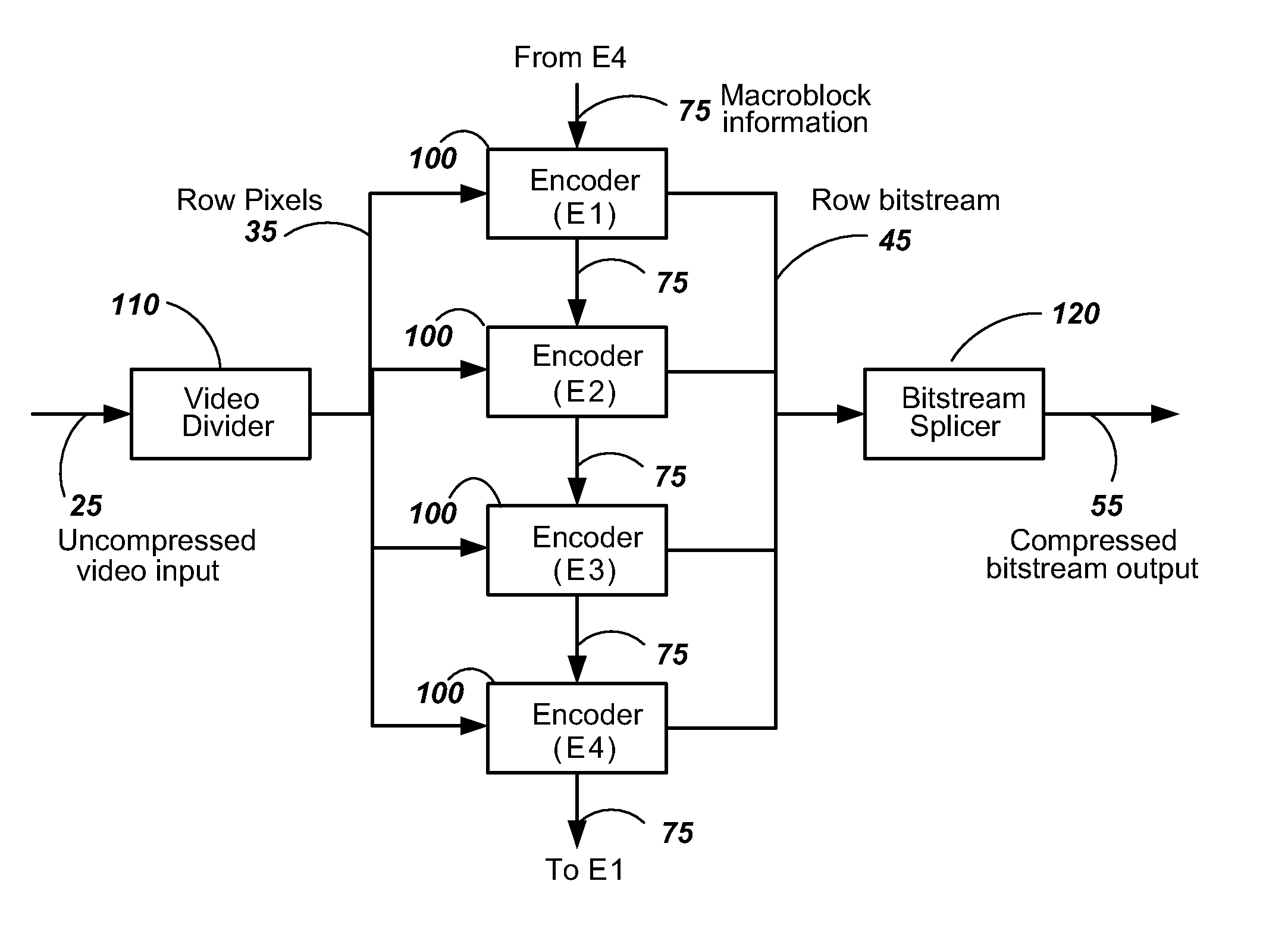

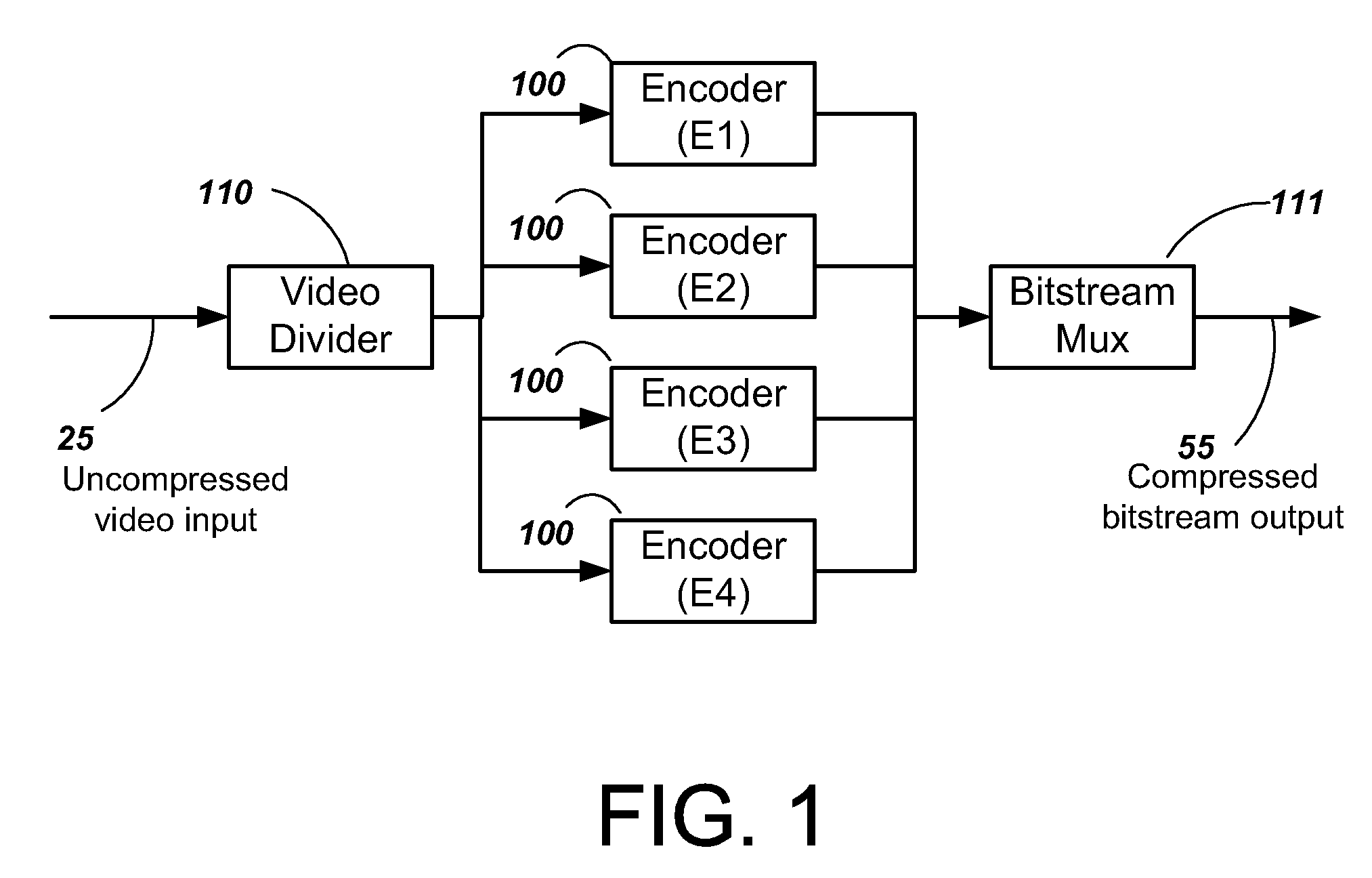

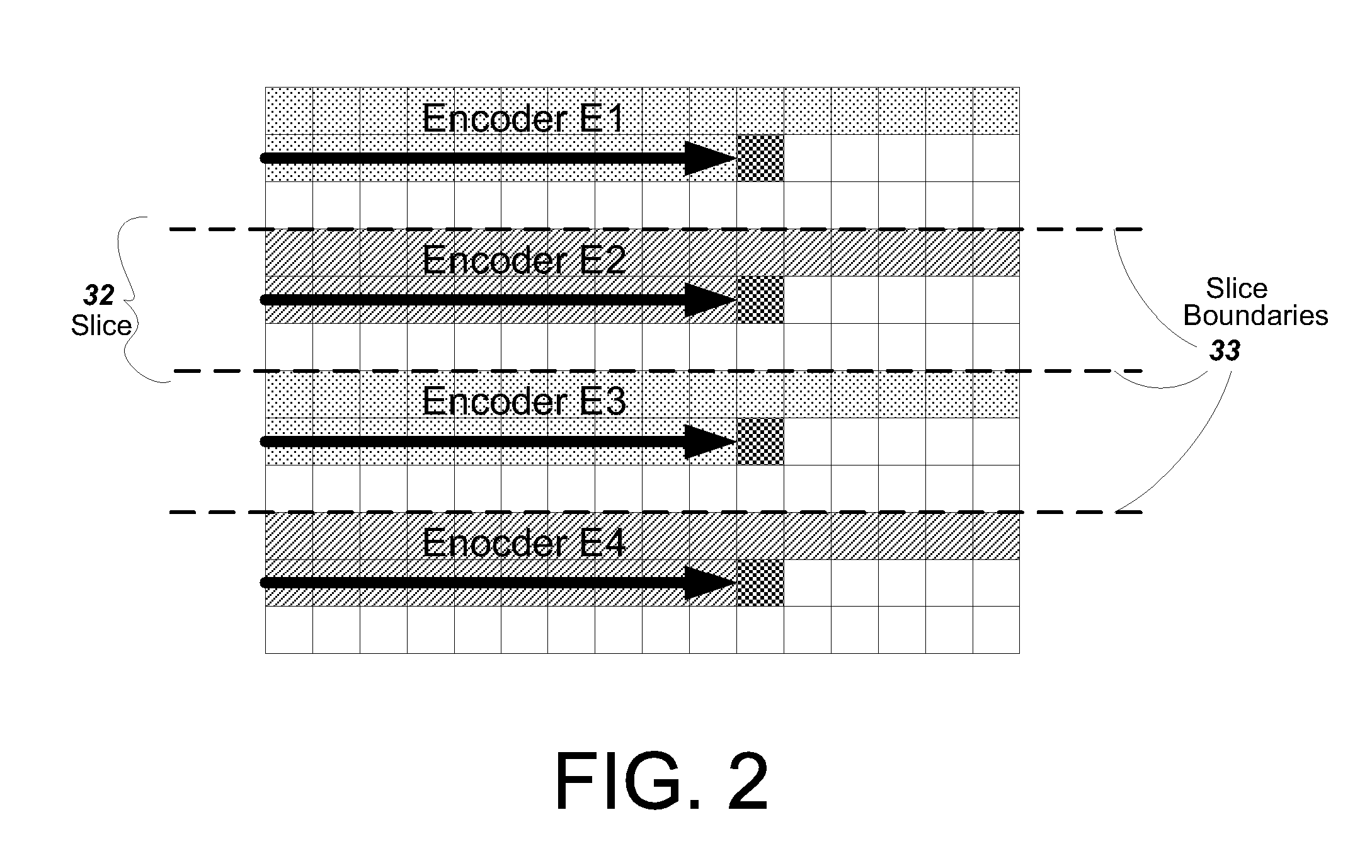

Video encoder with multiple processors

InactiveUS20070086528A1Lower latencyImprove compression efficiencyColor television with pulse code modulationColor television with bandwidth reductionComputer architectureVideo encoding

A method and system is described for video encoding with multiple parallel encoders. The system uses multiple encoders which operate in different rows of the same slice of the same video frame. Data dependencies between frames, rows, and blocks are resolved through the use of a data network. Block information is passed between encoders of adjacent rows. The system can achieve low latency compared to other parallel approaches.

Owner:CISCO TECH INC

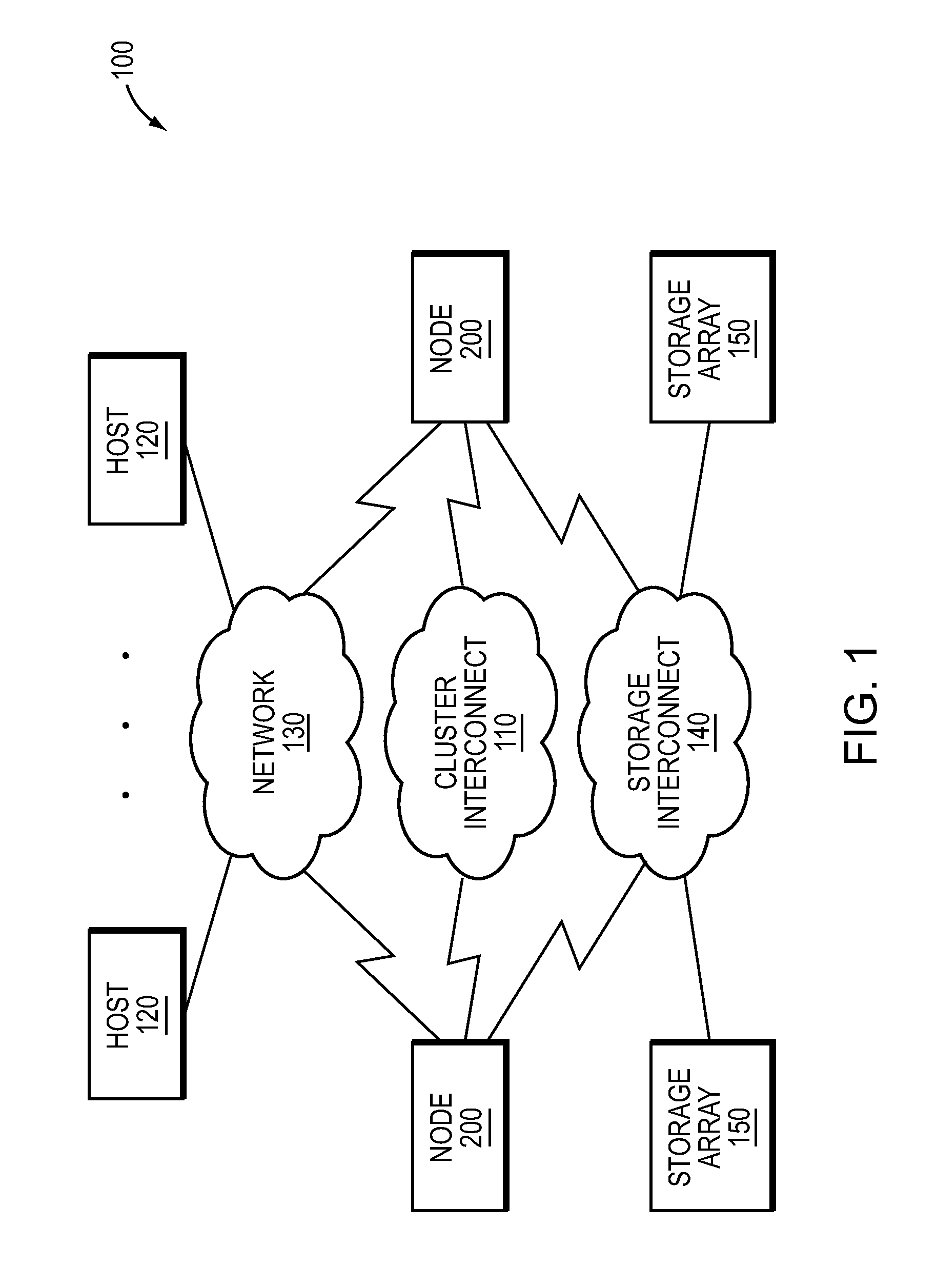

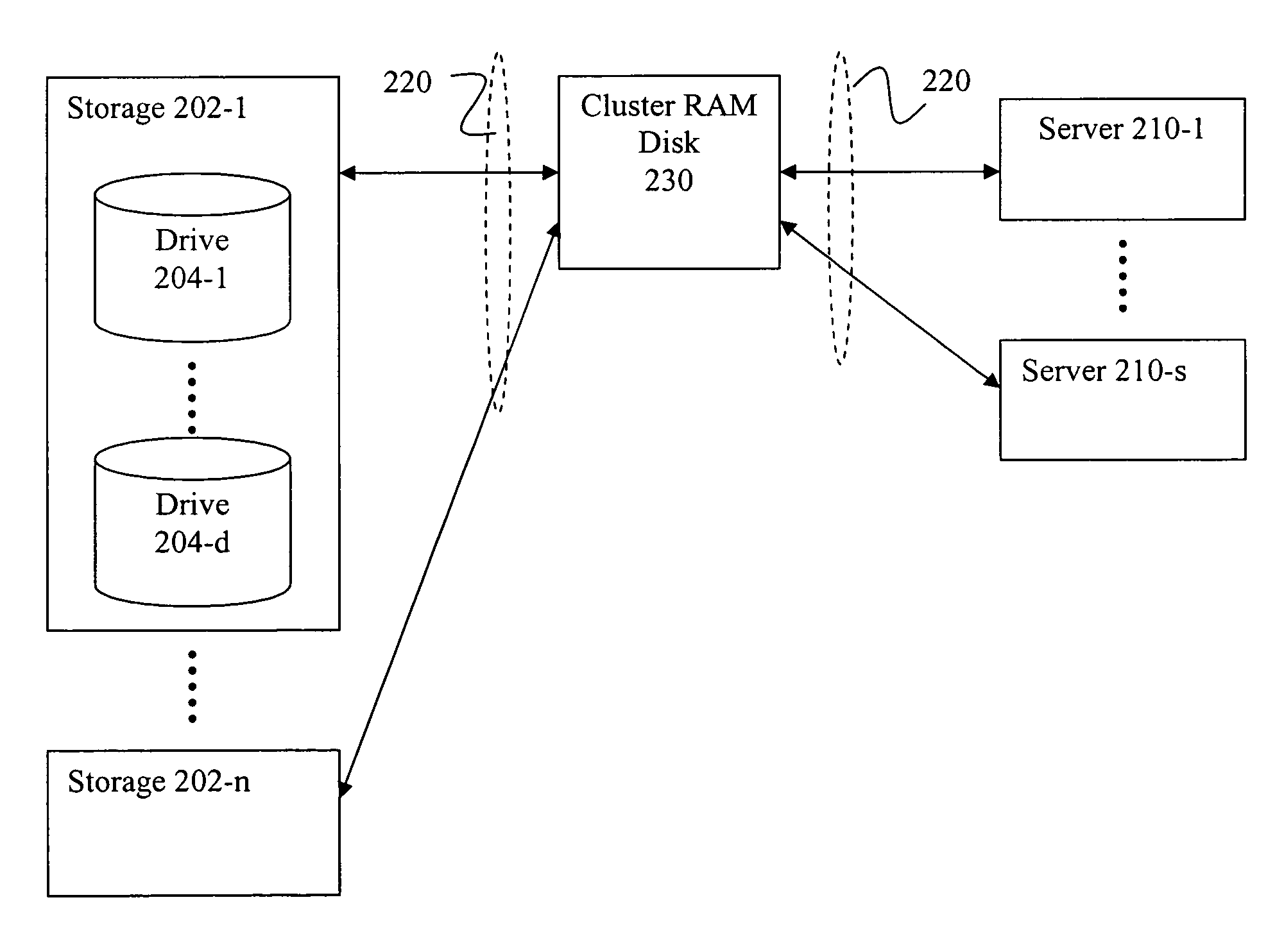

Method and apparatus for providing high-performance and highly-scalable storage acceleration

ActiveUS9390019B2Memory architecture accessing/allocationMemory adressing/allocation/relocationLatency (engineering)Datapath

A method and apparatus of providing high performance and highly scalable storage acceleration includes a cluster node-spanning RAM disk (CRD) interposed in the data path between a storage server and a computer server. The CRD addresses performance problems with applications that need to access large amounts of data and are negatively impacted by the latency of classic disk-based storage systems. It solves this problem by placing the data the application needs into a large (with respect to the server's main memory) RAM-based cache where it can be accessed with extremely low latency, hence improving the performance of the application significantly. The CRD is implemented using a novel architecture which has very significant cost and performance advantages over existing or alternative solutions.

Owner:INNOVATIONS IN MEMORY LLC

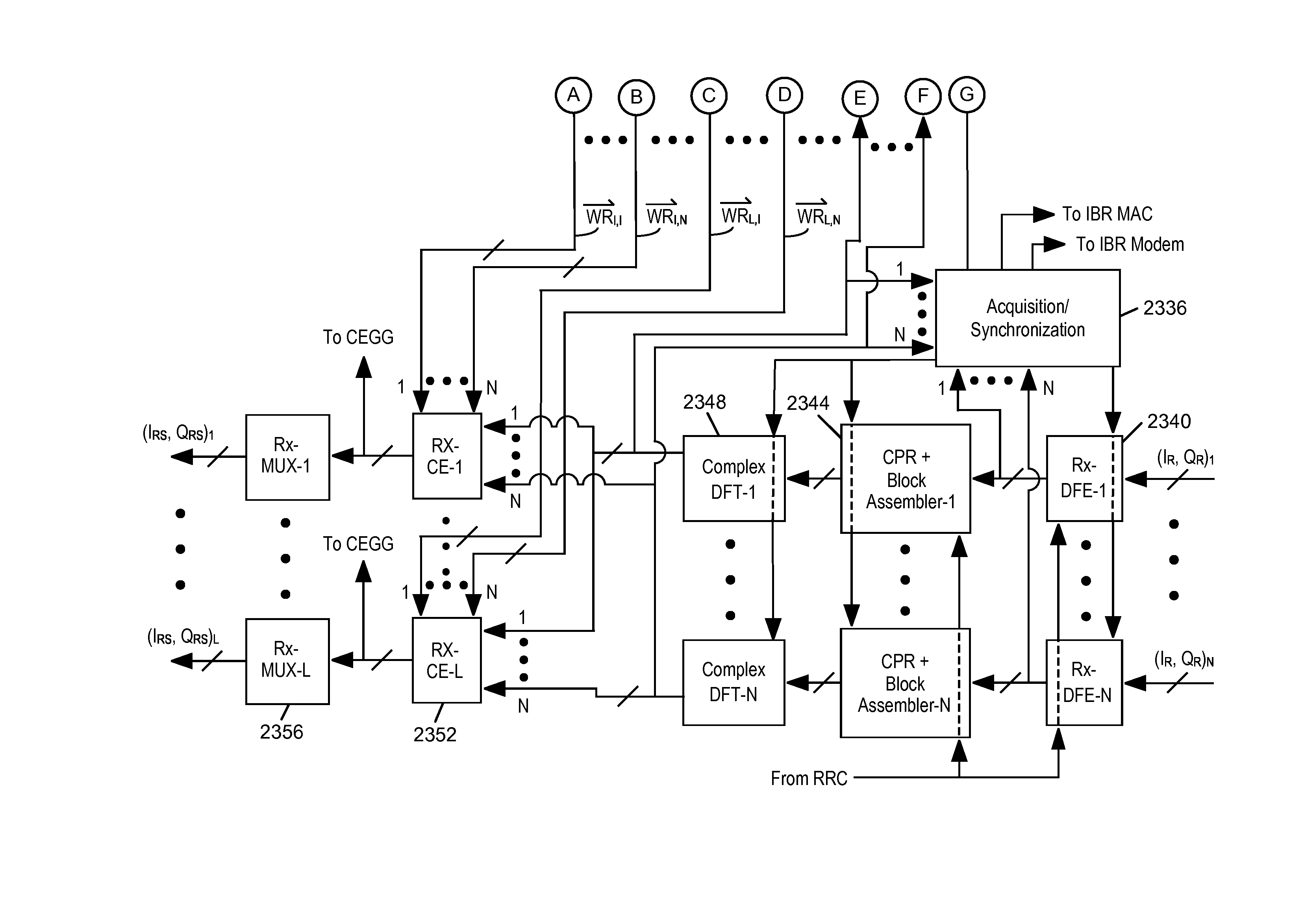

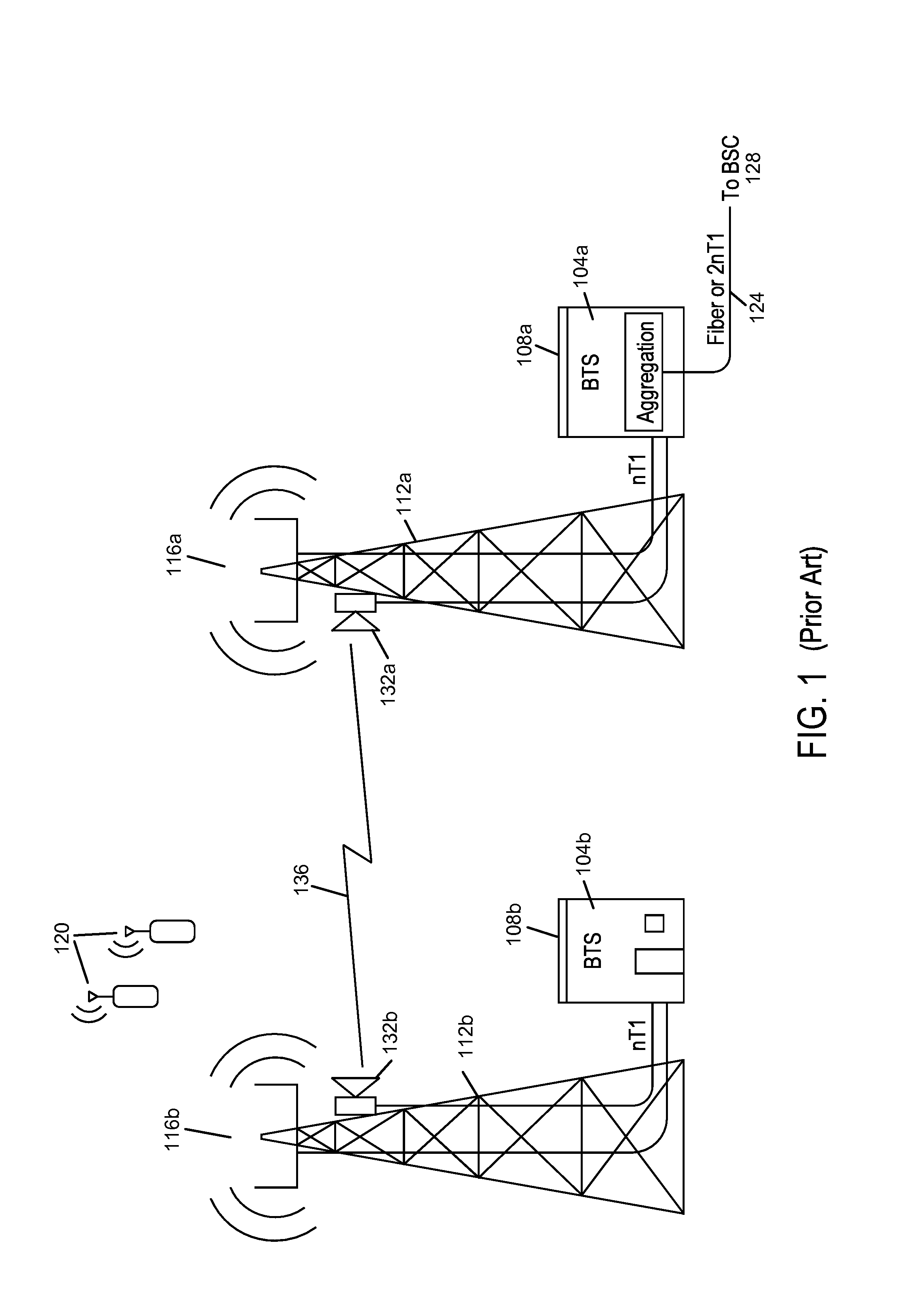

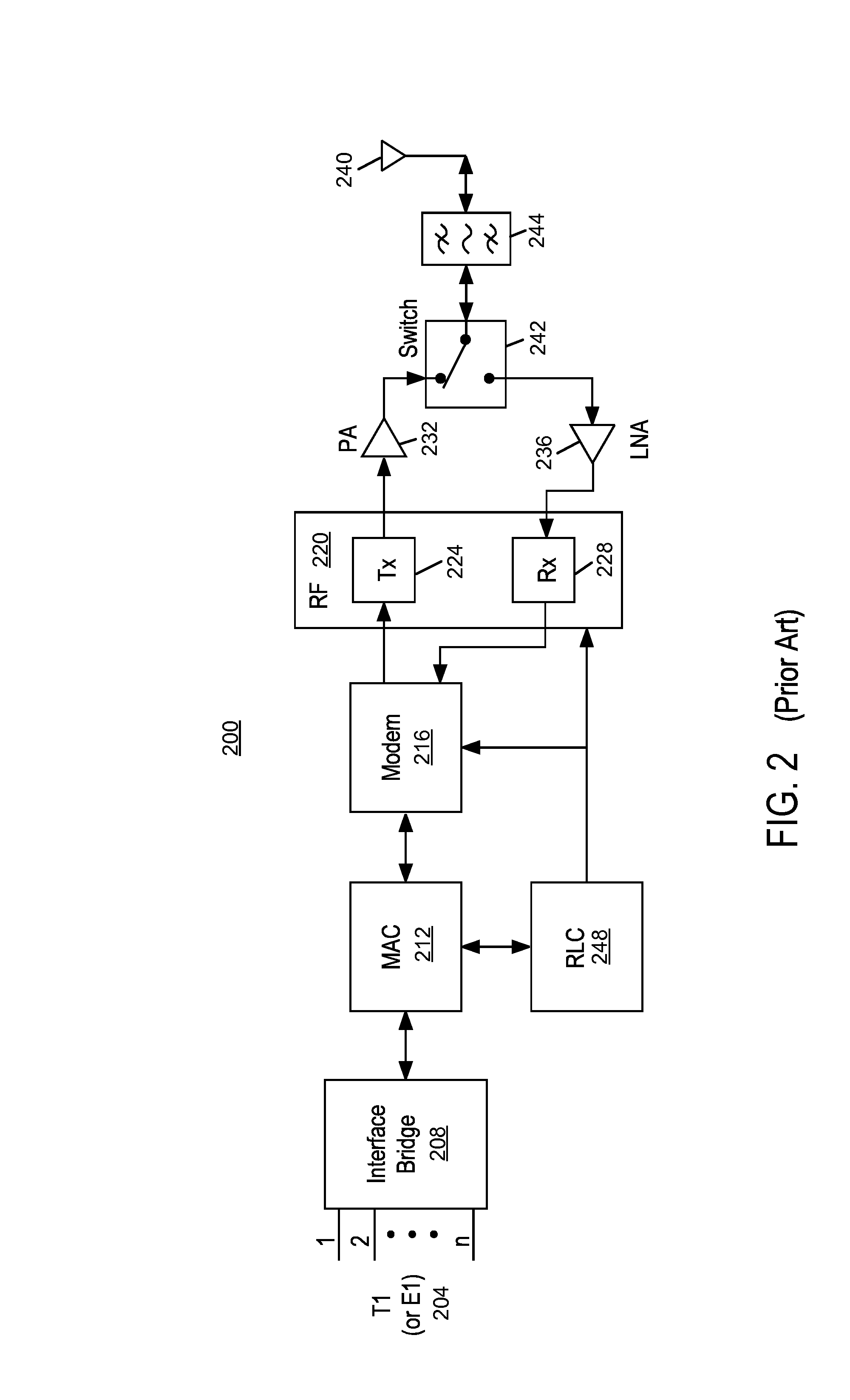

Intelligent backhaul radio

ActiveUS8238318B1Lower latencyEfficient use of resourcesSpatial transmit diversitySimultaneous aerial operationsFrequency spectrumLatency (engineering)

A intelligent backhaul radio is disclosed that is compact, light and low power for street level mounting, operates at 100 Mb / s or higher at ranges of 300 m or longer in obstructed LOS conditions with low latencies of 5 ms or less, can support PTP and PMP topologies, uses radio spectrum resources efficiently and does not require precise physical antenna alignment.

Owner:COMS IP HLDG LLC

Transfer ready frame reordering

InactiveUS20030056000A1Multiple digital computer combinationsData switching networksLatency (engineering)Distributed computing

A system and method for reordering received frames to ensure that transfer ready (XFER_RDY) frames among the received frames are handled at higher priority, and thus with lower latency, than other frames. In one embodiment, an output that is connected to one or more devices may be allocated an additional queue specifically for XFER_RDY frames. Frames on this queue are given a higher priority than frames on the normal queue. XFER_RDY frames are added to the high priority queue, and other frames to the lower priority queue. XFER_RDY frames on the higher priority queue are forwarded before frames on the lower priority queue. In another embodiment, a single queue may be used to implement XFER_RDY reordering. In this embodiment, XFER_RDY frames to be inserted in front of other types of frames in the queue.

Owner:BROCADE COMMUNICATIONS SYSTEMS

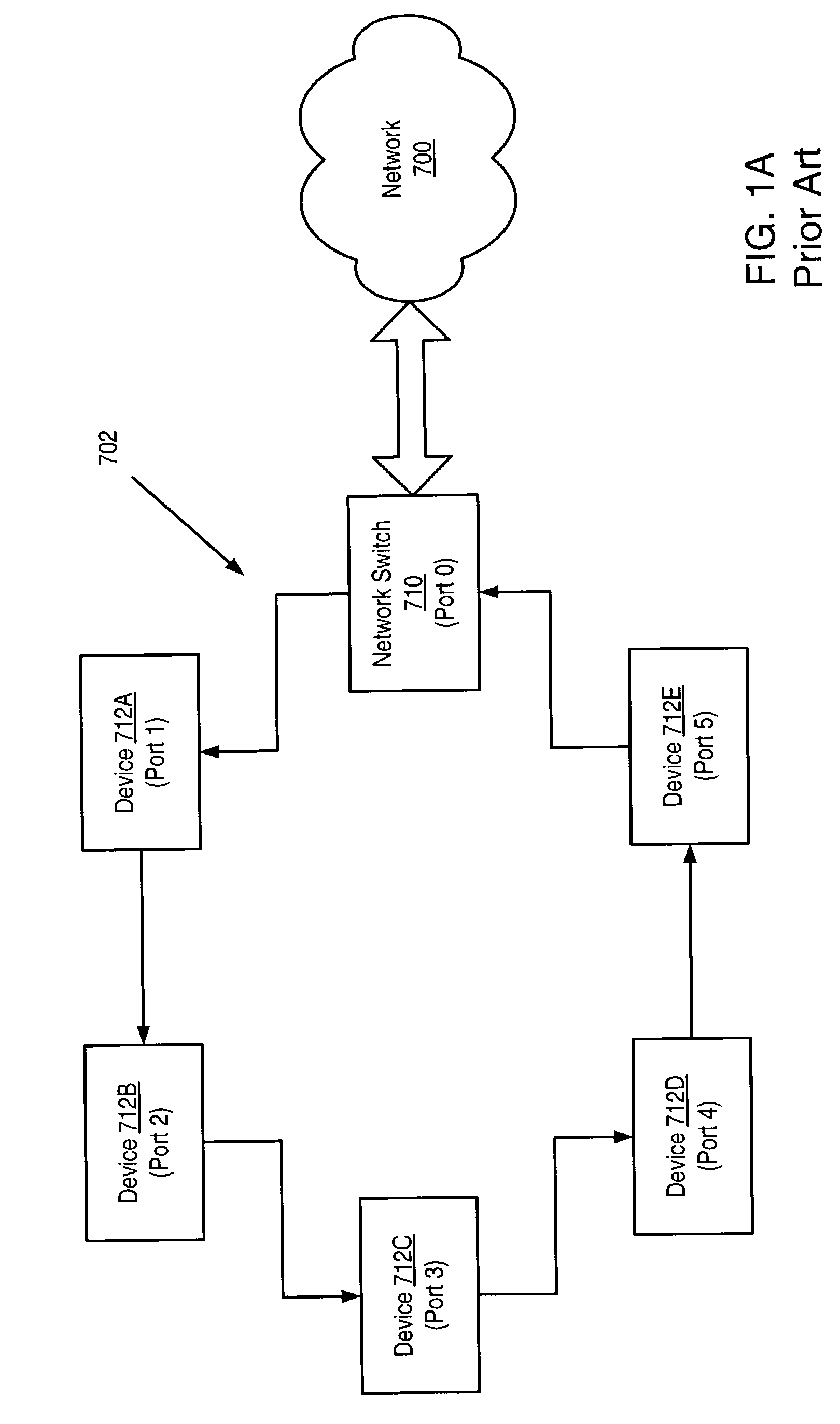

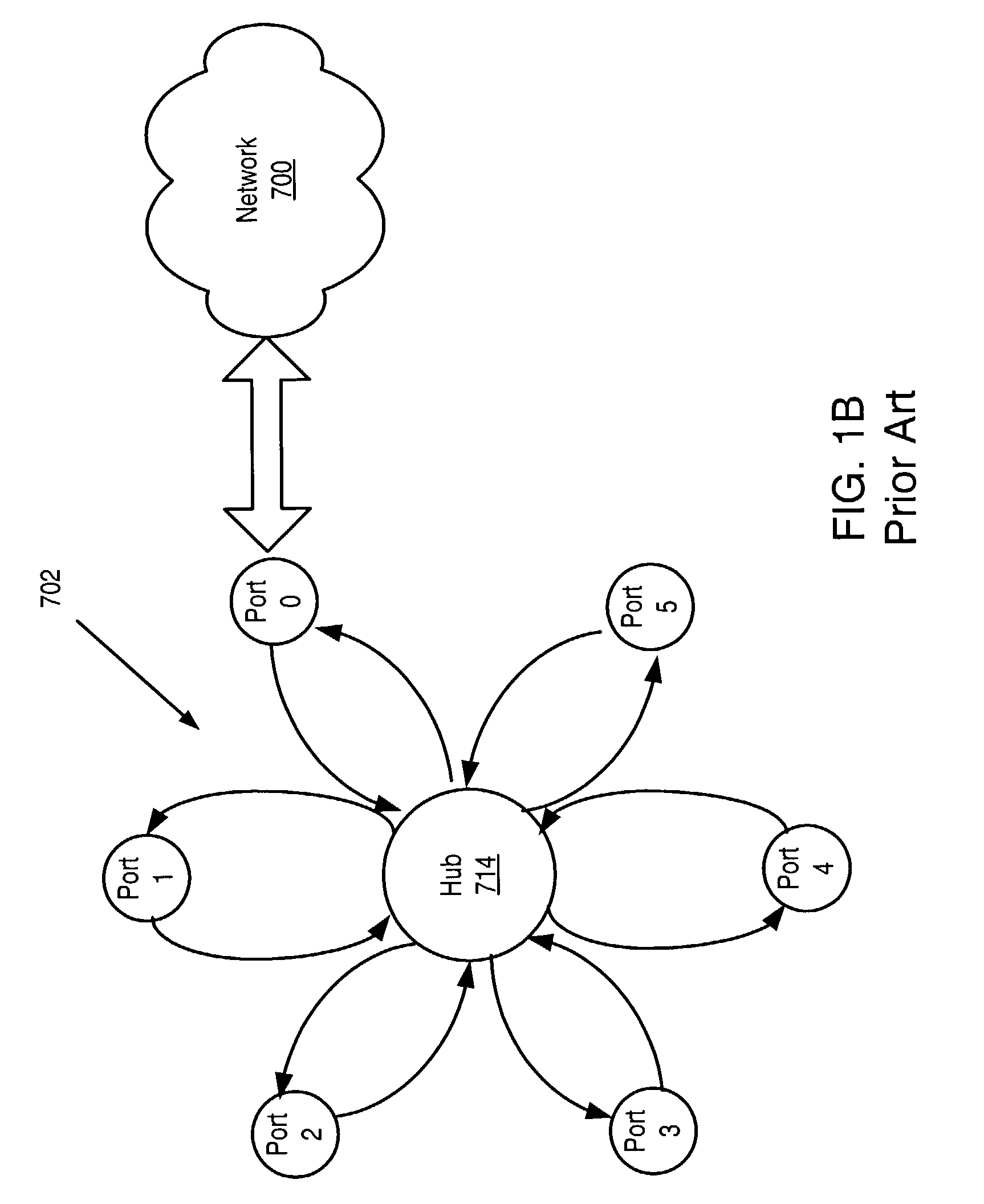

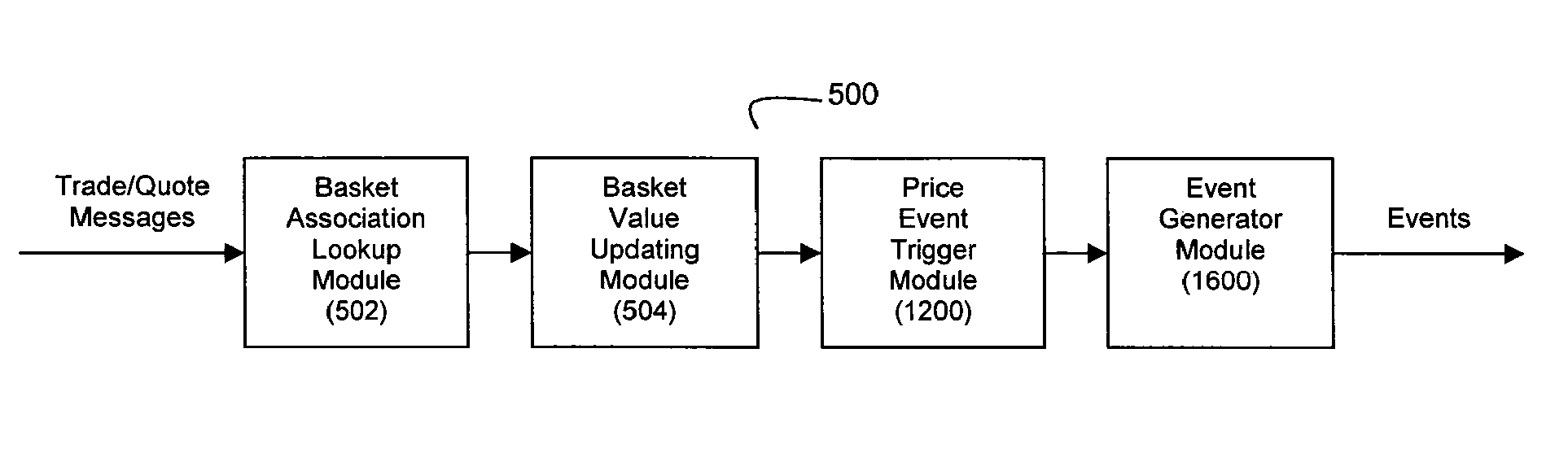

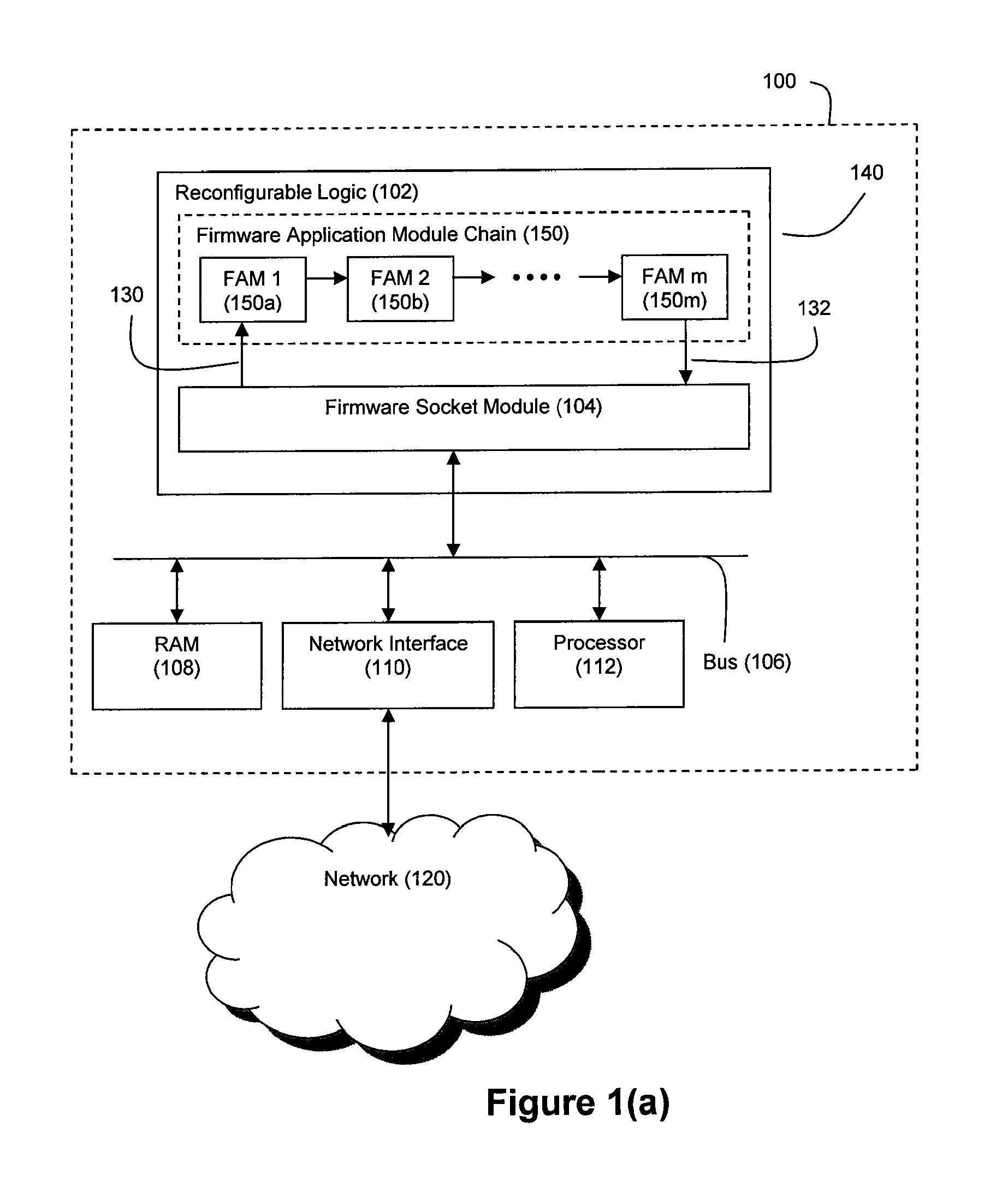

Method and System for Low Latency Basket Calculation

A basket calculation engine is deployed to receive a stream of data and accelerate the computation of basket values based on that data. In a preferred embodiment, the basket calculation engine is used to process financial market data to compute the net asset values (NAVs) of financial instrument baskets. The basket calculation engine can be deployed on a coprocessor and can also be realized via a pipeline, the pipeline preferably comprising a basket association lookup module and a basket value updating module. The coprocessor is preferably a reconfigurable logic device such as a field programmable gate array (FPGA).

Owner:EXEGY INC

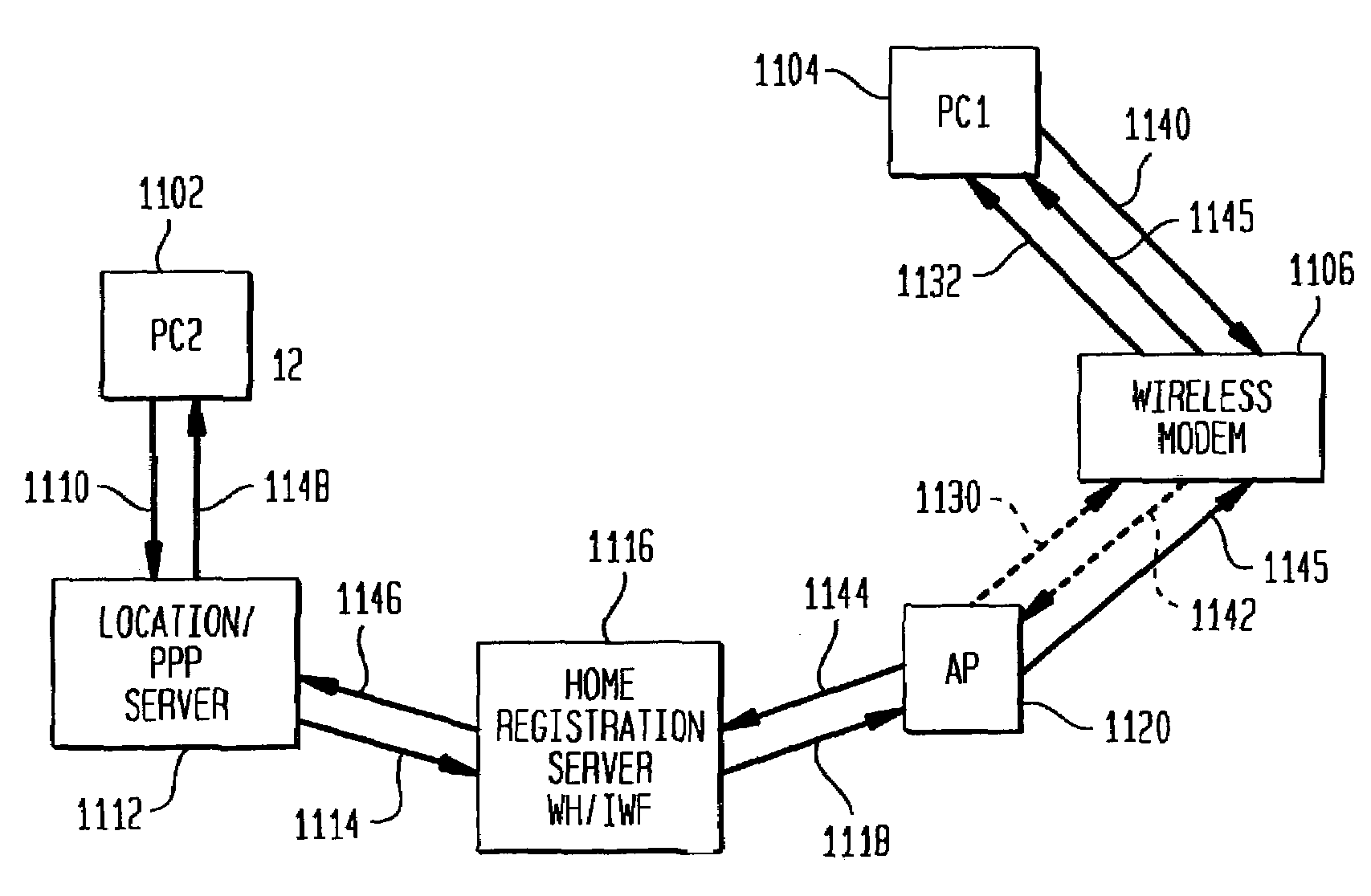

Method for paging a device in a wireless network

InactiveUS7197025B2Avoiding all setup messagingEffective controlPower managementFrequency-division multiplex detailsWireless mesh networkMessage flow

A method for access control in a wireless network having a base station and a plurality of remote hosts includes the optional abilities of making dynamic adjustments of the uplink / downlink transmission ratio, making dynamic adjustments of the total number of reservation minislots, and assigning access priorities by message content type within a single user message stream. The method of the invention further provides for remote wireless host paging and for delayed release of active channels by certain high priority users in order to provide low latency of real-time packets by avoiding the need for repeated channel setup signaling messages. In the preferred embodiment, there are N minislots available for contention in the next uplink frame organized into a plurality of access priority classes. The base station allows m access priority classes. Each remote host of access priority class i randomly picks one contention minislot and transmits an access request, the contention minislot picked being in a range from 1 to Ni where N(i+1)<Ni and N1=N. In an alternate embodiment of a method for access control according to the present invention, each remote host of access priority class i and with a stack level that equals 0, then transmits an access request with a probability Pi where P(i+1)<Pi and P1=1.

Owner:WSOU INVESTMENTS LLC

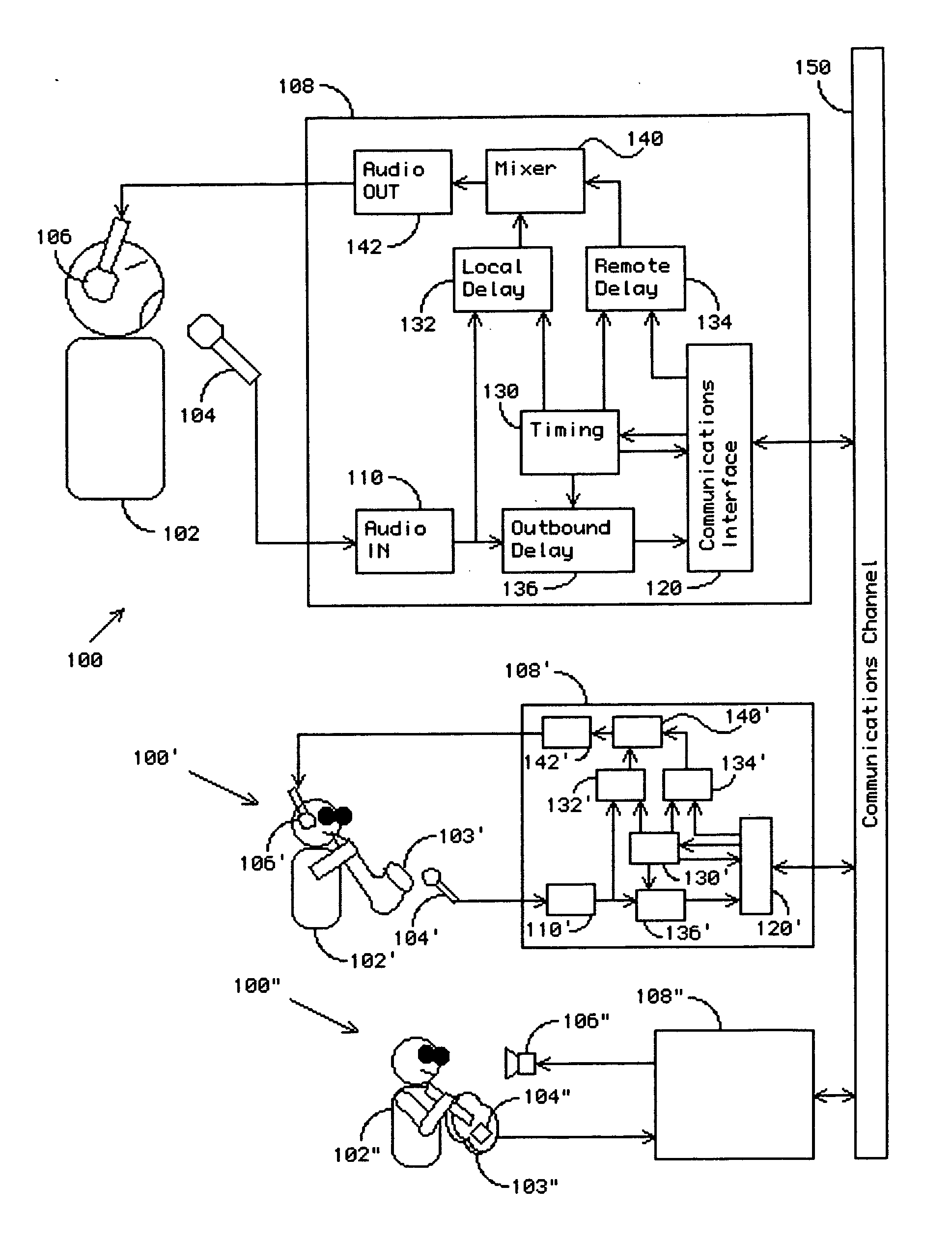

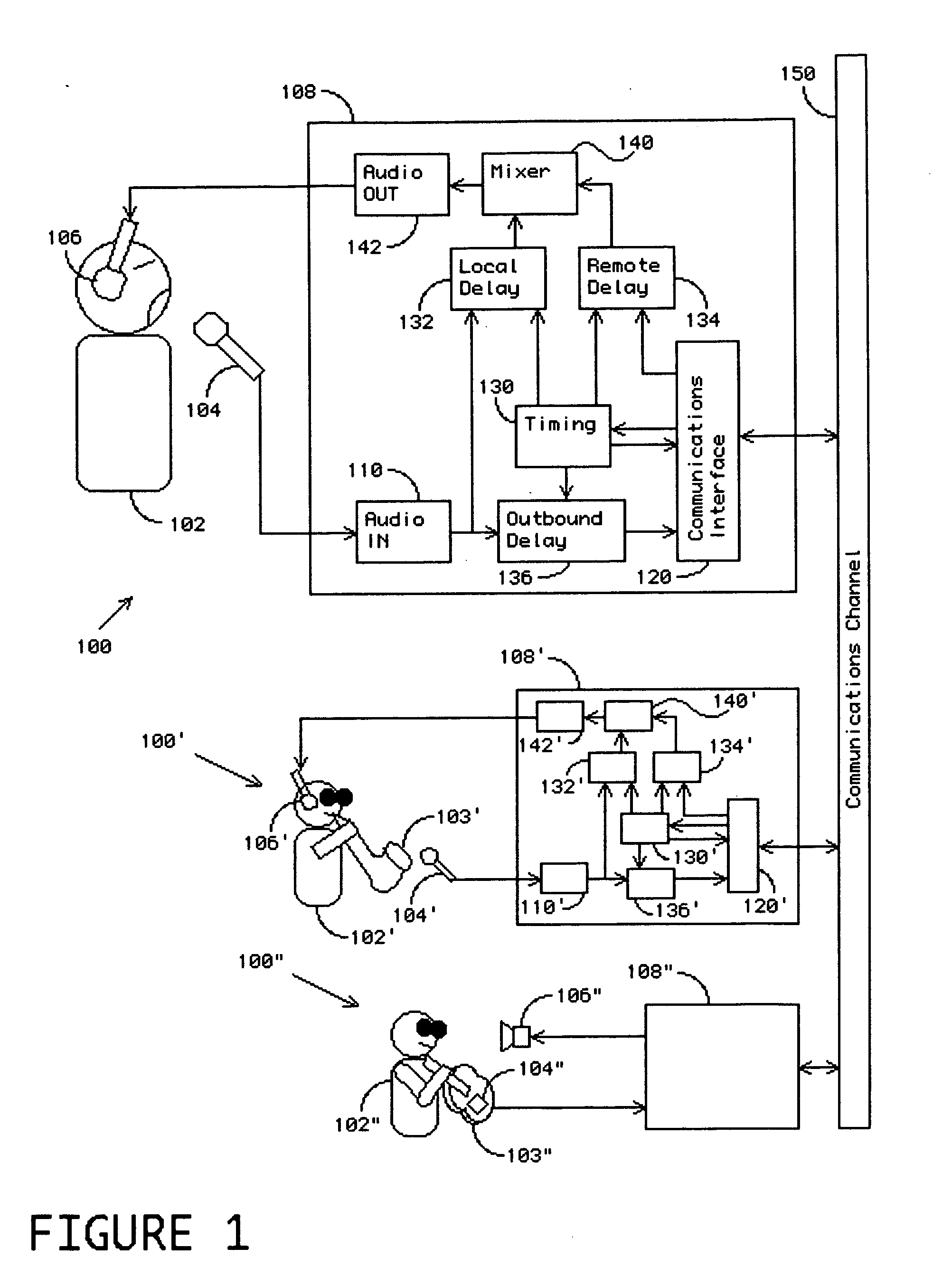

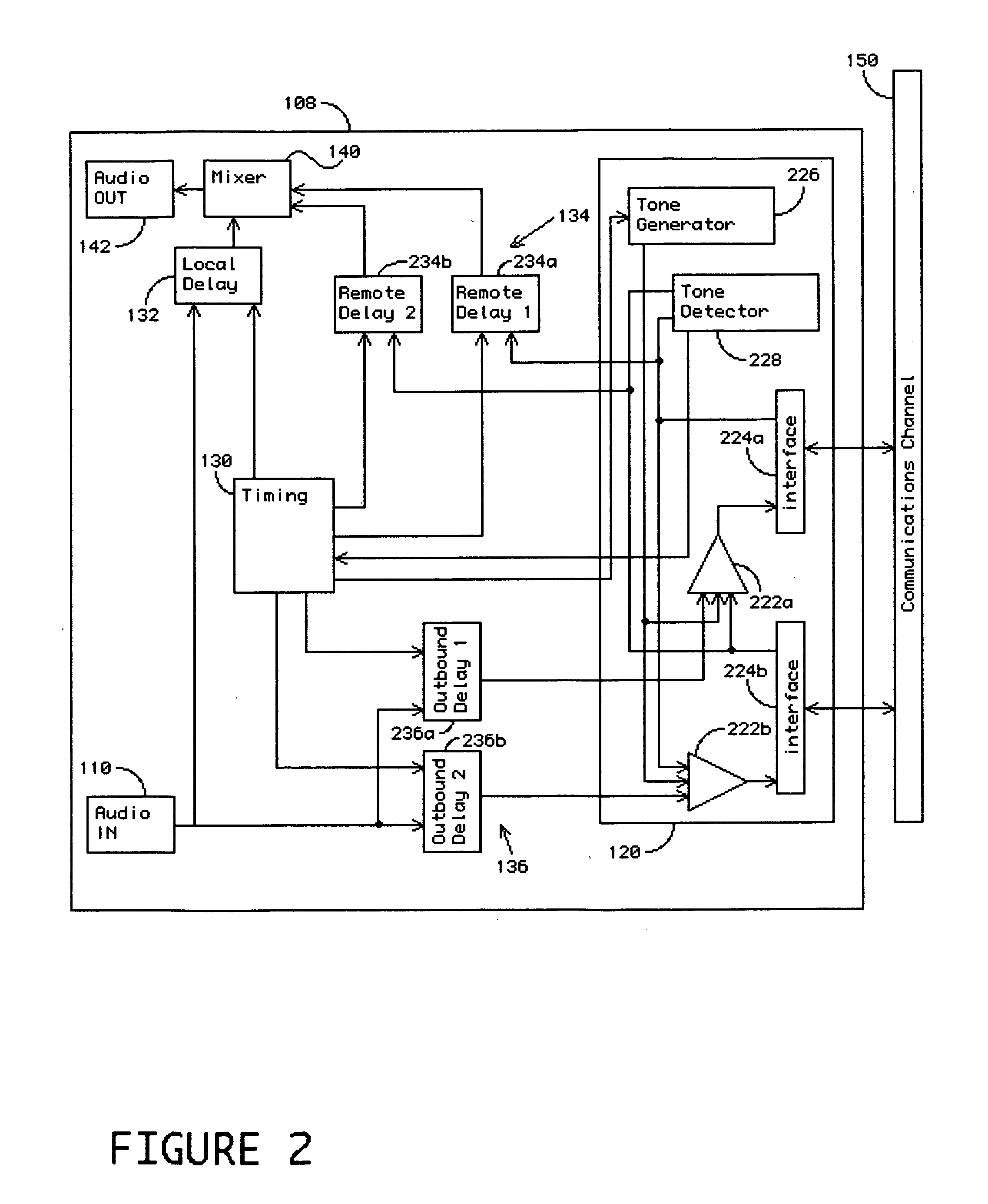

Method and apparatus for remote real time collaborative acoustic performance and recording thereof

InactiveUS20070140510A1Electrophonic musical instrumentsPublic address systemsLatency (engineering)Audio frequency

A method and apparatus are disclosed to permit real time, distributed acoustic performance by multiple musicians at remote locations. The latency of the communication channel is reflected in the audio monitor used by the performer. This allows a natural accommodation to be made by the musician. Simultaneous remote acoustic performances are played together at each location, though not necessarily simultaneously at all locations. This allows locations having low latency connections to retain some of their advantage. The amount of induced latency can be overridden by each musician. The method preferably employs a CODEC able to aesthetically synthesize packets missing from the audio stream in real time.

Owner:EJAMMING

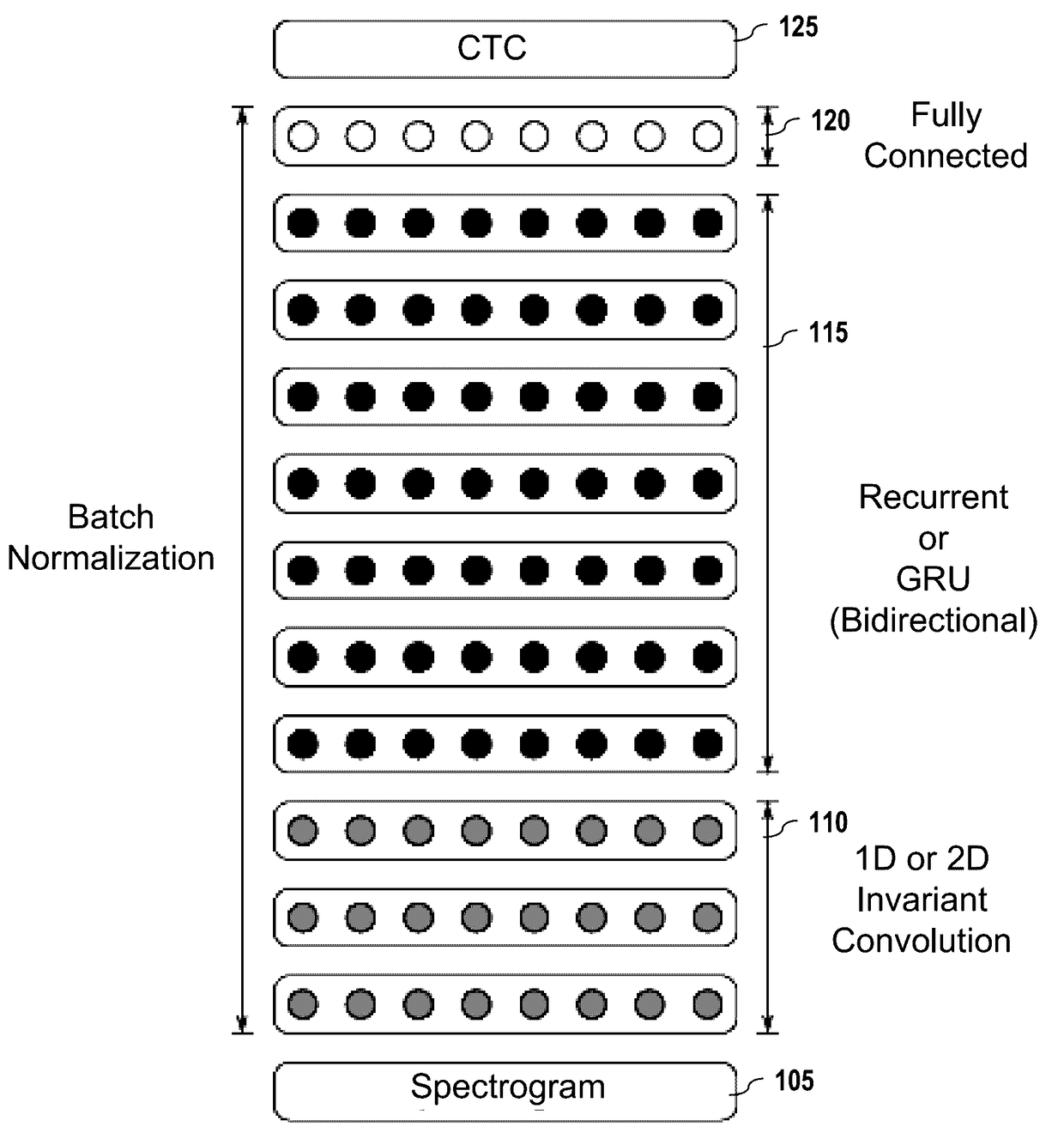

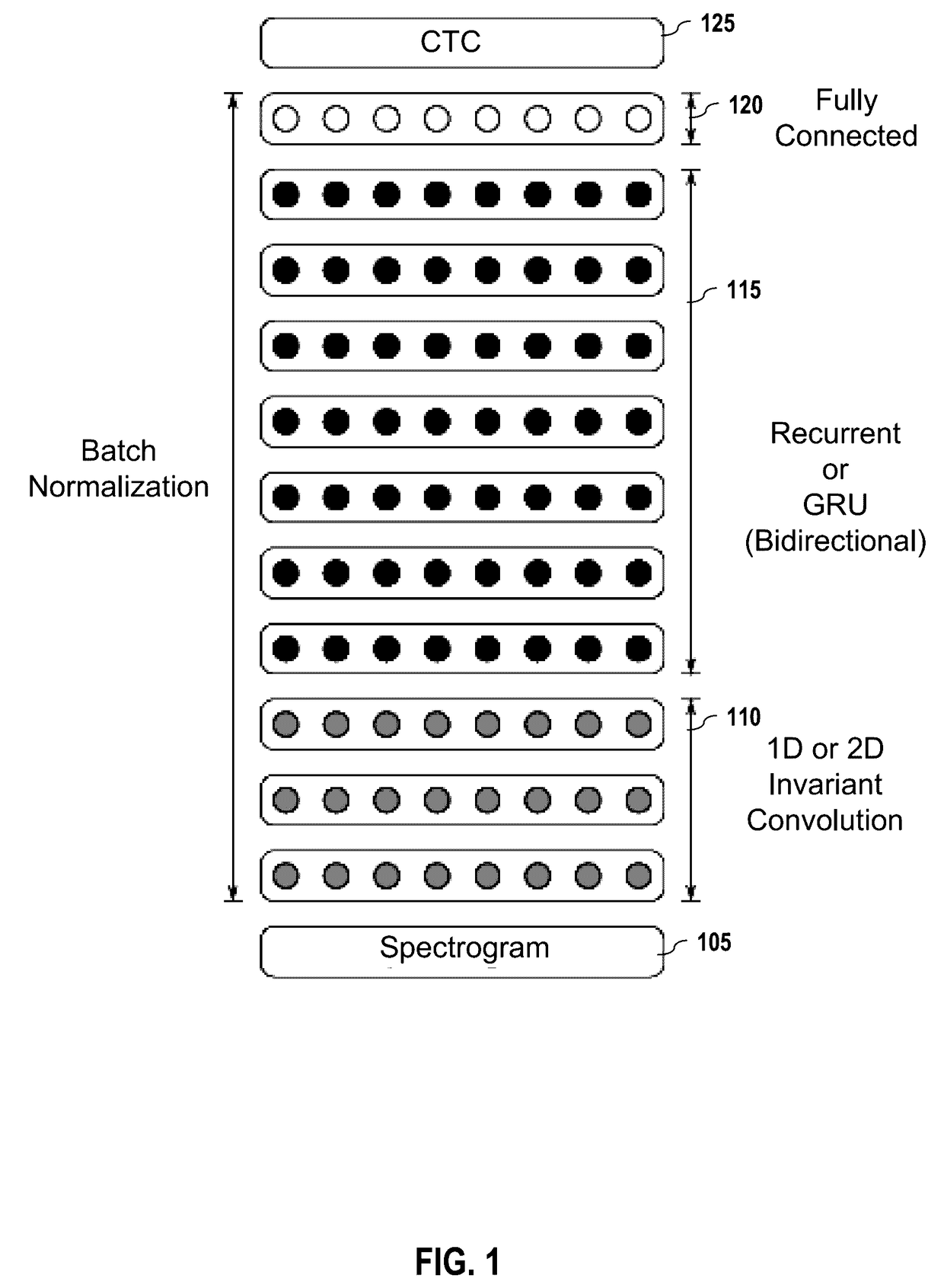

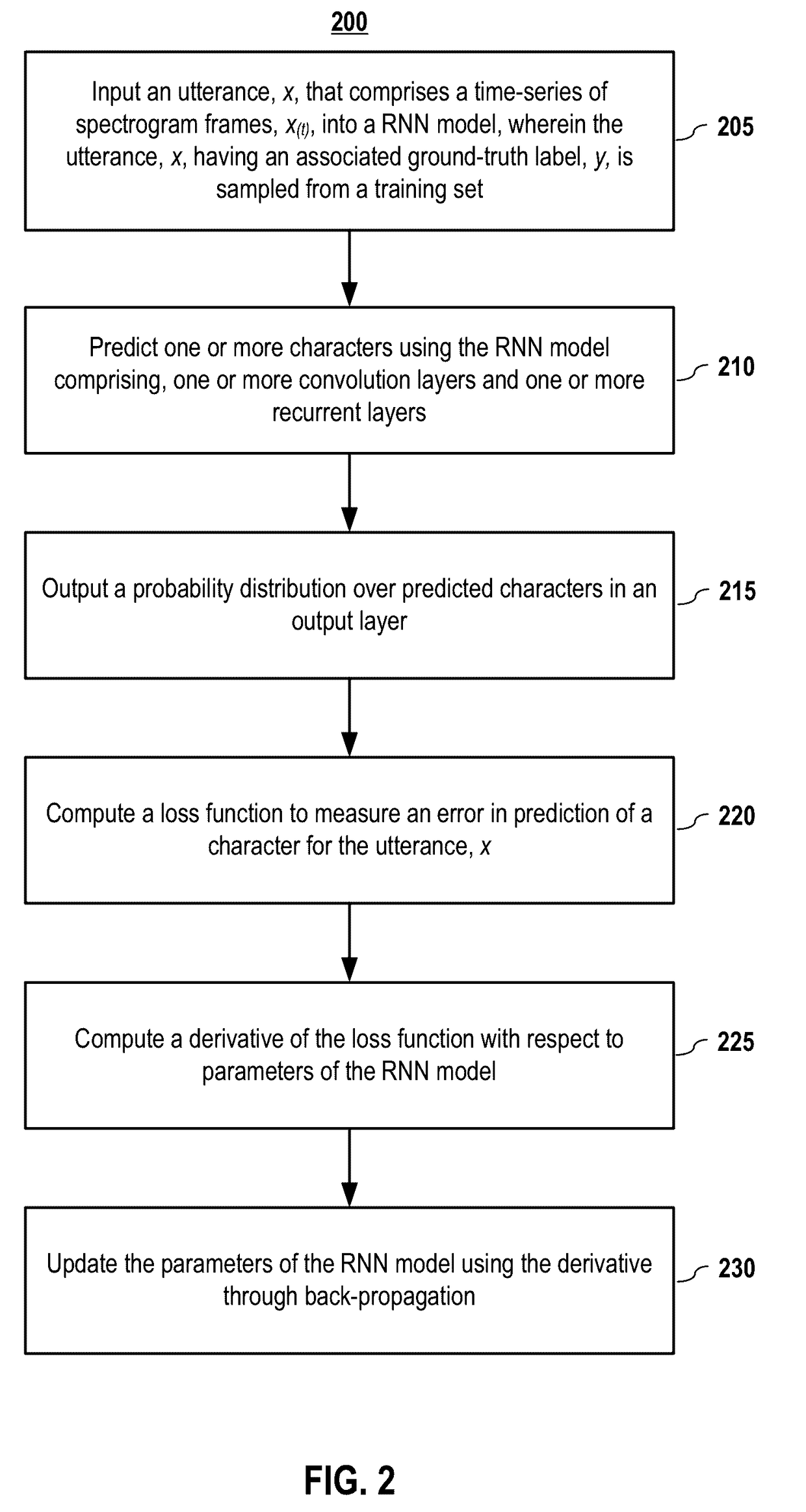

Deployed end-to-end speech recognition

Embodiments of end-to-end deep learning systems and methods are disclosed to recognize speech of vastly different languages, such as English or Mandarin Chinese. In embodiments, the entire pipelines of hand-engineered components are replaced with neural networks, and the end-to-end learning allows handling a diverse variety of speech including noisy environments, accents, and different languages. Using a trained embodiment and an embodiment of a batch dispatch technique with GPUs in a data center, an end-to-end deep learning system can be inexpensively deployed in an online setting, delivering low latency when serving users at scale.

Owner:BAIDU USA LLC

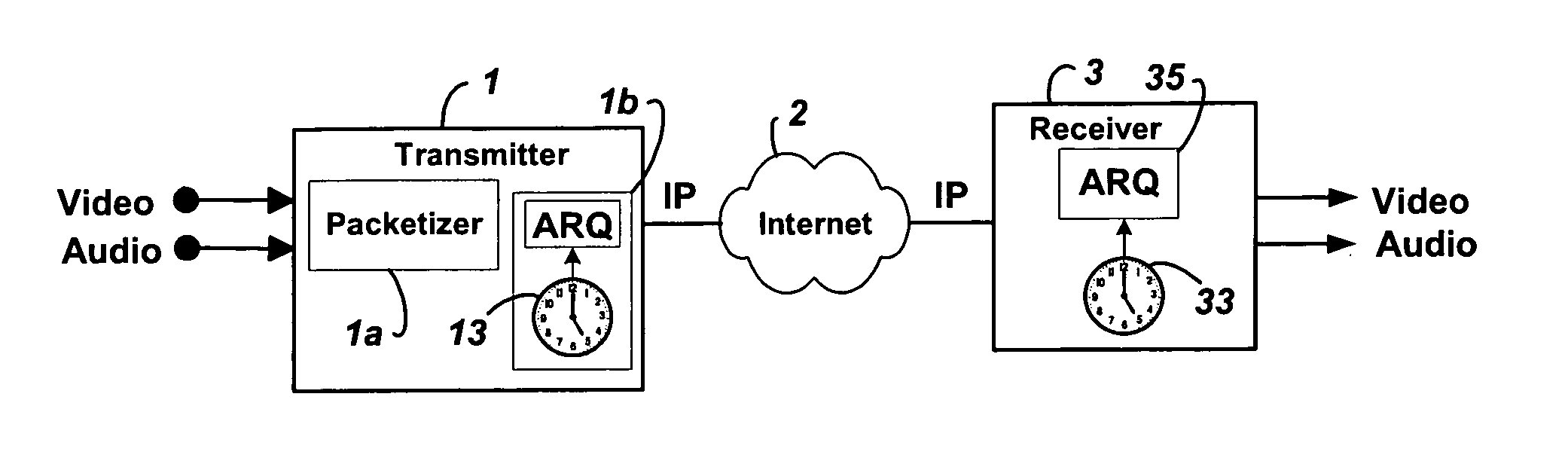

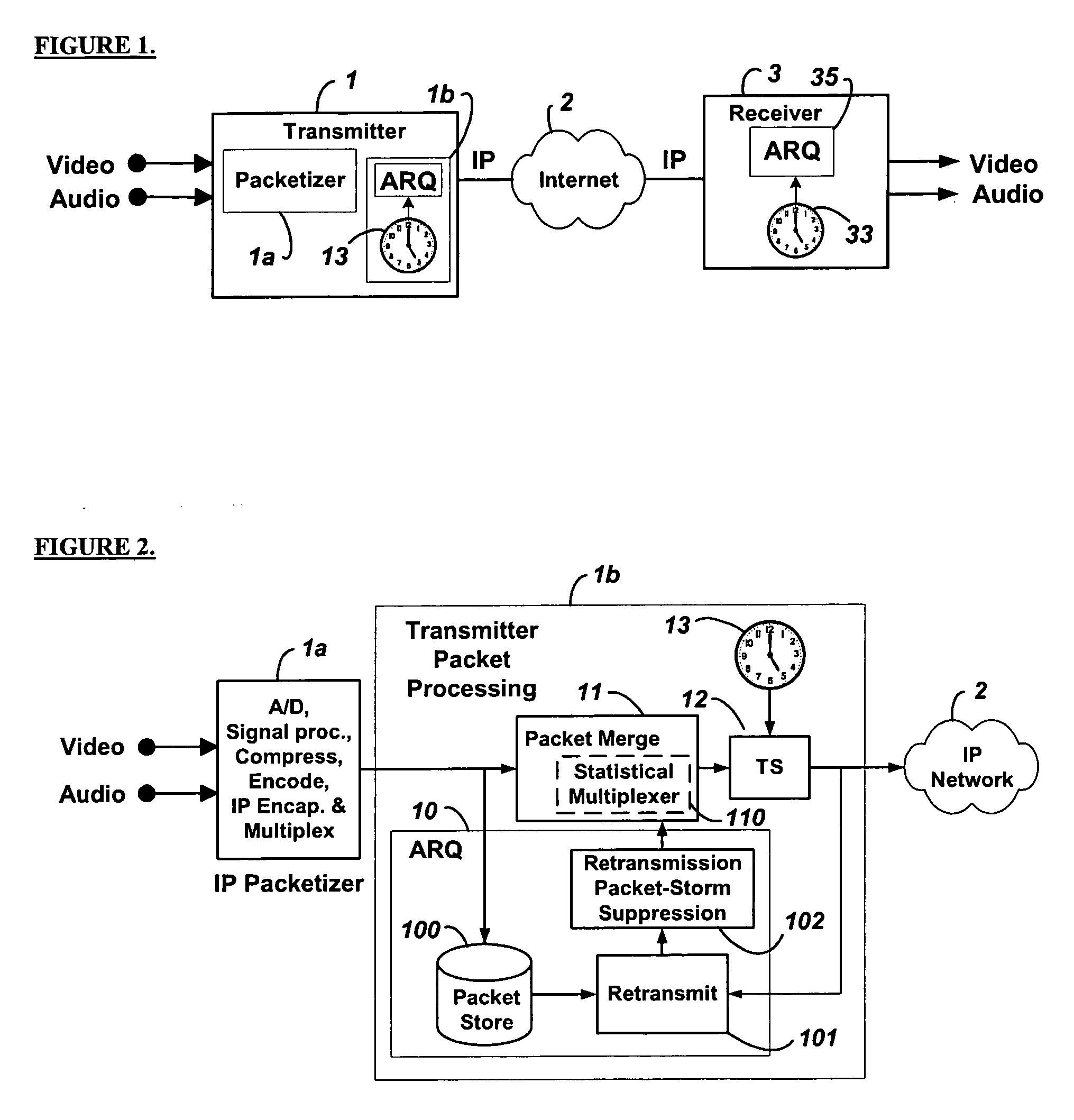

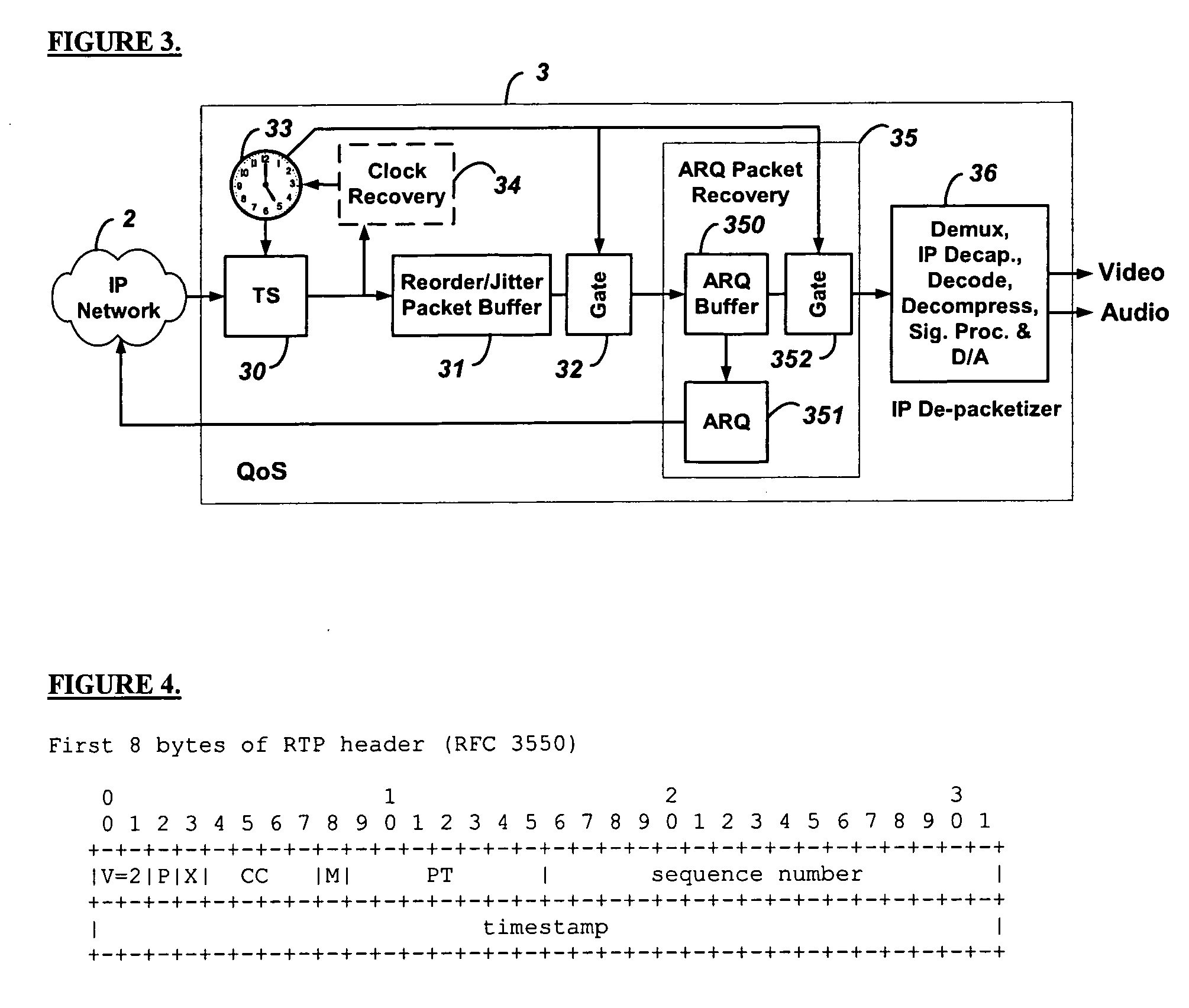

Low-latency automatic repeat request packet recovery mechanism for media streams

ActiveUS20060104279A1Robust and low-latency transportDelay minimizationError preventionFrequency-division multiplex detailsAutomatic repeat requestLatency (engineering)

An Automatic Repeat reQuest (ARQ) error correction method optimized for protecting real-time audio-video streams for transmission over packet-switched networks. Embodiments of this invention provide bandwidth-efficient and low-latency ARQ for both variable and constant bit-rate audio and video streams. Embodiments of this invention use timing constraints to limit ARQ latency and thereby facilitate the use of ARQ packet recovery for the transport of both constant bit rate and variable bit rate media streams.

Owner:QVIDIUM TECH

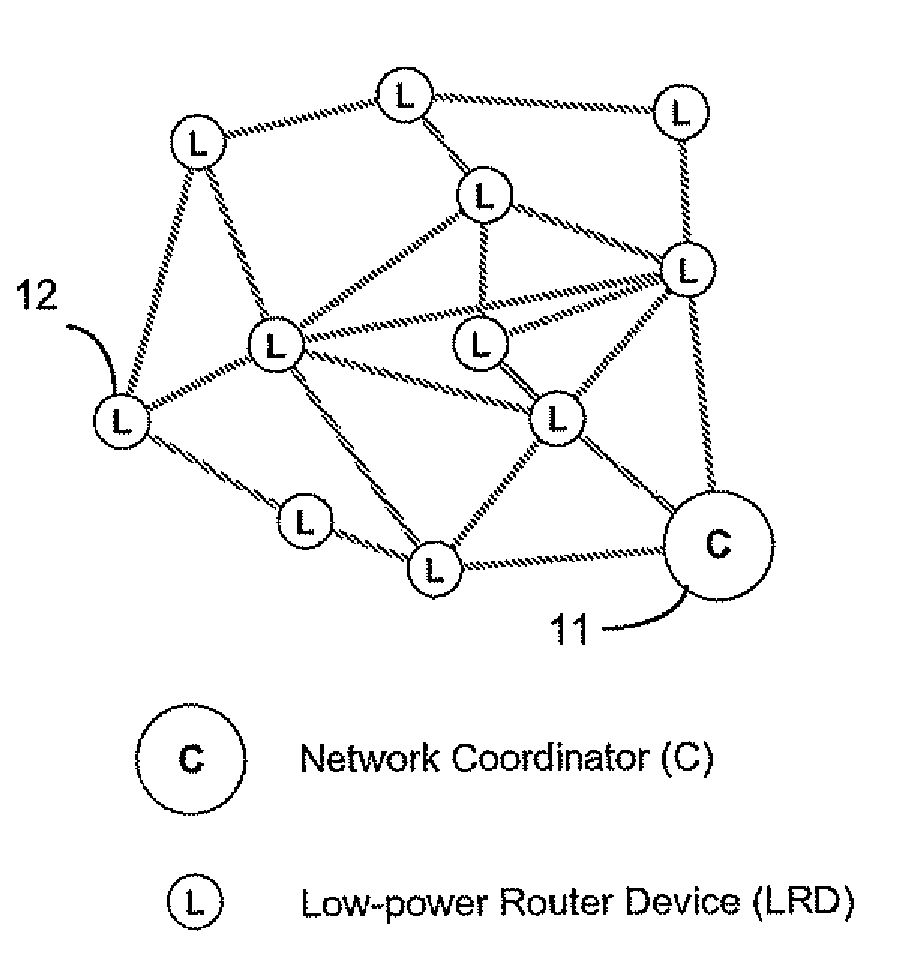

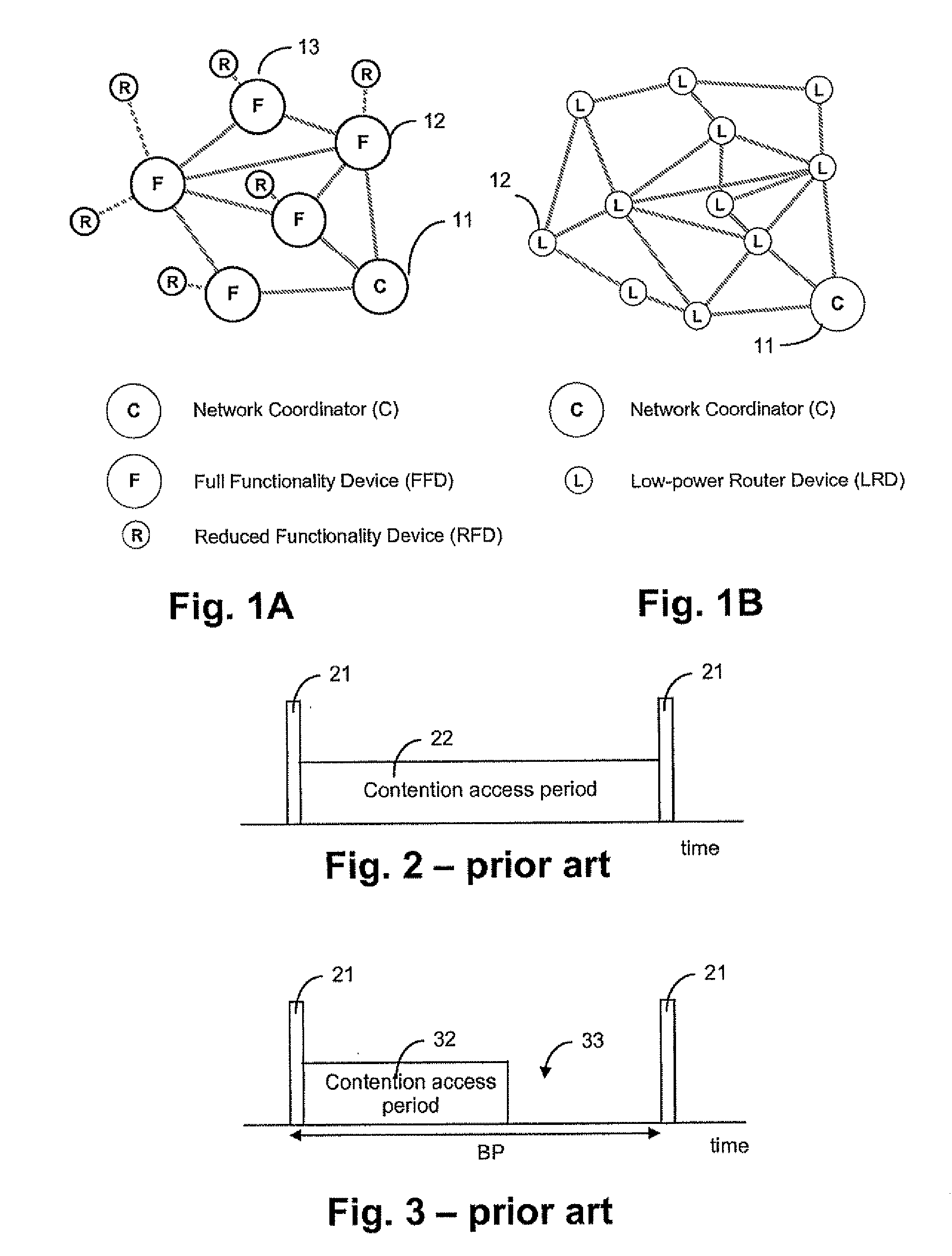

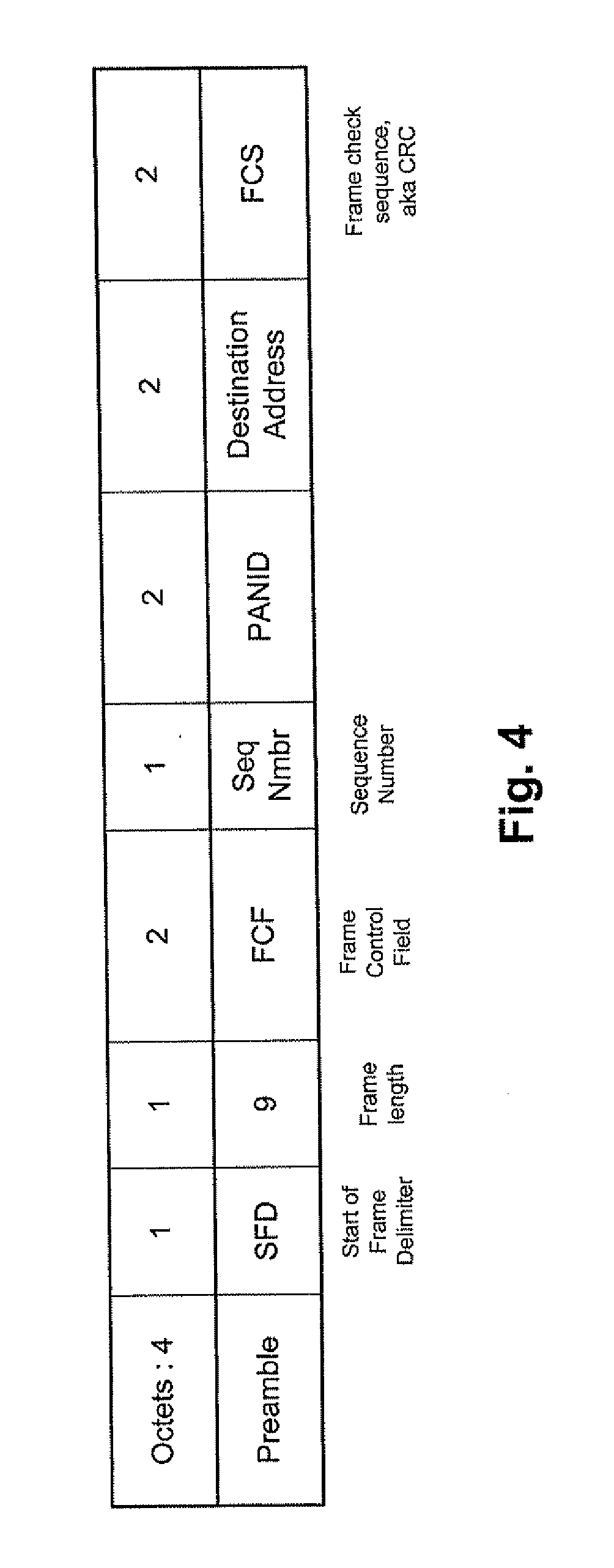

Low-power wireless multi-hop networks

A method of communication or transmission of data between a first and a second node in a wireless multi-hop network comprises bringing the first node and the second node in a fully operative mode for communication with each other and subsequently bringing the first node and the second node in a reduced operative mode where no communication with any other node is possible and, before bringing the second node in the fully operative mode, bringing the second node in a listening mode during a token listening period to check for presence of a wake-up token from the first node, and thereafter bringing the second node into the reduced operative mode if no wake-up token is received, and bringing the second node into the fully operative mode for reception of data from the first node if a wake-up token is received. This wake-up scheme is a kind of scheduled rendezvous scheme with collision avoidance, and may be called an induced wake-up scheme. It is advantageous because of its low power consumption (no des only wake up completely when a message is present for them to receive) and because of the low latency in data transfer. The wake-up tokens used enable random access to other nodes, nevertheless having a system which is low-power in operation.

Owner:GREENPEAK TECHNOLOGIES

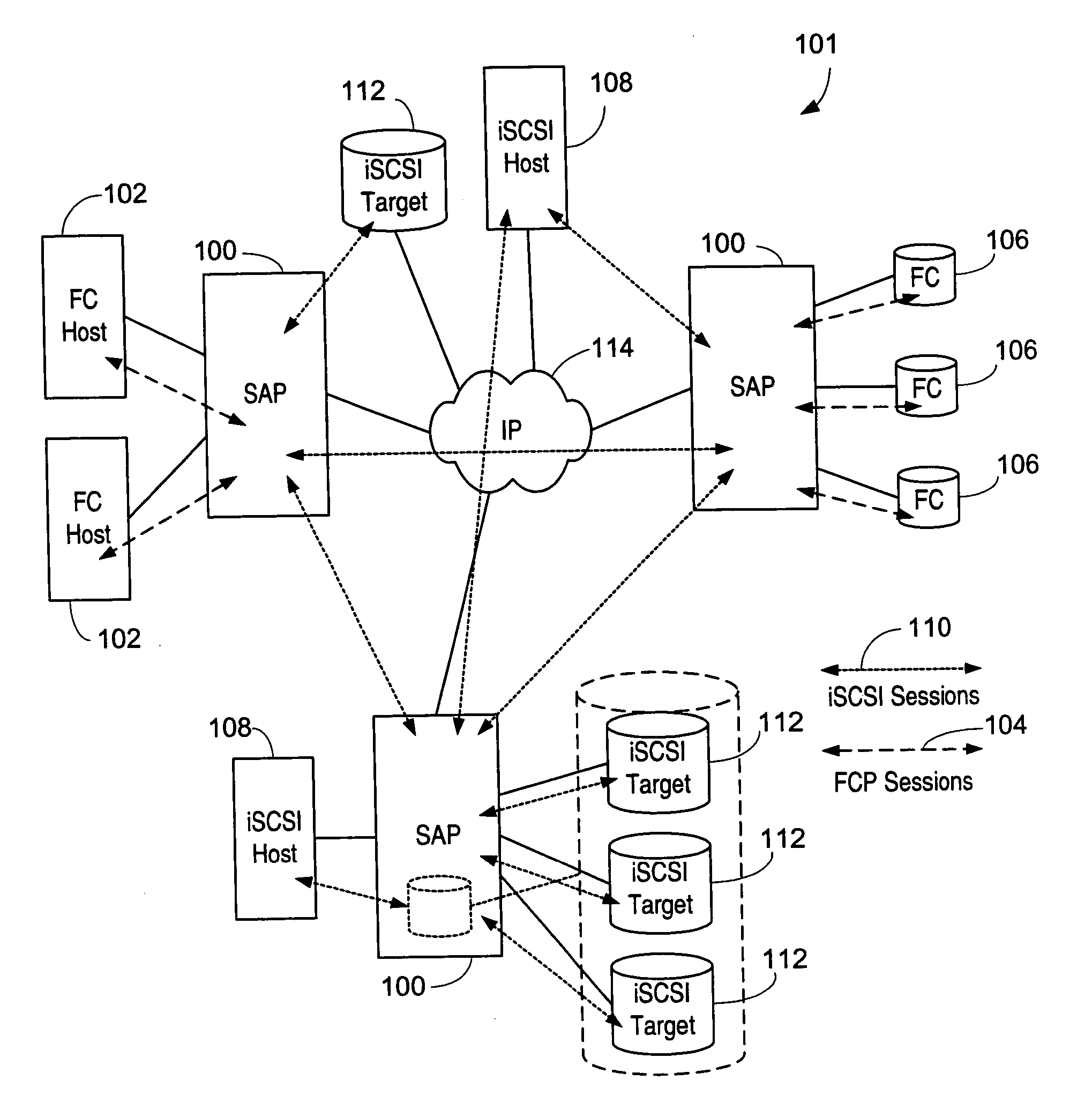

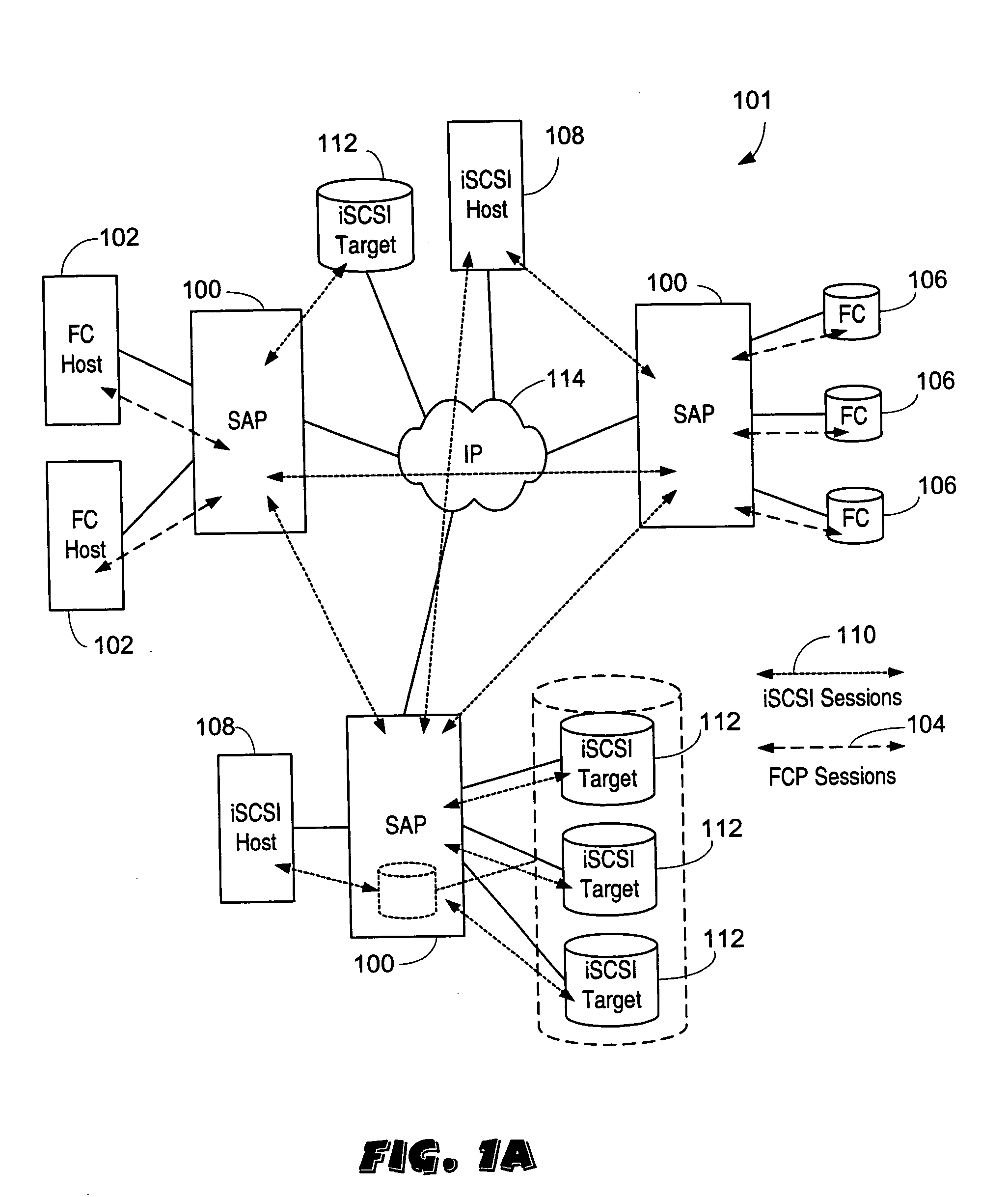

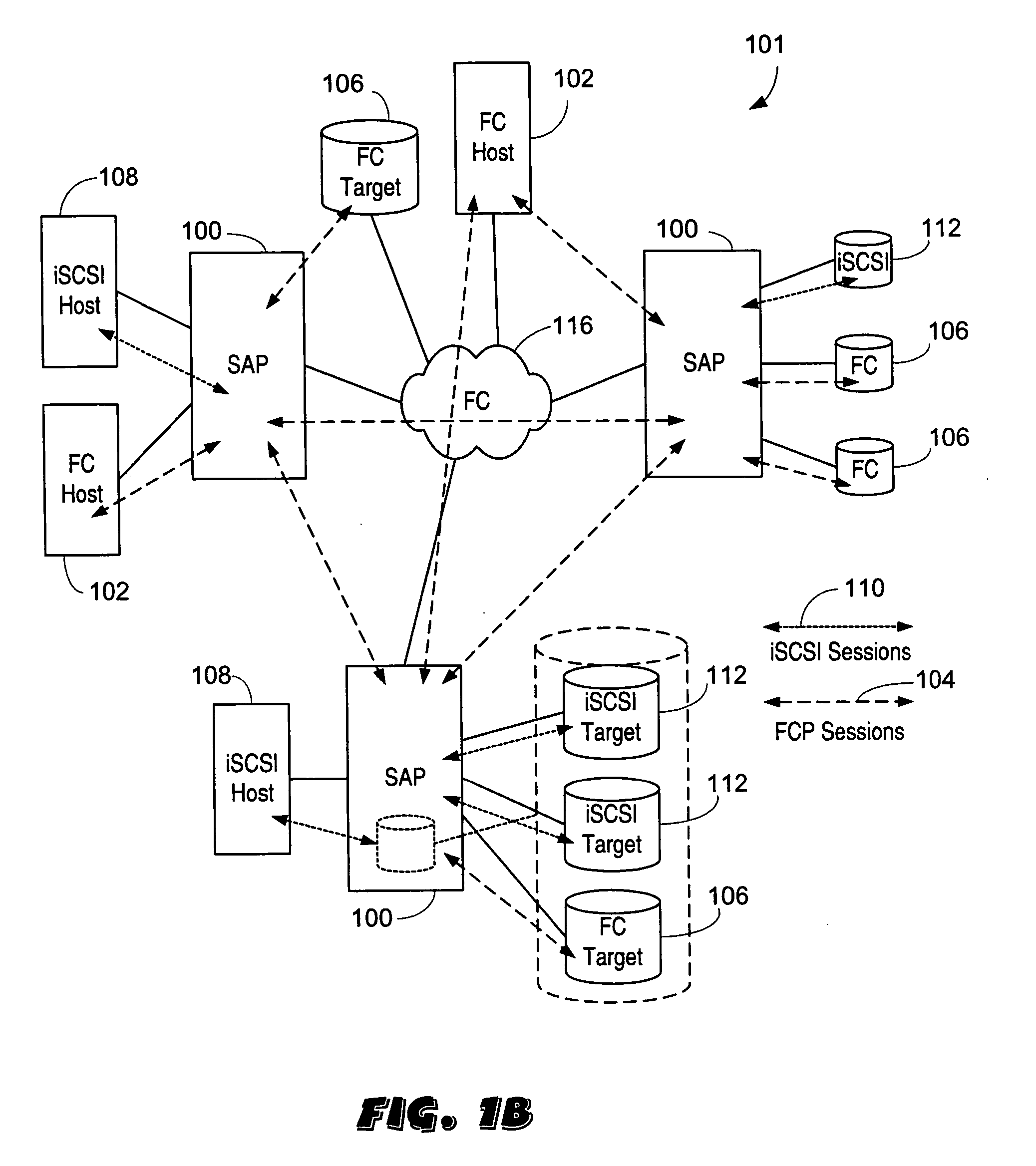

Apparatus and method for data virtualization in a storage processing device

ActiveUS20050033878A1Achieve scaleProvide flexibilityMultiplex system selection arrangementsInput/output to record carriersInternet trafficMulti protocol

A system including a storage processing device with an input / output module. The input / output module has port processors to receive and transmit network traffic. The input / output module also has a switch connecting the port processors. Each port processor categorizes the network traffic as fast path network traffic or control path network traffic. The switch routes fast path network traffic from an ingress port processor to a specified egress port processor. The storage processing device also includes a control module to process the control path network traffic received from the ingress port processor. The control module routes processed control path network traffic to the switch for routing to a defined egress port processor. The control module is connected to the input / output module. The input / output module and the control module are configured to interactively support data virtualization, data migration, data journaling, and snapshotting. The distributed control and fast path processors achieve scaling of storage network software. The storage processors provide line-speed processing of storage data using a rich set of storage-optimized hardware acceleration engines. The multi-protocol switching fabric provides a low-latency, protocol-neutral interconnect that integrally links all components with any-to-any non-blocking throughput.

Owner:AVAGO TECH INT SALES PTE LTD

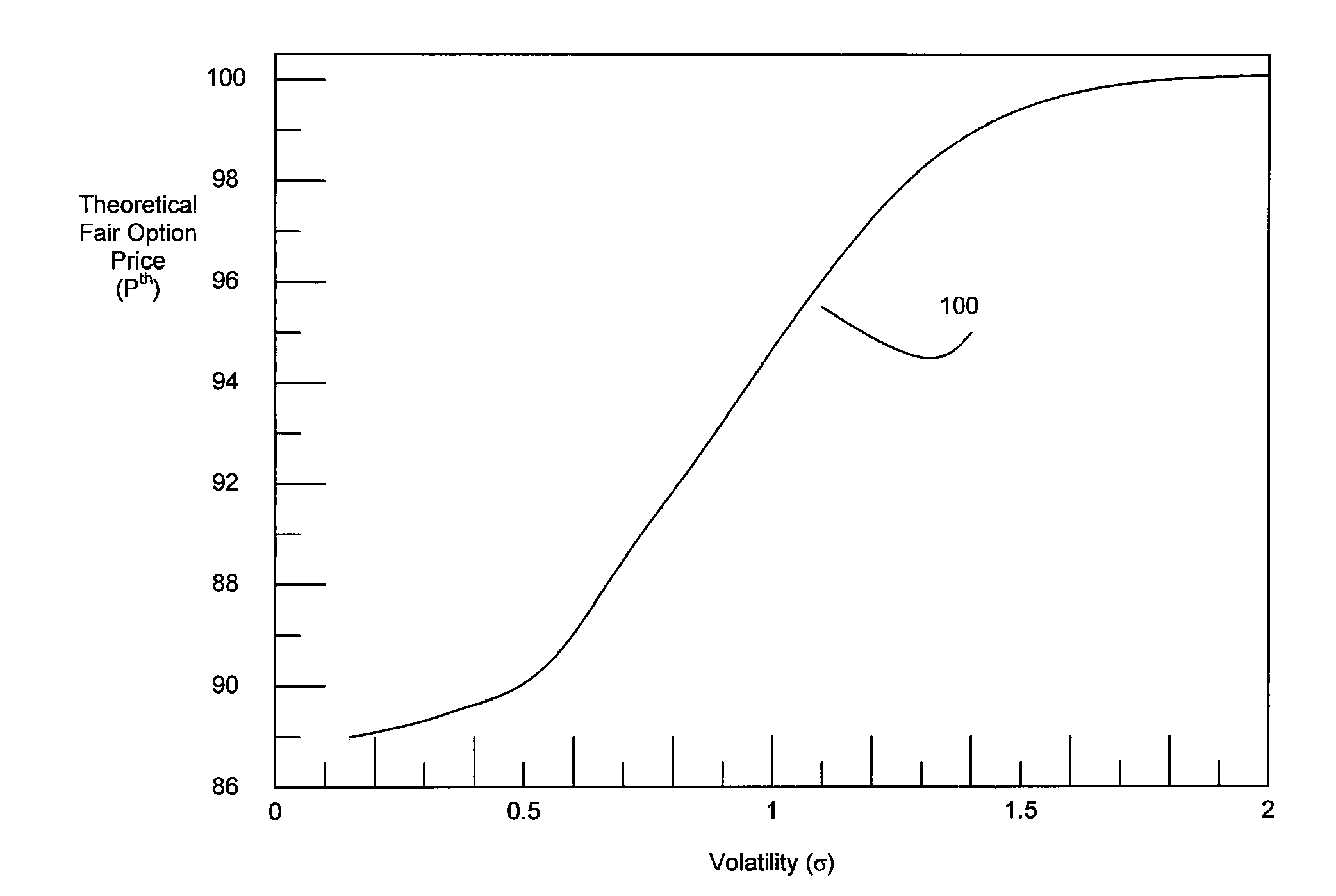

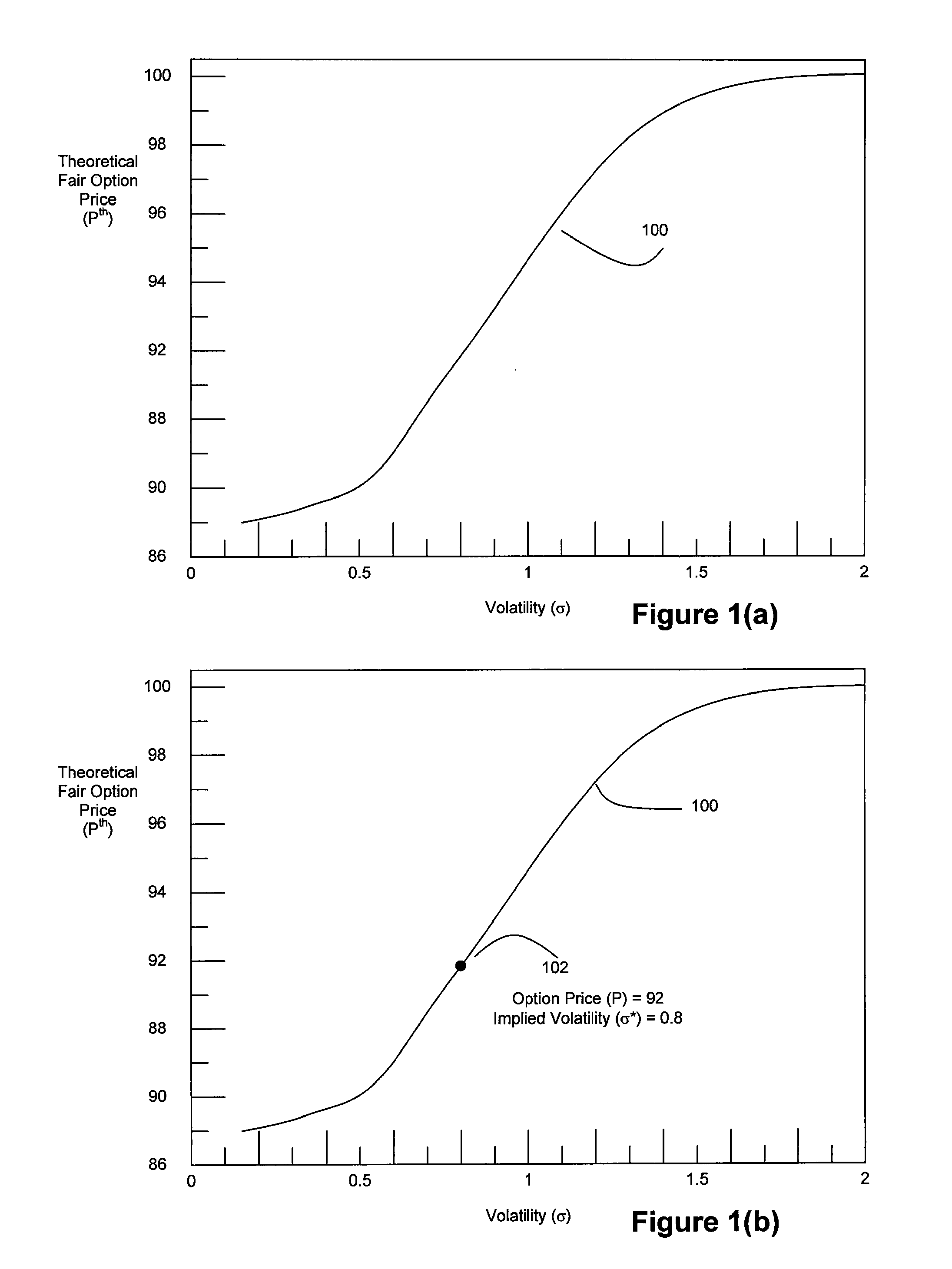

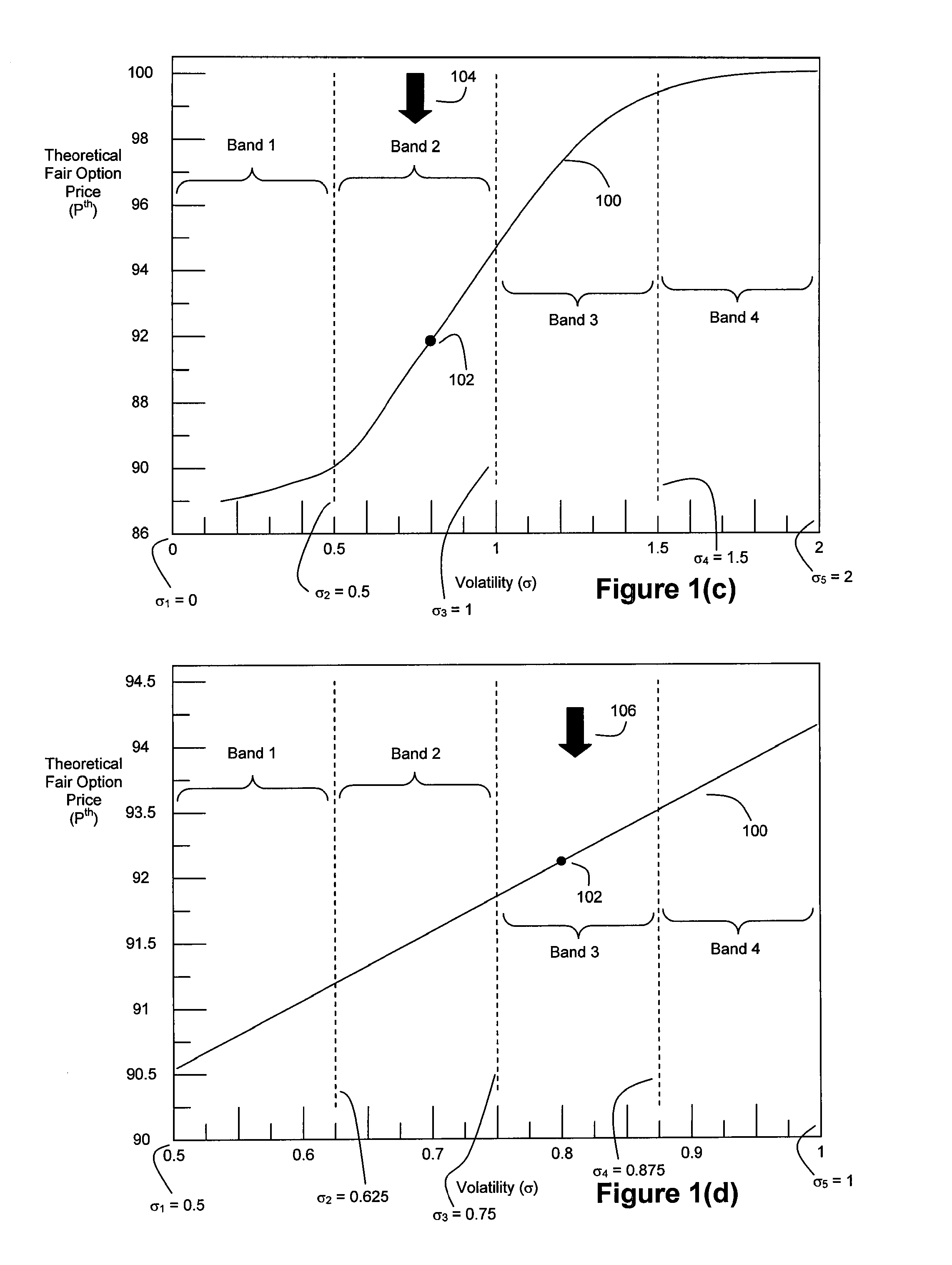

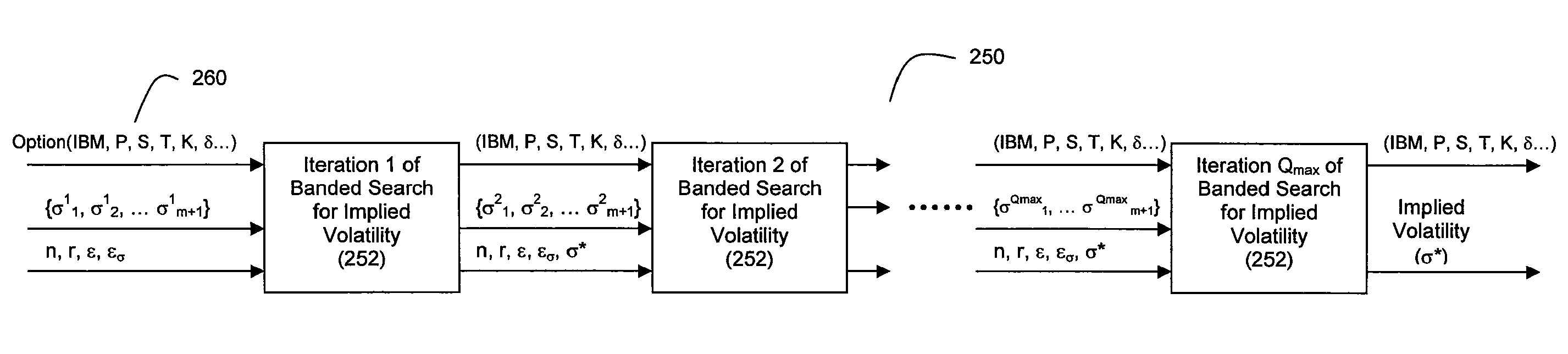

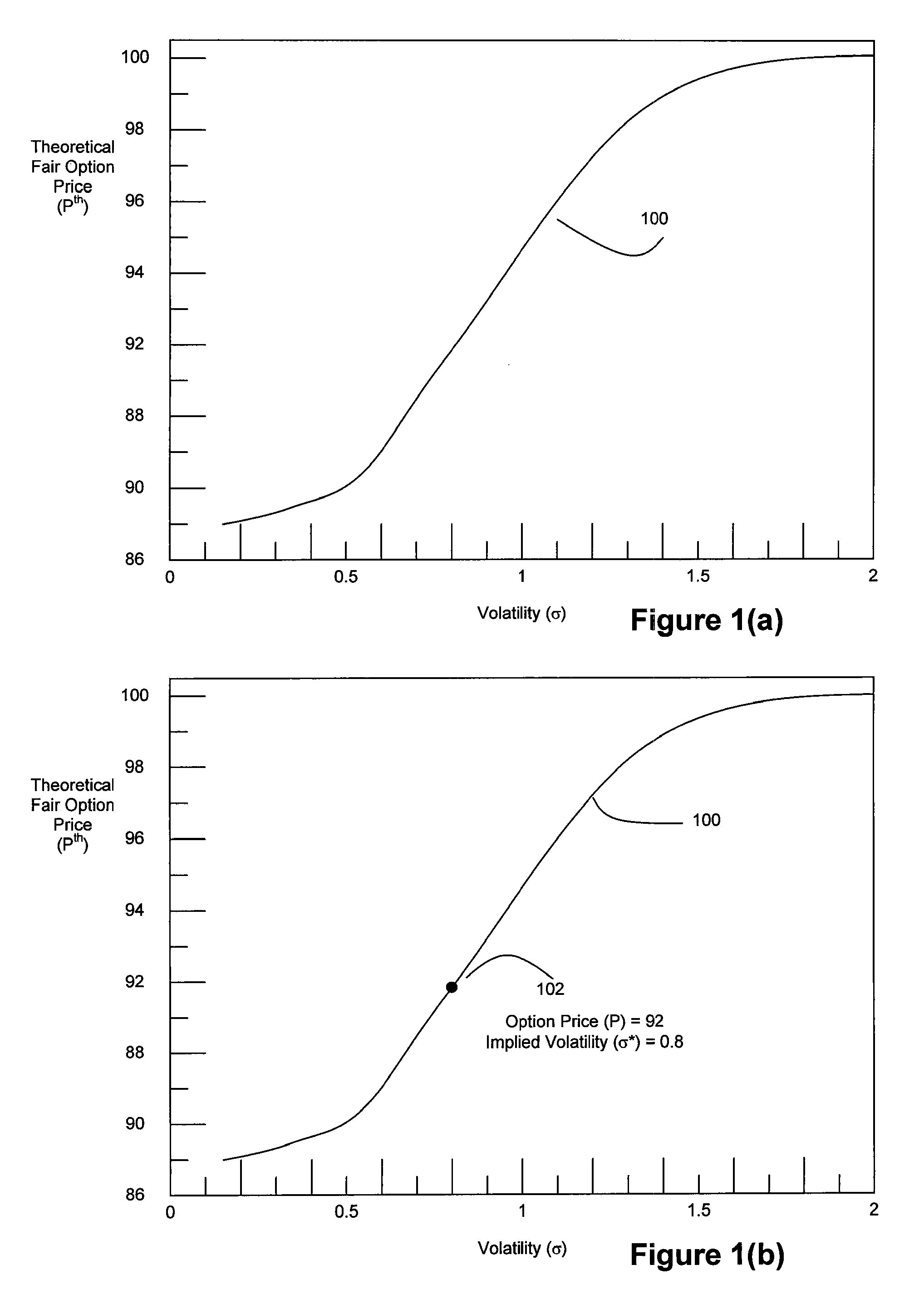

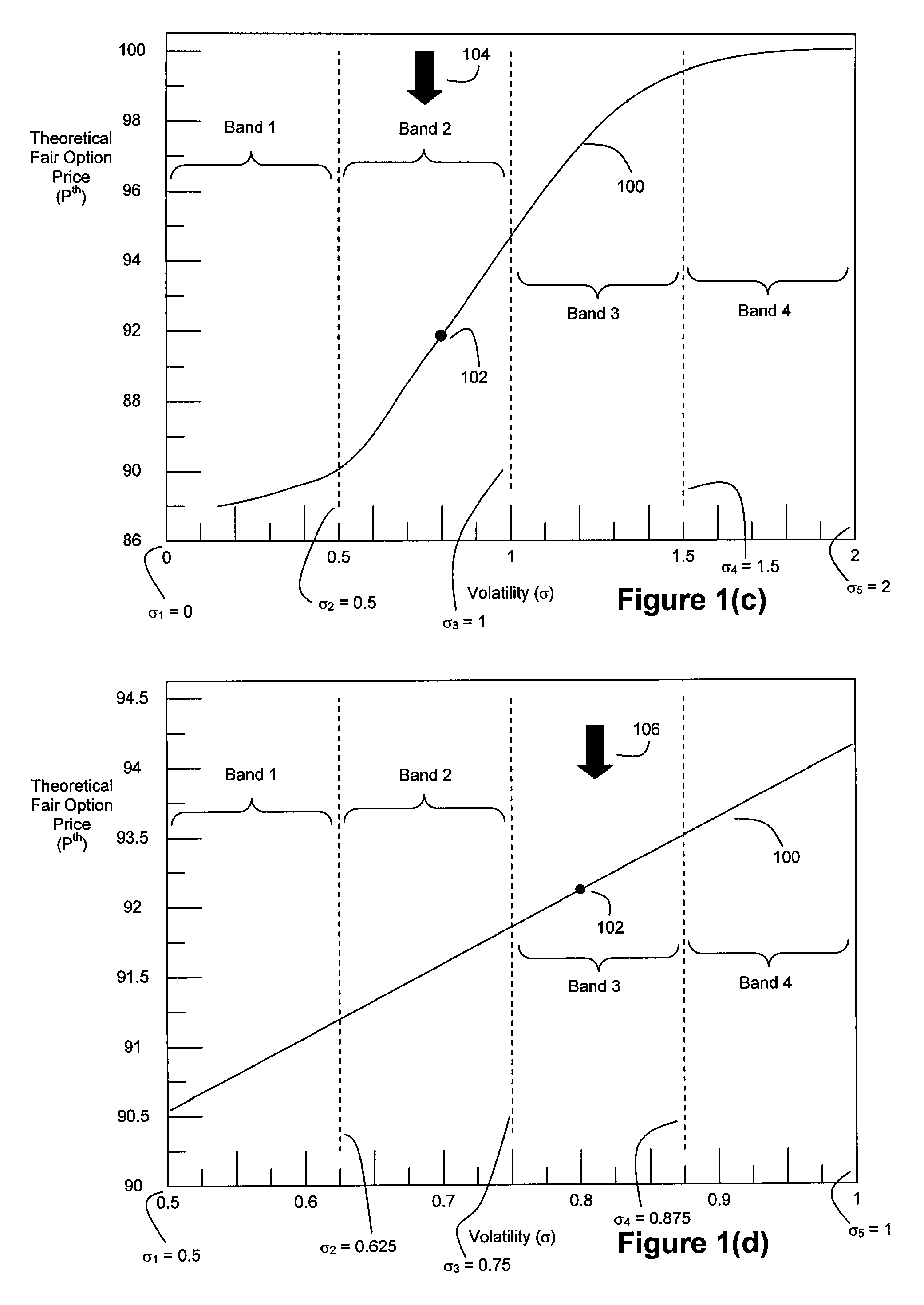

Method and System for High Speed Options Pricing

ActiveUS20070294157A1SpeedReduction of informationFinanceTheoretical computer scienceLatency (engineering)

A high speed technique for options pricing in the financial industry is disclosed that can provide both high throughput and low latency. A parallel / pipelined architecture is disclosed for computing an implied volatility in connection with an option. Parallel / pipelined architectures are also disclosed for computing an option's theoretical fair price. Preferably these parallel / pipelined architectures are deployed in hardware, and more preferably reconfigurable logic such as Field Programmable Gate Arrays (FPGAs) to accelerate the options pricing operations relative to conventional software-based options pricing operations.

Owner:EXEGY INC

Method and system for high speed options pricing

ActiveUS7840482B2SpeedReduction of informationFinanceLatency (engineering)Theoretical computer science

A high speed technique for options pricing in the financial industry is disclosed that can provide both high throughput and low latency. A parallel / pipelined architecture is disclosed for computing an implied volatility in connection with an option. Parallel / pipelined architectures are also disclosed for computing an option's theoretical fair price. Preferably these parallel / pipelined architectures are deployed in hardware, and more preferably reconfigurable logic such as Field Programmable Gate Arrays (FPGAs) to accelerate the options pricing operations relative to conventional software-based options pricing operations.

Owner:EXEGY INC

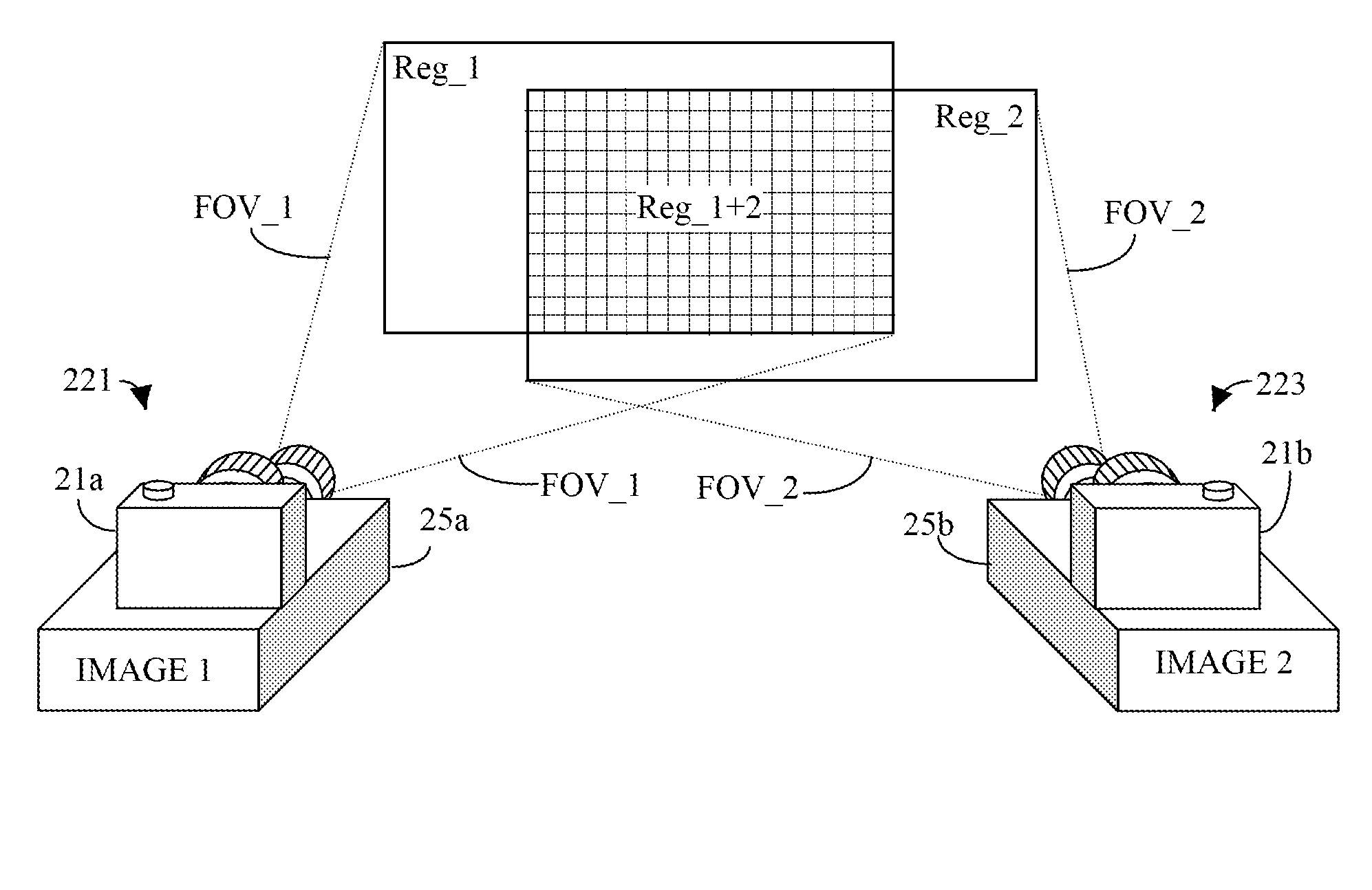

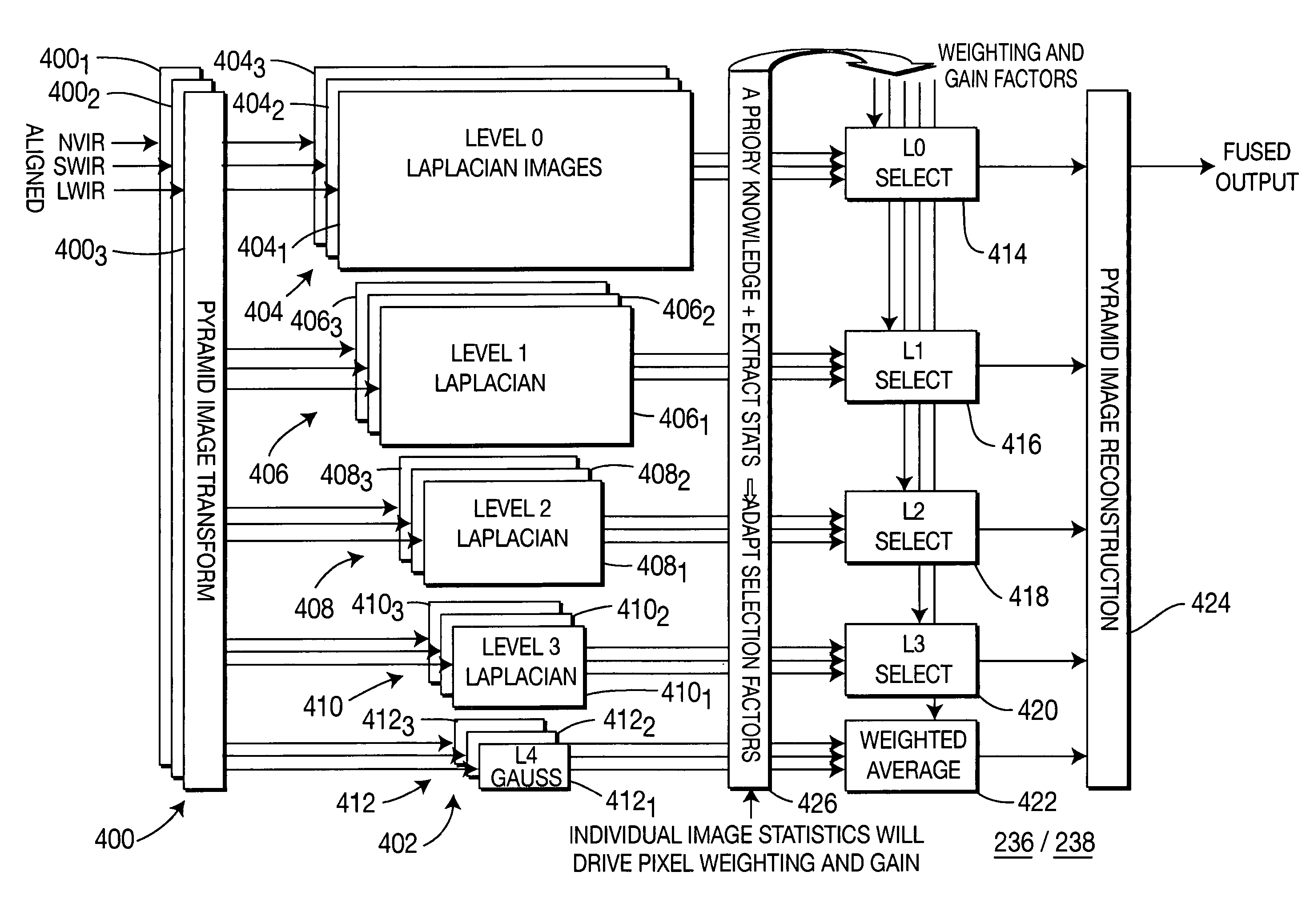

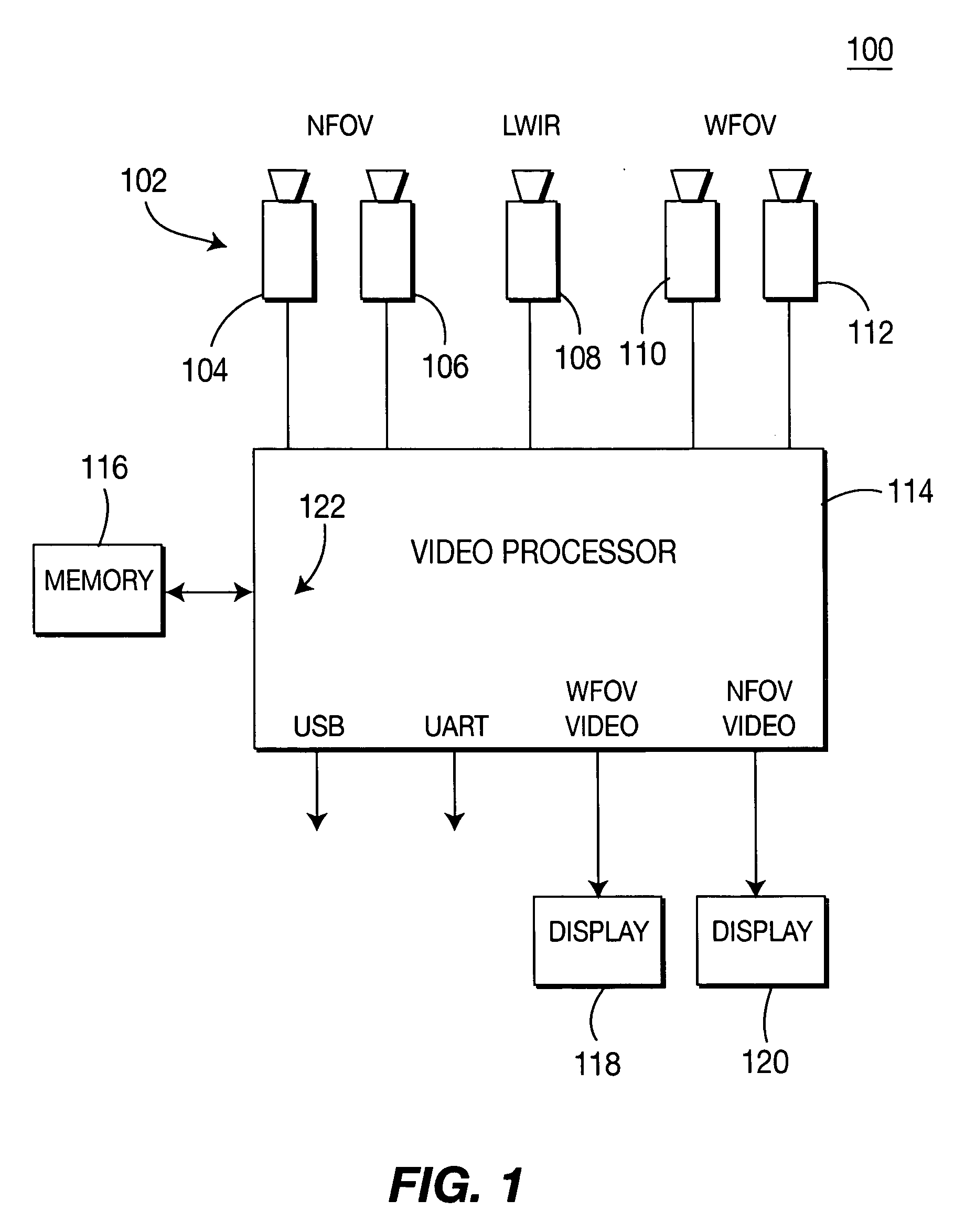

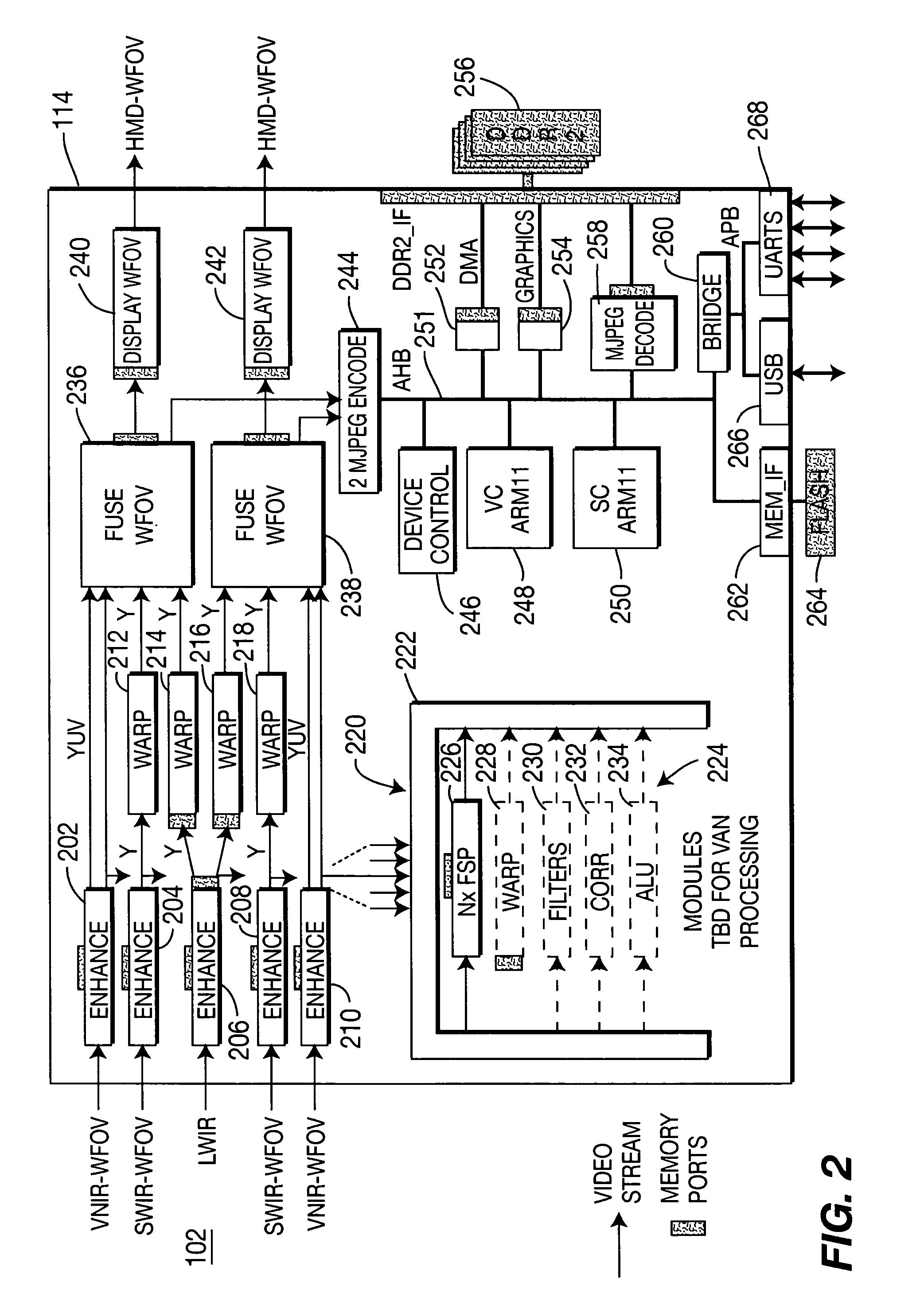

Low latency pyramid processor for image processing systems

A video processor that uses a low latency pyramid processing technique for fusing images from multiple sensors. The imagery from multiple sensors is enhanced, warped into alignment, and then fused with one another in a manner that provides the fusing to occur within a single frame of video, i.e., sub-frame processing. Such sub-frame processing results in a sub-frame delay between a moment of capturing the images to the display of the fused imagery.

Owner:SARNOFF CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com