Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

2823 results about "Lower priority" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

There is a simple way to lower the priority of a running program which is through the Windows Task Manager. You can view the current priority for all processes by going to View -> Select Columns… and ticking Base Priority. Then simply right click on the process -> Set Priority and drop it down to BelowNormal or Low.

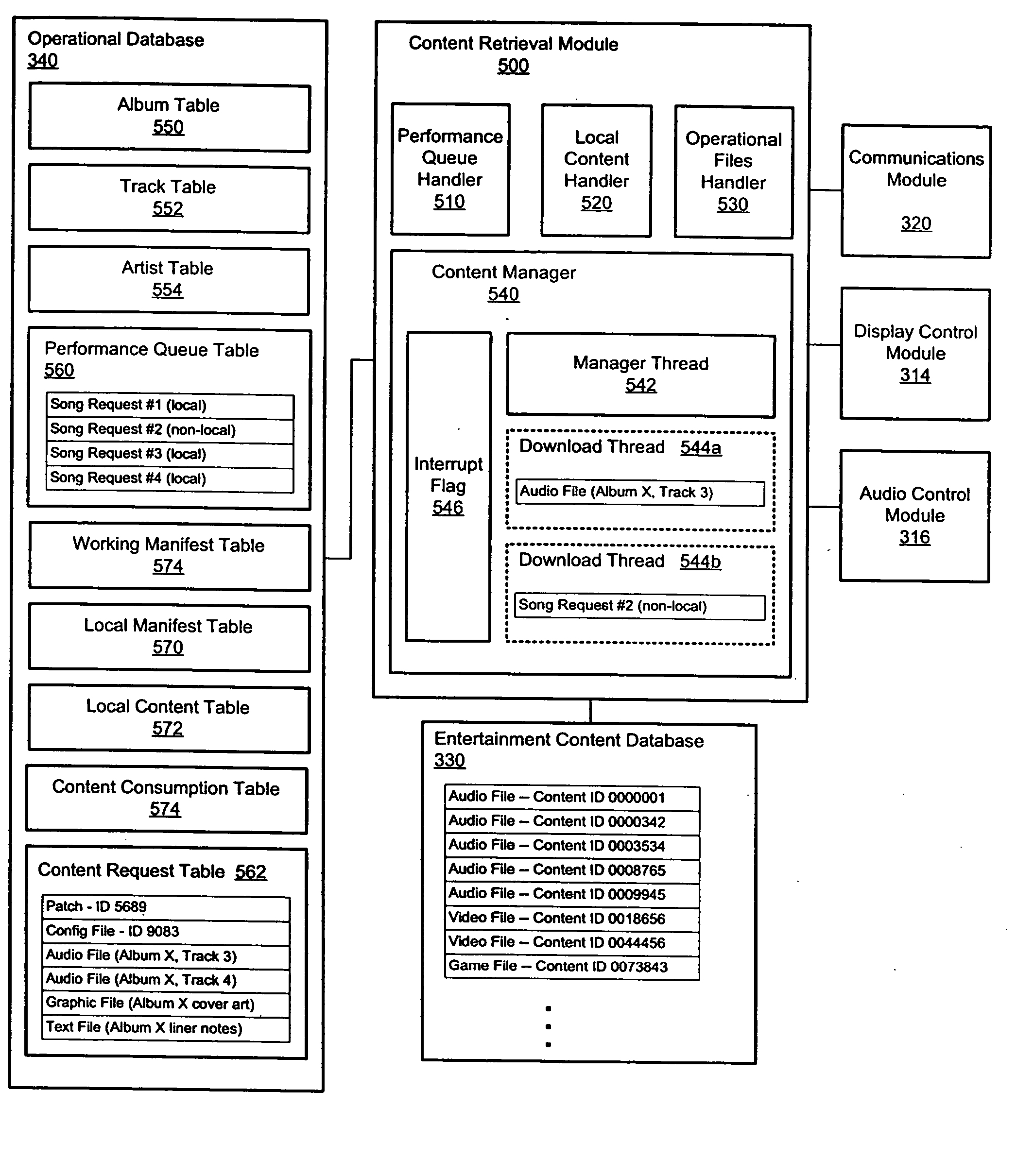

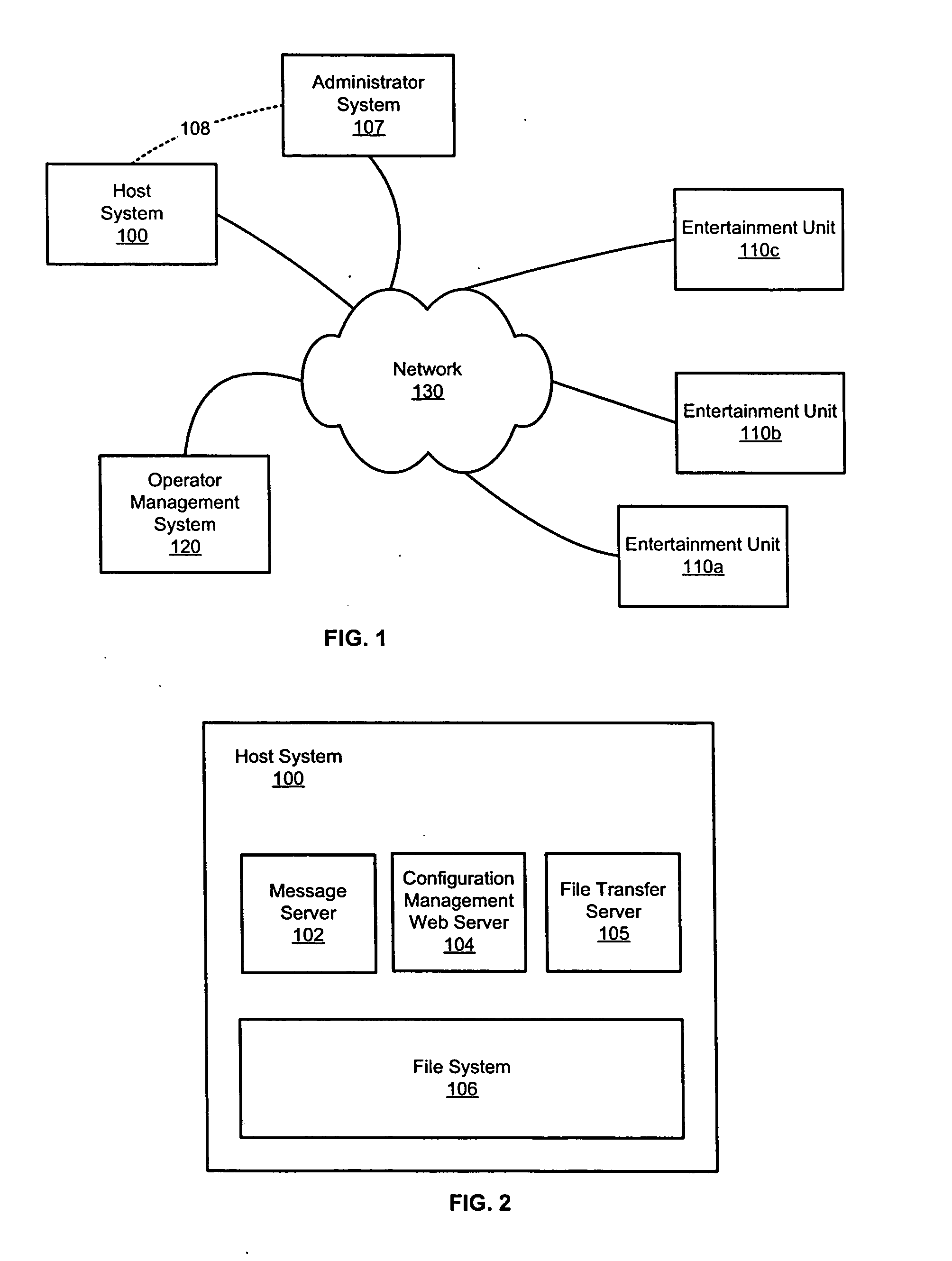

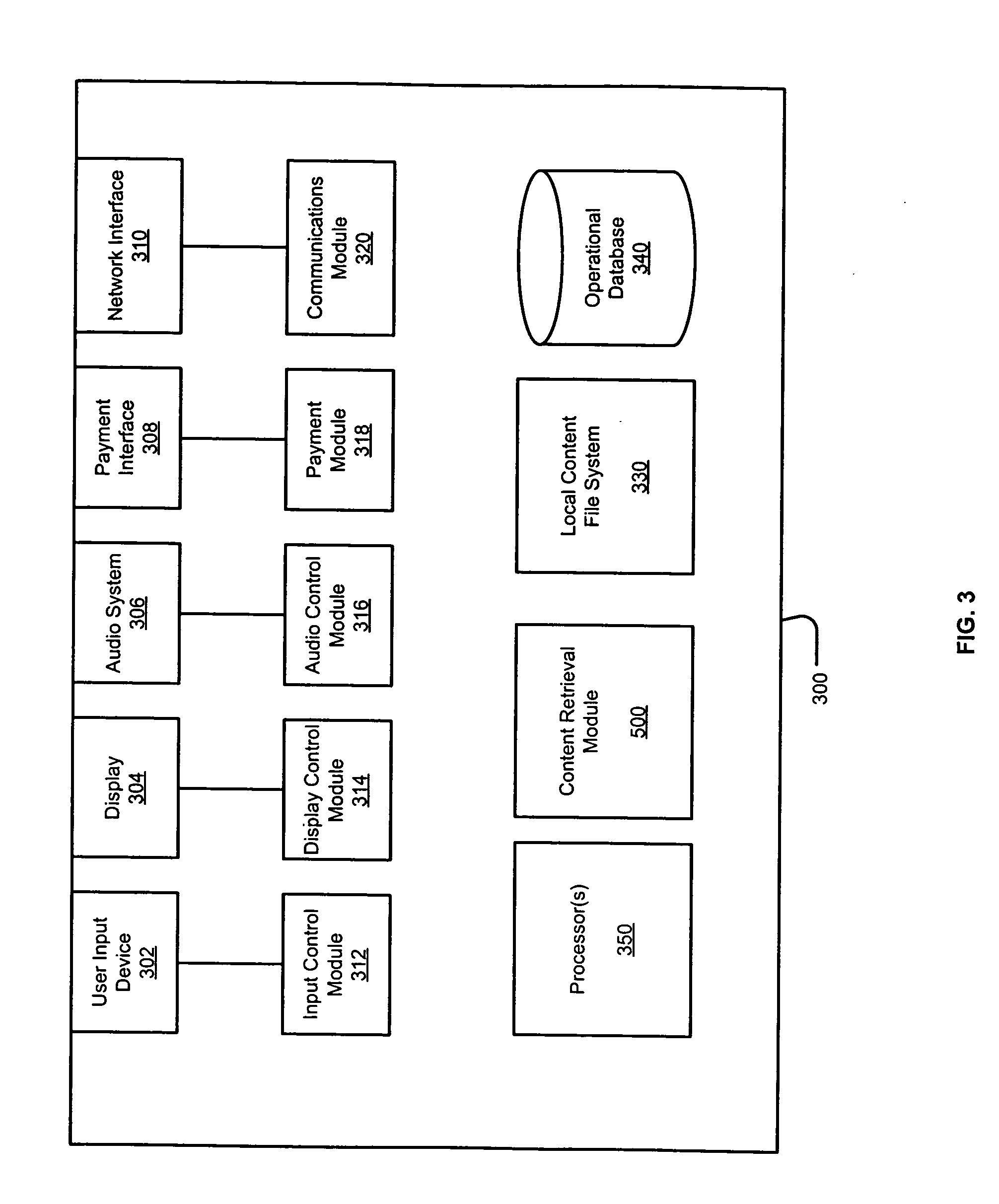

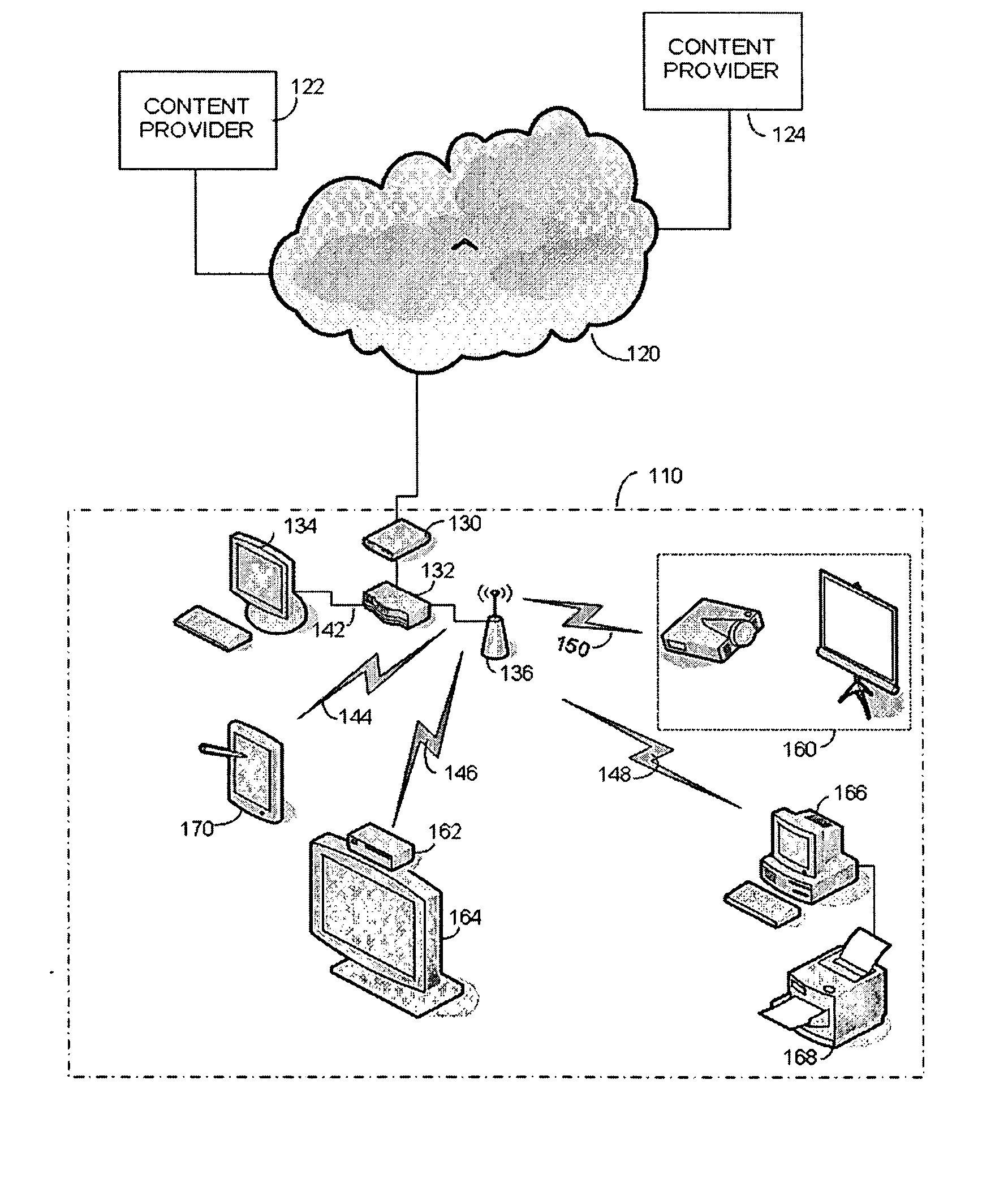

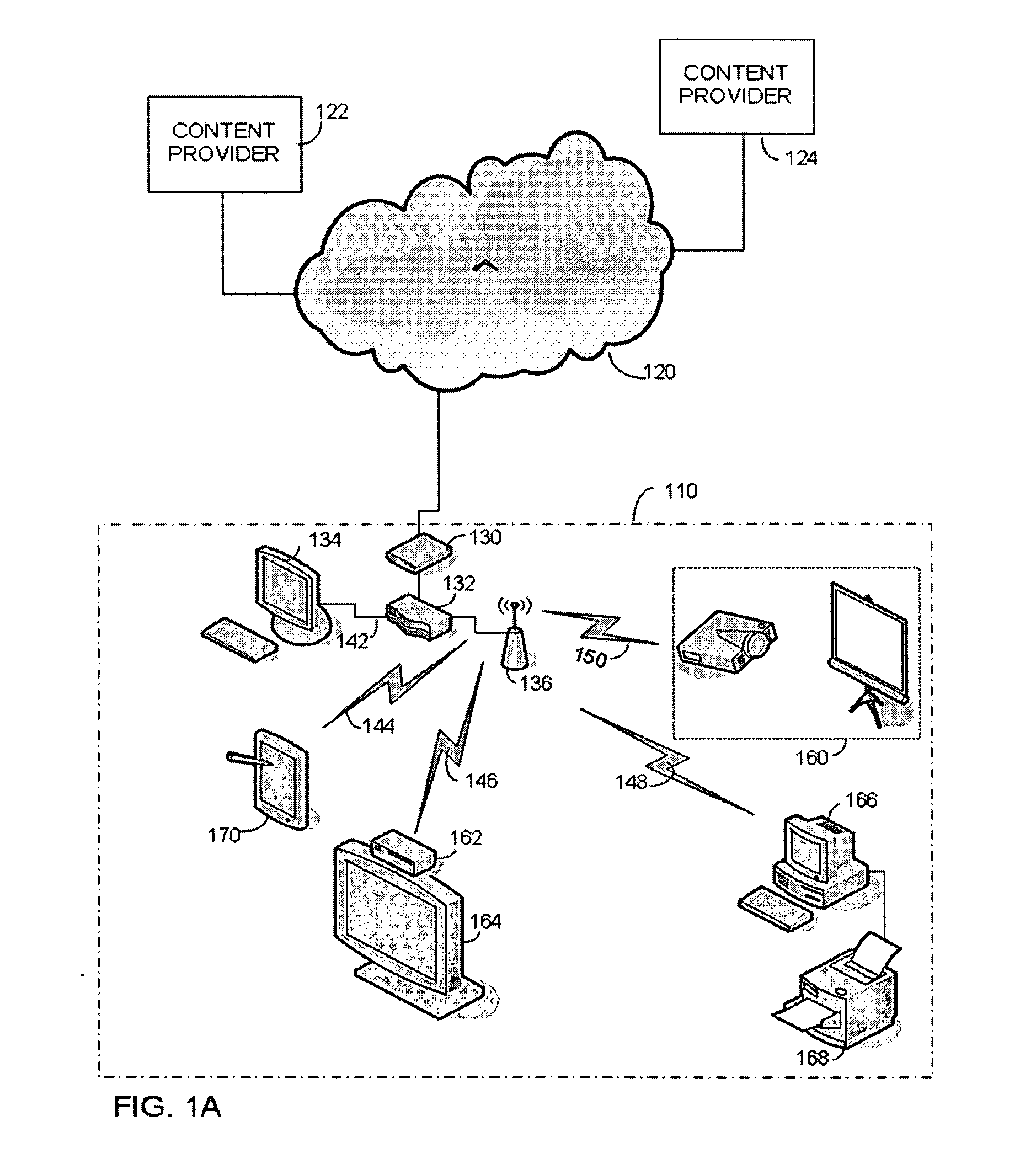

Prioritized content download for an entertainment device

ActiveUS20060074750A1Multiprogramming arrangementsCash registersContent retrievalDistributed computing

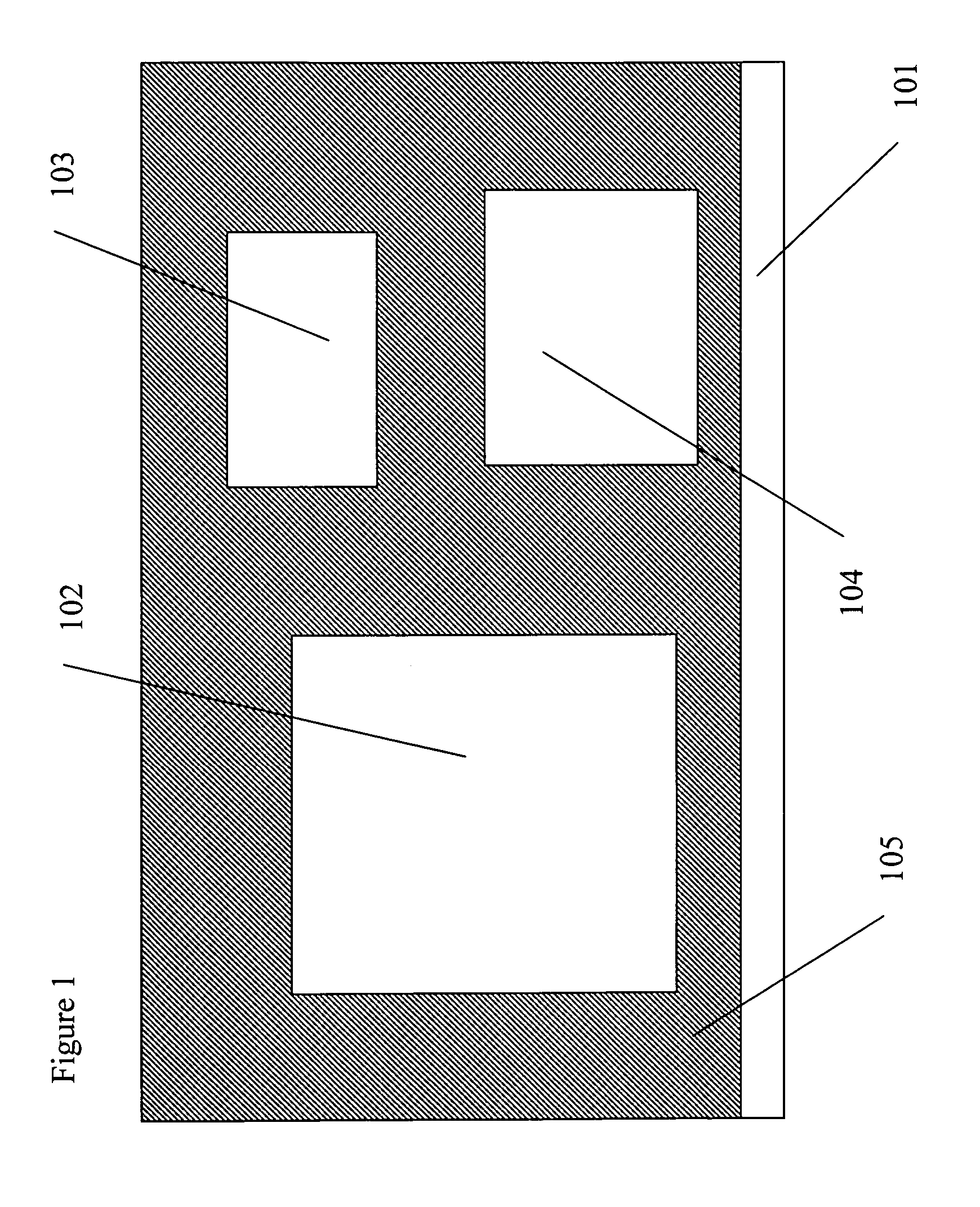

Priority-based content retrieval mechanisms for digital entertainment devices are provided. In various embodiments, the download prioritizations may be interrupt-based, sequence-based, or a combination of the two. In interrupt-based prioritizations, a higher priority download request will interrupt a lower-priority download that is already in progress. In sequence-based prioritizations, a plurality of file download requests may be ordered in a download queue depending on the priority of the request, with higher priority requests being positioned towards the top of the queue and lower priority requests being positioned towards the bottom of the queue.

Owner:AMI ENTERTAINMENT NETWORK

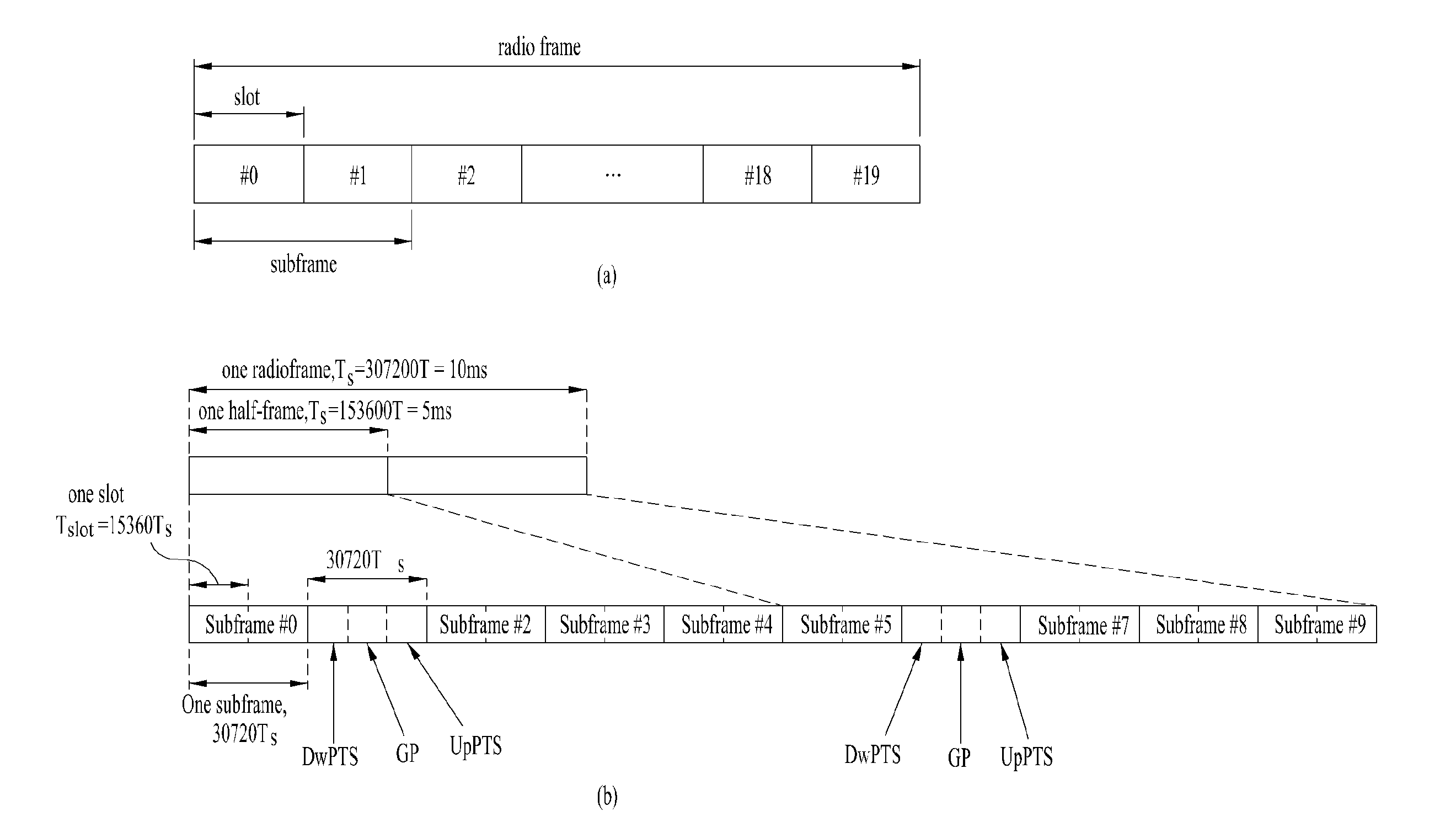

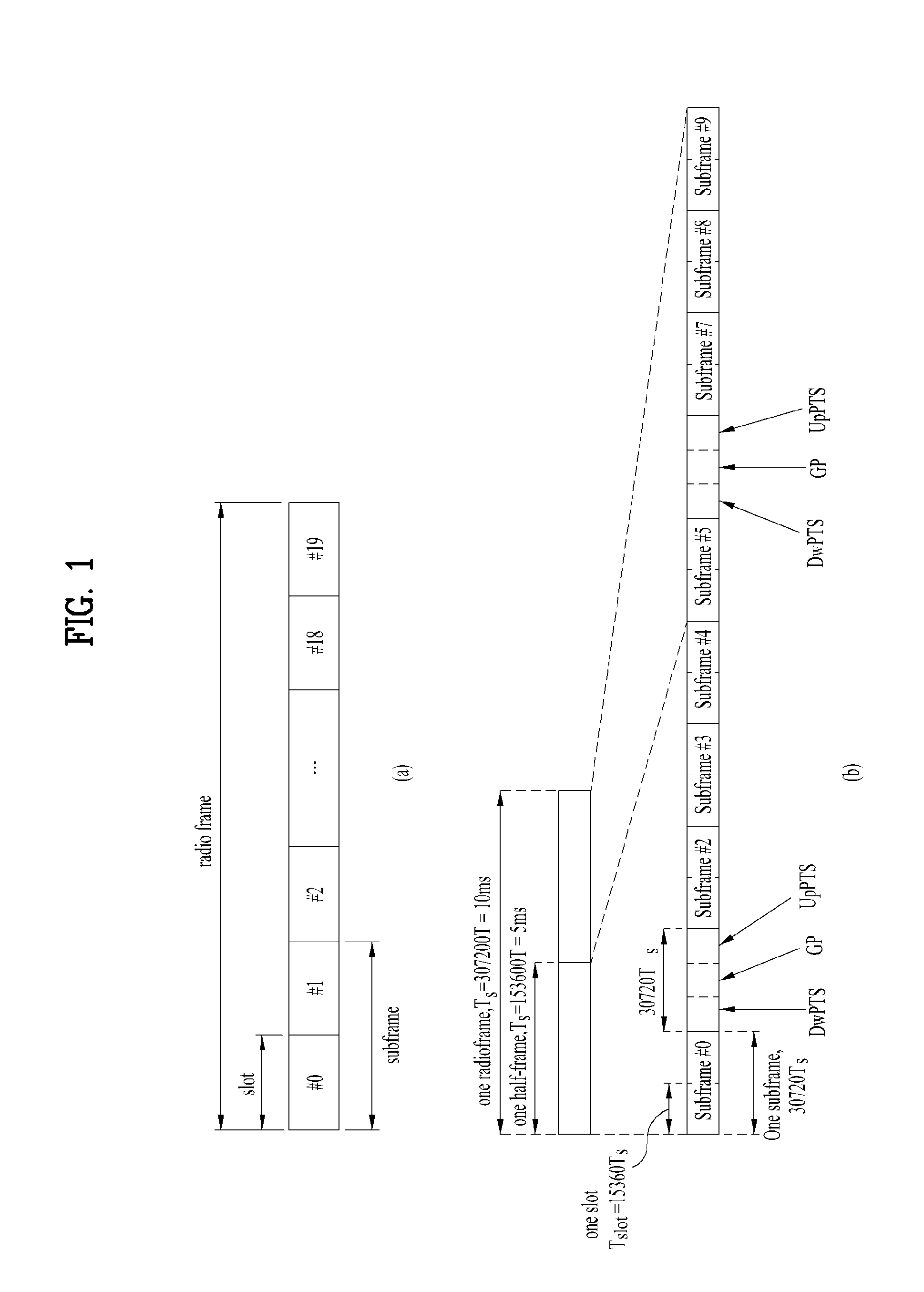

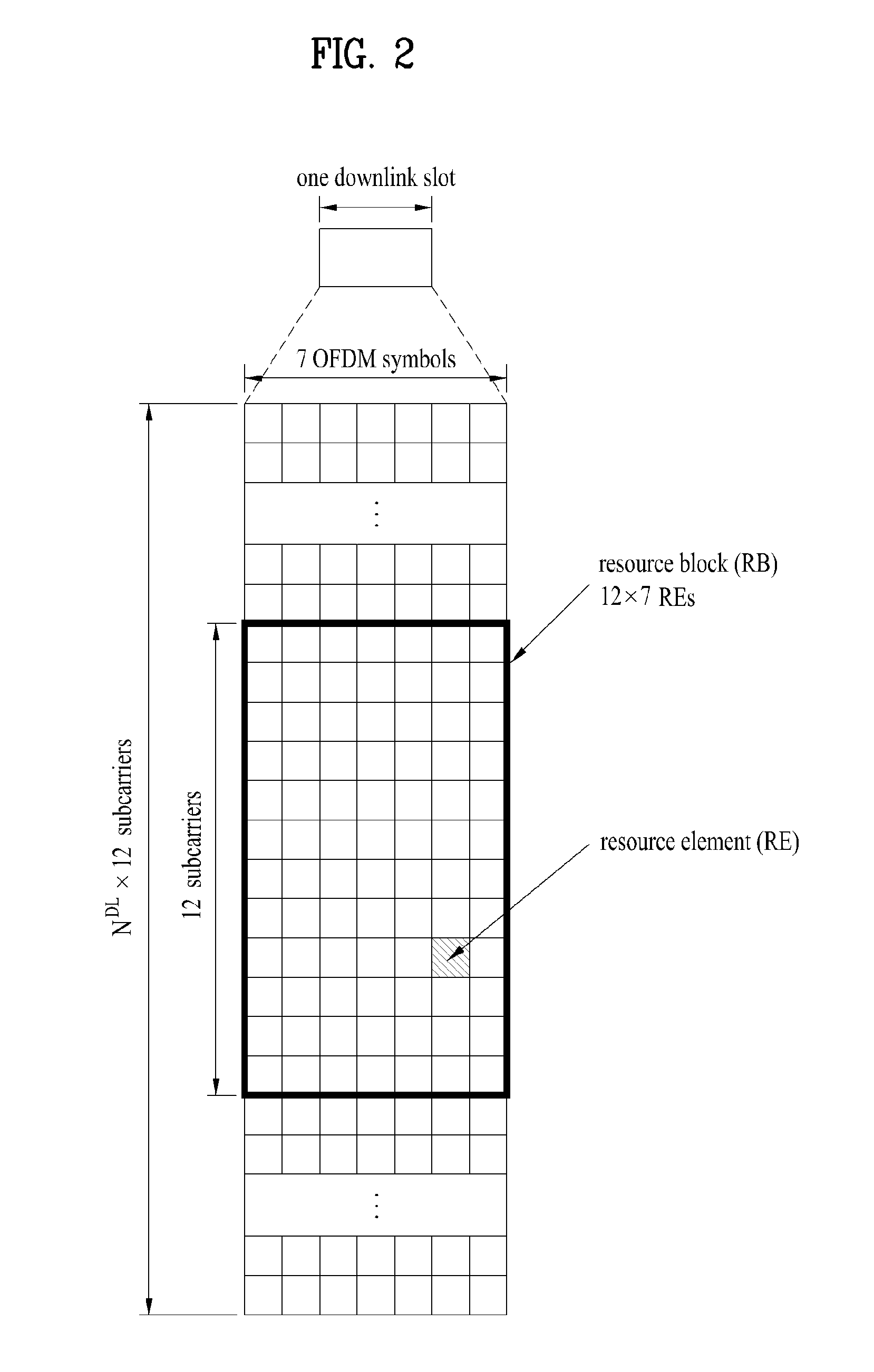

Method and apparatus for performing effective feedback in wireless communication system supporting multiple antennas

ActiveUS20120076028A1Easy to operateModulated-carrier systemsTransmission systemsChannel state informationCommunications system

A method for transmitting channel status information (CSI) of downlink multi-carrier transmission includes generating the CSI including at least one of a rank indicator (RI), a first precoding matrix index (PMI), a second PMI and a channel quality indicator (CQI) for one or more downlink carriers, the CQI being calculated based on precoding information determined by a combination of the first and second PMIs, determining, when two or more CSIs collide with one another in one uplink subframe of one uplink carrier, a CSI to be transmitted on the basis of priority, and transmitting the determined CSI over a uplink channel. If a CSI including an RI or a wideband first PMI collides with a CSI including a wideband CQI or a subband CQI, the CSI including a wideband CQI or a subband CQI has low priority and is dropped.

Owner:LG ELECTRONICS INC

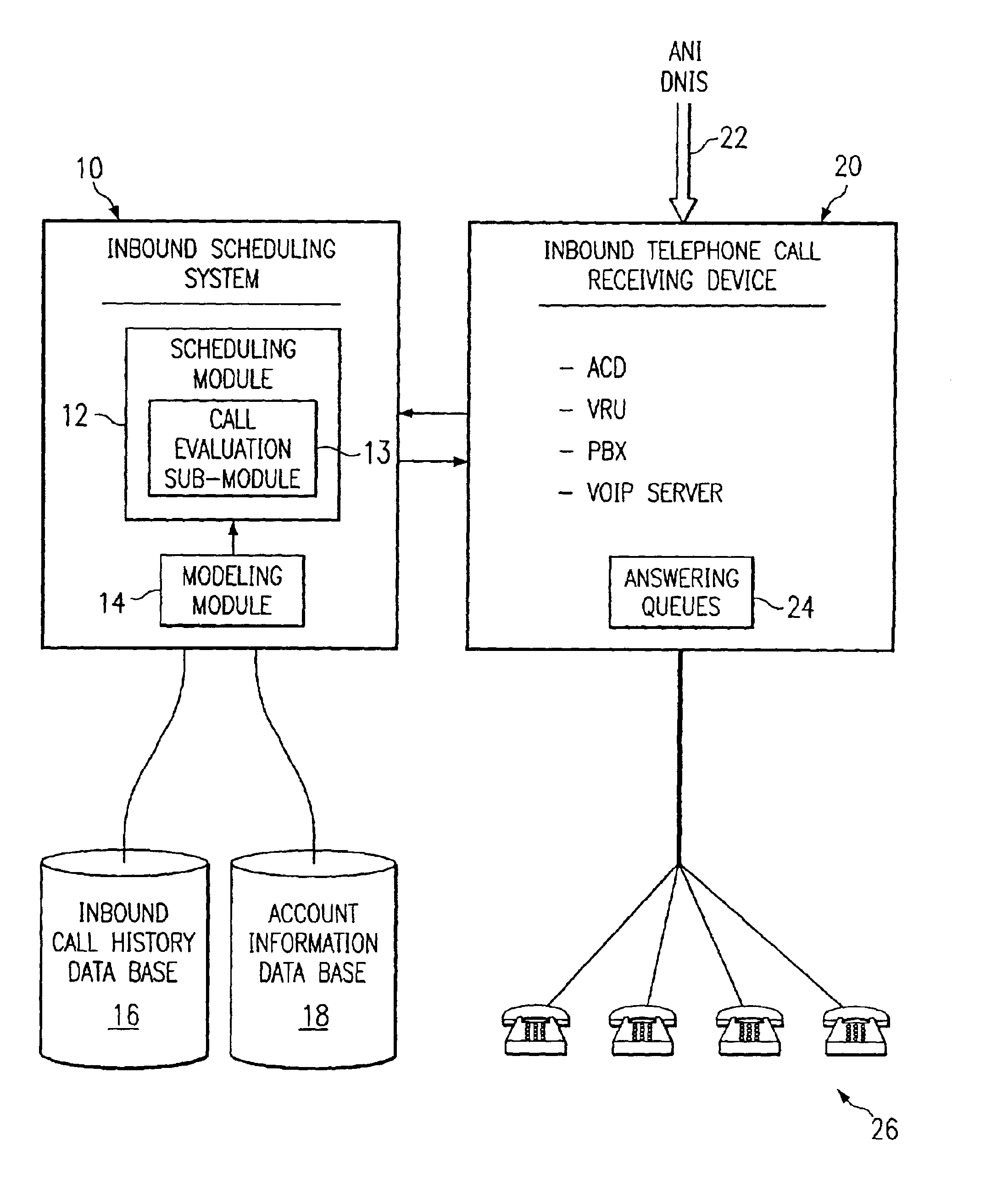

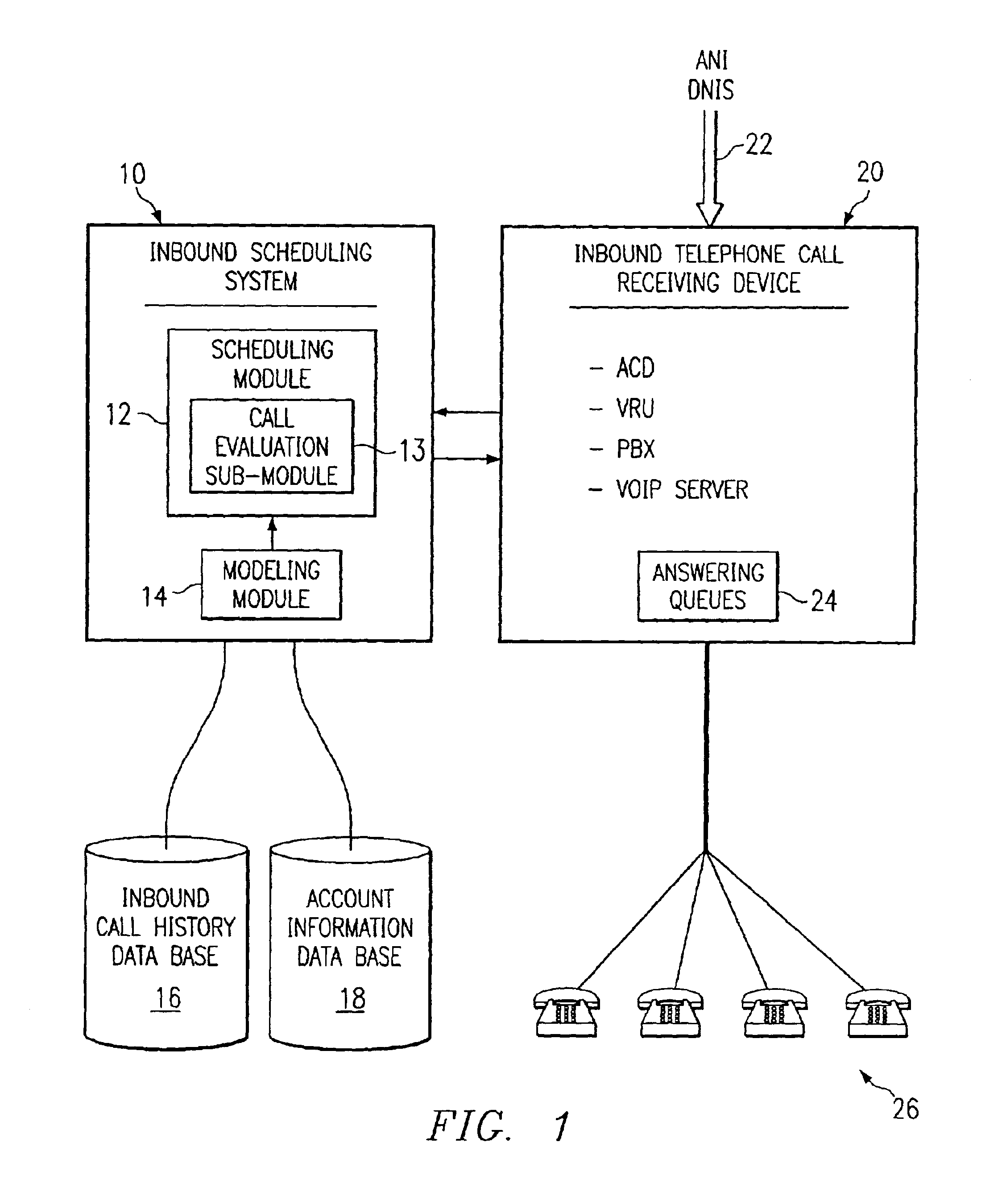

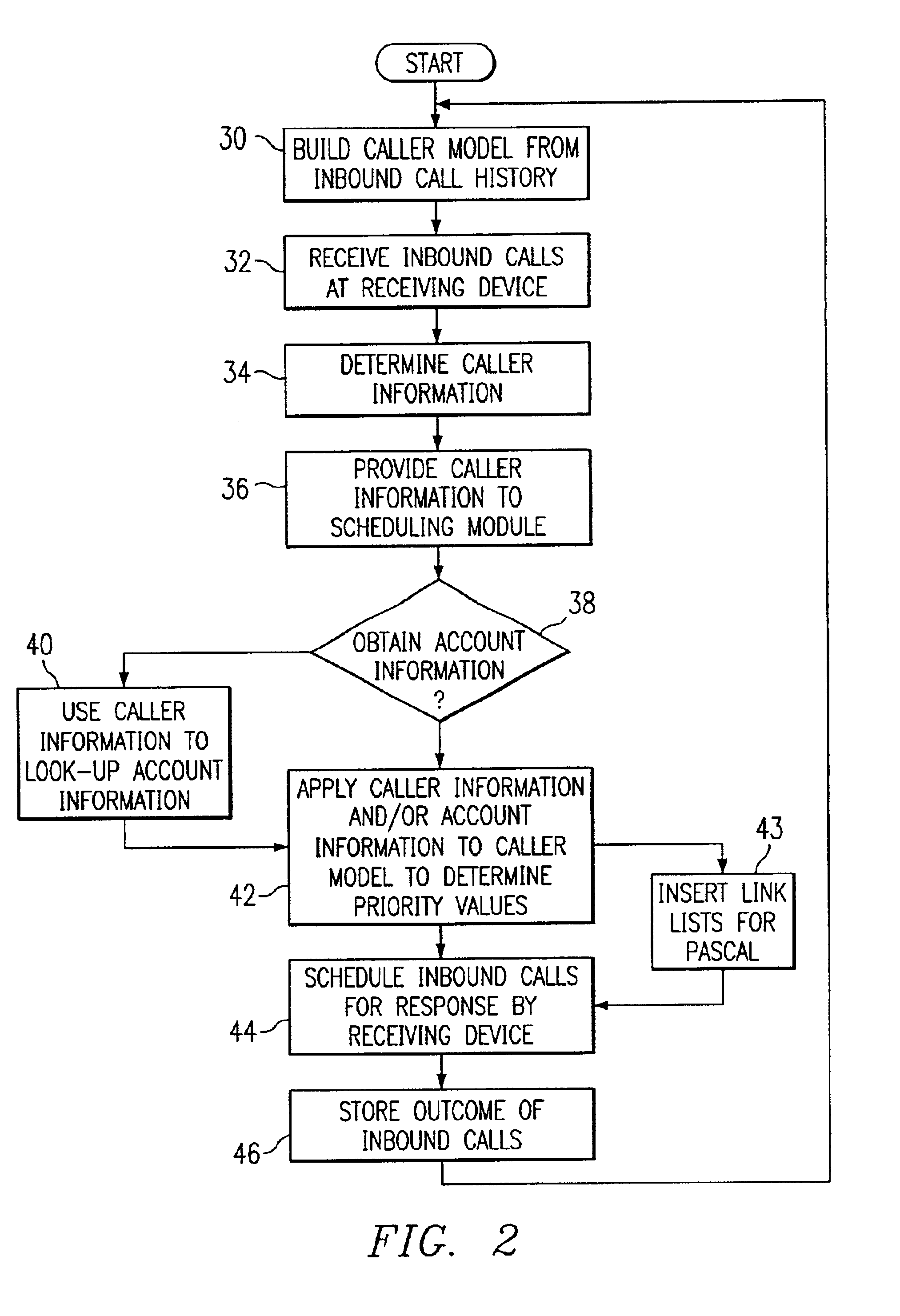

Method and system for self-service scheduling of inbound inquiries

InactiveUS6859529B2Accurate modelingMaximize useSpecial service for subscribersManual exchangesProduction rateRegression analysis

A method and system schedules inbound inquiries, such as inbound telephone calls, for response by agents in an order that is based in part on the forecasted outcome of the inbound inquiries. A scheduling module applies inquiry information to a model to forecast the outcome of an inbound inquiry. The forecasted outcome is used to set a priority value for ordering the inquiry. The priority value may be determined by solving a constrained optimization problem that seeks to maximize an objective function, such as maximizing an agent's productivity to produce sales or to minimize inbound call attrition. A modeling module generates models that forecast inquiry outcomes based on a history and inquiry information. Statistical analysis such as regression analysis determines the model with the outcome related to the nature of the inquiry. Operator wait time is regulated by forcing low priority and / or highly tolerant inbound inquiries to self service.

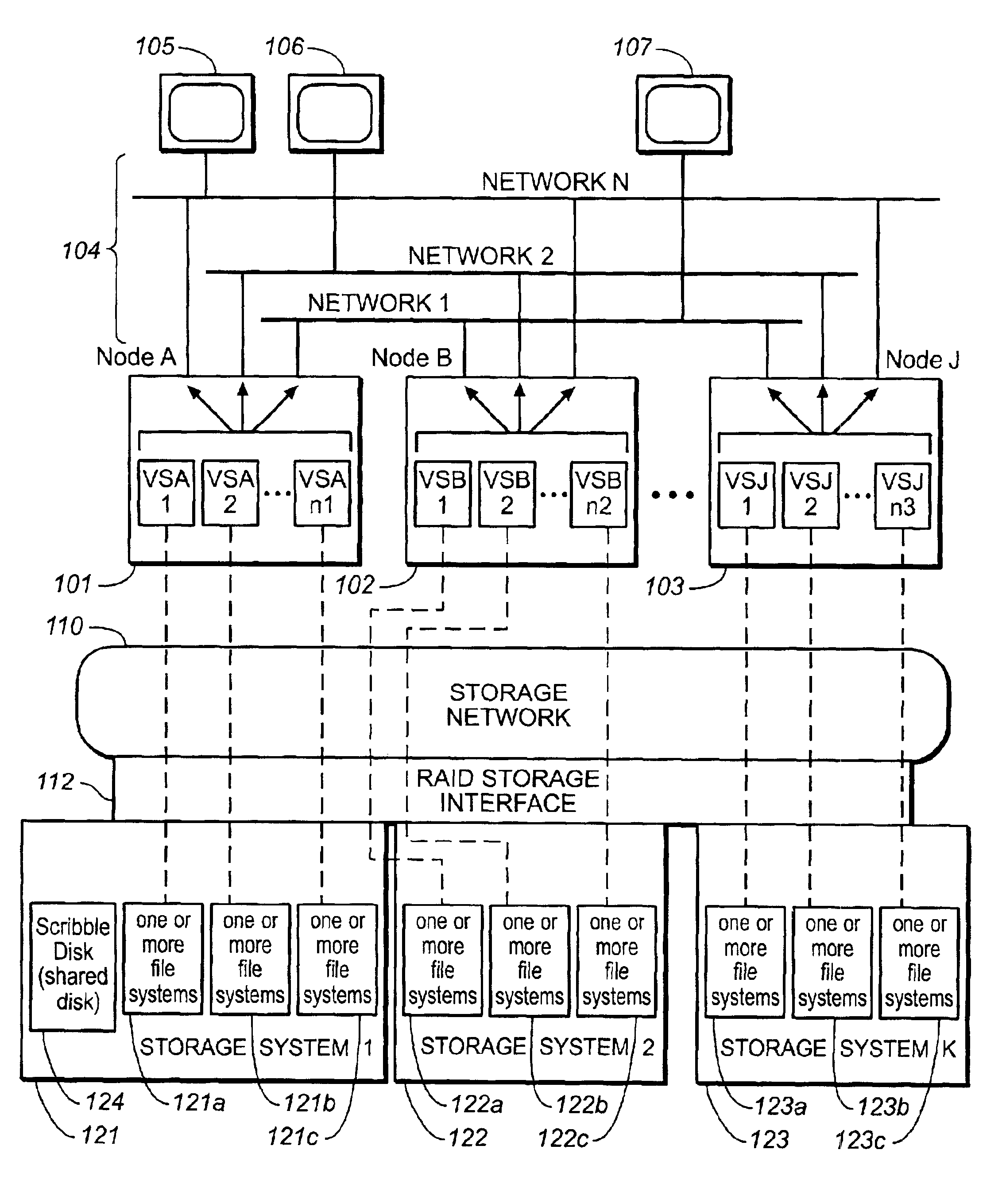

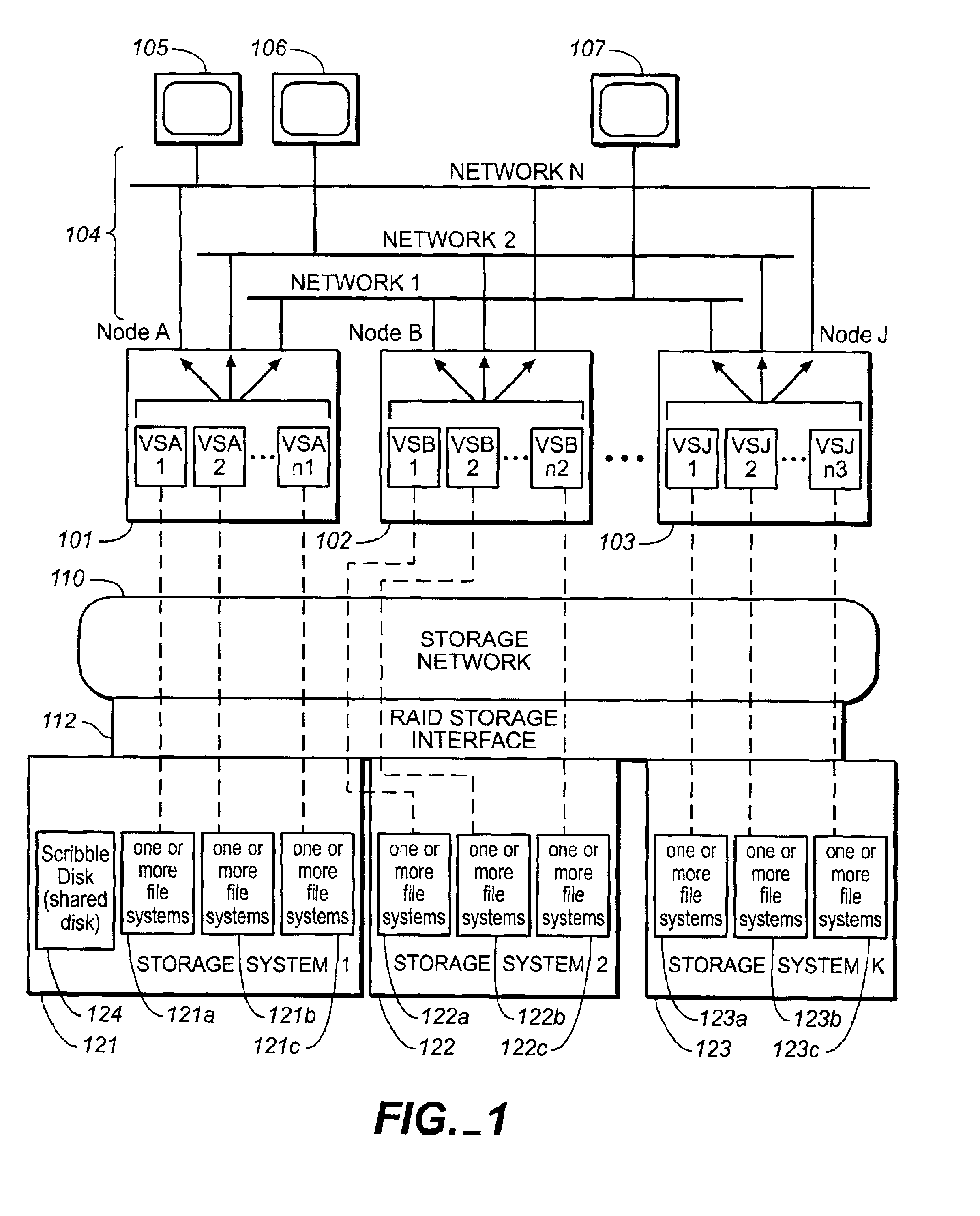

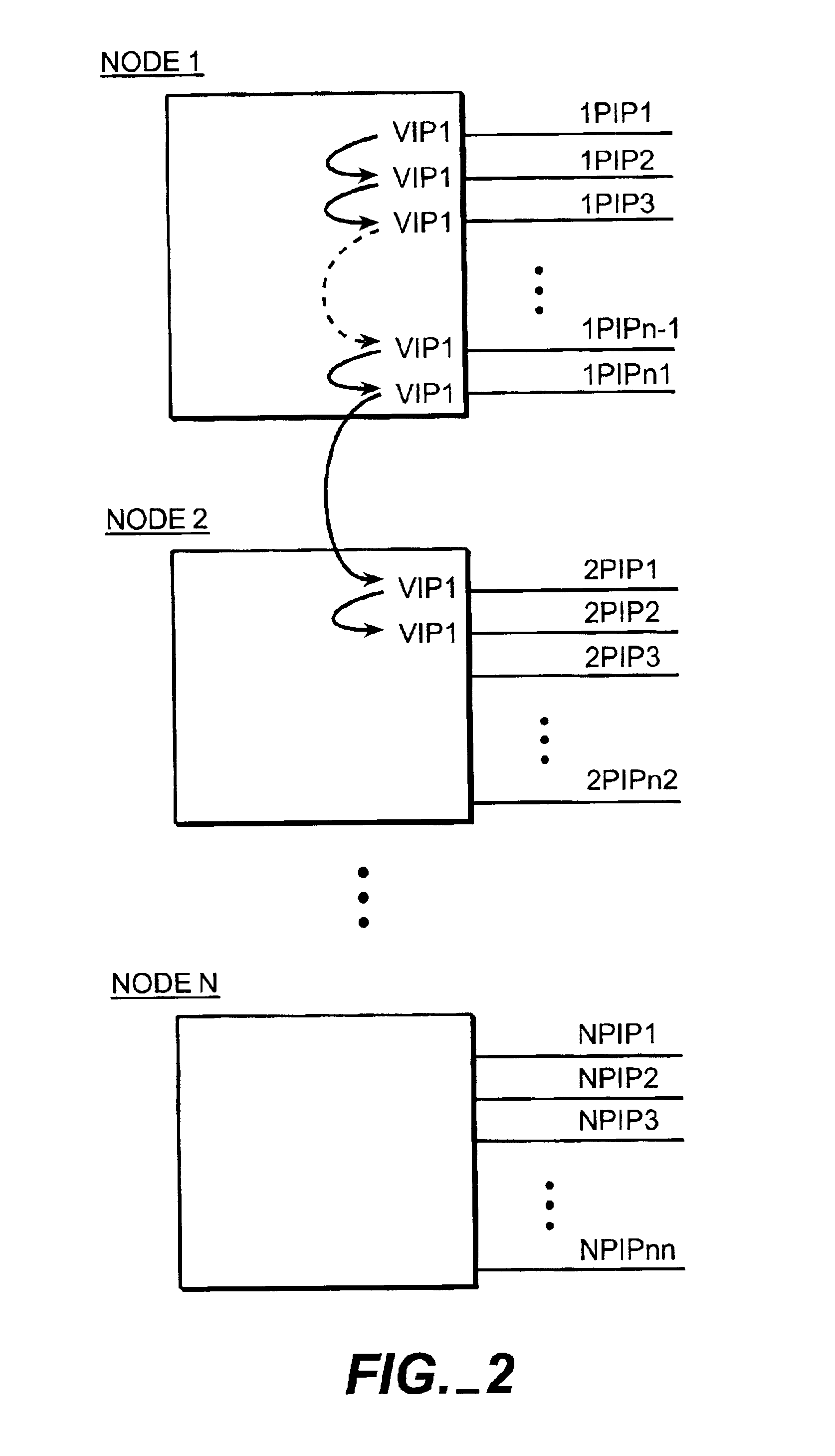

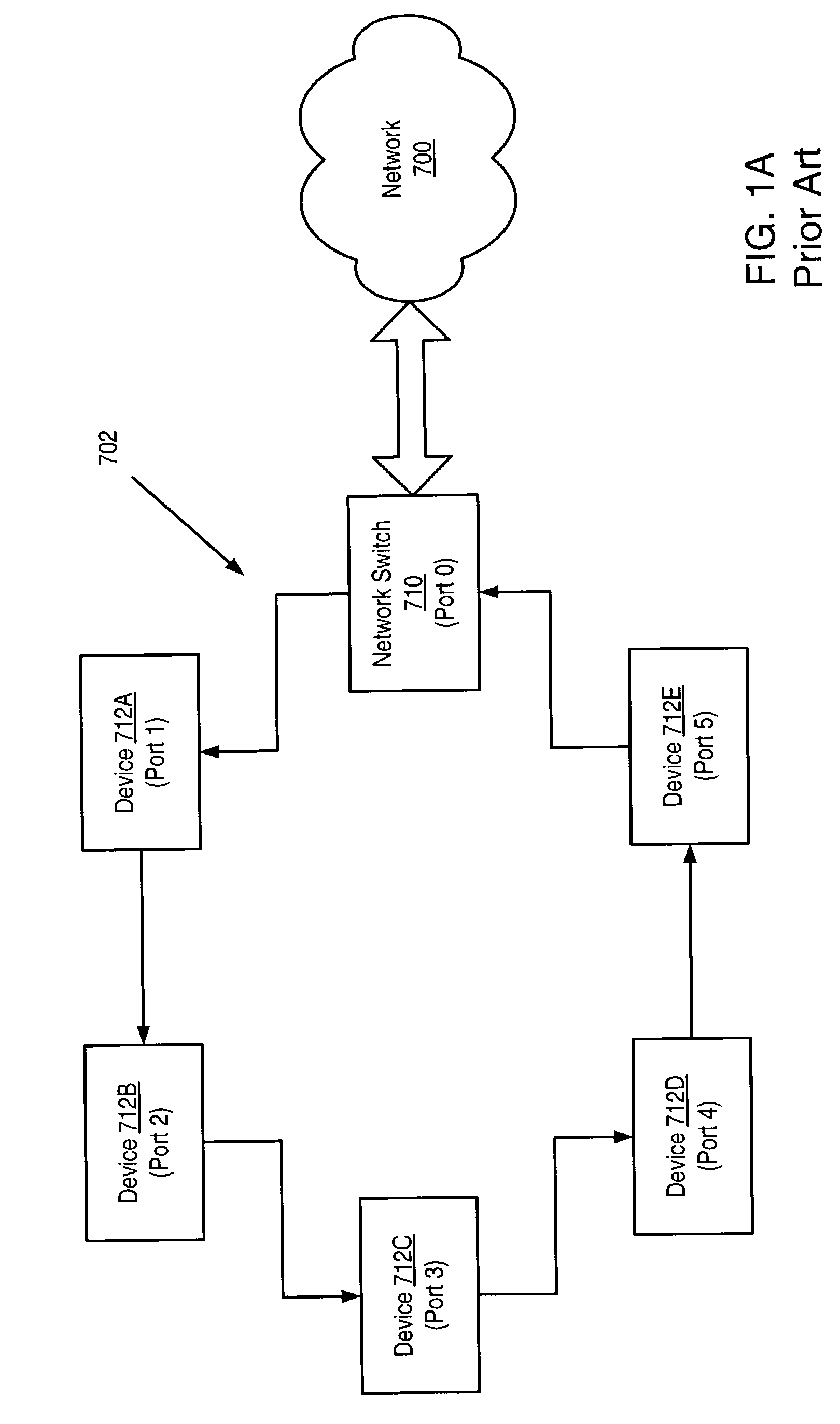

High-availability cluster virtual server system

InactiveUS6944785B2Minimize occurrenceImprove system performanceInput/output to record carriersData switching networksFailoverHigh availability

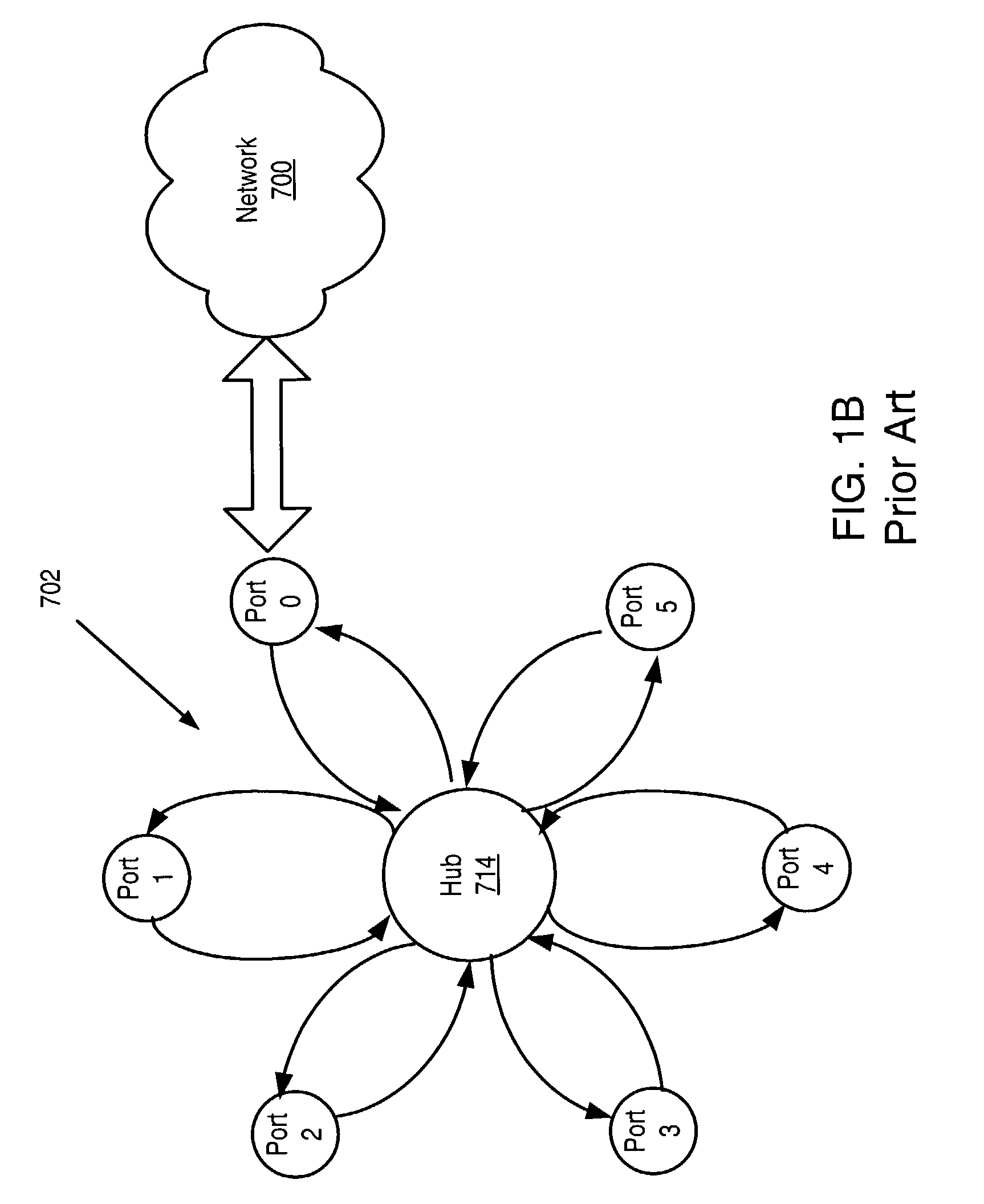

Systems and methods, including computer program products, providing high-availability in server systems. In one implementation, a server system is cluster of two or more autonomous server nodes, each running one or more virtual servers. When a node fails, its virtual servers are migrated to one or more other nodes. Connectivity between nodes and clients is based on virtual IP addresses, where each virtual server has one or more virtual IP addresses. Virtual servers can be assigned failover priorities, and, in failover, higher priority virtual servers can be migrated before lower priority ones. Load balancing can be provided by distributing virtual servers from a failed node to multiple different nodes. When a port within a node fails, the node can reassign virtual IP addresses from the failed port to other ports on the node until no good ports remain and only then migrate virtual servers to another node or nodes.

Owner:NETWORK APPLIANCE INC

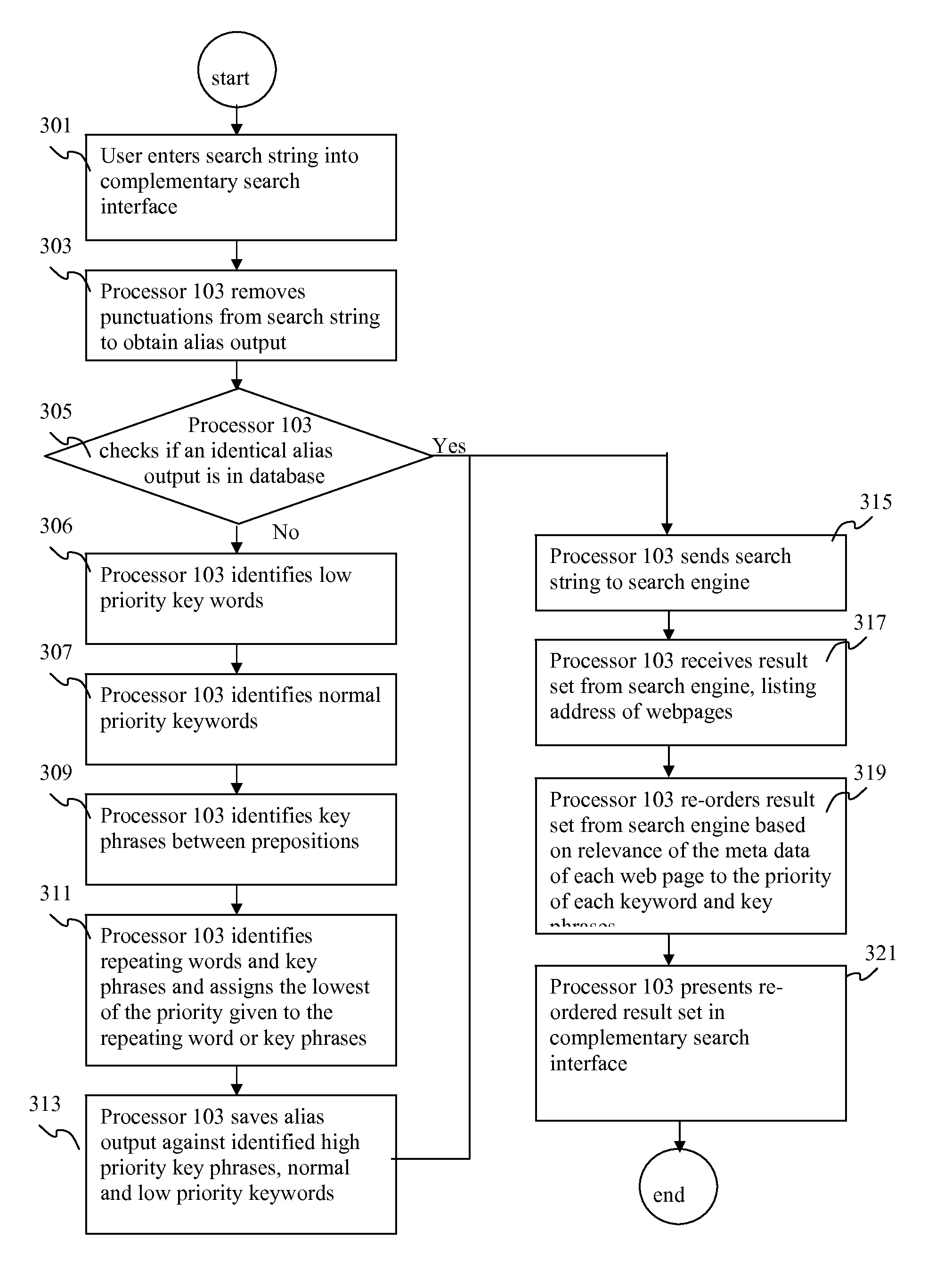

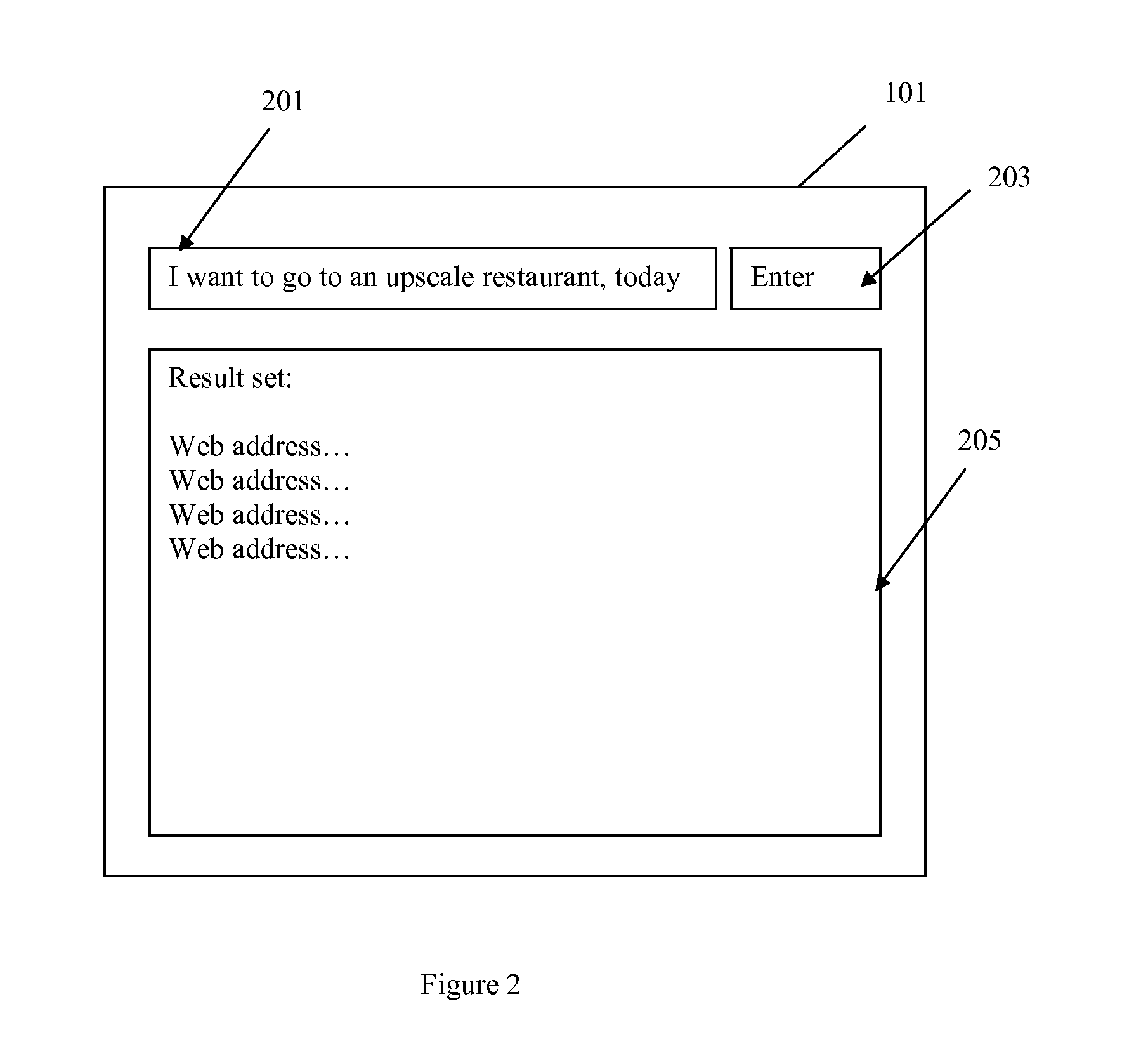

Method Of Sorting The Result Set Of A Search Engine

InactiveUS20110161309A1Efficient use ofDigital data information retrievalDigital data processing detailsResult setAuxiliary verb

A method is disclosed wherein the webpages listed in the result set of a search engine is sorted according to the relevance of the webpages to a list of prioritised search terms. Search terms which are phrases that are delimited by prepositions are considered search terms with high priority. Search terms which nouns are set to high priority. Search terms which are adjectives, verbs, auxiliary verbs, articles, conjunctions, pronouns and prepositions are set to low priority.

Owner:LX1 TECH

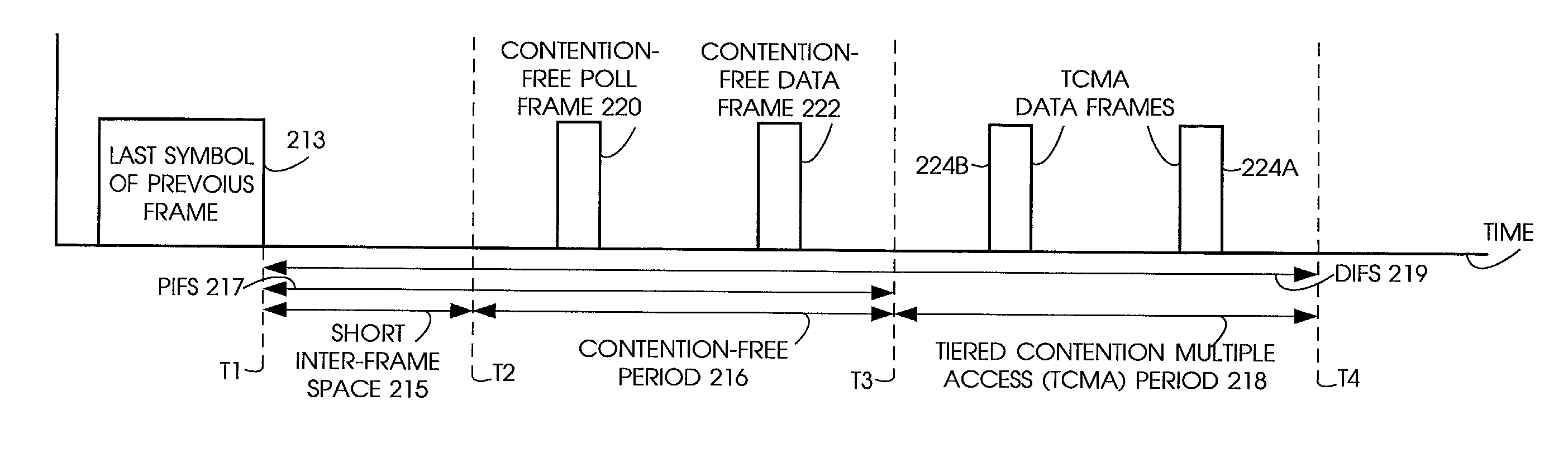

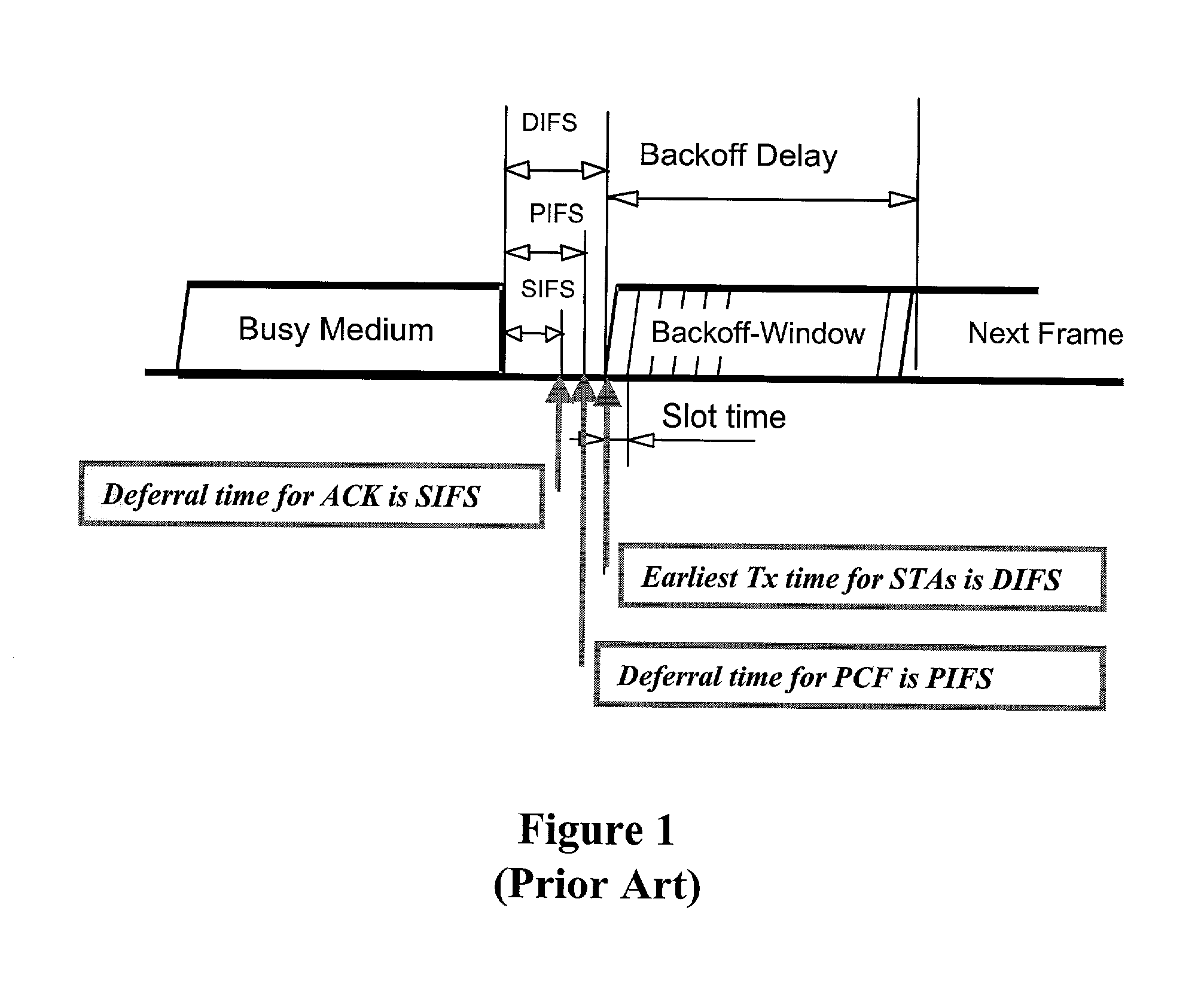

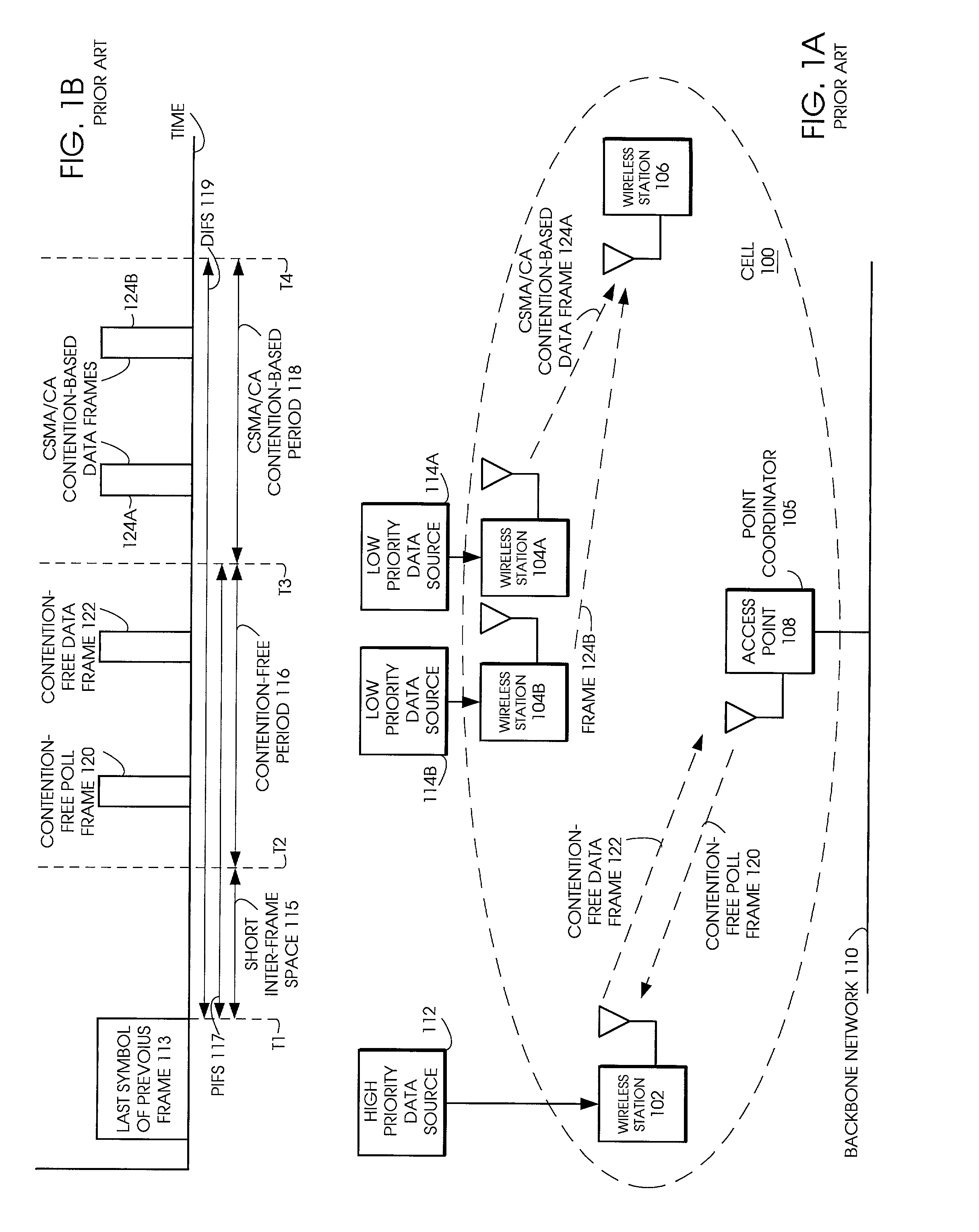

Tiered contention multiple access (TCMA): a method for priority-based shared channel access

ActiveUS7095754B2Minimizing chanceLower latencySynchronisation arrangementNetwork traffic/resource managementService-level agreementIdle time

Quality of Service (QoS) support is provided by means of a Tiered Contention Multiple Access (TCMA) distributed medium access protocol that schedules transmission of different types of traffic based on their service quality specifications. In one embodiment, a wireless station is supplied with data from a source having a lower QoS priority QoS(A), such as file transfer data. Another wireless station is supplied with data from a source having a higher QoS priority QoS(B), such as voice and video data. Each wireless station can determine the urgency class of its pending packets according to a scheduling algorithm. For example file transfer data is assigned lower urgency class and voice and video data is assigned higher urgency class. There are several urgency classes which indicate the desired ordering. Pending packets in a given urgency class are transmitted before transmitting packets of a lower urgency class by relying on class-differentiated urgency arbitration times (UATs), which are the idle time intervals required before the random backoff counter is decreased. In another embodiment packets are reclassified in real time with a scheduling algorithm that adjusts the class assigned to packets based on observed performance parameters and according to negotiated QoS-based requirements. Further, for packets assigned the same arbitration time, additional differentiation into more urgency classes is achieved in terms of the contention resolution mechanism employed, thus yielding hybrid packet prioritization methods. An Enhanced DCF Parameter Set is contained in a control packet sent by the AP to the associated stations, which contains class differentiated parameter values necessary to support the TCMA. These parameters can be changed based on different algorithms to support call admission and flow control functions and to meet the requirements of service level agreements.

Owner:AT&T INTPROP I L P

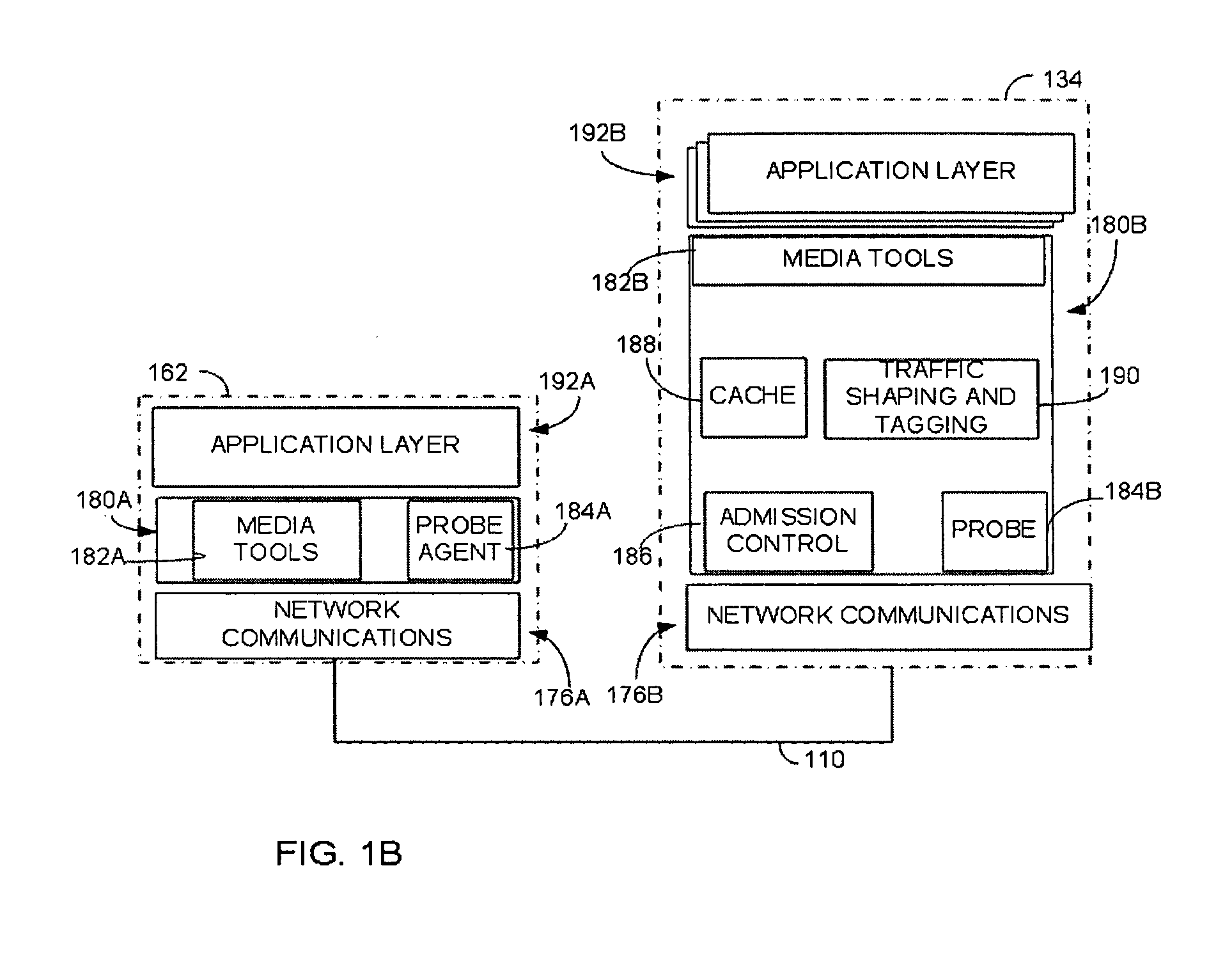

Quality of service support for A/V streams

ActiveUS20070248100A1Speed up the processLower latencyData switching by path configurationWireless commuication servicesQuality of serviceData stream

An access control mechanism in a network connecting one or more sink devices to a server providing audio / visual data (A / V) in streams. As a sink device requests access, the server measures available bandwidth to the sink device. If the measurement of available bandwidth is completed before the sink device requests a stream of audio / visual data, the measured available bandwidth is used to set transmission parameters of the data stream in accordance with a Quality of Service (QoS) policy. If the measurement is not completed when the data stream is requested, the data stream is nonetheless transmitted. In this scenario, the data stream may be transmitted using parameters computed using a cached measurement of the available bandwidth to the sink device. If no cached measurement is available, the data stream is transmitted with a low priority until a measurement can be made. Once the measurement is available, the transmission parameters of the data stream are re-set. With this access control mechanism, A / V streams may be provided with low latency but with transmission parameters accurately set in accordance with the QoS policy.

Owner:MICROSOFT TECH LICENSING LLC

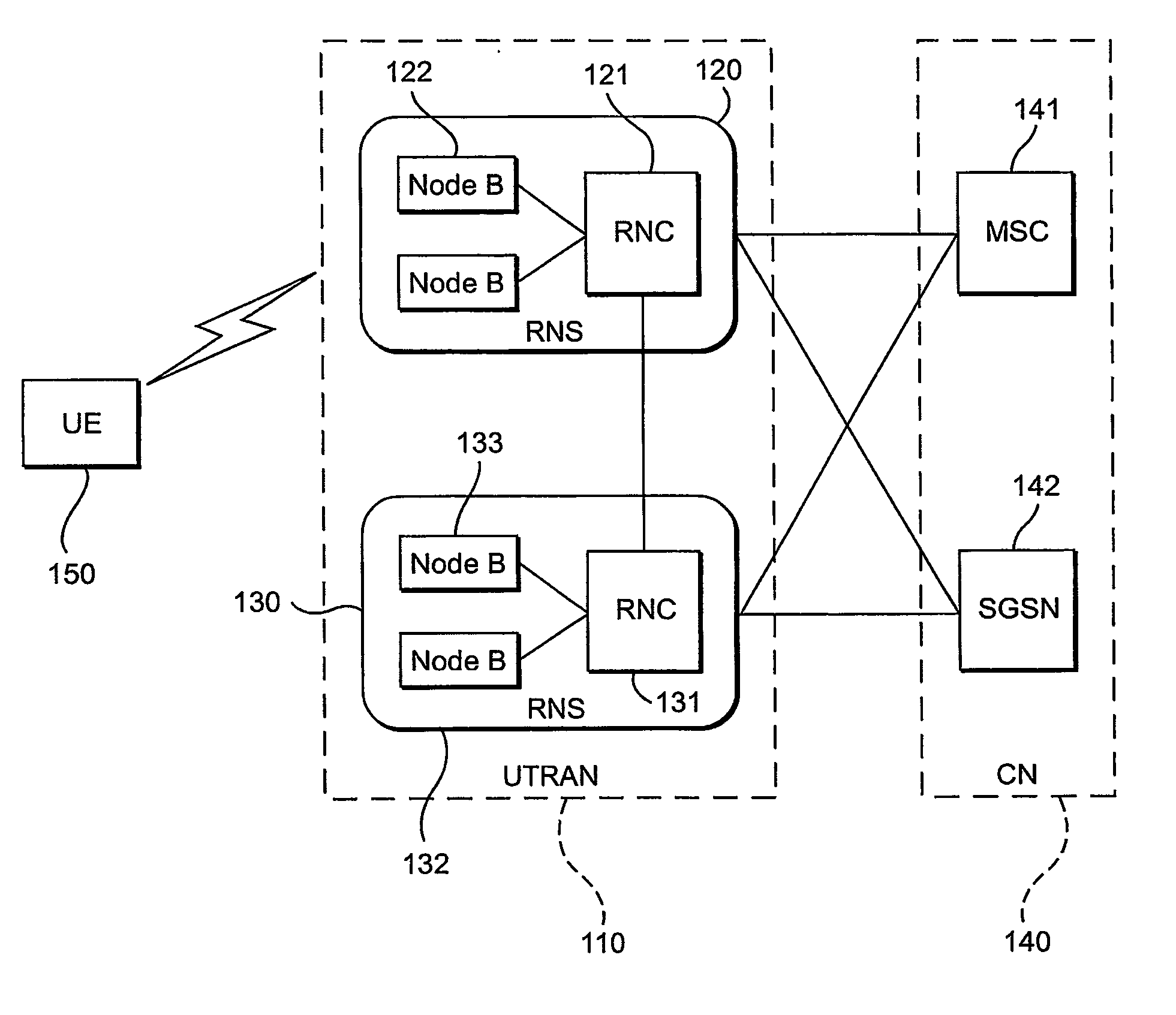

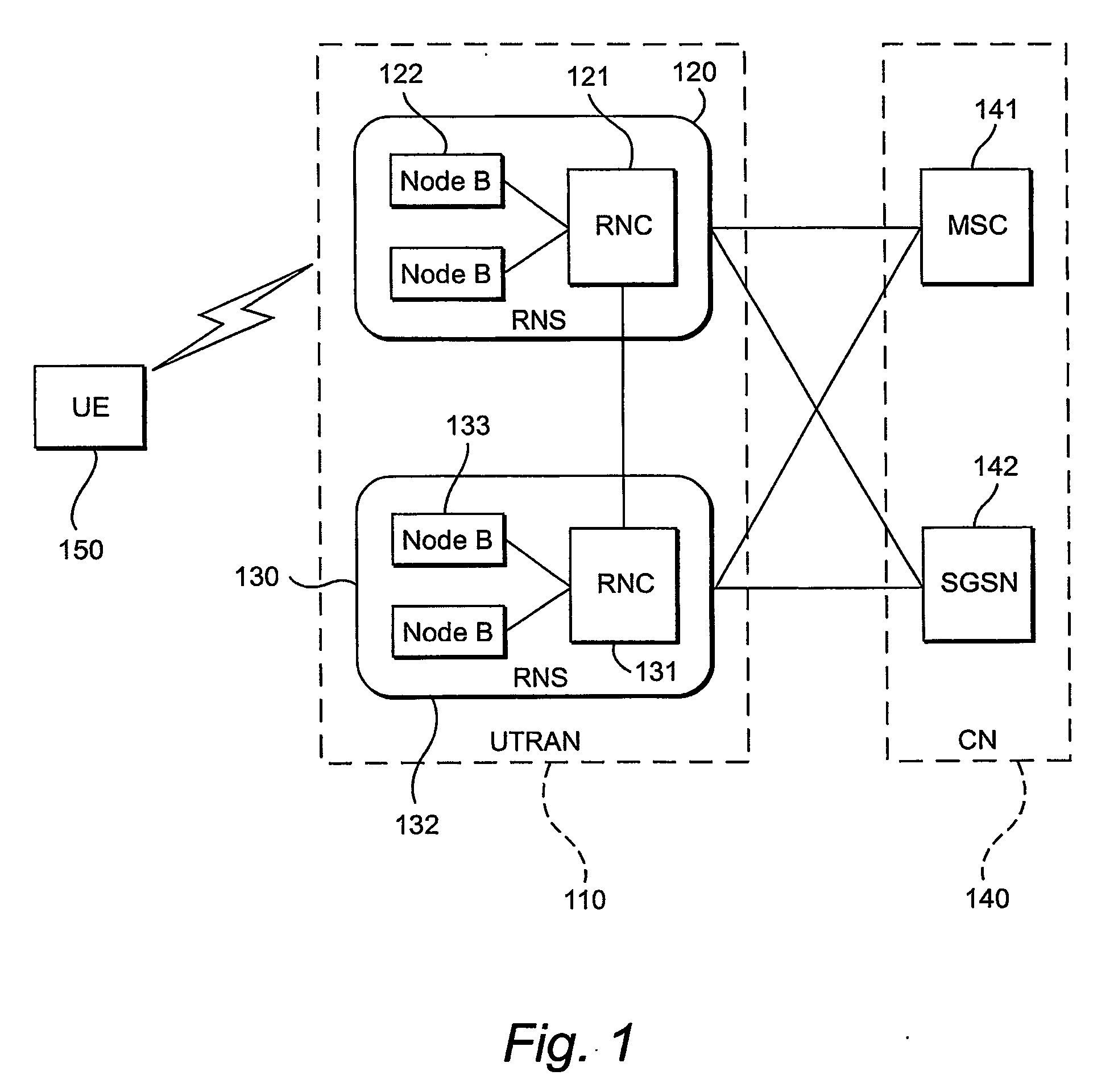

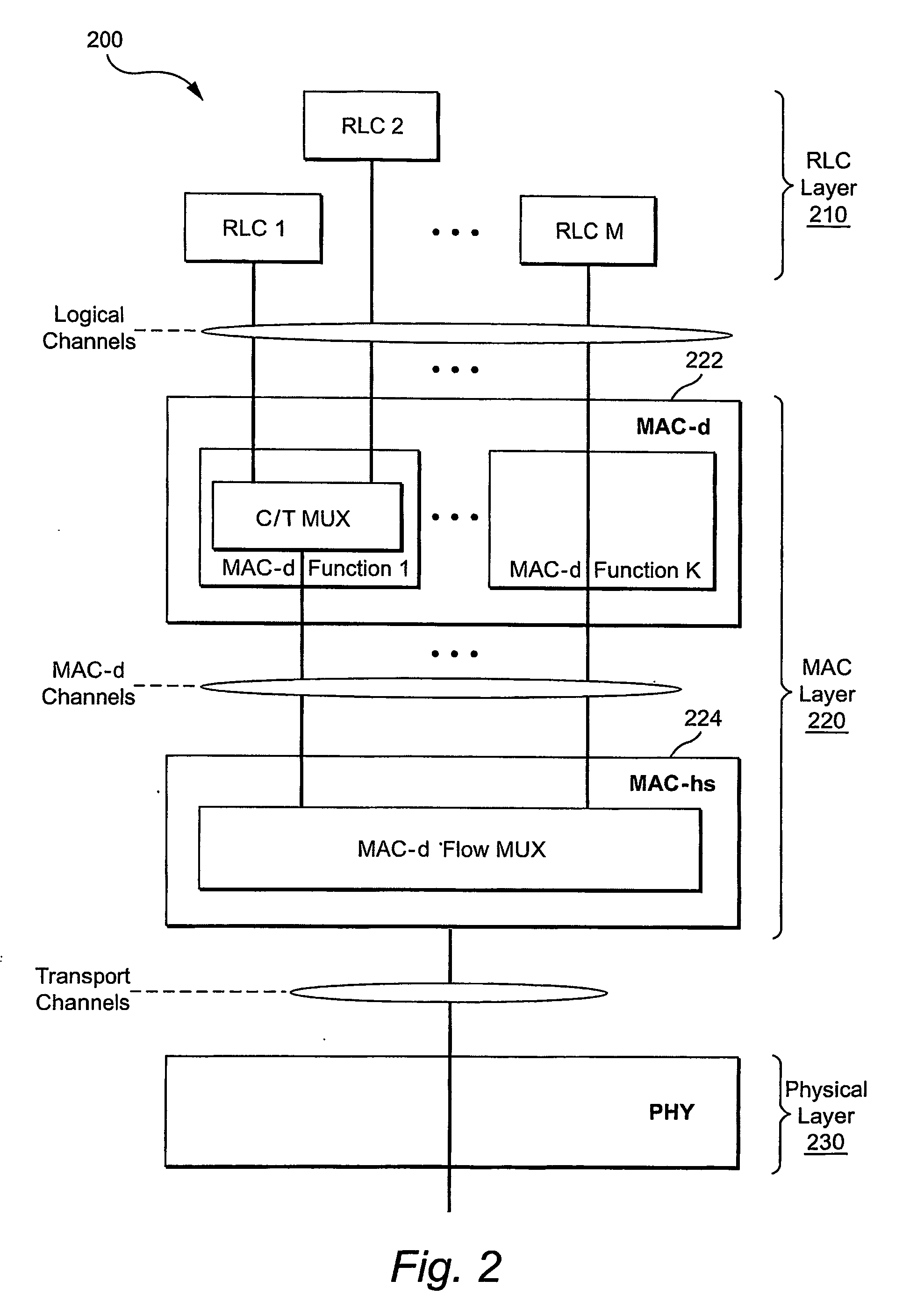

Medium access control priority-based scheduling for data units in a data flow

InactiveUS20070081513A1Reduce delaysImprove efficiencyError prevention/detection by using return channelTransmission systemsData streamRadio networks

A data communication having at least one data flow is established over a wireless interface between a radio network and a user equipment node (UE). A medium access control (MAC) layer located in a radio network node receives data units from a higher radio link control (RLC) layer located in another radio network node. Some or all of a header of a RLC data units associated with the one data flow is analyzed at the MAC layer. Based on that analysis, the MAC layer determines a priority of the data unit relative to other data units associated with the one data flow. The MAC layer schedules transmission of higher priority data units associated with the one data flow before lower priority data units associated with the one data flow. The priority determination does not require extra priority flags or signaling.

Owner:TELEFON AB LM ERICSSON (PUBL)

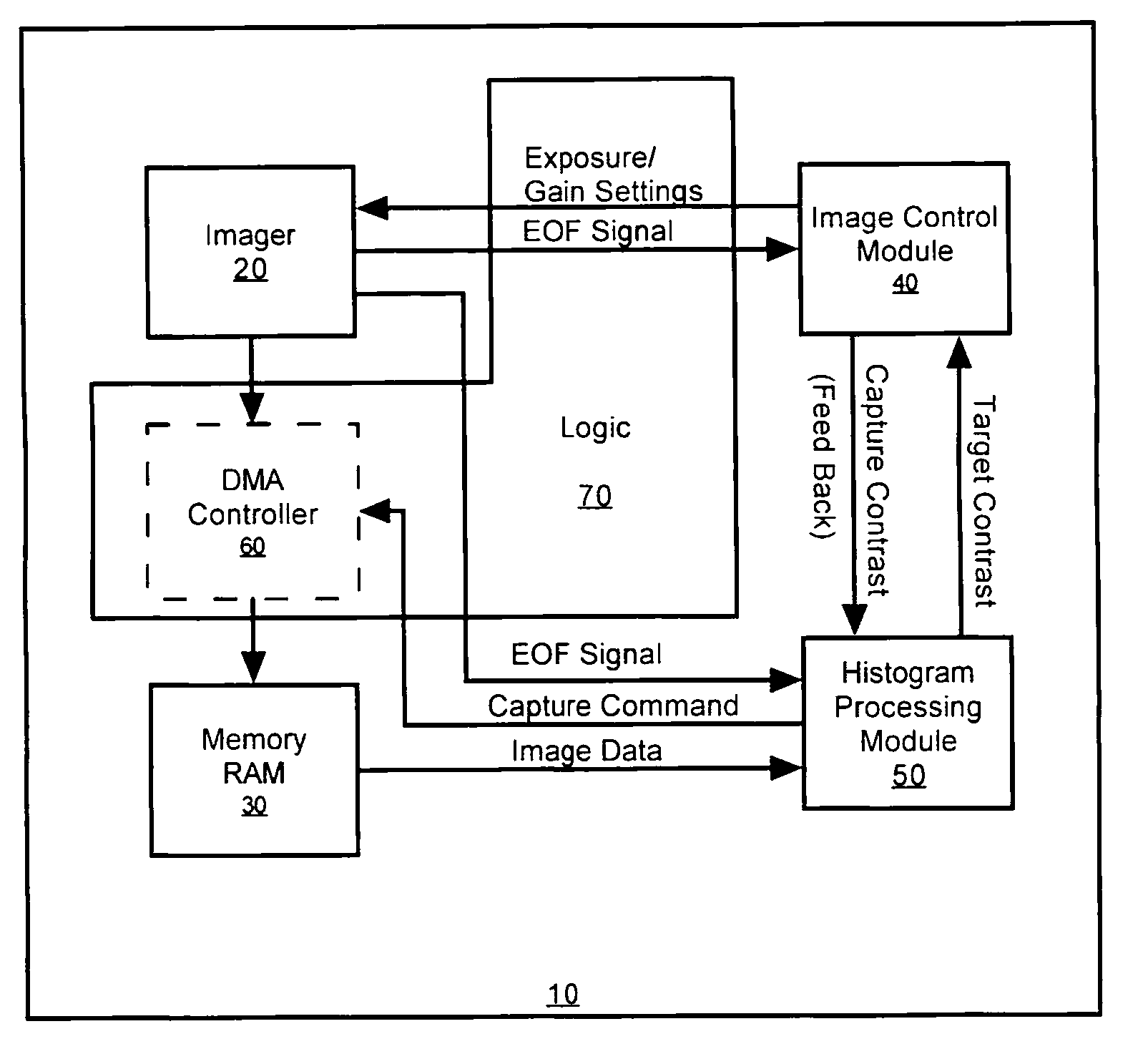

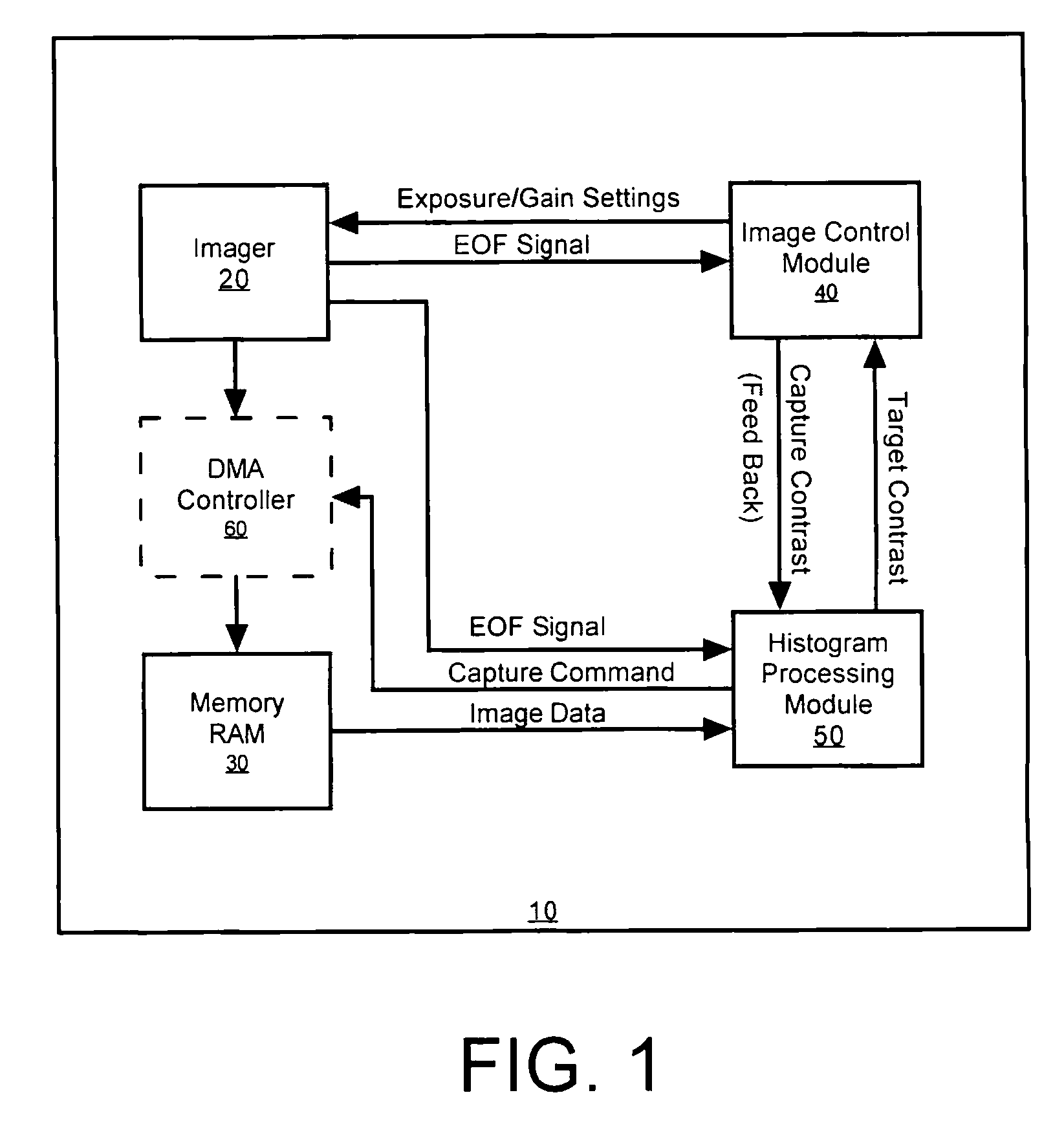

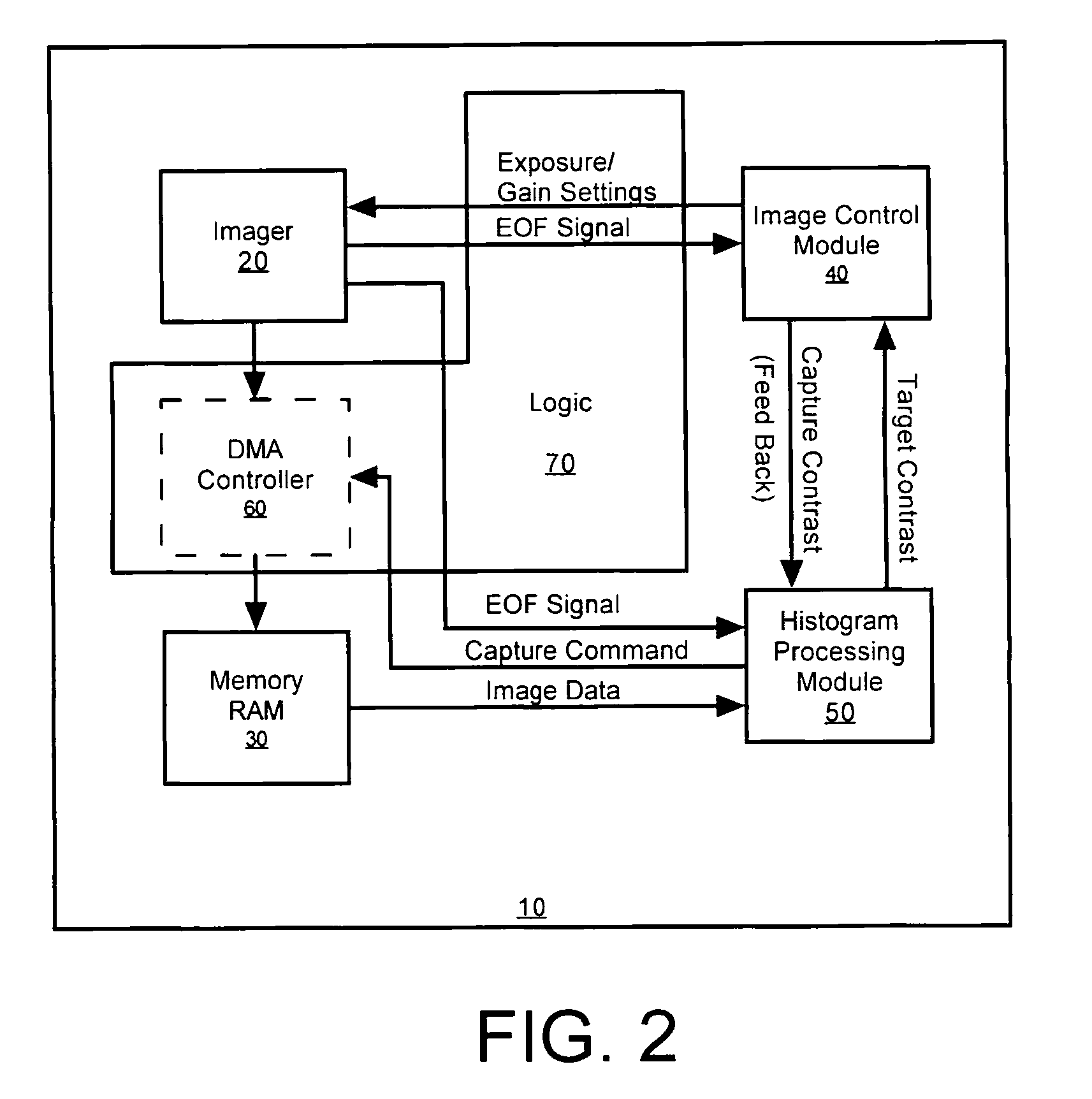

Methods and apparatus for automatic exposure control

A multi-dimensional imaging device and method for automated exposure control that implement two distinct modules to control the exposure and gain settings in the imager so that processing can occur in a multi-tasking single CPU environment. The first module, referred to herein as the imager control module, controls the exposure and gain settings in the imager. The first module is typically implemented in a high priority routine, such as an interrupt service routine, to insure that module is executed on every captured frame. The second module, referred to herein as the histogram processing module, calculates a target contrast (the product of the targeted exposure and gain settings) based on feedback data from the first module and image data from memory. The second module is typically implemented in a low priority routine, such as a task level routine, to allow for the routine to be executed systematically in accordance with priority.

Owner:HAND HELD PRODS

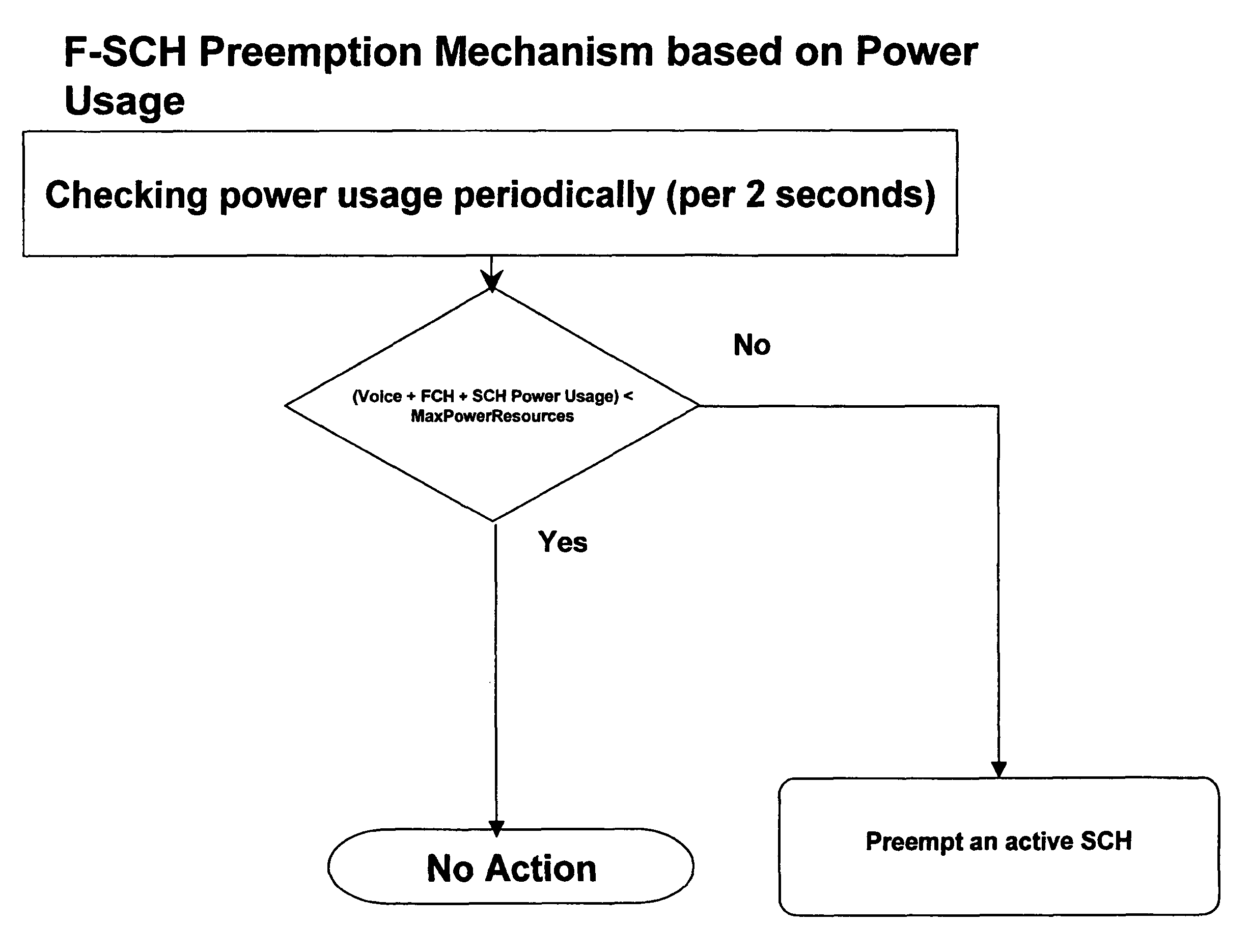

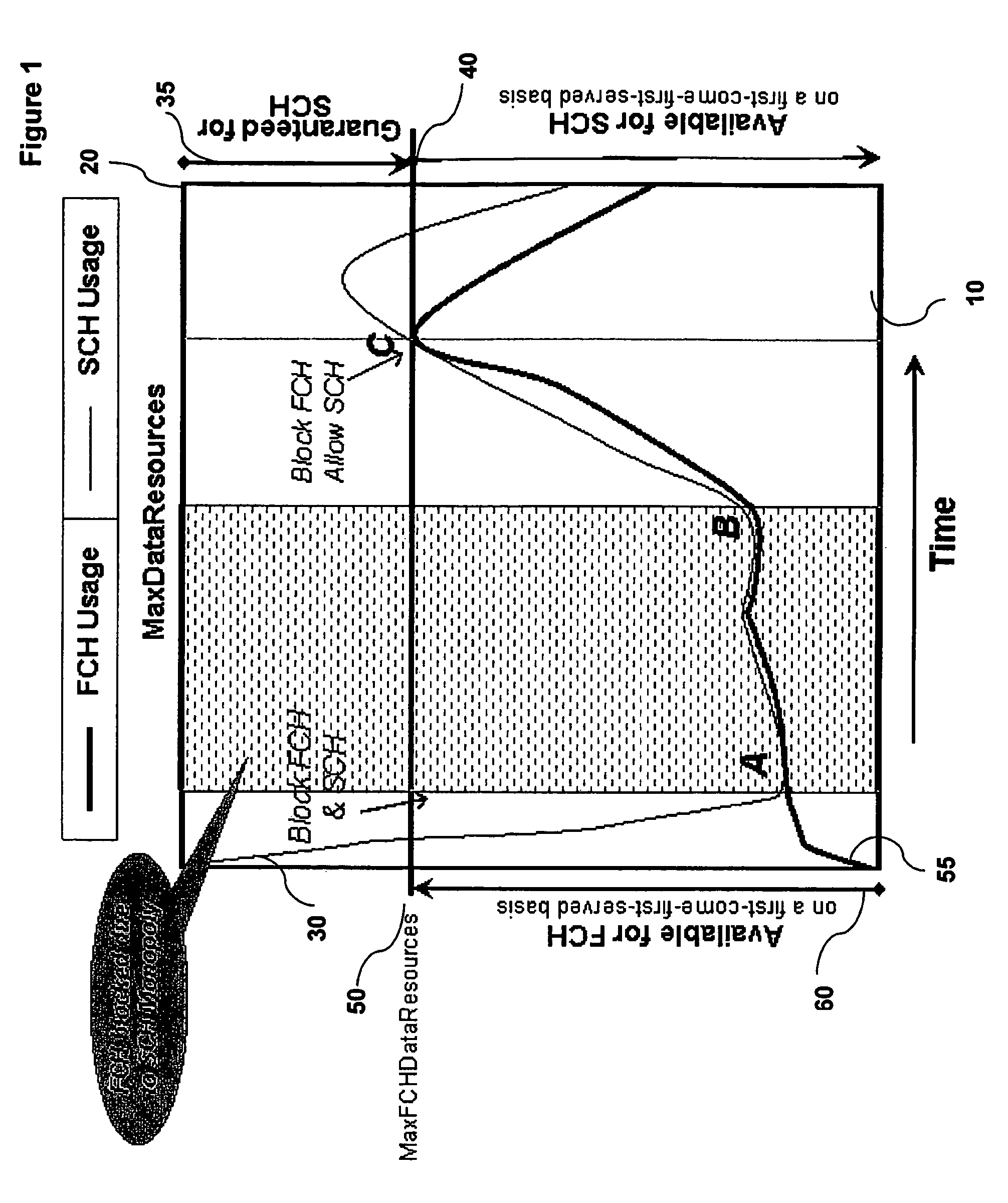

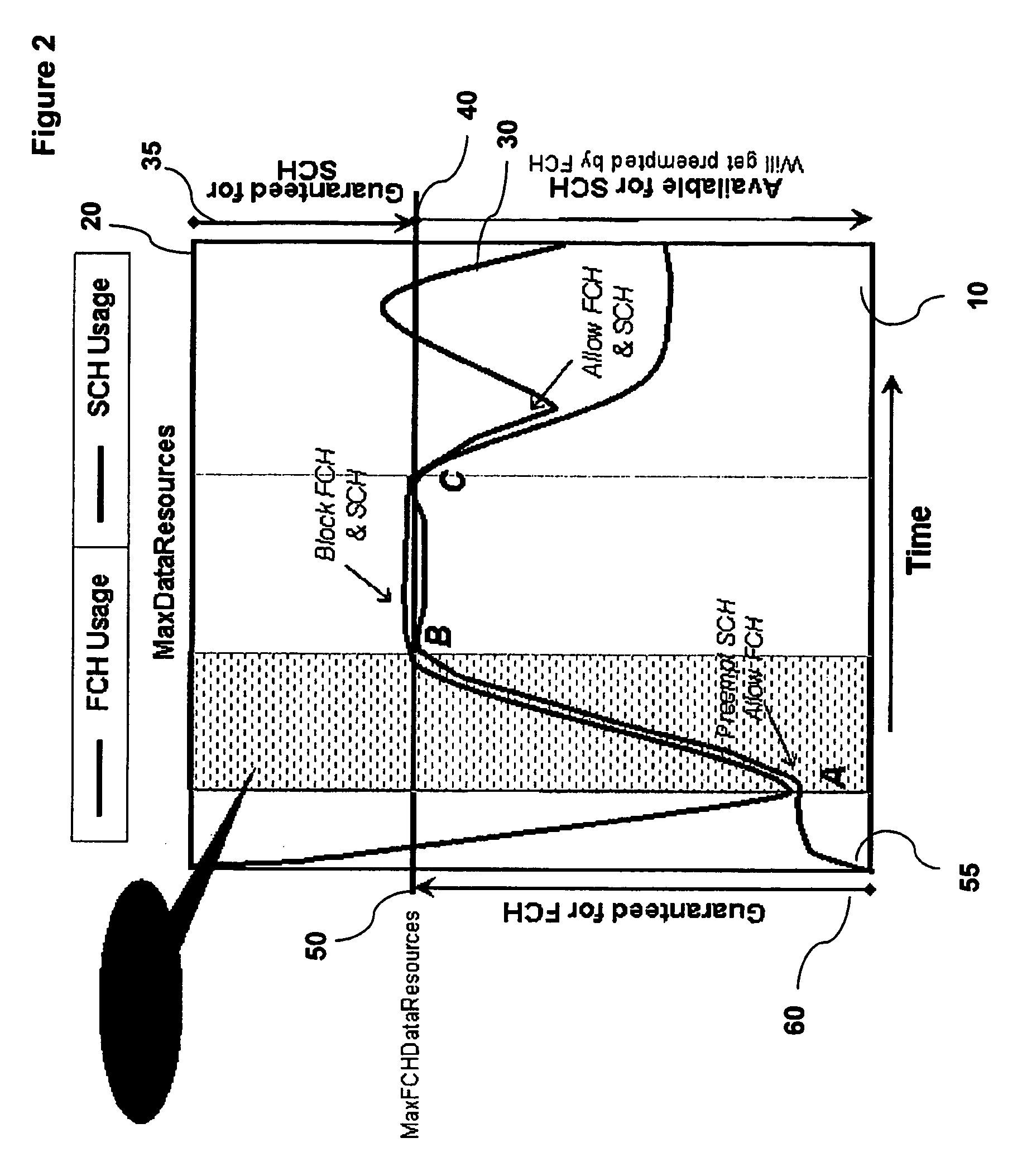

Method and system of managing wireless resources

ActiveUS8116781B2Reducing call blockingMore accessCode division multiplexWireless commuication servicesPriority callCall blocking rate

The invention provides for the management of wireless resources, which can reduce call blocking by allowing high priority services, under suitable conditions, to use resources allocated to low priority services. Thus high priority services can pre-empt the usage of wireless resources by low priority services. This has the advantage of reducing call blocking for high priority calls, while permitting low priority calls to have more access to radio resources than conventional systems with the same call blocking rate. Thus a base station can implement a preemption mechanism that would reclaim Walsh Code and Forward Power resources from an active Supplemental Channel (SCH) burst in order to accommodate incoming Fundamental Channel (FCH) requests.

Owner:MICROSOFT TECH LICENSING LLC

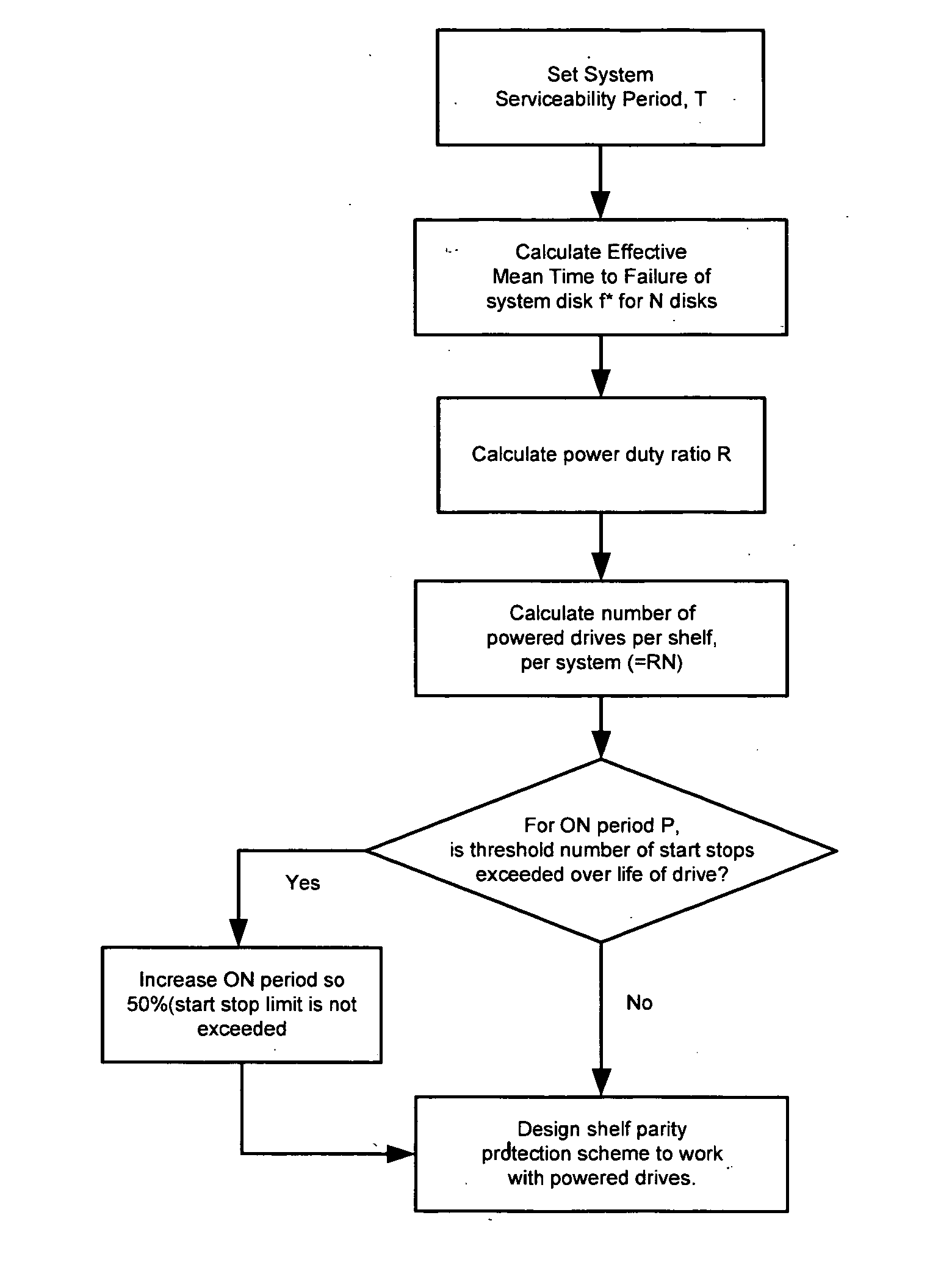

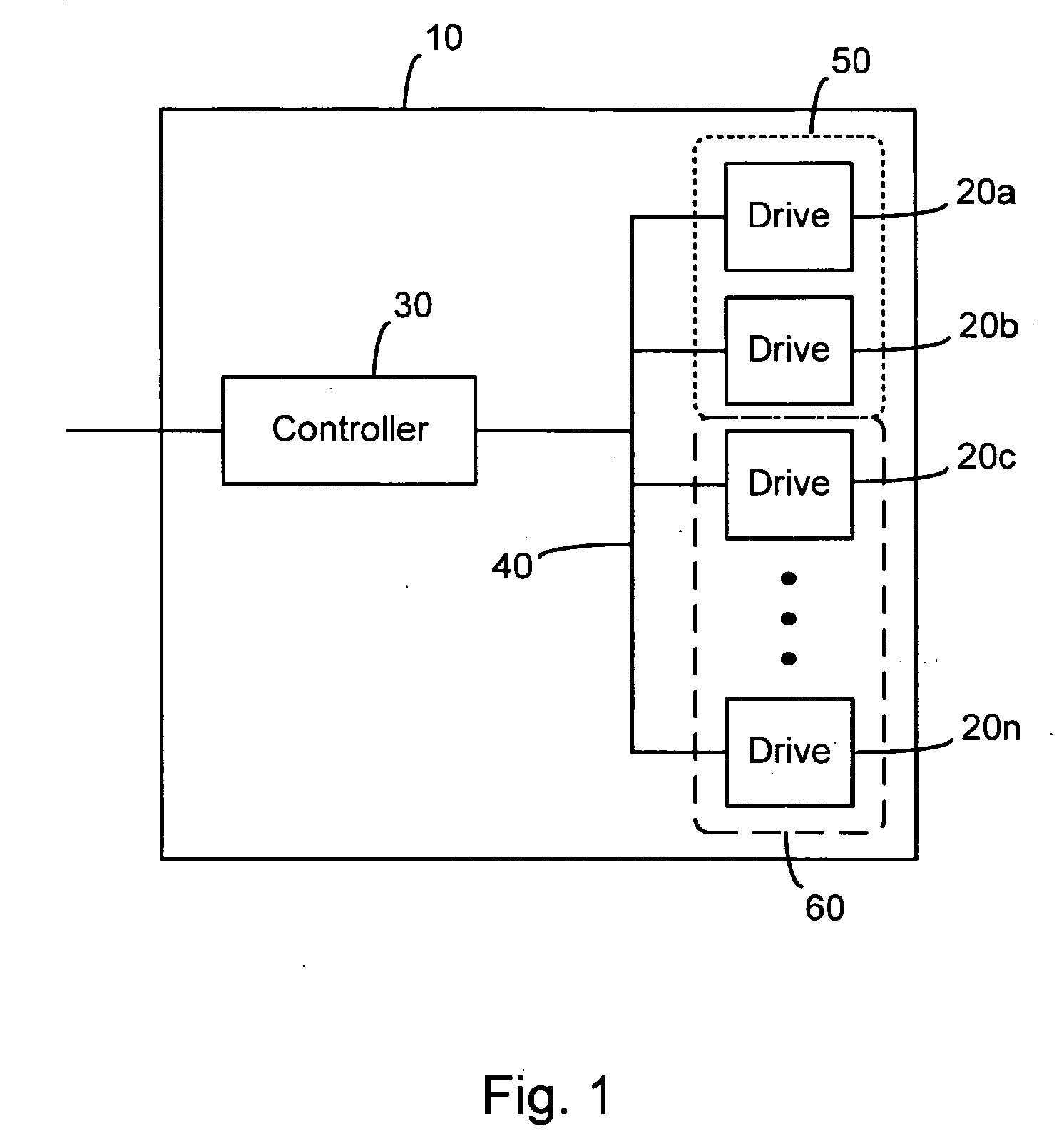

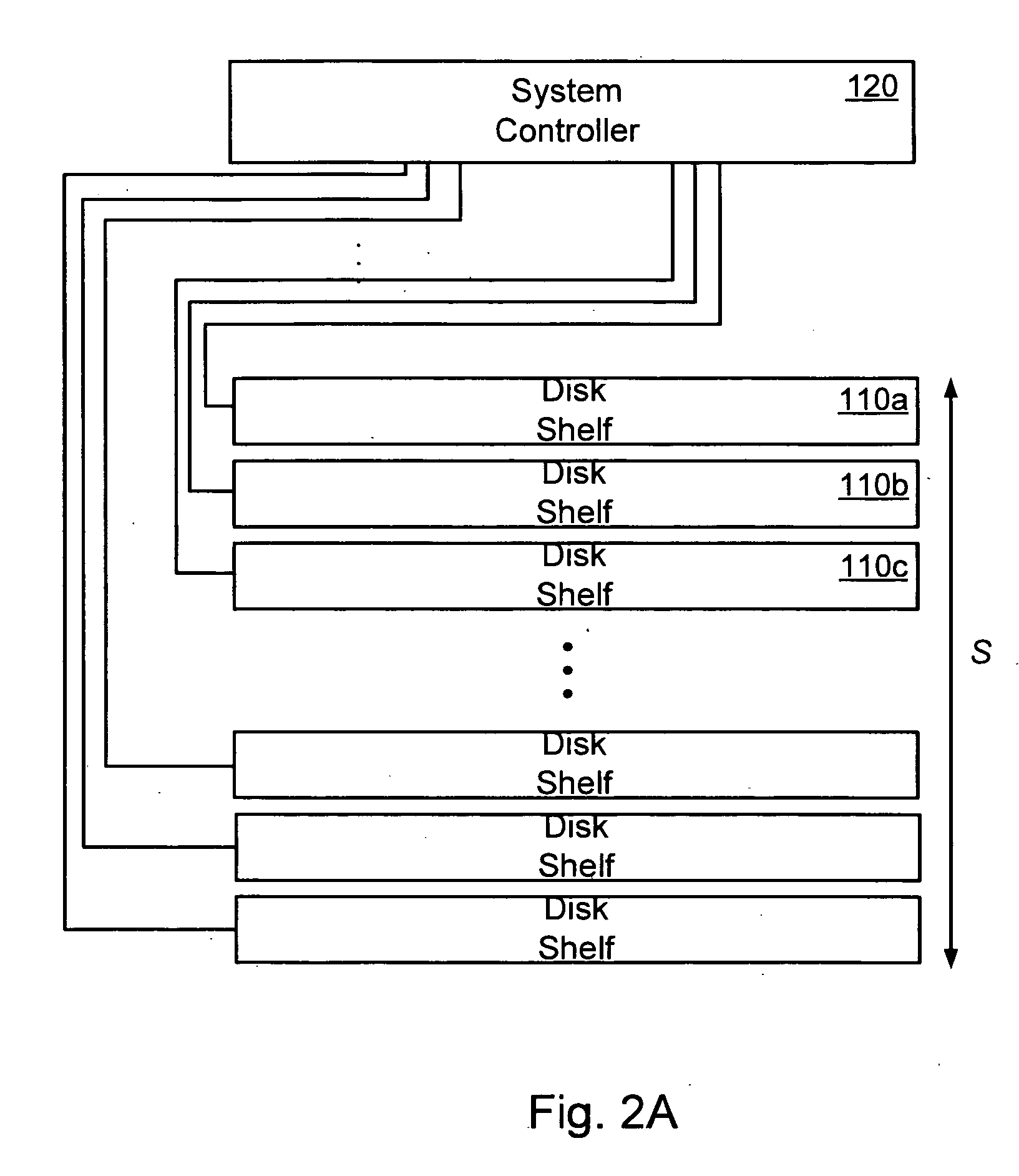

Method and apparatus for power-efficient high-capacity scalable storage system

InactiveUS20050210304A1Energy efficient ICTVolume/mass flow measurementPower efficientLower priority

A method for managing power consumption among a plurality of storage devices is disclosed. A system and a computer program product for managing power consumption among a plurality of storage devices are also disclosed. All the storage devices from among the plurality of storage devices are not powered-on at the same time. A request is received for powering on a storage device. A priority level for the request is determined, and a future power consumption (FPC) of the plurality of storage devices is predicted. The FPC is compared with a threshold. If the threshold is exceeded, a signal is sent to power off a powered-on device. The signal is sent only when the powered-on device is being used for a request with a lower priority than the determined priority. Once, the powered-on device is powered off, the requested storage device is powered on.

Owner:RPX CORP +1

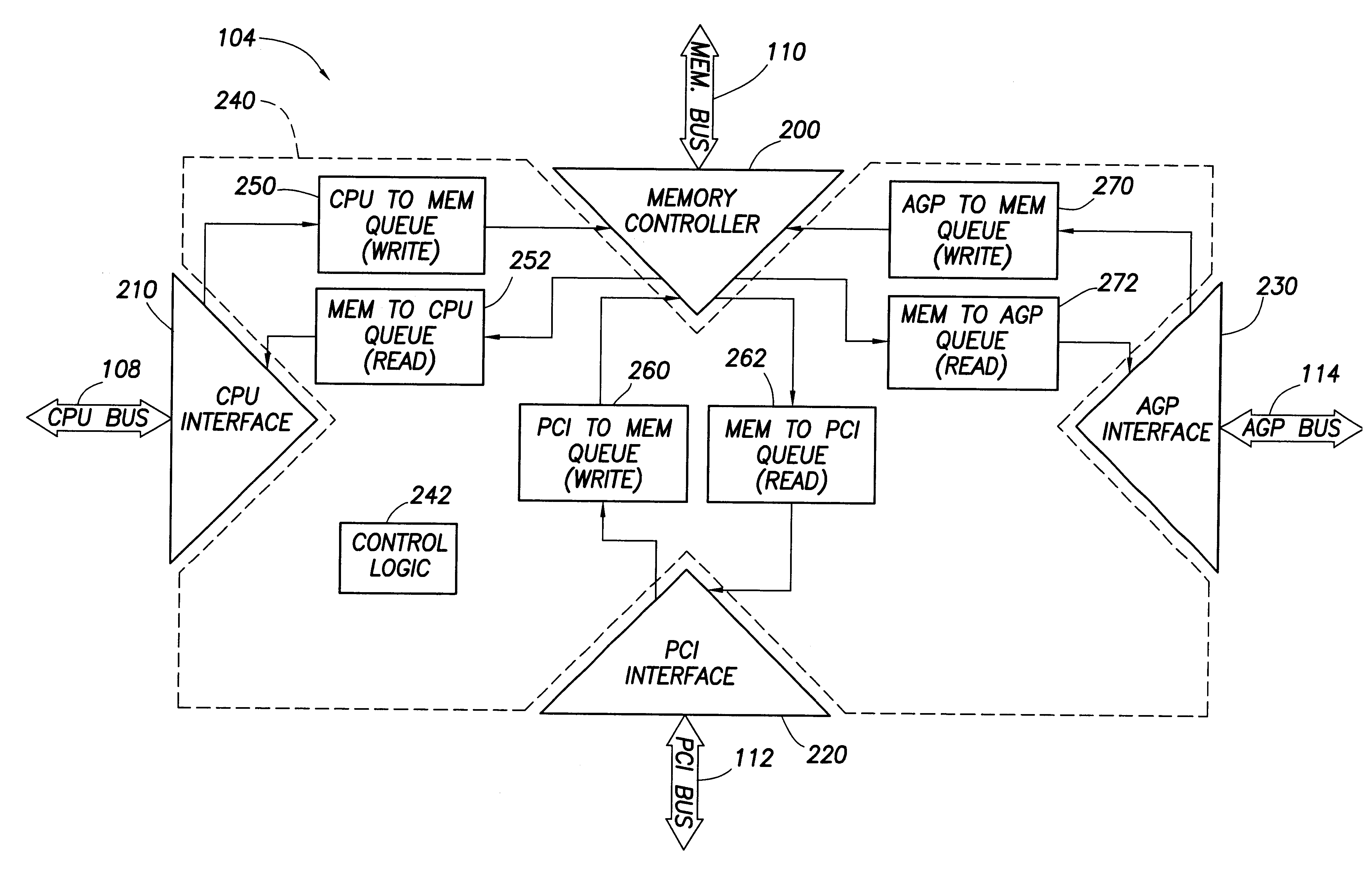

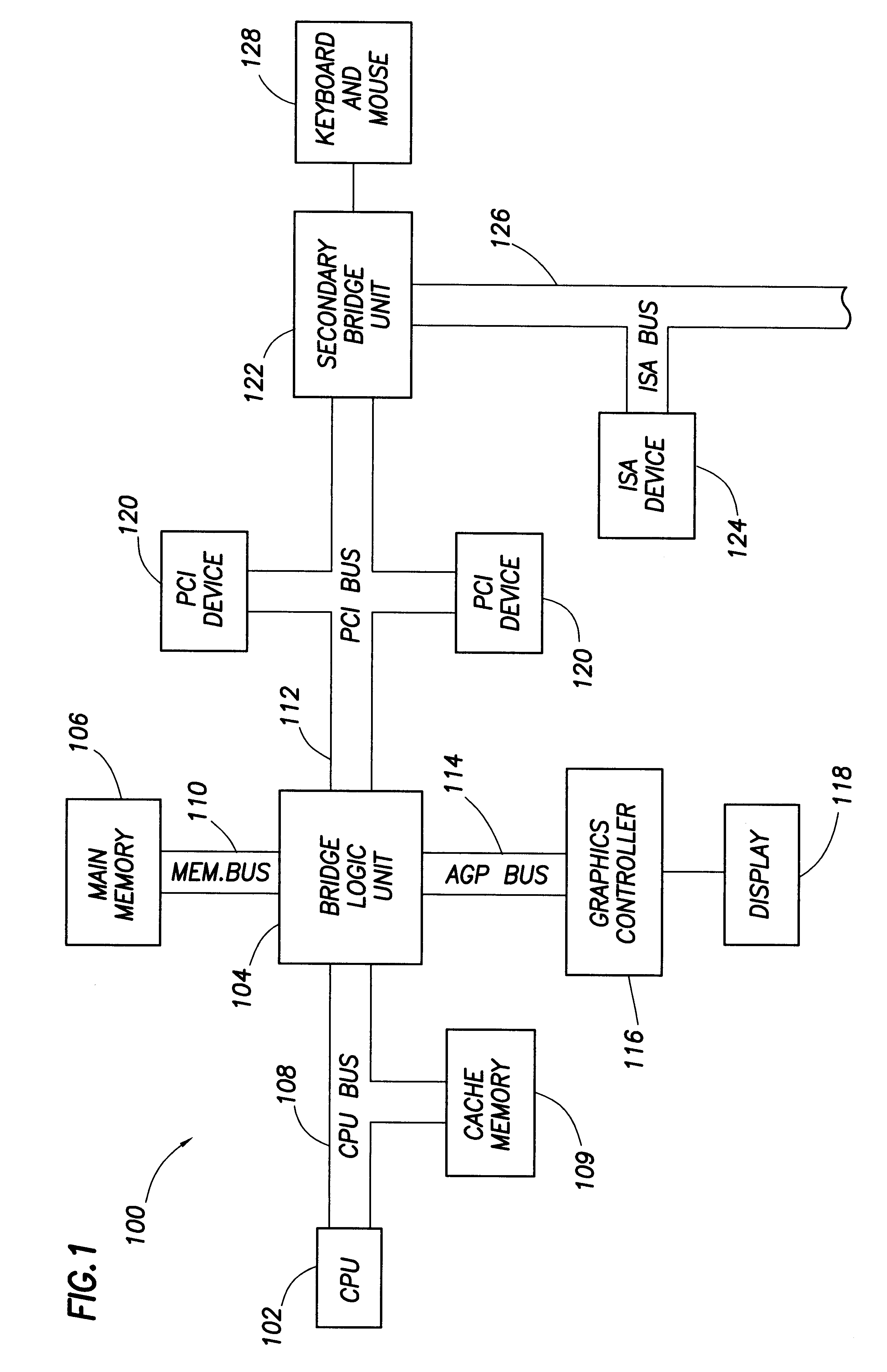

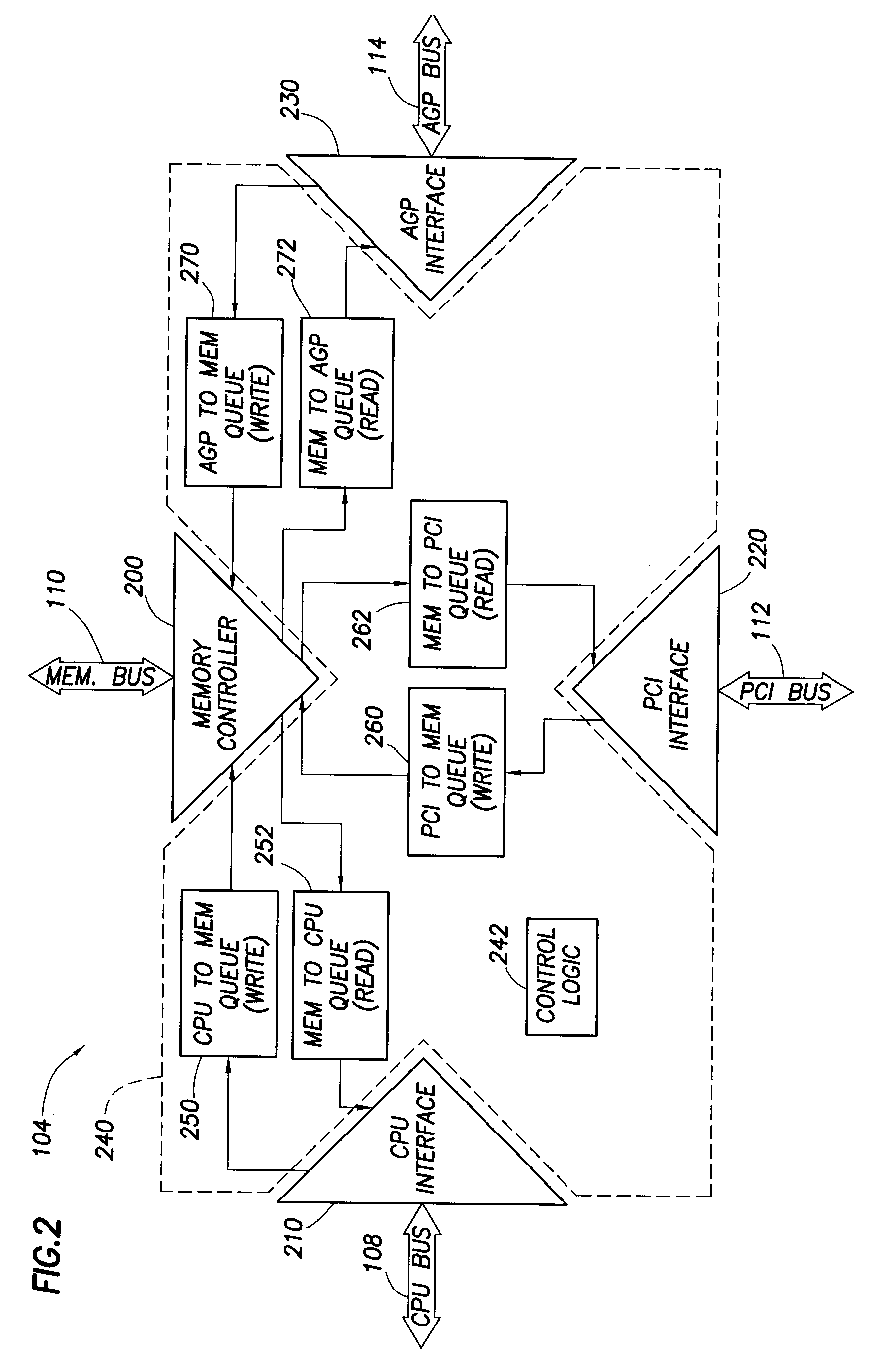

Computer system with adaptive memory arbitration scheme

InactiveUS6286083B1Raise priorityAvoid accessMemory systemsInput/output processes for data processingOut of memoryParallel computing

A computer system includes an adaptive memory arbiter for prioritizing memory access requests, including a self-adjusting, programmable request-priority ranking system. The memory arbiter adapts during every arbitration cycle, reducing the priority of any request which wins memory arbitration. Thus, a memory request initially holding a low priority ranking may gradually advance in priority until that request wins memory arbitration. Such a scheme prevents lower-priority devices from becoming "memory-starved." Because some types of memory requests (such as refresh requests and memory reads) inherently require faster memory access than other requests (such as memory writes), the adaptive memory arbiter additionally integrates a nonadjustable priority structure into the adaptive ranking system which guarantees faster service to the most urgent requests. Also, the adaptive memory arbitration scheme introduces a flexible method of adjustable priority-weighting which permits selected devices to transact a programmable number of consecutive memory accesses without those devices losing request priority.

Owner:HEWLETT PACKARD DEV CO LP

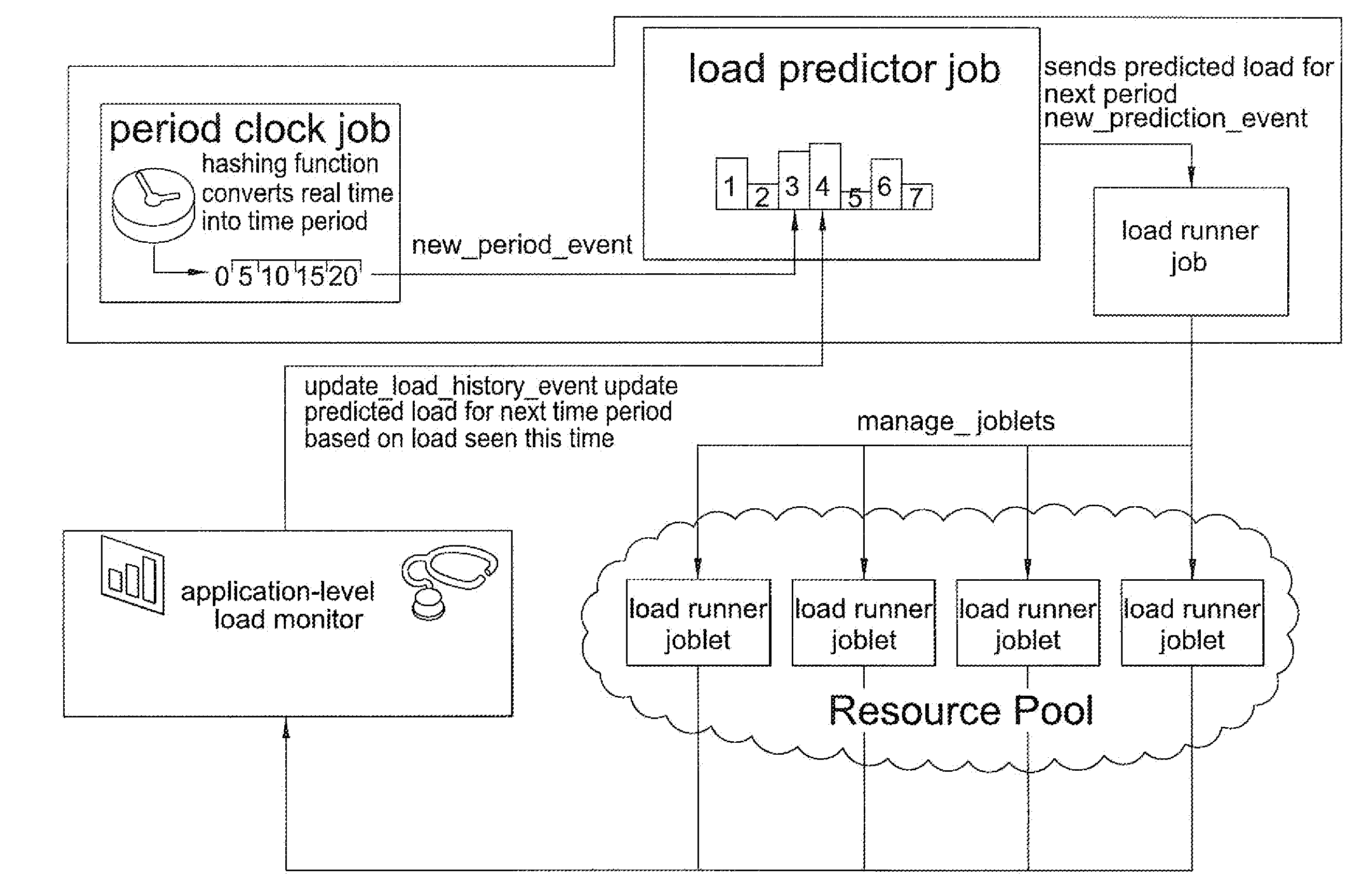

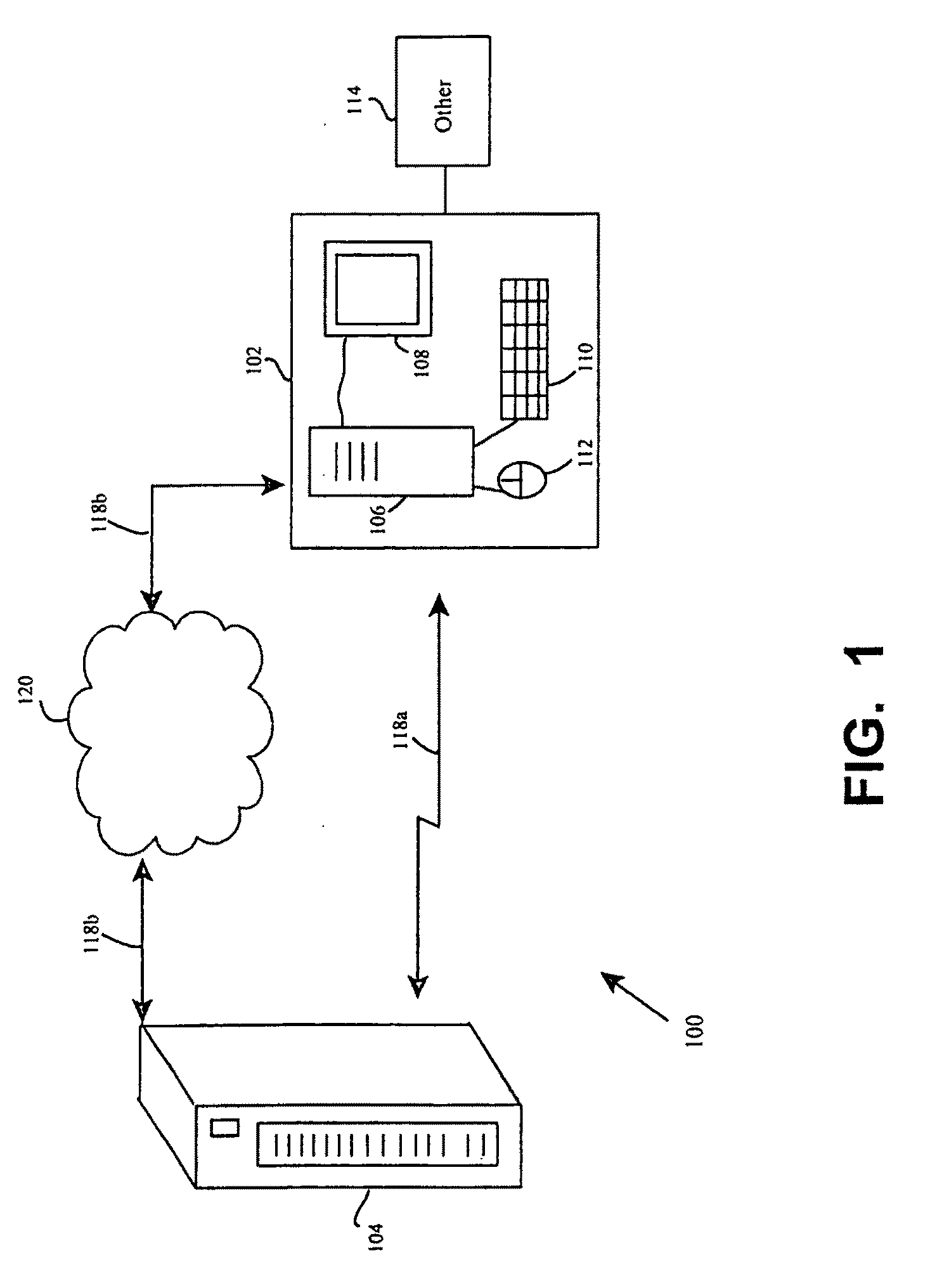

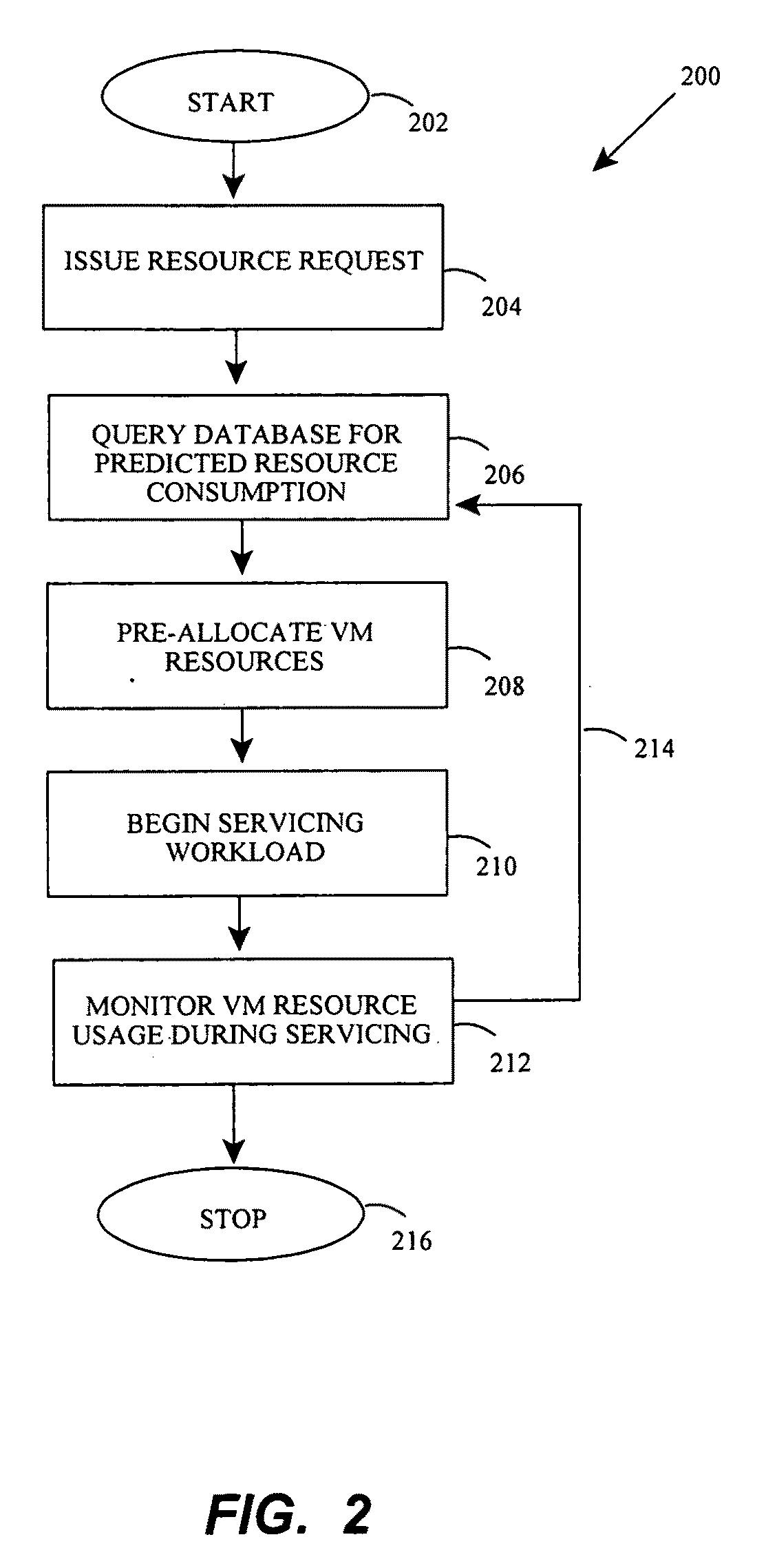

Proactive application workload management

InactiveUS20100131959A1Optimize resource allocationResource allocationMemory systemsResource consumptionComputerized system

A method is provided for continuous optimization of allocation of computing resources for a horizontally scalable application which has a cyclical load pattern wherein each cycle may be subdivided into a number of time slots. A computing resource allocation application pre-allocates computing resources at the beginning of a time slot based on a predicted computing resource consumption during that slot. During the servicing of the workload, a measuring application measures actual consumption of computing resources. On completion of servicing, the measuring application updates the predicted computing resource consumption profile, allowing optimal allocation of resources. Un-needed computing resources may be released, or may be marked as releasable, for use upon request by other applications, including applications having the same or lower priority than the original application. Methods, computer systems, and computer programs available as a download or on a computer-readable medium for installation according to the invention are provided.

Owner:NOVELL INC

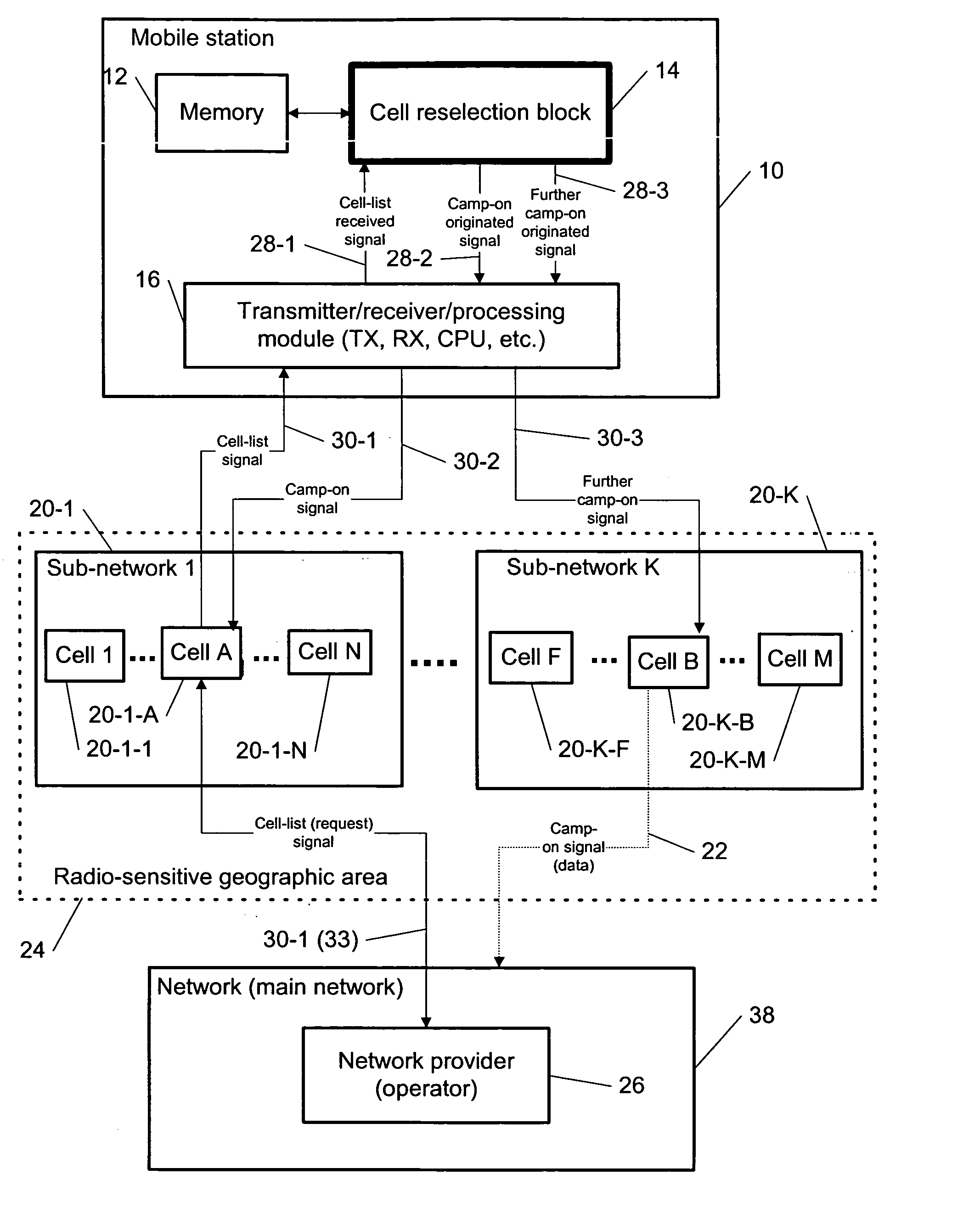

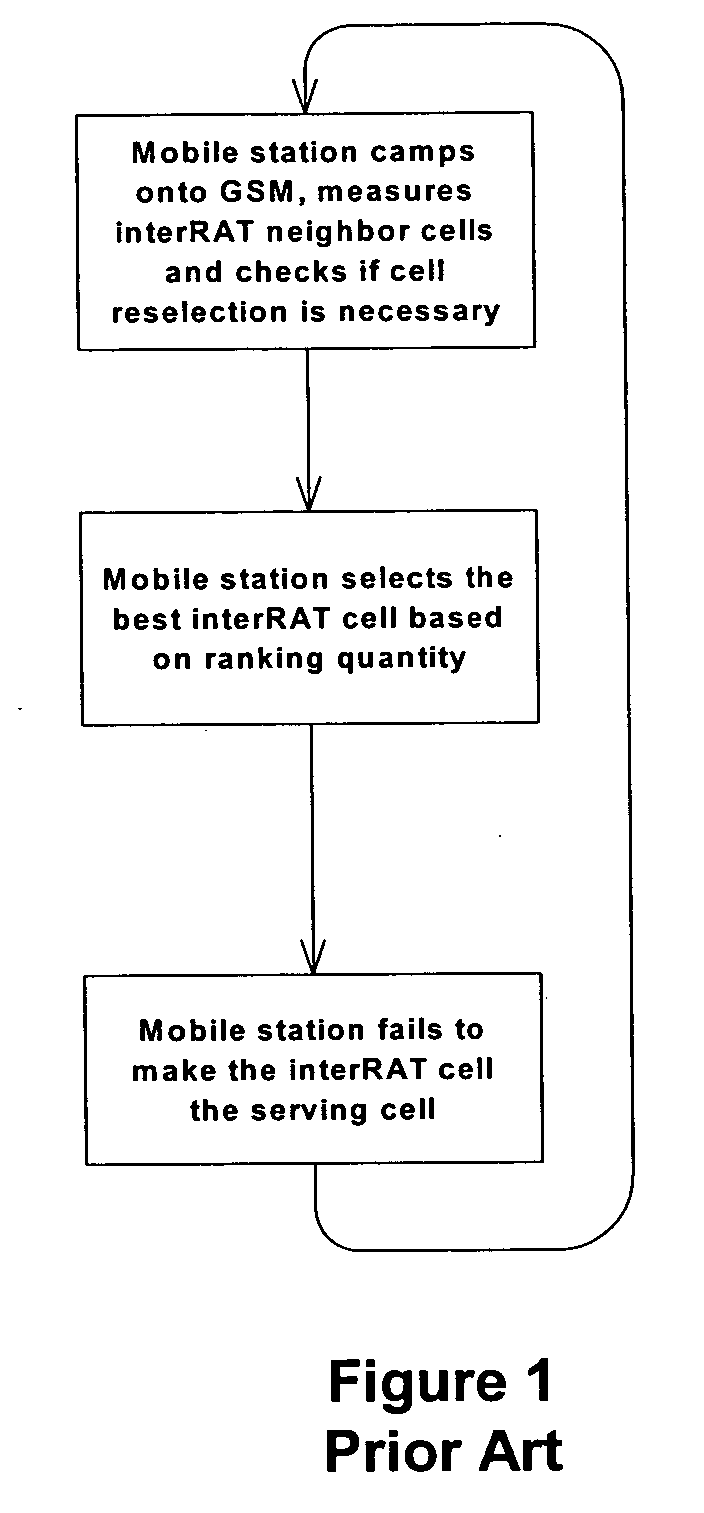

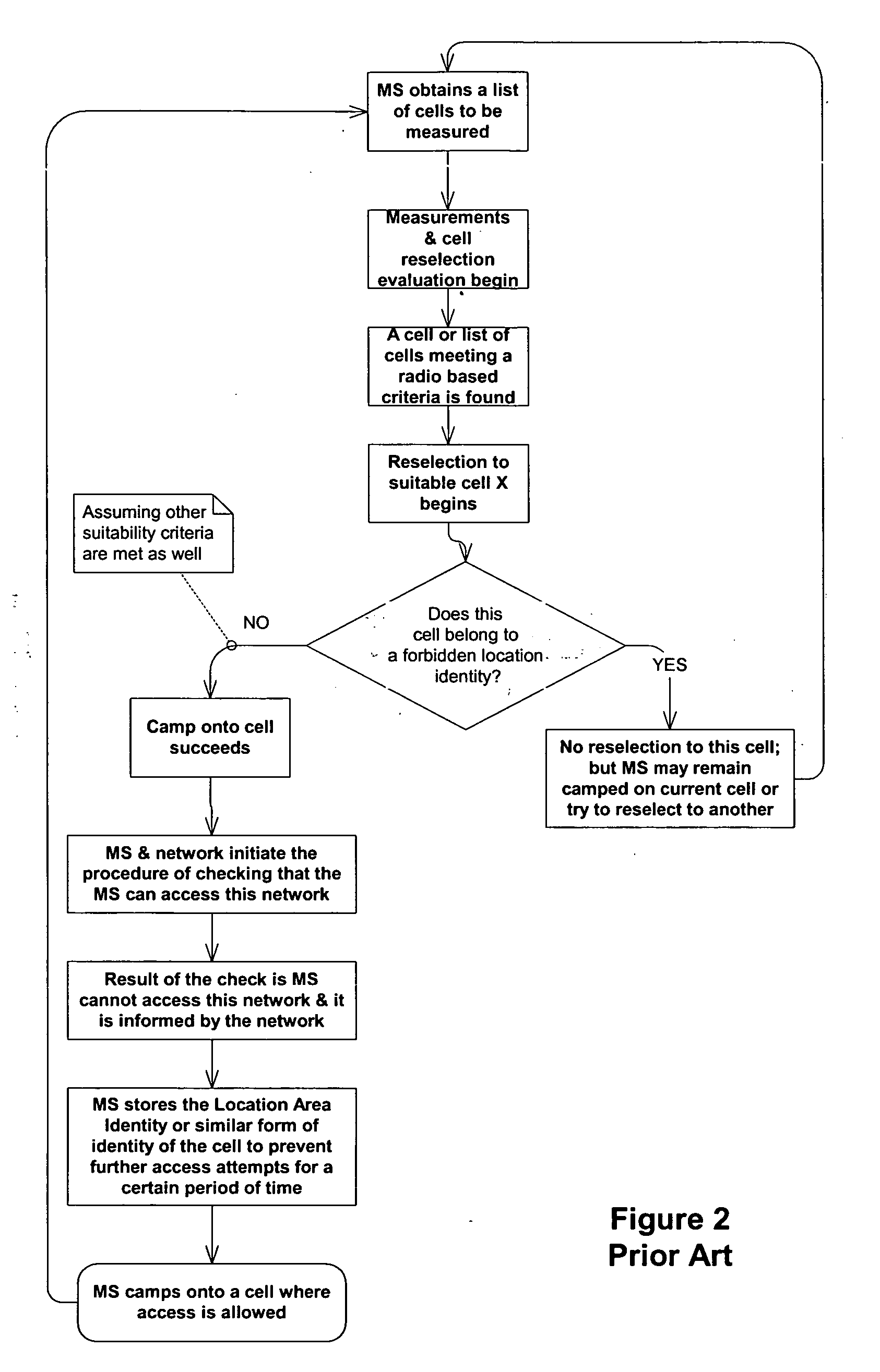

Cell reselection for improving network interconnection

InactiveUS20060084443A1Improving network interconnectionImprove interoperabilityAssess restrictionRadio/inductive link selection arrangementsCell selectionInterconnection

This invention describes a new methodology for a cell reselection by a mobile station (MS) for improving network interconnection and interoperability in a limited mobile access environment. The invention is applicable to any kind of networks and their interconnections. The invention describes how the MS can better recover from failed intersystem cell reselection attempts so that there are fewer subsequent failed attempts using two major improvements. First, the MS takes into consideration during cell reselection evaluation and candidate-cell selection, whether the MS had previously been unsuccessful in reselecting the considered cell. This means treating neighbor cells to which the MS had failed reselection before with a lower priority in subsequent cell reselection evaluations. Second, the MS is allowed to stop monitoring and thus, to stop evaluating cells if it was earlier found that the access to those cells is forbidden.

Owner:RPX CORP

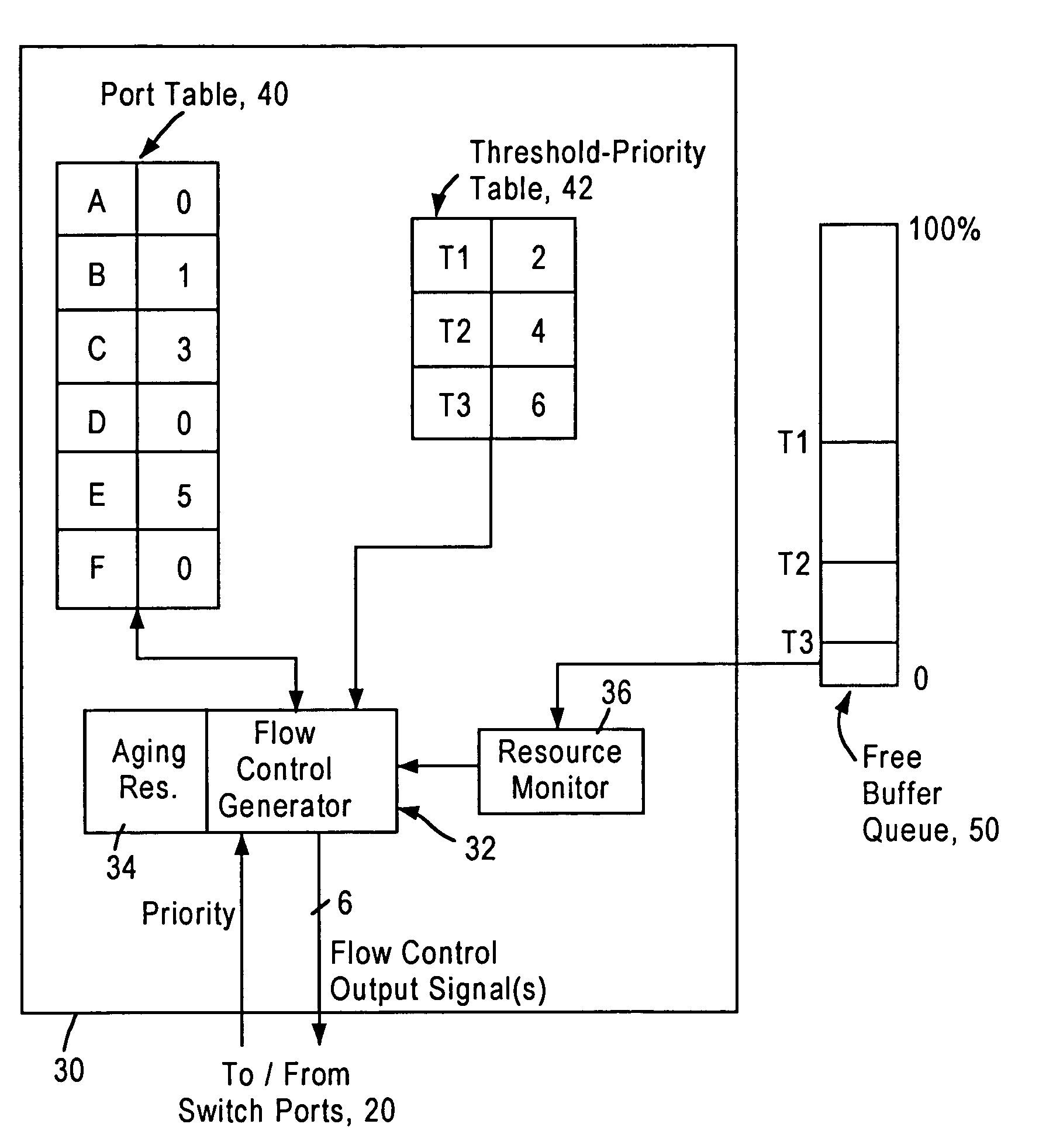

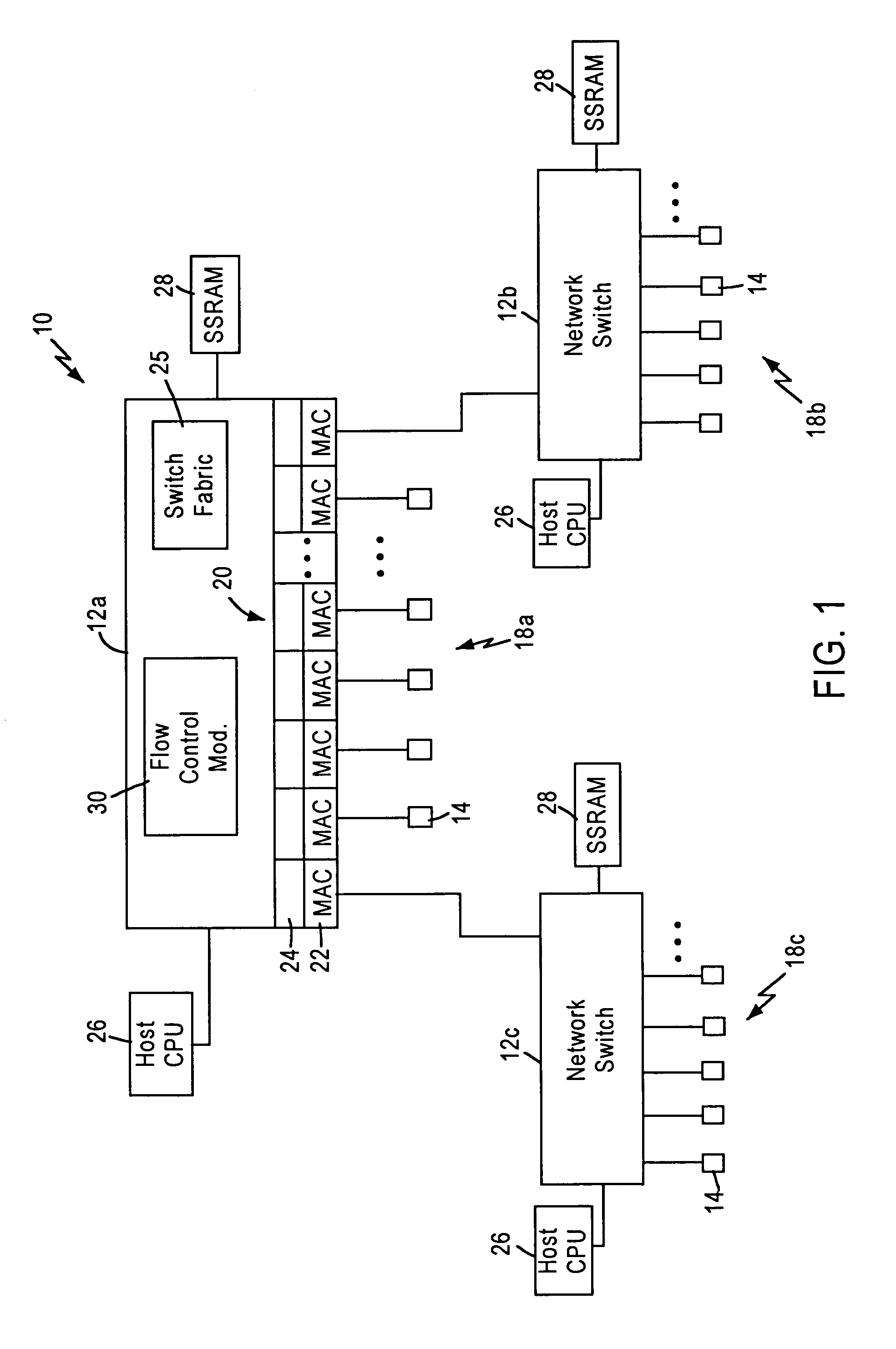

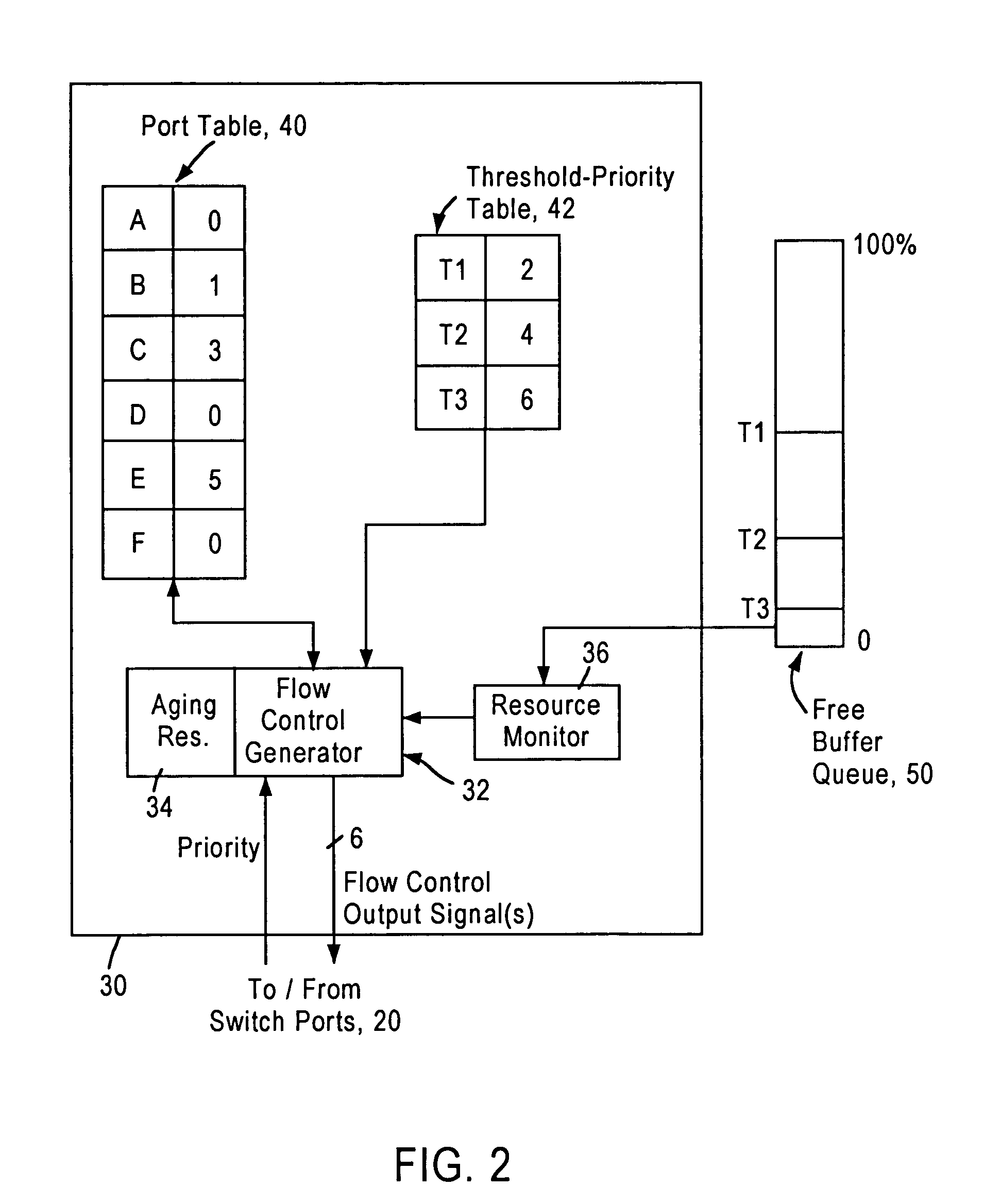

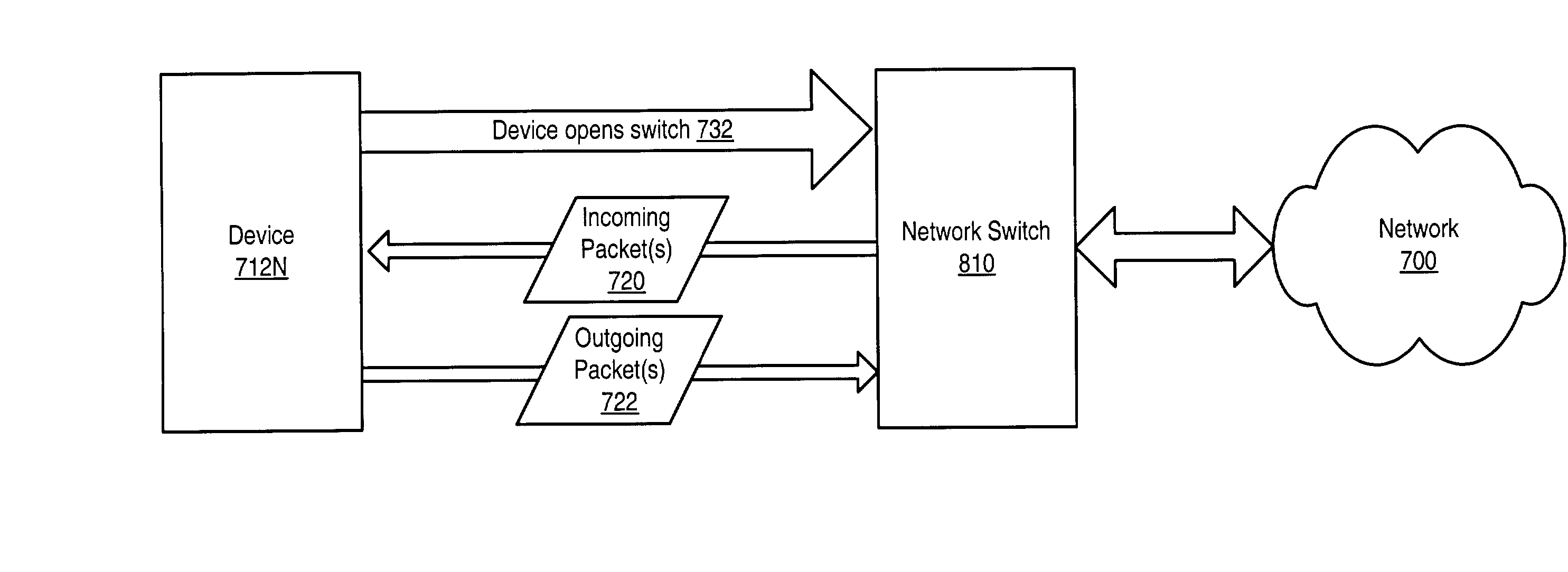

Flow control arrangement in a network switch based on priority traffic

InactiveUS6981054B1Avoid congestionMaintain reliabilityError preventionFrequency-division multiplex detailsTraffic capacityFrame based

A network switch includes network switch ports, each including a port filter configured for detecting user-selected attributes from a received layer 2 frame. Each port filter, upon detecting a user-selected attribute in a received layer 2 frame, sends a signal to a switching module indicating the determined presence of the user-selected attribute, for example whether the data packet has a prescribed priority value. The network switch includes a flow control module that determines which of the network switch ports should output a flow control frame based on the determined depletion of network switch resources and based on the corresponding priority value of the network traffic on each network switch port. Hence, any network switch port that receives high priority traffic does not output a flow control frame to the corresponding network station, enabling that network station to continue transmission of the high priority traffic. In most cases, the congestion and depletion of network switch resources can be alleviated by sending flow control frames on only those network switch ports that receive lower priority traffic, enabling the network switch to reduce congestion without interfering with high priority traffic.

Owner:GLOBALFOUNDRIES US INC

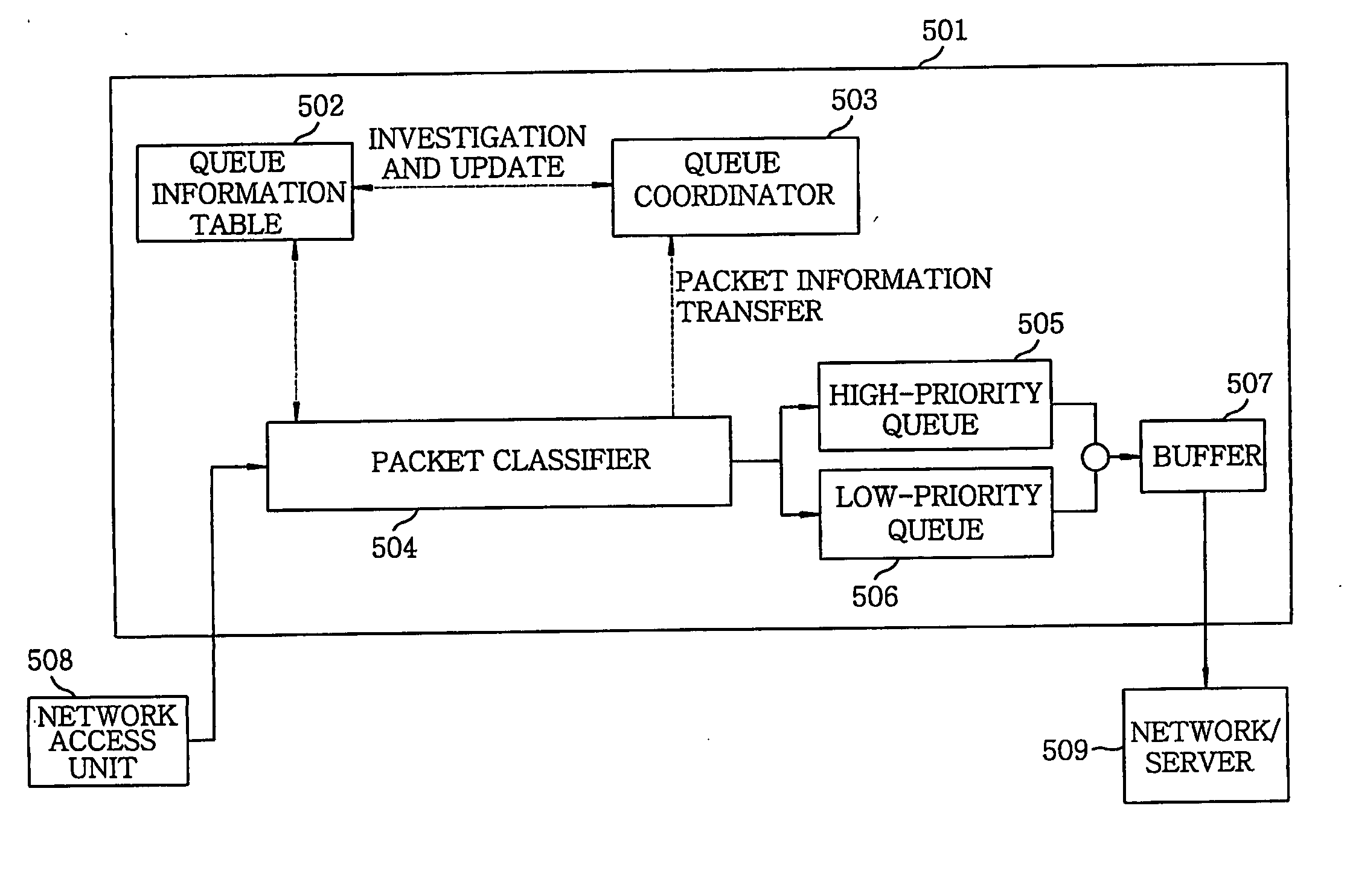

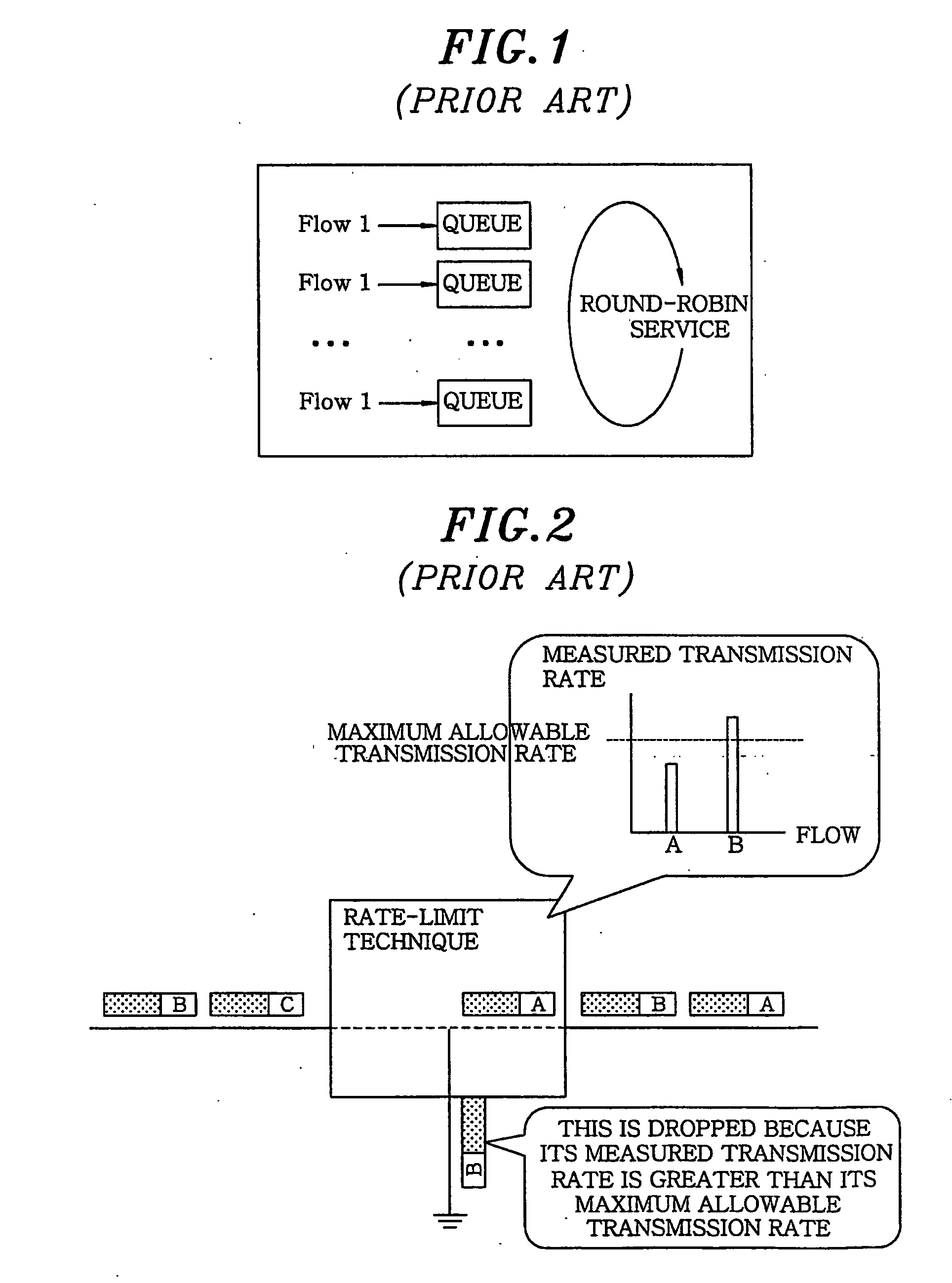

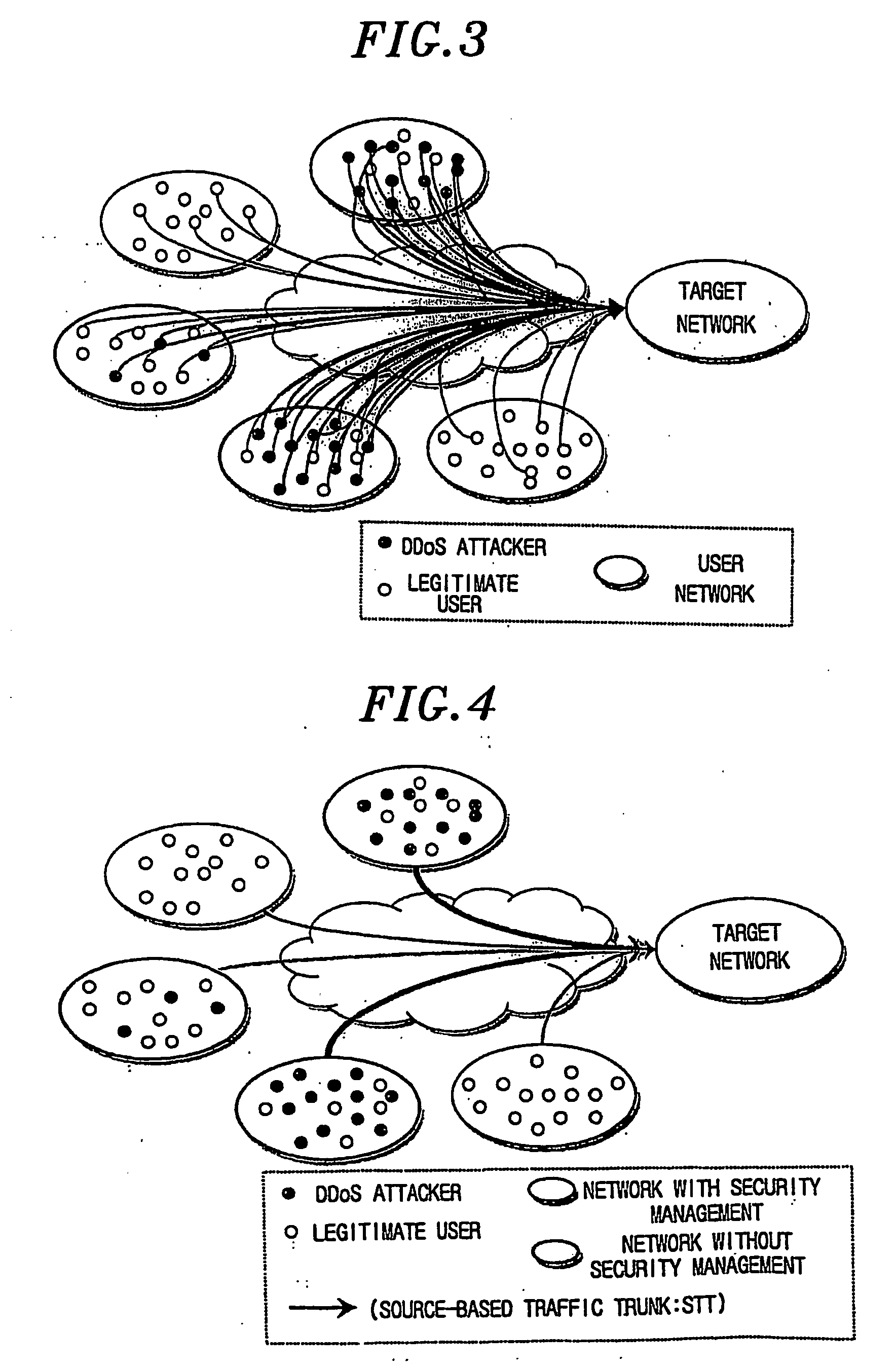

Method and apparatus for protecting legitimate traffic from dos and ddos attacks

InactiveUS20060041667A1Protection attackError preventionFrequency-division multiplex detailsDistributed computingLower priority

An apparatus for protecting legitimate traffic from DoS and DDoS attacks has a high-priority (505) and a low-priority (506) queue. Besides, a queue information table (402) has STT (Source-based Traffic Trunk) service queue information of a specific packet. A queue coordinator (502) updates the queue information table (502) based on a load of a provided STT and a load of the high-priority queue (505). A packet classifier (504) receives a packet from the network access unit (508), investigates an STT service queue of the packet from the queue information table (502), selectively transfers the packet to the high-priority (505) or the low-priority (506) queue and provides information on the packet to the queue coordinator (503). A buffer (507) buffers outputs of the high-priority (505) and the low-priority (506) queue and provides outputs to the network (509) to be protected.

Owner:ELECTRONICS & TELECOMM RES INST

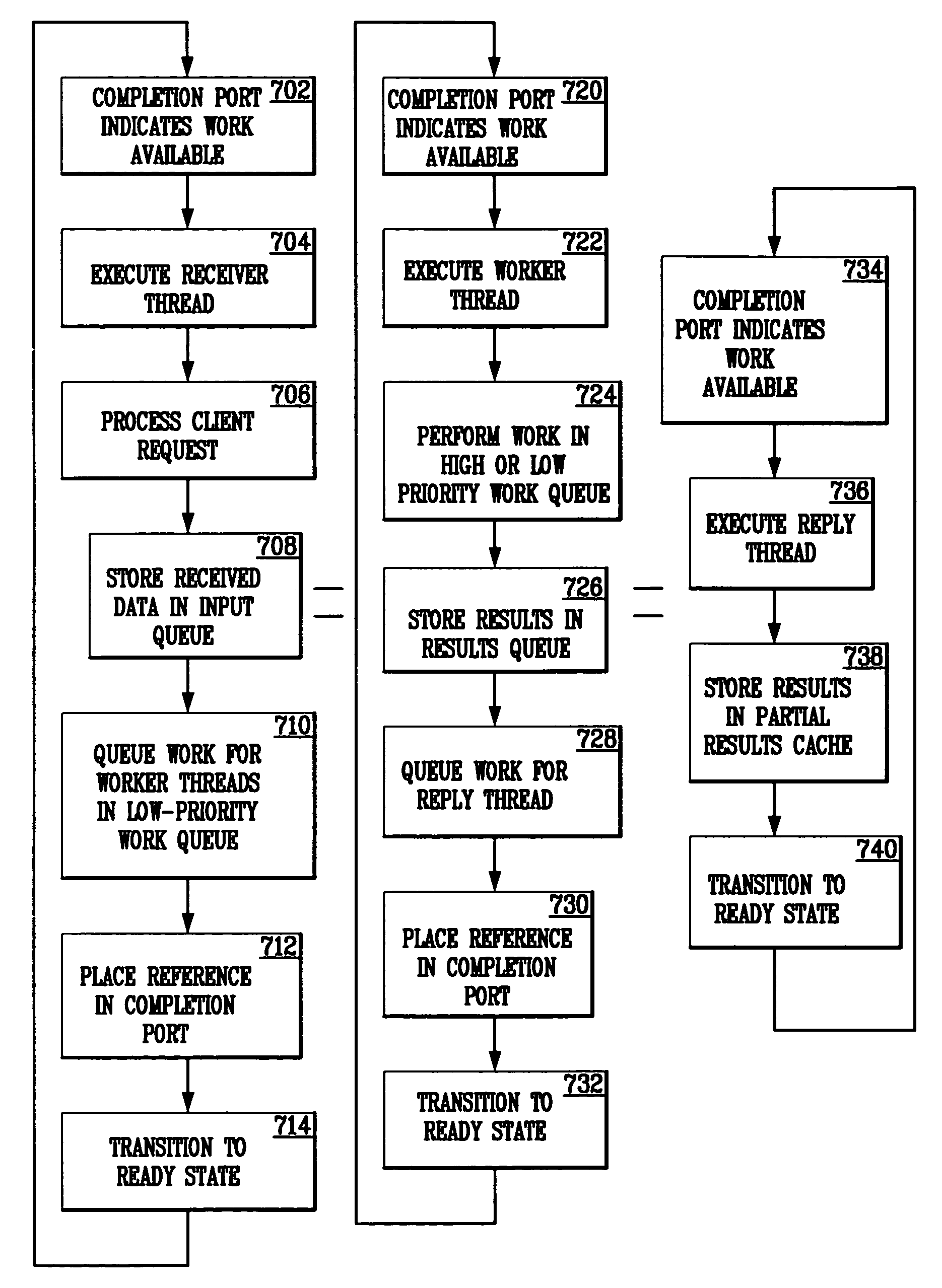

Generic application server and method of operation therefor

InactiveUS7051330B1Multiprogramming arrangementsMultiple digital computer combinationsApplication serverState switching

A generic application server is capable of simultaneously receiving requests, processing requested work, and returning results using multiple, conceptual thread pools. In addition, functions are programmable as state machines. While executing such a function, when a worker thread encounters a potentially blocking condition, the thread issues an asynchronous request for data, a state transition is performed, and the thread is released to do other work. After the blocking condition is relieved, another worker thread is scheduled to advance to the next function state and continue the function. Multiple priority work queues are used to facilitate completion of functions already in progress. In addition, lower-priority complex logic threads can be invoked to process computationally intense logic that may be necessitated by a request. Throttling functions are also implemented, which control the quantity of work accepted into the server and server response time.

Owner:MICROSOFT TECH LICENSING LLC

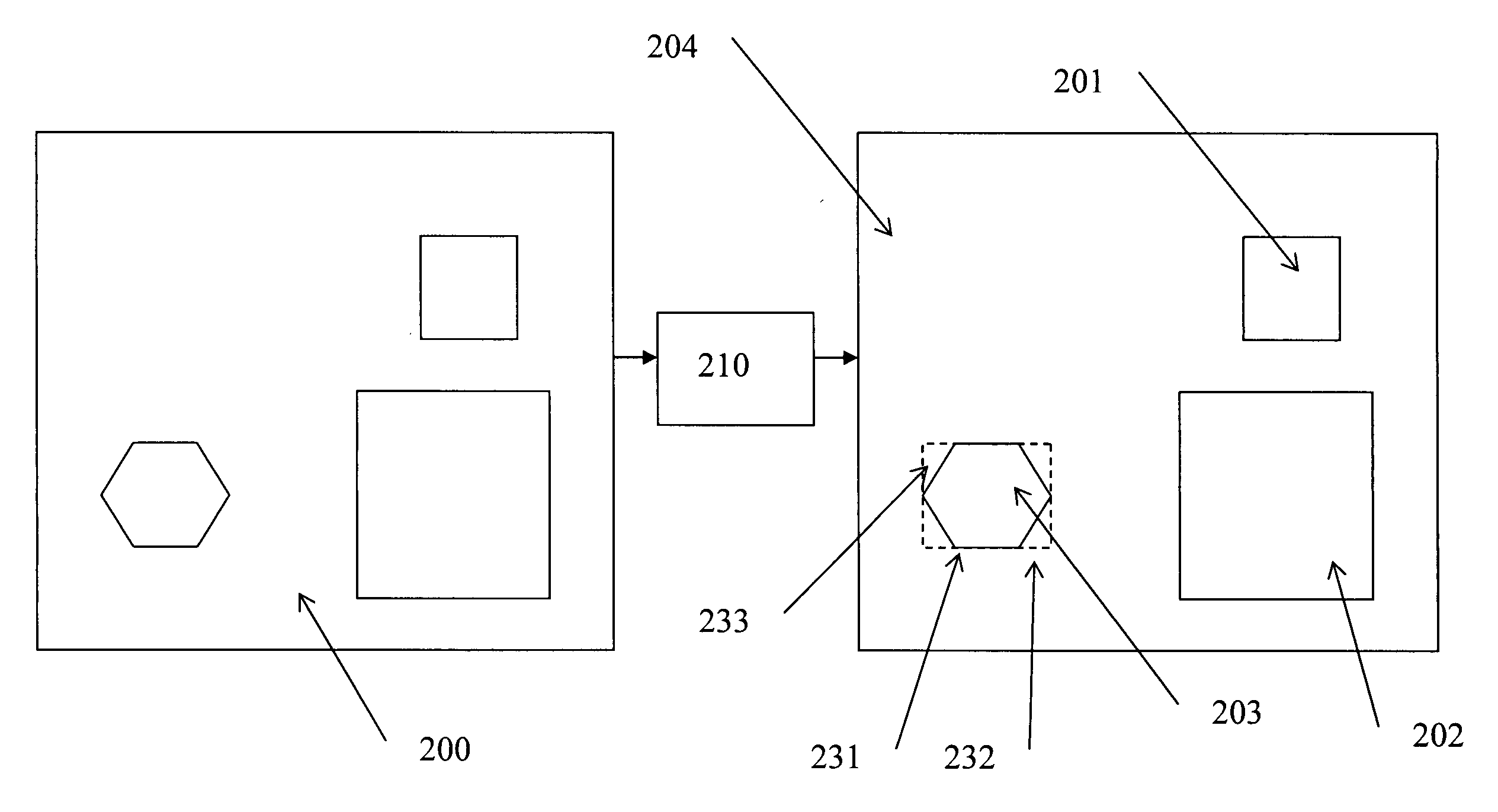

Method and device for image and video transmission over low-bandwidth and high-latency transmission channels

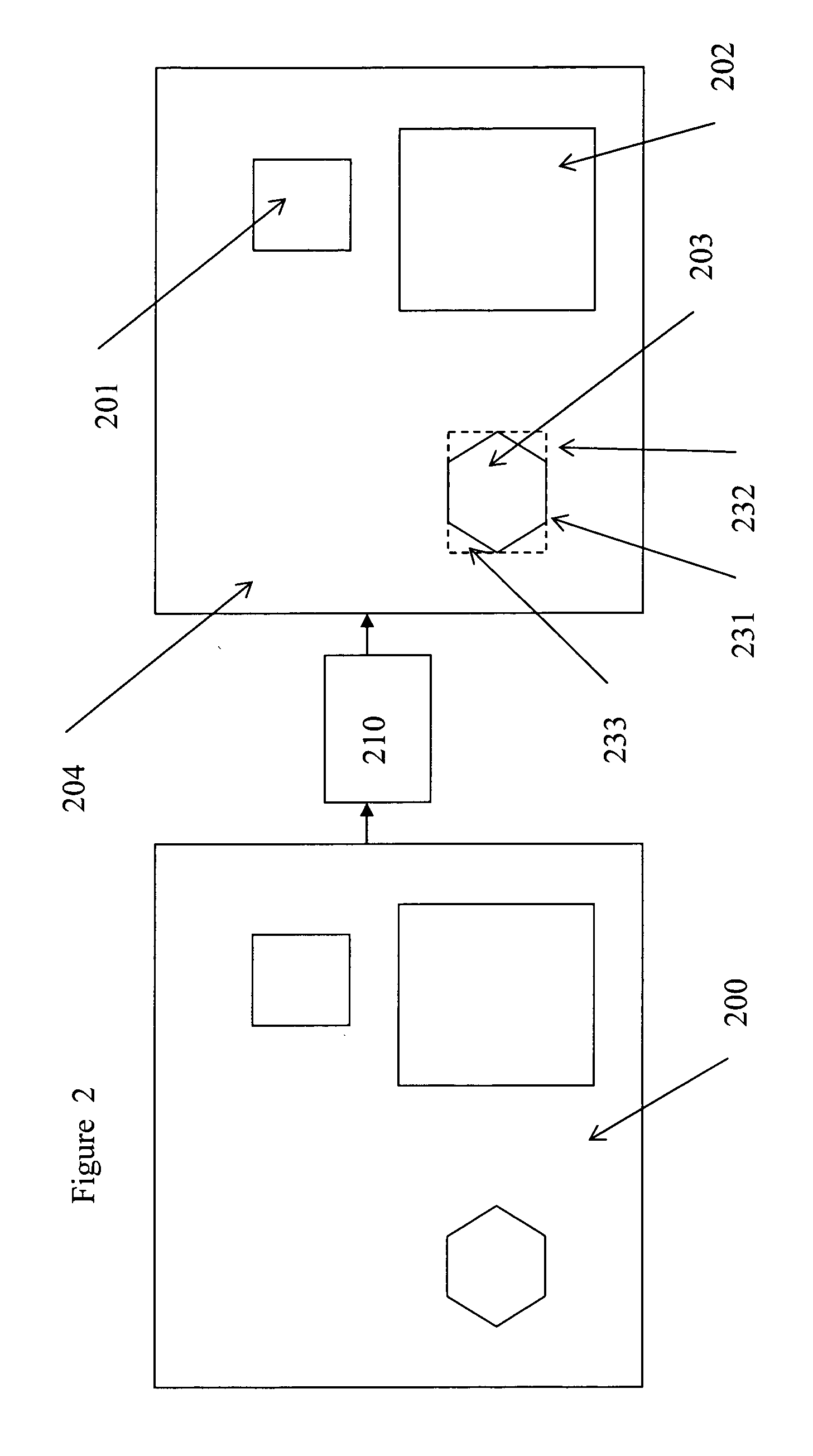

InactiveUS20070183493A1Increase the compression ratioLow priorityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningVideo transmissionEncoding algorithm

The present invention provides a method for transmission of a images and / or video over bandwidth limited transmission channels having varying available bandwidth, which method comprises the use of a classification algorithm for decomposing the images and / or video to be transmitted into multiple spatial areas, each area having a specific image type; detecting the image type of each of those areas separately selecting for each of those areas an image and / or video encoding algorithm having a compression ratio. The classification algorithm prioritizes each of the areas, the classification algorithm increasing the compression ratio of the image and / or video encoding algorithm dedicated to spatial areas having lower priority in case of decreasing bandwidth.

Owner:BARCO NV

Transfer ready frame reordering

InactiveUS20030056000A1Multiple digital computer combinationsData switching networksLatency (engineering)Distributed computing

A system and method for reordering received frames to ensure that transfer ready (XFER_RDY) frames among the received frames are handled at higher priority, and thus with lower latency, than other frames. In one embodiment, an output that is connected to one or more devices may be allocated an additional queue specifically for XFER_RDY frames. Frames on this queue are given a higher priority than frames on the normal queue. XFER_RDY frames are added to the high priority queue, and other frames to the lower priority queue. XFER_RDY frames on the higher priority queue are forwarded before frames on the lower priority queue. In another embodiment, a single queue may be used to implement XFER_RDY reordering. In this embodiment, XFER_RDY frames to be inserted in front of other types of frames in the queue.

Owner:BROCADE COMMUNICATIONS SYSTEMS

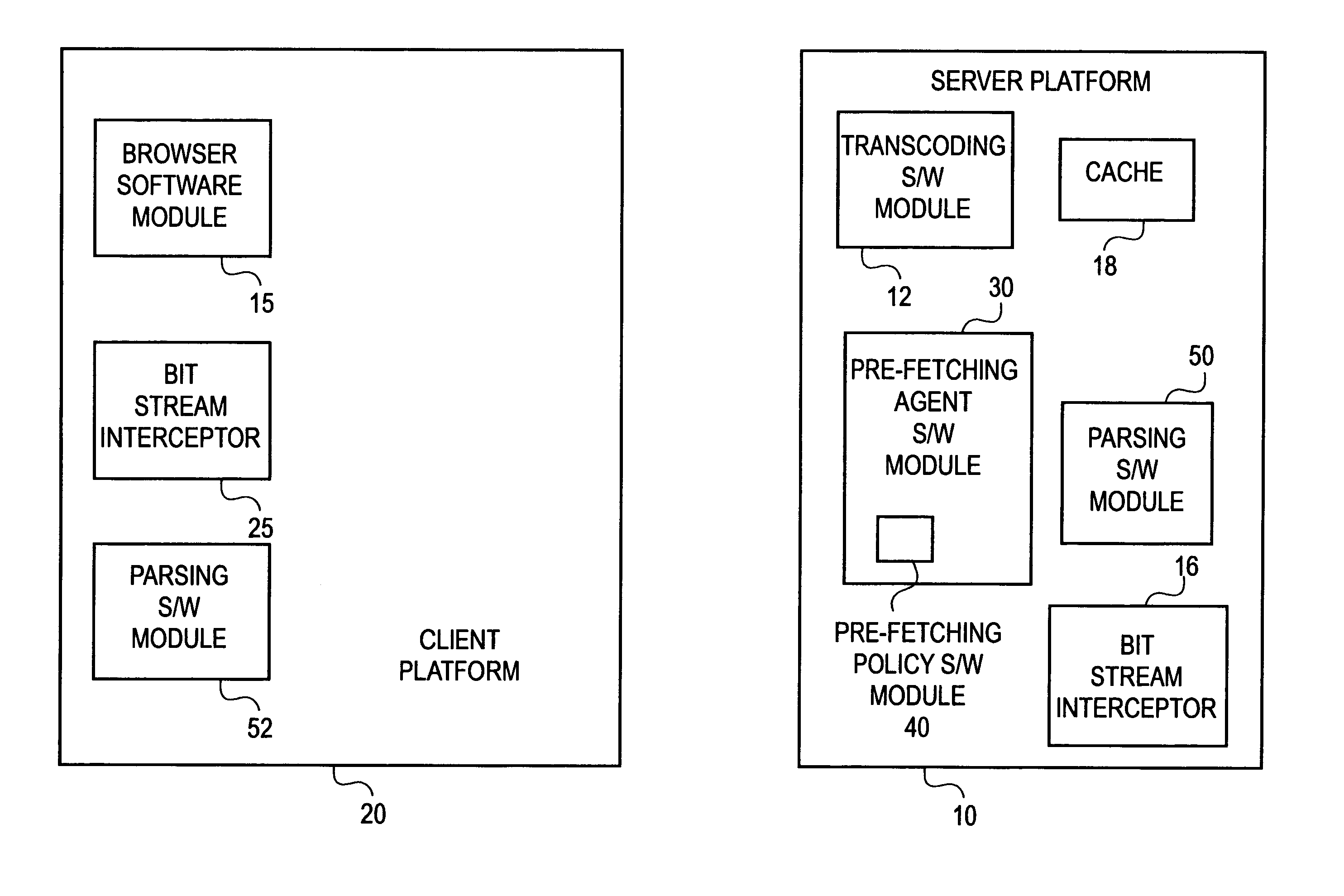

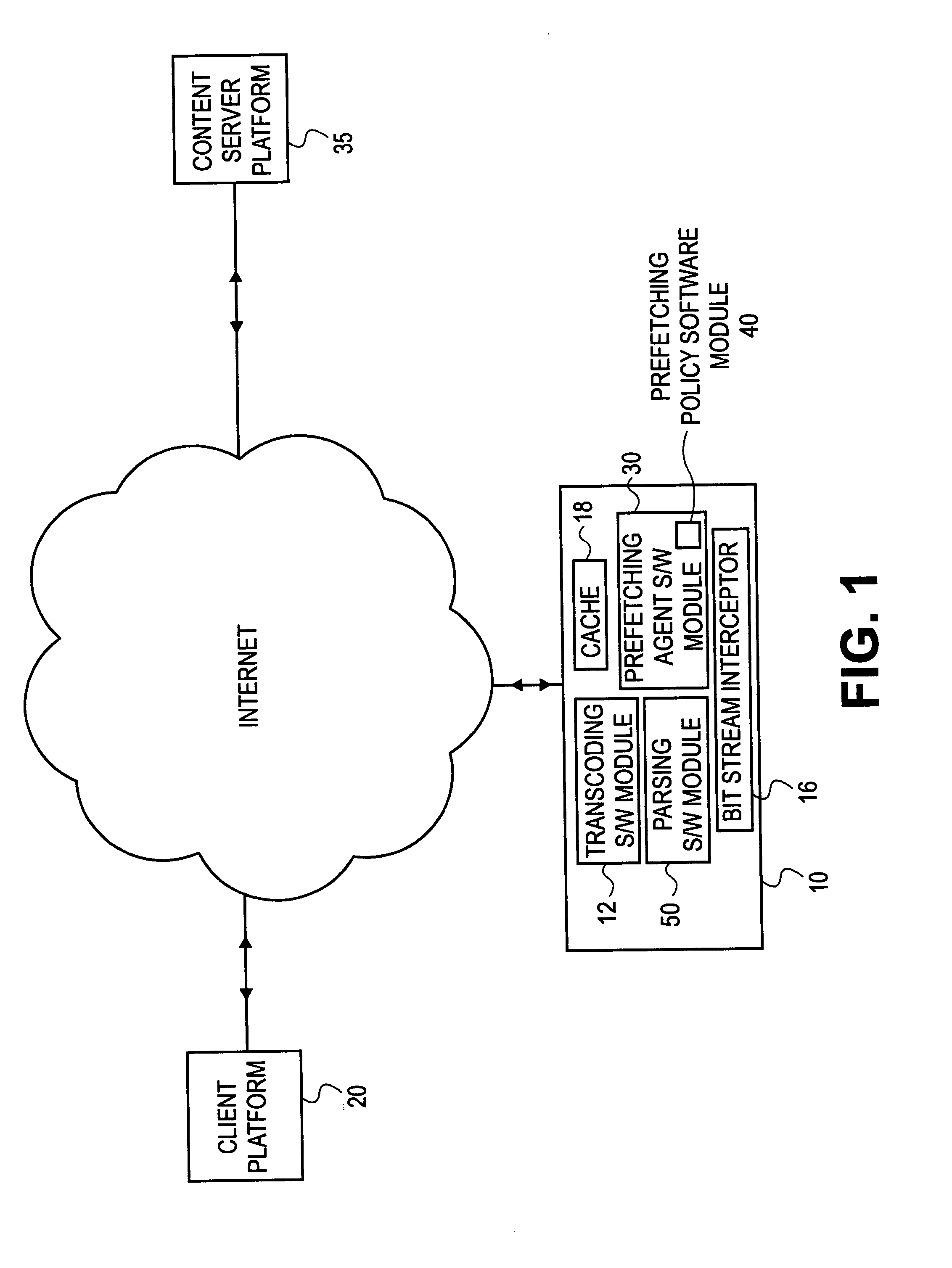

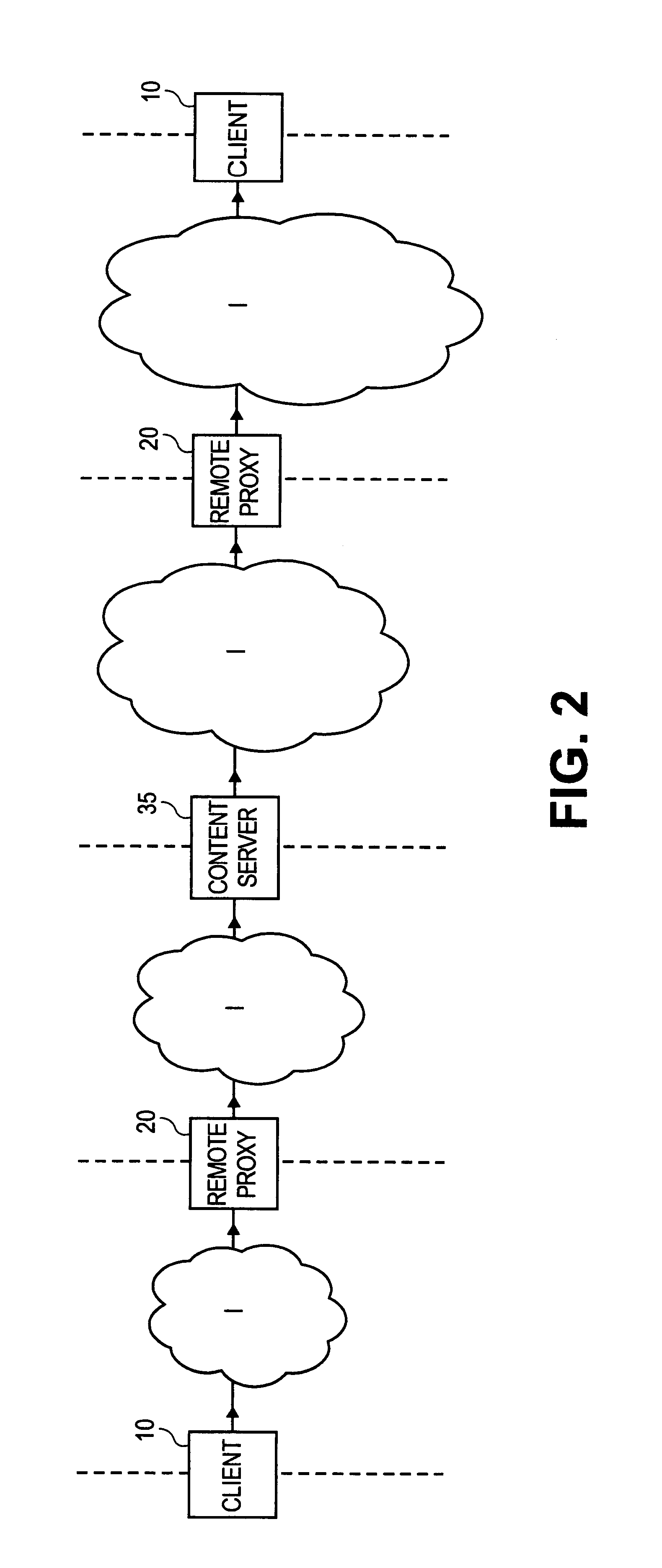

Method of proxy-assisted predictive pre-fetching with transcoding

InactiveUS6959318B1Digital data information retrievalMultiple digital computer combinationsNetwork connectionTranscoding

Briefly, in accordance one embodiment of the invention, a method of suspending a network connection used for low priority transmissions between a client platform and a server platform includes: determining a characteristic of a transmission between the client platform and the server platform, said characteristic consisting essentially of a high priority transmission and a low priority transmission; and suspending the connection if the characteristic of the transmission comprises a high priority transmission briefly, in accordance with another embodiment, a method of using a network connection between a client platform and a server platform includes: producing on one of the platforms a list of Uniform Resource Locators (URLs) from a requested network page, said list comprising links in said requested network page; and pre-fetching via said connection at least one of said URLs to said remote proxy server.

Owner:INTEL CORP

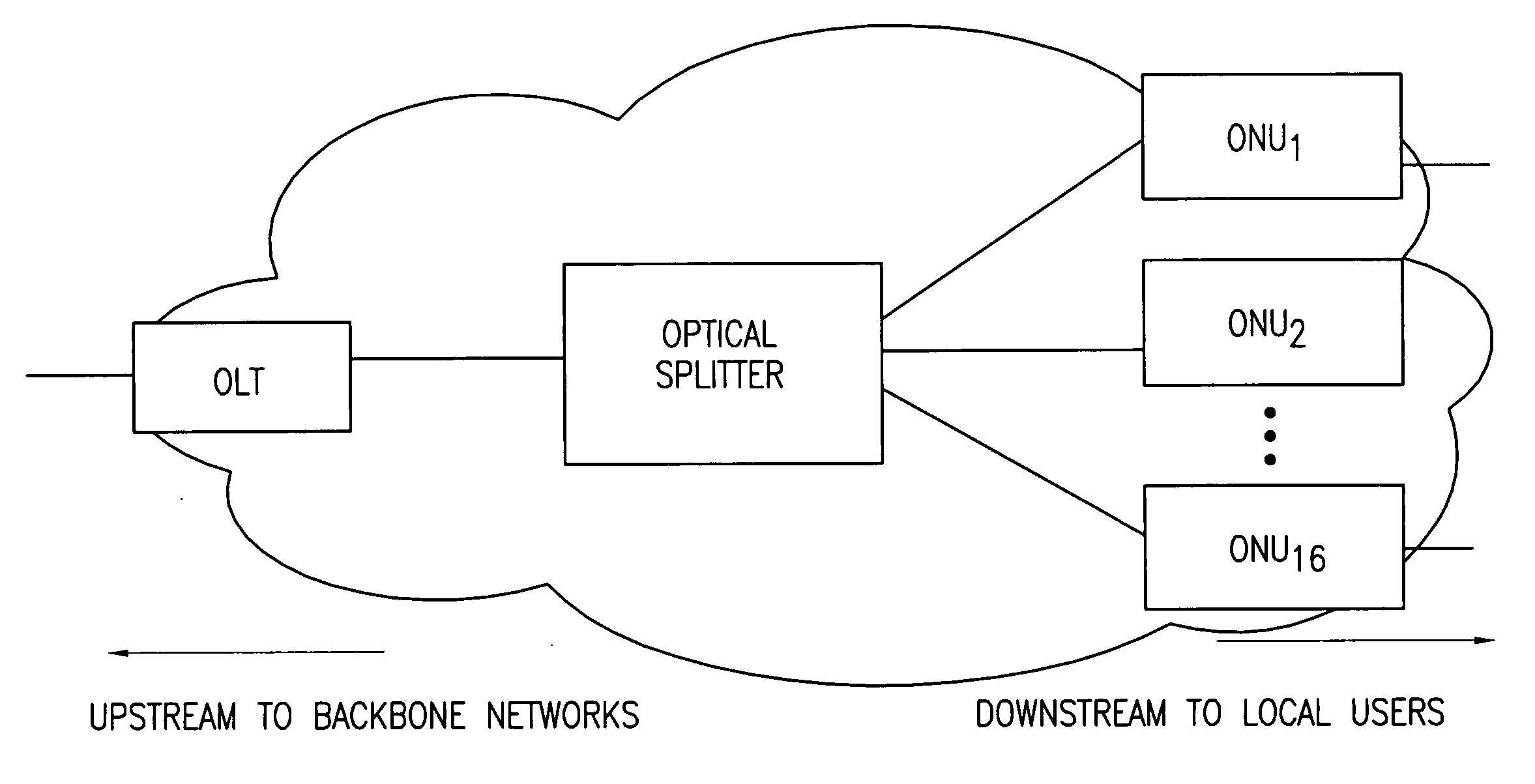

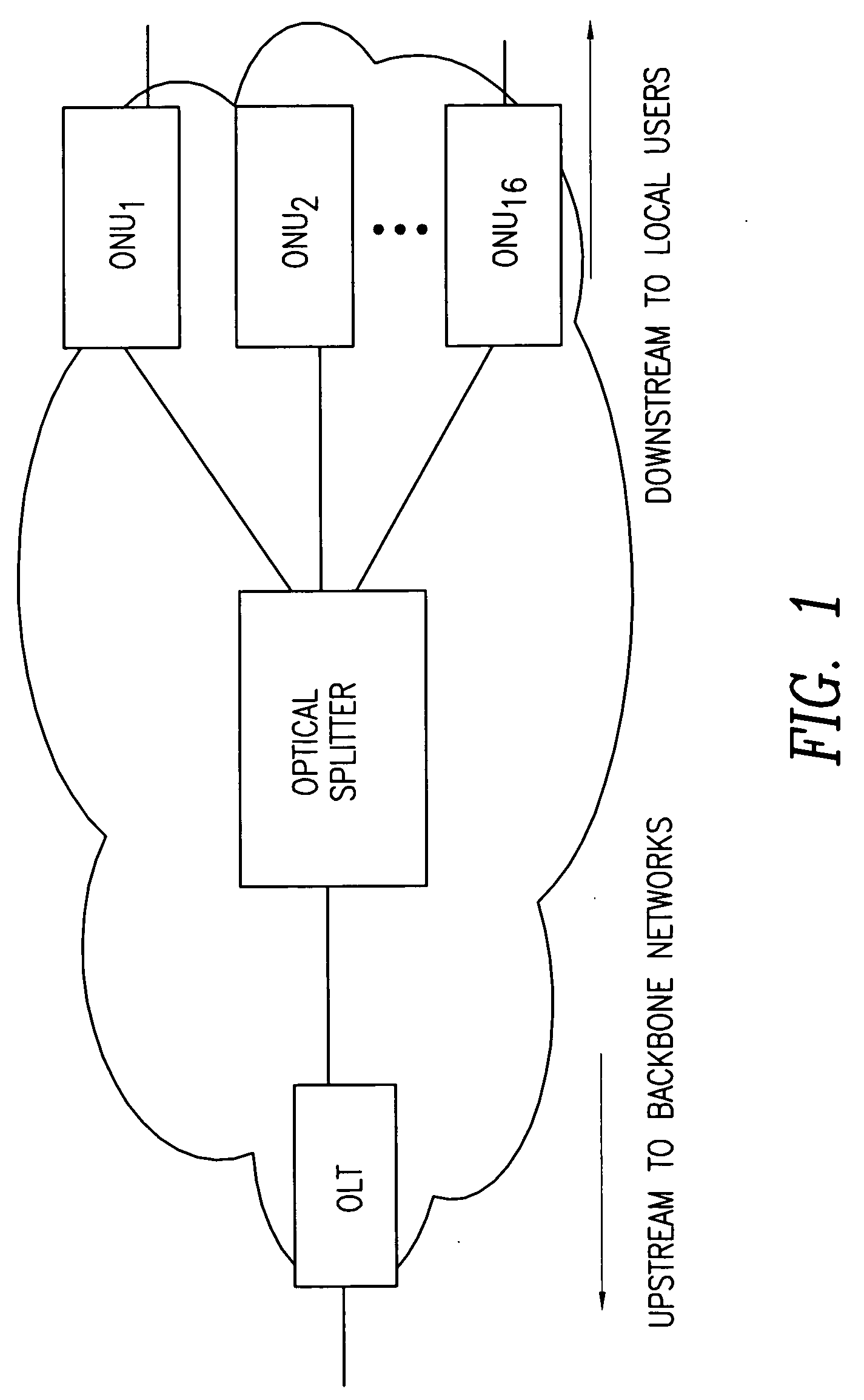

Dynamic bandwidth allocation and service differentiation for broadband passive optical networks

InactiveUS20060268704A1Effective bandwidthSpace complexityMultiplex system selection arrangementsError preventionService-level agreementTraffic prediction

A dynamic upstream bandwidth allocation scheme is disclosed, i.e., limited sharing with traffic prediction (LSTP), to improve the bandwidth efficiency of upstream transmission over PONs. LSTP adopts the PON MAC control messages, and dynamically allocates bandwidth according to the on-line traffic load. The ONU bandwidth requirement includes the already buffered data and a prediction of the incoming data, thus reducing the frame delay and alleviating the data loss. ONUs are served by the OLT in a fixed order in LSTP to facilitate the traffic prediction. Each optical network unit (ONU) classifies its local traffic into three classes with descending priorities: expedited forwarding (EF), assured forwarding (AF), and best effort (BE). Data with higher priority replace data with lower priority when the buffer is full. In order to alleviate uncontrolled delay and unfair drop of the lower priority data, the priority-based scheduling is employed to deliver the buffered data in a particular transmission timeslot. The bandwidth allocation incorporates the service level agreements (SLAs) and the on-line traffic dynamics. The basic limited sharing with traffic prediction (LSTP) scheme is extended to serve the classified network traffic.

Owner:NEW JERSEY INSTITUTE OF TECHNOLOGY

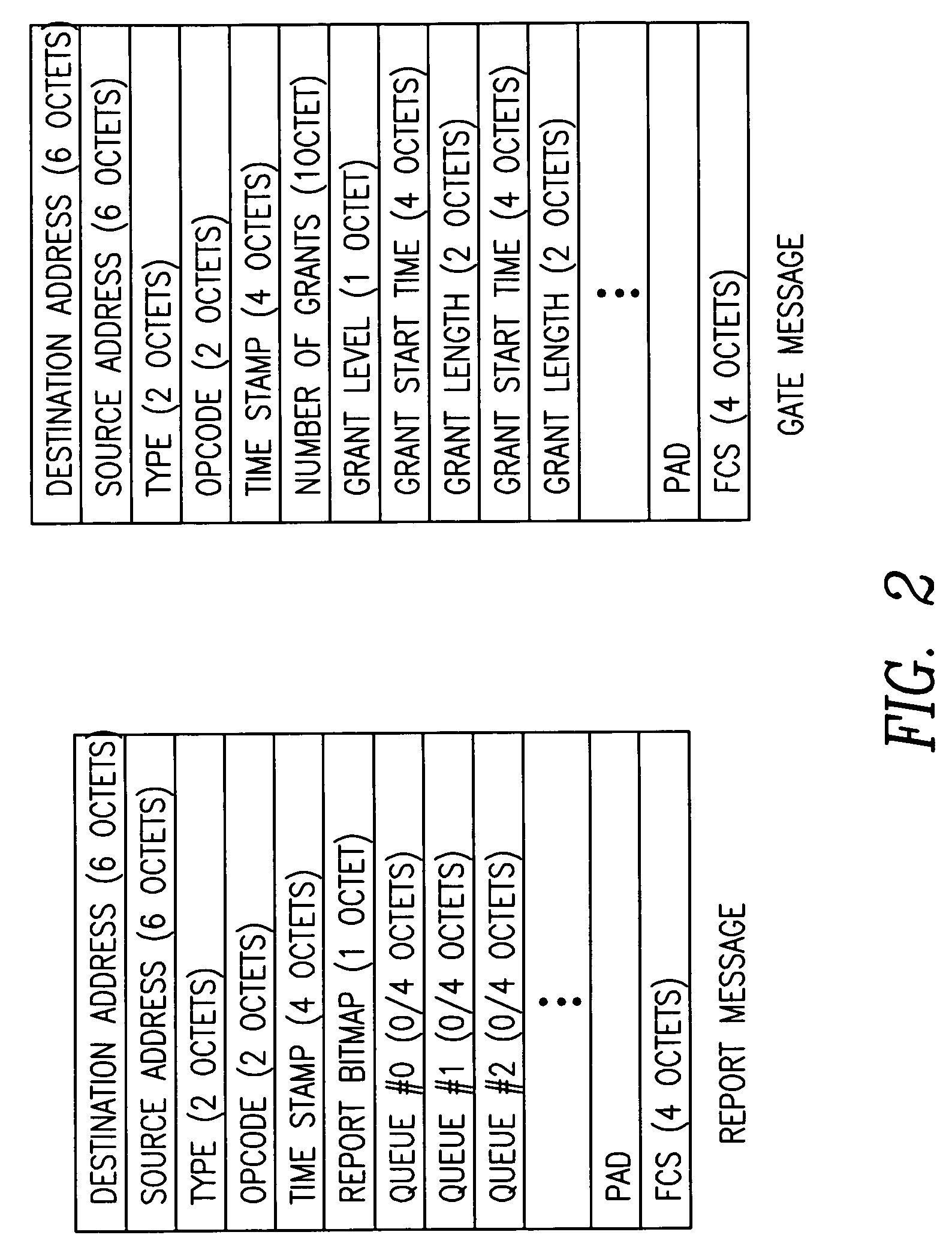

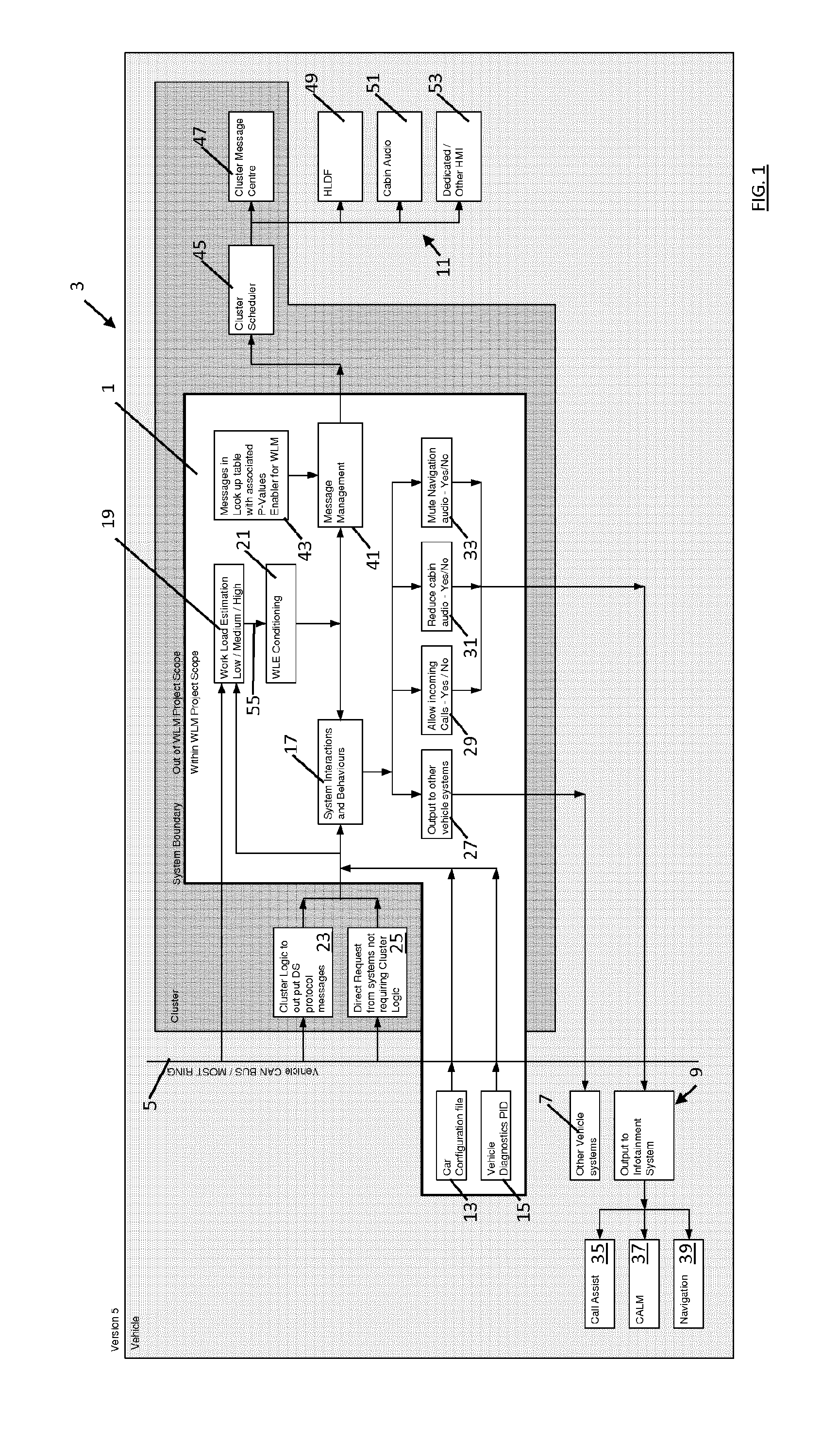

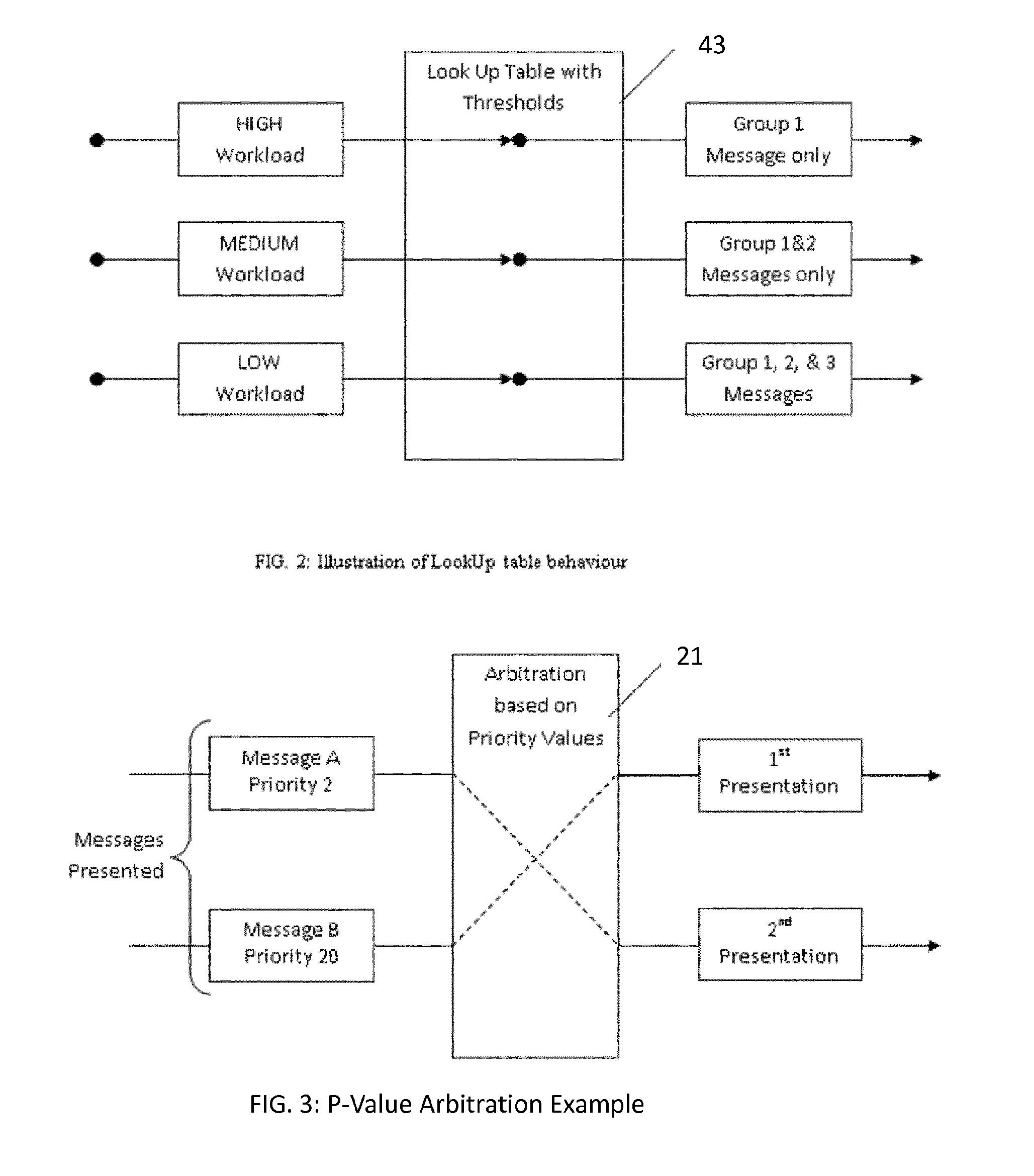

Control System and Method

ActiveUS20150051752A1Lower performance requirementsAvoid lower priority information being withheld indefinitelyDigital data processing detailsInstrument arrangements/adaptationsDriver/operatorControl system

The present invention relates to a method and system of controlling the output of information to a driver. It provides to monitor at least one operating parameter of a vehicle and to estimate driver workload is estimated based on said at least one operating parameter, using a plurality of thresholds (HIGH, MEDIUM, LOW) defining driver workload ratings. Output of information to the driver is controlled based on the estimated driver workload rating. To ensure that also low priority information is outputted, the invention provides to periodically cycling through some or all of those defined driver workload ratings having a lower rating than the current estimated workload rating.

Owner:JAGUAR LAND ROVER LTD

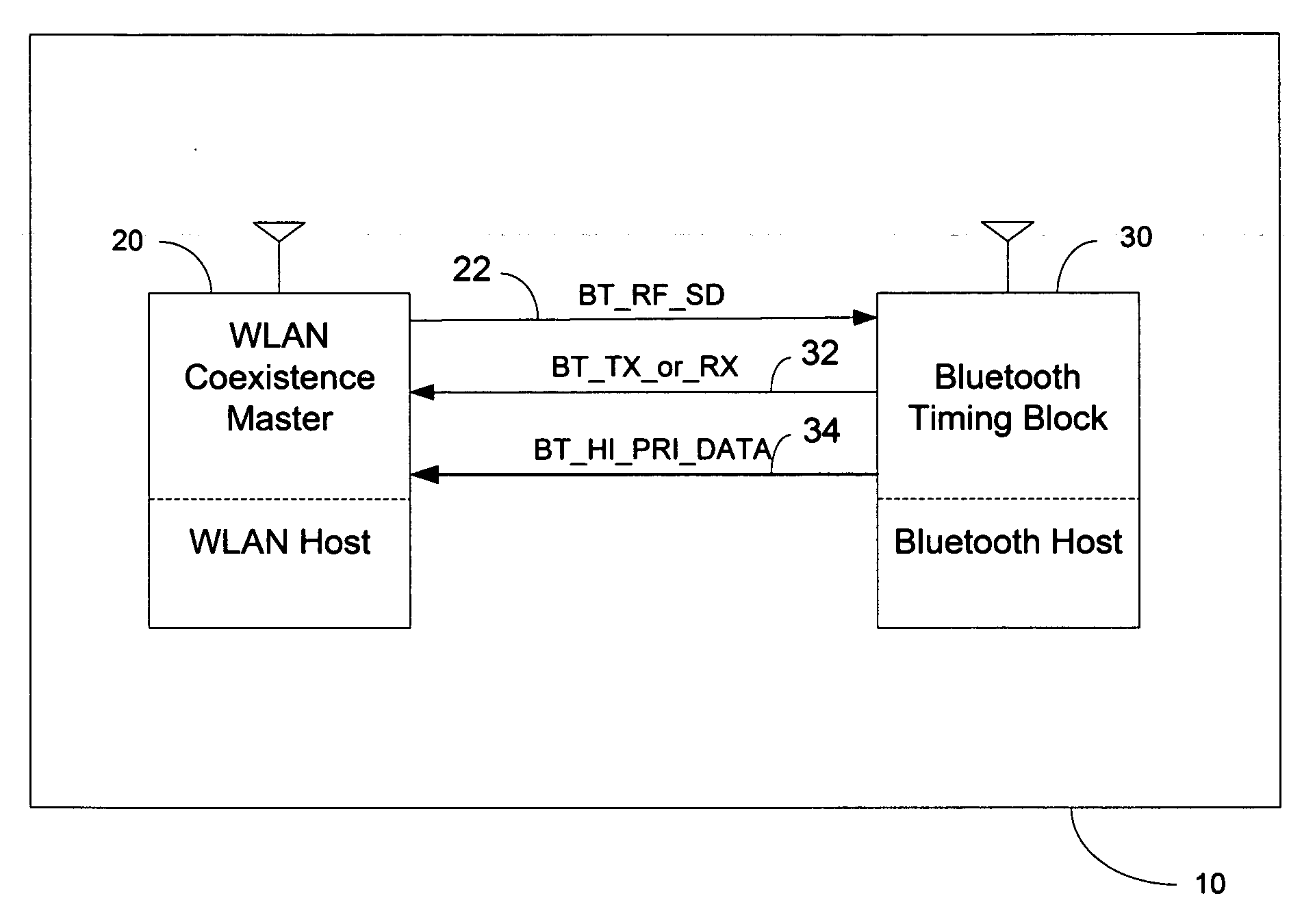

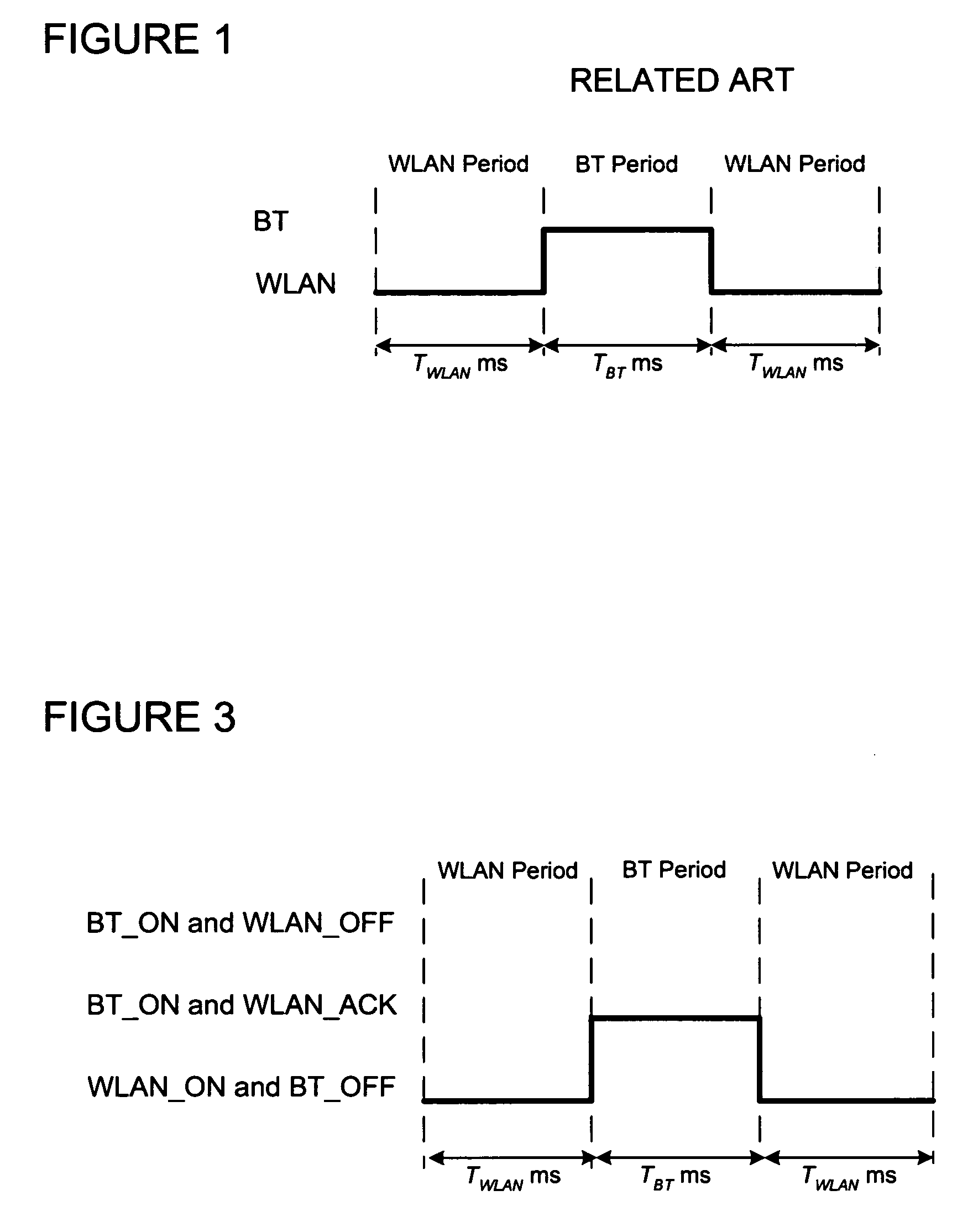

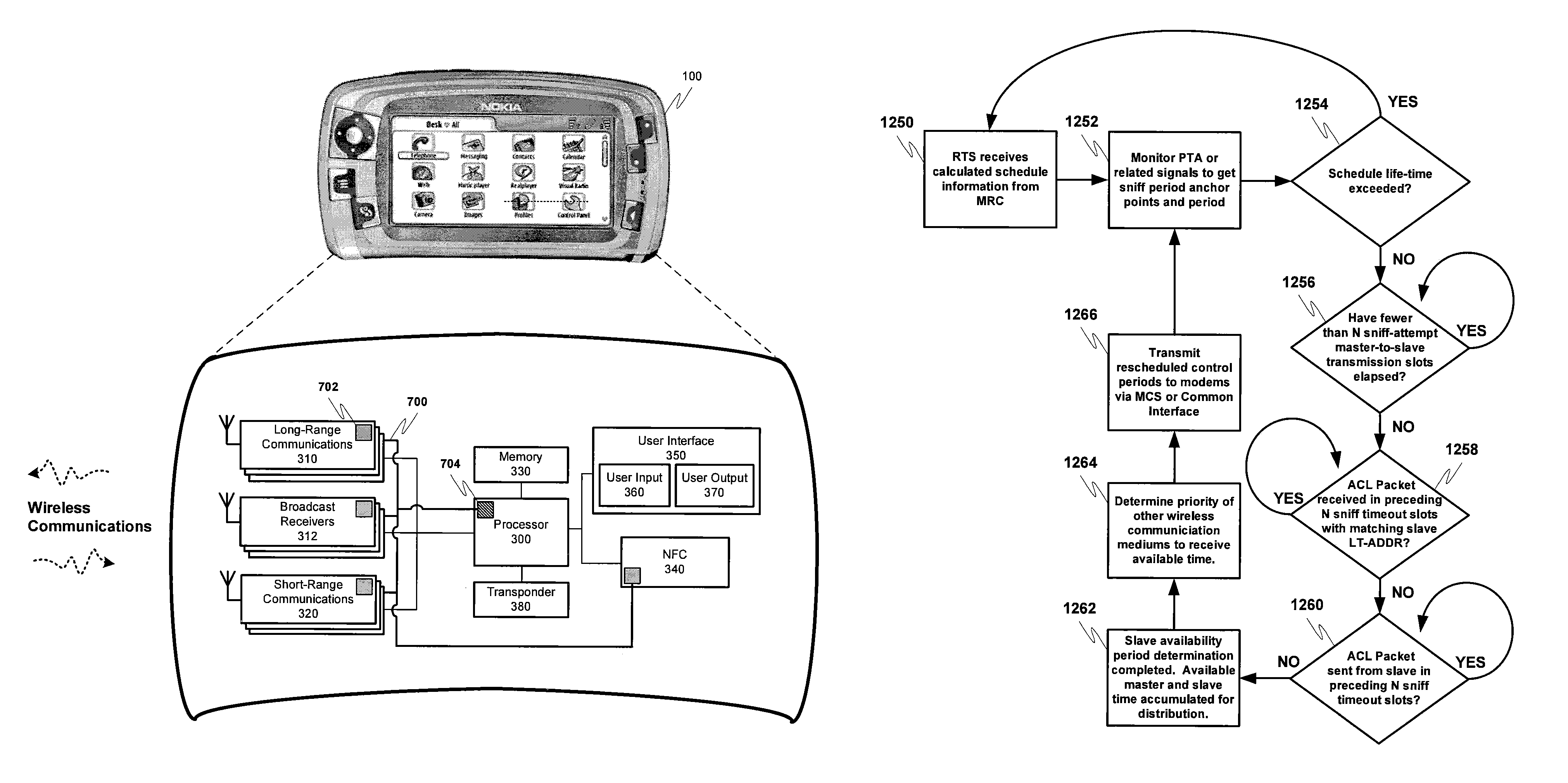

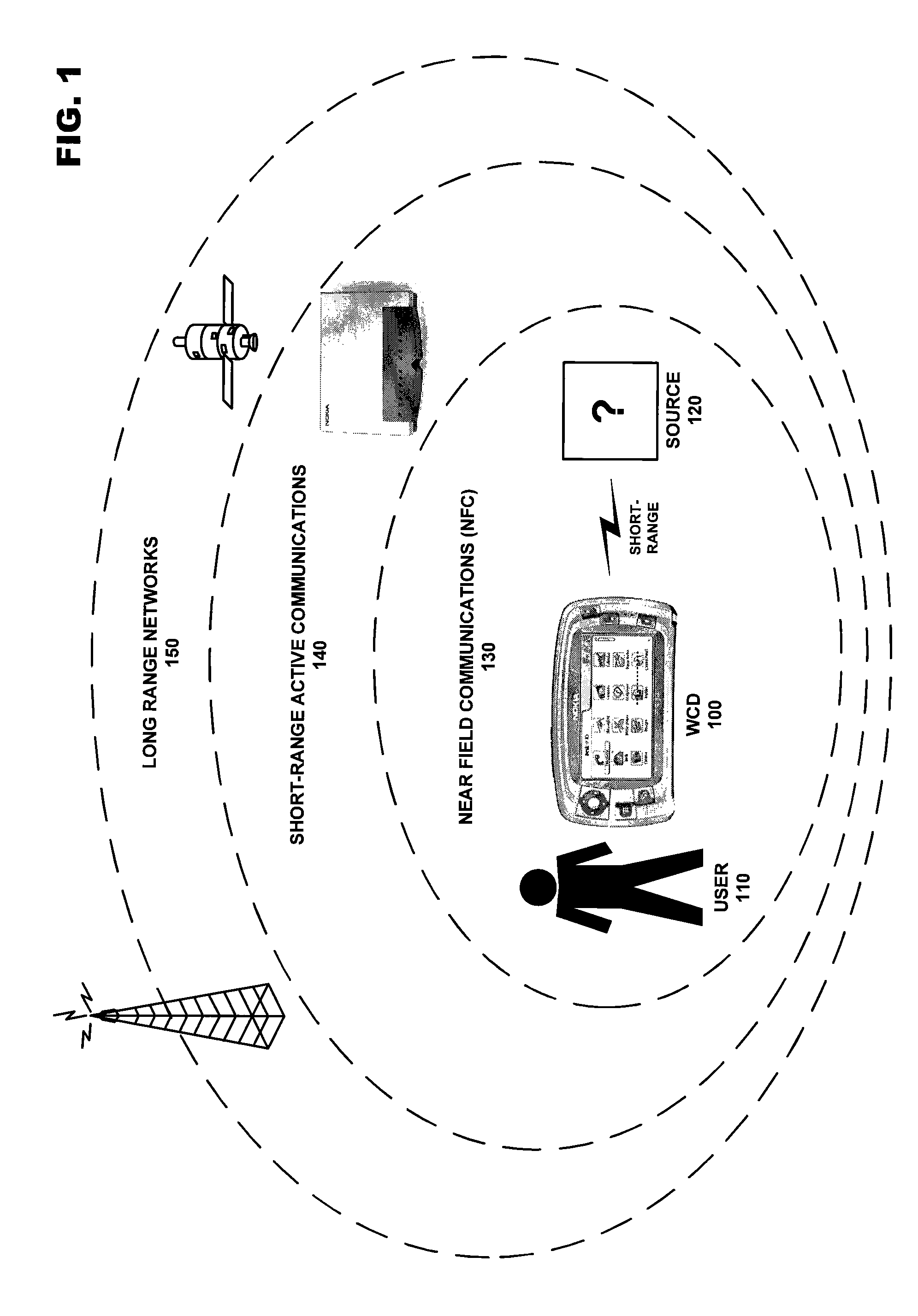

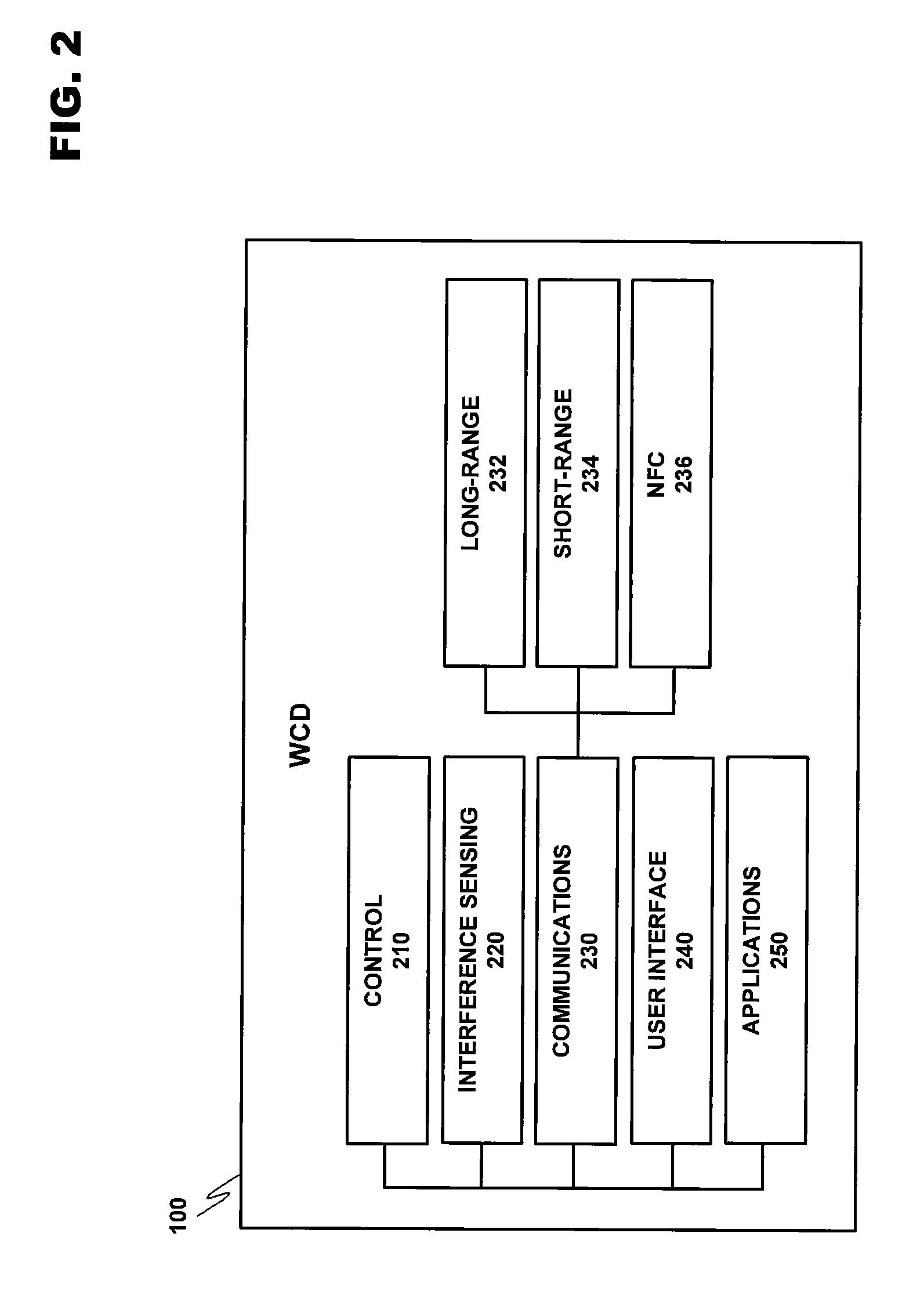

Method of wireless local area network and Bluetooth network coexistence in a collocated device

InactiveUS20060292987A1Power managementDevices with wireless LAN interfaceWireless lanTime-division multiplexing

A collated wireless local area network / Bluetooth (WLAN / BT) device avoids radio interference between the two wireless systems by collaborative coexistence mechanisms. The collocated WLAN / BT device and coexistence methods include time division multiplexing based on various operating states of the collocated WLAN and BT systems, respectively. Such operating states include the transmission of low priority WLAN and BT data signals during WLAN and BT periods, the sleep mode of the collocated WLAN system, transmission of high priority data signals from the collocated BT system during time division multiplexed WLAN and BT periods, the transition of the collocated BT system from an active state to an idle state, and the transition of the collocated WLAN system from an active state to an idle state.

Owner:TEXAS INSTR INC

Bandwidth conservation by reallocating unused time scheduled for a radio to another radio

InactiveUS7778603B2Avoid communicationTransmission systemsSubstation equipmentTime scheduleModem device

A system for managing the operation of a plurality of radio modems contained within the same wireless communication device. The radio modems may be managed so that simultaneous communication involving two or more radio modems utilizing conflicting wireless communication mediums may be avoided. More specifically, a multiradio controller may identify when scheduled communication time in a radio modem using a more dominant, or high priority, wireless communication medium will in actually go unused, and may reallocate some or all of the now available scheduled time to radio modems using a lower priority wireless communication medium that have messages to transact.

Owner:NOKIA CORP

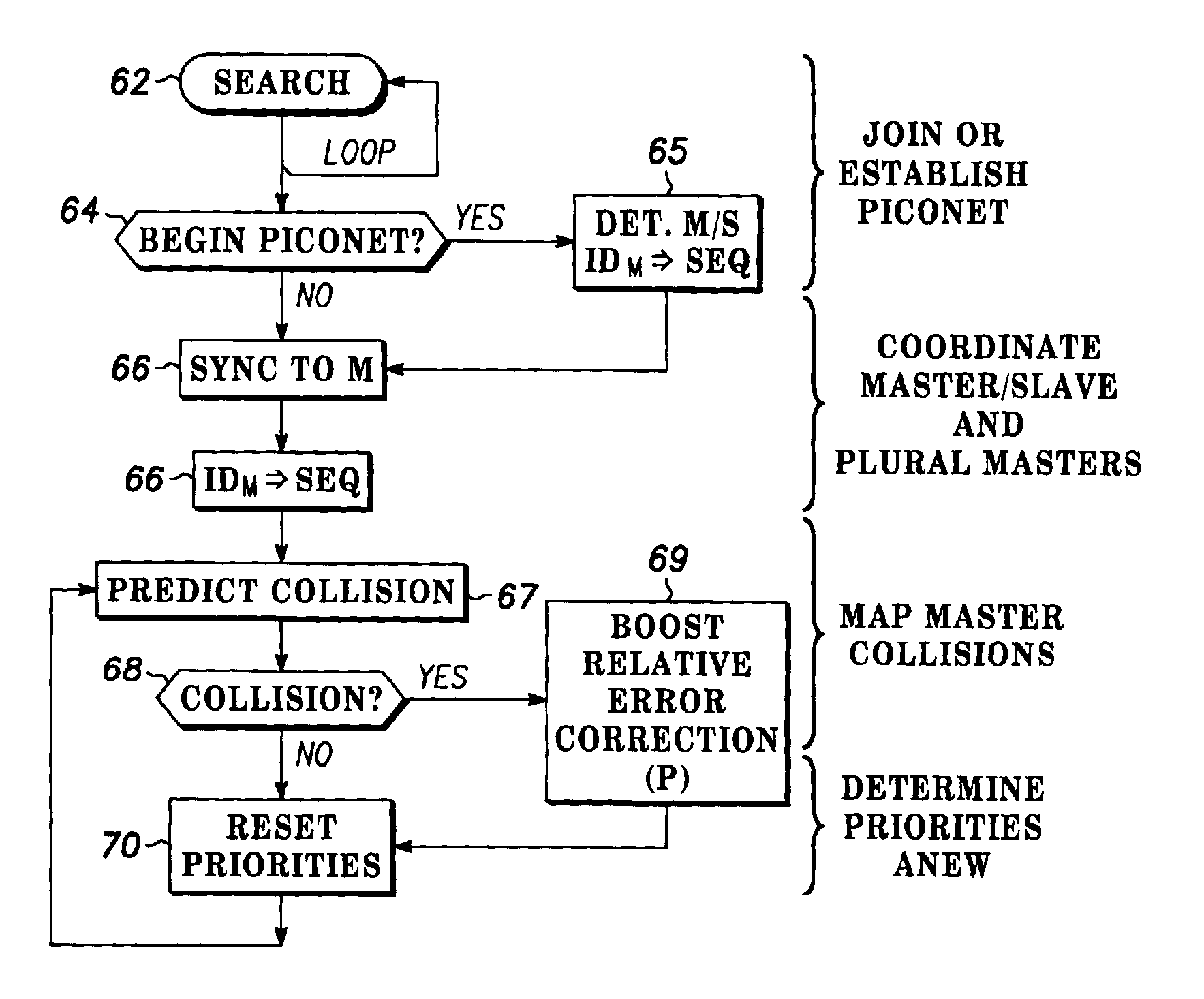

Multiple access frequency hopping network with interference anticipation

InactiveUS6920171B2Spreading over bandwidthSecret communicationMulti-frequency code systemsMessage queueRadio equipment

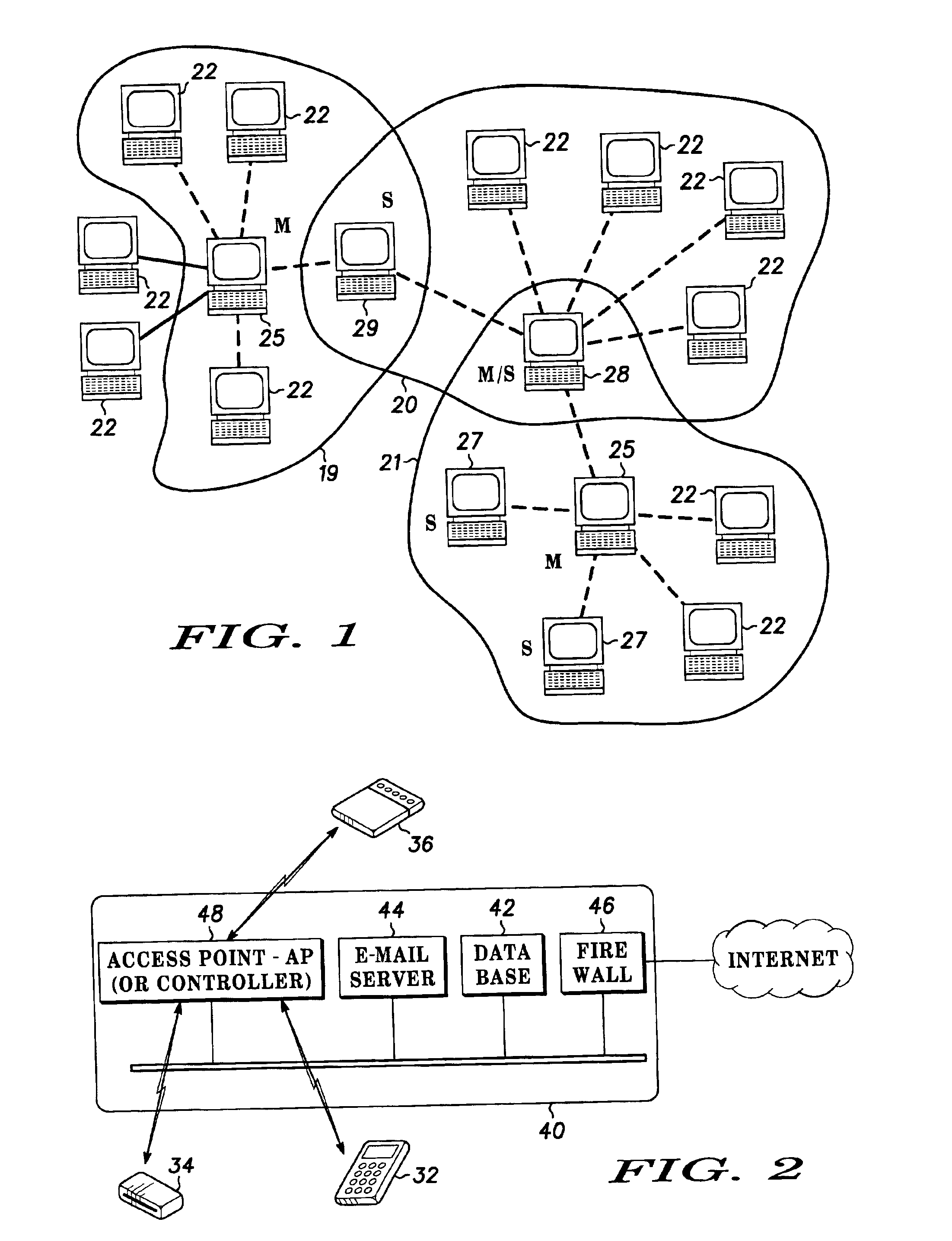

Spread spectrum packet-switching radio devices (22) are operated in two or more ad-hoc networks or pico-networks (19, 20, 21) that share frequency-hopping channel and time slots that may collide. The frequency hopping sequences (54) of two or more masters (25) are exchanged using identity codes, permitting the devices to anticipate collision time slots (52). Priorities are assigned to the simultaneously operating piconets (19, 20, 21) during collision slots (52), e.g., as a function of their message queue size or latency, or other factors. Lower priority devices may abstain from transmitting during predicted collision slots (52), and / or a higher priority device may employ enhanced transmission resources during those slots, such as higher error correction levels, or various combinations of abstinence and error correction may be applied. Collisions are avoided or the higher priority piconet (19, 20, 21) is made likely to prevail in a collision.

Owner:GOOGLE TECH HLDG LLC +1

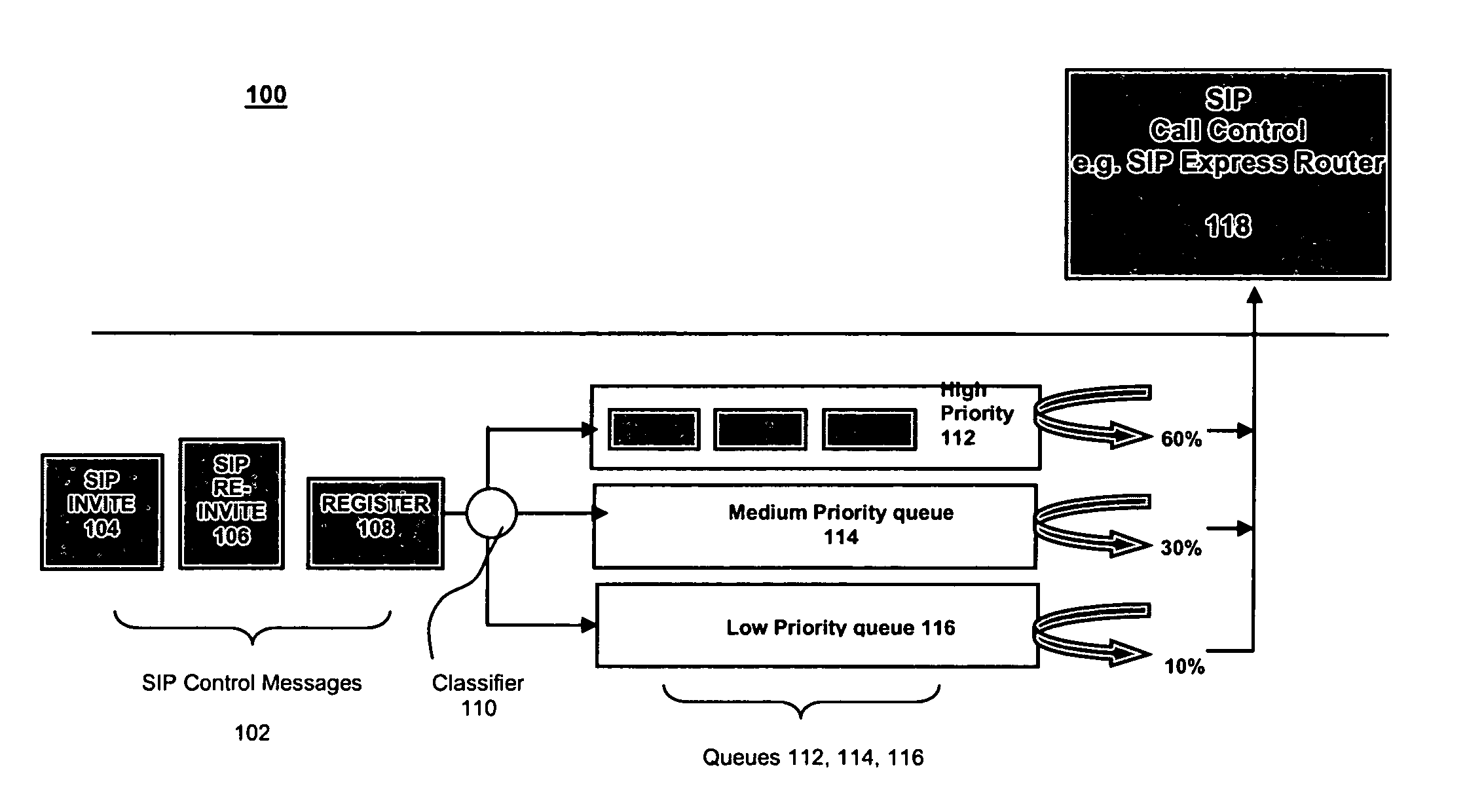

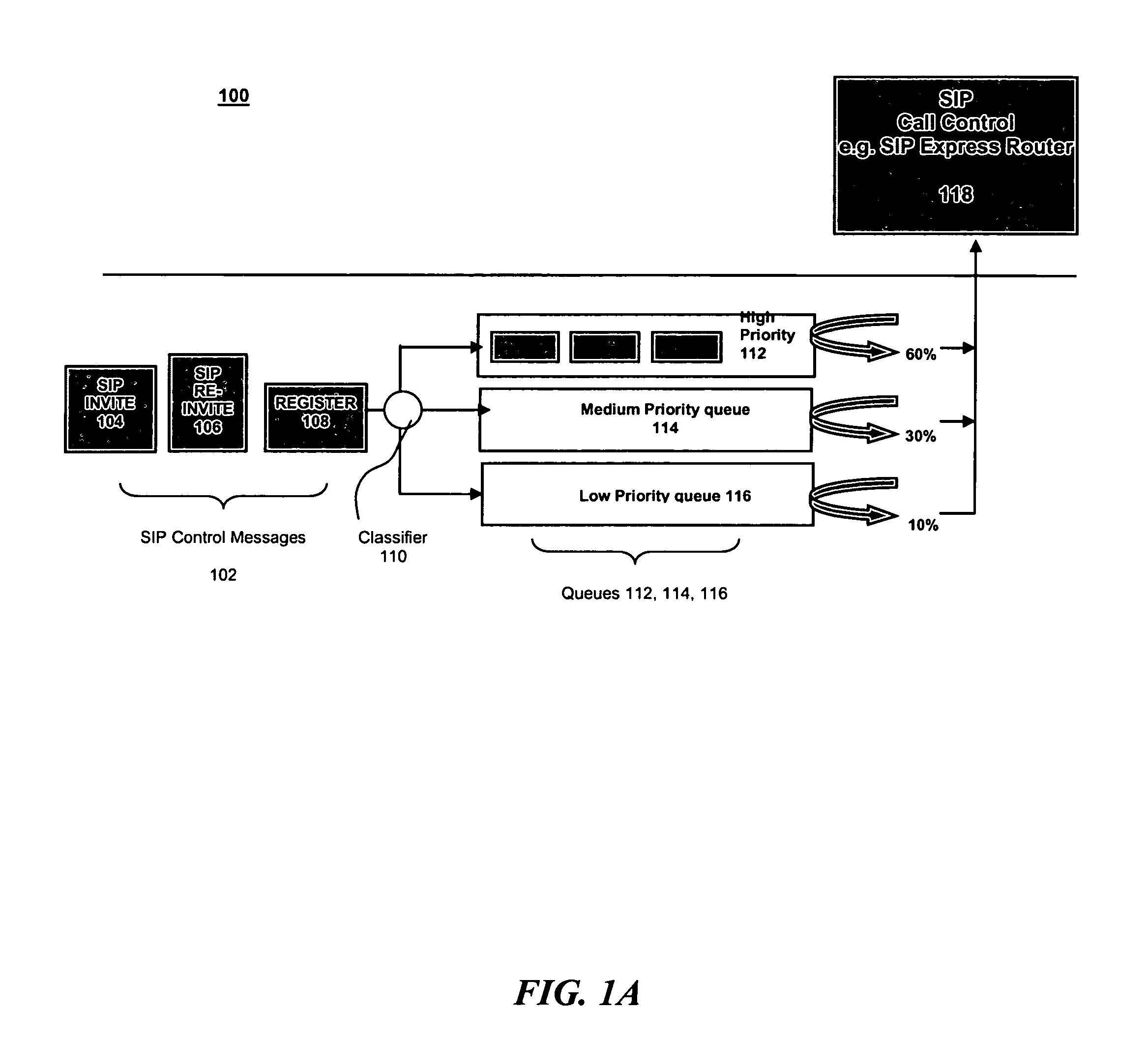

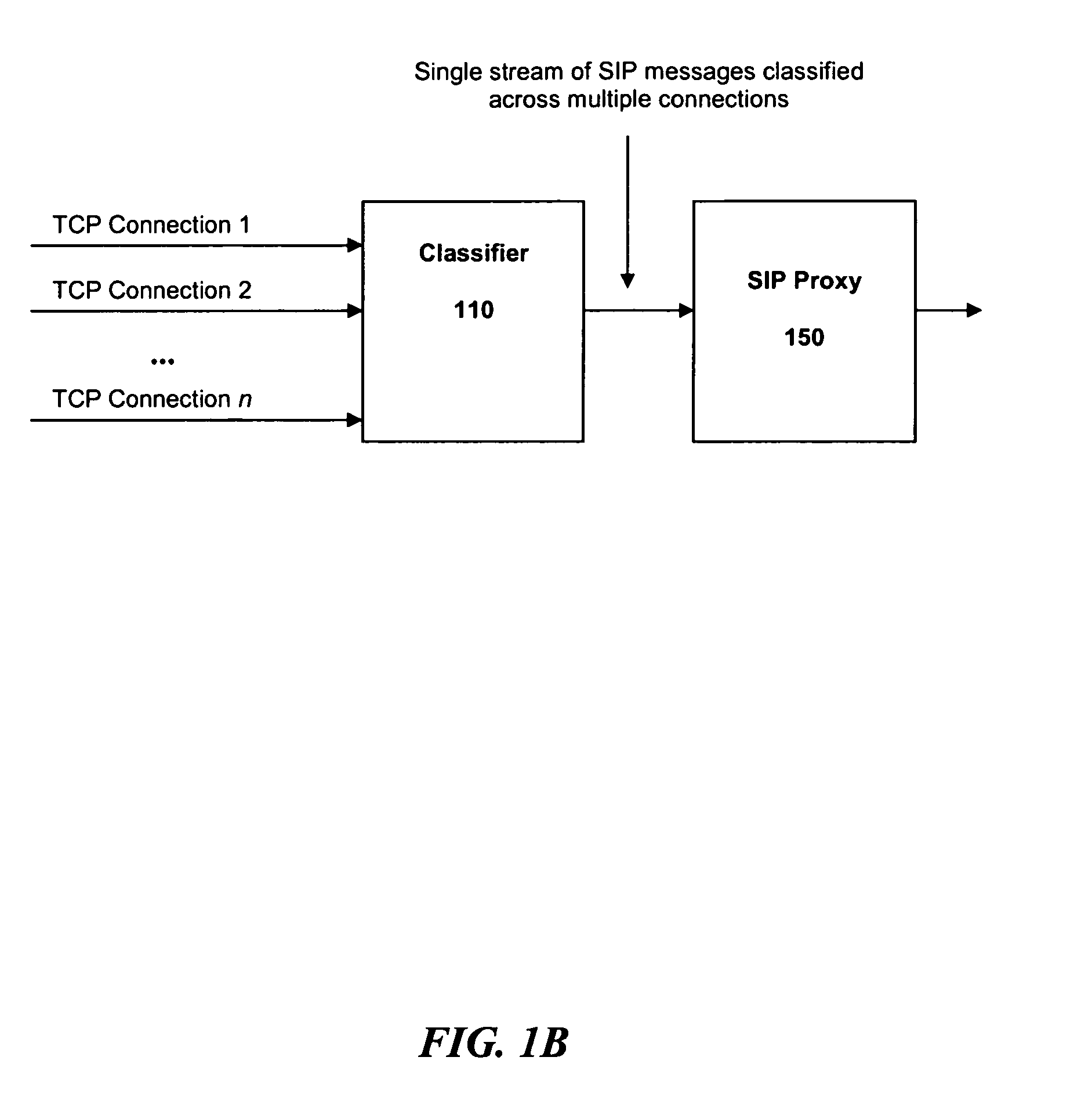

Overload protection for SIP servers

InactiveUS7522581B2Data switching by path configurationNetwork connectionsComputer scienceDistributed computing

A method for operating a server having a maximum capacity for servicing requests comprises the following steps: receiving a plurality of requests; classifying each request according to a value; determining a priority for handling the request according to the value, such that requests with higher values are assigned higher priorities; placing each request in one of multiple queues according to its priority value; and dropping the requests with the lowest priority when the plurality of requests are received at a rate that exceeds the maximum capacity. The server operates according to a session initiation protocol.Classifying each request comprises running a classification algorithm. The classification algorithm comprising steps of: receiving a rule set, each rule comprising headers and conditions; creating a condition table by taking a union of all conditions in the rules; creating a header table by extracting a common set of headers from the condition table; extracting the relevant headers from the header table; determining a matching rule; creating a bit vector table; selecting the matching rule according to data in the bit vector table; and applying the rule to place the message in the appropriate queue.

Owner:INT BUSINESS MASCH CORP

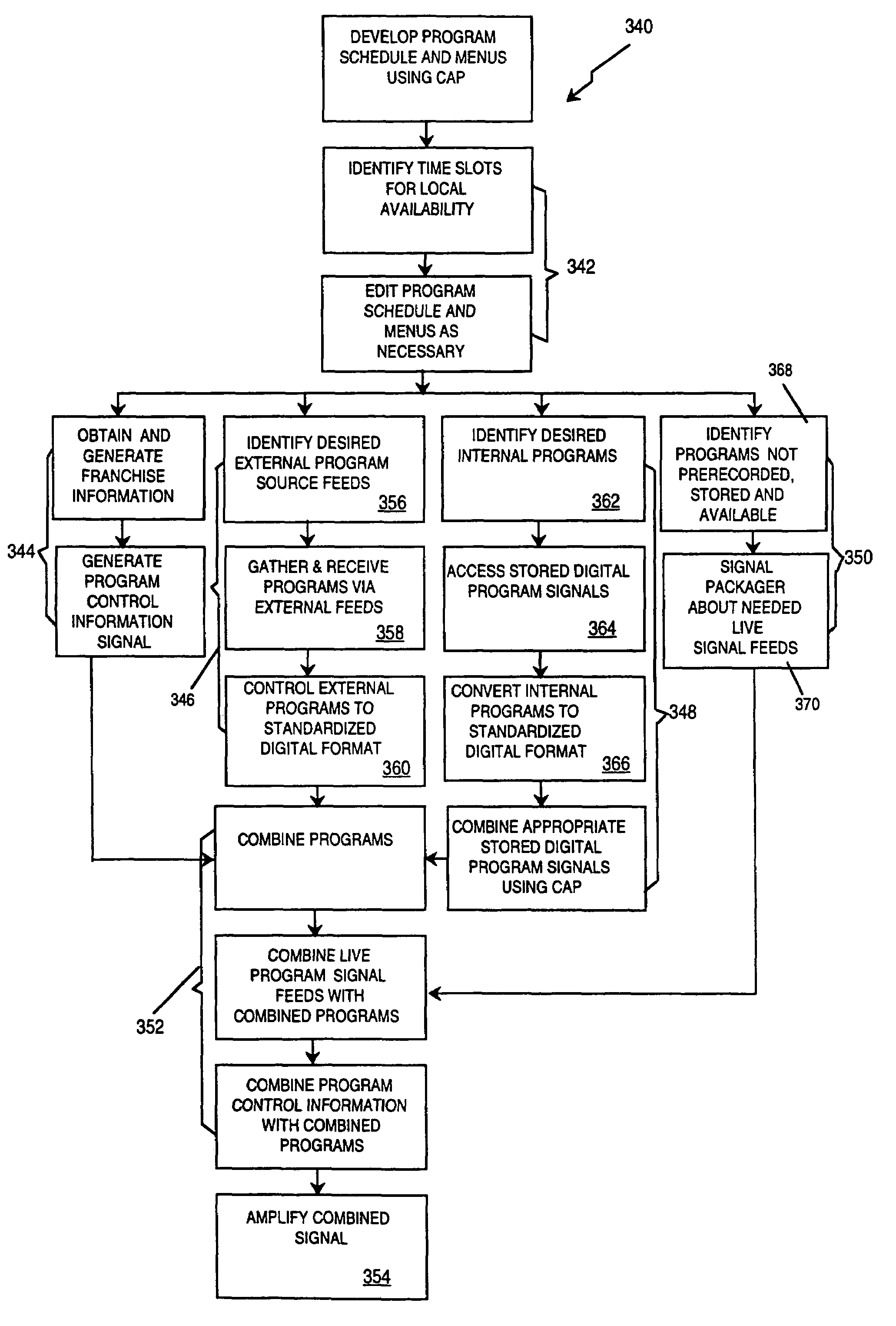

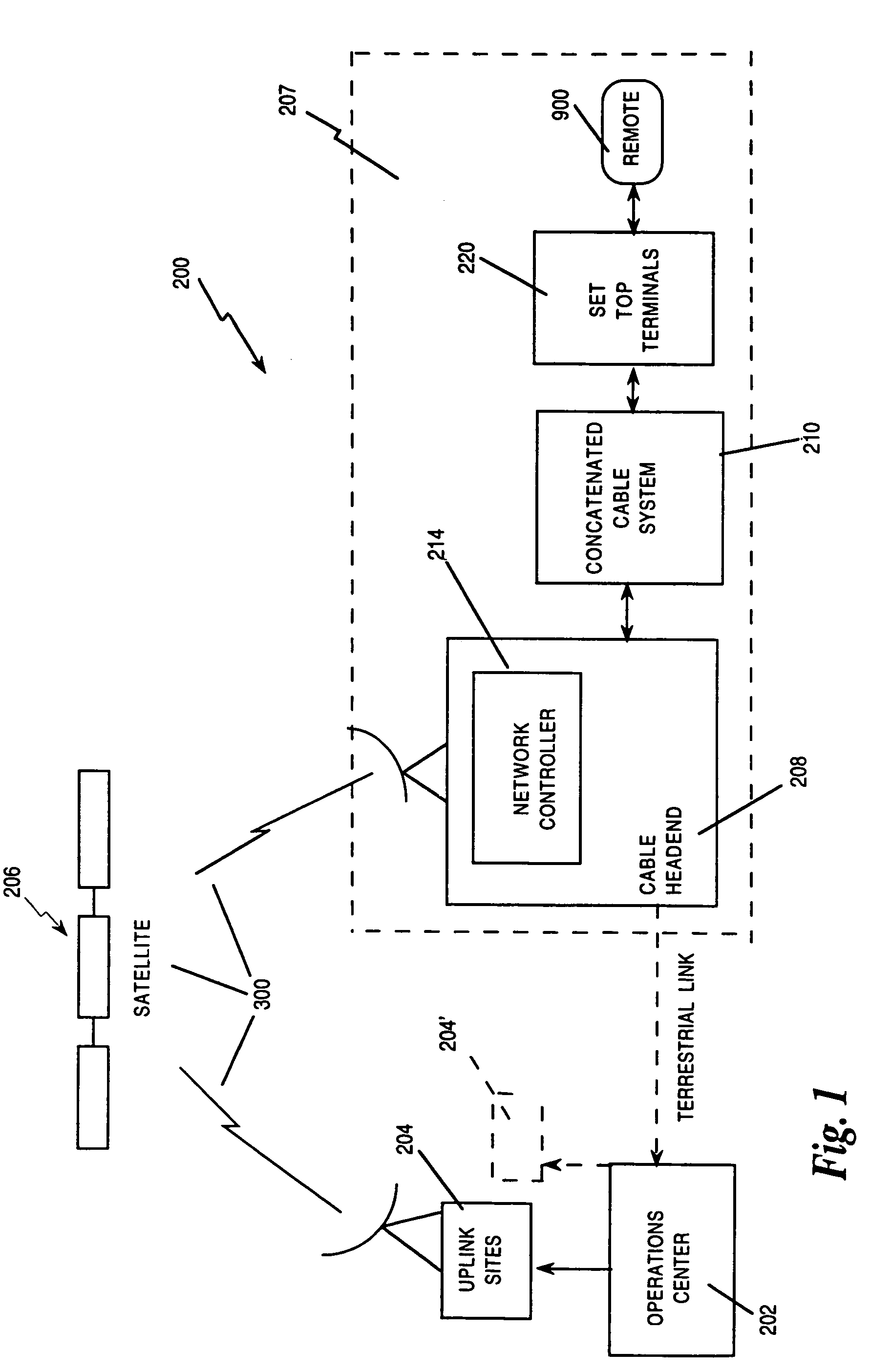

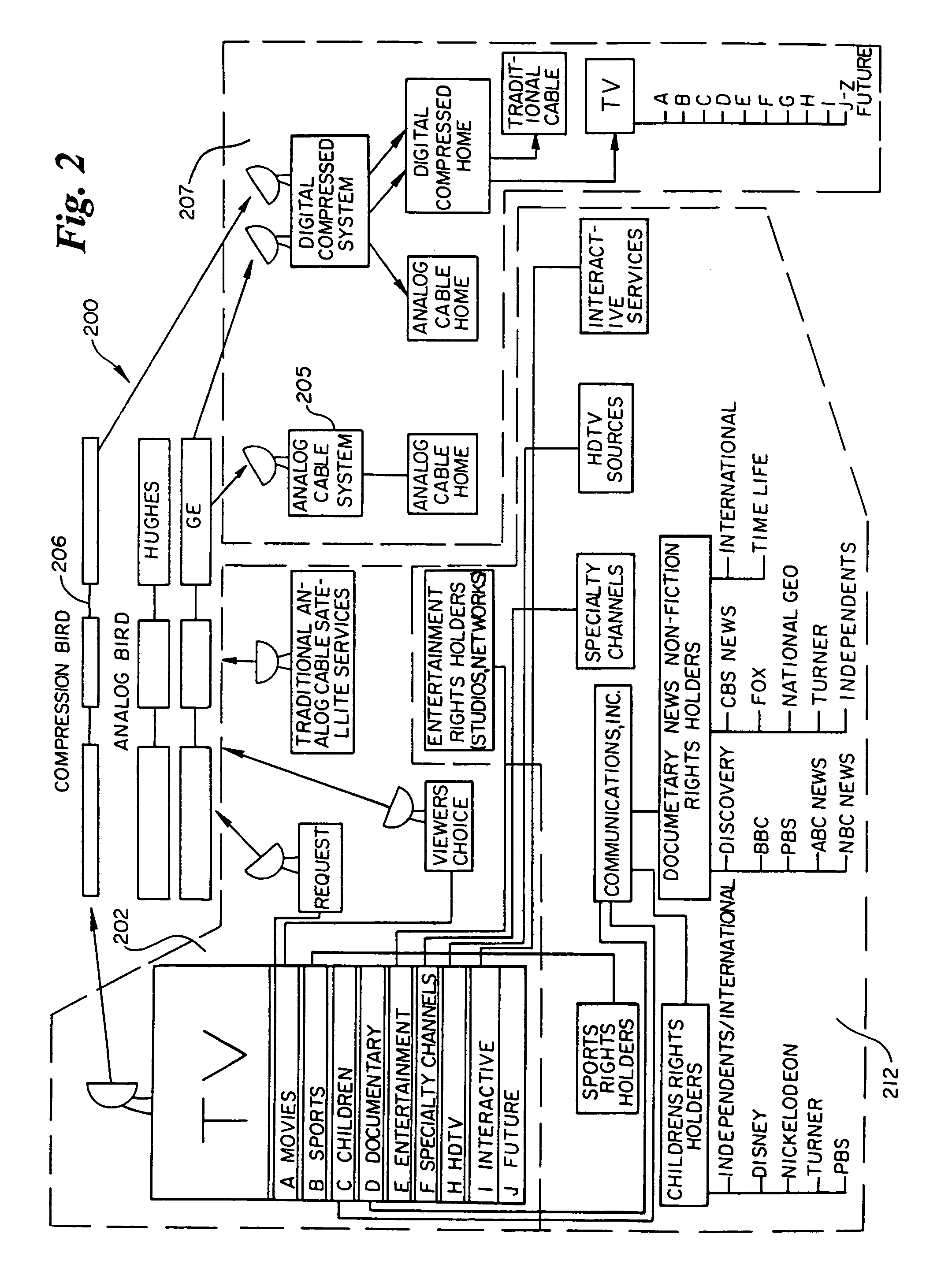

Bandwidth allocation for a television program delivery system

InactiveUS7207055B1Avoid difficult choicesOrganize effectivelyTelevision system detailsResource management arrangementsConsumer demandDistributed computing

This invention is a method of allocating bandwidth for a television program delivery system. This method selects specific programs from a plurality of programs, allocates the selected programs to a segment of bandwidth, and continues to allocate the programs until all the programs are allocated or all of the available bandwidth is allocated. The programs may be selected based on a variety of different factors or combination of factors. The selected programs may also be prioritized so that higher priority programs are distributed before lower priority programs in case there is not enough bandwidth to transmit all of the programs. This invention allows a television program delivery system to prioritize a large number of television programs and distribute these programs based on their priority levels. The invention also permits a television program delivery system to dynamically allocate bandwidth over time or based on marketing information, such as consumer demand.

Owner:COMCAST IP HLDG I

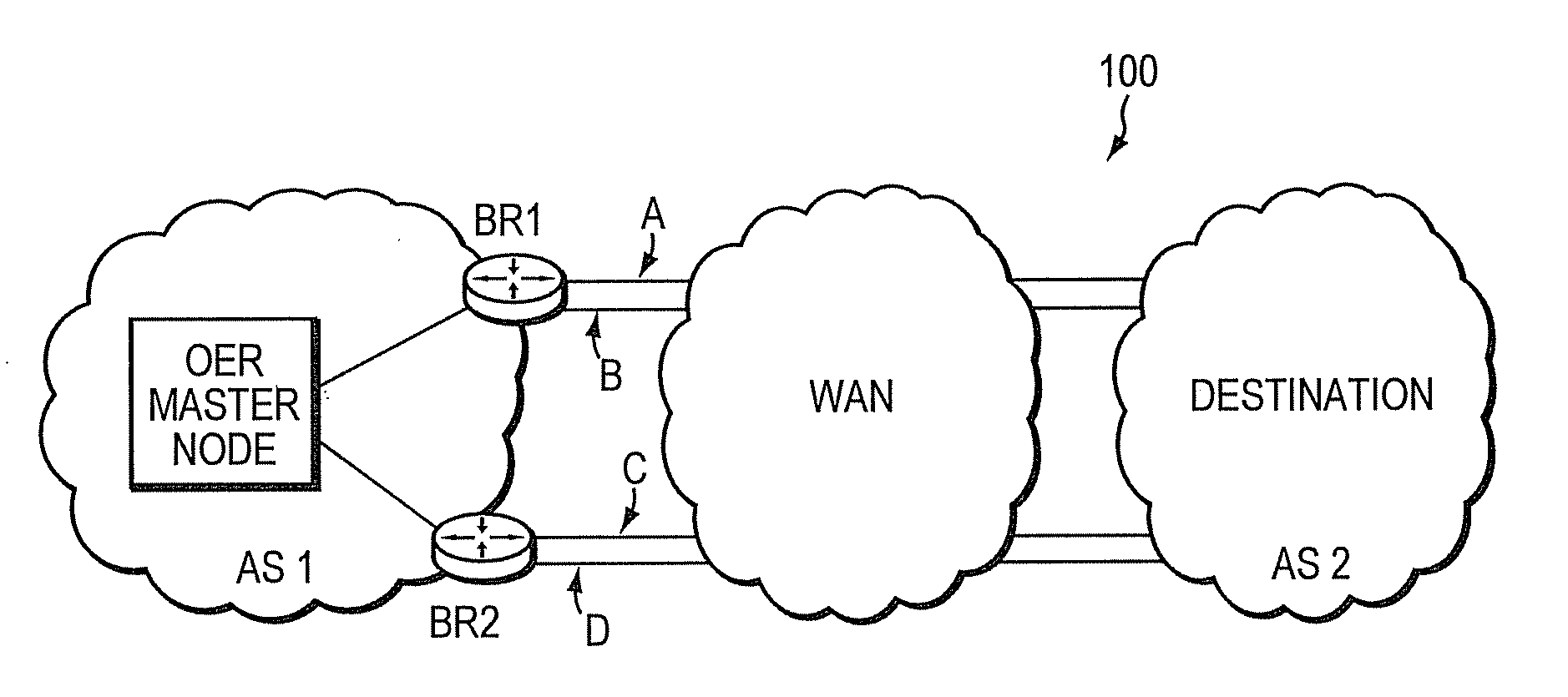

Technique for policy conflict resolution using priority with variance

ActiveUS20100265825A1Reduce varianceError preventionTransmission systemsComputer scienceLower priority

In one embodiment, a value for a option for a particular policy of a plurality of policies that are ranked in a priority order is ascertained. A variance to the value associated with the option for the particular policy is applied to define a range of acceptable values for the particular policy. A determination is made whether one or more other options exist that have values within the range of acceptable values for the particular policy. If no other options exist that have values within the range of acceptable values for the particular policy, the option is selected If other options exist that have values within the range of acceptable values for the particular policy, the ascertaining, applying and determining is repeated for a next lower priority policy of the plurality of policies to consider the other options, the repeating to occur successively until an option is selected.

Owner:CISCO TECH INC

User and content aware object-based data stream transmission methods and arrangements

InactiveUS7093028B1Quality improvementImprove interactivityMultiple digital computer combinationsData switching networksObject basedData stream

A scalable video transmission scheme is provided in which client interaction and video content itself are taken into consideration during transmission. Methods and arrangements are provided to prioritize / classify different types of information according to their importance and to packetize or otherwise arrange the prioritized information in a manner such that lower priority information may be dropped during transmission. Thus, when network congestion occurs or there is not enough network bandwidth to transmit all of the prioritized information about an object, some (e.g., lower priority) information may be dropped at the server or at an intermediate network node to reduce the bit rate. Thus, when the server transmits multiple video objects over a channel of limited bandwidth capacity, the bit rate allocated to each object can be adjusted according to several factors, such as, e.g., information importance and client interaction.

Owner:MICROSOFT TECH LICENSING LLC

Threshold-based and power-efficient scheduling request procedure

ActiveUS20150117342A1Shorten total active timeReduce power consumptionConnection managementChannel coding adaptationPower efficientRequest procedure

The invention relates to methods for improving a scheduling request transmission between a UE and a base station. The transmission of the scheduling request is postponed, by implementing a threshold that the data in the transmission buffer has to reach, before a transmission of the scheduling request is triggered. In one variant, the data in the transmission buffer needs to reach a specific amount, to trigger a scheduling request. The invention refers to further improvements: the PDDCH monitoring time window is delayed after sending a scheduling request; the dedicated scheduling request resources of the PUCCH are prioritized differently such that low-priority scheduling requests are transmitted less often.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com