Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

71 results about "Packet prioritization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

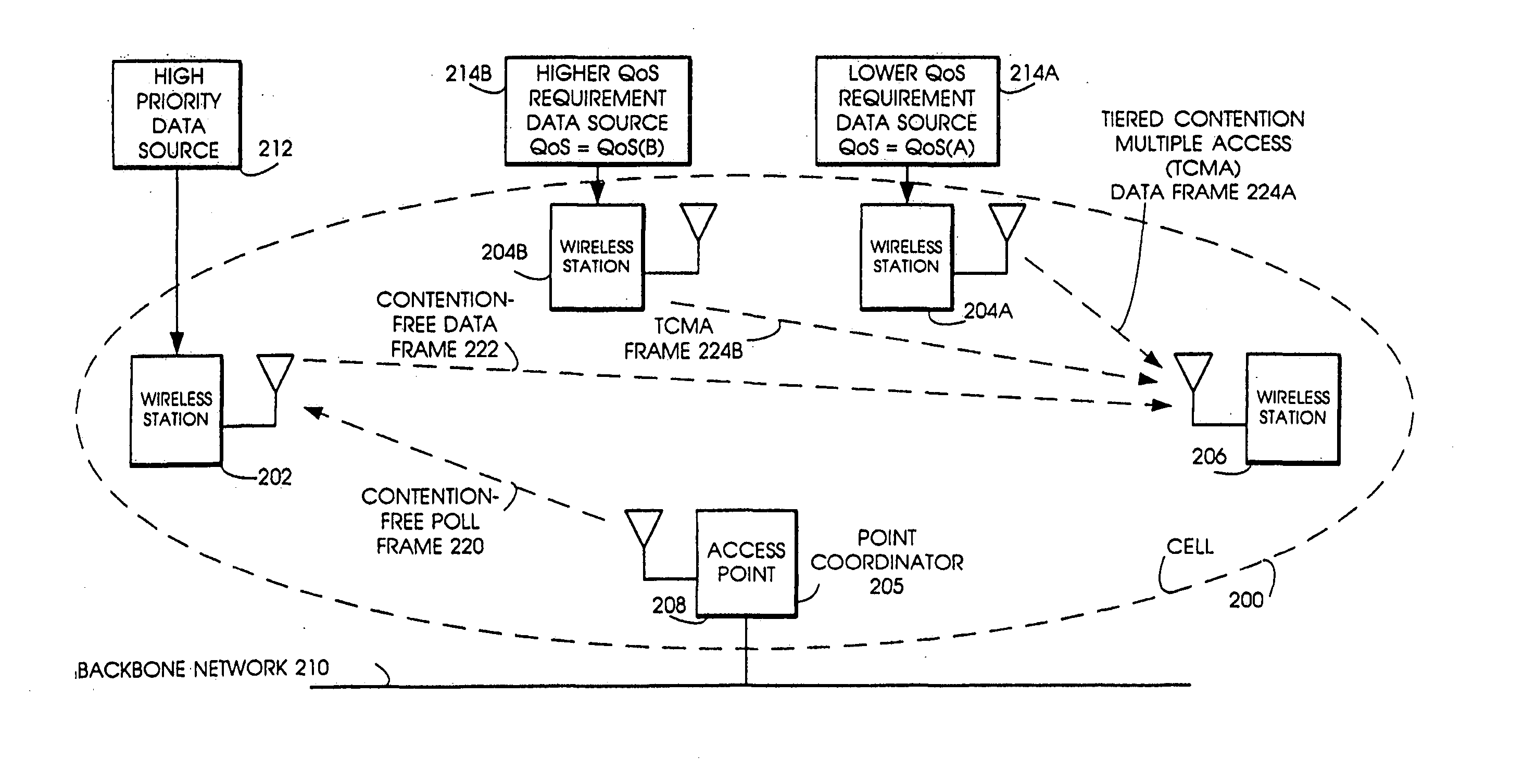

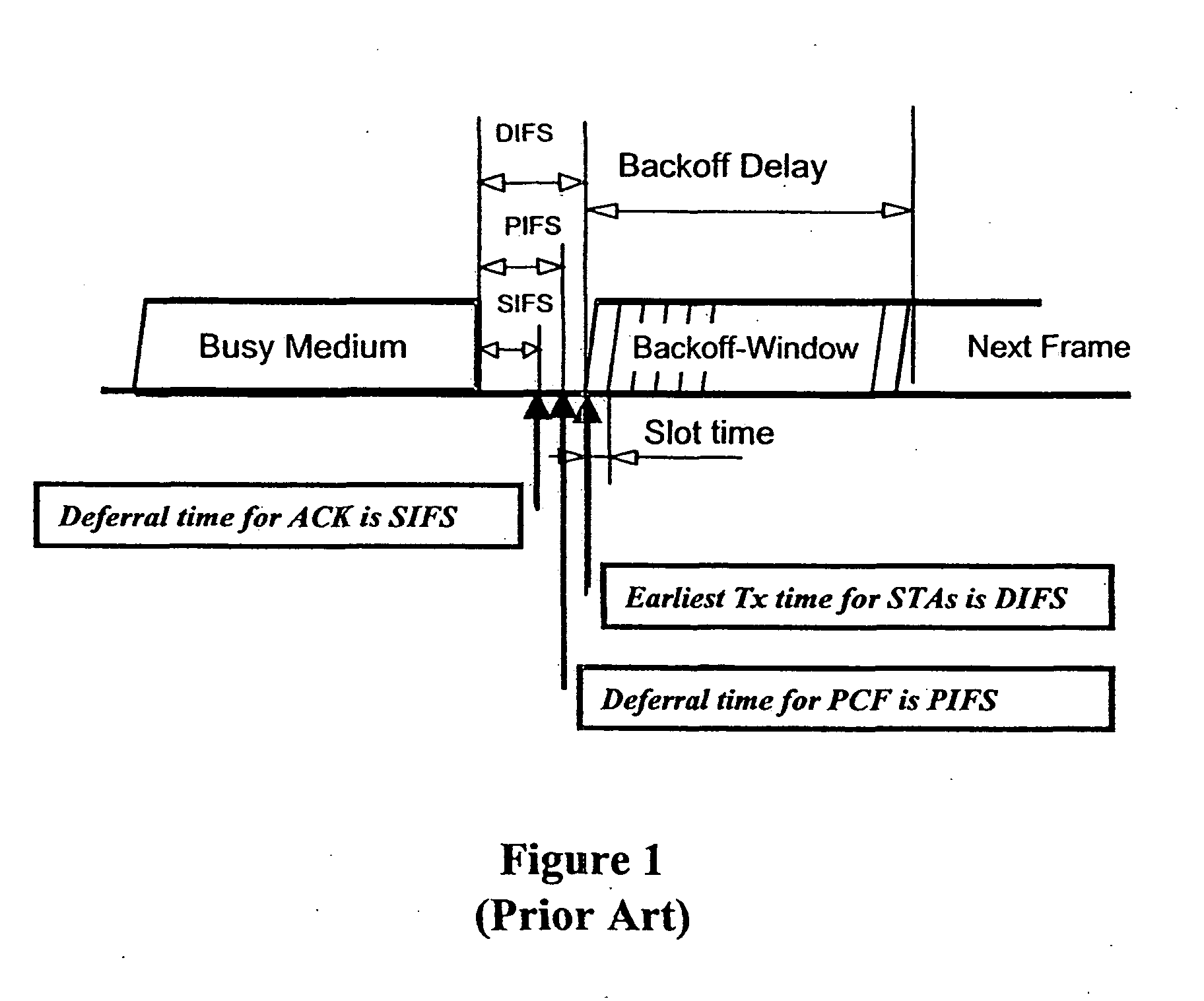

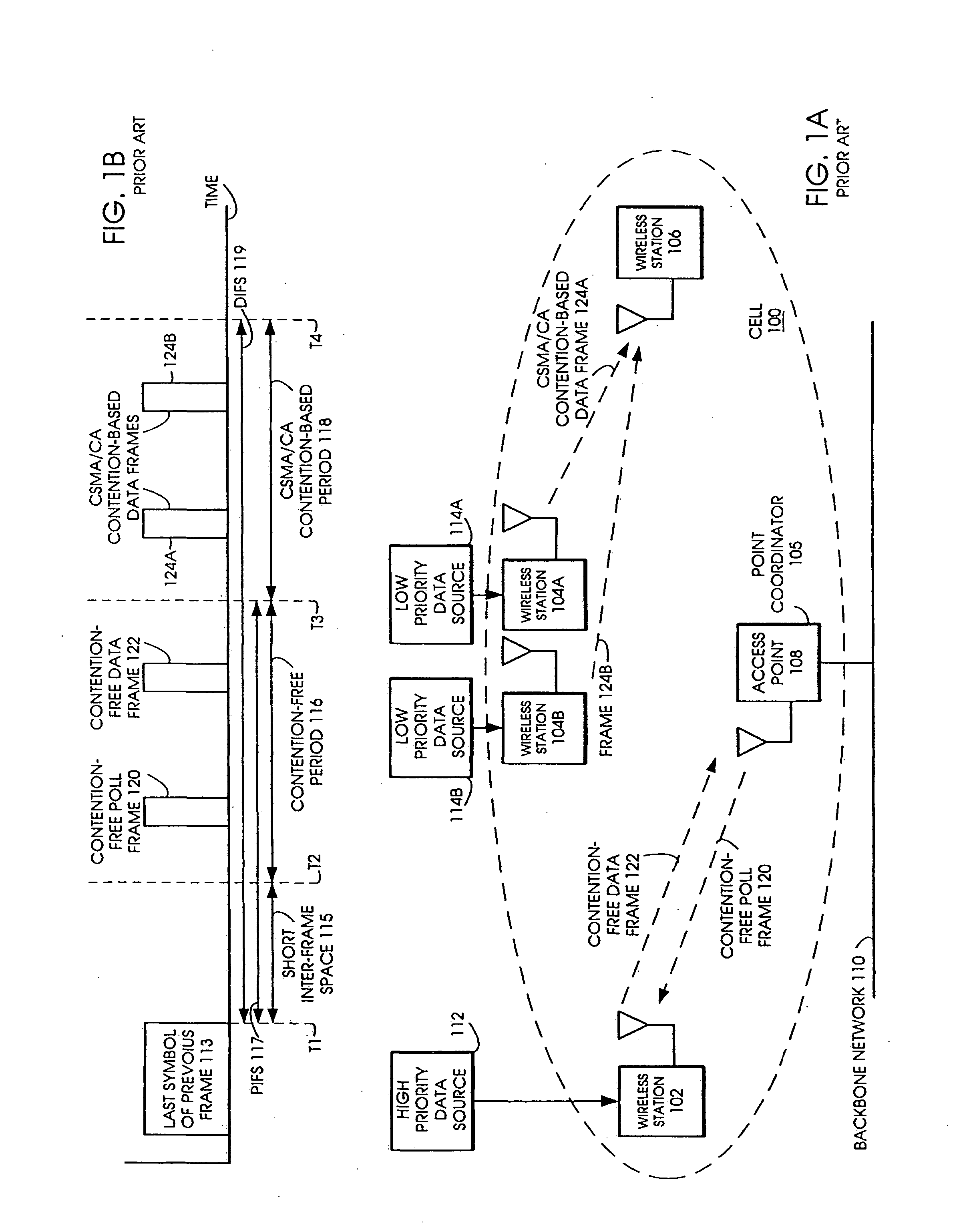

Tiered contention multiple access (TCMA): a method for priority-based shared channel access

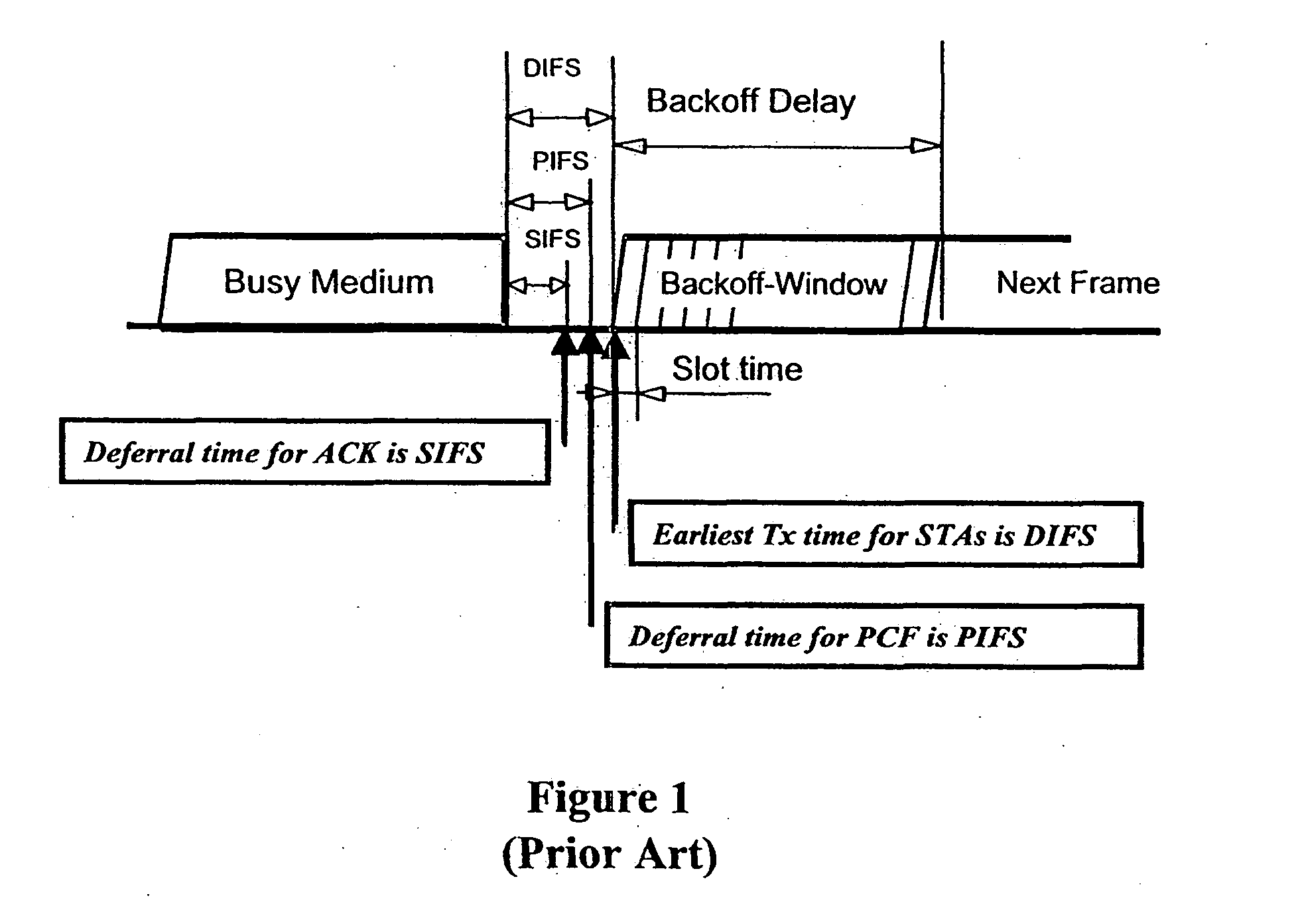

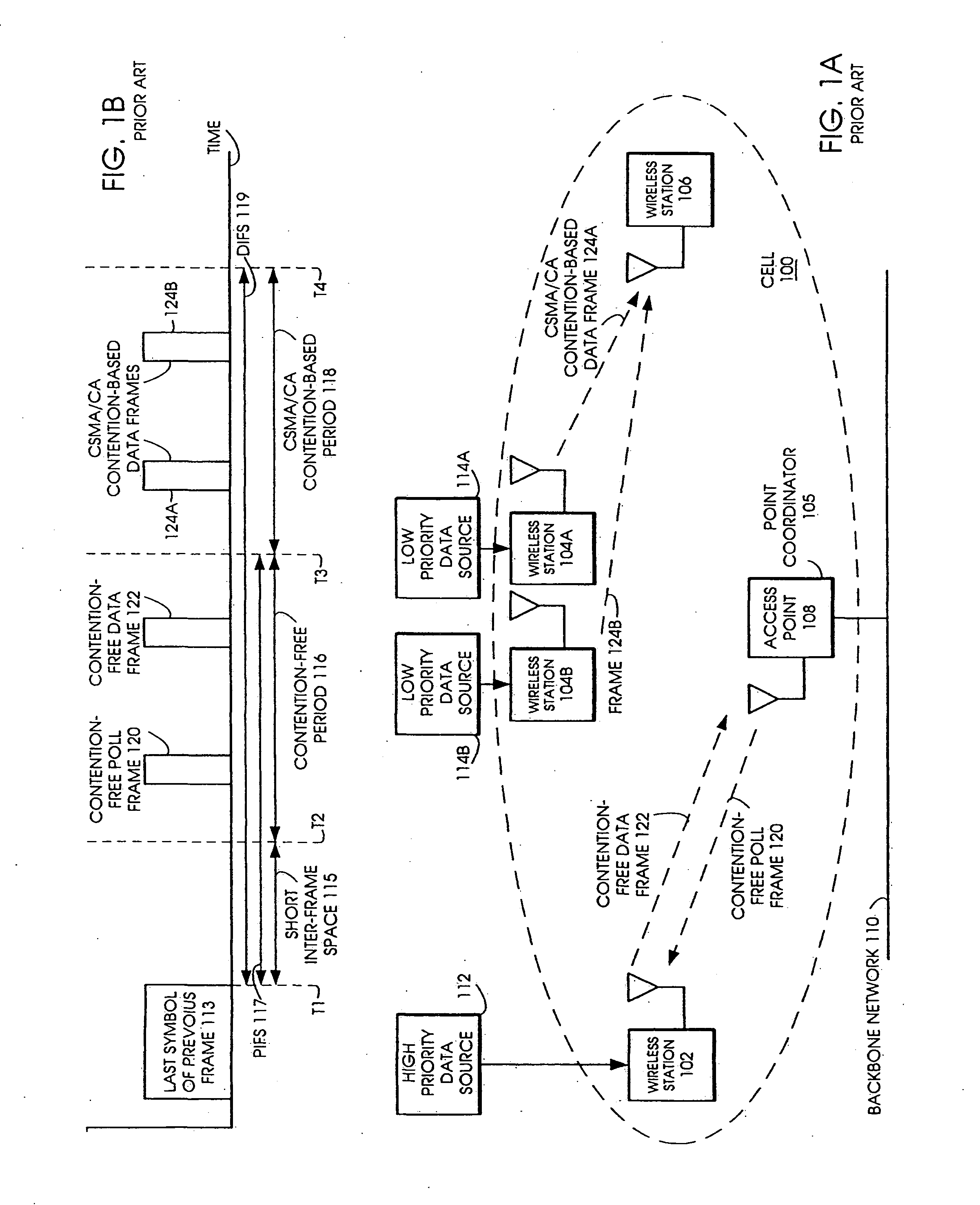

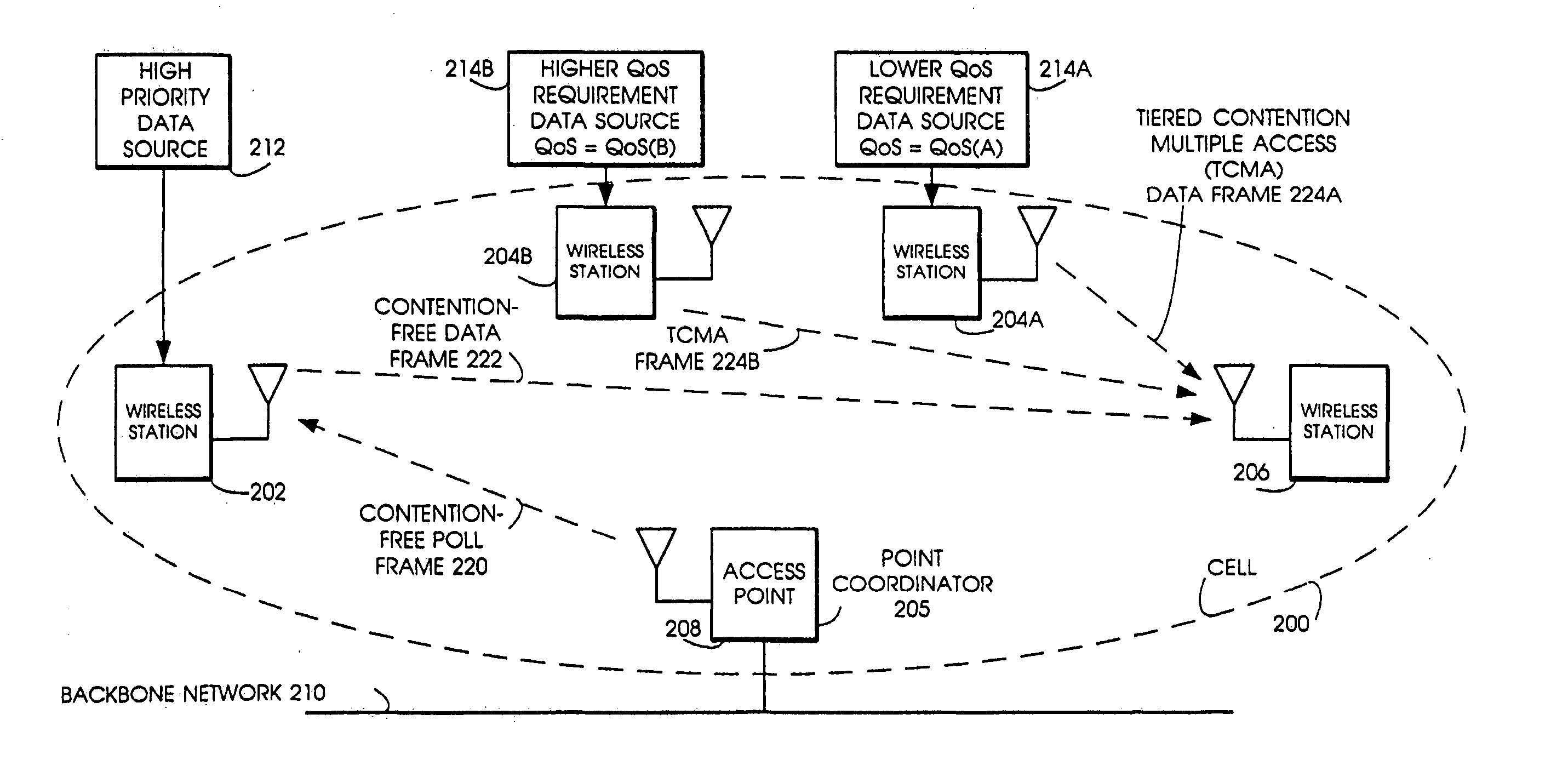

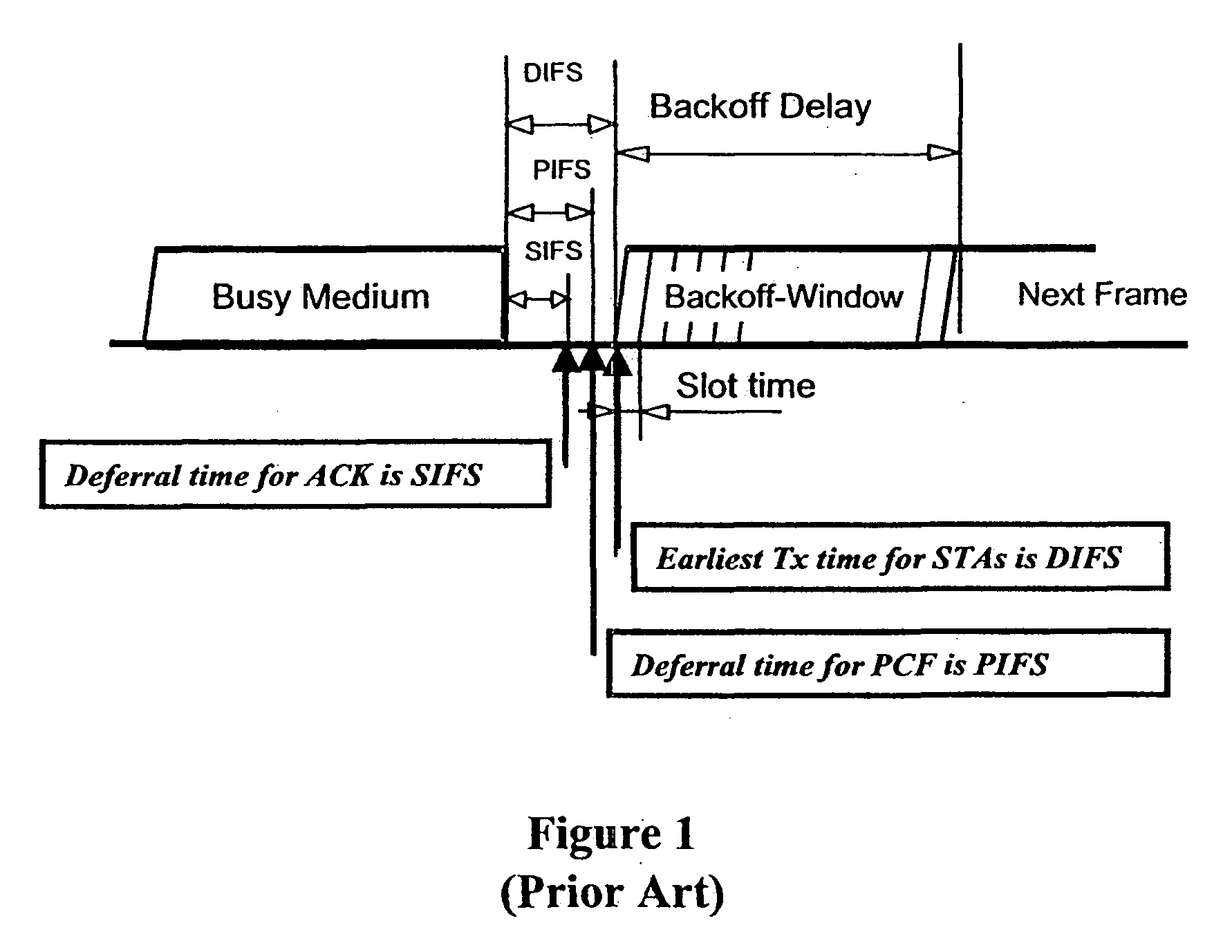

ActiveUS7095754B2Minimizing chanceLower latencySynchronisation arrangementNetwork traffic/resource managementService-level agreementIdle time

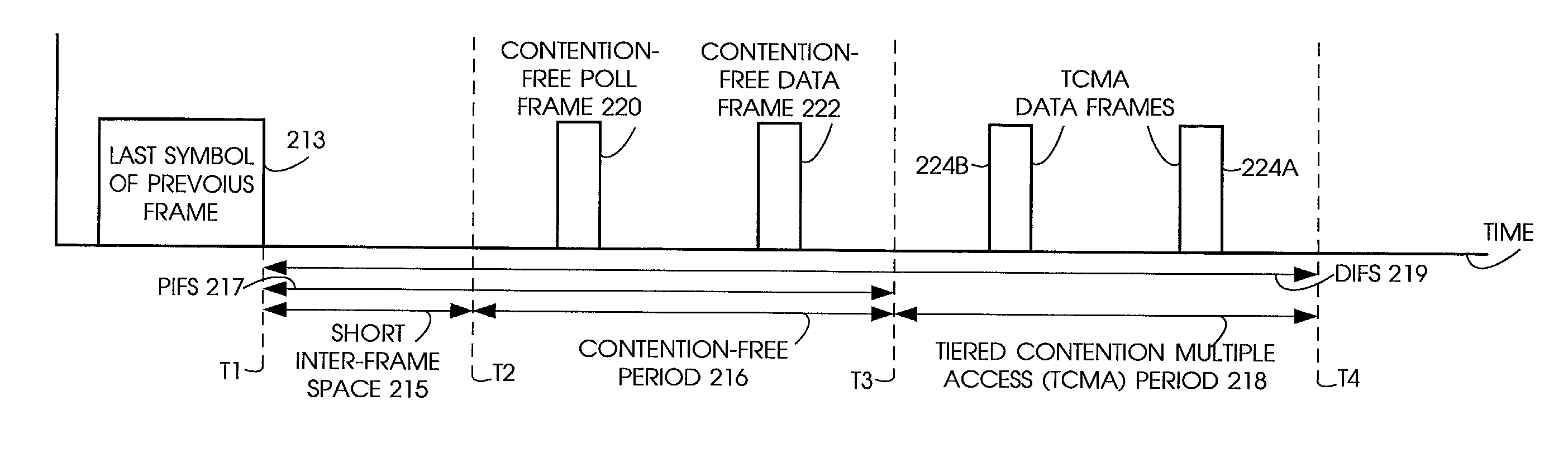

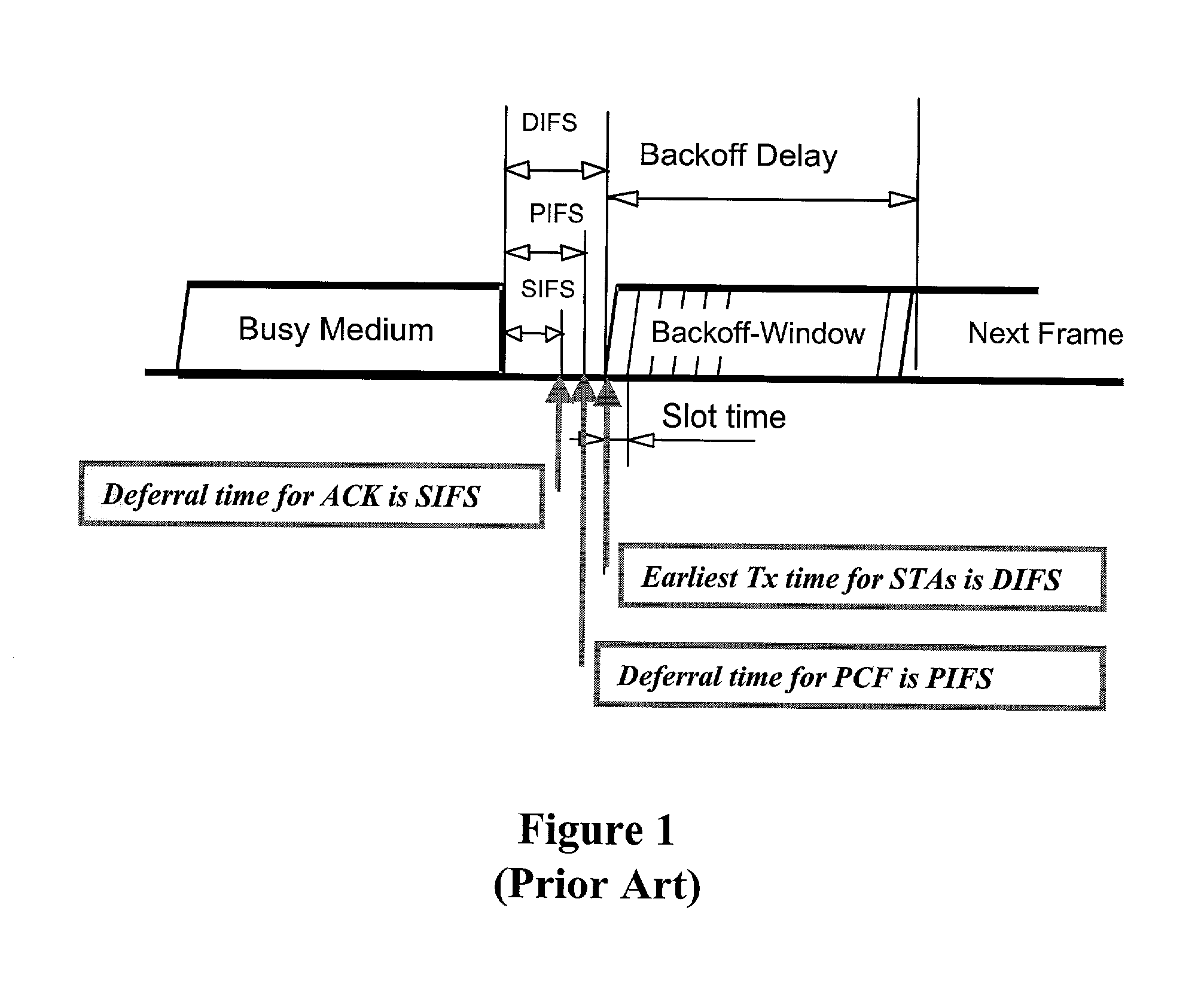

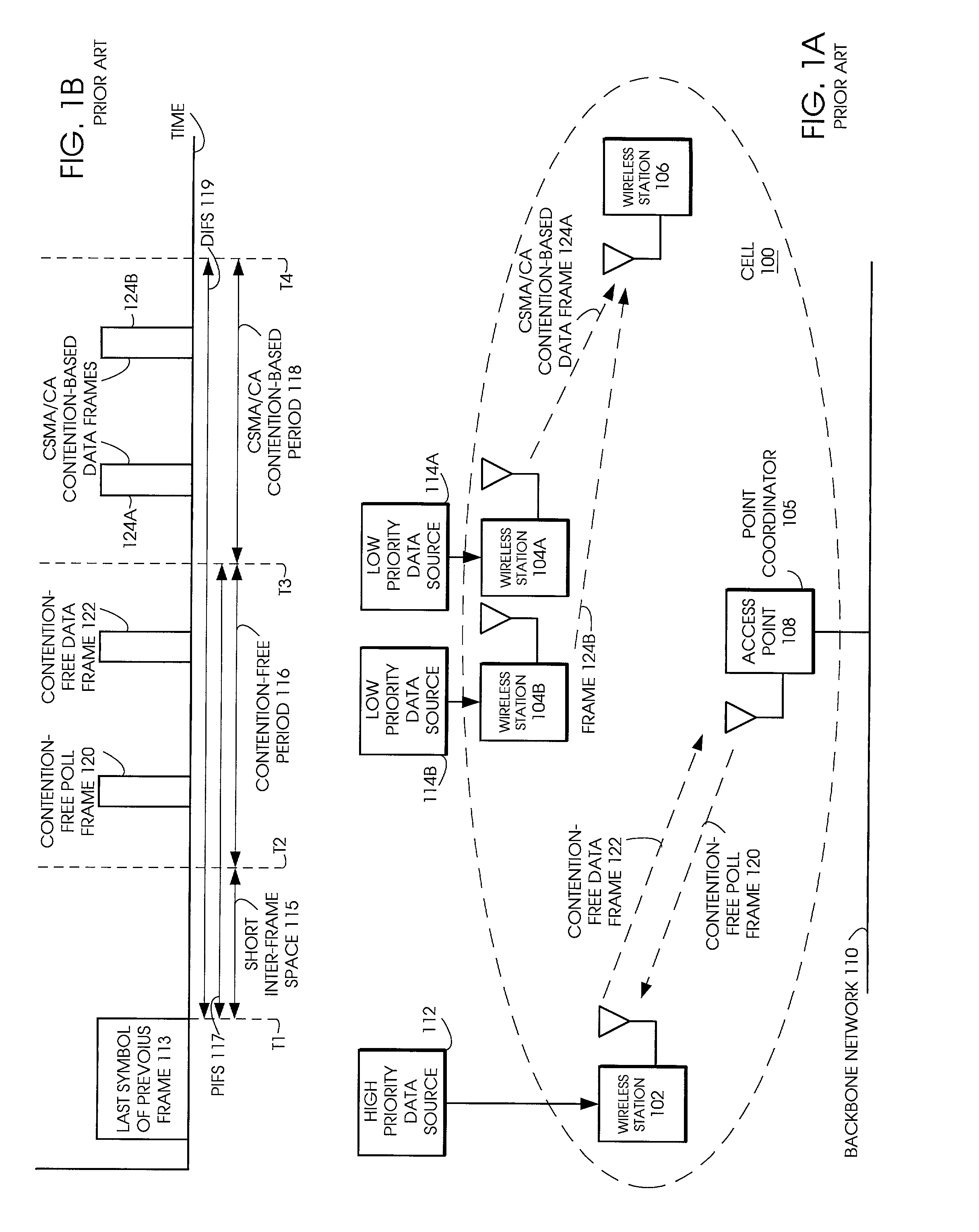

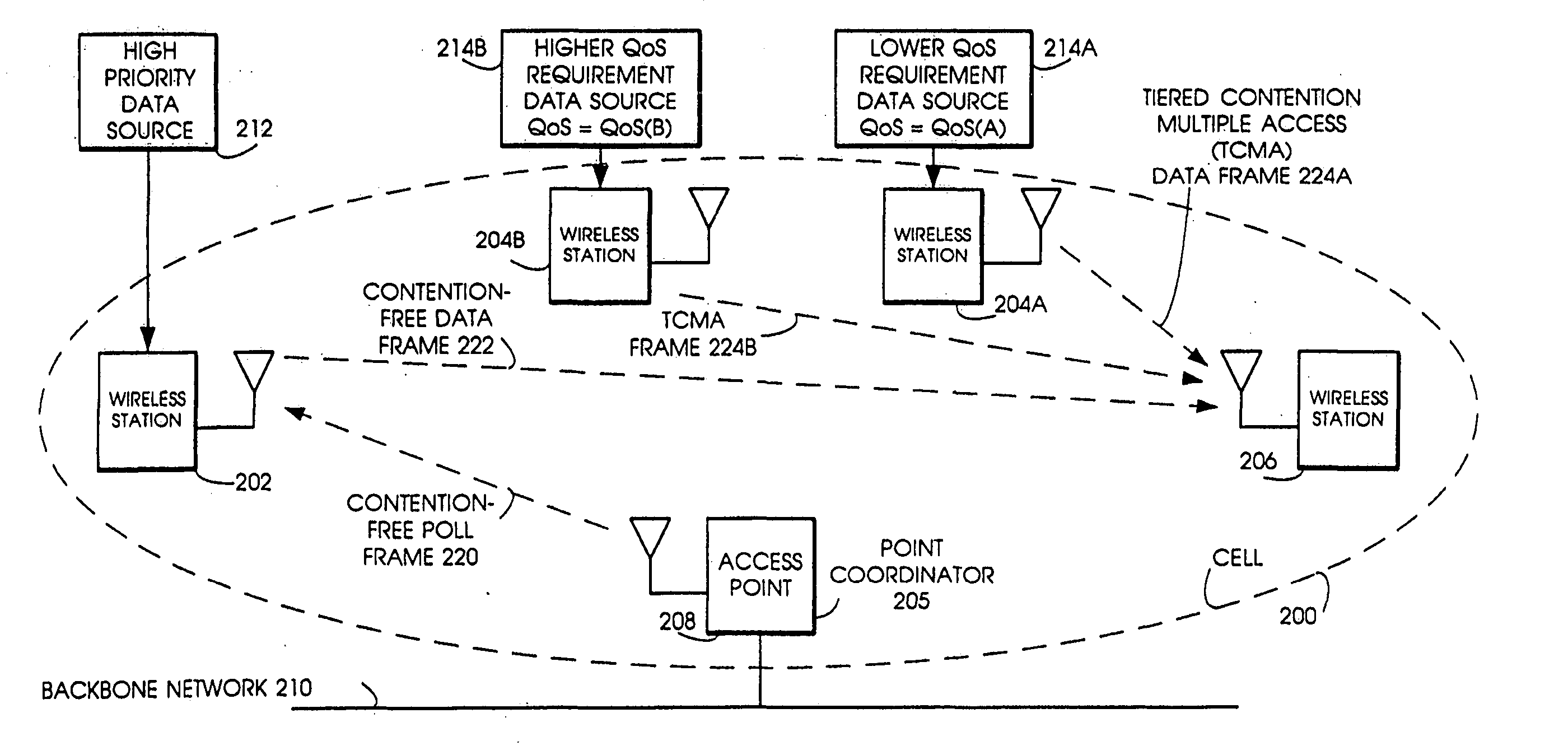

Quality of Service (QoS) support is provided by means of a Tiered Contention Multiple Access (TCMA) distributed medium access protocol that schedules transmission of different types of traffic based on their service quality specifications. In one embodiment, a wireless station is supplied with data from a source having a lower QoS priority QoS(A), such as file transfer data. Another wireless station is supplied with data from a source having a higher QoS priority QoS(B), such as voice and video data. Each wireless station can determine the urgency class of its pending packets according to a scheduling algorithm. For example file transfer data is assigned lower urgency class and voice and video data is assigned higher urgency class. There are several urgency classes which indicate the desired ordering. Pending packets in a given urgency class are transmitted before transmitting packets of a lower urgency class by relying on class-differentiated urgency arbitration times (UATs), which are the idle time intervals required before the random backoff counter is decreased. In another embodiment packets are reclassified in real time with a scheduling algorithm that adjusts the class assigned to packets based on observed performance parameters and according to negotiated QoS-based requirements. Further, for packets assigned the same arbitration time, additional differentiation into more urgency classes is achieved in terms of the contention resolution mechanism employed, thus yielding hybrid packet prioritization methods. An Enhanced DCF Parameter Set is contained in a control packet sent by the AP to the associated stations, which contains class differentiated parameter values necessary to support the TCMA. These parameters can be changed based on different algorithms to support call admission and flow control functions and to meet the requirements of service level agreements.

Owner:AT&T INTPROP I L P

Tiered contention multiple access (TCMA): a method for priority-based shared channel access

InactiveUS20070019664A1Minimizing chanceLower latencySynchronisation arrangementError preventionService-level agreementClass of service

Quality of Service (QoS) support is provided by means of a Tiered Contention Multiple Access (TCMA) distributed medium access protocol that schedules transmission of different types of traffic based on their service quality specifications. In one embodiment, a wireless station is supplied with data from a source having a lower QoS priority QoS(A), such as file transfer data. Another wireless station is supplied with data from a source having a higher QoS priority QoS(B), such as voice and video data. Each wireless station can determine the urgency class of its pending packets according to a scheduling algorithm. For example file transfer data is assigned lower urgency class and voice and video data is assigned higher urgency class. There are several urgency classes which indicate the desired ordering. Pending packets in a given urgency class are transmitted before transmitting packets of a lower urgency class by relying on class-differentiated urgency arbitration times (UATs), which are the idle time intervals required before the random backoff counter is decreased. In another embodiment packets are reclassified in real time with a scheduling algorithm that adjusts the class assigned to packets based on observed performance parameters and according to negotiated QoS-based requirements. Further, for packets assigned the same arbitration time, additional differentiation into more urgency classes is achieved in terms of the contention resolution mechanism employed, thus yielding hybrid packet prioritization methods. An Enhanced DCF Parameter Set is contained in a control packet sent by the AP to the associated stations, which contains class differentiated parameter values necessary to support the TCMA. These parameters can be changed based on different algorithms to support call admission and flow control functions and to meet the requirements of service level agreements.

Owner:AT&T INTPROP II L P

Tiered contention multiple access (TCMA): a method for priority-based shared channel access

InactiveUS20070041398A1Minimizing chanceLower latencySynchronisation arrangementNetwork traffic/resource managementService-level agreementTraffic capacity

Quality of Service (QoS) support is provided by means of a Tiered Contention Multiple Access (TCMA) distributed medium access protocol that schedules transmission of different types of traffic based on their service quality specifications. In one embodiment, a wireless station is supplied with data from a source having a lower QoS priority QoS(A), such as file transfer data. Another wireless station is supplied with data from a source having a higher QoS priority QoS(B), such as voice and video data. Each wireless station can determine the urgency class of its pending packets according to a scheduling algorithm. For example file transfer data is assigned lower urgency class and voice and video data is assigned higher urgency class. There are several urgency classes which indicate the desired ordering. Pending packets in a given urgency class are transmitted before transmitting packets of a lower urgency class by relying on class-differentiated urgency arbitration times (UATs), which are the idle time intervals required before the random backoff counter is decreased. In another embodiment packets are reclassified in real time with a scheduling algorithm that adjusts the class assigned to packets based on observed performance parameters and according to negotiated QoS-based requirements. Further, for packets assigned the same arbitration time, additional differentiation into more urgency classes is achieved in terms of the contention resolution mechanism employed, thus yielding hybrid packet prioritization methods. An Enhanced DCF Parameter Set is contained in a control packet sent by the AP to the associated stations, which contains class differentiated parameter values necessary to support the TCMA. These parameters can be changed based on different algorithms to support call admission and flow control functions and to meet the requirements of service level agreements.

Owner:AT & T INTPROP II LP

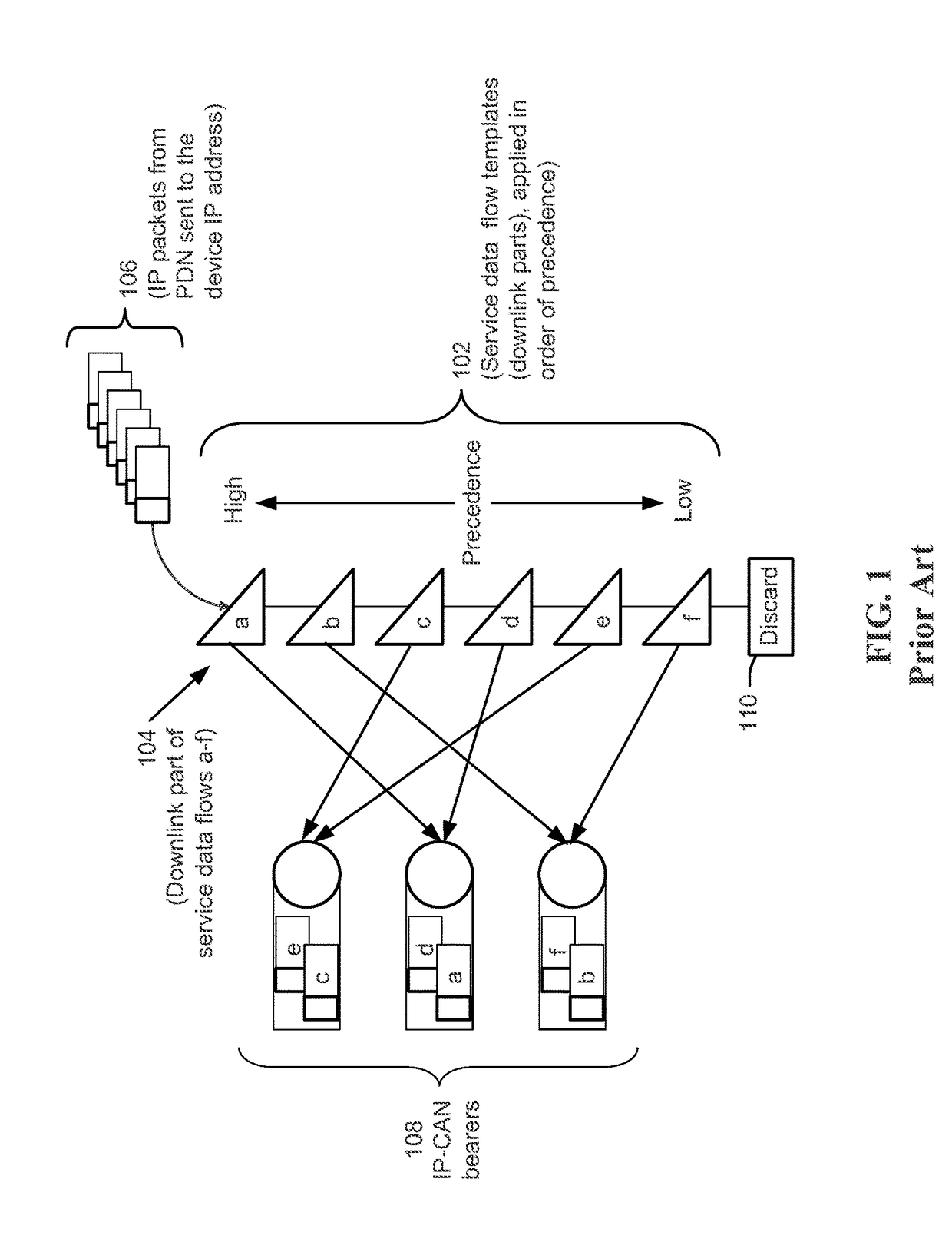

Hierarchical virtual queuing

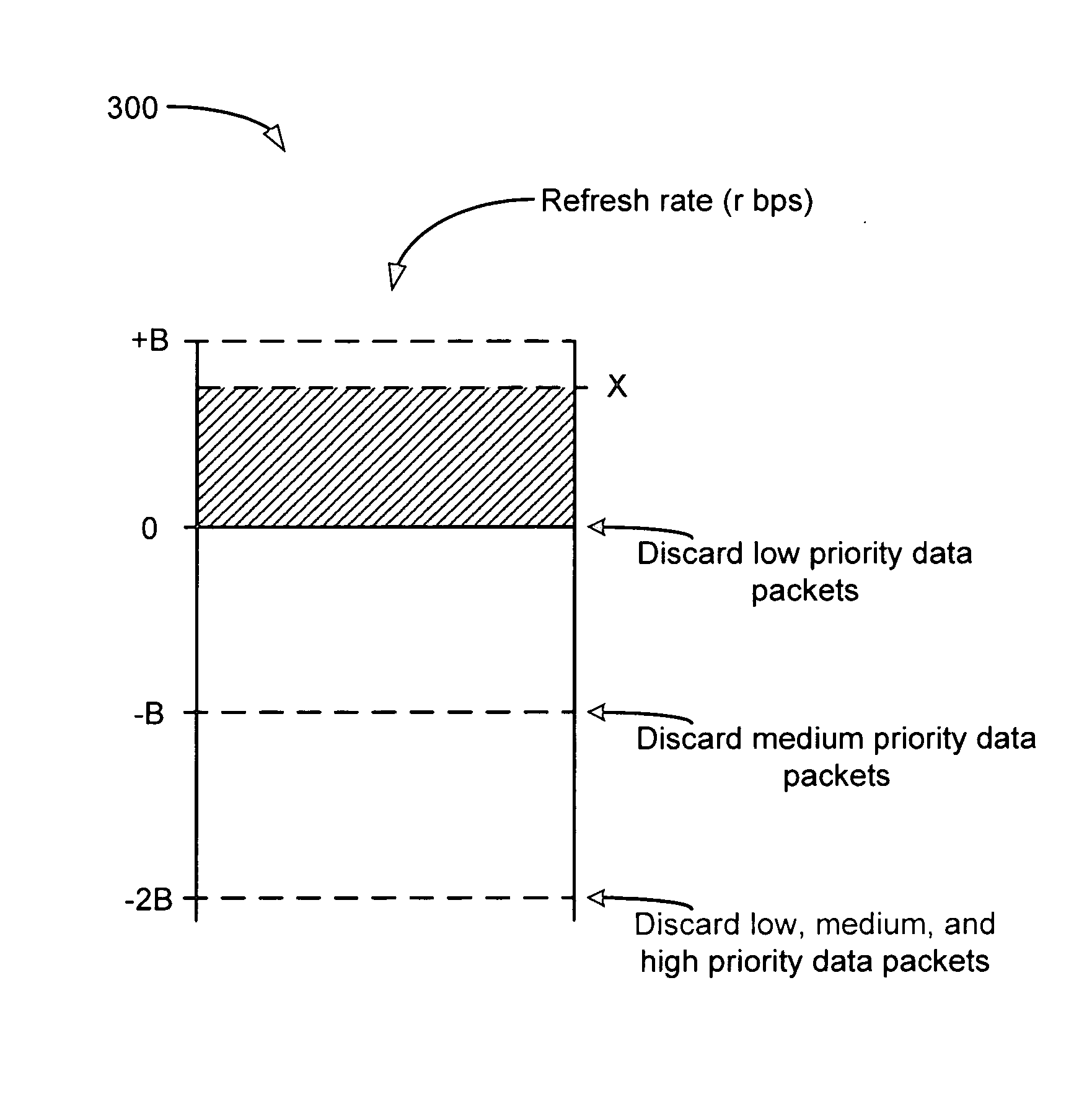

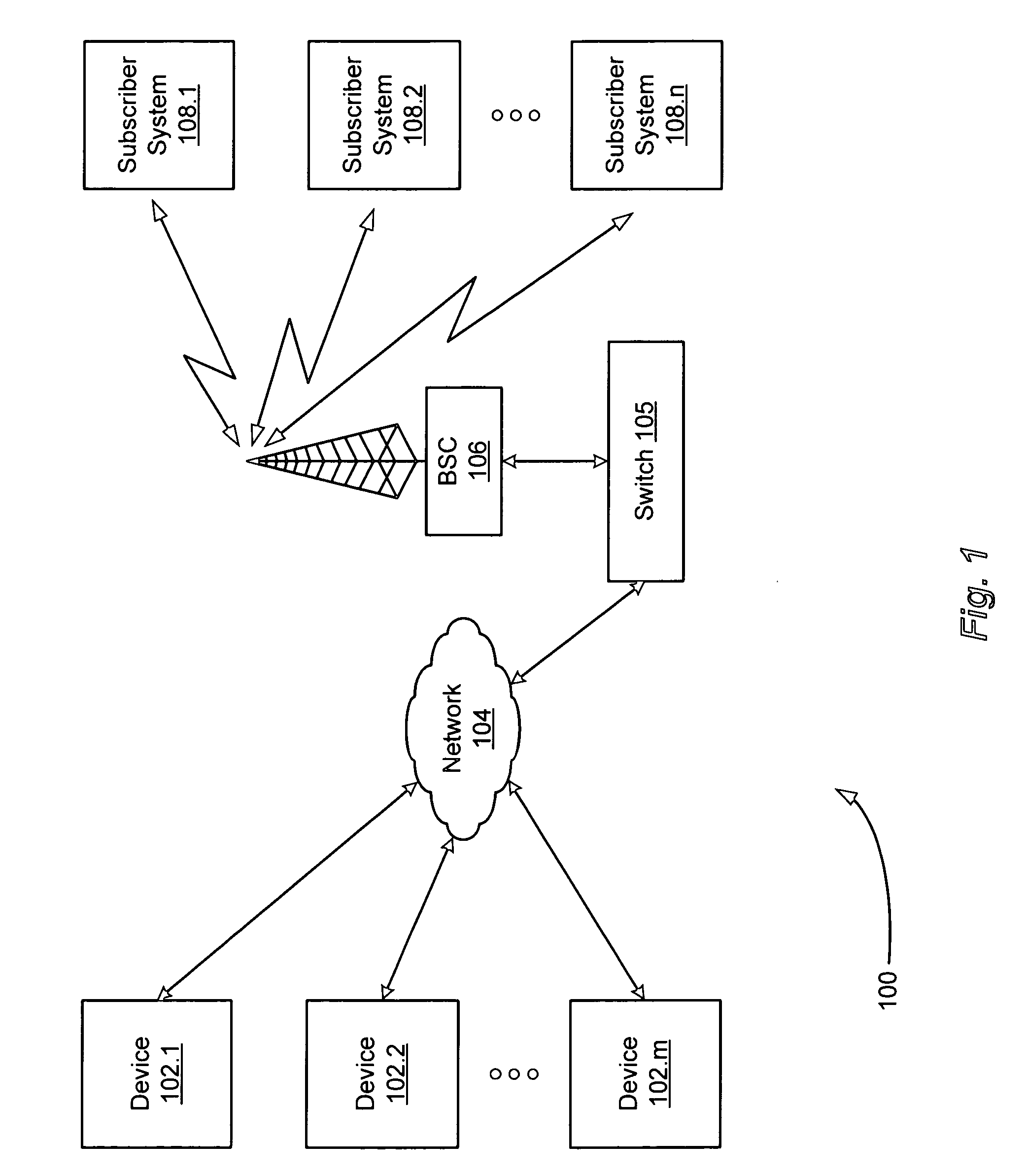

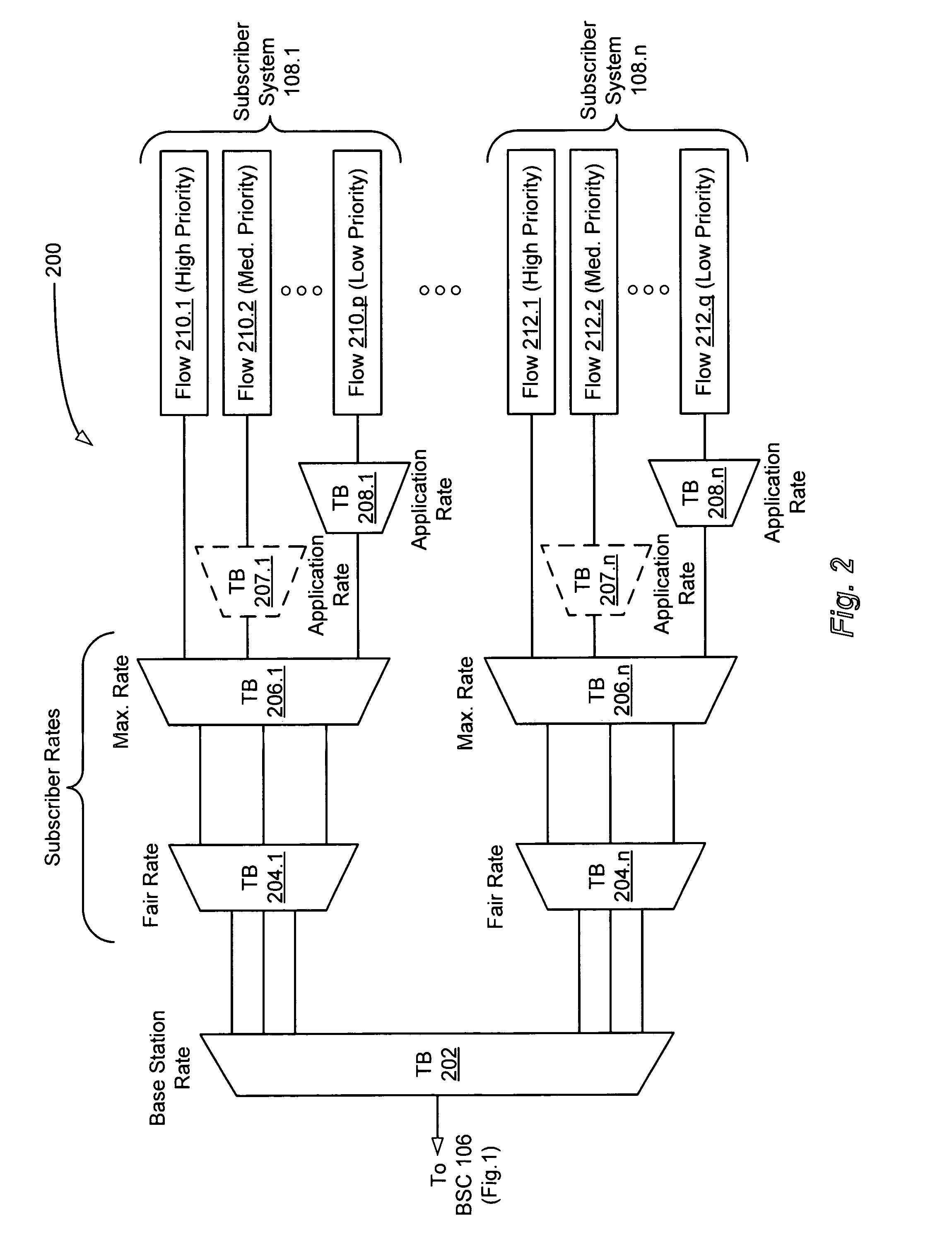

InactiveUS20080159135A1Unwanted latencyFair sharingError preventionTransmission systemsData streamService planning

A system and method of providing high speed, prioritized delivery of data packets over broadband communications networks that avoids inducing unwanted latency in data packet transmission. The system employs a hierarchical, real-time, weighted token bucket prioritization scheme that provides for fair sharing of the available network bandwidth. At least one token bucket is employed at each level of the hierarchy to meter data flows providing service applications included in multiple subscribers' service plans. Each token bucket passes, discards, or marks as being eligible for subsequent discarding data packets contained in the data flows using an algorithm that takes into account the priority of the data packets, including strict high, strict medium, and strict low priorities corresponding to strict priority levels that cannot be overridden. The algorithm also takes into account weighted priorities of at least a subset of the low priority data packets. The priority levels of these low priority data packets are weighted to provide for fair sharing of the available network bandwidth among the low priority data flows, and to assure that none of the low priority data flows is starved of service.

Owner:ELLACOYA NETWORKS

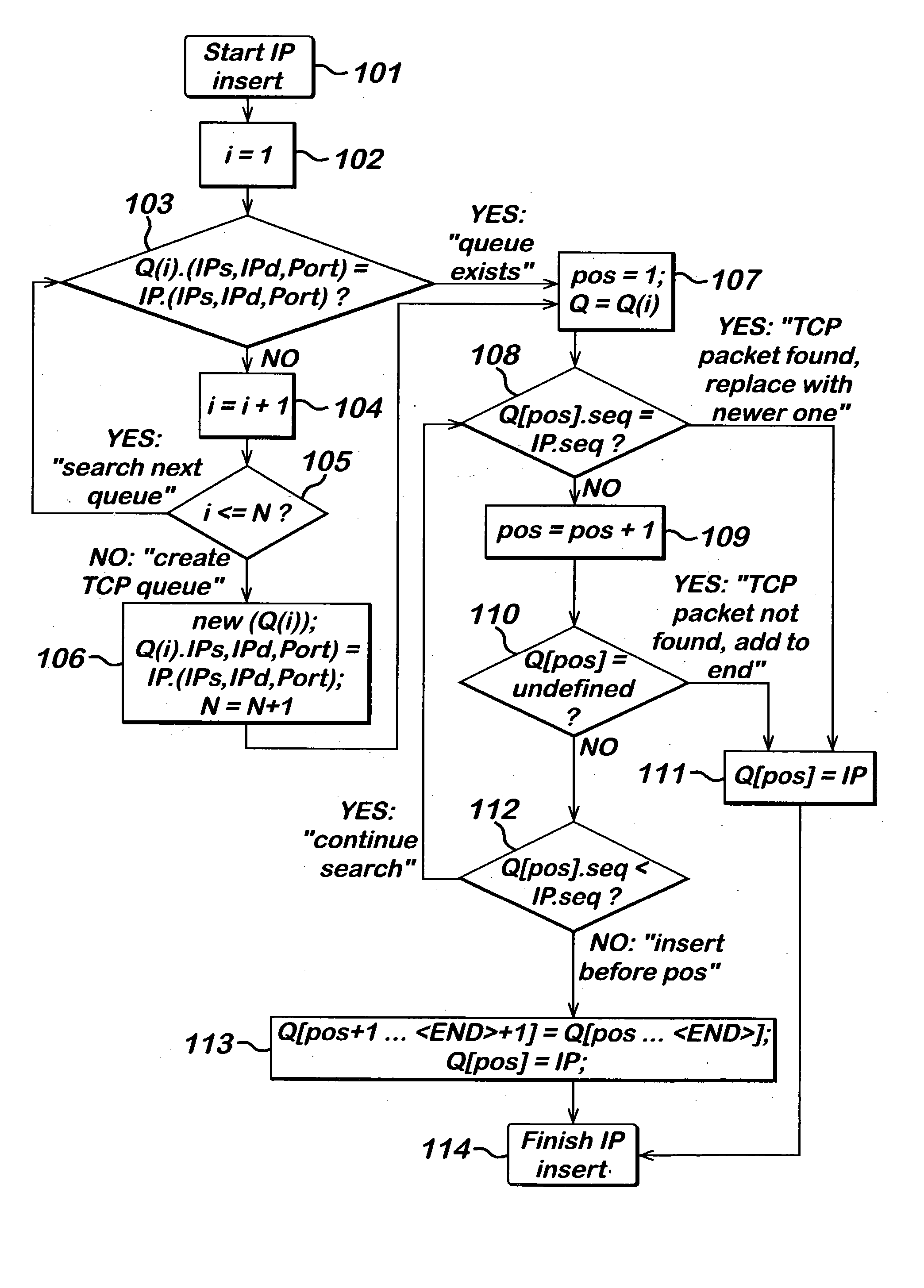

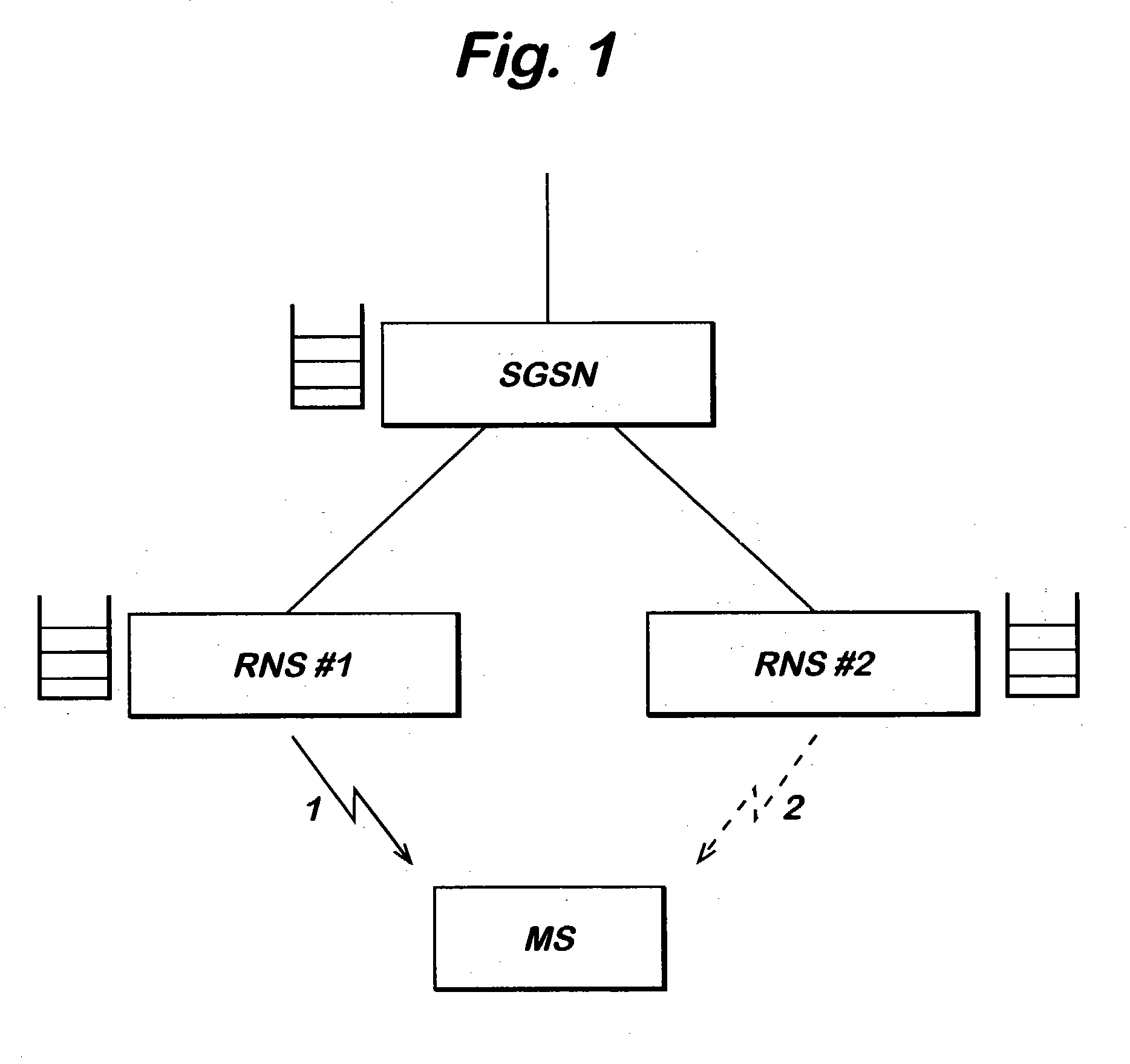

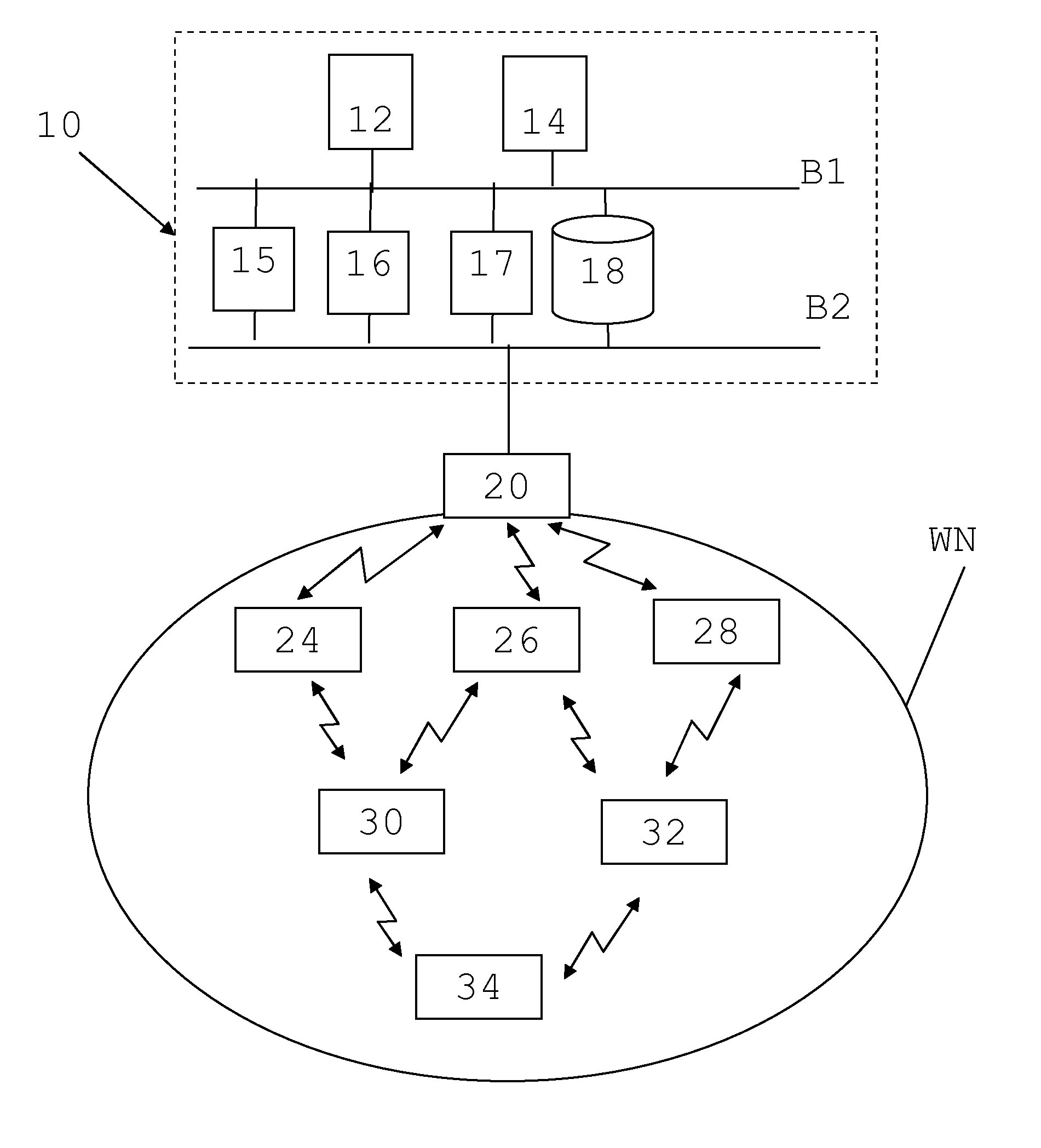

Packet ordering method and apparatus in a mobile communication network employing hierarchical routing with data packet forwarding

ActiveUS20040131040A1Enhancement of packet data performanceReduce the impactNetwork traffic/resource managementTime-division multiplexUser equipmentHierarchical routing

The present invention, in particular, refers to is a data packet ordering method in a mobile communication network employing hierarchical routing with data packet forwarding comprising the step of providing at least one data message encompassing a predefined sequence of data packets, forwarding at least one of said data packets of the sequence via a first network element over a first transmission path to a user equipment, whereby a part of the data packets are temporarily buffered in the first network element during transmission, establishing a second transmission path, while forwarding the data packet sequence, such that the remaining data packets of the sequence not yet transmitted over the first path are forwarded via a second network element, forwarding of the data packets buffered in the first network element to the second network element for providing all data packets comprised by the data packet sequence to the user equipment, receiving and ordering of the data packets within said second network element according to the packet data priority given by the data packet sequence, forwarding the ordered data packets to the user equipment.

Owner:ALCATEL-LUCENT USA INC +1

Tiered contention multiple access(TCMA): a method for priority-based shared channel access

InactiveUS20070019665A1Minimizing chanceLower latencySynchronisation arrangementError preventionService-level agreementTraffic capacity

Quality of Service (QoS) support is provided by means of a Tiered Contention Multiple Access (TCMA) distributed medium access protocol that schedules transmission of different types of traffic based on their service quality specifications. In one embodiment, a wireless station is supplied with data from a source having a lower QoS priority QoS(A), such as file transfer data. Another wireless station is supplied with data from a source having a higher QoS priority QoS(B), such as voice and video data. Each wireless station can determine the urgency class of its pending packets according to a scheduling algorithm. For example file transfer data is assigned lower urgency class and voice and video data is assigned higher urgency class. There are several urgency classes which indicate the desired ordering. Pending packets in a given urgency class are transmitted before transmitting packets of a lower urgency class by relying on class-differentiated urgency arbitration times (UATs), which are the idle time intervals required before the random backoff counter is decreased. In another embodiment packets are reclassified in real time with a scheduling algorithm that adjusts the class assigned to packets based on observed performance parameters and according to negotiated QoS-based requirements. Further, for packets assigned the same arbitration time, additional differentiation into more urgency classes is achieved in terms of the contention resolution mechanism employed, thus yielding hybrid packet prioritization methods. An Enhanced DCF Parameter Set is contained in a control packet sent by the AP to the associated stations, which contains class differentiated parameter values necessary to support the TCMA. These parameters can be changed based on different algorithms to support call admission and flow control functions and to meet the requirements of service level agreements.

Owner:AT&T INTPROP II L P

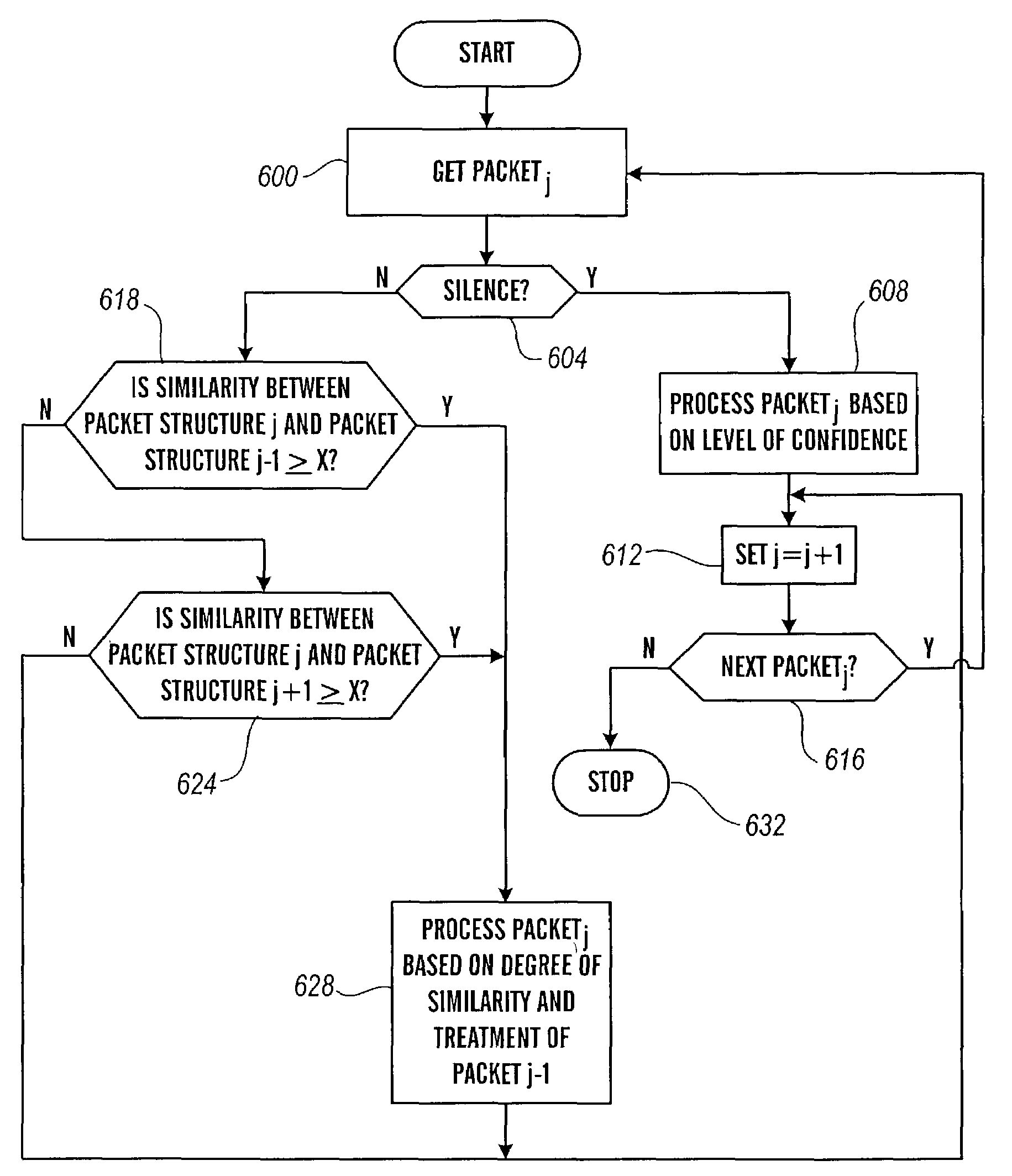

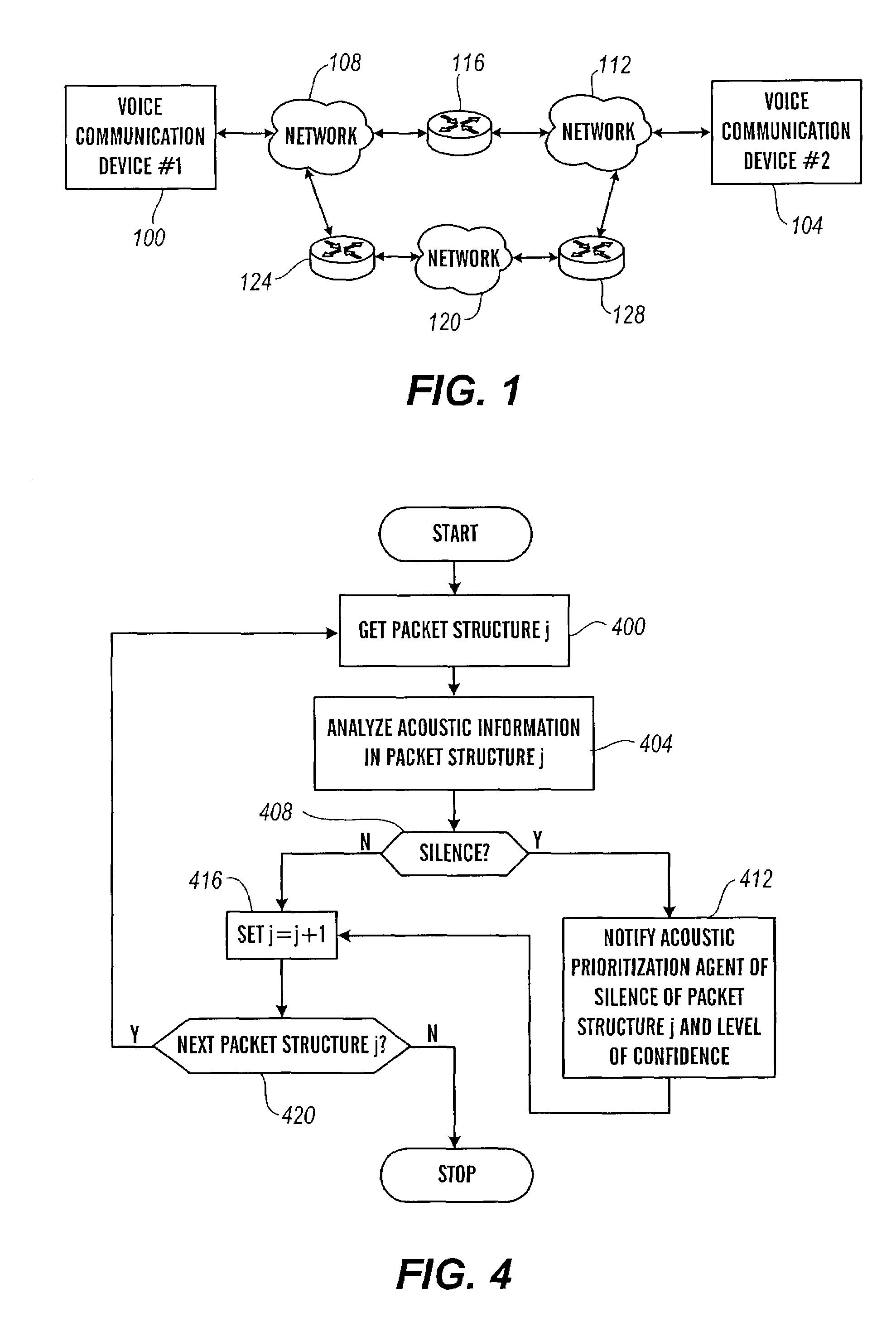

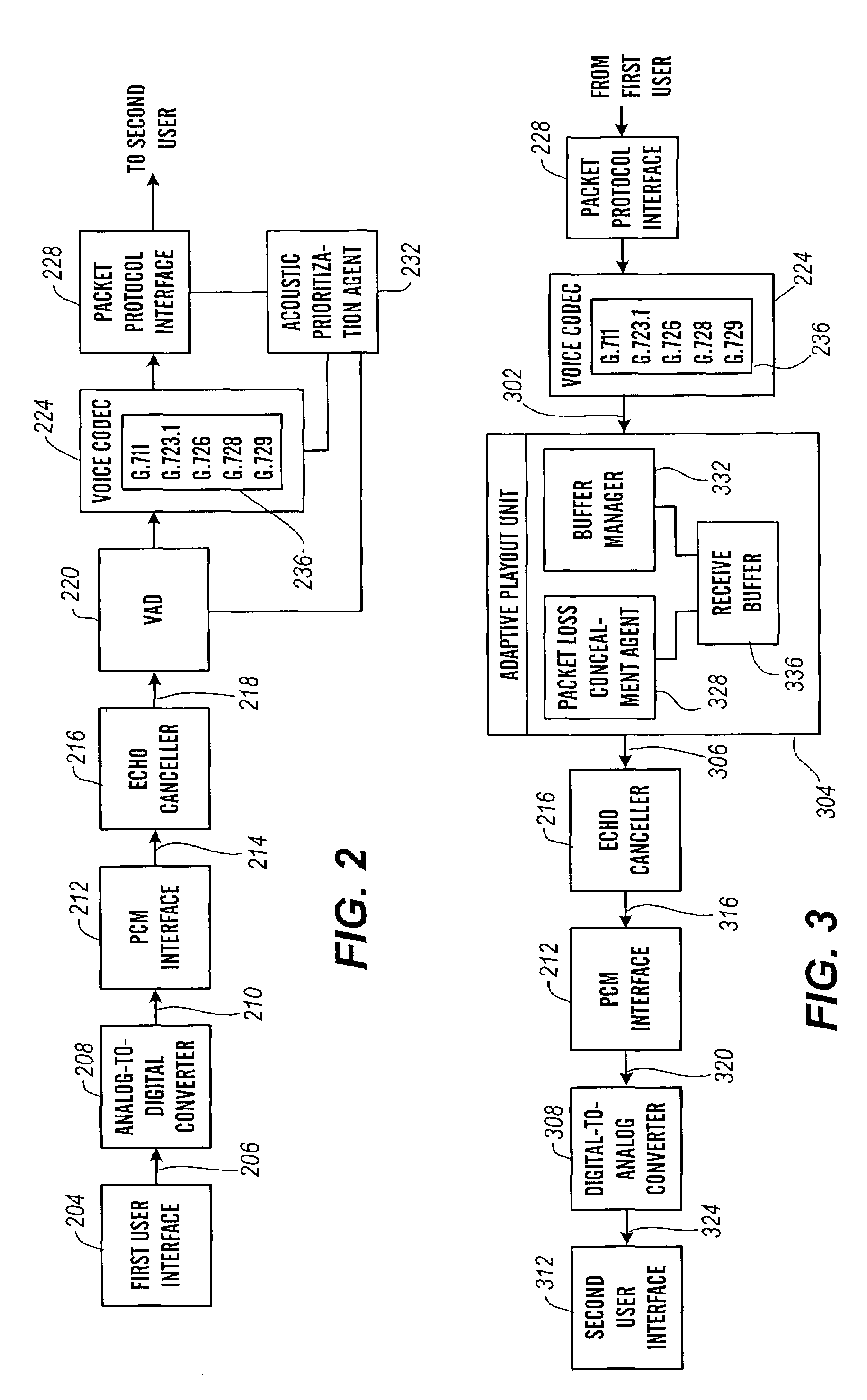

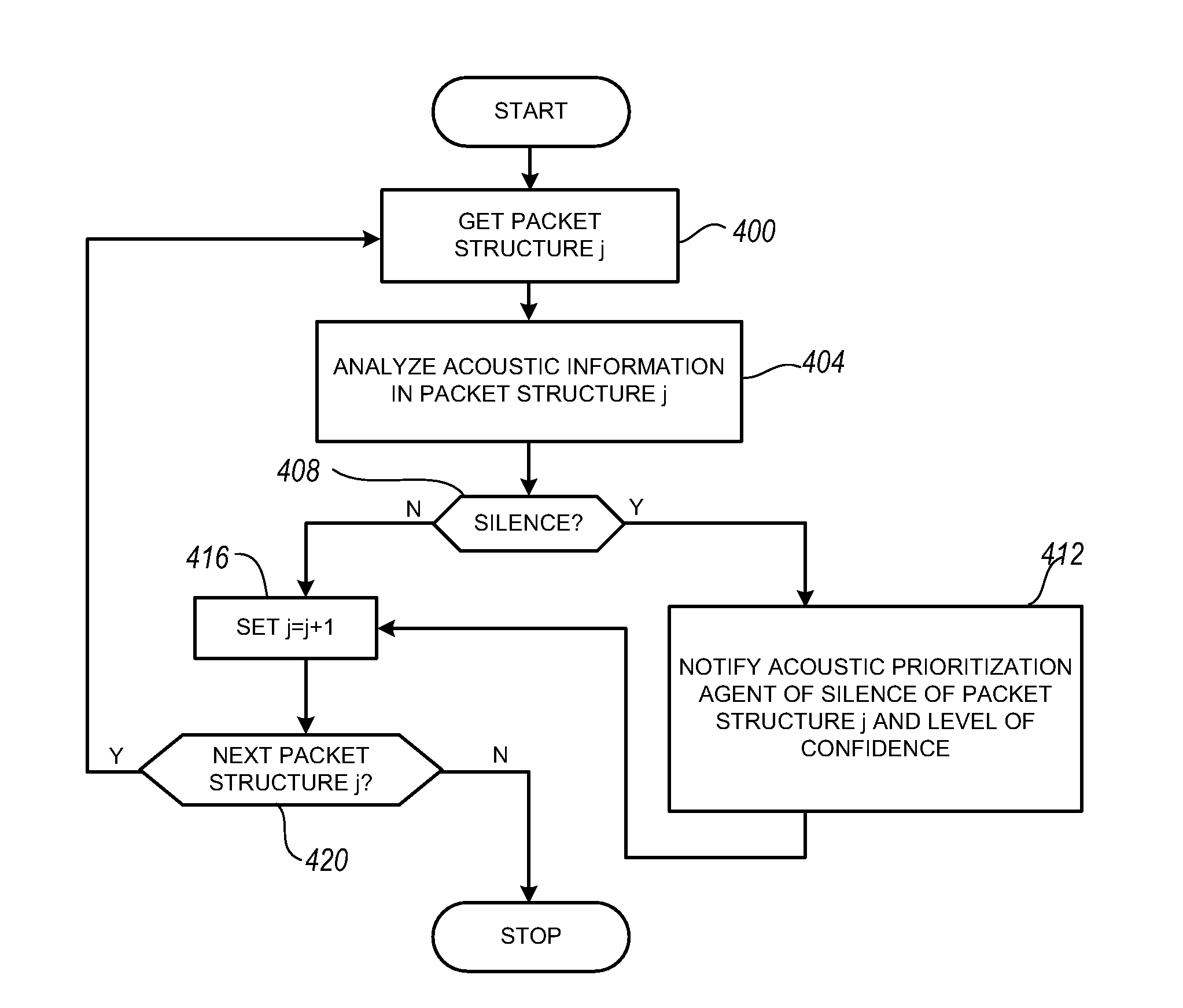

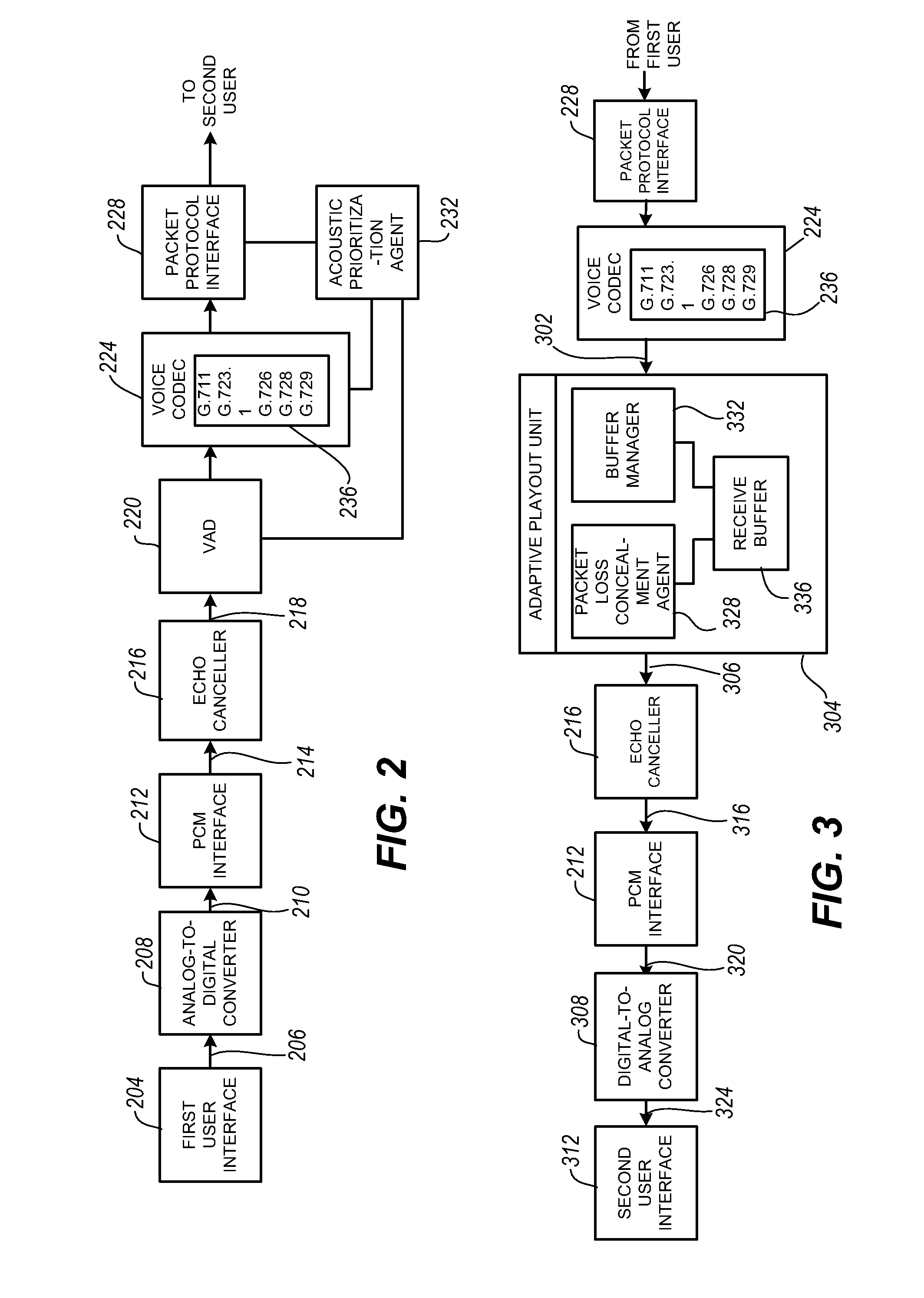

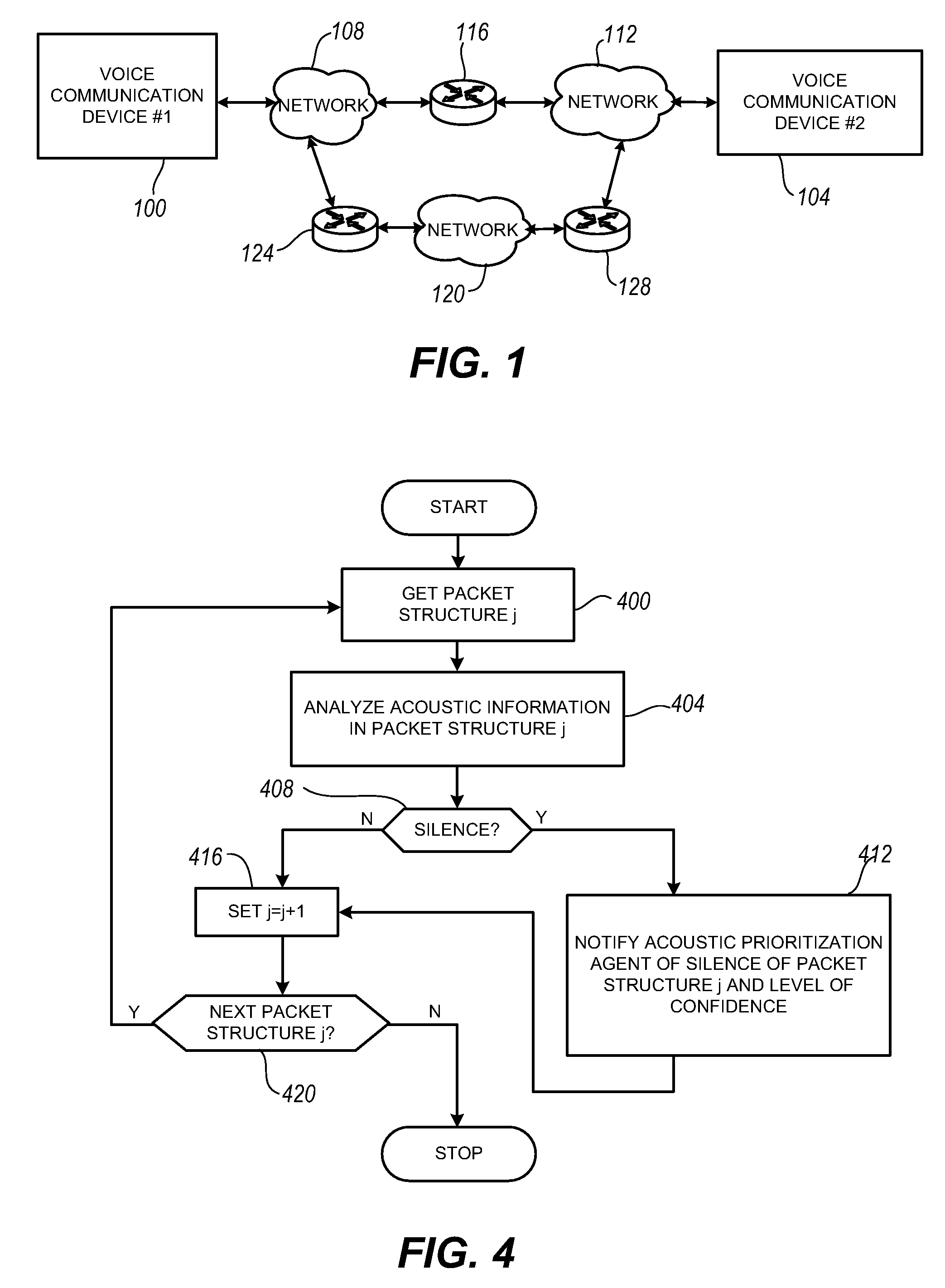

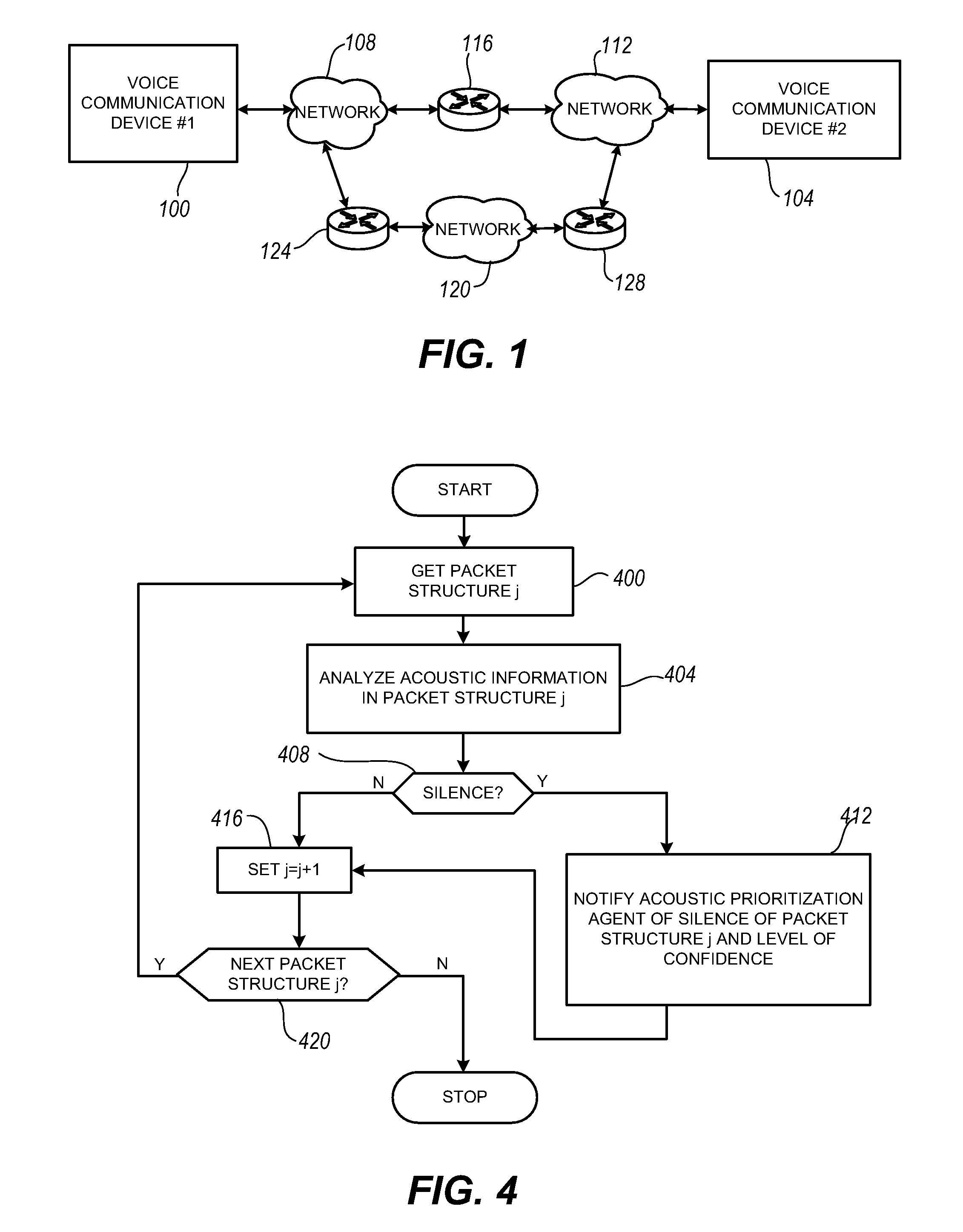

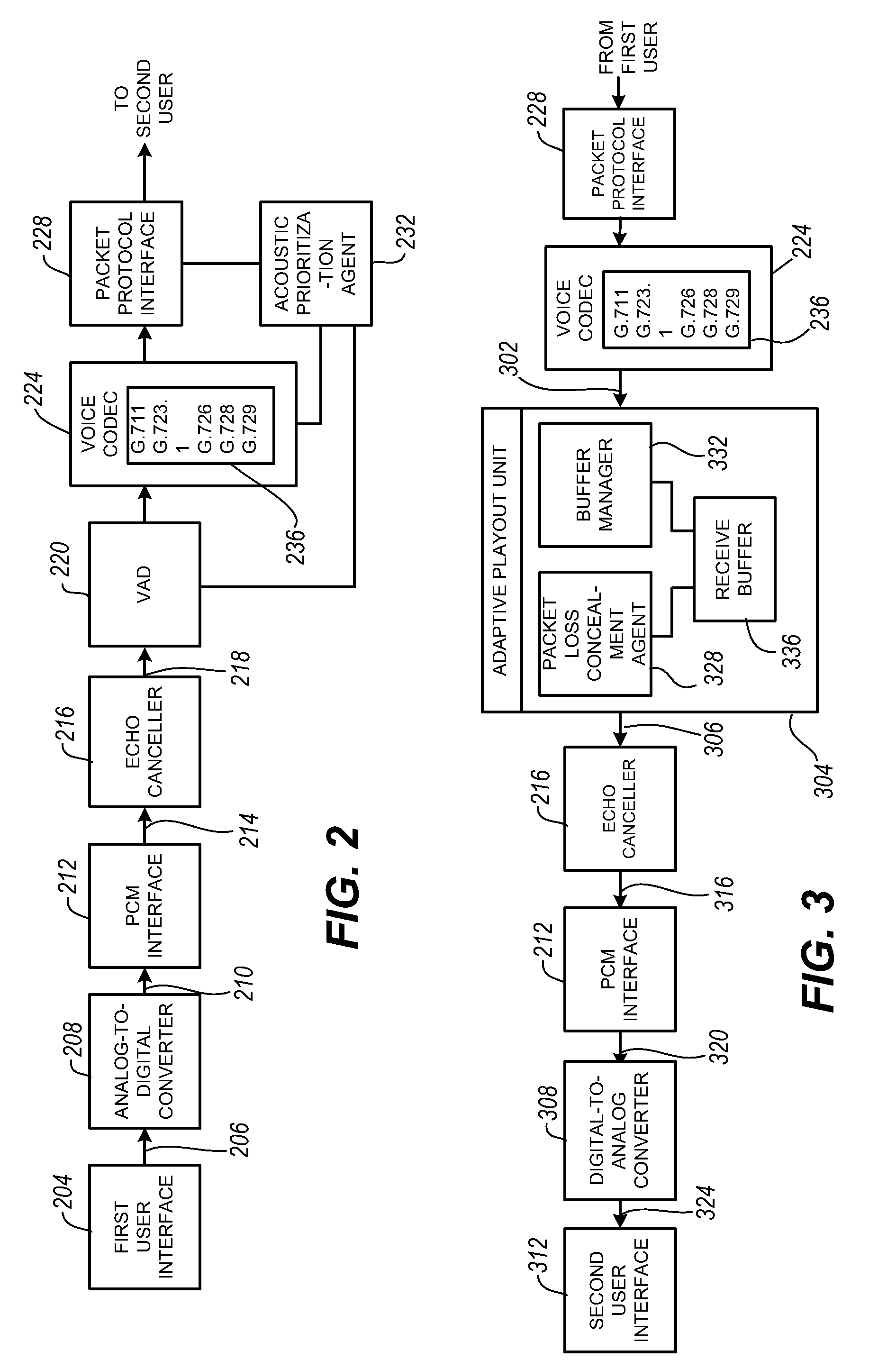

Packet prioritization and associated bandwidth and buffer management techniques for audio over IP

ActiveUS7359979B2Raise priorityReduce the possibilityMultiple digital computer combinationsSpeech recognitionVoice communicationVoice activity

The present invention is directed to voice communication devices in which an audio stream is divided into a sequence of individual packets, each of which is routed via pathways that can vary depending on the availability of network resources. All embodiments of the invention rely on an acoustic prioritization agent that assigns a priority value to the packets. The priority value is based on factors such as whether the packet contains voice activity and the degree of acoustic similarity between this packet and adjacent packets in the sequence. A confidence level, associated with the priority value, may also be assigned. In one embodiment, network congestion is reduced by deliberately failing to transmit packets that are judged to be acoustically similar to adjacent packets; the expectation is that, under these circumstances, traditional packet loss concealment algorithms in the receiving device will construct an acceptably accurate replica of the missing packet. In another embodiment, the receiving device can reduce the number of packets stored in its jitter buffer, and therefore the latency of the speech signal, by selectively deleting one or more packets within sustained silences or non-varying speech events. In both embodiments, the ability of the system to drop appropriate packets may be enhanced by taking into account the confidence levels associated with the priority assessments.

Owner:AVAYA INC

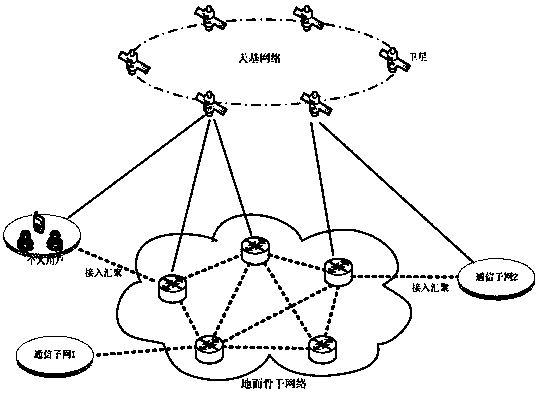

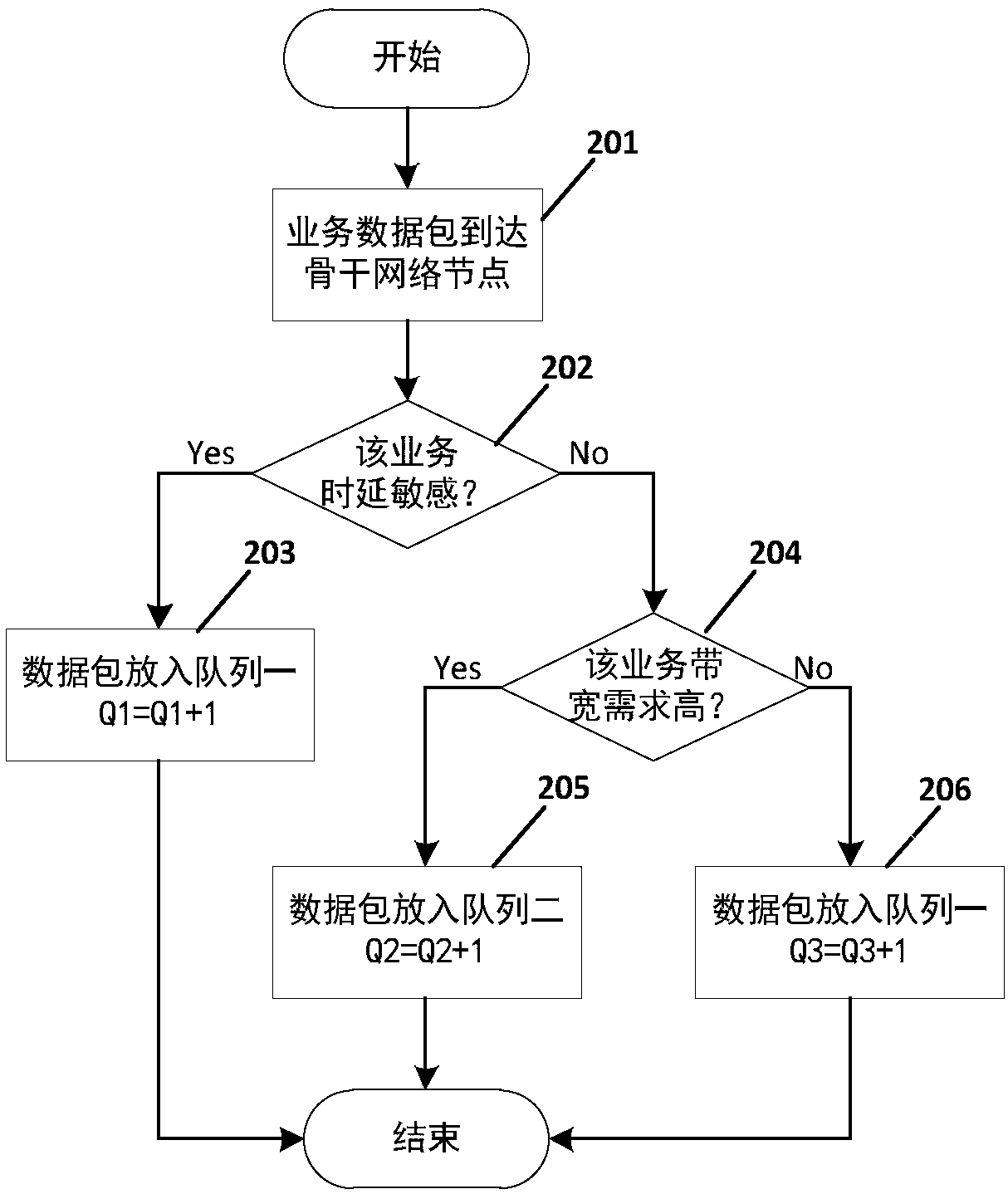

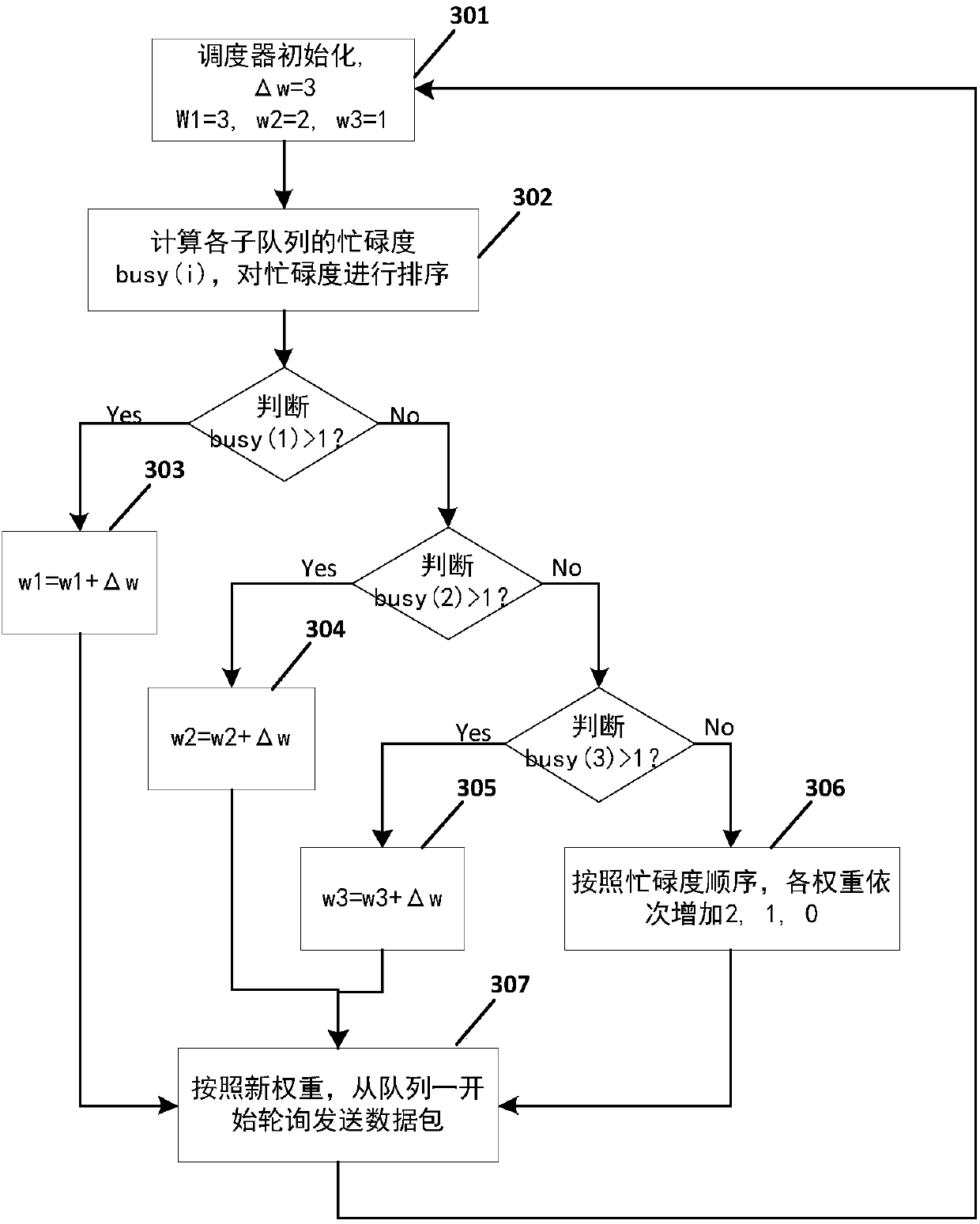

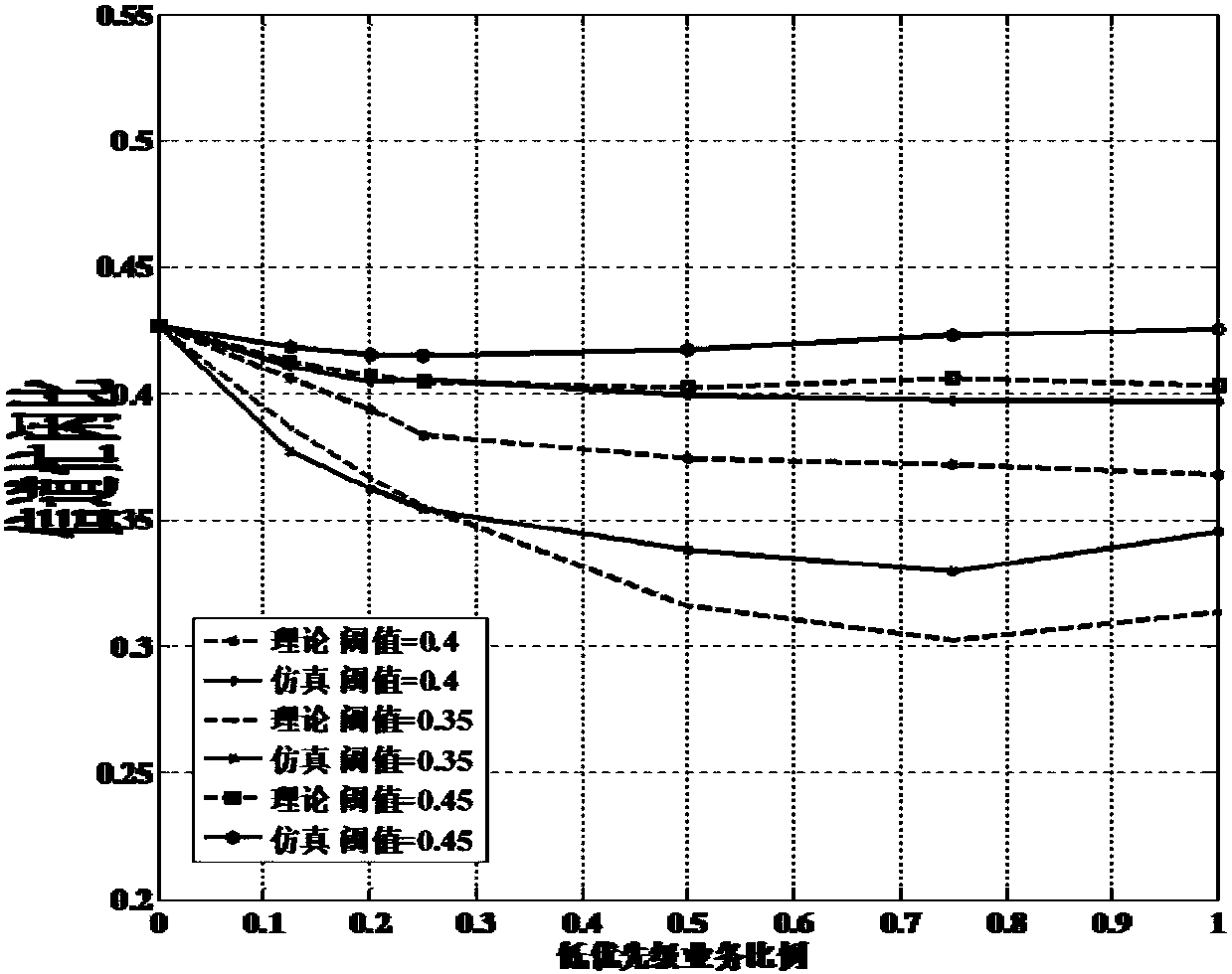

Dynamic weighted round robin scheduling policy method based on priorities

InactiveCN107733689AIncrease profitImprove transmission qualityRadio transmissionData switching networksData streamResource utilization

The invention provides a dynamic weighted round robin scheduling policy method based on priorities. Through utilization of the method, a network resource utilization rate is high, bandwidth resource allocation is fair and network resource scheduling is reasonable and efficient. The method is realized through adoption of the following technical scheme that for data streams of different priorities,the dynamic weighted round robin scheduling policy method based on the priorities comprises a queue management module and a round robin scheduling module; the queue management module divides all business in a network into sub-queues of n priorities; after backbone network nodes receive business data packets, the priorities of the data packets arriving at the backbone network nodes are judged according to QoS demands of the business, and the business data packets are inserted into the corresponding cache sub-queues according to the priorities; the round robin scheduling module carries out periodic round robin; and the busy degree of each sub-queue is calculated according to the current queue lengths Q of the sub-queues, the busy degree is arranged, round robin weight values of the sub-queues are dynamically adjusted according to a busy degree arrangement result, the round robin is carried out on each sub-queue in sequence, and the data packets are sent.

Owner:10TH RES INST OF CETC

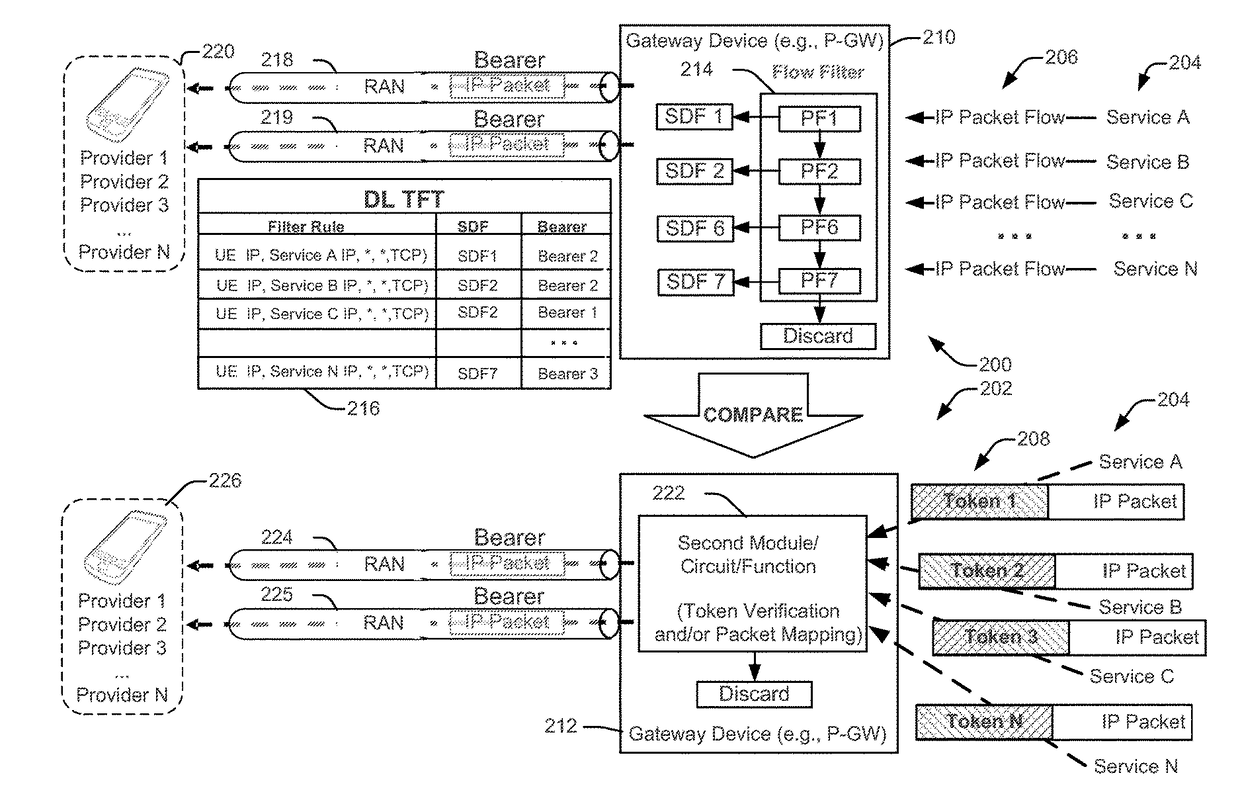

In-flow packet prioritization and data-dependent flexible QOS policy

ActiveUS20170324652A1Well formedNetwork traffic/resource managementNetwork topologiesData dependentReal-time computing

A method, operational at a device, includes receiving at least one packet belonging to a first set of packets of a packet flow marked with an identification value, determining that the at least one packet is marked with the identification value, determining to change a quality of service (QoS) treatment of packets belonging to the first set of packets marked with the identification value that are yet to be received, and sending a request to change the QoS treatment of packets belonging to the first set of packets marked with the identification value that are yet to be received to trigger a different QoS treatment of packets within the packet flow, responsive to determining to change the QoS treatment. Other aspects, embodiments, and features are also claimed and described.

Owner:QUALCOMM INC

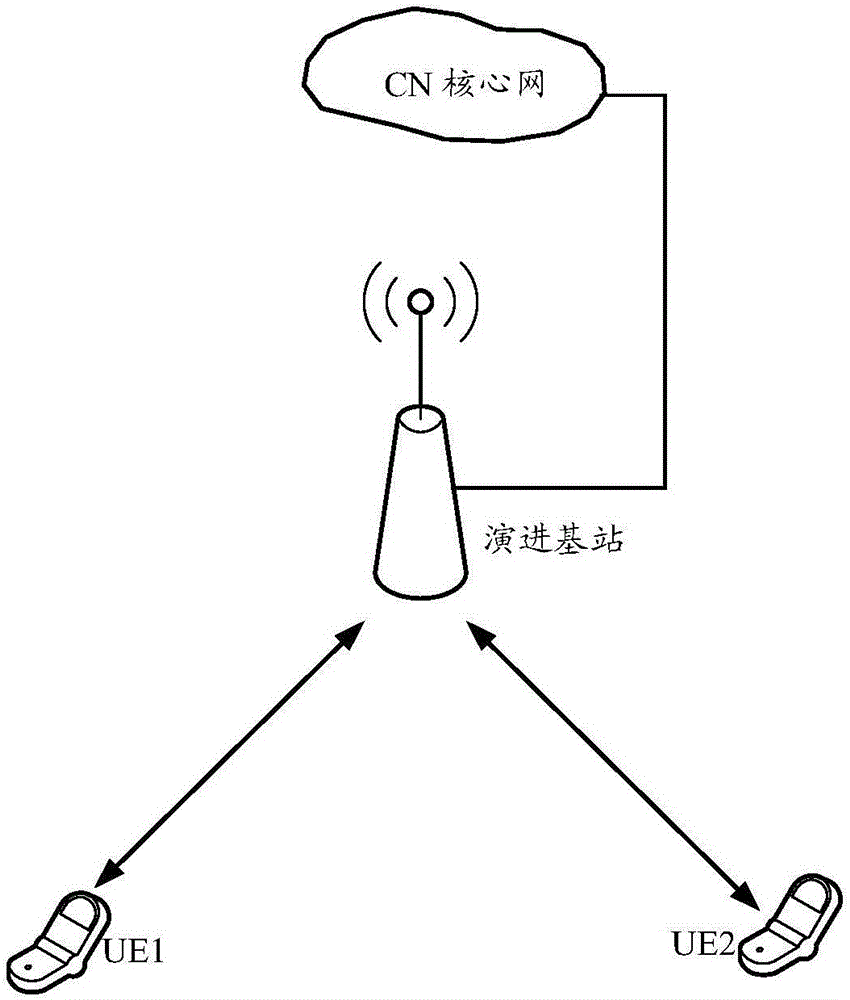

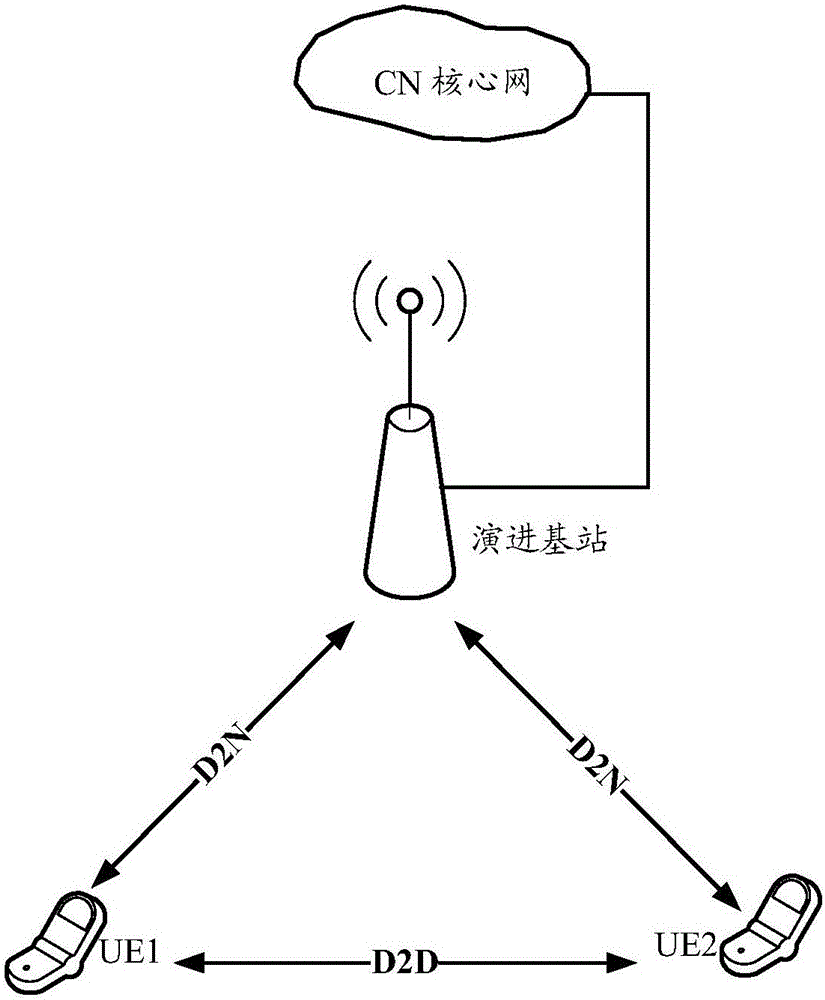

Resource allocation method and resource allocation device

ActiveCN106454687AImprove performanceWireless commuication servicesTransmissionResource allocationReal-time computing

The invention relates to the wireless communication technology field, especially relates to a resource allocation method and a resource allocation device. The resource allocation method and the resource allocation device are used to solve a problem of a prior art of inability of realizing scheduling based on D2D communication data package priority caused by conventional resource allocation ways of D2D communication base station scheduling. A terminal is used to notify a network side device of a first corresponding relation, and the network side device is used to determine PPP corresponding to LCG ID of every destination in sidelink BSR MAC CE according to the first corresponding relation. Resources are allocated for every destination of the terminal according to the determined PPP and buffer area state information corresponding to the LCG ID of every destination address in the sidelink BSR MAC CE. The resources are allocated for every destination address of the terminal according to the PPP, and therefore system performance is improved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

Packet prioritization and associated bandwidth and buffer management techniques for audio over IP

InactiveUS20080151921A1Raise priorityReduce the possibilityData switching by path configurationVoice communicationVoice activity

The present invention is directed to voice communication devices in which an audio stream is divided into a sequence of individual packets, each of which is routed via pathways that can vary depending on the availability of network resources. All embodiments of the invention rely on an acoustic prioritization agent that assigns a priority value to the packets. The priority value is based on factors such as whether the packet contains voice activity and the degree of acoustic similarity between this packet and adjacent packets in the sequence. A confidence level, associated with the priority value, may also be assigned. In one embodiment, network congestion is reduced by deliberately failing to transmit packets that are judged to be acoustically similar to adjacent packets; the expectation is that, under these circumstances, traditional packet loss concealment algorithms in the receiving device will construct an acceptably accurate replica of the missing packet. In another embodiment, the receiving device can reduce the number of packets stored in its jitter buffer, and therefore the latency of the speech signal, by selectively deleting one or more packets within sustained silences or non-varying speech events. In both embodiments, the ability of the system to drop appropriate packets may be enhanced by taking into account the confidence levels associated with the priority assessments.

Owner:AVAYA INC

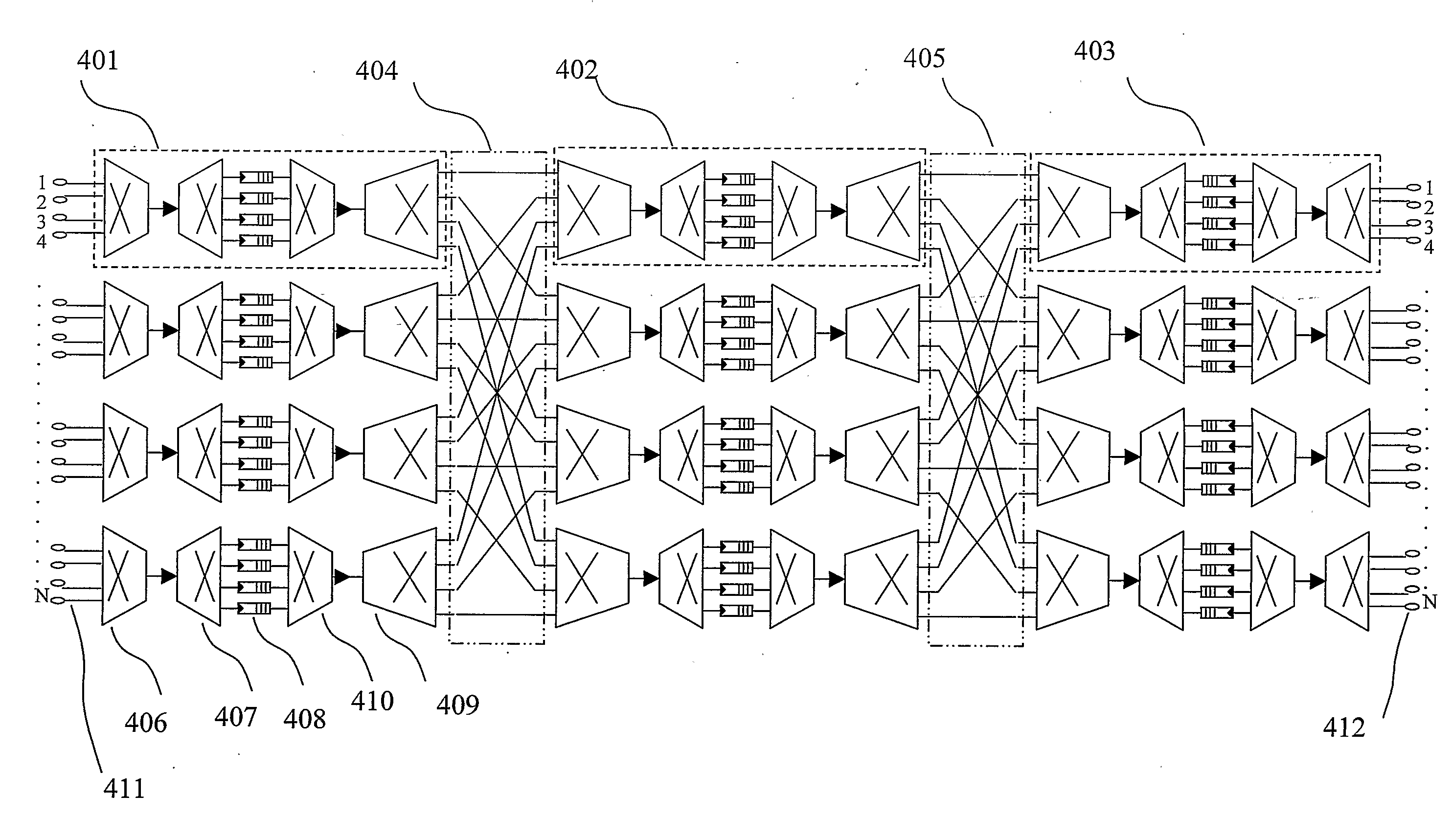

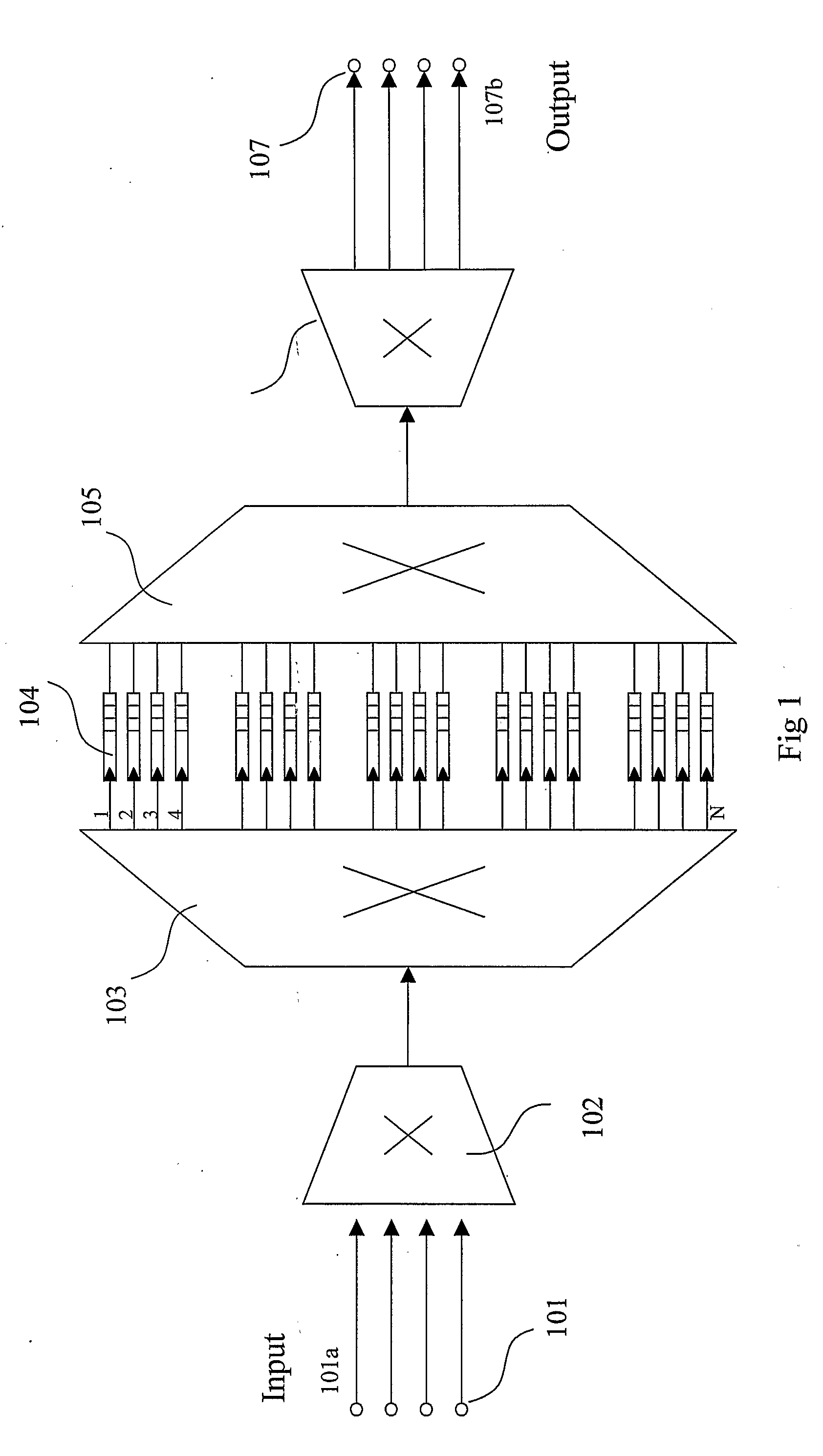

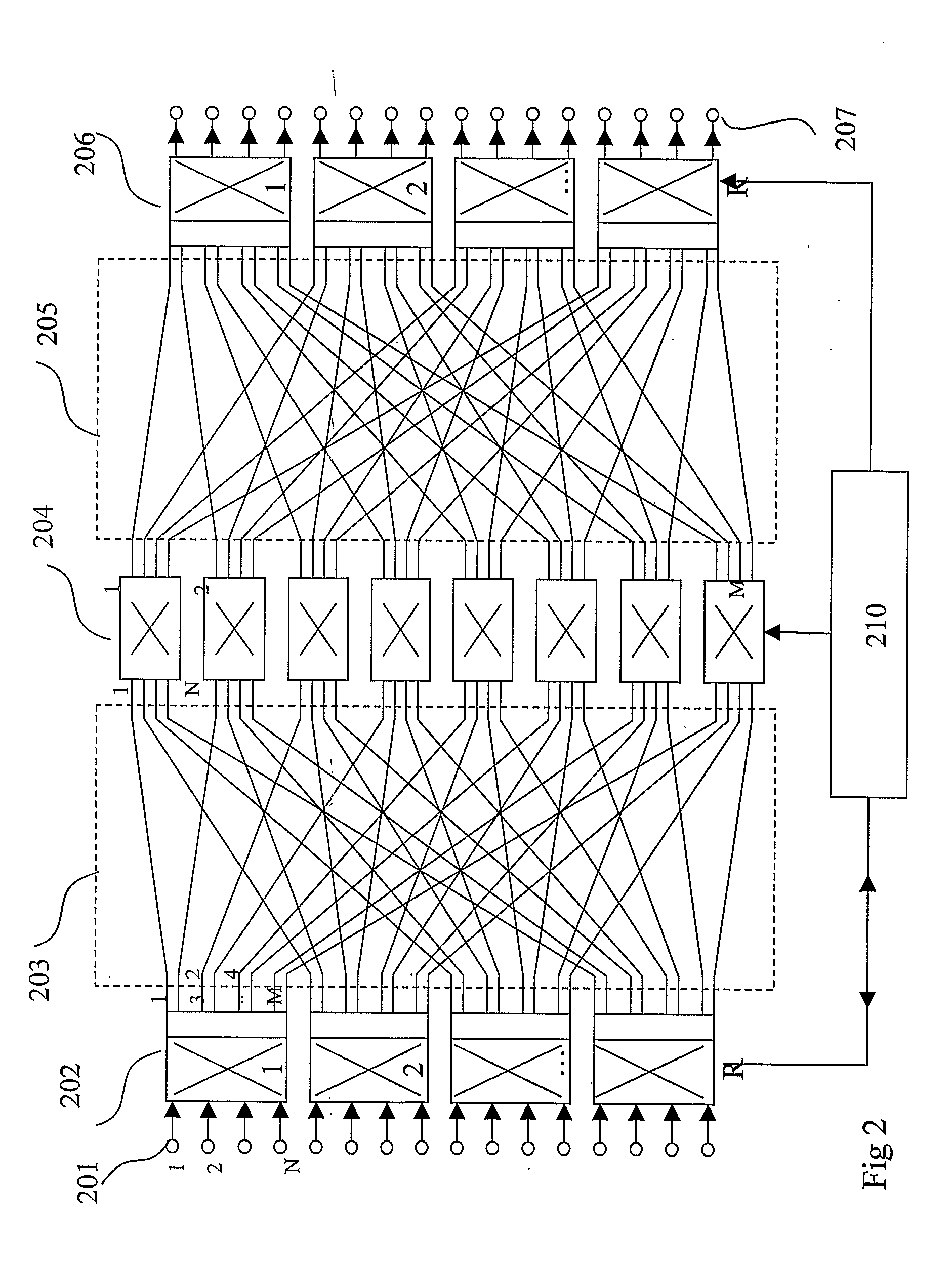

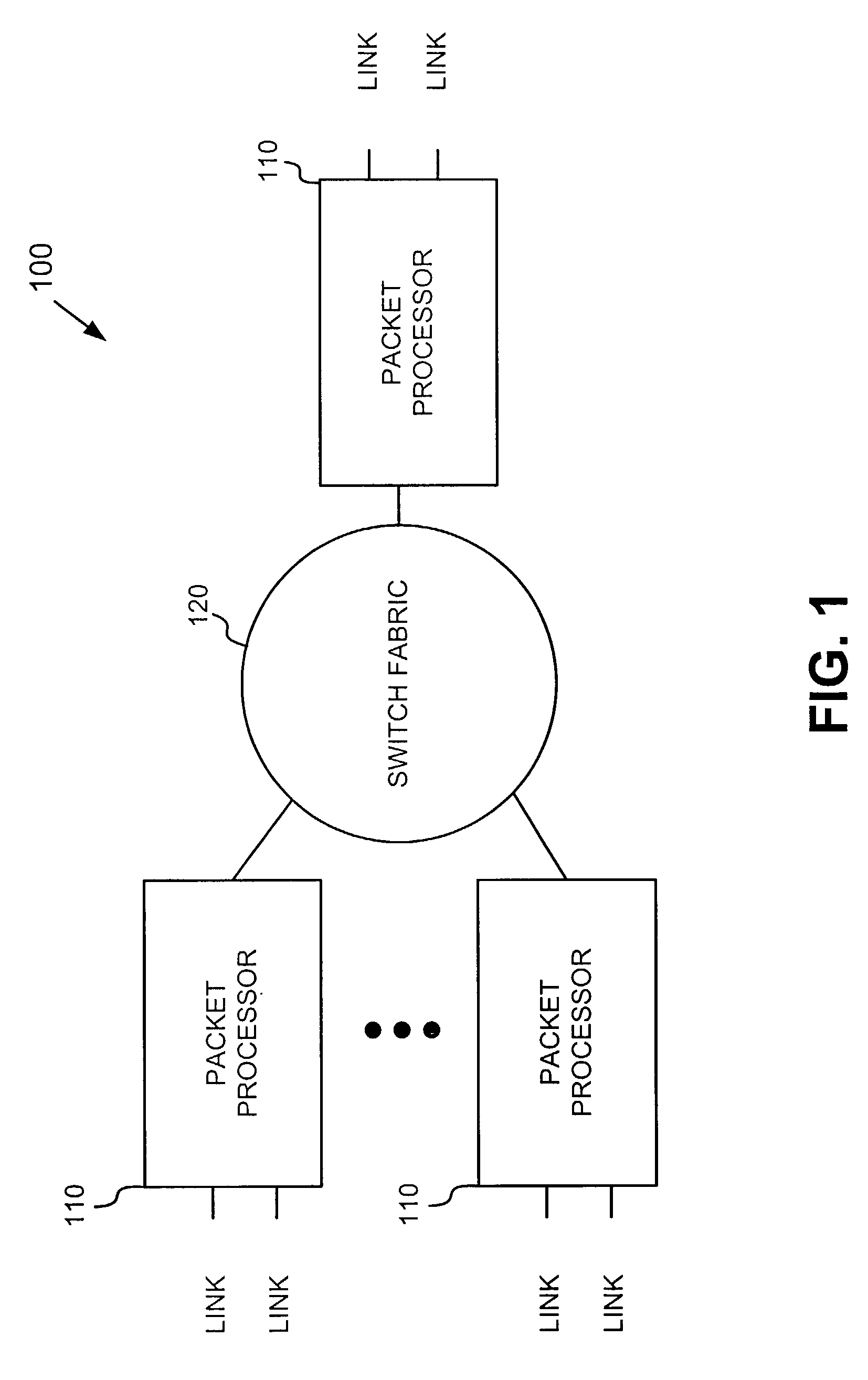

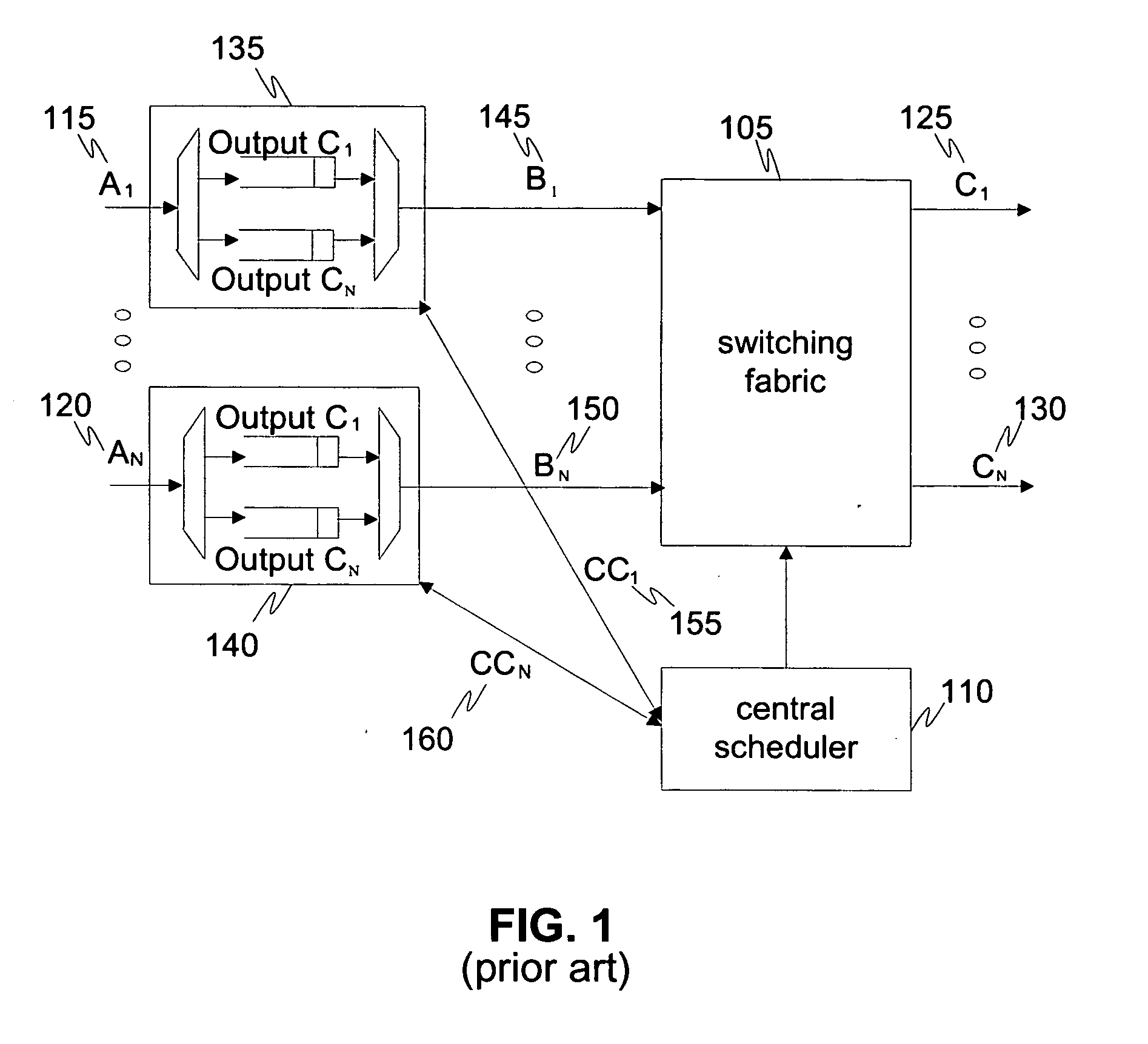

Compact Load Balanced Switching Structures for Packet Based Communication Networks

InactiveUS20080267204A1Meet demandLoad balancingData switching by path configurationReal-time computingThroughput delay

A switching node is disclosed for the routing of packetized data employing a multi-stage packet based routing fabric combined with a plurality of memory switches employing memory queues. The switching node allowing reduced throughput delays, dynamic provisioning of bandwidth and packet prioritization.

Owner:HALL TREVOR DR

Resource distribution method and device

ActiveCN106412794AImprove performanceNetwork traffic/resource managementWireless commuication servicesDistribution methodComputer science

The embodiment of the present invention relates to the wireless communication technology field, in particular to a resource distribution method and device used for solving the problem in the prior art that by a resource distribution mode of the device-to-device (D2D) communication base station scheduling, the data packet priority scheduling based on D2D communication can not be realized. According to the embodiment of the present invention, a network-side device notices the first correspondence relation of the priority per packet (PPP) and the LCG ID of each destination address to a terminal, and according to the first correspondence relation, the PPP corresponding to the LCG ID of each destination address in a sidelink buffer status report (BSR) MAC CE is determined; according to the determined PPP and the buffer status information corresponding to the LCG ID of each destination address in the sidelink buffer status report (BSR) MAC CE, resources are distributed to each destination address of the terminal. According to the embodiment of the present invention, the situation that resources are distributed to each destination address of the terminal according to the PPP, is realized, thereby improving the performance of a system.

Owner:DATANG MOBILE COMM EQUIP CO LTD

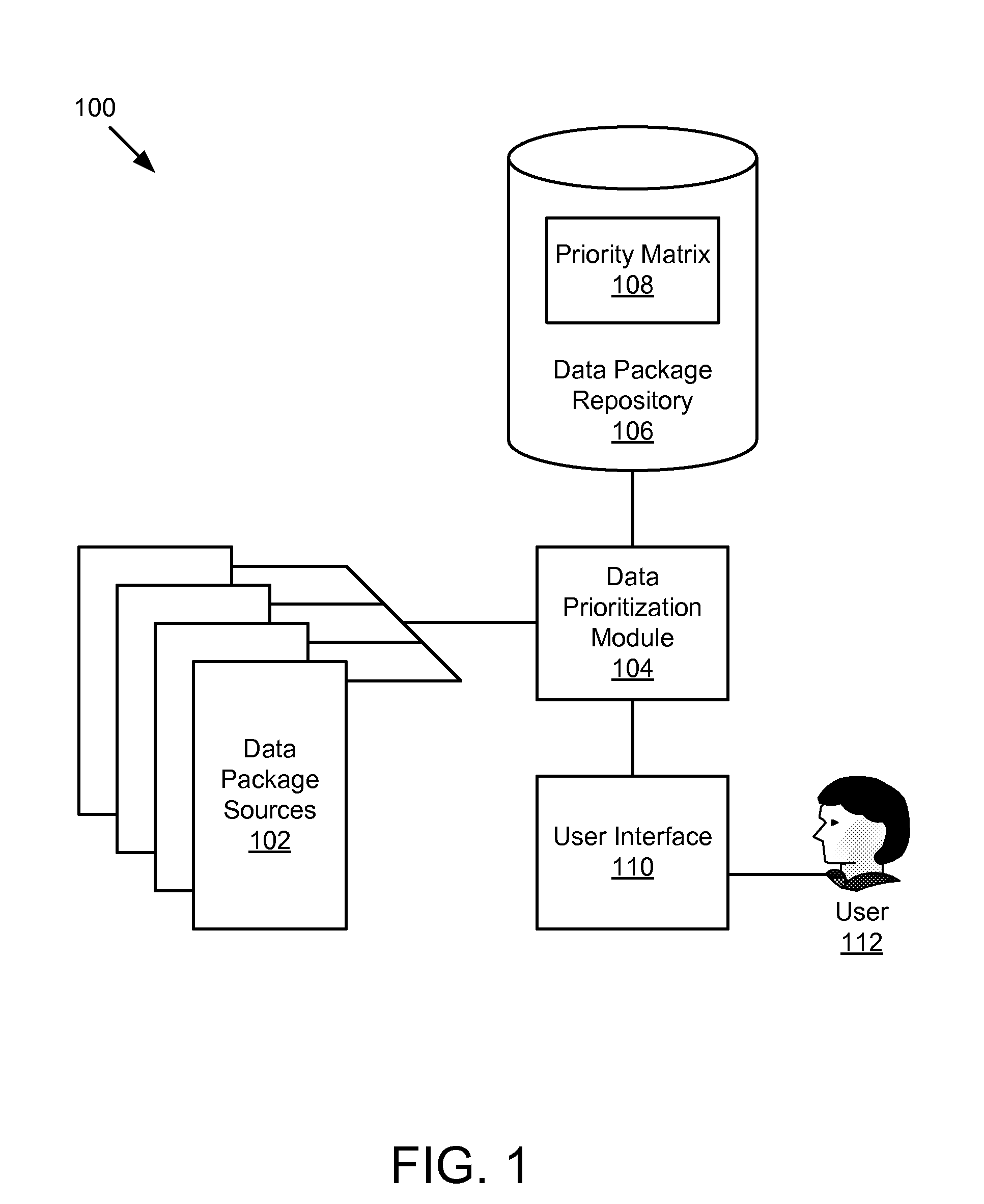

Apparatus, system, and method for automated error priority determination of call home records

An apparatus, system, and method are disclosed for data priority determination. A receiving module receives a data package. A parsing module parses out priority indicators from the data package. A comparison module compares the priority indicators to entries in a priority matrix, determining whether the data package is of a defined data package type. In response to a determination that the data package is of the defined data package type, a priority determination module determines a data package priority of the data package based on a data package priority of the defined data package type. In response to a determination that the data package is not of the defined data package type, a type definition module defines a new data package type having a data package priority based on the priority indicators. A priority update module updates the data package priority of the defined data package type.

Owner:IBM CORP

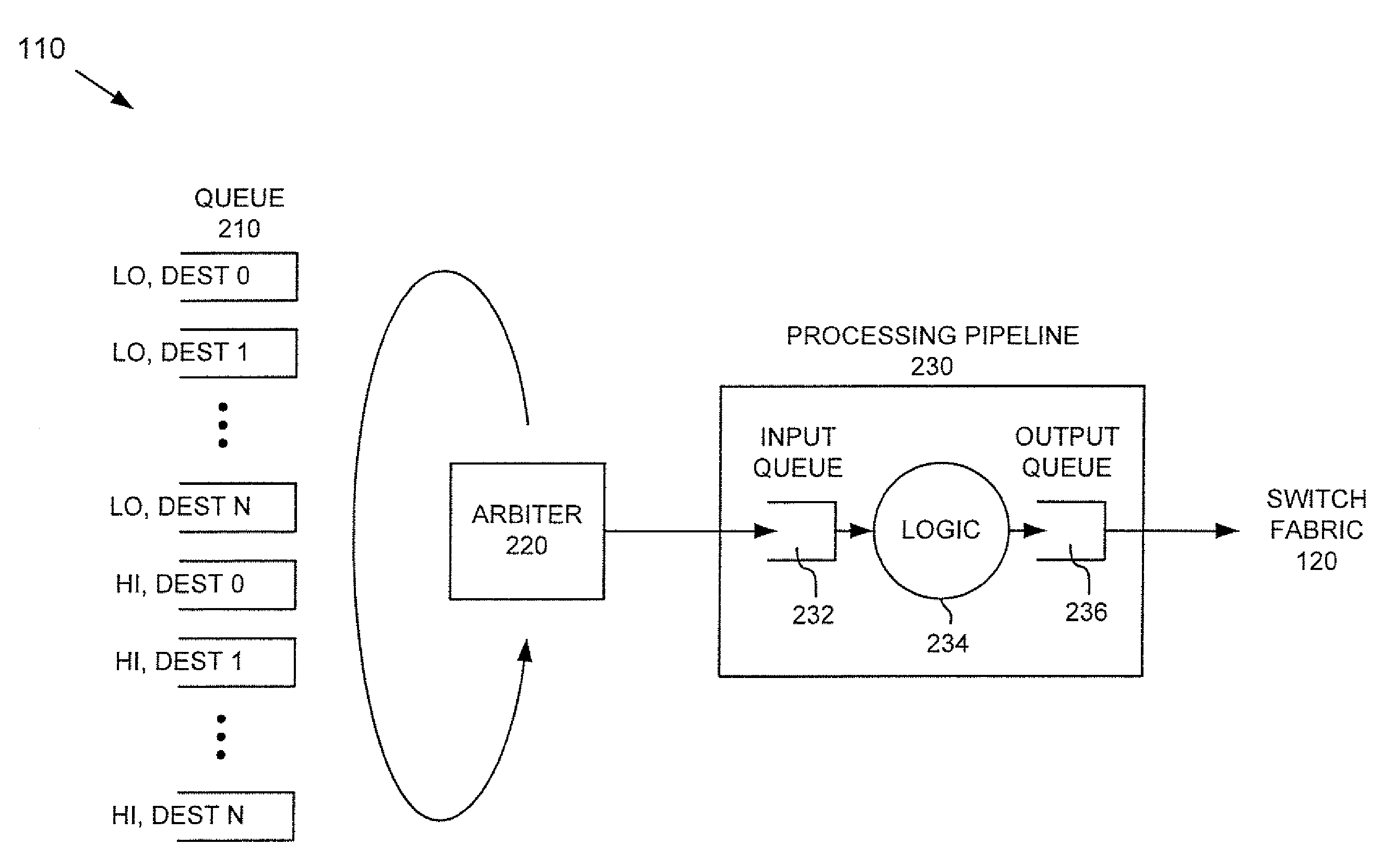

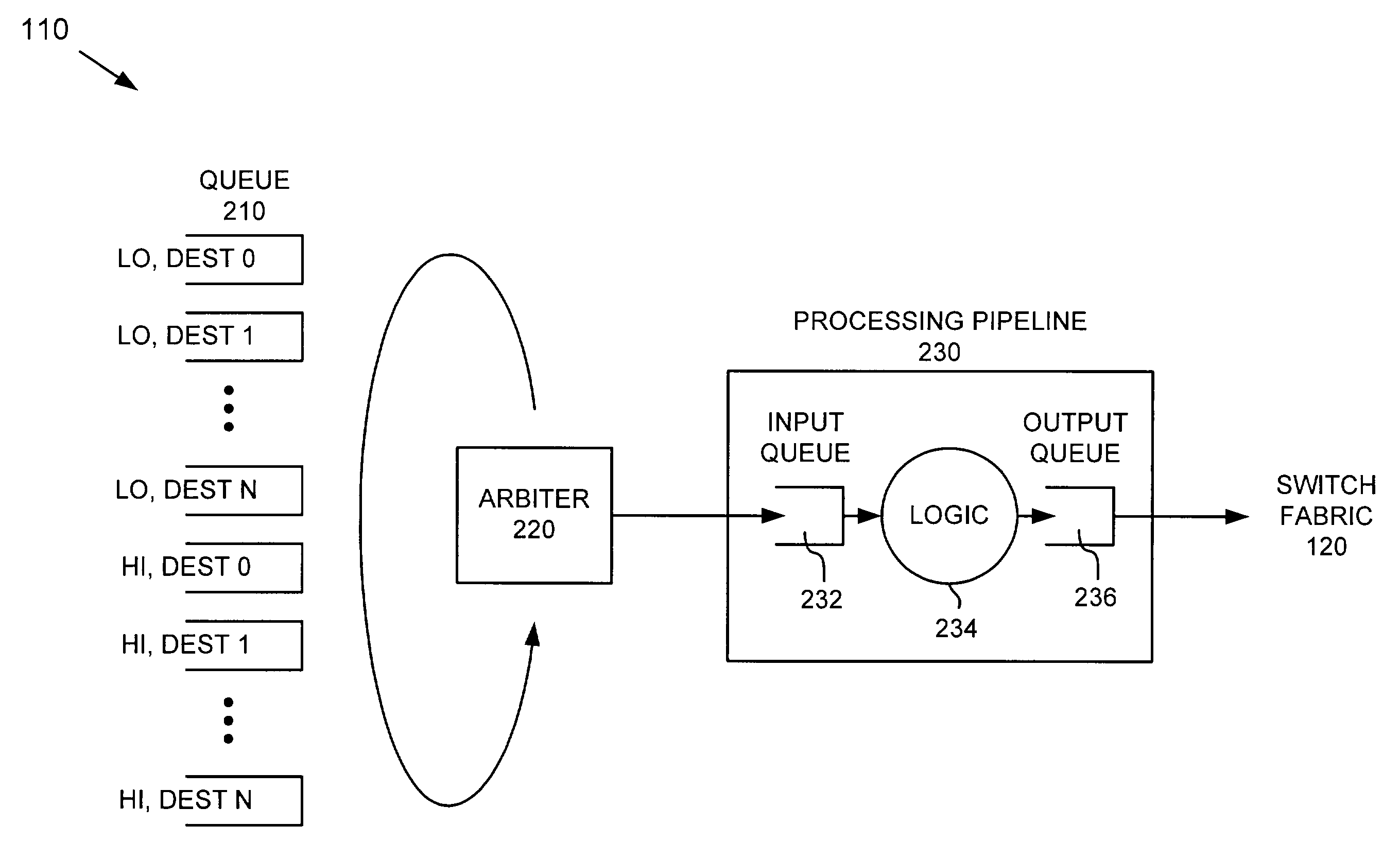

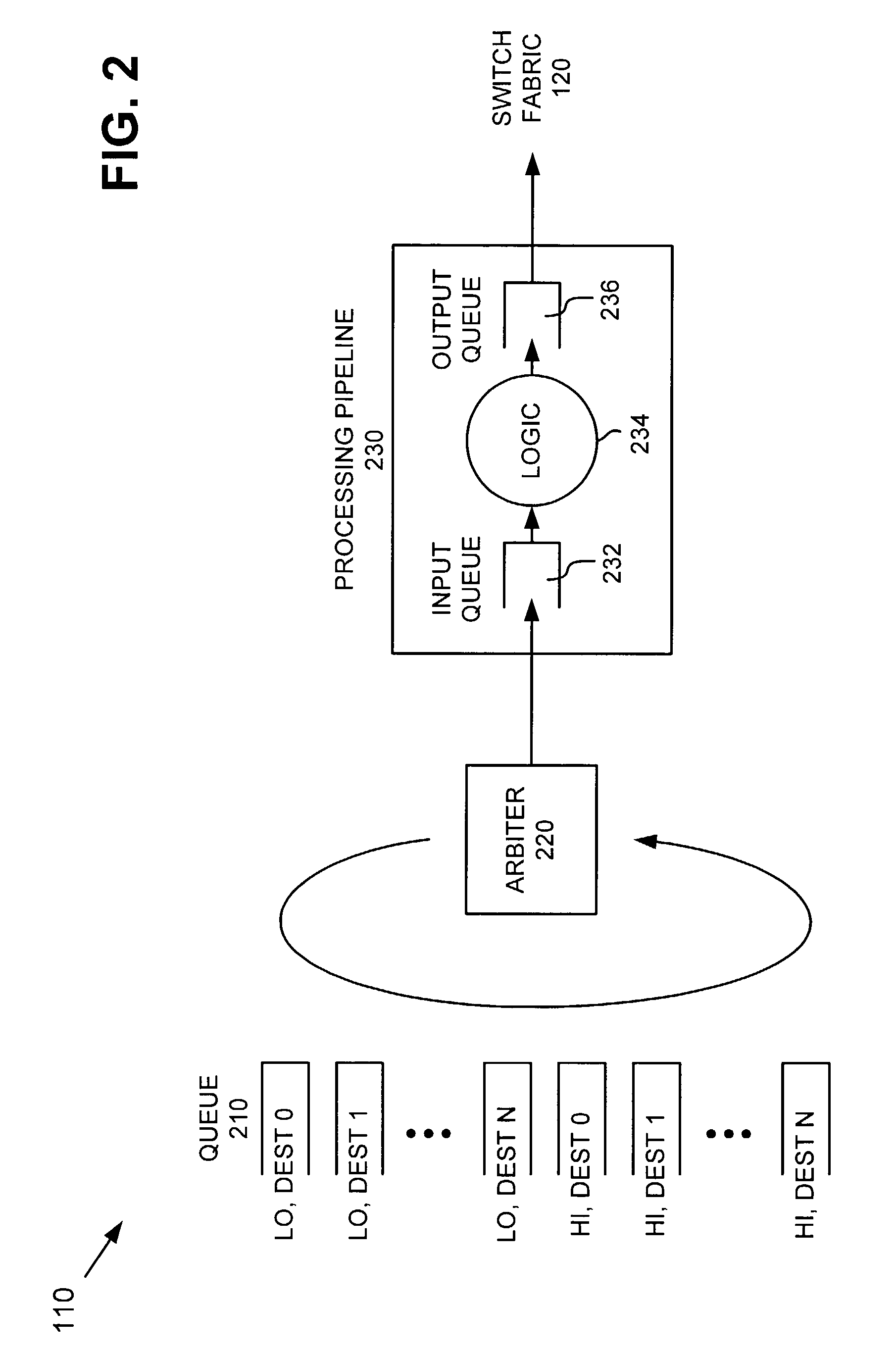

Packet prioritization systems and methods using address aliases

InactiveUS7415533B1Multiple digital computer combinationsData switching networksSwitched fabricReal-time computing

Owner:JUMIPER NETWORKS INC

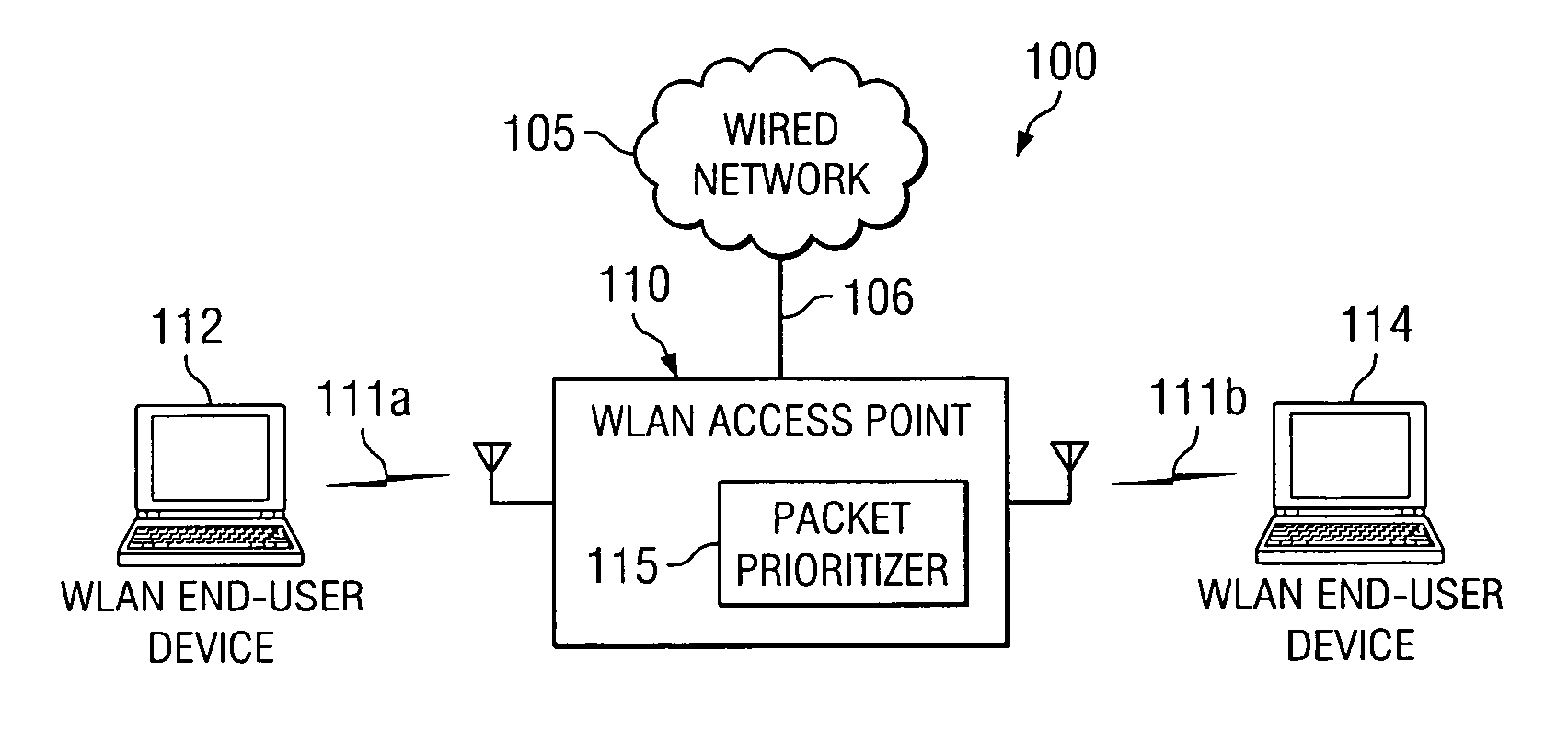

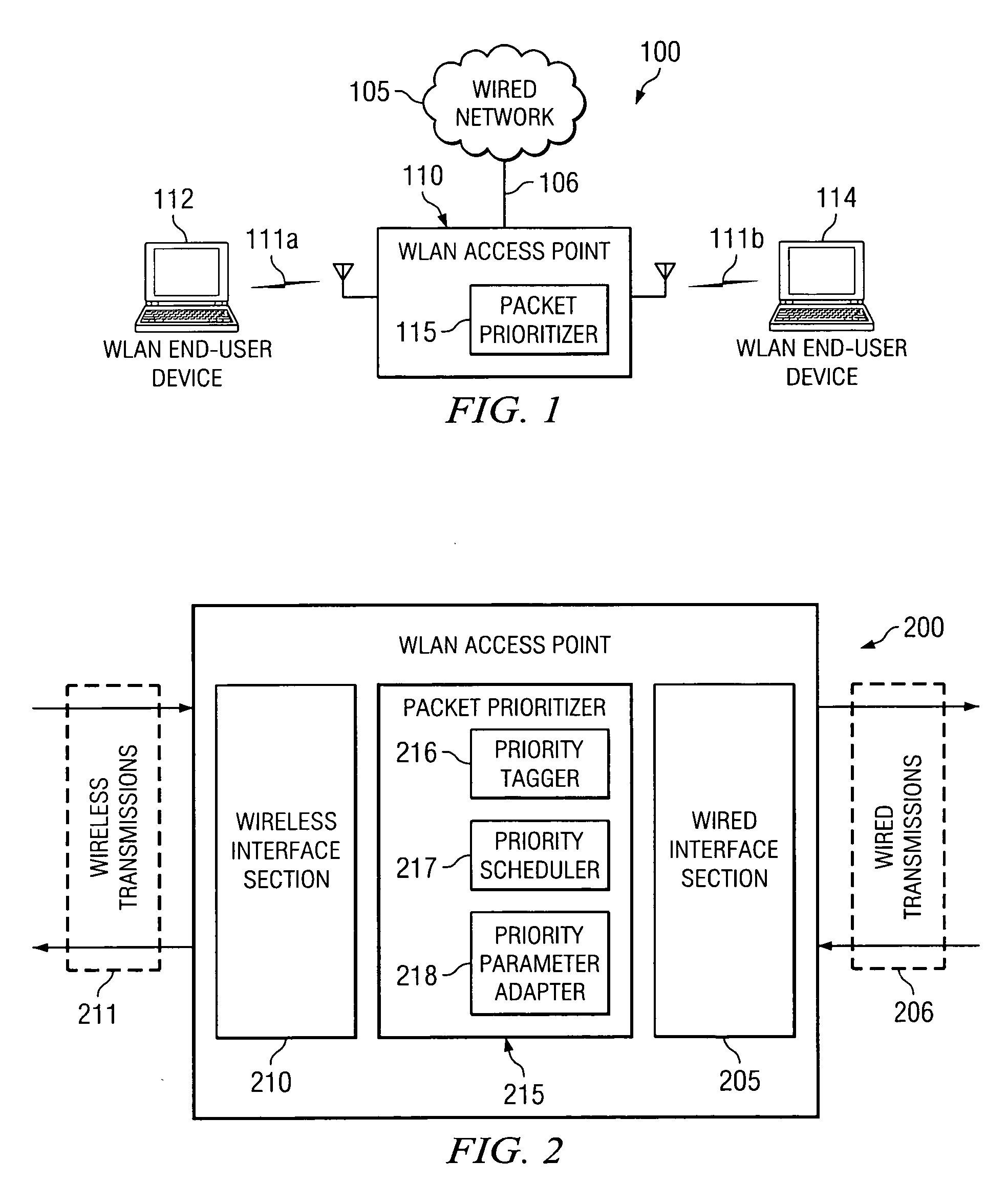

Packet-level service differentiation for quality of service provisioning over wireless local area networks

The present invention provides a packet prioritizer for use with a wireless local area network (WLAN) access point. In one embodiment, the packet prioritizer includes a priority tagger configured to provide a packet priority for a WLAN packet. Additionally, the packet prioritizer also includes a priority scheduler coupled to the priority tagger and configured to provide a strict priority scheduling of the WLAN packet through the WLAN access point based on the packet priority.

Owner:TEXAS INSTR INC

Packet prioritization systems and methods using address aliases

ActiveUS7191249B1Multiple digital computer combinationsData switching networksSwitched fabricReal-time computing

Owner:JUMIPER NETWORKS INC

Packet prioritization and associated bandwidth and buffer management techniques for audio over IP

InactiveUS20080151886A1Raise priorityReduce the possibilityData switching by path configurationVoice communicationVoice activity

The present invention is directed to voice communication devices in which an audio stream is divided into a sequence of individual packets, each of which is routed via pathways that can vary depending on the availability of network resources. All embodiments of the invention rely on an acoustic prioritization agent that assigns a priority value to the packets. The priority value is based on factors such as whether the packet contains voice activity and the degree of acoustic similarity between this packet and adjacent packets in the sequence. A confidence level, associated with the priority value, may also be assigned. In one embodiment, network congestion is reduced by deliberately failing to transmit packets that are judged to be acoustically similar to adjacent packets; the expectation is that, under these circumstances, traditional packet loss concealment algorithms in the receiving device will construct an acceptably accurate replica of the missing packet. In another embodiment, the receiving device can reduce the number of packets stored in its jitter buffer, and therefore the latency of the speech signal, by selectively deleting one or more packets within sustained silences or non-varying speech events. In both embodiments, the ability of the system to drop appropriate packets may be enhanced by taking into account the confidence levels associated with the priority assessments.

Owner:AVAYA INC

Packet prioritization and associated bandwidth and buffer management techniques for audio over IP

InactiveUS20080151898A1Raise priorityReduce the possibilityData switching by path configurationPacket loss concealmentReal-time computing

The present invention is directed to voice communication devices in which an audio stream is divided into a sequence of individual packets, each of which is routed via pathways that can vary depending on the availability of network resources. All embodiments of the invention rely on an acoustic prioritization agent that assigns a priority value to the packets. The priority value is based on factors such as whether the packet contains voice activity and the degree of acoustic similarity between this packet and adjacent packets in the sequence. A confidence level, associated with the priority value, may also be assigned. In one embodiment, network congestion is reduced by deliberately failing to transmit packets that are judged to be acoustically similar to adjacent packets; the expectation is that, under these circumstances, traditional packet loss concealment algorithms in the receiving device will construct an acceptably accurate replica of the missing packet. In another embodiment, the receiving device can reduce the number of packets stored in its jitter buffer, and therefore the latency of the speech signal, by selectively deleting one or more packets within sustained silences or non-varying speech events. In both embodiments, the ability of the system to drop appropriate packets may be enhanced by taking into account the confidence levels associated with the priority assessments.

Owner:AVAYA INC

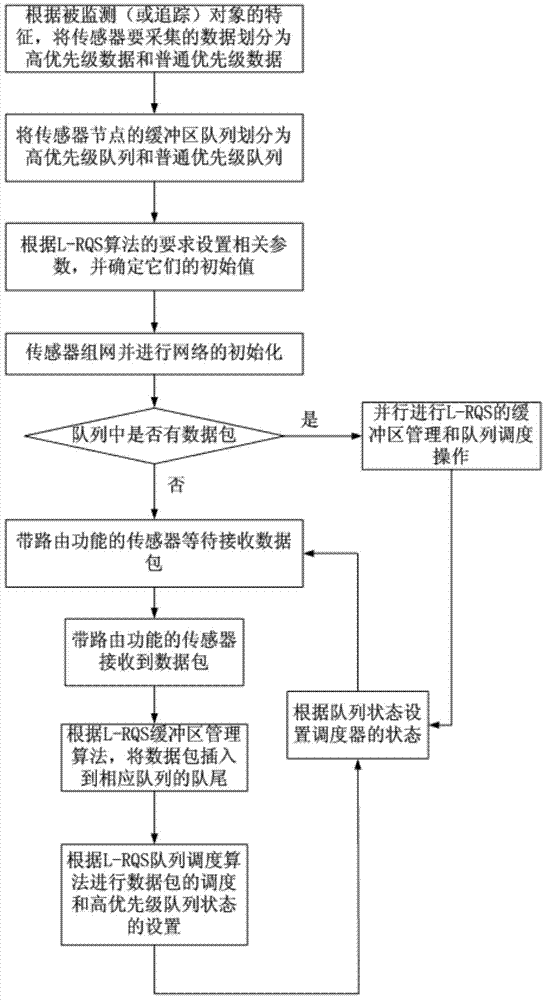

Scheduling method for guaranteeing real-time transmission of wireless sensor network information

ActiveCN103532877AGuaranteed Priority SchedulingIncrease profitNetwork traffic/resource managementNetwork topologiesMobile wireless sensor networkManagement algorithm

The invention relates to a scheduling method for guaranteeing real-time transmission of wireless sensor network information. The scheduling method comprises the following steps: 1) prioritizing data received by sensor nodes according to a wireless sensor network application environment and a monitoring object; 2) prioritizing buffer zone queues of wireless sensor nodes with routing functions according to the prioritization; 3) configuring corresponding parameters of an L-RQS (LCFS-based Real-time Queue Scheduling) algorithm and determining the initial values of the parameters; 4) building a wireless sensor network and initializing the network for enabling sensors to normally work; 5) when the sensor nodes receive data packets, performing a corresponding operation according to a state of a current queue and the priority of the data packets by a buffer zone management algorithm in L-RQS; 6) selecting a corresponding data packet for scheduling and setting a state of a high-priority queue according to the number of continuously transmitted high-priority data packets or waiting time by a queue scheduling algorithm in L-RQS; 7) when finishing the scheduling of the corresponding data packet at a time, selecting the state according to the number of the data packets in the queue by a scheduler. The scheduling method is used in the field of an application of the wireless sensor network with higher real-time requirement.

Owner:NORTH CHINA INST OF SCI & TECH

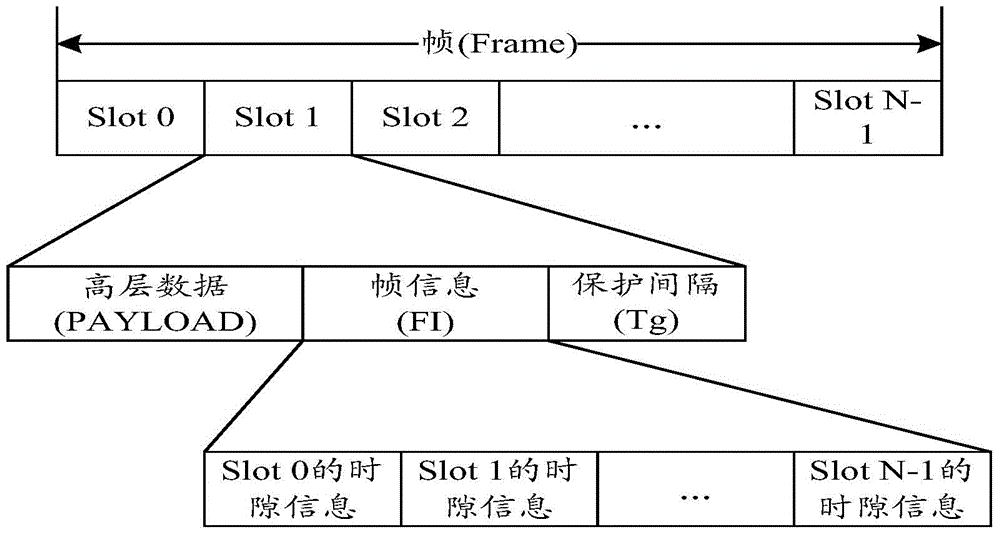

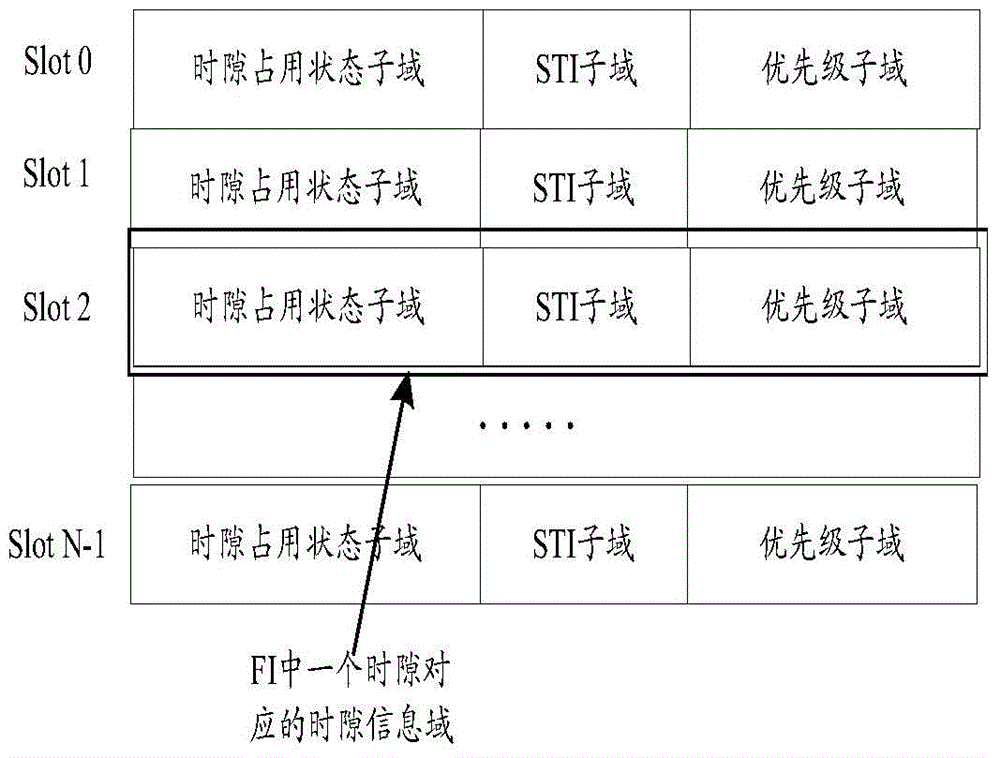

Data transmission method and device

ActiveCN104918281ASupport priority sendingEnsure fairnessNetwork traffic/resource managementTransmission time delayData transmission

The invention relates to a data transmission method and a data transmission device, which are used for realizing data transmission through the combination of a resource scheduling algorithm based on remaining time and a time slot resource allocation algorithm based on time slot reservation, better supporting the prior transmission of high-priority data during time slot resource steal and time slot resource collision in congestion scenario by matching a time slot priority and a data packet priority as much as possible, and better ensuring the fairness when each node accesses a channel. The data transmission method comprises the steps of: maintaining transmission remaining time of a data packet according to transmission time delay information corresponding to the data packet when the data packet arrives a transmission cache queue; and determining a data packet to be transmitted currently and transmitting the data packet through currently arrived transmission resources according to the transmission remaining time of the data packet in the transmission cache queue, a corresponding priority and a resource priority of the transmission resource available for the nodes when service time of the transmission resources used for transmitting the data packet is up.

Owner:DATANG GOHIGH INTELLIGENT & CONNECTED TECH (CHONGQING) CO LTD

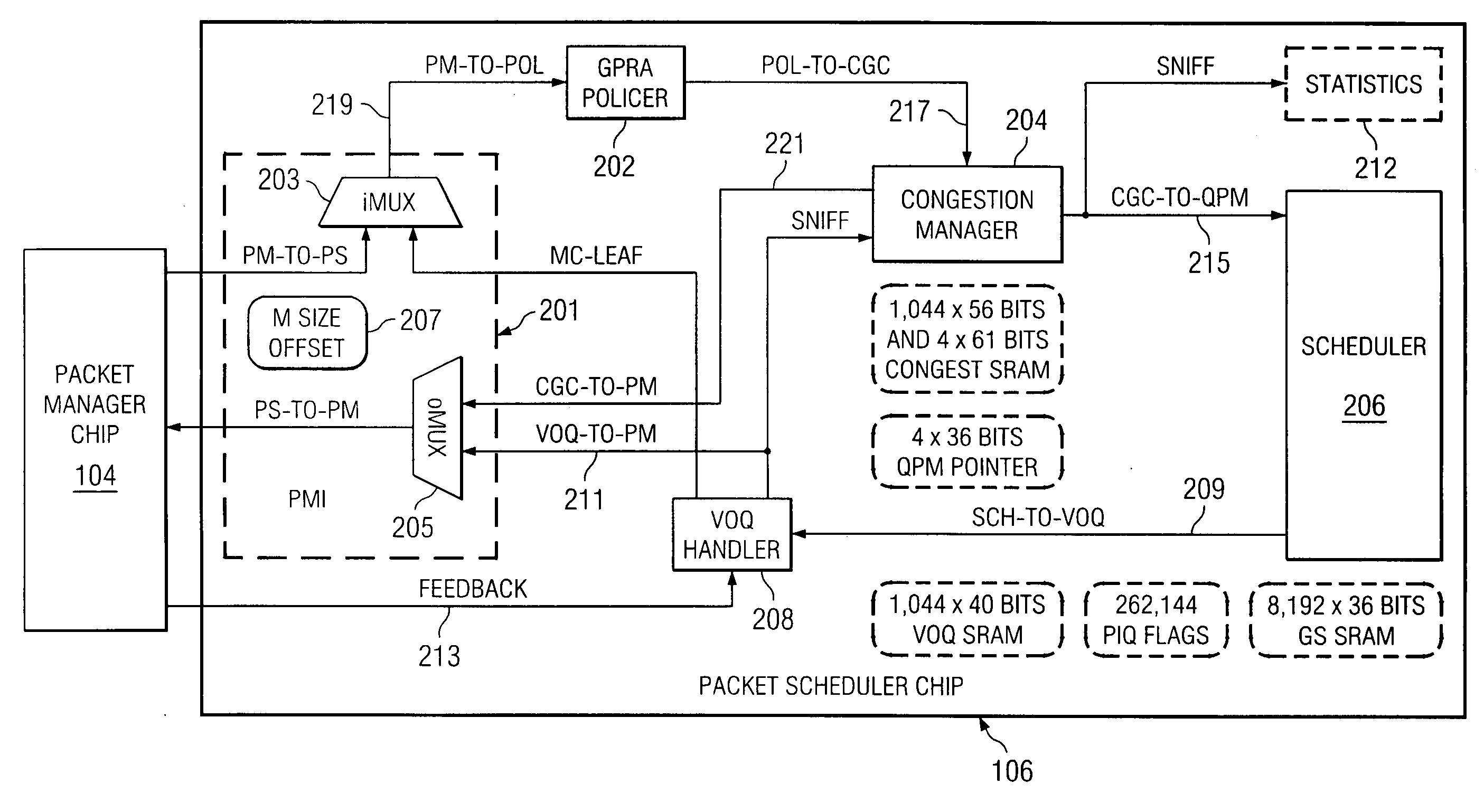

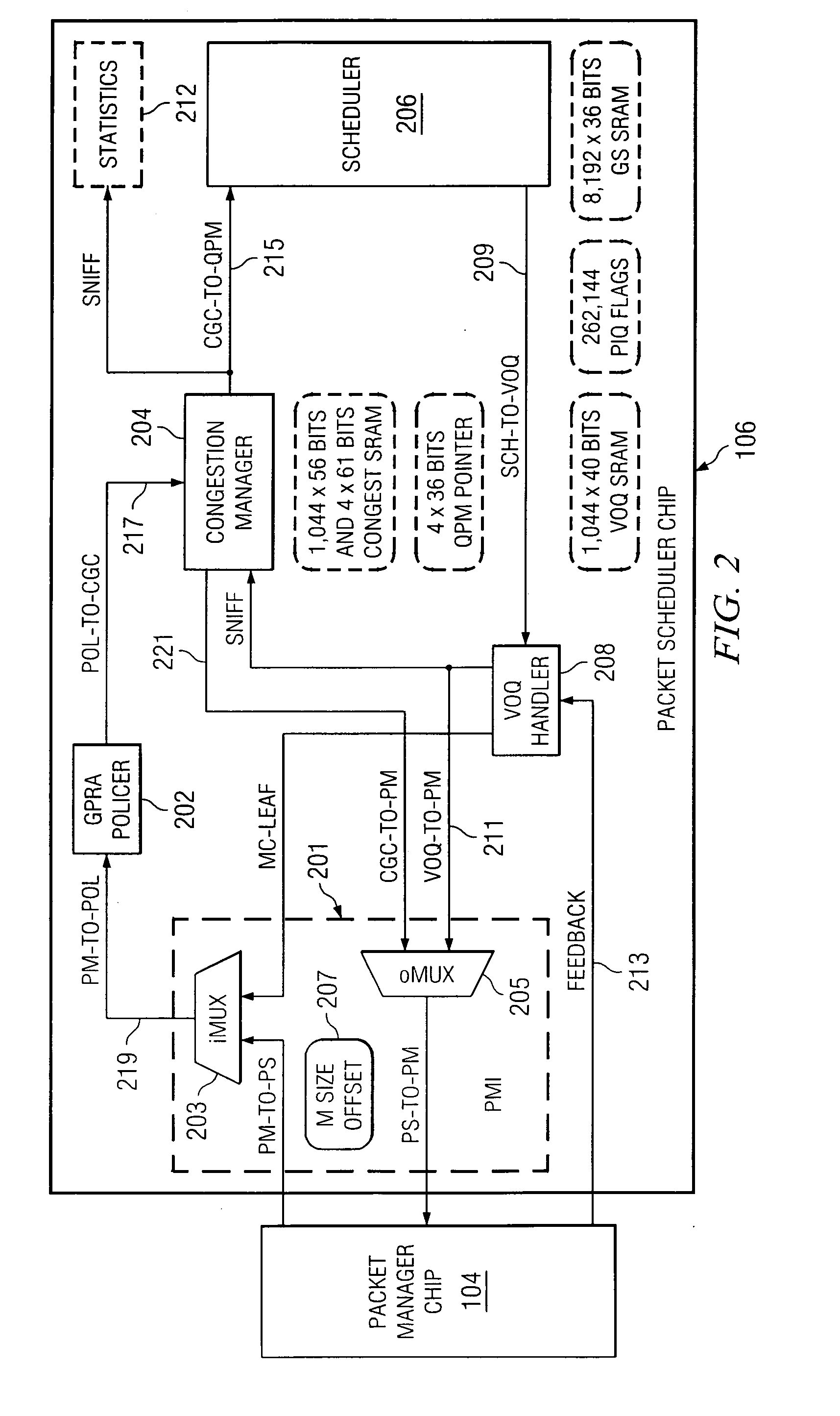

Apparatus and methods for scheduling packets in a broadband data stream

A packet scheduler includes a packet manager interface, a policer, a congestion manager, a scheduler, and a virtual output queue (VOQ) handler. The policer assigns a priority to each packet. Depending on congestion levels, the congestion manager determines whether to send a packet based on the packet's priority assigned by the policer. The scheduler schedules packets in accordance with configured rates for virtual connections and group shapers. A scheduled packet is queued at a virtual output queue (VOQ) by the VOQ handler. In one embodiment, the VOQ handler sends signals to a packet manager (through the packet manager interface) to instruct the packet manager to transmit packets in a scheduled order.

Owner:TELLABS OPERATIONS

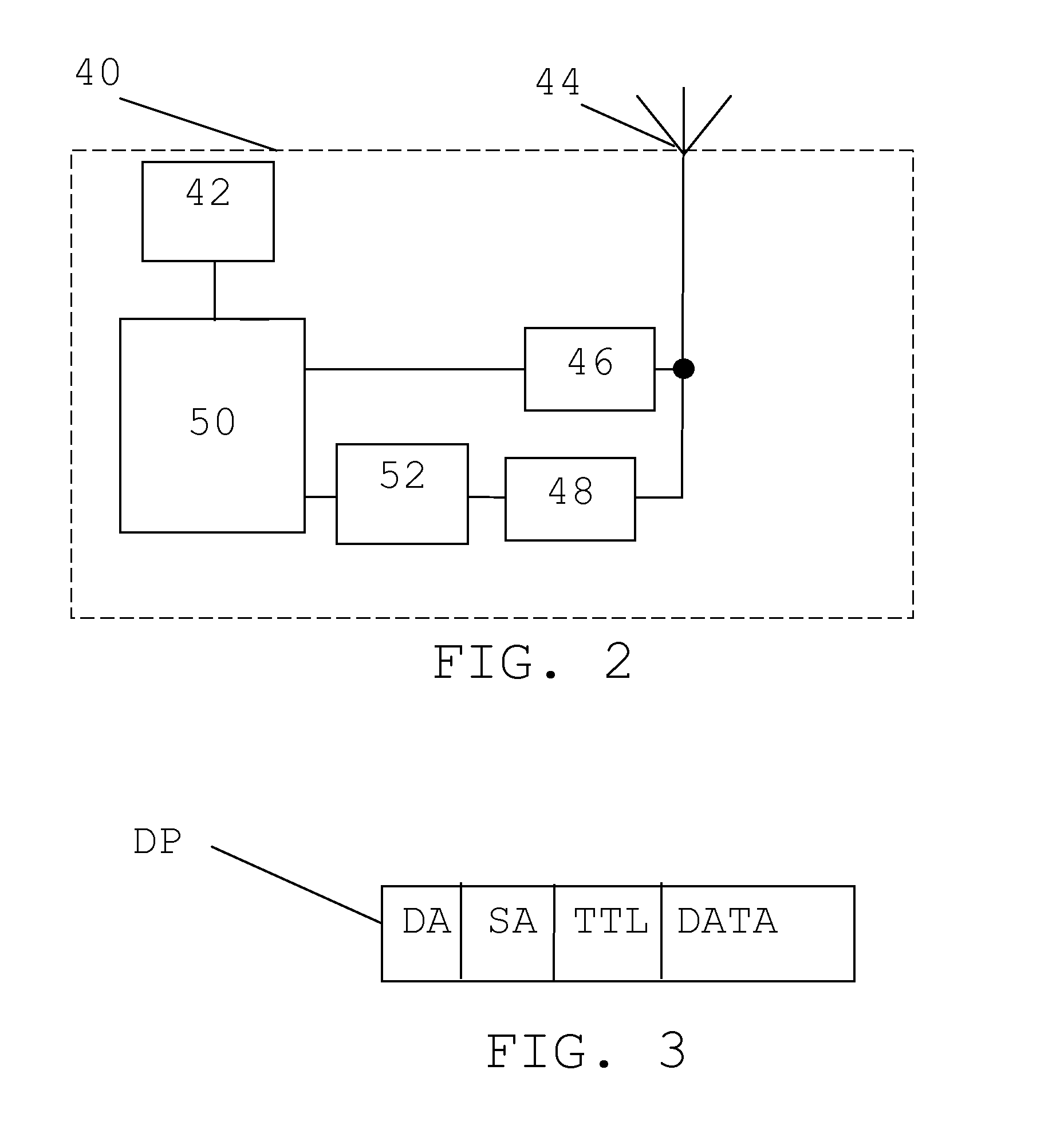

Packet prioritizing in an industrial wireless network

ActiveUS20150244635A1Simple methodError preventionNetwork traffic/resource managementWireless transmitterCommunication device

A wireless communication device providing a node in the network includes a wireless transmitter, a wireless receiver, a transmission buffer, and a packet prioritizing unit configured to obtain data relating to packets to be transmitted in the network, obtain indications of the remaining lifetime of the packets, where the indications are based on the time needed for reaching a destination node, prioritize the packets according to the remaining life time indications, where the prioritization is made in ascending order from an indication of a low remaining life time to a high remaining lifetime, and place the packets in the transmission buffer for transmission according to the prioritization.

Owner:ABB (SCHWEIZ) AG

Priority packet processing

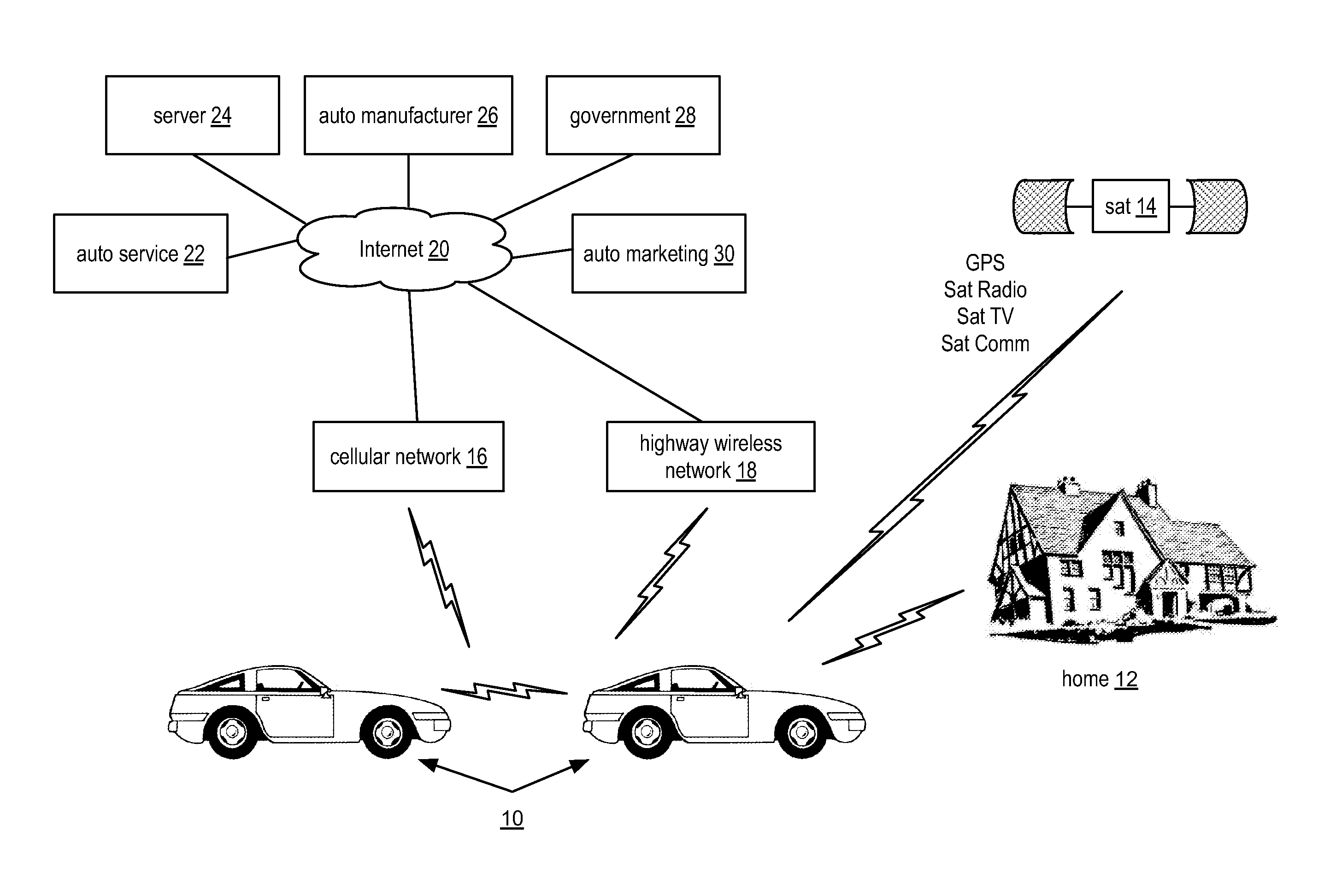

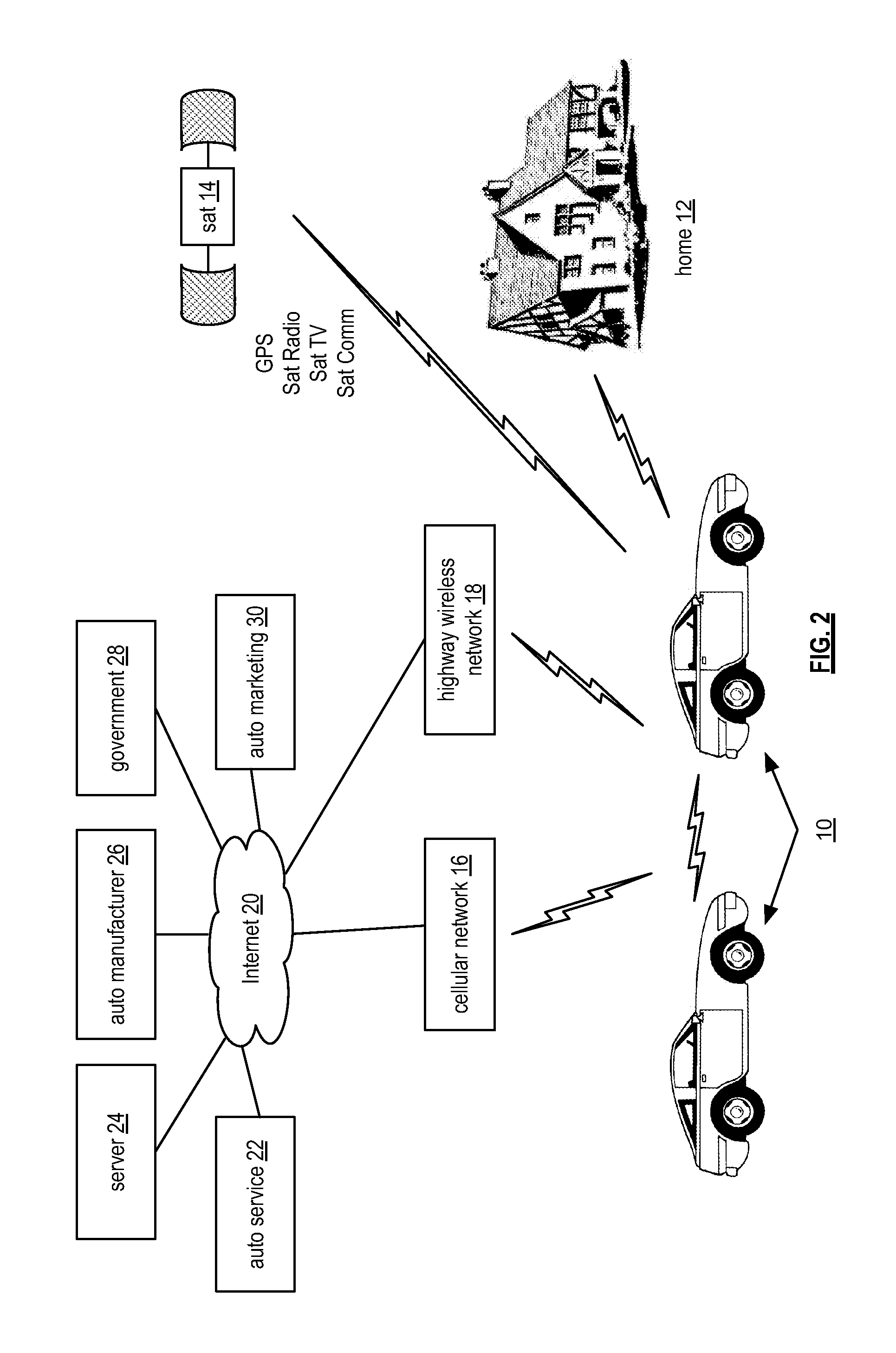

ActiveUS20120106447A1Registering/indicating working of vehiclesDigital data processing detailsTime to livePacket processing

A network node in a vehicular network processes packets based on a prioritization scheme. The prioritization scheme uses packet type, priority, source, destination, or other information to determine a priority of the packets. Packets can be stored in one of multiple queues organized according to packet type, or other criteria. In some cases, only one queue is used. The packets are time stamped when put into a queue, and a time to live is calculated based on the timestamp. The time to live, as well as other factors such as packet type, packet priority, packet source, and packet destination can be used to adjust a packet's priority within the queue. Packets are transmitted from the queues in priority order. In some cases, the network node can identify a top-priority packet, and transmit the top priority packet without first storing the packet in the queue.

Owner:AVAGO TECH INT SALES PTE LTD

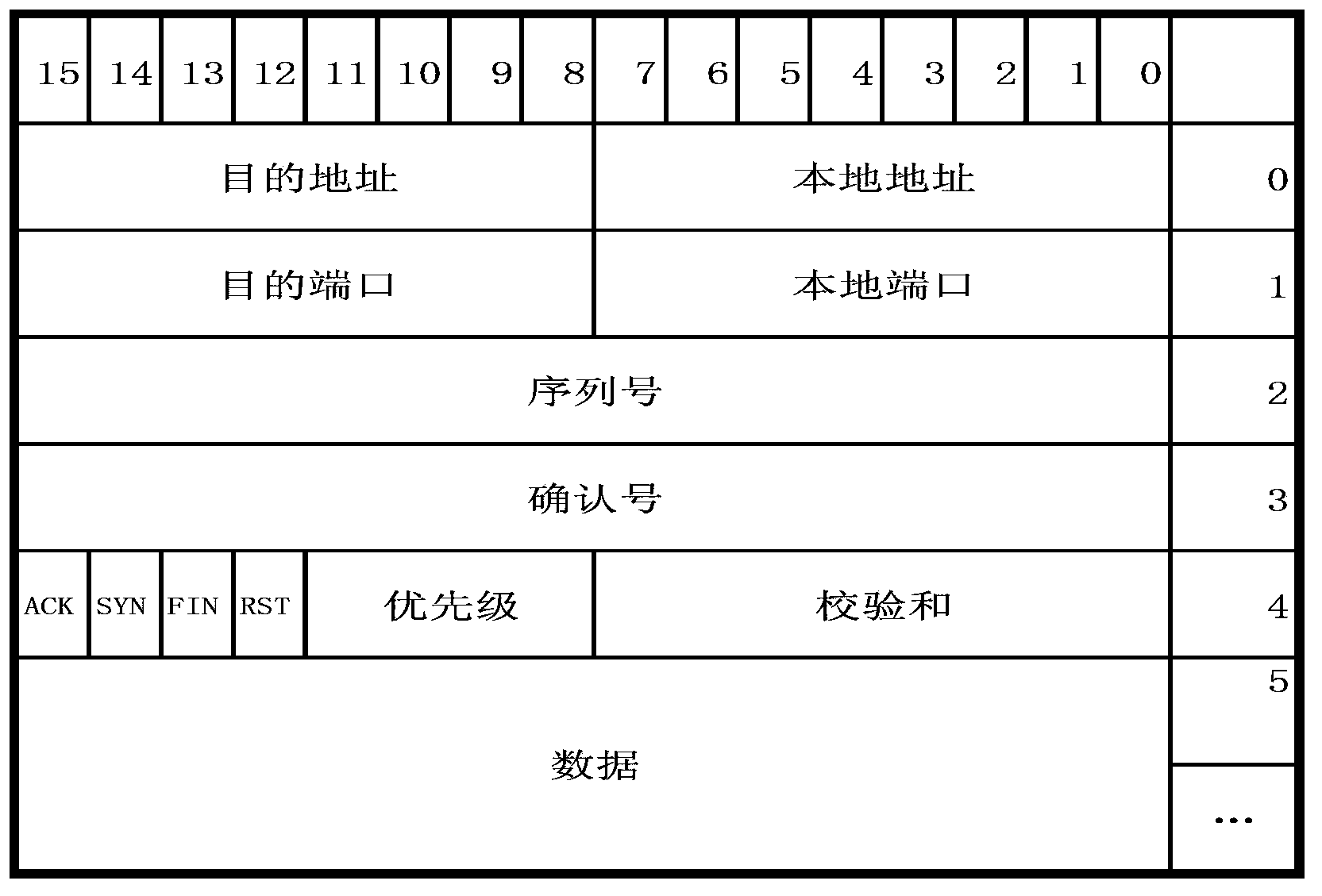

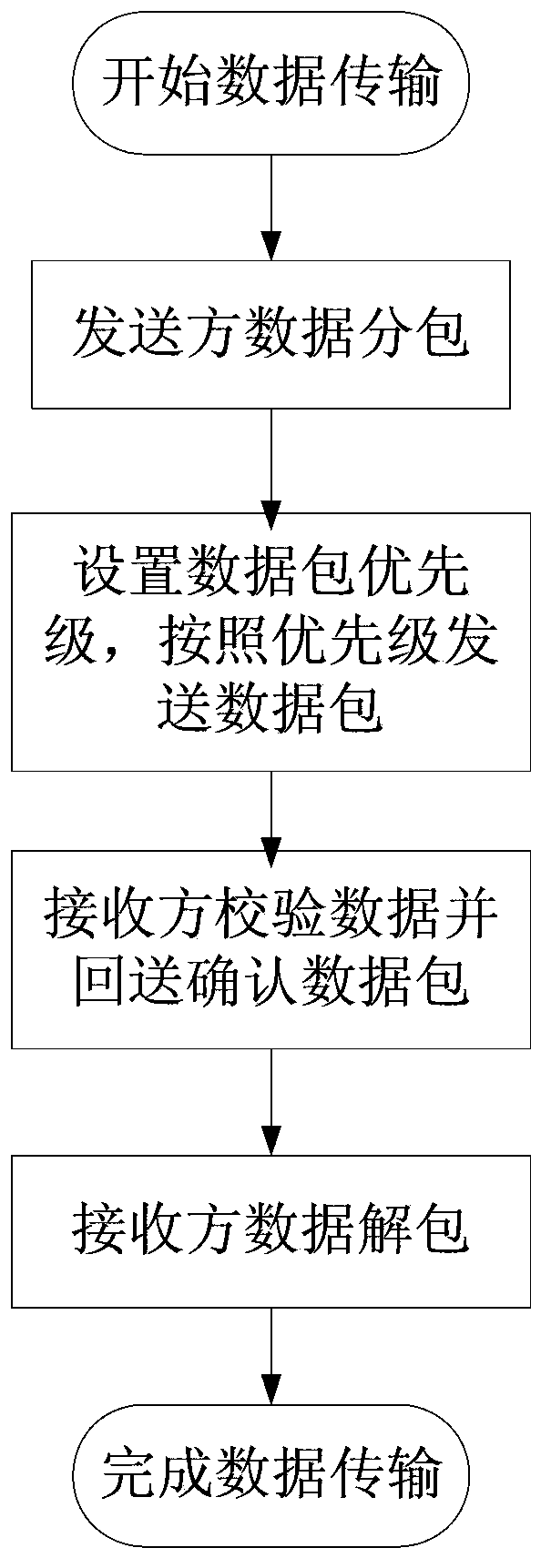

High real-time spacecraft data transmission method based on priorities

InactiveCN103414692AImprove reliabilityGuaranteed reliabilityData switching networksComplete dataData transmission

The invention discloses a high real-time spacecraft data transmission method based on priorities. The method comprises the first step of partitioning data assigned to be sent into a plurality of data packages and continuously allocating serial numbers to the data packages according to the successive sequence of data organization, the second step of setting the priorities for all data packages and sending the data packages according to the sequence from a high priority to a low priority of the data packages, the third step of verifying the integrity and correctness of one data package after a receiver receives the data package, and sending a confirmed data package comprising the serial number of a next data package needing to be received to a sender, the fourth step of sending the next data package after the sender receives the confirmed data package, if the confirmed data package is not received by the sender in the assigned time, the confirmed data package is sent again, and the fifth step of combining all received data packages into integrated data via the receiver according to the successive sequence of the serial numbers after all data packages are sent to the receiver. The high real-time spacecraft data transmission method has the advantages of being high in real-time performance and reliability.

Owner:BEIJING INST OF CONTROL ENG

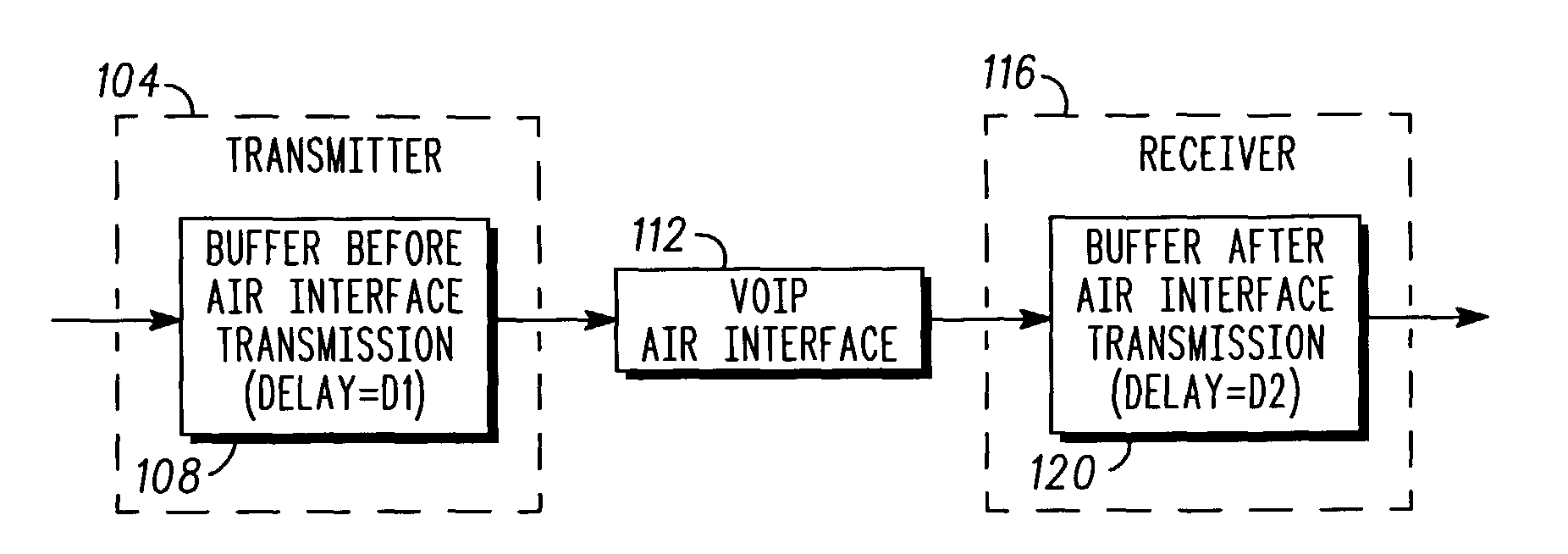

VOIP transmitter and receiver devices and methods therefor

A Voice Over Internet Protocol (VOIP) receiver (630), operating in conjunction with a transmitter (606) receives a sequence of voice packets representing a speech utterance transmitted over a VOIP wireless interface (112). A receive packet buffer (120) buffers the received sequence of voice packets after receipt and before playback of reconstructed speech. A processor (650), operating under program control, determines a transmission buffer (108) delay of a first packet in the sequence of packets representing the speech utterance. The control processor (650) further sets a prescribed amount of delay in the receive packet buffer (120) based upon the transmission buffer (108) delay so that the transmission buffer delay+receive buffer delay=a predetermined total delay. The status of the receiver buffer (120) is monitored and tracked by or fed back to the transmitter side to minimize receive buffer (120) under-runs by use of CDMA soft capacity (200), link dependent prioritization (300), real-time packet prioritization (400) and / or variation of vocoder (624) rates (500).

Owner:GOOGLE TECH HLDG LLC

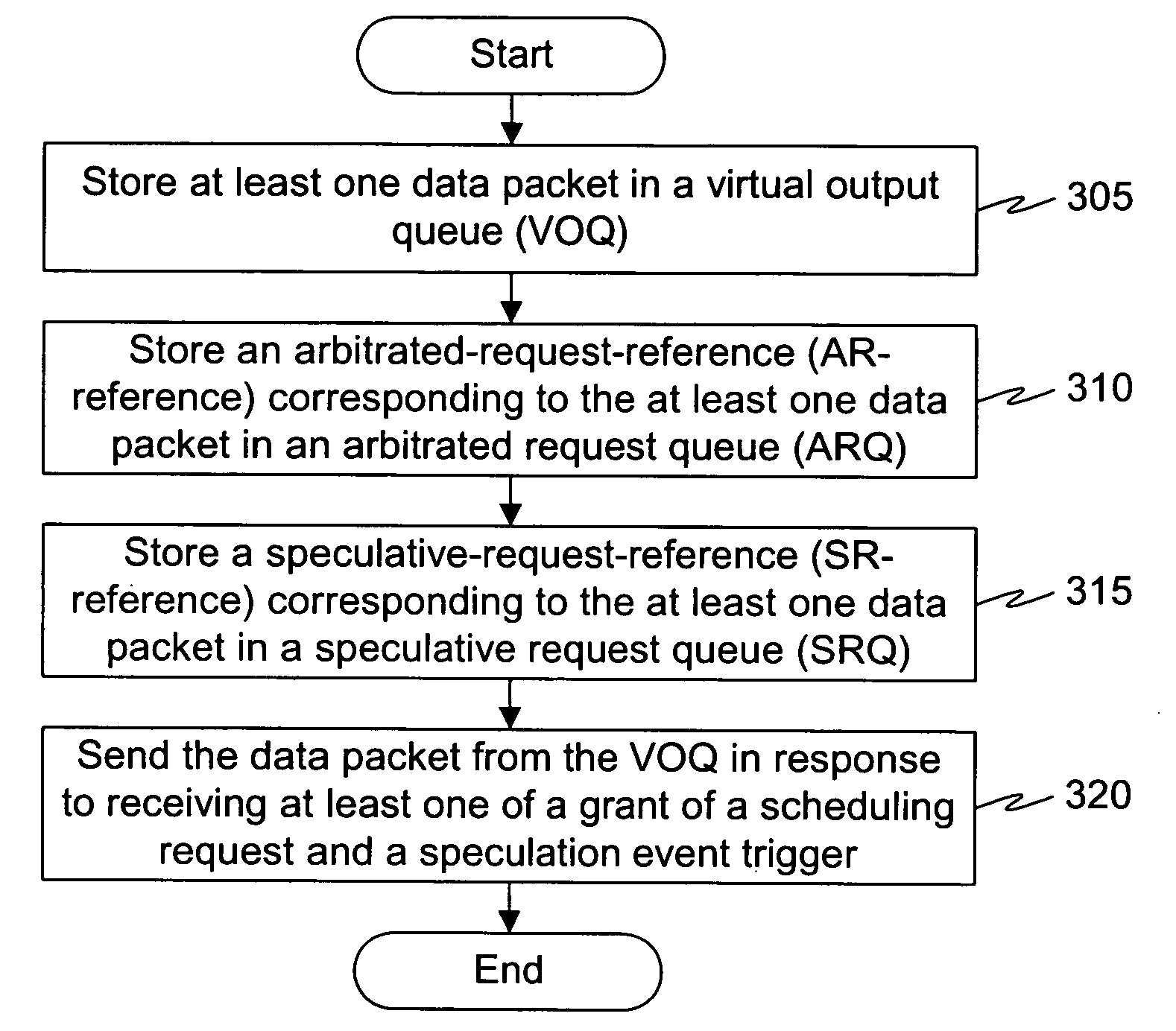

Method and system for high-concurrency and reduced latency queue processing in networks

InactiveUS20070201497A1Response was lostMaintain consistencyTime-division multiplexData switching by path configurationPacket arrivalEvent triggered

A method and a system for controlling a plurality of queues of an input port in a switching or routing system. The method supports the regular request-grant protocol along with speculative transmission requests in an integrated fashion. Each regular scheduling request or speculative transmission request is stored in request order using references to minimize memory usage and operation count. Data packet arrival and speculation event triggers can be processed concurrently to reduce operation count and latency. The method supports data packet priorities using a unified linked list for request storage. A descriptor cache is used to hide linked list processing latency and allow central scheduler response processing with reduced latency. The method further comprises processing a grant of a scheduling request, an acknowledgement of a speculation request or a negative acknowledgement of a speculation request. Grants and speculation responses can be processed concurrently to reduce operation count and latency. A queue controller allows request queues to be dequeued concurrently on central scheduler response arrival. Speculation requests are stored in a speculation request queue to maintain request queue consistency and allow scheduler response error recovery for the central scheduler.

Owner:IBM CORP

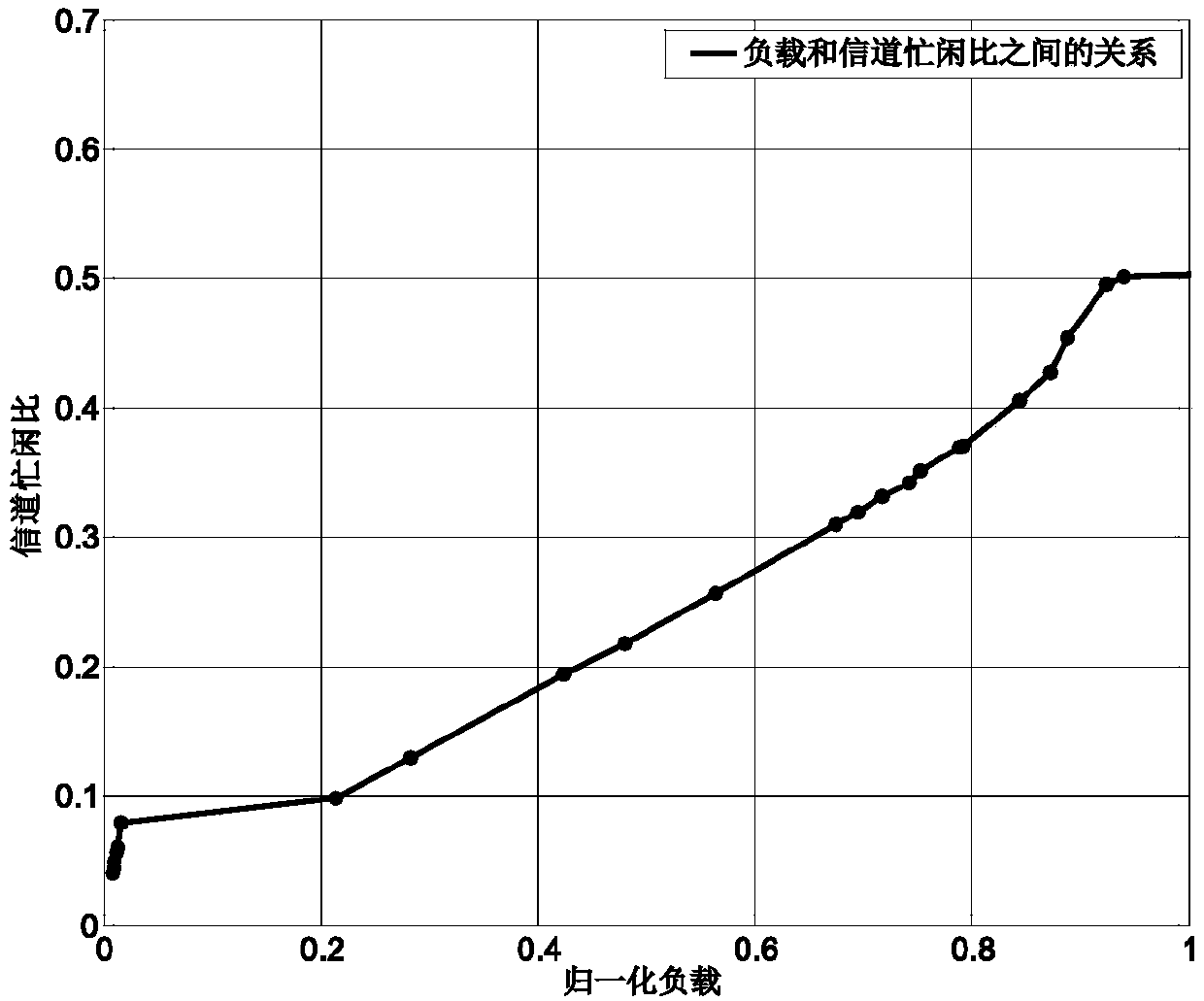

Long distance CSMA/CA protocol with QoS assurance

The invention discloses a long distance CSMA / CA (Carrier Sense Multiple Access with Collision Avoidance) protocol with QoS (Quality of Service) assurance, a node in a communication channel executes the following steps: (1) entering a backoff stage after sending of the previous data packet is finished in a sending queue; (2) according to priority conditions of the data packets in the existing sending queue, extracting the data packet with the highest priority and arranging the extracted data packet at the head of the sending queue; (3) calculating a channel busy-to-idle ratio and a priority threshold corresponding to the data packet of the head of the sending queue; (4) after the backoff stage is ended, detecting the busy-to-idle ratio of the current channel, and comparing the priority threshold corresponding to the data packet of the head of the sending queue with the busy-to-idle ratio of the current channel, if the busy-to-idle ratio of the current channel is less than the priority threshold corresponding to the data packet, enabling the data packet to be accessed to the channel and sending the data packet, and returning back to the step (1). According to the long distance CSMA / CA protocol with QoS assurance provided by the invention, performance requirement of the data packet with high priority is satisfied through delay of access of the data packet with low priority, and hereby, a collision probability is reduced and a throughput is improved.

Owner:CHINESE AERONAUTICAL RADIO ELECTRONICS RES INST +1

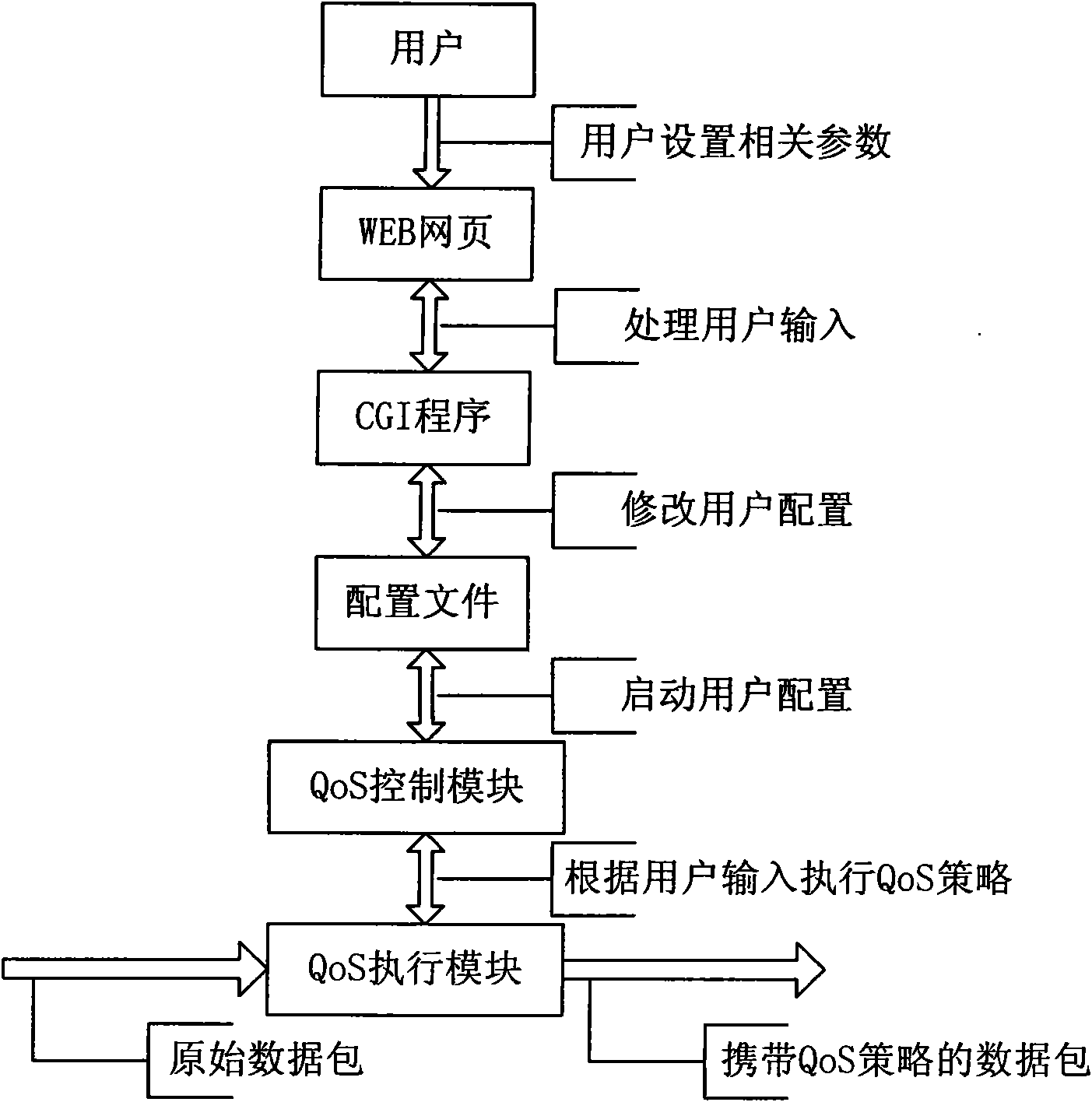

Method and system for realizing terminal quality of service of internet voice transmission system

InactiveCN101631066AGuaranteed Voice QualityMeeting basic needsInterconnection arrangementsData switching networksTraffic capacityService control

The invention discloses a method and a system for realizing the terminal quality of service of an internet voice transmission system. The quality of service link of the system comprises a quality of service control module and a quality of service execution module. The method comprises the following steps that: a user configures parameters and then a CGI module writes the parameters into a configuration file; the quality of service control module generates the configuration file into a flow control script for execution and then writes a quality of service policy into an inner core of the system; and when the inner core of the system receives a data packet, the quality of service execution module configures the data packet. A first-in first-out policy comprising the determined priority and allocated bandwidth is introduced to configure the QoS quality of service, and end-to-end quality of service in an internet voice transmission application system is uniformed, a superior QoS processing mechanism is ensured on a VoIP terminal, the voice quality of the terminal is guaranteed, real-time smoothness of IP phones is realized and the basic requirements of the user are met; and the low cost is realized and the system is easy to develop and maintain.

Owner:ZTE CORP

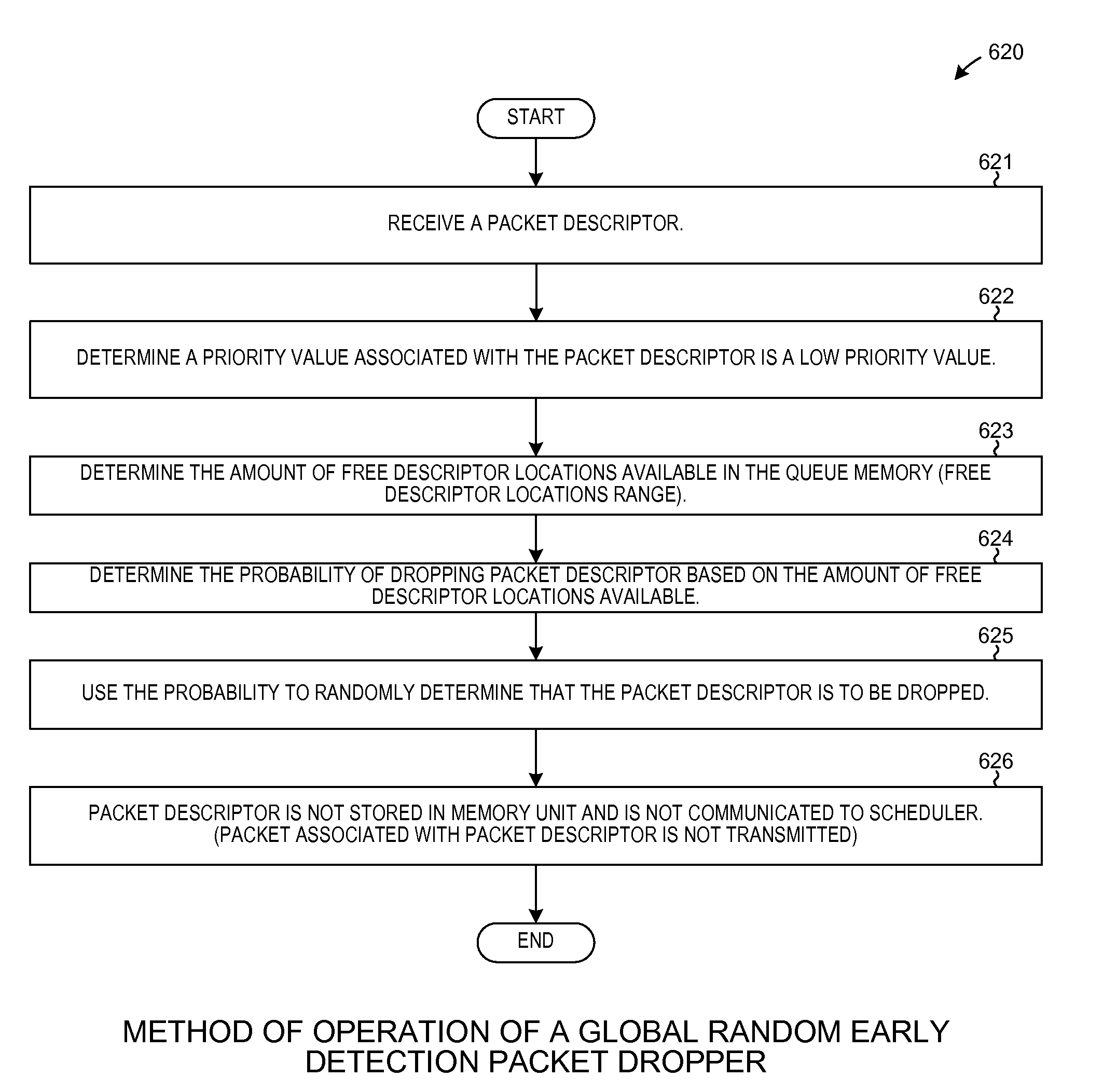

Packet storage distribution based on available memory

A method for receiving a packet descriptor including a priority indicator and a queue number indicating a queue stored within a first memory unit, storing a packet associated with the packet descriptor in a second memory, determining a first amount of free memory in the first memory unit, determining if the first amount of free memory is above a threshold value, writing the packet from the second memory to a third memory when the first amount of memory is above the threshold value and the priority indicator is equal to a first value, not writing the packet from the second memory unit to the third memory unit if the first amount of memory is below the threshold value or when the priority indicator is equal to a second value. The priority indicator is equal to a first value for high priority packets and a second value for low priority packets.

Owner:NETRONOME SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com