Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4299 results about "Linked list" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, a linked list is a linear collection of data elements, whose order is not given by their physical placement in memory. Instead, each element points to the next. It is a data structure consisting of a collection of nodes which together represent a sequence. In its most basic form, each node contains: data, and a reference (in other words, a link) to the next node in the sequence. This structure allows for efficient insertion or removal of elements from any position in the sequence during iteration. More complex variants add additional links, allowing more efficient insertion or removal of nodes at arbitrary positions. A drawback of linked lists is that access time is linear (and difficult to pipeline). Faster access, such as random access, is not feasible. Arrays have better cache locality compared to linked lists.

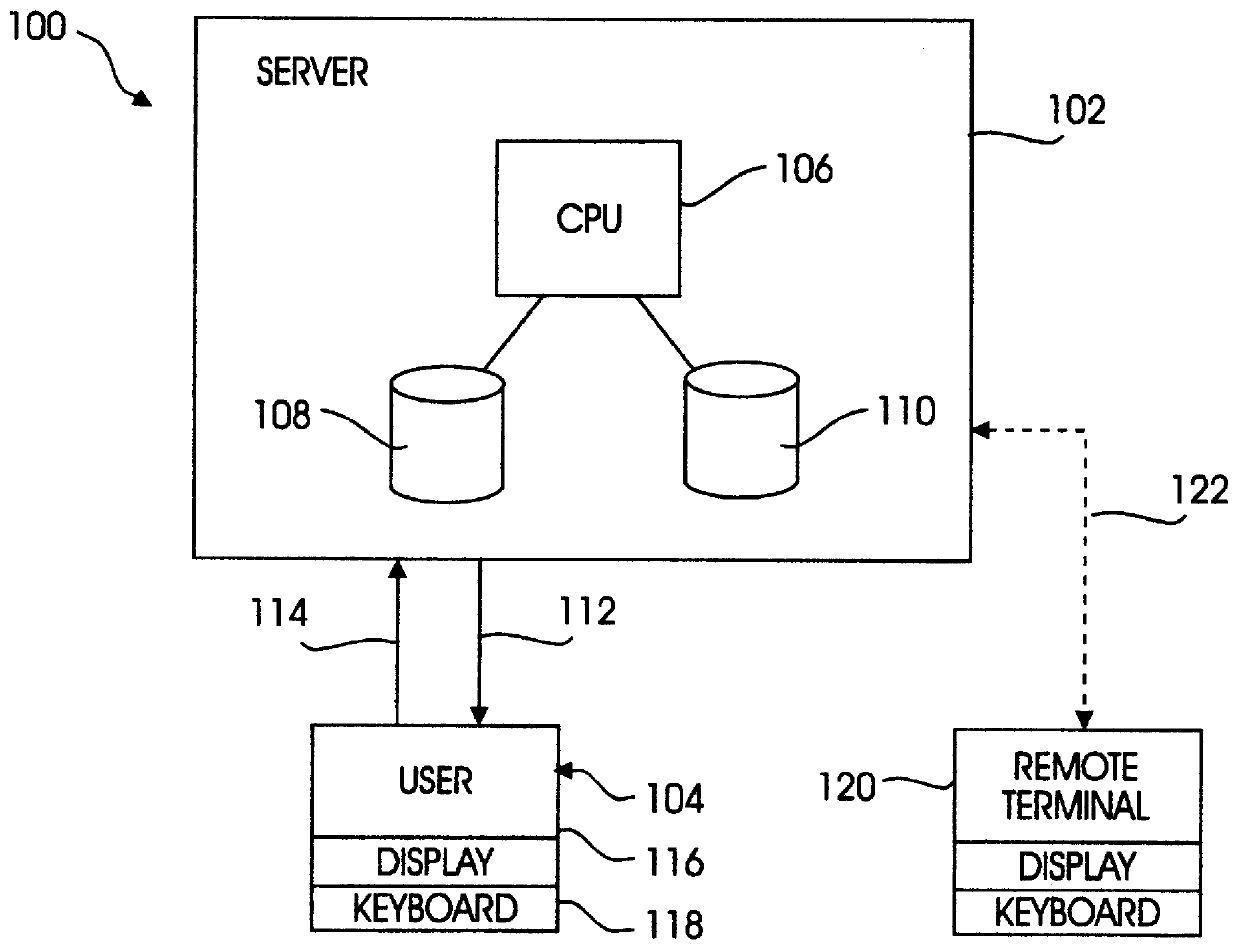

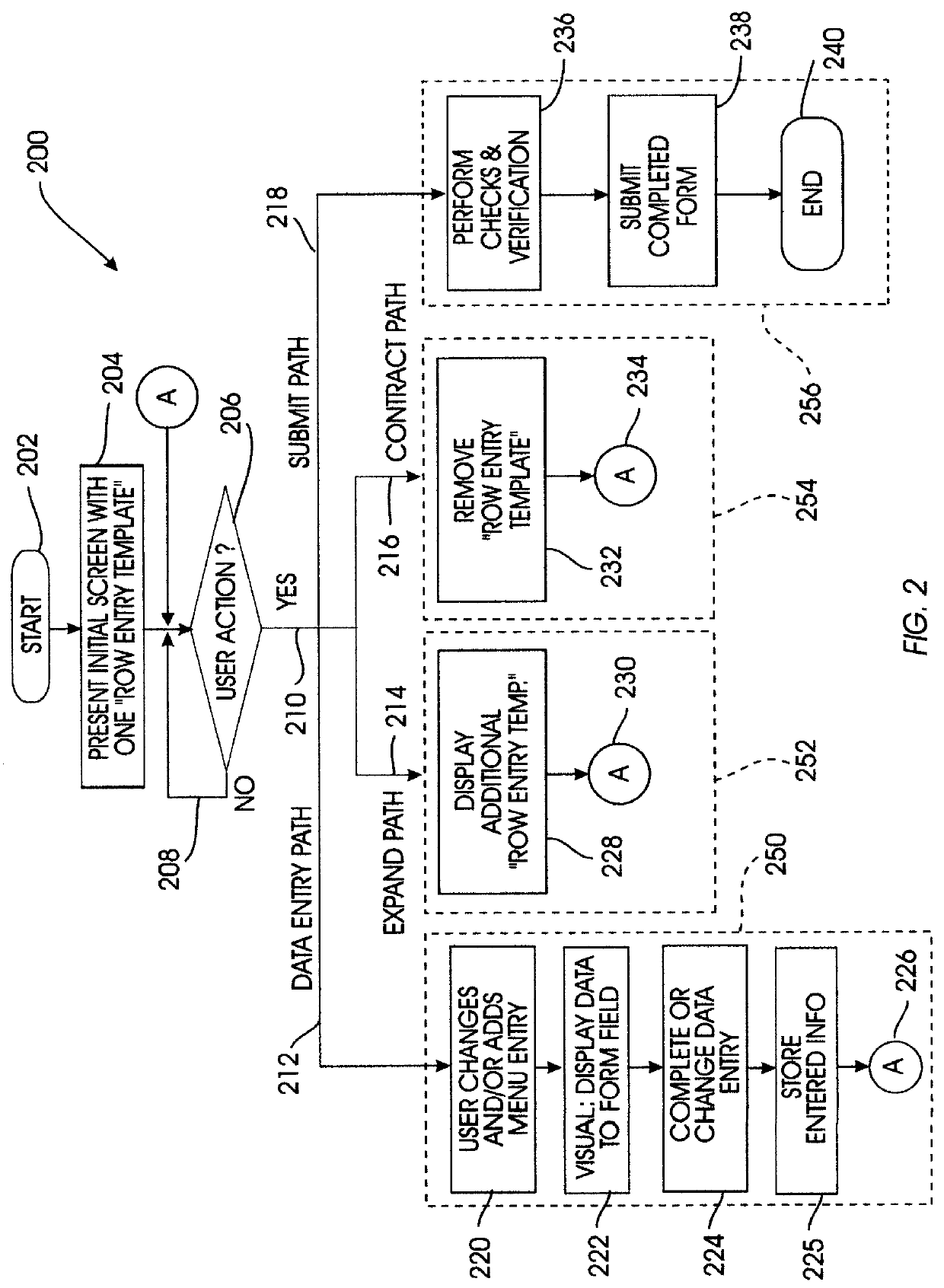

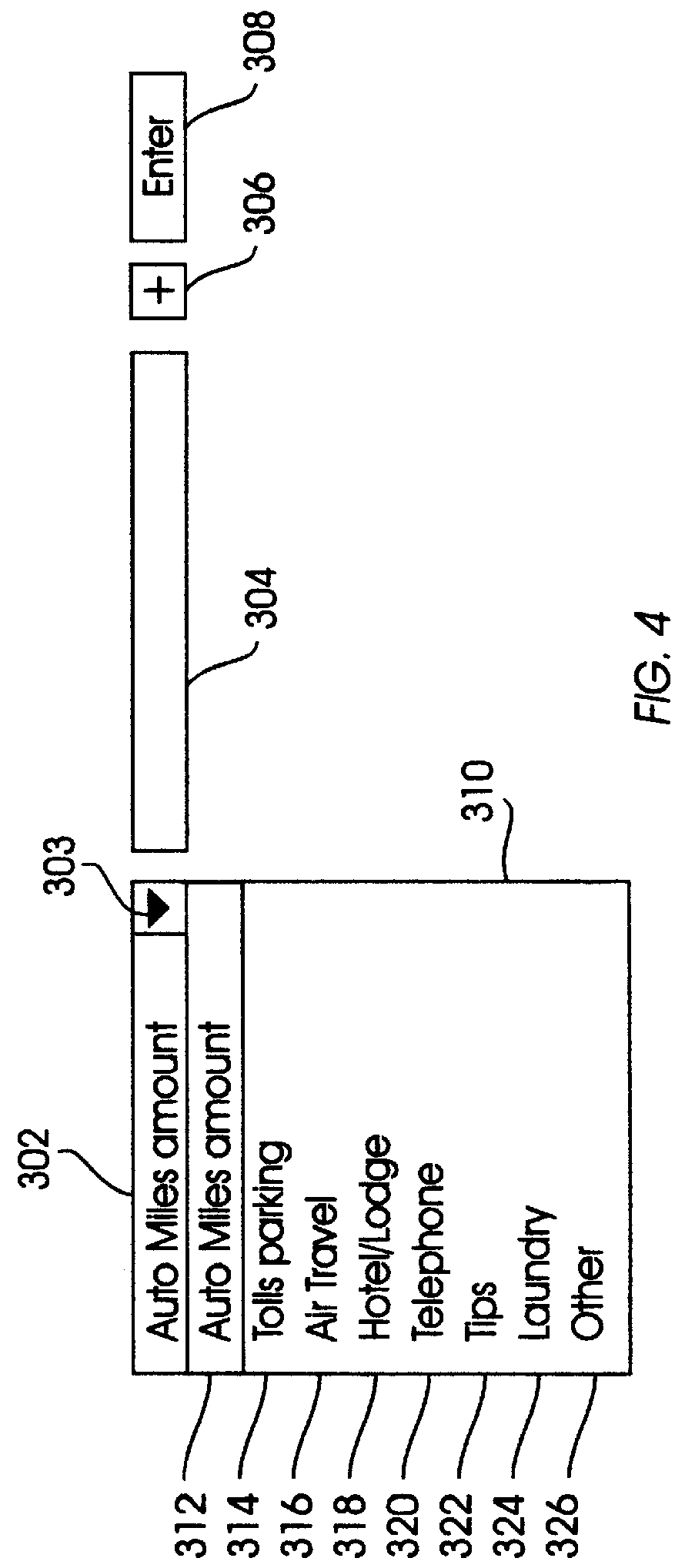

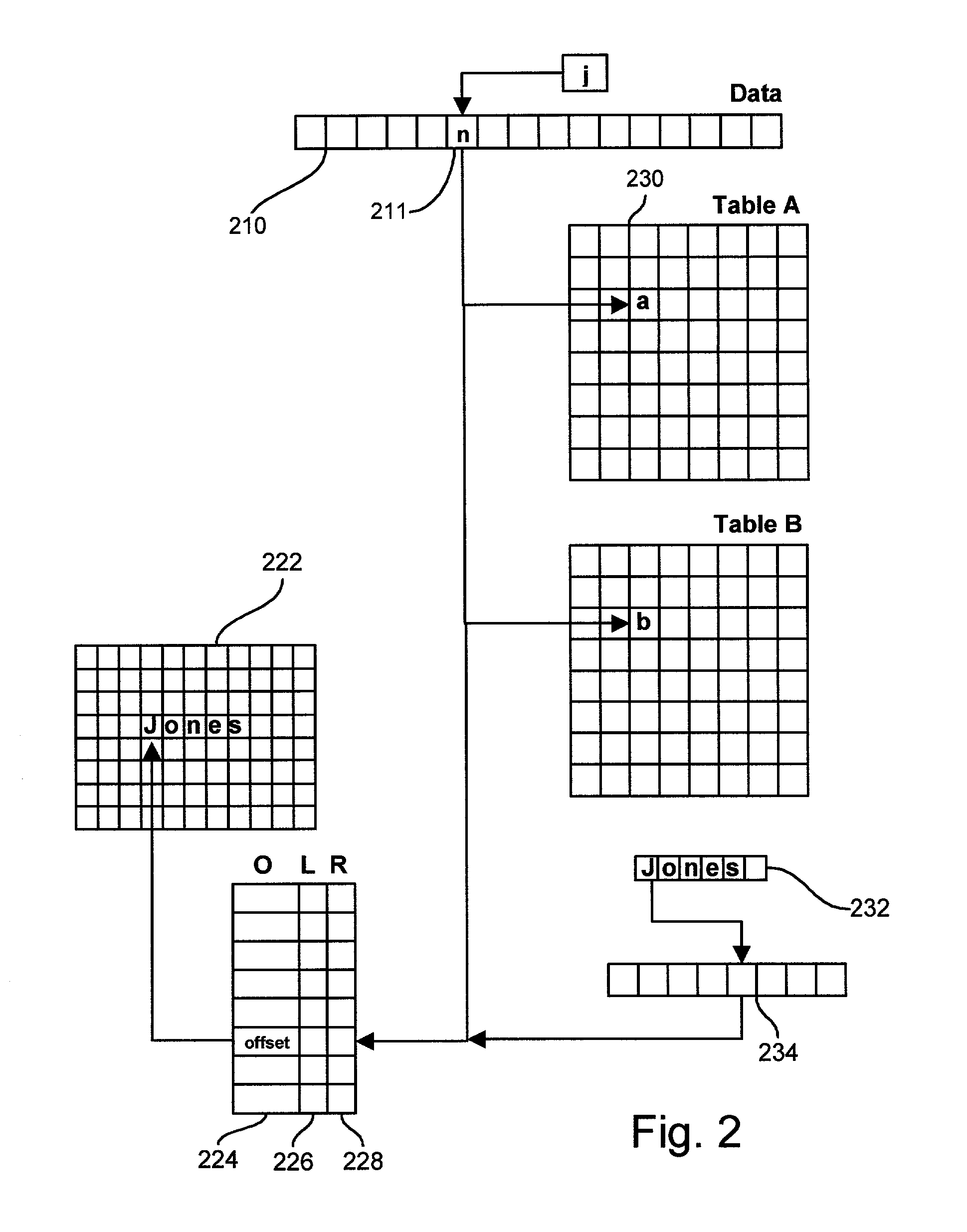

System for directly accessing fields on electronic forms

InactiveUS6084585AQuickly and efficiently completeMinimization requirementsNatural language data processingSpecial data processing applicationsGraphical user interfaceLinked list

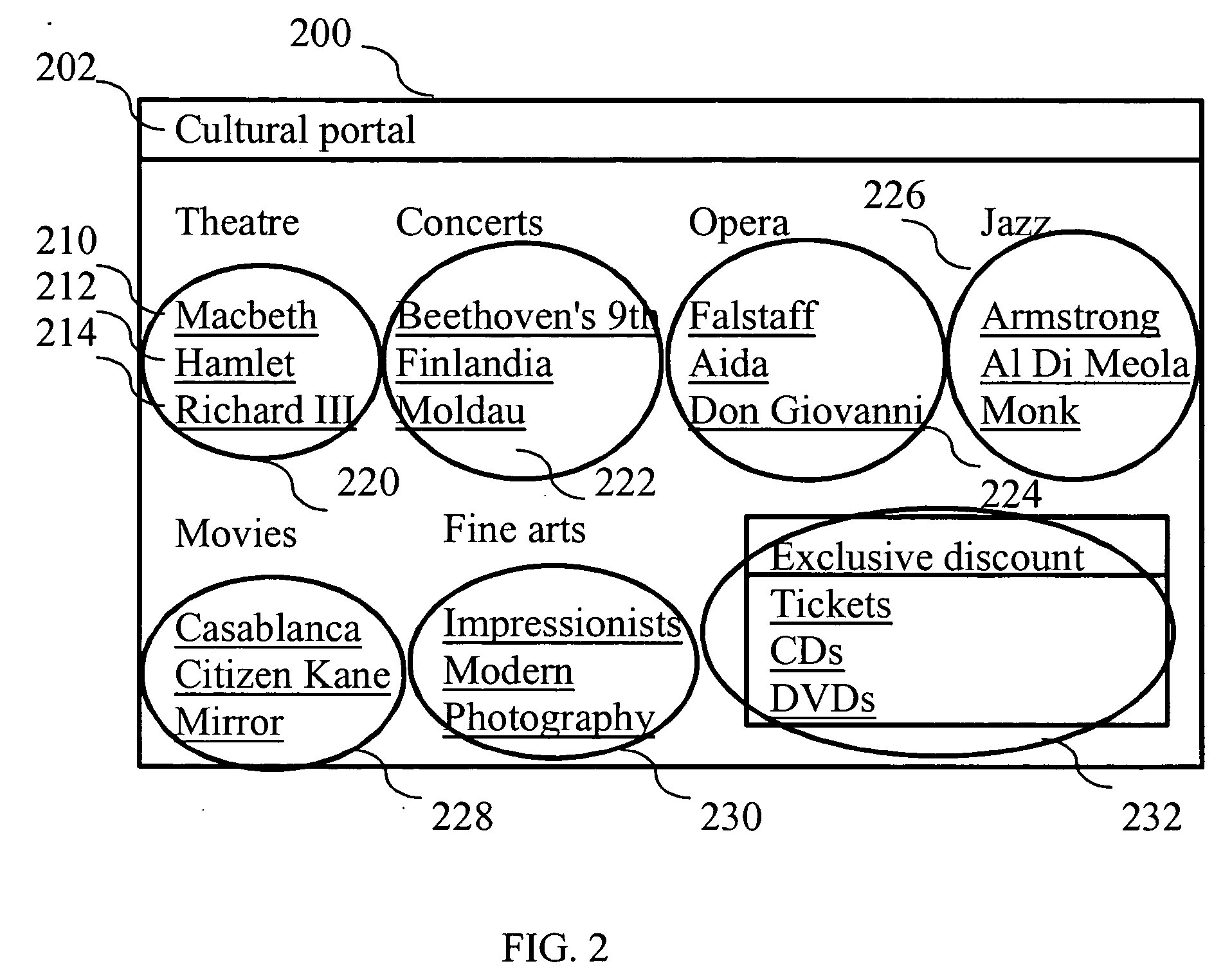

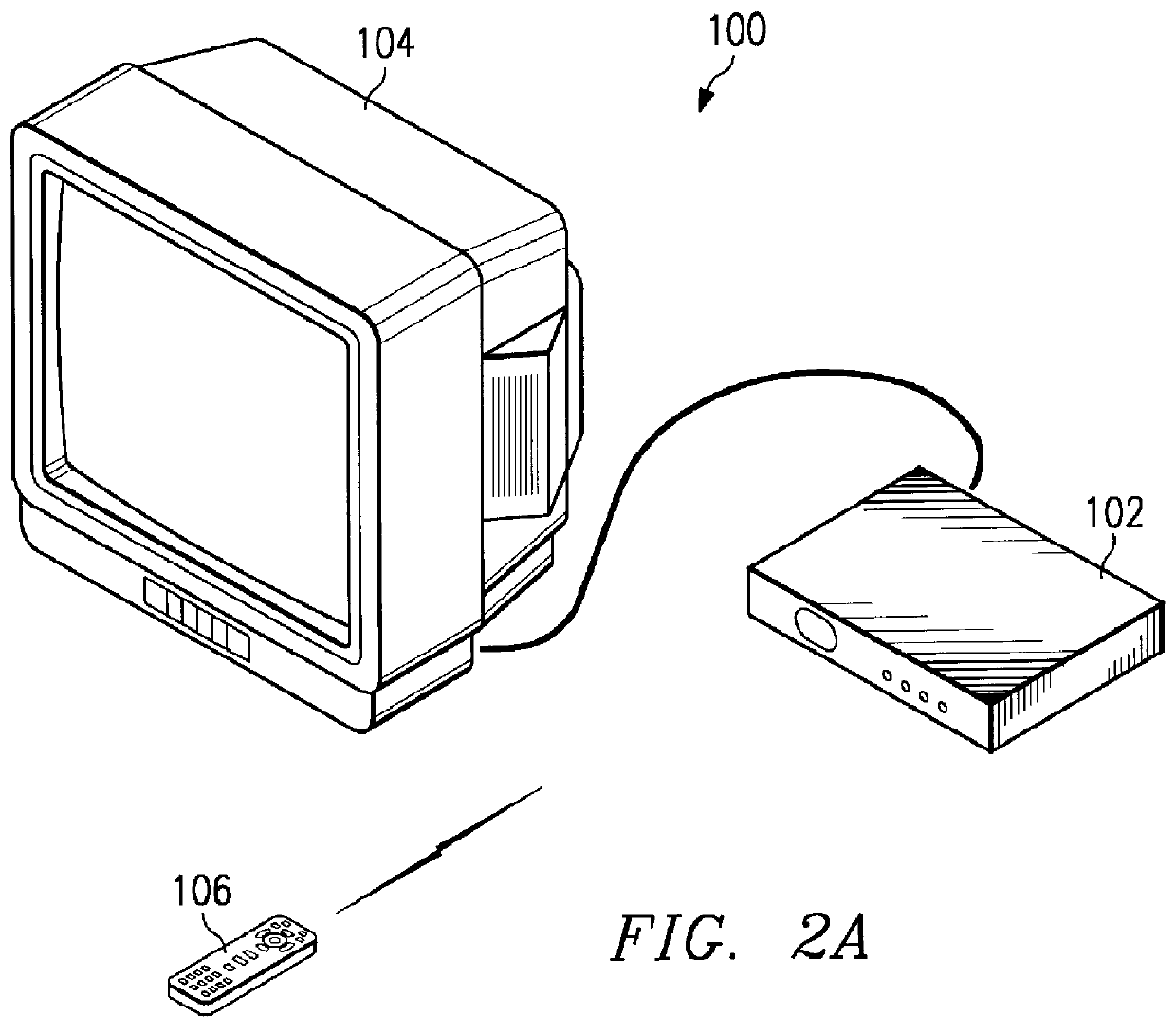

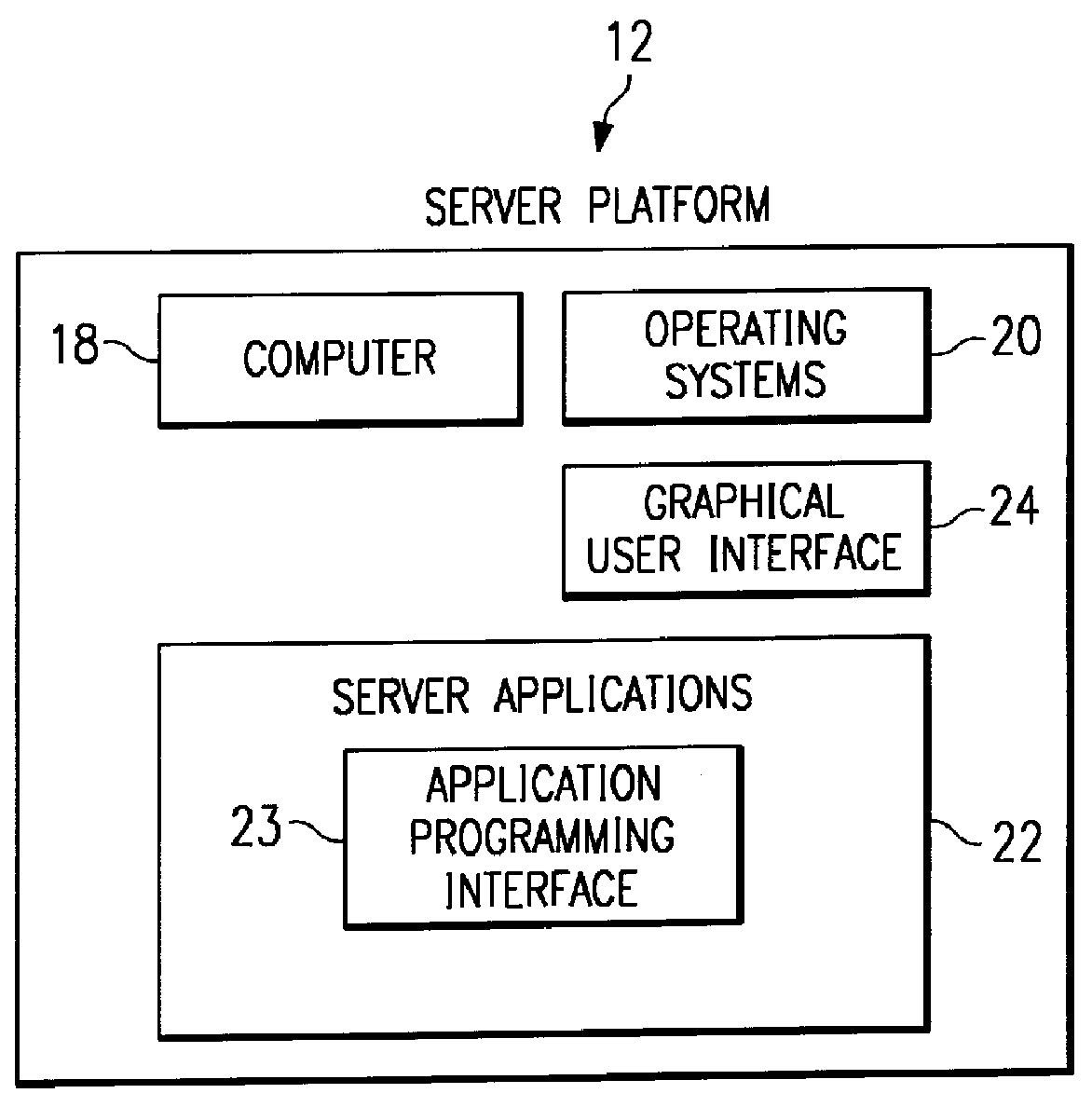

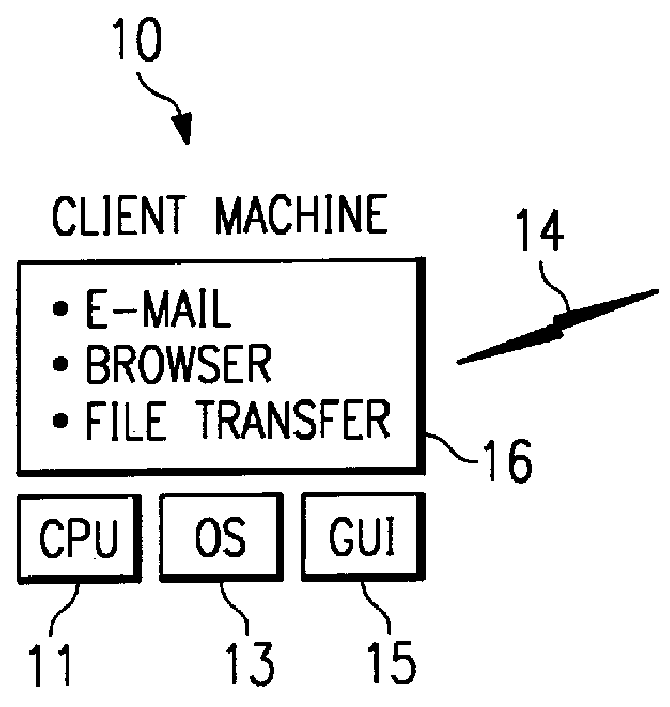

A computer system provides a graphical user interface (GUI) to assist a user in completing electronic forms. The computer includes components such as a processor, user interface, and video display. Using the video display, the processor presents a row entry template including a menu field and an associated data field. The user completes the menu field by selecting a desired menu entry from a list of predefined menu entries. The user completes the data field by entering data into the data field. This format is especially useful when the data entry provides data categorized by the menu entry, explains the menu entry, or otherwise pertains to the menu entry. Each time the GUI detects activation of a form expand key, it presents an additional row entry template for completion by the user. Upon selection of a submit key, data of the completed form is sent to a predefined destination, such as a linked list, table, database, or another computer. Thus, by planned selection of menu entries, the user can limit his / her completion of an electronic form to blanks applicable to that user, avoiding the others. Nonetheless, the form can be easily expanded row by row to accommodate as many different blanks as the user wishes to complete. The invention may be implemented by a host sending a remote computer machine-executable instructions which the remote computer executes to provide the GUI, where the remote computer ultimately returns the completed form data to the host.

Owner:IBM CORP

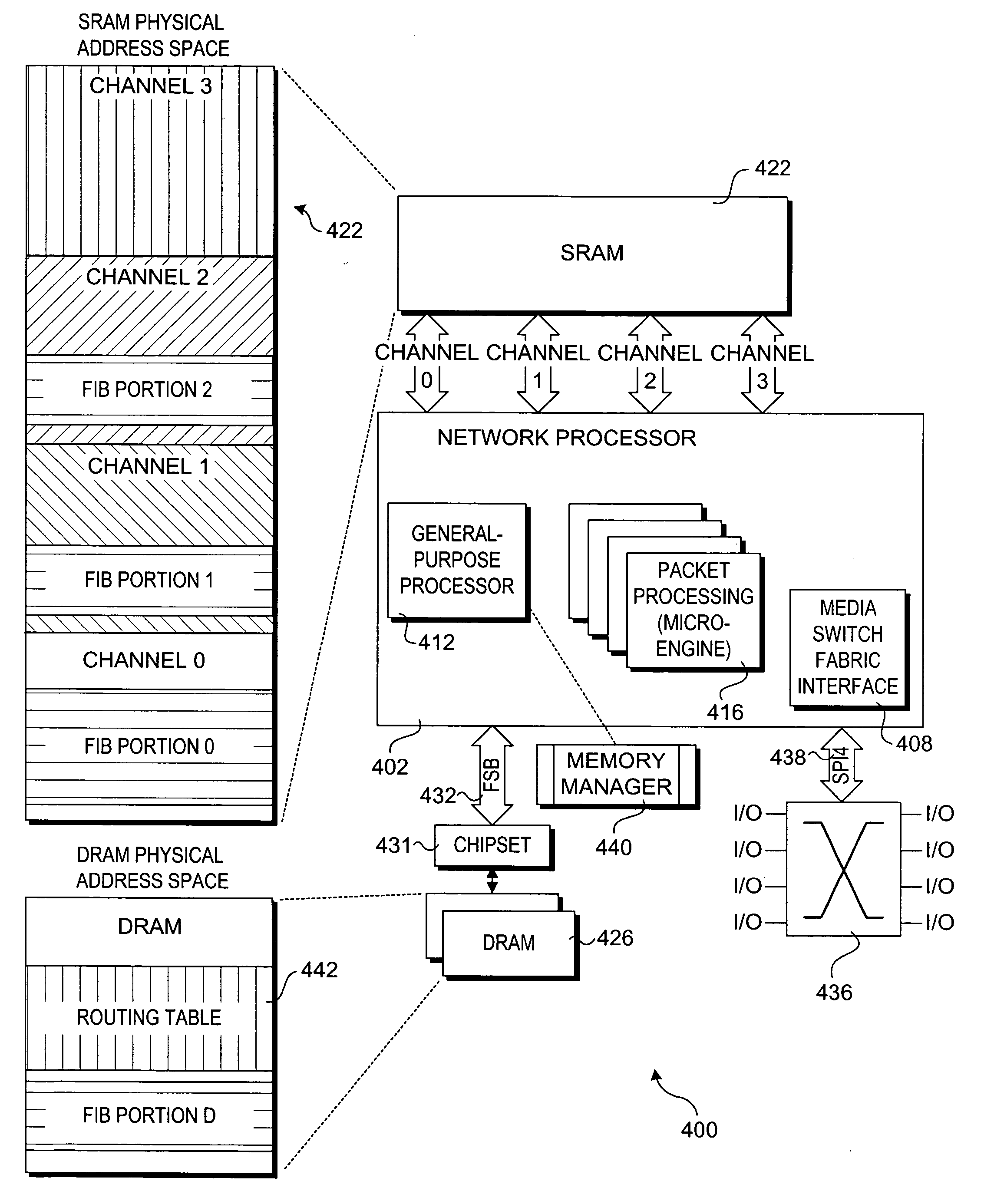

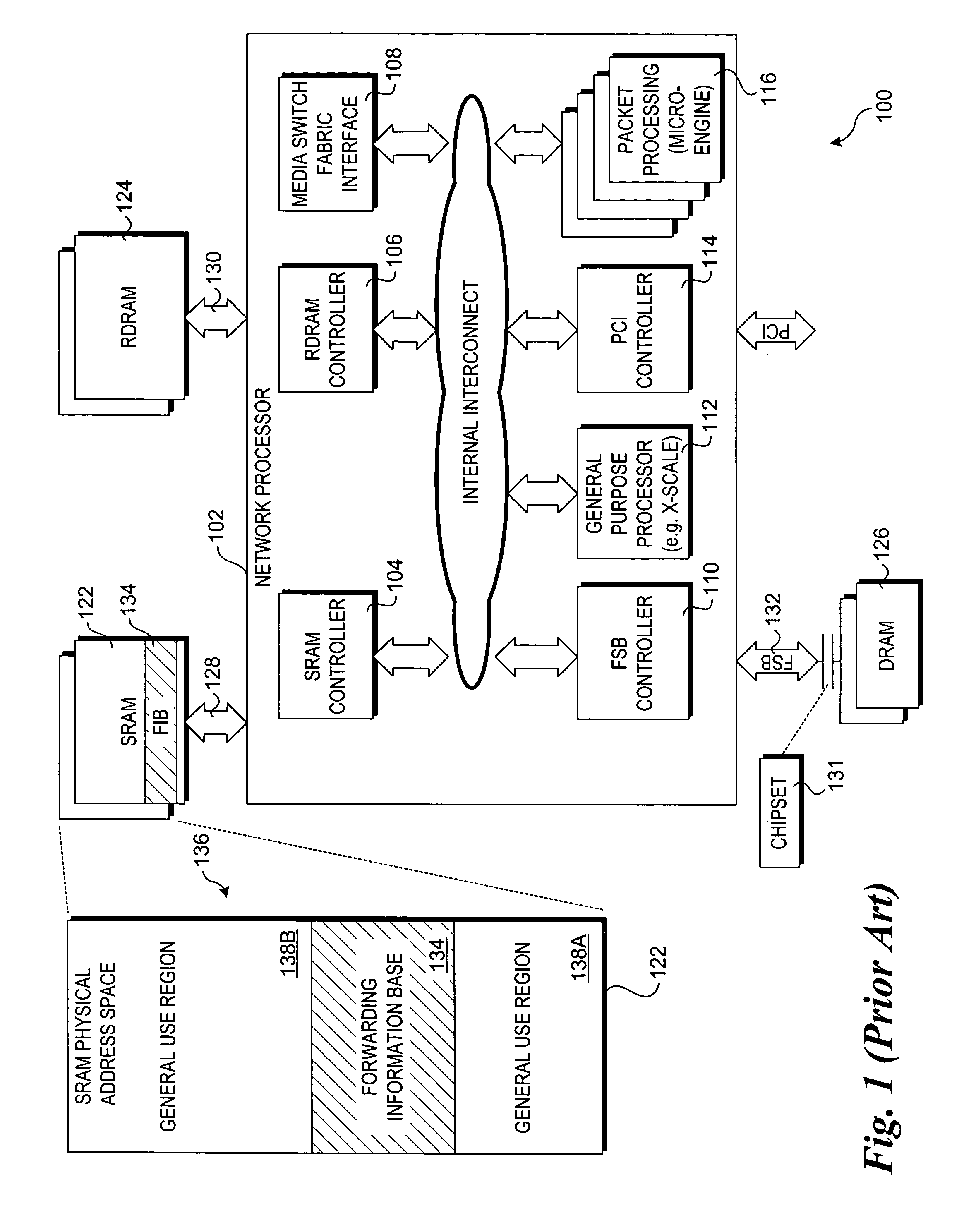

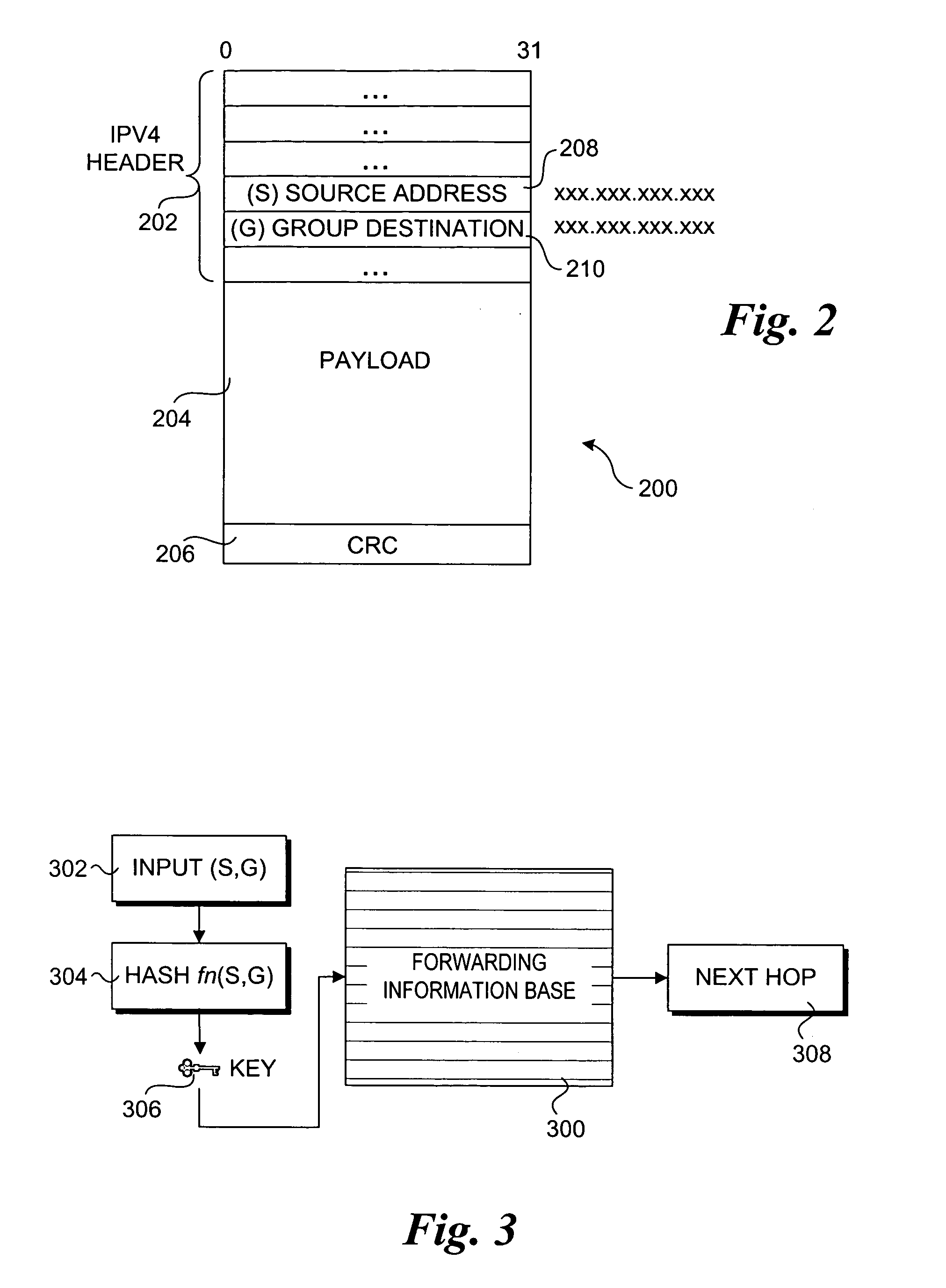

Method to improve forwarding information base lookup performance

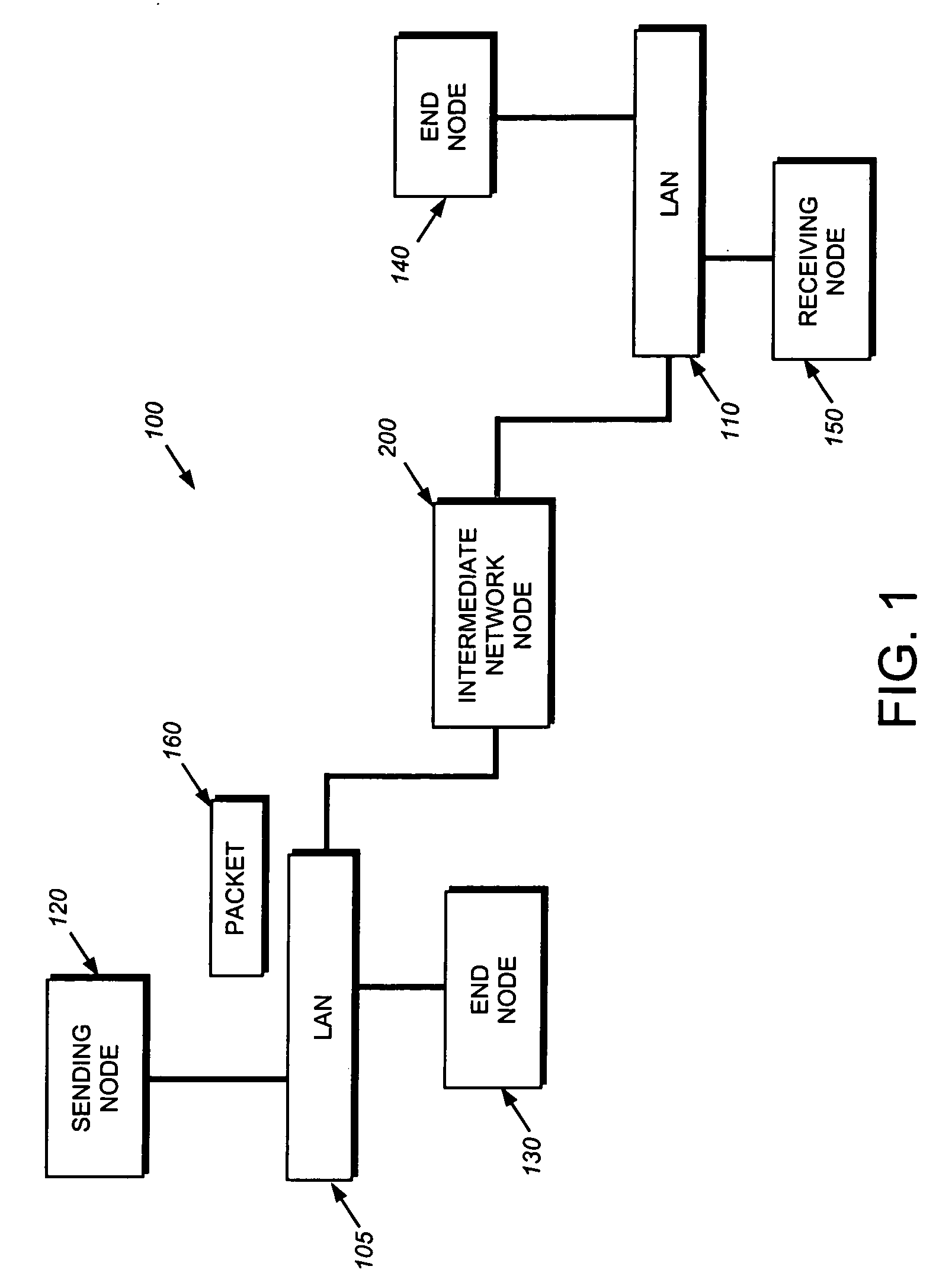

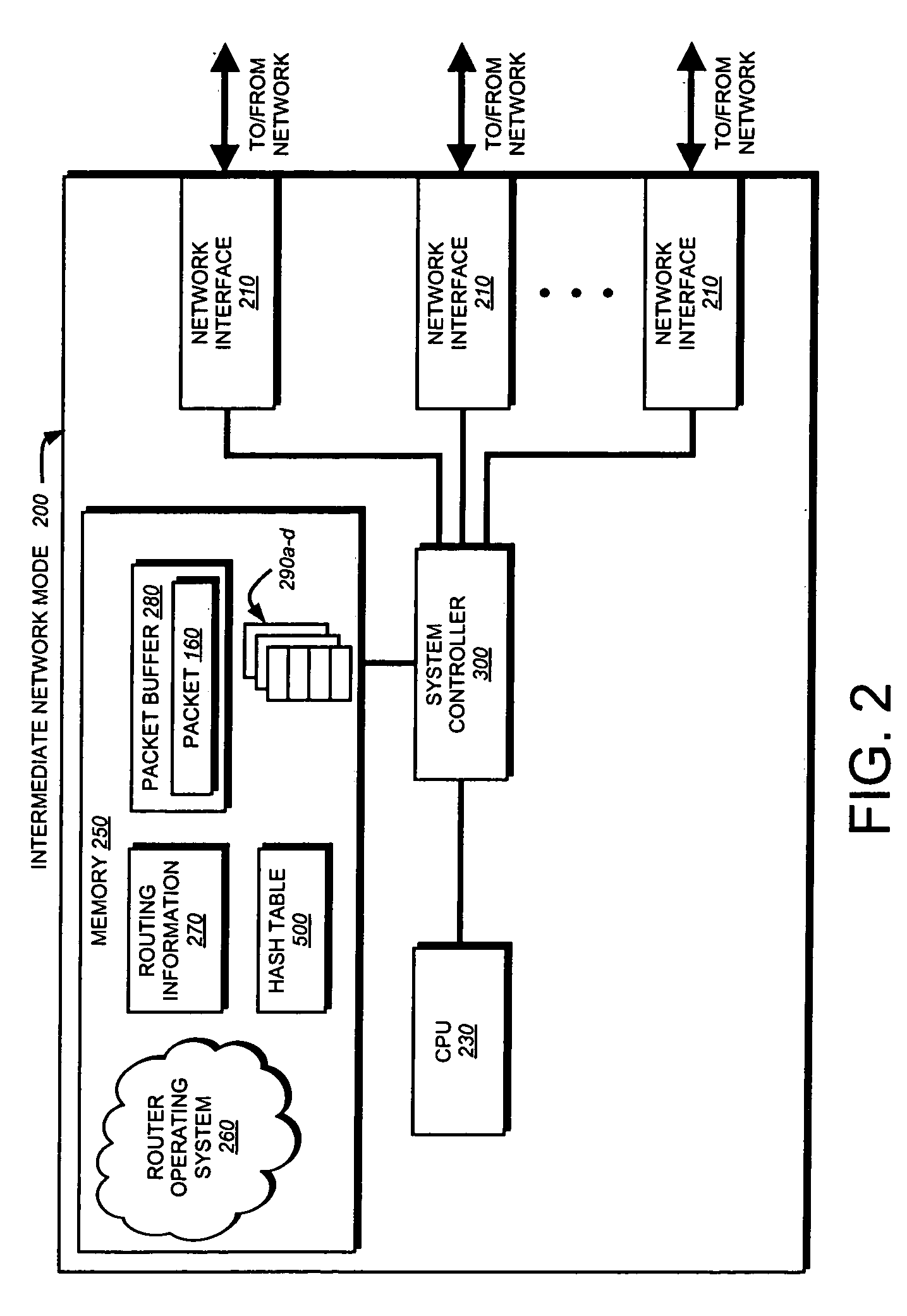

InactiveUS20050259672A1Multiprogramming arrangementsData switching by path configurationInformation repositoryDepth level

A method and apparatus for improving forwarding information base (FIB) lookup performance. An FIB is partitioned into a multiple portions that are distributed across segments of a multi-channel SRAM store to form a distributed FIB that is accessible to a network processor. Primary entries corresponding to a linked list of FIB entries are stored in a designated FIB portion. Secondary FIB entries are stored in other FIB portions (a portion of the secondary FIB entries may also be stored in the designated primary entry portion), enabling multiple FIB entries to be concurrently accessed via respective channels. A portion of the secondary FIB entries may also be stored in a secondary (e.g., DRAM) store. A depth level threshold is set to limit the number of accesses to a linked list of FIB entries by a network processor micro-engine thread, wherein an access depth that would exceed the threshold generates an exception that is handled by a separate execution thread to maintain line-rate throughput.

Owner:INTEL CORP

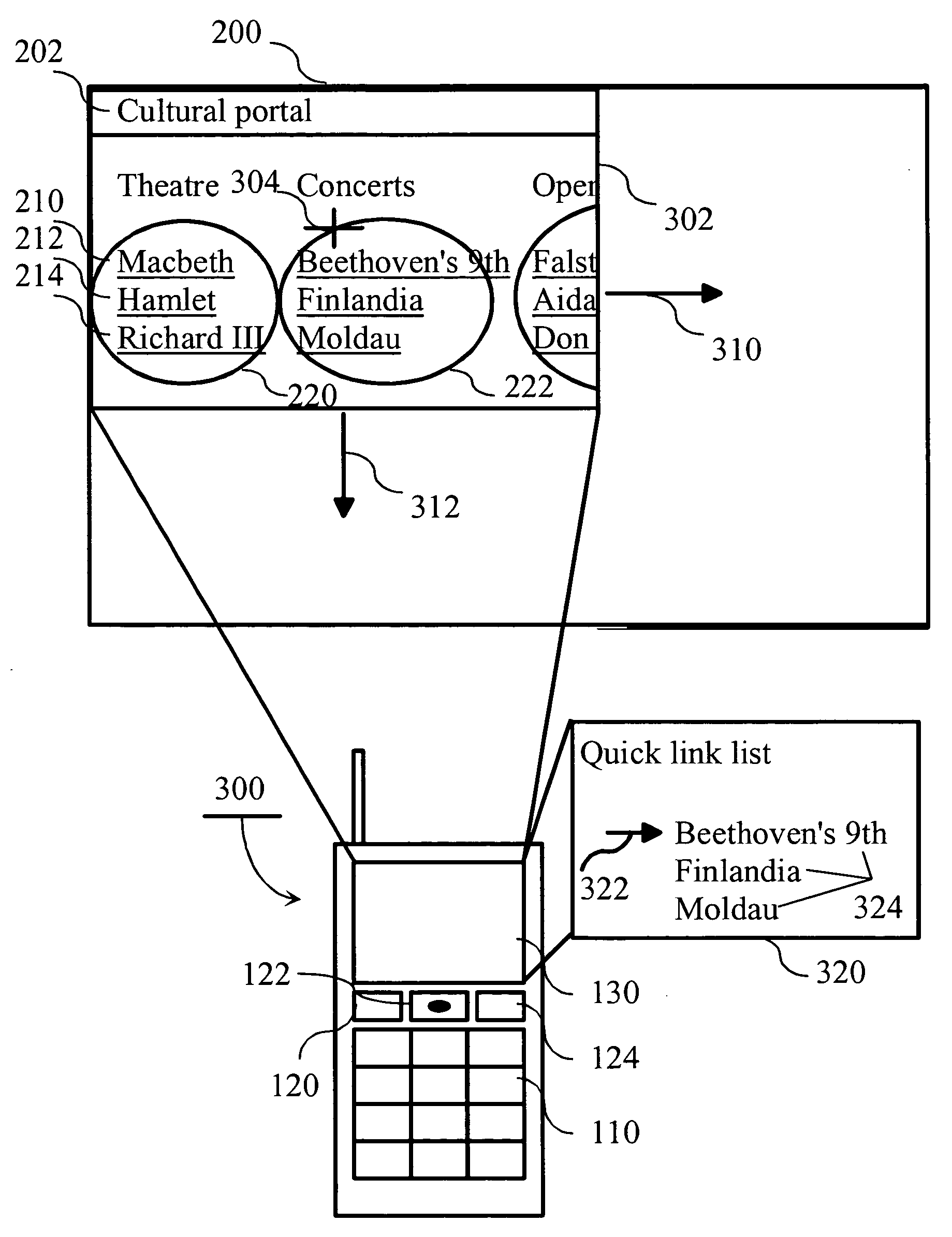

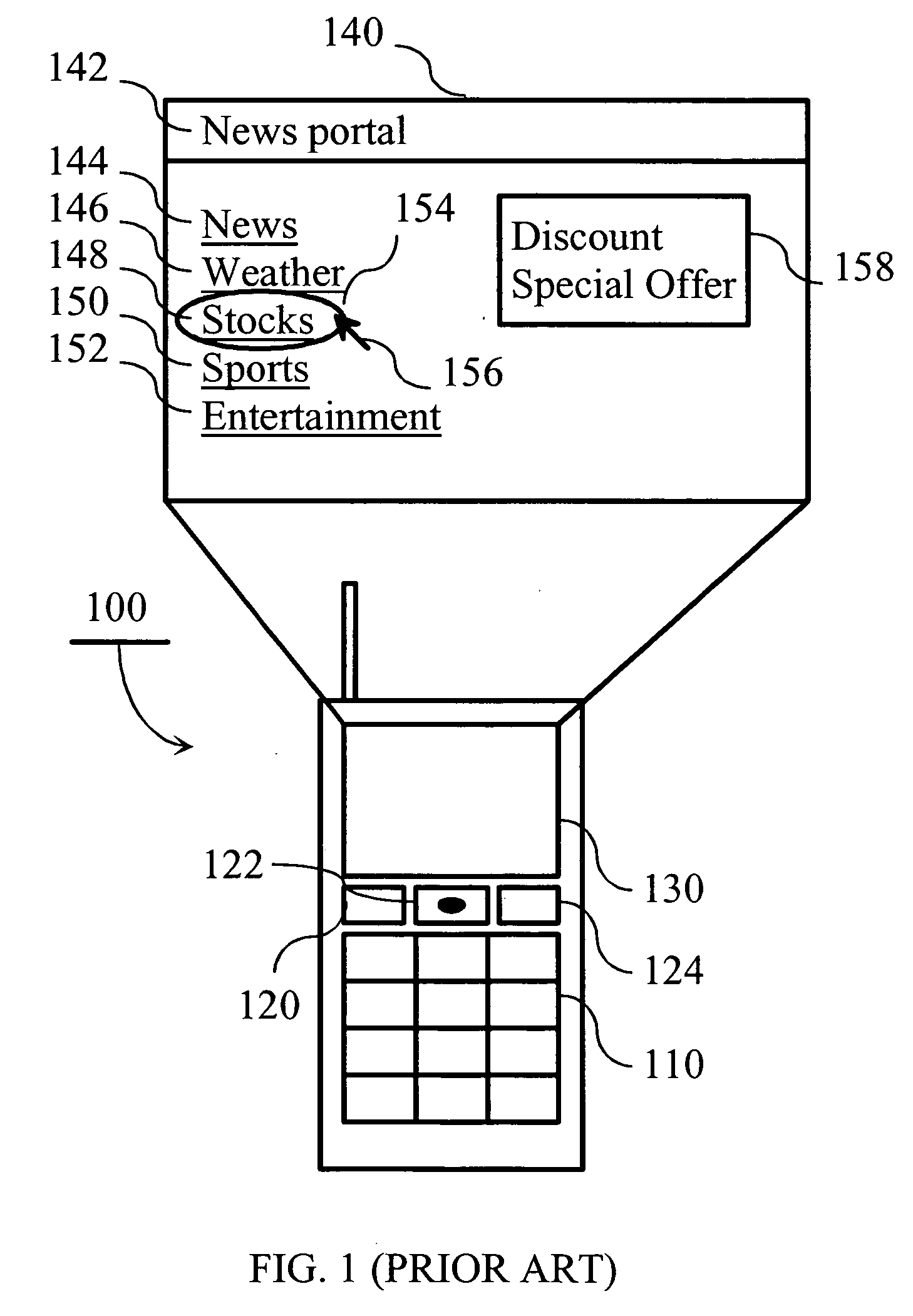

Method for the presentation and selection of document links in small screen electronic devices

InactiveUS20050229119A1Increase flexibilityAvoid precisionDigital data information retrievalSpecial data processing applicationsPaper documentDocument preparation

A method, an electronic device and a computer program, for document link presentation and selection in an electronic device. In the method a first hypertext page comprising at least one separate link area is opened in an electronic device. At least part of said first hypertext page is displayed in a view window movable in the area of said first hypertext page. A link area nearest to a first point on said view window is determined. A link list comprising links associated with said link area is formed. As a user selects a first link in the link list and a second hypertext page indicated by the first link is opened in the electronic device.

Owner:NOKIA CORP

Method of saving a web page to a local hard drive to enable client-side browsing

InactiveUS6163779AEasy to operateDigital data information retrievalData processing applicationsDocument preparationClient-side

A method of copying a Web page presented for display on a browser of a Web client. The Web page comprises a base HTML document and a plurality of hypertext references, one or more of which may be associated with embedded objects (such as image files). The operation begins by copying the base HTML document to the client local storage and establishing a pointer to the copied document. A first linked list of the hypertext references in the base document is then generated. Thereafter, and for each hypertext reference in the first linked list, the following operations are performed. If the hypertext reference refers to an embedded object in the base HTML document, the embedded object is saved on the client local storage and the file name of the saved embedded object is stored (as a fully-qualified URL) in a second linked list. If the hypertext reference does not refer to an embedded object in the base HTML document, the fully-qualified URL of the hypertext reference is stored in the second linked list. Then, the fully-qualified URLs of the second linked list (including those associated with the stored images) are updated to point to the files located on the client local storage. At the end of this operation, there is a new HTML page with links for images pointing to files on the local hard drive. When the user desires to retrieve the copied page, a link to the pointer is activated.

Owner:IBM CORP

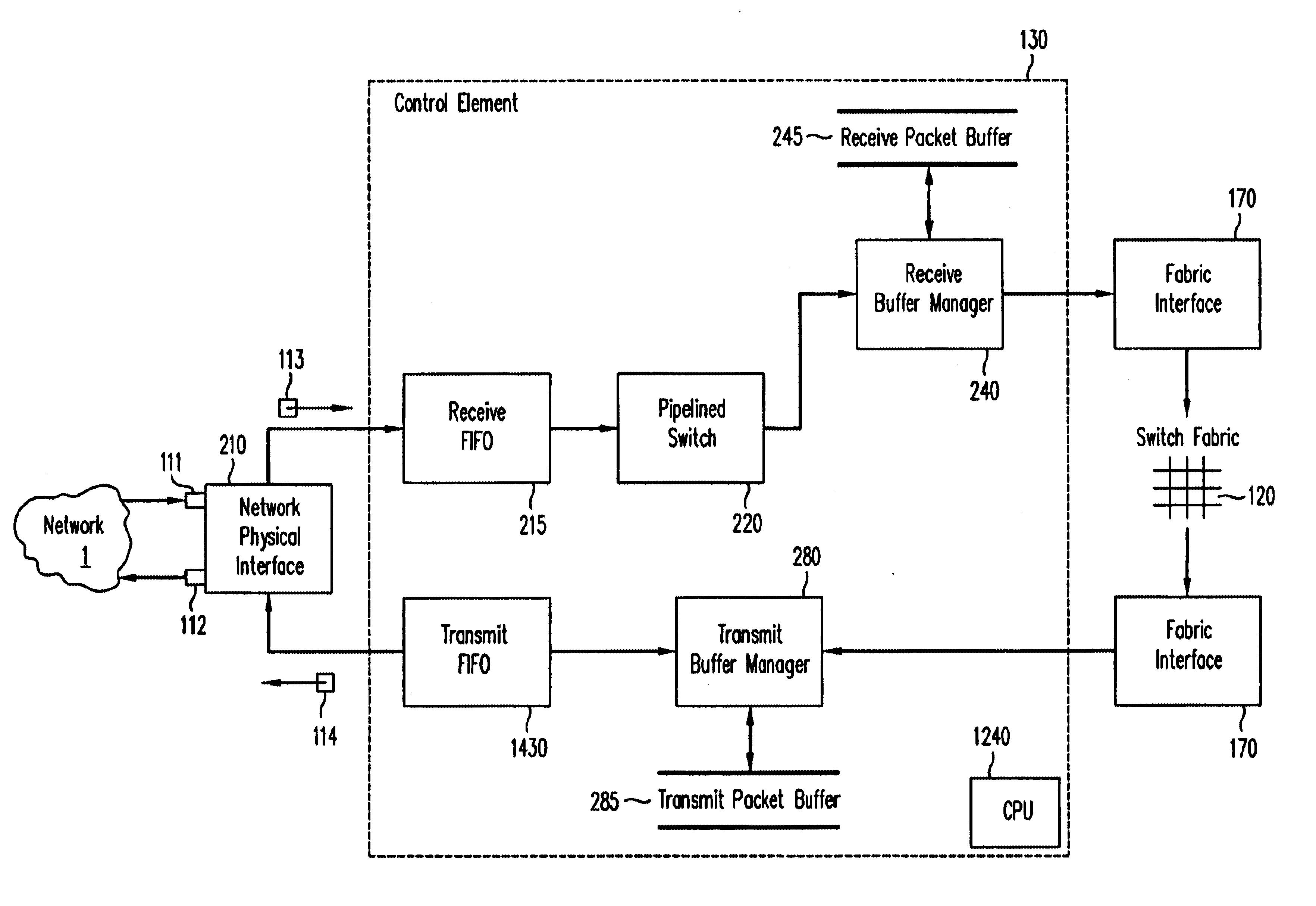

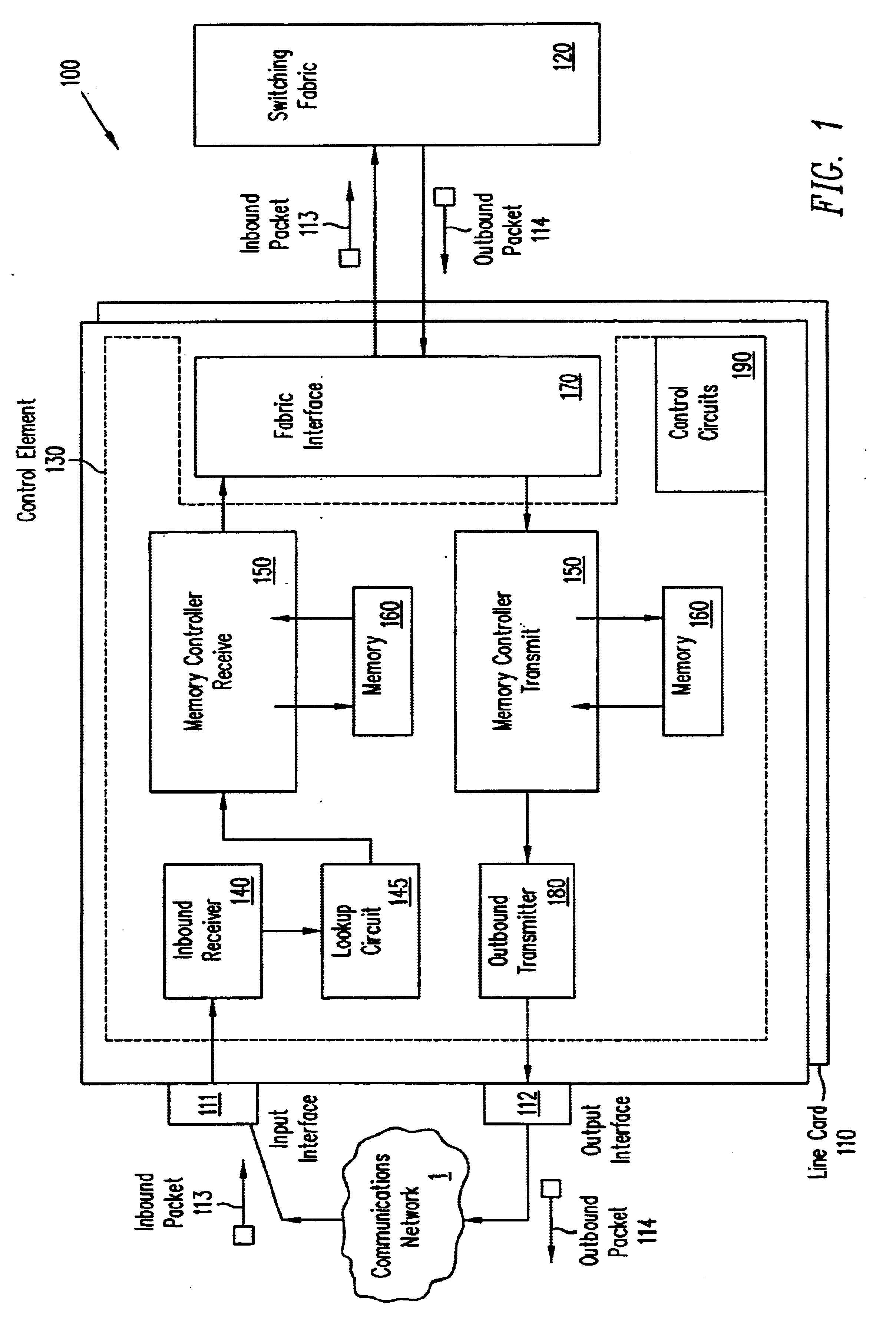

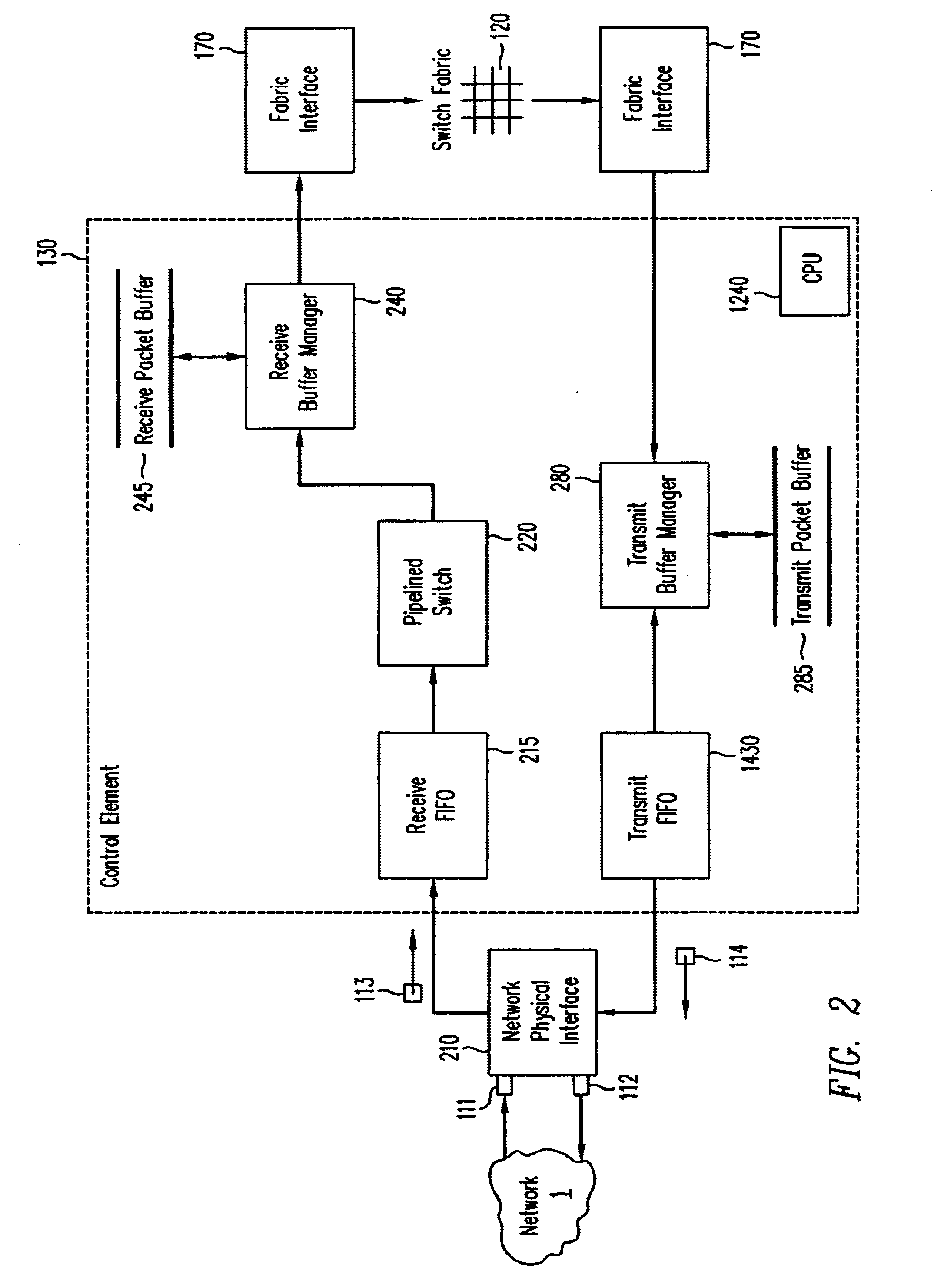

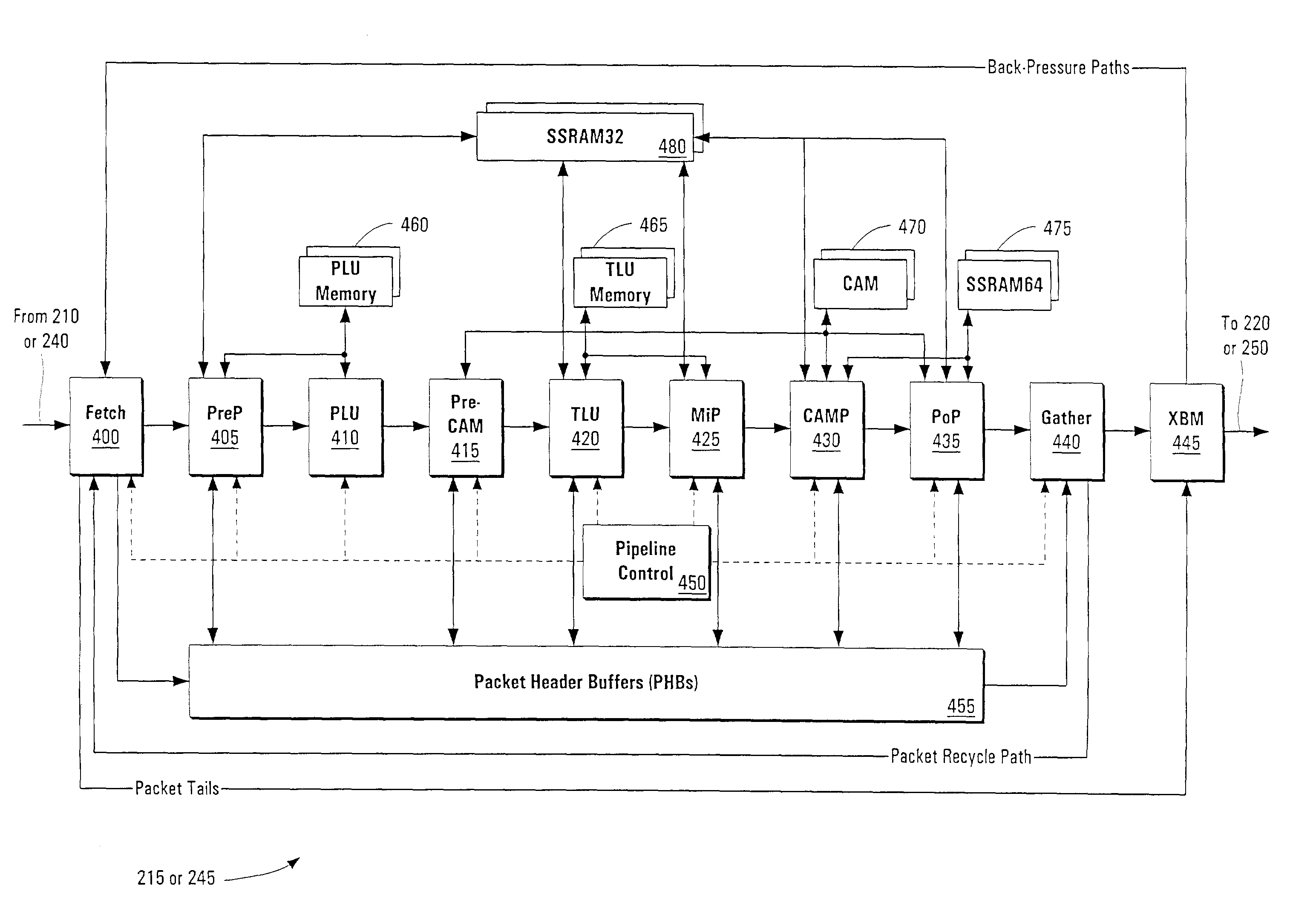

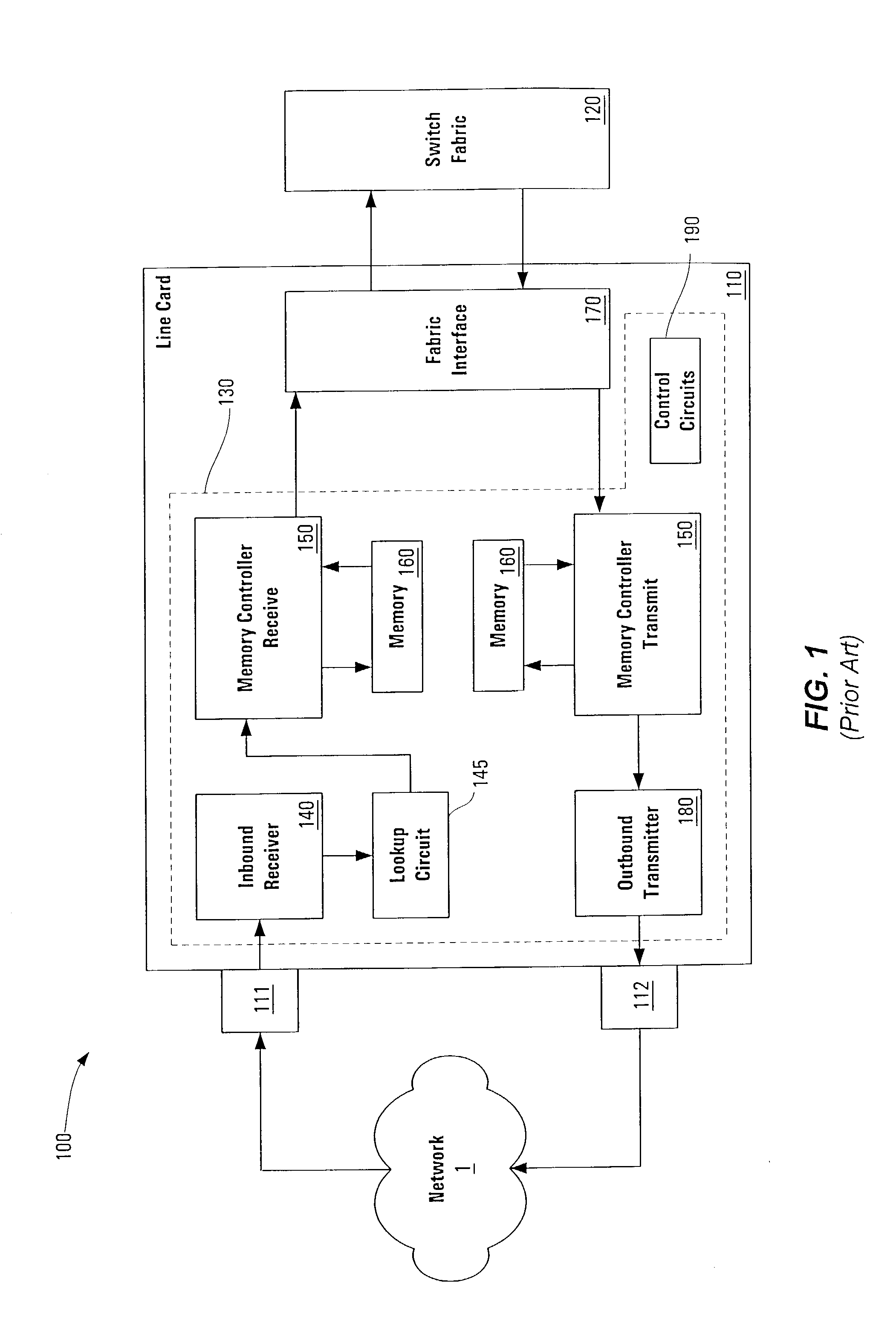

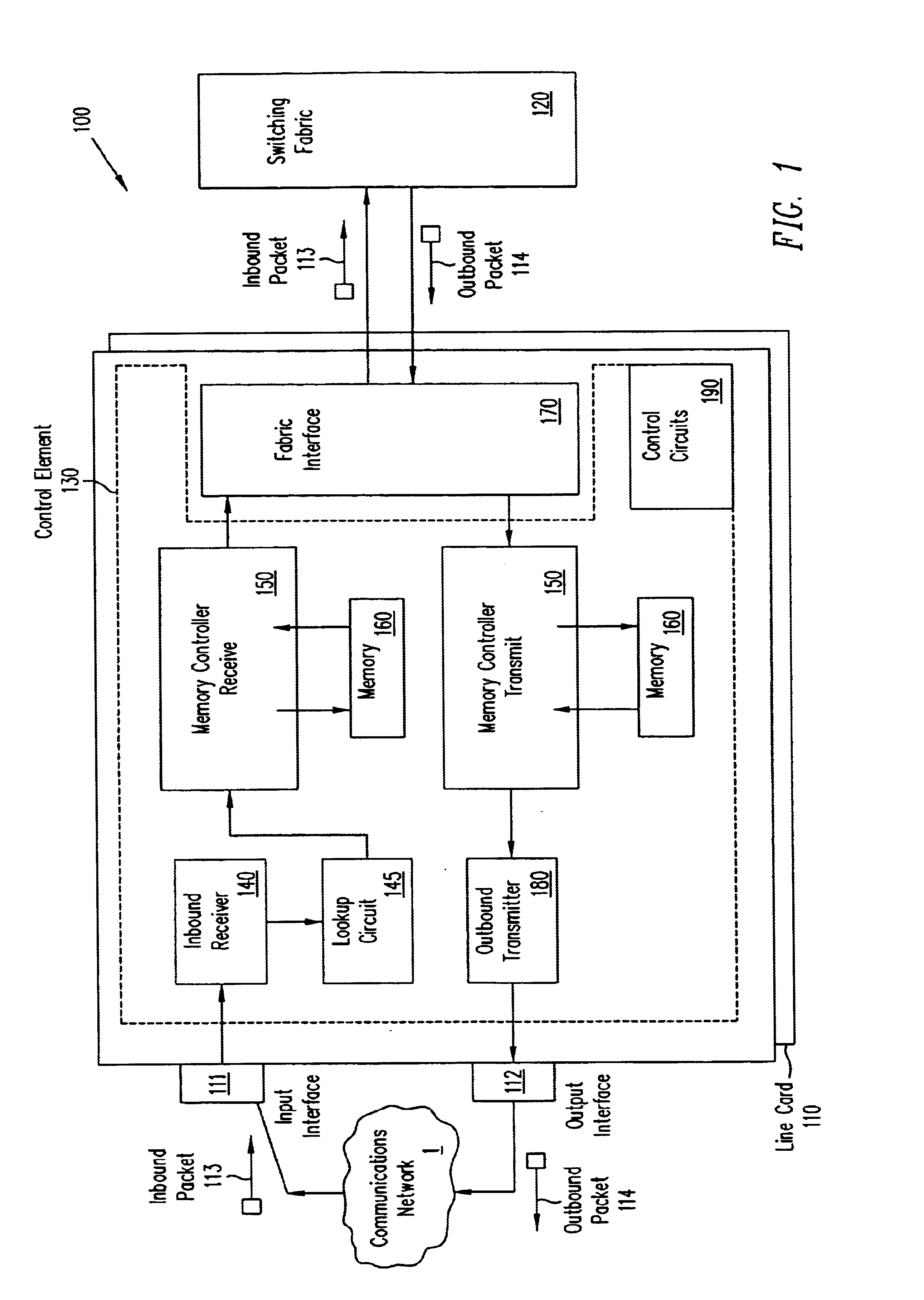

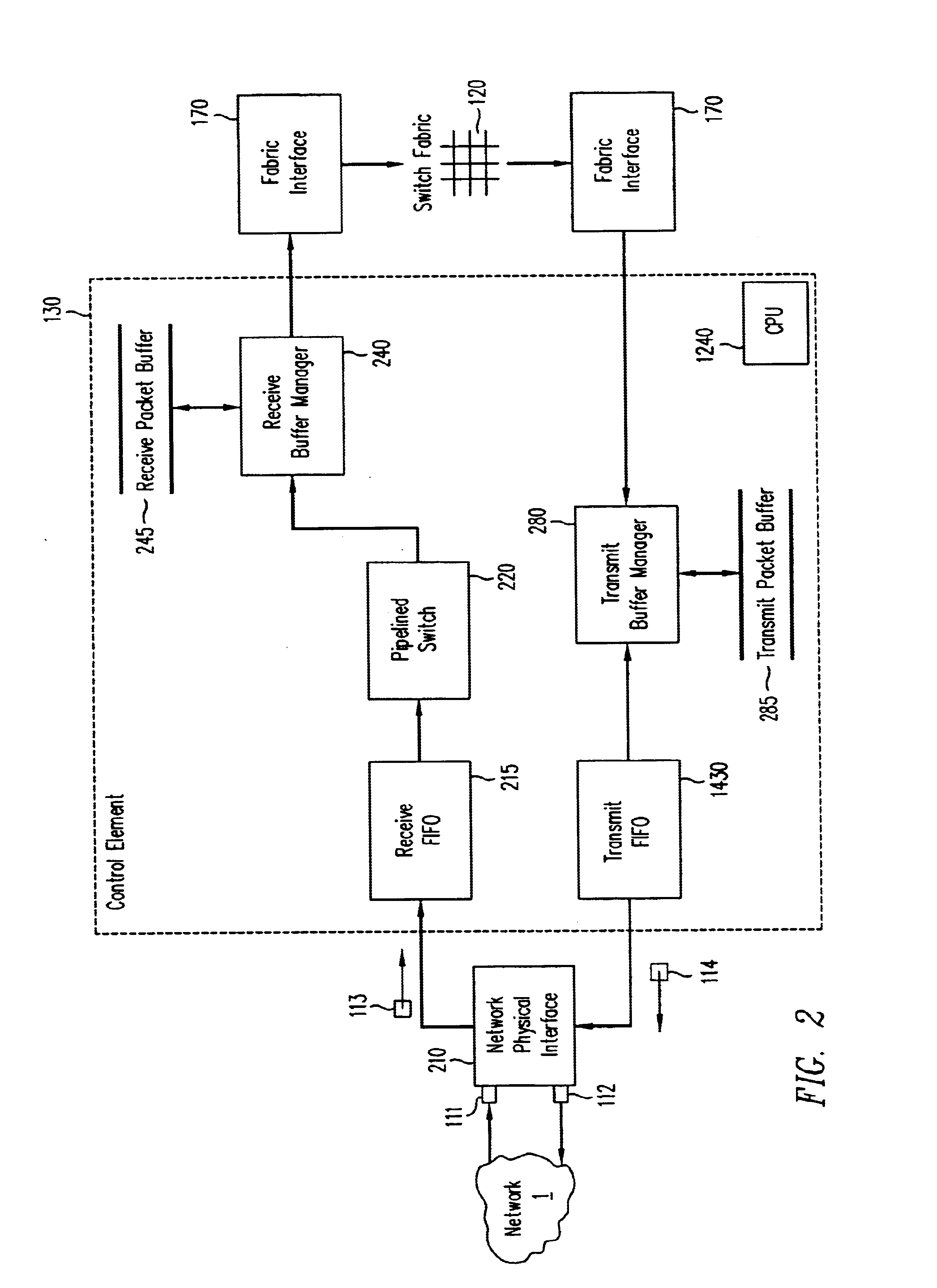

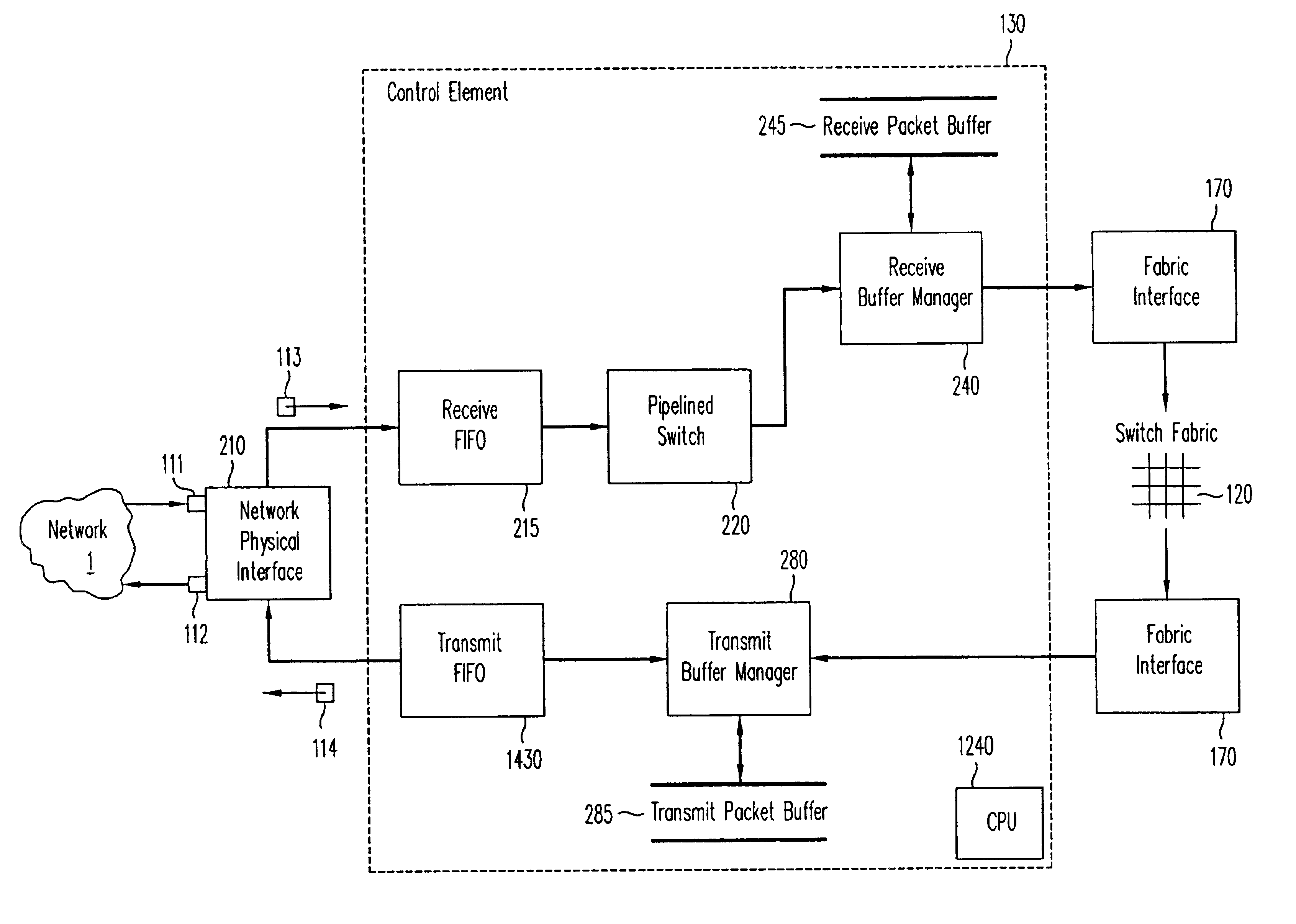

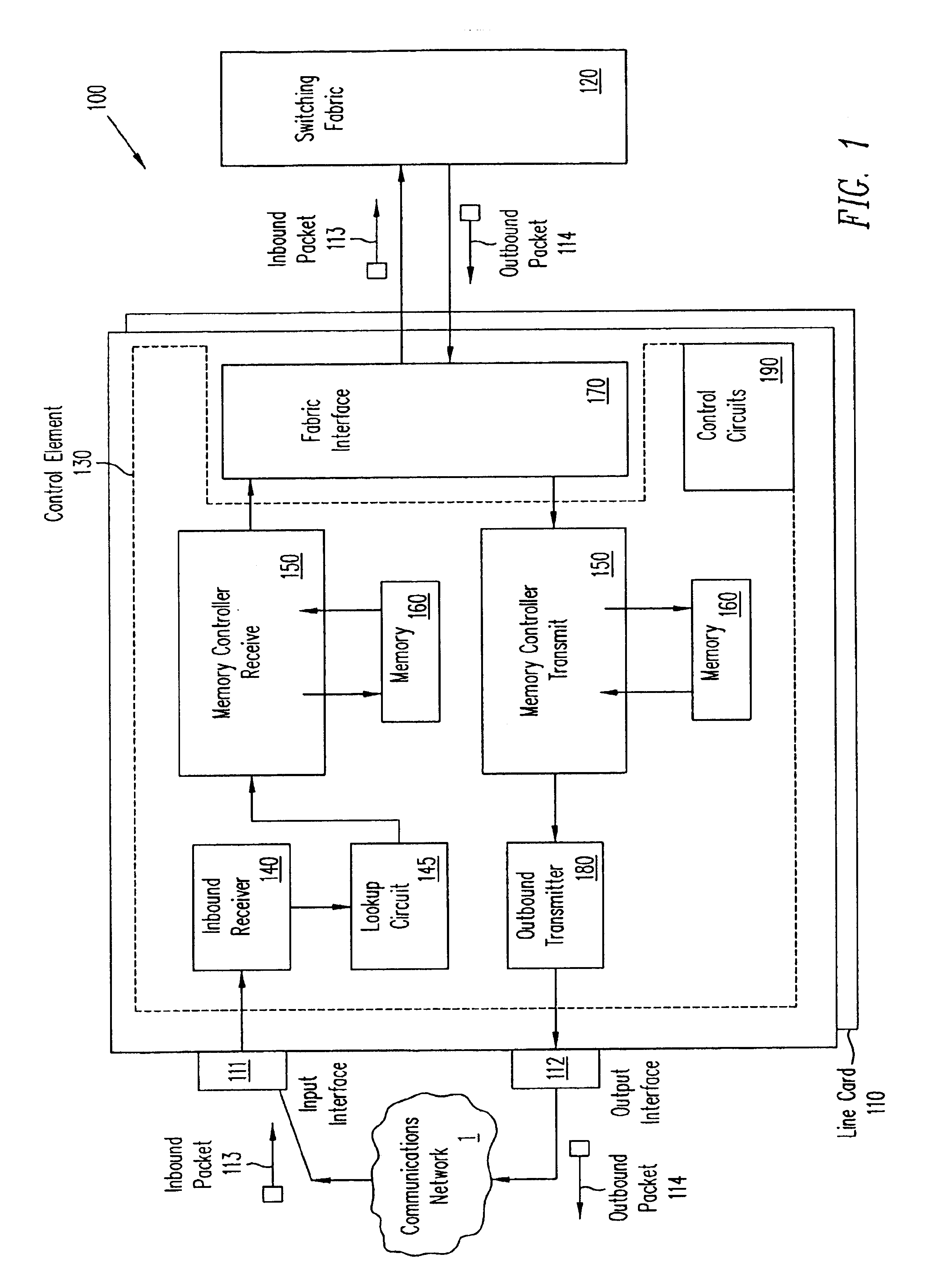

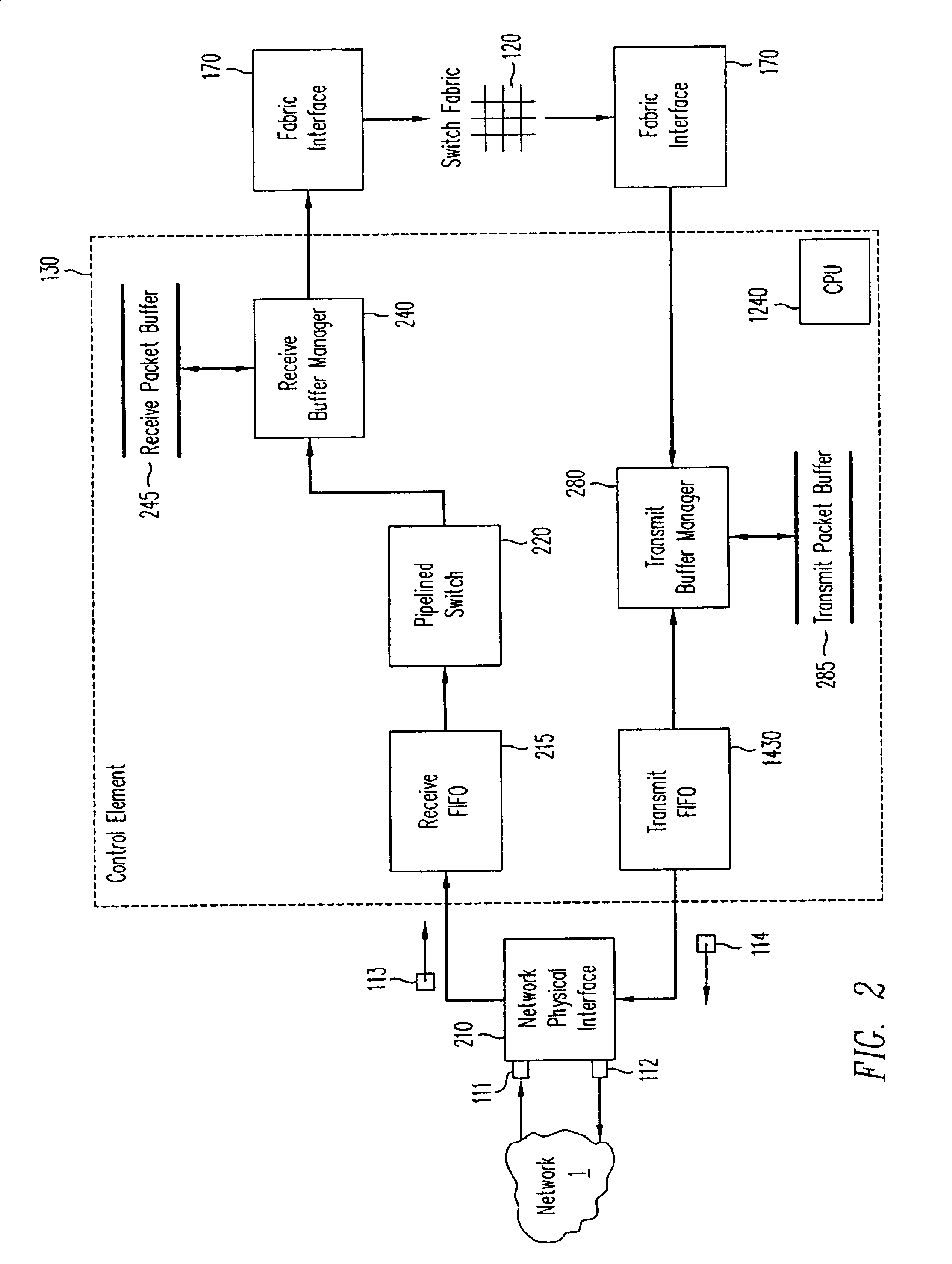

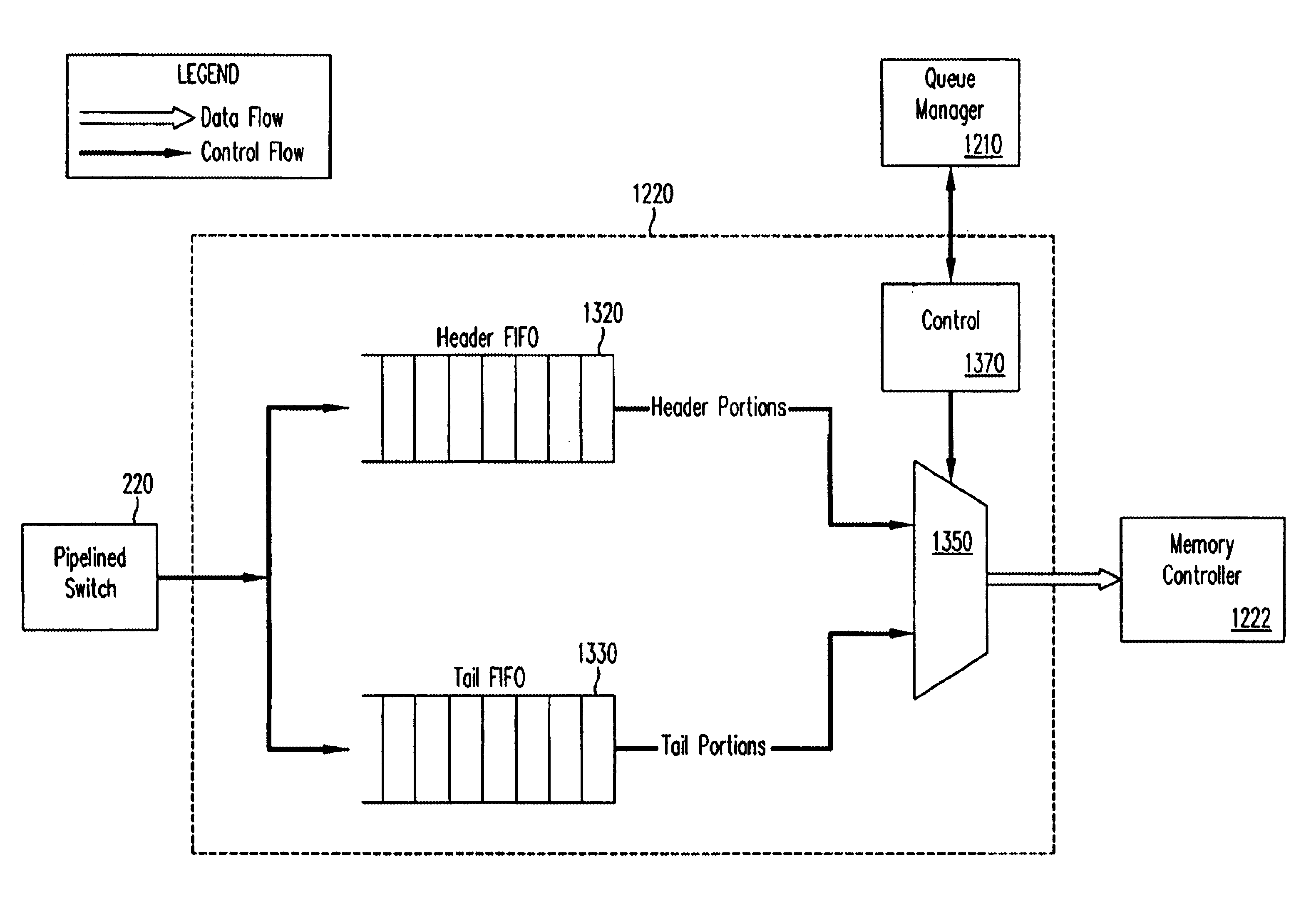

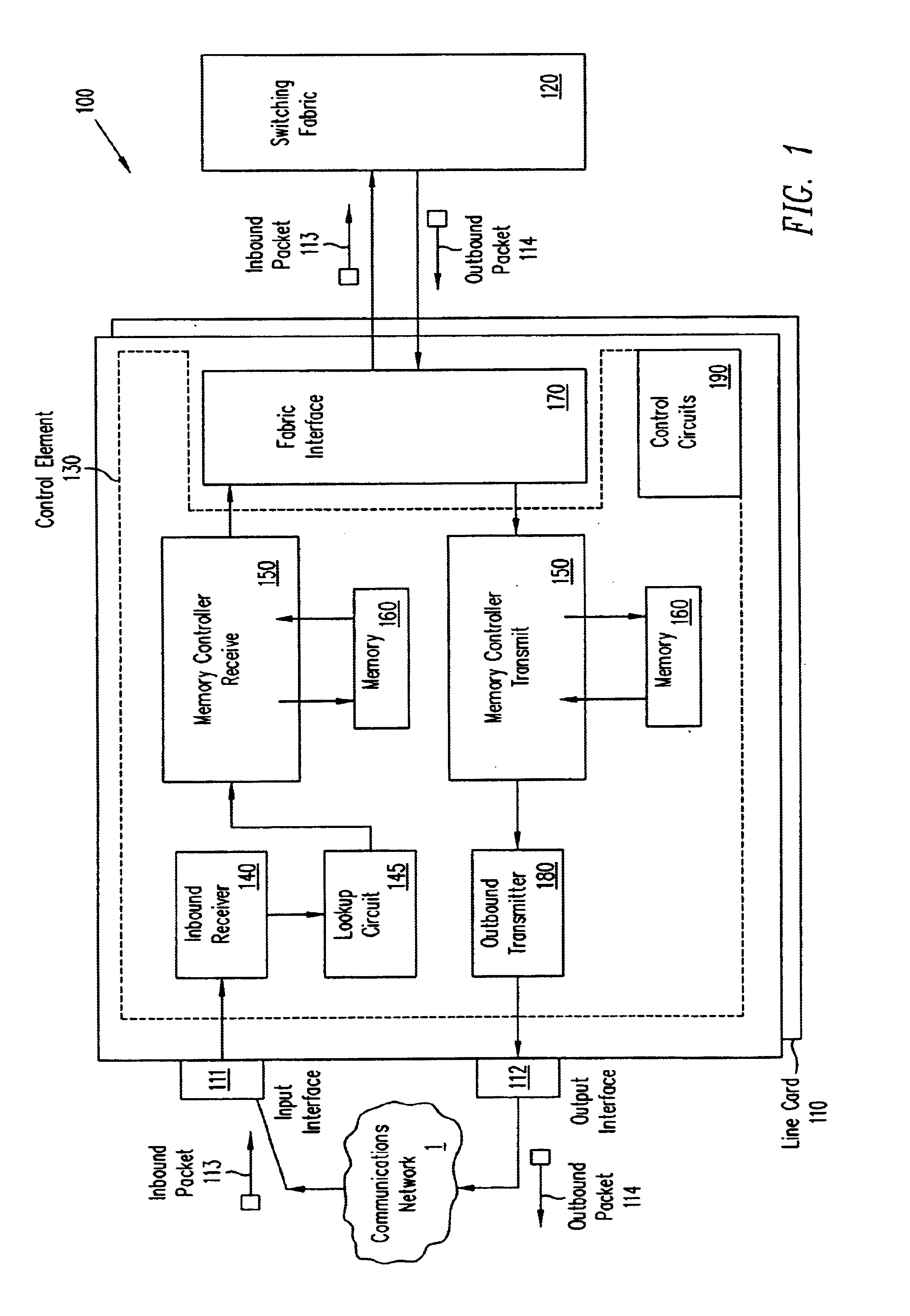

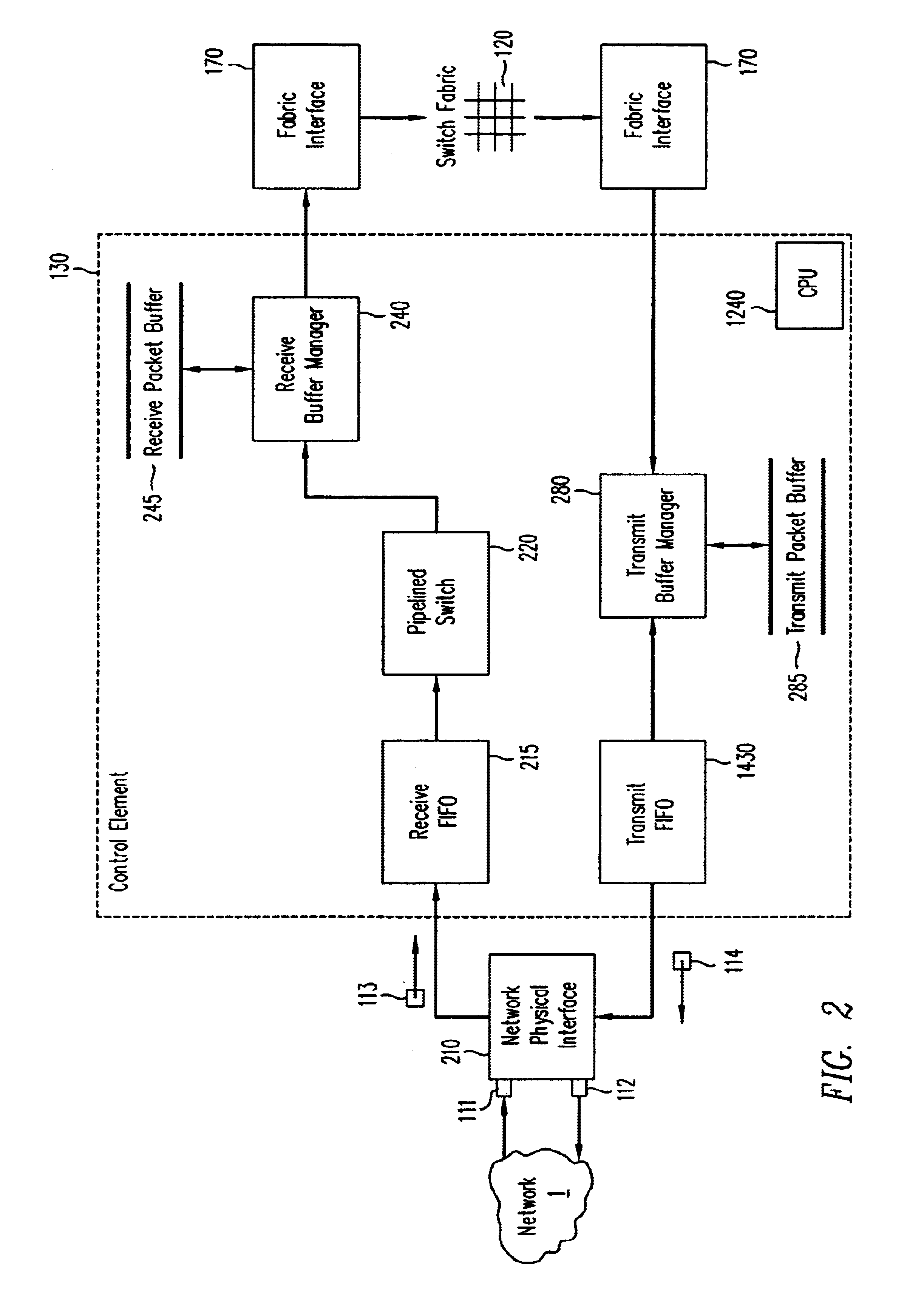

Flexible engine and data structure for packet header processing

InactiveUS6721316B1Multiplex system selection arrangementsData switching by path configurationWeb transportLinked list

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

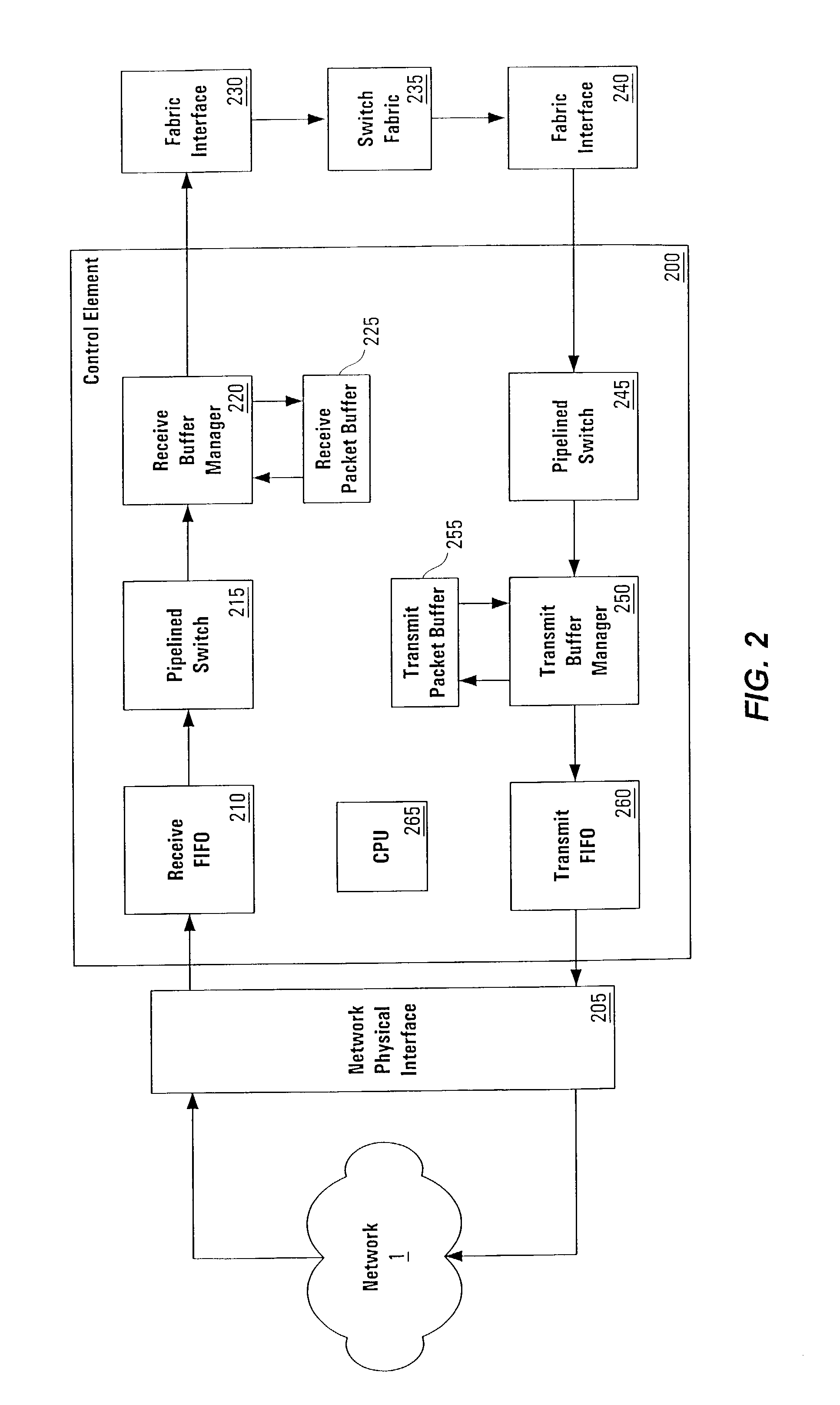

Pipelined packet switching and queuing architecture

InactiveUS6980552B1Quick implementationImprove throughputData switching by path configurationParallel processingBandwidth management

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in a multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Using bandwidth management techniques, each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path includes a buffer / queuing circuit similar to that used in the receive path and can include another pipelined switch. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus, congestion avoidance, and bandwidth management hardware.

Owner:CISCO TECH INC

High-speed hardware implementation of MDRR algorithm over a large number of queues

InactiveUS6778546B1Data switching by path configurationStore-and-forward switching systemsHardware implementationsComputer science

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

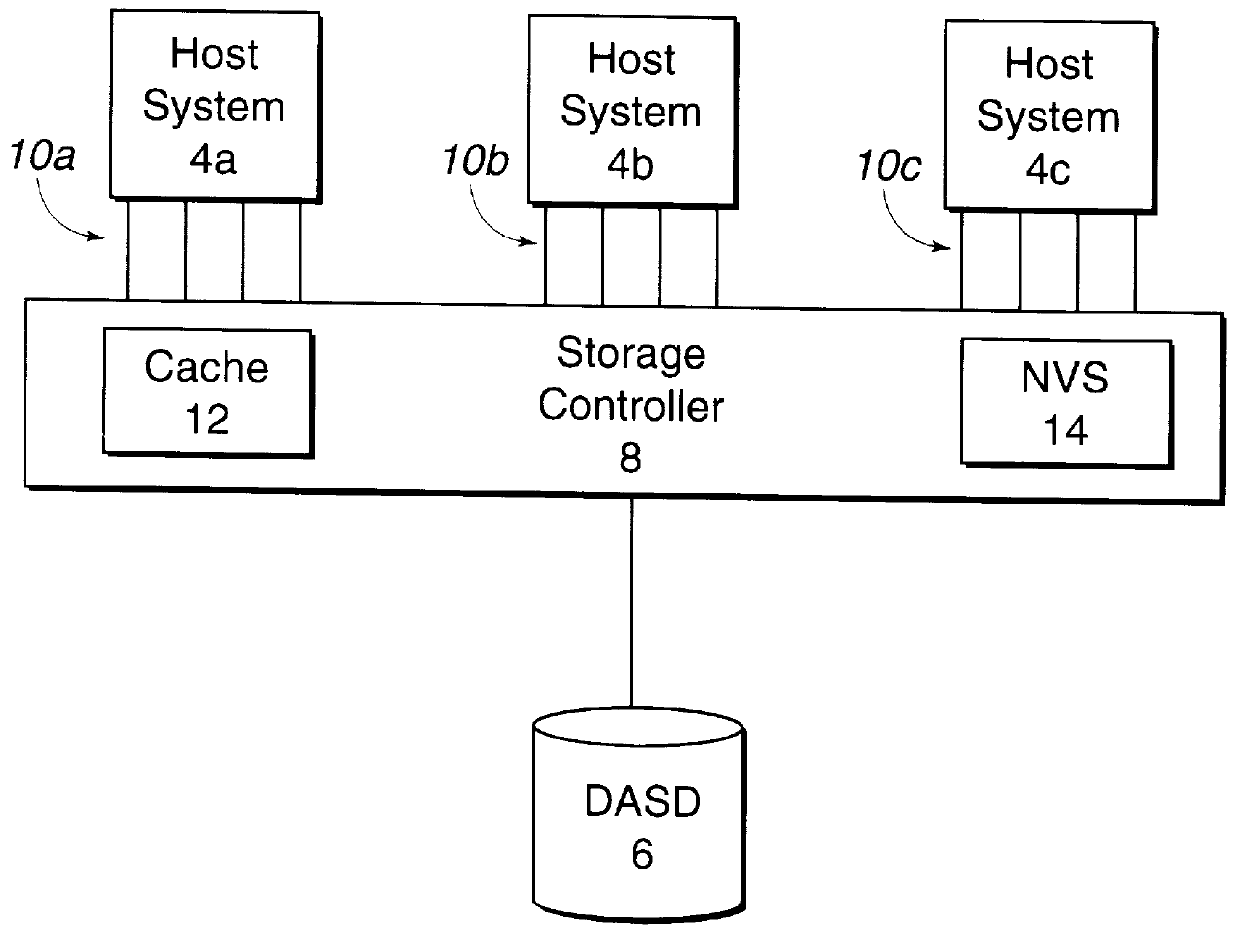

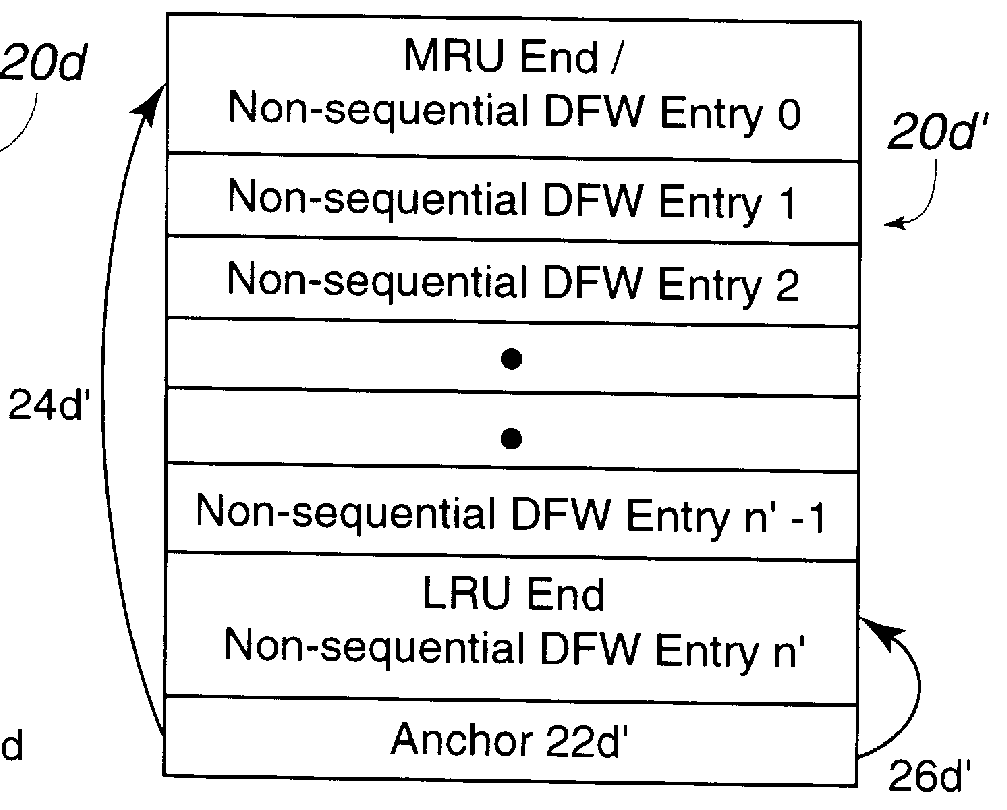

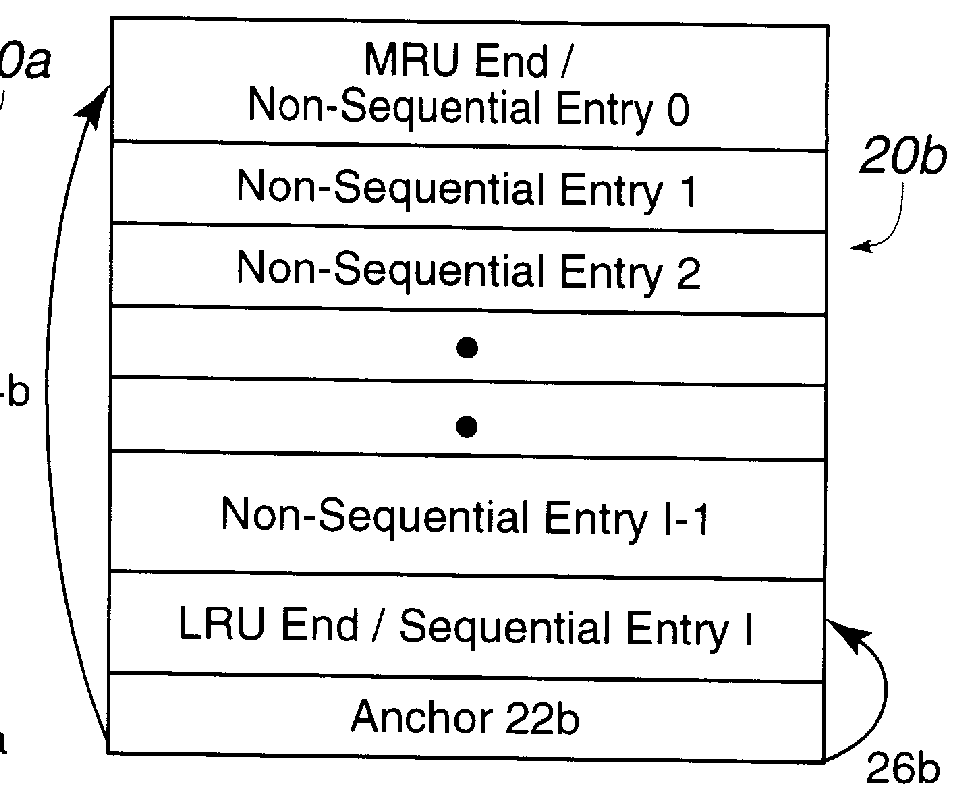

Method and system for managing data in cache using multiple data structures

InactiveUS6141731AEfficient managementChoose accuratelyMemory adressing/allocation/relocationLeast recently frequently usedCache management

Disclosed is a cache management scheme using multiple data structure. A first and second data structures, such as linked lists, indicate data entries in a cache. Each data structure has a most recently used (MRU) entry, a least recently used (LRU) entry, and a time value associated with each data entry indicating a time the data entry was indicated as added to the MRU entry of the data structure. A processing unit receives a new data entry. In response, the processing unit processes the first and second data structures to determine a LRU data entry in each data structure and selects from the determined LRU data entries the LRU data entry that is the least recently used. The processing unit then demotes the selected LRU data entry from the cache and data structure including the selected data entry. The processing unit adds the new data entry to the cache and indicates the new data entry as located at the MRU entry of one of the first and second data structures.

Owner:IBM CORP

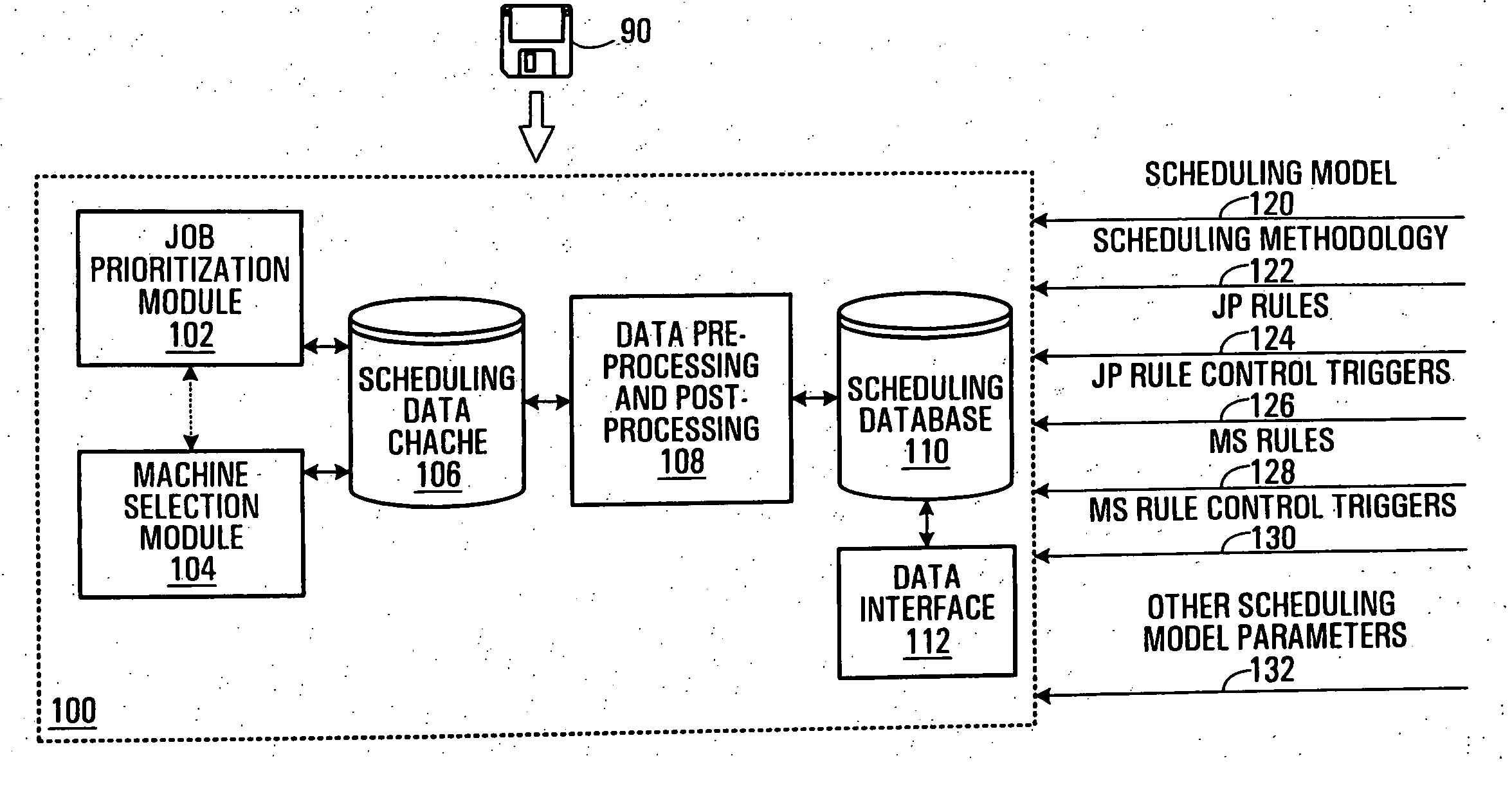

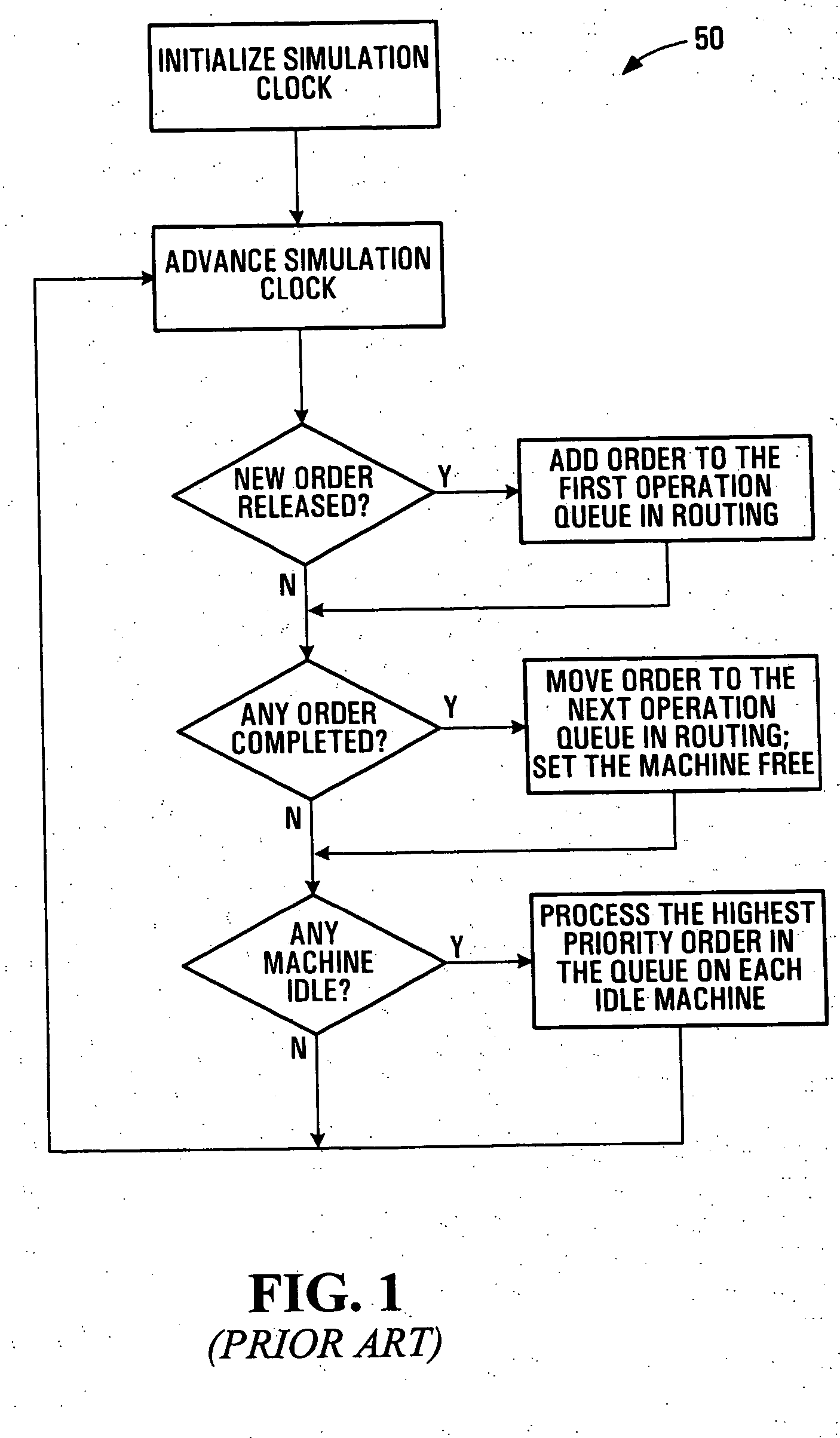

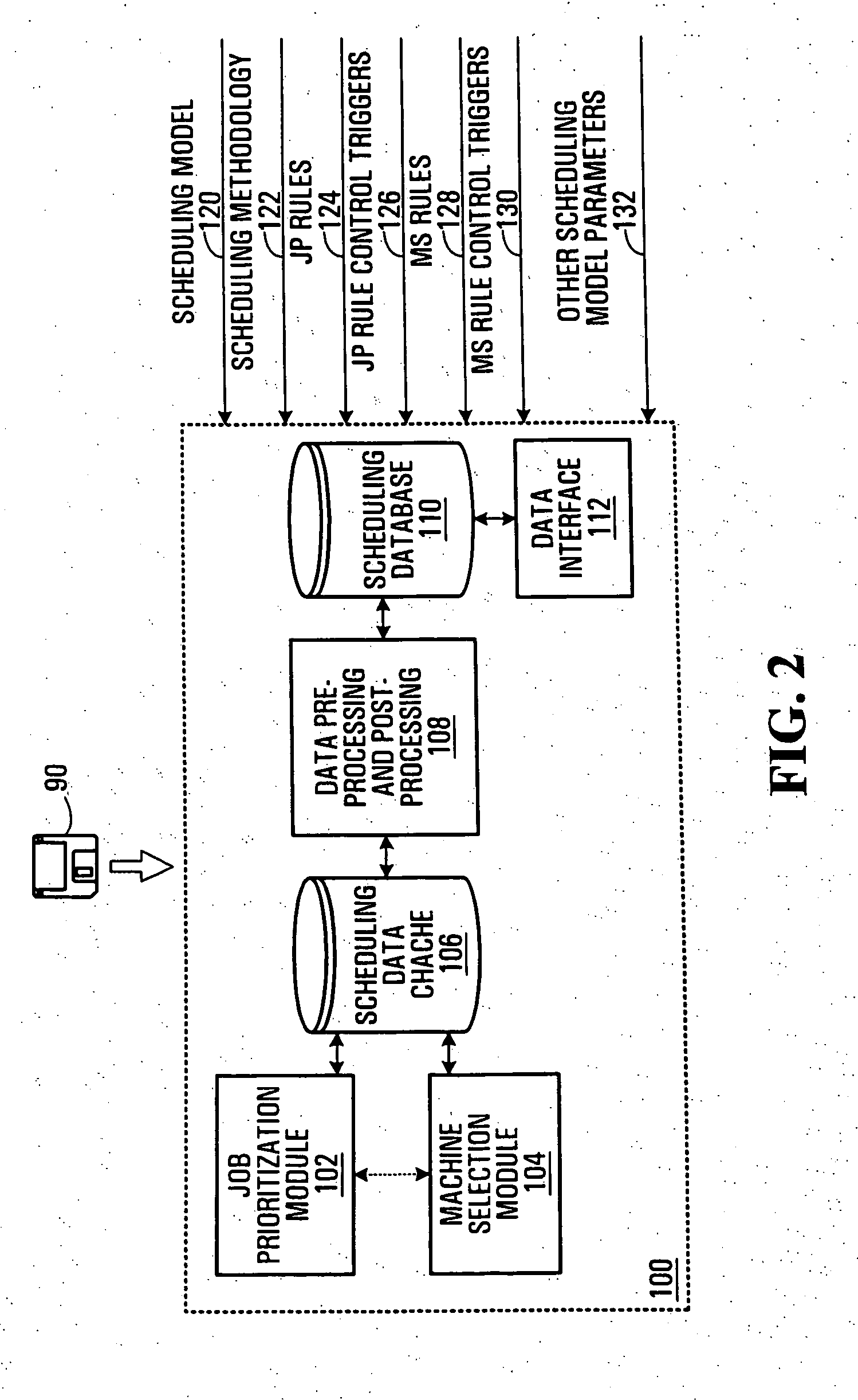

Finite capacity scheduling using job prioritization and machine selection

InactiveUS20050154625A1Improve machine utilizationResourcesSpecial data processing applicationsMachine selectionMachine utilization

In a method, device, and computer-readable medium for finite capacity scheduling, heuristic rules are applied in two integrated stages: Job Prioritization and Machine Selection. During Job Prioritization (“JP”), jobs are prioritized based on a set of JP rules which are machine independent. During Machine Selection (“MS”), jobs are scheduled for execution at machines that are deemed to be best suited based on a set of MS rules. The two-stage approach allows scheduling goals to be achieved for performance measures relating to both jobs and machines. For example, machine utilization may be improved while product cycle time objectives are still met. Two user-configurable options, namely scheduling model (job shop or flow shop) and scheduling methodology (forward, backward, or bottleneck), govern the scheduling process. A memory may store a three-dimensional linked list data structure for use in scheduling work orders for execution at machines assigned to work centers.

Owner:AGENCY FOR SCI TECH & RES

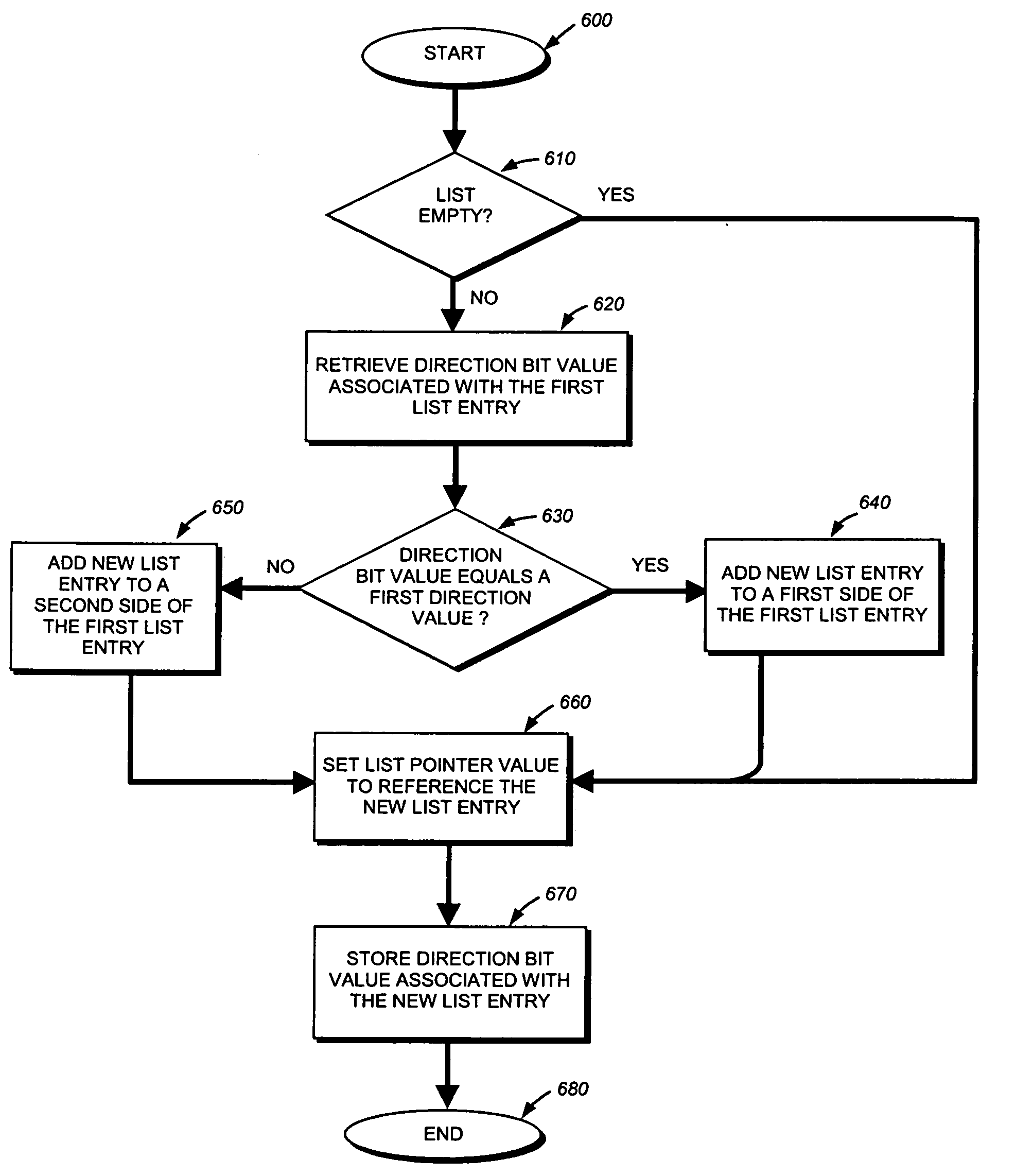

Memory efficient hashing algorithm

InactiveUS20050171937A1Efficient searchFew processing timeDigital data information retrievalSpecial data processing applicationsTerm memoryComputer science

A technique efficiently searches a hash table. Conventionally, a predetermined set of “signature” information is hashed to generate a hash-table index which, in turn, is associated with a corresponding linked list accessible through the hash table. The indexed list is sequentially searched, beginning with the first list entry, until a “matching” list entry is located containing the signature information. For long list lengths, this conventional approach may search a substantially large number of list entries. In contrast, the inventive technique reduces, on average, the number of list entries that are searched to locate the matching list entry. To that end, list entries are partitioned into different groups within each linked list. Thus, by searching only a selected group (e.g., subset) of entries in the indexed list, the technique consumes fewer resources, such as processor bandwidth and processing time, than previous implementations.

Owner:CISCO TECH INC

High-speed hardware implementation of red congestion control algorithm

InactiveUS6813243B1Error preventionFrequency-division multiplex detailsParallel processingHardware implementations

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

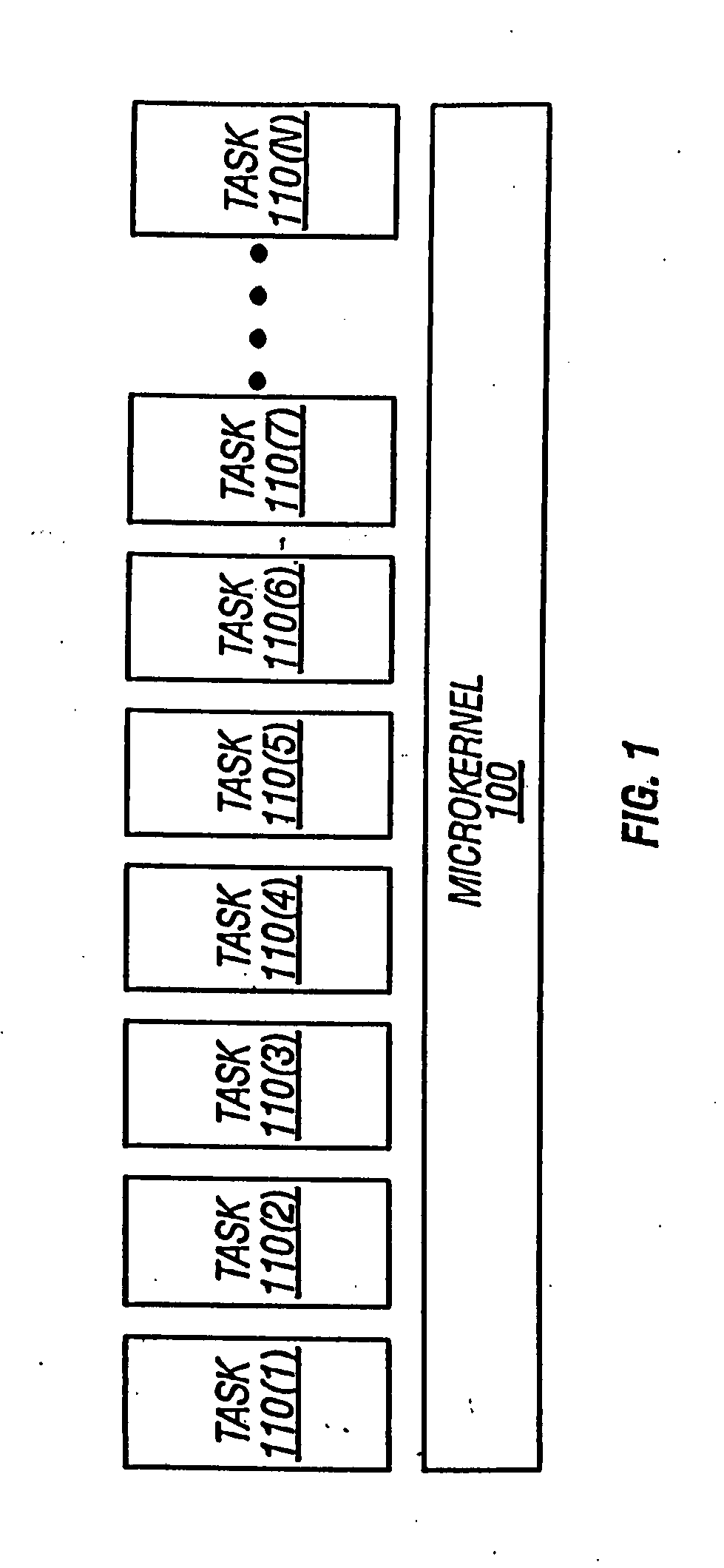

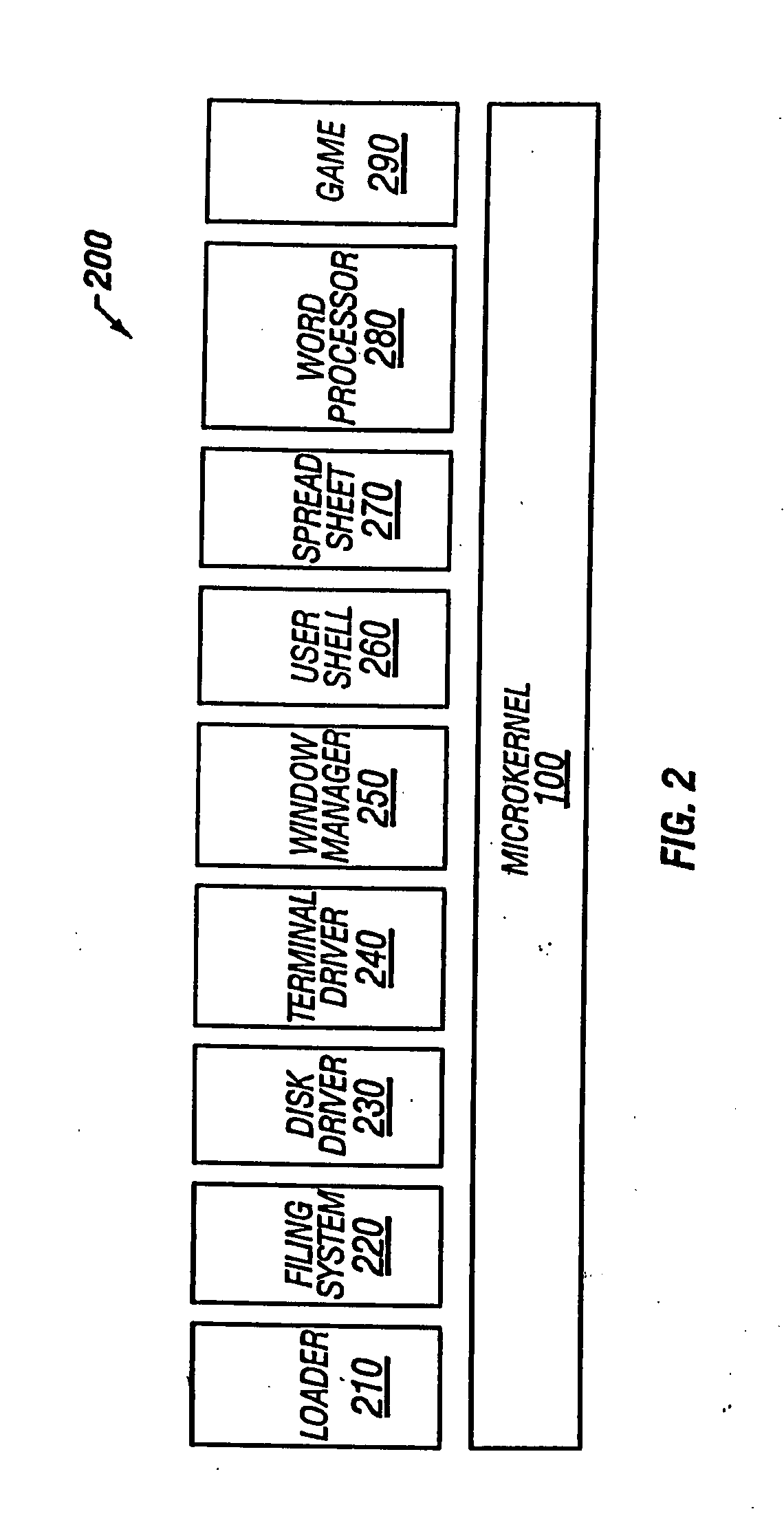

Lock-free implementation of concurrent shared object with dynamic node allocation and distinguishing pointer value

A novel linked-list-based concurrent shared object implementation has been developed that provides non-blocking and linearizable access to the concurrent shared object. In an application of the underlying techniques to a deque, non-blocking completion of access operations is achieved without restricting concurrency in accessing the deque's two ends. In various realizations in accordance with the present invention, the set of values that may be pushed onto a shared object is not constrained by use of distinguishing values. In addition, an explicit reclamation embodiment facilitates use in environments or applications where automatic reclamation of storage is unavailable or impractical.

Owner:ORACLE INT CORP

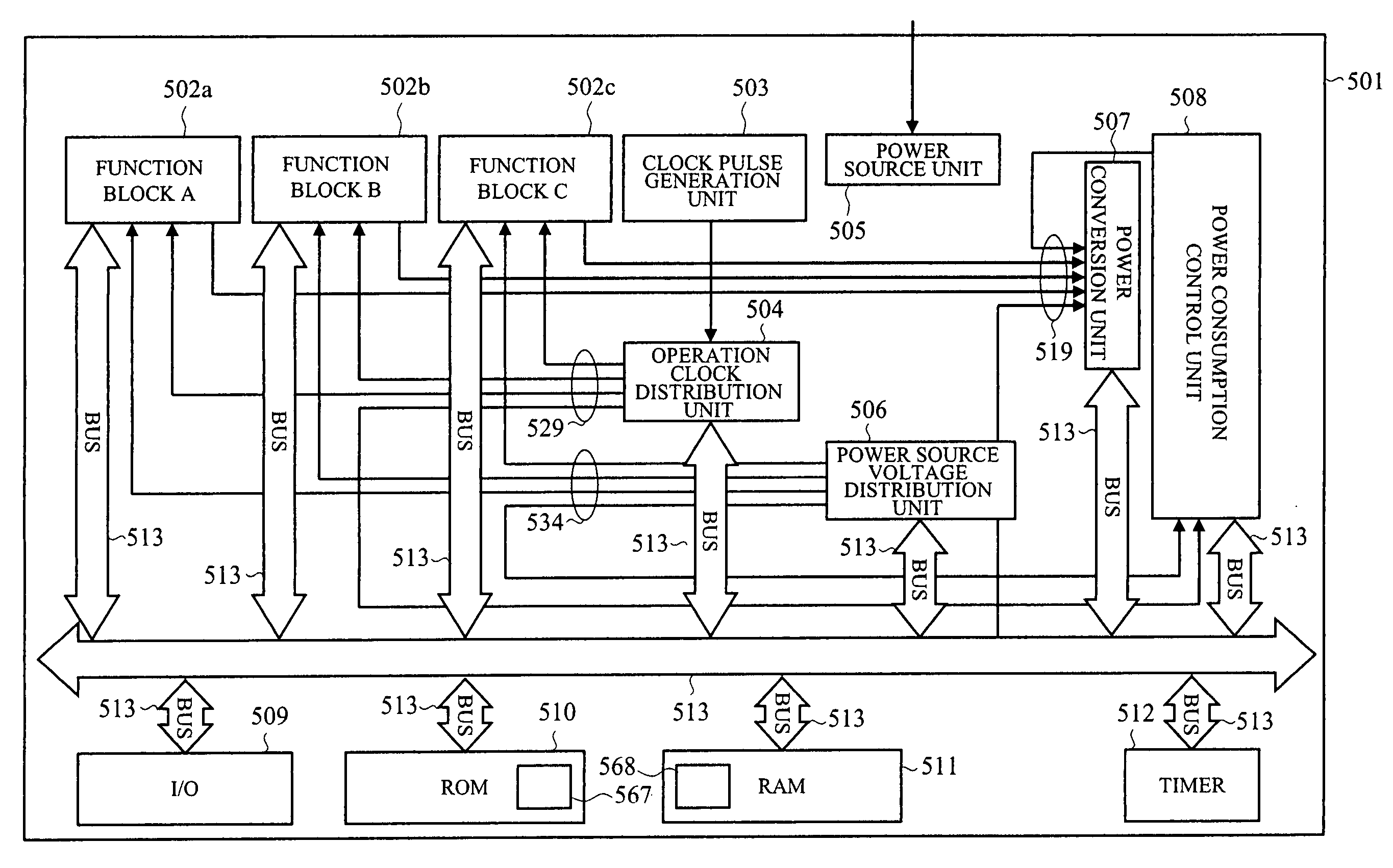

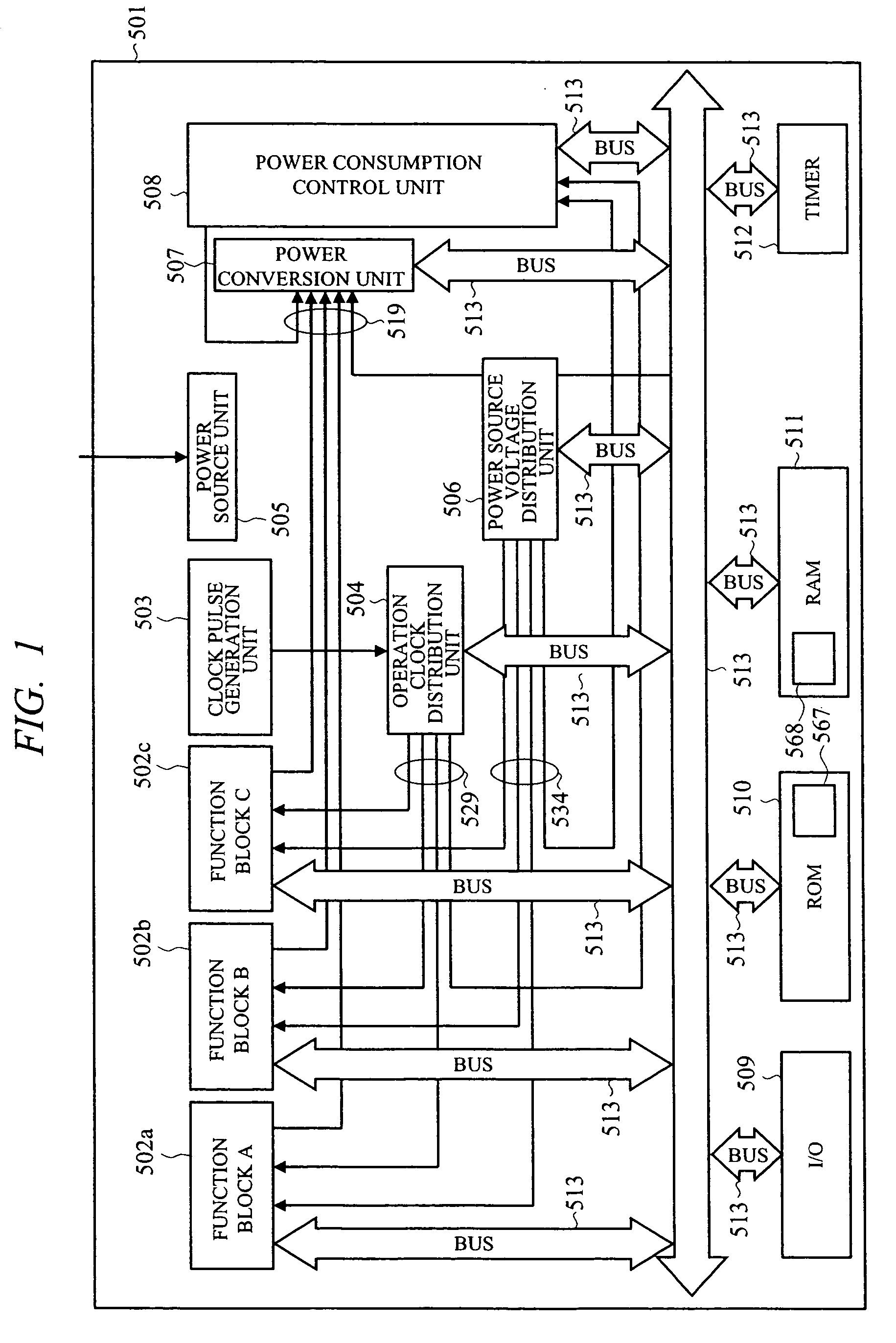

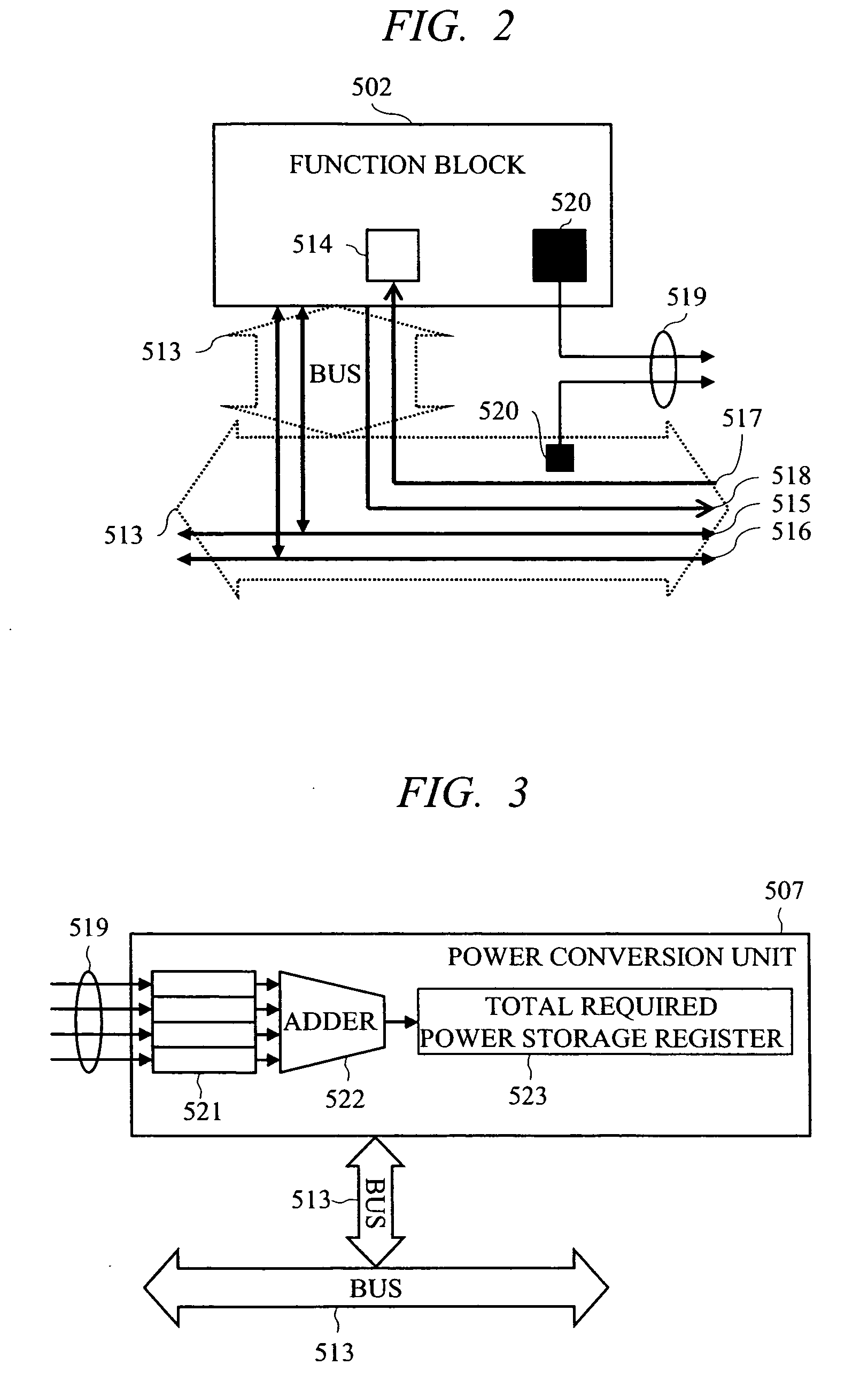

Semiconductor integrated circuit device and power consumption control device

ActiveUS20070083779A1Guaranteed uptimeReduce power consumptionEnergy efficient ICTDigital data processing detailsPower modePower budget

To perform execution scheduling of function blocks so as to control the total required power of the function blocks within a supplyable power budget value, and thereby realize stable operations at low power consumption. Function block identifiers are allotted to all the function blocks, and to a RAM area that a power consumption control device can read and write, a list to store identifiers and task priority, power mode value showing power states, and power mode time showing the holding time of power states can be linked. A single or plural link lists for controlling the schedules of tasks operating on the function blocks, a link list for controlling the function block in execution currently in high power mode, a link list for controlling the function block in stop currently in stop mode, and a link list for controlling the function block in execution currently in low power mode are allotted, and thereby the power source and the operation clock are controlled by the power consumption control device.

Owner:RENESAS ELECTRONICS CORP

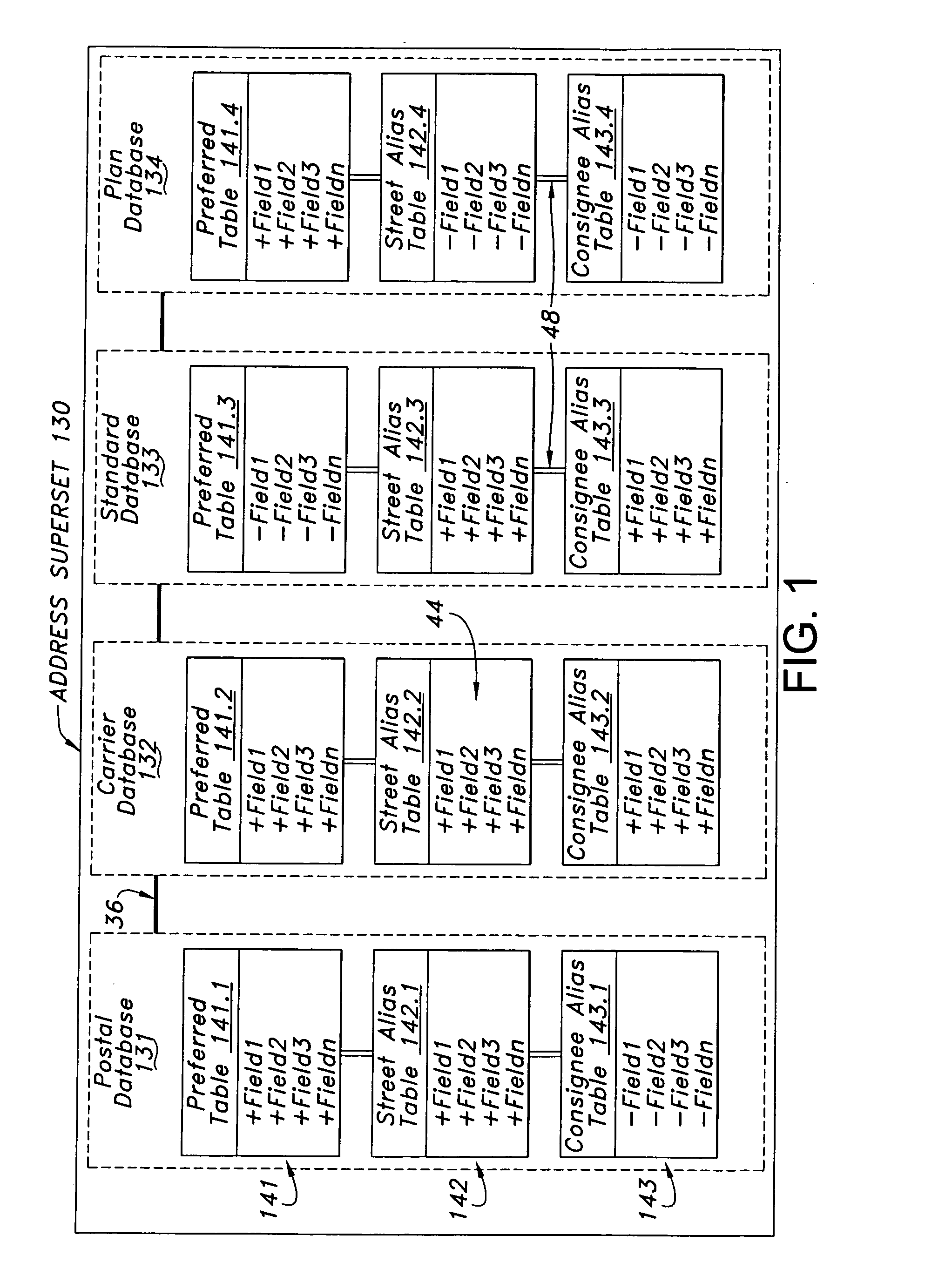

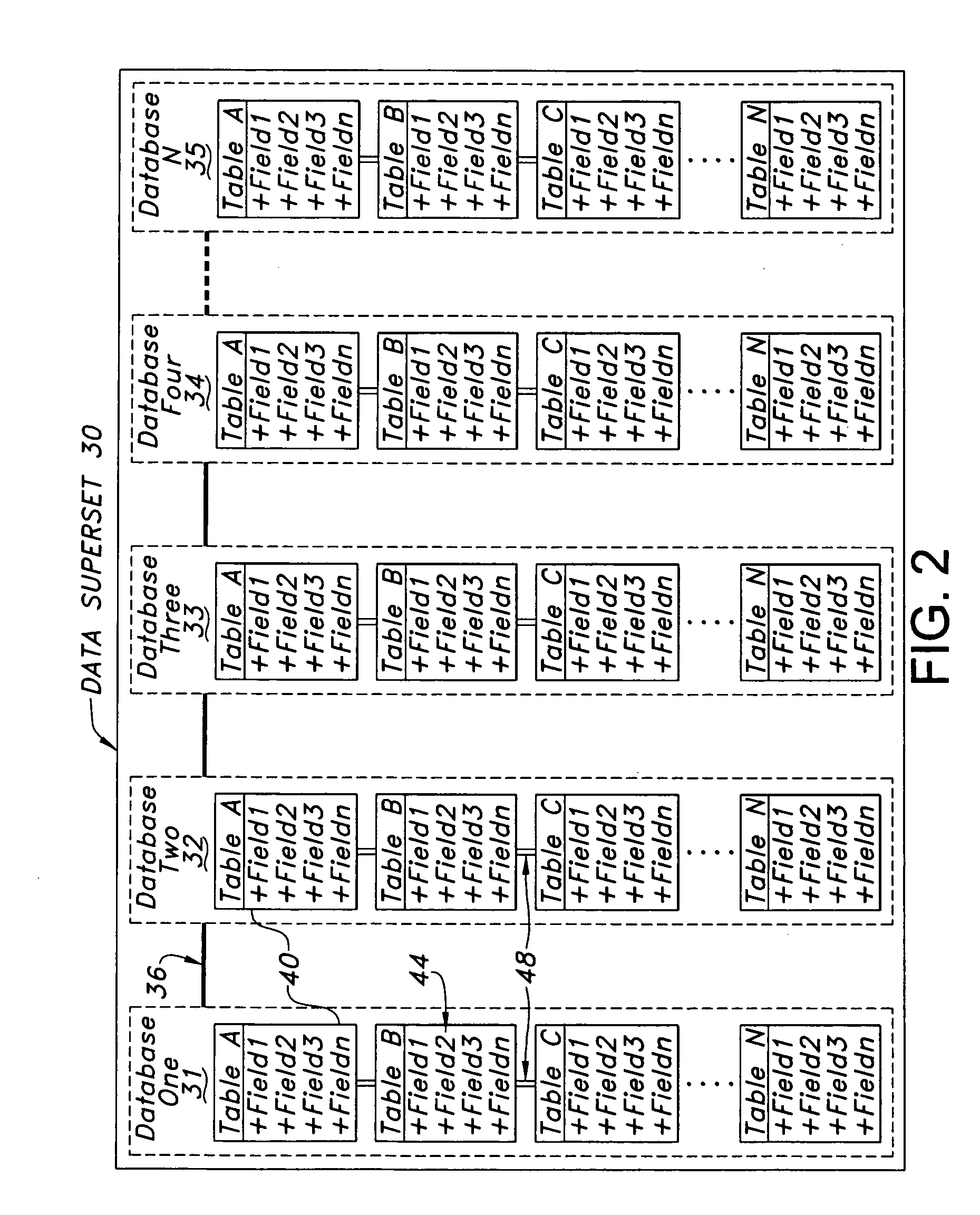

Data structure and management system for a superset of relational databases

ActiveUS20050086256A1Facilitate prompt and efficient validationEasy constructionData processing applicationsRelational databasesTable (database)Relational database

A data structure, database management system, and methods of validating data are disclosed. A data structure is described that includes a superset of interconnected relational databases containing multiple tables having a common data structure. The tables may be stored as a sparse matrix linked list. A method is disclosed for ordering records in hierarchical order, in a series of levels from general to specific. An example use with address databases is described, including a method for converting an input address having a subject representation into an output address having a preferred representation. Preferred artifacts may be marked with a token. Alias tables may be included. This Abstract is provided to comply with the rules, which require an abstract to quickly inform a searcher or other reader about the subject matter of the application. This Abstract is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:UNITED PARCEL SERVICE OF AMERICAN INC

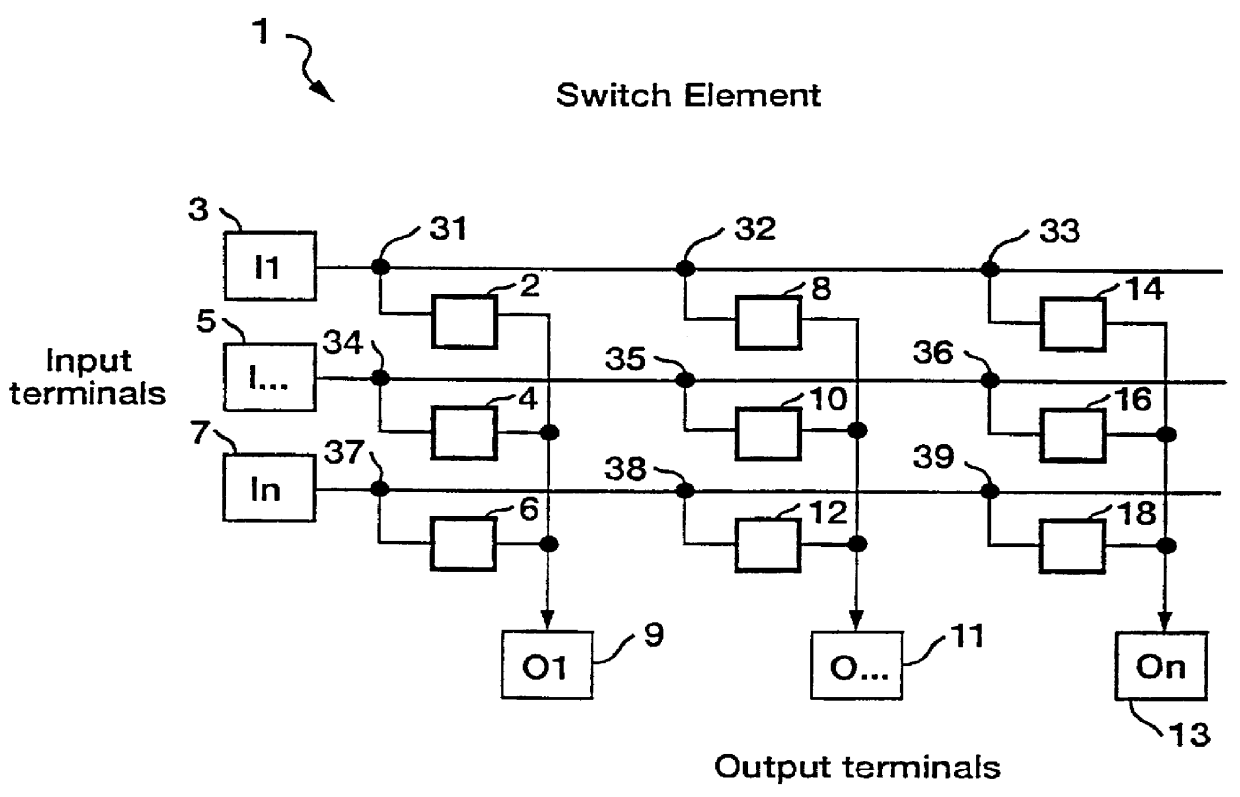

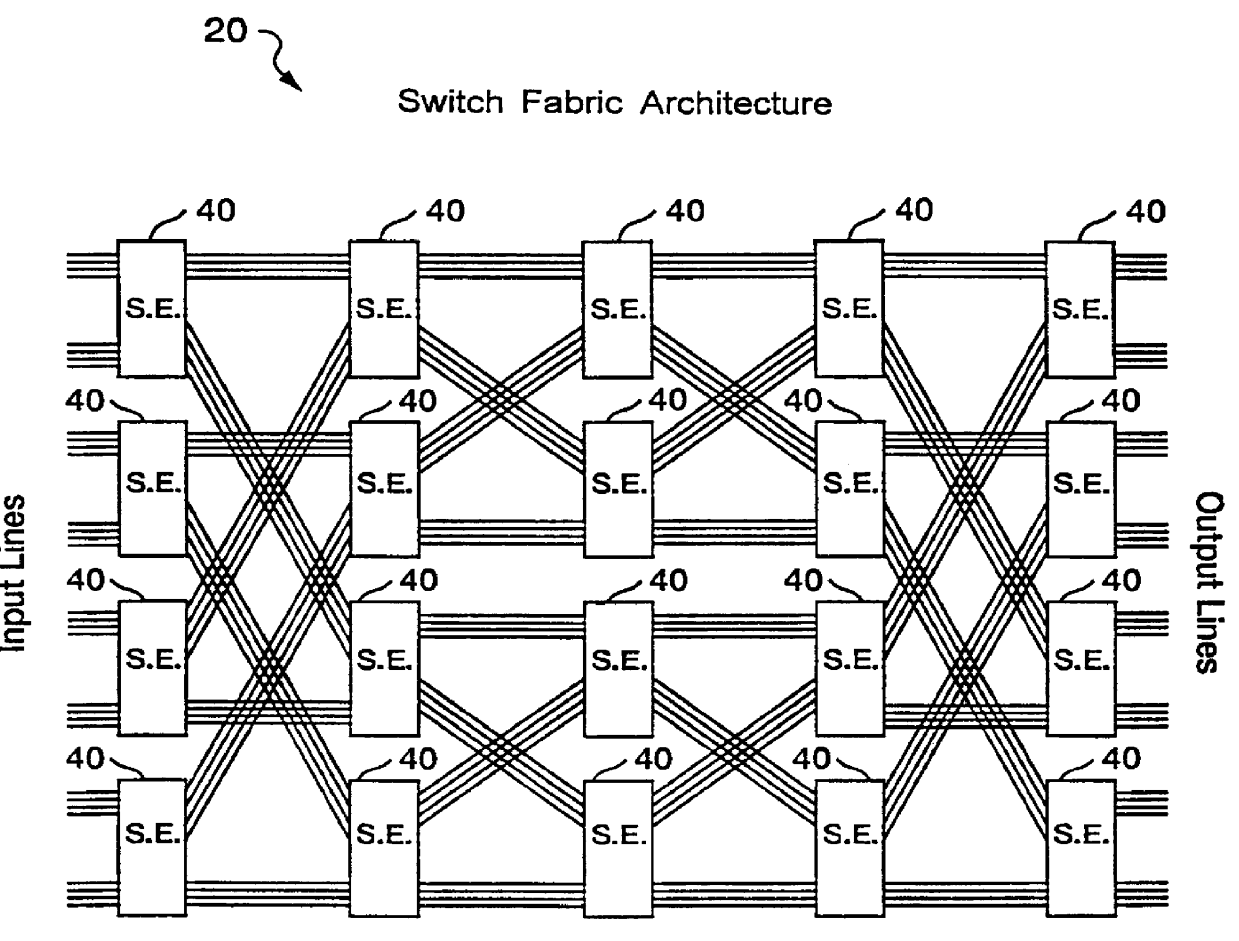

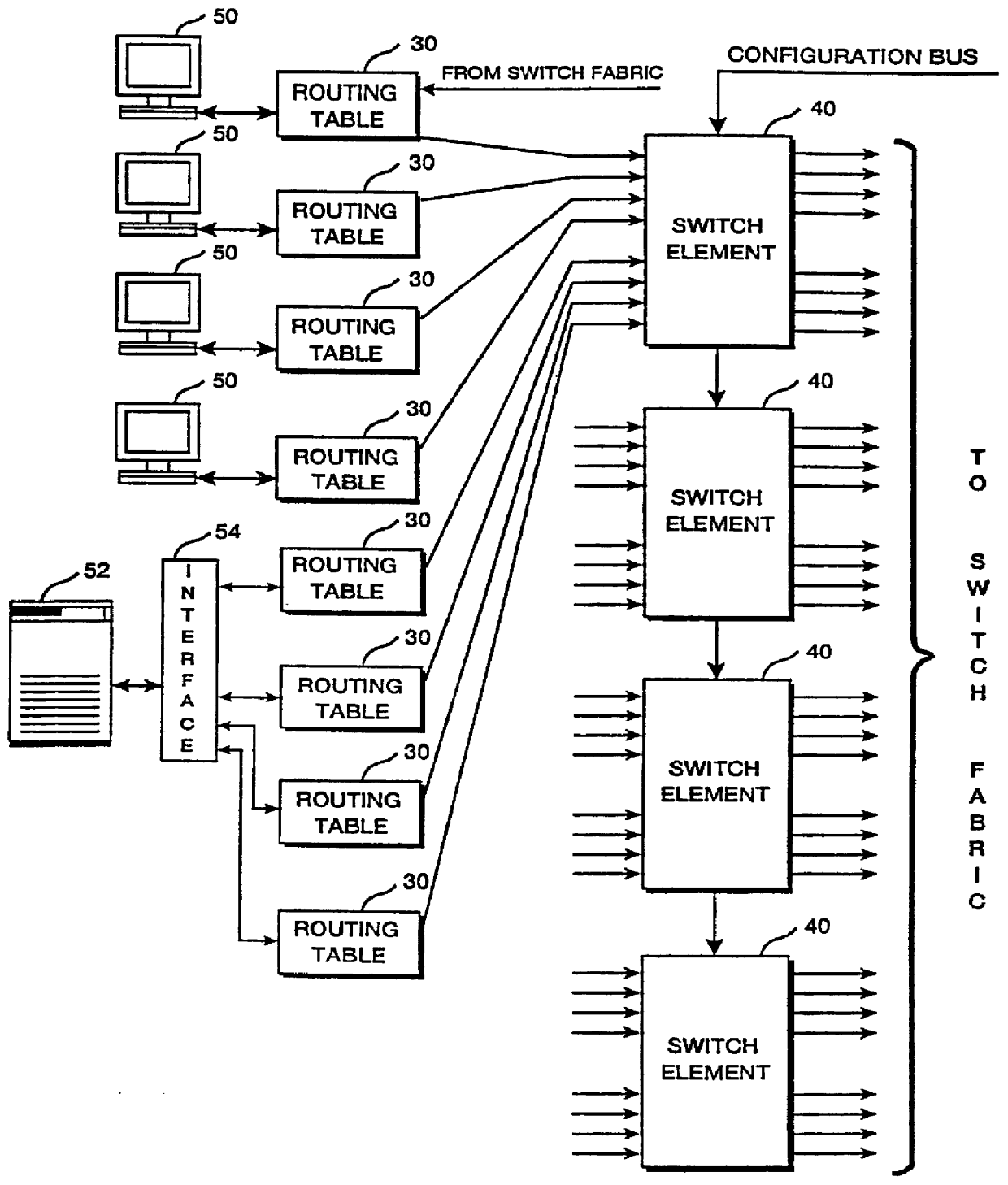

ATM architecture and switching element

An ATM switching system architecture of a switch fabric-type is built of, a plurality of ATM switch element circuits and routing table circuits for each physical connection to / from the switch fabric. A shared pool of memory is employed to eliminate the need to provide memory at every crosspoint. Each routing table maintains a marked interrupt linked list for storing information about which ones of its virtual channels are experiencing congestion. This linked list is available to a processor in the external workstation to alert the processor when a congestion condition exists in one of the virtual channels. The switch element circuit typically has up to eight 4-bit-wide nibble inputs and eight 4-bit-wide nibble outputs and is capable of connecting cells received at any of its inputs to any of its outputs, based on the information in a routing tag uniquely associated with each cell.

Owner:PMC SEIRRA

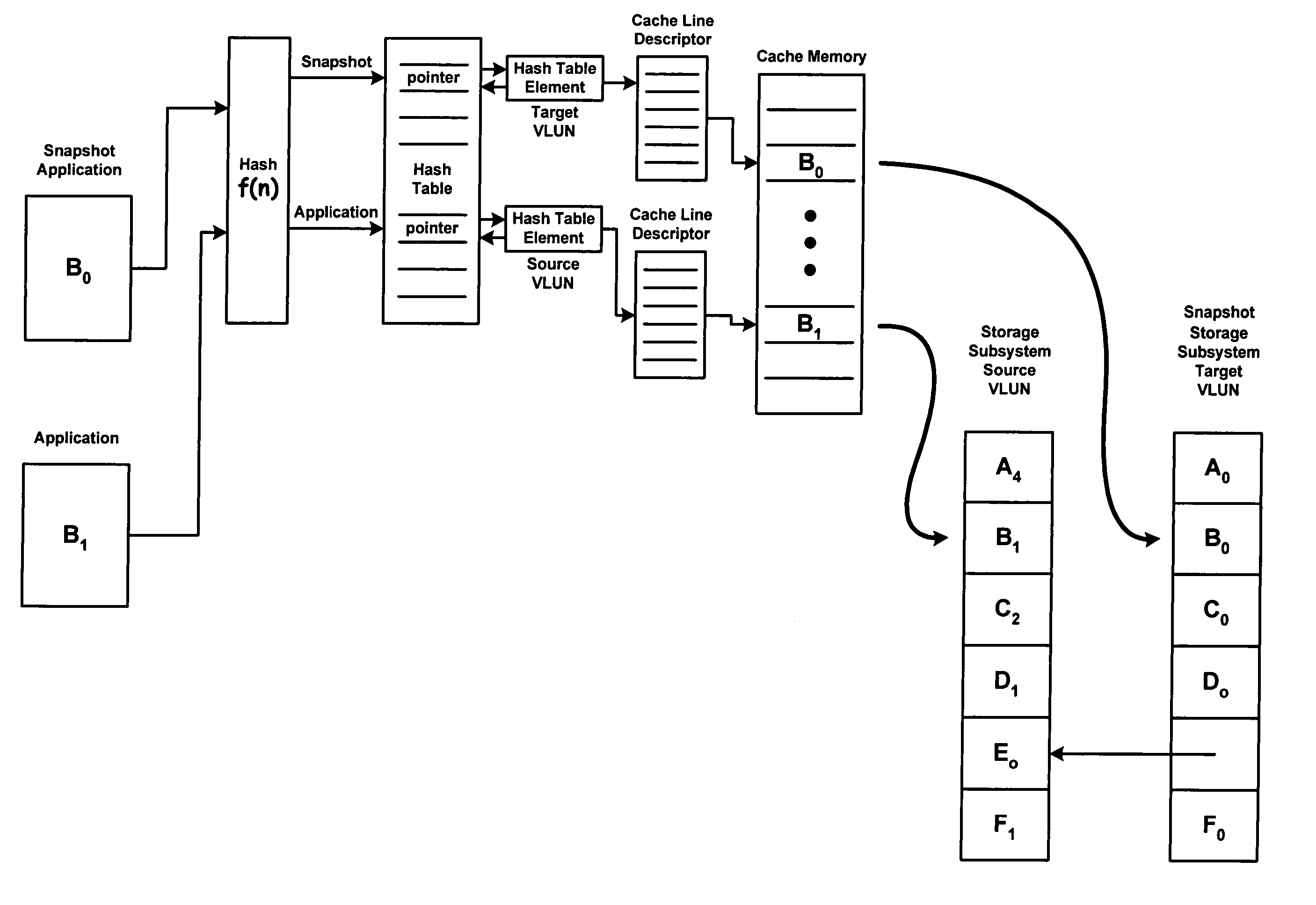

Methods and systems of cache memory management and snapshot operations

InactiveUS20060265568A1Memory architecture accessing/allocationMemory loss protectionParallel computingHash table

The present invention relates to a cache memory management system suitable for use with snapshot applications. The system includes a cache directory including a hash table, hash table elements, cache line descriptors, and cache line functional pointers, and a cache manager running a hashing function that converts a request for data from an application to an index to a first hash table pointer in the hash table. The first hash table pointer in turn points to a first hash table element in a linked list of hash table elements where one of the hash table elements of the linked list of hash table elements points to a first cache line descriptor in the cache directory and a cache memory including a plurality of cache lines, wherein the first cache line descriptor has a one-to-one association with a first cache line. The present invention also provides for a method converting a request for data to an input to a hashing function, addressing a hash table based on a first index output from the hashing function, searching the hash table elements pointed to by the first index for the requested data, determining the requested data is not in cache memory, and allocating a first hash table element and a first cache line descriptor that associates with a first cache line in the cache memory.

Owner:ORACLE INT CORP

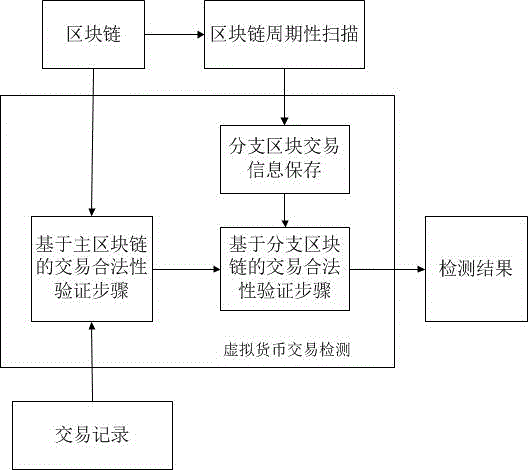

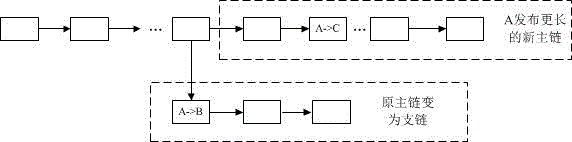

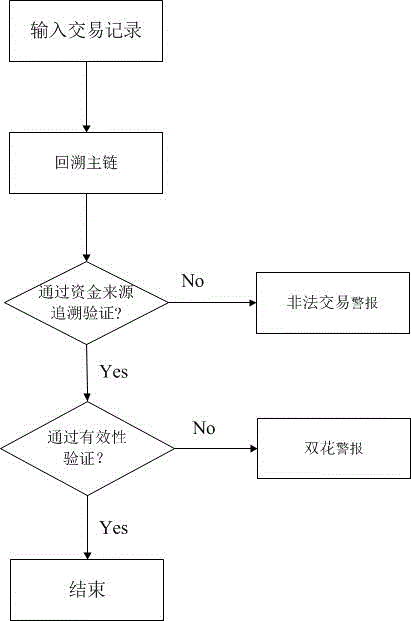

Virtual currency transaction validation method based on block chain multi-factor cross-validation

The invention provides a virtual currency transaction validation method based on block chain multi-factor cross-validation to solve the double-spending problem that virtual currency based on block chains are liable to 51% attacks and thus generates transactions. The method queries and backups a history block chain branch periodically, organizes confirmed transaction information into a hash chain list array which is easy for querying, and avoids branch transaction information losses induced by block chain evolution. During a virtual currency transaction, the method not only checks payer information, recipient information, a fund source, a currency transaction amount and the like recorded in a current main block chain, but also queries a backup branch block chain to check whether the current transaction and a history transaction on the branch block chain have the same fund source. If any transaction does not pass the check, a miner gives an alarm over the whole network about the transaction, so that the method can avoid the double-spending problem induced by illegal transactions.

Owner:SICHUAN UNIV

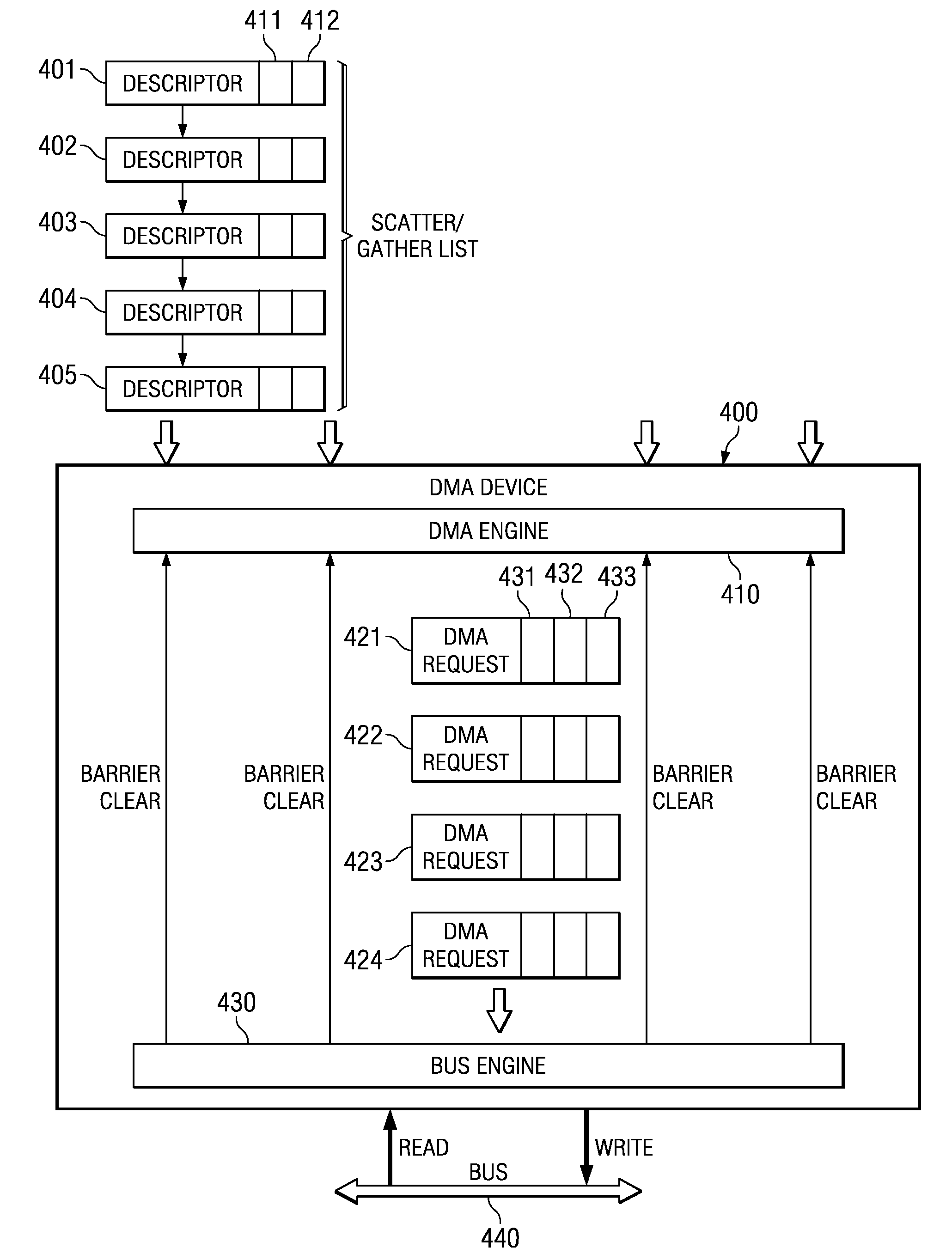

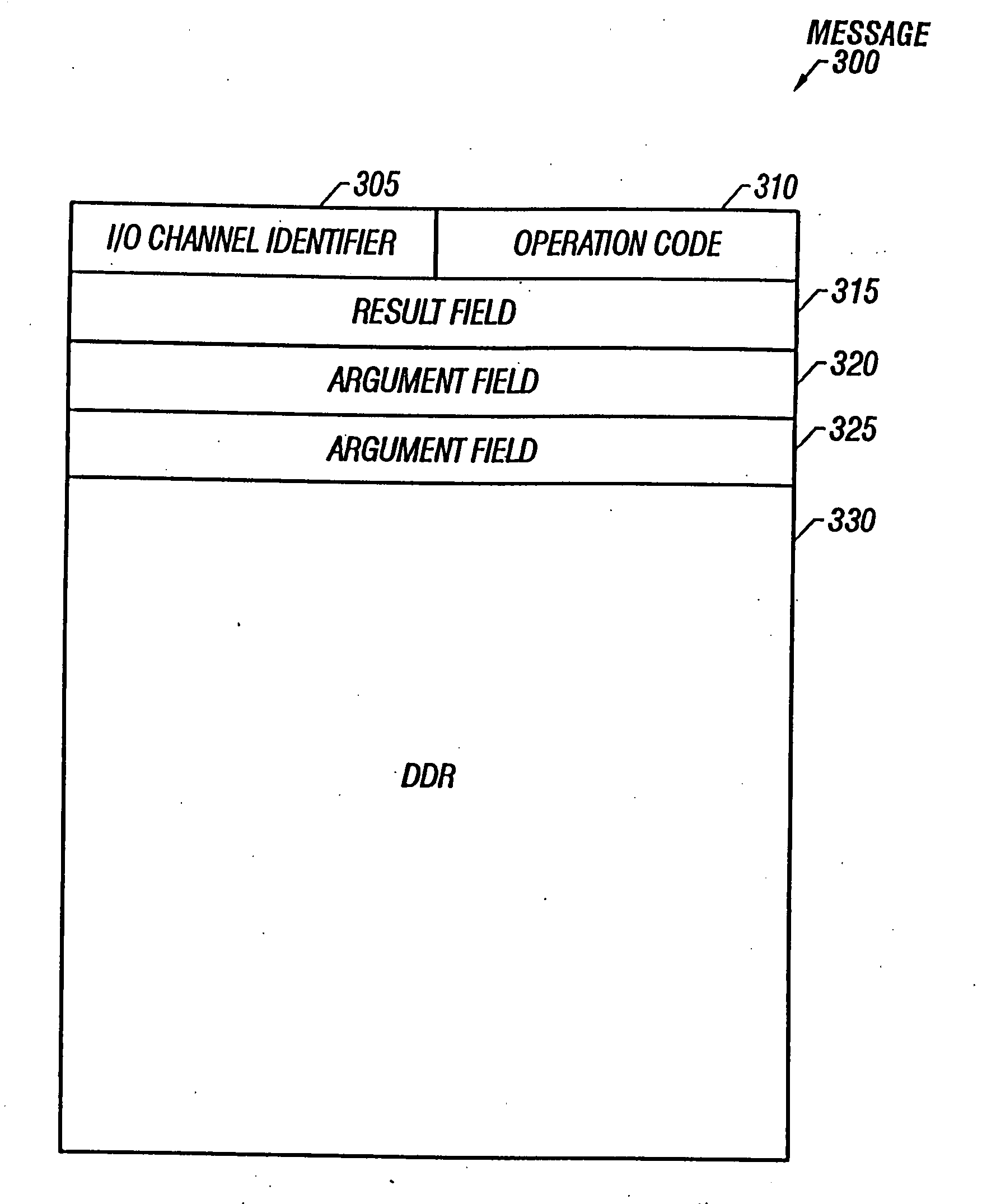

Barrier and Interrupt Mechanism for High Latency and Out of Order DMA Device

A direct memory access (DMA) device includes a barrier and interrupt mechanism that allows interrupt and mailbox operations to occur in such a way that ensures correct operation, but still allows for high performance out-of-order data moves to occur whenever possible. Certain descriptors are defined to be “barrier descriptors.” When the DMA device encounters a barrier descriptor, it ensures that all of the previous descriptors complete before the barrier descriptor completes. The DMA device further ensures that any interrupt generated by a barrier descriptor will not assert until the data move associated with the barrier descriptor completes. The DMA controller only permits interrupts to be generated by barrier descriptors. The barrier descriptor concept also allows software to embed mailbox completion messages into the scatter / gather linked list of descriptors.

Owner:IBM CORP

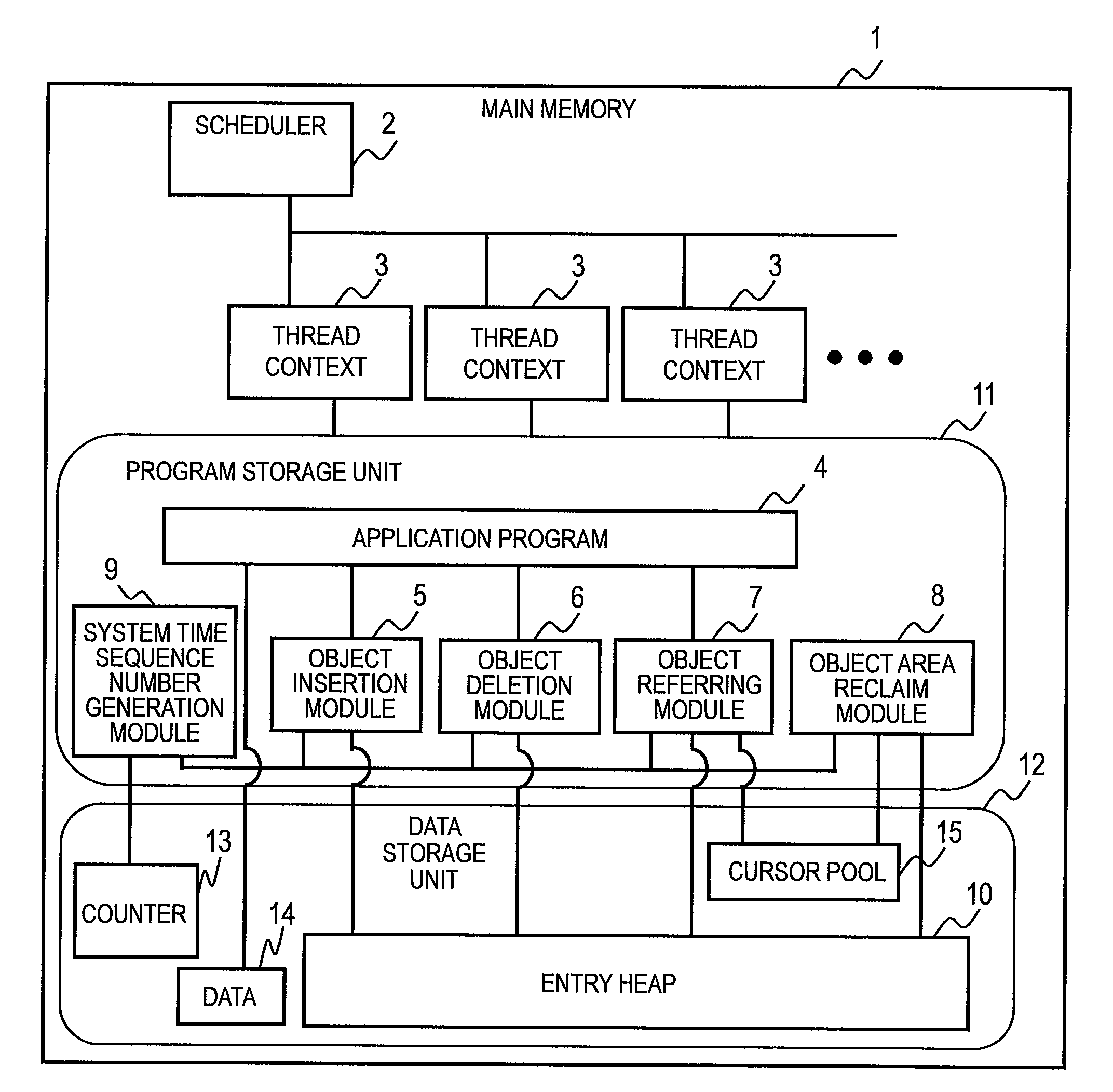

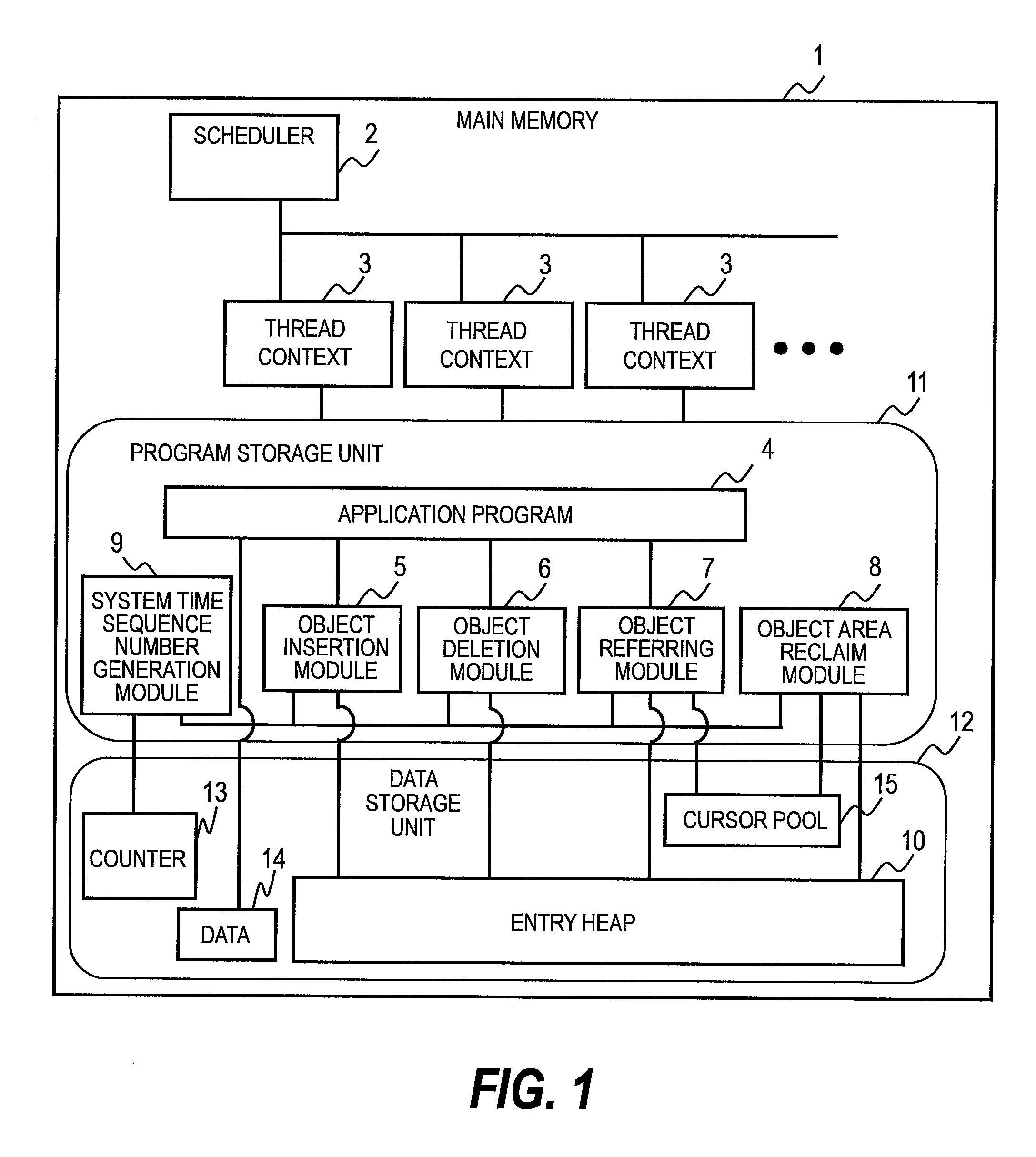

Data management method, data management program, and data management device

InactiveUS20090271435A1Overhead costDigital data information retrievalProgram controlInsertion timeData management

Provided is a data management method. Data corresponds to an entry including a reference to another entry and is managed in a set which is a collection of pieces of the data. The set corresponds to a linked list where the entry corresponding to the data is linked in order of addition of the data. The entry includes an insertion time sequence number inserted into the linked list and information indicating if the data has been deleted from the set. In that case, the entry is separated from the linked list at a predetermined timing. The linked list is traced to refer to the data. When the insertion time sequence number of the reference entry is later than the insertion time sequence number of the entry which has already been referred to, it is judged that the reference entry has been separated from the linked list.

Owner:HITACHI LTD

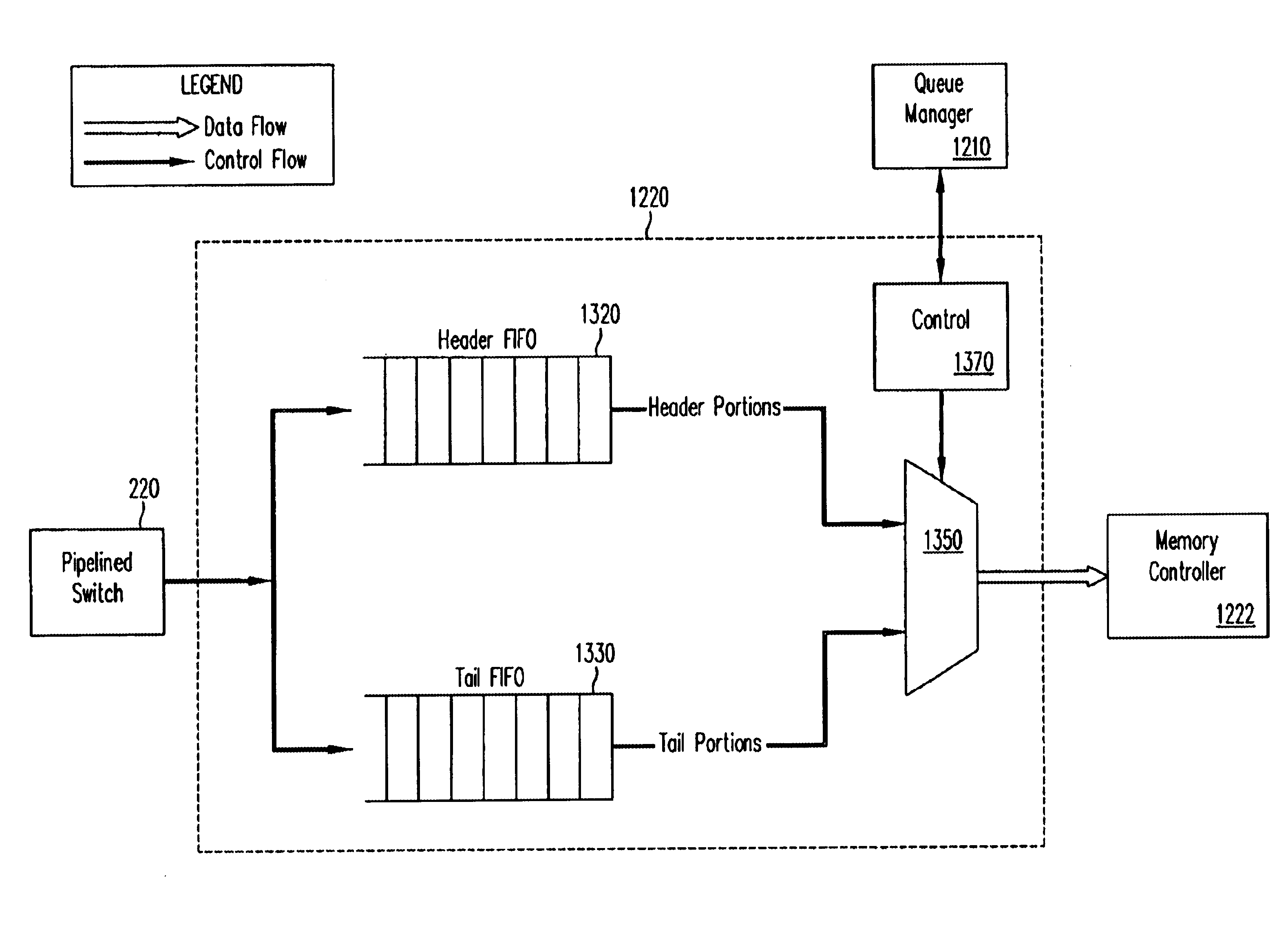

Flexible DMA engine for packet header modification

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

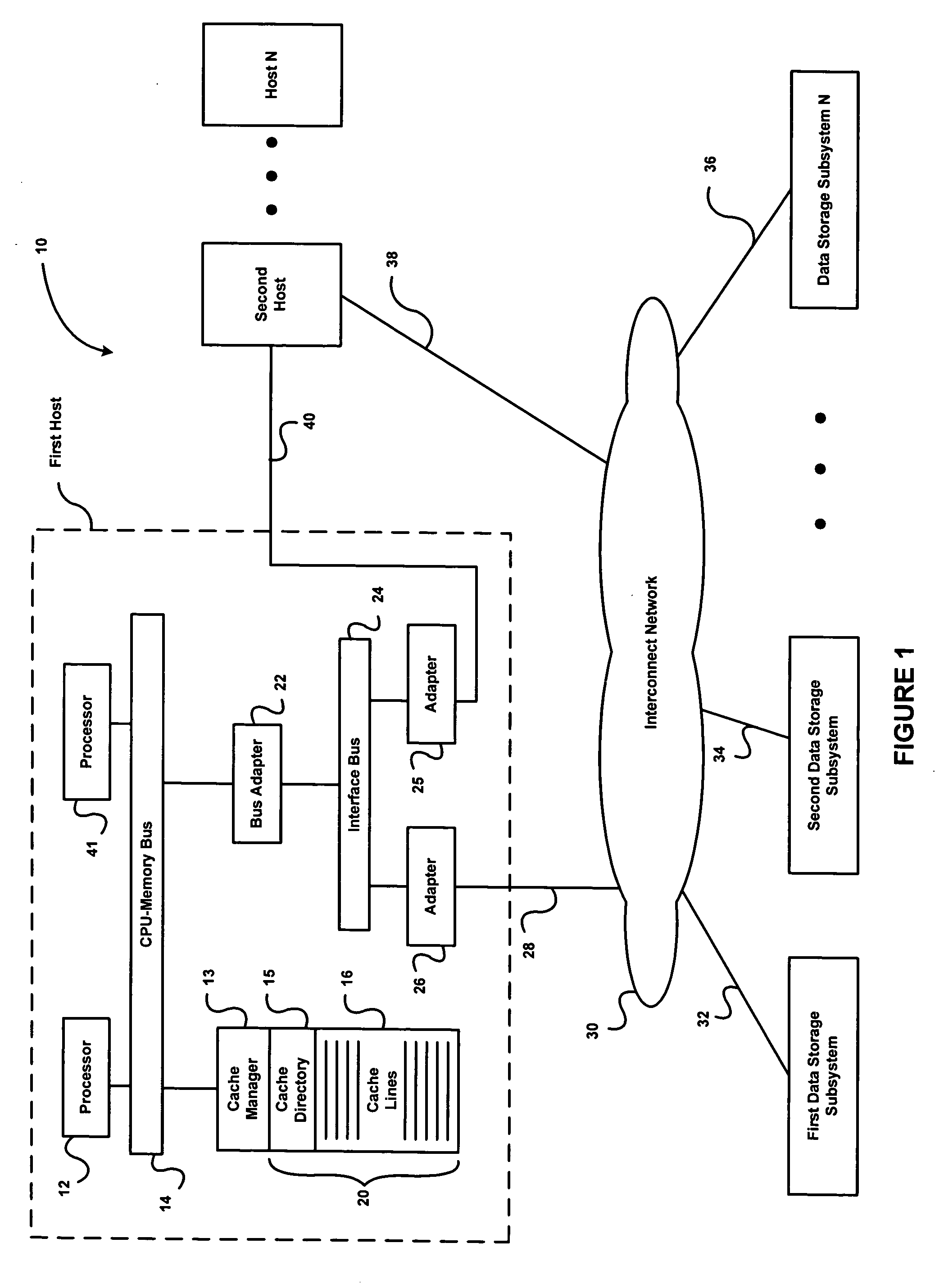

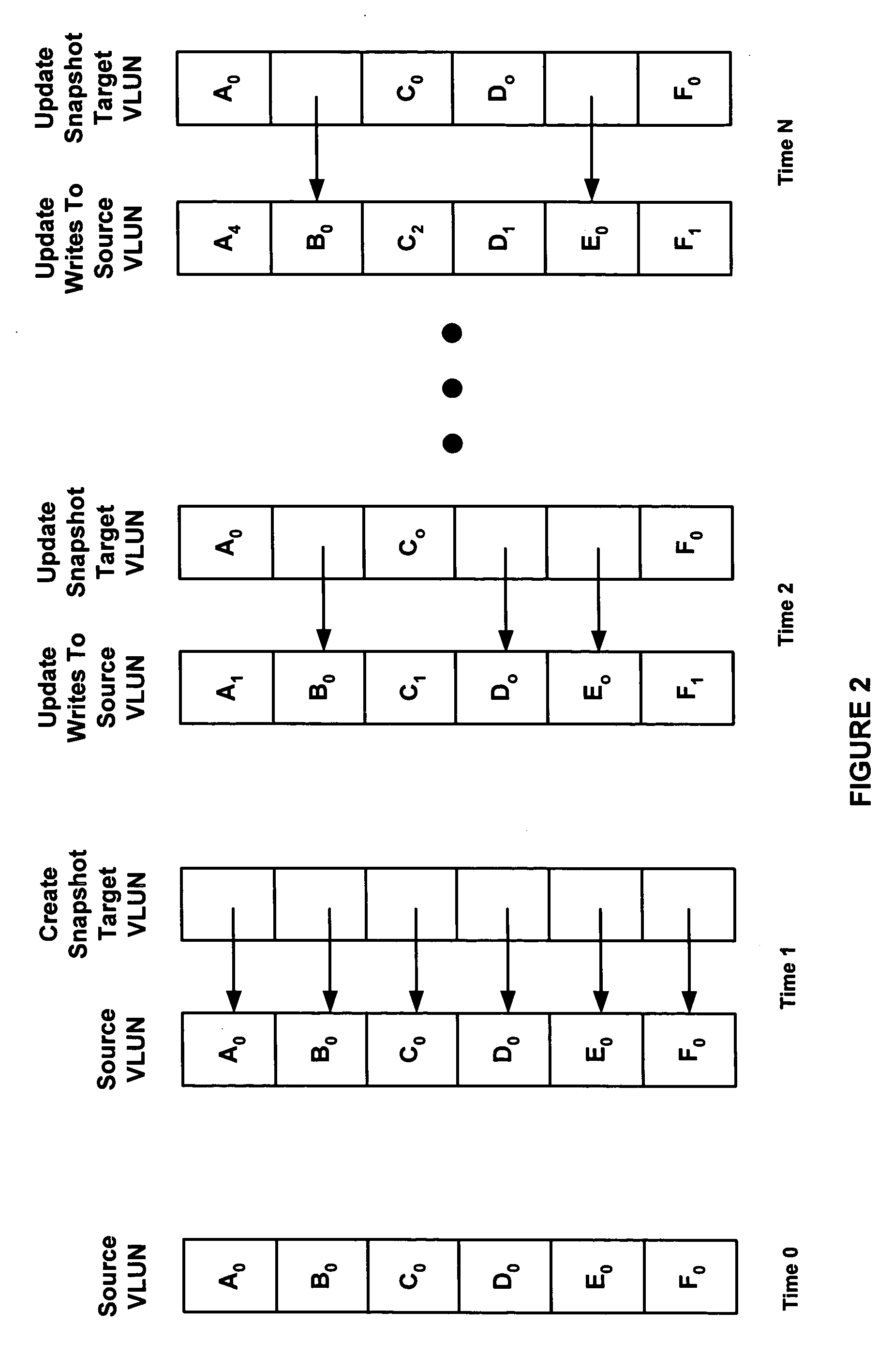

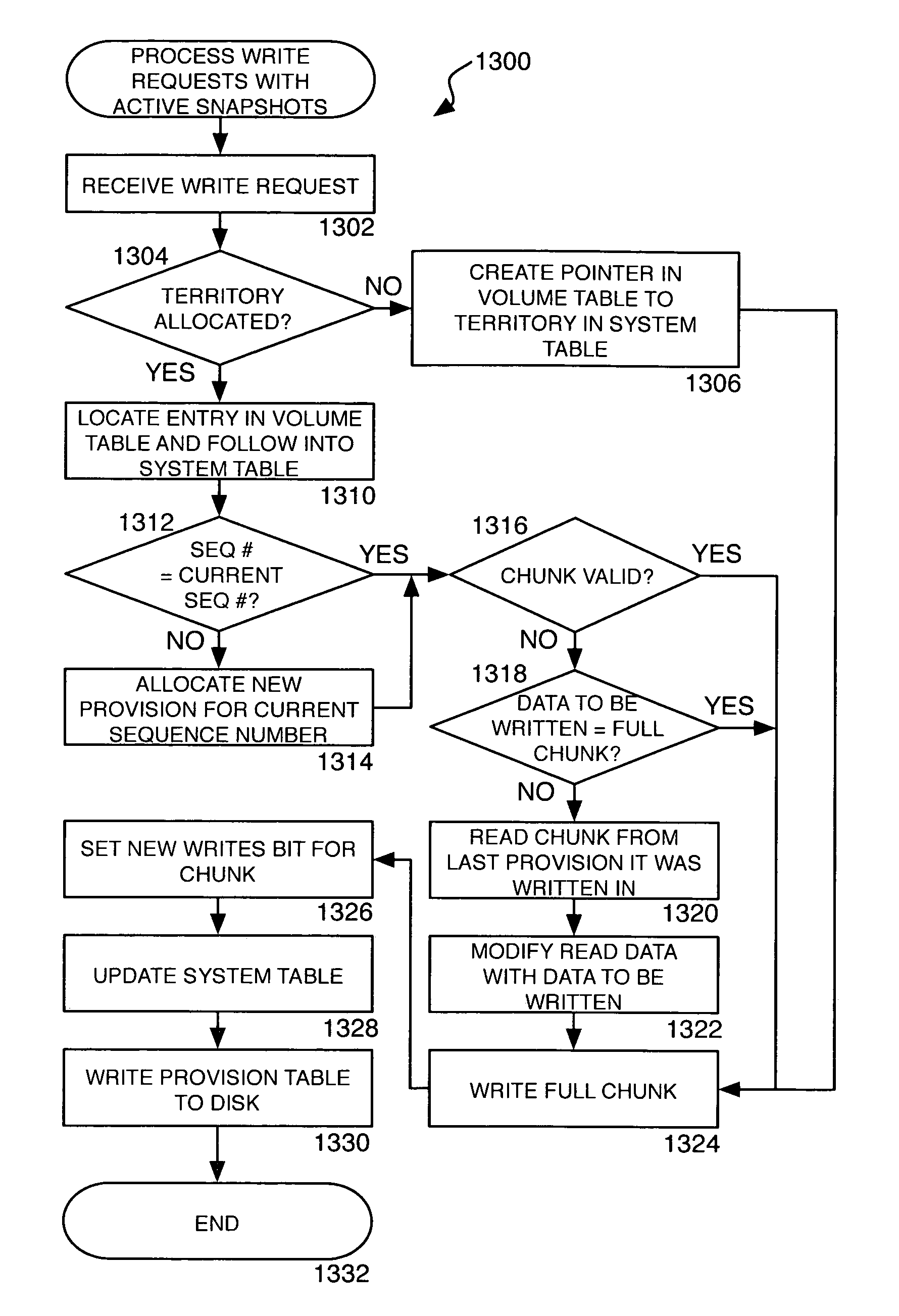

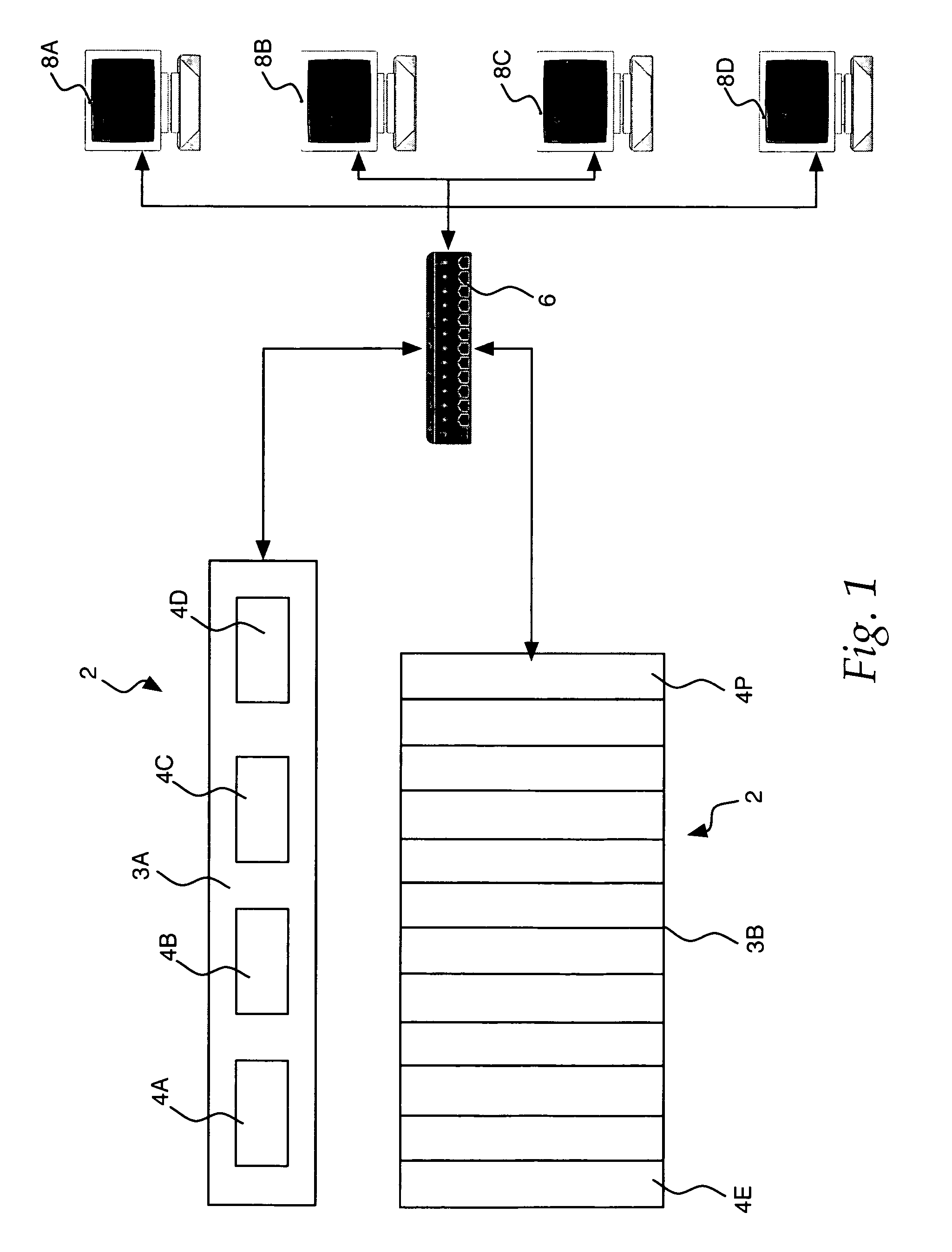

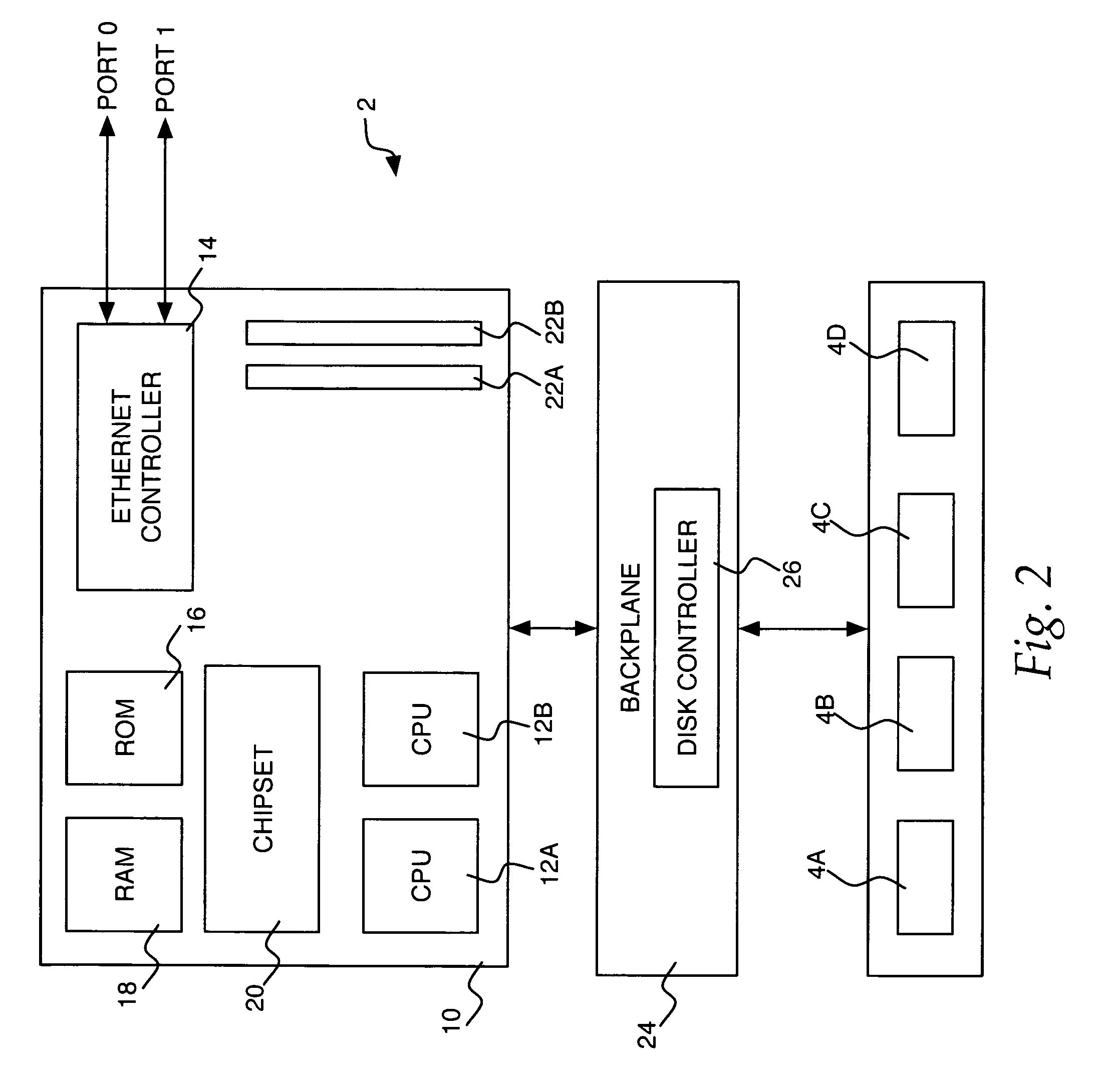

Method, system, apparatus, and computer-readable medium for taking and managing snapshots of a storage volume

ActiveUS7373366B1No performance lossScroll fastError detection/correctionSpecial data processing applicationsGranularityInvalid Data

A method, system, apparatus, and computer-readable medium are provided for taking snapshots of a storage volume. According to aspects of one method, each snapshot is represented as a unique sequence number. Every fresh write access to a volume in a new snapshot lifetime is allocated a new section in the disk, called a provision, which is labeled with the sequence number. Read-modify-write operations are performed on a sub-provision level at the granularity of a chunk. Because each provision contains chunks with valid data and chunks with invalid data, a bitmap is utilized to identify the valid and invalid chunks with each provision. Provisions corresponding to different snapshots are arranged in a linked list. Branches from the linked list can be created for storing writable snapshots. Provisions may also be deleted and rolled back by manipulating the contents of the linked lists.

Owner:AMZETTA TECH LLC

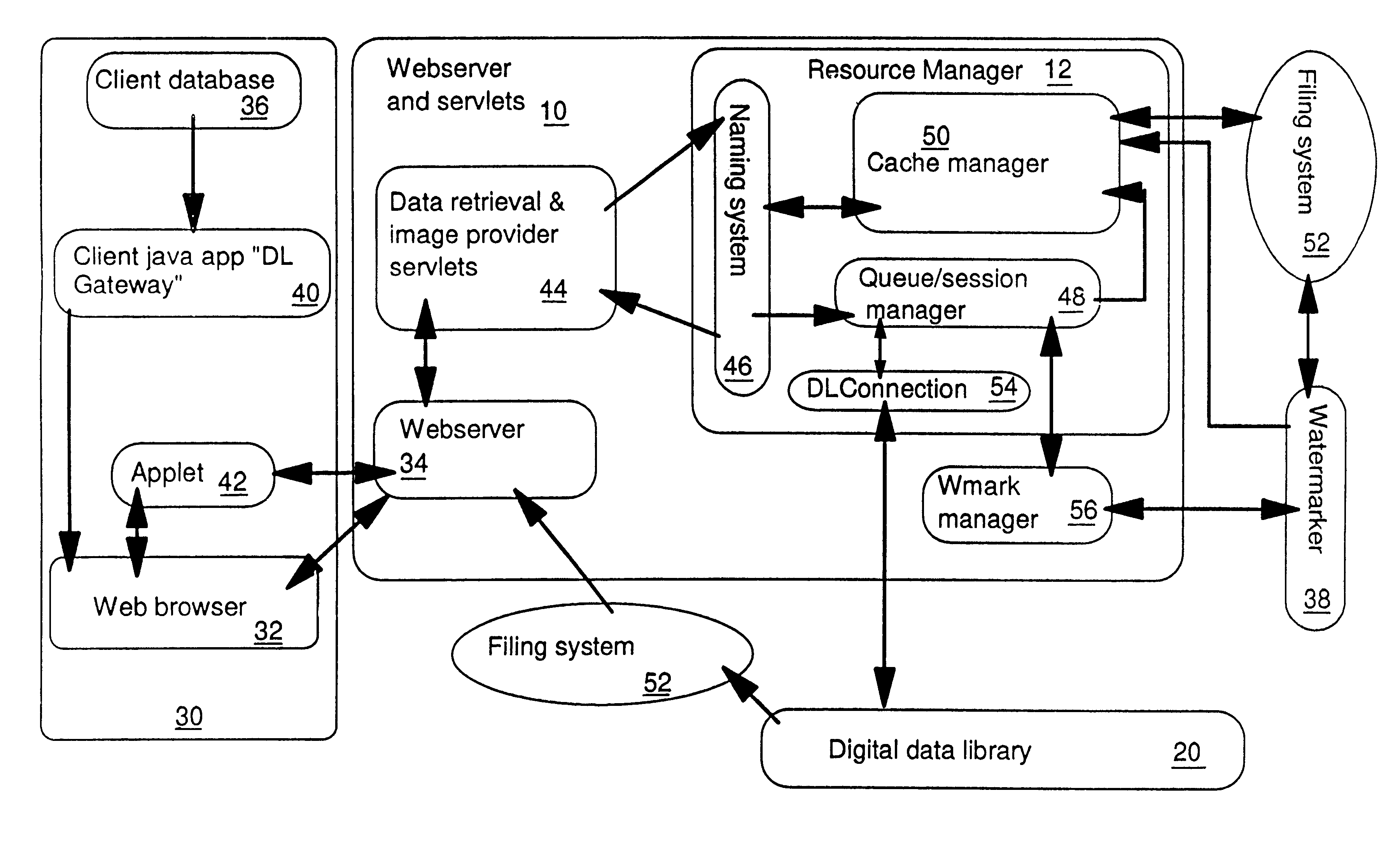

Handling processor-intensive operations in a data processing system

InactiveUS6389421B1Affects process throughputTime can be spentData processing applicationsResource allocationData processing systemData retrieval

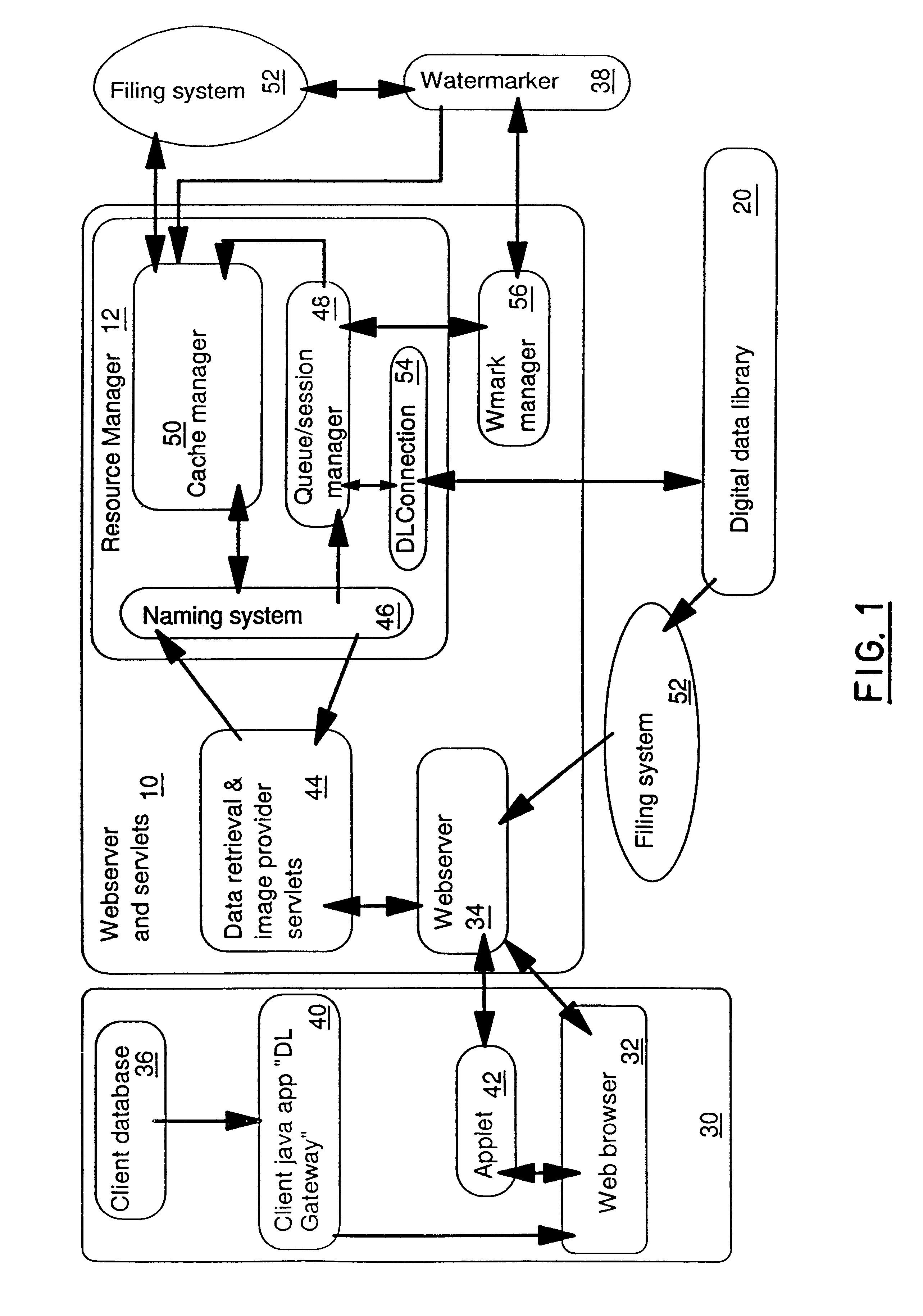

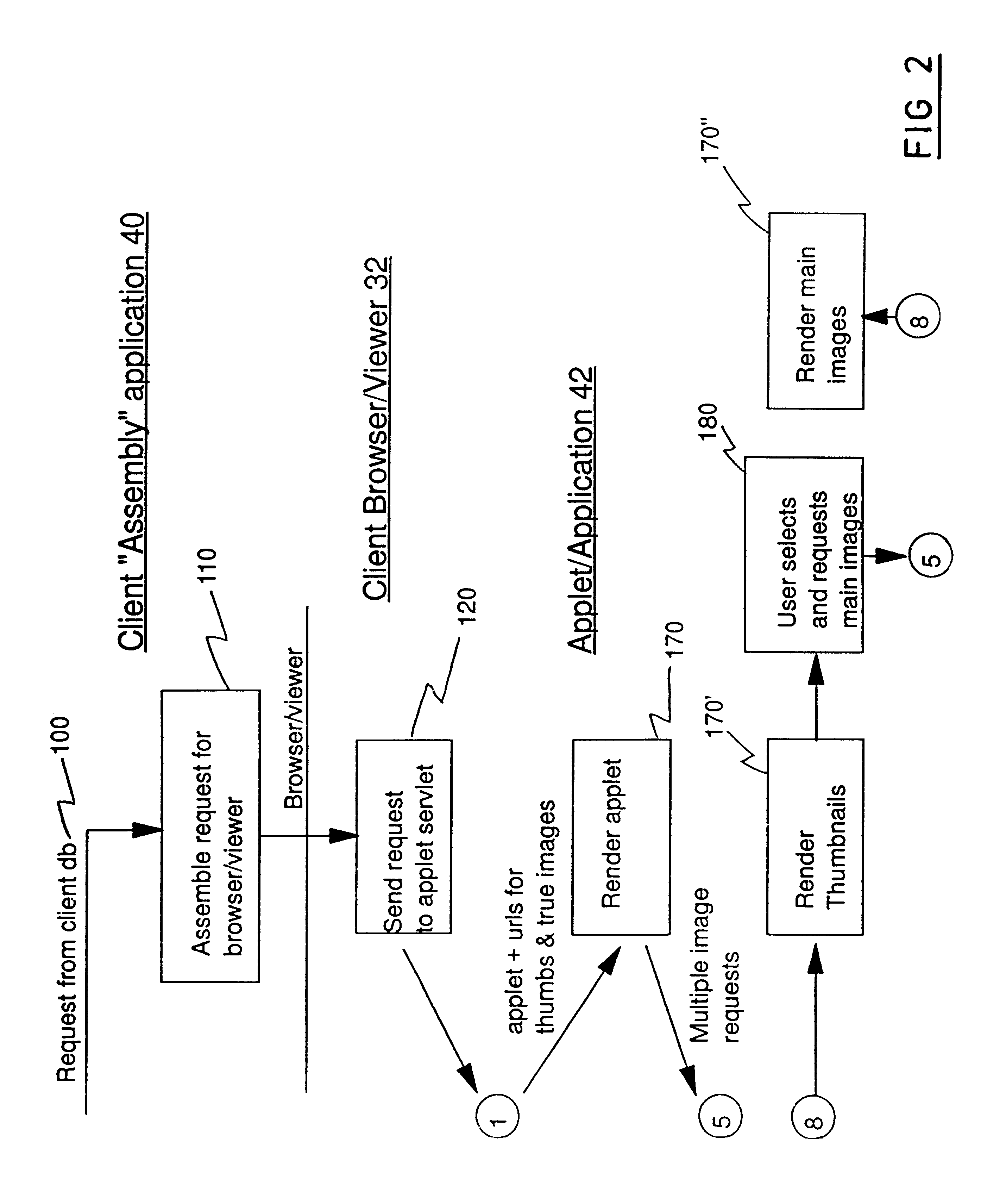

Provided are a system and a method for data retrieval which identify from requested data retrieval operations those operations which require a particular processing task to be performed, and then separate those operations from operations not requiring the processing task. The separated sets of tasks are queued separately for independent processing. This enables resource scheduling to be performed which avoids the operations which do not require performance of the task from having to wait for the operations which do require the task. This is an advantage where the task is a processor-intensive task such as digital watermarking of images.A particular resource allocation method includes enqueuing the set of operations requiring the processing task in a circularly linked list and then employing a scheduler to implement a round-robin allocation of resources for each of the system users in turn.Also provided is a pre-fetch policy whereby sets of data objects are retrieved from a data repository for processing in response to data retrieval requests and post-retrieval processing such as watermarking is initiated before an individual data object in the set has been selected.

Owner:IBM CORP

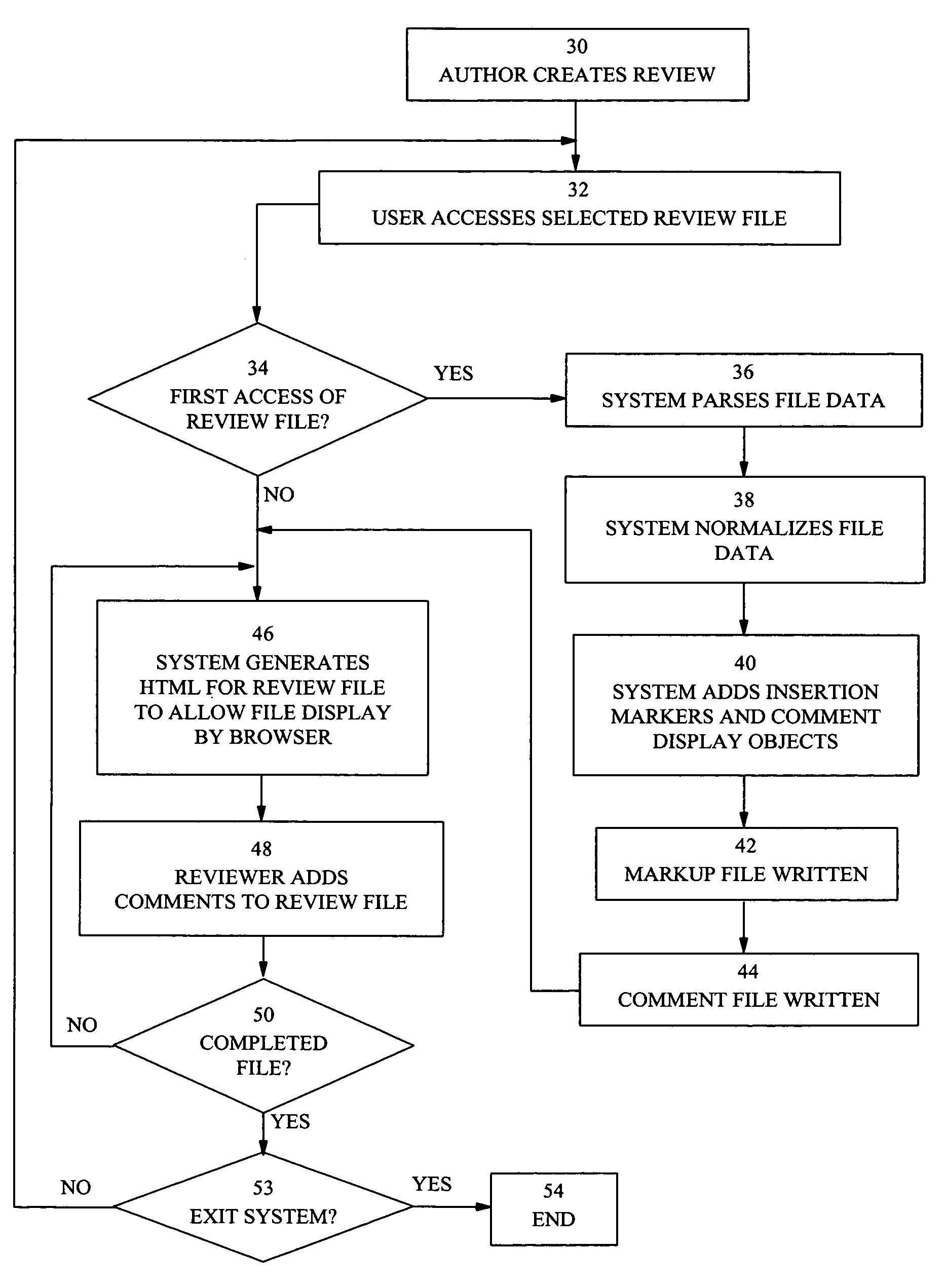

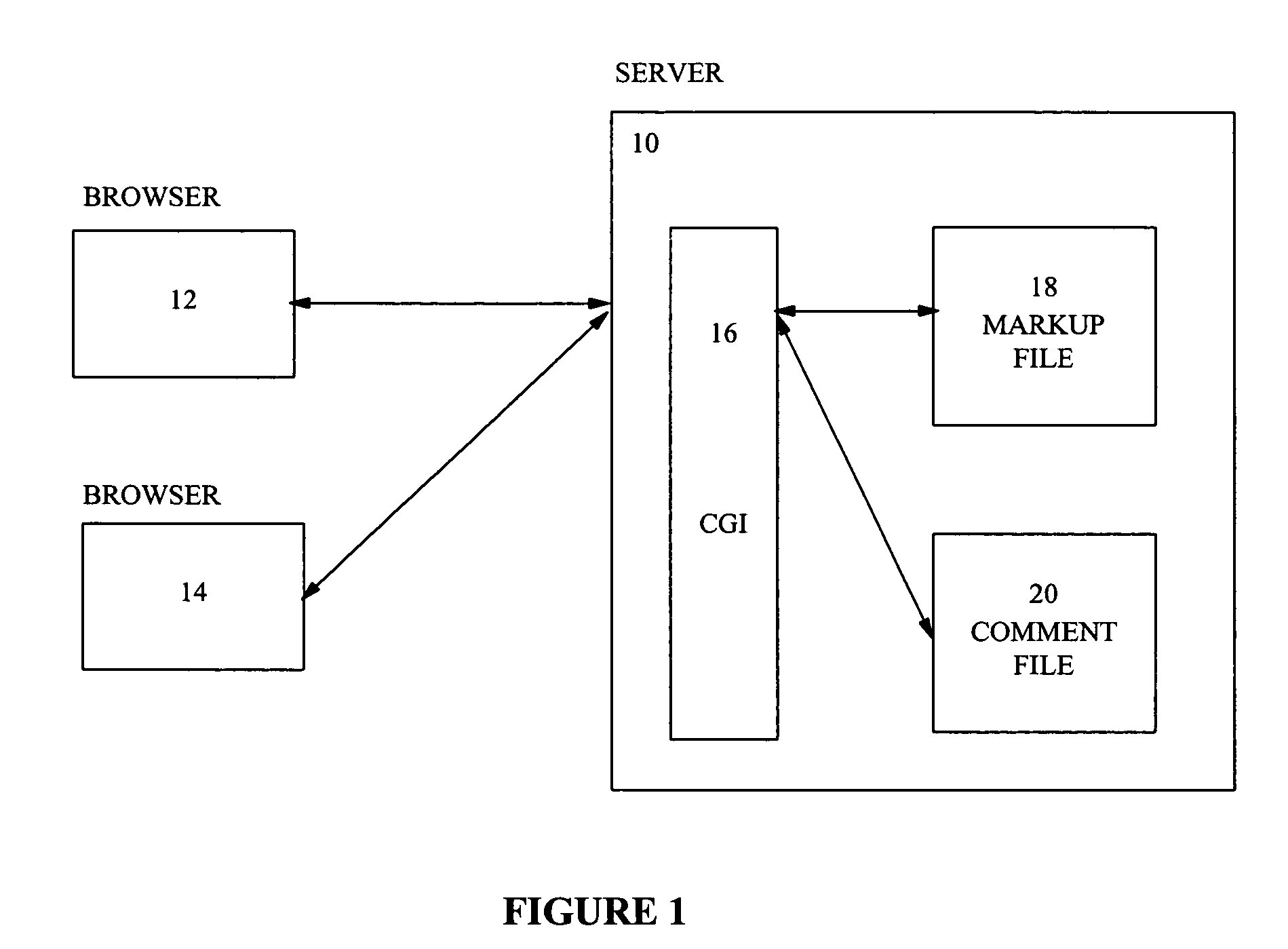

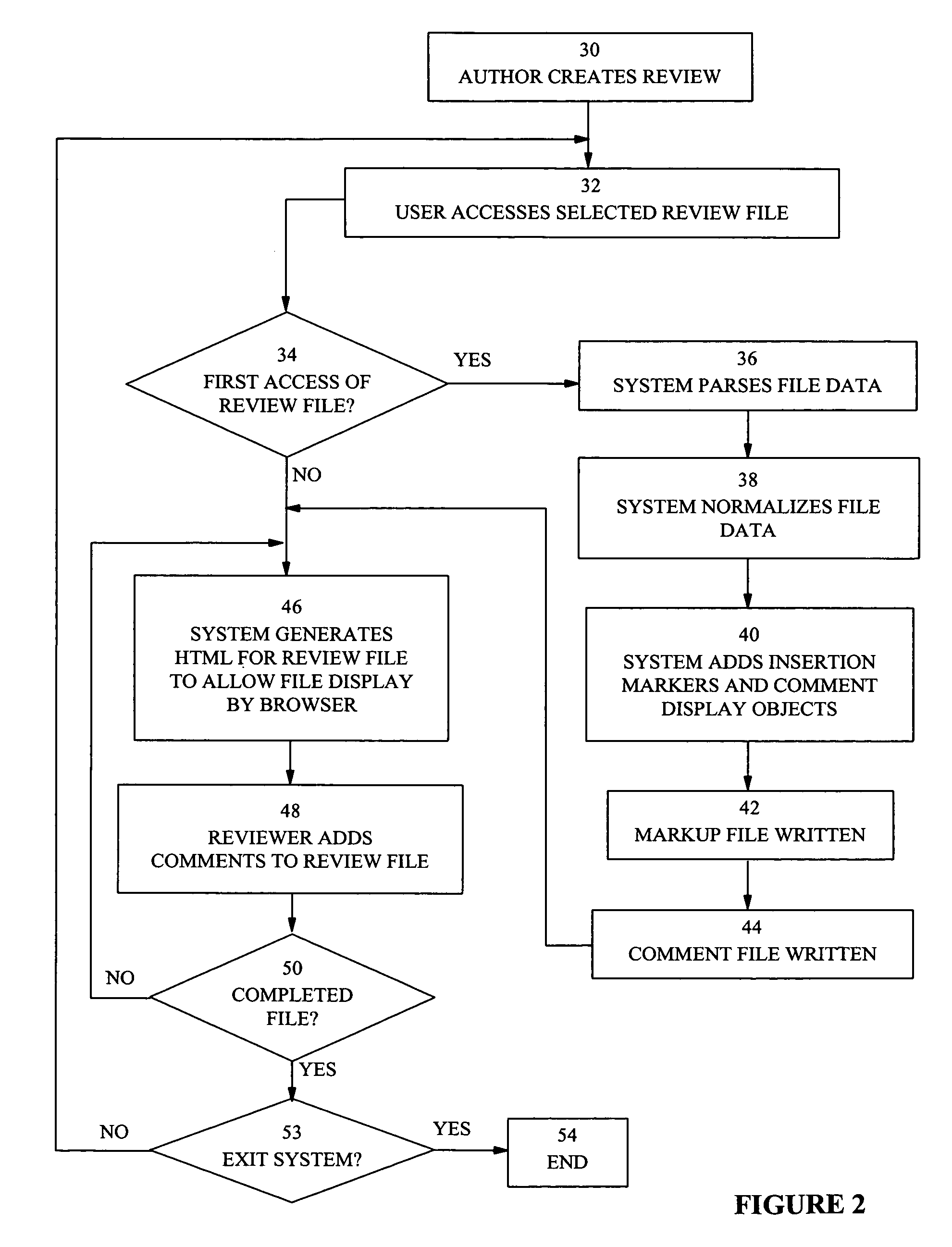

Web-based file review system utilizing source and comment files

InactiveUS7194679B1Reduce impactDigital computer detailsNatural language data processingDocument preparationCommon Gateway Interface

A system for reviewing files which permits comments to be inserted in files to be viewed with a hypertext browser. When the hypertext mark-up language employed is HTML, text files are converted to an HTML representation. An HTML file is represented by a linked list of objects. Comment insertion markers and comment display objects are inserted at predefined points in the HTML linked list representation. The linked list is stored as a binary file and has a comment file associated with it. Access to the HTML file by reviewers and authors causes the regeneration of the HTML document by a Common Gateway Interface which recreates the linked list representation of the document from the binary file and which then generates HTML code from the linked list. Comments may be entered by reviewers working in parallel on the HTML document. Comments are displayed as inserted at the next regeneration of the HTML document by the system.

Owner:IBM CORP

Data structure describing logical data spaces

InactiveUS20070156729A1Automatic and efficientAvoid disadvantagesData processing applicationsDigital data information retrievalData storingData store

Owner:SUN MICROSYSTEMS INC

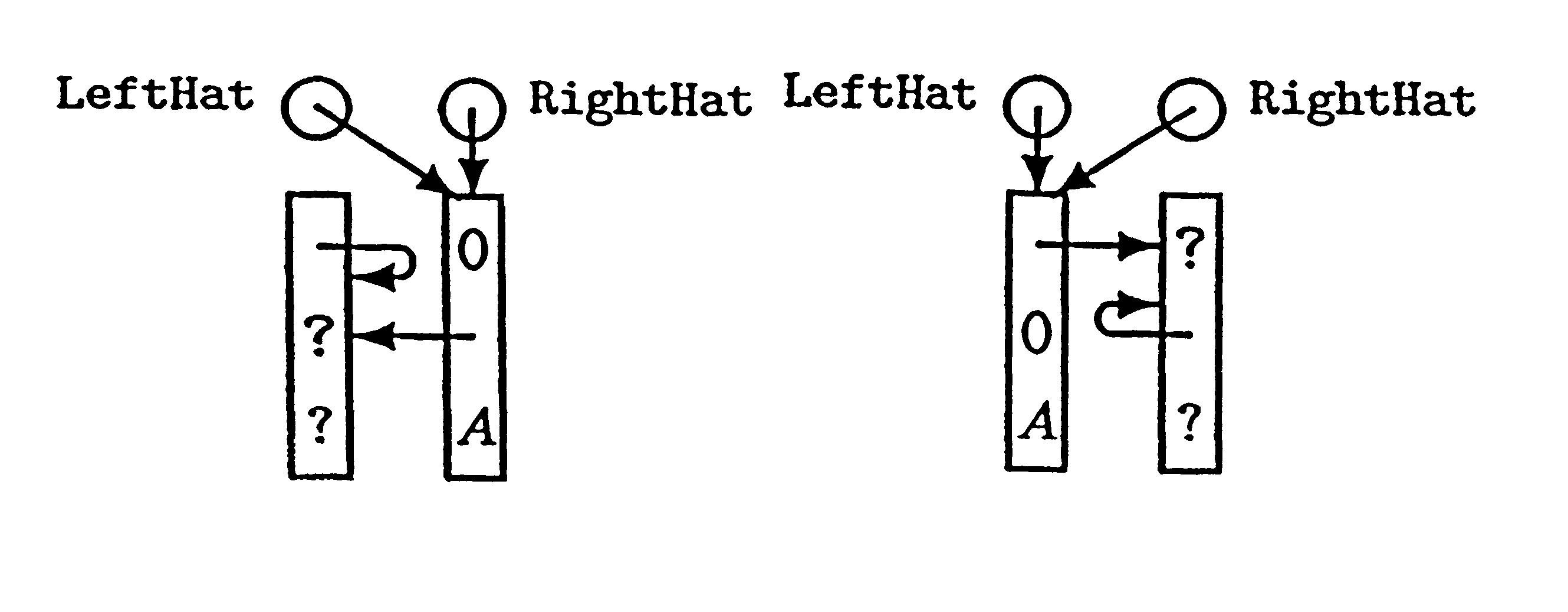

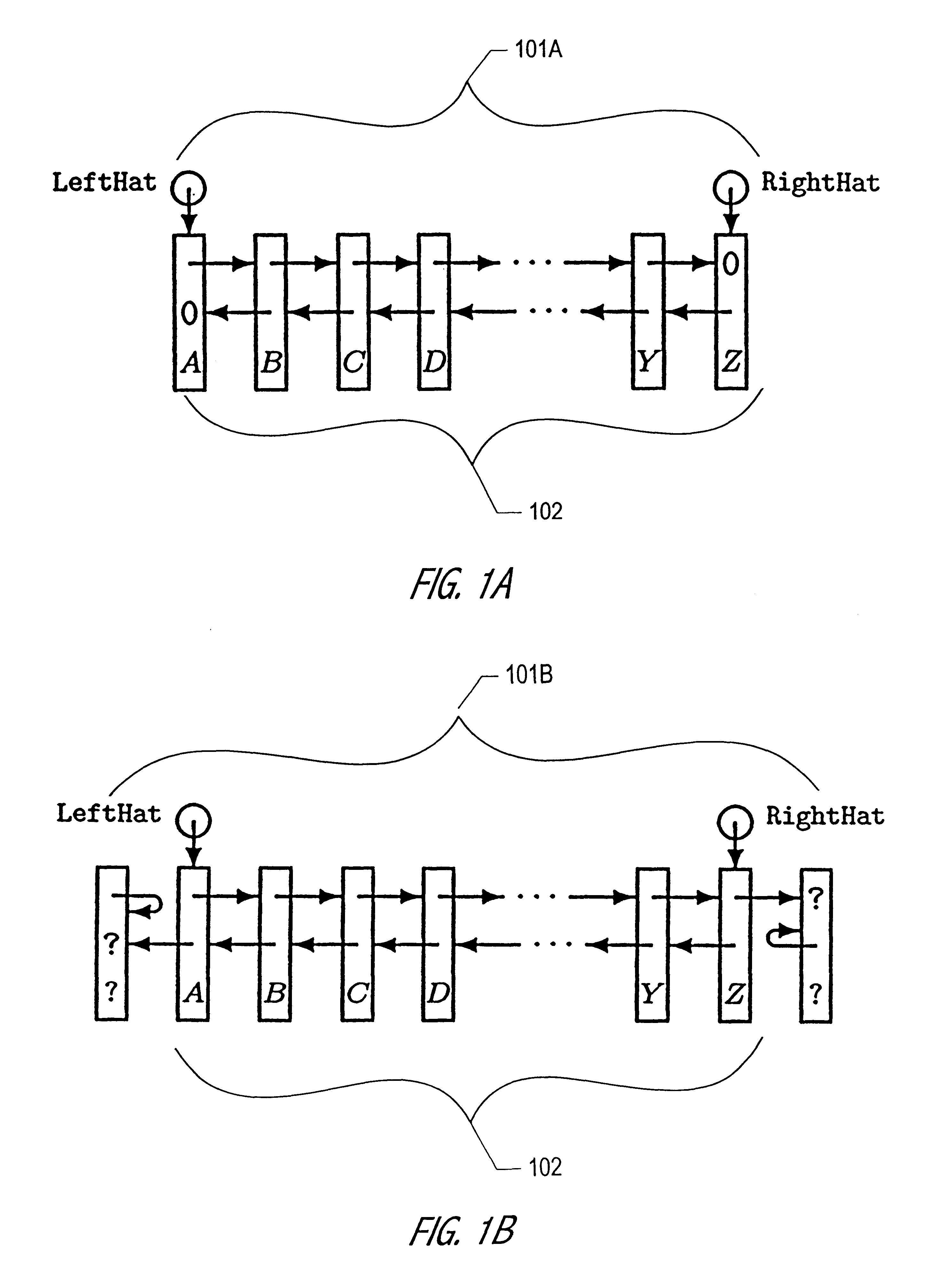

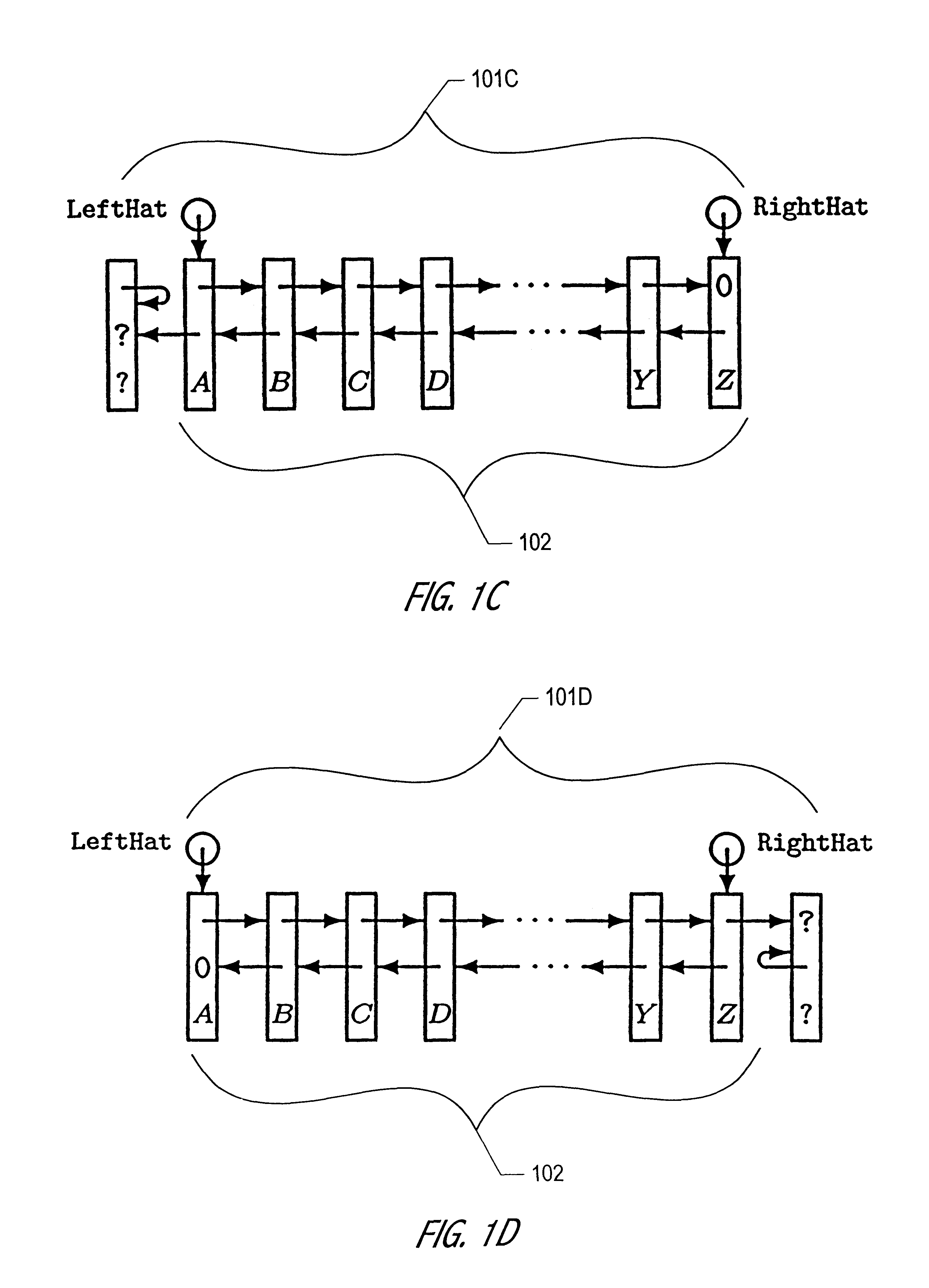

Concurrent shared object implemented using a linked-list with amortized node allocation

InactiveUS20010047361A1Useful for promotionHandling data according to predetermined rulesMultiprogramming arrangementsGranularityWaste collection

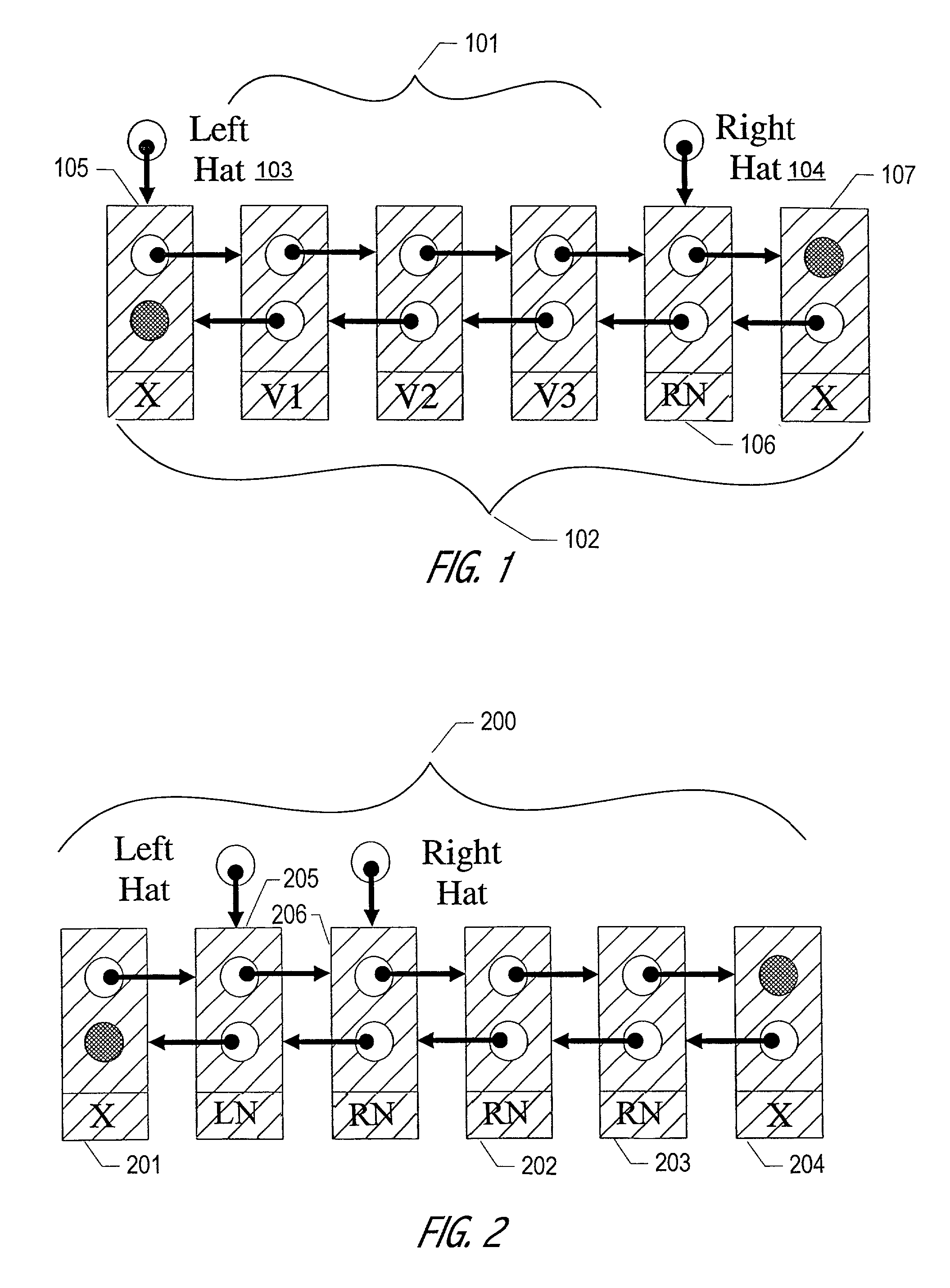

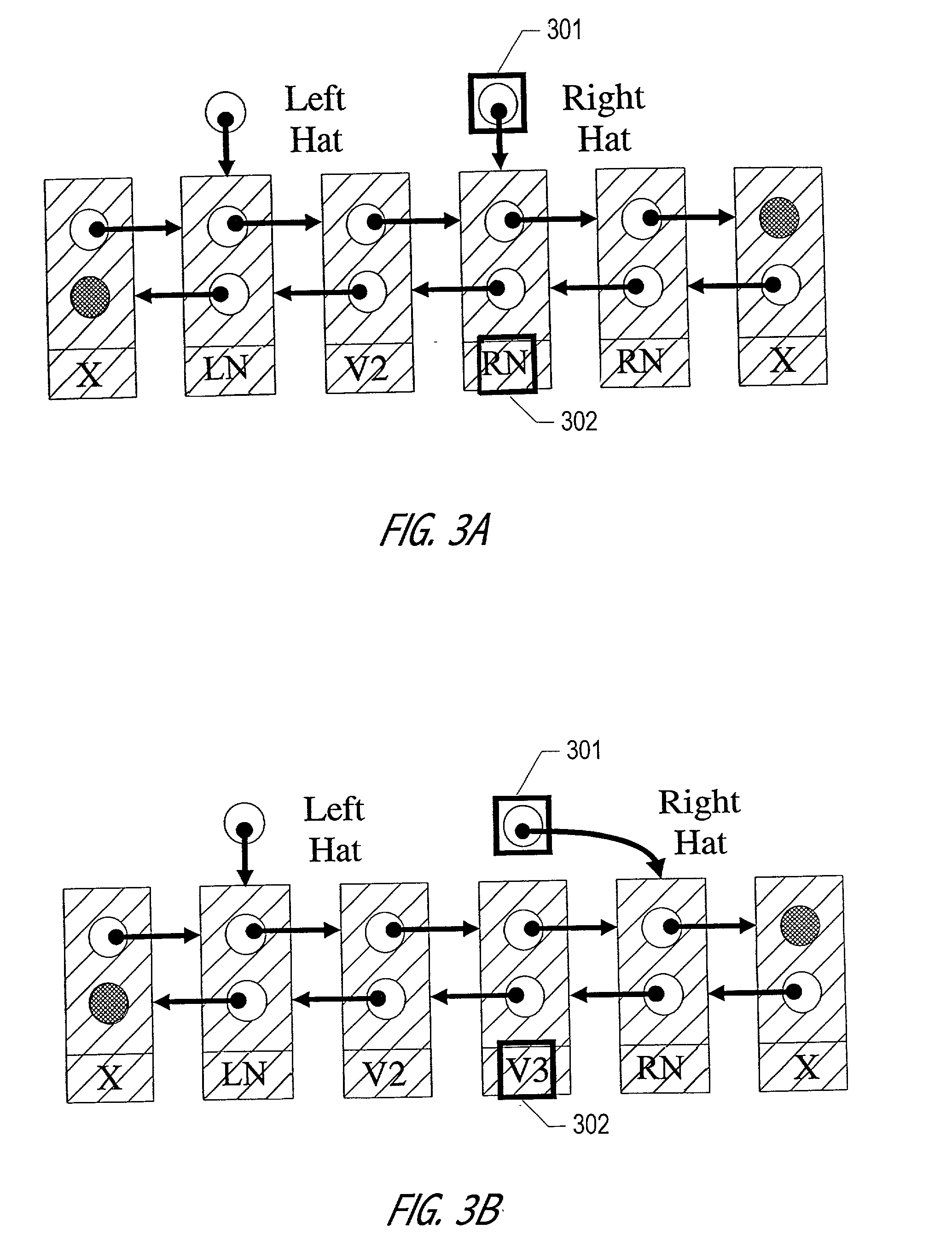

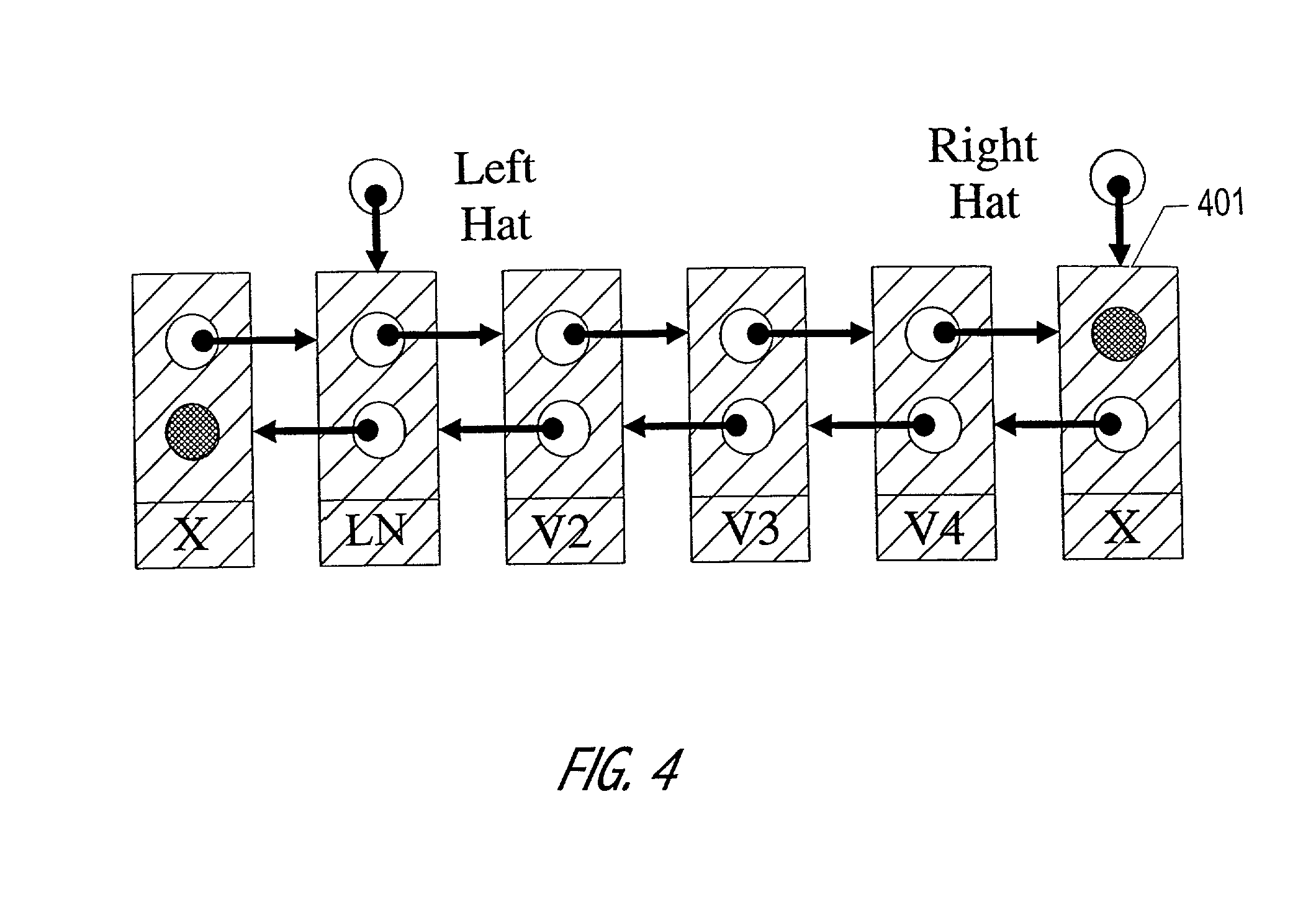

The Hat Trick deque requires only a single DCAS for most pushes and pops. The left and right ends do not interfere with each other until there is one or fewer items in the queue, and then a DCAS adjudicates between competing pops. By choosing a granularity greater than a single node, the user can amortize the costs of adding additional storage over multiple push (and pop) operations that employ the added storage. A suitable removal strategy can provide similar amortization advantages. The technique of leaving spare nodes linked in the structure allows an indefinite number of pushes and pops at a given deque end to proceed without the need to invoke memory allocation or reclamation so long as the difference between the number of pushes and the number of pops remains within given bounds. Both garbage collection dependent and explicit reclamation implementations are described.

Owner:ORACLE INT CORP

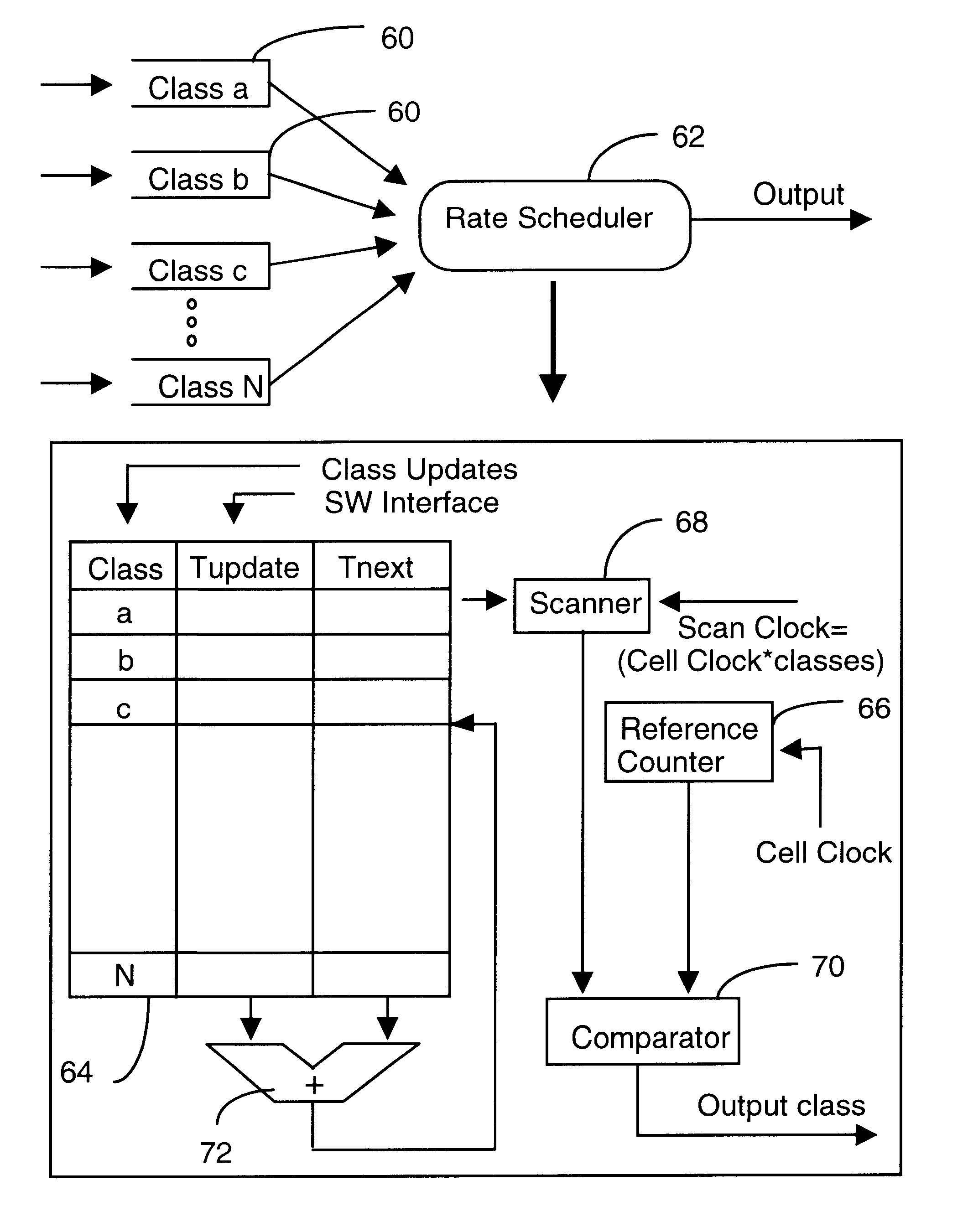

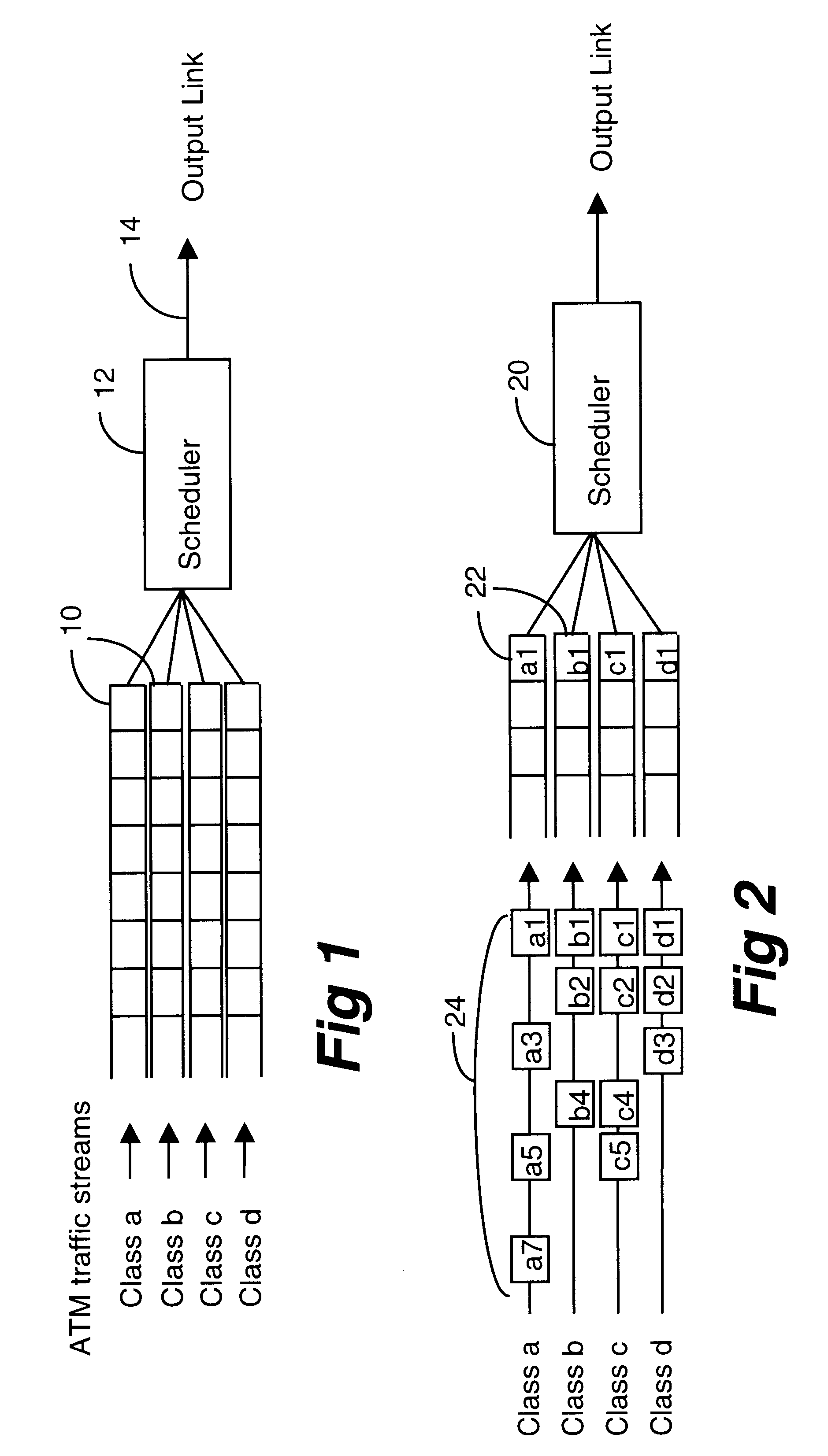

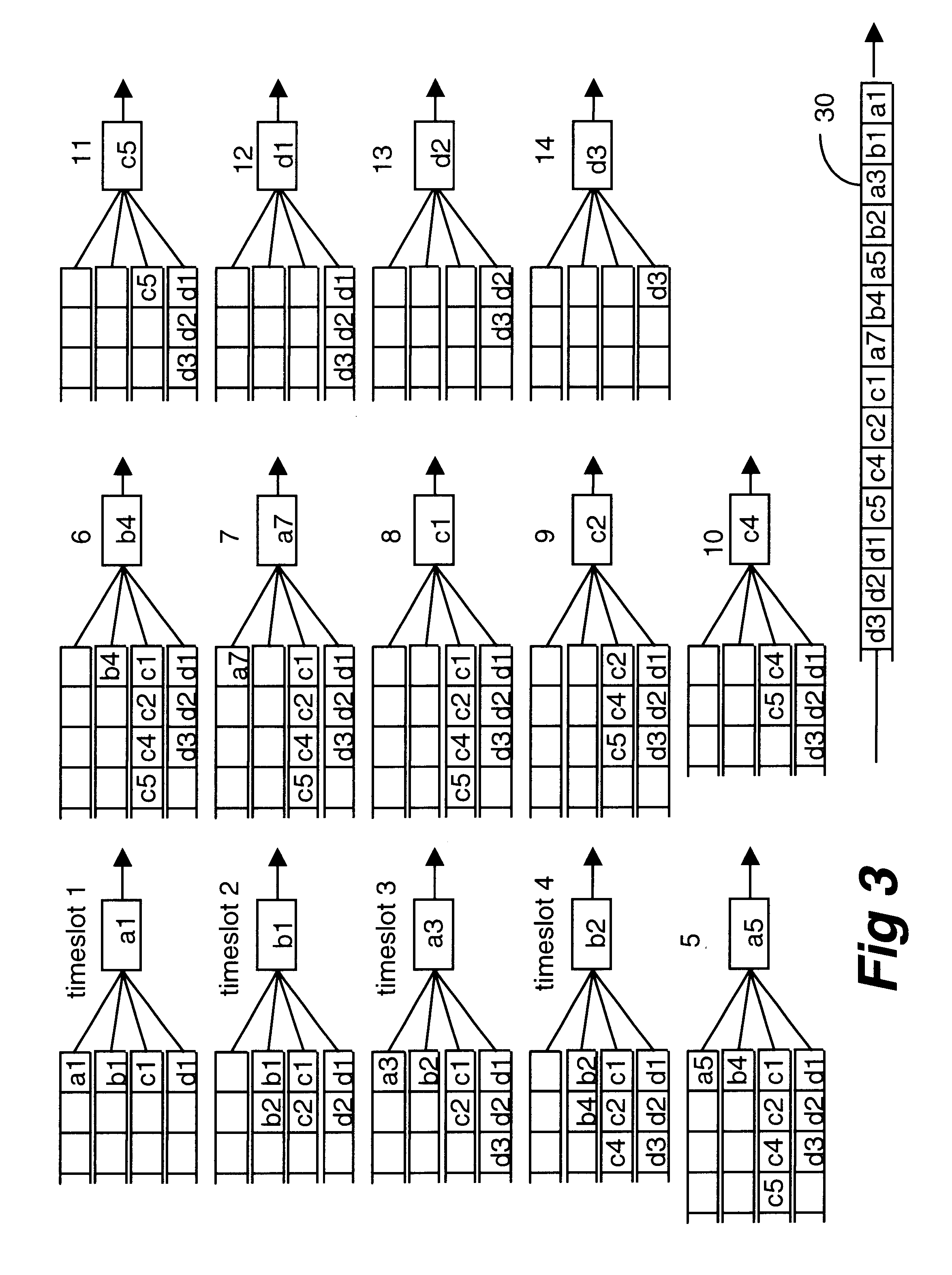

Time linked scheduling of cell-based traffic

A node in a packet network such as an ATM network receives a plurality of traffic classes and outputs them to an output link. The invention relates to a technique of scheduling the traffic classes in a time linked fashion at the node based on the bandwidth allocated to each traffic class. Traffic scheduling based strictly on priority may not satisfy delay and loss guarantees of traffic classes when there are many classes with many different priority levels. The rate based scheduling in a time-linked fashion solves these problems by limiting the bandwidth to which each class is accessible. According to one embodiment, the invention uses timeslot values and a linked list of the traffic classes.

Owner:TELEFON AB LM ERICSSON (PUBL)

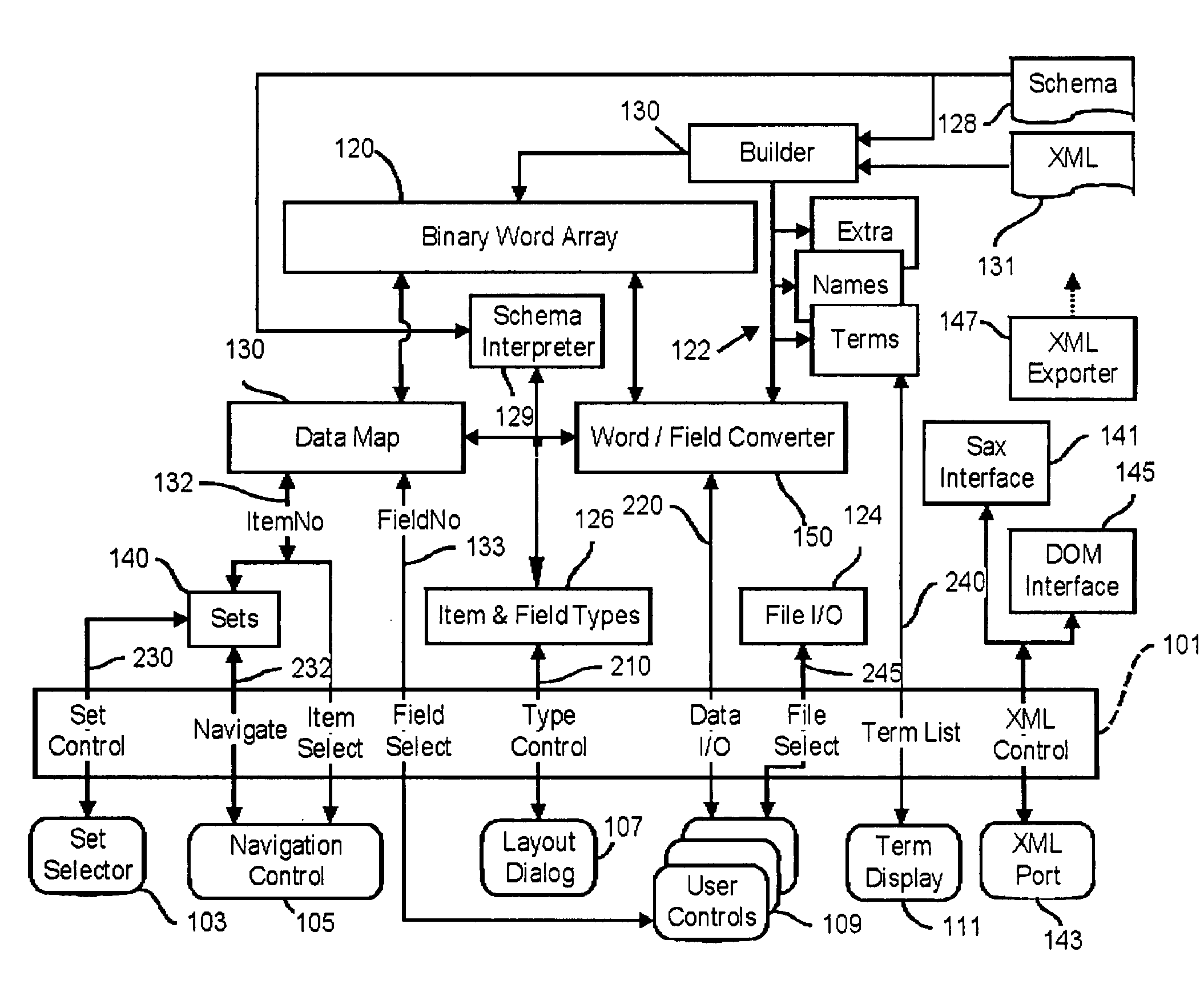

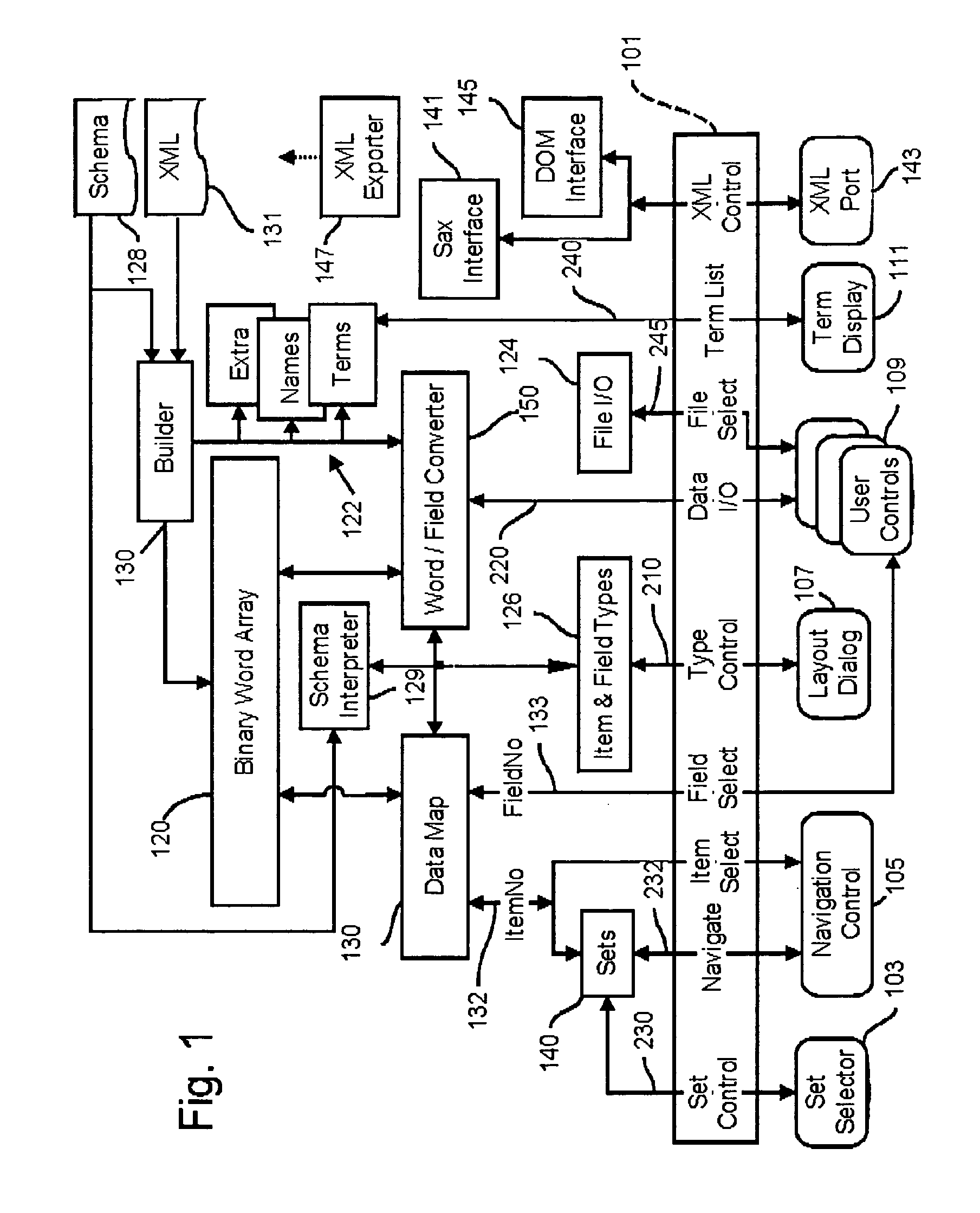

Methods and apparatus for storing and manipulating variable length and fixed length data elements as a sequence of fixed length integers

InactiveUS7178100B2Less transmission bandwidthEasy to handleData processing applicationsNatural language data processingArray data structureTheoretical computer science

Apparatus for storing and processing a plurality of data items each comprising supplied data values organized in one or more fields each of which stores typed data. Character strings and natural language text are converted to numerical token values in an array of fixed length integers and other forms of typed data (real numbers, dates, times, boolean values, etc.) are also converted to integer form and stored in the array. Stored metadata specifies the data type of all data in the integer array to enable each integer to be rapidly accessed and interpreted. When fixed length data types are present, the metadata specifies location, size and type of each fixed length element. When variable length data is stored in the integer array, size and location data stored in the integer array is accessed to rapidly and directly access the variable size data. The presence of implicit or explicit size information for each data structure, including variable size structures, speeds processing by eliminating the need to scan the data for delimiters, and by reducing the processing needed to perform memory allocation, data movement, lookup operations and data addressing functions. Data stored in the integer array is subdivided into items, and items are subdivided into fields. Items may be organized into more complex data structures, such as relational tables, hierarchical object structures, linked lists and trees, and the like, using special fields called links which identify other referenced items.

Owner:CALL CHARLES G

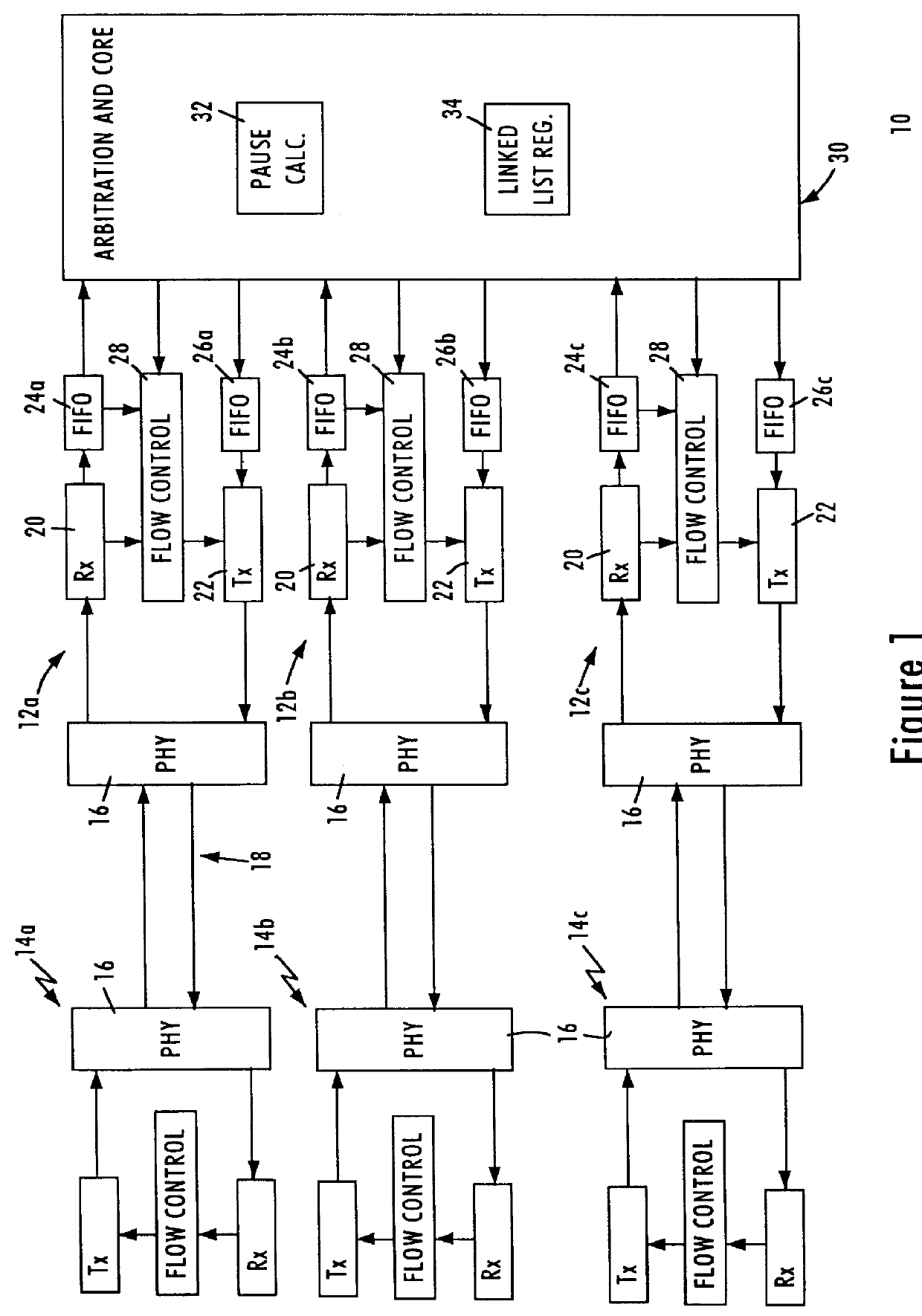

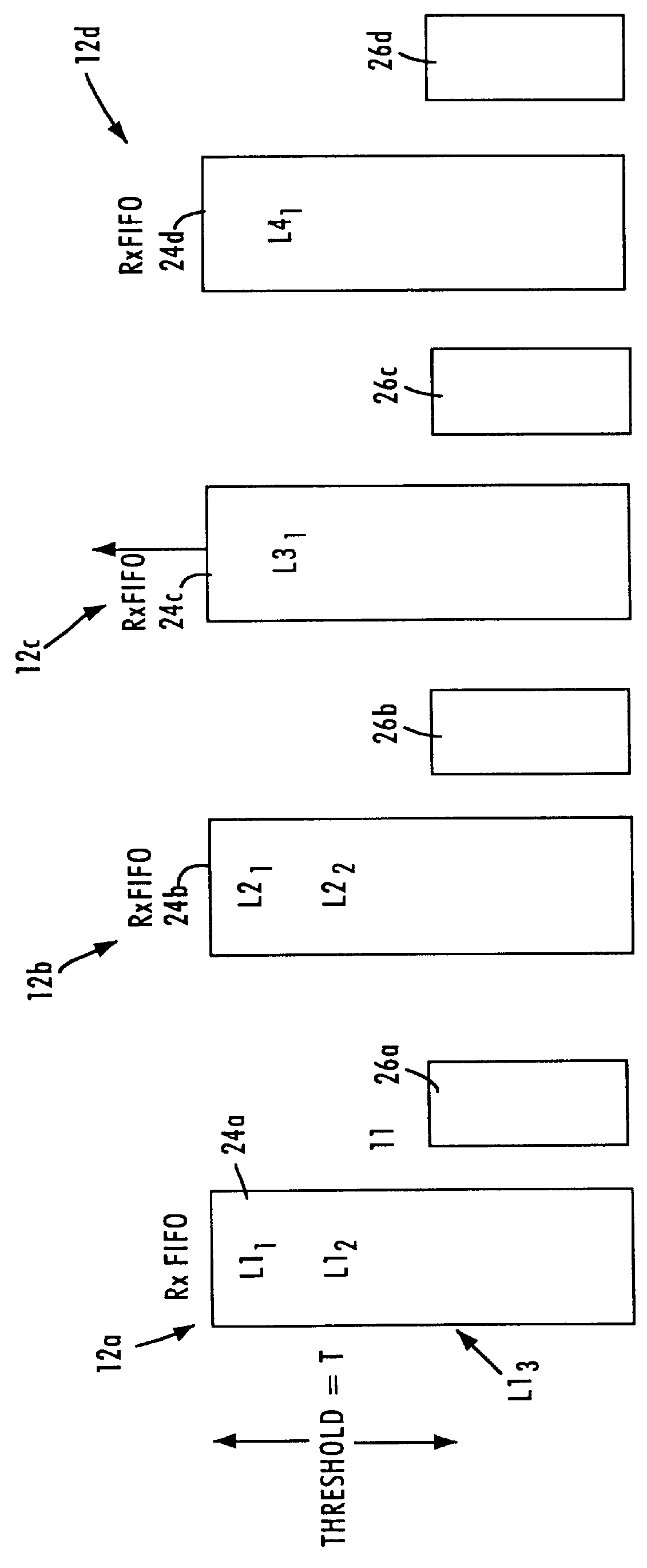

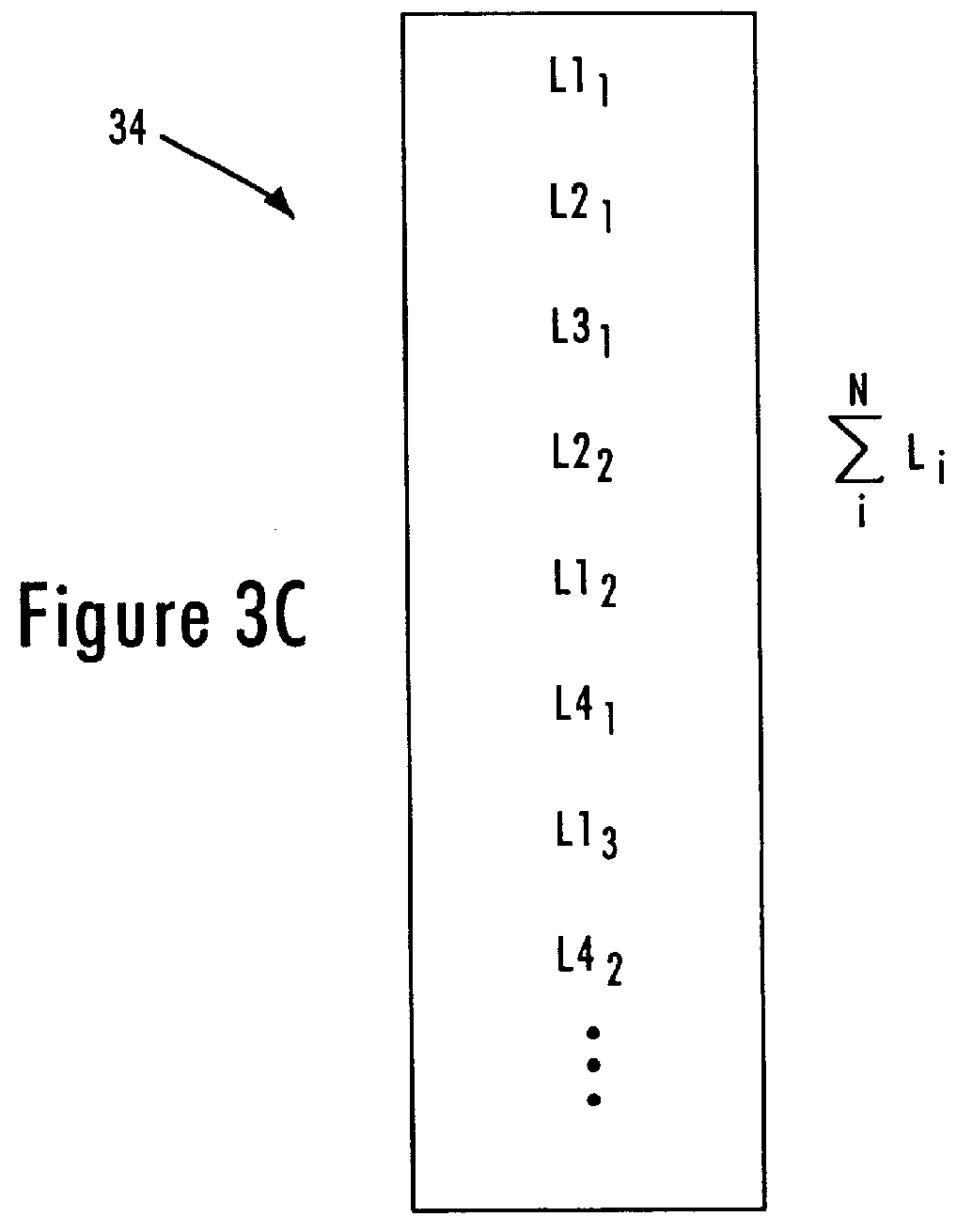

Apparatus and method for generating a pause frame in a buffered distributor based on lengths of data packets distributed according to a round robin repeater arbitration

InactiveUS6031821AEliminate congestion problemsError preventionTransmission systemsFrame basedProcessor register

A buffered distributor (i.e., a full-duplex repeater) having receive buffers for respective network ports calculates pause frames based on the size of stored data packets that need to be output by the repeater core according to a round robin sequence before congestion in an identified receive buffer is eliminated. The distribution core within the buffered distributor includes a linked list register that stores the determined links of received data packets for each network port. Upon detecting a congestion condition in one of the receive buffers for a corresponding port, the buffered distributor determines the relative position of the congested port within the round robin sequence, and calculates the pause interval based on the length of the data packets that need to be output before congestion is eliminated. The sum of the data packet lengths are compared to an output data rate of the distribution core, as well as switching delays within the core. The calculated pause frames, in conjunction with the prescribed congestion threshold, ensures that buffer sizes can be efficiently designed at a low cost, without compromising cost or risk of lost data packets.

Owner:GLOBALFOUNDRIES INC

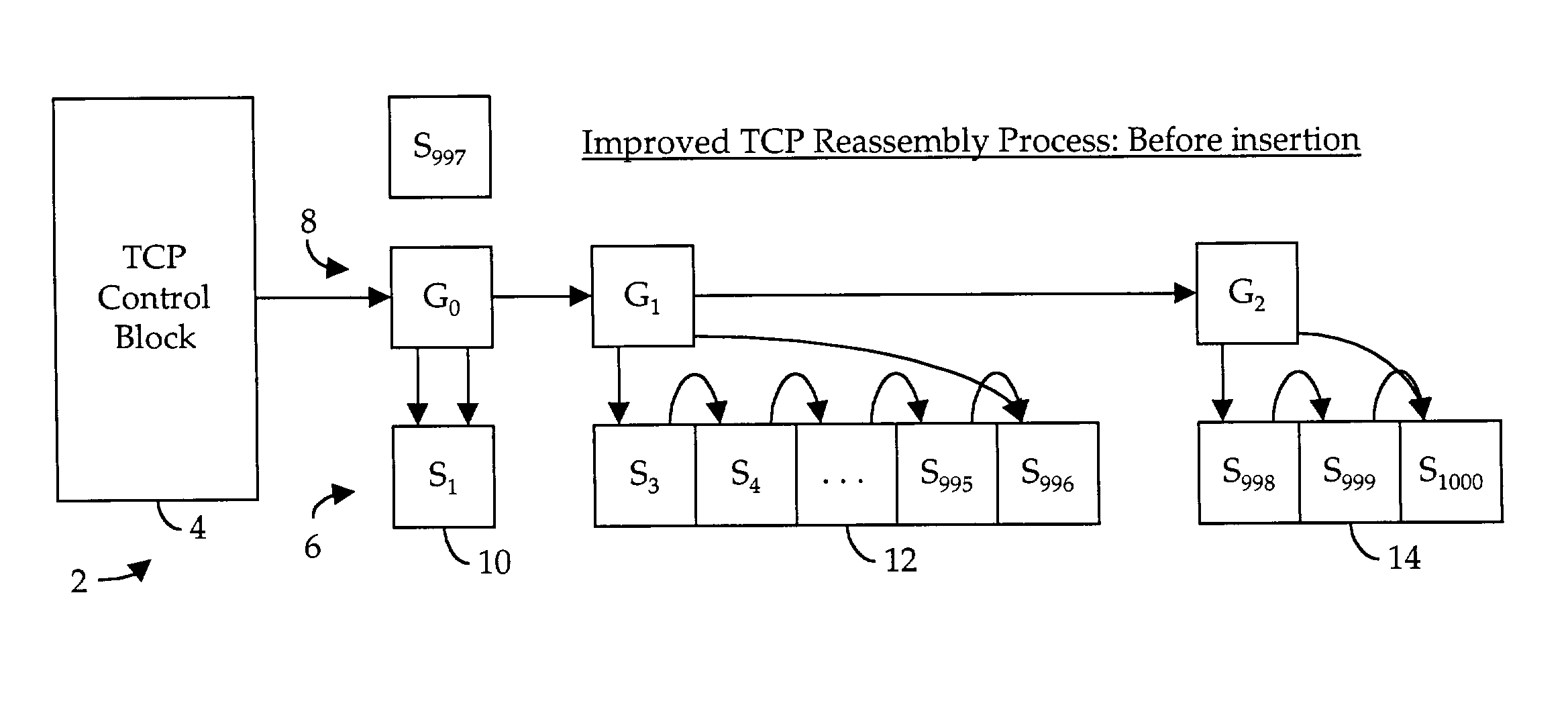

Structure and method for maintaining ordered linked lists

InactiveUS7685121B2Data processing applicationsDigital data information retrievalFacilitated SegmentLinked list

A hierarchically-organized linked list structure have a first level comprised of sections of sequentially-ordered segments, and a second level comprised of representatives of each of said sections at the first level. A method for maintaining the hierarchically-organized linked list structure to facilitate segment insertion, retrieval and removal.

Owner:AVAGO TECH INT SALES PTE LTD

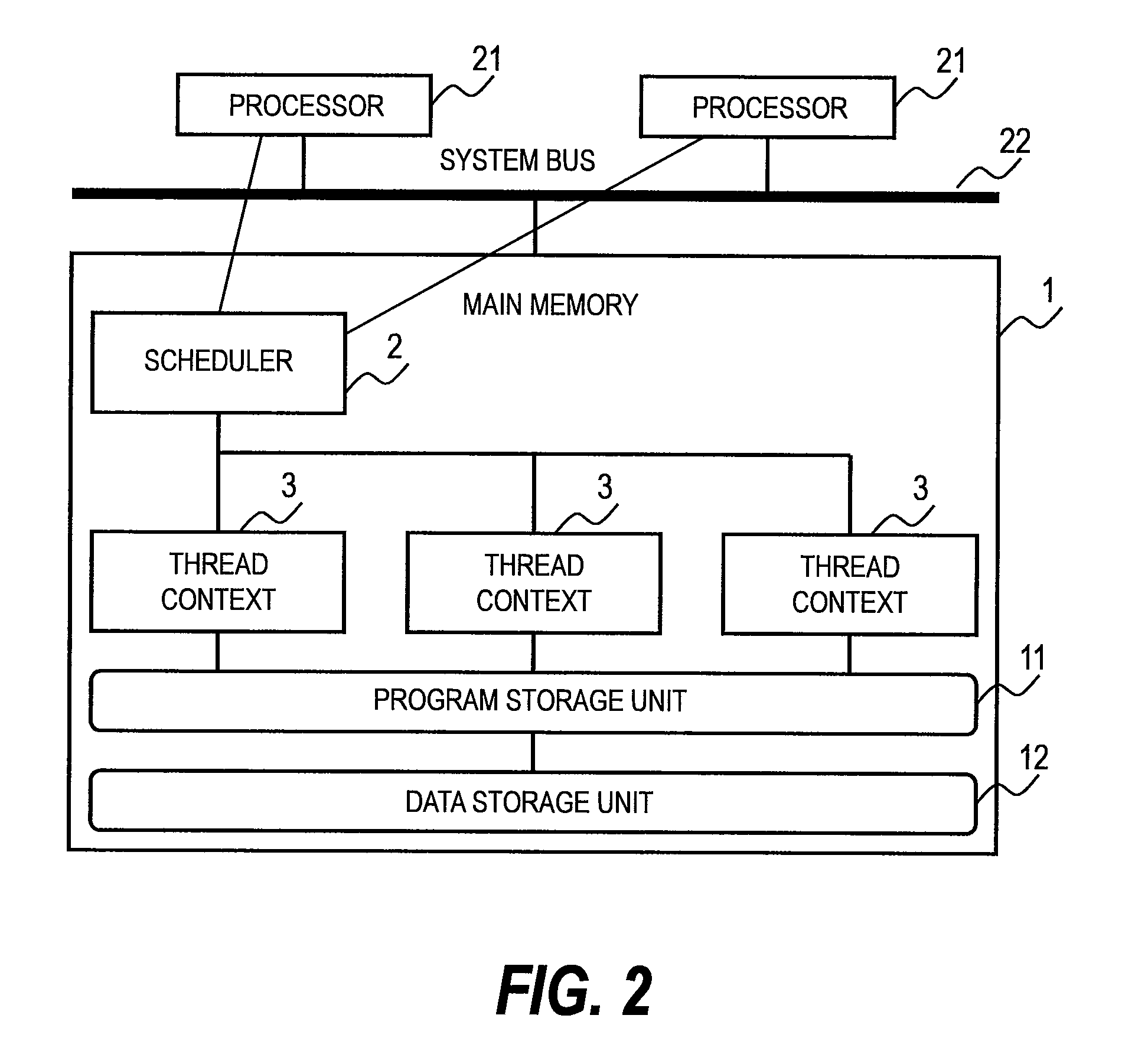

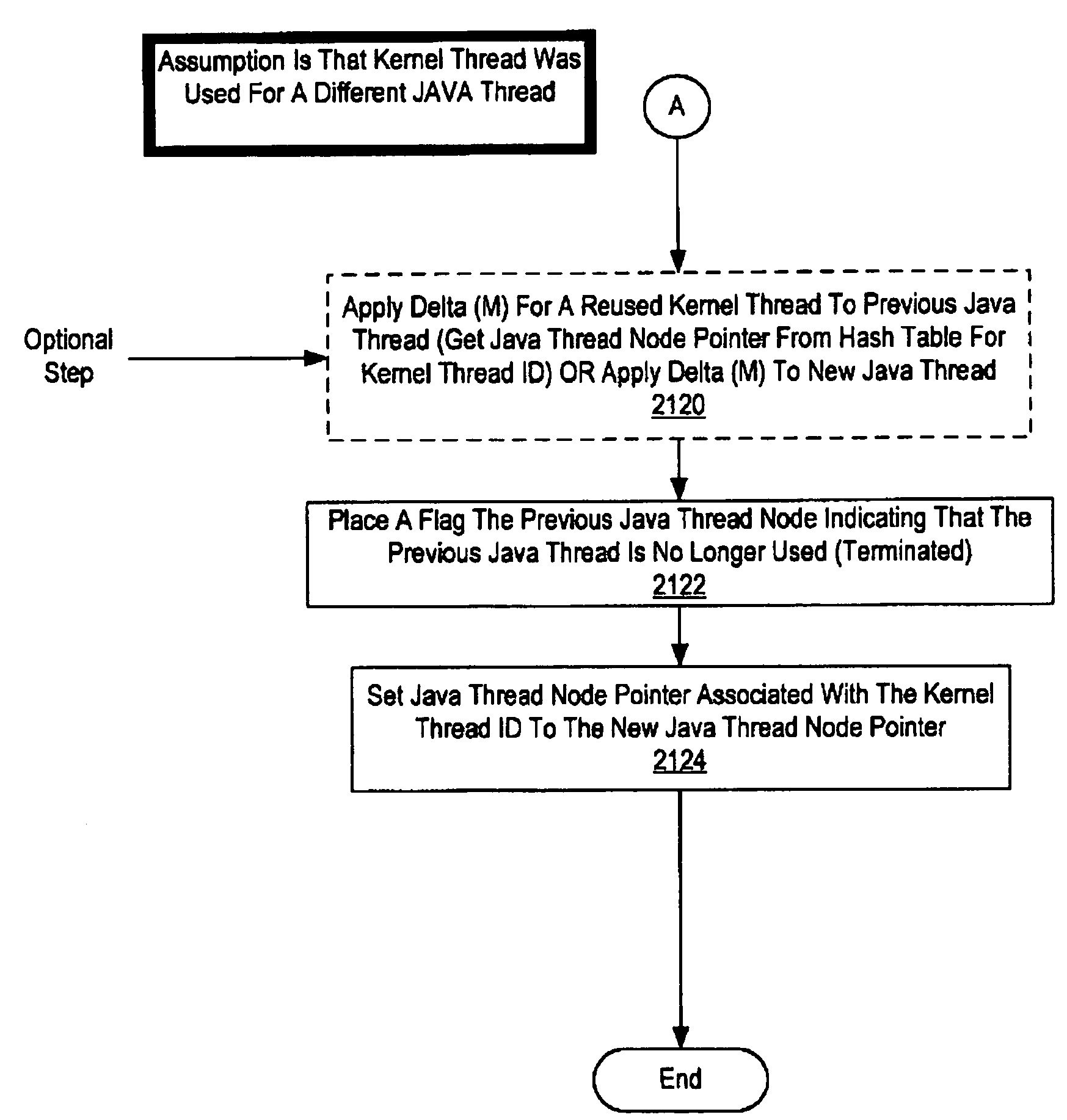

Method and system for tracing profiling information using per thread metric variables with reused kernel threads

InactiveUS7389497B1Error detection/correctionSpecific program execution arrangementsOperational systemOperating system

A method and system for tracing profiling information using per thread metric variables with reused kernel threads is disclosed. In one embodiment kernel thread level metrics are stored by the operating system kernel. A profiler request metric information for the operating system kernel in response to an event. After the kernel thread level metrics are read by the operating system for a profiler, their values are reset to zero by the operating system kernel. The profiler then applies the metric values to base metric values to appropriate Java threads that are stored in nodes in a tree structure base on the type of event and whether or not the kernel thread has been reused. In another embodiment non-zero values of thread level metrics are entered on a liked list. In response to a request from a profiler, the operating system kernel reads each kernel thread's entry in the linked list and zeros each entry. The profiler can then update the intermediate full tree snapshots of profiling information with the collection of non-zero metric variables.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com