Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1317 results about "Single node" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A single node cluster is a special implementation of a cluster running on a standalone node. You can deploy a single node cluster if your workload only requires a single node, but does not need nondisruptive operations.

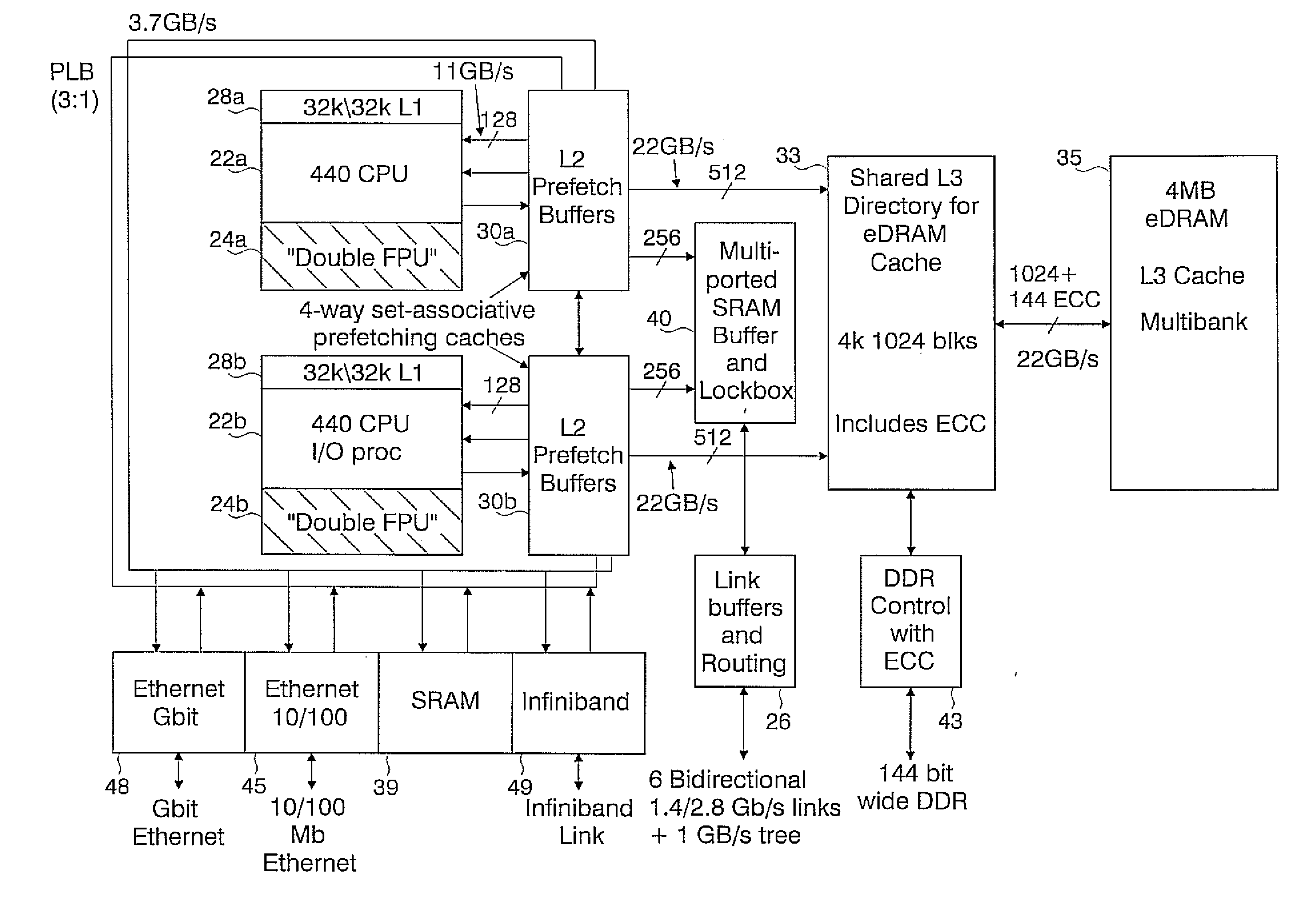

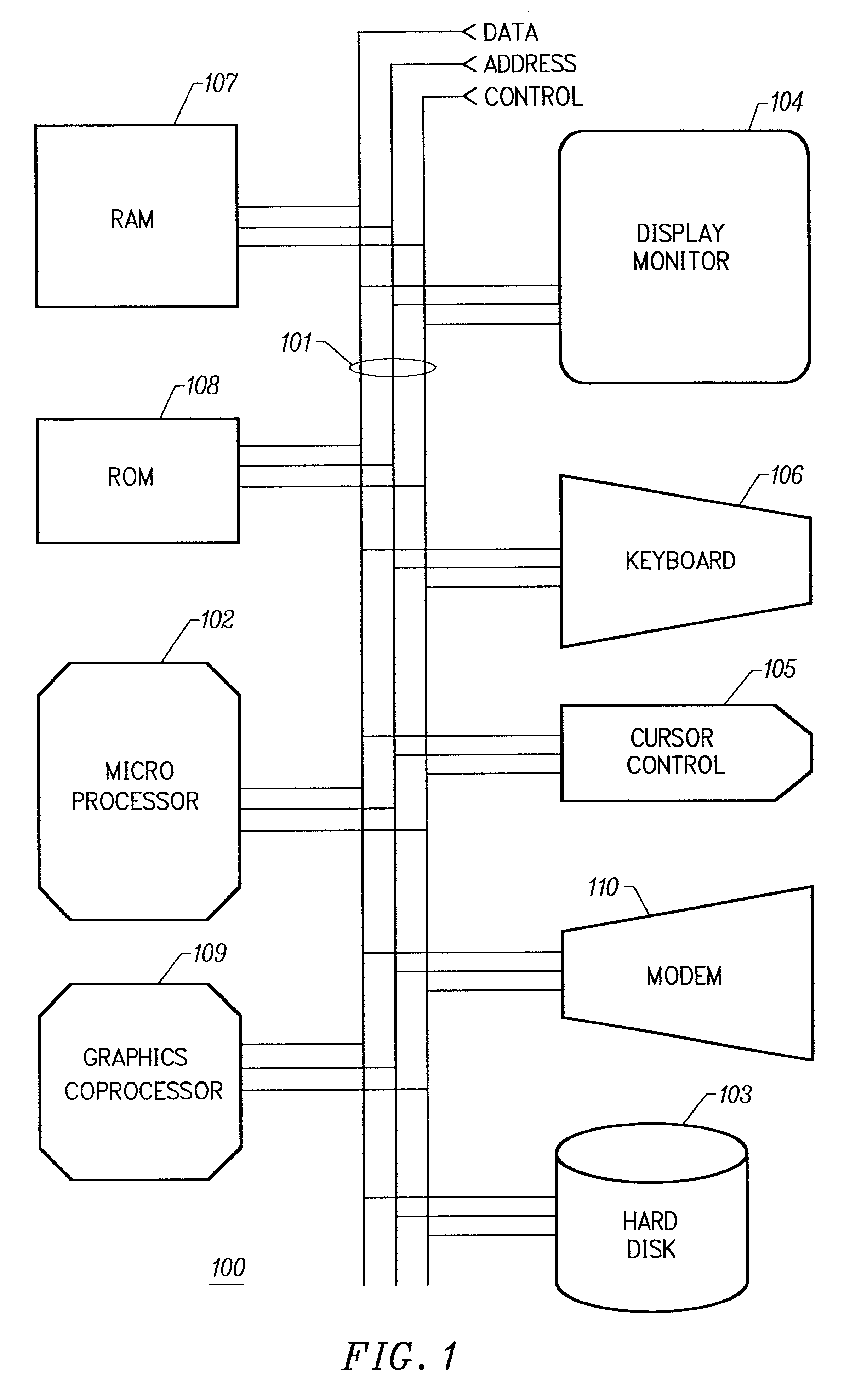

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

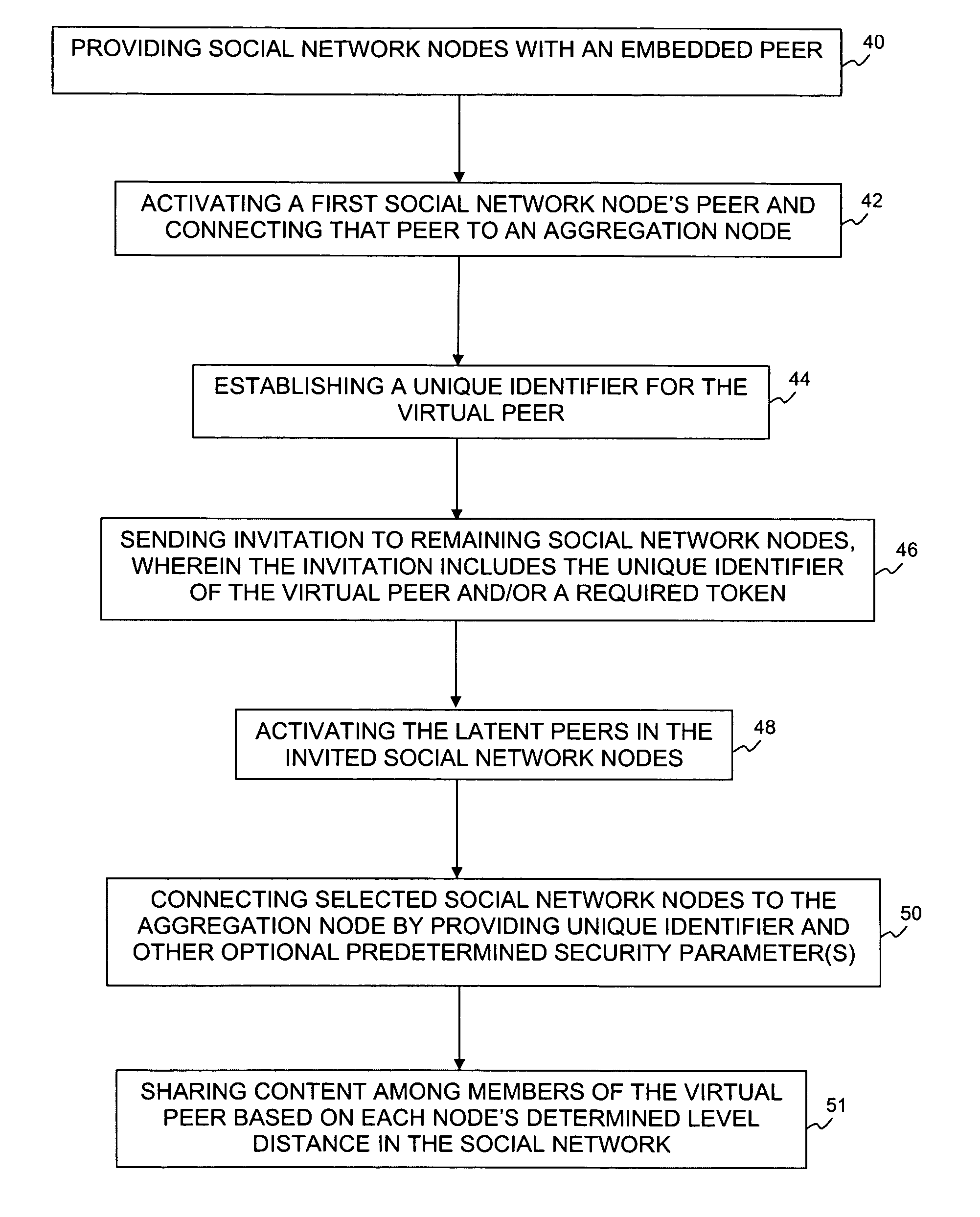

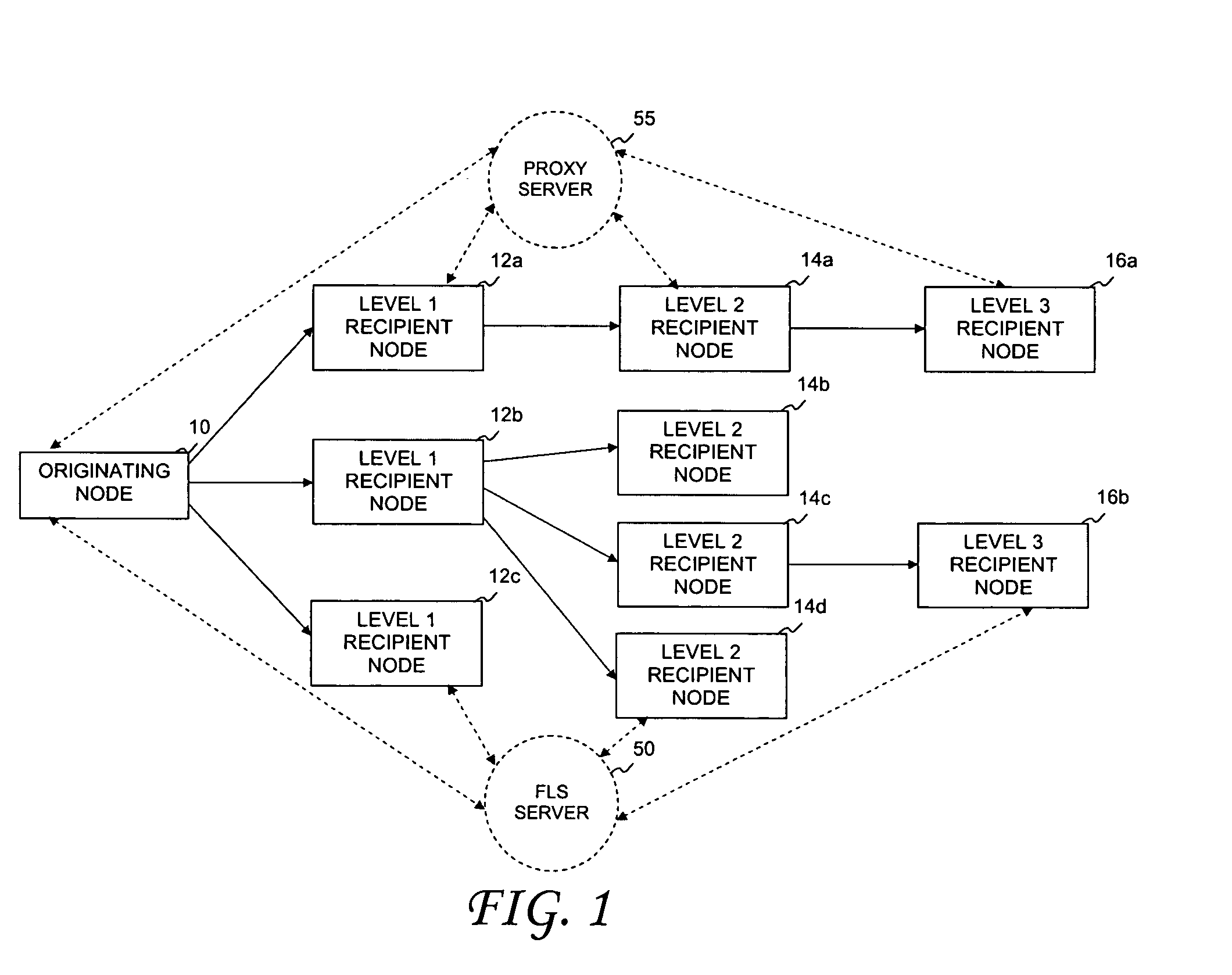

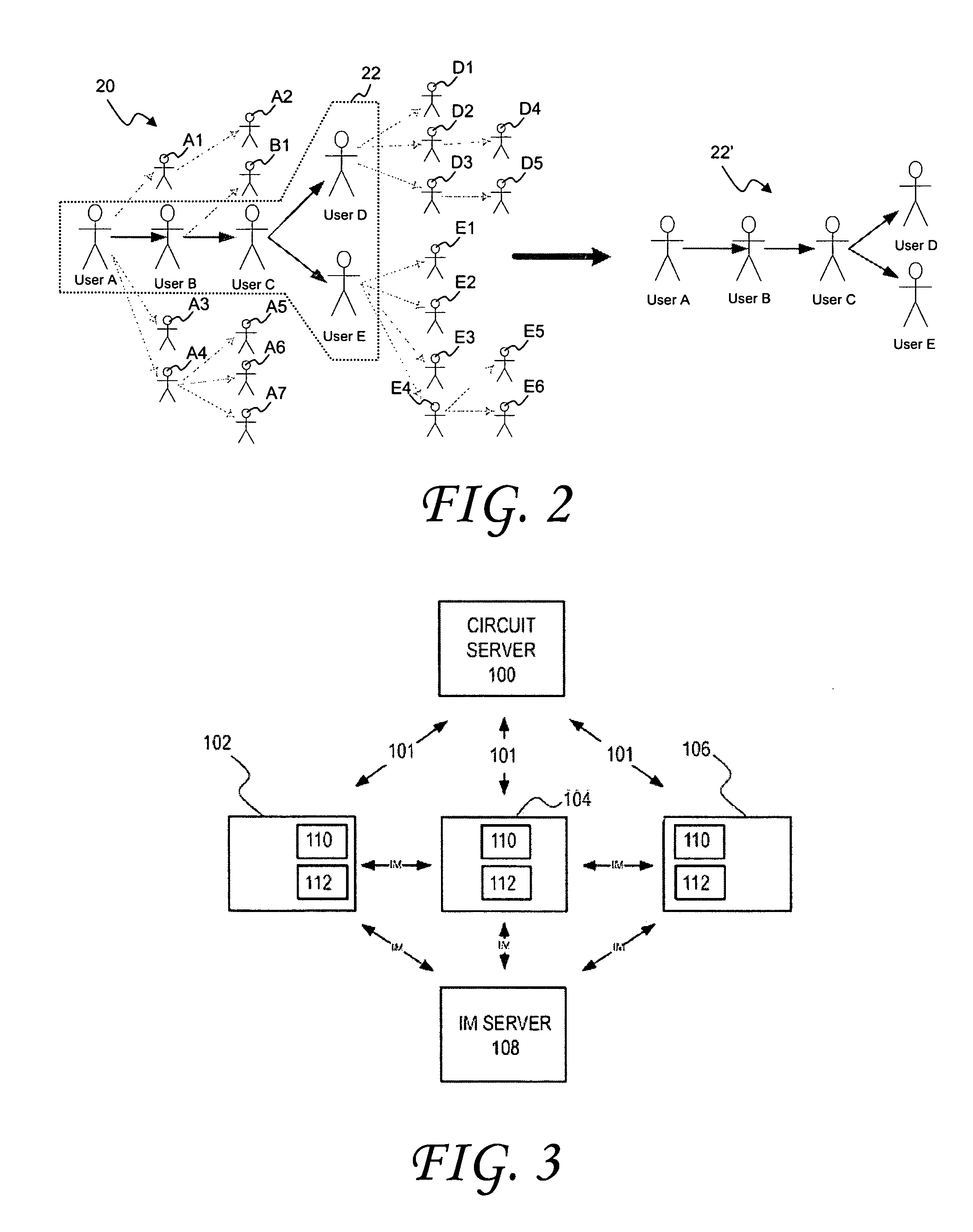

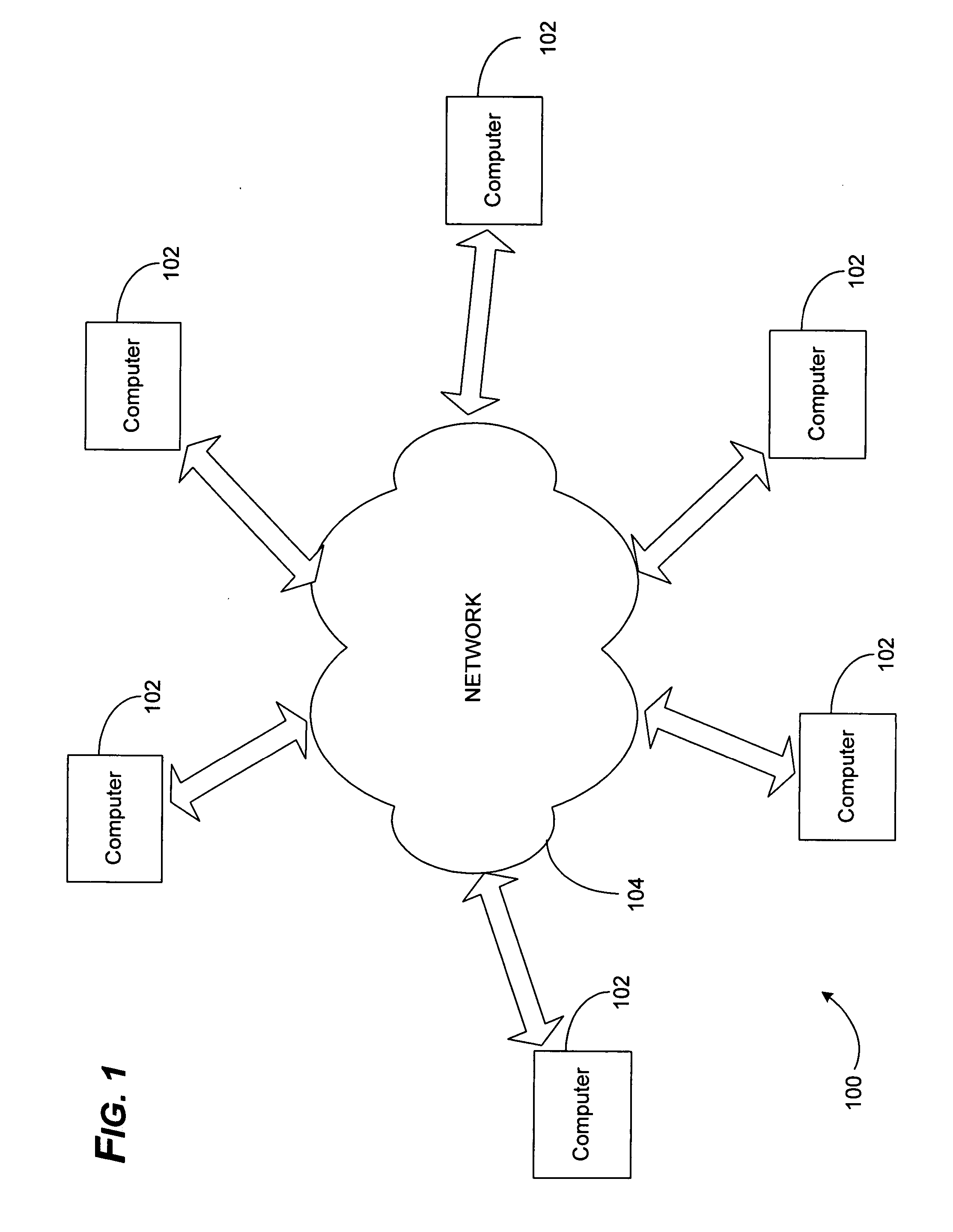

System and method of sharing content among multiple social network nodes using an aggregation node

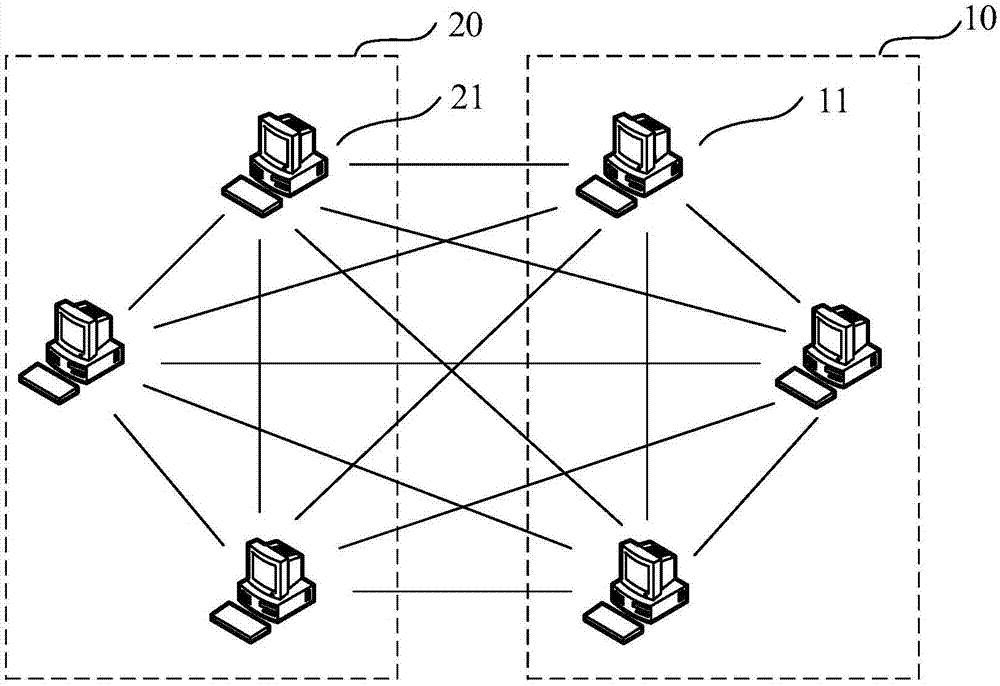

The present invention generally relates to communication and controlled sharing of digital content among networked users, and is more particularly concerned with creation of an improved sharing network for relaying data among linked nodal members of a social network. An ad hoc network may be a hybrid P2P network that conforms to a virtual peer representation accessible as a single node from outside the given social network nodes. Members of a pre-existing social network may selectively activate embedded latent peers and link to an aggregation node to form a hybrid P2P network. Content may be shared in a controlled distribution according to level rights among participating network nodes. Playlists and / or specialized user interfaces may also be employed to facilitate content sharing among social network members of a virtual peer.

Owner:QURIO HLDG

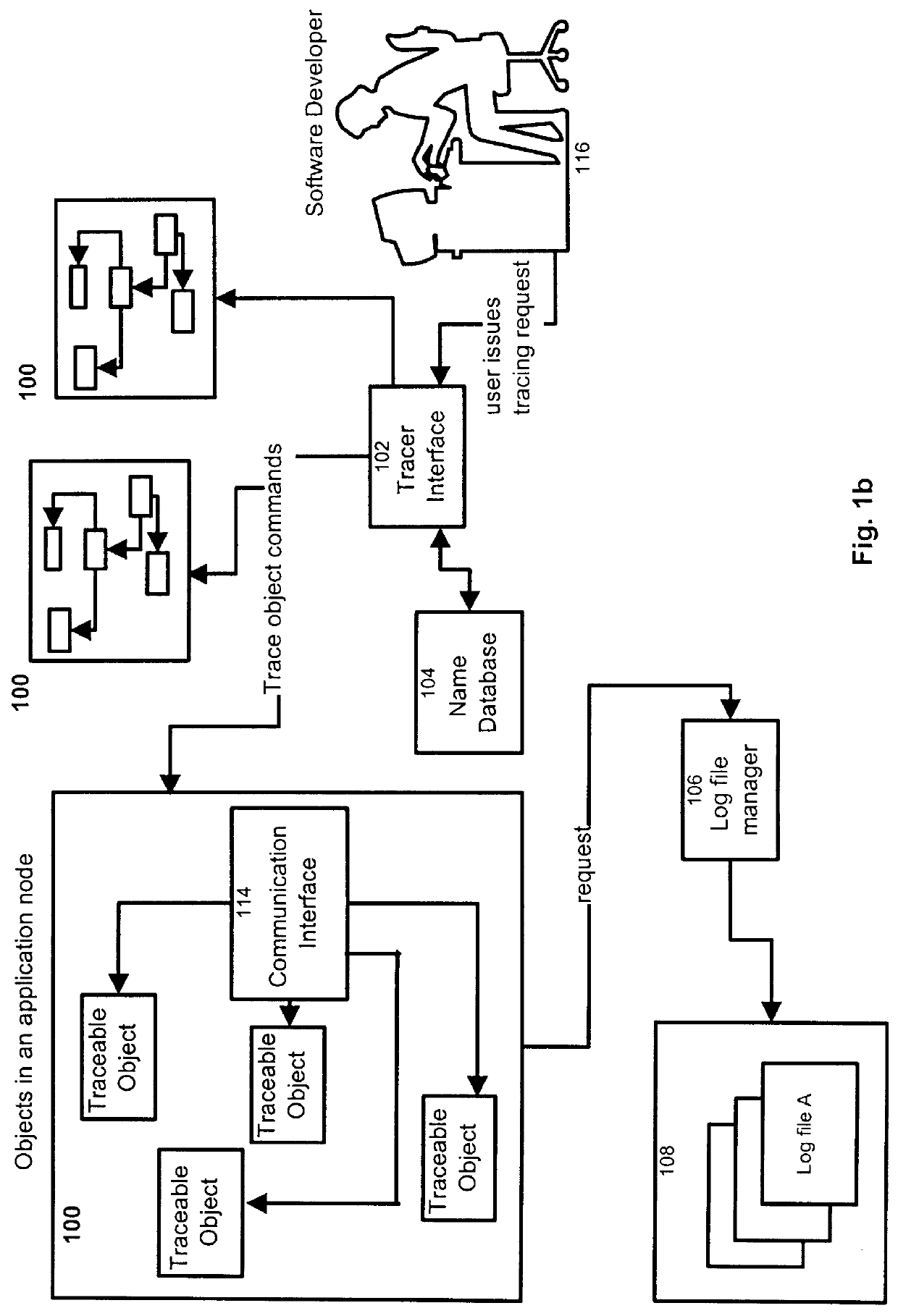

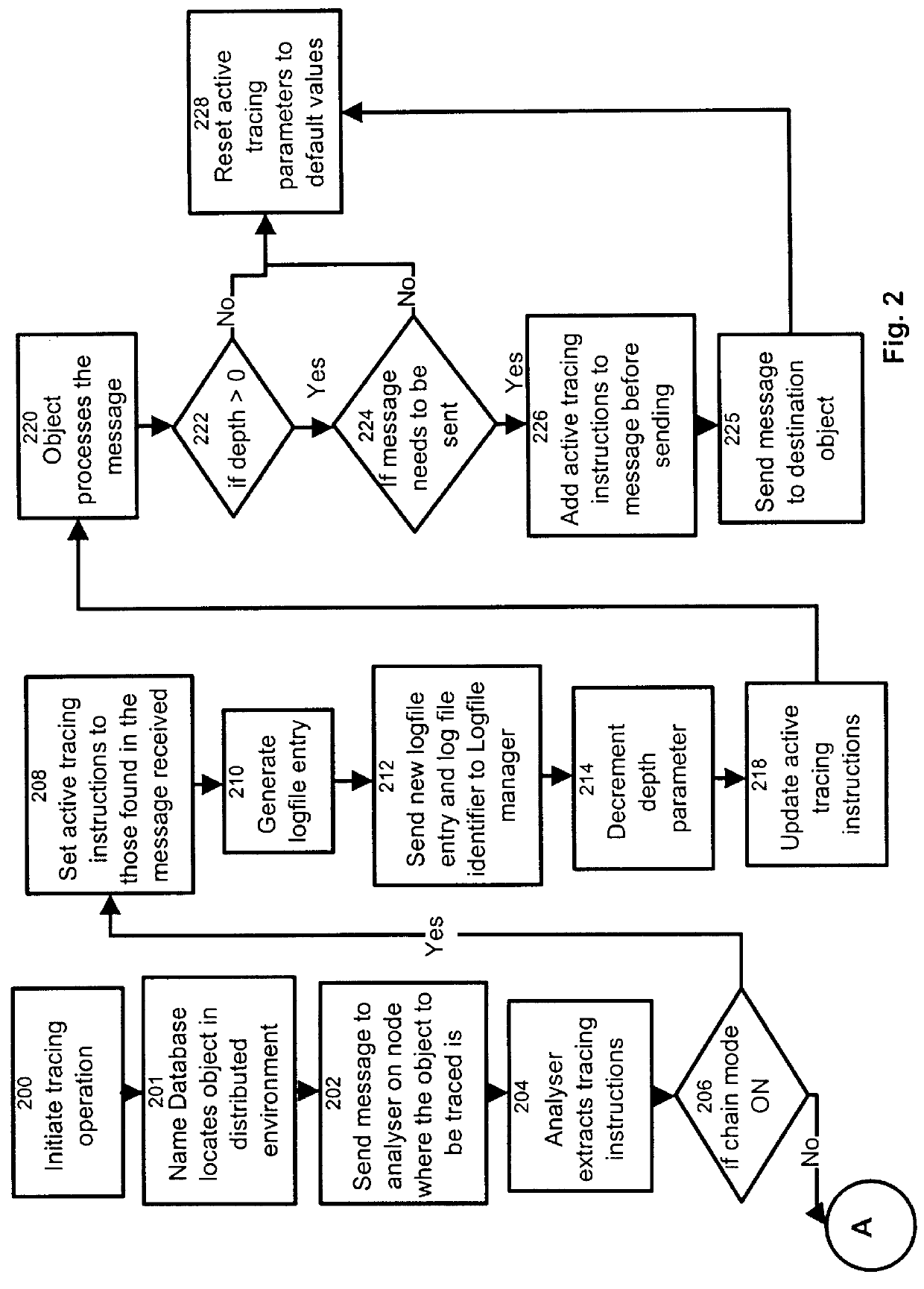

Process and apparatus for tracing software entities in a distributed system

InactiveUS6083281AHardware monitoringSoftware testing/debuggingControl flowDistributed Computing Environment

The invention relates to a process and apparatus for tracing software entities, more particularly a tracing tool providing tracing capabilities to entities in an application. The object-tracing tool provides software components to allow tracing the execution of an application. Tracing software entities is important for software developers to permit the quick localization of errors and hence facilitate the debugging process. It is also useful for the software user who wishes to view the control flow and perhaps add some modifications to the software. Traditionally, software-tracing tools have been confined to single node systems where all the components of an application run on a single machine. The novel tracing tool presented in this application provides a method and an apparatus for tracing software entities in a distributed computing environment. This is done by using a network management entity to keep track of the location of the entities in the system and by using a library of modules that can be inherited to provide tracing capabilities. It also uses a log file to allow the program developer or user to examine the flow, the occurrence of events during a trace and the values of designated attributes. The invention also provides a computer readable storage medium containing a program element to direct a processor of a computer to implement the software tracing process.

Owner:RPX CLEARINGHOUSE

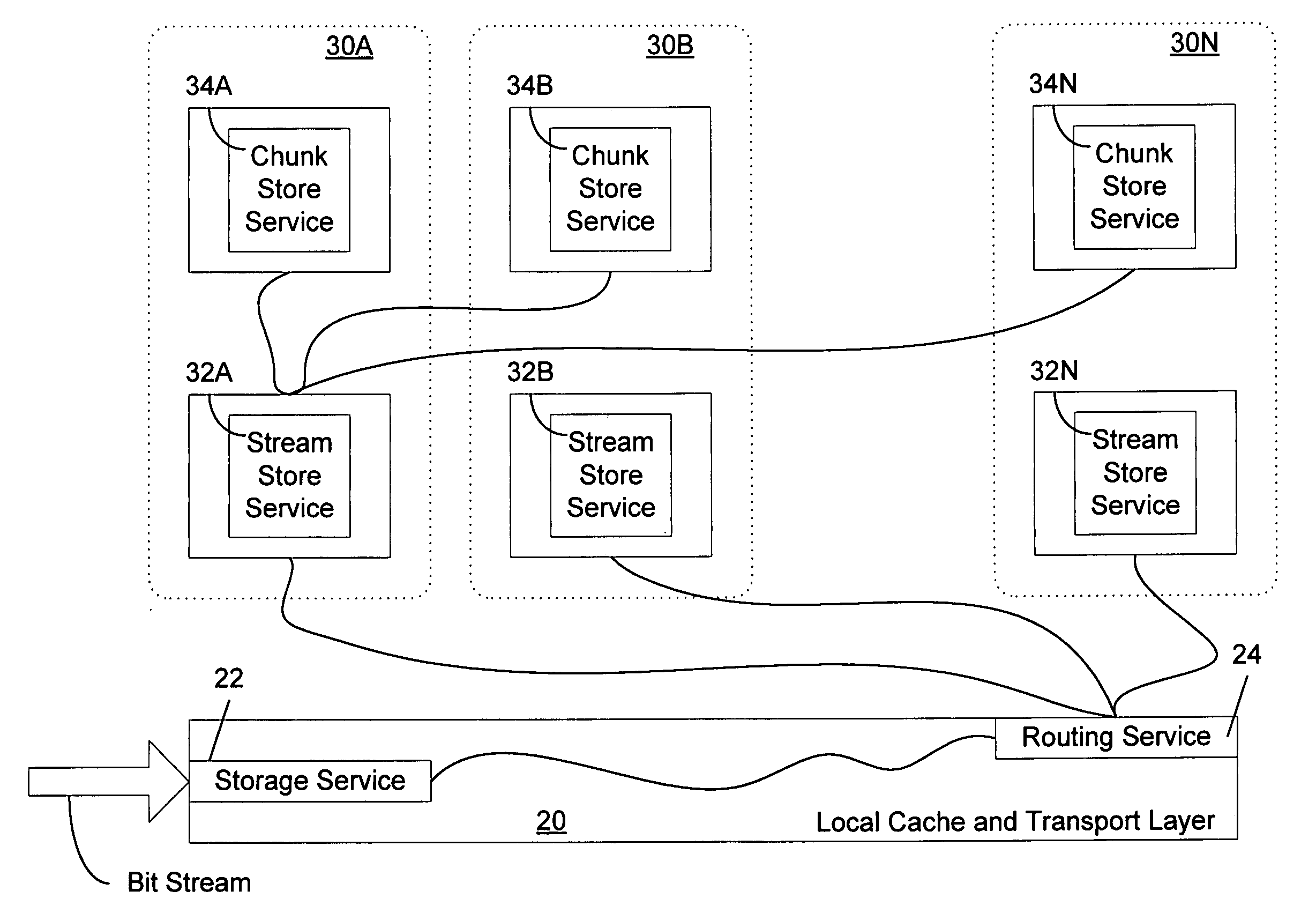

Systems and methods for providing distributed, decentralized data storage and retrieval

ActiveUS20060242155A1Improve reliabilityImprove availabilityDigital data information retrievalMultiple digital computer combinationsData storeDistributed computing

Systems and methods for distributed, decentralized storage and retrieval of data in an extensible SOAP environment are disclosed. Such systems and methods decentralize not only the bandwidth required for data storage and retrieval, but also the computational requirements. Accordingly, such systems and methods alleviate the need for one node to do all the storage and retrieval processing, and no single node is required to send or receive all the data.

Owner:MICROSOFT TECH LICENSING LLC

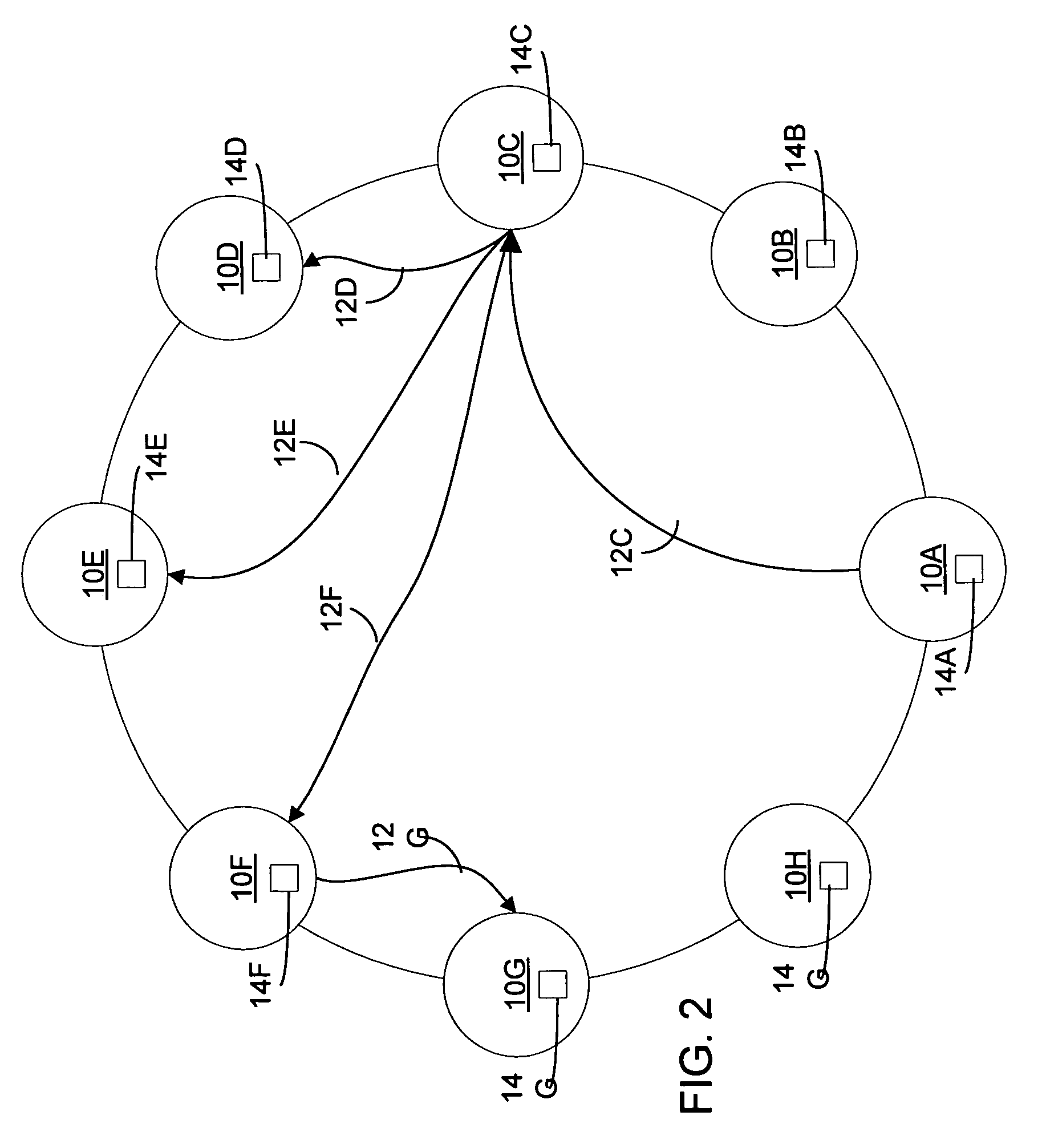

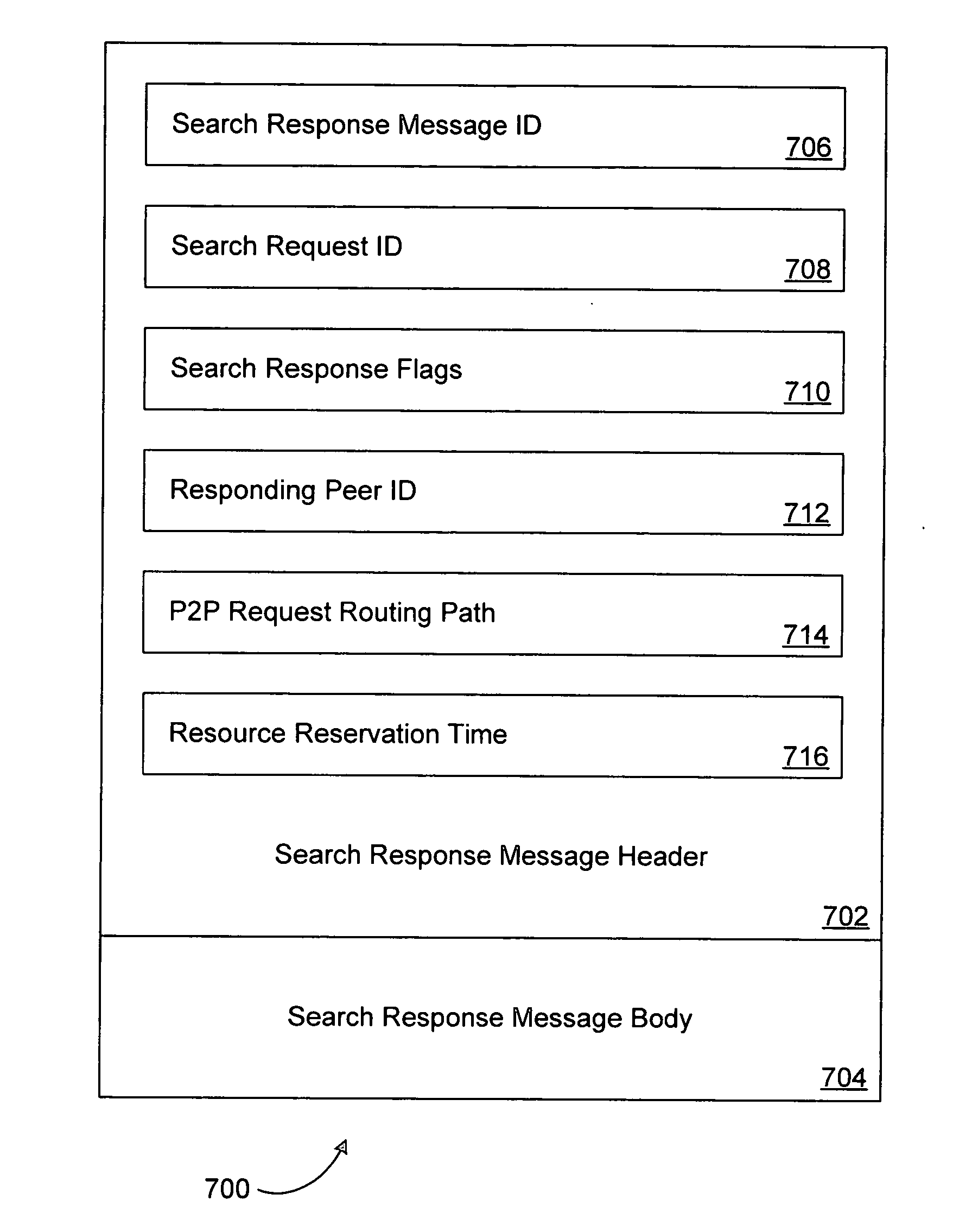

System and method for searching a peer-to-peer network

A peer-to-peer (P2P) search request message may multicast from an originating peer to its neighboring peers. Each neighboring peer may multicast the request message in turn until a search radius is reached. Each peer receiving the request message may conduct a single node search. If the single node search is successful, a P2P search response message may be generated. Each receiving peer may filter duplicate messages and may multicast to less than 100% of its neighbors. Responses may be cached and cached responses sent in response to request messages, expanding the effective search radius of a given P2P search. The multicast probability for a neighbor may be a function of how frequently the neighbor has previously responded to a particular search type. To reduce abuse by impolite or malicious peers, in addition to rate-based throttling, originating peers may be required to solve a computationally expensive puzzle.

Owner:MICROSOFT TECH LICENSING LLC

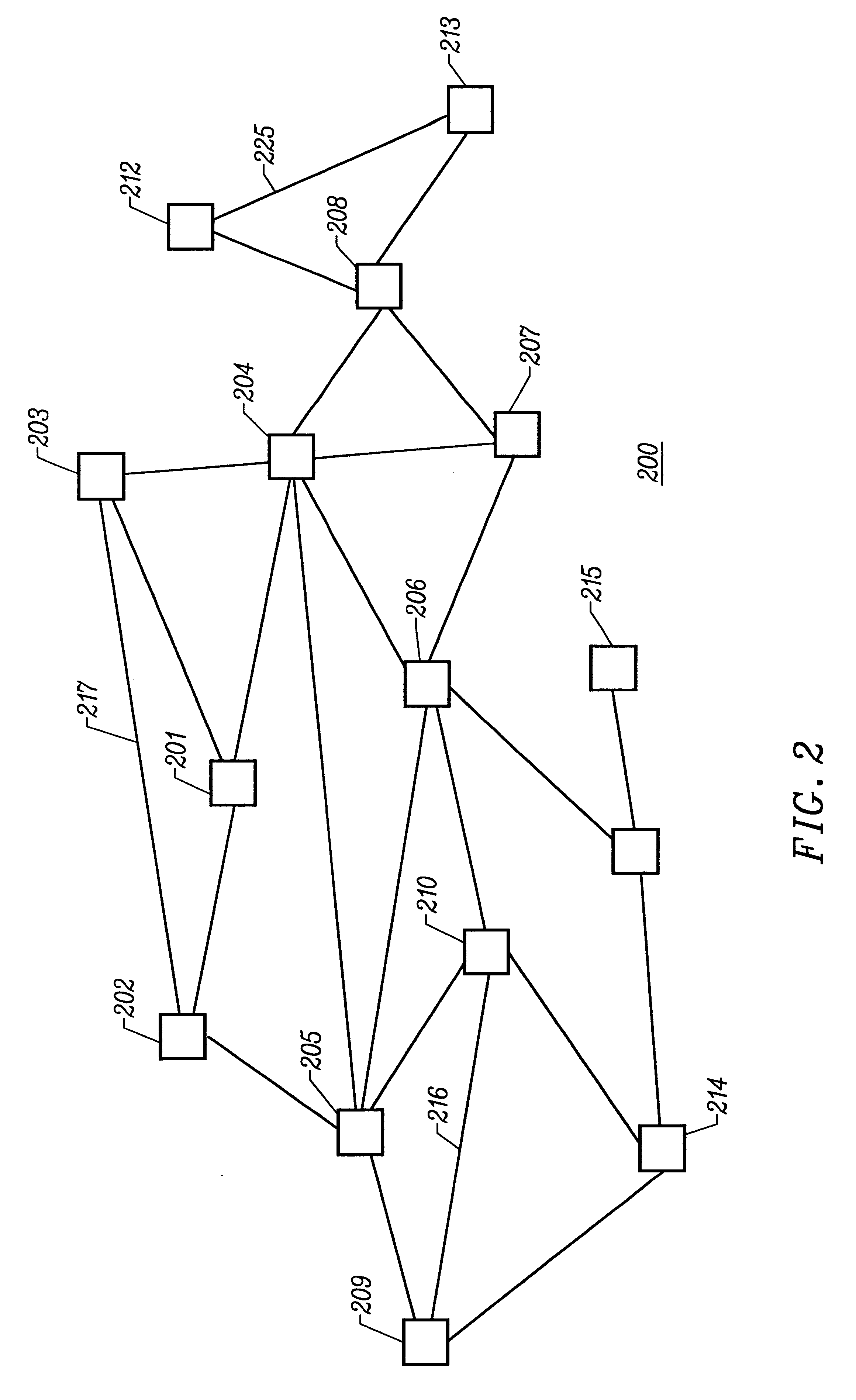

Methods for visualizing transformations among related series of graphs

A method for displaying in a coherent manner the changes over time of a web site's structure, usage, and content is disclosed. Time tubes are generated by a method of displaying a related series of graphs. Time tubes illustrate changes in a graph that undergoes one or more transformations from one state to another. The transformations are displayed using the length of the cylindrical tube, filling the length of the time tube with planar slices which represent the data at various stages of the transformations. Time tubes may encode several dimensions of the transformations simultaneously by altering the representation of size, color, and layout among the planar slices. Temporal transformations occur when web pages are added or deleted over time. Value-based transformations include node colors, which may be used to encode a specific page's usage parameter. Spatial transformations include the scaling of physical dimension as graphs expand or contract in size. The states of a graph at various times are represented as a series of related graphs. In a preferred embodiment, an inventory of all existing nodes is performed so as to generate a list of all nodes that have existed at any time. This inventory is used to produce a layout template in which each unique node is assigned a unique layout position. To produce each planar slice, the specific nodes which exist in the slice are placed at their respective positions assigned in the layout template. In another aspect, corresponding nodes in planar slices are linked, such as with translucent streamlines, in response to a user selecting a node in a planar slice by placing his cursor over the selected node, or to show clustering of two or more nodes in one planar slice into a single node in an adjacent planar slice.

Owner:GOOGLE LLC

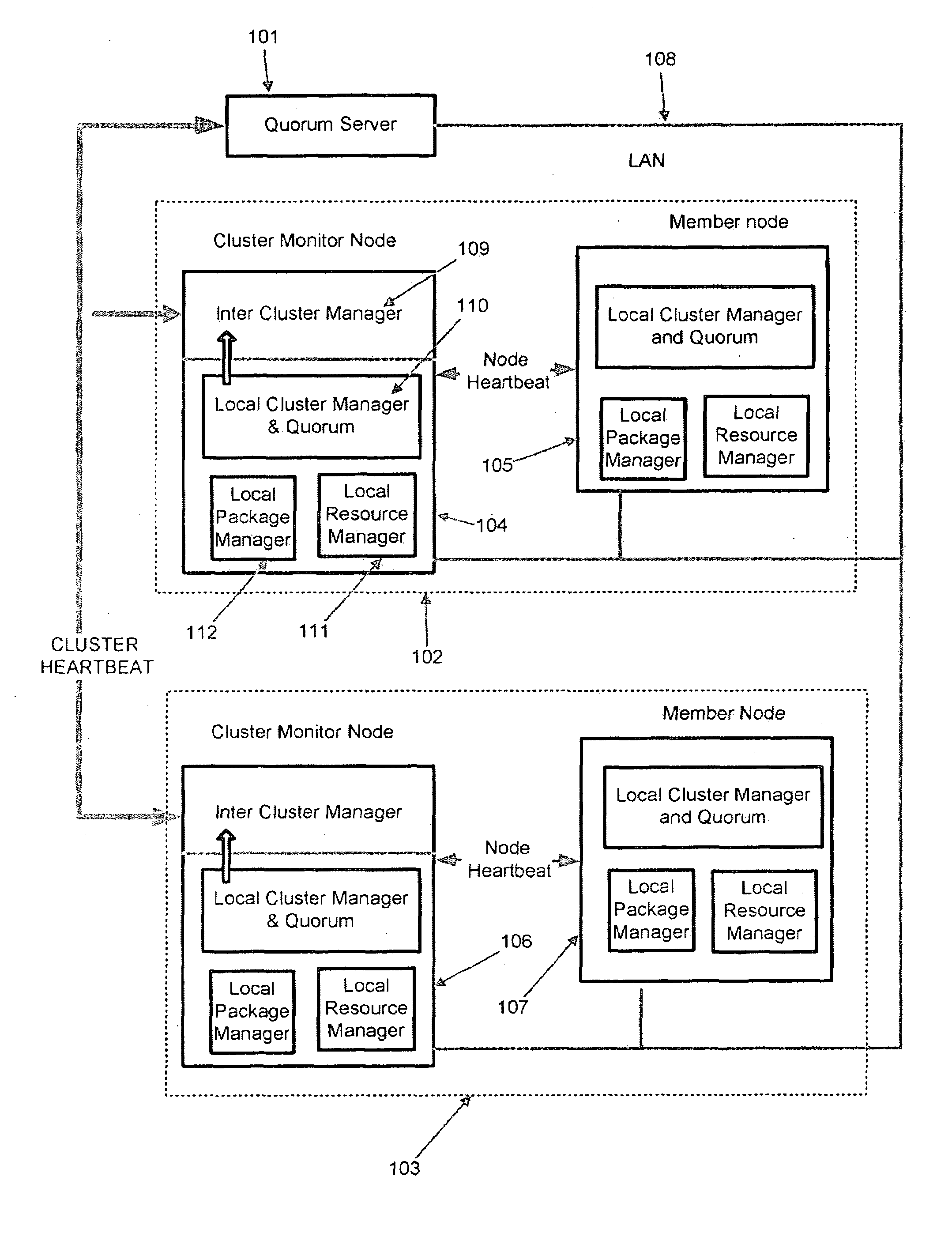

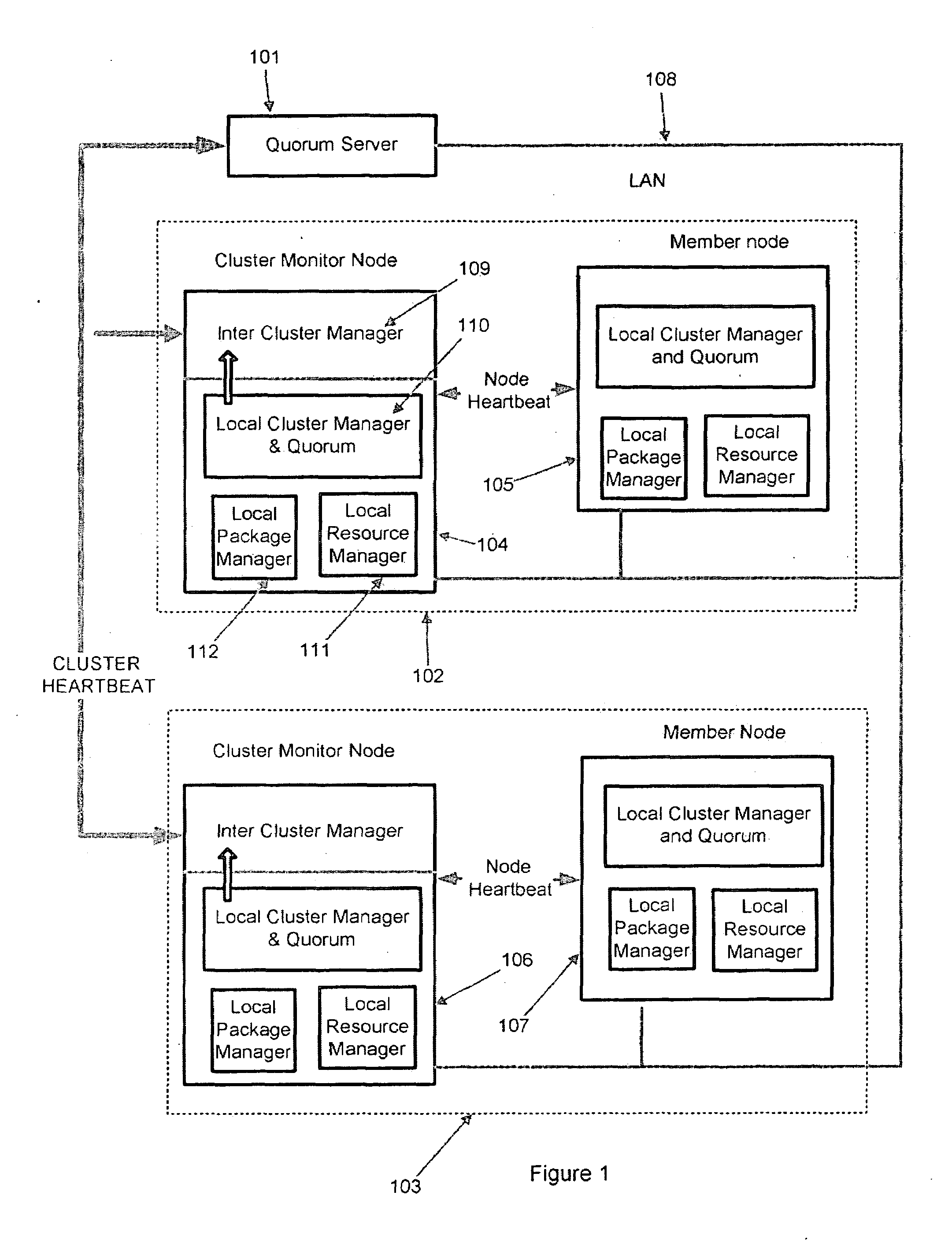

Method And System For Clustering

ActiveUS20090113034A1Multiple digital computer combinationsElectric digital data processingHigh availabilitySelection criterion

A system and a method for automatic cluster formation by automatically selecting nodes based on a selection criteria configured by the user is disclosed. During a cluster re-formation process, a failed node may automatically get replaced by one of the healthy nodes in its network, which is not part of any cluster avoiding cluster failures. In case of a cluster getting reduced to a single node due to node failures and failed nodes could not be replaced due to non-availability of free nodes, the single node cluster may be merged with a existing healthy clusters in the network providing a constant level of high availability. The proposed method may also provide an affective load balancing by maintaining a constant number of member nodes in a cluster by automatically replacing the dead nodes with a healthy node.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

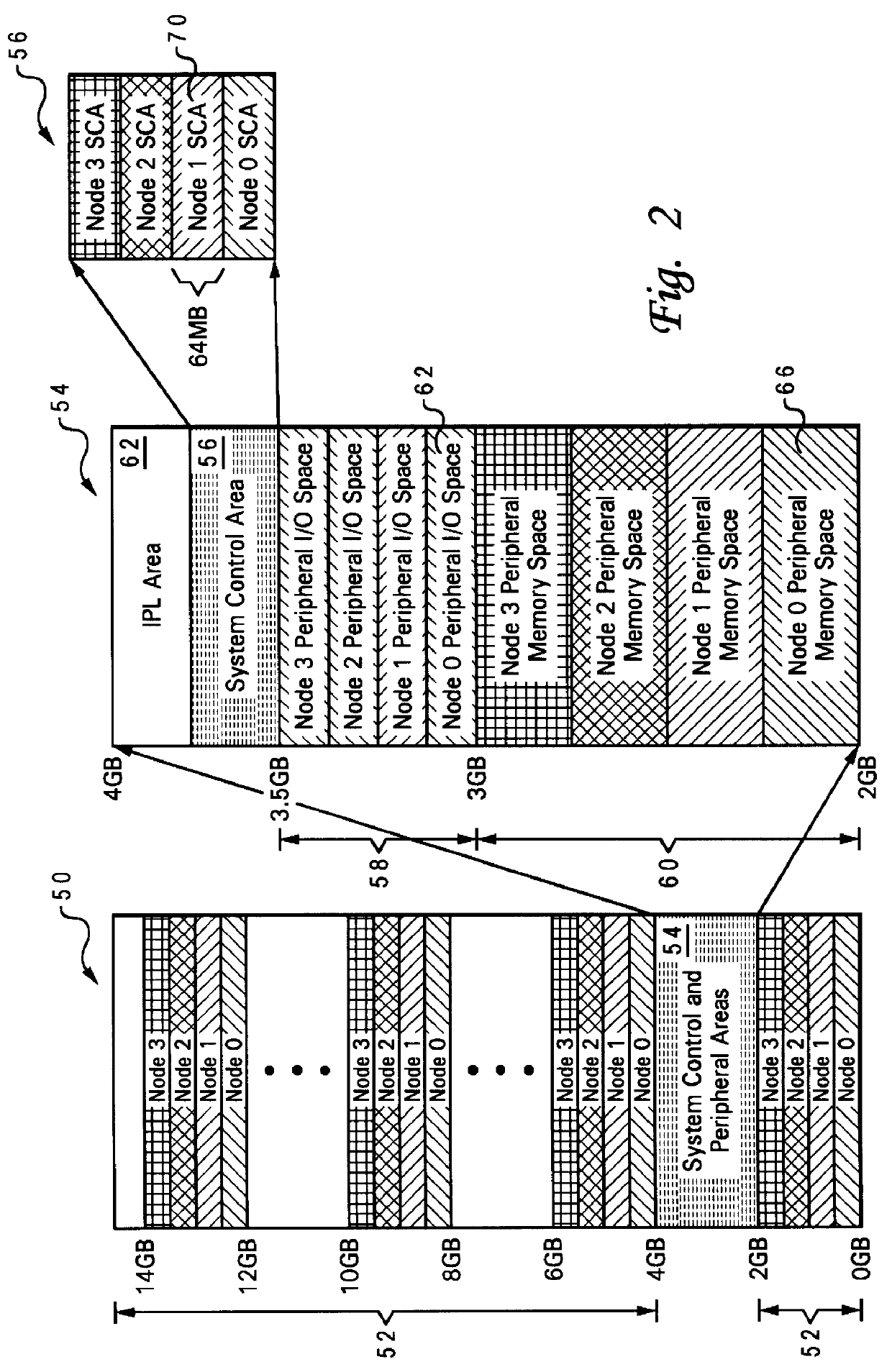

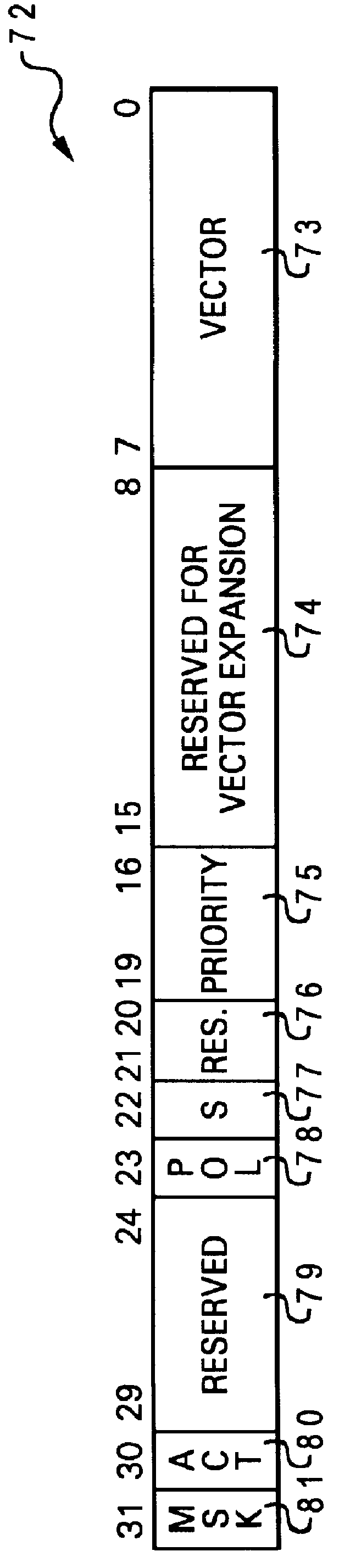

Interrupt architecture for a non-uniform memory access (NUMA) data processing system

InactiveUS6148361ASmall sizePromote disseminationProgram initiation/switchingGeneral purpose stored program computerExtensibilityData processing system

A non-uniform memory access (NUMA) computer system includes at least two nodes coupled by a node interconnect, where at least one of the nodes includes a processor for servicing interrupts. The nodes are partitioned into external interrupt domains so that an external interrupt is always presented to a processor within the external interrupt domain in which the interrupt occurs. Although each external interrupt domain typically includes only a single node, interrupt channeling or interrupt funneling may be implemented to route external interrupts across node boundaries for presentation to a processor. Once presented to a processor, interrupt handling software may then execute on any processor to service the external interrupt. Servicing external interrupts is expedited by reducing the size of the interrupt handler polling chain as compared to prior art methods. In addition to external interrupts, the interrupt architecture of the present invention supports inter-processor interrupts (IPIs) by which any processor may interrupt itself or one or more other processors in the NUMA computer system. IPIs are triggered by writing to memory mapped registers in global system memory, which facilitates the transmission of IPIs across node boundaries and permits multicast IPIs to be triggered simply by transmitting one write transaction to each node containing a processor to be interrupted. The interrupt hardware within each node is also distributed for scalability, with the hardware components communicating via interrupt transactions conveyed across shared communication paths.

Owner:IBM CORP

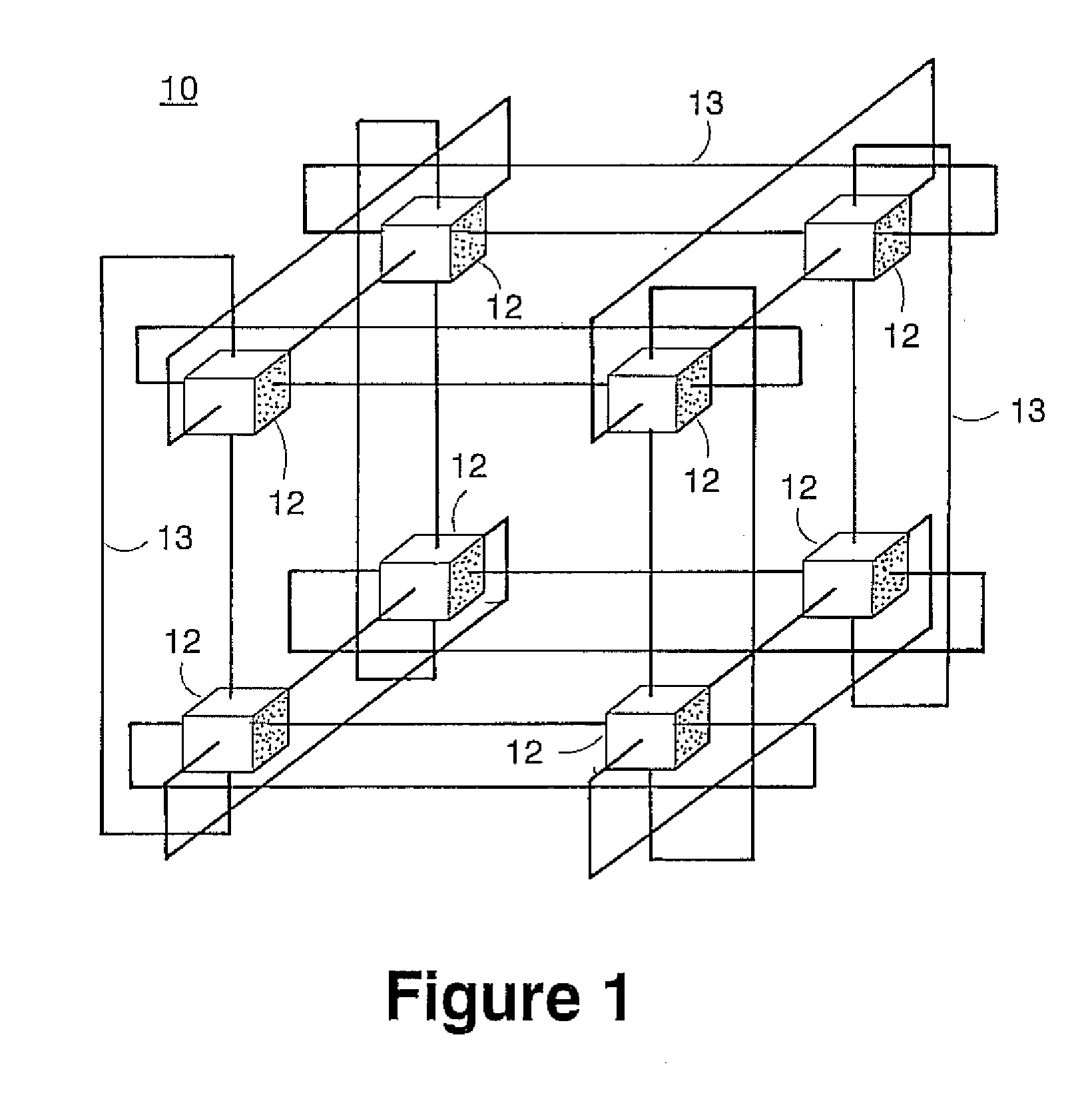

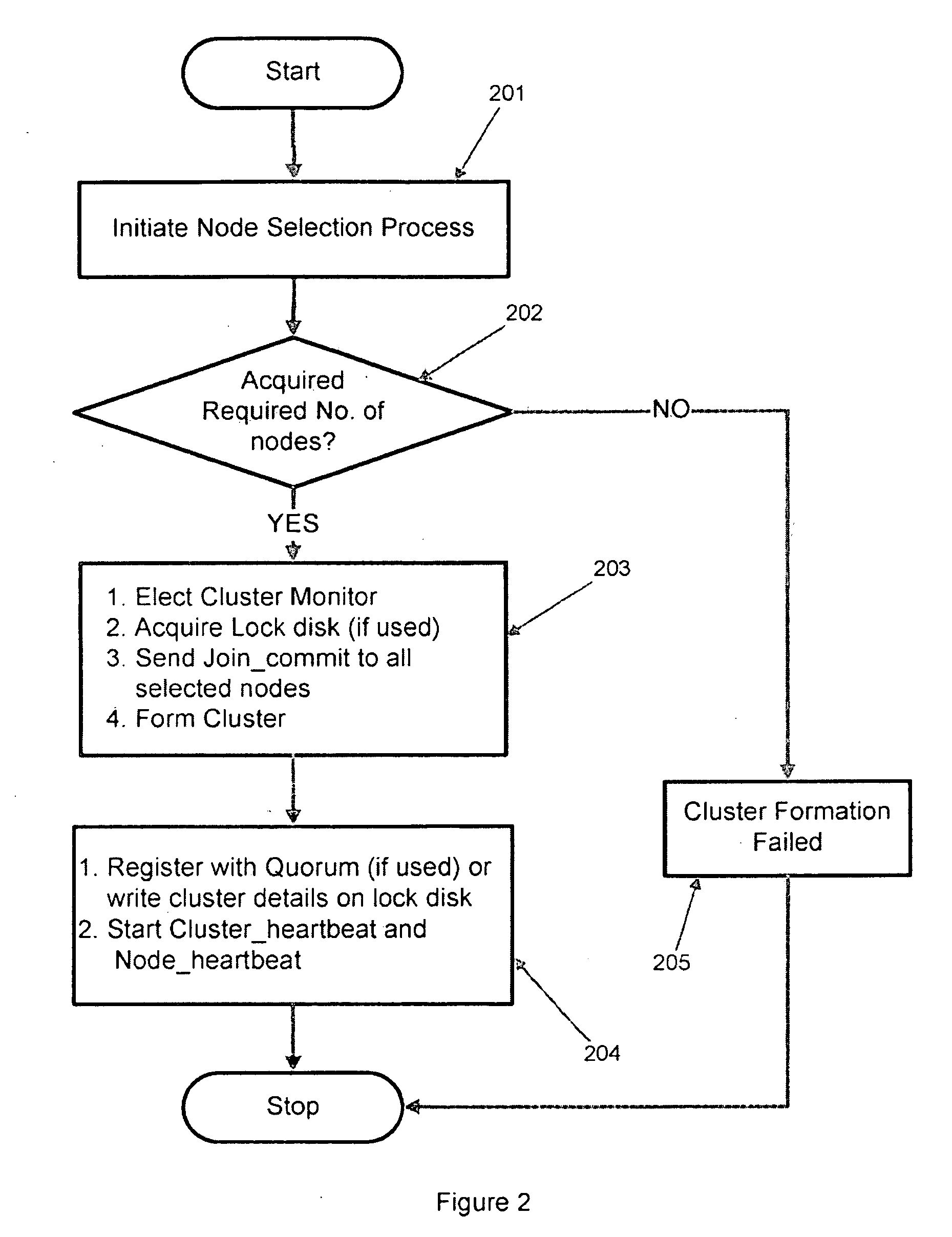

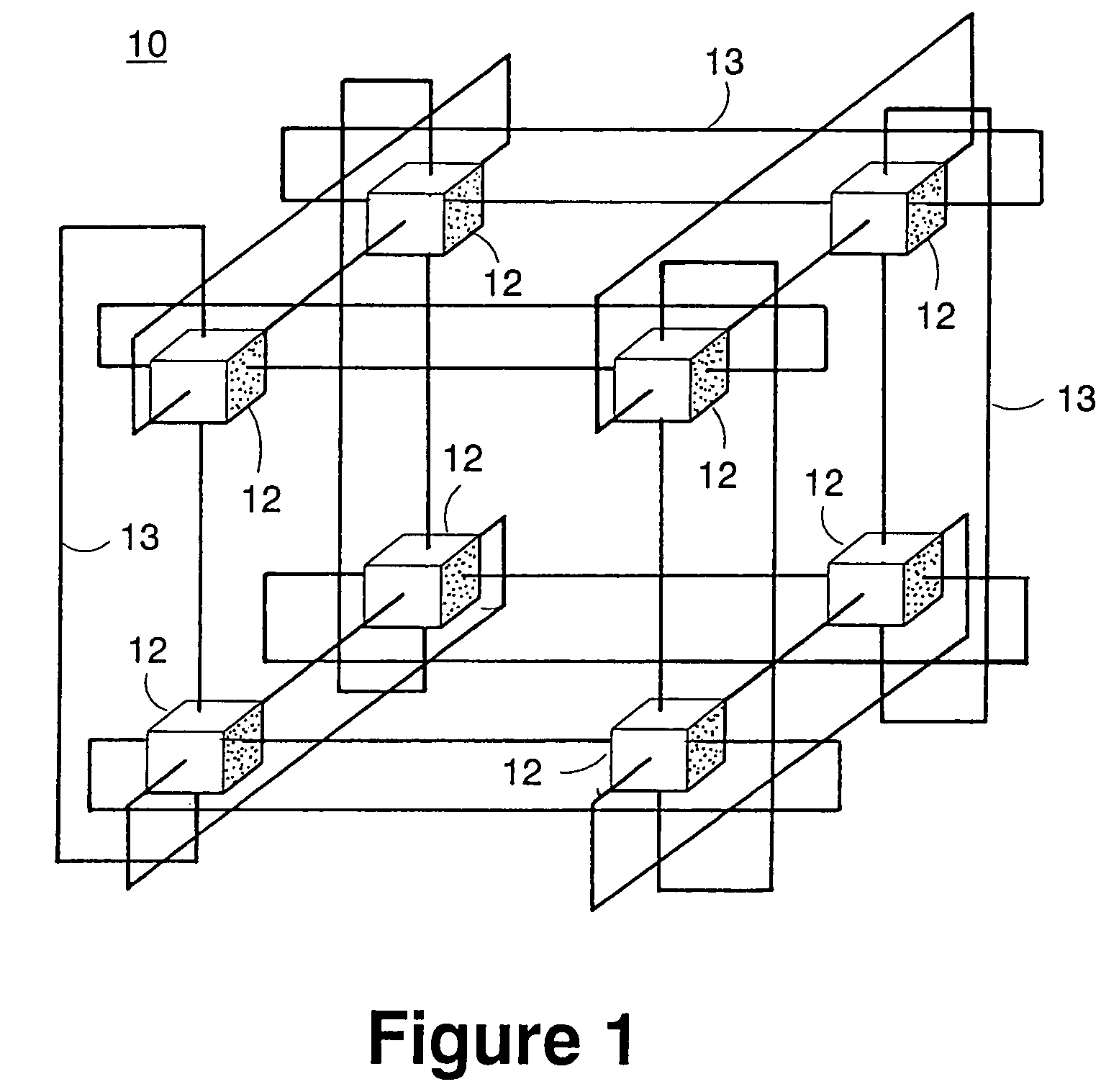

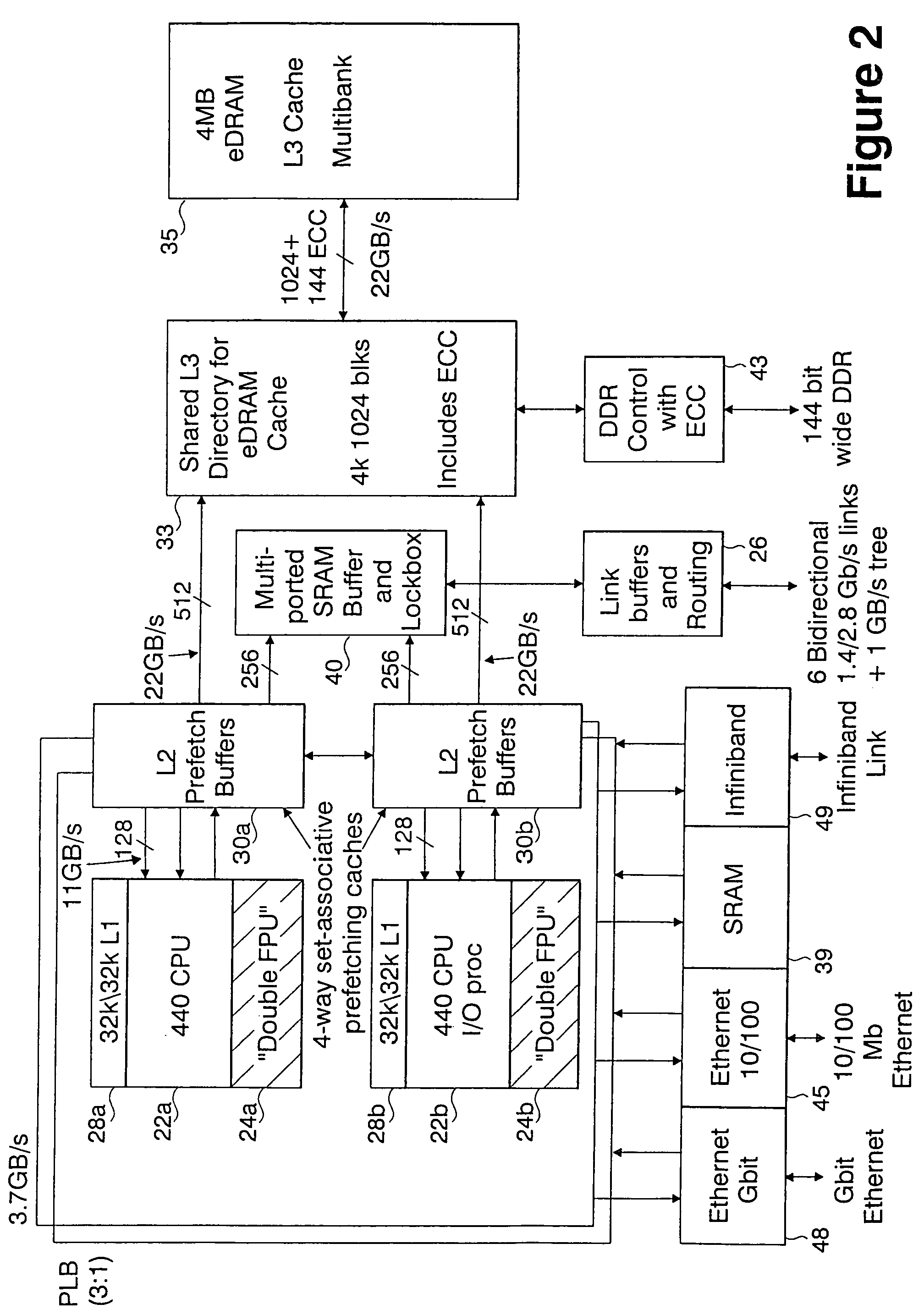

Massively parallel supercomputer

InactiveUS7555566B2Massive level of scalabilityUnprecedented level of scalabilityError preventionProgram synchronisationPacket communicationSupercomputer

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures based upon System-On-a-Chip technology, i.e., each processing node comprises a single Application Specific Integrated Circuit (ASIC). Within each ASIC node is a plurality of processing elements each of which consists of a central processing unit (CPU) and plurality of floating point processors to enable optimal balance of computational performance, packaging density, low cost, and power and cooling requirements. The plurality of processors within a single node may be used individually or simultaneously to work on any combination of computation or communication as required by the particular algorithm being solved or executed at any point in time. The system-on-a-chip ASIC nodes are interconnected by multiple independent networks that optimally maximizes packet communications throughput and minimizes latency. In the preferred embodiment, the multiple networks include three high-speed networks for parallel algorithm message passing including a Torus, Global Tree, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. For particular classes of parallel algorithms, or parts of parallel calculations, this architecture exhibits exceptional computational performance, and may be enabled to perform calculations for new classes of parallel algorithms. Additional networks are provided for external connectivity and used for Input / Output, System Management and Configuration, and Debug and Monitoring functions. Special node packaging techniques implementing midplane and other hardware devices facilitates partitioning of the supercomputer in multiple networks for optimizing supercomputing resources.

Owner:IBM CORP

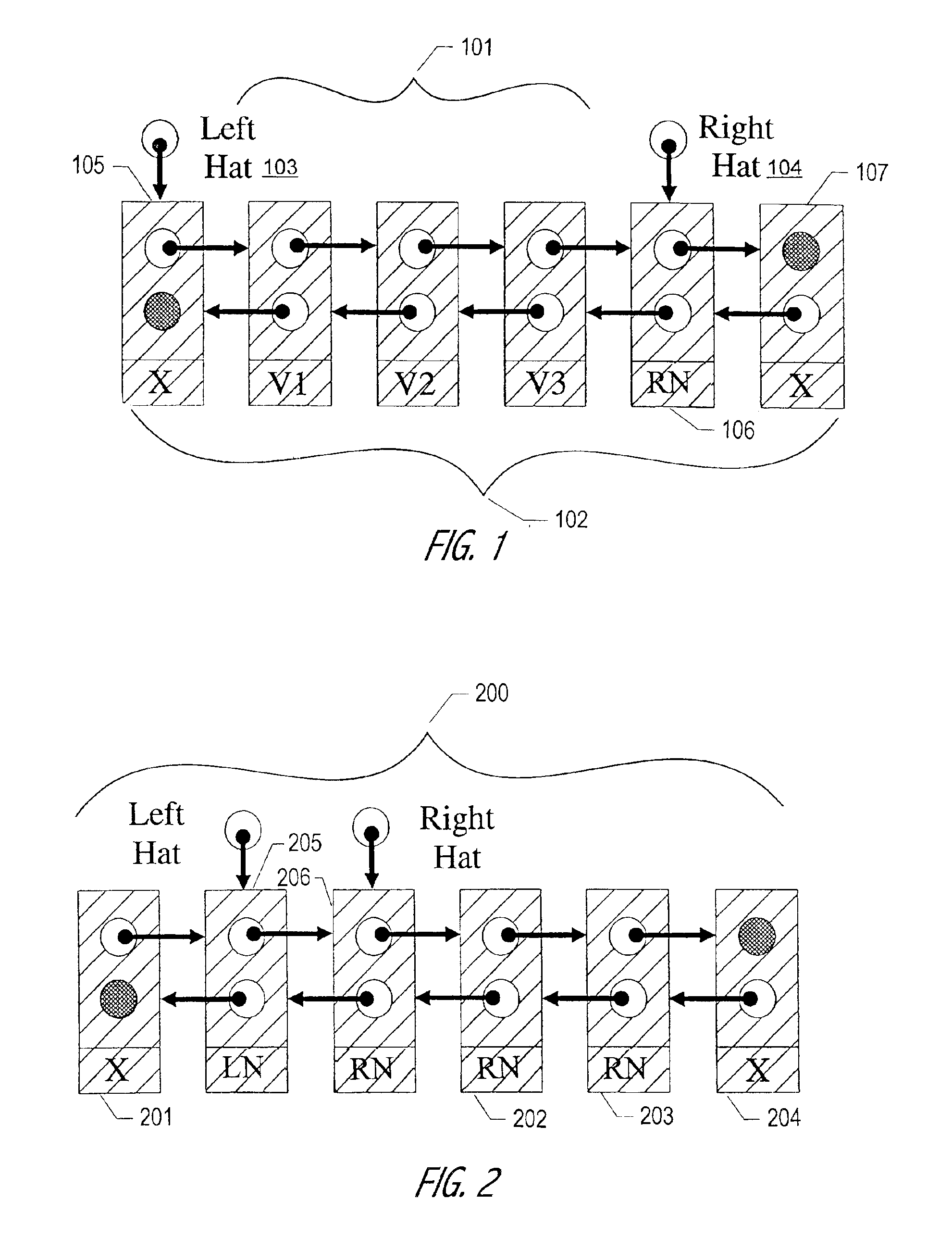

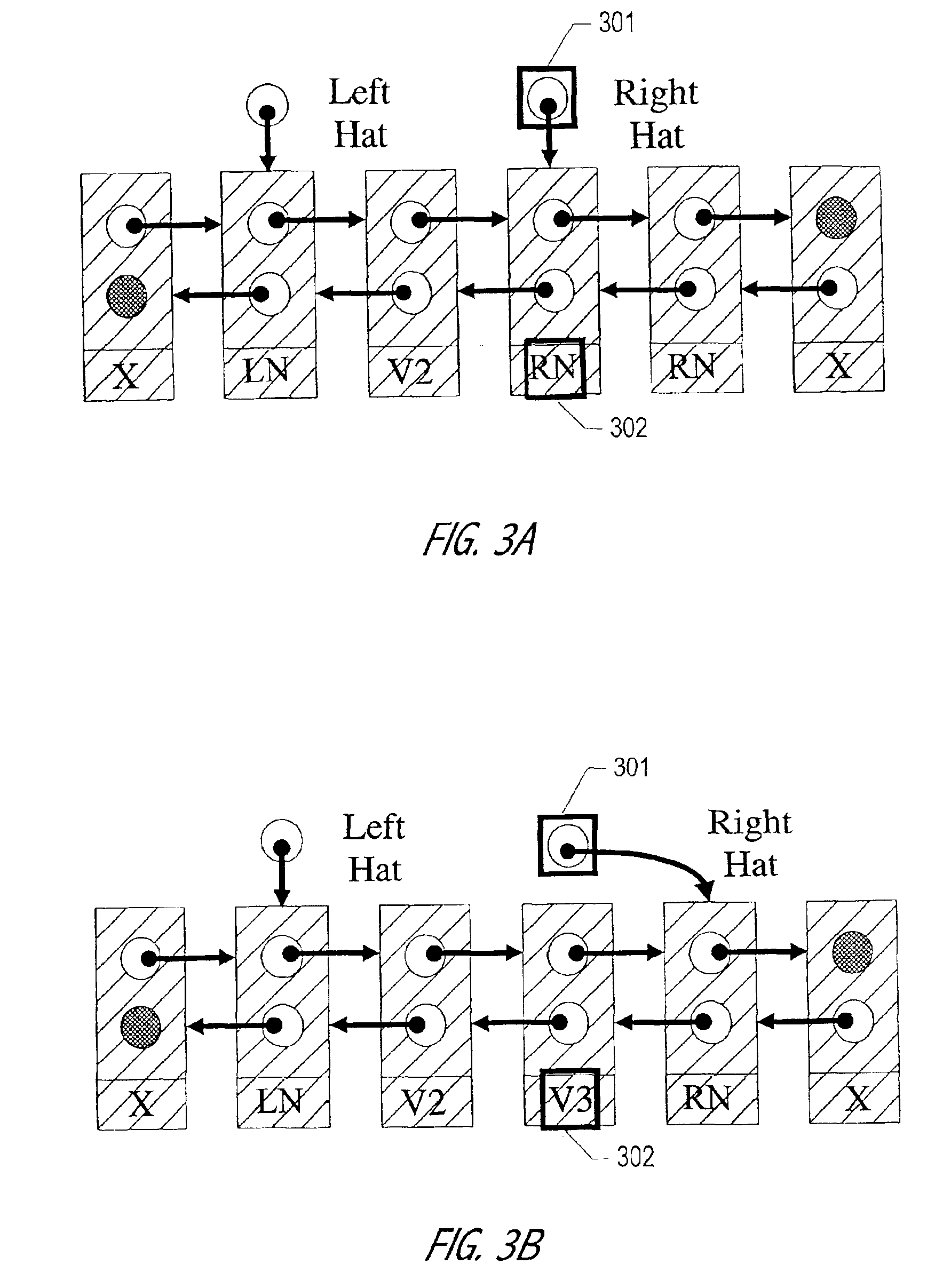

Concurrent shared object implemented using a linked-list with amortized node allocation

InactiveUS20010047361A1Useful for promotionHandling data according to predetermined rulesMultiprogramming arrangementsGranularityWaste collection

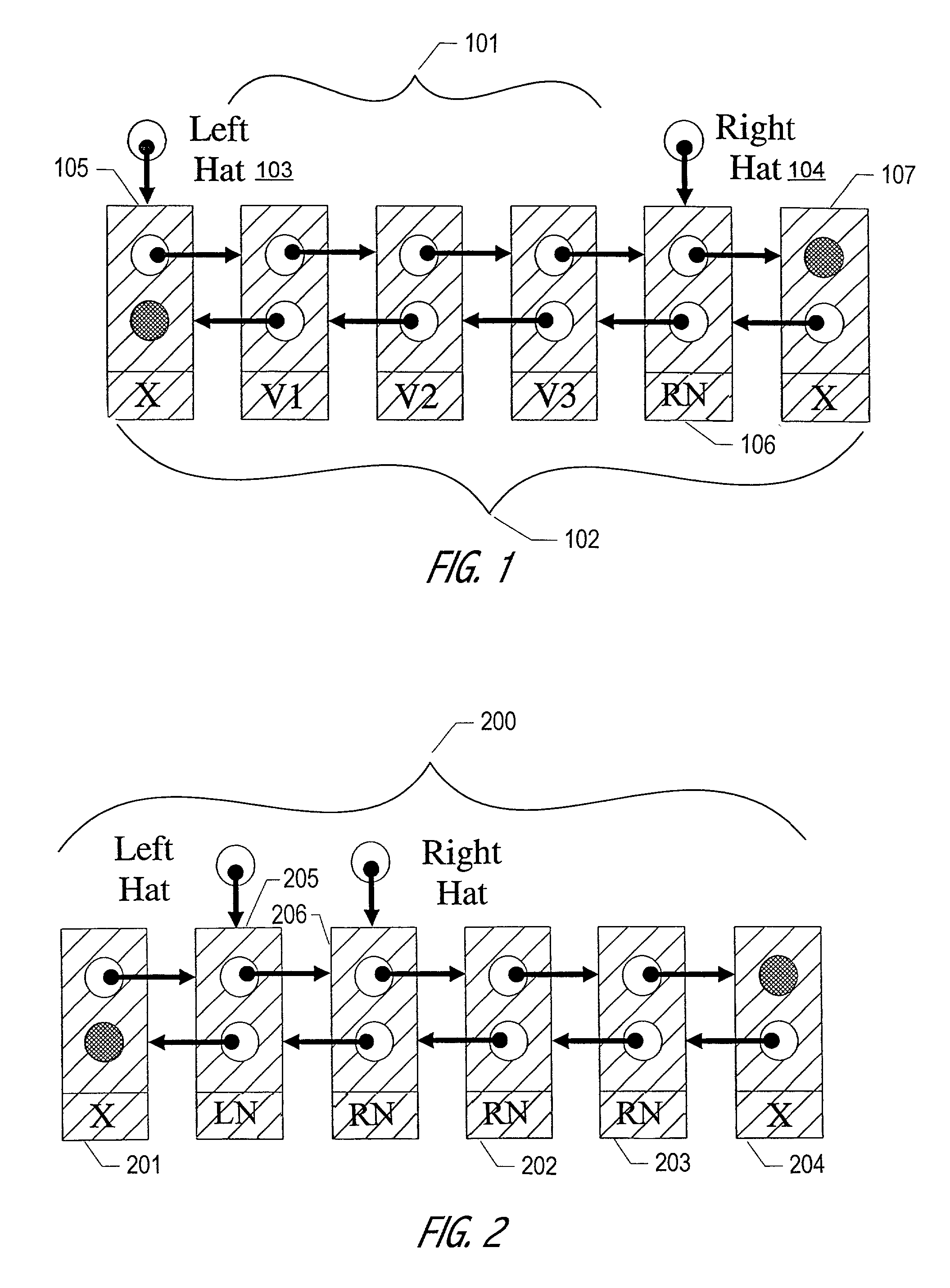

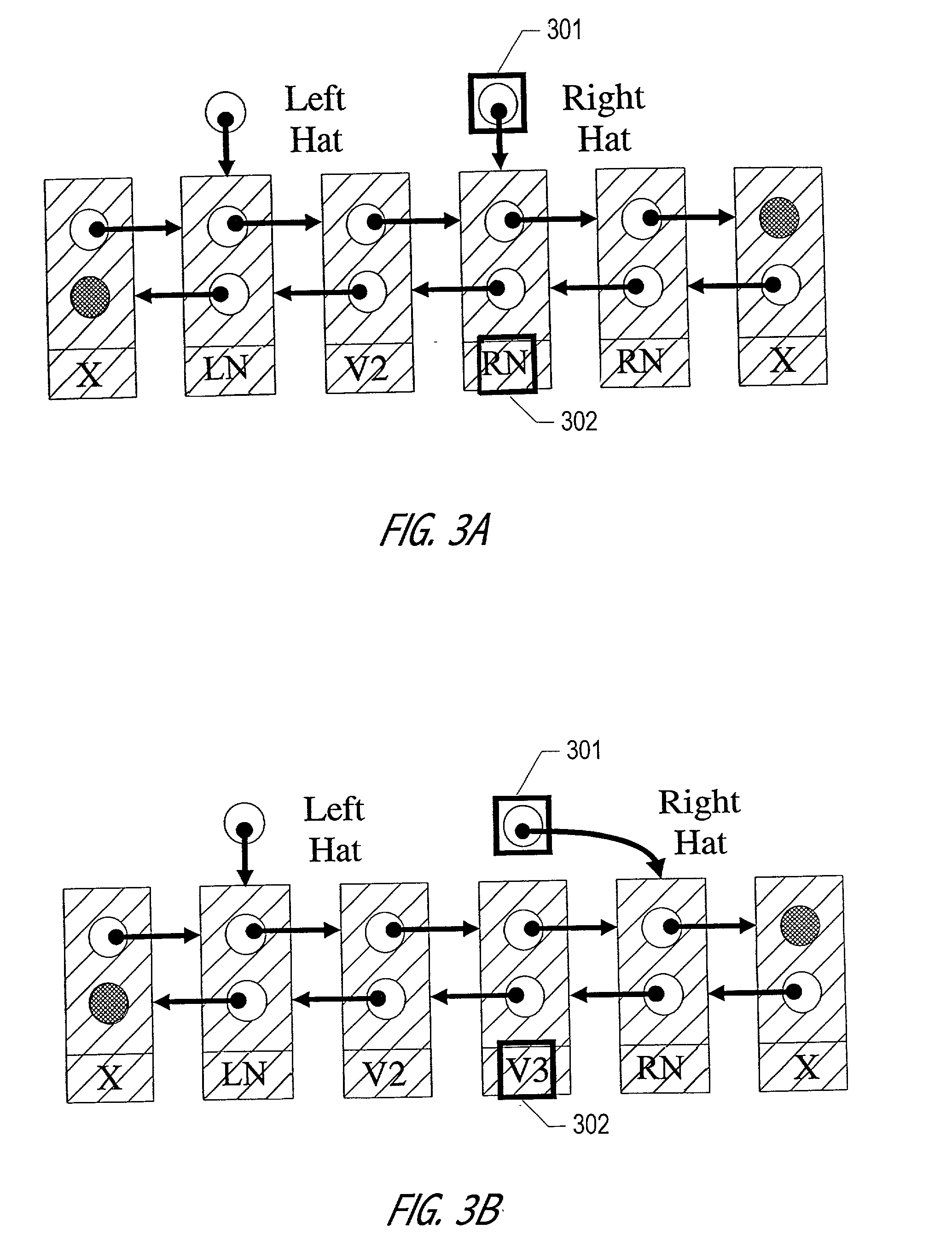

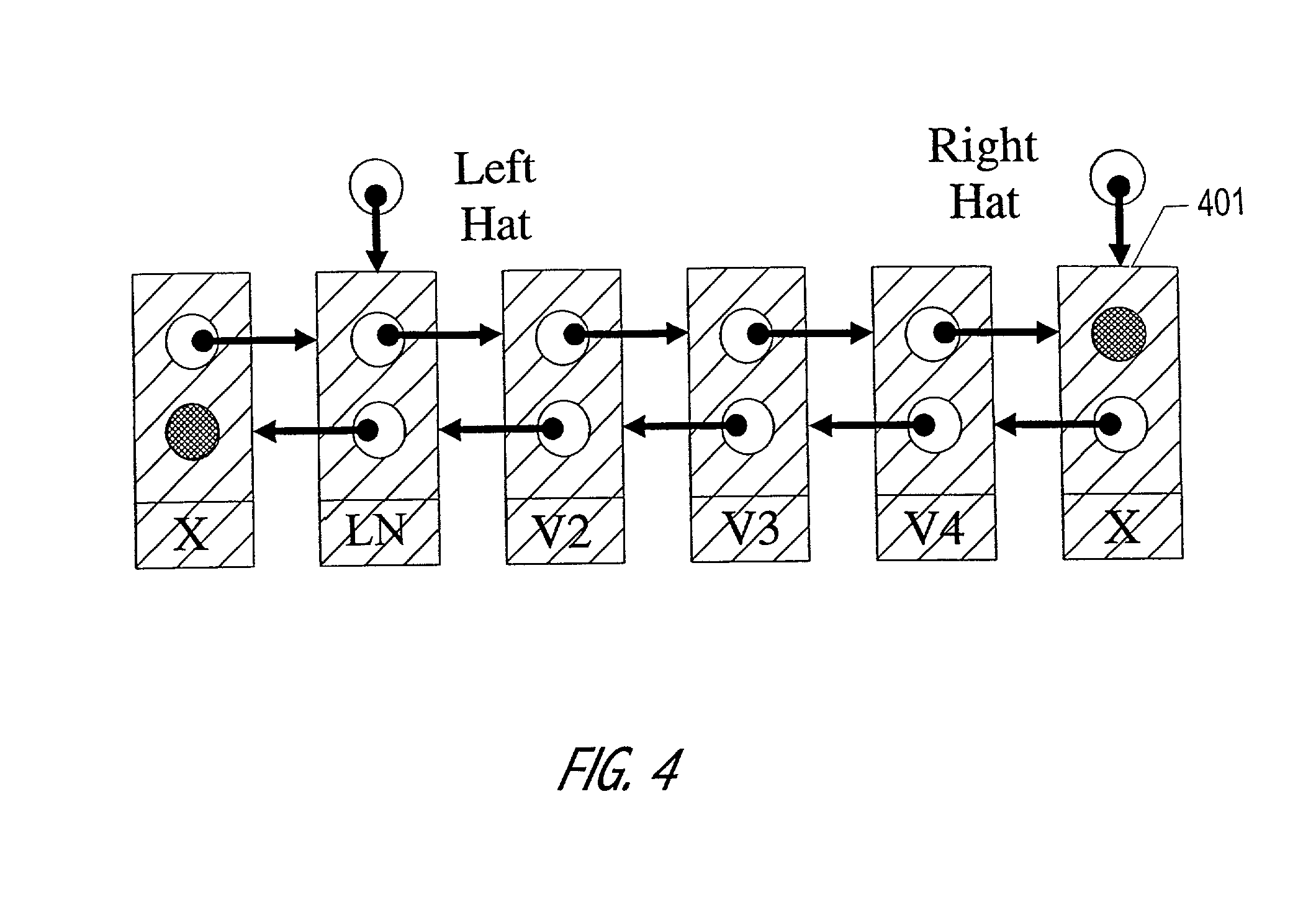

The Hat Trick deque requires only a single DCAS for most pushes and pops. The left and right ends do not interfere with each other until there is one or fewer items in the queue, and then a DCAS adjudicates between competing pops. By choosing a granularity greater than a single node, the user can amortize the costs of adding additional storage over multiple push (and pop) operations that employ the added storage. A suitable removal strategy can provide similar amortization advantages. The technique of leaving spare nodes linked in the structure allows an indefinite number of pushes and pops at a given deque end to proceed without the need to invoke memory allocation or reclamation so long as the difference between the number of pushes and the number of pops remains within given bounds. Both garbage collection dependent and explicit reclamation implementations are described.

Owner:ORACLE INT CORP

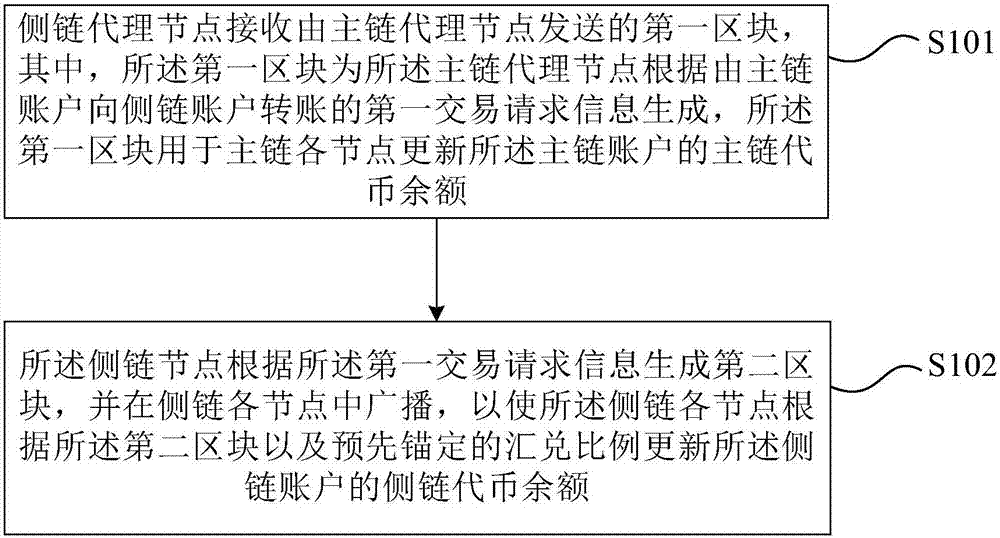

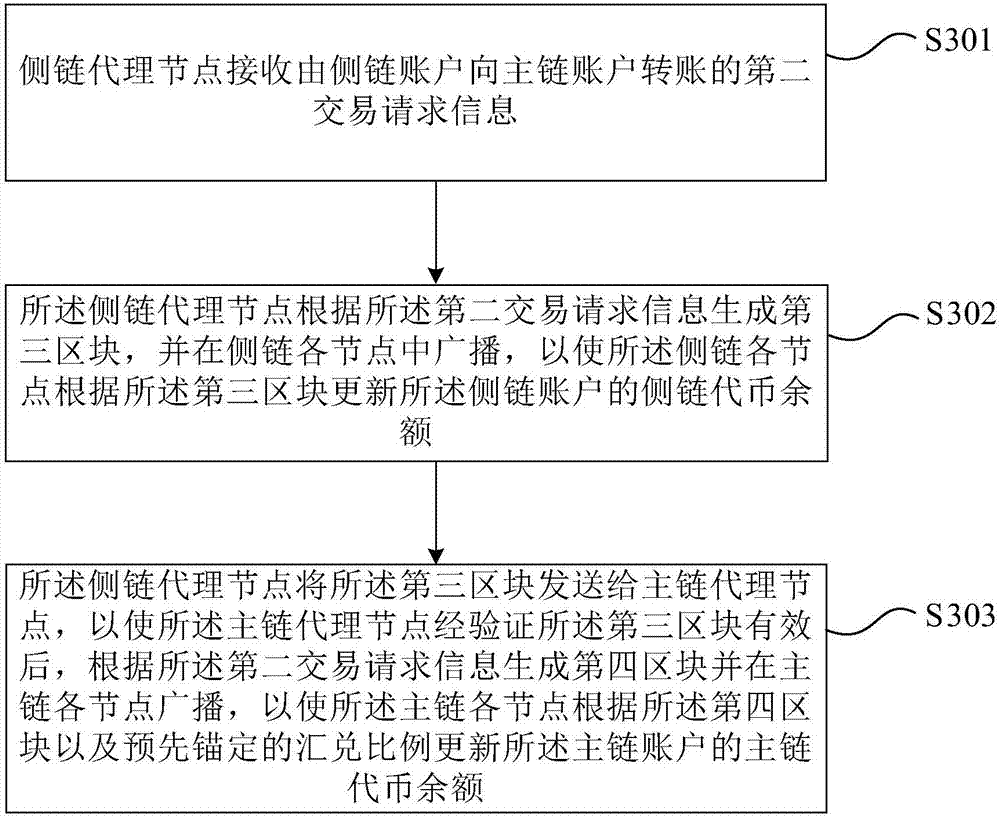

Method and system for transactions between main chain and side chain of block chain

ActiveCN107464106AReduce transaction volumeReduce data volumeFinanceBroadcast service distributionSide chainTransaction data

The invention provides a method and a system for transactions between a main chain and a side chain of a block chain. According to the invention, through the side chain, service types of the block chain can be expanded; by use of a flow dividing mode, transaction quantity of the main chain is reduced; and nodes of the main chain and nodes of the side chain store and verify block data and transaction data of own chains respectively. Thus, data quantity and calculated amount of the transaction are reduced and problems in data storage and expansion of the calculated amount of a single node caused by the fact that different services are supported by the same chain are solved. In addition, by use of a de-centralization scheme, bidirectional anchoring of capitals between the side chain and the main chain can be achieved; multiple side chains are capable of using or anchoring capitals on the same main chain; capital types are reduced; a mutual exchange problem between different capitals is avoided; and the services are quite clear and rational.

Owner:北京果仁宝科技有限公司

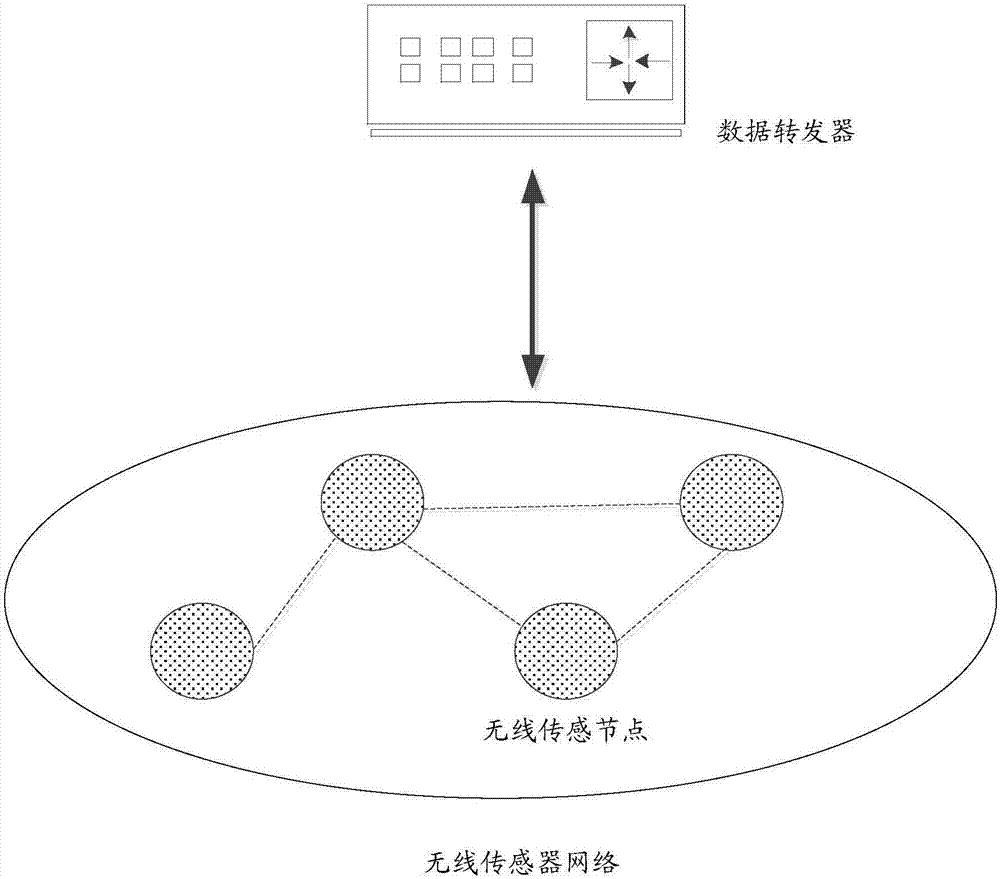

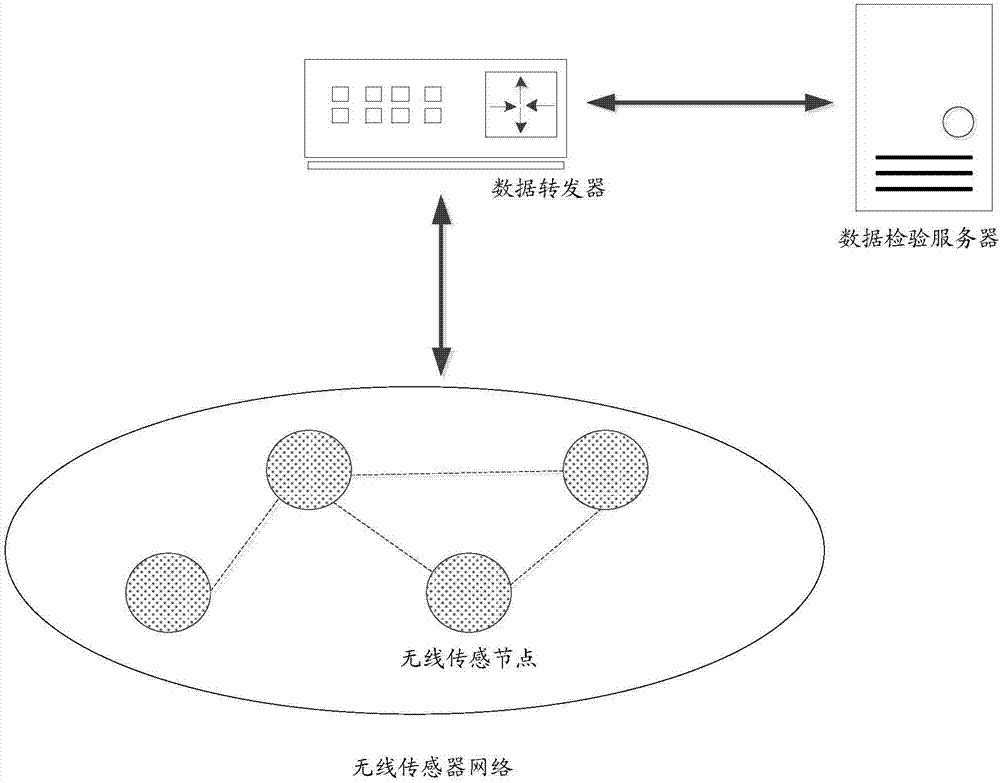

Data verification method based on block chains and data verification system thereof

ActiveCN107249009AOvercome efficiencyOvercome speedWireless commuication servicesTransmissionSource Data VerificationData verification

The invention discloses a data verification method based on block chains and a data verification system thereof. The data verification method comprises the steps that the sensing data of the environment and / or household equipment state are acquired through multiple wireless sensor nodes of a wireless sensor network and processed and then uploaded to a data repeater; the data repeater receives the processed sensing data and then forwards the sensing data to a data verification network; multiple data verification nodes in the data verification network are responsible for verifying and saving the sensing data; and all the data verification nodes are block chain nodes, and multiple data verification nodes form a distributed database. The data verification task is completed by using the data verification nodes, and the verification work is distributed to the verification nodes from the data repeater so that the problems of being low in verification efficiency, low in speed, high in transmission delay and vulnerable because of the excessively centralized verification task can be overcome, and the nodes are excited to actively authenticate the data and rapidly complete the verification task so that the whole verification system is not influenced by single node failure of any node.

Owner:GUANGDONG UNIV OF TECH

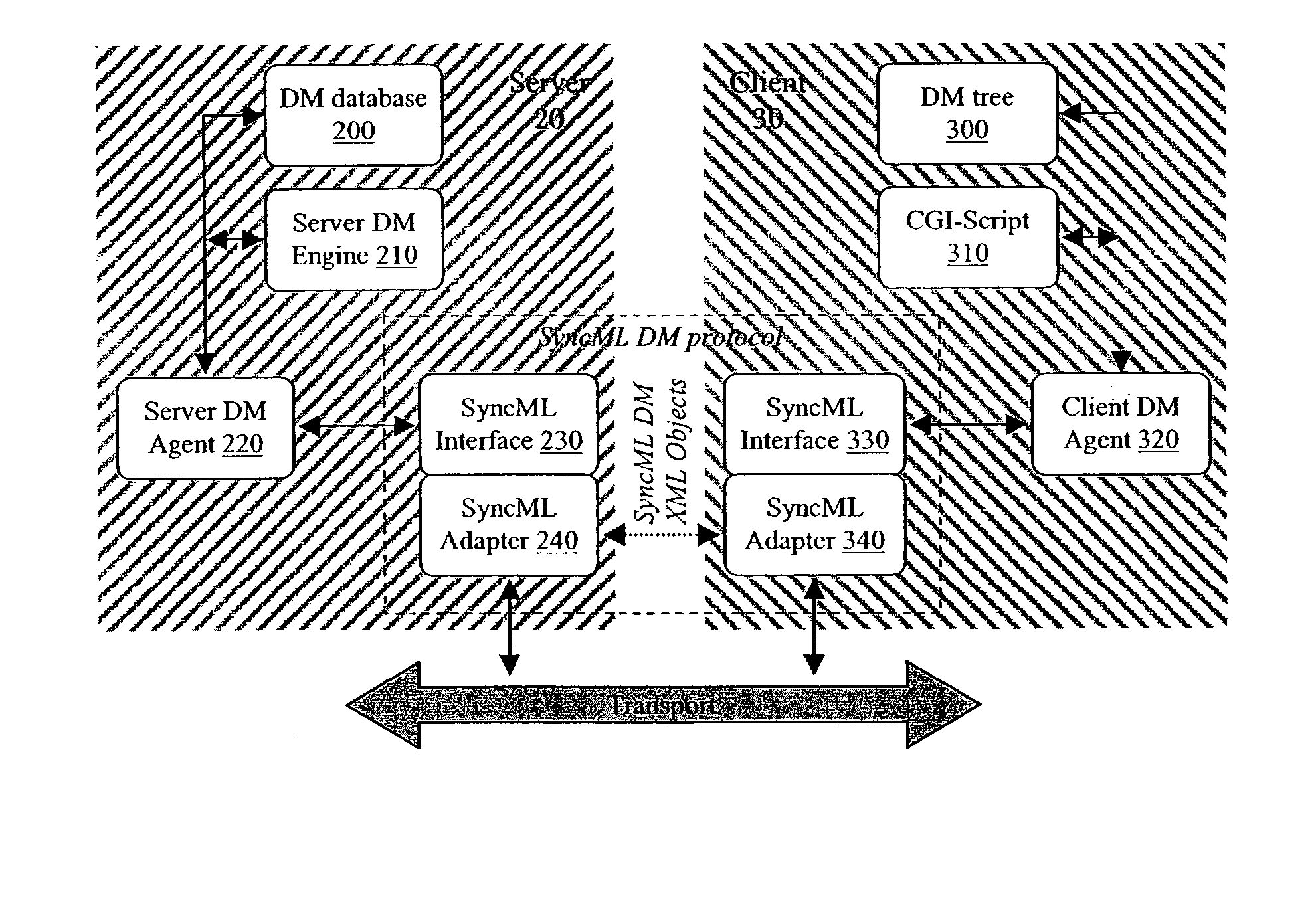

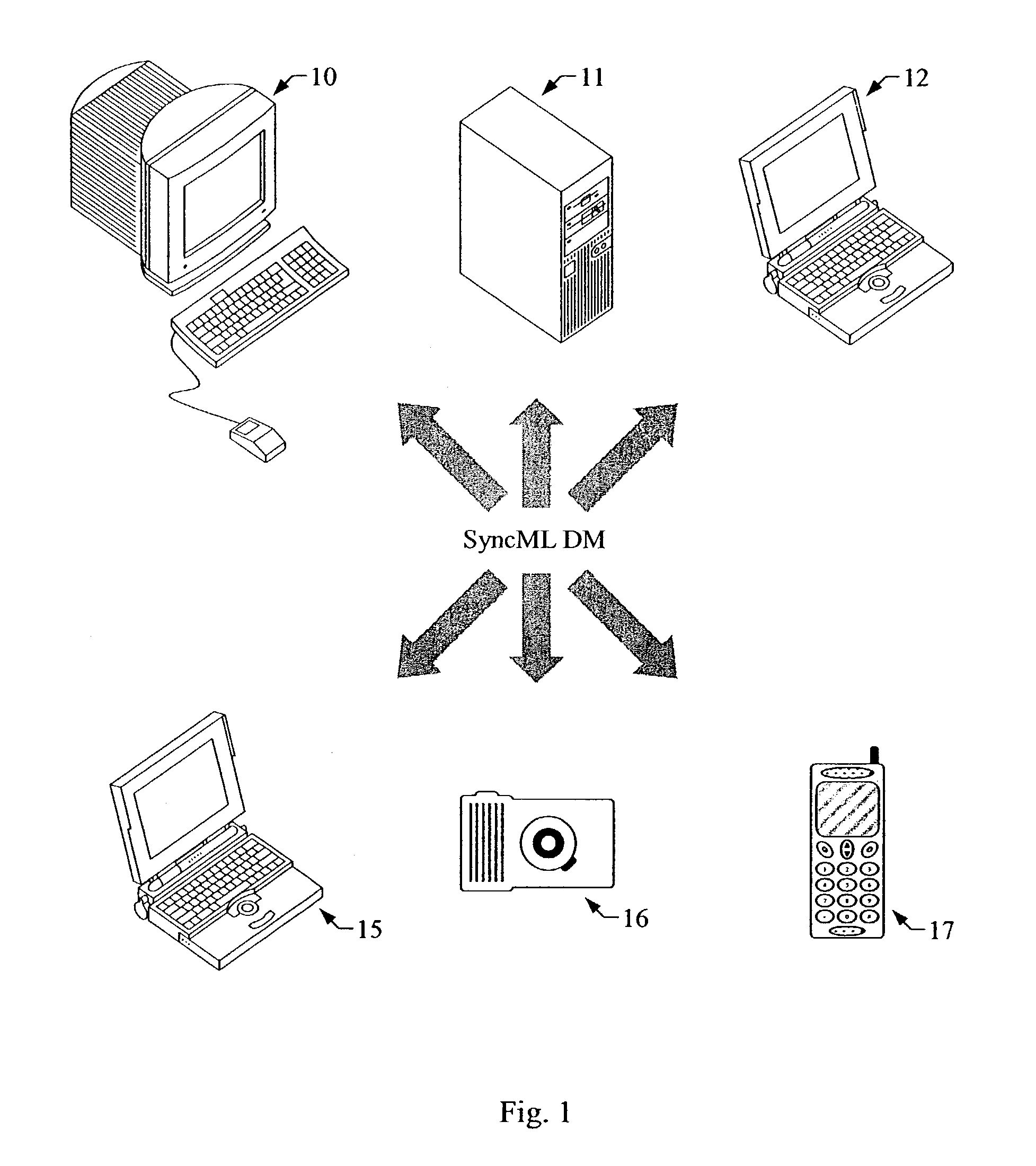

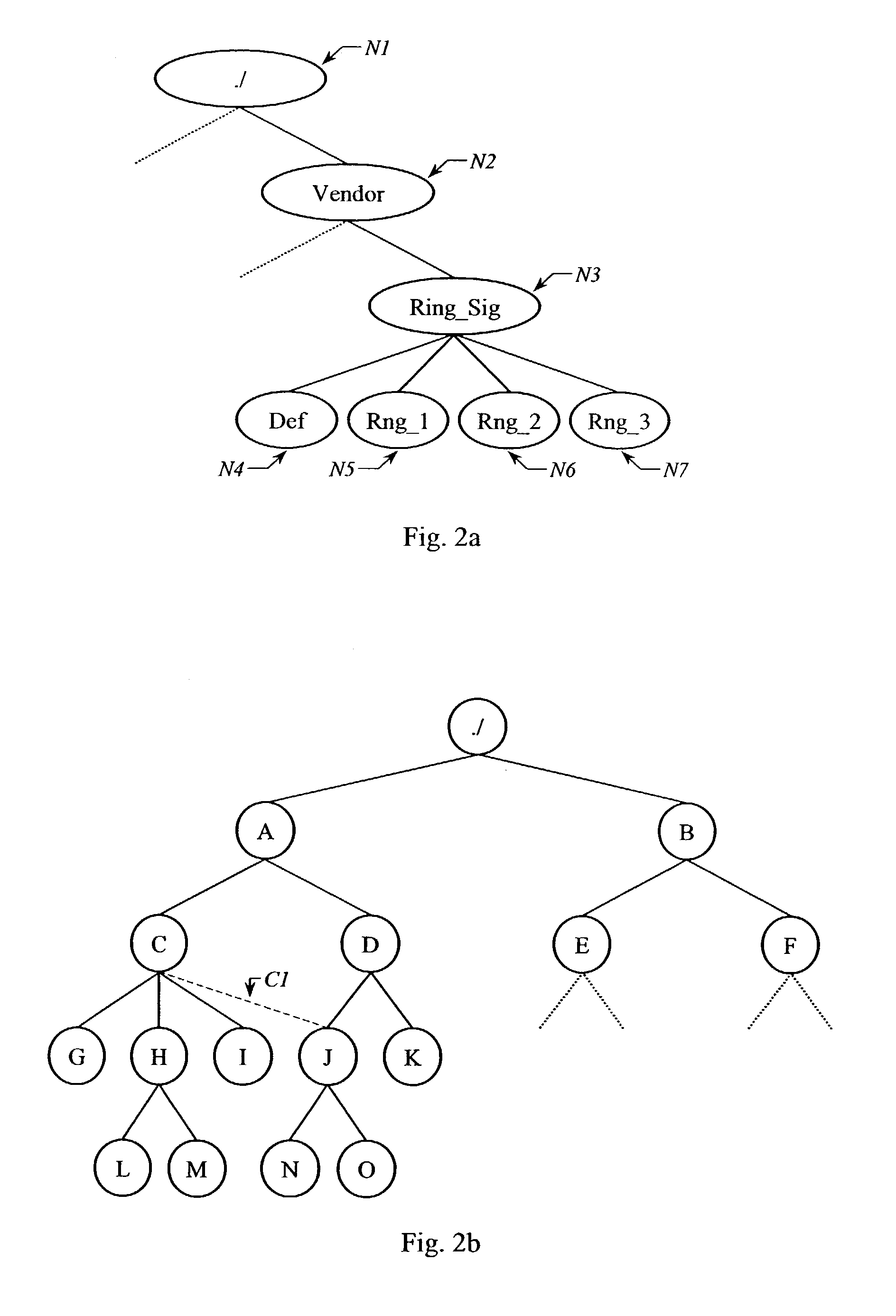

Method and device for management of tree data exchange

ActiveUS7269821B2Efficient and economical and timeService provisioningDigital computer detailsTime efficientManagement process

A management tree or nodes arranged hierarchically tree-like, respectively, is used to manage, contain and map information of a manageable device according to the SyncML DM protocol standard. A management server can request from such a device, by means of a GET command, information contained in a certain node of the management tree server. The manageable device responds by transmitting the requested information of the management tree. The inventive concept provides methods which allow a request of information not only from one single node but from a plurality of nodes at the same time. This leads to an efficient, time and cost saving management process.

Owner:NOKIA TECHNOLOGLES OY

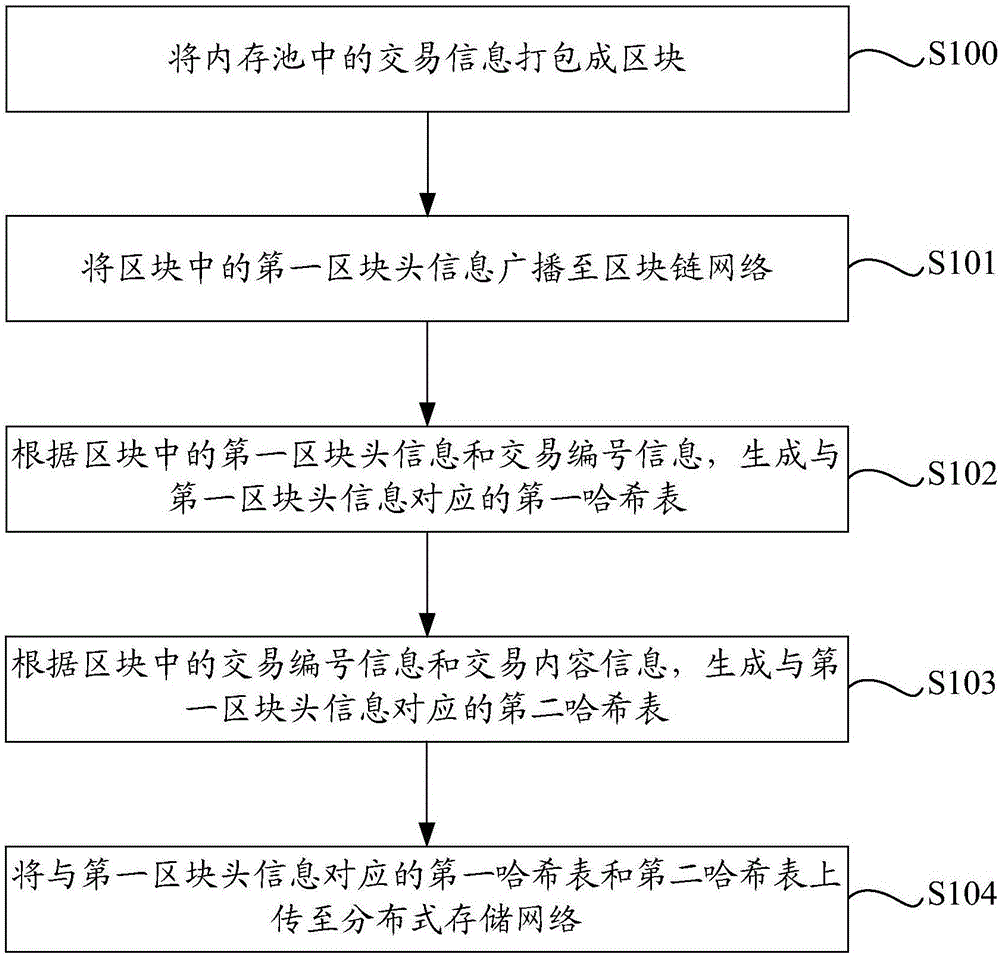

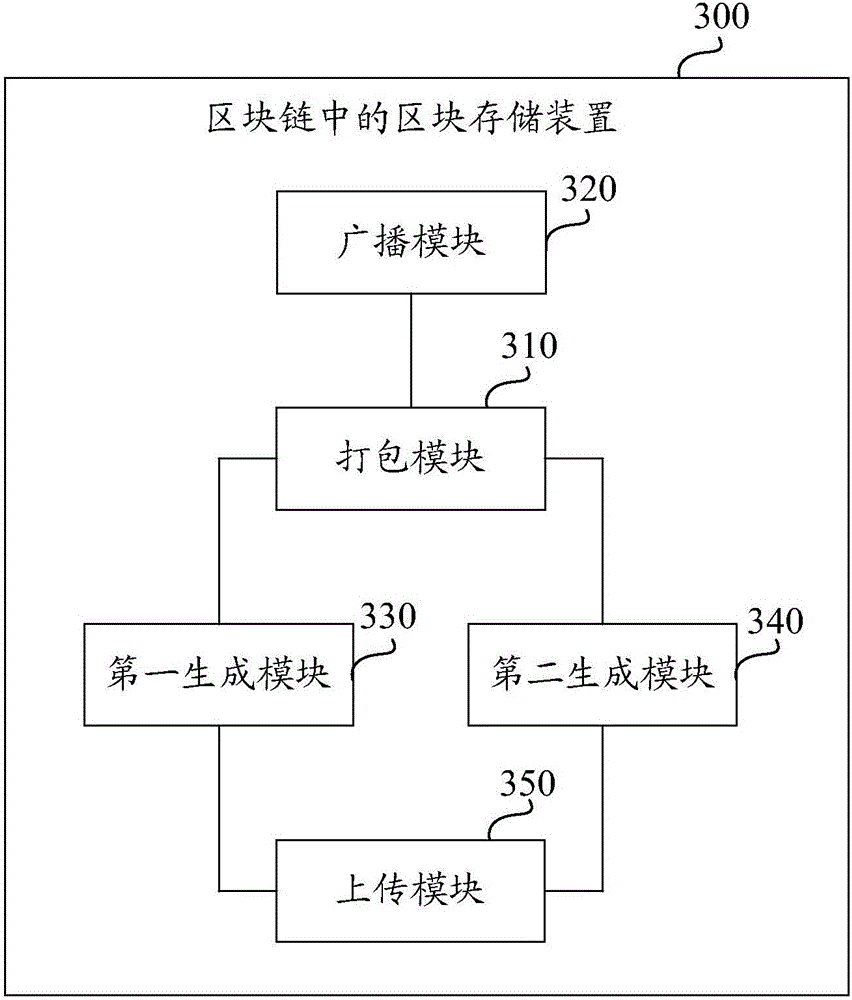

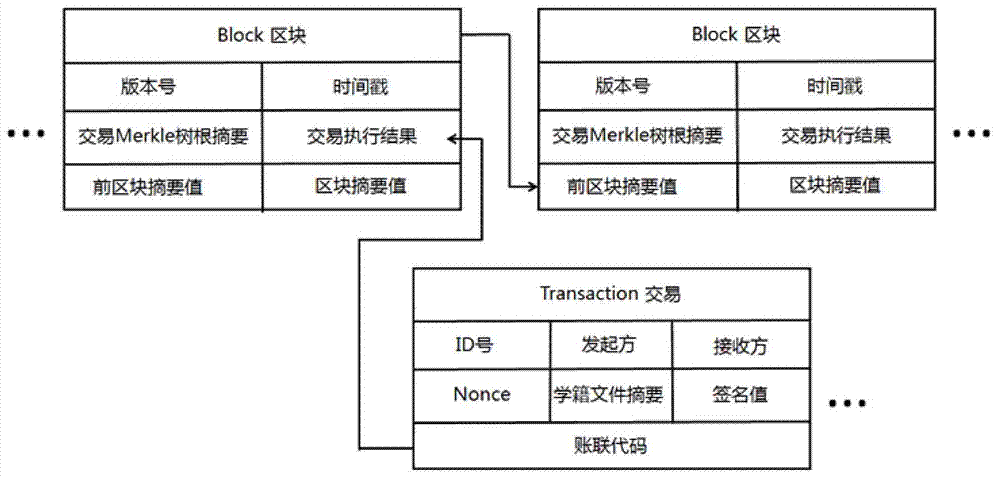

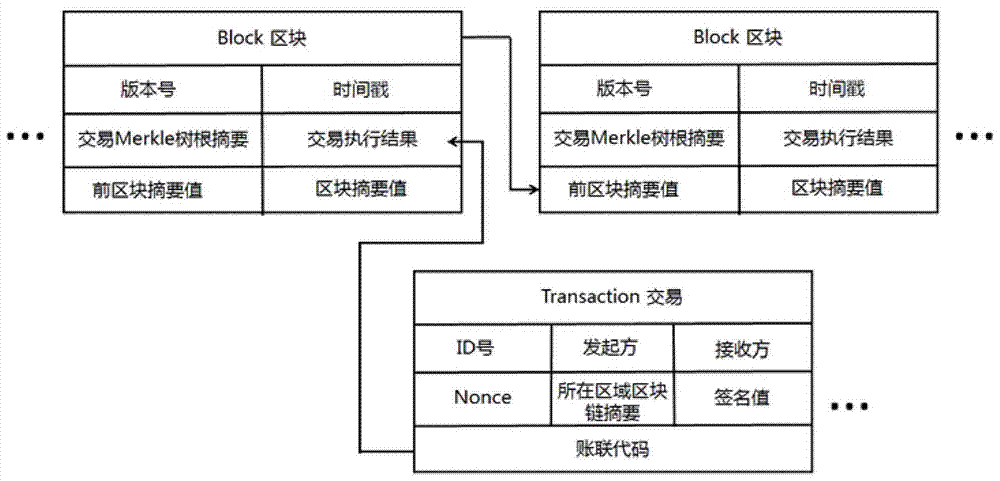

Block storage method and device in blockchain

The invention discloses a block storage method and a block storage device in a blockchain. The block storage method in the blockchain is implemented by a miner node, and comprises the steps of: packing transaction information in a memory pool into a block; broadcasting first block header information in the block to a blockchain network, wherein the first block header information comprises a block transaction serial number hash value; generating a first hash table corresponding to the first block header information according to the first block header information in the block and transaction serial number information; generating a second hash table corresponding to the first block header information according to the transaction serial number information in the block and transaction content information; and uploading the first hash table and the second hash table corresponding to the first block header information to a distributed storage network. By utilizing the block storage method and the block storage device provided by the invention, the pressure of network transmission and storage pressure of the single node are effectively alleviated.

Owner:JIANGSU PAYEGIS TECH CO LTD

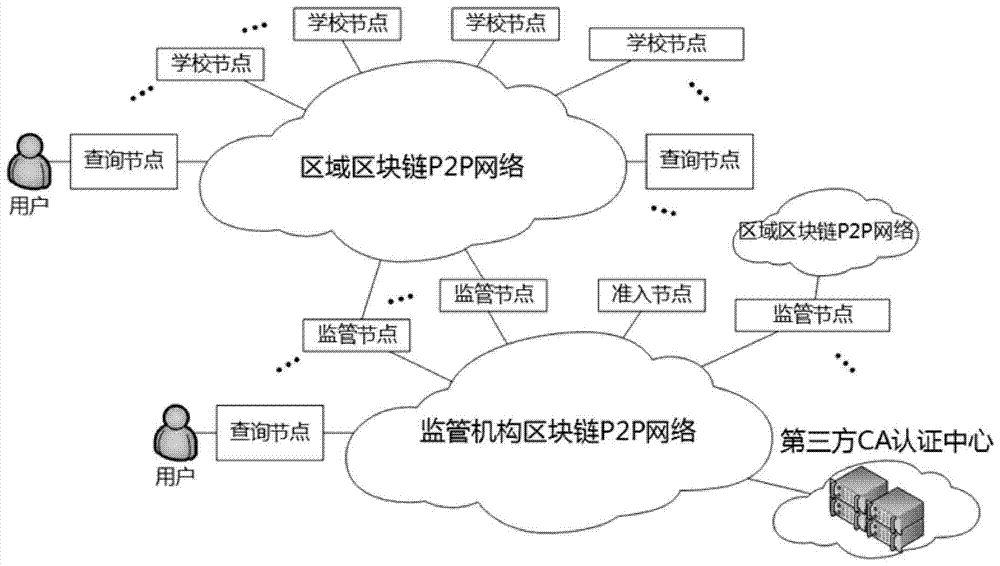

School roll tracing authentication method based on block chains

ActiveCN107257341AProtect student privacyRetrospective Certification RealizationData processing applicationsTransmissionThird partyAuthentication

The invention discloses a school roll tracing authentication method based on block chains. The school roll tracing authentication method has the implementing process of: firstly, configuring a school block chain which comprises a plurality of region block chains, wherein in each region block chain, an independent P2P network is formed by a plurality of school nodes; configuring a monitoring block chain, and forming an independent P2P network by a plurality of monitoring nodes corresponding to the school block chain and at least one admission node; configuring a third-party CA authentication center, and connecting the monitoring block chain for issuing certificates to the school nodes which pass authentication determination; and configuring an inquiring node and implementing verification and tracing of school roll files by inquiring the school block chain and the monitoring block chain. Compared with the prior art, according to the school roll tracing authentication method based on the block chains, which is disclosed by the invention, partitioning is carried out according to regions, generation of the block chains can be more efficiently completed, and calculation efficiency is improved; and a plurality of monitoring mechanisms exist in each region, and admission of the nodes is strictly controlled, thereby benefiting for improving supervision and effectively solving the problem of single node failure.

Owner:SHANDONG INSPUR SCI RES INST CO LTD

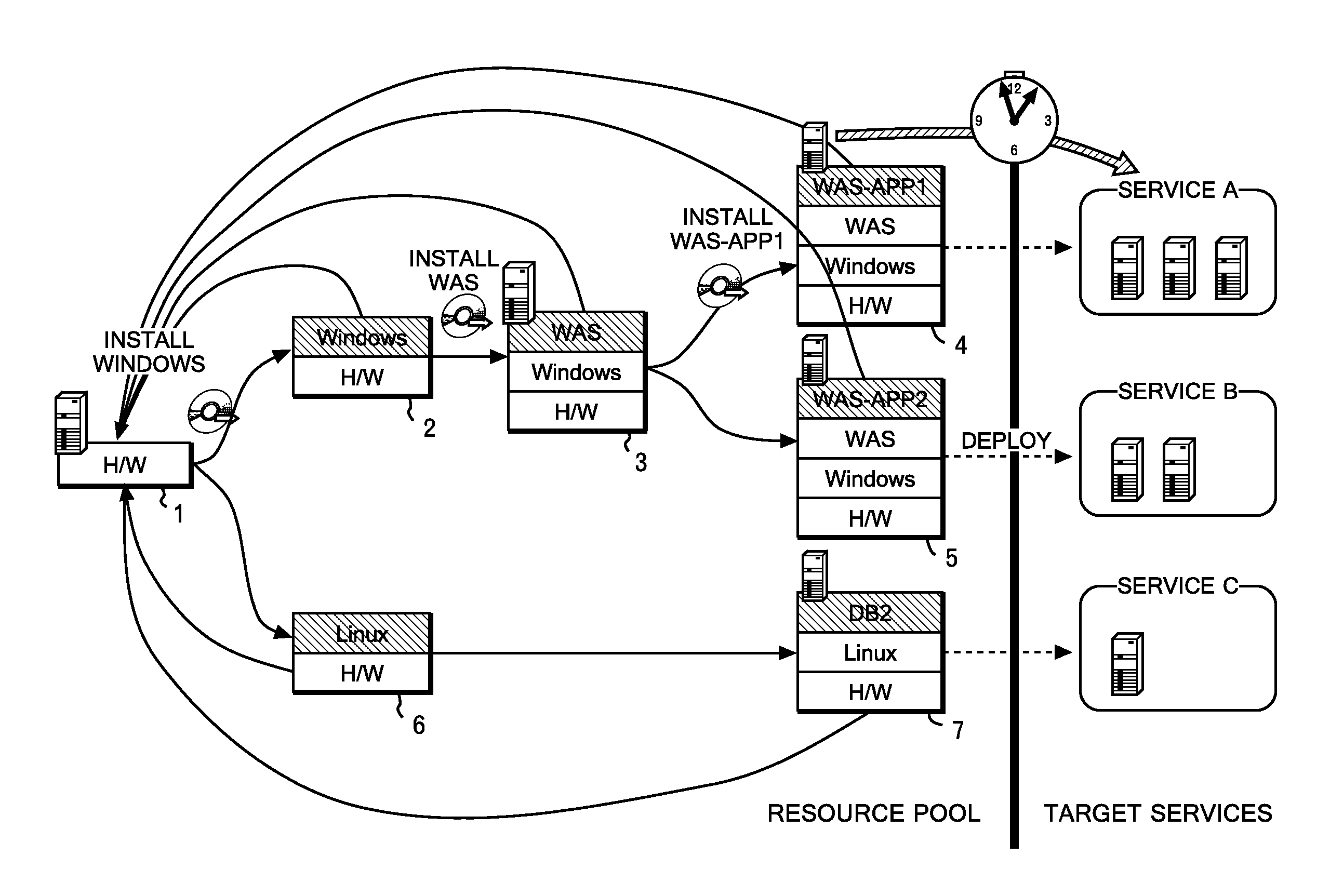

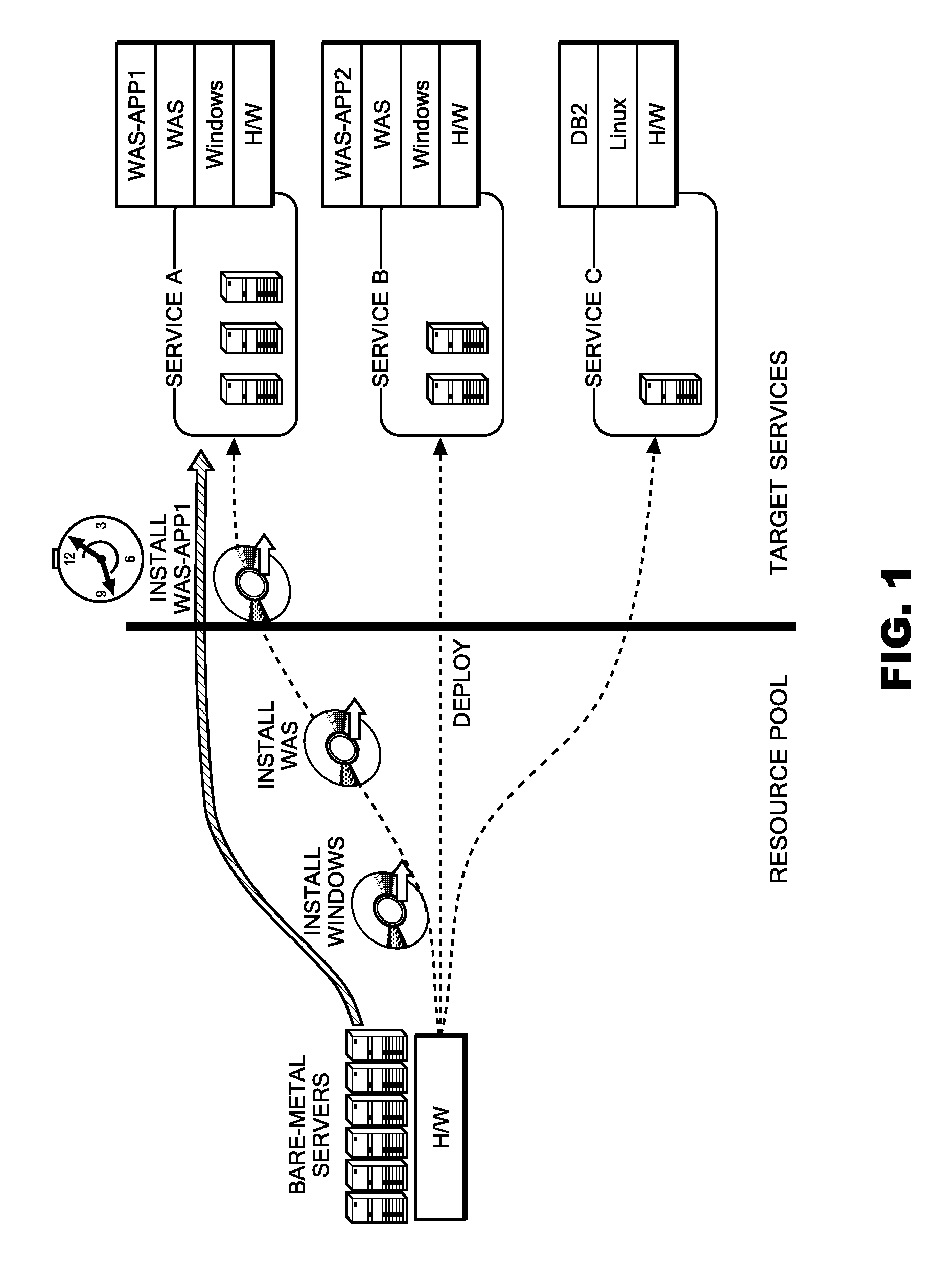

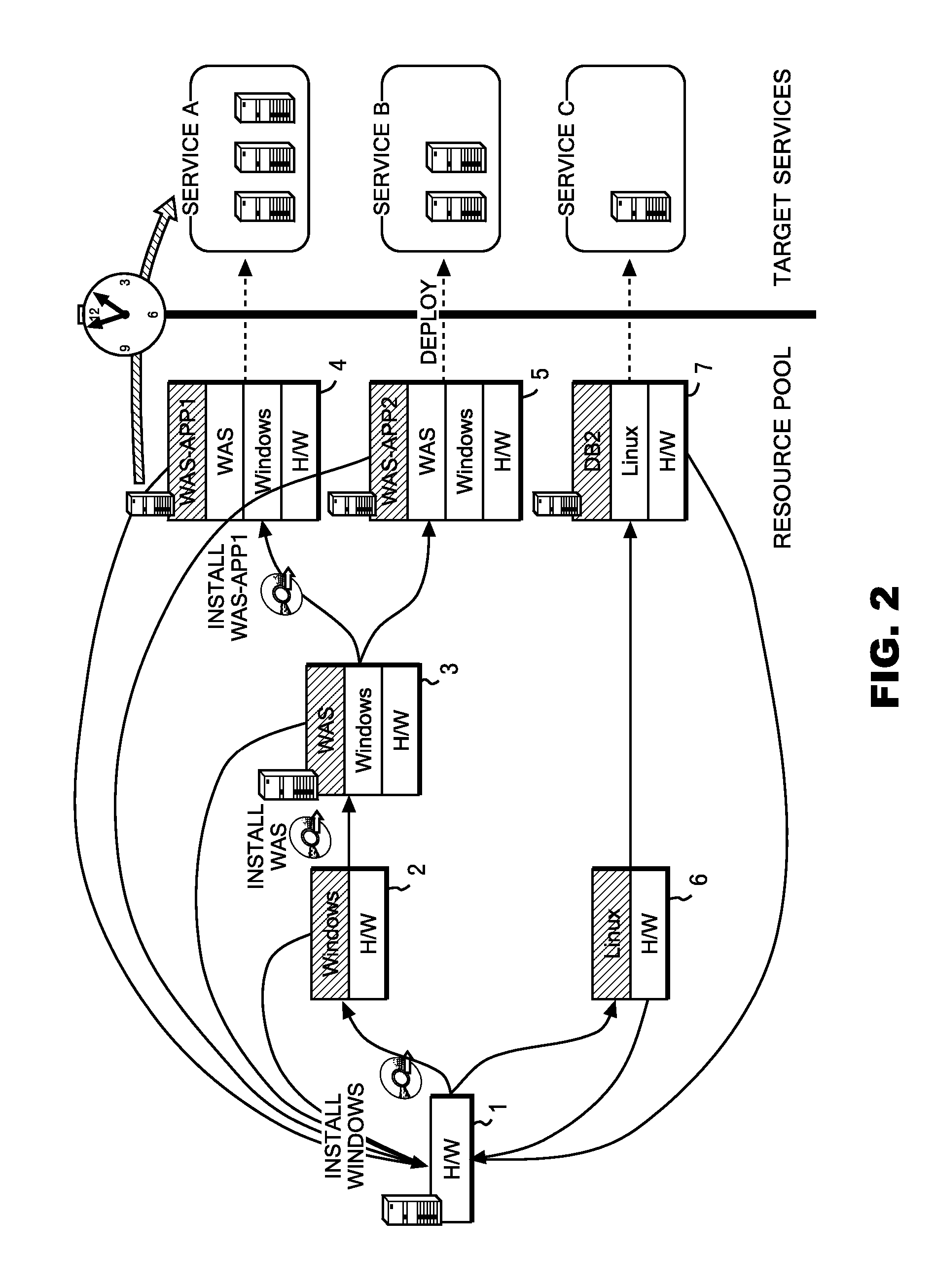

Method for provisioning resources

A present IT environment, in particular, a place such as a data center in which resources are concentrated at one place, requires a mechanism (provisioning) for allocating an excess resource (a server, a network apparatus, a storage, or the like) to the service in response to a load fluctuation of a service. In some cases, setting operations occurring in the process of the provisioning are time-demanding. In those cases, it is impossible to respond to abrupt load fluctuations. [Solving means] Procedures starting from an initial state of the resource and ending in a state where each of resources is deployed for each service are identified, and the procedures are expressed as a directed graph having a state of the resource in each phase as a node (stage), and having a setting operation as an edge. There are some cases, however, where even for deployments intended for different services, an initial state and some of intermediate states are common. In those cases, those common intermediate states are expressed as a single node. When resources are allocated to nodes near to a state where a service is developed, it is made possible to reduce a time required for provisioning to the service.

Owner:IBM CORP

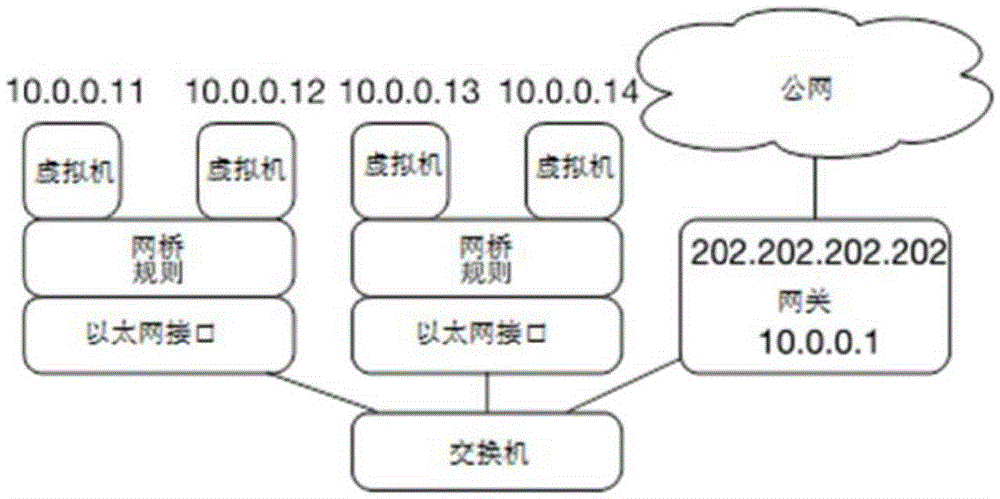

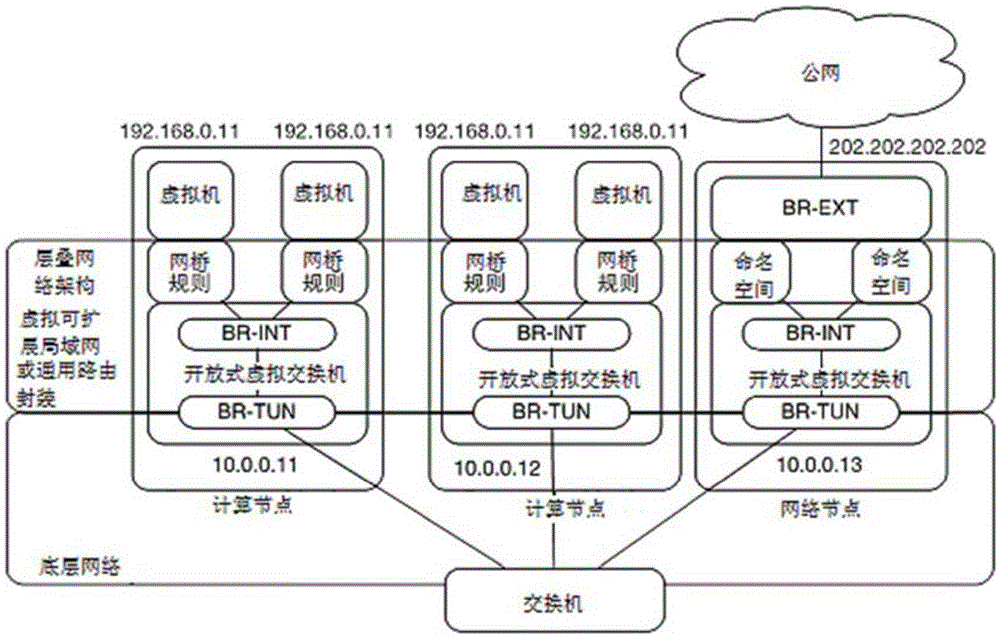

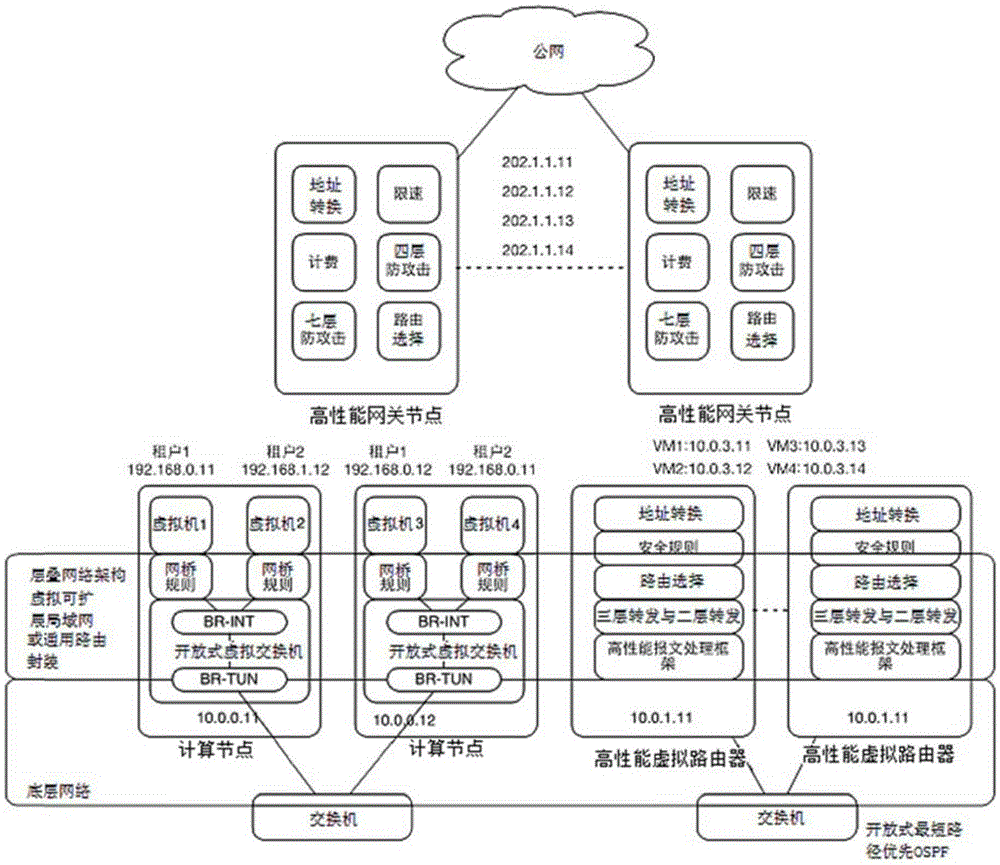

Multi-tenant-oriented cloud network architecture

ActiveCN105391771ADoes not affect availabilityImprove performanceData switching networksPrivate networkIp address

The invention discloses a multi-tenant-oriented network architecture. The cloud network architecture comprises computing nodes, a virtual router cluster and a cloud gateway. Virtual machines which are included in the computing nodes perform message exchange with a public server in a private network through the virtual router cluster. Furthermore message exchange between the virtual machines and a public network is realized through the virtual router cluster and the cloud gateway, wherein the virtual router cluster comprises at least two virtual routers, and each virtual router transmits a same IP address to a private network switch. The cloud gateway comprises at least two gateway nodes. Each gateway node transmits an equivalent default router to the private network switch. Furthermore each gateway node transmits a same floating IP address to a public network router or a public network switch, thereby realizing cluster expansion of the cloud network architecture, preventing serviceability reduction of the whole network caused by fault of a single node and improving defensive capability of the network to attacks.

Owner:北京云启志新科技股份有限公司

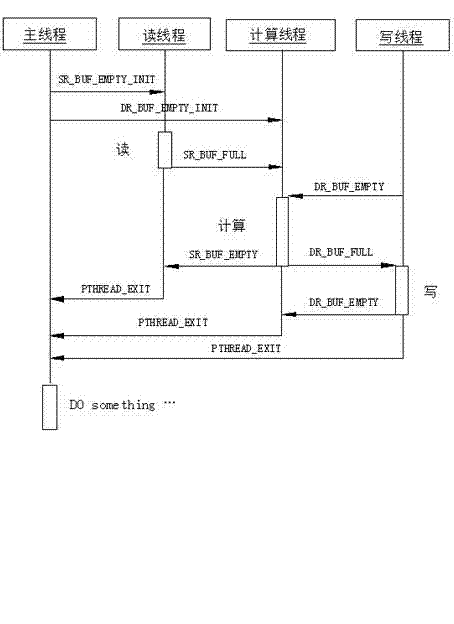

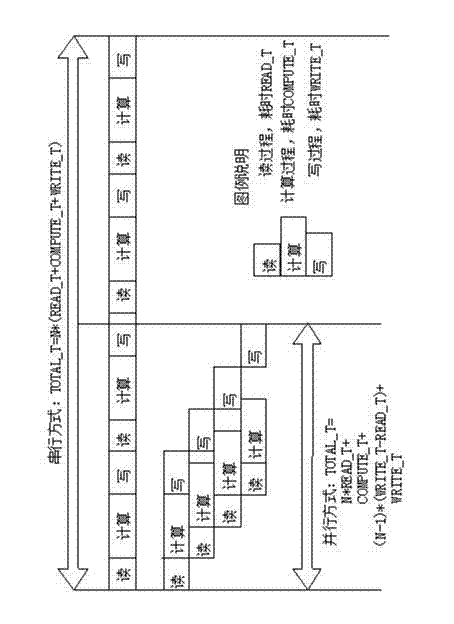

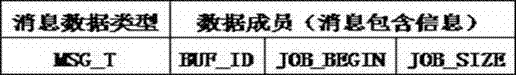

Multi-thread parallel processing method based on multi-thread programming and message queue

ActiveCN102902512AFast and efficient multi-threaded transformationReduce running timeConcurrent instruction executionComputer architectureConcurrent computation

The invention provides a multi-thread parallel processing method based on a multi-thread programming and a message queue, belonging to the field of high-performance computation of a computer. The parallelization of traditional single-thread serial software is modified, and current modern multi-core CPU (Central Processing Unit) computation equipment, a pthread multi-thread parallel computing technology and a technology for realizing in-thread communication of the message queue are utilized. The method comprises the following steps of: in a single node, establishing three types of pthread threads including a reading thread, a computing thread and a writing thread, wherein the quantity of each type of the threads is flexible and configurable; exploring multi-buffering and establishing four queues for the in-thread communication; and allocating a computing task and managing a buffering space resource. The method is widely applied to the application field with multi-thread parallel processing requirements; a software developer is guided to carry out multi-thread modification on existing software so as to realize the optimization of the utilization of a system resource; and the hardware resource utilization rate is obviously improved, and the computation efficiency of software and the whole performance of the software are improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

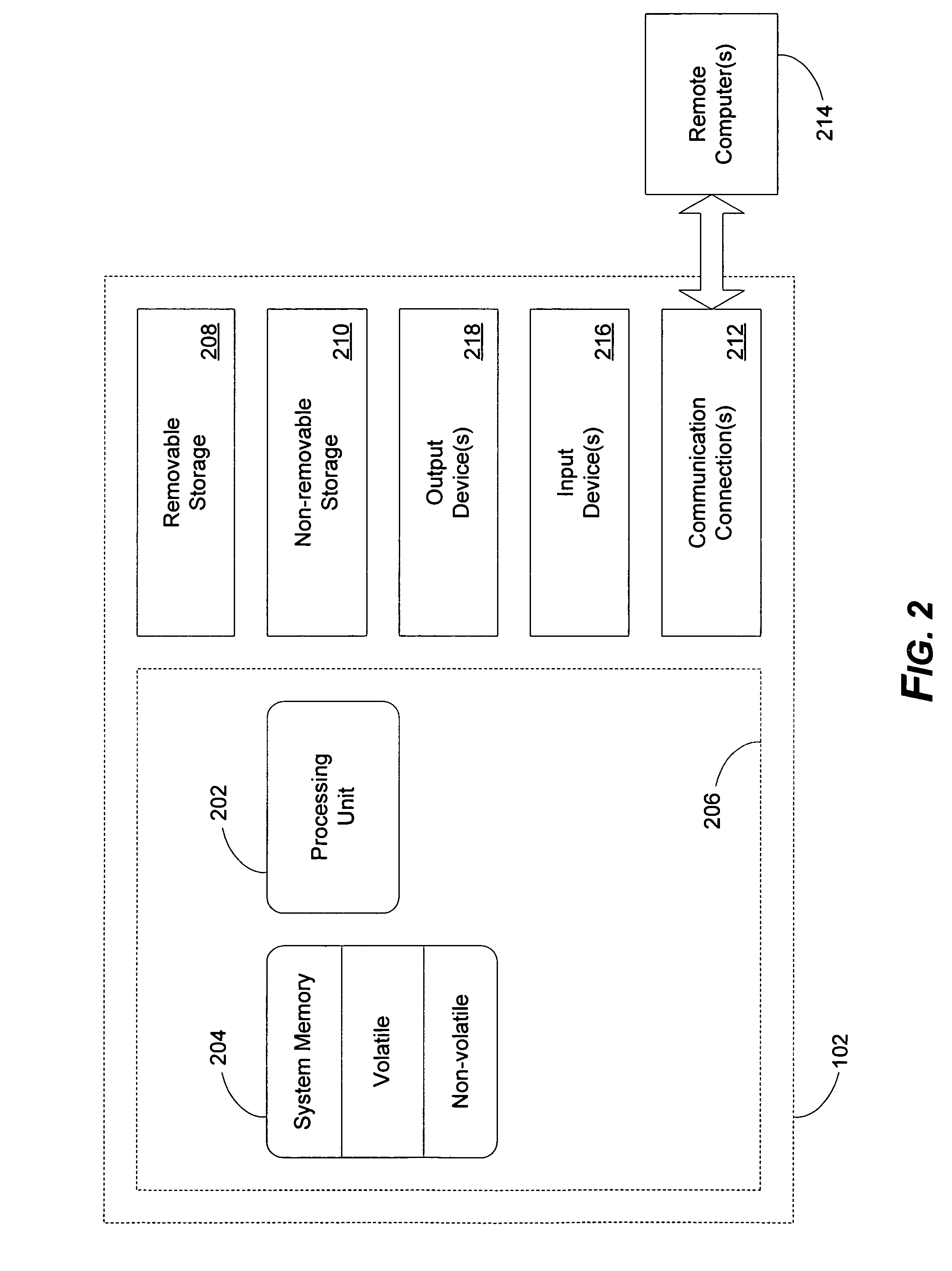

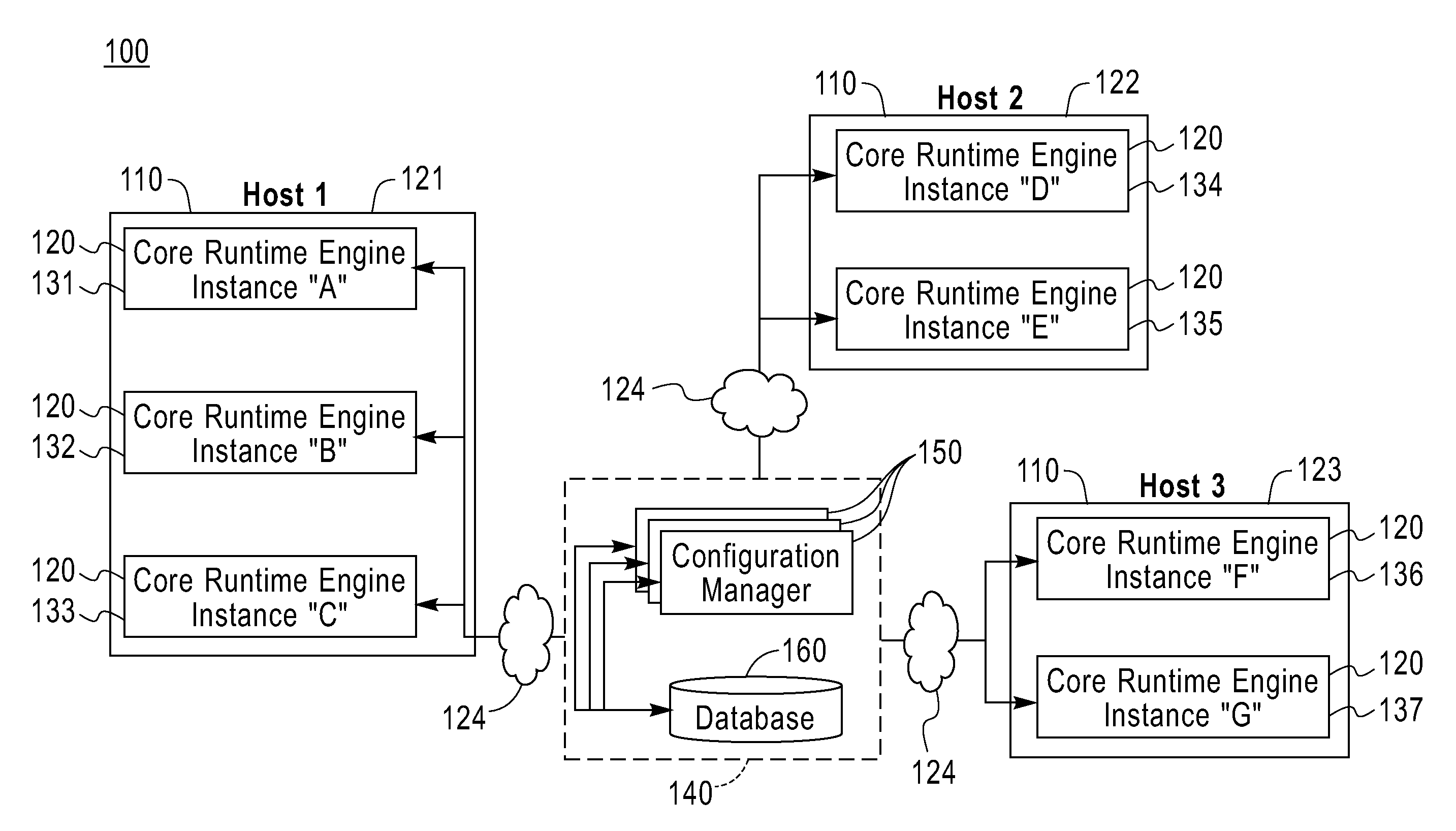

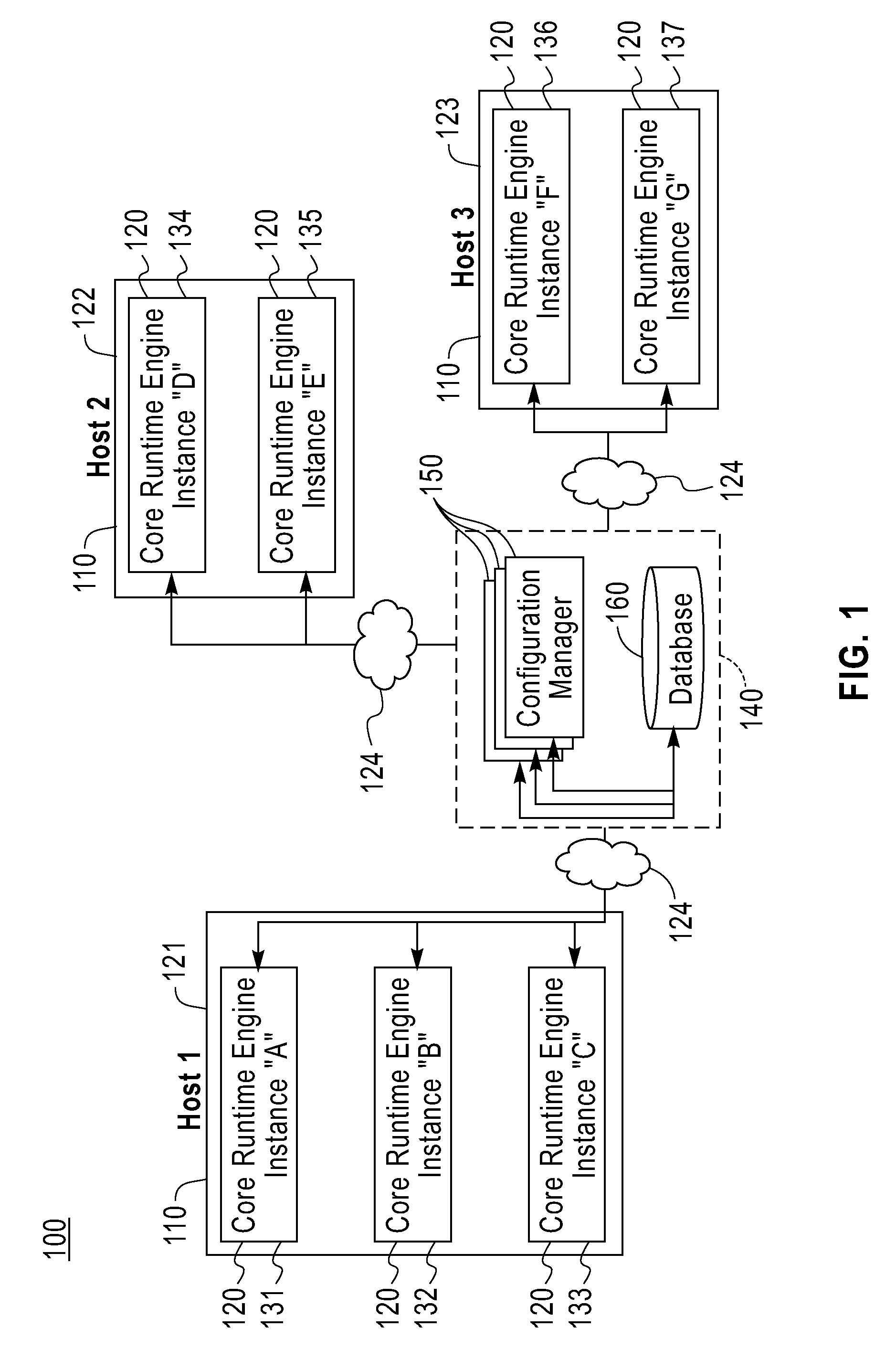

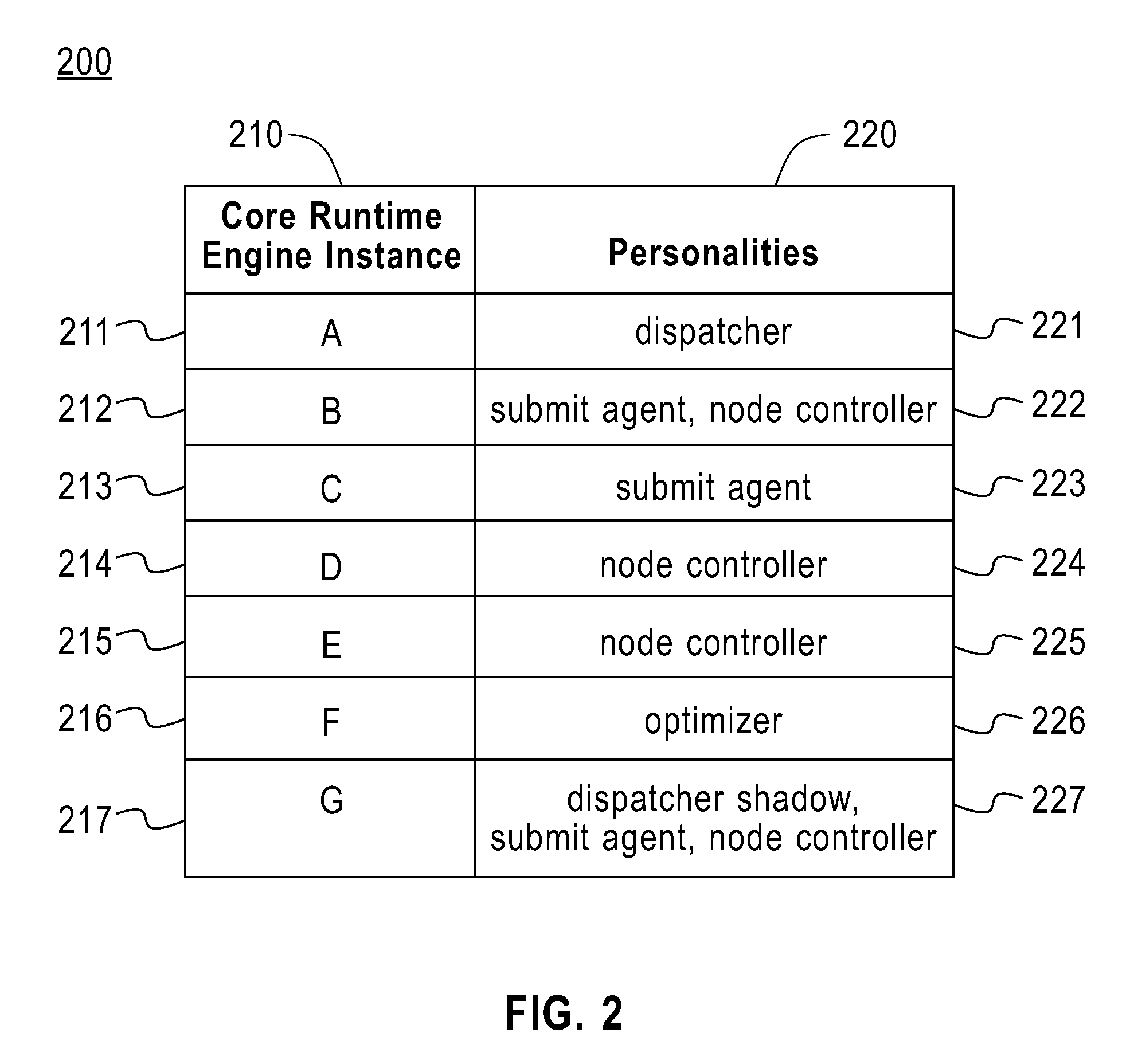

Distributed Pluggable Middleware Services

InactiveUS20080235710A1Improve utilizationEasy to manageSpecific program execution arrangementsMemory systemsProgramming languageApplication software

Plug-in configurable middleware is provided for managing distributed applications. The middleware includes at least one core runtime engine configured as a plurality of concurrent instantiations on one or more hosts within a distributed architecture. These hosts can represent separate nodes or a single node within the architecture. Each core runtime engine instance provides the minimum amount of functionality required to support plug-in architecture, that is to support the instantiation of one or more plug-ins within that core runtime engine instance. Each core runtime engine instance is in communication with other concurrent core runtime engine instances and can share the functionality of plug-in instances with the other core runtime engine instances, for example through the use of proxies. A plurality of personalities representing pre-defined functions is defined and one of more of these personalities is associated with each core runtime engine instance. A plurality of pre-defined plug-ins are defined and associated with the personalities. Each plug-in is a unit of function containing runtime code that provides a portion of the function a personality to which the plug-in is associated. The plug-ins are instantiated on the appropriate core runtime instances to provide the function to that core runtime engine instance as defined in the associated personality.

Owner:IBM CORP

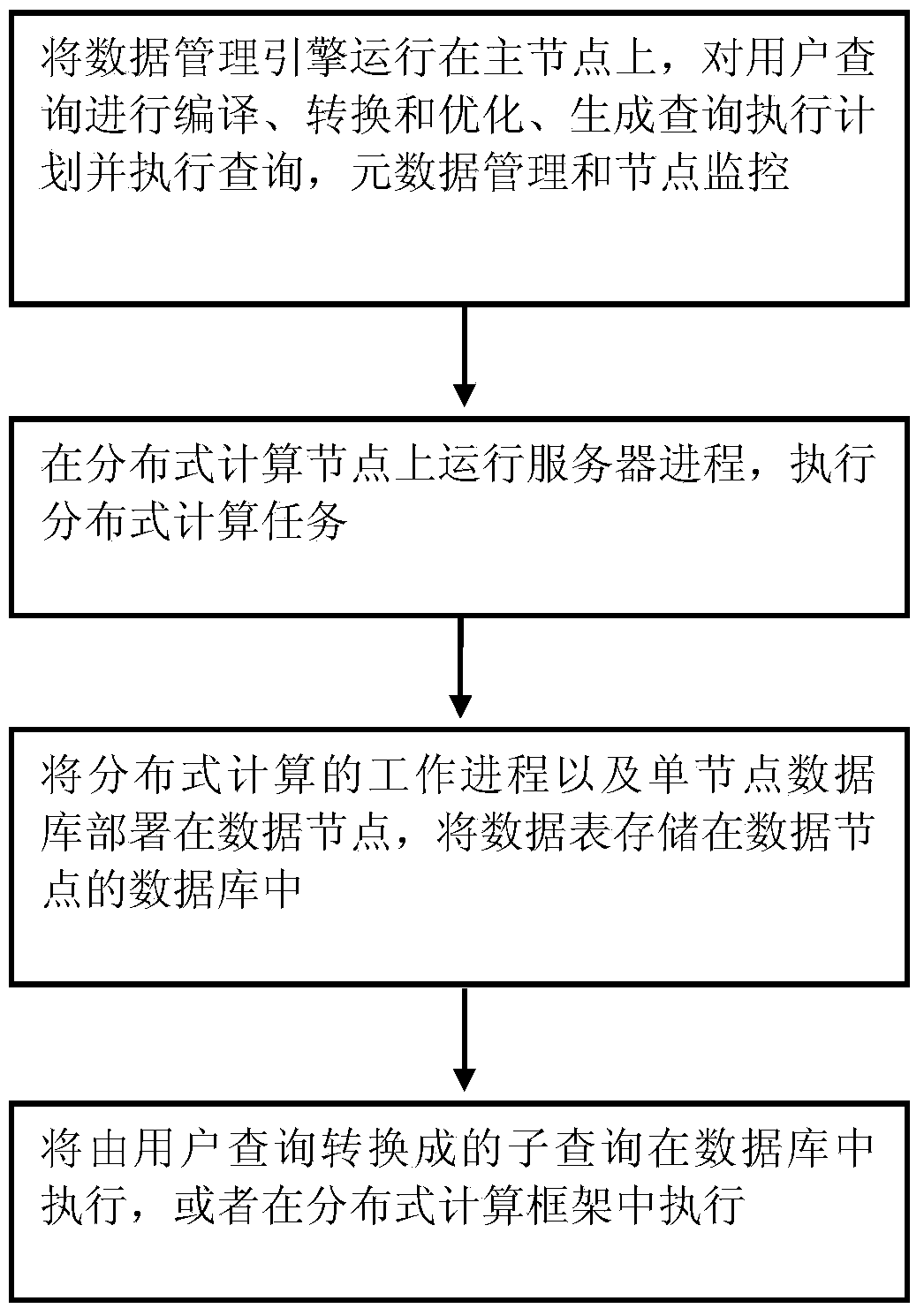

Big data distributed storage method and system

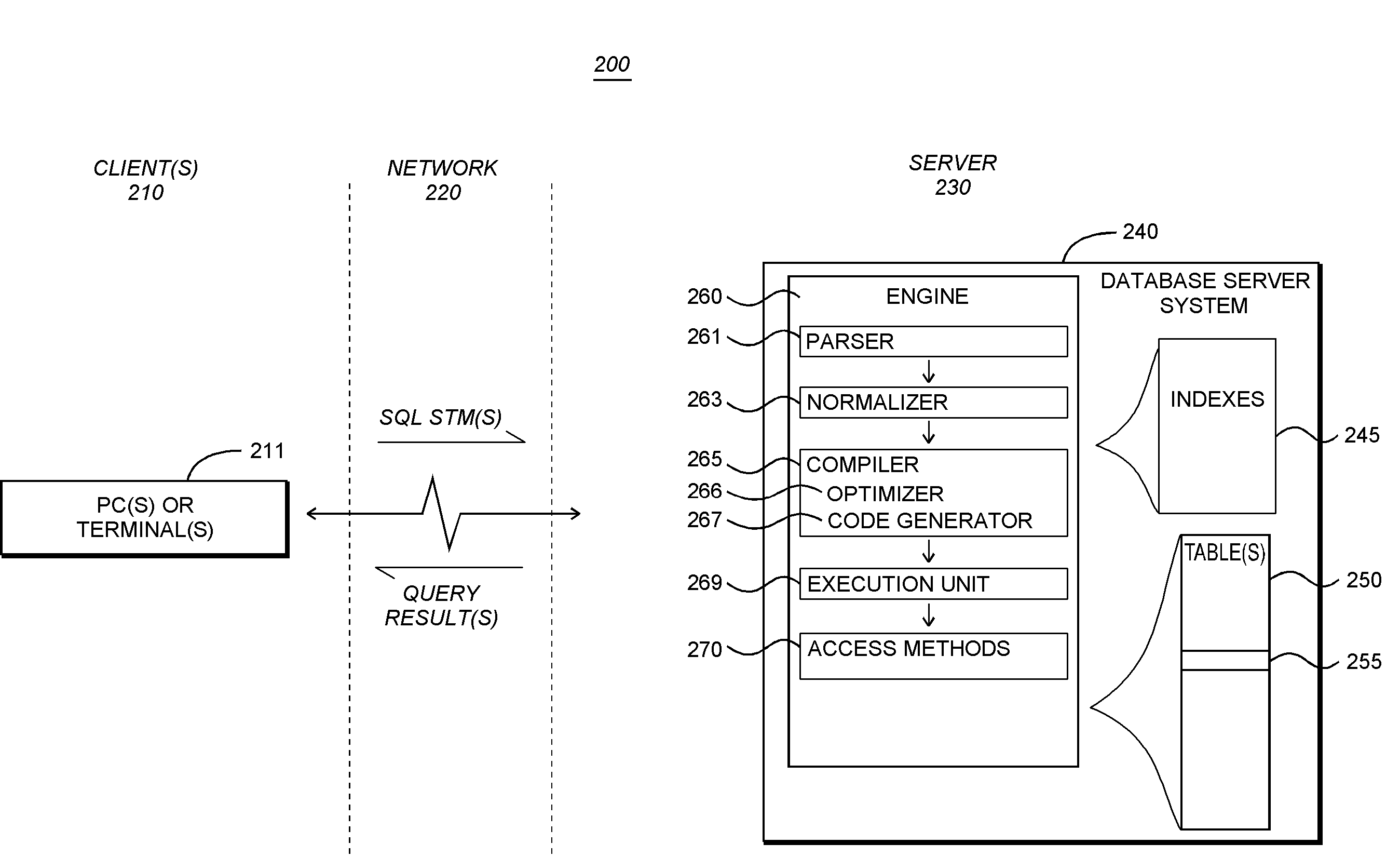

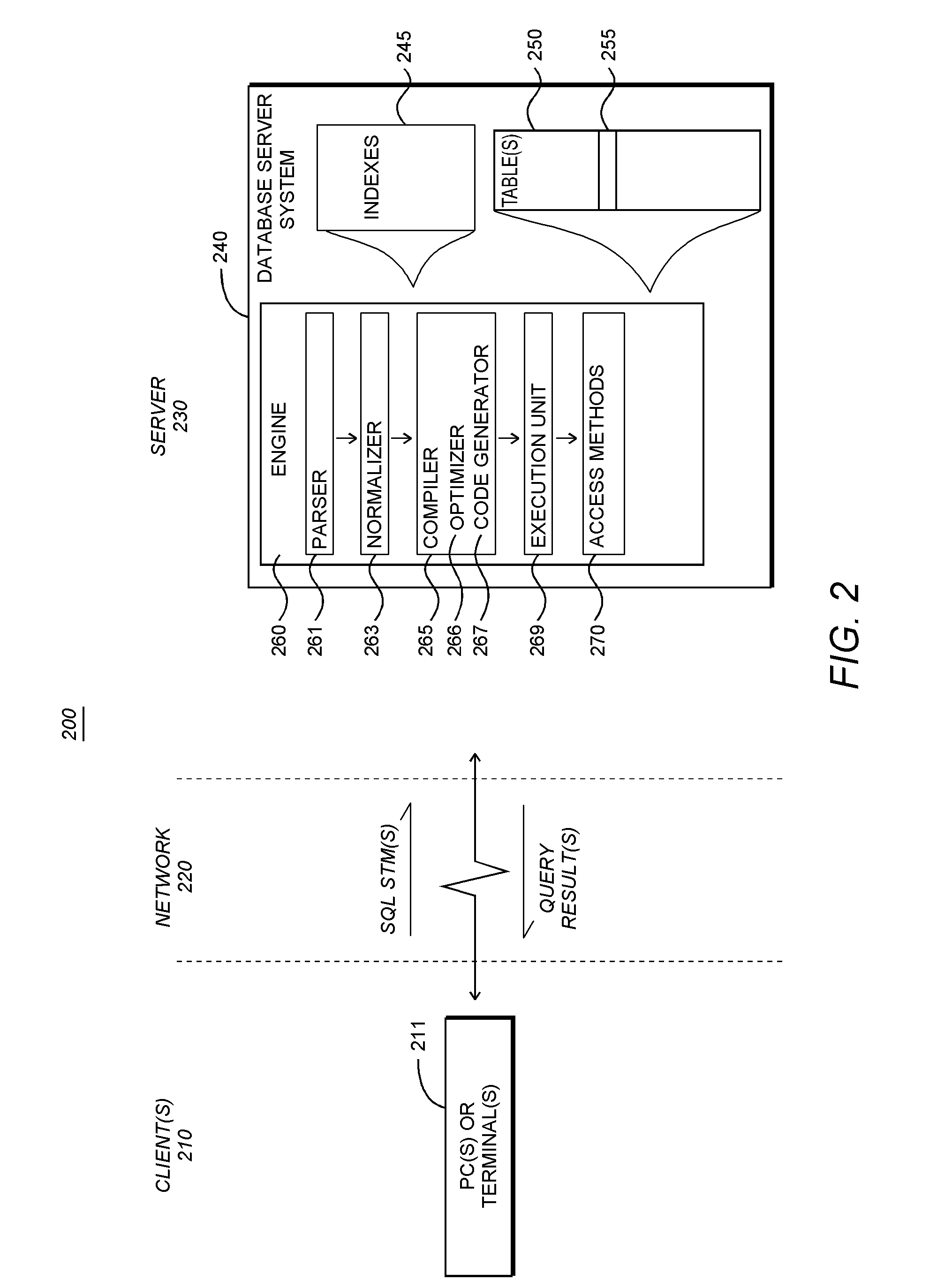

InactiveCN104063486AAvoid transmission costsImproved distributed storage methodSpecial data processing applicationsExecution planMetadata management

The invention provides a big data distributed storage method and system. The method comprises the steps of operating a data management engine on a main node, conducting compiling, conversion and optimization on user queries, generating and executing a query executing plan, and conducting metadata management and node monitoring; operating server processes on a distributed computational node and executing a distributed computation task; deploying the working processes of distributed computation and a single-node database on a data node; executing a subquery in the database or in a distributed computation frame. According to the big data distributed storage method and system, the opportunities that the queries are pushed down to the database to be executed are increased, data transmission cost caused by cross-node connection is avoided, and query performance is improved.

Owner:SICHUAN FEDSTORE TECH

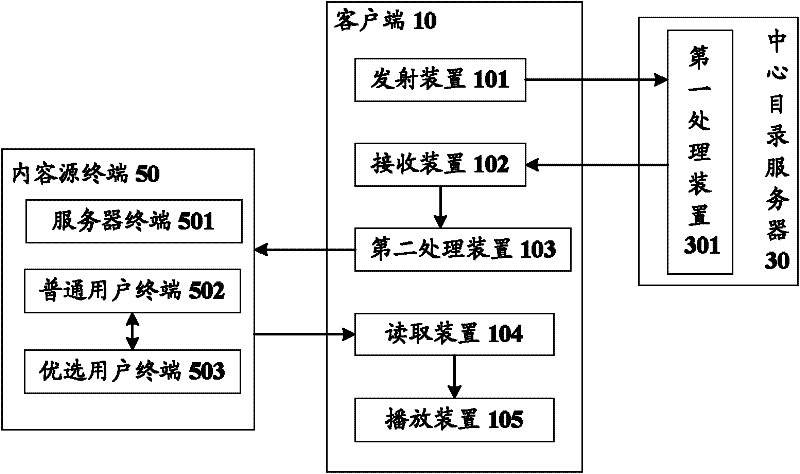

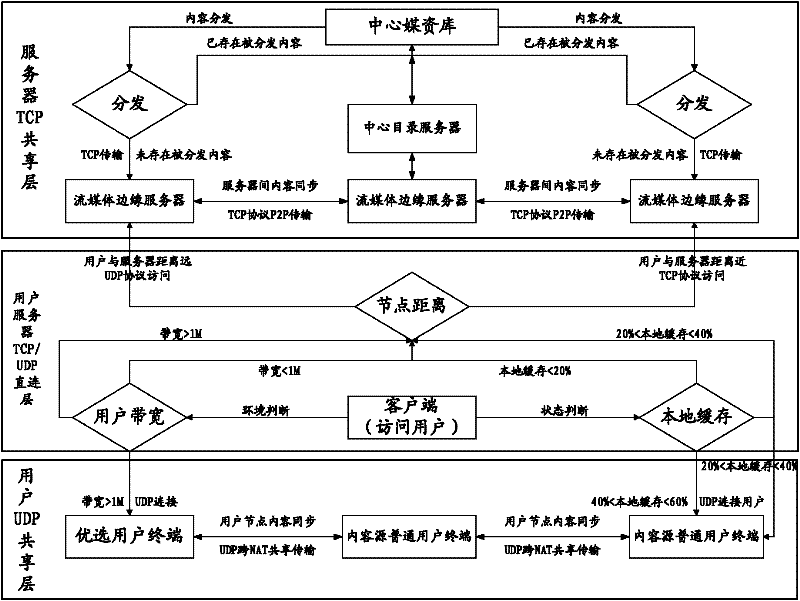

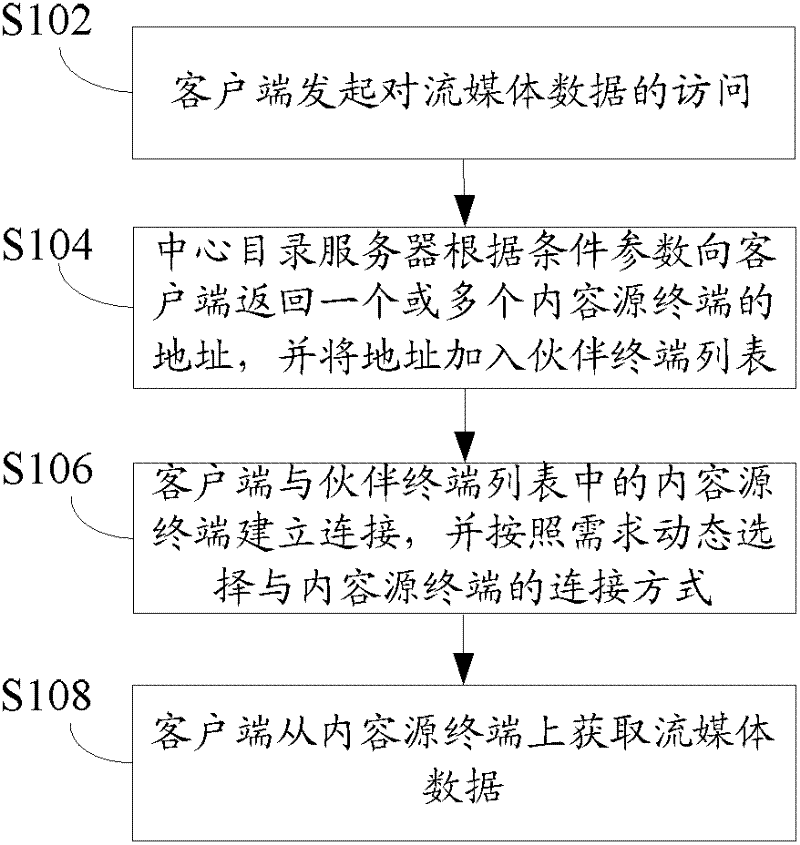

Cloud streaming media data transmission method and system

InactiveCN102355448AGuaranteed timelinessGuarantee stabilityTransmissionNetwork conditionsComputer terminal

The invention discloses a cloud streaming media data transmission method and a cloud streaming media data transmission system. The method comprises that: a client initiates access to streaming media data; a central directory server returns the address(es) of one or more content source terminals to the client according to condition parameters, and adds the address(es) to a partner terminal list; the client establishes connection with the content source terminal in the partner terminal list, and dynamically selects a way for the connection with the content resource terminal according to needs; and the client acquires the streaming media data from the content source terminal. By the method and the system, the streaming media data can be distributed according to the needs by acquiring dynamic data based on own network conditions of the client, the problem that the transmission timeliness and stability of the streaming media data cannot be ensured by the streaming media transmission methods in related technologies is solved, and the acquisition timeliness and stability of the streaming media data can be ensured in failures such as network jitter, invalidation of a single node and the like.

Owner:卫小刚

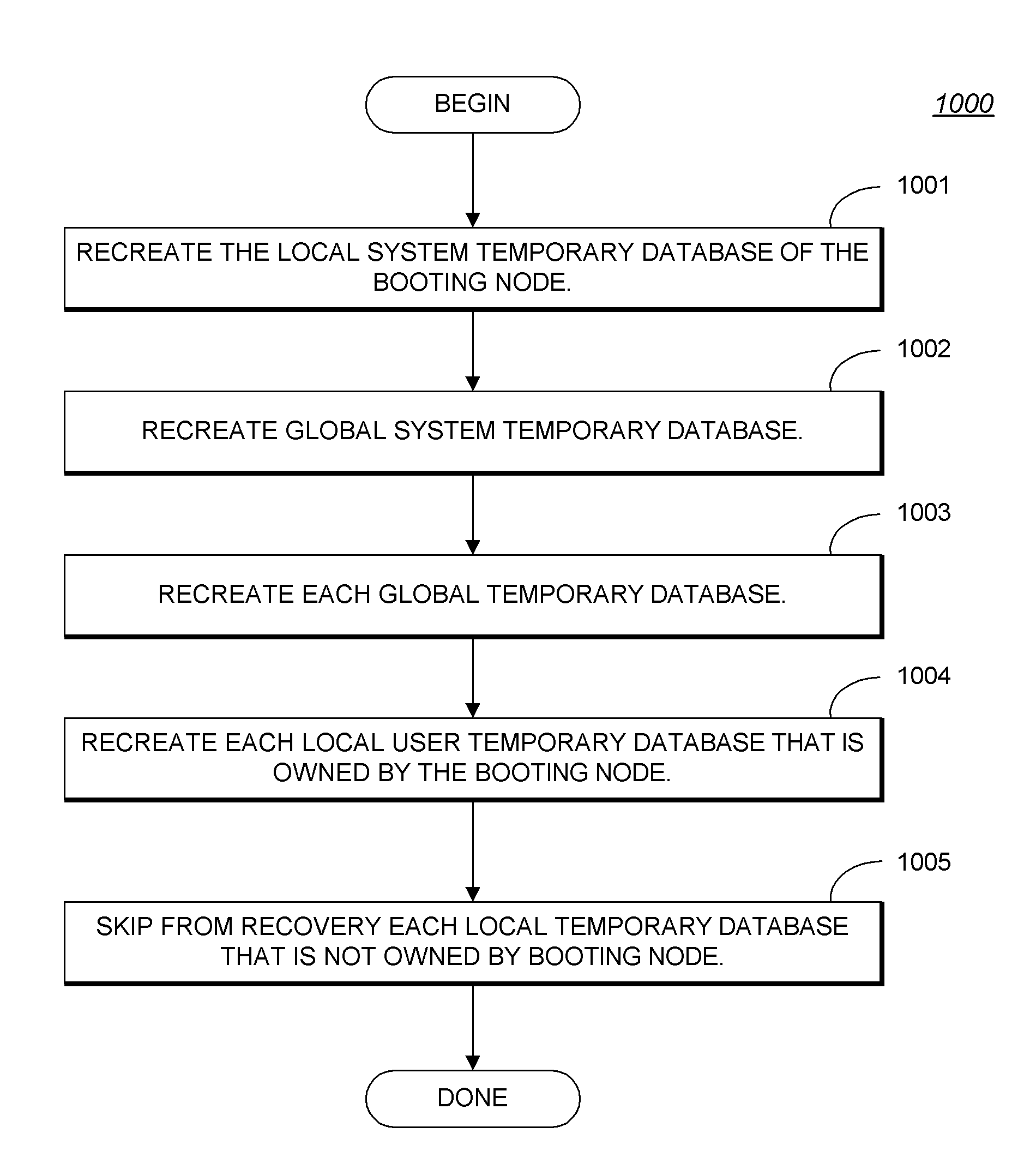

System and Methods For Temporary Data Management in Shared Disk Cluster

ActiveUS20080086480A1Digital data processing detailsError detection/correctionCluster systemsData management

System and methods for temporary data management in shared disk cluster configurations is described. In one embodiment, a method for managing temporary data storage comprises: creating a global temporary database accessible to all nodes of the cluster on shared storage; creating a local temporary database accessible to only a single node (owner node) of the cluster; providing failure recovery for the global temporary database without providing failure recovery for the local temporary database, so that changes to the global temporary database are transactionally recovered upon failure of a node; binding an application or database login to the local temporary database on the owner node for providing the application with local temporary storage when connected to the owner node; and storing temporary data used by the application or database login in the local temporary database without requiring use of write ahead logging for transactional recovery of the temporary data.

Owner:SYBASE INC

Multi-tenant data management model for SAAS (software as a service) platform

InactiveCN103455512ASupport independent customizationSupport data unified managementSpecial data processing applicationsData accessData node

The invention provides a multi-tenant data management model for an SAAS (software as a service) platform. The multi-tenant data management model comprises a data storage unit, a data index unit and a data splitting and synchronous migration unit. According to the model, metadata are connected with user customized data in a data mapping mode, data indexes are built by the aid of position indexes and tenant logic indexes, the model provides complete customized data for tenants in a single node through the indexes, data of a single tenant are simultaneously operated in a plurality of nodes, independent customization of the tenants and unified data management can be effectively supported, data sharing among the nodes is facilitated, scalability of platform data nodes in the cloud is ensured, and uniformity, scalability and usability of data access patterns of the SaaS delivery platform are improved.

Owner:上海博腾信息科技(集团)有限公司

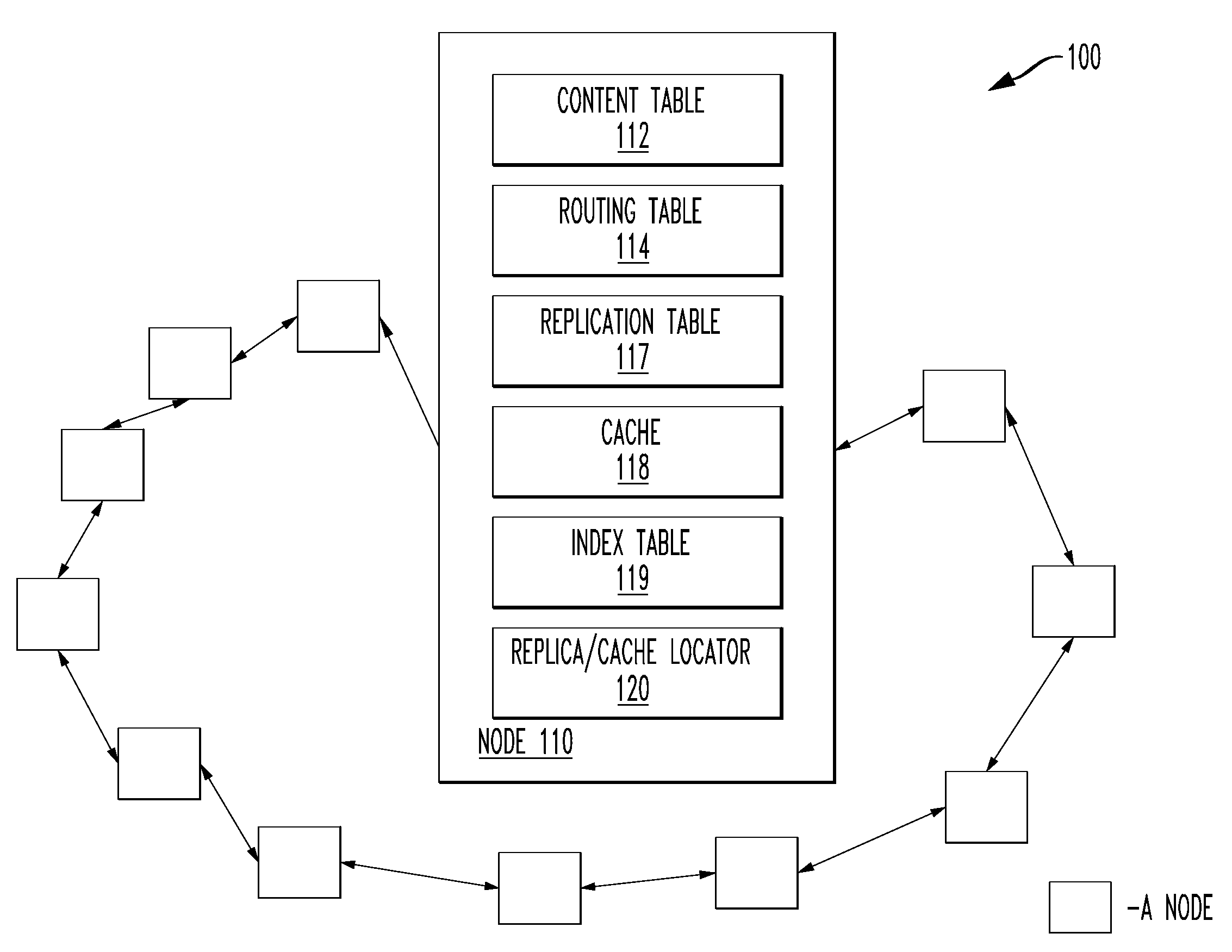

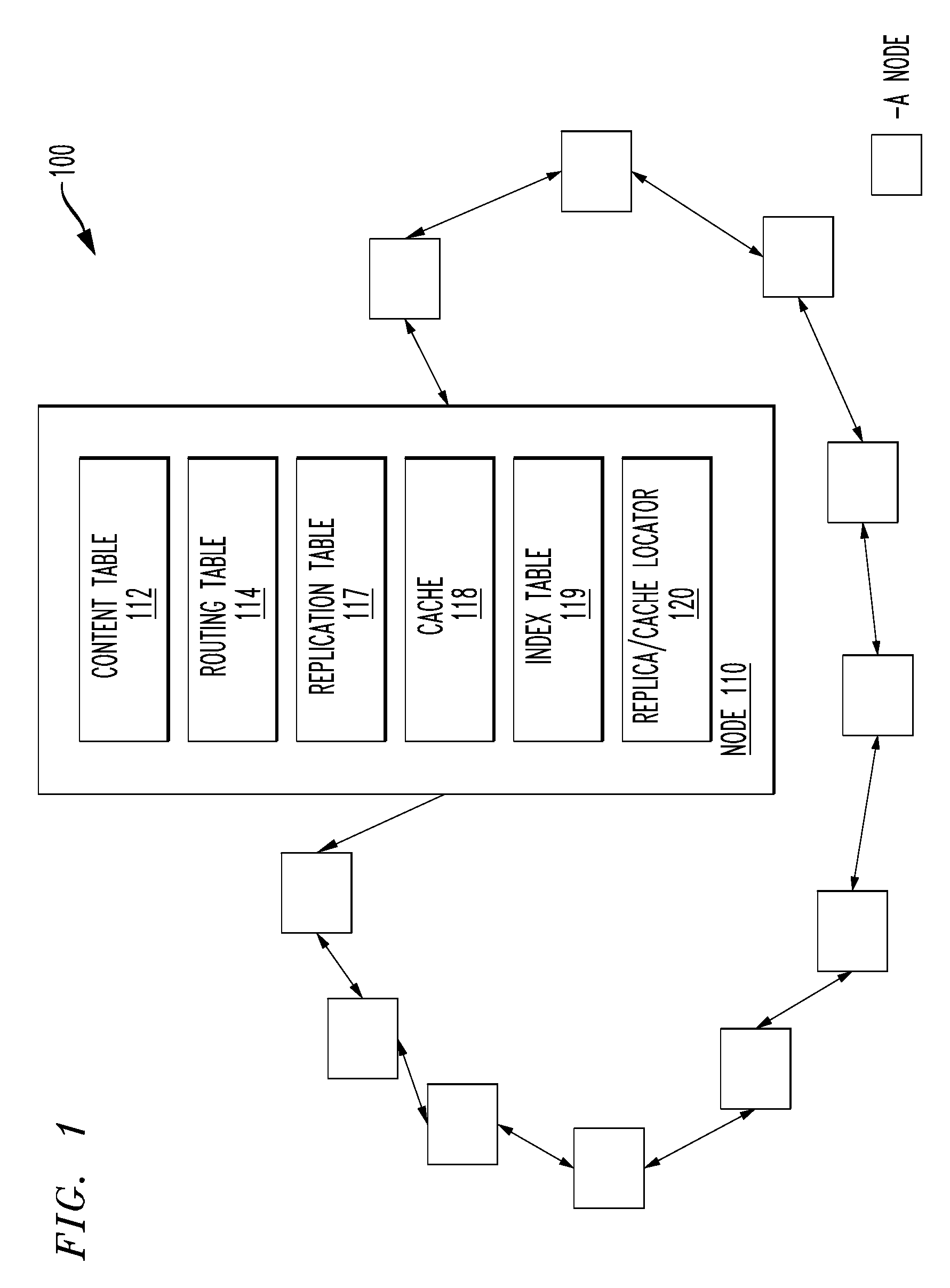

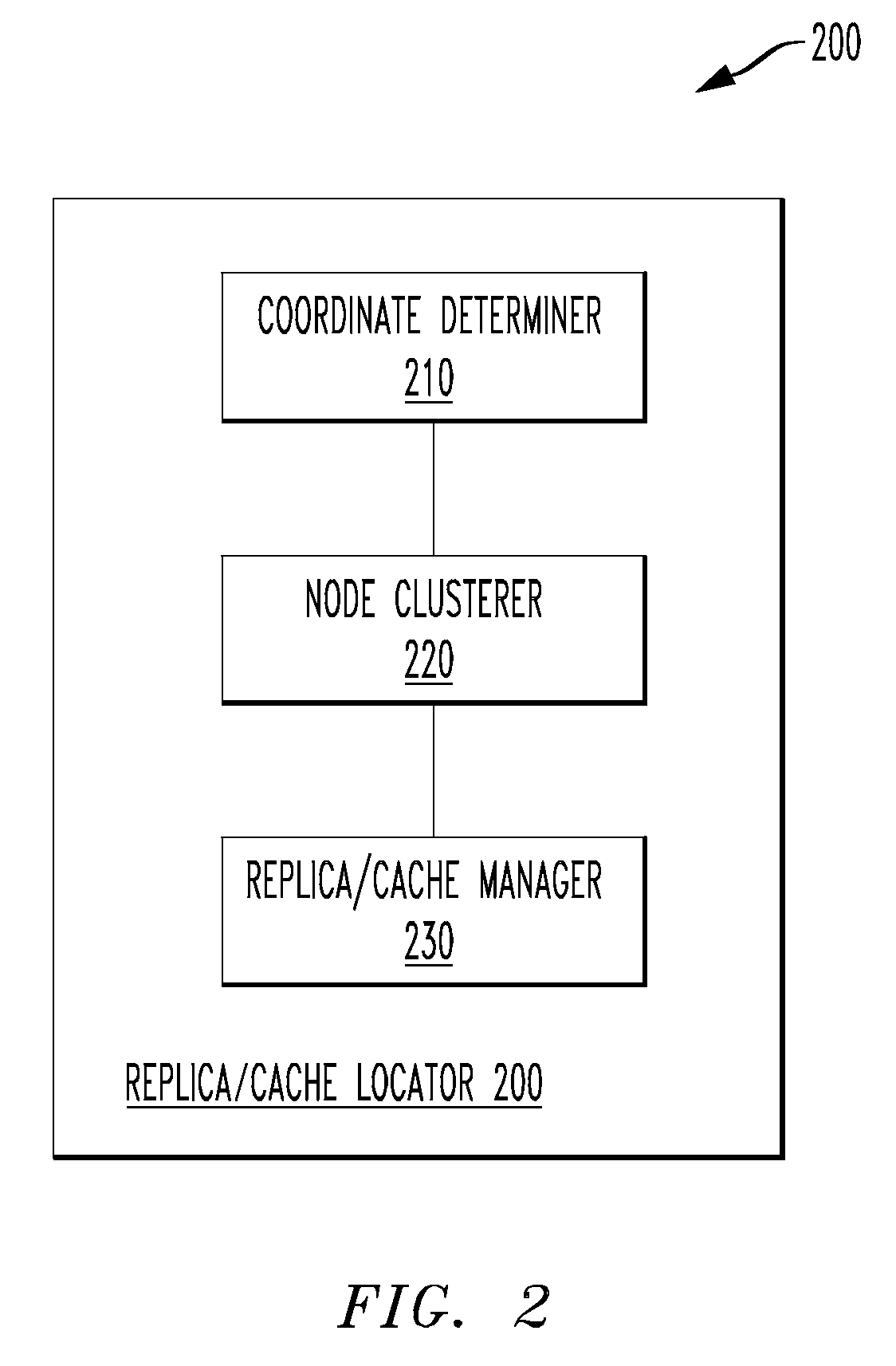

Replica/cache locator, an overlay network and a method to locate replication tables and caches therein

A replica / cache locator, a method to locate replication tables and caches in an overlay network having multiple nodes and an overlay network. In one embodiment, the method includes: (1) determining a network distance between a first node of the multiple nodes in the overlay network and each of the remaining multiple nodes, (2) calculating m clusters of the multiple nodes based on the network distances and (3) designating at least a single node from the m clusters to include a replication table of the first node.

Owner:RPX CORP

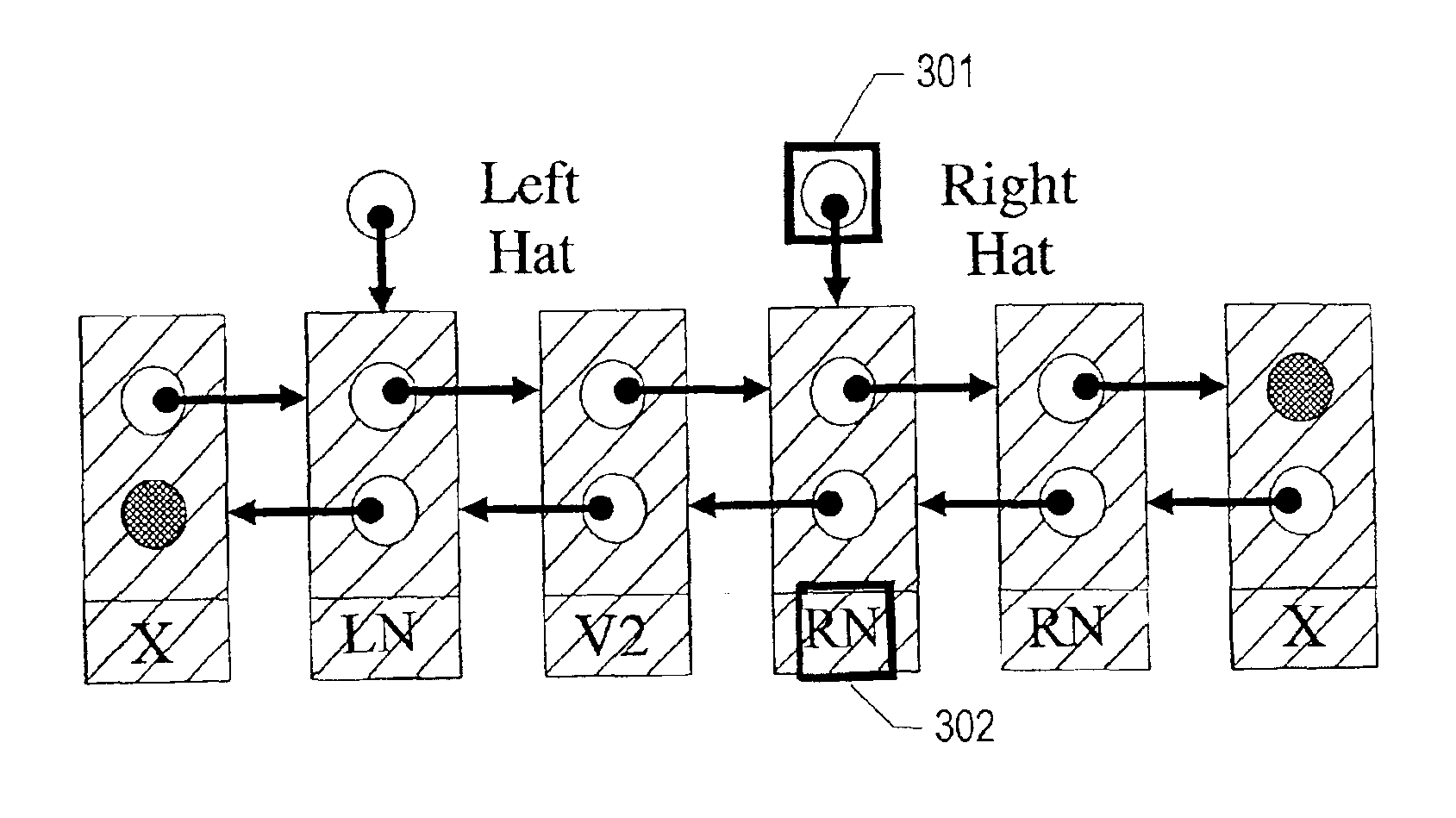

Concurrent shared object implemented using a linked-list with amortized node allocation

InactiveUS7017160B2Useful for promotionInterprogram communicationHandling data according to predetermined rulesGranularityWaste collection

The Hat Trick deque requires only a single DCAS for most pushes and pops. The left and right ends do not interfere with each other until there is one or fewer items in the queue, and then a DCAS adjudicates between competing pops. By choosing a granularity greater than a single node, the user can amortize the costs of adding additional storage over multiple push (and pop) operations that employ the added storage. A suitable removal strategy can provide similar amortization advantages. The technique of leaving spare nodes linked in the structure allows an indefinite number of pushes and pops at a given deque end to proceed without the need to invoke memory allocation or reclamation so long as the difference between the number of pushes and the number of pops remains within given bounds. Both garbage collection dependent and explicit reclamation implementations are described.

Owner:ORACLE INT CORP

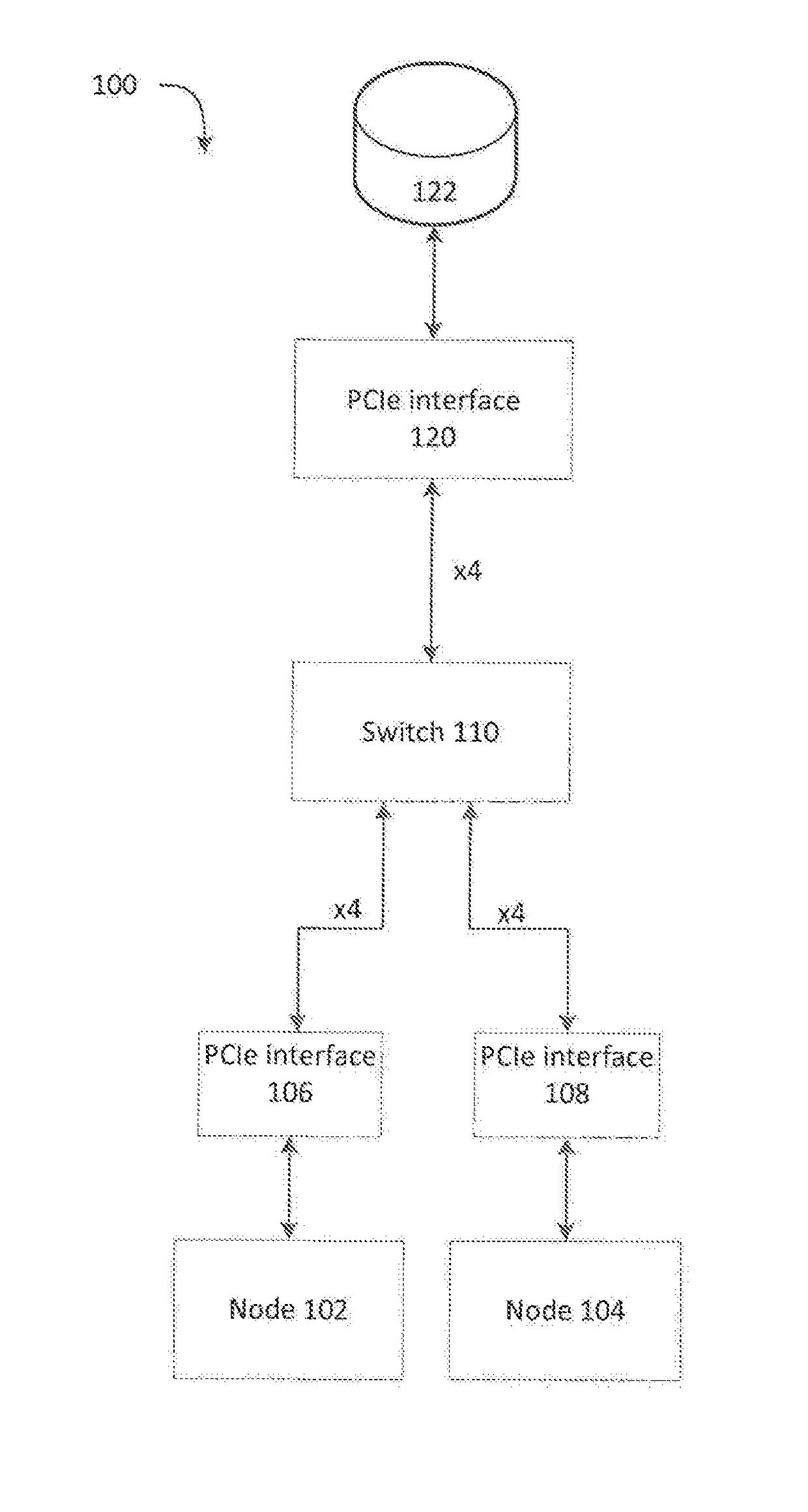

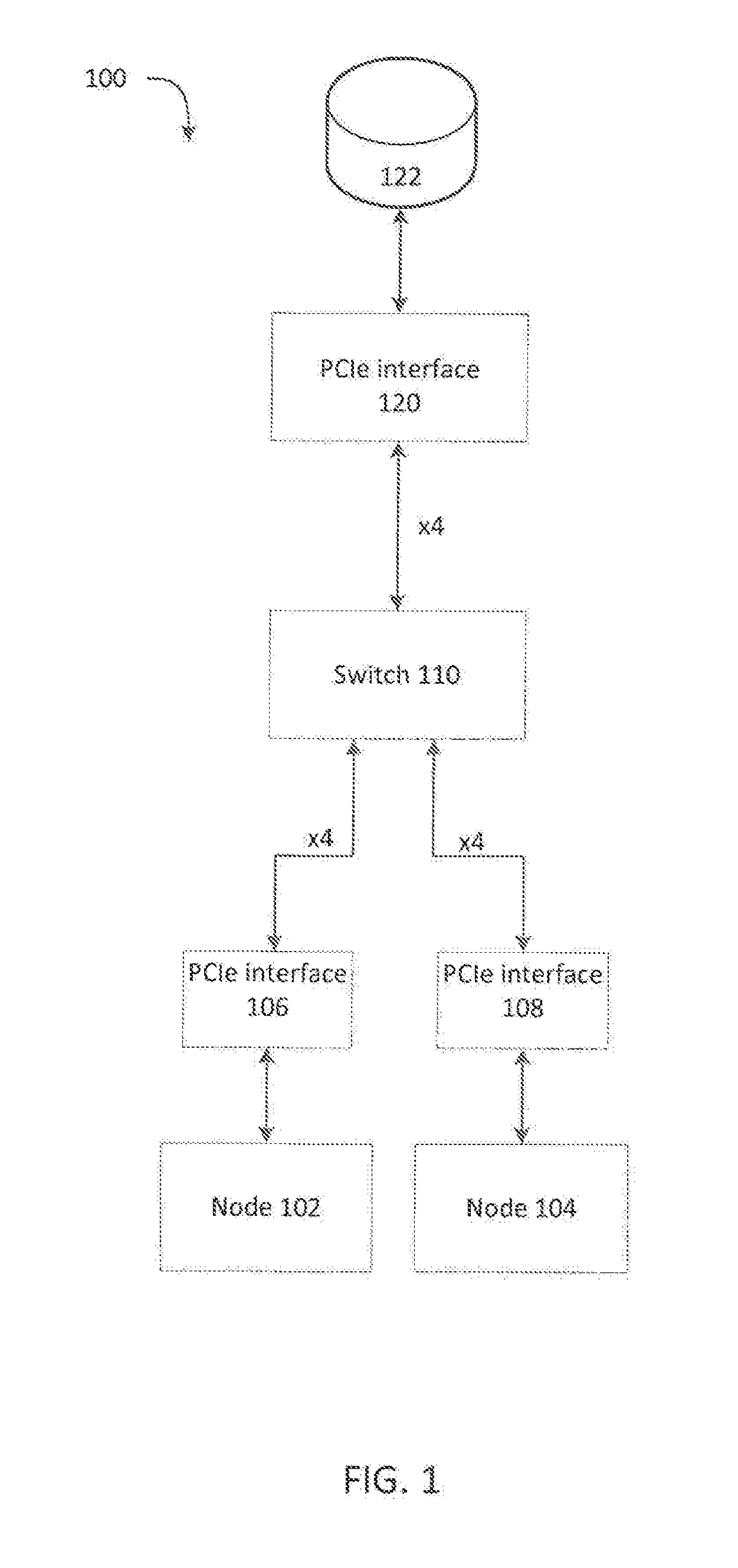

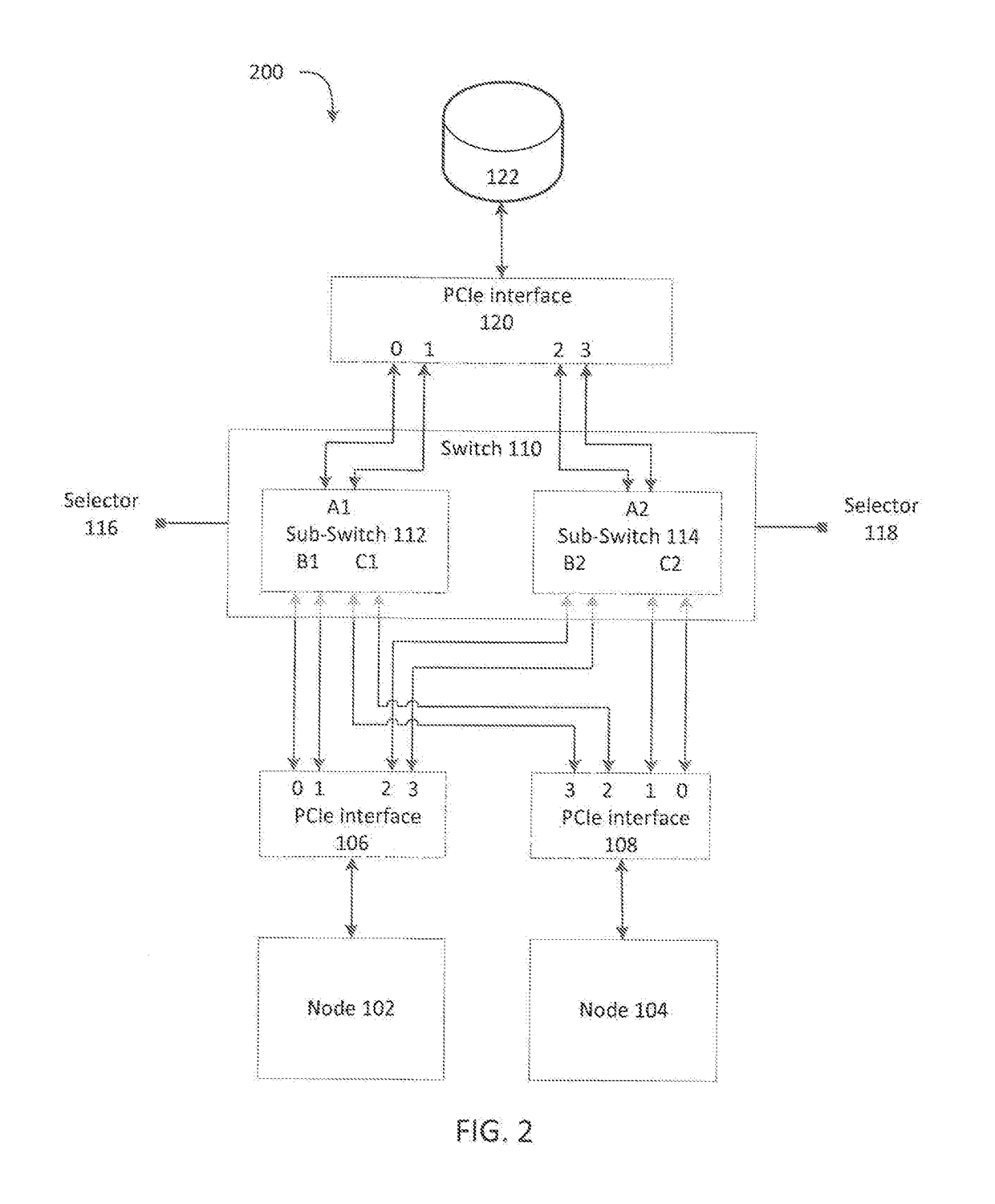

System for switching between a single node pcie mode and a multi-node pcie mode

ActiveUS20170212858A1Memory architecture accessing/allocationMemory adressing/allocation/relocationControl signalEngineering

A system for switching between a high performance mode and dual path mode is disclosed. The system includes a first device, a second device, a third device, and a switch configured to receive control signals, and in response causing the switch to selectively couple one or more first lanes of the first device or one or more second lanes of the second device to third lanes of the third device to yield enabled lanes. The system also include a number of the enabled lanes is less than or equal to a number of the third lanes, and the switch is configured to route the enabled lanes associated with the first device to a first portion of the third lanes in an increasing order and to route the enabled lanes associated with the second device to a second portion of the third lanes in a decreasing order.

Owner:QUANTA COMPUTER INC

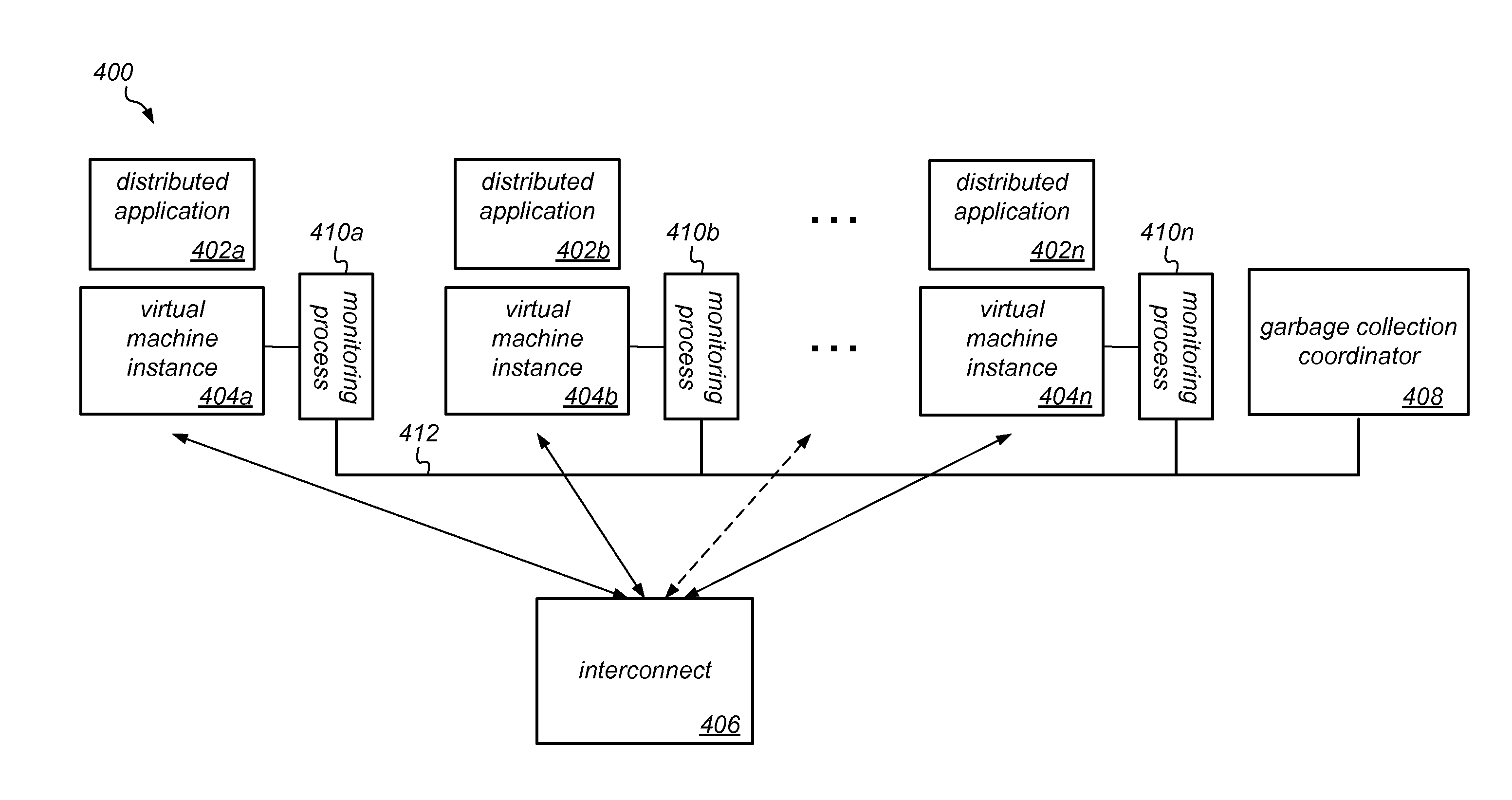

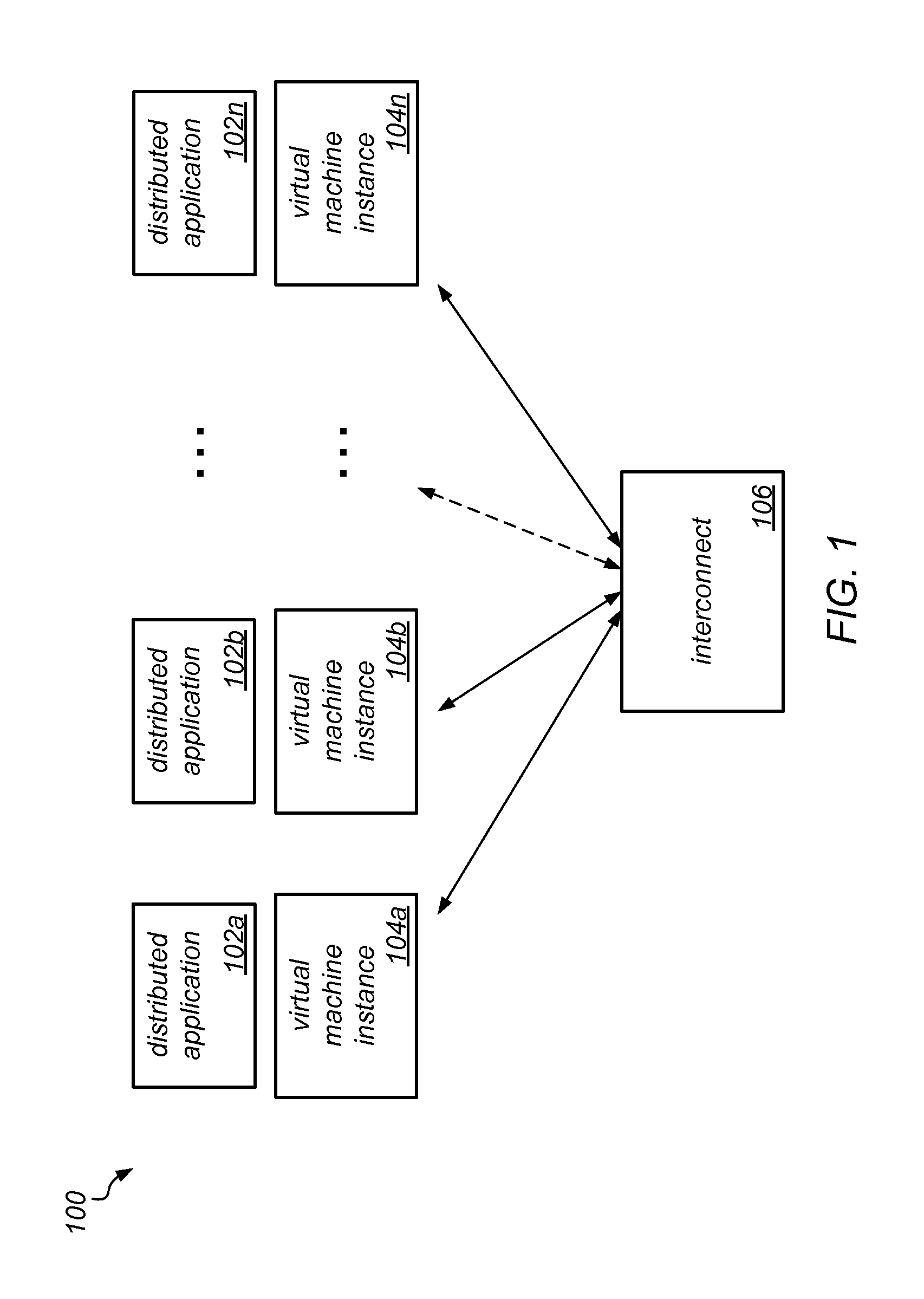

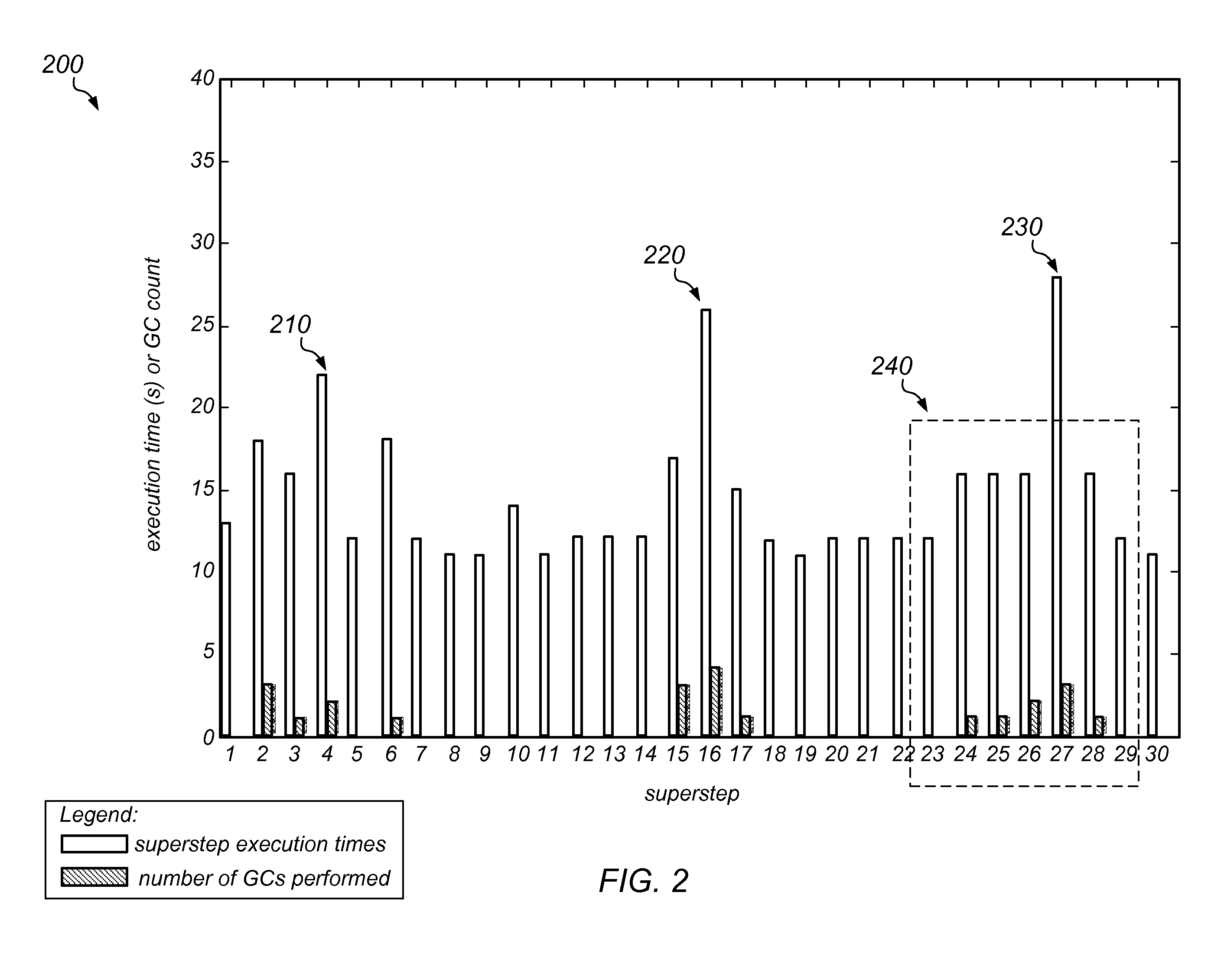

Coordinated Garbage Collection in Distributed Systems

ActiveUS20160070593A1Reduce the impactReduce throughputMemory architecture accessing/allocationProgram initiation/switchingWaste collectionApplication software

Fast modern interconnects may be exploited to control when garbage collection is performed on the nodes (e.g., virtual machines, such as JVMs) of a distributed system in which the individual processes communicate with each other and in which the heap memory is not shared. A garbage collection coordination mechanism (a coordinator implemented by a dedicated process on a single node or distributed across the nodes) may obtain or receive state information from each of the nodes and apply one of multiple supported garbage collection coordination policies to reduce the impact of garbage collection pauses, dependent on that information. For example, if the information indicates that a node is about to collect, the coordinator may trigger a collection on all of the other nodes (e.g., synchronizing collection pauses for batch-mode applications where throughput is important) or may steer requests to other nodes (e.g., for interactive applications where request latencies are important).

Owner:ORACLE INT CORP

System and methods for temporary data management in shared disk cluster

ActiveUS8156082B2Digital data processing detailsError detection/correctionCluster systemsApplication software

System and methods for temporary data management in shared disk cluster configurations is described. In one embodiment, a method for managing temporary data storage comprises: creating a global temporary database accessible to all nodes of the cluster on shared storage; creating a local temporary database accessible to only a single node (owner node) of the cluster; providing failure recovery for the global temporary database without providing failure recovery for the local temporary database, so that changes to the global temporary database are transactionally recovered upon failure of a node; binding an application or database login to the local temporary database on the owner node for providing the application with local temporary storage when connected to the owner node; and storing temporary data used by the application or database login in the local temporary database without requiring use of write ahead logging for transactional recovery of the temporary data.

Owner:SYBASE INC

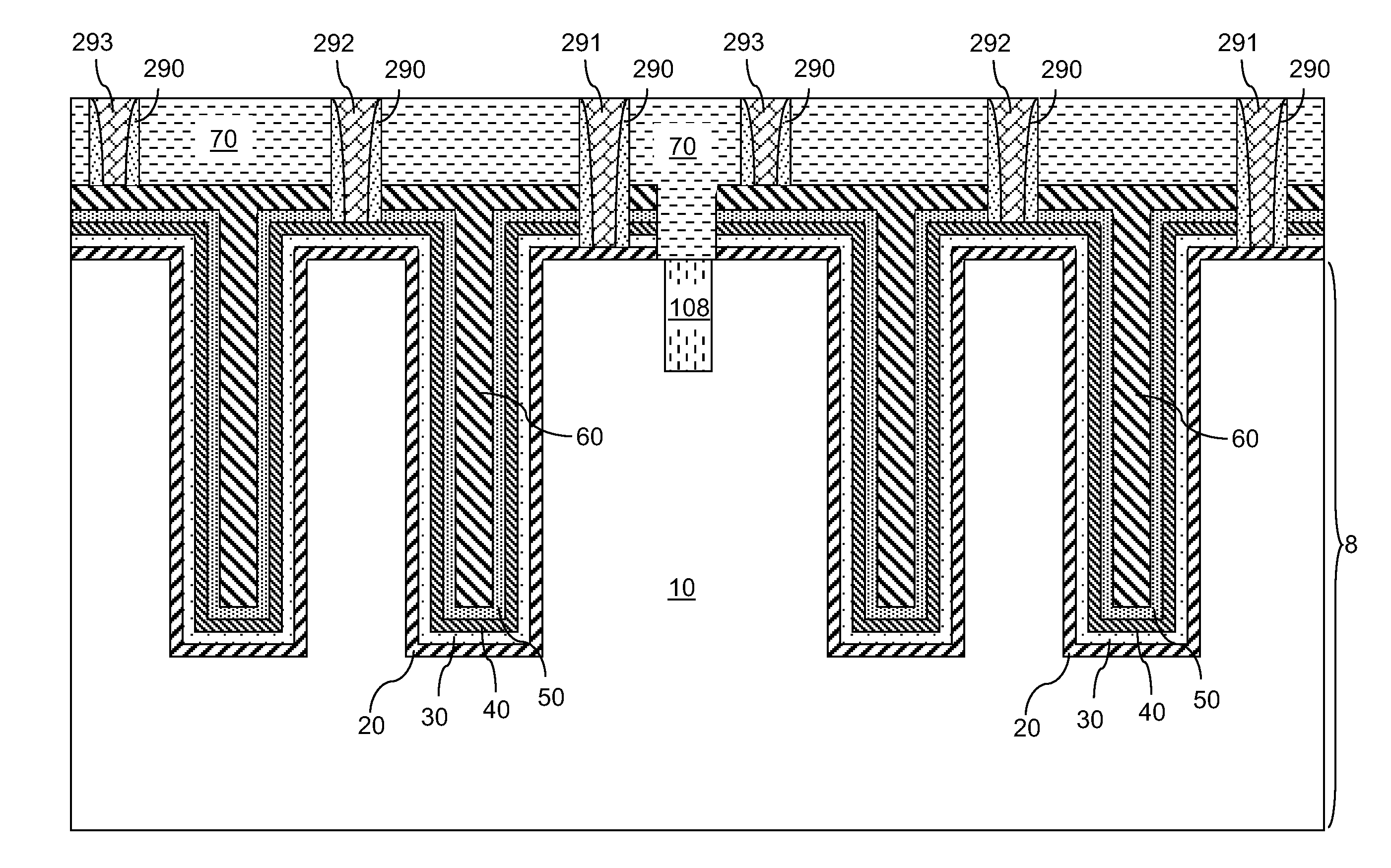

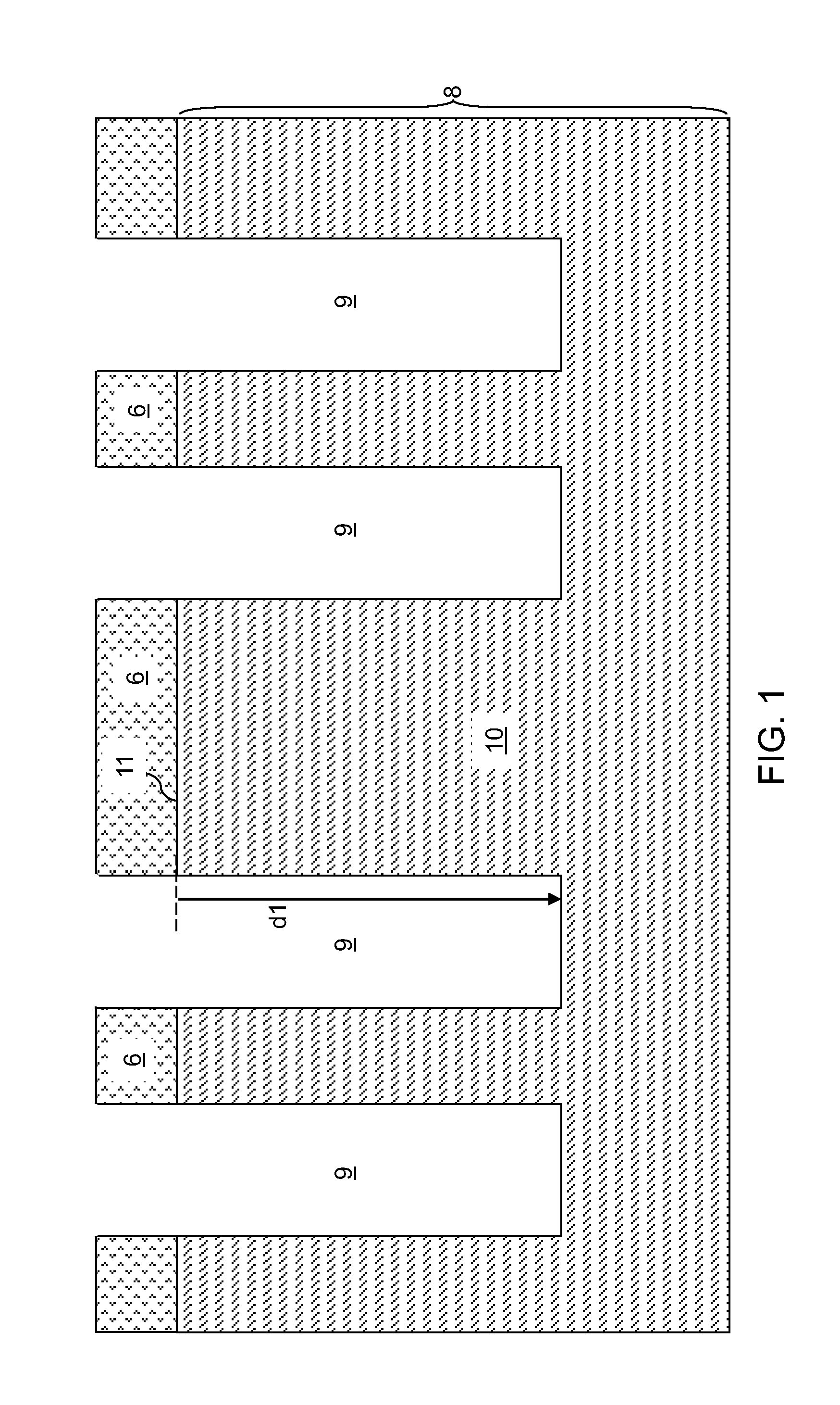

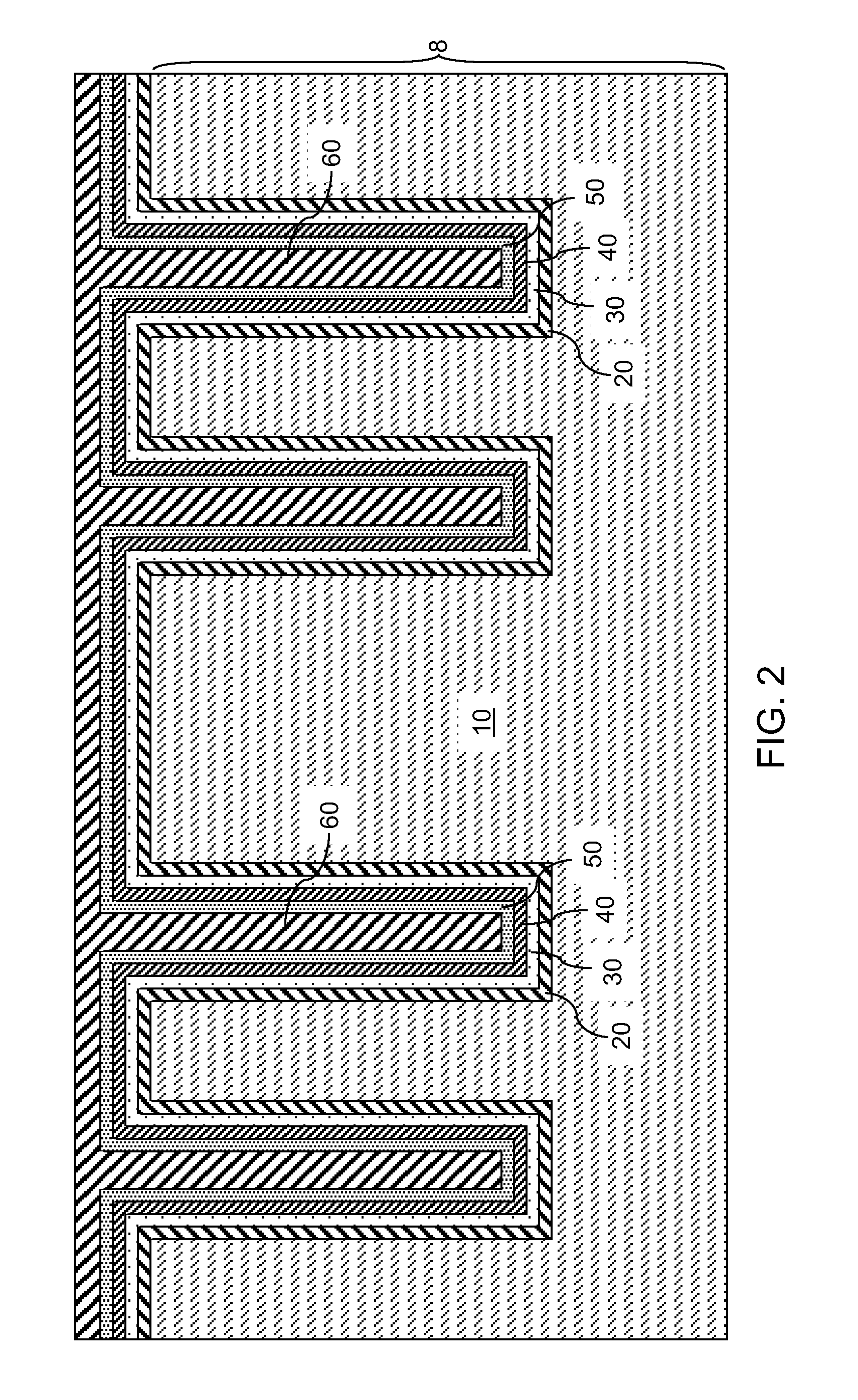

High capacitance trench capacitor

ActiveUS20120061798A1Solid-state devicesSemiconductor/solid-state device manufacturingCapacitanceEngineering

A dual node dielectric trench capacitor includes a stack of layers formed in a trench. The stack of layers include, from bottom to top, a first conductive layer, a first node dielectric layer, a second conductive layer, a second node dielectric layer, and a third conductive layer. The dual node dielectric trench capacitor includes two back-to-back capacitors, which include a first capacitor and a second capacitor. The first capacitor includes the first conductive layer, the first node dielectric layer, the second conductive layer, and the second capacitor includes the second conductive layer, the second node dielectric layer, and the third conductive layer. The dual node dielectric trench capacitor can provide about twice the capacitance of a trench capacitor employing a single node dielectric layer having a comparable composition and thickness as the first and second node dielectric layers.

Owner:GLOBALFOUNDRIES US INC

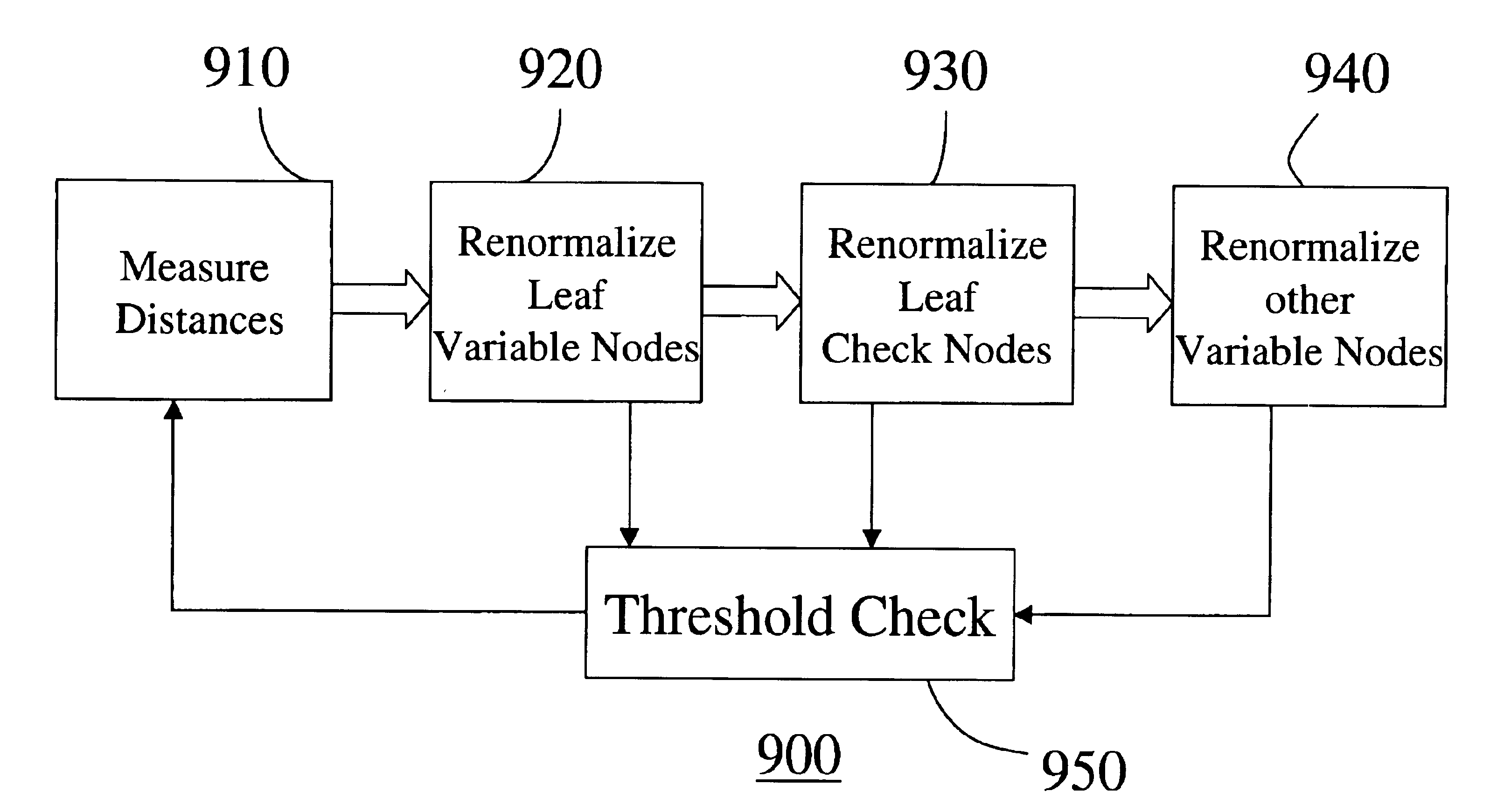

Evaluating and optimizing error-correcting codes using a renormalization group transformation

InactiveUS6857097B2Improve performanceCorrect operation testingOther decoding techniquesRenormalizationParity-check matrix

A method evaluates an error-correcting code for a data block of a finite size. An error-correcting code is defined by a parity check matrix, wherein columns represent variable bits and rows represent parity bits. The parity check matrix is represented as a bipartite graph. A single node in the bipartite graph is iteratively renormalized until the number of nodes in the bipartite graph is less than a predetermine threshold. During the iterative renormalization, a particular variable node is selected as a target node, and a distance between the target node and every other node in the bipartite graph is measured. Then, if there is at least one leaf variable node, renormalize the leaf variable node farthest from the target node, otherwise, renormalize a leaf check node farthest from the target node, and otherwise renormalize a variable node farthest from the target node and having fewest directly connected check nodes. By evaluating many error-correcting codes according to the method, an optimal code according to selected criteria can be obtained.

Owner:MITSUBISHI ELECTRIC RES LAB INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com