Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

298 results about "Parallel algorithm" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, a parallel algorithm, as opposed to a traditional serial algorithm, is an algorithm which can do multiple operations in a given time. It has been a tradition of computer science to describe serial algorithms in abstract machine models, often the one known as Random-access machine. Similarly, many computer science researchers have used a so-called parallel random-access machine (PRAM) as an parallel (shared-memory) abstract machine.

Novel massively parallel supercomputer

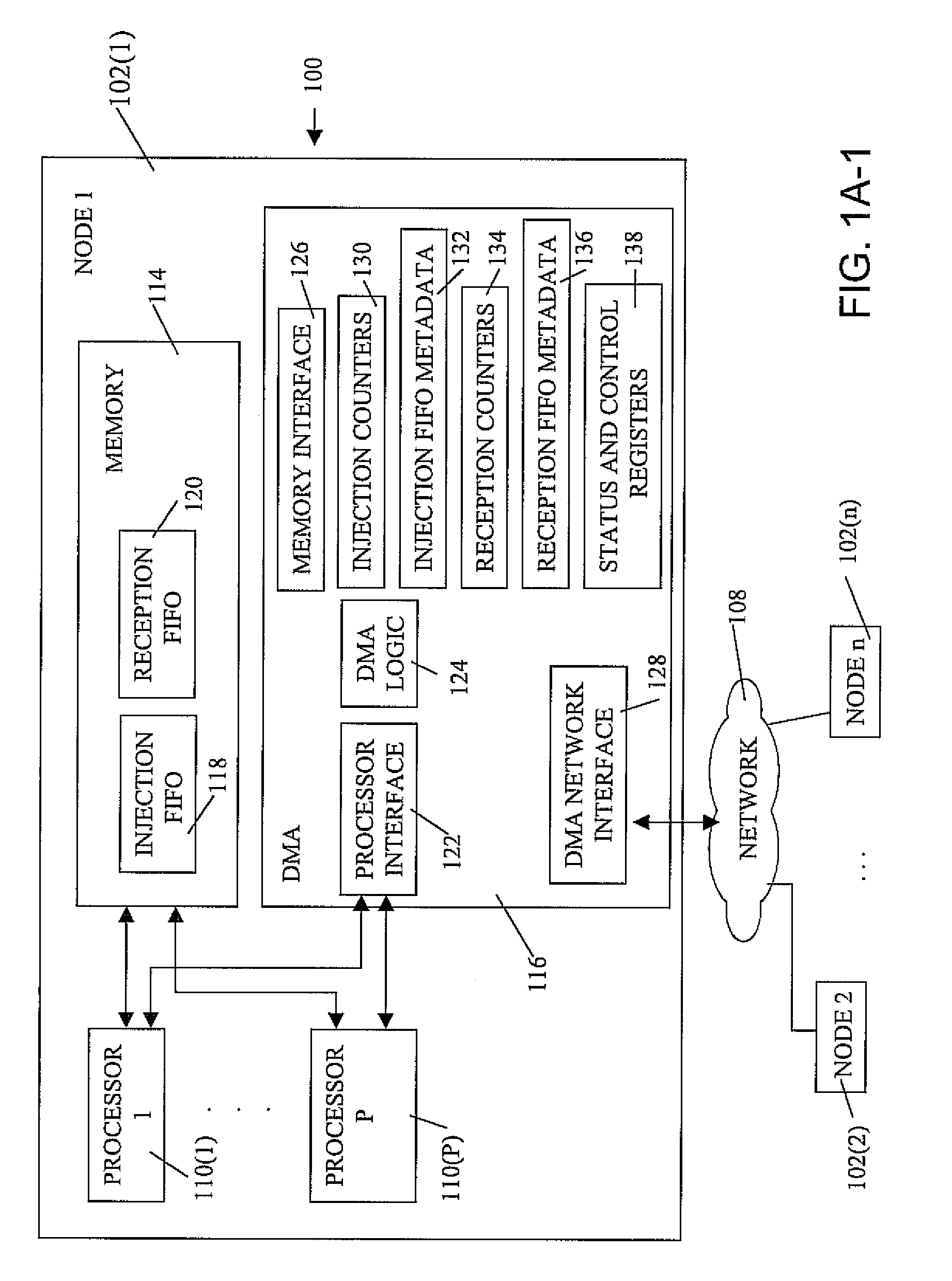

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Ultrascalable petaflop parallel supercomputer

InactiveUS7761687B2Maximize throughputDelay minimizationGeneral purpose stored program computerElectric digital data processingSupercomputerPacket communication

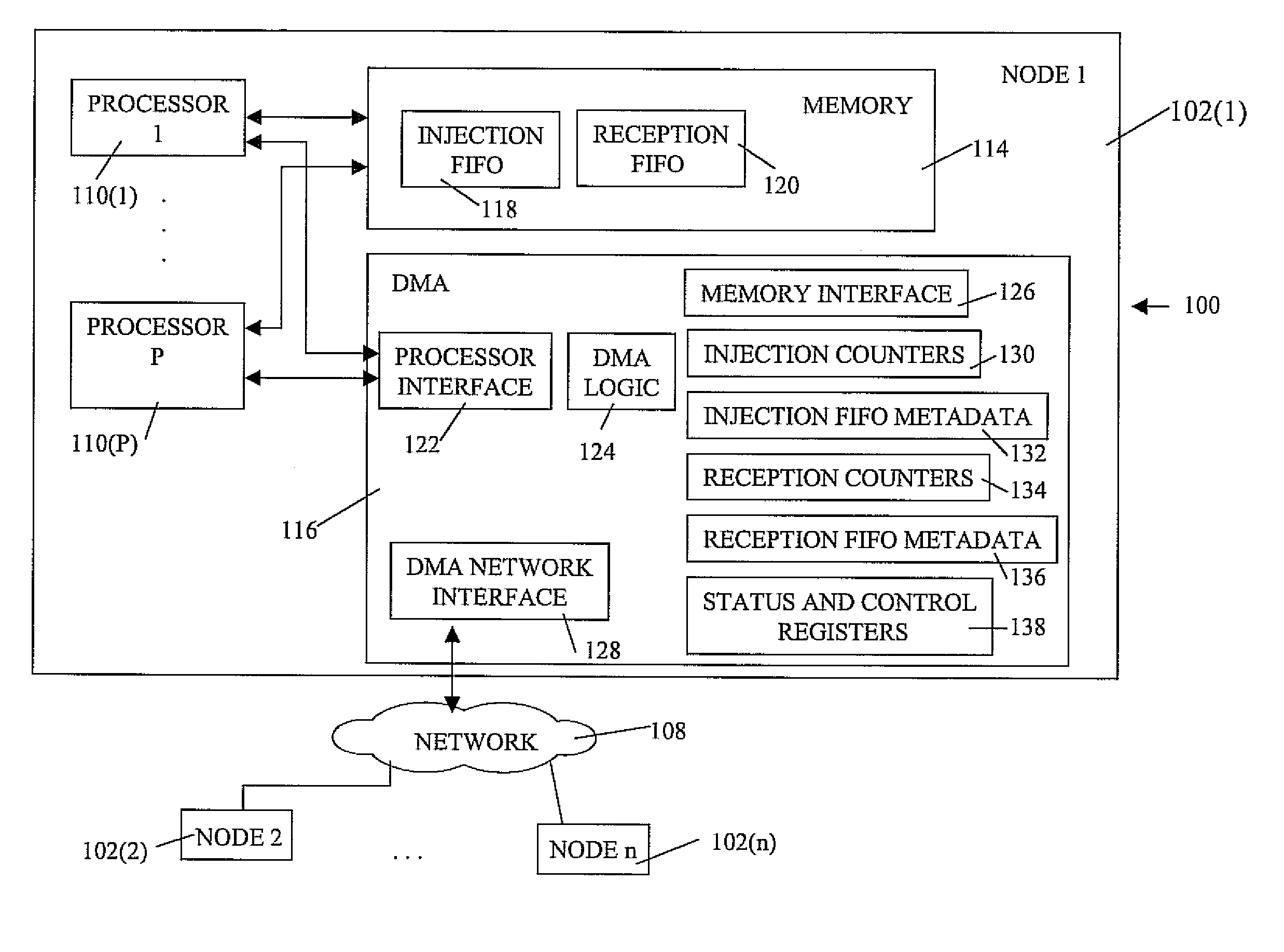

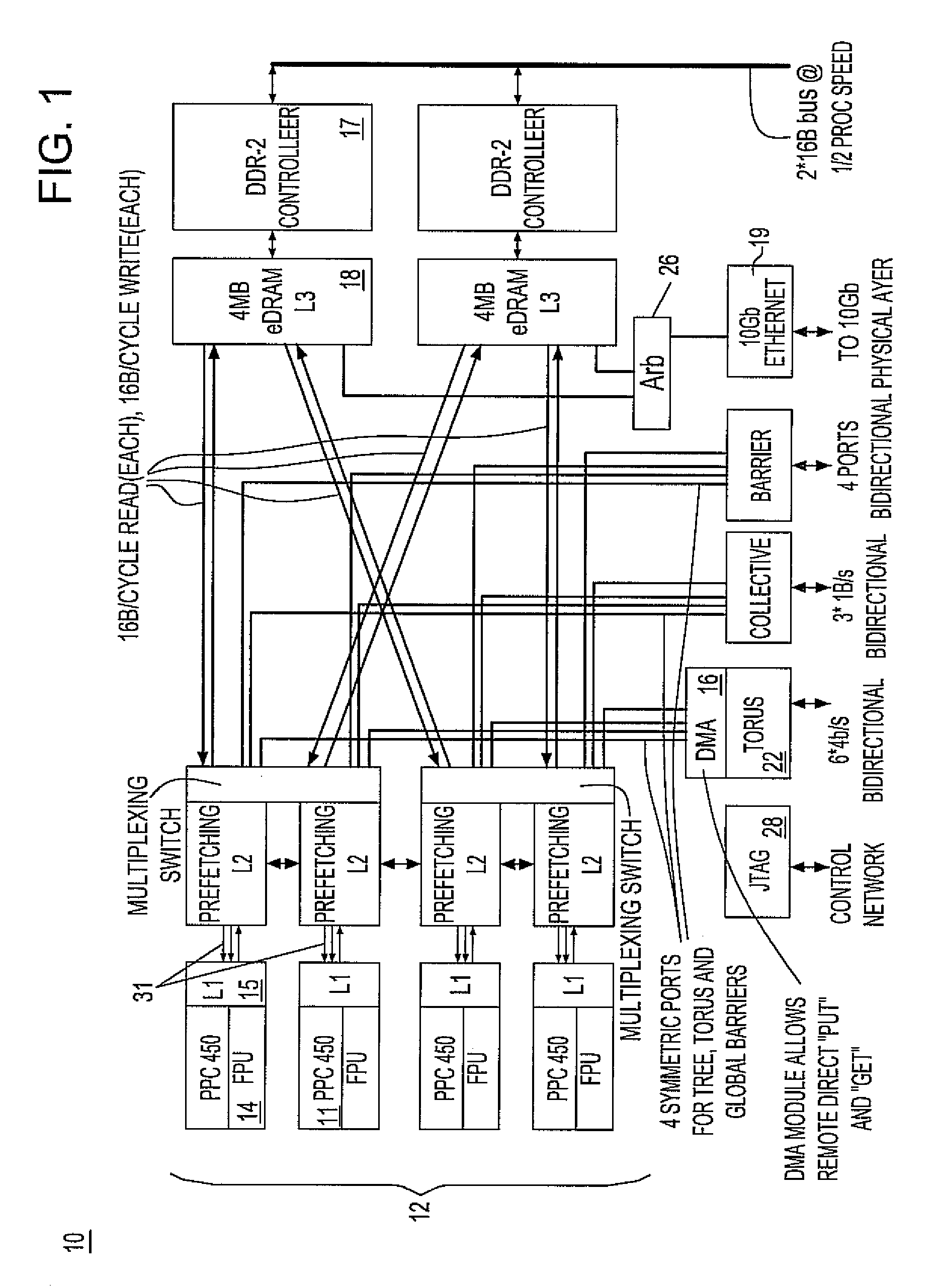

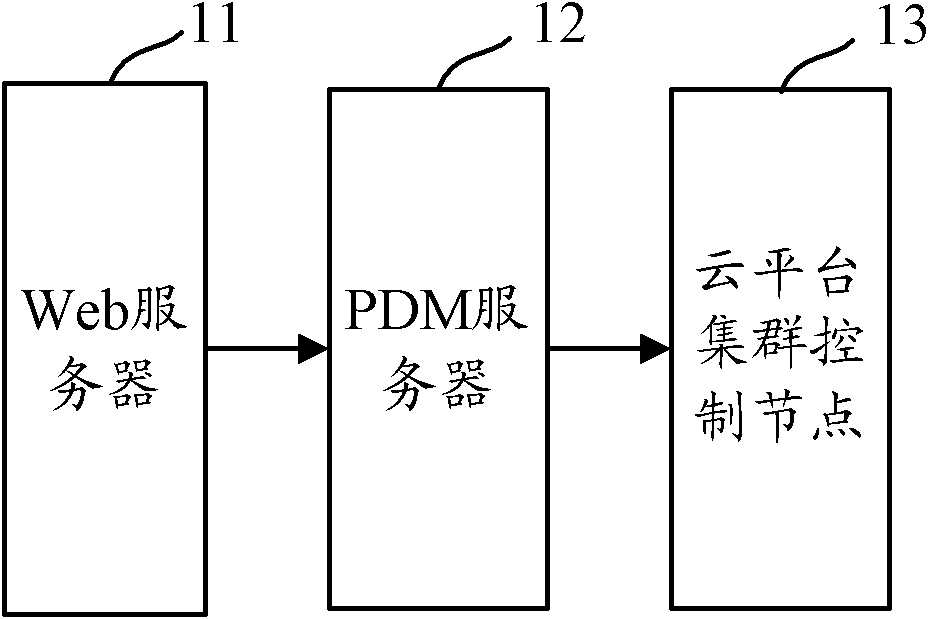

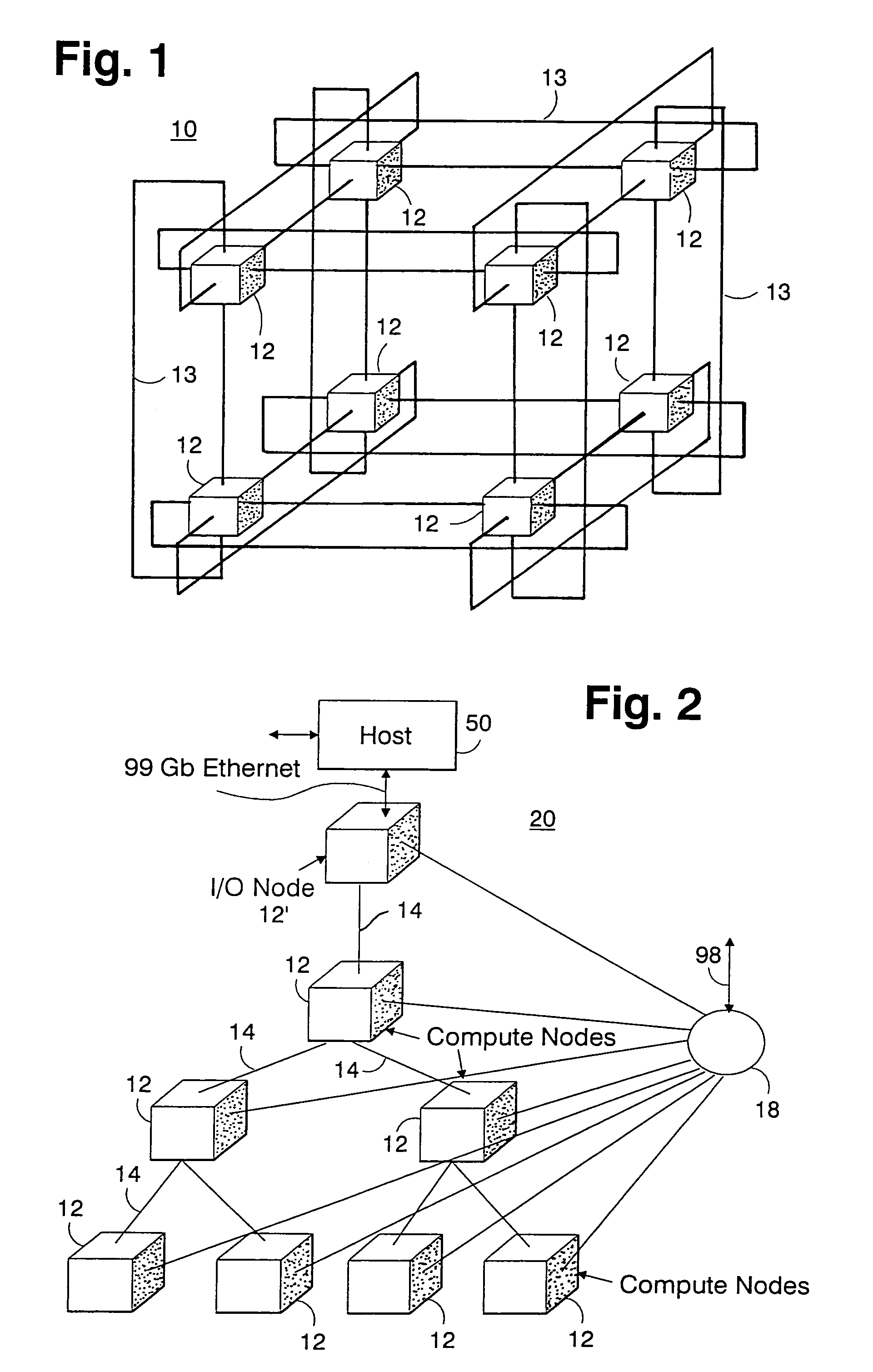

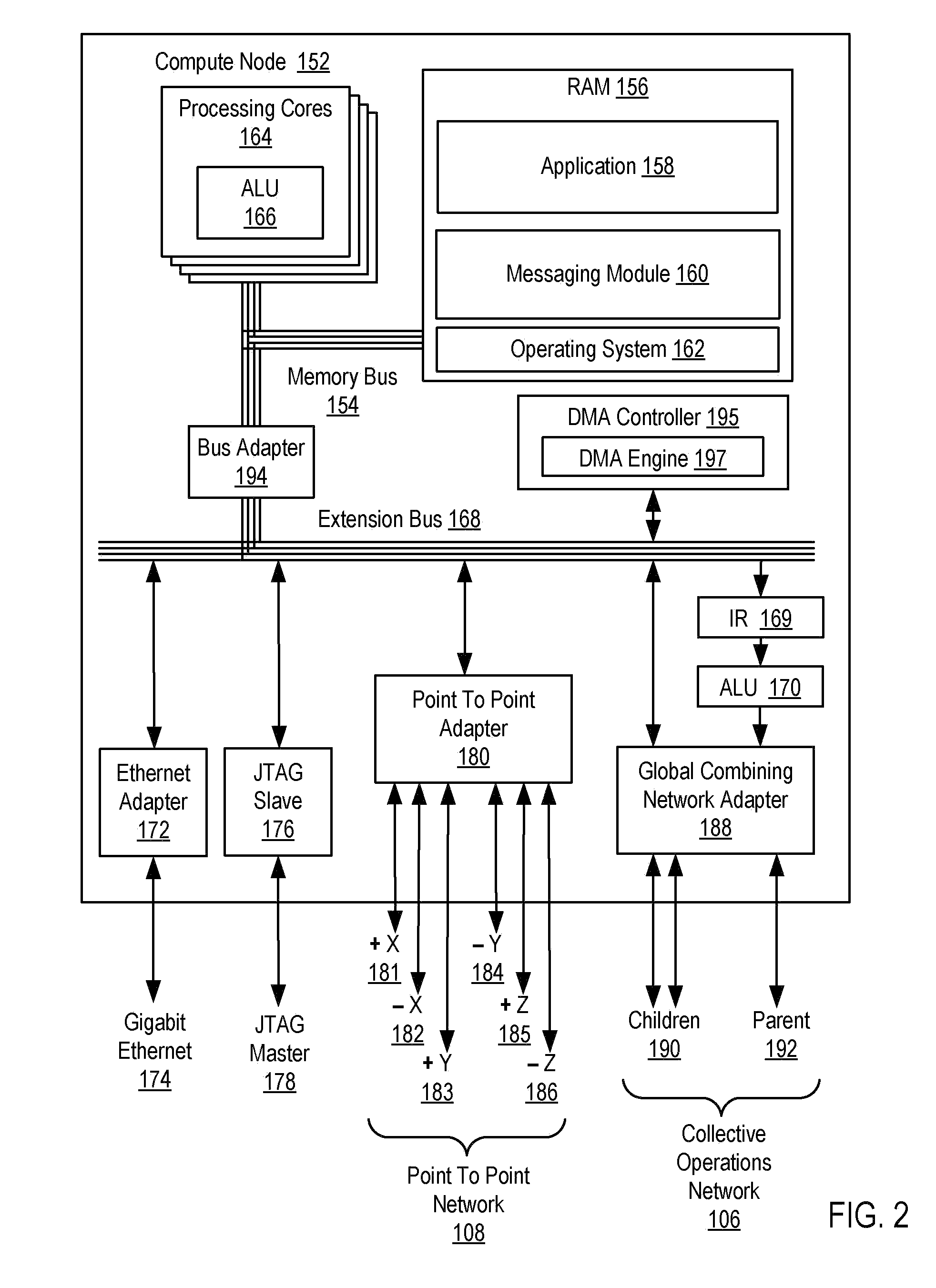

A massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. The use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:INT BUSINESS MASCH CORP

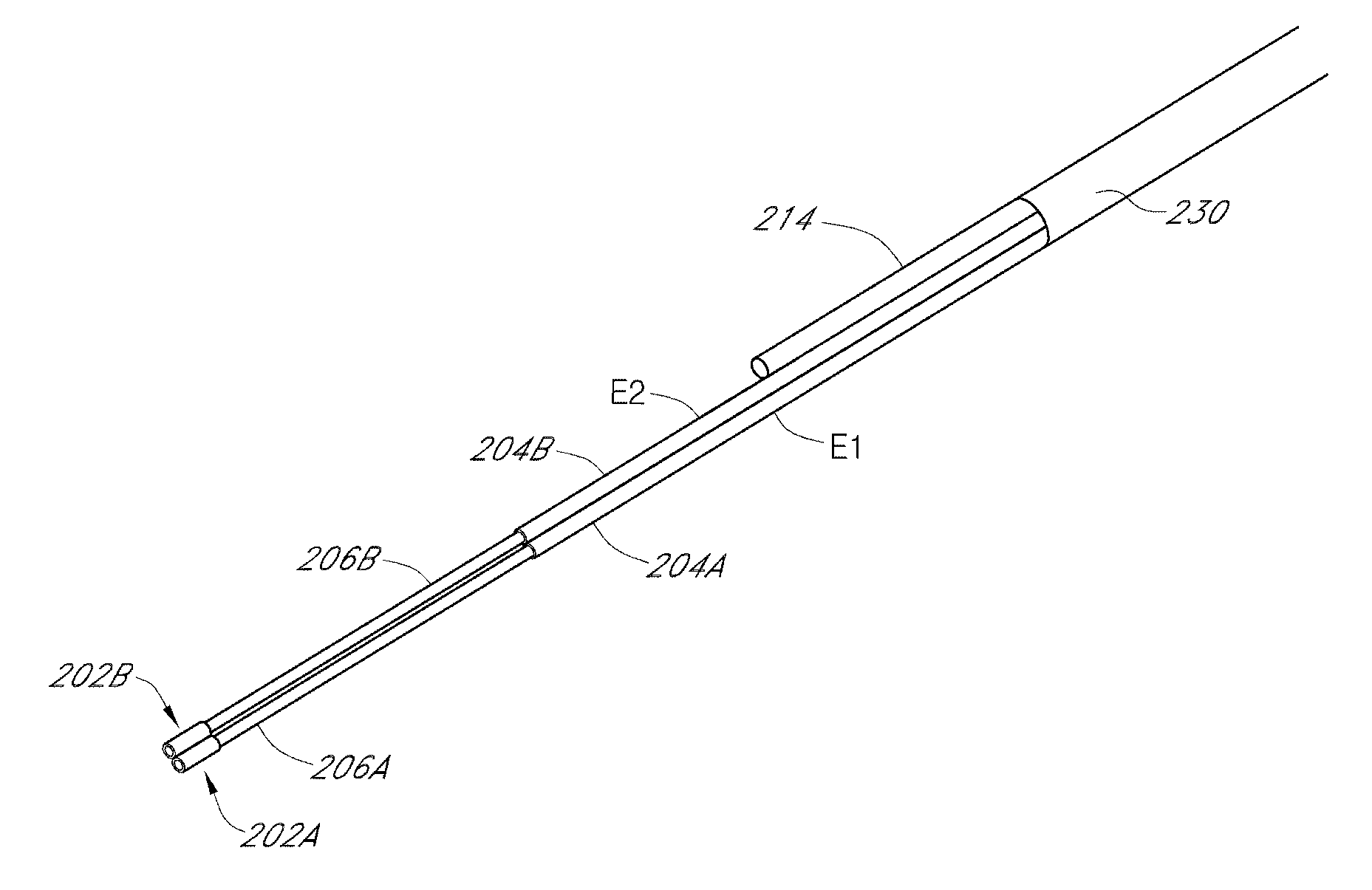

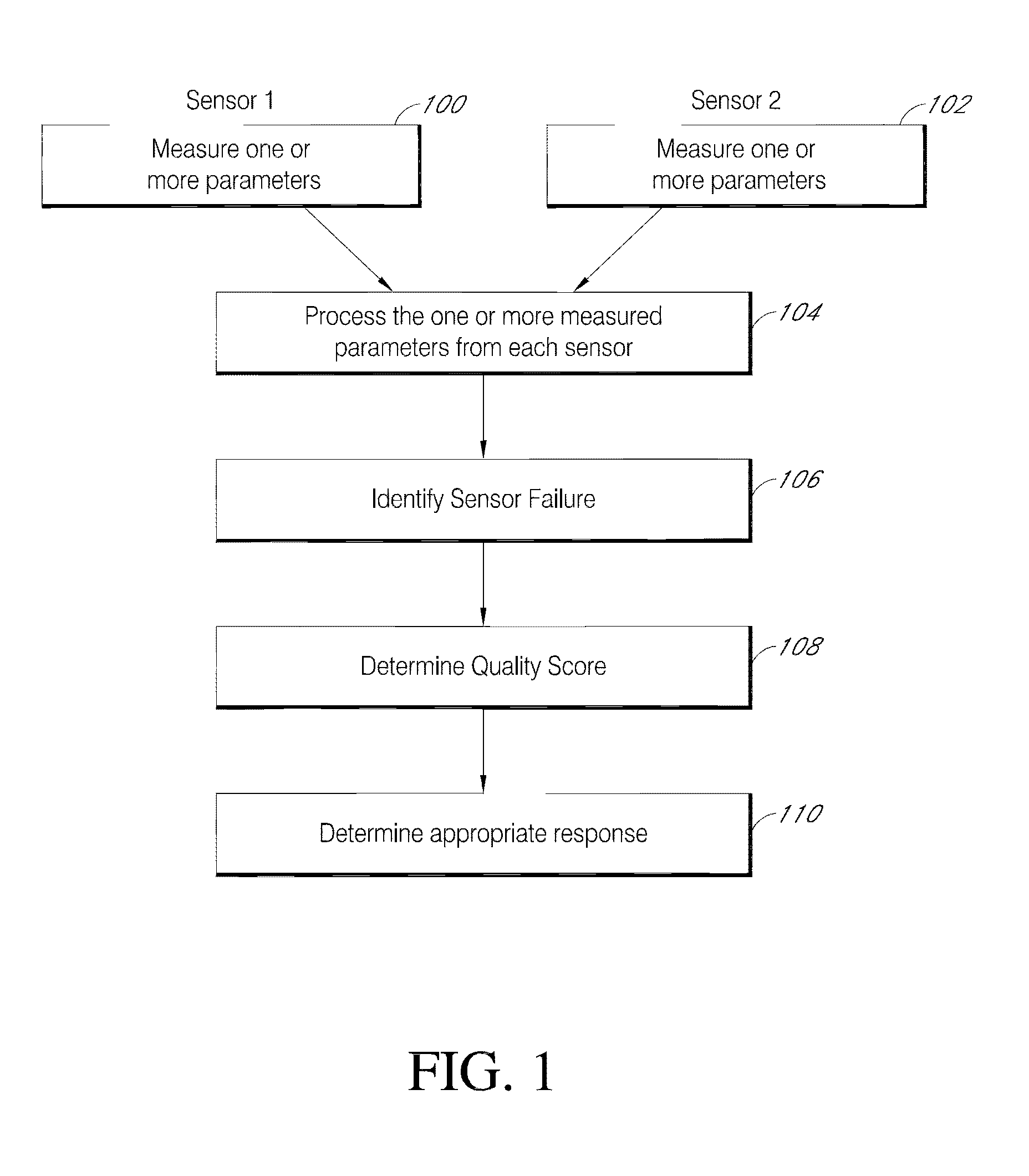

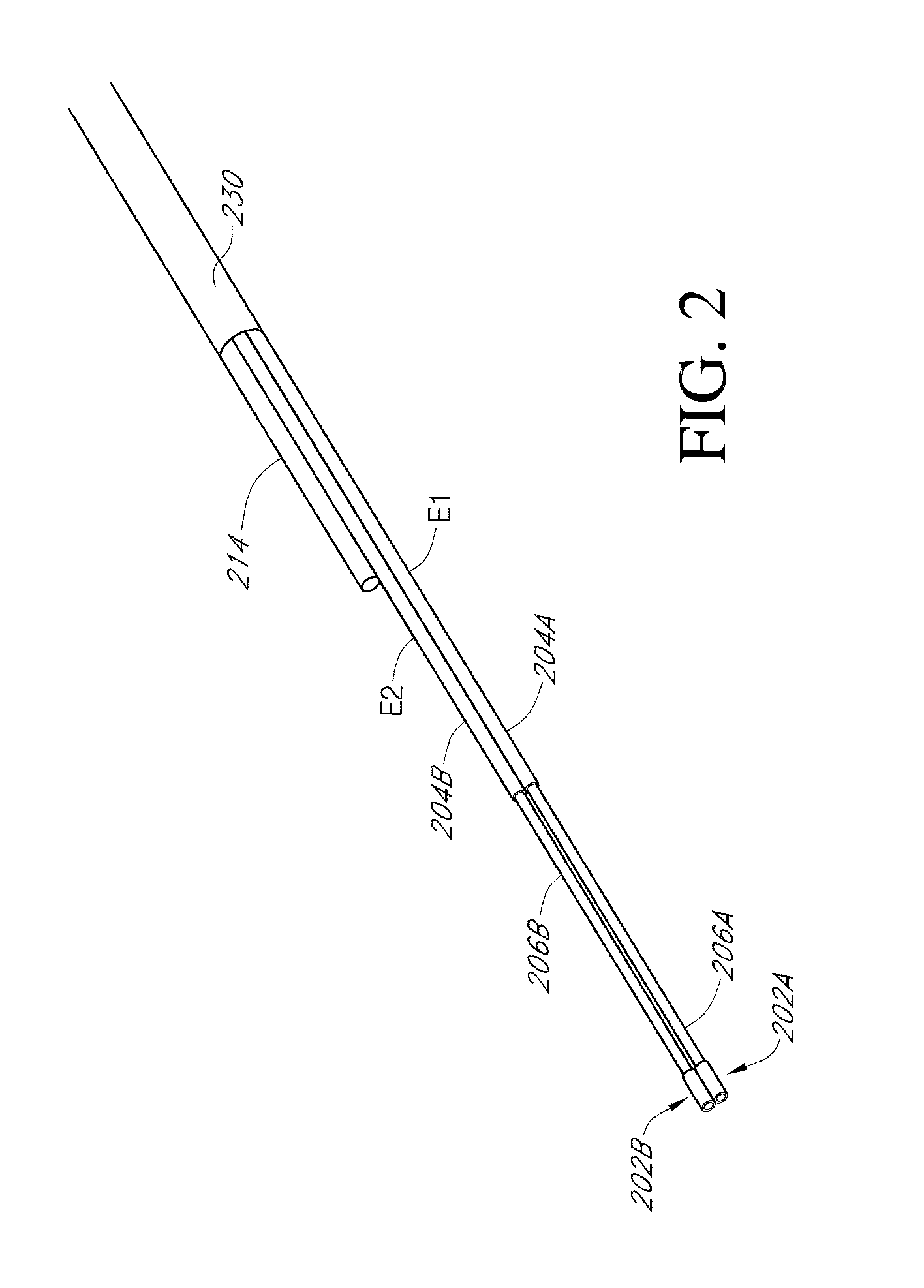

Use of sensor redundancy to detect sensor failures

Devices, systems, and methods for providing more accurate and reliable sensor data and for detecting sensor failures. Two or more electrodes can be used to generate data, and the data can be subsequently compared by a processing module. Alternatively, one sensor can be used, and the data processed by two parallel algorithms to provide redundancy. Sensor performance, including sensor failures, can be identified. The user or system can then respond appropriately to the information related to sensor performance or failure.

Owner:DEXCOM

Massively parallel supercomputer

InactiveUS7555566B2Massive level of scalabilityUnprecedented level of scalabilityError preventionProgram synchronisationPacket communicationSupercomputer

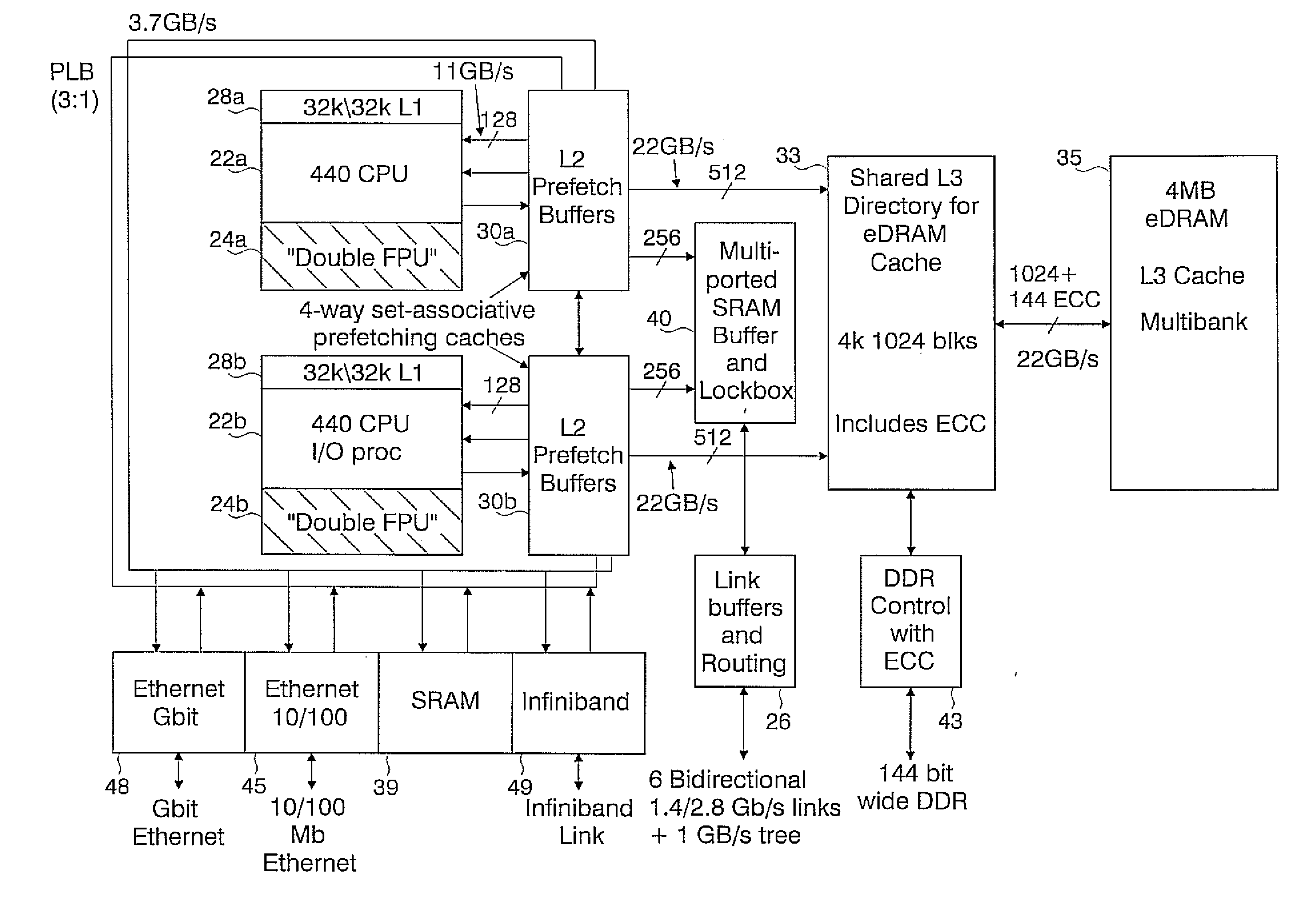

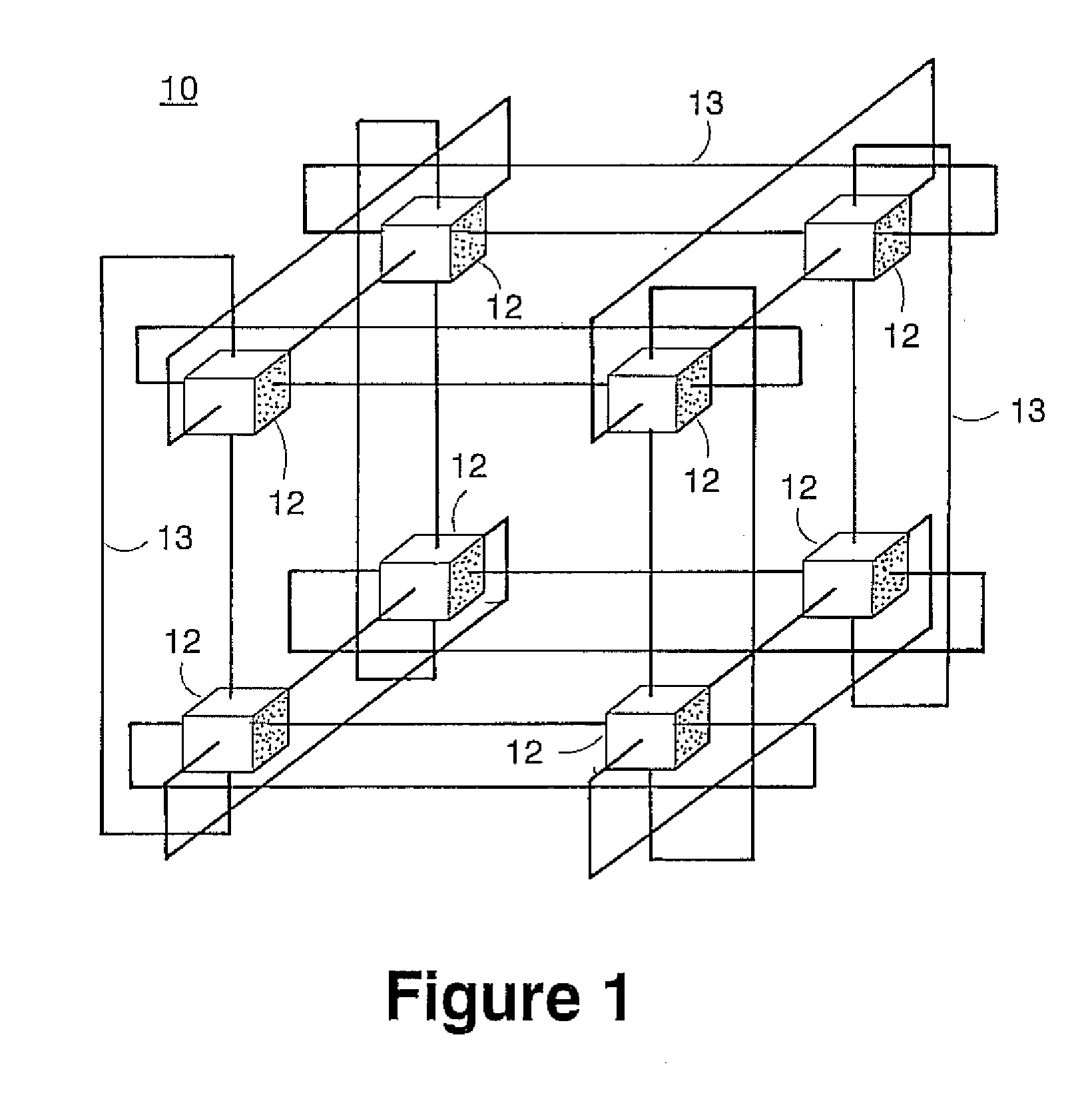

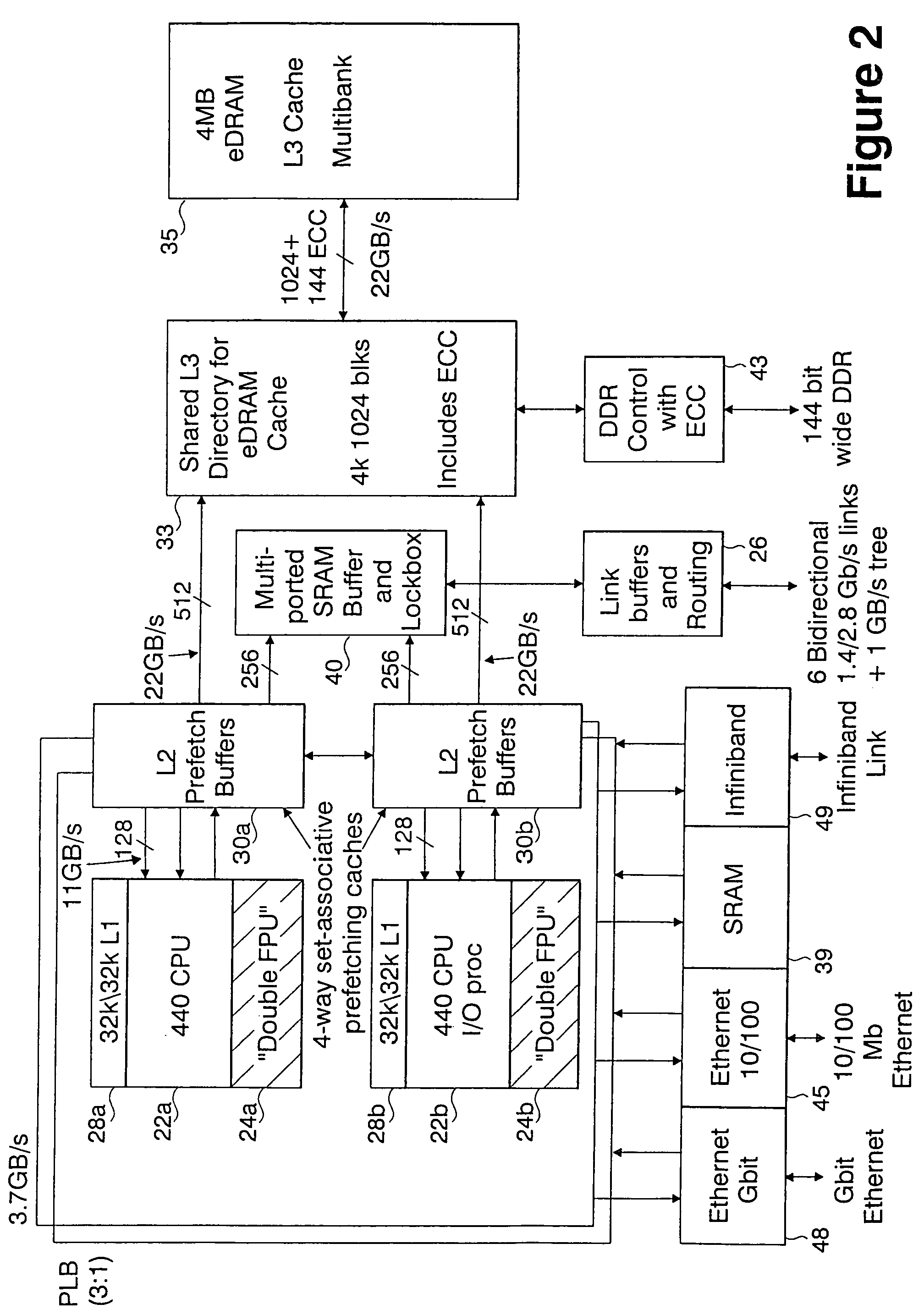

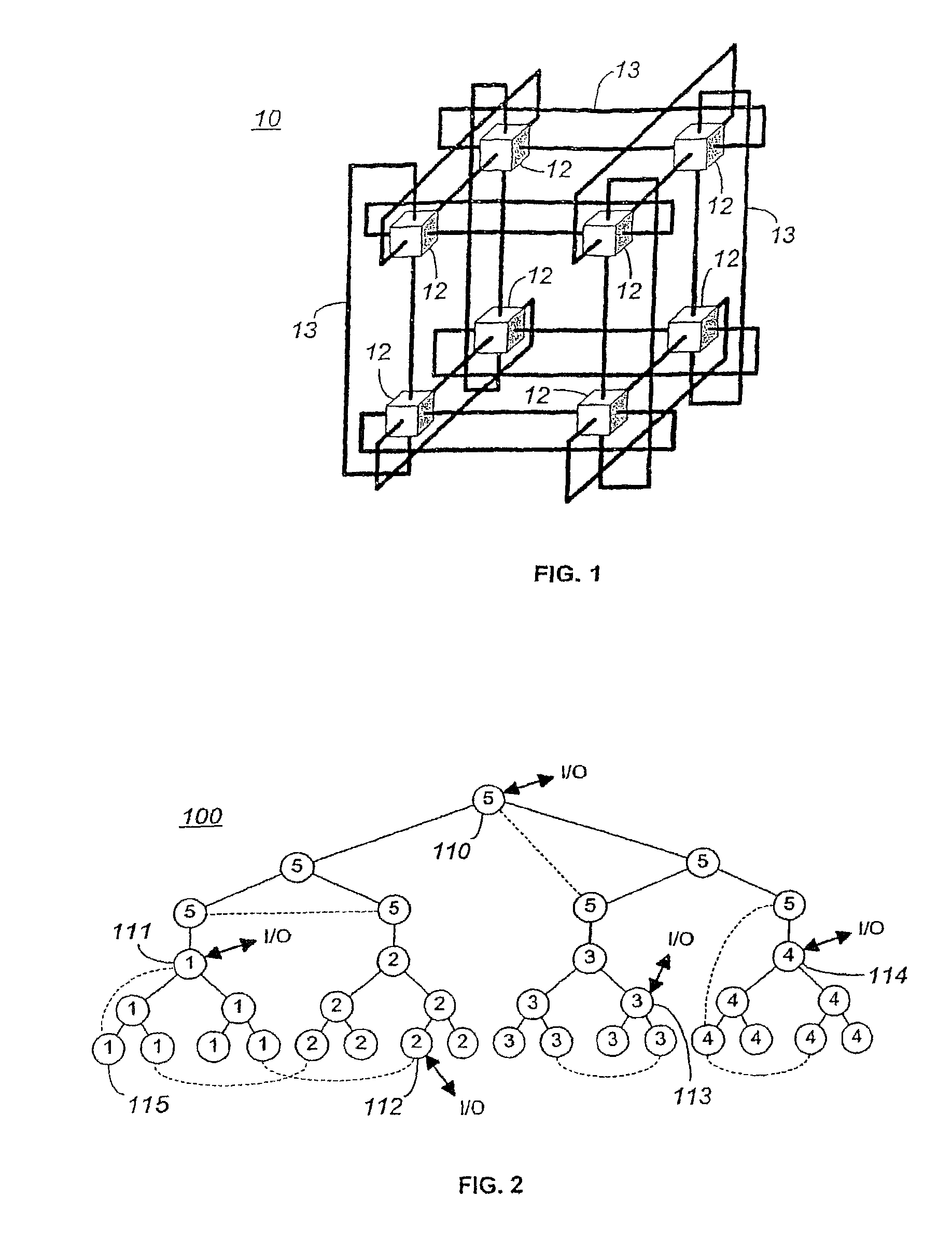

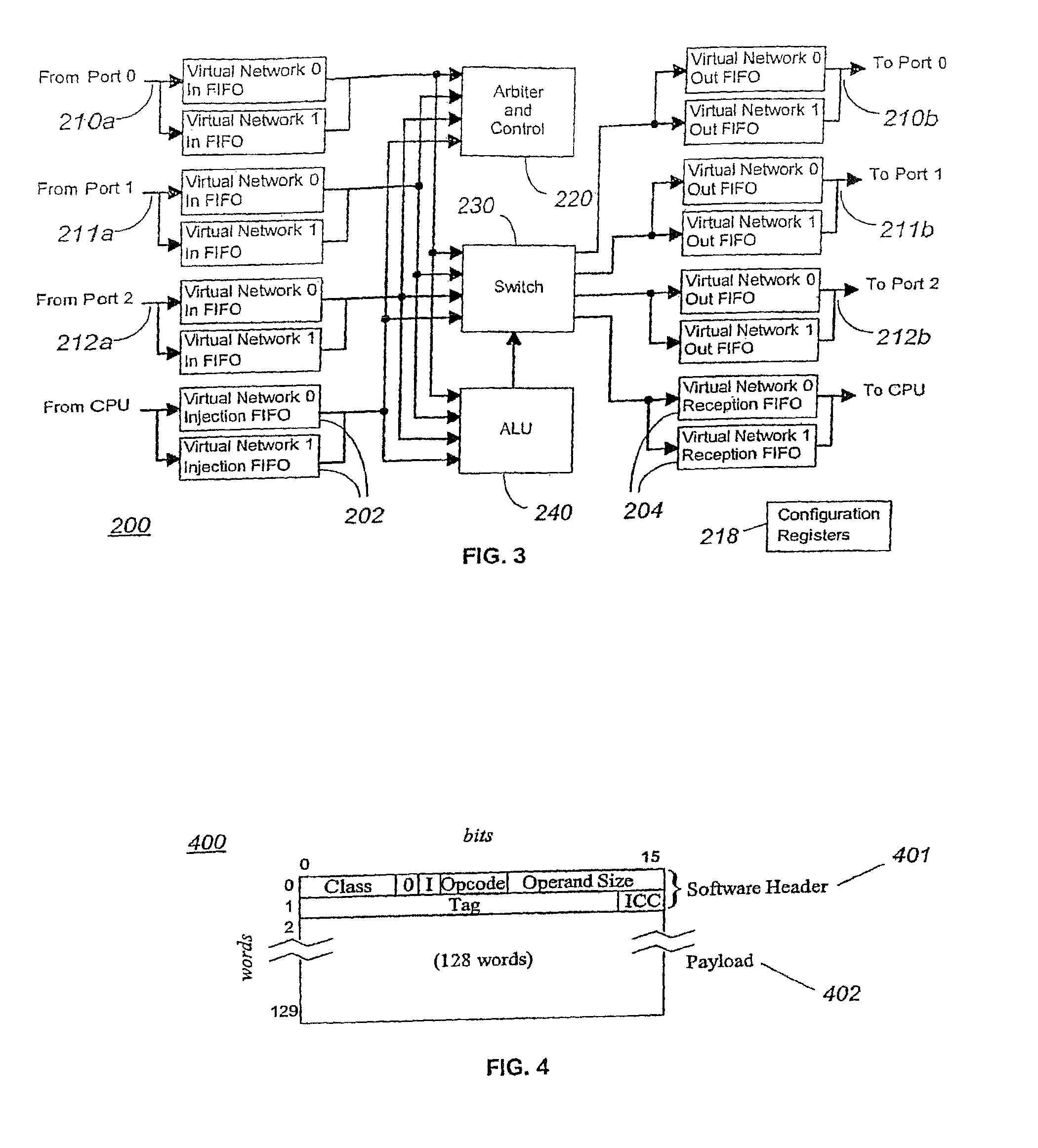

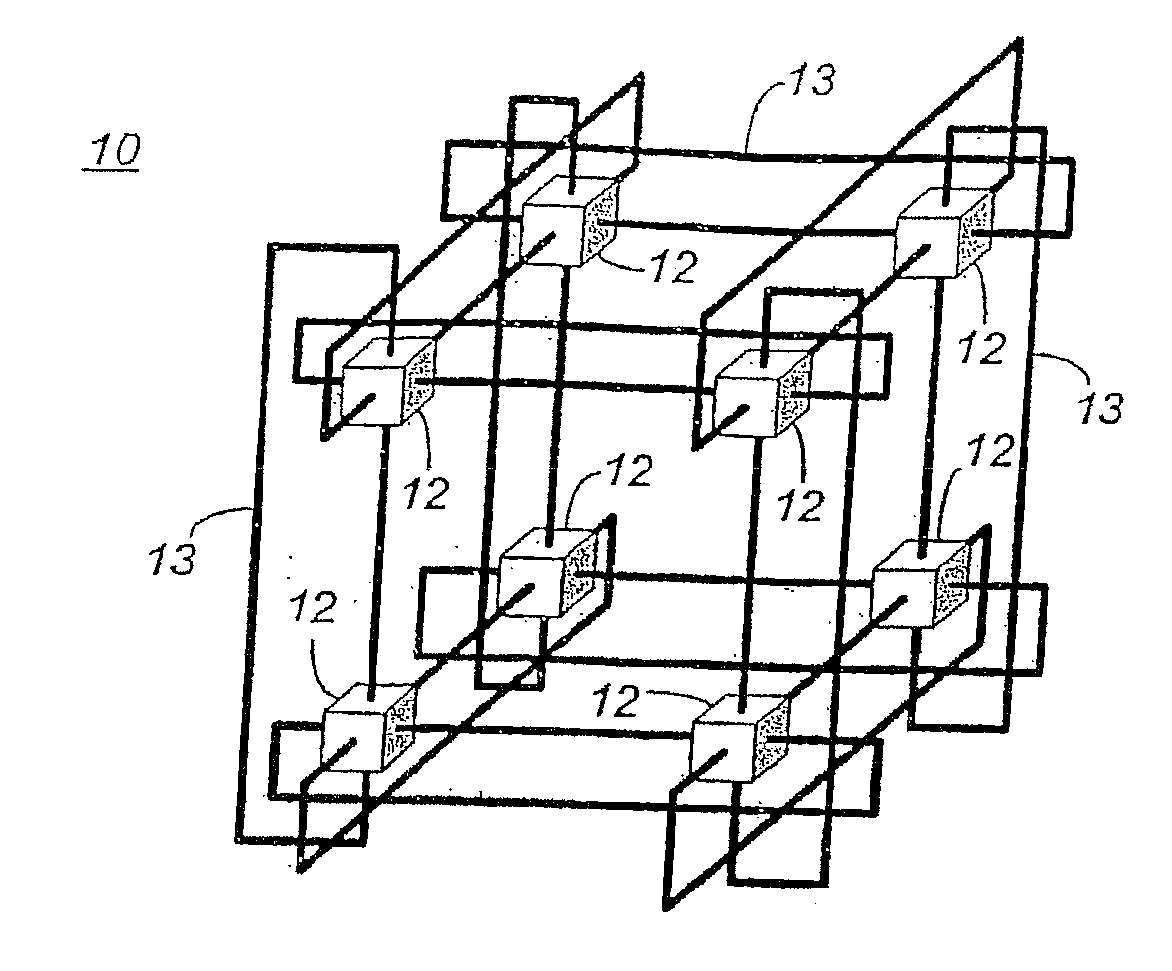

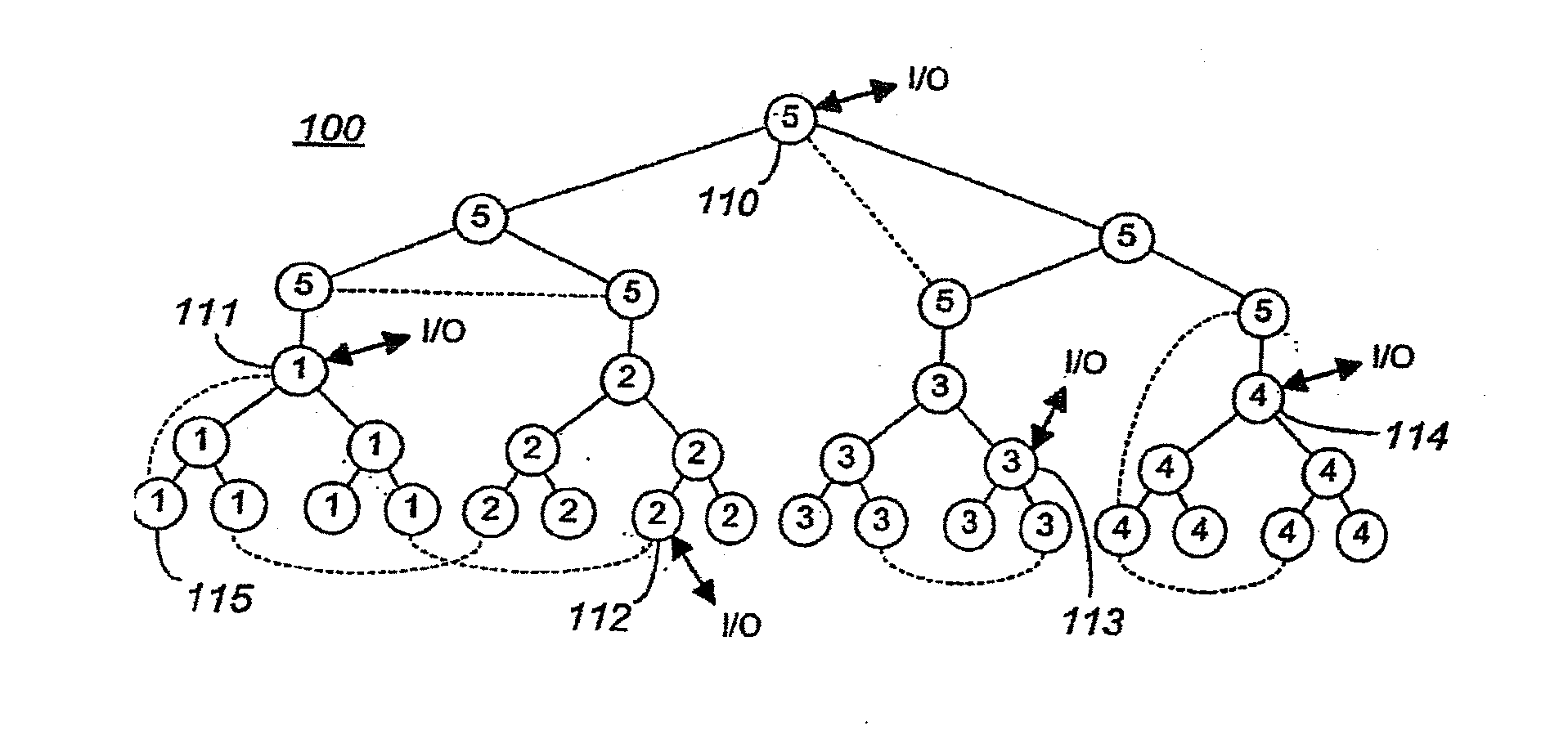

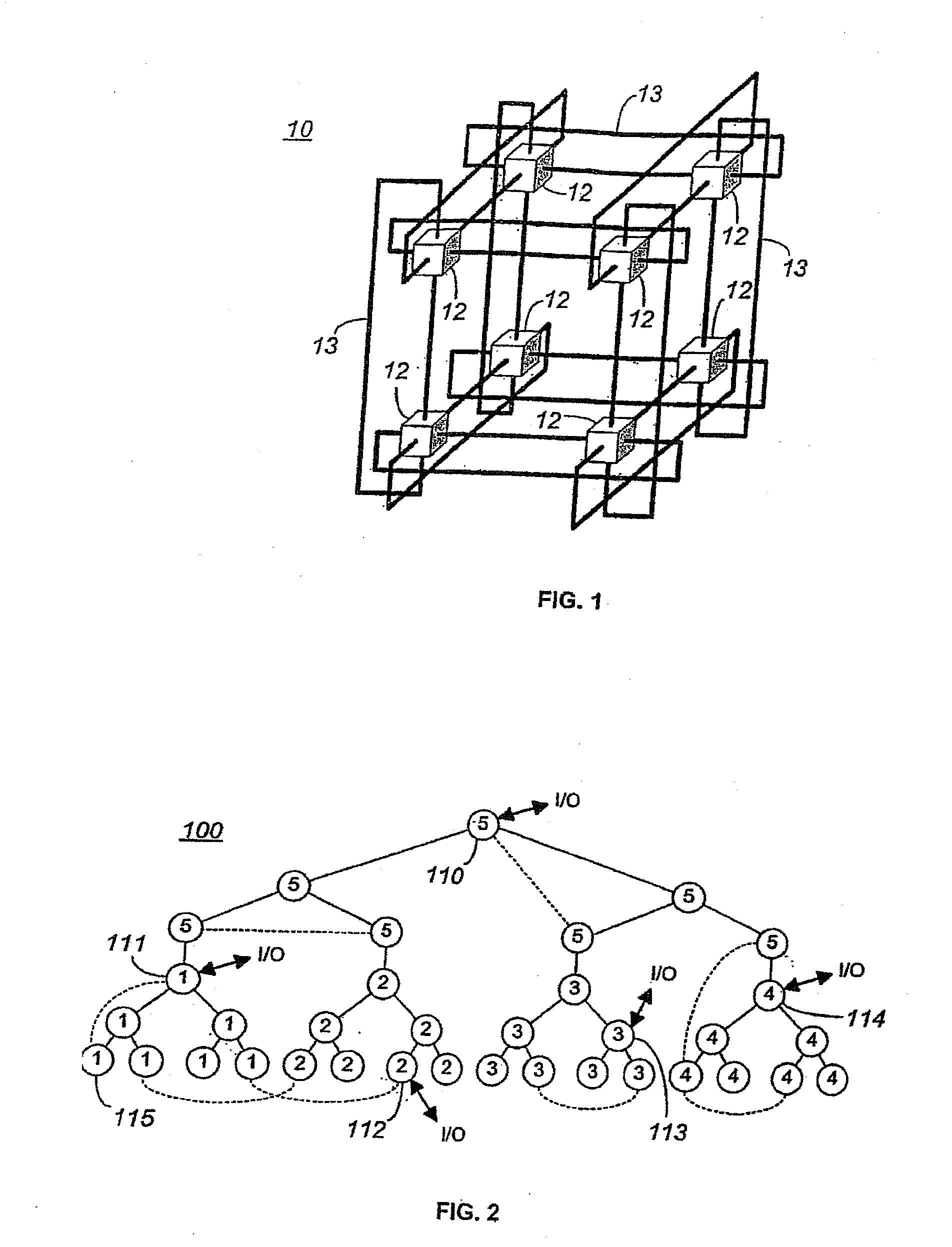

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures based upon System-On-a-Chip technology, i.e., each processing node comprises a single Application Specific Integrated Circuit (ASIC). Within each ASIC node is a plurality of processing elements each of which consists of a central processing unit (CPU) and plurality of floating point processors to enable optimal balance of computational performance, packaging density, low cost, and power and cooling requirements. The plurality of processors within a single node may be used individually or simultaneously to work on any combination of computation or communication as required by the particular algorithm being solved or executed at any point in time. The system-on-a-chip ASIC nodes are interconnected by multiple independent networks that optimally maximizes packet communications throughput and minimizes latency. In the preferred embodiment, the multiple networks include three high-speed networks for parallel algorithm message passing including a Torus, Global Tree, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. For particular classes of parallel algorithms, or parts of parallel calculations, this architecture exhibits exceptional computational performance, and may be enabled to perform calculations for new classes of parallel algorithms. Additional networks are provided for external connectivity and used for Input / Output, System Management and Configuration, and Debug and Monitoring functions. Special node packaging techniques implementing midplane and other hardware devices facilitates partitioning of the supercomputer in multiple networks for optimizing supercomputing resources.

Owner:IBM CORP

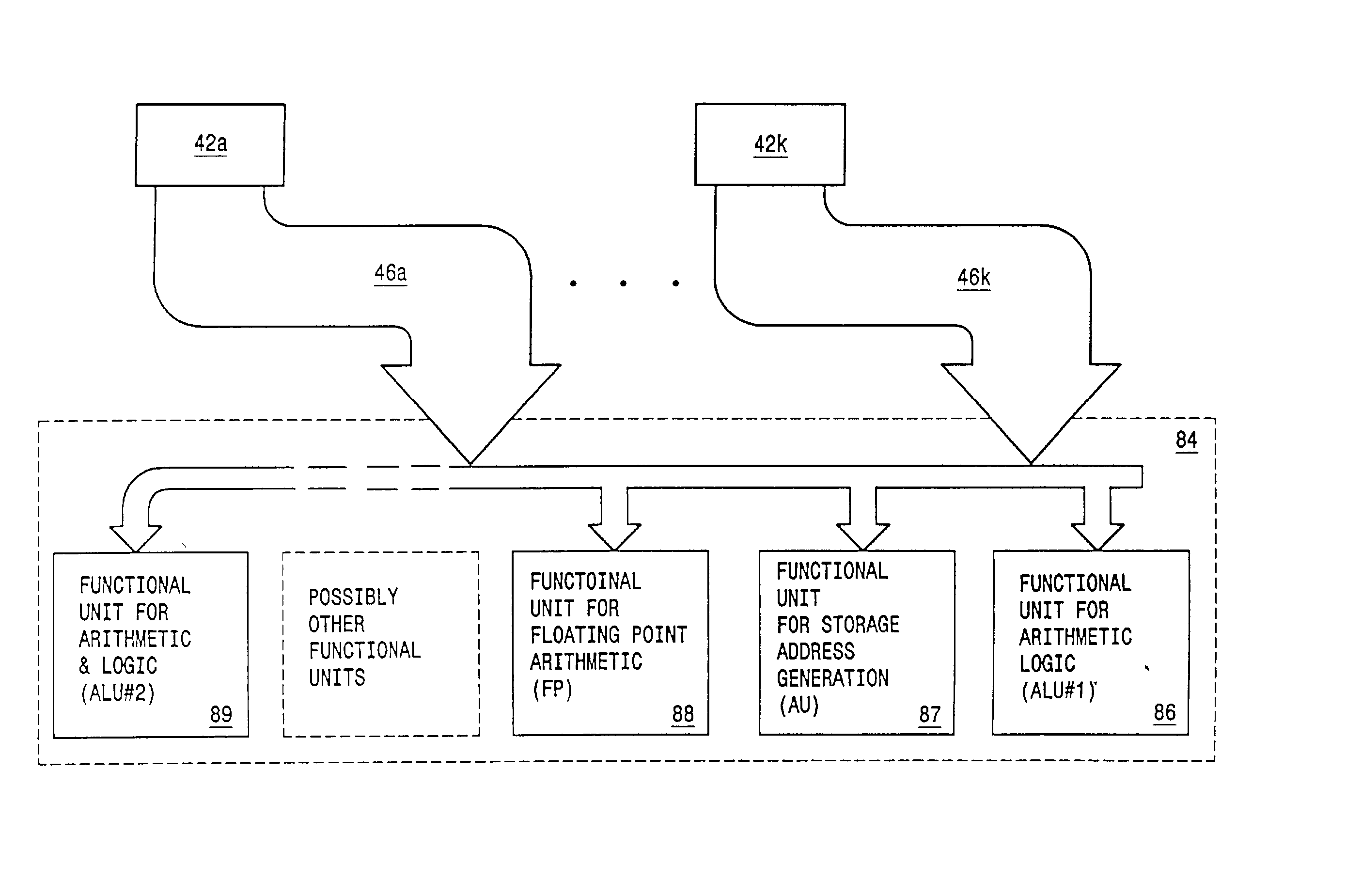

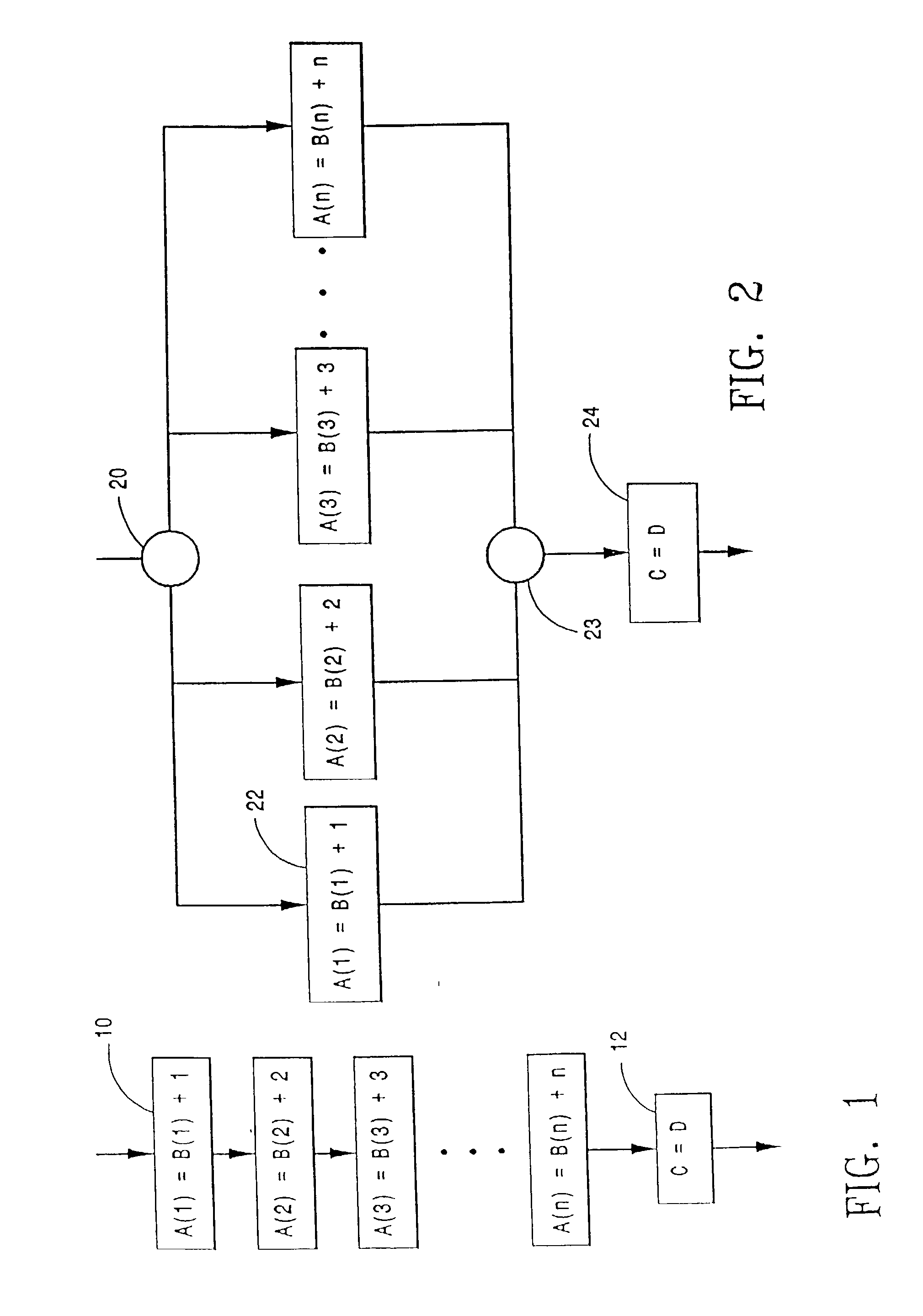

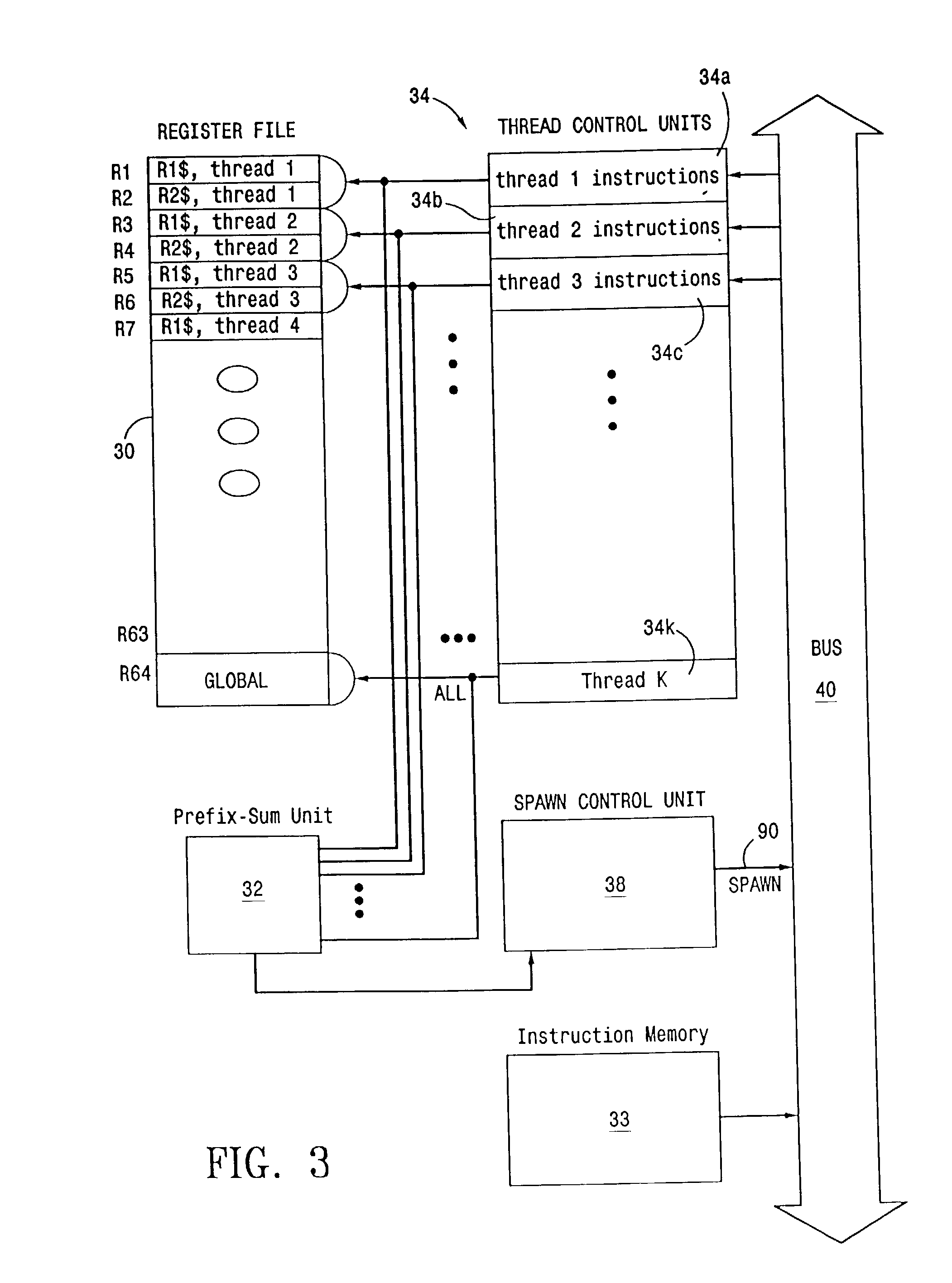

Spawn-join instruction set architecture for providing explicit multithreading

InactiveUS6463527B1Apparent advantageProgram initiation/switchingProgram synchronisationInstruction memoryFrequency spectrum

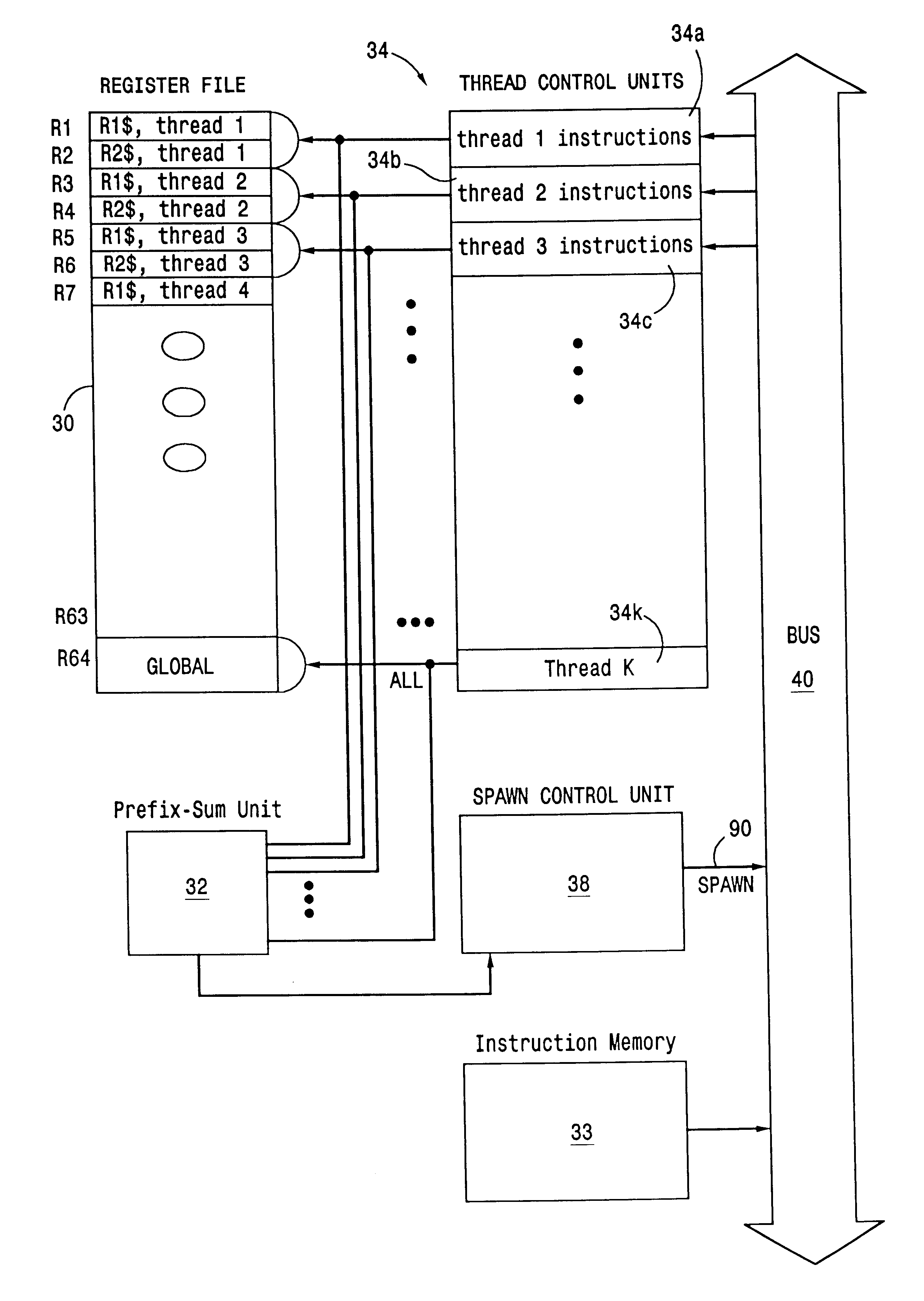

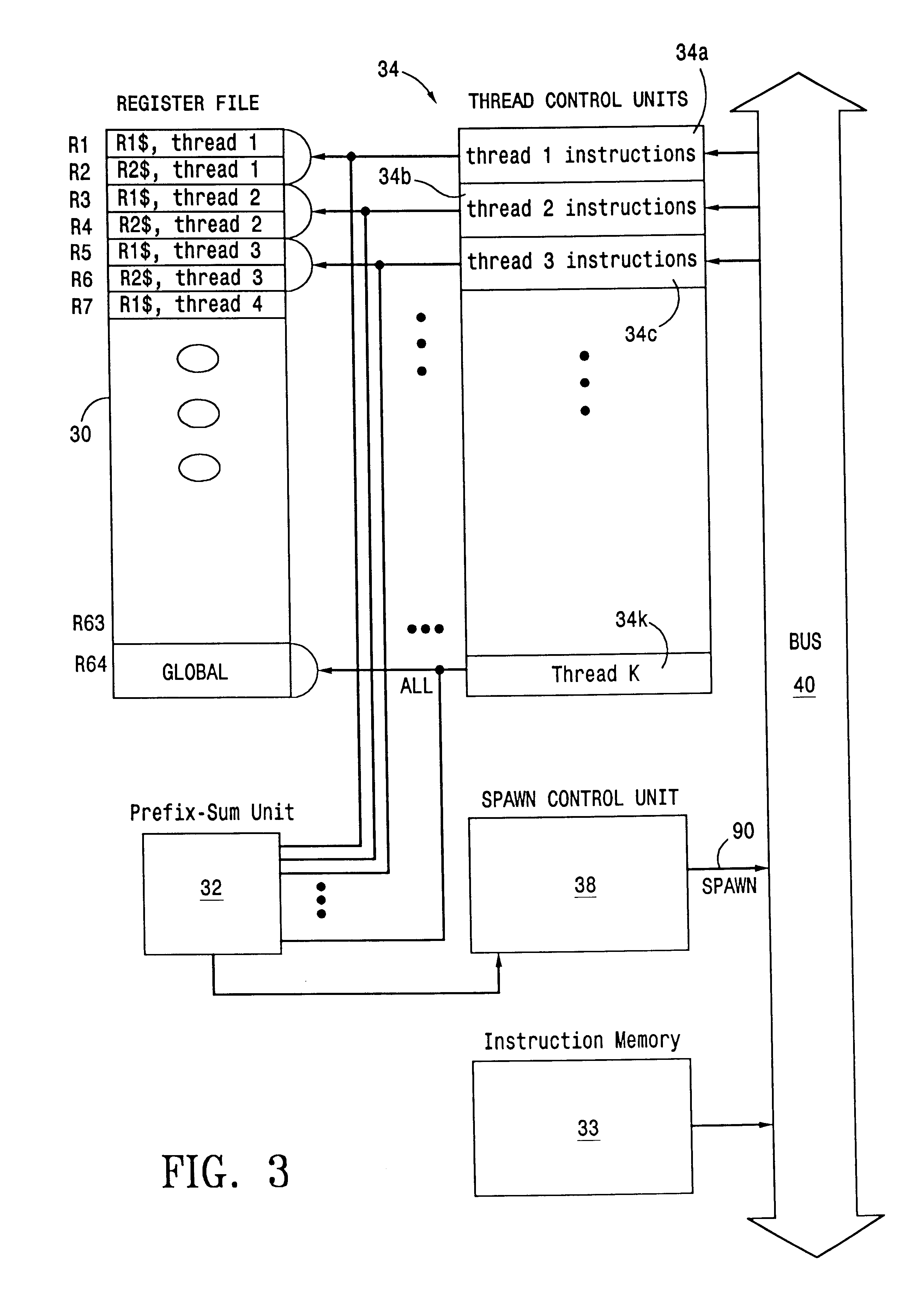

The invention presents a unique computational paradigm that provides the tools to take advantage of the parallelism inherent in parallel algorithms to the full spectrum from algorithms through architecture to implementation. The invention provides a new processing architecture that extends the standard instruction set of the conventional uniprocessor architecture. The architecture used to implement this new computational paradigm includes a thread control unit (34), a spawn control unit (30), and an enabled instruction memory (50). The architecture initiates multiple threads and executes them in parallel. Control of the threads is provided such that the threads may be suspended or allowed to execute each at its own pace.

Owner:VISHKIN UZI Y

Generating a set of pre-fetch address candidates based on popular sets of address and data offset counters

InactiveUS7430650B1Memory architecture accessing/allocationDigital computer detailsOperational systemParallel algorithm

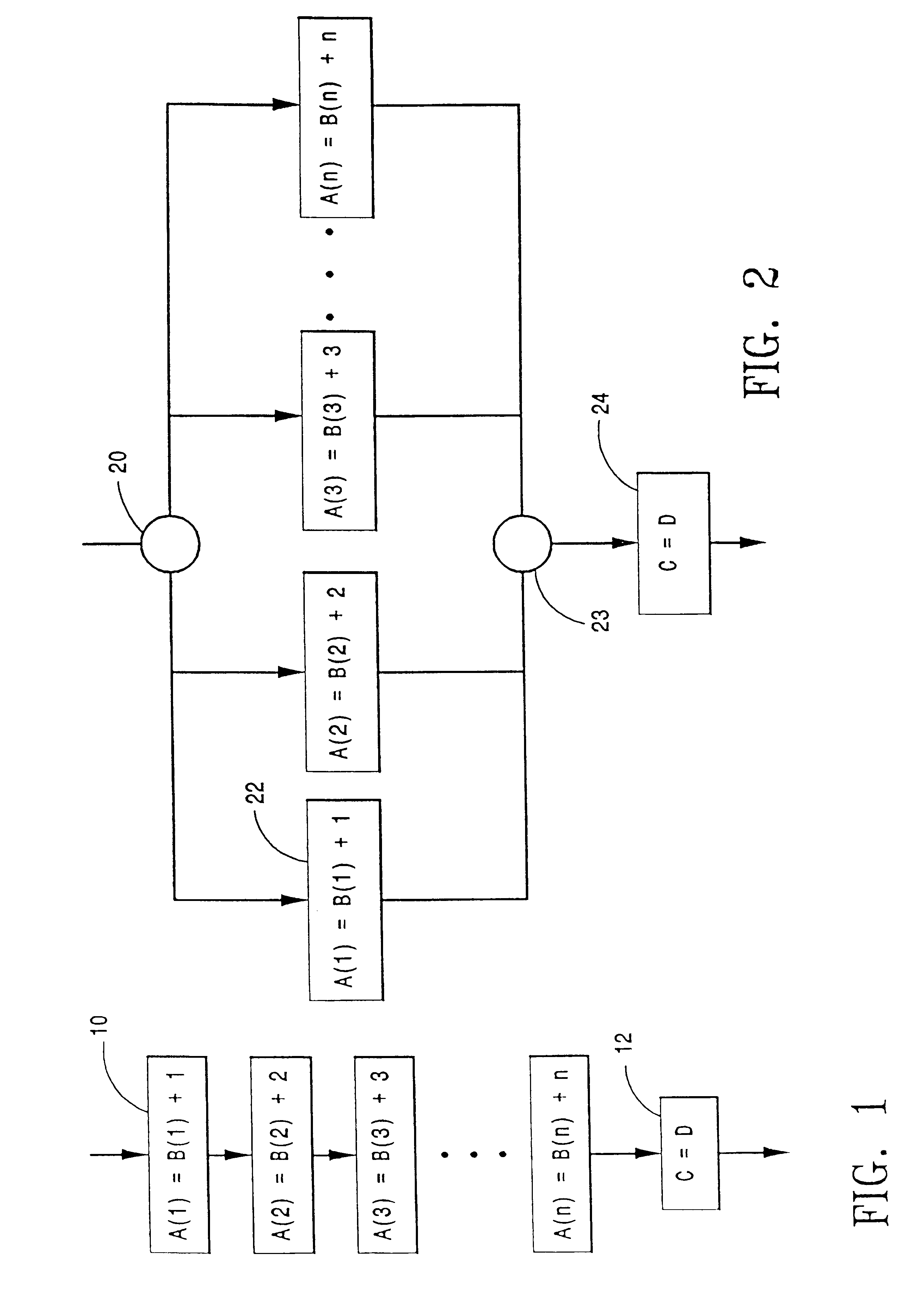

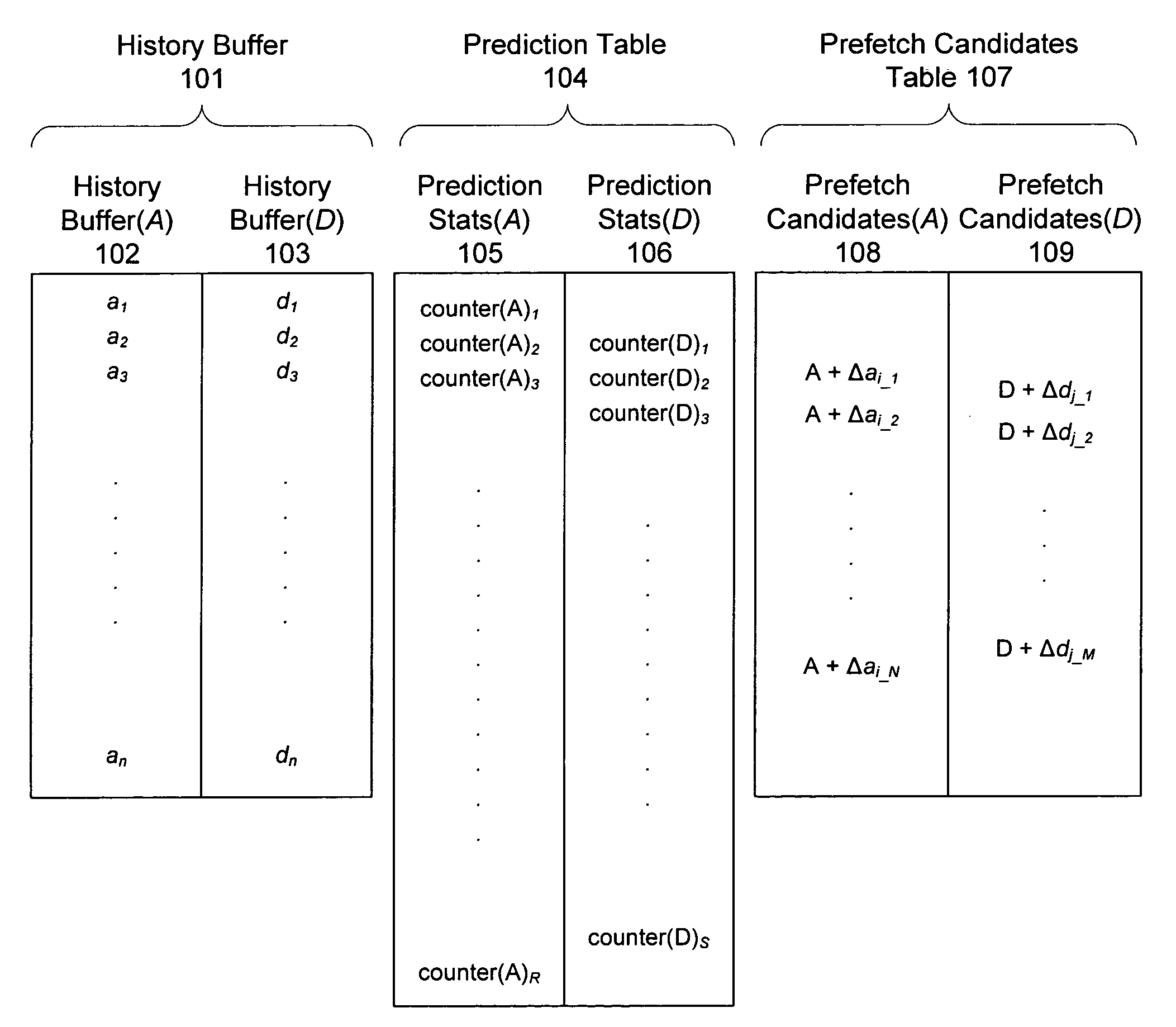

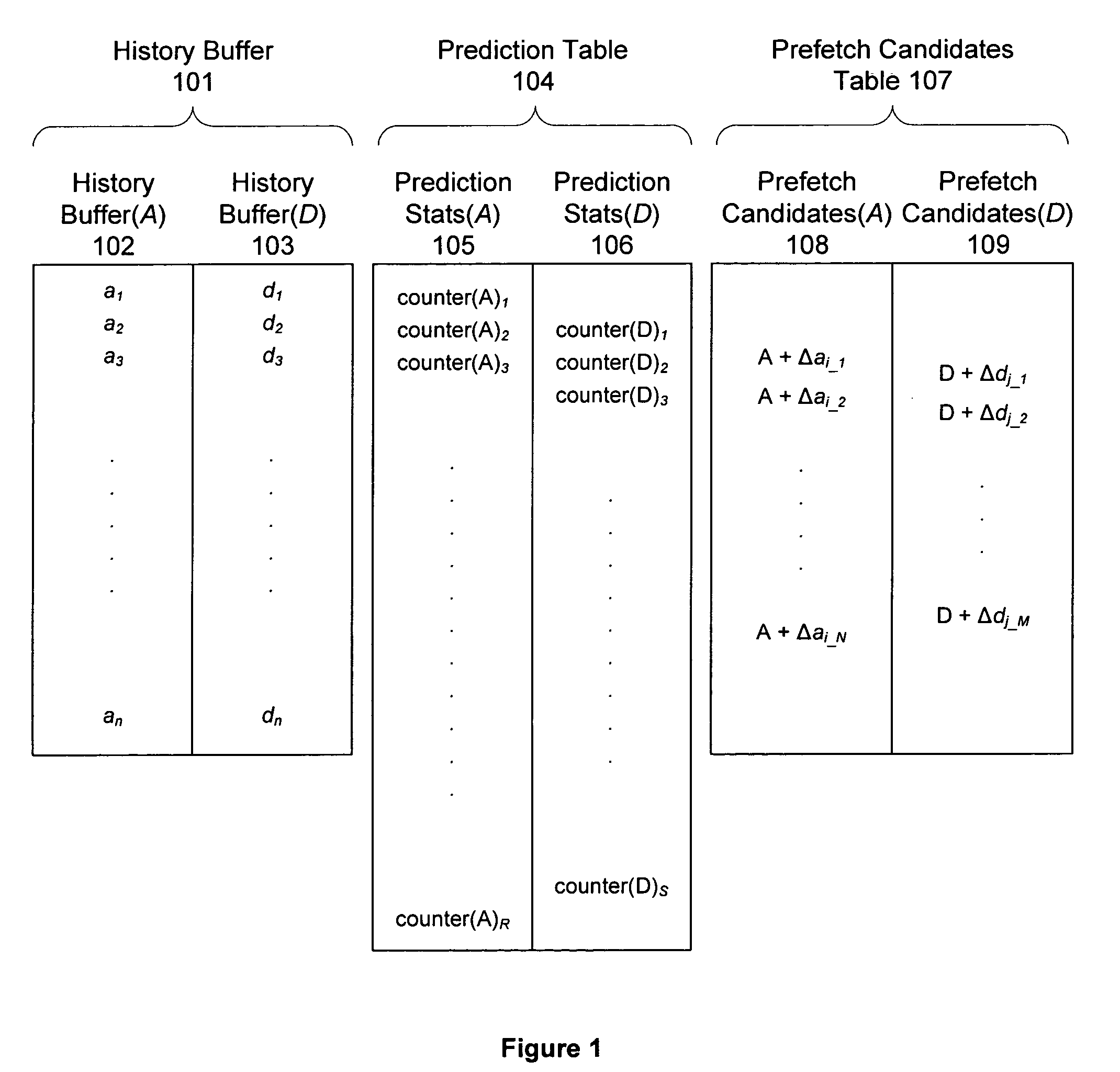

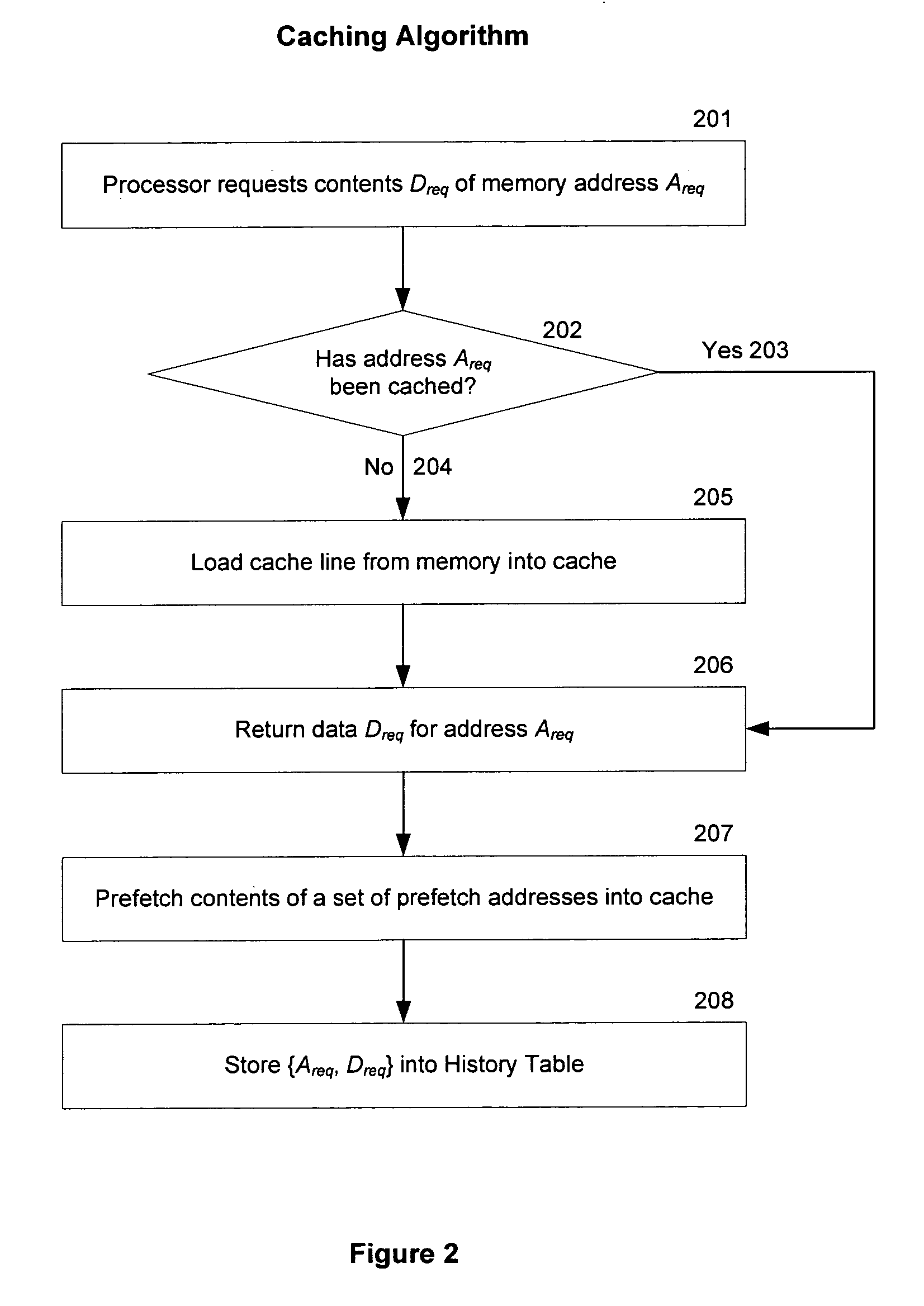

Cache prefetching algorithm uses previously requested address and data patterns to predict future data needs and prefetch such data from memory into cache. A requested address is compared to previously requested addresses and returned data to compute a set of increments, and the set of increments is added to the currently requested address and returned data to generate a set of prefetch candidates. Weight functions are used to prioritize prefetch candidates. The prefetching method requires no changes to application code or operation system (OS) and is transparent to the compiler and the processor. The prefetching method comprises a parallel algorithm well-suited to implementation on an application-specific integrated circuit (ASIC) or field-programmable gate array (FPGA), or to integration into a processor.

Owner:ROSS RICHARD

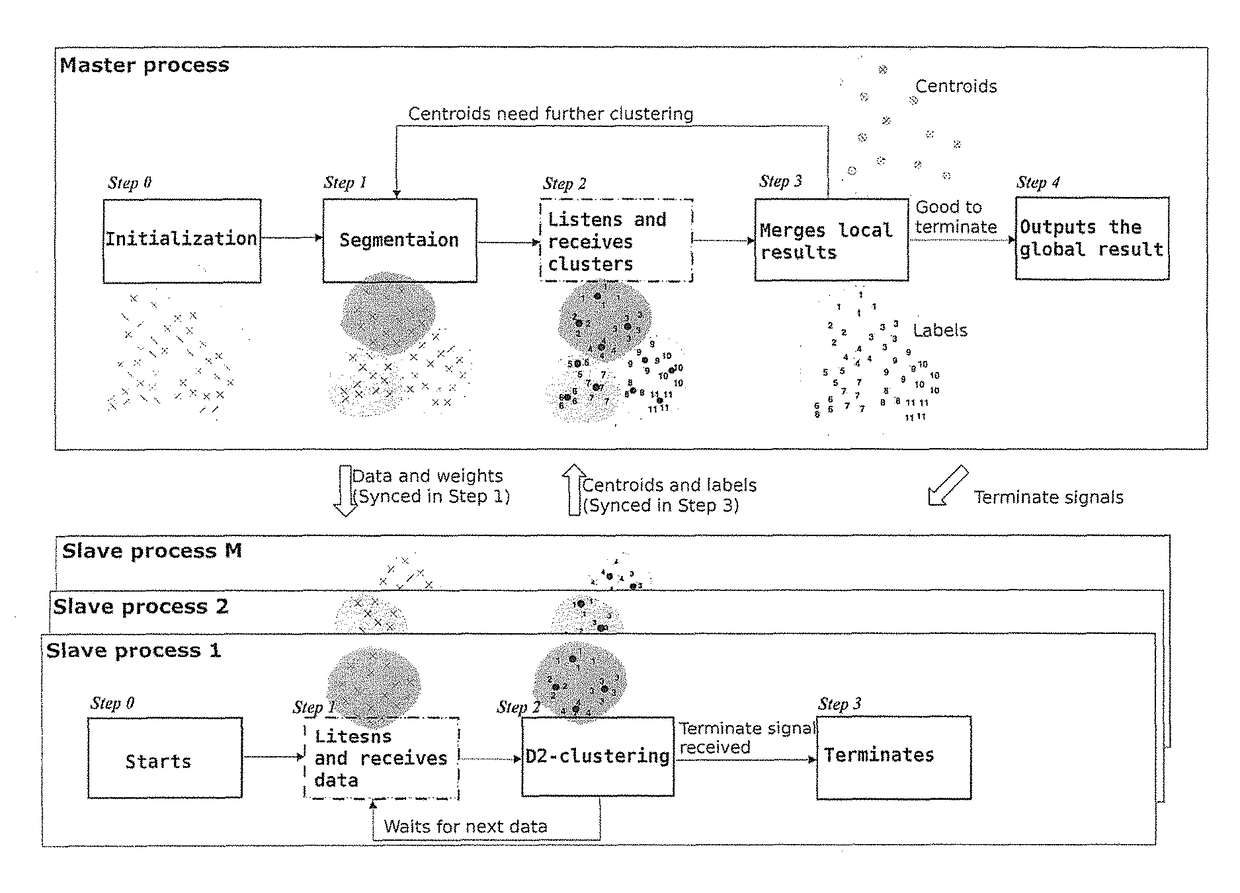

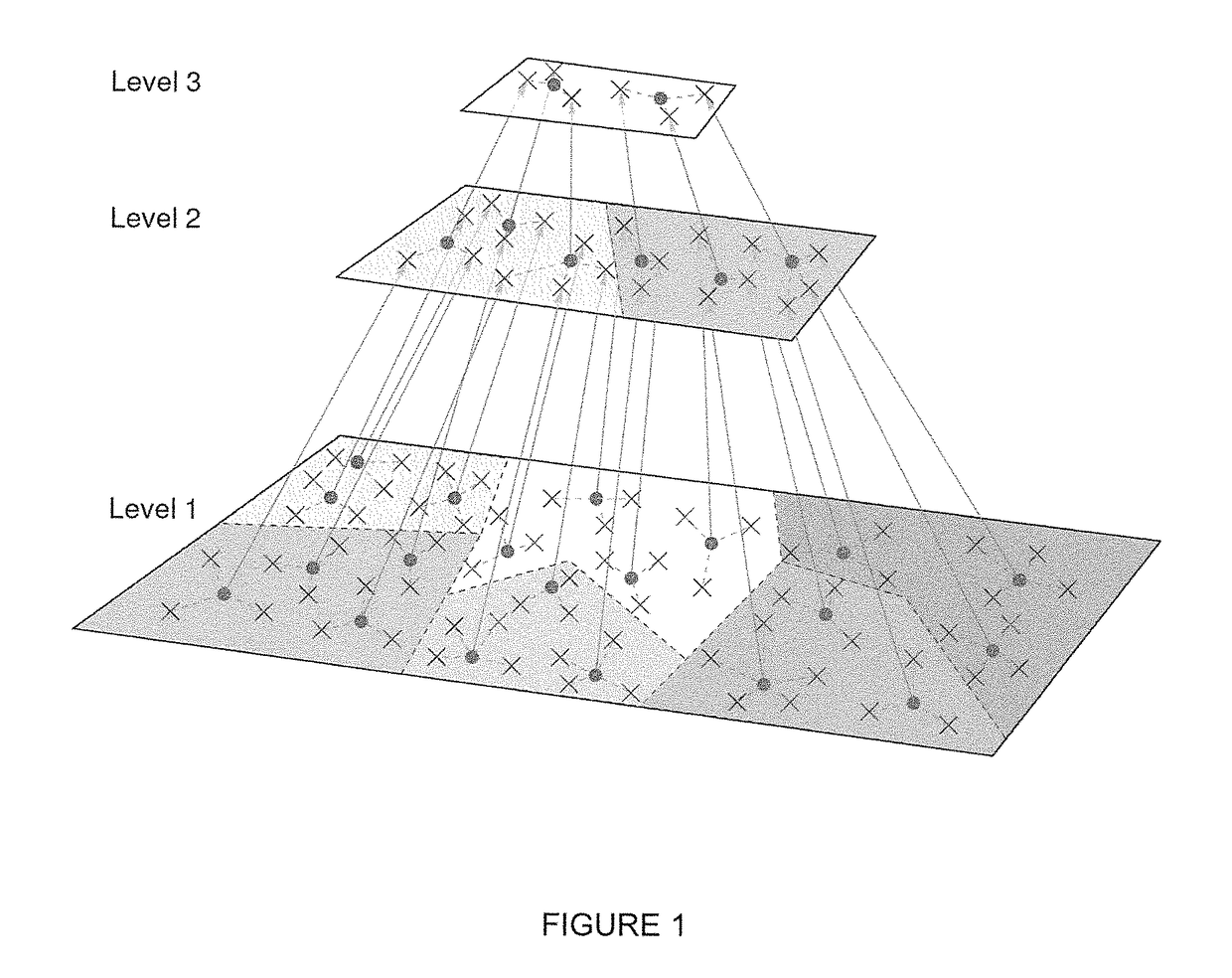

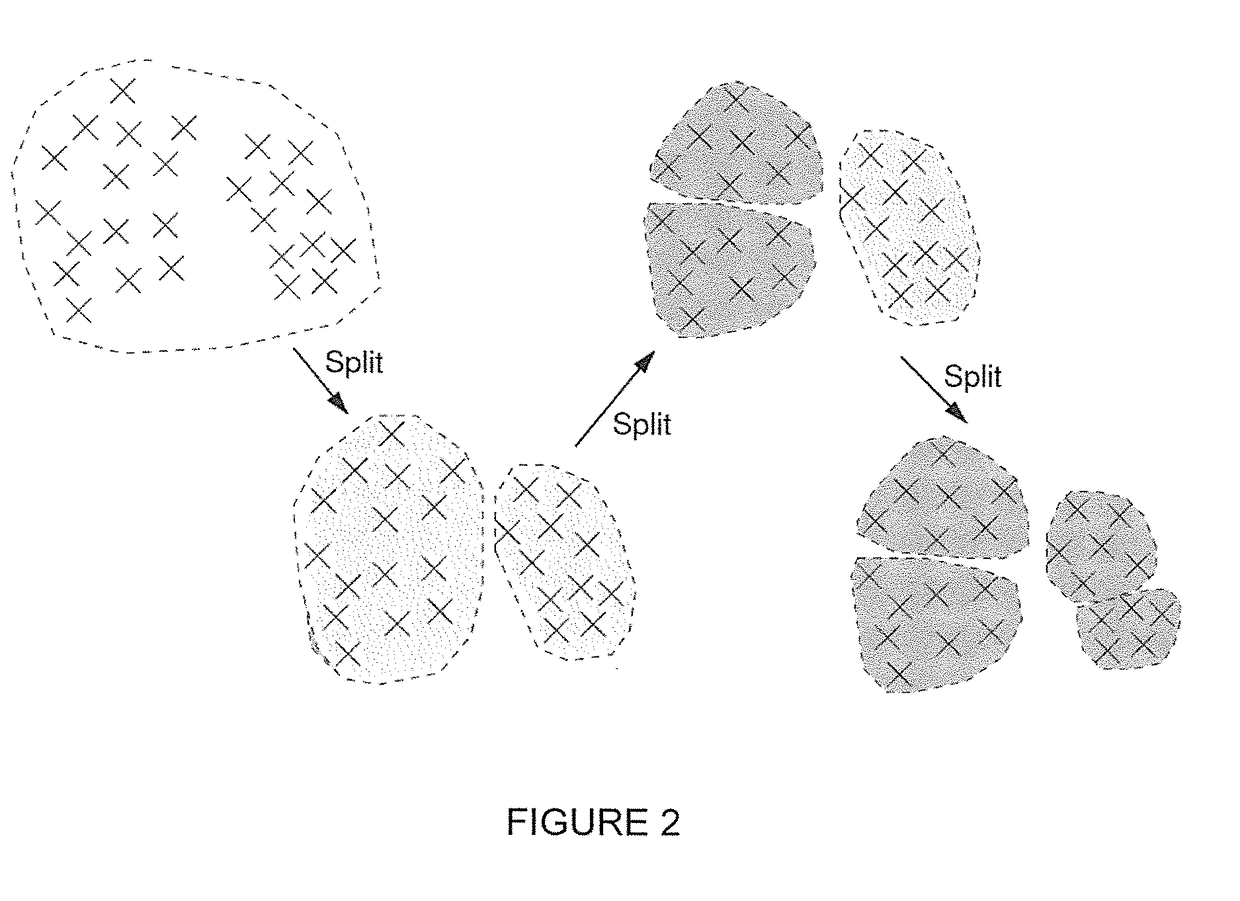

Massive clustering of discrete distributions

ActiveUS20140143251A1Perform data efficientlyEfficient executionDigital data processing detailsRelational databasesCanopy clustering algorithmCluster algorithm

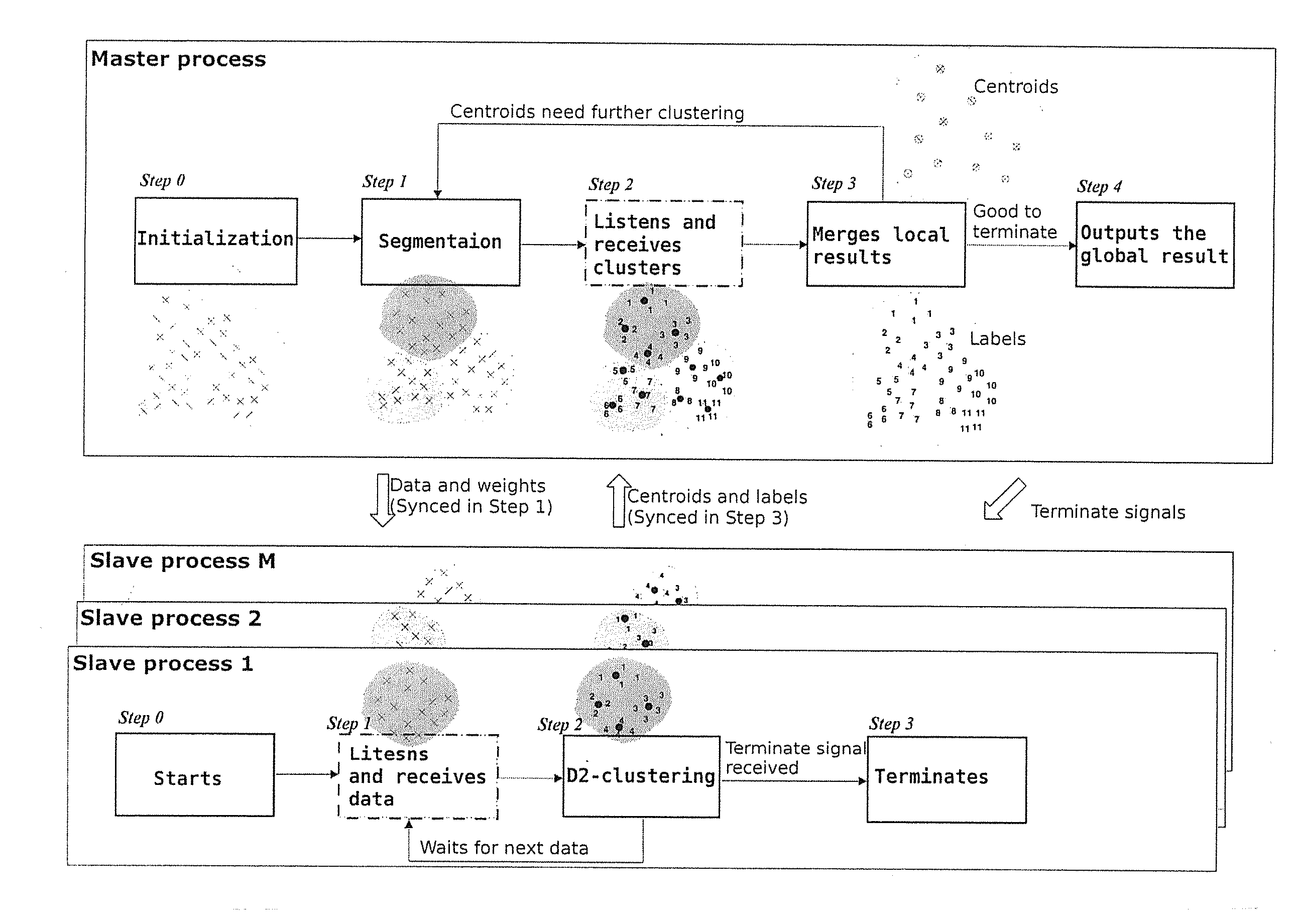

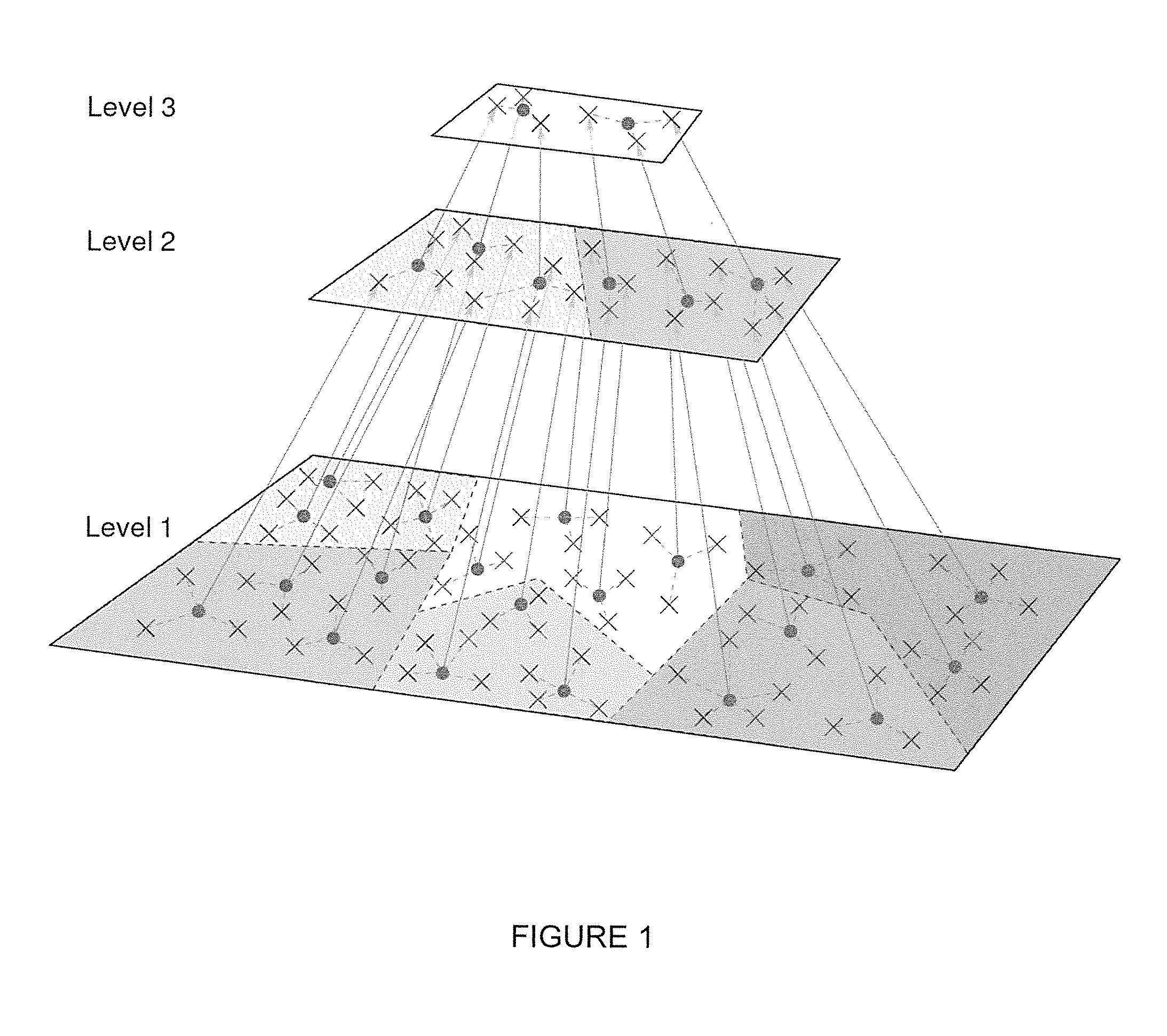

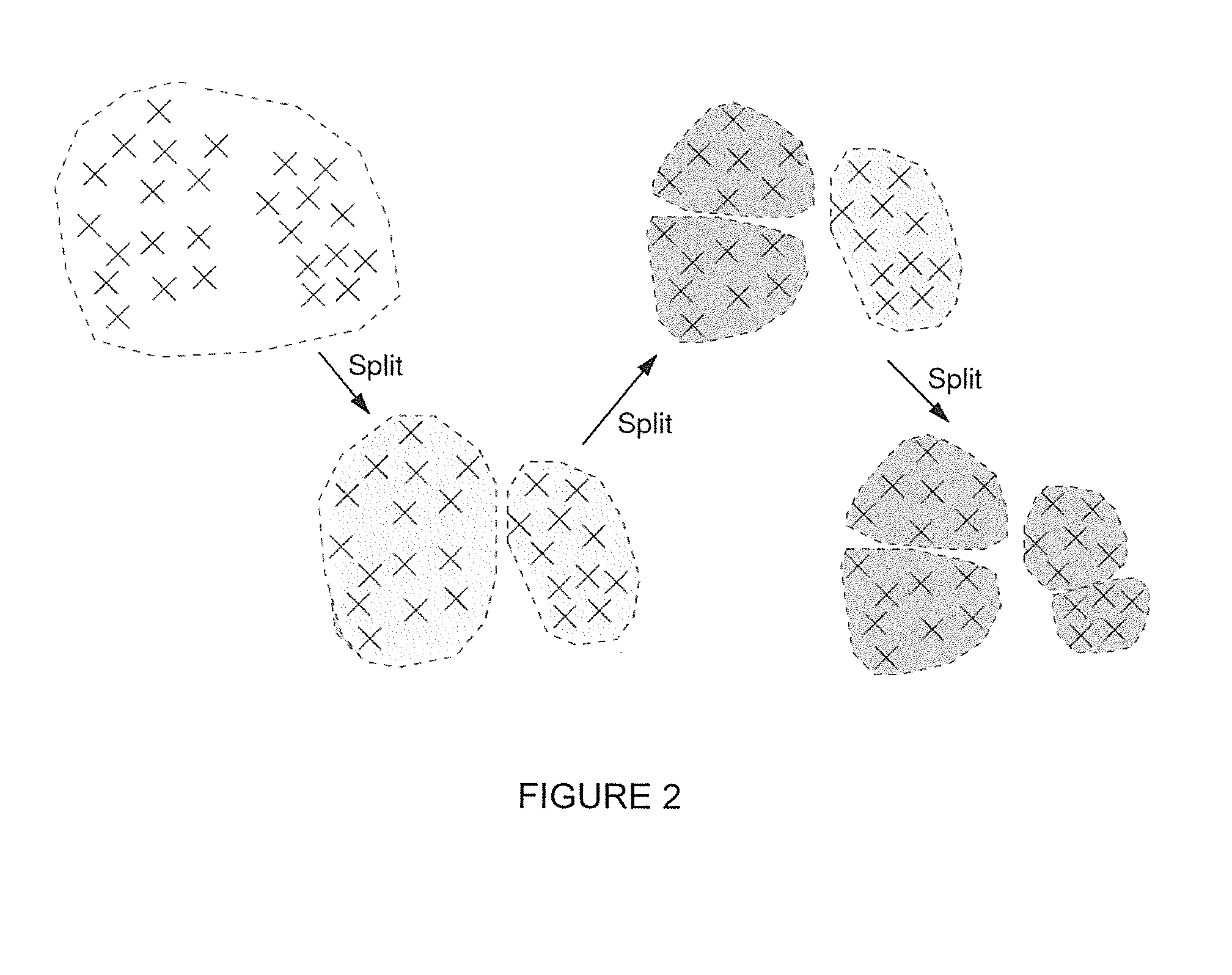

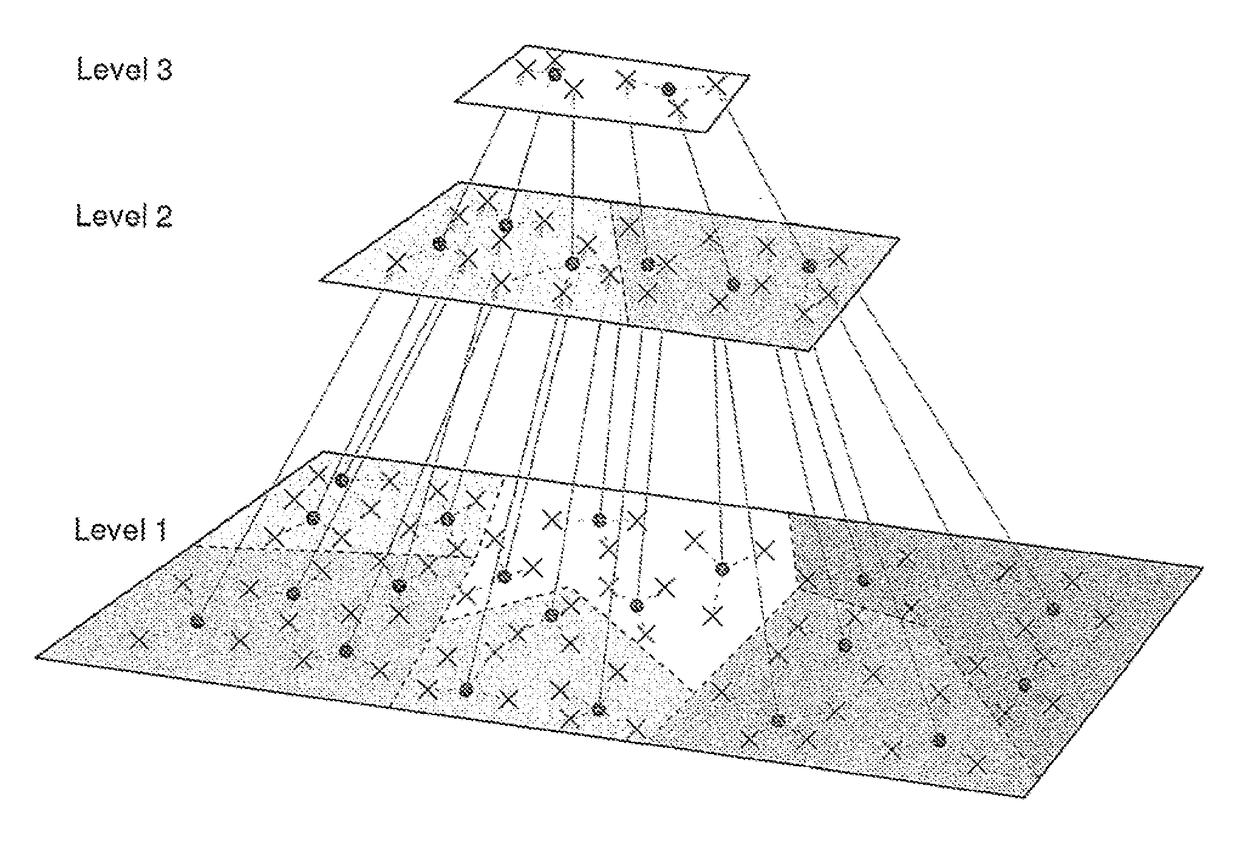

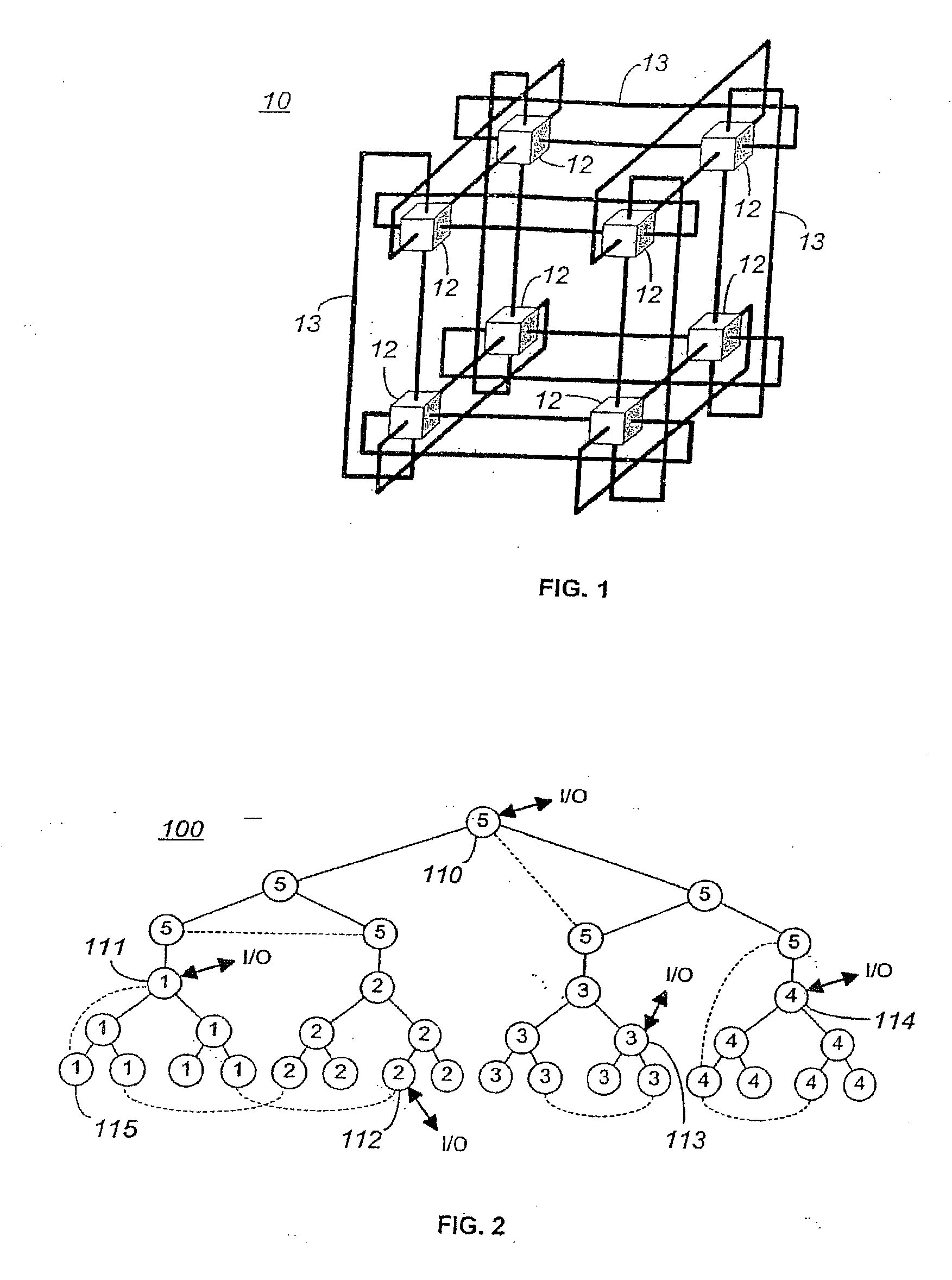

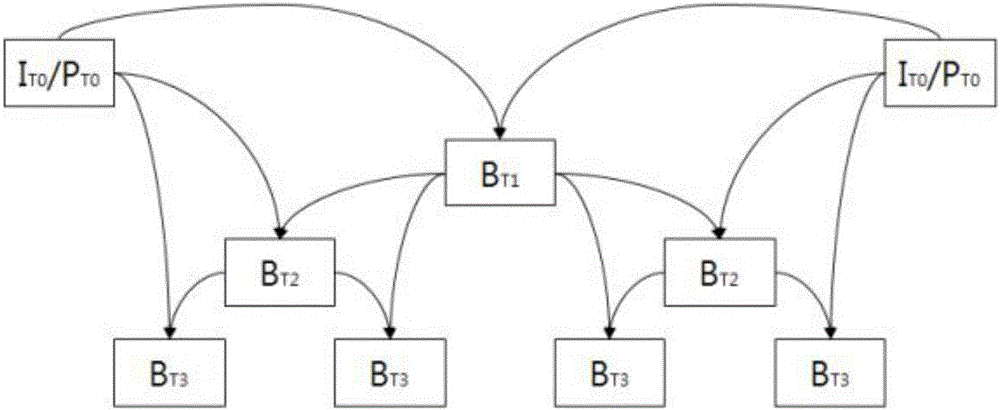

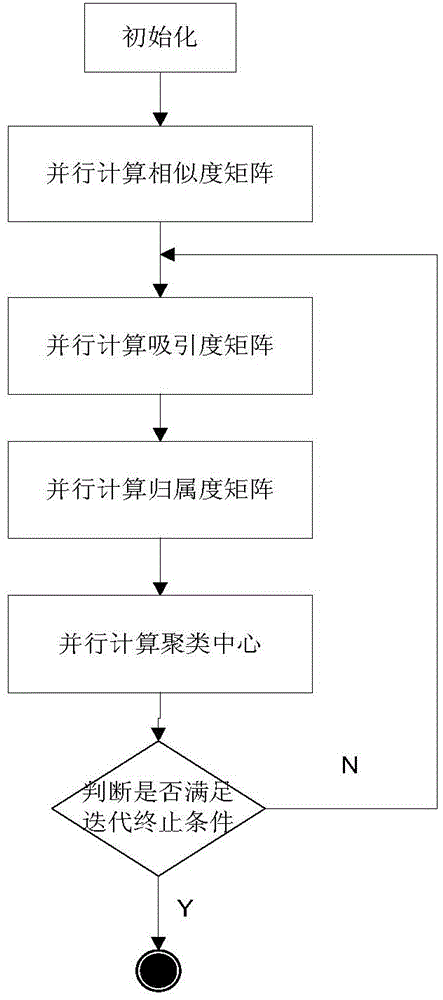

The trend of analyzing big data in artificial intelligence requires more scalable machine learning algorithms, among which clustering is a fundamental and arguably the most widely applied method. To extend the applications of regular vector-based clustering algorithms, the Discrete Distribution (D2) clustering algorithm has been developed for clustering bags of weighted vectors which are well adopted in many emerging machine learning applications. The high computational complexity of D2-clustering limits its impact in solving massive learning problems. Here we present a parallel D2-clustering algorithm with substantially improved scalability. We develop a hierarchical structure for parallel computing in order to achieve a balance between the individual-node computation and the integration process of the algorithm. The parallel algorithm achieves significant speed-up with minor accuracy loss.

Owner:PENN STATE RES FOUND

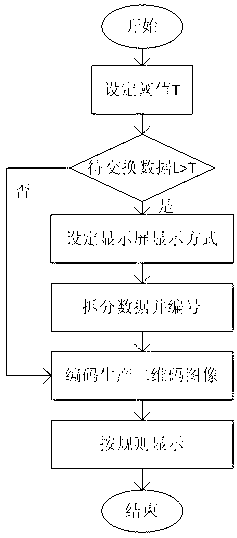

Intranet-extranet physical isolation data exchange method based on QR (quick response) code

InactiveCN103268461AMake up for the high priceMake up for defects such as labor requiredCo-operative working arrangementsInternal/peripheral component protectionParallel algorithmData exchange

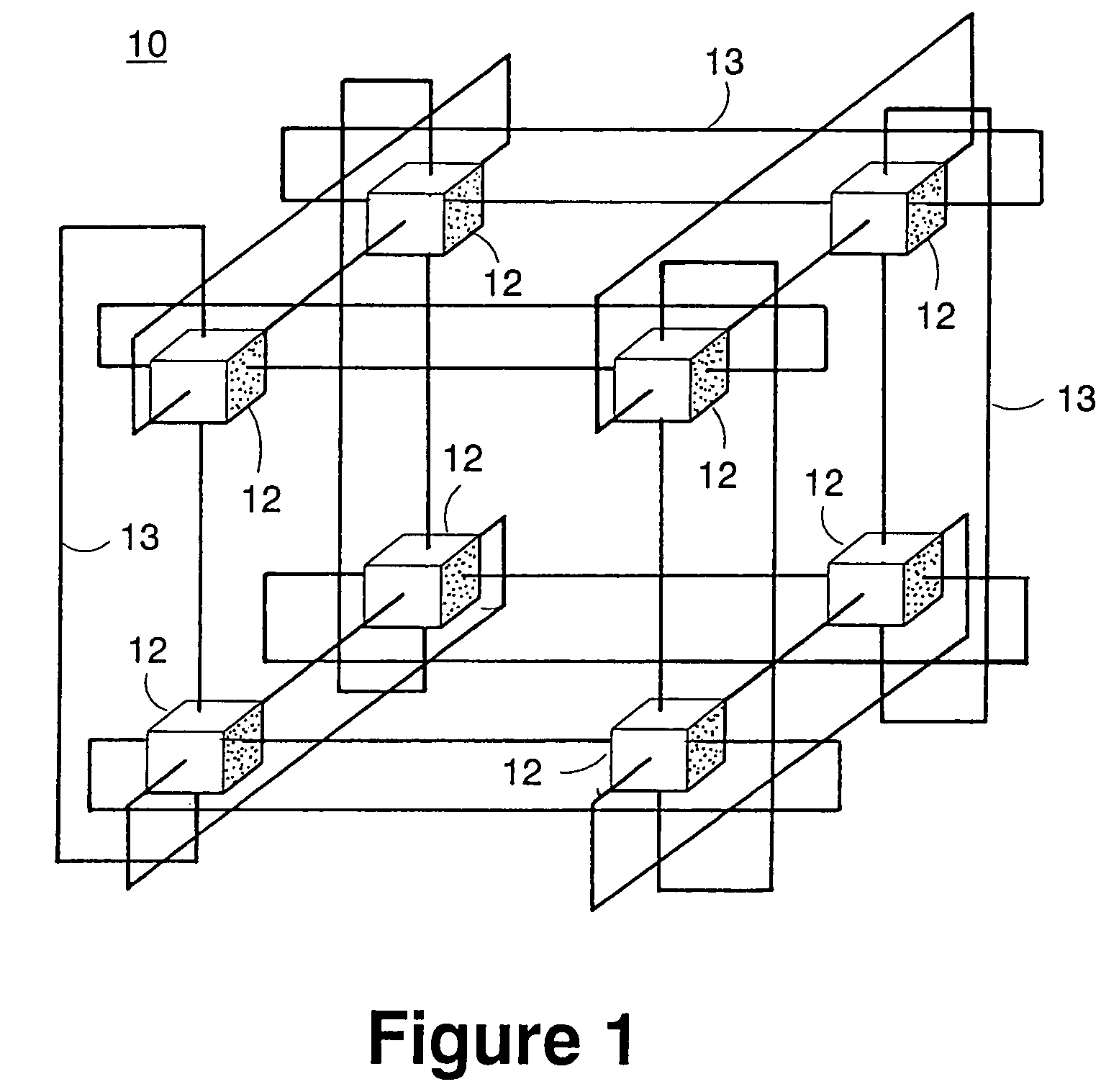

The invention discloses an intranet-extranet physical isolation data exchange method based on QR (quick response) code. The method of exchanging data from an intranet to an extranet includes: firstly, extracting data to be exchanged in an intranet database, encoding the data to generate a plurality of QR code images, and regularly arranging and displaying the images on a display screen of intranet system terminal equipment in a form of QR code matrix; secondly, using a camera of extranet system terminal equipment to acquire the matrix-type irregularly arranged QR code images displayed on the display screen of the intranet system terminal equipment; and thirdly decoding acquired QR code sequences by parallel algorithm on the extranet system terminal equipment so as to obtain exchanged data. The method has the advantages that such defects as high precise and need for labor in existing traditional exchange methods of gateway copying or USB (universal serial bus) flash drive copying, the method is high in reliability, high in safety and low in operation and maintenance cost, exchange time is shortened, and efficiency in intranet-extranet data exchange is improved.

Owner:浙江成功软件开发有限公司

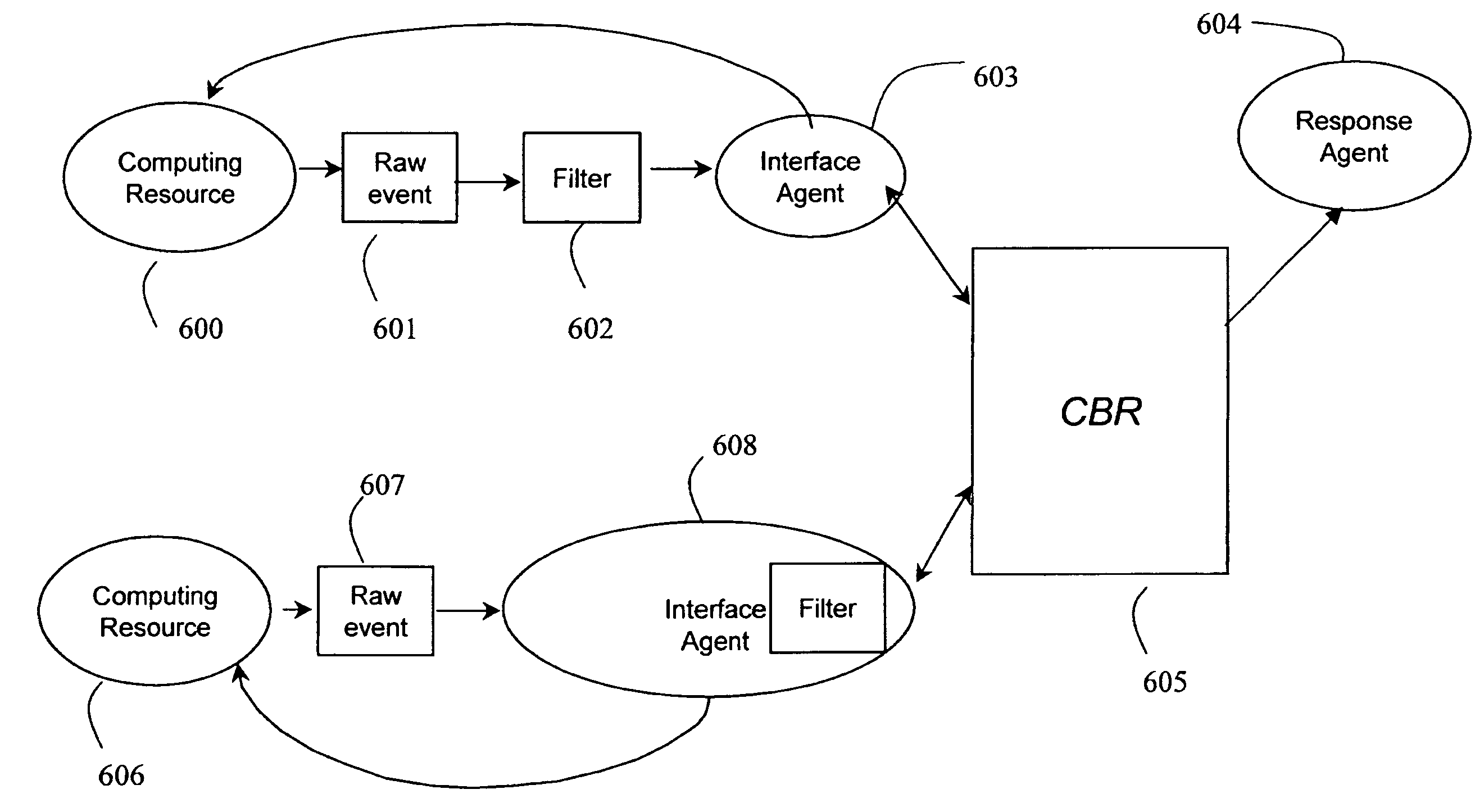

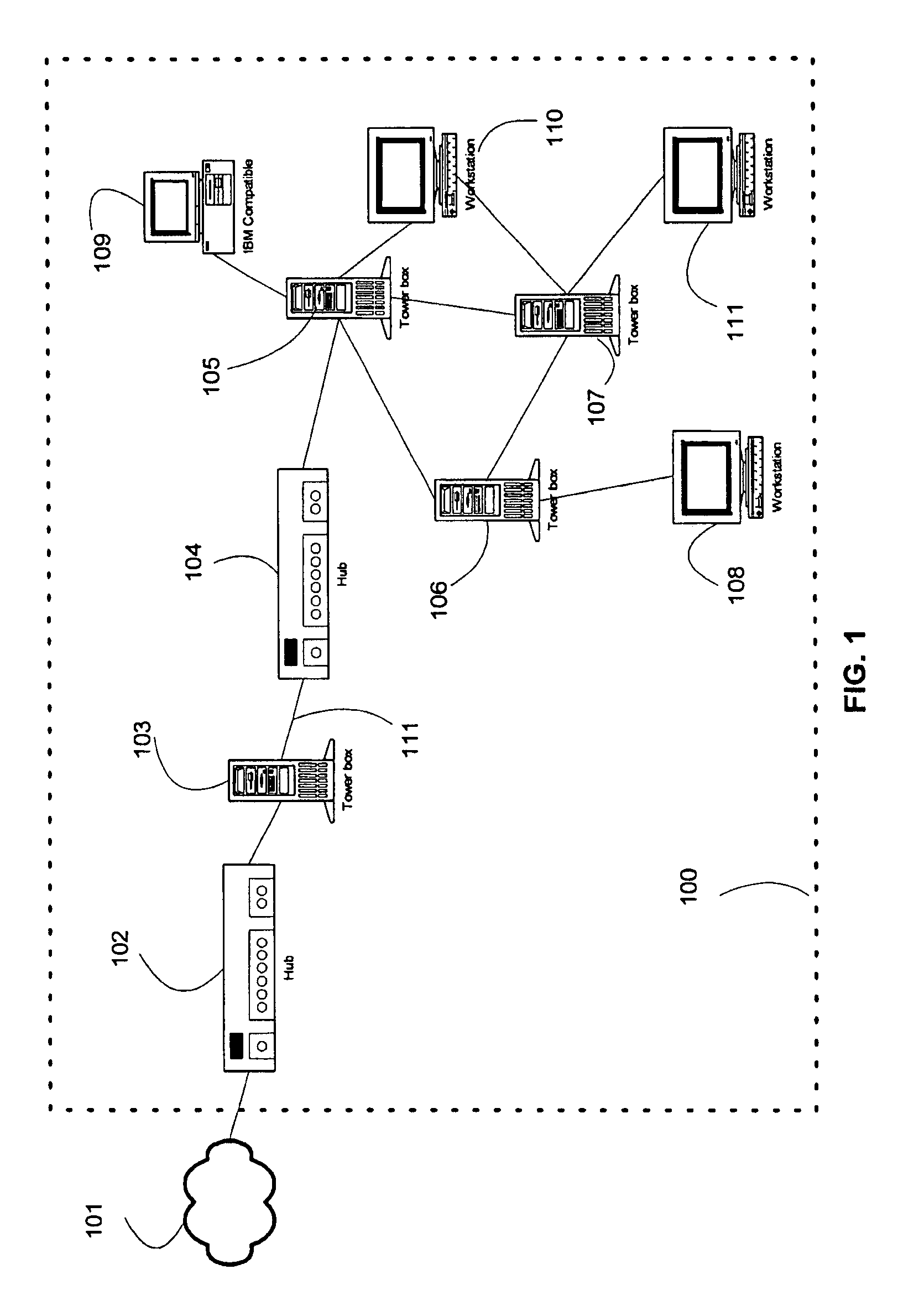

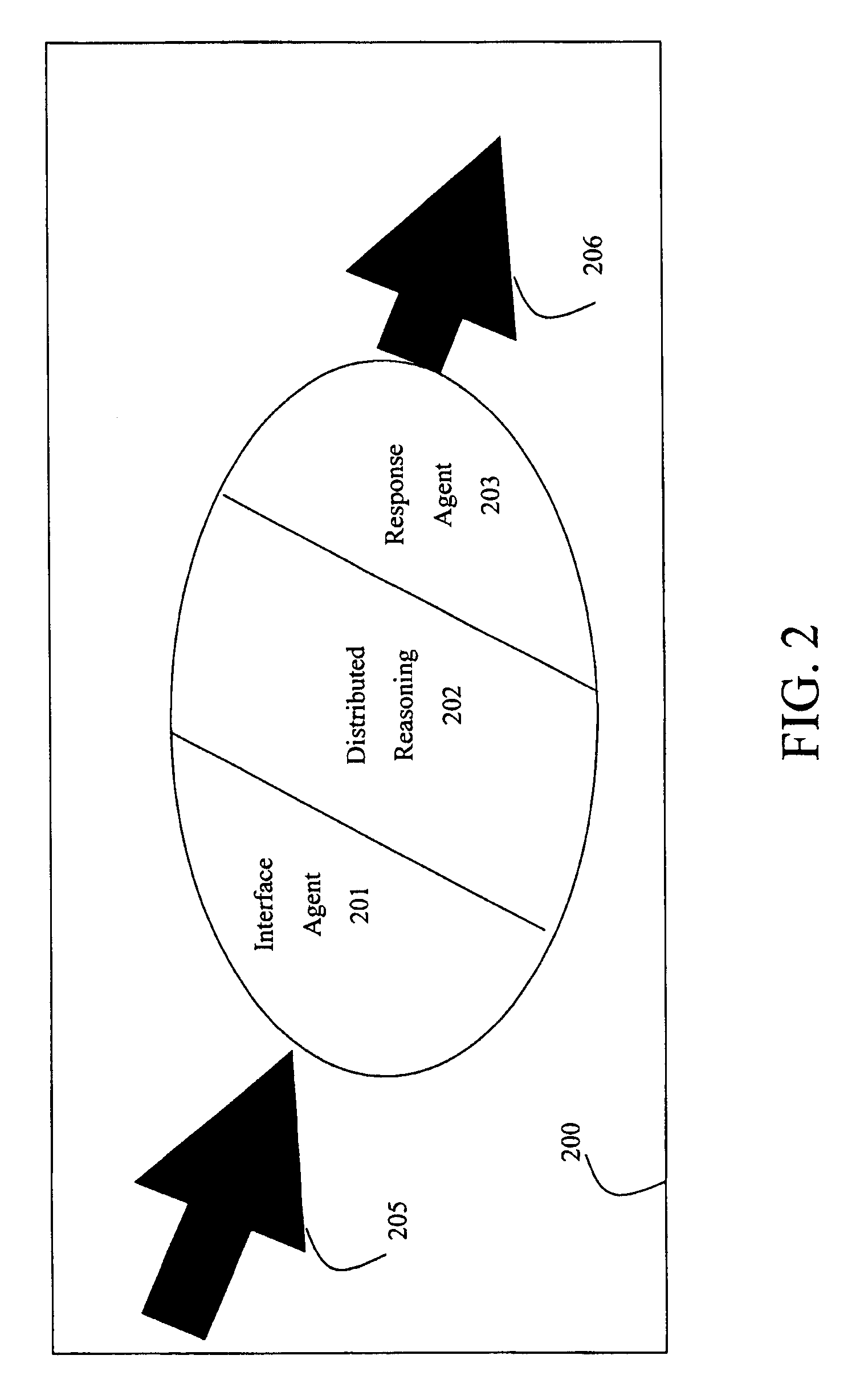

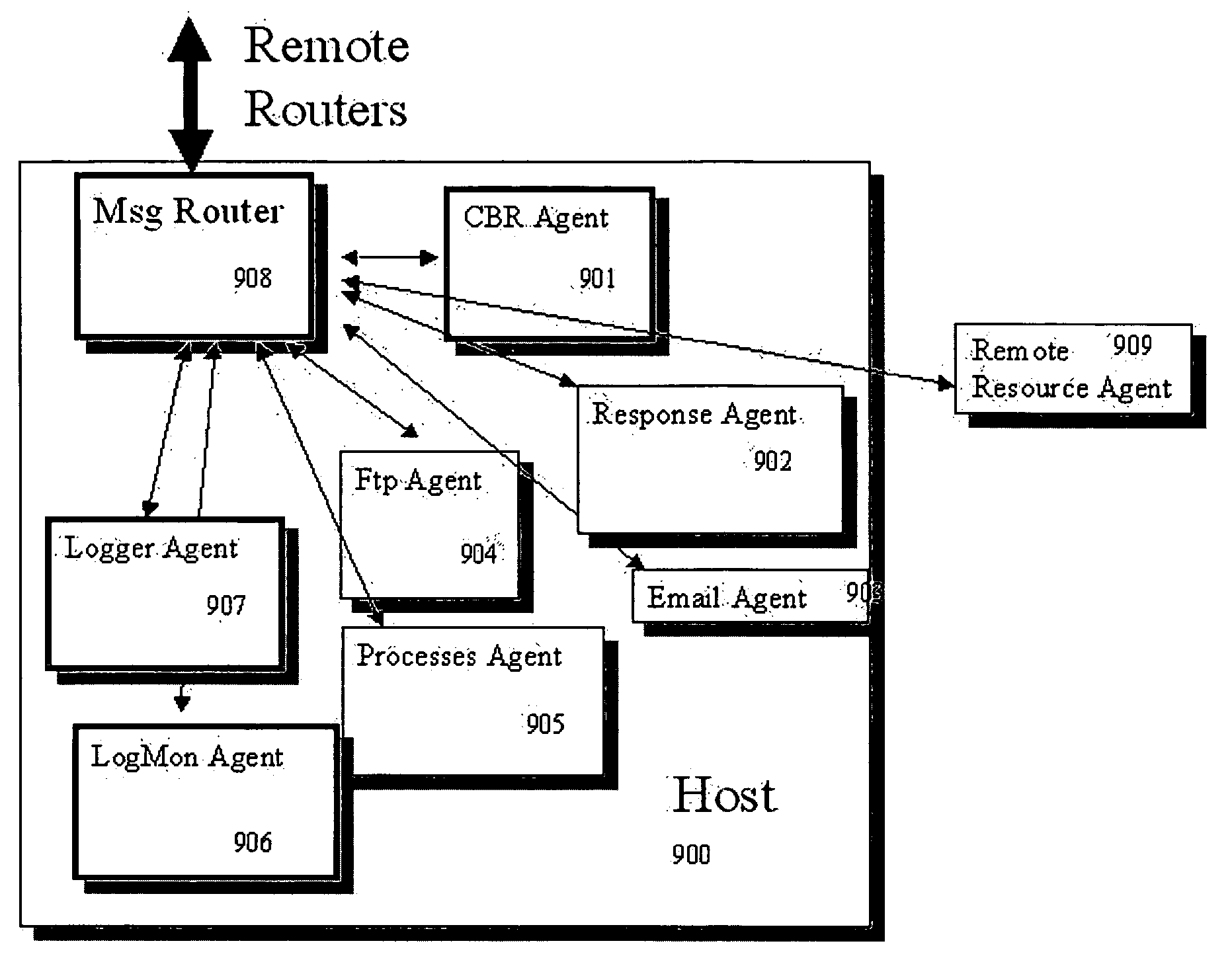

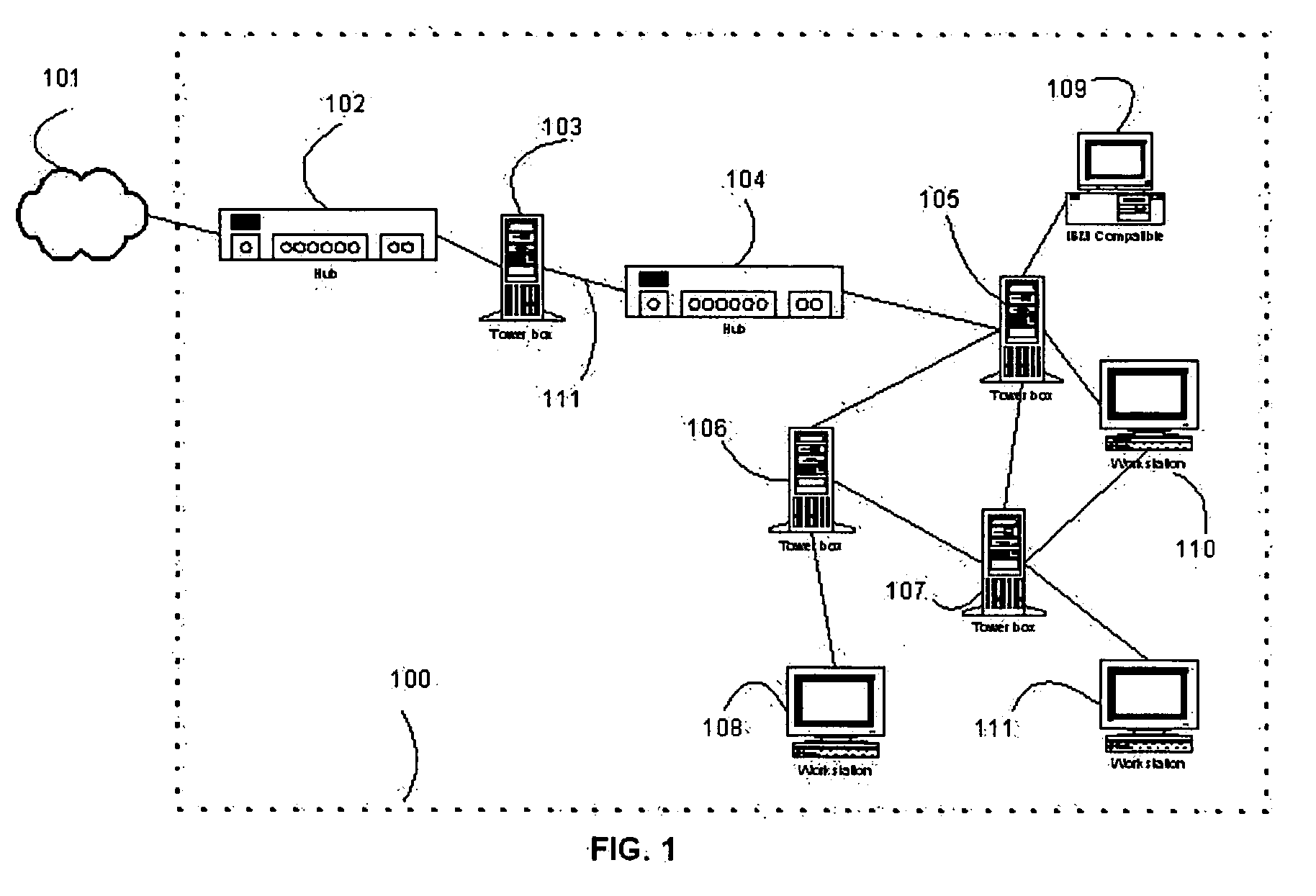

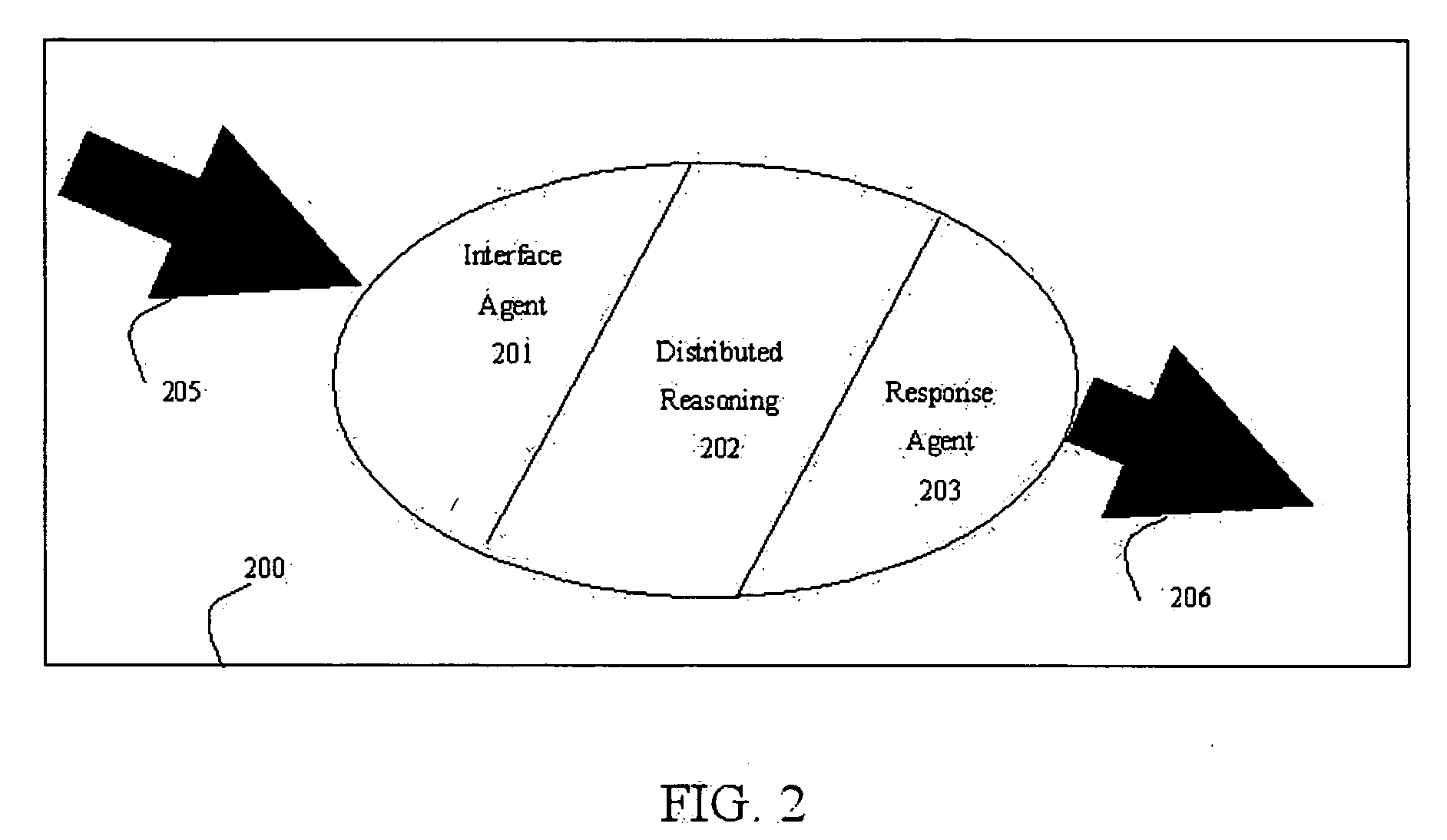

System and method for using agent-based distributed reasoning to manage a computer network

InactiveUS7469239B2Efficient and effective managementEasy to manageKnowledge representationTransmissionParallel algorithmDistributed computing

The present invention describes a system and method for using agent-based distributed reasoning to manage a computer network. In particular, the system includes interface agents to integrate event streams, distributed reasoning agents, and response agents, which run on hosts in the network. An interface agent monitors a resource in the network and reports an event to an appropriate distributed reasoning agent. The distributed reasoning agent, using one or more knowledge bases, determines a response to the event. An appropriate response agent implements the response. Characteristics of the reasoning agent's mean that they can work together collaboratively, as well as implementing parallel algorithms.

Owner:MUSMAN SCOTT A

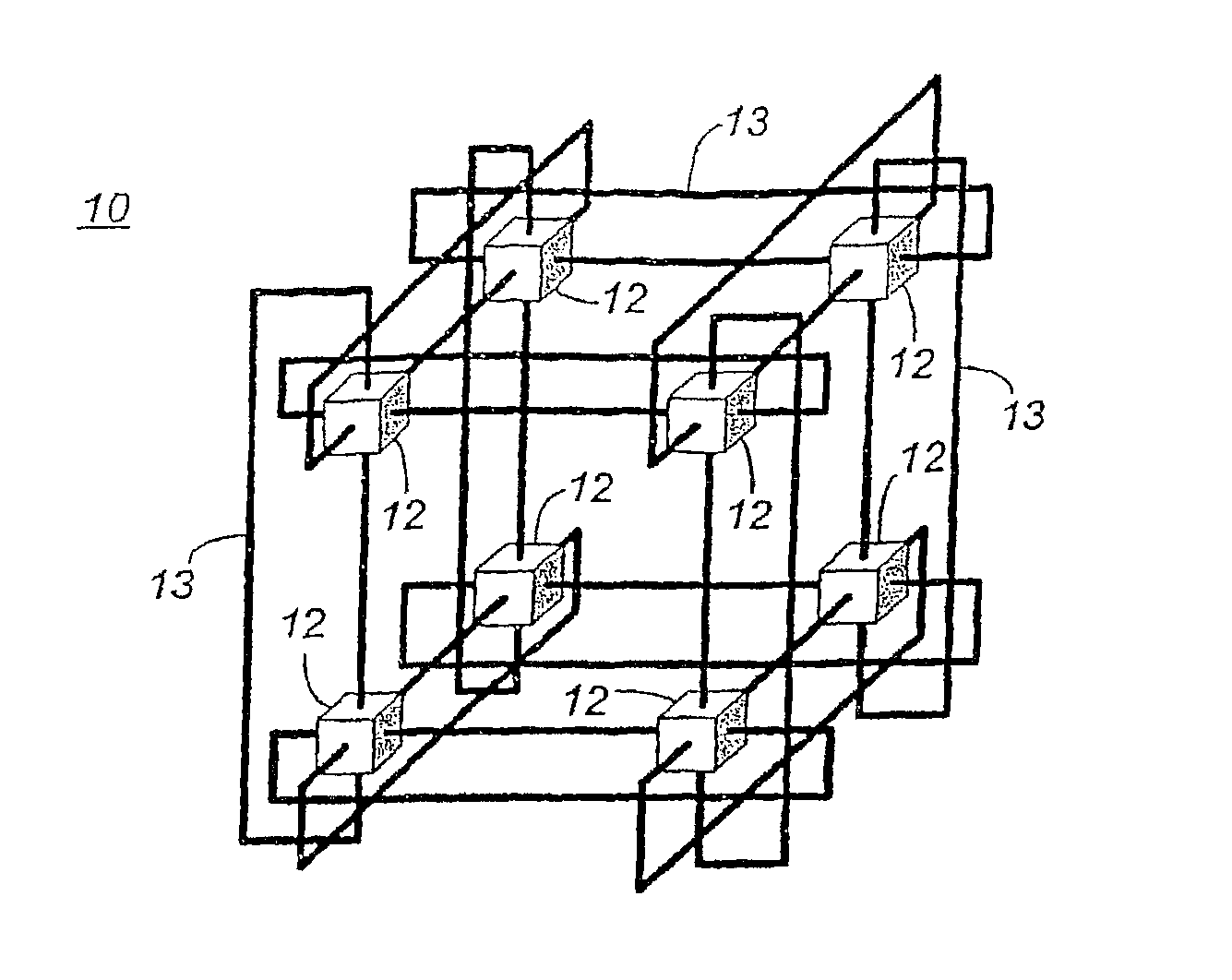

Collective network for computer structures

InactiveUS8001280B2Efficient and reliable computationLimited resourceError preventionGeneral purpose stored program computerCollective communicationParallel algorithm

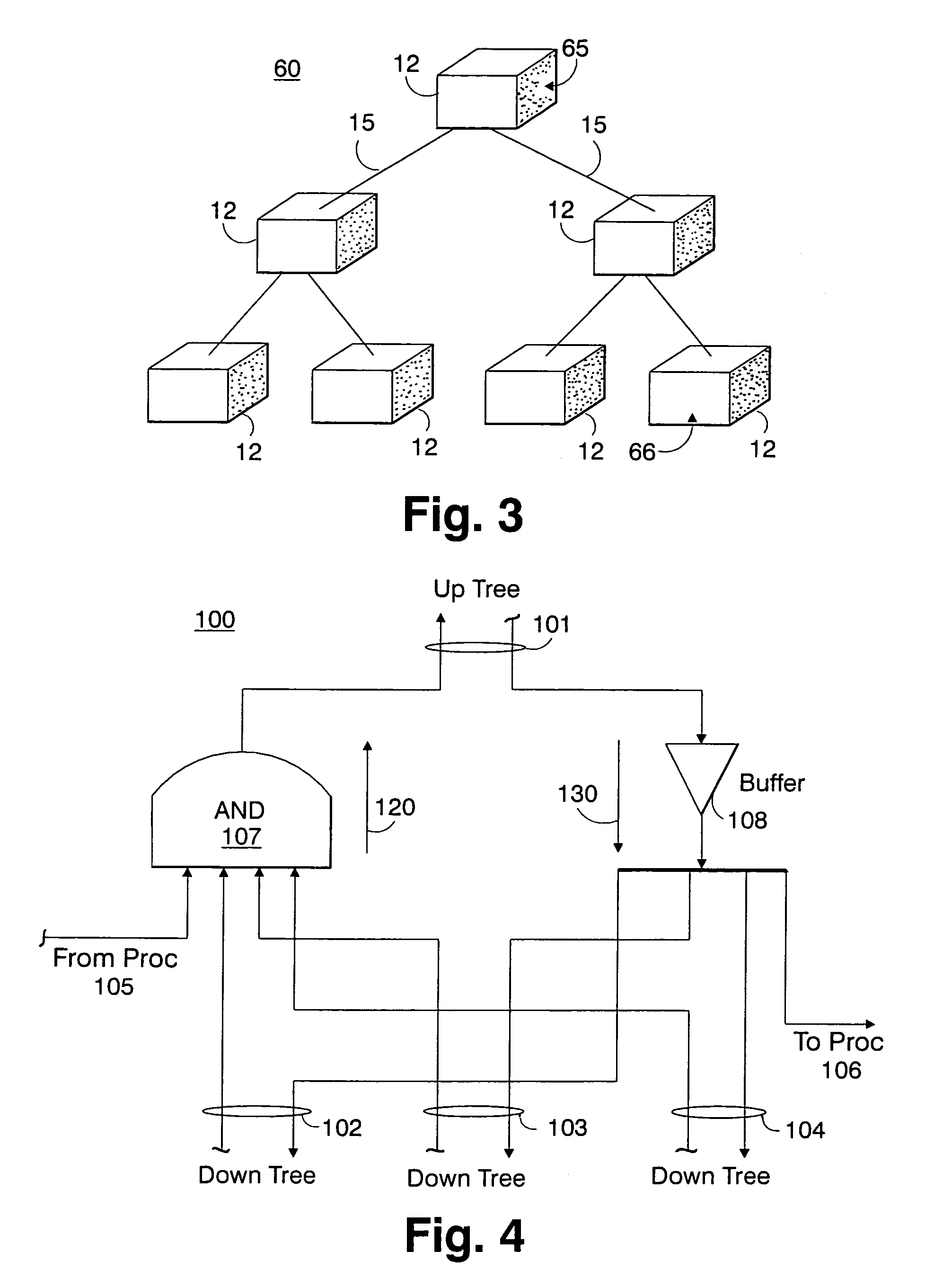

A system and method for enabling high-speed, low-latency global collective communications among interconnected processing nodes. The global collective network optimally enables collective reduction operations to be performed during parallel algorithm operations executing in a computer structure having a plurality of the interconnected processing nodes. Router devices ate included that interconnect the nodes of the network via links to facilitate performance of low-latency global processing operations at nodes of the virtual network and class structures. The global collective network may be configured to provide global barrier and interrupt functionality in asynchronous or synchronized manner. When implemented in a massively-parallel supercomputing structure, the global collective network is physically and logically partitionable according to needs of a processing algorithm.

Owner:INT BUSINESS MASCH CORP

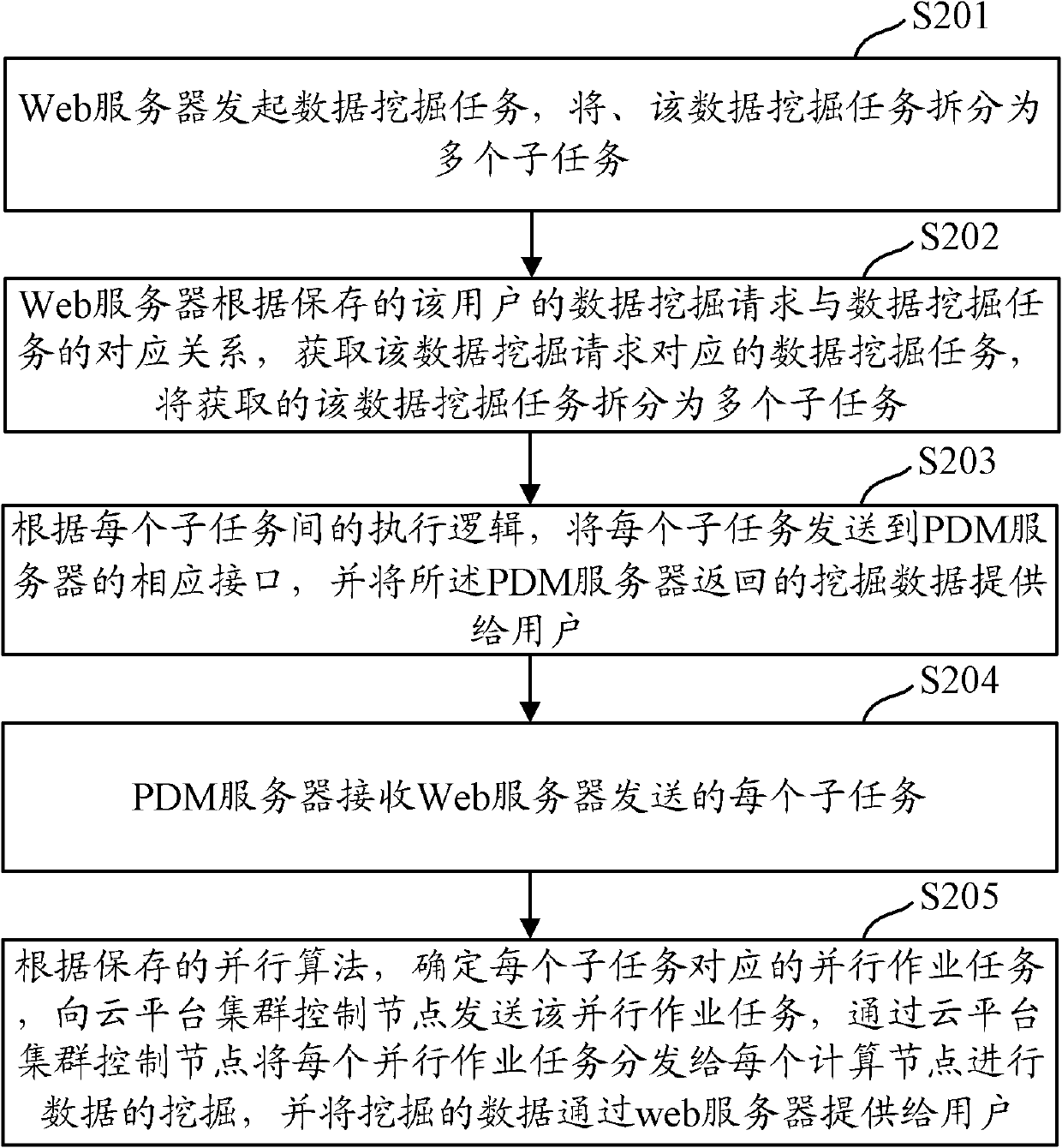

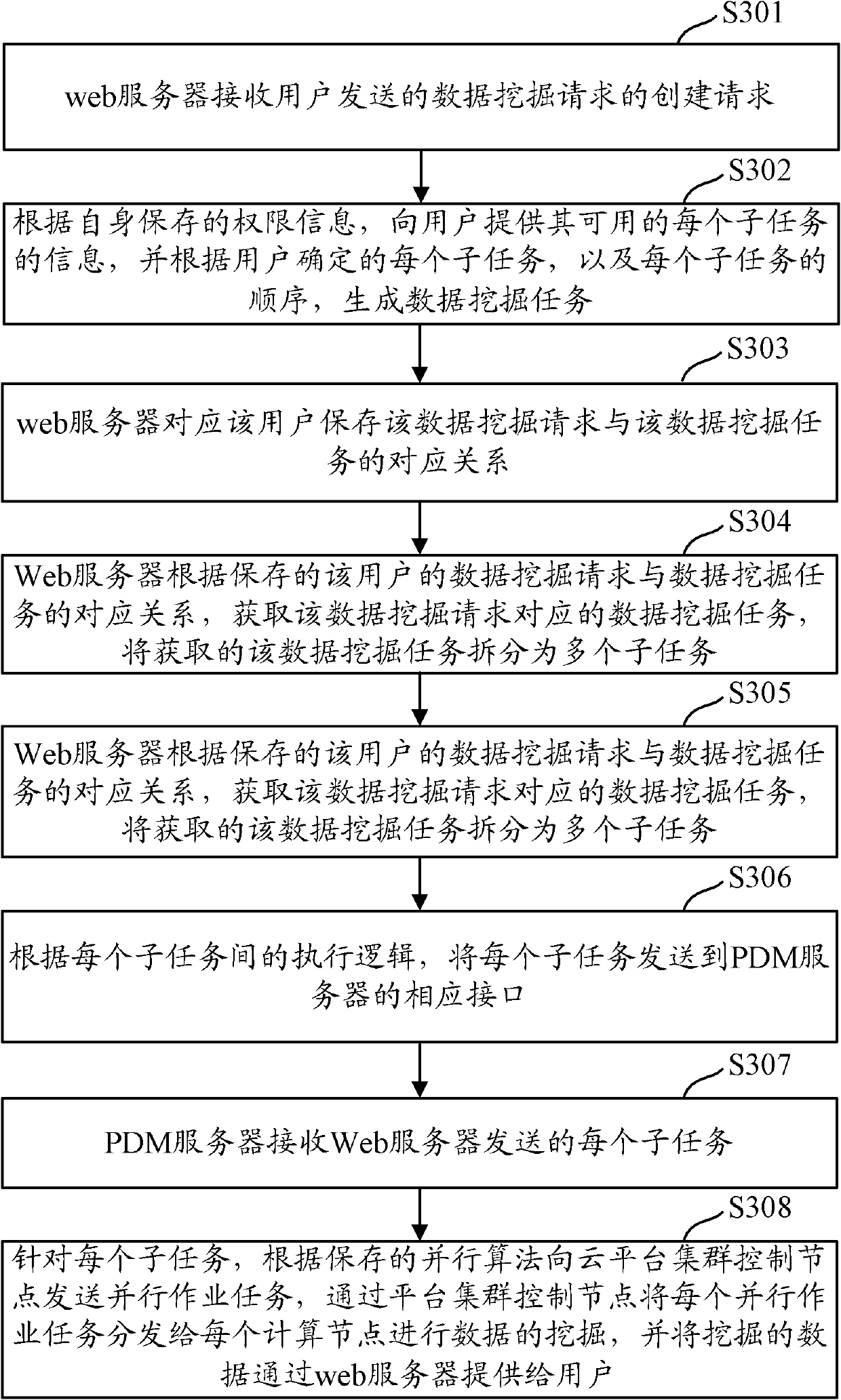

Method, system and device for data mining on basis of cloud computing

The invention discloses a method, a system and a device for data mining on basis of cloud computing, which are used for solving the problems of low efficiency and unsatisfied mass data processing requirement during the data mining process. When a product data management (PDM) server receives all the corresponding subtasks of the data mining requests of a user after a web server is split, the system determines the parallel job task corresponding to each subtask according to a saved parallel algorithm, the parallel job tasks are sent to a clustered-control node of a cloud platform, and the received mining data which is fed back by the clustered-control node of the cloud platform is provided for the web server after being integrated. Because the data mining process is realized in a web mode in the embodiment of the invention, the data mining method can be simultaneously provided for a plurality of users, the data mining process mines on the basis of the parallel job tasks, so the data mining efficiency is effectively improved.

Owner:CHINA MOBILE COMM GRP CO LTD

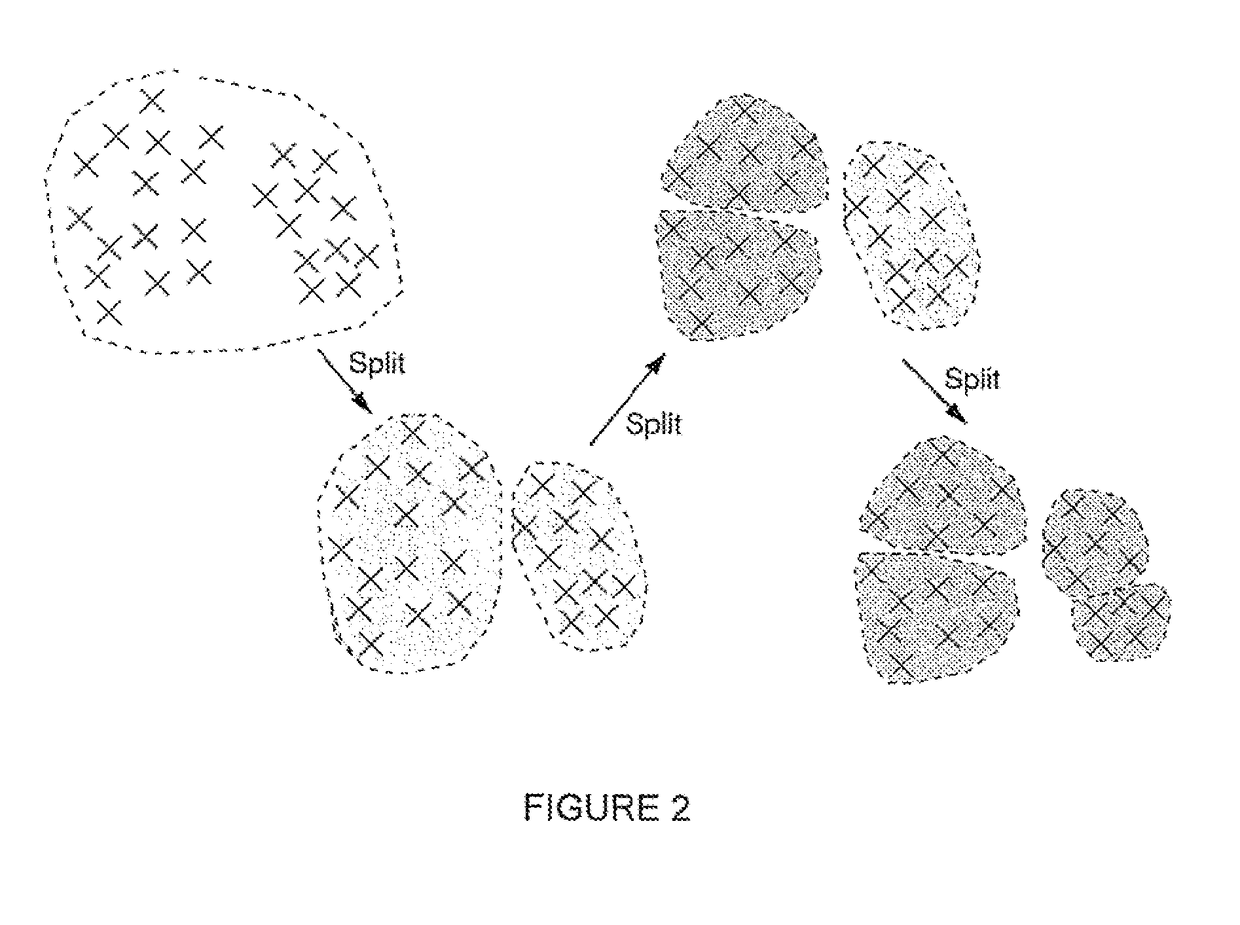

Accelerated discrete distribution clustering under wasserstein distance

ActiveUS20170083608A1Improve scalabilityAdequate scaleKernel methodsRelational databasesCluster algorithmParallel algorithm

Computationally efficient accelerated D2-clustering algorithms are disclosed for clustering discrete distributions under the Wasserstein distance with improved scalability. Three first-order methods include subgradient descent method with re-parametrization, alternating direction method of multipliers (ADMM), and a modified version of Bregman ADMM. The effects of the hyper-parameters on robustness, convergence, and speed of optimization are thoroughly examined. A parallel algorithm for the modified Bregman ADMM method is tested in a multi-core environment with adequate scaling efficiency subject to hundreds of CPUs, demonstrating the effectiveness of AD2-clustering.

Owner:PENN STATE RES FOUND

System and method for using agent-based distributed reasoning to manage a computer network

InactiveUS20060036670A1Ease management taskEfficient and effective managementMultiple digital computer combinationsKnowledge representationParallel algorithmDistributed computing

The present invention describes a system and method for using agent-based distributed reasoning to manage a computer network. In particular, the system includes interface agents to integrate event streams, distributed reasoning agents, and response agents, which run on hosts in the network. An interface agent monitors a resource in the network and reports an event to an appropriate distributed reasoning agent. The distributed reasoning agent, using one or more knowledge bases, determines a response to the event. An appropriate response agent implements the response. Characteristics of the reasoning agent's mean that they can work together collaboratively, as well as implementing parallel algorithms.

Owner:MUSMAN SCOTT A

Massive clustering of discrete distributions

ActiveUS9720998B2Relational databasesSpecial data processing applicationsCanopy clustering algorithmCluster algorithm

The trend of analyzing big data in artificial intelligence requires more scalable machine learning algorithms, among which clustering is a fundamental and arguably the most widely applied method. To extend the applications of regular vector-based clustering algorithms, the Discrete Distribution (D2) clustering algorithm has been developed for clustering bags of weighted vectors which are well adopted in many emerging machine learning applications. The high computational complexity of D2-clustering limits its impact in solving massive learning problems. Here we present a parallel D2-clustering algorithm with substantially improved scalability. We develop a hierarchical structure for parallel computing in order to achieve a balance between the individual-node computation and the integration process of the algorithm. The parallel algorithm achieves significant speed-up with minor accuracy loss.

Owner:PENN STATE RES FOUND

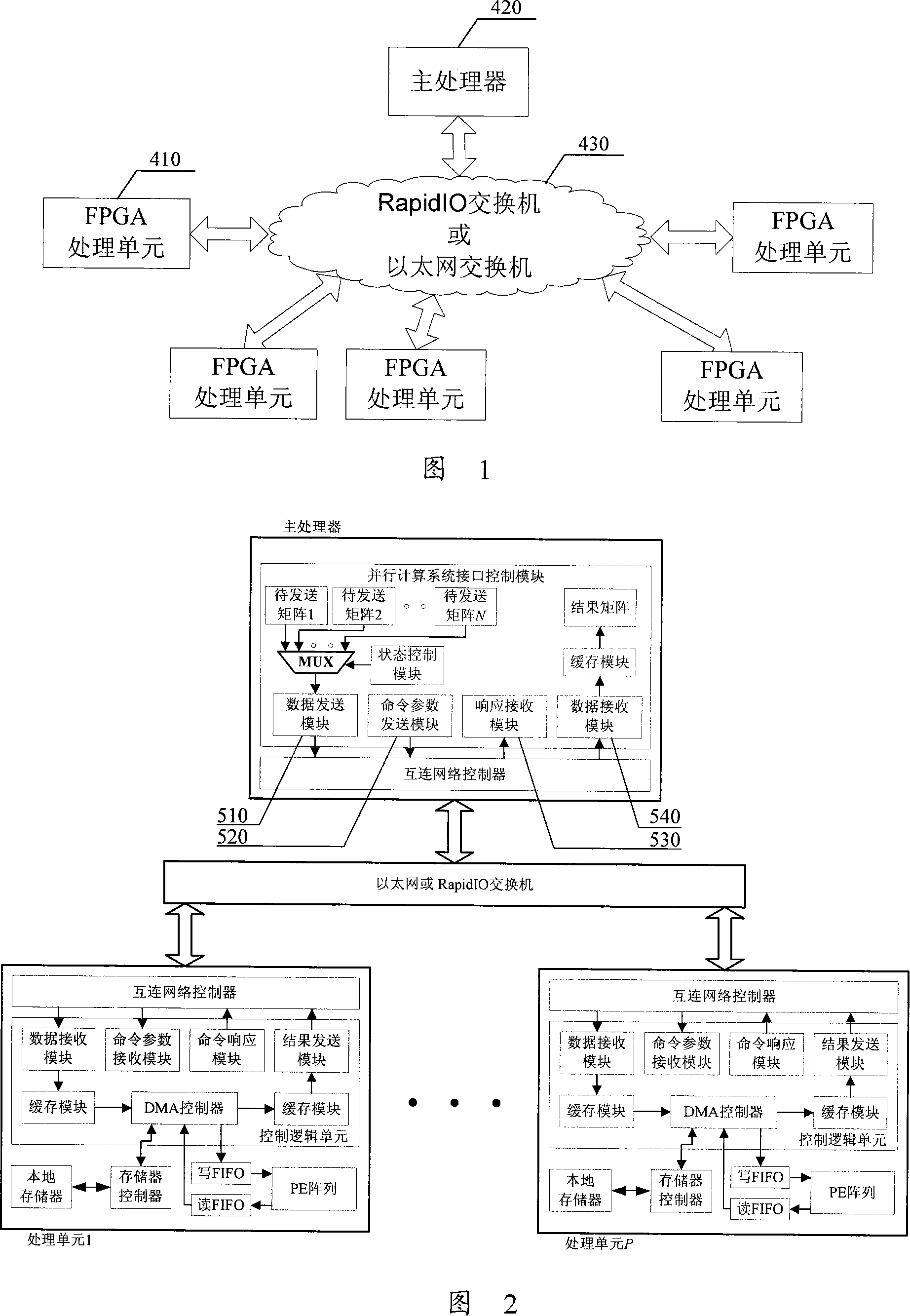

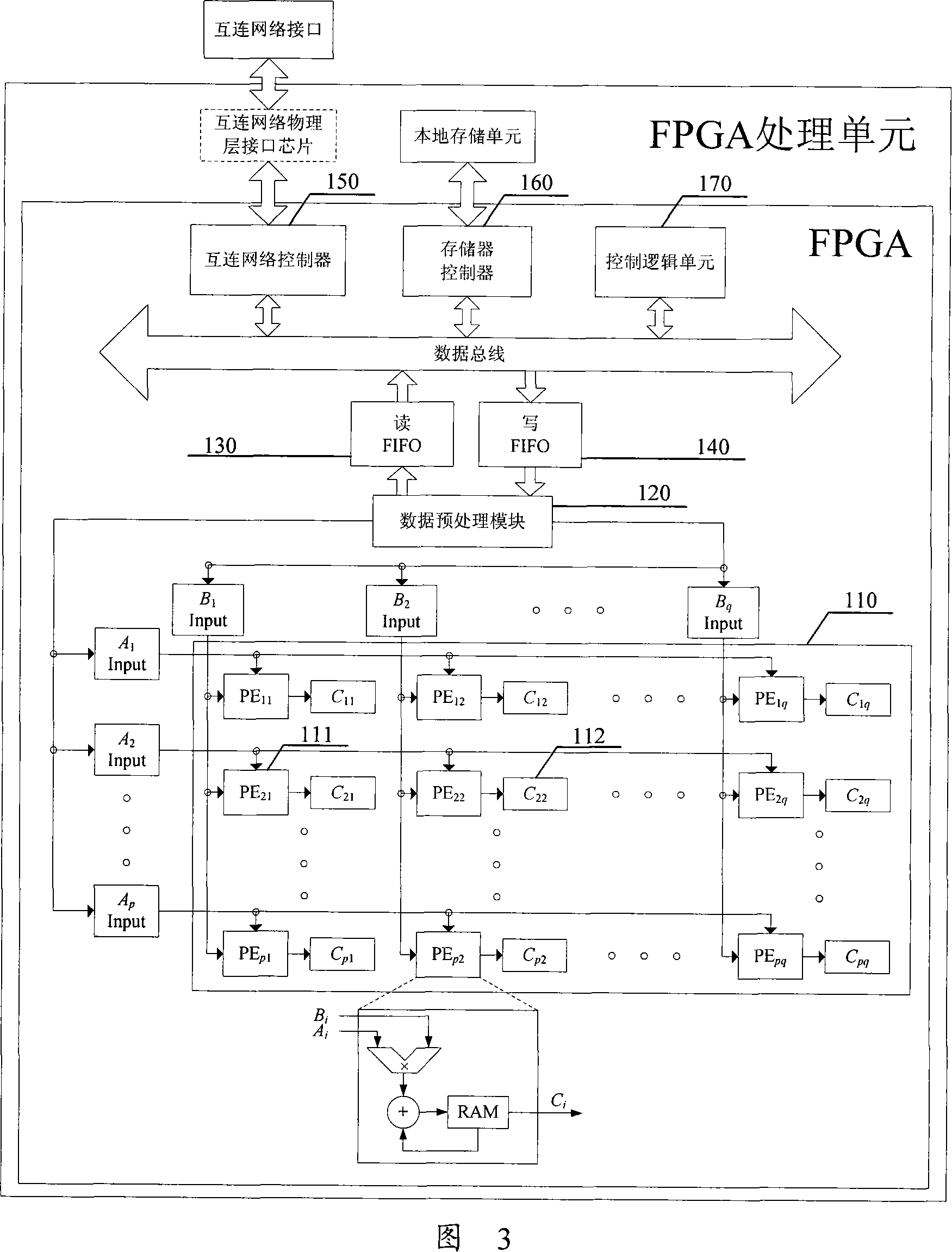

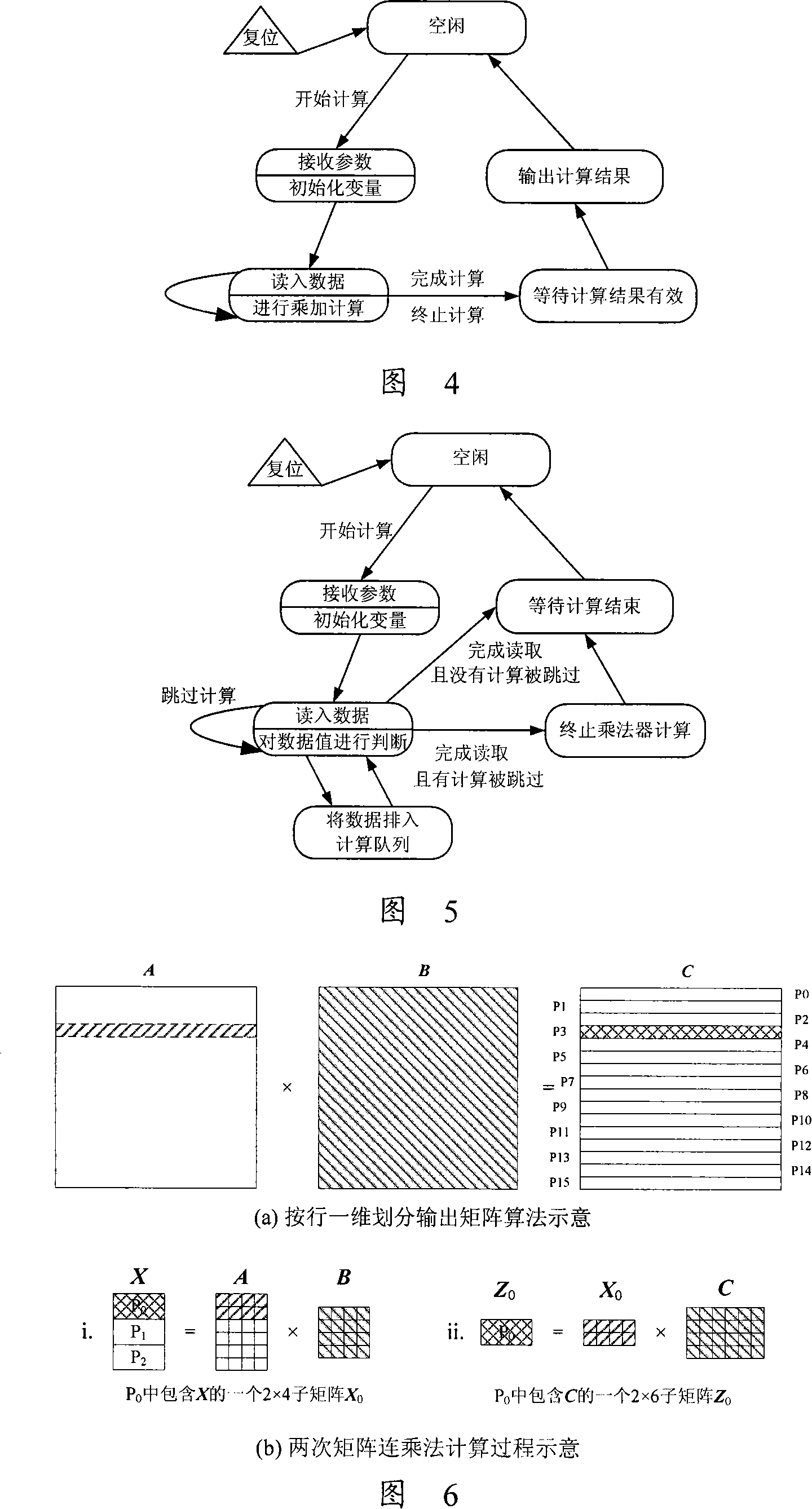

Matrix multiplication parallel computing system based on multi-FPGA

InactiveCN101089840AReduce resource requirementsImprove computing powerDigital computer detailsData switching networksBinary multiplierParallel algorithm

A matrix multiplication parallel calculation system based on multi-FPGA is prepared as utilizing FPGA as processing unit to finalize dense matrix multiplication calculation and to raise sparse matrix multiplication calculation function, utilizing Ethernet and star shaped topology structure to form master-slave distributed FPGA calculation system, utilizing Ethernet multicast-sending mode to carry out data multicast-sending to processing unit requiring the same data and utilizing parallel algorithm based on line one-dimensional division output matrix to carry out matrix multiplication parallel calculation for decreasing communication overhead of system.

Owner:ZHEJIANG UNIV

Collective Network For Computer Structures

InactiveUS20080104367A1Efficient and reliable computationLimited resourceError preventionMultiple digital computer combinationsCollective communicationParallel algorithm

A system and method for enabling high-speed, low-latency global collective communications among interconnected processing nodes. The global collective network optimally enables collective reduction operations to be performed during parallel algorithm operations executing in a computer structure having a plurality of the interconnected processing nodes. Router devices ate included that interconnect the nodes of the network via links to facilitate performance of low-latency global processing operations at nodes of the virtual network and class structures. The global collective network may be configured to provide global barrier and interrupt functionality in asynchronous or synchronized manner. When implemented in a massively-parallel supercomputing structure, the global collective network is physically and logically partitionable according to needs of a processing algorithm.

Owner:IBM CORP

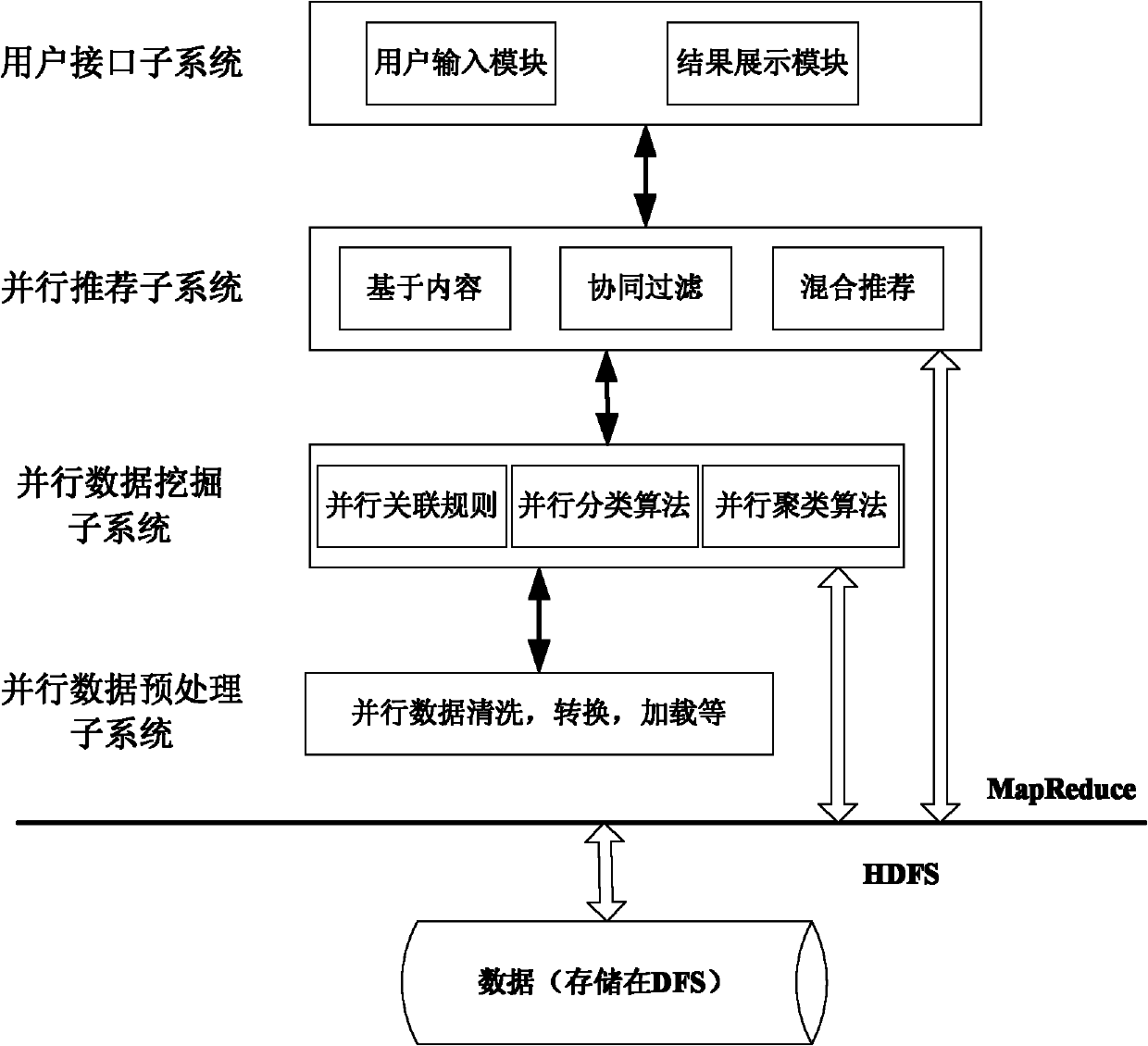

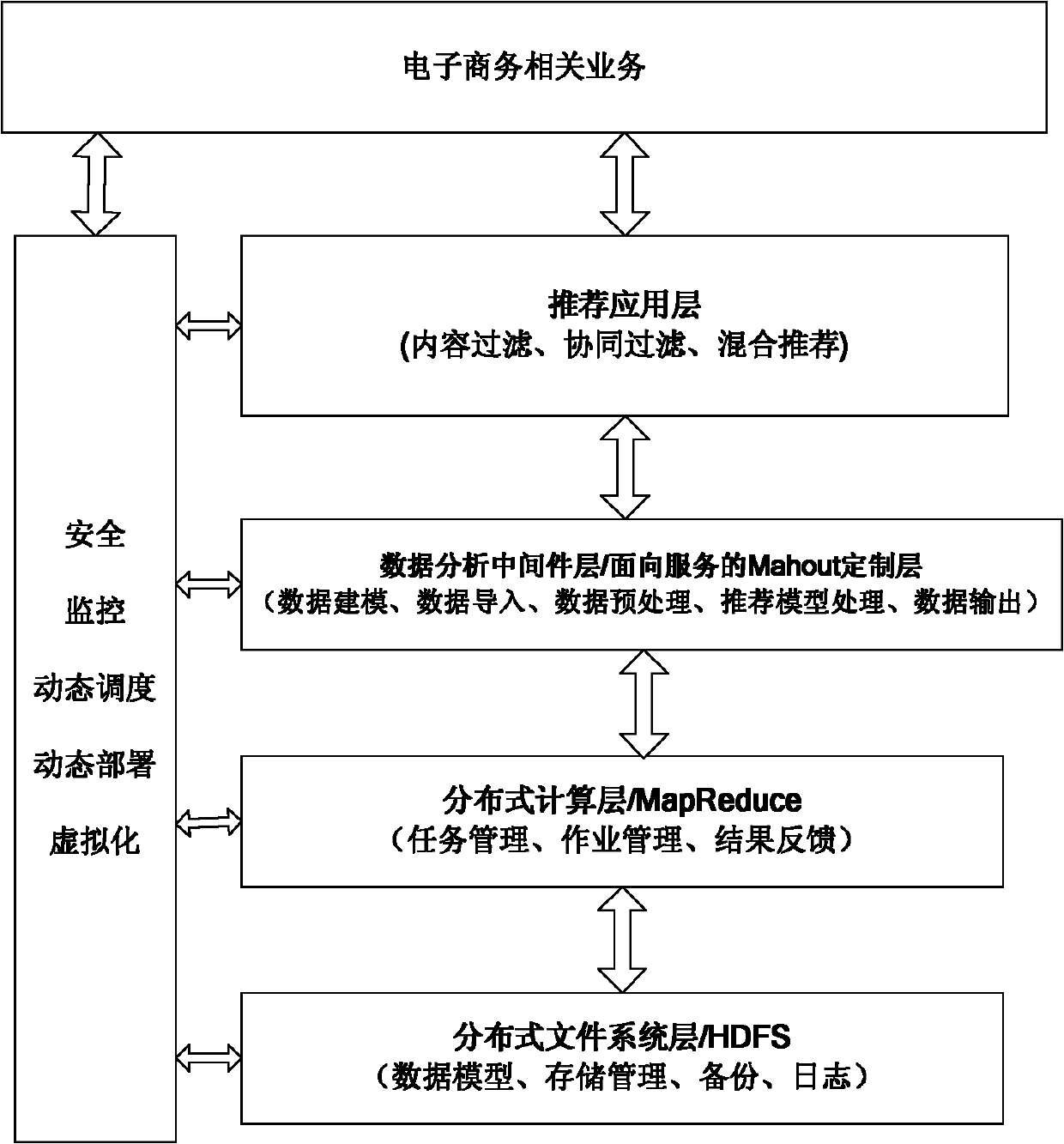

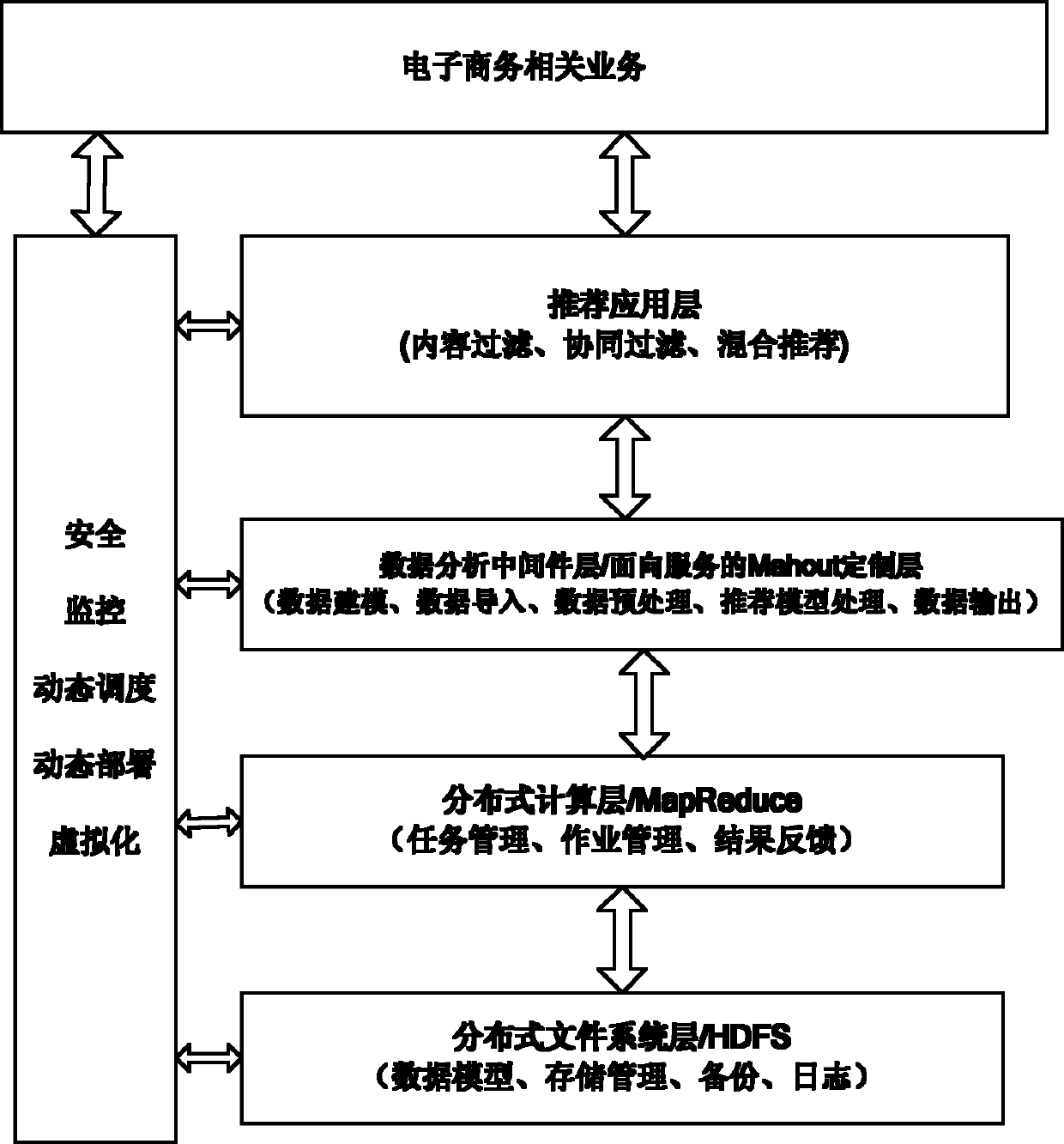

Recommendation system building method based on cloud computing

InactiveCN102169505AFlexible formulationImprove processing efficiencyCommerceSpecial data processing applicationsUser needsParallel algorithm

The invention discloses a recommendation system building method based on cloud computing, which belongs to the field of cloud computing and recommendation system building. In the method, a Hadoop cloud platform with a plurality of nodes is built firstly; then a Mahout middleware is built on the Hadoop; a Mahout algorithm library is customized according to business demands; a traditional advancing algorithm, a pseudo-distributed advancing algorithm, and a distributed algorithm are realized on the Mahout middleware; and finally a recommended application framework is built according to the demands of users. With the invention, as a serial recommended algorithm and MapReduce are combined to realize a parallel algorithm, the processing efficiency can be effectively improved; a large quantity of data which can not be processed under the condition of using a single machine can be completed; and the recommendation results can be supplied to users very quickly.

Owner:SUZHOU LIANGJIANG TECH

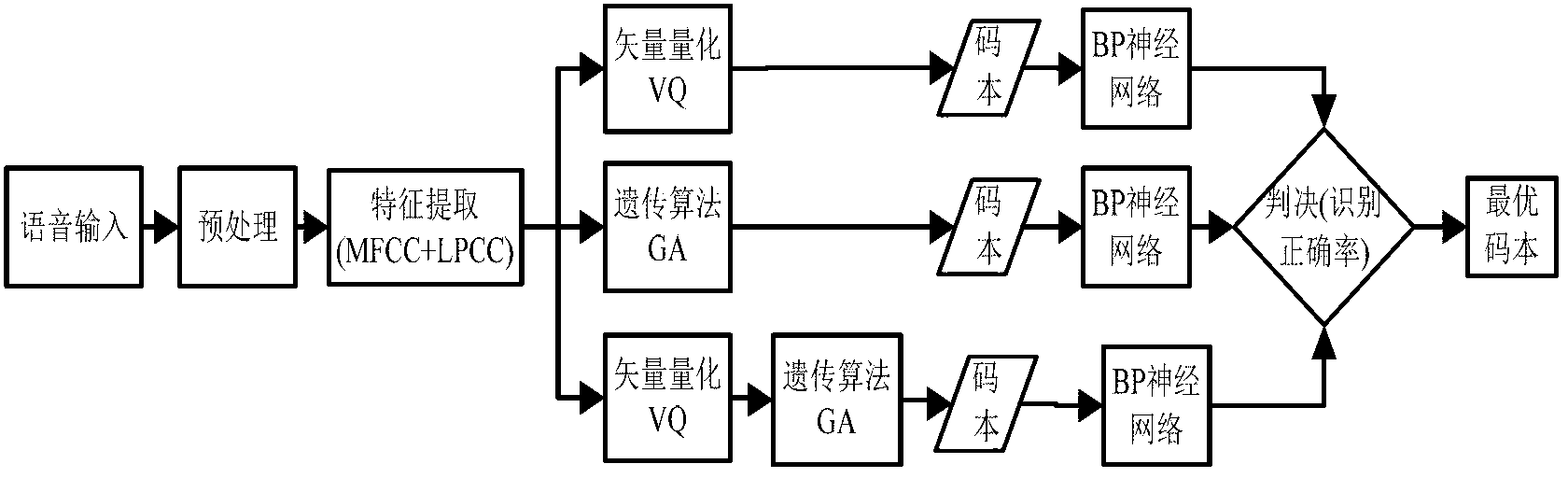

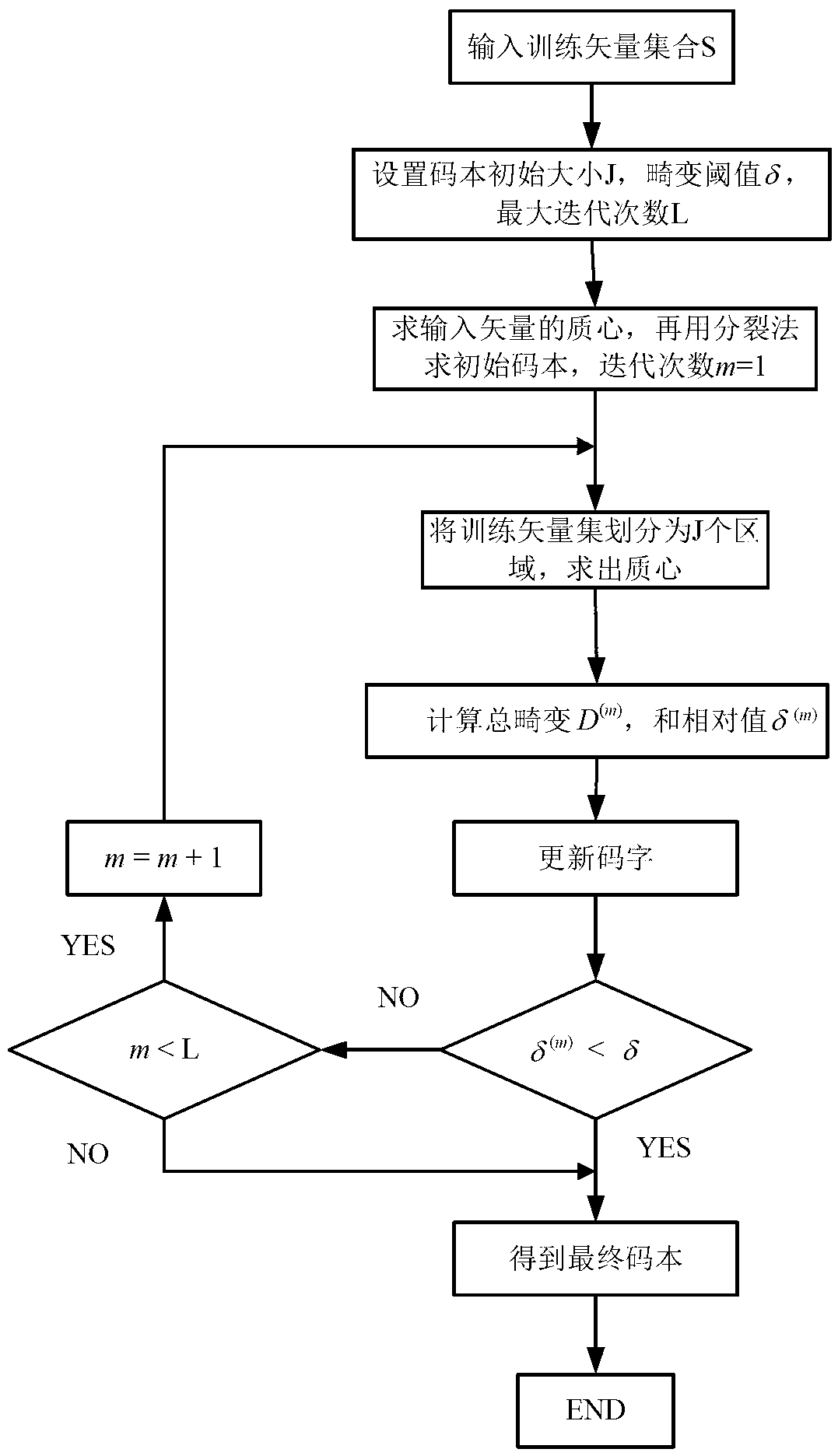

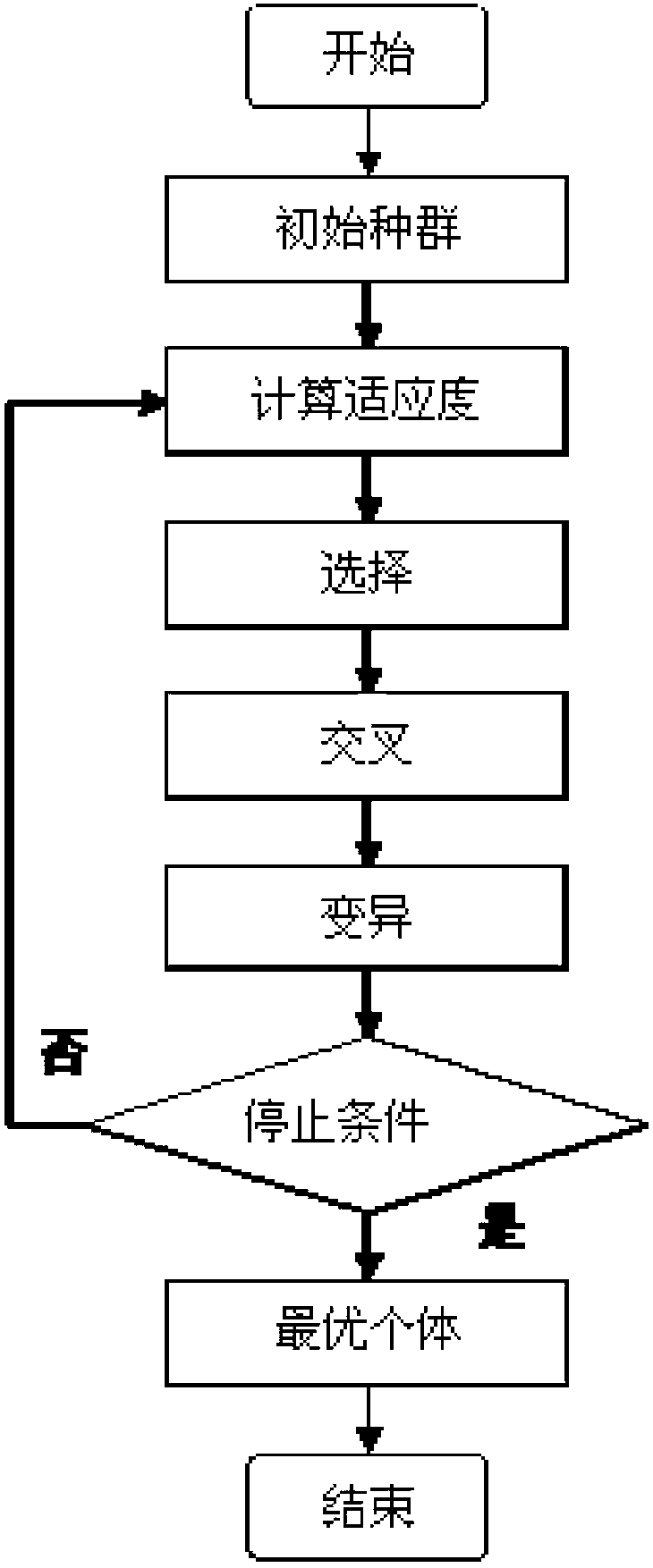

Optimal codebook design method for voiceprint recognition system based on nerve network

InactiveCN102800316AImprove adaptabilityImprove stabilitySpeech recognitionNerve networkParallel algorithm

The invention relates to an optimal codebook design method for a voiceprint recognition system based on a nerve network. The optimal codebook design method comprises following five steps: voice signal input, voice signal pretreatment, voice signal characteristic parameter extraction, three-way initial codebook generation and nerve network training as well as optimal codebook selection; MFCC (Mel Frequency Cepstrum Coefficient) and LPCC (Linear Prediction Cepstrum Coefficient) parameters are extracted at the same time after pretreatment; then a local optimal vector quantization method and a global optimal genetic algorithm are adopted to realize that a hybrid phonetic feature parameter matrix generates initial codebooks through three-way parallel algorithms based on VQ, GA and VQ as well as GA; and the optimal codebook is selected by judging the nerve network recognition accuracy rate of the three-way codebooks. The optimal codebook design method achieves the remarkable effects as follows: the optimal codebook is utilized to lead the voiceprint recognition system to obtain higher recognition rate and higher stability, and the adaptivity of the system is improved; and compared with the mode recognition based on a single codebook, the performance is improved obviously by adopting the voiceprint recognition system of the optimal codebook based on the nerve network.

Owner:CHONGQING UNIV

Spawn-join instruction set architecture for providing explicit multithreading

InactiveUS20030088756A1Program initiation/switchingGeneral purpose stored program computerInstruction memoryFrequency spectrum

The invention presents a unique computational paradigm that provides the tools to take advantage of the parallelism inherent in parallel algorithms to the full spectrum from algorithms through architecture to implementation. The invention provides a new processing architecture that extends the standard instruction set of the conventional uniprocessor architecture. The architecture used to implement this new computational paradigm includes a thread control unit (34), a spawn control unit (38), and an enabled instruction memory (50). The architecture initiates multiple threads and executes them in parallel. Control of the threads is provided such that the threads may be suspended or allowed to execute each at its own pace.

Owner:VISHKIN UZI Y

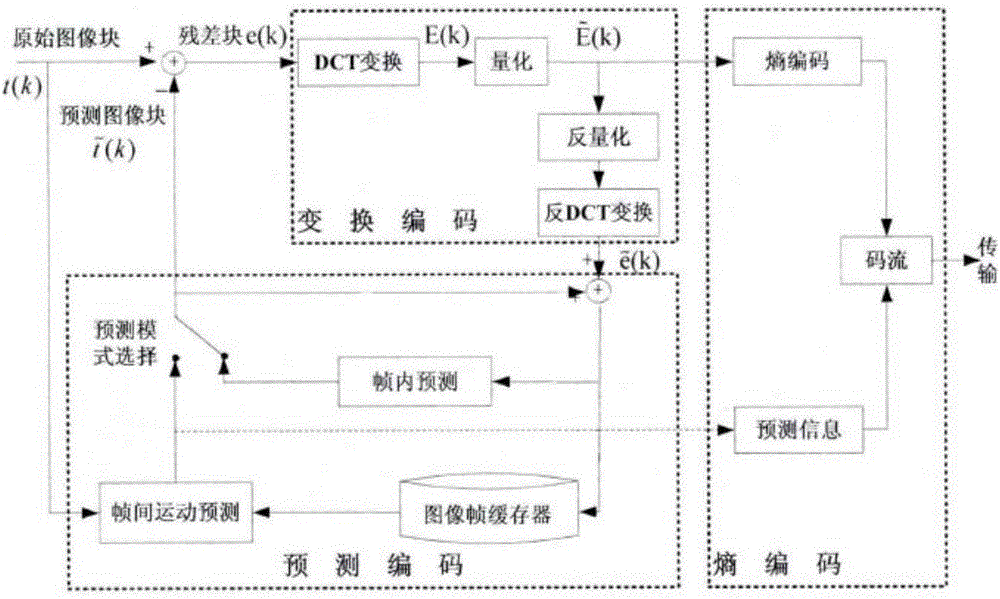

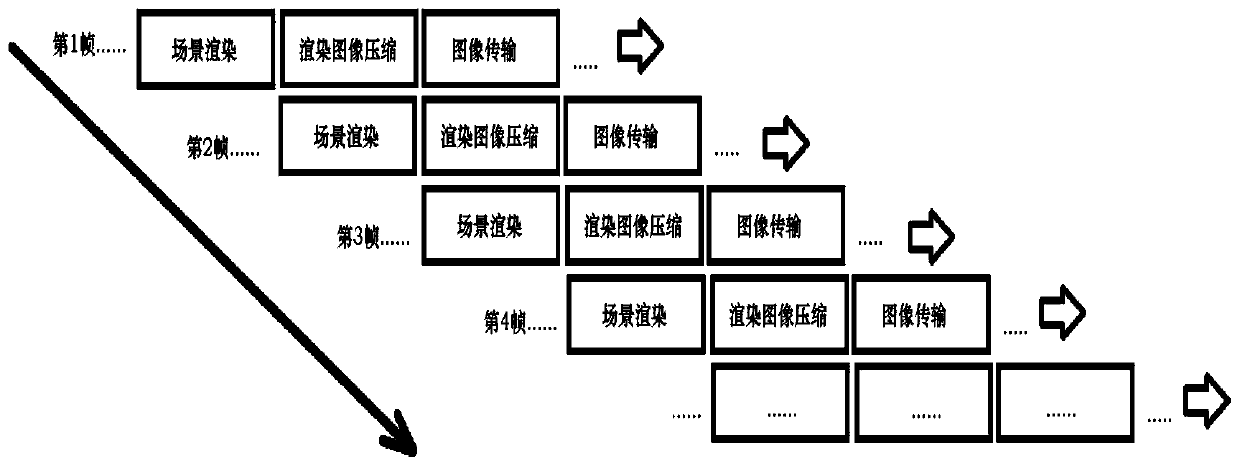

Multilevel multitask parallel decoding algorithm on multicore processor platform

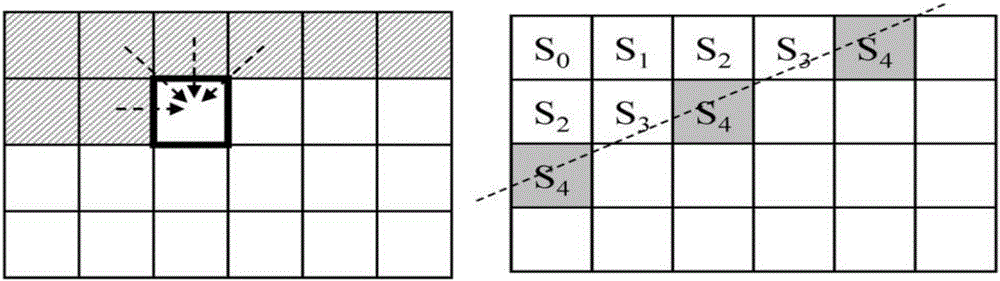

ActiveCN105992008AOptimal Design StructureImprove the design structure to more effectively play the function of the processorDigital video signal modificationRound complexityImaging quality

The invention discloses a multilevel multitask parallel decoding algorithm on a multicore processor platform, and provides the multilevel multitask parallel decoding algorithm for effective combination of tasks and data on the multicore processor platform by utilizing the dependency of HEVC data by aiming at the problems of mass data volume of high-definition videos and ultrahigh processing complexity of HEVC decoding. HEVC decoding is divided into two tasks of frame layer entropy decoding and CTU layer data decoding which are processed in parallel by using different granularity; the entropy decoding task is processed in parallel in a frame level mode; the CTU data decoding task is processed in parallel in a CTU data line mode; and each task is performed by an independent thread and bound to an independent core to operate so that the parallel computing performance of a multicore processor can be fully utilized, and real-time parallel decoding of HEVC full high-definition single code stream using no parallel coding technology can be realized. Compared with serial decoding, the decoding parallel acceleration rate can be greatly enhanced and the decoding image quality can be guaranteed by using the multicore parallel algorithm.

Owner:NANJING UNIV OF POSTS & TELECOMM

Method and apparatus for efficient execution of concurrent processes on a multithreaded message passing system

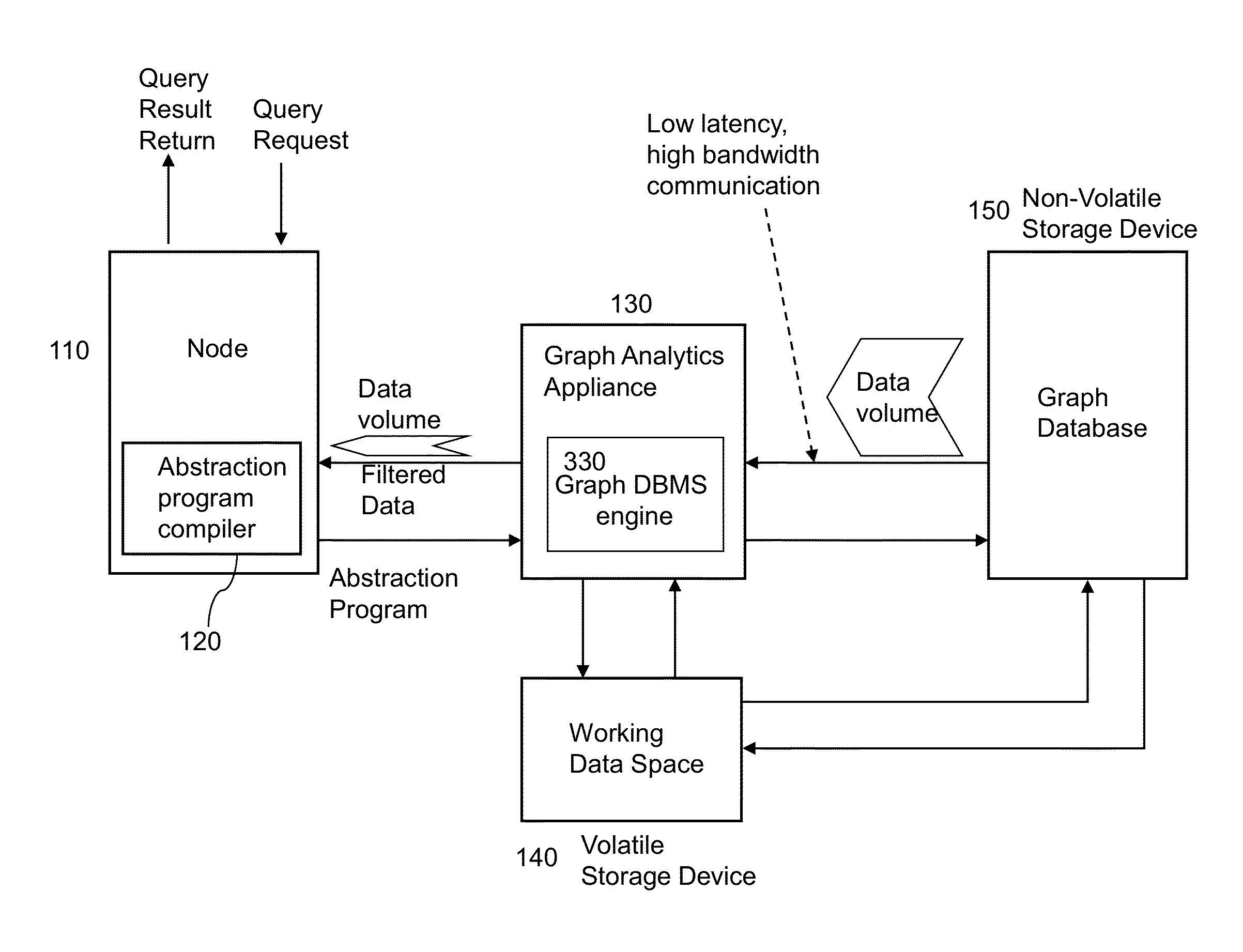

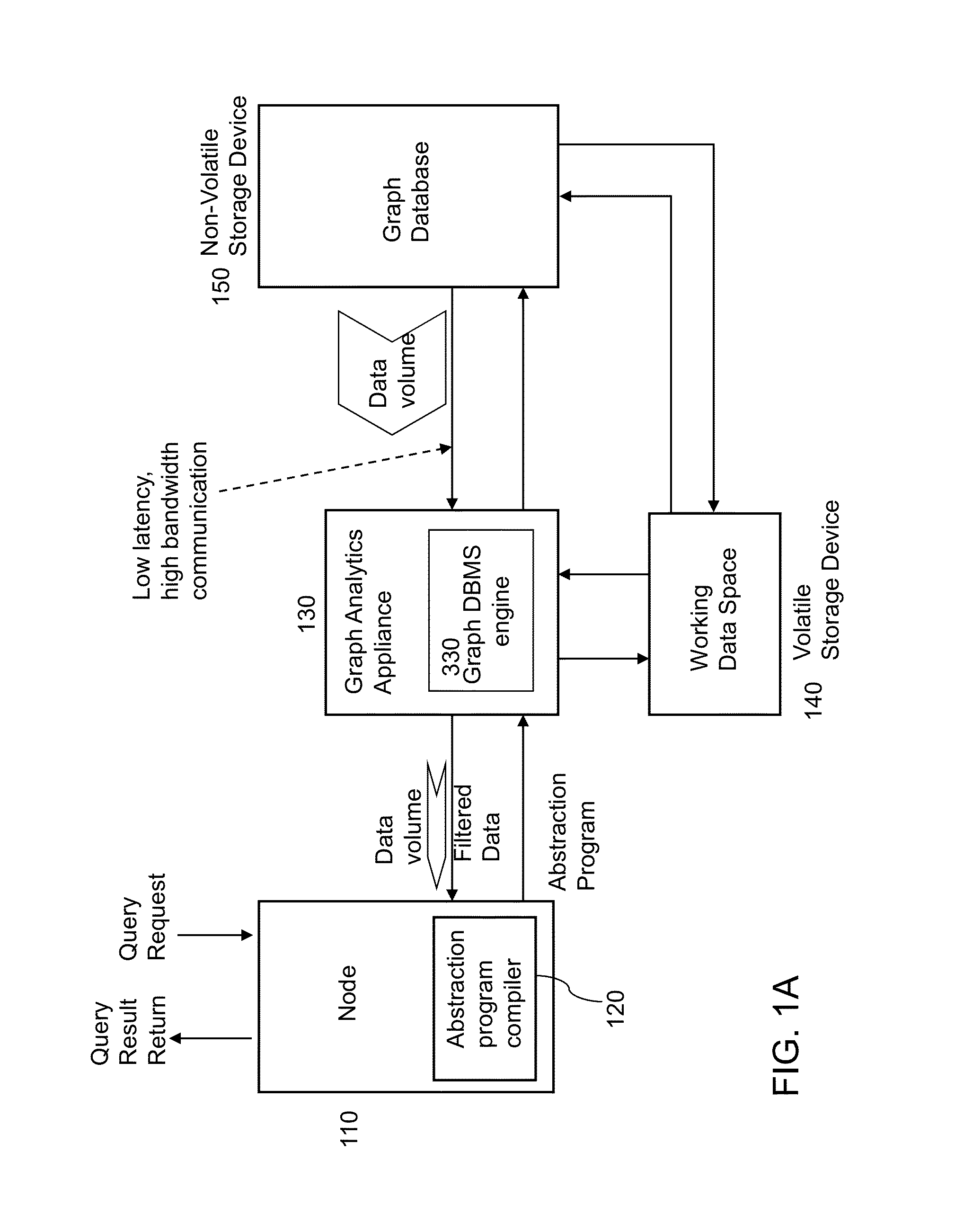

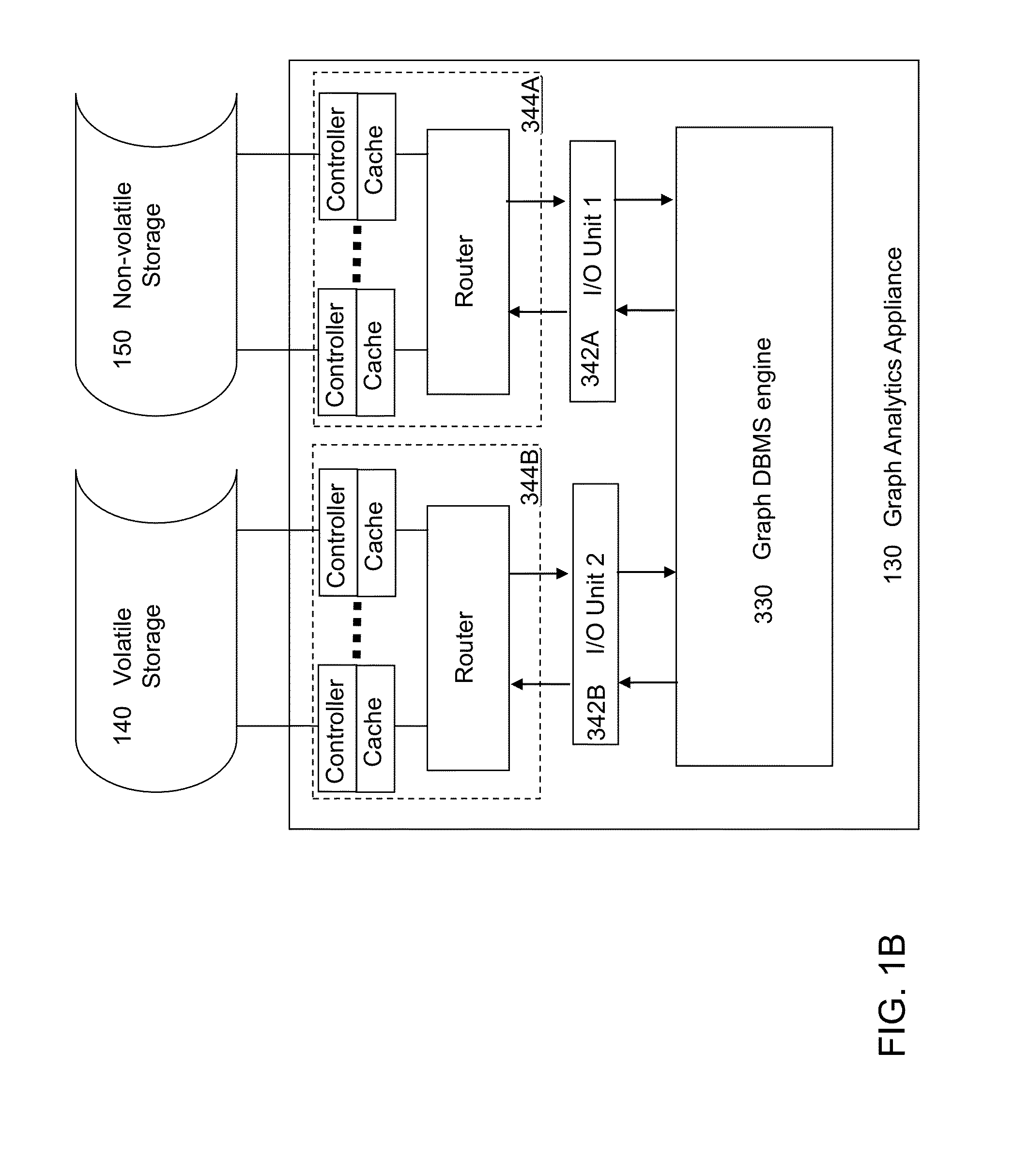

A graph analytics appliance can be employed to extract data from a graph database in an efficient manner. The graph analytics appliance includes a router, a worklist scheduler, a processing unit, and an input / output unit. The router receives an abstraction program including a plurality of parallel algorithms for a query request from an abstraction program compiler residing on computational node or the graph analytics appliance. The worklist scheduler generates a prioritized plurality of parallel threads for executing the query request from the plurality of parallel algorithms. The processing unit executes multiple threads selected from the prioritized plurality of parallel threads. The input / output unit communicates with a graph database.

Owner:IBM CORP

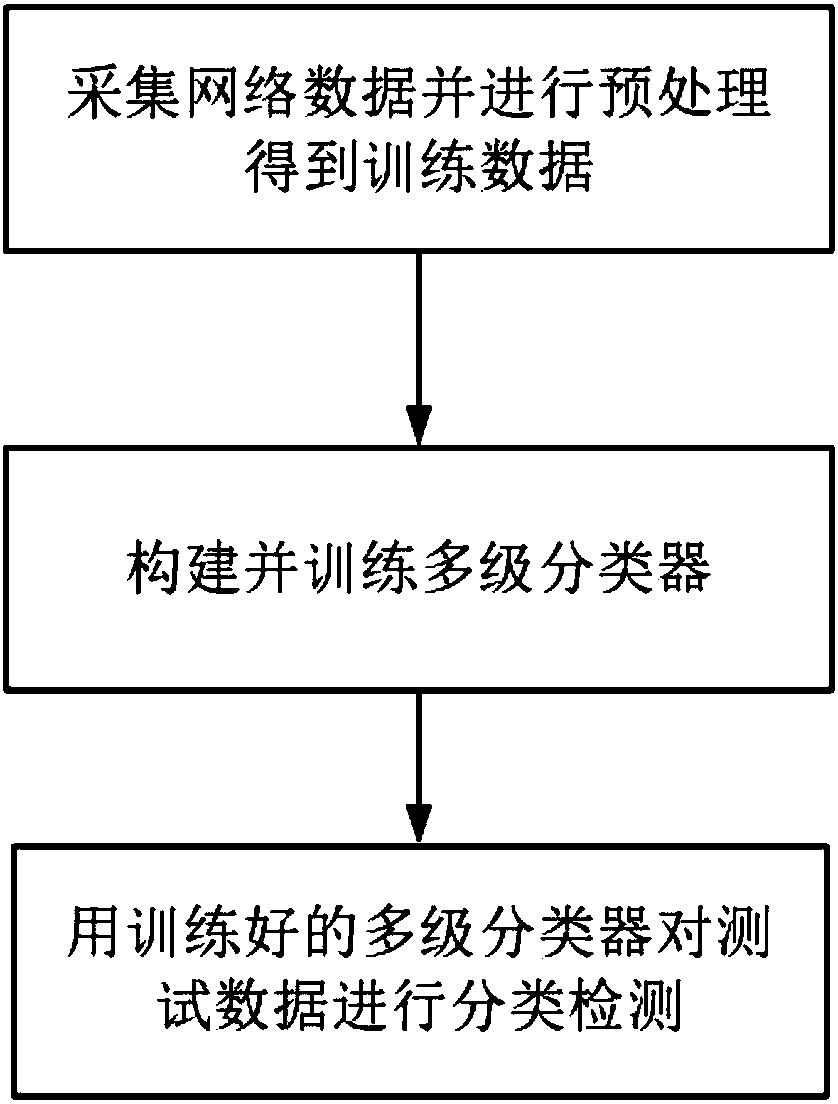

Method for carrying out classification detection on network attack behaviors through utilization of machine learning technology

InactiveCN108540451ASmall scaleImprove efficiencyCharacter and pattern recognitionTransmissionPretreatment methodParallel algorithm

The invention relates to a method for carrying out classification detection on network attack behaviors through utilization of a machine learning technology and belongs to the technical field of information. The method comprises the steps of 1, collecting network data and carrying out preprocessing to obtain training data; 2, establishing and training a multilevel classifier; and carrying out classification detection on test data through utilization of the trained multilevel classifier. Compared with the prior art, the method provided by the invention has the advantages that 1, through utilization of a preprocessing method for the collection data, the data scale can be reduced, moreover, partial unrelated data is removed, and the integrated efficiency is improved; 2, through utilization ofthe multilevel classifier and an integrated learning thought, the problem that a single classifier is low in fitting precision is solved, and the detection precision of the system is greatly improved; and 3, through design of a data blocking method based on an improved random forest algorithm, different types of attach behavior detection can be realized as parallel algorithms, so the integrated detection speed of the system is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

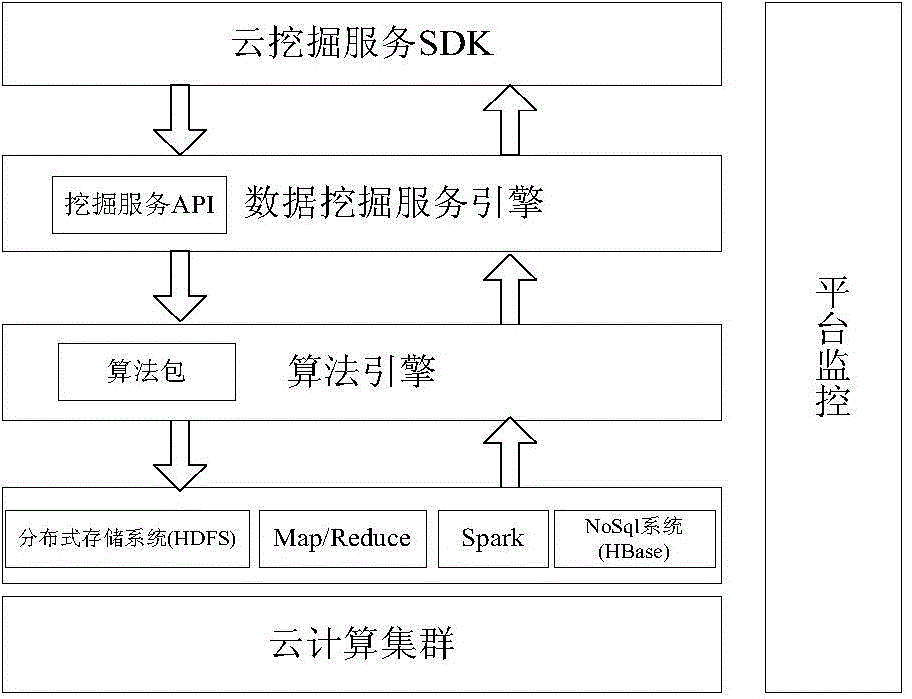

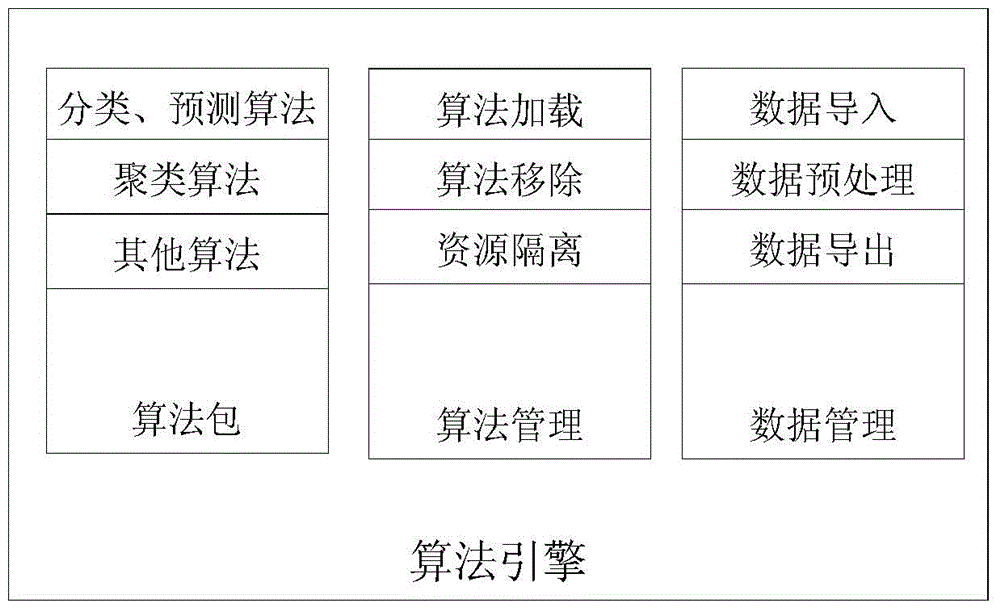

Data mining REST service platform based on cloud computing

The invention discloses a data mining REST service platform based on cloud computing. The data mining REST service platform comprises a cloud computing clustering layer, an algorithm engine layer, a data mining service engine layer and a cloud mining service SDK (software development kit), the cloud computing clustering layer is used for providing cloud storing and parallel computing capacity, the algorithm engine layer is used for providing parallel data mining capacity and providing various parallel algorithm libraries, the data mining service engine layer is used for outwards providing mining cloud services, all services are outwards exposed through a Restful interface, and the cloud mining service SDK is used for providing a mode of locally calling the mining cloud services to use data mining and analyzing functions in business systems by introducing the cloud mining service SDK into other business systems. The data mining REST service platform is effectively suitable for mass data processing and high in product profit.

Owner:ZHEJIANG UNIV OF TECH

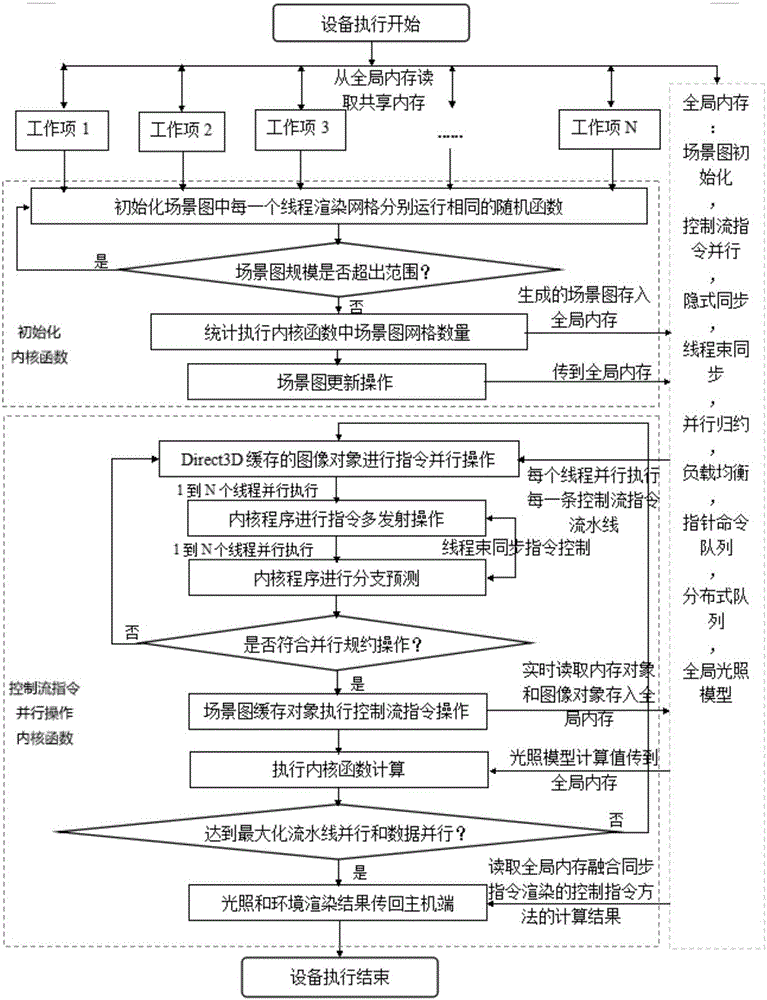

Fractal image generation and rendering method based on game engine and CPU parallel processing

InactiveCN105787865AHigh Speed Image RenderingShorten the timeConcurrent instruction executionProcessor architectures/configurationParallel algorithmData information

The invention provides a fractal image generation and rendering method based on a game engine and CPU parallel processing, relates to the technical field of CPU parallel processing in an image game engine, and aims to solve the technical problems in the prior art that image data information division is unreasonable, the rendering performance and the efficiency are low due to limited arranging structures of commands such as parallel processing and synchronous operation, and the performance requirements of real-time rendering cannot be met. In addition, the invention provides a new technical problem which specifically is how to realize highly-efficient and high-quality image rendering by simultaneously using more than two fractal algorithms in parallel. A software rendering generation algorithm is constructed based on fractal characteristics; and the CPU parallel processing technology is utilized to process fractal image geometrical characteristics in real time, a control flow instruction parallel algorithm is utilized to optimize rendering generated pipe lines, rapid generation and rendering of a fractal image model are realized, and a rapid and accurate display effect in a user PC machine is achieved.

Owner:XIHUA UNIV

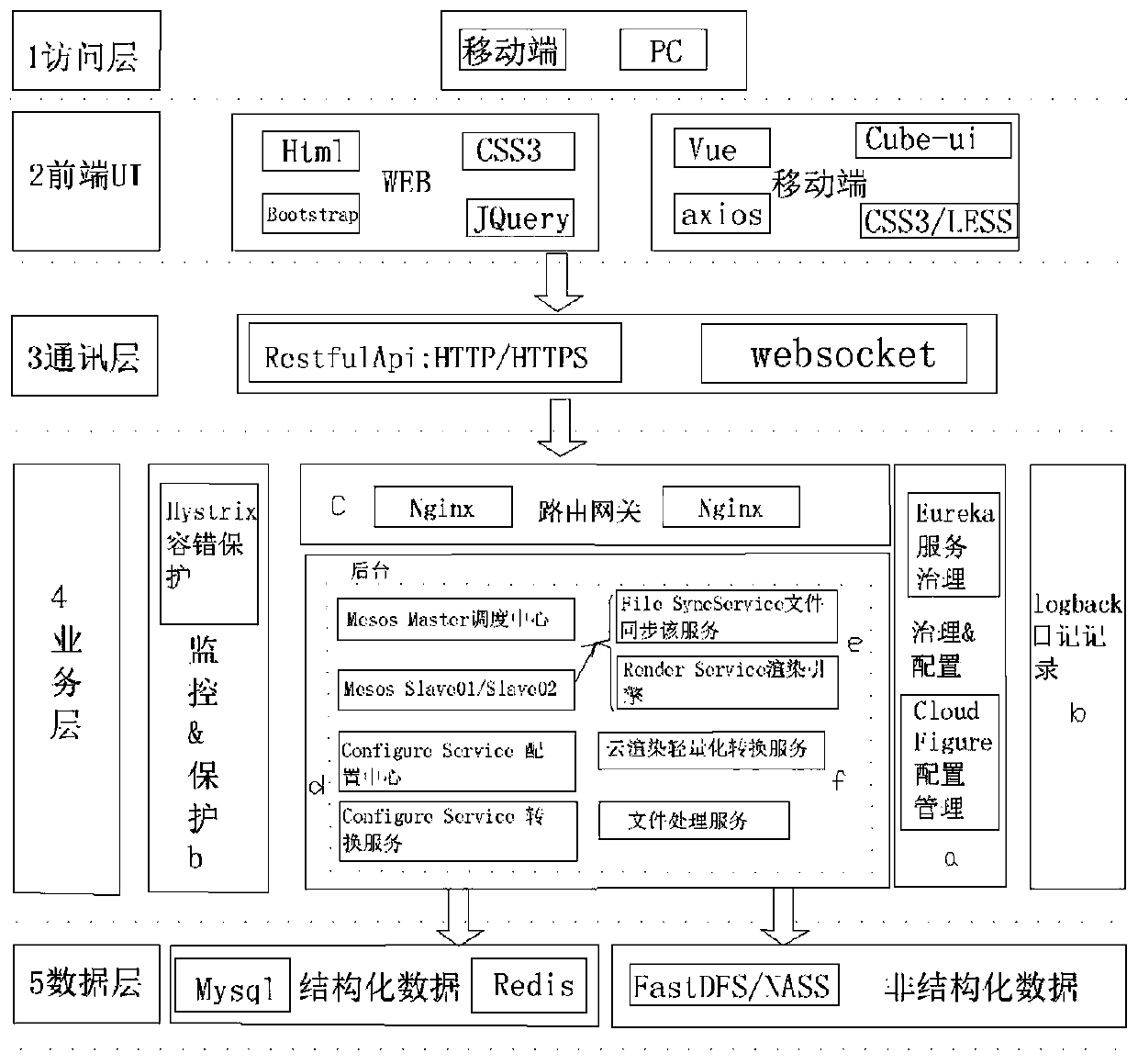

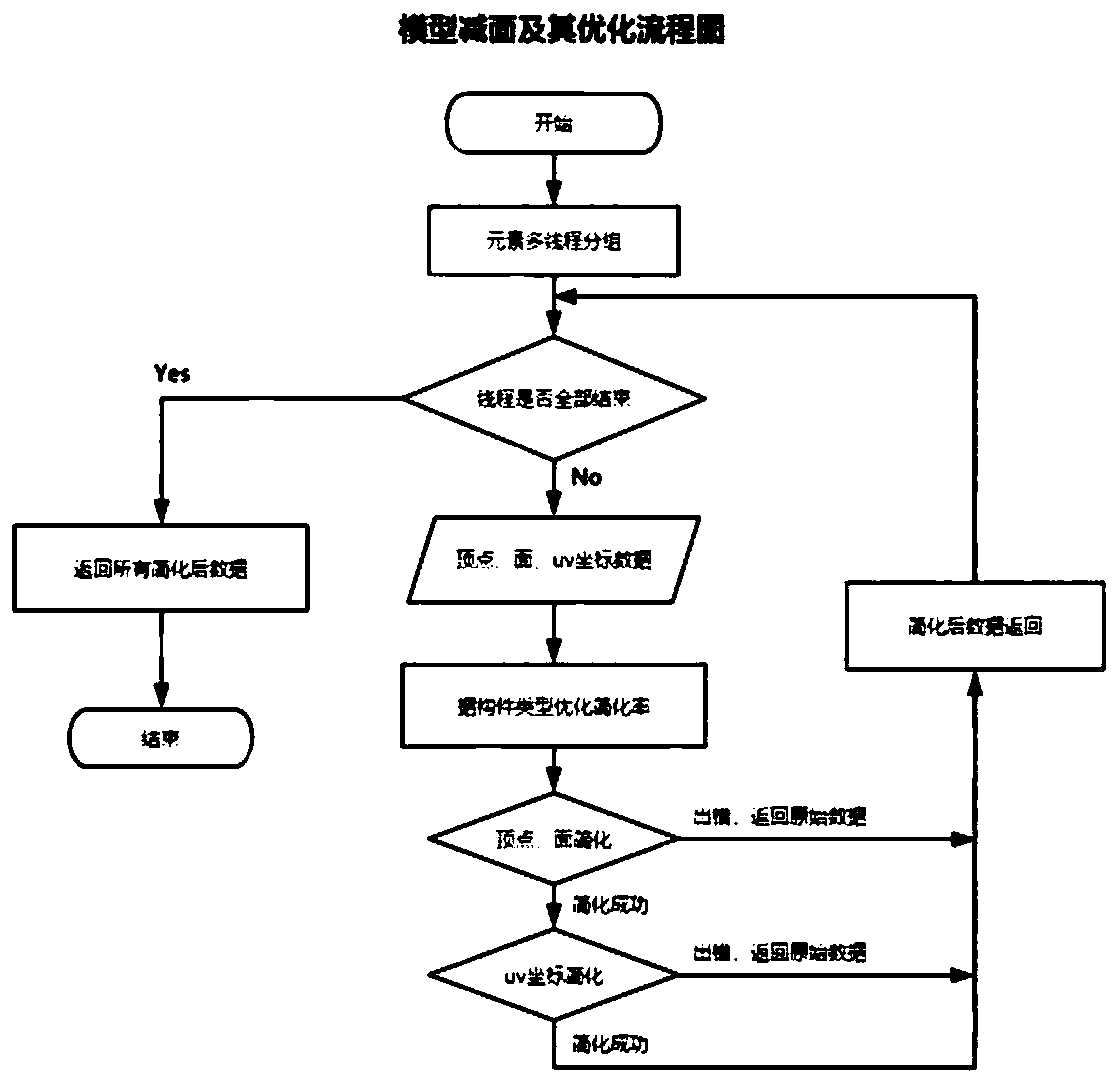

Online three-dimensional rendering technology and system based on cloud platform

PendingCN110751712AQuality improvementRender hdProcessor architectures/configuration3D-image renderingComputer hardwareParallel algorithm

The invention discloses an online three-dimensional rendering technology and system based on a cloud platform, the system comprises a WEB front-end system, the WEB front-end system comprises an accesslayer and a front-end UI, and the access layer comprises a mobile phone and a PC terminal. A communication layer, a service layer and a data layer, a model subtraction optimization algorithm is adopted to carry out lightweight processing on a model file; a step of compressing a lightweight model after surface reduction optimization and a step of optimizing a model file through a real-time communication protocol are included. In addition, a parallel algorithm and a dynamic computing power rendering algorithm of a rendering pipeline are adopted to carry out lightweight processing on the model file; a user can obtain high-quality 3D rendering on any lightweight terminal; according to the online three-dimensional rendering system of the cloud platform, a product in a design is rendered and generated in the cloud server, designers from various countries or various regions can design in the same platform and directly communicate with each other to see a law and make a conclusion, and the modified design is verified together.

Owner:中设数字技术有限公司

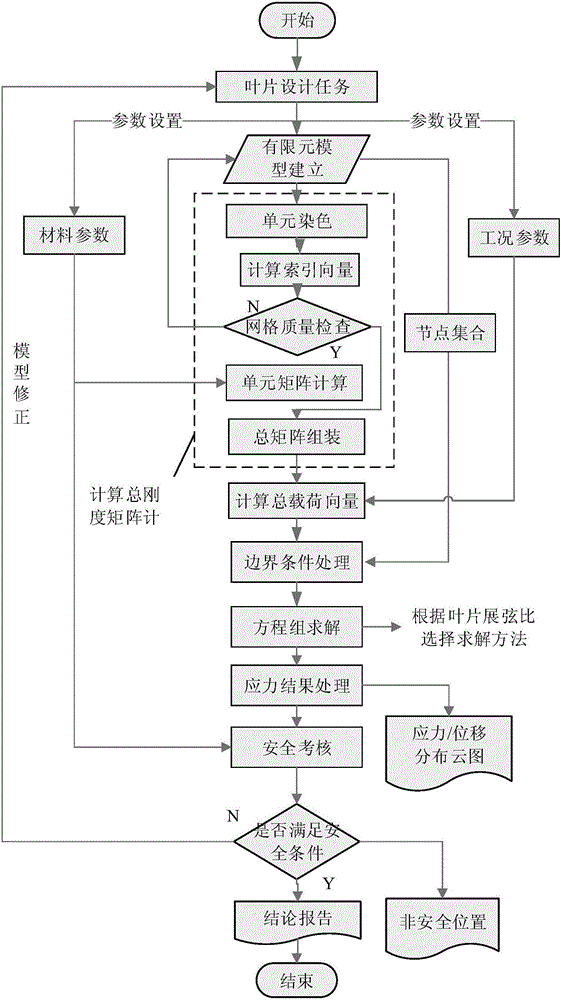

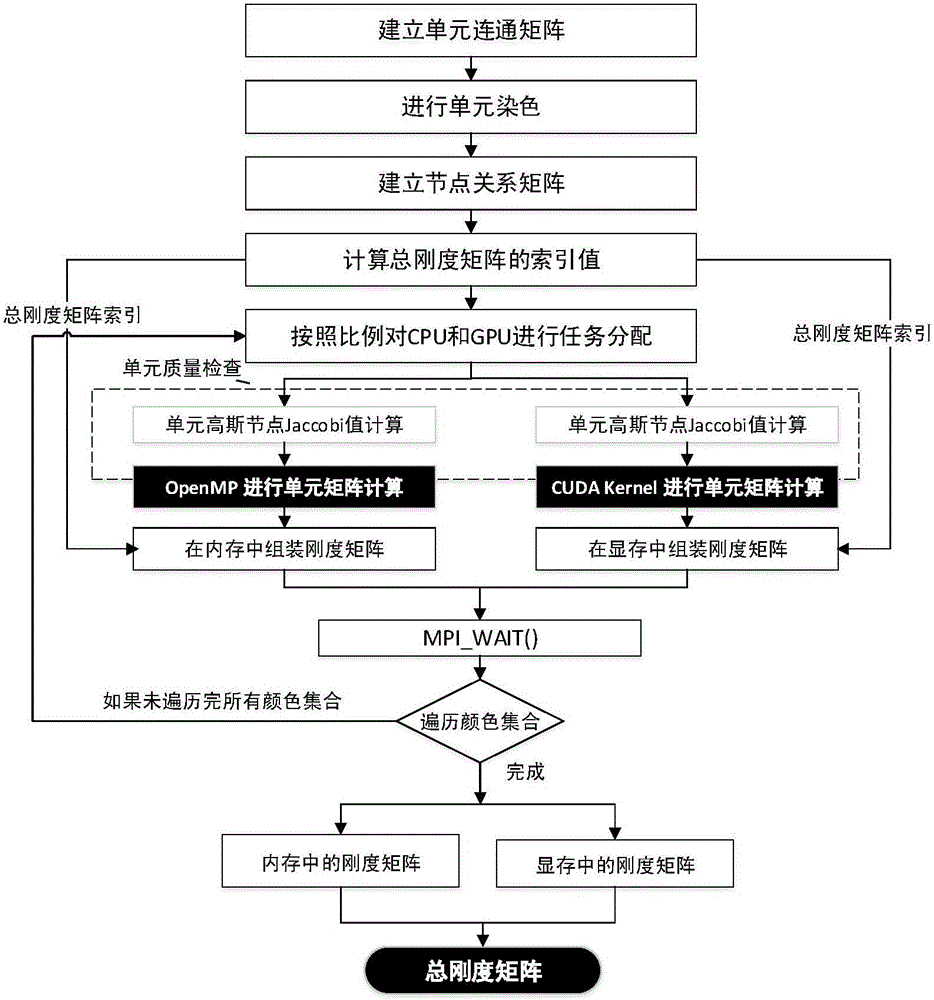

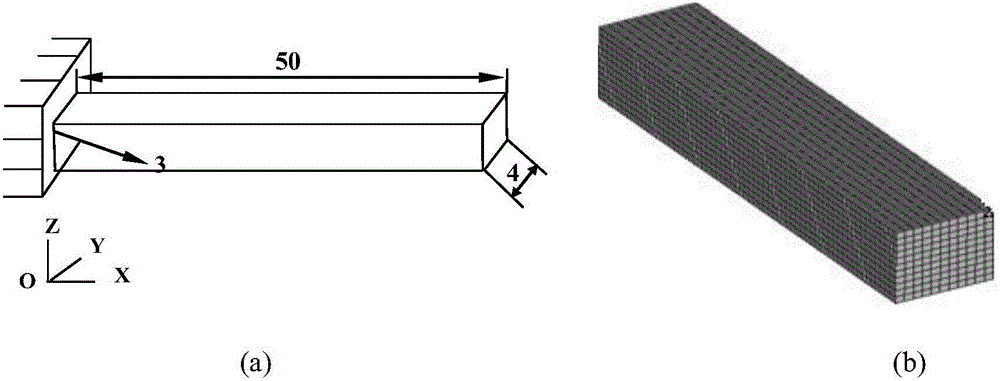

Method for analyzing static strength characteristics of turbomachinery blade based on CPU+GPU heterogeneous parallel computing

ActiveCN106570204AShorten the design cycleAccurate static strength characteristic analysis resultsGeometric CADDesign optimisation/simulationElement modelParallel algorithm

The invention discloses a method for analyzing static strength characteristics of a turbomachinery blade based on CPU+GPU heterogeneous parallel computing. The method comprises the following steps of firstly, establishing a finite element model, computing the total stiffness matrix of the model, then, computing the centrifugal load vector and the pneumatic load vector of the turbomachinery blade, performing displacement constraint and coupling of a node, and correcting the total stiffness matrix; and then, solving an equation set formed by the total stiffness matrix and the load vector in parallel by using CPU+GPU, obtaining a node displacement vector, then, computing the principal strain and the VonMises equivalent stress, drawing a distribution cloud picture, and finally, performing safety check. By means of the method disclosed by the invention, for static strength analysis and design of the turbomachinery blade, project planners can perform operation conveniently; simultaneously, due to an adopted CPU+GPU parallel algorithm, the computing speed of a finite element method can be effectively increased; an accurate and rapid blade static strength characteristic analysis result is provided for design of the turbomachinery blade; and the design period of the turbomachinery blade is greatly shortened.

Owner:XI AN JIAOTONG UNIV

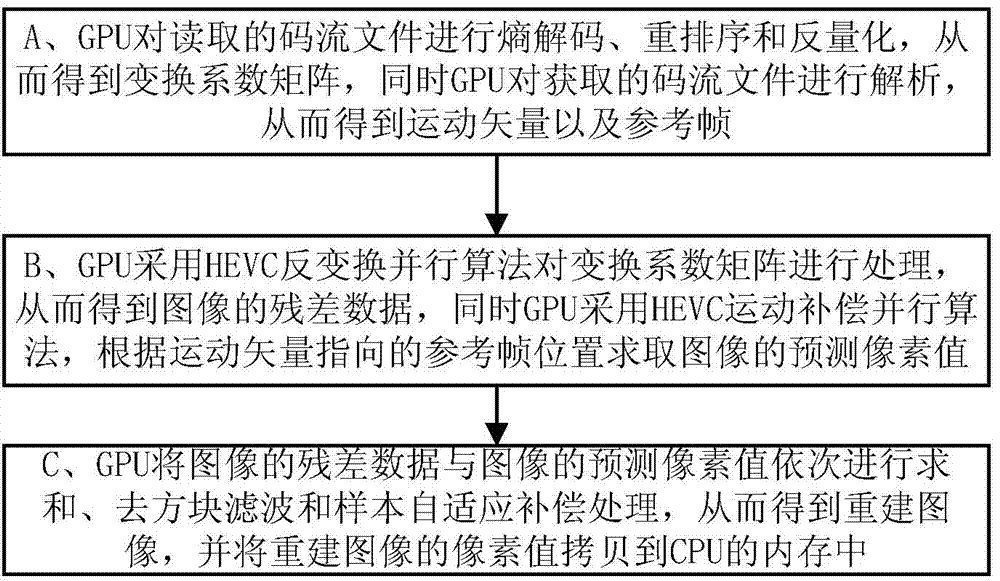

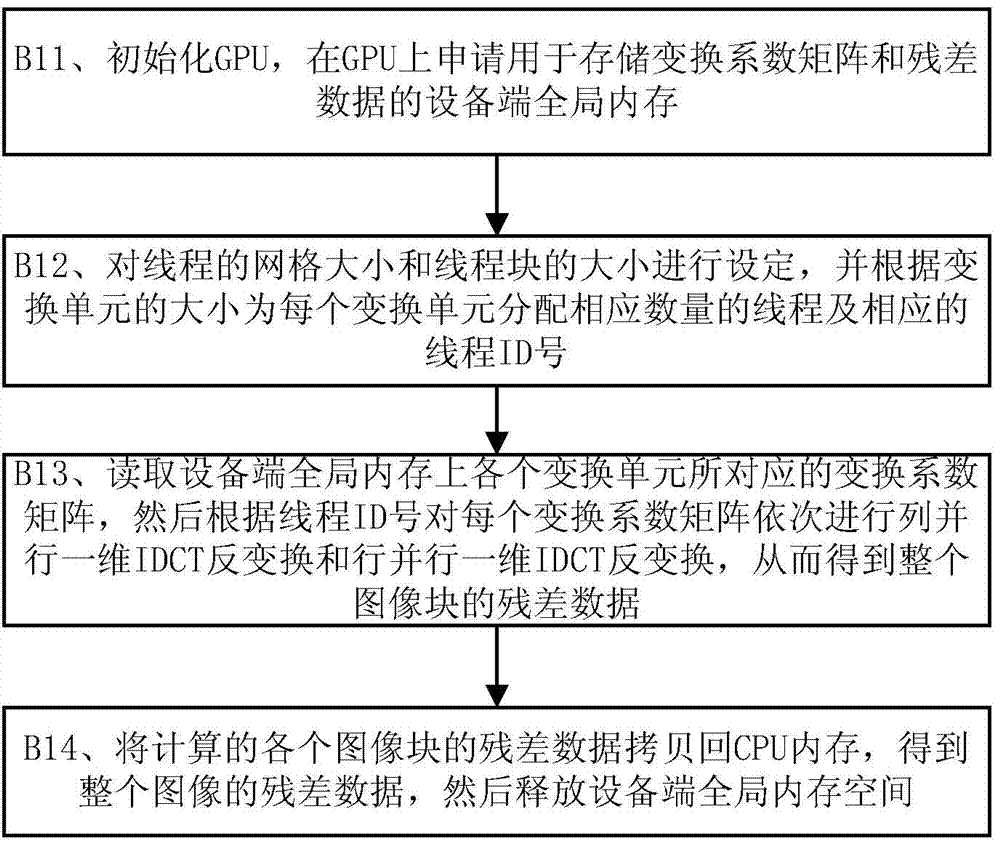

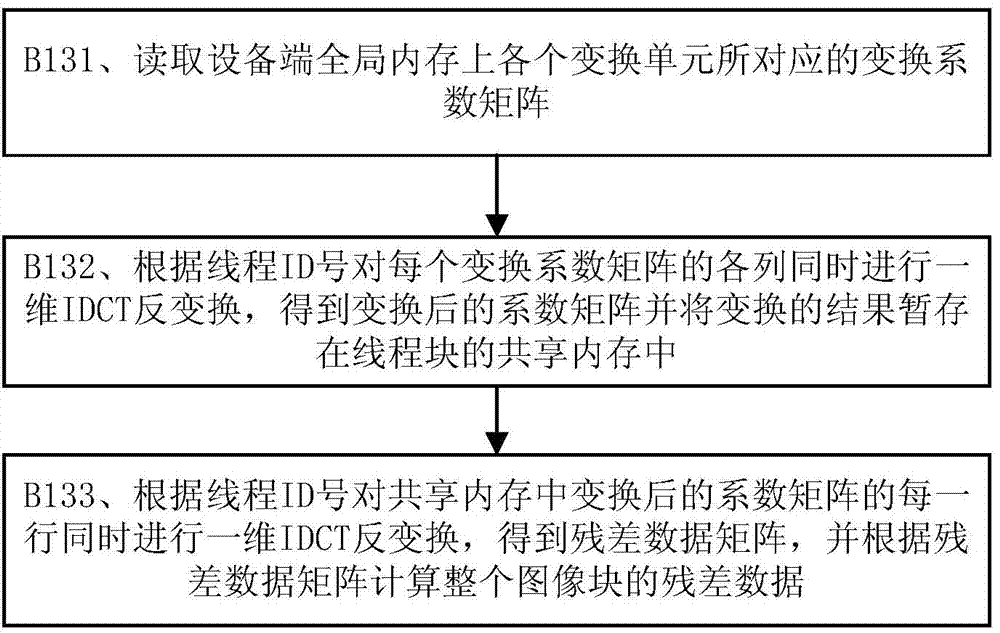

GPU (Graphics Processing Unit)-based HEVC (High Efficiency Video Coding) parallel decoding method

InactiveCN104125466AImprove decoding speedImprove decoding efficiencyDigital video signal modificationMotion vectorParallel algorithm

The invention discloses a GPU-based HEVC parallel decoding method. The method includes that a GPU performs entropy decoding, re-ordering and inverse quantization on a read code stream file to obtain a transformation coefficient matrix, and the GPU parses the obtained code stream file to a obtain motion vector and a reference frame; the GPU processes the transformation coefficient matrix through an HEVC inverse transformation parallel algorithm to obtain residual data of an image, and the GPU uses an HEVC motion compensation parallel algorithm to obtain a predicted pixel value of the image according to the reference frame position which the motion vector points to; the GPU sequentially performs summing, deblocking filter and sample self-adaption compensation on the residual data and the predicted pixel value of the image to obtain a reconstructed image, and a pixel value of the reconstructed image is copied to a memory of the CPU. The GPU-based HEVC parallel decoding method effectively improves the decoding speed and efficiency and can be widely used in the video coding and decoding field.

Owner:SUN YAT SEN UNIV

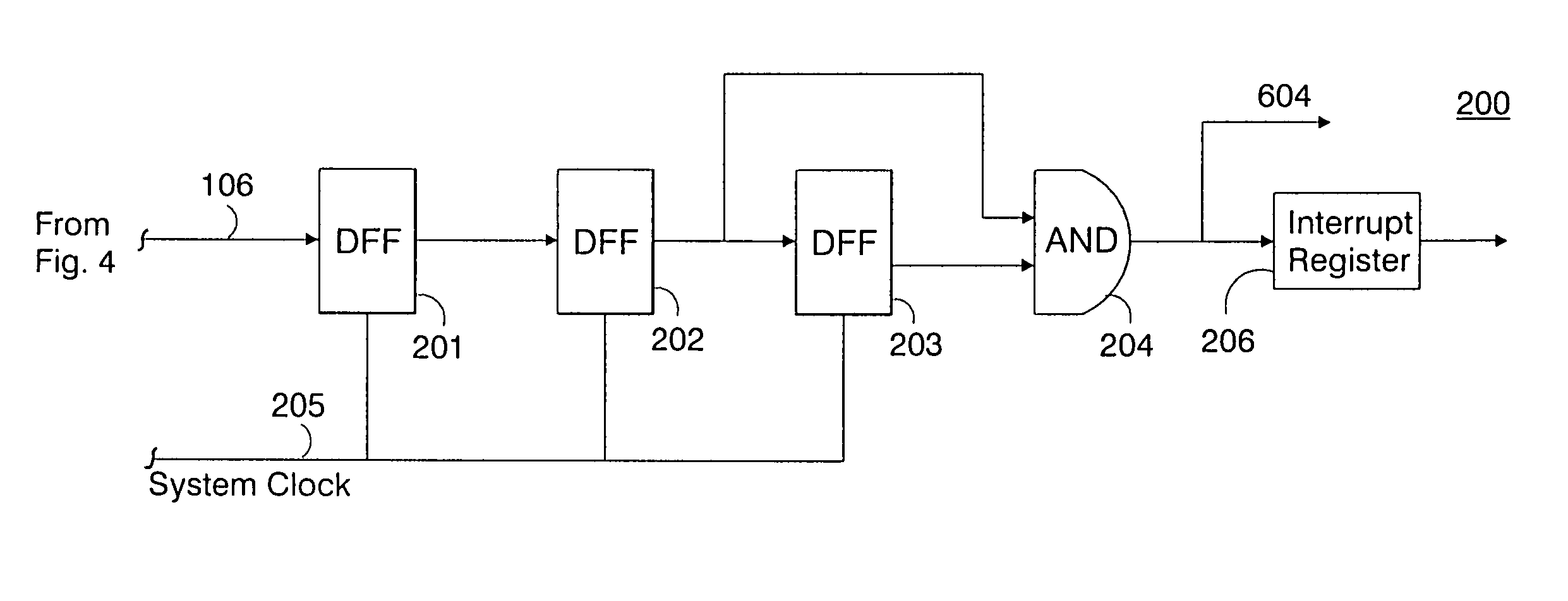

Global interrupt and barrier networks

InactiveUS7444385B2Efficient executionEffective controlError preventionProgram synchronisationParallel algorithmAsynchronous operation

A system and method for generating global asynchronous signals in a computing structure. Particularly, a global interrupt and barrier network is implemented that implements logic for generating global interrupt and barrier signals for controlling global asynchronous operations performed by processing elements at selected processing nodes of a computing structure in accordance with a processing algorithm; and includes the physical interconnecting of the processing nodes for communicating the global interrupt and barrier signals to the elements via low-latency paths. The global asynchronous signals respectively initiate interrupt and barrier operations at the processing nodes at times selected for optimizing performance of the processing algorithms. In one embodiment, the global interrupt and barrier network is implemented in a scalable, massively parallel supercomputing device structure comprising a plurality of processing nodes interconnected by multiple independent networks, with each node including one or more processing elements for performing computation or communication activity as required when performing parallel algorithm operations. One multiple independent network includes a global tree network for enabling high-speed global tree communications among global tree network nodes or sub-trees thereof. The global interrupt and barrier network may operate in parallel with the global tree network for providing global asynchronous sideband signals.

Owner:IBM CORP

Collective network for computer structures

InactiveUS20110219280A1Efficient and reliable computationLimited resourceError prevention/detection by using return channelTransmission systemsCollective communicationParallel algorithm

A system and method for enabling high-speed, low-latency global collective communications among interconnected processing nodes. The global collective network optimally enables collective reduction operations to be performed during parallel algorithm operations executing in a computer structure having a plurality of the interconnected processing nodes. Router devices are included that interconnect the nodes of the network via links to facilitate performance of low-latency global processing operations at nodes of the virtual network and class structures. The global collective network may be configured to provide global barrier and interrupt functionality in asynchronous or synchronized manner. When implemented in a massively-parallel supercomputing structure, the global collective network is physically and logically partitionable according to needs of a processing algorithm.

Owner:IBM CORP

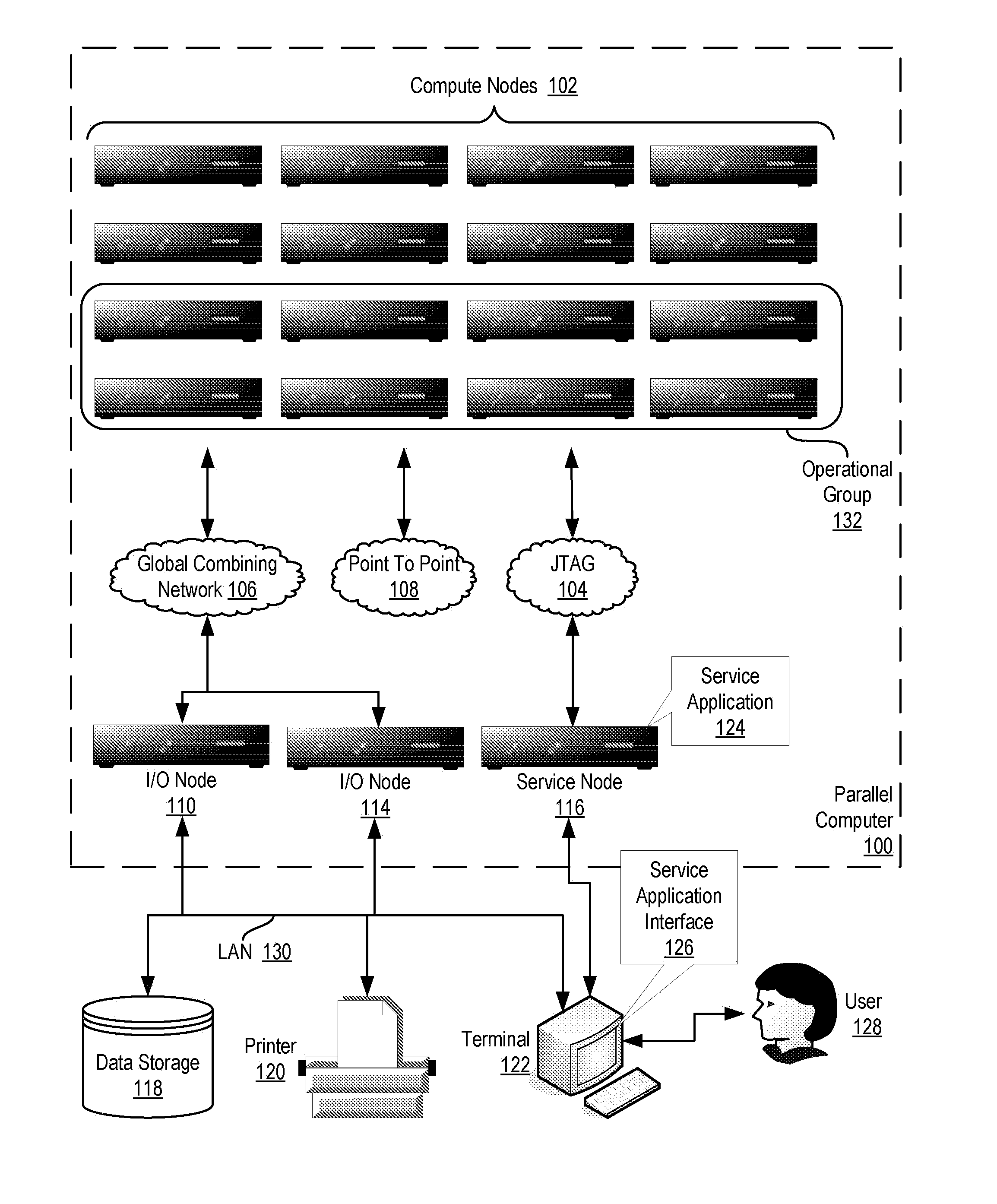

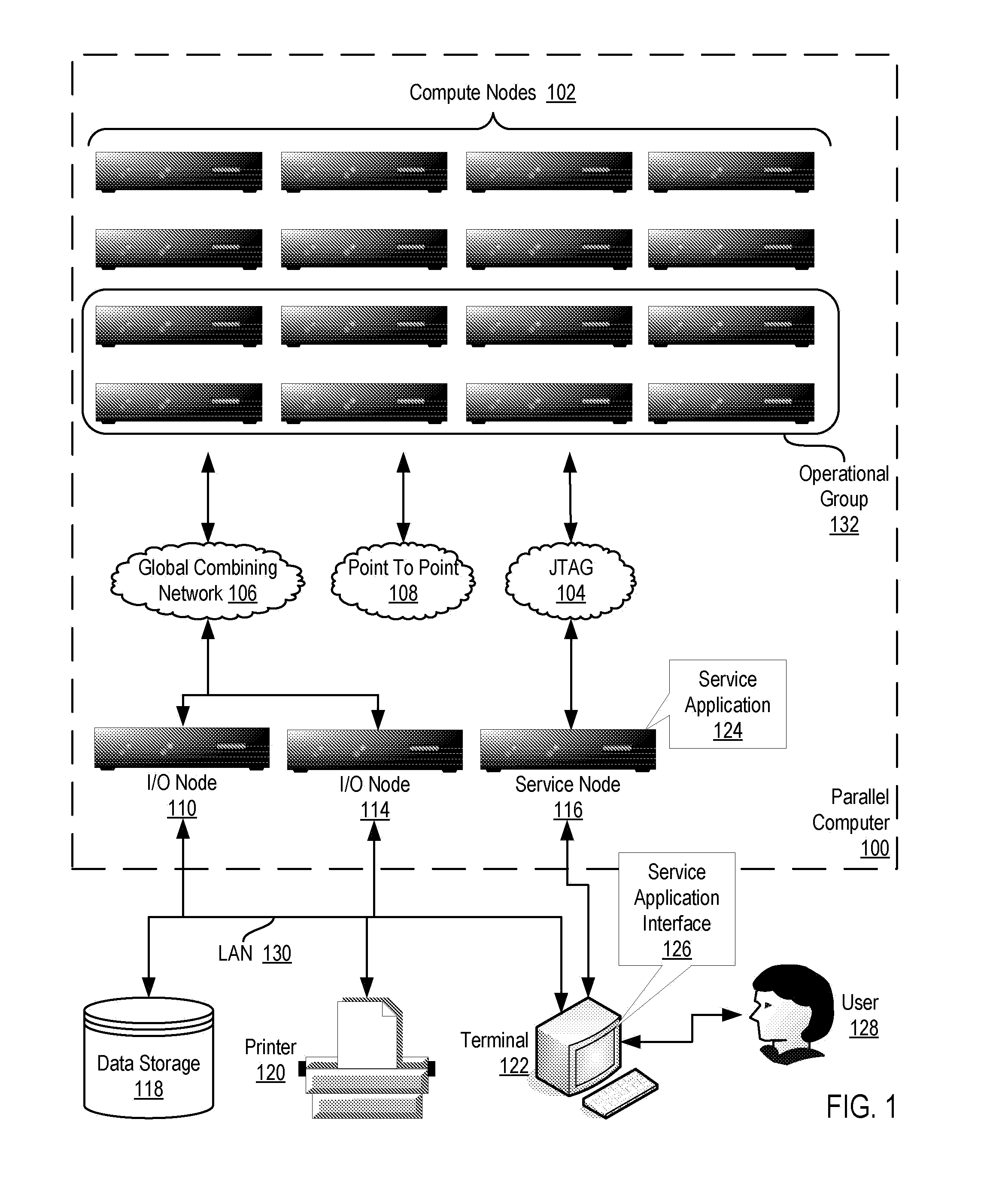

Distributing parallel algorithms of a parallel application among compute nodes of an operational group in a parallel computer

ActiveUS20090204789A1Energy efficient ICTProgram control using stored programsParallel algorithmApplication software

Methods, apparatus, and products for distributing parallel algorithms of a parallel application among compute nodes of an operational group in a parallel computer are disclosed that include establishing a hardware profile, the hardware profile describing thermal characteristics of each compute node in the operational group; establishing a hardware independent application profile, the application profile describing thermal characteristics of each parallel algorithm of the parallel application; and mapping, in dependence upon the hardware profile and application profile, each parallel algorithm of the parallel application to a compute node in the operational group.

Owner:RAKUTEN GRP INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com