Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1140 results about "Switched fabric" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

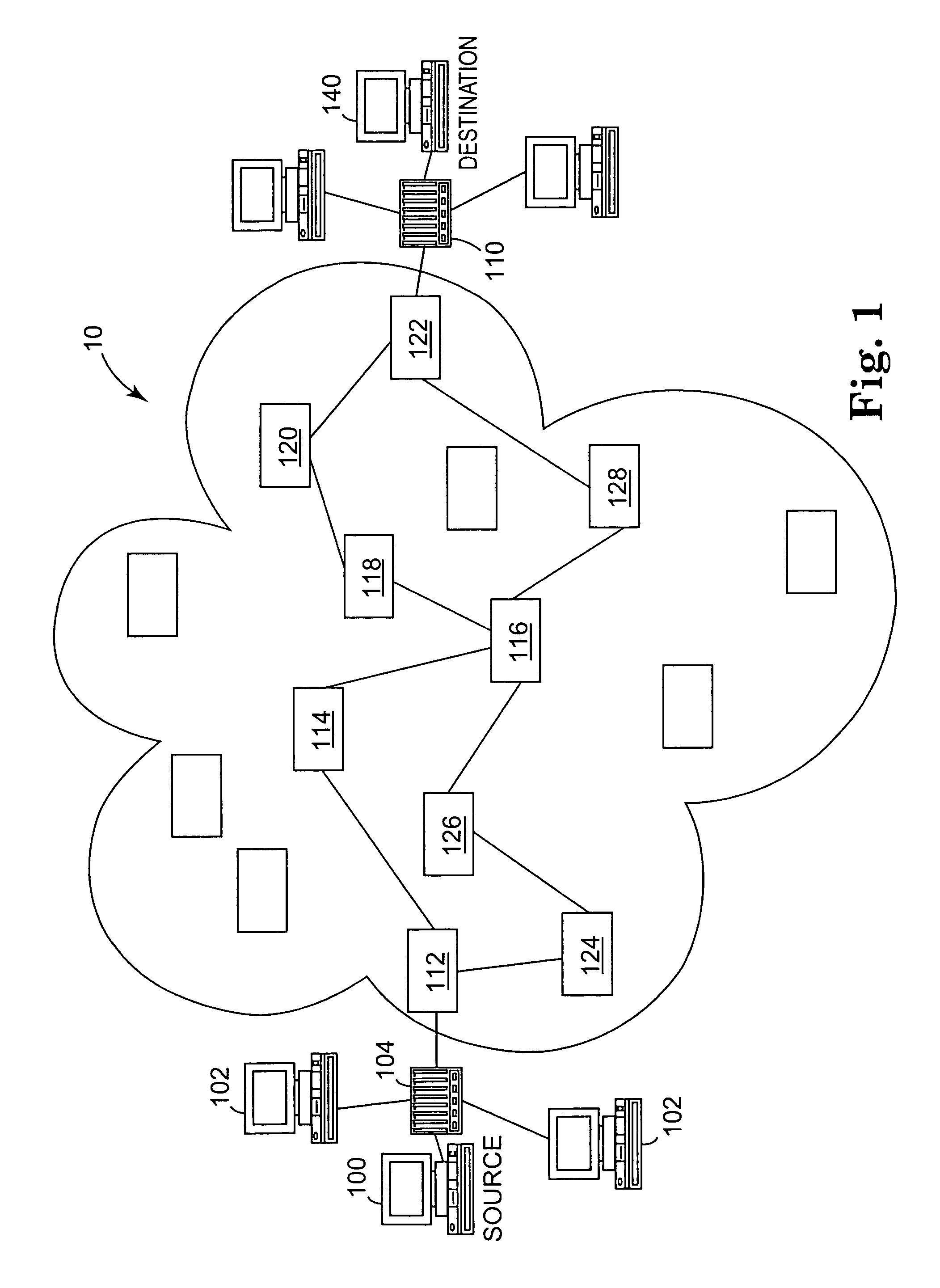

Switched Fabric or switching fabric is a network topology in which network nodes interconnect via one or more network switches (particularly crossbar switches). Because a switched fabric network spreads network traffic across multiple physical links, it yields higher total throughput than broadcast networks, such as the early 10BASE5 version of Ethernet, or most wireless networks such as Wi-Fi.

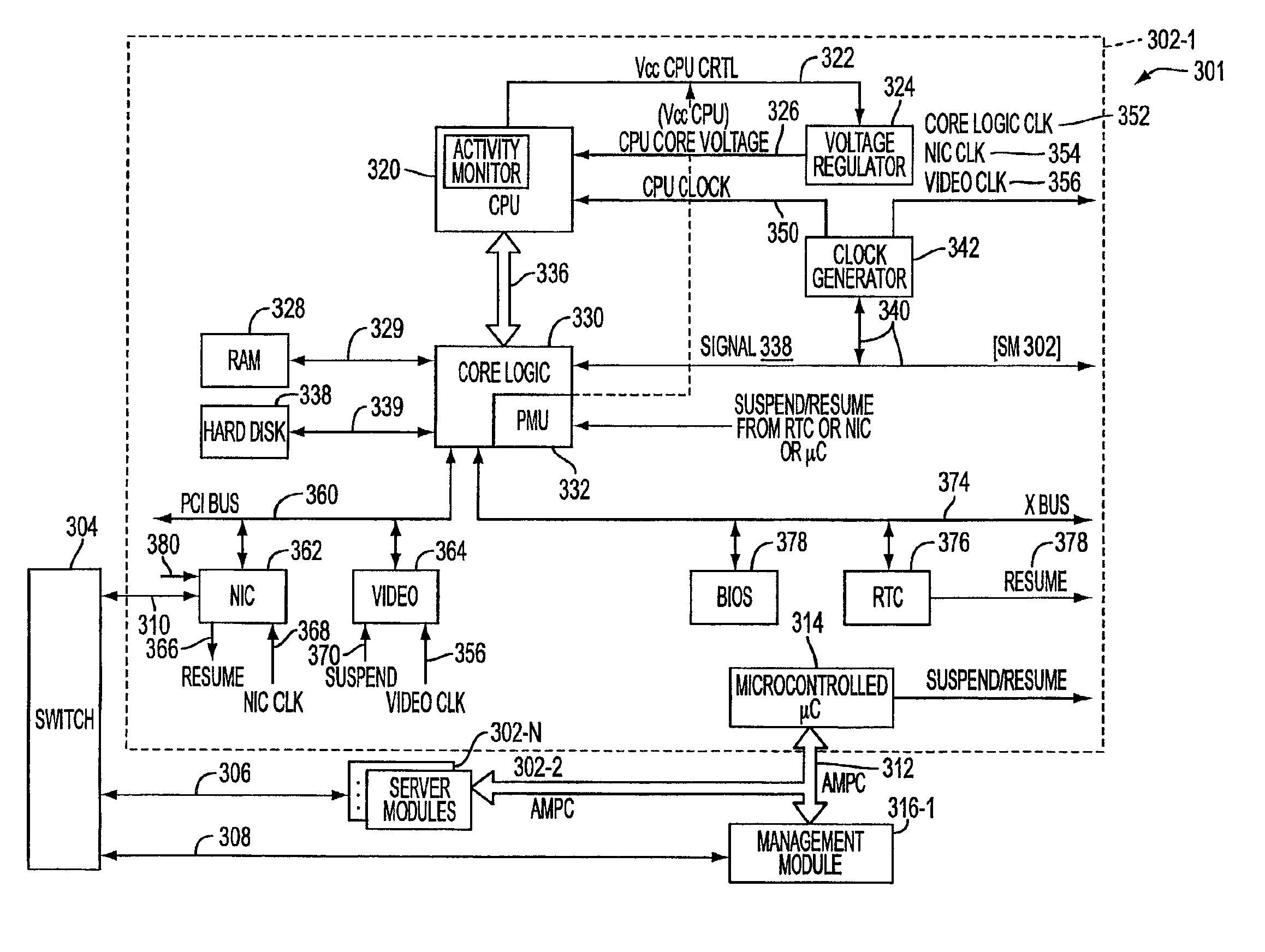

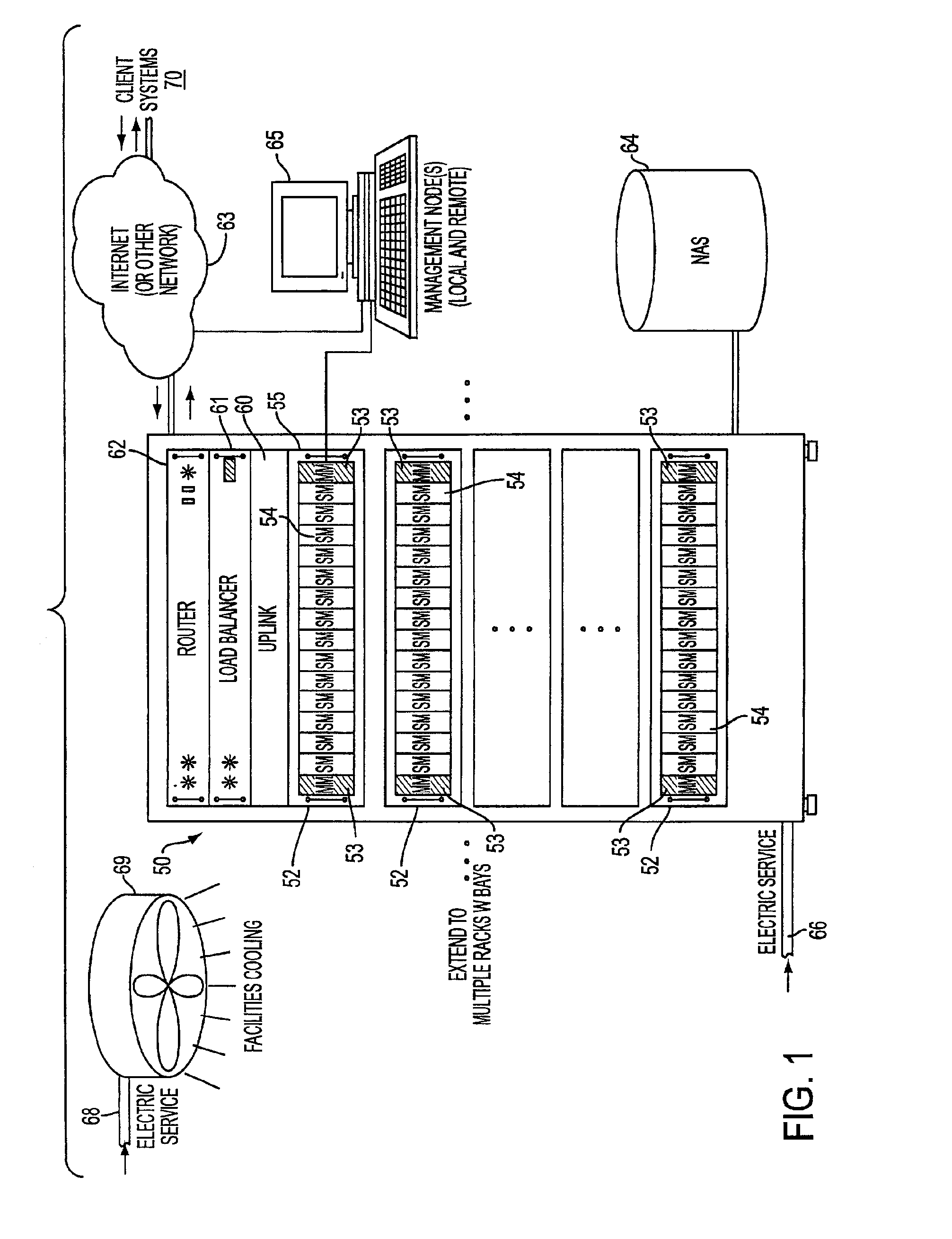

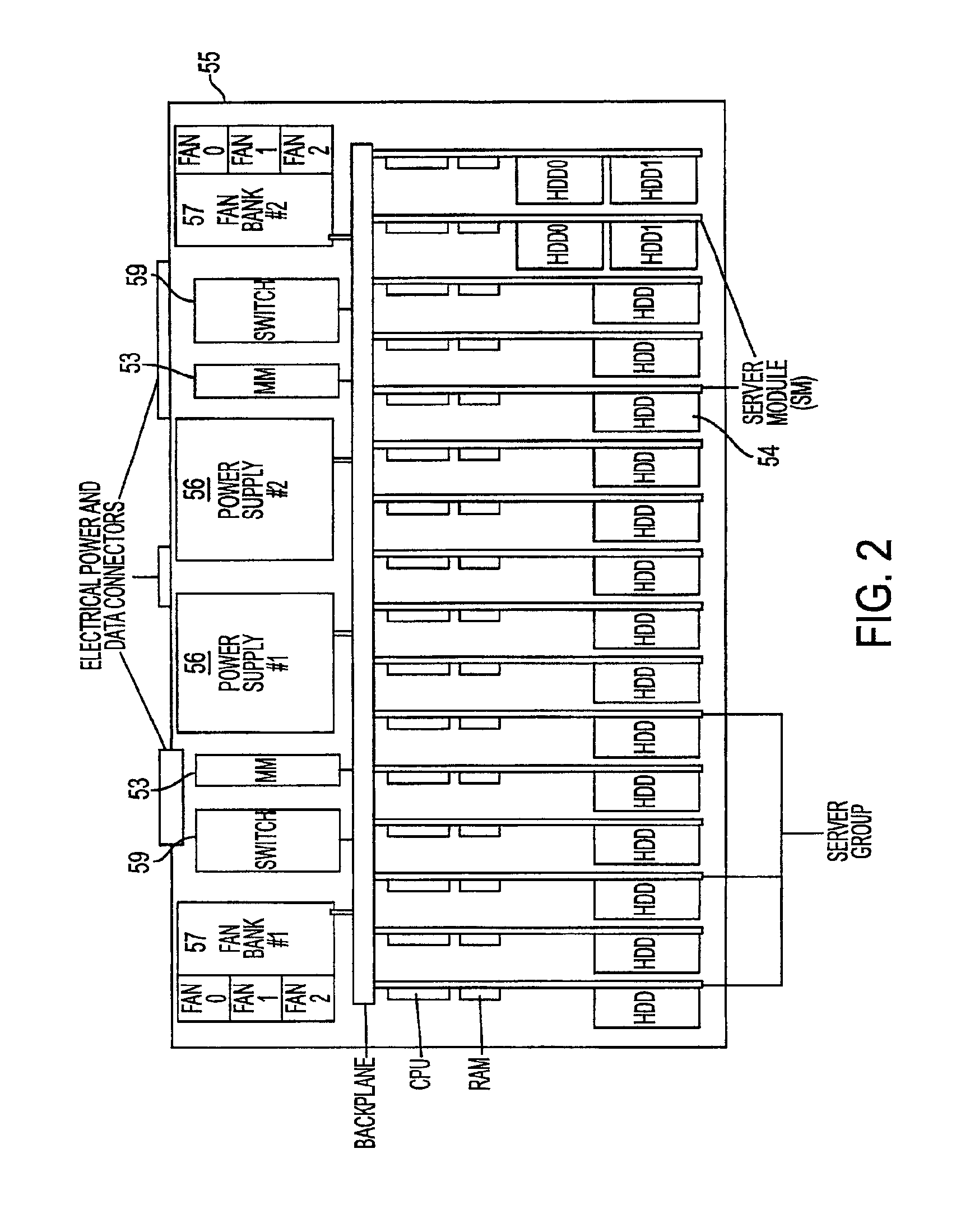

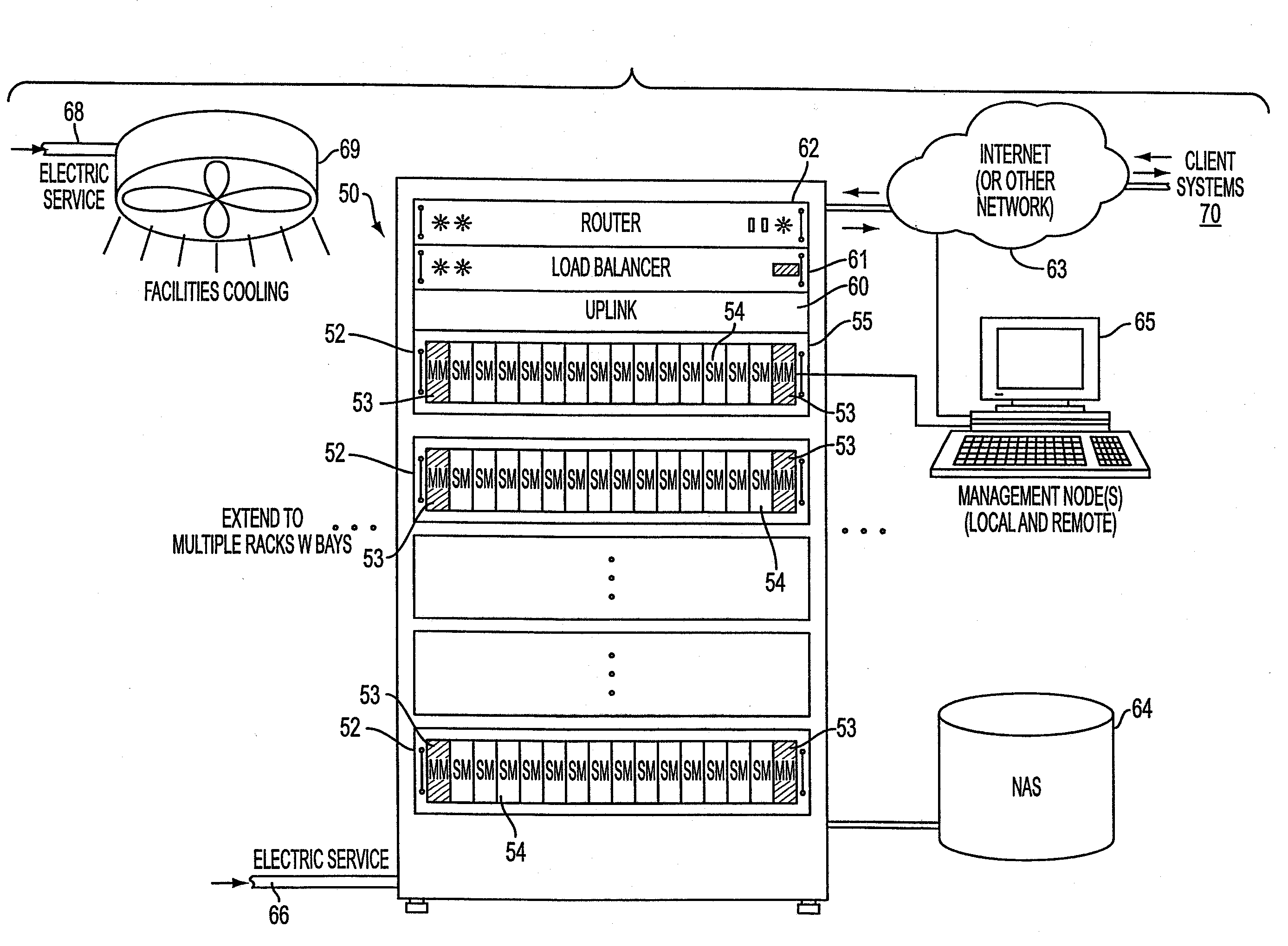

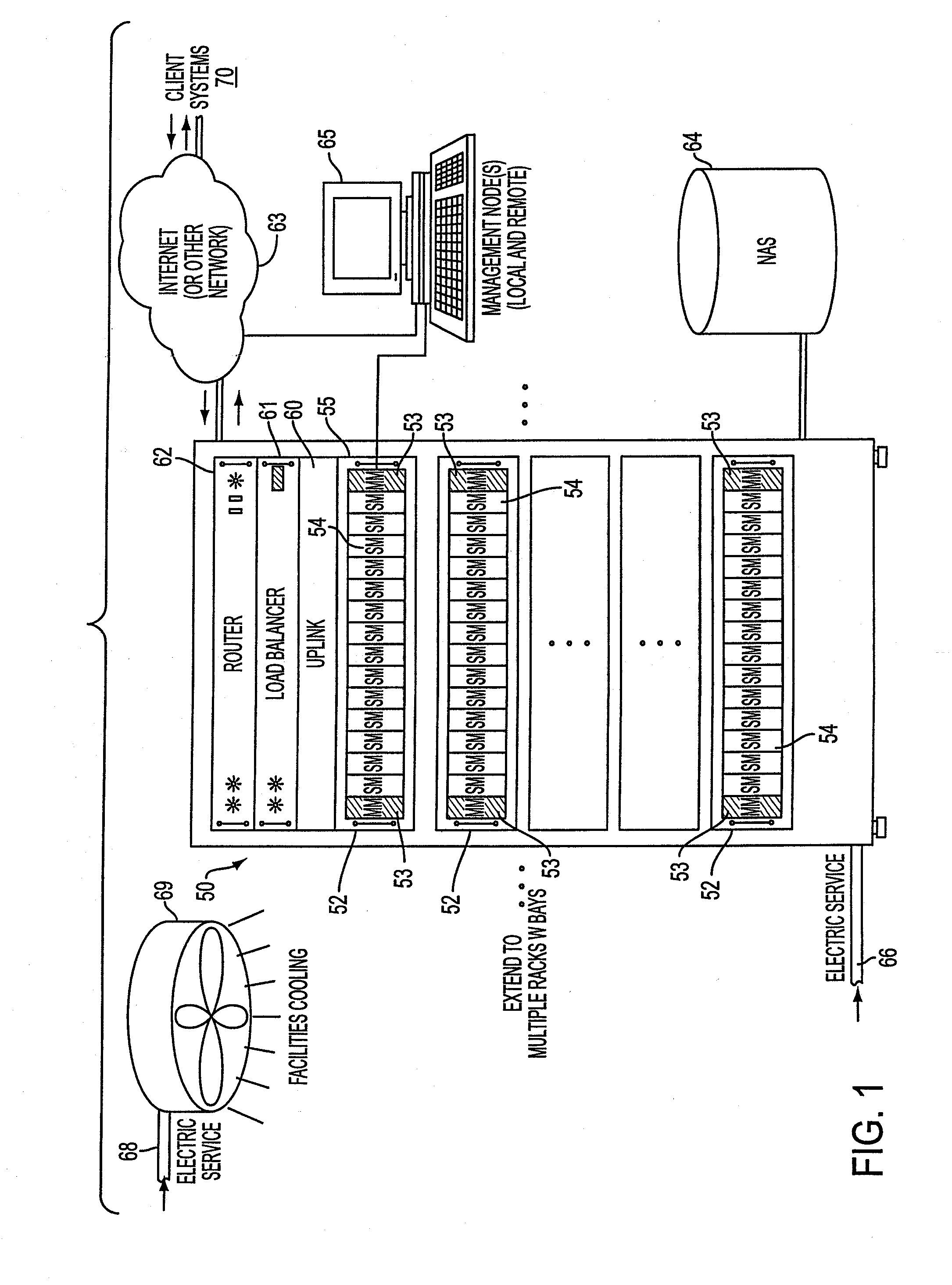

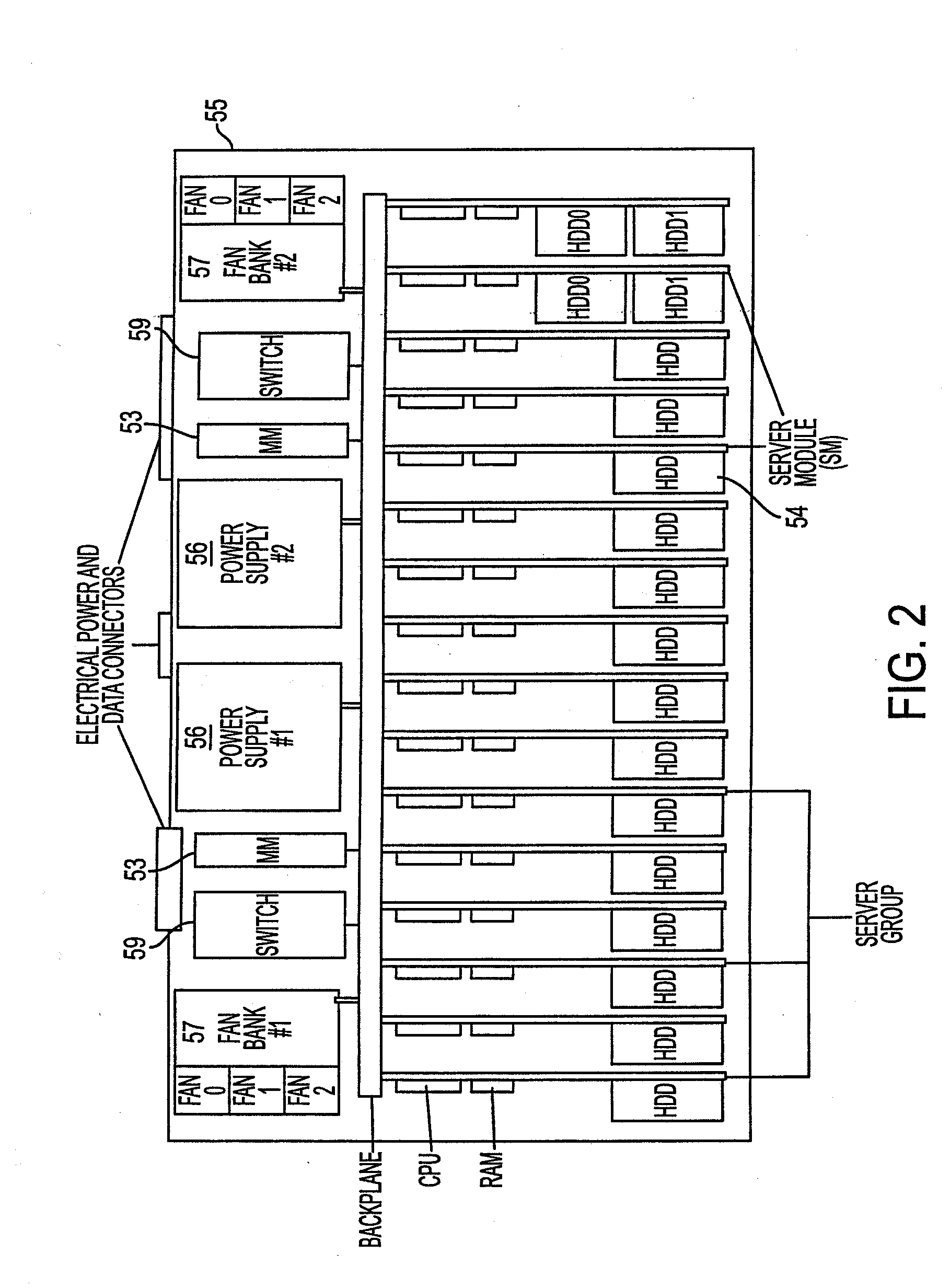

System, method, and architecture for dynamic server power management and dynamic workload management for multi-server environment

InactiveUS6859882B2Save energyConserving methodEnergy efficient ICTVolume/mass flow measurementNetwork architectureWorkload management

Network architecture, computer system and / or server, circuit, device, apparatus, method, and computer program and control mechanism for managing power consumption and workload in computer system and data and information servers. Further provides power and energy consumption and workload management and control systems and architectures for high-density and modular multi-server computer systems that maintain performance while conserving energy and method for power management and workload management. Dynamic server power management and optional dynamic workload management for multi-server environments is provided by aspects of the invention. Modular network devices and integrated server system, including modular servers, management units, switches and switching fabrics, modular power supplies and modular fans and a special backplane architecture are provided as well as dynamically reconfigurable multi-purpose modules and servers. Backplane architecture, structure, and method that has no active components and separate power supply lines and protection to provide high reliability in server environment.

Owner:HURON IP

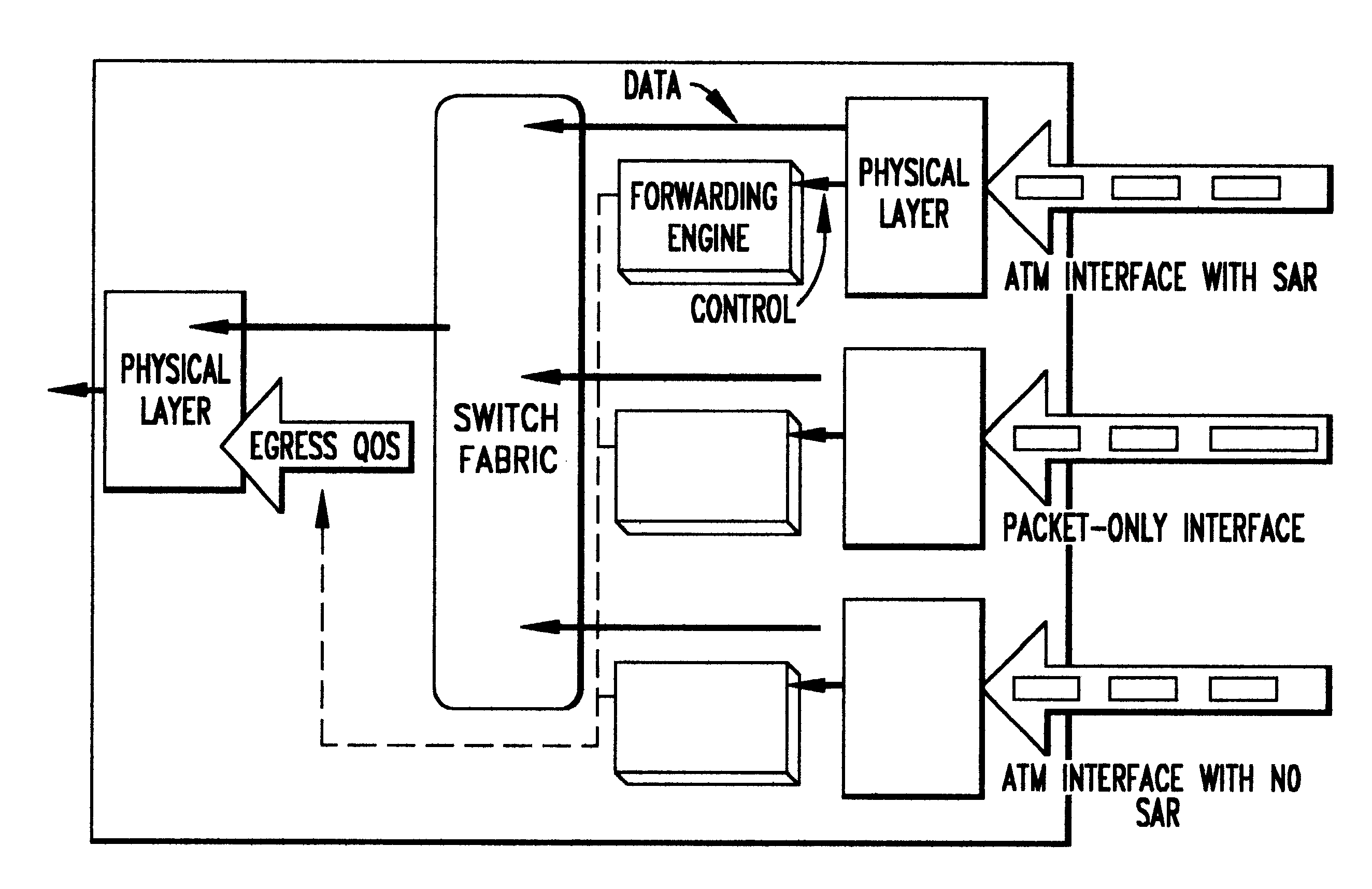

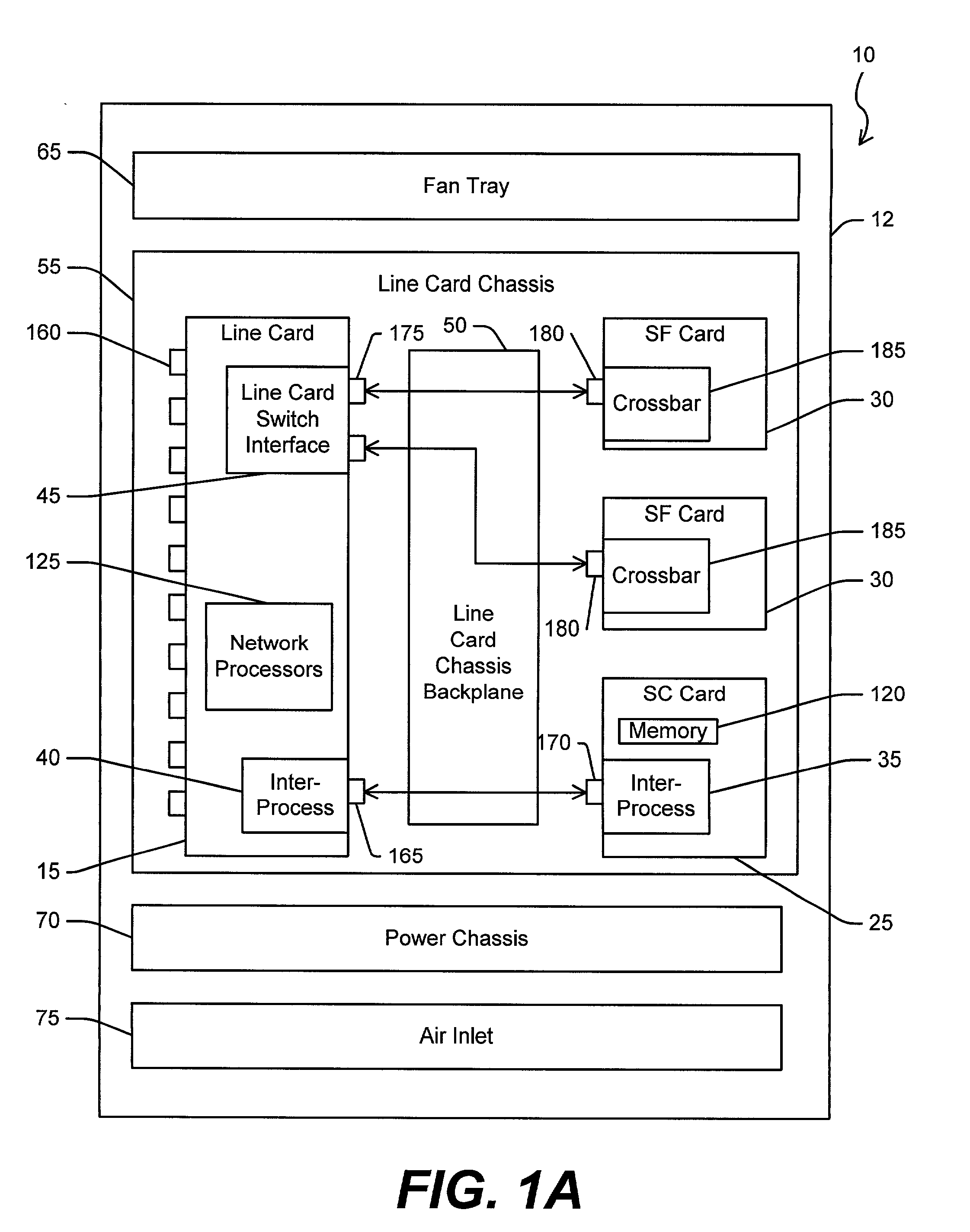

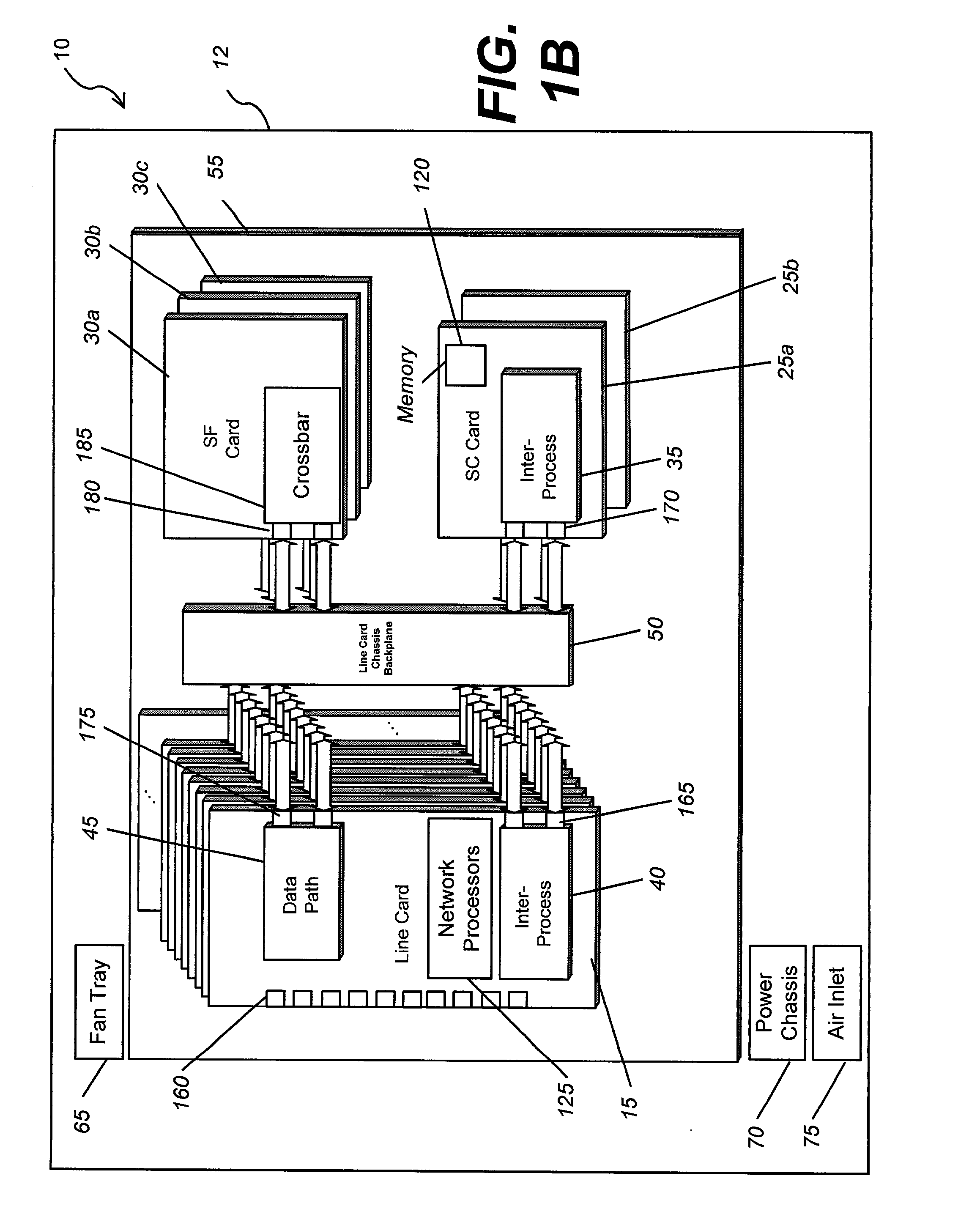

System architecture for and method of processing packets and/or cells in a common switch

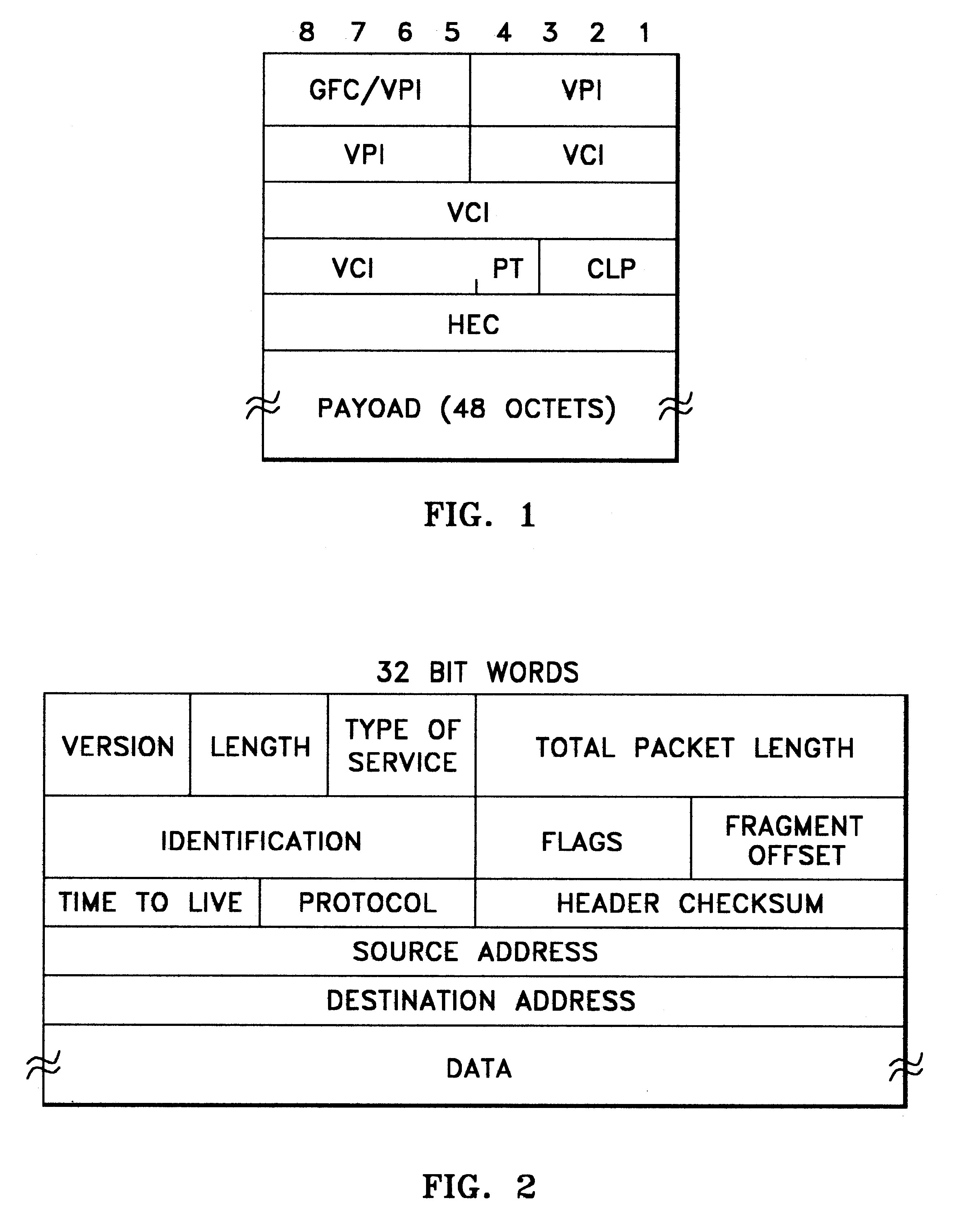

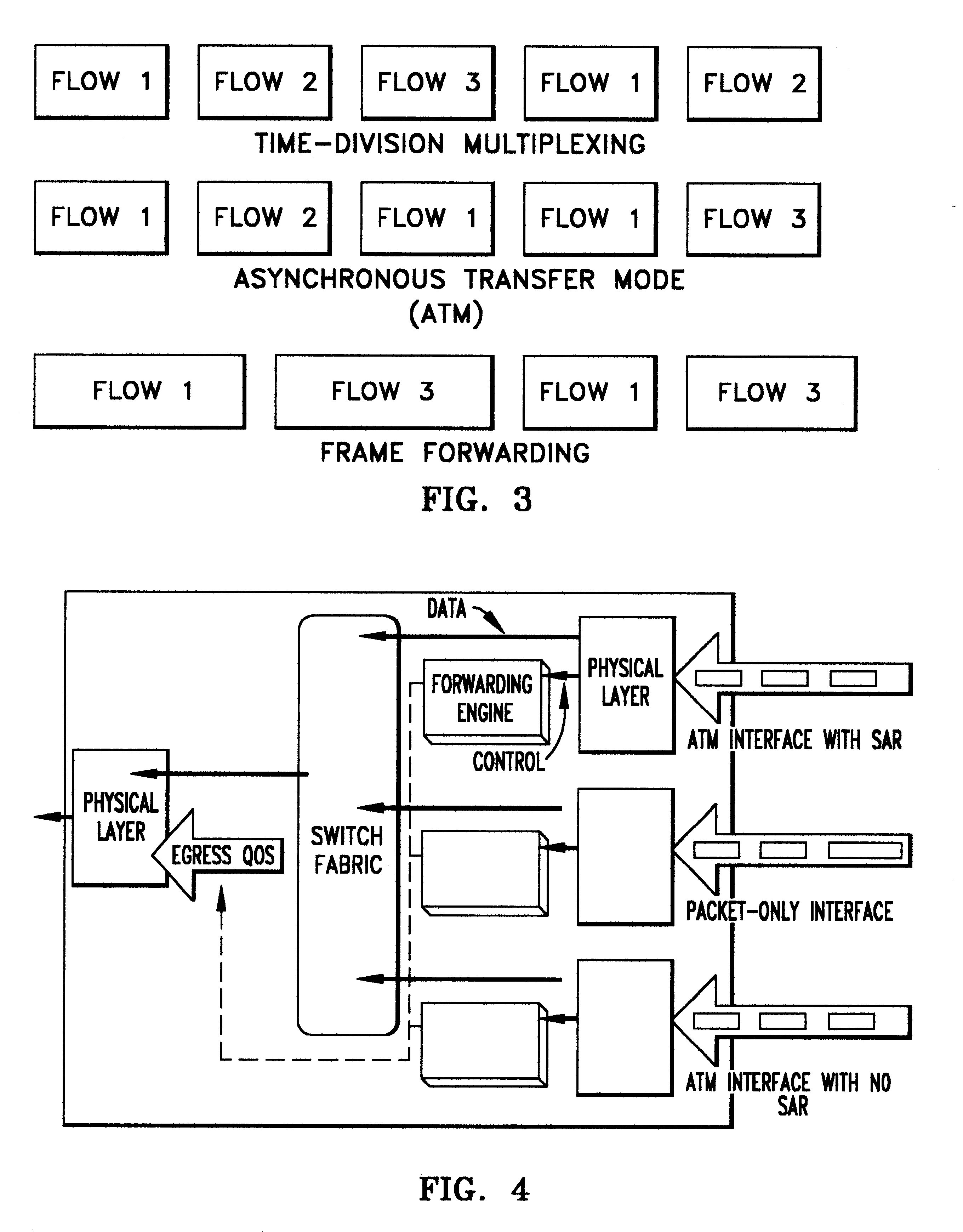

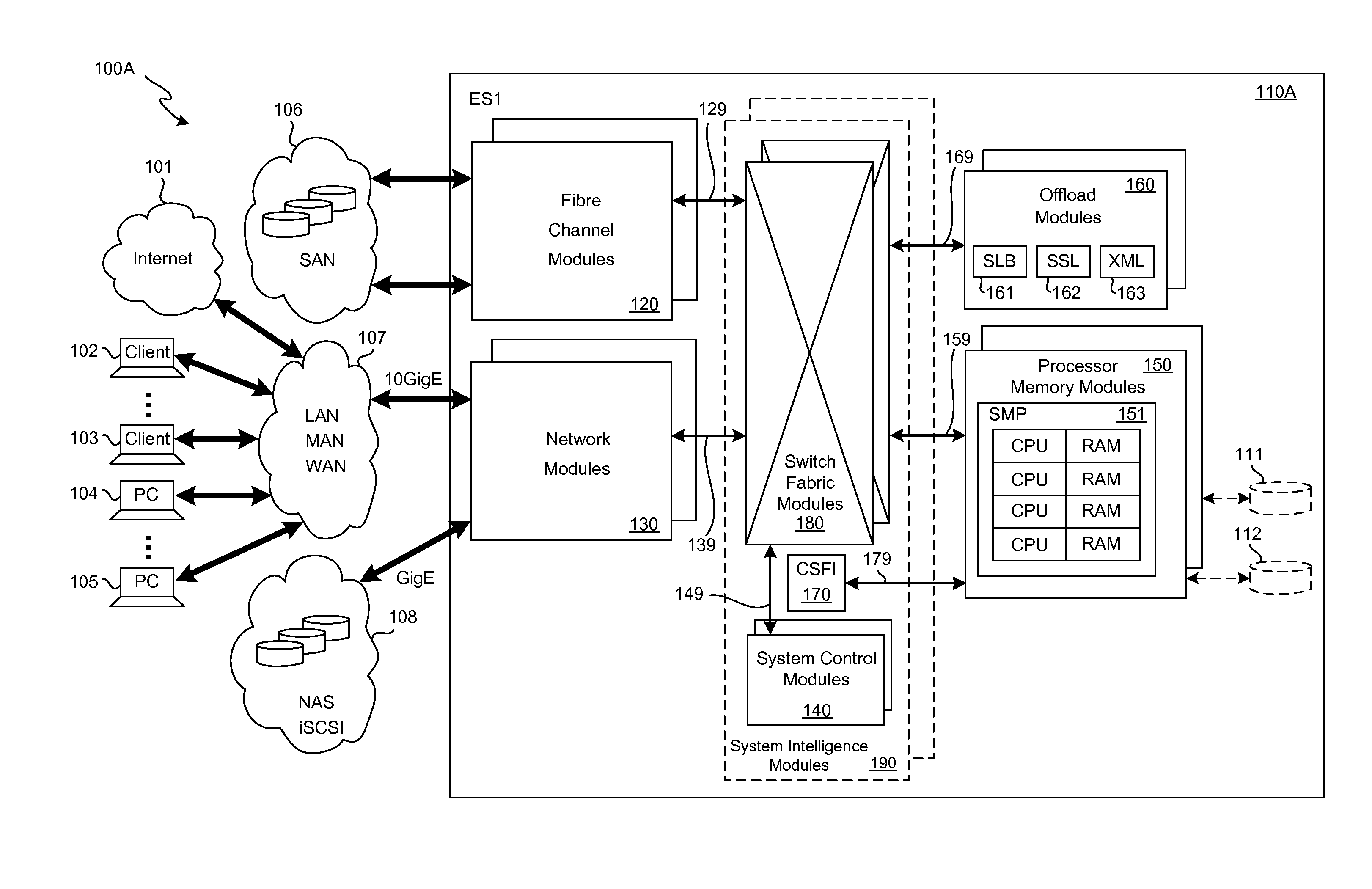

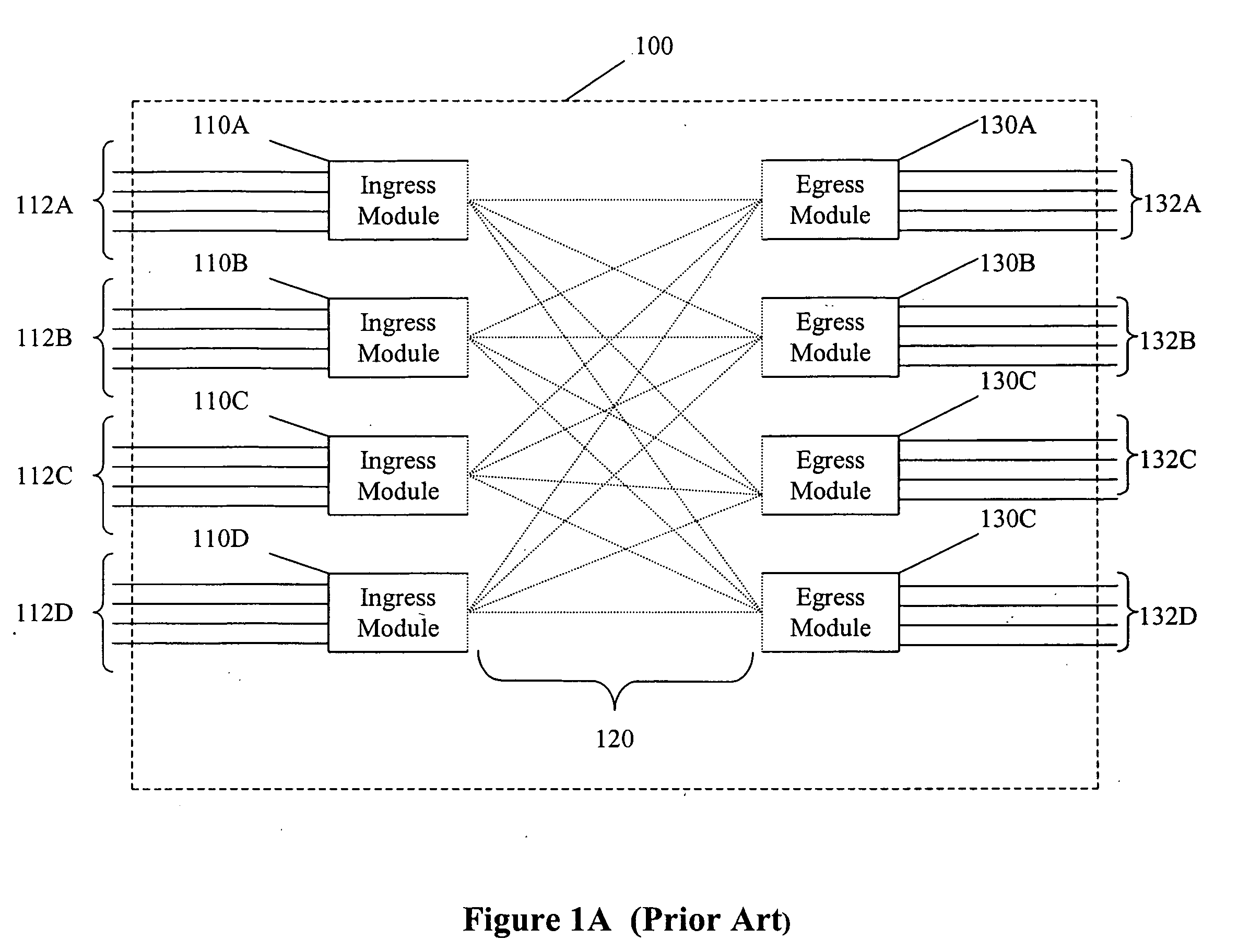

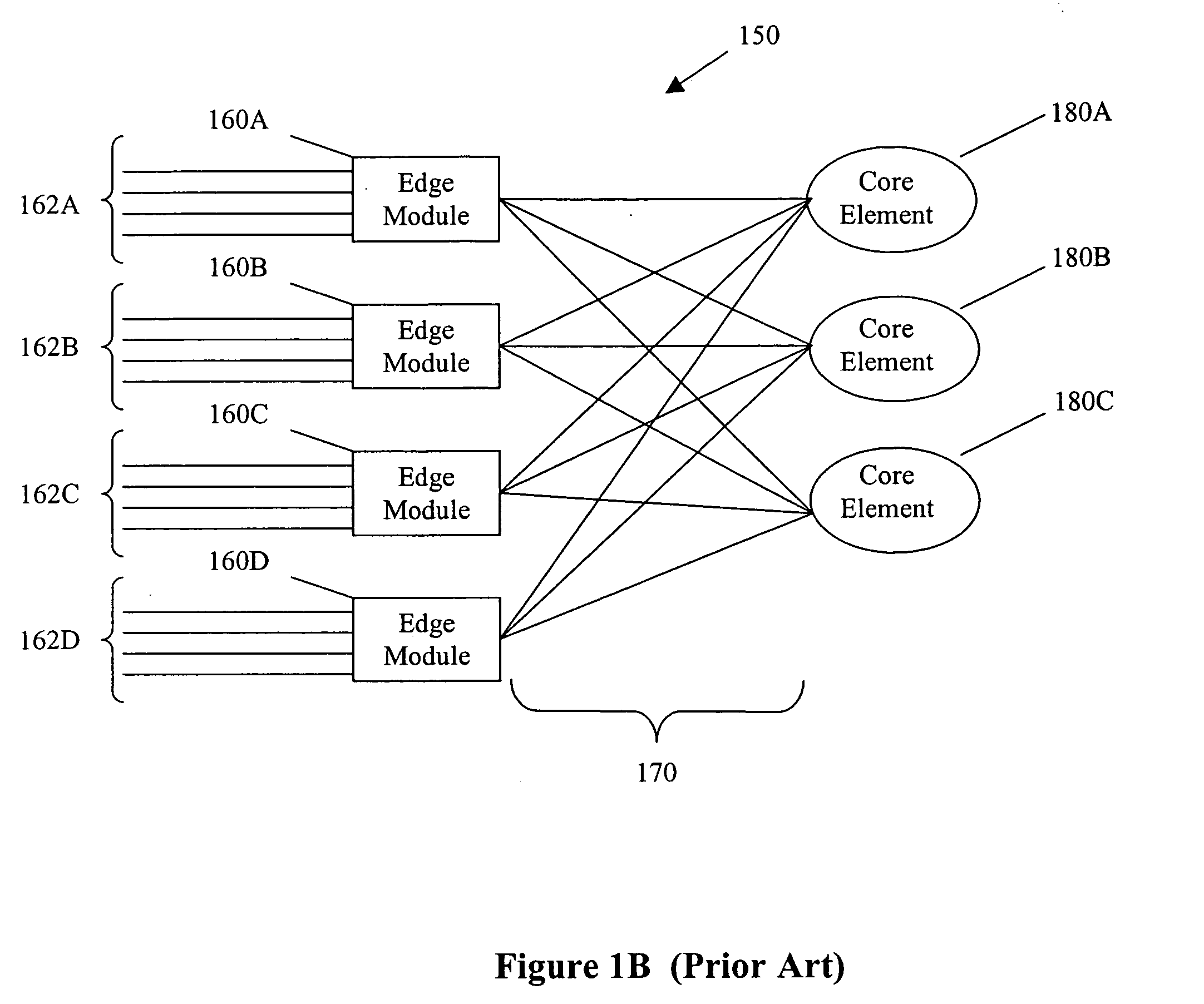

InactiveUS6259699B1Without impacting performance aspectPromote resultsData switching by path configurationStore-and-forward switching systemsAtm switchingQos management

A novel networking architecture and technique for transmitting both cells and packets or frames across a common switch fabric, effected, at least in part, by utilizing a common set of algorithms for the forwarding engine (the ingress side) and a common set of algorithms for the QoS management (the egress part) that are provided for each I / O module to process packet / cell information without impacting the correct operation of ATM switching and without transforming packets into cells for transfer across the switch fabric.

Owner:WSOU INVESTMENTS LLC +1

Storage traffic communication via a switch fabric in accordance with a VLAN

ActiveUS20130151646A1Improve performanceImprove efficiencyDigital computer detailsTransmissionTraffic capacityControl store

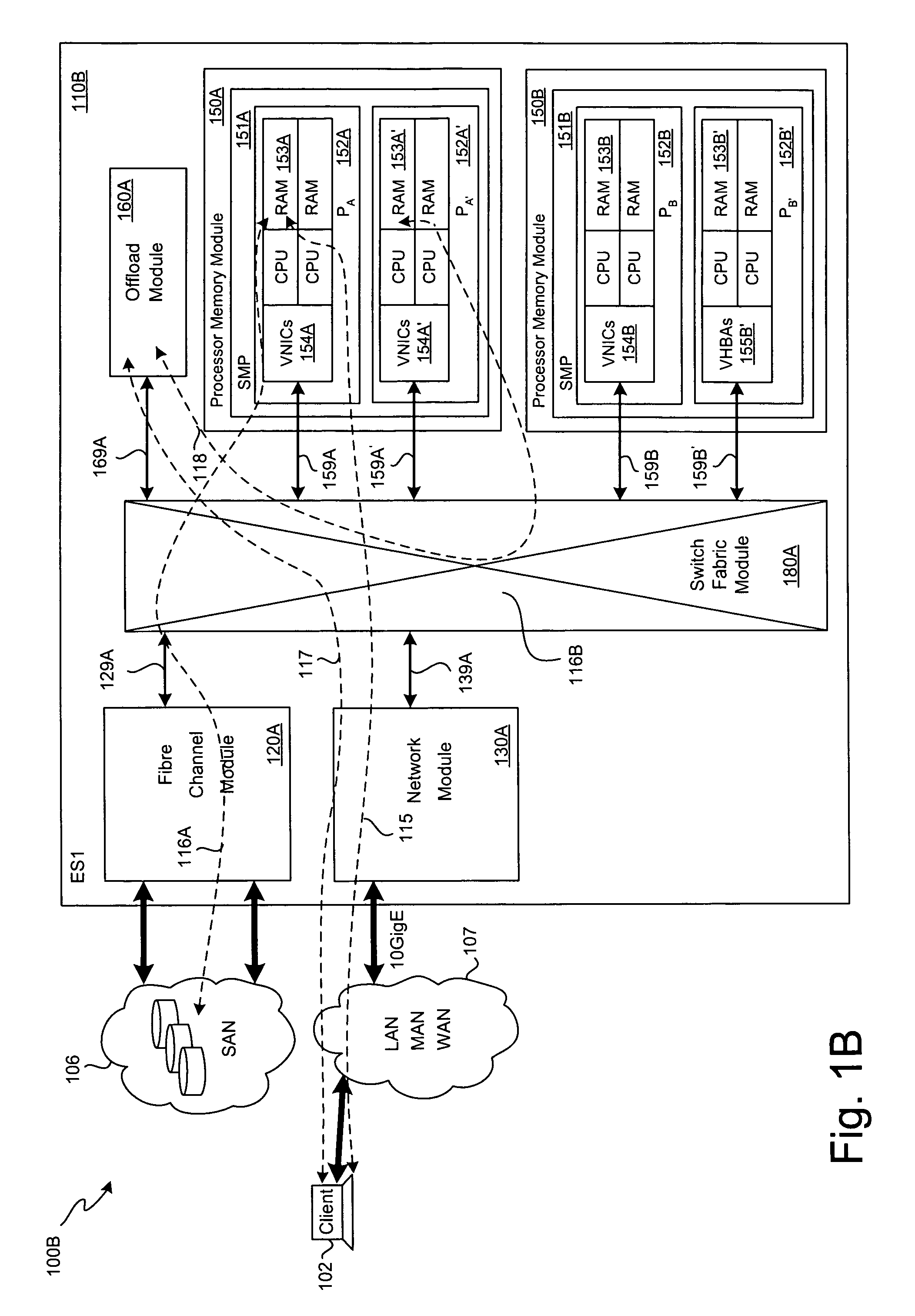

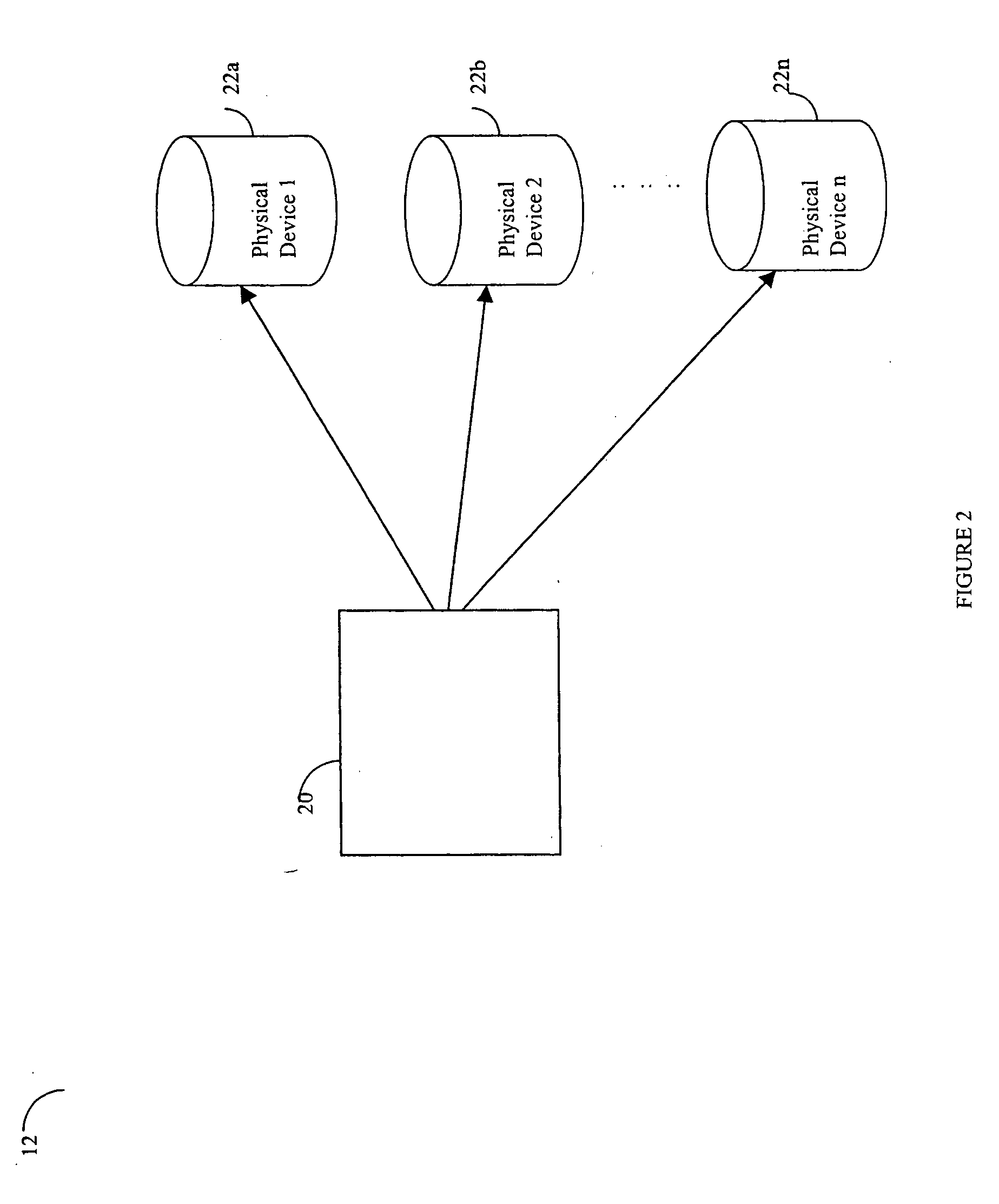

A plurality of SMP modules and an IOP module communicate storage traffic via respective corresponding I / O controllers coupled to respective physical ports of a switch fabric by addressing cells to physical port addresses corresponding to the physical ports. One of the SMPs executes initiator software to partially manage the storage traffic and the IOP executes target software to partially manage the storage traffic. Storage controllers are coupled to the IOP, enabling communication with storage devices, such as disk drives, tape drives, and / or networks of same. Respective network identification registers are included in each of the I / O controller corresponding to the SMP executing the initiator software and the I / O controller corresponding to the IOP. Transport of the storage traffic in accordance with a particular VLAN is enabled by writing a same particular value into each of the network identification registers.

Owner:ORACLE INT CORP

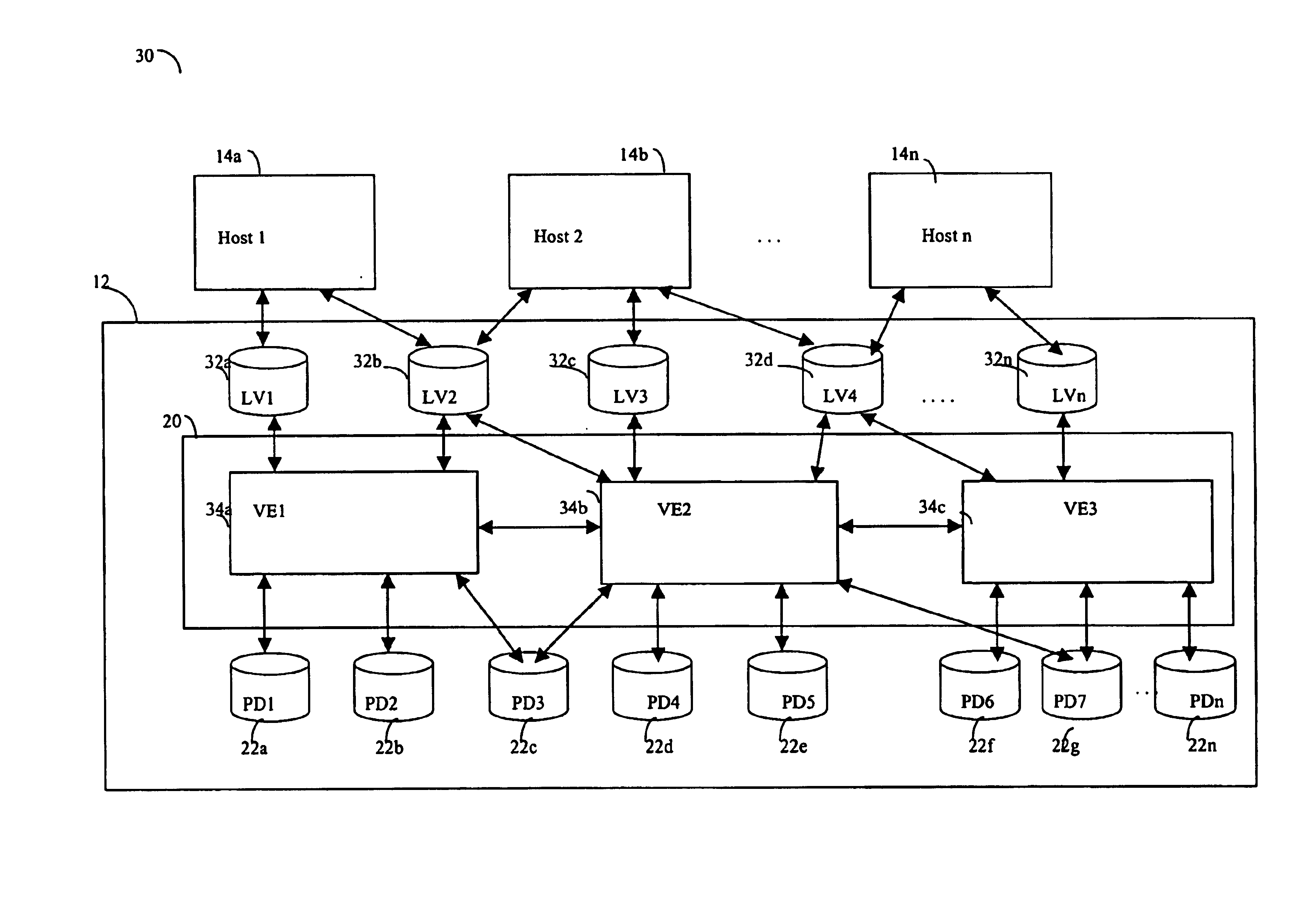

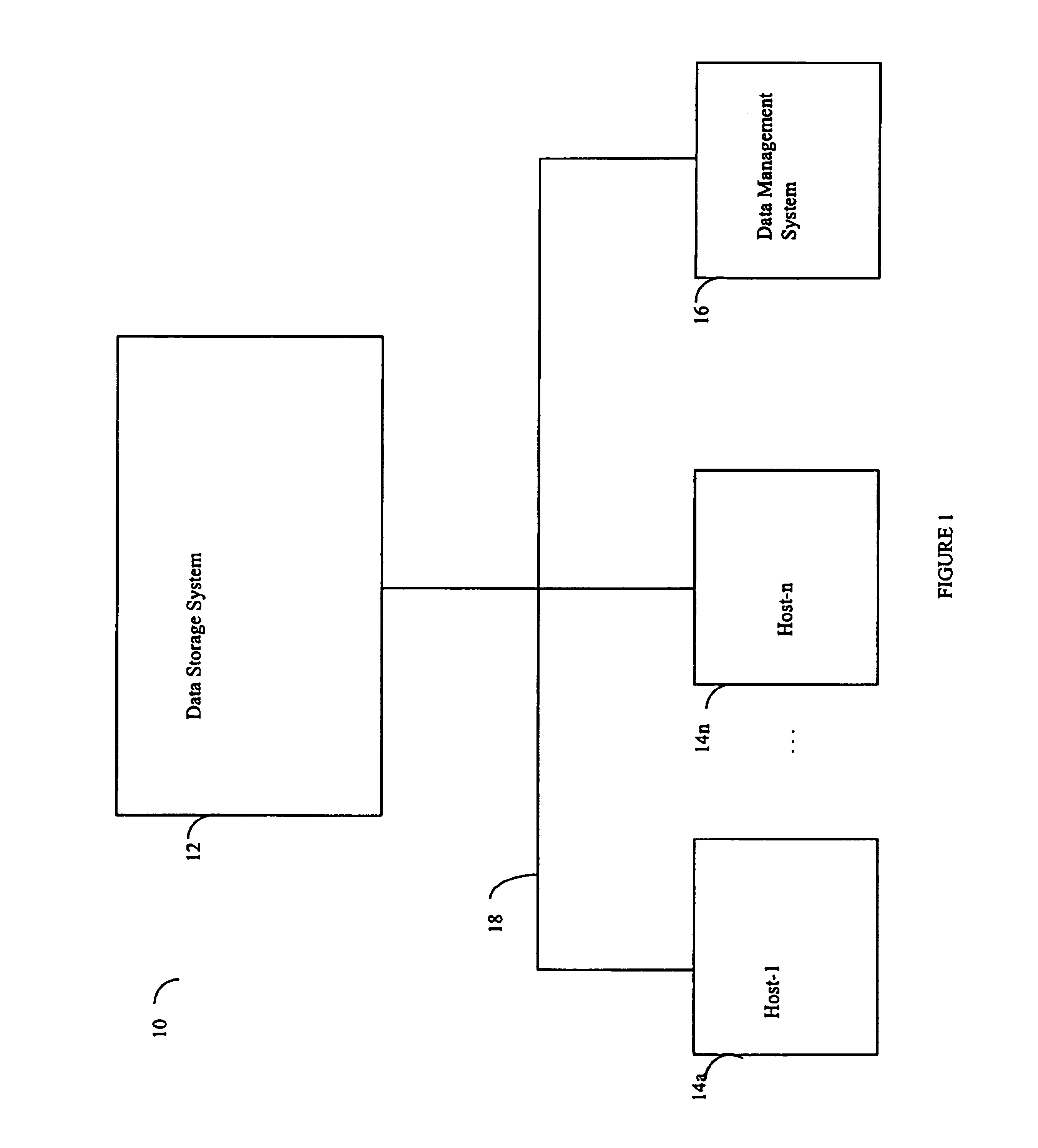

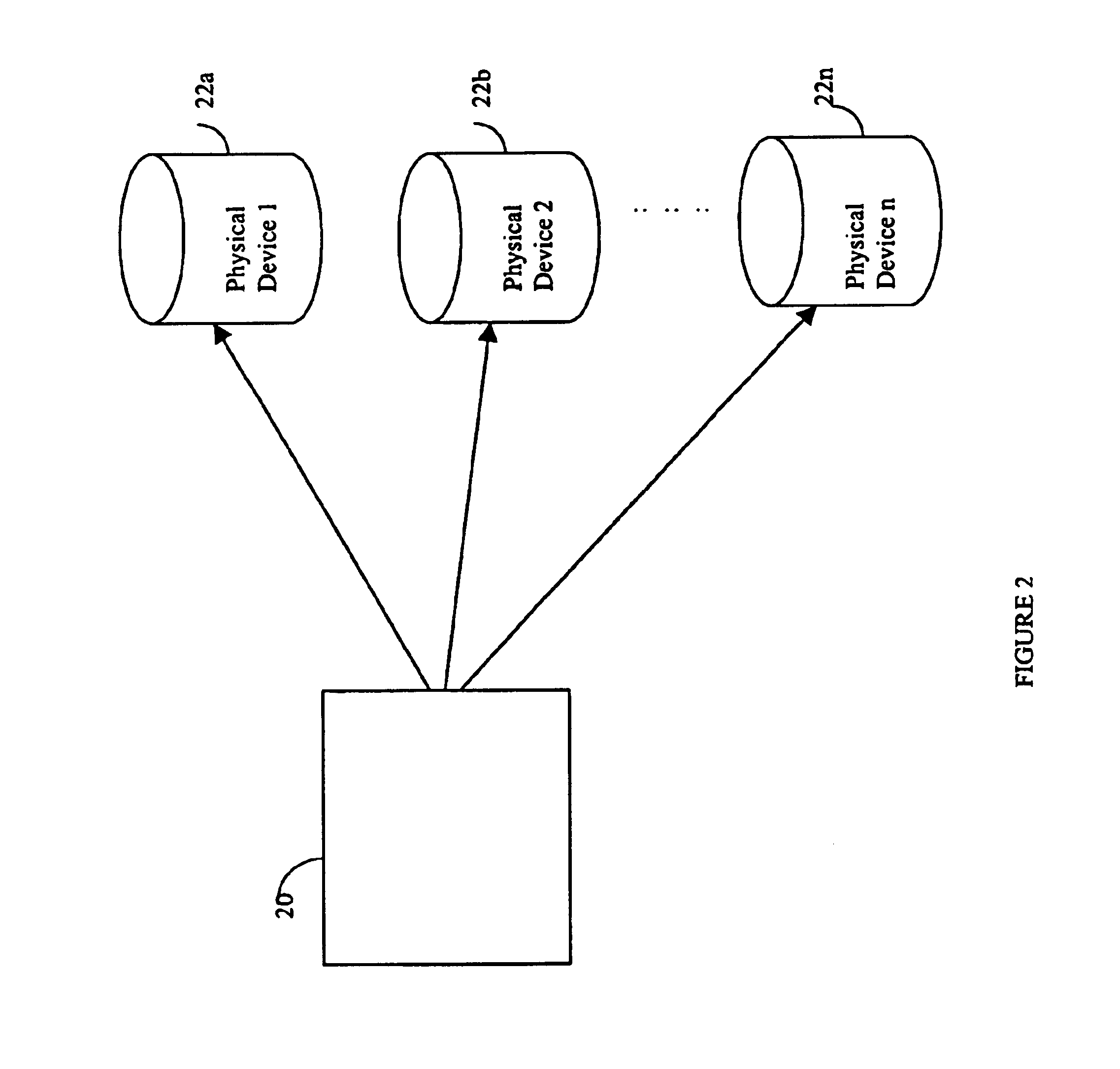

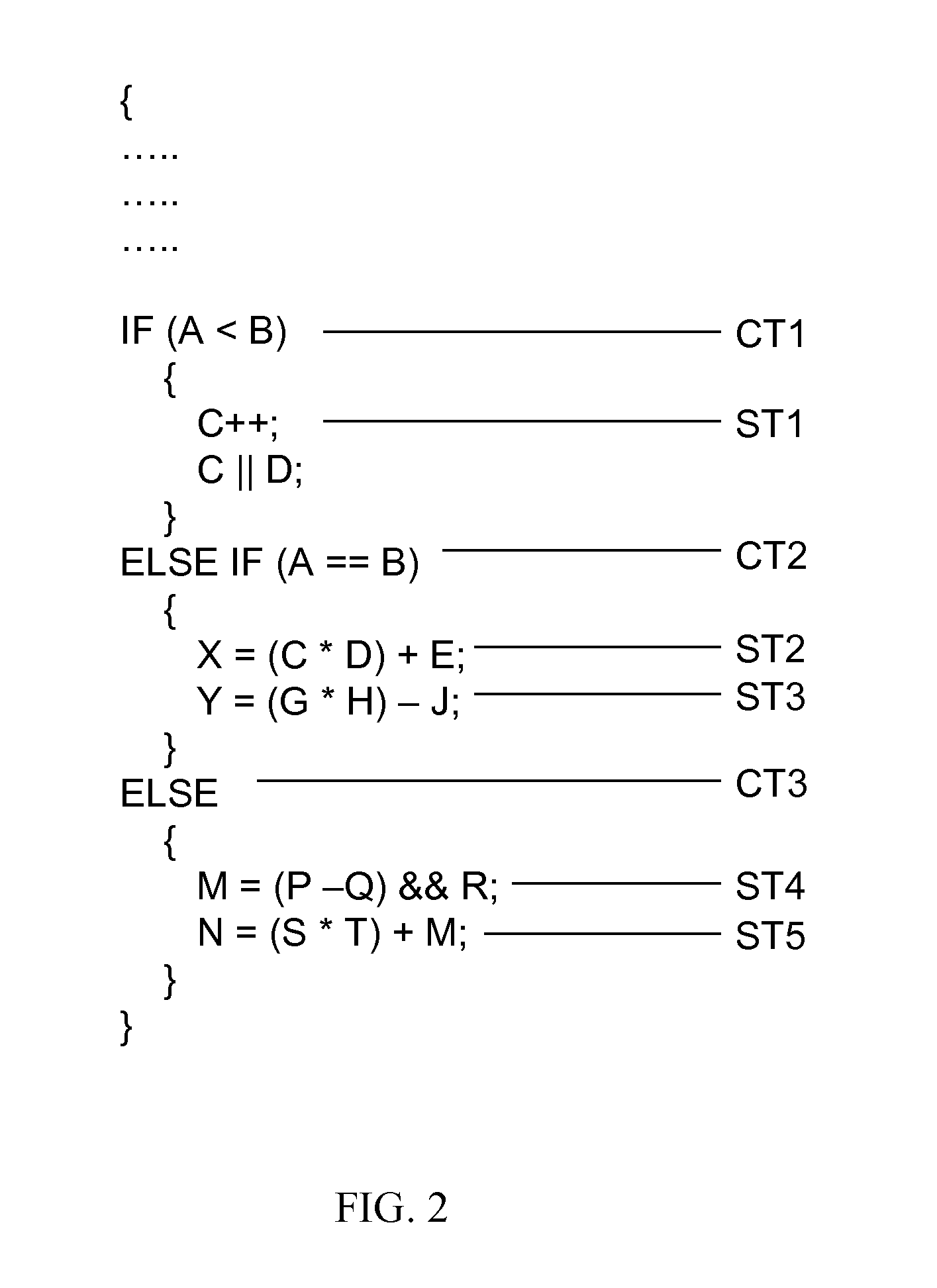

Locking technique for control and synchronization

InactiveUS6973549B1Digital data information retrievalMultiprogramming arrangementsFast pathLocking mechanism

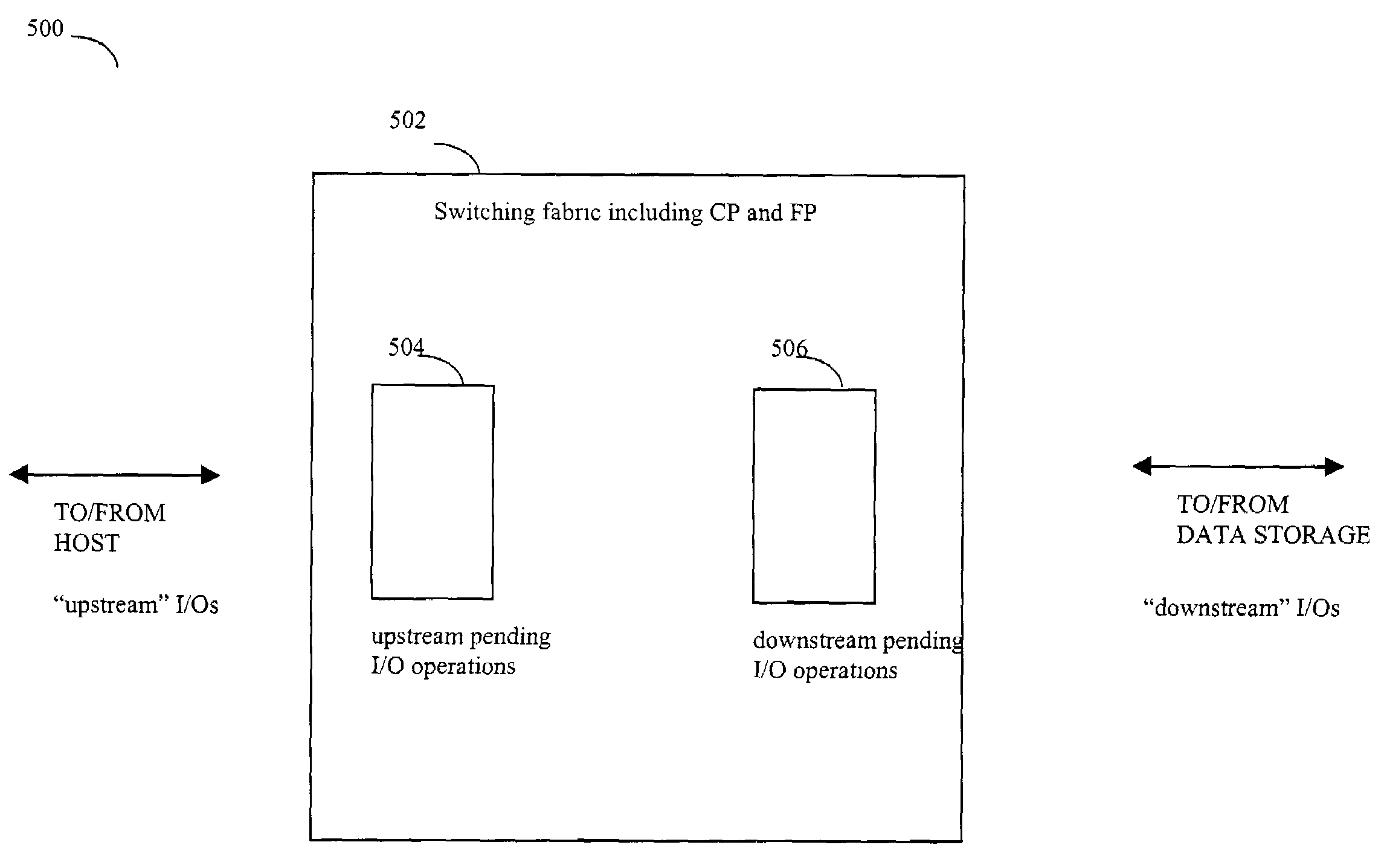

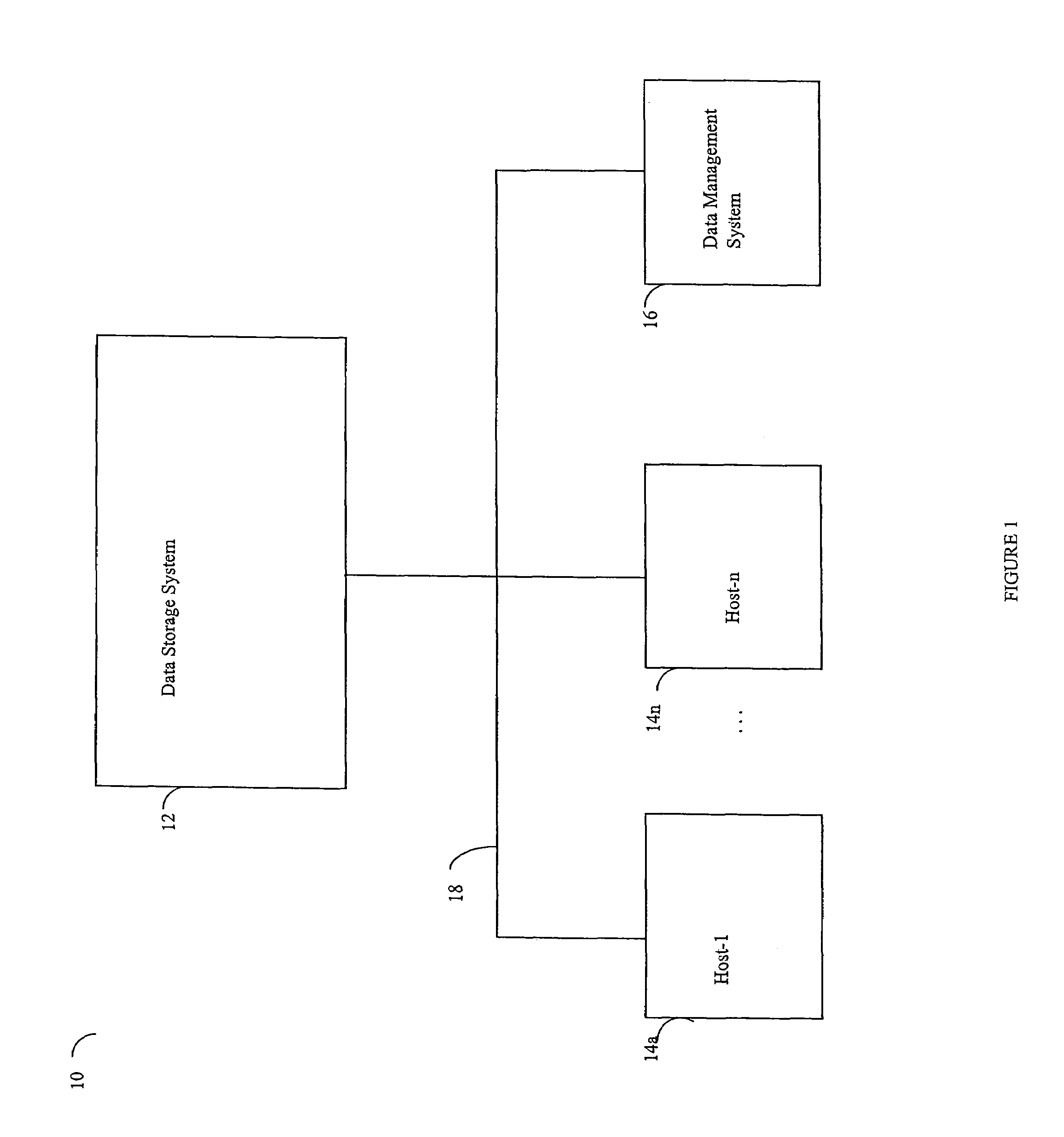

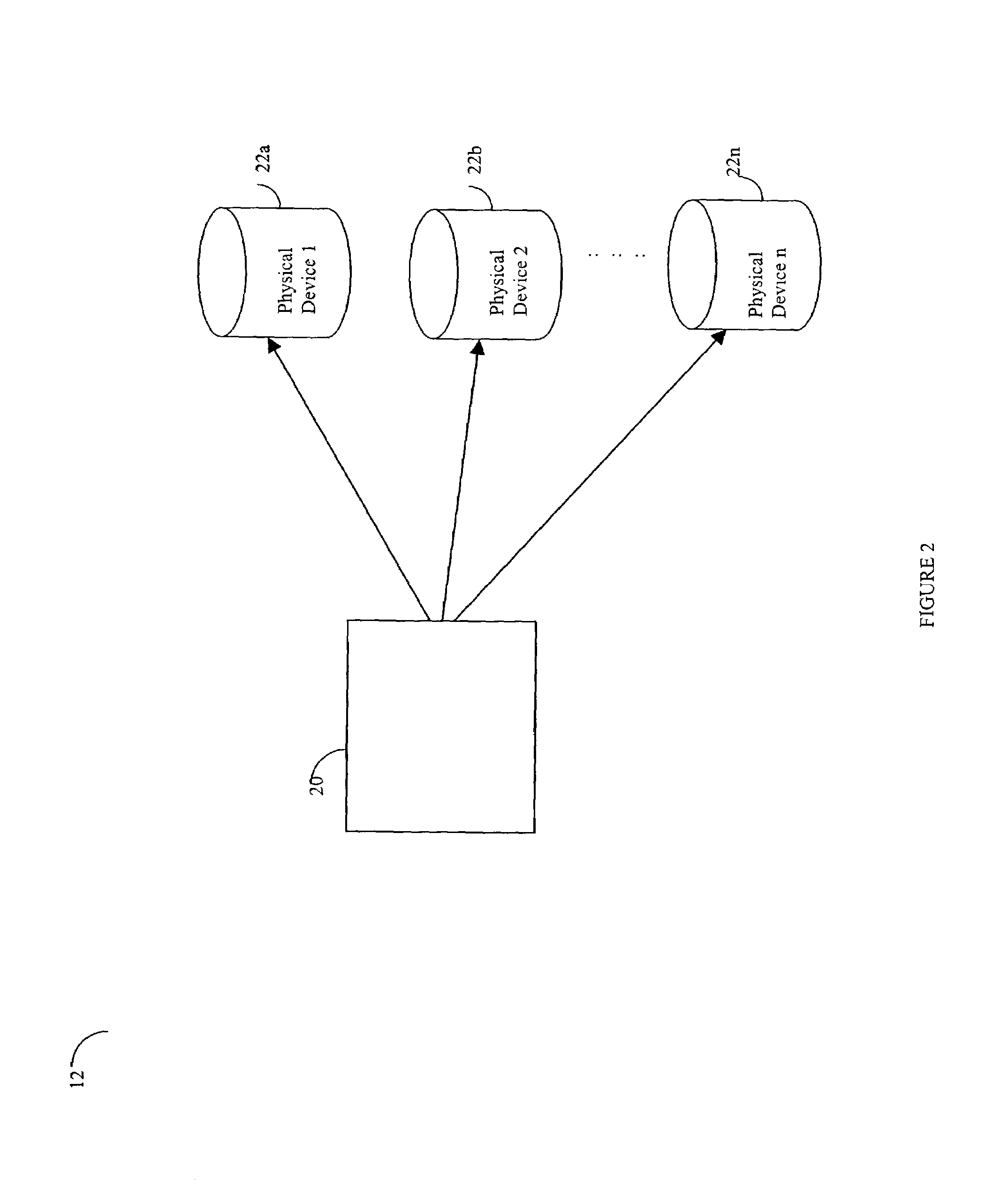

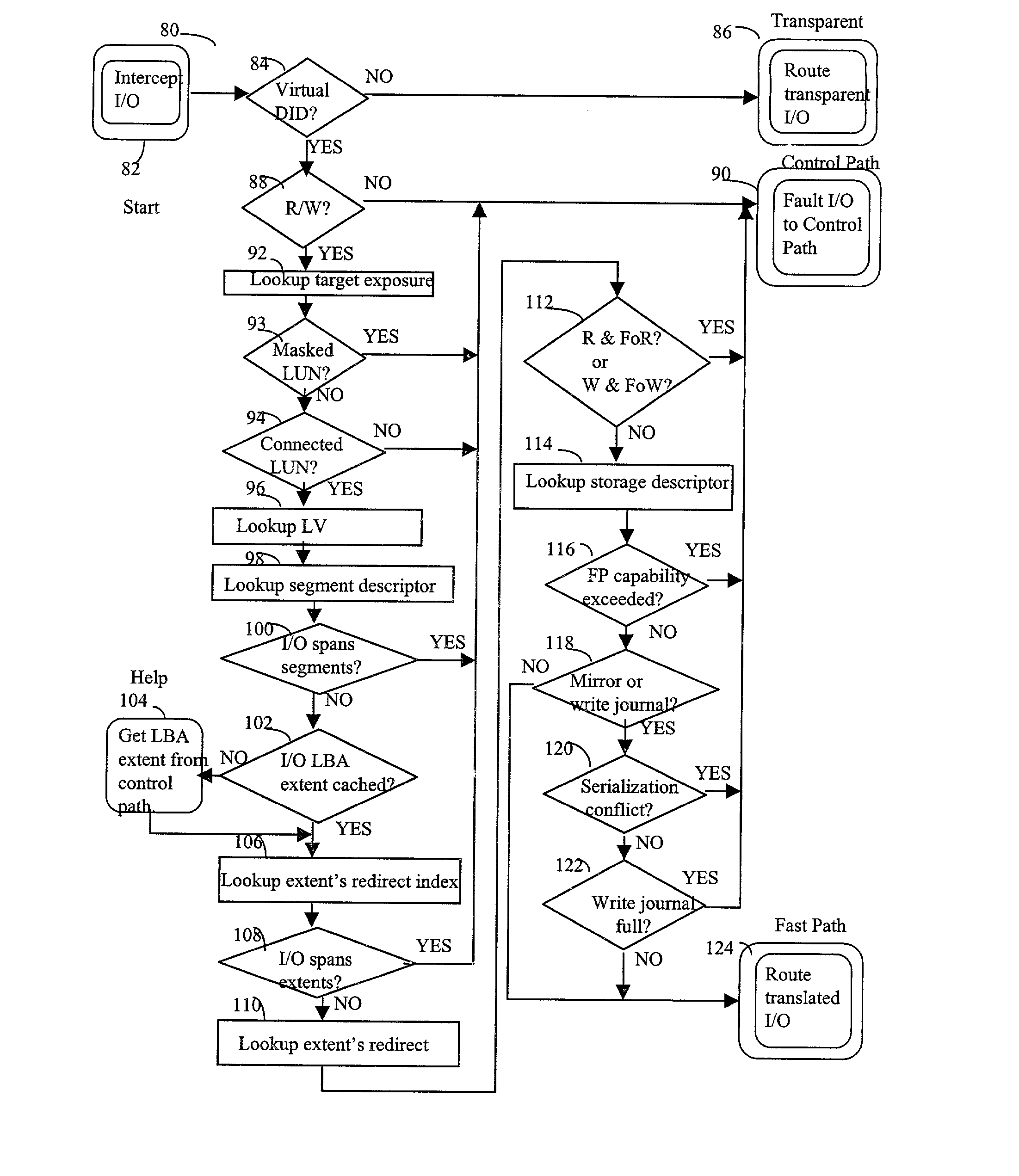

Described are techniques used in a computer system for handling data operations to storage devices. A switching fabric includes one or more fast paths for handling lightweight, common data operations and at least one control path for handling other data operations. A control path manages one or more fast paths. The fast path and the control path are utilized in mapping virtual to physical addresses using mapping tables. The mapping tables include an extent table of one or more entries corresponding to varying address ranges. The size of an extent may be changed dynamically in accordance with a corresponding state change of physical storage. The fast path may cache only portions of the extent table as needed in accordance with a caching technique. The fast path may cache a subset of the extent table stored within the control path. A set of primitives may be used in performing data operations. A locking mechanism is described for controlling access to data shared by the control paths.

Owner:IBM CORP

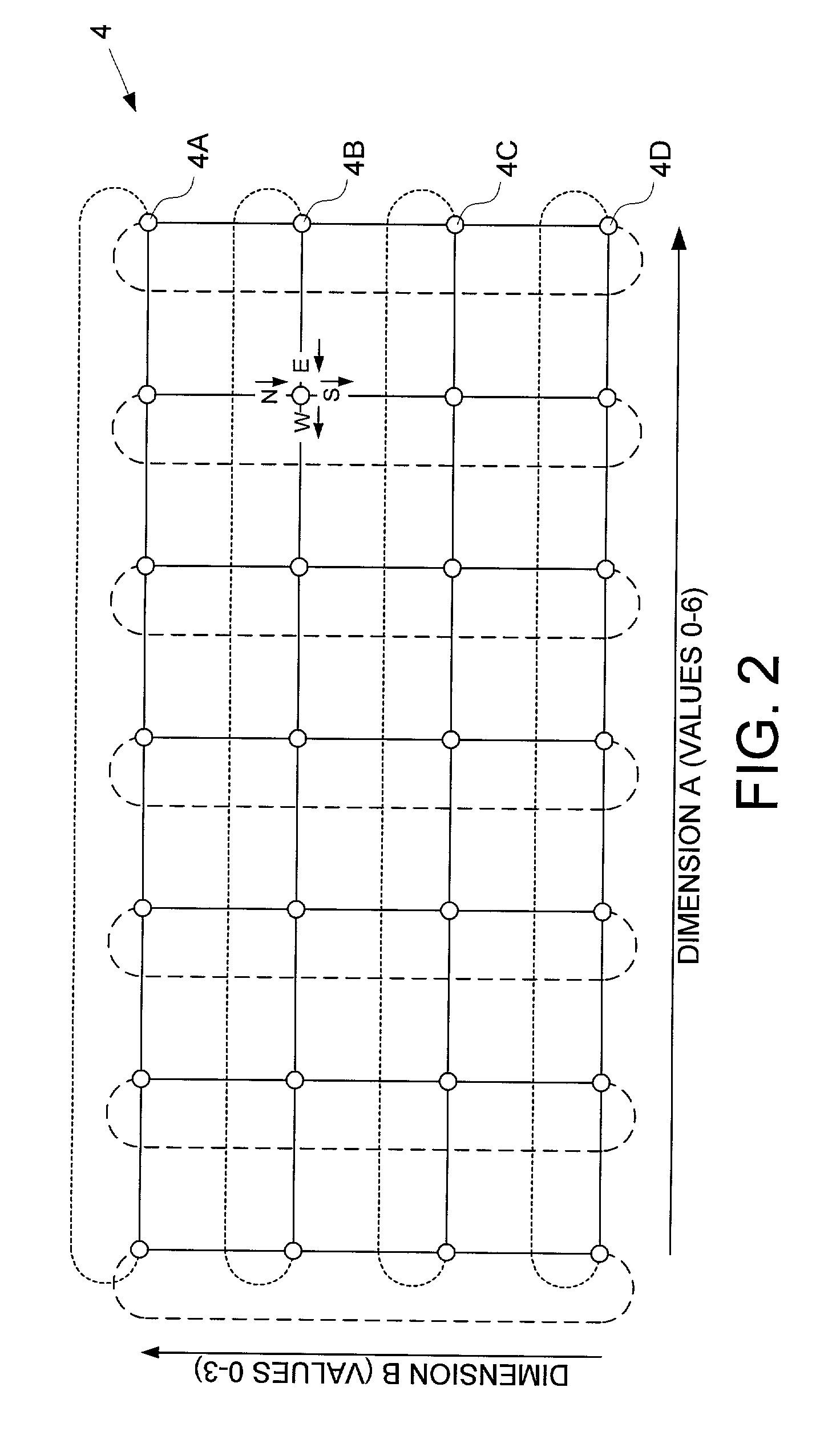

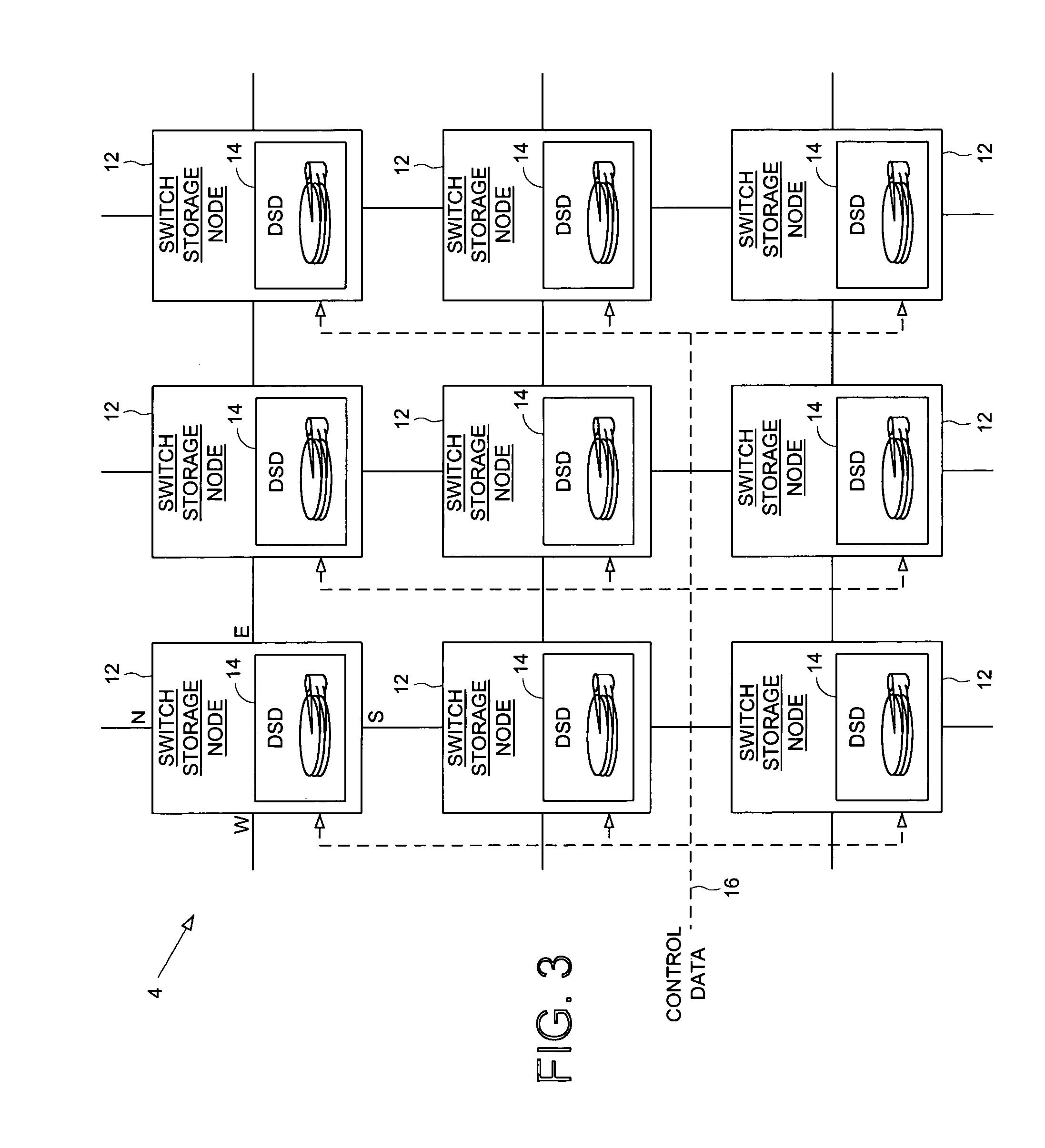

Isochronous switched fabric network

An isochronous switched fabric network is disclosed comprising a plurality of interconnected switched nodes forming multiple dimensions, each switched node comprising an upstream port and a downstream port for each dimension, each upstream and downstream port comprising an input port and an output port. A discovery facility discovers a depth of each dimension, and discovers resources within each switched node. An addressing facility assigns a matrix address to each switched node, a resource reservation facility reserves resources within each switched node to establish a path through the switched fabric network for transmitting an isochronous data stream, and a scheduling facility schedules isochronous data transmitted through the switched fabric network.

Owner:WESTERN DIGITAL TECH INC

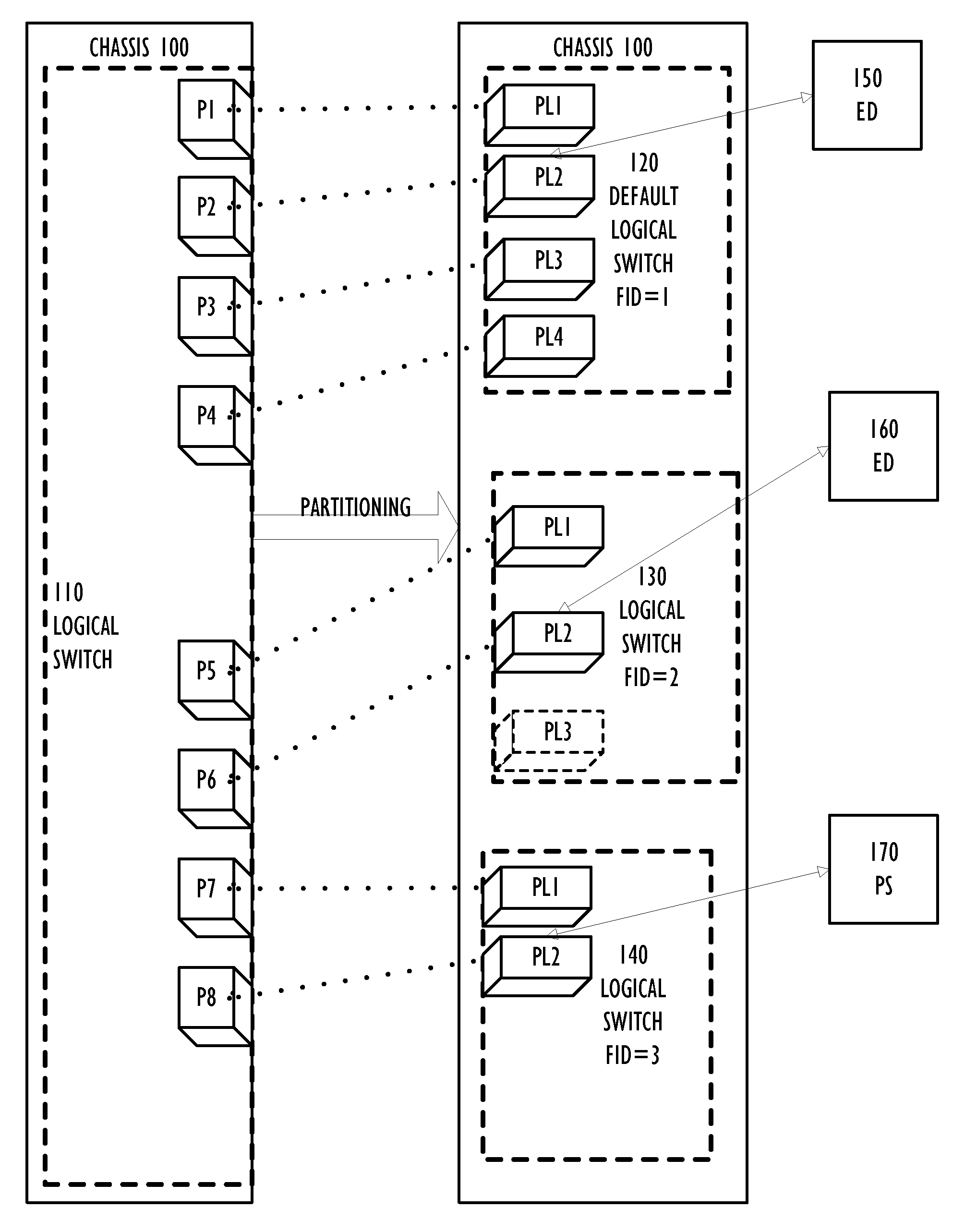

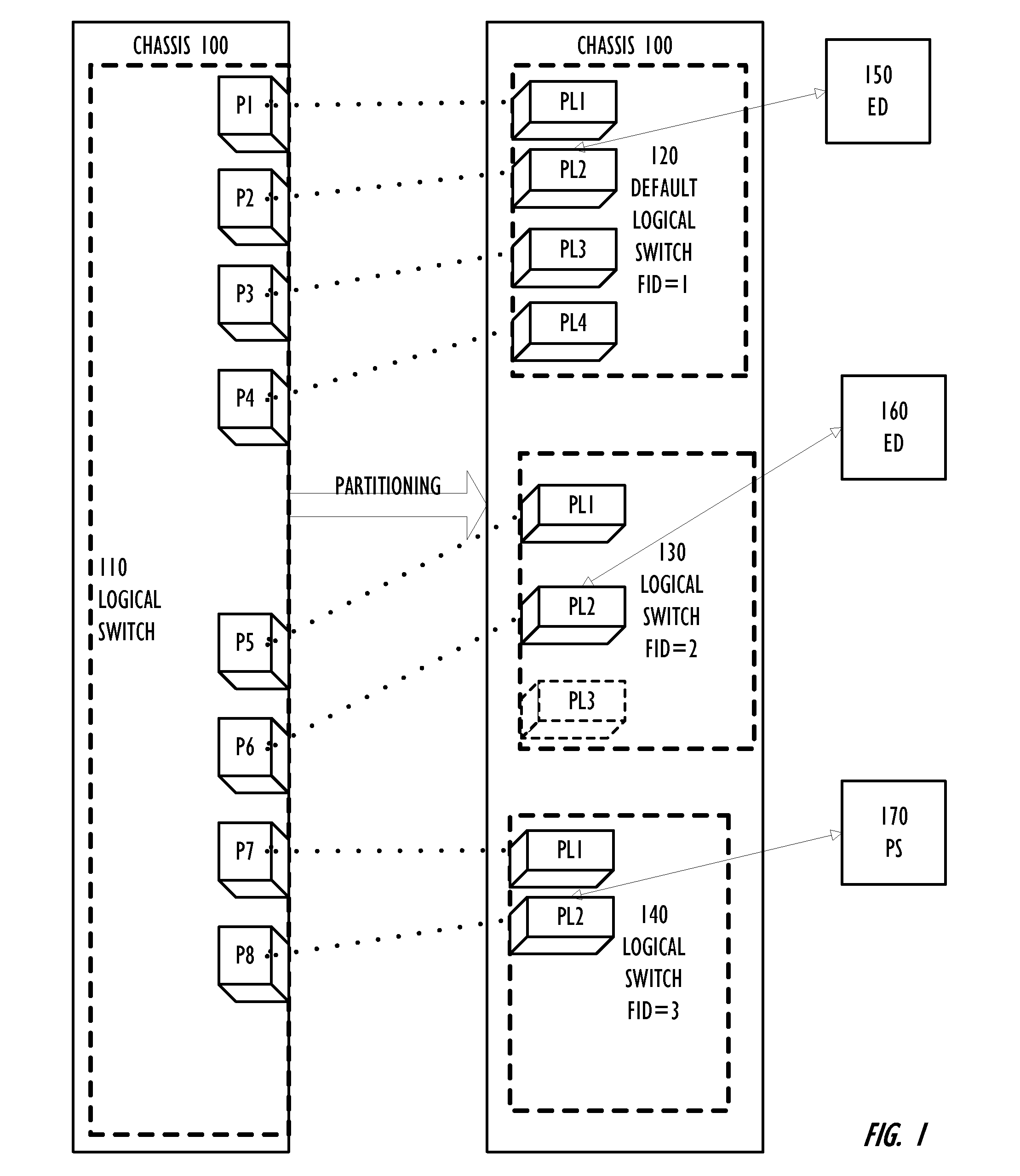

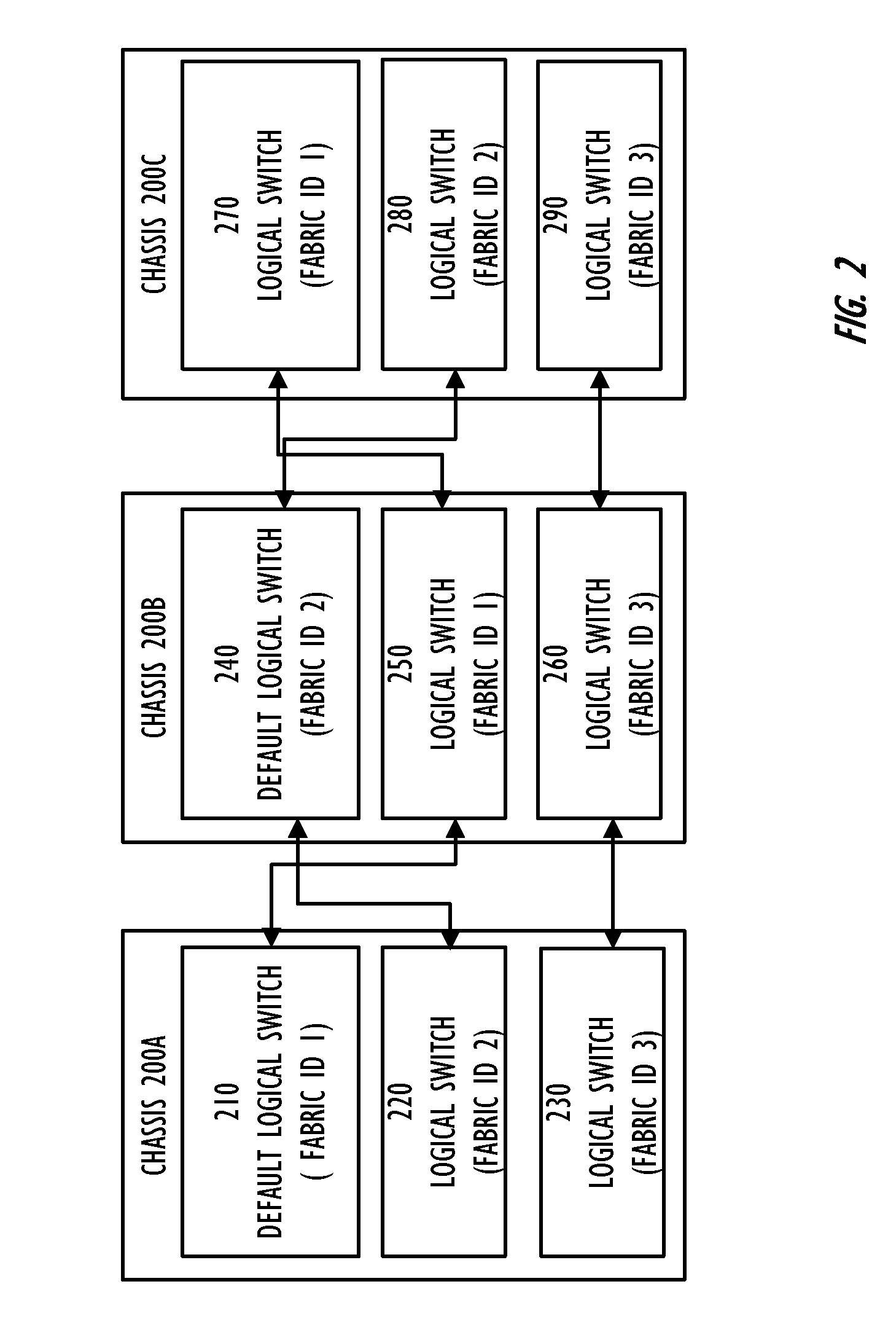

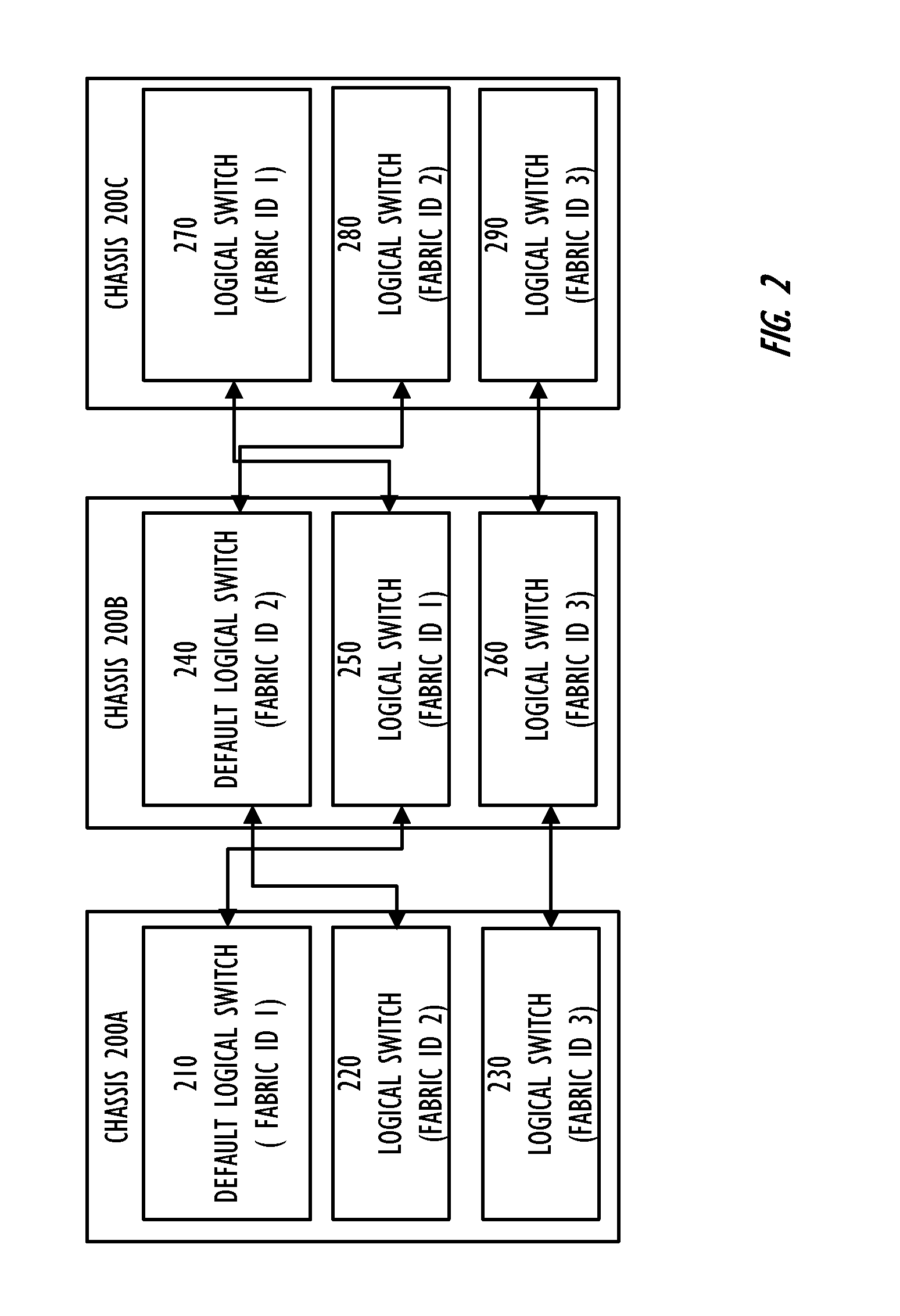

Partitioning of Switches and Fabrics into Logical Switches and Fabrics

A Layer 2 network switch is partitionable into a plurality of switch fabrics. The single-chassis switch is partitionable into a plurality of logical switches, each associated with one of the virtual fabrics. The logical switches behave as complete and self-contained switches. A logical switch fabric can span multiple single-chassis switch chassis. Logical switches are connected by inter-switch links that can be either dedicated single-chassis links or logical links. An extended inter-switch link can be used to transport traffic for one or more logical inter-switch links. Physical ports of the chassis are assigned to logical switches and are managed by the logical switch. Legacy switches that are not partitionable into logical switches can serve as transit switches between two logical switches.

Owner:AVAGO TECH INT SALES PTE LTD

Transit Switches in a Network of Logical Switches

ActiveUS20110085559A1Data switching by path configurationWireless commuication servicesComputer architectureTier 2 network

A Layer 2 network switch is partitionable into a plurality of switch fabrics. The single-chassis switch is partitionable into a plurality of logical switches, each associated with one of the virtual fabrics. The logical switches behave as complete and self-contained switches. A logical switch fabric can span multiple single-chassis switch chassis. Logical switches are connected by inter-switch links that can be either dedicated single-chassis links or logical links. An extended inter-switch link can be used to transport traffic for one or more logical inter-switch links. Physical ports of the chassis are assigned to logical switches and are managed by the logical switch. Legacy switches that are not partitionable into logical switches can serve as transit switches between two logical switches.

Owner:AVAGO TECH INT SALES PTE LTD

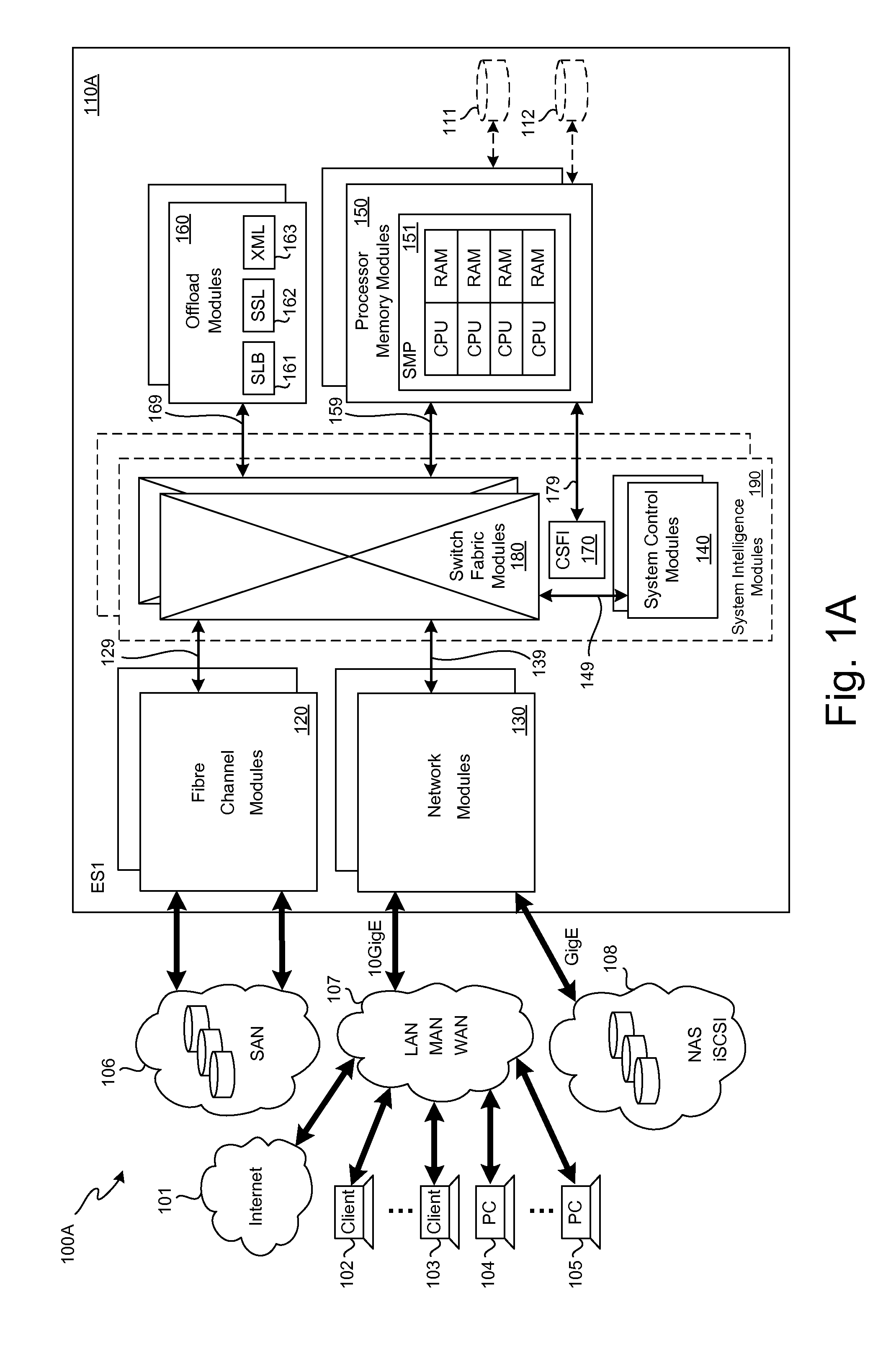

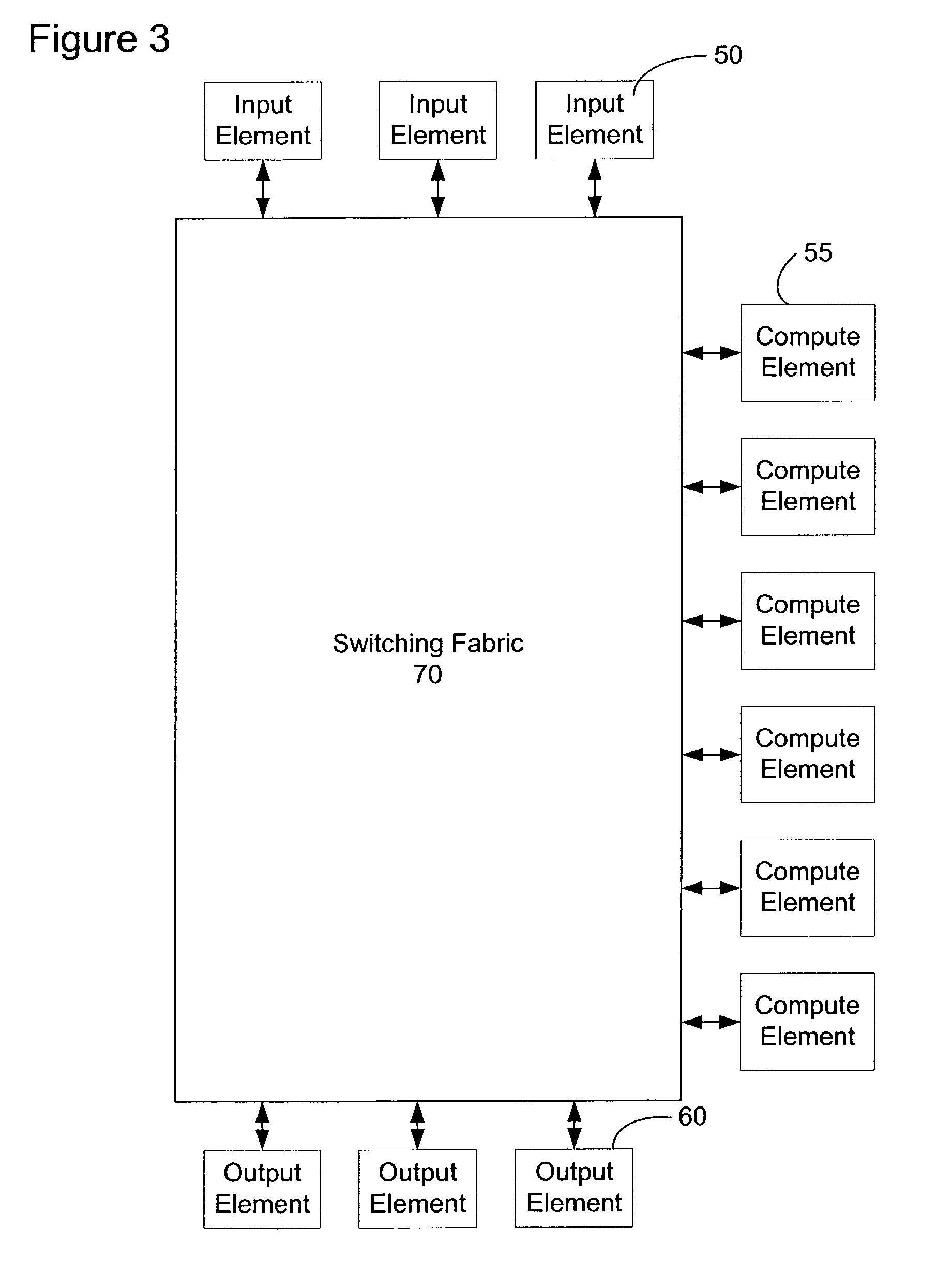

Highly scalable architecture for application network appliances

A highly scalable application network appliance is described herein. According to one embodiment, a network element includes a switch fabric, a first service module coupled to the switch fabric, and a second service module coupled to the first service module over the switch fabric. In response to packets of a network transaction received from a client over a first network to access a server of a data center having multiple servers over a second network, the first service module is configured to perform a first portion of OSI (open system interconnection) compatible layers of network processes on the packets while the second service module is configured to perform a second portion of the OSI compatible layers of network processes on the packets. The first portion includes at least one OSI compatible layer that is not included in the second portion. Other methods and apparatuses are also described.

Owner:CISCO TECH INC

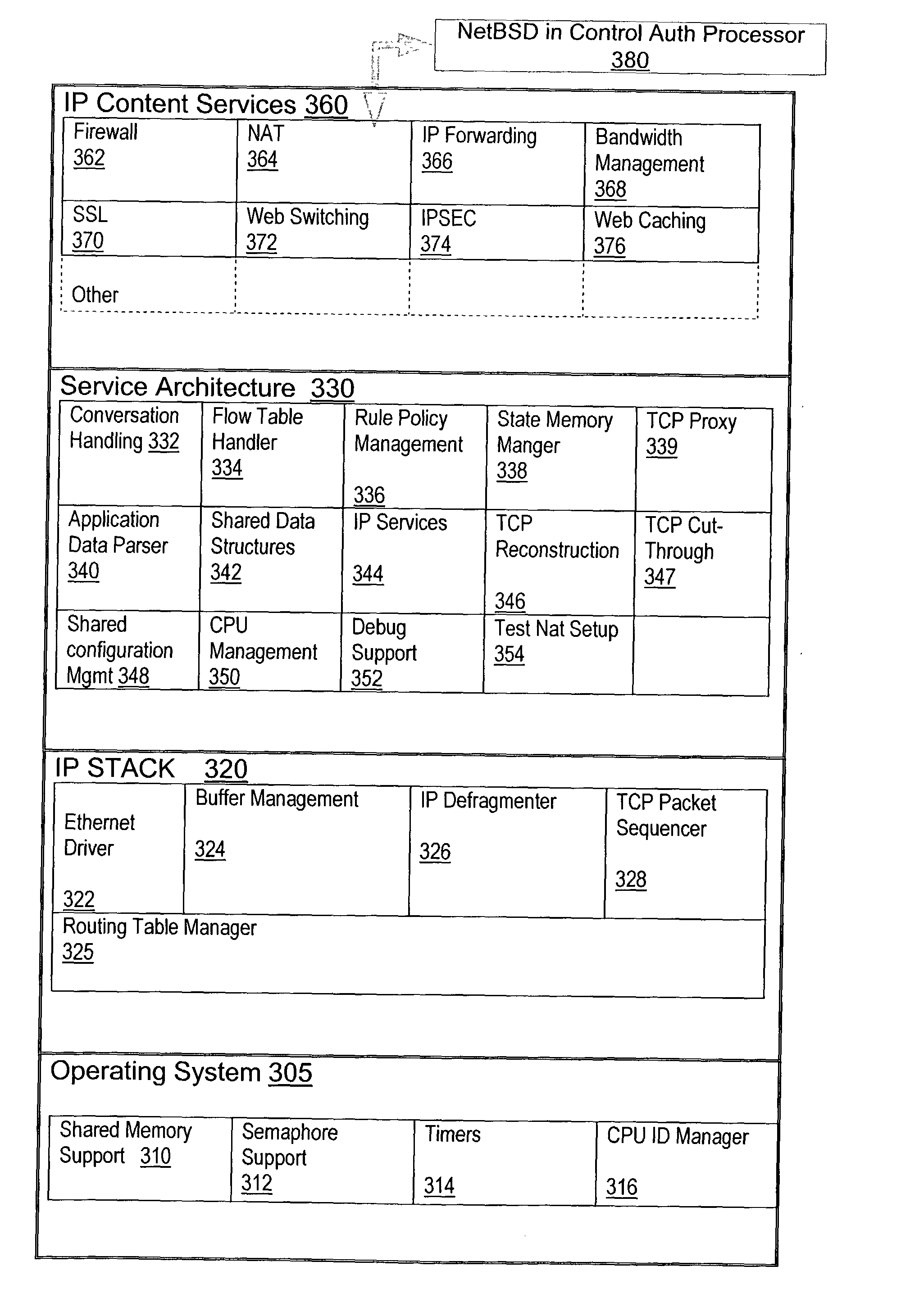

Content service aggregation system

ActiveUS20030126233A1Easy to processMultiple digital computer combinationsElectric digital data processingPrivate networkStructure of Management Information

A network content service apparatus includes a set of compute elements adapted to perform a set of network services; and a switching fabric coupling compute elements in said set of compute elements. The set of network services includes firewall protection, Network Address Translation, Internet Protocol forwarding, bandwidth management, Secure Sockets Layer operations, Web caching, Web switching, and virtual private networking. Code operable on the compute elements enables the network services, and the compute elements are provided on blades which further include at least one input / output port.

Owner:NEXSI SYST +1

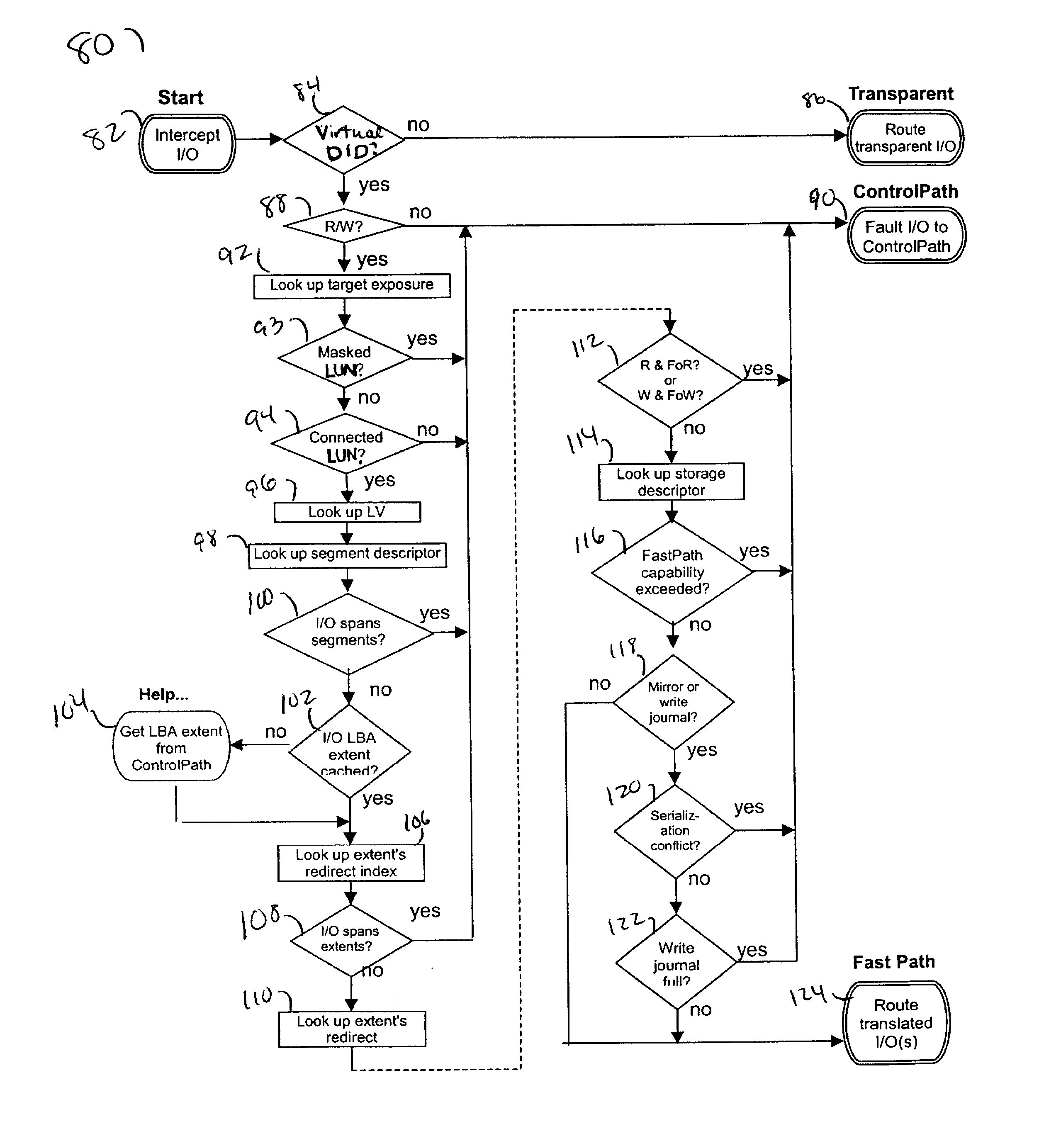

Fast path caching

InactiveUS20030140209A1Input/output to record carriersMemory adressing/allocation/relocationFast pathData operations

Described are techniques used in a computer system for handling data operations to storage devices. A switching fabric includes one or more fast paths for handling lightweight, common data operations and at least one control path for handling other data operations. A control path manages one or more fast paths. The fast path and the control path are utilized in mapping virtual to physical addresses using mapping tables. The mapping tables include an extent table of one or more entries corresponding to varying address ranges. The size of an extent may be changed dynamically in accordance with a corresponding state change of physical storage. The fast path may cache only portions of the extent table as needed in accordance with a caching technique. The fast path may cache a subset of the extent table stored within the control path. A set of primitives may be used in performing data operations. A locking mechanism is described for controlling access to data shared by the control paths.

Owner:IBM CORP

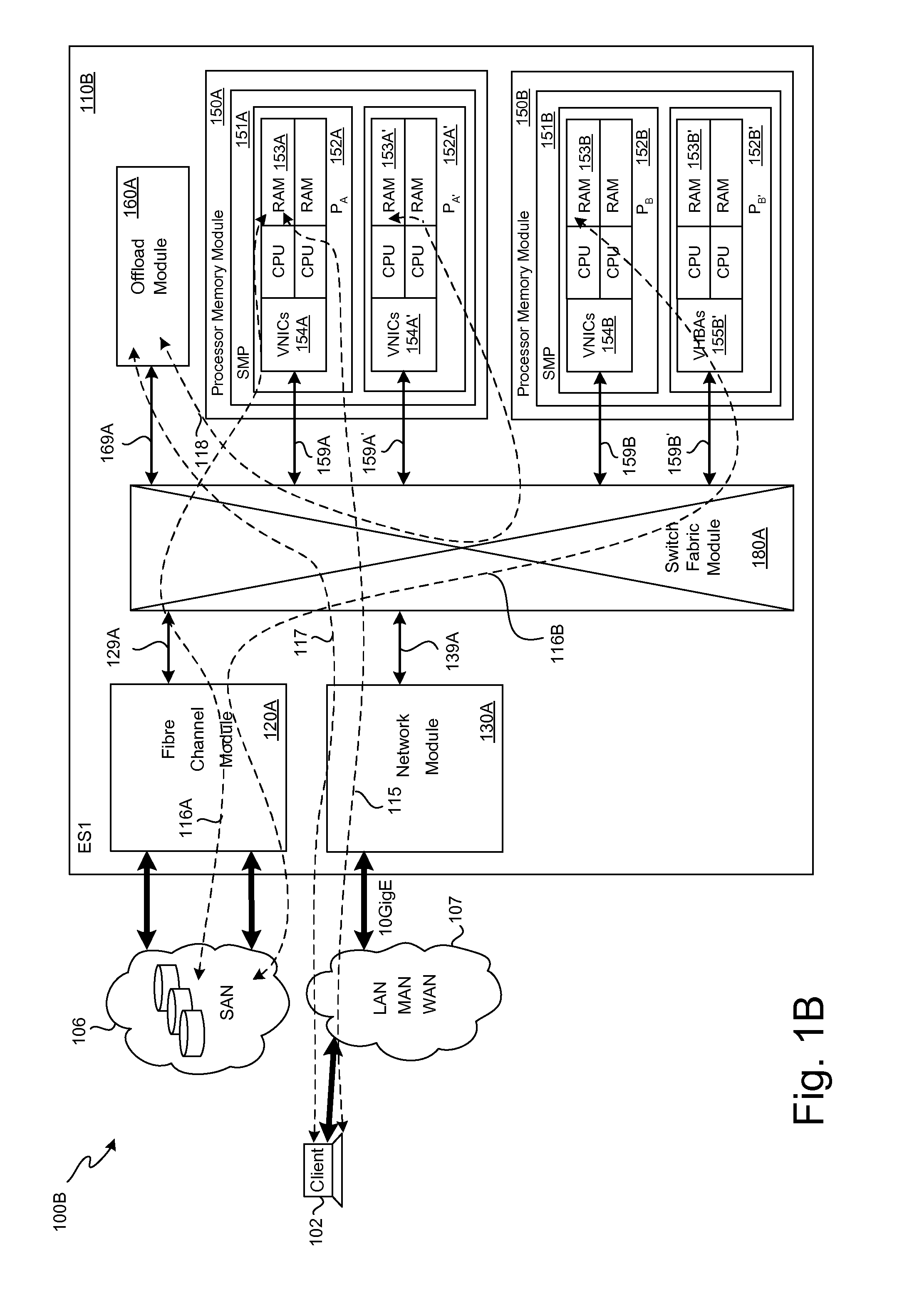

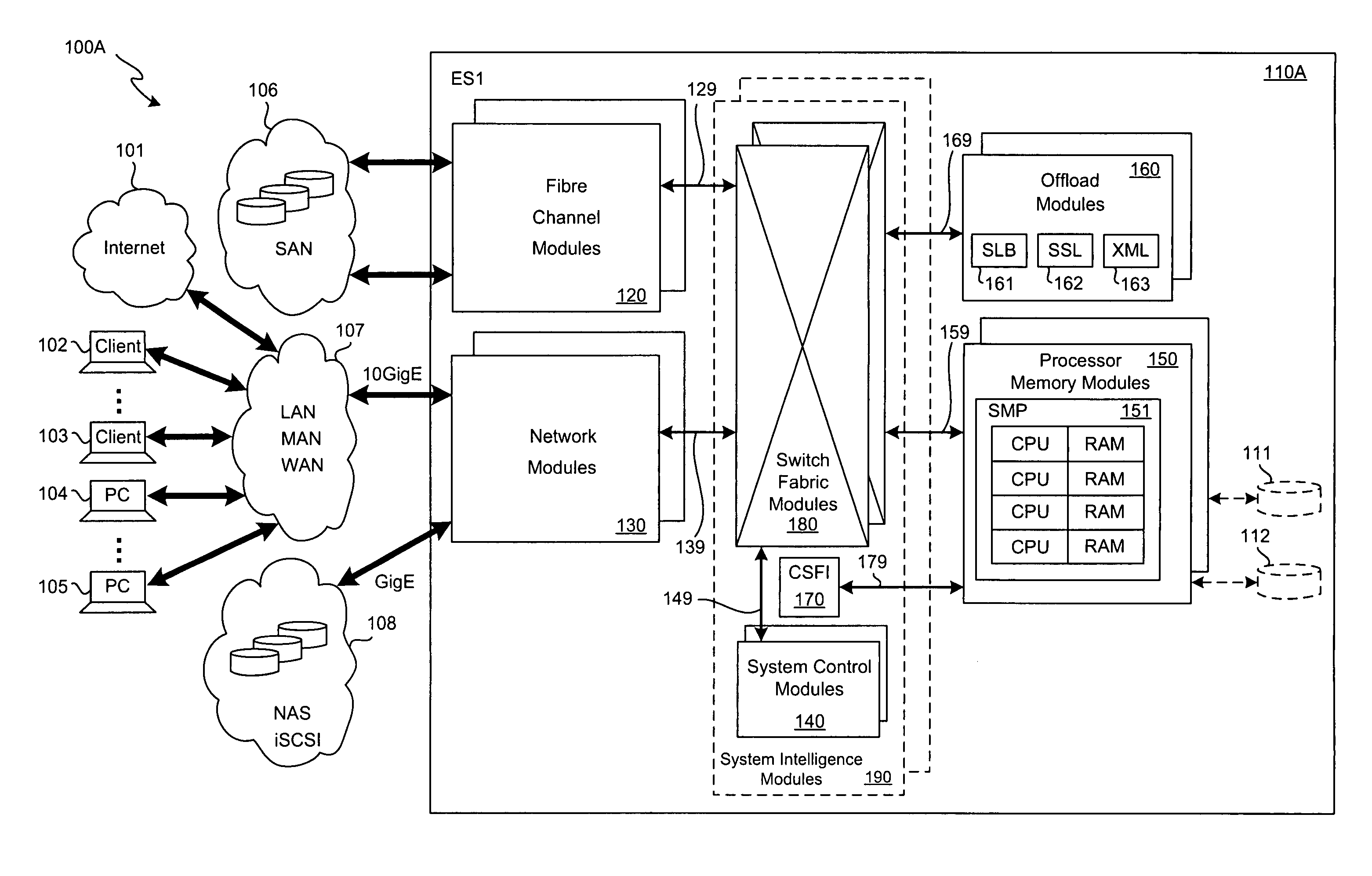

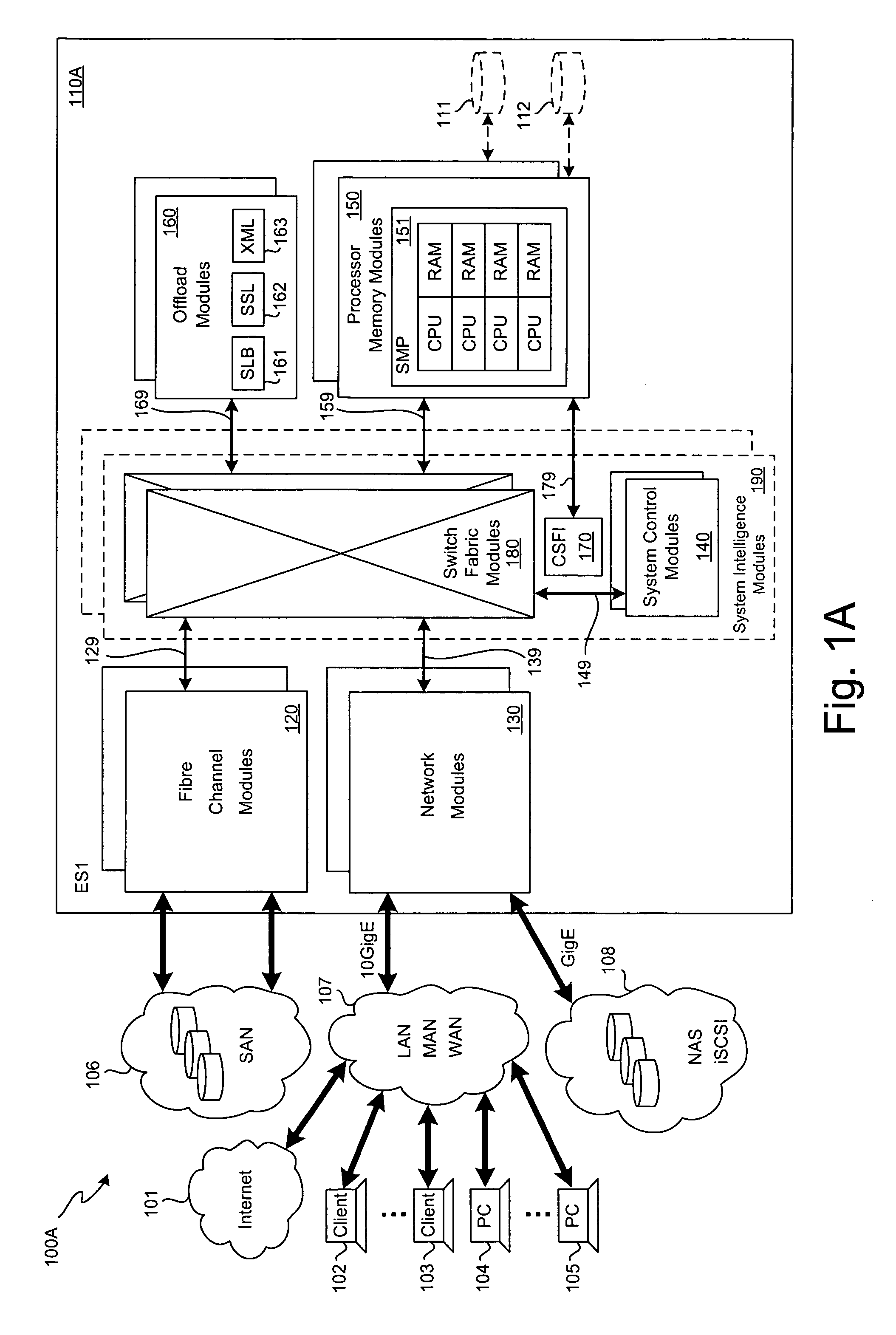

SCSI transport for fabric-backplane enterprise servers

ActiveUS7633955B1Improve performanceImprove efficiencyDigital computer detailsData switching by path configurationSCSI initiator and targetFiber

A Small Computer System Interface (SCSI) transport for fabric backplane enterprise servers provides for local and remote communication of storage system information between storage sub-system elements of an ES system and other elements of an ES system via a storage interface. The transport includes encapsulation of information for communication via a reliable transport implemented in part across a cellifying switch fabric. The transport may optionally include communication via Ethernet frames over any of a local network or the Internet. Remote Direct Memory Access (RDMA) and Direct Data Placement (DDP) protocols are used to communicate the information (commands, responses, and data) between SCSI initiator and target end-points. A Fibre Channel Module (FCM) may be operated as a SCSI target providing a storage interface to any of a Processor Memory Module (PMM), a System Control Module (SCM), and an OffLoad Module (OLM) operated as a SCSI initiator.

Owner:ORACLE INT CORP

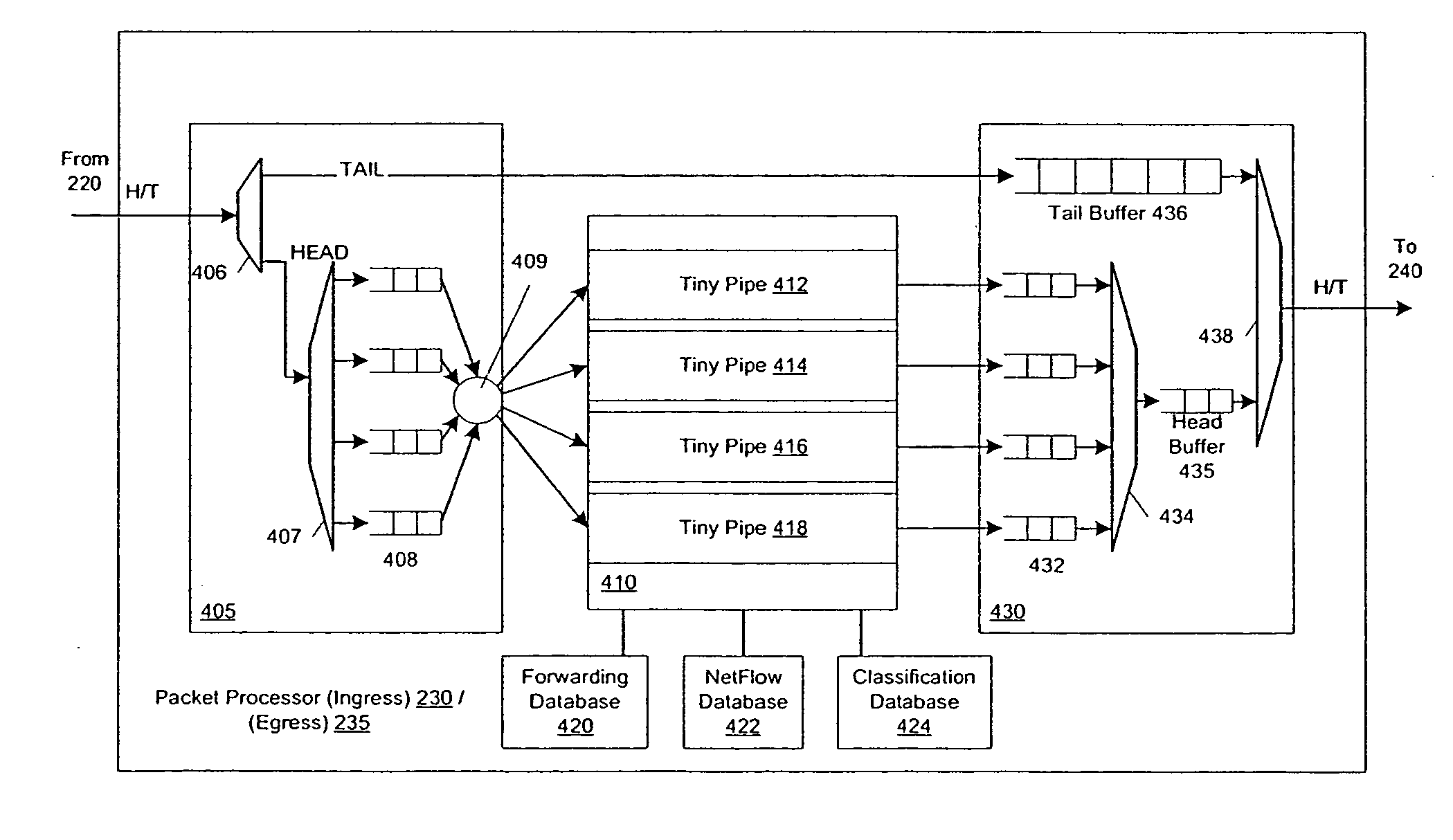

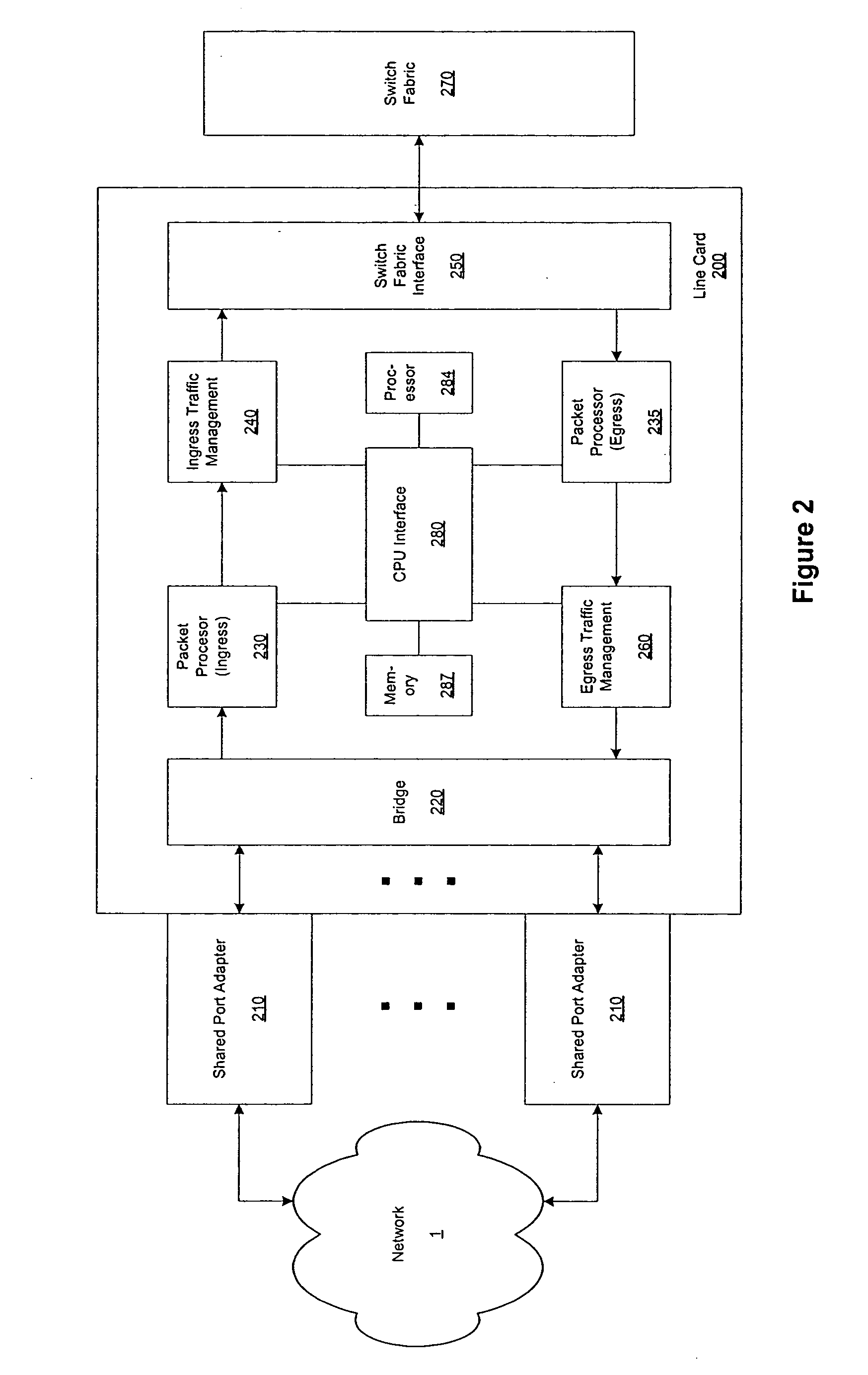

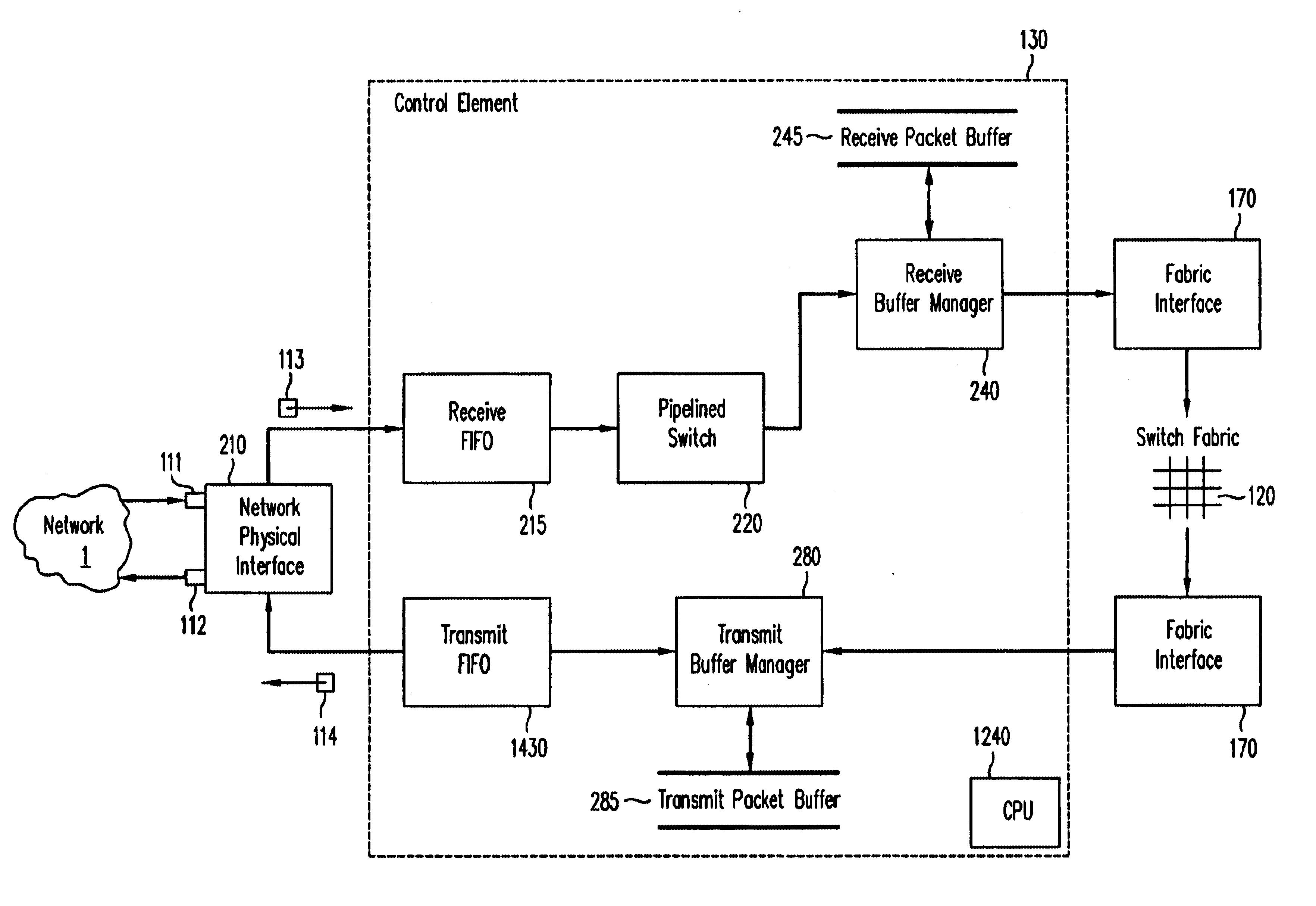

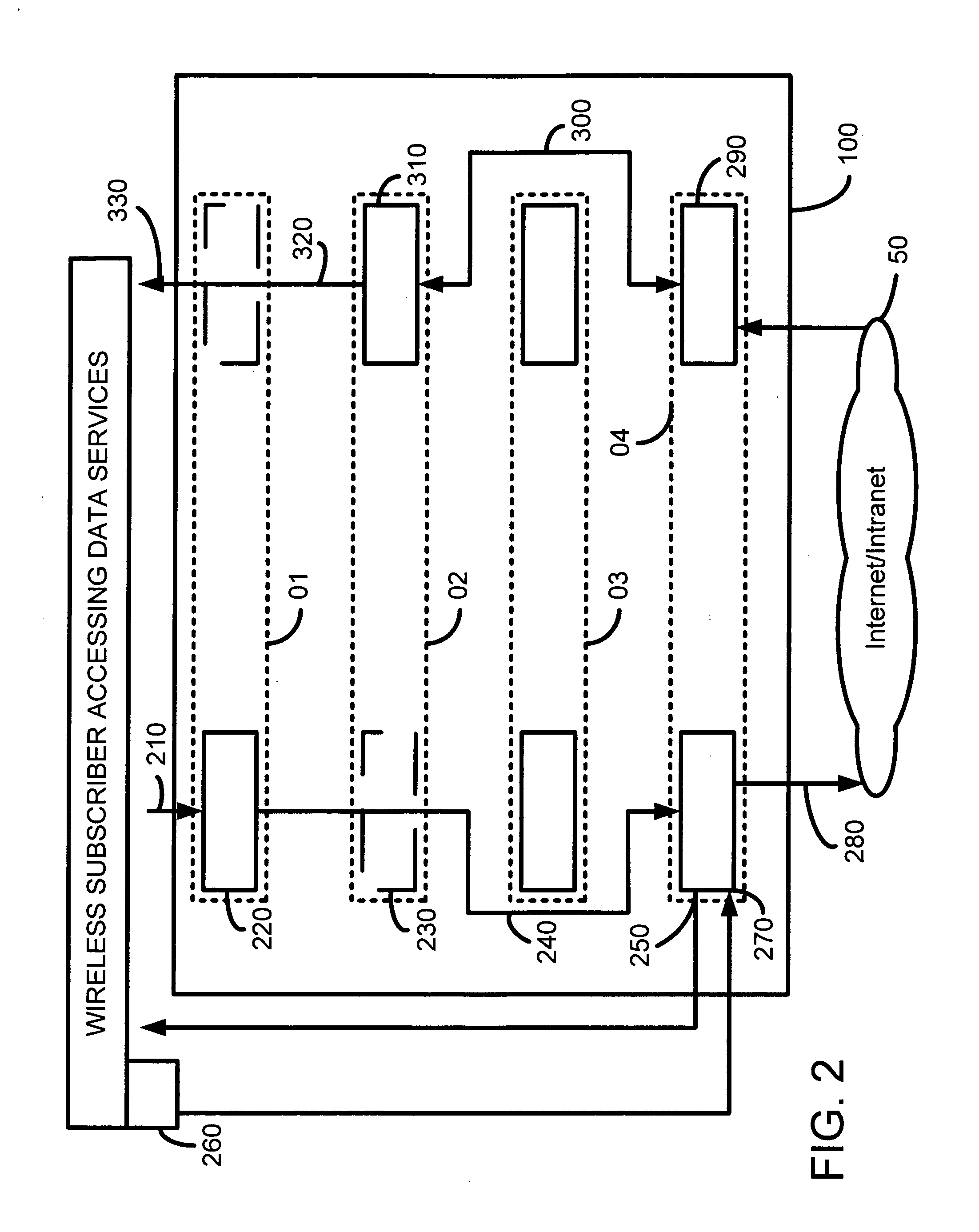

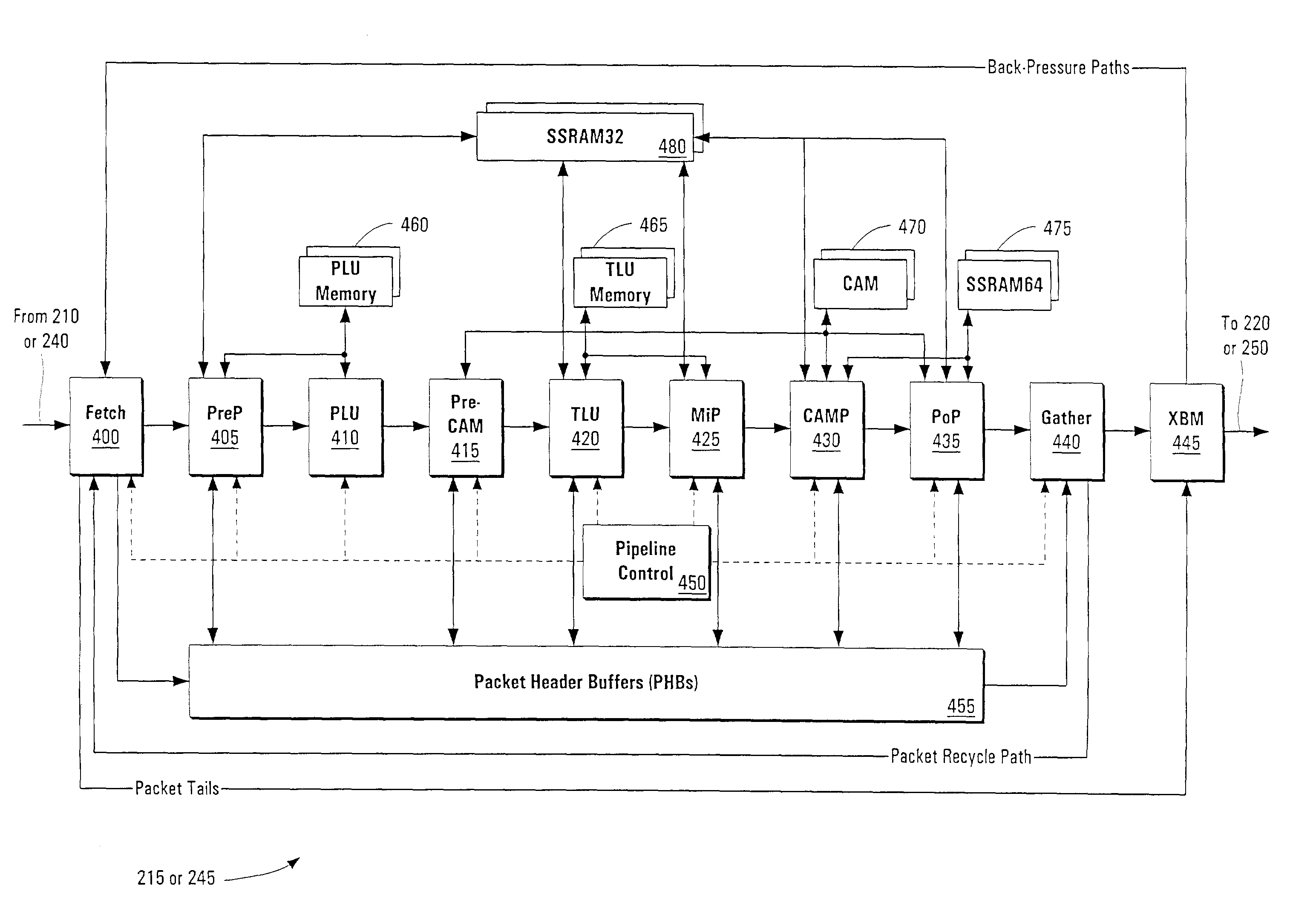

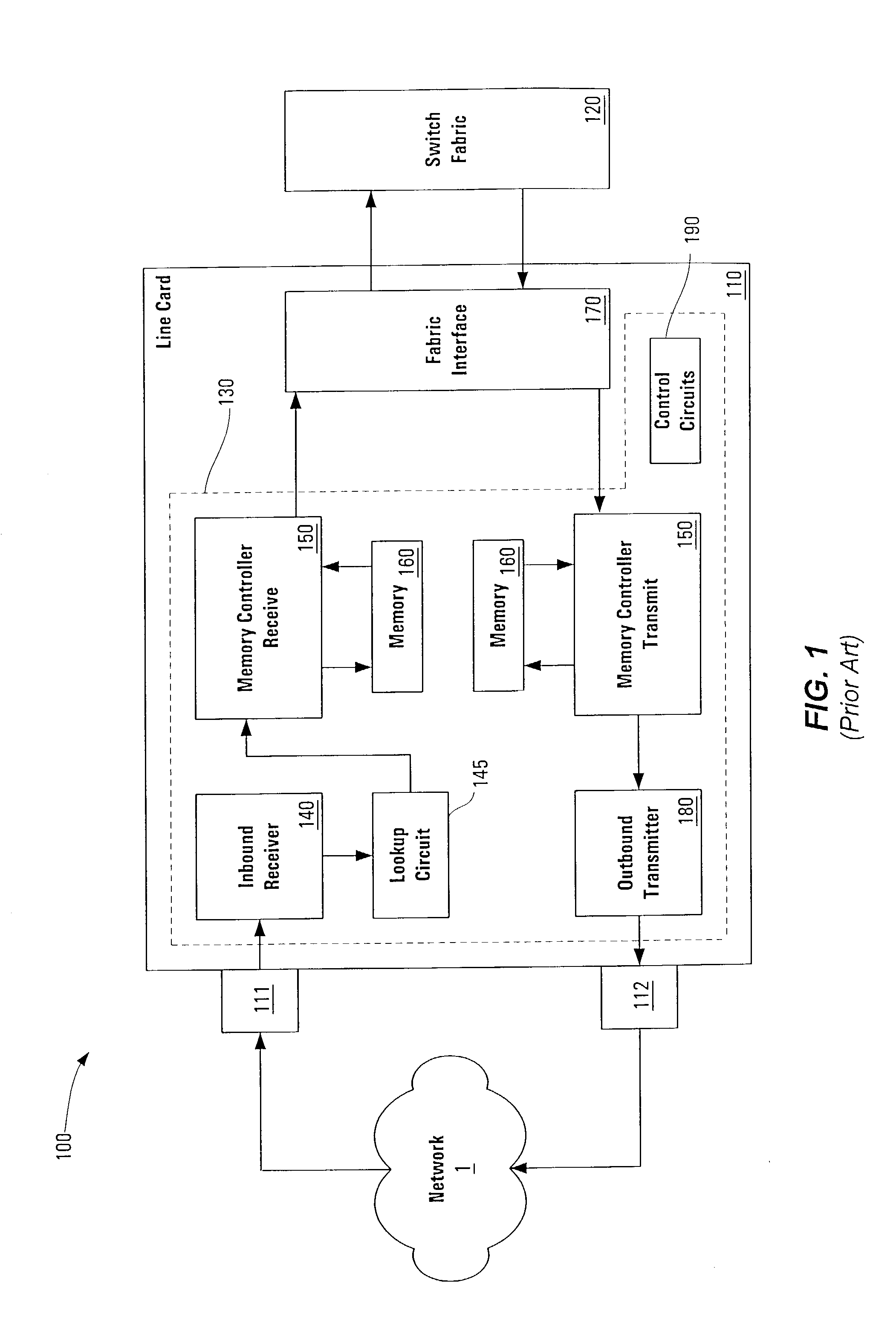

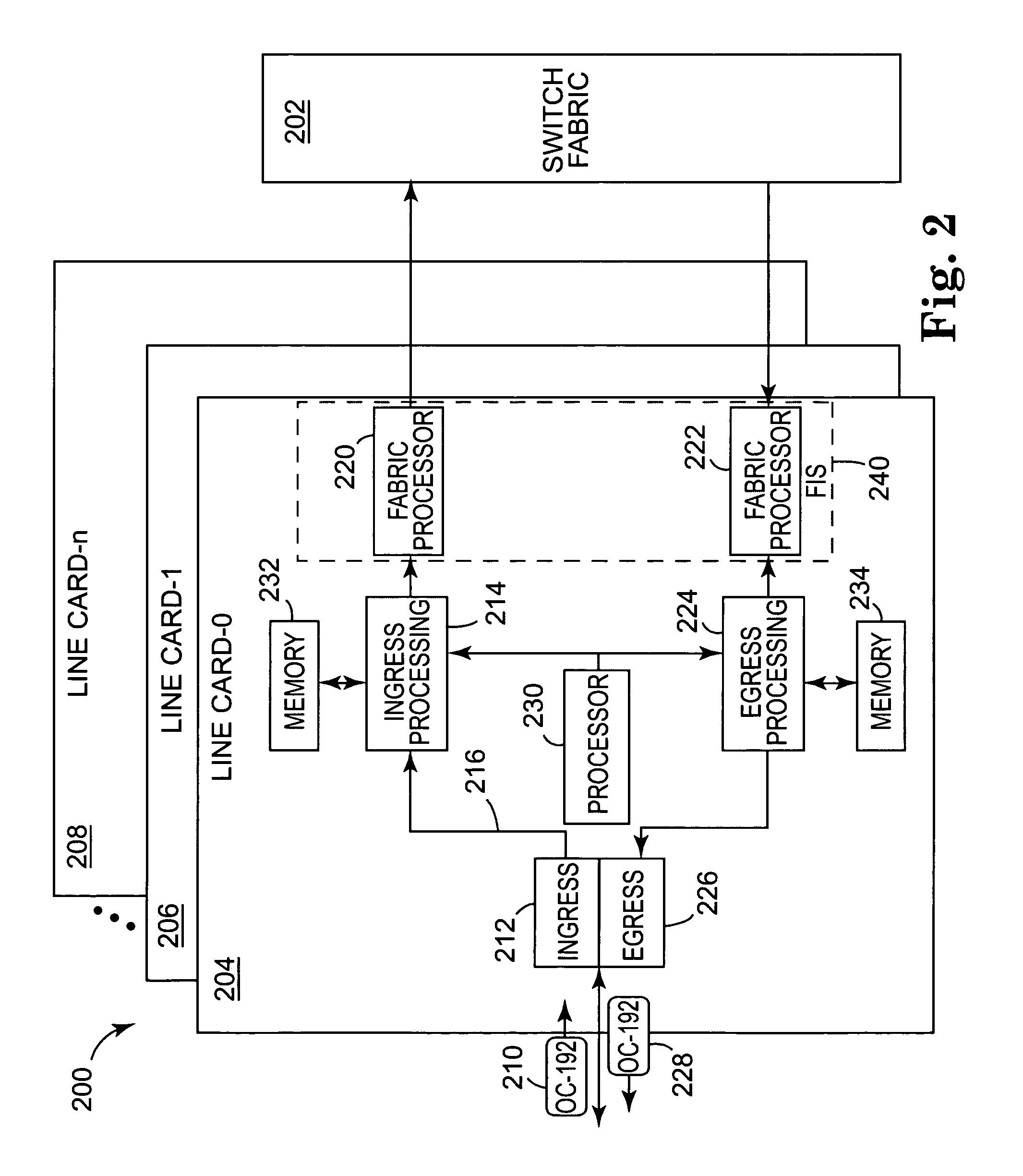

Pipelined packet switching and queuing architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

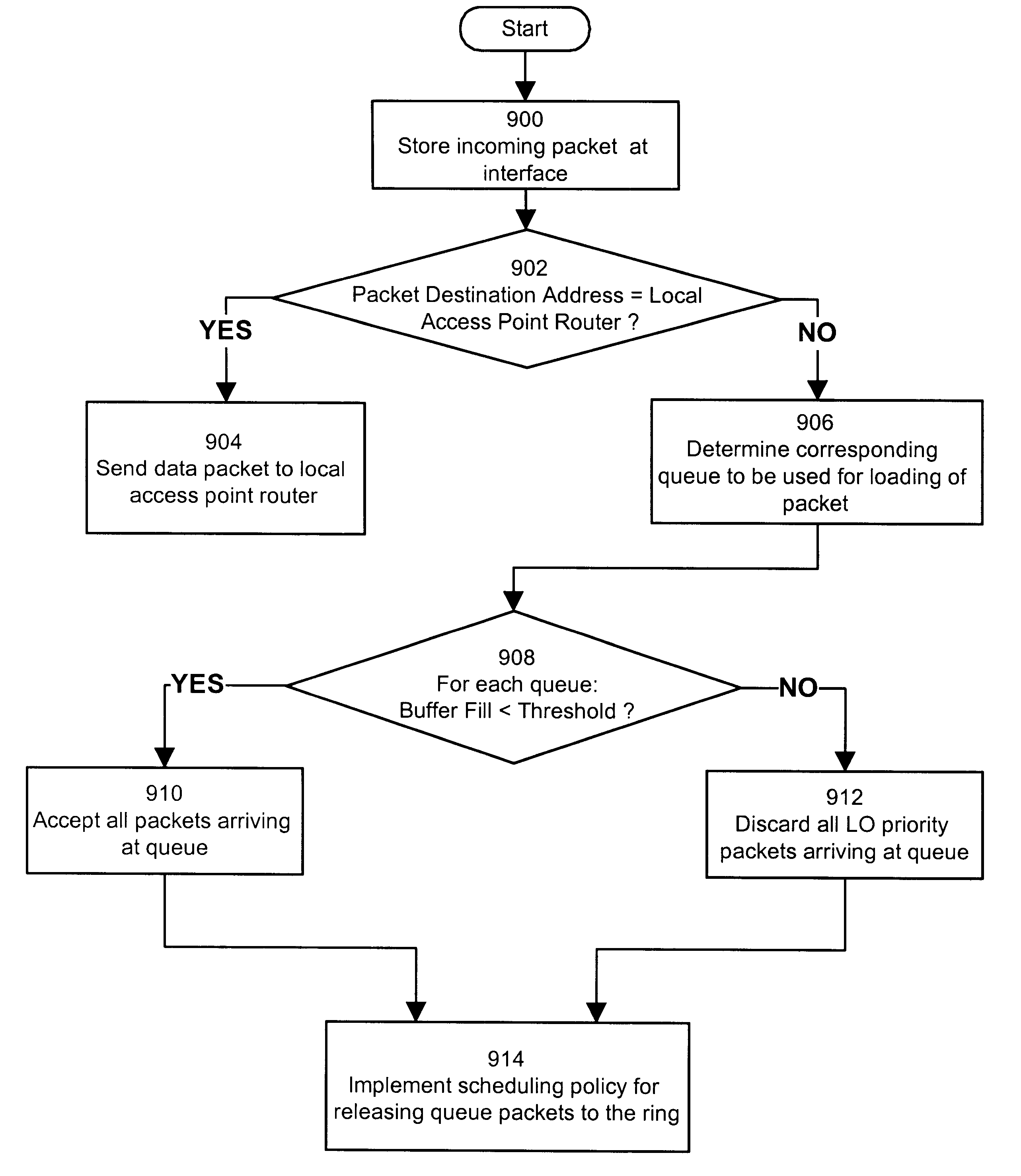

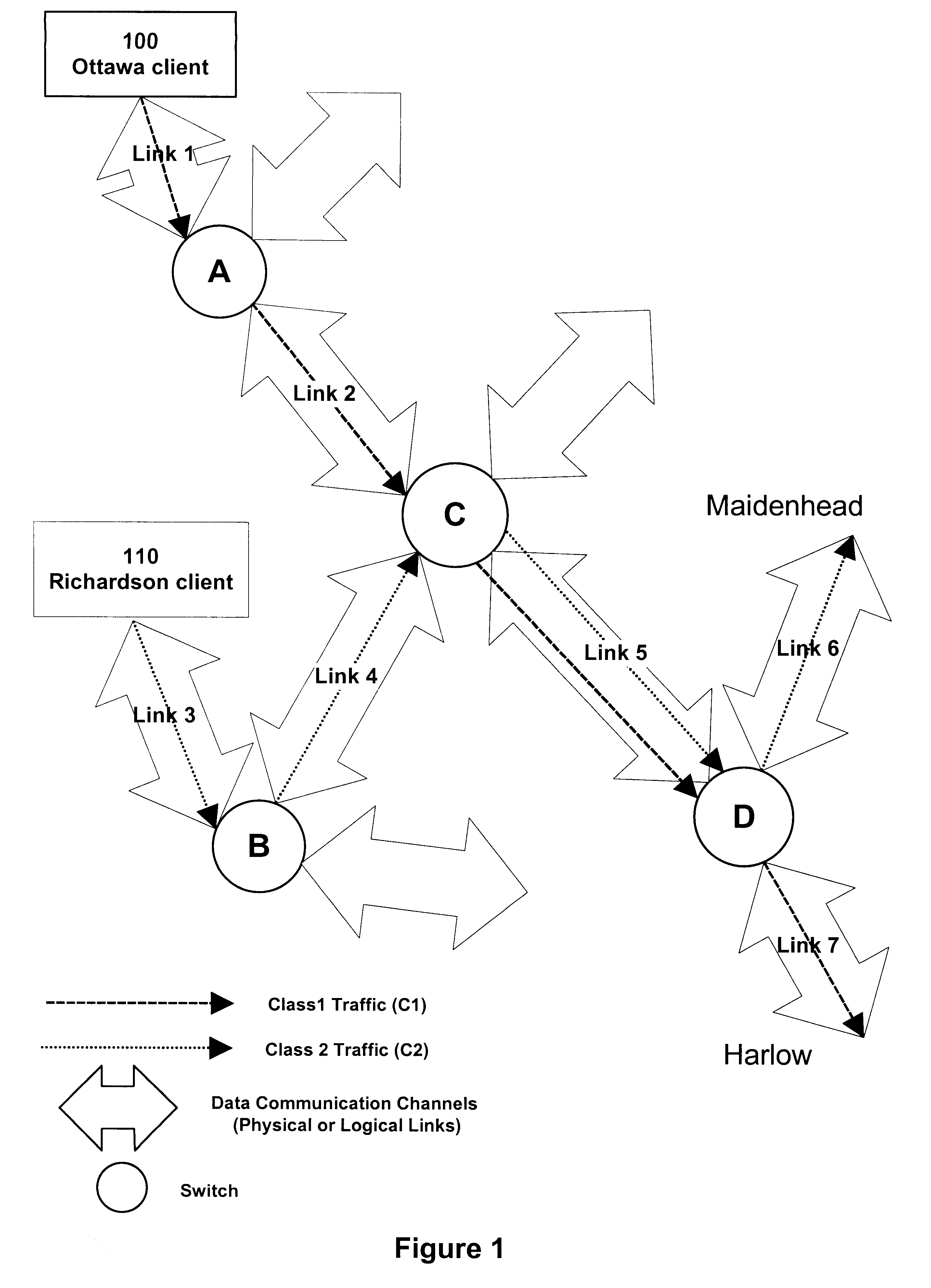

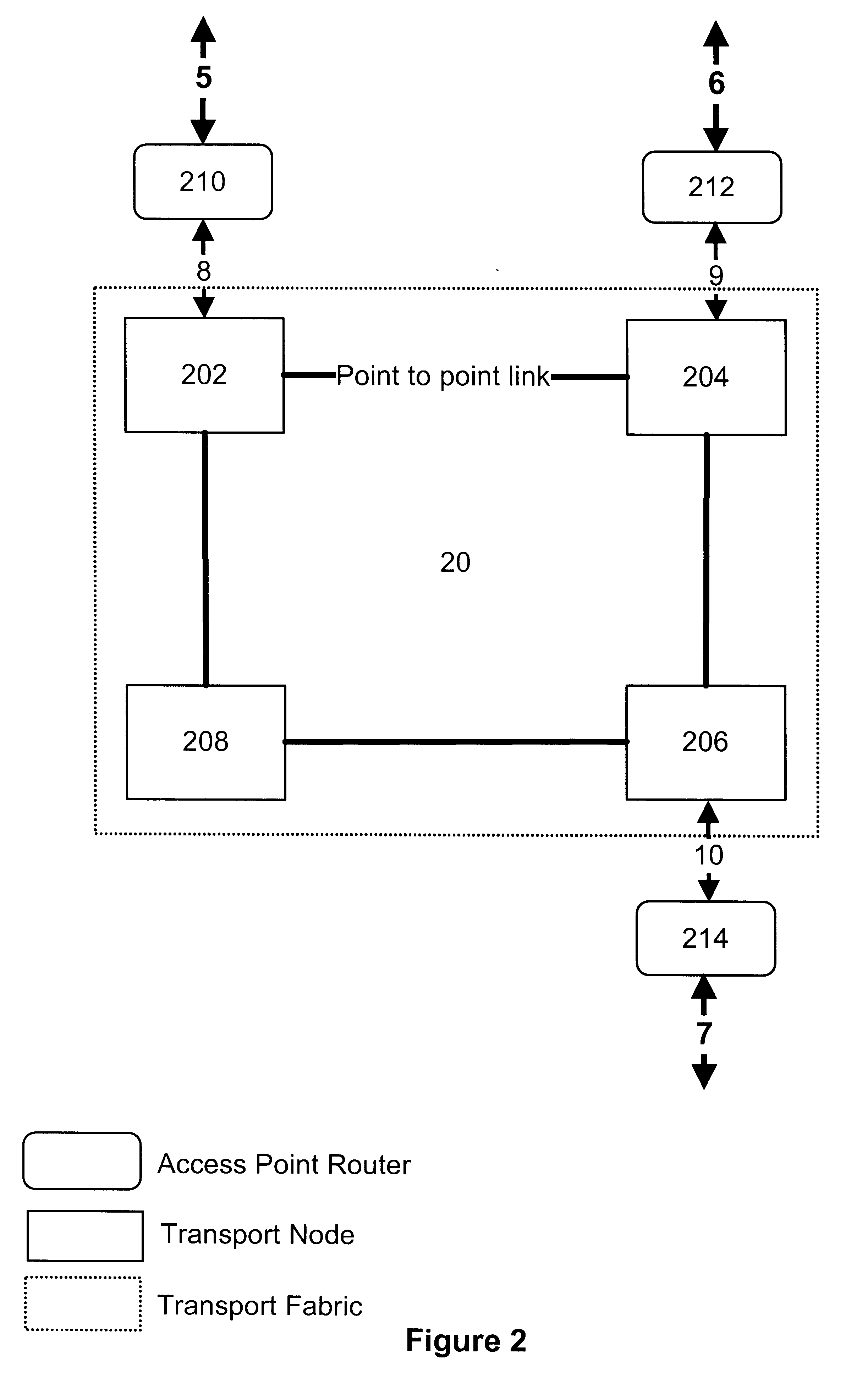

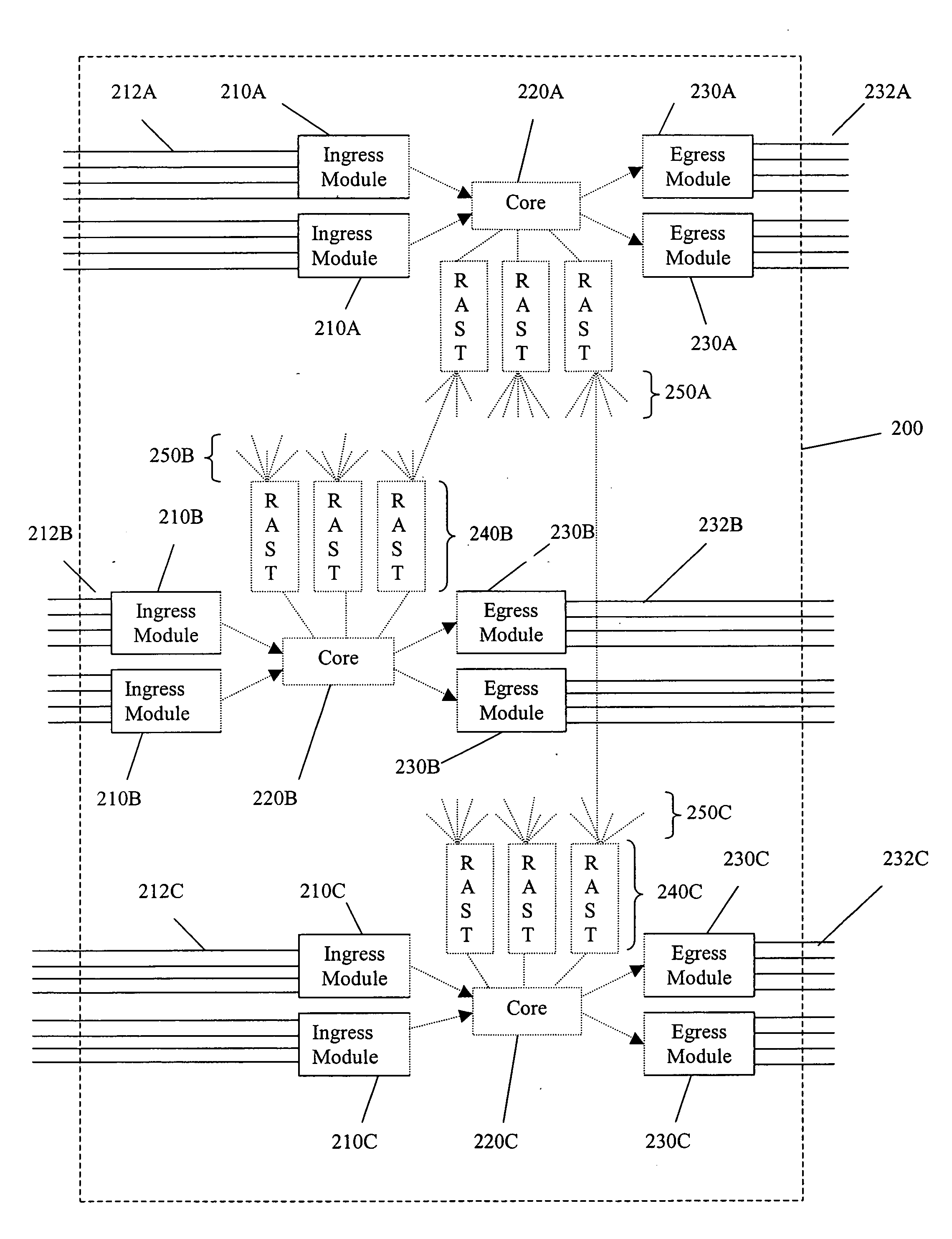

Method and apparatus for input based control of discards in a lossy packet network

InactiveUS6304552B1Error preventionFrequency-division multiplex detailsReal-time computingSwitched fabric

The present invention relates to a lossy switch for processing data units, for example IP data packets. The switch can be implemented as a contained network that includes a plurality of input ports, a plurality of output ports and a lossy switch fabric capable of establishing logical pathways to interconnect a certain input port with a certain output port. A characterizing element of the switch is its ability to control the discard of data packets at a transport point within the switch. This control mechanism prevents and reduces congestion which may occur within the switch fabric and at the level of the input and output ports. The system also supports priorities, routing HI priority request data packets over the switch fabric before LO priority request data packets, and discarding LO priority data packets first when controlling congestion.

Owner:AVAYA INC

Virtual machine task management system

InactiveUS20060029056A1Multiplex system selection arrangementsCircuit switching systemsAuto-configurationConnection table

A switch encapsulates incoming information using a header, and removes the header upon egress. The header is used by both distributed ingress nodes and within a distributed core to facilitate switching. The ingress and egress elements preferably support Ethernet or other protocol providing connectionless media with a stateful connection. Preferred switches include management protocols for discovering which elements are connected, for constructing appropriate connection tables, for designating a master element, and for resolving failures and off-line conditions among the switches. Secure data protocol (SDP), port to port (PTP) protocol, and active / active protection service (AAPS) are all preferably implemented. Systems and methods contemplated herein can advantageously use Strict Ring Topology (SRT), and conf configure the topology automatically. Components of a distributed switching fabric can be geographically separated by at least one kilometer, and in some cases by over 150 kilometers.

Owner:RAPTOR NETWORKS TECH

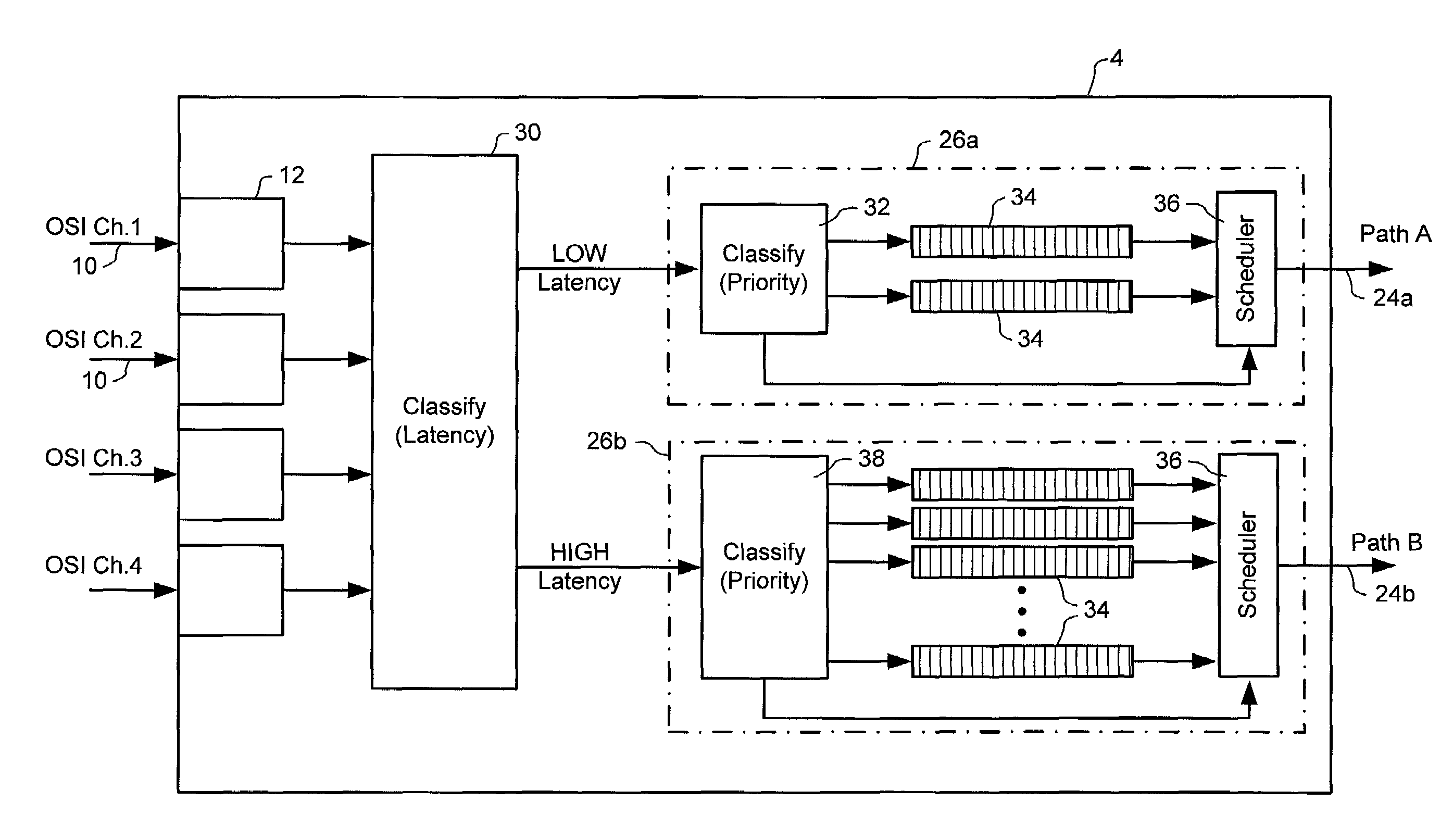

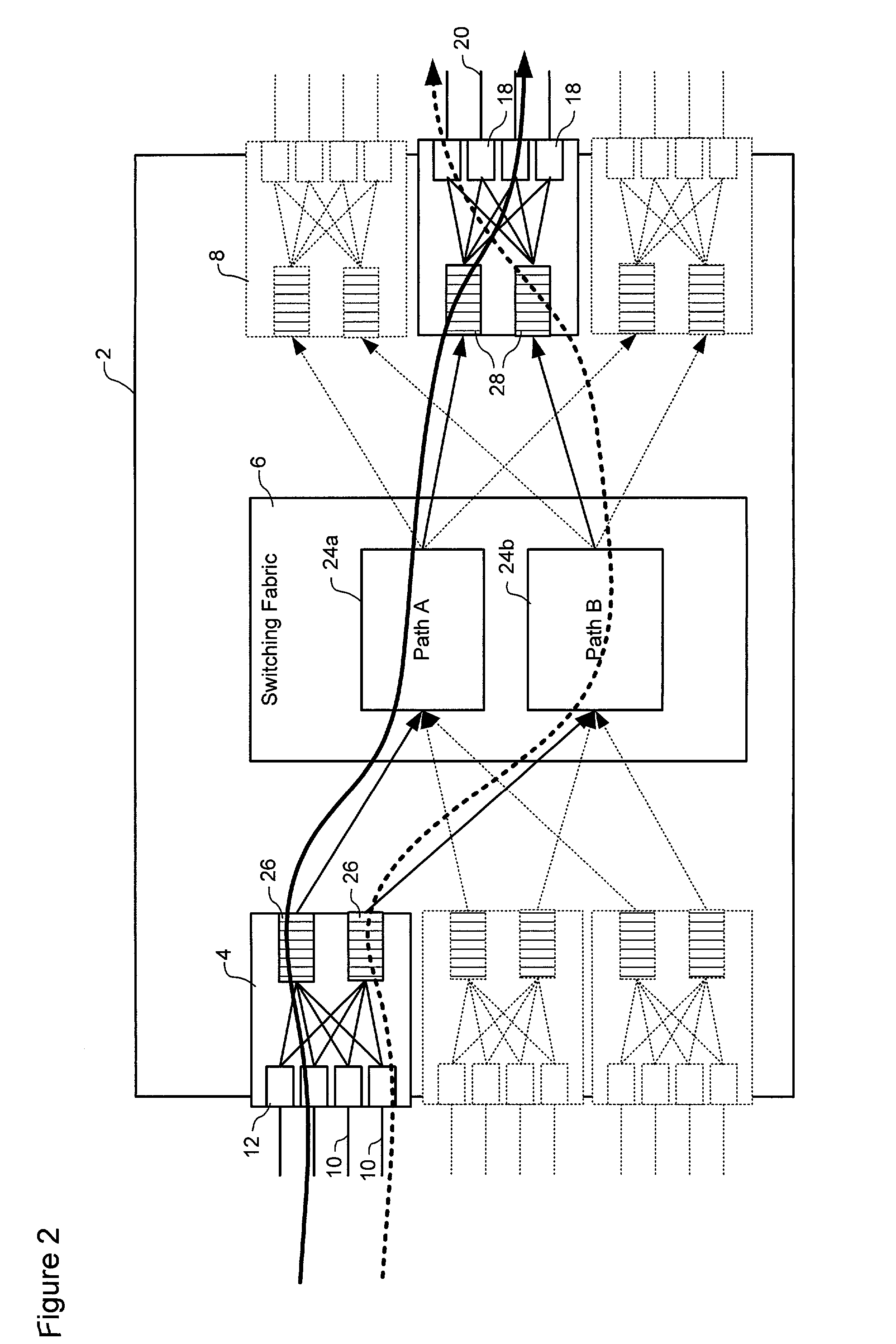

Traffic switching using multi-dimensional packet classification

ActiveUS7260102B2Efficient transportMultiplex system selection arrangementsError preventionTraffic capacityLatency (engineering)

A method and system for conveying an arbitrary mixture of high and low latency traffic streams across a common switch fabric implements a multi-dimensional traffic classification scheme, in which multiple orthogonal traffic classification methods are successively implemented for each traffic stream traversing the system. At least two diverse paths are mapped through the switch fabric, each path being optimized to satisfy respective different latency requirements. A latency classifier is adapted to route each traffic stream to a selected path optimized to satisfy latency requirements most closely matching a respective latency requirement of the traffic stream. A prioritization classifier independently prioritizes traffic streams in each path. A fairness classifier at an egress of each path can be used to enforce fairness between responsive and non-responsive traffic streams in each path. This arrangement enables traffic streams having similar latency requirements to traverse the system through a path optimized for those latency requirements.

Owner:CIENA

Switching system

ActiveUS7313614B2Accelerates TCP processingRobust systemInput/output to record carriersError detection/correctionProcessor elementComputer architecture

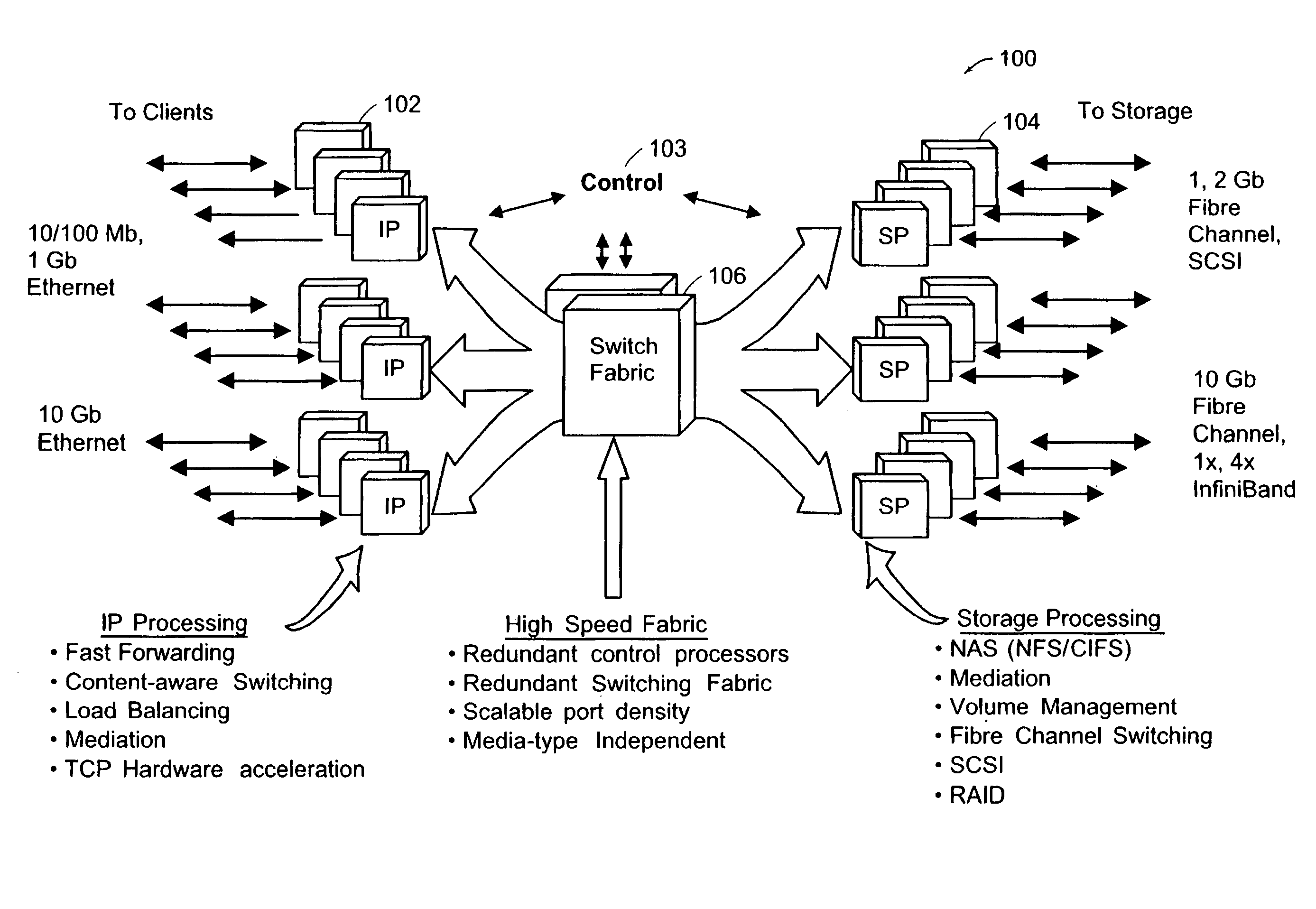

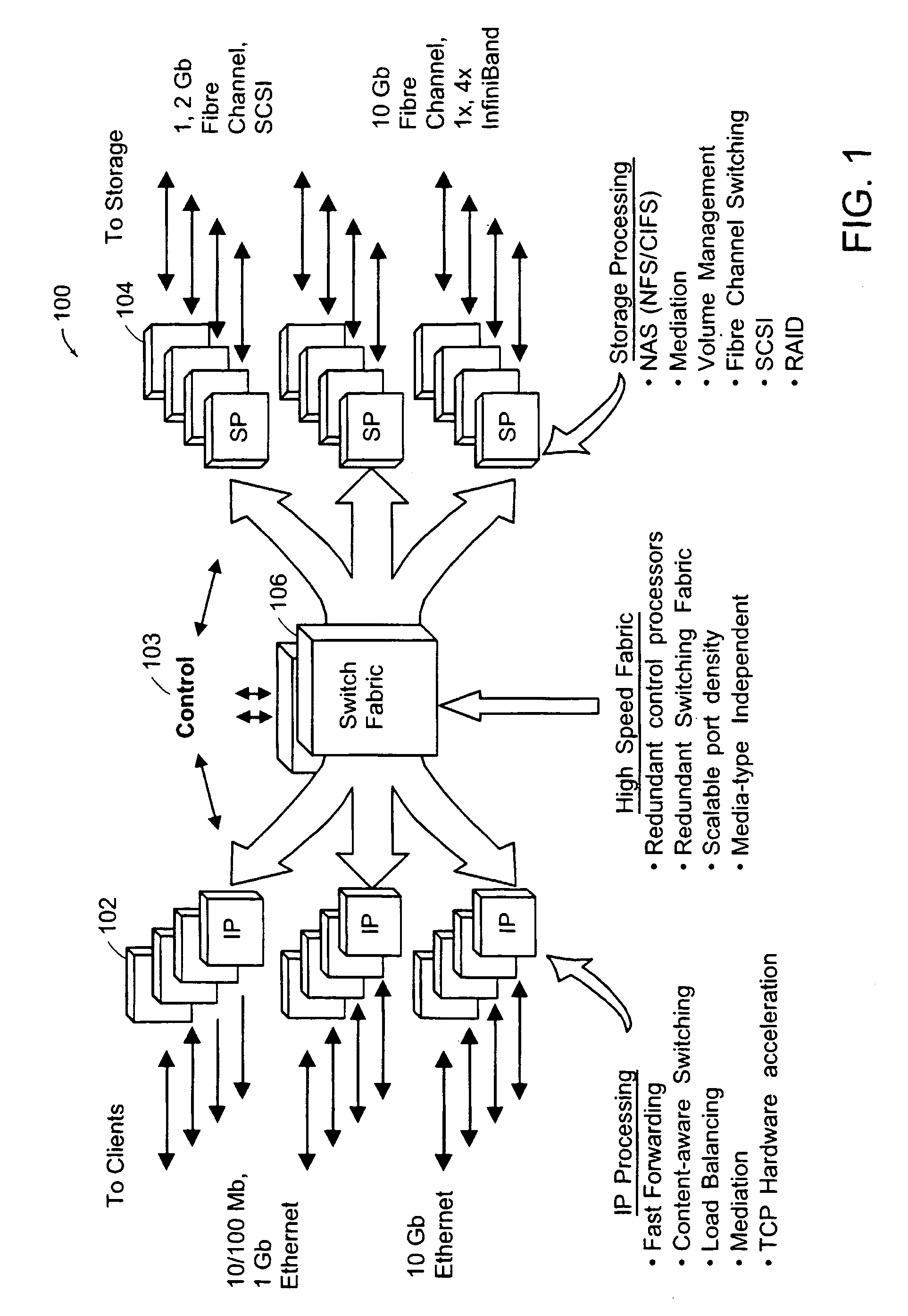

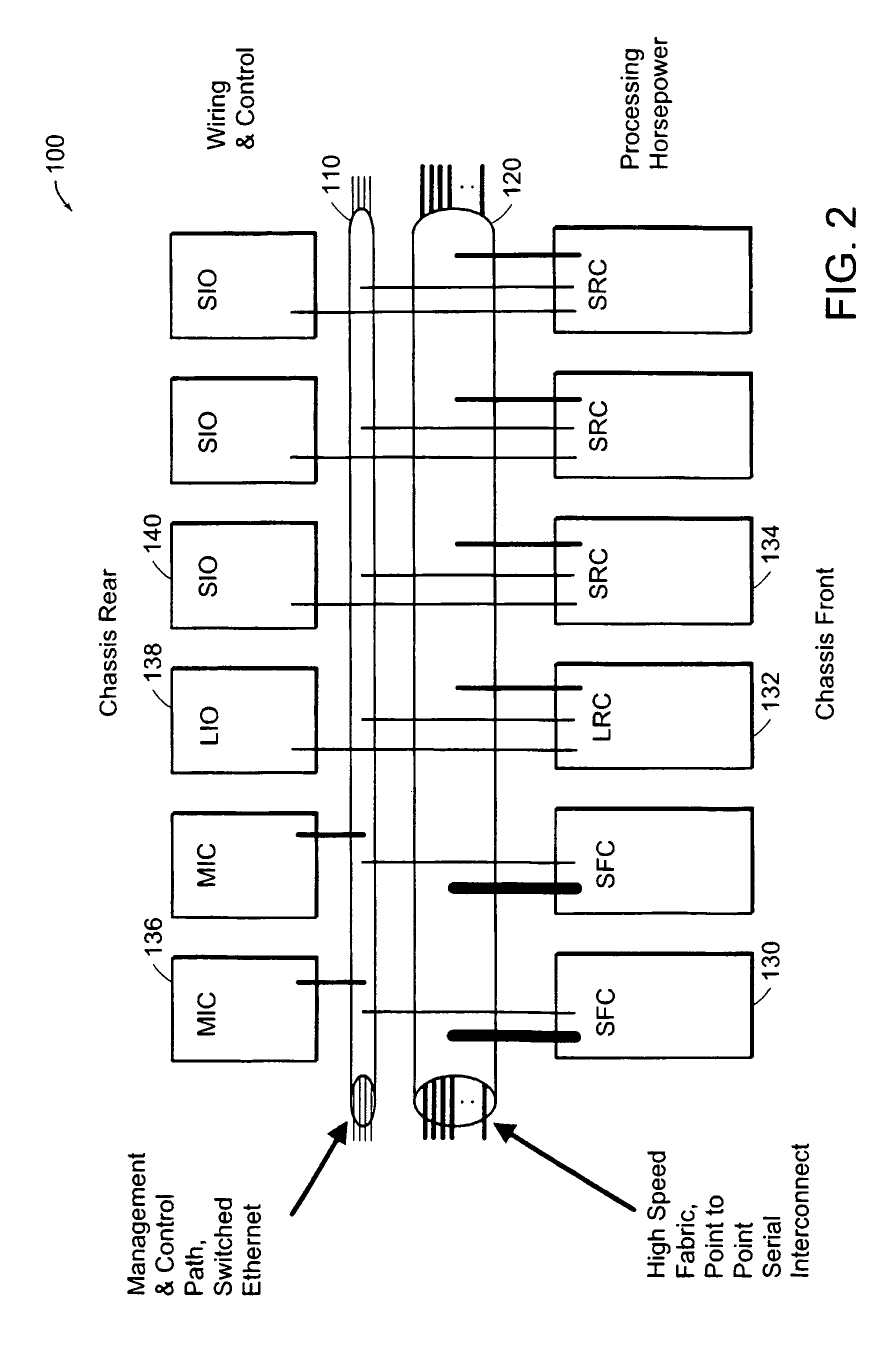

A system and method for provided a switch system (100) having a first configurable set of processor elements (102) to process storage resource connection requests (104), a second configurable set of processor elements capable of communications with the first configurable set of processor elements (102) to receive, from the first configurable set of processor elements, storage connection requests representative of client requests, and to route the requests to at least one of the storage elements (104), and a configurable switching fabric (106) interconnected between the first and second sets of processor elements (102), for receiving at least a first storage connection request (104) from one of the first set of processor elements (102), determining an appropriate one of the second set of processors for processing the storage connection request (104), automatically configuring the storage connection request in accordance with a protocol utilized by the selected one of the second set of processors, and forwarding the storage connection request to the selected one of the second set of processors for routing to at least one of the storage elements.

Owner:ORACLE INT CORP

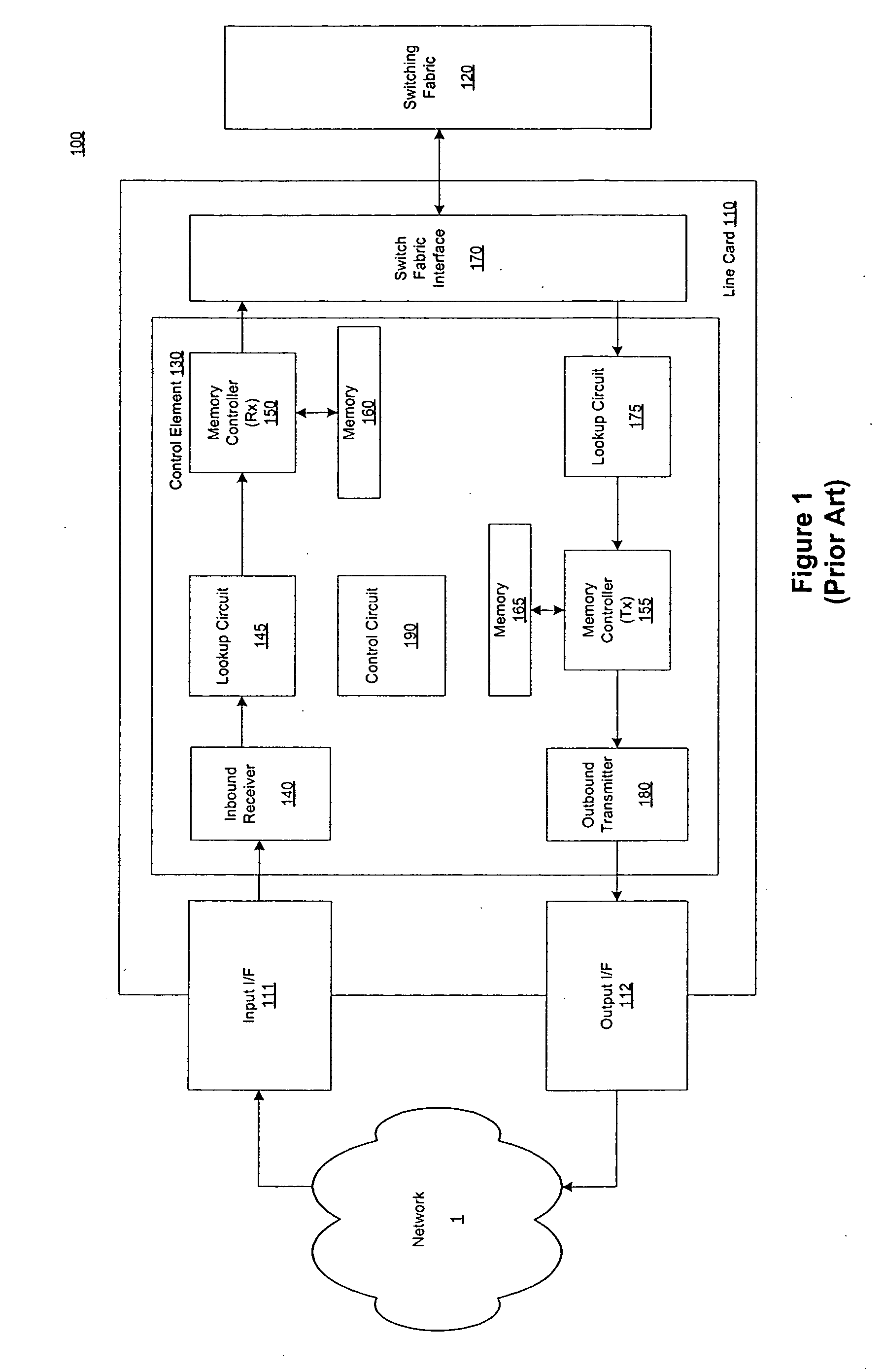

Flexible engine and data structure for packet header processing

InactiveUS6721316B1Multiplex system selection arrangementsData switching by path configurationWeb transportLinked list

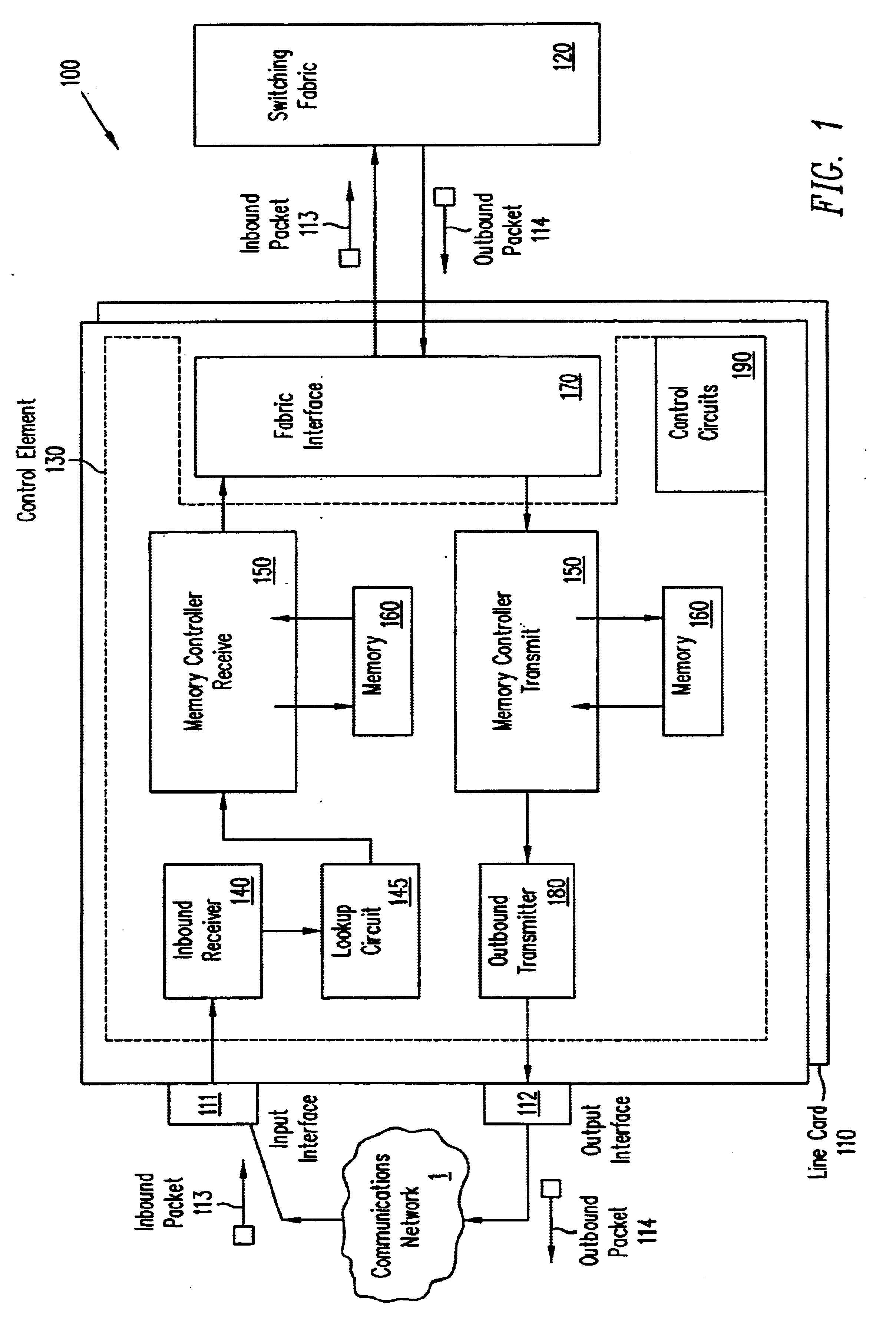

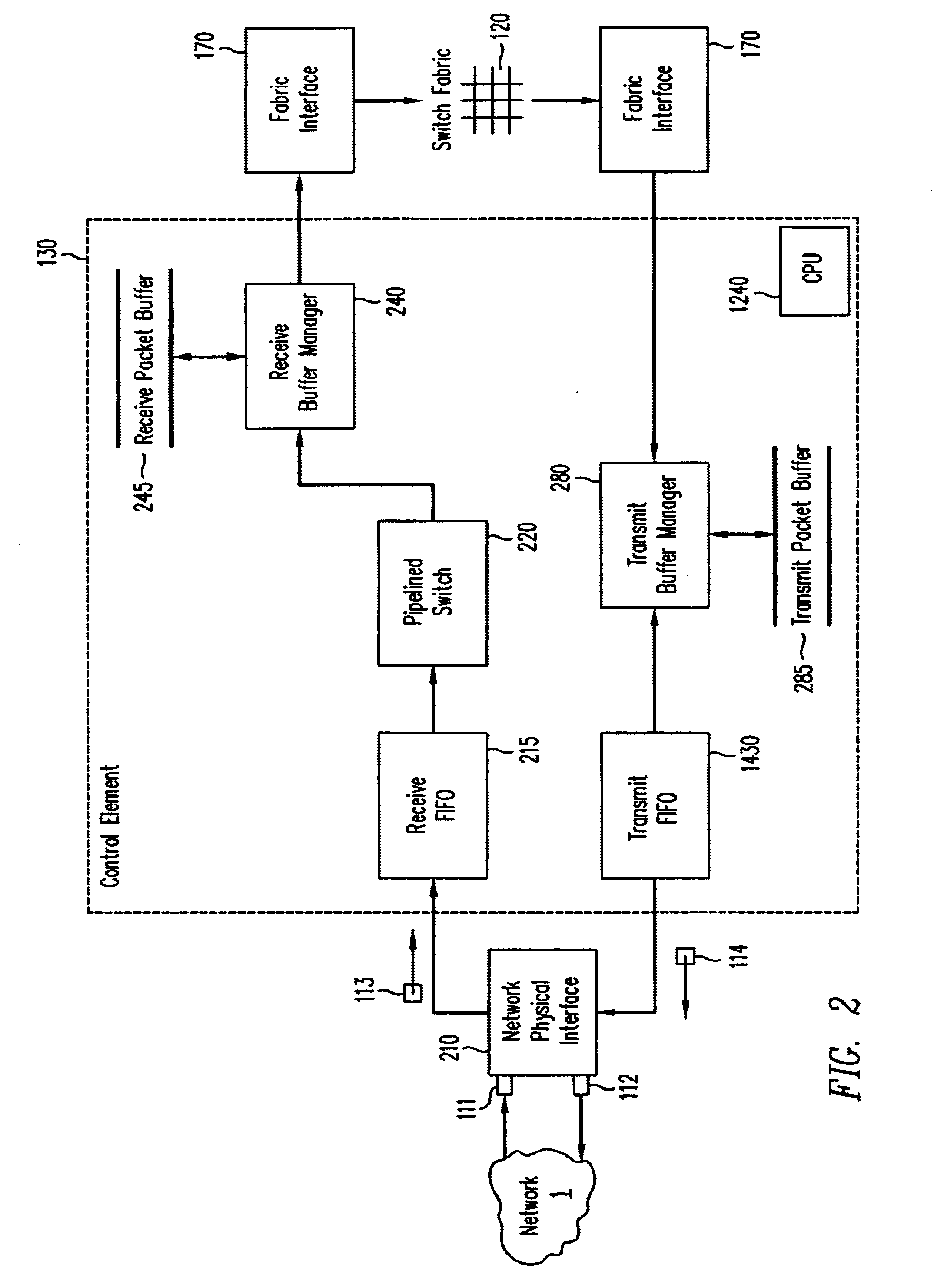

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

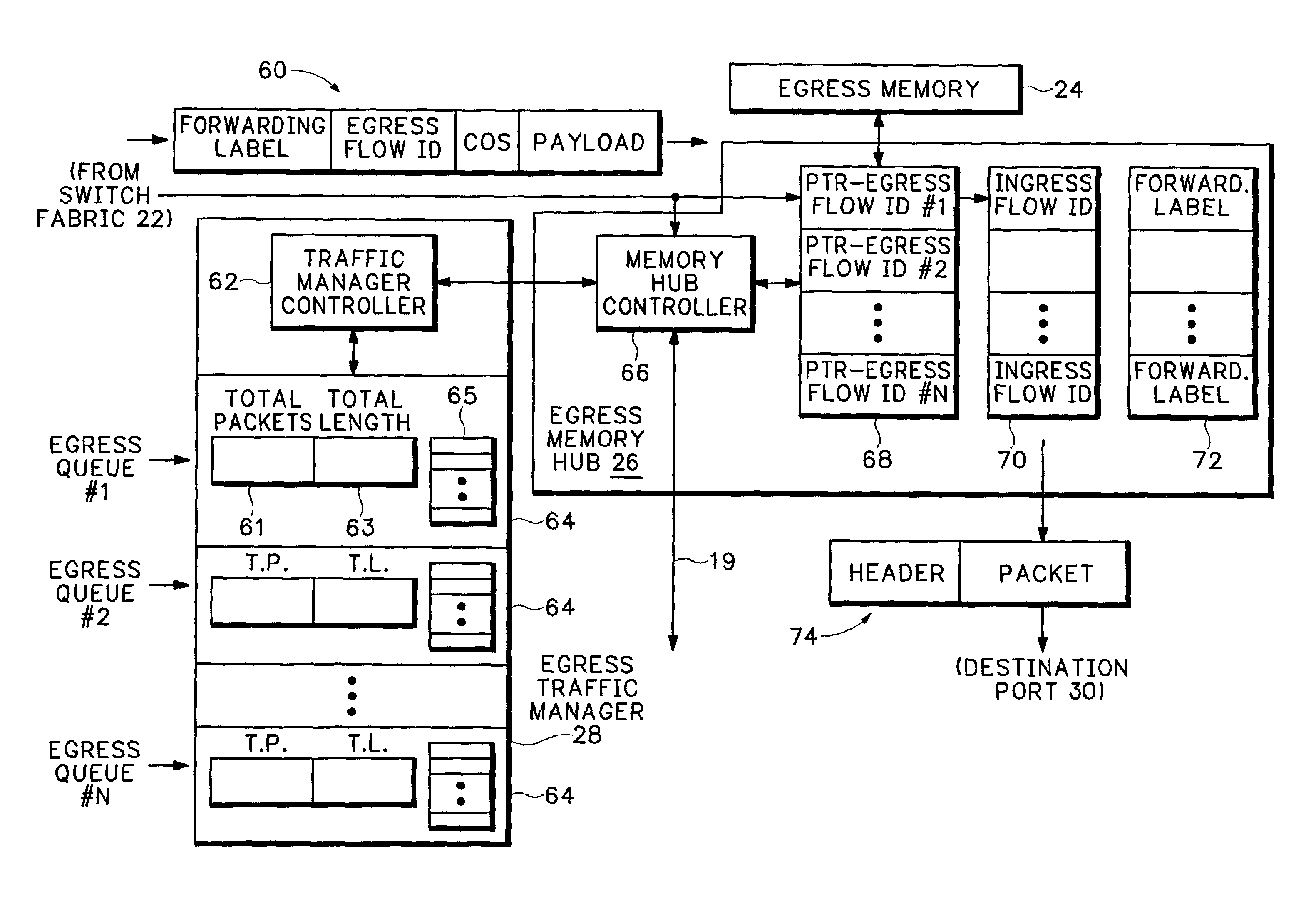

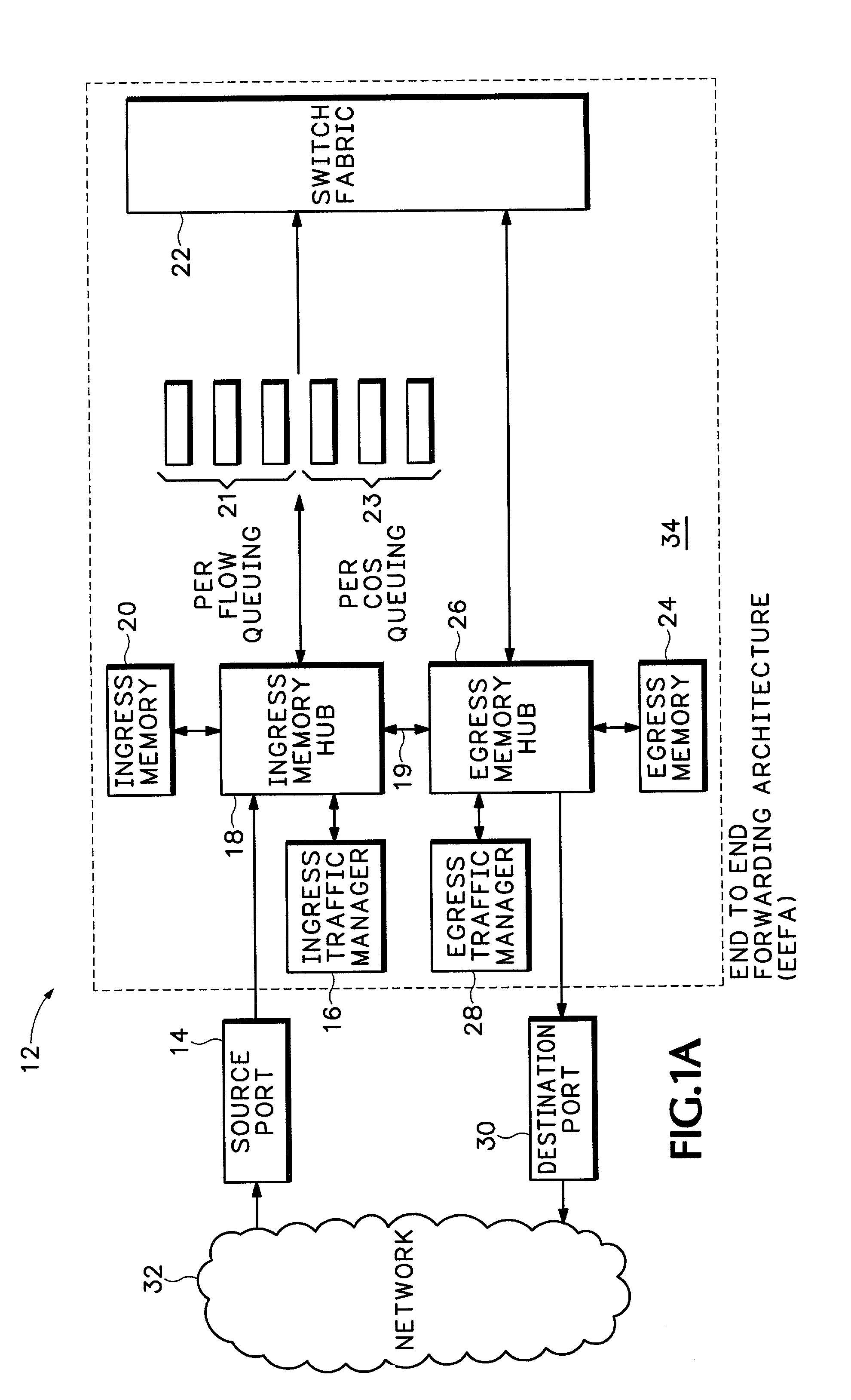

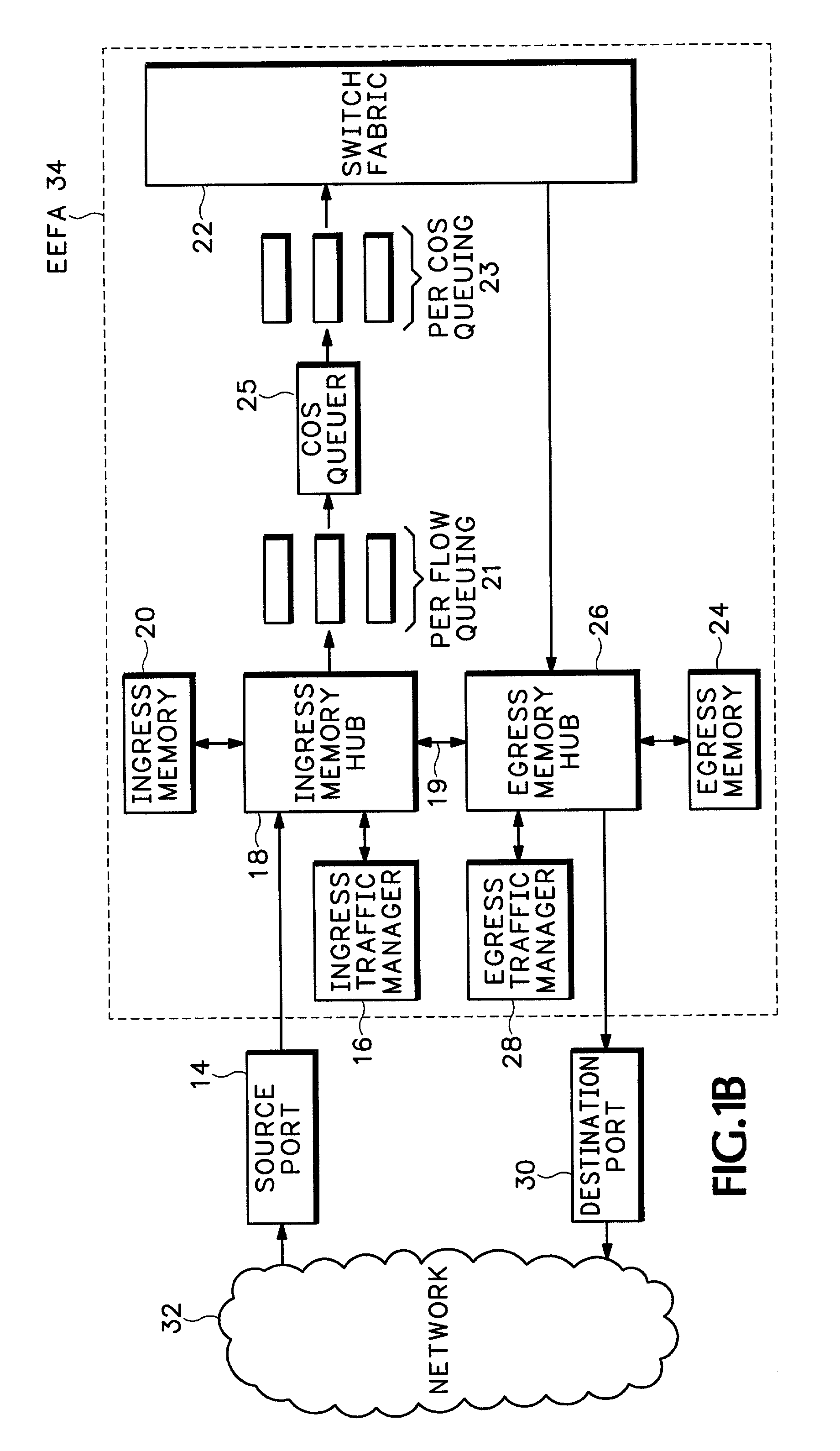

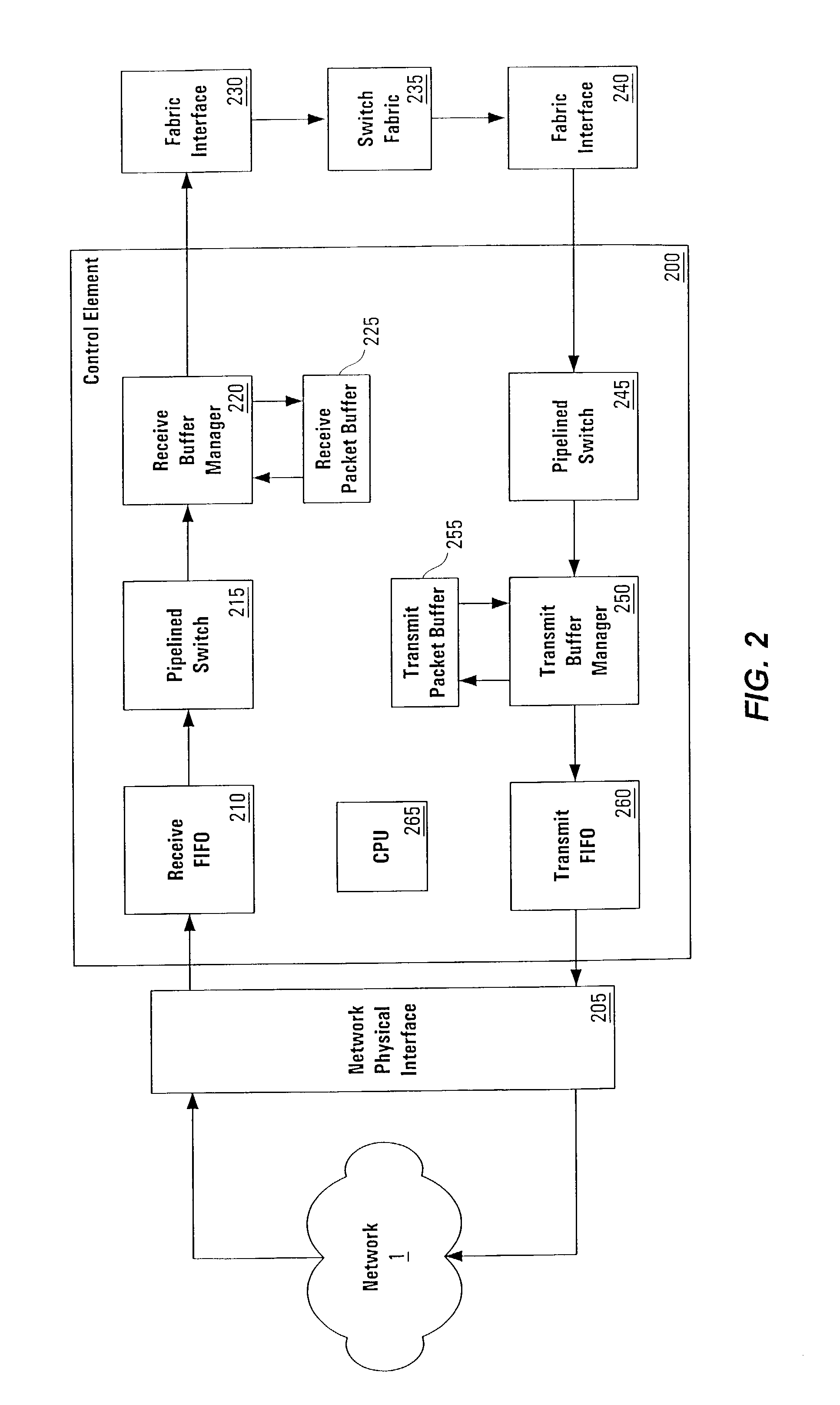

Method and apparatus for end to end forwarding architecture

An end to end forwarding architecture includes a memory hub having a first ingress interface for receiving packets from a source port. The packets have associated ingress flow identifiers. A second ingress interface outputs the packets to a switch fabric. An ingress controller manages how the packets are queued and output to the switch fabric. The same memory hub can be used for both per flow queuing and per Class of Service (CoS) queuing. A similar structure is used on the egress side of the switch fabric. The end to end forwarding architecture separates per flow traffic scheduling operations performed in a traffic manager from the per flow packet storage operations performed by the memory hub.

Owner:RPX CORP +1

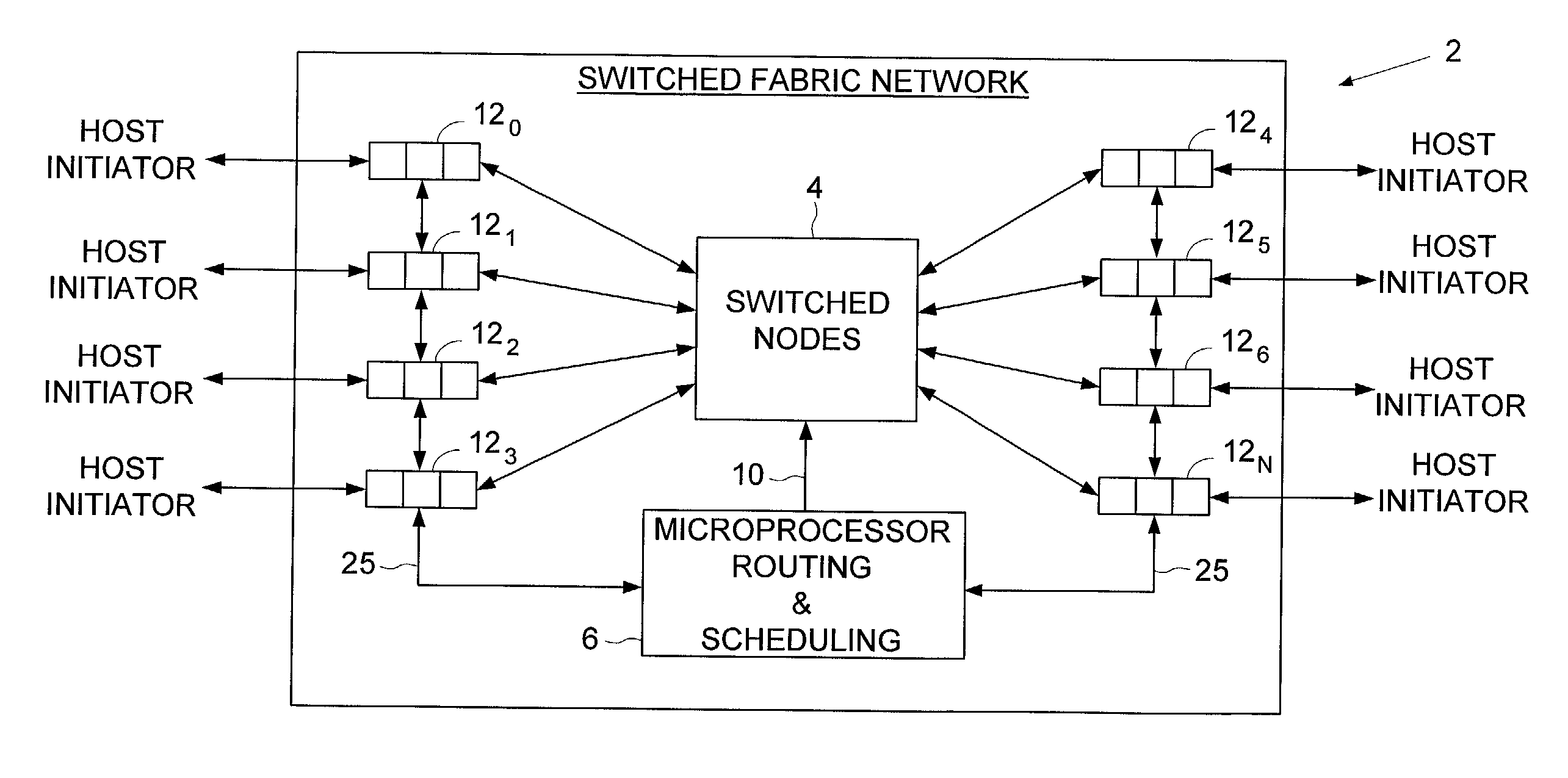

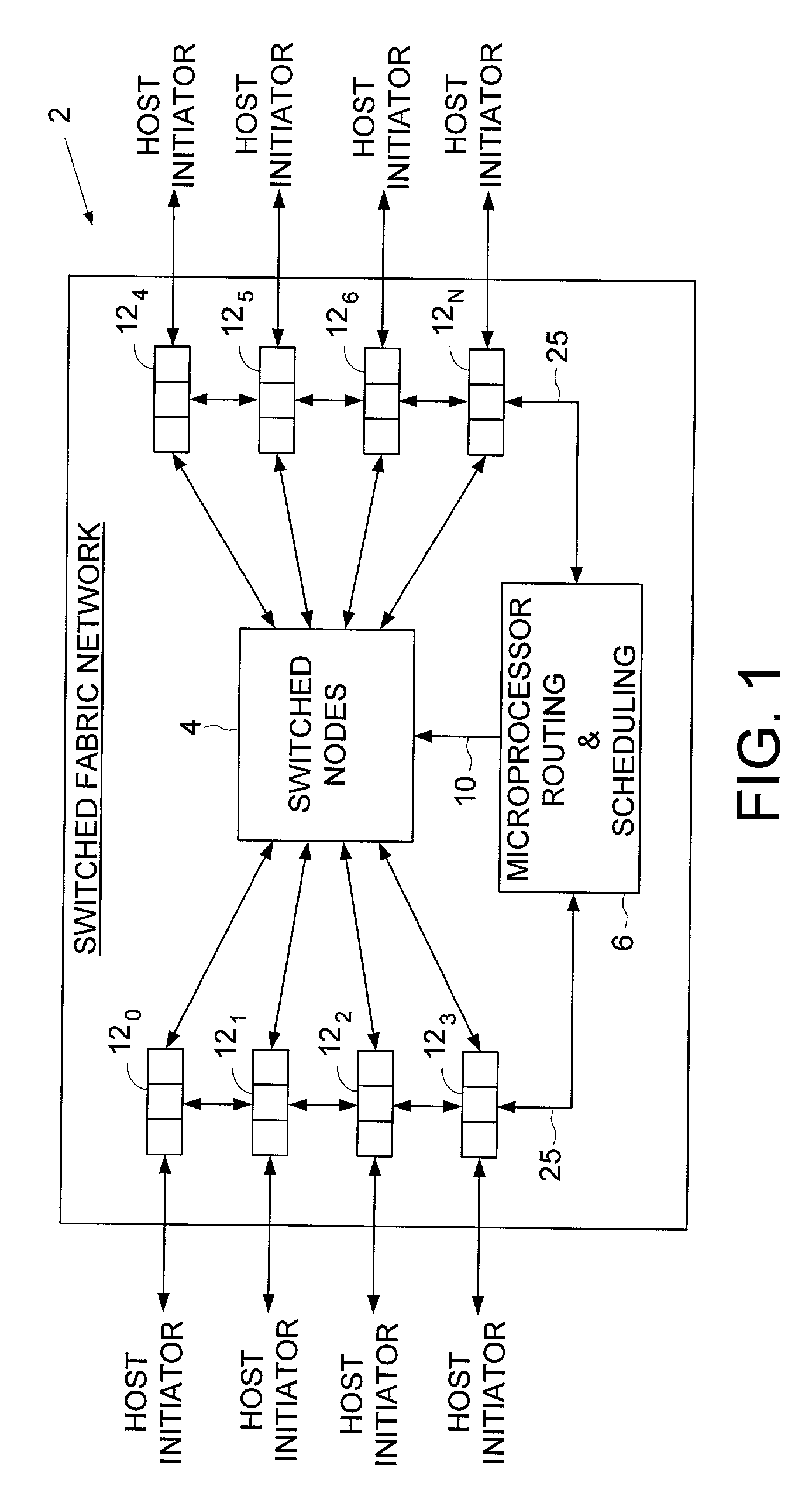

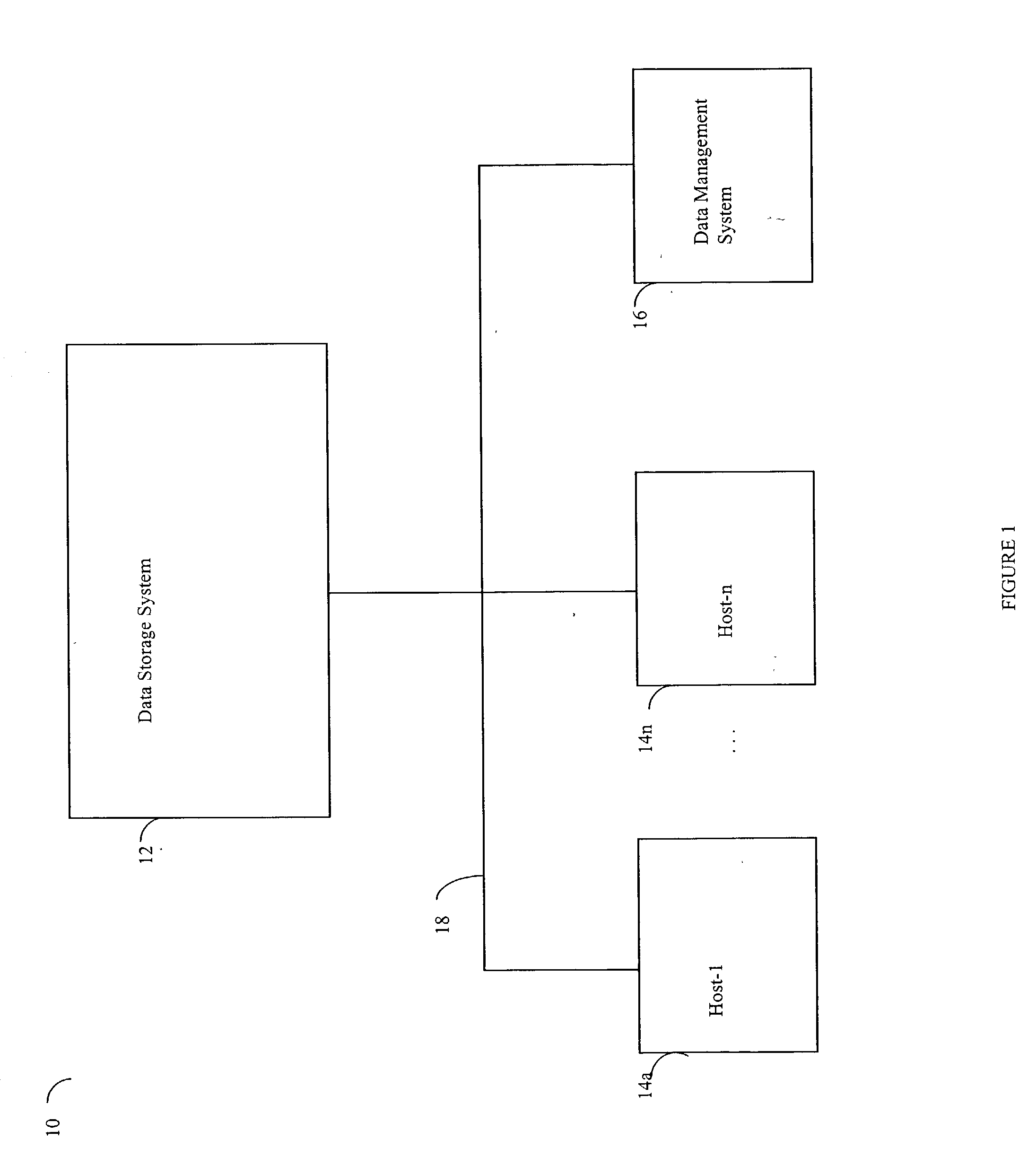

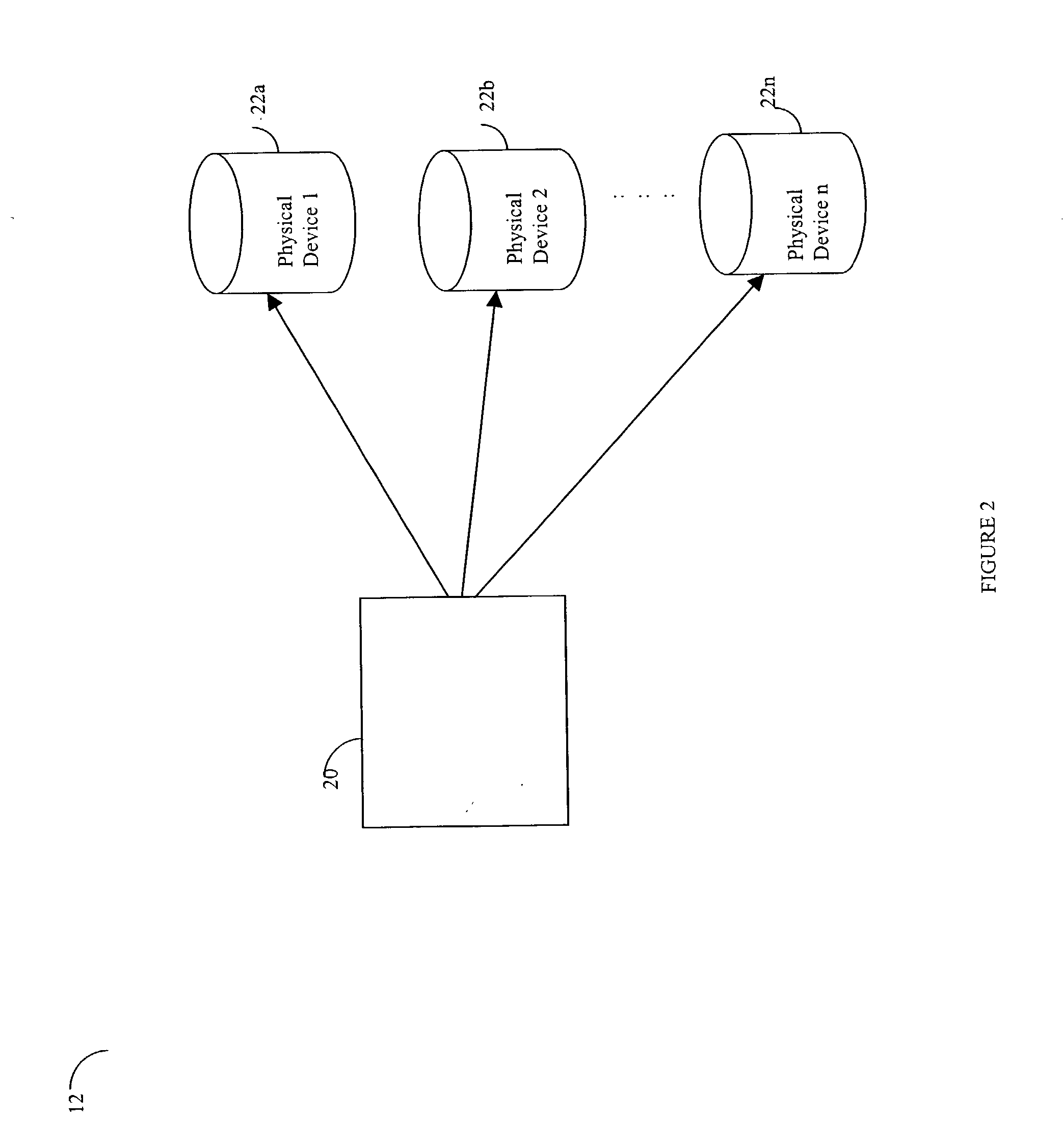

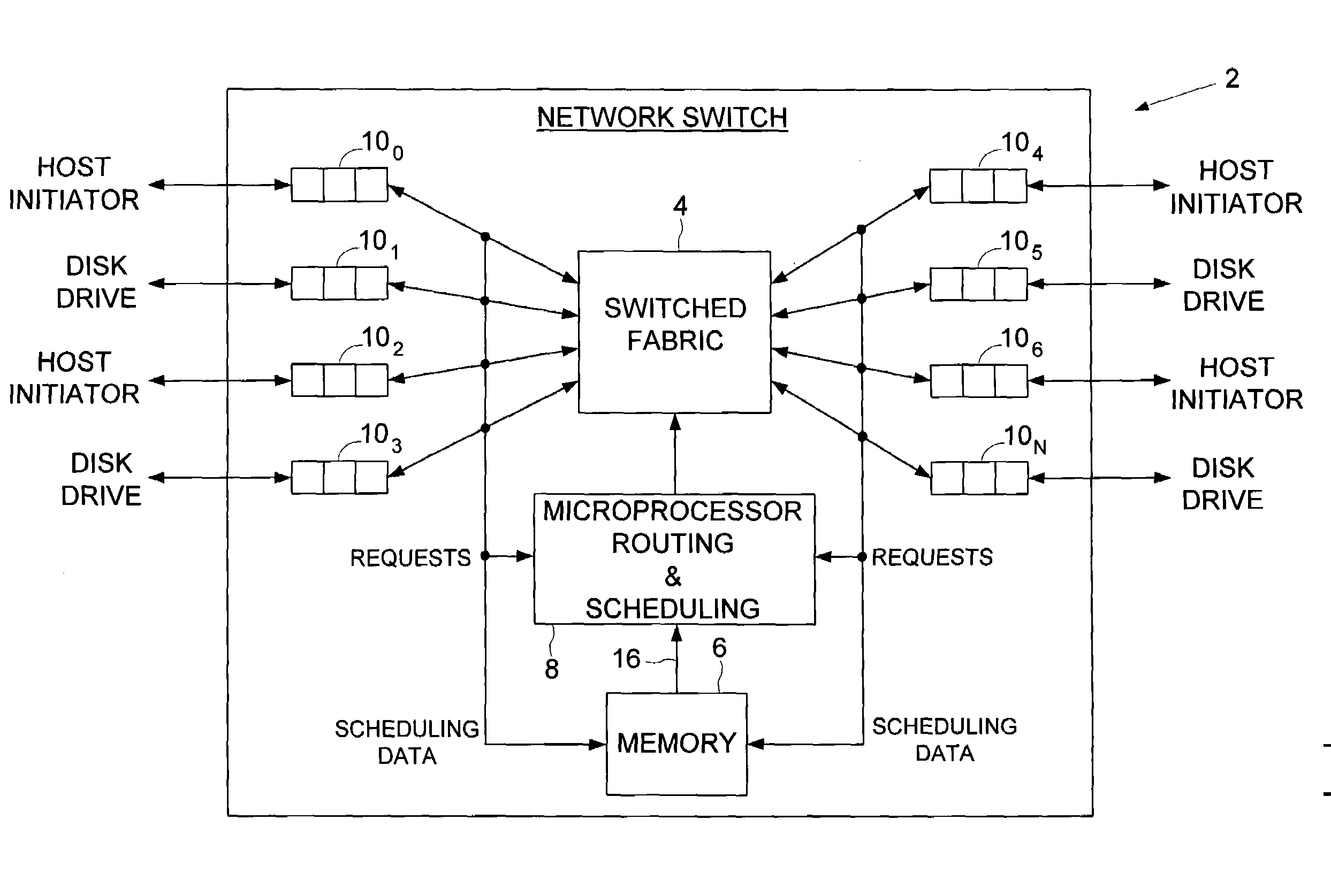

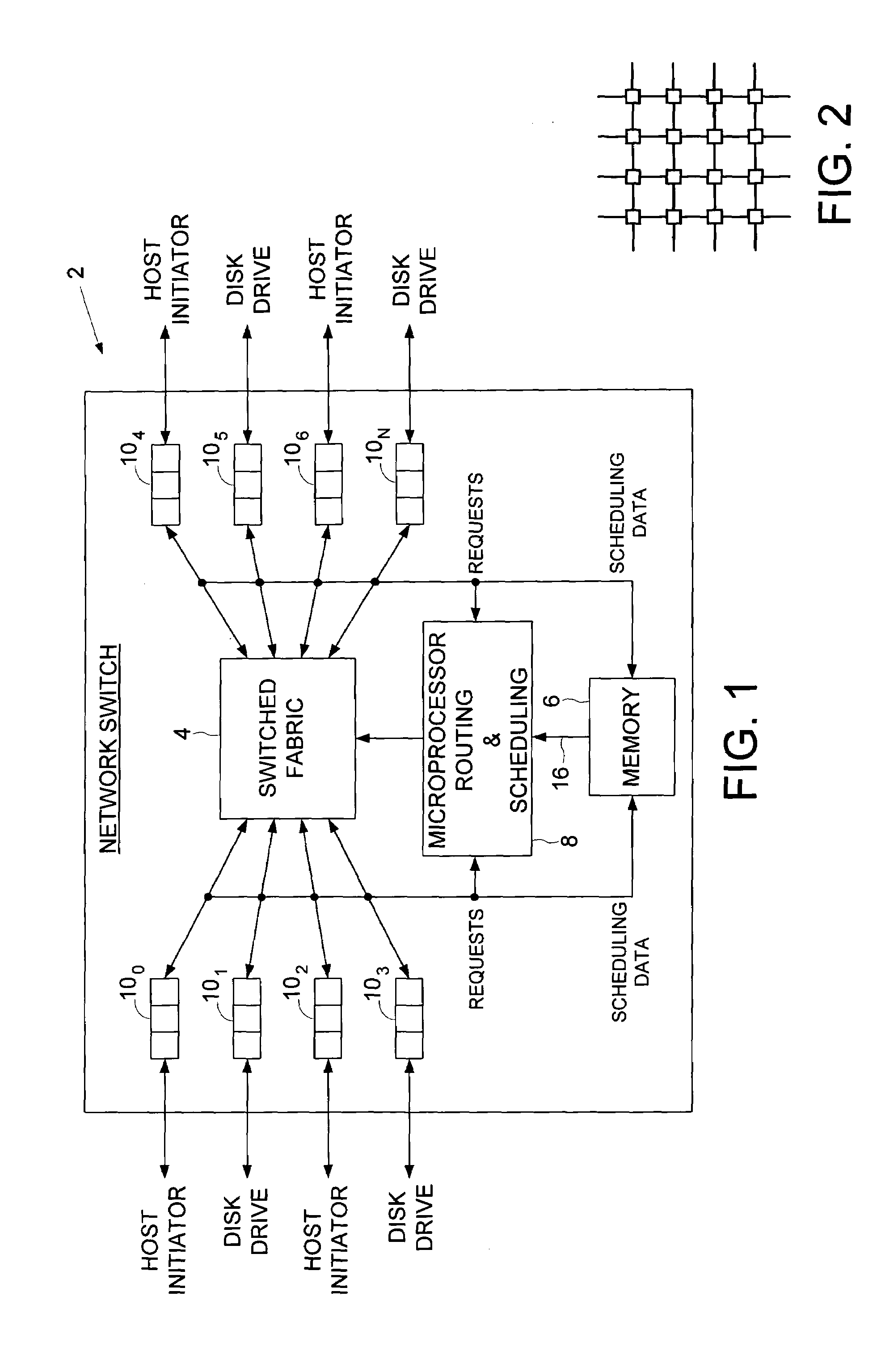

Transferring scheduling data from a plurality of disk storage devices to a network switch before transferring data associated with scheduled requests between the network switch and a plurality of host initiators

InactiveUS6928470B1Input/output to record carriersMultiple digital computer combinationsControl dataNetwork switch

A network switch is disclosed for resolving requests from a plurality of host initiators by scheduling access to a plurality of disk storage devices. The network switch comprises a switched fabric comprising a plurality of switching elements. Each switching element comprises a plurality of bi-directional switched fabric ports, and a control input connected to receive switch control data for selectively configuring the switching element in order to interconnect the bi-directional switched fabric ports. The network switch further comprises a memory for storing a routing and scheduling program, and a microprocessor, responsive to the requests, for executing the steps of the routing and scheduling program to generate the switch control data to transmit scheduled requests through the bi-directional switched fabric ports. At least one of the plurality of switching elements comprises a disk storage interface for connecting to a selected one of the disk storage devices. The microprocessor schedules access to the plurality of disk storage devices through the disk storage interface. The disk storage interface receives scheduling data from the selected one of the storage devices, and the memory stores the scheduling data received via the bi-directional switched fabric ports of a selected number of the switching elements. The scheduling data is processed according to a priority such that the selected switching elements transfer the scheduling data through the bi-directional switched fabric ports before transferring data associated with the scheduled requests.

Owner:WESTERN DIGITAL TECH INC

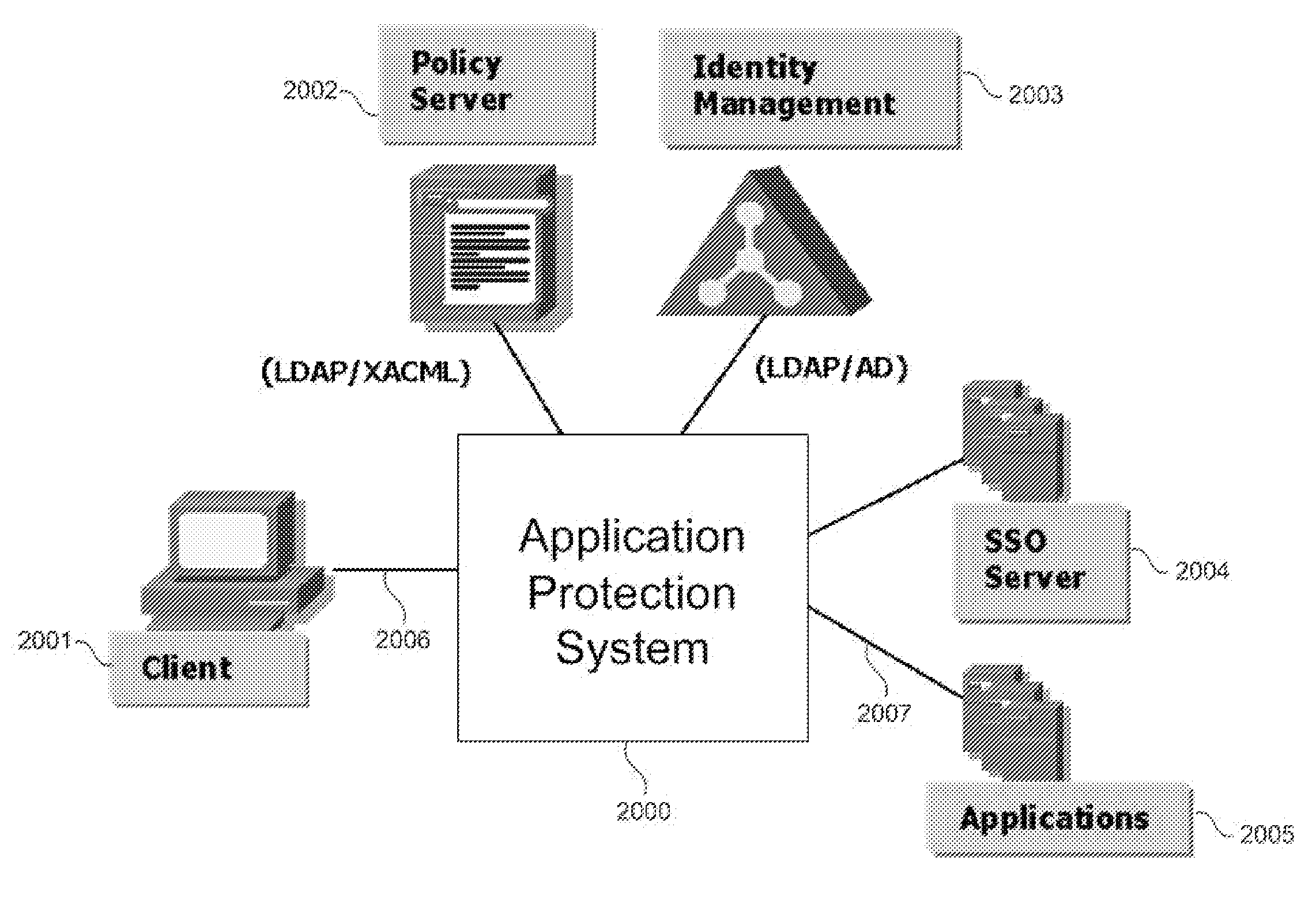

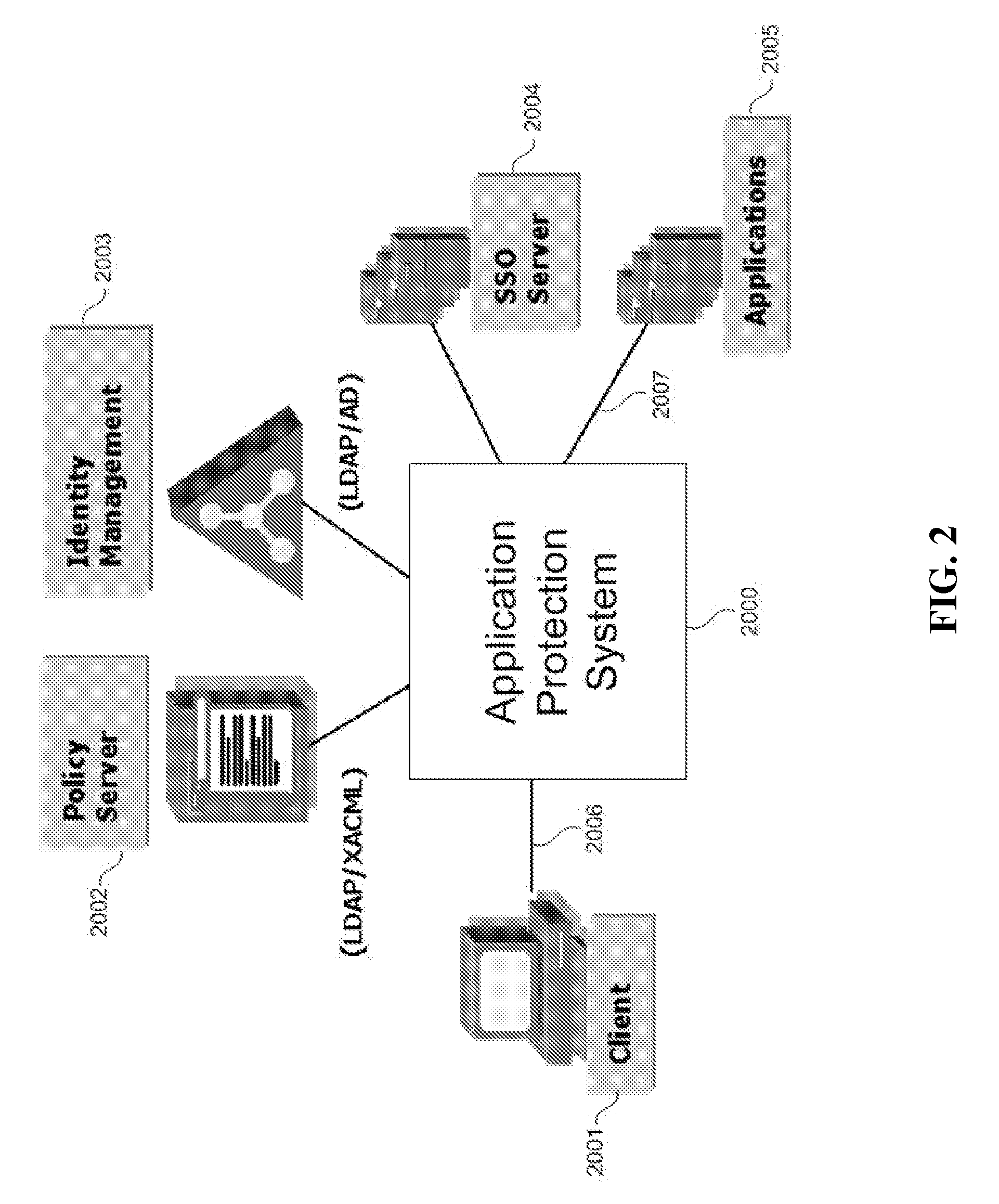

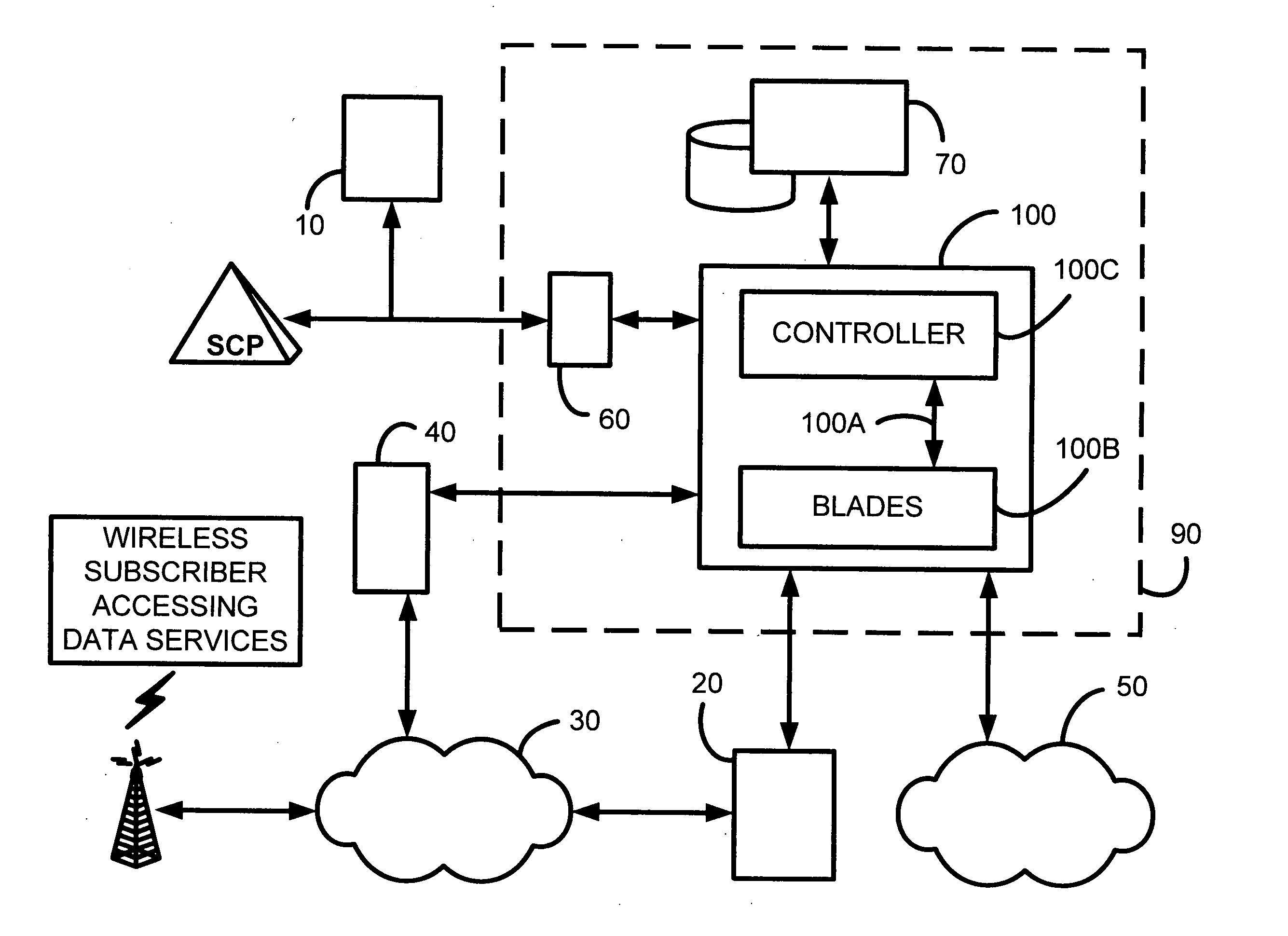

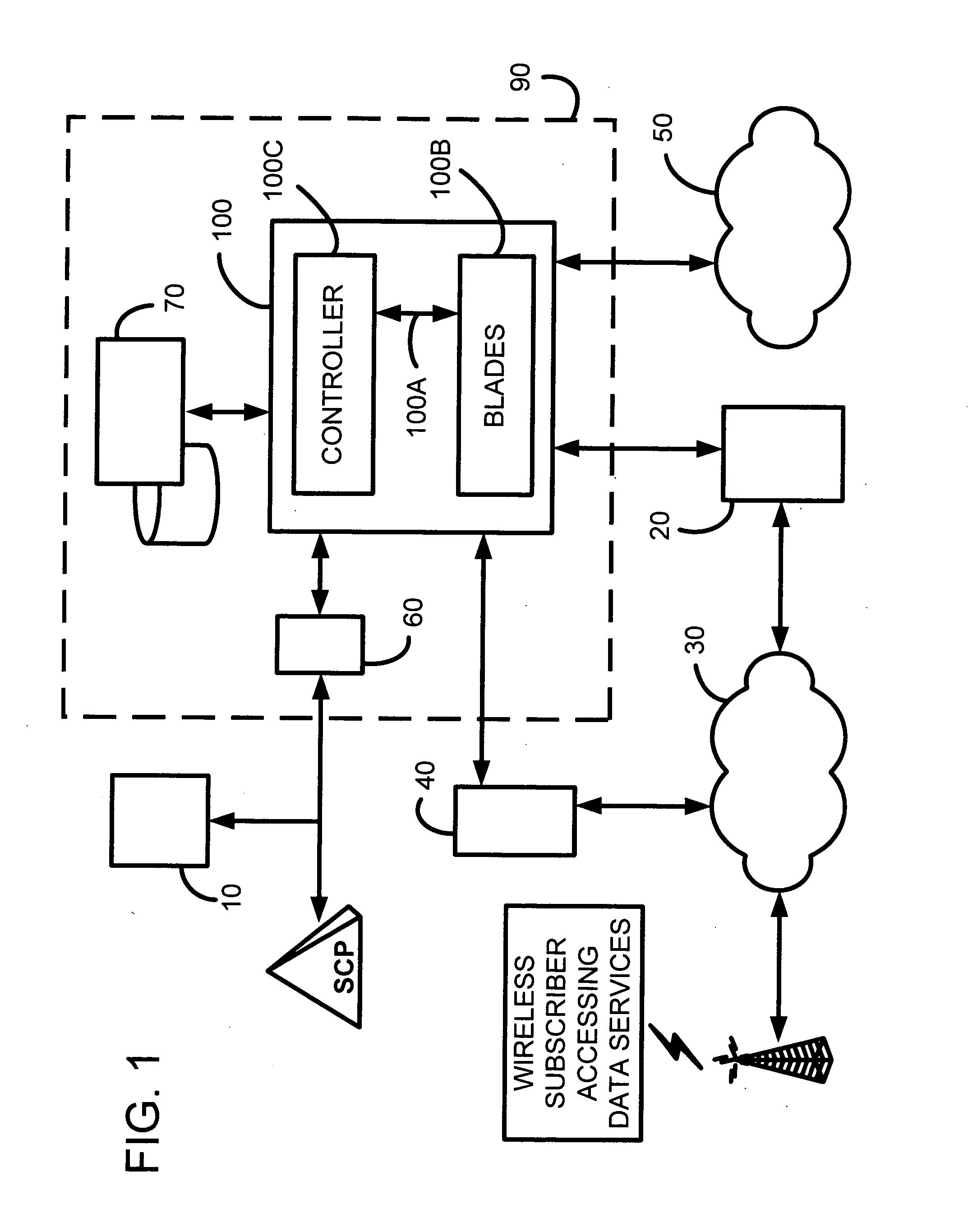

Method for implementing an intelligent content rating middleware platform and gateway system

InactiveUS20050135264A1Highly scalable, manageable and reliableMetering/charging/biilling arrangementsError preventionCyber operationsMiddleware

The method for implementing an Intelligent Content Rating middleware platform and gateway system disclosed herewith provides telecommunications carriers and network operators with the ability to define routing and actions based on HTTP / SIP based content and subscriber context through a powerful, extensible Layer 4-7 switching fabric technology. The invention mediates communications between applications and networks for IP packet flows, personal messaging, location-based services and billing. Furthermore, it enables advanced context-sensitive dialogue scenarios with the wireless subscriber such as, but not limited to, Advice-of-Charge dialogues. The art permits telecommunications network operators and like entities to introduce real-time rating of data services for both prepaid and post-paid subscribers. Further advances in the art include the validation of digital signatures, combined with authentication and non-repudiation techniques to ensure subscriber privacy remains protected.

Owner:REDKNEE INC

Pipelined packet switching and queuing architecture

InactiveUS6980552B1Quick implementationImprove throughputData switching by path configurationParallel processingBandwidth management

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in a multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Using bandwidth management techniques, each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path includes a buffer / queuing circuit similar to that used in the receive path and can include another pipelined switch. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus, congestion avoidance, and bandwidth management hardware.

Owner:CISCO TECH INC

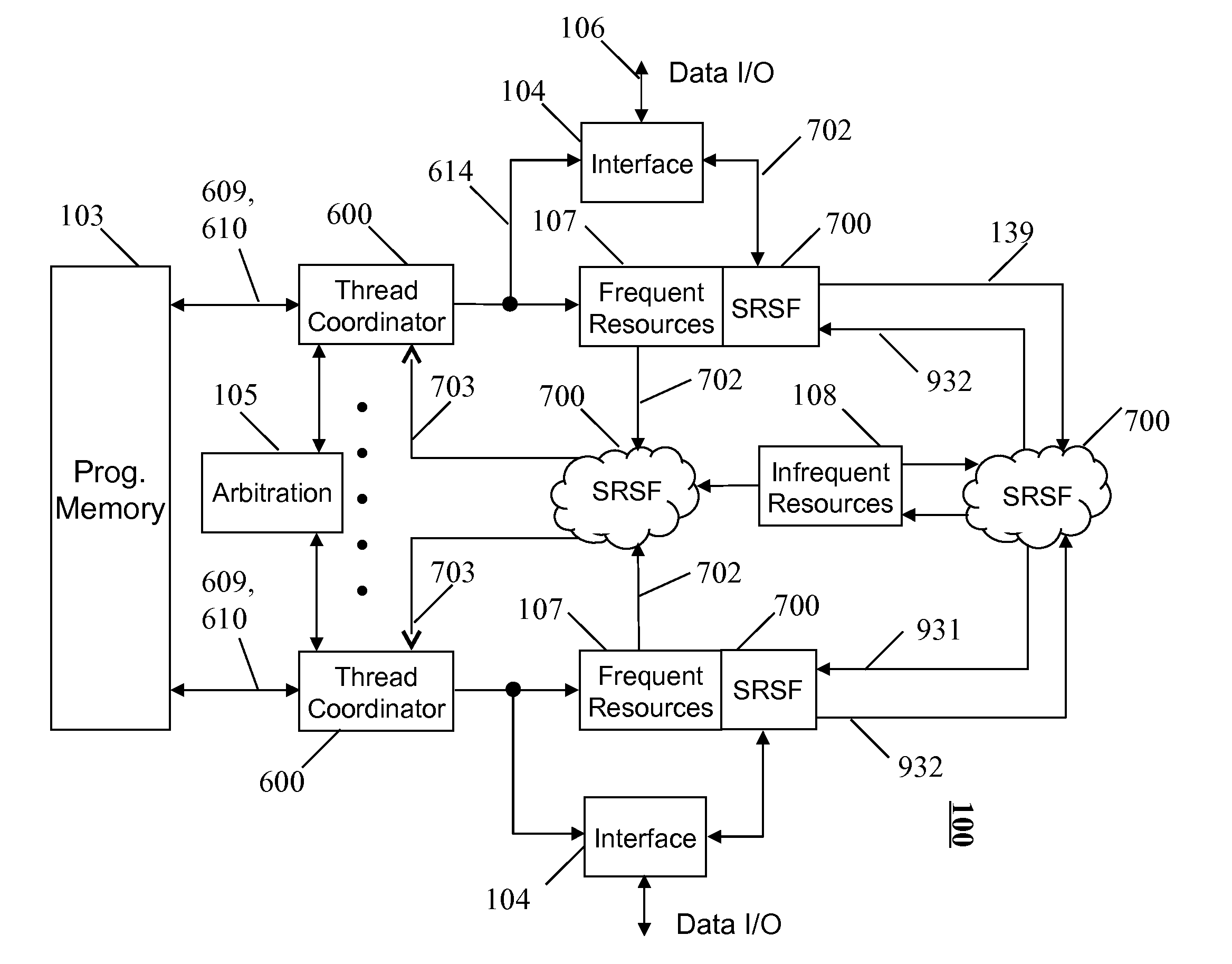

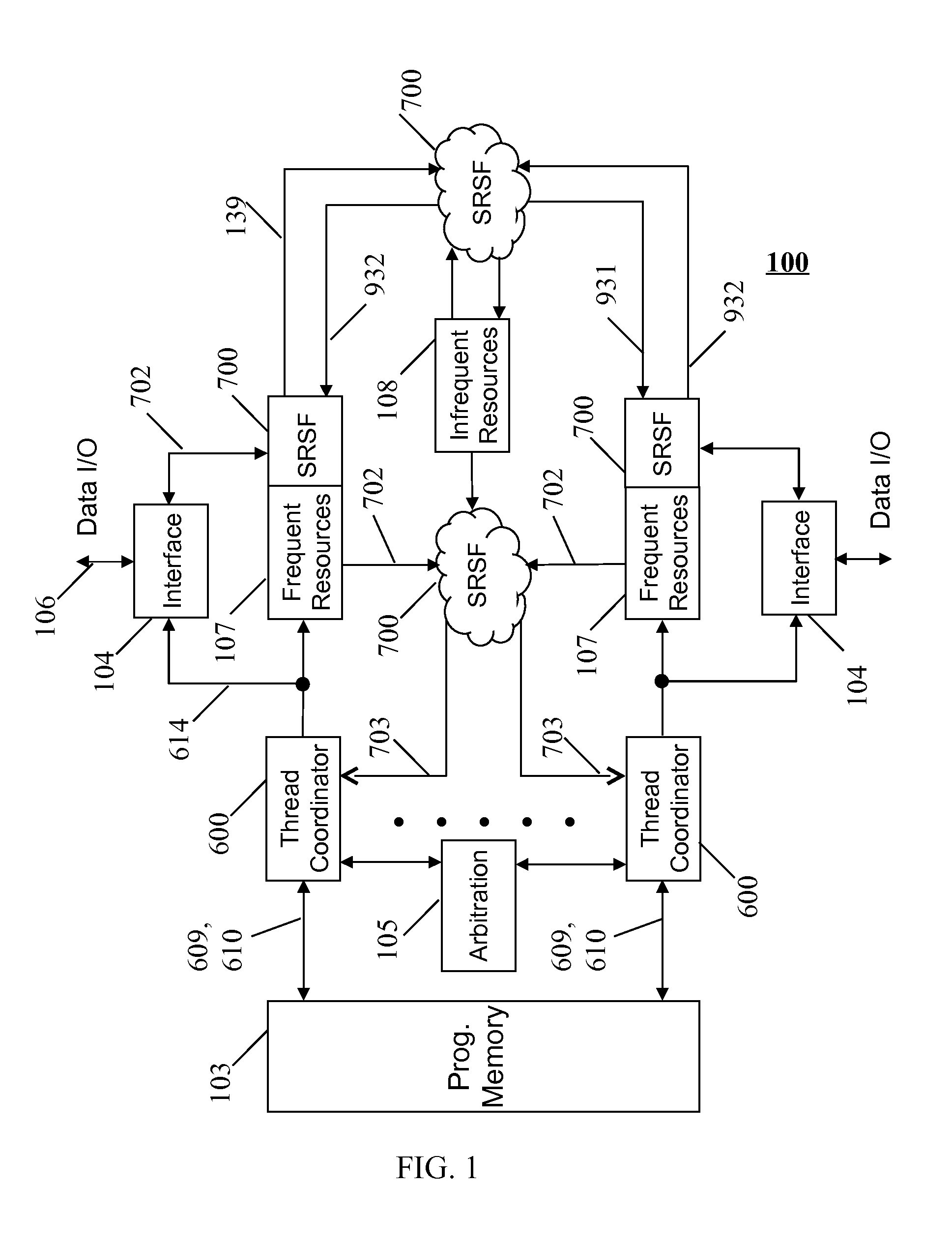

Shared resource multi-thread processor array

ActiveUS20120089812A1Dataflow computersProgram initiation/switchingProgrammable circuitsSwitched fabric

A shared resource multi-thread processor array wherein an array of heterogeneous function blocks are interconnected via a self-routing switch fabric, in which the individual function blocks have an associated switch port address. Each switch output port comprises a FIFO style memory that implements a plurality of separate queues. Thread queue empty flags are grouped using programmable circuit means to form self-synchronised threads. Data from different threads are passed to the various addressable function blocks in a predefined sequence in order to implement the desired function. The separate port queues allows data from different threads to share the same hardware resources and the reconfiguration of switch fabric addresses further enables the formation of different data-paths allowing the array to be configured for use in various applications.

Owner:SMITH GRAEME ROY

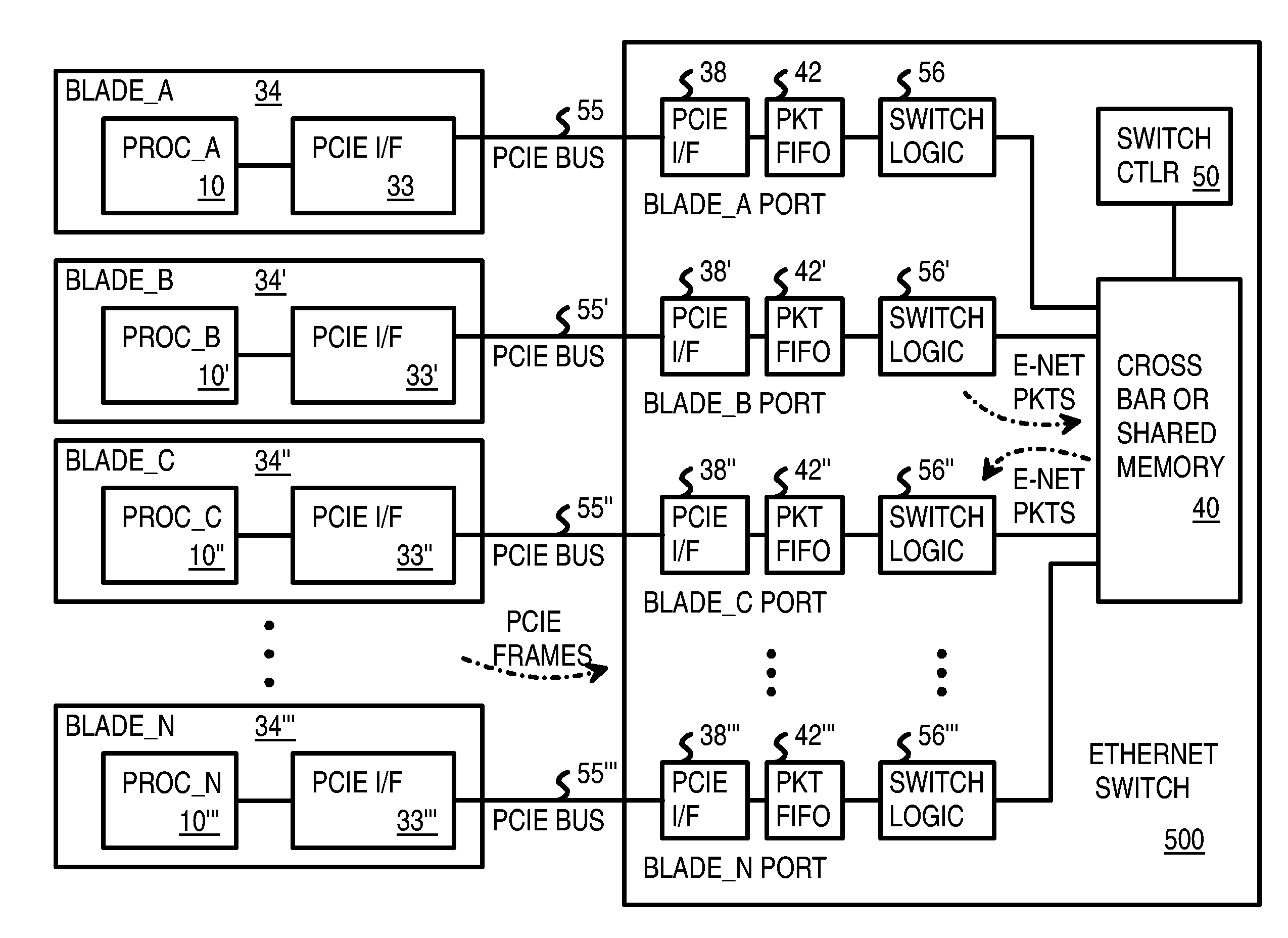

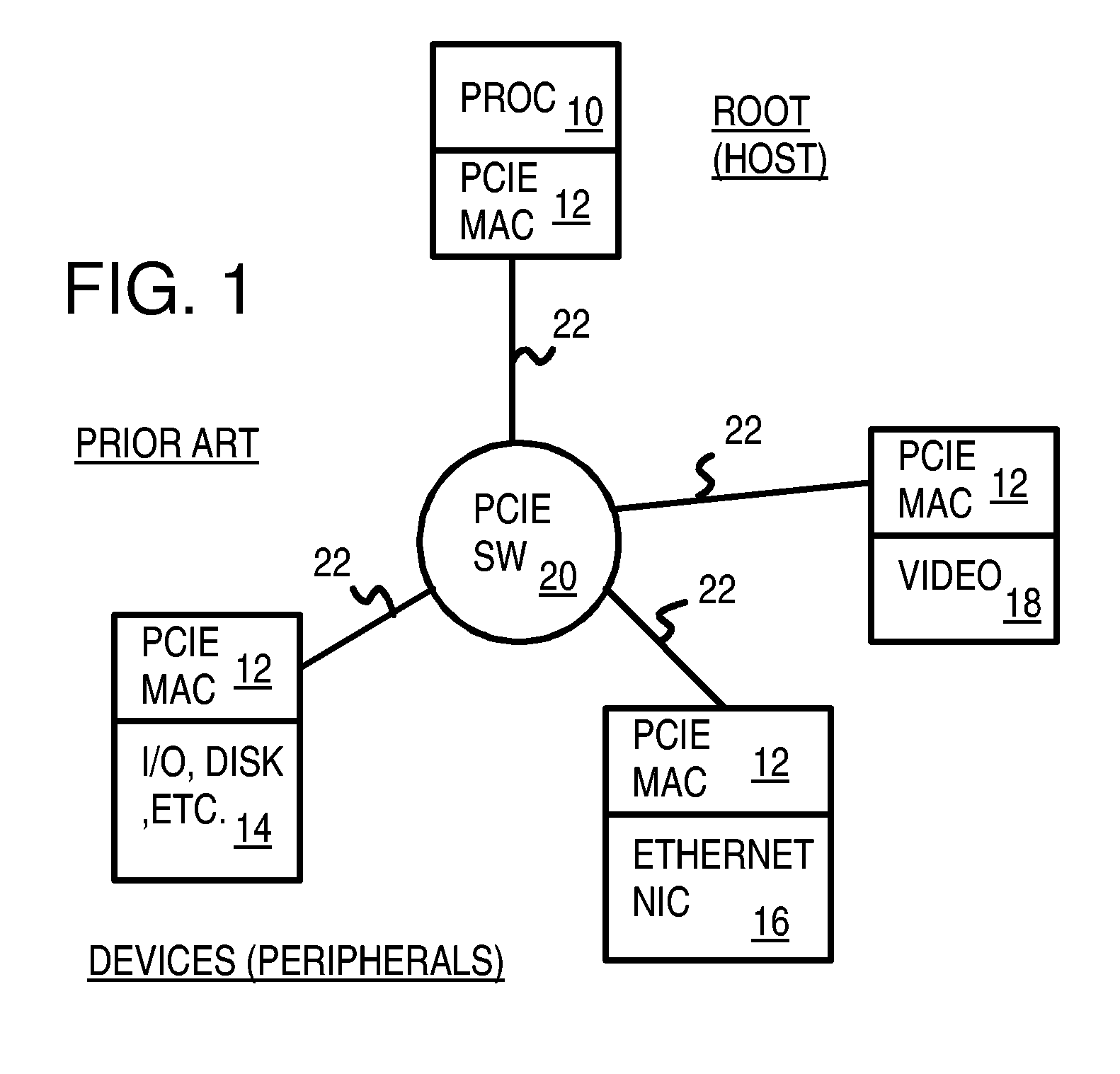

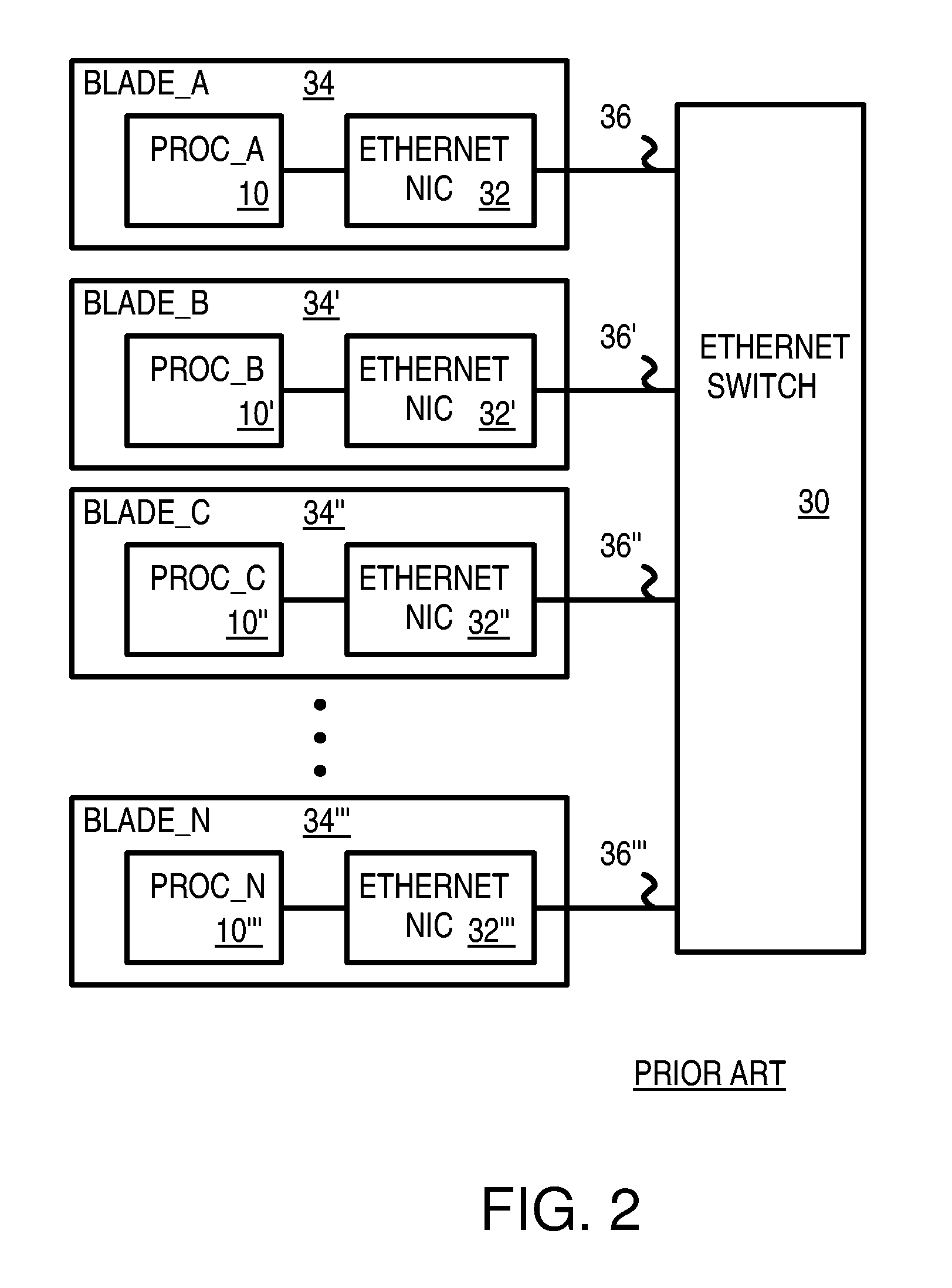

Pseudo-ethernet switch without ethernet media-access-controllers (MAC's) that copies ethernet context registers between PCI-express ports

A Pseudo-Ethernet switch has a routing table that uses Ethernet media-access controller (MAC) addresses to route Ethernet packets through a switch fabric between an input port and an output port. However, the input port and output port have Peripheral Component Interconnect Express (PCIE) interfaces that read and write PCI-Express packets to and from host-processor memories. When used in a blade system, host processor boards have PCIE physical links that connect to the PCIE ports on the Pseudo-Ethernet switch. The Pseudo-Ethernet switch does not have Ethernet MAC and Ethernet physical layers, saving considerable hardware. The switch fabric can be a cross-bar switch or can be a shared memory that stores Ethernet packet data embedded in the PCIE packets. Write and read pointers for a buffer storing an Ethernet packet in the shared memory can be passed from input to output port to perform packet switching.

Owner:DIODES INC

I/O primitives

InactiveUS7013379B1Error detection/correctionMemory adressing/allocation/relocationFast pathLocking mechanism

Described are techniques used in a computer system for handling data operations to storage devices. A switching fabric includes one or more fast paths for handling lightweight, common data operations and at least one control path for handling other data operations. A control path manages one or more fast paths. The fast path and the control path are utilized in mapping virtual to physical addresses using mapping tables. The mapping tables include an extent table of one or more entries corresponding to varying address ranges. The size of an extent may be changed dynamically in accordance with a corresponding state change of physical storage. The fast path may cache only portions of the extent table as needed in accordance with a caching technique. The fast path may cache a subset of the extent table stored within the control path. A set of primitives may be used in performing data operations. A locking mechanism is described for controlling access to data shared by the control paths.

Owner:IBM CORP

Dynamic and variable length extents

InactiveUS20030140210A1Input/output to record carriersMemory adressing/allocation/relocationVariable-length codeFast path

Described are techniques used in a computer system for handling data operations to storage devices. A switching fabric includes one or more fast paths for handling lightweight, common data operations and at least one control path for handling other data operations. A control path manages one or more fast paths. The fast path and the control path are utilized in mapping virtual to physical addresses using mapping tables. The mapping tables include an extent table of one or more entries corresponding to varying address ranges. The size of an extent may be changed dynamically in accordance with a corresponding state change of physical storage. The fast path may cache only portions of the extent table as needed in accordance with a caching technique. The fast path may cache a subset of the extent table stored within the control path. A set of primitives may be used in performing data operations. A locking mechanism is described for controlling access to data shared by the control paths.

Owner:IBM CORP

Fast path for performing data operations

InactiveUS20070016754A1Multiplex system selection arrangementsUnauthorized memory use protectionFast pathData operations

Described are techniques used in a computer system for handling data operations to storage devices. A switching fabric includes one or more fast paths for handling lightweight, common data operations and at least one control path for handling other data operations. A control path manages one or more fast paths. The fast path and the control path are utilized in mapping virtual to physical addresses using mapping tables. The mapping tables include an extent table of one or more entries corresponding to varying address ranges. The size of an extent may be changed dynamically in accordance with a corresponding state change of physical storage. The fast path may cache only portions of the extent table as needed in accordance with a caching technique. The fast path may cache a subset of the extent table stored within the control path. A set of primitives may be used in performing data operations. A locking mechanism is described for controlling access to data shared by the control paths.

Owner:IBM CORP

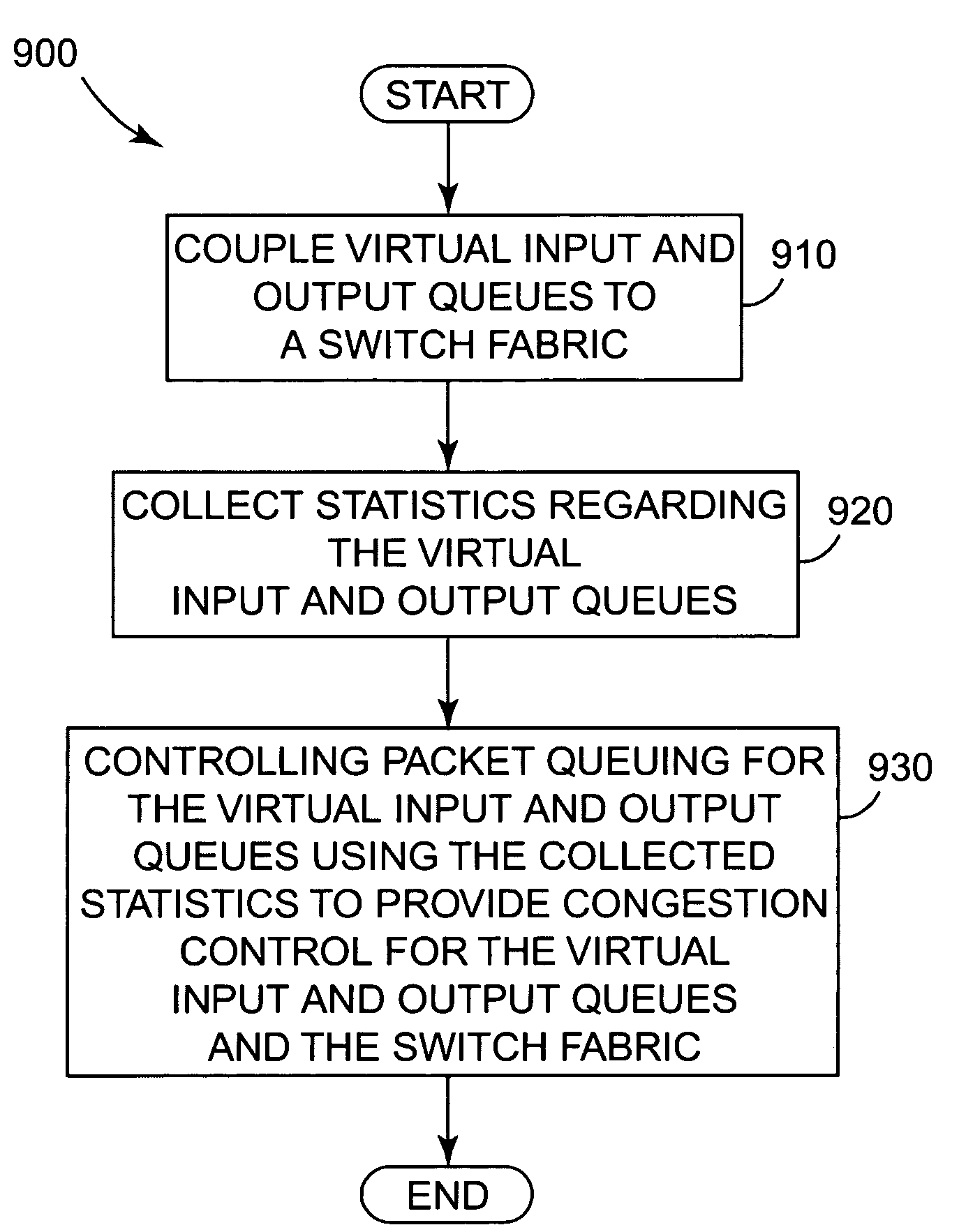

Multi-service queuing method and apparatus that provides exhaustive arbitration, load balancing, and support for rapid port failover

InactiveUS7151744B2Efficient multicastingEasy to handleError preventionTransmission systemsTraffic capacityFailover

The present invention provides a multi-service queuing method and apparatus that provides exhaustive arbitration, load balancing, and support for rapid port failover. Routers and switches according to the present invention can instantaneously direct the flow of traffic to another port should there be a failure on a link, efficiently handle multicast traffic and provide multiple service classes. The fabric interface interfaces the switch fabric with the ingress and egress functions provided at a network node and provides virtual input and output queuing with backpressure feedback, redundancy for high availability applications, and packet segmentation and reassembly into variable length cells. The user configures fixed and variable-length cells. Virtual input and output queues are coupled to a switch fabric. Statistics regarding the virtual input and output queues are collected and packet queuing for the virtual input and output queues is controlled using the collected statistic to provide congestion control for the virtual input and output queues and the switch fabric.

Owner:RPX CORP

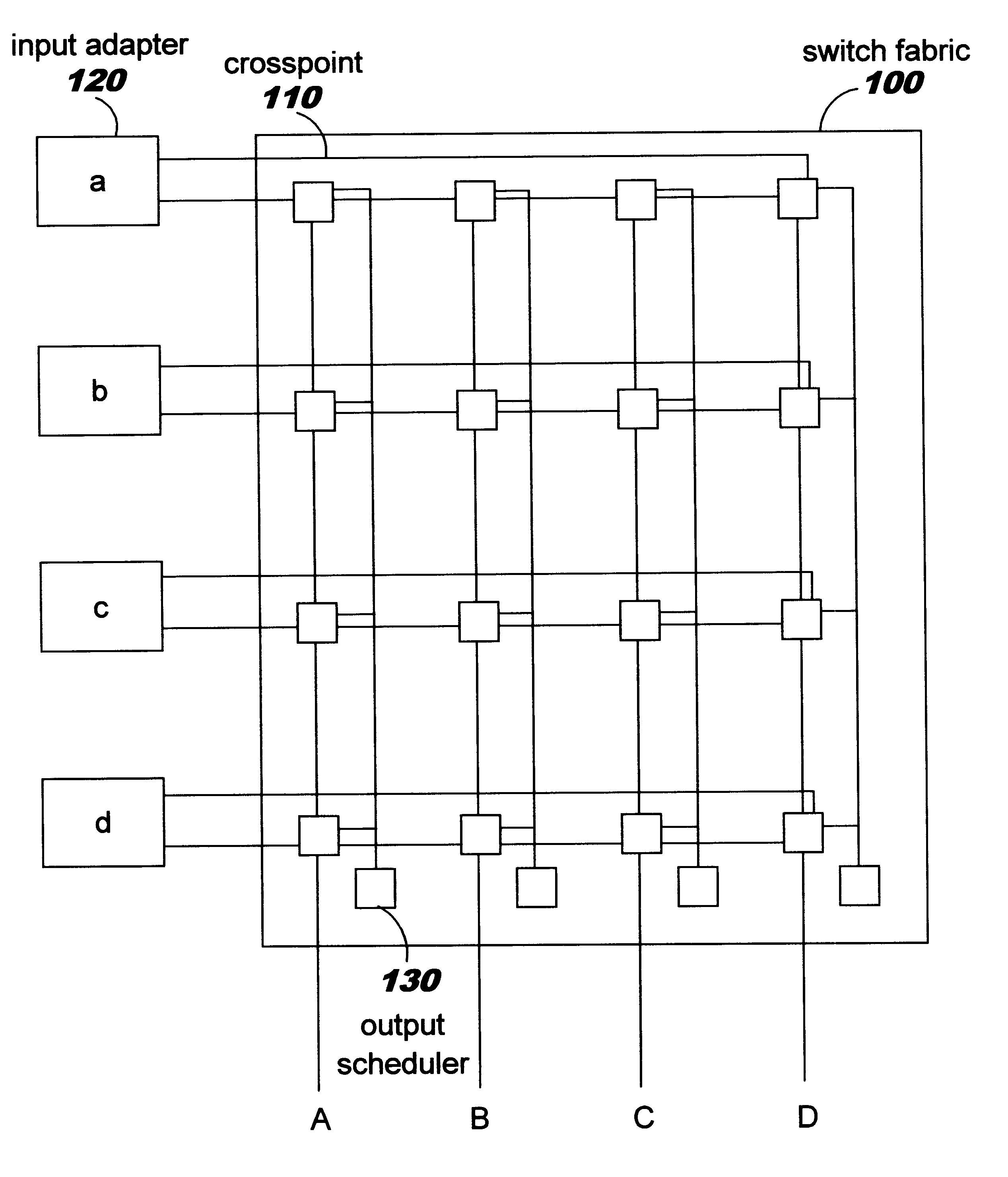

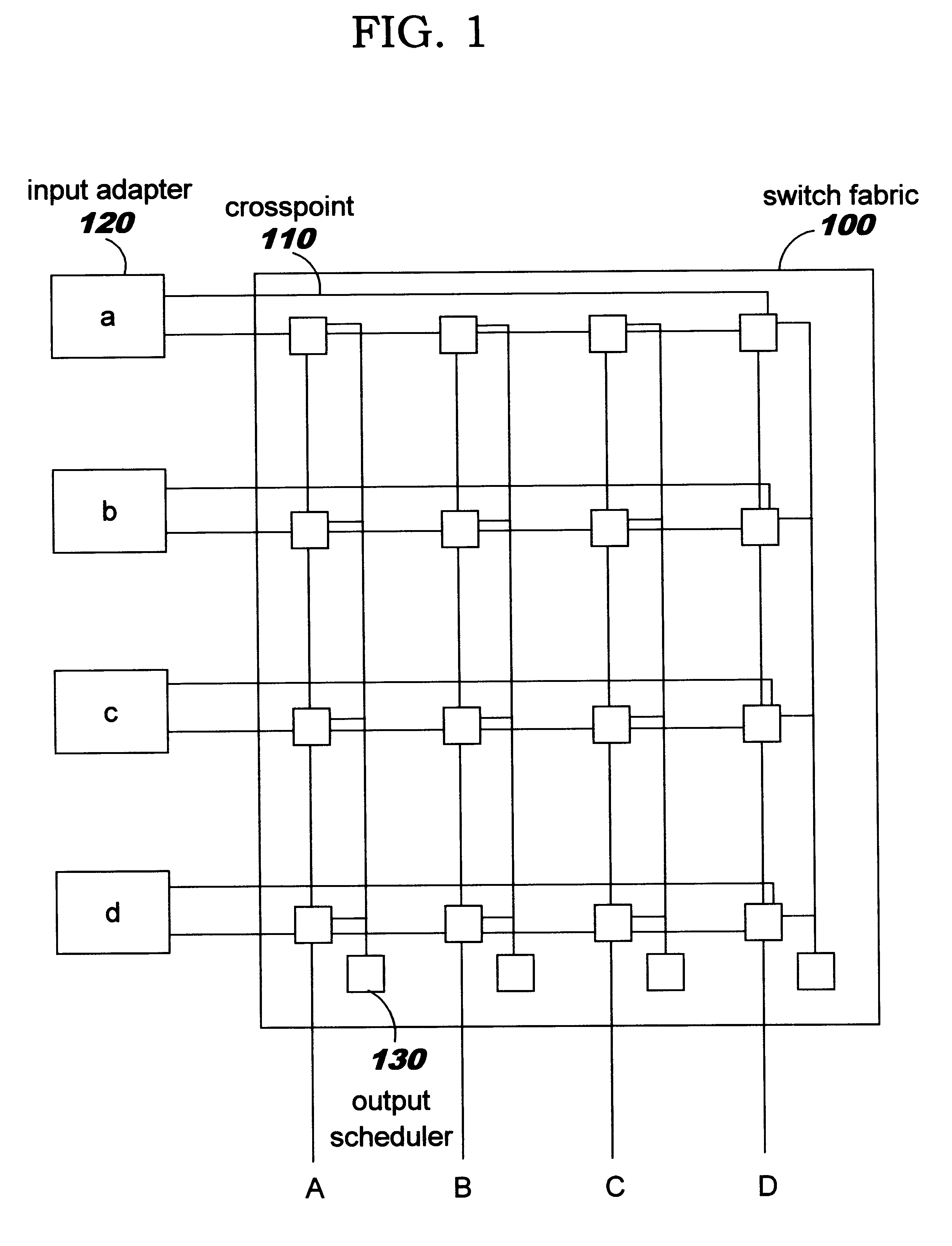

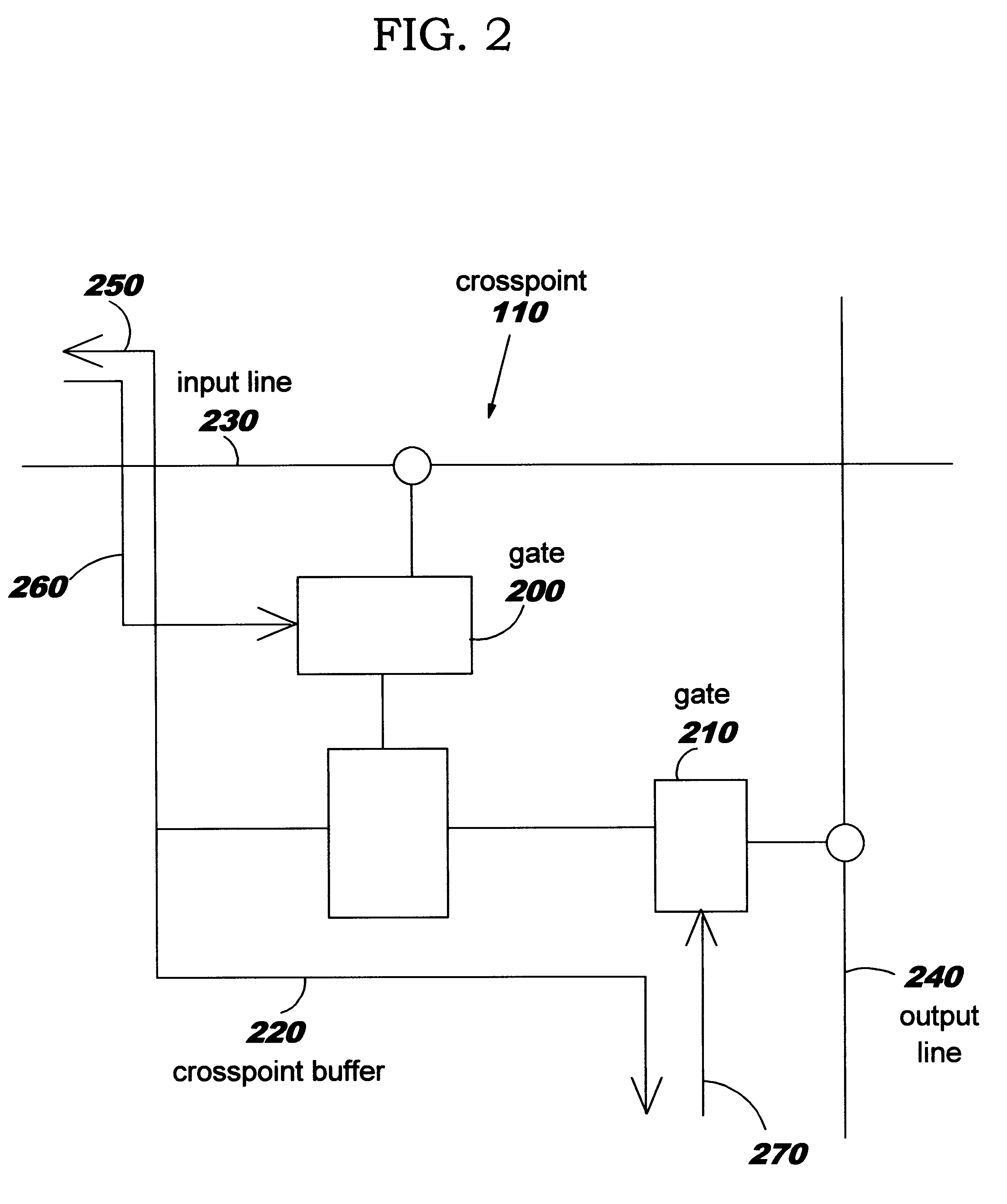

Data switch

A packet data switch is described comprising a crossbar switch fabric including a set of crosspoint buffers for storing at least one data packet, one for each input / output pair. An input queue is provided for each input-output pair and means are provided for storing incoming data packets in one of the queues corresponding to an input-output routing for the data packet. An input scheduler repeatedly selects one queue from the plurality of queues at each input and a data packet is transferred from the queue selected by the input scheduler from the input queue means to the crosspoint buffer corresponding to the input-output routing for the data packet. A back pressure mechanism is arranged to inhibit selection by the first selector of queues corresponding to input / output pairs for which the respective crosspoint buffer is full. Finally, an output scheduler repeatedly selects for each output one of the crosspoint buffers corresponding to the output and the switch is responsive to the output scheduler to complete the transmission through the switch fabric of the data packet stored in the crosspoint buffer selected by the output scheduler.

Owner:IBM CORP

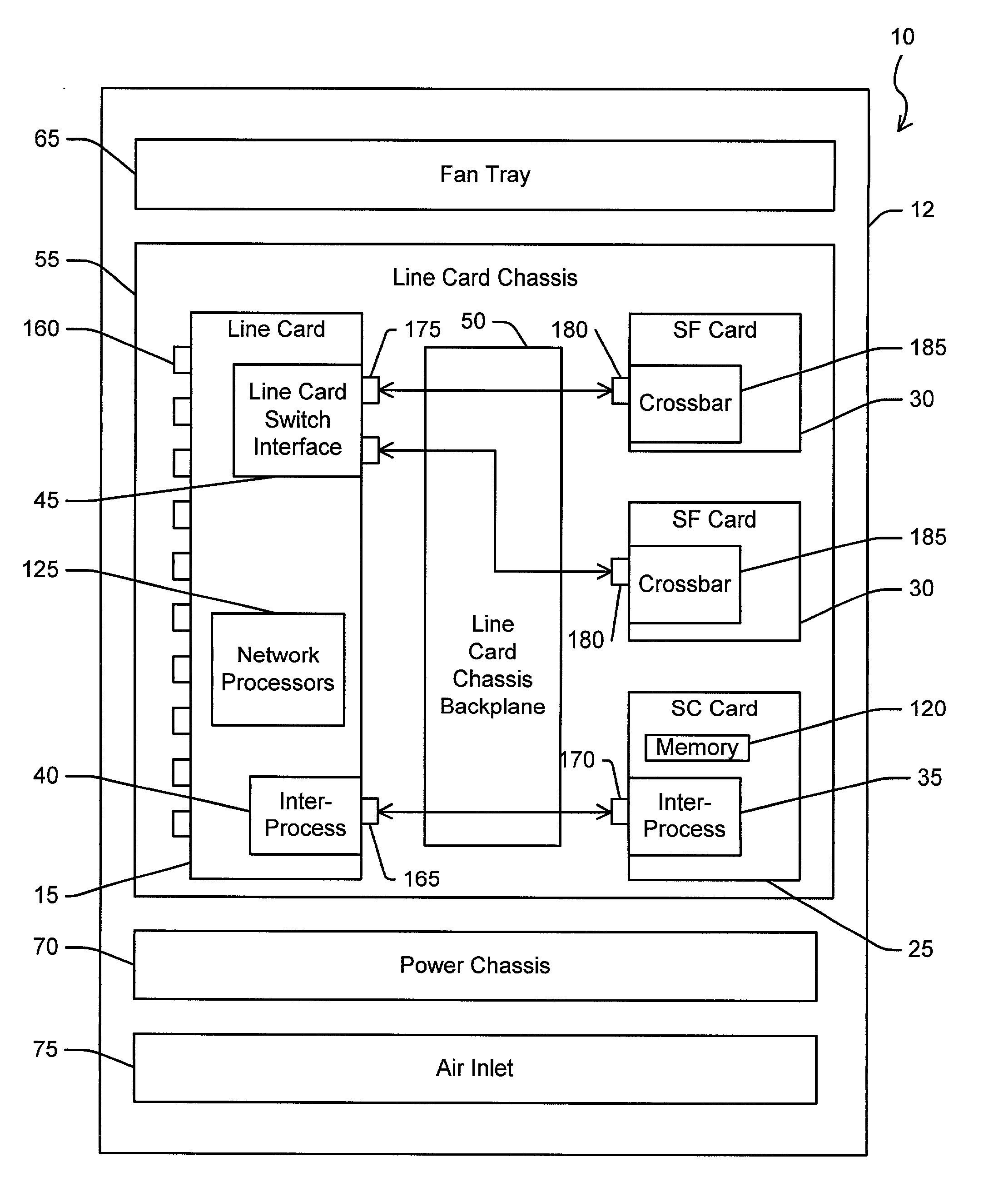

System and method for load-sharing computer network switch

A computer network switch system is disclosed. A switch system may be configured as a single chassis system that has at least one line card, a set of active switch fabric cards to concurrently carry network traffic; and a first system control card to provide control functionality for the line card. The switch system may be configured as a multiple chassis system that has at least one line card chassis containing several line cards, and a switch fabric chassis (or a second line card chassis) that contains several switch fabric cards to provide a switching fabric with multiple ports. Load-sharing is accomplished primarily at the chip level, although card-level load-sharing is possible.

Owner:CIPHERMAX

Apparatus and method for modular dynamically power managed power supply and cooling system for computer systems, server applications, and other electronic devices

InactiveUS20090144568A1Save energyConserving methodEnergy efficient ICTPower supply for data processingNetwork architectureEnergy expenditure

Network architecture, computer system and / or server, circuit, device, apparatus, method, and computer program and control mechanism for managing power consumption and workload in computer system and data and information servers. Further provides power and energy consumption and workload management and control systems and architectures for high-density and modular multi-server computer systems that maintain performance while conserving energy and method for power management and workload management. Dynamic server power management and optional dynamic workload management for multi-server environments is provided by aspects of the invention. Modular network devices and integrated server system, including modular servers, management units, switches and switching fabrics, modular power supplies and modular fans and a special backplane architecture are provided as well as dynamically reconfigurable multi-purpose modules and servers. Backplane architecture, structure, and method that has no active components and separate power supply lines and protection to provide high reliability in server environment.

Owner:HURON IP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com