Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

136 results about "Processor array" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

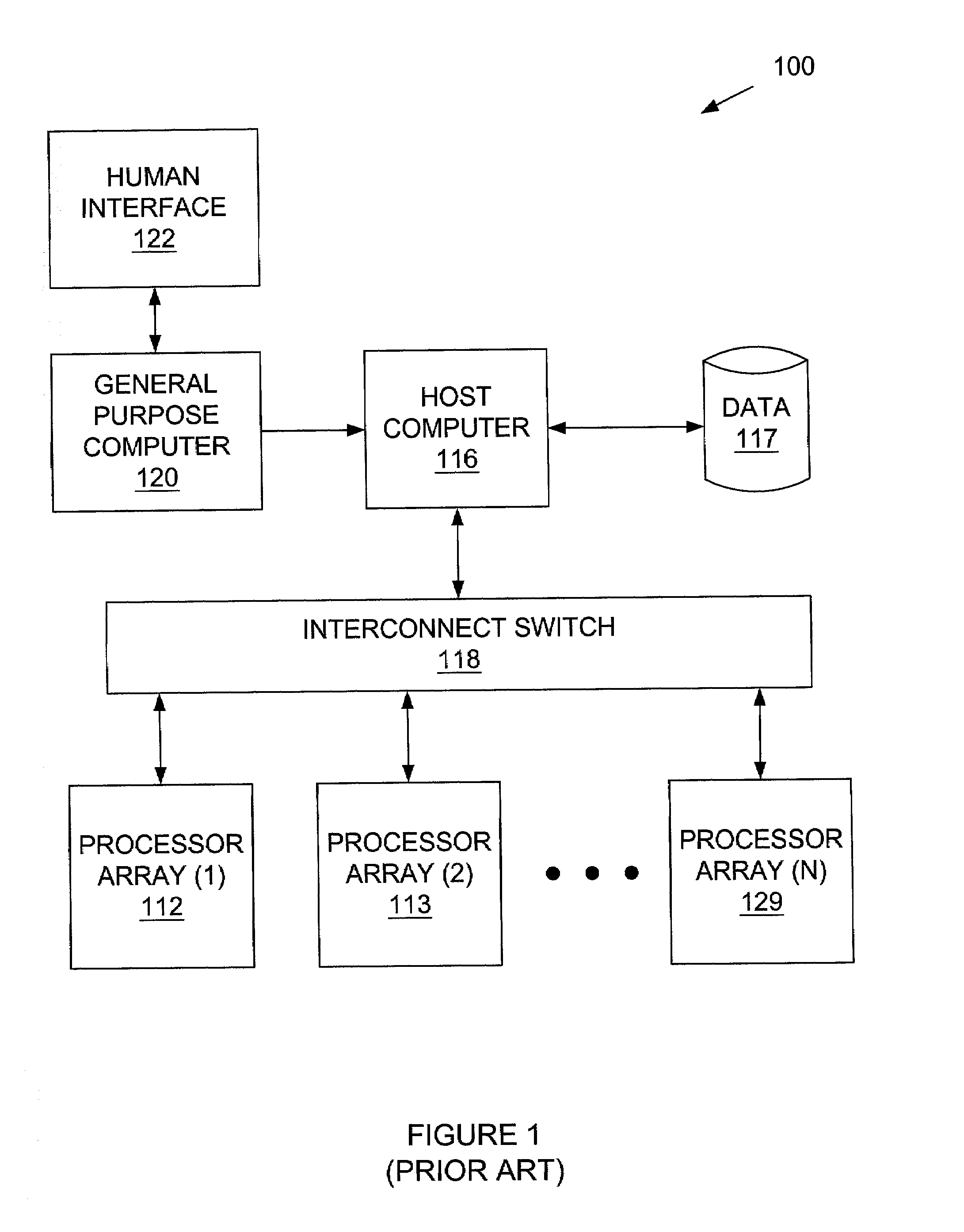

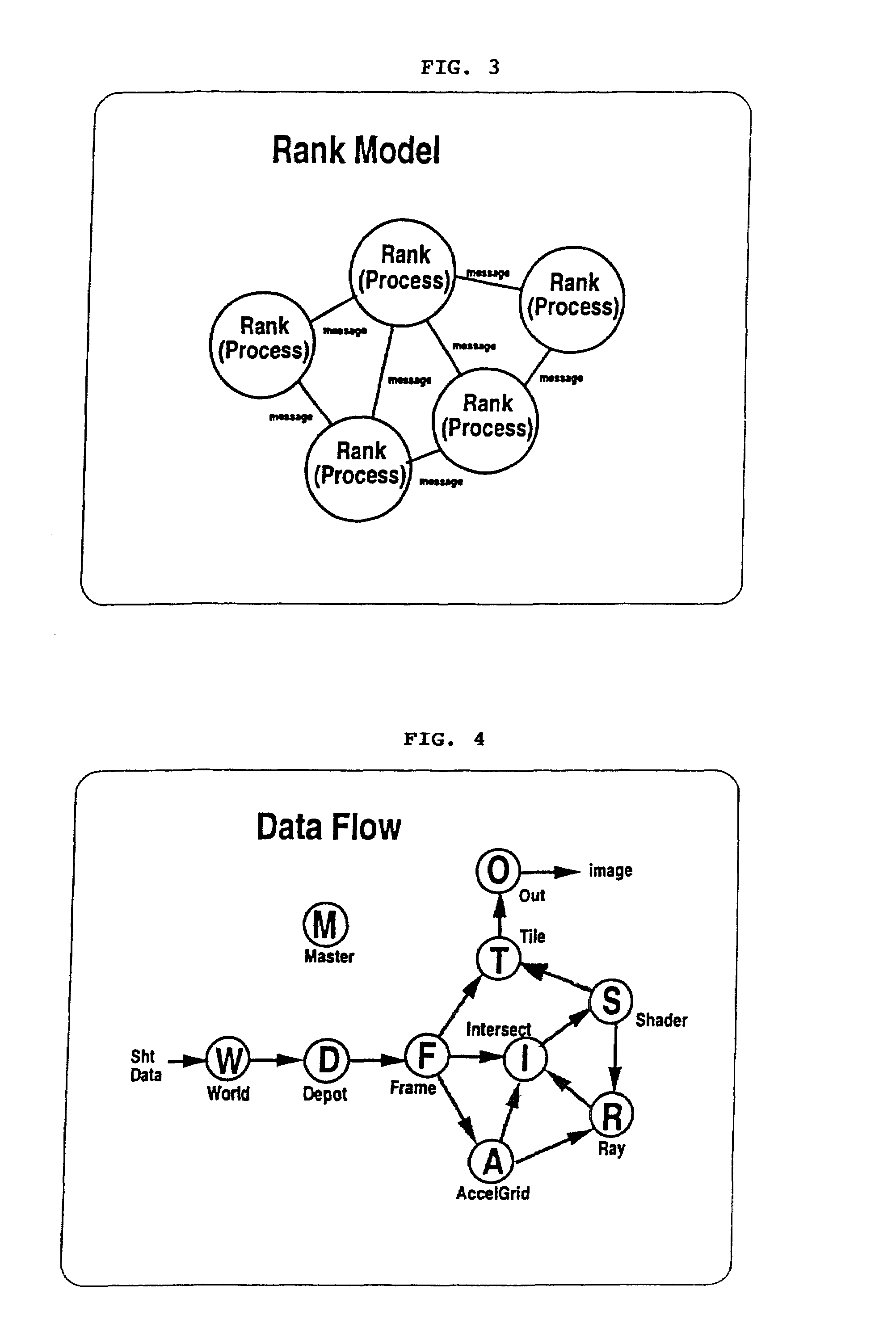

A processor array is like a storage array but contains and manages processing elements instead of storage elements.

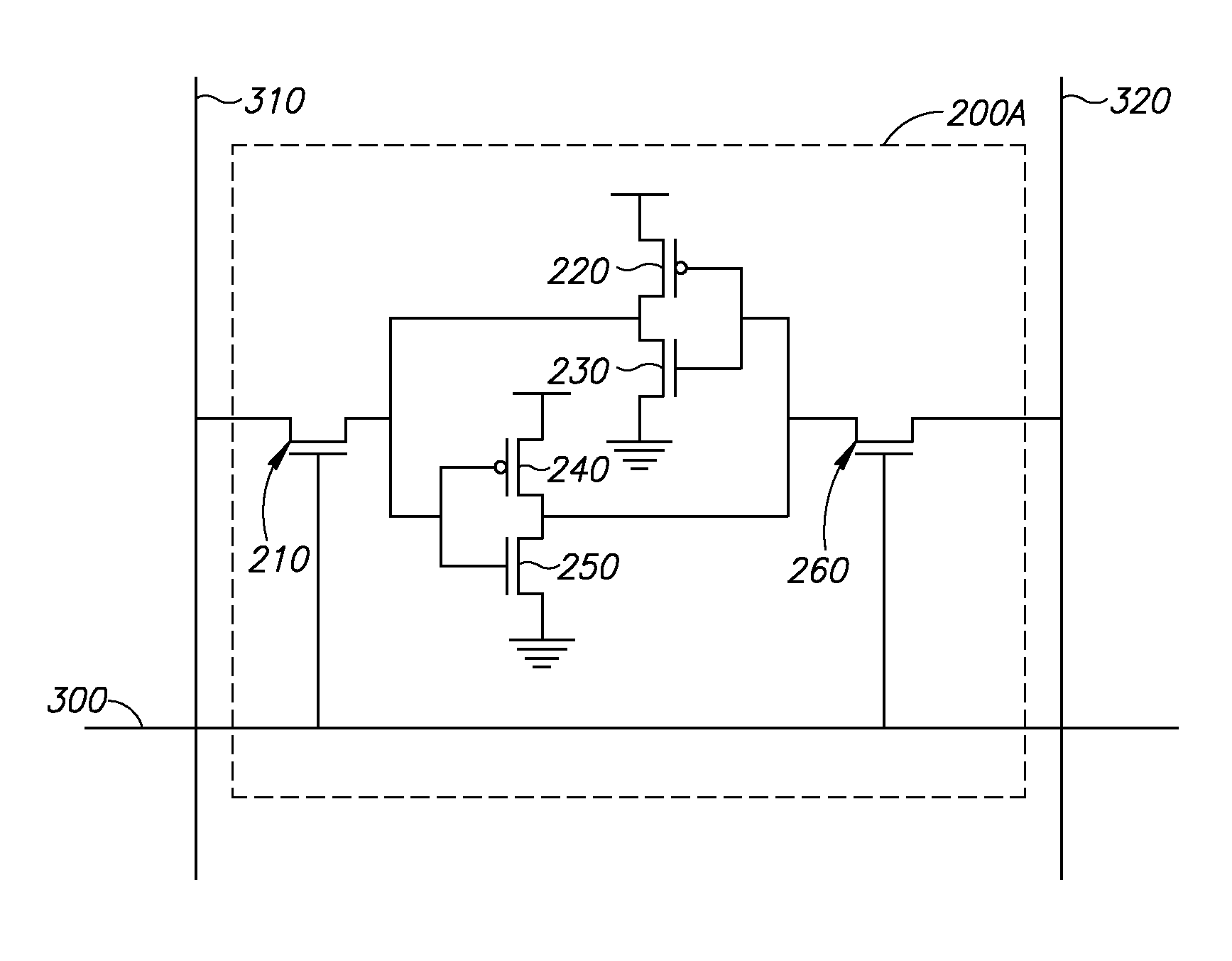

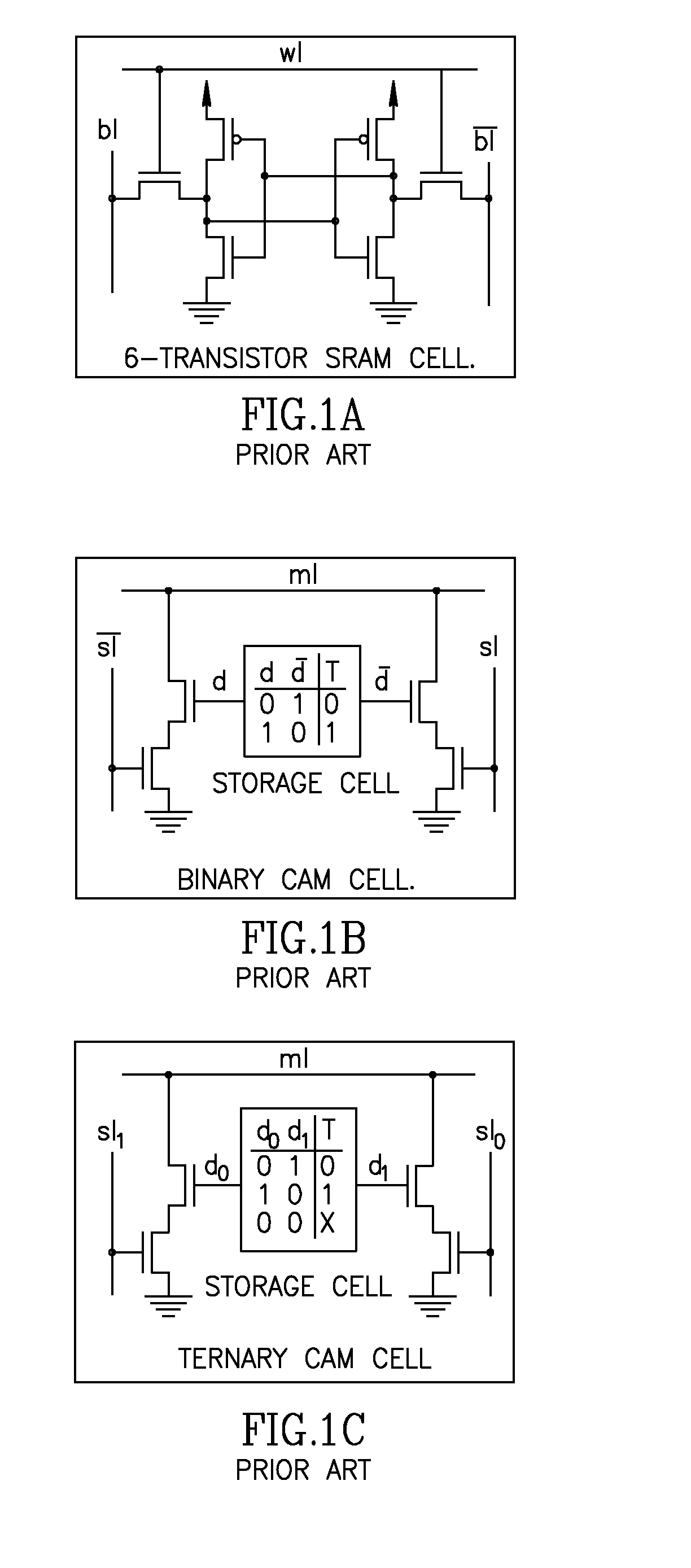

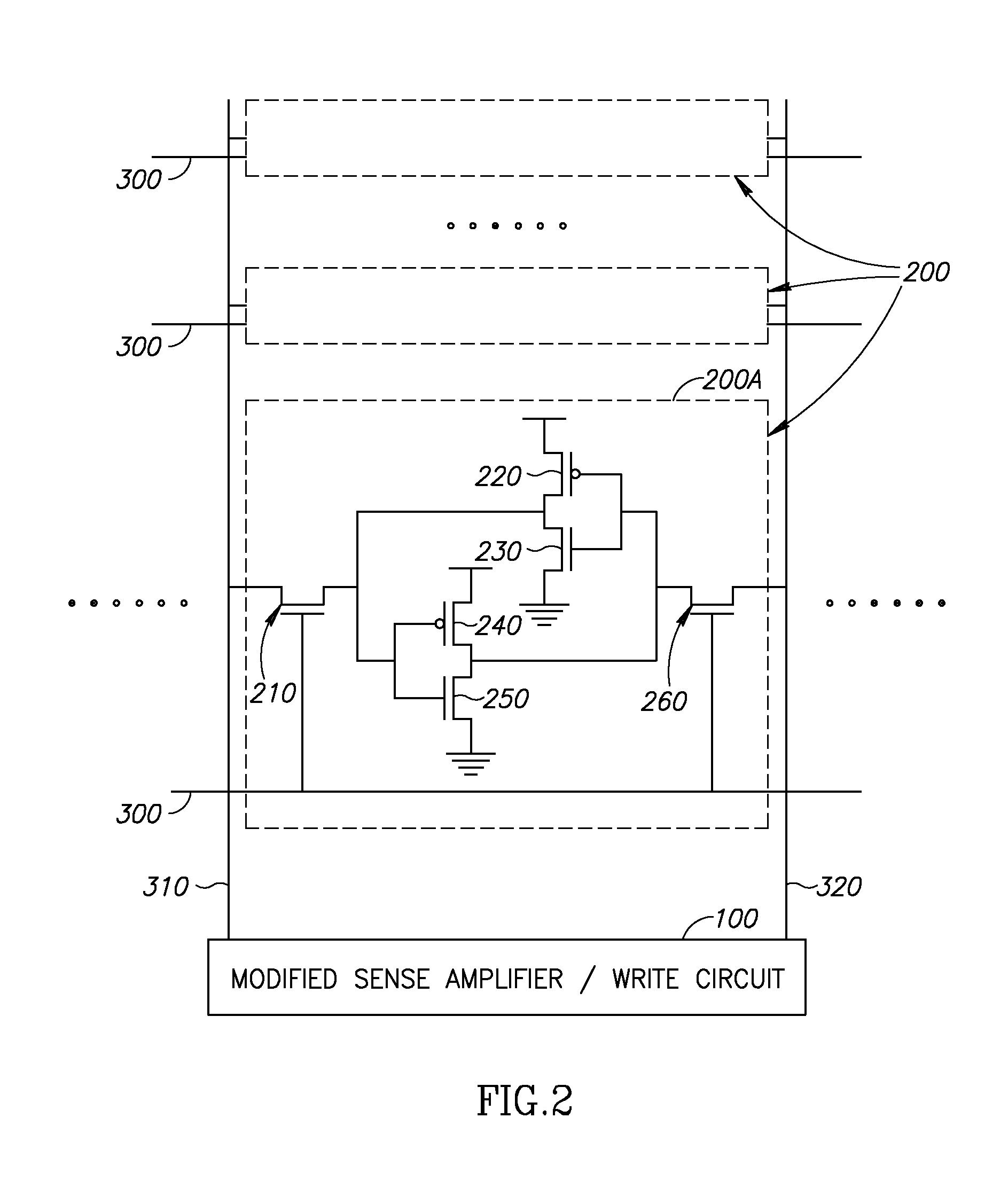

Processor Arrays Made of Standard Memory Cells

Owner:GSI TECH

Barrier synchronization mechanism for processors of a systolic array

InactiveUS7100021B1Without consuming substantial memory resourceImprove latencyProgram synchronisationGeneral purpose stored program computerSystolic arrayCommon point

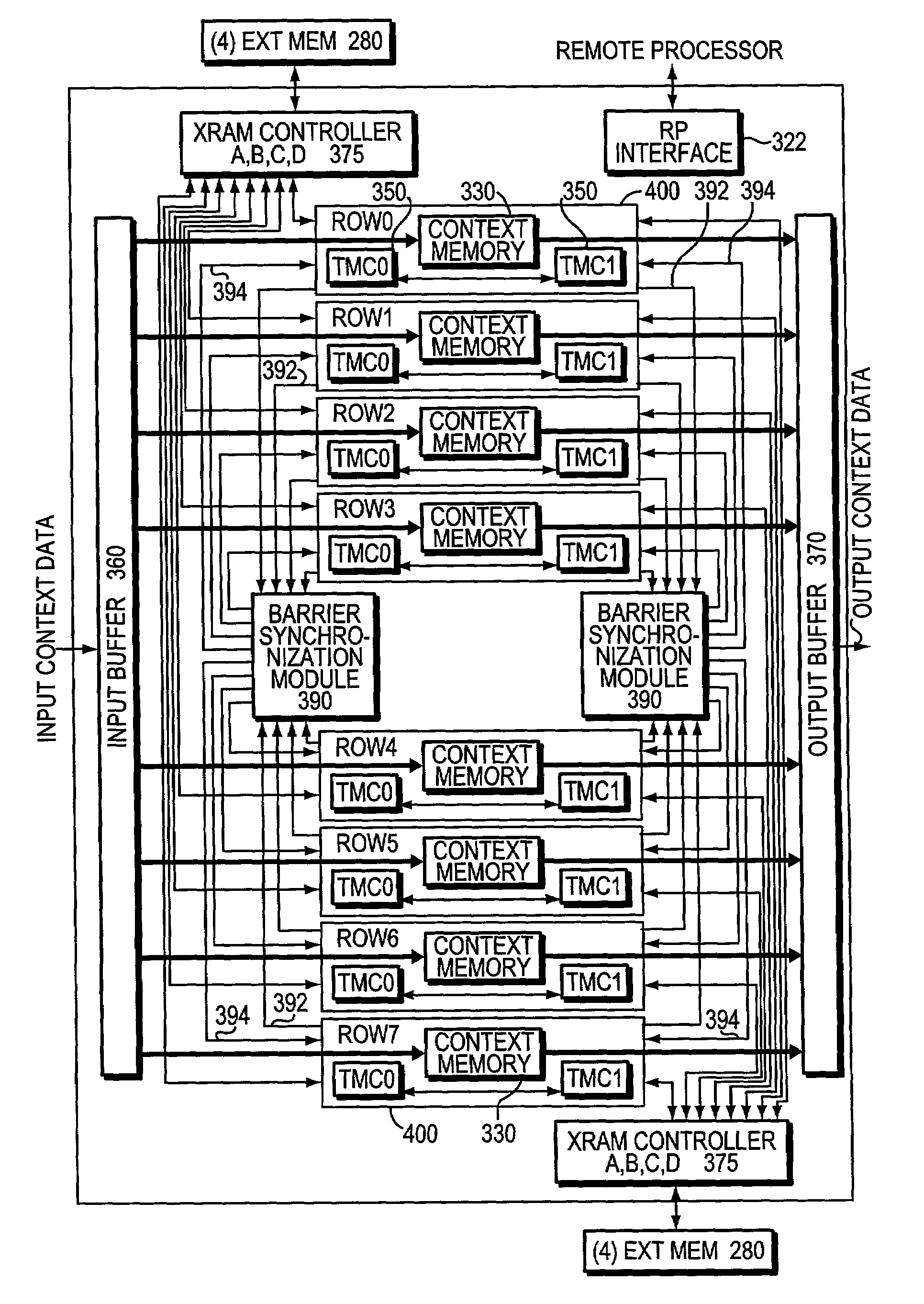

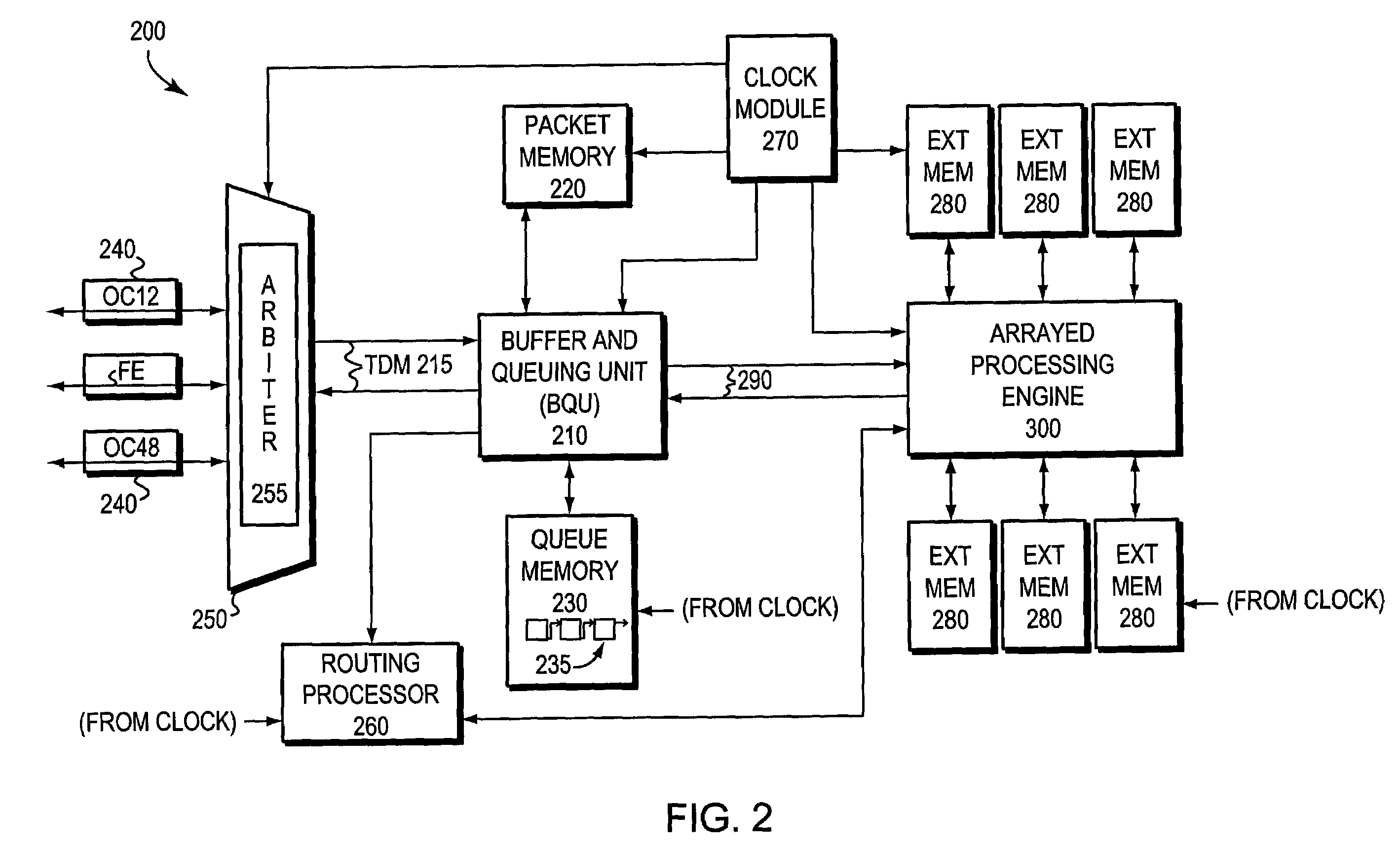

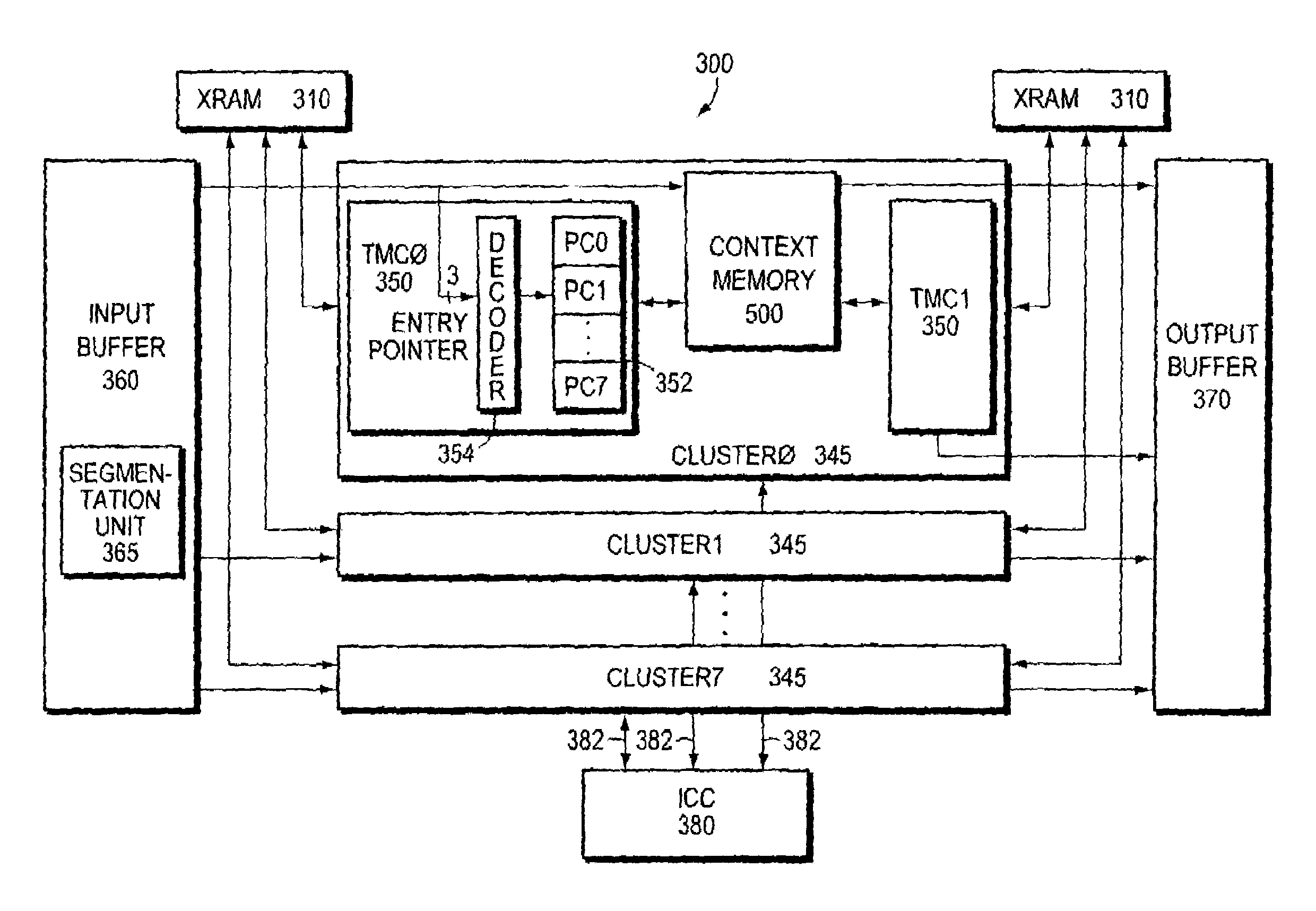

A mechanism synchronizes among processors of a processing engine in an intermediate network station. The processing engine is configured as a systolic array having a plurality of processors arrayed as rows and columns. The mechanism comprises a barrier synchronization mechanism that enables synchronization among processors of a column (i.e., different rows) of the systolic array. That is, the barrier synchronization function allows all participating processors within a column to reach a common point within their instruction code sequences before any of the processors proceed.

Owner:CISCO TECH INC

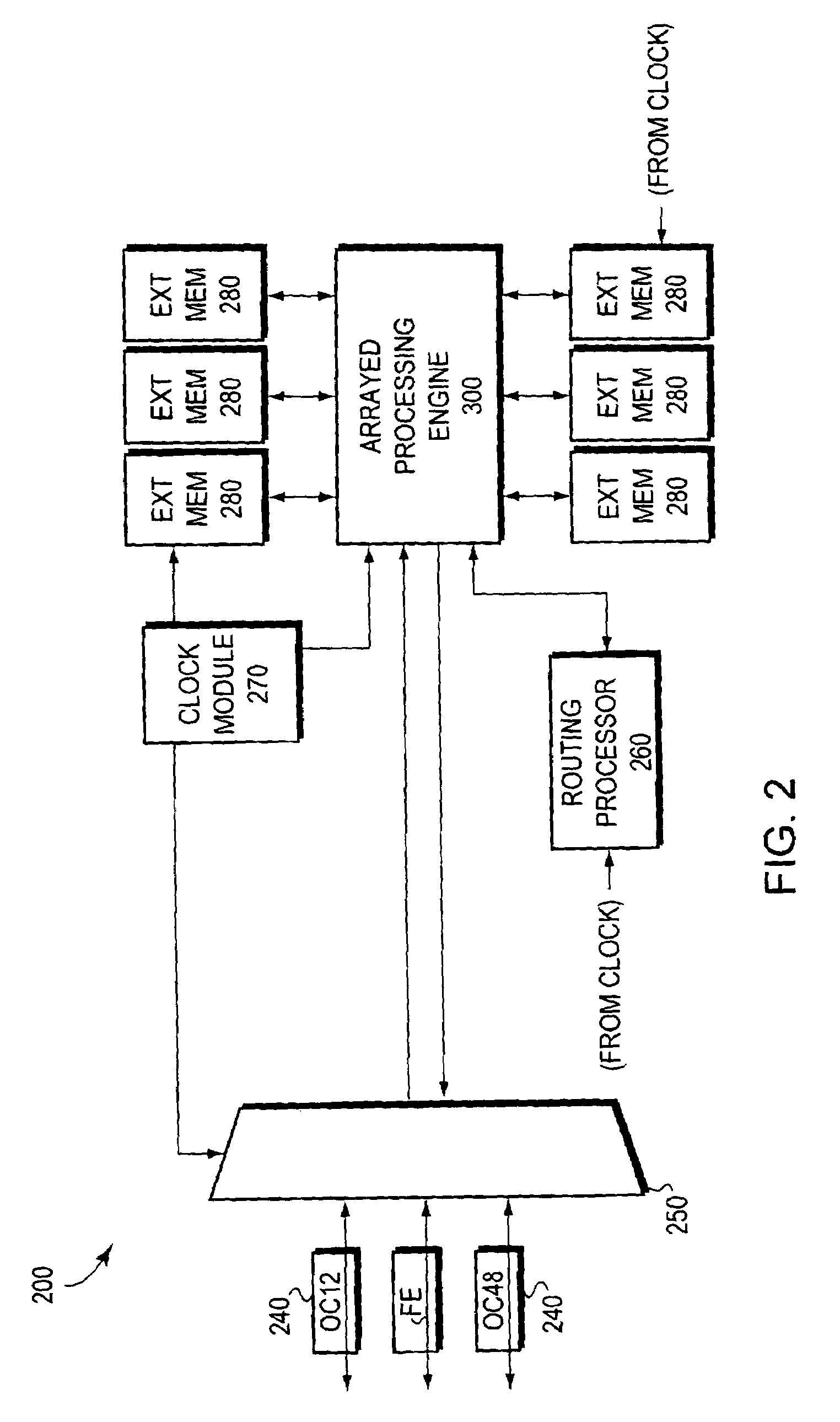

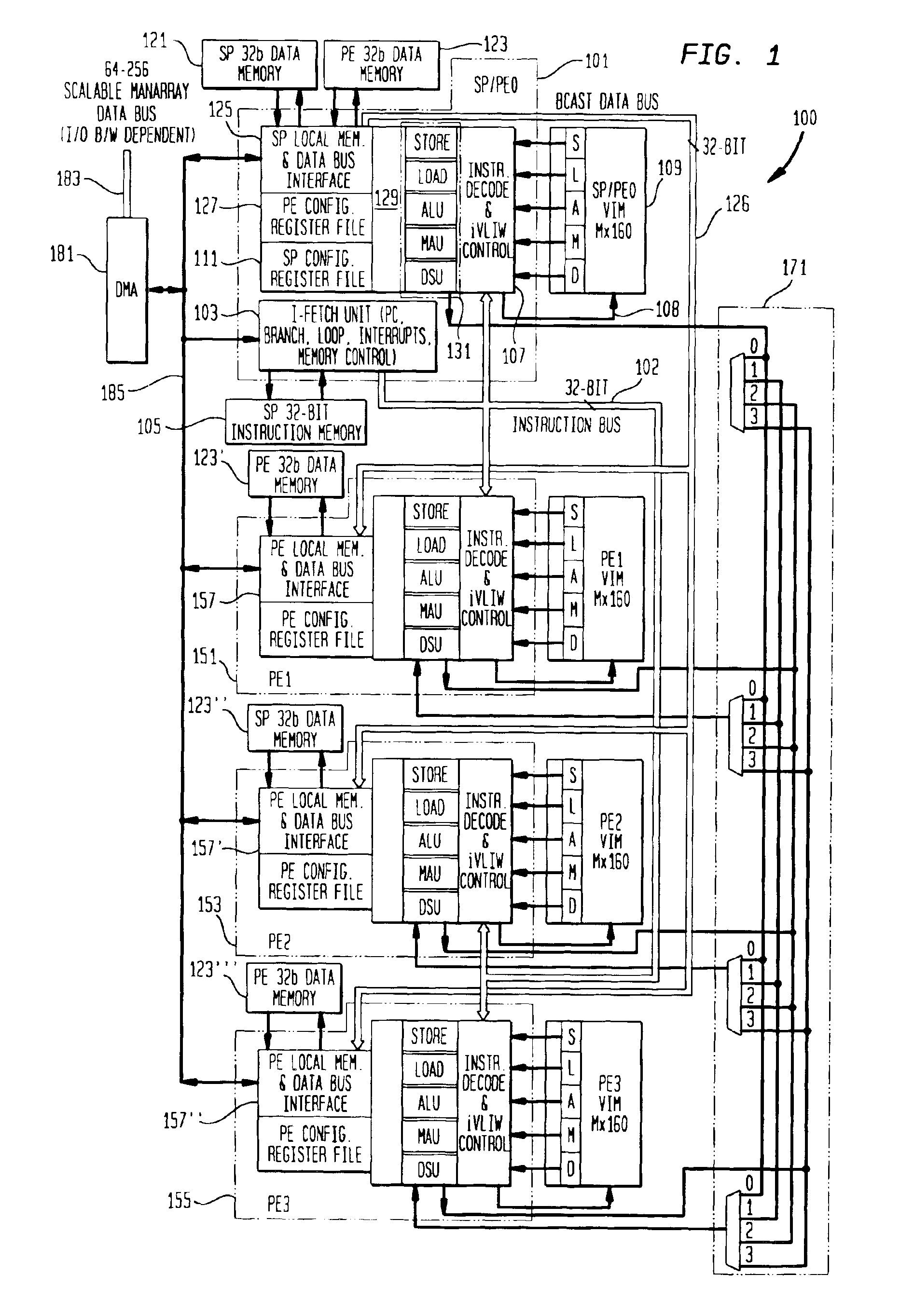

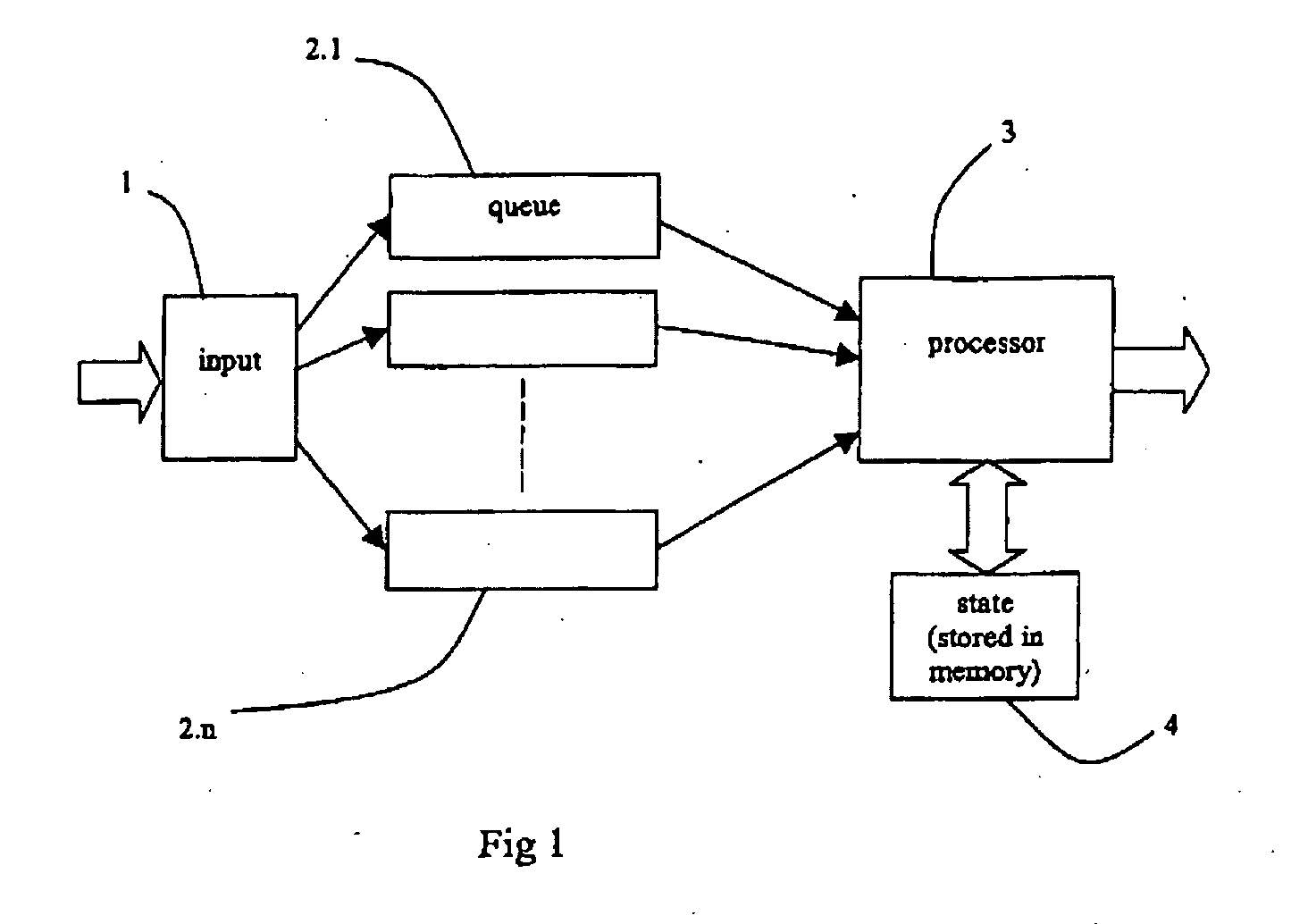

Packet striping across a parallel header processor

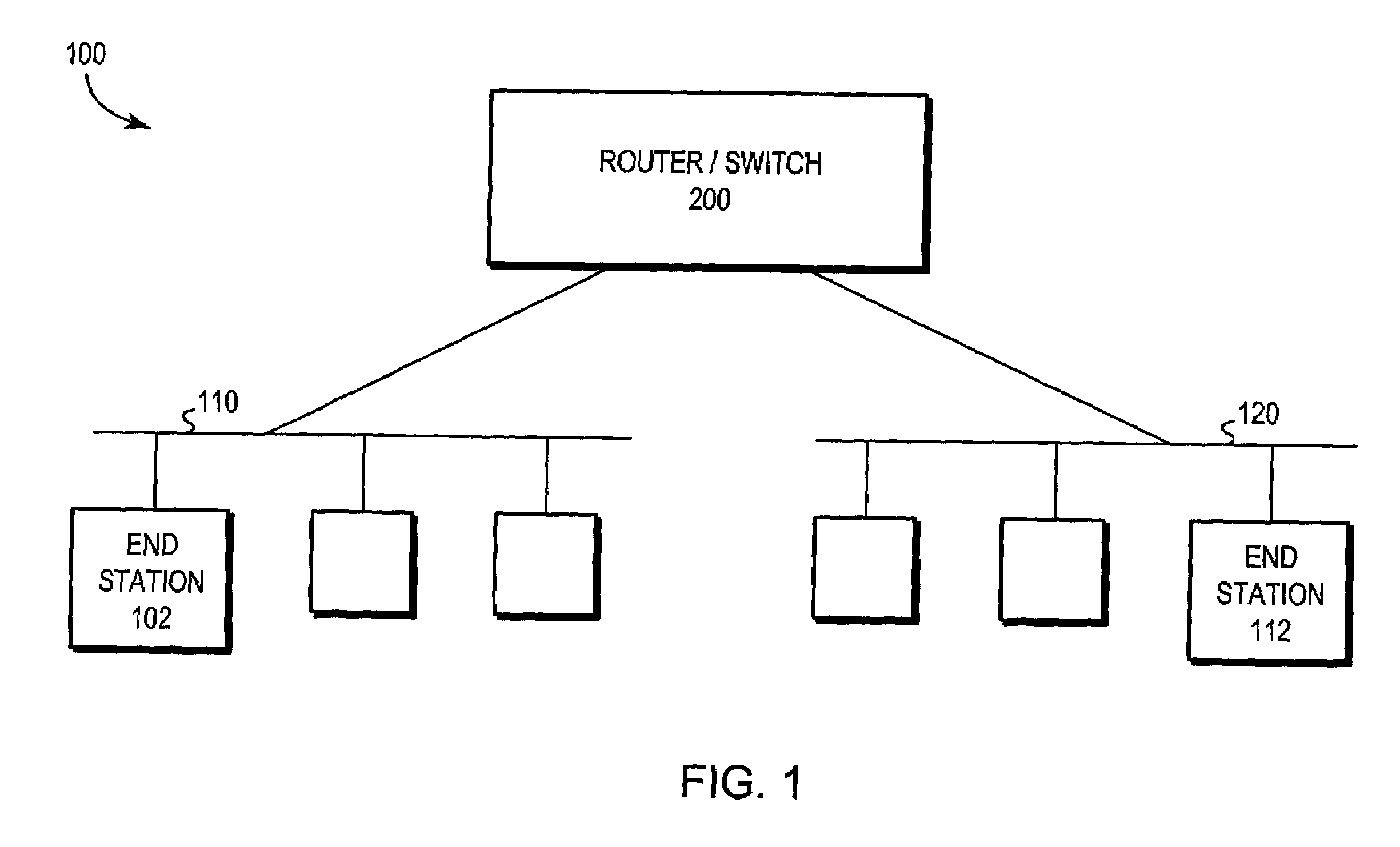

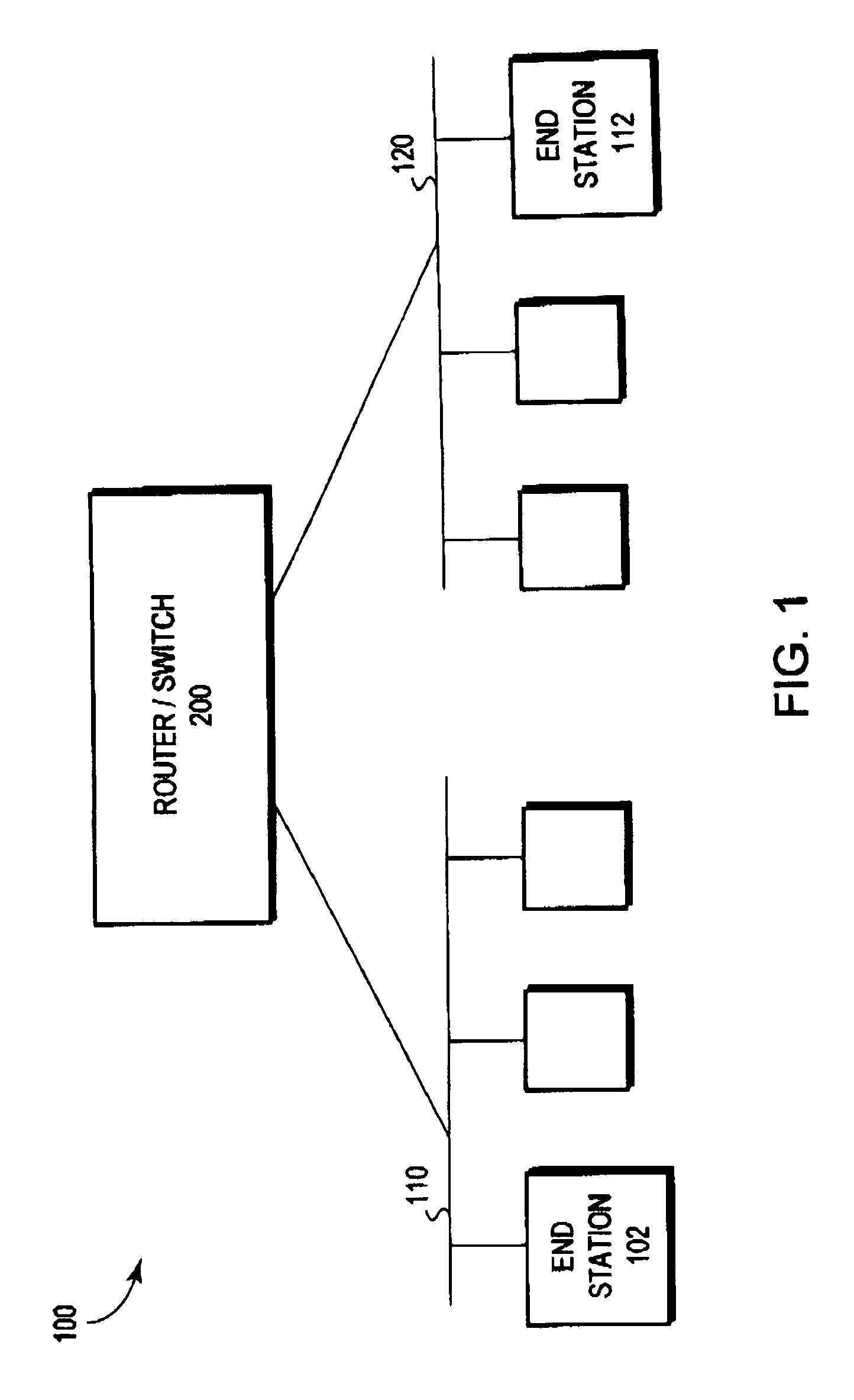

InactiveUS6965615B1Easy to processImprove performanceTime-division multiplexData switching networksNetwork switchData buffer

A technique is provided for striping packets across pipelines of a processing engine within a network switch. The processing engine comprises a plurality of processors arrayed as pipeline rows and columns embedded between input and output buffers of the engine. Each pipeline row or cluster includes a context memory having a plurality of window buffers of a defined size. Each packet is apportioned into fixed-sized contexts corresponding to the defined window size associated with each buffer of the context memory. The technique includes a mapping mechanism for correlating each context with a relative position within the packet, i.e., the beginning, middle and end contexts of a packet. The mapping mechanism facilitates reassembly of the packet at the output buffer, while obviating any any out-of-order issues involving the particular contexts of a packet.

Owner:CISCO TECH INC

Method and apparatus for cycle-based computation

ActiveUS7036114B2Analogue computers for electric apparatusCAD circuit designData connectionComputerized system

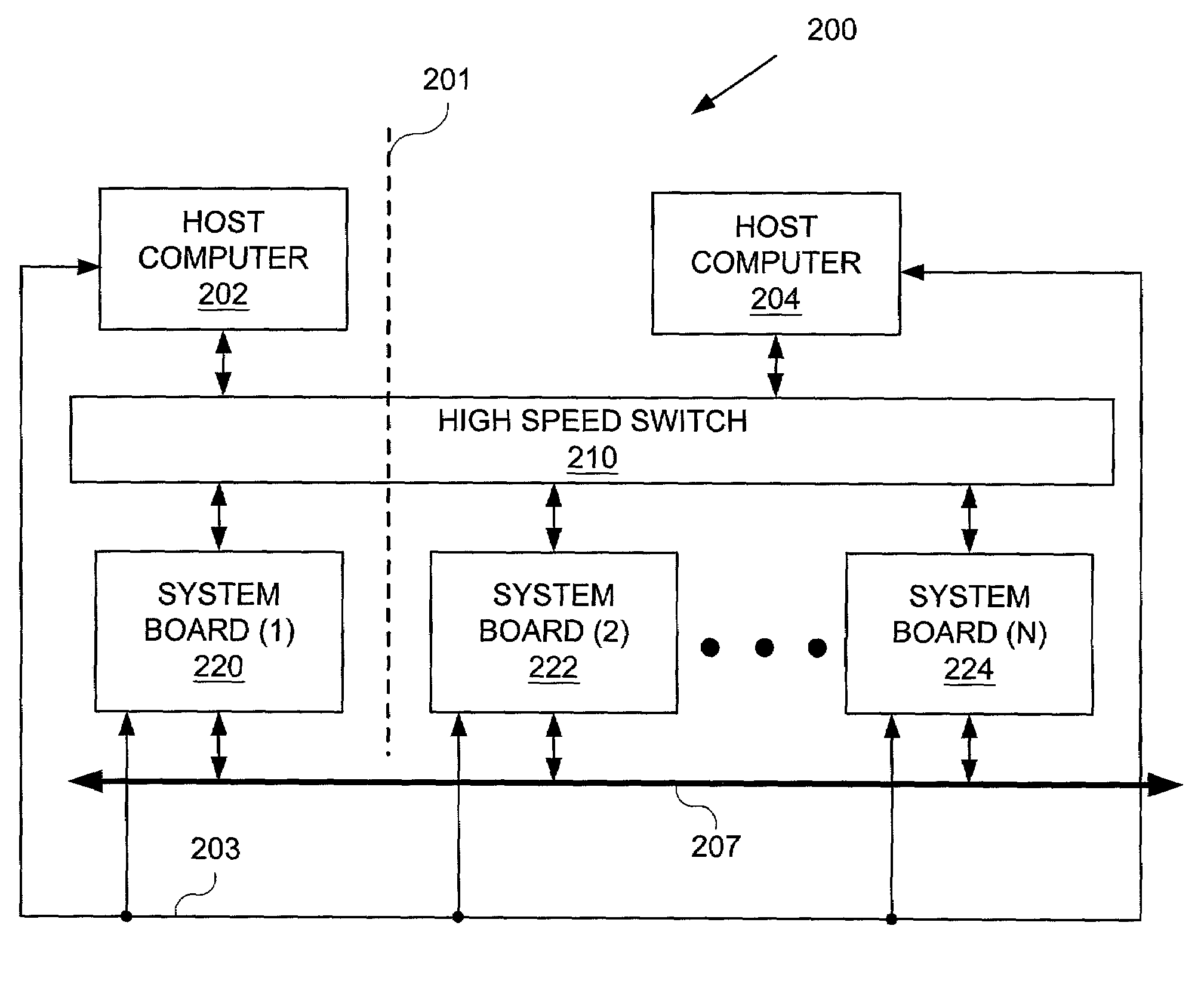

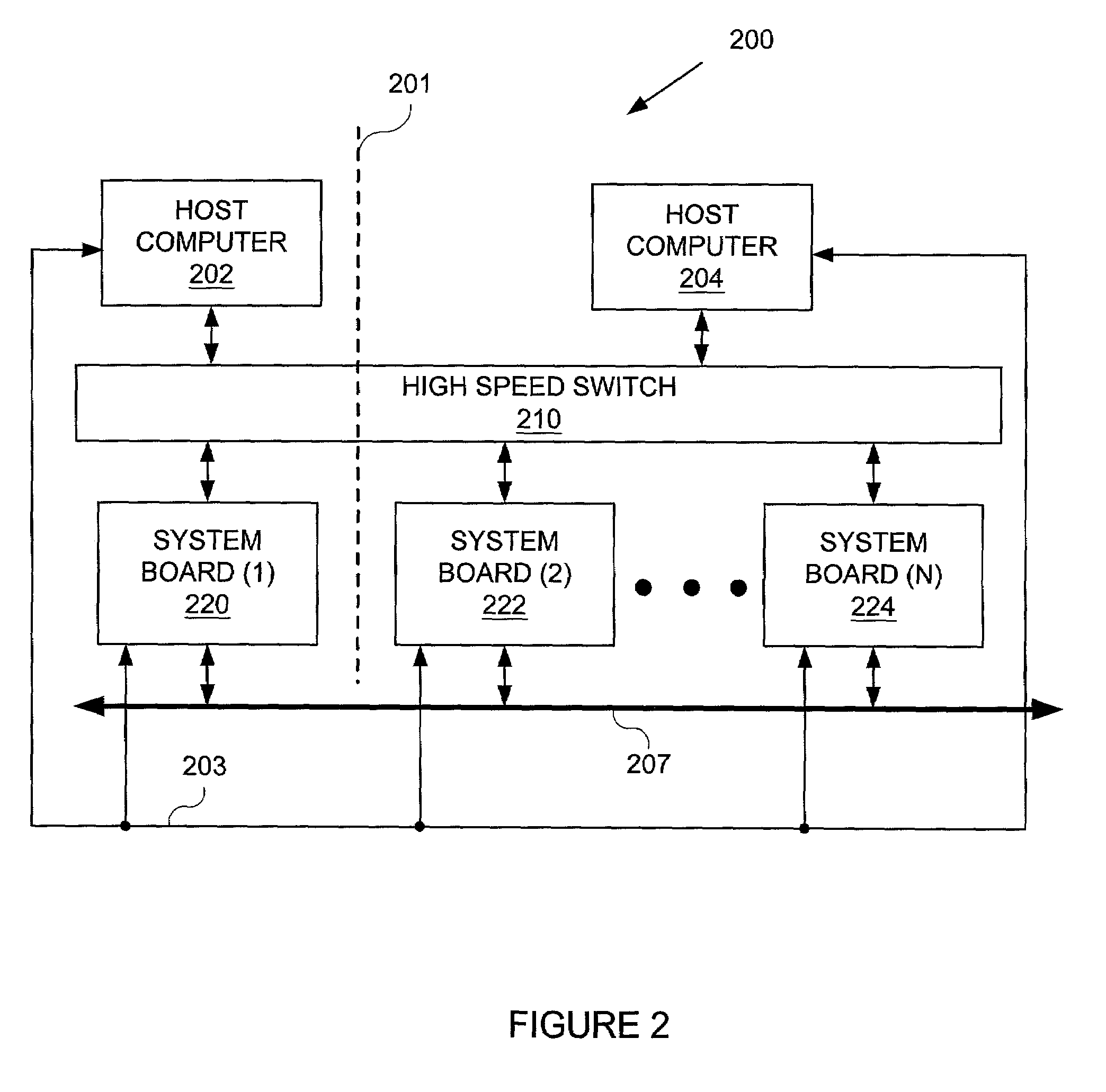

A computer system for cycle-based computation includes a processor array, a translation component adapted to translate a cycle-based design, a host computer operatively connected to the processor array and to the translation component, a data connection component interconnecting a plurality of members of the processor array using static routing, a synchronization component enabling known timing relationships among the plurality of members of the processor array, a host service request component adapted to send a host service request from a member of the processor array to the host computer, and an access component adapted to access a portion of a state of the processor array and a portion of a state of the data connection.

Owner:ORACLE INT CORP

System upgrade and processor service

InactiveUS6378027B1Volume/mass flow measurementMultiple digital computer combinationsComputer architectureEngineering

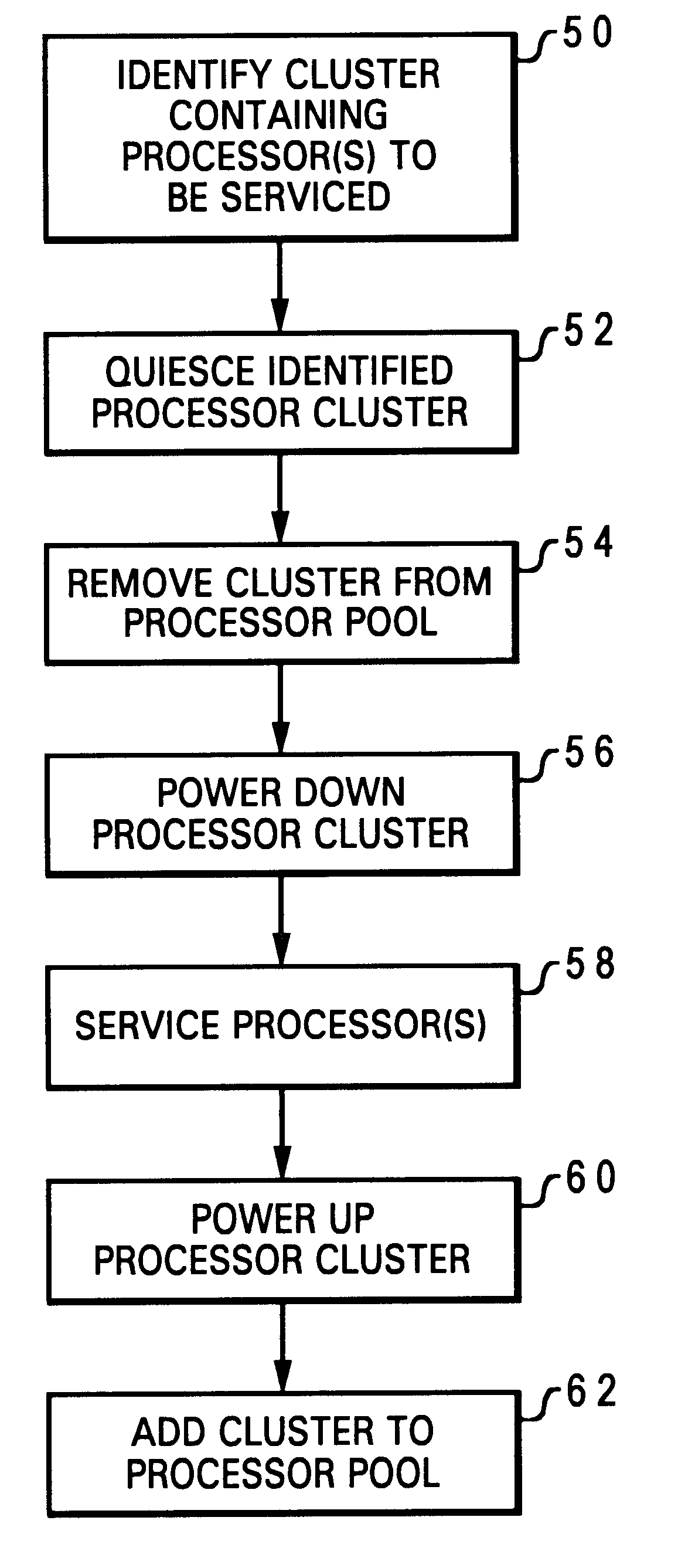

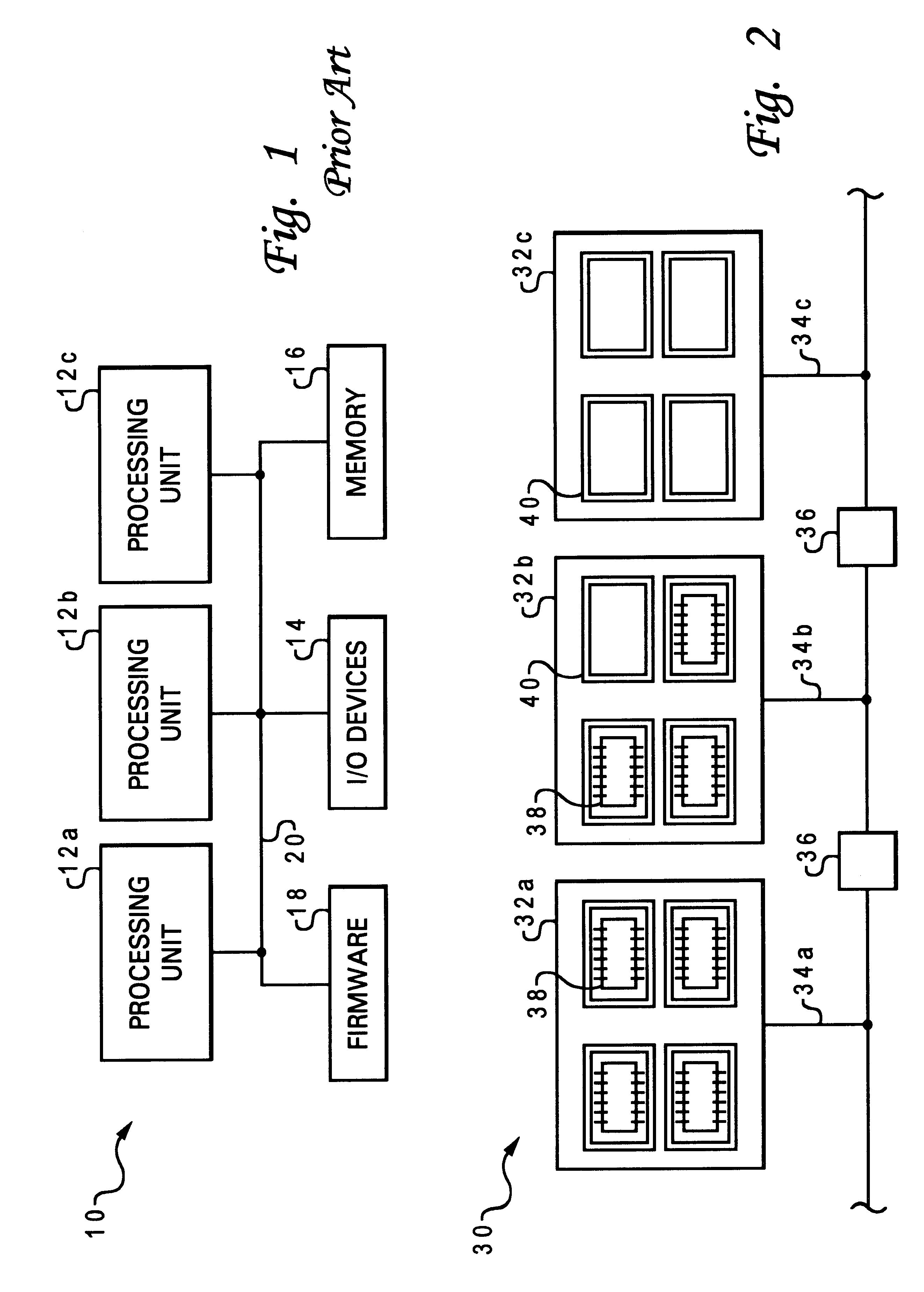

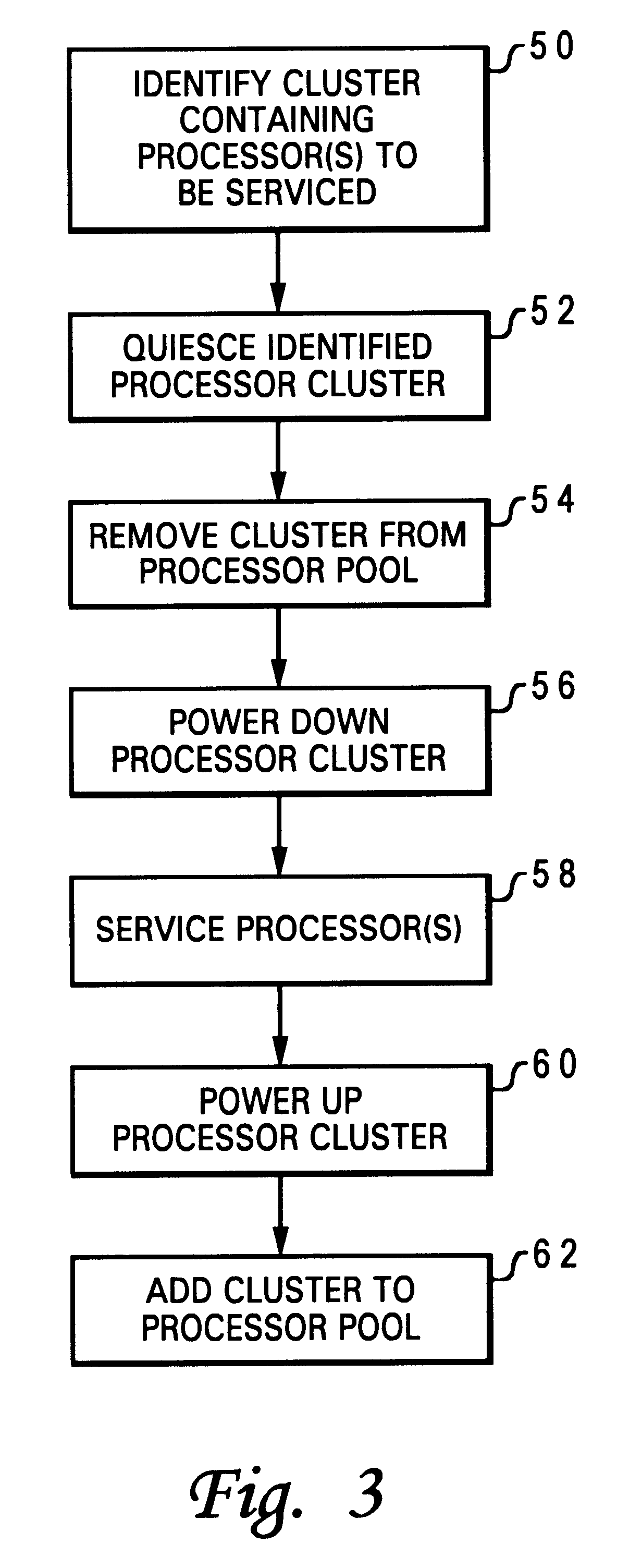

A method of servicing a processor array of a computer system by quiescing a processor selected for maintenance and removing the selected processor from a processor pool used by the computer's operating system. The selected processor is then powered down while maintaining power to and operation of other processors in the processor array. The selected processor may be identified as being defective, or may have been selected for upgrading. The processor array may include several processor clusters, such that the quiescing, removing and powering down steps apply to all processors in one of the processing clusters. The operating system assigns one of the processors in the processor array to be a service processor, and if the service processor is the processor selected for maintenance, the OS re-assigns the service processor functions to another processor in the processor array.

Owner:LENOVO PC INT

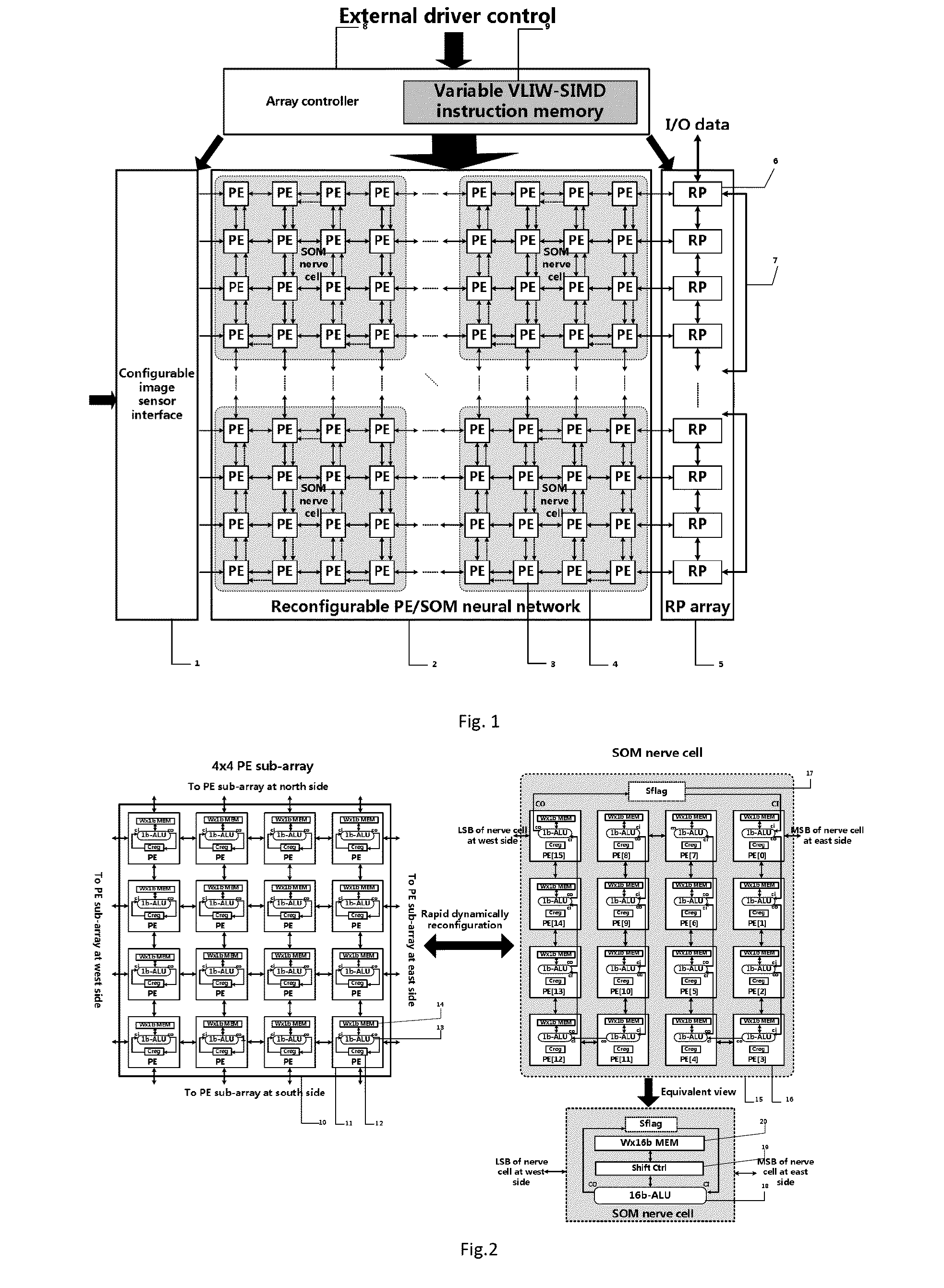

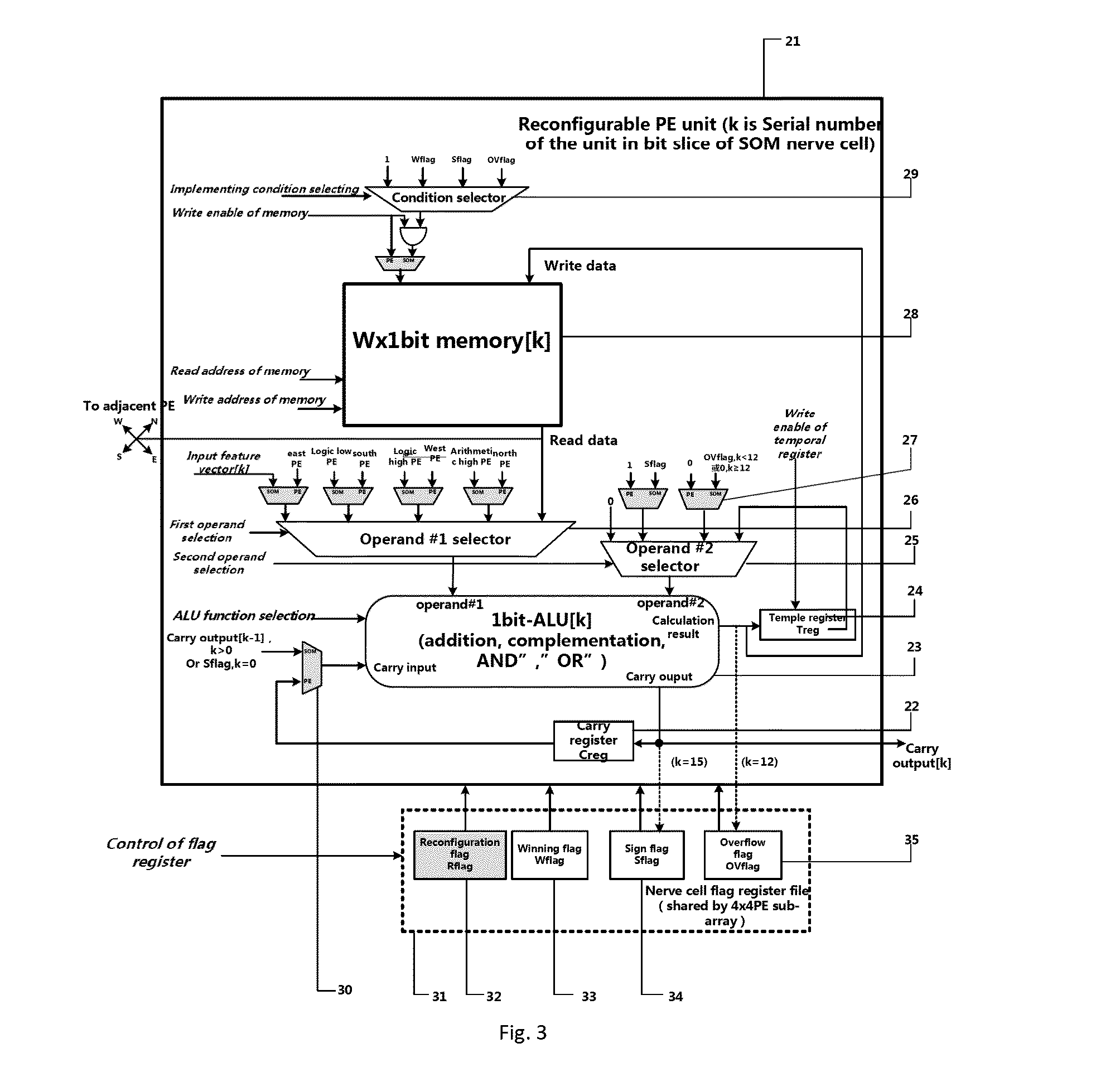

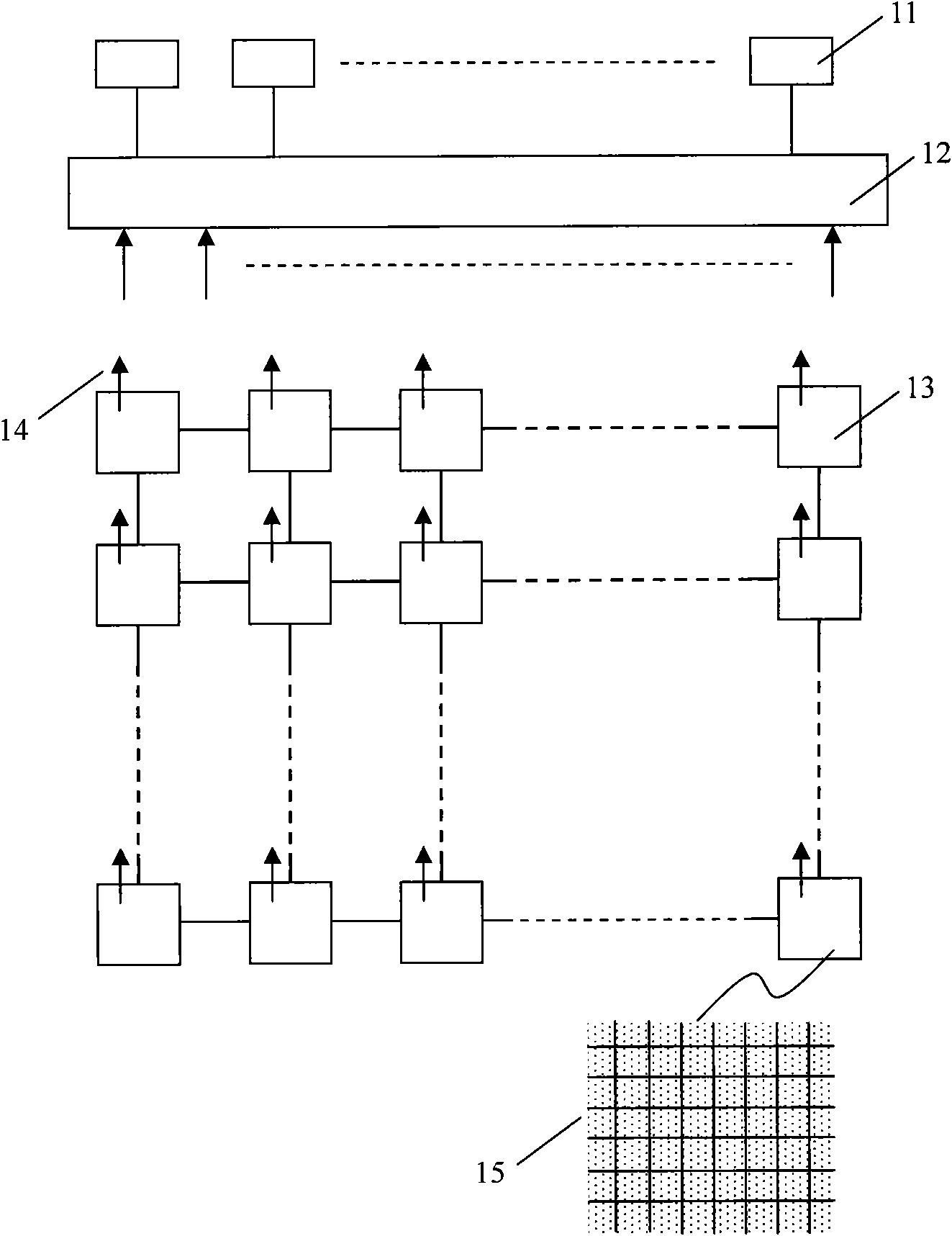

Dynamically reconstructable multistage parallel single instruction multiple data array processing system

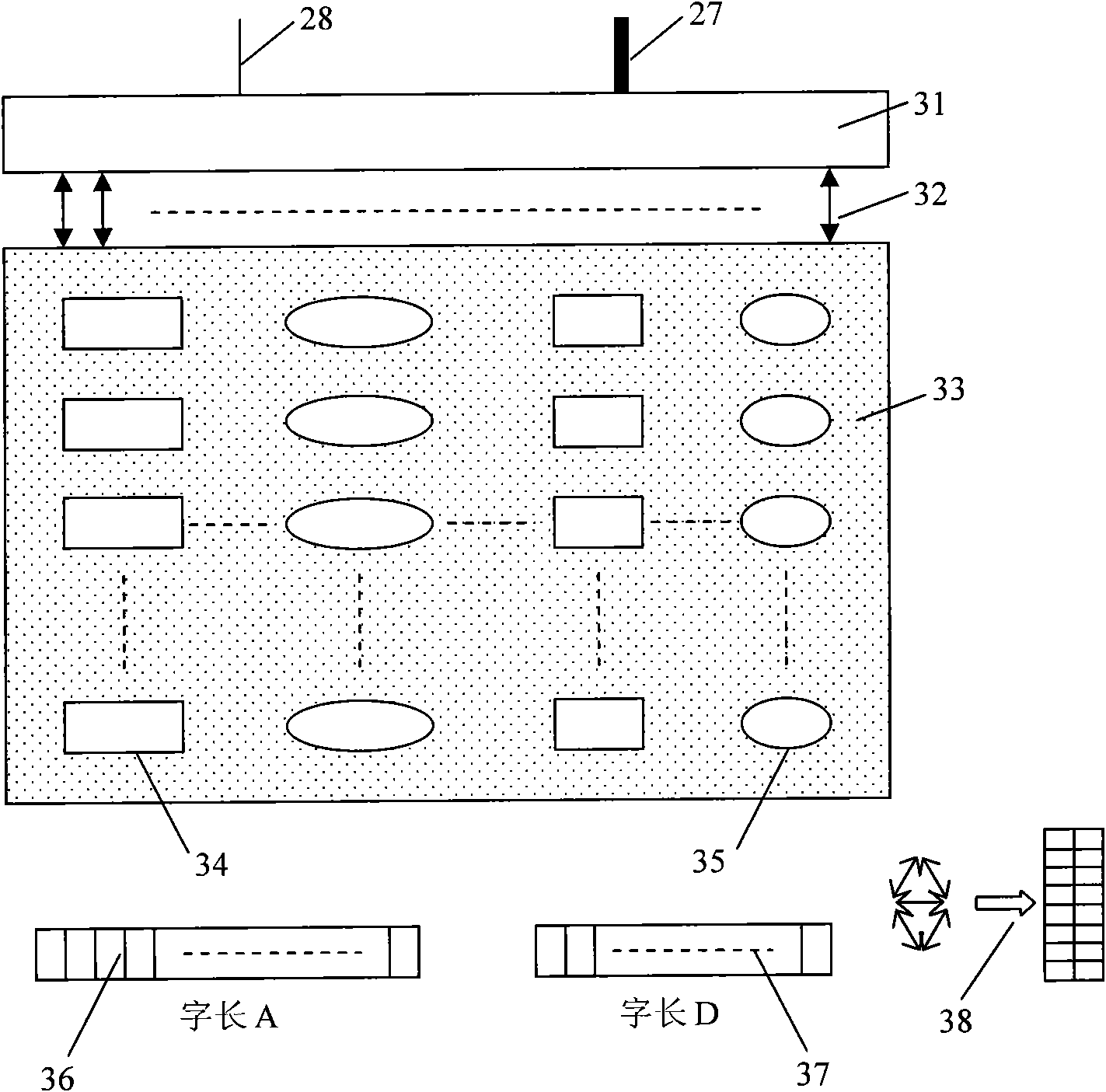

ActiveUS20150310311A1Single instruction multiple data multiprocessorsCharacter and pattern recognitionHandling systemLinearity

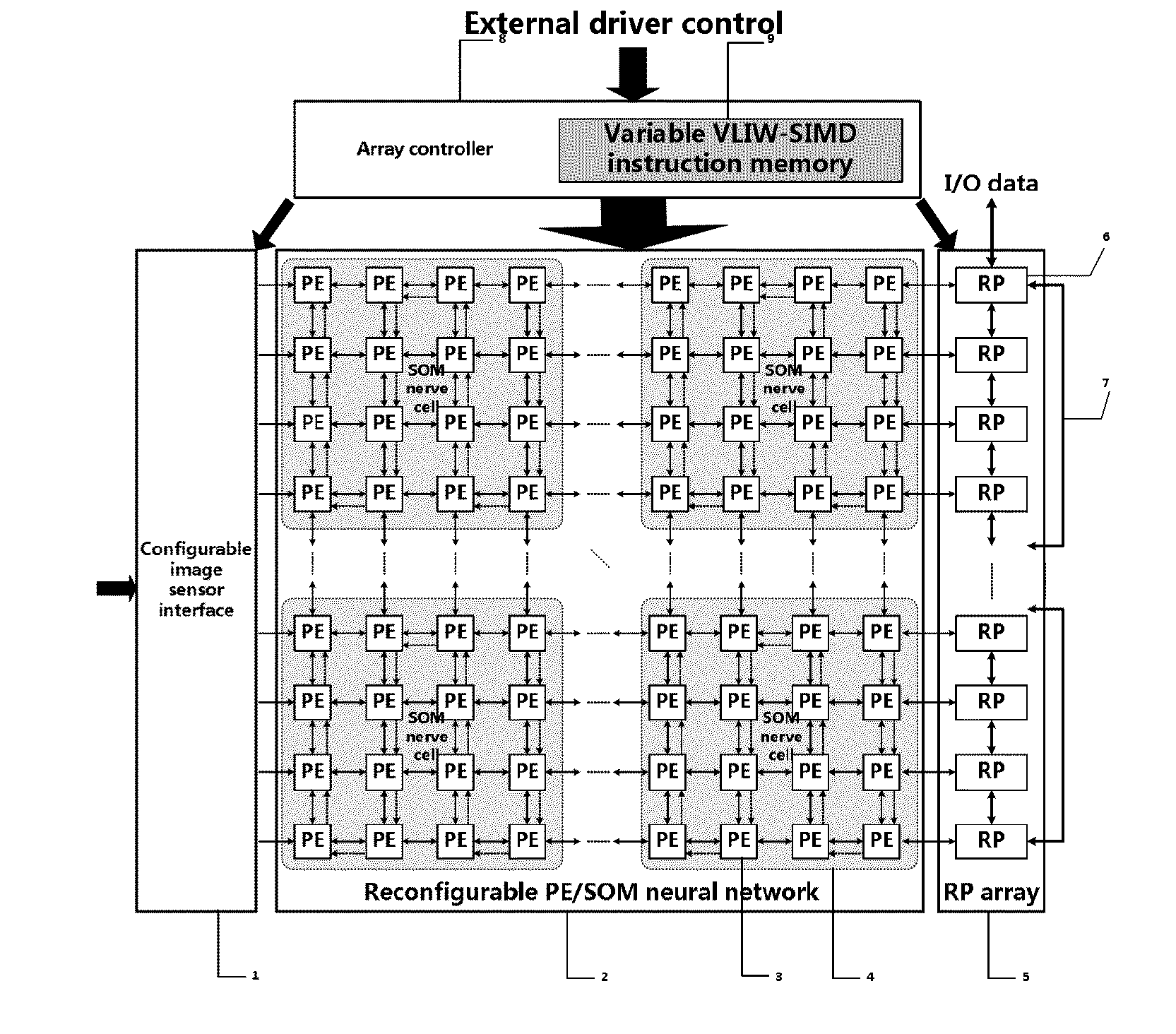

The present invention proposes a dynamically reconfigurable multistage parallel single instruction multiple data array processing system which has a pixel level parallel image processing element array and a row processor array parallel. The PE array mainly implements a linear operation which is adapted to be executed in parallel in the low and middle levels of image processing and the RP array implements an operation which is adapted to be executed in row-parallel in the low and middle levels of image processing or more complex nonlinear operations. In particularly, such a system may dynamically reconfigure a SOM neural network in a low cost of performance and area, and the neural network supports high level of image processing such as a high speed online neural network training and image feature recognition, and completely overcomes a defect in which a high level of image processing can't be done by pixel-level parallel processing array in the existing programmable vision chip and parallel vision processor, and facilitate an intelligent and portable real time on-chip vision image system with a complete function at low device cost and low power consumption.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

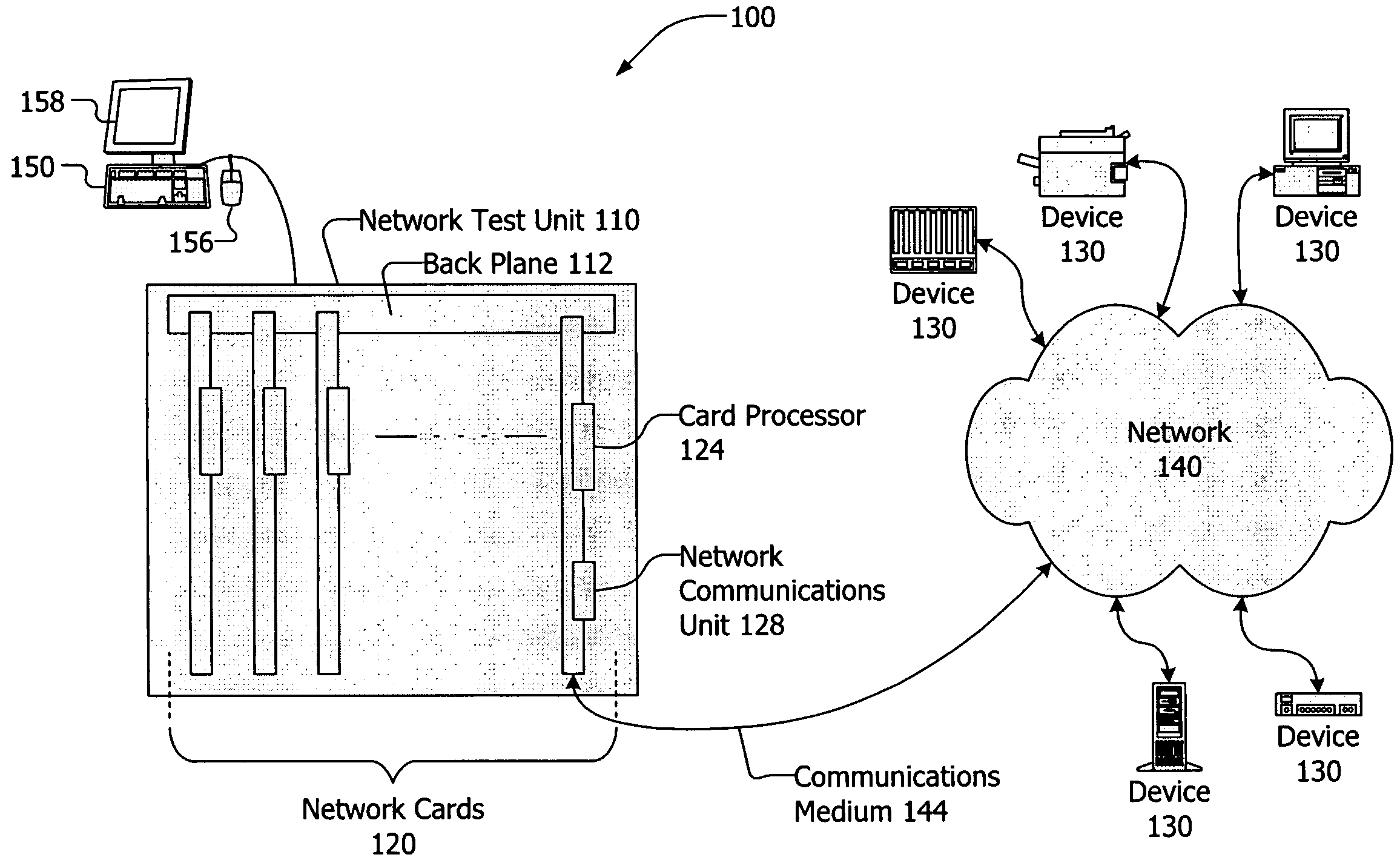

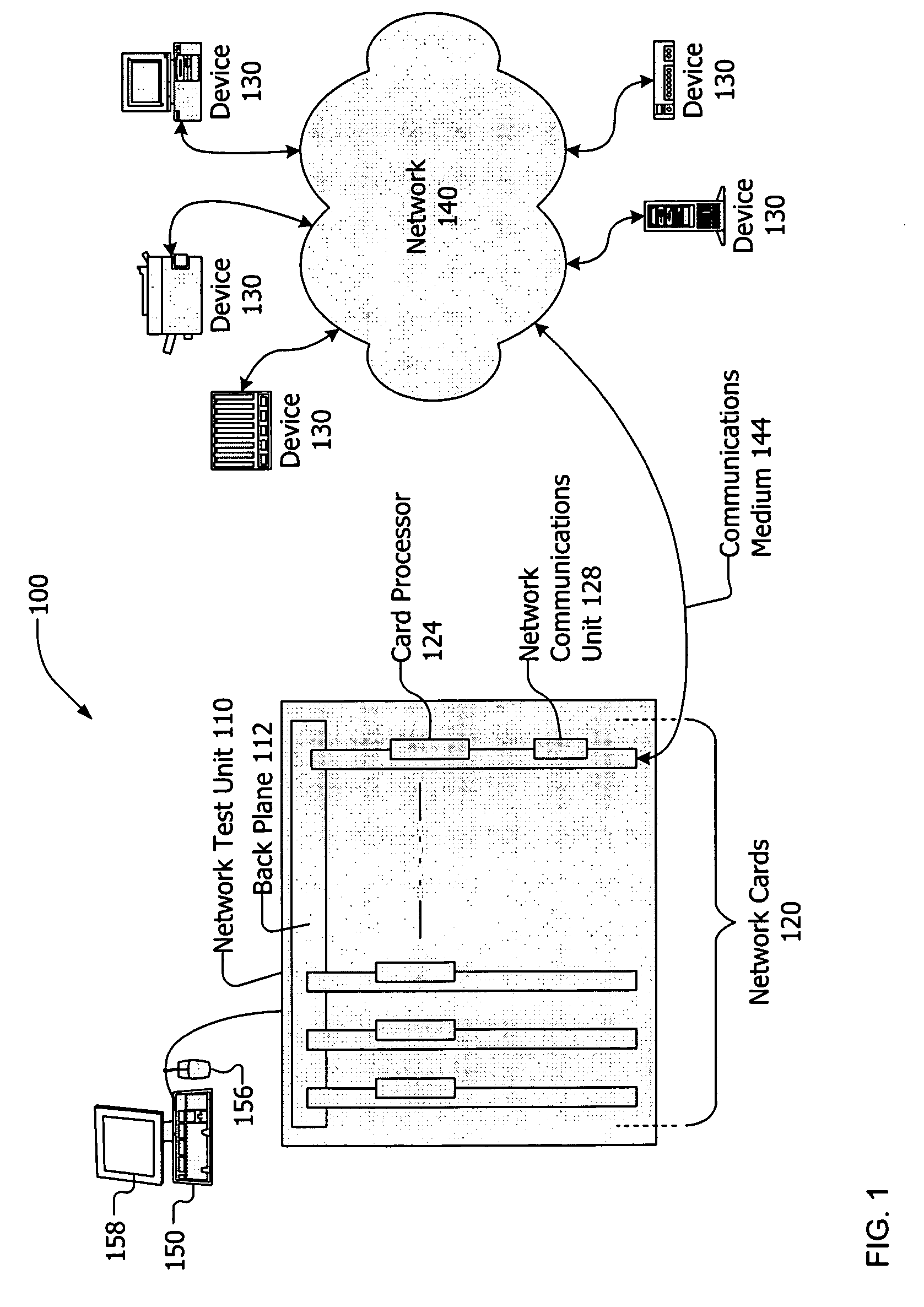

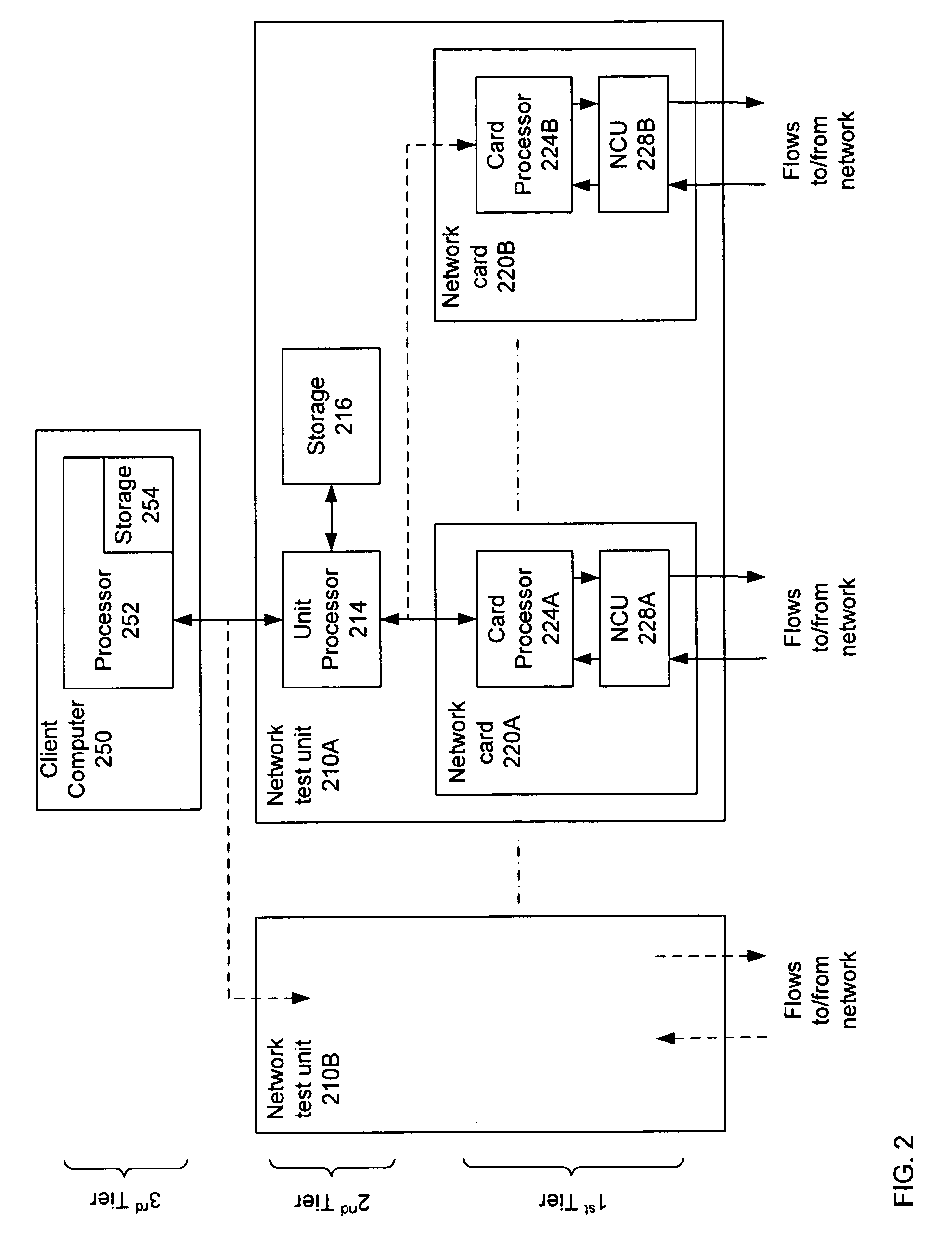

Distributed Flow Analysis

ActiveUS20090310491A1Error preventionFrequency-division multiplex detailsTraffic flow analysisStreamflow

Owner:KEYSIGHT TECH SINGAPORE (SALES) PTE LTD

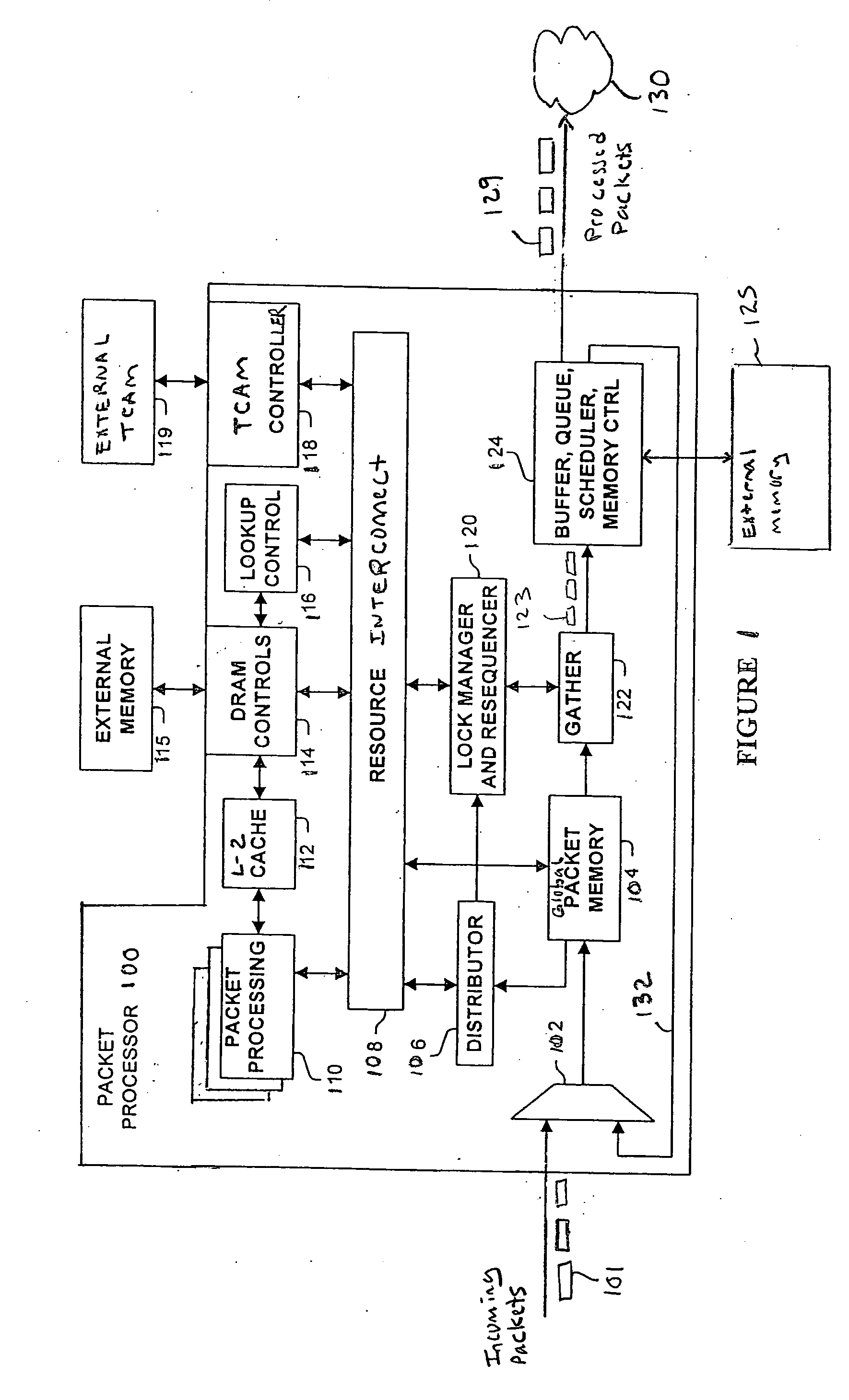

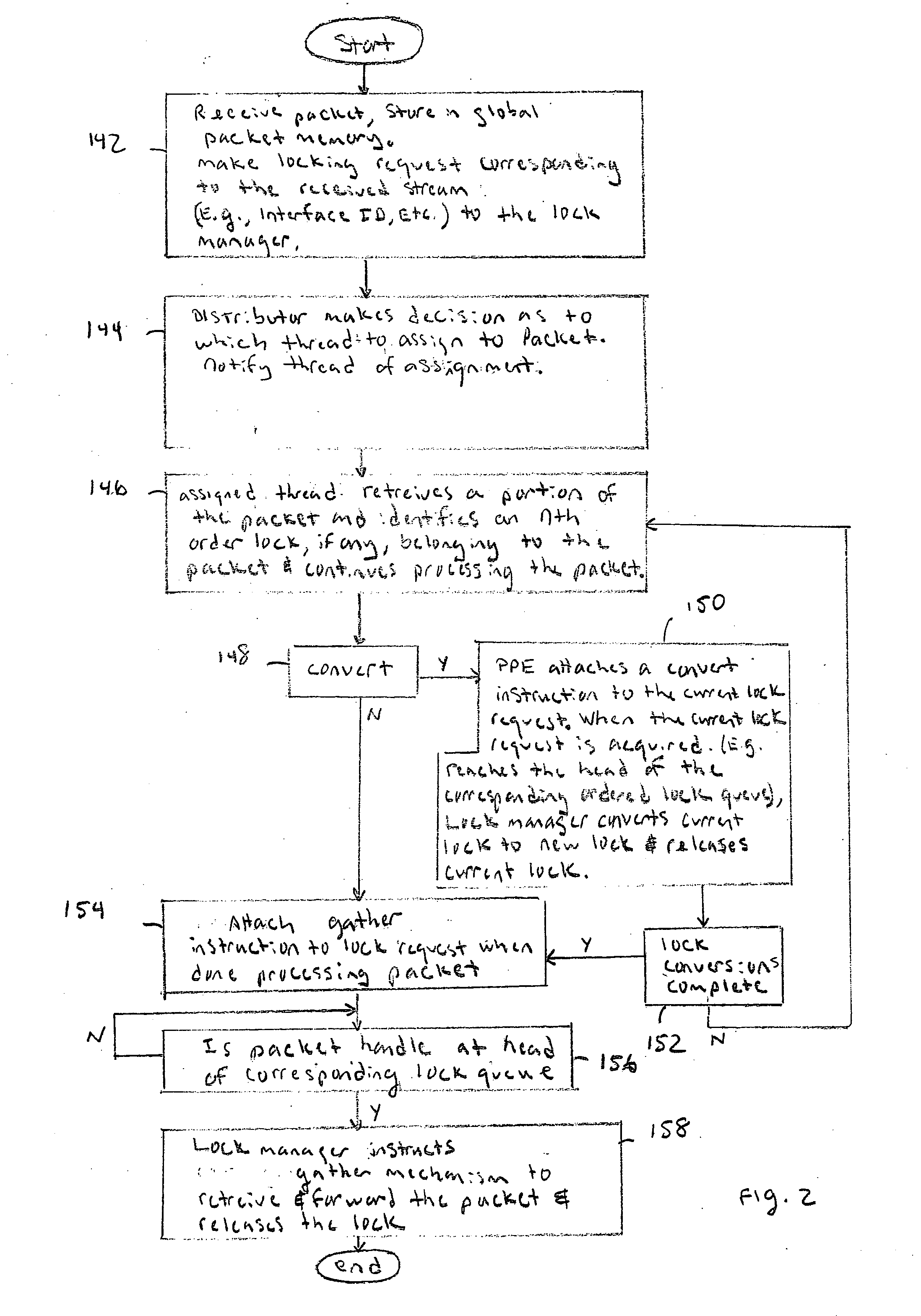

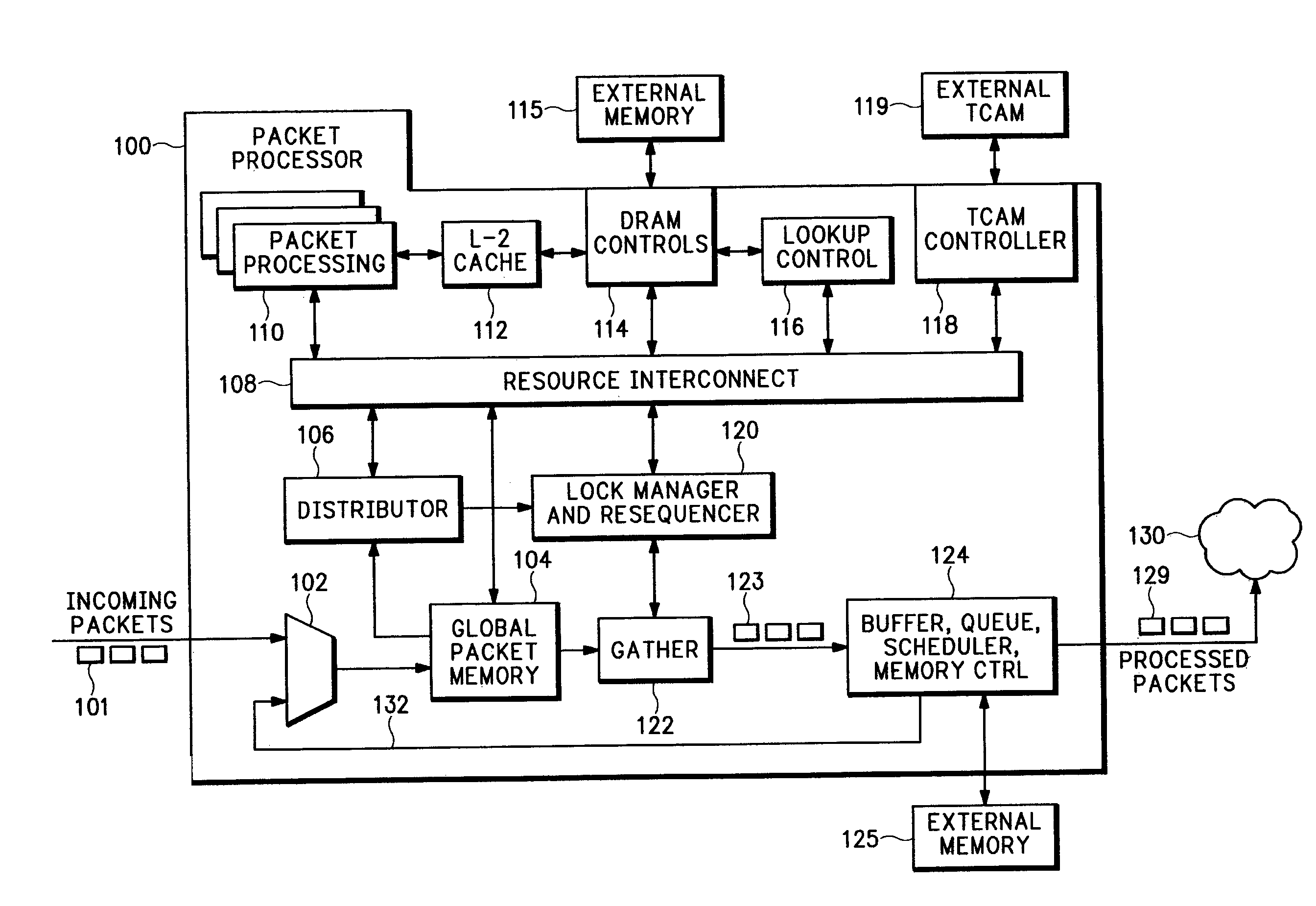

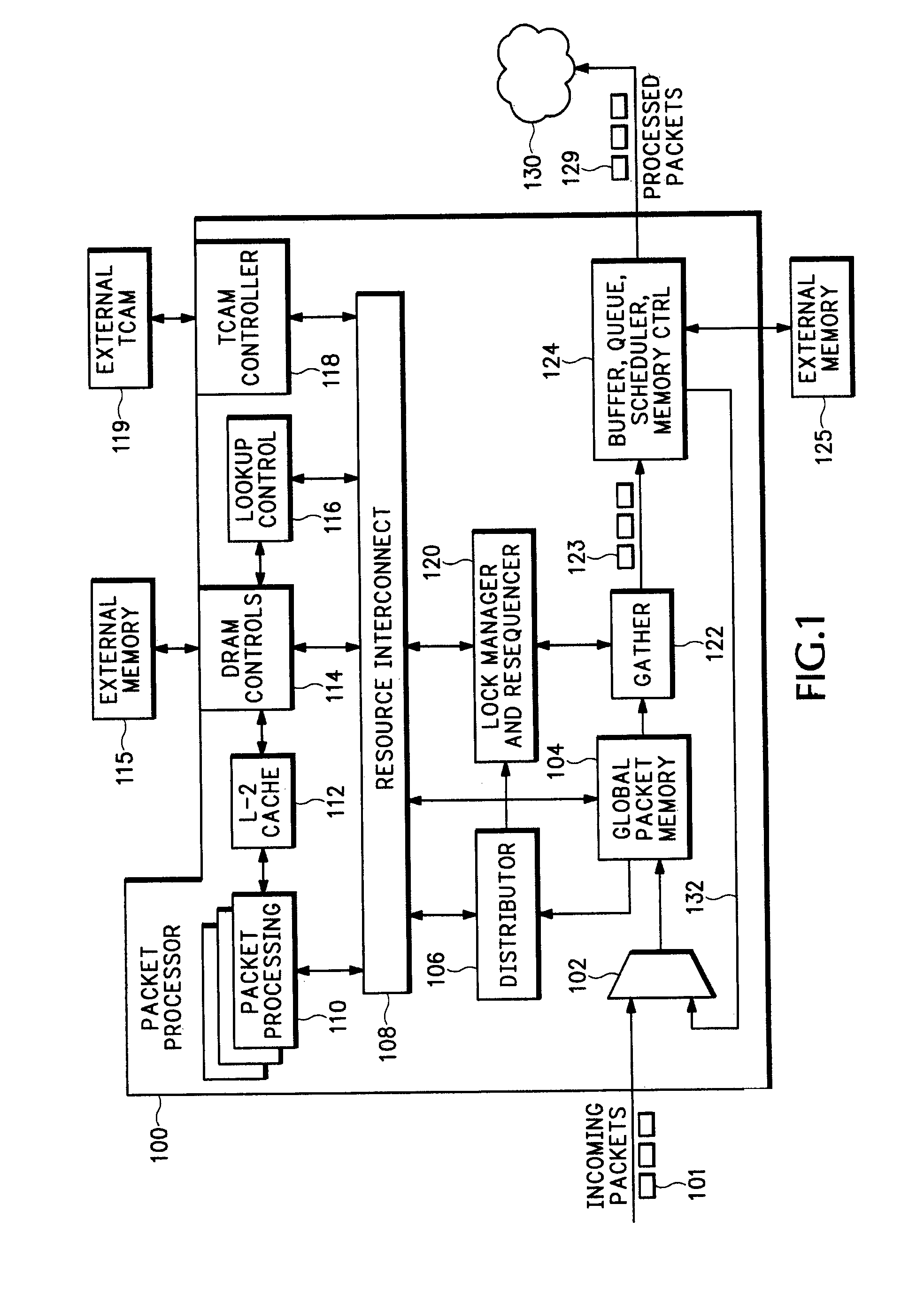

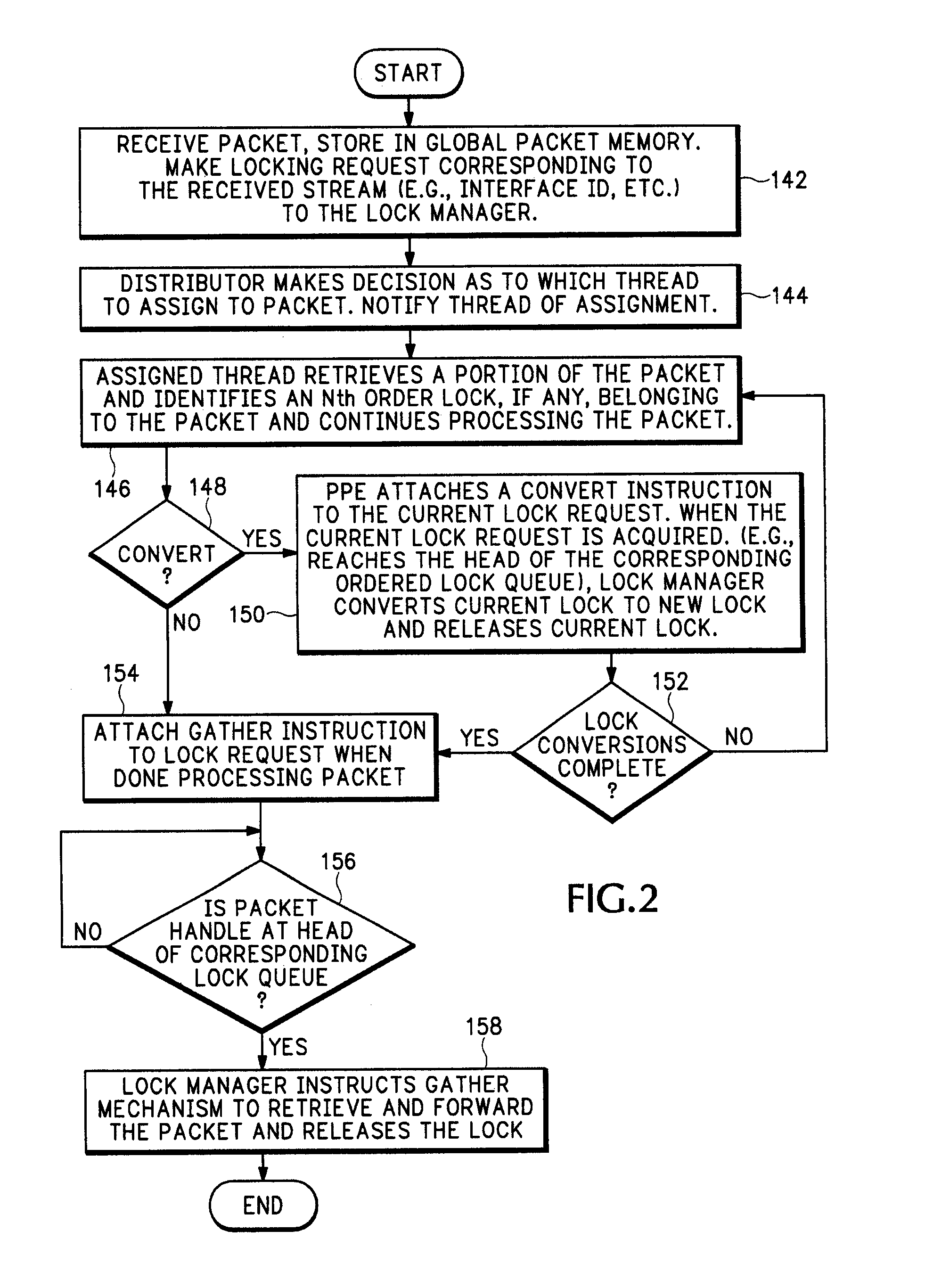

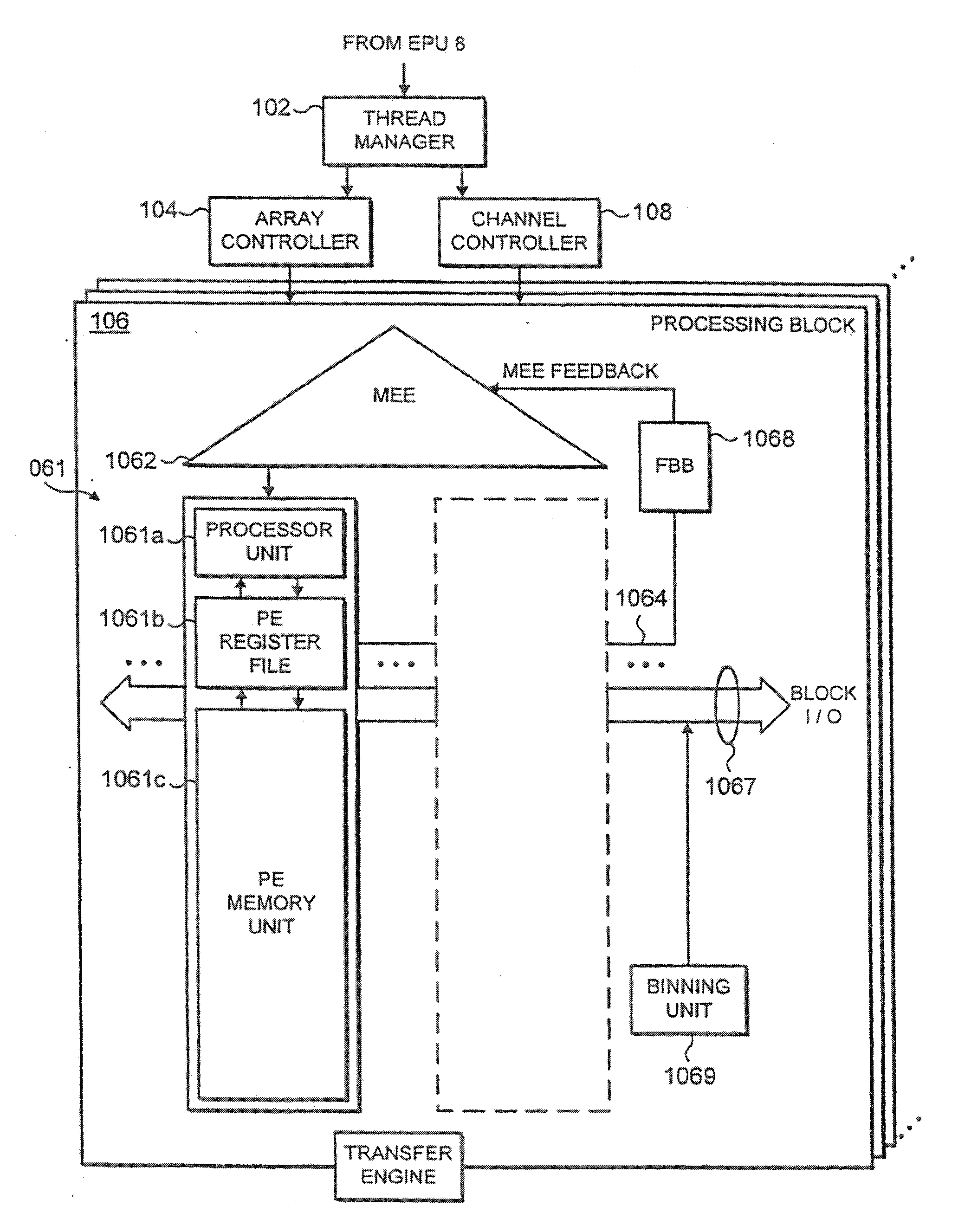

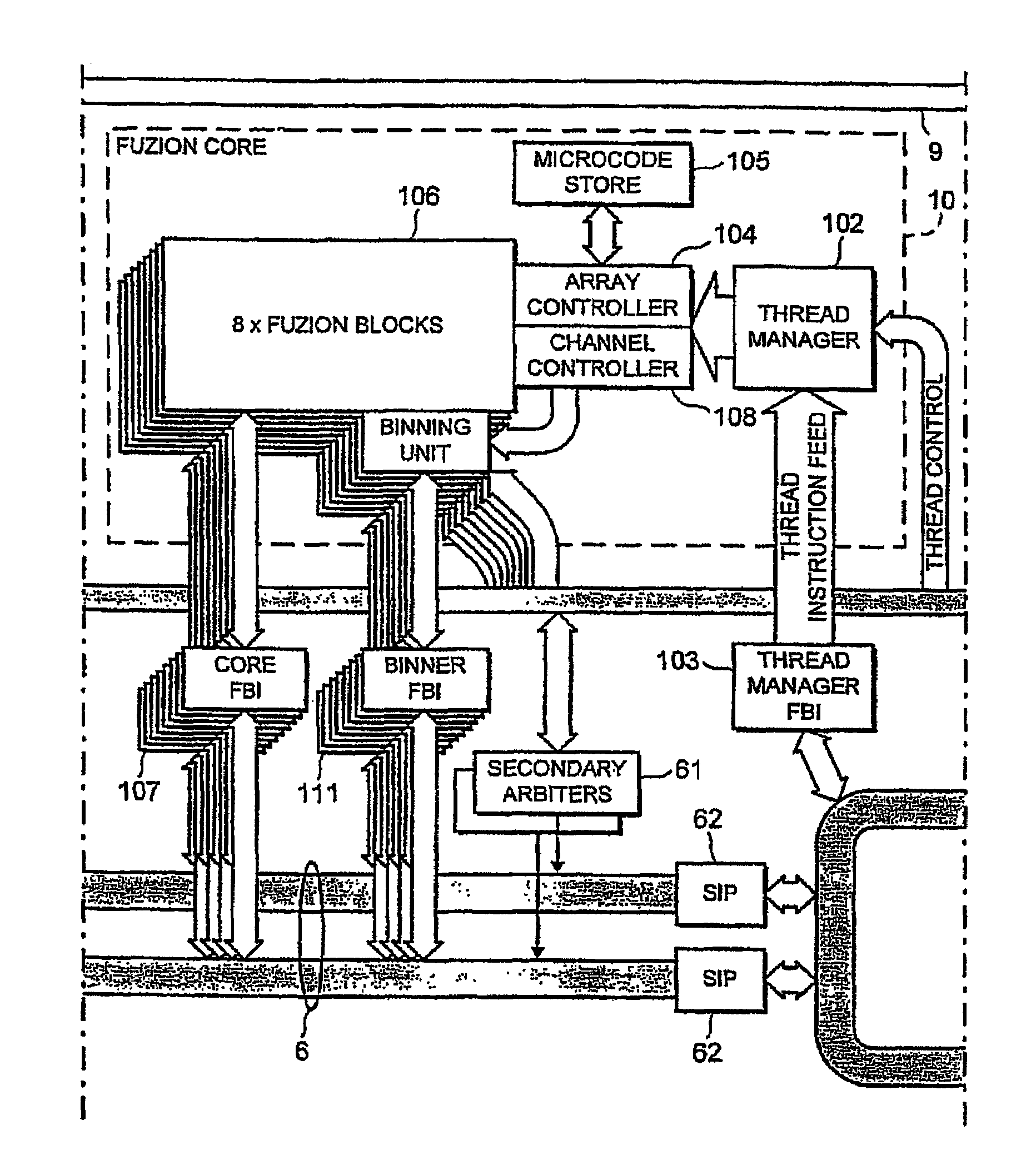

Multi-threaded packeting processing architecture

InactiveUS20060179156A1Quicker and high quality portingIncrease data rateDigital computer detailsData switching by path configurationData packHardware architecture

A network processor has numerous novel features including a multi-threaded processor array, a multi-pass processing model, and Global Packet Memory (GPM) with hardware managed packet storage. These unique features allow the network processor to perform high-touch packet processing at high data rates. The packet processor can also be coded using a stack-based high-level programming language, such as C or C++. This allows quicker and higher quality porting of software features into the network processor. Processor performance also does not severely drop off when additional processing features are added. For example, packets can be more intelligently processed by assigning processing elements to different bounded duration arrival processing tasks and variable duration main processing tasks. A recirculation path moves packets between the different arrival and main processing tasks. Other novel hardware features include a hardware architecture that efficiently intermixes co-processor operations with multi-threaded processing operations and improved cache affinity.

Owner:CISCO TECH INC

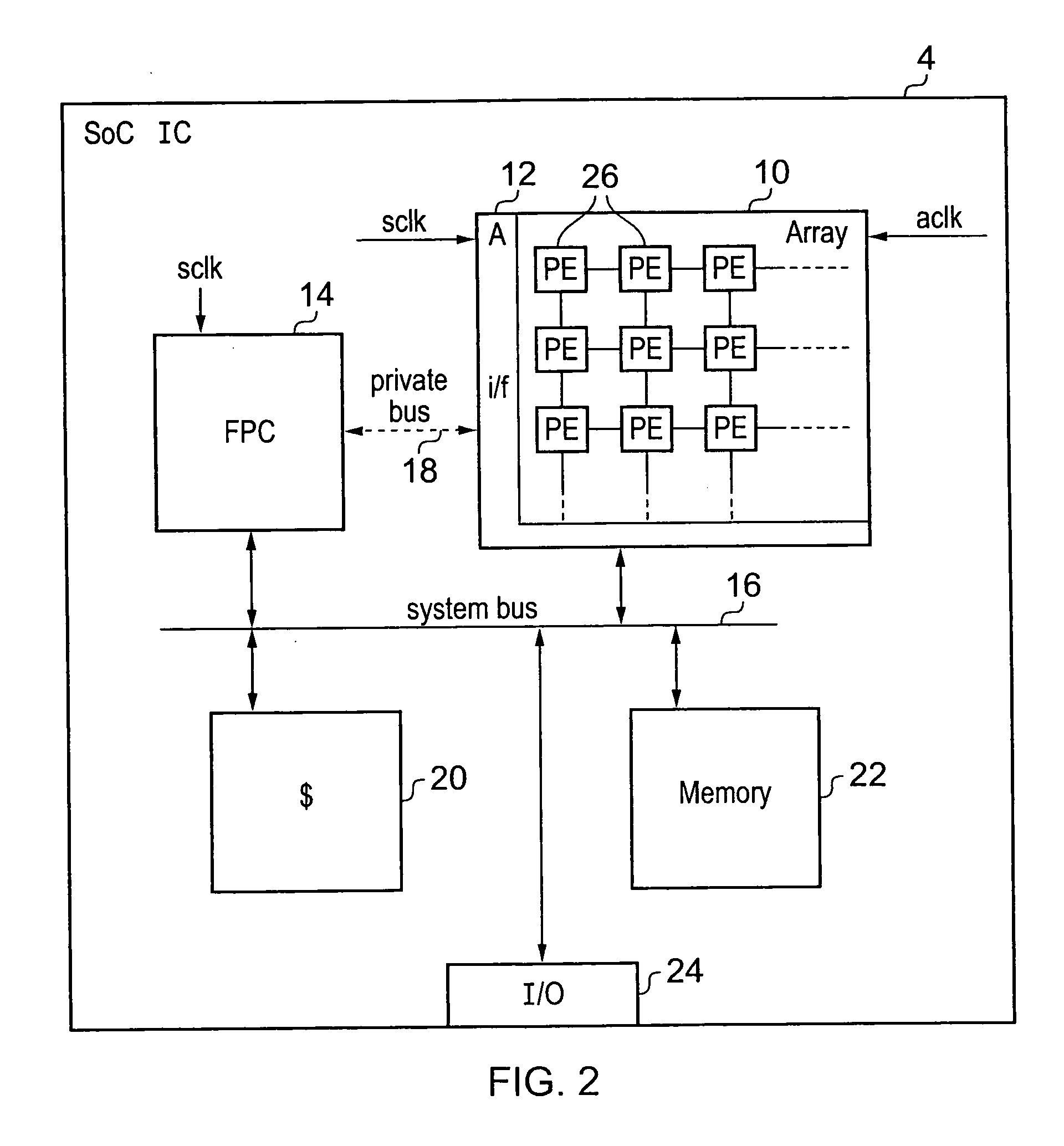

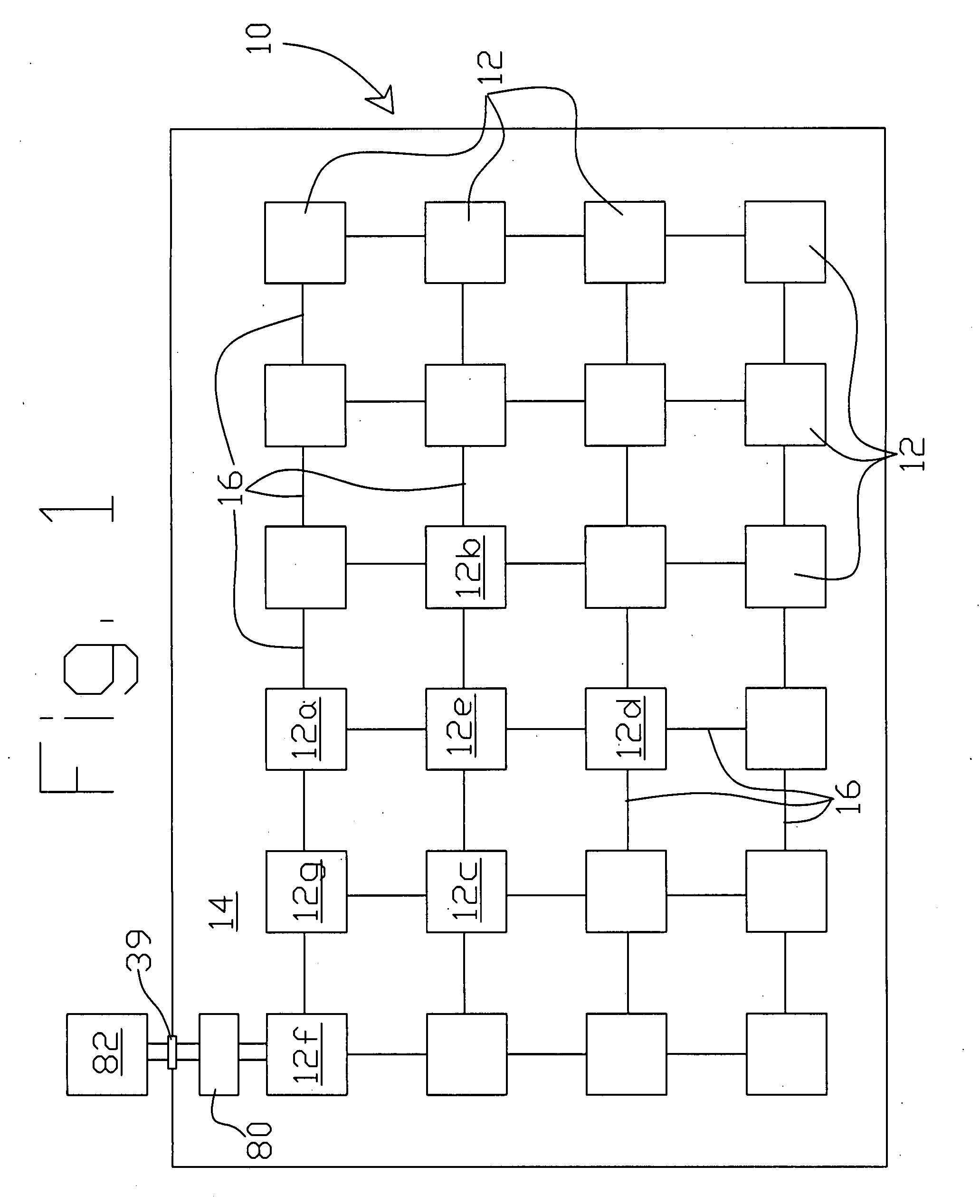

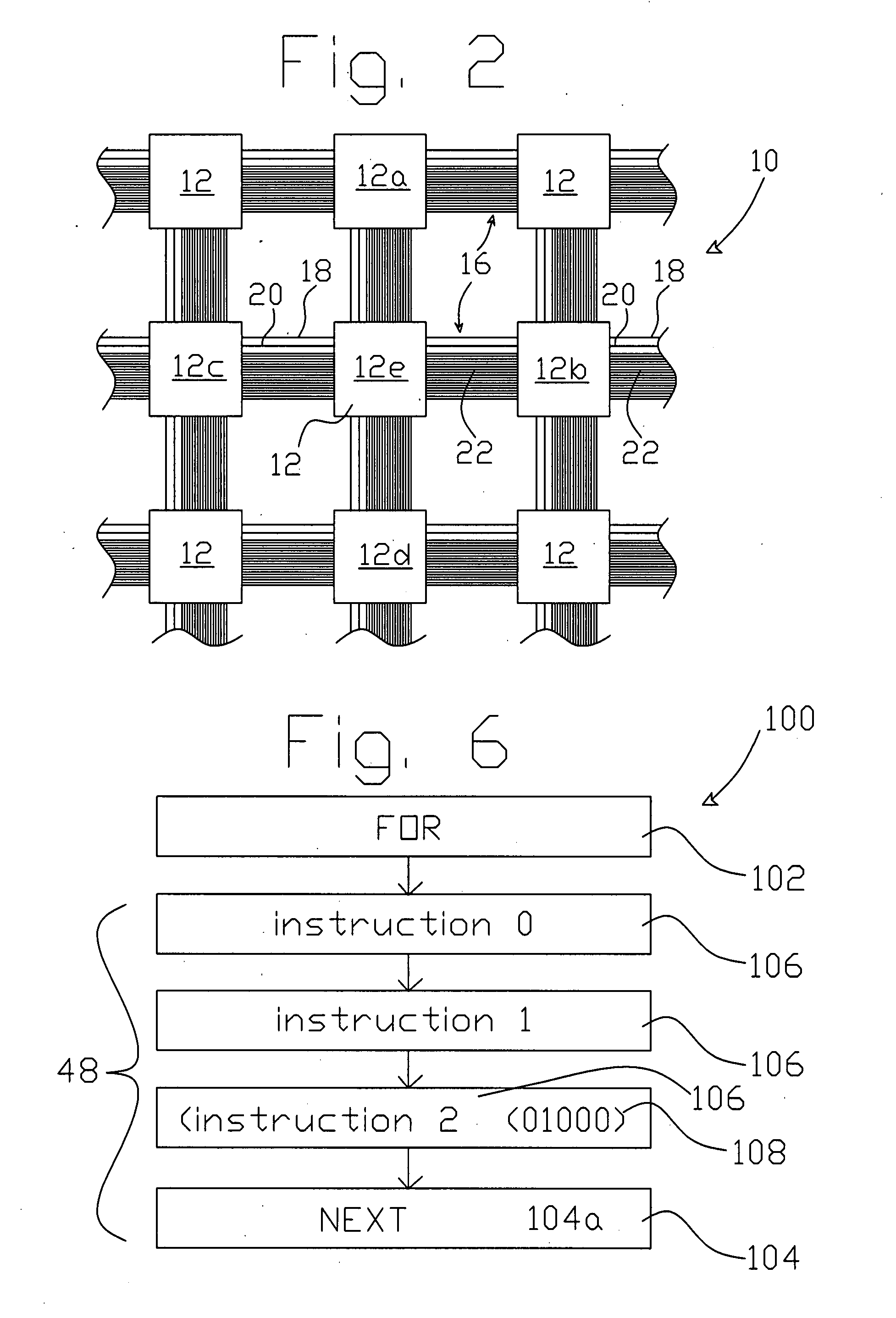

Integrated circuit incorporating an array of interconnected processors executing a cycle-based program

InactiveUS20100100704A1Facilitate communicationImprove performanceSingle instruction multiple data multiprocessorsProgram control using wired connectionsArray data structureState variable

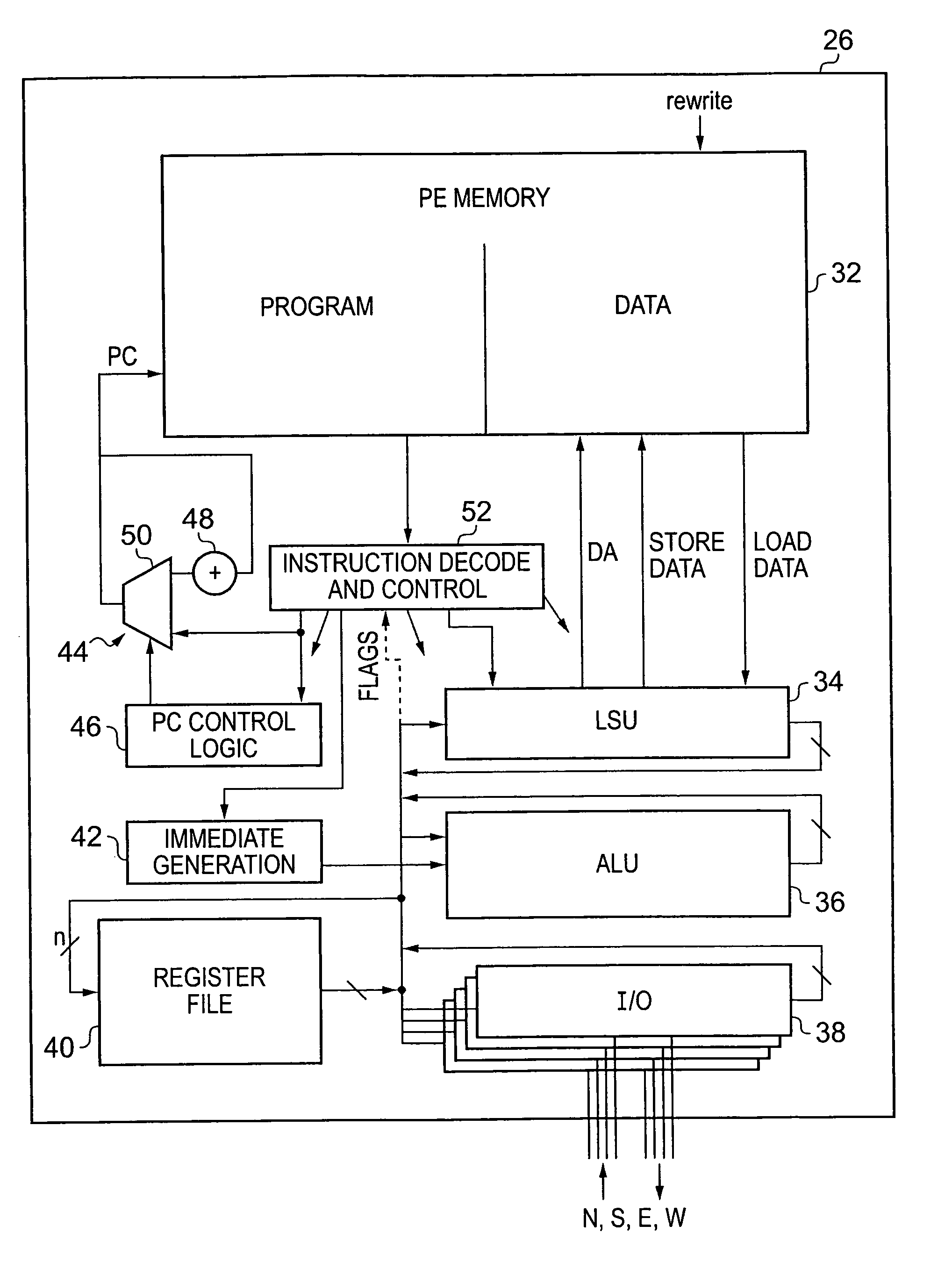

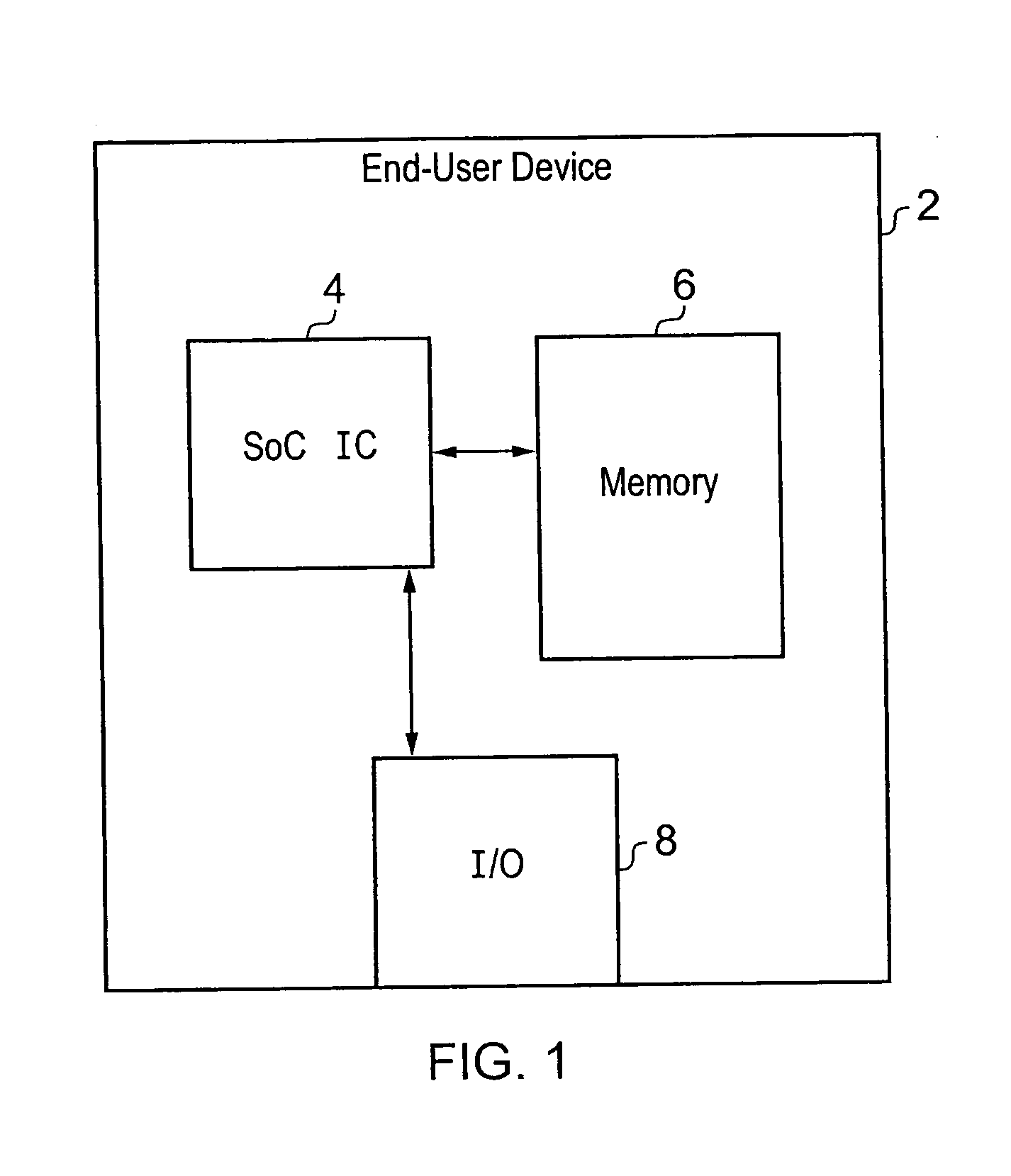

An integrated circuit 4 is provided including an array 10 of processors 26 with interface circuitry 12 providing communication with further processing circuitry 14. The processors 26 within the array 10 execute individual programs which together provide the functionality of a cycle-based program. During each program-cycle of the cycle based program, each of the processors executes its respective program starting from a predetermined execution start point to evaluate a next state of at least some of the state variables of the cycle-based program. A boundary between program-cycles provides a synchronisation time (point) for processing operations performed by the array.

Owner:ARM LTD

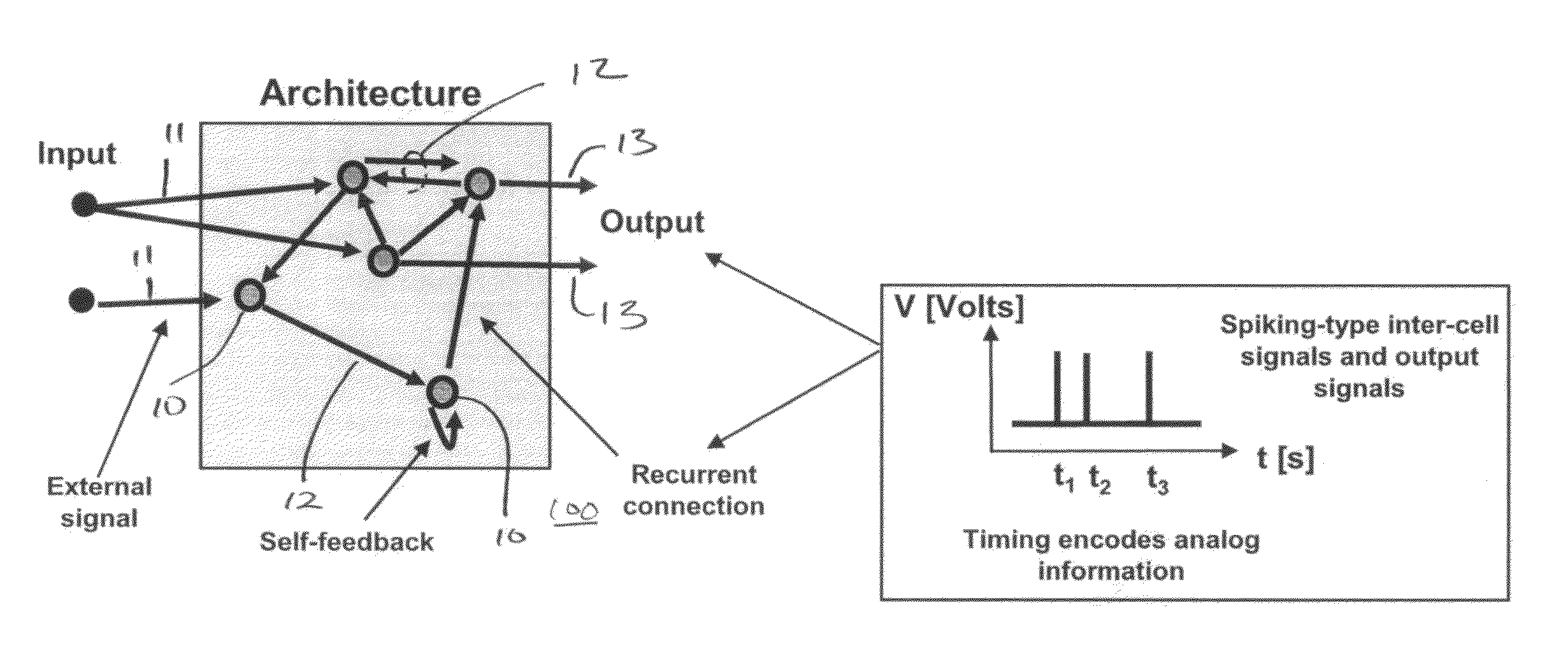

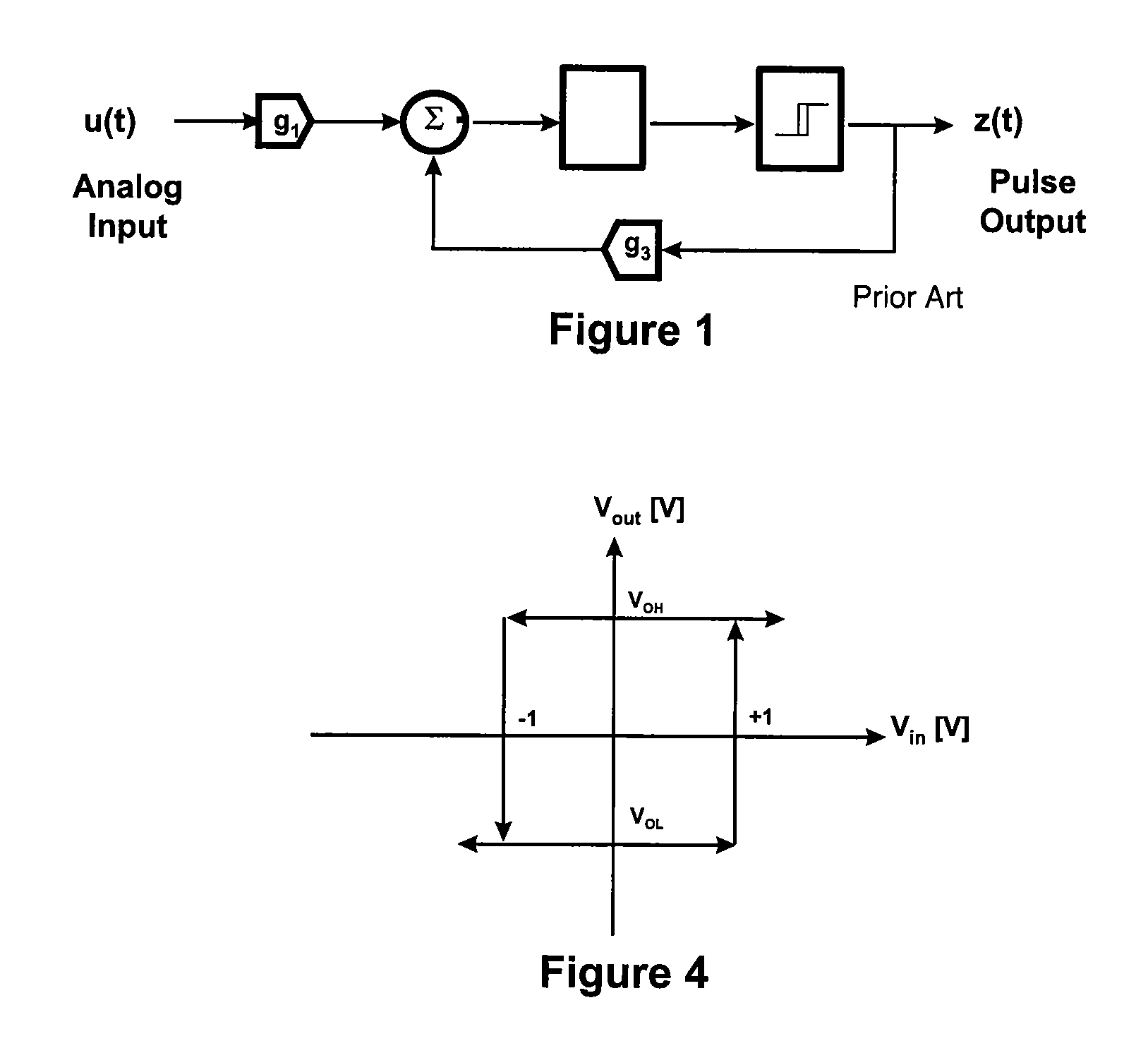

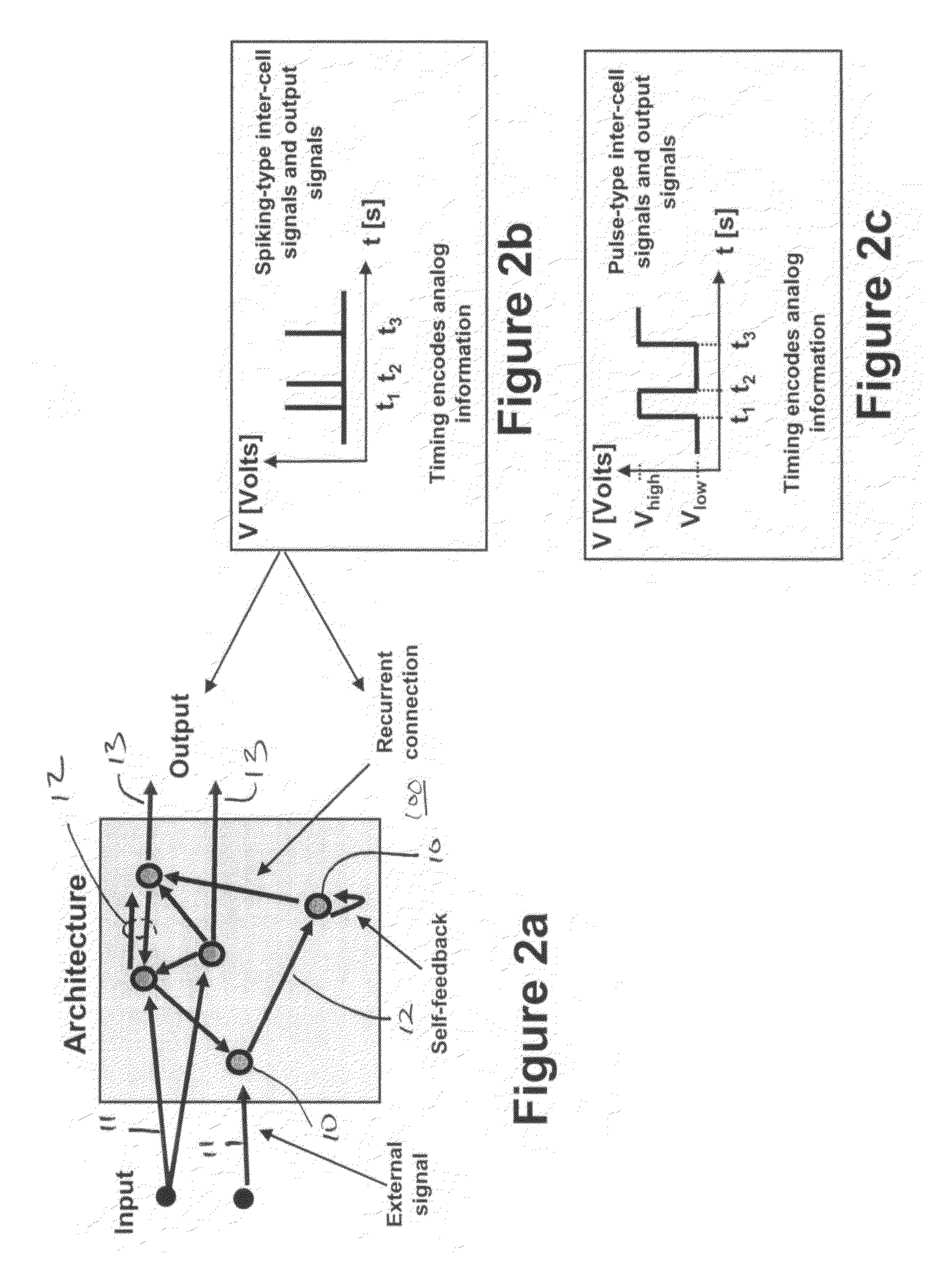

Spike domain and pulse domain non-linear processors

A neural network has an array of interconnected processors, each processor operating either the pulse domain or spike domain. Each processor has (i) first inputs selectively coupled to other processors in the array of processors, each first input having an associated 1 bit DAC coupled to a summing node, (ii) second inputs selectively coupled to inputs of the neural network, the second inputs having current generators associated therewith coupled to said summing node, (iii) a filter / integrator for generating an analog signal corresponding to current arriving at the summing node, (iv) an optional nonlinear element coupled to the filter / integrator, and (v) an analog-to-pulse converter, if the processors operate in the pulse domain, or an analog-to-spike convertor, if the processors operate in the spike domain, for converting an analog signal output by the optional nonlinear element or by the filter / integrator to either the pulse domain or spike domain, and providing the converted analog signal as an unquantized pulse or spike domain signal at an output of the processor. The array of processors are selectively interconnected with either unquantized pulse domain or spike domain signals.

Owner:HRL LAB

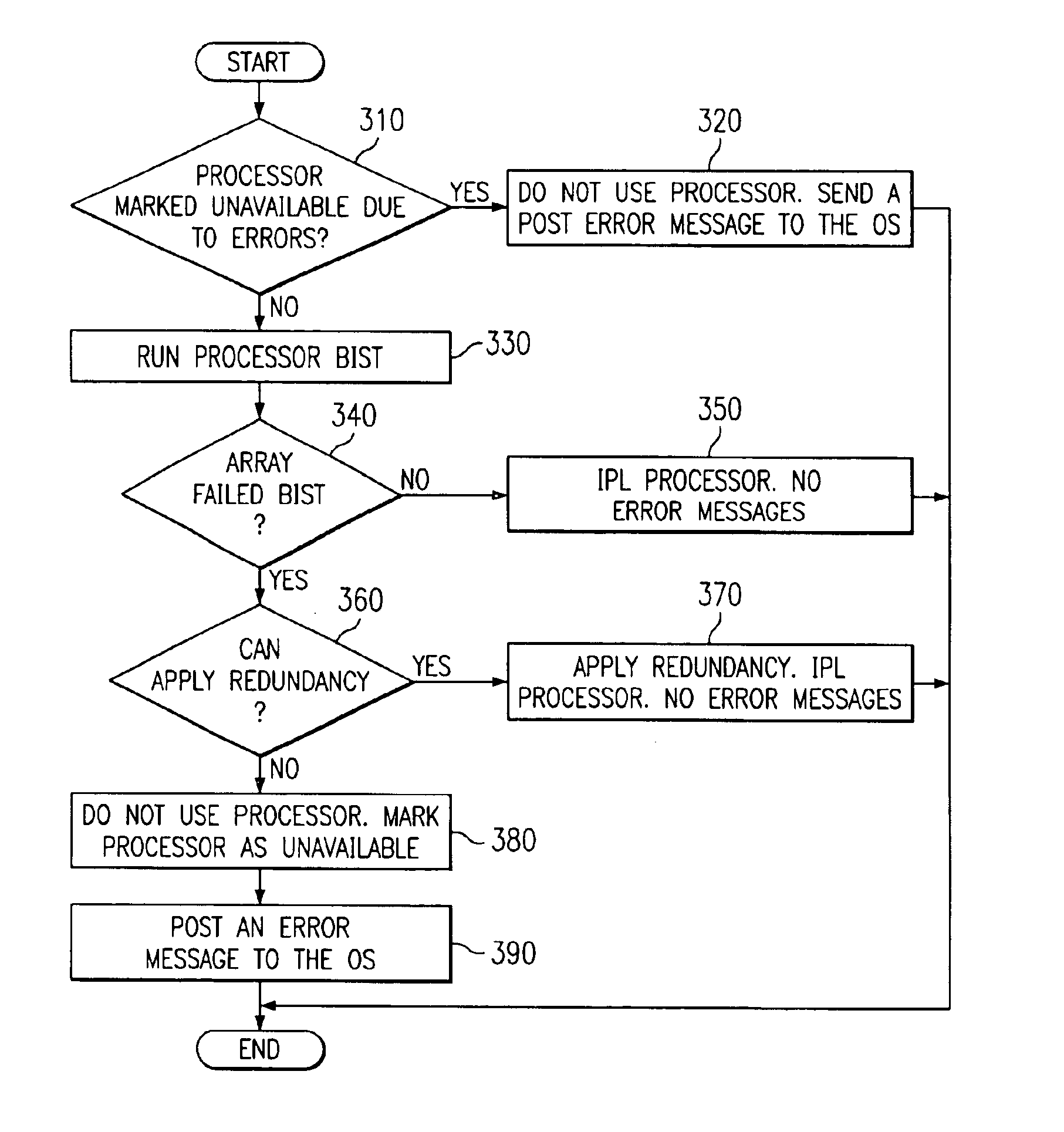

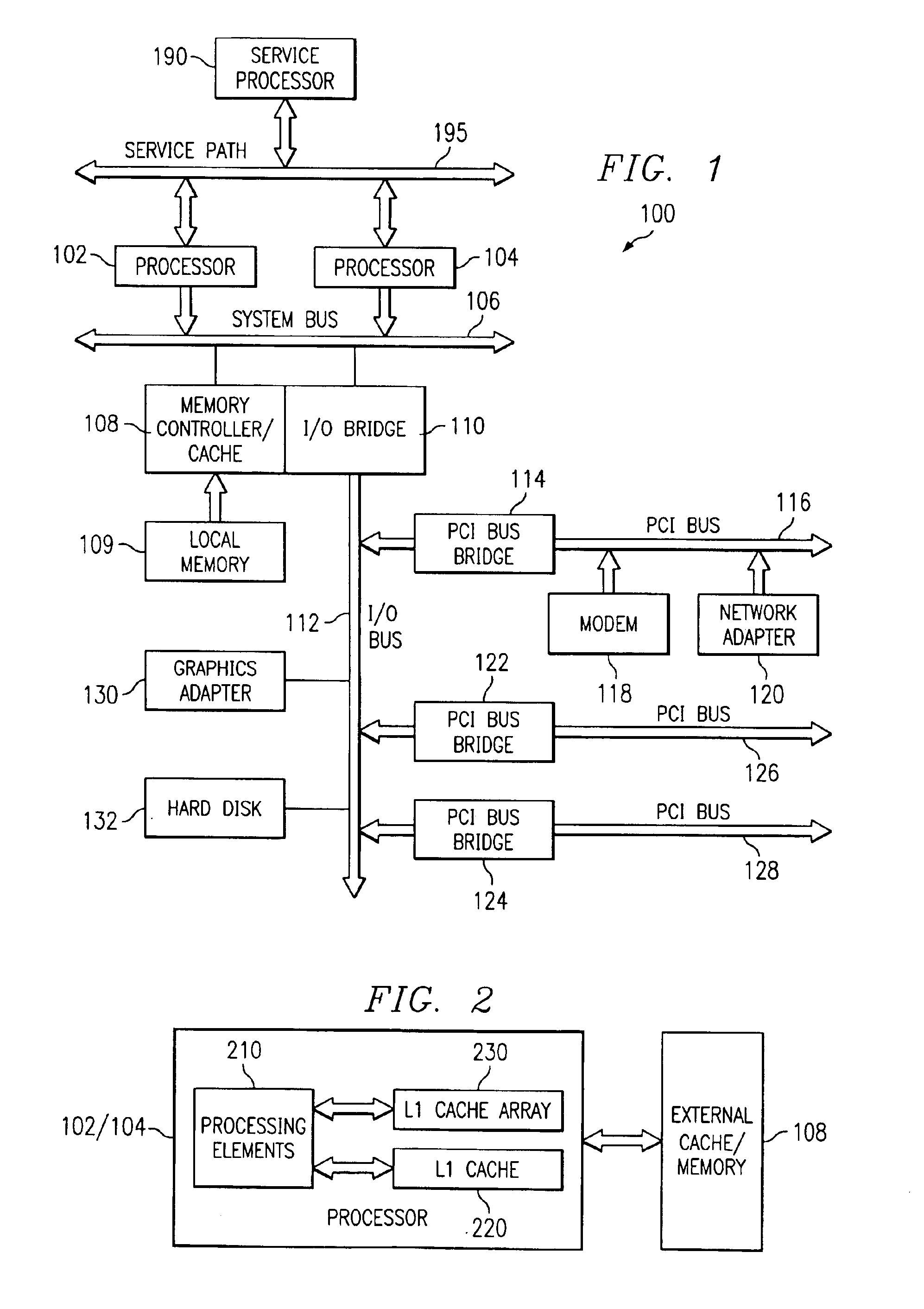

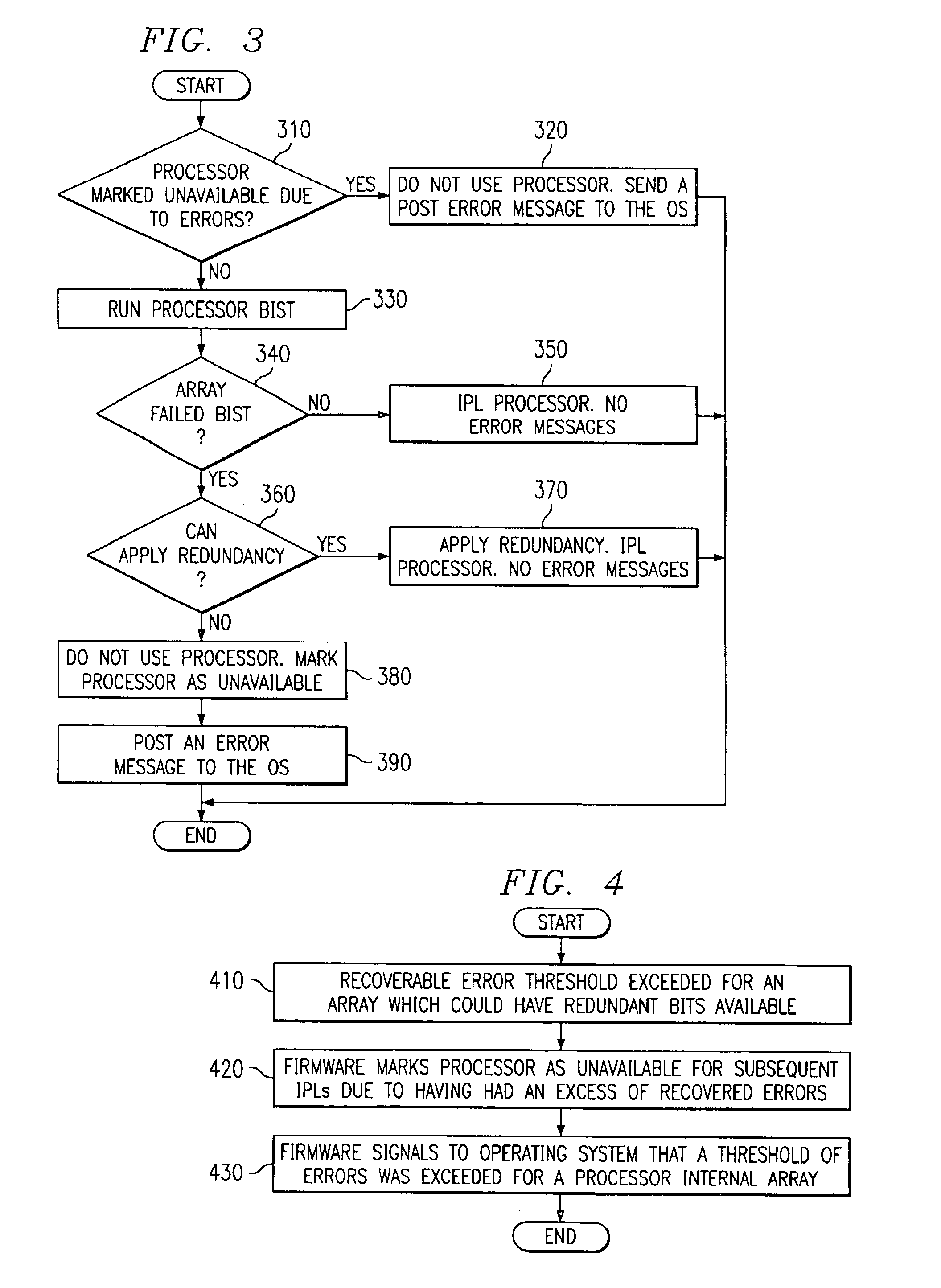

Apparatus and method of repairing a processor array for a failure detected at runtime

InactiveUS6851071B2Maintenance of such featureNon-redundant fault processingStatic storageRunning timeComputer science

An apparatus and method of repairing a processor array for a failure detected at runtime in a system supporting persistent component deallocation are provided. The apparatus and method of the present invention allow redundant array bits to be used for recoverable faults detected in arrays during run time, instead of only at system boot, while still maintaining the dynamic and persistent processor deallocation features of the computing system. With the apparatus and method of the present invention, a failure of a cache array is detected and a determination is made as to whether a repairable failure threshold is exceeded during runtime. If this threshold is exceeded, a determination is made as to whether cache array redundancy may be applied to correct the failure, i.e. a bit error. If so, the cache array redundancy is applied without marking the processor as unavailable. At some time later, the system undergoes a re-initial program load (re-IPL) at which time it is determined whether a second failure of the processor occurs. If a second failure occurs, a determination is made as to whether any status bits are set for arrays other than the cache array that experienced the present failure, if so, the processor is marked unavailable. If not, a determination is made as to whether cache redundancy can be applied to correct the failure. If so, the failure is corrected using the cache redundancy. If not, the processor is marked unavailable.

Owner:IBM CORP

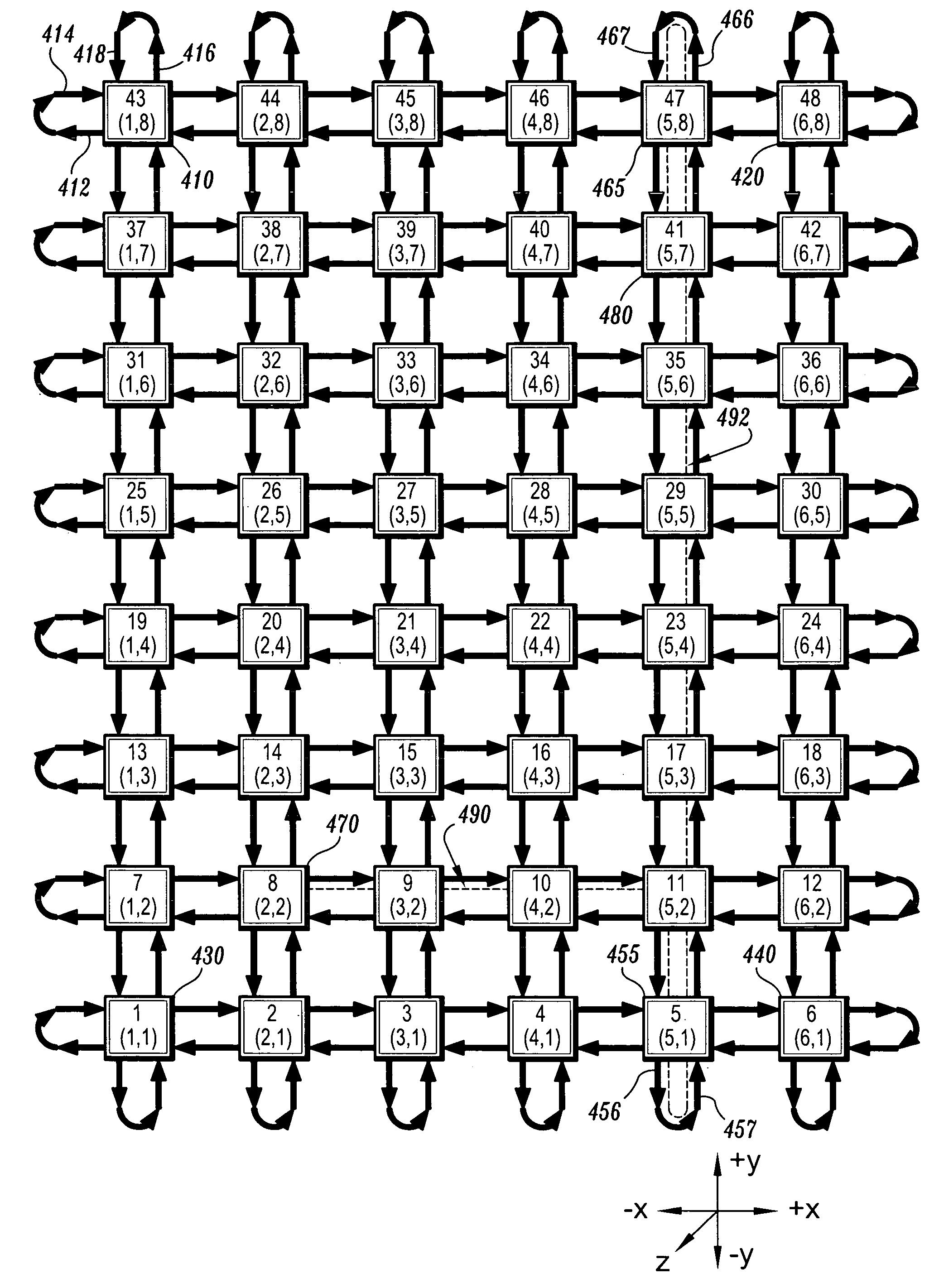

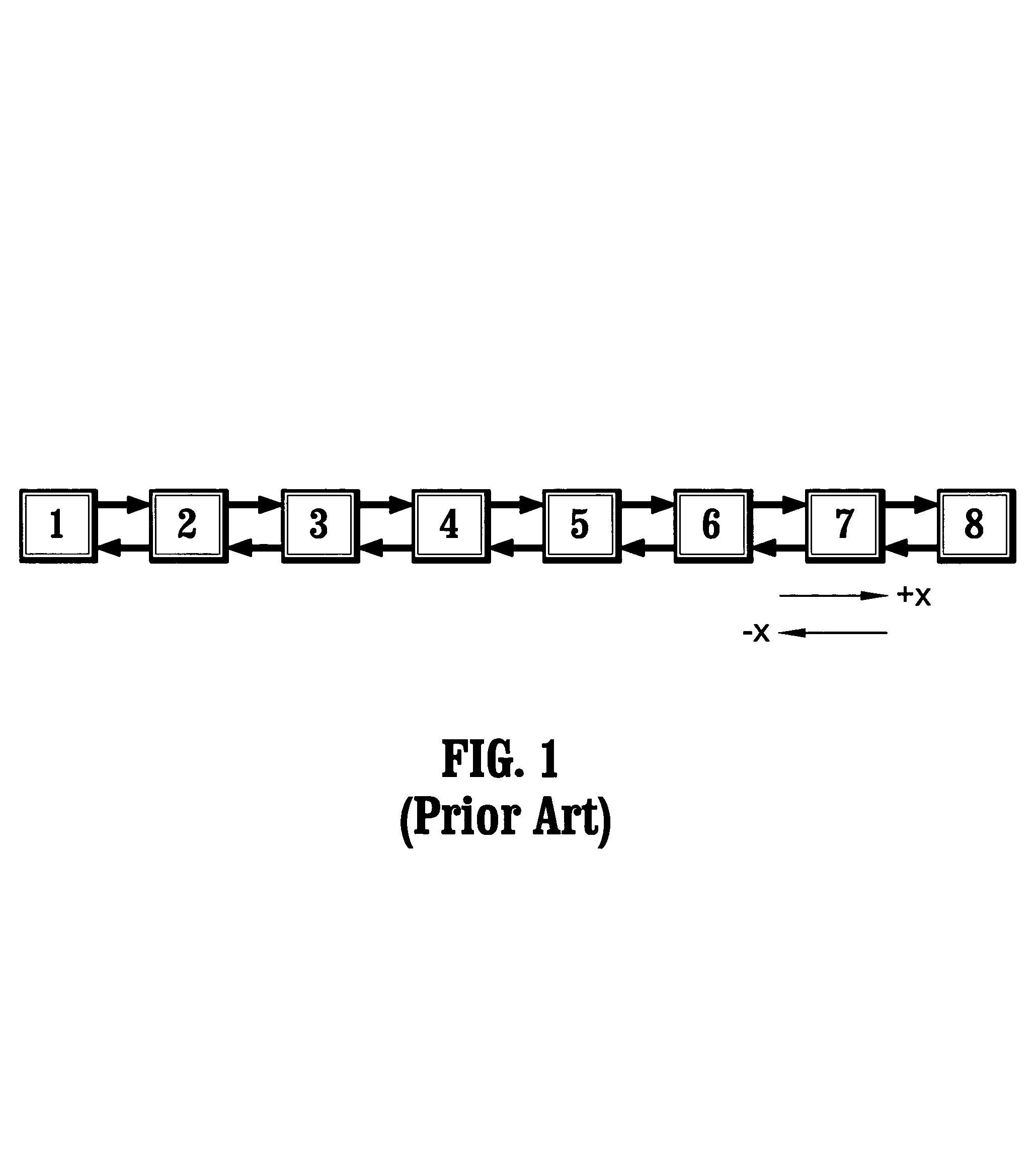

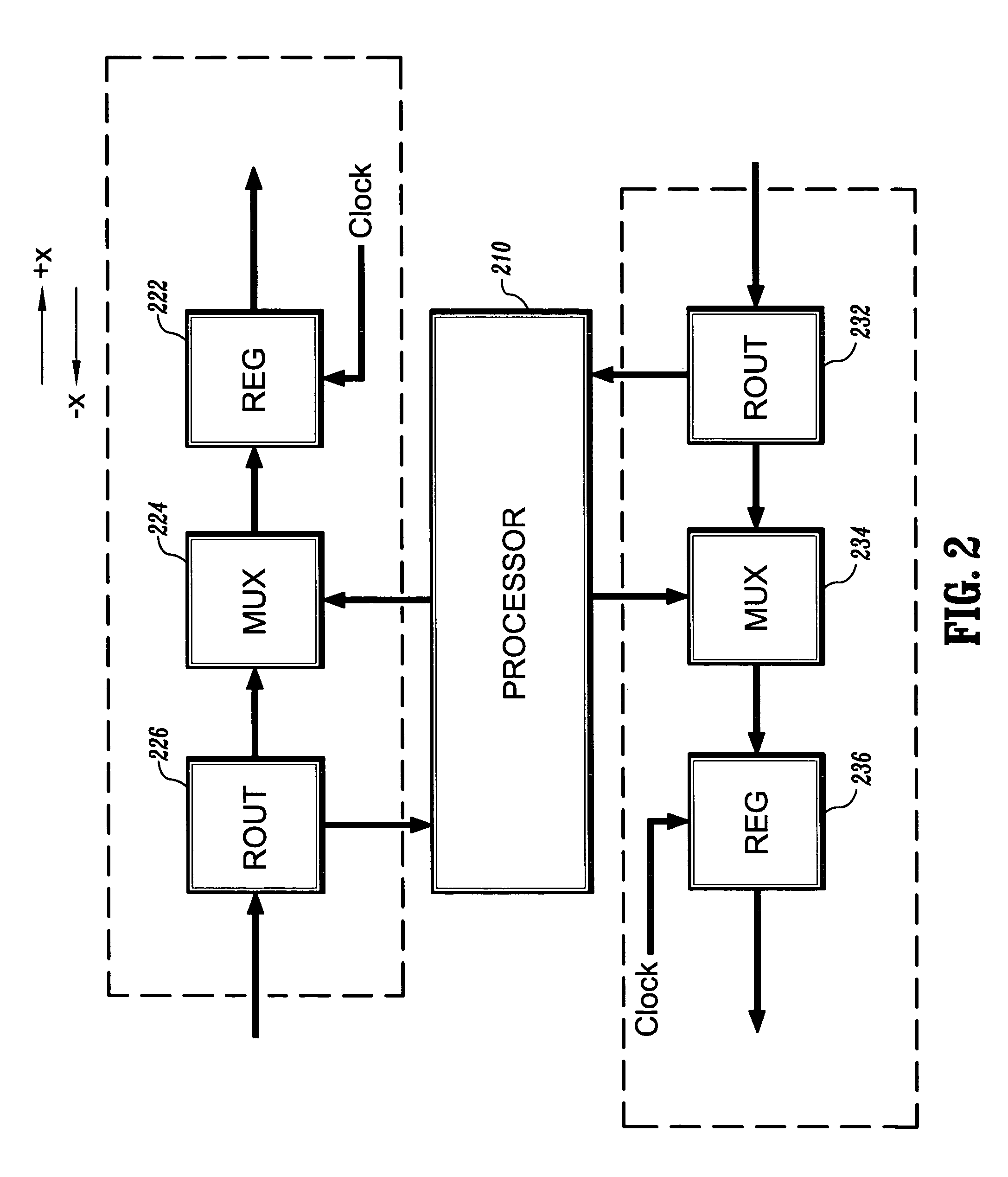

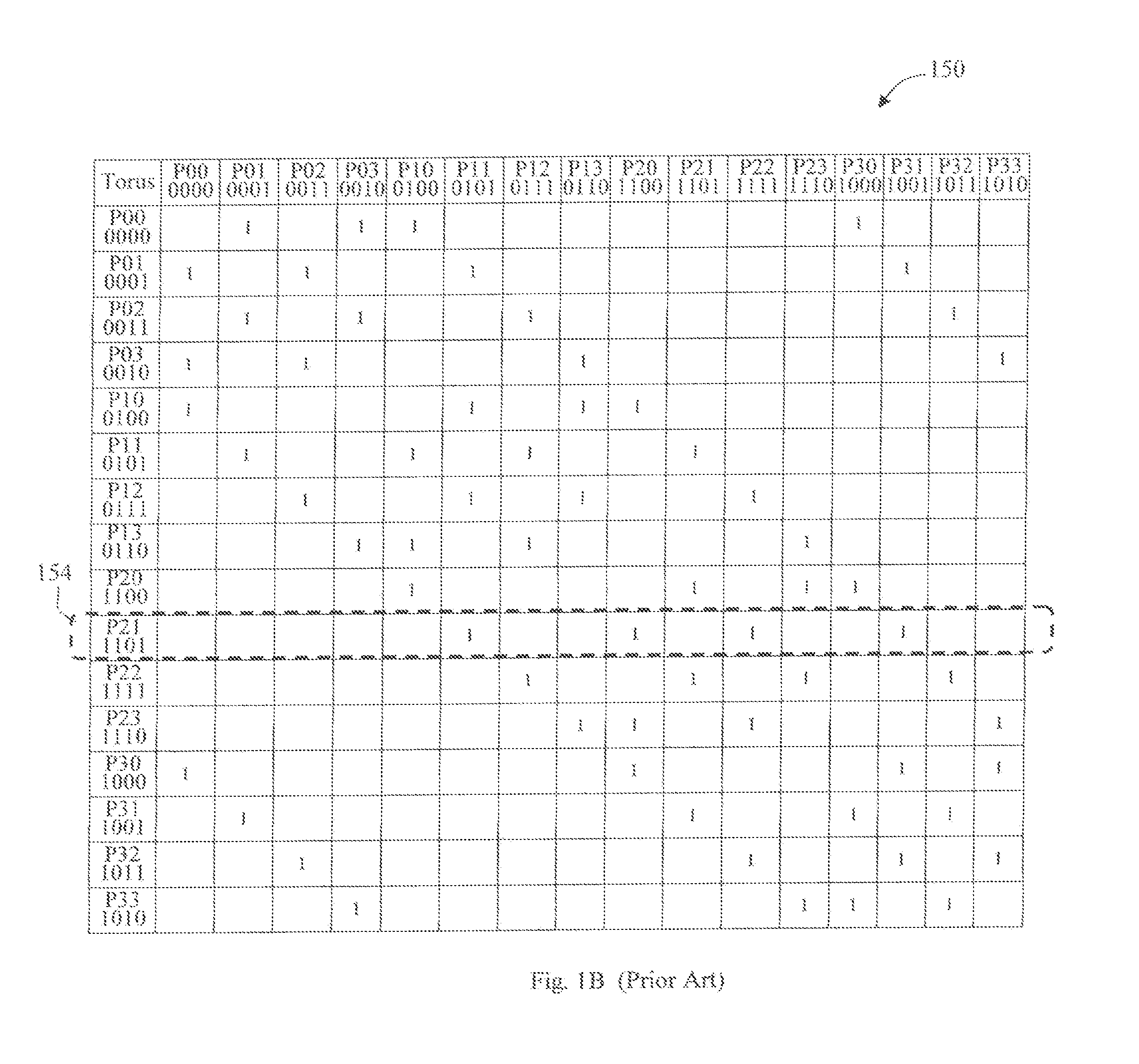

Methods for routing packets on a linear array of processors

InactiveUS6961782B1Reduce energy consumptionNo reduction in bandwidthMultiplex system selection arrangementsGeneral purpose stored program computerNetwork packetNear neighbor

There is provided a method for routing packets on a linear array of N processors connected in a nearest neighbor configuration. The method includes the step of, for each end processor of the array, connecting unused outputs to corresponding unused inputs. For each axis required to directly route a packet from a source to a destination processor, the following steps are performed. It is determined whether a result of directly sending a packet from an initial processor to a target processor is less than or greater than N / 2 moves, respectively. The initial processor is the source processor in the first axis, and the target processor is the destination processor in the last axis. The packet is directly sent from the initial processor to the target processor, when the result is less than N / 2 moves. The packet is indirectly sent so as to wrap around each end processor, when the result is greater than N / 2 moves. The method may optionally include the step of randomly sending the packet using either of the sending steps, when the result is equal to N / 2 moves and N is an even number.

Owner:IBM CORP

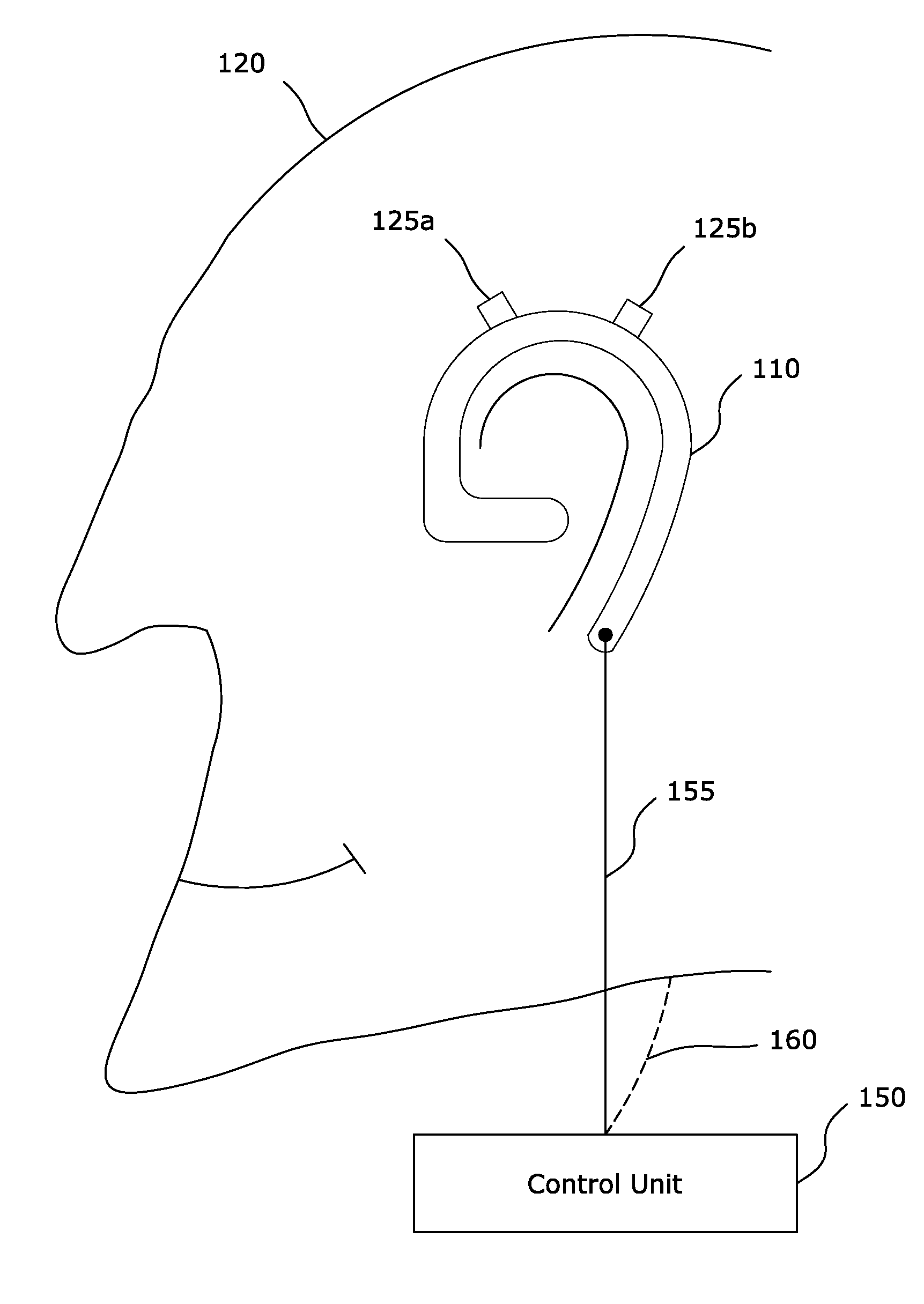

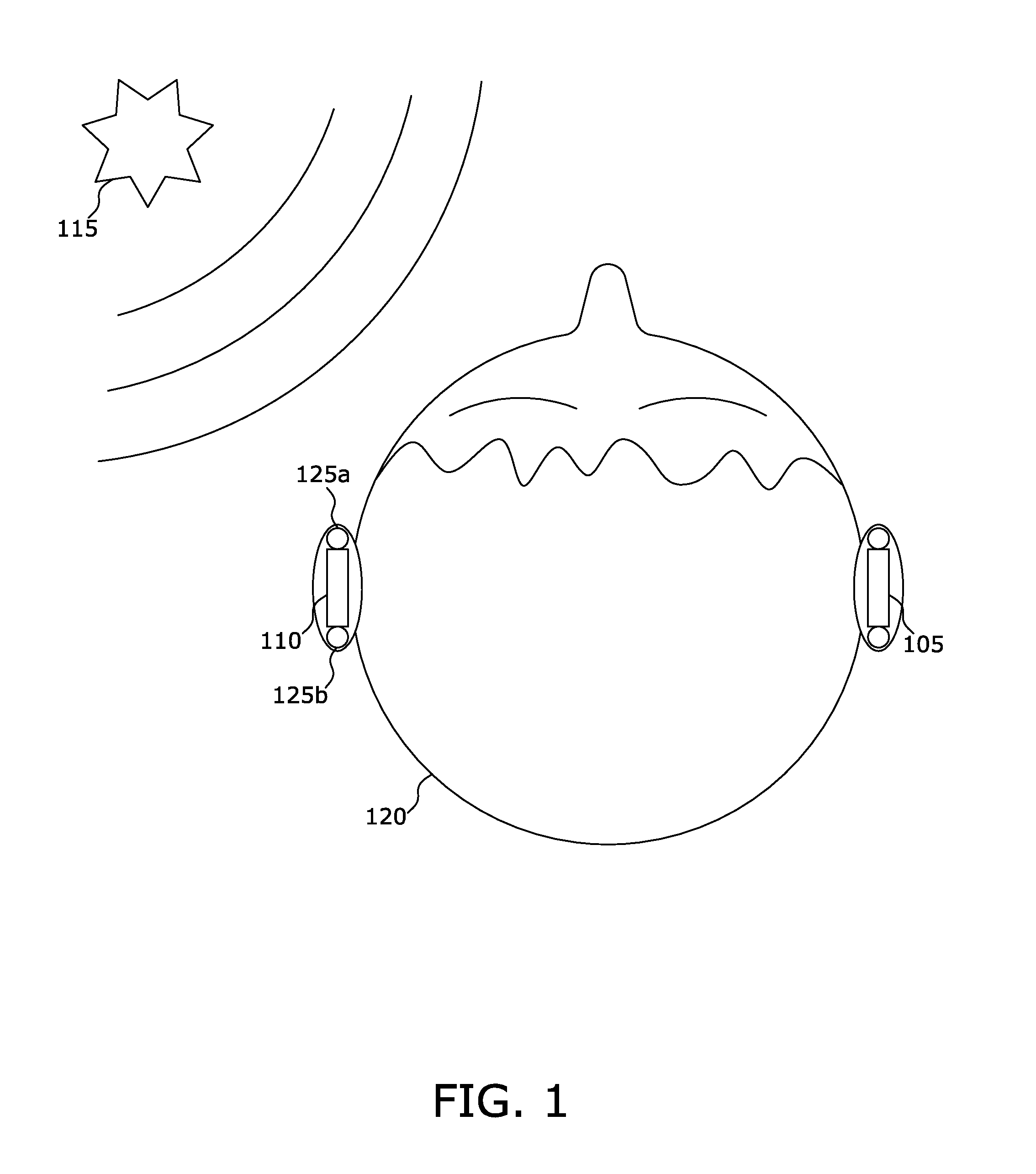

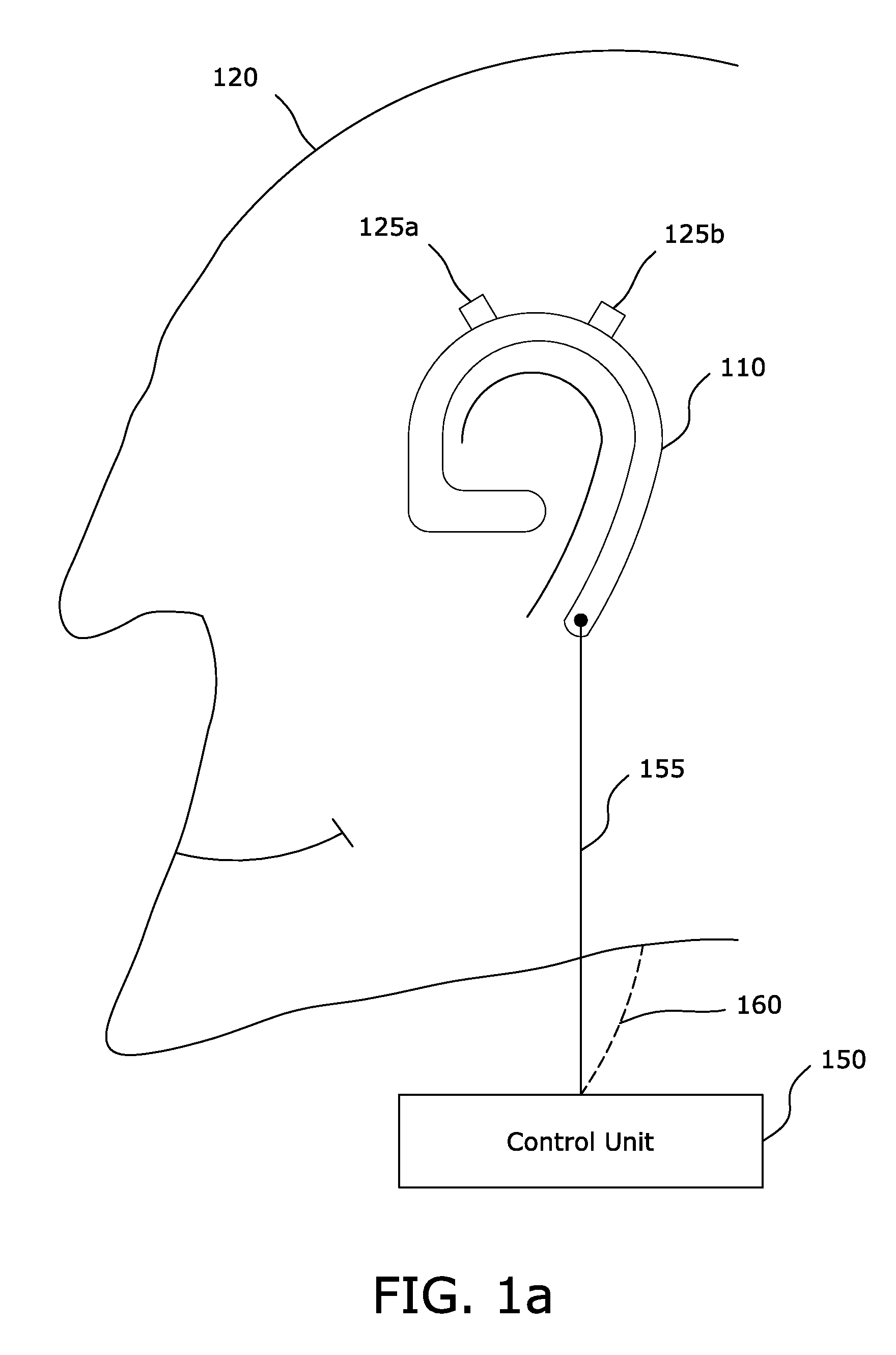

Method and Apparatus for Implementing Hearing Aid with Array of Processors

InactiveUS20100246866A1Good flexibilityCompact formHearing device energy consumption reductionDeaf-aid setsDigital dataHearing aid

A method and apparatus for operation of a hearing aid 205 with signal processing functions performed with an array processor 220. In one embodiment, a reconfiguration module 250 allows reconfiguration of the processors 220 in the field. Another embodiment provides wireless communication by use of earpieces 105, 110 provided with antennas 235 in communication with a user module 260. The method includes steps of converting analog data into digital data 915 filtering out noise 920 and processing the digital data in parallel 925 compensating for the user's hearing deficiencies and convert the digital data back into analog. Another embodiment adds the additional step of reconfiguring the processor in the field 1145. Yet another embodiment adds wireless communication 1040-1065.

Owner:SWATACR PORTFOLIO

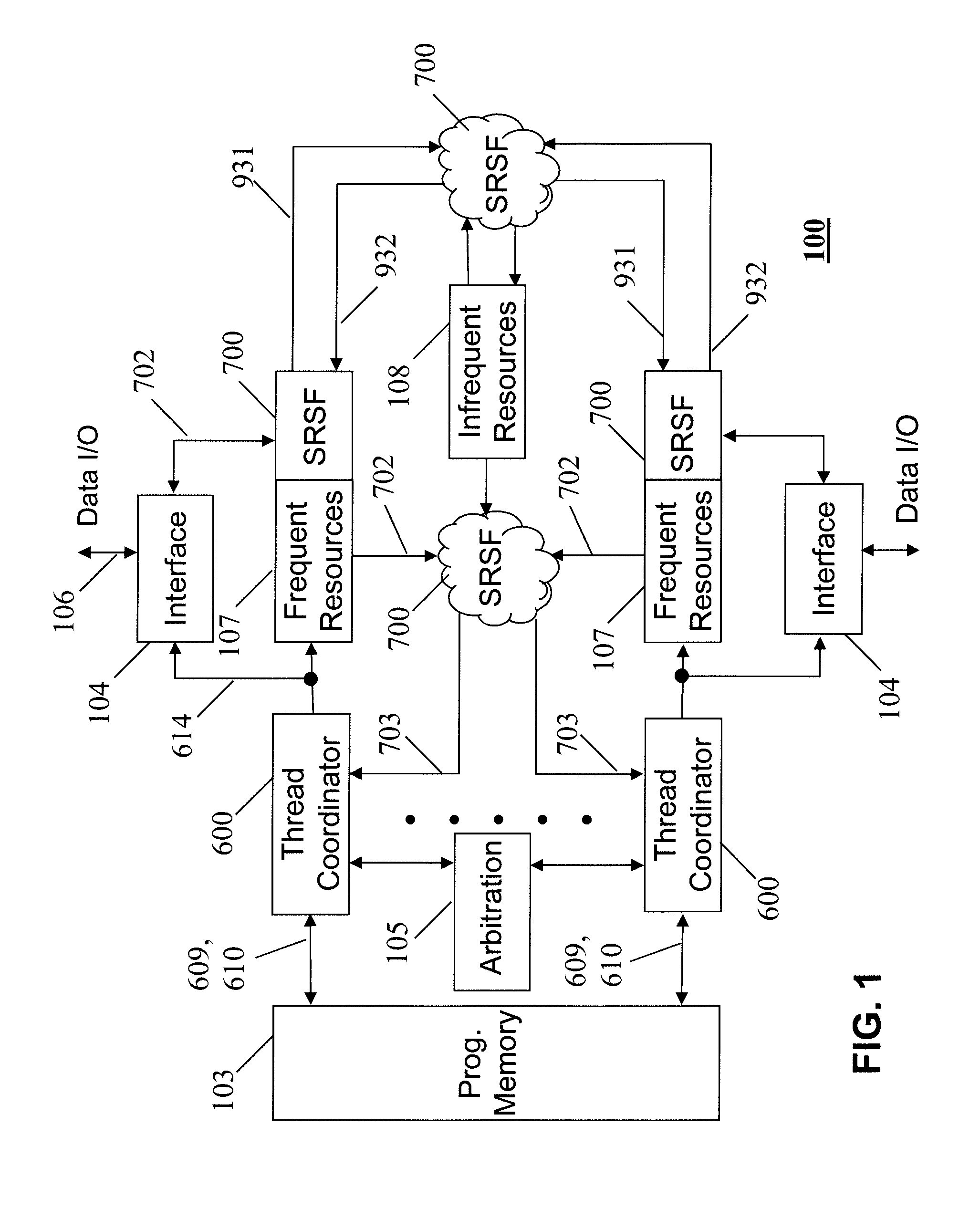

Multithreaded processor array with heterogeneous function blocks communicating tokens via self-routing switch fabrics

ActiveUS9158575B2Dataflow computersProgram initiation/switchingSelf routingStructure of Management Information

A shared resource multi-thread processor array wherein an array of heterogeneous function blocks are interconnected via a self-routing switch fabric, in which the individual function blocks have an associated switch port address. Each switch output port comprises a FIFO style memory that implements a plurality of separate queues. Thread queue empty flags are grouped using programmable circuit means to form self-synchronised threads. Data from different threads are passed to the various addressable function blocks in a predefined sequence in order to implement the desired function. The separate port queues allows data from different threads to share the same hardware resources and the reconfiguration of switch fabric addresses further enables the formation of different data-paths allowing the array to be configured for use in various applications.

Owner:SMITH GRAEME ROY

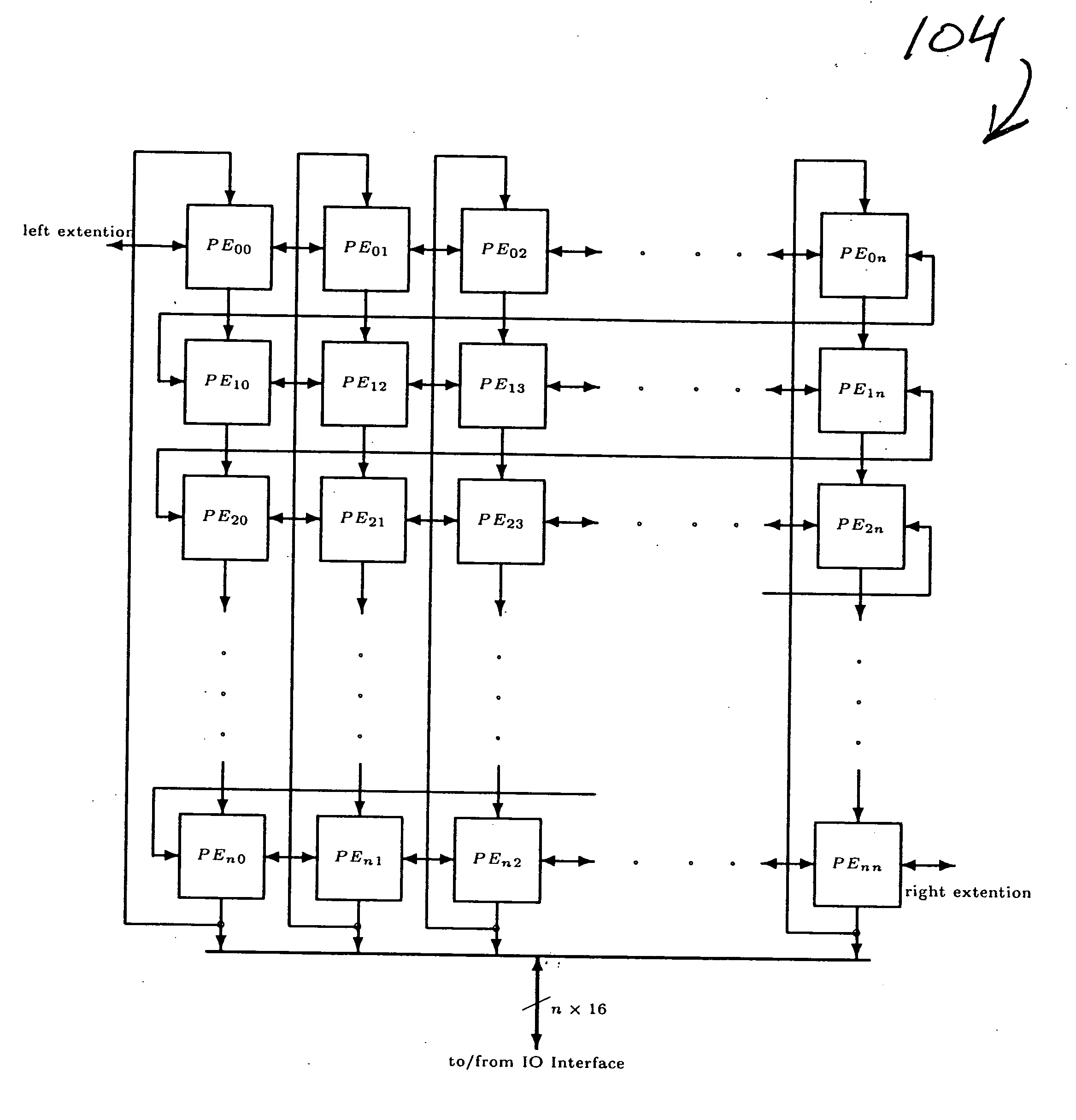

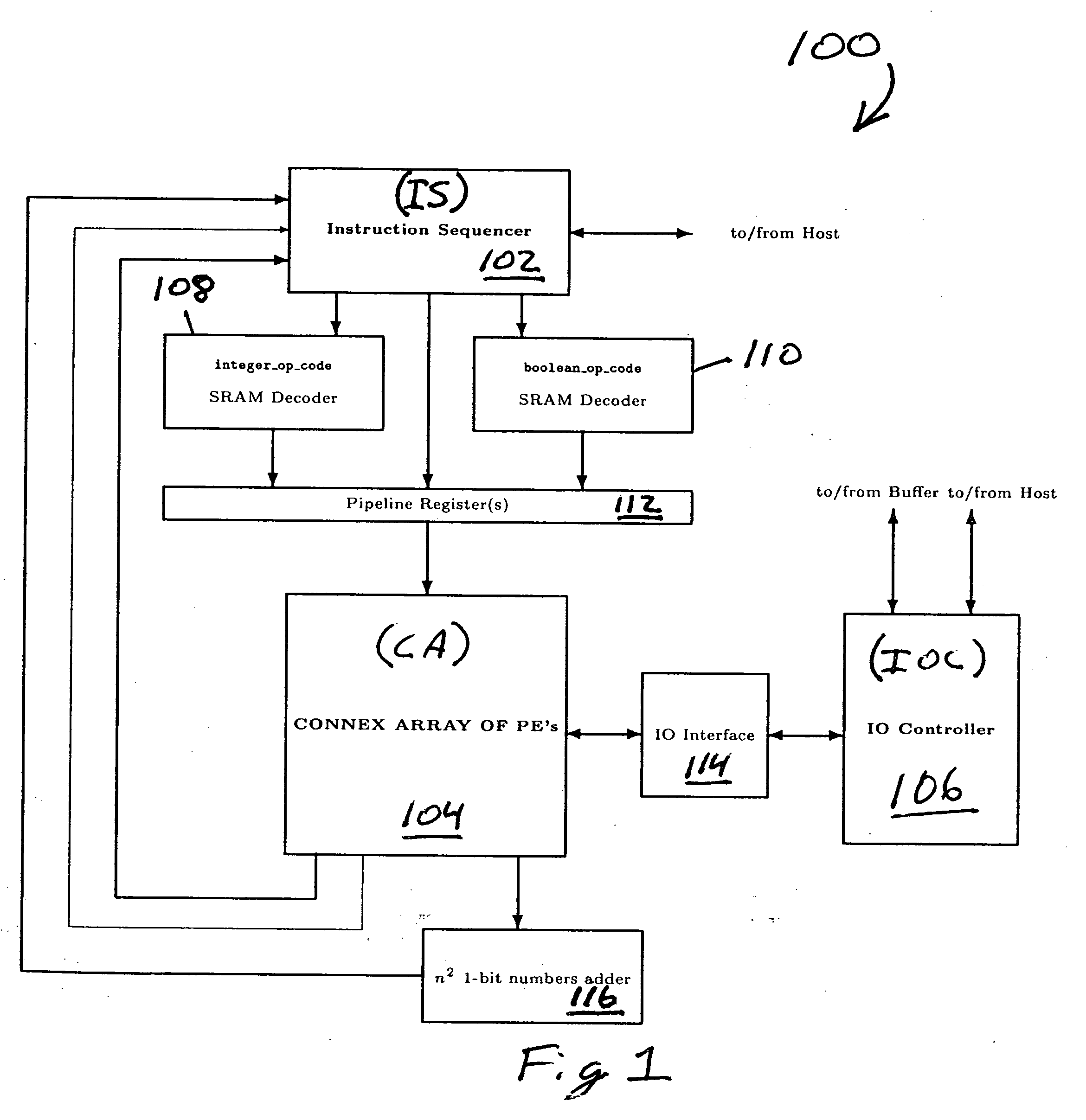

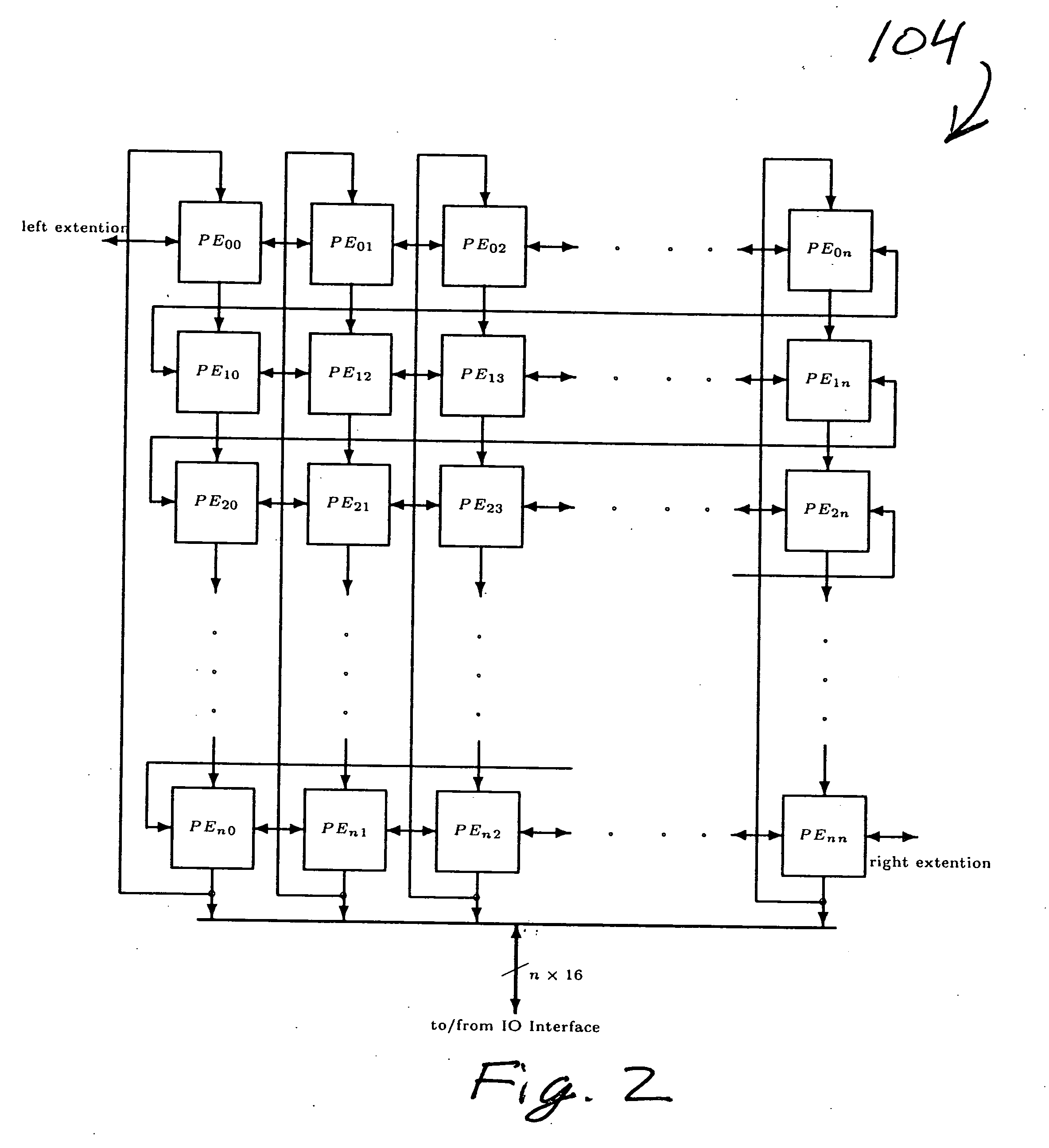

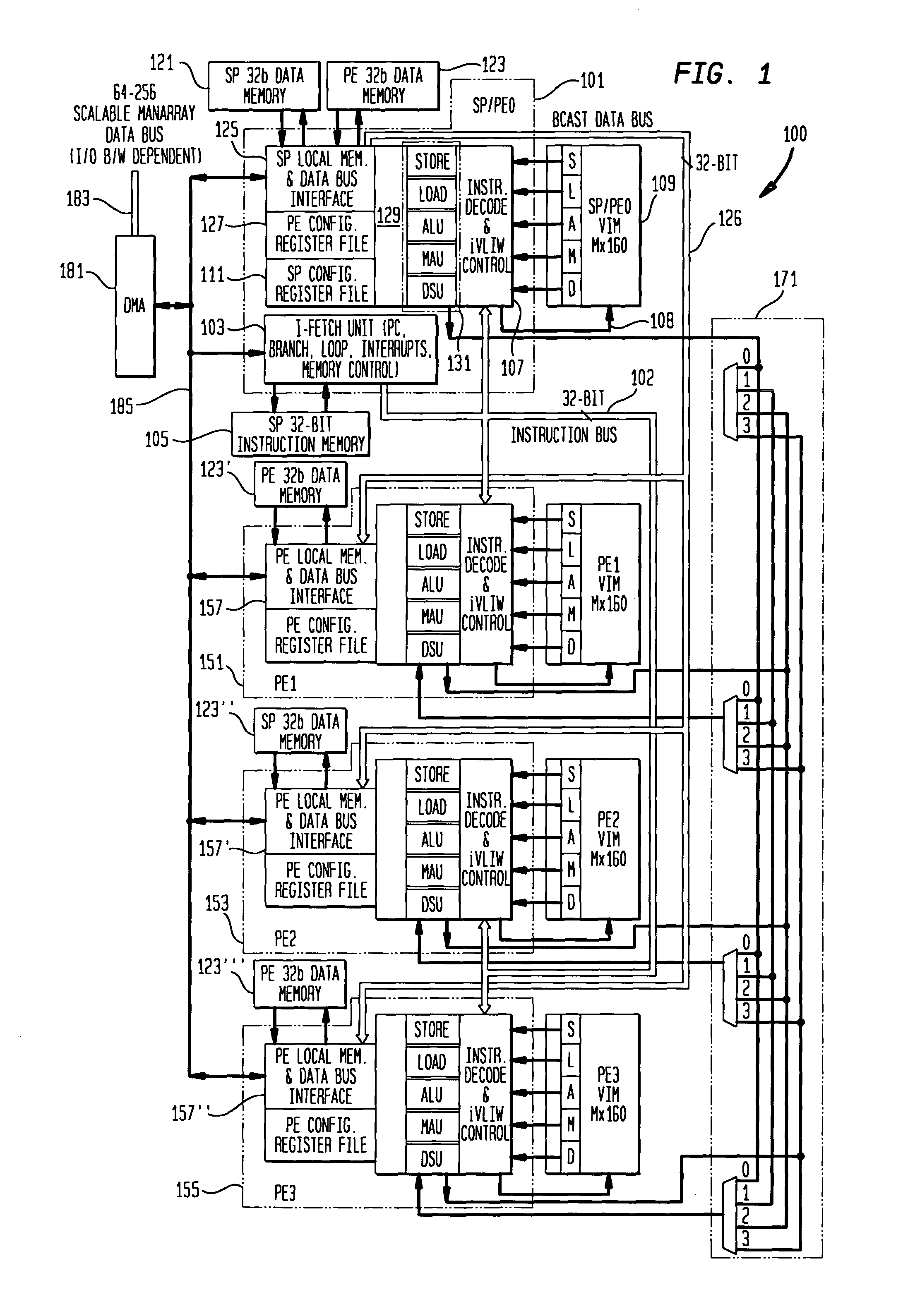

Integrated processor array, instruction sequencer and I/O controller

InactiveUS20070130444A1Single instruction multiple data multiprocessorsSpecific program execution arrangementsInformation processingLogic state

A computer processor having an integrated instruction sequencer, array of processing engines, and I / O controller. The instruction sequencer sequences instructions from a host, and transfers these instructions to the processing engines, thus directing their operation. The I / O controller controls the transfer of I / O data to and from the processing engines in parallel with the processing controlled by the instruction sequencer. The processing engines themselves are constructed with an integer arithmetic and logic unit (ALU), a 1-bit ALU, a decision unit, and registers. Instructions from the instruction sequencer direct the integer ALU to perform integer operations according to logic states stored in the 1-bit ALU and data stored in the decision unit. The 1-bit ALU and the decision unit can modify their stored information in the same clock cycle as the integer ALU carries out its operation. The processing engines also contain a local memory for storing instructions and data.

Owner:ALLSEARCH SEMI

Concurrent computational system for multi-scale discrete simulation

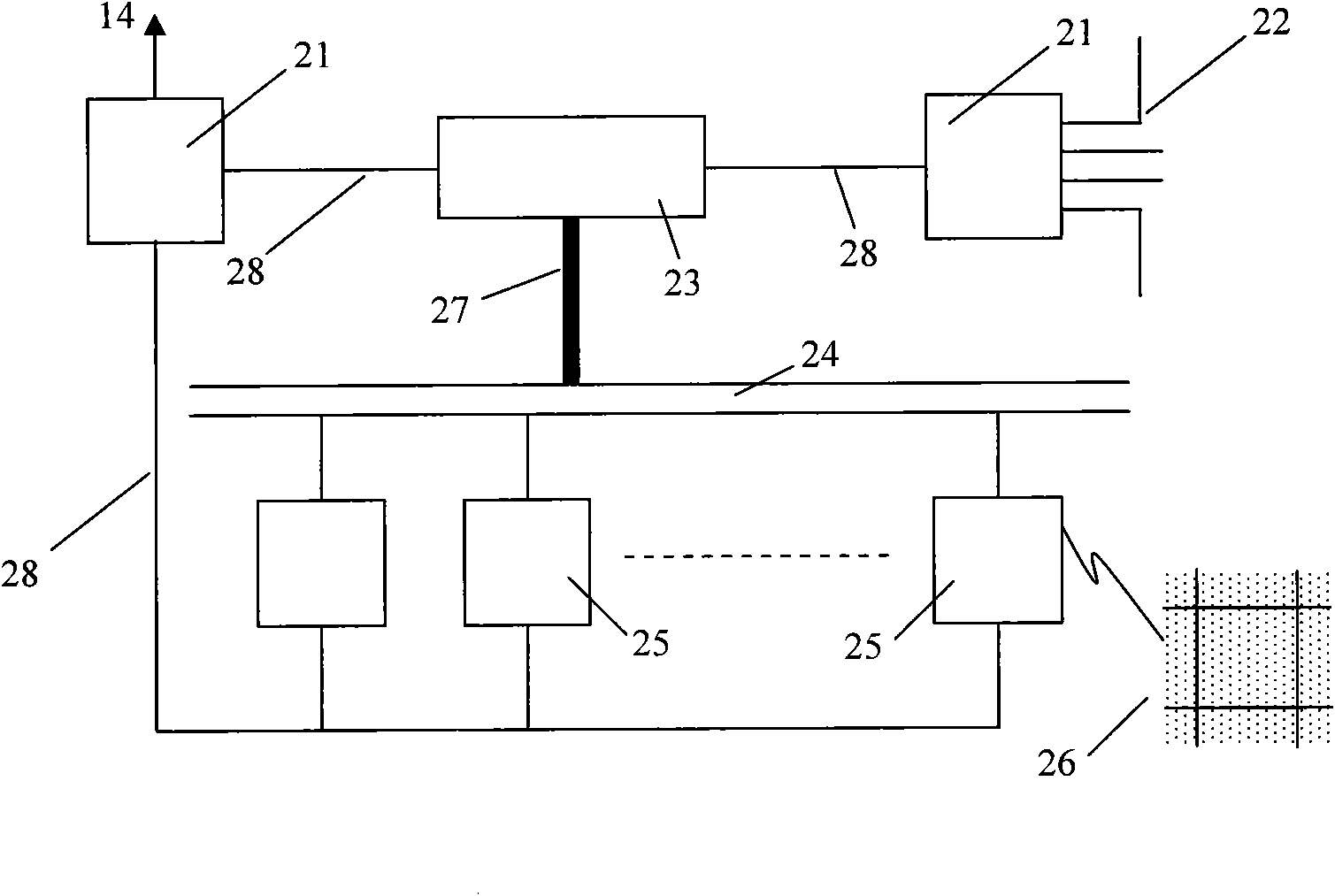

ActiveCN102053945AImprove general performanceSimple designDigital computer detailsElectric digital data processingScale structureComplex system

The invention relates to the technical field of high-performance computers, and discloses a concurrent computational system for multi-scale discrete simulation. In respect to the multi-scale structure and discrete characteristics of the complex system in the real world, the system maintains the consistency and similarity among simulation object, computation model, frame of algorithm and computer architecture. The system describes the local behavior of the systems in different layers with a large number of adjacent discrete units and describes the collective behavior of the systems in different layers with long-range restraint and correlation, and two-way feedback is applied between the upper layer unit and the lower layer unit. Correspondingly, the special reconfigurable vectorized accelerator group is used as the bottom layer computation hardware, the universal processor array is used as the upper layer computation hardware, and the adjacent processors or accelerators in the same layer can directly communicate or share memory with the processors or accelerators in the part of adjacent layers. Therefore, the system can efficiently and truly simulate complex process and system.

Owner:INST OF PROCESS ENG CHINESE ACAD OF SCI

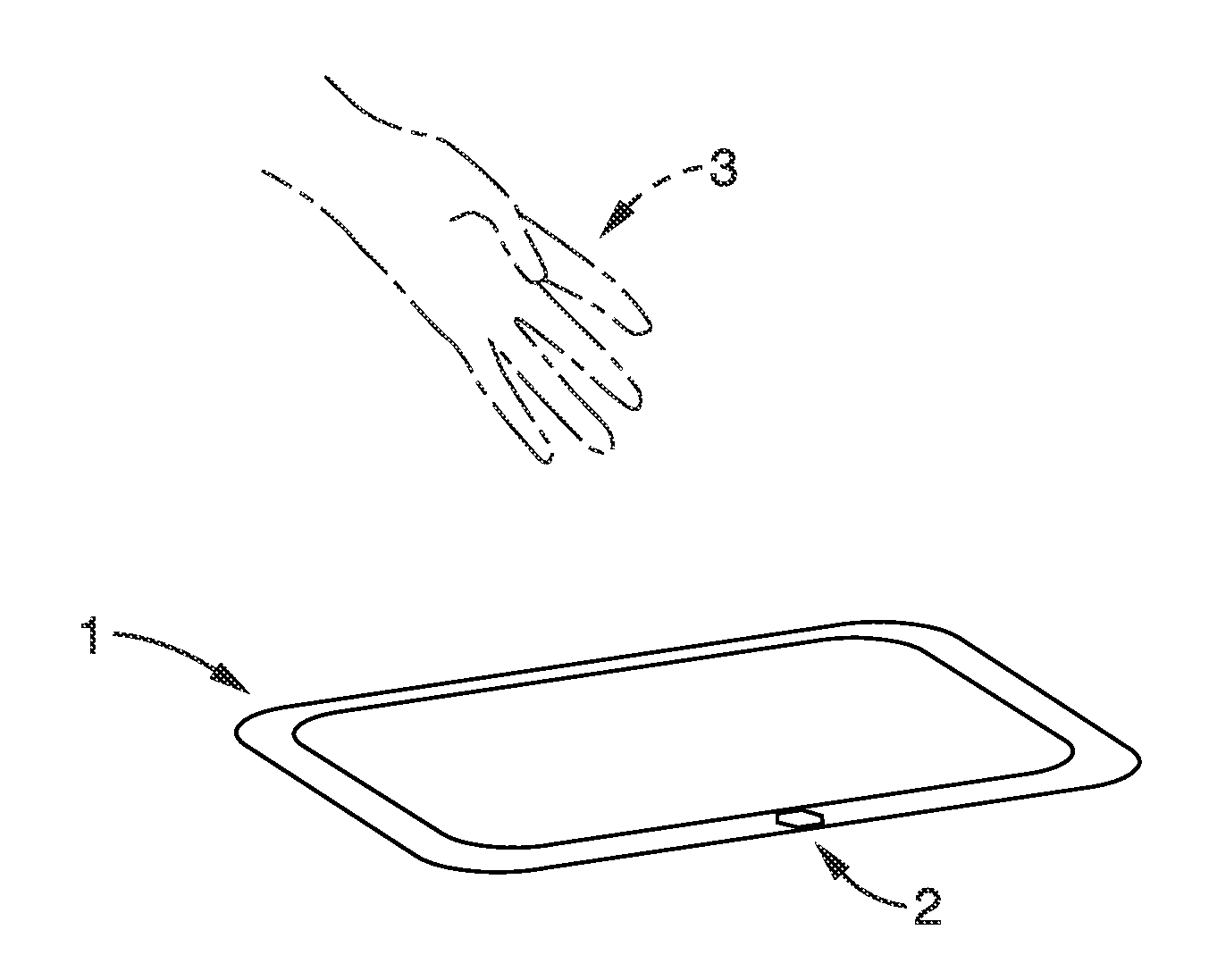

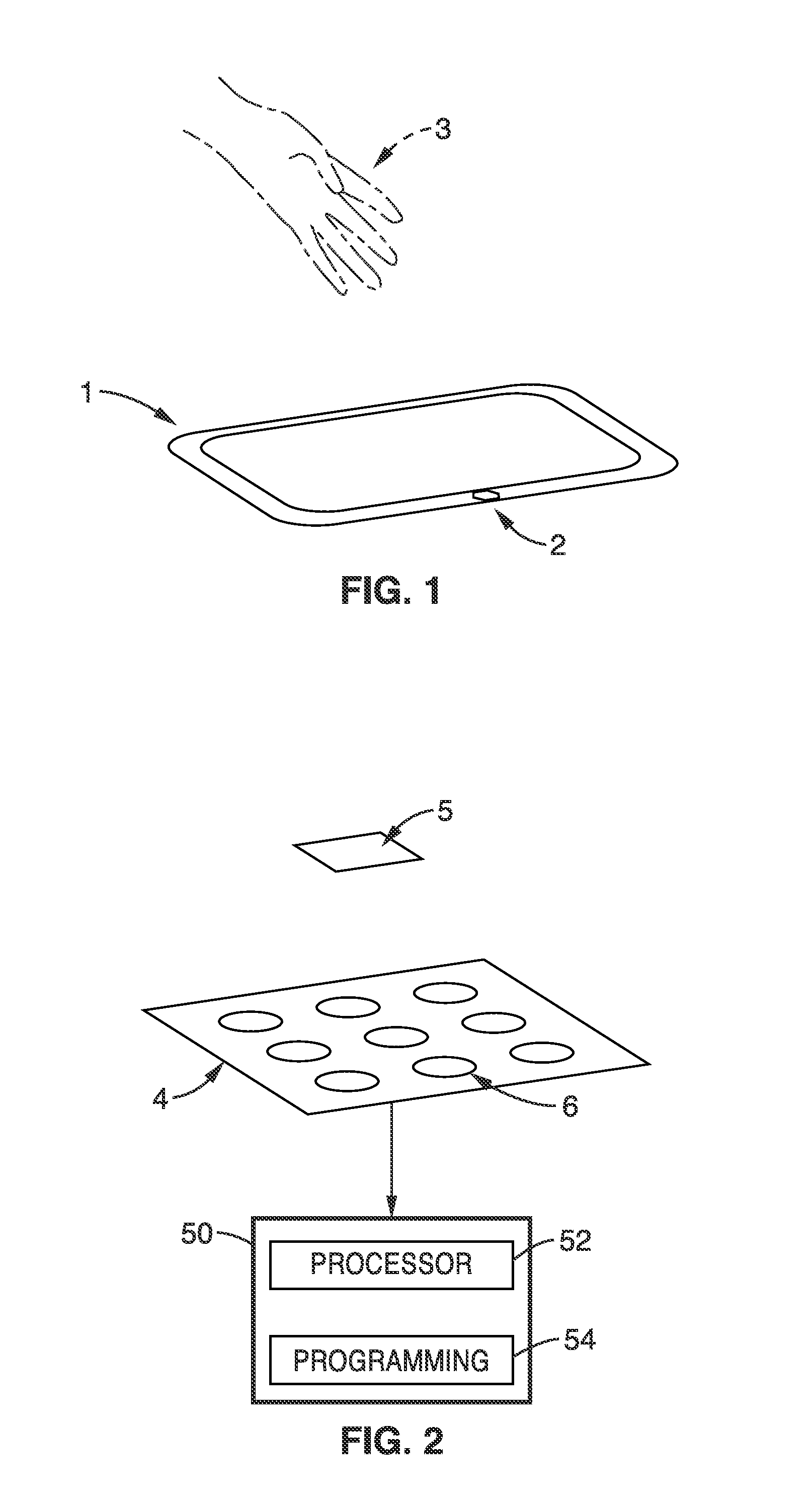

In-air ultrasonic rangefinding and angle estimation

ActiveUS20140253435A1Less powerInput/output for user-computer interactionCathode-ray tube indicatorsSonificationPMUT

An apparatus for determining location of a moveable object in relation to an input device includes an array of one or more piezoelectric micromachined ultrasonic transducer (pMUT) elements and a processor. The array is formed from a common substrate. The one or more pMUT elements include one or more transmitters and one or more receivers. The processor configured to determine a location of a moveable object in relation to an input device using sound waves that are emitted from the one or more transmitters, reflected from the moveable object, and received by the one or more receivers.

Owner:RGT UNIV OF CALIFORNIA

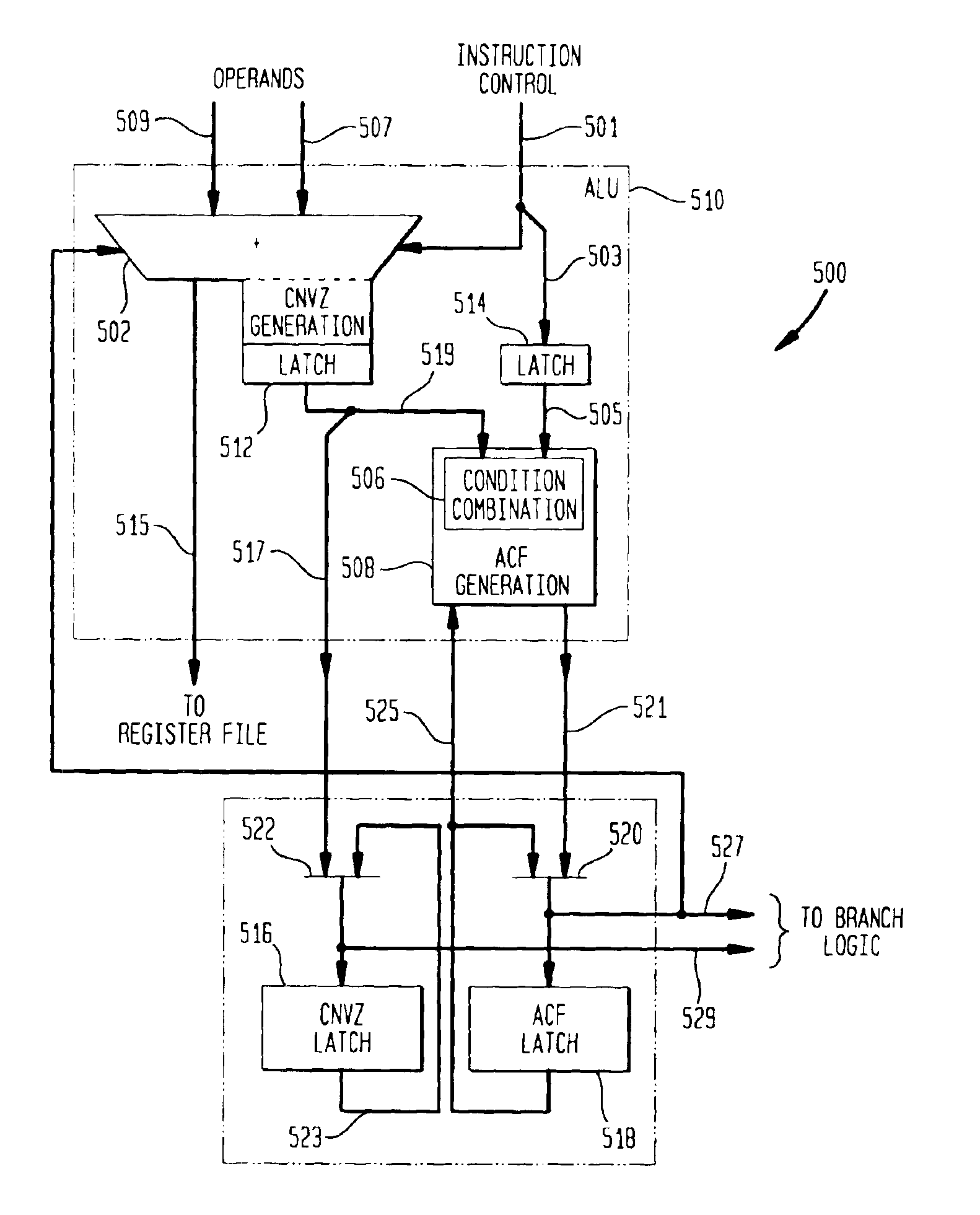

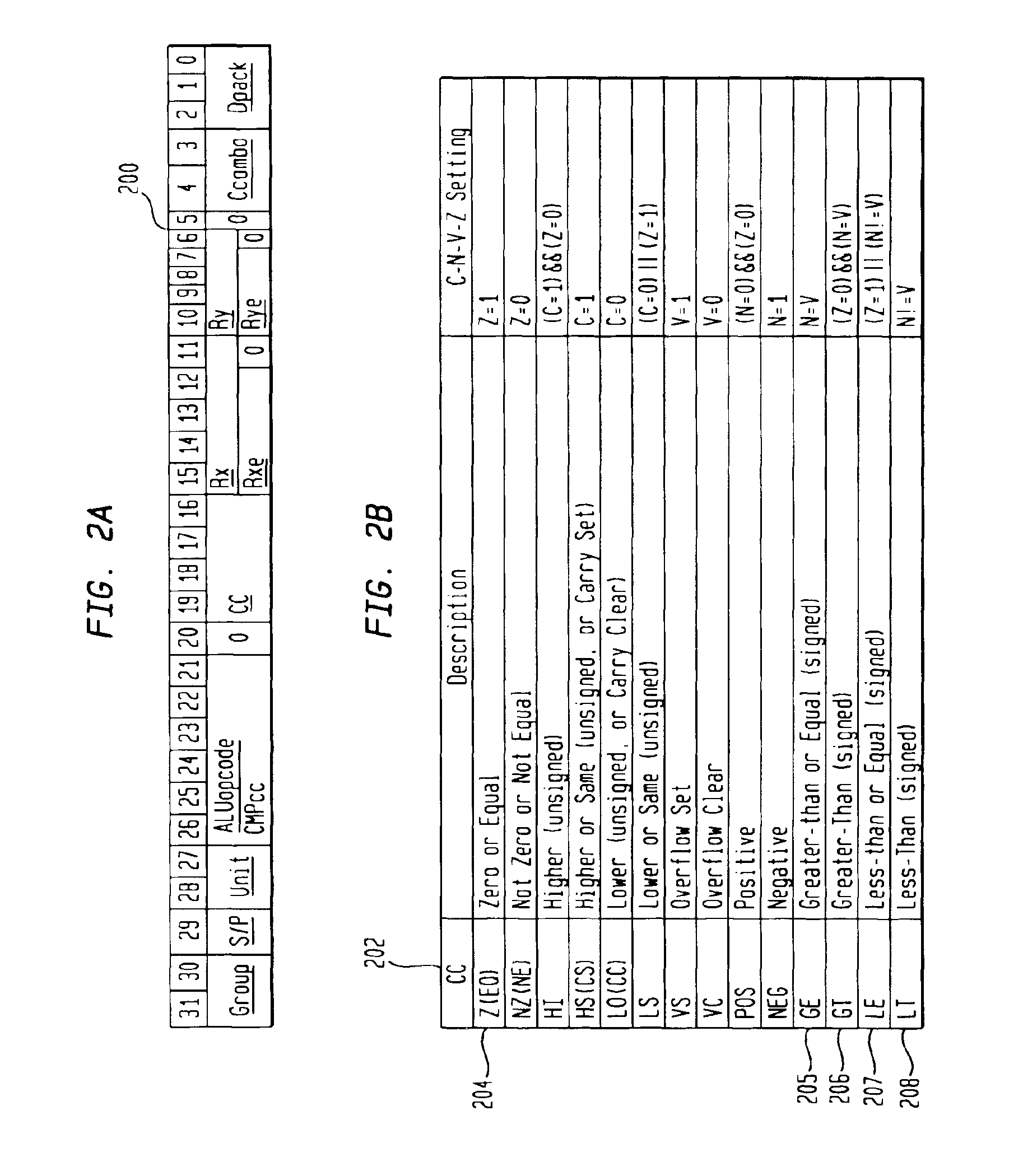

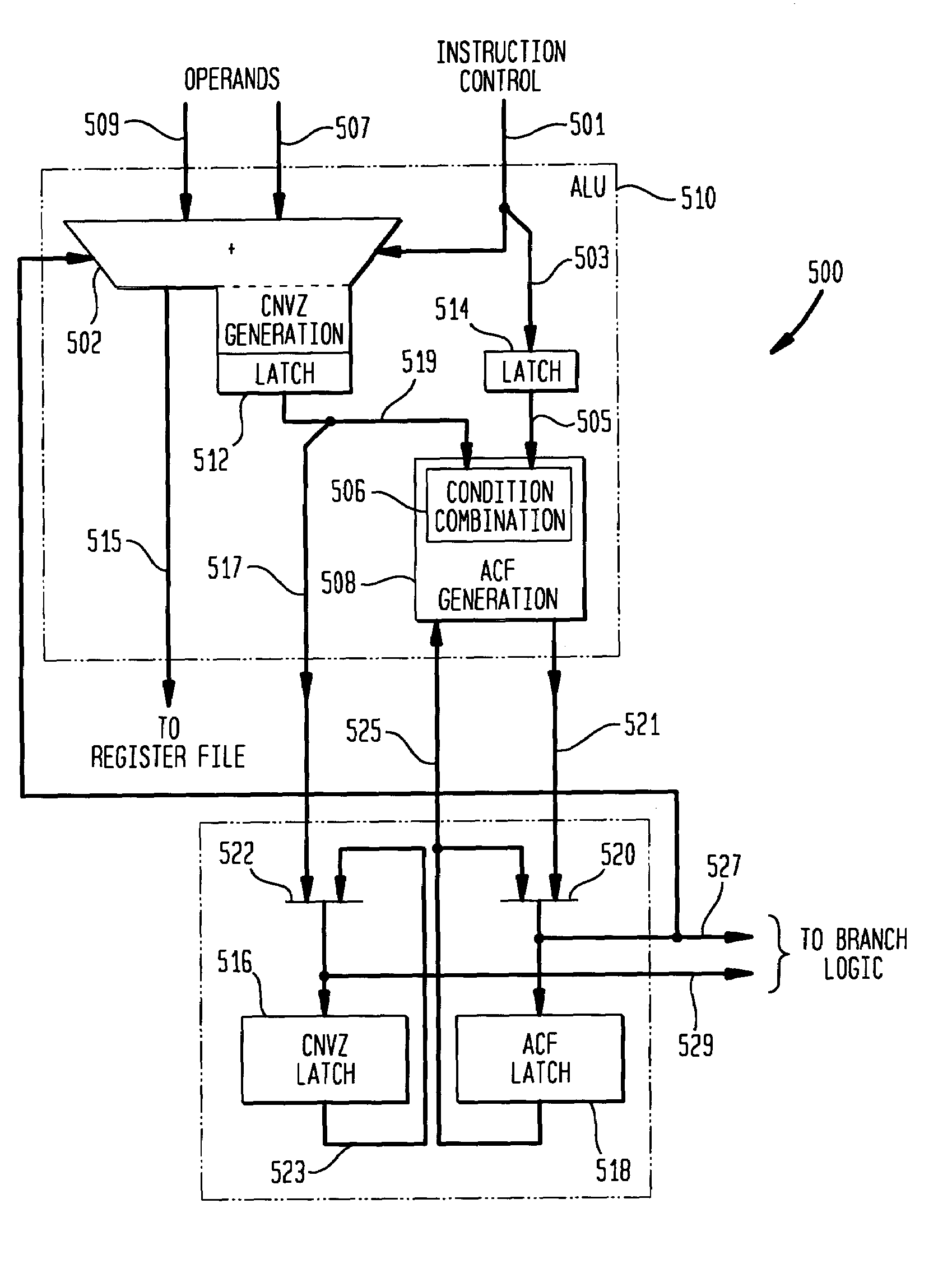

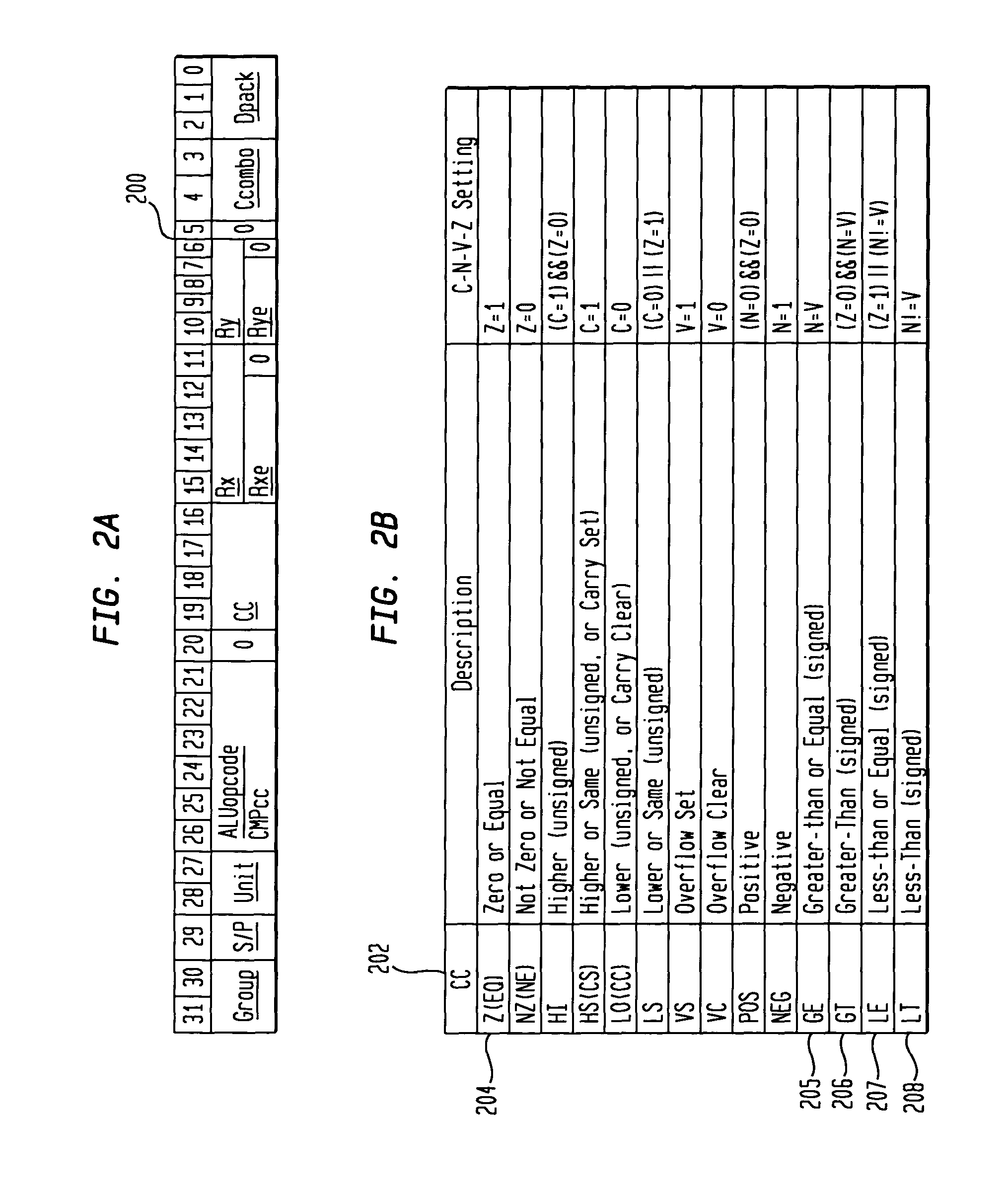

Methods and apparatus to support conditional execution in a VLIW-based array processor with subword execution

InactiveUS6954842B2Not affectFacilitate communicationSingle instruction multiple data multiprocessorsConditional code generationCommunication interfaceGeneral purpose

General purpose flags (ACFs) are defined and encoded utilizing a hierarchical one-, two- or three-bit encoding. Each added bit provides a superset of the previous functionality. With condition combination, a sequential series of conditional branches based on complex conditions may be avoided and complex conditions can then be used for conditional execution. ACF generation and use can be specified by the programmer. By varying the number of flags affected, conditional operation parallelism can be widely varied, for example, from mono-processing to octal-processing in VLIW execution, and across an array of processing elements (PE)s. Multiple PEs can generate condition information at the same time with the programmer being able to specify a conditional execution in one processor based upon a condition generated in a different processor using the communications interface between the processing elements to transfer the conditions. Each processor in a multiple processor array may independently have different units conditionally operate based upon their ACFs.

Owner:ALTERA CORP

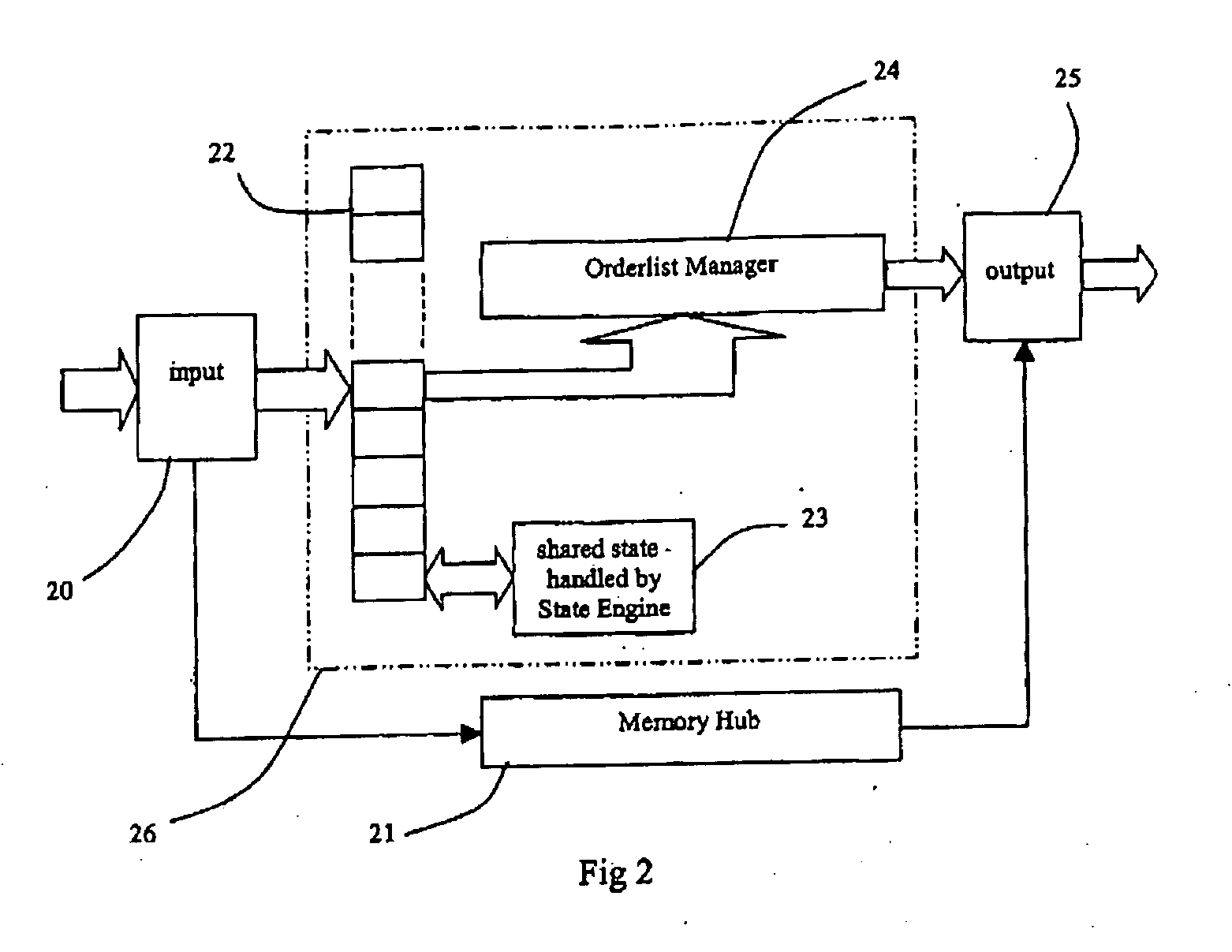

Traffic management architecture

InactiveUS20050243829A1Access controlData switching by path configurationStore-and-forward switching systemsRapid processingRapid access

An architecture for sorting incoming data packets in real time, on the fly, processes the packets and places them into an exit order queue before storing the packets. This is in contrast to the traditional way of storing first then sorting later and provides rapid processing capability. A processor generates packet records from an input stream and determines an exit order number for the related packet. The records are stored in an orderlist manager while the data portions are stored in a memory hub for later retrieval in the exit order stored in the manager. The processor is preferably a parallel processor array using SIMD and is provided with rapid access to a shared state by a state engine.

Owner:RAMBUS INC

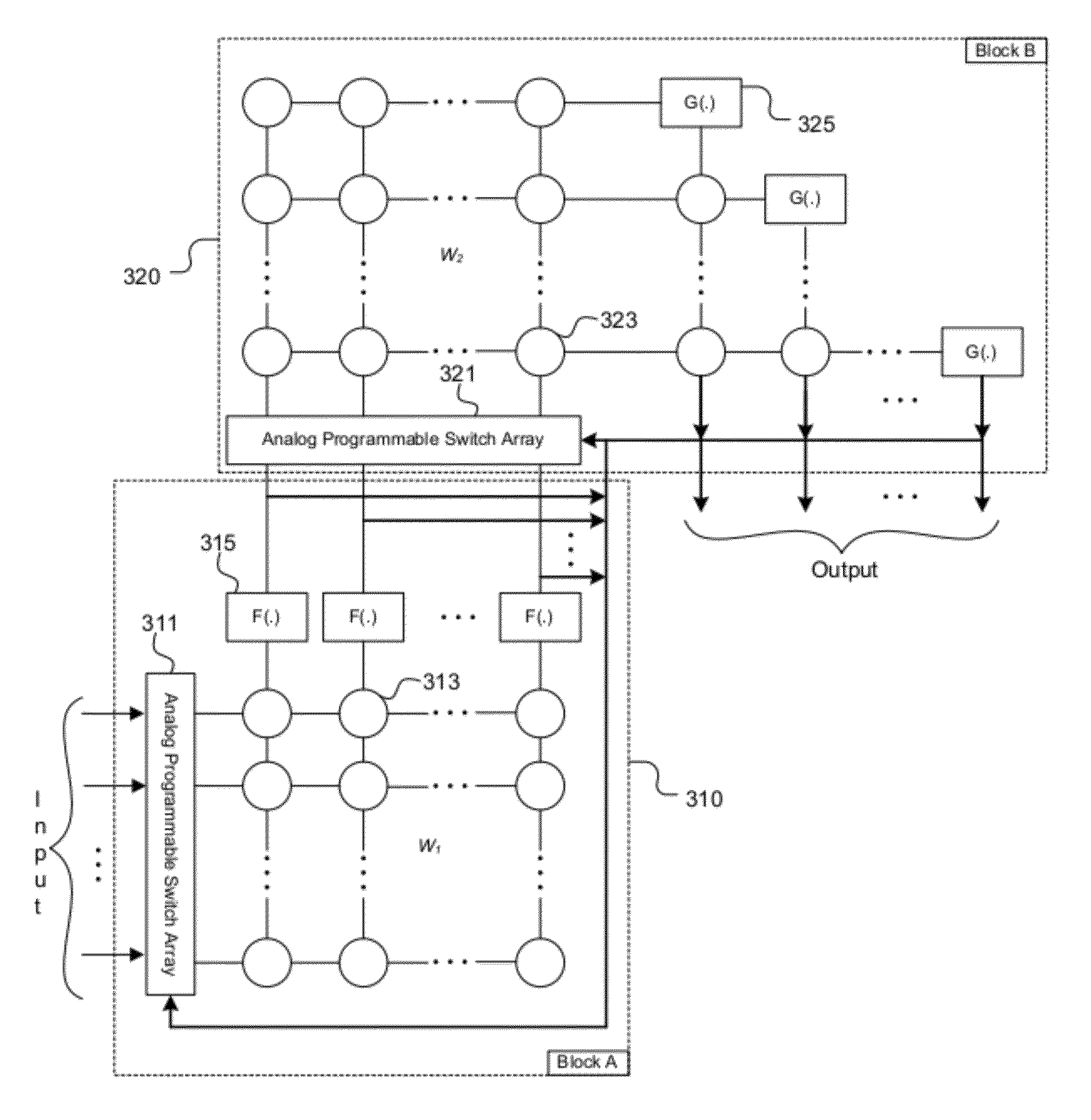

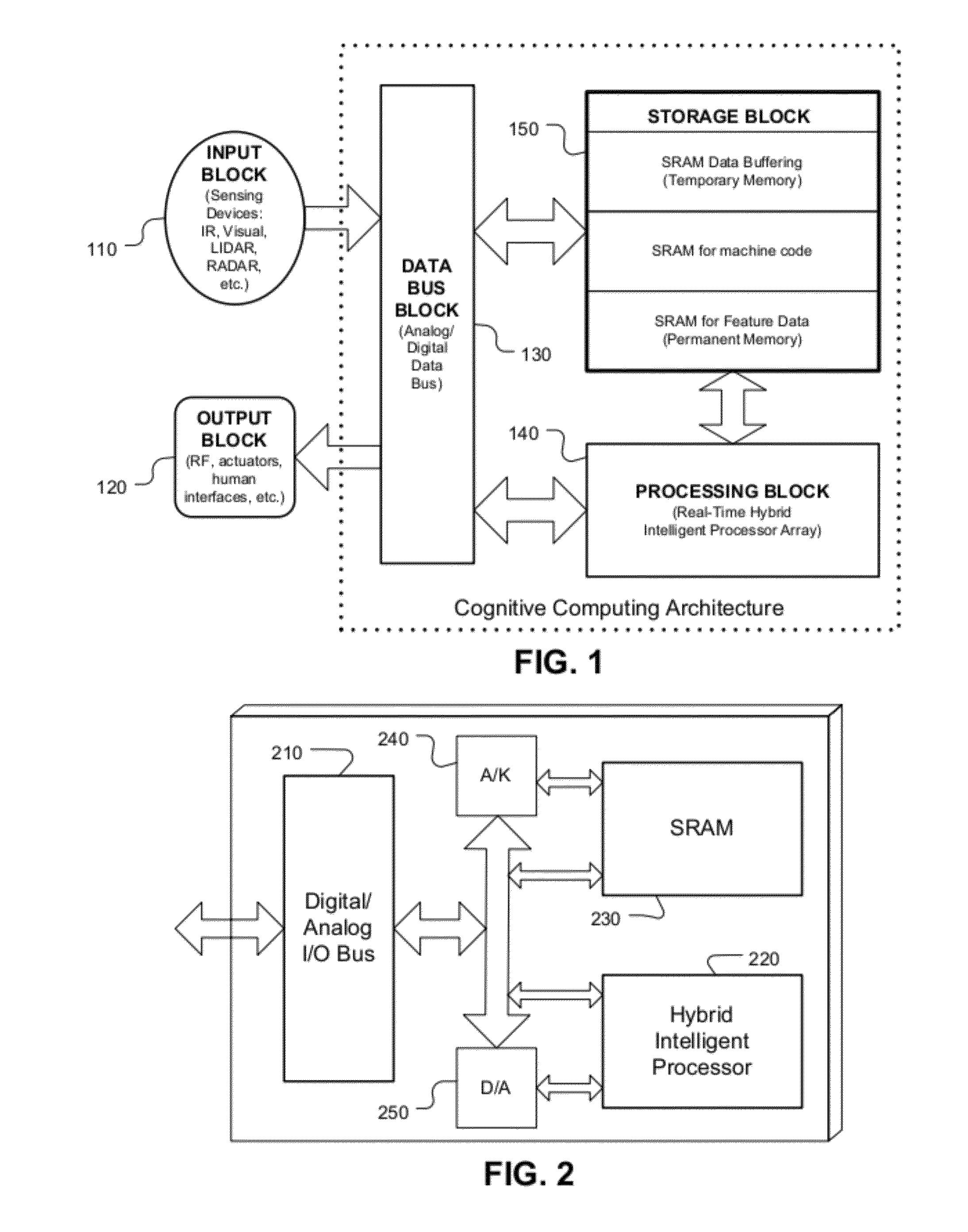

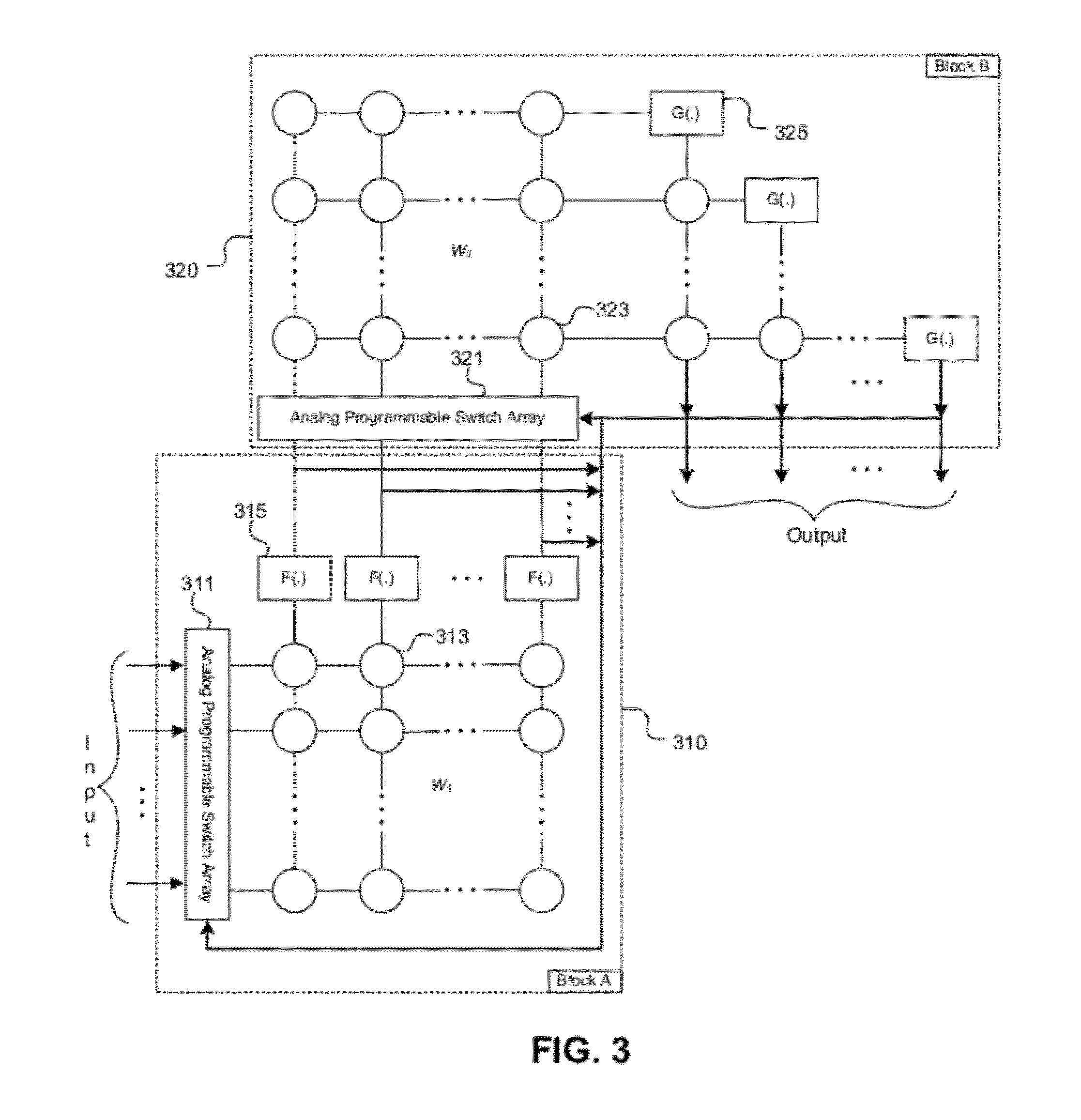

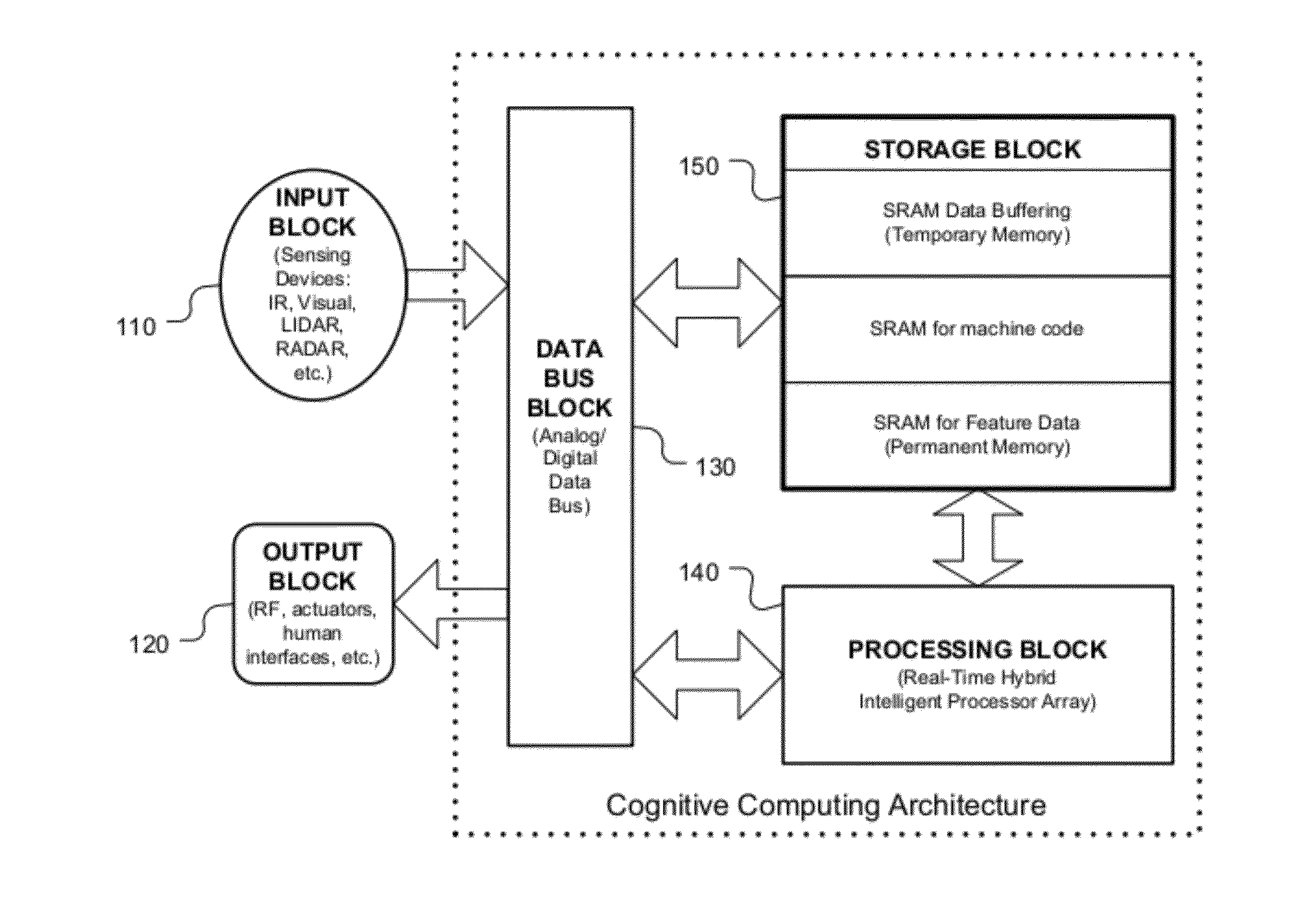

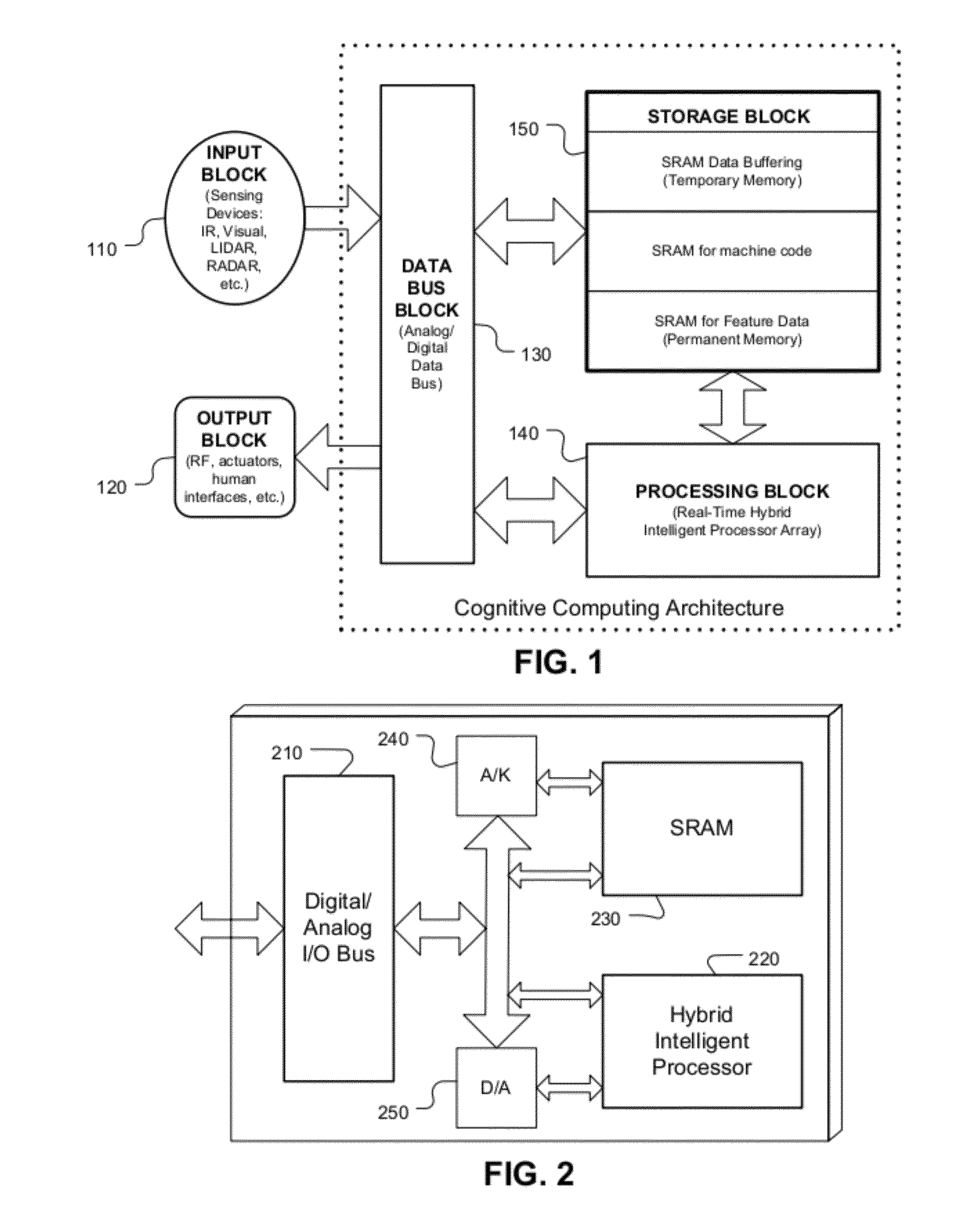

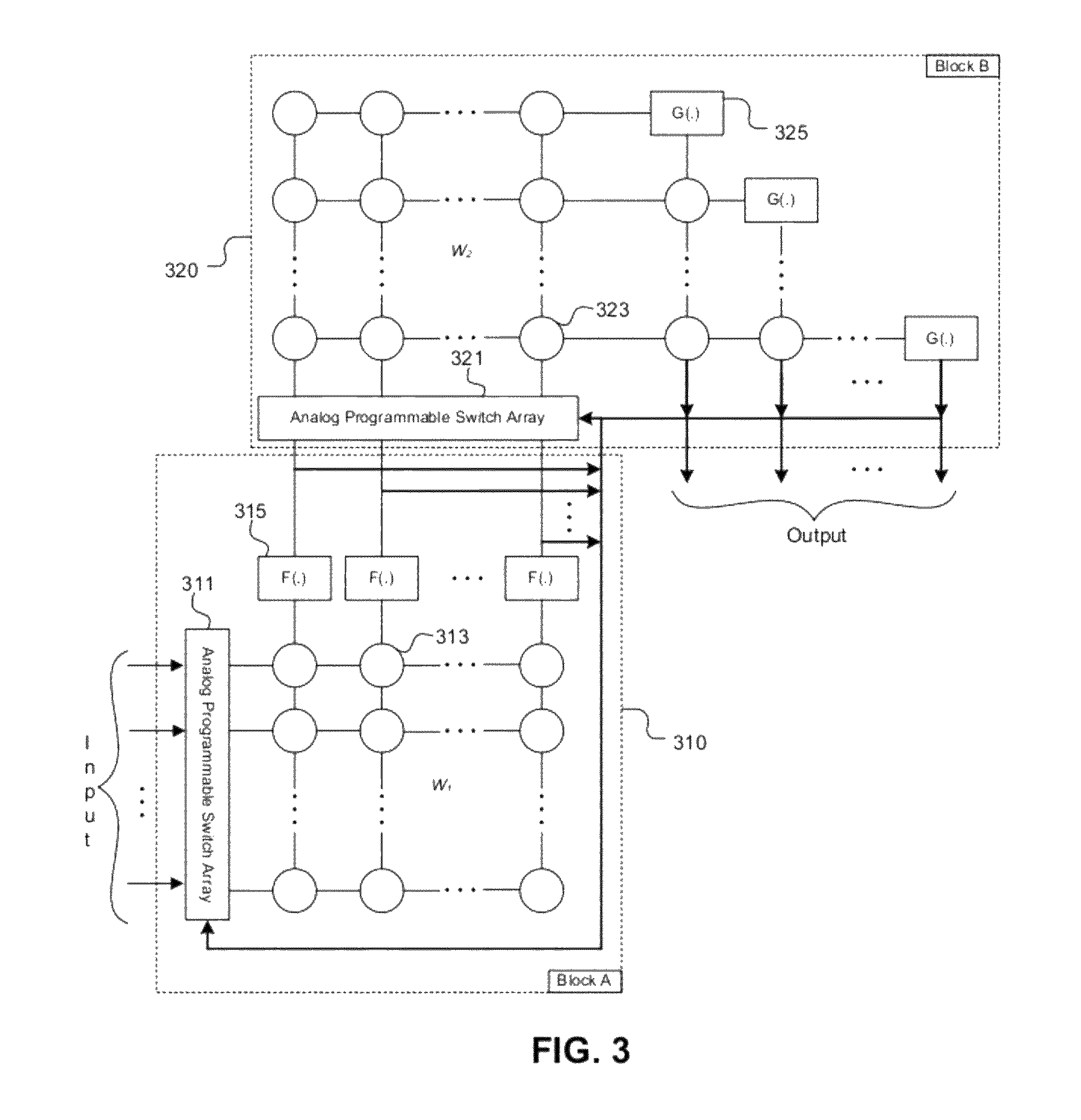

System and method for cognitive processing for data fusion

ActiveUS20120136913A1Computing operations for multiplication/divisionDigital storageAdaptive learningStatic random-access memory

A system and method for cognitive processing of sensor data. A processor array receiving analog sensor data and having programmable interconnects, multiplication weights, and filters provides for adaptive learning in real-time. A static random access memory contains the programmable data for the processor array and the stored data is modified to provide for adaptive learning.

Owner:CALIFORNIA INST OF TECH

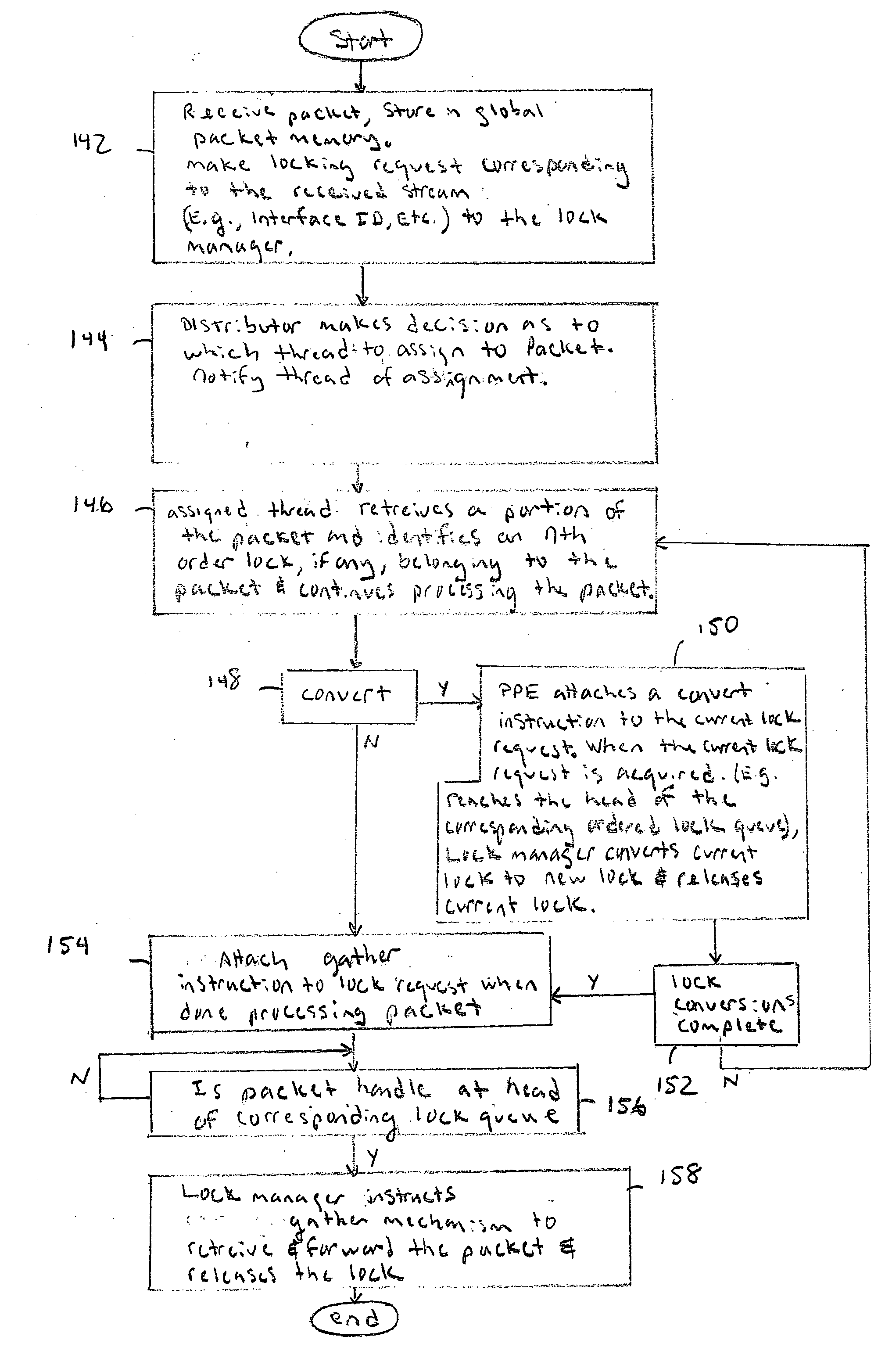

Multi-threaded packet processing architecture with global packet memory, packet recirculation, and coprocessor

InactiveUS7551617B2Quicker and high quality portingIncrease data rateDigital computer detailsData switching by path configurationHardware architectureCoprocessor

A network processor has numerous novel features including a multi-threaded processor array, a multi-pass processing model, and Global Packet Memory (GPM) with hardware managed packet storage. These unique features allow the network processor to perform high-touch packet processing at high data rates. The packet processor can also be coded using a stack-based high-level programming language, such as C or C++. This allows quicker and higher quality porting of software features into the network processor.Processor performance also does not severely drop off when additional processing features are added. For example, packets can be more intelligently processed by assigning processing elements to different bounded duration arrival processing tasks and variable duration main processing tasks. A recirculation path moves packets between the different arrival and main processing tasks. Other novel hardware features include a hardware architecture that efficiently intermixes co-processor operations with multi-threaded processing operations and improved cache affinity.

Owner:CISCO TECH INC

Methods and apparatus to support conditional execution in a VLIW-based array processor with subword execution

InactiveUS7010668B2Minimization requirementsFacilitate communicationSingle instruction multiple data multiprocessorsConditional code generationCommunication interfaceGeneral purpose

General purpose flags (ACFs) are defined and encoded utilizing a hierarchical one-, two- or three-bit encoding. Each added bit provides a superset of the previous functionality. With condition combination, a sequential series of conditional branches based on complex conditions may be avoided and complex conditions can then be used for conditional execution. ACF generation and use can be specified by the programmer. By varying the number of flags affected, conditional operation parallelism can be widely varied, for example, from mono-processing to octal-processing in VLIW execution, and across an array of processing elements (PE)s. Multiple PEs can generate condition information at the same time with the programmer being able to specify a conditional execution in one processor based upon a condition generated in a different processor using the communications interface between the processing elements to transfer the conditions. Each processor in a multiple processor array may independently have different units conditionally operate based upon their ACFs.

Owner:ALTERA CORP

System and method for cognitive processing for data fusion

ActiveUS8204927B1Computing operations for multiplication/divisionDigital storageAdaptive learningStatic random-access memory

A system and method for cognitive processing of sensor data. A processor array receiving analog sensor data and having programmable interconnects, multiplication weights, and filters provides for adaptive learning in real-time. A static random access memory contains the programmable data for the processor array and the stored data is modified to provide for adaptive learning.

Owner:CALIFORNIA INST OF TECH

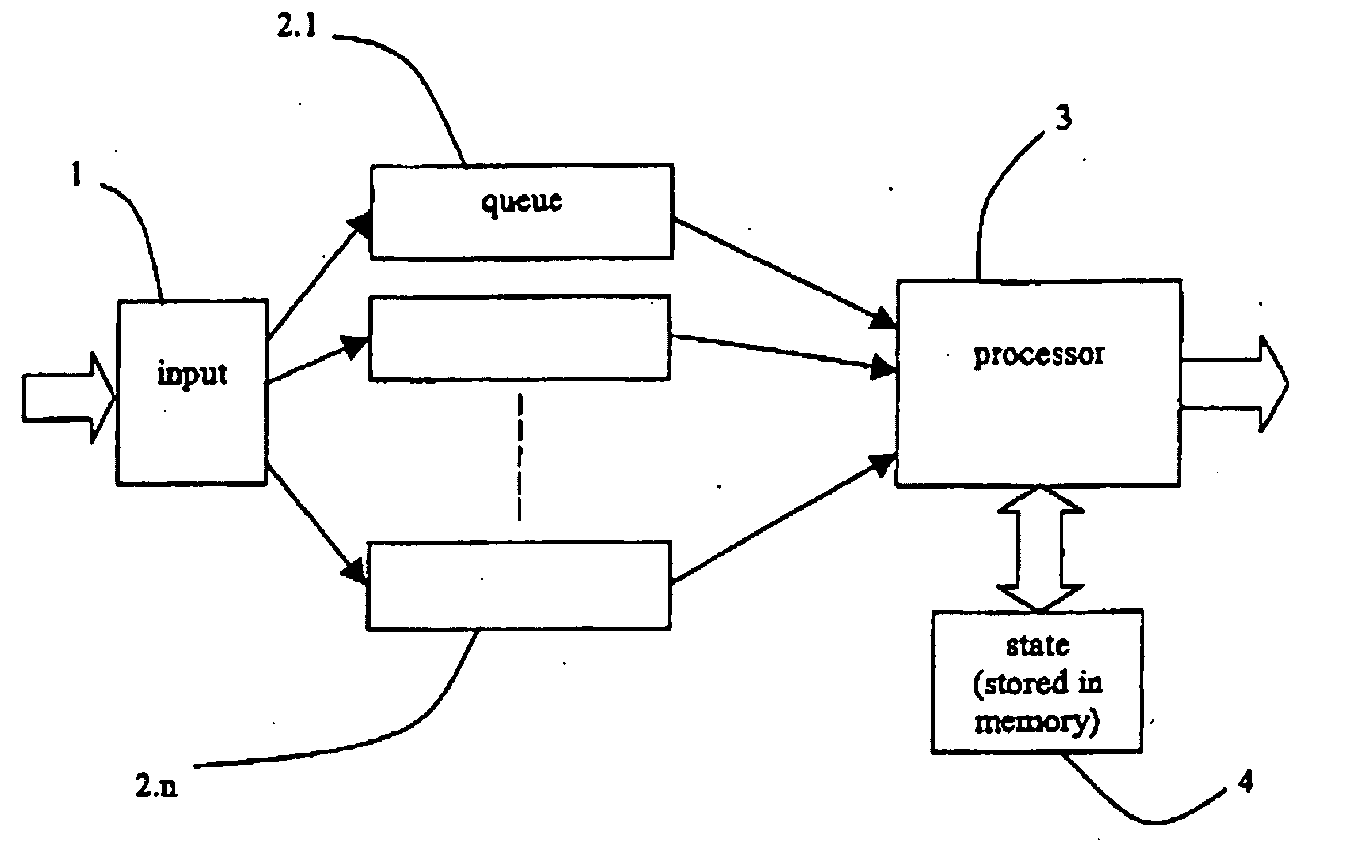

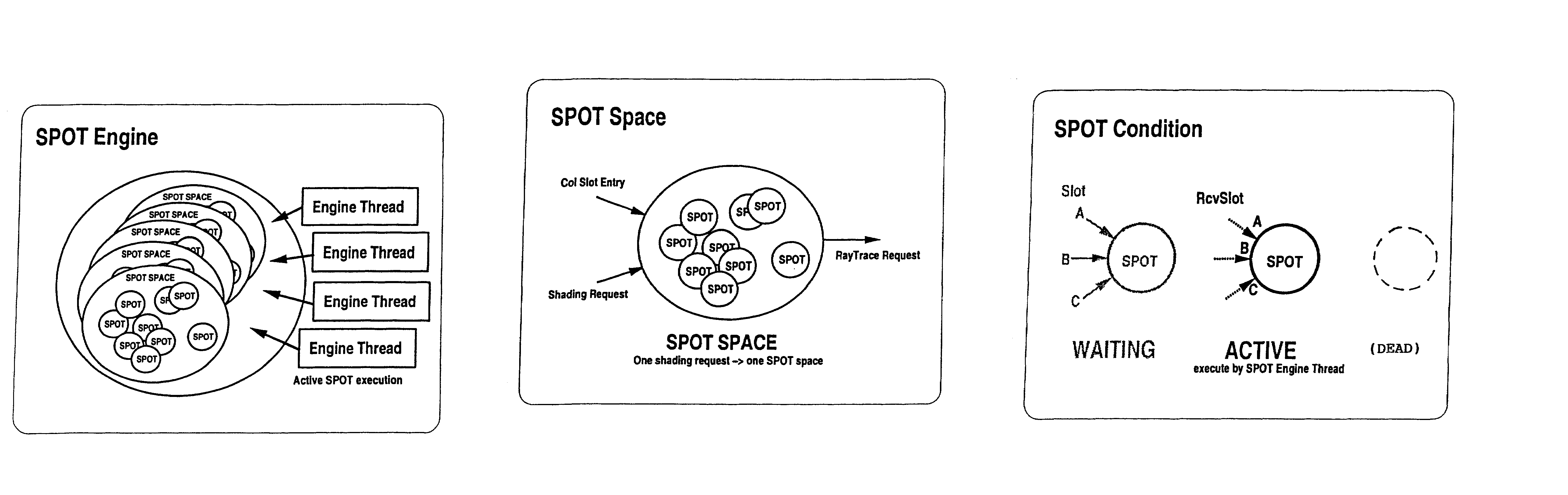

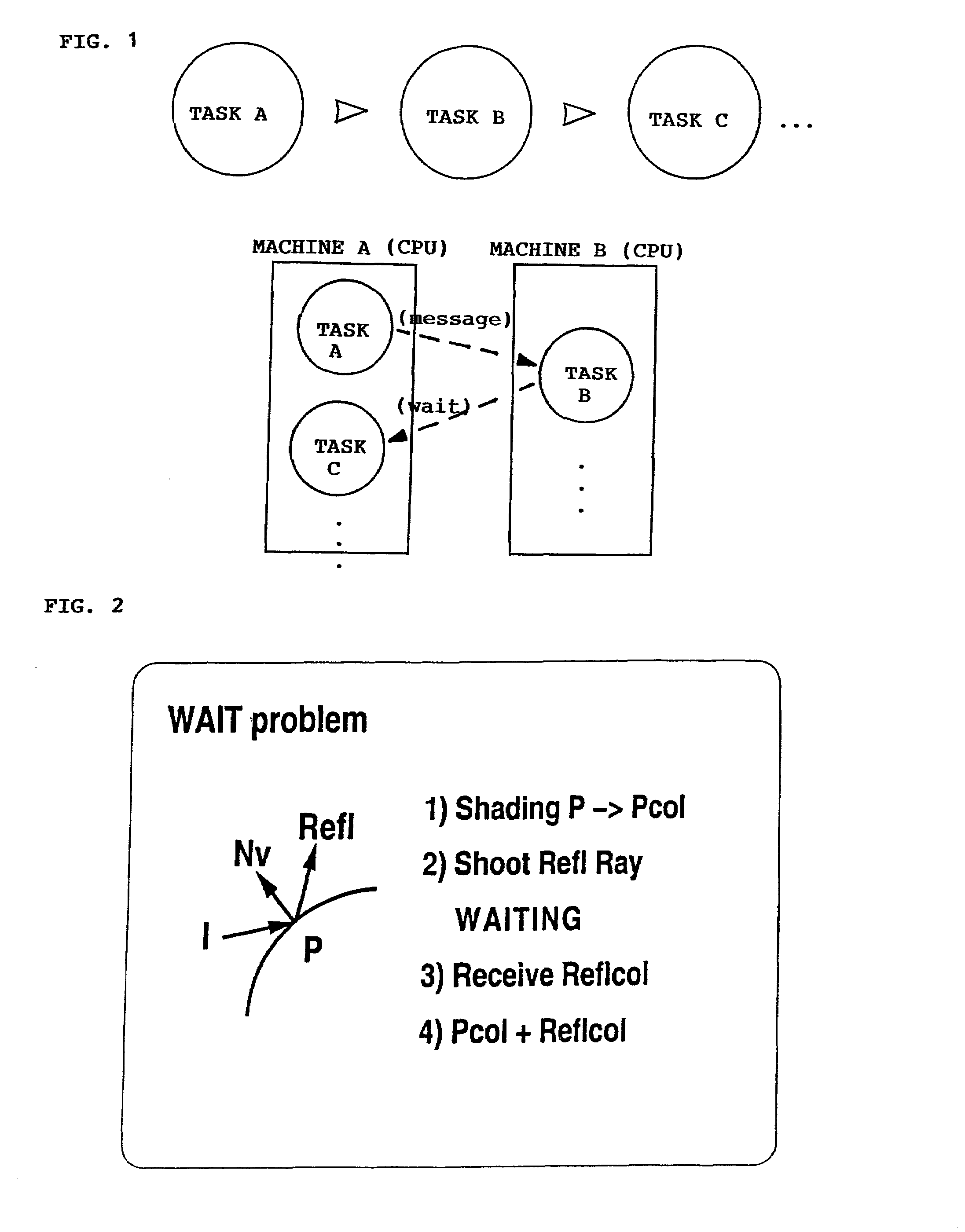

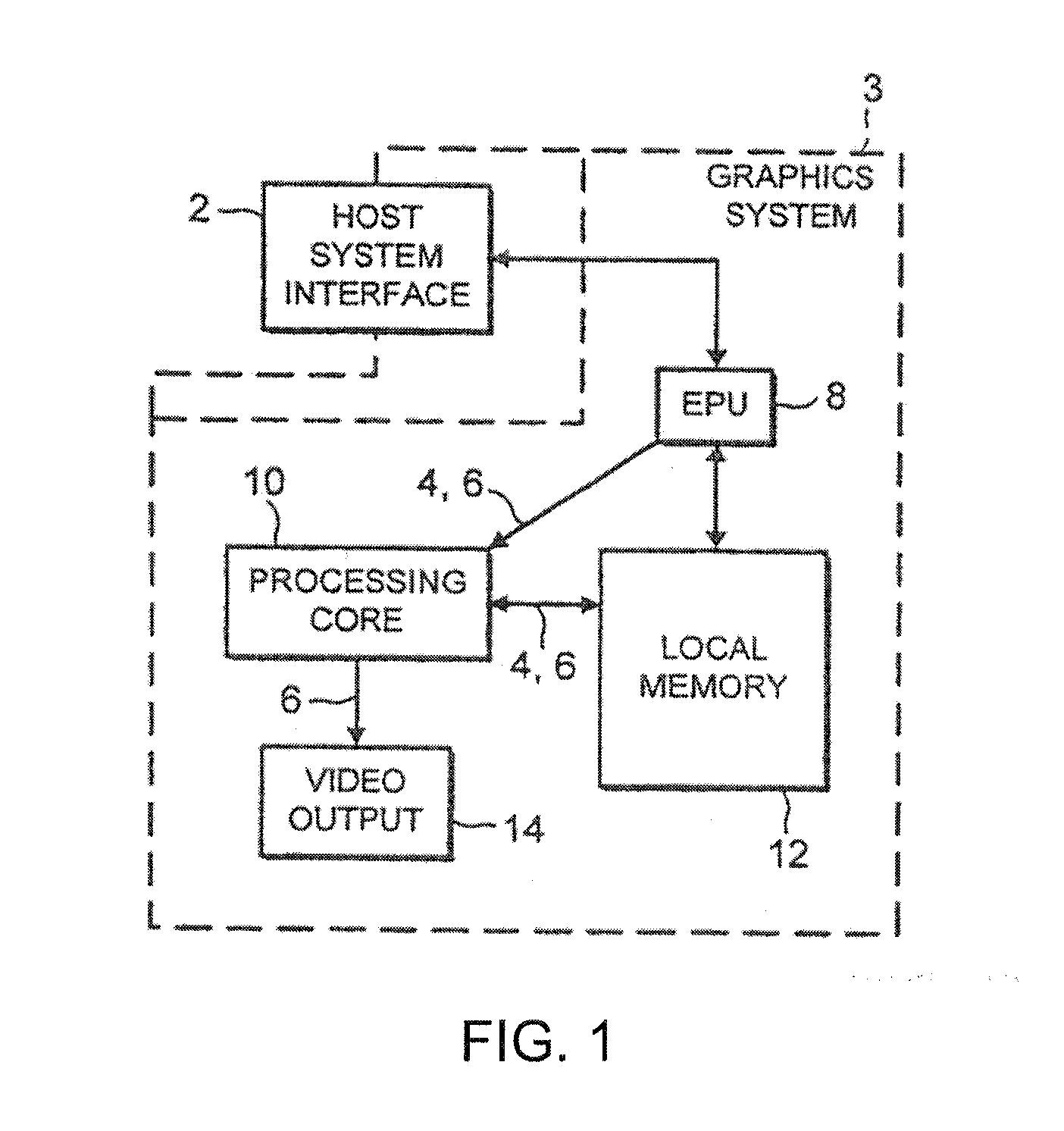

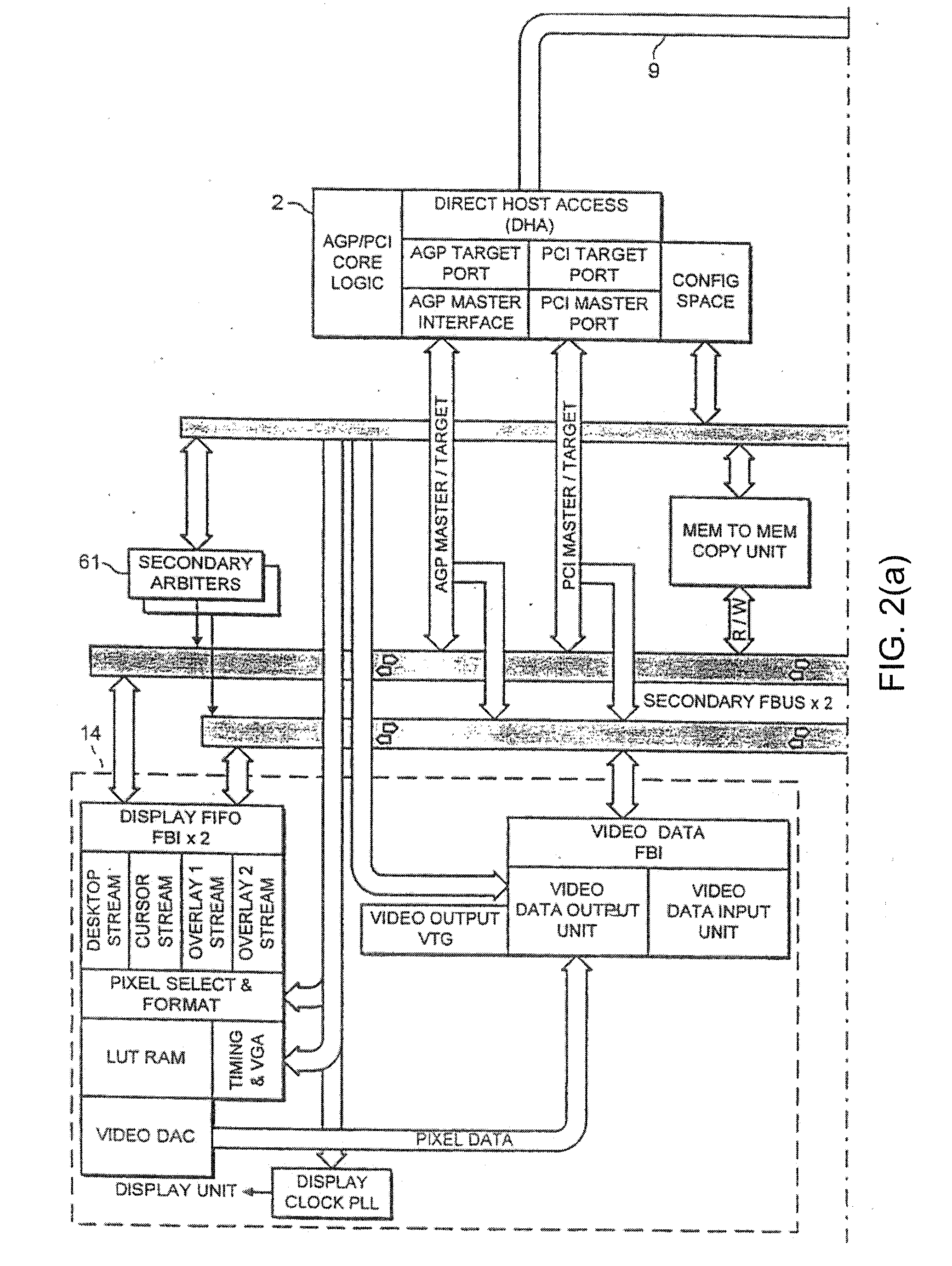

Parallel object task engine and processing method

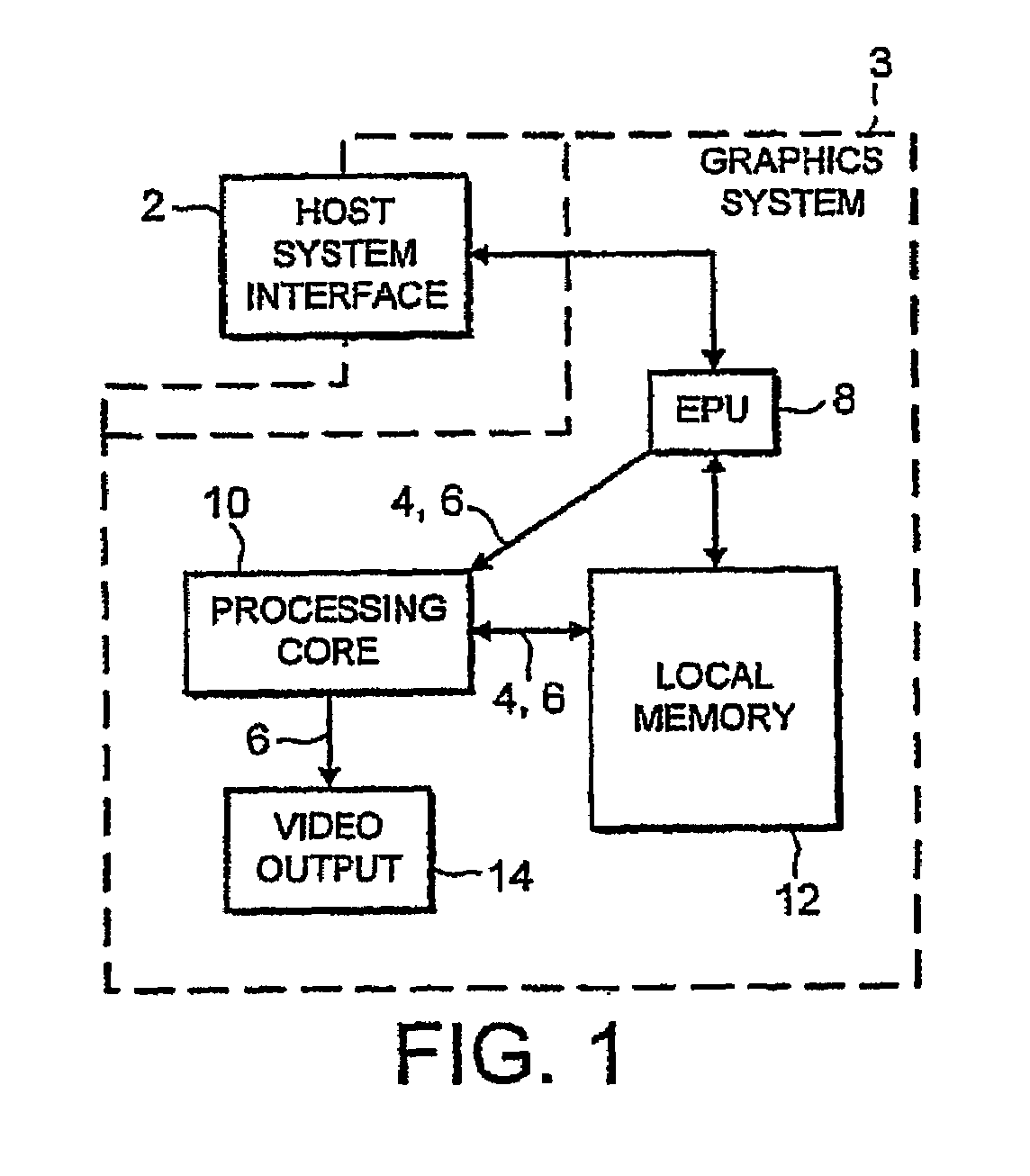

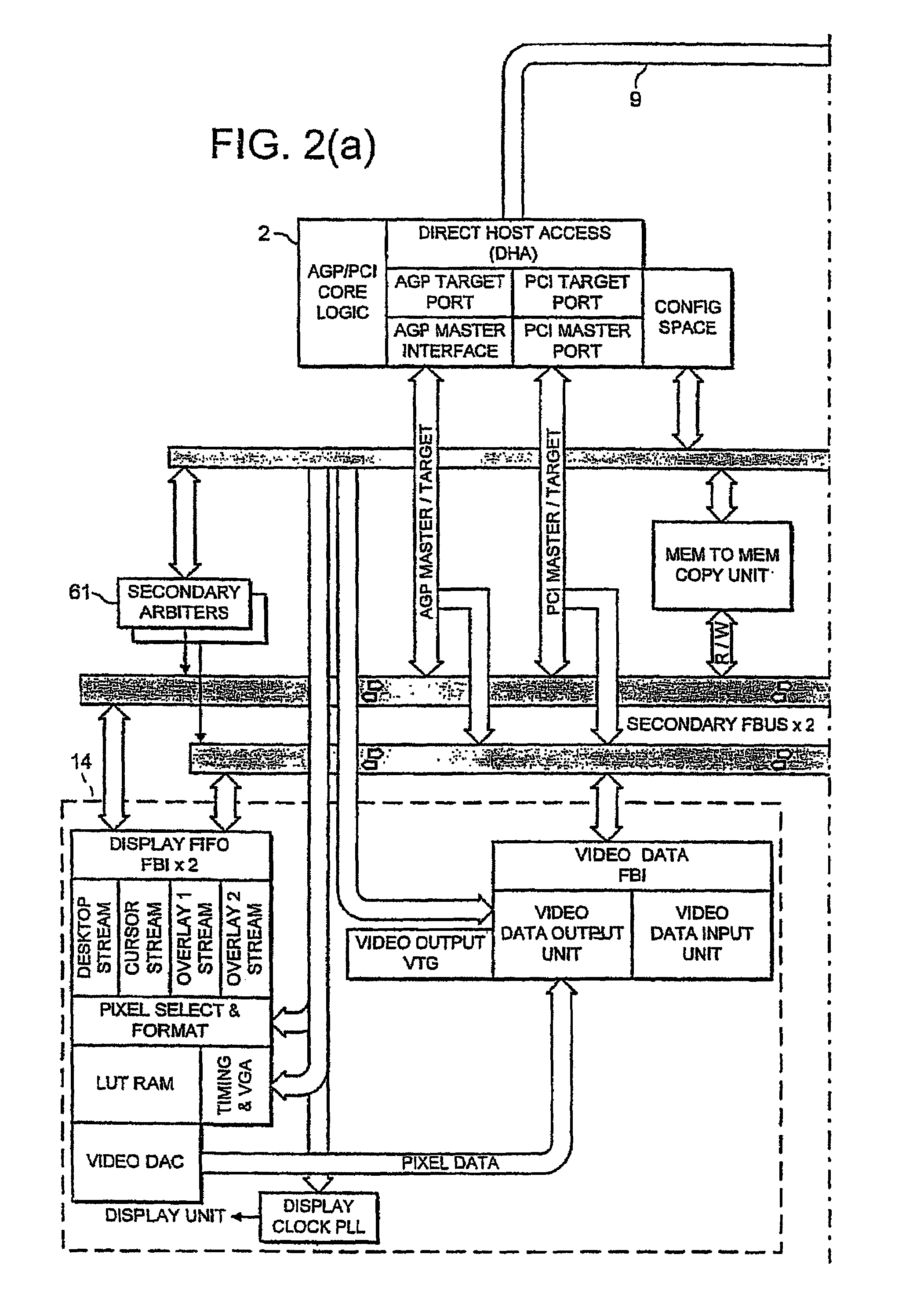

InactiveUS7233331B2Multiprogramming arrangementsImage data processing detailsGraphicsWaste processing

A parallel processing system and method for performing processing tasks in parallel on a plurality of processors breaks down a processing task into a plurality of self-contained task objects, each of which has one or more “data-waiting” slots for receiving a respective data input required for performing a computational step. The task objects are maintained in a “waiting” state while awaiting one or more inputs to fill its slots. When all slots are filled, the task object is placed in an “active” state and can be performed on a processor without waiting for any other input. The “active” tasks objects are placed in a queue and assigned to a next available processor. The status of the task object is changed to a “dead” state when the computation has been completed, and dead task objects are removed from memory at periodic intervals. This method is well suited to computer graphics (CG) rendering, and particularly to the shading task which can be broken up into task spaces of task objects for shading each pixel of an image frame based upon light sources in the scene. By allowing shading task objects to be defined with “data-waiting” slots for light / color data input, and placing task objects in the “active” state for processing by the next available one of an array of processors, the parallel processing efficiency can be kept high without wasting processing resources waiting for return of data.

Owner:SQUARE ENIX HLDG CO LTD

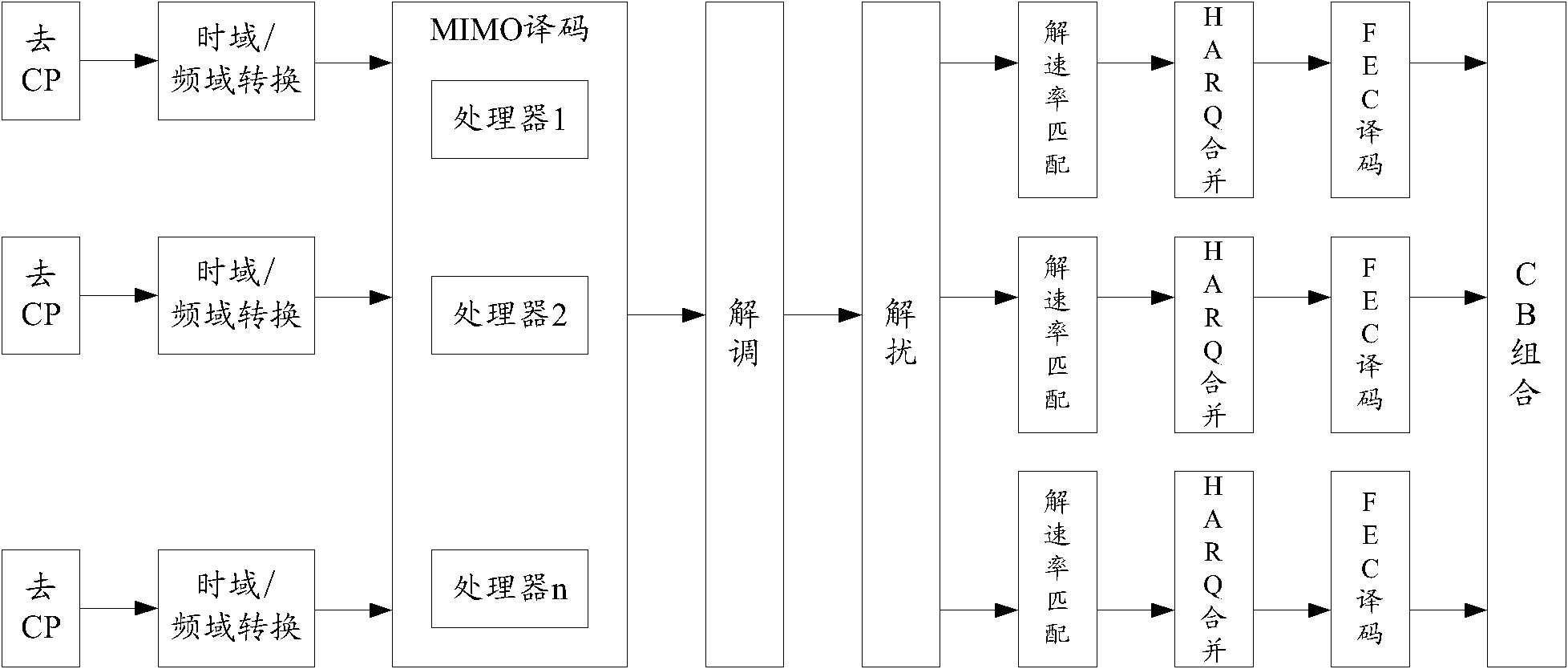

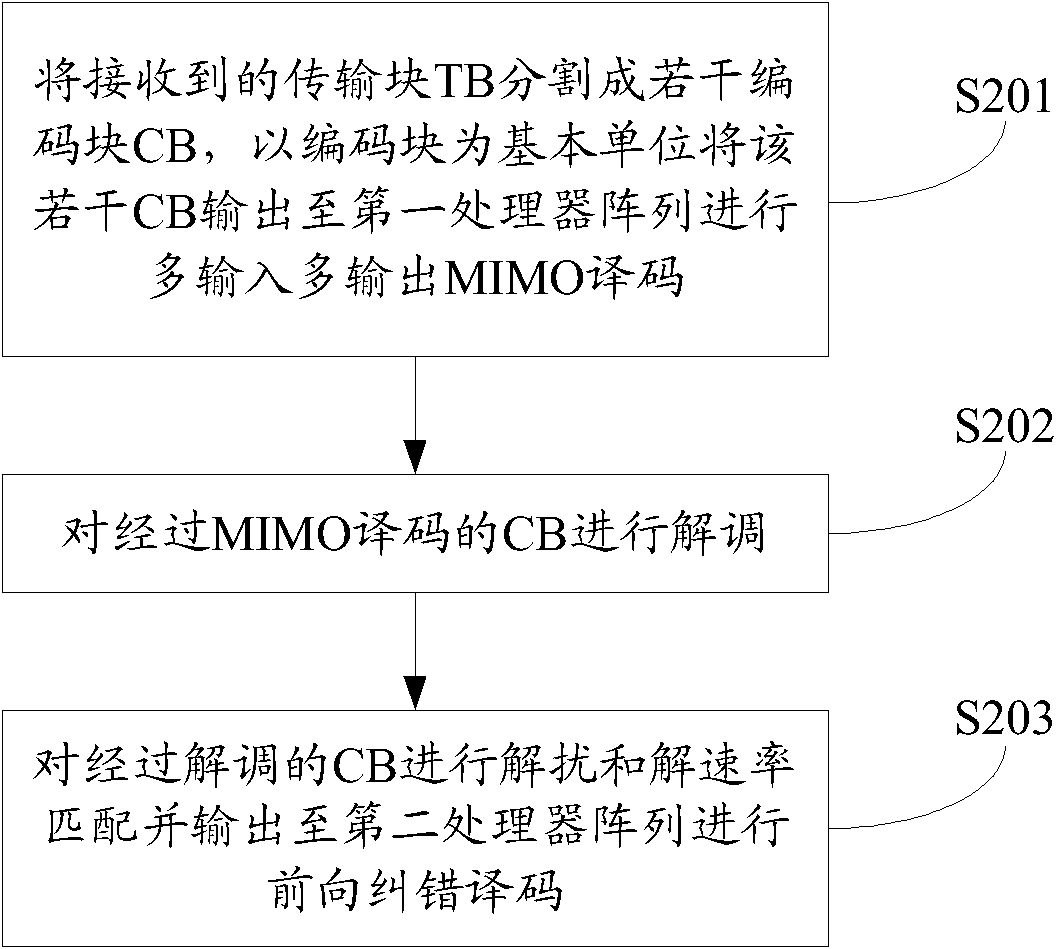

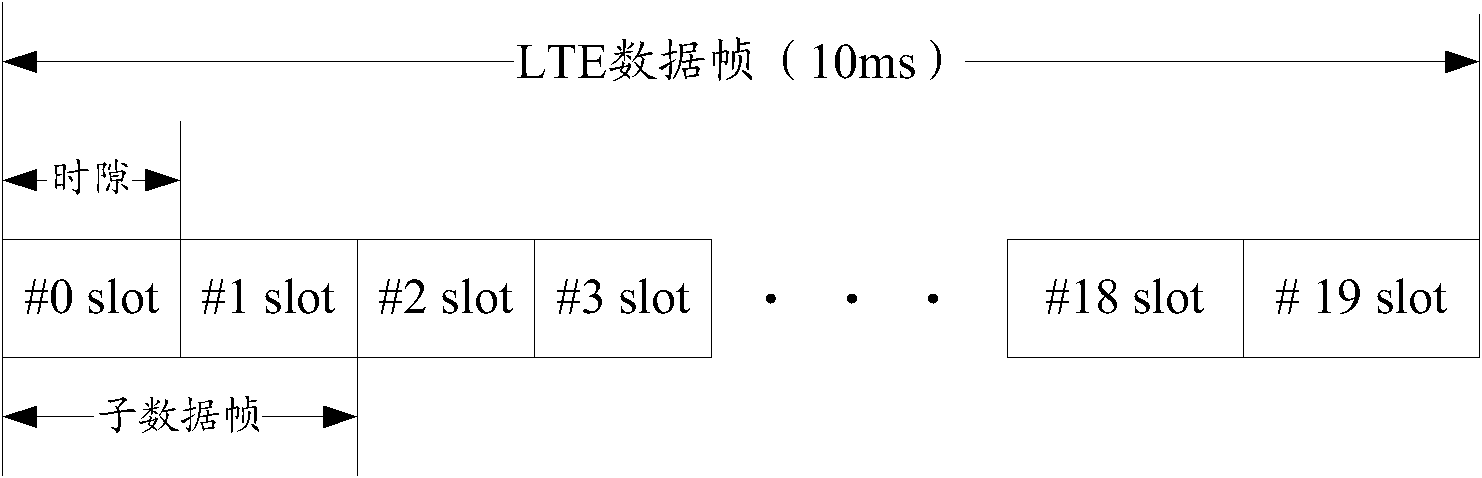

Method and device for segment decoding of transport block (TB) as well as multiple input multiple output (MIMO) receiver

InactiveCN102055558ALower performance requirementsLow costError prevention/detection by diversity receptionDecompositionForward error correction

The embodiment of the invention provides a method and device for segment decoding of a transport block (TB) as well as a multiple input multiple output (MIMO) receiver. The method comprises the following steps: cutting the received TB into a plurality of code blocks (CBs), and outputting the plurality of CBs to a first processor array by taking the CBs as a basic unit for MIMO decoding; modulating the CBs subjected to MIMO decoding; carrying out descrambling and decomposition rate matching on the modulated CBs; and outputting the CBs subjected to descrambling and decomposition rate matching to a second processor array for forward error collection (FEC) decoding. By utilizing the method provided by the invention, the interactions of processors (or kernels) in the processor arrays are reduced, and the complexity of dispatching is reduced; and simultaneously, the CB segment dispatching ensures that more processing time can be reserved for the processing process after MIMO decoding, so that property requirements on the processor arrays for FEC decoding are objectively reduced, thereby reducing the realization cost of user equipment (UE).

Owner:SHANGHAI HUAWEI TECH CO LTD

Parallel data processing apparatus

InactiveUS20090198898A1Single instruction multiple data multiprocessorsProgram control using wired connectionsProcessing elementData treatment

A controller for controlling a data processor having a plurality of processor arrays, each of which includes a plurality of processing elements, comprises a retrieval unit operable to retrieve a plurality of incoming instructions streams in parallel with one another, and a distribution unit operable to supply such incoming instruction streams to respective ones of the said plurality of processor arrays.

Owner:RAMBUS INC

Parallel data processing apparatus

InactiveUS7506136B2Single instruction multiple data multiprocessorsProcessor architectures/configurationProcessing elementData treatment

Owner:RAMBUS INC

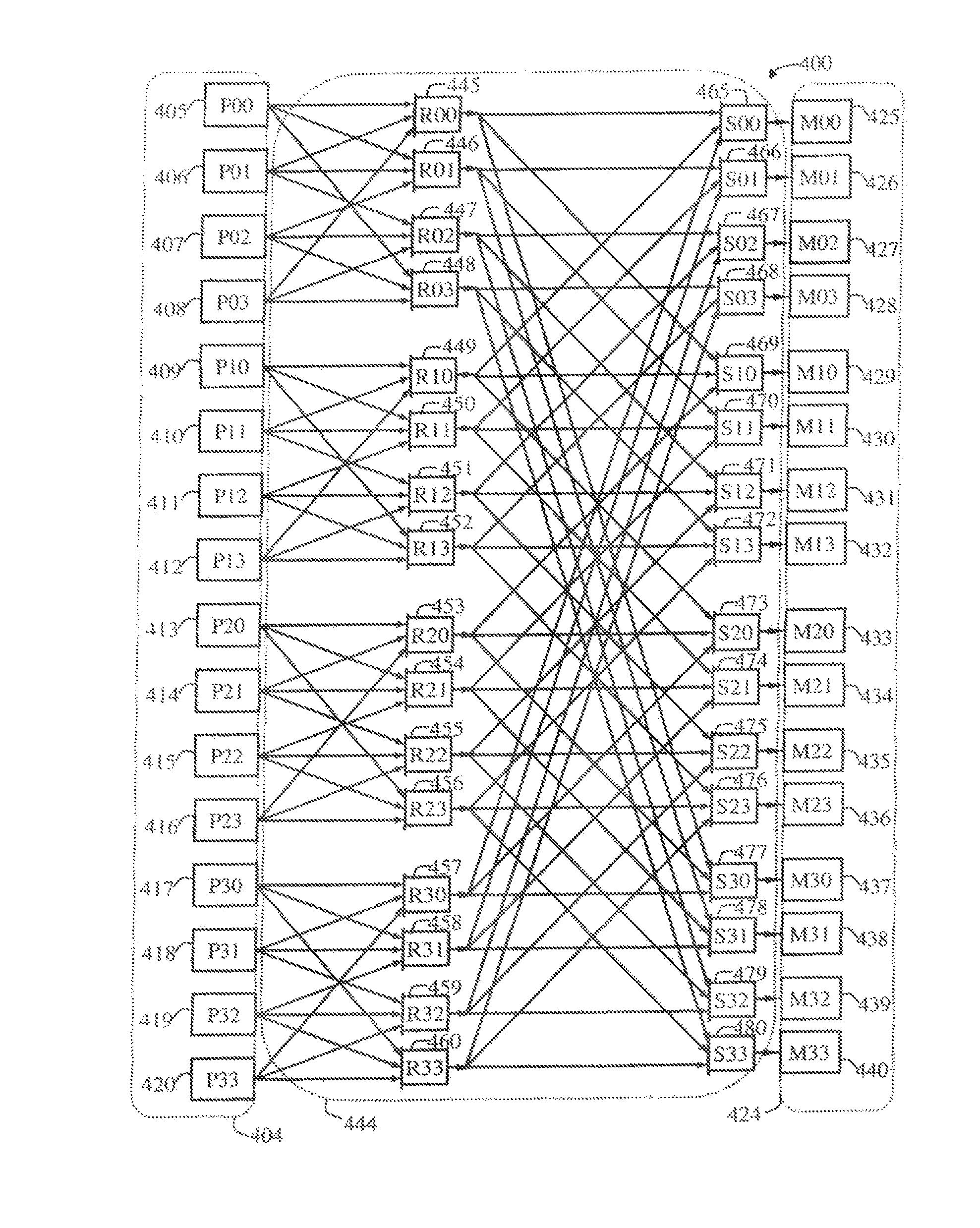

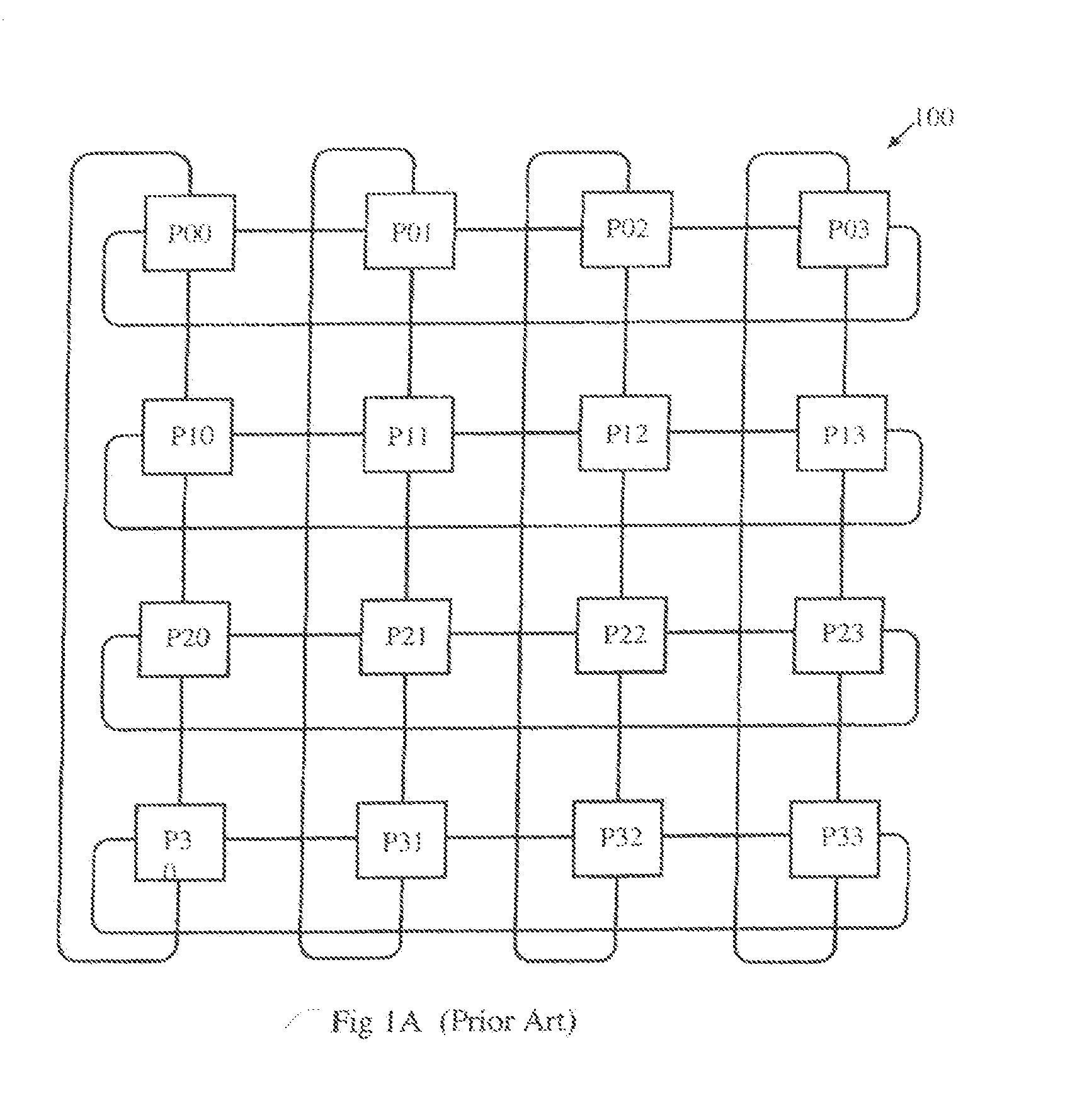

Interconnection network connecting operation-configurable nodes according to one or more levels of adjacency in multiple dimensions of communication in a multi-processor and a neural processor

InactiveUS20130198488A1Single instruction multiple data multiprocessorsInstruction analysisNODALSynaptic weight

A Wings array system for communicating between nodes using store and load instructions is described. Couplings between nodes are made according to a 1 to N adjacency of connections in each dimension of a G×H matrix of nodes, where G≧N and H≧N and N is a positive odd integer. Also, a 3D Wings neural network processor is described as a 3D G×H×K network of neurons, each neuron with an N×N×N array of synaptic weight values stored in coupled memory nodes, where G≧N, H≧N, K≧N, and N is determined from a 1 to N adjacency of connections used in the G×H×K network. Further, a hexagonal processor array is organized according to an INFORM coordinate system having axes at 60 degree spacing. Nodes communicate on row paths parallel to an FM dimension of communication, column paths parallel to an IO dimension of communication, and diagonal paths parallel to an NR dimension of communication.

Owner:PECHANEK GERALD GEORGE

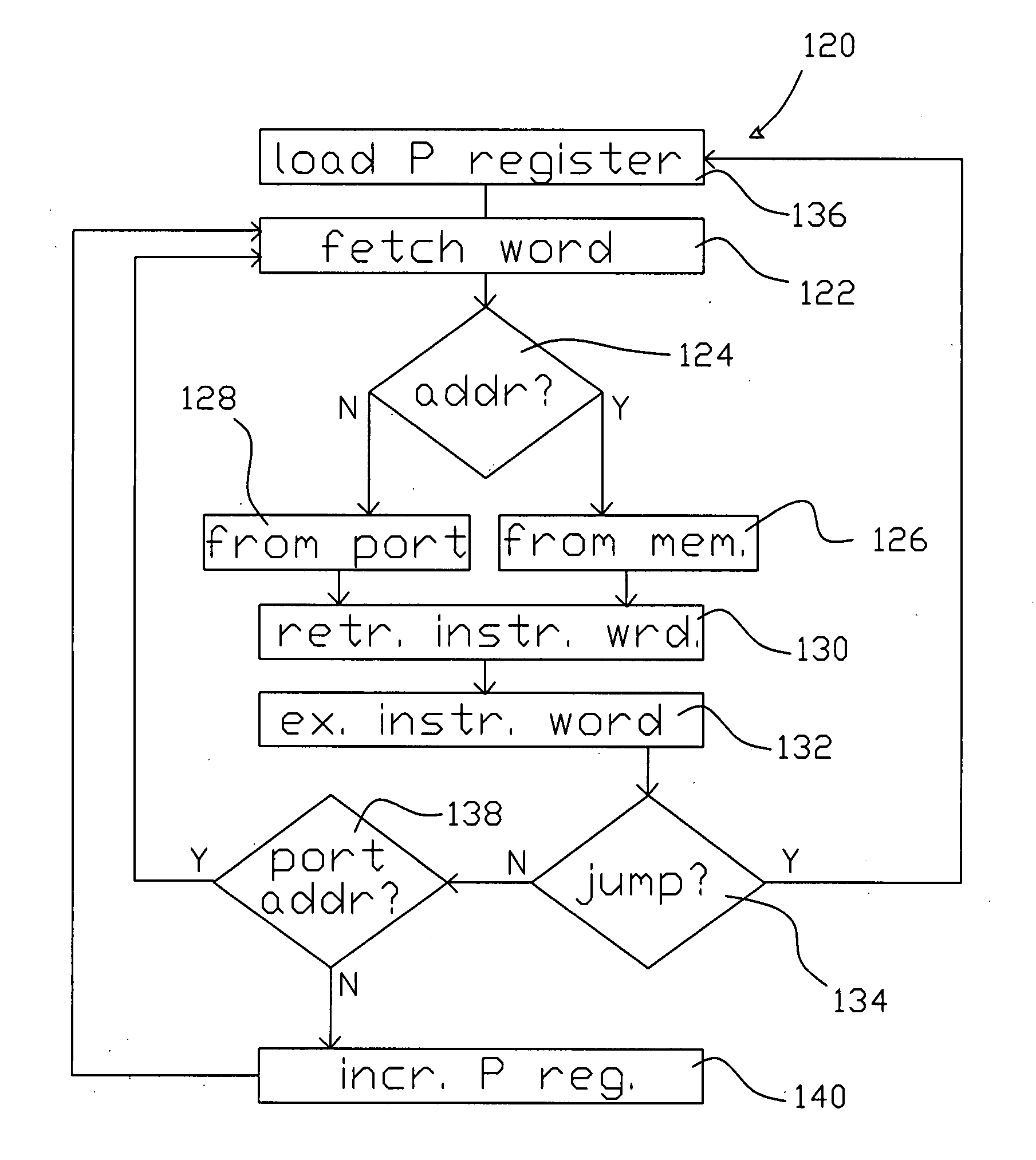

Method and apparatus for operating a computer processor array

InactiveUS20070250682A1Impact performanceAvoid readingSingle instruction multiple data multiprocessorsMultiple digital computer combinationsSpecific functionProcessor array

Owner:ARRAY PORTFOLIO

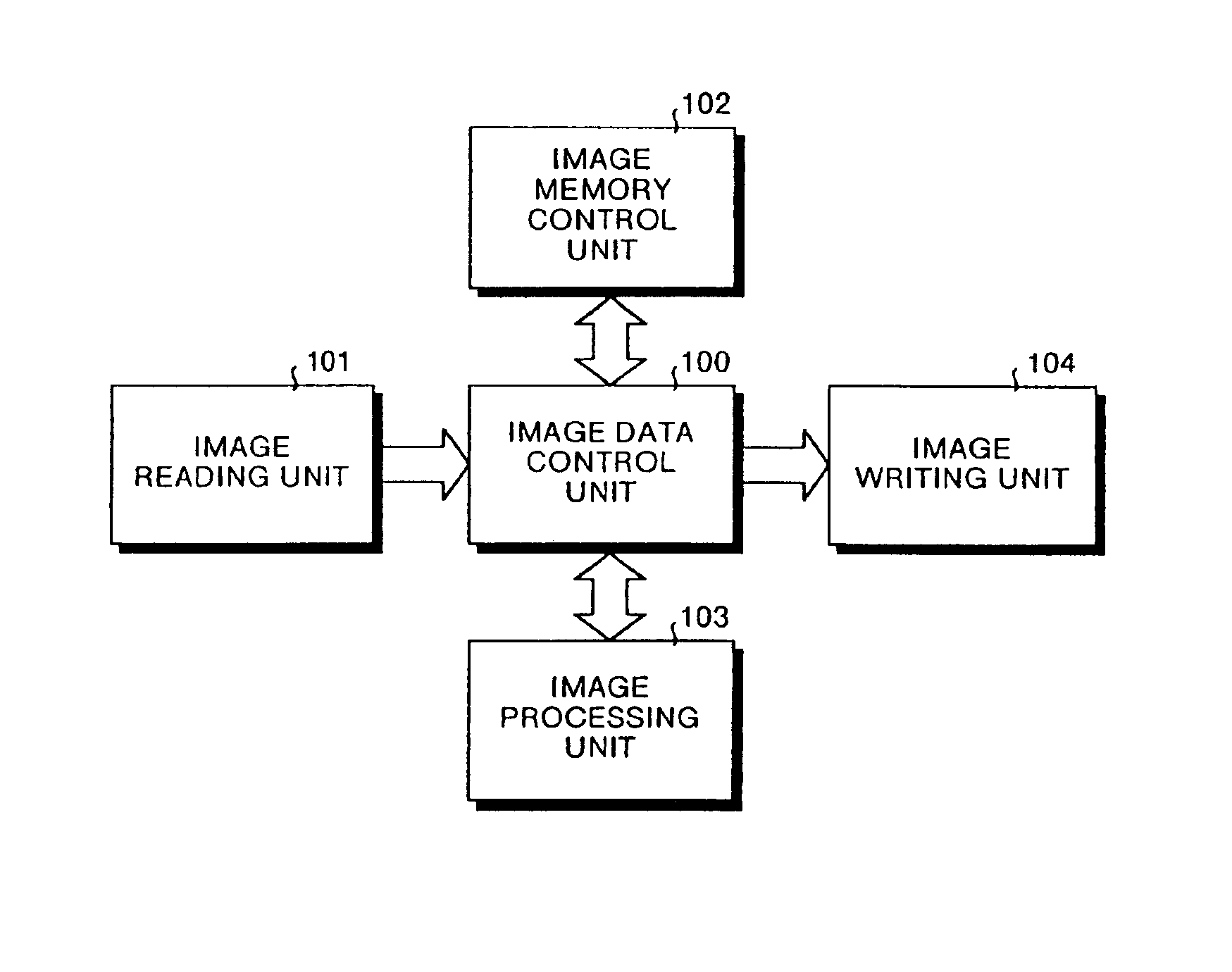

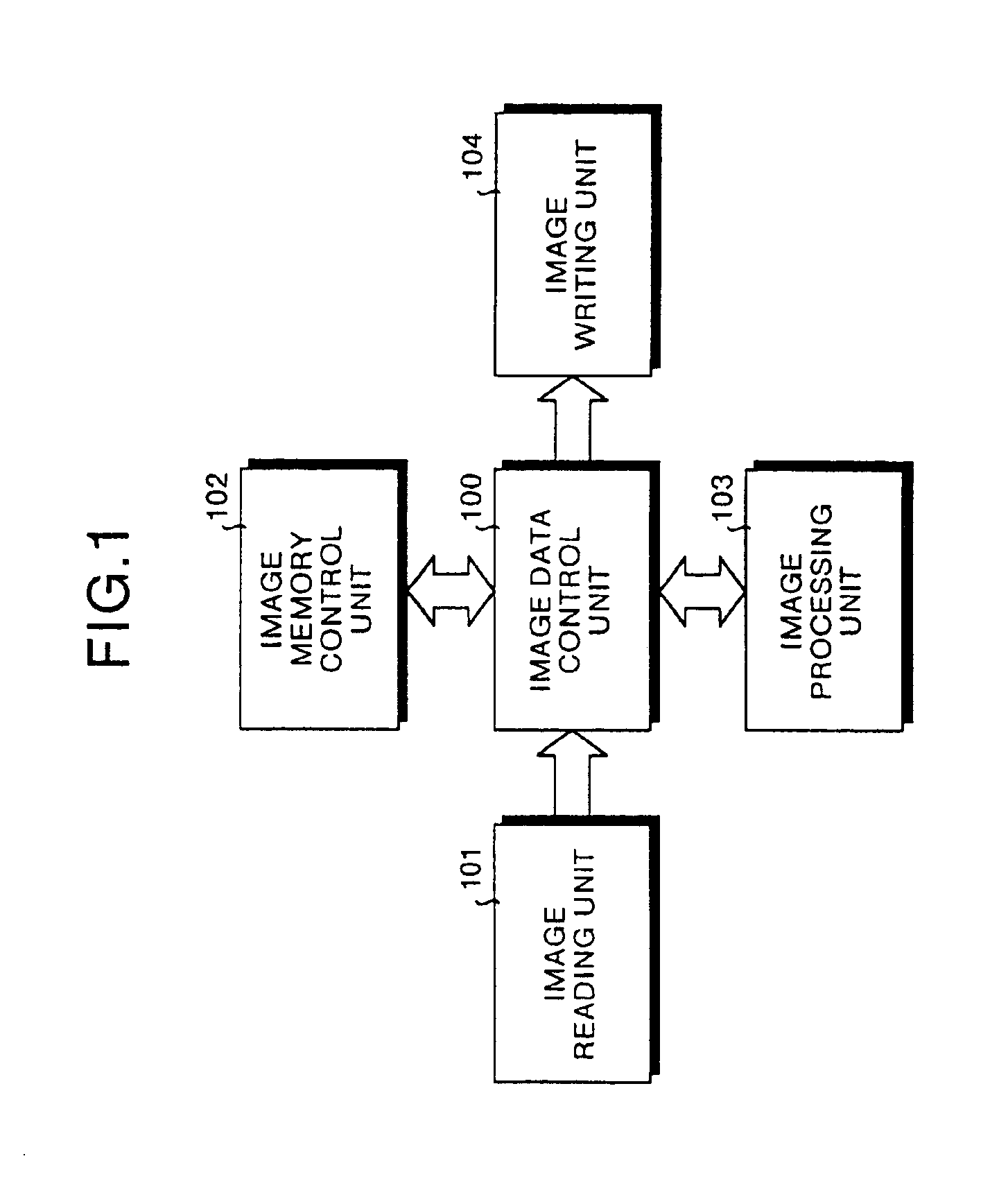

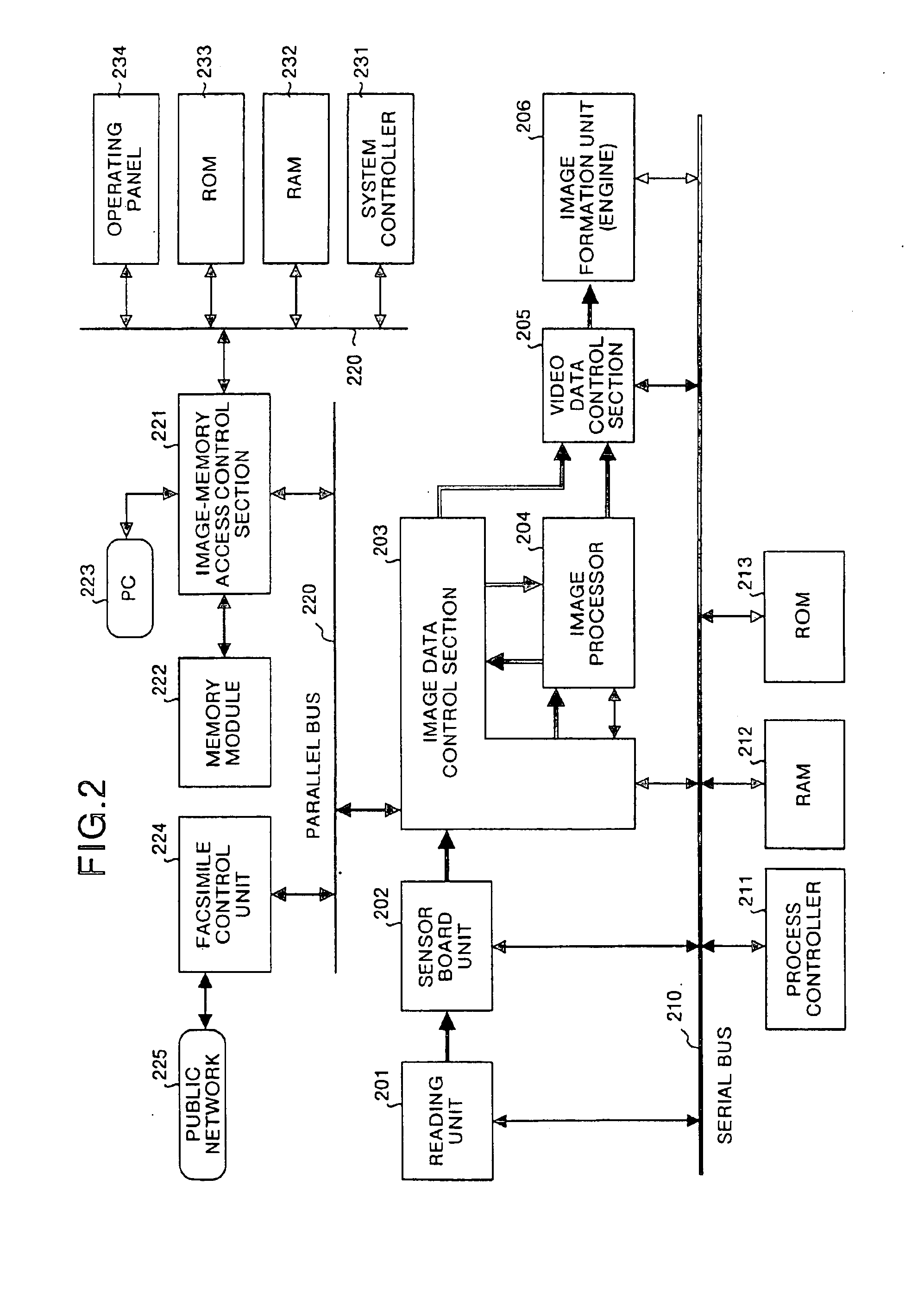

Image processing apparatus, method for adding or updating sequence of image processing and data for image processing in the image processing apparatus, and computer-readable recording medium where program for making computer execute the method is recorded

InactiveUS6862101B1High efficiency and availabilityEfficient executionProgram control using stored programsDigital computer detailsImaging processingComputer graphics (images)

An image processor has a transfer control section that transfers a sequence of image processing and data for image processing to be added or updated from a host buffer to program RAM and data RAM. The host buffer receives the sequence of image processing and data for image processing to be added or updated transferred from a process controller, and temporarily stores the sequence and the data during idle cycle time that a processor array section does not execute image processing. The transfer control section provides controls for transfer so that the sequence of image processing and data for image processing to be added or updated are split into blocks for a plurality of transfer times, and the blocks are transferred from the host buffer to the program RAM and the data RAM.

Owner:RICOH KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com