Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

12649 results about "Data buffer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, a data buffer (or just buffer) is a region of a physical memory storage used to temporarily store data while it is being moved from one place to another. Typically, the data is stored in a buffer as it is retrieved from an input device (such as a microphone) or just before it is sent to an output device (such as speakers). However, a buffer may be used when moving data between processes within a computer. This is comparable to buffers in telecommunication. Buffers can be implemented in a fixed memory location in hardware—or by using a virtual data buffer in software, pointing at a location in the physical memory. In all cases, the data stored in a data buffer are stored on a physical storage medium. A majority of buffers are implemented in software, which typically use the faster RAM to store temporary data, due to the much faster access time compared with hard disk drives. Buffers are typically used when there is a difference between the rate at which data is received and the rate at which it can be processed, or in the case that these rates are variable, for example in a printer spooler or in online video streaming. In the distributed computing environment, data buffer is often implemented in the form of burst buffer that provides distributed buffering service.

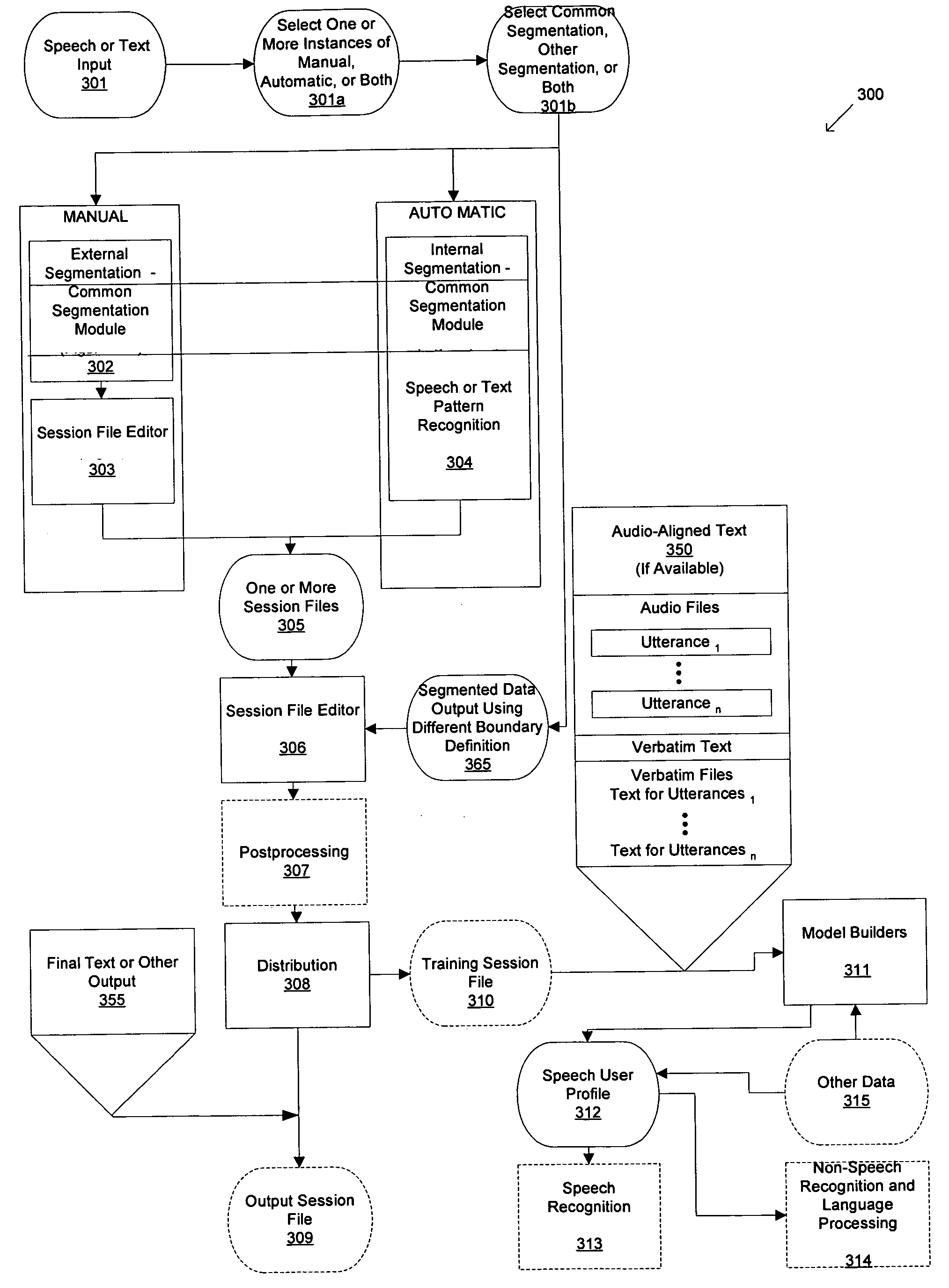

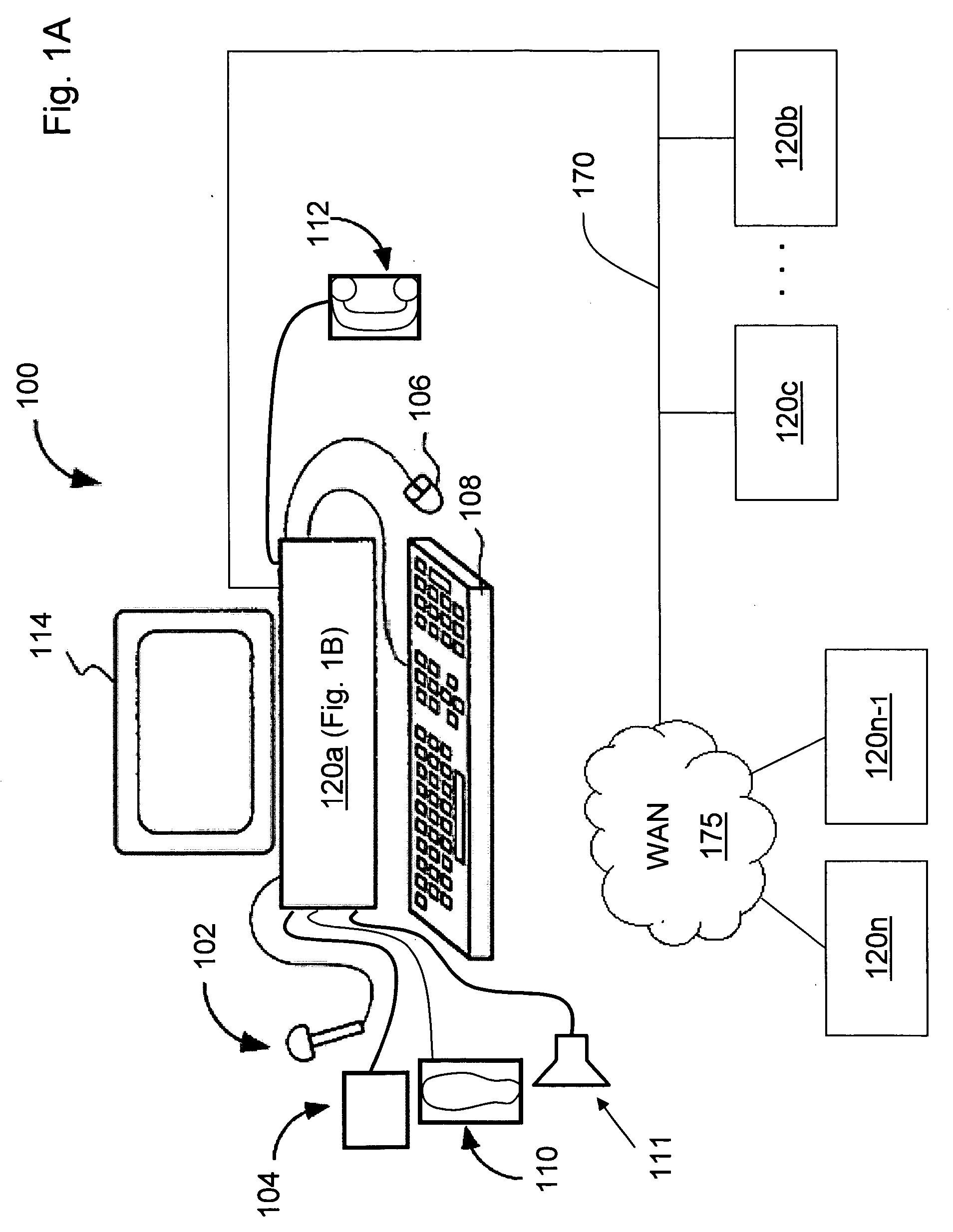

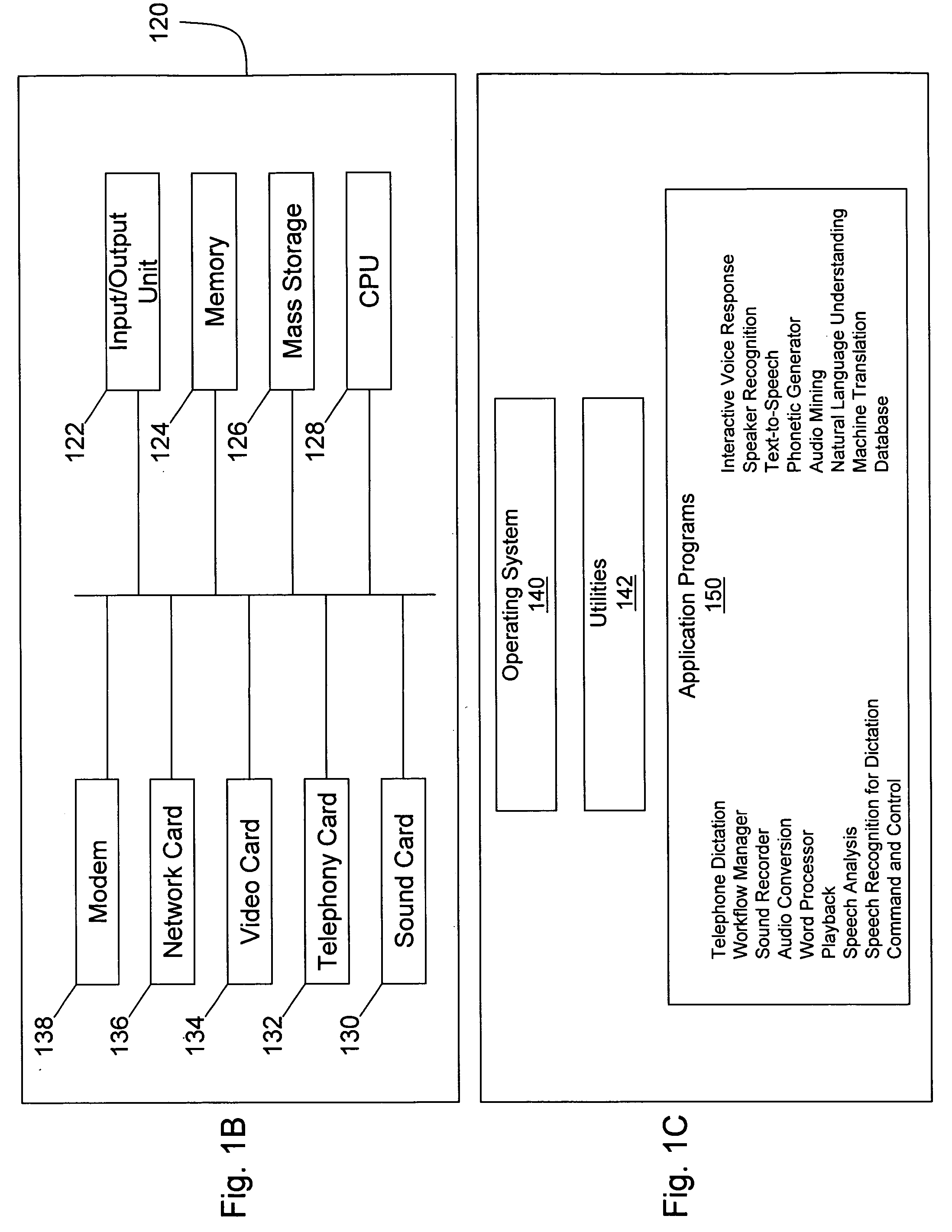

Synchronized pattern recognition source data processed by manual or automatic means for creation of shared speaker-dependent speech user profile

InactiveUS20060149558A1Avoids time-consuming generationMaximize likelihoodSpeech recognitionGraphicsData segment

An apparatus for collecting data from a plurality of diverse data sources, the diverse data sources generating input data selected from the group including text, audio, and graphics, the diverse data sources selected from the group including real-time and recorded, human and mechanically-generated audio, single-speaker and multispeaker, the apparatus comprising: means for dividing the input data into one or more data segments, the dividing means acting separately on the input data from each of the plurality of diverse data sources, each of the data segments being associated with at least one respective data buffer such that each of the respective data buffers would have the same number of segments given the same data; means for selective processing of the data segments within each of the respective data buffers; and means for distributing at least one of the respective data buffers such that the collected data associated therewith may be used for further processing.

Owner:CUSTOM SPEECH USA

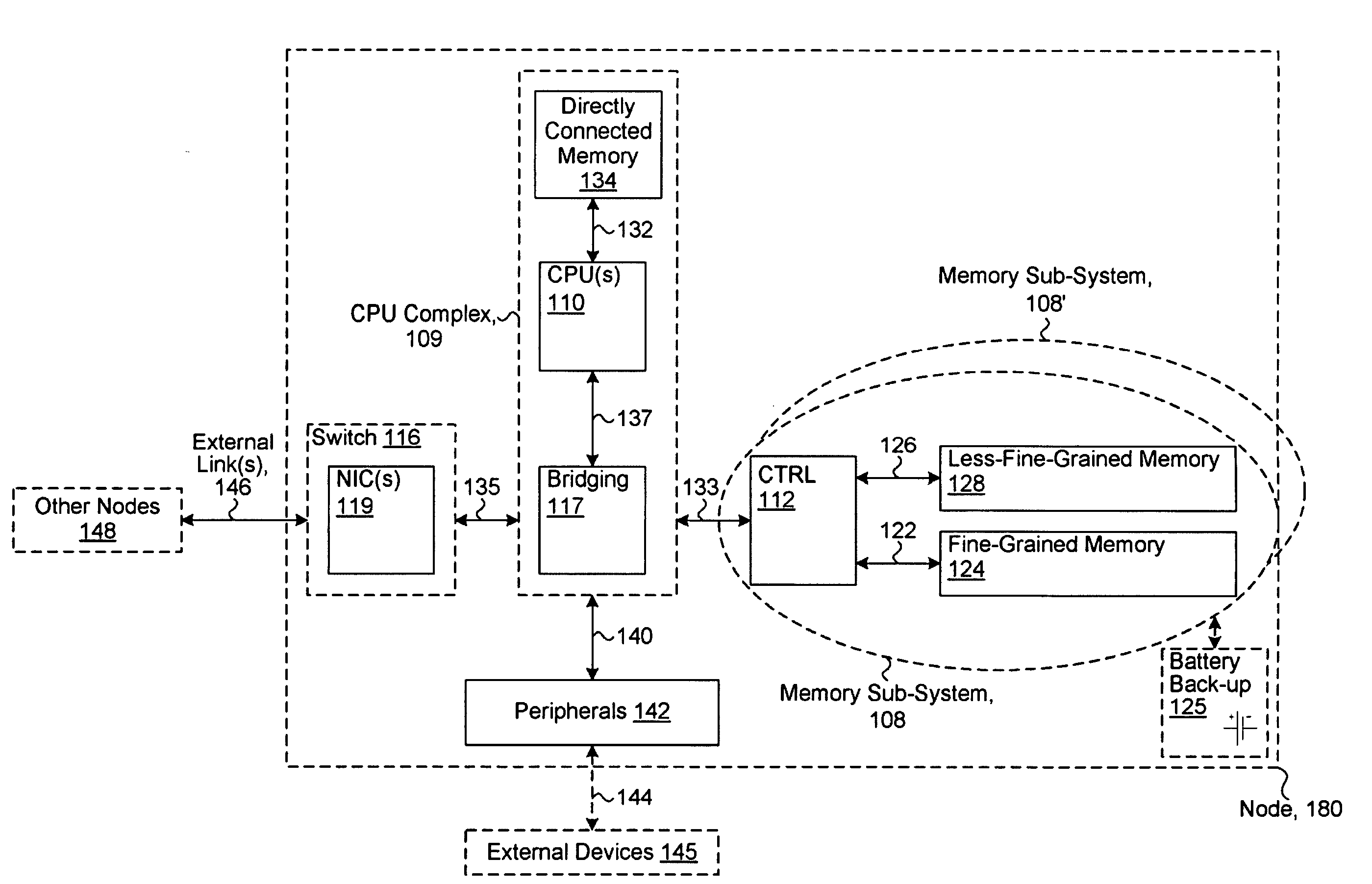

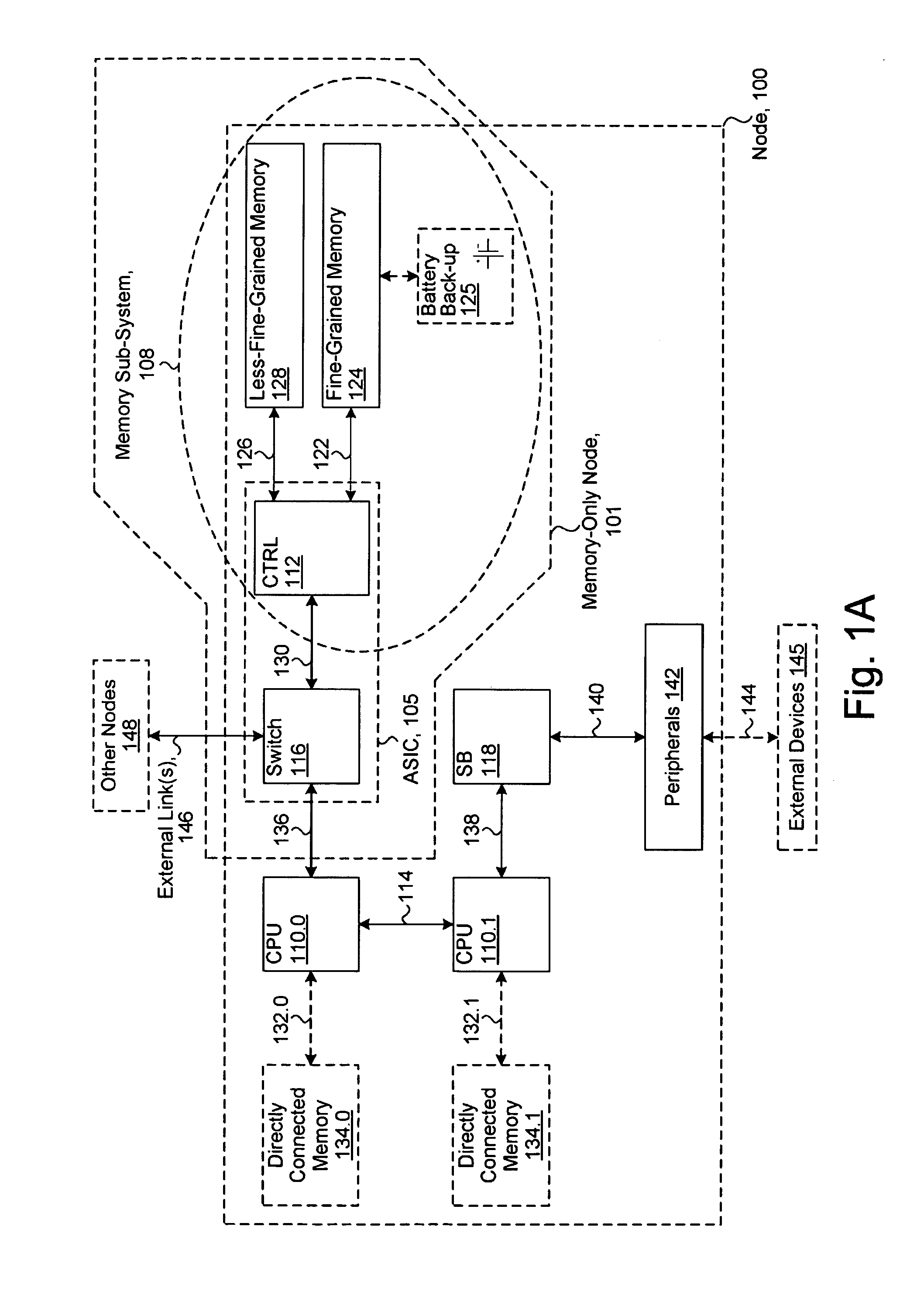

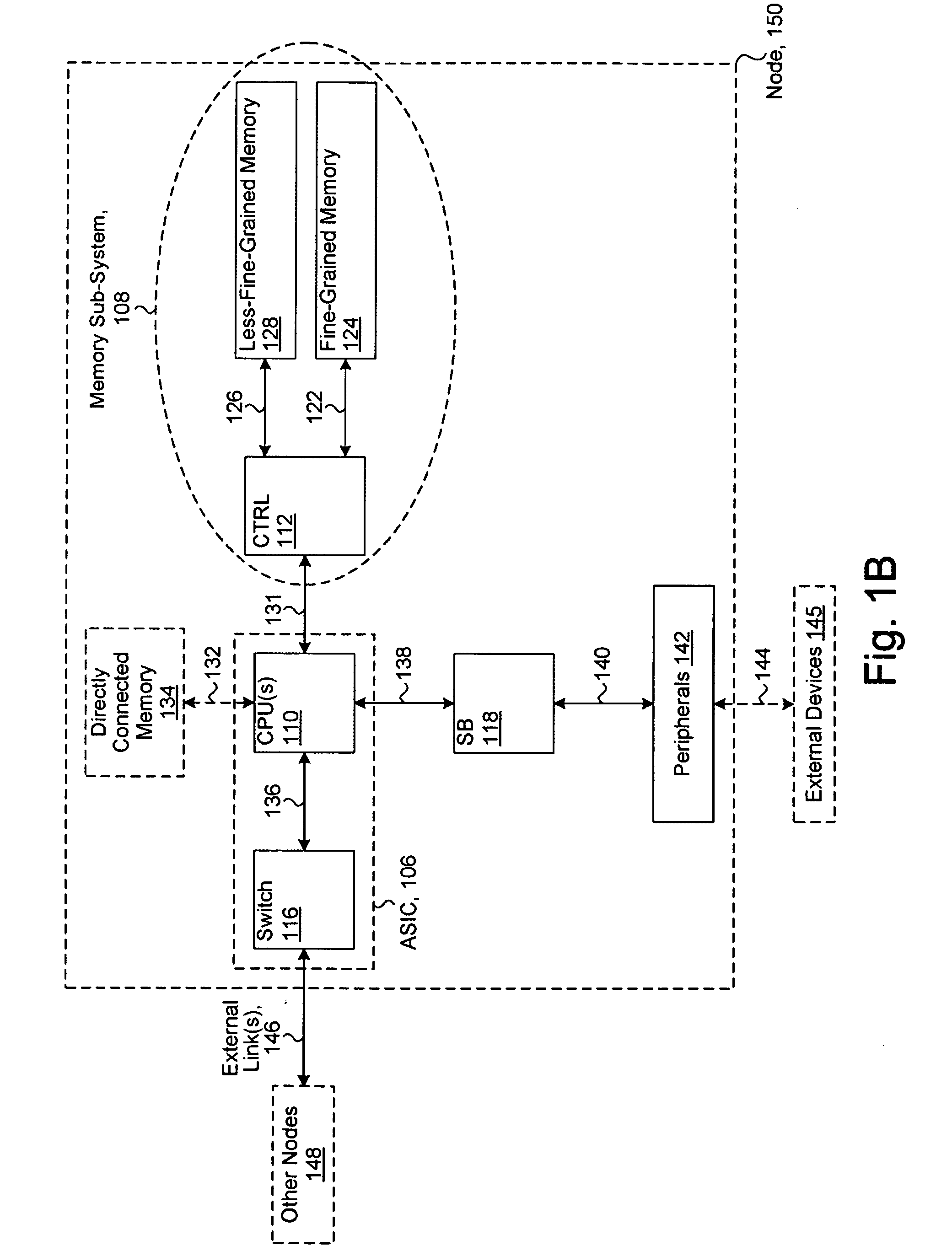

System including a fine-grained memory and a less-fine-grained memory

ActiveUS20080301256A1Memory architecture accessing/allocationEnergy efficient ICTData processing systemWrite buffer

A data processing system includes one or more nodes, each node including a memory sub-system. The sub-system includes a fine-grained, memory, and a less-fine-grained (e.g., page-based) memory. The fine-grained memory optionally serves as a cache and / or as a write buffer for the page-based memory. Software executing on the system uses n node address space which enables access to the page-based memories of all nodes. Each node optionally provides ACID memory properties for at least a portion of the space. In at least a portion of the space, memory elements are mapped to locations in the page-based memory. In various embodiments, some of the elements are compressed, the compressed elements are packed into pages, the pages are written into available locations in the page-based memory, and a map maintains an association between the some of the elements and the locations.

Owner:SANDISK TECH LLC

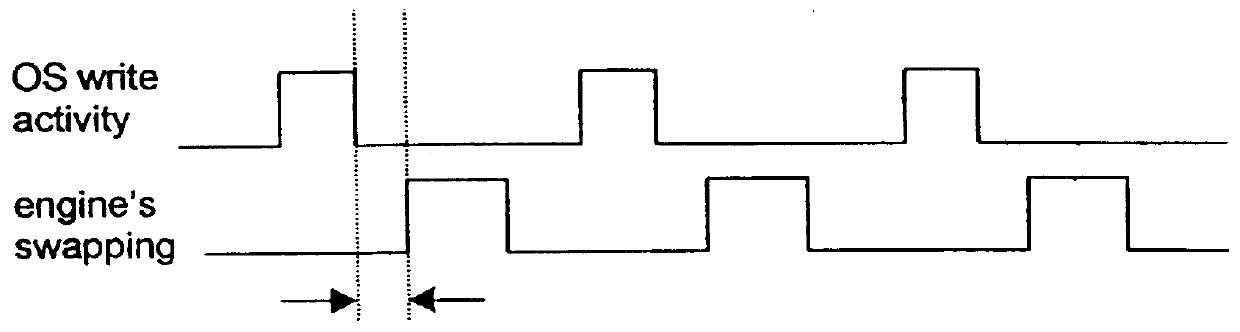

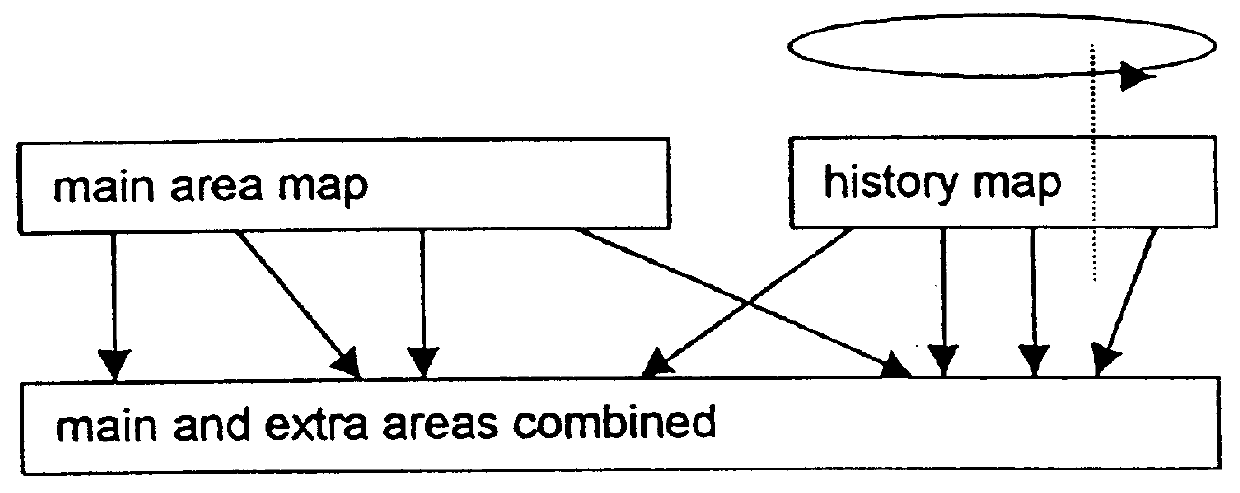

Method, software and apparatus for saving, using and recovering data

InactiveUS6016553ADigital data information retrievalInput/output to record carriersOperational systemOriginal data

A method and apparatus for reverting a disk drive to an earlier point in time is disclosed. Changes made to the drive are saved in a circular history buffer which includes the old data, the time it was replaced by new data, and the original location of the data. The circular history buffer may also be implemented by saving new data elements into new locations and leaving the old data elements in their original locations. References to the new data elements are mapped to the new location. The disk drive is reverted to an earlier point in time by replacing the new data elements with the original data elements retrieved from the history buffer, or in the case of the other embodiment, reads to the disk are mapped to the old data elements stilled stored in their original locations. The method and apparatus may be implemented as part of an operating system, or as a separate program, or in the controller for the disk drive. The method and apparatus are applicable to other forms of data storage as well. Also disclosed are method and apparatus for providing firewall protection to data in a data storage medium of a computer system.

Owner:POWER MANAGEMENT ENTERPRISES

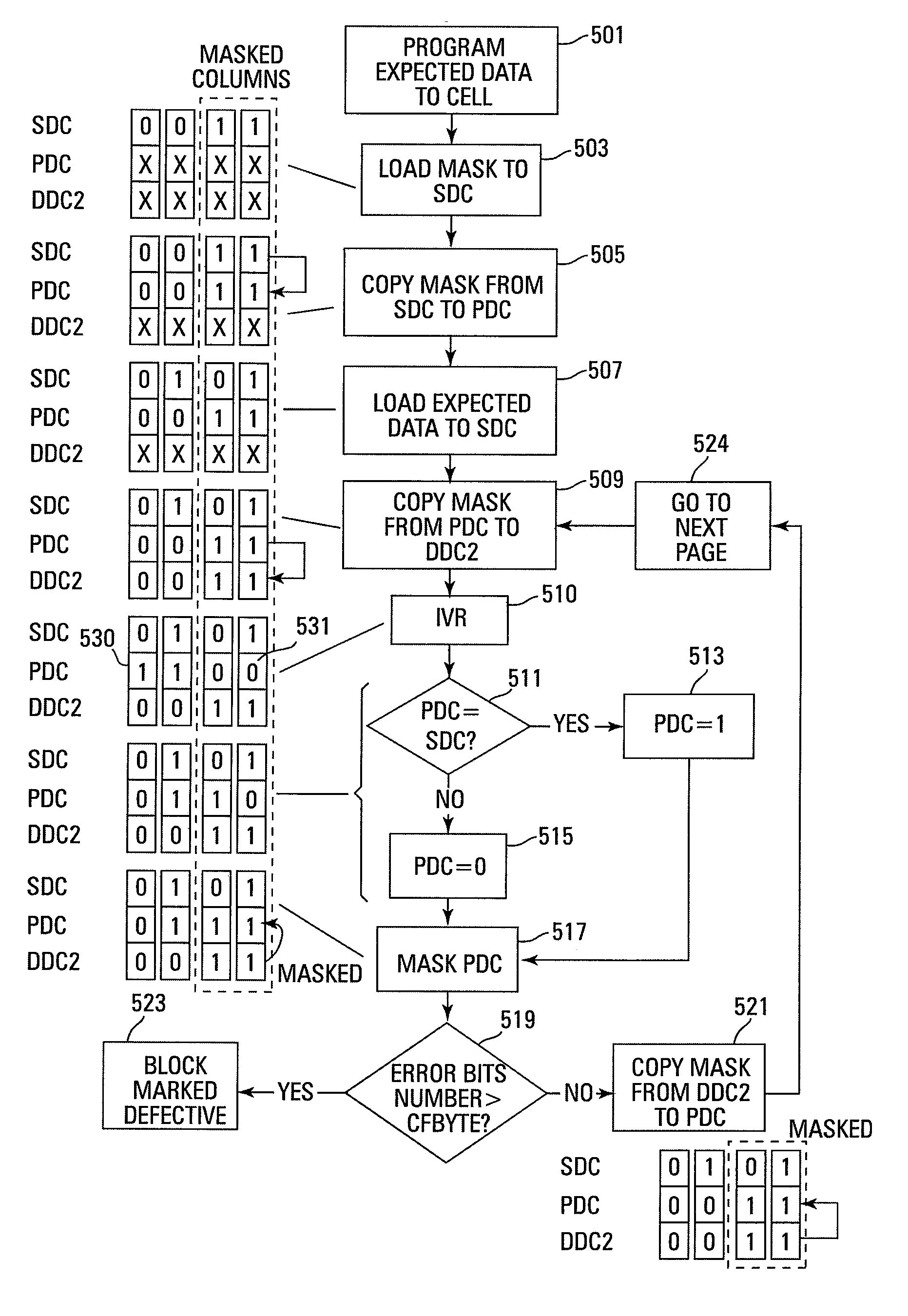

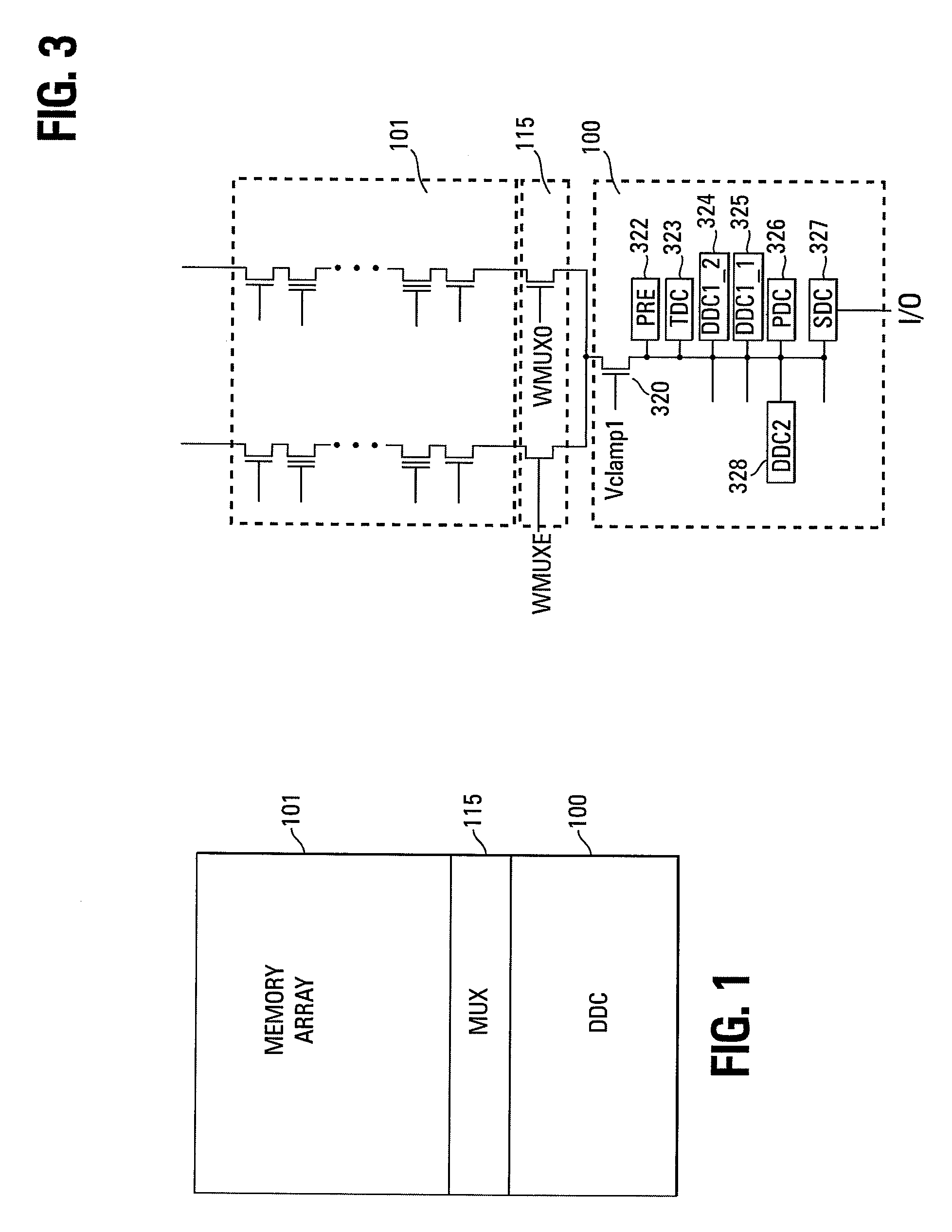

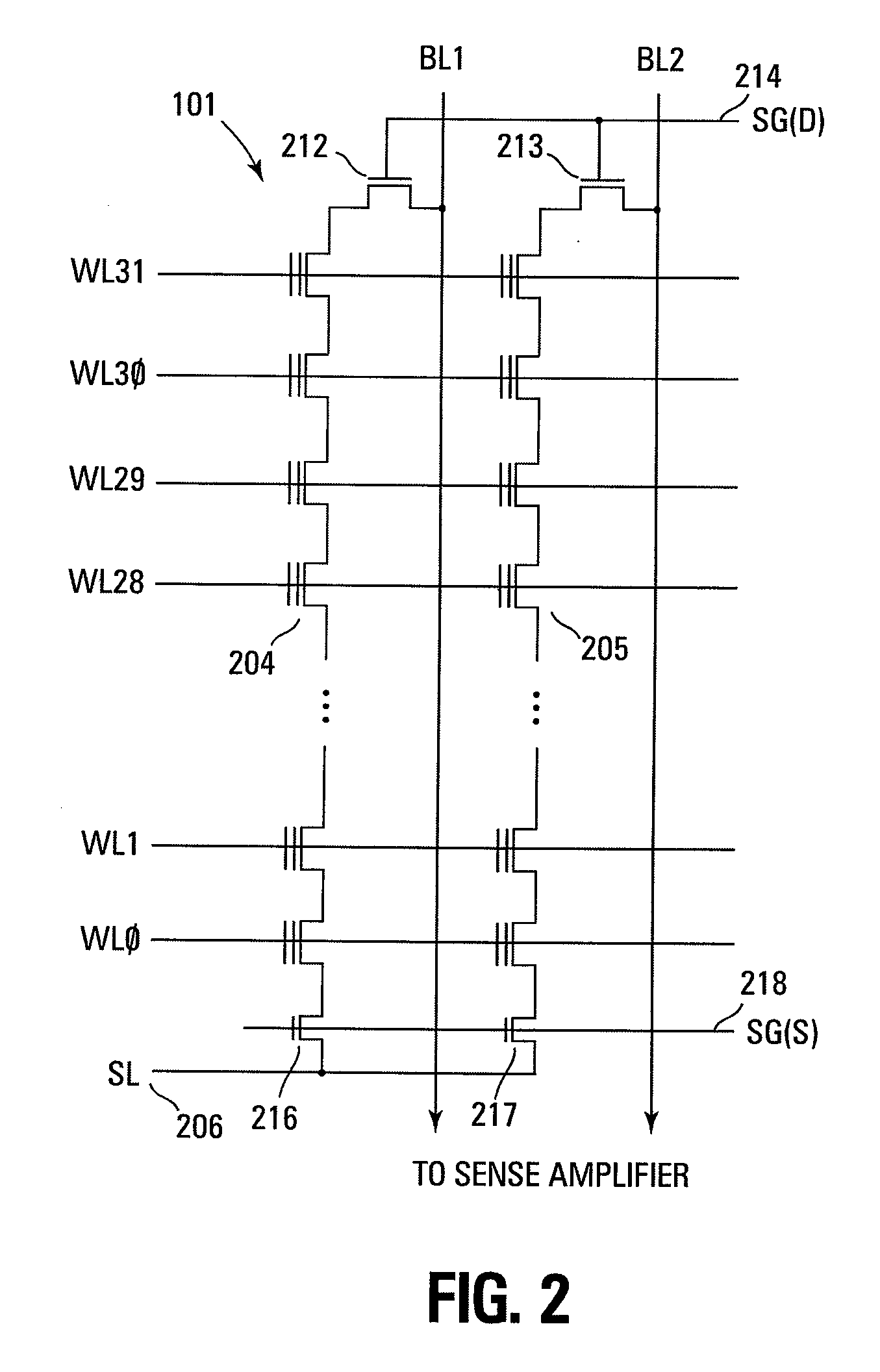

Small unit internal verify read in a memory device

Methods for small unit internal verify read operation and a memory device are disclosed. In one such method, expected data is programmed into a grouping of columns of memory cells (e.g., memory block). Mask data is loaded into a third dynamic data cache of three dynamic data caches. The expected data is loaded into a second data cache. After a read operation of programmed columns of memory cells, the read data is compared to the expected data and error bit indicators are stored in the second data cache in the error bit locations. The second data cache is masked with the mask data so that only those error bits that are unmasked are counted. If the number of unmasked error bit indicators is greater than a threshold, the memory block is marked as unusable.

Owner:MICRON TECH INC

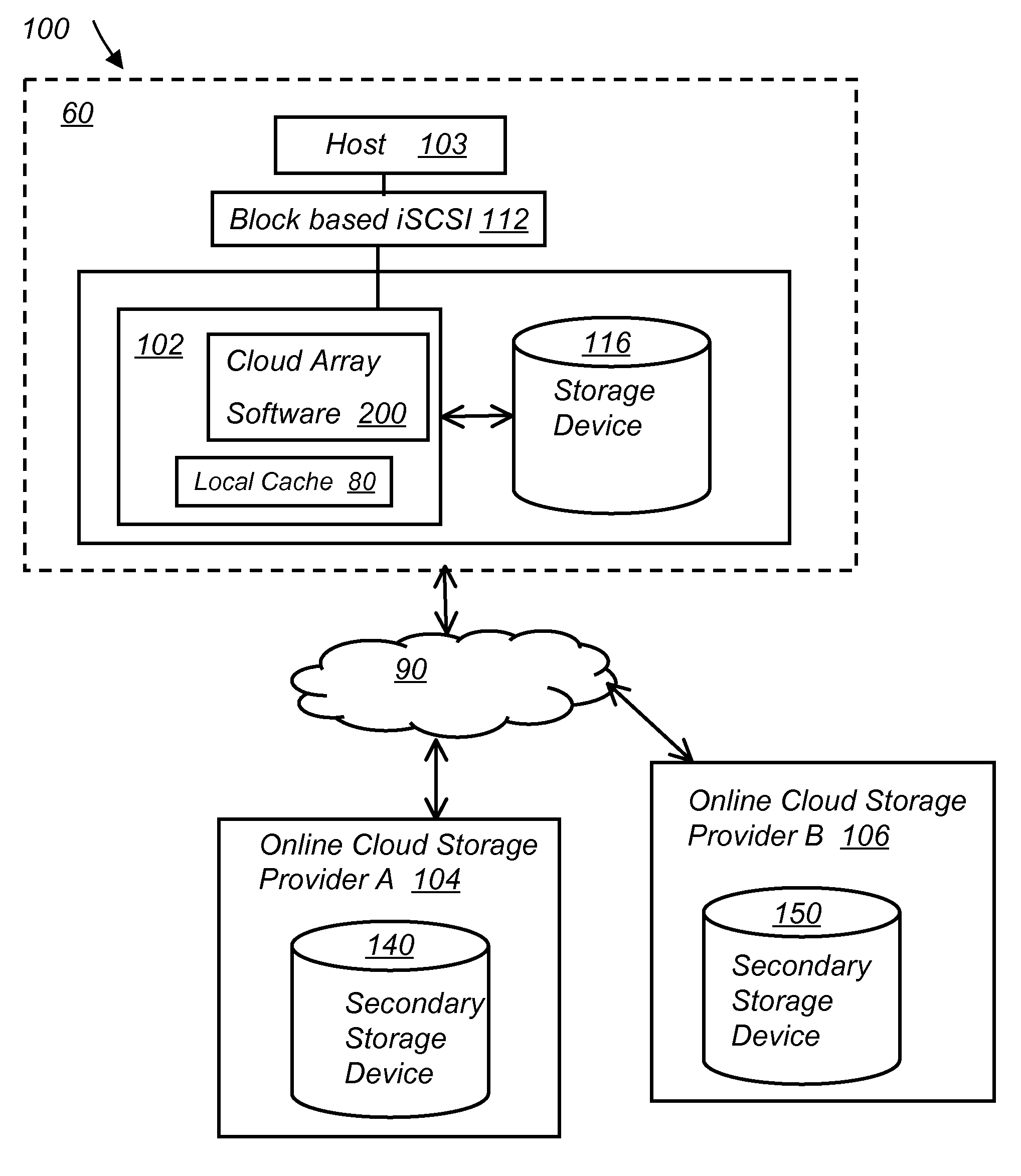

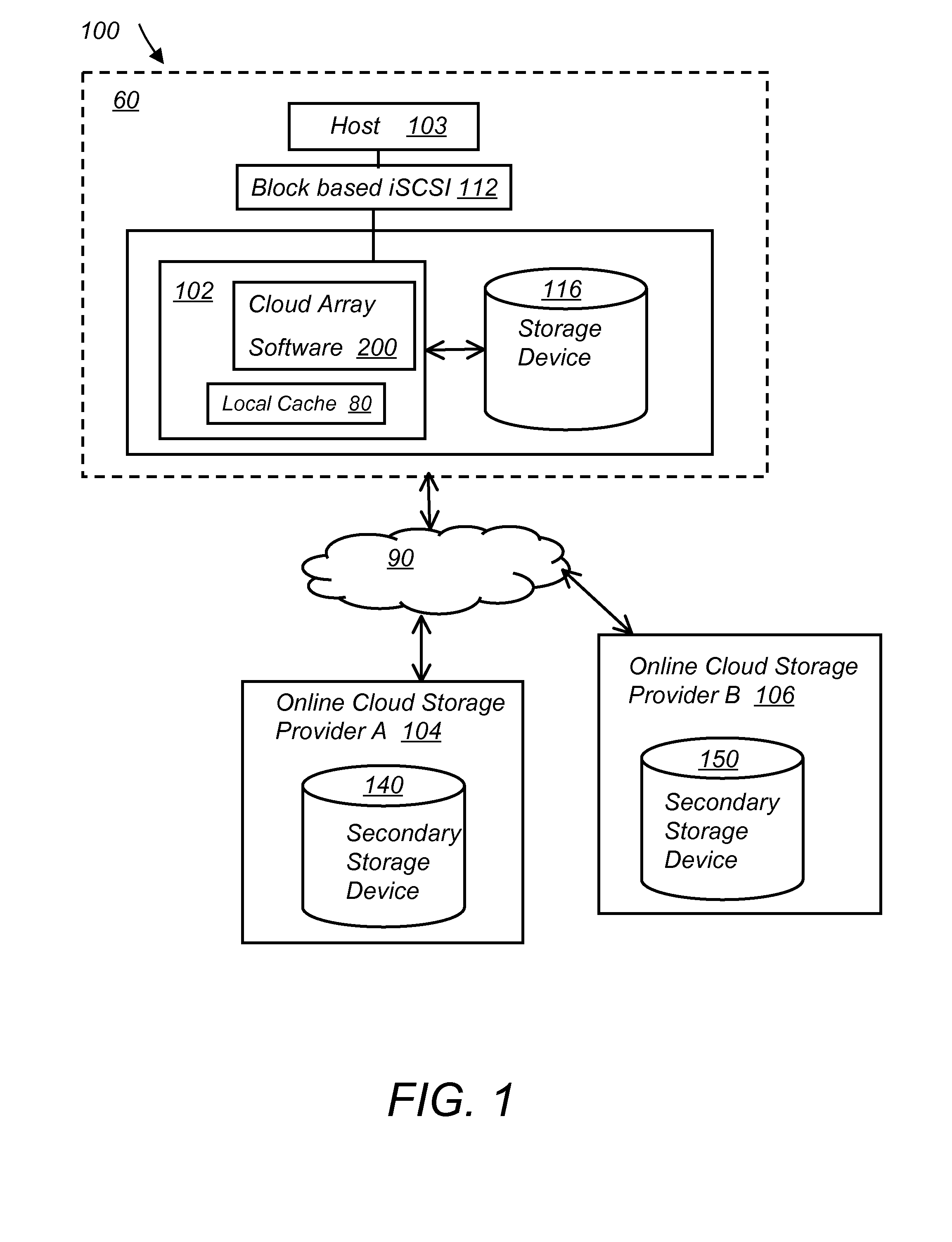

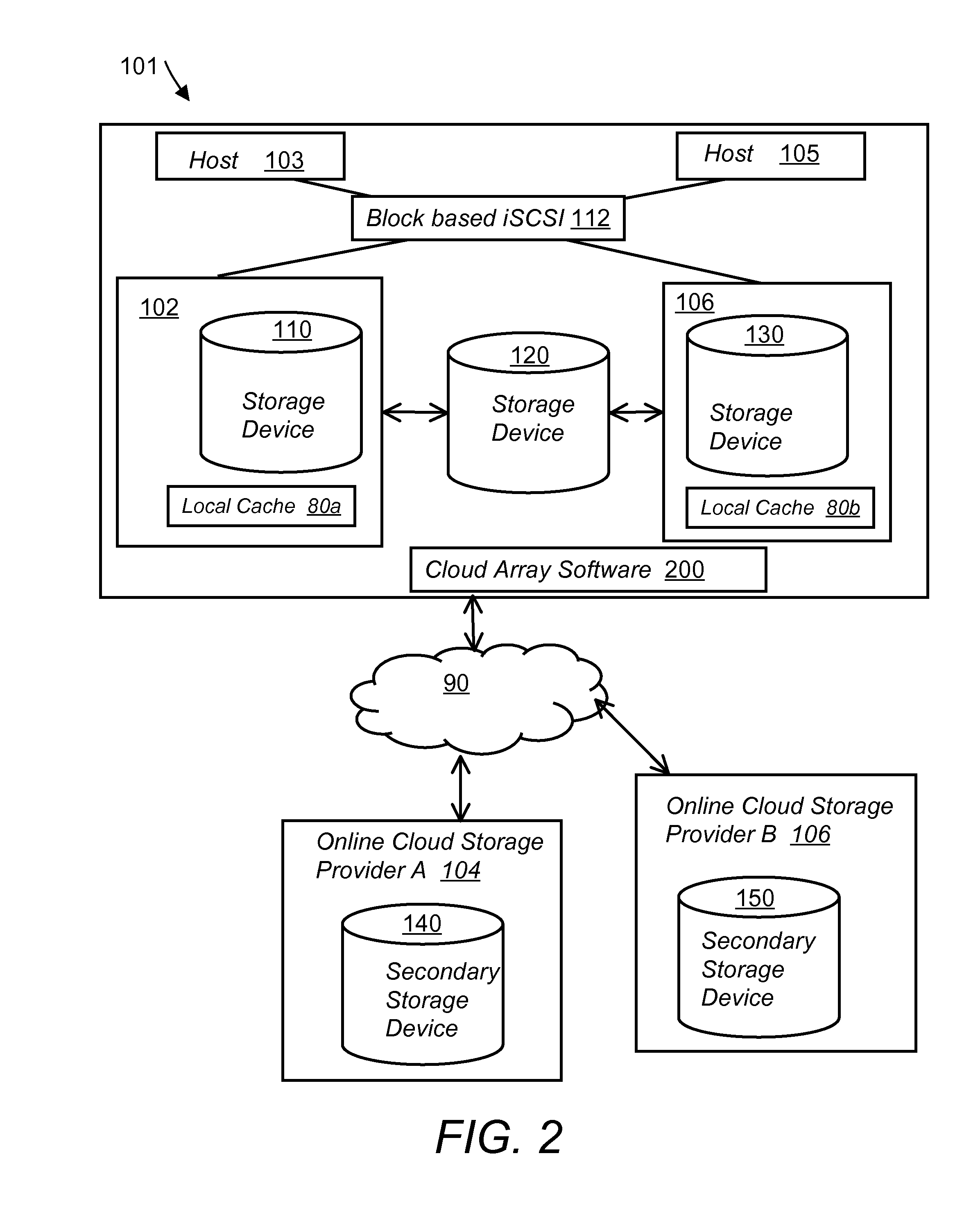

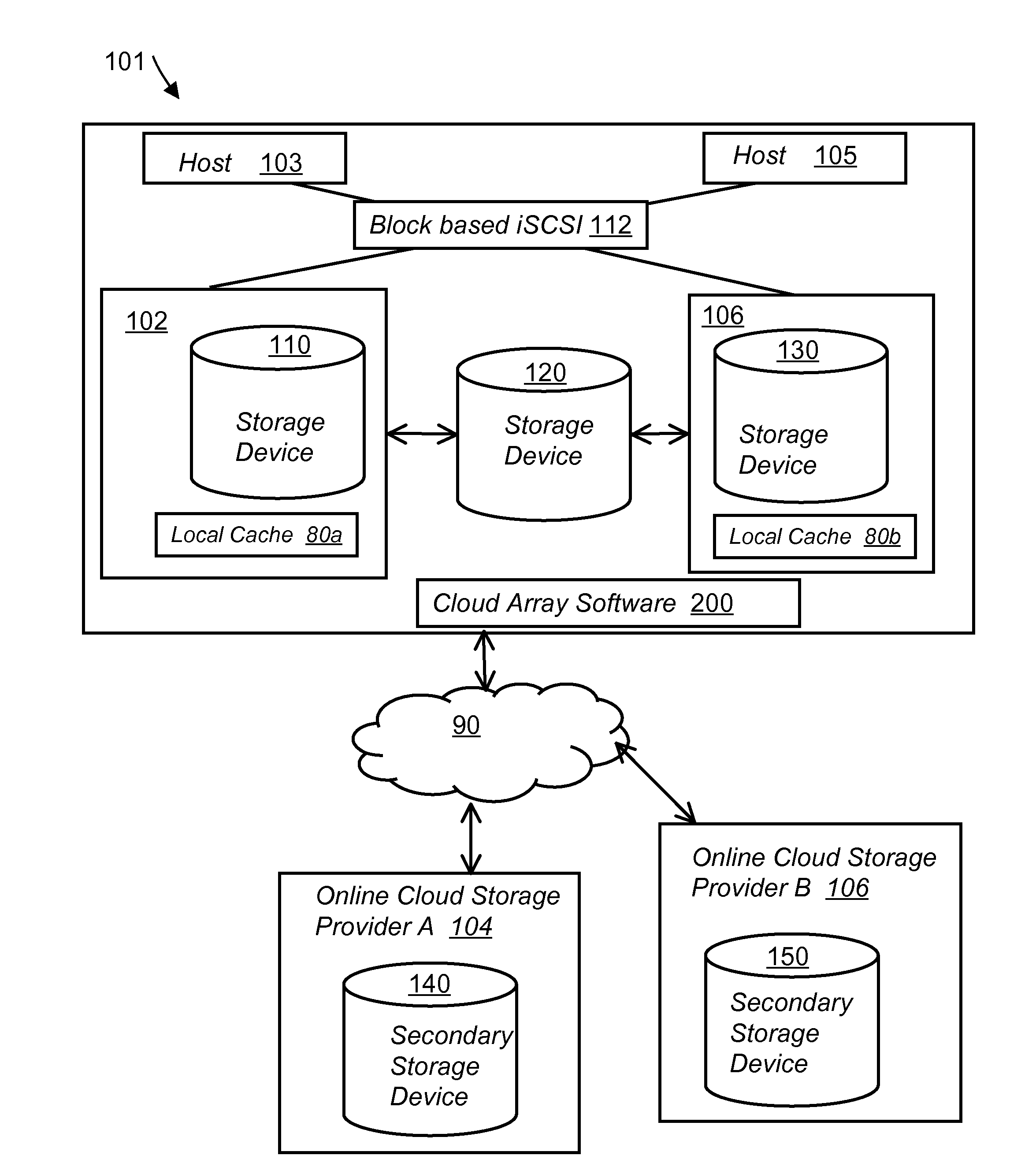

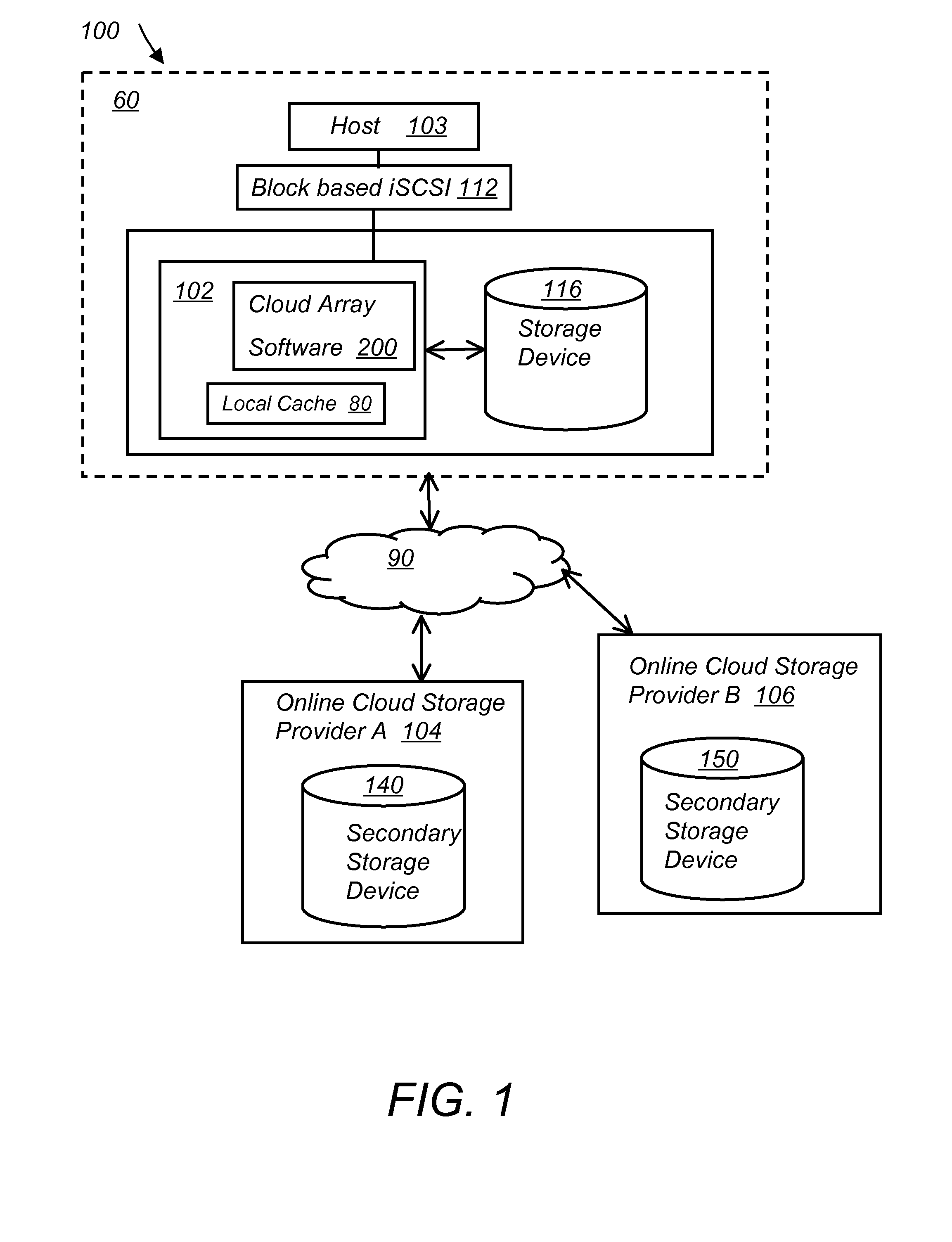

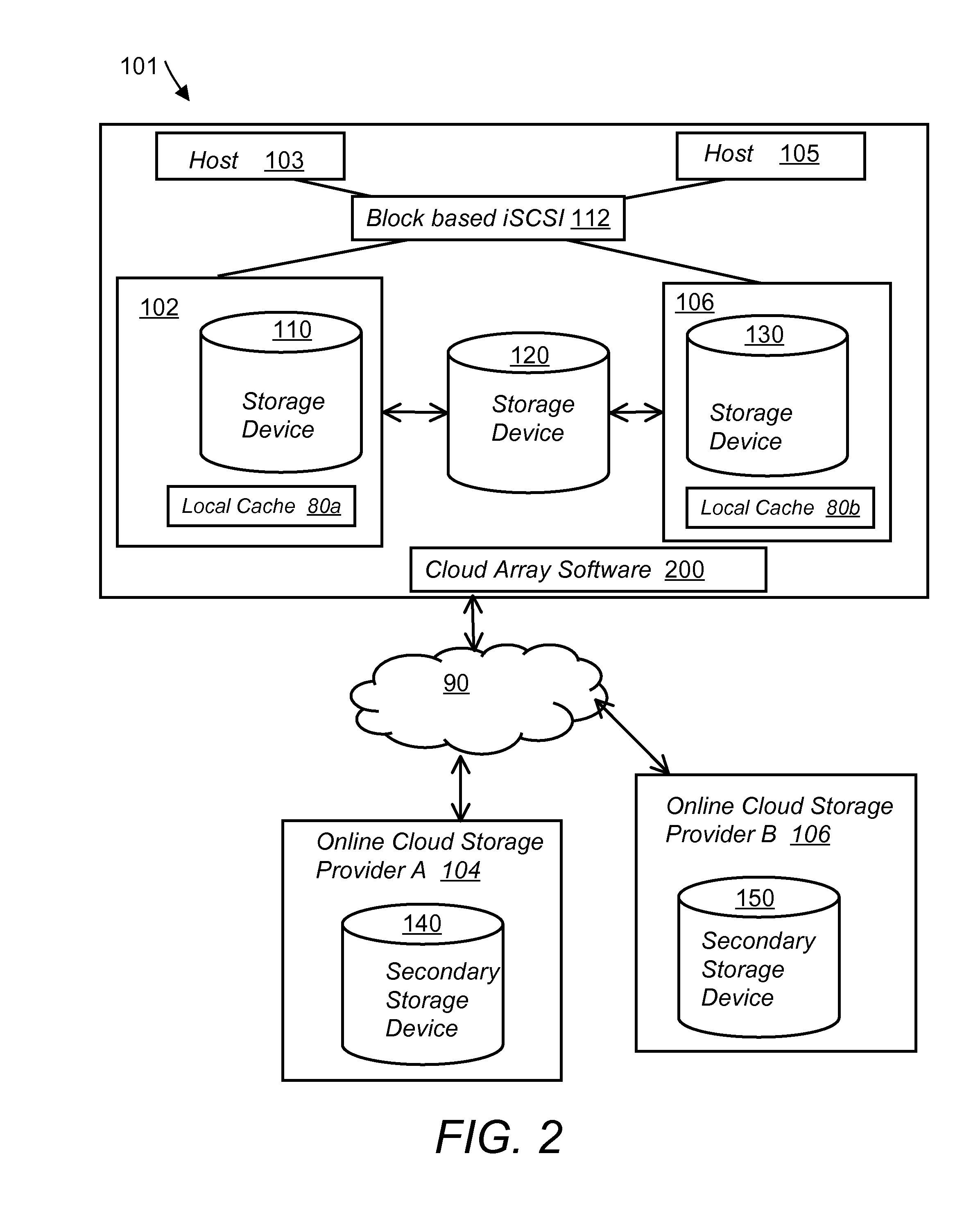

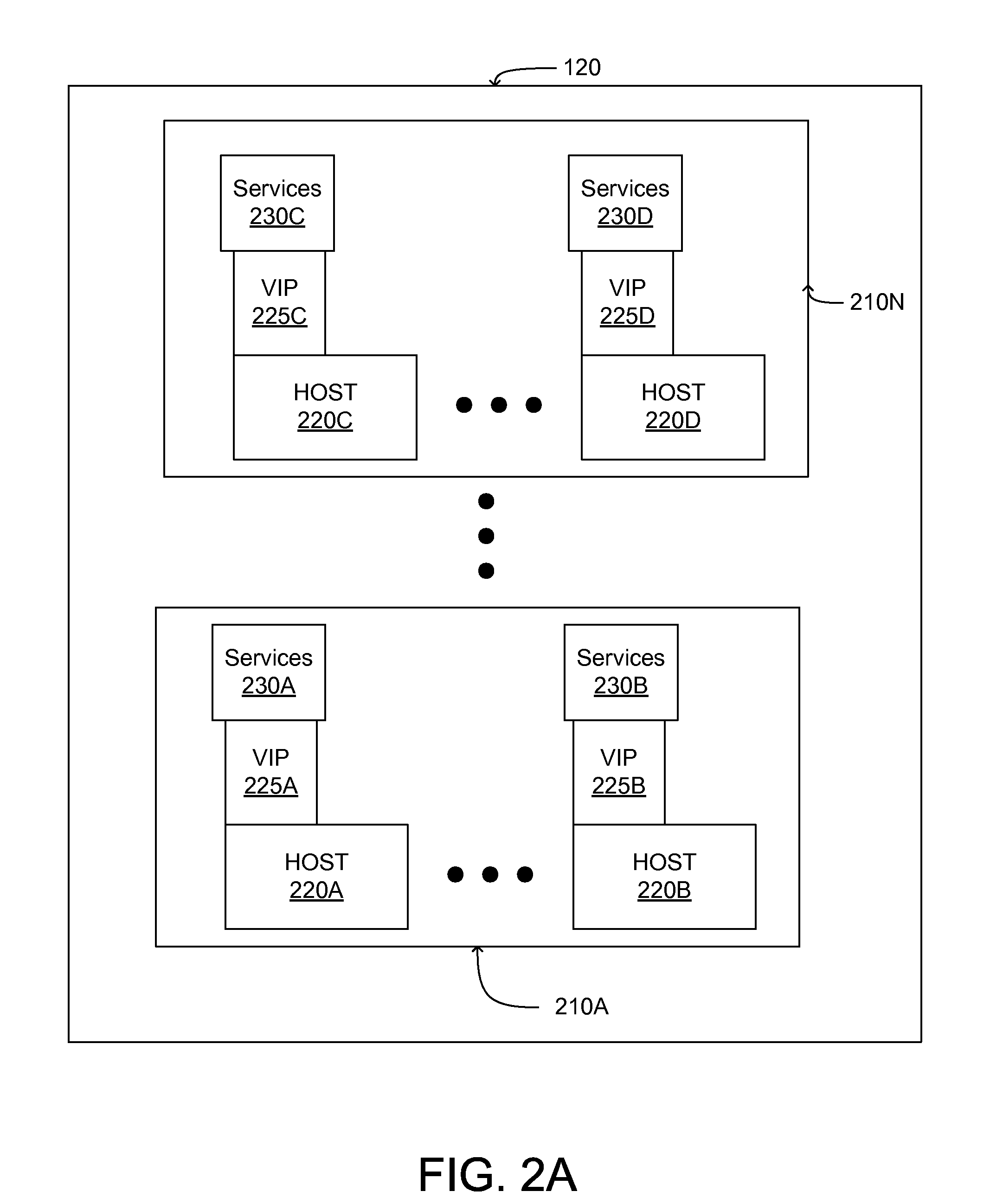

System and method for secure and reliable multi-cloud data replication

ActiveUS8762642B2Reduce the amount requiredReduce storage costsMemory architecture accessing/allocationUnauthorized memory use protectionReliable computingNetwork connection

Owner:EMC IP HLDG CO LLC

System and method for secure and reliable multi-cloud data replication

ActiveUS20100199042A1Reduce the amount requiredReduce storage costsMemory architecture accessing/allocationMemory loss protectionReliable computingReplication method

A multi-cloud data replication method includes providing a data replication cluster comprising at least a first host node and at least a first online storage cloud. The first host node is connected to the first online storage cloud via a network and comprises a server, a cloud array application and a local cache. The local cache comprises a buffer and a first storage volume comprising data cached in one or more buffer blocks of the local cache's buffer. Next, requesting authorization to perform cache flush of the cached first storage volume data to the first online storage cloud. Upon receiving approval of the authorization, encrypting the cached first storage volume data in each of the one or more buffer blocks with a data private key. Next, assigning metadata comprising at lest a unique identifier to each of the one or more buffer blocks and then encrypting the metadata with a metadata private key. Next, transmitting the one or more buffer blocks with the encrypted first storage volume data to the first online cloud storage. Next, creating a sequence of updates of the metadata, encrypting the sequence with the metadata private key and then transmitting the sequence of metadata updates to the first online storage cloud.

Owner:EMC IP HLDG CO LLC

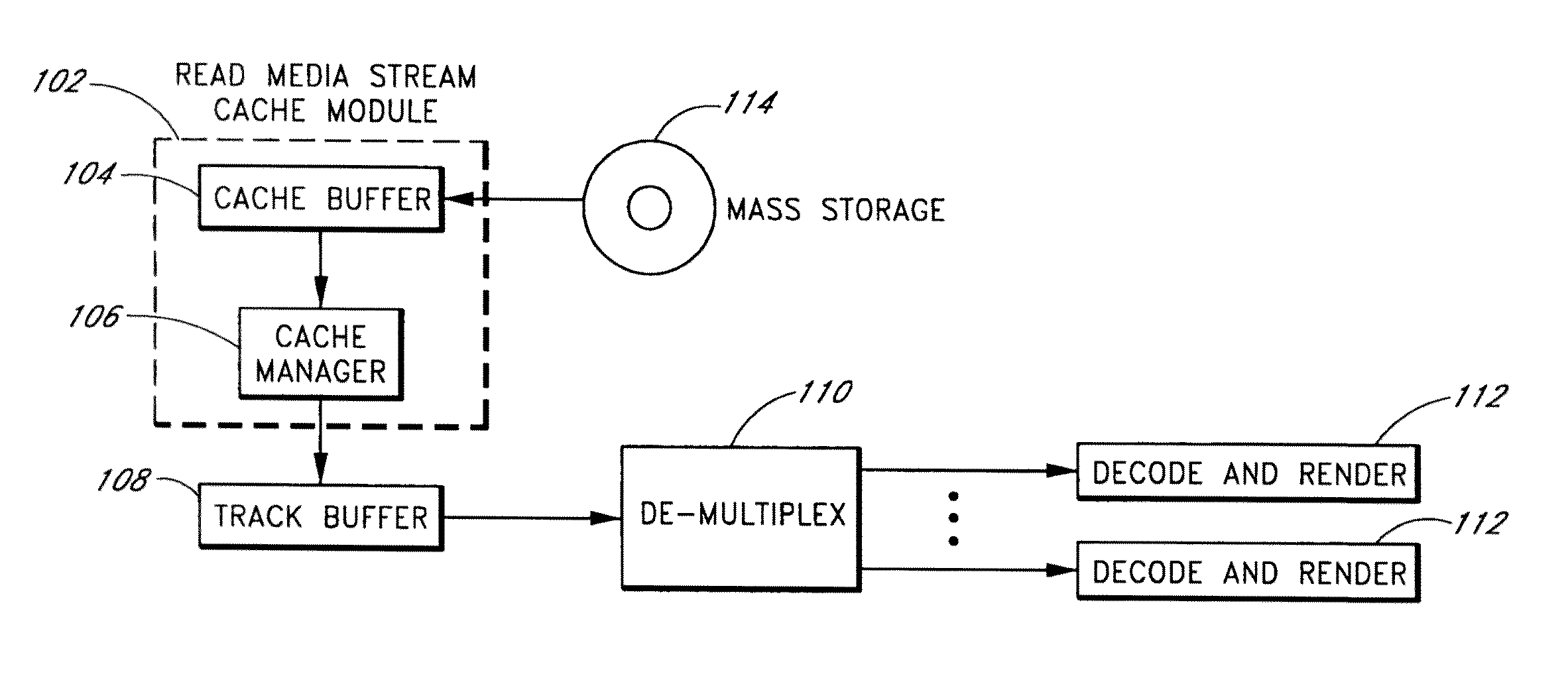

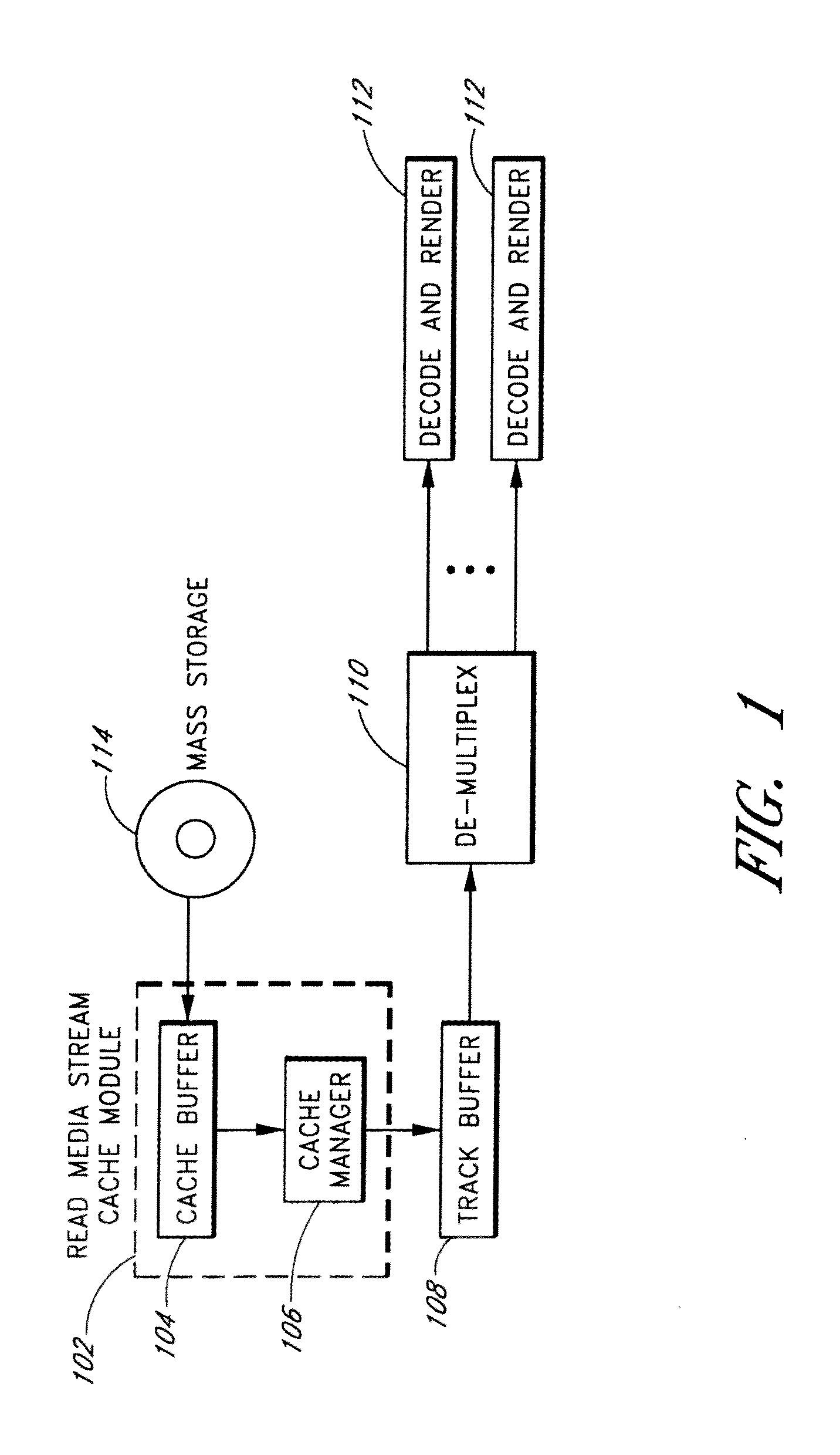

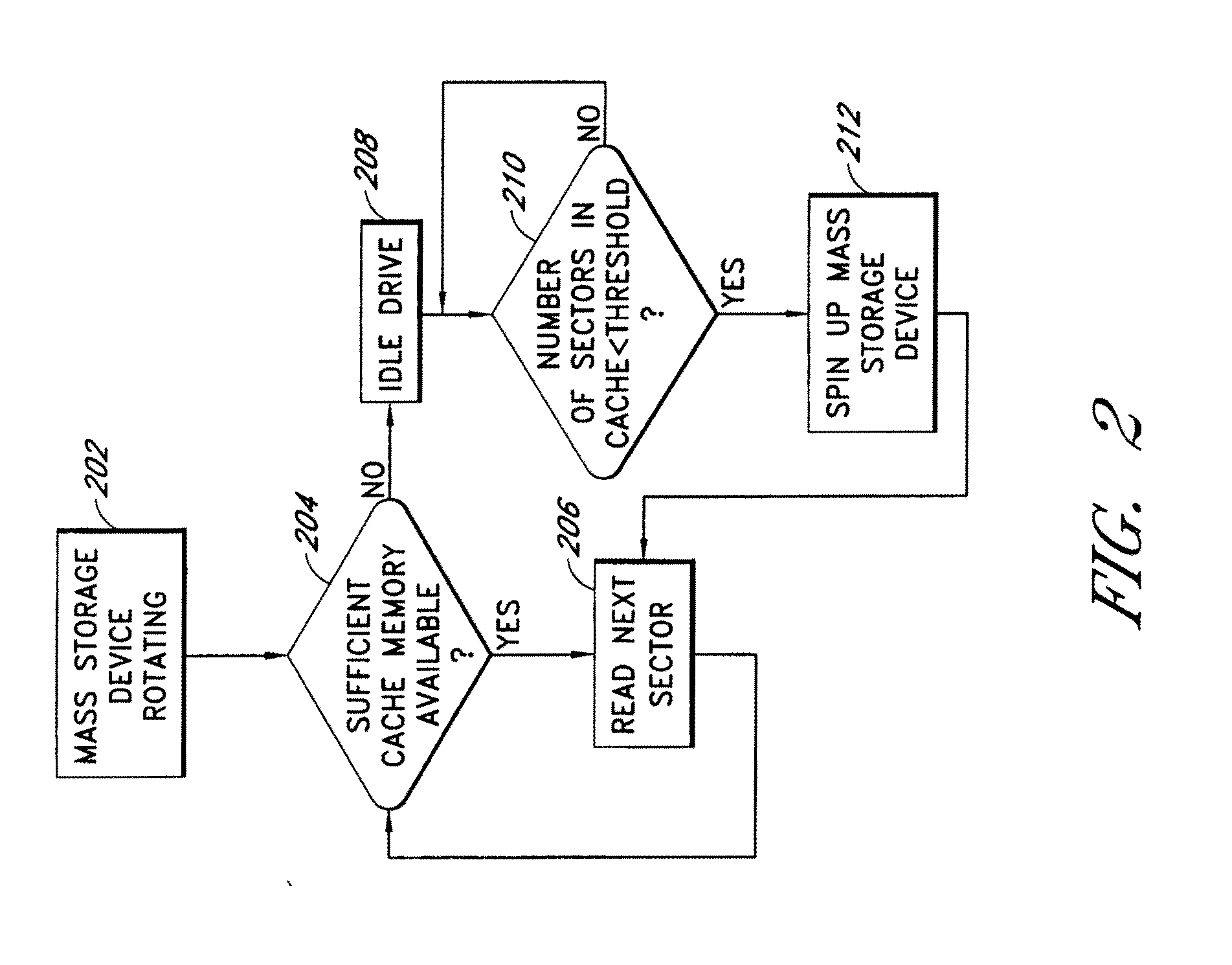

System and Method for Caching Multimedia Data

InactiveUS20100332754A1Data augmentationImprove functionalityMemory architecture accessing/allocationEnergy efficient ICTParallel computingData system

Systems and methods are provided for caching media data to thereby enhance media data read and / or write functionality and performance. A multimedia apparatus, comprises a cache buffer configured to be coupled to a storage device, wherein the cache buffer stores multimedia data, including video and audio data, read from the storage device. A cache manager coupled to the cache buffer, wherein the cache buffer is configured to cause the storage device to enter into a reduced power consumption mode when the amount of data stored in the cache buffer reaches a first level.

Owner:COREL CORP

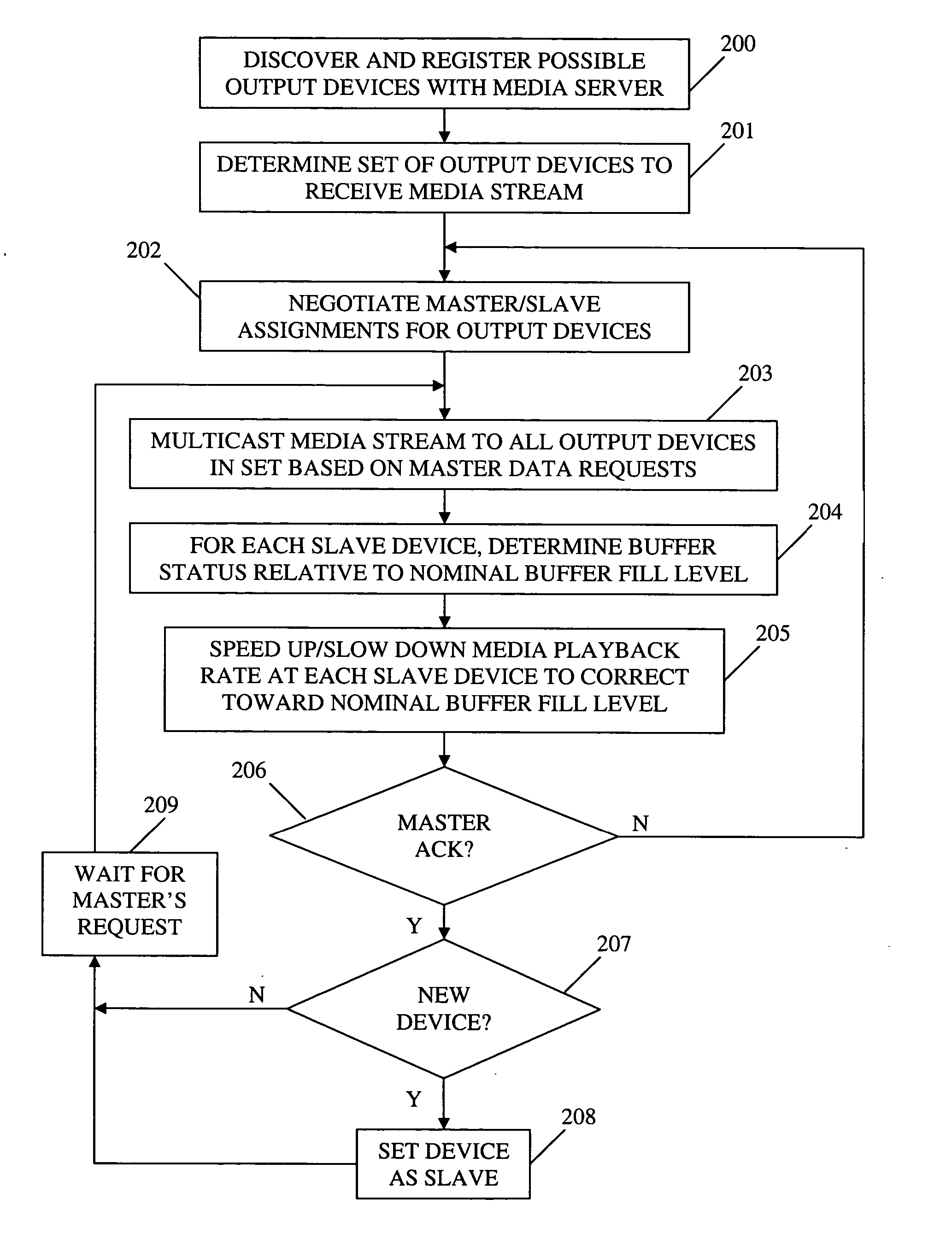

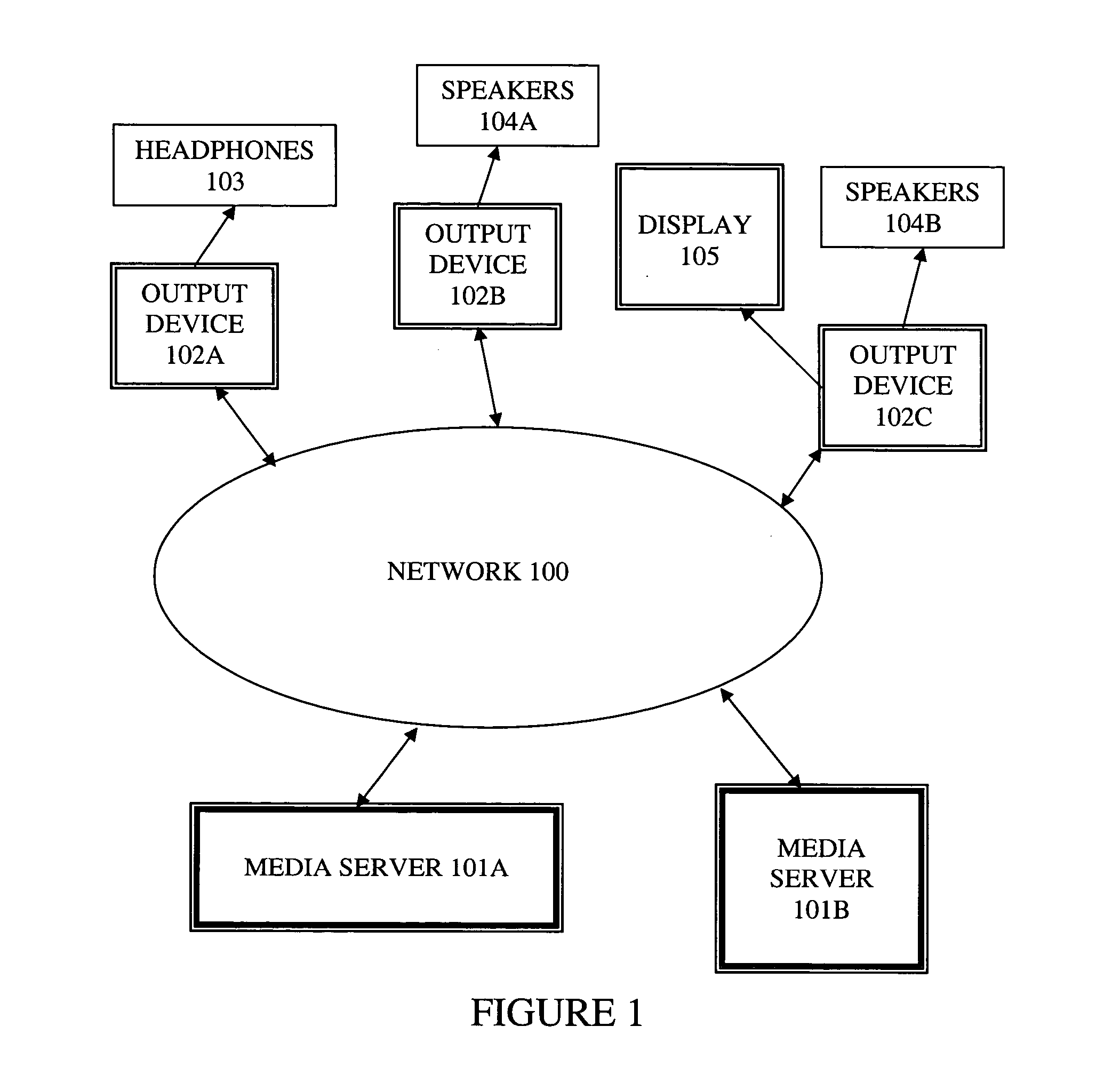

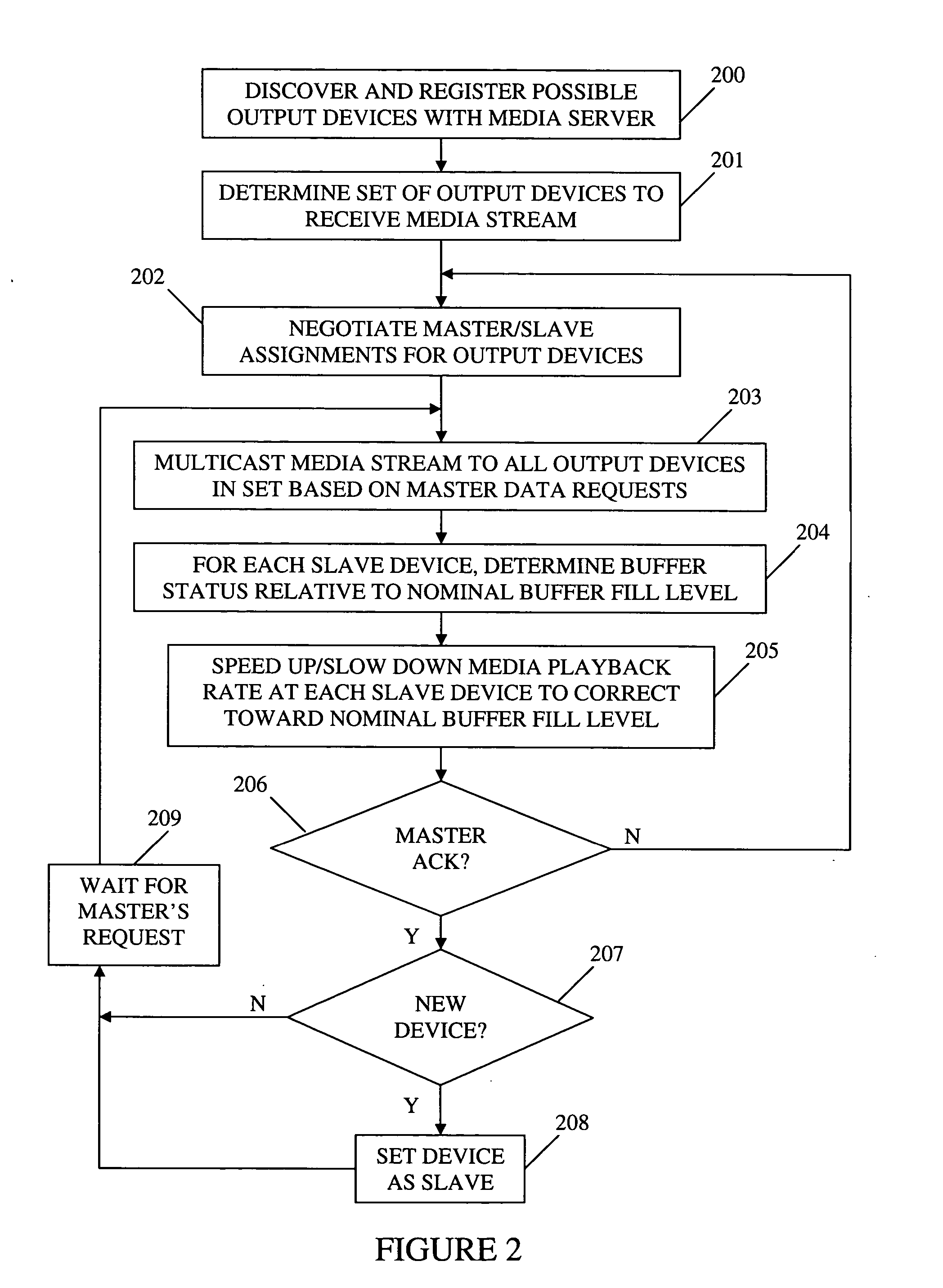

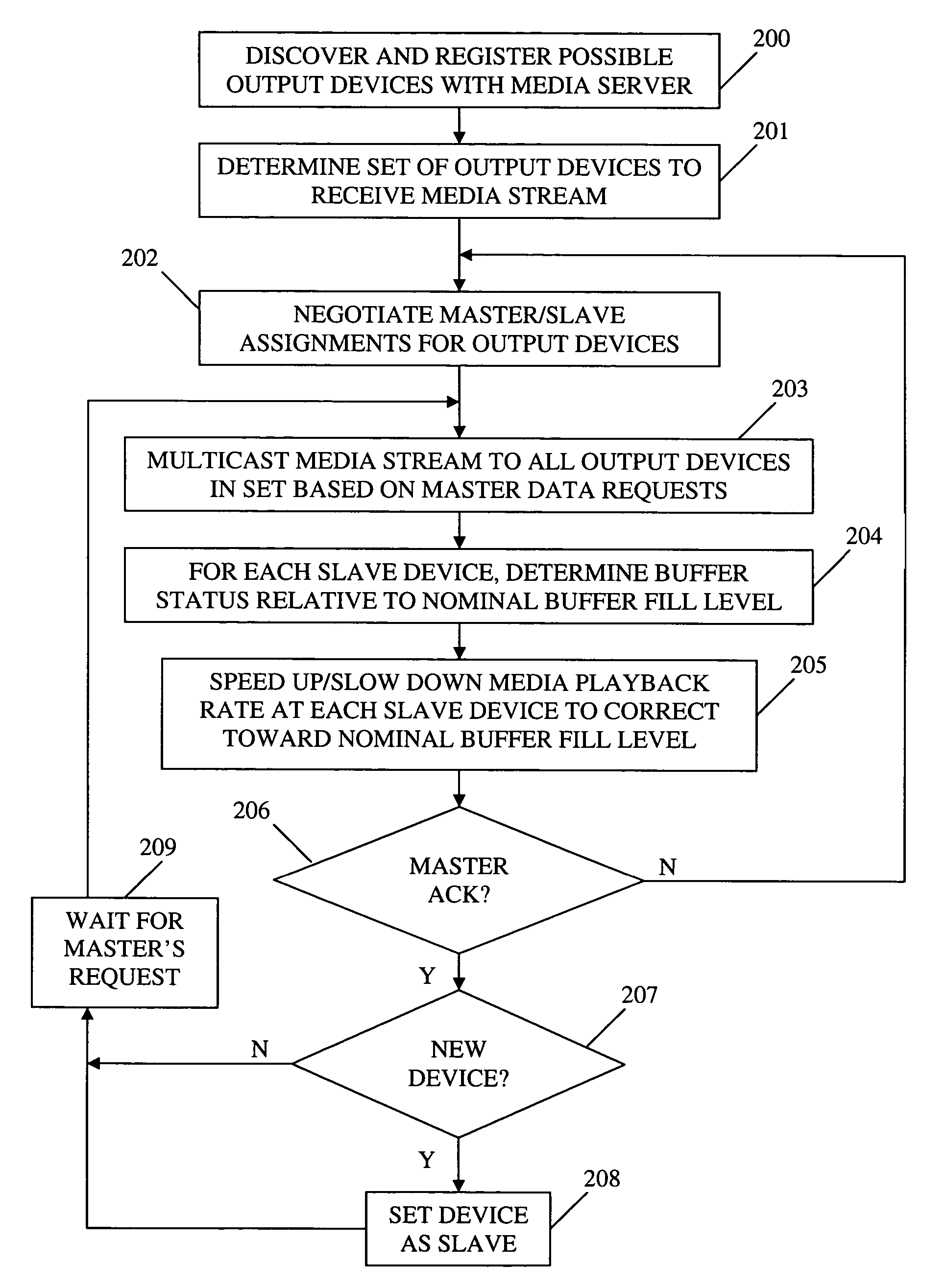

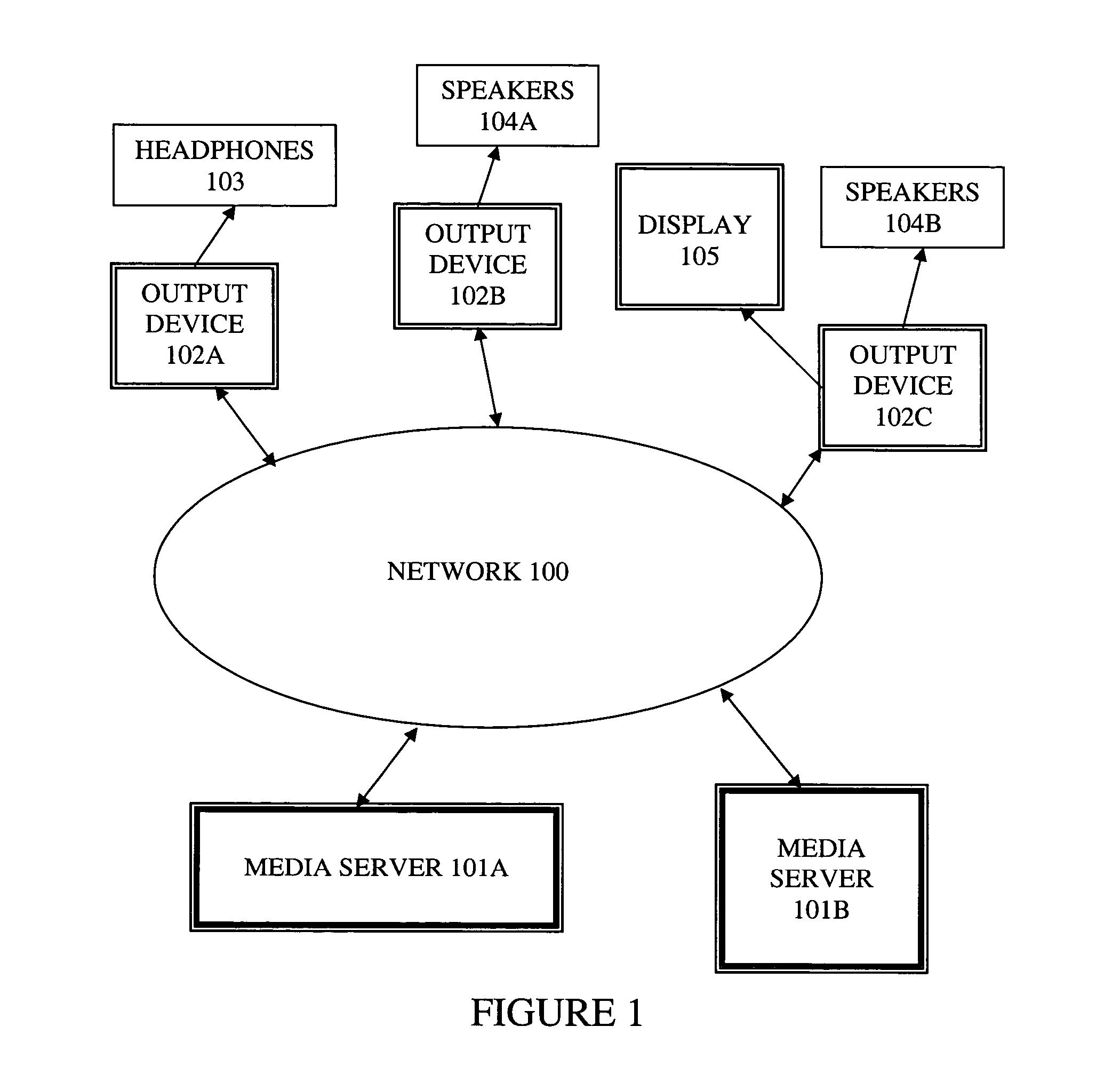

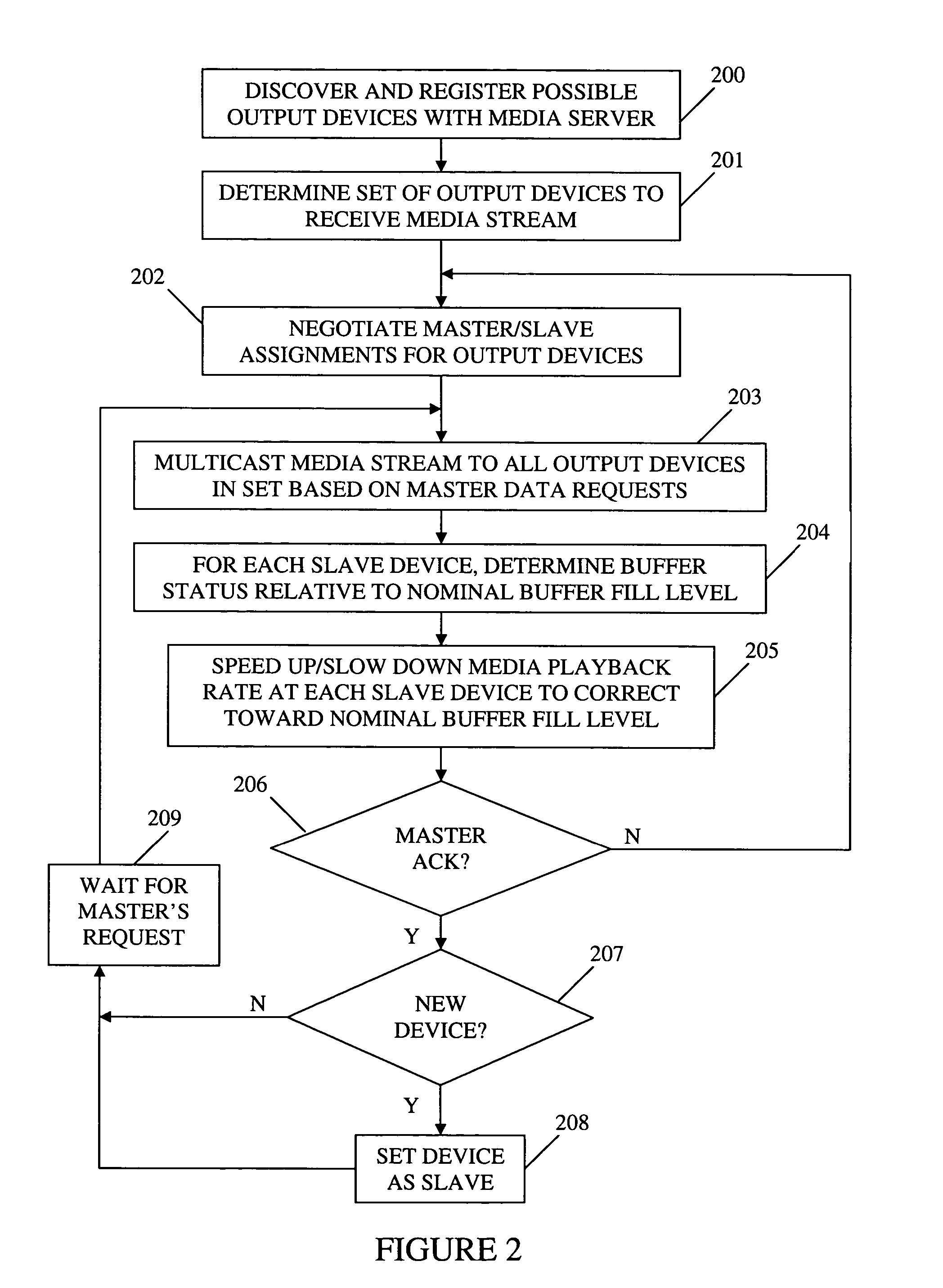

Method and apparatus for synchronizing playback of streaming media in multiple output devices

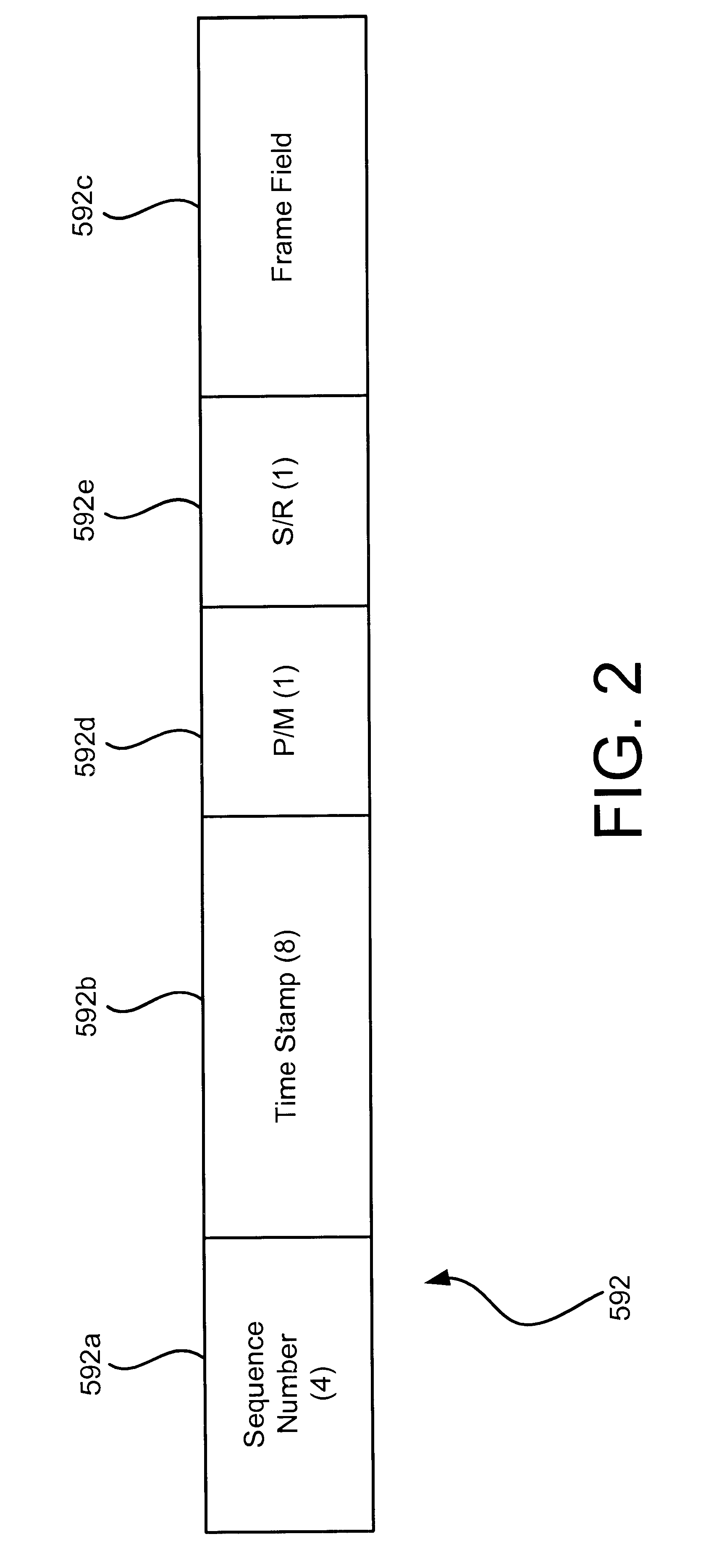

ActiveUS20060149850A1Maintaining average timing synchronizationError preventionTransmission systemsStreaming dataTimestamp

A method and apparatus for synchronizing streaming media with multiple output devices. One or more media servers serve media streams to one or more output devices (i.e., players). For playback synchronization, one output device is the “master”, whereas the remaining output devices are “slaves”. More data is requested from the media server by the “master” device to maintain a nominal buffer fill level over time. The “slave” devices receive streamed data from the media server at the rate determined by the master device's data requests, and the average rate of data flow over the streaming network is thus controlled by the frequency of the single “master” device's crystal. “Slave” devices make playback rate corrections to maintain respective buffer fill levels within upper and lower threshold levels. For slow networks, each media data packet timestamp is calculated from the time the master's buffer reaches nominal level.

Owner:SNAP ONE LLC

Taint tracking mechanism for computer security

ActiveUS8510827B1Easy to trackAvoid modificationMemory loss protectionDigital data processing detailsInformation dispersalOperation mode

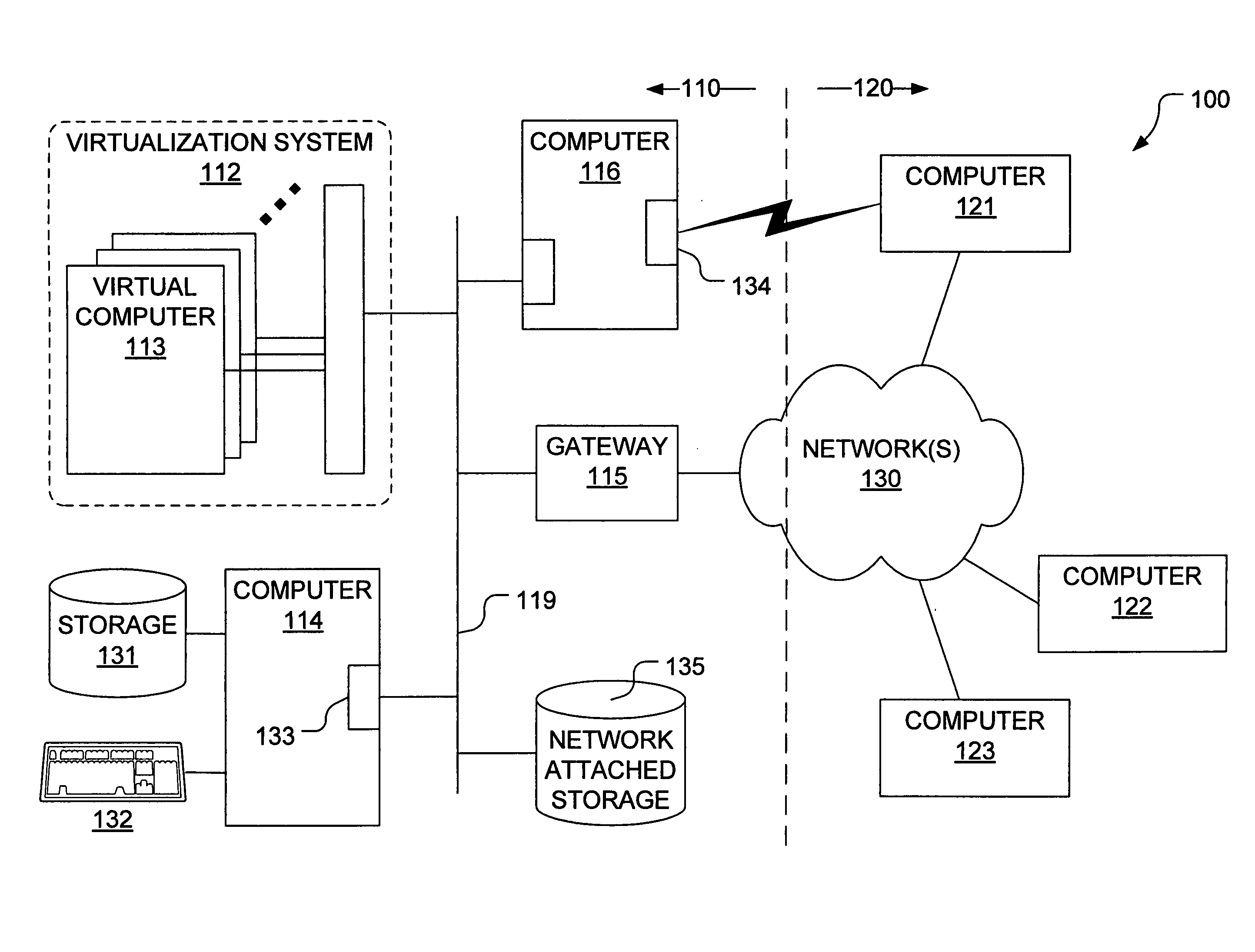

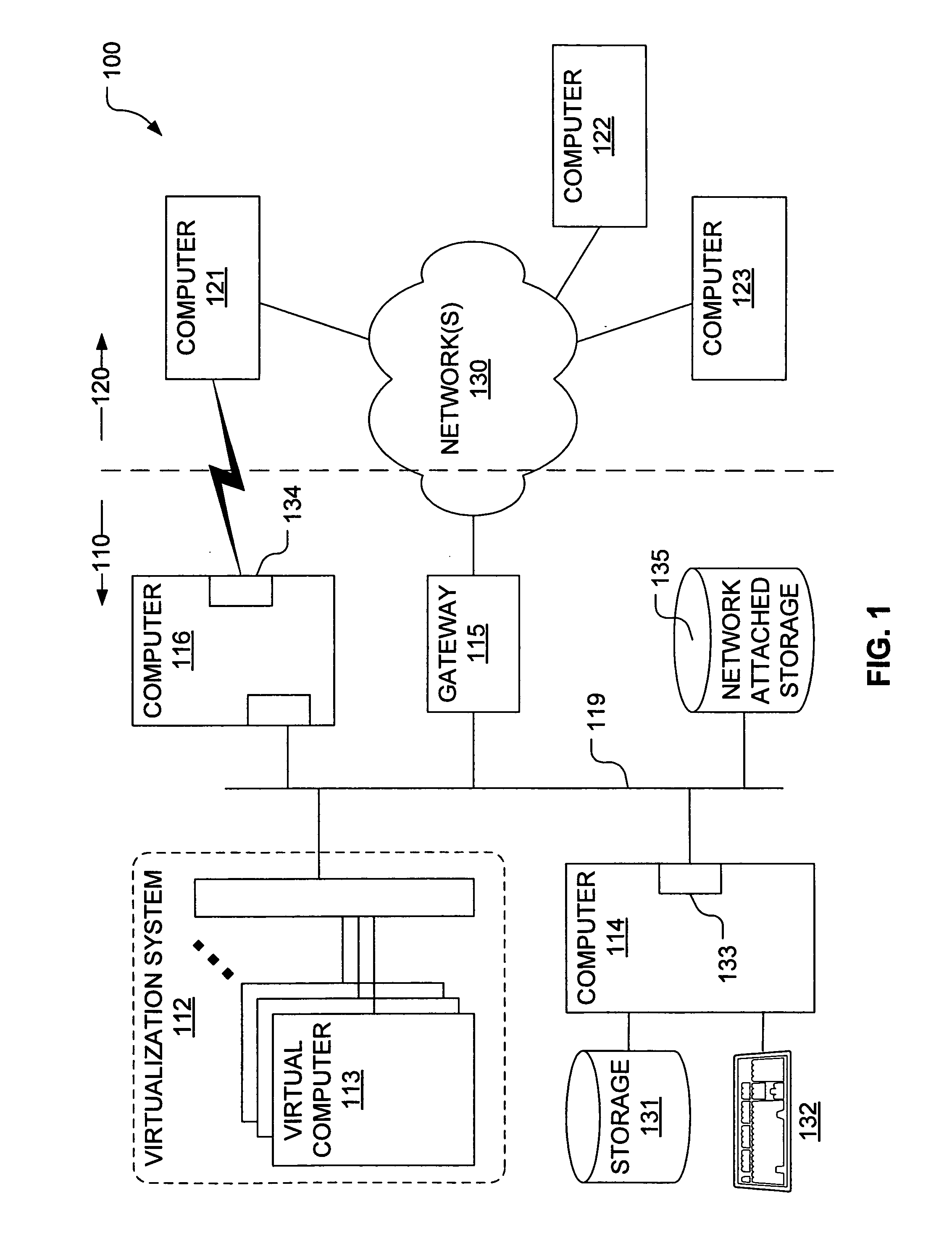

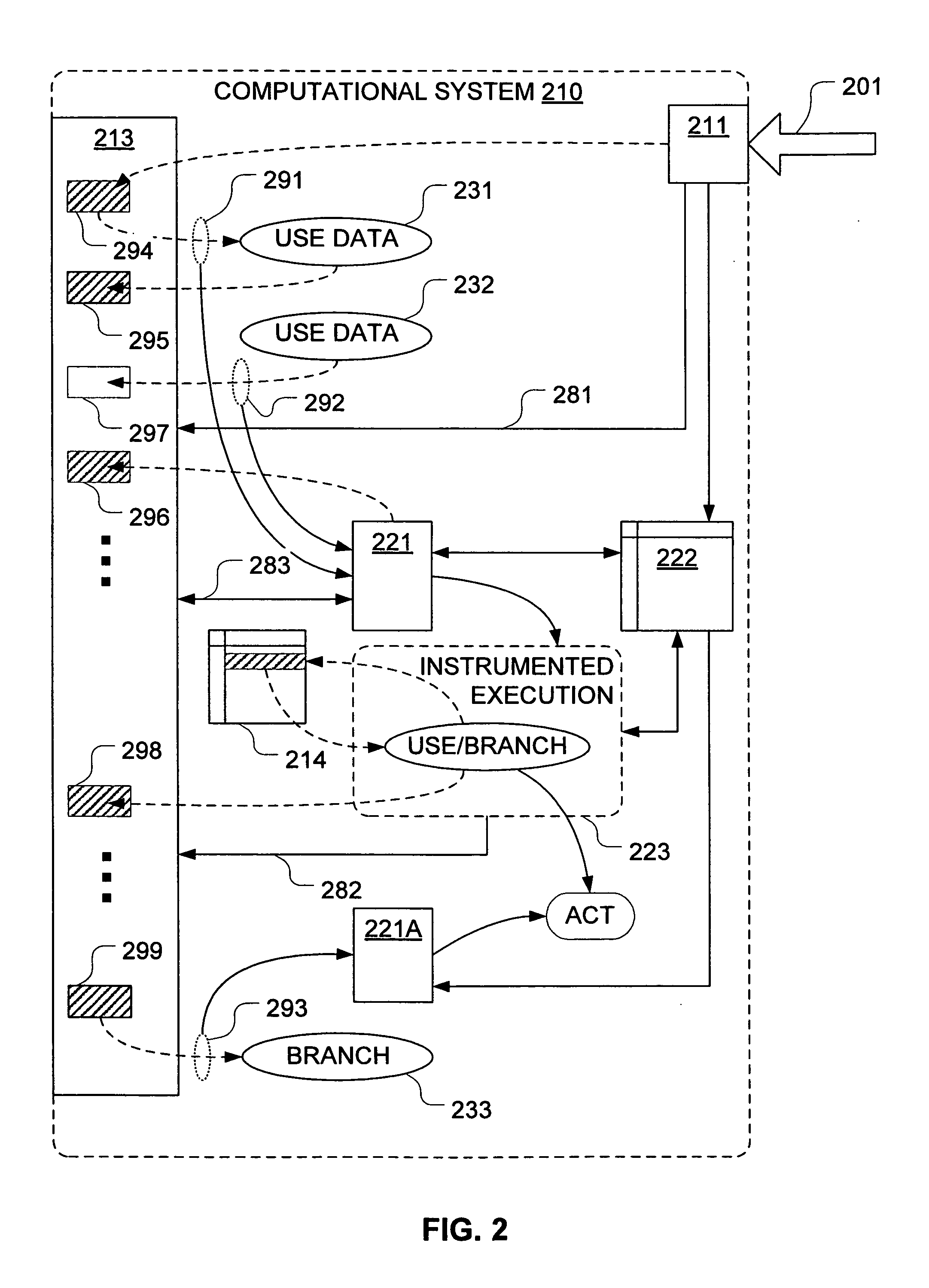

Mechanisms have been developed for securing computational systems against certain forms of attack. In particular, it has been discovered that, by maintaining and propagating taint status for memory locations in correspondence with information flows of instructions executed by a computing system, it is possible to provide a security response if and when a control transfer (or other restricted use) is attempted based on tainted data. In some embodiments, memory management facilities and related exception handlers can be exploited to facilitate taint status propagation and / or security responses. Taint tracking through registers of a processor (or through other storage for which access is not conveniently mediated using a memory management facility) may be provided using an instrumented execution mode of operation. For example, the instrumented mode may be triggered by an attempt to propagate tainted information to a register. In some embodiments, an instrumented mode of operation may be more generally employed. For example, data received from an untrusted source or via an untrusted path is often transferred into a memory buffer for processing by a particular service, routine, process, thread or other computational unit. Code that implements the computational unit may be selectively executed in an instrumented mode that facilitates taint tracking. In general, instrumented execution modes may be supported using a variety of techniques including a binary translation (or rewriting) mode, just-in-time (JIT) compilation / re-compilation, interpreted mode execution, etc. Using an instrumented execution mode and / or exception handler techniques, modifications to CPU hardware can be avoided if desirable.

Owner:VMWARE INC

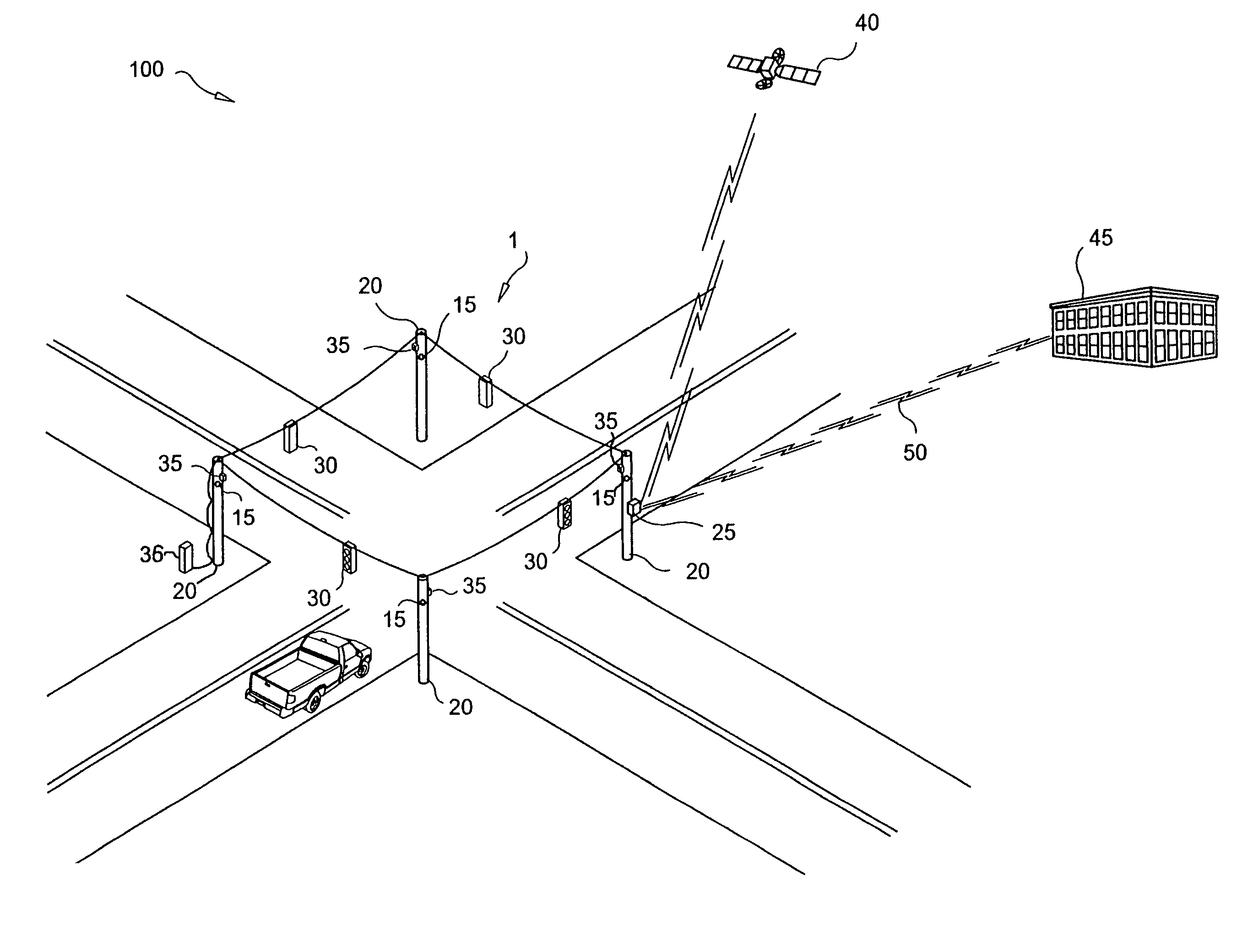

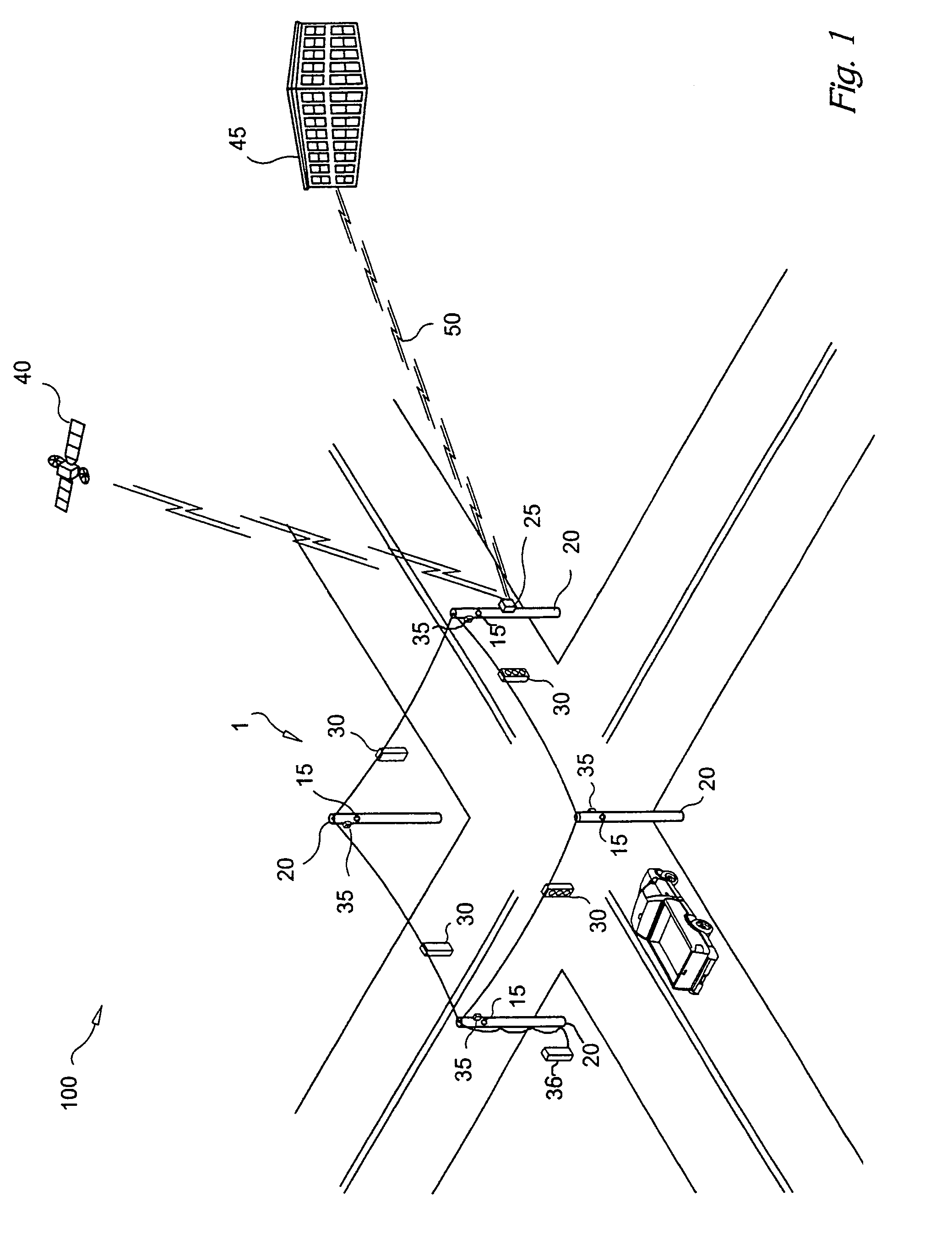

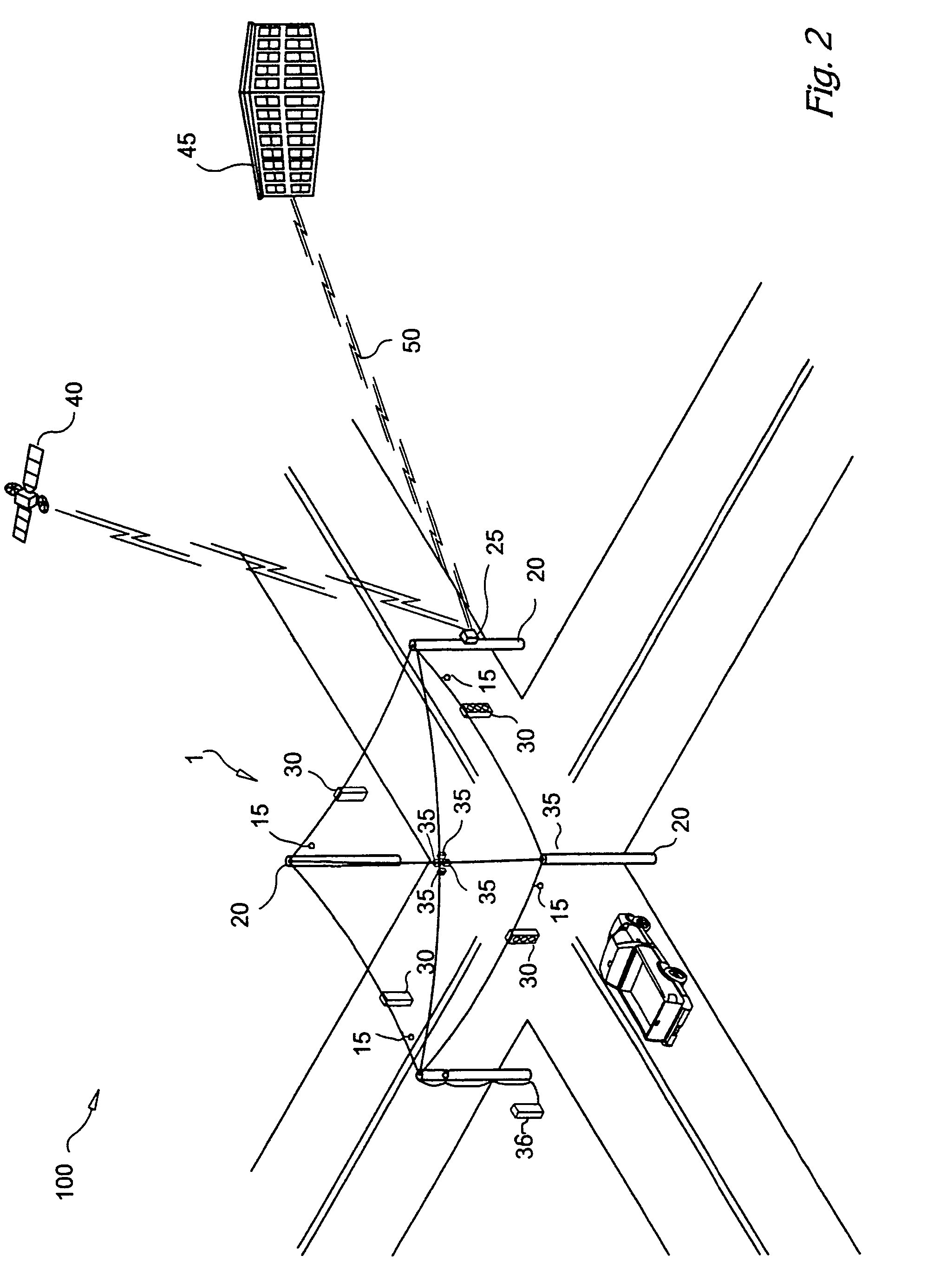

Advanced automobile accident detection, data recordation and reporting system

InactiveUS7348895B2Reduce the burden onCost effectiveControlling traffic signalsRegistering/indicating working of vehiclesSuccessful transmissionTelecommunications link

A system for monitoring a location to detect and report a vehicular incident, comprising a transducer for detecting acoustic waves at the location, and having an audio output; a processor for determining a probable occurrence or impending occurrence of a vehicular incident, based at least upon said audio output; an imaging system for capturing images of the location, and having an image output; a buffer, receiving said image output, and storing at least a portion of said images commencing at or before said determination; and a communication link, for selectively communicating said portion of said images stored in said buffer with a remote location and at least information identifying the location, wherein information stored in said buffer is preserved at least until an acknowledgement of receipt is received representing successful transmission through said communication link with the remote location.

Owner:LAGASSEY PAUL J

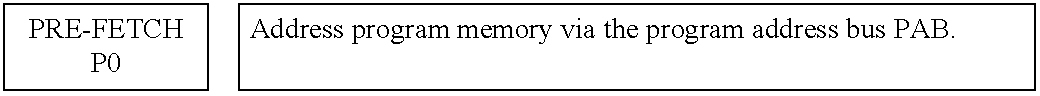

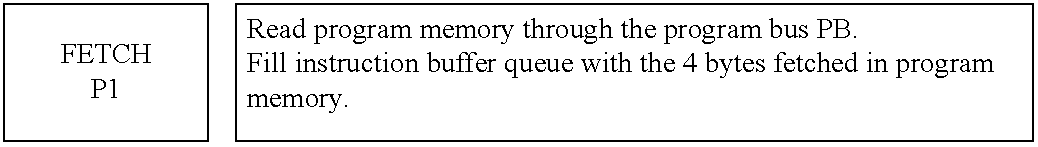

Microprocessors

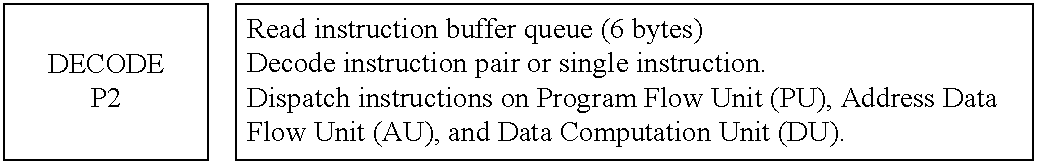

A processor (100) is provided that is a programmable fixed point digital signal processor (DSP) with variable instruction length, offering both high code density and easy programming. Architecture and instruction set are optimized for low power consumption and high efficiency execution of DSP algorithms, such as for wireless telephones, as well as pure control tasks. The processor includes an instruction buffer unit (106), a program flow control unit (108), an address / data flow unit (110), a data computation unit (112), and multiple interconnecting busses. Dual multiply-accumulate blocks improve processing performance. A memory interface unit (104) provides parallel access to data and instruction memories. The instruction buffer is operable to buffer single and compound instructions pending execution thereof. A decode mechanism is configured to decode instructions from the instruction buffer. The use of compound instructions enables effective use of the bandwidth available within the processor. A soft dual memory instruction can be compiled from separate first and second programmed memory instructions. Instructions can be conditionally executed or repeatedly executed. Bit field processing and various addressing modes, such as circular buffer addressing, further support execution of DSP algorithms. The processor includes a multistage execution pipeline with pipeline protection features. Various functional modules can be separately powered down to conserve power. The processor includes emulation and code debugging facilities with support for cache analysis.

Owner:TEXAS INSTR INC

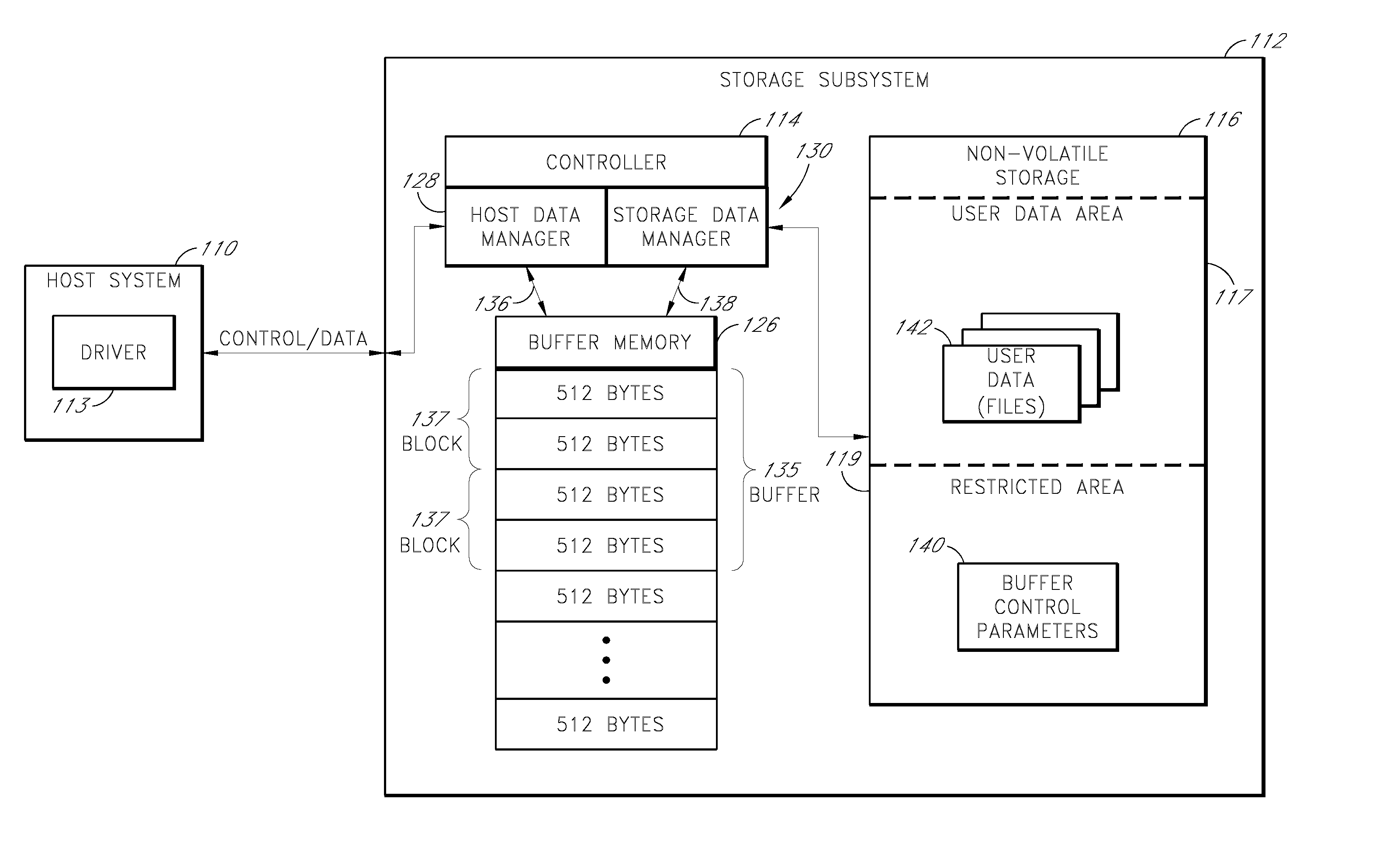

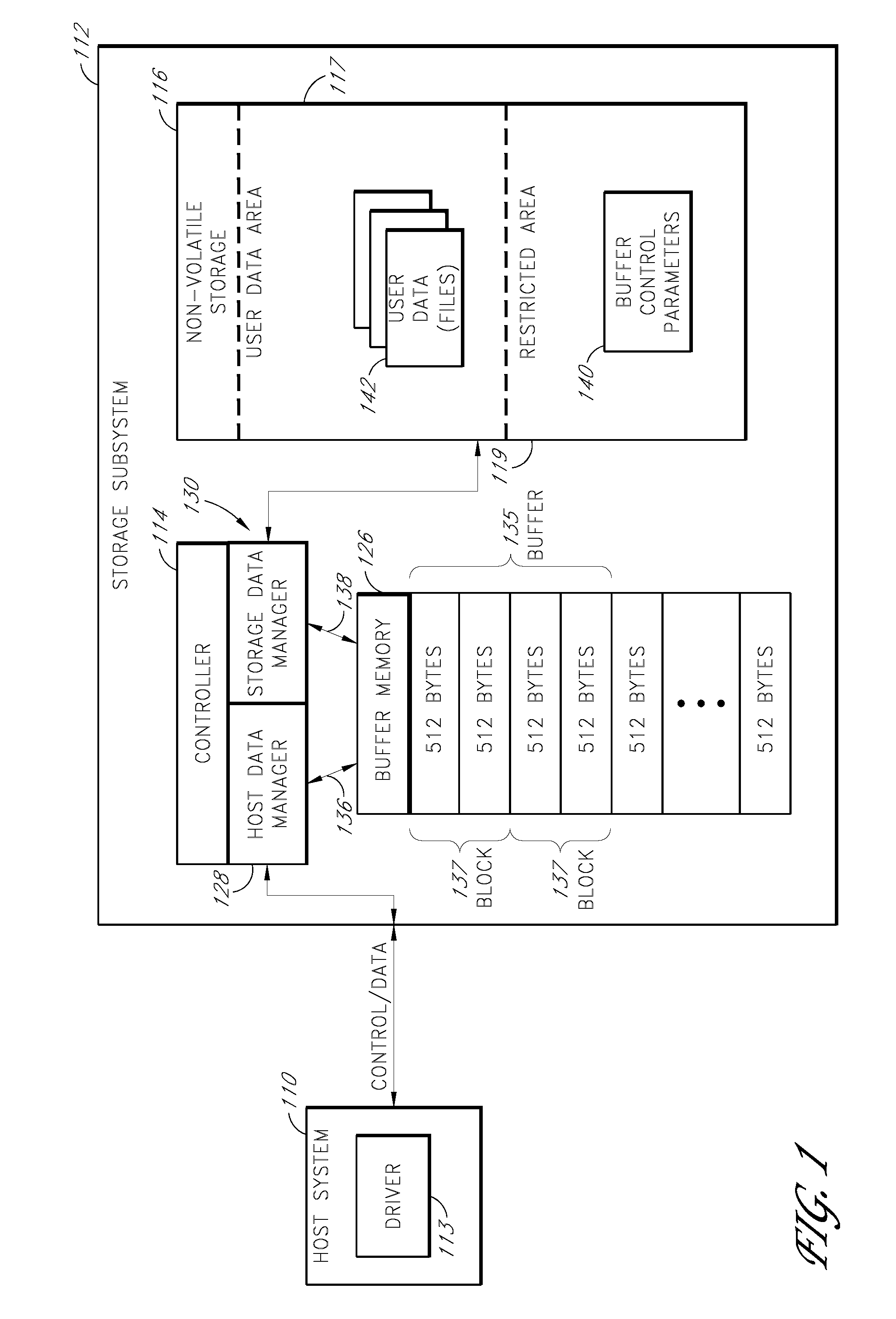

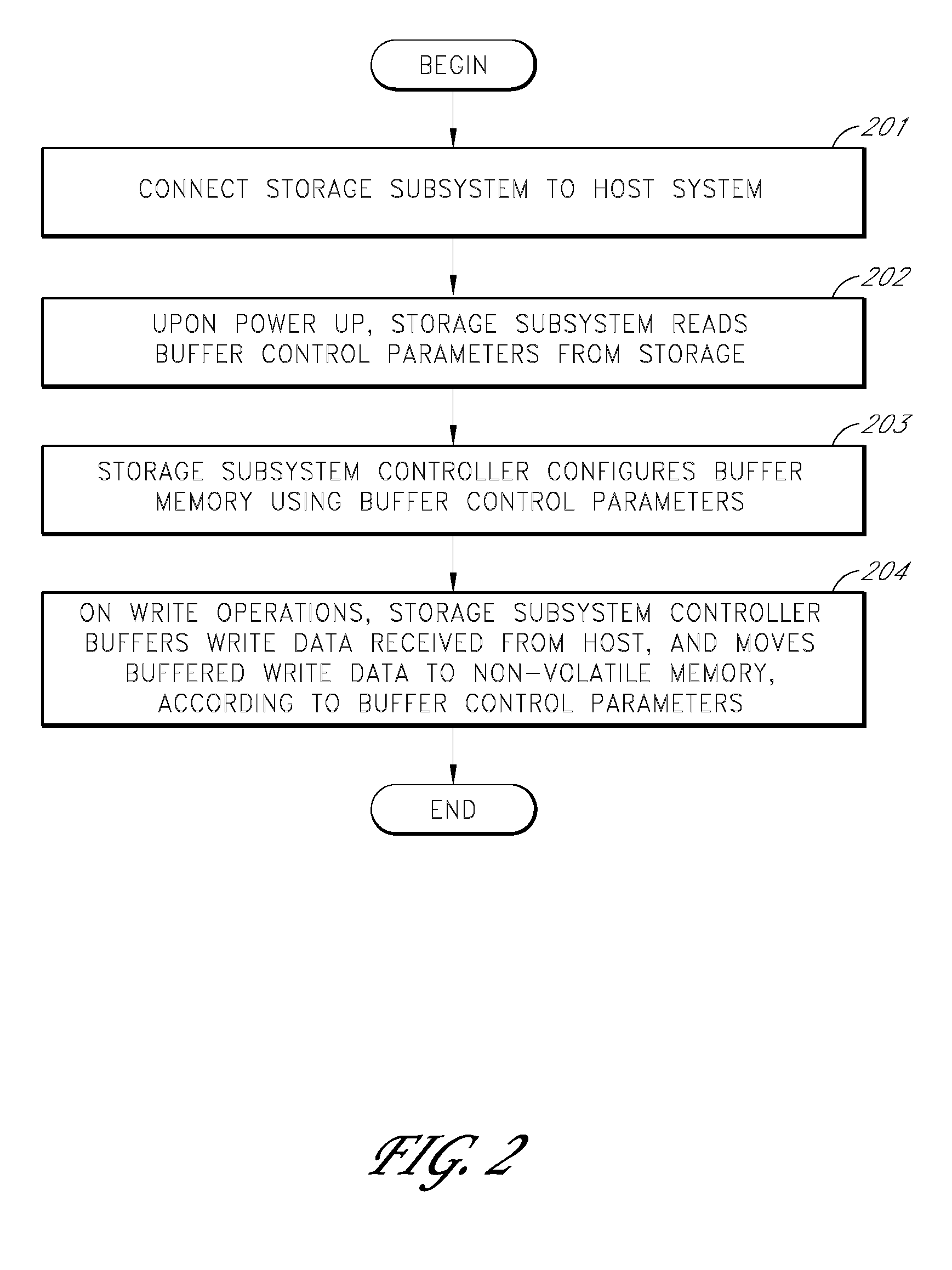

Storage subsystem with configurable buffer

ActiveUS7596643B2Reduce riskImprove performanceRecord information storageInput/output processes for data processingWrite bufferData loss

Owner:WESTERN DIGITAL TECH INC

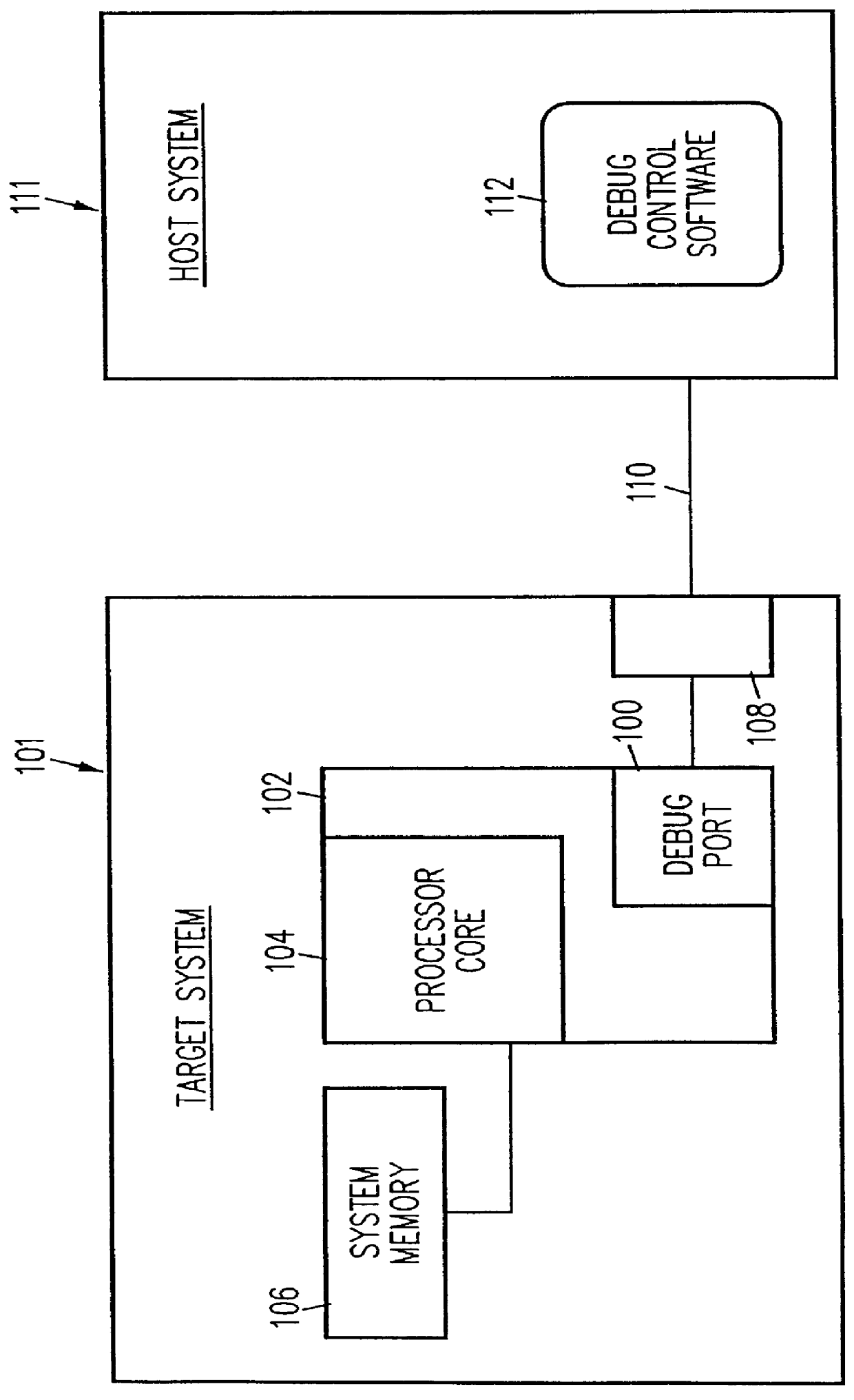

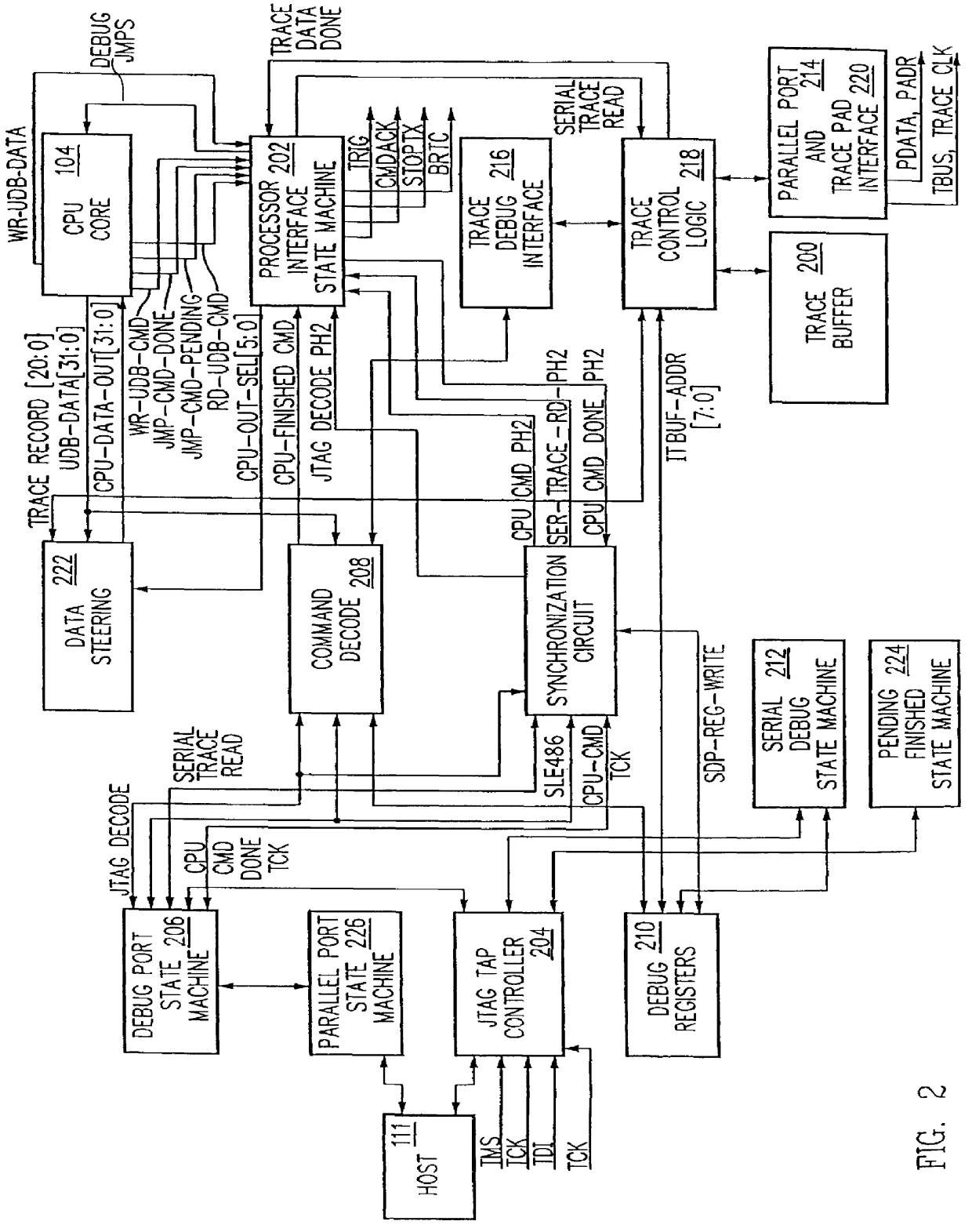

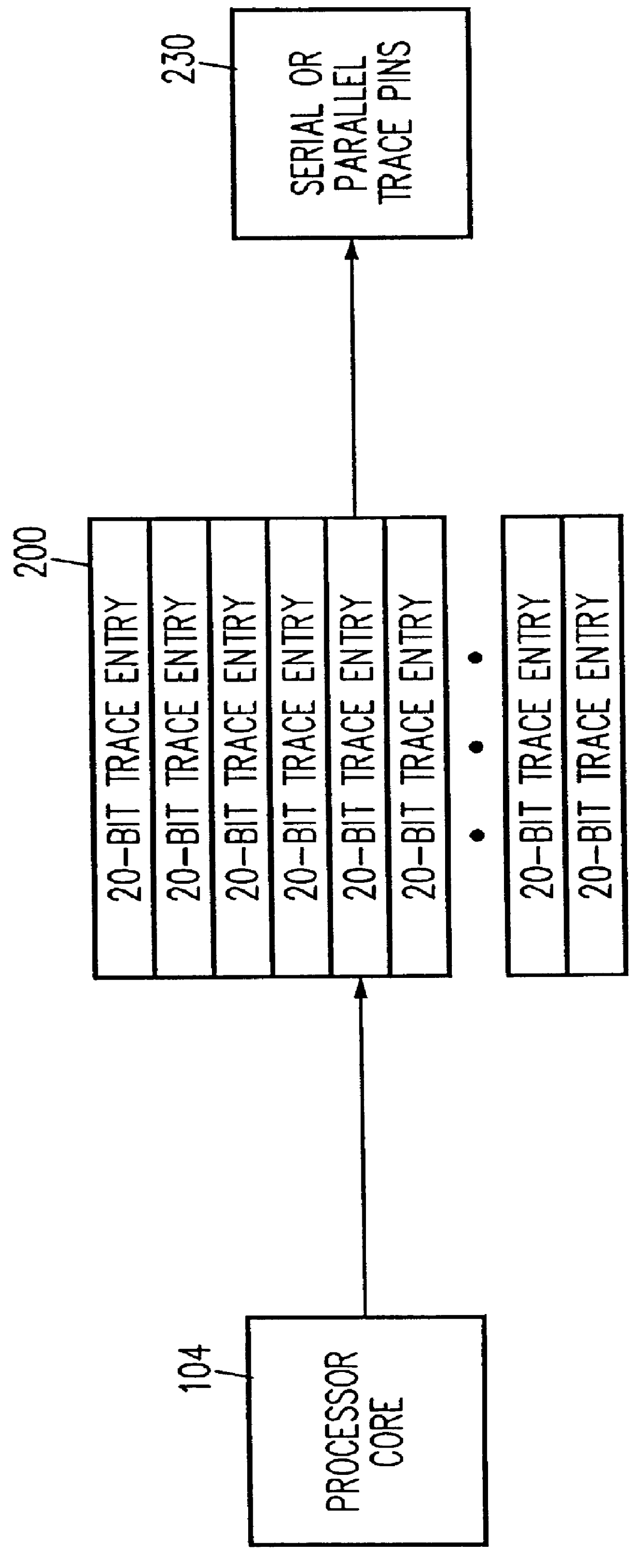

Debug interface including a compact trace record storage

InactiveUS6094729AReliability increasing modificationsHardware monitoringInformation typeParallel computing

In-circuit emulation (ICE) and software debug facilities are included in a processor via a debug interface that interfaces a target processor to a host system. The debug interface includes a trace controller that monitors signals produced by the target processor to detect specified conditions and produce a trace record of the specified conditions including a notification of the conditions are selected information relating to the conditions. The trace controller formats a trace information record and stores the trace information record in a trace buffer in a plurality of trace data storage elements. The trace data storage elements have a format that includes a trace code (TCODE) field indicative of a type of trace information and a trace data (TDATA) field indicative of a type of trace information data.

Owner:GLOBALFOUNDRIES INC

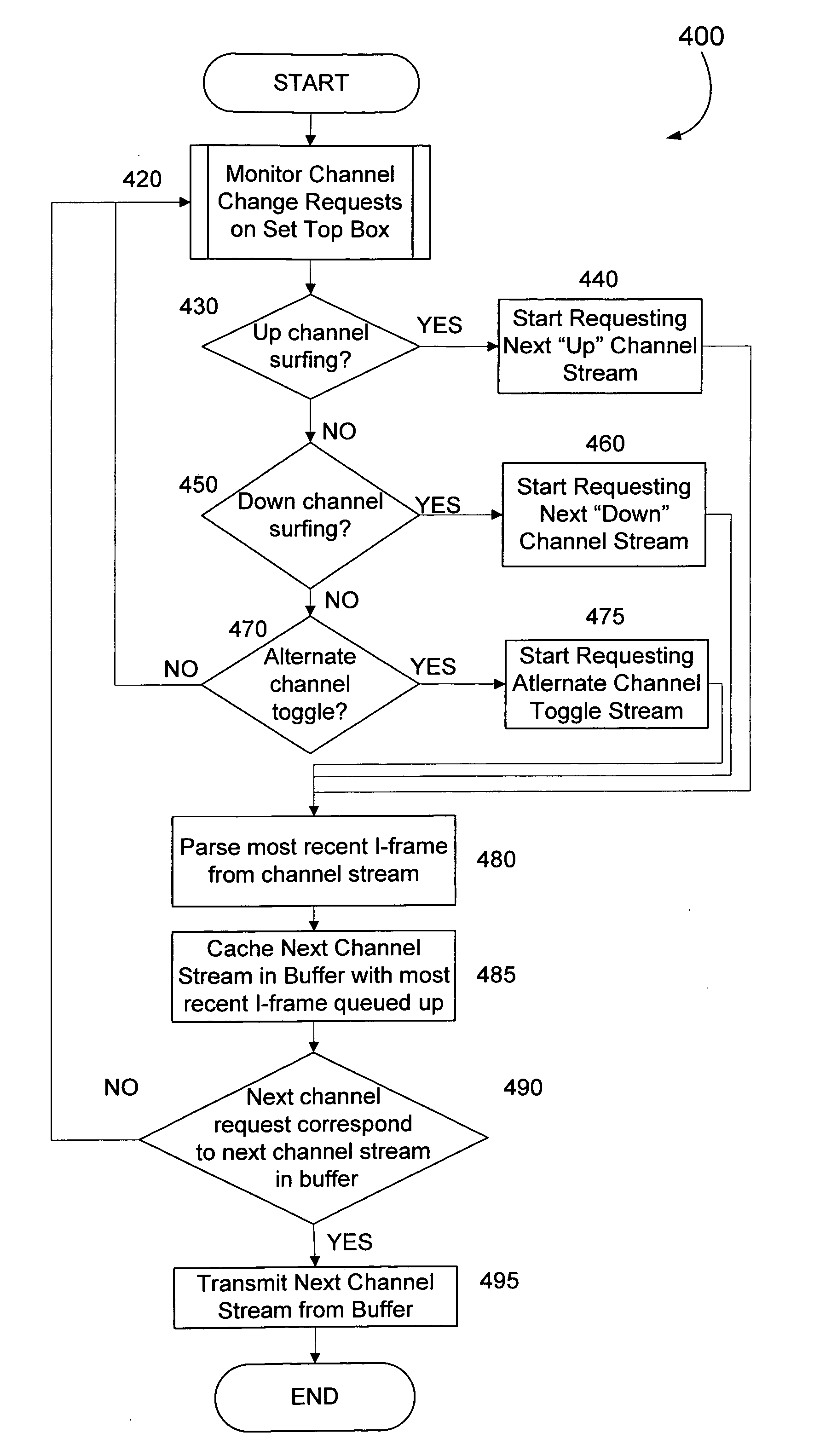

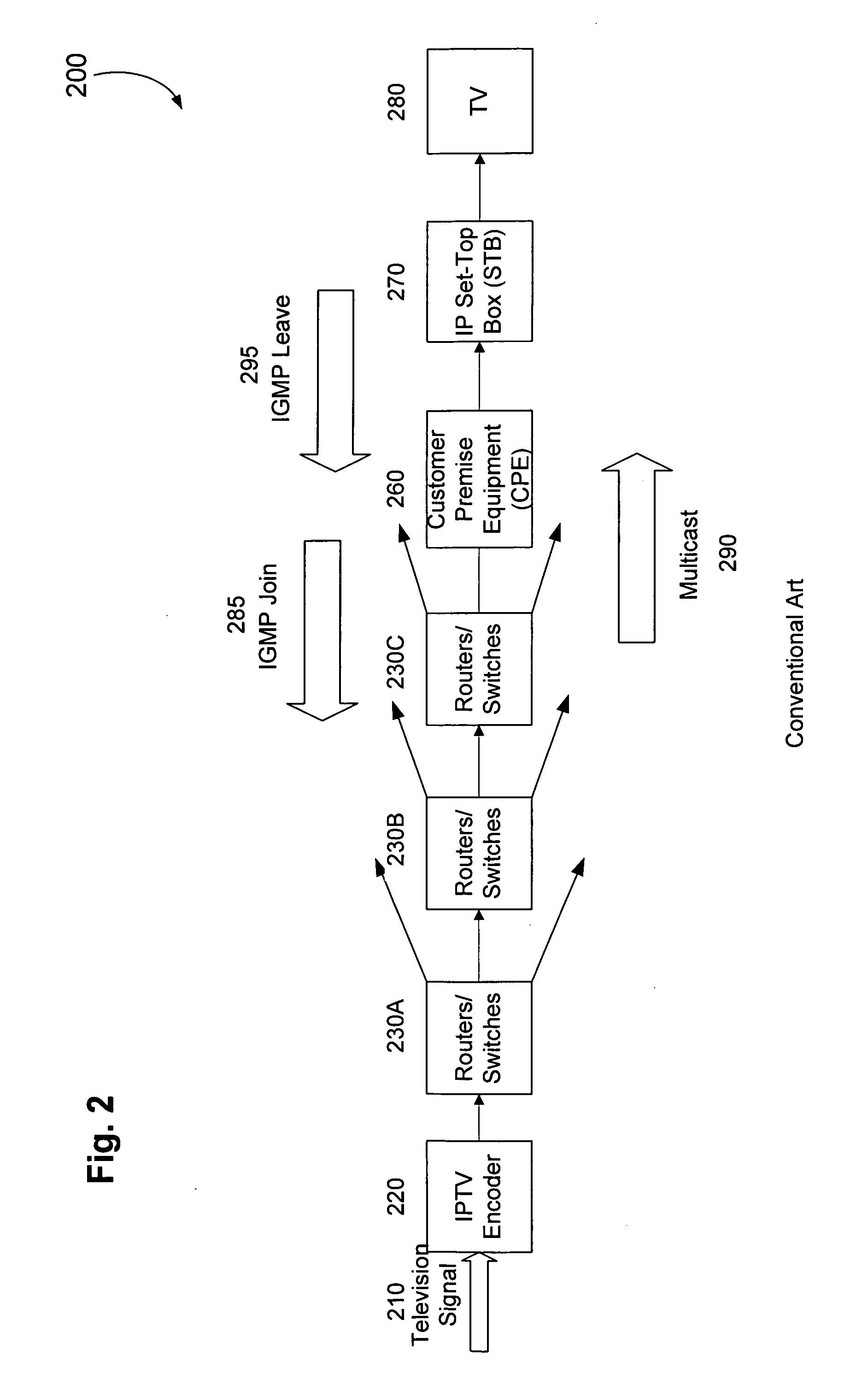

Minimizing channel change time for IP video

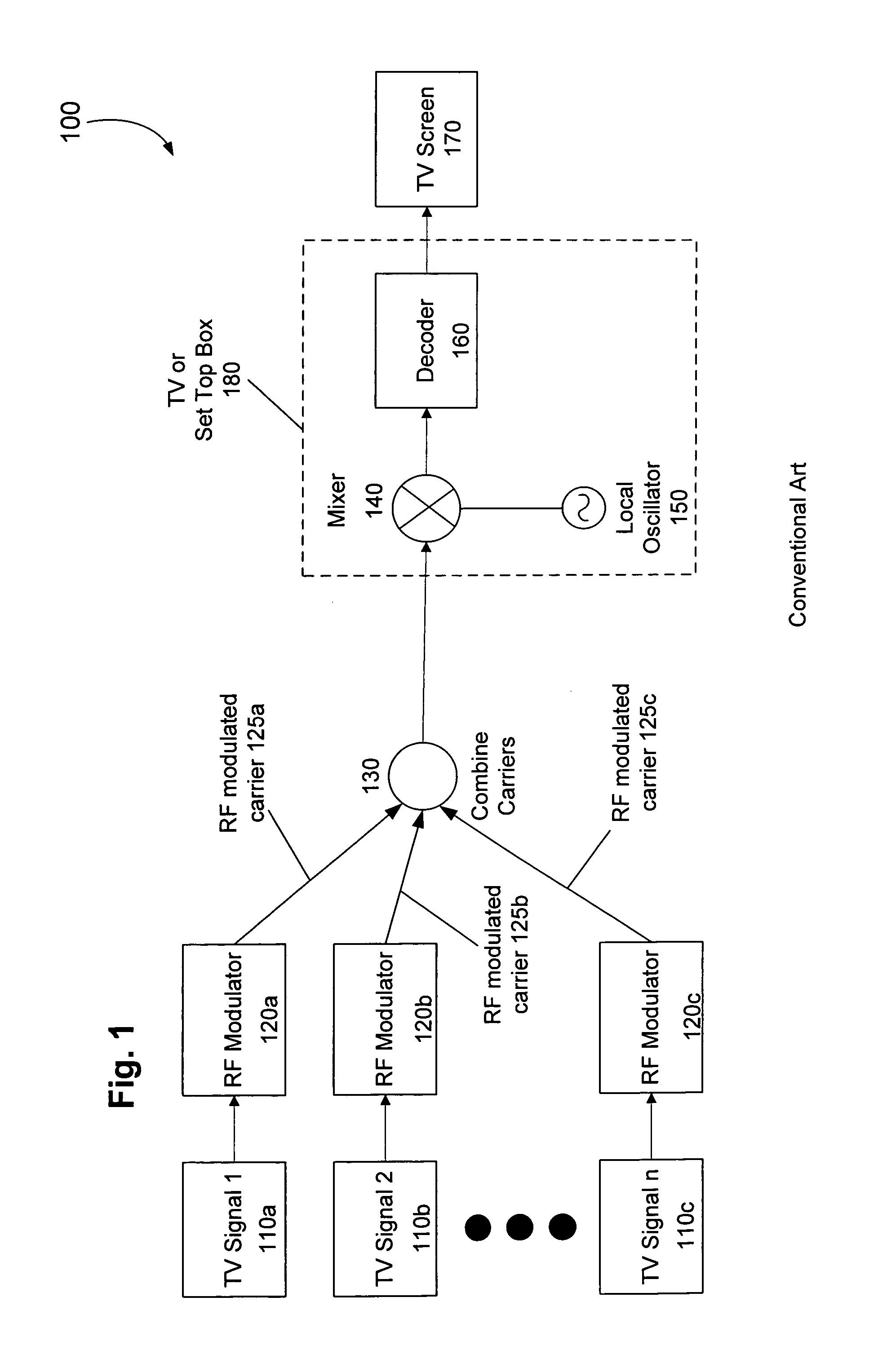

InactiveUS20060075428A1Reduce delaysSlow changeTelevision system detailsColor television detailsDigital videoSet top box

Subscribers to Internet Protocol TV services usually complain about one key characteristic—the additional delay digital video introduces when subscribers change channels, especially when subscribers “channel surf.” The problem is traced to at least three sources of delay in a convention Internet Protocol video deployment system. The channel changing delay can be minimized by caching video packets for the most likely next channel in a buffer in anticipation of a television subscriber changing channels and / or by having an adaptable buffer length in the set top box.

Owner:ENABLENCE USA FTTX NETWORKS

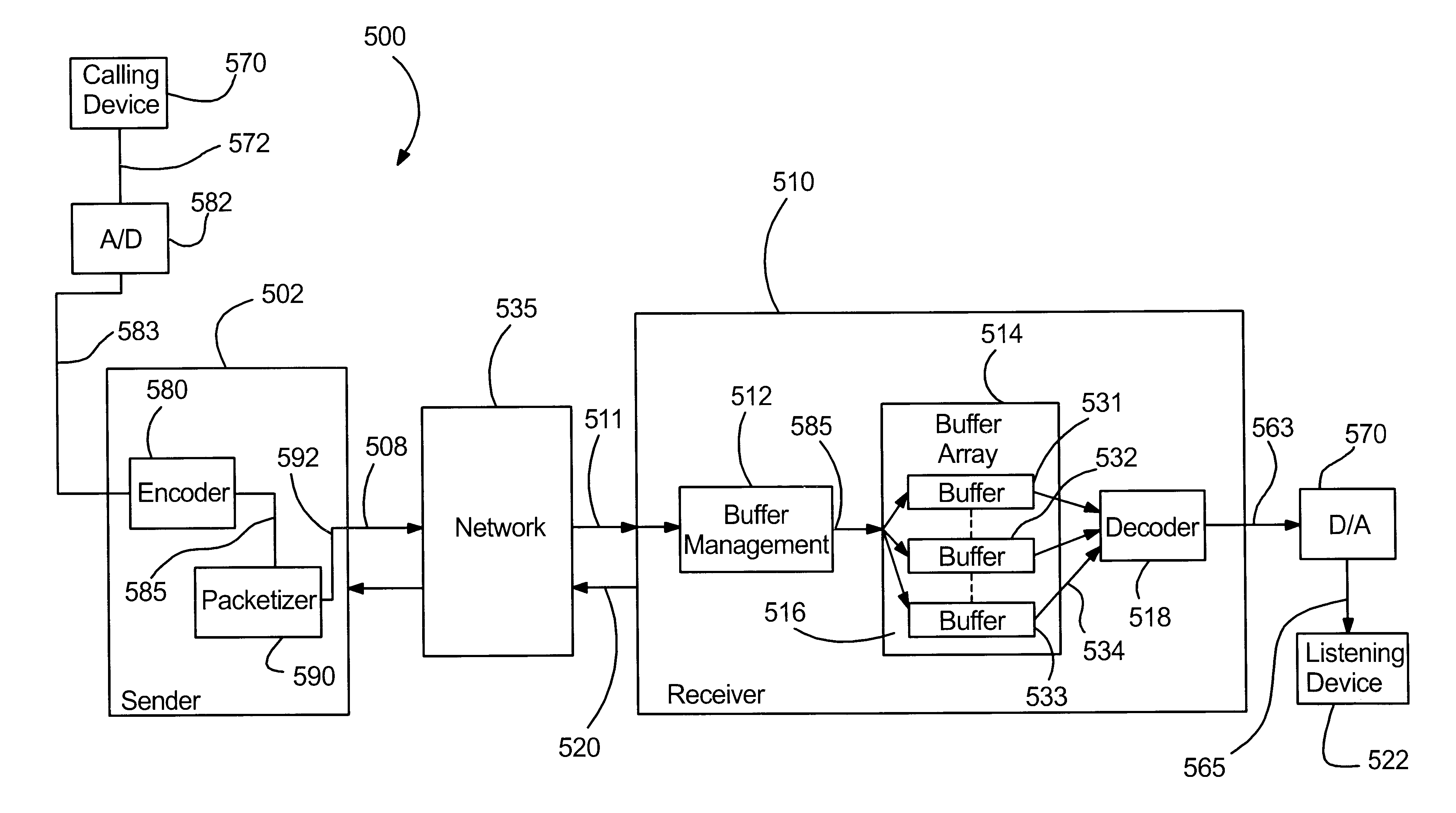

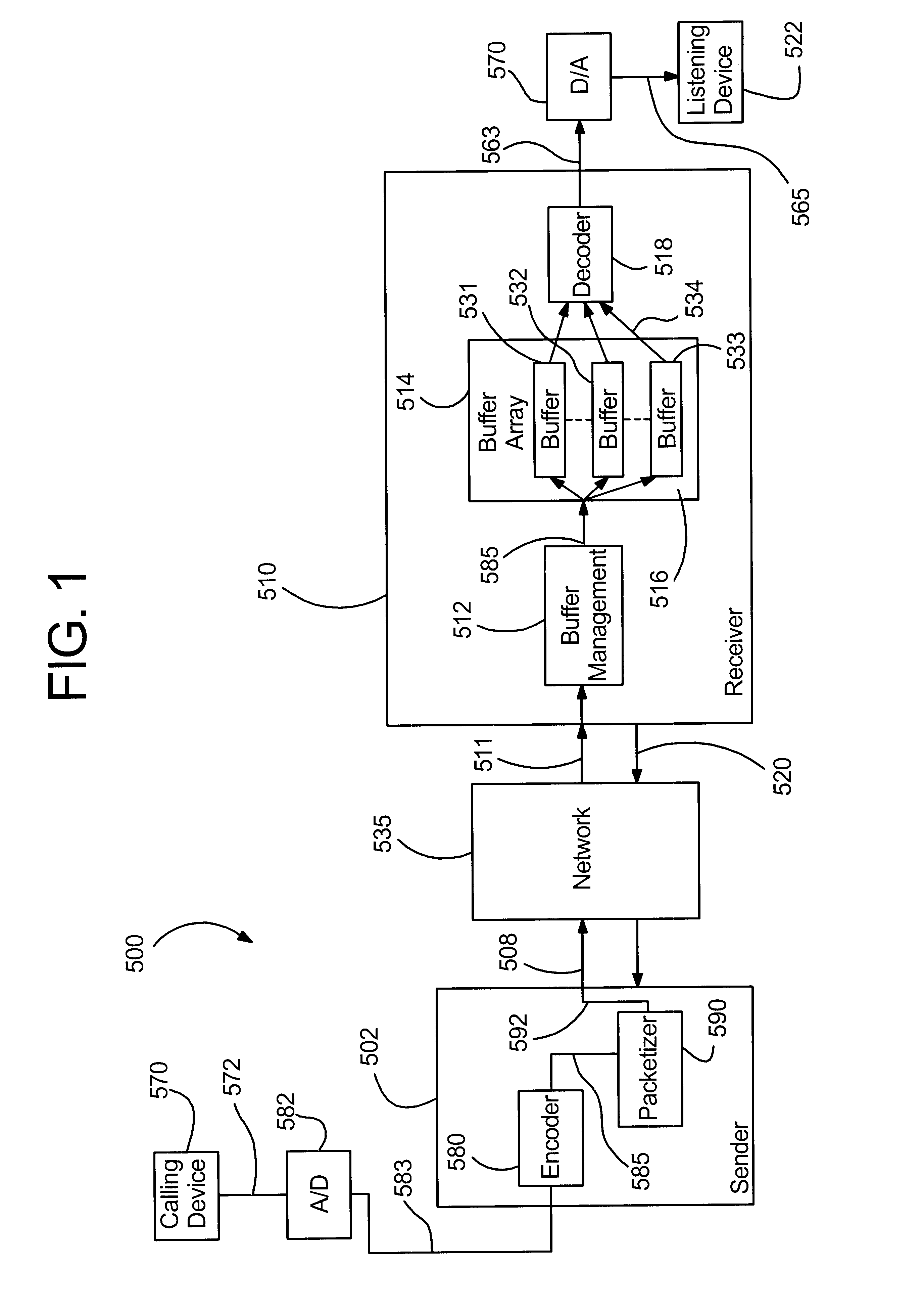

System for real time communication buffer management

A system and method for receiving a transported stream of data packets includes a buffer management device for receiving the data packets, unpacking the data packets, and forwarding a stream of data frames. The system and method further includes a first jitter buffer for receiving the data frames from the buffer management device and buffering the data frames, and a second jitter buffer for receiving the data frames from the buffer management device and buffering the data frames. In addition, the system and method includes a computationally-desirable jitter buffer selected from the first jitter buffer or the second jitter buffer by comparing a first jitter buffer quality and a second jitter buffer quality. The system and method also includes a decoder for receiving buffered data frames from the computationally-desirable jitter buffer.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

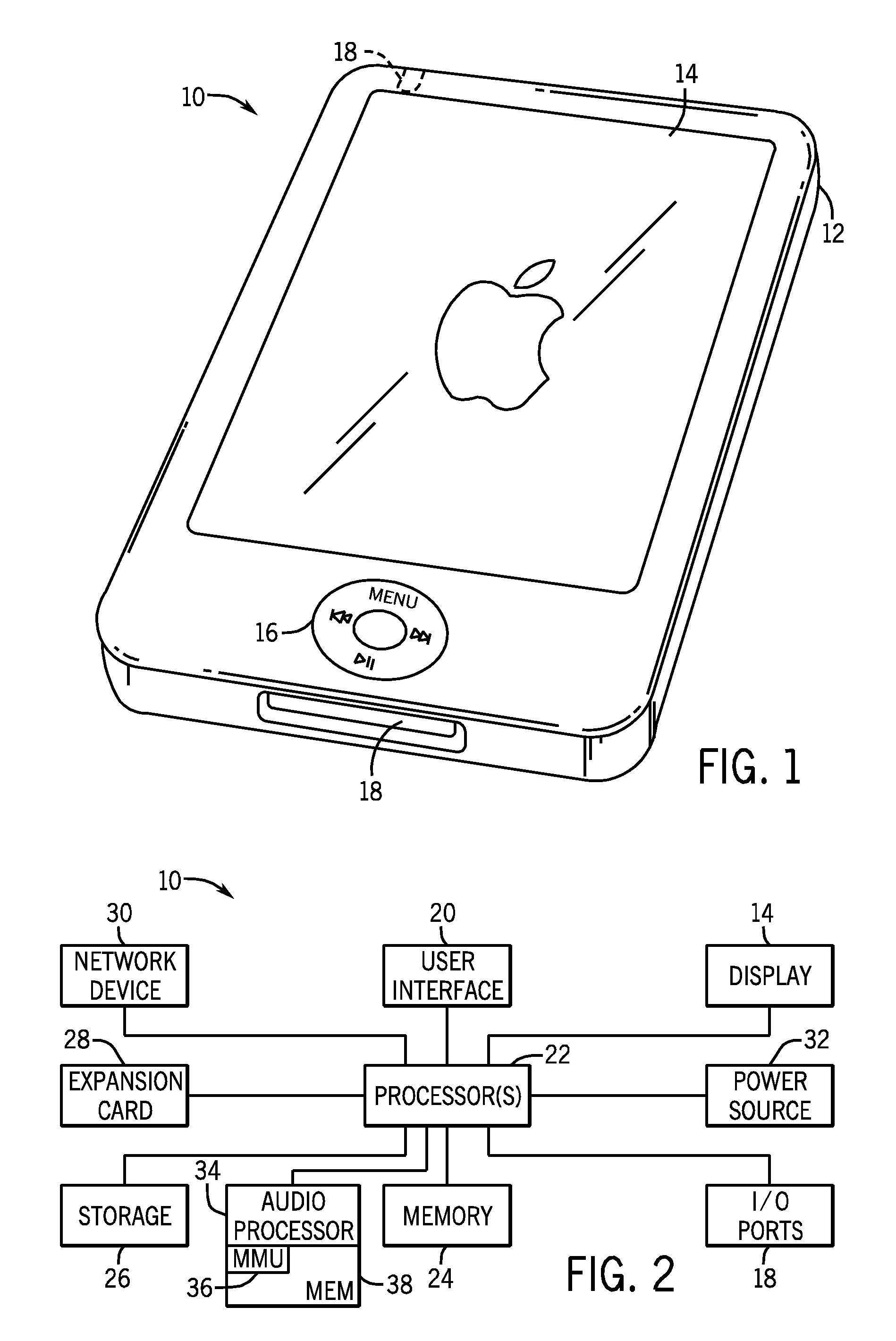

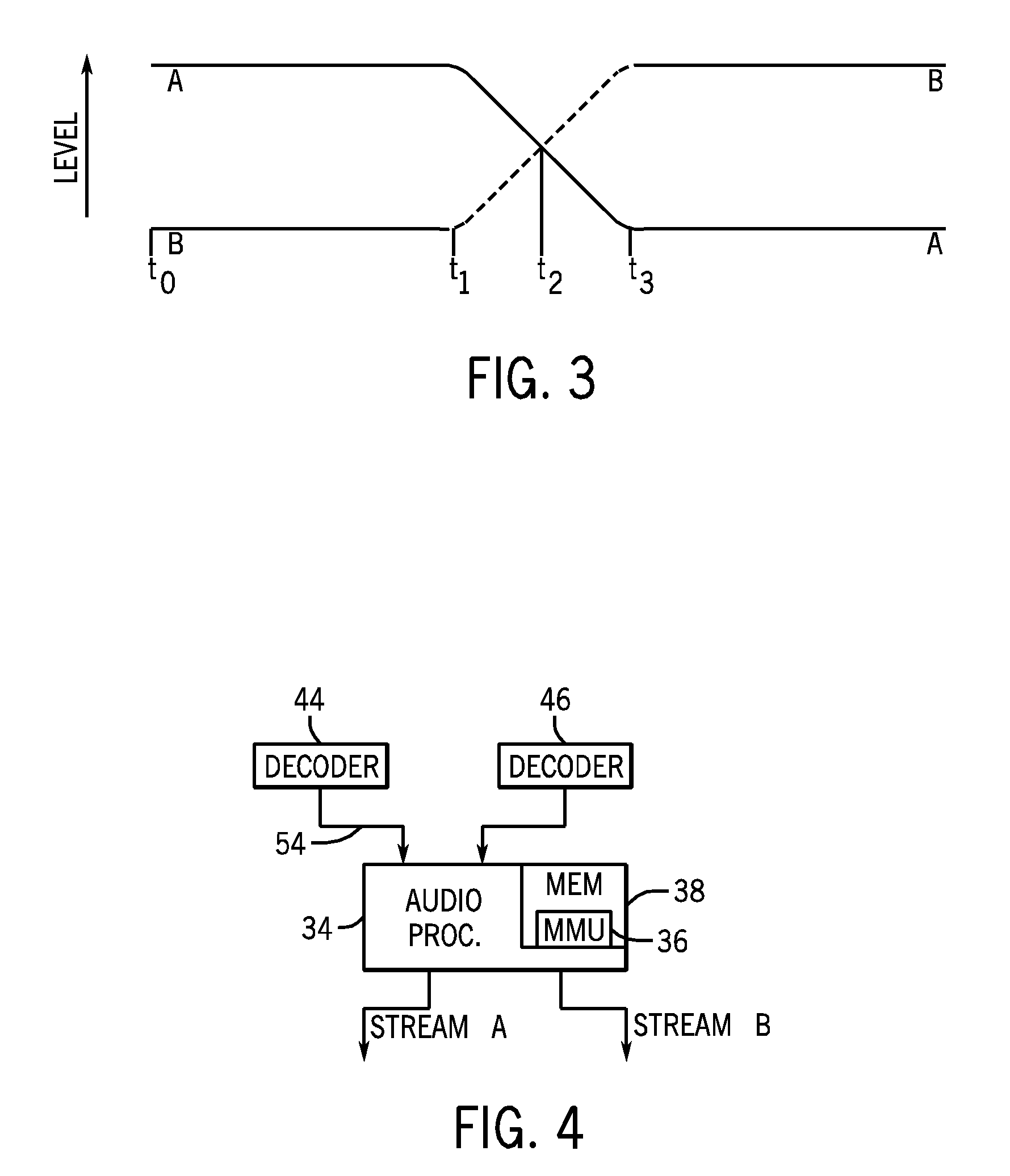

Systems and Methods for Memory Management and Crossfading in an Electronic Device

InactiveUS20100063825A1Sufficient dataLow costElectronic editing digitised analogue information signalsSpeech analysisCircular bufferAudio frequency

Systems and methods are disclosed for the management of memory used in a crossfading operation in an electronic device. In one embodiment, a processor is used to alternately decode two audio streams, one which is being faded out and one which is being faded in to implement a crossfade. The two audio streams may be encoded in the same or different formats and may be alternately decoded such that resource usage is reduced. The amount of decoded data of both audio streams and other parameters may determine which audio stream is to be actively decoded. In certain embodiments, the decoded data may be stored in a circular buffer, and a delta is determined between the decoded data and the empty space of the buffer.

Owner:APPLE INC

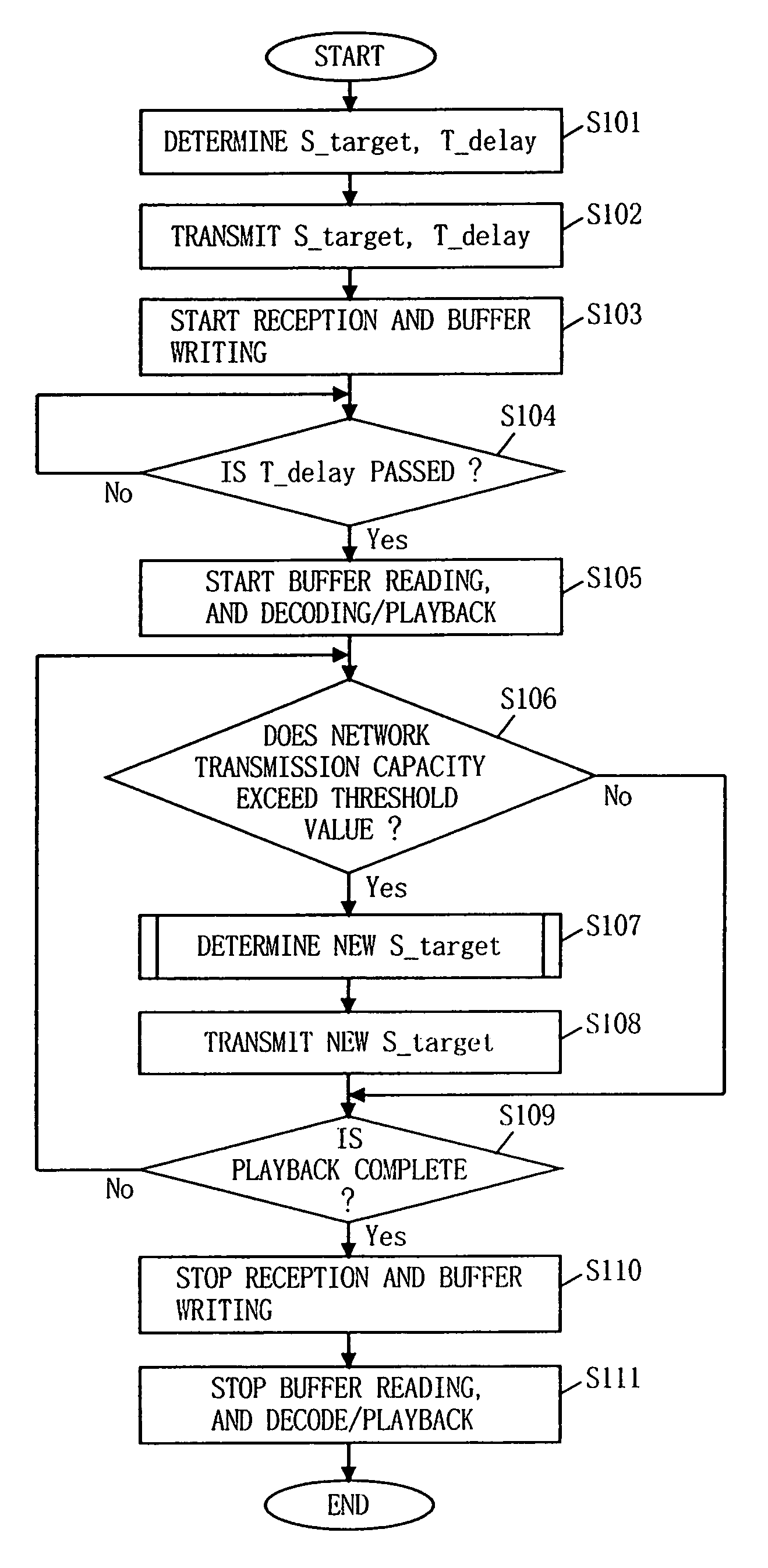

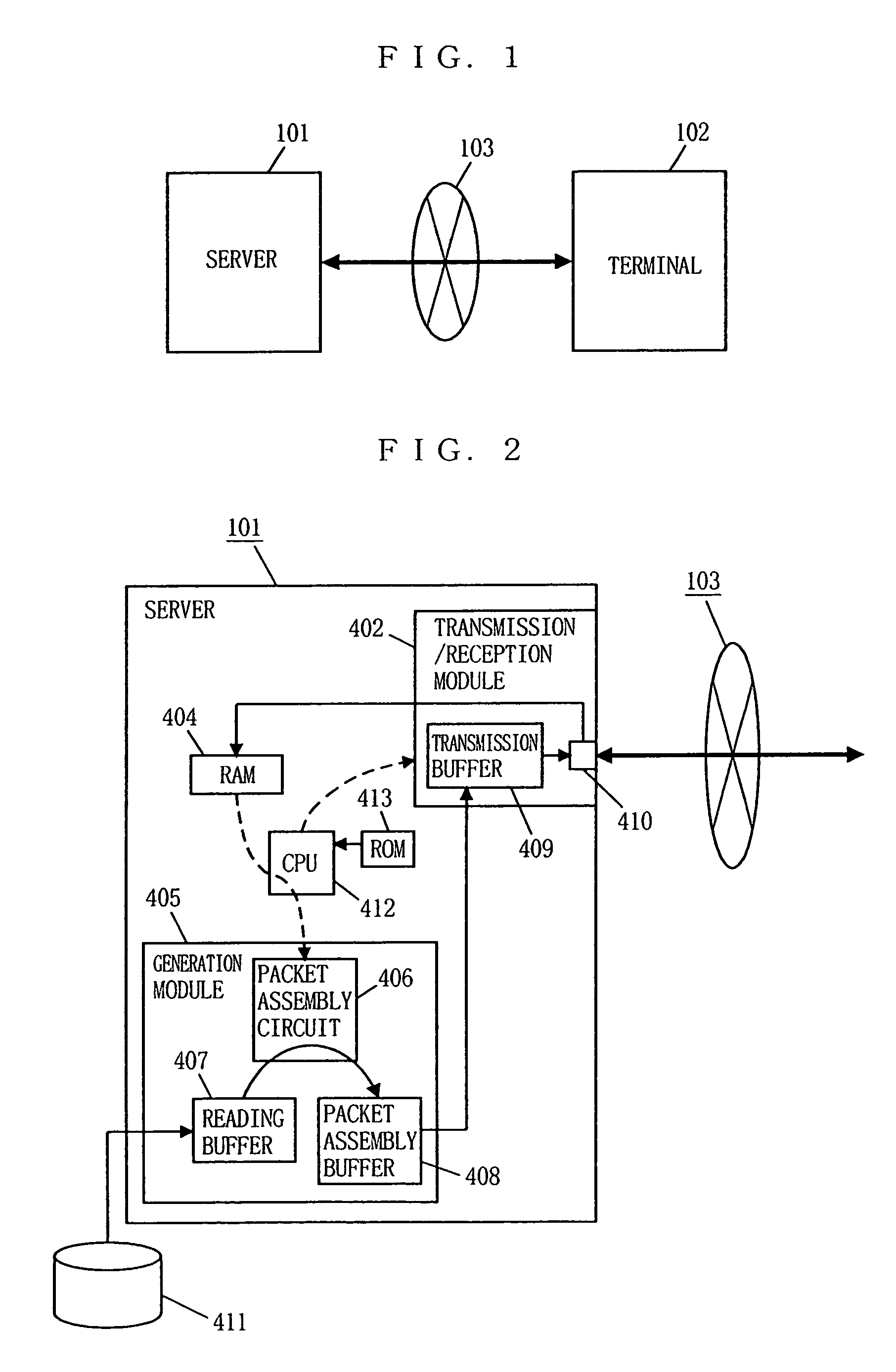

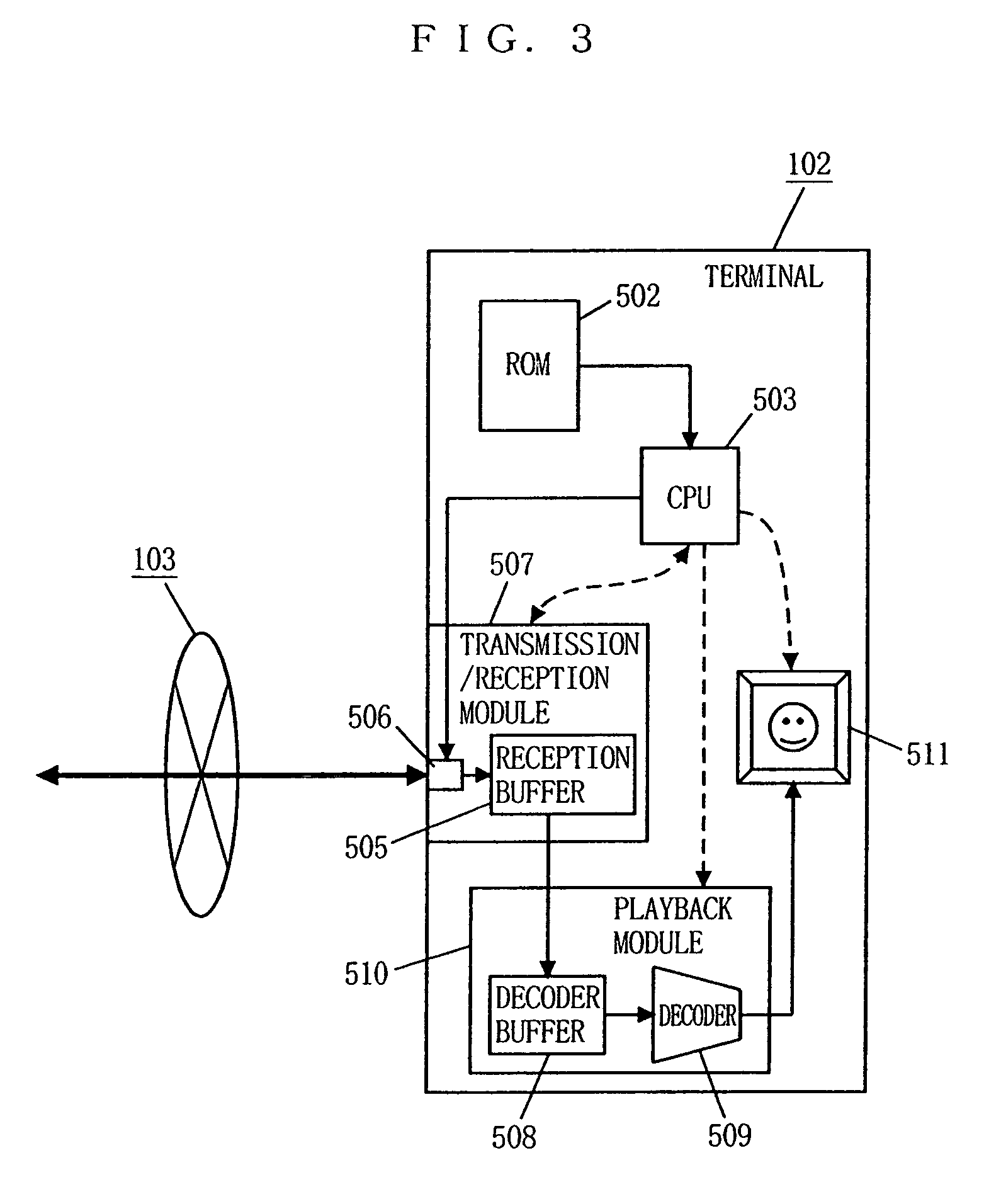

System for transmitting stream data from server to client based on buffer and transmission capacities and delay time of the client

ActiveUS7016970B2Shorten the timePreventing streaming playback from being disturbedEnergy efficient ICTPulse modulation television signal transmissionDelayed timeStream data

A terminal determines a target value of stream data to be stored in its buffer in relation to its buffer capacity and the transmission capacity of the network. Also, the terminal arbitrarily determines a delay time from when the terminal writes a head data of the stream data to the buffer to when the terminal reads the data to start playback in a range not exceeding a value obtained by dividing the buffer capacity by the transmission capacity. The target value and the delay time are then both notified to a server. Based on those notified values, the server controls the transmission speed so that the buffer occupancy of the terminal changes in the vicinity of the target value without exceeding the target value.

Owner:SUN PATENT TRUST

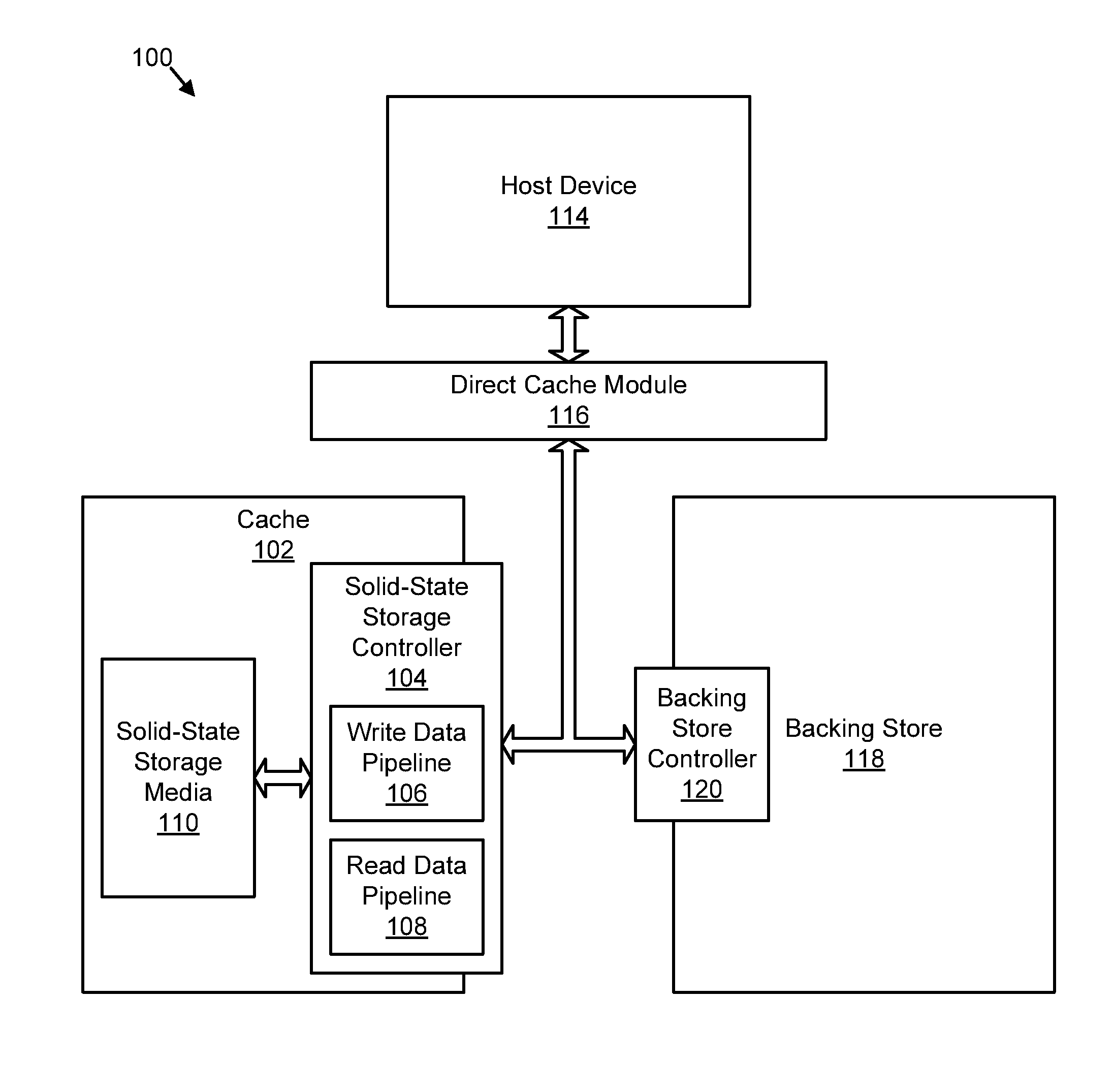

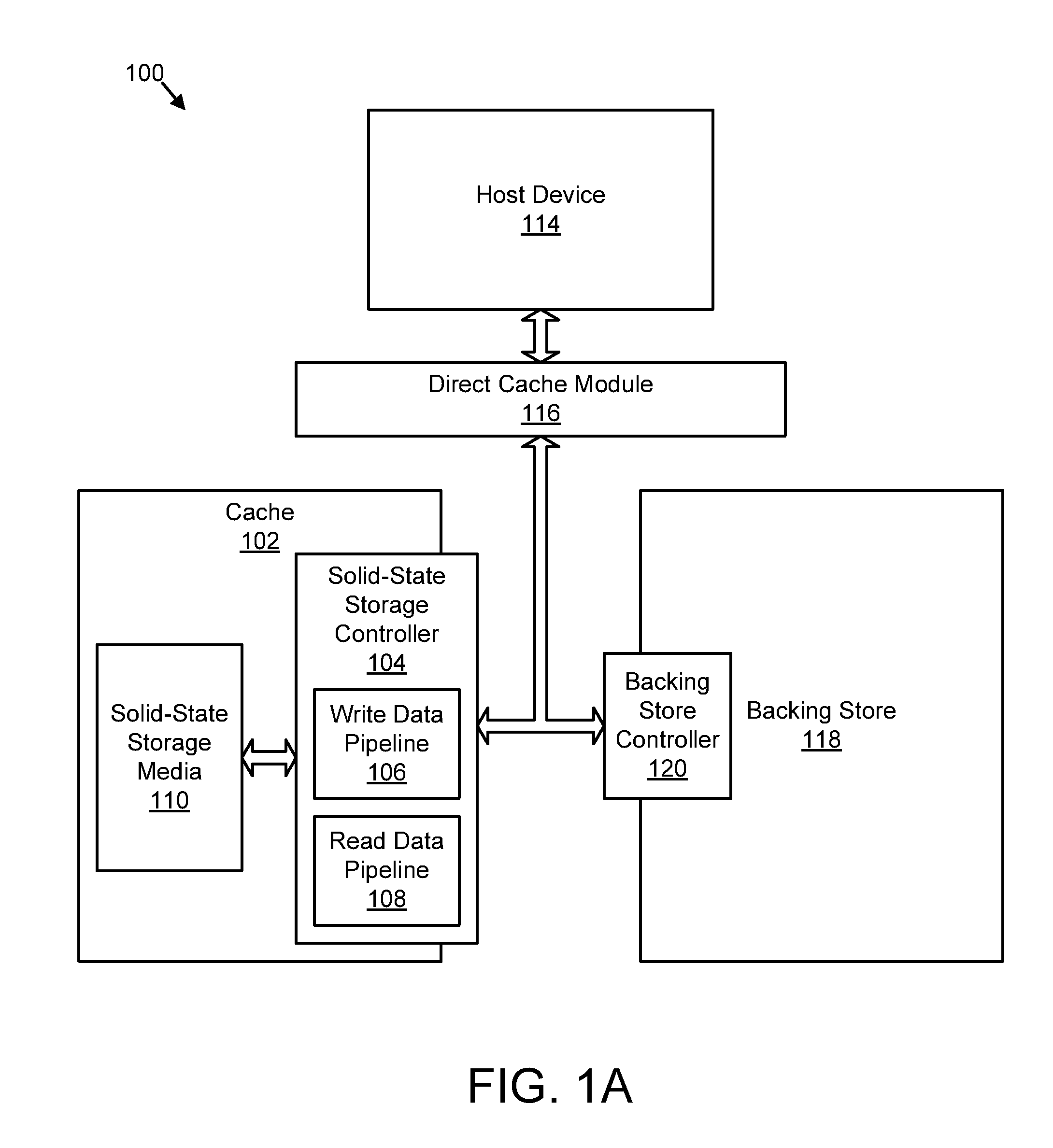

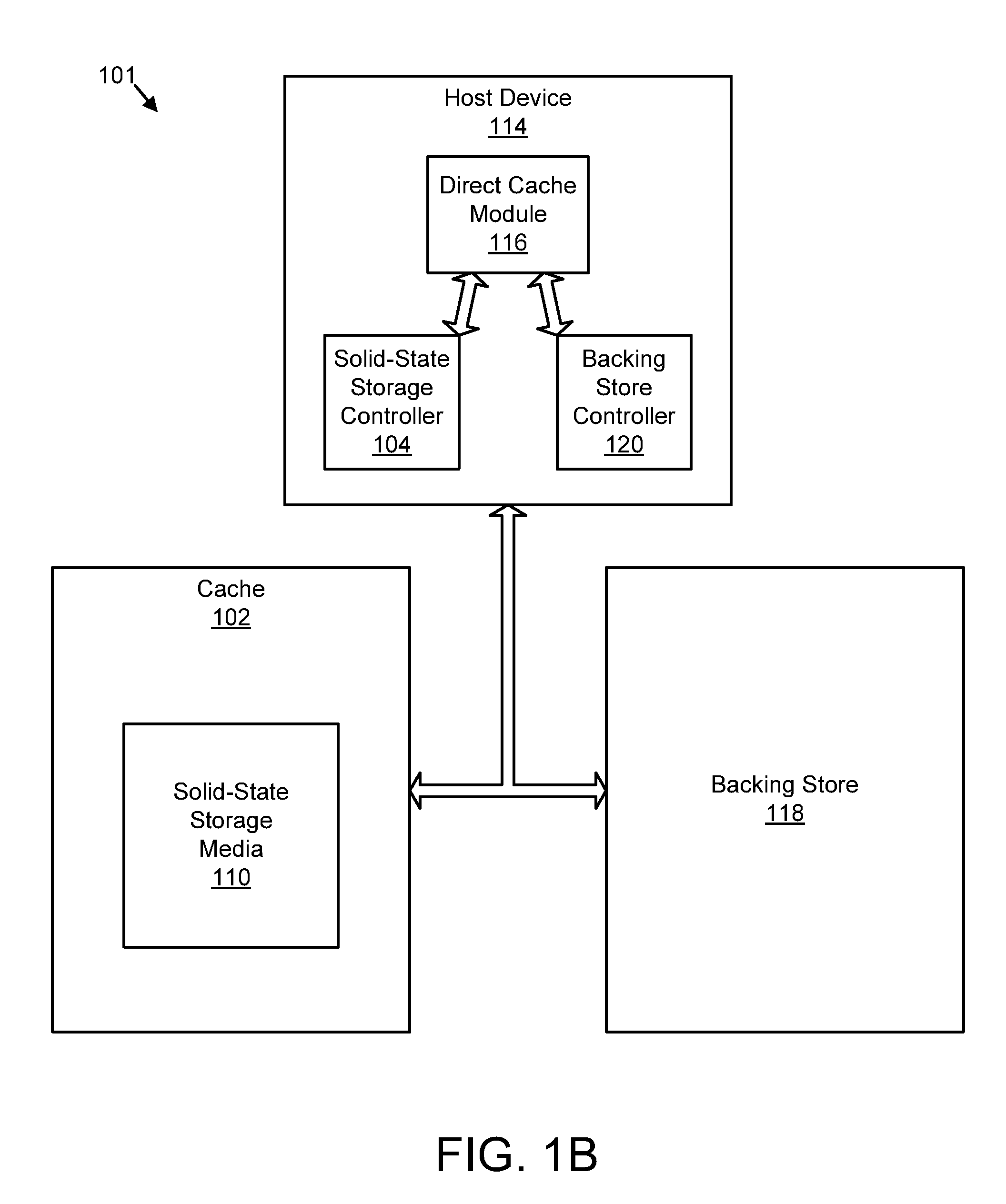

Apparatus, system, and method for destaging cached data

InactiveUS20110258391A1Memory architecture accessing/allocationError detection/correctionSolid-state storageControl store

An apparatus, system, and method are disclosed for destaging cached data. A controller detects one or more write requests to store data in a backing store. The cache controller sends the write requests to a storage controller for a nonvolatile solid-state storage device. The storage controller receives the write requests and caches the data associated with the write requests in the nonvolatile solid-state storage device by appending the data to a log of the nonvolatile solid-state storage device. The log includes a sequential, log-based structure preserved in the nonvolatile solid-state storage device. The cache controller receives at least a portion of the data from the storage controller in a cache log order and destages the data to the backing store in the cache log order. The cache log order comprises an order in which the data was appended to the log of the nonvolatile solid-state storage device.

Owner:SANDISK TECH LLC

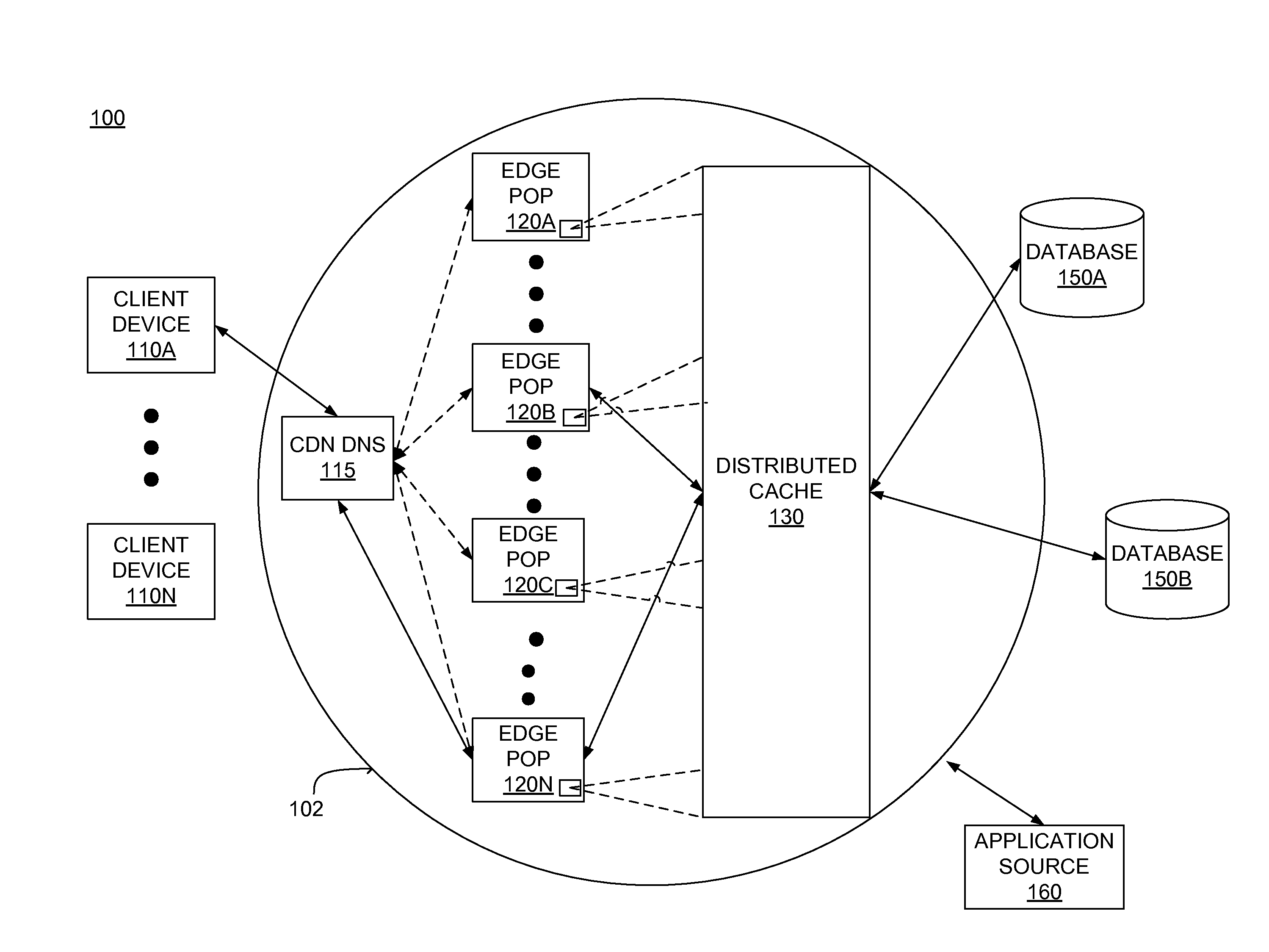

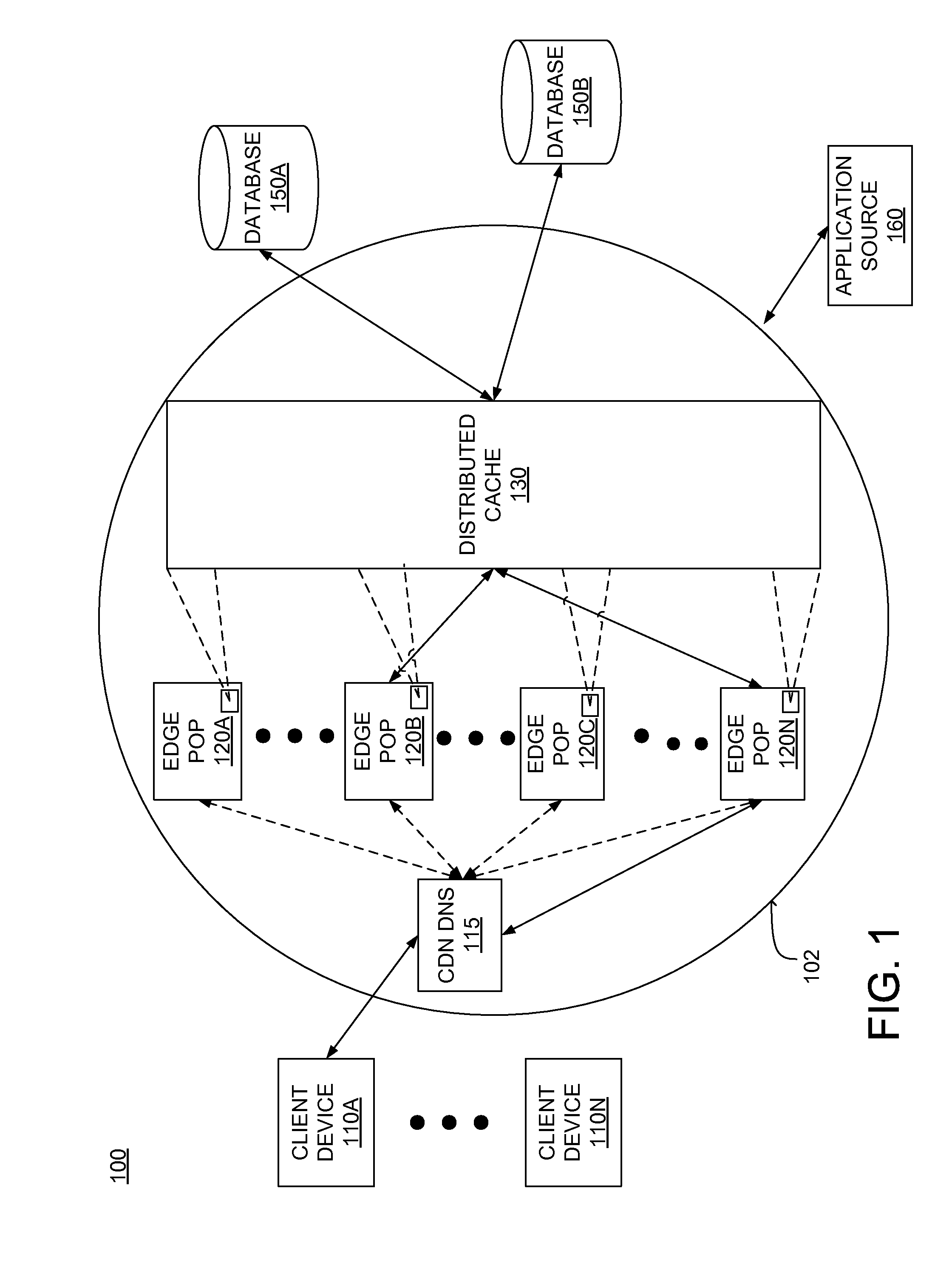

Distributed data cache for on-demand application acceleration

ActiveUS20120041970A1Decreasing application executionShorten the timeDigital data information retrievalDigital data processing detailsApplication softwareDistributed computing

A distributed data cache included in a content delivery network expedites retrieval of data for application execution by a server in a content delivery network. The distributed data cache is distributed across computer-readable storage media included in a plurality of servers in the content delivery network. When an application generates a query for data, a server in the content delivery network determines whether the distributed data cache includes data associated with the query. If data associated with the query is stored in the distributed data cache, the data is retrieved from the distributed data cache. If the distributed data cache does not include data associated with the query, the data is retrieved from a database and the query and associated data are stored in the distributed data cache to expedite subsequent retrieval of the data when the application issues the same query.

Owner:CDNETWORKS HLDG SINGAPORE PTE LTD

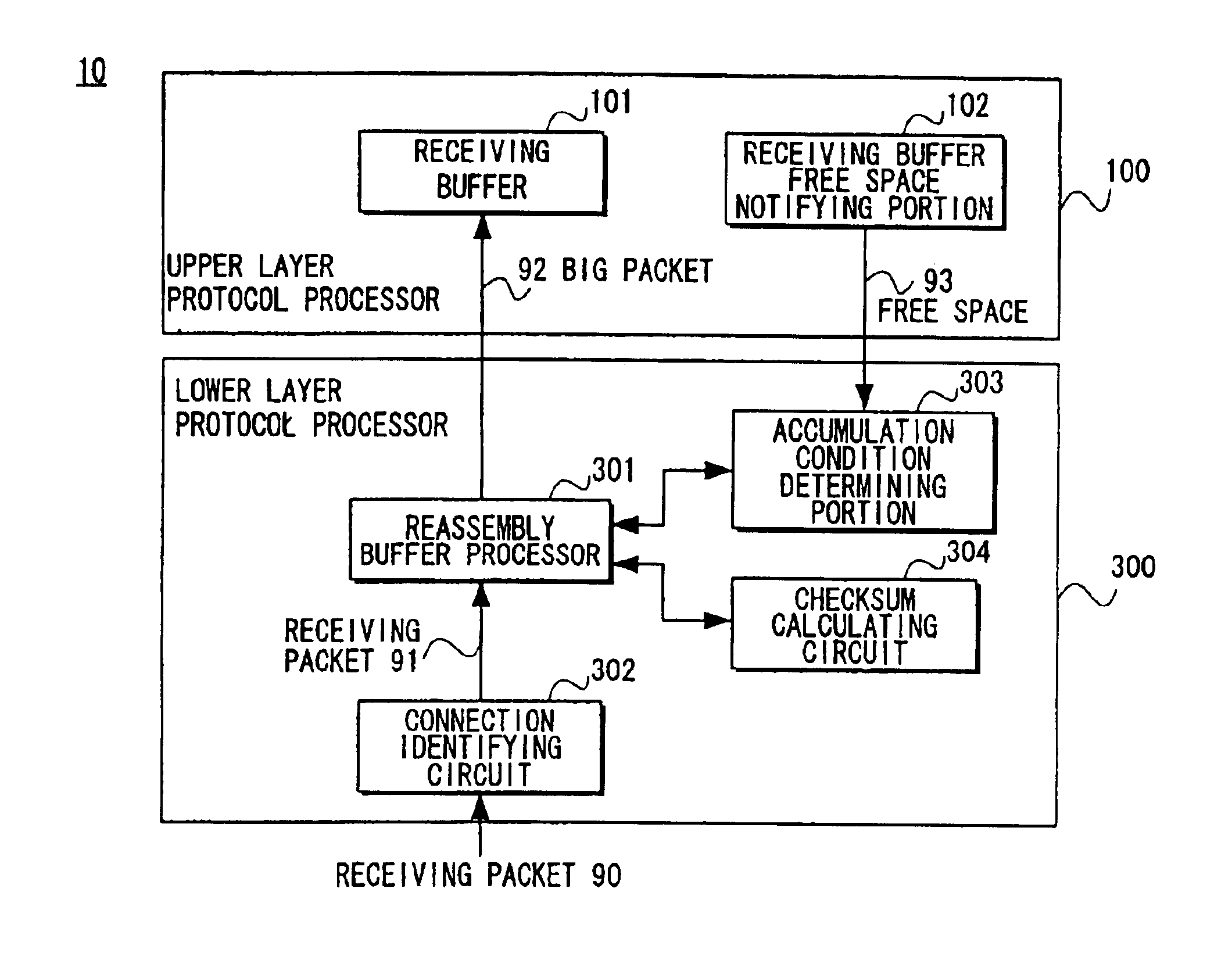

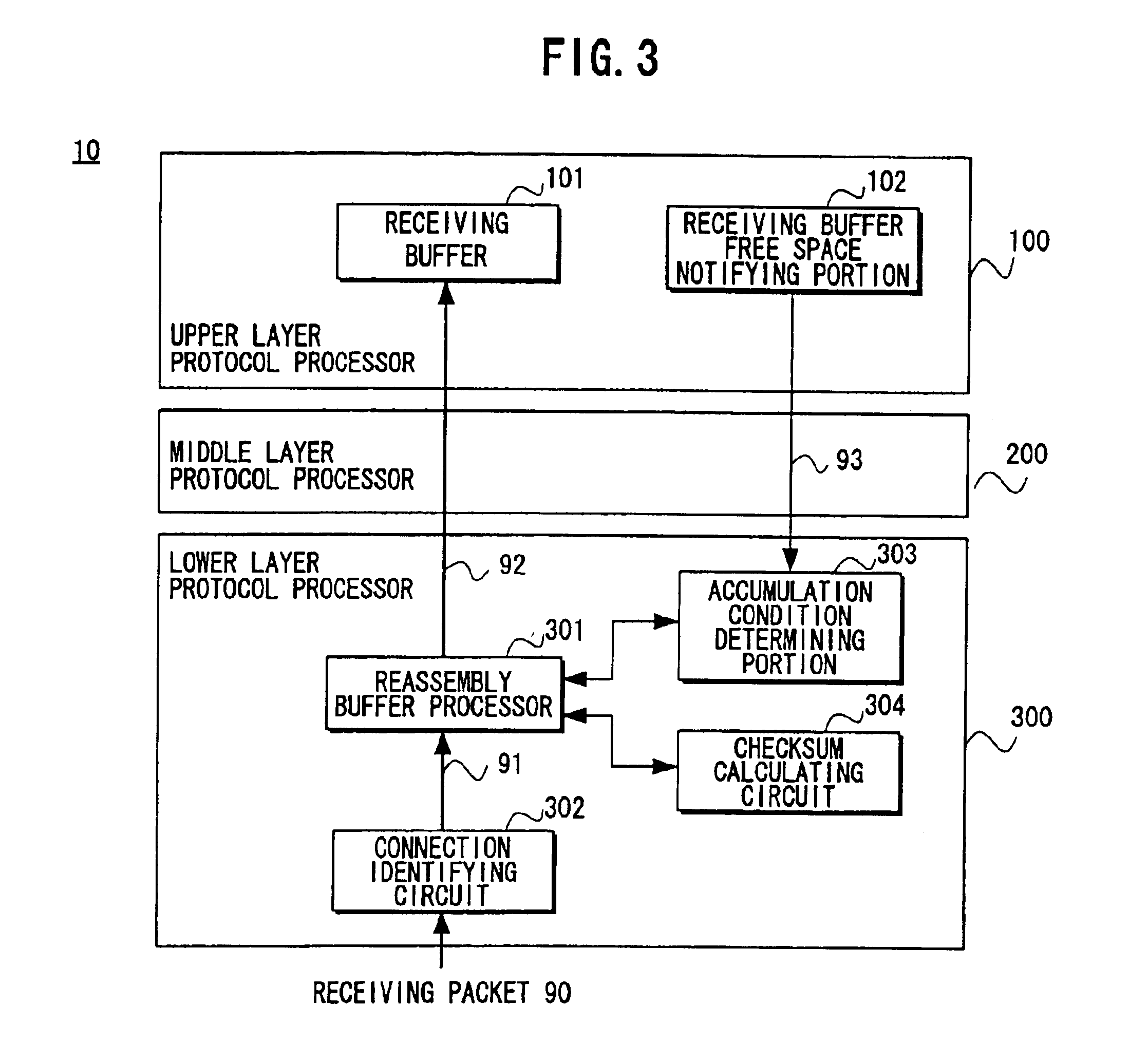

Packet processing device

InactiveUS6907042B1Reduce the number of processesEliminate overheadError preventionFrequency-division multiplex detailsTransport layerProtocol Application

A packet processing device in which a receiving buffer free space notifying portion notifies a free space of a receiving buffer, an accumulation condition determining portion determines a size of a big packet based on the free space, and a reassembly buffer processor reassembles a plurality of receiving packets into a single big packet to be transmitted to the receiving buffer. A backward packet inclusive information reading circuit for detecting the free space based on information within a backward packet from the upper layer may be used as the receiving buffer free space notifying portion. Also, an application layer may be used as the upper layer so that the big packet is transmitted not through a buffer of a transport layer but directly to the receiving buffer.

Owner:FUJITSU LTD

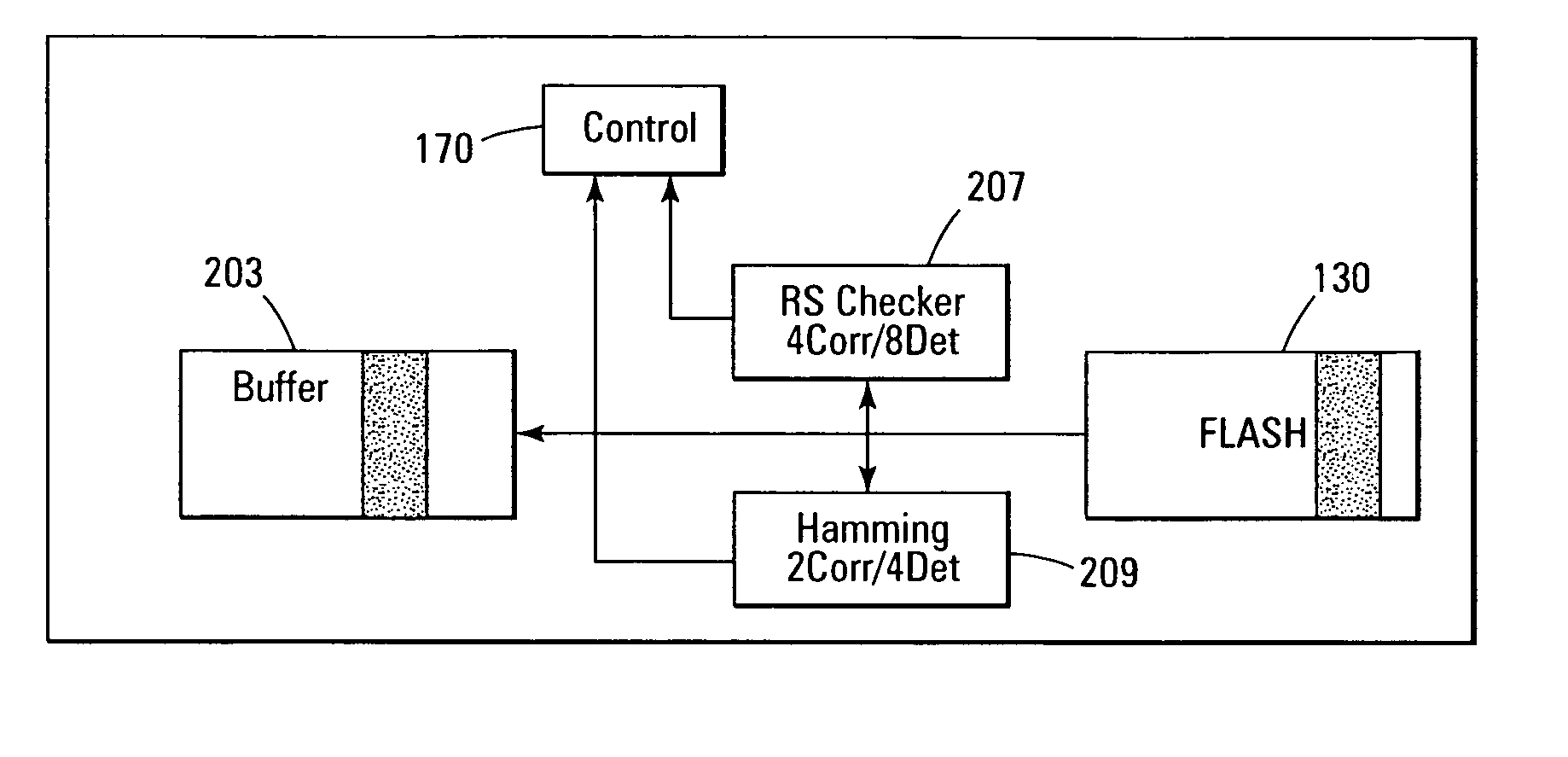

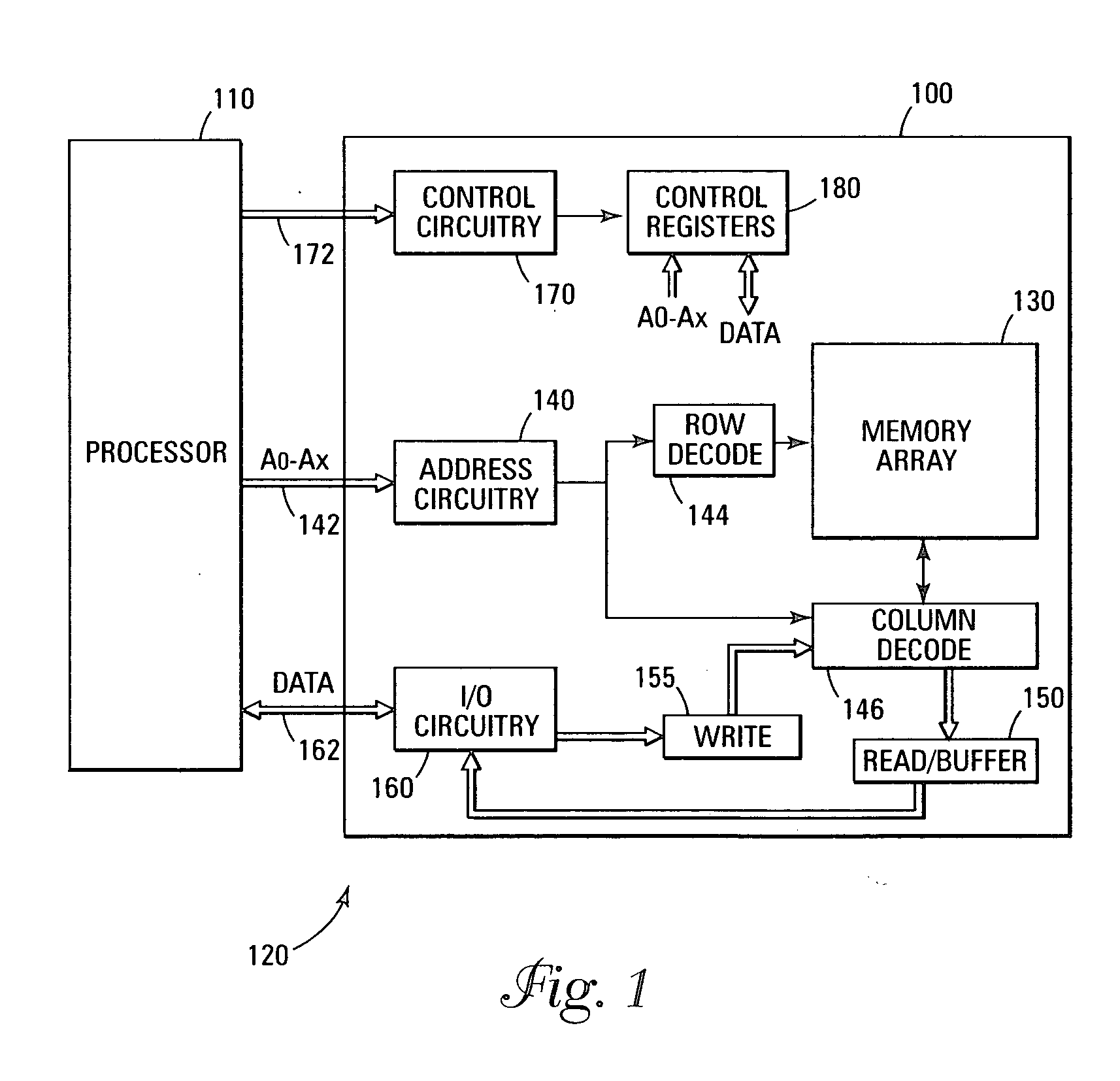

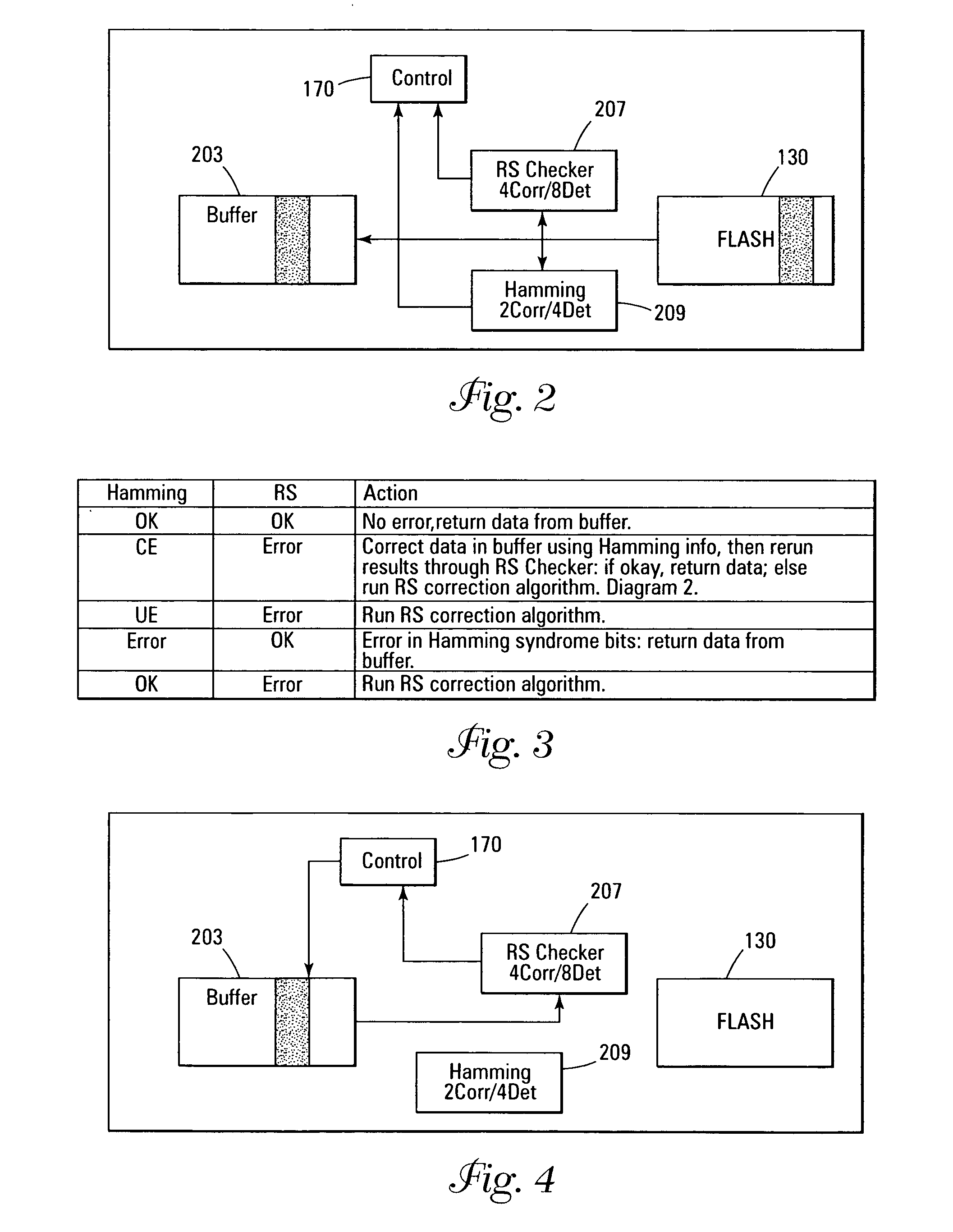

Error detection and correction scheme for a memory device

ActiveUS20050172207A1Code conversionError correction/detection by combining multiple code structuresHamming codeCorrection code

Data is read from a memory array. Before being stored in a data buffer, a Hamming code detection operation and a Reed-Solomon code detection operation are operated in parallel to determine if the data word has any errors. The results of the parallel detection operations are communicated to a controller circuit. If an error is present that can be corrected by the Hamming code correction operation, this is performed and the Reed-Solomon code detection operation is performed on the corrected word. If the error is uncorrectable by the Hamming code, the Reed-Solomon code correction operation is performed on the word.

Owner:MICRON TECH INC

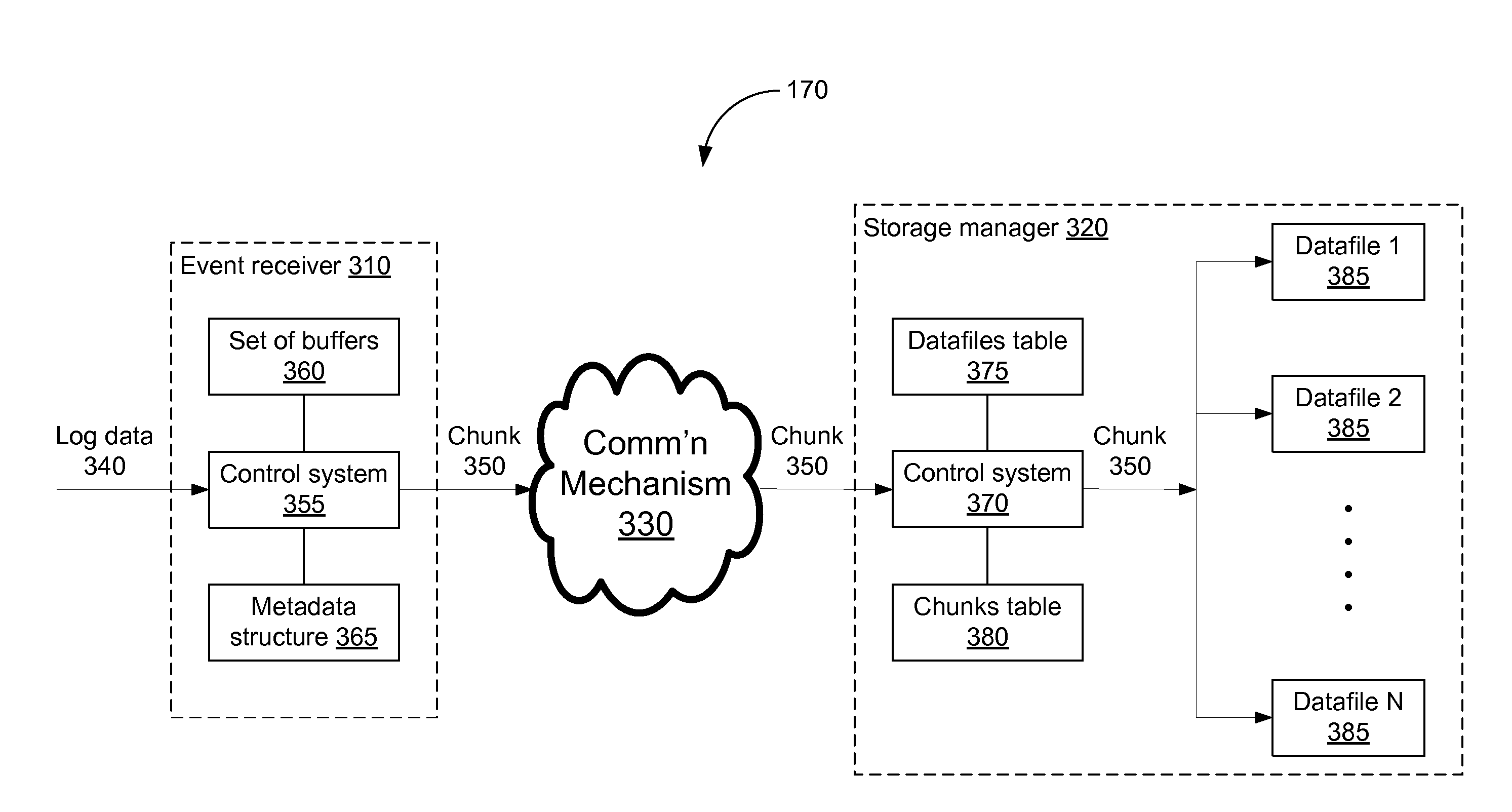

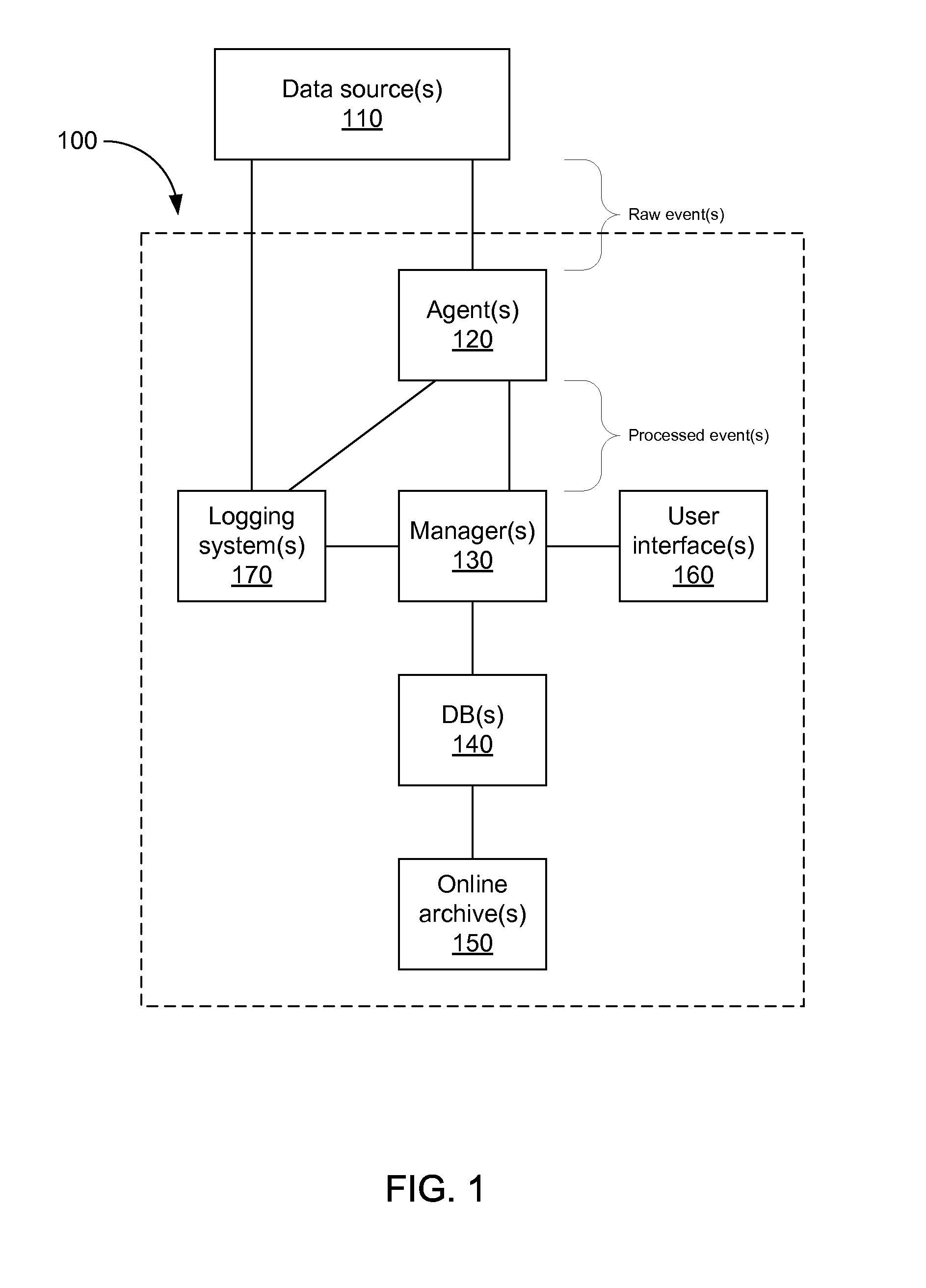

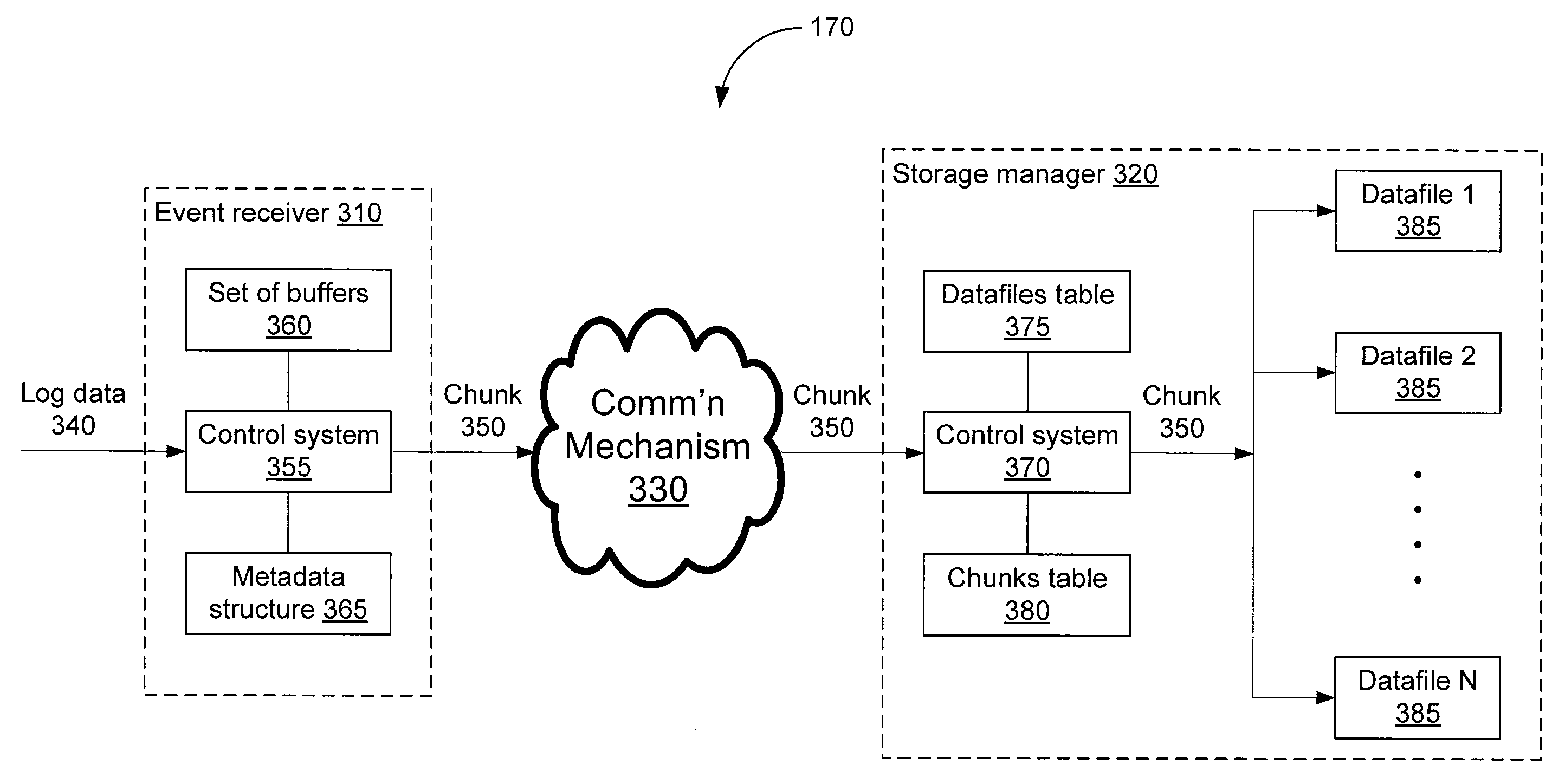

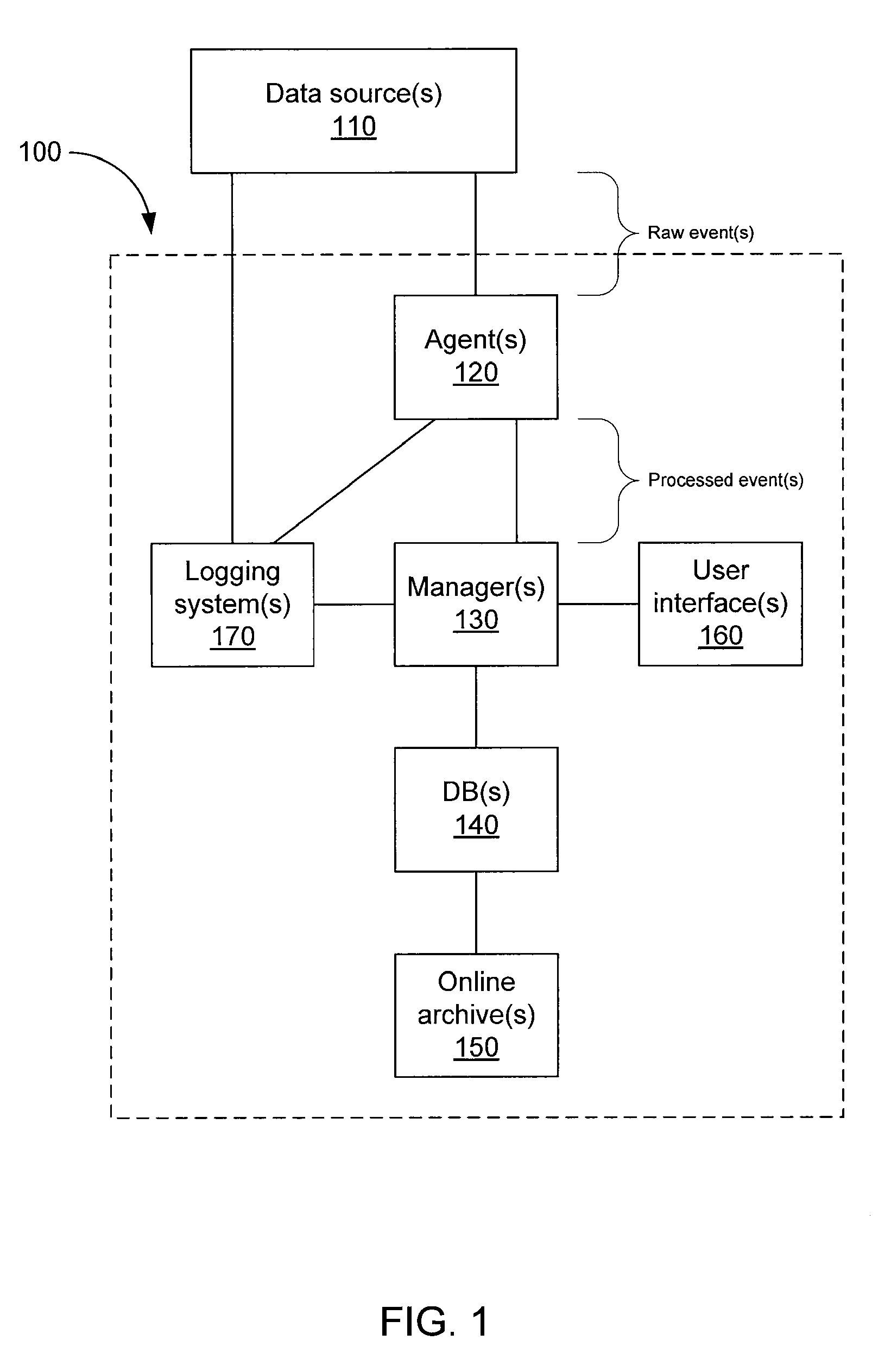

Storing log data efficiently while supporting querying

A logging system includes an event receiver and a storage manager. The receiver receives log data, processes it, and outputs a column-based data “chunk.” The manager receives and stores chunks. The receiver includes buffers that store events and a metadata structure that stores metadata about the contents of the buffers. Each buffer is associated with a particular event field and includes values from that field from one or more events. The metadata includes, for each “field of interest,” a minimum value and a maximum value that reflect the range of values of that field over all of the events in the buffers. A chunk is generated for each buffer and includes the metadata structure and a compressed version of the buffer contents. The metadata structure acts as a search index when querying event data. The logging system can be used in conjunction with a security information / event management (SIEM) system.

Owner:MICRO FOCUS LLC

Method and apparatus for reducing inefficiencies in shared memory devices

InactiveUS20030043158A1Cathode-ray tube indicatorsDigital output to display deviceGraphicsGraphic system

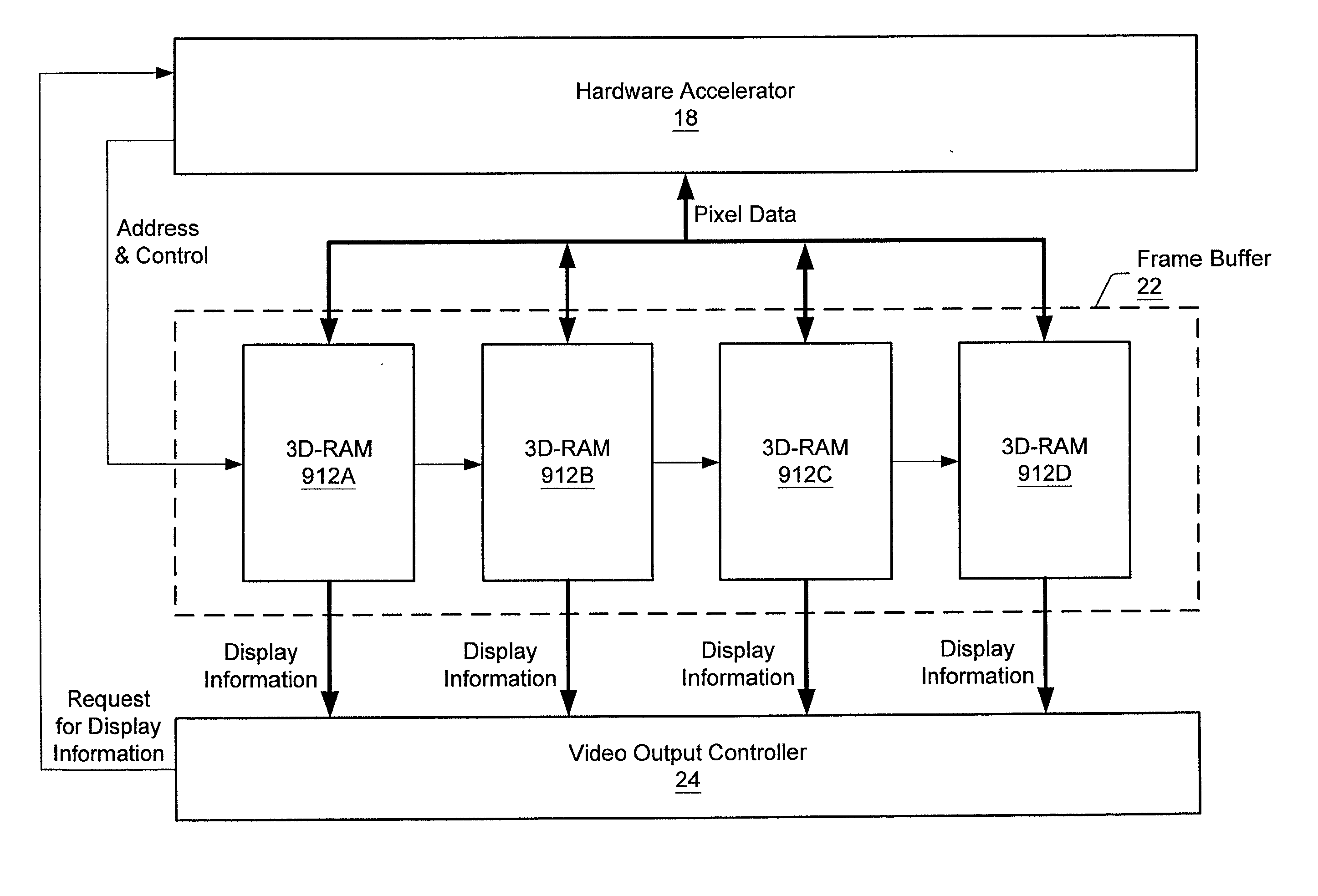

A graphics system that may be shared between multiple display channels includes a frame buffer, an arbiter, and two pixel output buffers. The arbiter arbitrates between the display channels' requests for display information from the frame buffer and forwards a selected request to the frame buffer. The frame buffer is divided into a first and a second portion. The arbiter alternates display channel requests for data between the first and second portions of the frame buffer. The frame buffer outputs display information in response to receiving the forwarded request, and pixels corresponding to this display information are stored in the output buffers. The arbiter selects which request to forward to the frame buffer based on a relative state of neediness of each of the requesting display channels.

Owner:ORACLE INT CORP

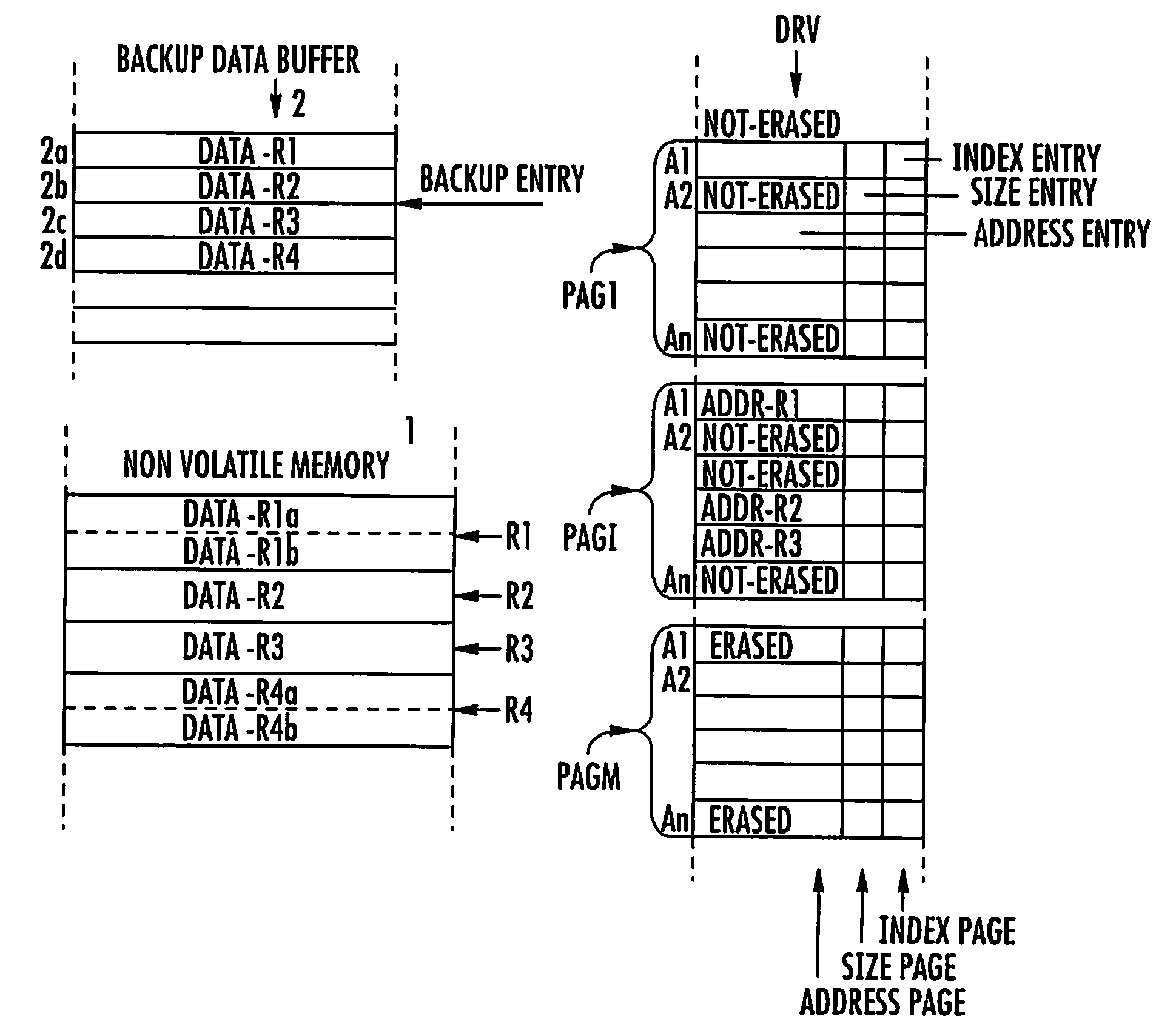

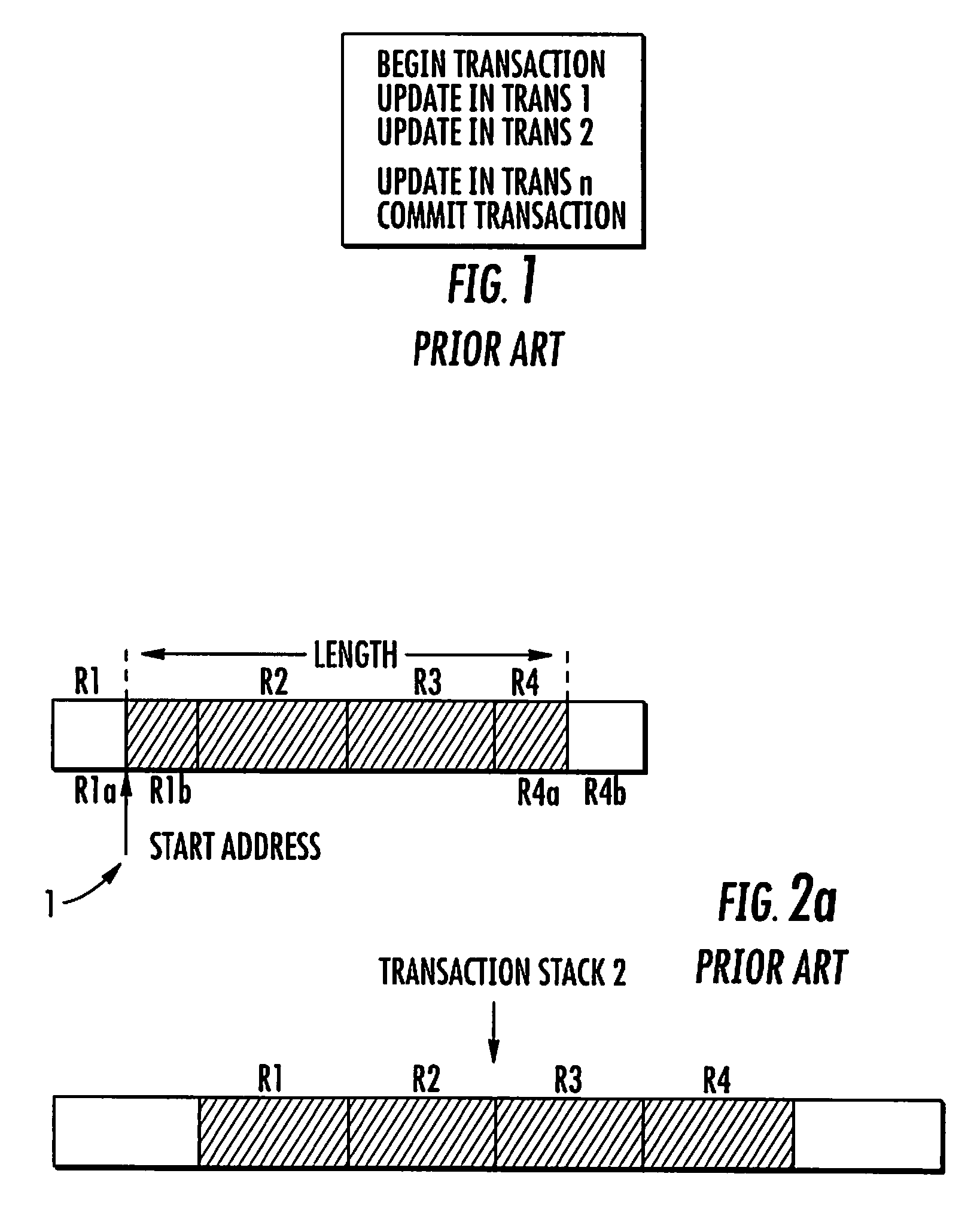

Compression Method for Managing the Storing of Persistent Data From a Non-Volatile Memory to a Backup Buffer

InactiveUS20080005510A1Avoid dataPrevent overflowMemory loss protectionError detection/correctionCompression methodData store

A compression method for a backup data buffer includes a plurality of backup entries for storing persistent data of a non-volatile memory device during at least one update operation. An address of the persistent data in the non-volatile memory device is stored in a driver buffer including address pages. Each address page includes address entries. The compression method includes the functions for marking as erasable an address entry included in a first address page of the driver buffer when the at least one update operation on the persistent data is completed. Address entries not marked as erasable or non-erasable are copied from the first address page to a second address page of the driver buffer. The second address page contains address entries not marked as erasable. The first address page is erased for rendering it ready to be written. The content of the second address page is written to the first, and the second address page is for future writings.

Owner:STMICROELECTRONICS INT NV

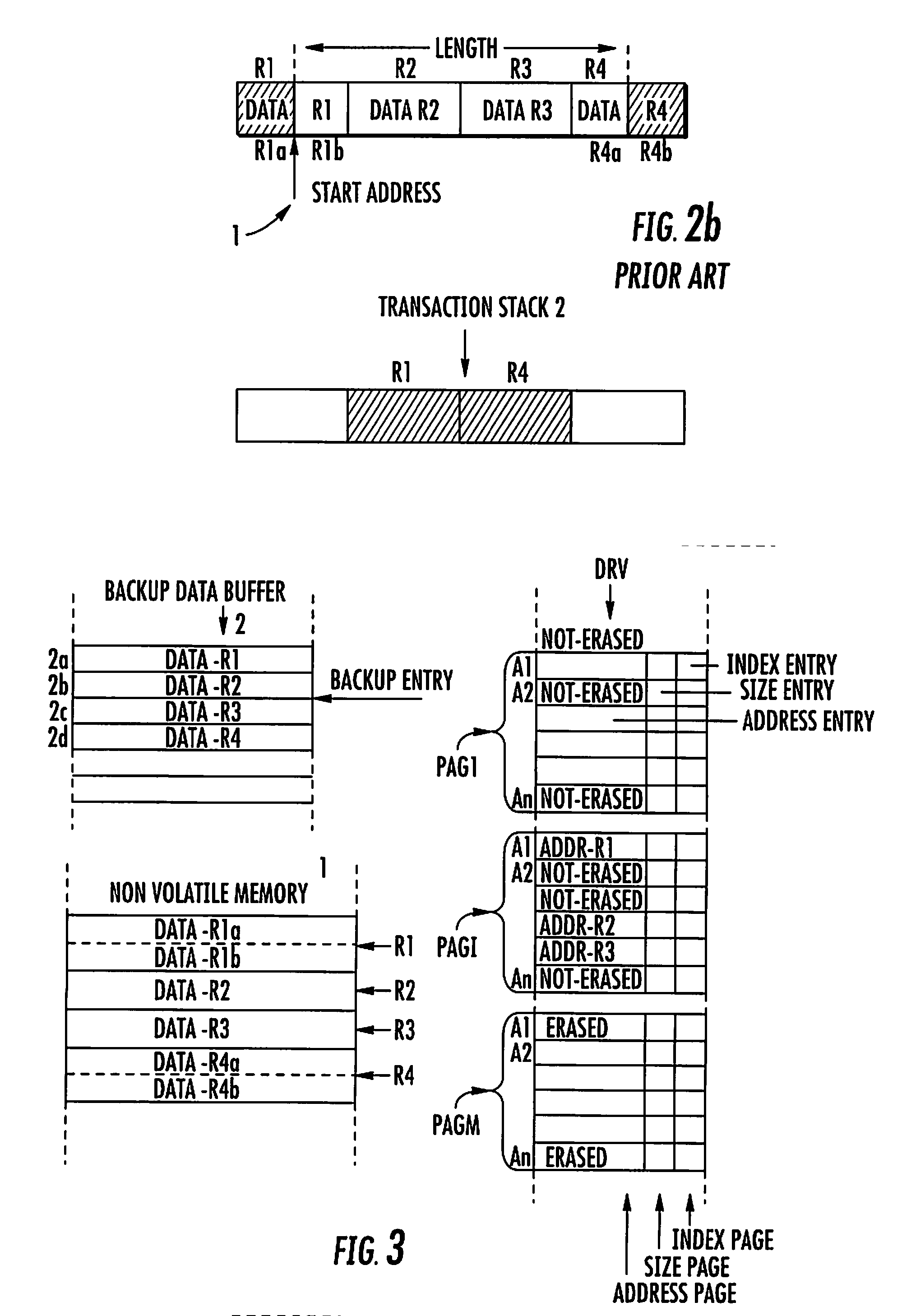

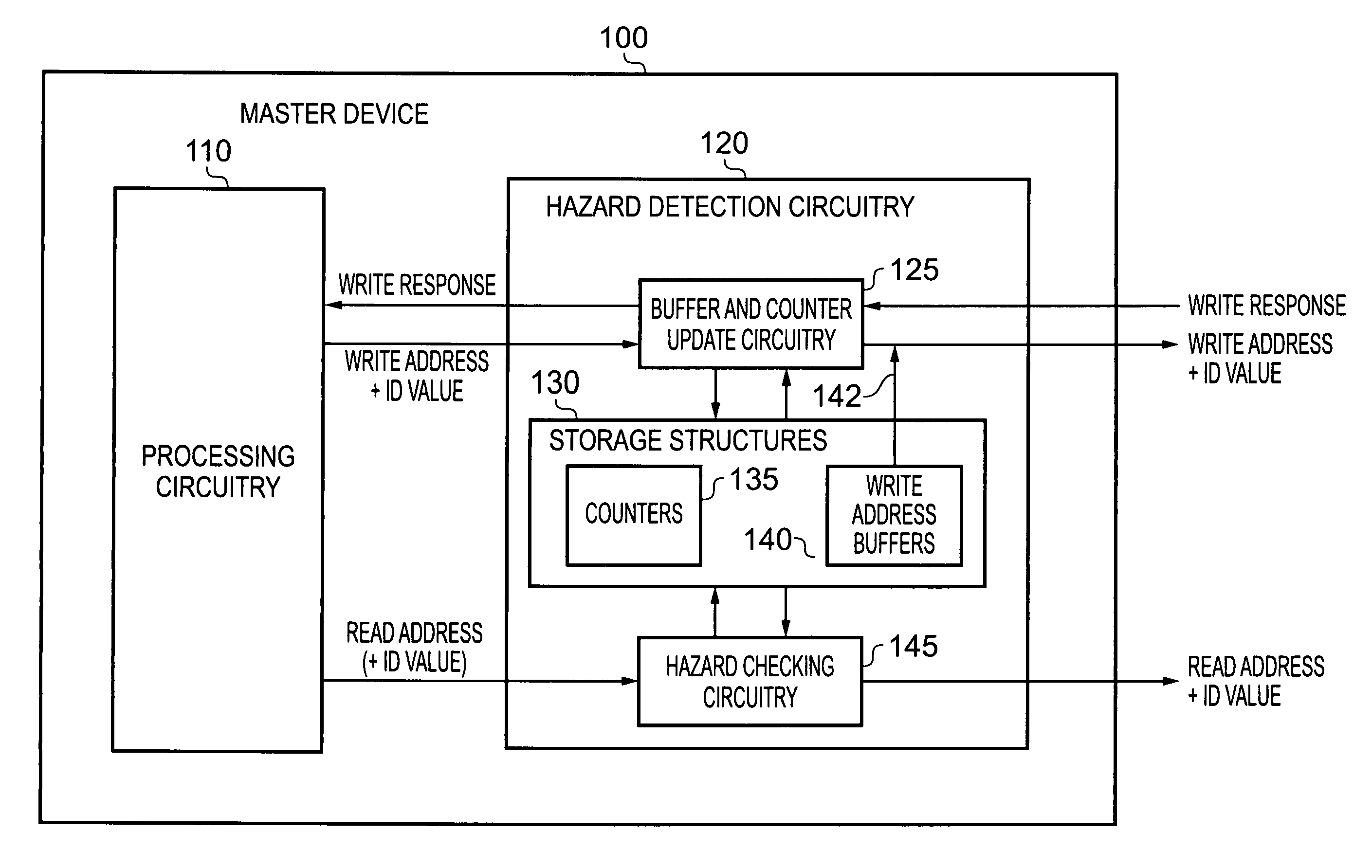

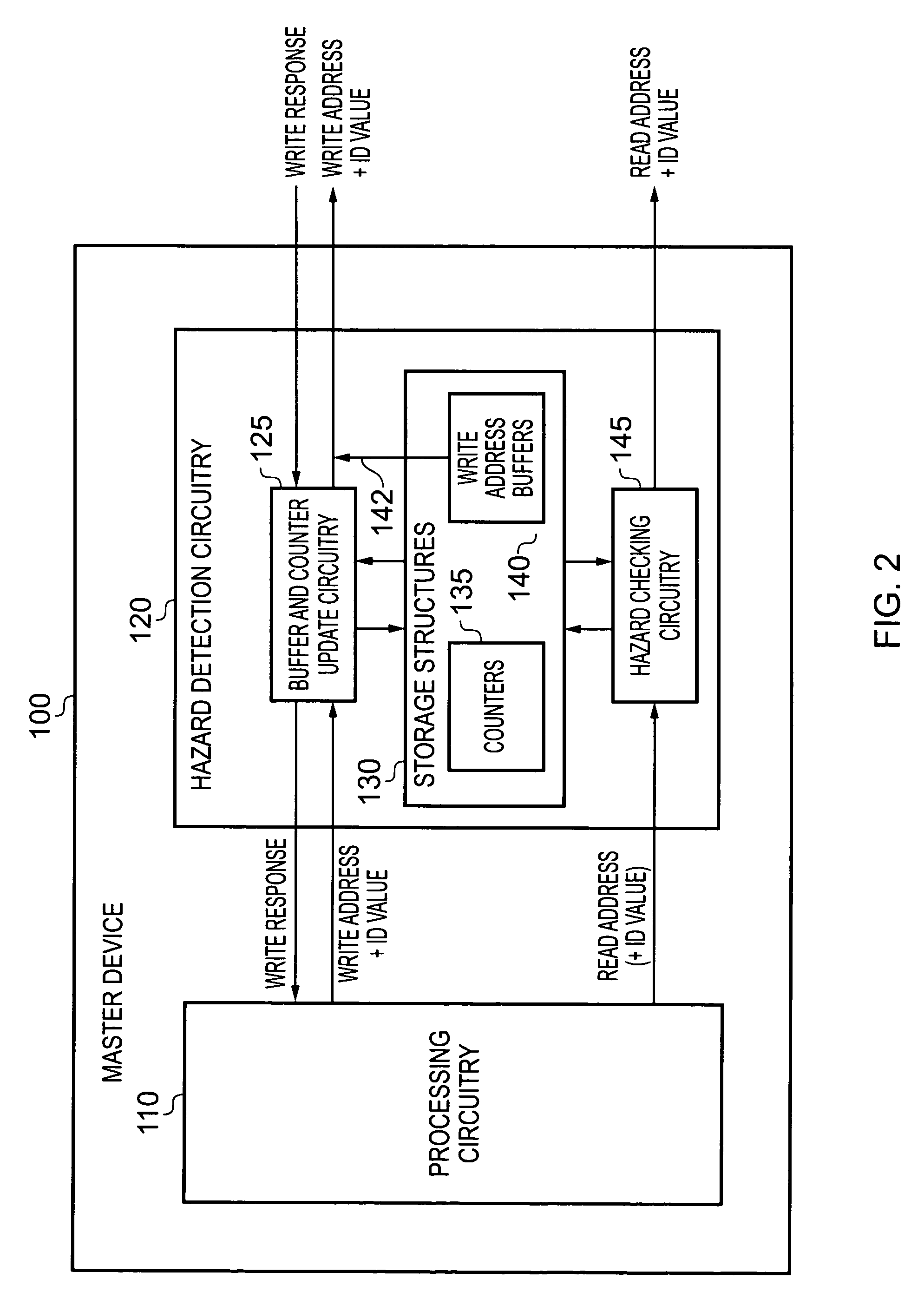

Data processing apparatus and method for performing hazard detection

ActiveUS20100250802A1Avoid possibilityAvoiding WAW hazardMemory systemsInput/output processes for data processingAccess historyData buffer

A data processing apparatus and method are provided for performing hazard detection in respect of a series of access requests issued by processing circuitry for handling by one or more slave devices. The series of access requests include one or more write access requests, each write access request specifying a write operation to be performed by an addressed slave device, and each issued write access request being a pending write access request until the write operation has been completed by the addressed slave device. Hazard detection circuitry comprises a pending write access history storage having at least one buffer and at least one counter for keeping a record of each pending write access request. Update circuitry is responsive to receipt of a write access request to be issued by the processing circuitry, to perform an update process to identify that write access request as a pending write access request in one of the buffers, and if the identity of another pending write access request is overwritten by that update process, to increment a count value in one of the counters. On completion of each write access request by the addressed slave device, the update circuitry performs a further update process to remove the record of that completed write access request from the pending write access history storage. Hazard checking circuitry is then responsive to at least a subset of the access requests to be issued by the processing circuitry, to reference the pending write access history storage in order to determine whether a hazard condition occurs. The manner in which the update circuitry uses a combination of buffers and counters to keep a record of each pending write access request provides improved performance with respect to known prior art techniques, without the hardware cost that would be associated with increasing the number of buffers.

Owner:ARM LTD

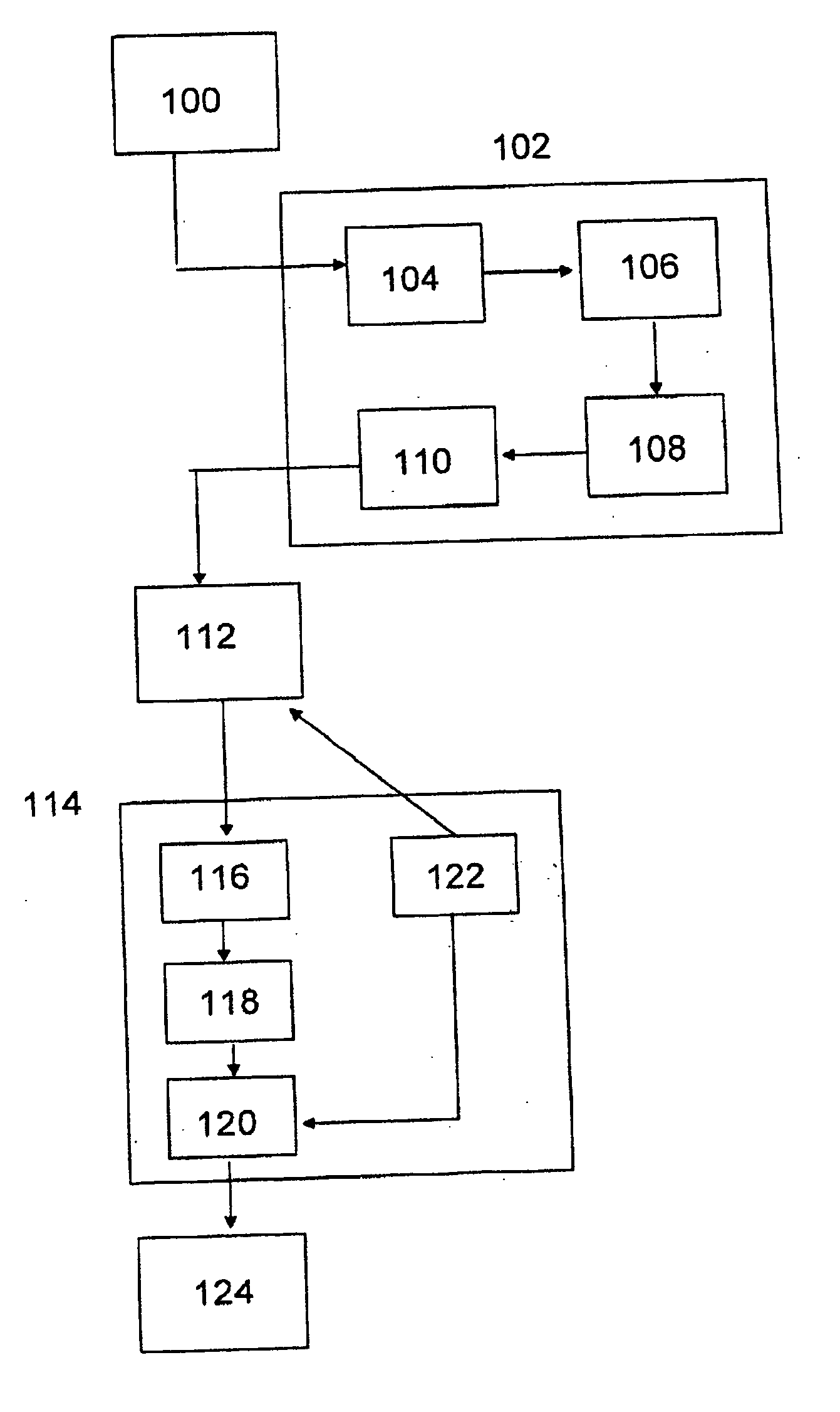

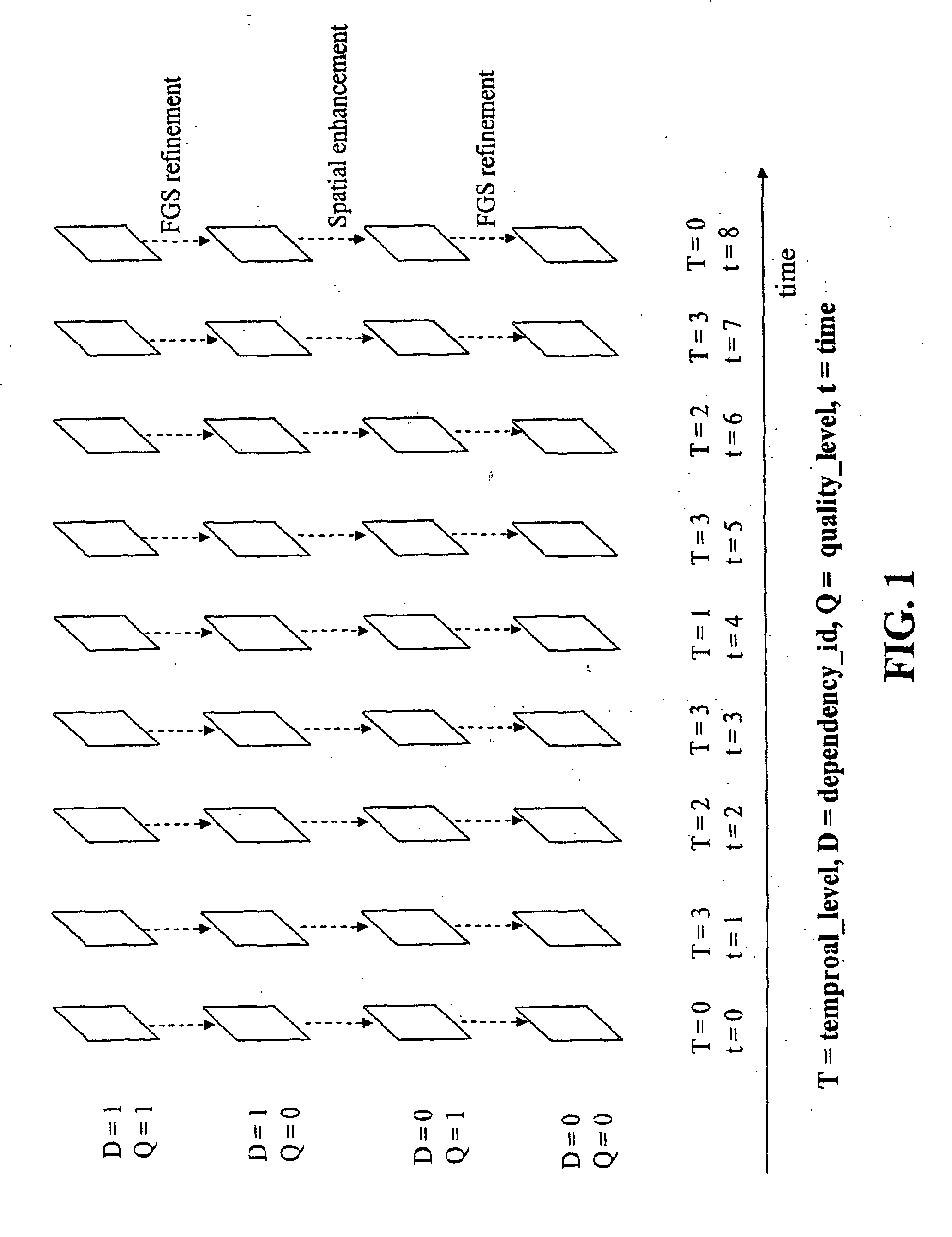

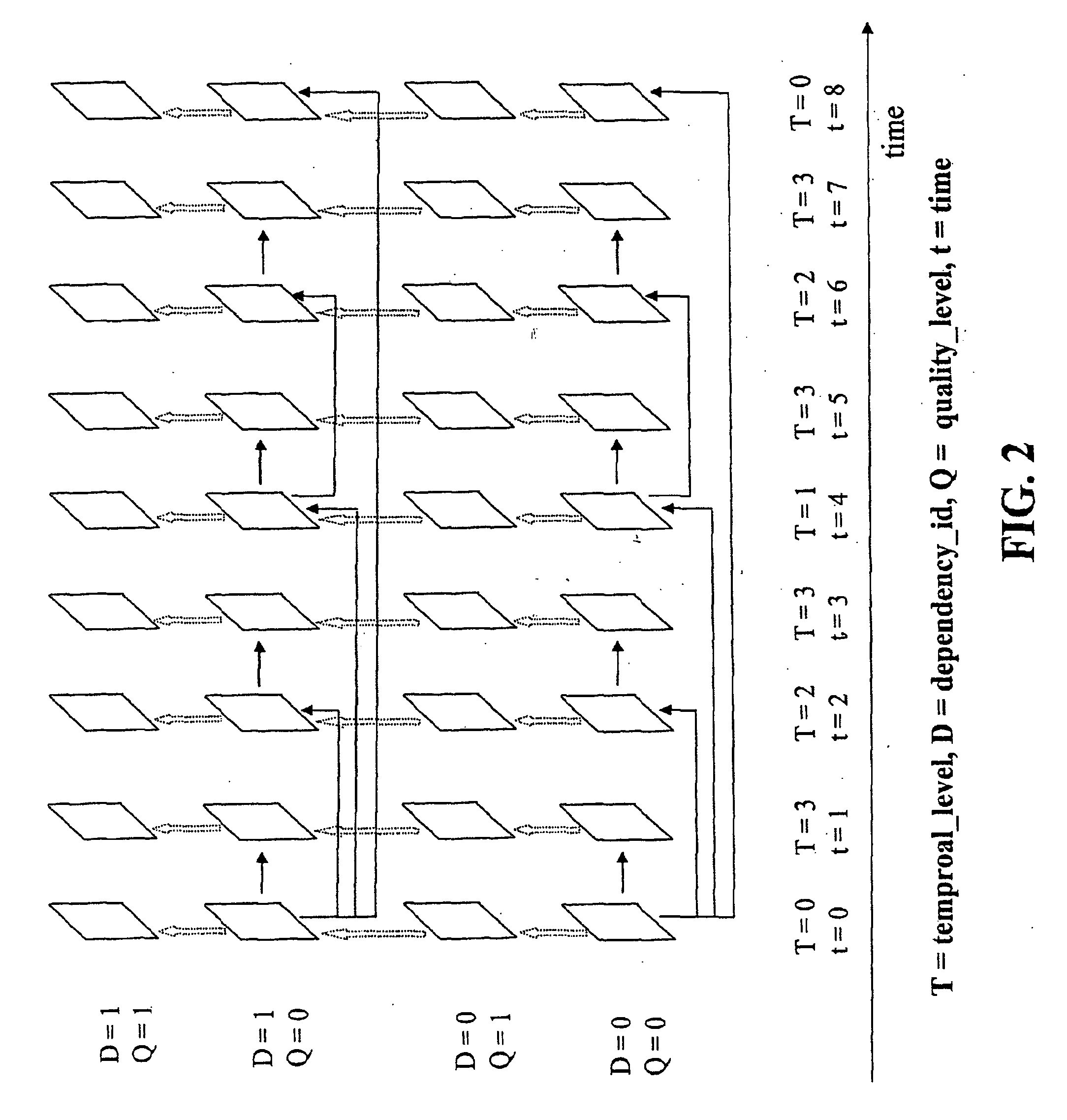

Efficient decoded picture buffer management for scalable video coding

InactiveUS20070086521A1Color television with pulse code modulationColor television with bandwidth reductionInter layerComputer graphics (images)

A system and method for enabling the removal of decoded pictures from a decoded picture buffer as soon as the decoded pictures are no longer needed for prediction reference and future output. An indication is introduced into the bitstream as to whether a picture may be used for inter-layer prediction reference, as well as a decoded picture buffer management method which uses the indication. The present invention includes a process for marking a picture as being used for inter-layer reference or unused for inter-layer reference, a storage process of decoded pictures into the decoded picture buffer, a marking process of reference pictures, and output and removal processes of decoded pictures from the decoded picture buffer.

Owner:NOKIA CORP

Storing log data efficiently while supporting querying to assist in computer network security

ActiveUS20080162592A1Data processing applicationsError detection/correctionSystem of recordEvent management

A logging system includes an event receiver and a storage manager. The receiver receives log data, processes it, and outputs a data “chunk.” The manager receives data chunks and stores them so that they can be queried. The receiver includes buffers that store events and a metadata structure that stores metadata about the contents of the buffers. The metadata includes a unique identifier associated with the receiver, the number of events in the buffers, and, for each “field of interest,” a minimum value and a maximum value that reflect the range of values of that field over all of the events in the buffers. A chunk includes the metadata structure and a compressed version of the contents of the buffers. The metadata structure acts as a search index when querying event data. The logging system can be used in conjunction with a security information / event management (SIEM) system.

Owner:MICRO FOCUS LLC

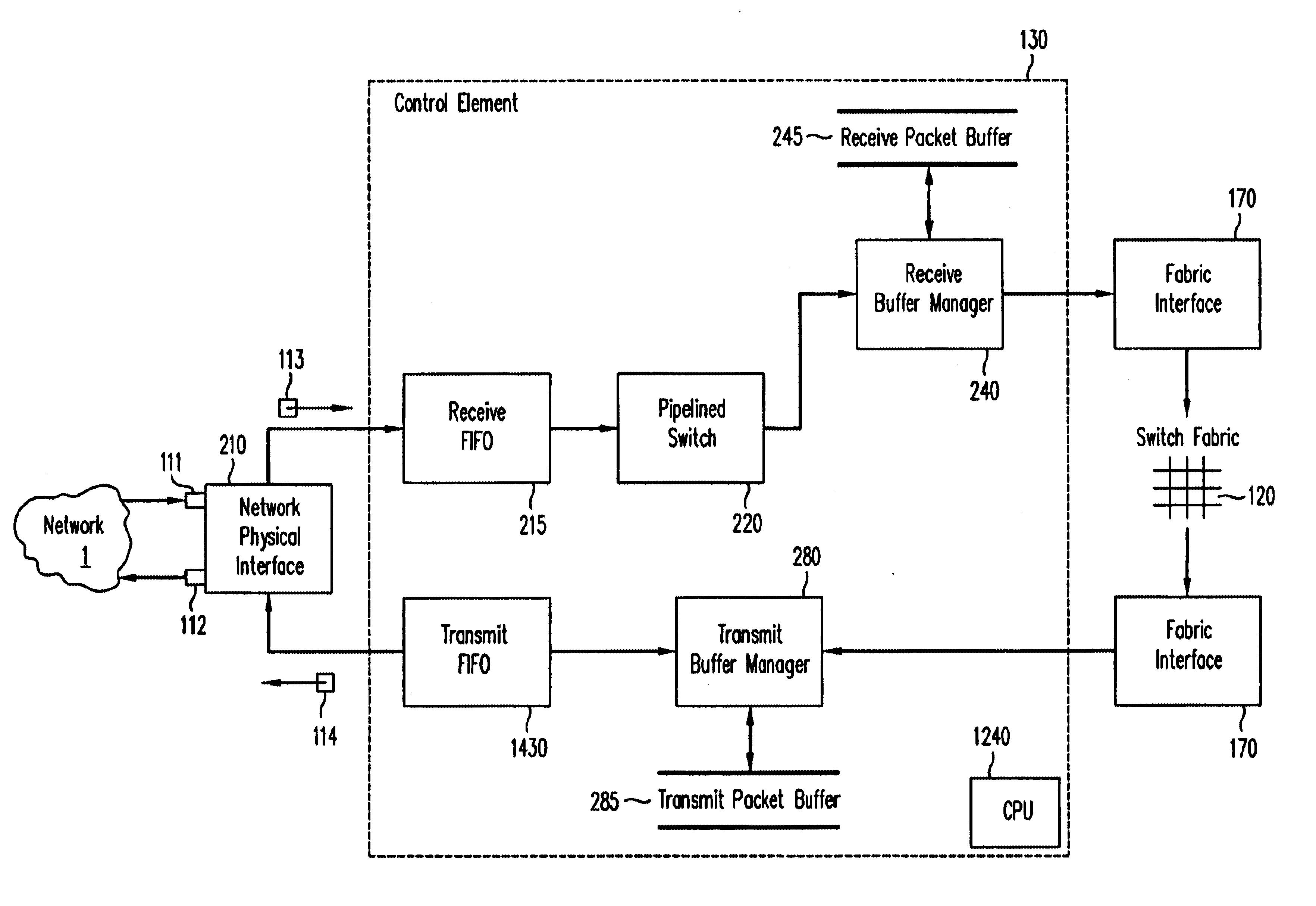

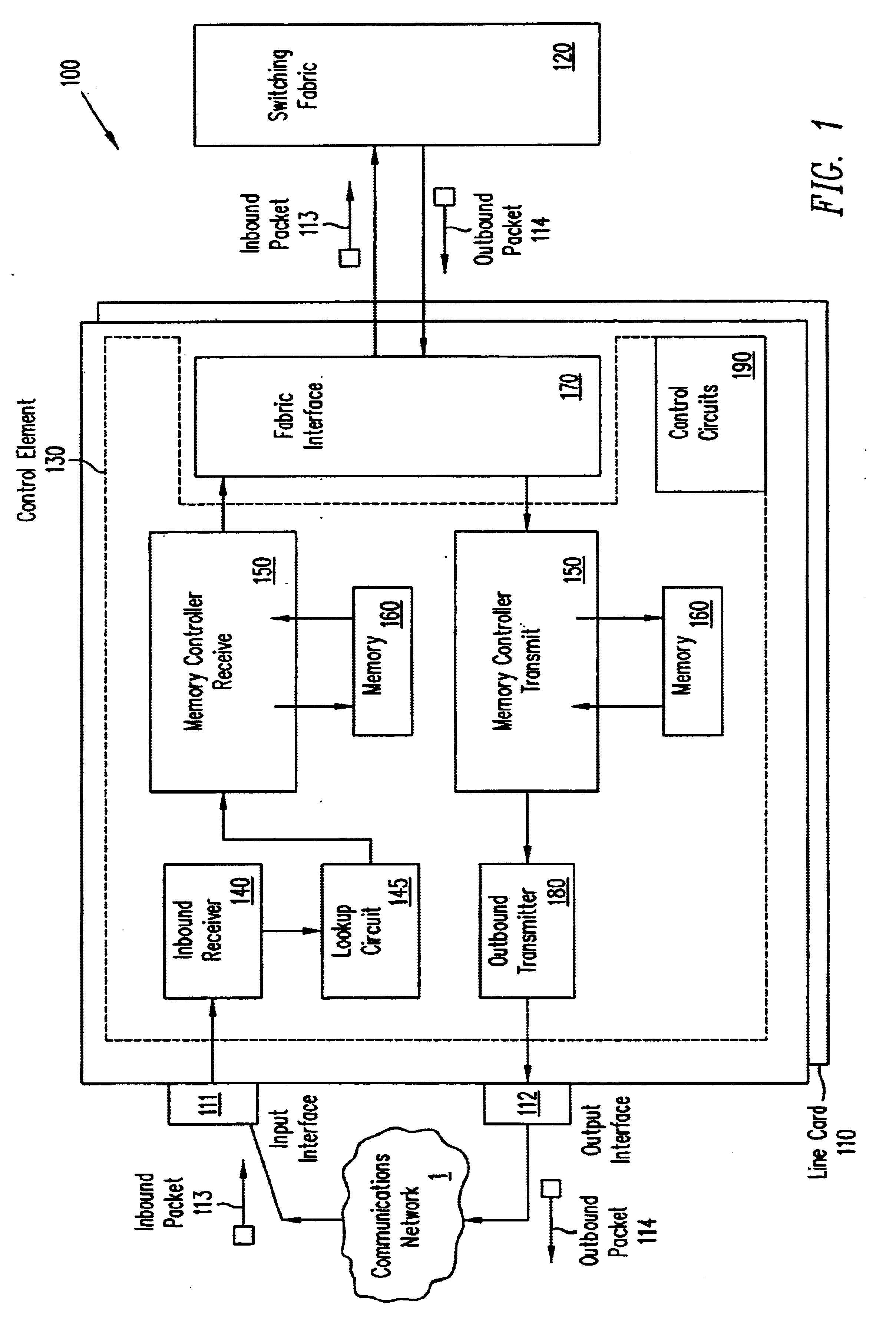

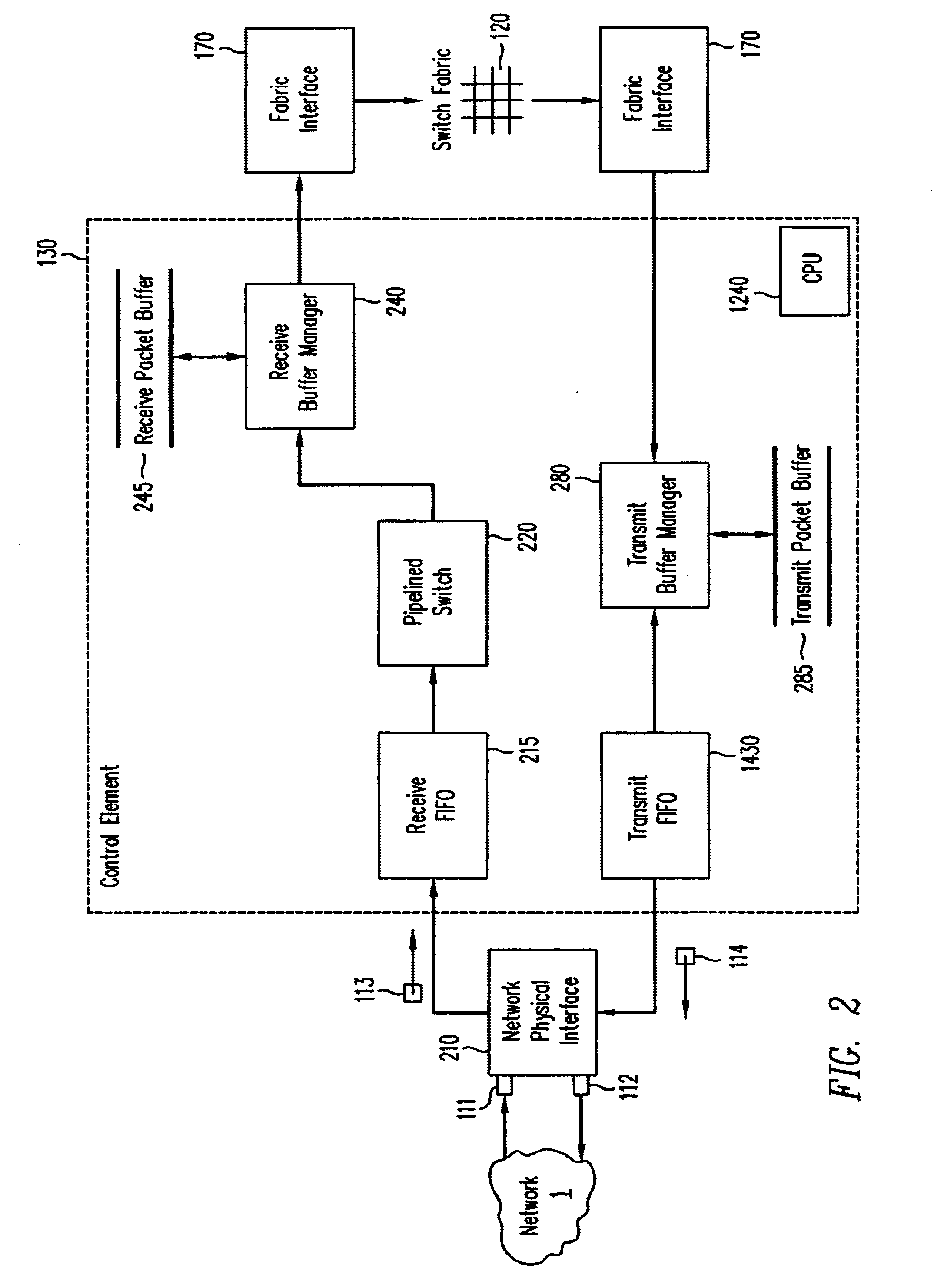

Flexible engine and data structure for packet header processing

InactiveUS6721316B1Multiplex system selection arrangementsData switching by path configurationWeb transportLinked list

A pipelined linecard architecture for receiving, modifying, switching, buffering, queuing and dequeuing packets for transmission in a communications network. The linecard has two paths: the receive path, which carries packets into the switch device from the network, and the transmit path, which carries packets from the switch to the network. In the receive path, received packets are processed and switched in an asynchronous, multi-stage pipeline utilizing programmable data structures for fast table lookup and linked list traversal. The pipelined switch operates on several packets in parallel while determining each packet's routing destination. Once that determination is made, each packet is modified to contain new routing information as well as additional header data to help speed it through the switch. Each packet is then buffered and enqueued for transmission over the switching fabric to the linecard attached to the proper destination port. The destination linecard may be the same physical linecard as that receiving the inbound packet or a different physical linecard. The transmit path consists of a buffer / queuing circuit similar to that used in the receive path. Both enqueuing and dequeuing of packets is accomplished using CoS-based decision making apparatus and congestion avoidance and dequeue management hardware. The architecture of the present invention has the advantages of high throughput and the ability to rapidly implement new features and capabilities.

Owner:CISCO TECH INC

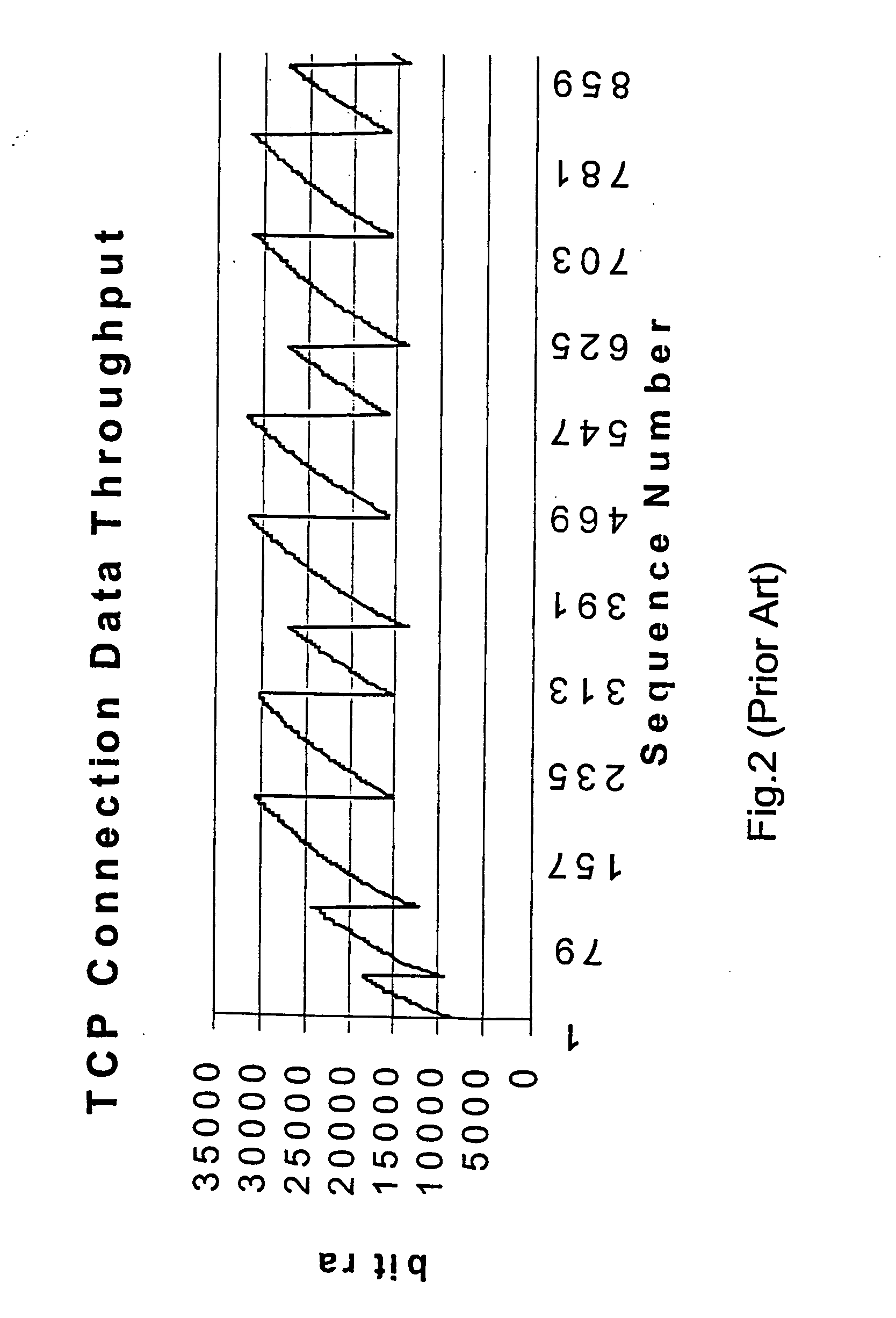

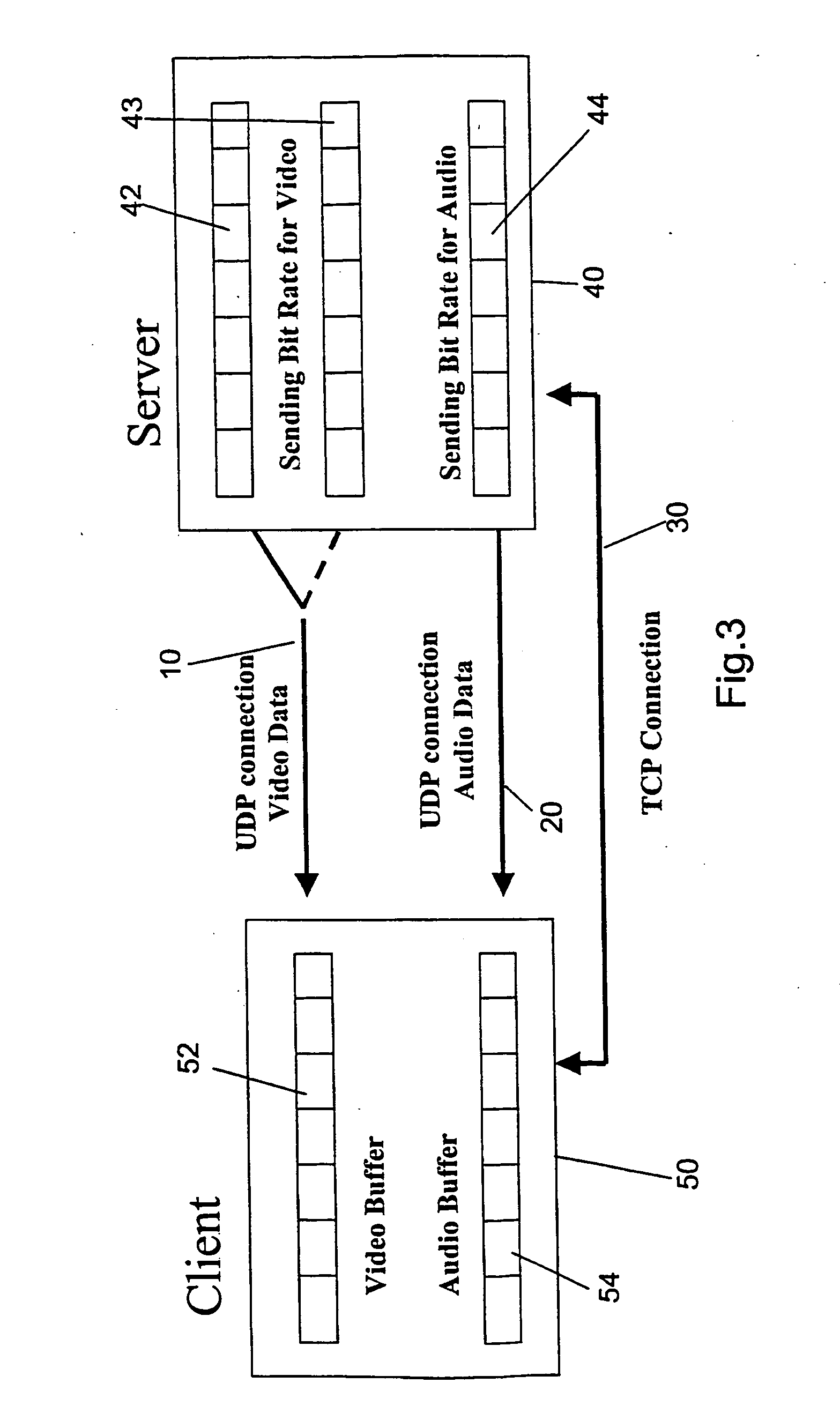

Data communications method and system using buffer size to calculate transmission rate for congestion control

InactiveUS20050021830A1Multiple digital computer combinationsData switching networksData streamClient-side

A data transmission method and system is disclosed in which one or more data streams are transmitted at respective transmission rates which are controlled to prevent data buffers in the receiver from overflowing. In some embodiments feedback data concerning the state of each buffer in a receiving client is received at the transmitting server, and used to adapt the sending rates to achieve the effect. Information indicative of the data decode rates or the fill extent of each buffer is communicated to the server as the feedback data. In other embodiments the server makes an open-loop estimate of the remaining space in the buffer, and controls the transmission rate accordingly. A data receiving method and system adapted to receive the data streams is also disclosed.

Owner:BRITISH TELECOMM PLC

Method and apparatus for synchronizing playback of streaming media in multiple output devices

ActiveUS8015306B2Maintaining average timing synchronizationError preventionTransmission systemsTimestampNetwork packet

A method and apparatus for synchronizing streaming media with multiple output devices. One or more media servers serve media streams to one or more output devices (i.e., players). For playback synchronization, one output device is the “master”, whereas the remaining output devices are “slaves”. More data is requested from the media server by the “master” device to maintain a nominal buffer fill level over time. The “slave” devices receive streamed data from the media server at the rate determined by the master device's data requests, and the average rate of data flow over the streaming network is thus controlled by the frequency of the single “master” device's crystal. “Slave” devices make playback rate corrections to maintain respective buffer fill levels within upper and lower threshold levels. For slow networks, each media data packet timestamp is calculated from the time the master's buffer reaches nominal level.

Owner:SNAP ONE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com