Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

222results about "Program control using wired connections" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

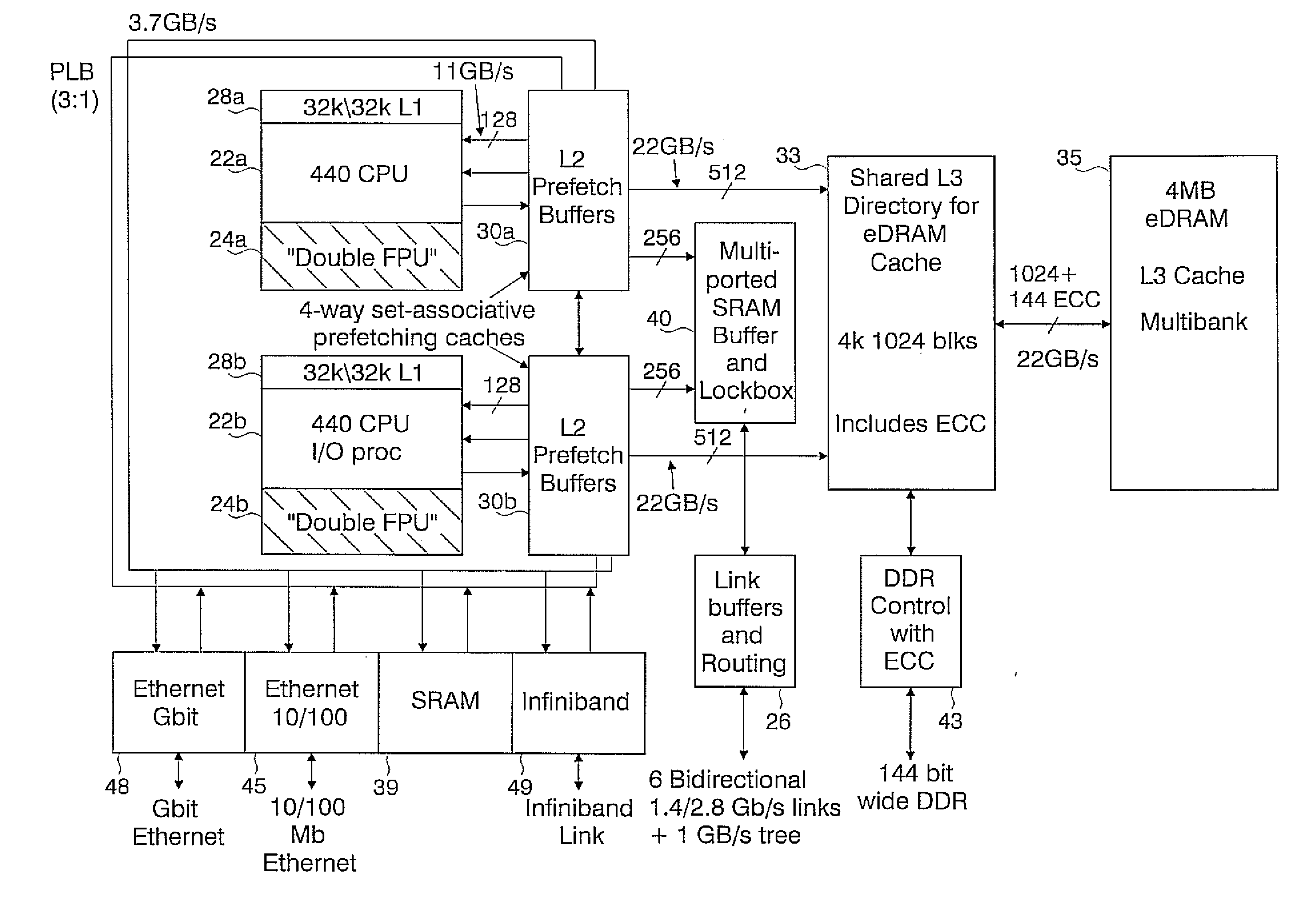

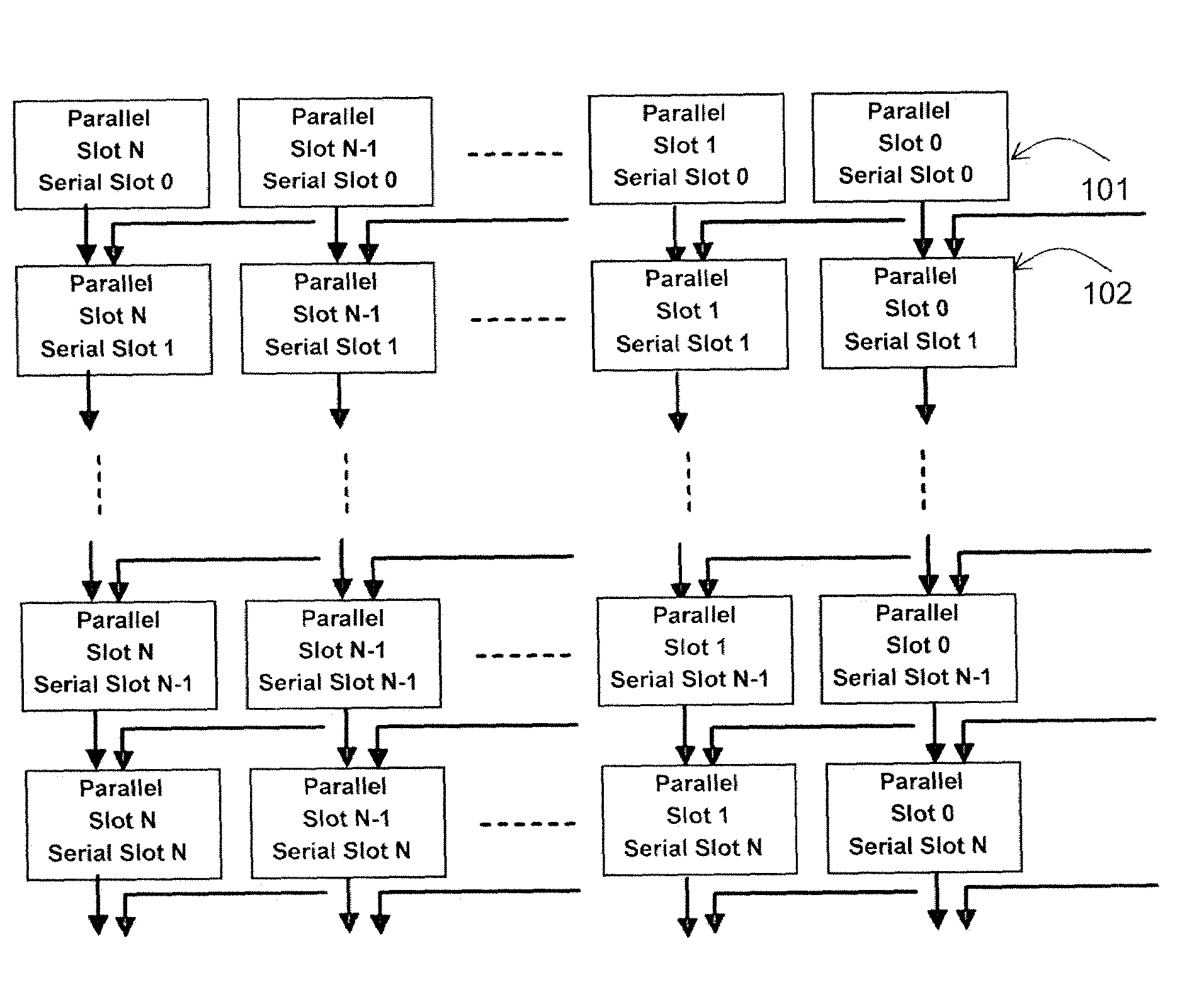

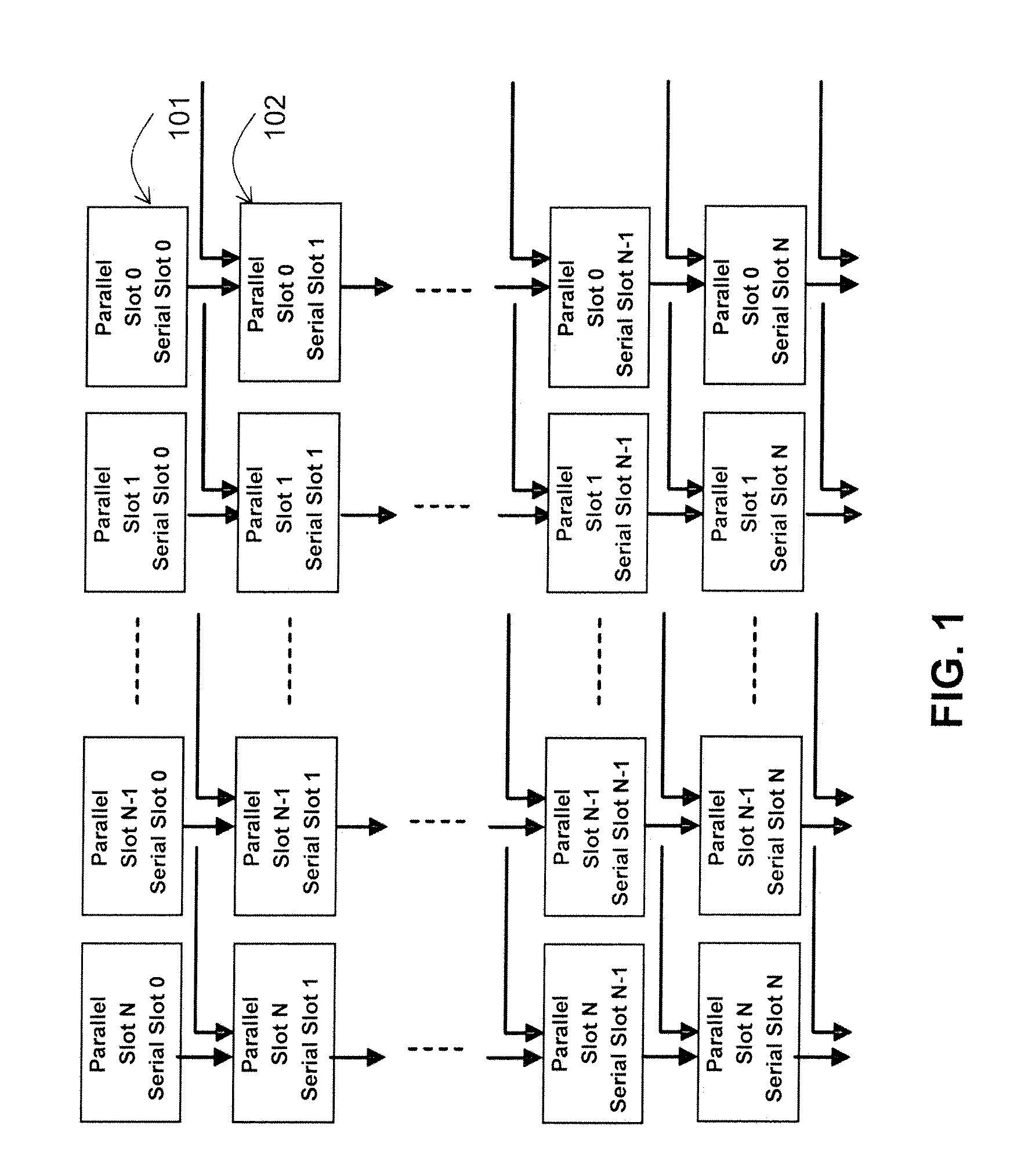

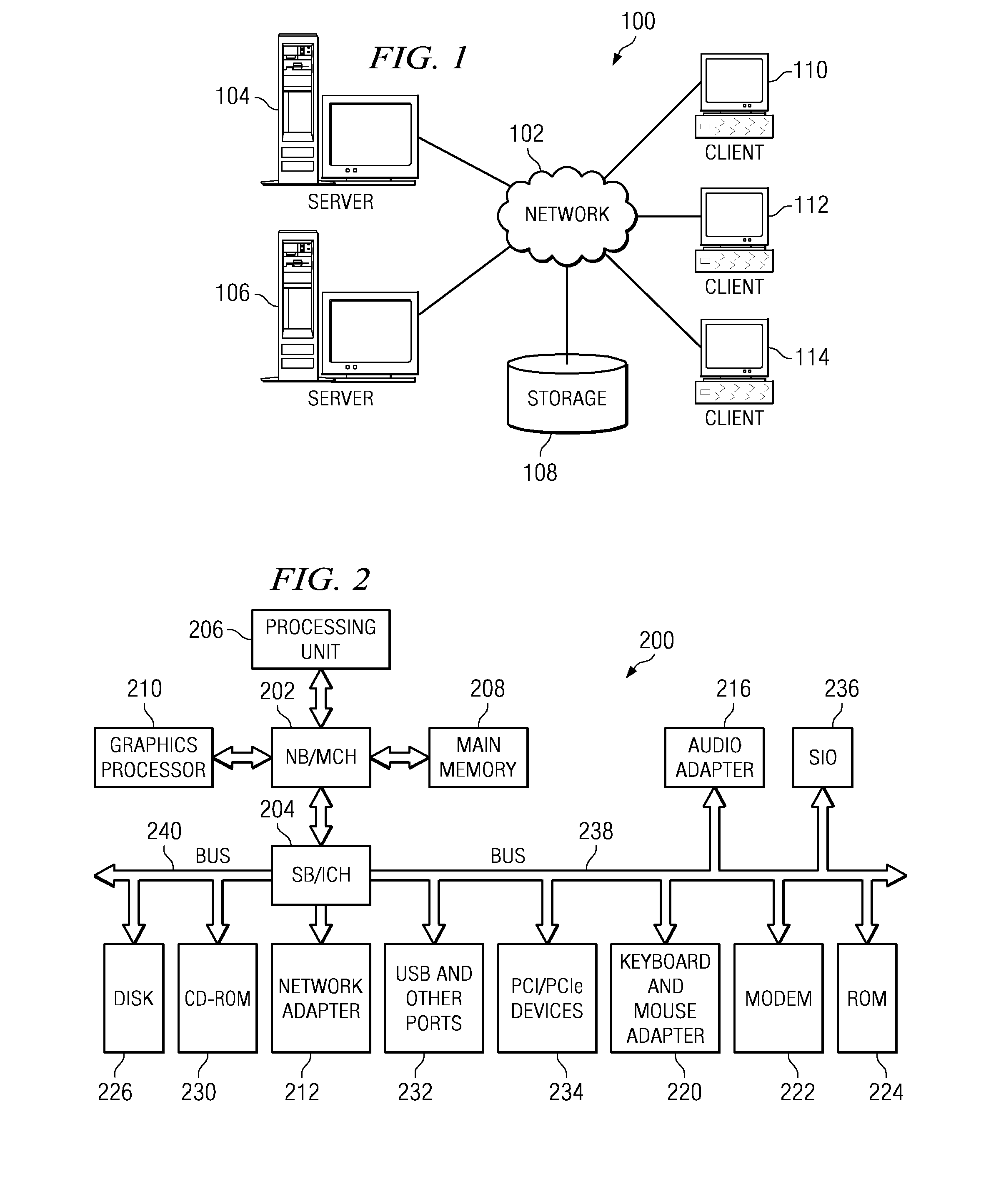

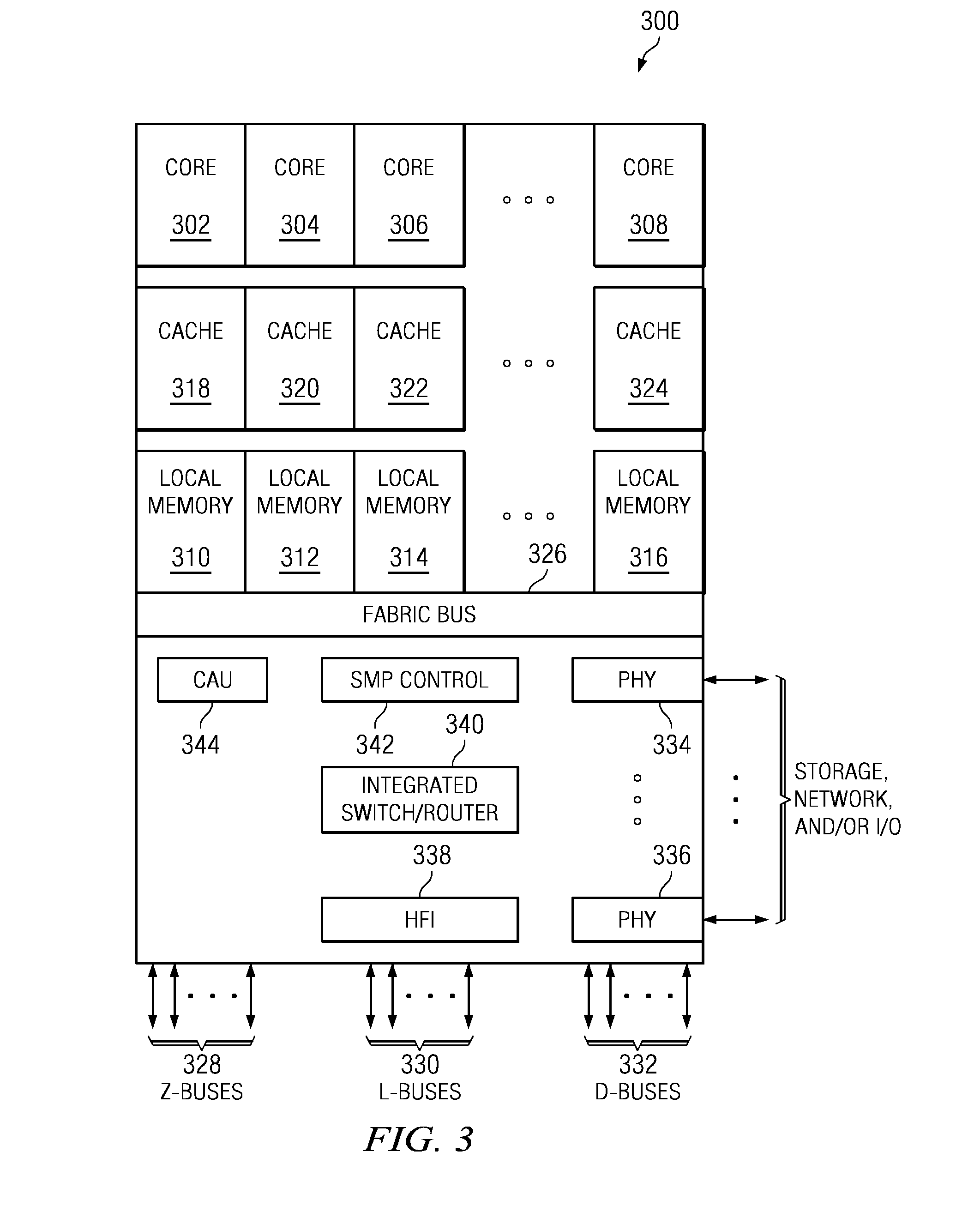

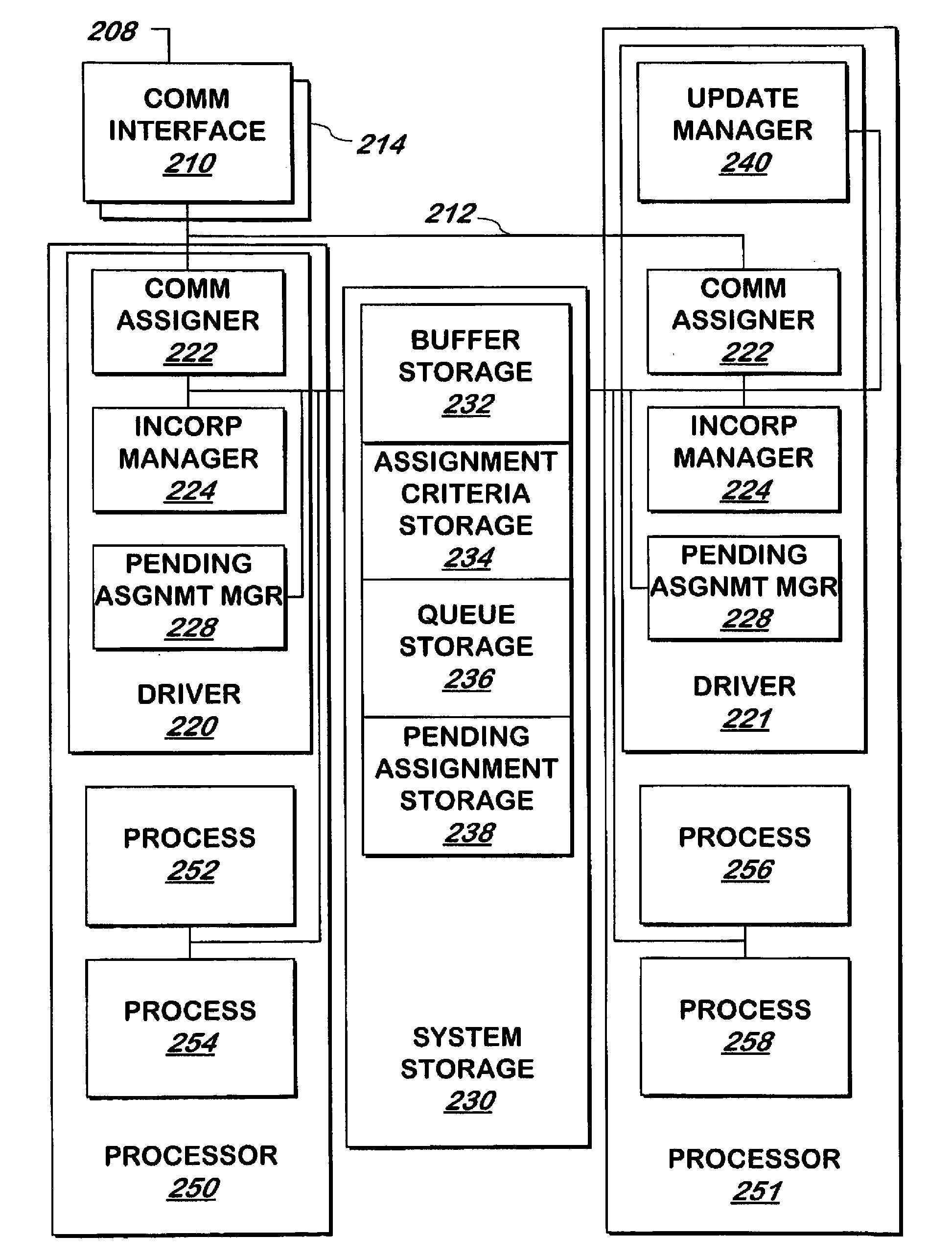

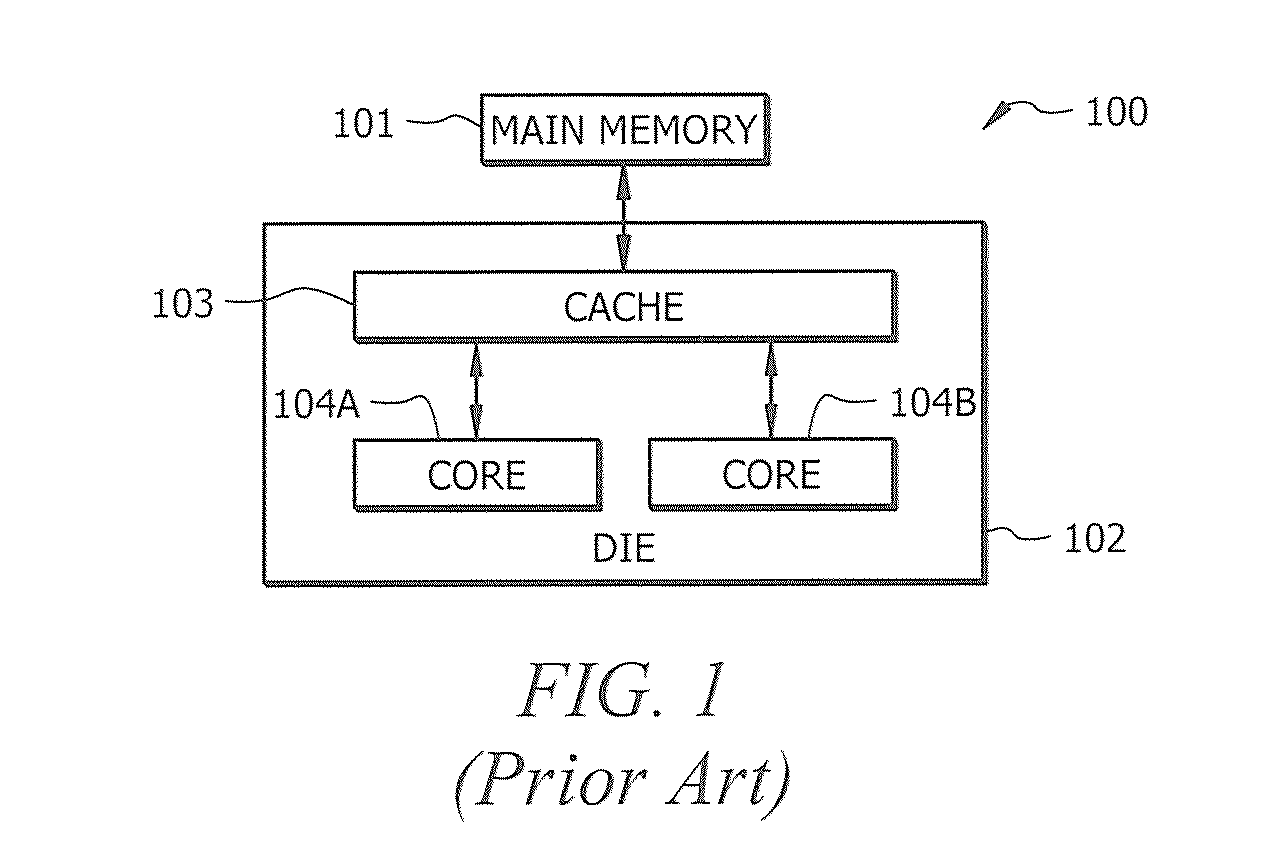

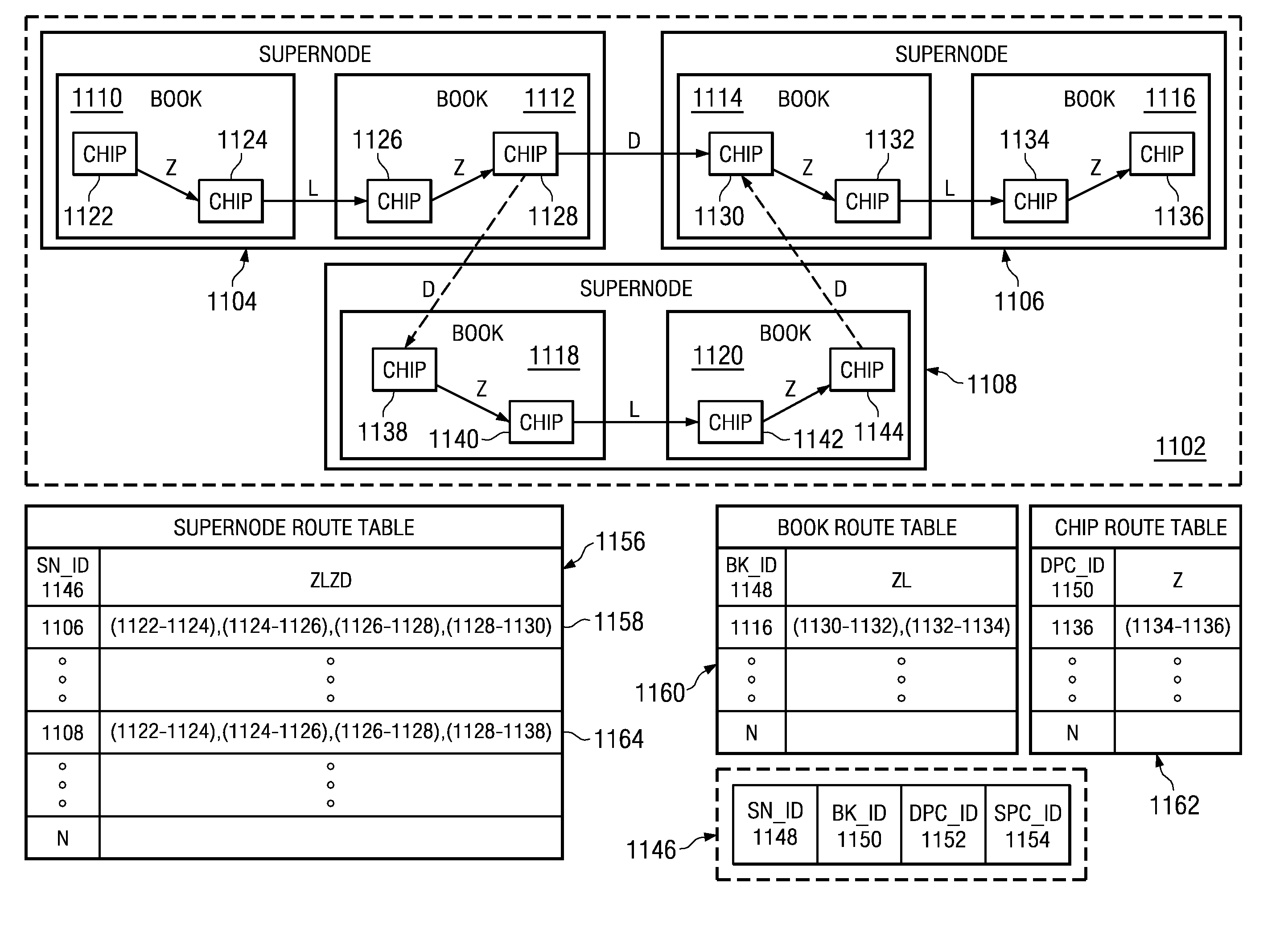

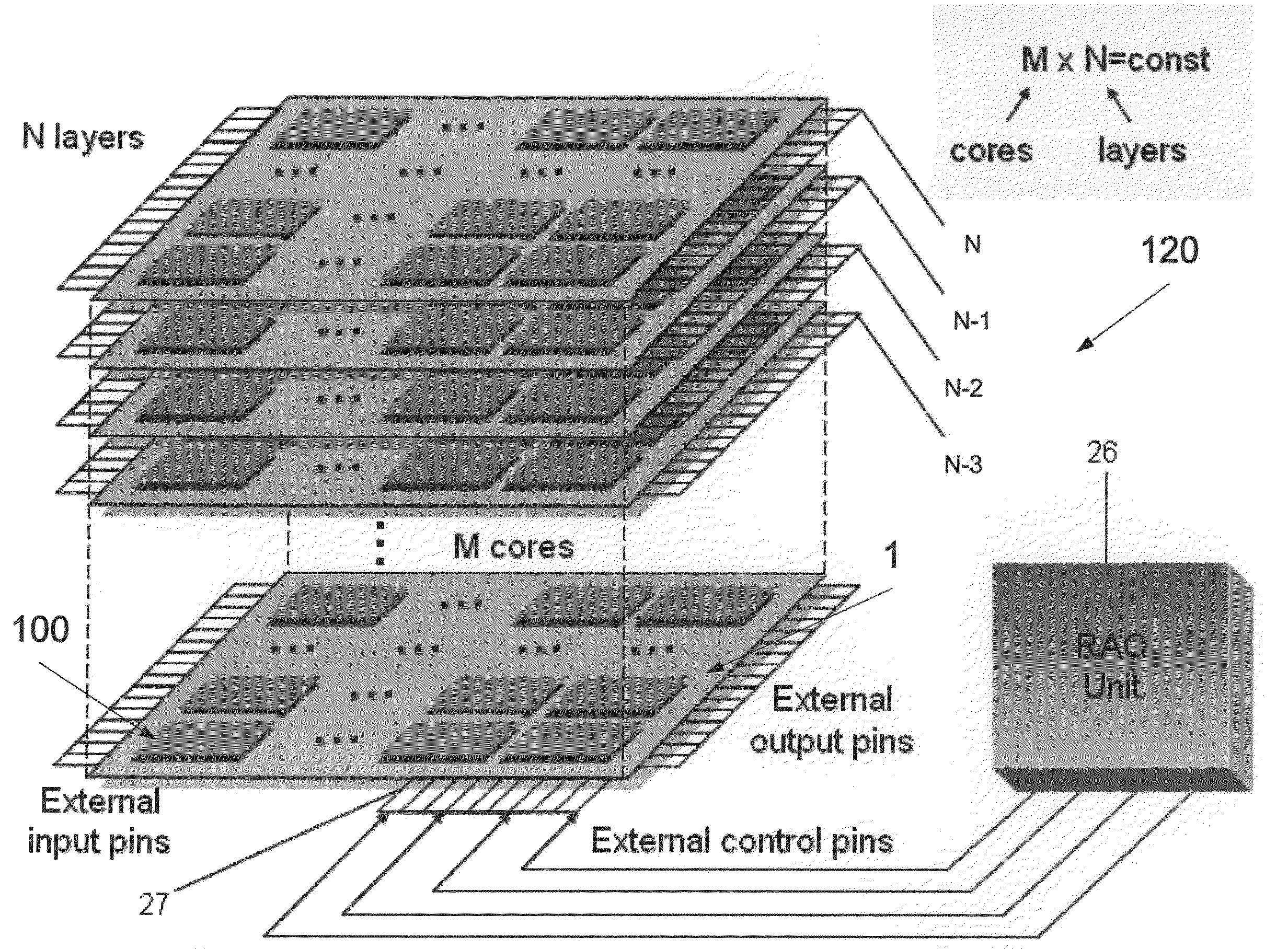

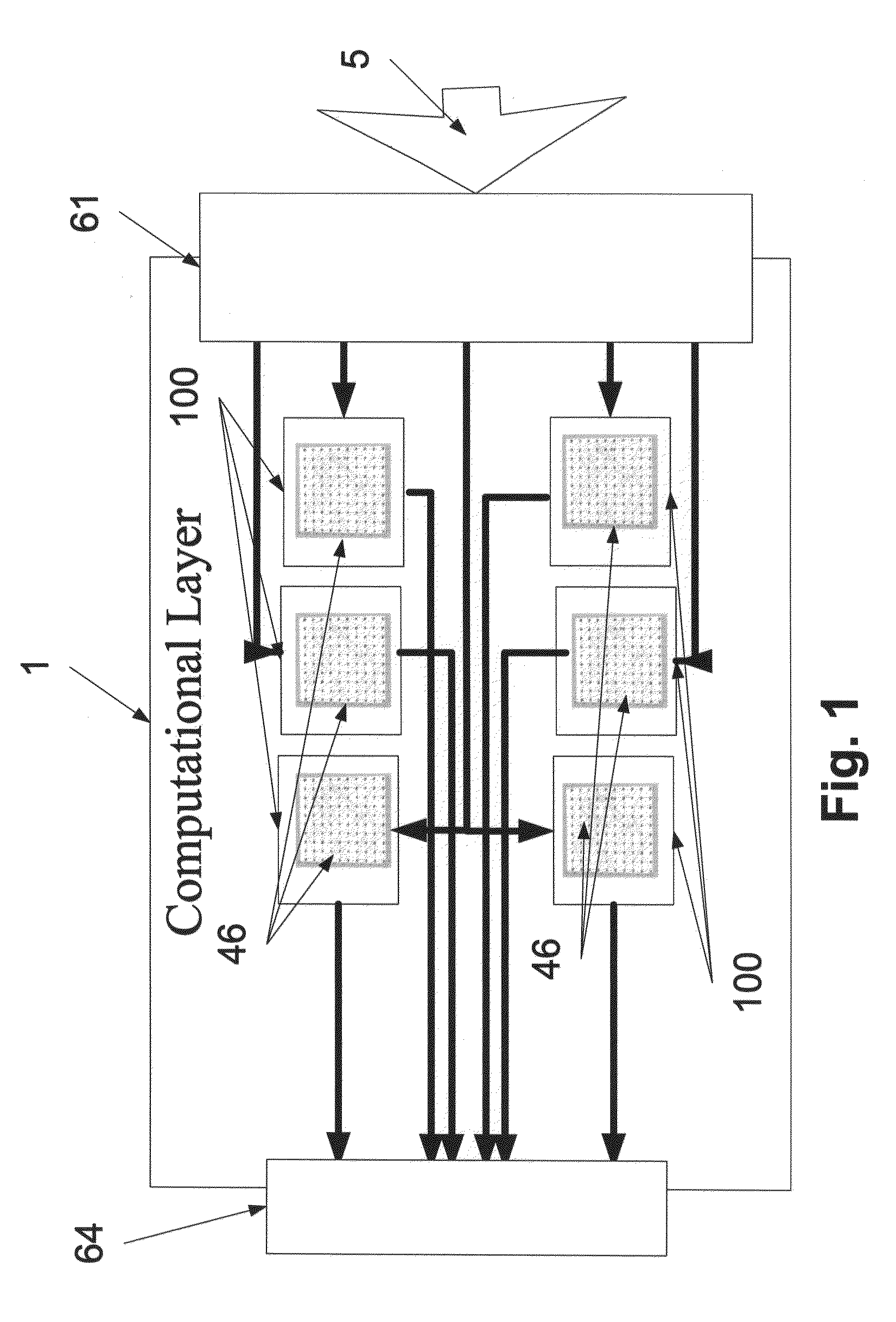

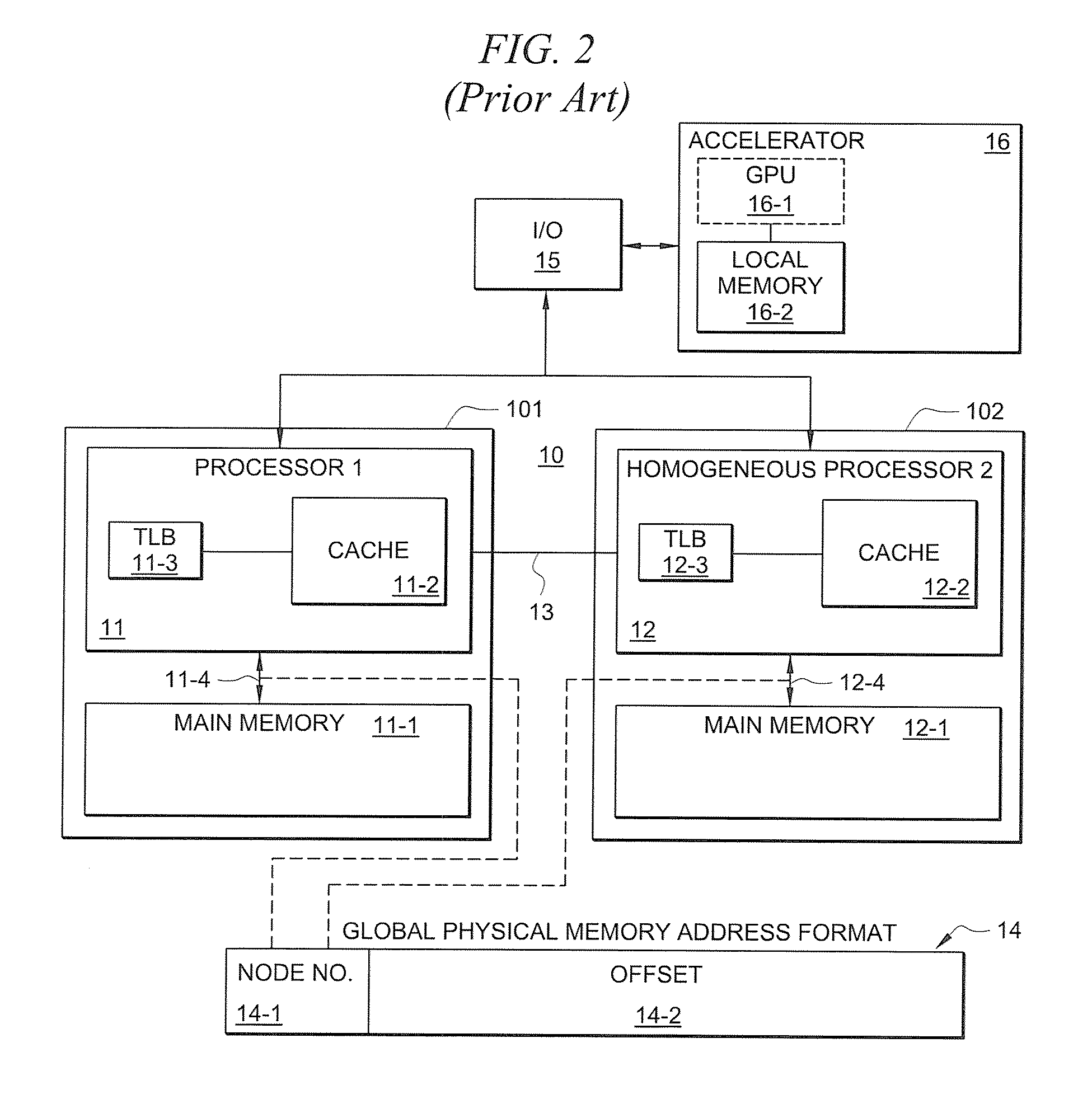

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

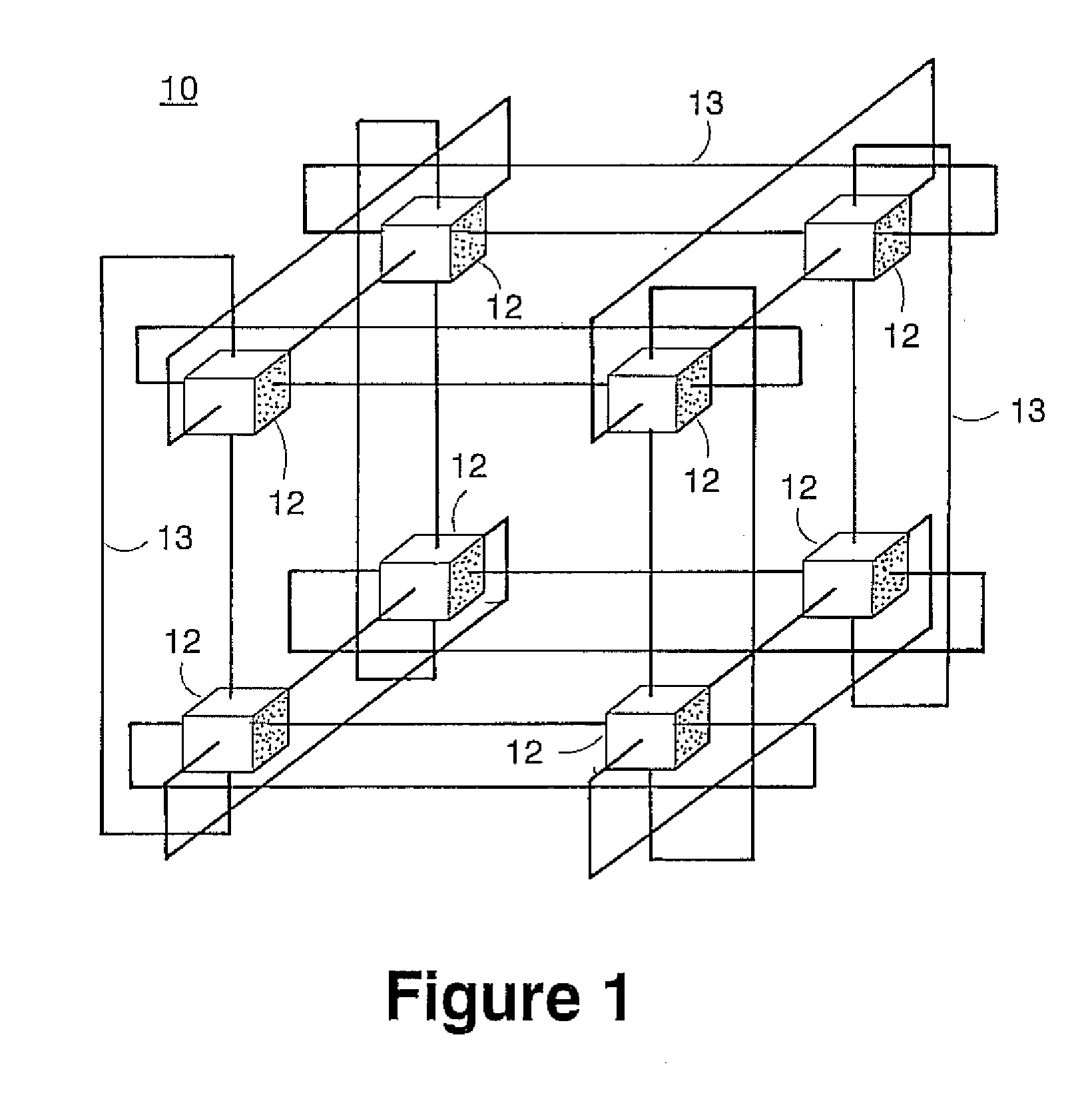

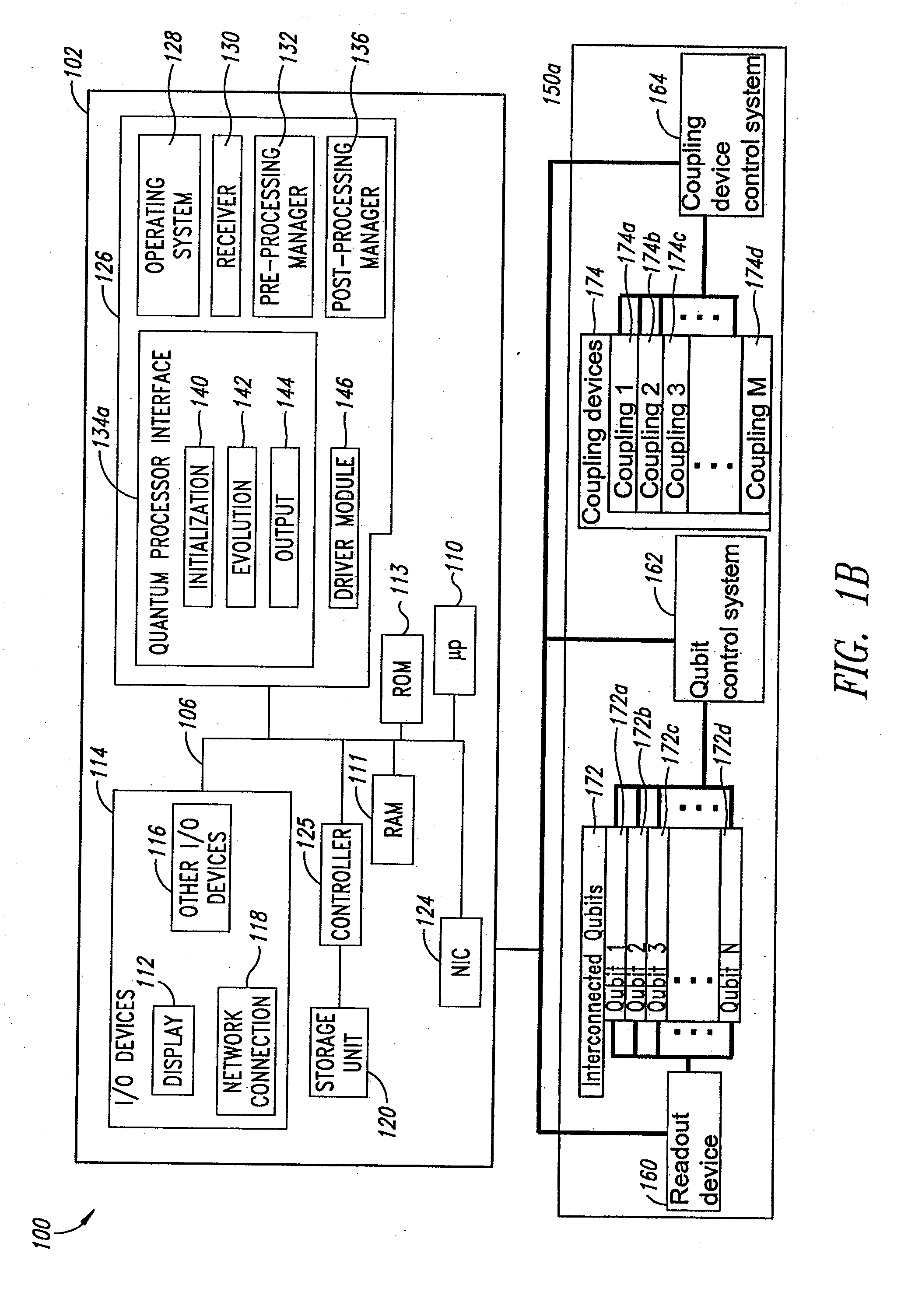

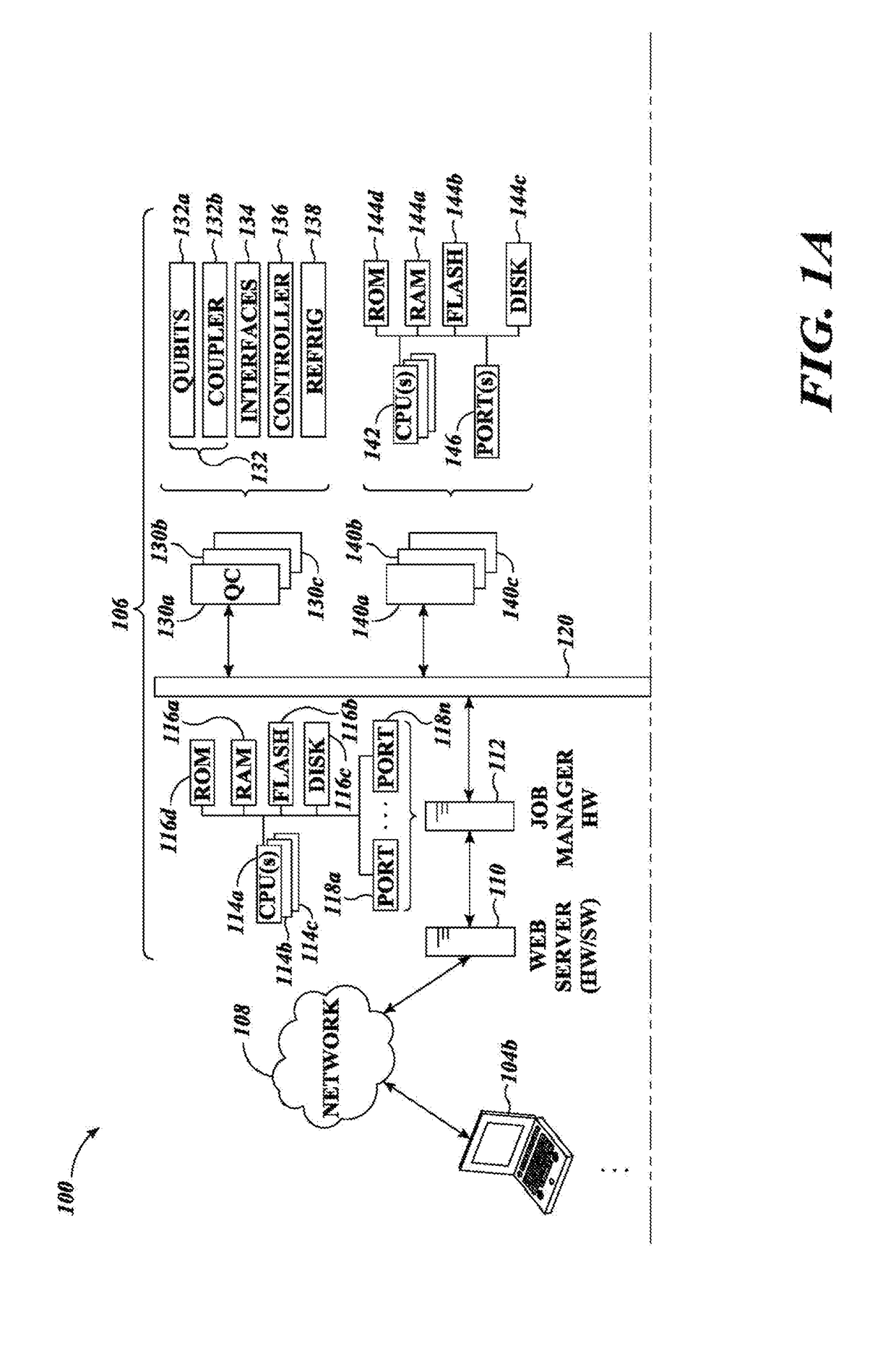

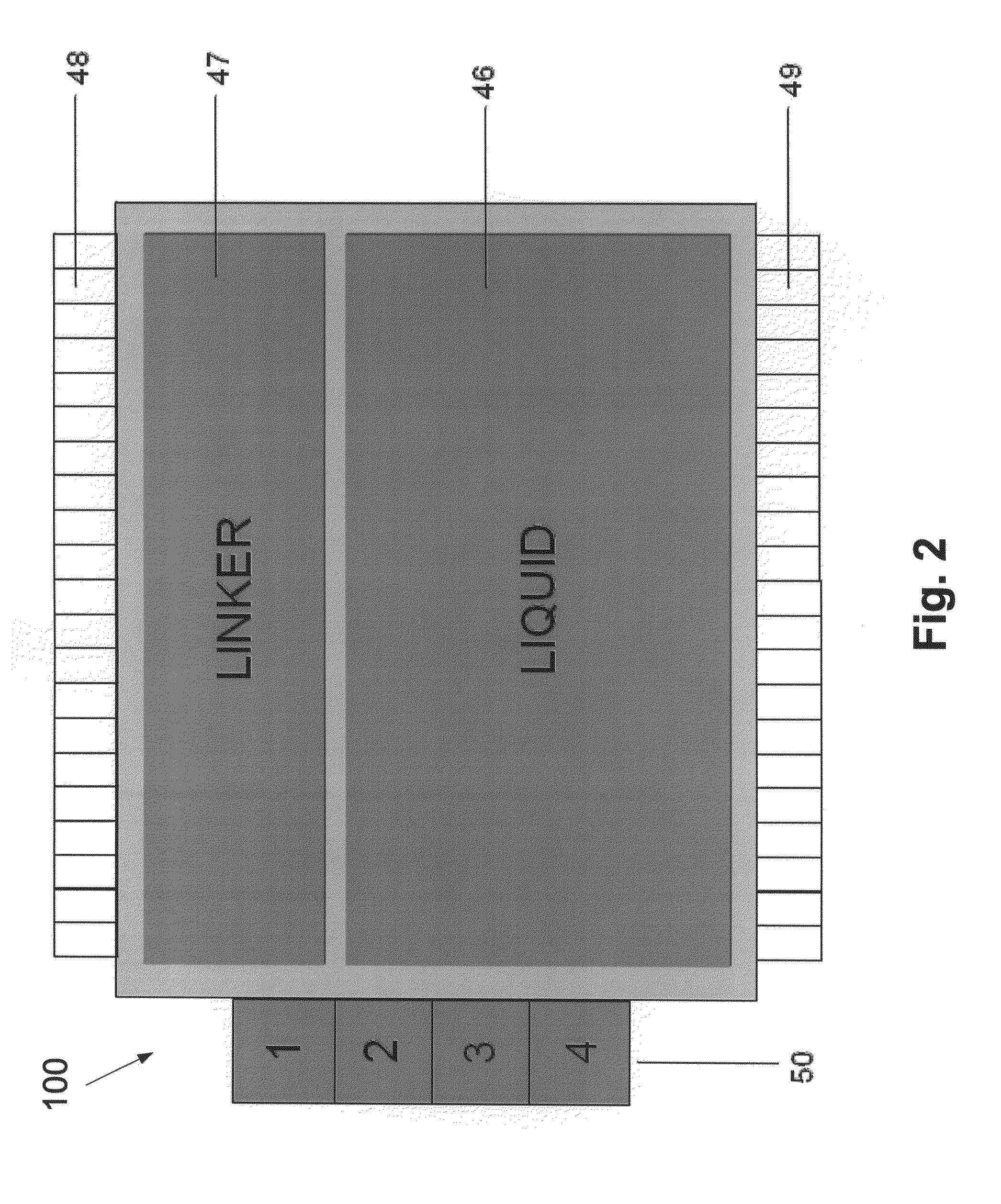

Systems, devices, and methods for interconnected processor topology

ActiveUS20080176750A1Improve legibilityQuantum computersProgram control using wired connectionsAnalog processorCoupling

An analog processor, for example a quantum processor may include a plurality of elongated qubits that are disposed with respect to one another such that each qubit may selectively be directly coupled to each of the other qubits via a single coupling device. Such may provide a fully interconnected topology.

Owner:D WAVE SYSTEMS INC

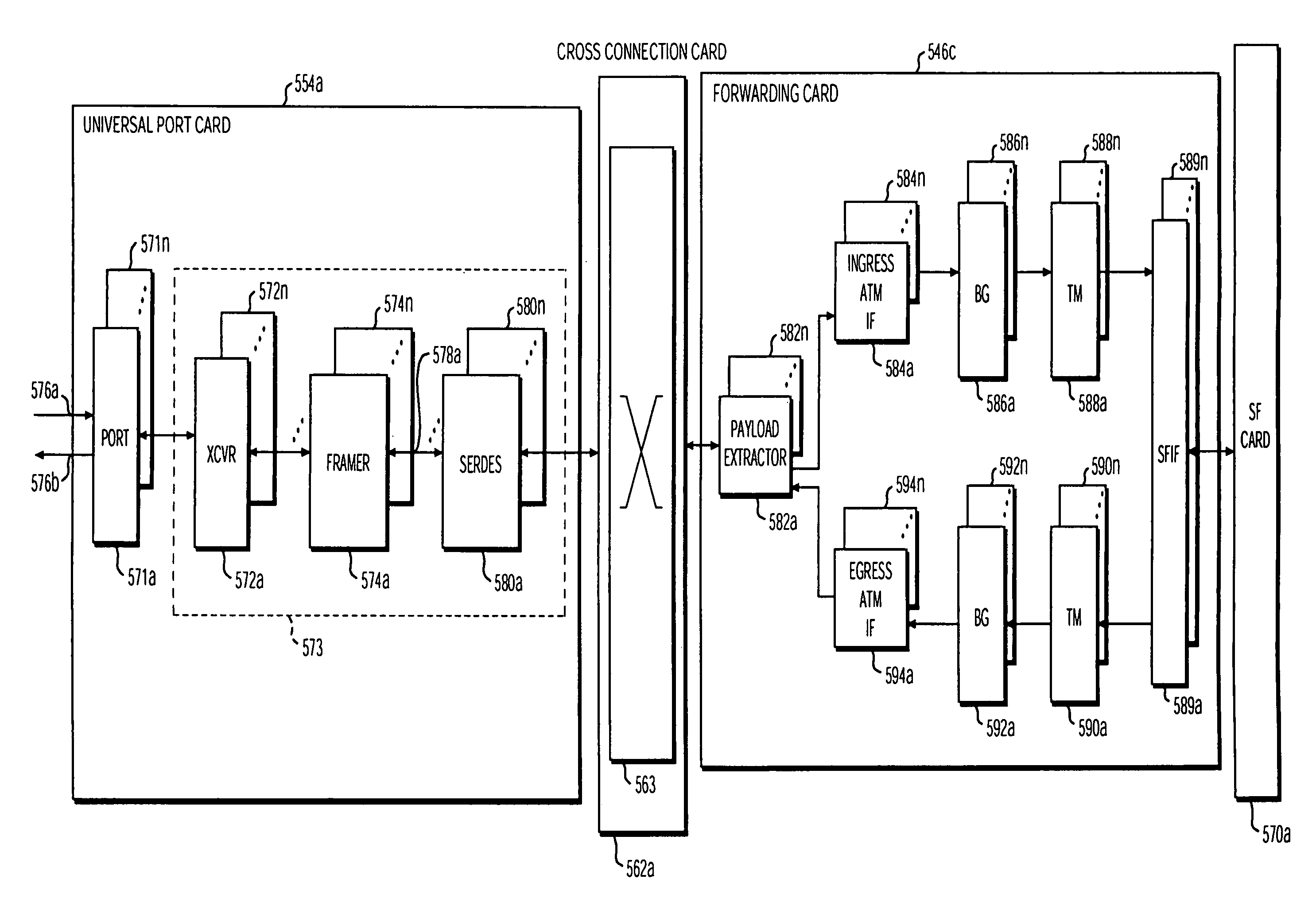

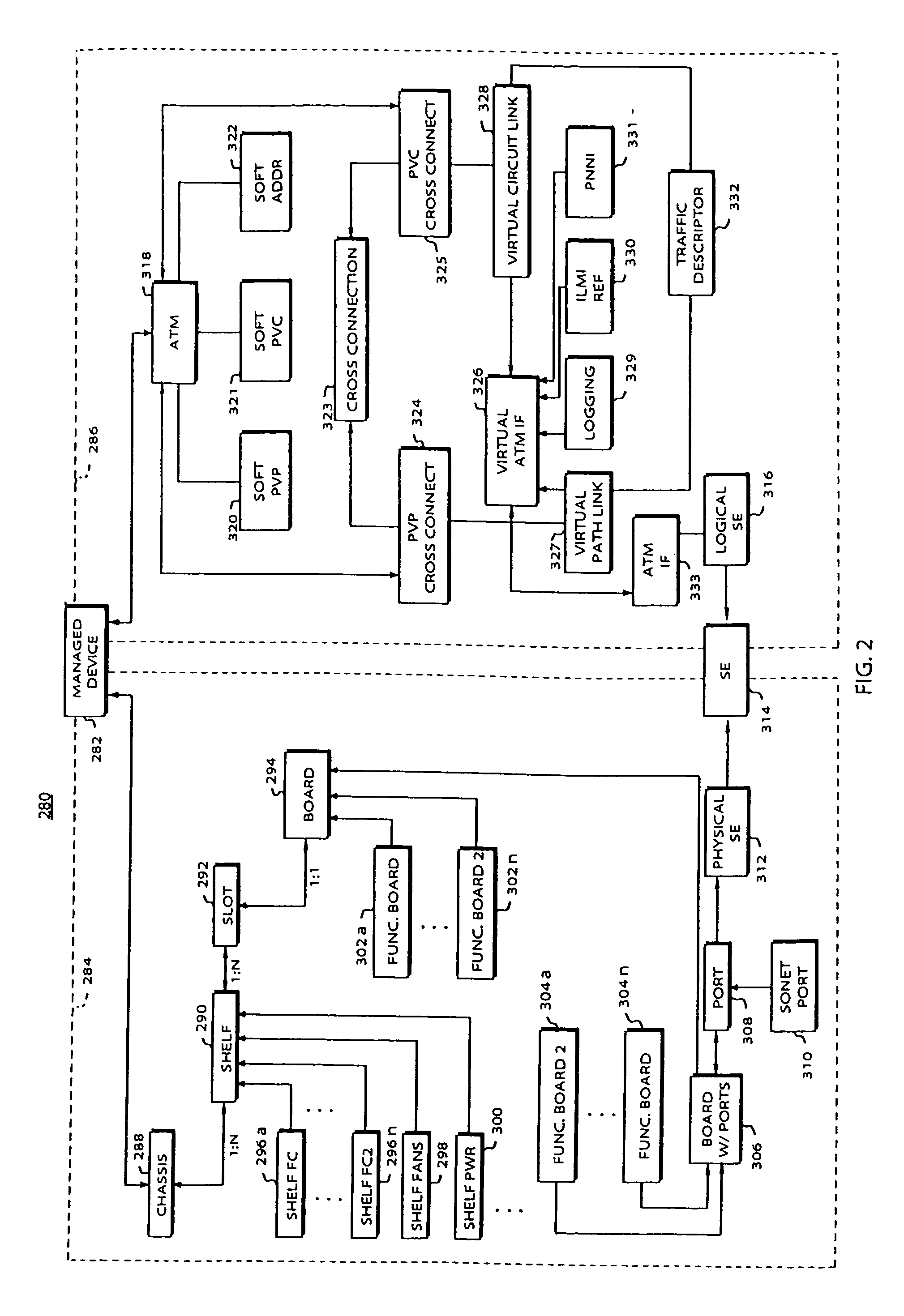

Policy based provisioning of network device resources

InactiveUS7062642B1Reduce administrative workloadProgram control using wired connectionsData resettingQuality of serviceData transmission

Methods are disclosed for establishing a path for data transmissions in a system having a plurality of possible paths by creating a configuration database and establishing internal connection paths based upon a configuration policy and the configuration database. The configuration policy can be based on available system resources and needs at a given time. In one embodiment, one or more tables are initiated in the configuration database to provide connection information to the system. For example, a path table and a service endpoint table can be employed to establishing a partial record in the configuration database whenever a user connects to a particular port on a universal port card in the system. The method can further include periodically polling records in the path table and transmitting data from the partial records to a policy provisioning manager (PPM). The PPM then implements a connection policy by comparing one or more of the new path characteristics, to the available forwarding card resources in the quadrant containing the universal port card port and path. The path characteristics can include the protocol, the desired number of time slots, the desired number of virtual circuits, and any virtual circuit scheduling restrictions. The PPM can also take other factors into consideration, including quality of service, for example, redundancy requirements or dedicated resource requirements, and balancing resource usage (i.e., load balancing) evenly within a quadrant.

Owner:CIENA

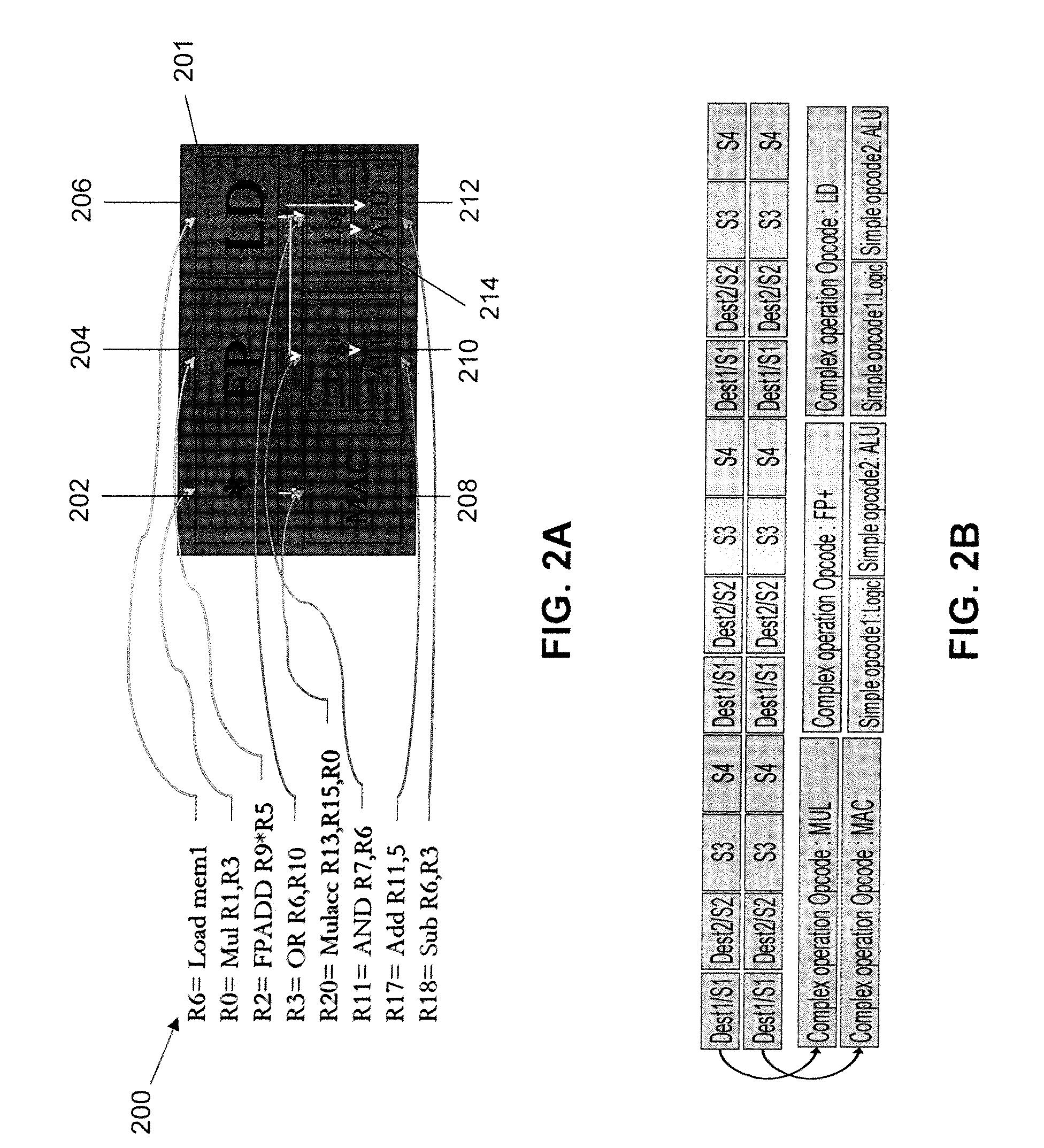

Apparatus and Method for Processing an Instruction Matrix Specifying Parallel and Dependent Operations

ActiveUS20090113170A1Single instruction multiple data multiprocessorsRegister arrangementsParallel computingMatrix manipulation

Owner:INTEL CORP

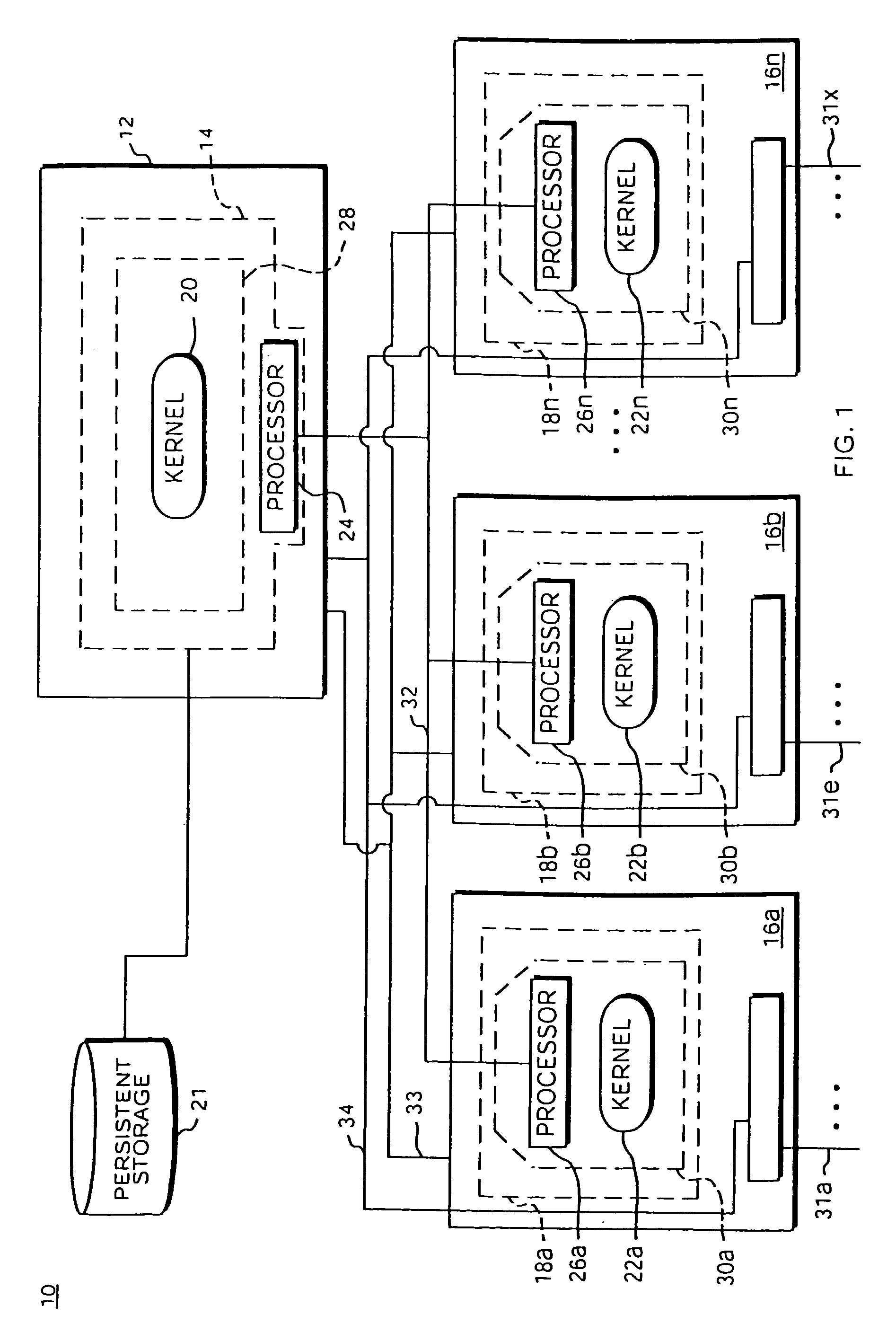

System and Method for Performing Dynamic Request Routing Based on Broadcast Source Request Information

InactiveUS20090198958A1Small amountReduction of wasted processor cycleProgram control using wired connectionsGeneral purpose stored program computerDistributed computing

A system and method for performing dynamic request routing based on broadcast source request information are provided. Each processor chip in the system may use a synchronized heartbeat signal it generates to provide source request information to each of the other processor chips in the system. The source request information identifies the number of active source requests sent by the processor chip that originated the heartbeat signal. The source request information from each of the processor chips in the system may be used by the processor chips in determining optimal routing paths for data from a source processor chip to a destination processor chip. As a result, the congestion of data for processing at each of the processor chips along each possible routing path may be taken into account when selecting to which processor chip to forward data.

Owner:IBM CORP

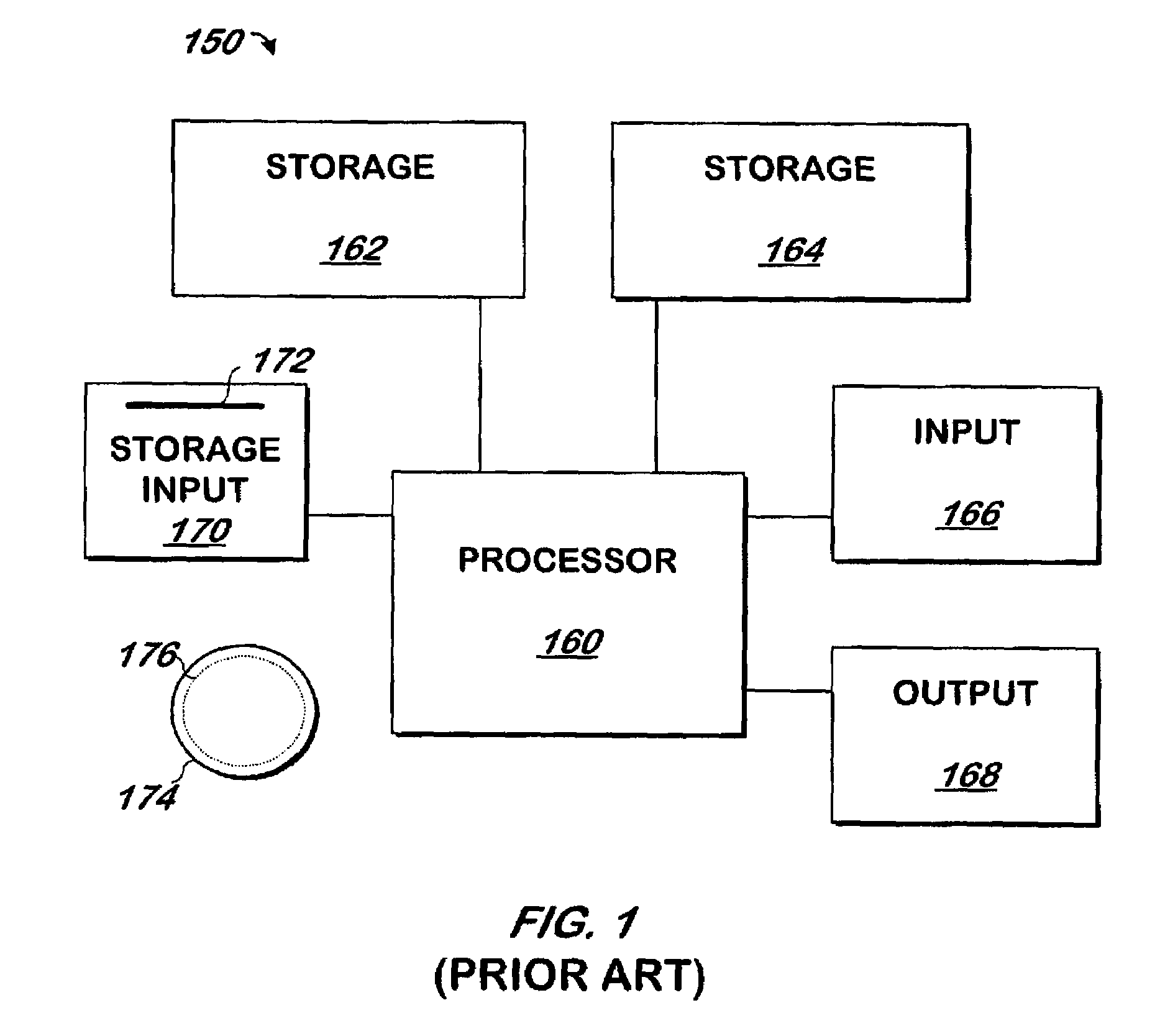

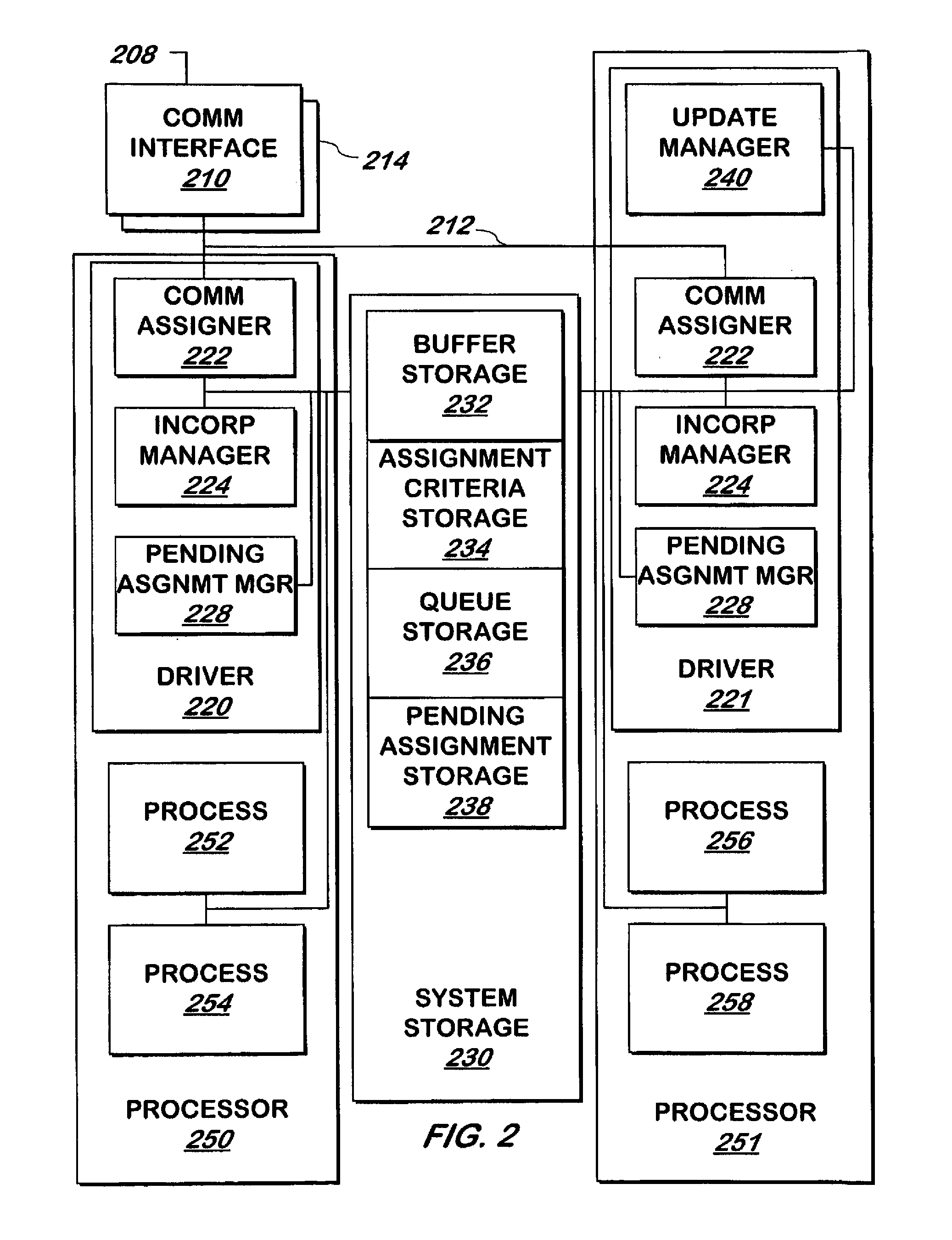

System and method for allocating communications to processors in a multiprocessor system

InactiveUS20080301406A1Easy to handleProgram control using wired connectionsGeneral purpose stored program computerLocking mechanismMulti processor

In a multiprocessor-system, a system and method assigns communications to processors, processes, or subsets of types of communications to be processed by a specific 5 processor without using a locking mechanism specific to the resources required for assignment.

Owner:JACOBSON VAN +3

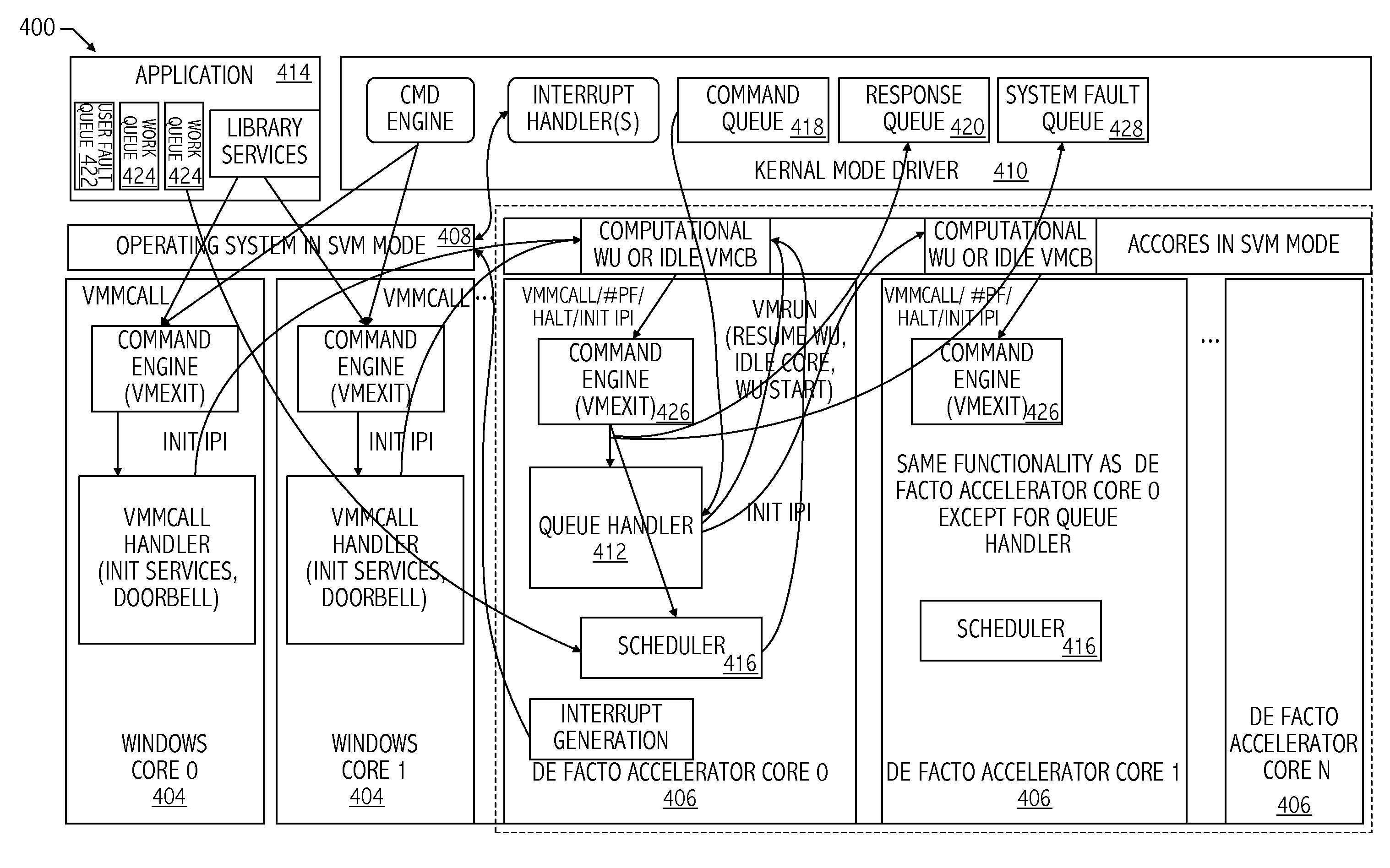

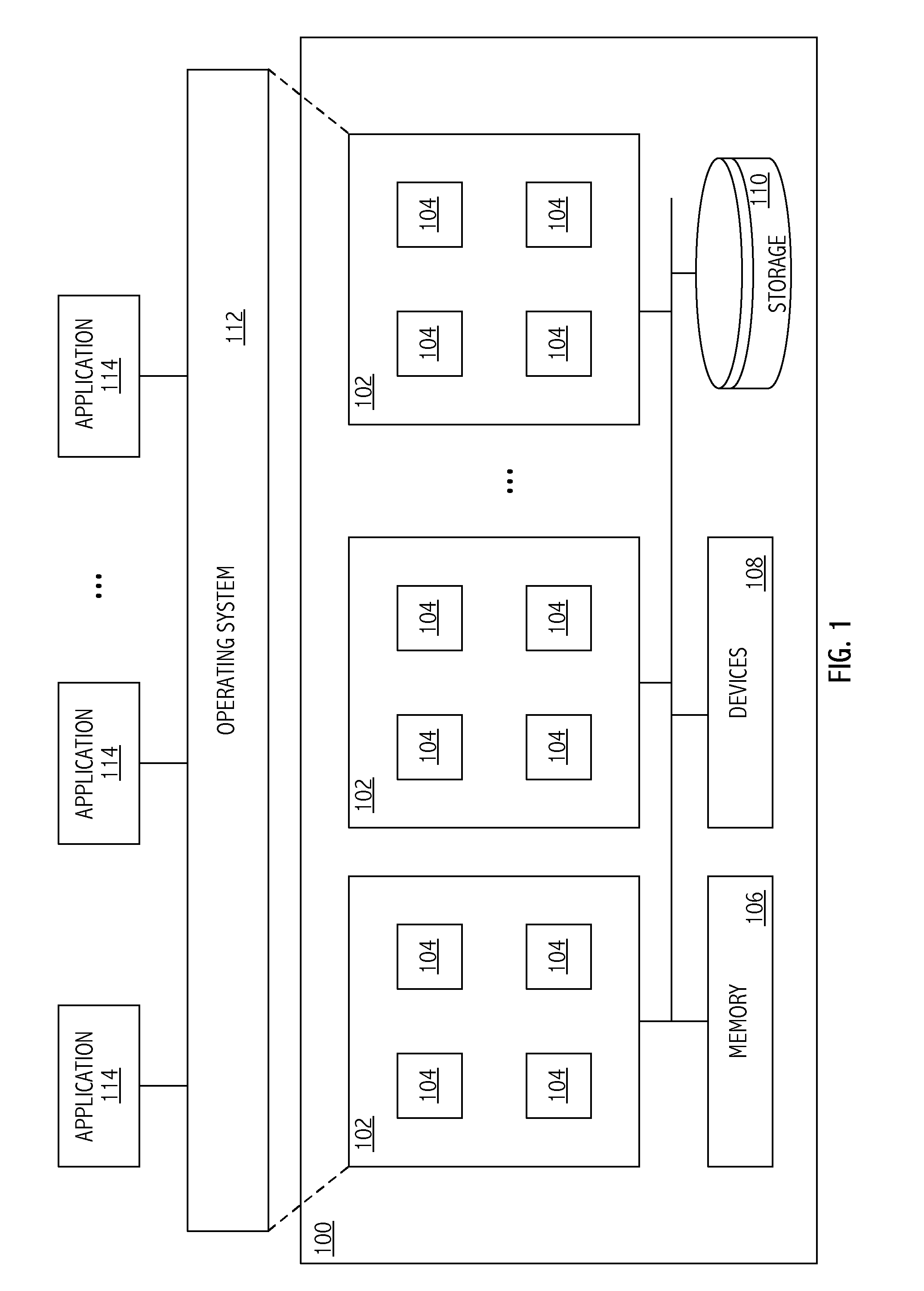

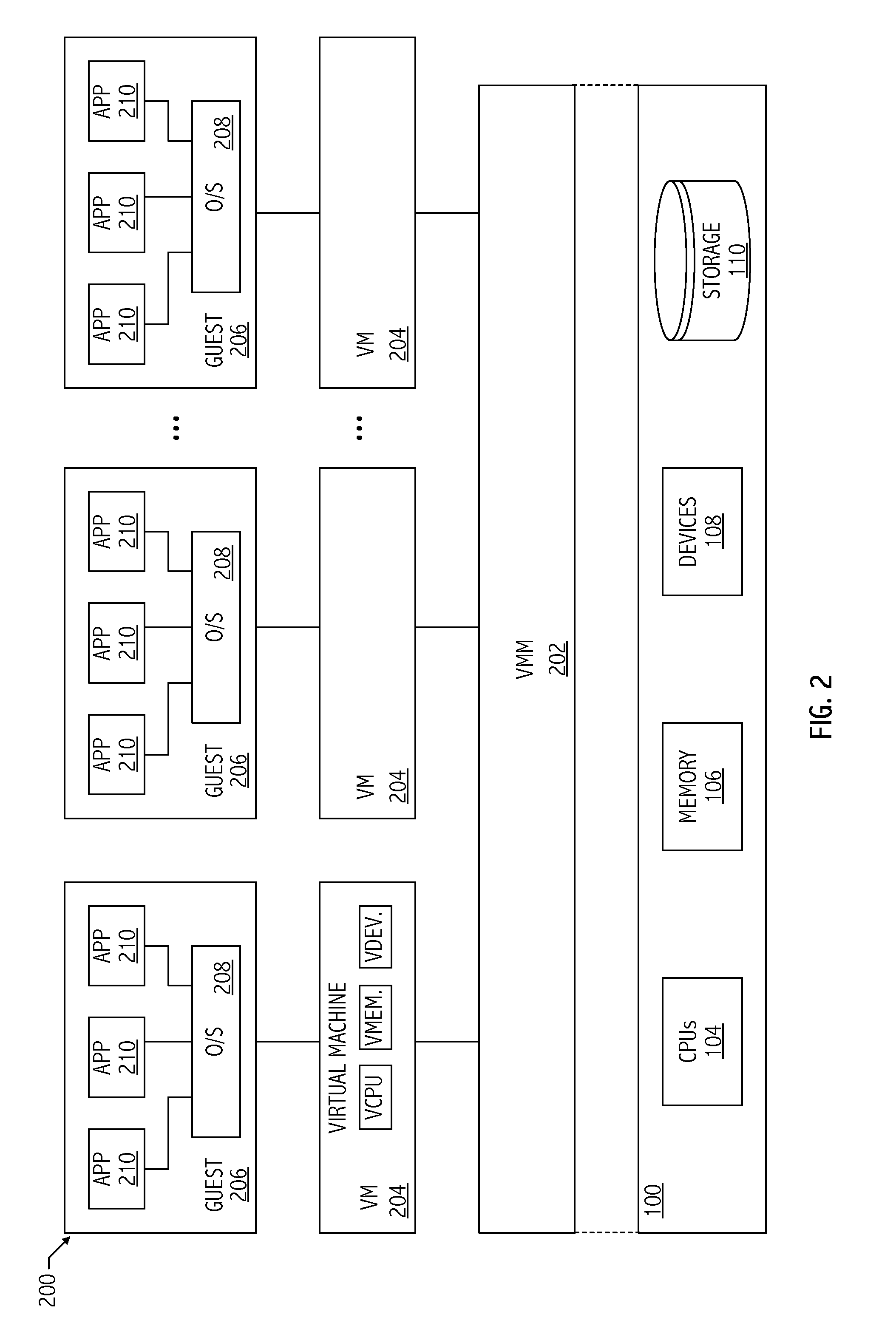

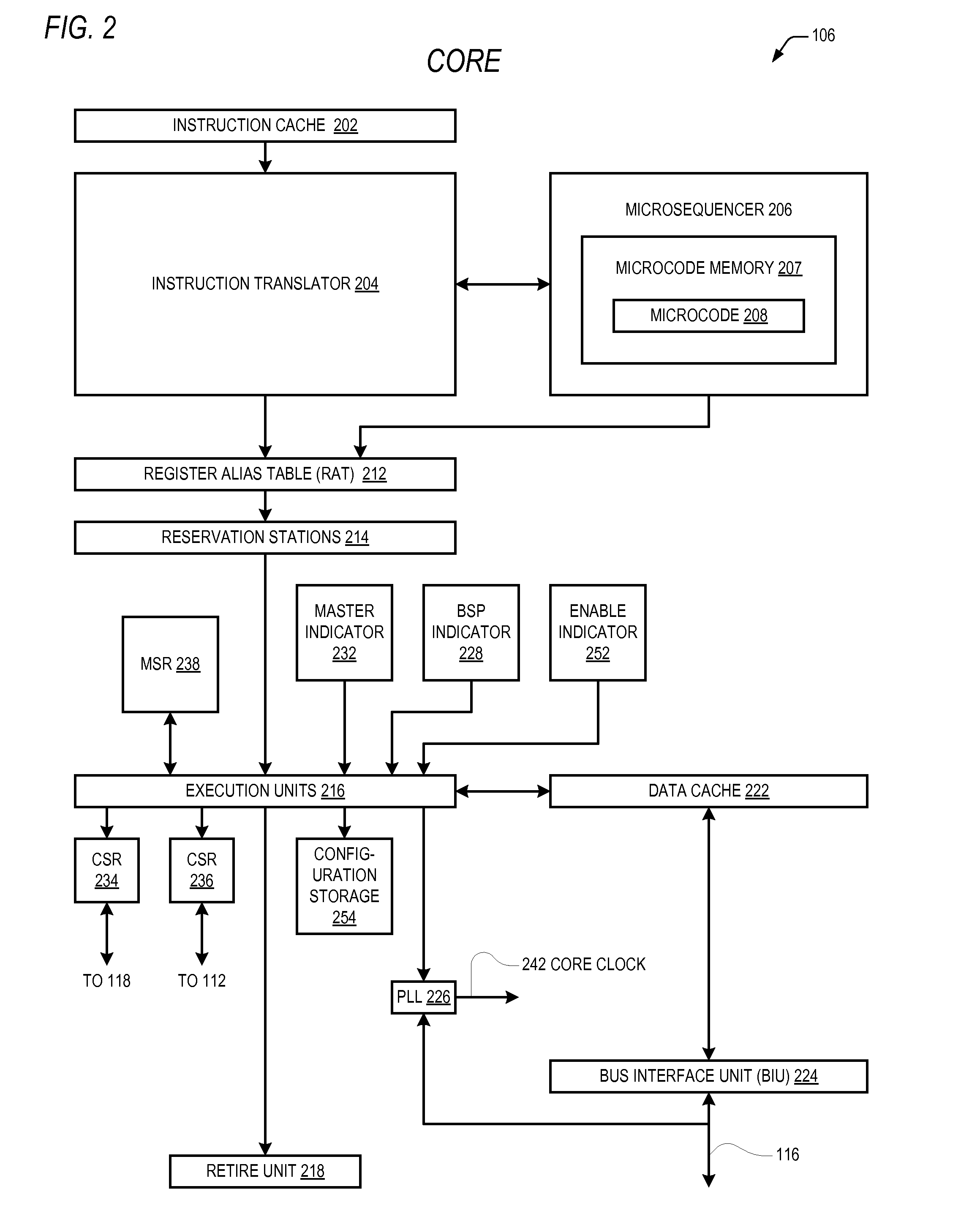

Hypervisor isolation of processor cores

ActiveUS20110161955A1Program control using wired connectionsGeneral purpose stored program computerComputerized systemParallel computing

Techniques for utilizing processor cores include sequestering processor cores for use independently from an operating system. In at least one embodiment of the invention, a method includes executing an operating system on a first subset of cores including one or more cores of a plurality of cores of a computer system. The operating system executes as a guest under control of a virtual machine monitor. The method includes executing work for an application on a second subset of cores including one or more cores of the plurality of cores. The first and second subsets of cores are mutually exclusive and the second subset of cores is not visible to the operating system. In at least one embodiment, the method includes sequestering the second subset of cores from the operating system.

Owner:ADVANCED MICRO DEVICES INC

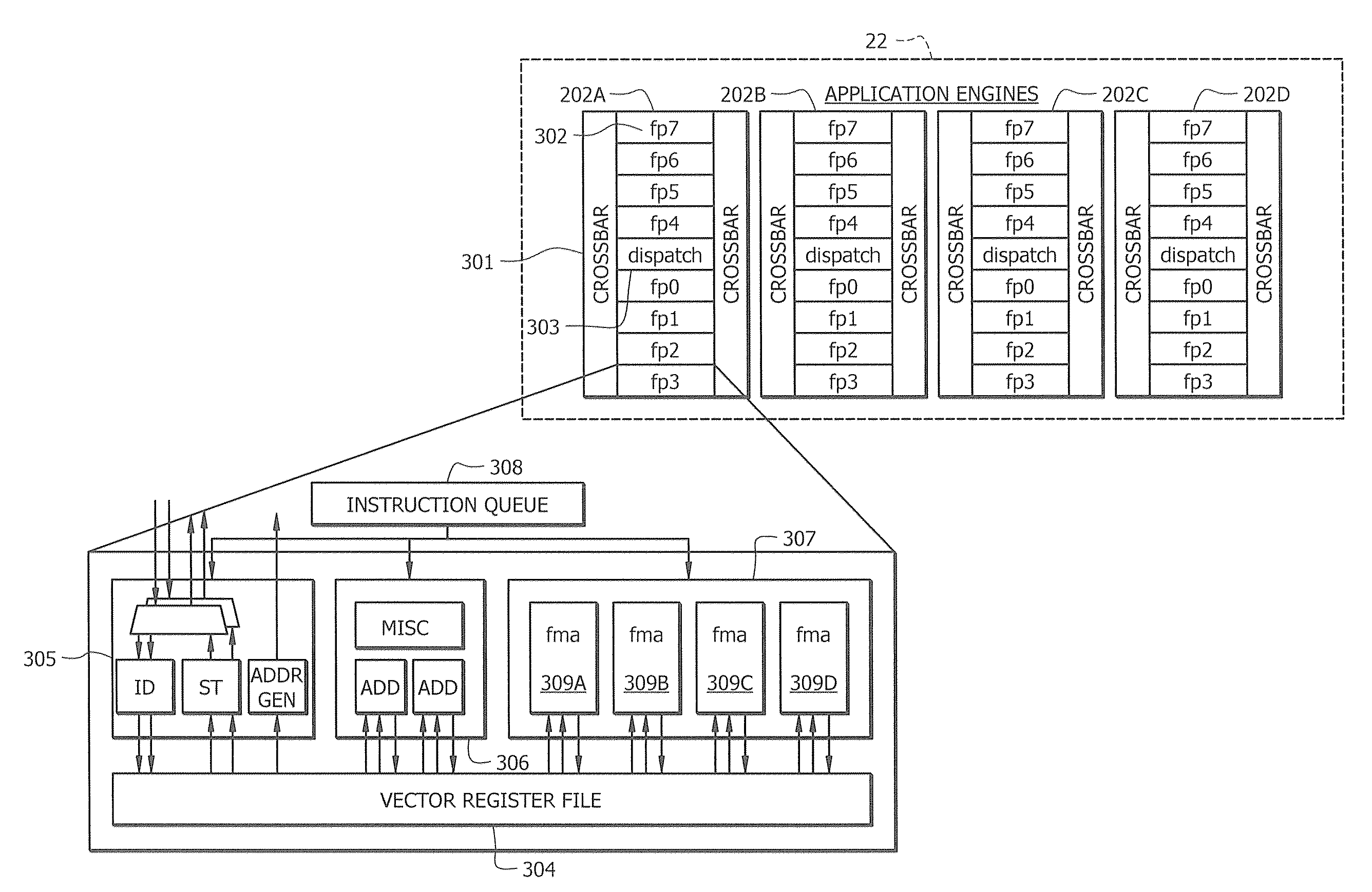

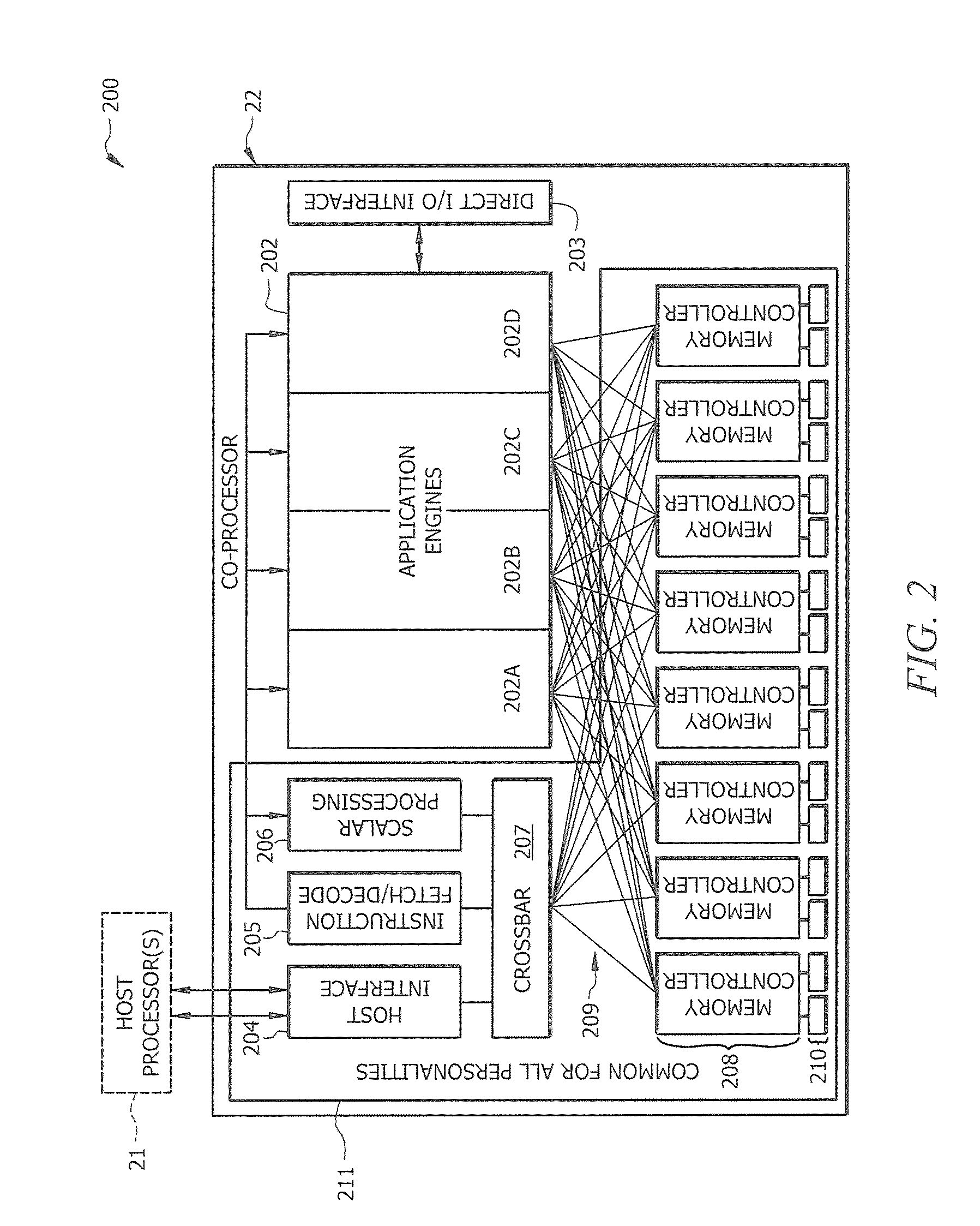

Dynamically-selectable vector register partitioning

InactiveUS20100115233A1Register arrangementsProgram control using wired connectionsProcessor registerMulti processor

The present invention is directed generally to dynamically-selectable vector register partitioning, and more specifically to a processor infrastructure (e.g., co-processor infrastructure in a multi-processor system) that supports dynamic setting of vector register partitioning to any of a plurality of different vector partitioning modes. Thus, rather than being restricted to a fixed vector register partitioning mode, embodiments of the present invention enable a processor to be dynamically set to any of a plurality of different vector partitioning modes. Thus, for instance, different vector register partitioning modes may be employed for different applications being executed by the processor, and / or different vector register partitioning modes may even be employed for use in processing different vector oriented operations within a given applications being executed by the processor, in accordance with certain embodiments of the present invention.

Owner:CONVEY COMP

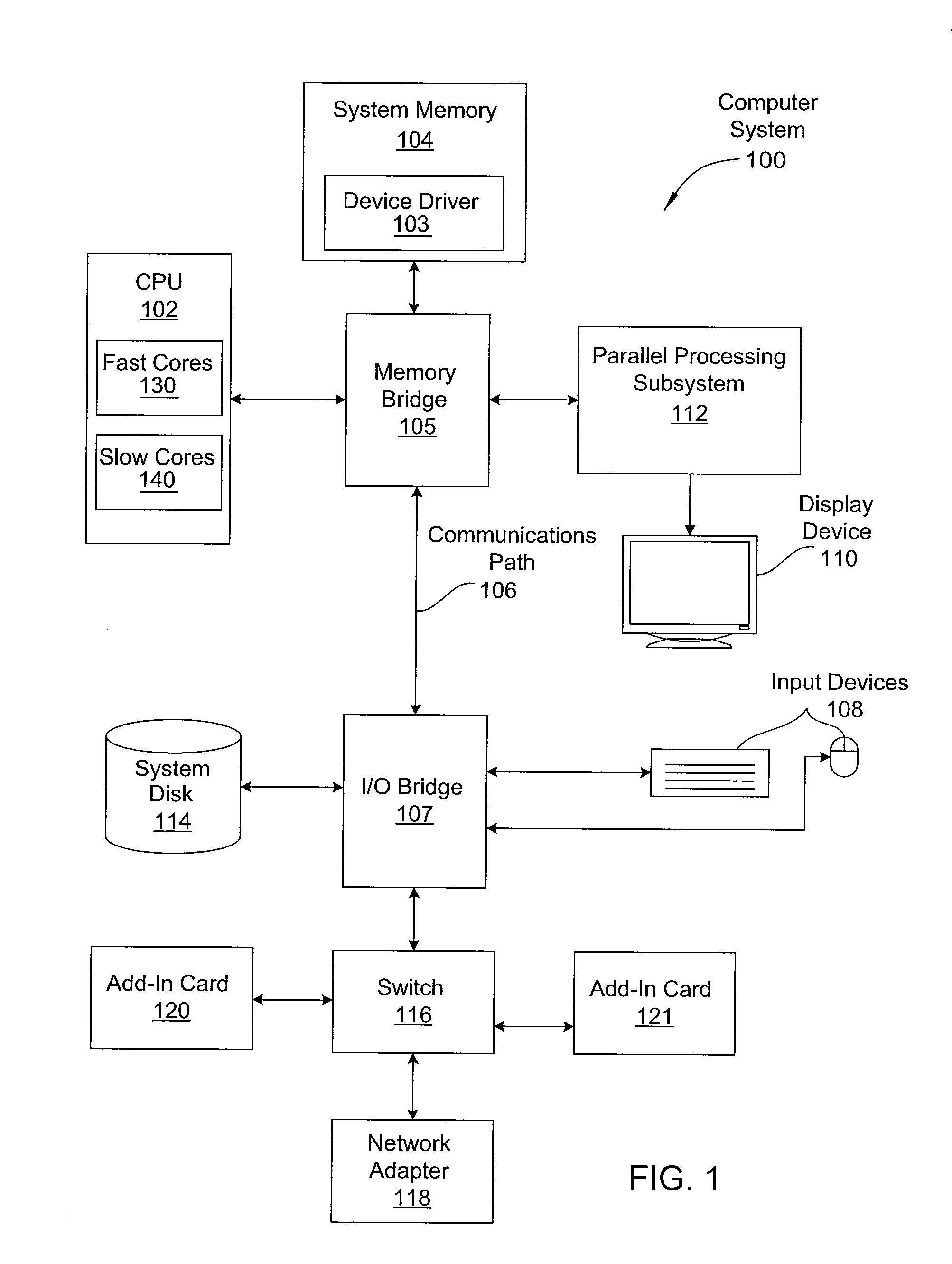

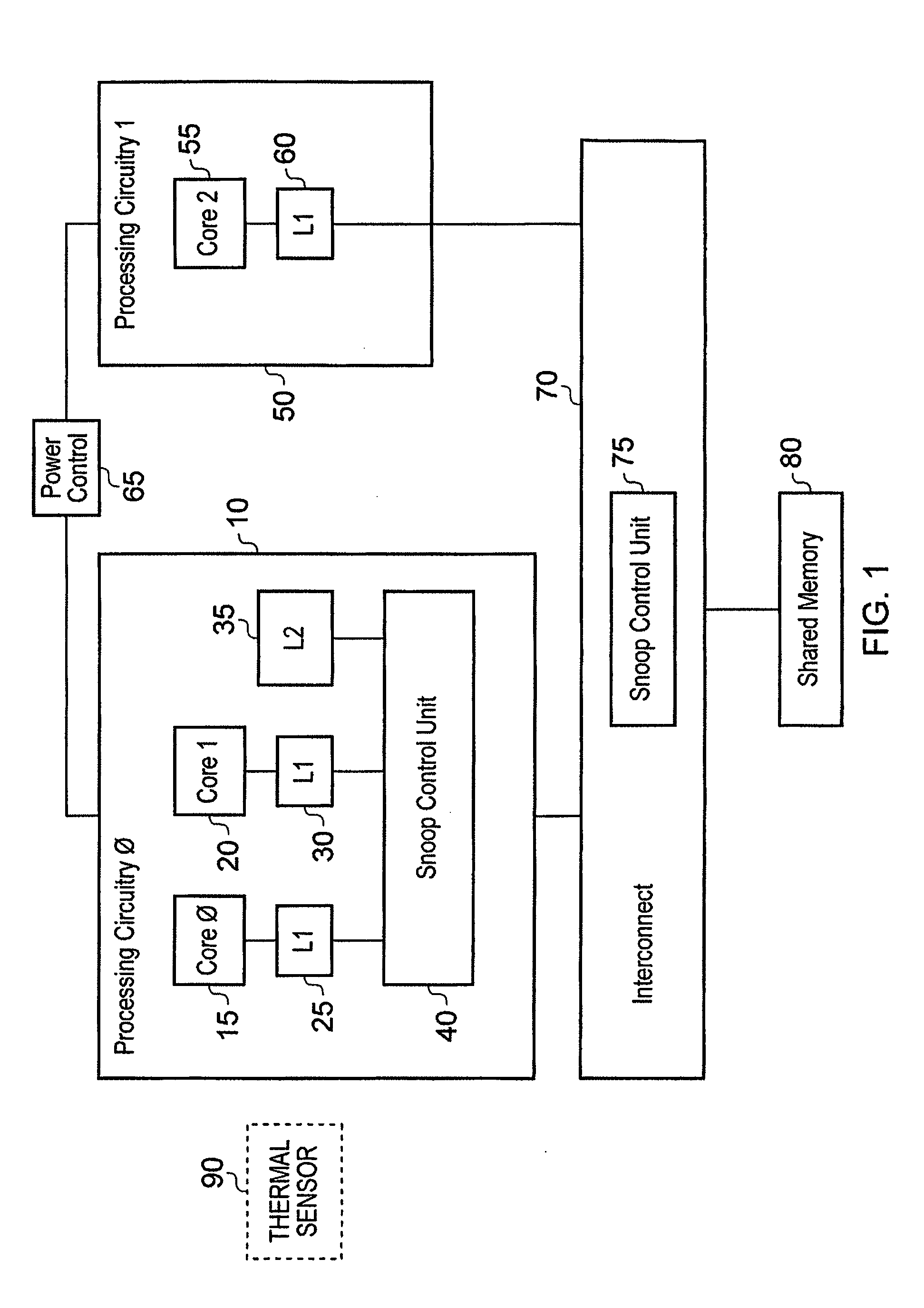

System and Method for Power Optimization

InactiveUS20110213950A1Reduce power consumptionEnergy efficient ICTSingle instruction multiple data multiprocessorsParallel computingOperation mode

A technique for reducing the power consumption required to execute processing operations. A processing complex, such as a CPU or a GPU, includes a first set of cores comprising one or more fast cores and second set of cores comprising one or more slow cores. A processing mode of the processing complex can switch between a first mode of operation and a second mode of operation based on one or more of the workload characteristics, performance characteristics of the first and second sets of cores, power characteristics of the first and second sets of cores, and operating conditions of the processing complex. A controller causes the processing operations to be executed by either the first set of cores or the second set of cores to achieve the lowest total power consumption.

Owner:NVIDIA CORP

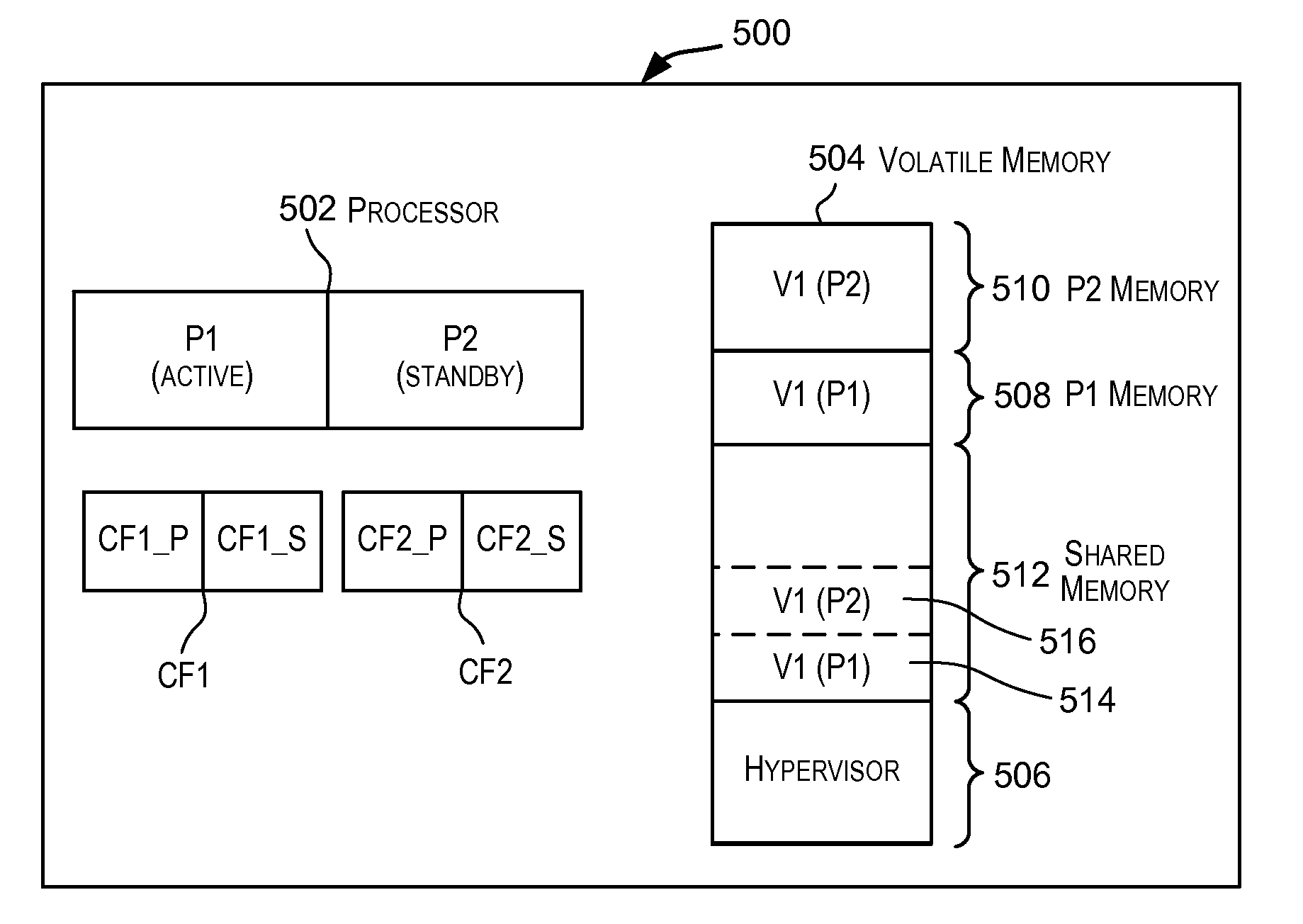

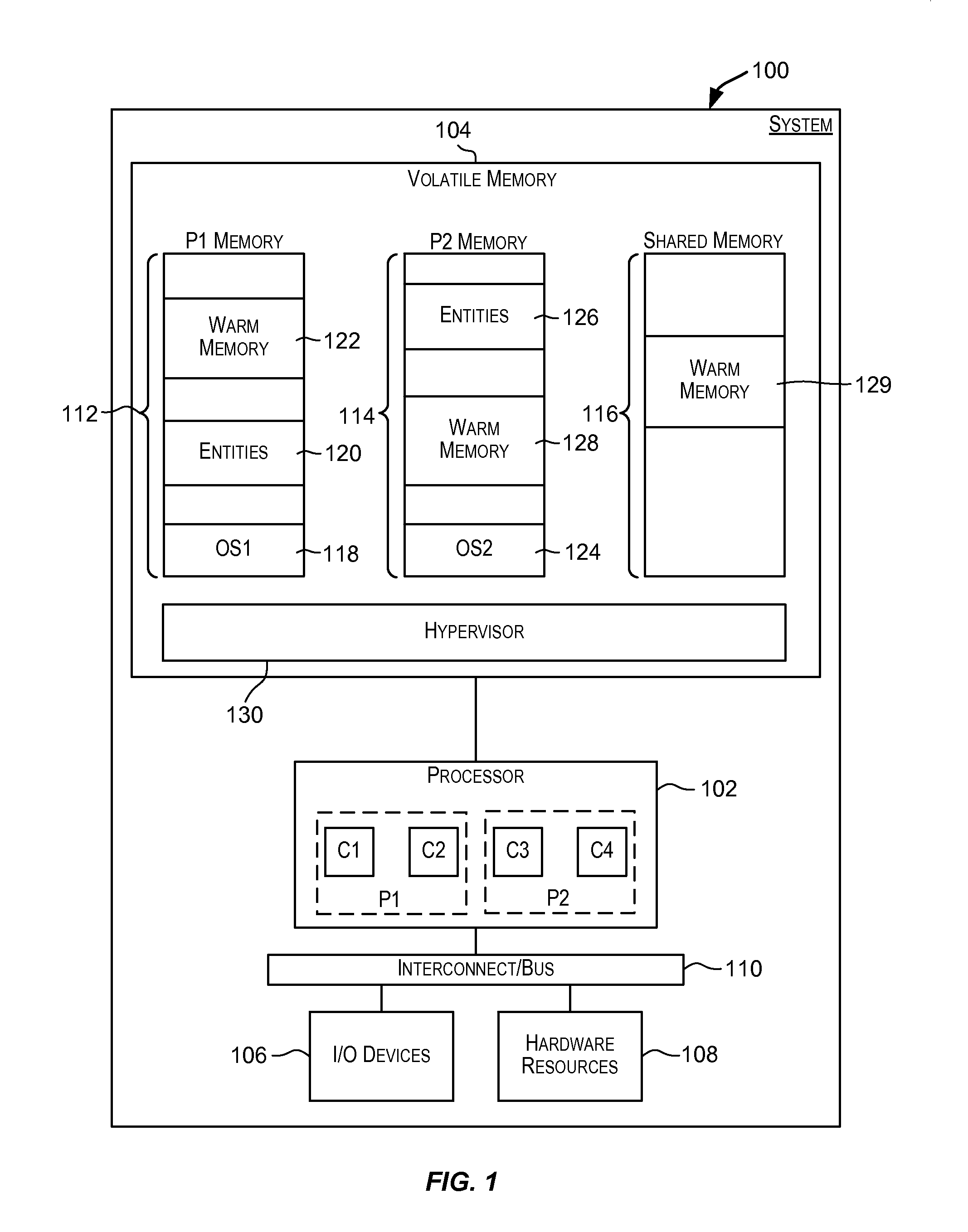

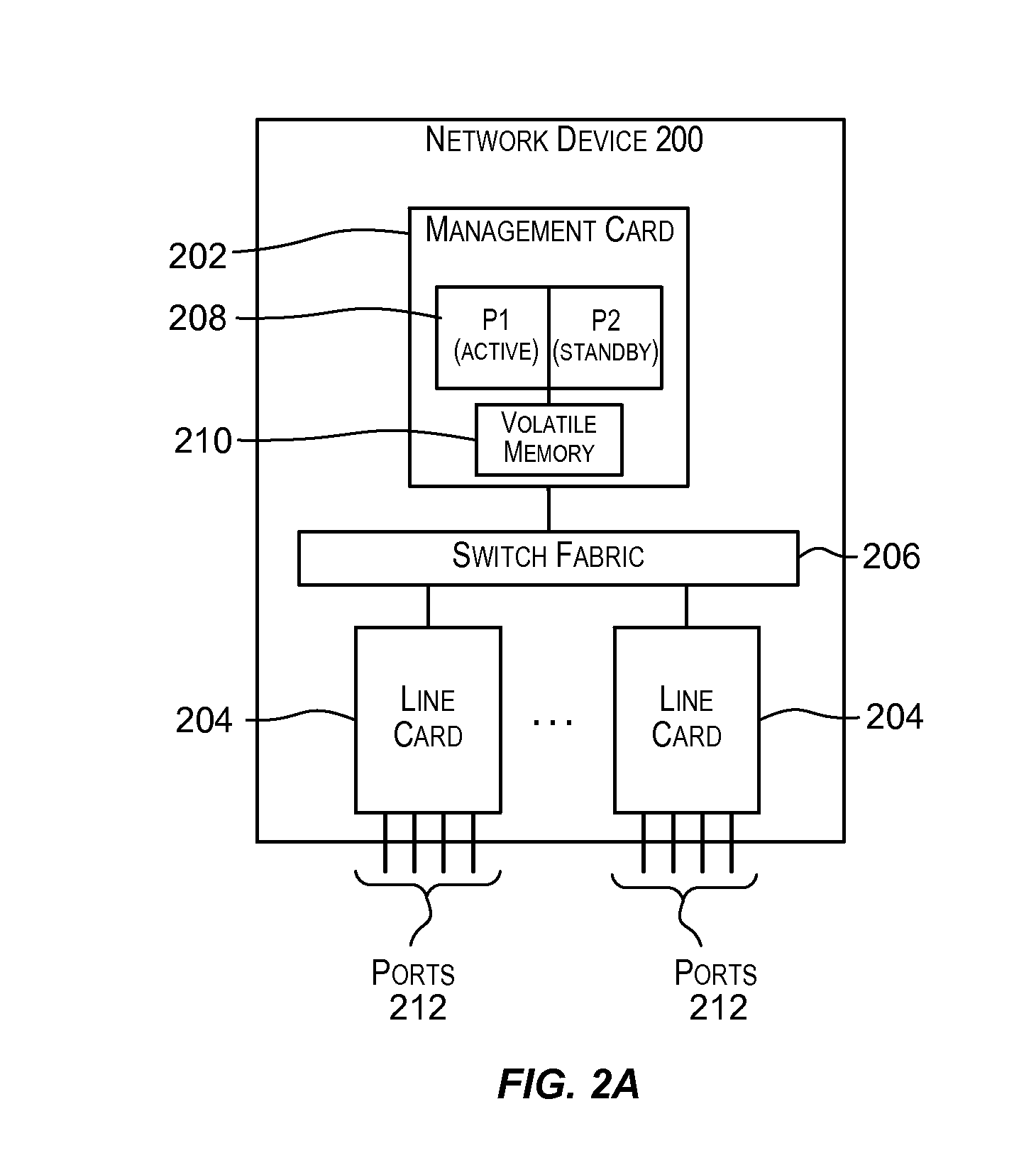

Achieving ultra-high availability using a single CPU

ActiveUS20120023309A1Improve usabilityProgram control using wired connectionsGeneral purpose stored program computerComputer architectureHigh availability

Techniques for achieving high-availability using a single processor (CPU). In a system comprising a multi-core processor, at least two partitions may be configured with each partition being allocated one or more cores of the multiple cores. The partitions may be configured such that one partition operates in active mode while another partition operates in standby mode. In this manner, a single processor is able to provide active-standby functionality, thereby enhancing the availability of the system comprising the processor.

Owner:AVAGO TECH INT SALES PTE LTD

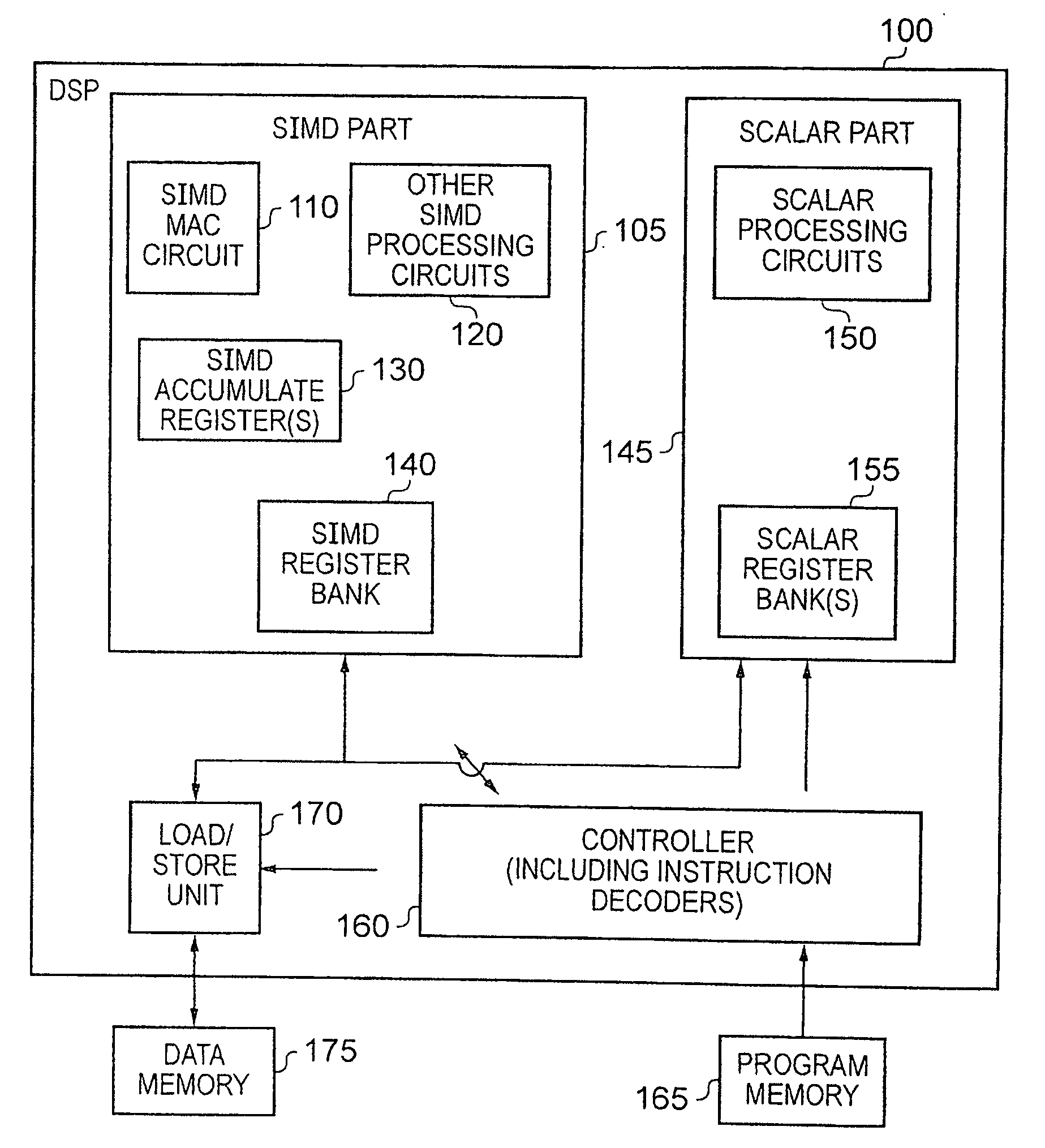

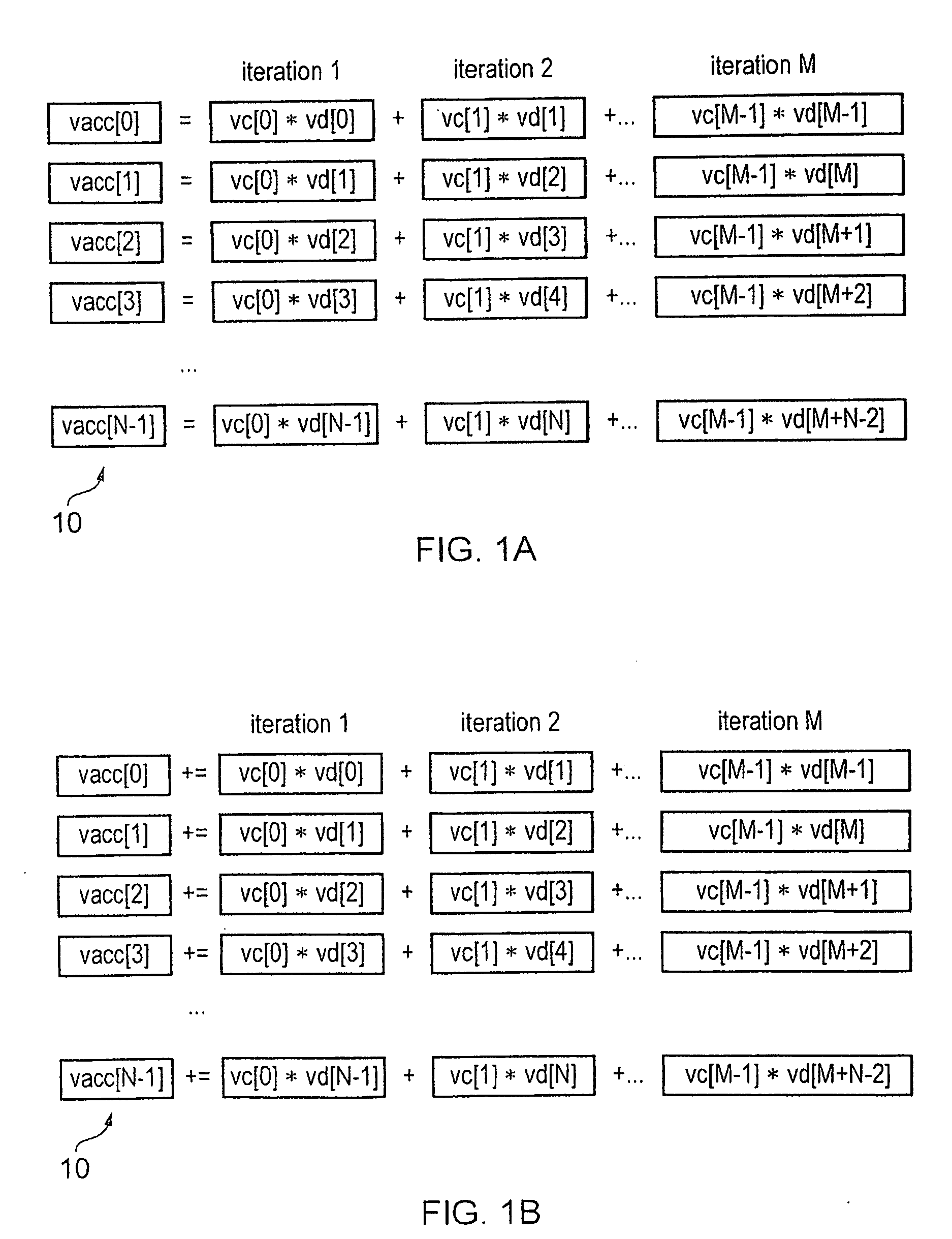

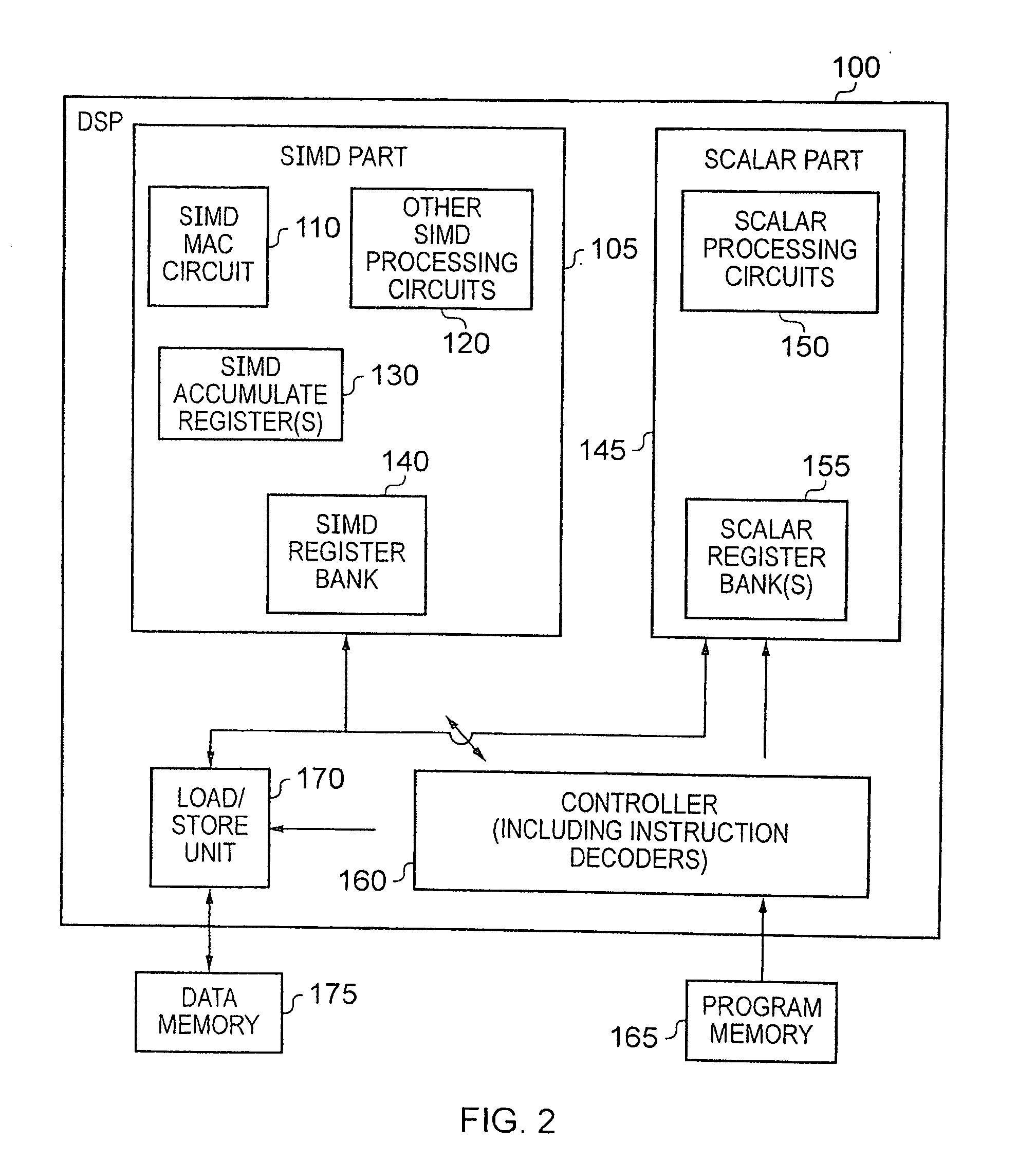

Apparatus and Method for Performing SIMD Multiply-Accumulate Operations

ActiveUS20100274990A1Reduce unnecessary power consumptionSignificant power savingAssociative processorsProgram control using wired connectionsProgram instructionScalar Value

An apparatus and method for performing SIMD multiply-accumulate operations includes SIMD data processing circuitry responsive to control signals to perform data processing operations in parallel on multiple data elements. Instruction decoder circuitry is coupled to the SIMD data processing circuitry and is responsive to program instructions to generate the required control signals. The instruction decoder circuitry is responsive to a single instruction (referred to herein as a repeating multiply-accumulate instruction) having as input operands a first vector of input data elements, a second vector of coefficient data elements, and a scalar value indicative of a plurality of iterations required, to generate control signals to control the SIMD processing circuitry. In response to those control signals, the SIMD data processing circuitry performs the plurality of iterations of a multiply-accumulate process, each iteration involving performance of N multiply-accumulate operations in parallel in order to produce N multiply-accumulate data elements. For each iteration, the SIMD data processing circuitry determines N input data elements from said first vector and a single coefficient data element from the second vector to be multiplied with each of the N input data elements. The N multiply-accumulate data elements produced in a final iteration of the multiply-accumulate process are then used to produce N multiply-accumulate results. This mechanism provides a particularly energy efficient mechanism for performing SIMD multiply-accumulate operations, as for example are required for FIR filter processes.

Owner:U-BLOX

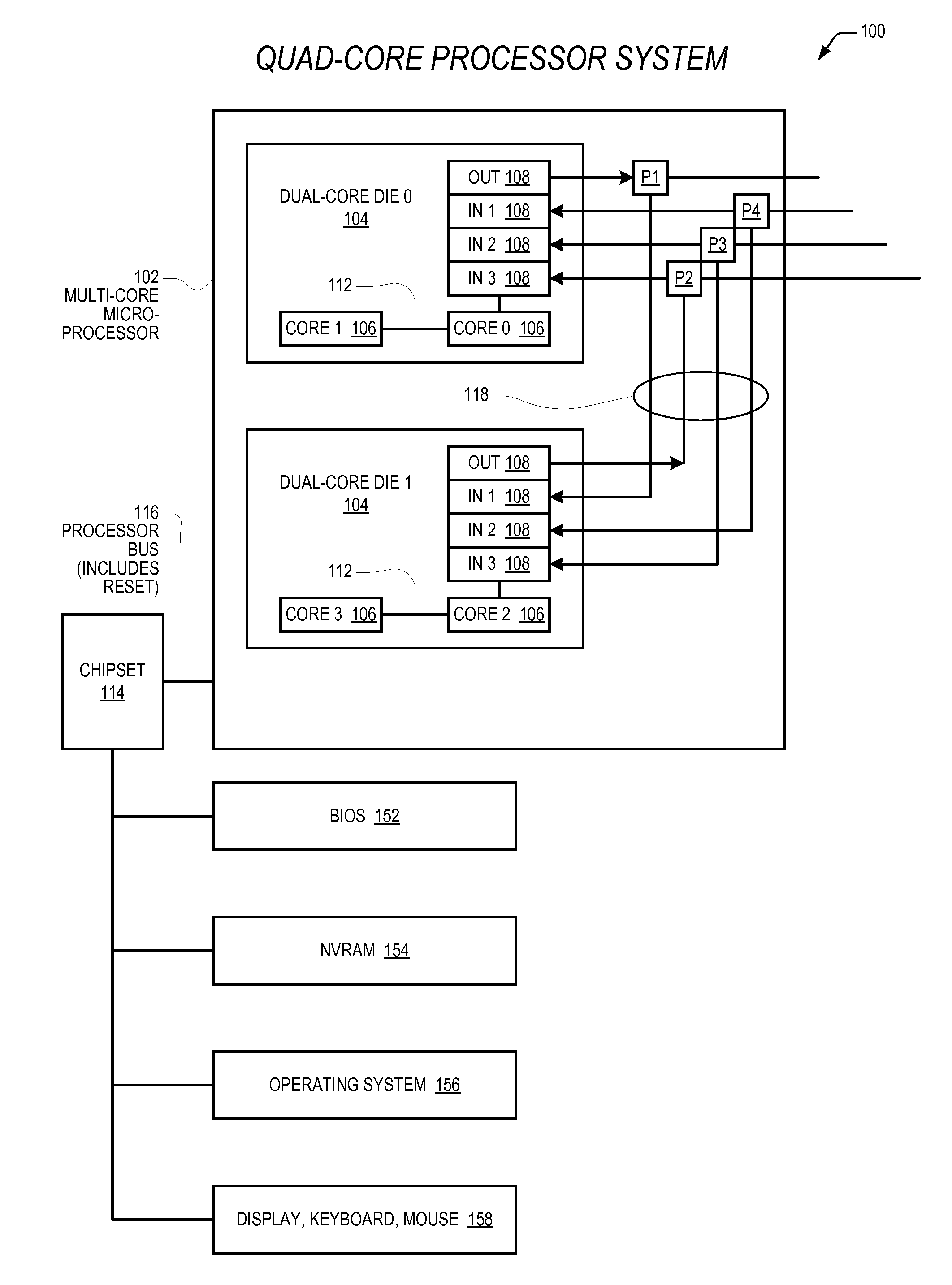

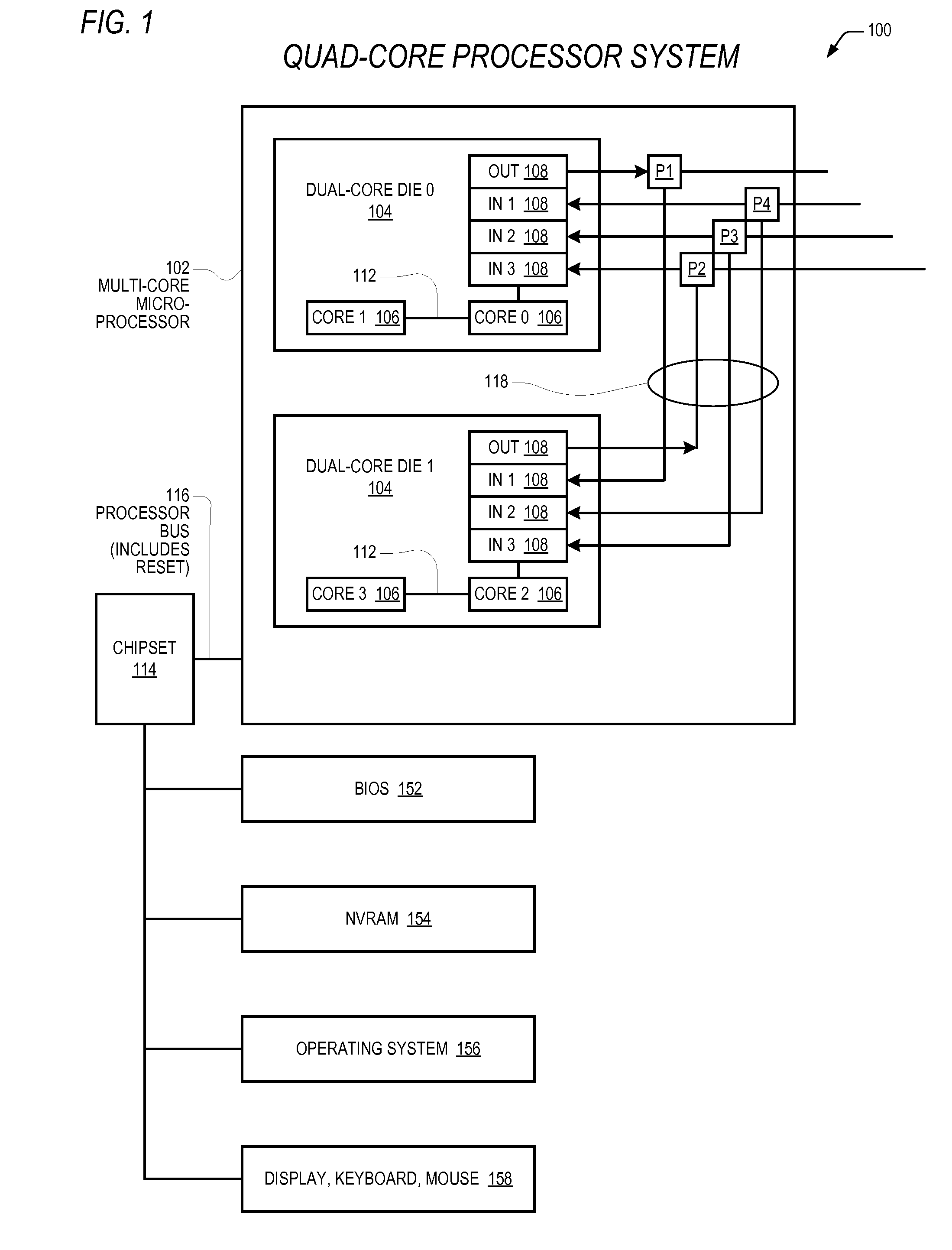

Dynamic and selective core disablement and reconfiguration in a multi-core processor

ActiveUS20120166764A1Not impose an associated drag on the processor busEnergy efficient ICTError detection/correctionMulti-core processorSupport system

Dynamically reconfigurable multi-core microprocessors and associated methods are provided. A multi-core microprocessor is provided that supports the ability of system software to disable, or kill, selected cores in such a way that they do not cause drag on the processor bus shared with the other cores. Another multi-core microprocessor is provided that supports reconfiguration of an inter-core coordination system of the microprocessor, wherein cores may be selectively designated as masters for purposes of driving signals onto an inter-core communication wire.

Owner:VIA TECH INC

Ceiling tile loudspeaker

InactiveUS6215881B1Sustain and propagateCeilingsProgram control using wired connectionsBrickTransducer

A ceiling tile adapted to be supported in an overhead opening and function as a loudspeaker. The tile is in the form of a distributed mode acoustic radiator with a transducer mounted wholly and exclusively on the radiator at a location for coupling to resonant bending wave modes so as to vibrate the radiator and cause it to resonate.

Owner:NEW TRANSDUCERS LTD

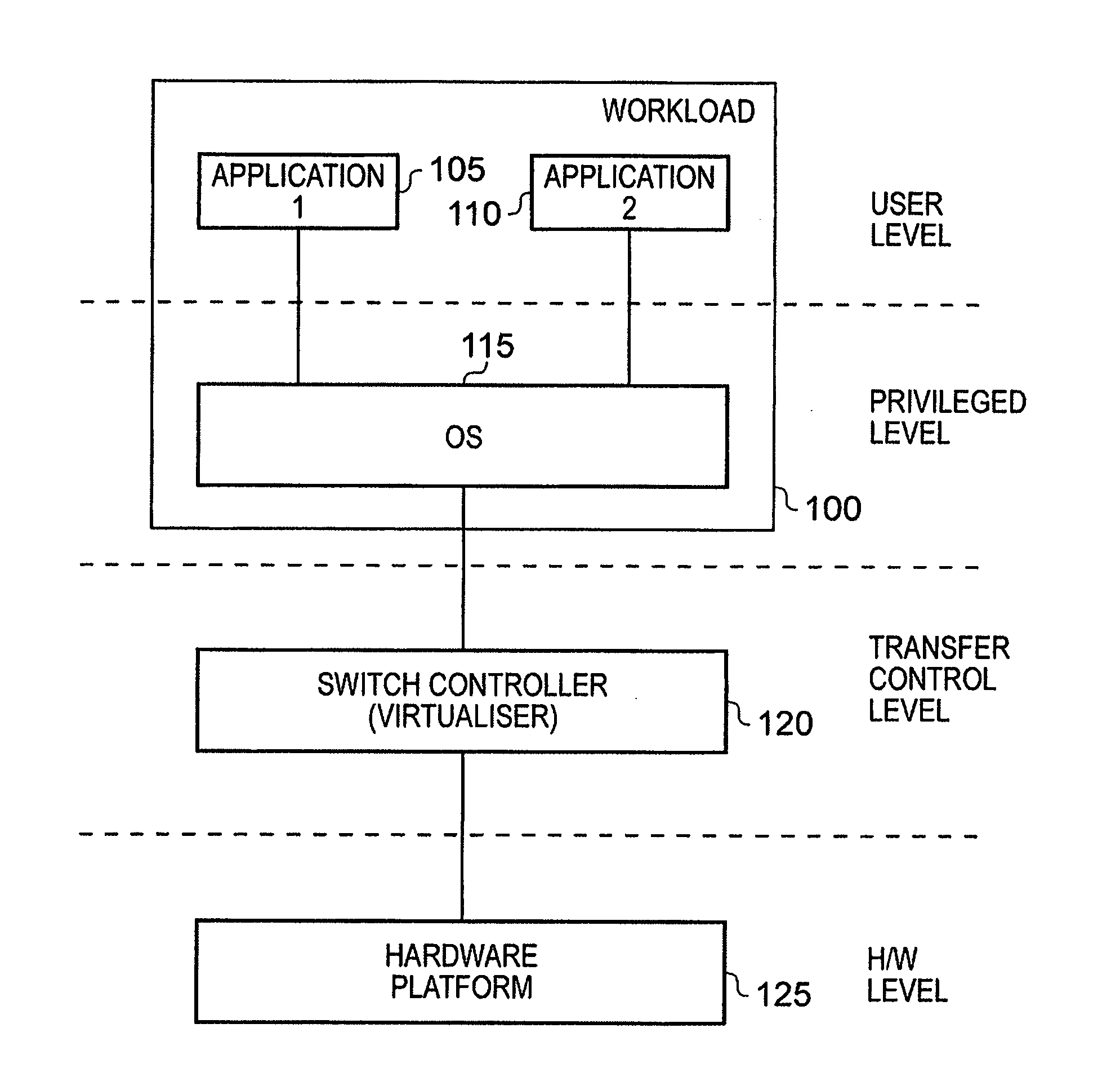

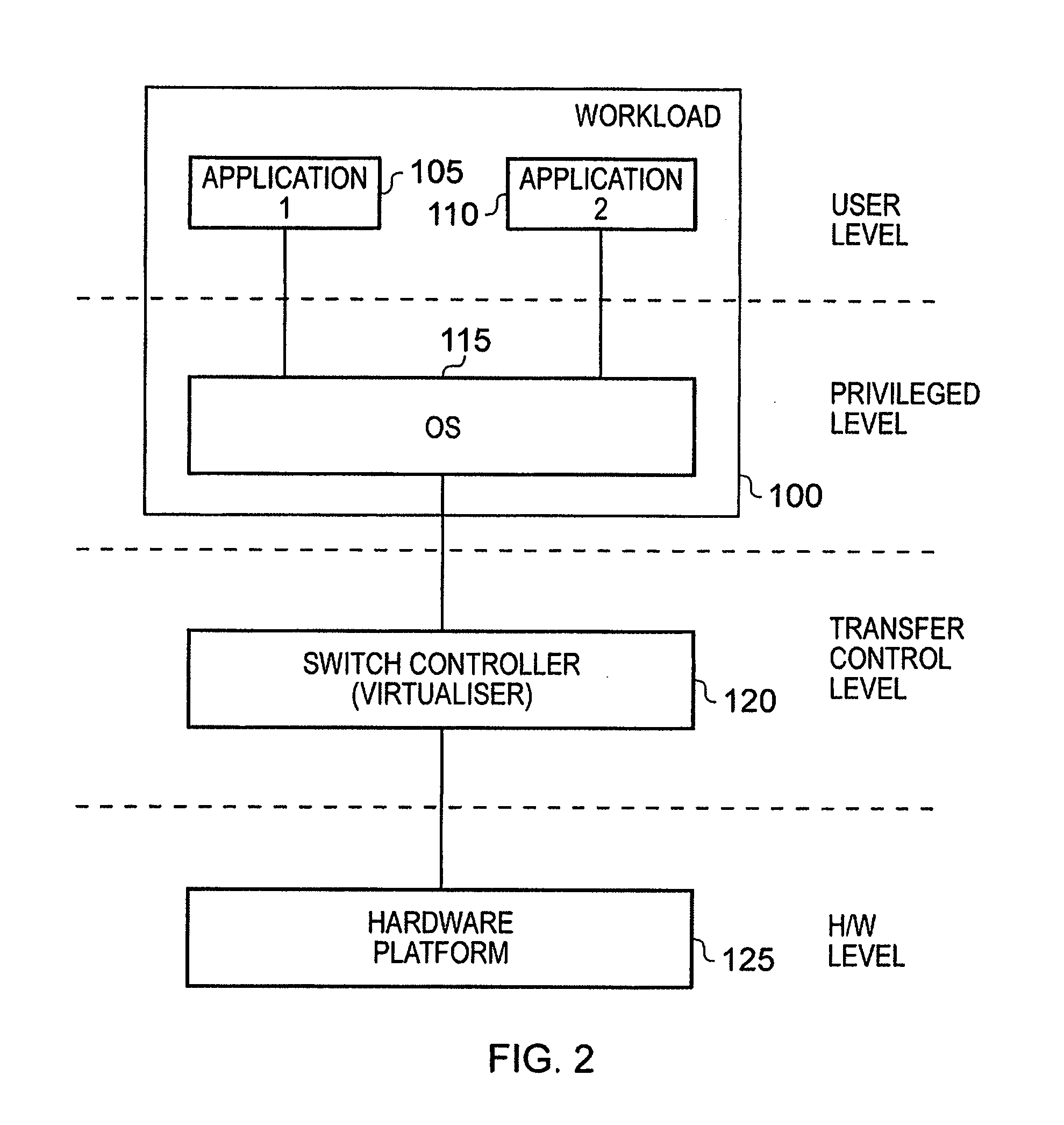

Data processing apparatus and method for switching a workload between first and second processing circuitry

ActiveUS20110213934A1Different performanceSuccessfully takeover performanceEnergy efficient ICTProgram control using wired connectionsStructure of Management InformationEnergy expenditure

A data processing apparatus and method are provided for switching performance of a workload between two processing circuits. The data processing apparatus has first processing circuitry which is architecturally compatible with second processing circuitry, but with the first processing circuitry being micro-architecturally different from the second processing circuitry. At any point in time, a workload consisting of at least one application and at least one operating system for running that application is performed by one of the first processing circuitry and the second processing circuitry. A switch controller is responsive to a transfer stimulus to perform a handover operation to transfer performance of the workload from source processing circuitry to destination processing circuitry, with the source processing circuitry being one of the first and second processing circuitry and the destination processing circuitry being the other of the first and second processing circuitry. During the handover operation, the switch controller causes the source processing circuitry to makes it current architectural state available to the destination processing circuitry, the current architectural state being that state not available from shared memory at a time the handover operation is initiated, and that is necessary for the destination processing circuitry to successfully take over performance of the workload from the source processing circuitry. In addition, the switch controller masks predetermined processor specific configuration information from the at least one operating system such that the transfer of the workload is transparent to that operating system. Such an approach has been found to yield significant energy consumption benefits whilst avoiding complexities associated with providing operating systems with the capability for switching applications between processing circuits.

Owner:ARM LTD

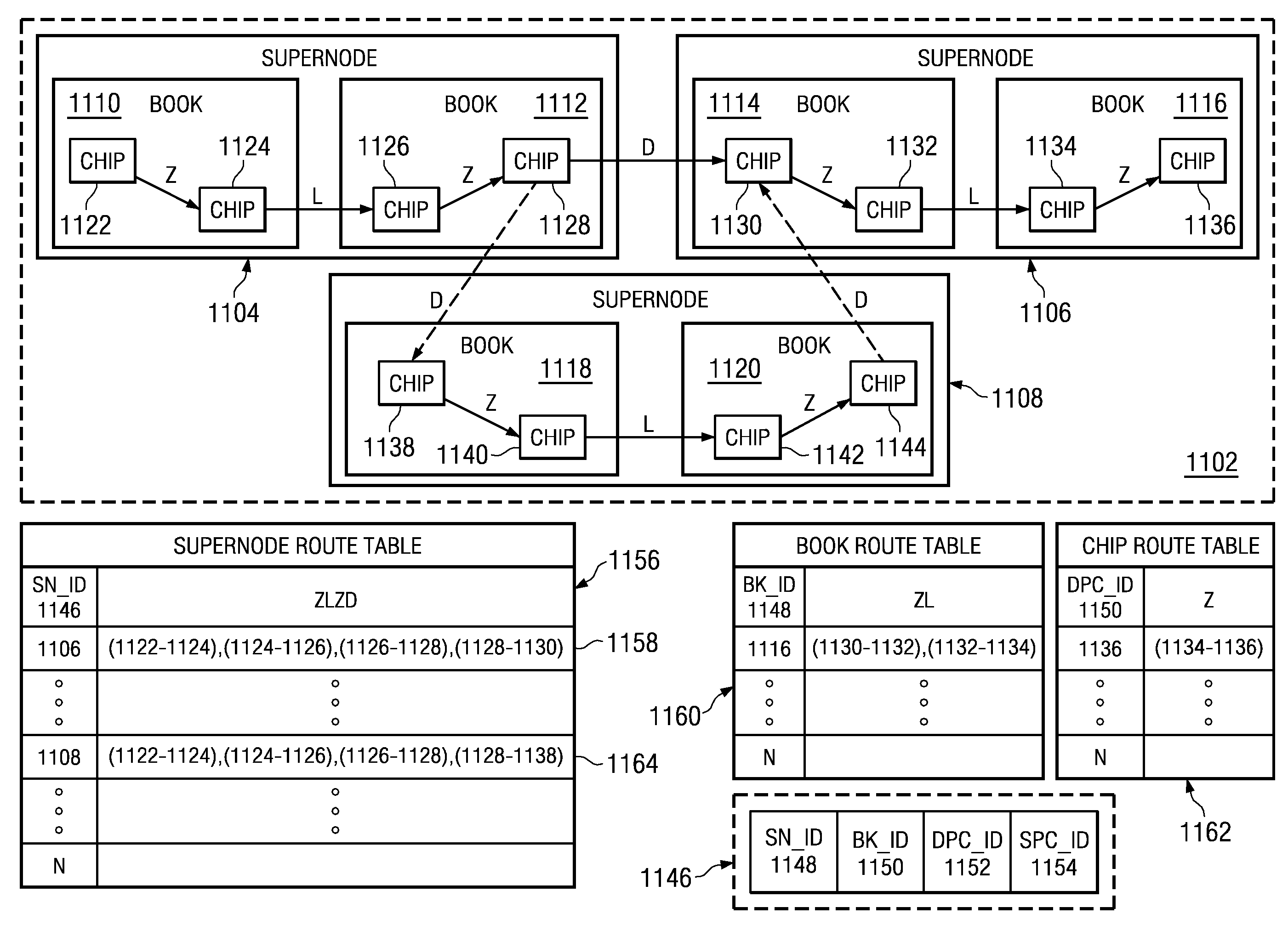

System and Method for Providing Full Hardware Support of Collective Operations in a Multi-Tiered Full-Graph Interconnect Architecture

InactiveUS20090063815A1Improve communication performanceImprove productivityProgram control using wired connectionsGeneral purpose stored program computerData processing systemCollective operation

A method, computer program product, and system are provided for performing collective operations. In hardware of a parent processor in a first processor book, a number of other processors are determined in a same or different processor book of the data processing system that is needed to execute the collective operation, thereby establishing a plurality of processors comprising the parent processor and the other processors. In hardware of the parent processor, the plurality of processors are logically arranged as a plurality of nodes in a hierarchical structure. The collective operation is transmitted to the plurality of processors based on the hierarchical structure. In hardware of the parent processor, results are received from the execution of the collective operation from the other processors, a final result is generated of the collective operation based on the received results, and the final result is output.

Owner:IBM CORP

Simd permutations with extended range in a data processor

InactiveUS20090100247A1Instruction analysisProgram control using wired connectionsData processing systemProcessor register

A processor in a data processing system executes a permutation instruction which identifies a first source register, at least one other source register, and a destination register. The first source register stores at least one in-range index value for the at least one other source register and at least one out-of-range index value for the at least one other source register. The at least one other source register stores a plurality of vector element values, wherein each in-range index value indicates which vector element value of the at least one other source register is to be stored into a corresponding vector element of the destination register. Each out-of-range index value is used to indicate which one of at least two predetermined constant values is to be stored into a corresponding vector element of the destination register. Partial table lookups using a permutation instruction shortens the time required to retrieve data.

Owner:NORTH STAR INNOVATIONS

System and Method for Performing Collective Operations Using Software Setup and Partial Software Execution at Leaf Nodes in a Multi-Tiered Full-Graph Interconnect Architecture

InactiveUS20090063816A1Improve communication performanceImprove productivityProgram control using wired connectionsGeneral purpose stored program computerData processing systemCollective operation

A method, computer program product, and system are provided for performing collective operations. In software executing on a parent processor in a first processor book, a number of other processors are determined in a same or different processor book of the data processing system that is needed to execute the collective operation, thereby establishing a plurality of processors comprising the parent processor and the other processors. In software executing on the parent processor, the plurality of processors are logically arranged as a plurality of nodes in a hierarchical structure. The collective operation is transmitted to the plurality of processors based on the hierarchical structure. In hardware of the parent processor, results are received from the execution of the collective operation from the other processors, a final result is generated of the collective operation based on the received results, and the final result is output.

Owner:IBM CORP

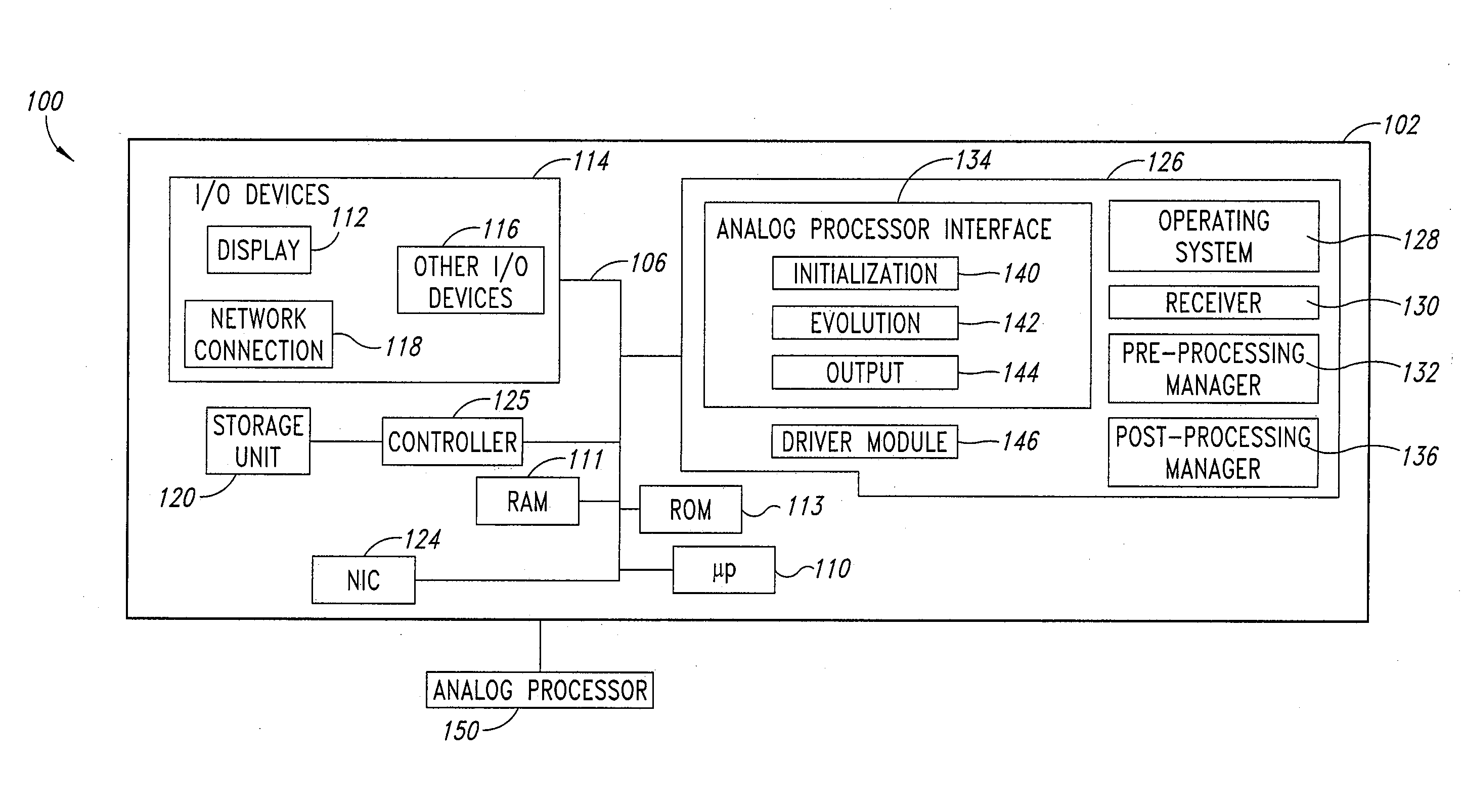

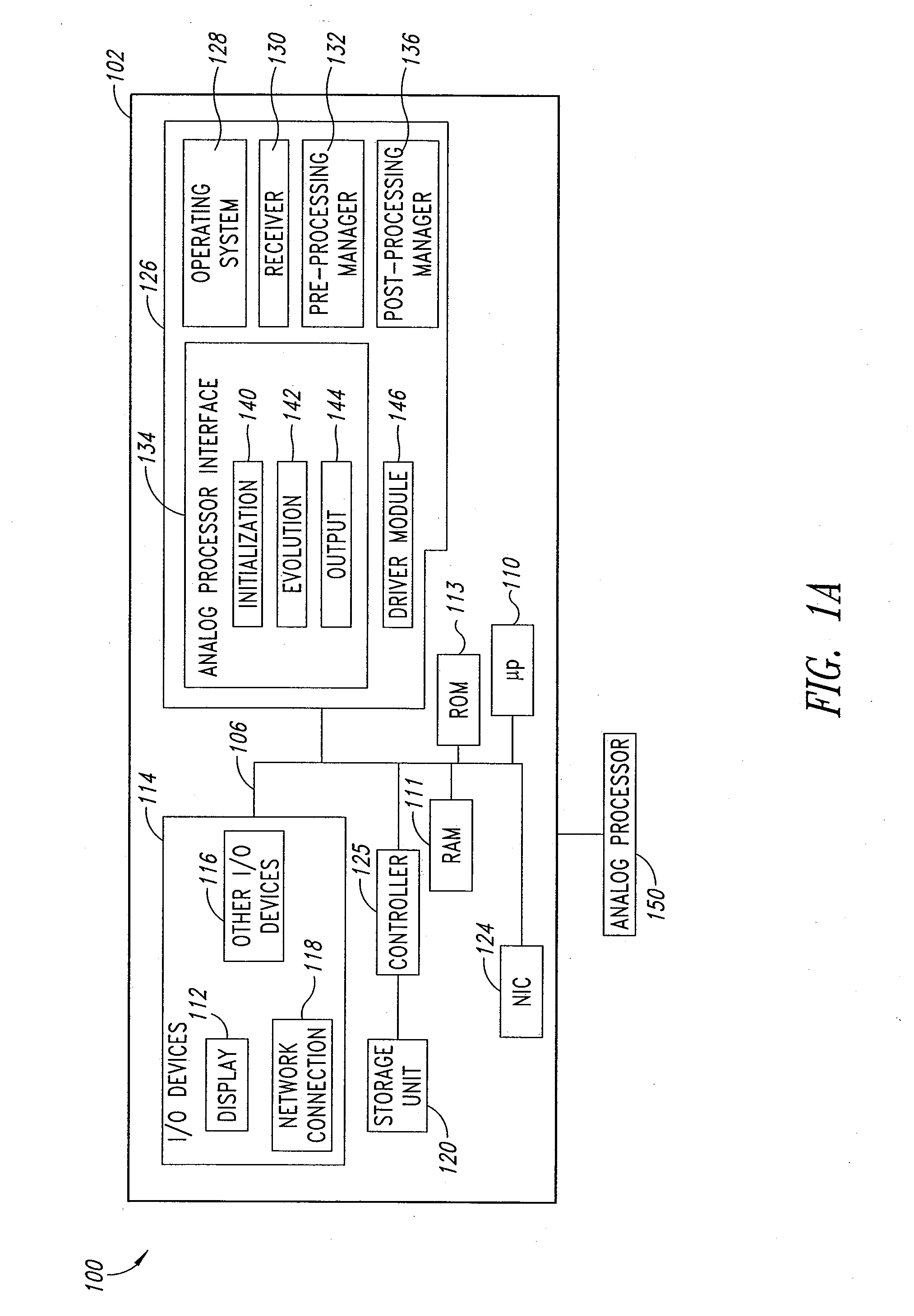

Systems and methods for problem solving, useful for example in quantum computing

ActiveUS20170255872A1Solve quality problemsQuantum computersProgram control using wired connectionsQuantum computerComputing systems

Computational systems implement problem solving using heuristic solvers or optimizers. Such may iteratively evaluate a result of processing, and modify the problem or representation thereof before repeating processing on the modified problem, until a termination condition is reached. Heuristic solvers or optimizers may execute on one or more digital processors and / or one or more quantum processors. The system may autonomously select between types of hardware devices and / or types of heuristic optimization algorithms. Such may coordinate or at least partially overlap post-processing operations with processing operations, for instance performing post-processing on an ith batch of samples while generating an (i+1)th batch of samples, e.g., so post-processing operation on the ith batch of samples does not extend in time beyond the generation of the (i+1)th batch of samples. Heuristic optimizers selection is based on pre-processing assessment of the problem, e.g., based on features extracted from the problem and for instance, on predicted success.

Owner:D-WAVE SYSTEMS

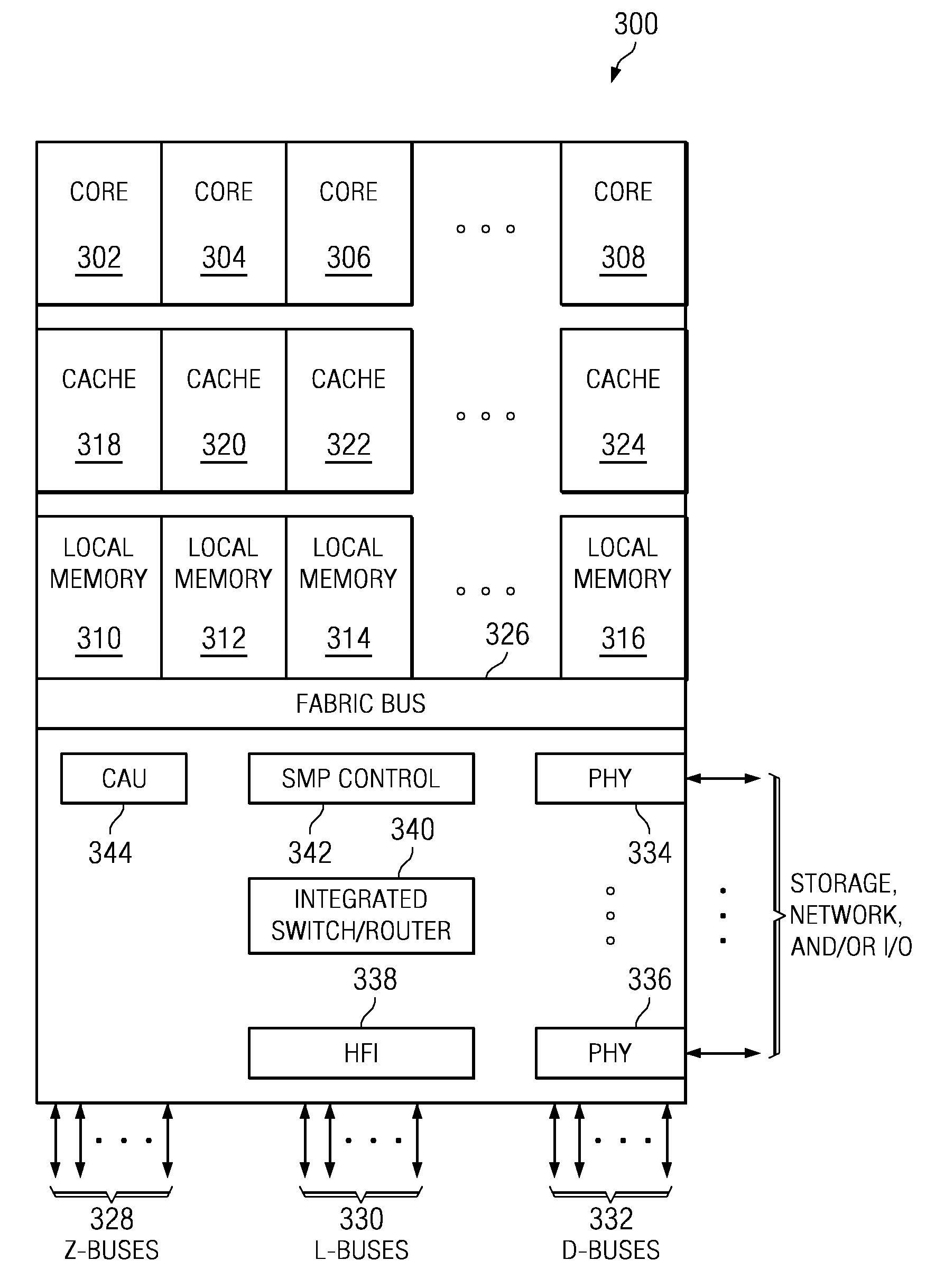

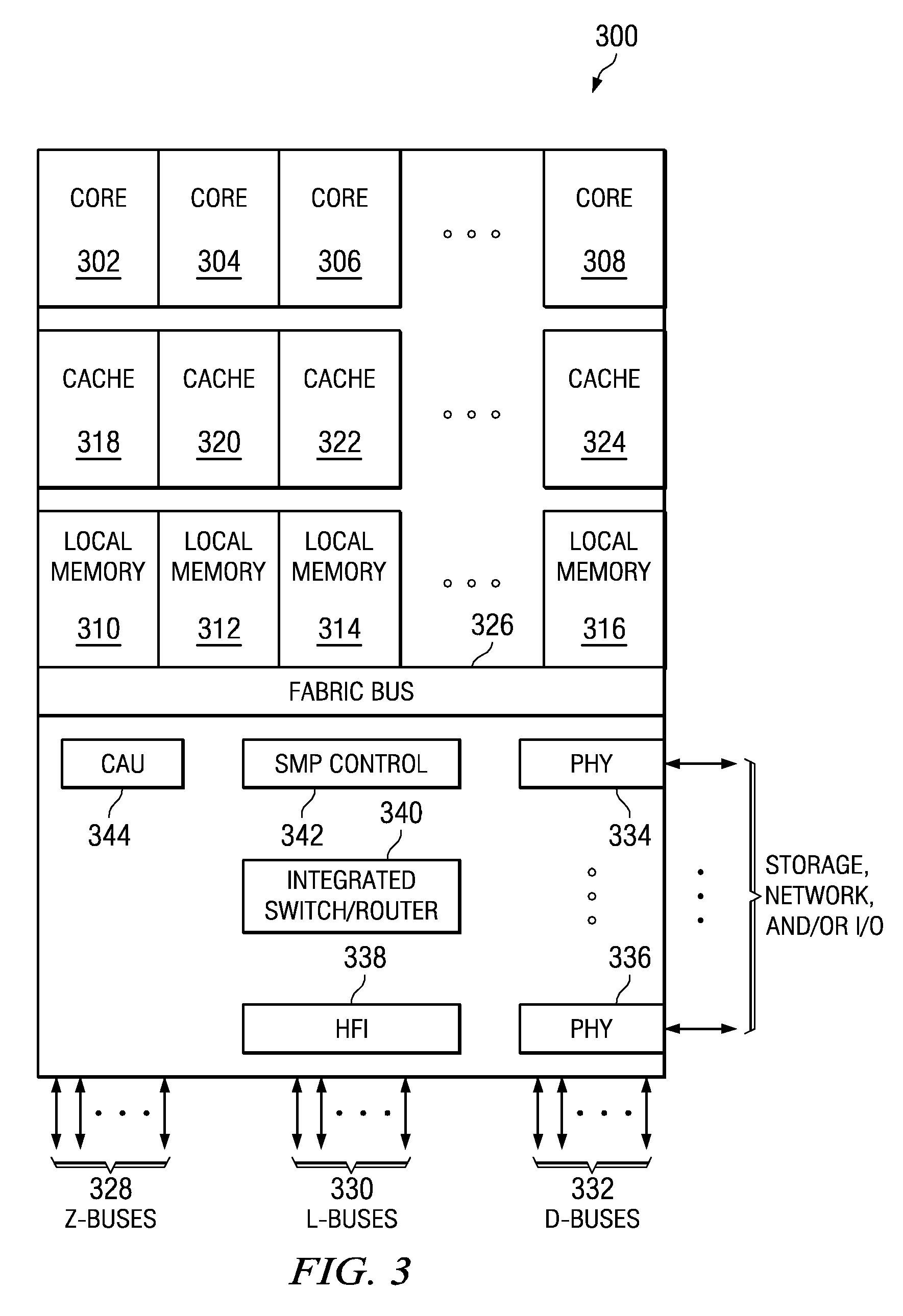

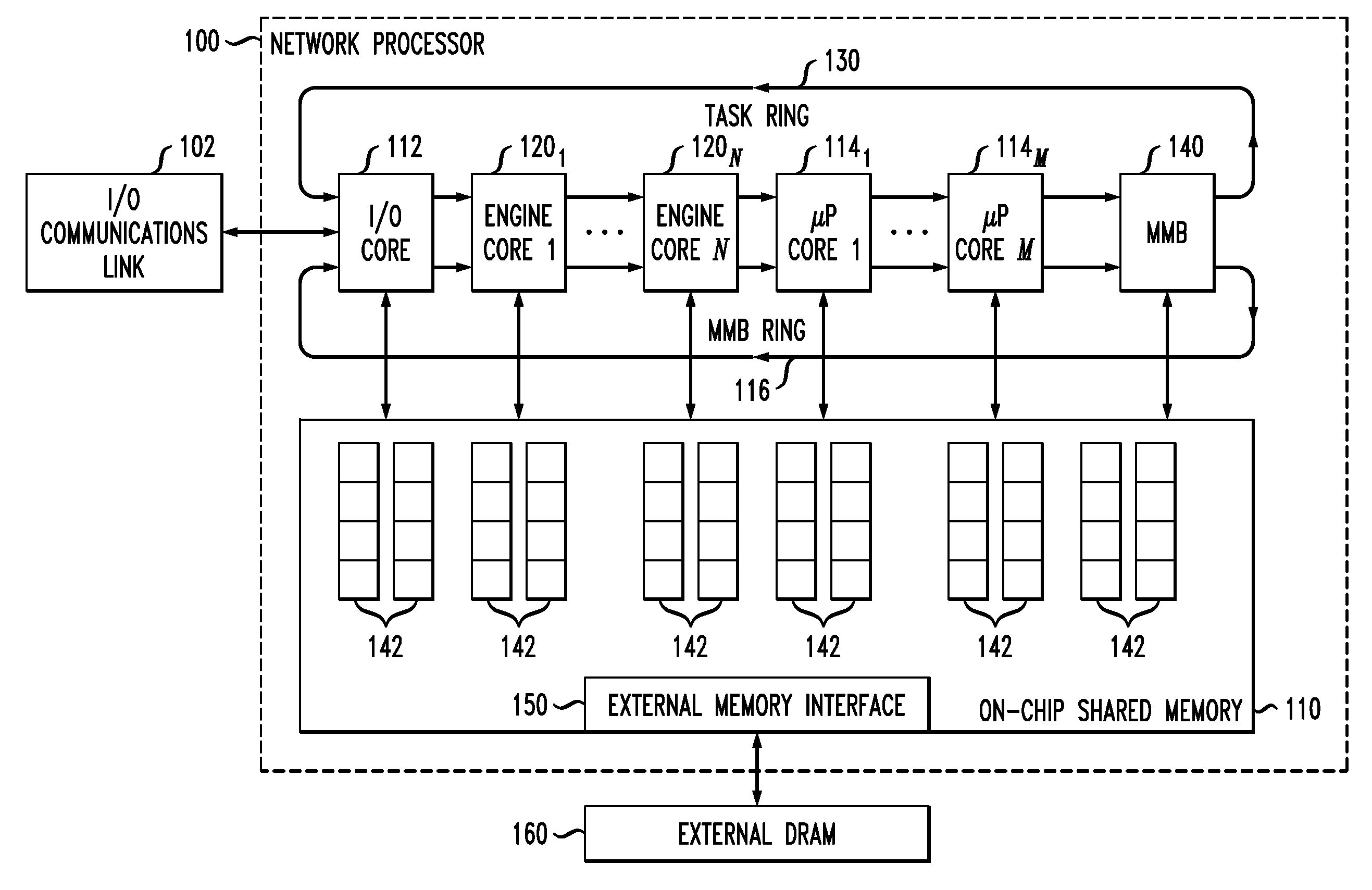

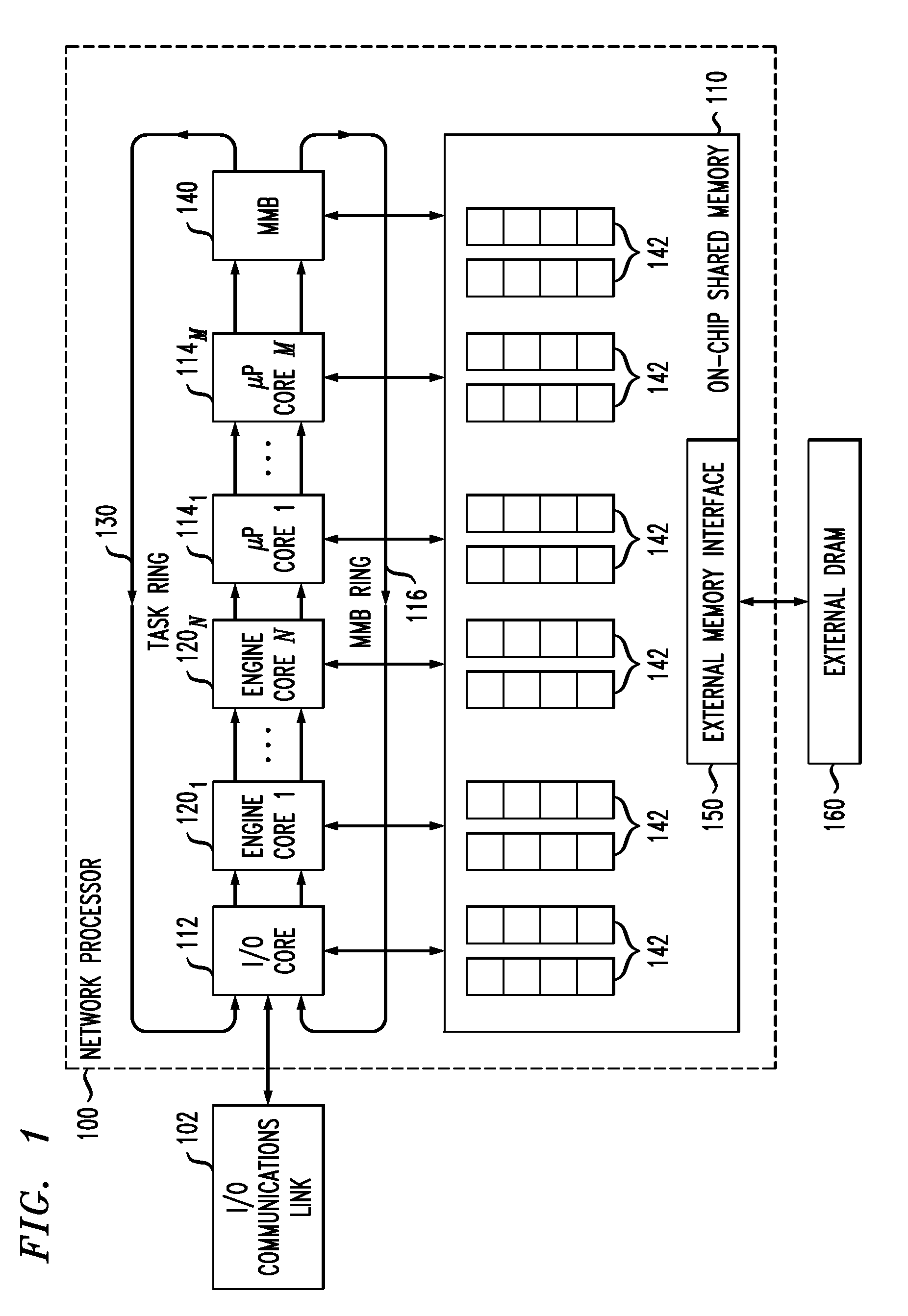

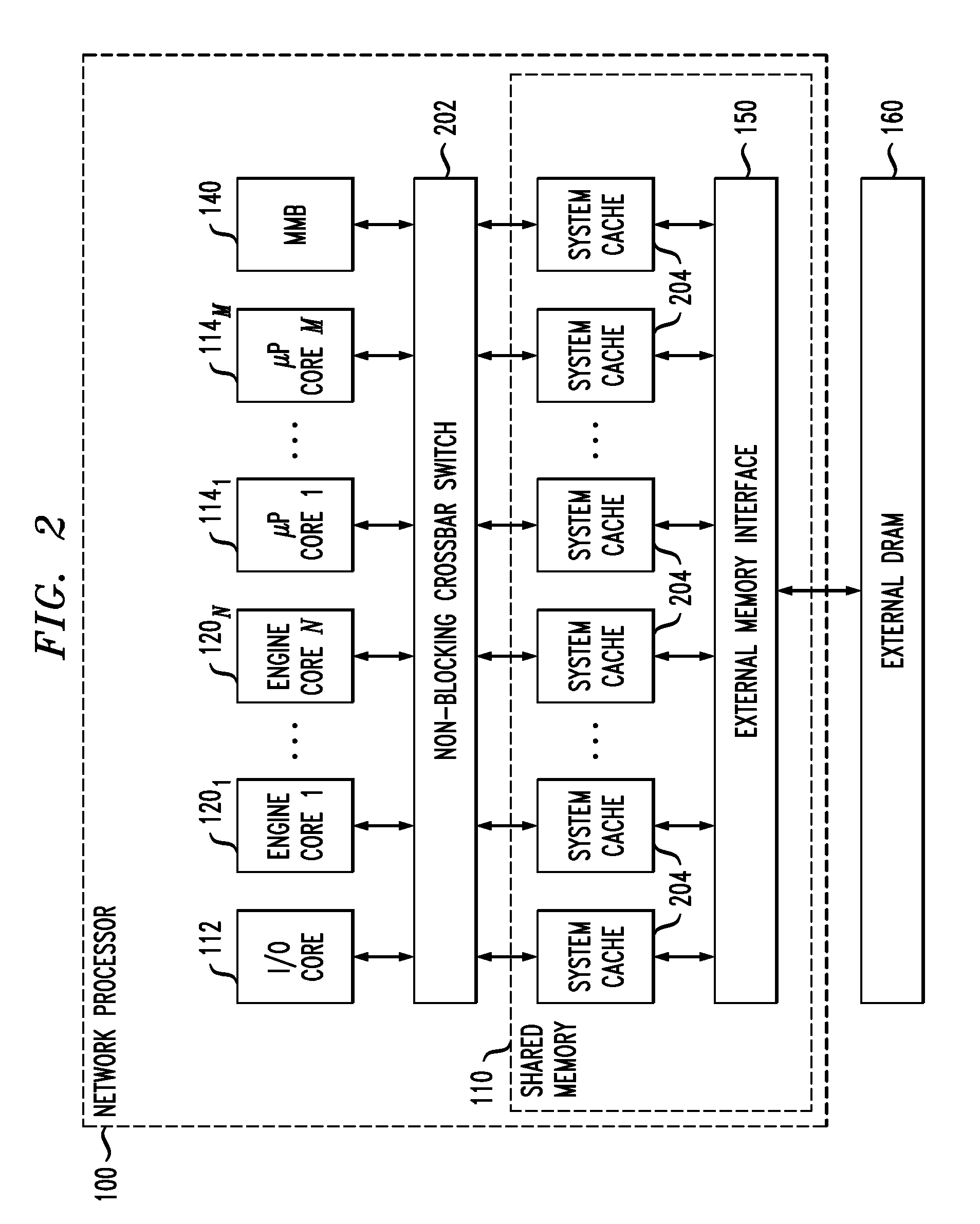

Network Communications Processor Architecture

InactiveUS20100293312A1Well formedProgram control using wired connectionsMemory adressing/allocation/relocationProcessing coreParallel computing

Described embodiments provide a system having a plurality of processor cores and common memory in direct communication with the cores. A source processing core communicates with a task destination core by generating a task message for the task destination core. The task source core transmits the task message directly to a receiving processing core adjacent to the task source core. If the receiving processing core is not the task destination core, the receiving processing core passes the task message unchanged to a processing core adjacent the receiving processing core. If the receiving processing core is the task destination core, the task destination core processes the message.

Owner:INTEL CORP

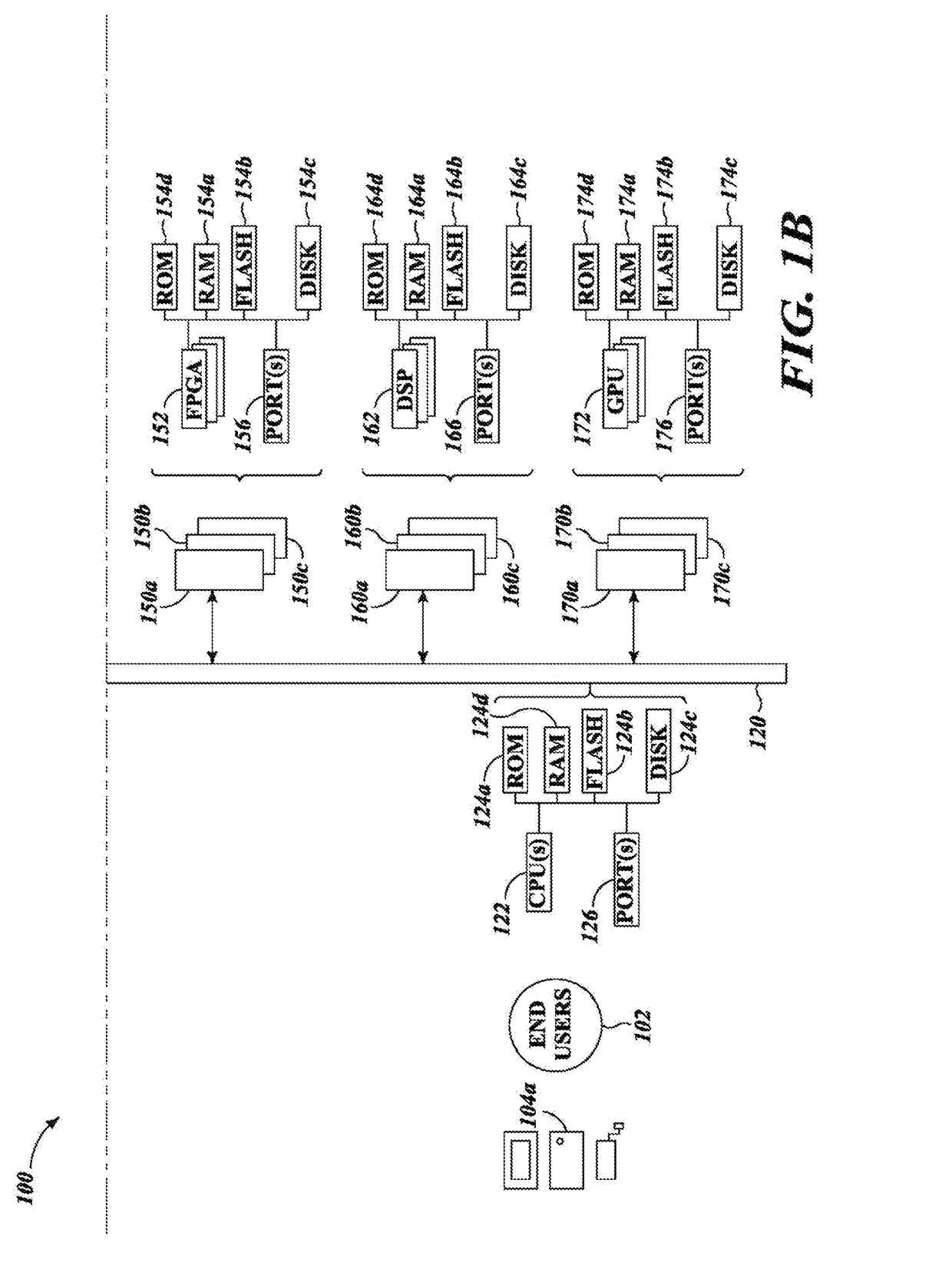

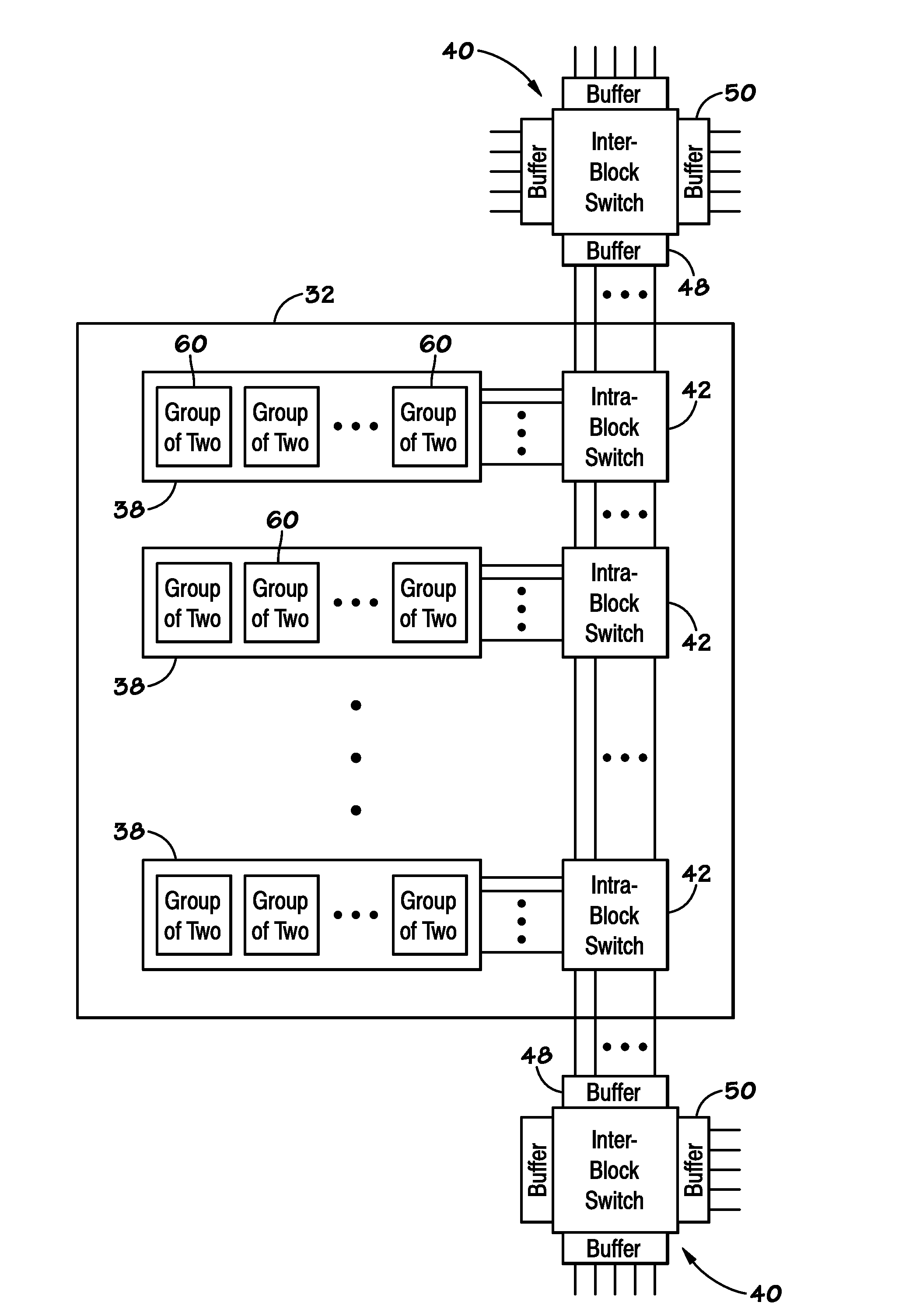

Hierarchical Reconfigurable Computer Architecture

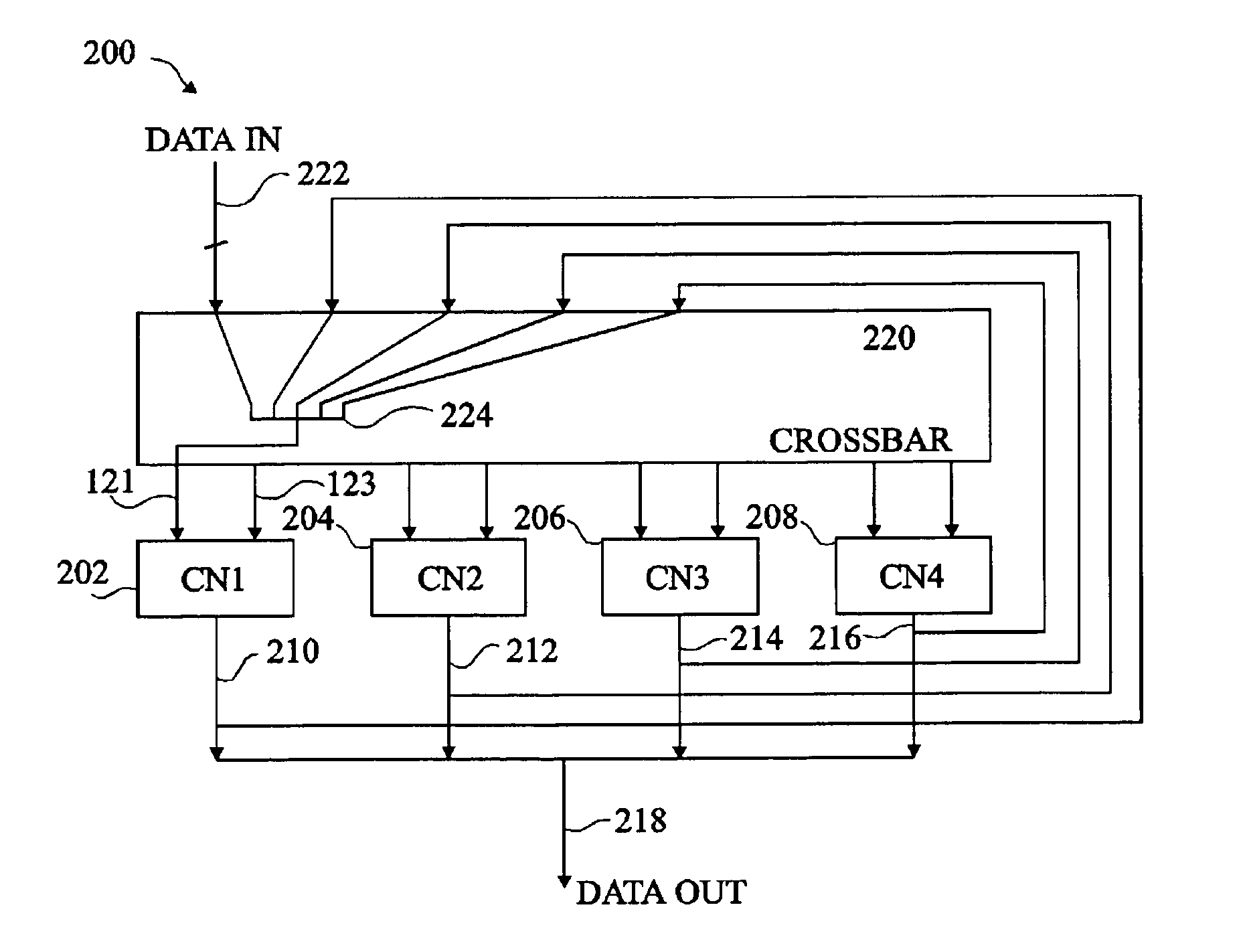

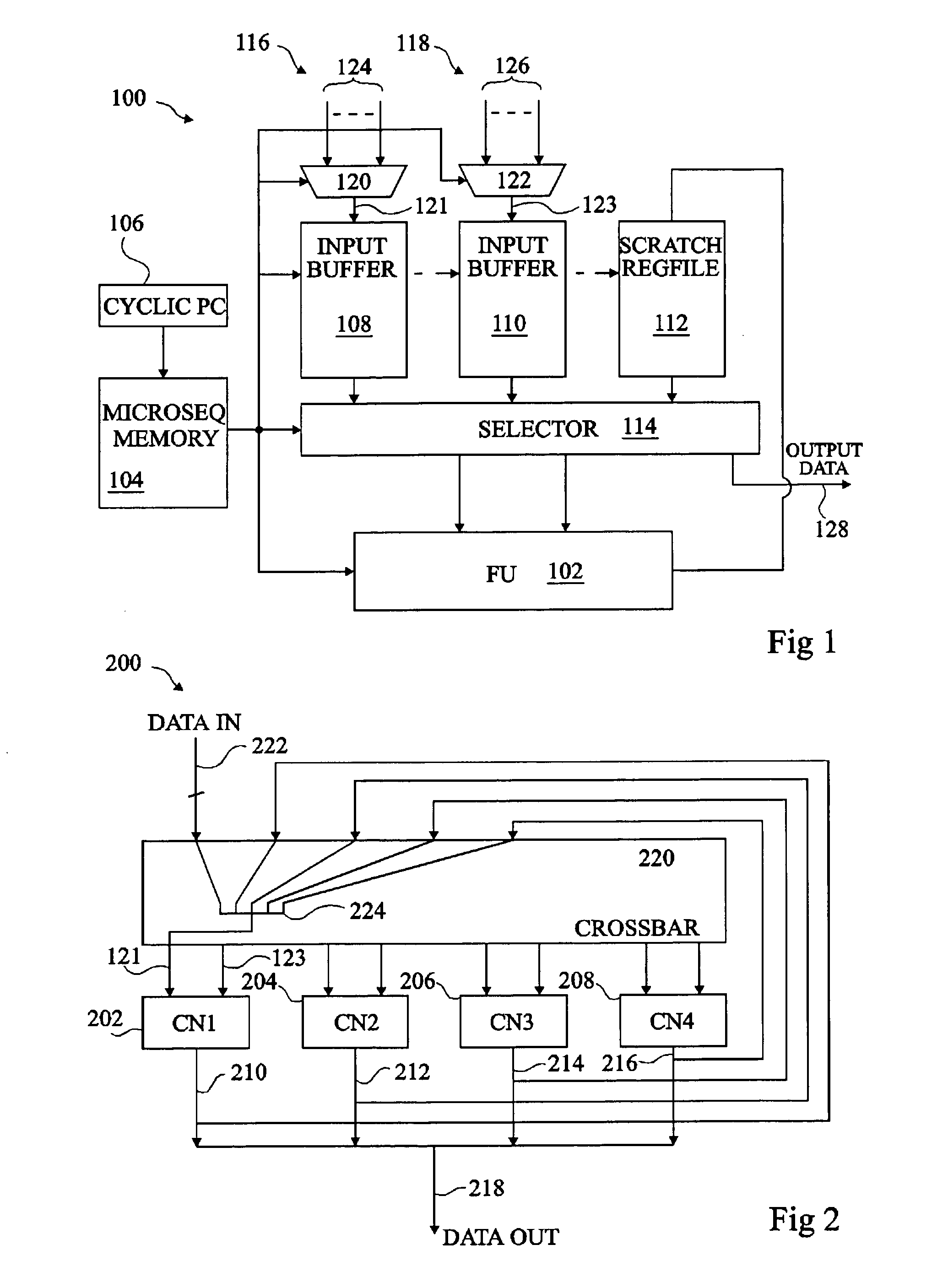

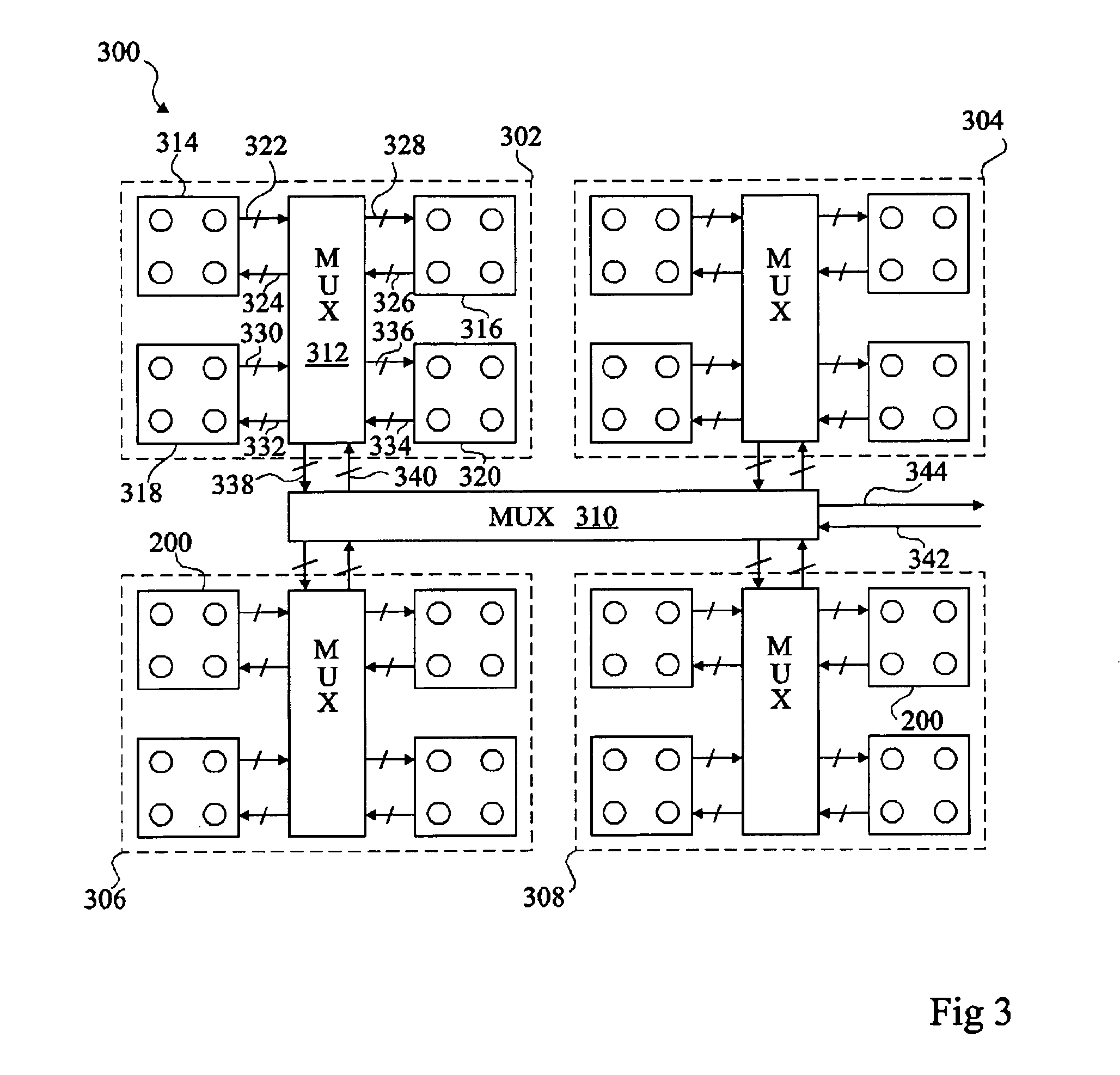

ActiveUS20110107337A1Program control using wired connectionsGeneral purpose stored program computerParallel computingData input

A reconfigurable hierarchical computer architecture having N levels, where N is an integer value greater than one, wherein said N levels include a first level including a first computation block including a first data input, a first data output and a plurality of computing nodes interconnected by a first connecting mechanism, each computing node including an input port, a functional unit and an output port, the first connecting mechanism capable of connecting each output port to the input port of each other computing node; and a second level including a second computation block including a second data input, a second data output and a plurality of the first computation blocks interconnected by a second connecting means for selectively connecting the first data output of each of the first computation blocks and the second data input to each of the first data inputs and for selectively connecting each of the first data outputs to the second data output.

Owner:STMICROELECTRONICS SRL

Computing Device, a System and a Method for Parallel Processing of Data Streams

ActiveUS20090187736A1Multimedia data queryingProgram control using wired connectionsData streamParallel processing

A computing arrangement for identification of a current temporal input against one or more learned signals. The arrangement comprising a number of computational cores, each core comprises properties having at least some statistical independency from other of the computational, the properties being set independently of each other core, each core being able to independently produce an output indicating recognition of a previously learned signal, and at least one decision unit for receiving the produced outputs from the number of computational cores and making an identification of the current temporal input based the produced outputs.

Owner:CORTICA LTD

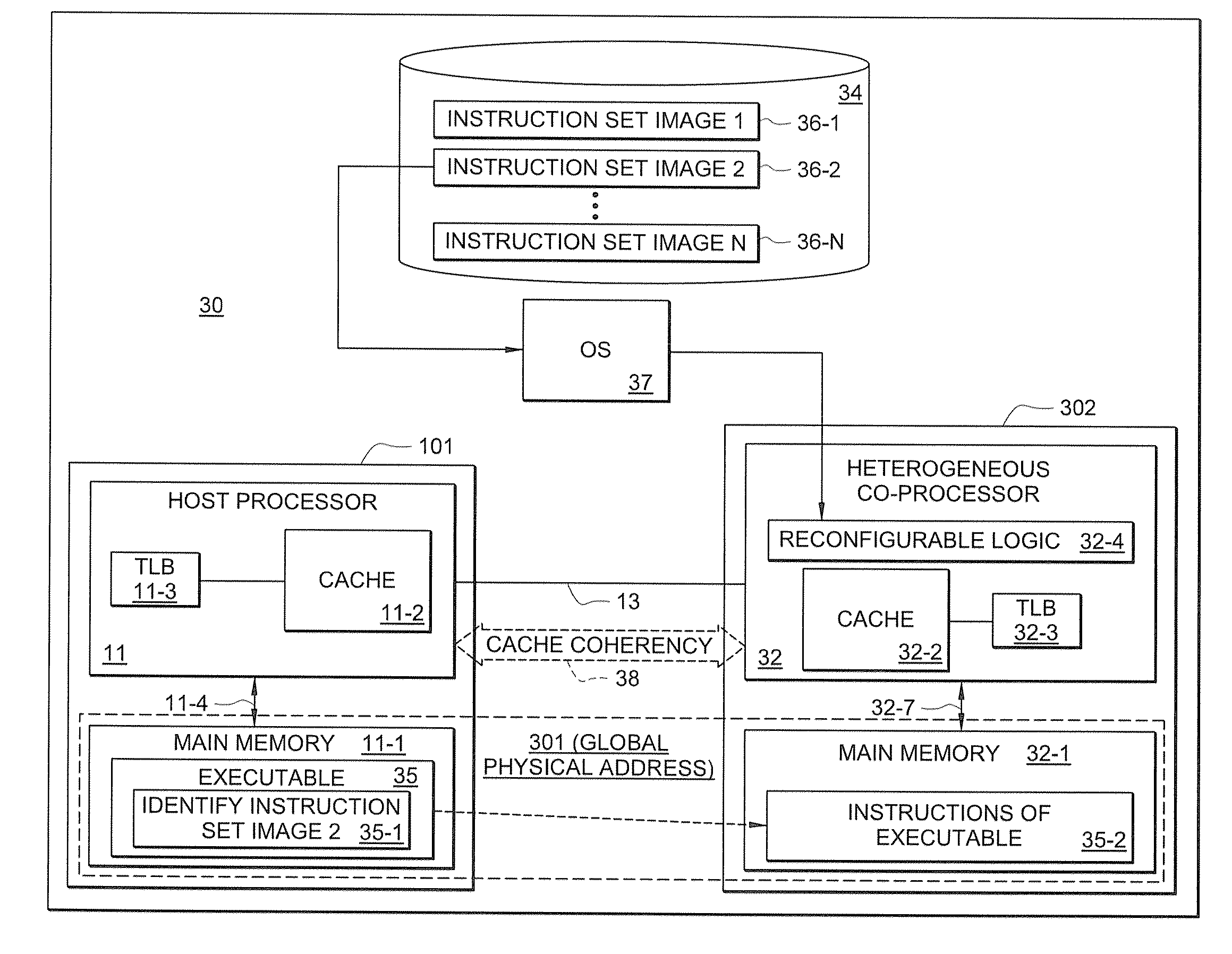

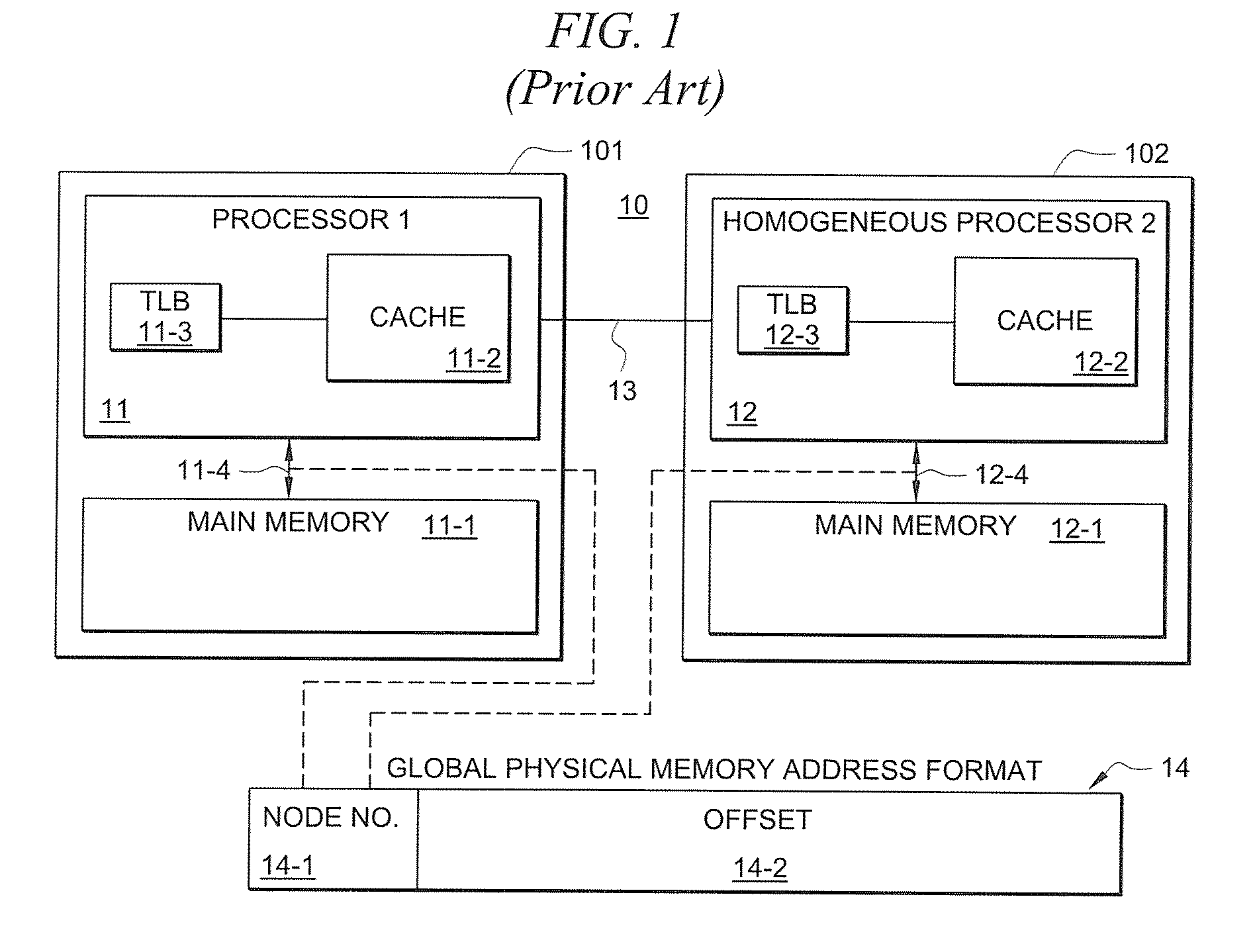

Dispatch mechanism for dispatching insturctions from a host processor to a co-processor

ActiveUS20090070553A1Program control using wired connectionsMemory adressing/allocation/relocationCoprocessorMaster processor

A dispatch mechanism is provided for dispatching instructions of an executable from a host processor to a heterogeneous co-processor. According to certain embodiments, cache coherency is maintained between the host processor and the heterogeneous co-processor, and such cache coherency is leveraged for dispatching instructions of an executable that are to be processed by the co-processor. For instance, in certain embodiments, a designated portion of memory (e.g., “UCB”) is utilized, wherein a host processor may place information in such UCB and the co-processor can retrieve information from the UCB (and vice-versa). The UCB may thus be used to dispatch instructions of an executable for processing by the co-processor. In certain embodiments, the co-processor may comprise dynamically reconfigurable logic which enables the co-processor's instruction set to be dynamically changed, and the dispatching operation may identify one of a plurality of predefined instruction sets to be loaded onto the co-processor.

Owner:MICRON TECH INC

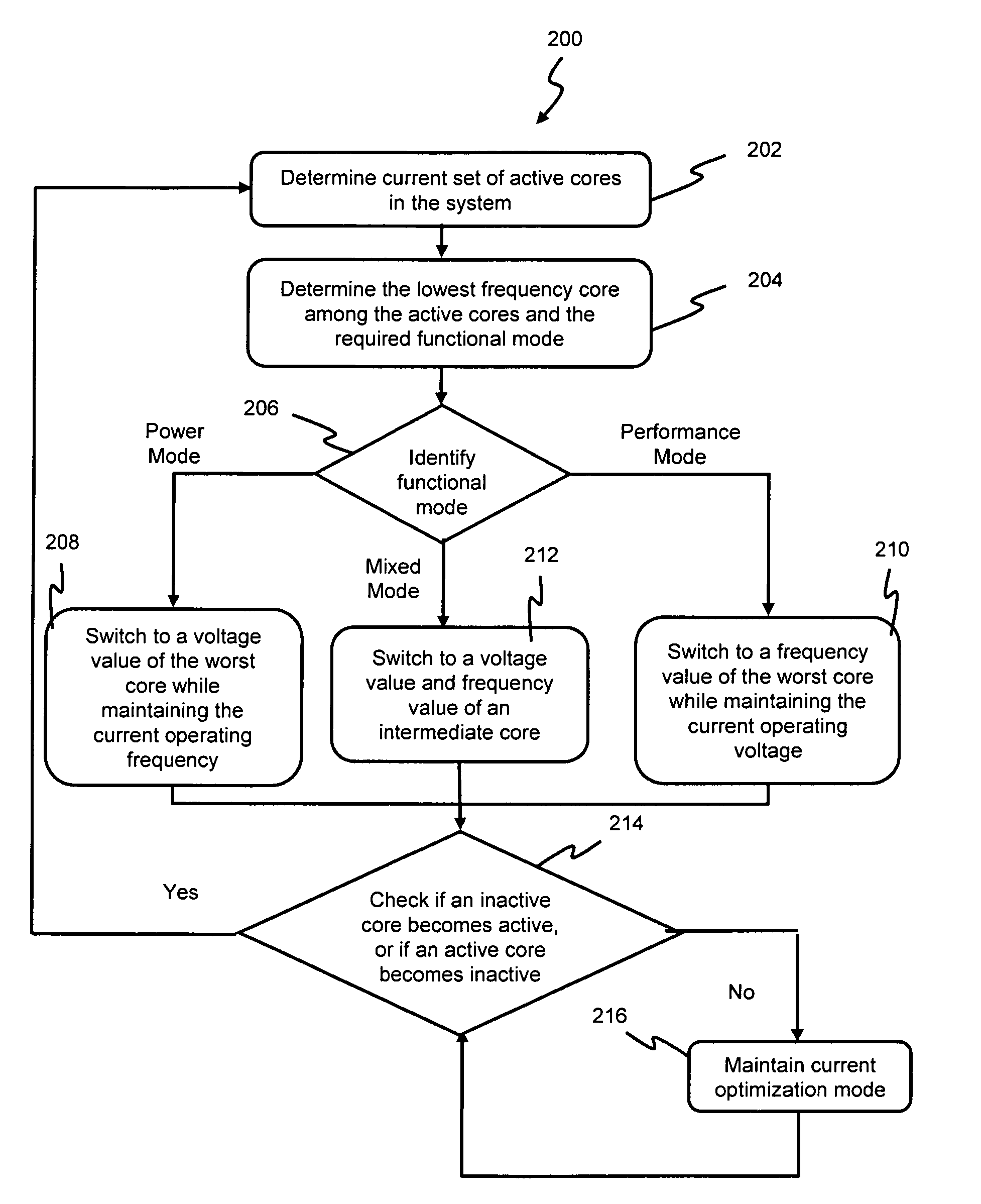

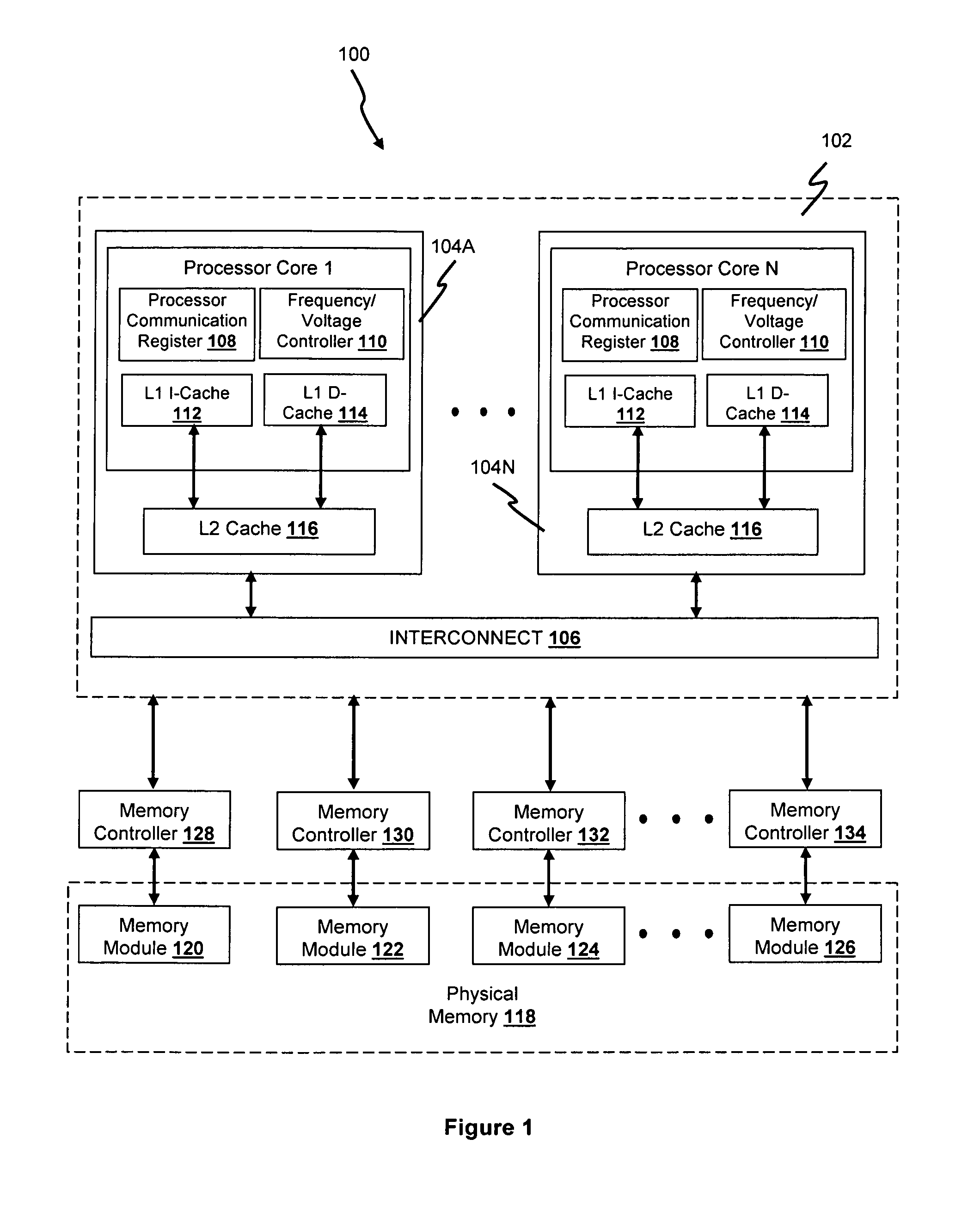

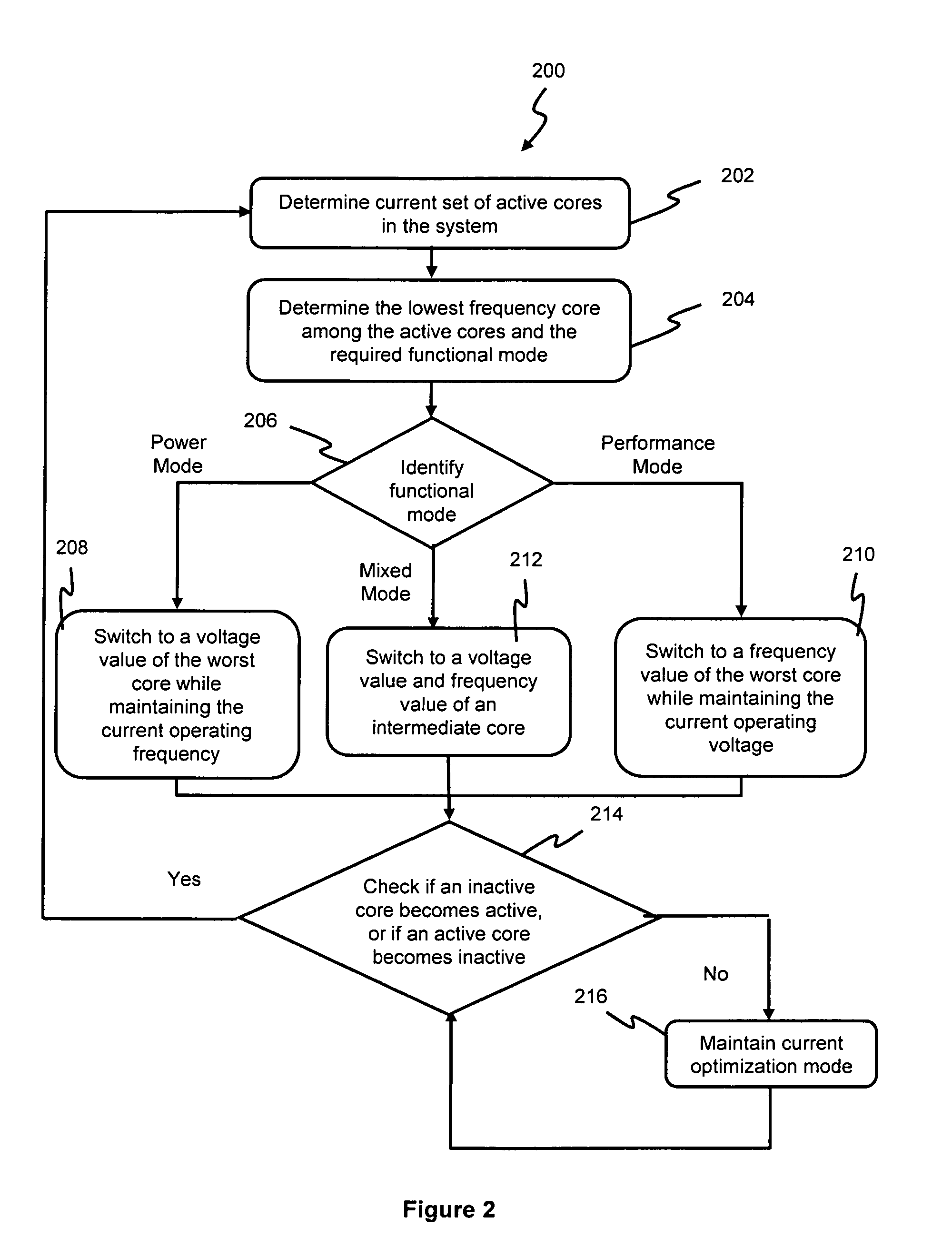

Method for optimizing voltage-frequency setup in multi-core processor systems

InactiveUS20100169609A1Program control using wired connectionsVolume/mass flow measurementFrequency characteristicMixed mode

A method for dynamically operating a multi-core processor system is provided. The method involves ascertaining currently active processor cores, identifying a currently active processor core having a lowest operating frequency, and adjusting at least one operational parameter according to voltage-frequency characteristics corresponding to the identified processor core to fulfill a predefined functional mode, e.g. power optimization mode, performance optimization mode and mixed mode.

Owner:INTEL CORP

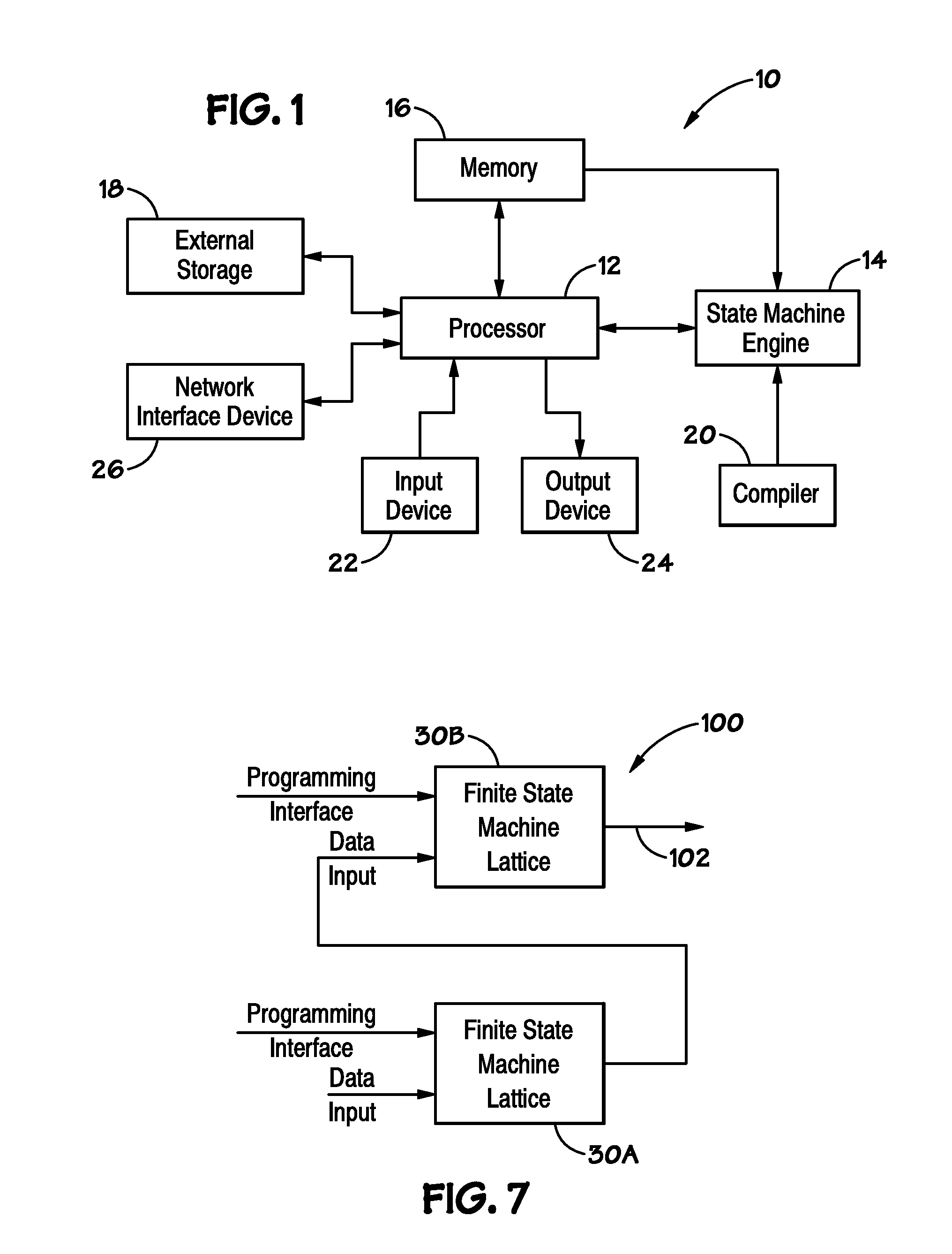

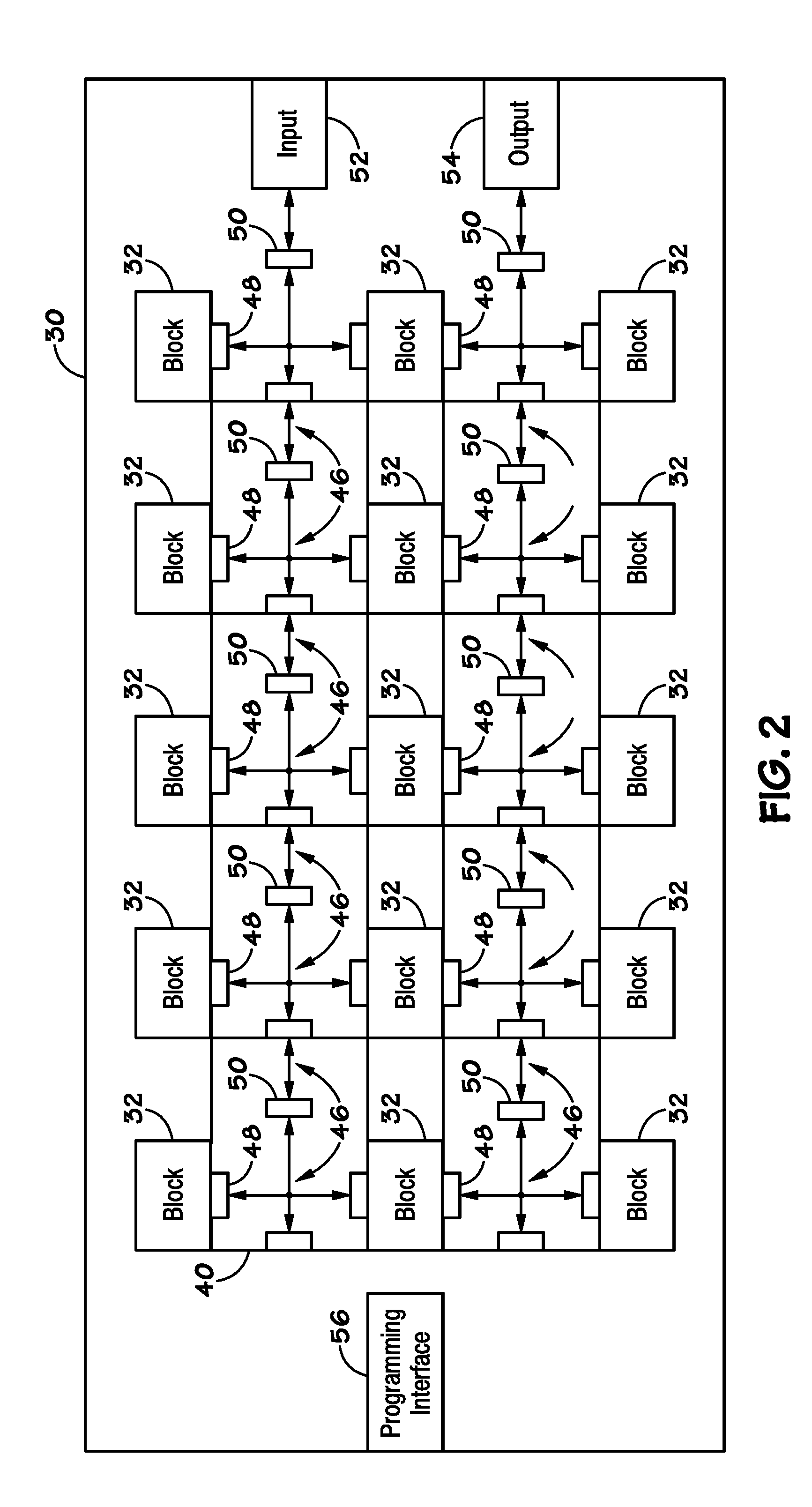

Counter operation in a state machine lattice

ActiveUS20130159670A1Programme controlProgram control using wired connectionsFinite-state machineComputer science

Disclosed are methods and devices, among which is a device that includes a finite state machine lattice. The lattice may include a counter suitable for counting a number of times a programmable element in the lattice detects a condition. The counter may be configured to output in response to counting the condition was detected a certain number of times. For example, the counter may be configured to output in response to determining a condition was detected at least (or no more than) the certain number of times, determining the condition was detected exactly the certain number of times, or determining the condition was detected within a certain range of times. The counter may be coupled to other counters in the device for determining high-count operations and / or certain quantifiers.

Owner:MICRON TECH INC

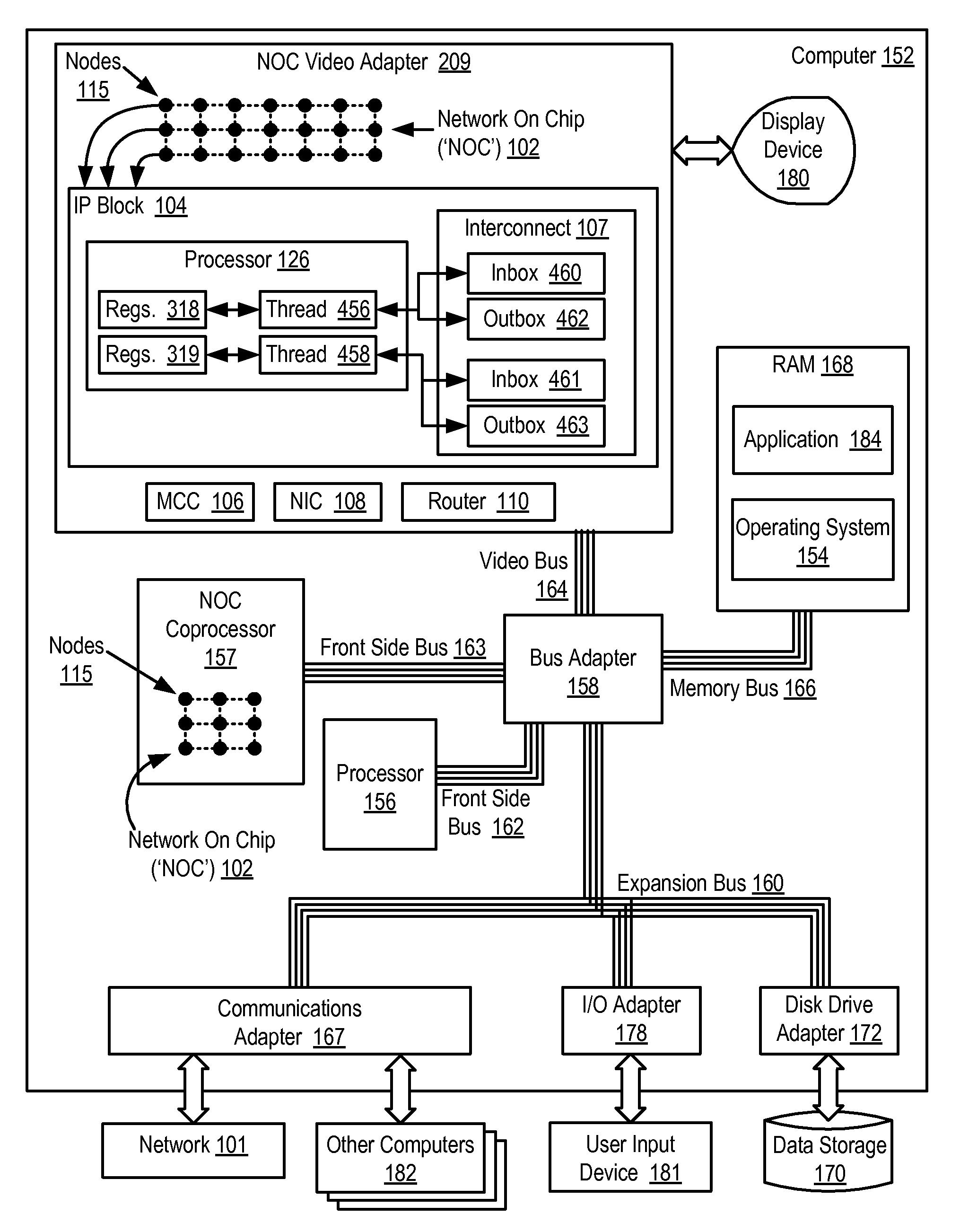

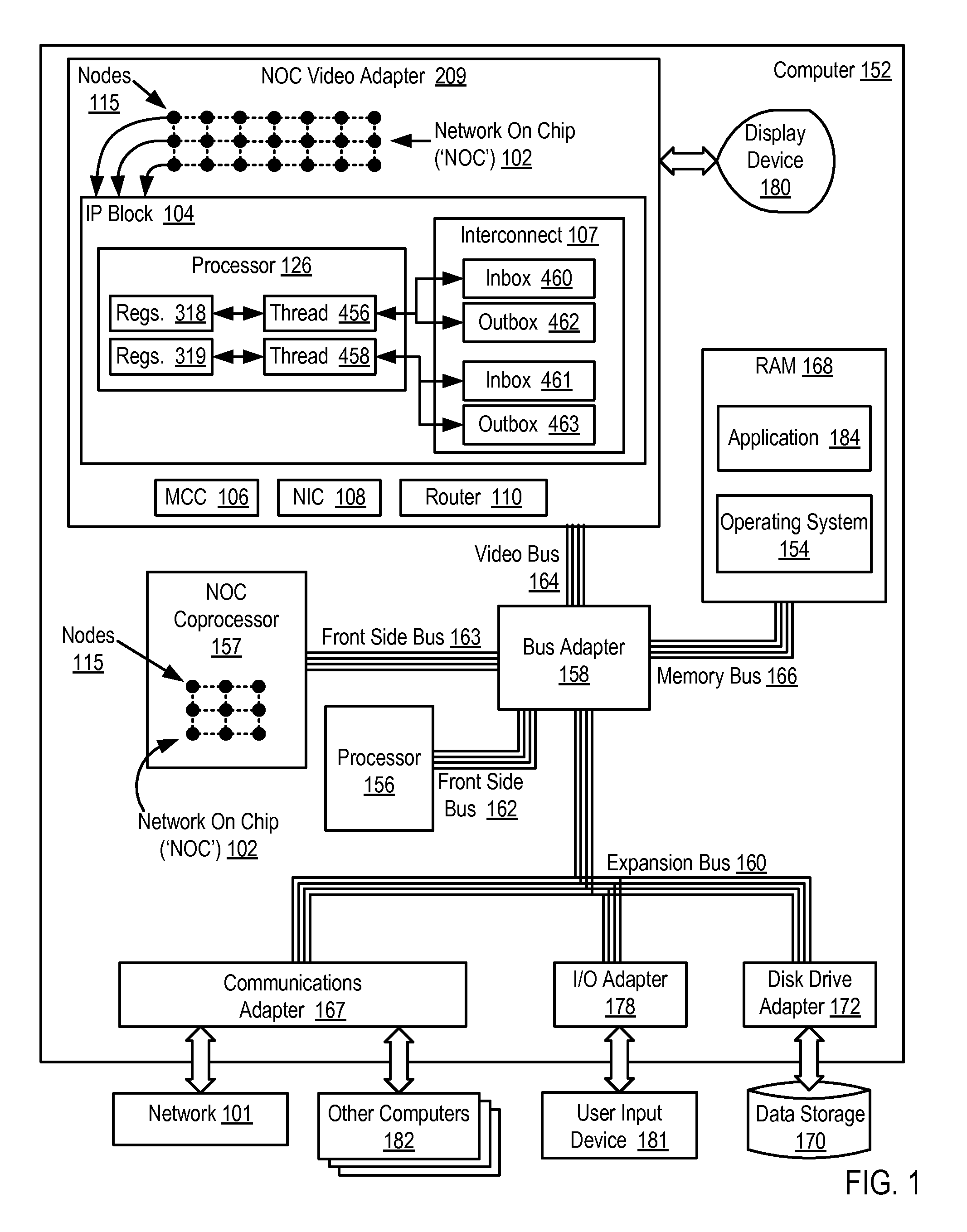

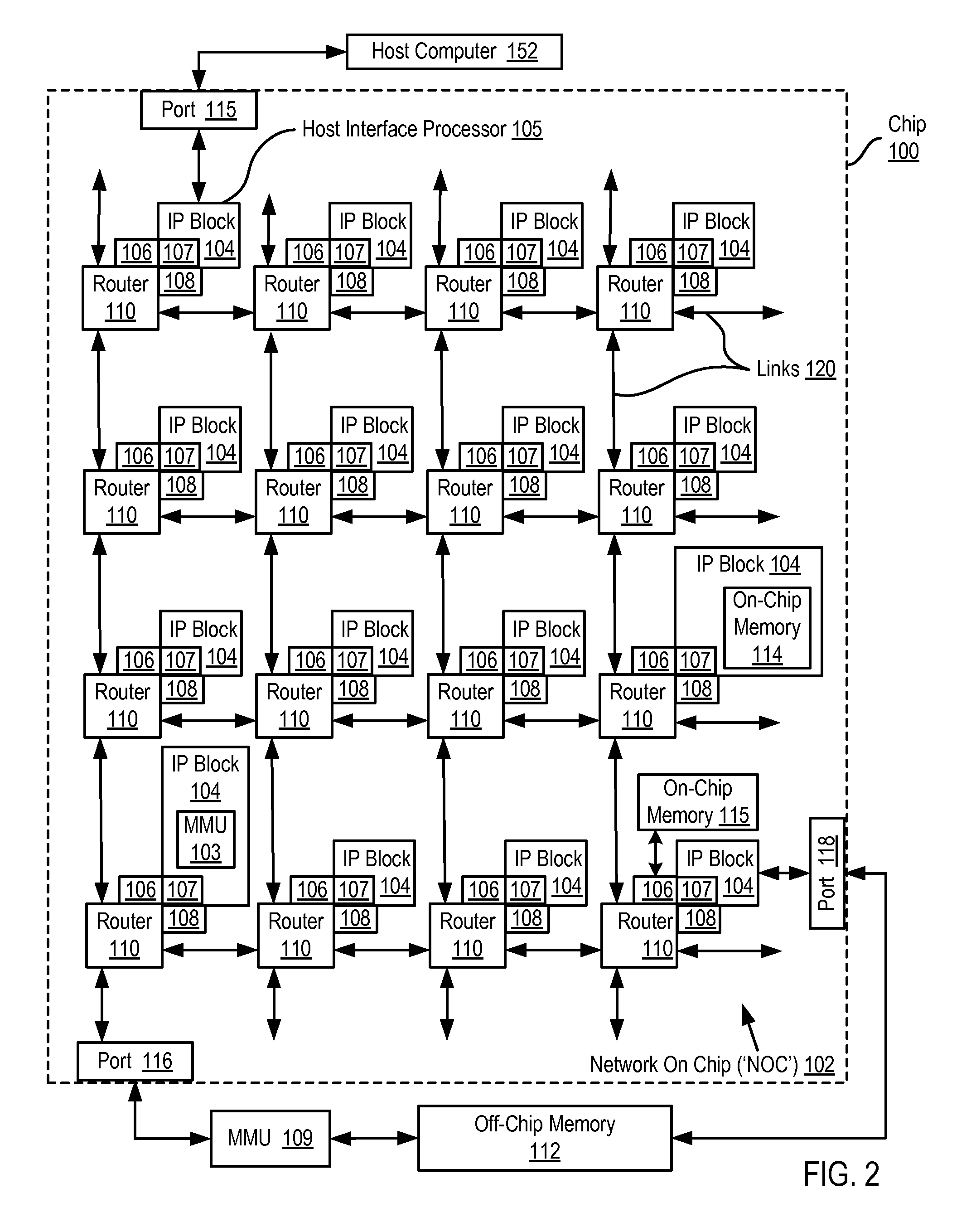

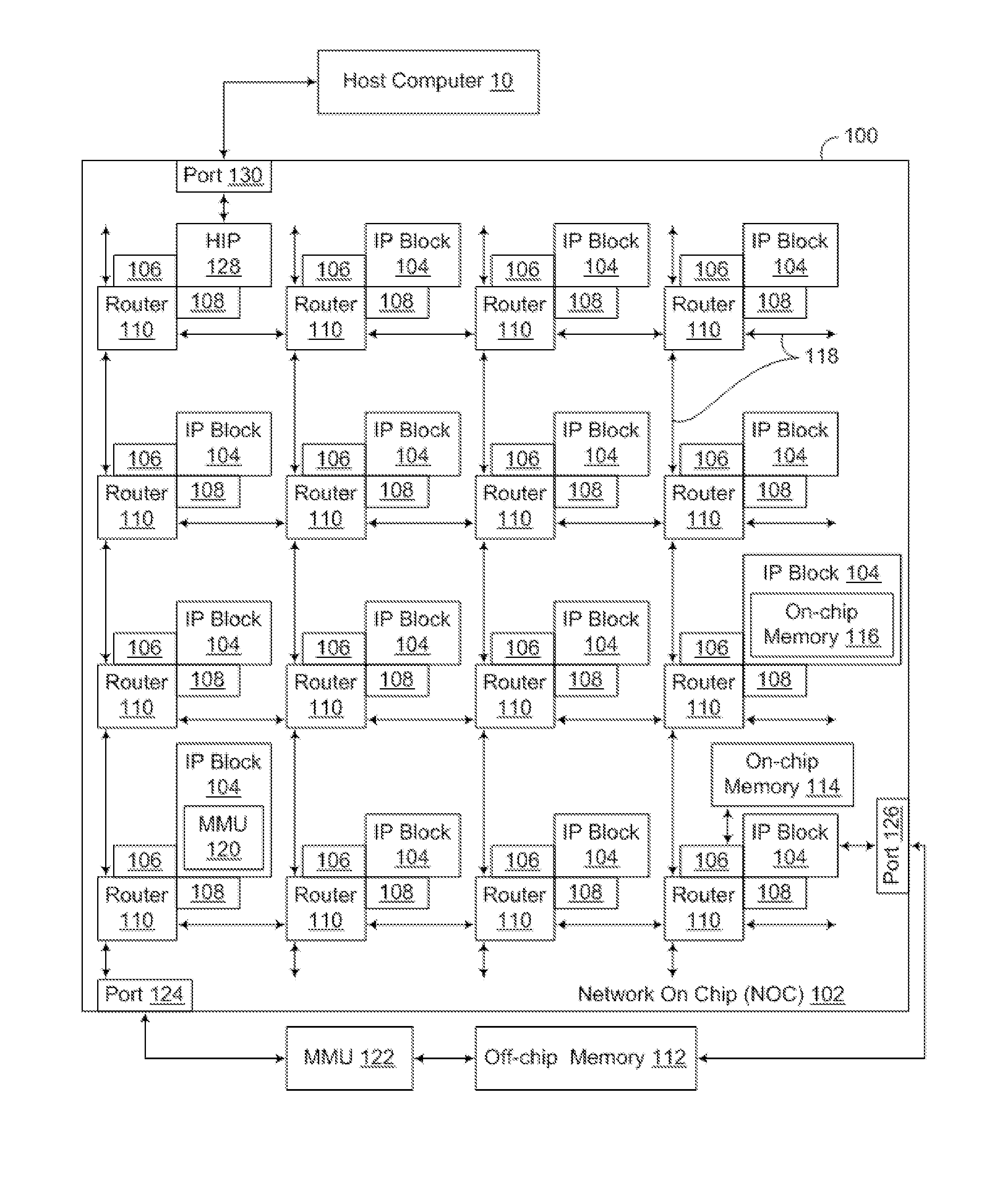

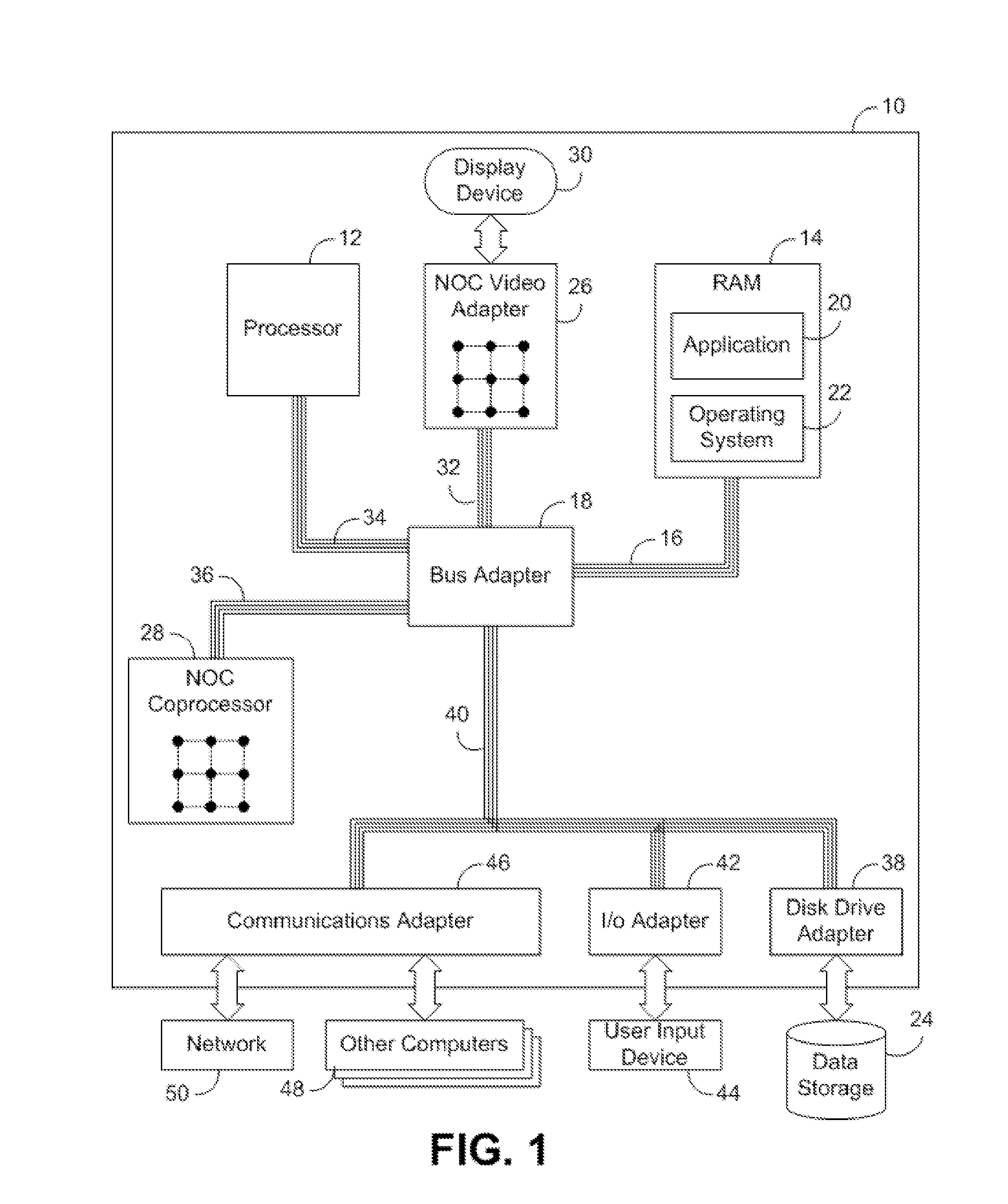

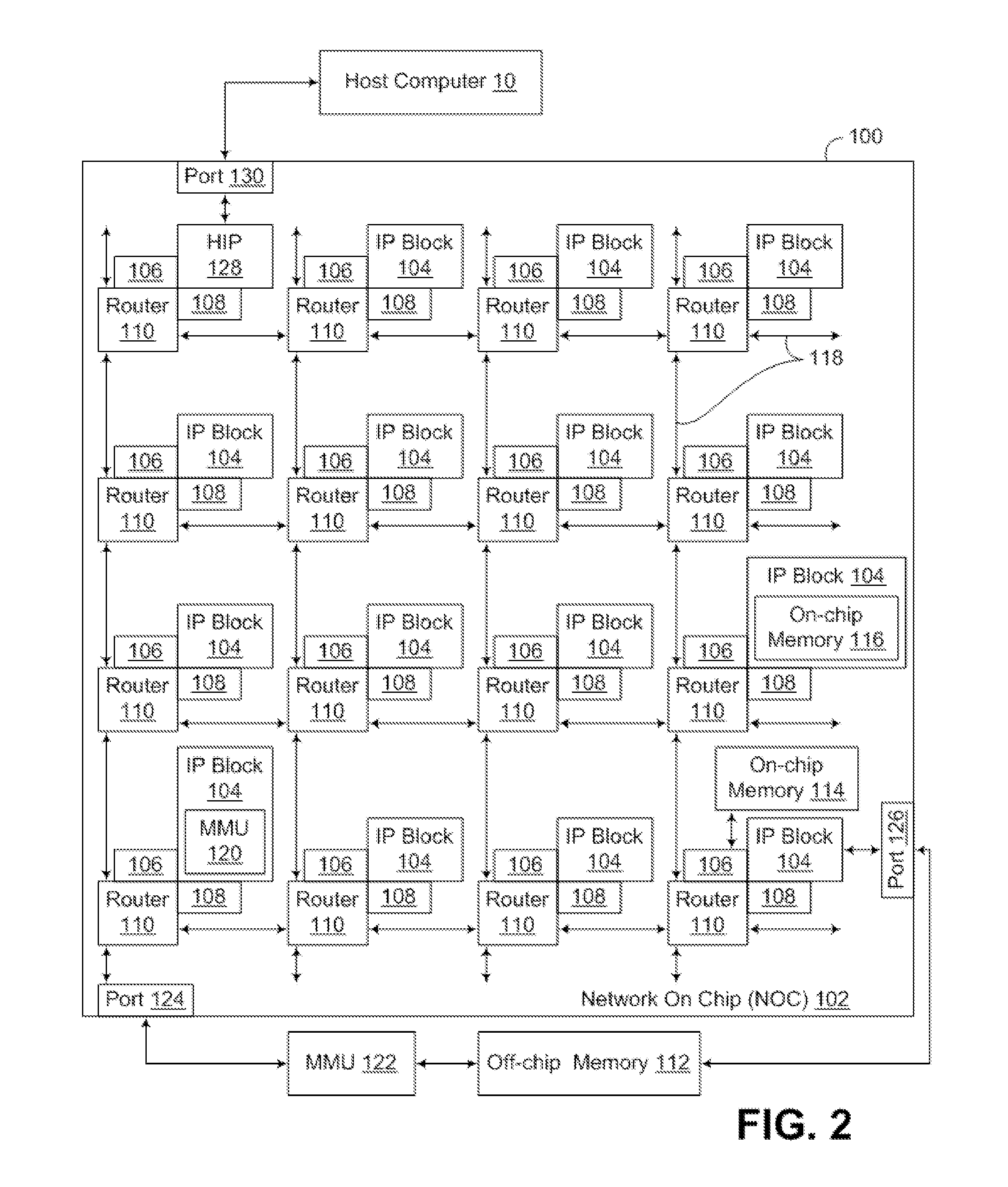

Network On Chip With Low Latency, High Bandwidth Application Messaging Interconnects That Abstract Hardware Inter-Thread Data Communications Into An Architected State of A Processor

ActiveUS20090282214A1Lower latencyHigh bandwidthProgram control using wired connectionsGeneral purpose stored program computerHardware threadHigh bandwidth

Data processing on a network on chip (‘NOC’) that includes integrated processor (‘IP’) blocks, each of a plurality of the IP blocks including at least one computer processor, each such computer processor implementing a plurality of hardware threads of execution; low latency, high bandwidth application messaging interconnects; memory communications controllers; network interface controllers; and routers; each of the IP blocks adapted to a router through a separate one of the low latency, high bandwidth application messaging interconnects, a separate one of the memory communications controllers, and a separate one of the network interface controllers; each application messaging interconnect abstracting into an architected state of each processor, for manipulation by computer programs executing on the processor, hardware inter-thread communications among the hardware threads of execution; each memory communications controller controlling communication between an IP block and memory; each network interface controller controlling inter-IP block communications through routers.

Owner:IBM CORP

Low latency variable transfer network for fine grained parallelism of virtual threads across multiple hardware threads

InactiveUS20130159669A1Reduce transferProgram control using wired connectionsGeneral purpose stored program computerHardware threadProcessing core

A method and circuit arrangement utilize a low latency variable transfer network between the register files of multiple processing cores in a multi-core processor chip to support fine grained parallelism of virtual threads across multiple hardware threads. The communication of a variable over the variable transfer network may be initiated by a move from a local register in a register file of a source processing core to a variable register that is allocated to a destination hardware thread in a destination processing core, so that the destination hardware thread can then move the variable from the variable register to a local register in the destination processing core.

Owner:IBM CORP

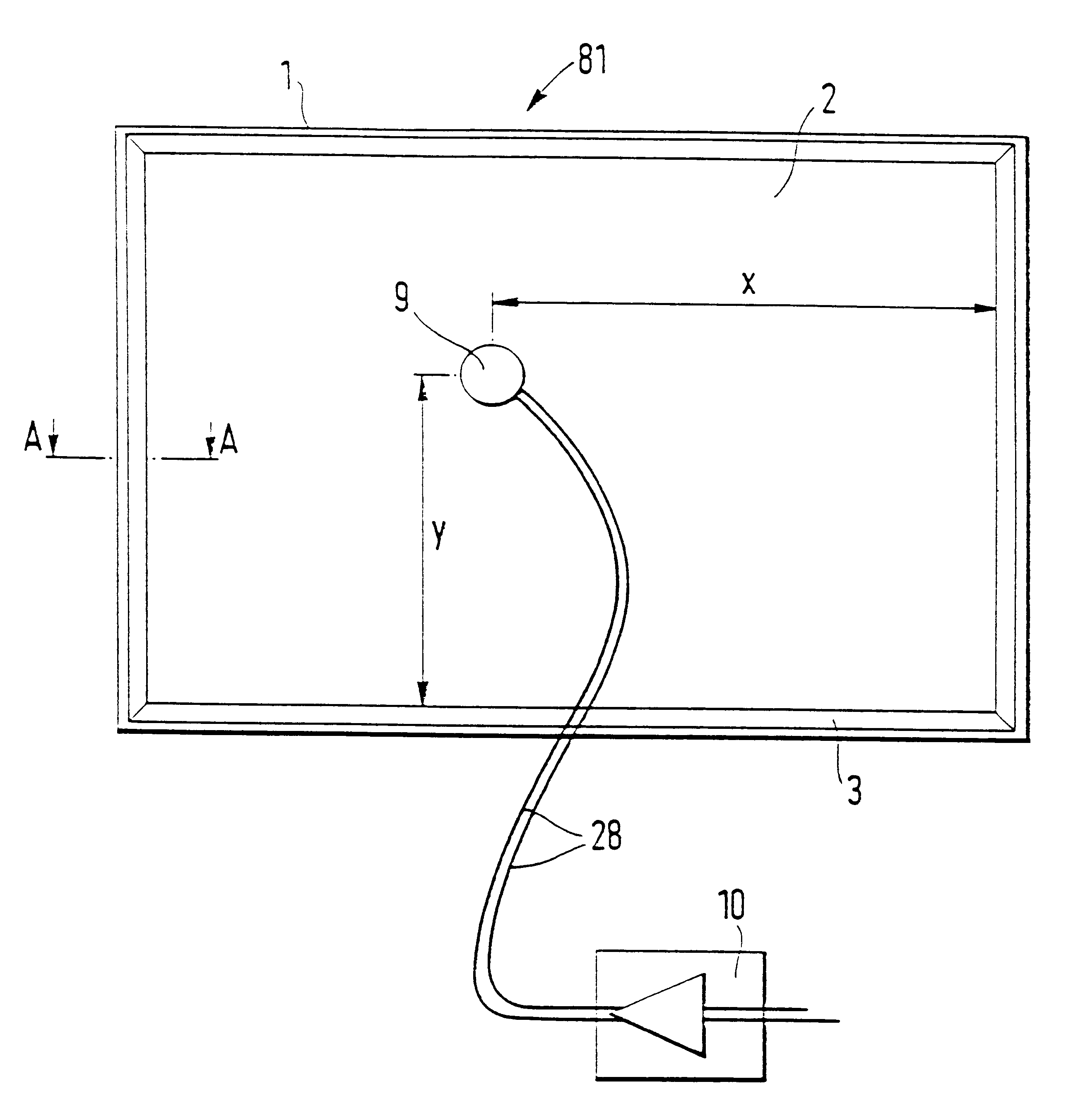

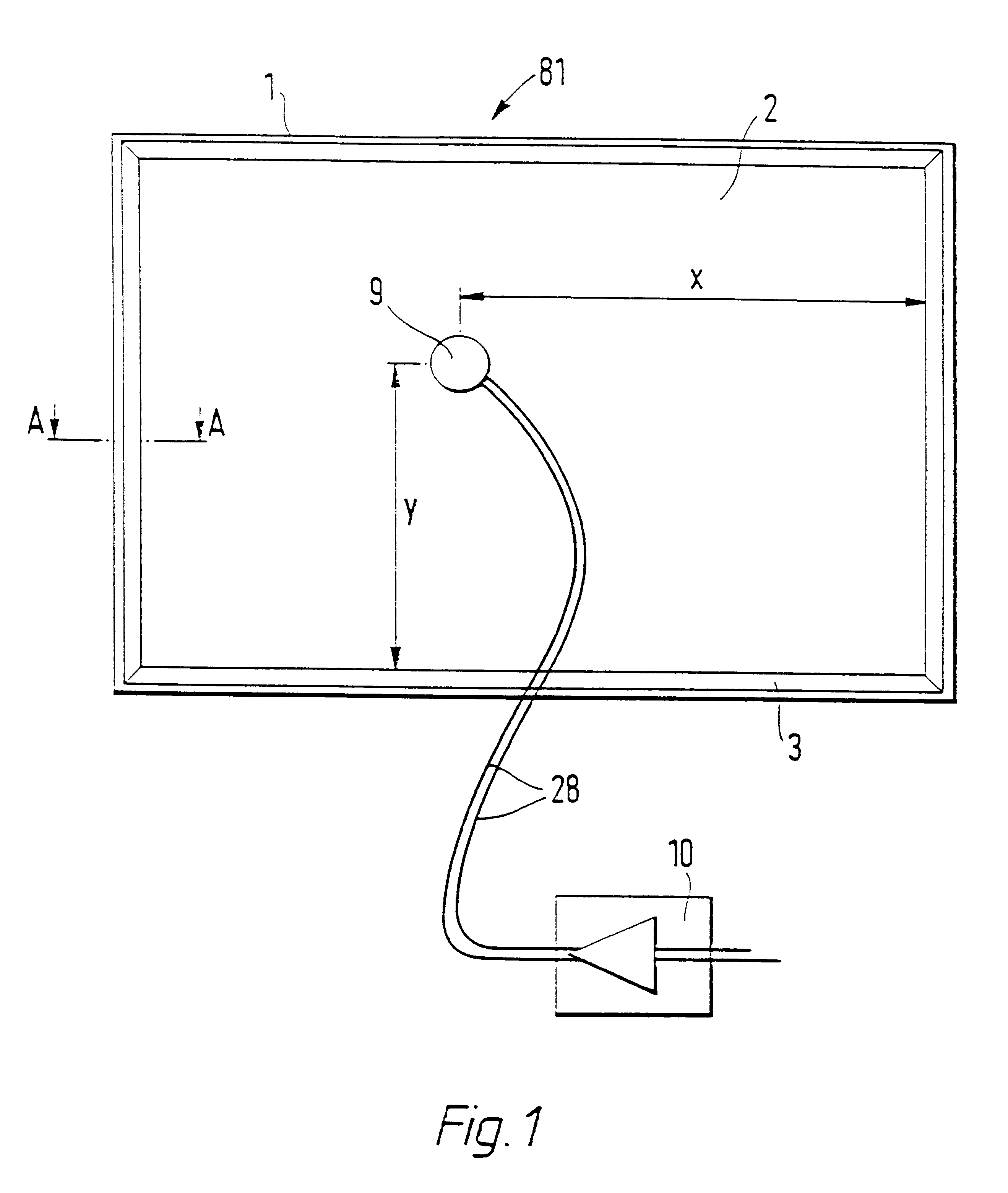

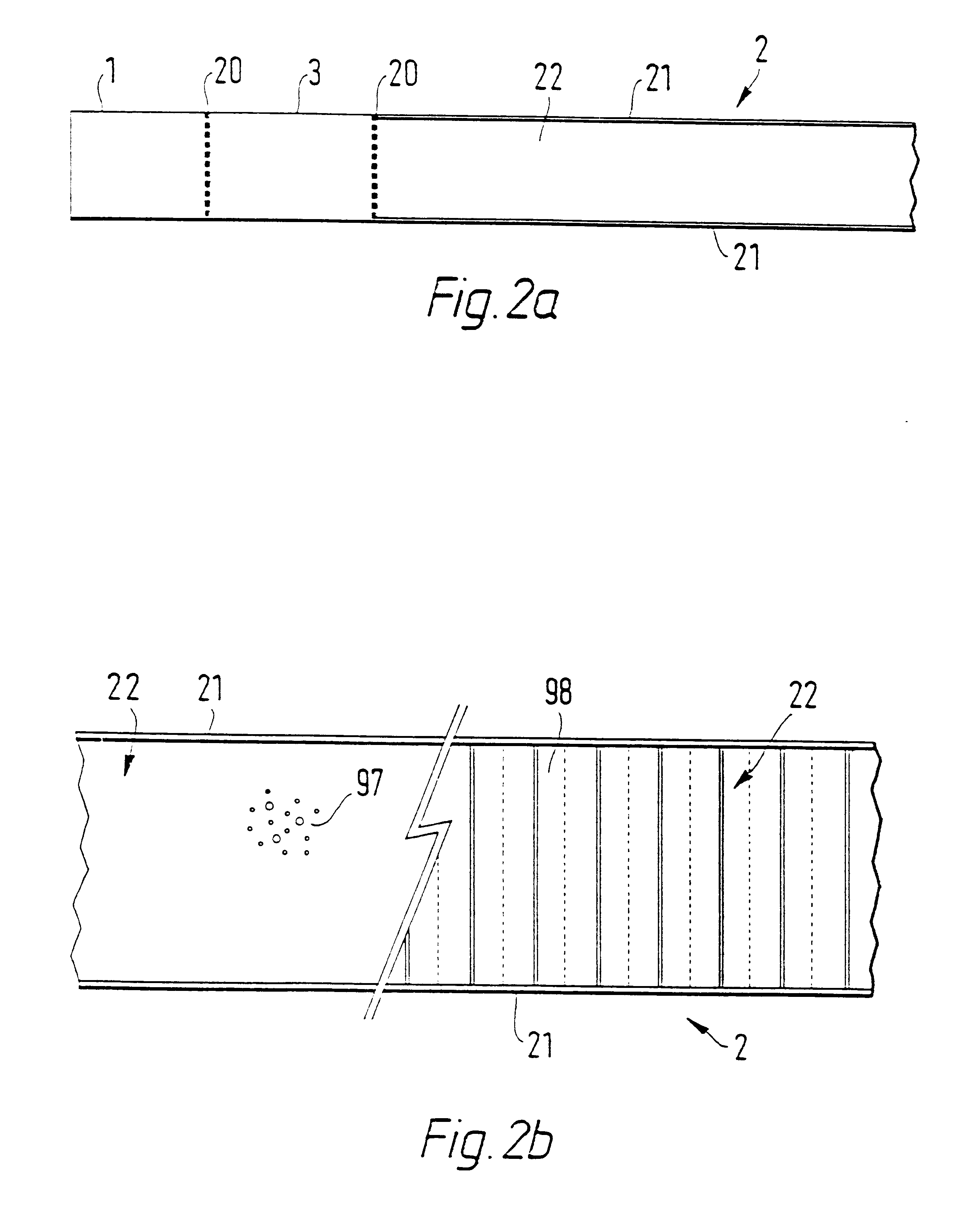

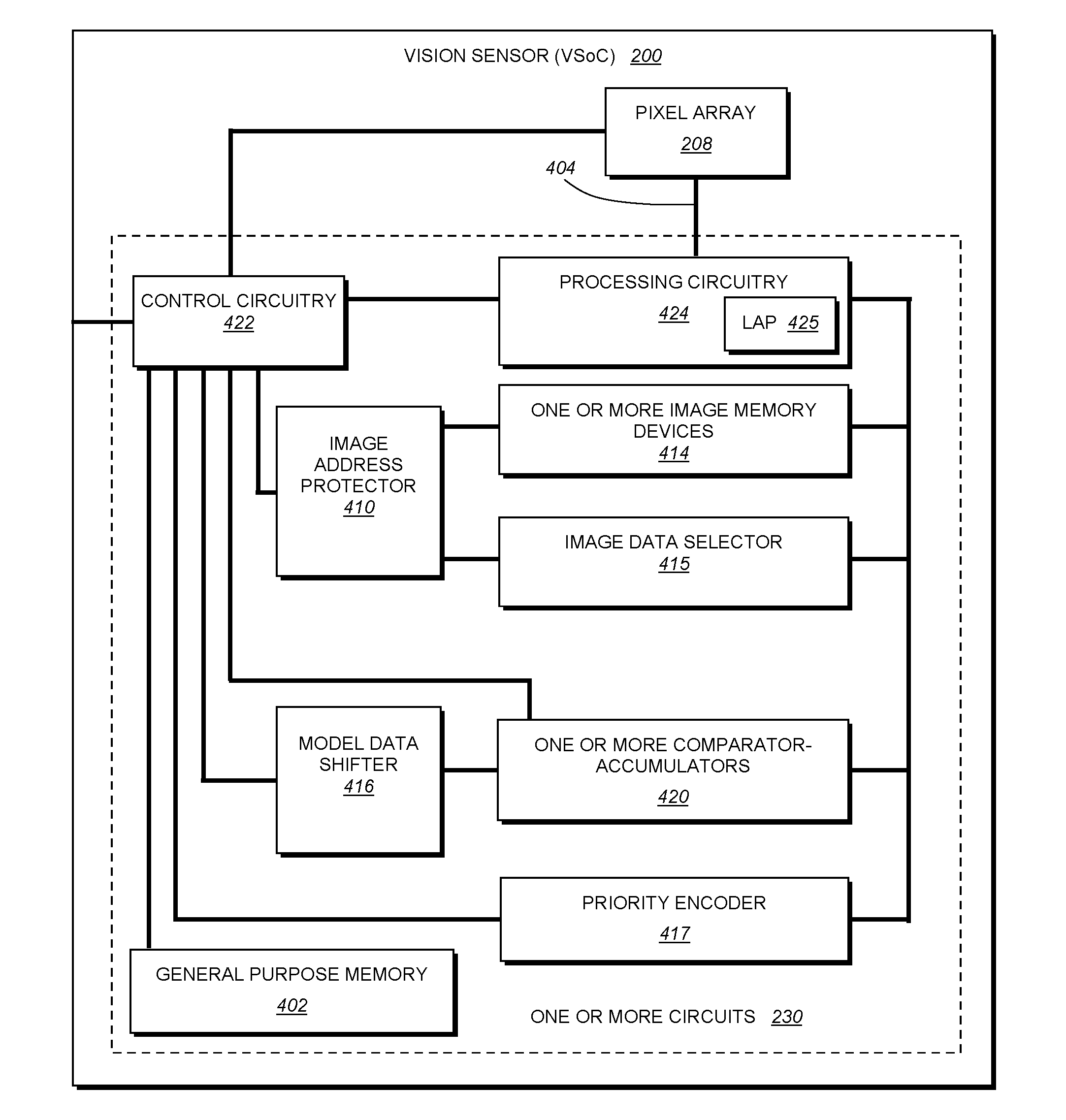

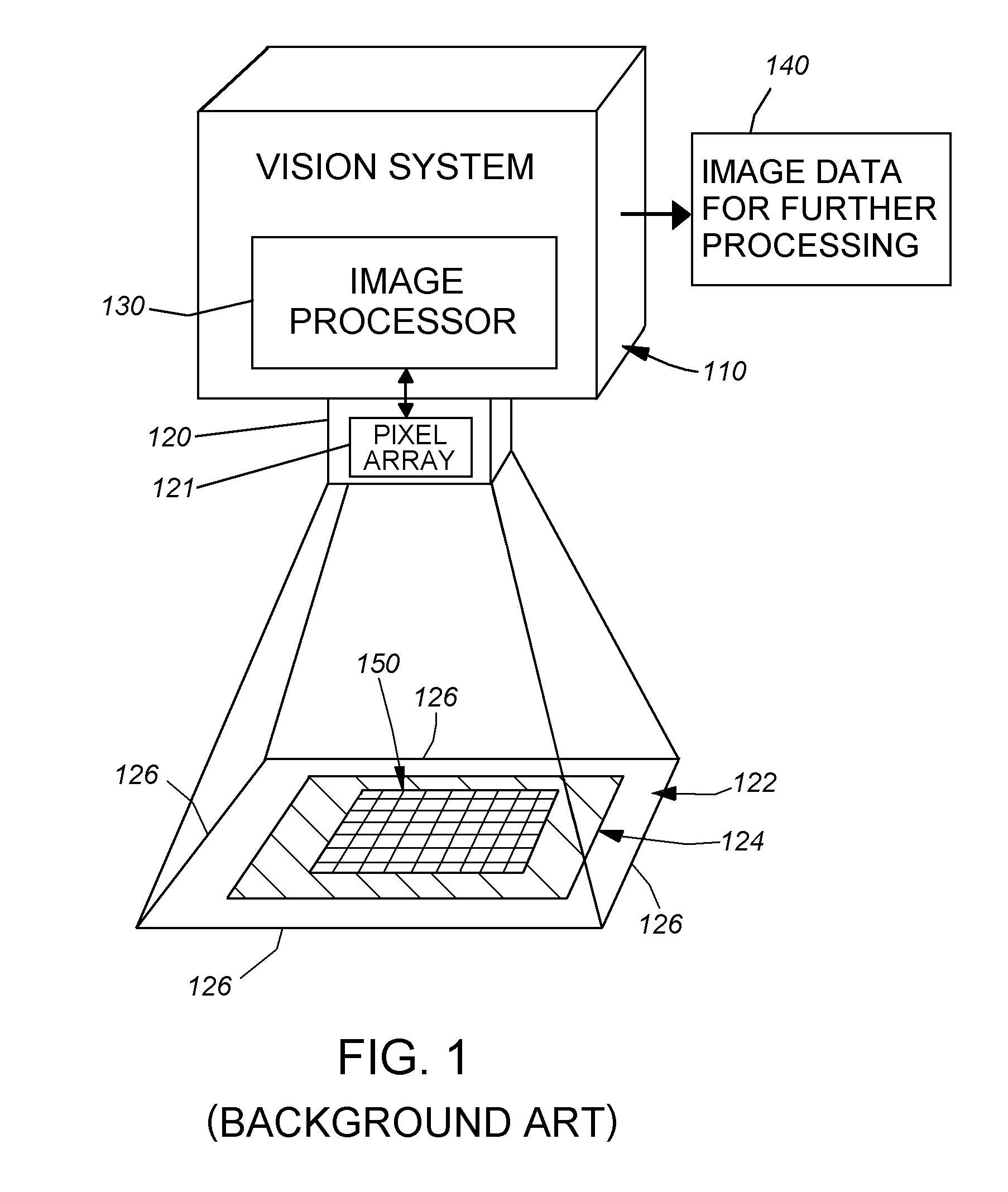

System and method for processing image data relative to a focus of attention within the overall image

InactiveUS20110211726A1Process time savingSave data storage overheadTelevision system detailsProgram control using wired connectionsSensor arrayDigital data

This invention provides a system and method for processing discrete image data within an overall set of acquired image data based upon a focus of attention within that image. The result of such processing is to operate upon a more limited subset of the overall image data to generate output values required by the vision system process. Such output value can be a decoded ID or other alphanumeric data. The system and method is performed in a vision system having two processor groups, along with a data memory that is smaller in capacity than the amount of image data to be read out from the sensor array. The first processor group is a plurality of SIMD processors and at least one general purpose processor, co-located on the same die with the data memory. A data reduction function operates within the same clock cycle as data-readout from the sensor to generate a reduced data set that is stored in the on-die data memory. At least a portion of the overall, unreduced image data is concurrently (in the same clock cycle) transferred to the second processor while the first processor transmits at least one region indicator with respect to the reduced data set to the second processor. The region indicator represents at least one focus of attention for the second processor to operate upon.

Owner:COGNEX CORP

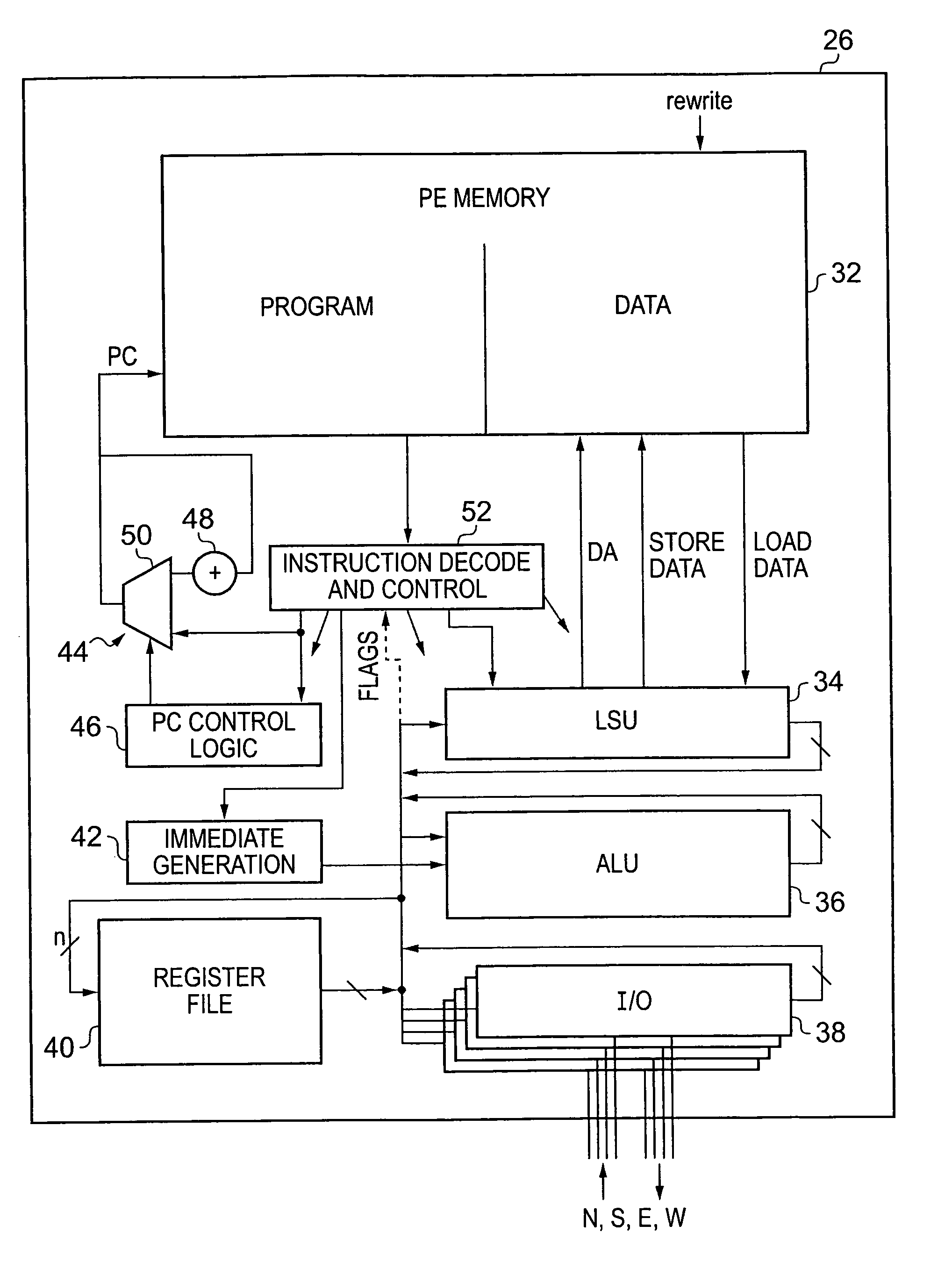

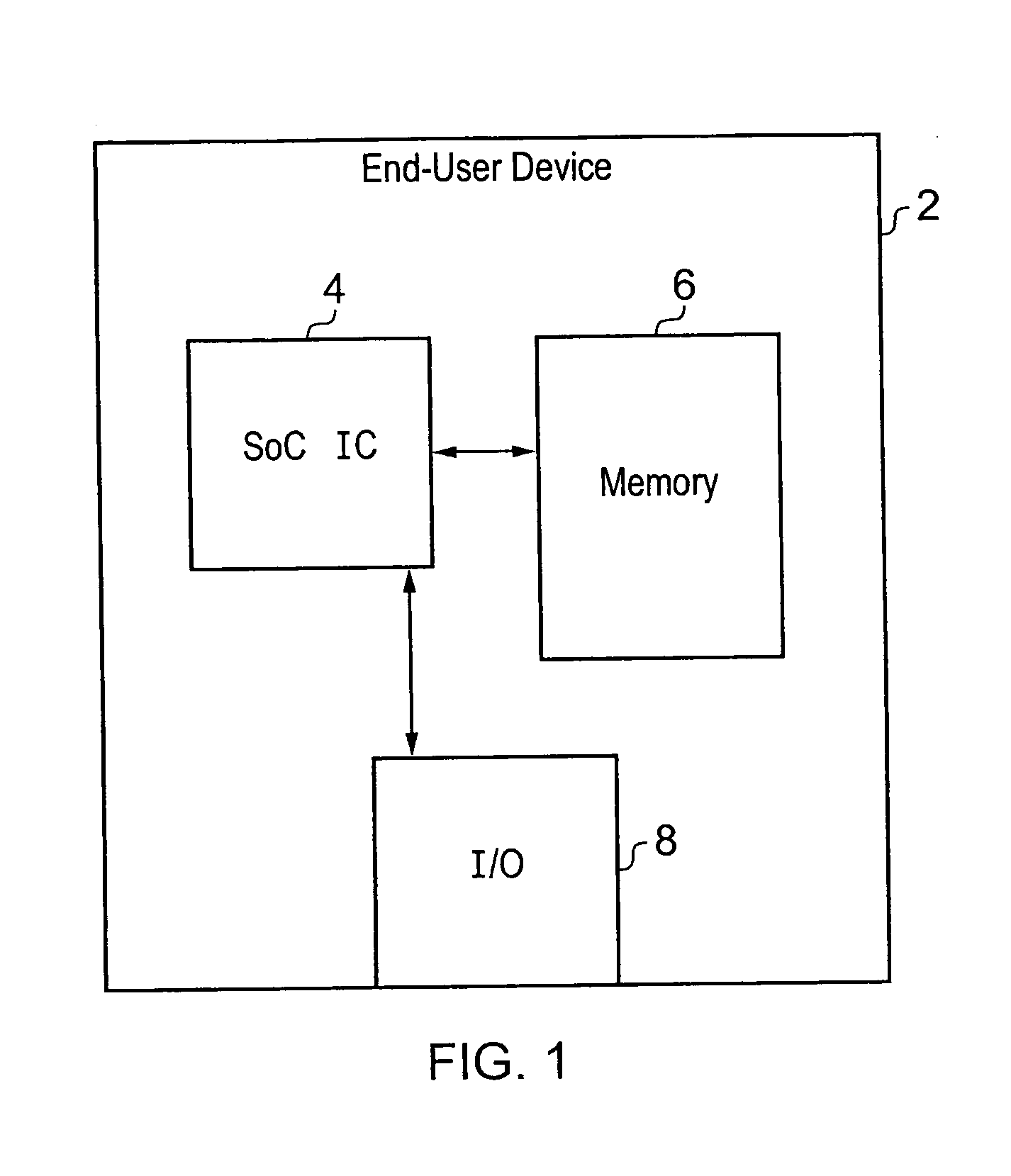

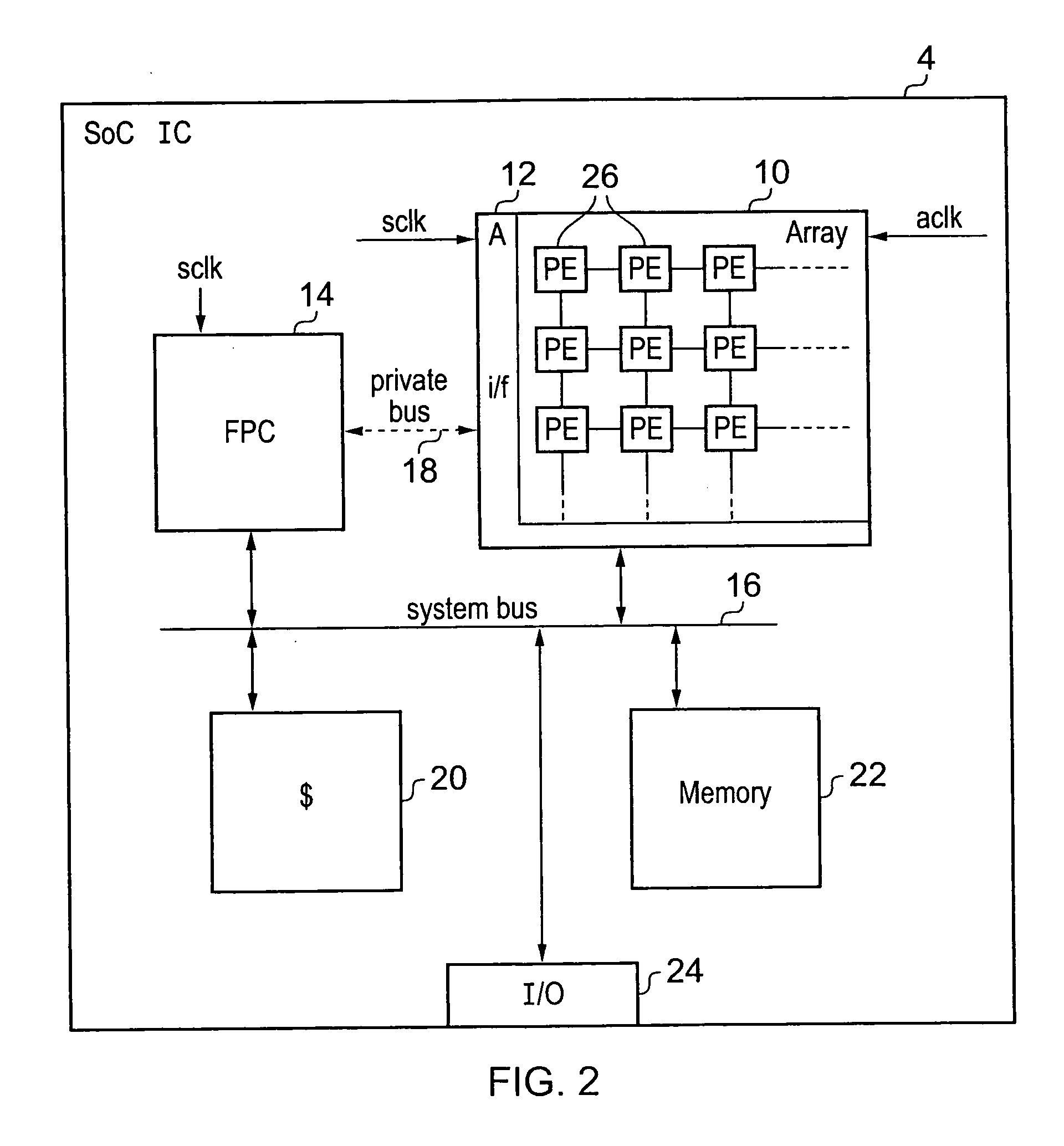

Integrated circuit incorporating an array of interconnected processors executing a cycle-based program

InactiveUS20100100704A1Facilitate communicationImprove performanceSingle instruction multiple data multiprocessorsProgram control using wired connectionsArray data structureState variable

An integrated circuit 4 is provided including an array 10 of processors 26 with interface circuitry 12 providing communication with further processing circuitry 14. The processors 26 within the array 10 execute individual programs which together provide the functionality of a cycle-based program. During each program-cycle of the cycle based program, each of the processors executes its respective program starting from a predetermined execution start point to evaluate a next state of at least some of the state variables of the cycle-based program. A boundary between program-cycles provides a synchronisation time (point) for processing operations performed by the array.

Owner:ARM LTD

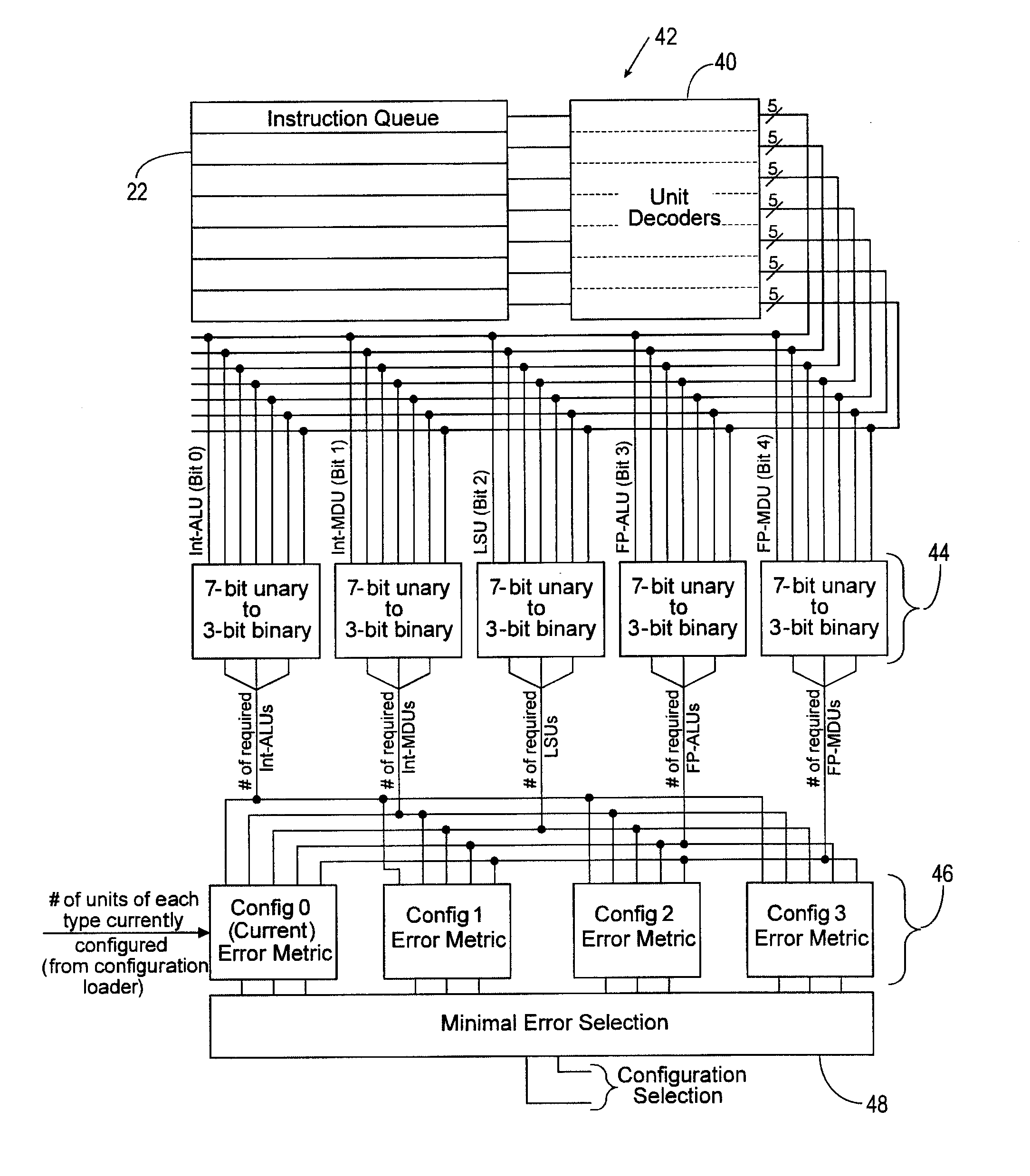

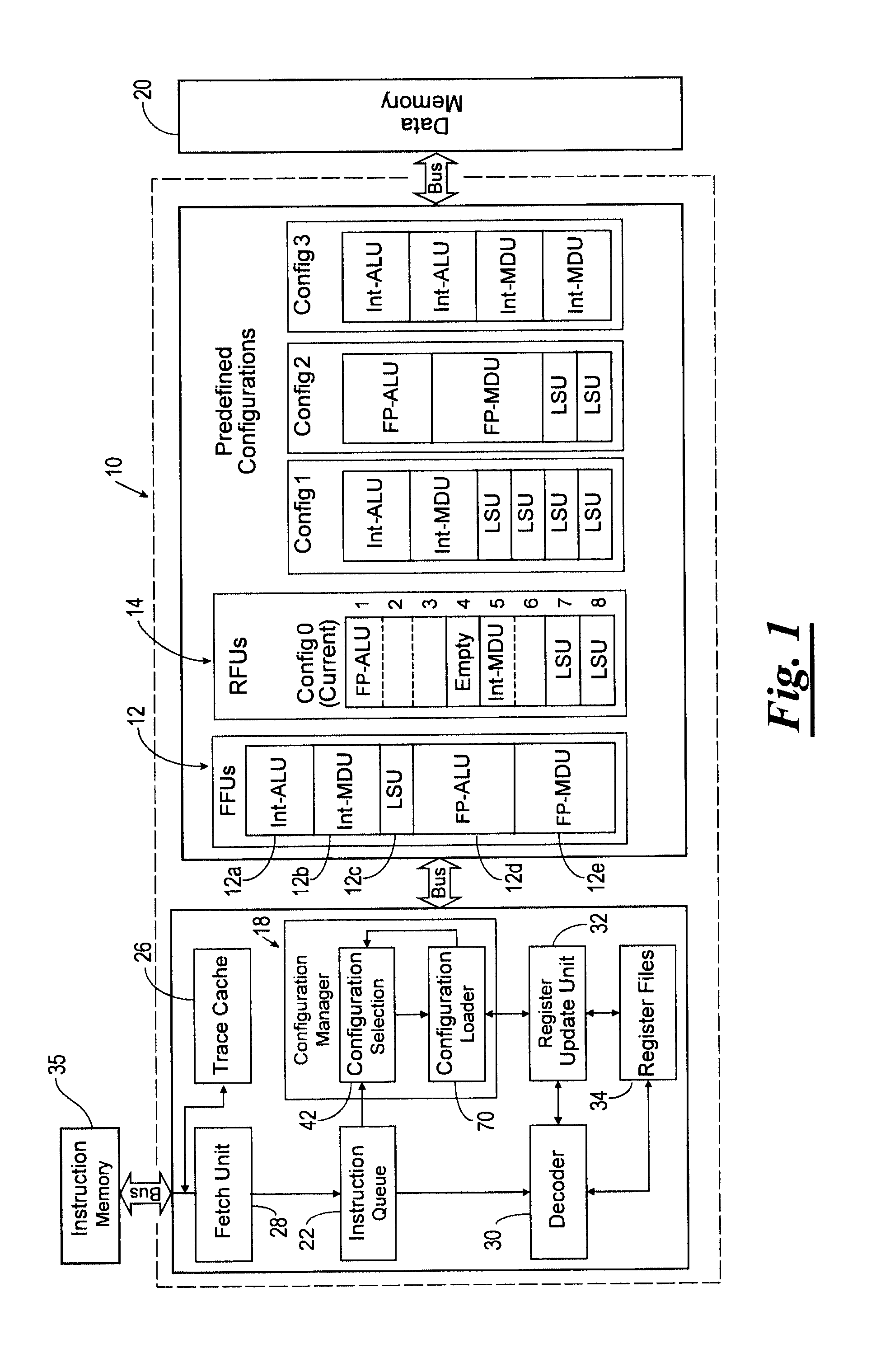

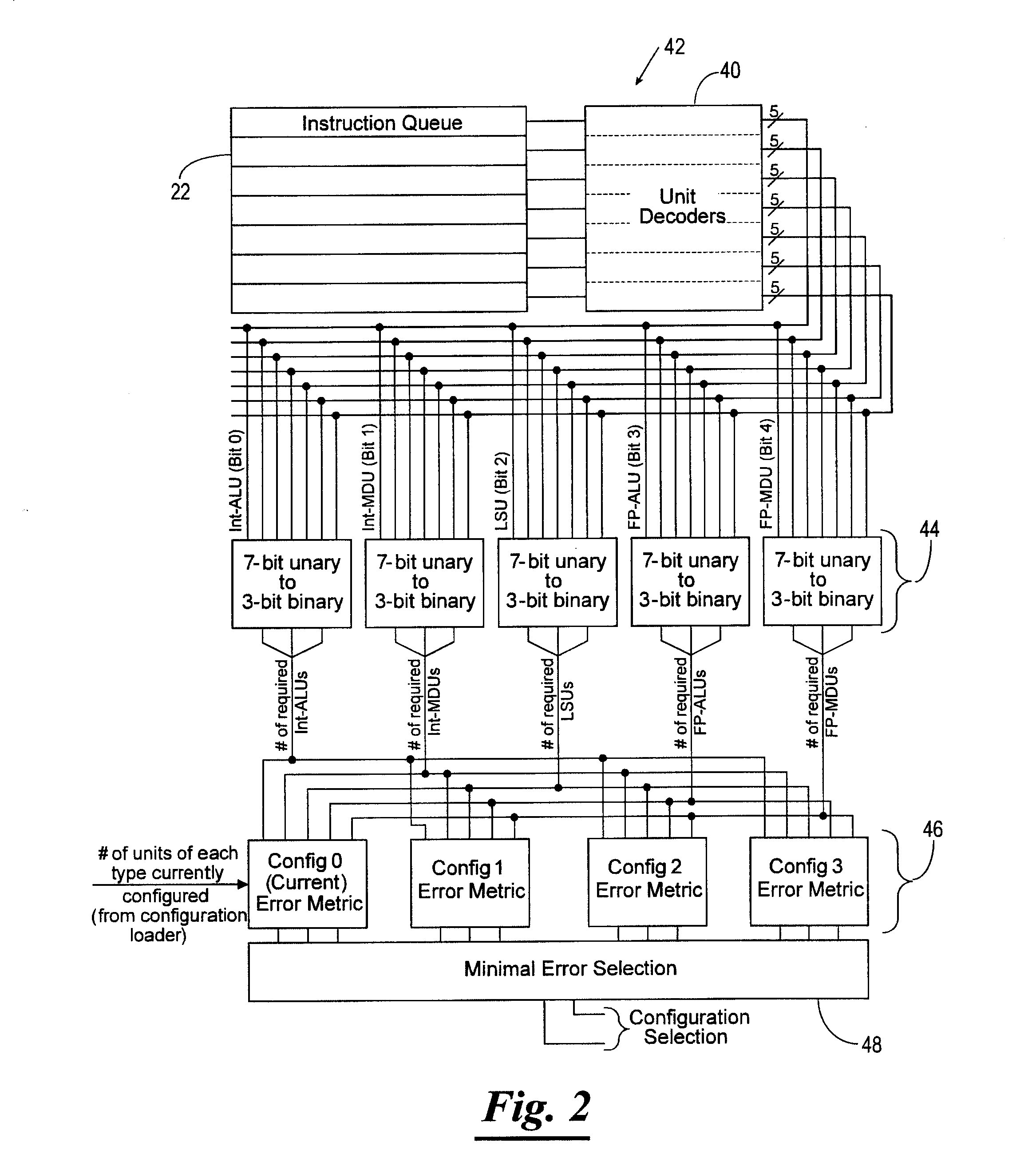

Reconfigurable Computing Architectures: Dynamic and Steering Vector Methods

InactiveUS20080263323A1Future usabilityEfficient loadingProgram control using wired connectionsGeneral purpose stored program computerConfiguration selectionReconfigurable computing architecture

A reconfigurable processor including a plurality of reconfigurable slots, a memory, an instruction queue, a configuration selection unit, and a configuration loader. The plurality of reconfigurable slots are capable of forming reconfigurable execution units. The memory stores a plurality of steering vector processing hardware configurations for configuring the reconfigurable execution units. The instruction queue stores a plurality of instructions to be executed by at least one of the reconfigurable execution units. The configuration selection unit analyzes the dependency of instructions stored in the instruction queue to determine an error metric value for each of the steering vector processing hardware configurations indicative of an ability of a reconfigurable slot configured with the steering vector processing hardware configuration to execute the instructions in the instruction queue, and chooses one of the steering vector processing hardware configurations based upon the error metric values. The configuration loader determines whether one or more of the reconfigurable slots are available and reconfigures at least one of the reconfigurable slots with at least a part of the chosen steering vector processing hardware configuration responsive to at least one of the reconfigurable slots being available.

Owner:THE BOARD OF RGT UNIV OF OKLAHOMA

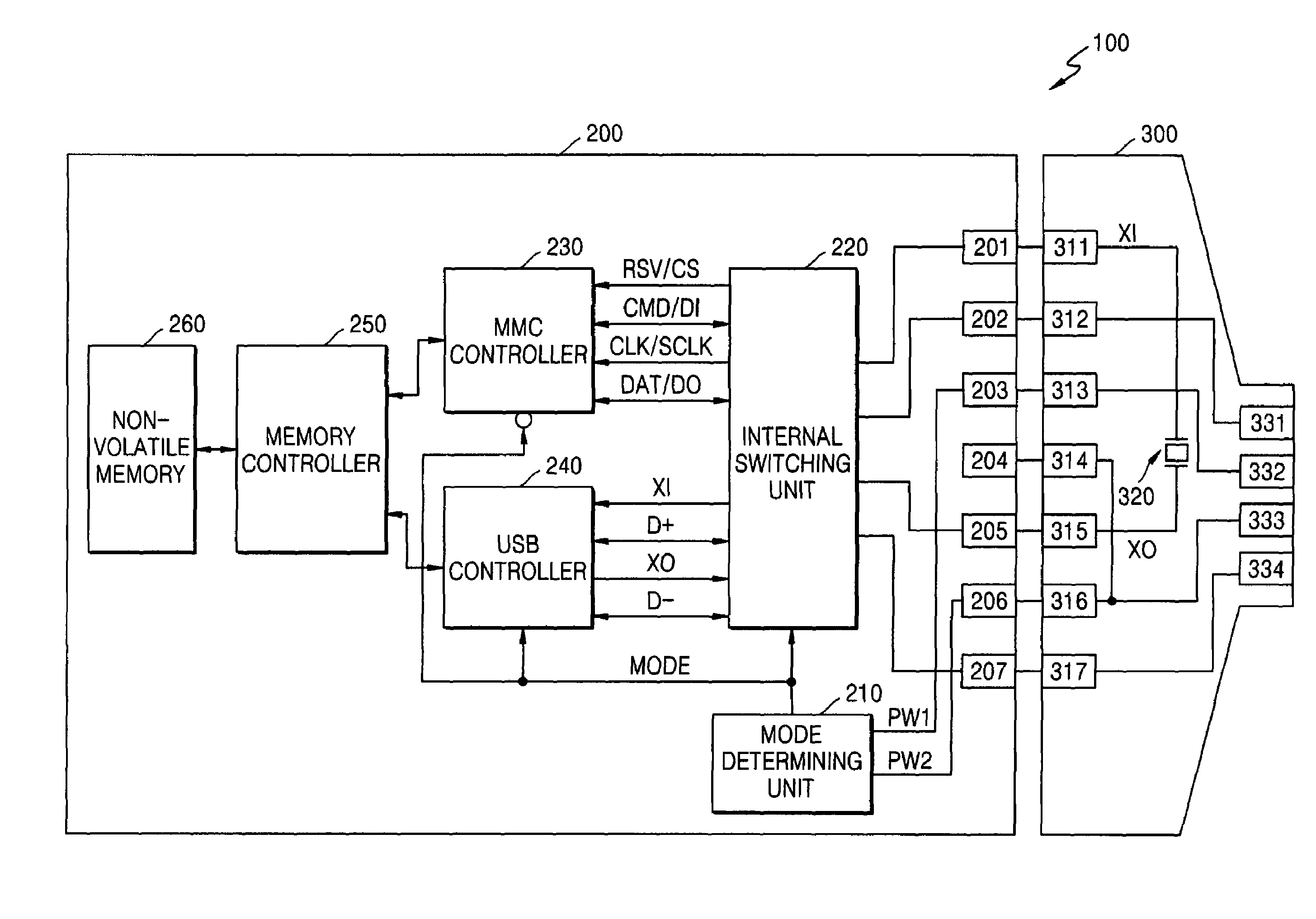

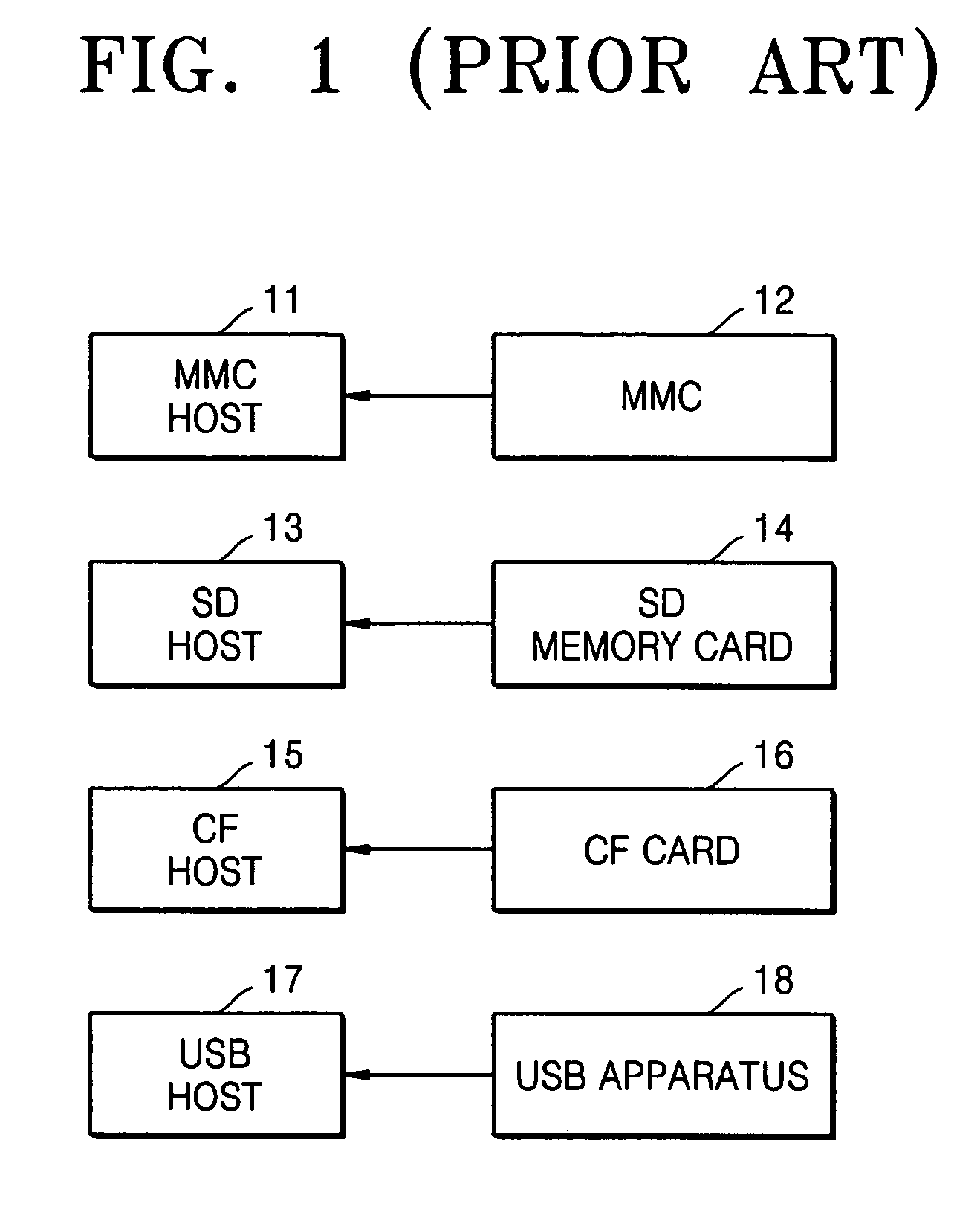

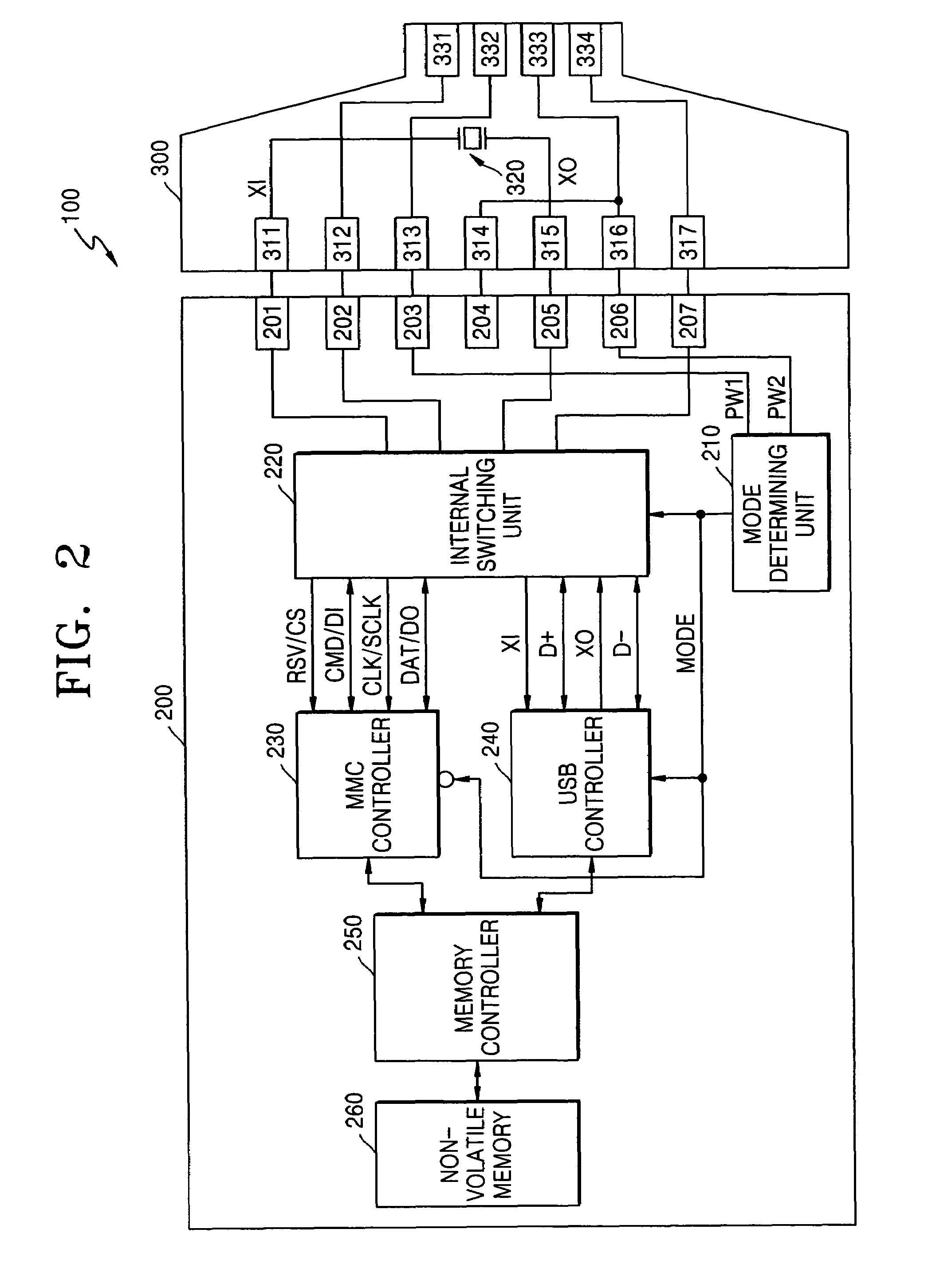

Multimedia/secure digital cards and adapters for interfacing using voltage levels to determine host types and methods of operating

InactiveUS7237049B2Program control using wired connectionsCo-operative working arrangementsEngineeringVoltage

A Multimedia (MMC) / Secure Digital (SD) form-factor compliant card apparatus can include a mode determining circuit connected to first and second pins of the card apparatus and configured to determine a type of host connected to the first and second pins based on comparing voltages at the first and second pins to an internal voltage level. Related adaptors and methods are also disclosed.

Owner:SAMSUNG ELECTRONICS CO LTD

Popular searches

Data switching by path configuration Efficient regulation technologies Multiple digital computer combinations Redundant data error correction Architecture with multiple processing units Redundant hardware error correction Complex mathematical operations Baseband system details Cathode-ray tube indicators Pump control

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com