Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

484 results about "Multi server" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

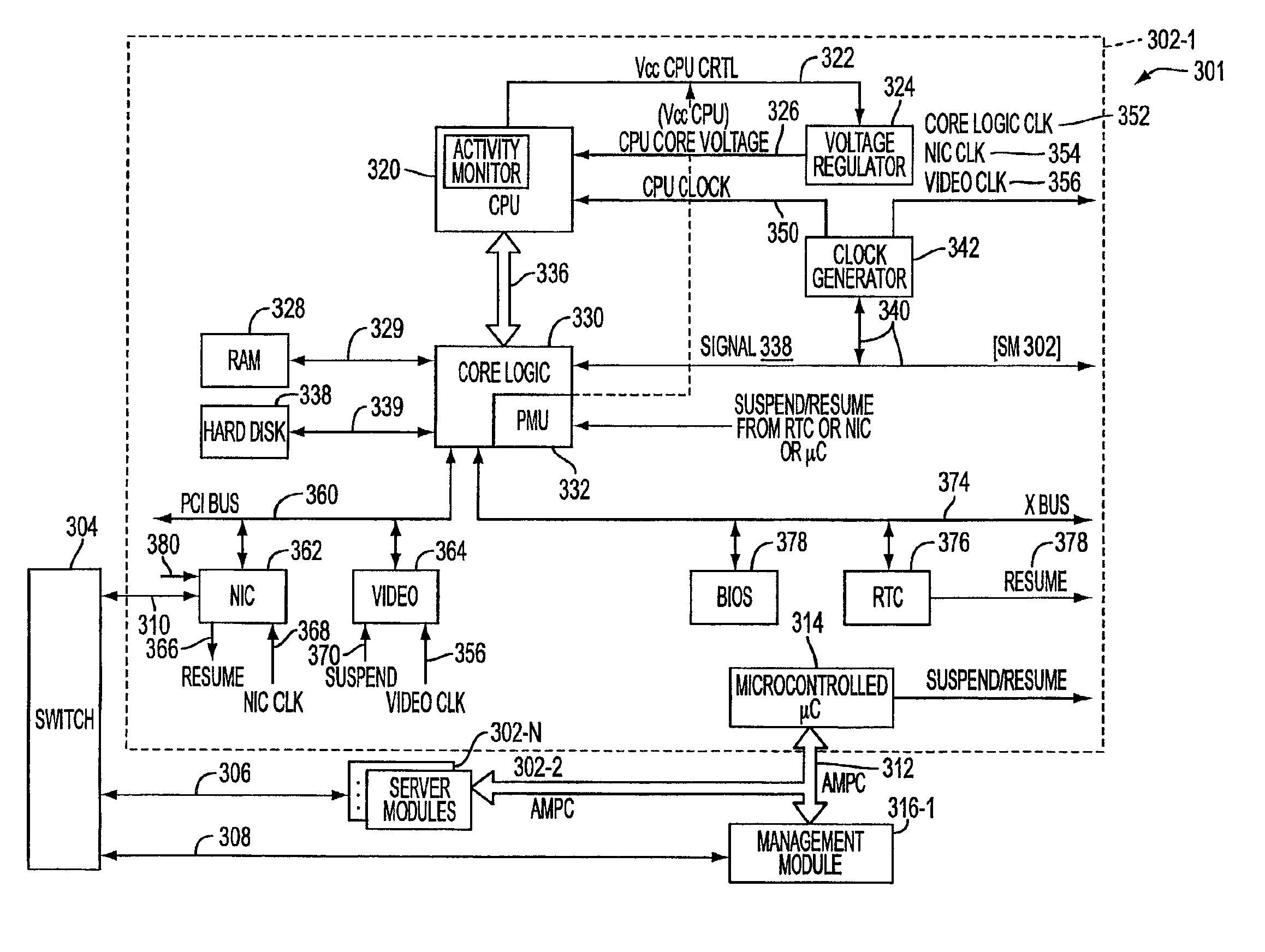

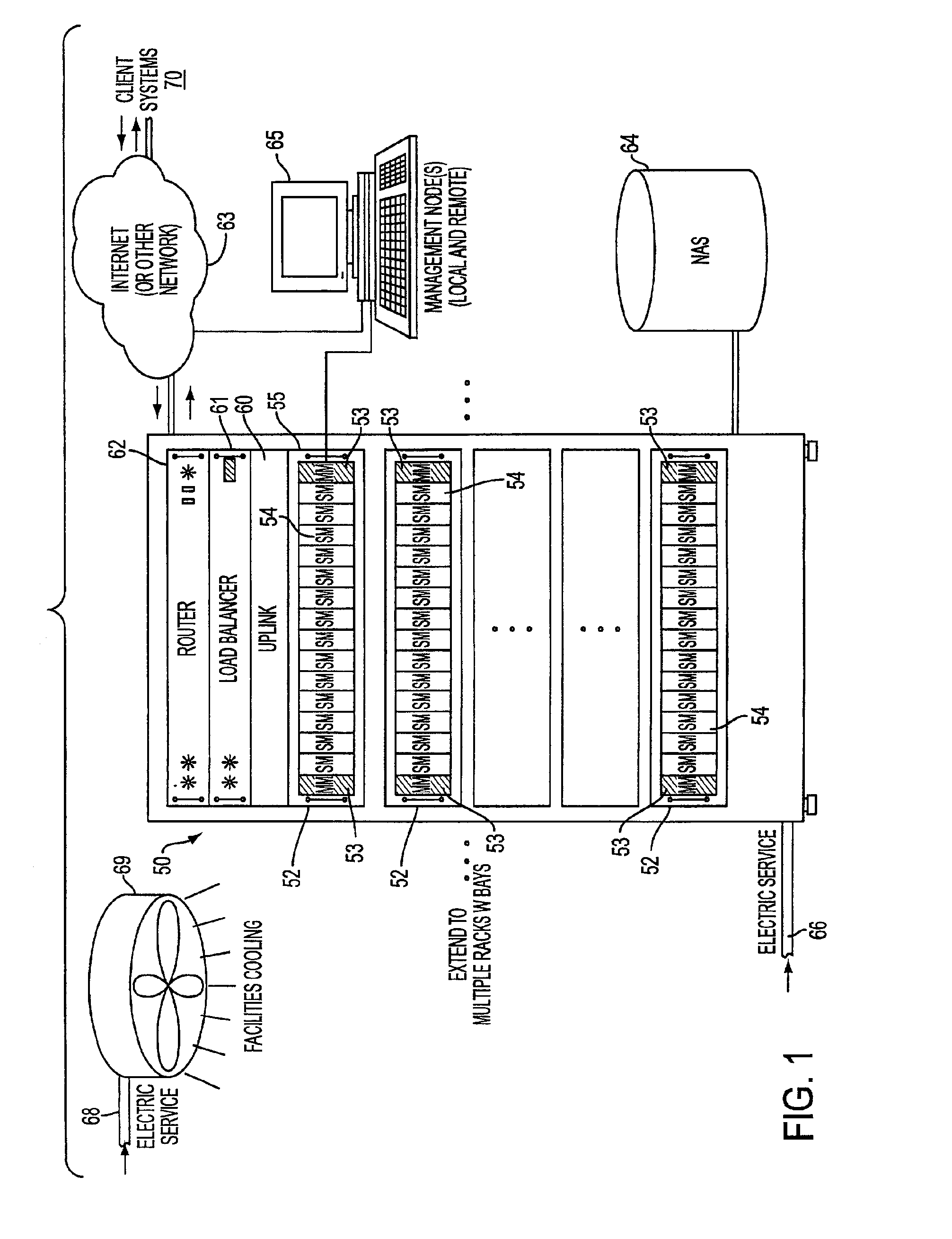

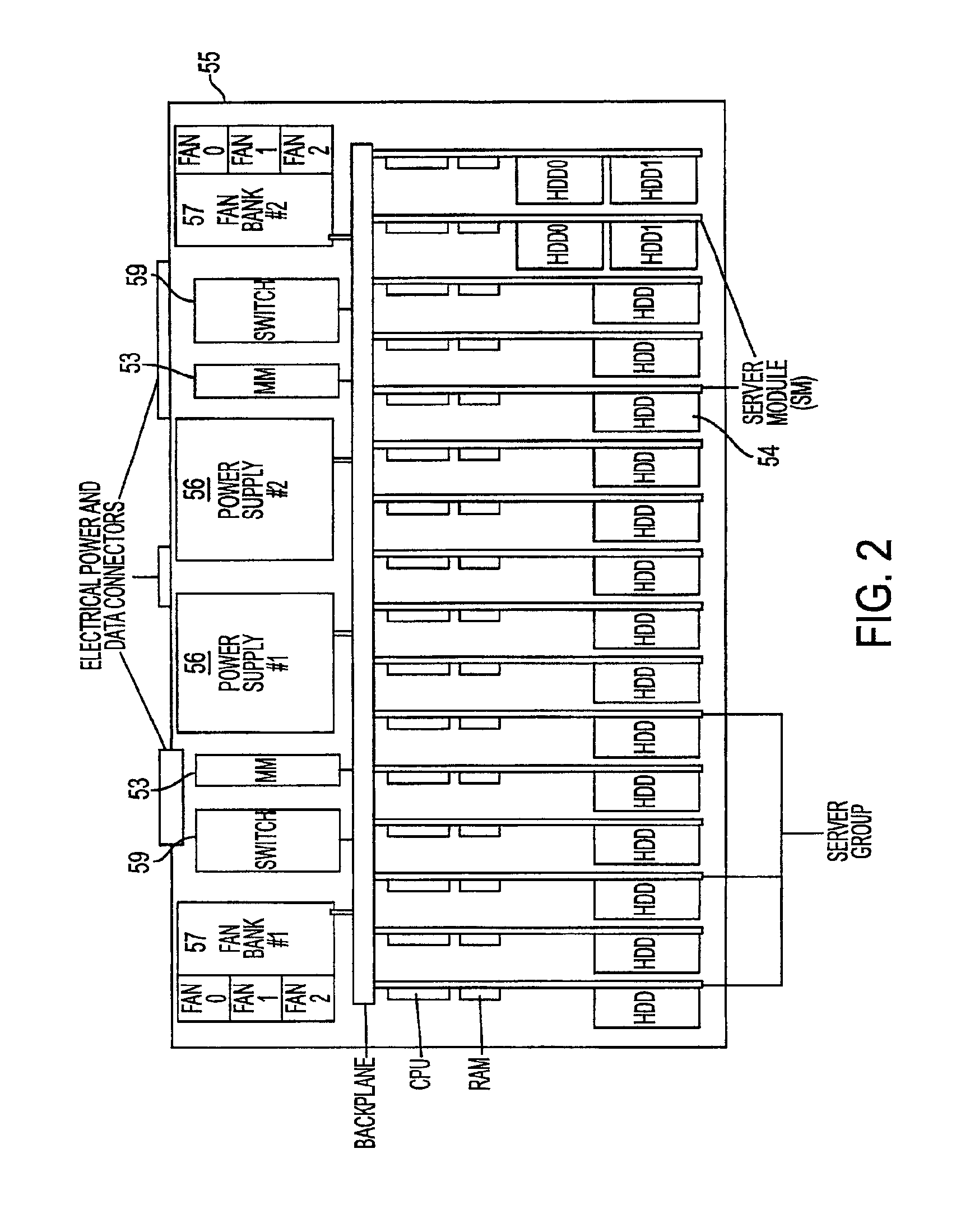

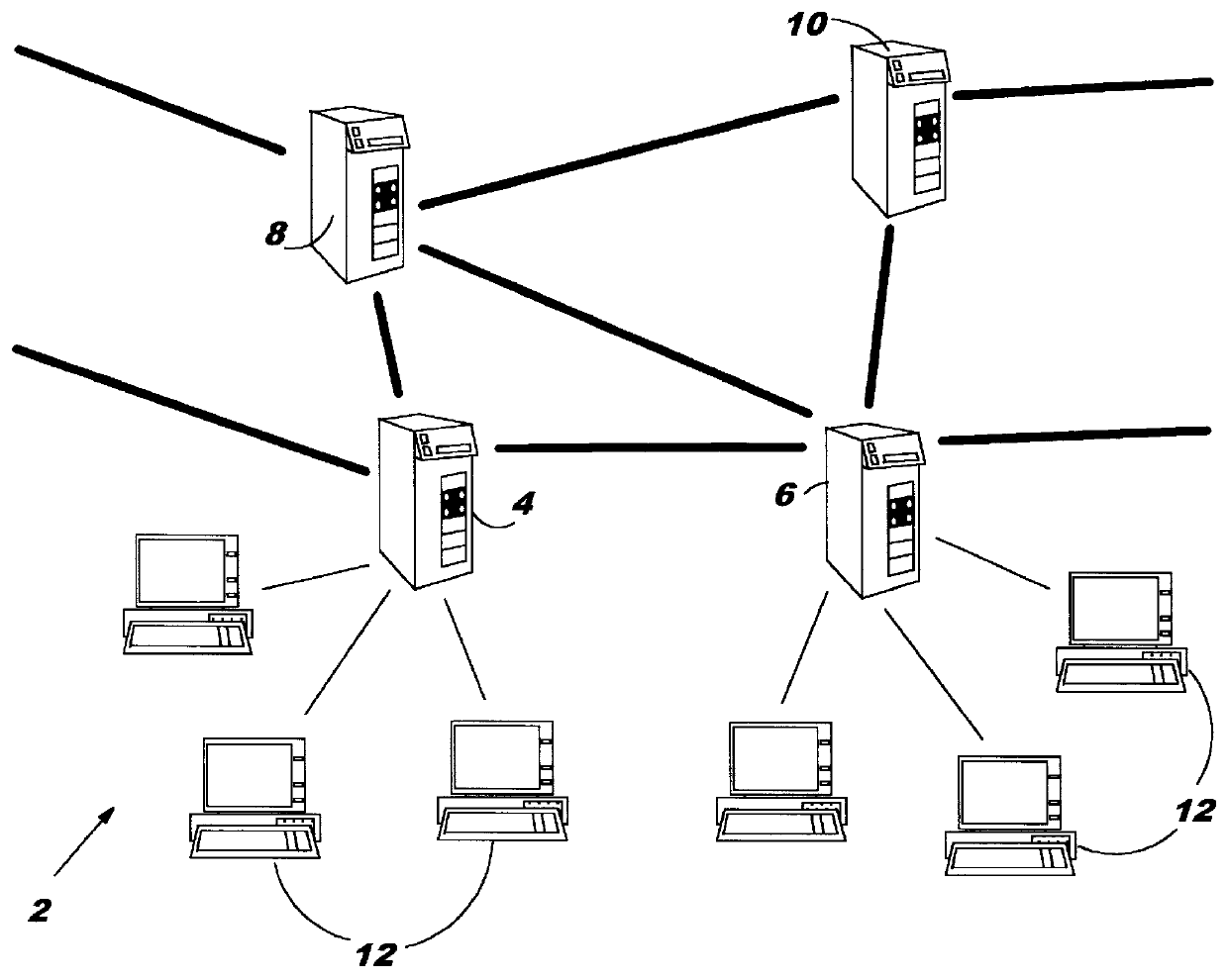

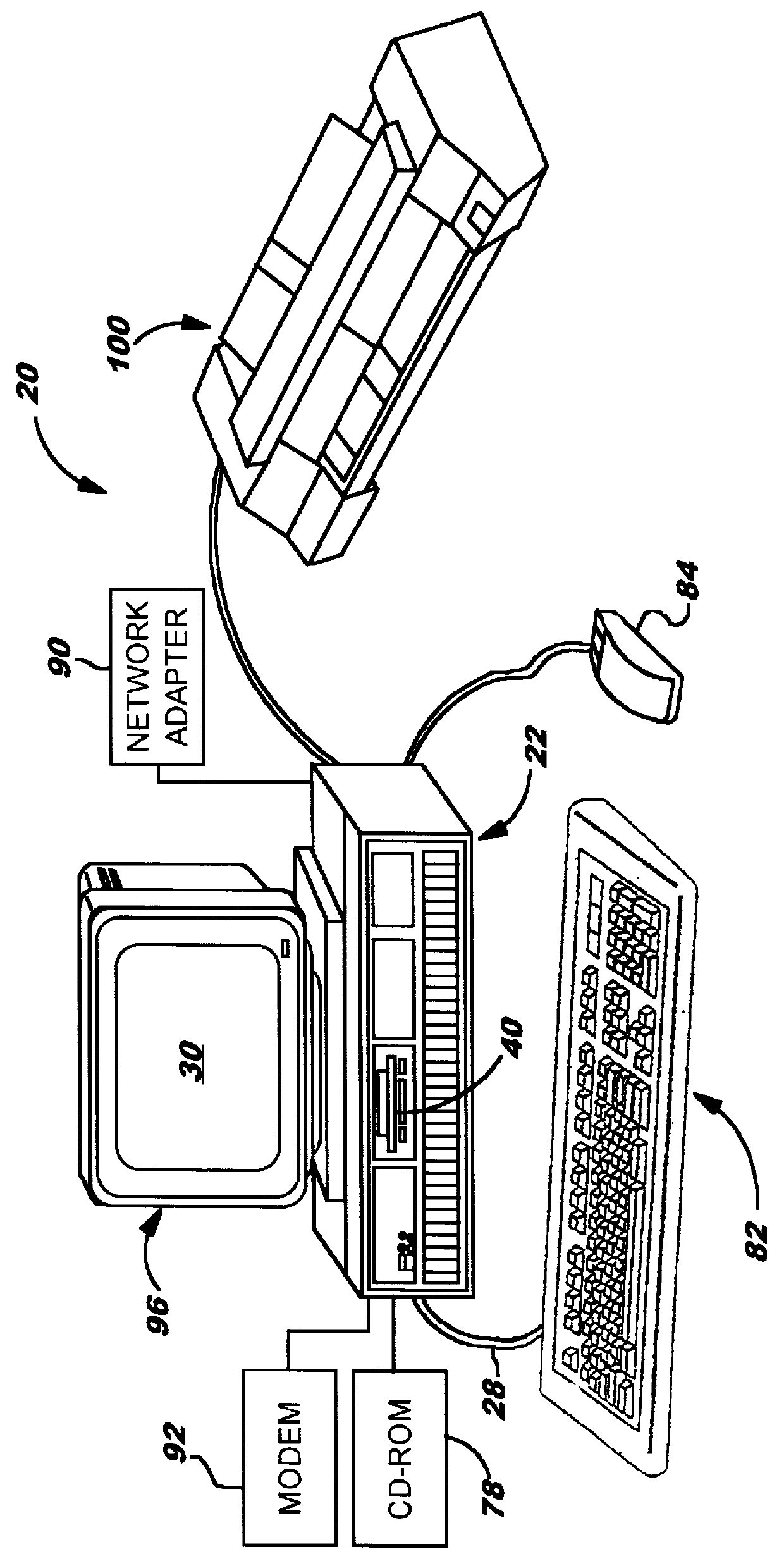

System, method, and architecture for dynamic server power management and dynamic workload management for multi-server environment

InactiveUS6859882B2Save energyConserving methodEnergy efficient ICTVolume/mass flow measurementNetwork architectureWorkload management

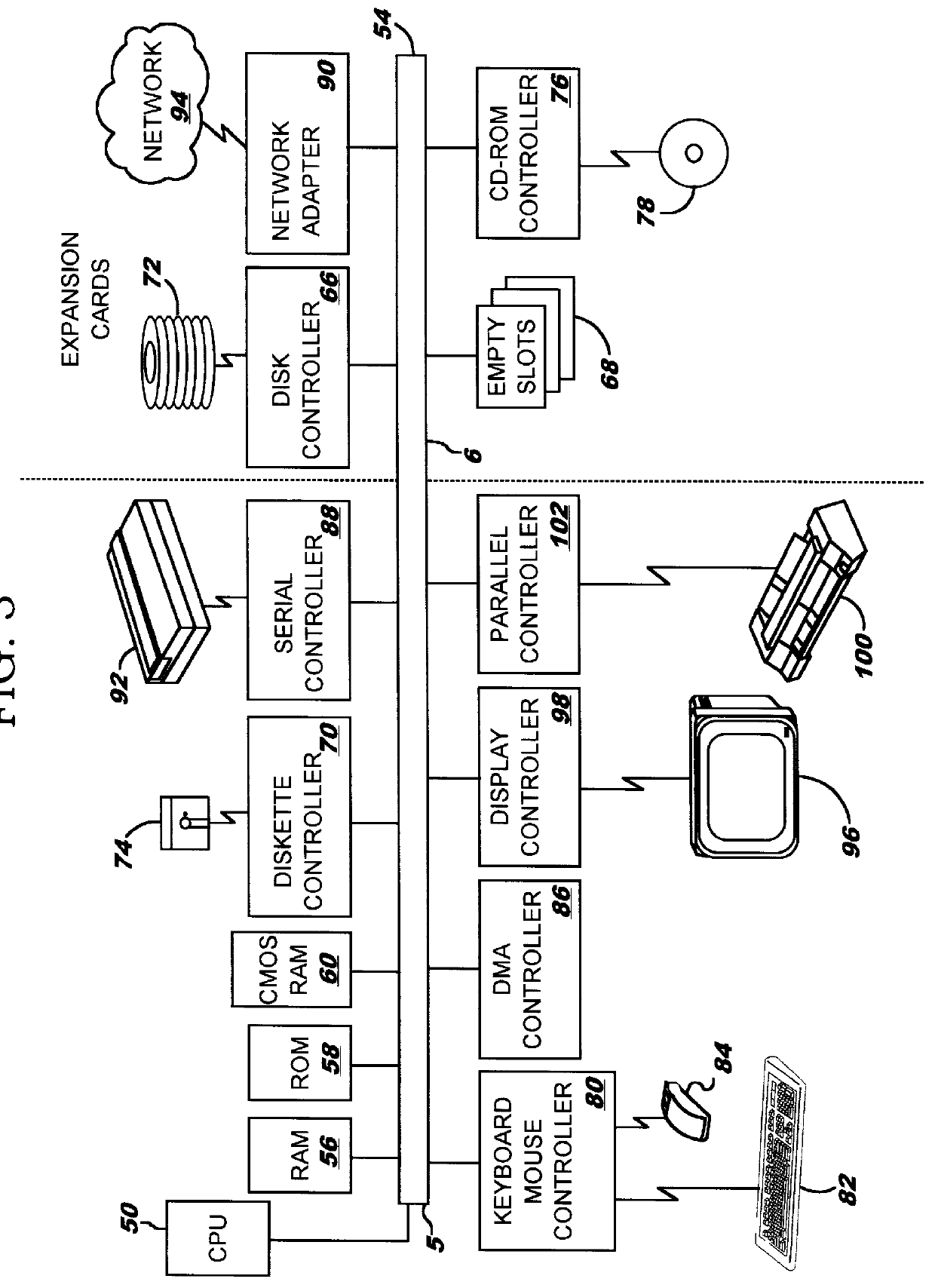

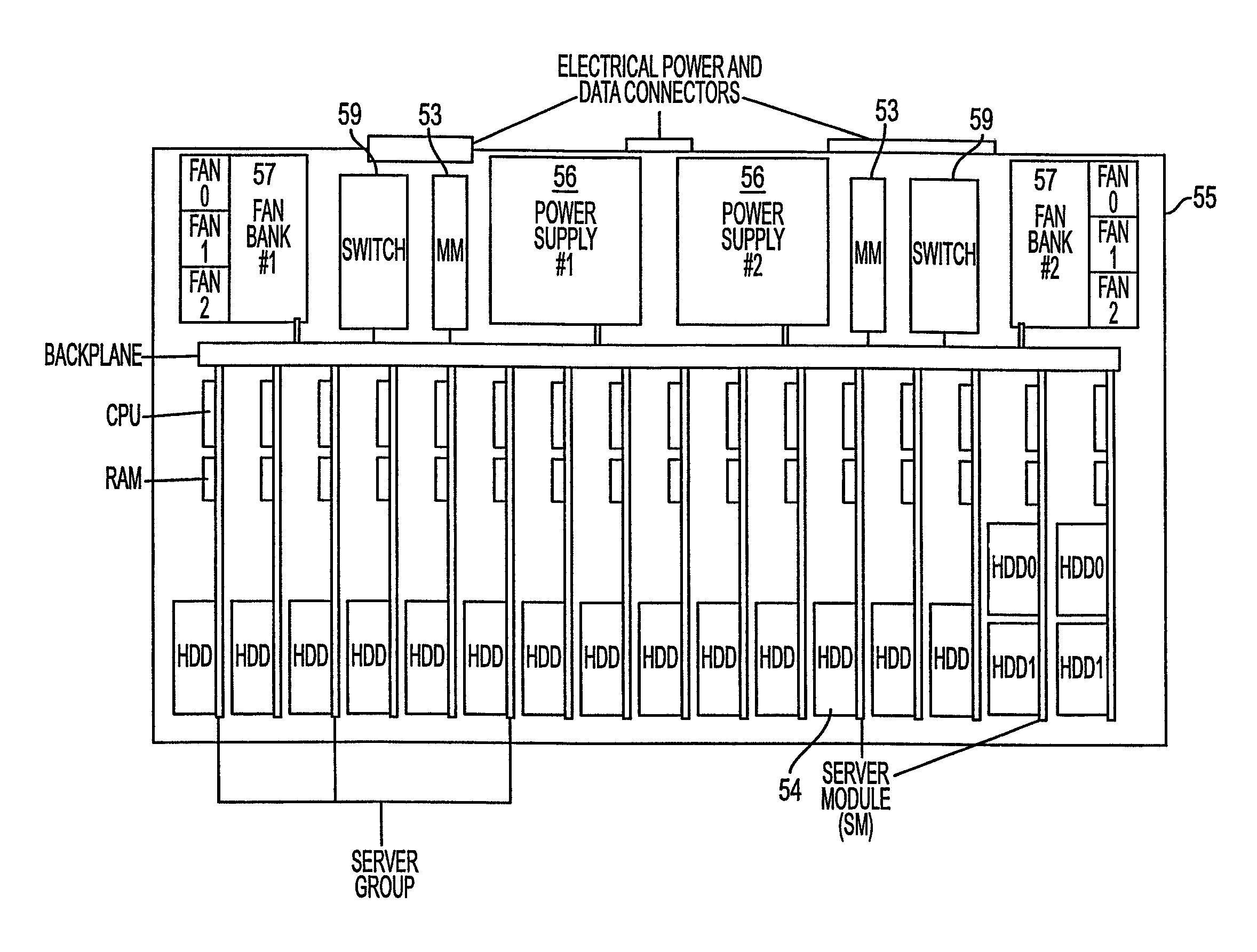

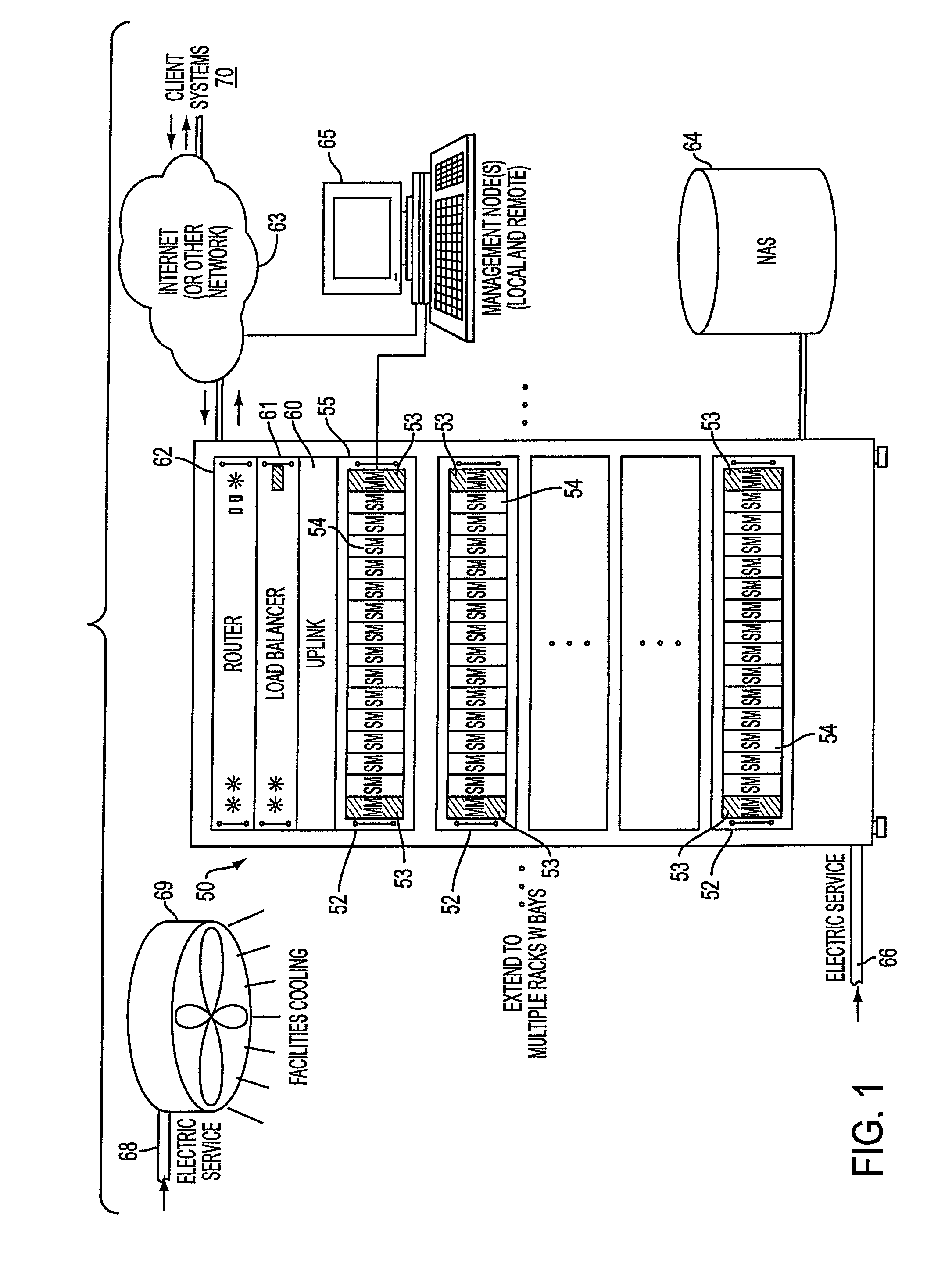

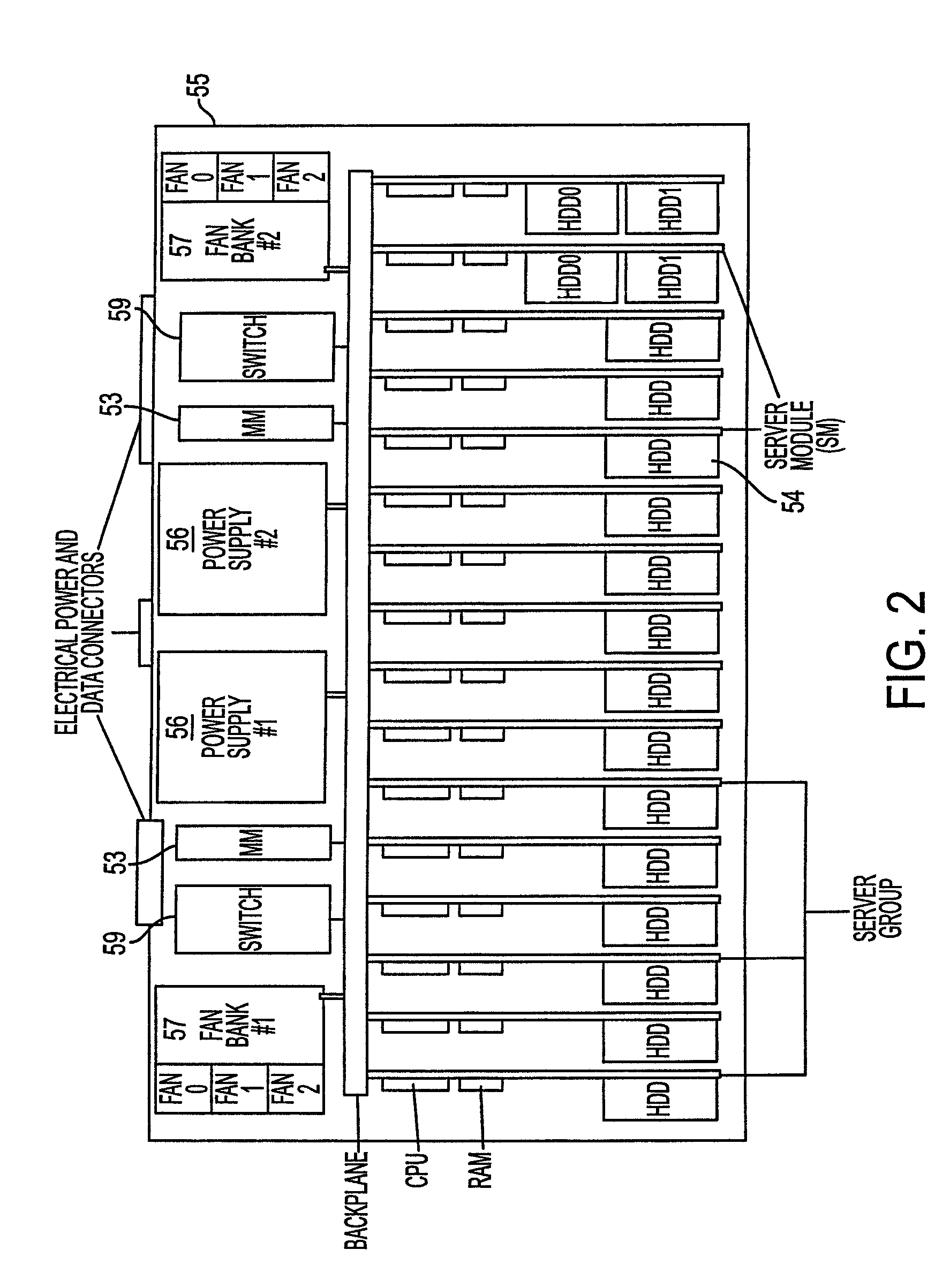

Network architecture, computer system and / or server, circuit, device, apparatus, method, and computer program and control mechanism for managing power consumption and workload in computer system and data and information servers. Further provides power and energy consumption and workload management and control systems and architectures for high-density and modular multi-server computer systems that maintain performance while conserving energy and method for power management and workload management. Dynamic server power management and optional dynamic workload management for multi-server environments is provided by aspects of the invention. Modular network devices and integrated server system, including modular servers, management units, switches and switching fabrics, modular power supplies and modular fans and a special backplane architecture are provided as well as dynamically reconfigurable multi-purpose modules and servers. Backplane architecture, structure, and method that has no active components and separate power supply lines and protection to provide high reliability in server environment.

Owner:HURON IP

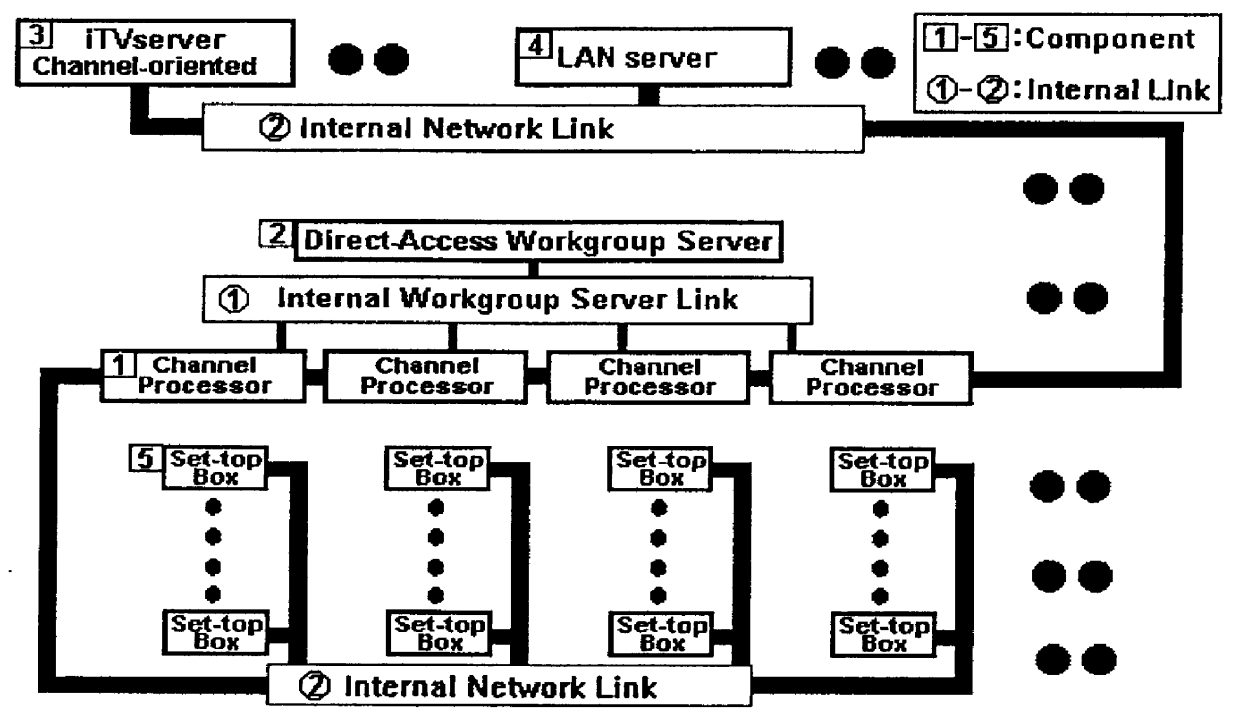

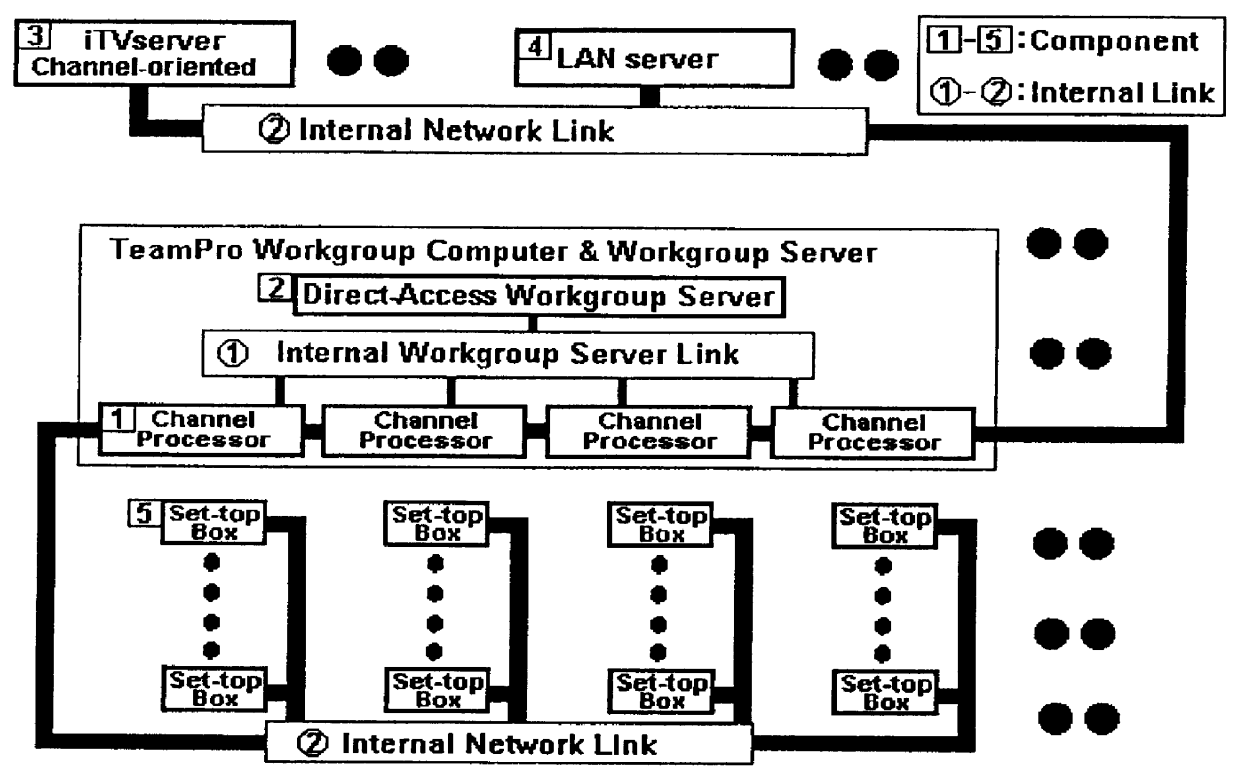

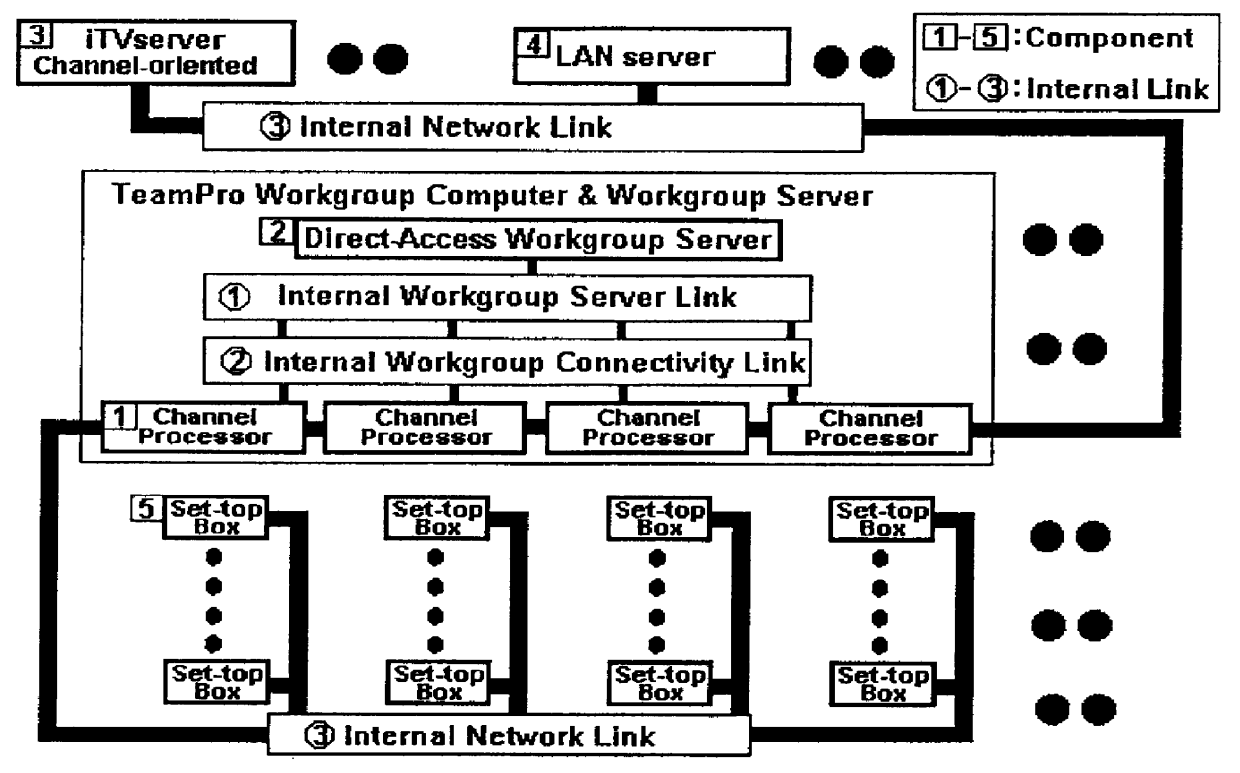

Multi server, interactive, video-on-demand television system utilizing a direct-access-on-demand workgroup

InactiveUS6049823ABroadcast transmission systemsMultiple digital computer combinationsWorkstationPeer-to-peer

An interactive television system that renders on-demand interactive multimedia services for a community of users. The interactive multimedia is delivered to each user on a TV or on a LAN-node (Local-Area-Network) computer through an "interactive TV channel" created and controlled by a Channel-processor, which can be implemented as either a PC or a high-end workstation. The system employs a direct-access on-demand workgroup server. It is equipped with the primary on-demand multimedia data base stored on a hard disk subsystem that is connected directly to the Channel-processors through an internal workgroup link. Using a no-overhead server technology, the connected workgrouped Channel-processors can all concurrently retrieve and process the data directly from the hard disk subsystem without resorting to a stand-alone server system for data retrieving and downloading. The system also employs peer-to-peer workgroup connectivity, so that all of the workgrouped Channel-processors that are connected to the workgroup server through the internal workgroup link, can communicate with one another.

Owner:HWANG IVAN CHUNG SHUNG

Performance/capacity management framework over many servers

InactiveUS6148335ADigital computer detailsData switching networksApplication programming interfaceColour coding

A method of monitoring a computer network by collecting resource data from a plurality of network nodes, analyzing the resource data to generate historical performance data, and reporting the historical performance data to another network node. The network nodes can be servers operating on different platforms, and resource data is gathered using separate programs having different application programming interfaces for the respective platforms. The analysis can generate daily, weekly, and monthly historical performance data, based on a variety of resources including CPU utilization, memory availability, I / O usage, and permanent storage capacity. The report may be constructed using a plurality of documents related by hypertext links. The hypertext links can be color-coded in response to at least one performance parameter in the historical performance data surpassing an associated threshold. An action list can also be created in response to such an event.

Owner:IBM CORP

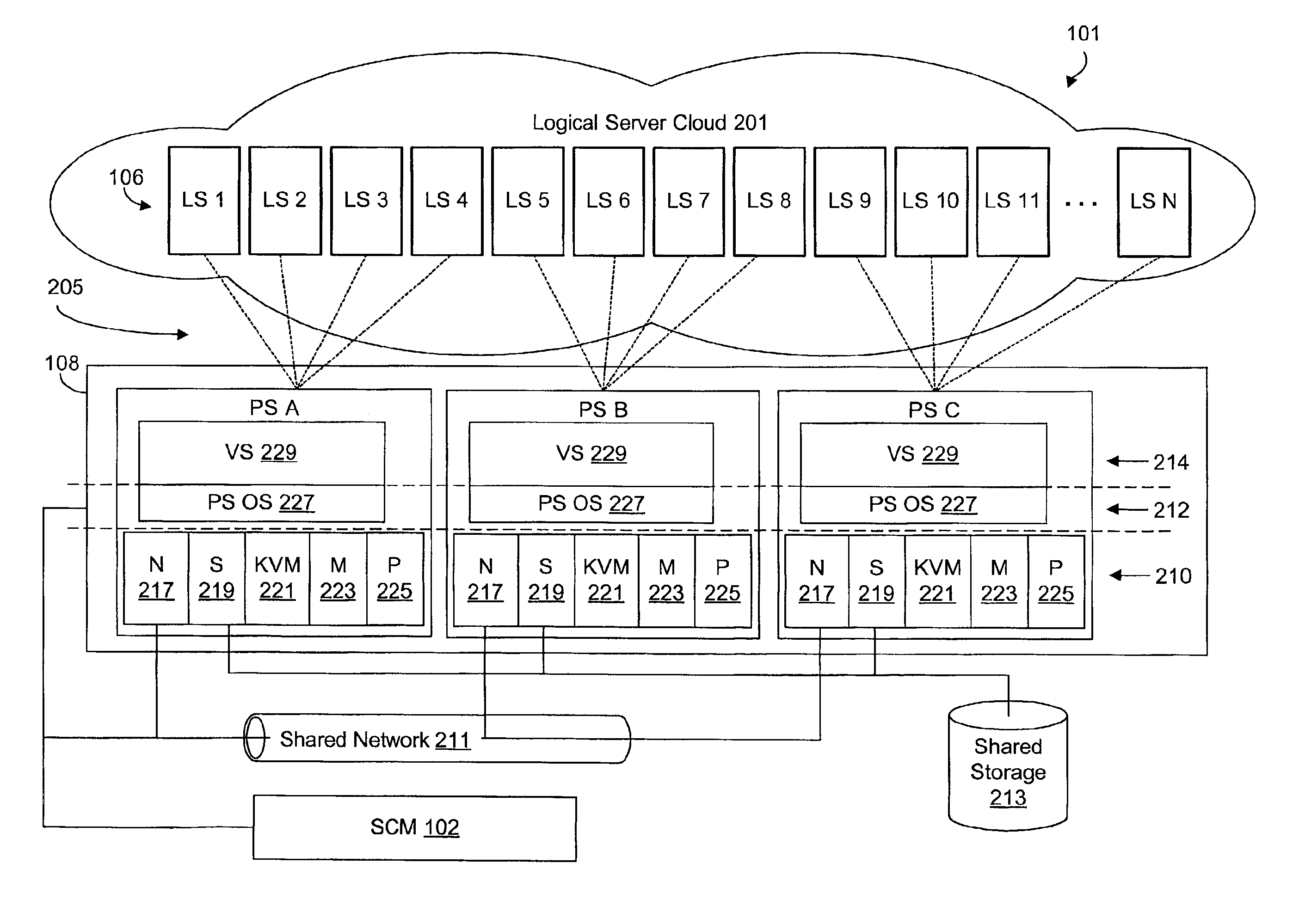

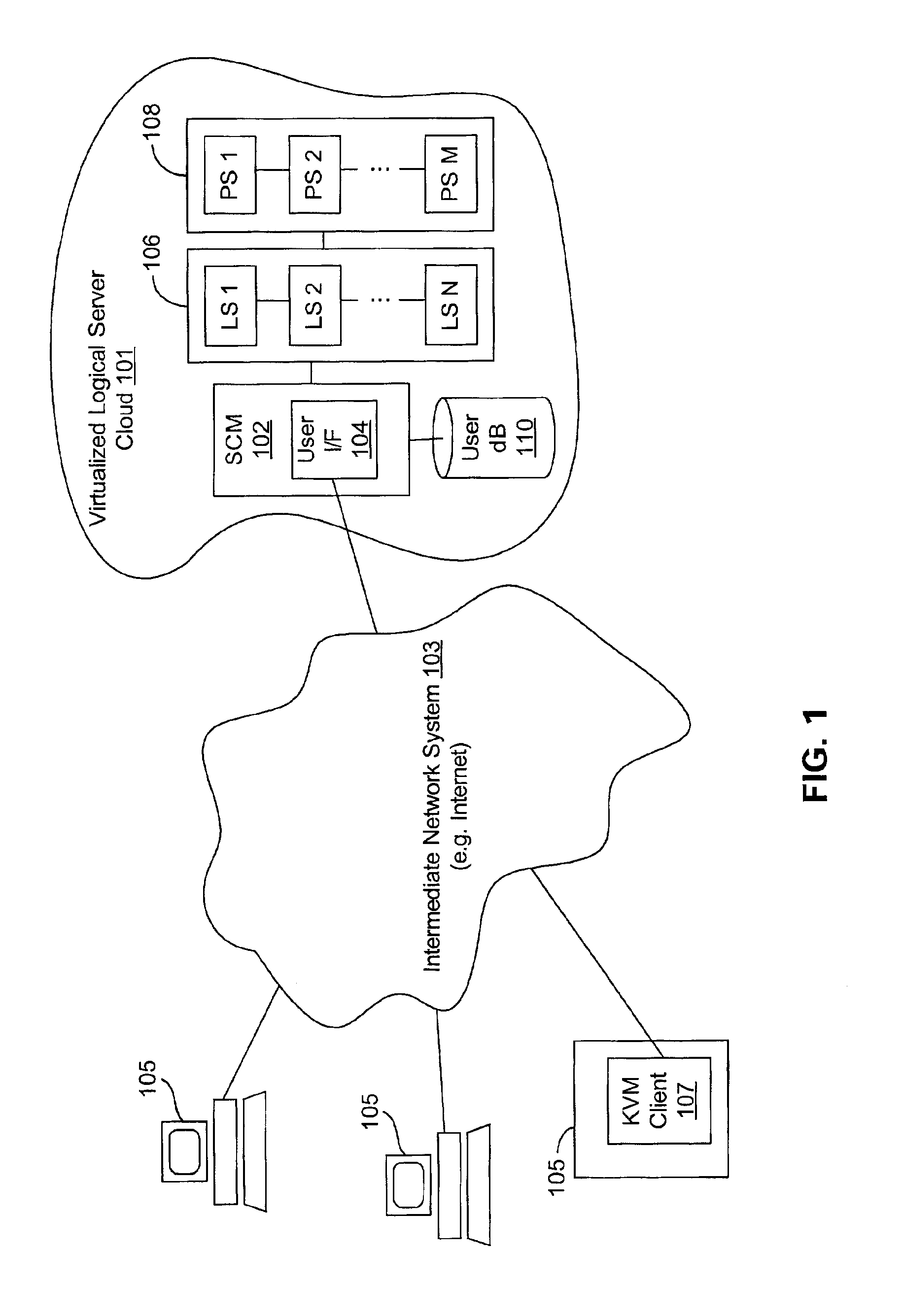

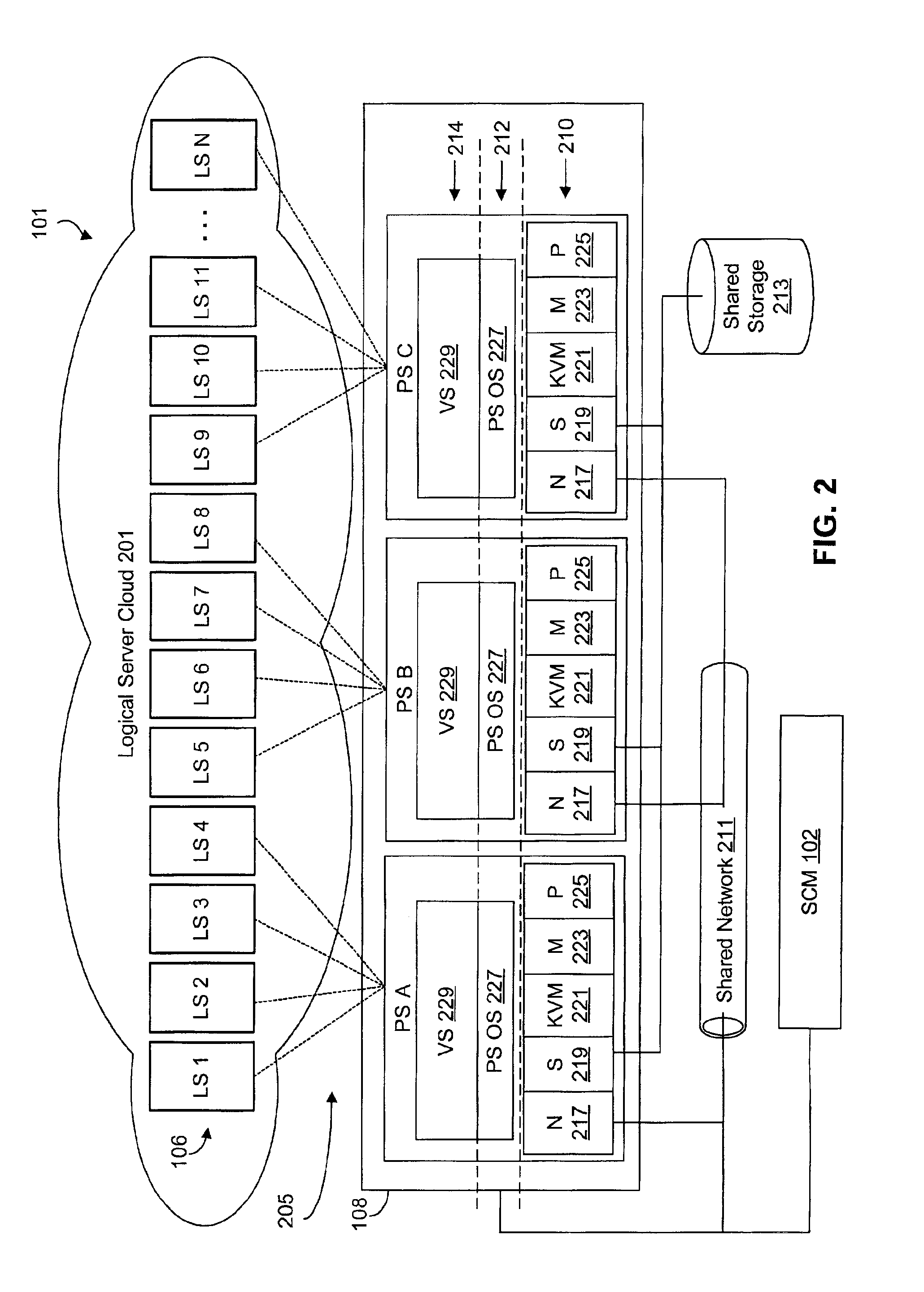

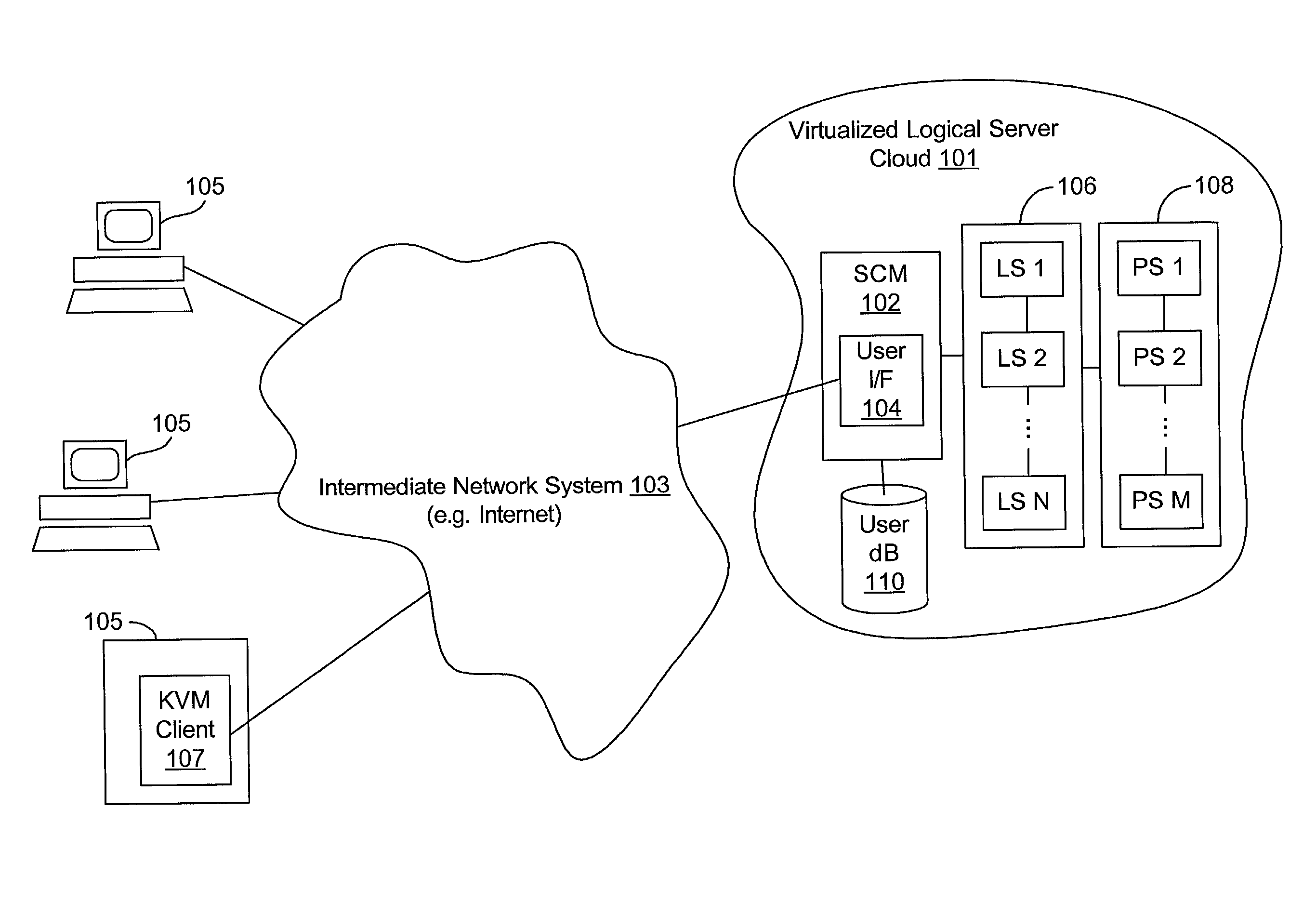

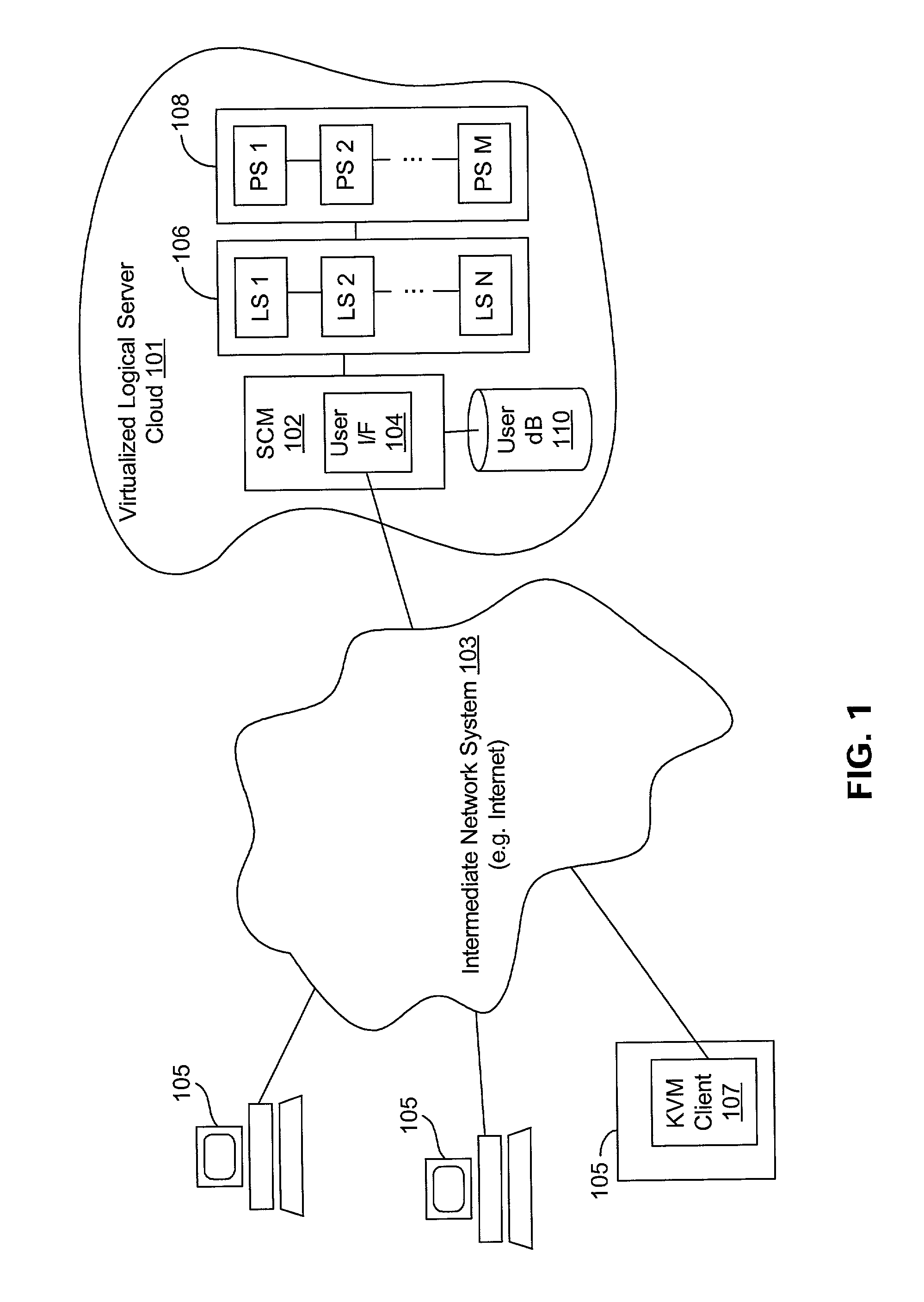

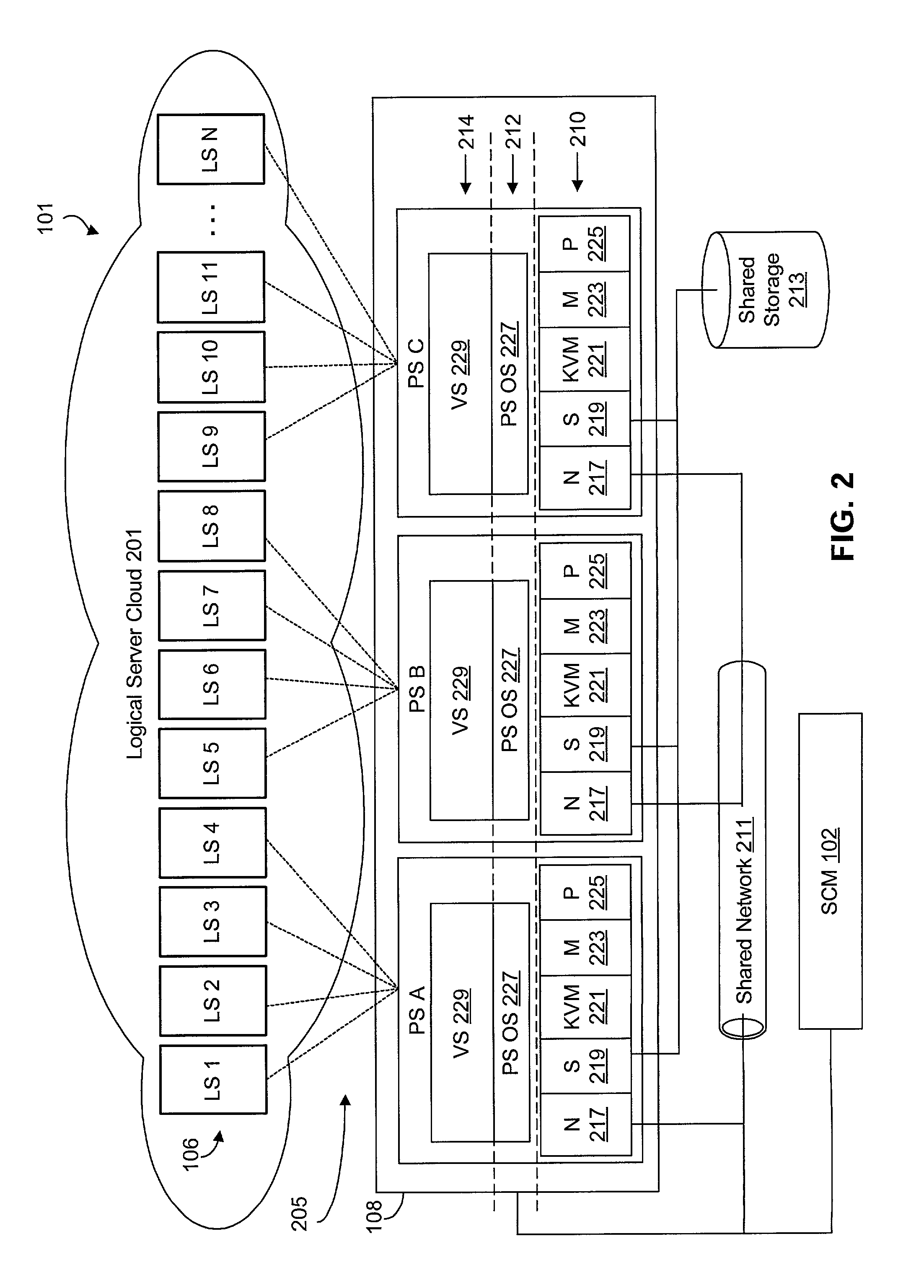

Virtualized logical server cloud providing non-deterministic allocation of logical attributes of logical servers to physical resources

InactiveUS6880002B2Resource allocationMultiple digital computer combinationsVirtualizationData store

A virtualized logical server cloud that enables logical servers to exist independent of physical servers that instantiate the logical servers. Servers are treated as logical resources in order to create a logical server cloud. The logical attributes of a logical server are non-deterministically allocated to physical resources creating a cloud of logical servers over the physical servers. Logical separation is facilitated by the addition of a server cloud manager, which is an automated multi-server management layer. Each logical server has persistent attributes that establish its identity. Each physical server includes or is coupled to physical resources including a network resource, a data storage resource and a processor resource. At least one physical server executes virtualization software that virtualizes physical resources for logical servers. The server cloud manager maintains status and instance information for the logical servers including persistent and non-persistent attributes that link each logical server with a physical server.

Owner:DELL PROD LP

Virtualized logical server cloud

InactiveUS20030051021A1Resource allocationMultiple digital computer combinationsVirtualizationData store

A virtualized logical server cloud that enables logical servers to exist independent of physical servers that instantiate the logical servers. Servers are treated as logical resources in order to create a logical server cloud. The logical attributes of a logical server are non-deterministically allocated to physical resources creating a cloud of logical servers over the physical servers. Logical separation is facilitated by the addition of a server cloud manager, which is an automated multi-server management layer. Each logical server has persistent attributes that establish its identity. Each physical server includes or is coupled to physical resources including a network resource, a data storage resource and a processor resource. At least one physical server executes virtualization software that virtualizes physical resources for logical servers. The server cloud manager maintains status and instance information for the logical servers including persistent and non-persistent attributes that link each logical server with a physical server.

Owner:DELL PROD LP

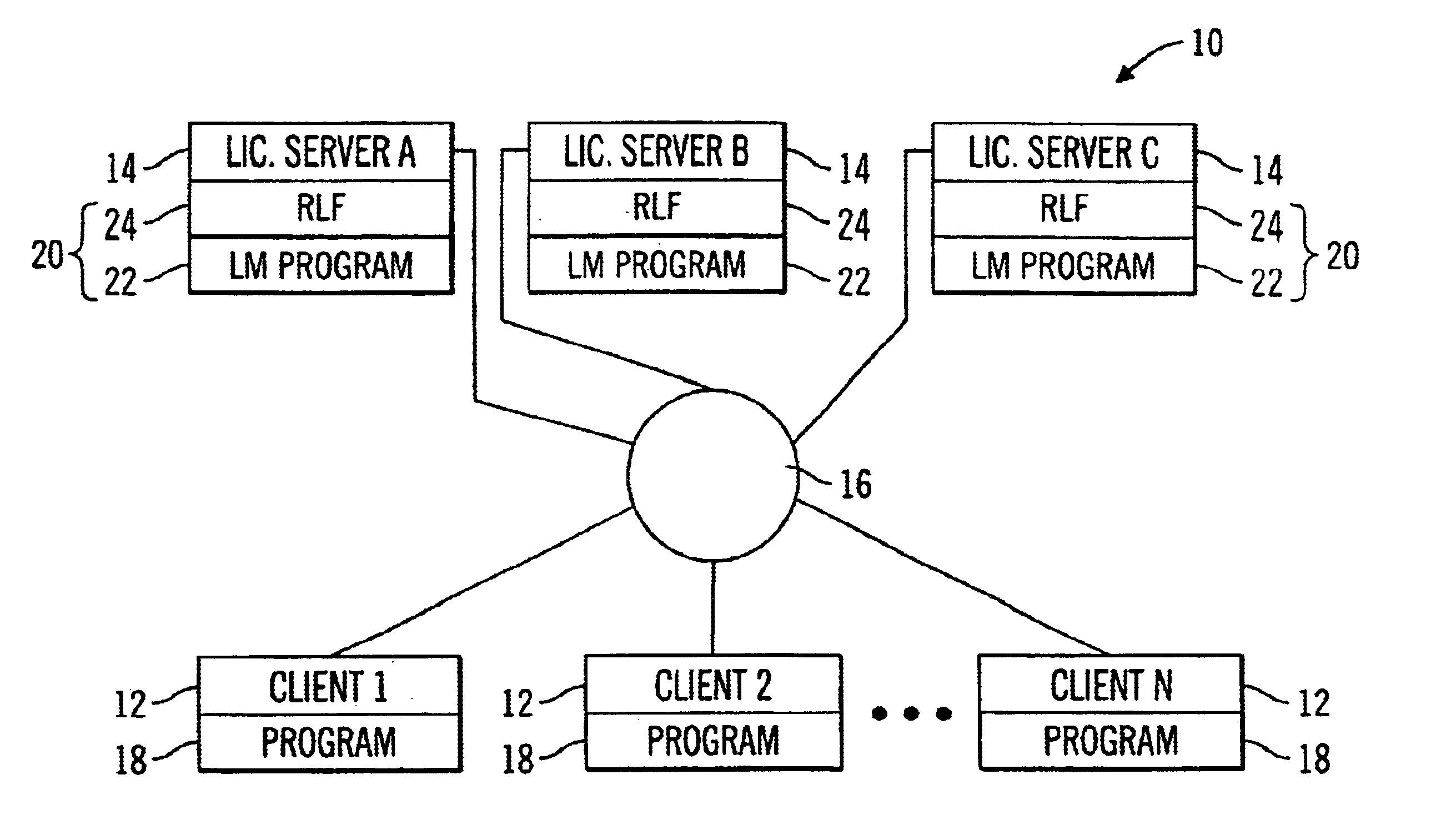

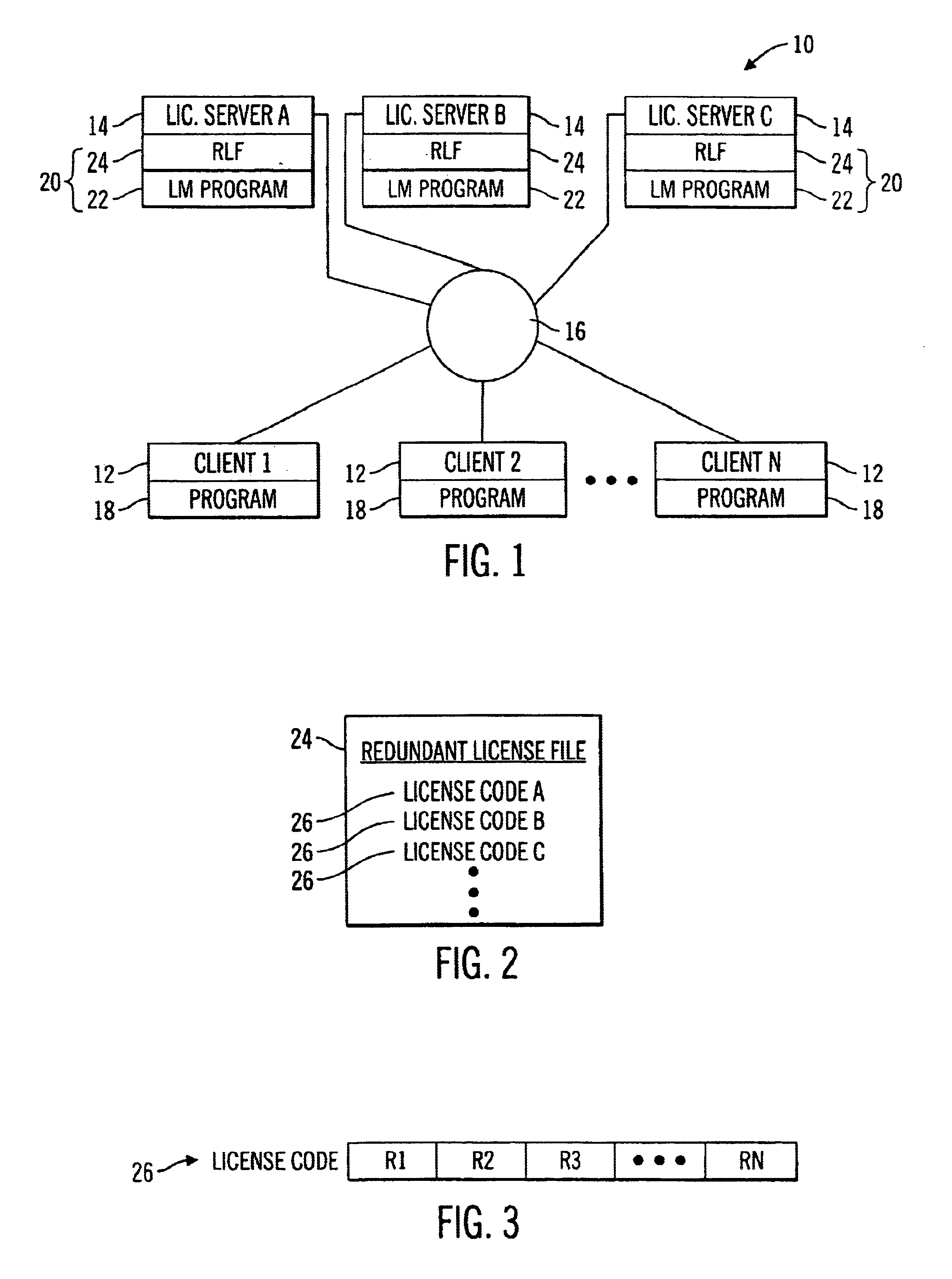

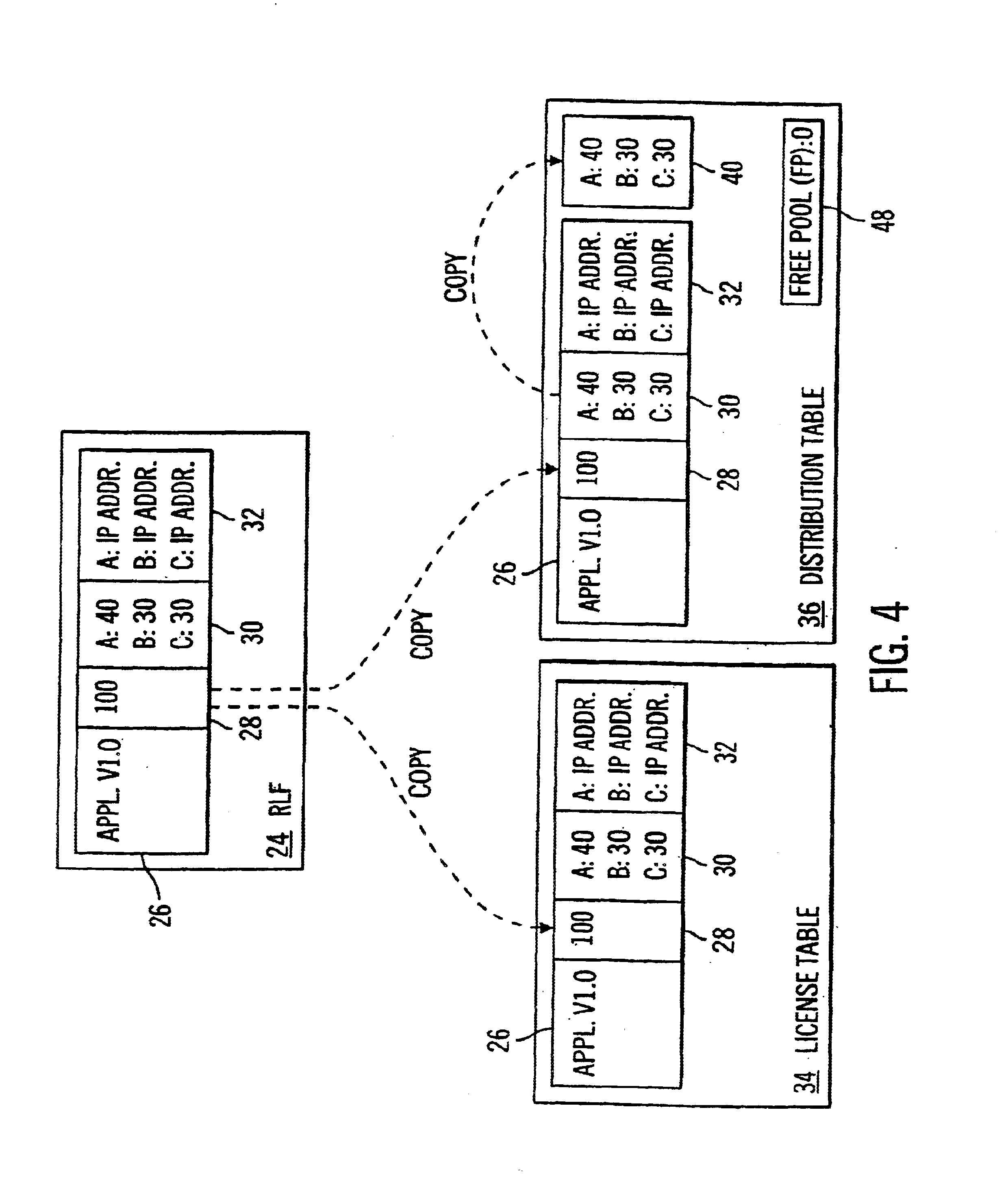

System and method for selecting a server in a multiple server license management system

InactiveUS6842896B1Improve distributionComputer security arrangementsOffice automationClient-sideClient machine

A system for managing licenses for protected software on a communication network is disclosed. The system comprises at least one client computer and a pool of license servers coupled to the communication network. The client computers request authorization to use the protected software, and the license servers manage a distribution of allocations to use the protected software. The pool of license servers includes a selected current leader server for managing the distribution of allocations for all license servers in the pool. The first license server to be started is selected as the current leader server. However, if no license server is started first, the license server with the highest priority according to a leader priority list is selected as the current leader server. In addition, client computers seeking authorization to use the protected software may receive information from the server pool and select a particular license server from which to request authorization to use the protected software based on the received information.

Owner:SAFENET

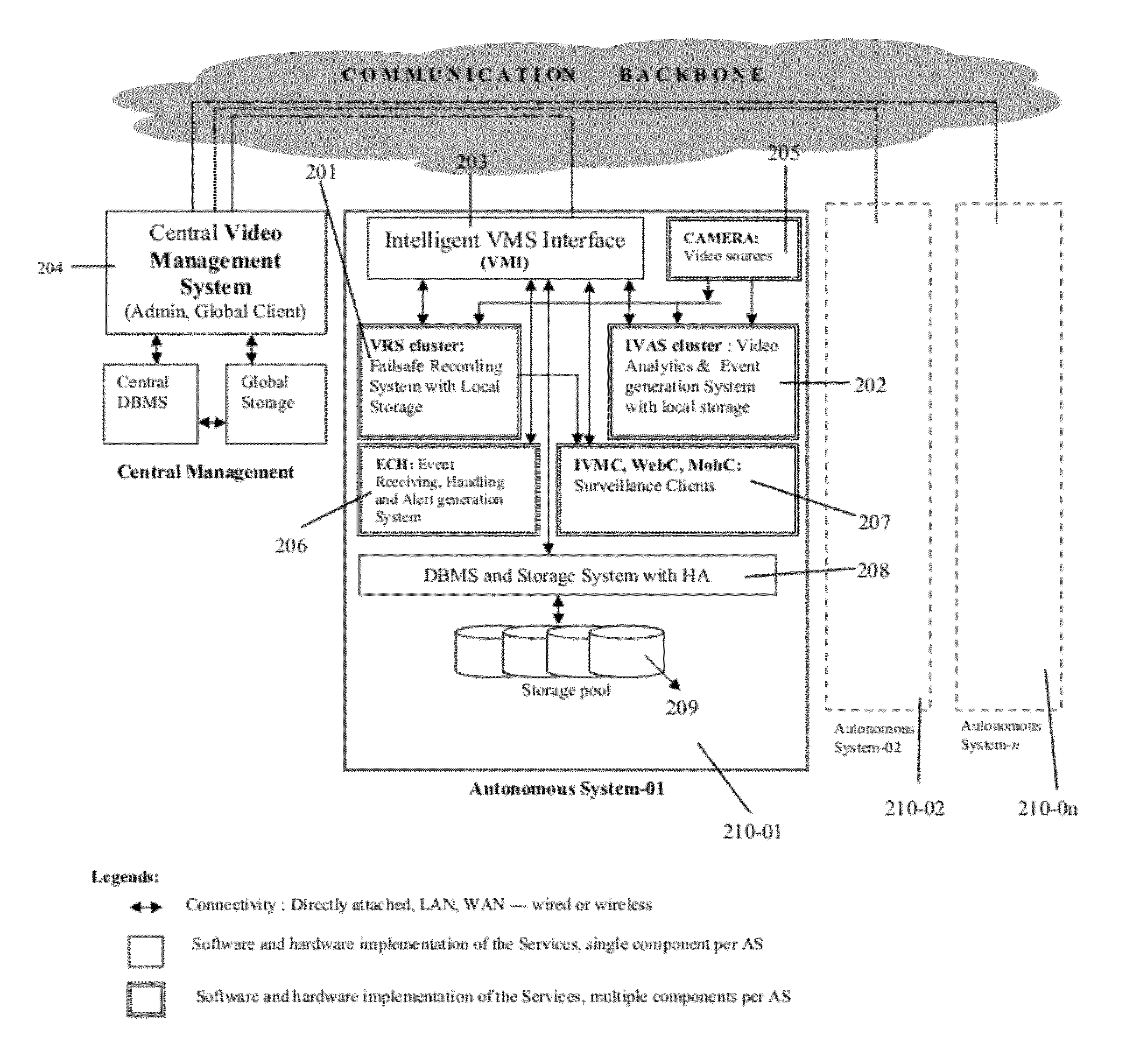

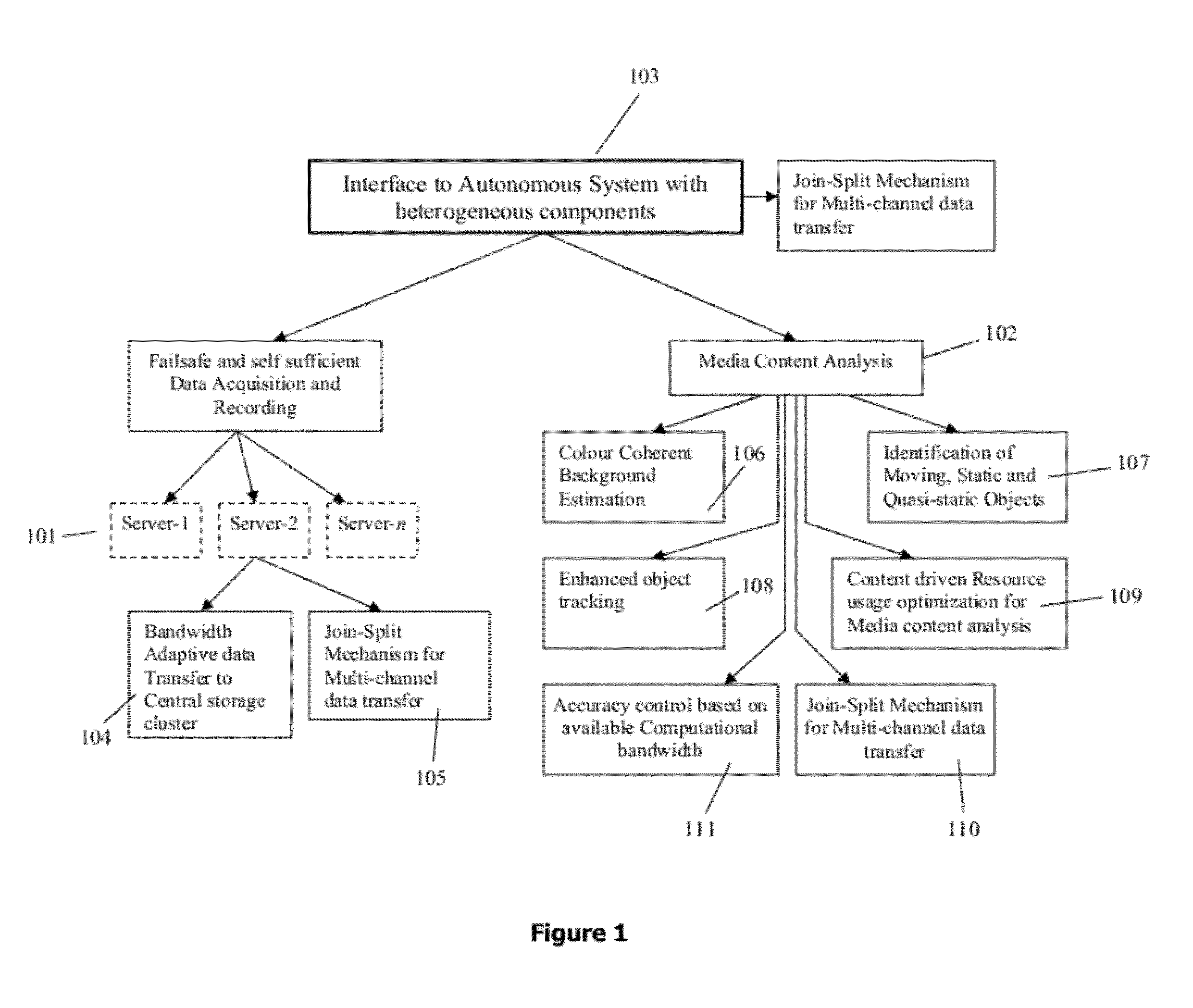

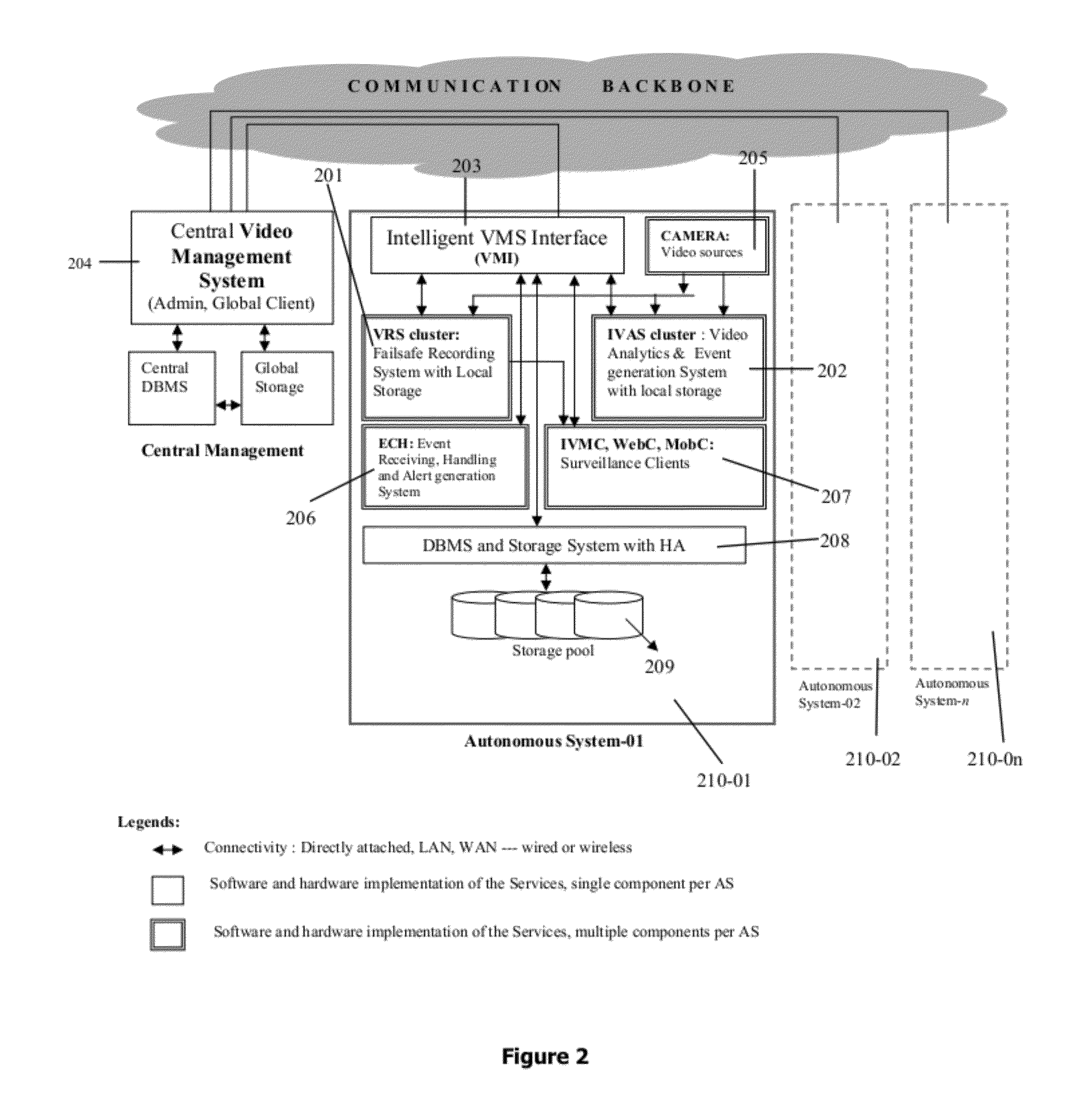

Integrated intelligent server based system and method/systems adapted to facilitate fail-safe integration and/or optimized utilization of various sensory inputs

ActiveUS20120179742A1Accurate predictive colour background estimationPrecise positioningTelevision system detailsRoad vehicles traffic controlFace detectionOperational system

Integrated intelligent system adapted for any operating system and / or multi-OS computing environment seamlessly having sensory input / data acquisition cum recording server group and / or analytics server group enabling fail-safe integration and / or optimized utilization of various sensory inputs for various utility applications. Also disclosed as added advancements include intelligent method / system for cost-effective and efficient band adaptive transferring / recording sensory data from single or multiple data sources to network accessible storage devices, fail safe and self sufficient server group based method for sensory input recording and live streaming in a multi-server environment, intelligent and unified method of colour coherent object analysis, face detection in video images and the like, resource allocation for analytical processing involving multi channel environment, multi channel join-split mechanism adapted for low and / or variable bandwidth network link, enhanced multi-colour and / or mono-colour object tracking and also an intelligent automated traffic enforcement system.

Owner:VIDEONETICS TECH PRIVATE

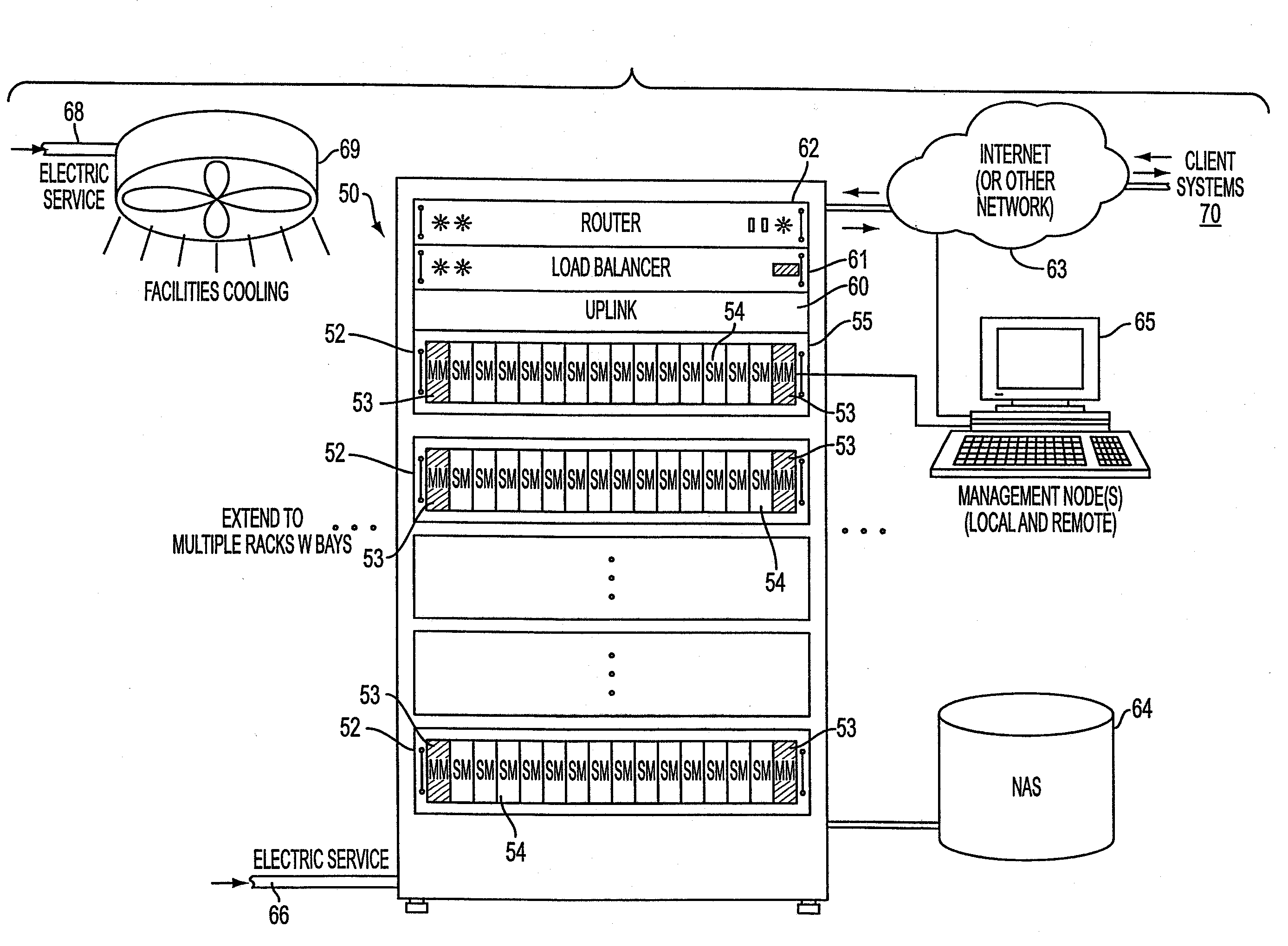

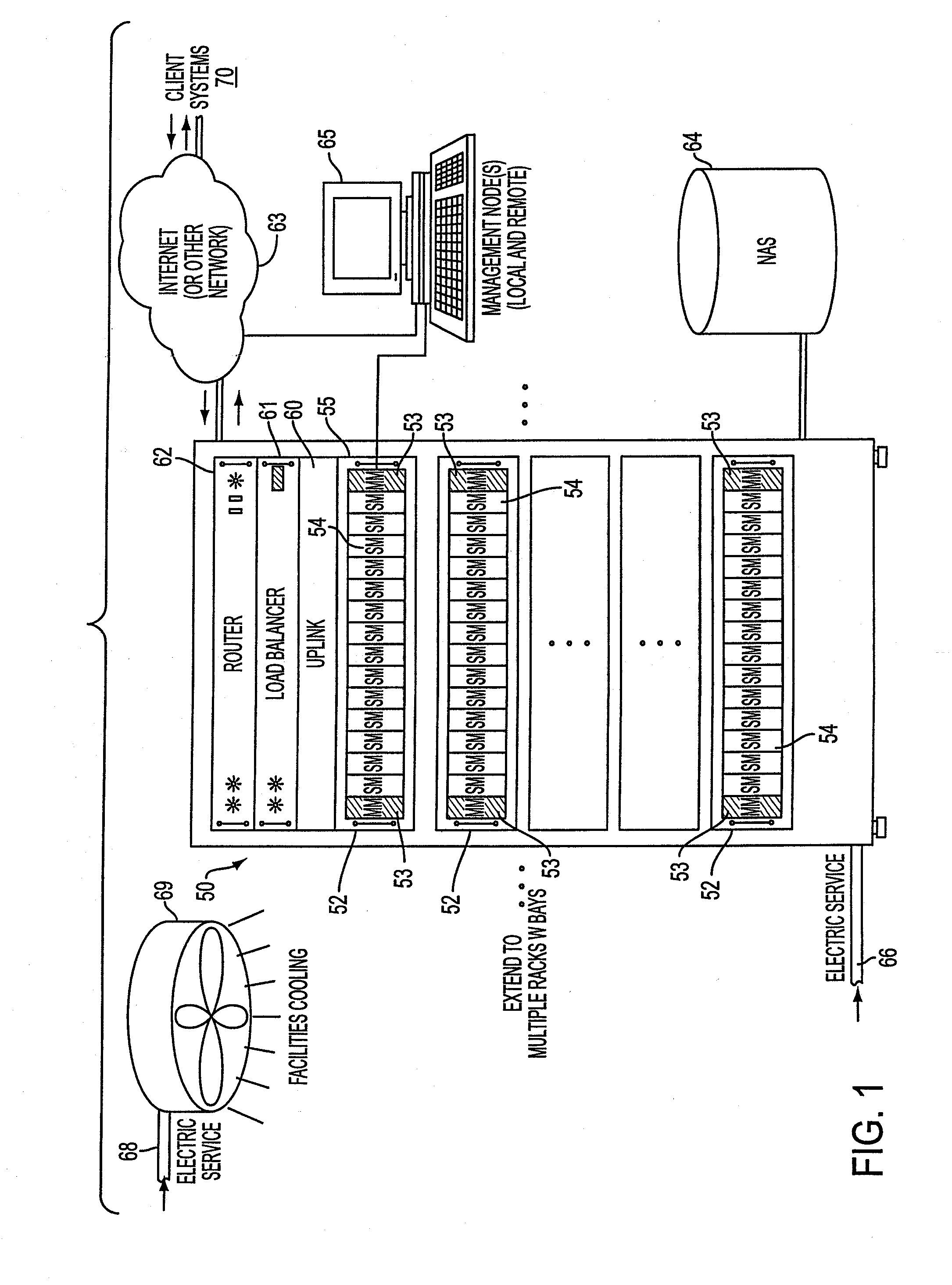

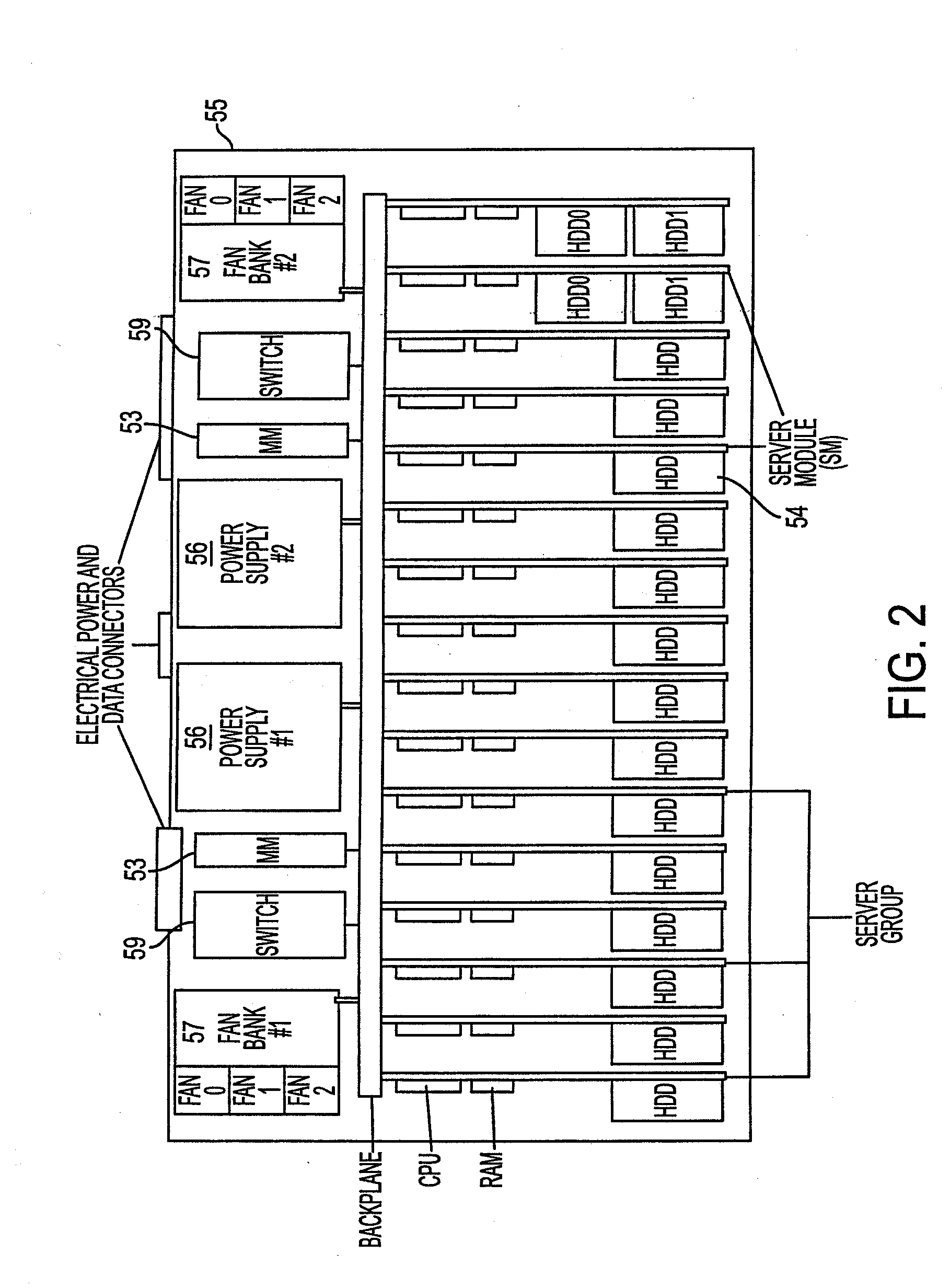

Apparatus and method for modular dynamically power managed power supply and cooling system for computer systems, server applications, and other electronic devices

InactiveUS20090144568A1Save energyConserving methodEnergy efficient ICTPower supply for data processingNetwork architectureEnergy expenditure

Network architecture, computer system and / or server, circuit, device, apparatus, method, and computer program and control mechanism for managing power consumption and workload in computer system and data and information servers. Further provides power and energy consumption and workload management and control systems and architectures for high-density and modular multi-server computer systems that maintain performance while conserving energy and method for power management and workload management. Dynamic server power management and optional dynamic workload management for multi-server environments is provided by aspects of the invention. Modular network devices and integrated server system, including modular servers, management units, switches and switching fabrics, modular power supplies and modular fans and a special backplane architecture are provided as well as dynamically reconfigurable multi-purpose modules and servers. Backplane architecture, structure, and method that has no active components and separate power supply lines and protection to provide high reliability in server environment.

Owner:HURON IP

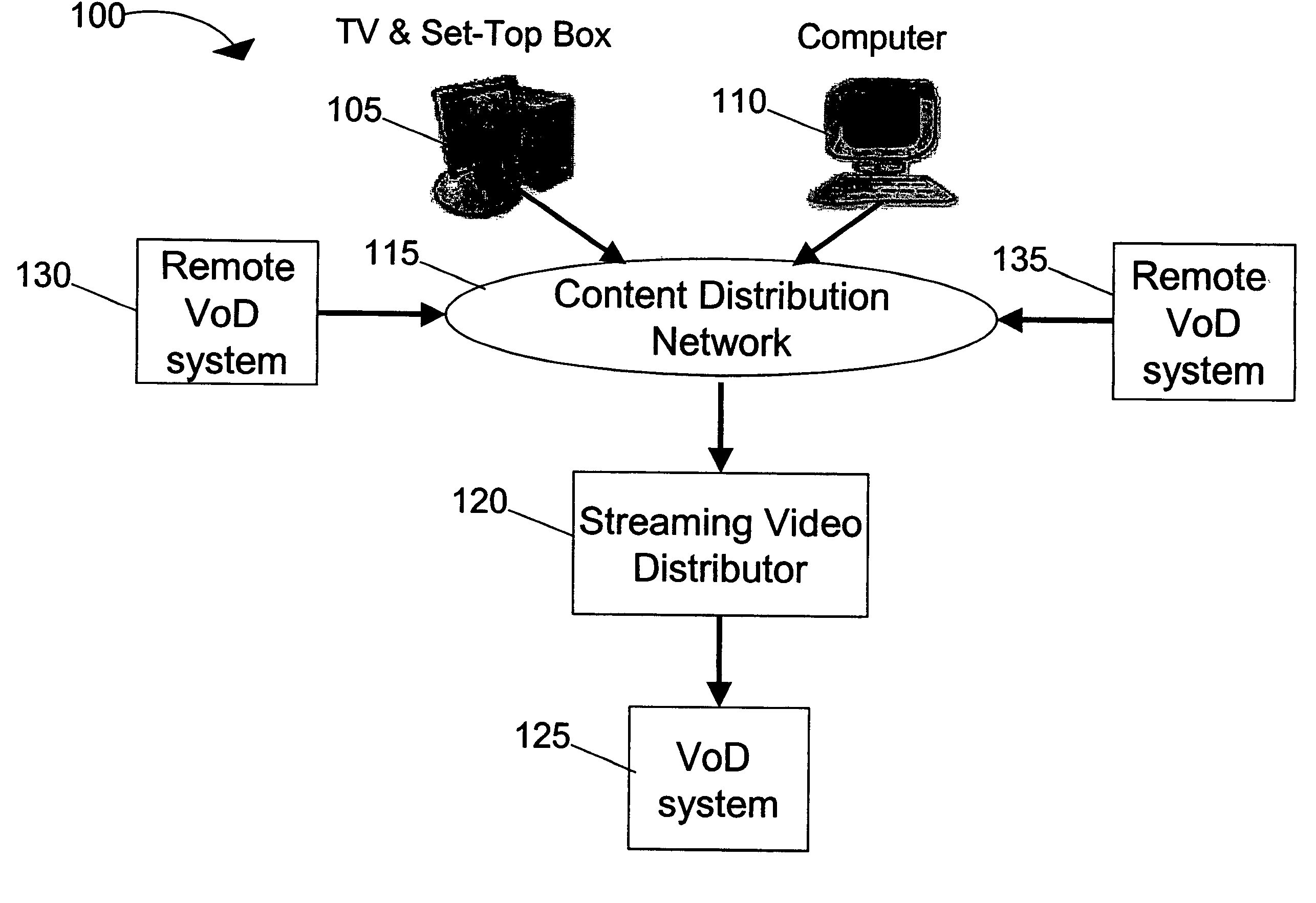

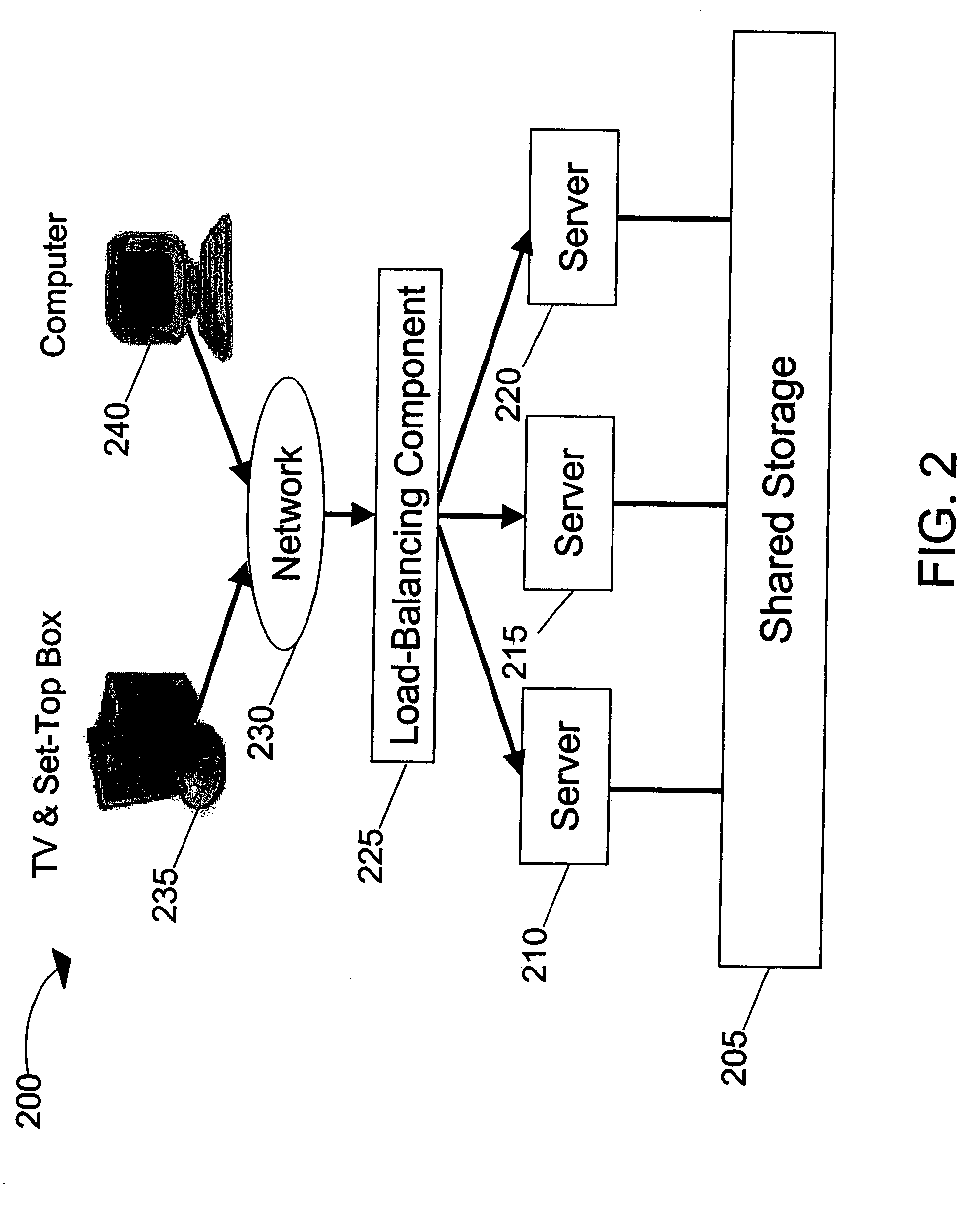

Systems and methods for load balancing storage and streaming media requests in a scalable, cluster-based architecture for real-time streaming

InactiveUS20050262246A1Large capacityCost-effectivelyTelevision system detailsDigital computer detailsReal time servicesCluster based

A scalable, cluster-based VoD system implemented with a multi-server, multi-storage architecture to serve large scale real-time ingest and streaming requests for content assets is provided. The scalable, cluster-based VoD system implements sophisticated load balancing algorithms for distributing the load among the servers in the cluster to achieve a cost-effective and high streaming and storage capacity solution capable of serving multiple usage patterns and large scale real-time service demands. The VoD system is designed with a highly-scalable and failure-resistant architecture for streaming content assets in real-time in various network configurations.

Owner:KASENNA

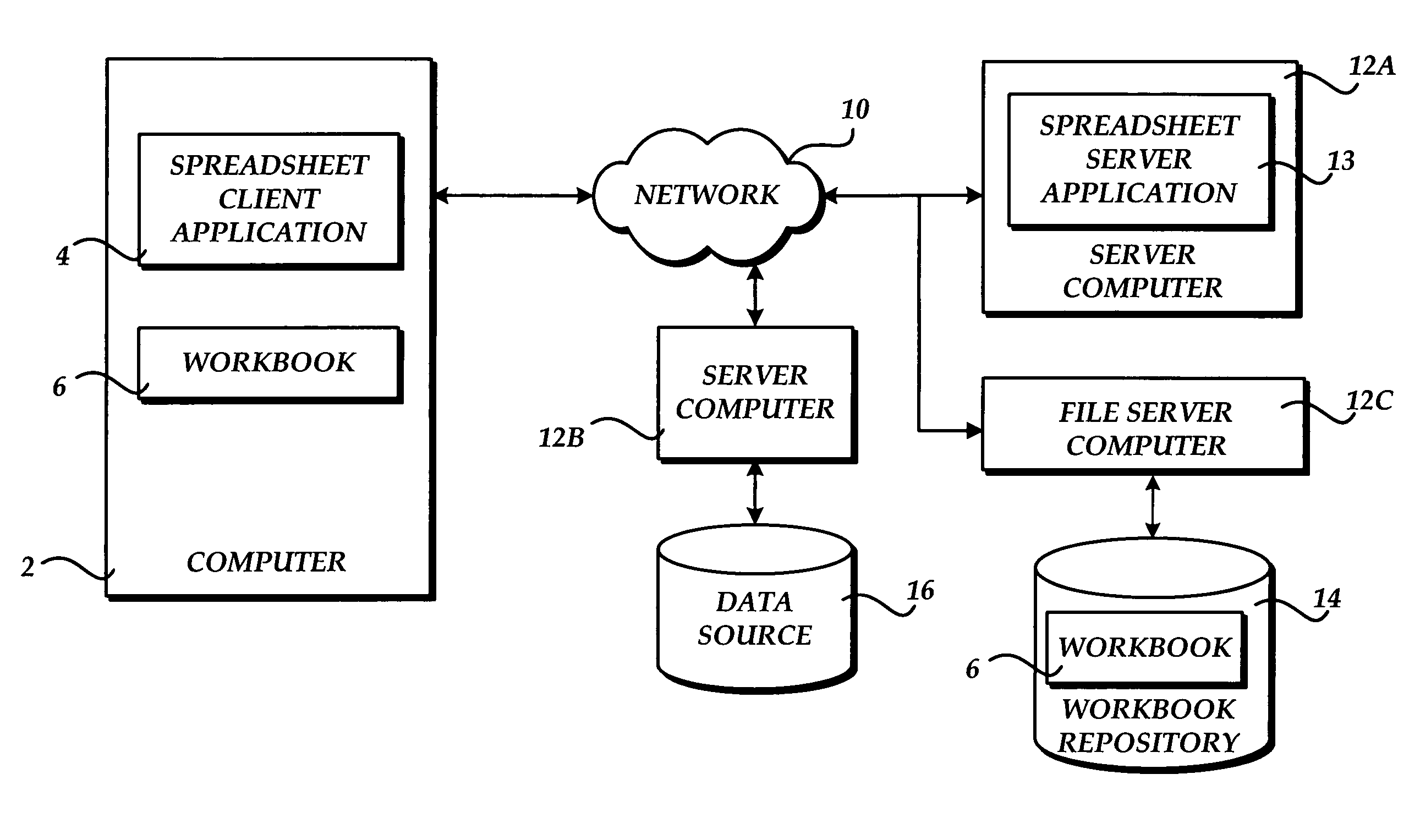

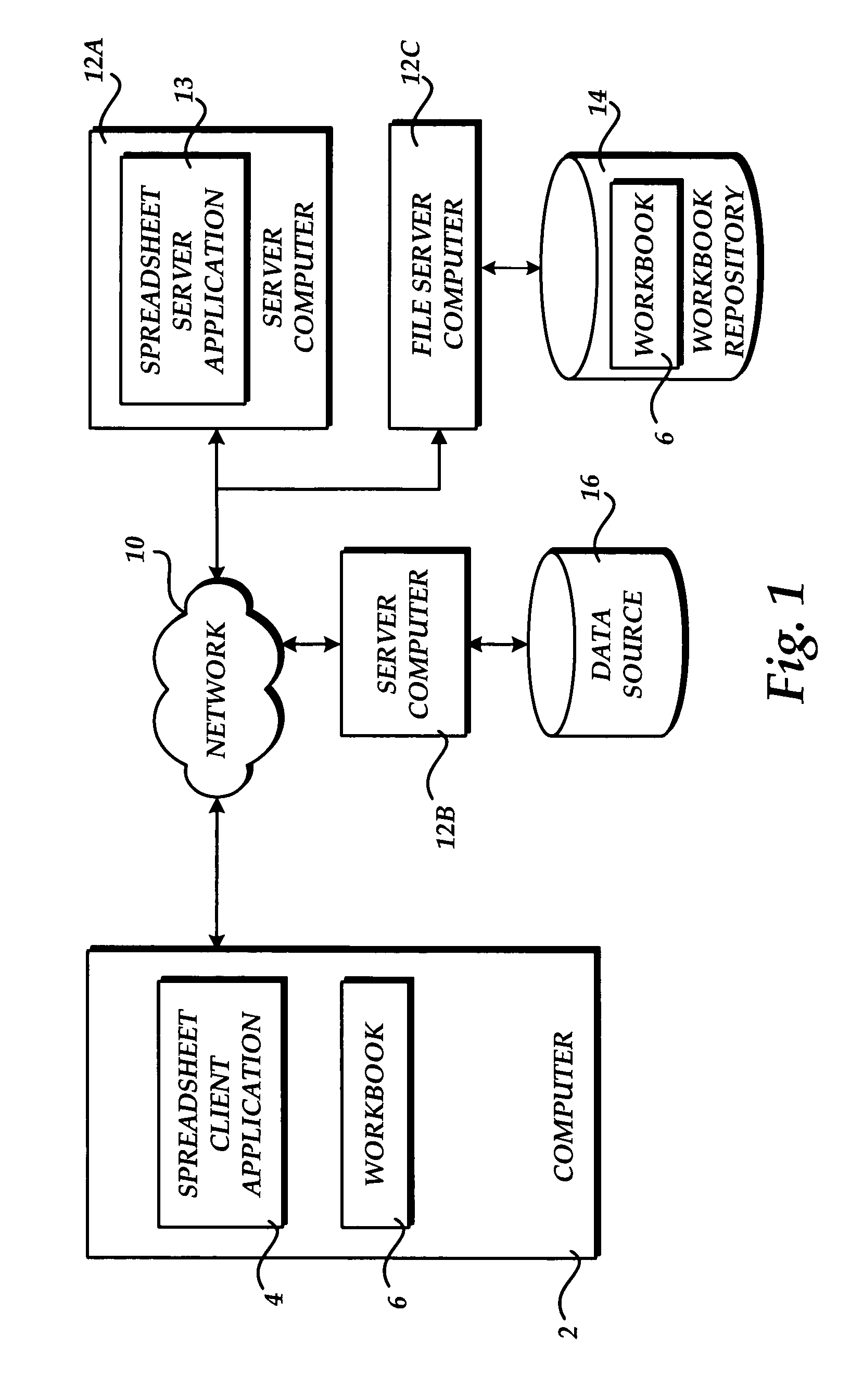

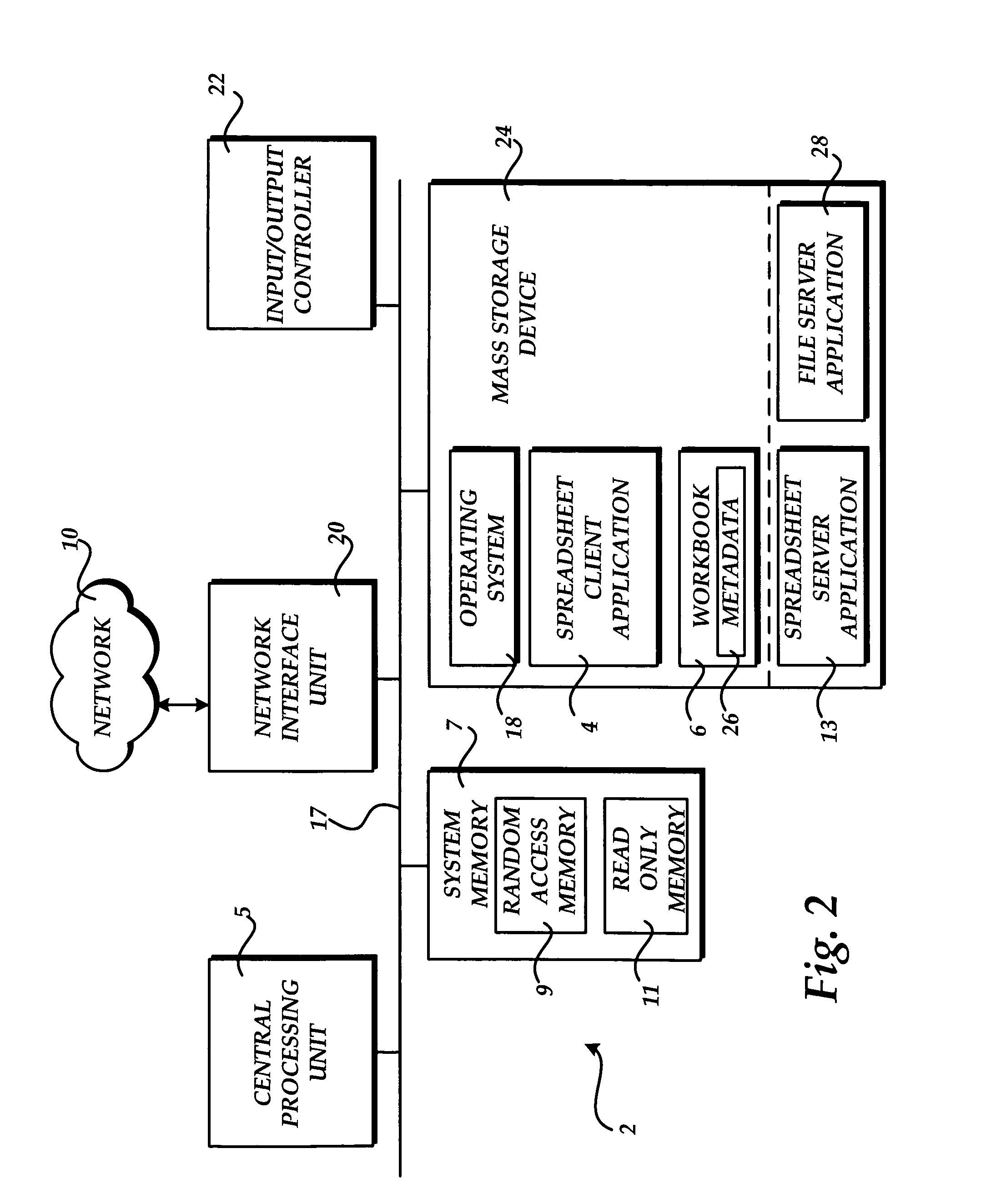

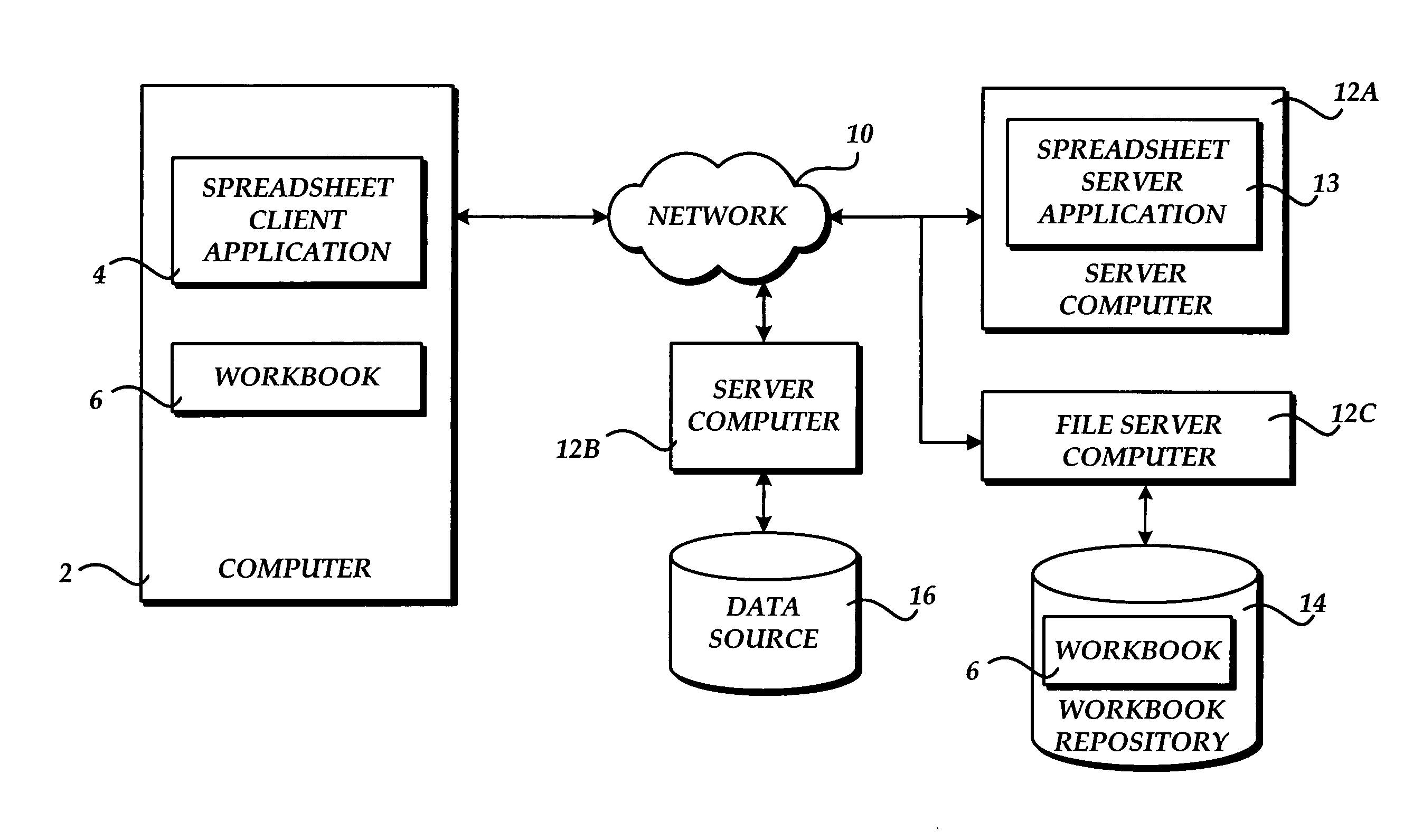

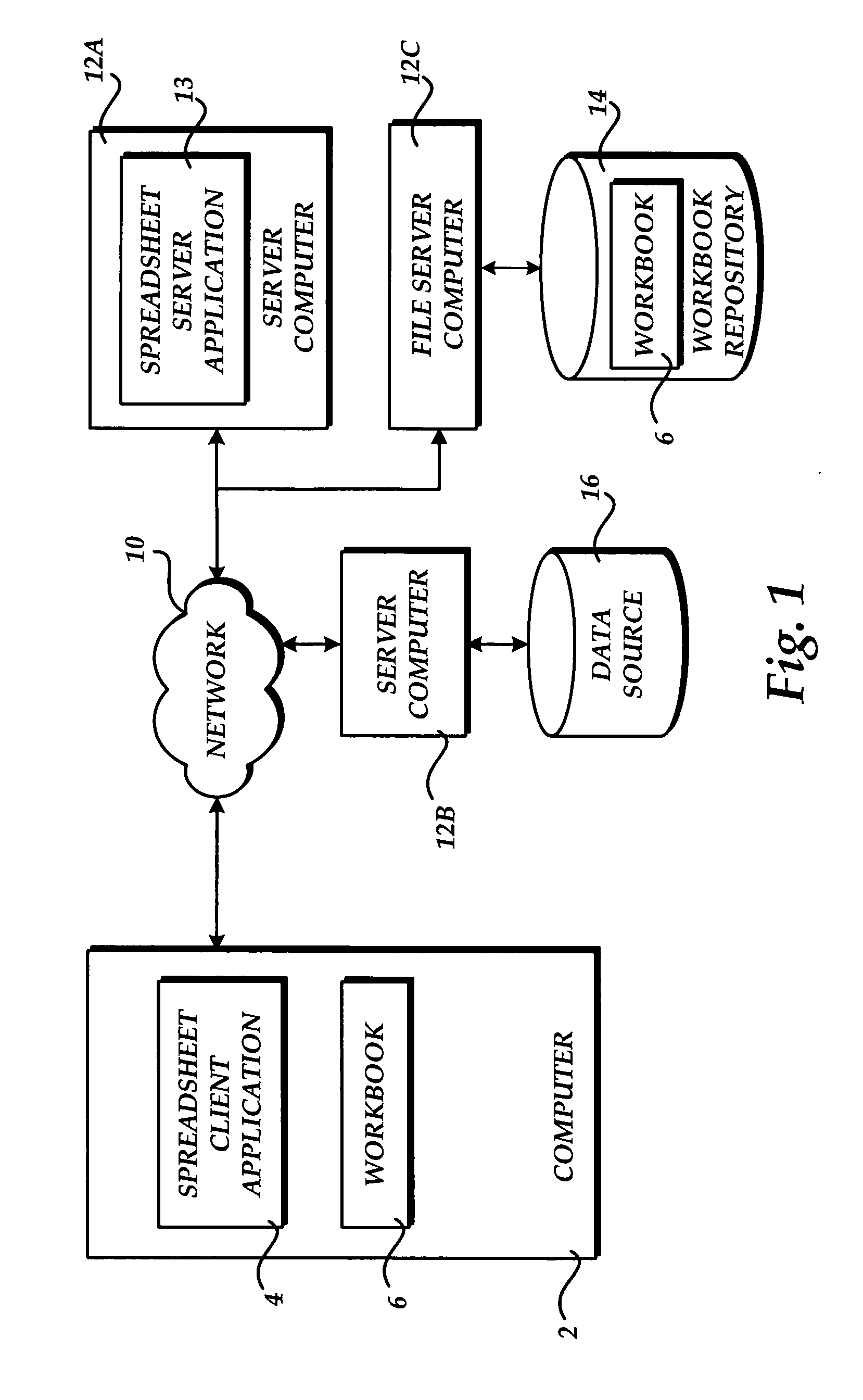

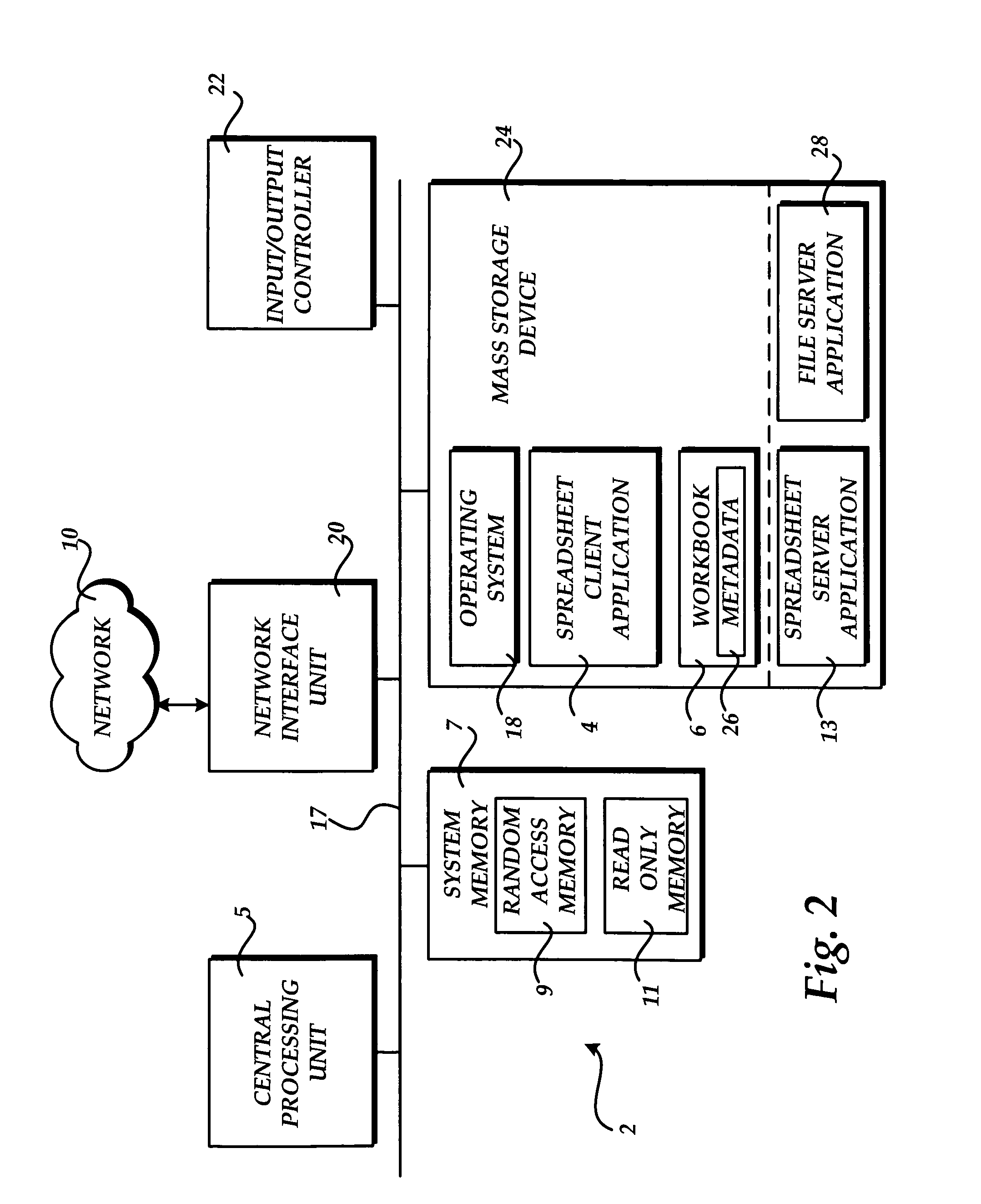

Load balancing based on cache content

A method, schema, and computer-readable medium provide various means for load balancing computing devices in a multi-server environment. The method, schema, and computer-readable medium for load balancing computing devices in a multi-server environment may be utilized in a networked server environment, implementing a spreadsheet application for manipulating a workbook, for example. The method, schema, and computer-readable medium operate to load balance computing devices in a multi-server environment including determining whether a file, such as a spreadsheet application workbook, resides in the cache of a particular server, such as a calculation server. Upon meeting certain conditions, the user request may be directed to the particular server.

Owner:MICROSOFT TECH LICENSING LLC

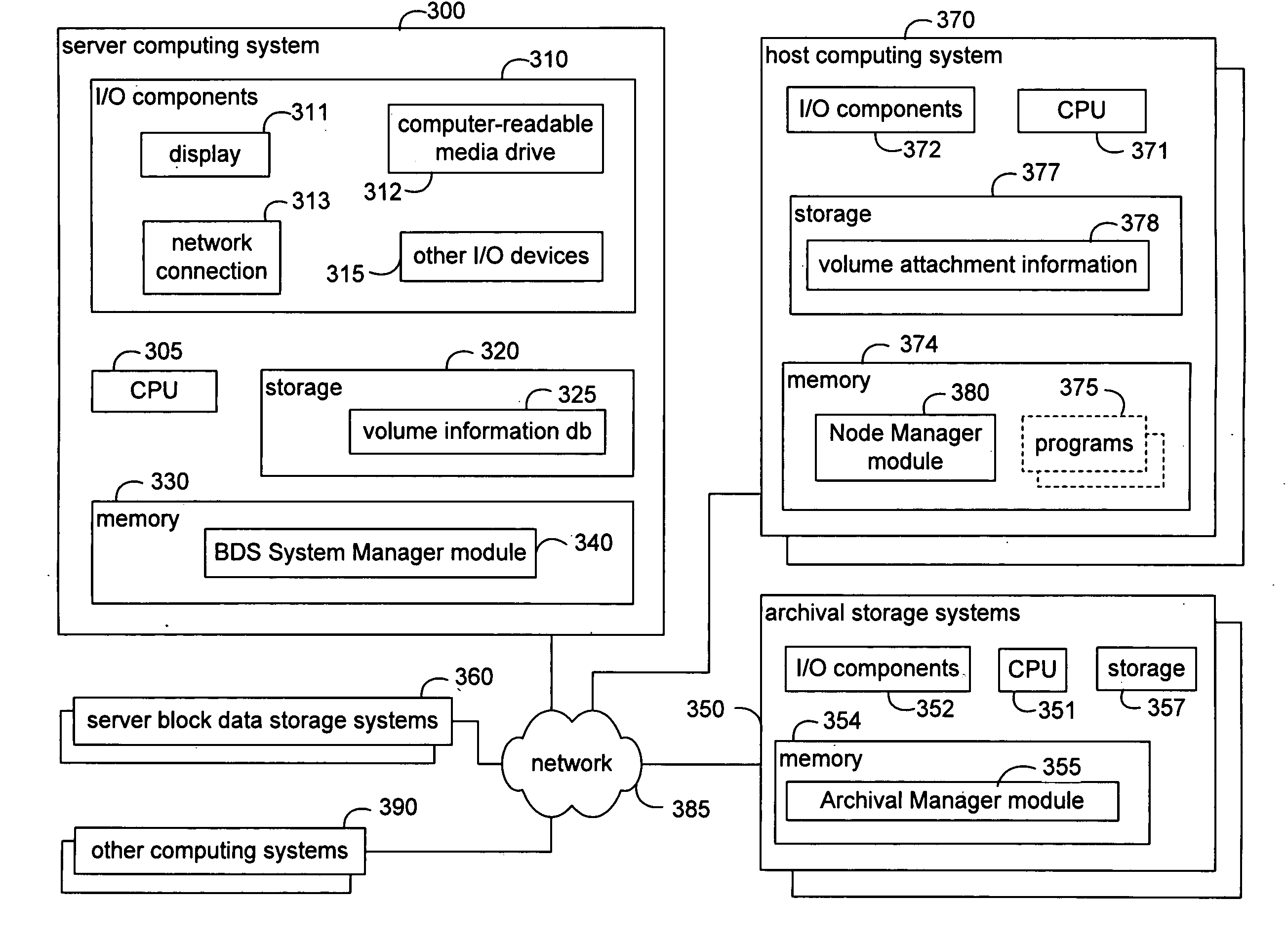

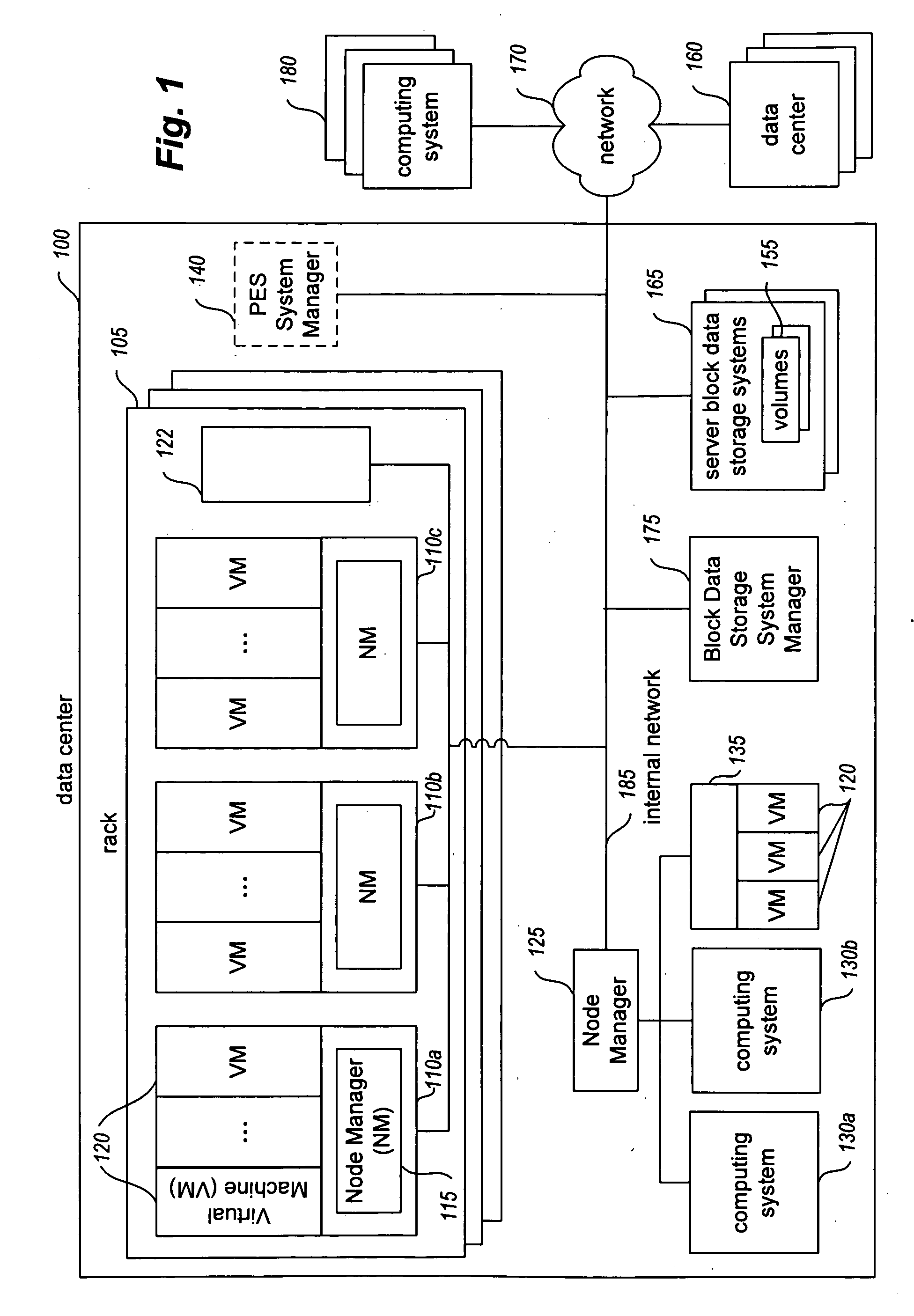

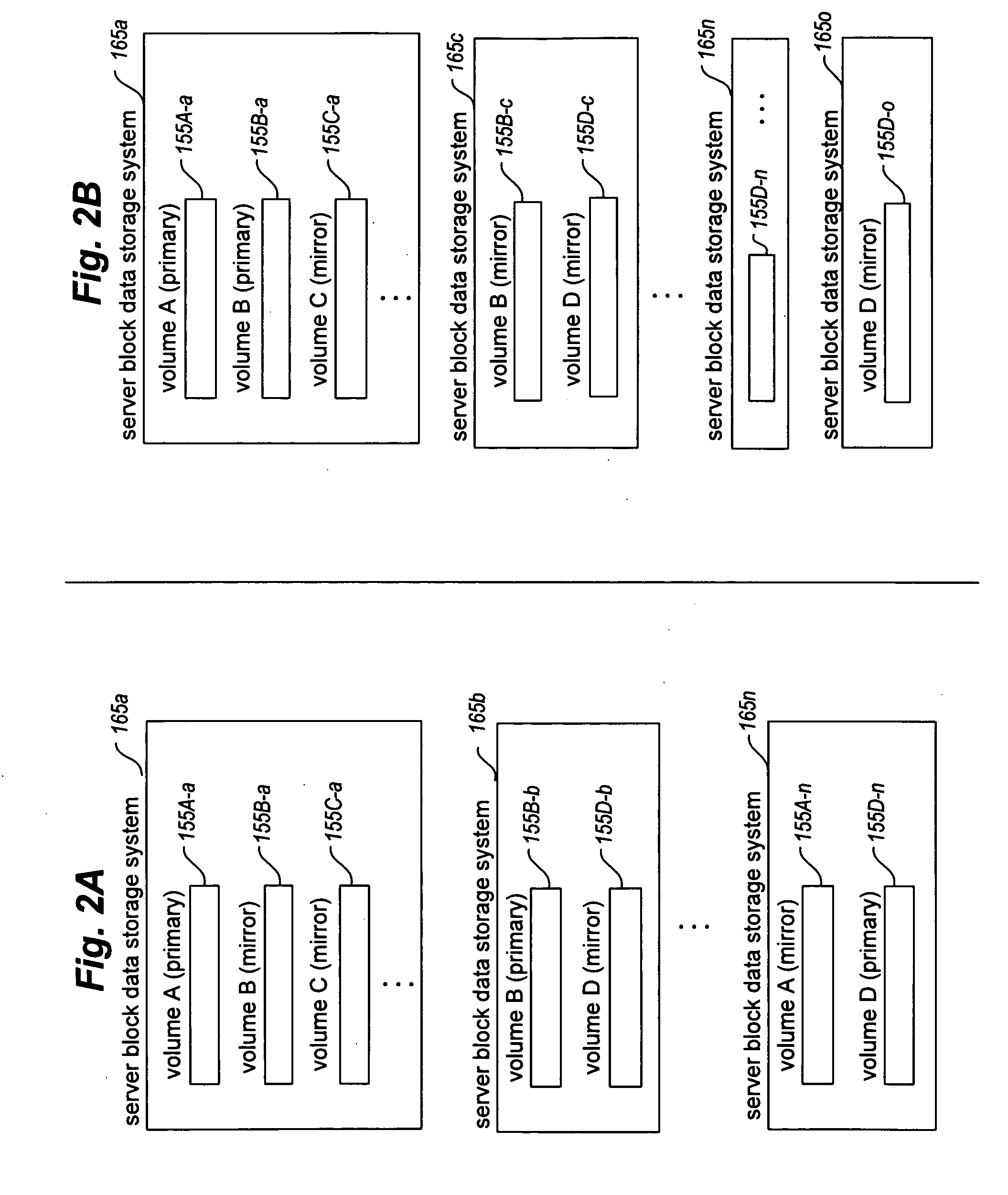

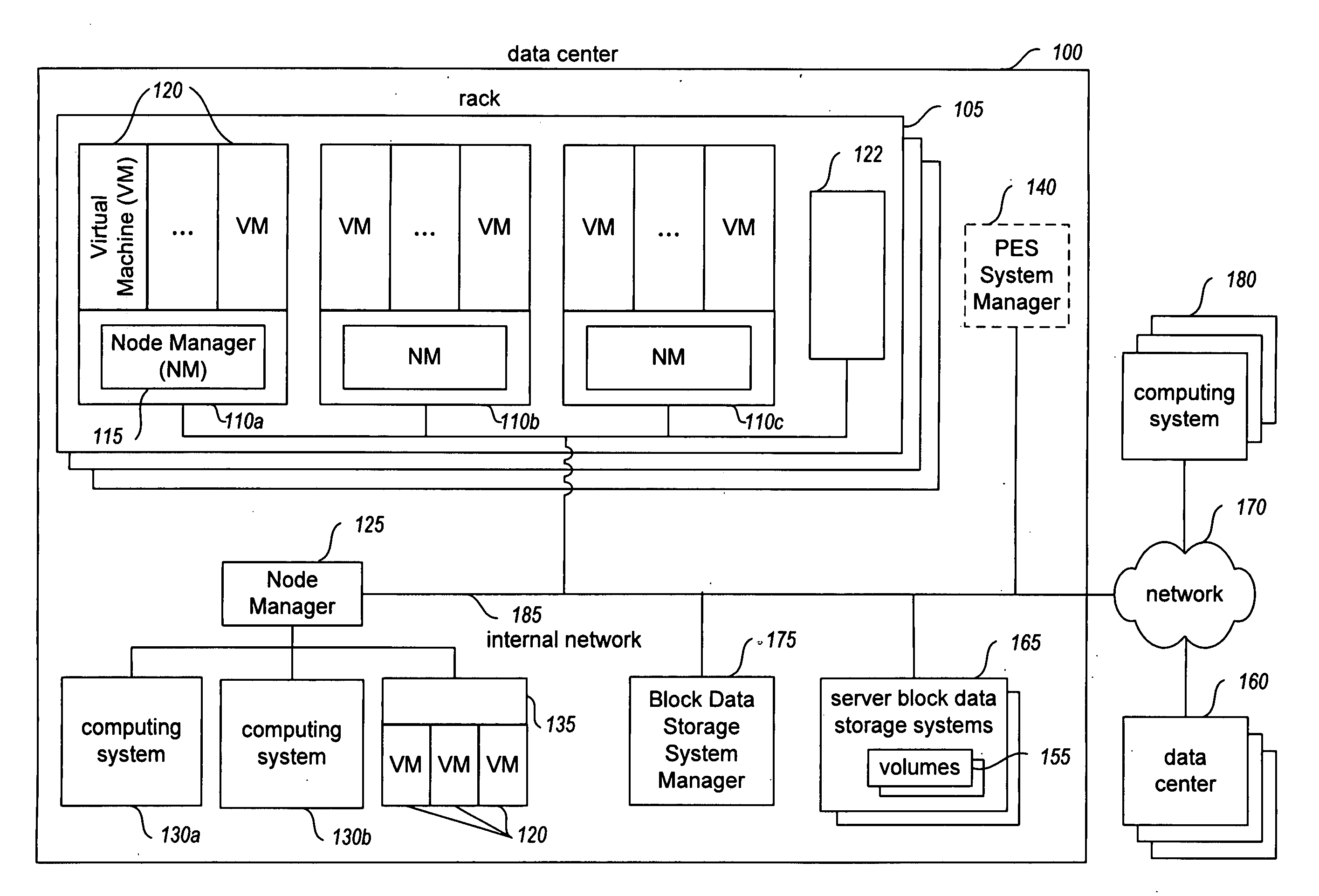

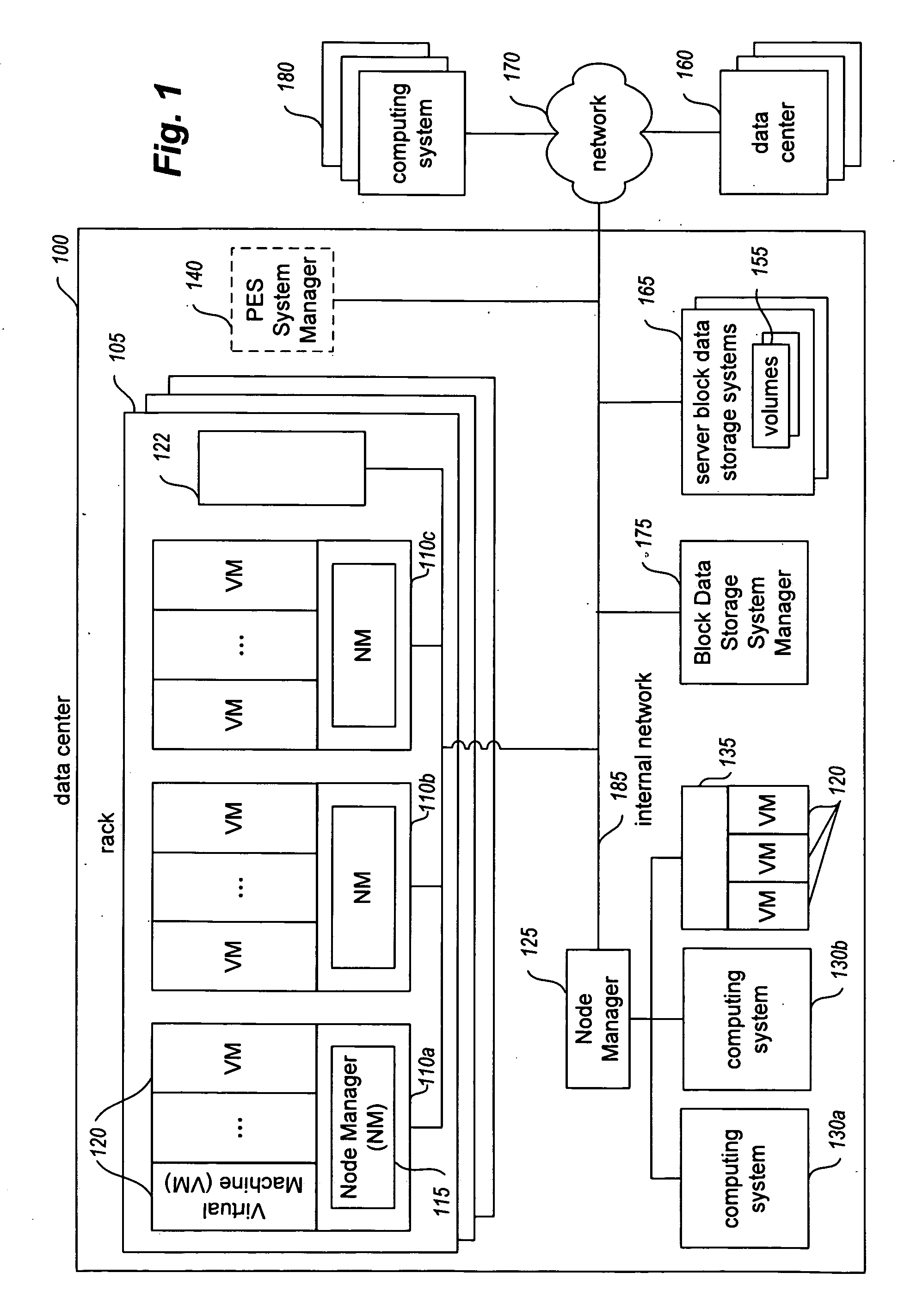

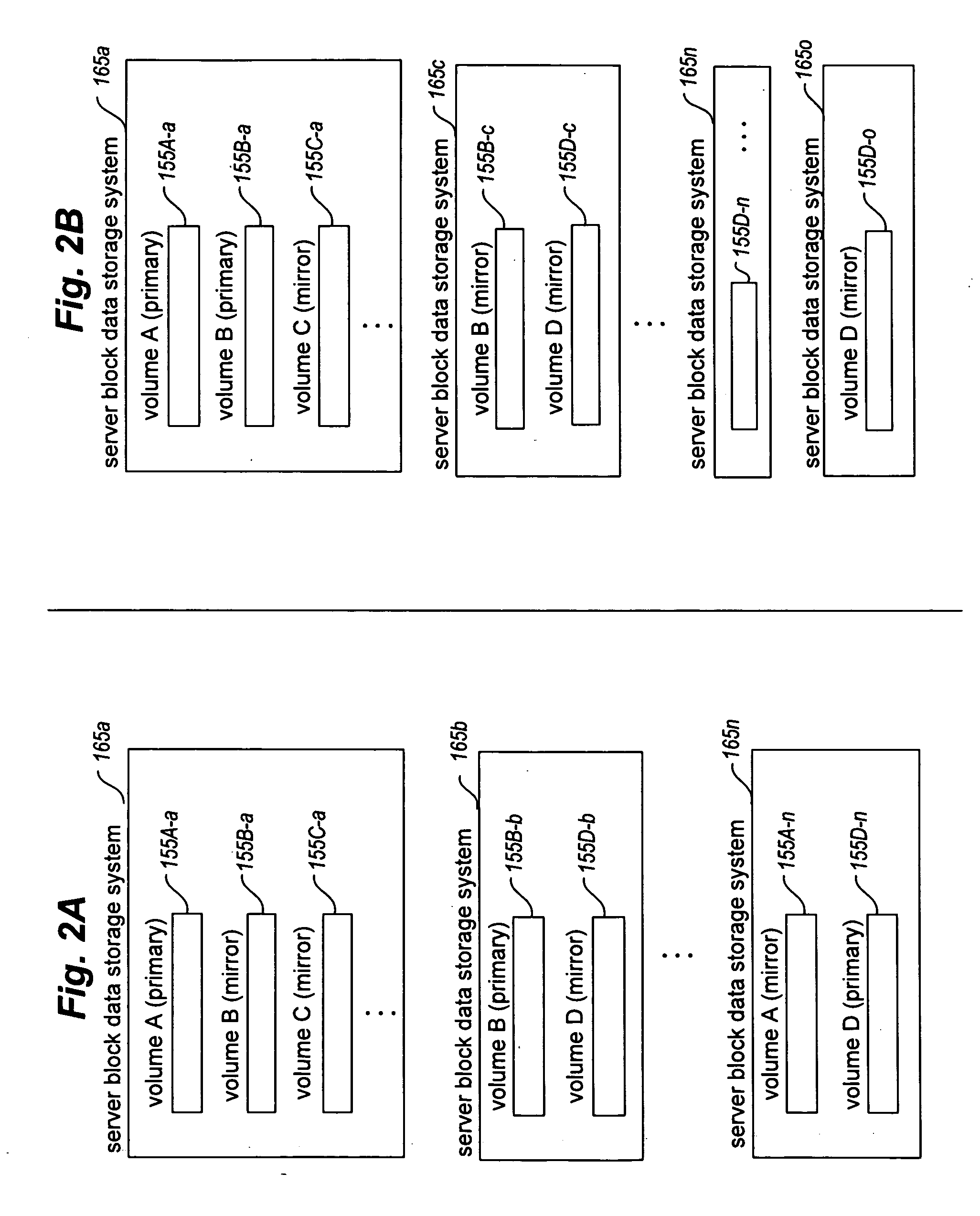

Providing a reliable backing store for block data storage

ActiveUS20100036931A1Memory loss protectionError detection/correctionData storage systemArchival storage

Techniques are described for managing access of executing programs to non-local block data storage. In some situations, a block data storage service uses multiple server storage systems to reliably store copies of network-accessible block data storage volumes that may be used by programs executing on other physical computing systems, and at least some stored data for some volumes may also be stored on remote archival storage systems. A group of multiple server block data storage systems that store block data volumes may in some situations be co-located at a data center, and programs that use volumes stored there may execute on other computing systems at that data center, while the archival storage systems may be located outside the data center. The data stored on the archival storage systems may be used in various ways, including to reduce the amount of data stored in at least some volume copies.

Owner:AMAZON TECH INC

Providing executing programs with access to stored block data of others

ActiveUS20100037031A1Memory loss protectionError detection/correctionData storage systemArchival storage

Techniques are described for managing access of executing programs to non-local block data storage. In some situations, a block data storage service uses multiple server storage systems to reliably store copies of network-accessible block data storage volumes that may be used by programs executing on other physical computing systems, and snapshot copies of some volumes may also be stored (e.g., on remote archival storage systems). A group of multiple server block data storage systems that store block data volumes may in some situations be co-located at a data center, and programs that use volumes stored there may execute on other computing systems at that data center, while the archival storage systems may be located outside the data center. The snapshot copies of volumes may be used in various ways, including to allow users to obtain their own copies of other users' volumes (e.g., for a fee).

Owner:AMAZON TECH INC

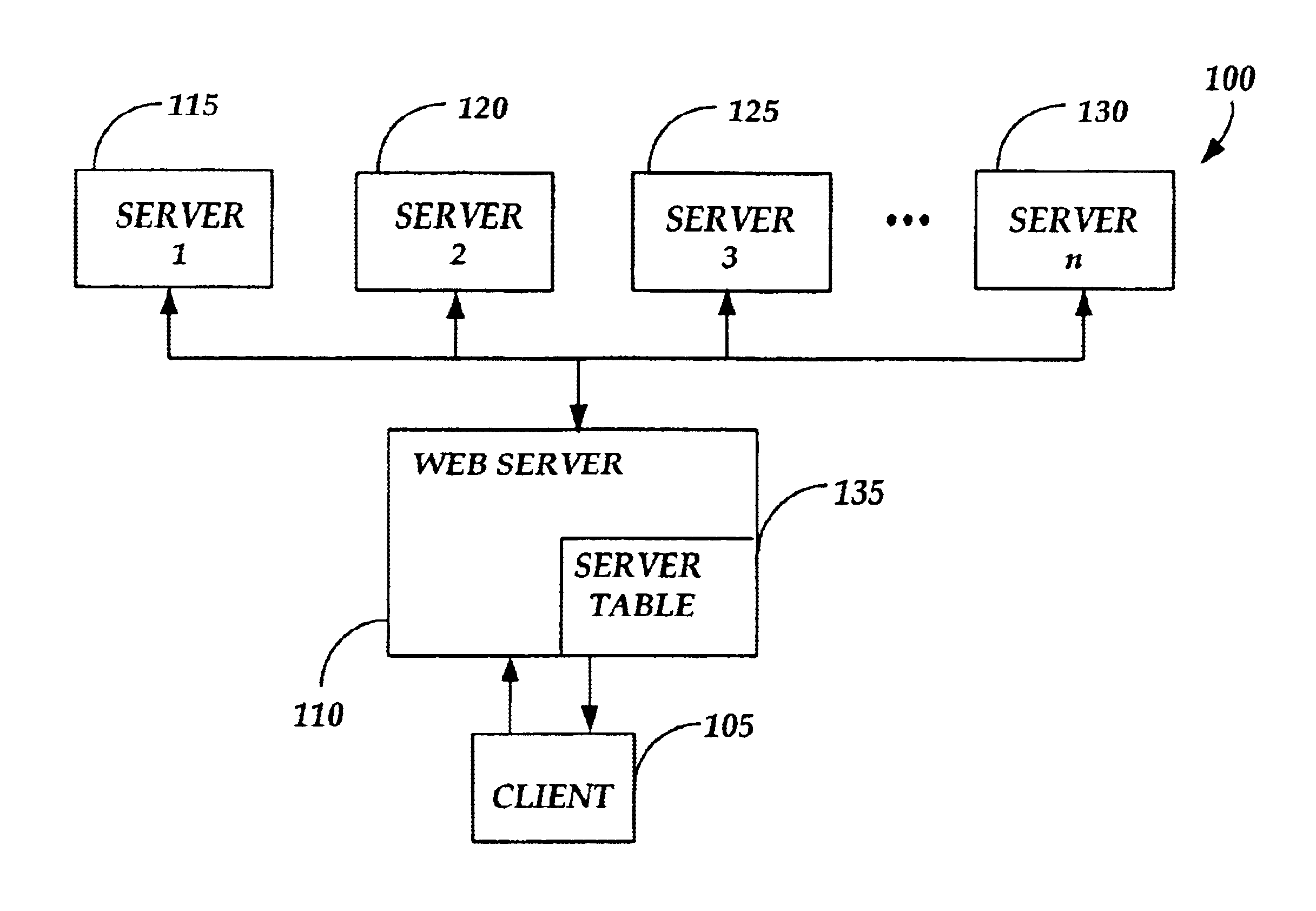

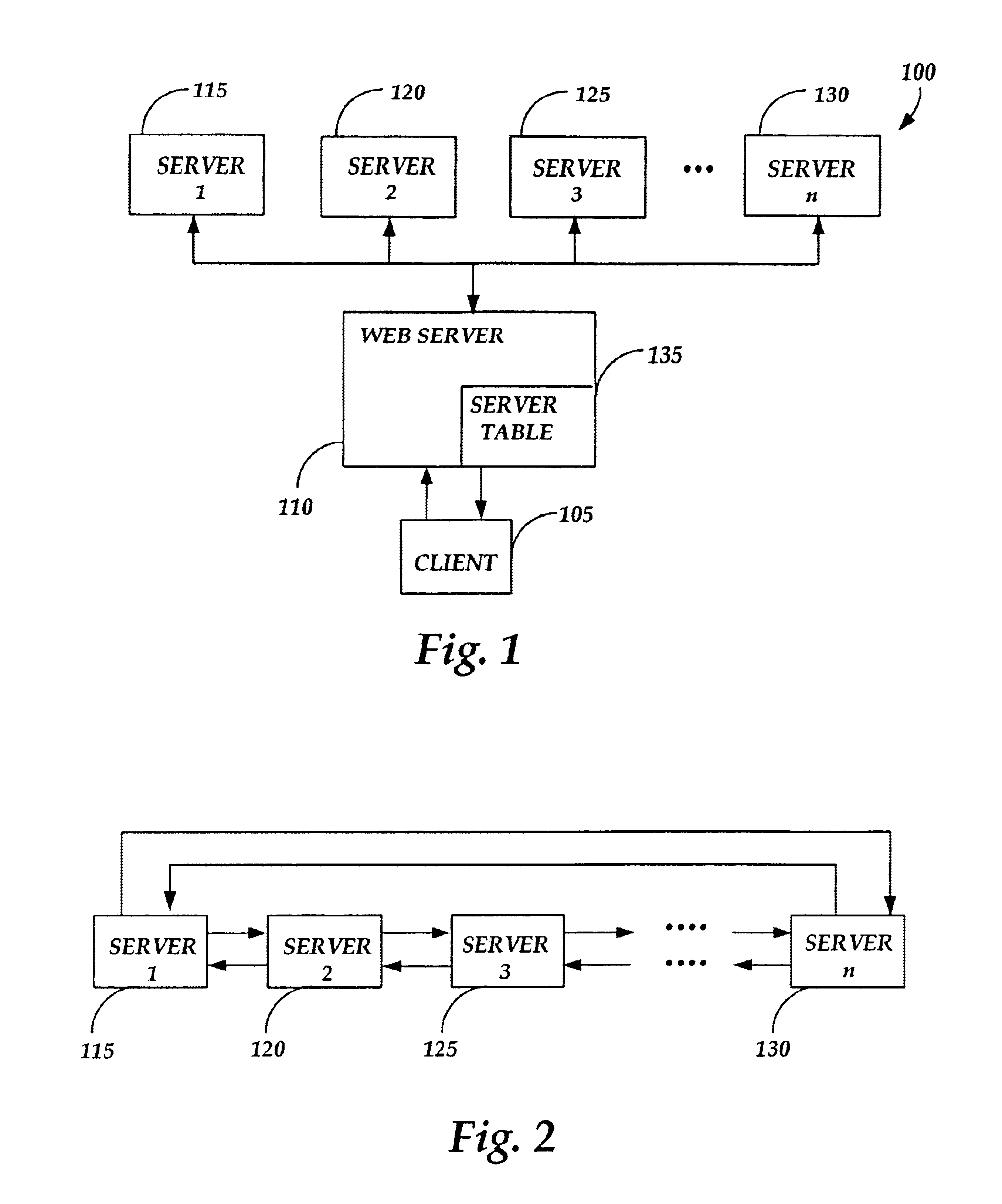

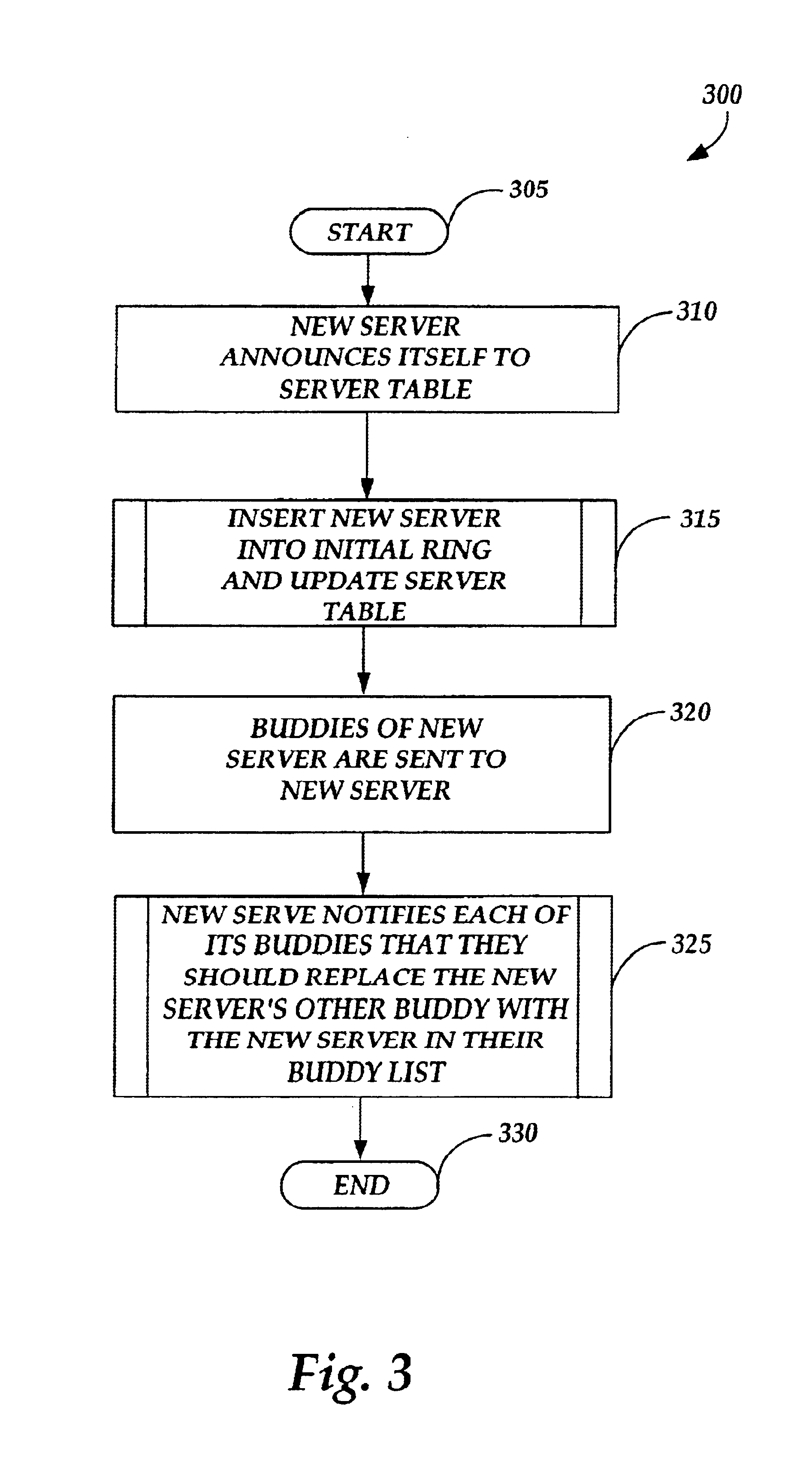

Method and system for detecting a dead server

Method and system for detecting a dead server in a multi-server environment. A virtual ring structure is used in which each server in a server pool is only required to monitor the status of two other servers in the server pool. Thus, a server need only transmit ping signals to two other servers (its buddies) in the server pool at any given time. Because each server maintains the status of only two other servers at any given time, the size of the server pool is not limited by the ability of each server to send and process ping signals. The two servers which are monitored by any given server in the server pool are referred to as the “buddy A” server and the “buddy B” server. When the monitoring server determines that one of its buddy servers is down, the monitoring server reports the status of the down server to a SQL server that maintains a server table. The server table maintains a list of each “live” server and the buddy servers assigned to that server. Down servers are removed from the server table. When a server determines that one of its buddies is down, the report to the SQL server results in a buddy reassignment. The buddies of the down server are made buddies of one another and the virtual server ring is once more intact. The SQL server then knows not to route any client to the down server.

Owner:MICROSOFT TECH LICENSING LLC

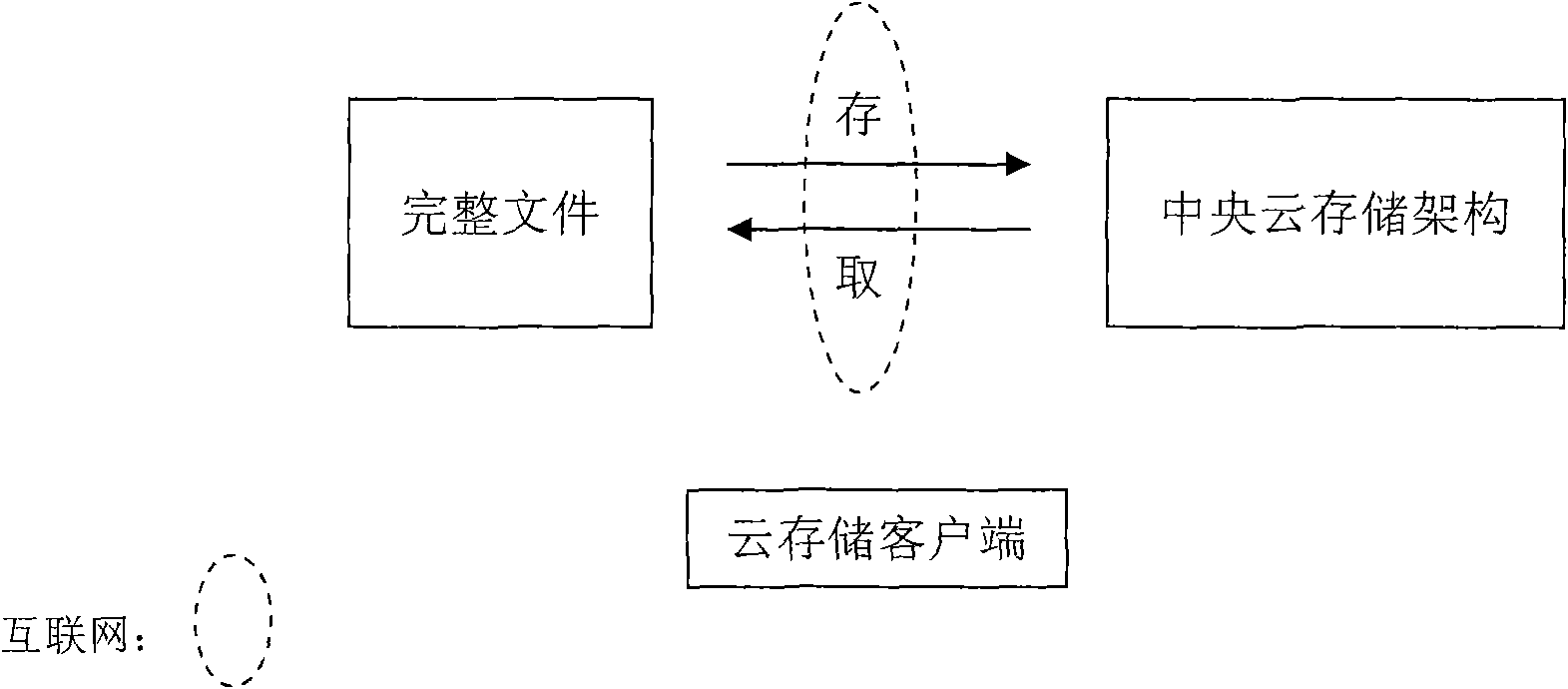

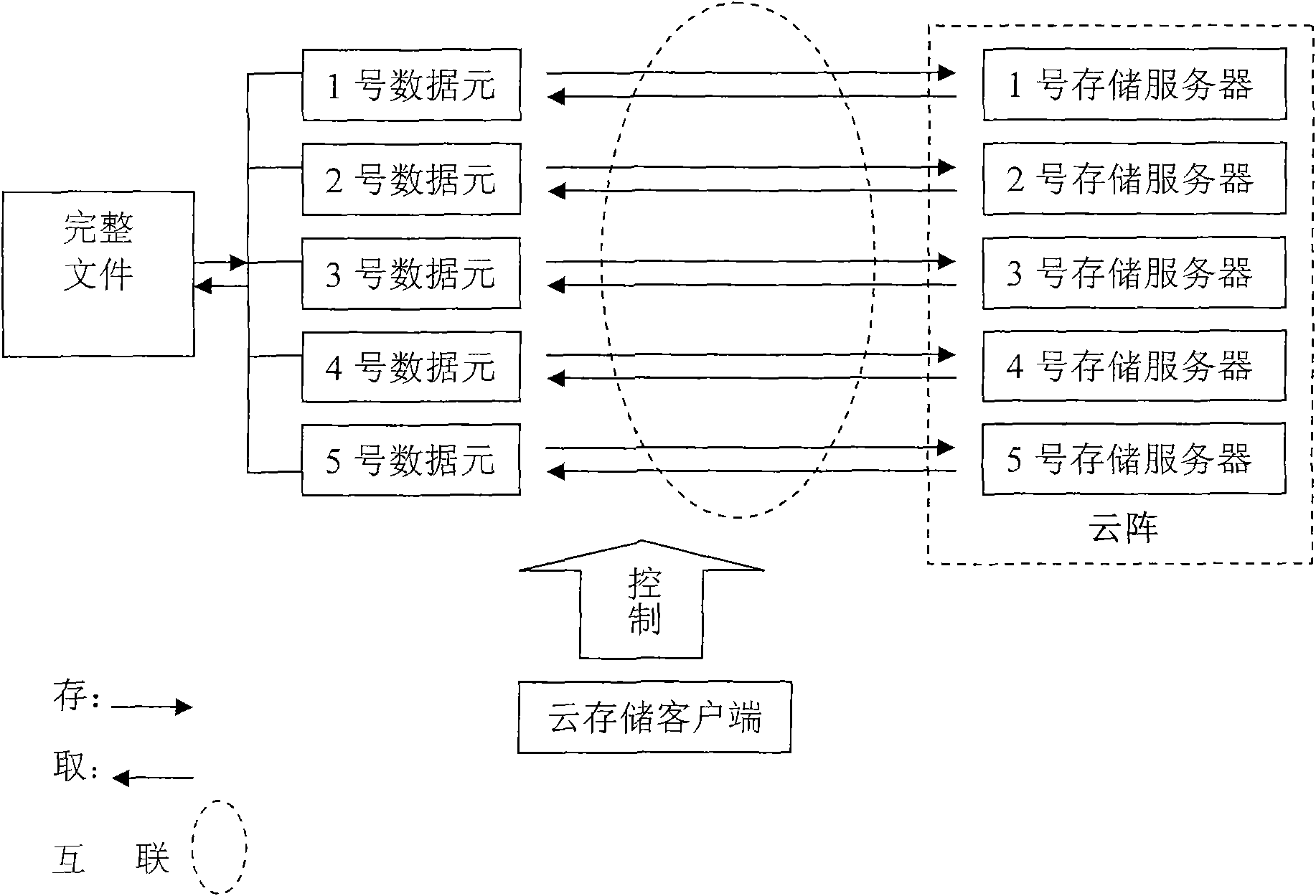

Method for structuring parallel system for cloud storage

The invention is mainly characterized in that: (1) a novel technical proposal for decentralizing network central storage is established, namely before being stored, a master file is broken into a plurality of sub-files known as data elements, as the smallest units for storage, by a program; (2) a prior network central storage method is replaced by cloud array storage technology, wherein a cloud array consists of a plurality of servers set by special serial numbers; an access relation of the data elements corresponding to the servers is established according to the serial numbers; and an original file is broken into the data elements respectively stored in the cloud arrays; (3) the storage of the data elements adopts a multi-process parallel mode, namely one process executes the storage of one data element, and N processes execute the storage of N data elements; and due to the parallel processes, the storage speed can be improved; (4) the data elements are encrypted and compressed, and an original password is not allowed to save; and (5) an operating system of the servers is Unix. The method for structuring a parallel system for the cloud storage can effectively solve the problem of the security of cloud storage data, and has important value for cloud storage structuring and application development.

Owner:何吴迪

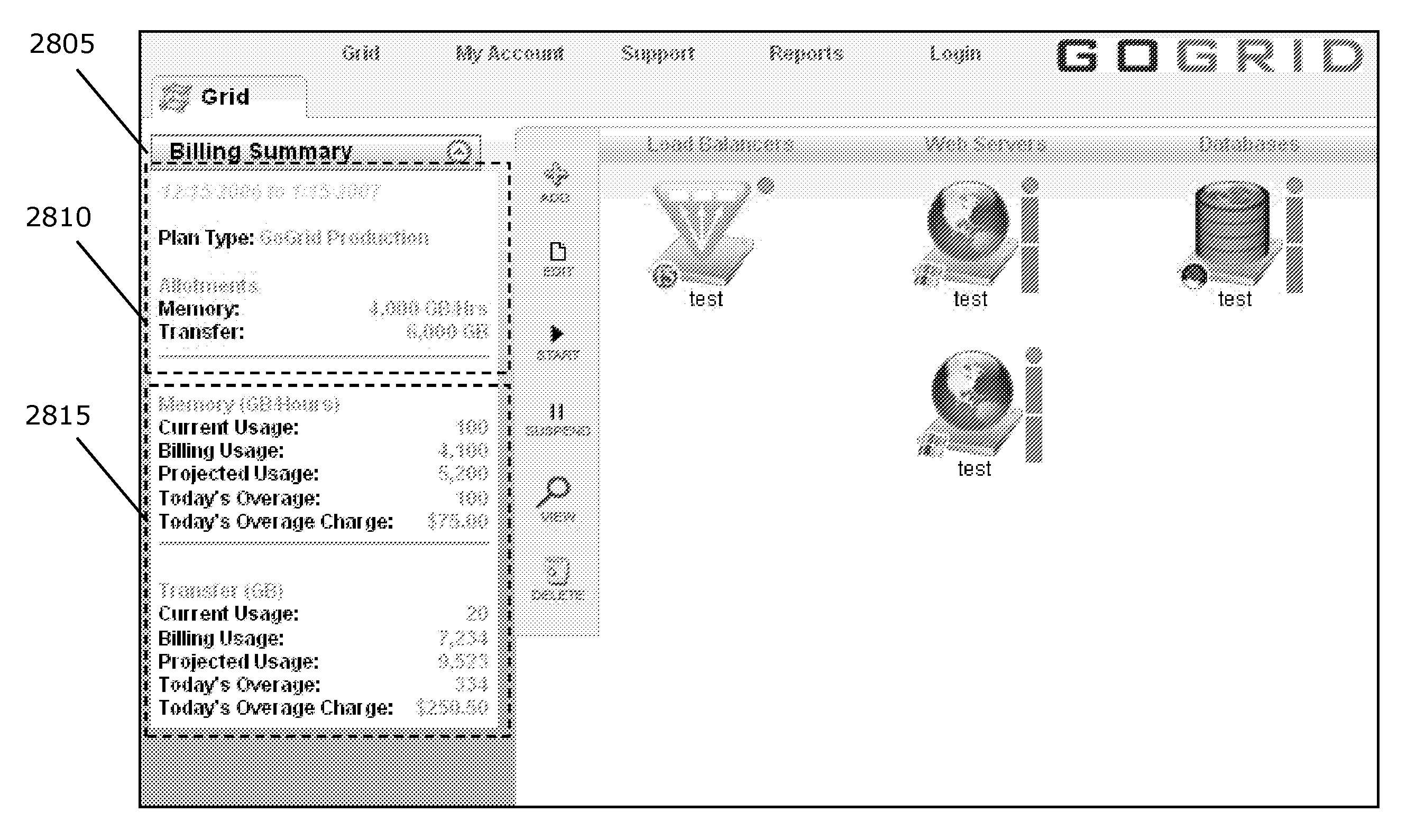

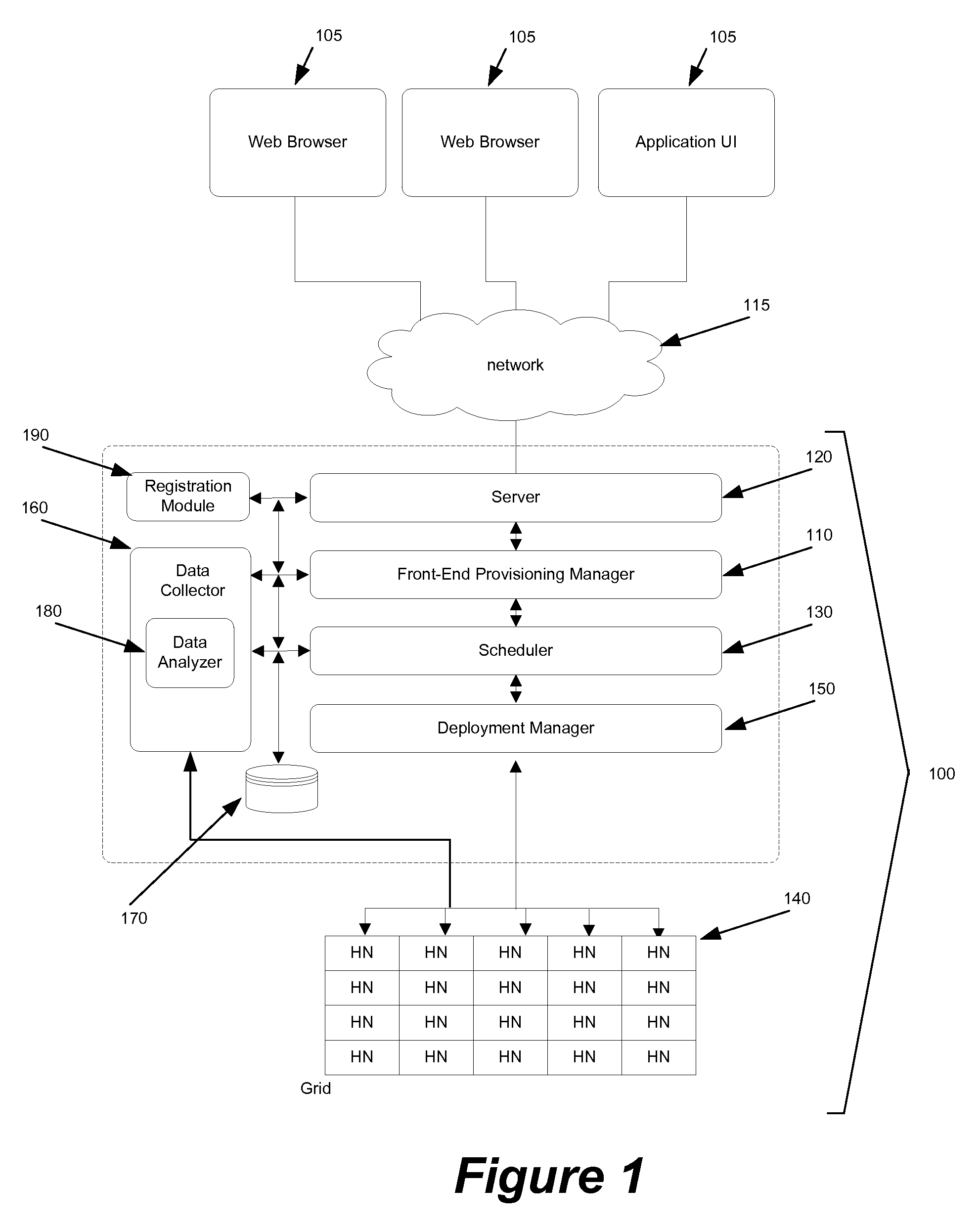

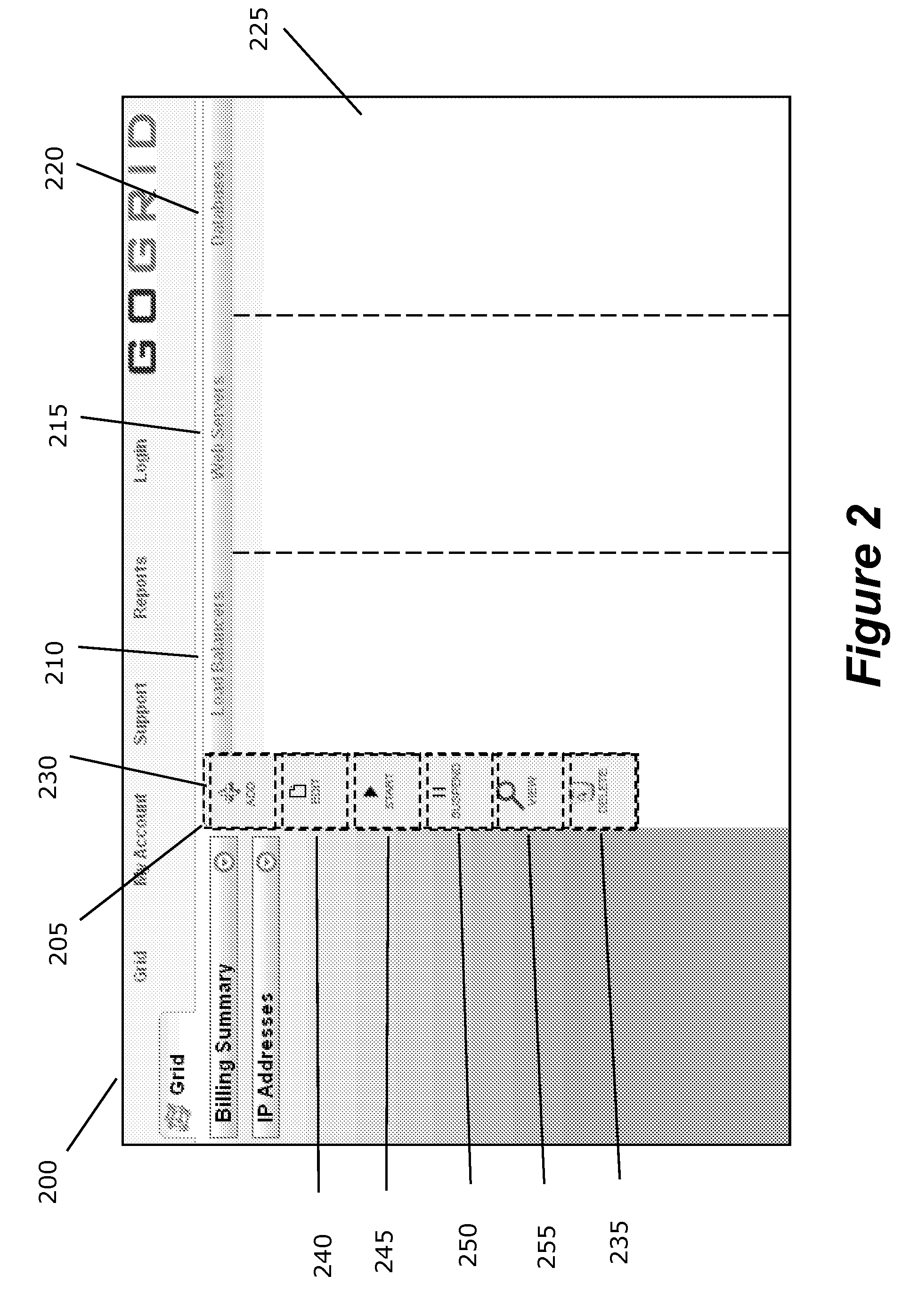

Multi-server control panel

Some embodiments of the invention provide a graphical user interface for receiving a server configuration (e.g., receiving a new configuration or a modification to an existing configuration). The graphical user interface (UI) includes several UI control elements for defining components of the server configuration. It also includes a display area for displaying graphical representations of the defined components of the server configuration. Examples of control elements in some embodiments include control elements for adding, deleting, and modifying servers. In some embodiments, at least one control element is displayed when a cursor control operation is performed on the UI. The cursor control operation (e.g., a right hand click operation) in some embodiments opens a display area that shows the control element. In some embodiments, at least two different components in the server configuration correspond to two different layers (e.g., a web server layer and a data storage layer) in the server configuration. The display area of some embodiments includes multiple tiers, where each tier is for displaying graphical representation of components in a particular layer of the server configuration. At least two tiers in some embodiments are displayed simultaneously in the display area.

Owner:GOOGLE LLC

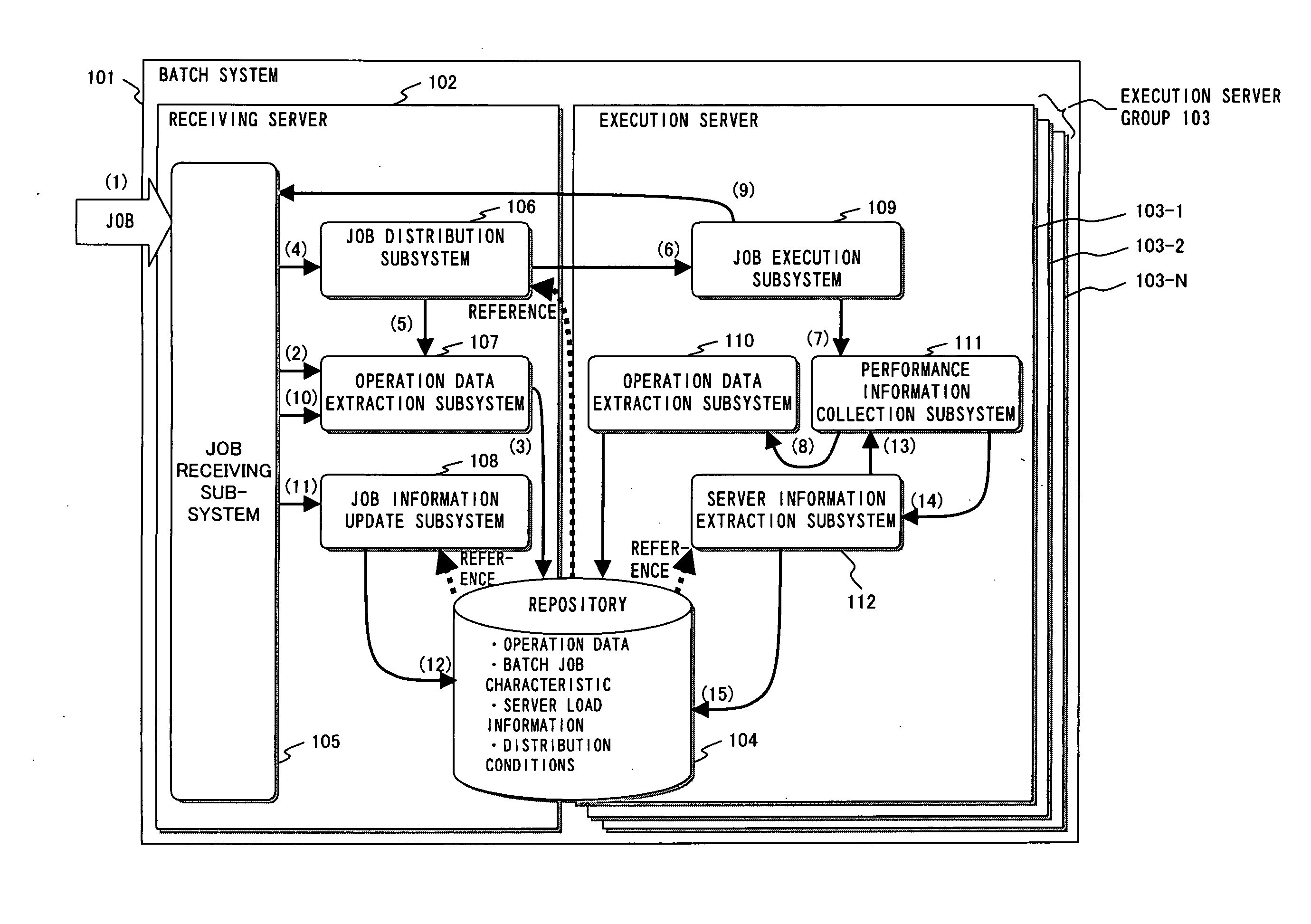

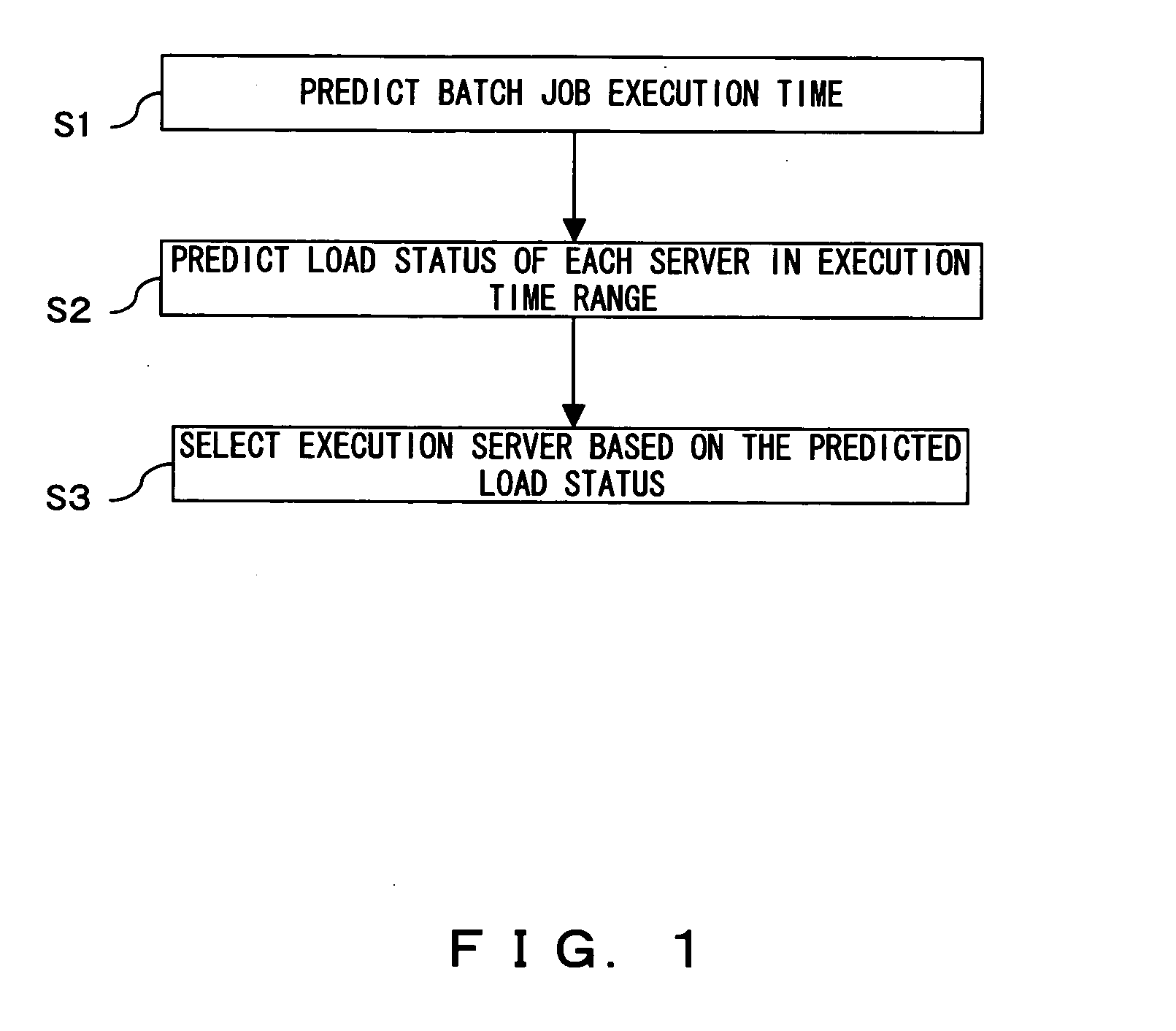

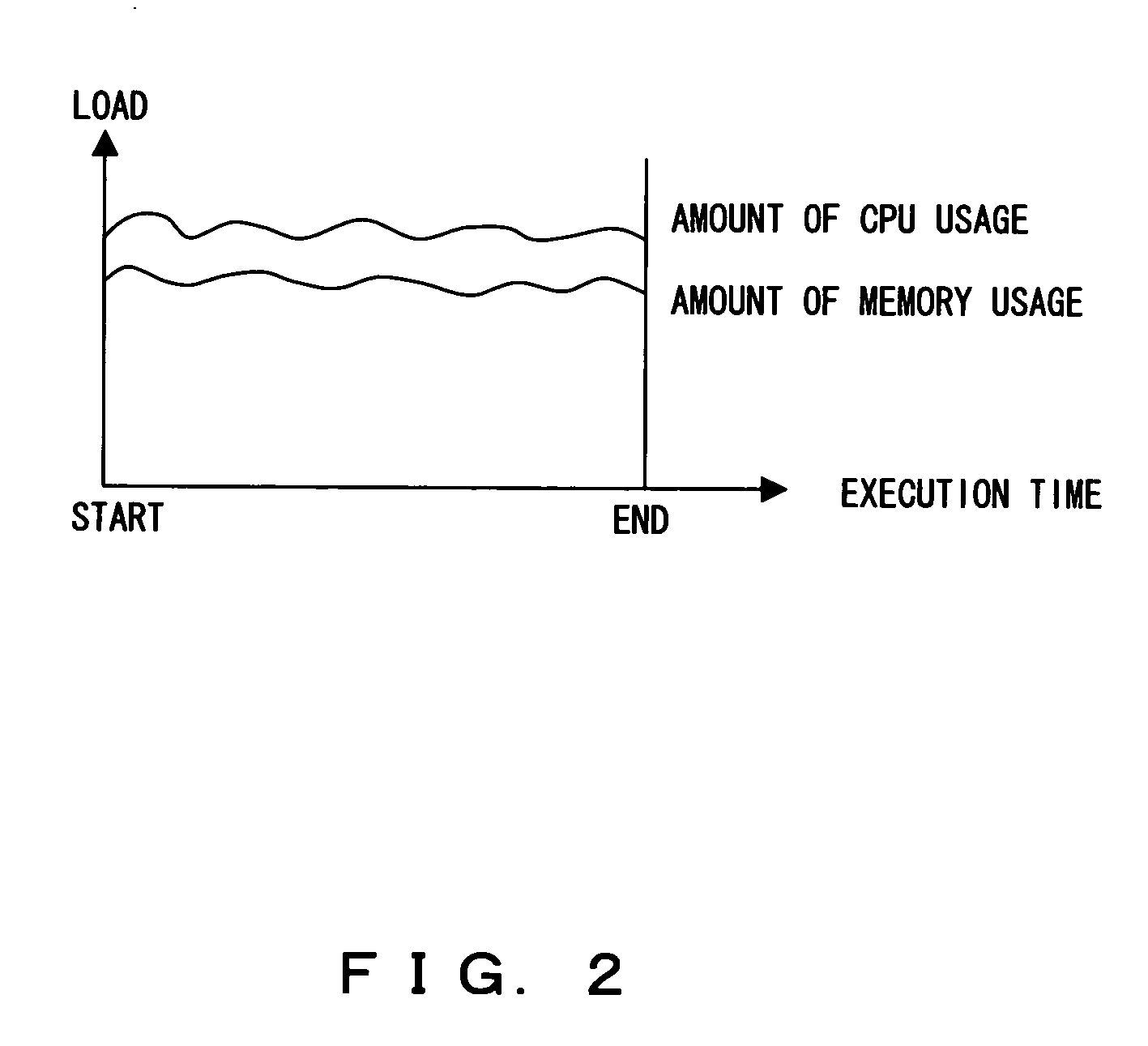

Program, apparatus and method for distributing batch job in multiple server environment

InactiveUS20070220516A1Reduce difficultyGood choiceMultiprogramming arrangementsMemory systemsTime rangeComputer science

Using a batch job characteristic and input data volume, the time required for the execution of the batch job is predicted, the load status of each execution server over the range of the time is predicted, and an execution server to execute the batch job is selected based on the predictions. Additionally, for every execution of the batch job, the load occurred by the batch job execution is measured and the batch job characteristic is updated based on the measurement. This measurement and update can improve reliability of the batch job characteristic and accuracy of the execution server selection.

Owner:FUJITSU LTD

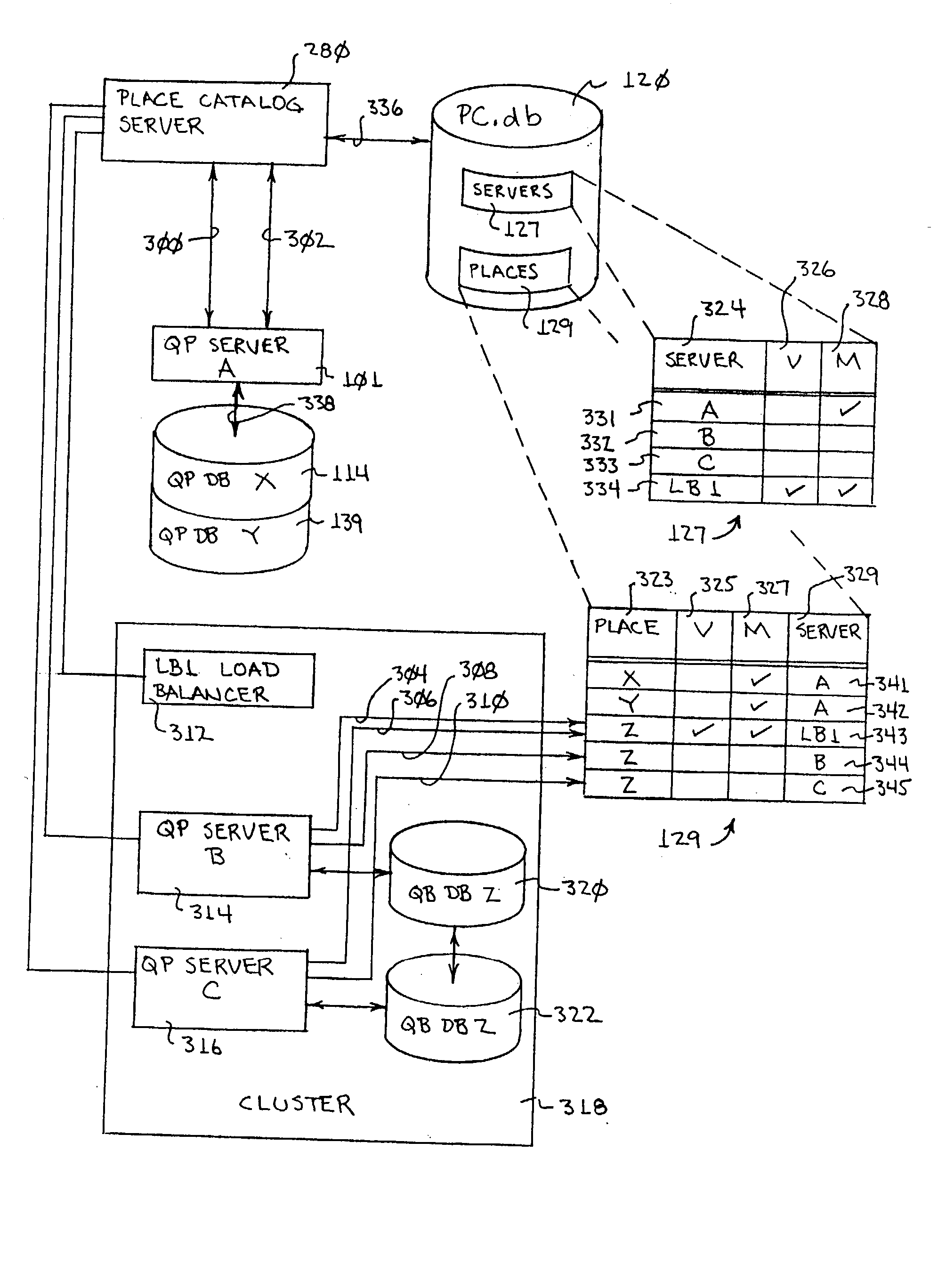

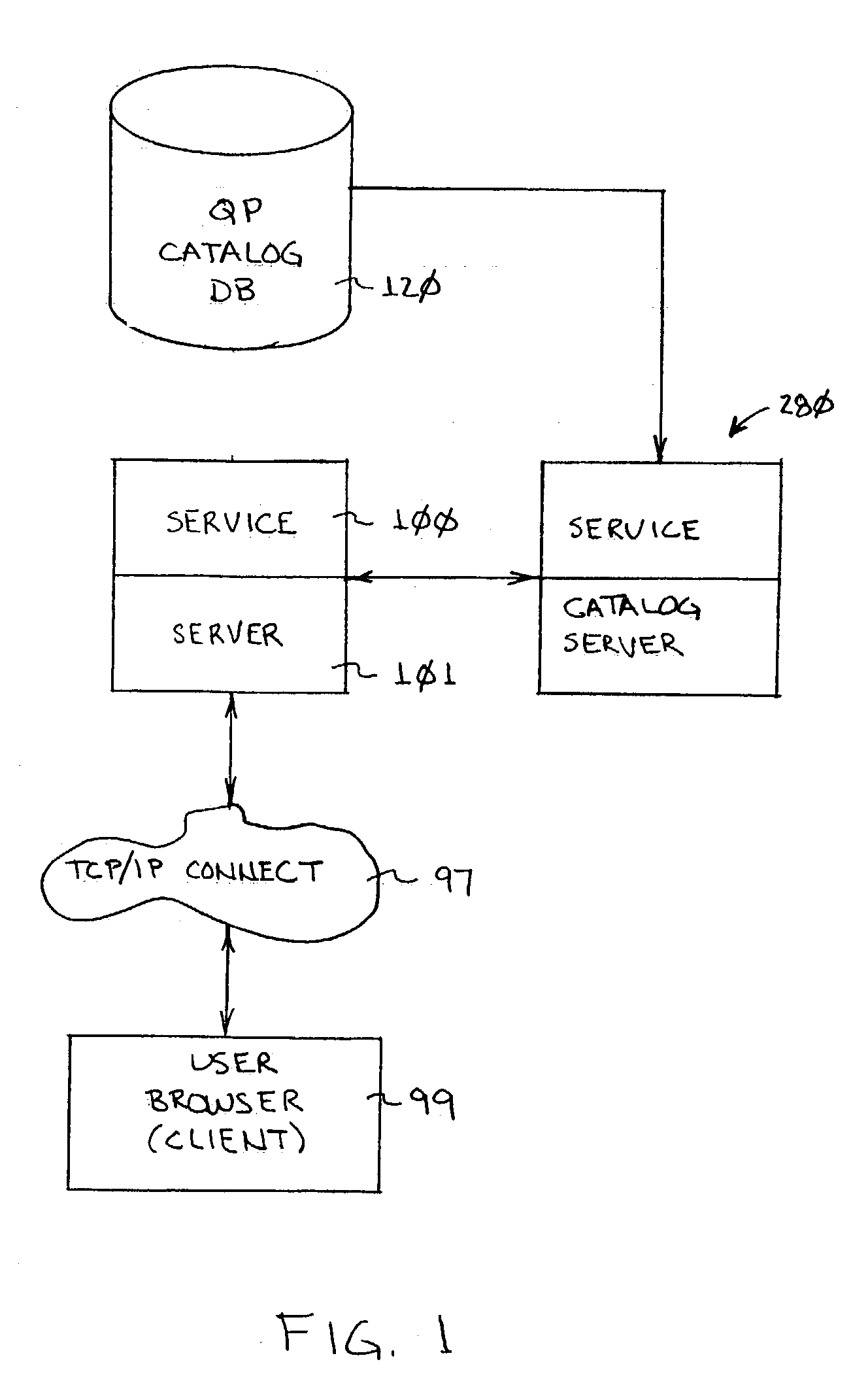

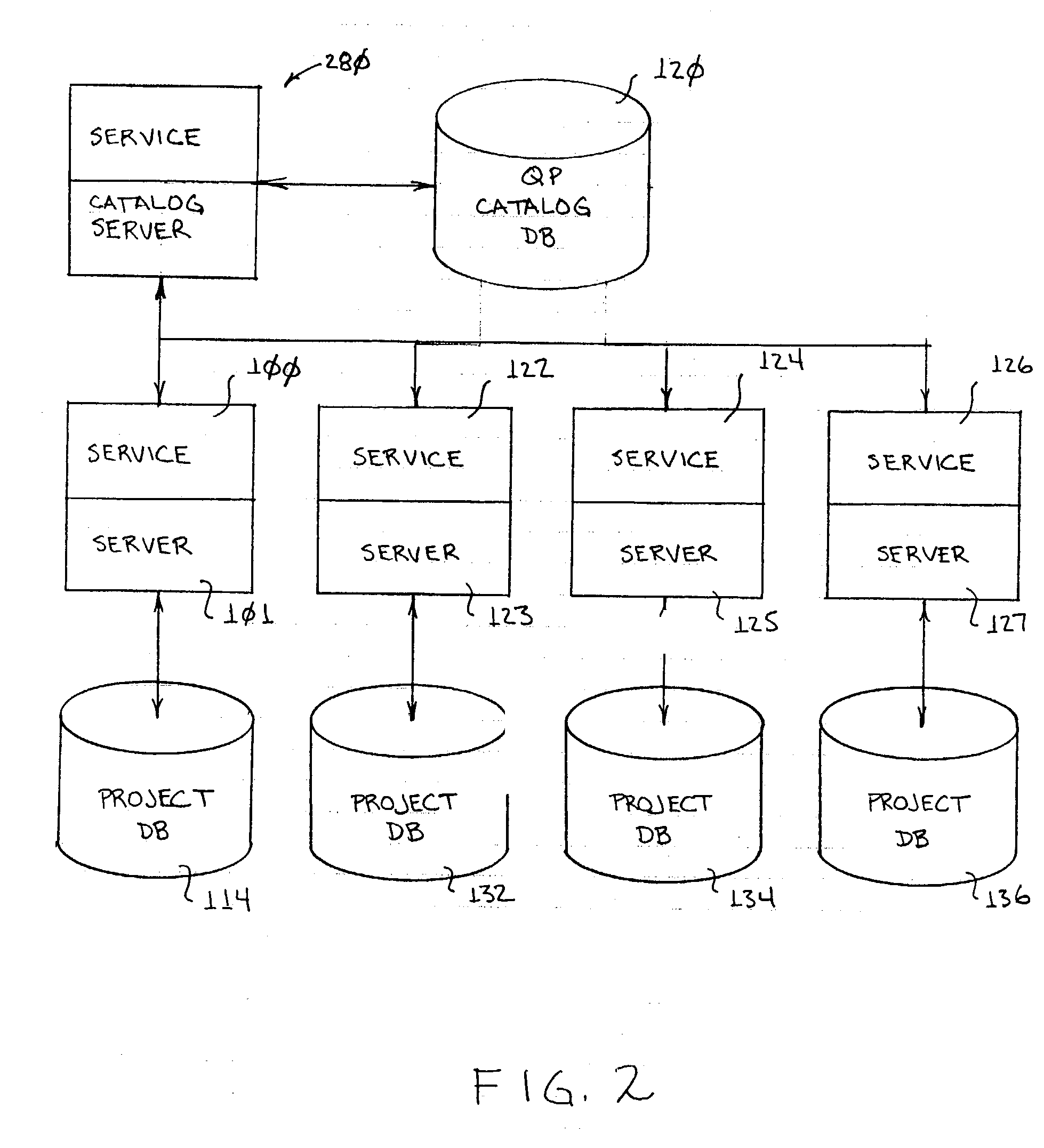

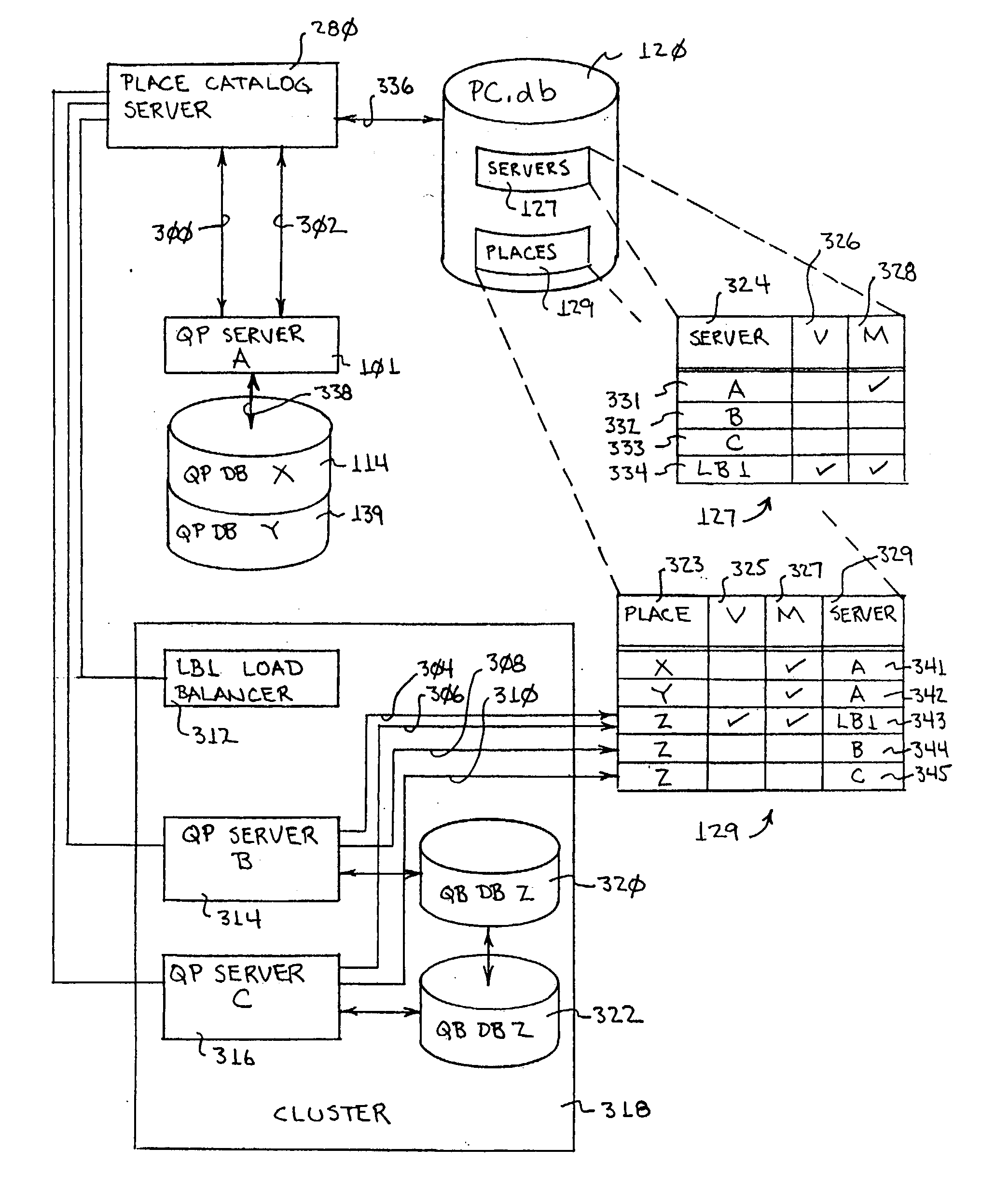

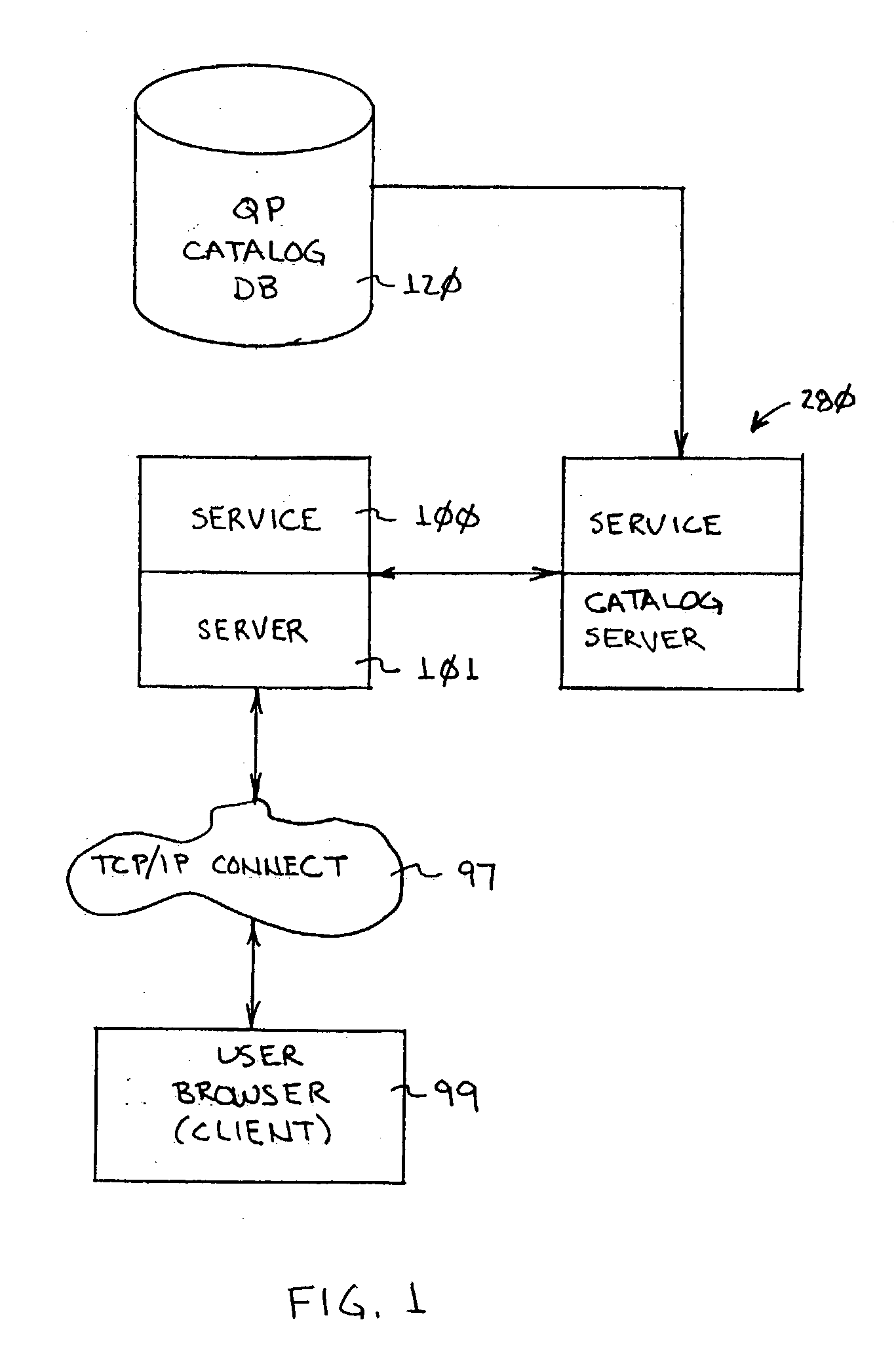

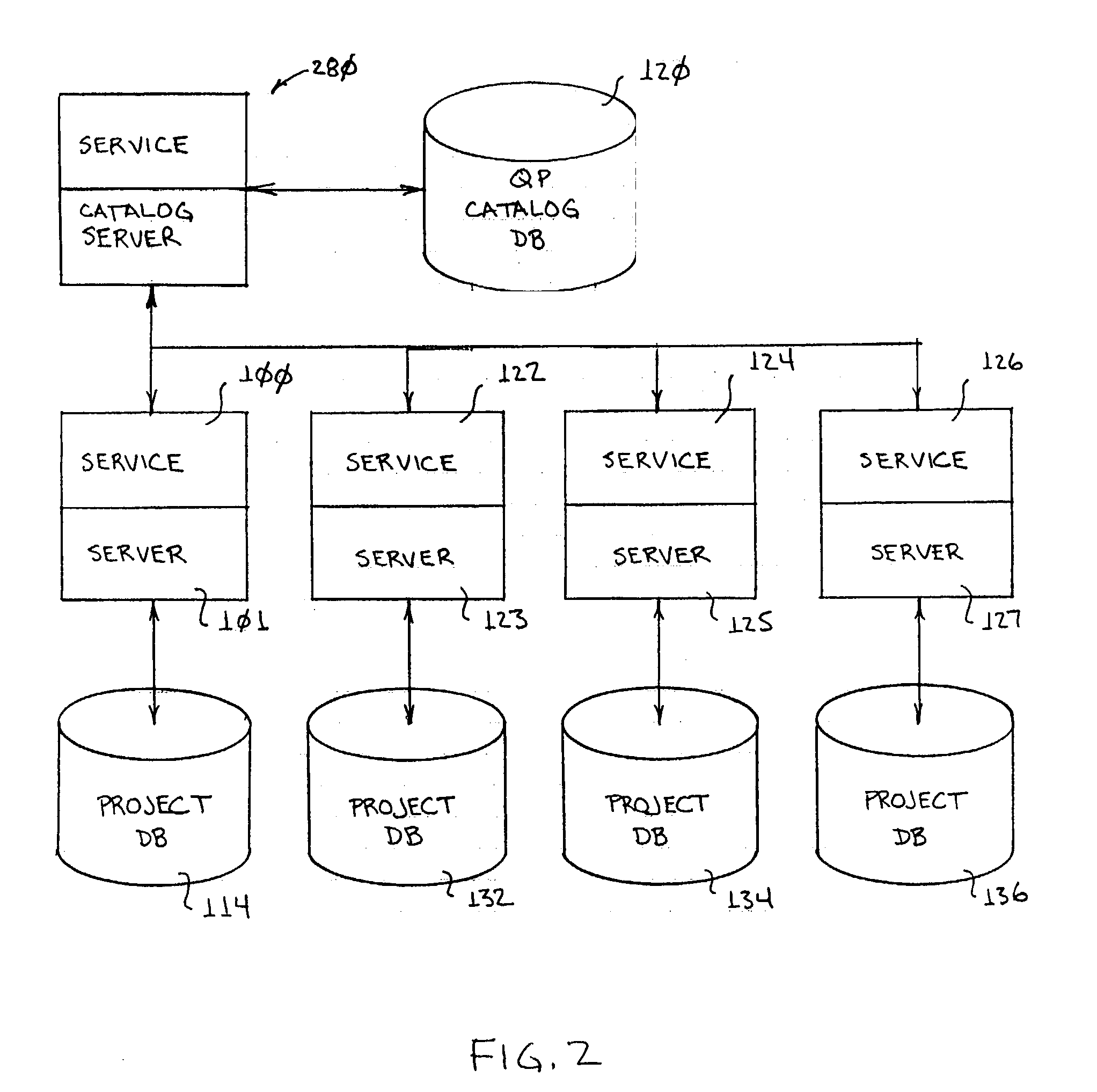

System and method for aggregating user project information in a multi-server system

ActiveUS20040139108A1Reduce in quantityGuaranteed to continue to useDigital data processing detailsOffice automationComputer scienceDirectory service

A system for aggregating user information on a plurality of projects and servers includes a project catalog; a project catalog server; a plurality of project servers; a plurality of project databases; a project database being associated with each project server; an entry in the project catalog for each project server and each project database; and a my projects procedure responsive to user entry of a my projects request for accessing the project catalog server to obtain markup language representations of entries in the project catalog for the user for display at a terminal.

Owner:GOOGLE LLC

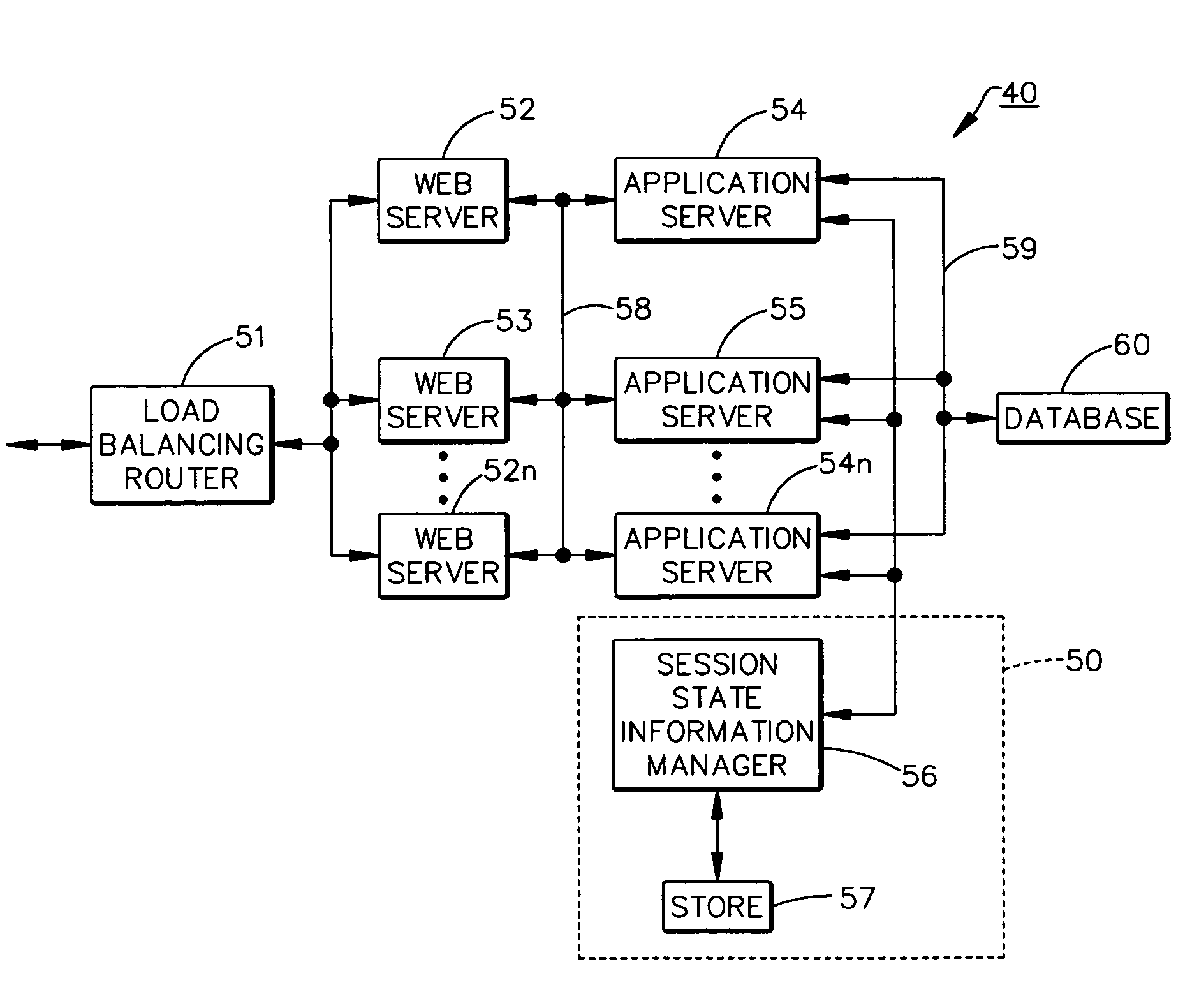

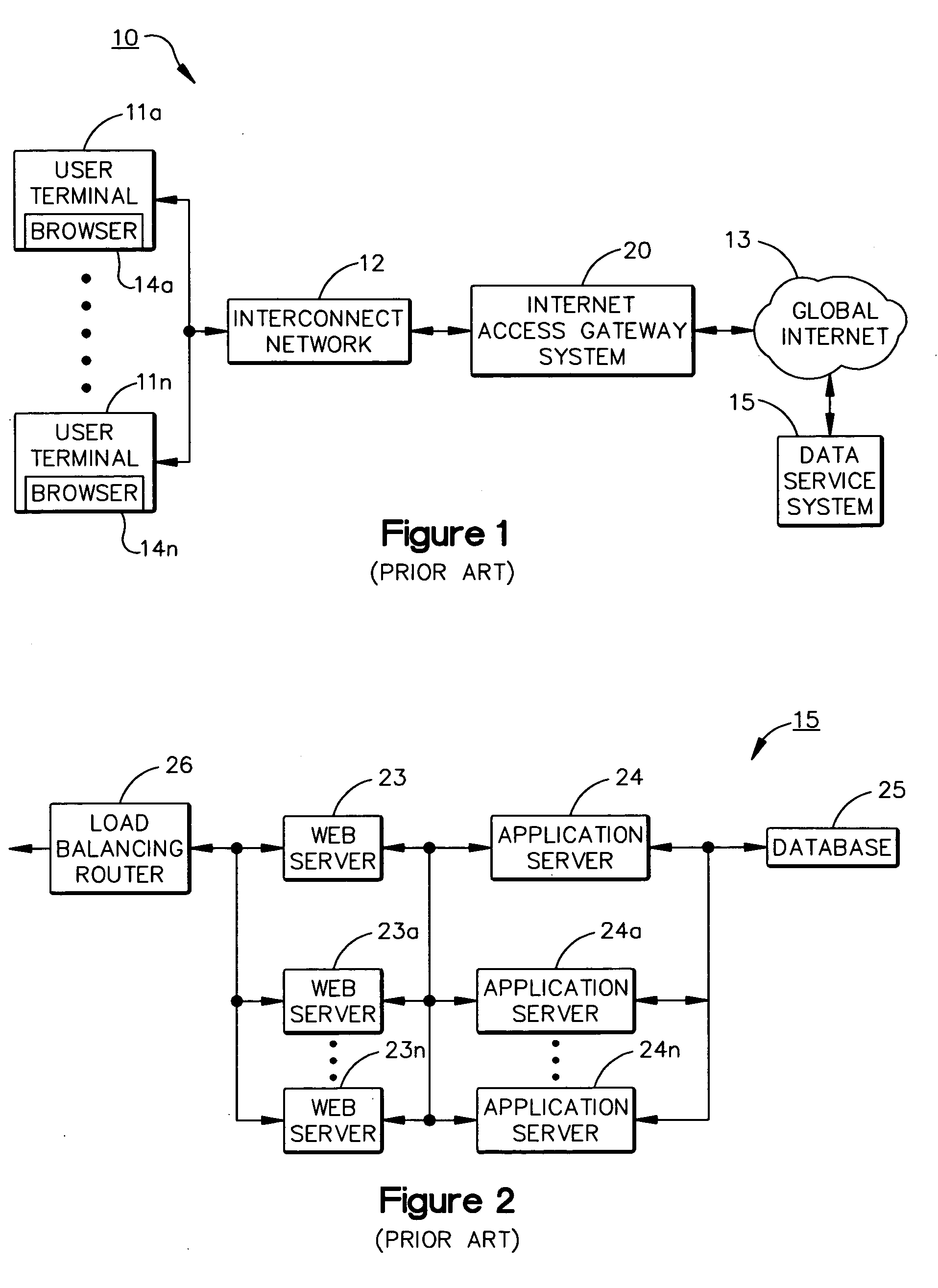

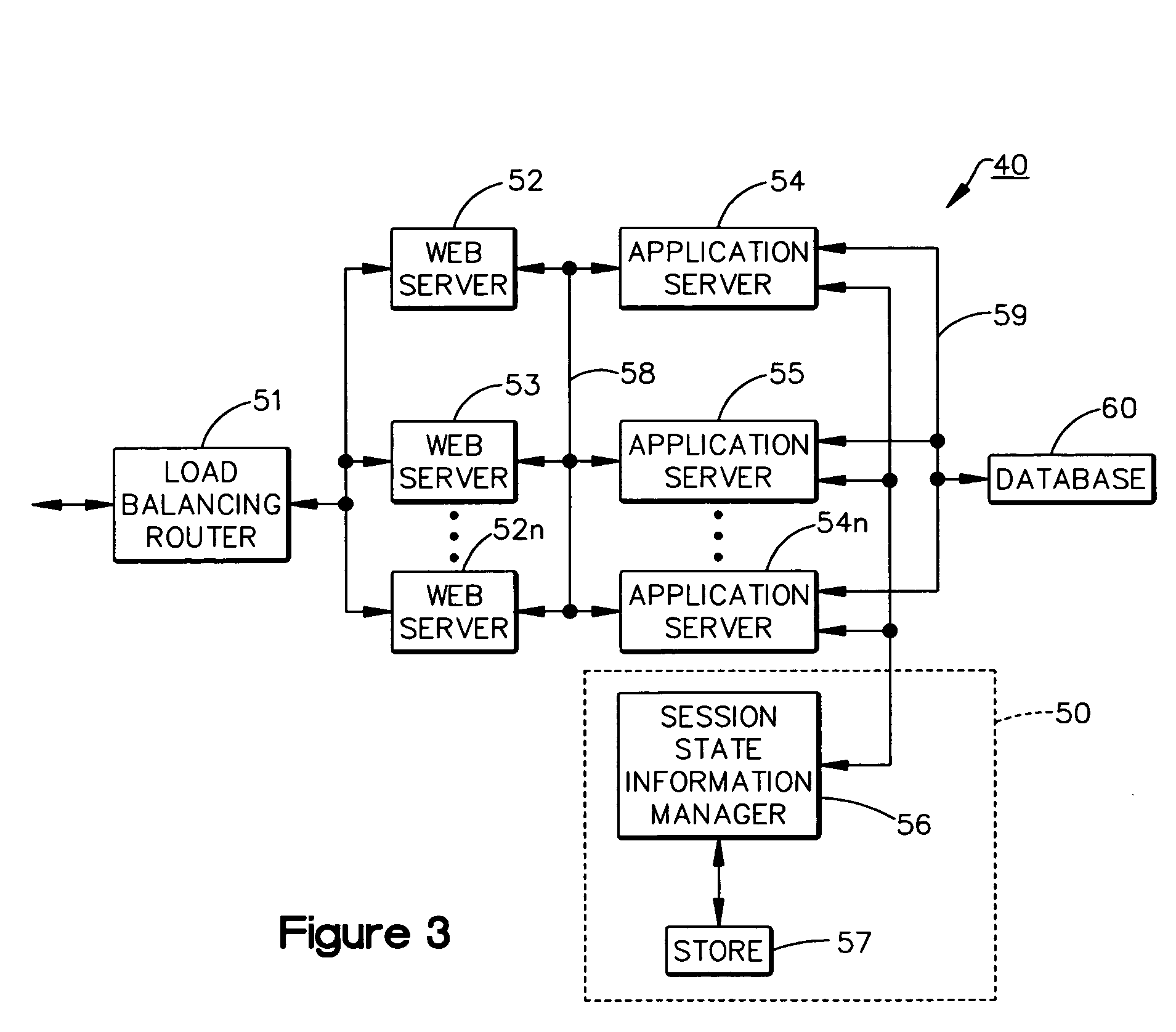

Allowing requests of a session to be serviced by different servers in a multi-server data service system

ActiveUS7200665B2Improve data performanceImprove performanceMultiple digital computer combinationsSecuring communicationSingle sessionApplication server

A data service system includes web servers, each servicing any access request received by the data service system. Duplicate application servers are also provided, each processing any request directed from any one of the web servers. A session state information managing system is provided to allow different application servers to process requests belonging to a single session without requiring the requests to carry their entire session state information. The managing system includes a session state information manager that, when called by an application server in processing a request, (1) provides the session state information of the request to the application server, and (2) generate a state reference for a new session state information for the request after the application server has processed the request and generated the new session state information. The managing system also includes a store that stores all session state information.

Owner:VALTRUS INNOVATIONS LTD +1

Load balancing based on cache content

InactiveUS20060161577A1Data processing applicationsDigital data processing detailsLoad SheddingElectronic form

A method, schema, and computer-readable medium provide various means for load balancing computing devices in a multi-server environment. The method, schema, and computer-readable medium for load balancing computing devices in a multi-server environment may be utilized in a networked server environment, implementing a spreadsheet application for manipulating a workbook, for example. The method, schema, and computer-readable medium operate to load balance computing devices in a multi-server environment including determining whether a file, such as a spreadsheet application workbook, resides in the cache of a particular server, such as a calculation server. Upon meeting certain conditions, the user request may be directed to the particular server.

Owner:MICROSOFT TECH LICENSING LLC

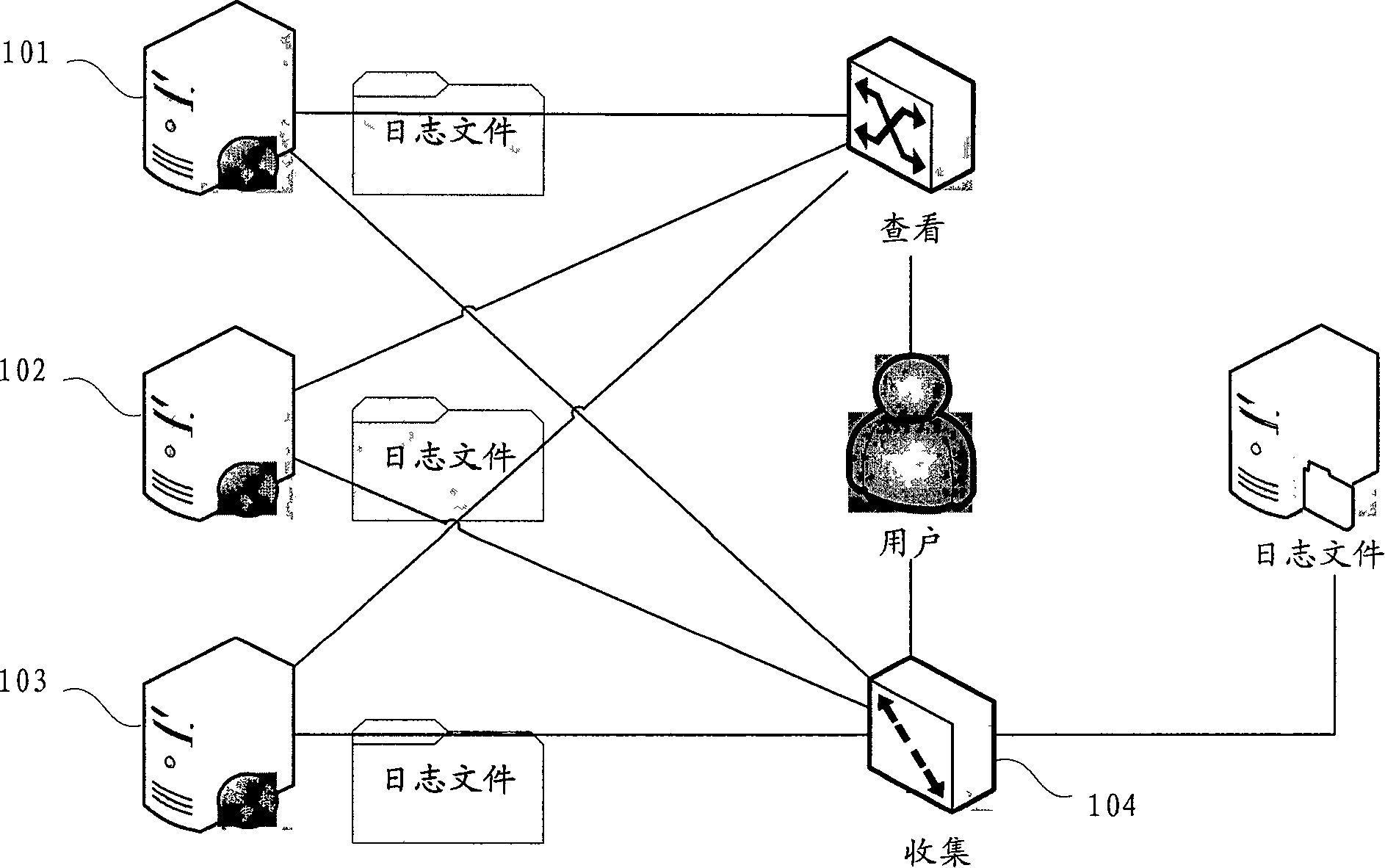

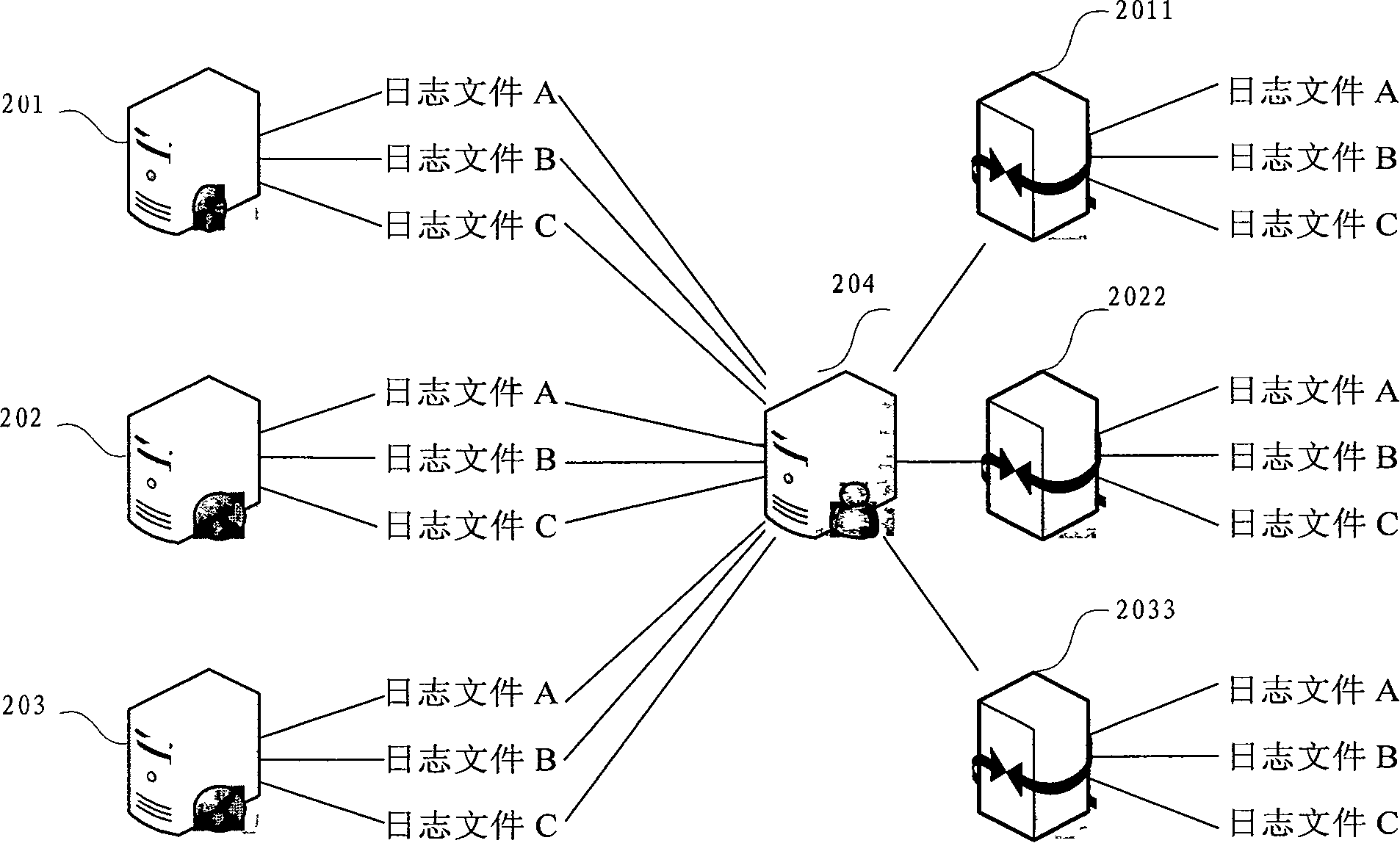

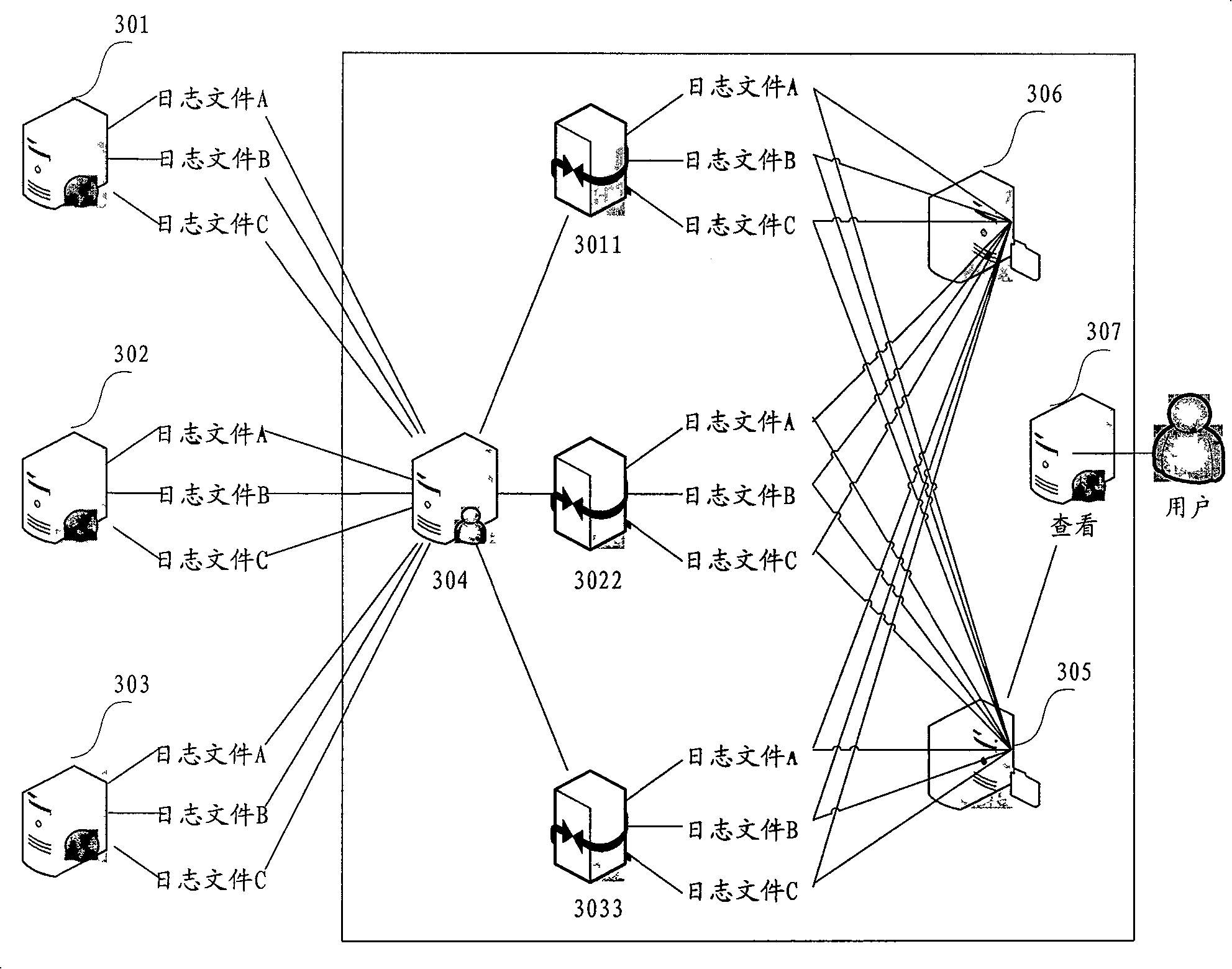

Method and system for providing log service

ActiveCN101197700ASequentialSolve the problem of no timingData switching networksSpecial data processing applicationsClient-sideDatabase

The invention discloses a method of providing log service and a system thereof, relates to the log processing technology and solves the problems of temporality after log collection and resource and time waste in log sorting under the environment of multiserver. The method comprises that: index target category information is configured in server configuration file; a client sends log information to a remote server; after the server receives the log information, the index target category is read; the log information and the index target category are read to an index object which is then put to an index queue; the index queue is read circularly; the index target category and the log information are acquired from the index object; a log index file is established and stored. As the log index file has temporality, the log index file solves the problem that a log file which is merged by a plurality of dispersed files has no temporality. Furthermore, the invention supports the requirement of high-efficient, safe and convenient log operation by multi-user and the log operation can be controlled through different user authority.

Owner:ADVANCED NEW TECH CO LTD

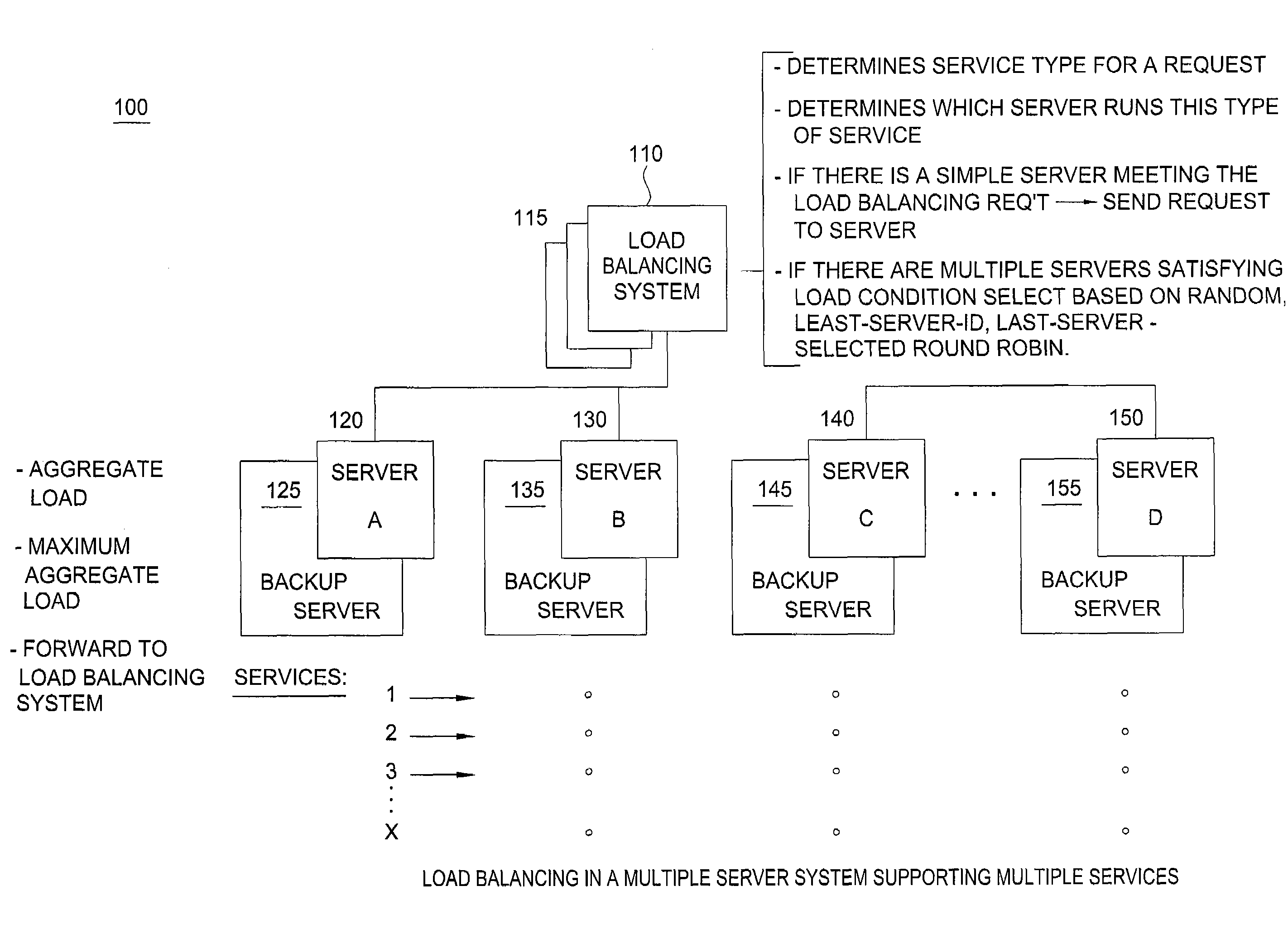

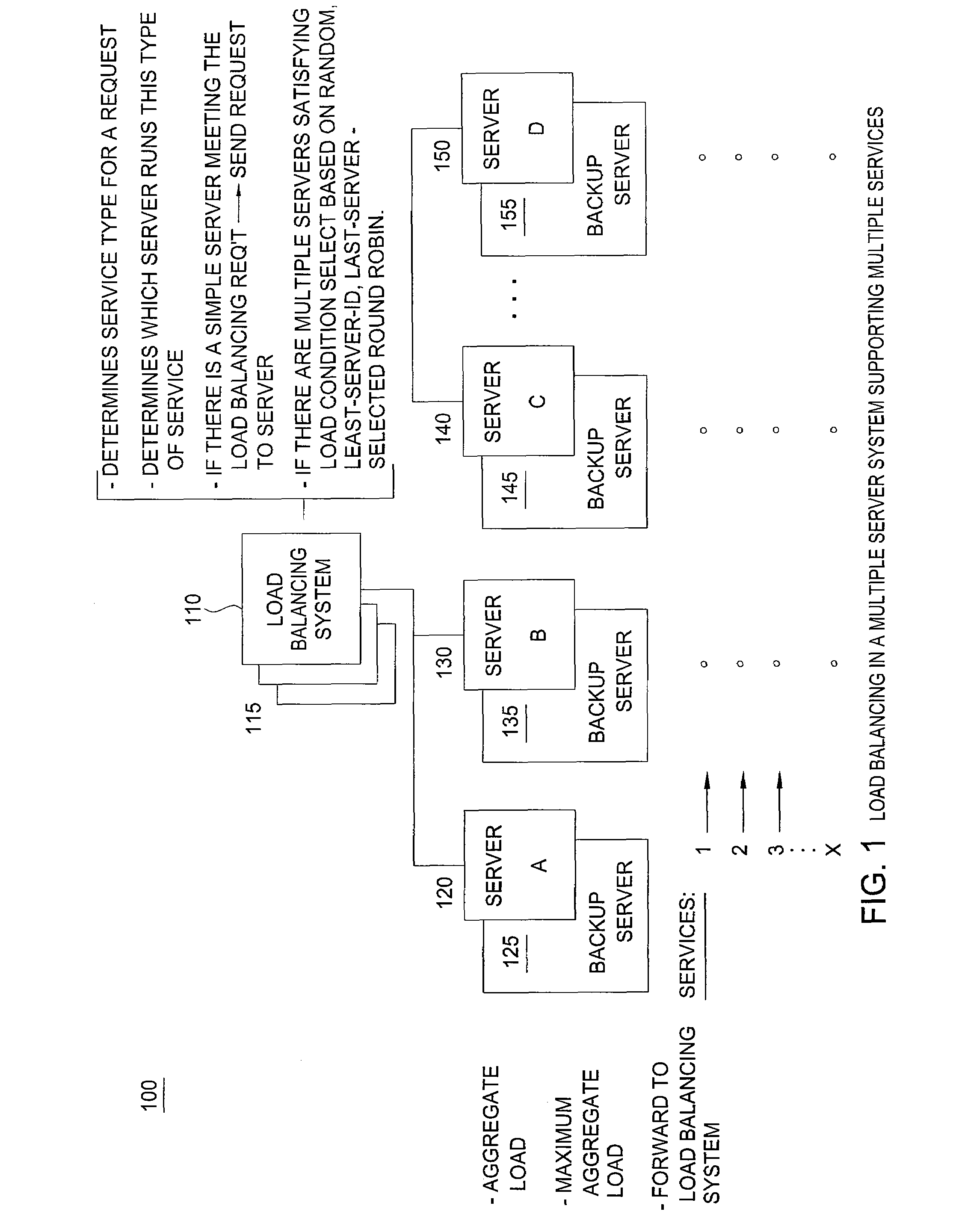

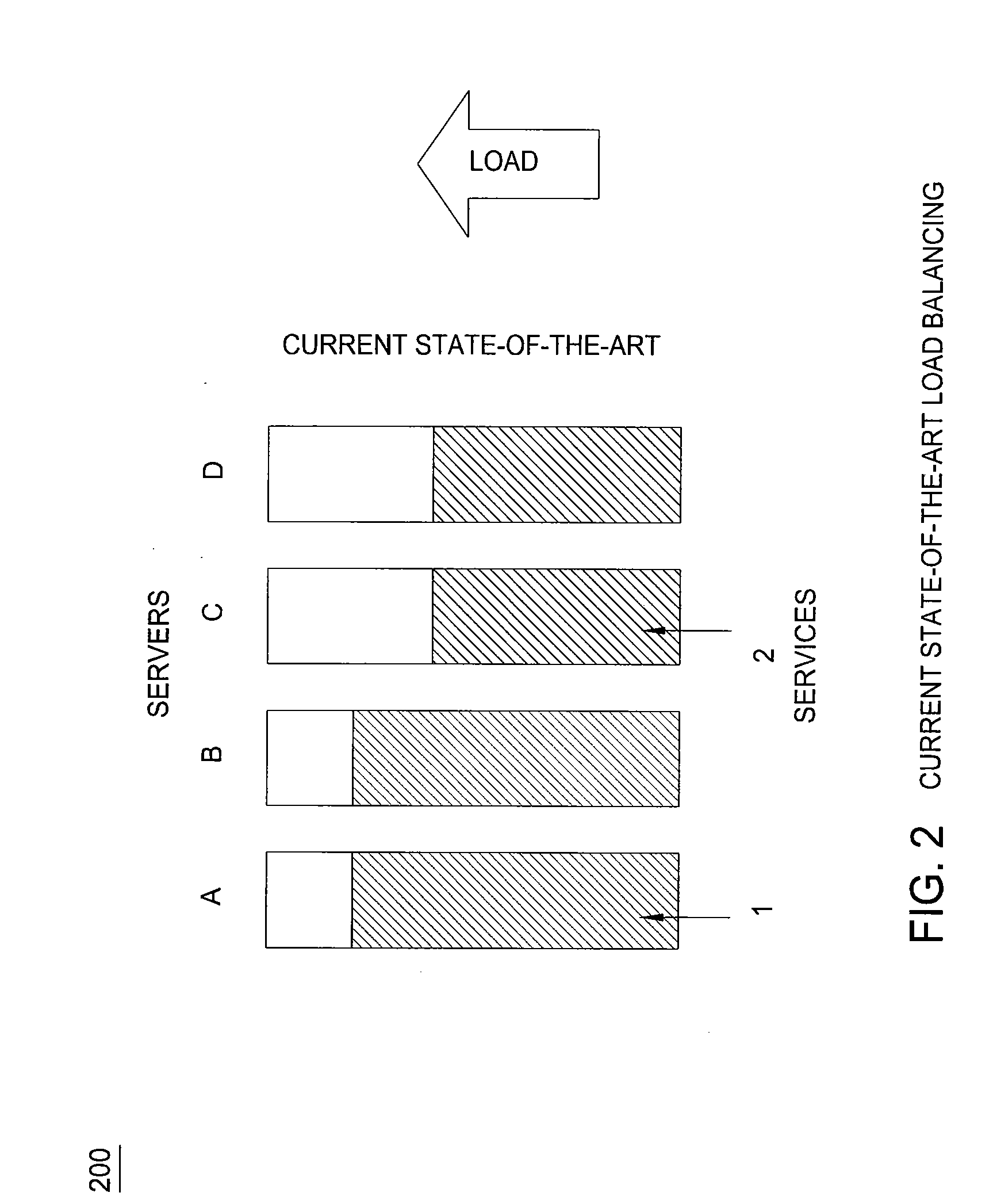

Load Balancing in a Multiple Server System Hosting an Array of Services

A method and system for load balancing in a multiple server system supporting multiple services are provided to determine the best server or servers supporting a service with the best response time. An induced aggregate load is determined for each of the multiple services in accordance with corresponding load metrics. A maximum induced aggregate load on a corresponding server that generates a substantially similar QoS for each of the plurality of services is determined. A load balancing server distributes the multiple services across the multiple servers in response to the determined induced aggregate and maximum induced aggregate loads, such that the QoS for each of the multiple services is substantially uniform across the servers.

Owner:ALCATEL LUCENT SAS

Apparatus and method for modular dynamically power managed power supply and cooling system for computer systems, server applications, and other electronic devices

InactiveUS8700923B2Energy efficient ICTVolume/mass flow measurementNetwork architectureWorkload management

Network architecture, computer system and / or server, circuit, device, apparatus, method, and computer program and control mechanism for managing power consumption and workload in computer system and data and information servers. Further provides power and energy consumption and workload management and control systems and architectures for high-density and modular multi-server computer systems that maintain performance while conserving energy and method for power management and workload management. Dynamic server power management and optional dynamic workload management for multi-server environments is provided by aspects of the invention. Modular network devices and integrated server system, including modular servers, management units, switches and switching fabrics, modular power supplies and modular fans and a special backplane architecture are provided as well as dynamically reconfigurable multi-purpose modules and servers. Backplane architecture, structure, and method that has no active components and separate power supply lines and protection to provide high reliability in server environment.

Owner:HURON IP

System and method for searching a plurality of databases distributed across a multi server domain

InactiveUS20040139057A1Improve usabilityData processing applicationsDigital data processing detailsDatabase serverUniform resource locator

A system is for searching a plurality of project databases, including a service including a plurality of project database servers; a multi server single server signon processor for authenticating access by a user to a plurality of the project databases; a domain catalog server including a native server, a place server and a domain catalog; the native server for receiving from the user a search request and accessing the domain catalog with respect to the search request to obtain URLs for a plurality of documents in said project databases served by project servers configured to the domain catalog to which the user is authenticated and satisfying said search request; and the place server responsive to the URLs for building an XML result tree for responding to the search request.

Owner:IBM CORP

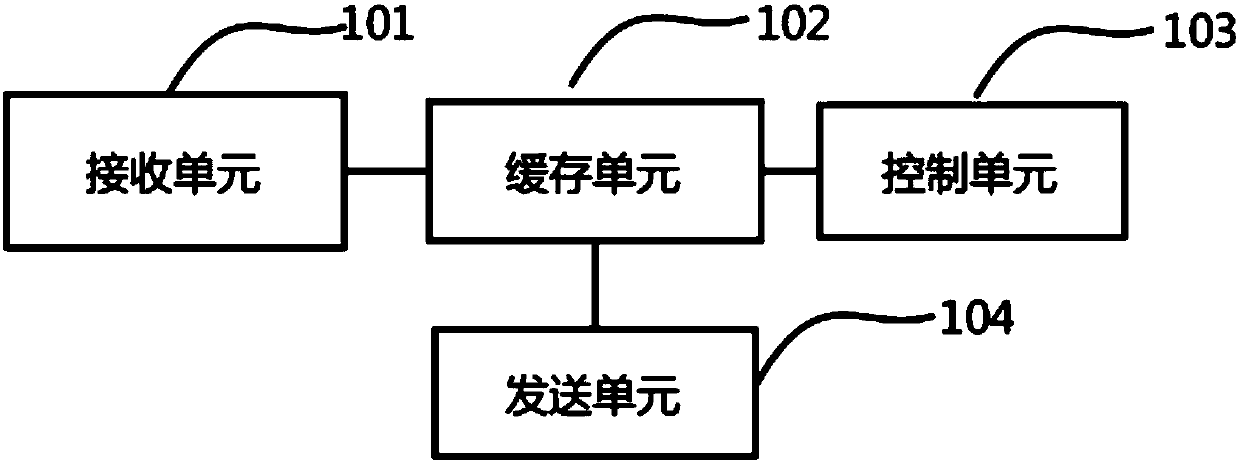

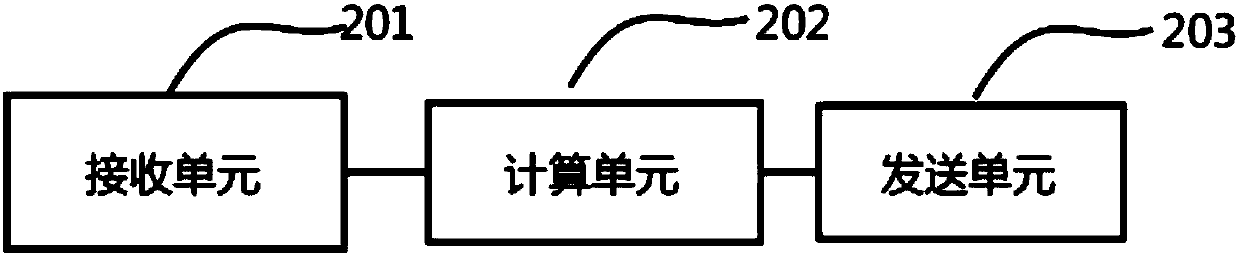

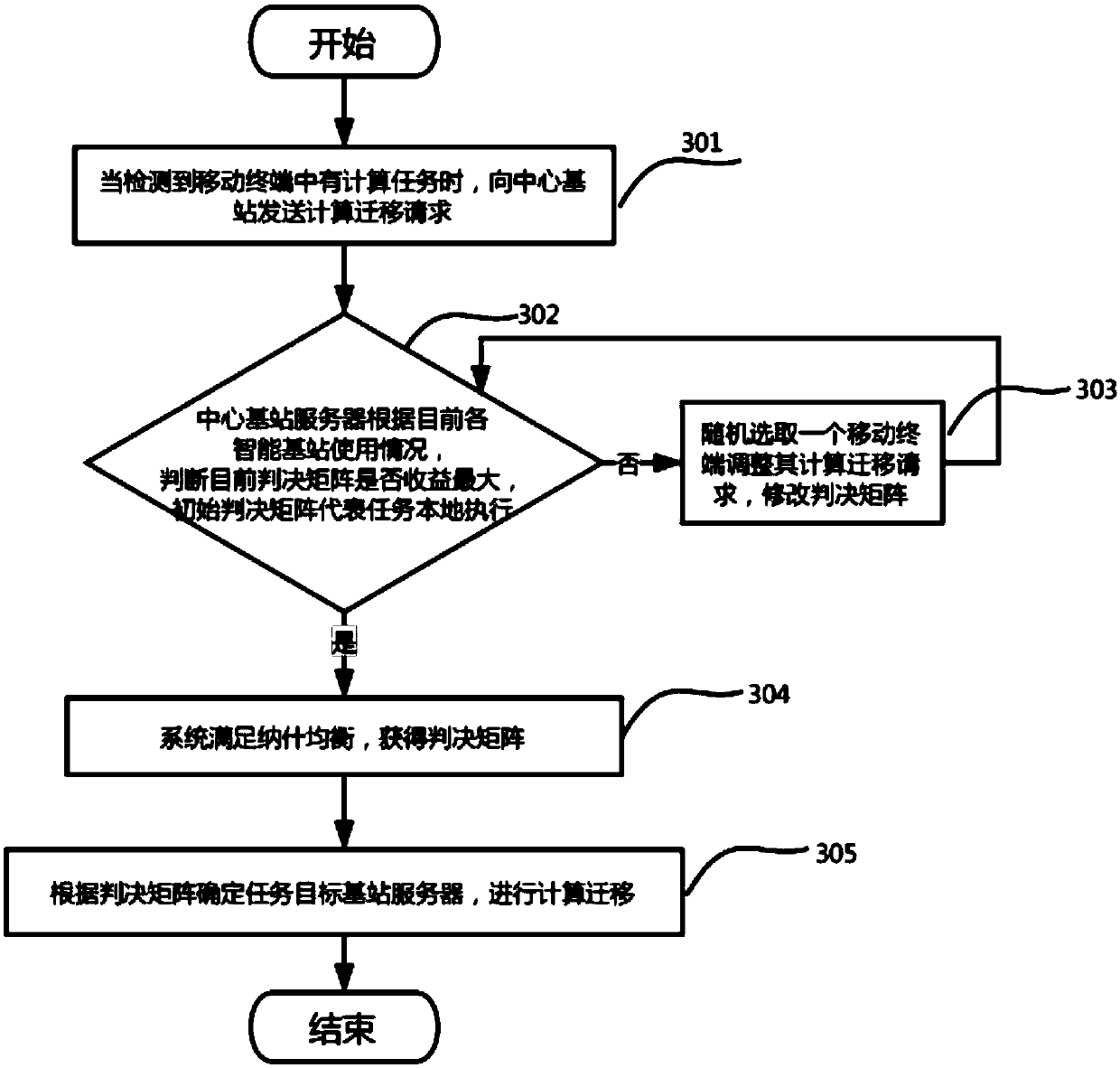

Multi-server mobile edge computing based control and resource scheduling method

InactiveCN107734558AIncrease flexibilityImprove processing efficiencyNetwork traffic/resource managementResource allocationTime delaysMobile edge computing

The invention discloses a resource allocation and base station service deployment method based on mobile edge computing, and the method comprises the following steps: when a computing task is detectedin a mobile terminal, sending a computing migration request to a smart base station; when a cache unit in the base station is short of computing data needed for the task request, sending the needed task data requirement to a network side; receiving the needed task data returned by the network side; computing a time delay gain and an energy consumption gain according to the received needed task data; obtaining a computing migration decision matrix according to an experienced utility function; and performing computing migration according to the computing migration decision matrix. The base station deployment scheme comprises a cache unit, a computing unit, an acquisition processing unit and a sending unit, and can provide computing ability and data caching ability. Thus, the MEC based resource scheduling method and base station service deployment scheme can realize the computing migration in which terminals have multiple tasks, the base stations are multifunctional and targets are diversified.

Owner:BEIJING UNIV OF POSTS & TELECOMM

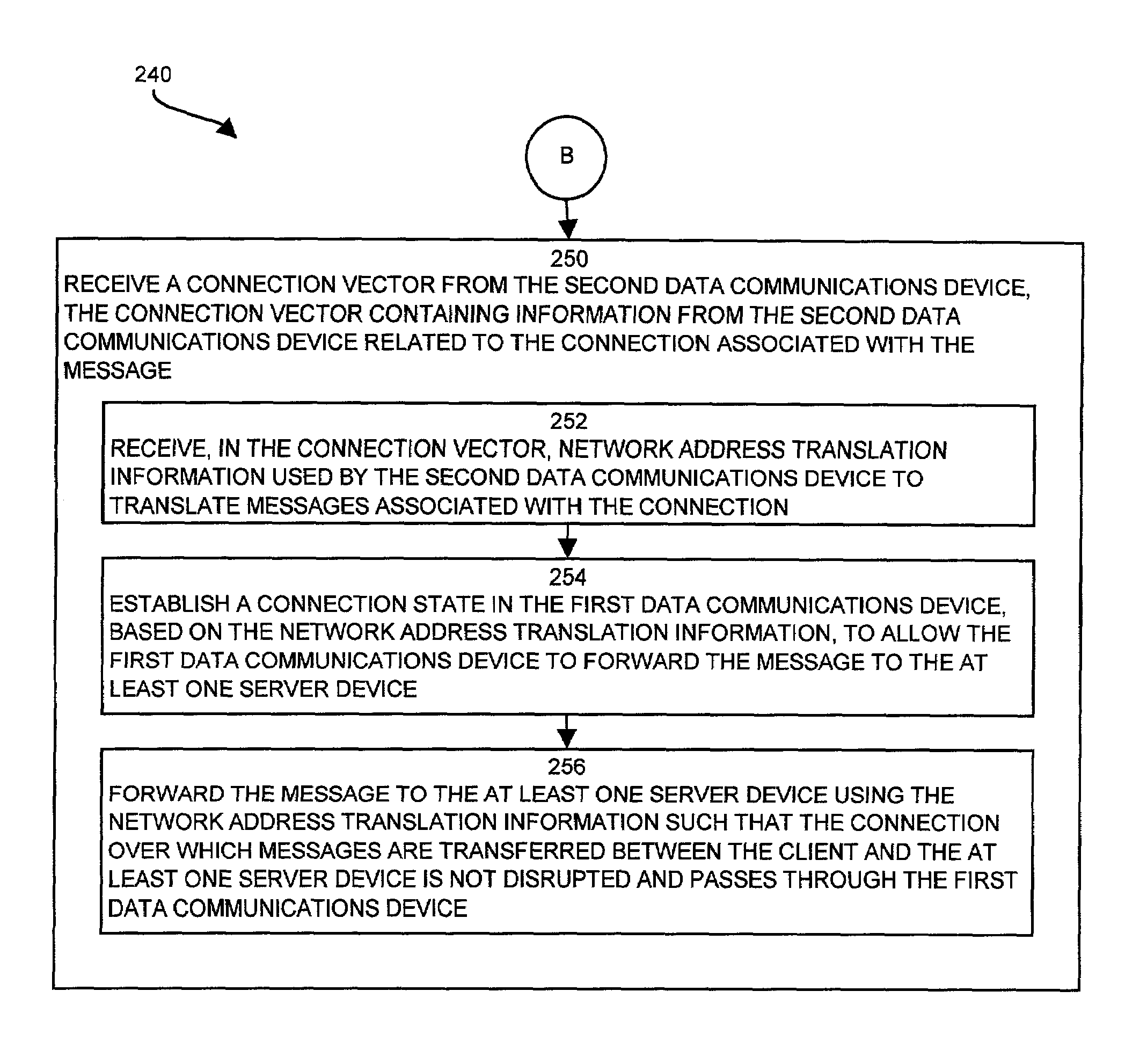

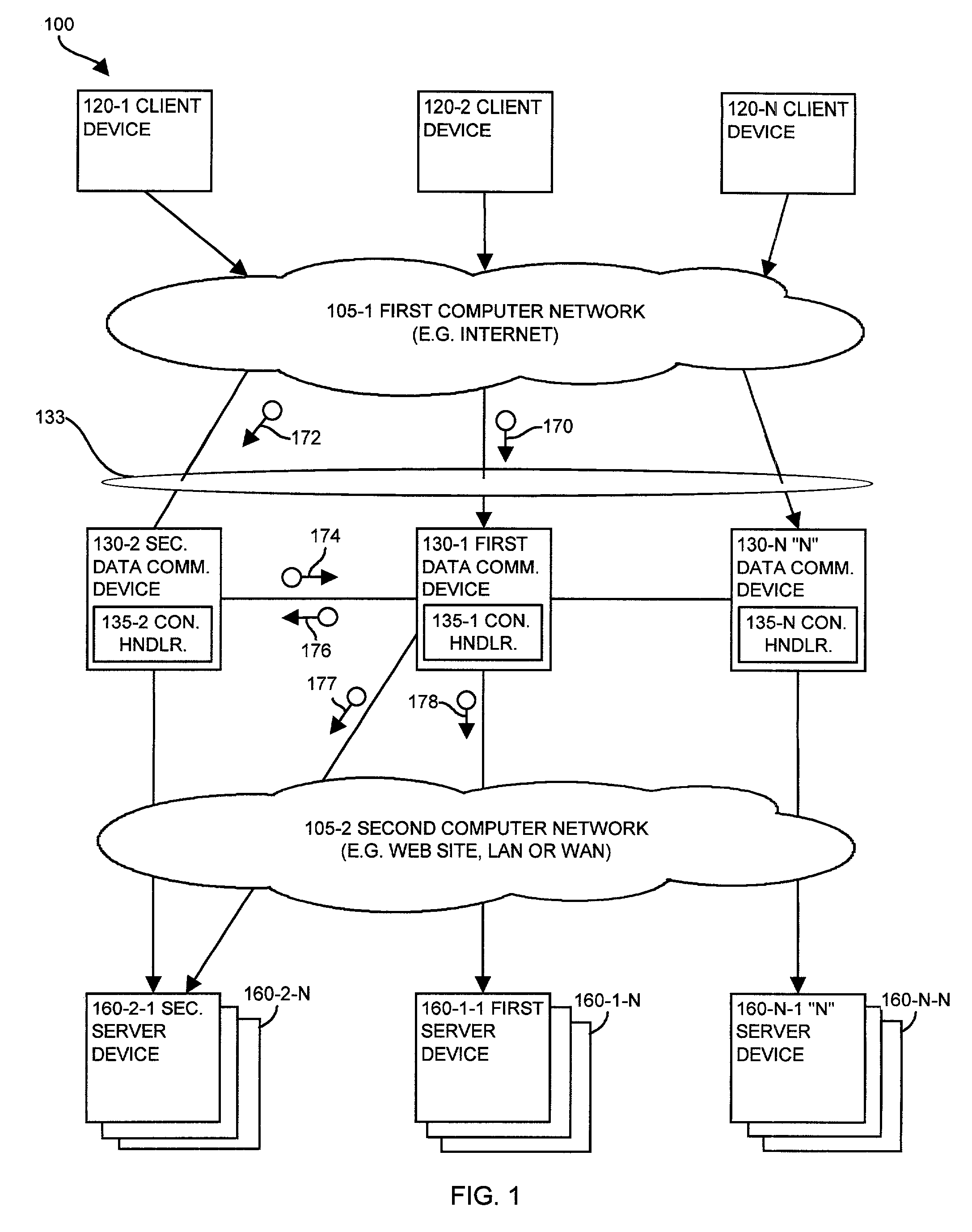

Methods and apparatus for providing multiple server address translation

InactiveUS7139840B1Fast transferEnsure completeMultiple digital computer combinationsTransmissionNetwork addressingNetwork address

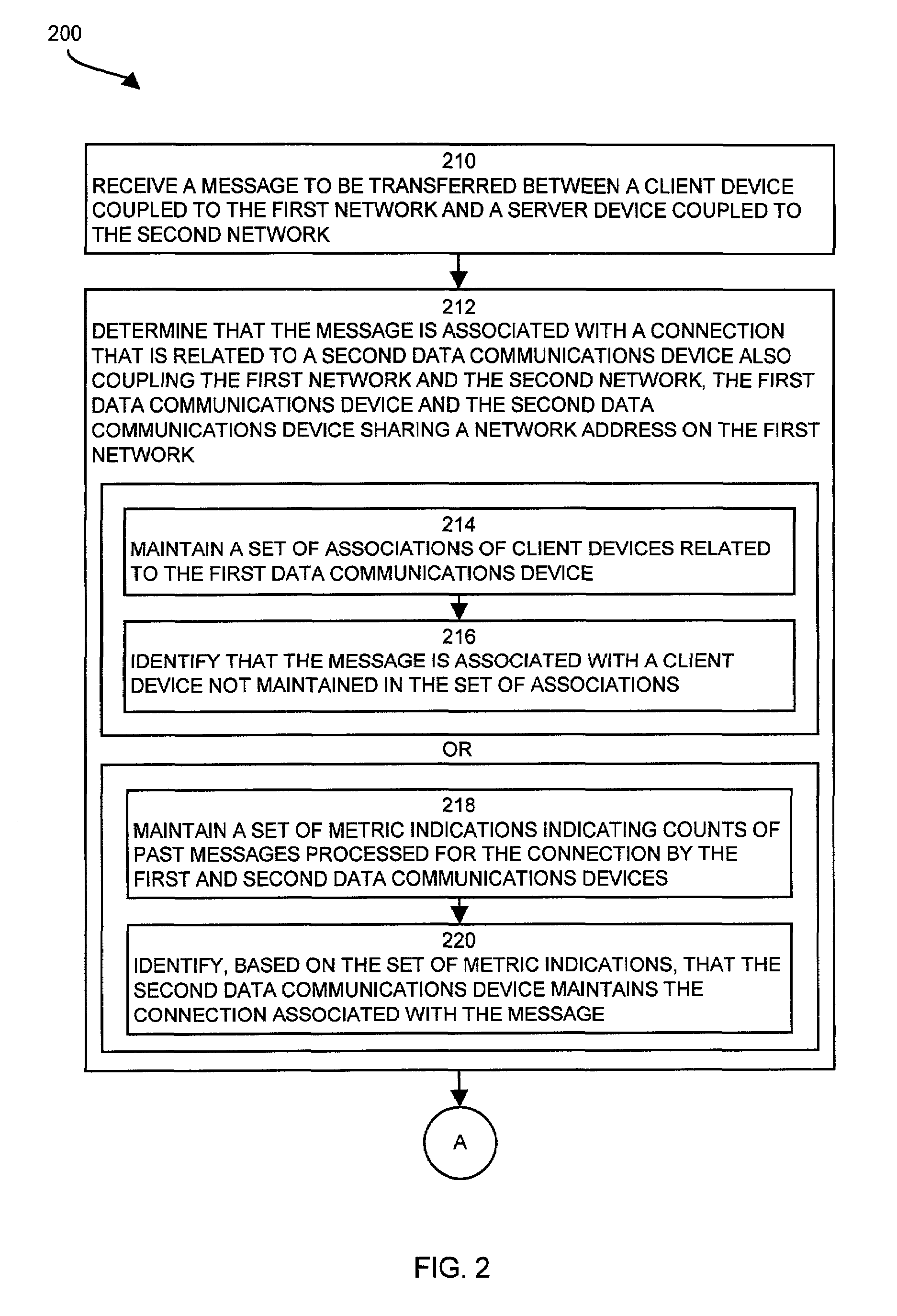

The invention is directed to techniques for coupling a first network and a second network including a method for processing messages to be transferred from a first network to a second network. The method comprising the steps of receiving a message to be transferred between a client device coupled to the first network and a server device coupled to the second network. A first data communications device determines that the message is associated with a connection that is related to a second data communications device also coupling the first network and the second network, wherein the first data communications device and the second data communications device share a network address on the first network. The first data communications device processes the message to maintain the connection to the client device on the first network and to at least one server device on the second network.

Owner:CISCO TECH INC

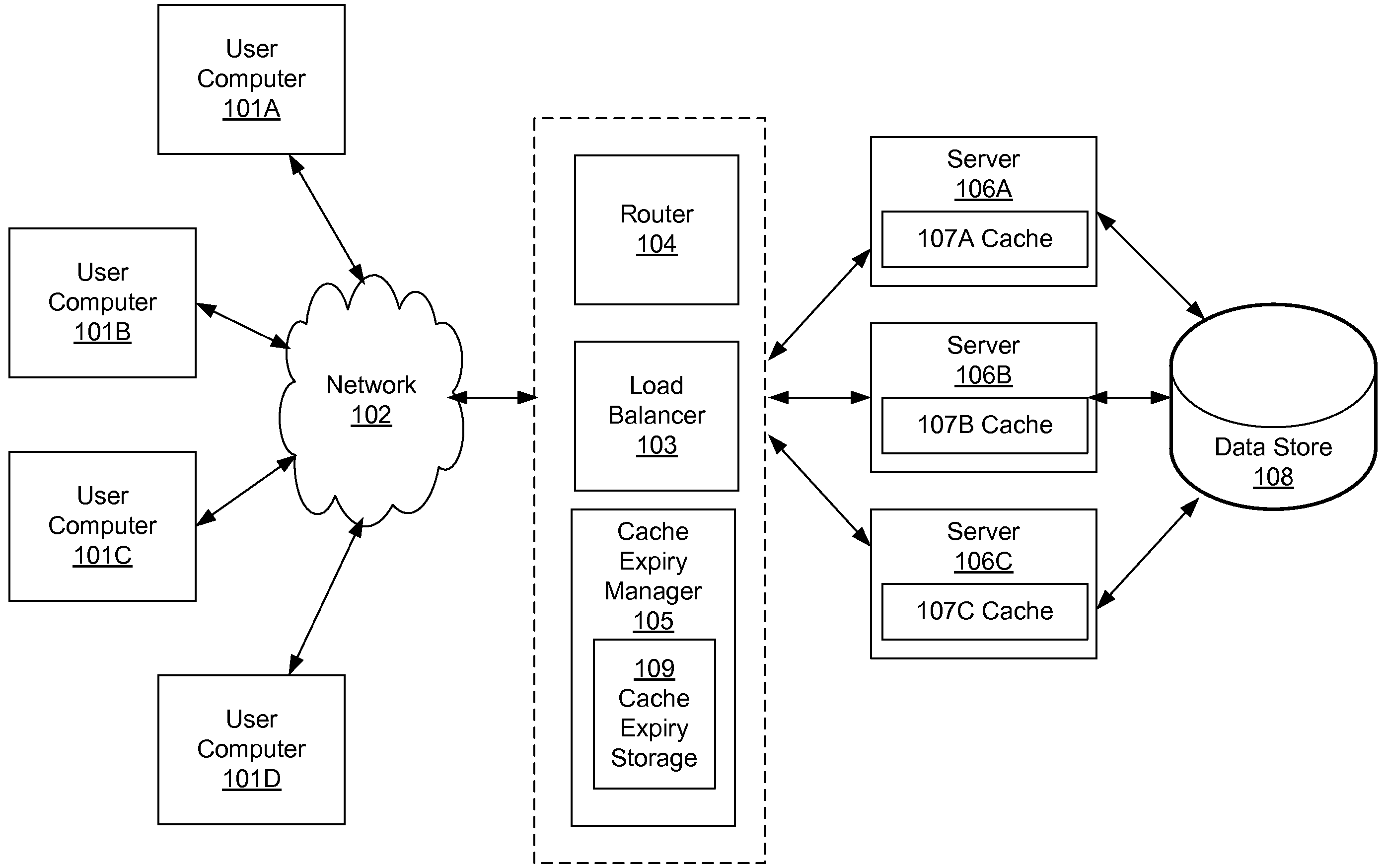

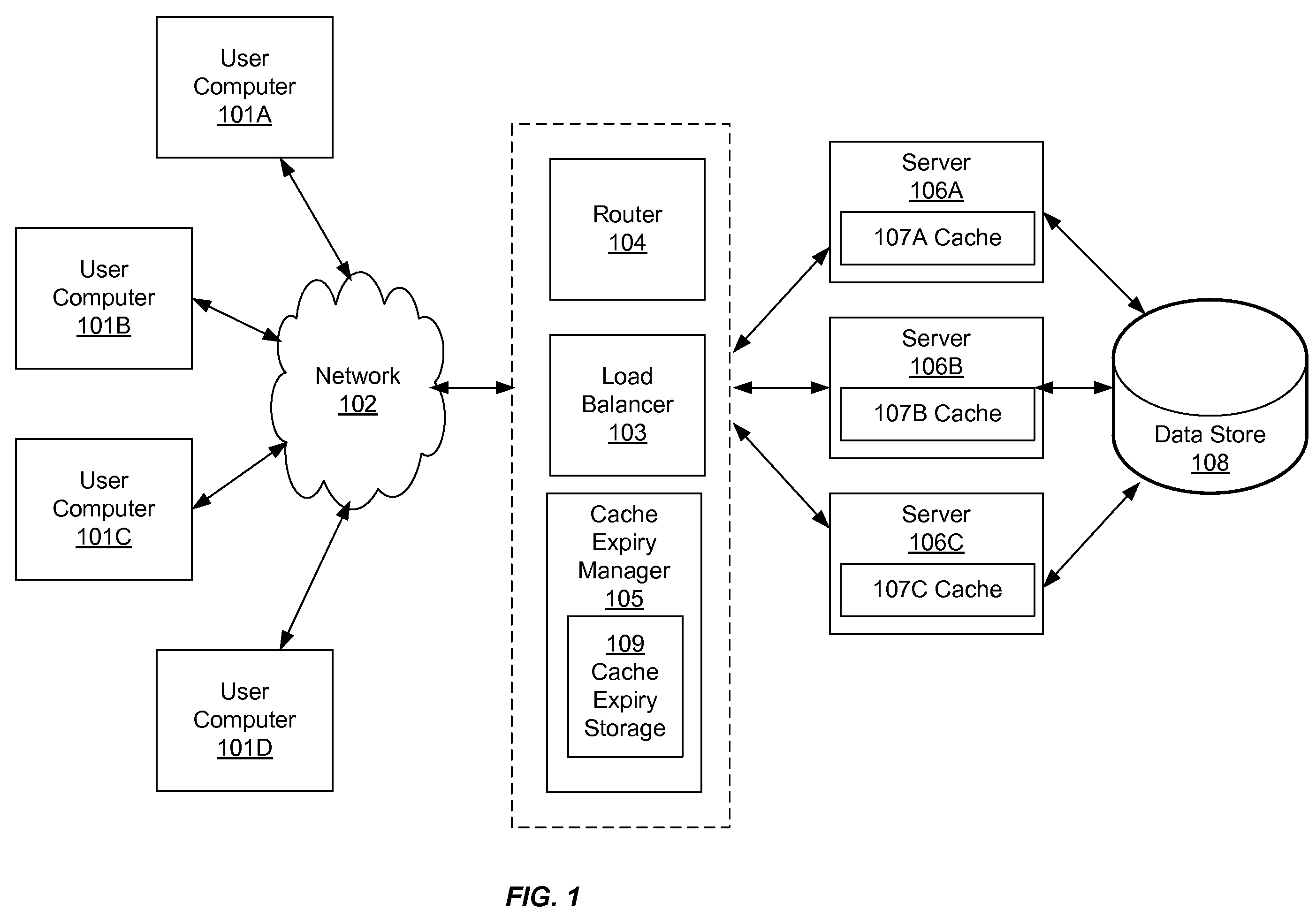

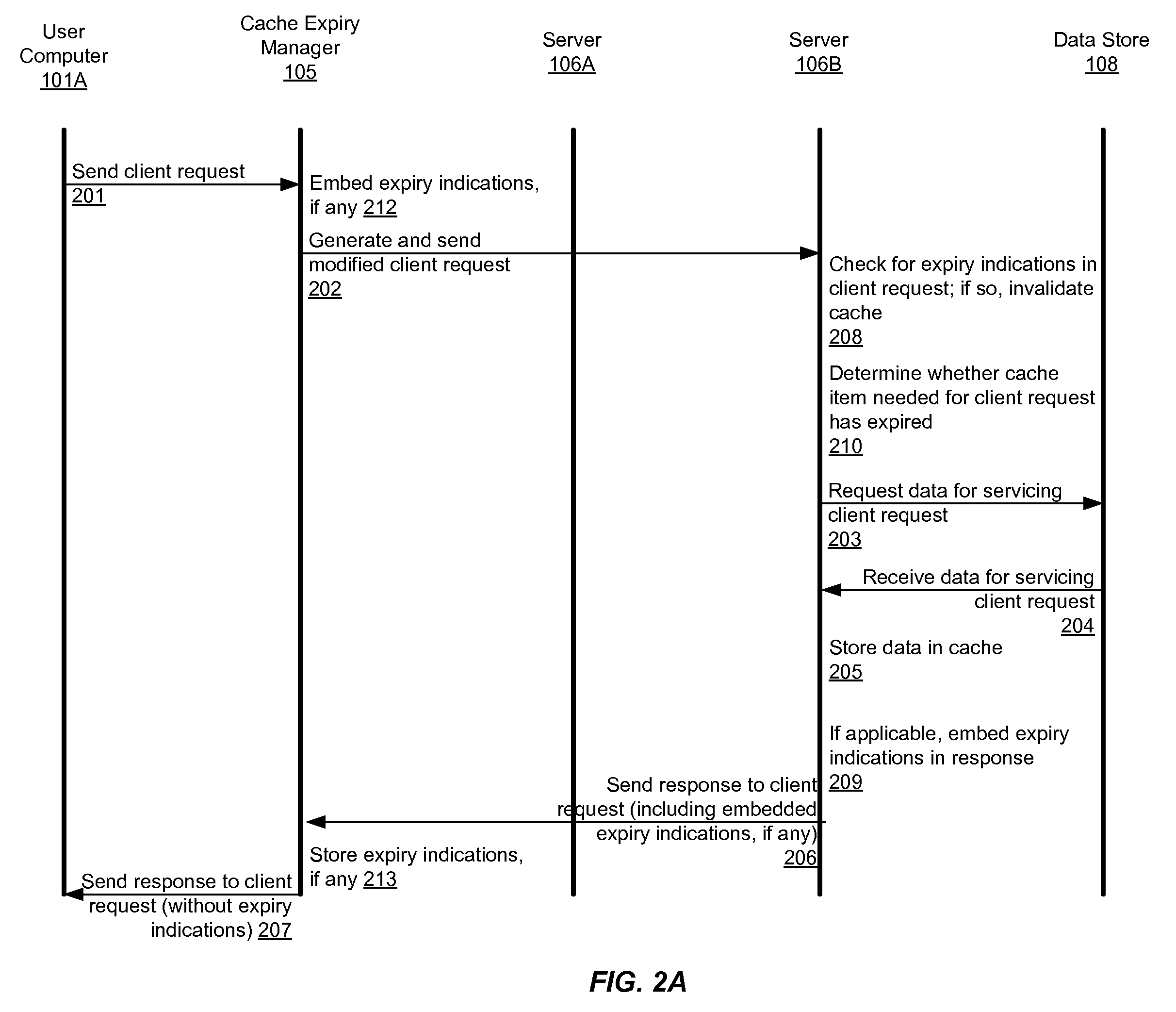

Cache expiry in multiple-server environment

InactiveUS20090043881A1Improve efficiencyAttenuation bandwidthEnergy efficient ICTDigital computer detailsClient-sideBroadcasting

In a multiple-server or multiple-process environment where each server has a local cache, data in one cache may become obsolete because of changes to a data store performed by another server or entity. The present invention provides techniques for efficiently notifying servers as to cache expiry indications that indicate that their local cache data is out of date and should not be used. A cache expiry manager receives cache expiry indications from servers, and sends cache expiry indications to servers in conjunction with client requests or in response to certain trigger events. The need for broadcasting cache expiry notifications to all servers is eliminated, as servers can be informed of cache expiry indications the next time a server is being given a client request that relates to the cache in question. Extraneous and duplicative cache expiry notifications are reduced or eliminated.

Owner:RADWARE

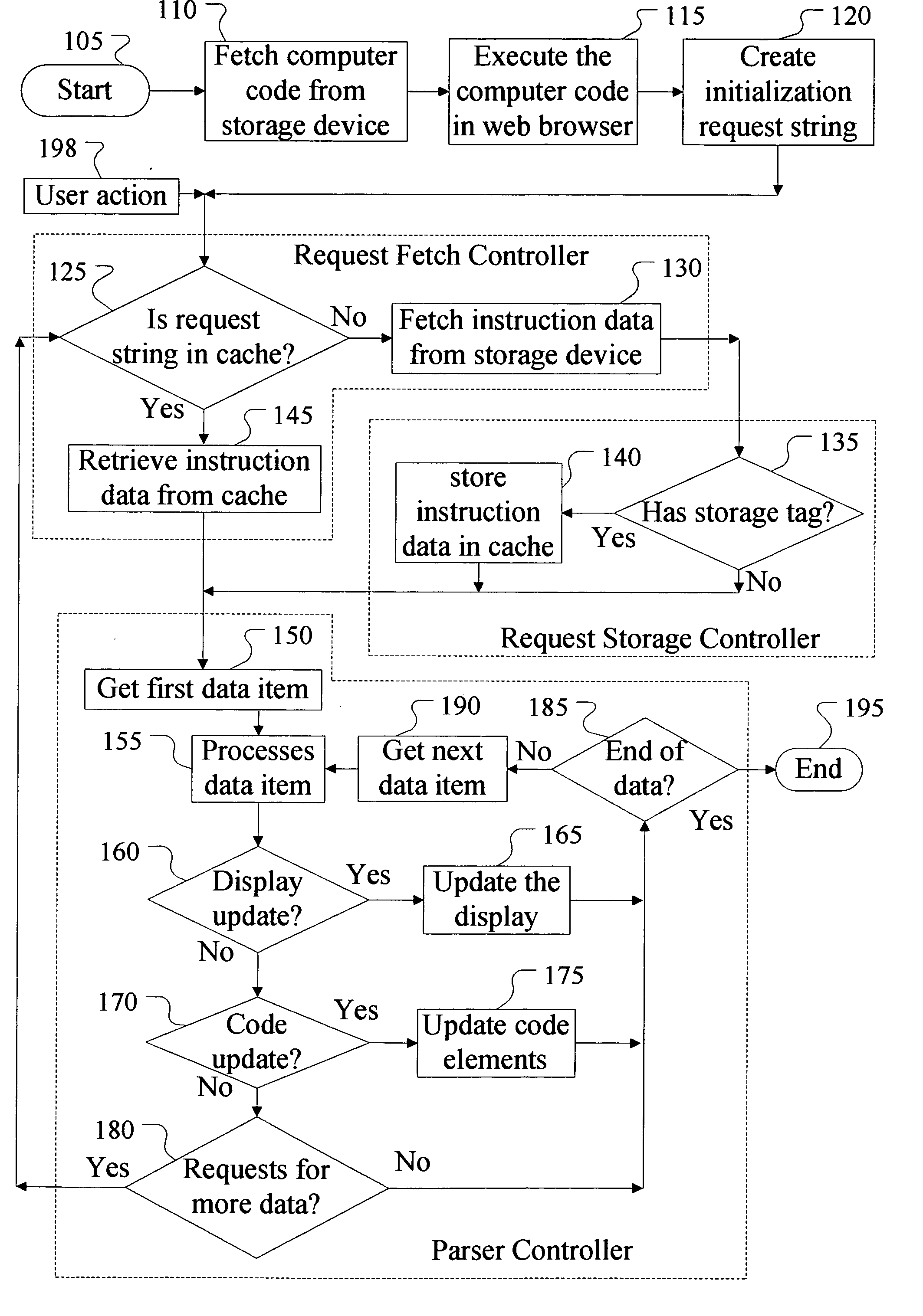

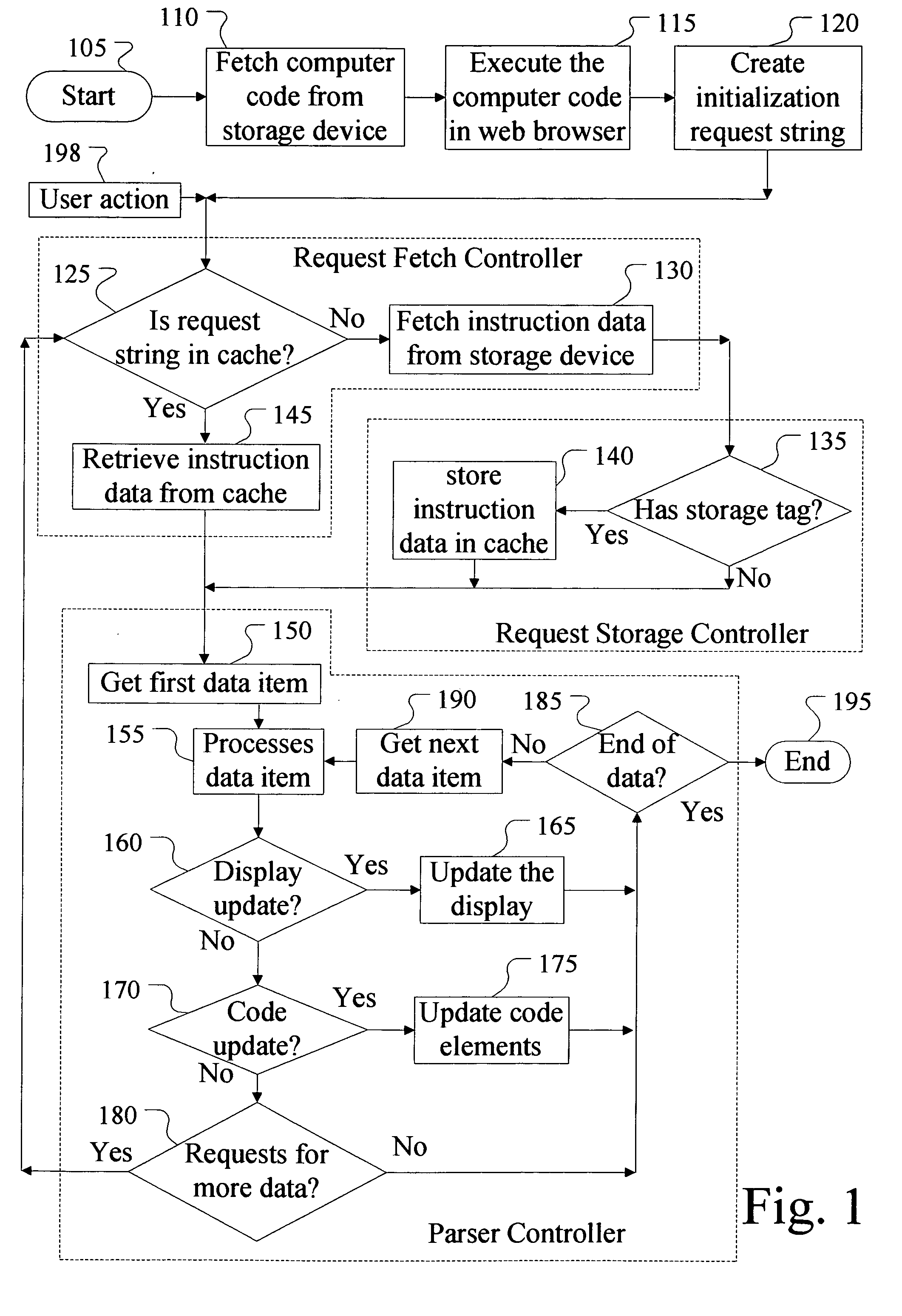

Method for creating editable web sites with increased performance & stability

InactiveUS20060031751A1Natural language data processingWebsite content managementWeb siteClient-side

A method, apparatus and computer program product for creating stable and editable web sites, which use efficient bandwidth, with fast response time and smooth web page transitions. The method creates a web page in the client's machine browser by combining layout instructions & content data. When navigating to a web page, the browser will try to fetch the layout or content data from the local cache, if not exists then the data will be fetched from a web server. This method allows cached layout data to be reused with different content data and edited web pages to be saved as content data. The method can also be used on mission critical systems as it gives the client computer the ability to smoothly overcome many server failures, by redirecting the client to an alternative server.

Owner:EHUD SHAI

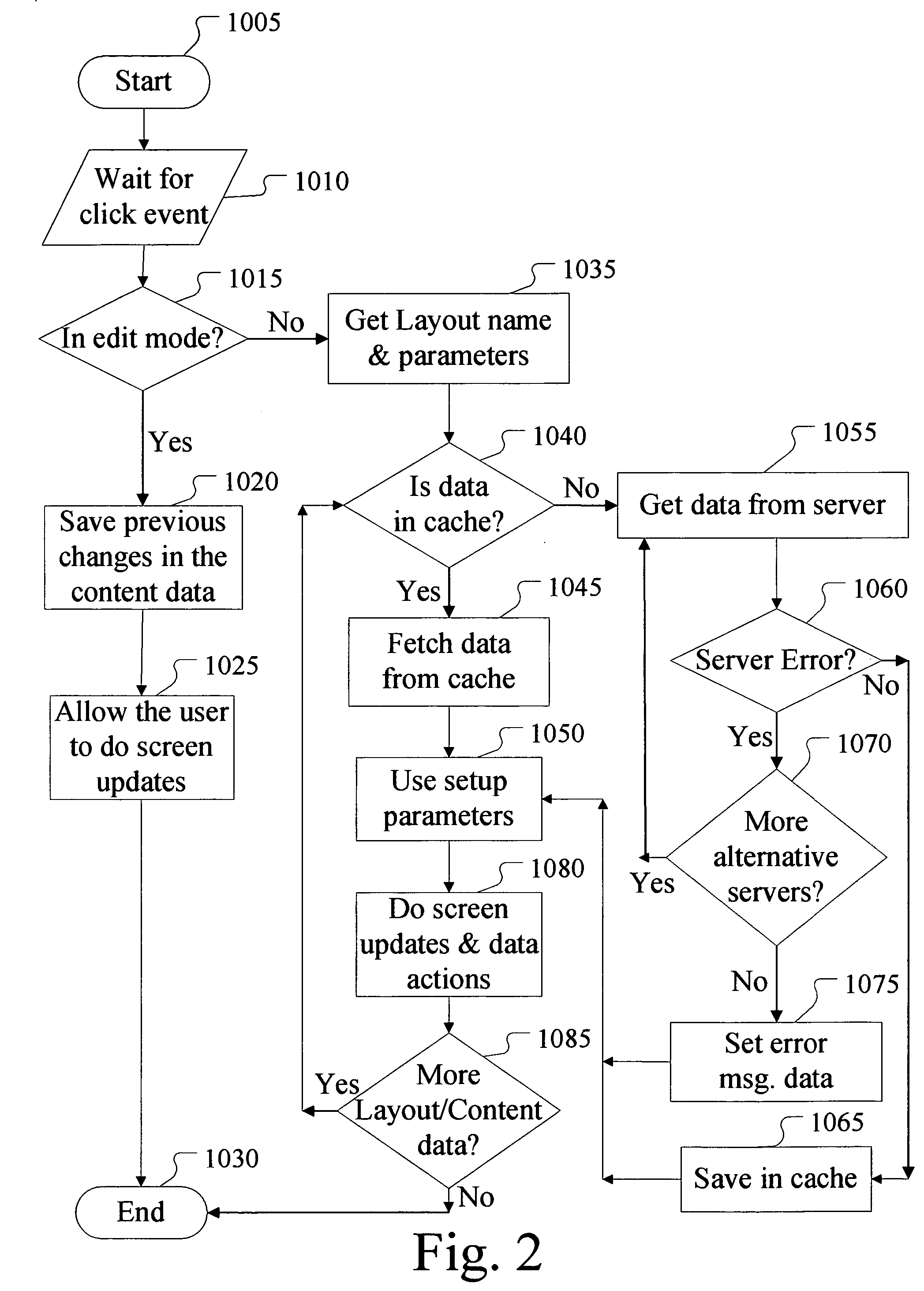

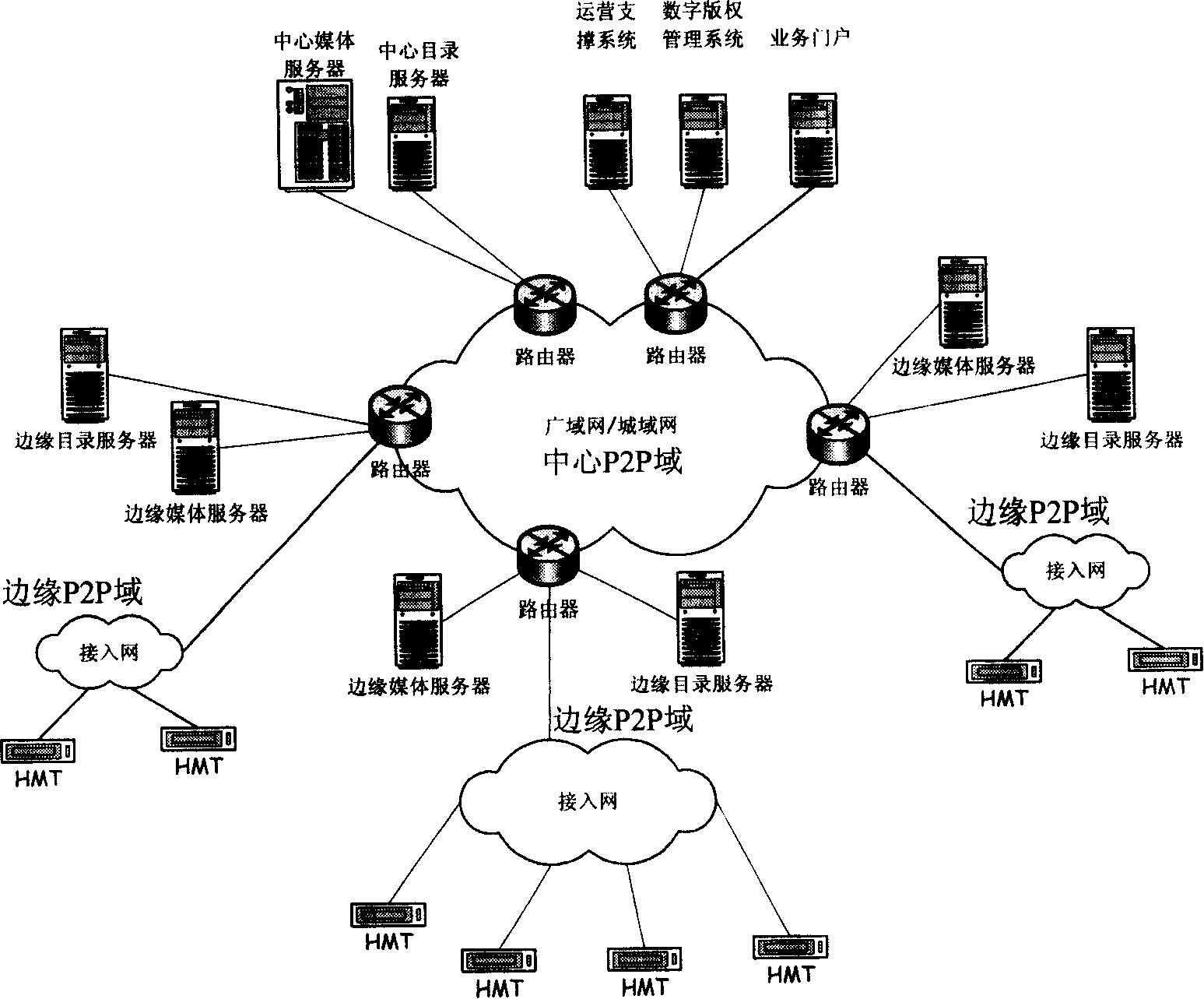

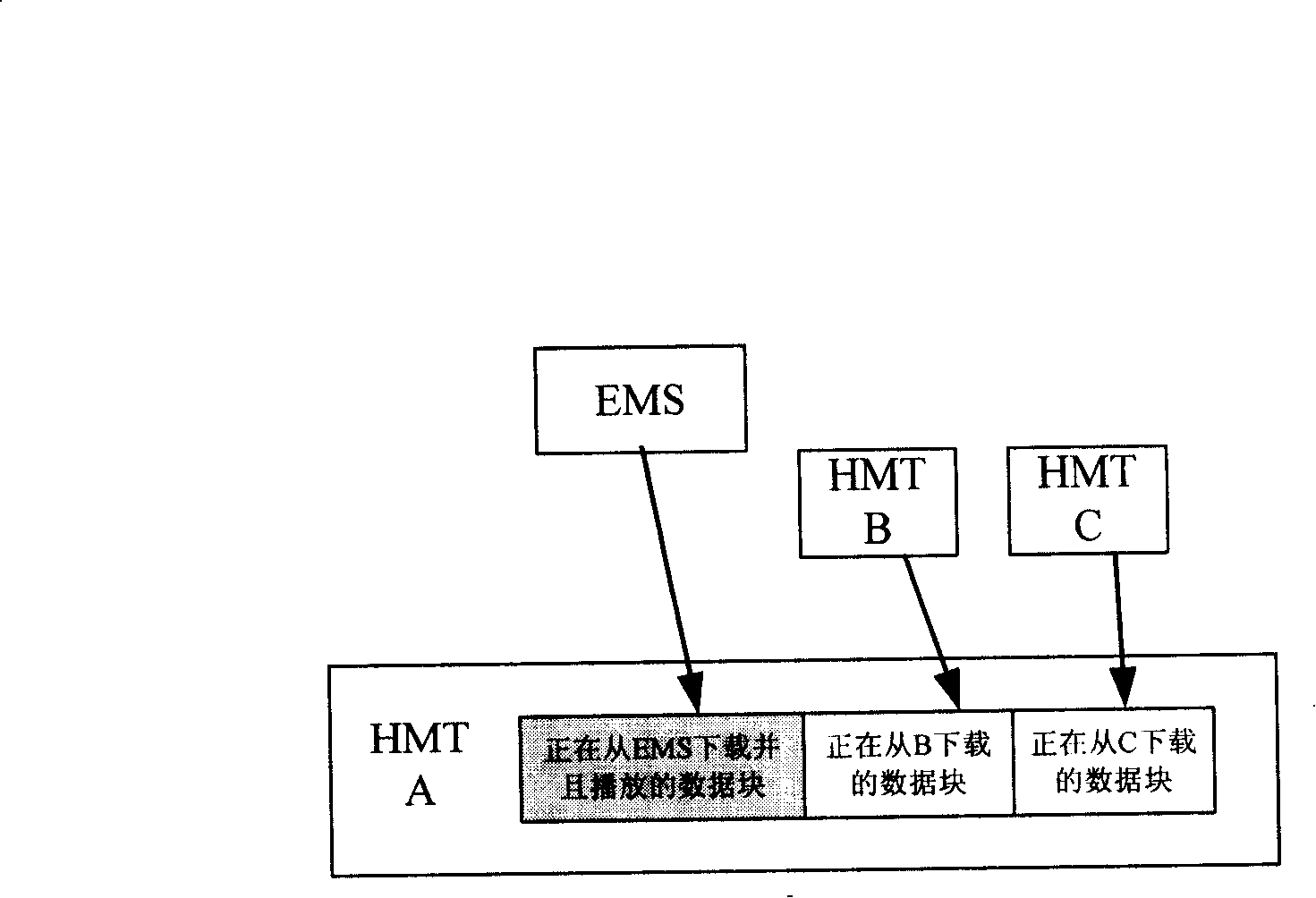

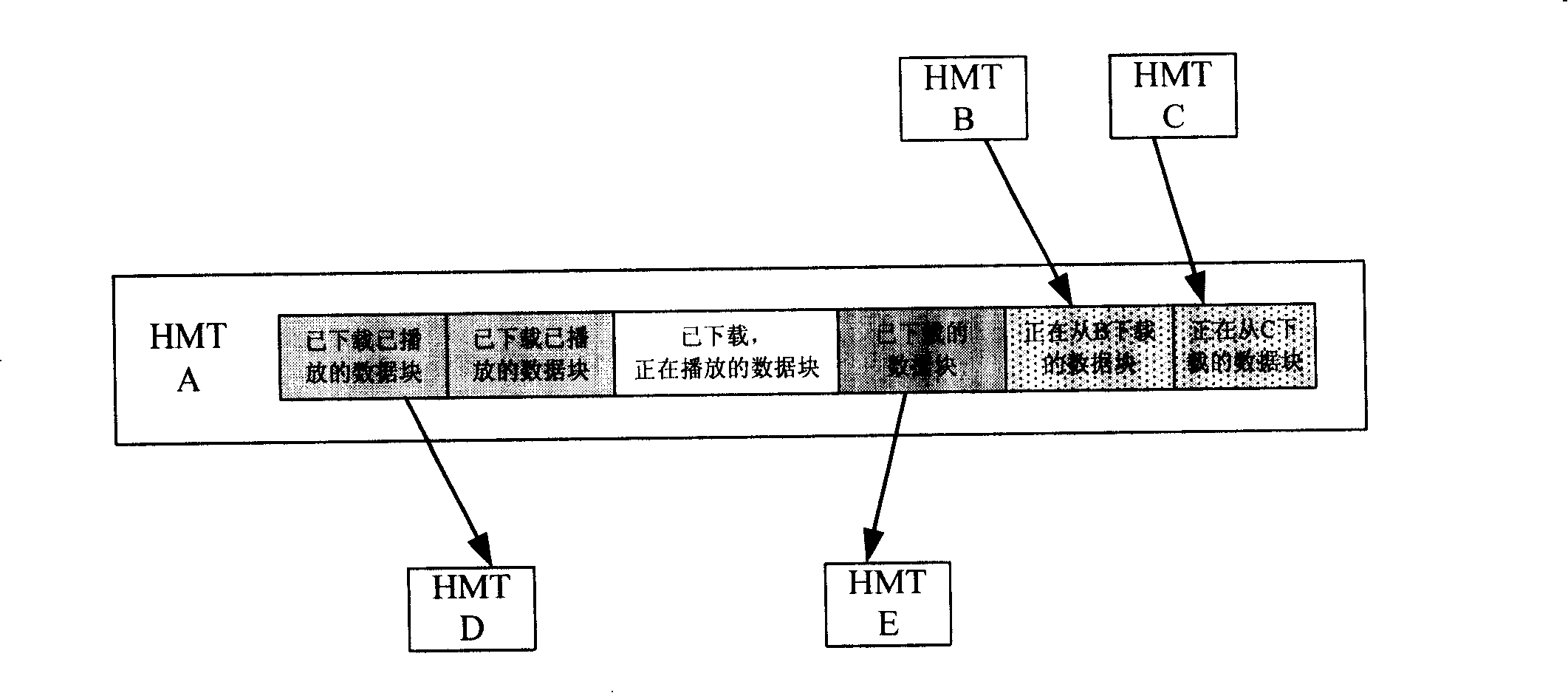

System and method for implementing video-on-demand with peer-to-peer network technique

InactiveCN101212646ARelieve pressureQuality improvementData switching by path configurationTwo-way working systemsAccess networkExtensibility

The invention relates to a system for realizing video-on-demand by using a peer-to-peer network technology and a method thereof, wherein, a central media server and a central directory server of the system are connected with a router of a metropolitan area / wide area network; an edge media server is connected with an edge router which is linked up with the metropolitan area / wide area network and an access network so as to form a content delivery network based on P2P; an edge directory server is connected with the edge router with which the edge media server is connected and a home media terminal is connected with the edge router with which the edge media server is connected through the access network, which forms a video-on-demand network based on the P2P. The method of the system for realizing the video-on-demand by using the peer-to-peer network technology and the method thereof comprises the steps: the home media terminal firstly requests and downloads data in a way of the P2P from an edge P2P area, when the requested data is not in the edge P2P area, corresponding data is downloaded and supplied to the home media terminal by the edge media server in a central P2P area. The system for realizing the video-on-demand by using the peer-to-peer network technology and the method thereof have the advantages of low cost and strong expandability; with the increase of the users, the pressure bore by the server is decreased.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

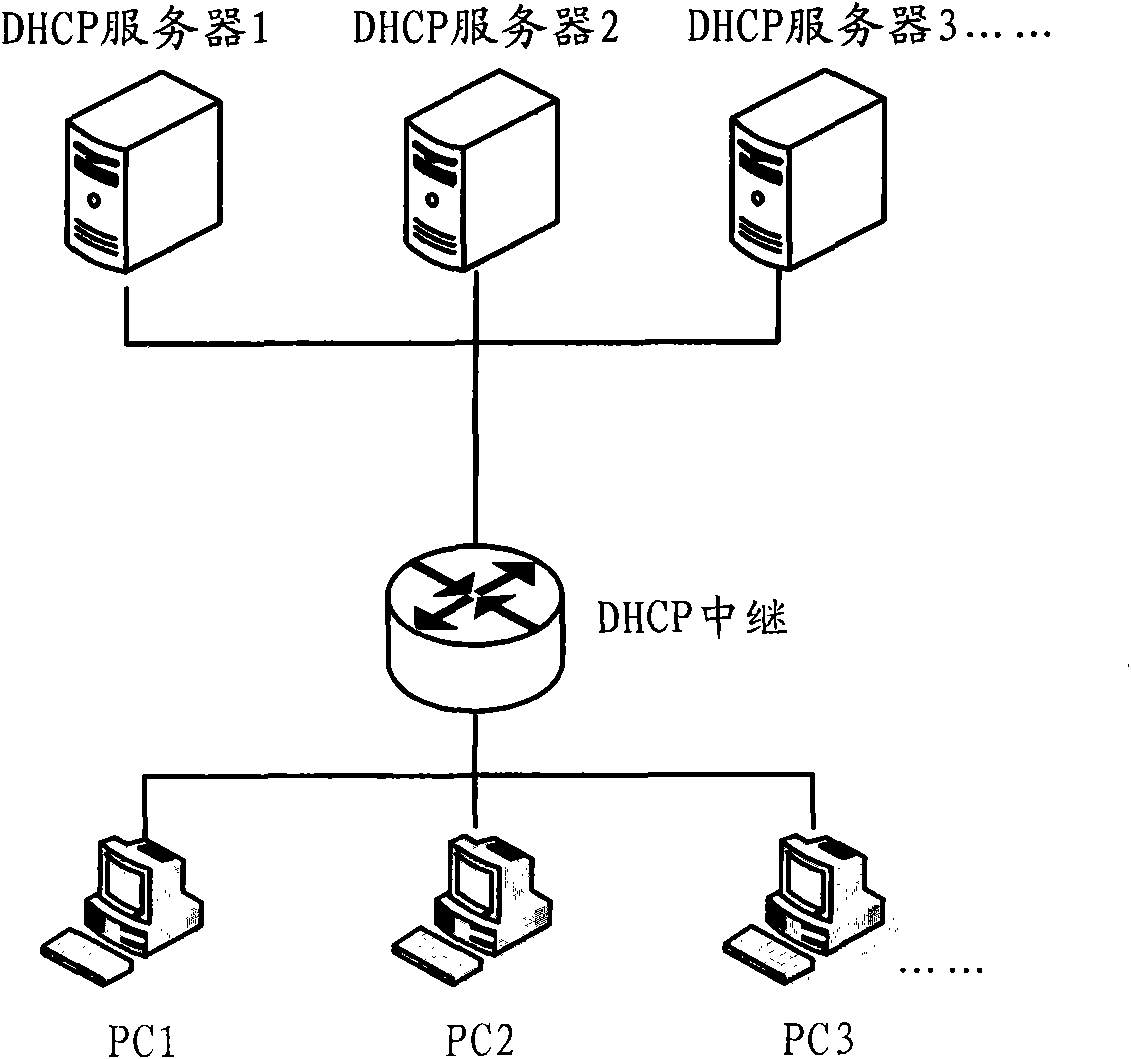

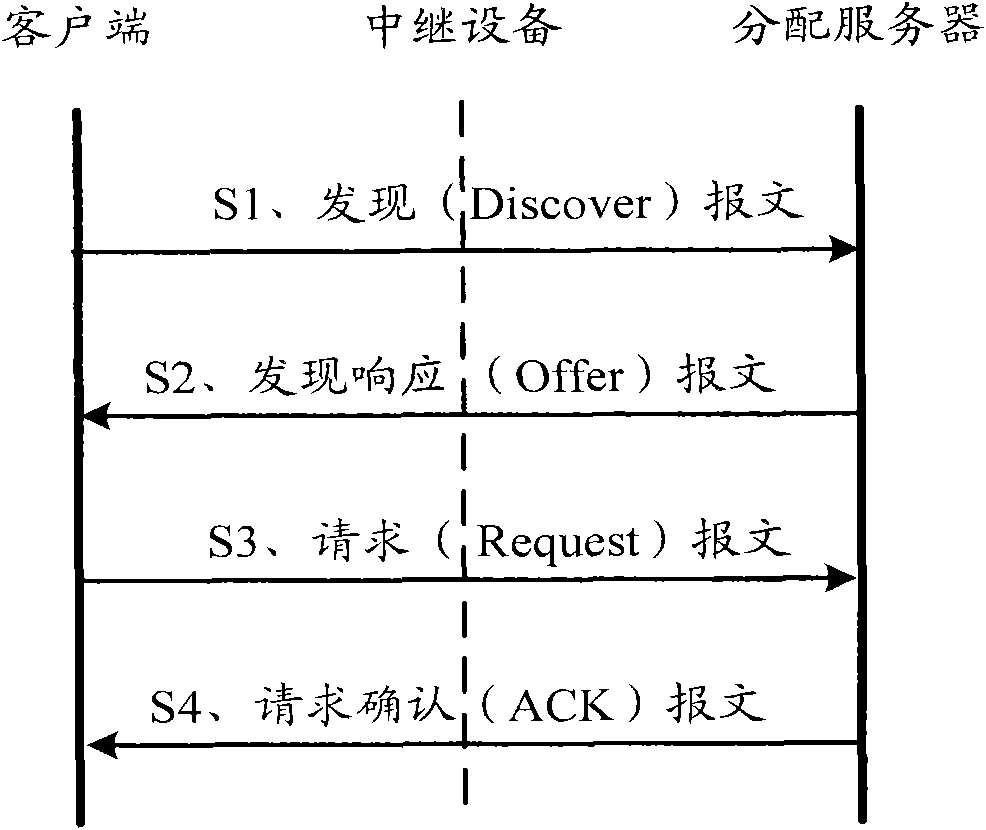

Method, system and trunk equipment for assigning multiple server addresses

InactiveCN101951417AAvoid allocation conflictsReduce latencyTransmissionDistribution methodNetwork addressing

The invention discloses method, system and trunk equipment for assigning multiple server addresses. The method comprises the following steps of: forwarding received discovery messages sent by a client to servers by the trunk equipment; when the trunk equipment receives the discovery response messages returned by the servers, resolving out carried network addresses from address choice fields of the discovery response messages and judging whether the resolved network addresses conflict with the stored and assigned network addresses or not; if so, returning the conflicting messages to the servers to require the servers to renewedly assign network addresses; and if not, forwarding the discovery response messages to the client, and requiring the servers to assign the network addresses carried in the discovery response messages by the client. The method effectively avoids the assigning conflicts of multiple server network addresses, reduces the time delay in the address assigning process and improves the system performance.

Owner:BEIJING XINWANG RUIJIE NETWORK TECH CO LTD

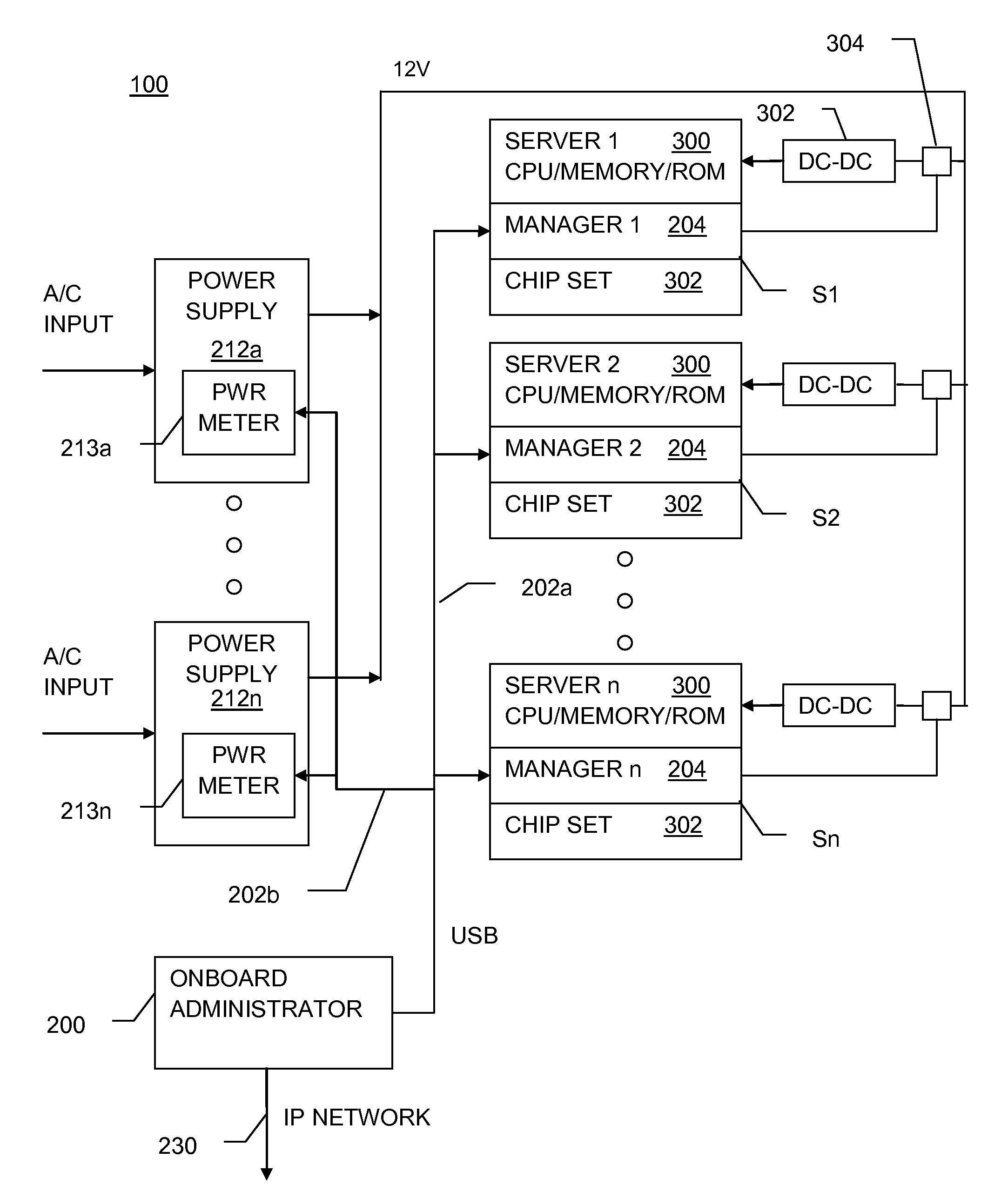

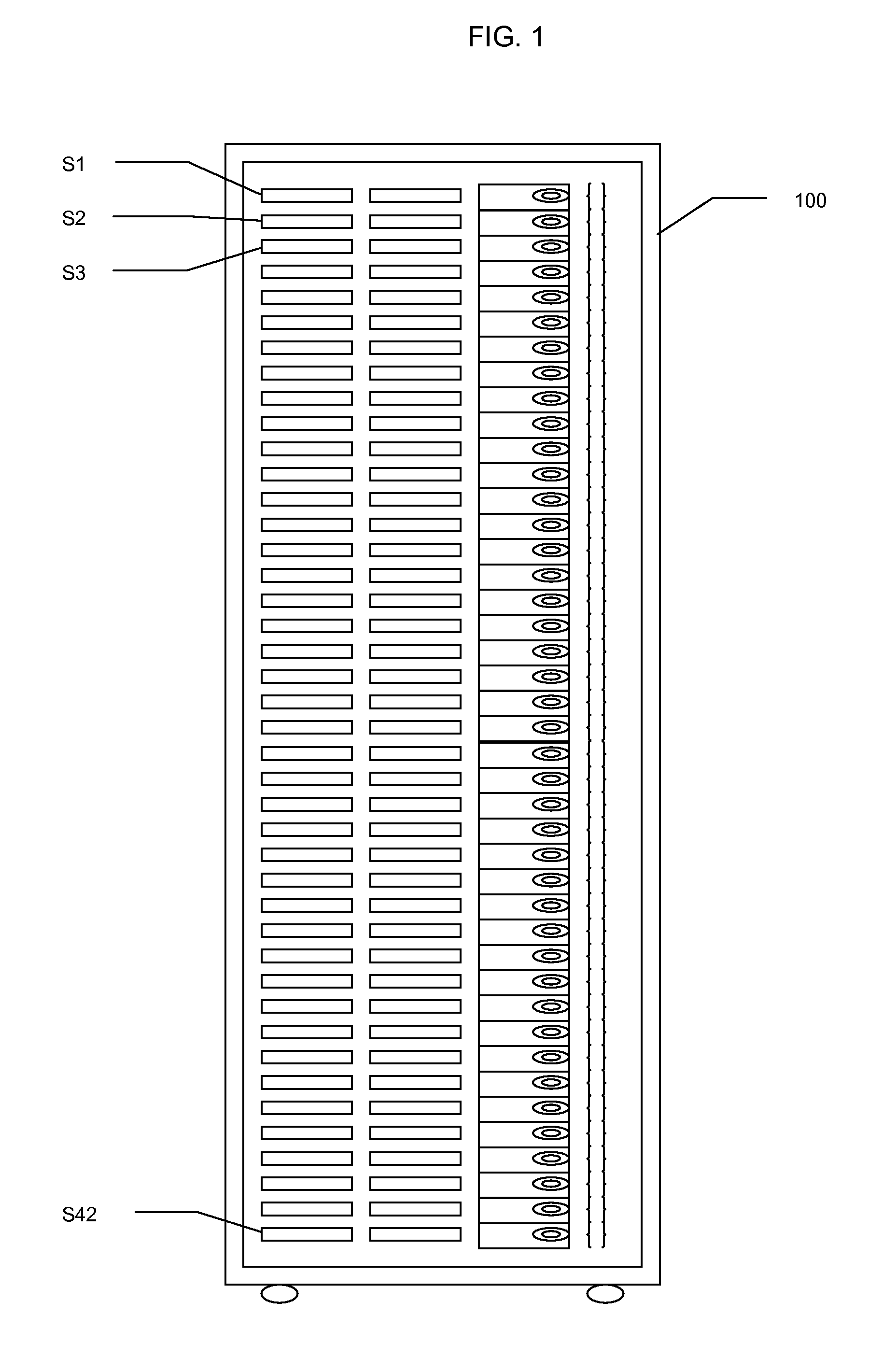

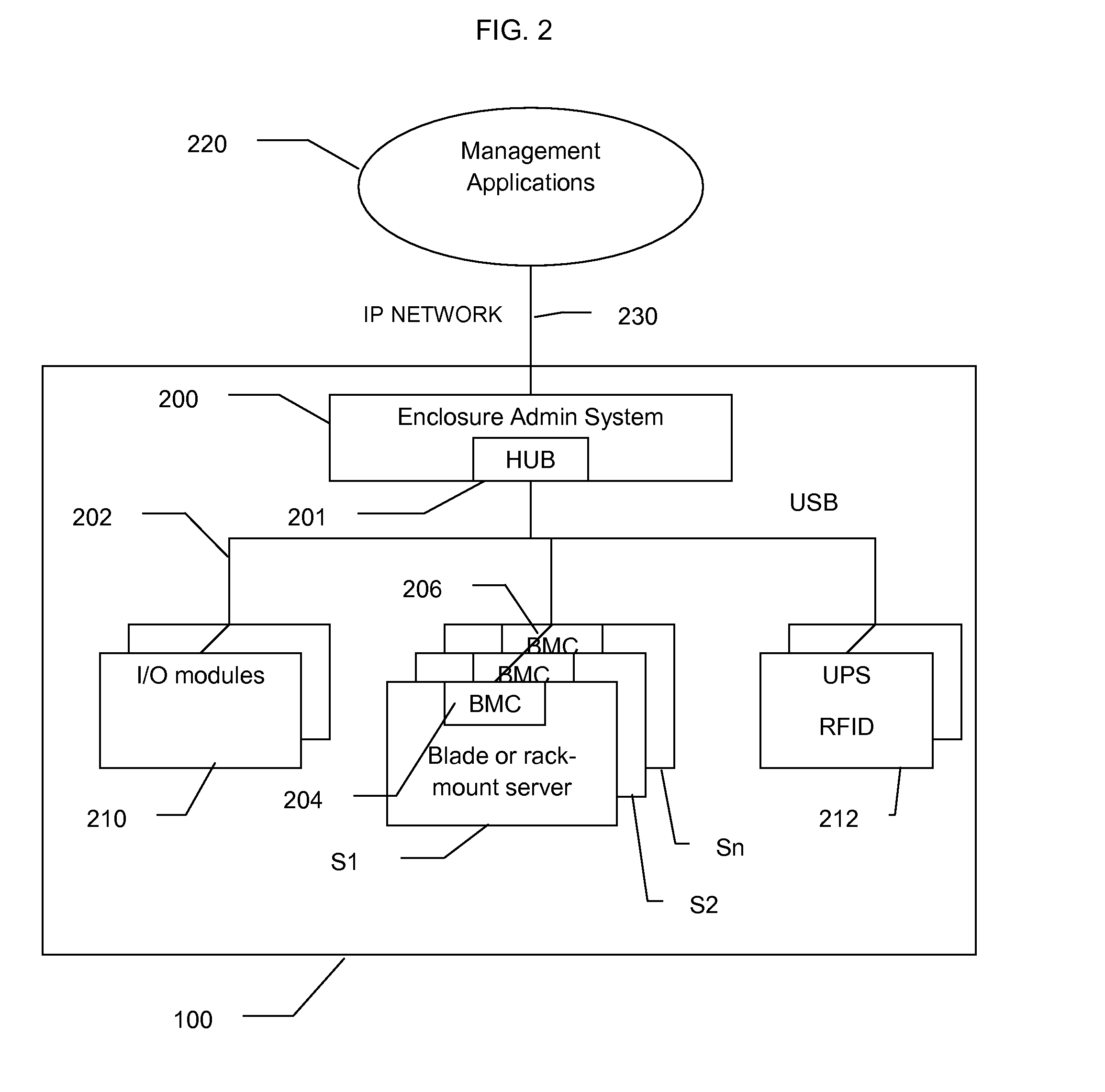

Centralized server rack management using USB

A multi-server computing system includes a plurality of server modules mounted in an enclosure; each server has a universal serial bus (USB) interface. An enclosure onboard administration (OA) module is also mounted in the enclosure and has an addressable communication interface for connection to a remote management system and a USB interface connected to each of the plurality of servers. The USB interface of the enclosure OA operates as a master and the USB interface of each of the plurality of servers acts as a slave to the enclosure OA, such that each of the server modules can be managed by the remote management system using a single communication address.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com