Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

313 results about "Parallel pipeline" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Foveated image coding system and method for image bandwidth reduction

InactiveUS6252989B1Increase in sizeQuick implementationDigitally marking record carriersPicture reproducers using cathode ray tubesData compressionImaging quality

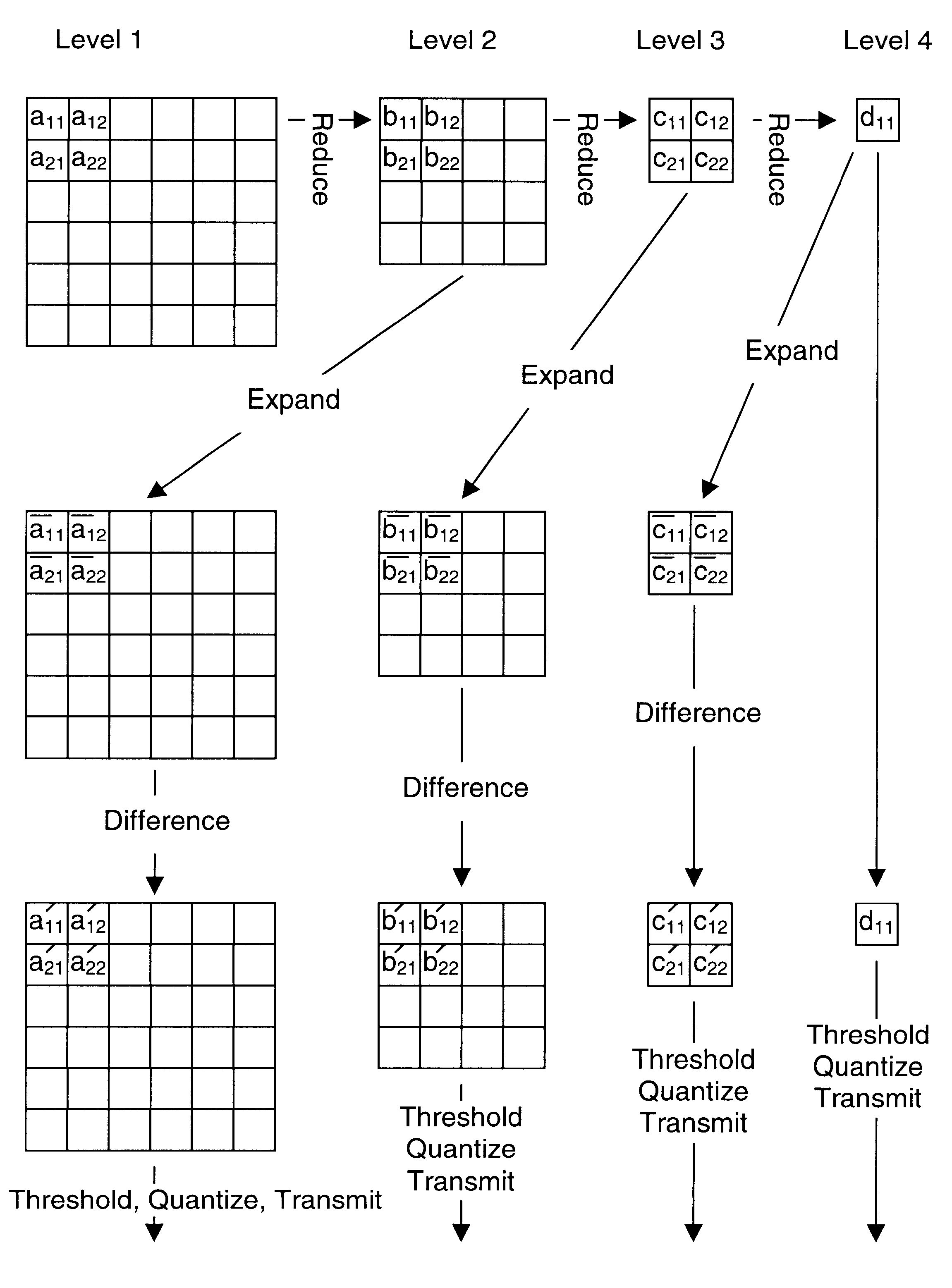

A foveated imaging system, which can be implemented on a general purpose computer and greatly reduces the transmission bandwidth of images has been developed. This system has demonstrated that significant reductions in bandwidth can be achieved while still maintaining access to high detail at any point in an image. The system is implemented with conventional computer, display, and camera hardware. It utilizes novel algorithms for image coding and decoding that are superior both in degree of compression and in perceived image quality and is more flexible and adaptable to different bandwidth requirements and communications applications than previous systems. The system utilizes novel methods of incorporating human perceptual properties into the coding the decoding algorithms providing superior foveation. One version of the system includes a simple, inexpensive, parallel pipeline architecture, which enhances the capability for conventional and foveated data compression. Included are novel applications of foveated imaging in the transmission of pre-recorded video (without eye tracking), and in the use of alternate pointing devices for foveation.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

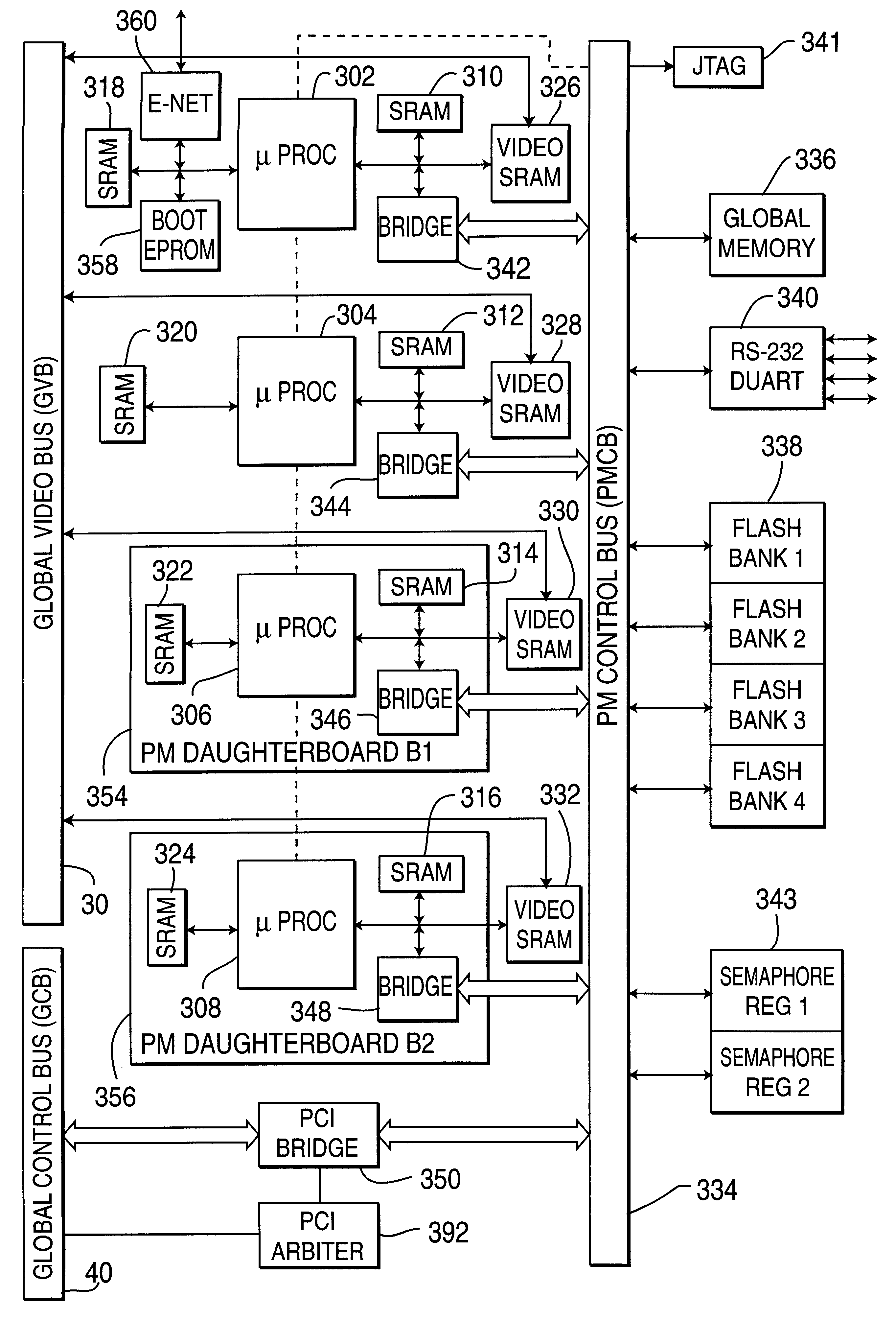

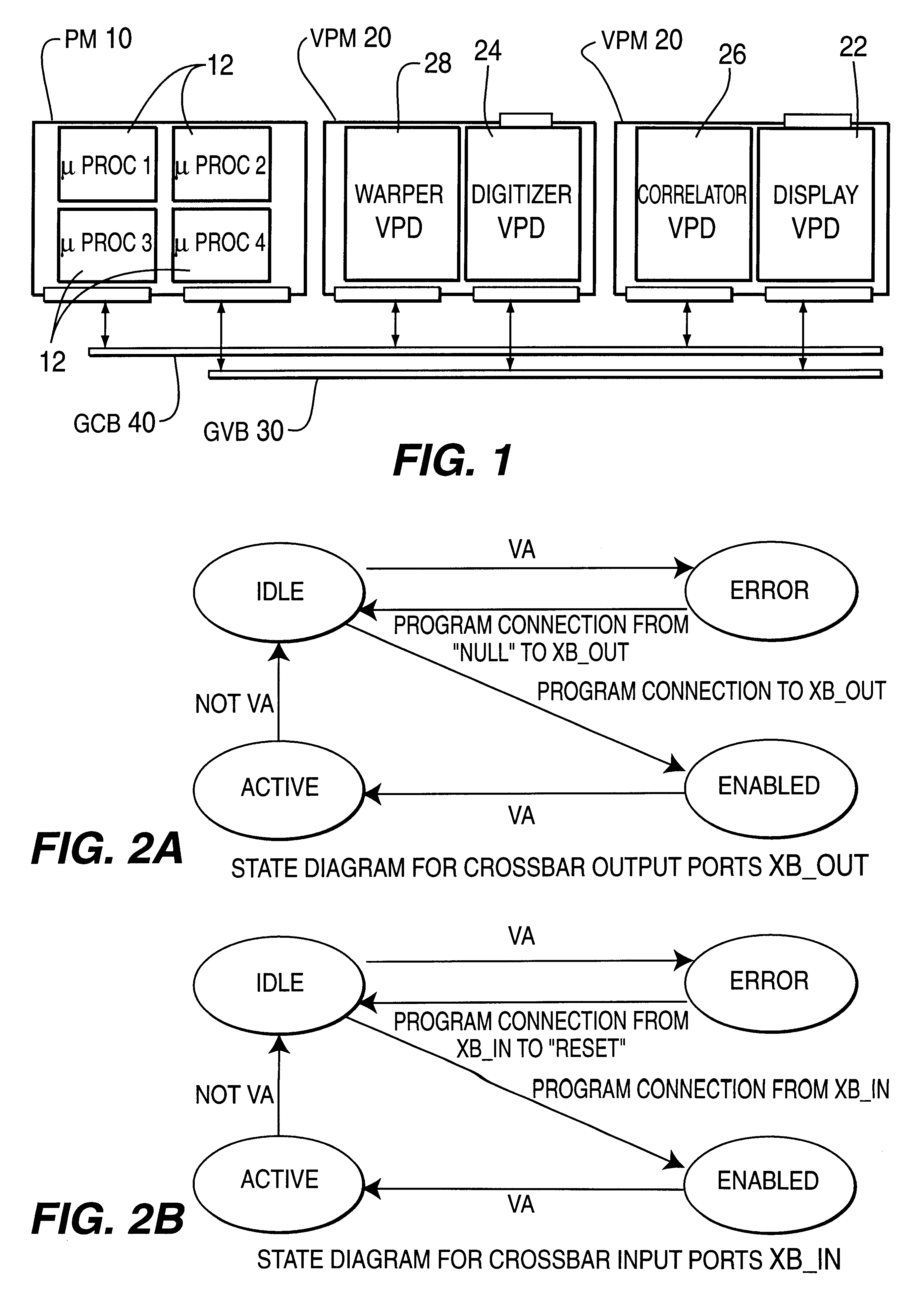

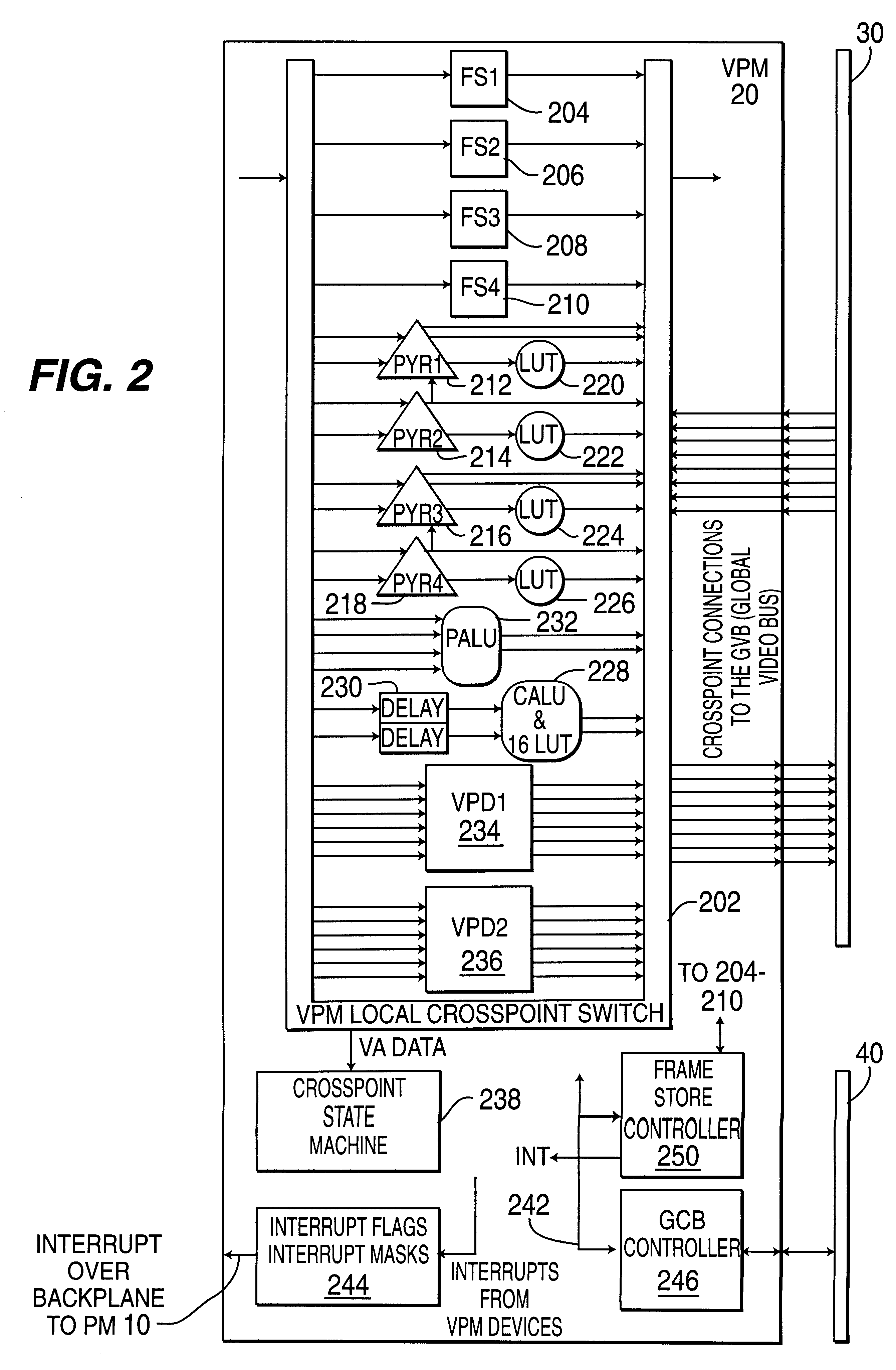

Modular parallel-pipelined vision system for real-time video processing

InactiveUS6188381B1Easy to operateReduce control overheadTelevision system detailsColor signal processing circuitsProcess moduleHandling system

A real-time modular video processing system (VPS) which can be scaled smoothly from relatively small systems with modest amounts of hardware to very large, very powerful systems with significantly more hardware. The modular video processing system includes a processing module containing at least one general purpose microprocessor which controls hardware and software operation of the video processing system using control data and which also facilitates communications with external devices. One or more video processing modules are also provided, each containing parallel pipelined video hardware which is programmable by the control data to provide different video processing operations on an input stream of video data. Each video processing module also contains one or more connections for accepting one or more daughterboards which each perform a particular image processing task. A global video bus routes video data between the processing module and each video processing module and between respective processing modules, while a global control bus provides the control data to / from the processing module from / to the video processing modules separate from the video data on the global video bus. A hardware control library loaded on the processing module provides an application programming interface including high level C-callable functions which allow programming of the video hardware as components are added and subtracted from the video processing system for different applications.

Owner:SARNOFF CORP

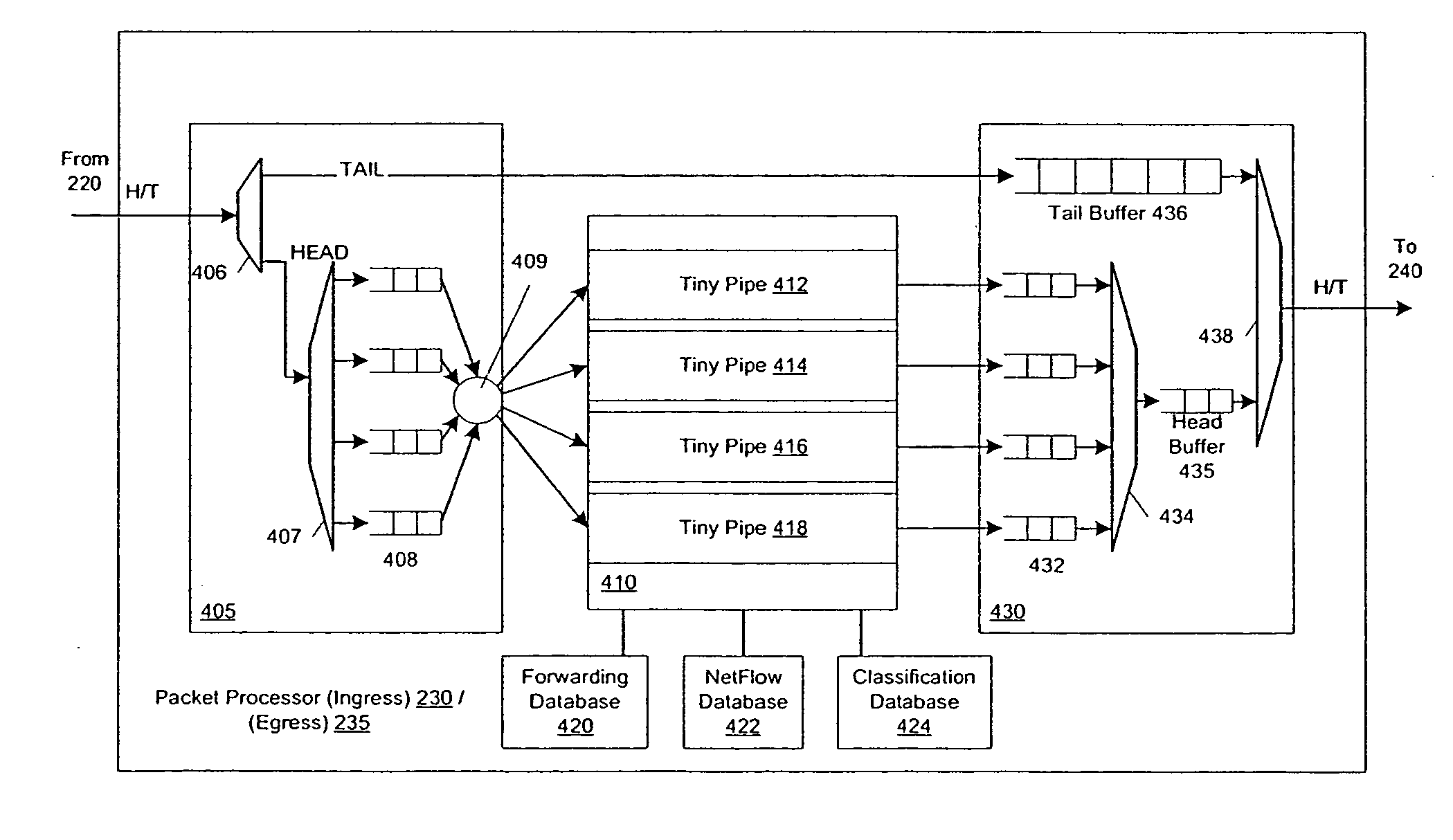

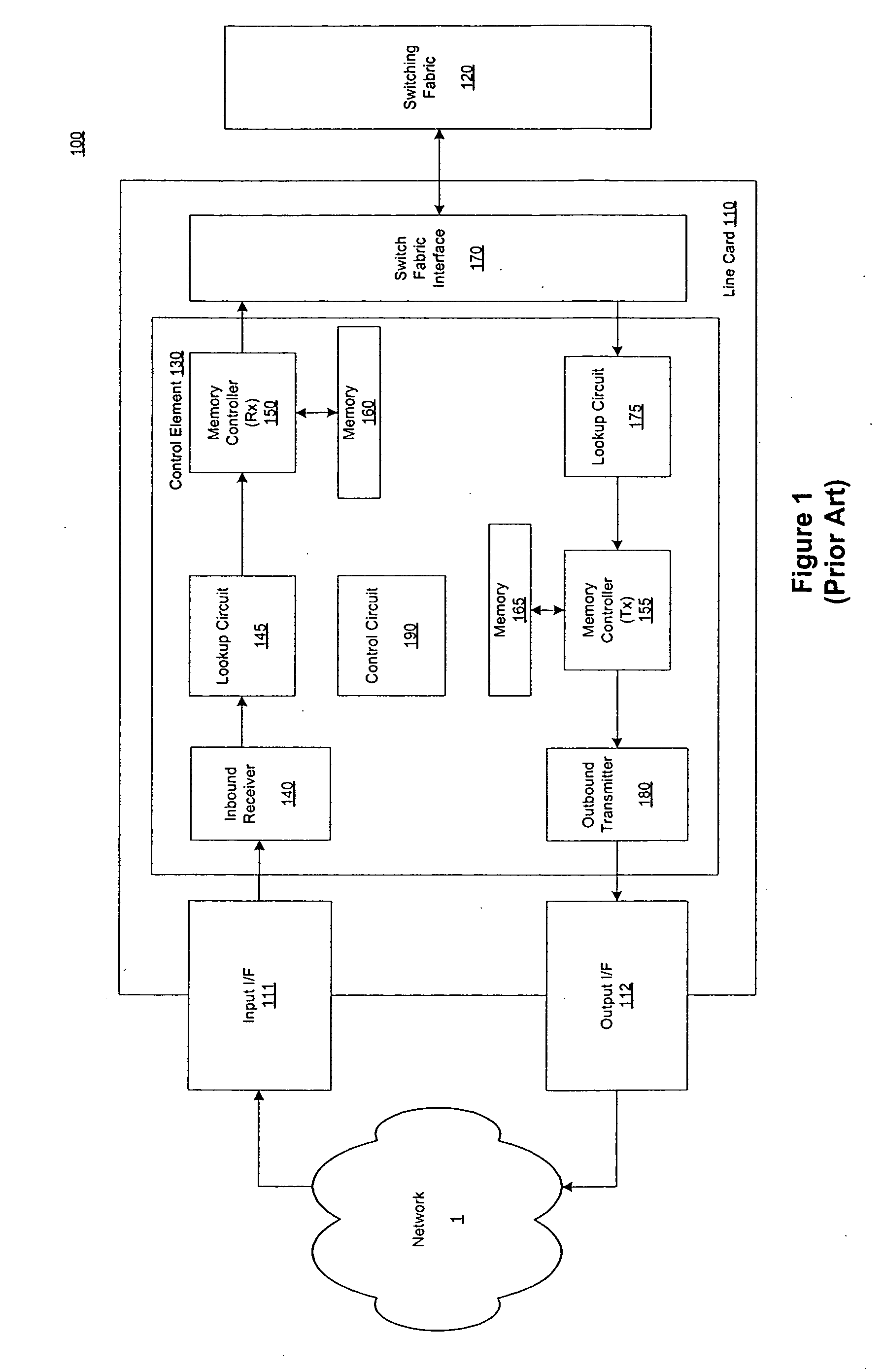

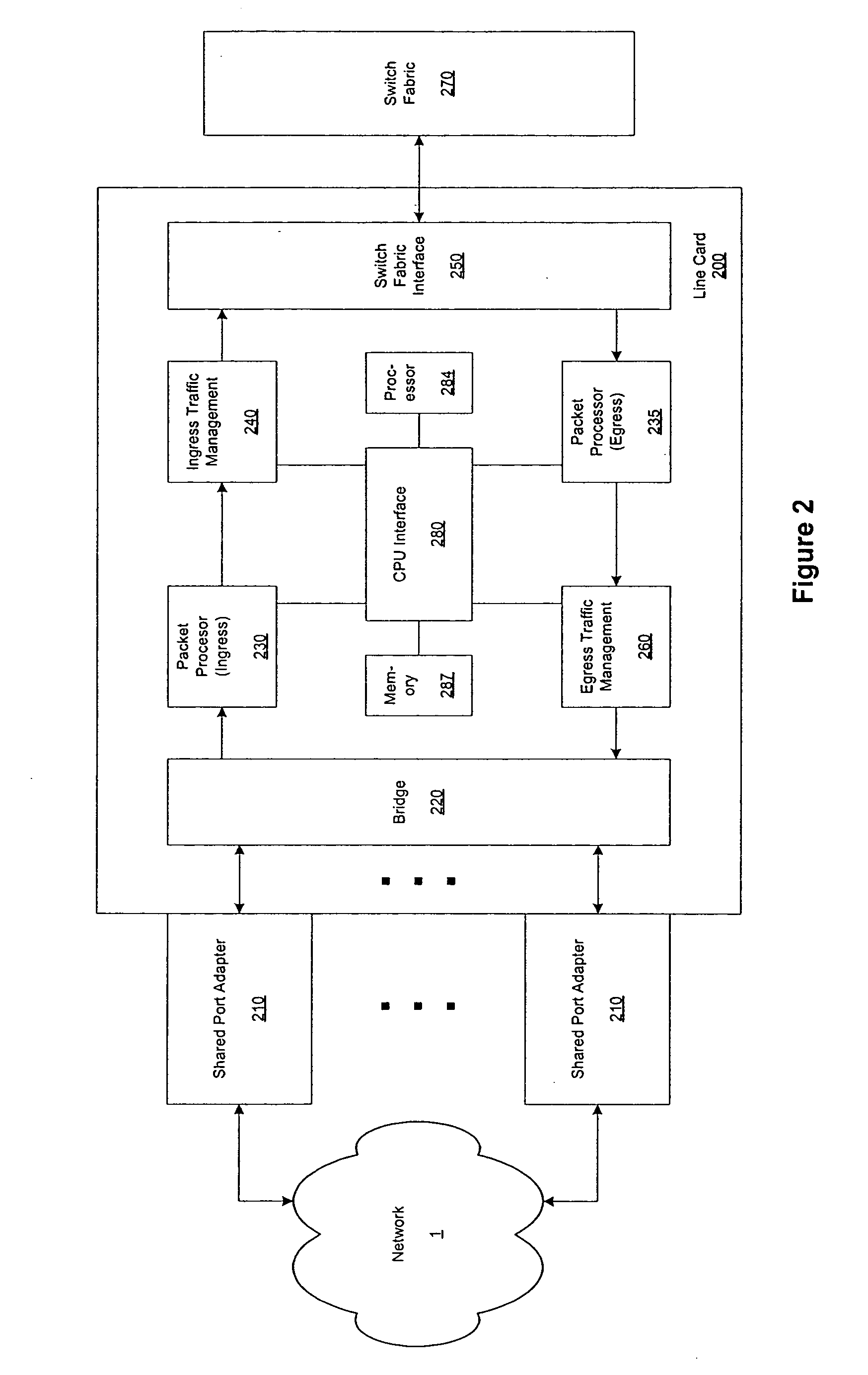

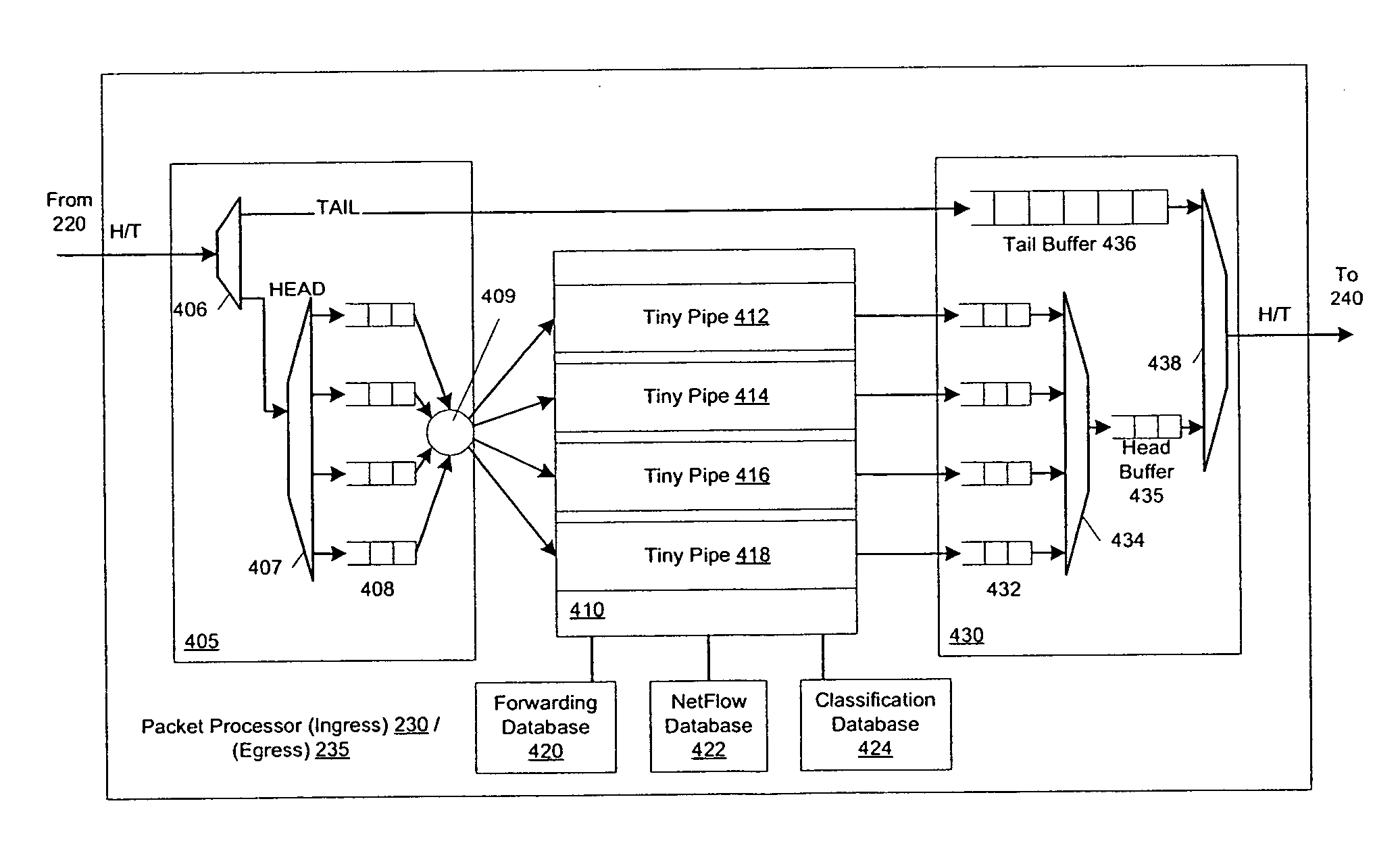

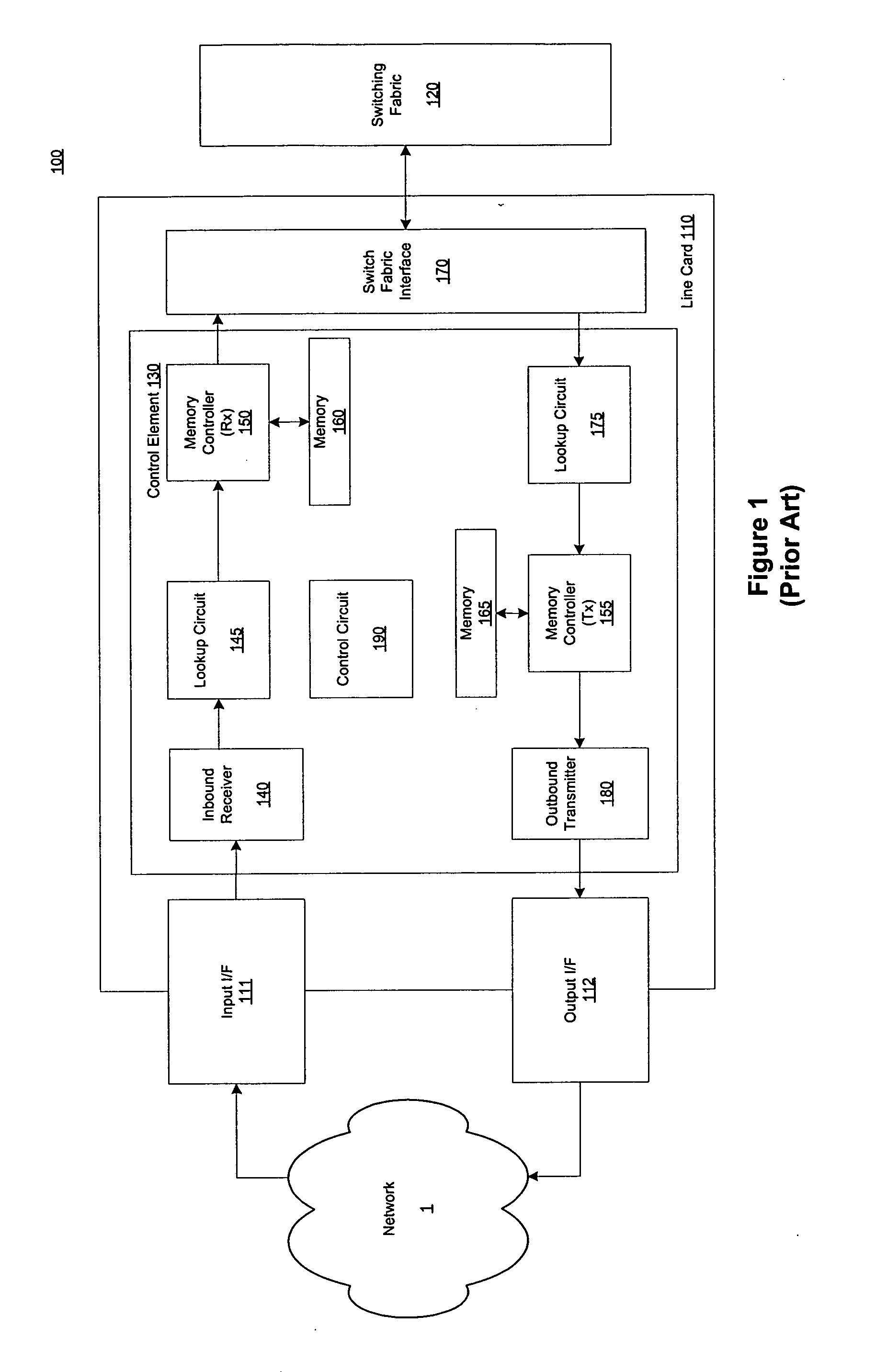

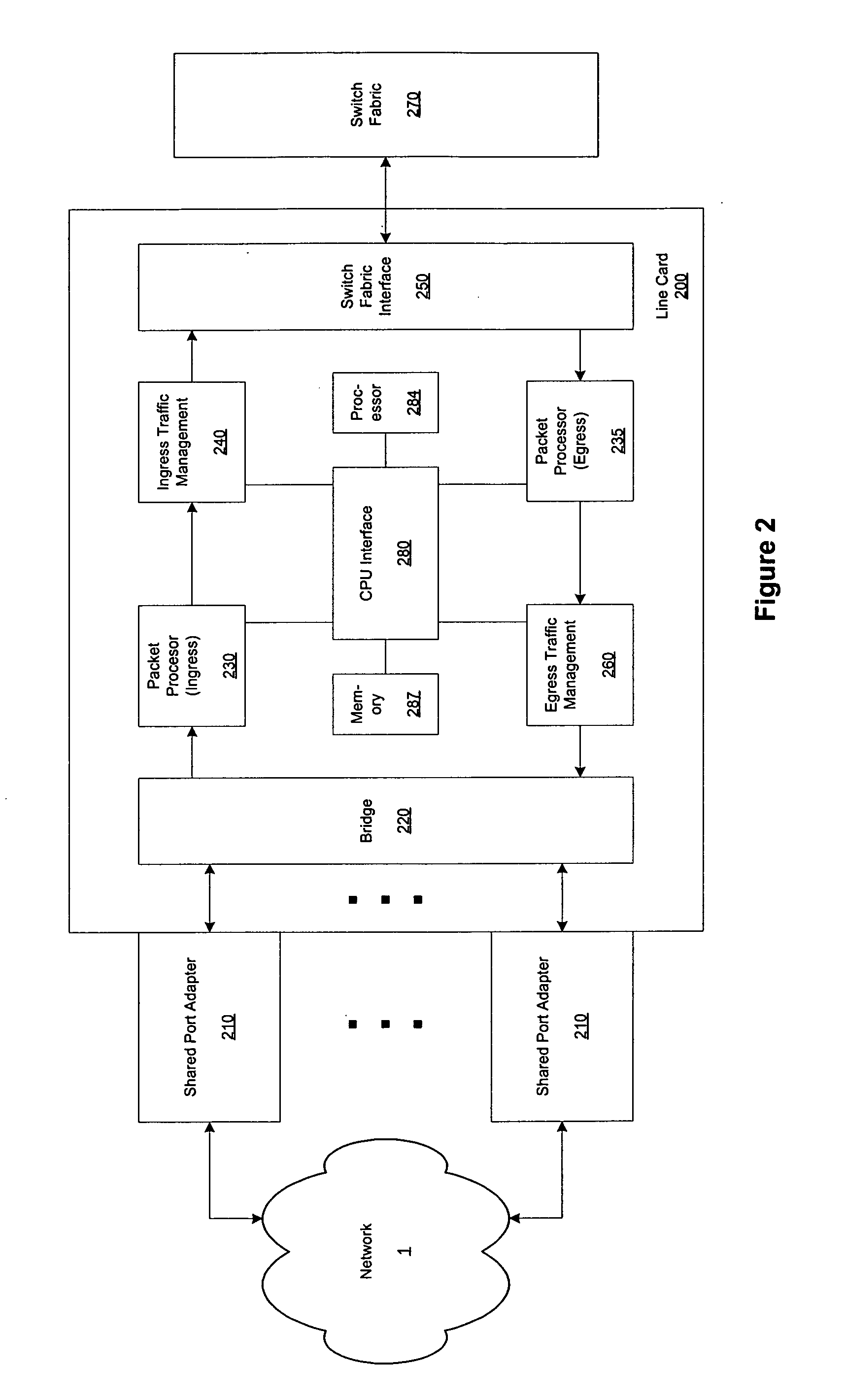

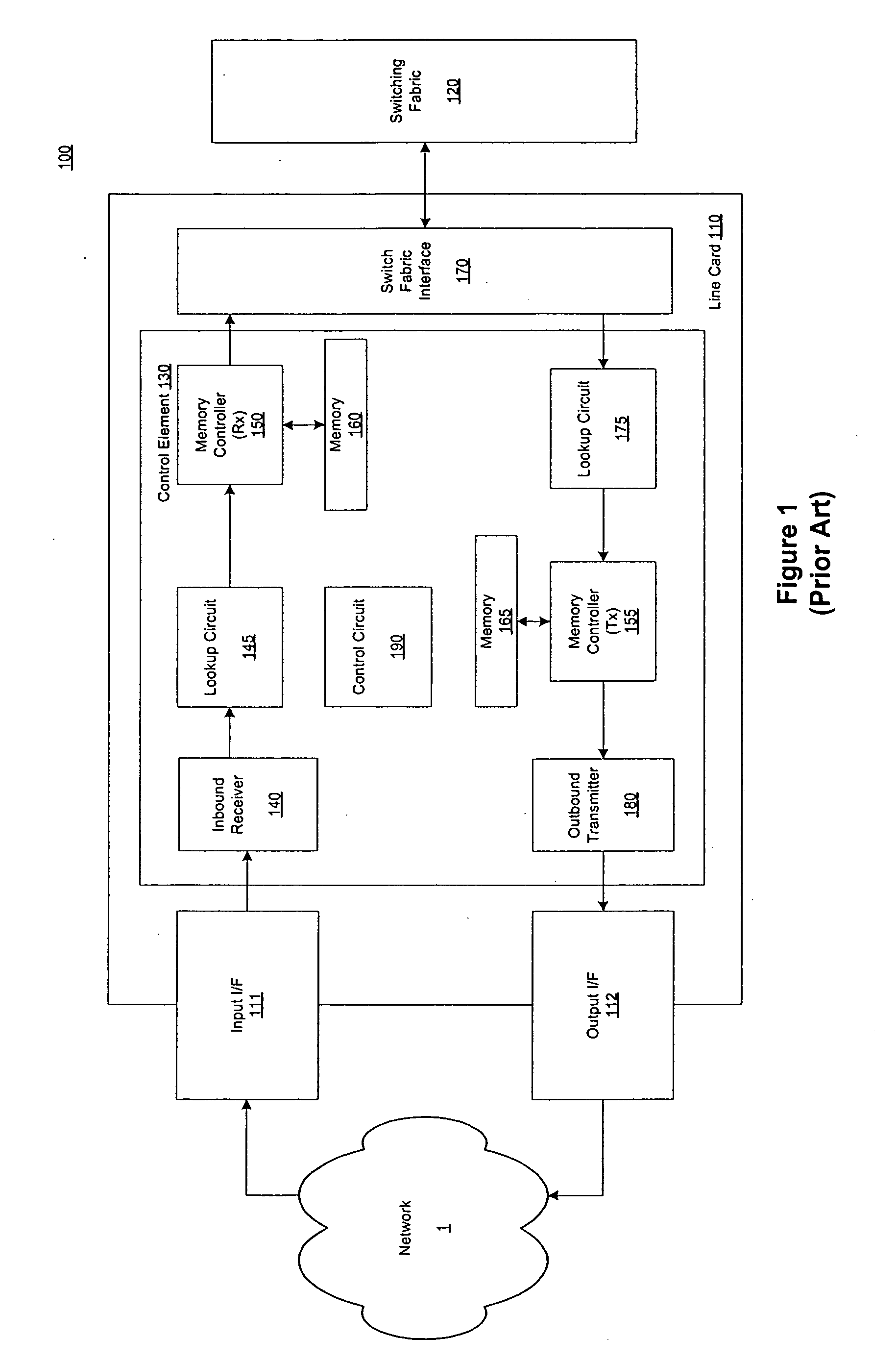

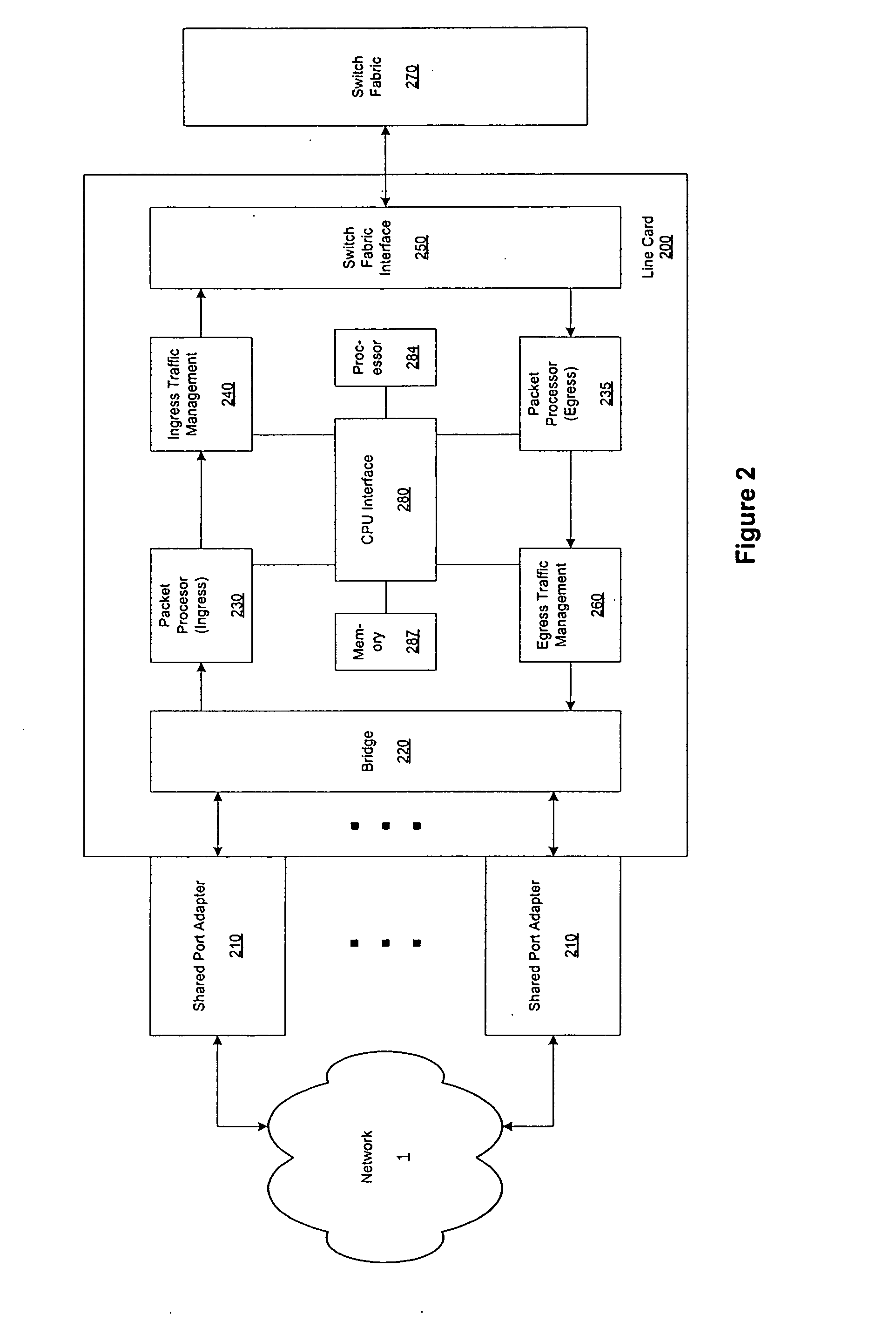

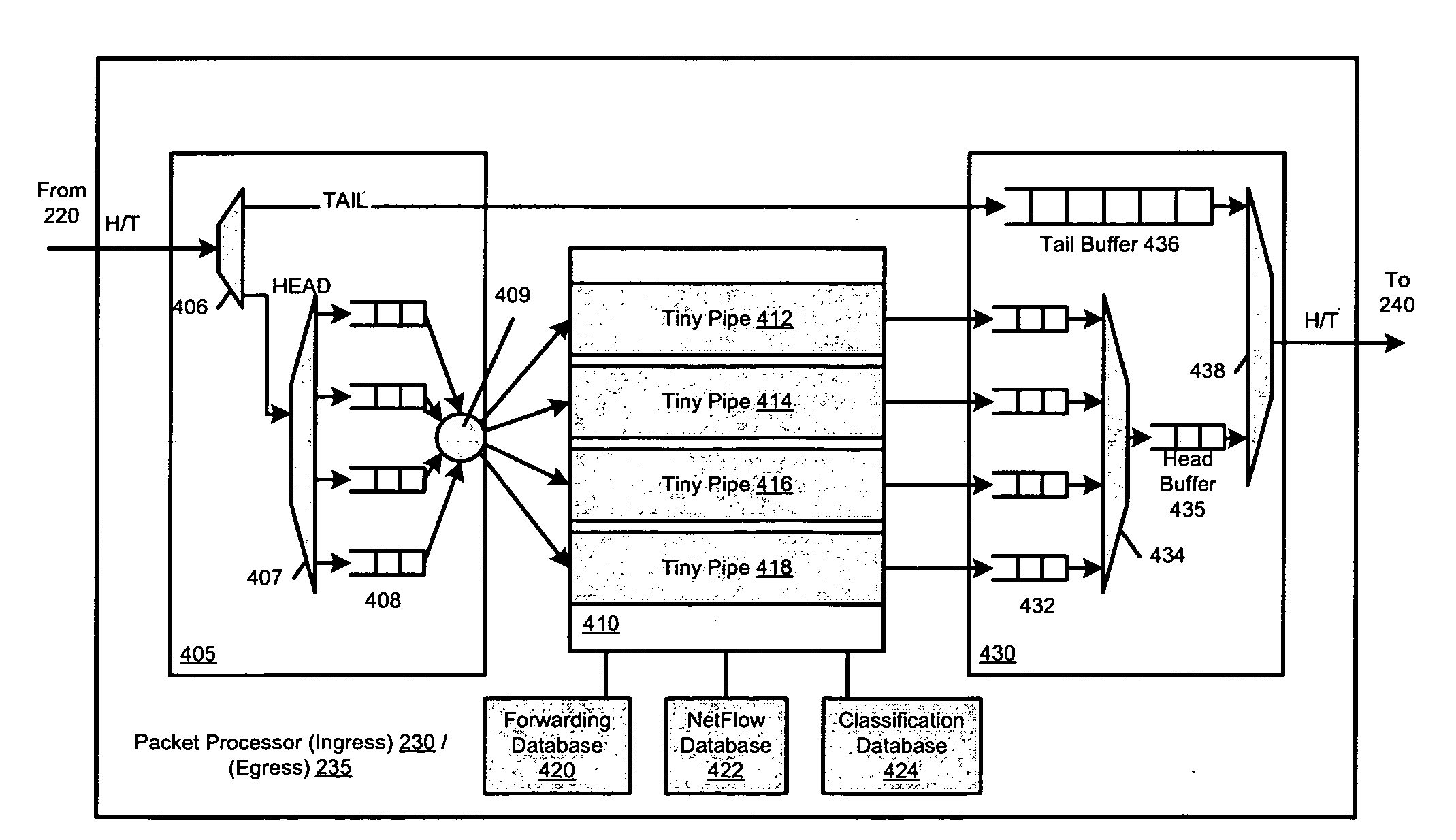

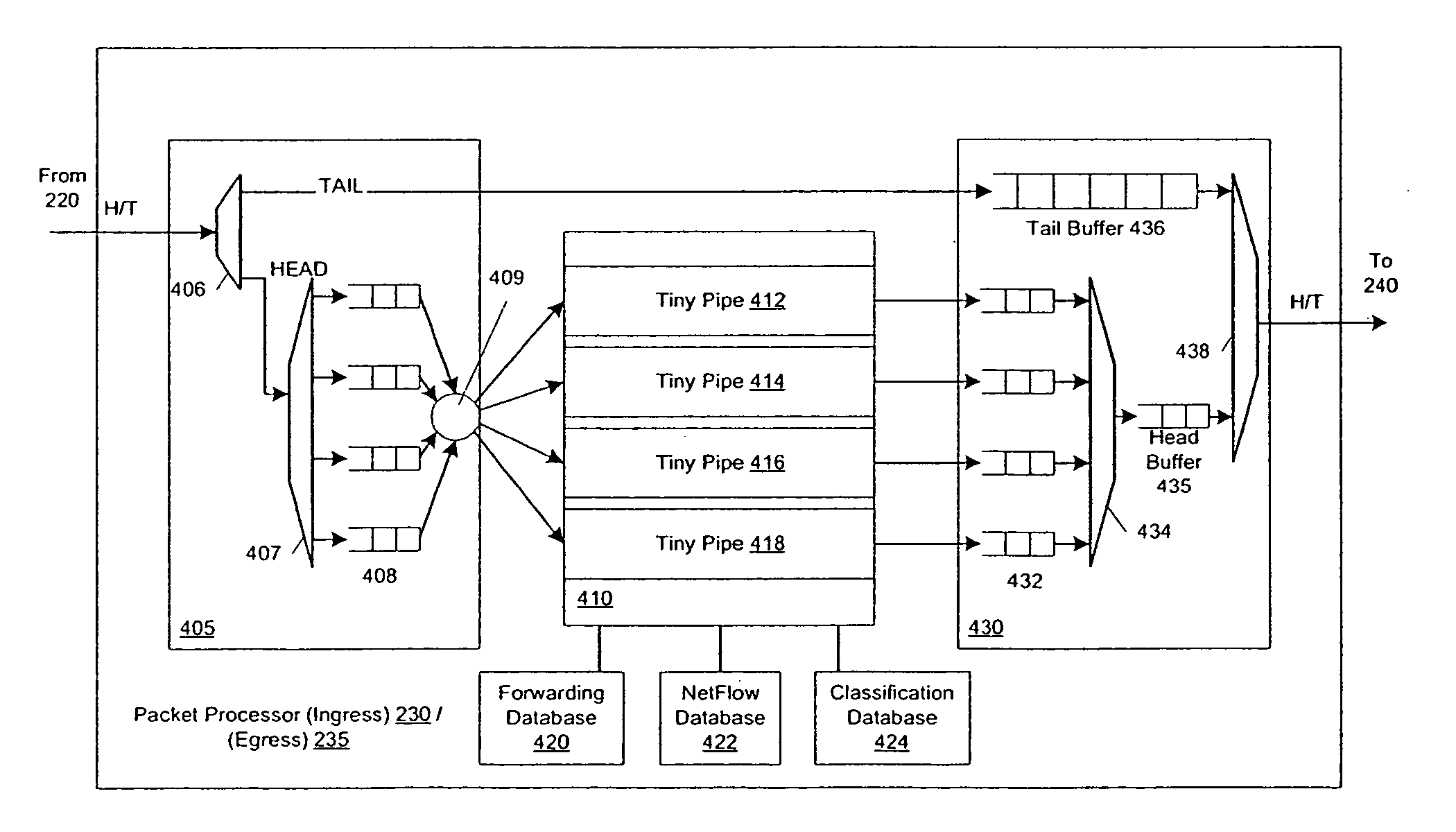

Pipelined packet switching and queuing architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

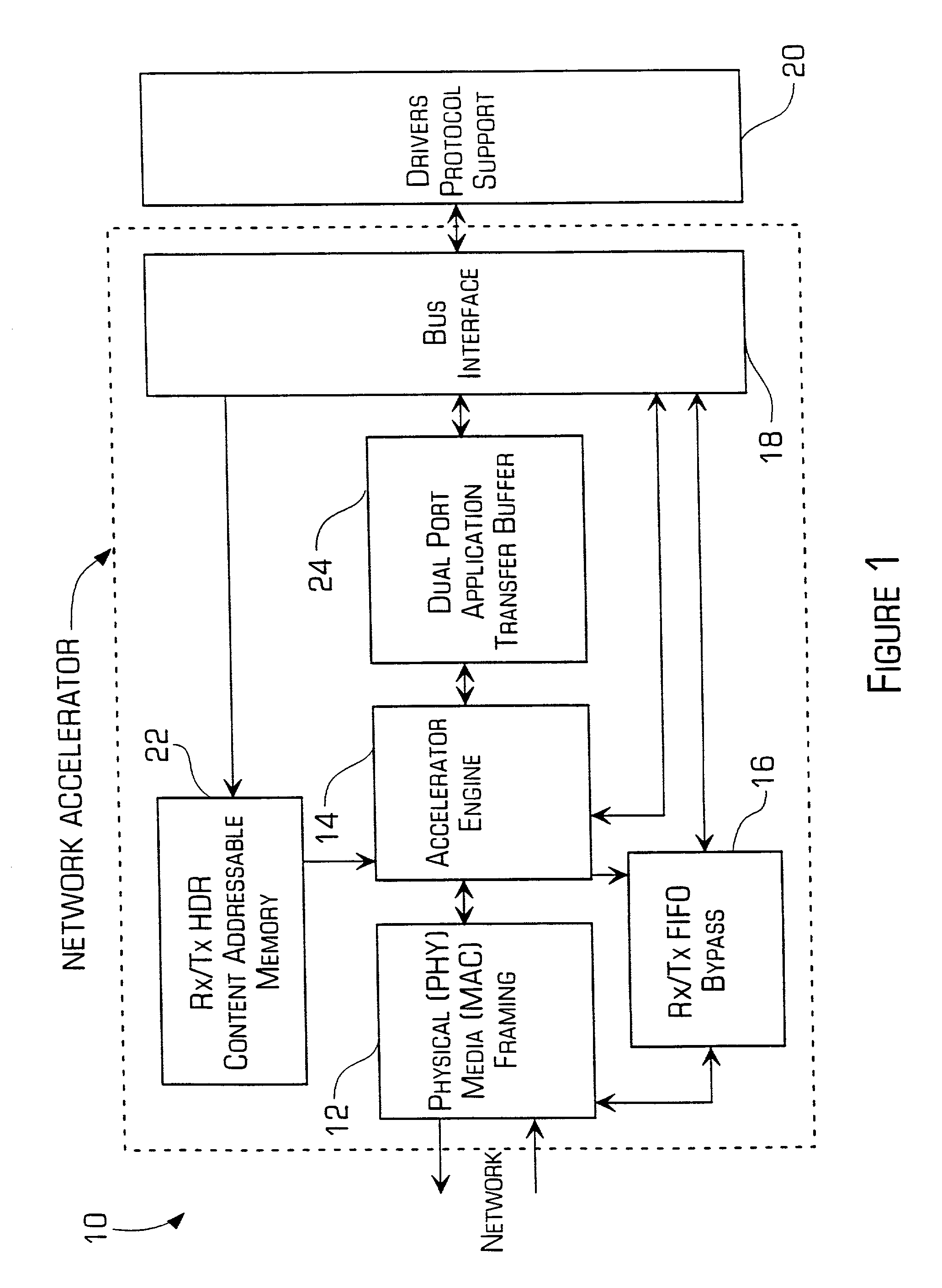

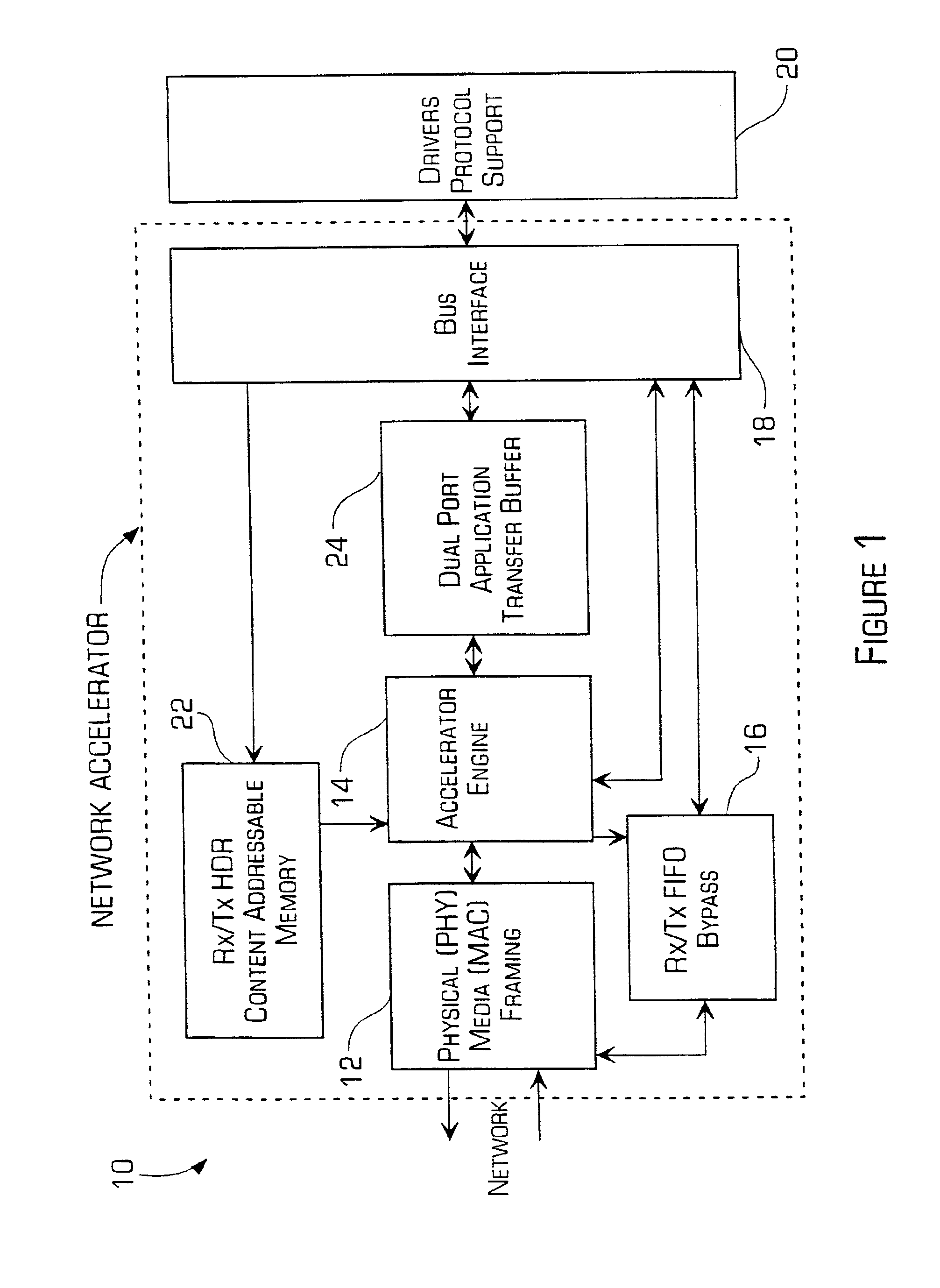

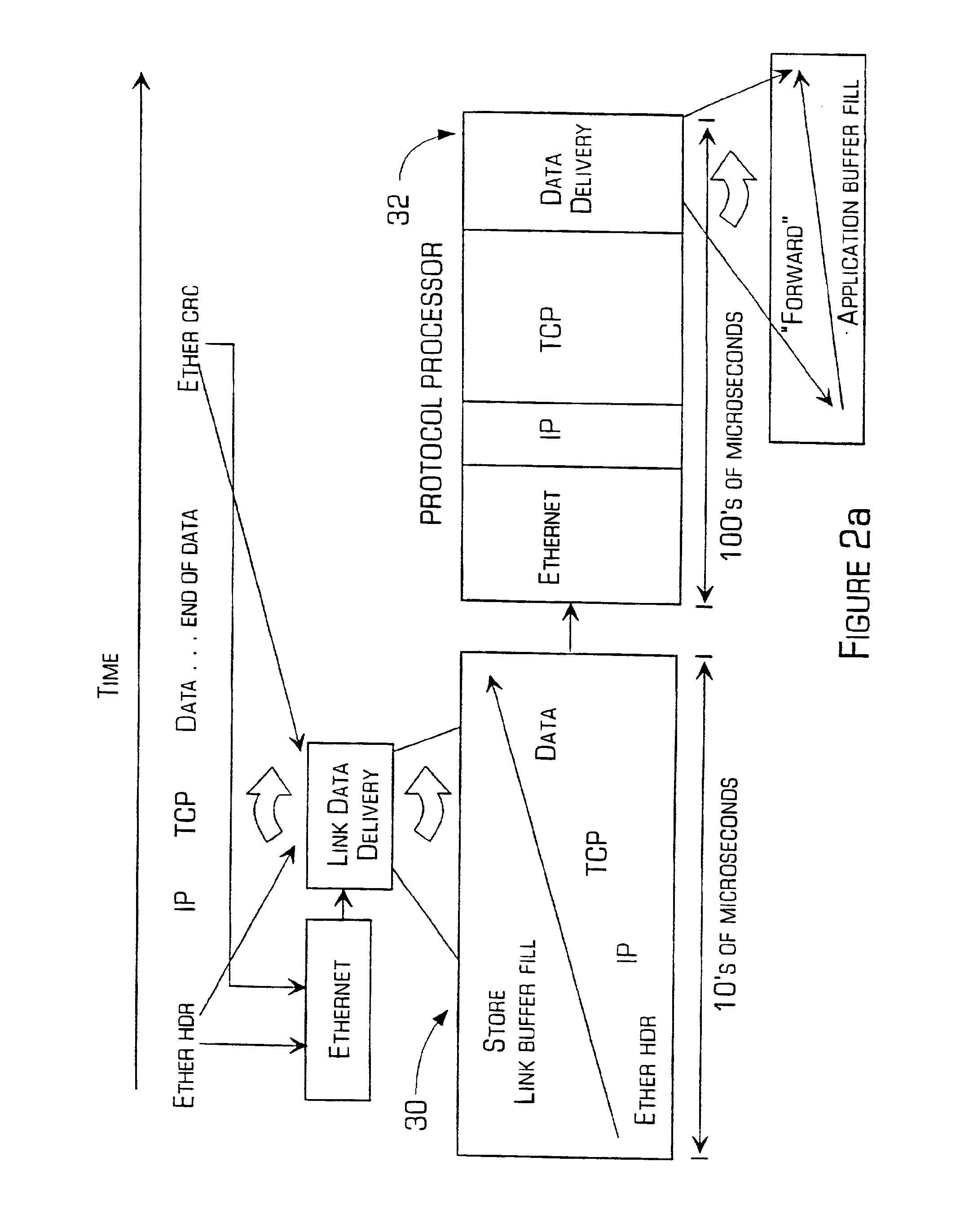

Accelerator system and method

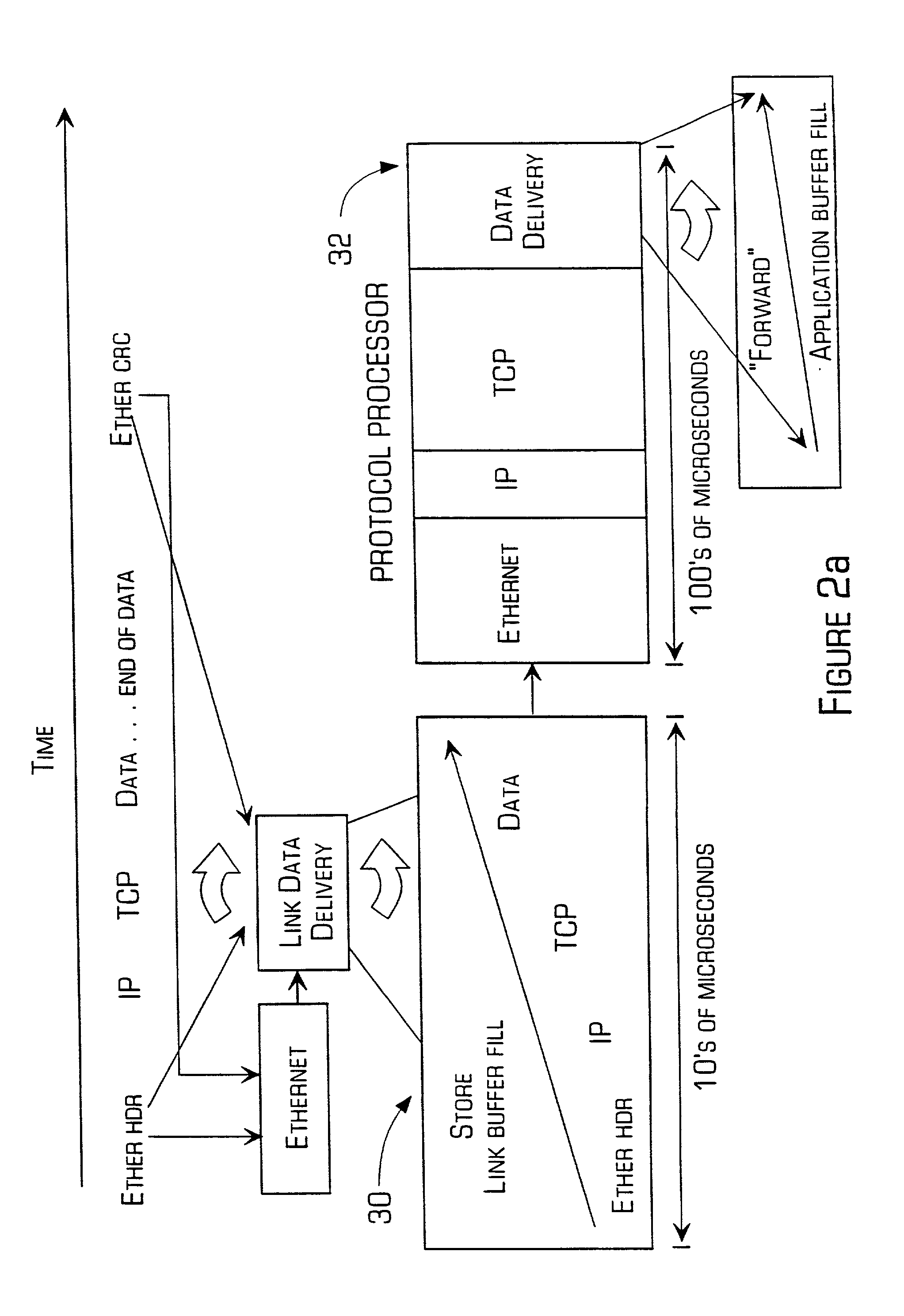

InactiveUS20010004354A1Improve throughputFaster and less-expensive to constructTime-division multiplexRadio/inductive link selection arrangementsTransmission protocolComputer hardware

A network accelerator for TCP / IP includes programmable logic for performing transparent protocol translation of streamed protocols for audio / video at network signaling rates. The programmable logic is configured in a parallel pipelined architecture controlled by state machines and implements processing for predictable patterns of the majority of transmissions which are stored in a content addressable memory, and are simultaneously stored in a dual port, dual bank application memory. The invention allows raw Internet protocol communications by cell phones, and cell phone to Internet gateway high capacity transfer that scales independent of a translation application, by processing packet headers in parallel and during memory transfers without the necessity of conventional store and forward techniques.

Owner:JOLITZ LYNNE G

Hardware parser accelerator

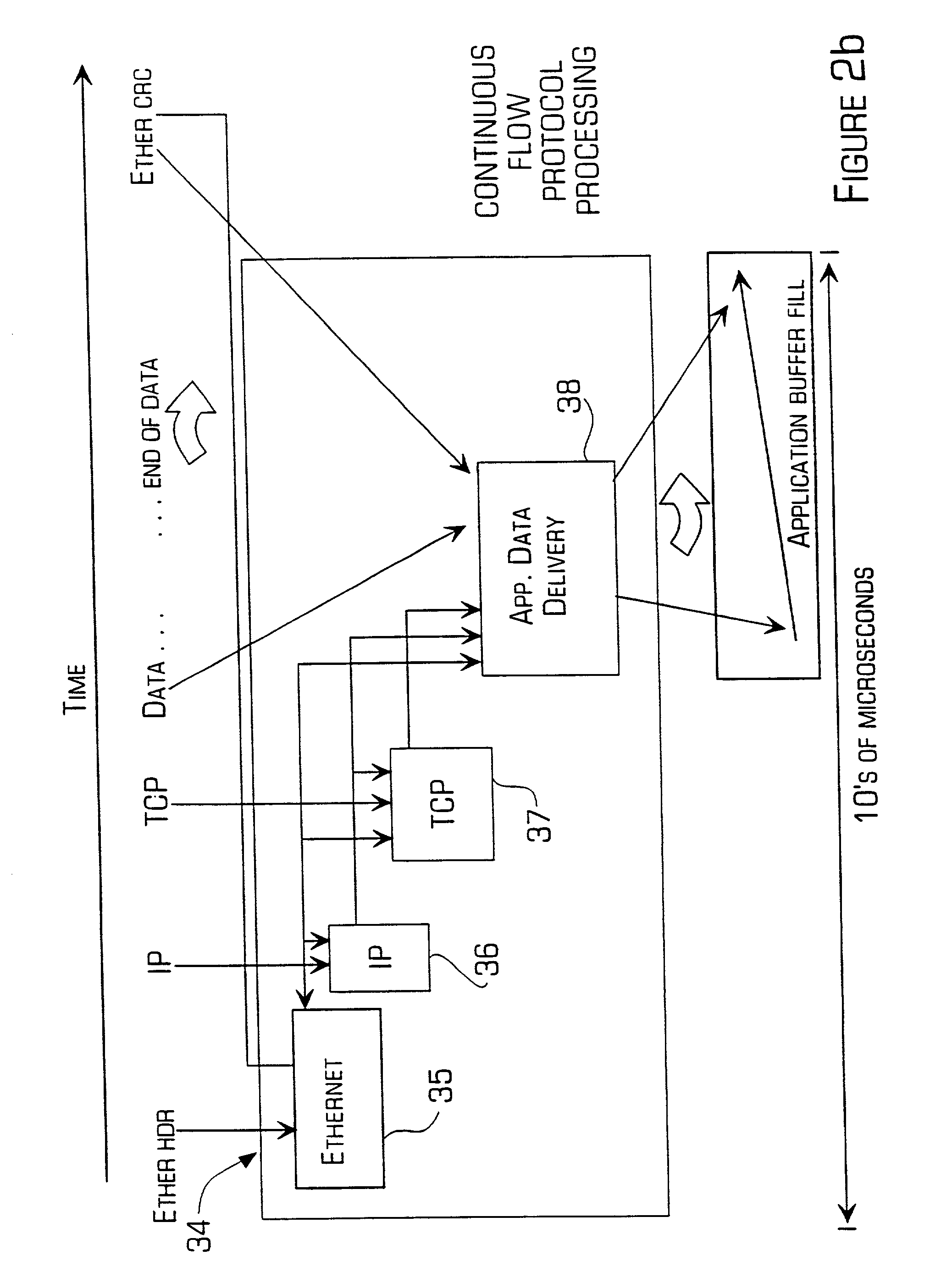

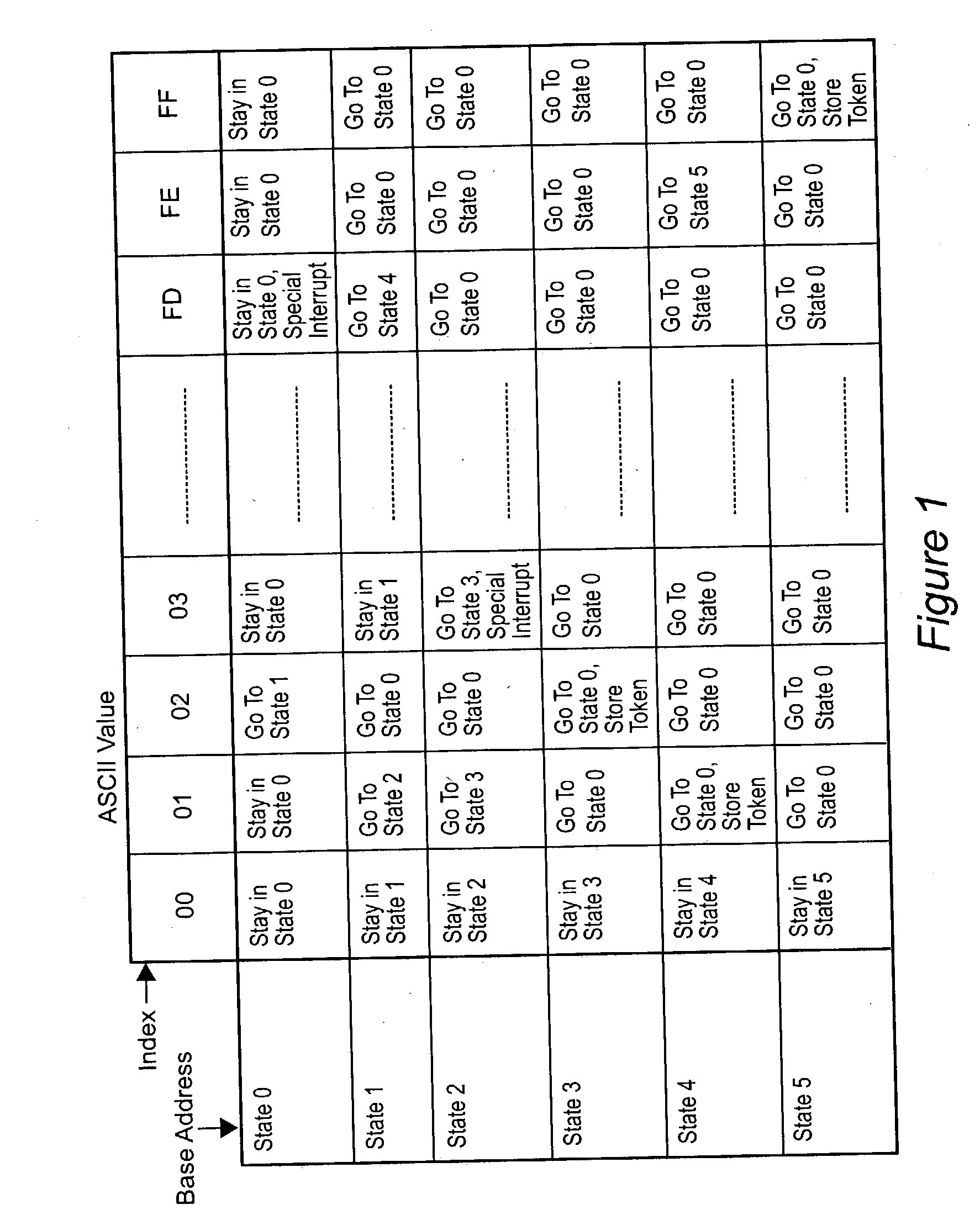

InactiveUS20040083466A1Software engineeringNatural language data processingHigh speed memoryTrace table

Dedicated hardware is employed to perform parsing of documents such as XML(TM) documents in much reduced time while removing a substantial processing burden from the host CPU. The conventional use of a state table is divided into a character palette, a state table in abbreviated form, and a next state palette. The palettes may be implemented in dedicated high speed memory and a cache arrangement may be used to accelerate accesses to the abbreviated state table. Processing is performed in parallel pipelines which may be partially concurrent. dedicated registers may be updated in parallel as well and strings of special characters of arbitrary length accommodated by a character palette skip feature under control of a flag bit to further accelerate parsing of a document.

Owner:LOCKHEED MARTIN CORP

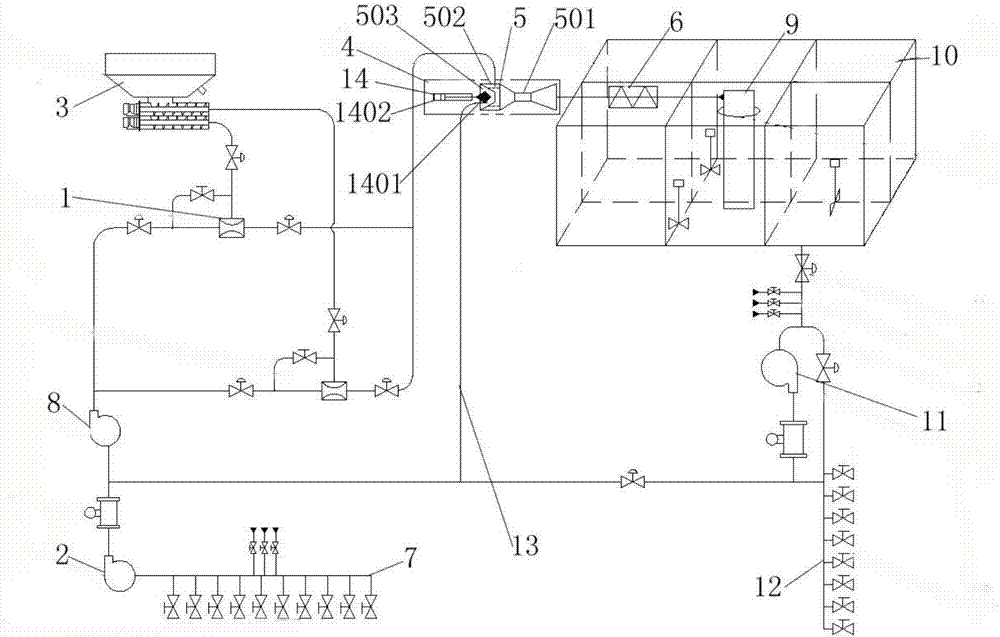

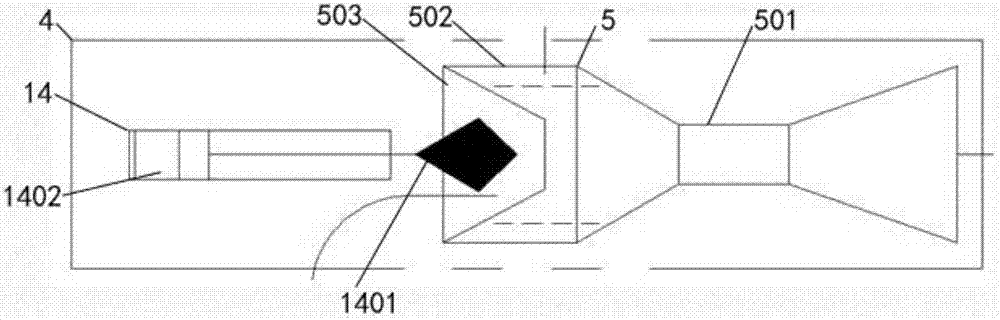

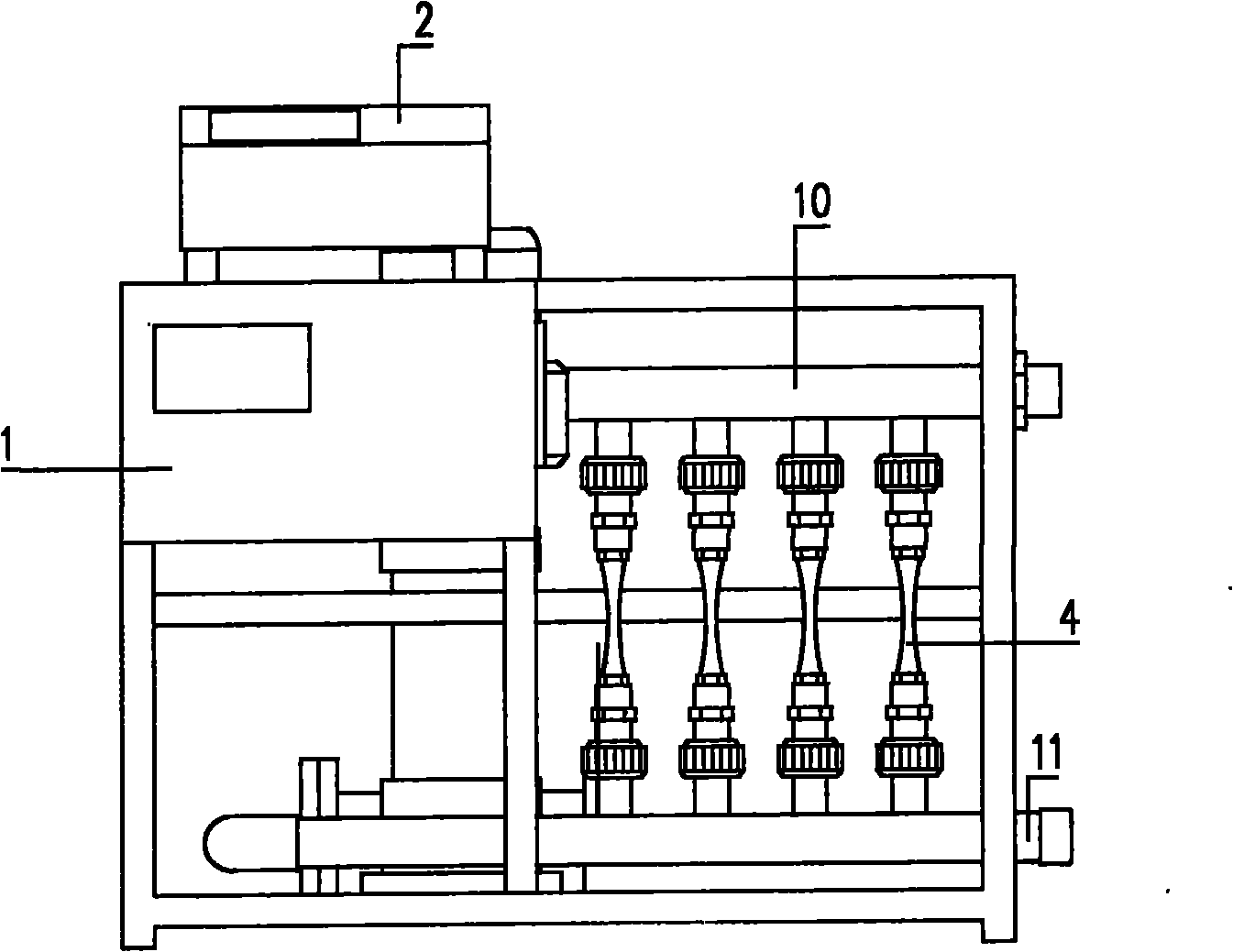

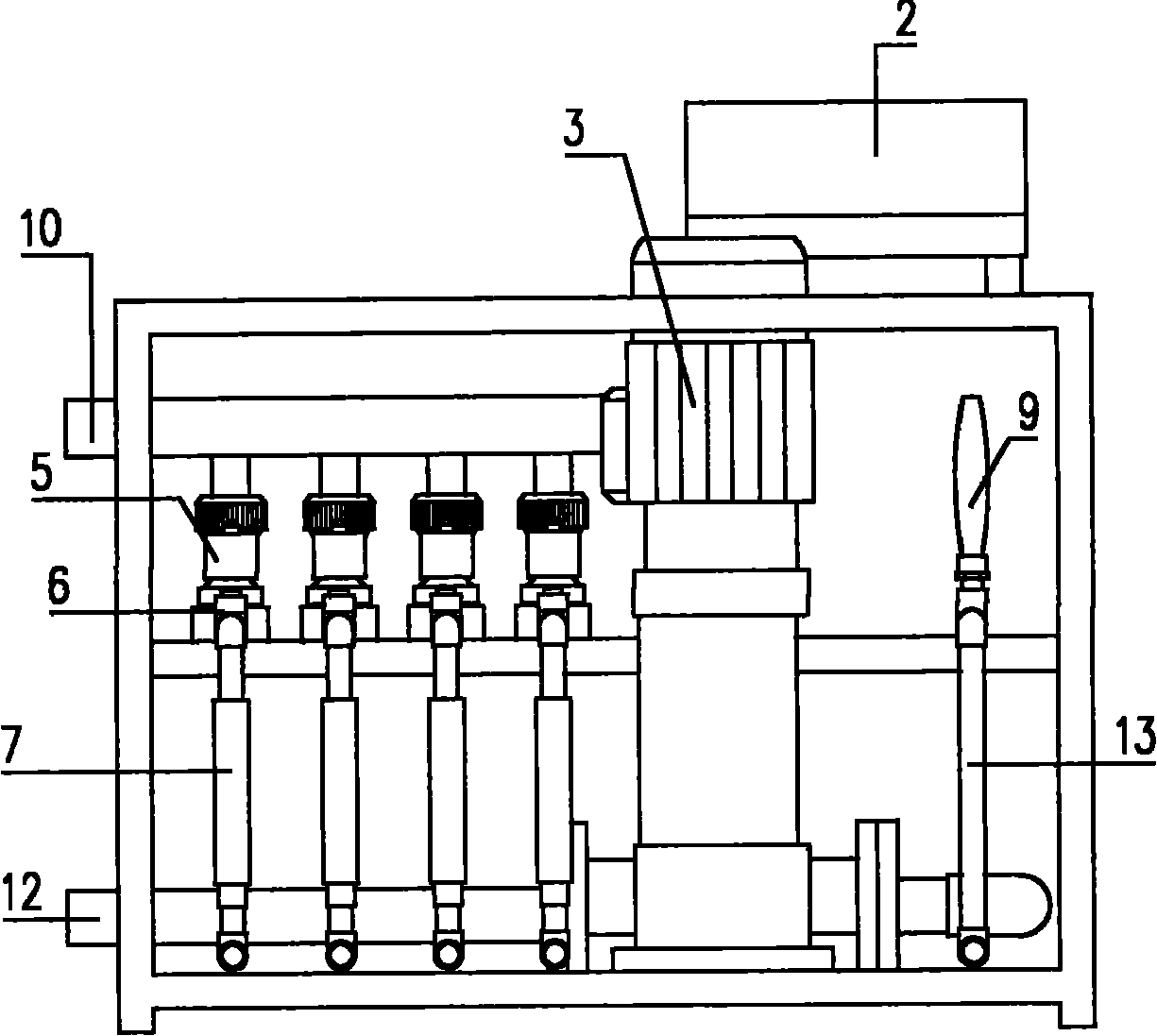

Mixing system of fracturing fluid and mixing method thereof

ActiveCN107159046AHigh work pressureReduce discharge pressureFlow mixersTransportation and packagingFluid viscosityFracturing fluid

The invention provides a mixing system of fracturing fluid, which comprises a first mixer, a base fluid supply piece and a powder supply piece; the first mixer is a jet mixer, and a base fluid input end of the first mixer is connected with the base fluid supply piece; a powder input end of the first mixer is connected with the powder supply piece, and the first mixer is linked in parallel with a parallel pipeline capable of conveying the base fluid; the base fluid input end of the parallel pipeline is connected with the base fluid output end of the base fluid supplier, and the first mixer is linked with a second mixer in series; the second mixer is a variable flow jet type mixer, a fracturing fluid input end of the second mixer is connected with a fracturing fluid output end of the first mixer; the base fluid input end of the second mixer is connected with the base fluid output end of the parallel pipeline. The mixing system of fracturing fluid can meet the emission and fluid viscosity of the fracturing fluid simultaneously.

Owner:YANTAI JEREH PETROLEUM EQUIP & TECH CO LTD +1

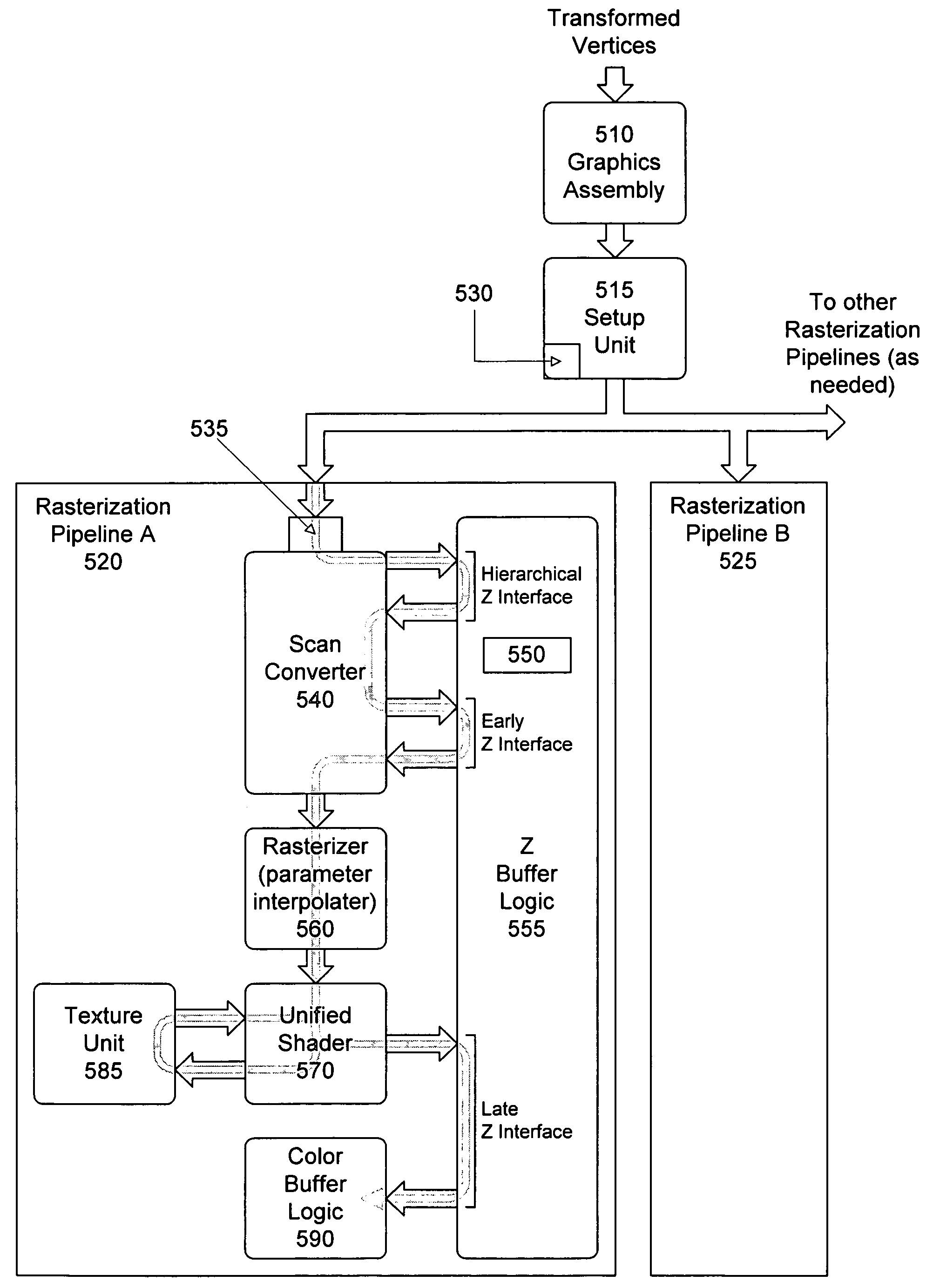

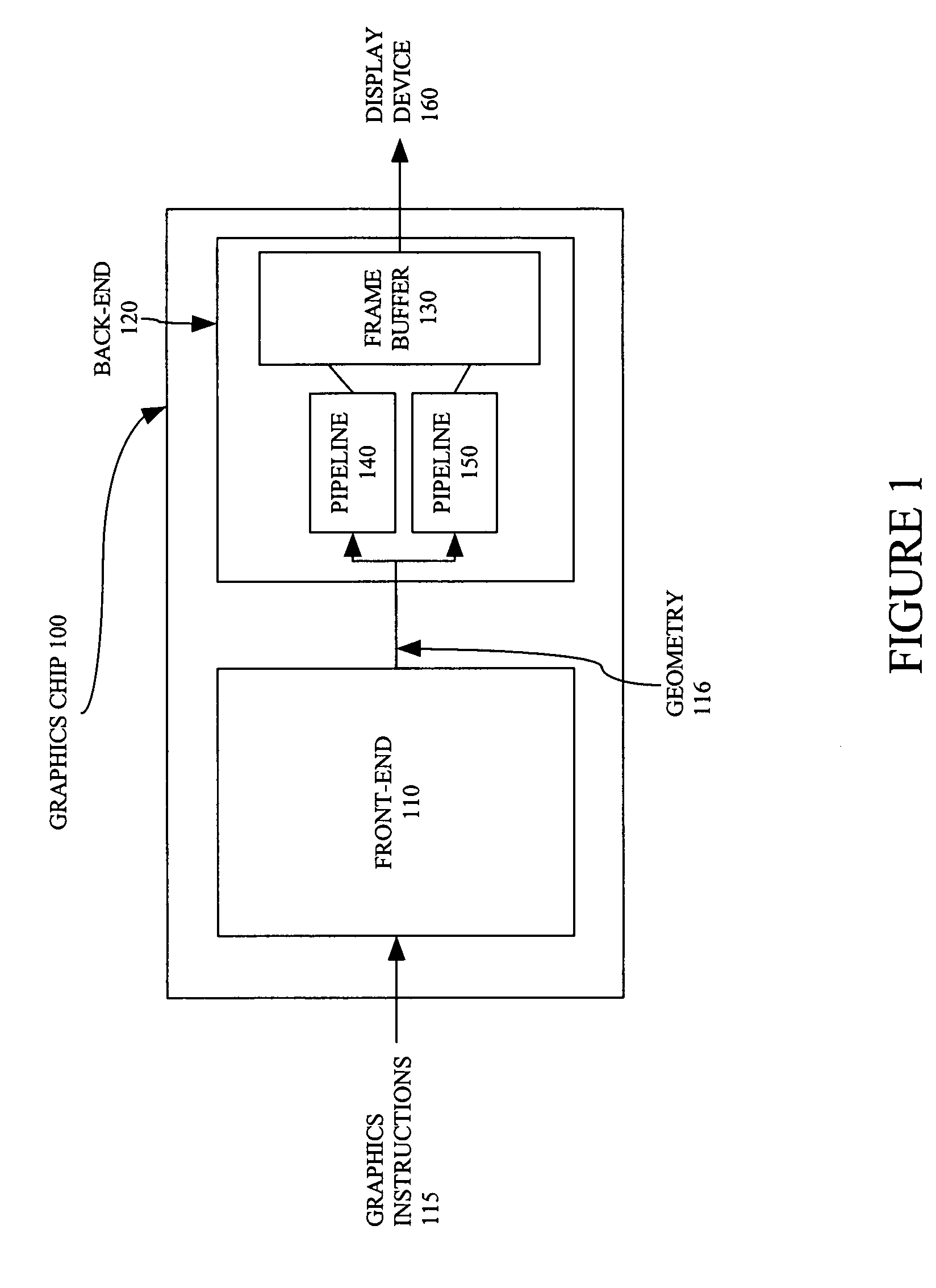

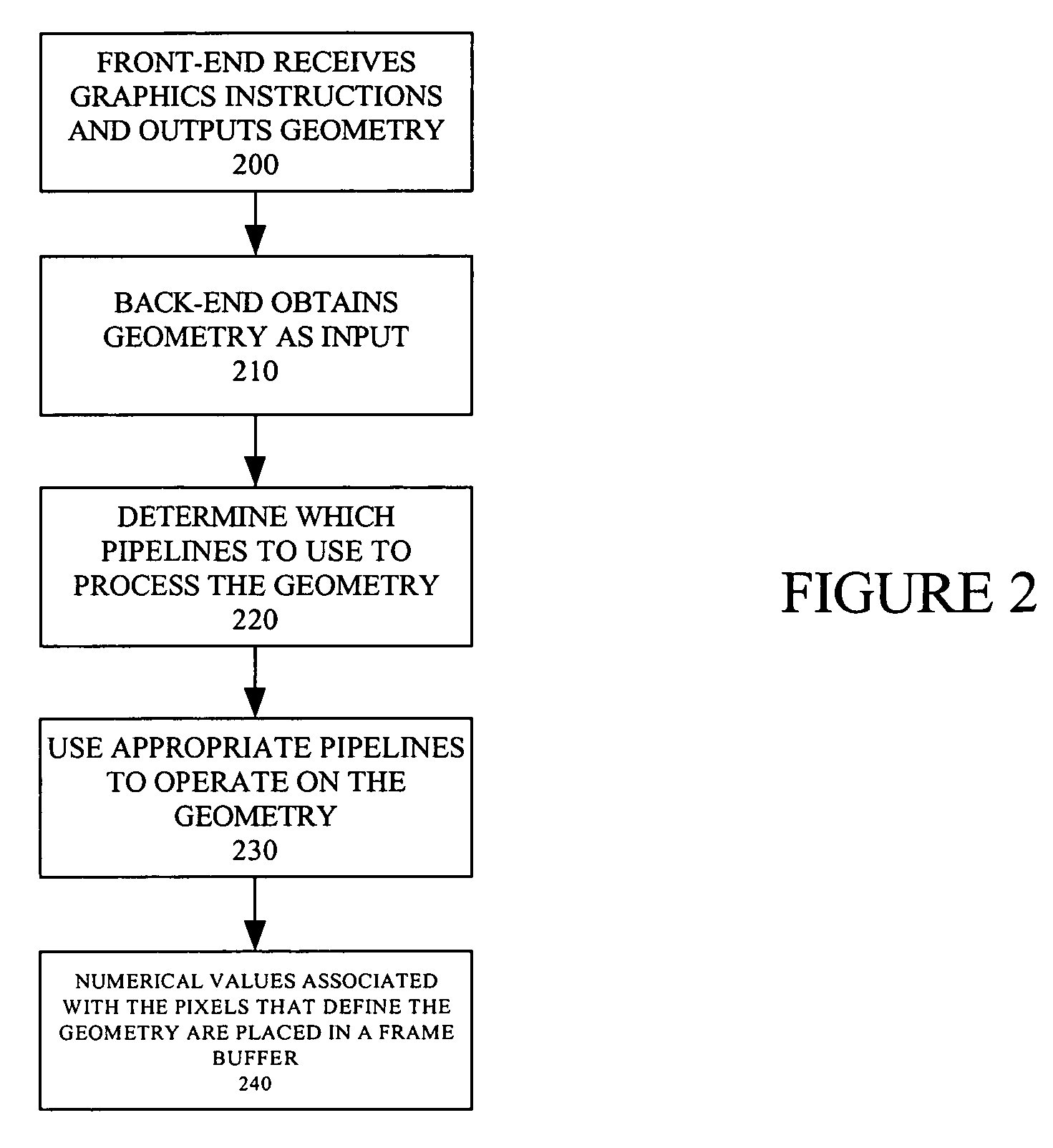

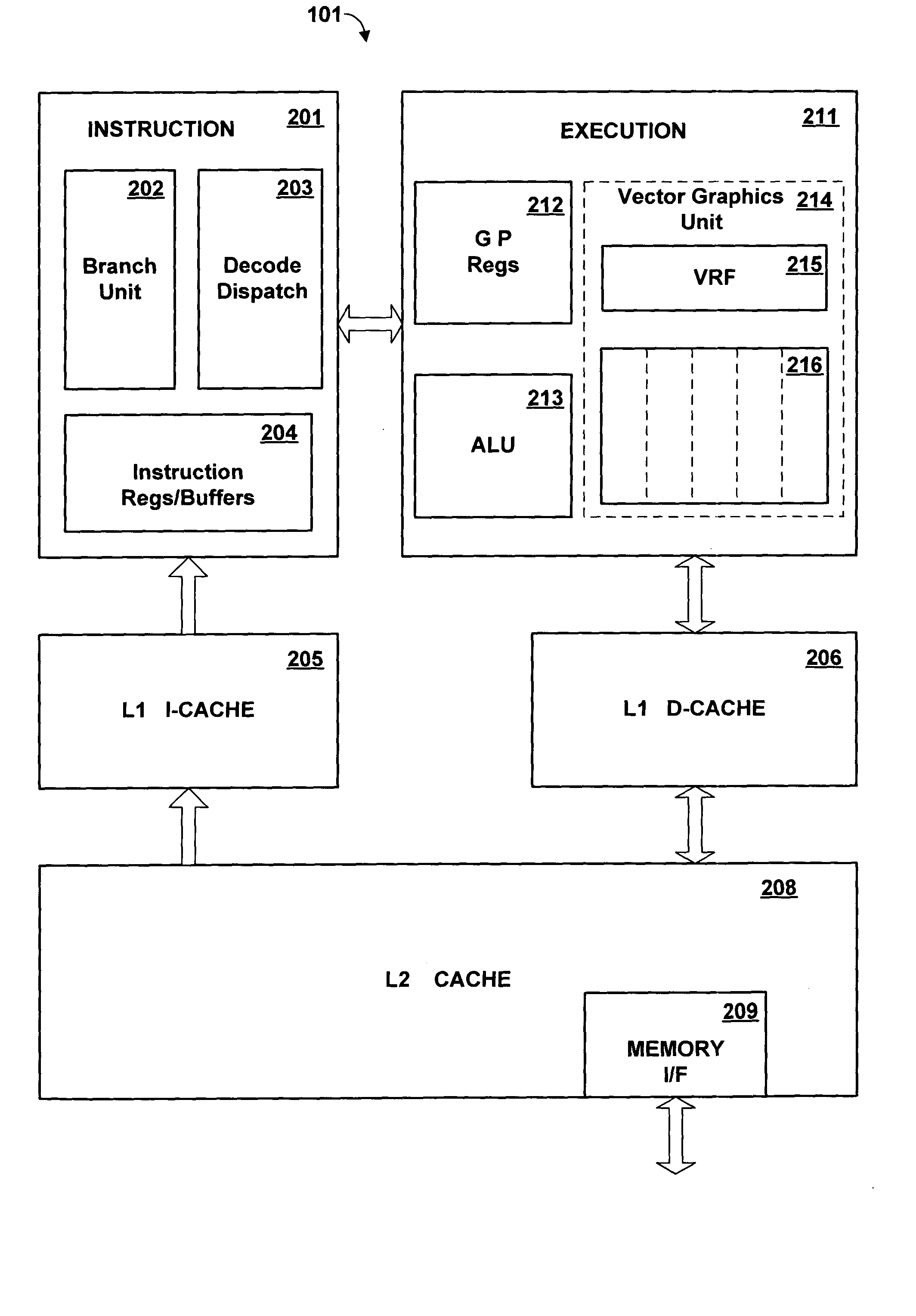

Parallel pipeline graphics system

ActiveUS7633506B1Precise definitionCathode-ray tube indicatorsProcessor architectures/configurationComputational scienceScan conversion

The present invention relates to a parallel pipeline graphics system. The parallel pipeline graphics system includes a back-end configured to receive primitives and combinations of primitives (i.e., geometry) and process the geometry to produce values to place in a frame buffer for rendering on screen. Unlike prior single pipeline implementation, some embodiments use two or four parallel pipelines, though other configurations having 2^n pipelines may be used. When geometry data is sent to the back-end, it is divided up and provided to one of the parallel pipelines. Each pipeline is a component of a raster back-end, where the display screen is divided into tiles and a defined portion of the screen is sent through a pipeline that owns that portion of the screen's tiles. In one embodiment, each pipeline comprises a scan converter, a hierarchical-Z unit, a z buffer logic, a rasterizer, a shader, and a color buffer logic.

Owner:ATI TECH INC

Pipelined packet switching and queuing architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

Efficient packet processing pipeline device and method

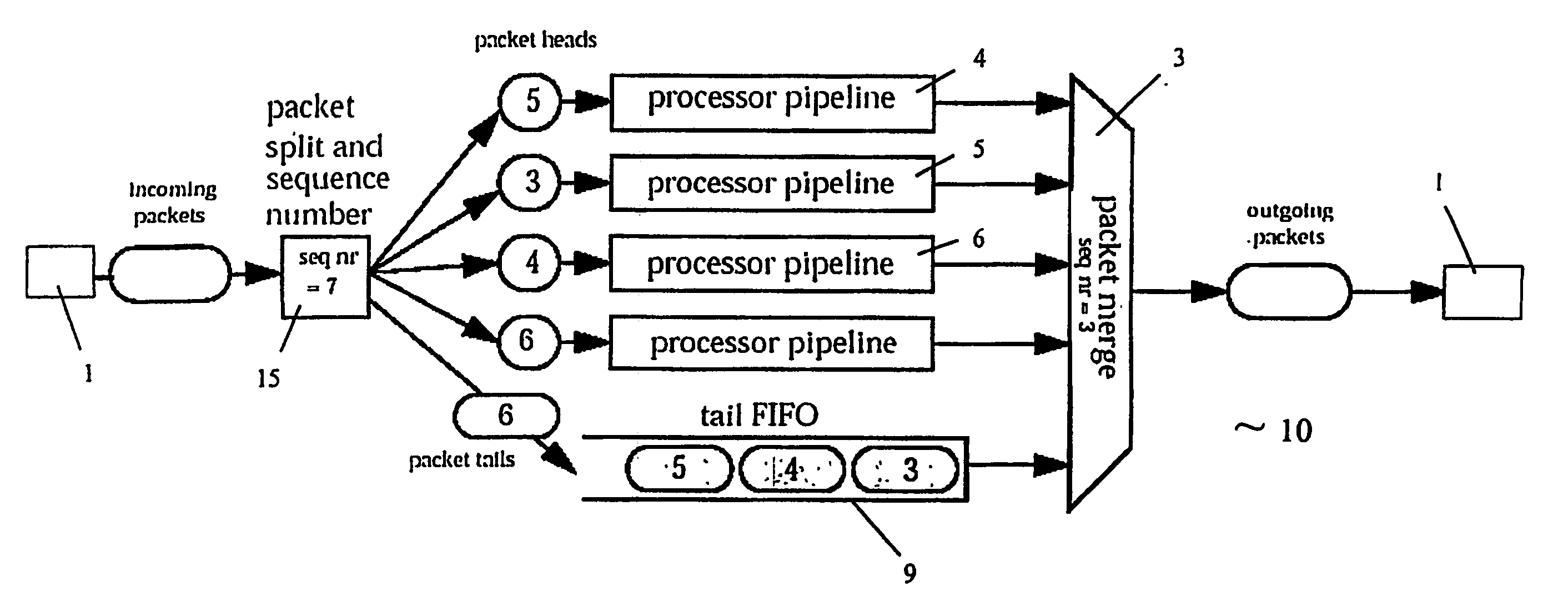

InactiveUS20050232303A1Improve efficiencyReduce usageTime-division multiplexData switching networksComputer hardwarePacket switched

A packet processing apparatus for processing data packets for use in a packet switched network includes means for receiving a packet, means for adding administrative information to a first data portion of the packet, the administrative information including at least an indication of at least one process to be applied to the first data portion, and a plurality of parallel pipelines, each pipeline comprising at least one processing unit, wherein the processing unit carries out the process on the first data portion indicated by the administrative information to provide a modified first data portion. According to a method, the tasks performed by each processing unit are organized into a plurality of functions such that there are substantially only function calls and no interfunction calls and that at the termination of each function called by the function call for one processing unit, the only context is a first data portion.

Owner:TRANSWITCH

Pipelined packet switching and queuing architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

Pipelined Packet Switching and Queuing Architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

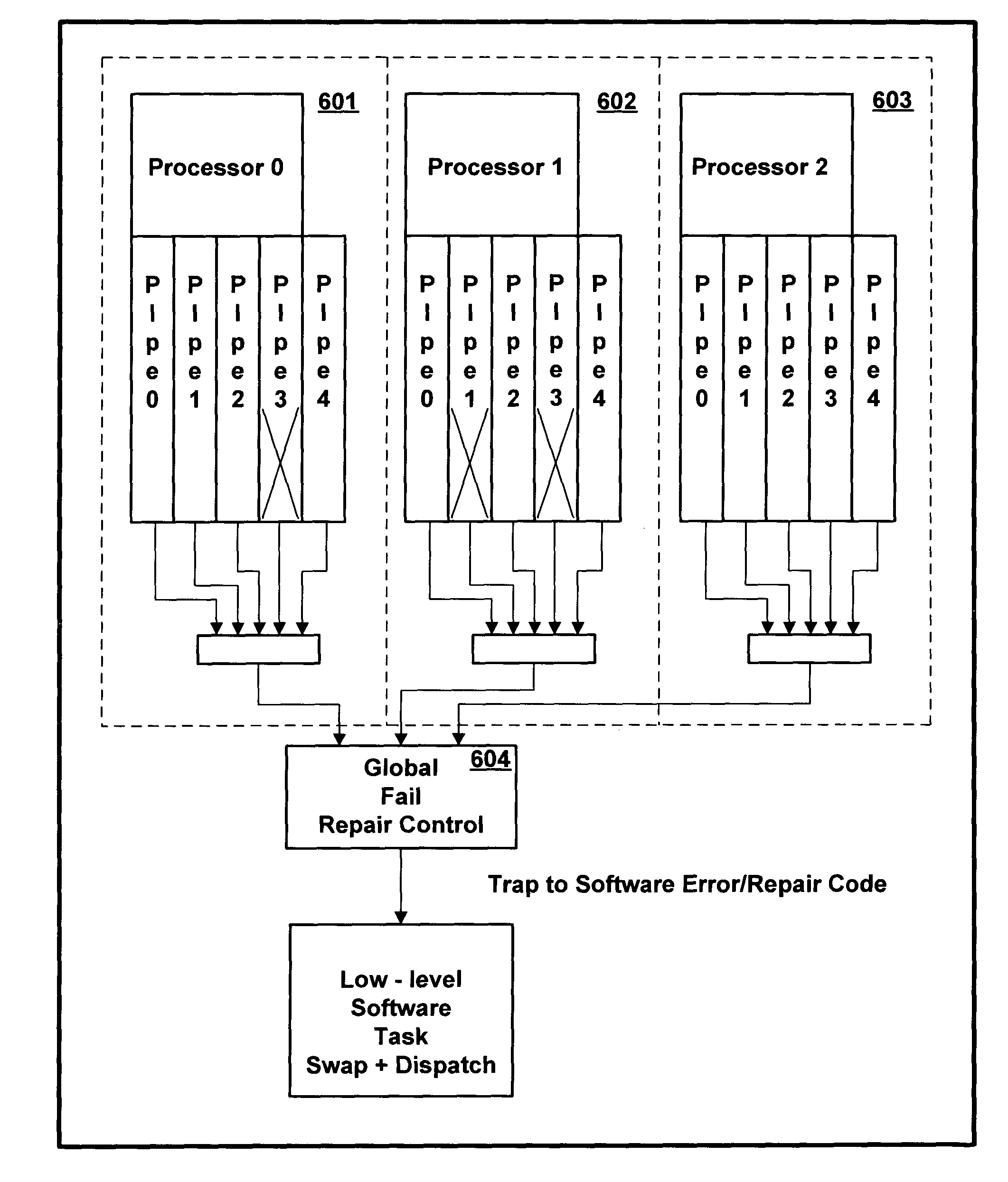

Multiple parallel pipeline processor having self-repairing capability

InactiveUS20050066148A1Error detection/correctionGeneral purpose stored program computerData sourceOperand

A multiple parallel pipeline digital processing apparatus has the capability to substitute a second pipeline for a first in the event that a failure is detected in the first pipeline. Preferably, a redundant pipeline is shared by multiple primary pipelines. Preferably, the pipelines are located physically adjacent one another in an array. A pipeline failure causes data to be shifted one position within the array of pipelines, to by-pass the failing pipeline, so that each pipeline has only two sources of data, a primary and an alternate. Preferably, selection logic controlling the selection between a primary and alternate source of pipeline data is integrated with other pipeline operand selection logic.

Owner:IBM CORP

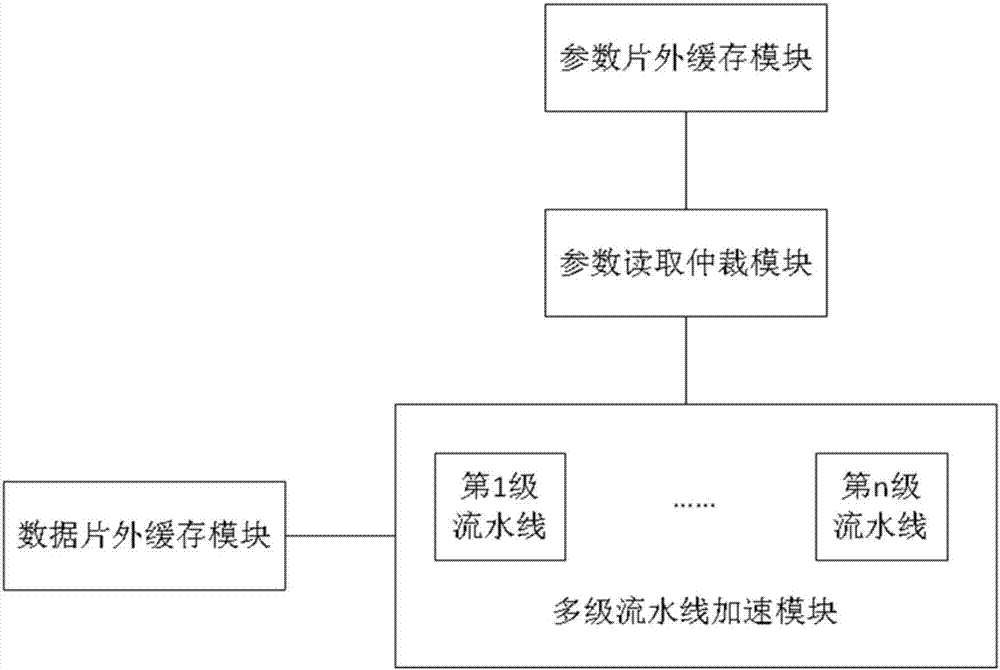

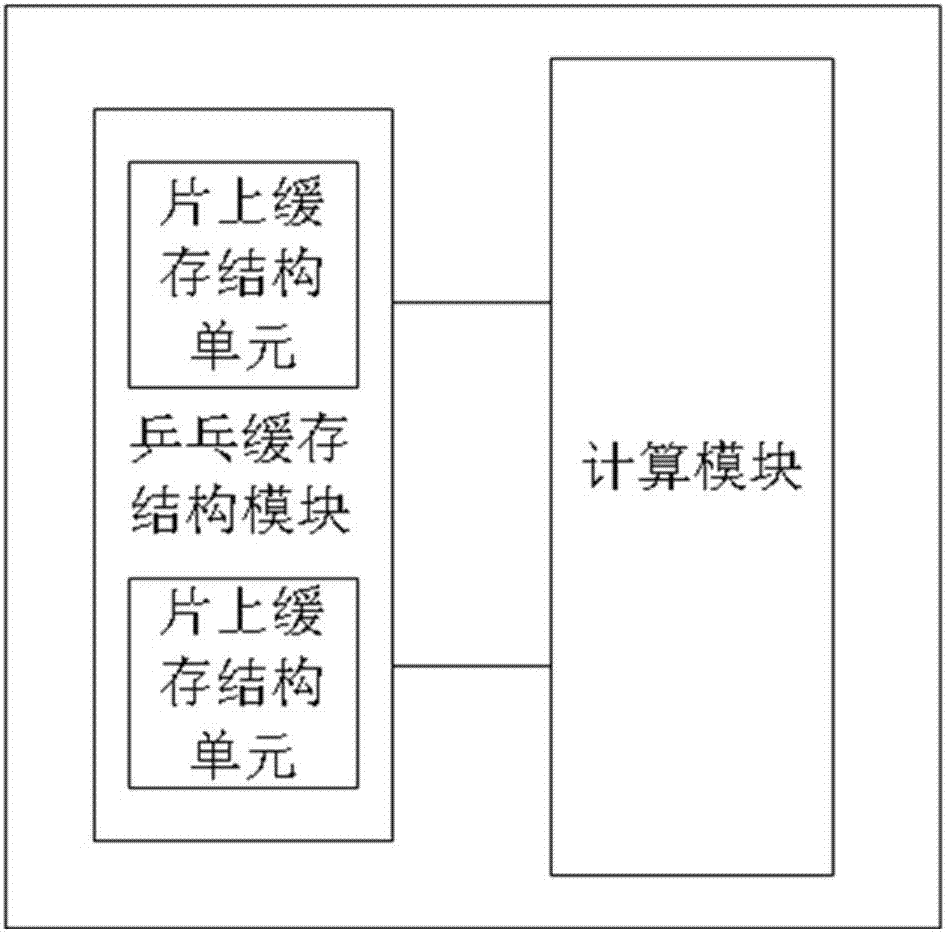

Hardware structure for realizing forward calculation of convolutional neural network

InactiveCN107066239AReduce cache requirementsImprove parallelismConcurrent instruction executionPhysical realisationHardware structureHardware architecture

The present application discloses a hardware structure for realizing forward calculation of a convolutional neural network. The hardware structure comprises: a data off-chip caching module, used for caching parameter data in each to-be-processed picture that is input externally into the module, wherein the parameter data waits for being read by a multi-level pipeline acceleration module; the multi-level pipeline acceleration module, connected to the data off-chip caching module and used for reading a parameter from the data off-chip caching module, so as to realize core calculation of a convolutional neural network; a parameter reading arbitration module, connected to the multi-level pipeline acceleration module and used for processing multiple parameter reading requests in the multi-level pipeline acceleration module, so as for the multi-level pipeline acceleration module to obtain a required parameter; and a parameter off-chip caching module, connected to the parameter reading arbitration module and used for storing a parameter required for forward calculation of the convolutional neural network. The present application realizes algorithms by adopting a hardware architecture in a parallel pipeline manner, so that higher resource utilization and higher performance are achieved.

Owner:智擎信息系统(上海)有限公司

Pipelined packet switching and queuing architecture

An architecture for a line card in a network routing device is provided. The line card architecture provides a bi-directional interface between the routing device and a network, both receiving packets from the network and transmitting the packets to the network through one or more connecting ports. In both the receive and transmit path, packets processing and routing in a multi-stage, parallel pipeline that can operate on several packets at the same time to determine each packet's routing destination is provided. Once a routing destination determination is made, the line card architecture provides for each received packet to be modified to contain new routing information and additional header data to facilitate packet transmission through the switching fabric. The line card architecture further provides for the use of bandwidth management techniques in order to buffer and enqueue each packet for transmission through the switching fabric to a corresponding destination port. The transmit path of the line card architecture further incorporates additional features for treatment and replication of multicast packets.

Owner:CISCO TECH INC

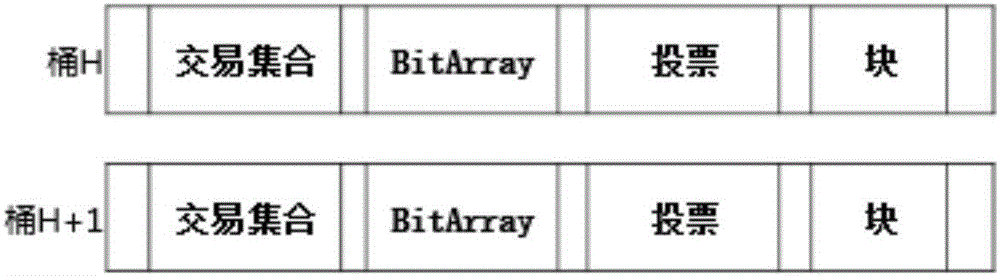

Block chain block-building method based on parallel PipeLine technology

ActiveCN106406896AEfficient use ofTake advantage ofSoftware designSpecific program execution arrangementsComputer resourcesRelationship - Father

The invention provides a block chain block-building method based on the parallel PipeLine technology. The block chain block-building method is characterized by comprising following steps of (1) storing data in a bucketing manner; (2) establishing a temporary data index; (3) changing a trigger point of a new round of block building; (4) processing remaining business; and (5) carrying out father Hash assignment operation. By means of the block chain block-building method, the computer resource on each node can be utilized fully, the block-building efficiency and the system scalability can be improved, the capacity of the CPU and the memory of the node is increased, the response speed of the system is further increased, the block-building information integrity and the block sequence are guaranteed by means of the bucket model, and are not affected by network delay and the like, and the block chain block-building method has good portability and can be applied in different block-building schemes.

Owner:ZEU CRYPTO NETWORKS INC

Accelerator system and method

InactiveUS6952409B2Improve throughputFaster and less-expensive to constructTime-division multiplexRadio/inductive link selection arrangementsTransmission protocolComputer hardware

A network accelerator for TCP / IP includes programmable logic for performing transparent protocol translation of streamed protocols for audio / video at network signaling rates. The programmable logic is configured in a parallel pipelined architecture controlled by state machines and implements processing for predictable patterns of the majority of transmissions which are stored in a content addressable memory, and are simultaneously stored in a dual port, dual bank application memory. The invention allows raw Internet protocol communications by cell phones, and cell phone to Internet gateway high capacity transfer that scales independent of a translation application, by processing packet headers in parallel and during memory transfers without the necessity of conventional store and forward techniques.

Owner:JOLITZ LYNNE G

Bypass automatic fertigation device and method thereof

InactiveCN101803507ASimple and fast connectionReduce duplication of investmentPressurised distribution of liquid fertiliserMaterial resistanceAgricultural engineeringFertigation

The invention provides a bypass automatic fertigation device which comprises a mixed fertilizer unit, an EC / PH detection unit, a control unit and an execution unit; the mixed fertilizer unit comprises a plurality of fertilizer injection pipelines, a pH regulating pipeline which are parallelly connected with the fertilizer injection pipelines, and water inlet pipelines and mixed fertilizer pipelines which are respectively arranged on the front end and the rear end of the parallel pipelines; the EC / PH detection unit is used for detecting the EC and the pH of the mixed fertilizer solution in the mixed fertilizer pipelines; the control unit controls the proportion of the fertilizer solution in the mixed fertilizer pipelines by respectively controlling the fertilizer injection and the conditioning solution injection of the fertilizer injection pipelines and the ph regulating pipeline, so that they meet the set values; and the execution unit responds to the fertilizing instructions of the control unit, and leads the fertilizer solution in the mixed fertilizer pipelines into a farmland irrigation network. The device can precisely control the fertilizing concentration, the fertilizing proportion and the fertilization in the fertilizing process, and can be simply and quickly connected with an irrigation system with a certain scale and an irrigation head with a certain size, so as to reduce the equipment cost.

Owner:BEIJING RES CENT OF INTELLIGENT EQUIP FOR AGRI

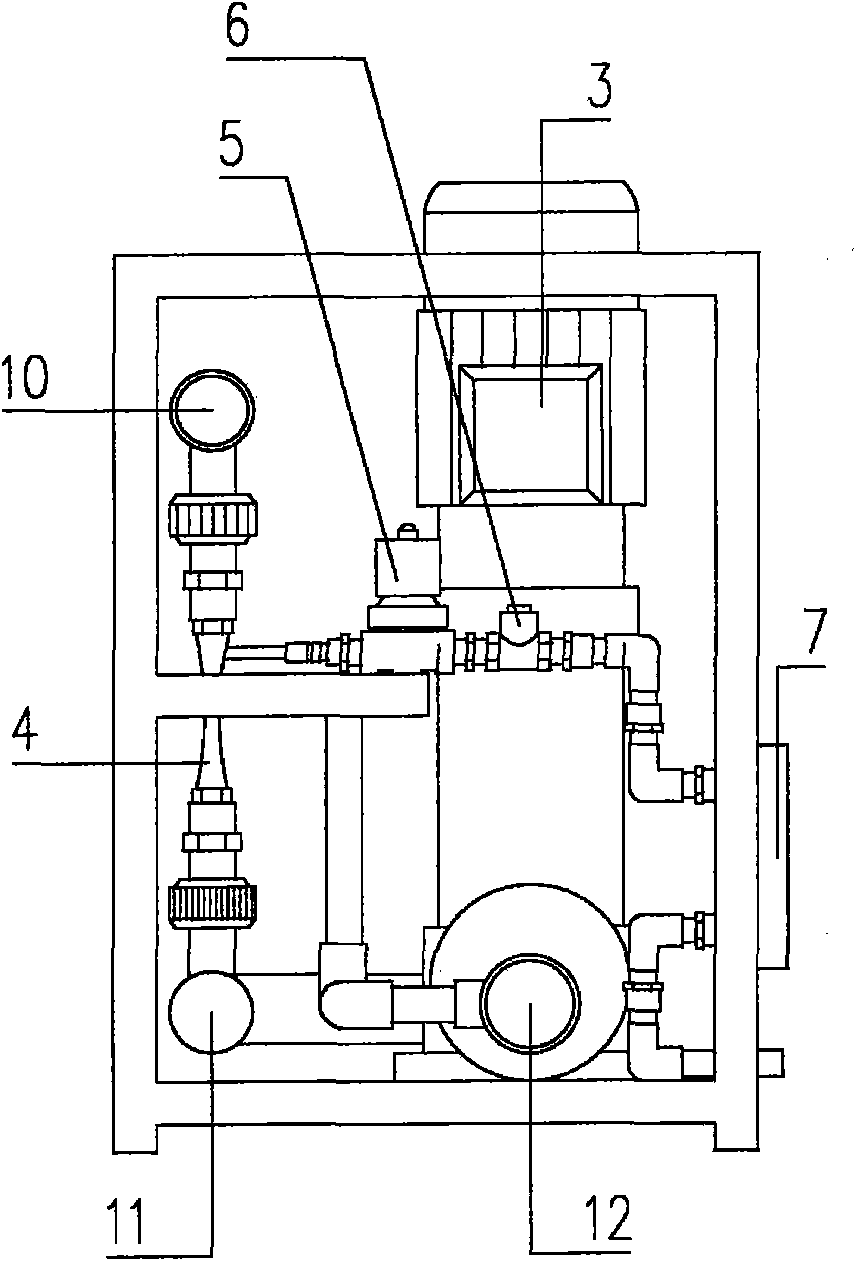

Stereoscopic vision optical tracking system aiming at multipoint targets

InactiveCN101694716ATrack real-time without dropping framesGet rid of the bondageImage analysisProcessor architectures/configurationDual coreWorkstation

The invention discloses a stereoscopic vision optical tracking system aiming at multipoint targets, which belongs to the field of the mechanical vision and positioning tracking technique. The stereoscopic vision optical tracking system utilizes a mode based on hardware realization, utilizes a binocular camera to acquire targets and original images of the background, adopts a parallel pipeline to identify and mark the targets rapidly and utilizes a dual-core digital signal processor to perform parallel operation, and then space positioning and tracking of the target is completed. The parallel pipeline processing and the dual-core serial computing are combined, and then hardware resources are allocated reasonably according to different algorithm characteristics. The stereoscopic vision optical tracking system is free of constraint of a PC or working station and breaks through the bottleneck of software computing resources of the PC or working station. In addition, the system can perform real-time positioning and tracking on multipoint targets in space in different illumination environments with different target illumination strengths, and can real-time track over 100 point targets in space in a non-drop frame form. Tracking precision of the system can reach 0.5mm RMS.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

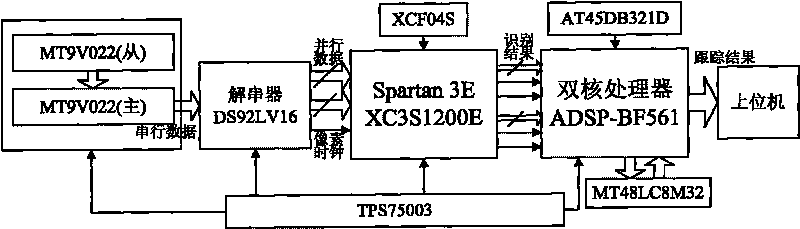

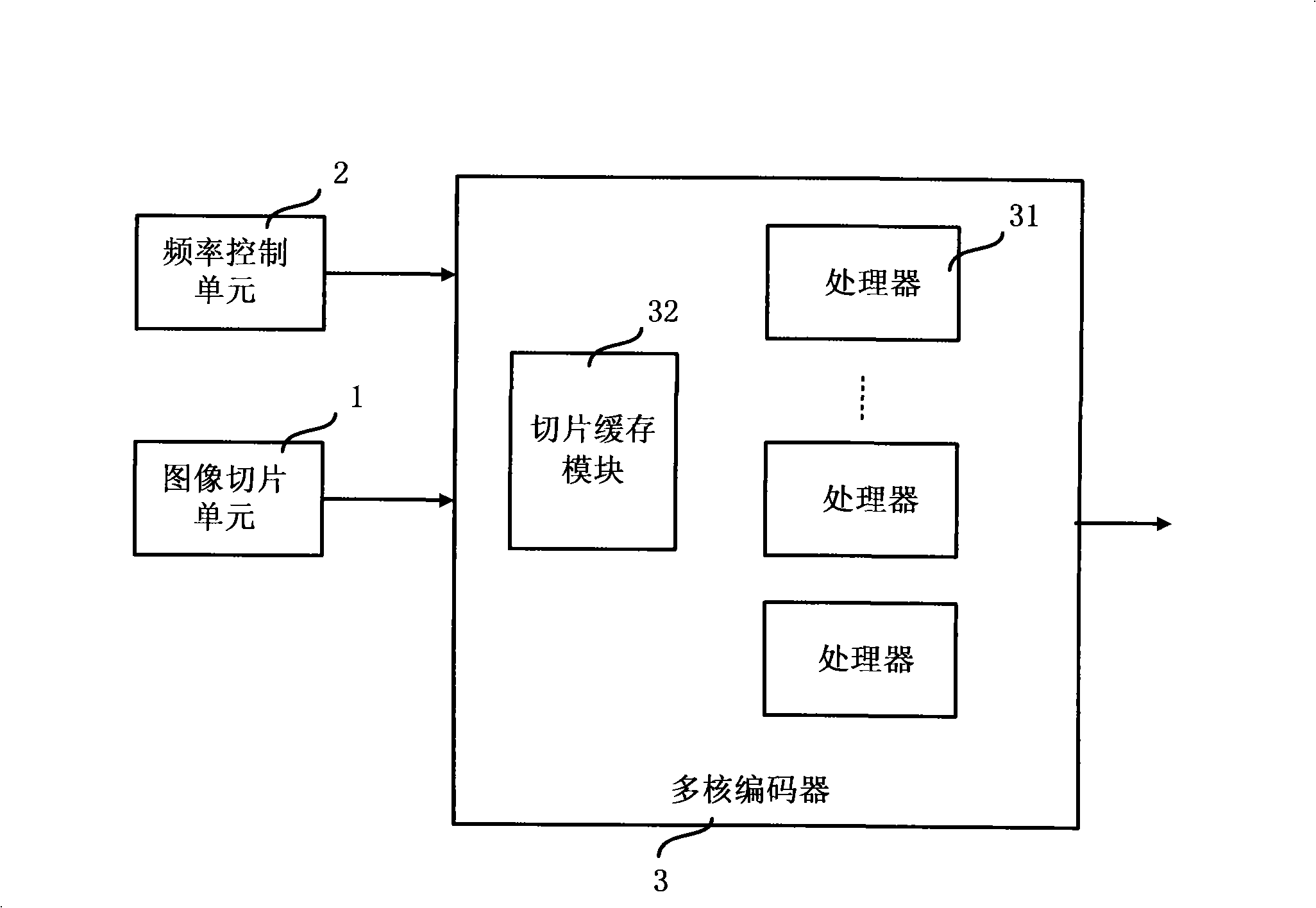

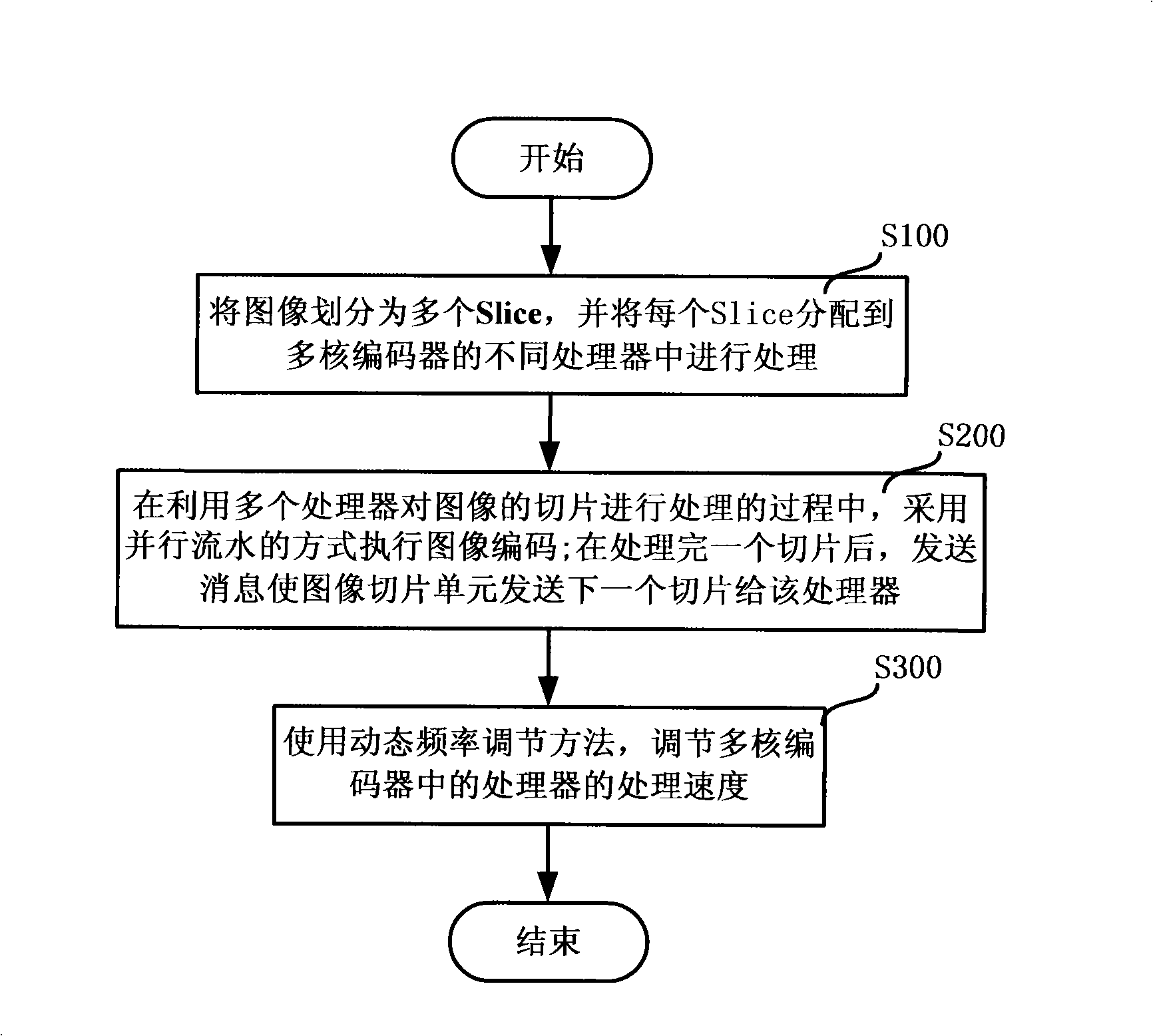

A multi-processor video coding chip device and method

ActiveCN101267564ADoes not affect running speedRun syncTelevision systemsDigital video signal modificationComputer architectureMulti processor

The invention discloses a device and a method for a multiprocessor video coding chip. The device comprises a multi-core coder including multiple processors, and an image slice unit. The image slice unit is used for segmenting a video image into multiple slices, and distributing the slices to different processors of the multi-core coder; the multi-core coder is used for imaging coding by means of parallel pipeline when processing the image slices by multiple processors, and sending a massage to the image slice unit after a processor of the multi-core coder finishes processing one slice, such that the image slice unit sends a next slice to the processor. The device also comprises a frequency control unit for adjusting the processing speeds of the processors of the multi-core coder by using dynamic frequency adjusting method. The invention can greatly reduce power consumption without affecting the running speed of a whole task.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

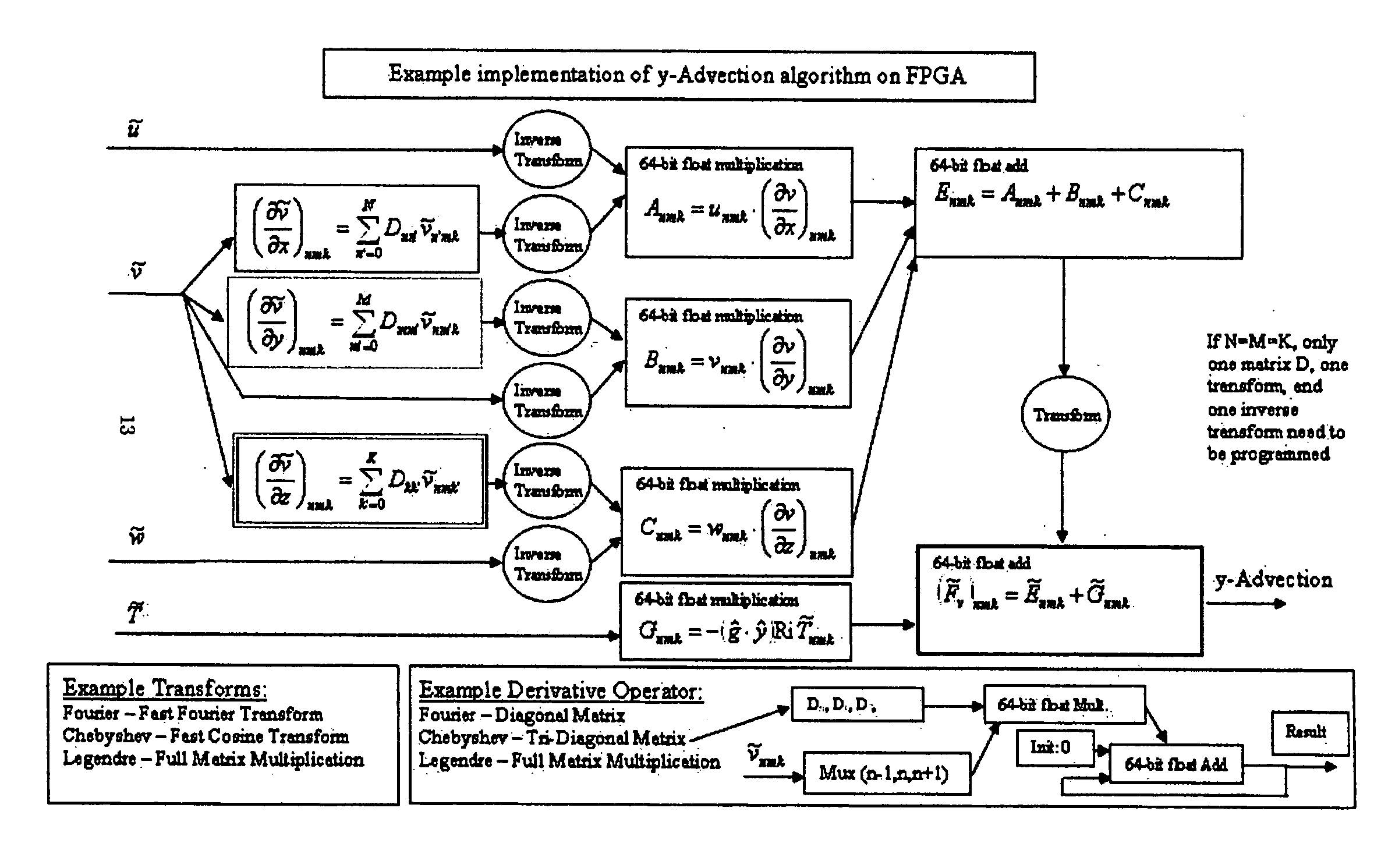

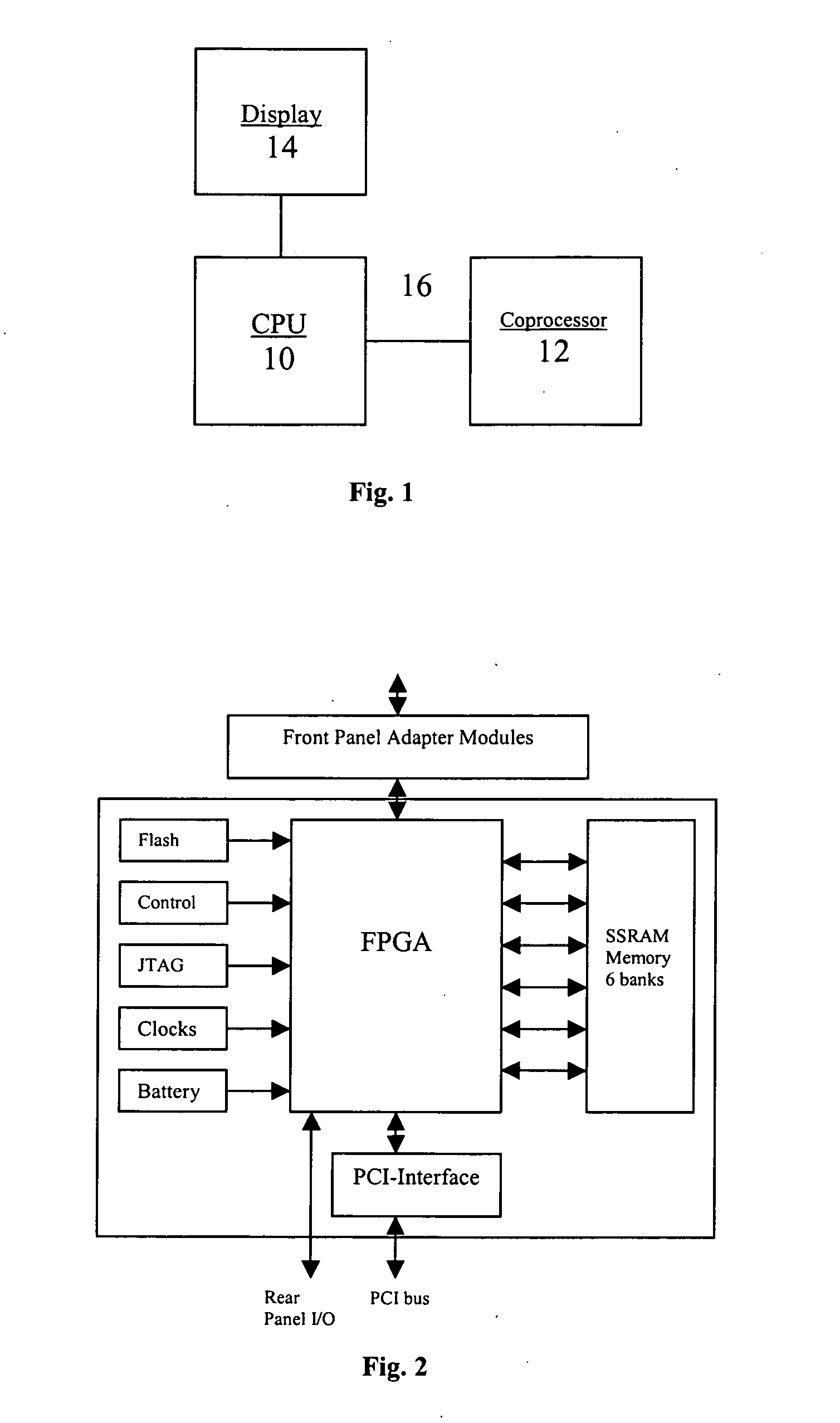

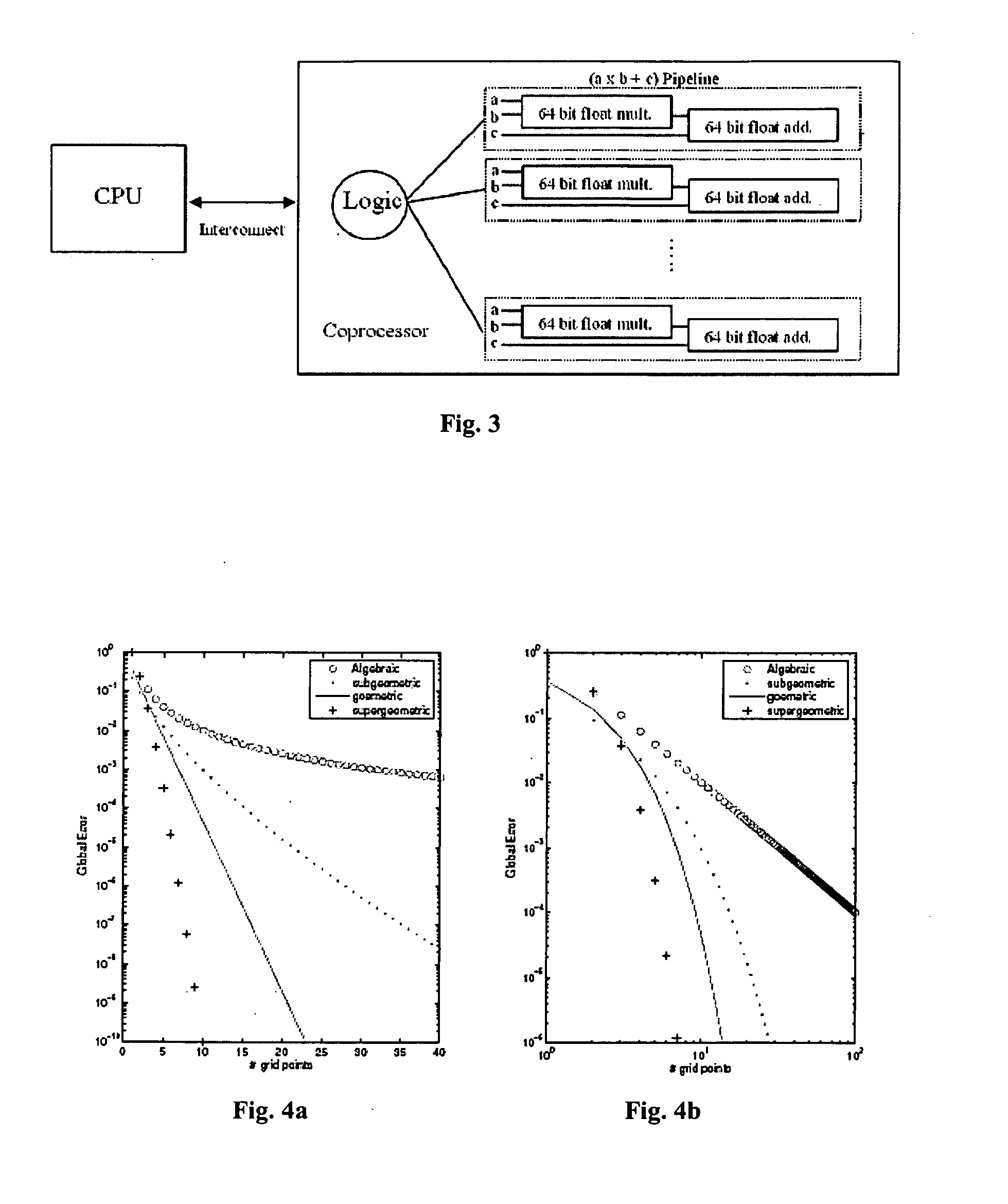

Computational fluid dynamics (CFD) coprocessor-enhanced system and method

InactiveUS20070219766A1Cost-effectiveWide variety of usDesign optimisation/simulationProgram controlCoprocessorDisplay device

The present invention provides a system, method and product for porting computationally complex CFD calculations to a coprocessor in order to decrease overall processing time. The system comprises a CPU in communication with a coprocessor over a high speed interconnect. In addition, an optional display may be provided for displaying the calculated flow field. The system and method include porting variables of governing equations from a CPU to a coprocessor; receiving calculated source terms from the coprocessor; and solving the governing equations at the CPU using the calculated source terms. In a further aspect, the CPU compresses the governing equations into combination of higher and / or lower order equations with fewer variables for porting to the coprocessor. The coprocessor receives the variables, iteratively solves for source terms of the equations using a plurality of parallel pipelines, and transfers the results to the CPU. In a further aspect, the coprocessor decompresses the received variables, solves for the source terms, and then compresses the results for transfer to the CPU. The CPU solves the governing equations using the calculated source terms. In a further aspect, the governing equations are compressed and solved using spectral methods. In another aspect, the coprocessor includes a reconfigurable computing device such as a Field Programmable Gate Array (FPGA). In yet another aspect, the coprocessor may be used for specific applications such as Navier-Stokes equations or Euler equations and may be configured to more quickly solve non-linear advection terms with efficient pipeline utilization.

Owner:VIRGINIA TECH INTPROP INC

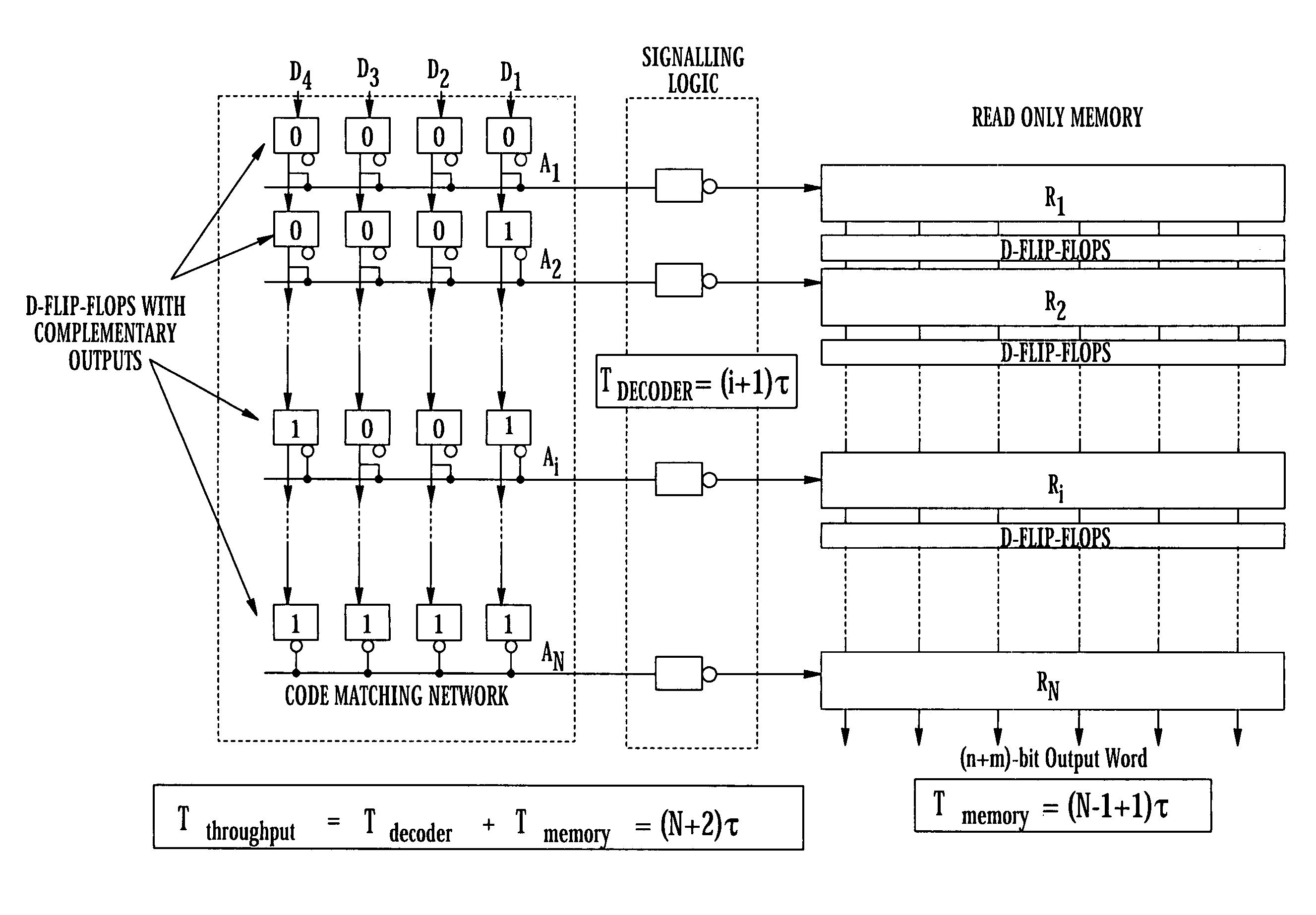

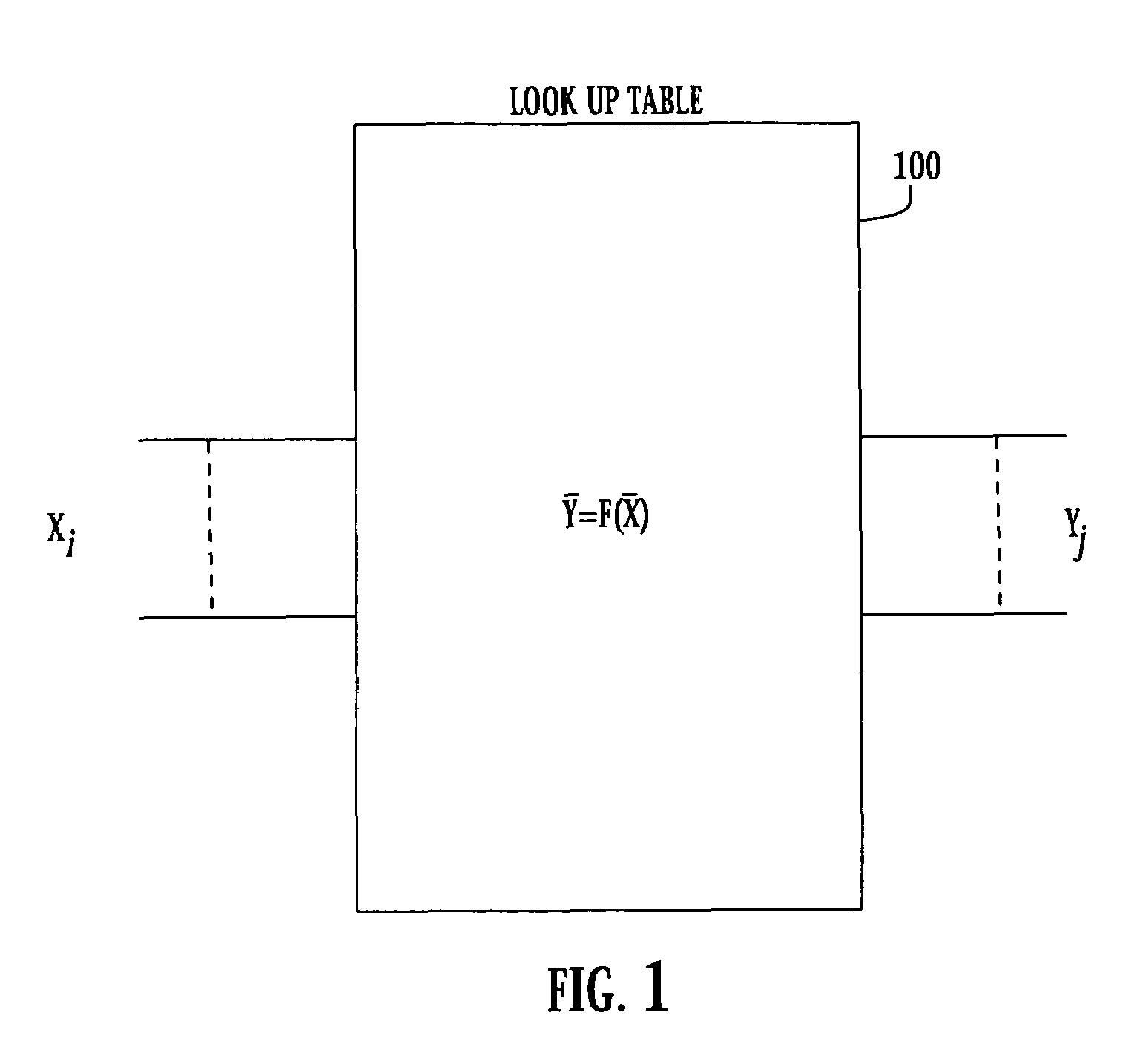

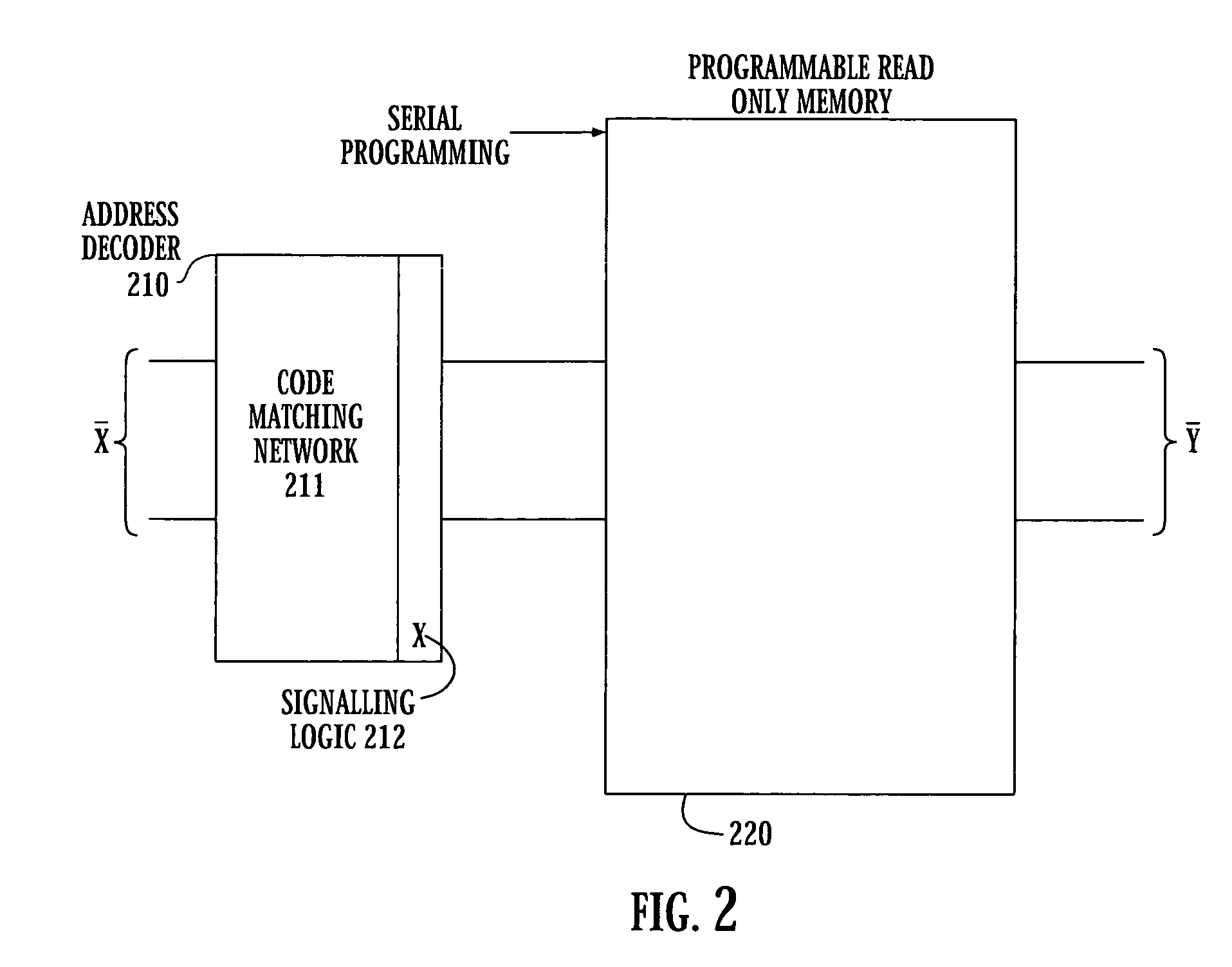

Superconducting circuit for high-speed lookup table

ActiveUS7443719B2Superconductors/hyperconductorsRead-only memoriesProgrammable read-only memoryReprogramming

A high-speed lookup table is designed using Rapid Single Flux Quantum (RSFQ) logic elements and fabricated using superconducting integrated circuits. The lookup table is composed of an address decoder and a programmable read-only memory array (PROM). The memory array has rapid parallel pipelined readout and slower serial reprogramming of memory contents. The memory cells are constructed using standard non-destructive reset-set flip-flops (RSN cells) and data flip-flops (DFF cells). An n-bit address decoder is implemented in the same technology and closely integrated with the memory array to achieve high-speed operation as a lookup table. The circuit architecture is scalable to large two-dimensional data arrays.

Owner:SEEQC INC

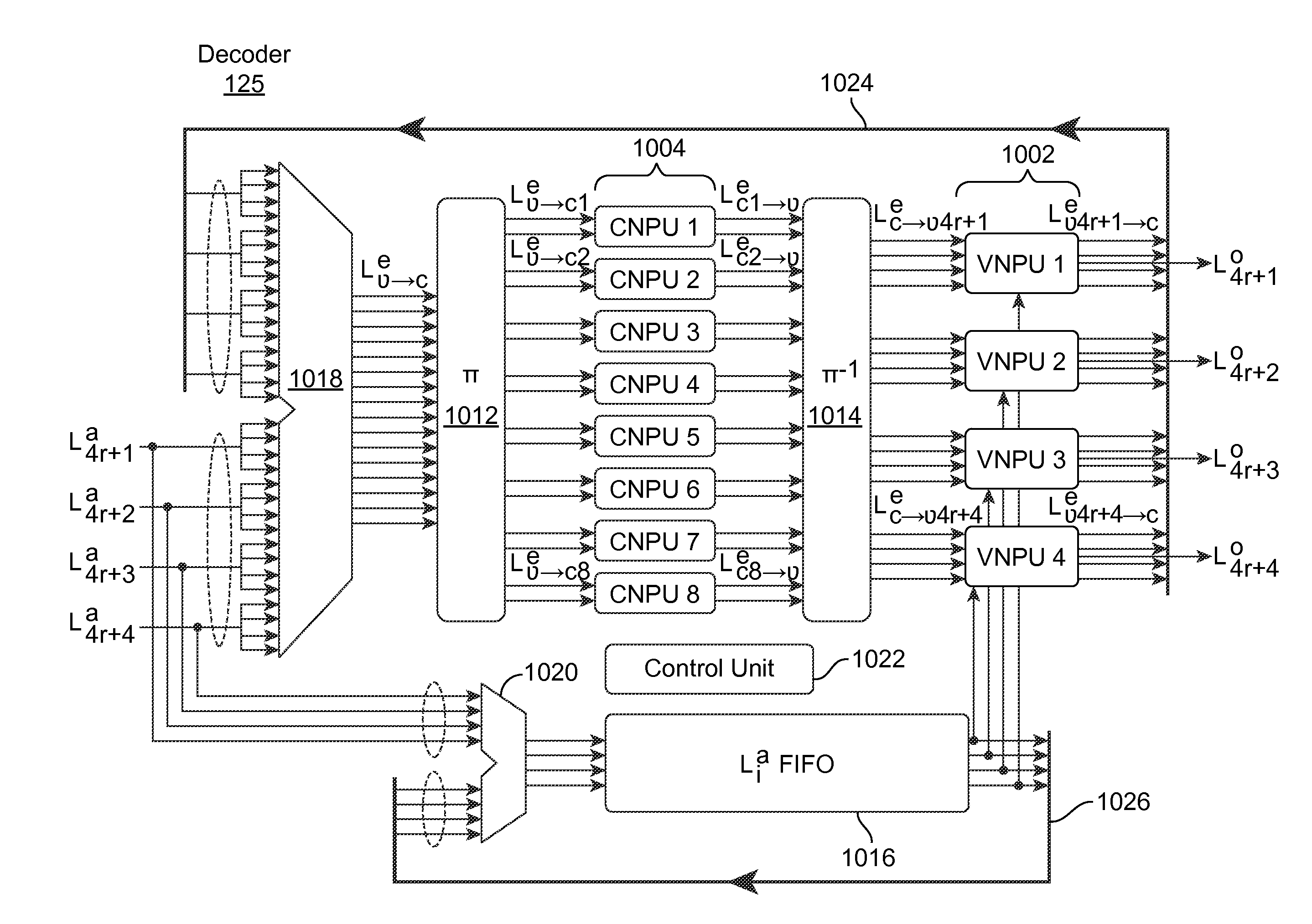

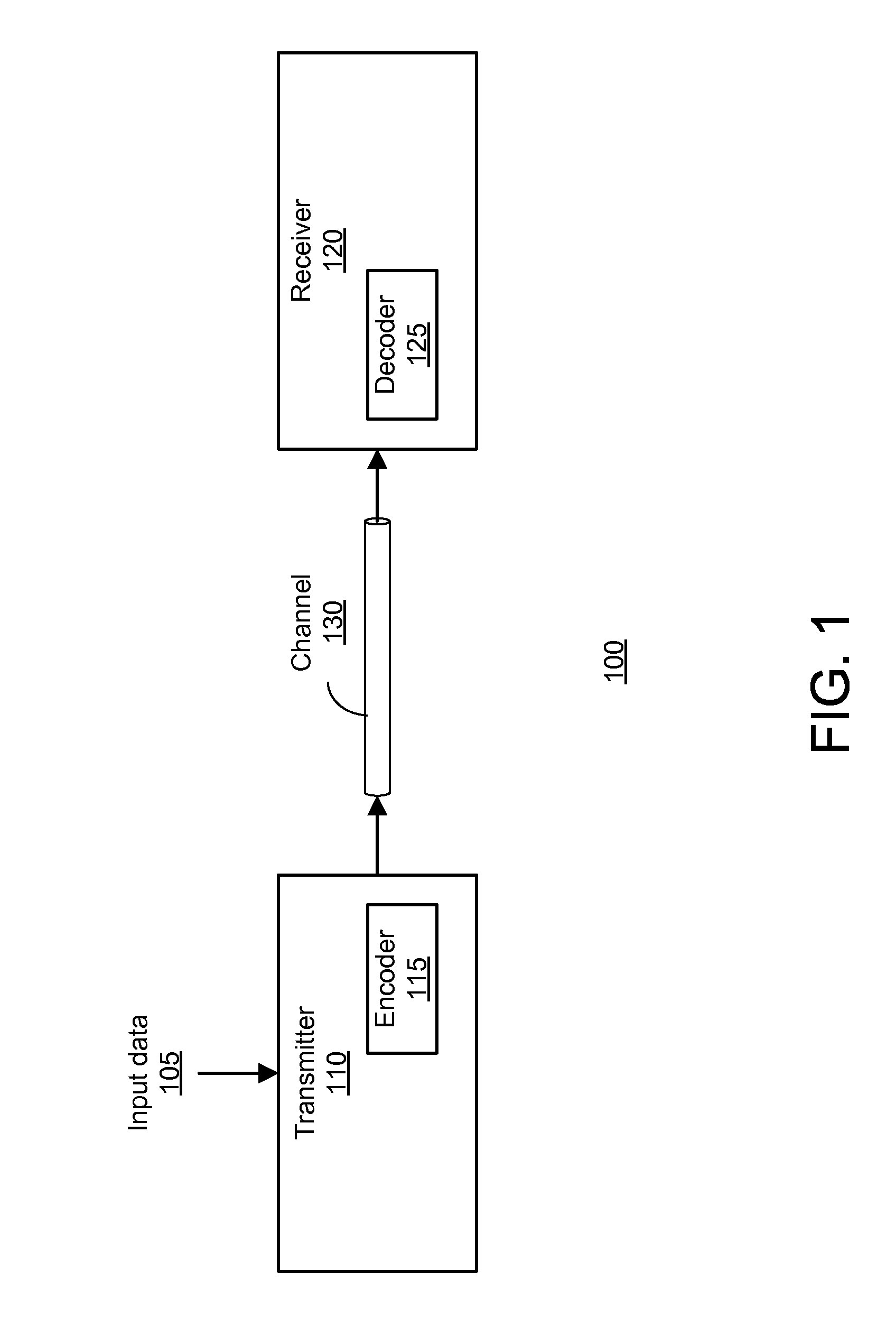

Non-Concatenated FEC Codes for Ultra-High Speed Optical Transport Networks

ActiveUS20120221914A1Improves decoding processAvoid delayError preventionChecking code calculationsUltra high speedParallel computing

A decoder performs forward error correction based on quasi-cyclic regular column-partition low density parity check codes. A method for designing the parity check matrix reduces the number of short-cycles of the matrix to increase performance. An adaptive quantization post-processing technique further improves performance by eliminating error floors associated with the decoding. A parallel decoder architecture performs iterative decoding using a parallel pipelined architecture.

Owner:MARVELL ASIA PTE LTD

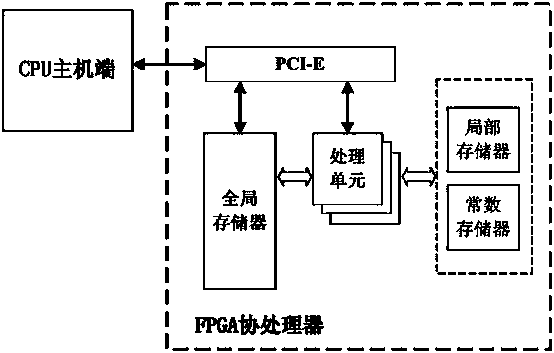

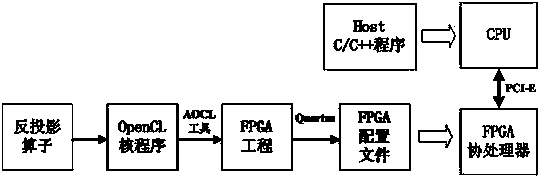

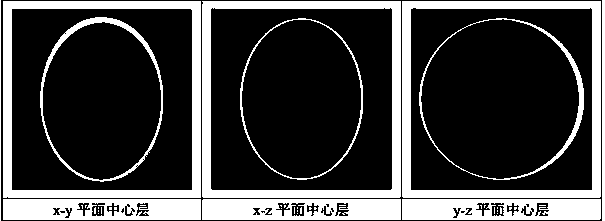

CT image reconstruction back projection acceleration method based on OpenCL-To-FPGA

ActiveCN104142845AFast transplantImprove developmentImage enhancementGeometric image transformationComputer architectureCoprocessor

The invention discloses a CT image reconstruction back projection acceleration method based on OpenCL-To-FPGA. Acceleration of the CT image reconstruction back projection step is achieved through an FPGA. The method comprises the specific steps that a CPU-FPGA heterogeneous computing mode with a CPU and the FPGA cooperating with each other is constructed in an OpenCL programming model, the CPU and the FPGA are in communication through a PCI-E bus, the CPU serves as a host and is in charge of serial tasks in an algorithm and the tasks of configuration and control on the FPGA, and the FPGA serves as a coprocessor and achieves parallel pipeline acceleration of back projection computing by loading an OpenCL kernel program. In the programming mode, executive programs of the FPGA are all developed through an OpenCL language similar to C / C++ in style, development is easy and convenient to perform, modification is flexible, the development cycle can be greatly shortened, and the development cost for product maintenance and upgrading is reduced; moreover, the new method is based on an OpenCL frame, codes can be fast transplanted between platforms, and the method is suitable for being extended and applied to cooperative acceleration of a multi-processor heterogeneous platform.

Owner:THE PLA INFORMATION ENG UNIV

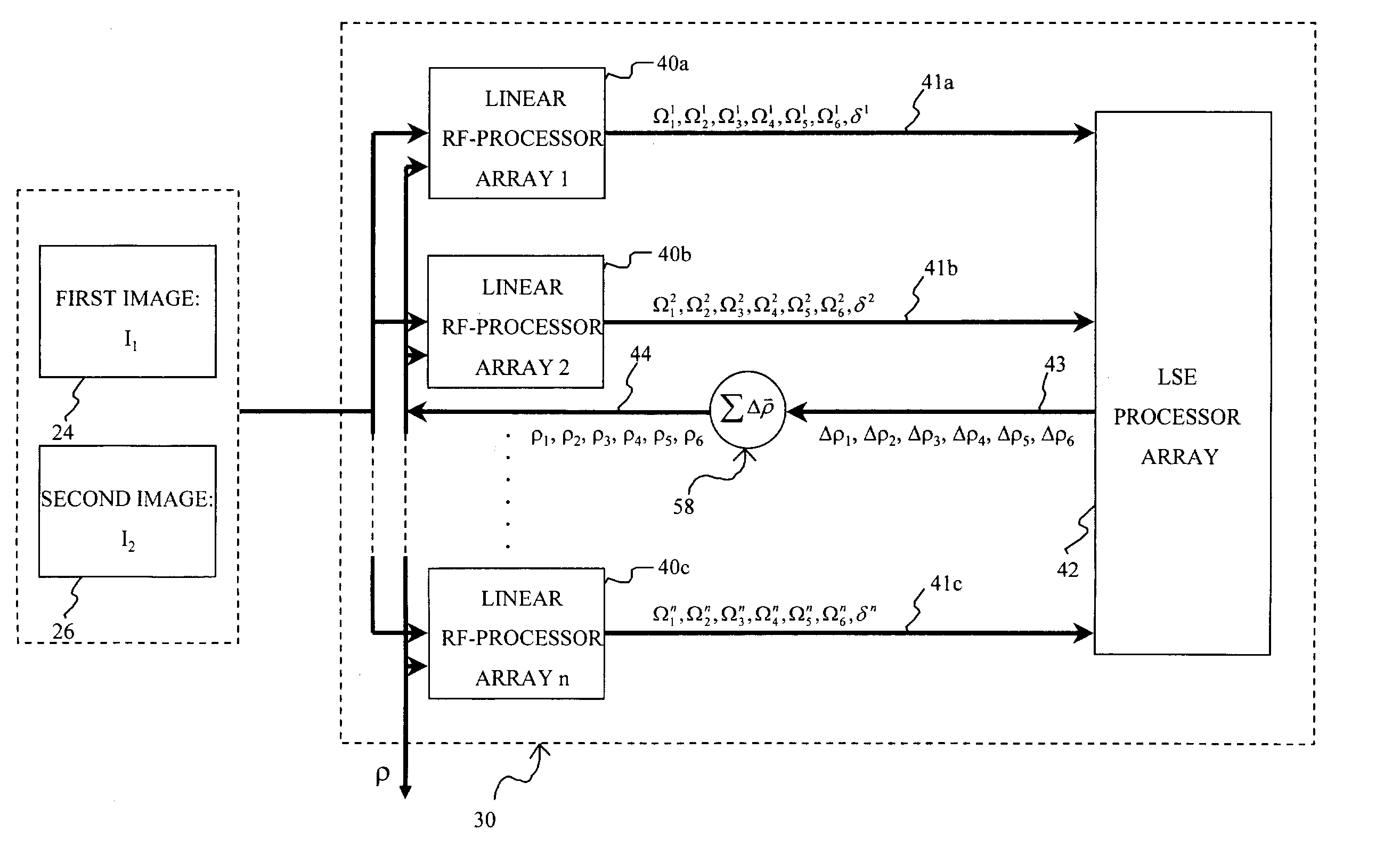

Affine transformation analysis system and method for image matching

InactiveUS20040175057A1Minimize the differenceImprove accuracyImage analysisGeneral purpose stored program computerRadio frequencyVisual perception

An affine transformation analysis system and method is provided for matching two images. The novel systolic array image affine transformation analysis system comprising a linear rf-processing means, an affine parameter incremental updating means, and a least square error fitting means is based on a Lie transformation group model of cortical visual motion and stereo processing. Image data is provided to a plurality of component linear rf-processing means each comprising a Gabor receptive field, a dynamical Gabor receptive field, and six Lie germs. The Gabor coefficients of images and affine Lie derivatives are extracted from responses of linear receptive fields, respectively. The differences and affine Lie-derivatives of these Gabor coefficients obtained from each parallel pipelined linear rf-processing components are then input to a least square error fitting means, a systolic array comprising a QR decomposition means and a backward substitution means. The output signal from the least square error fitting means then be used to updating of the affine parameters until the difference signals between the Gabor coefficients from the static and dynamical Gabor receptive fields are substantially reduced.

Owner:TSAO THOMAS +1

Multiple parallel pipeline processor having self-repairing capability

InactiveUS7124318B2Error detection/correctionGeneral purpose stored program computerComputer architectureParallel computing

Owner:INT BUSINESS MASCH CORP

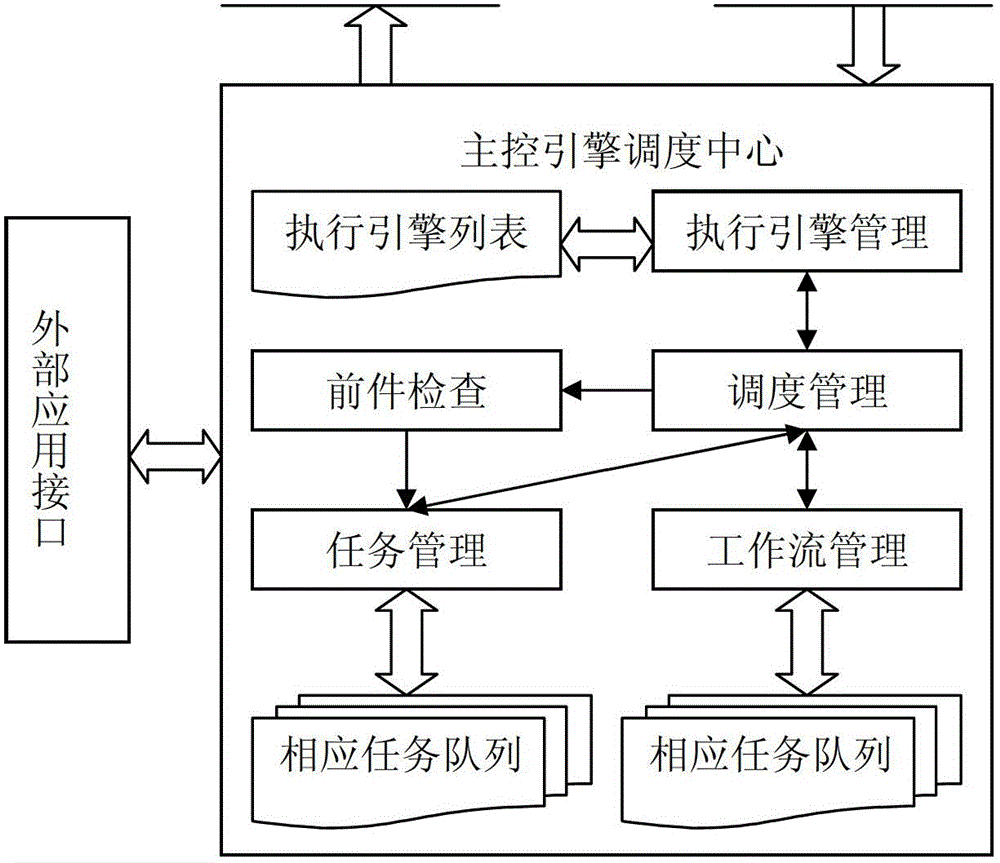

Workflow mechanism-based concurrent ETL (Extract, Transform and Load) conversion method

InactiveCN102722355AImprove extraction efficiencyImprove execution efficiencyMultiprogramming arrangementsConcurrent instruction executionData streamParallel processing

The invention discloses a workflow mechanism-based concurrent ETL (Extract, Transform and Load) conversion method. By using a workflow technology and a multi-thread concurrent technology, concurrent execution of a plurality of ETL tasks of an ETL workflow and concurrent execution of a plurality of ETL behaviors in a single task are realized. When a plurality of ETL workflows are executed simultaneously and more parallel branches are available in the ETL workflows and the ETL operation, the execution efficiency can be obviously increased. At the same time, according to the method, cluster distribution processing is constructed, and parallel ETL data extraction engines are constructed by a parallel pipeline technology, so that the extraction efficiency of data can be greatly increased, and the parallel processing problem of multiple data flows and the bottleneck problem of conversion processing are solved.

Owner:WHALE CLOUD TECH CO LTD

Method and apparatus for graphic processing using parallel pipeline

InactiveUS20140049539A1Processor architectures/configurationImage generationGraphicsParallel computing

Owner:KONGJU NAT UNIV IND UNIV COOPERATION FOUND +1

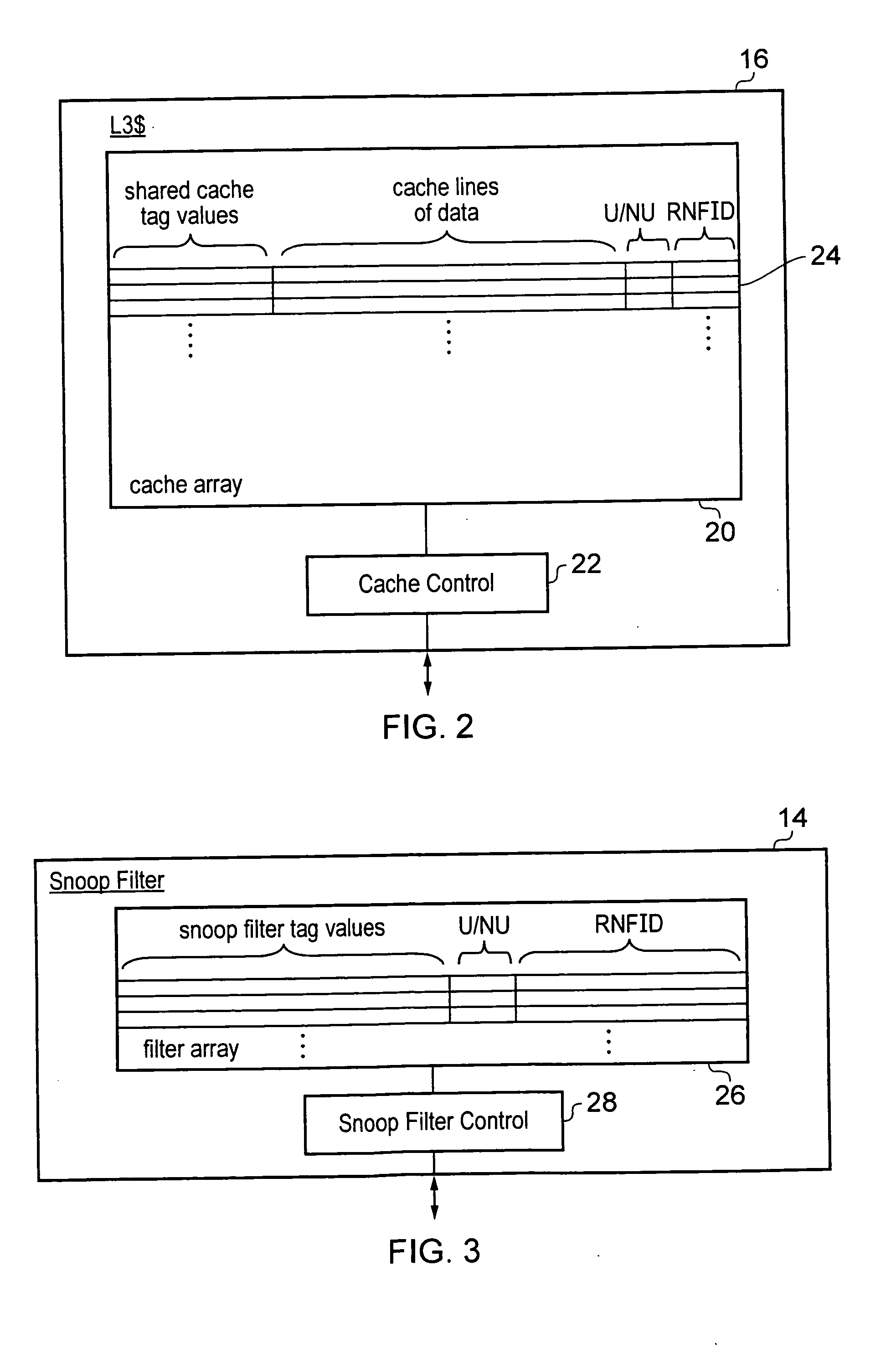

Snoop filter and non-inclusive shared cache memory

ActiveUS20130042078A1Save spaceLower the volumeMemory adressing/allocation/relocationParallel computingData treatment

A data processing apparatus 2 includes a plurality of transaction sources 8, 10 each including a local cache memory. A shared cache memory 16 stores cache lines of data together with shared cache tag values. Snoop filter circuitry 14 stores snoop filter tag values tracking which cache lines of data are stored within the local cache memories. When a transaction is received for a target cache line of data, then the snoop filter circuitry 14 compares the target tag value with the snoop filter tag values and the shared cache circuitry 16 compares the target tag value with the shared cache tag values. The shared cache circuitry 16 operates in a default non-inclusive mode. The shared cache memory 16 and the snoop filter 14 accordingly behave non-inclusively in respect of data storage within the shared cache memory 16, but inclusively in respect of tag storage given the combined action of the snoop filter tag values and the shared cache tag values. Tag maintenance operations moving tag values between the snoop filter circuitry 14 and the shared cache memory 16 are performed atomically. The snoop filter circuitry 14 and the shared cache memory 16 compare operations are performed using interlocked parallel pipelines.

Owner:ARM LTD

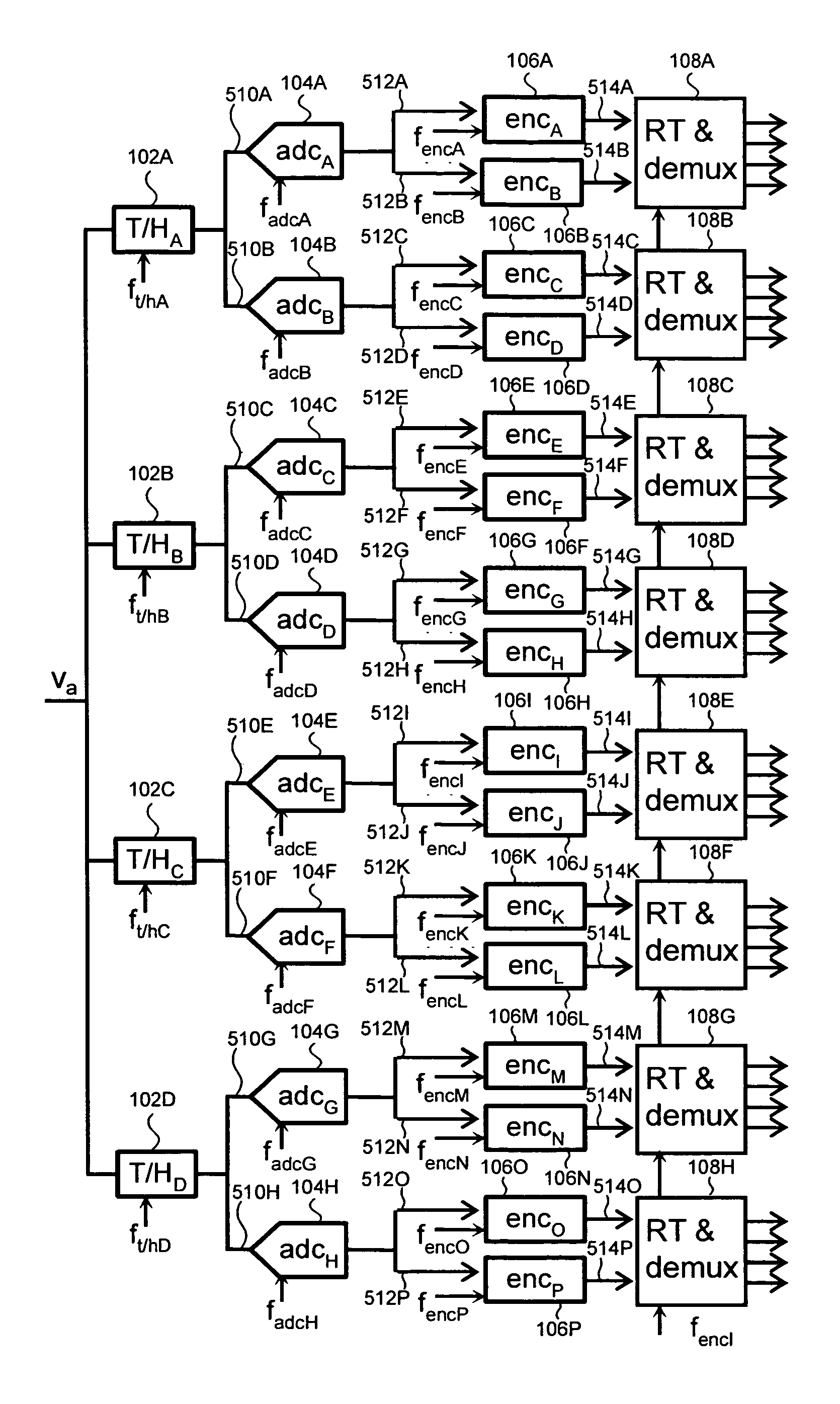

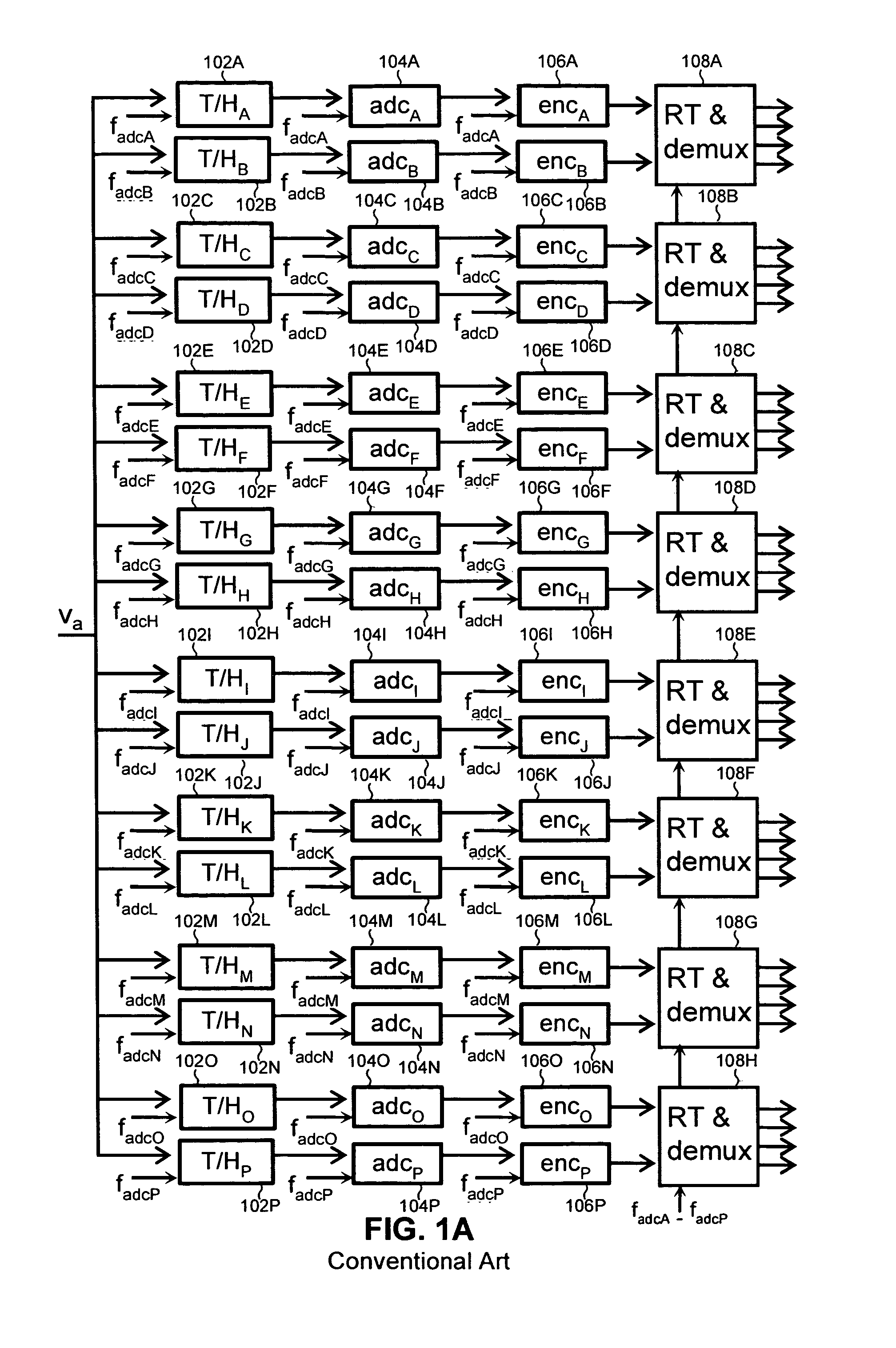

Hierarchical parallel pipelined operation of analog and digital circuits

ActiveUS20060066466A1Analogue/digital conversionElectric signal transmission systemsEngineeringMulti phase

A hierarchical parallel pipelined circuit includes a first stage with a plurality of sampling circuits and a plurality of corresponding analog or digital circuits that receive an output from the plurality of sampling circuits. A second stage includes a second plurality of sampling circuits and a plurality of corresponding analog or digital circuits that receive an output from the plurality of sampling circuits. A multi-frequency, multi-phase clock clocks the first and second stages, the multi-frequency, multi-phase clock providing a first clock having a first frequency having either a single or plurality of phases, and a second clock having a second frequency having a plurality of phases. A first phase of a plurality of phases is phase locked to the first phase of the first clock. The clock frequency multiplied by the number of parallel devices in each stage is the throughput of the circuit and is kept constant across the stages.

Owner:AVAGO TECH INT SALES PTE LTD

Hierarchical parallel pipelined operation of analog and digital circuits

ActiveUS7012559B1Eliminate disadvantagesAnalogue/digital conversionElectric signal transmission systemsClock rateMulti phase

A hierarchical parallel pipelined circuit includes a first stage with a plurality of sampling circuits and a plurality of corresponding analog or digital circuits that receive an output from the plurality of sampling circuits. A second stage includes a second plurality of sampling circuits and a plurality of corresponding analog or digital circuits that receive an output from the plurality of sampling circuits. A multi-frequency, multi-phase clock clocks the first and second stages, the multi-frequency, multi-phase clock providing a first clock having a first frequency having either a single or plurality of phases, and a second clock having a second frequency having a plurality of phases. A first phase of a plurality of phases is phase locked to the first phase of the first clock. The clock frequency multiplied by the number of parallel devices in each stage is the throughput of the circuit and is kept constant across the stages.

Owner:AVAGO TECH INT SALES PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com