Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

6493 results about "Computational science" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Computational science (also scientific computing or scientific computation (SC)) is a rapidly growing multidisciplinary field that uses advanced computing capabilities to understand and solve complex problems. It is an area of science which spans many disciplines, but at its core it involves the development of models and simulations to understand natural systems.

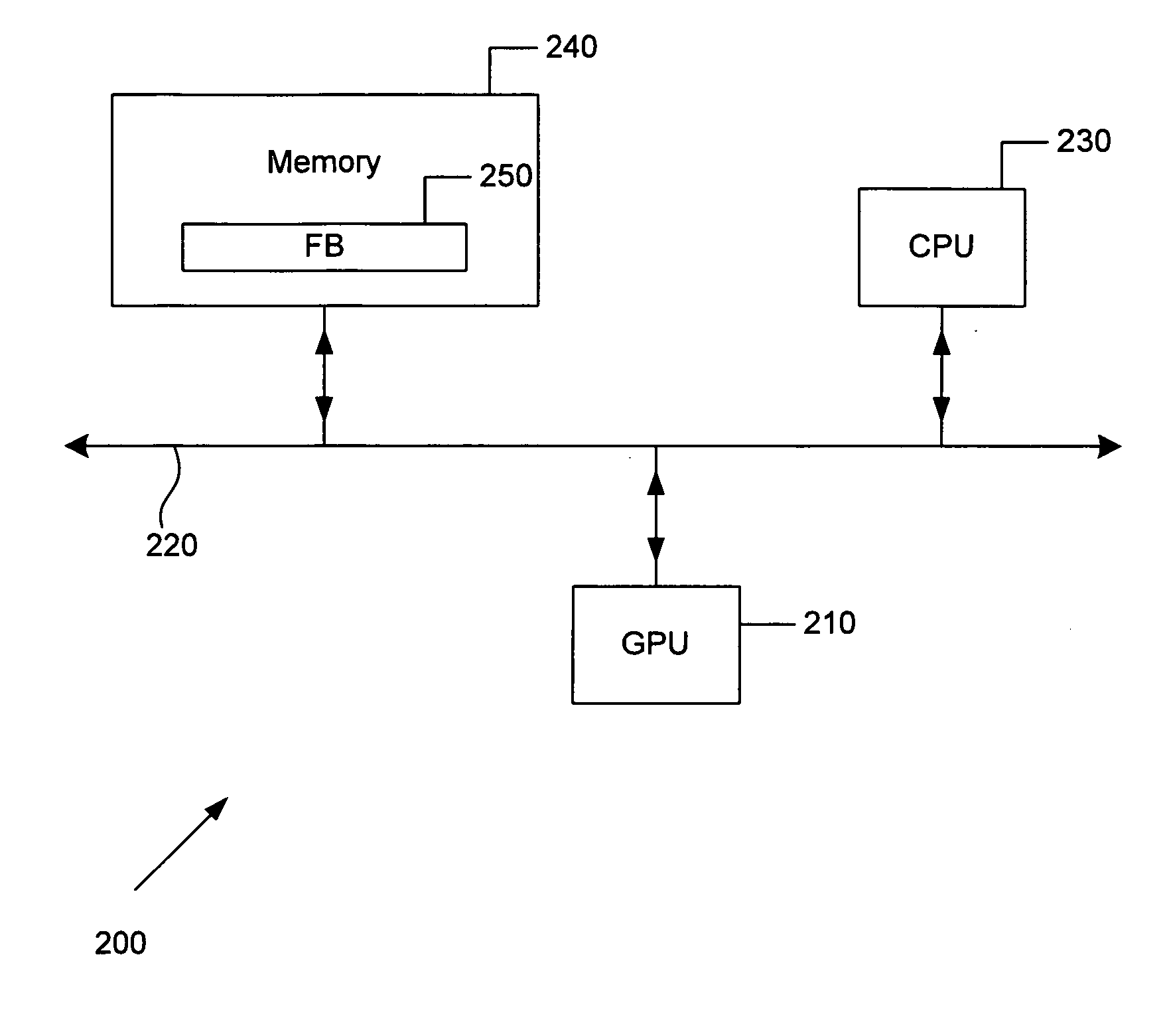

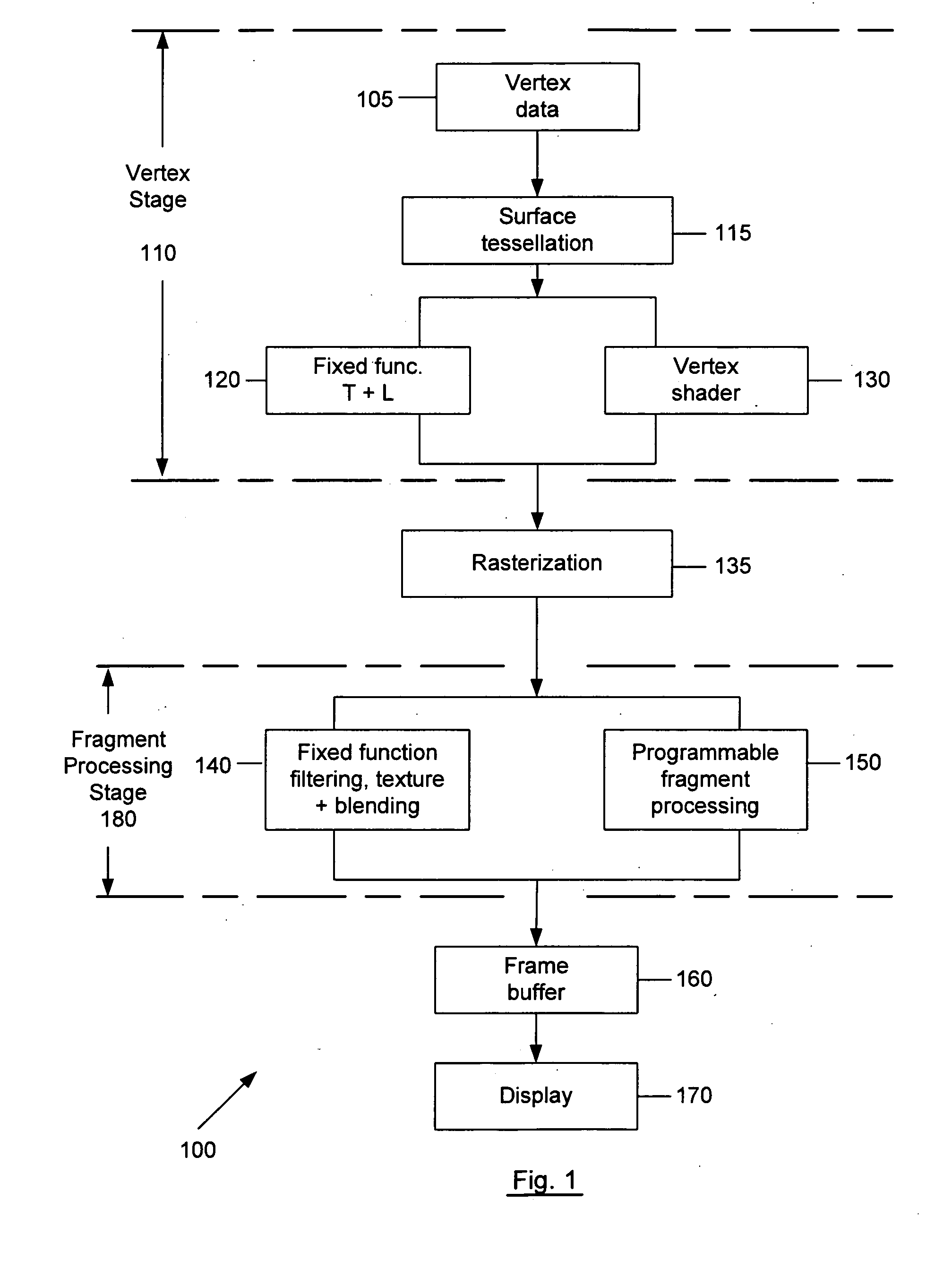

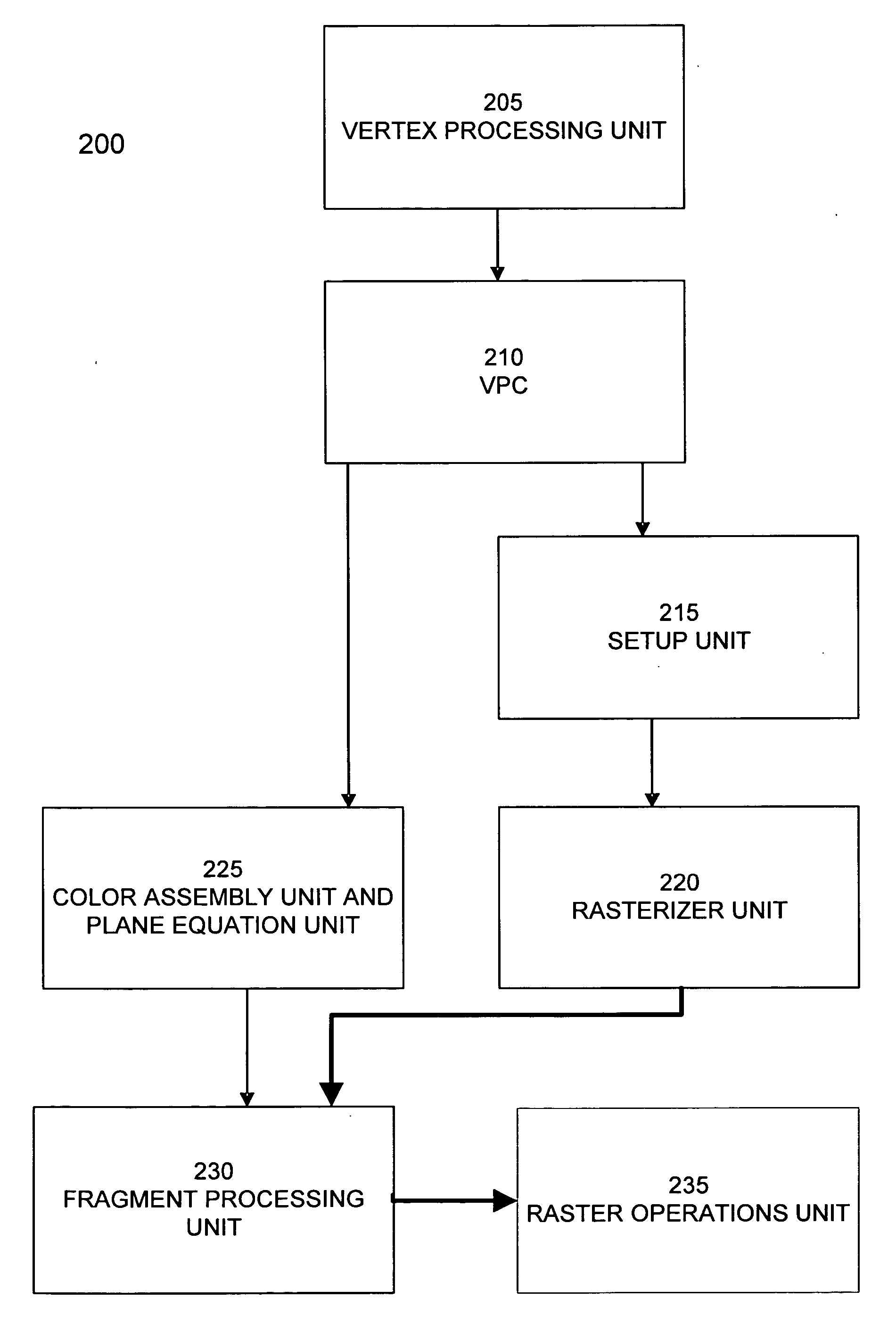

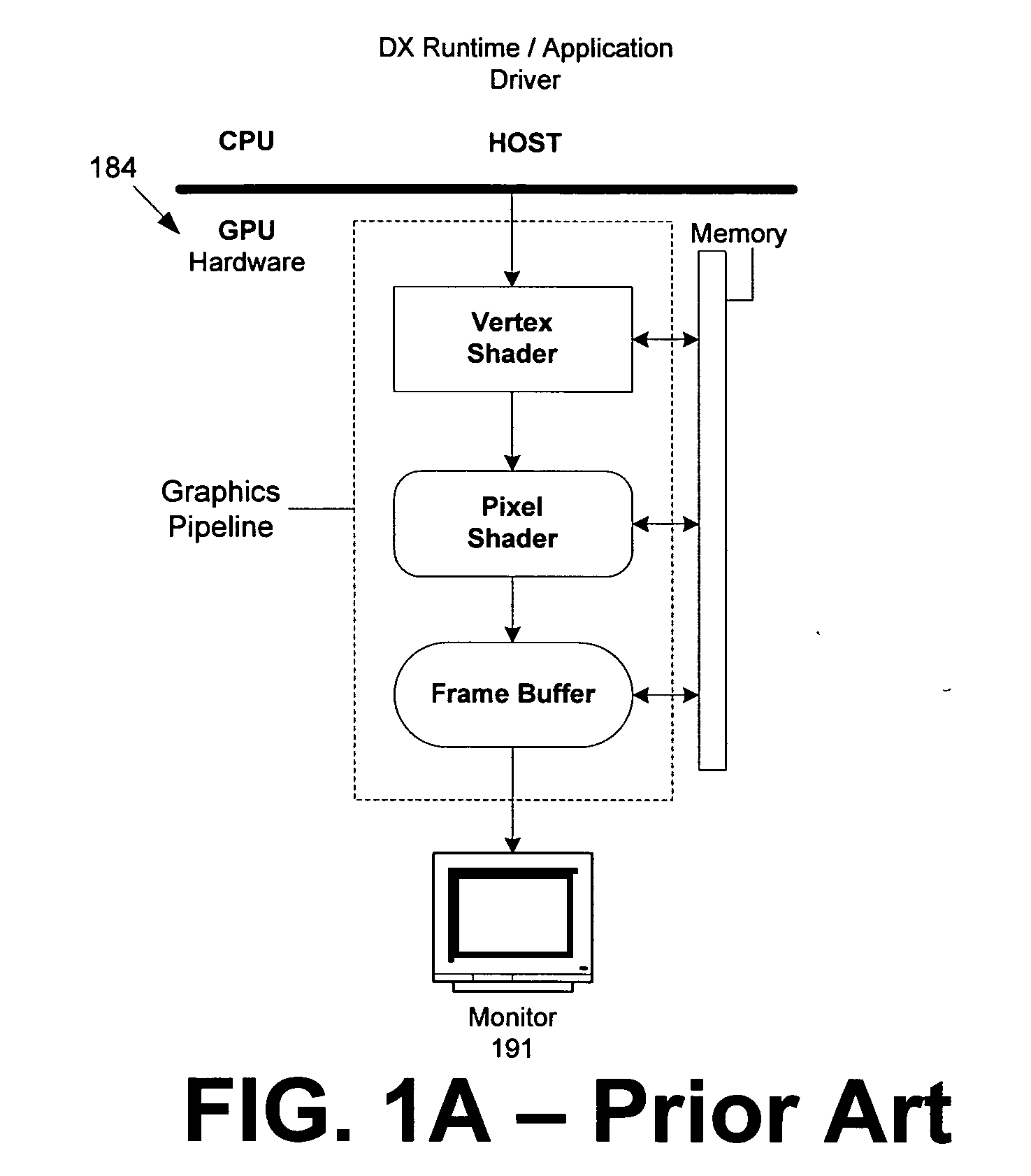

Rendering pipeline

InactiveUS7170515B1EfficientlyReduce memory bandwidth3D-image rendering3D modellingComputational scienceVisibility

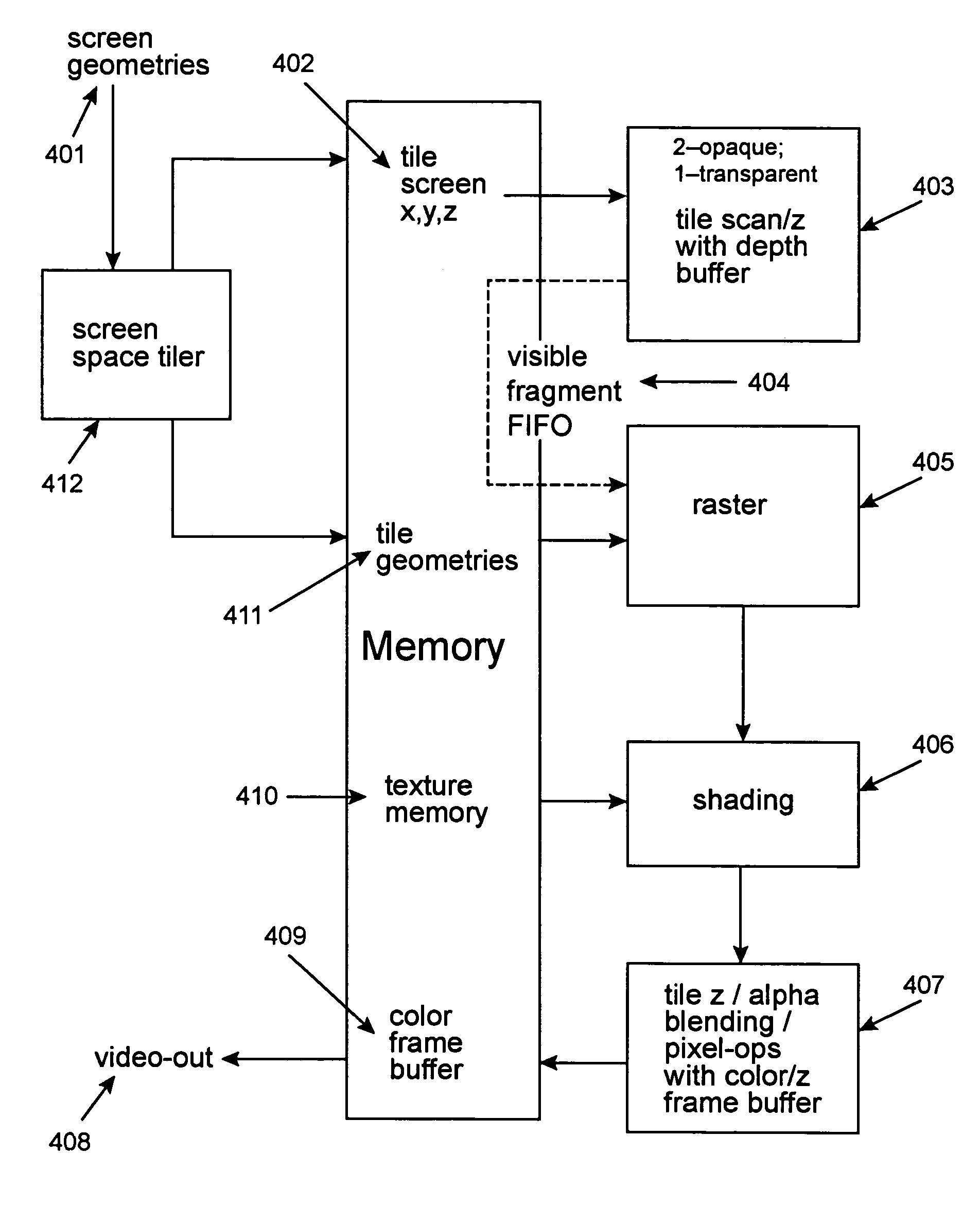

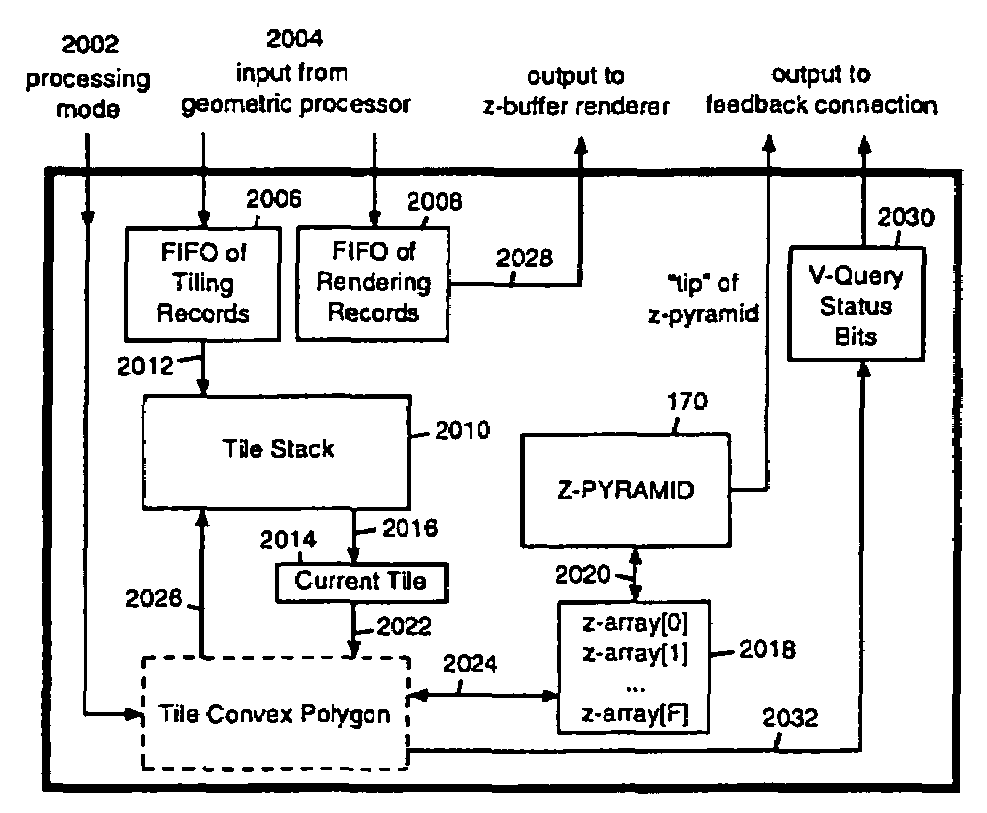

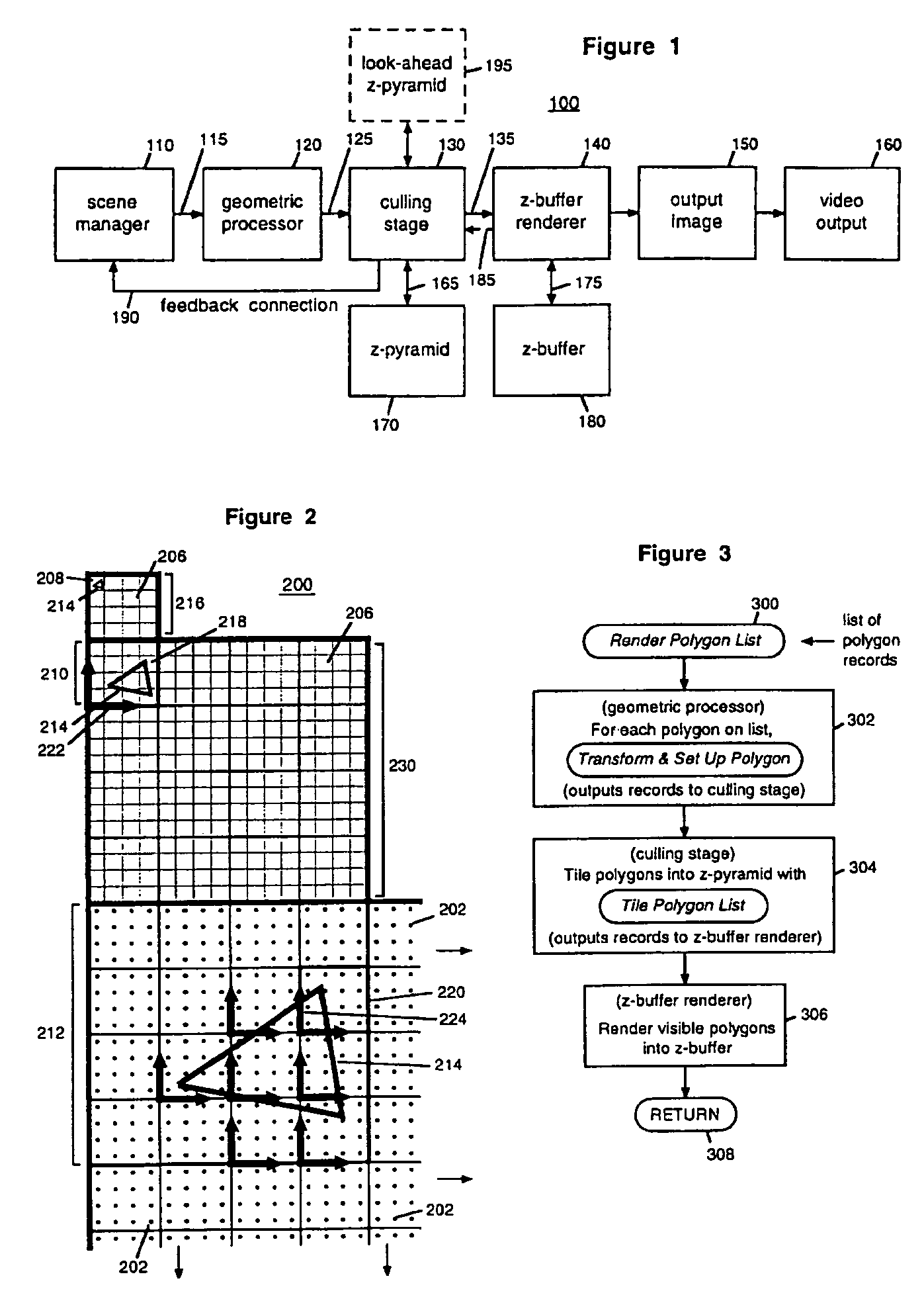

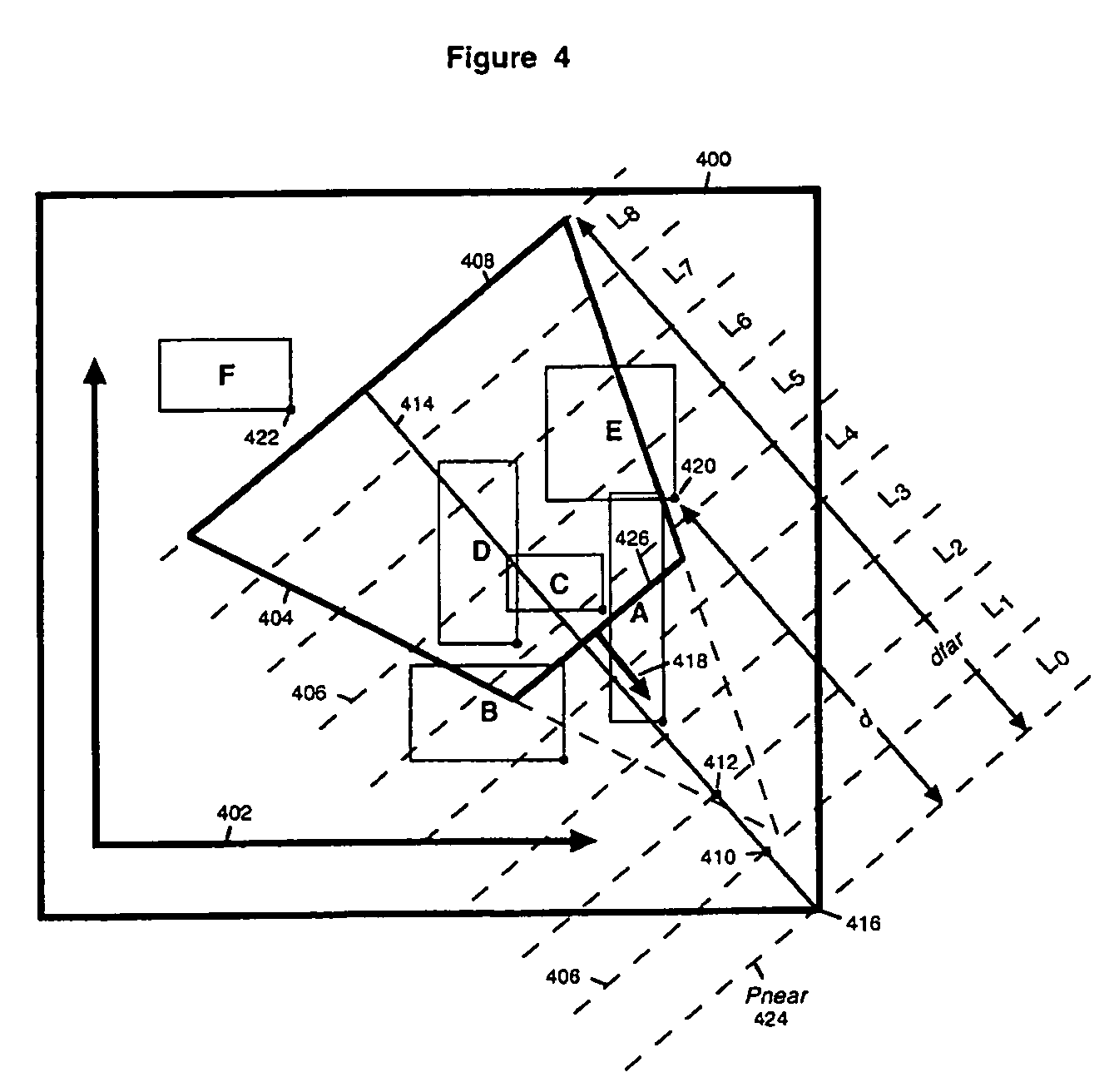

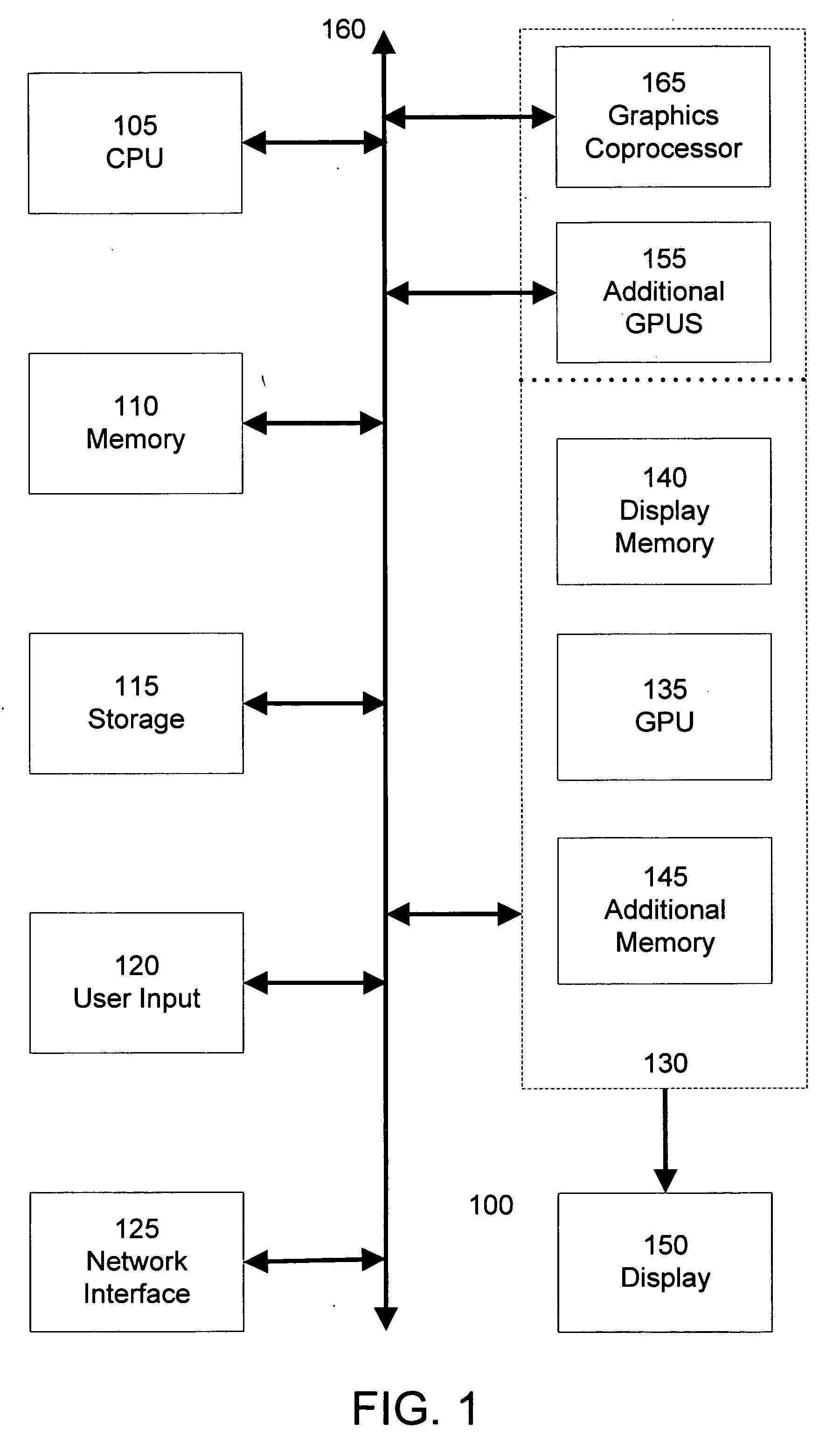

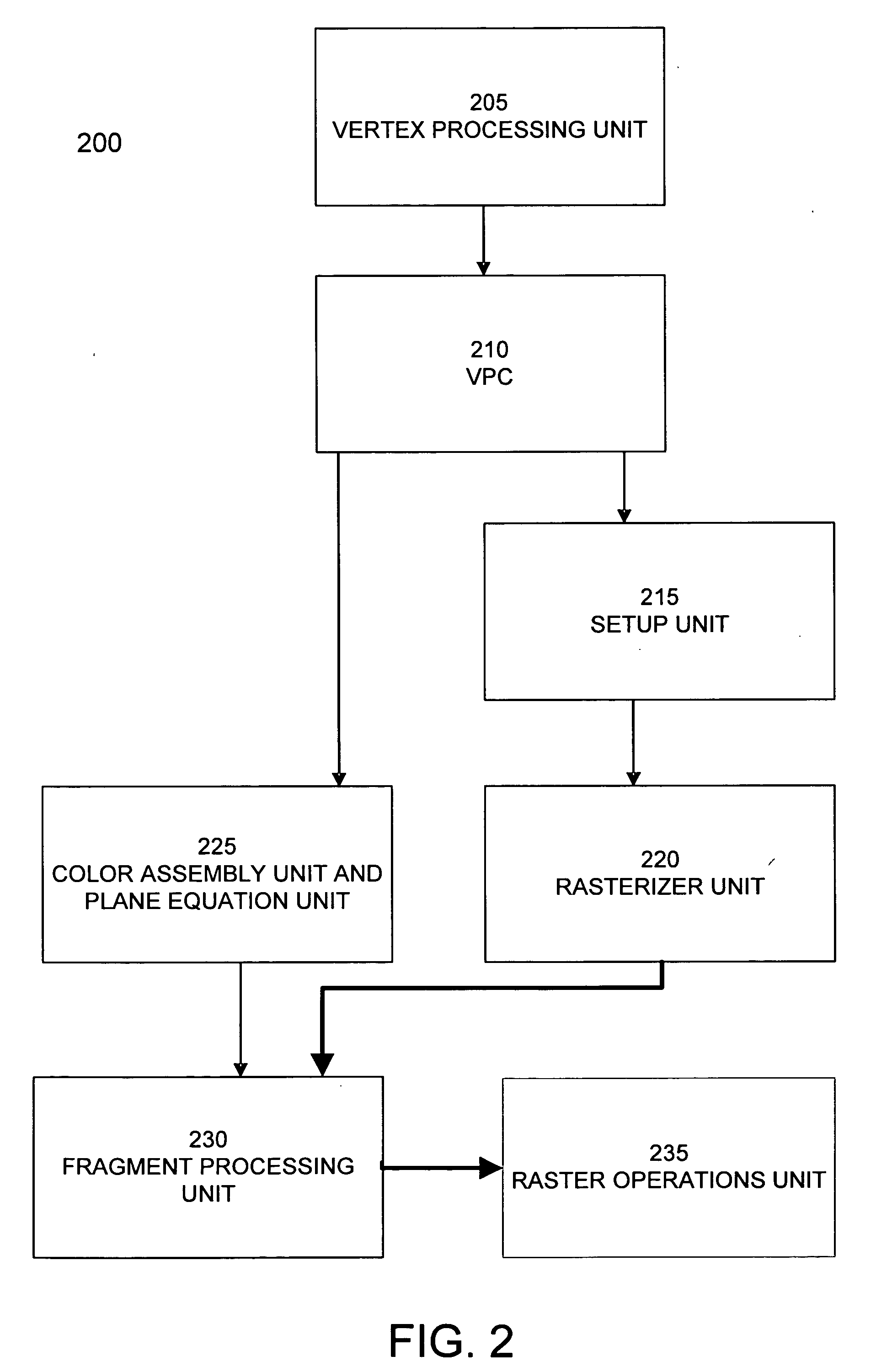

A rendering pipeline system for a computer environment uses screen space tiling (SST) to eliminate the memory bandwidth bottleneck due to frame buffer access and performs screen space tiling efficiently, while avoiding the breaking up of primitives. The system also reduces the buffering size required by SST. High quality, full-scene anti-aliasing is easily achieved because only the on-chip multi-sample memory corresponding to a single tile of the screen is needed. The invention uses a double-z scheme that decouples the scan conversion / depth-buffer processing from the more general rasterization and shading processing through a scan / z engine. The scan / z engine externally appears as a fragment generator but internally resolves visibility and allows the rest of the rendering pipeline to perform setup for only visible primitives and shade only visible fragments. The resulting reduced raster / shading requirements can lead to reduced hardware costs because one can process all parameters with generic parameter computing units instead of with dedicated parameter computing units. The invention processes both opaque and transparent geometries.

Owner:NVIDIA CORP

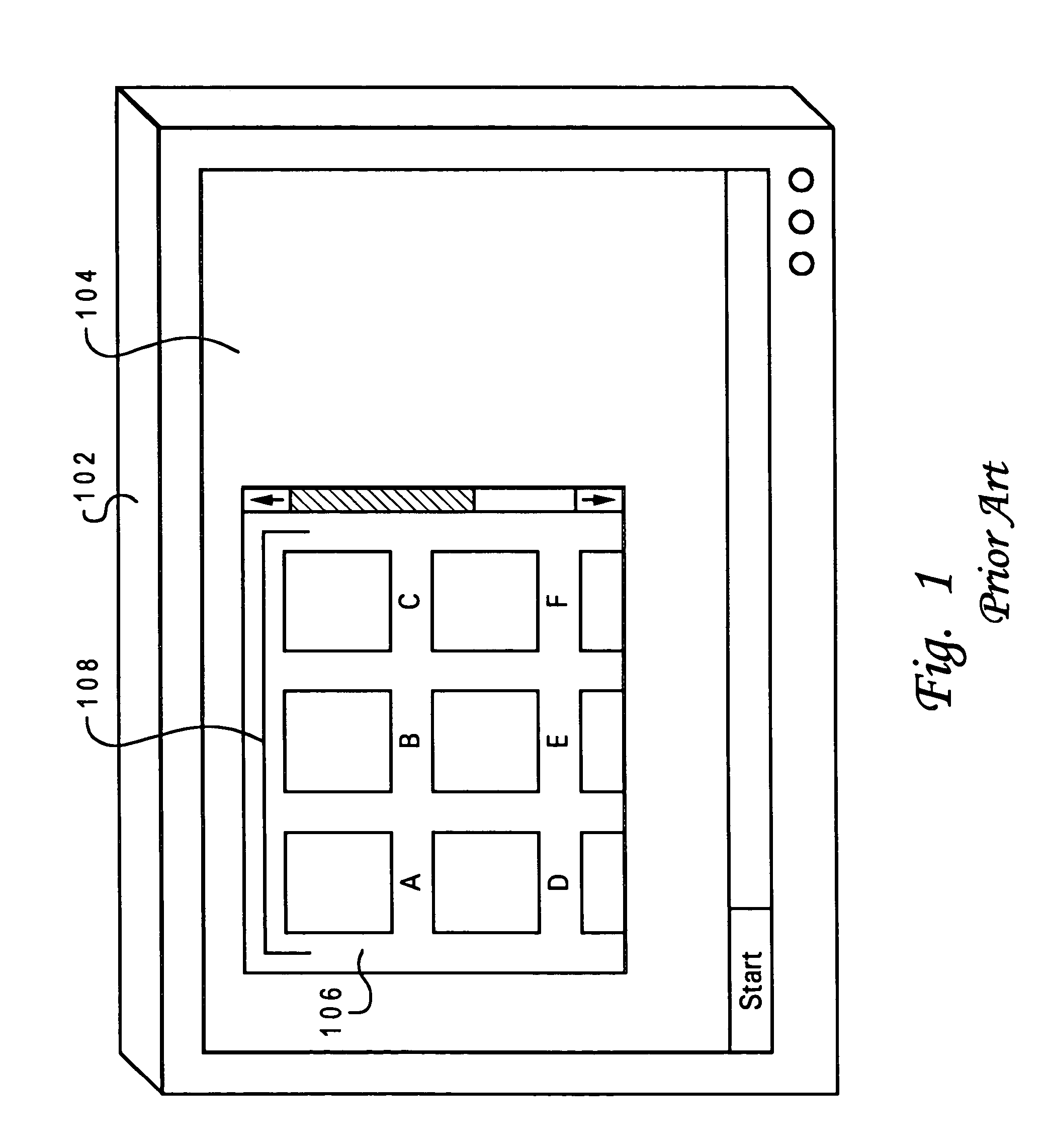

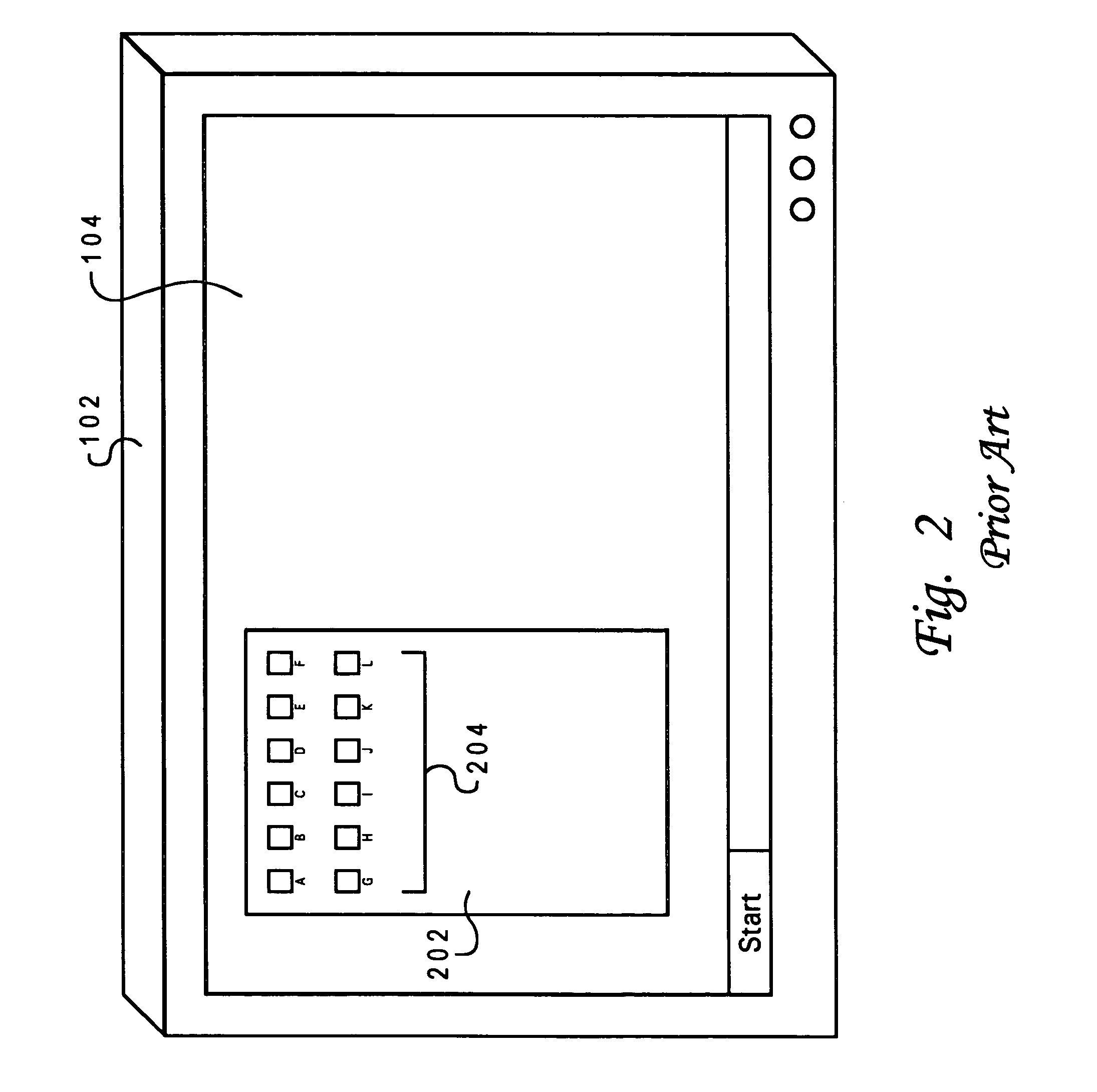

Automatically scaling icons to fit a display area within a data processing system

InactiveUS6983424B1Visual presentationDigital output to display deviceData processingComputer graphics (images)

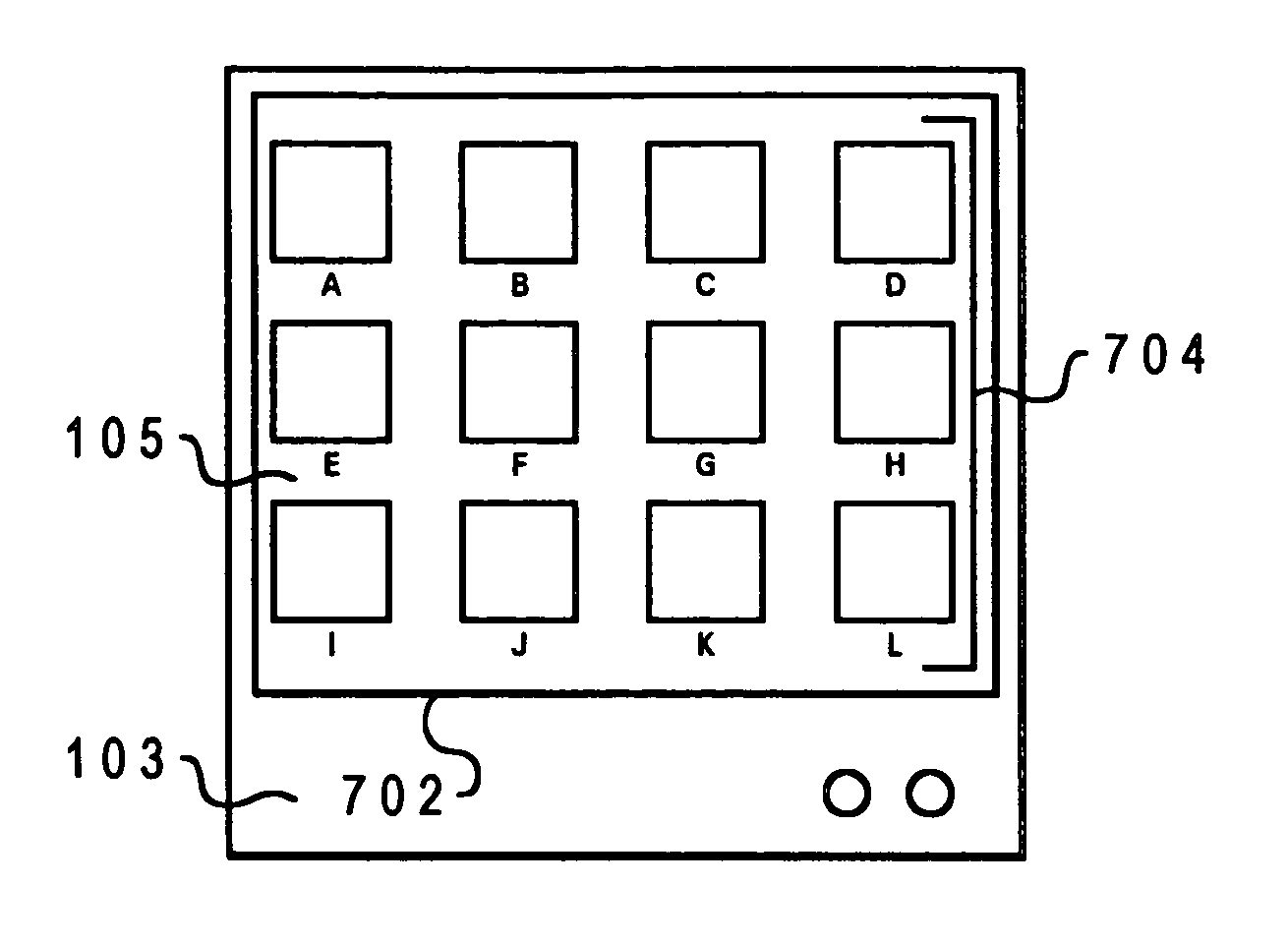

A method, system, and program is provided for displaying icons on a data processing system. The number of icons to be displayed on the computer screen is determined. The boundary area for displaying the icons on the computer screen is calculated. The sizes of the icons are then scaled to a size that allows all icons to be displayed in the boundary area while utilizing all available display space. The minimum and maximum sizes of the icons can be limited based on user preferences. If the icons cannot be scaled to fit within the boundary area using the user selected minimum size, then only a portion of the icon is displayed. In this manner, all icons are scaled and displayed at a size that utilizes the full boundary area of the display screen.

Owner:IBM CORP

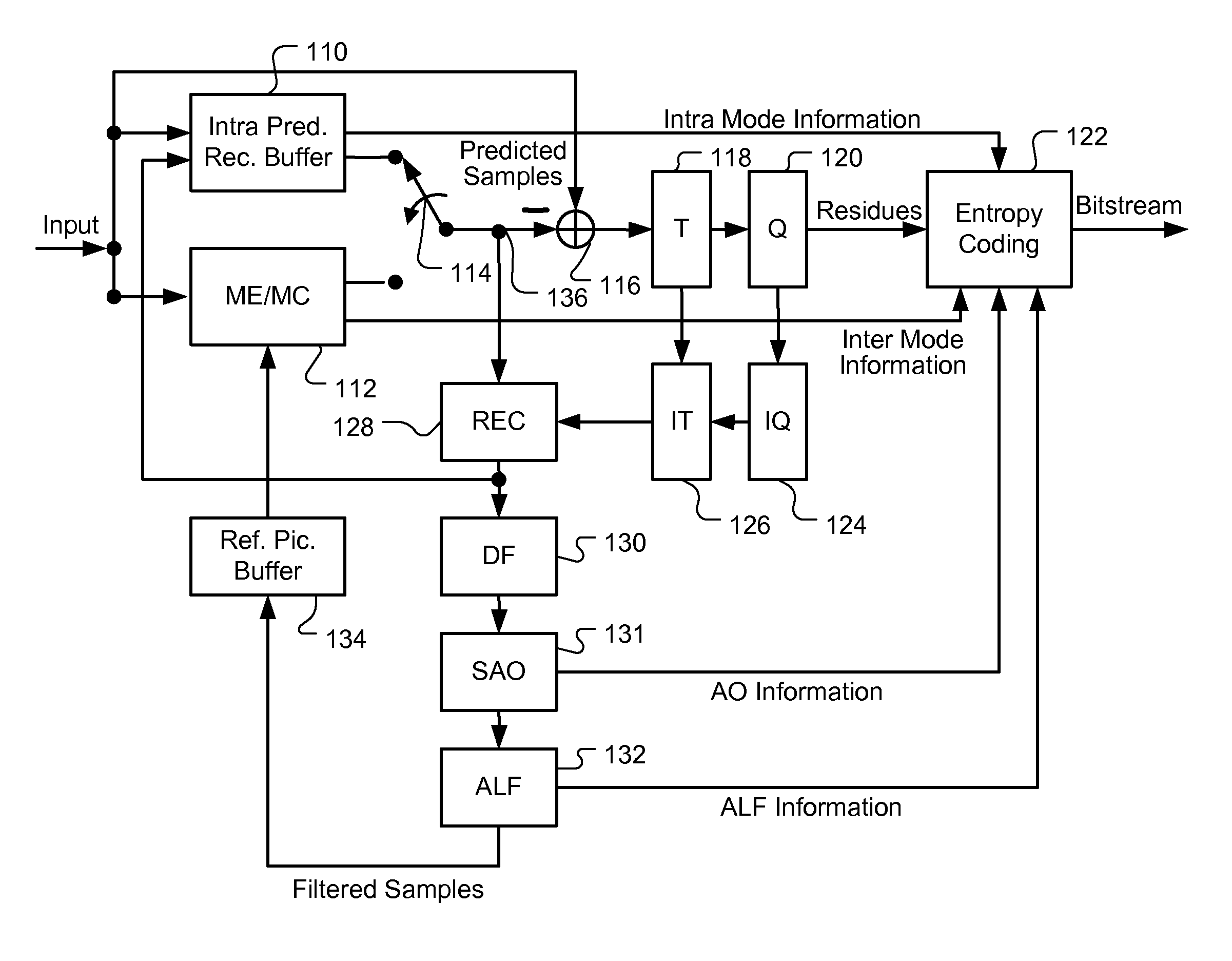

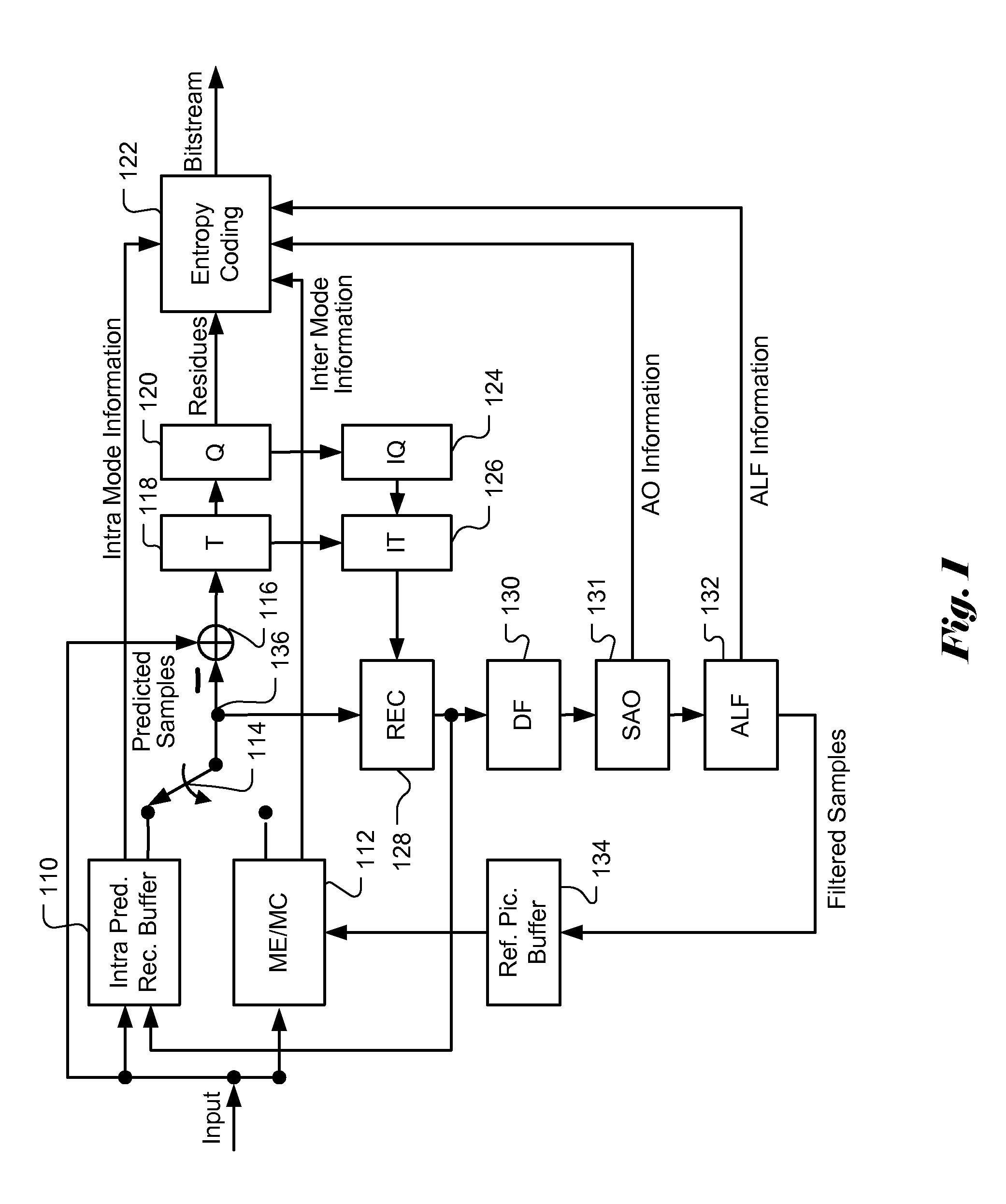

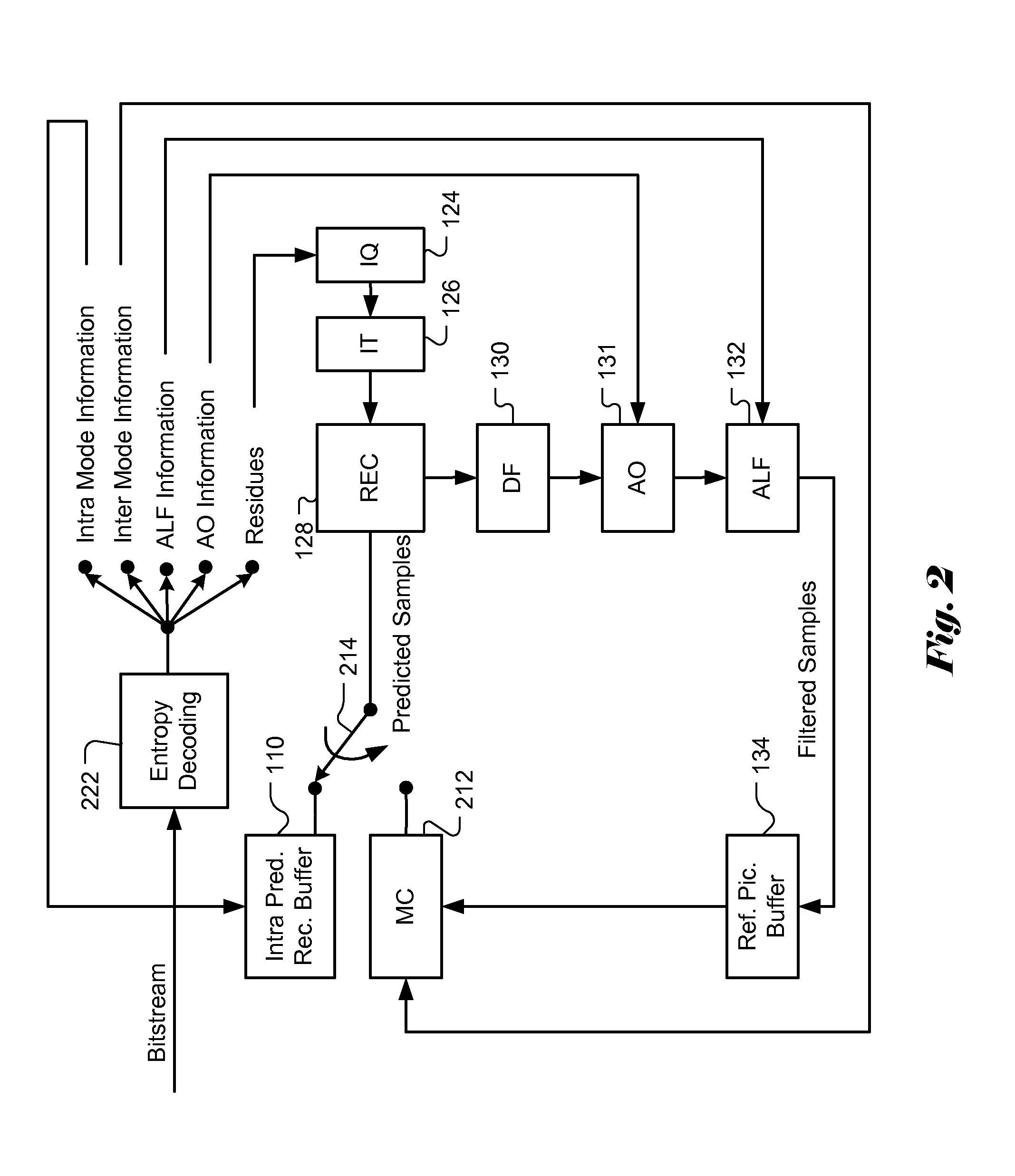

Apparatus and Method of Sample Adaptive Offset for Luma and Chroma Components

InactiveUS20120294353A1Improve coding efficiencyColor television with pulse code modulationColor television with bandwidth reductionComputational scienceLoop filter

A method and apparatus for processing reconstructed video using in-loop filter in a video coding system are disclosed. The method uses chroma in-loop filter indication to indicate whether chroma components are processed by in-loop filter when the luma in-loop filter indication indicates that in-loop filter processing is applied to the luma component. An additional flag may be used to indicate whether the in-loop filter processing is applied to an entire picture using same in-loop filter information or each block of the picture using individual in-loop filter information. Various embodiments according to the present invention to increase efficiency are disclosed, wherein various aspects of in-loop filter information are taken into consideration for efficient coding such as the property of quadtree-based partition, boundary conditions of a block, in-loop filter information sharing between luma and chroma components, indexing to a set of in-loop filter information, and prediction of in-loop filter information.

Owner:HFI INNOVATION INC

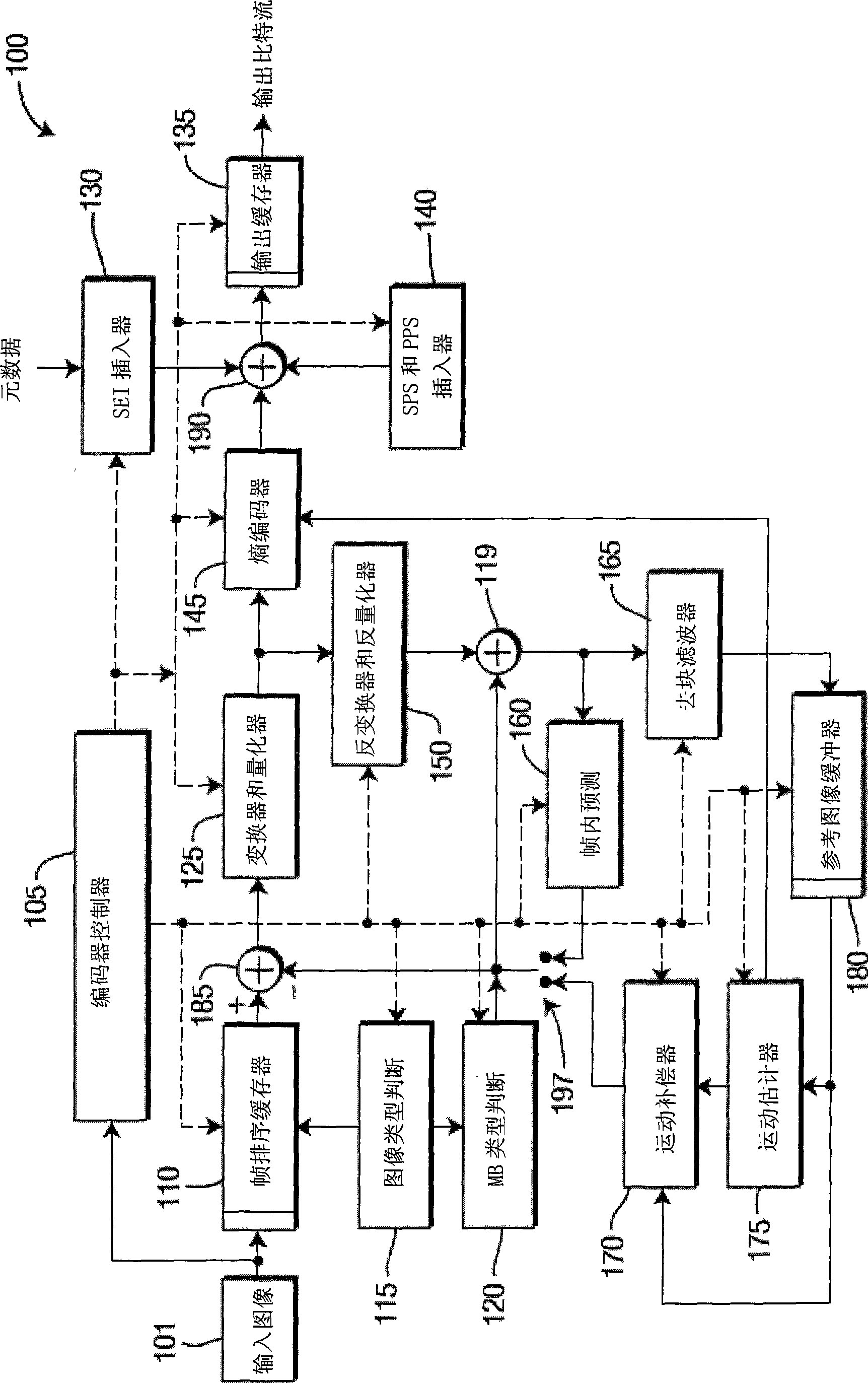

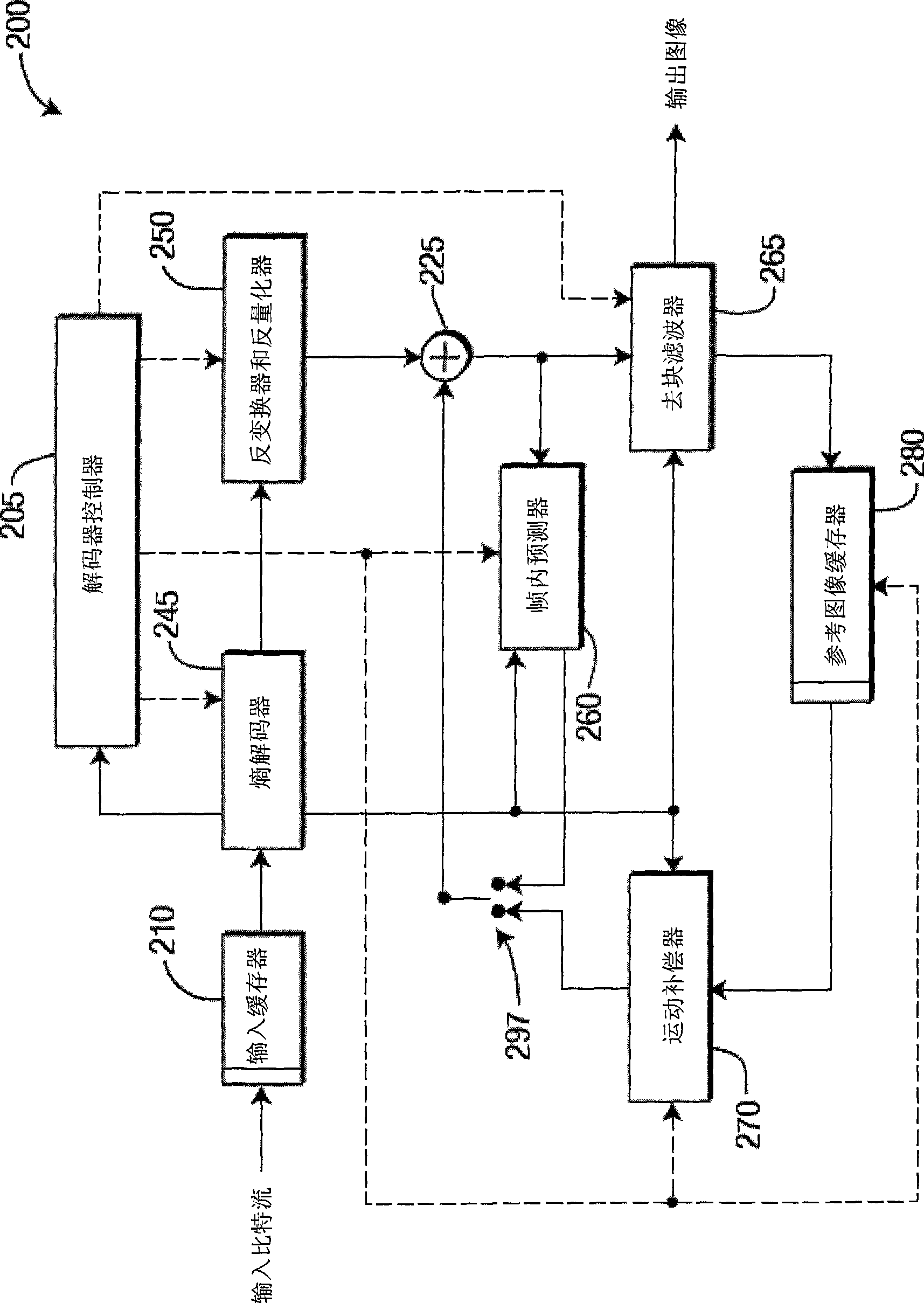

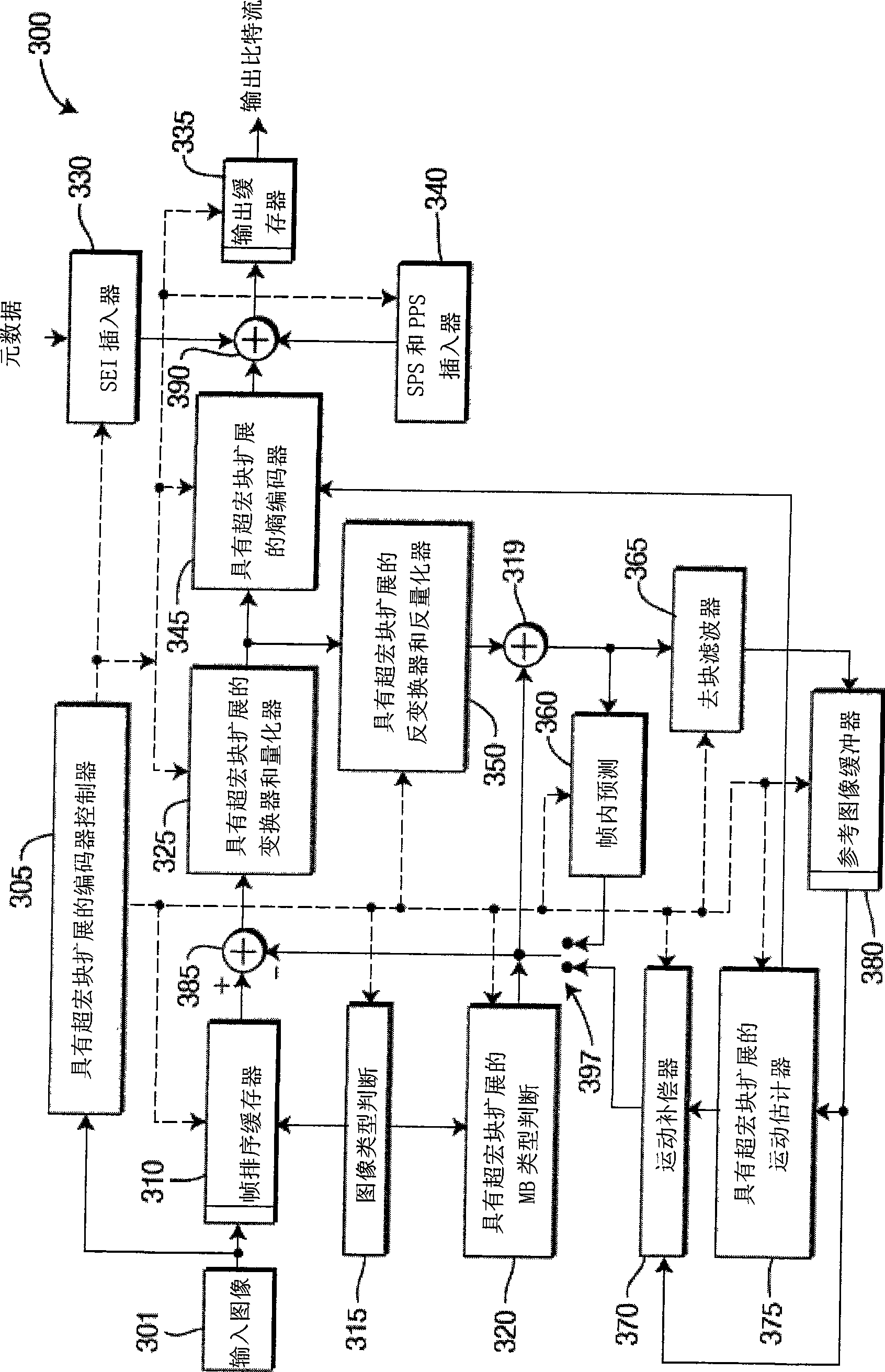

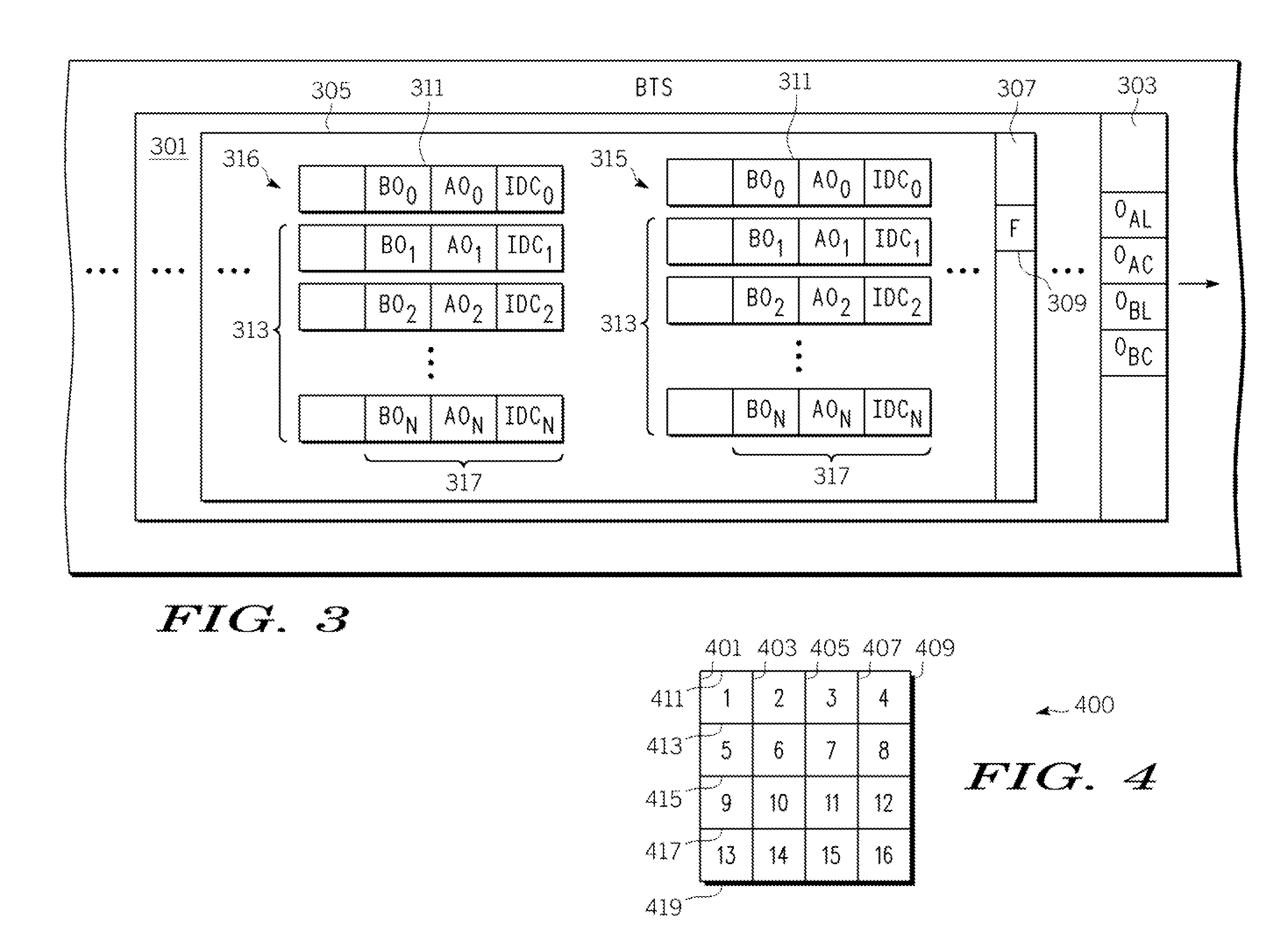

Methods and apparatus for reduced resolution partitioning

ActiveCN101507280APulse modulation television signal transmissionDigital video signal modificationImage resolutionTheoretical computer science

There are provided methods and apparatus for reduced resolution partitioning. An apparatus includes an encoder (300) for encoding video data using adaptive tree-based frame partitioning, wherein partitions are obtained from a combination of top-down tree partitioning and bottom-up tree joining.

Owner:INTERDIGITAL VC HLDG INC

System and method for accelerating graphics processing using a post-geometry data stream during multiple-pass rendering

A system and method are provided for accelerating graphics processing utilizing multiple-pass rendering. Initially, geometry operations are performed on graphics data, and the graphics data is stored in memory. During a first rendering pass, various operations take place. For example, the graphics data is read from the memory, and the graphics data is rasterized. Moreover, first z-culling operations are performed utilizing the graphics data. Such first z-culling operations maintain a first occlusion image. During a second rendering pass, the graphics data is read from memory. Still yet, the graphics data is rasterized and second z-culling operations are performed utilizing the graphics data and the first occlusion image. Moreover, visibility operations are performed utilizing the graphics data and a second occlusion image. Raster-processor operations are also performed utilizing the graphics data, during the second rendering pass.

Owner:NVIDIA CORP

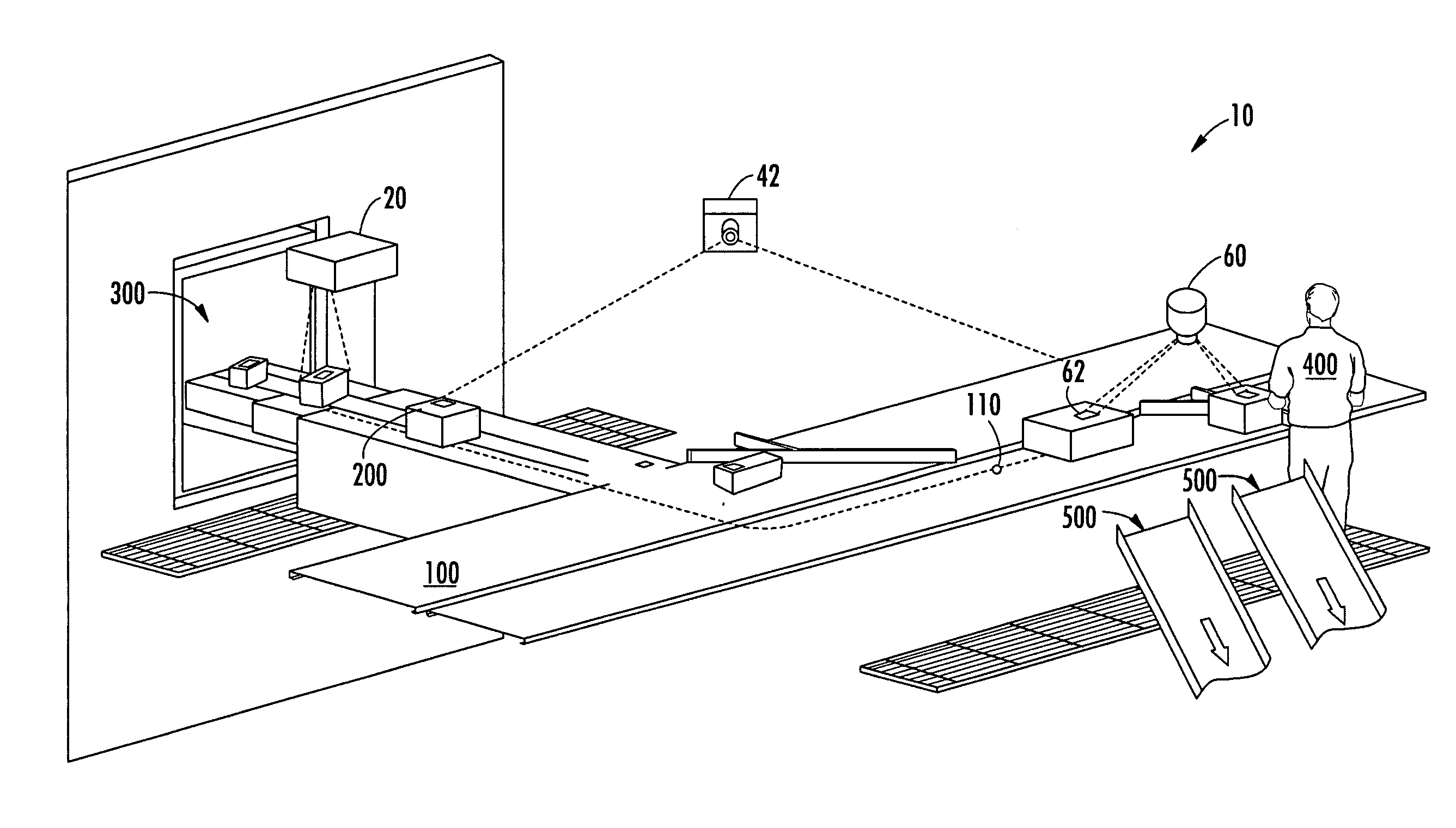

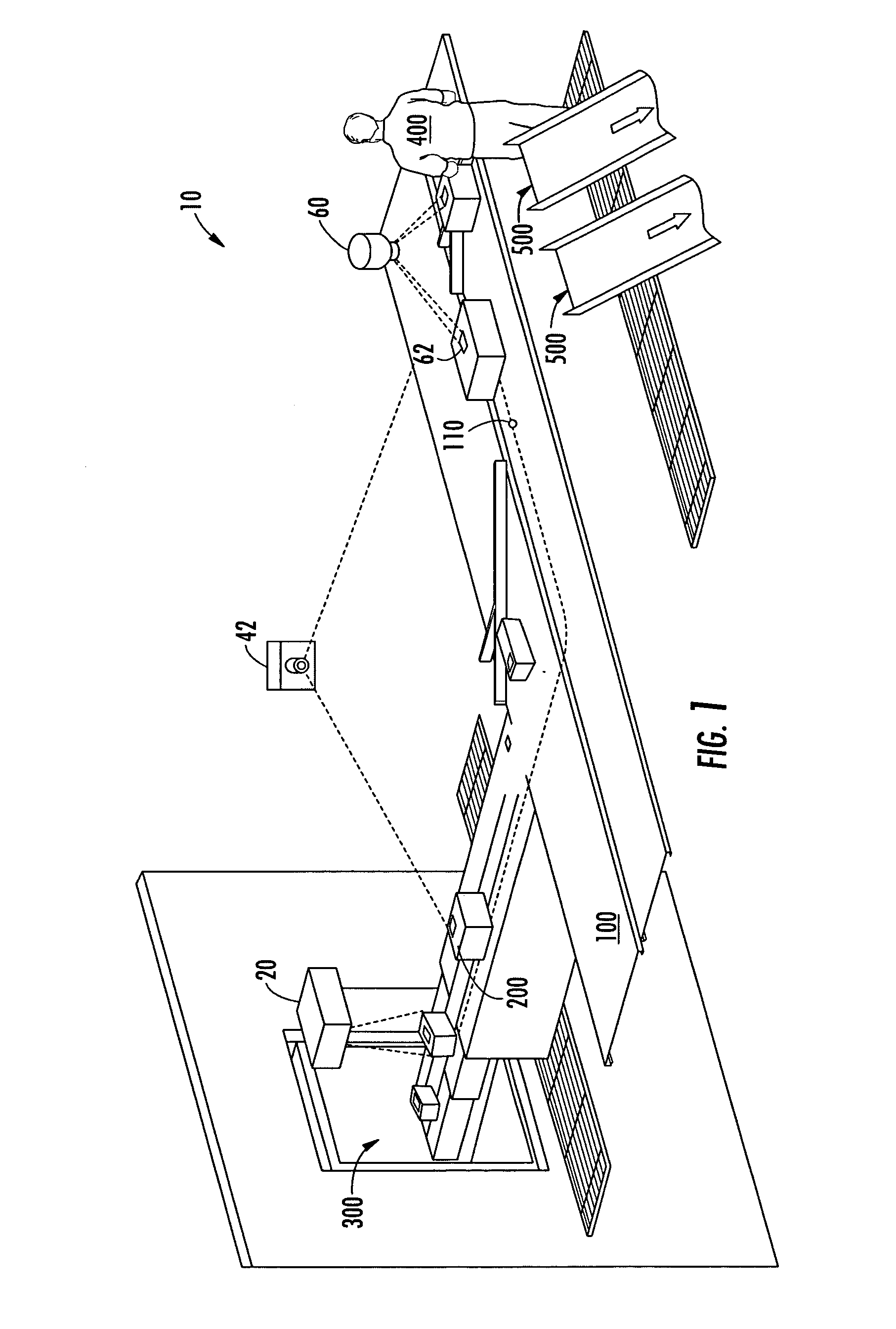

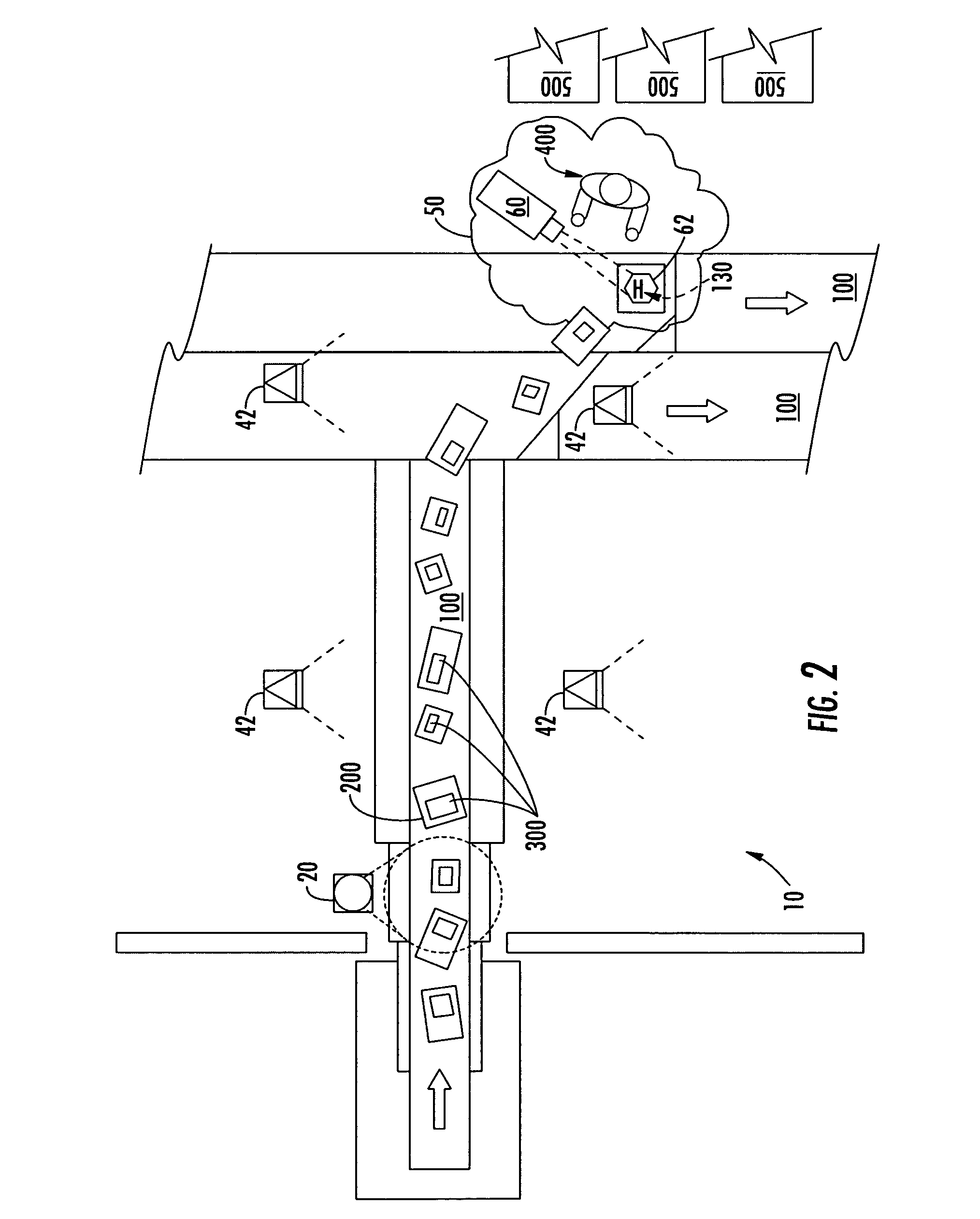

System for projecting a handling instruction onto a moving item or parcel

ActiveUS7090134B2Co-operative working arrangementsVisual presentationProcessing InstructionComputer graphics (images)

The invention includes a system for projecting a display onto an item or parcel using an acquisition device to capture indicia on each parcel, a tracking system, a controller or computer to select the display based on the indicia, and one or more display projectors. In one embodiment the display includes or connotes a handling instruction. The system in one embodiment includes a laser projection system to paint the selected display directly onto a selected exterior surface of the corresponding parcel, for multiple parcels simultaneously. The system may be configured to move each display in order follow each moving parcel so that each display remains legible to a viewer in a display zone where handling takes place.

Owner:UNITED PARCEL SERVICE OF AMERICAN INC

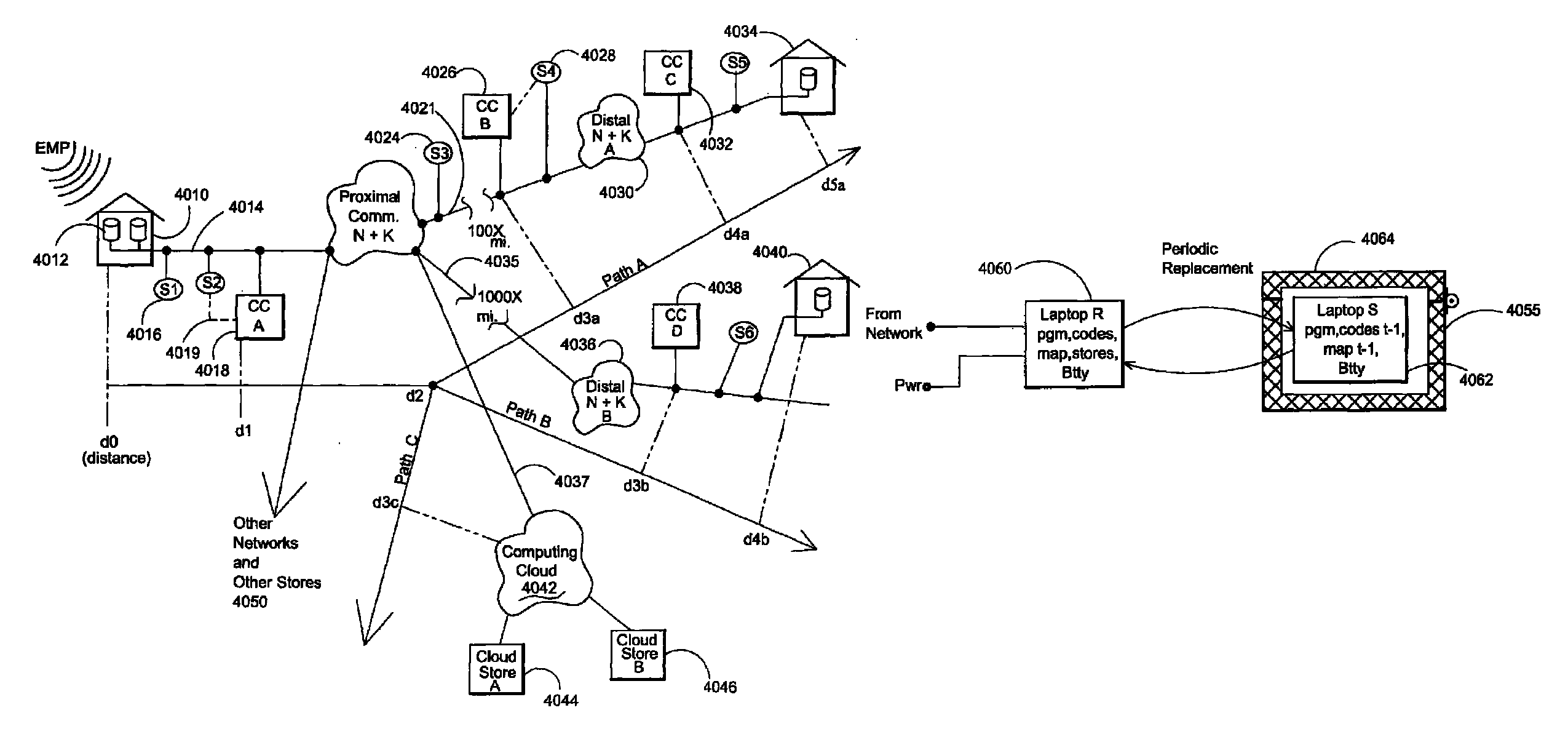

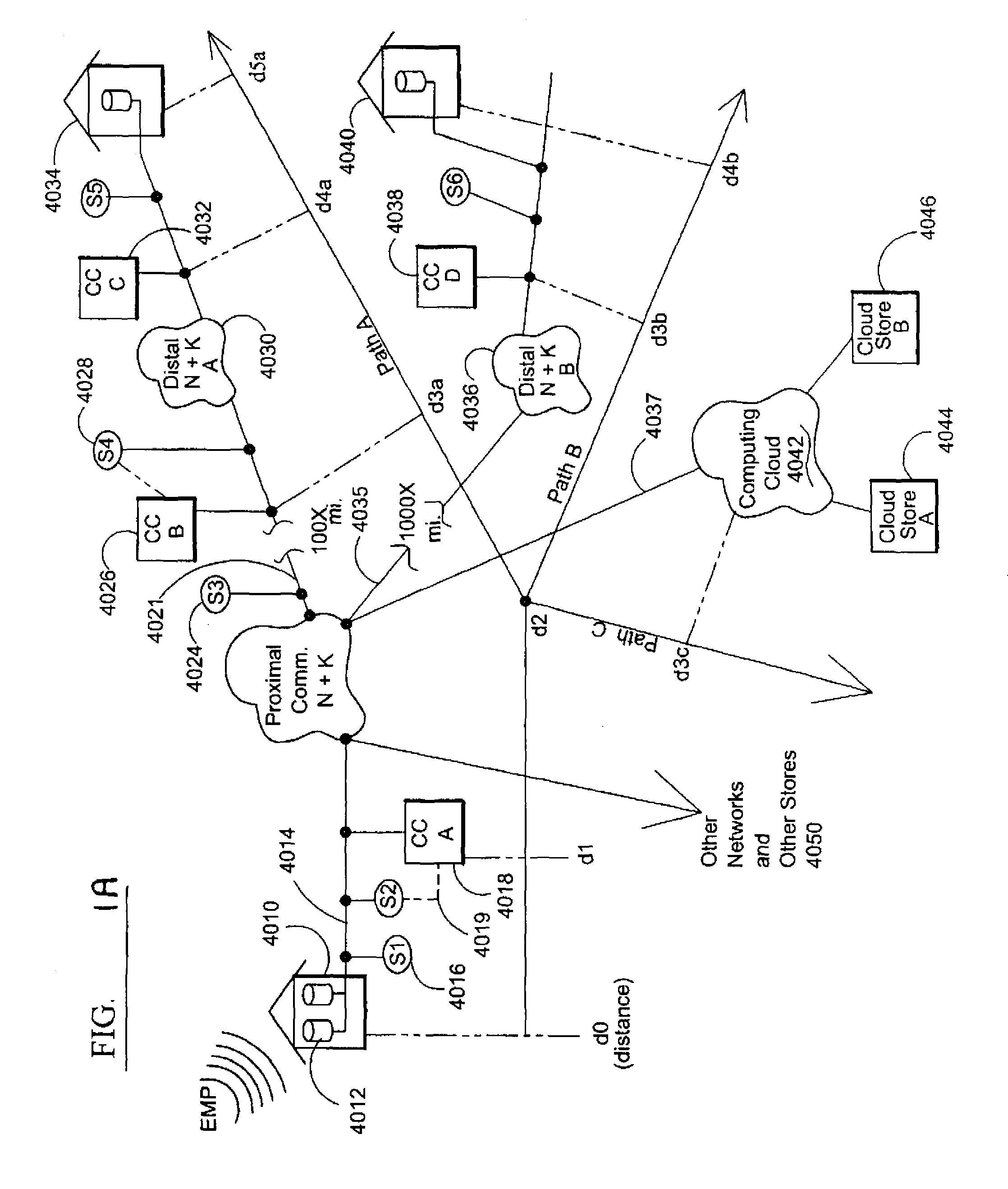

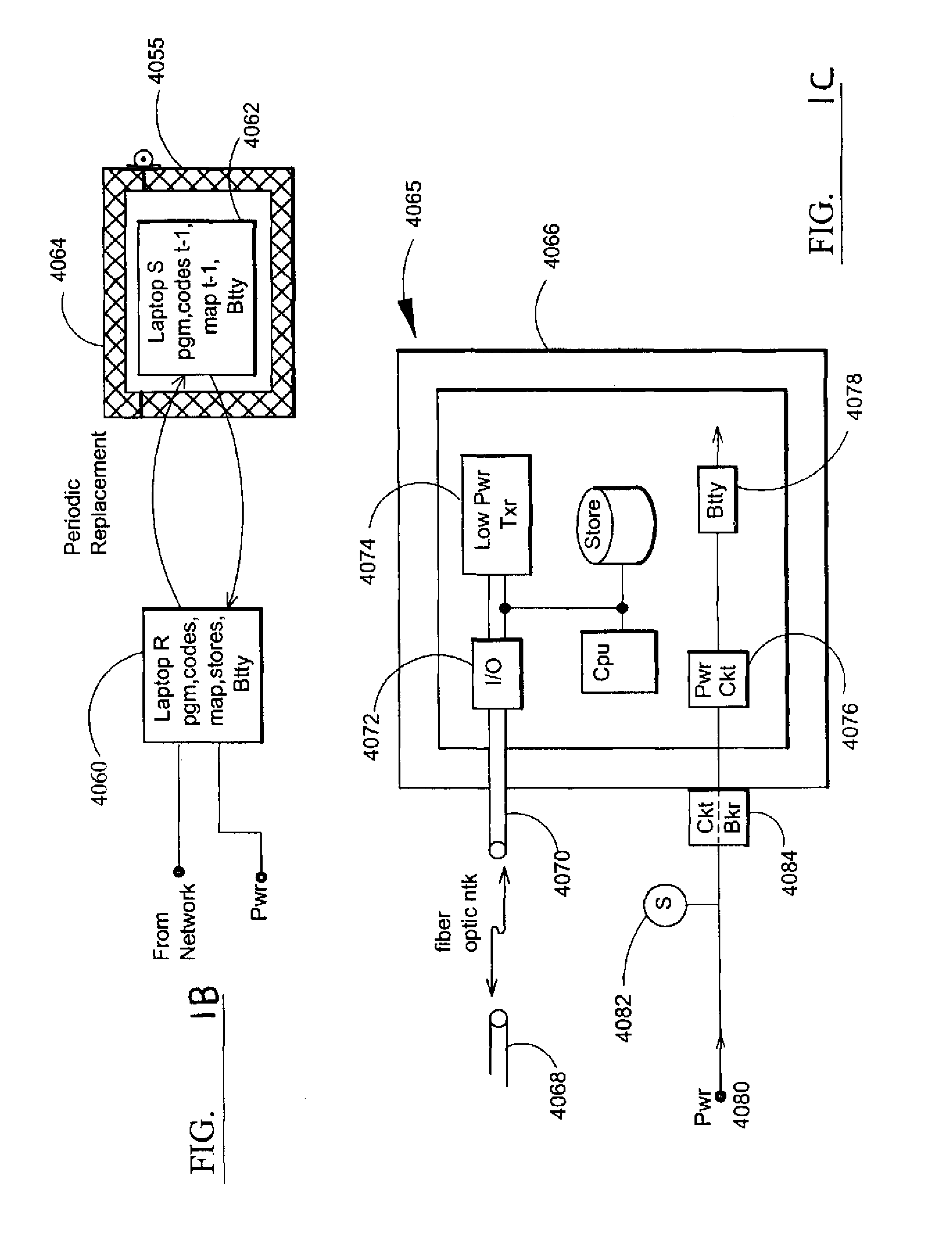

Electromagnetic pulse (EMP) hardened information infrastructure with extractor, cloud dispersal, secure storage, content analysis and classification and method therefor

ActiveUS8655939B2Improve the level ofImprove security levelDigital data processing detailsError detection/correctionElectromagnetic pulseContent analytics

A method and system processes data in a distributed computing system to survive an electromagnetic pulse (EMP) attack. The computing system has proximal select content (SC) data stores and geographically distributed distal data stores, all with respective access controls. The data input or put through the computing system is processed to obtain the SC and other associated content. The process then extracts and stores such content in the proximal SC data stores and geographically distributed distal SC data stores. The system further processes data to geographically distribute the data with data processes including: copy, extract, archive, distribute, and a copy-extract-archive and distribute process with a sequential and supplemental data destruction process. In this manner, the data input is distributed or spread out over the geographically distributed distal SC data stores. The system and method permits reconstruction of the processed data only in the presence of a respective access control.

Owner:DIGITAL DOORS

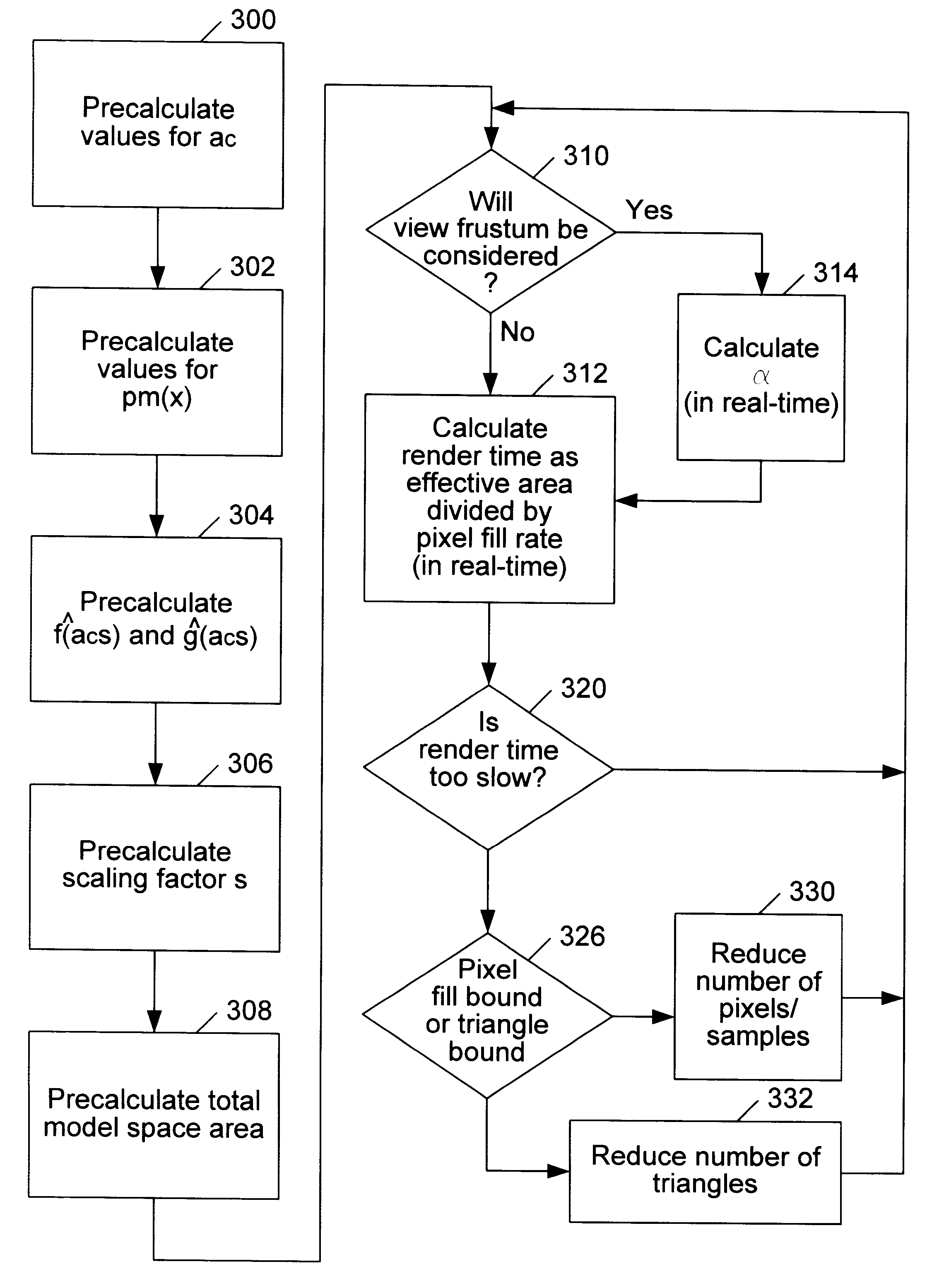

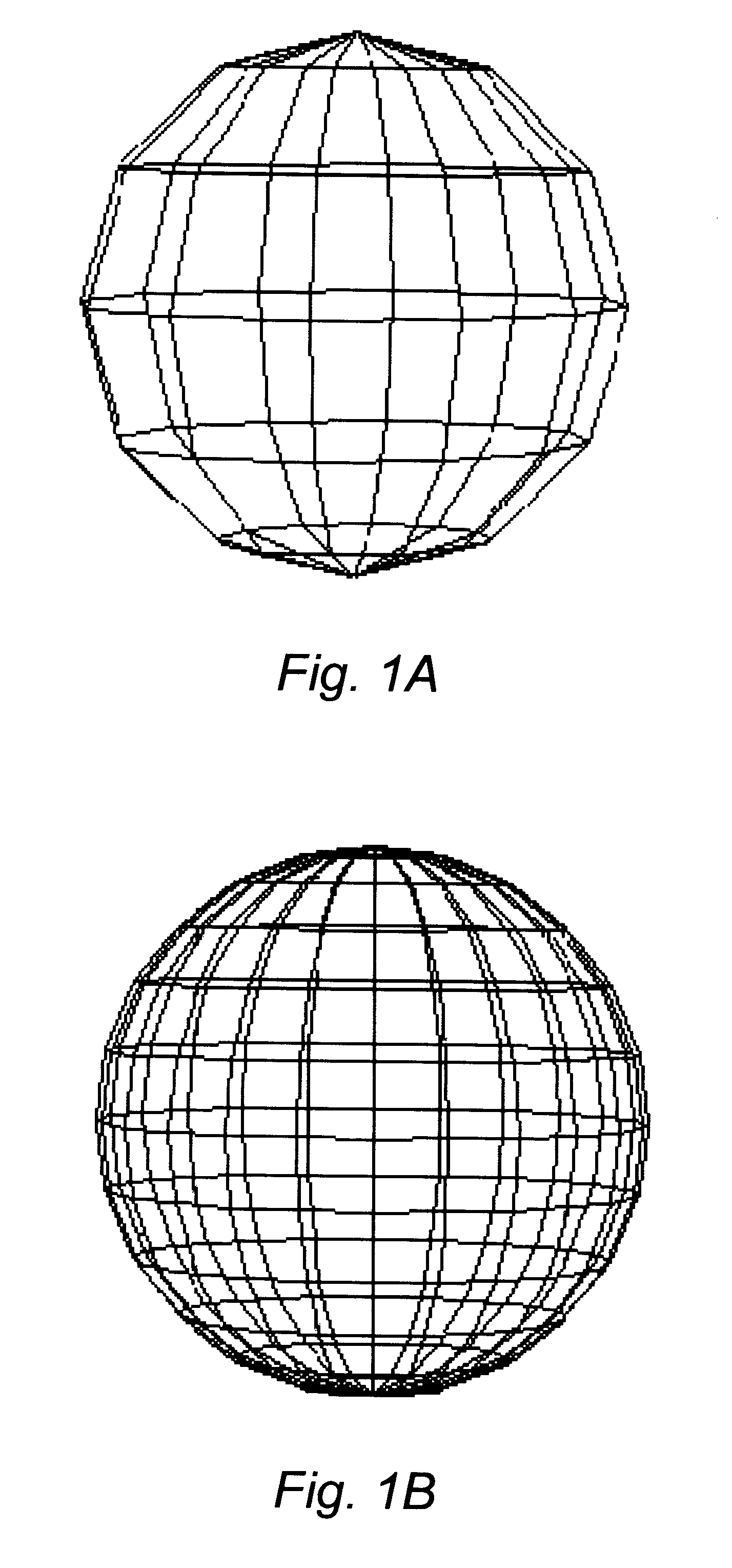

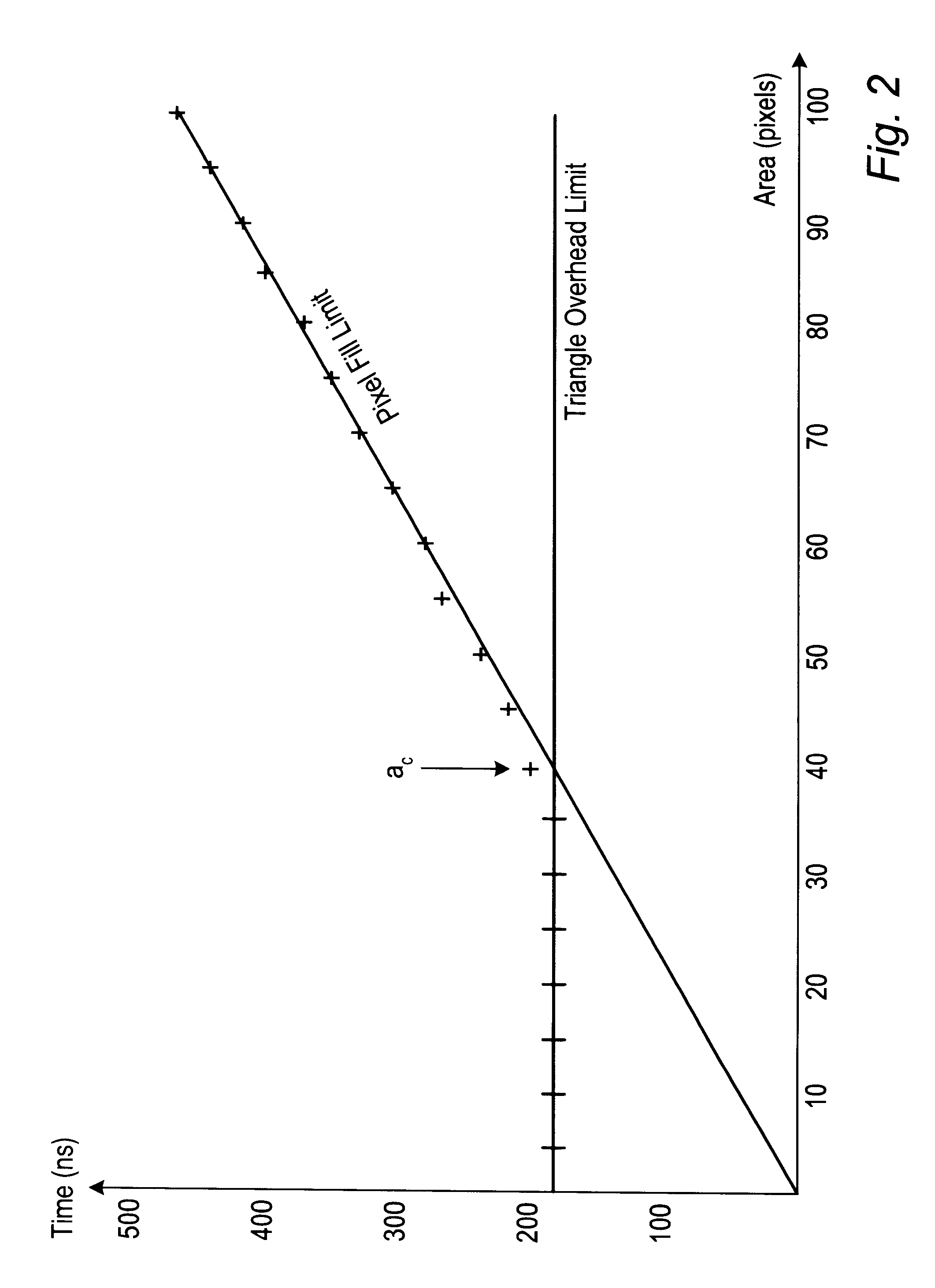

Estimating graphics system performance for polygons

InactiveUS6313838B1Cathode-ray tube indicatorsImage data processing detailsComputational scienceGraphic system

A method for estimating rendering times for three-dimensional graphics objects and scenes is disclosed. The rendering times may be estimated in real-time, thus allowing a graphics system to alter rendering parameters (such as level of detail and number of samples per pixel) to maintain a predetermined minimum frame rate. Part of the estimation may be performed offline to reduce the time required to perform the final estimation. The method may also detect whether the objects being rendered are pixel fill limited or polygon overhead limited. This information may allow the graphics system to make more intelligent choices as to which rendering parameters should be changed to achieve the desired minimum frame rate. A software program configured to efficiently estimate rendering times is also disclosed.

Owner:ORACLE INT CORP

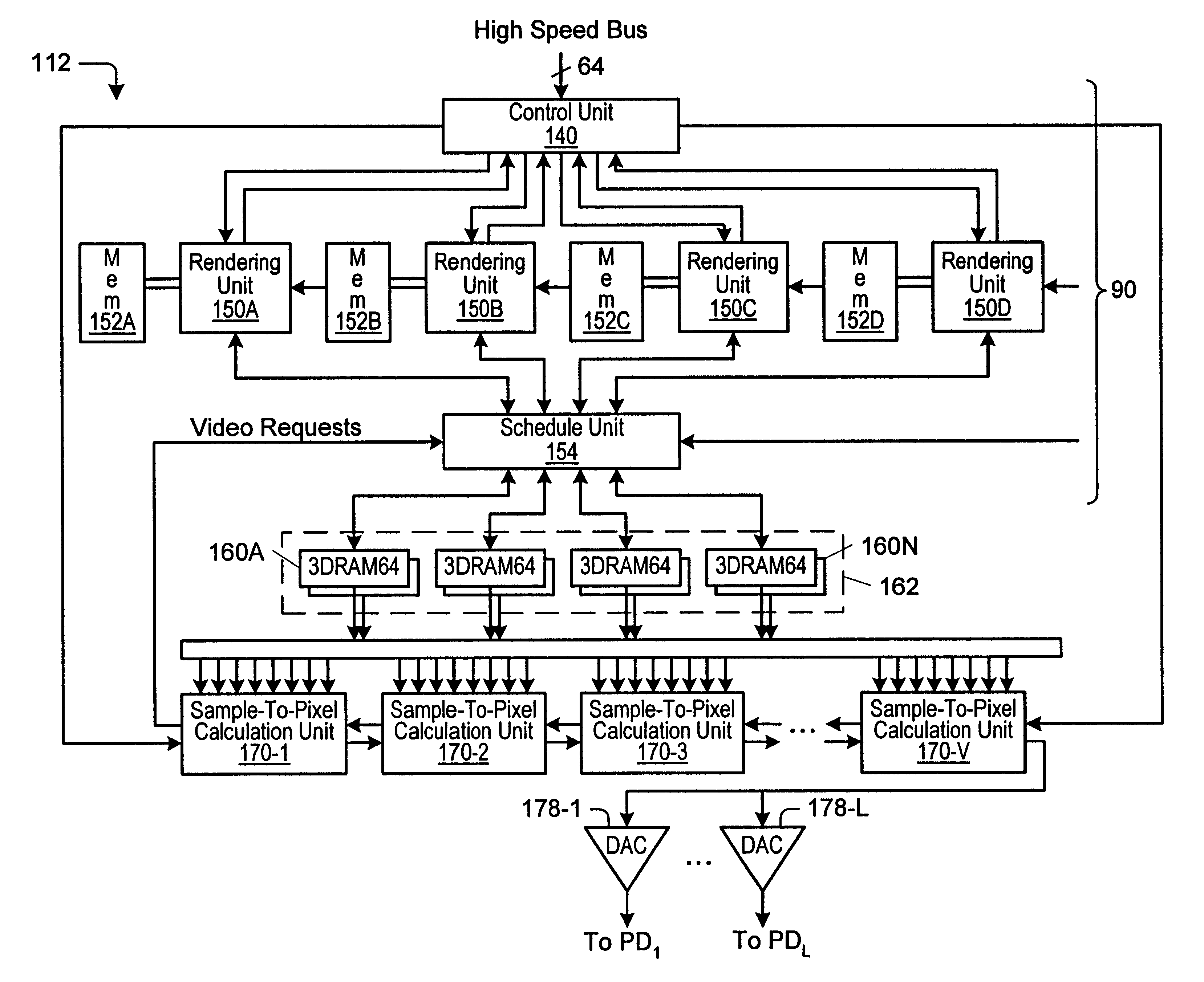

Graphics system configured to perform parallel sample to pixel calculation

A graphics system that is configured to utilize a sample buffer and a plurality of parallel sample-to-pixel calculation units, wherein the sample-pixel calculation units are configured to access different portions of the sample buffer in parallel. The graphics system may include a graphics processor, a sample buffer, and a plurality of sample-to-pixel calculation units. The graphics processor is configured to receive a set of three-dimensional graphics data and render a plurality of samples based on the graphics data. The sample buffer is configured to store the plurality of samples for the sample-to-pixel calculation units, which are configured to receive and filter samples from the sample buffer to create output pixels. Each of the sample-to-pixel calculation units are configured to generate pixels corresponding to a different region of the image. The region may be a vertical or horizontal stripe of the image, or a rectangular portion of the image. Each region may overlap the other regions of the image to prevent visual aberrations.

Owner:ORACLE INT CORP

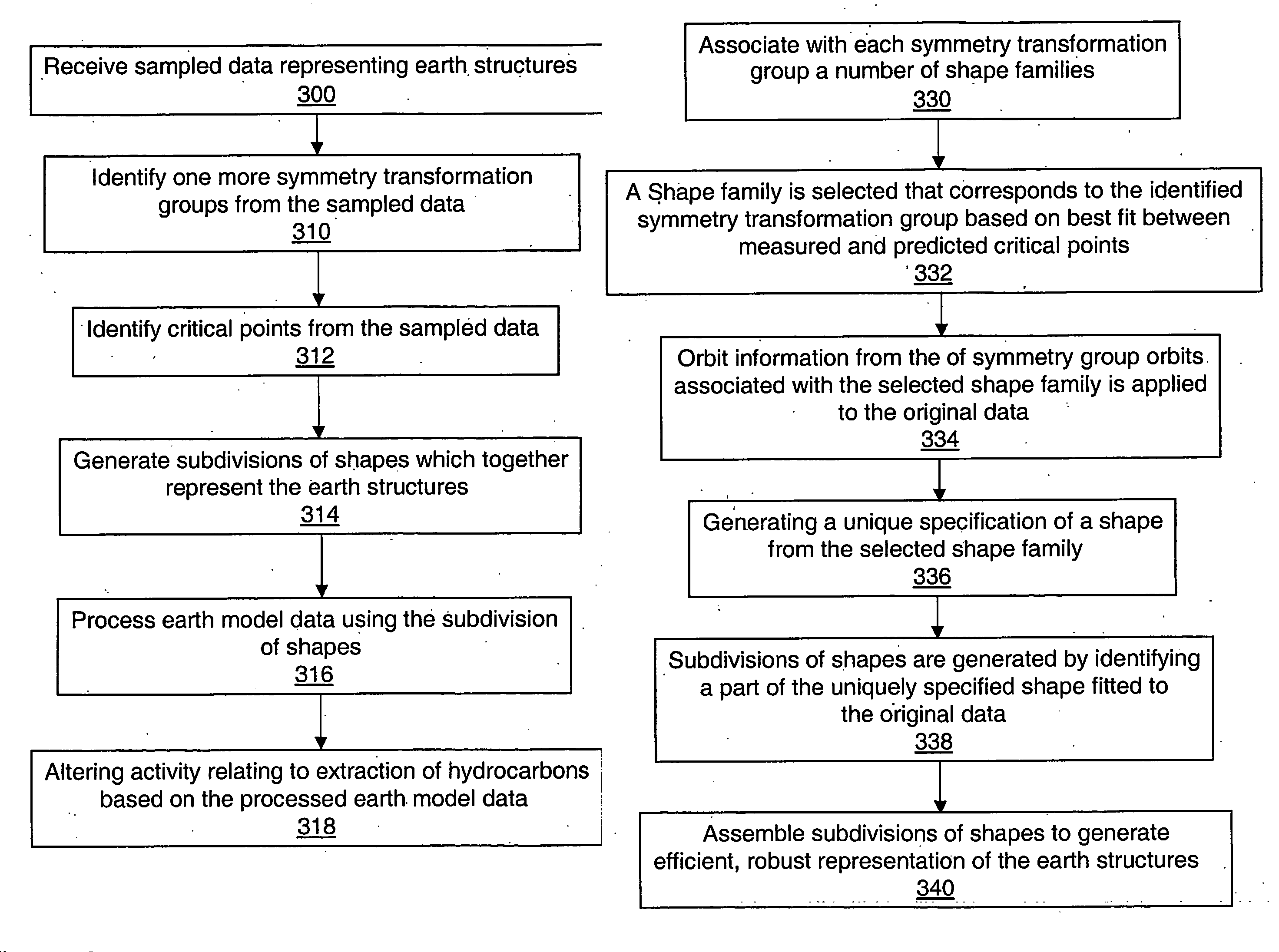

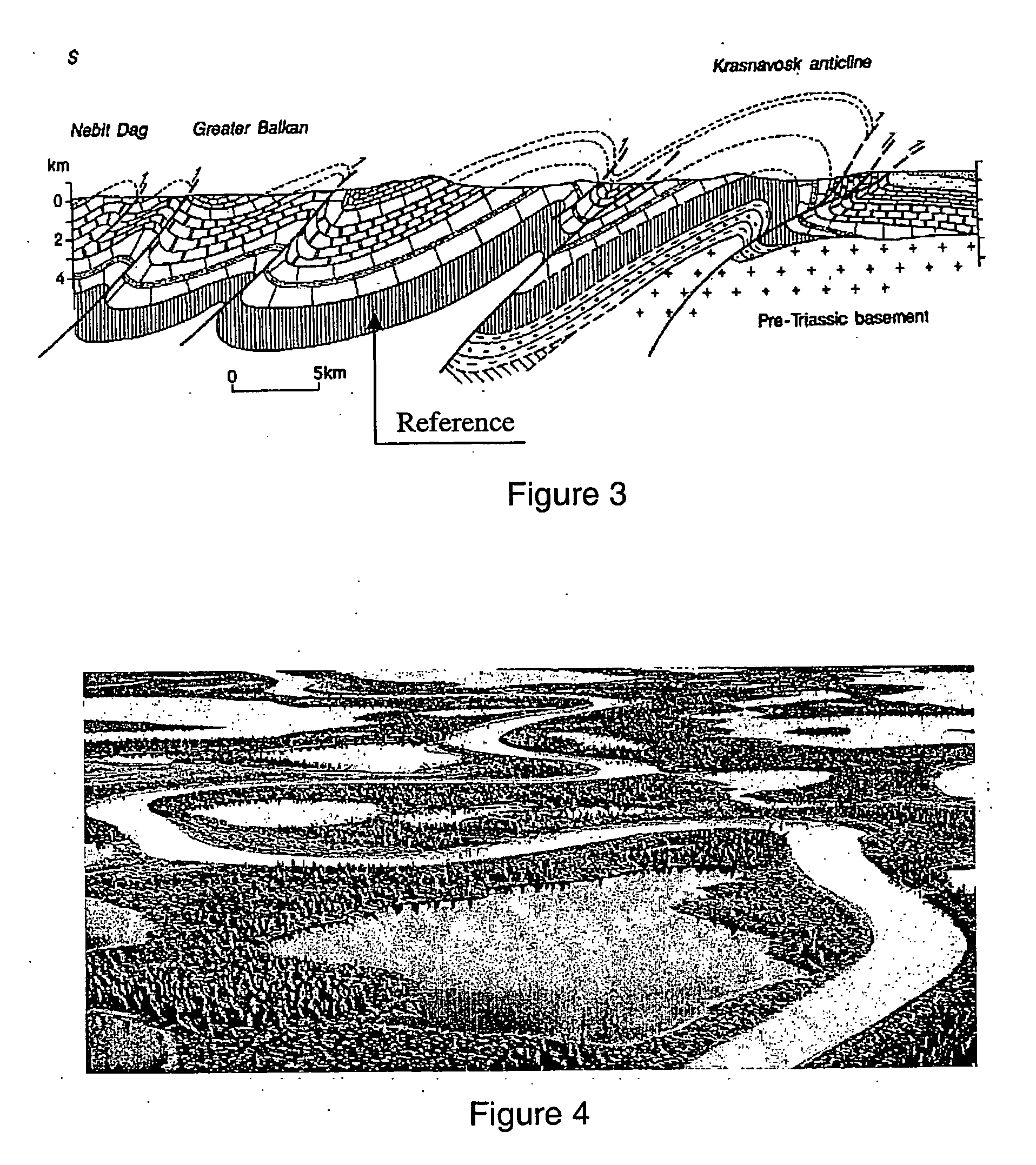

System and method for representing and processing and modeling subterranean surfaces

InactiveUS20060235666A1Enhance memoryImprove efficiencyGeological measurementsAnalogue processes for specific applicationsCrucial pointHydrocotyle bowlesioides

Methods and systems are disclosed for processing data used for hydrocarbon extraction from the earth. Symmetry transformation groups are identified from sampled earth structure data. A set of critical points is identified from the sampled data. Using the symmetry groups and the critical points, a plurality of subdivisions of shapes is generated, which together represent the original earth structures. The symmetry groups correspond to a plurality of shape families, each of which includes a set of predicted critical points. The subdivisions are preferably generated such that a shape family is selected according to a best fit between the critical points from the sampled data and the predicted critical points of the selected shape family.

Owner:SCHLUMBERGER TECH CORP

Load balancing

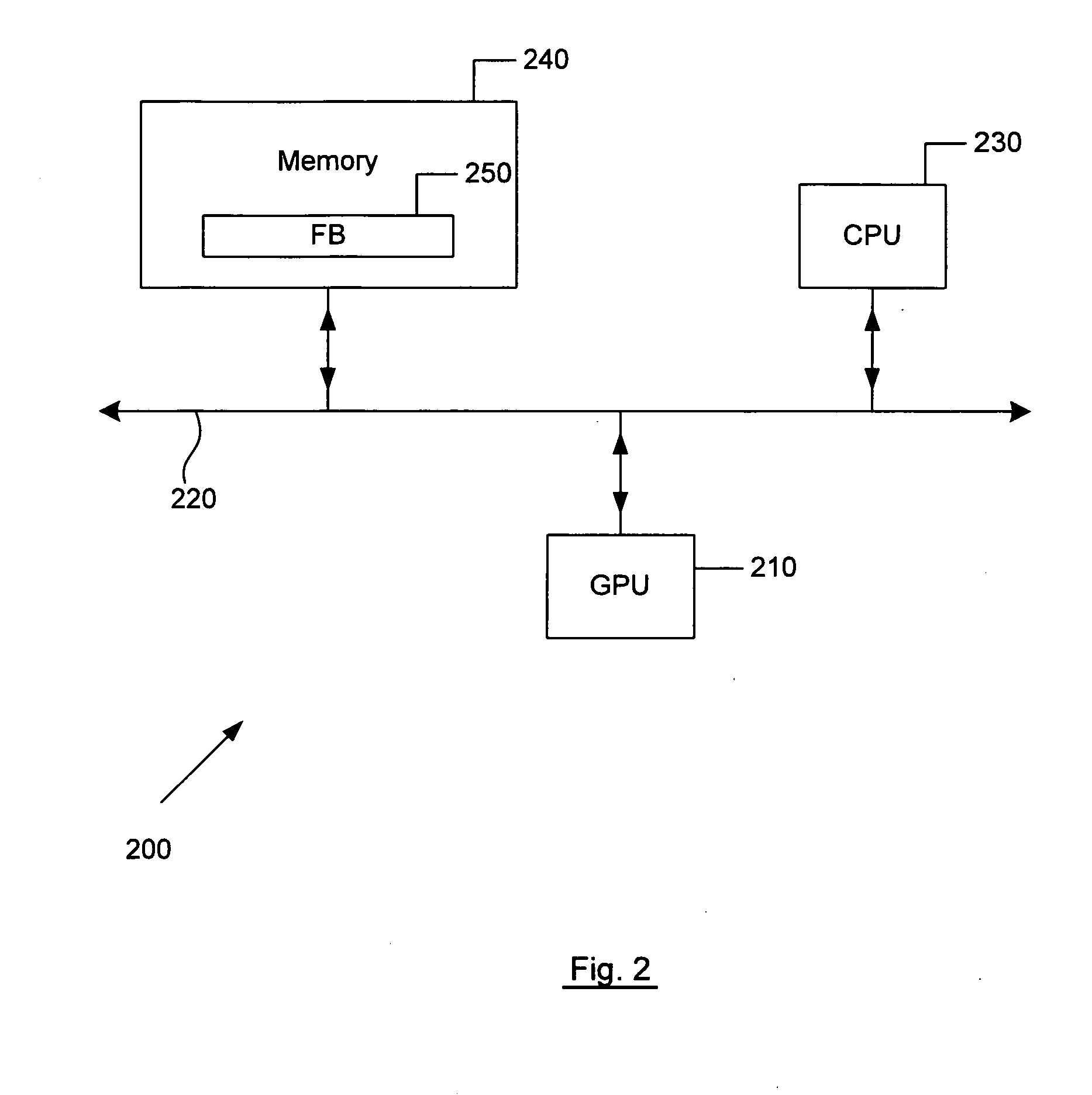

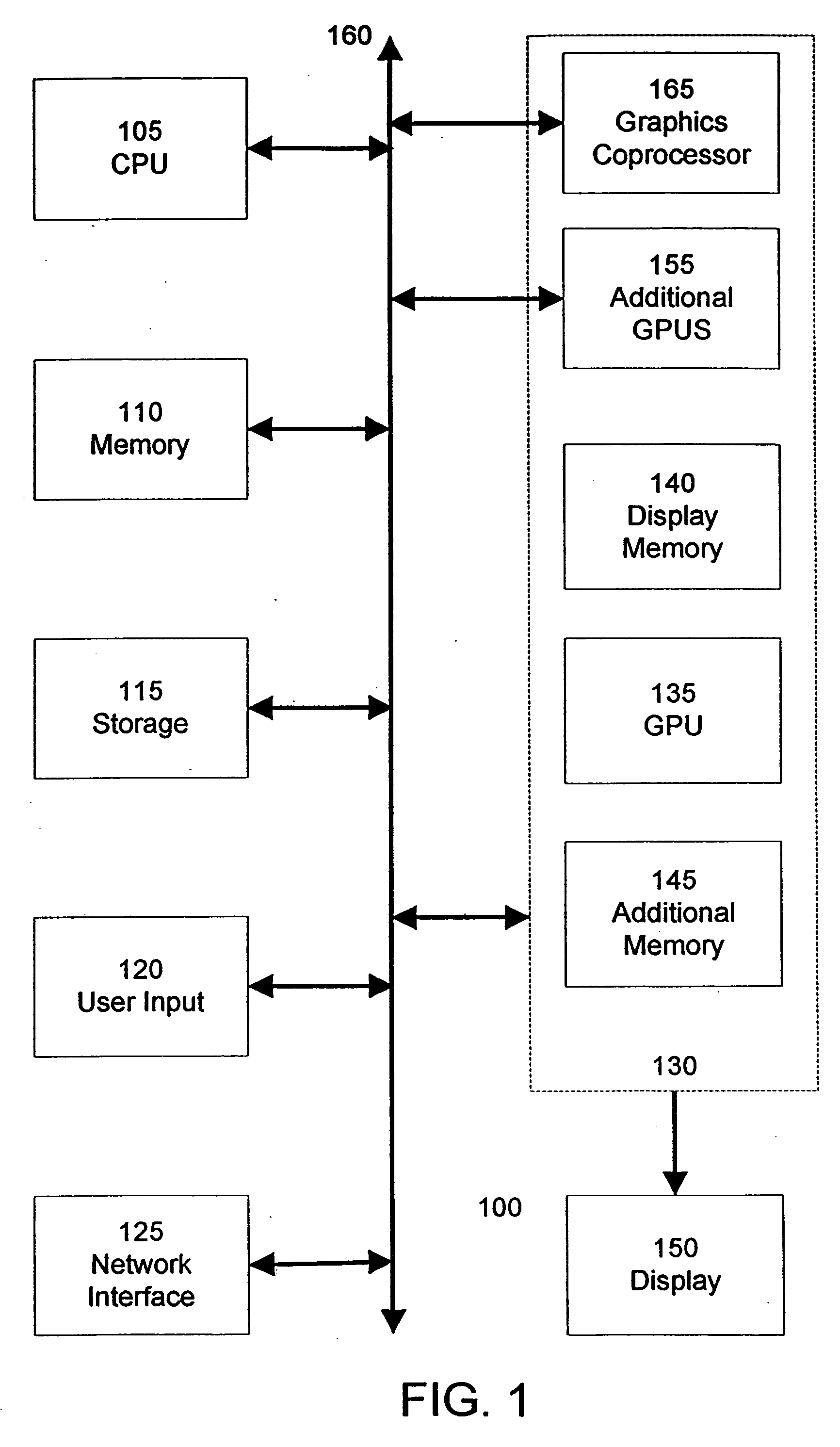

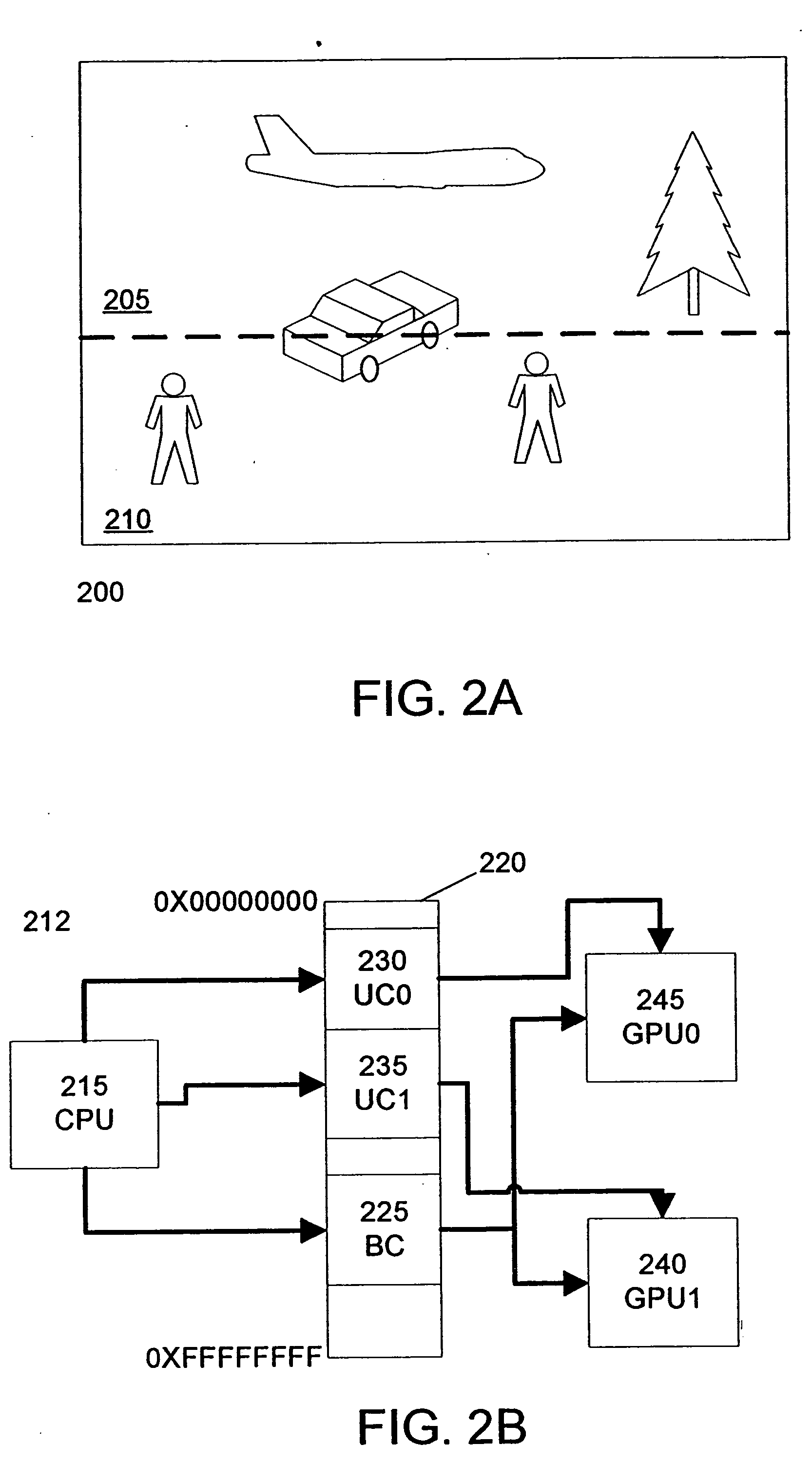

ActiveUS20060059494A1Multiprogramming arrangementsMultiple digital computer combinationsComputational scienceGraphics

Embodiments of methods, apparatuses, devices, and / or systems for load balancing two processors, such as for graphics and / or video processing, for example, are described.

Owner:NVIDIA CORP

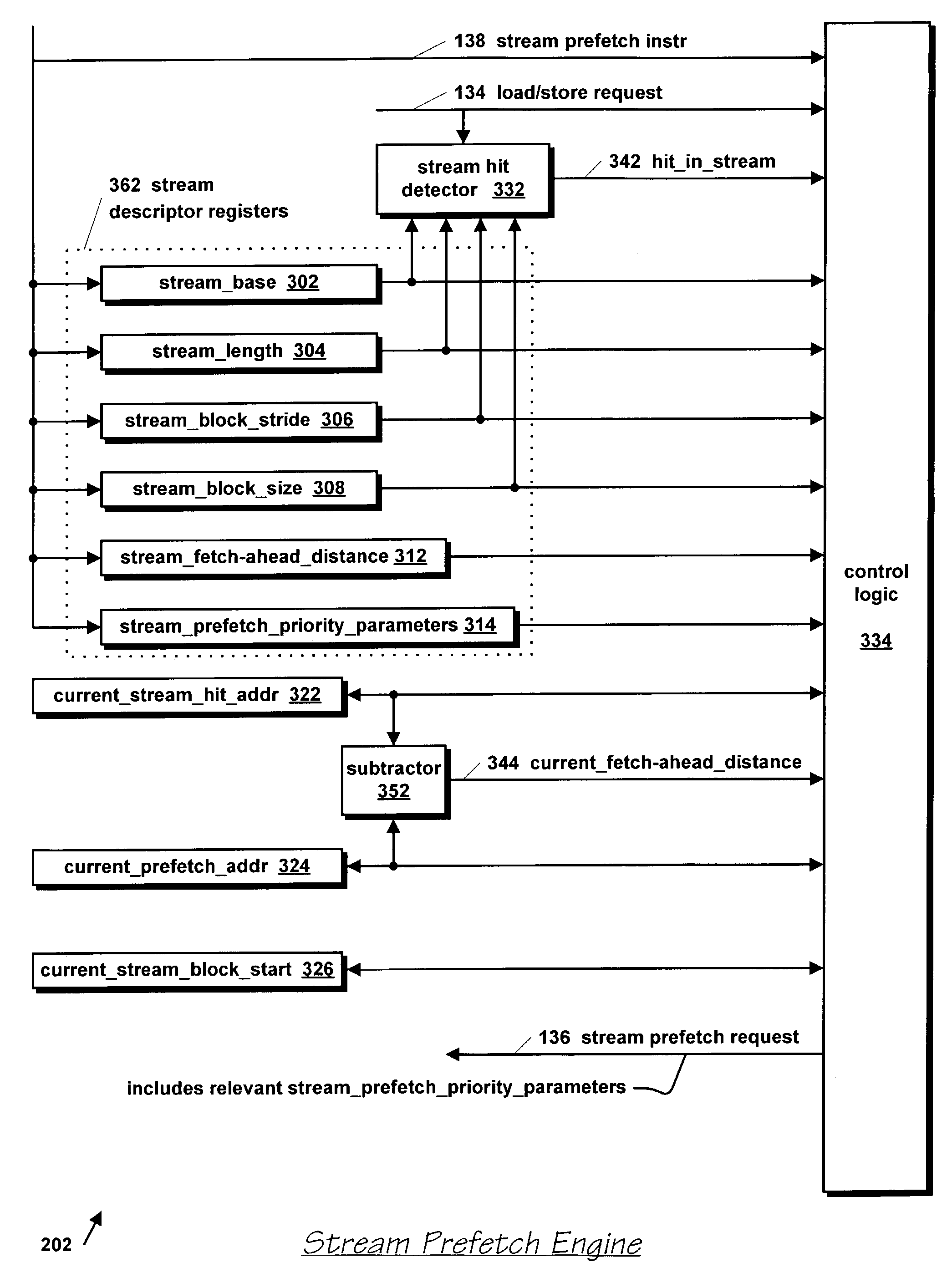

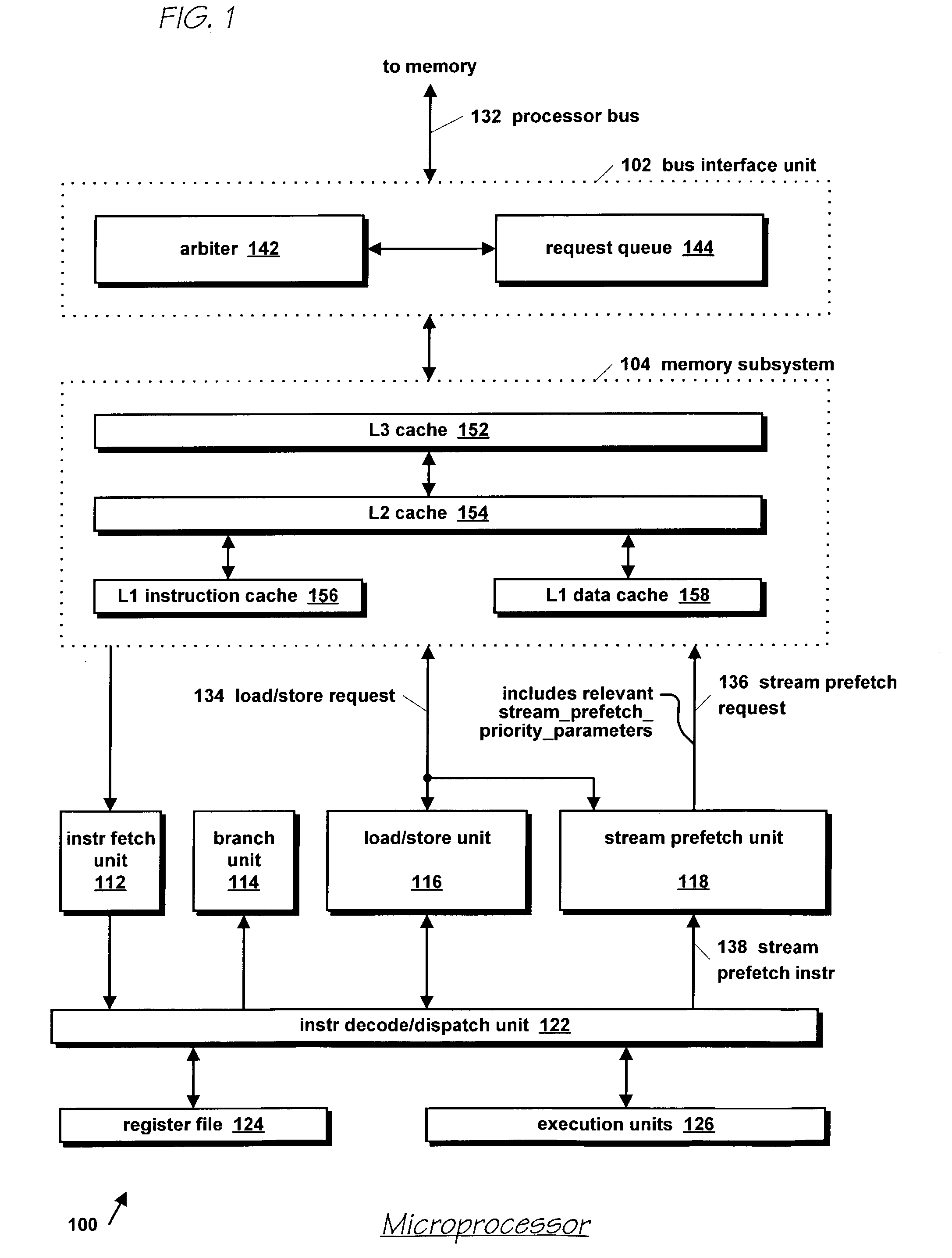

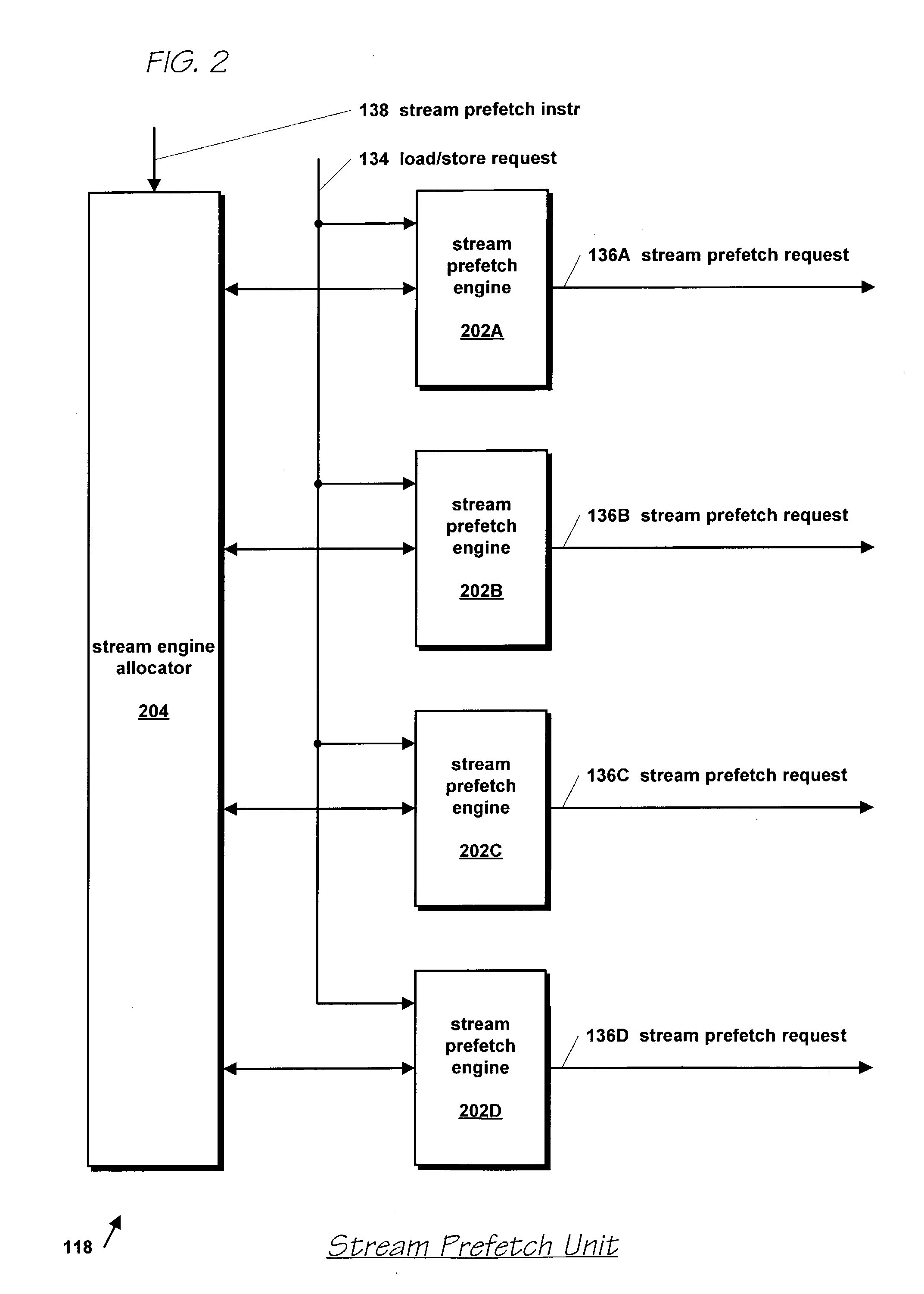

Microprocessor with improved data stream prefetching

ActiveUS7177985B1Improving data stream prefetchingMemory architecture accessing/allocationDigital computer detailsCache hierarchyPage fault

A microprocessor with multiple stream prefetch engines each executing a stream prefetch instruction to prefetch a complex data stream specified by the instruction in a manner synchronized with program execution of loads from the stream is provided. The stream prefetch engine stays at least a fetch-ahead distance (specified in the instruction) ahead of the program loads, which may randomly access the stream. The instruction specifies a level in the cache hierarchy to prefetch into, a locality indicator to specify the urgency and ephemerality of the stream, a stream prefetch priority, a TLB miss policy, a page fault miss policy, a protection violation policy, and a hysteresis value, specifying a minimum number of bytes to prefetch when the stream prefetch engine resumes prefetching. The memory subsystem includes a separate TLB for stream prefetches; or a joint TLB backing the stream prefetch TLB and load / store TLB; or a separate TLB for each prefetch engine.

Owner:ARM FINANCE OVERSEAS LTD

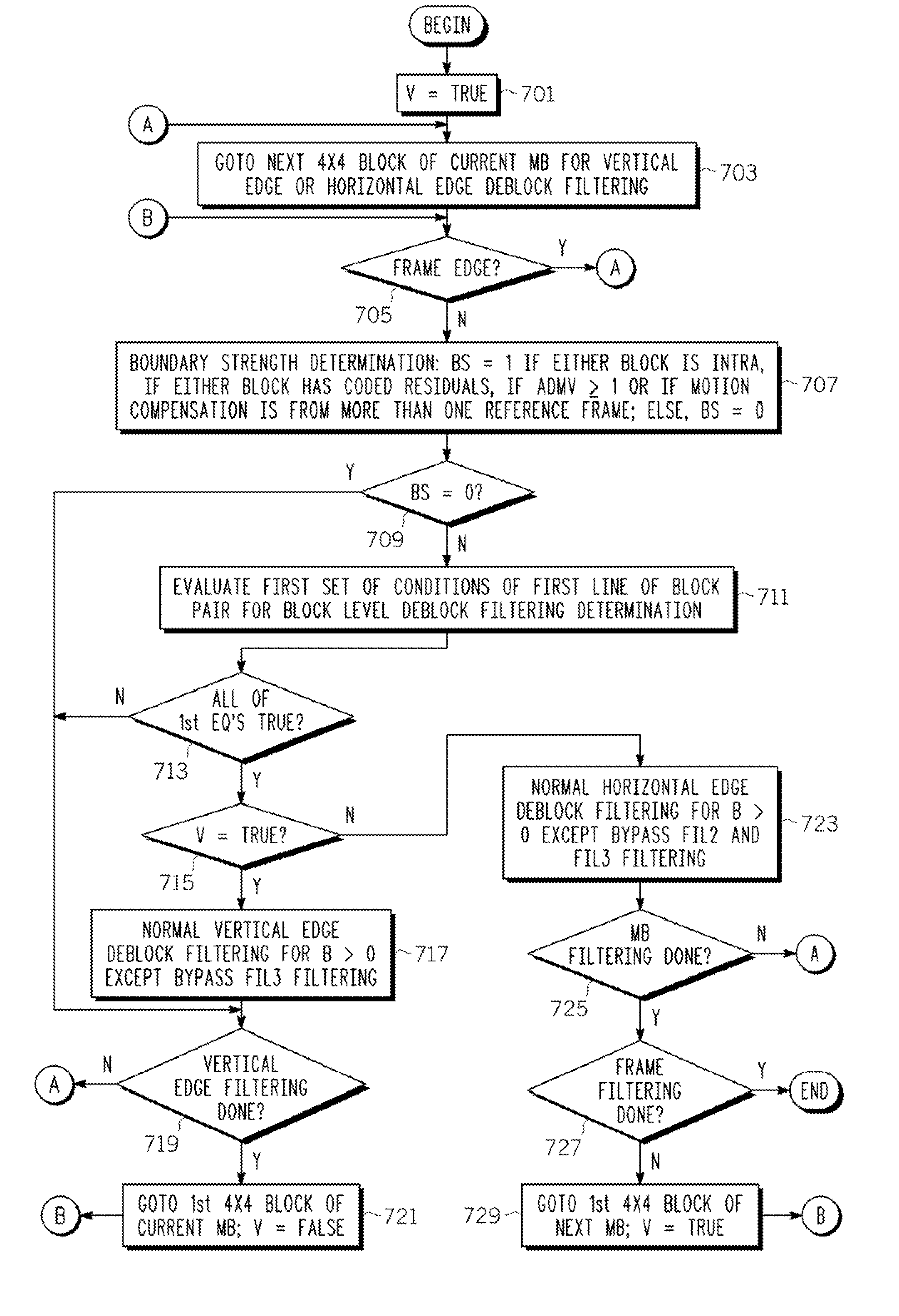

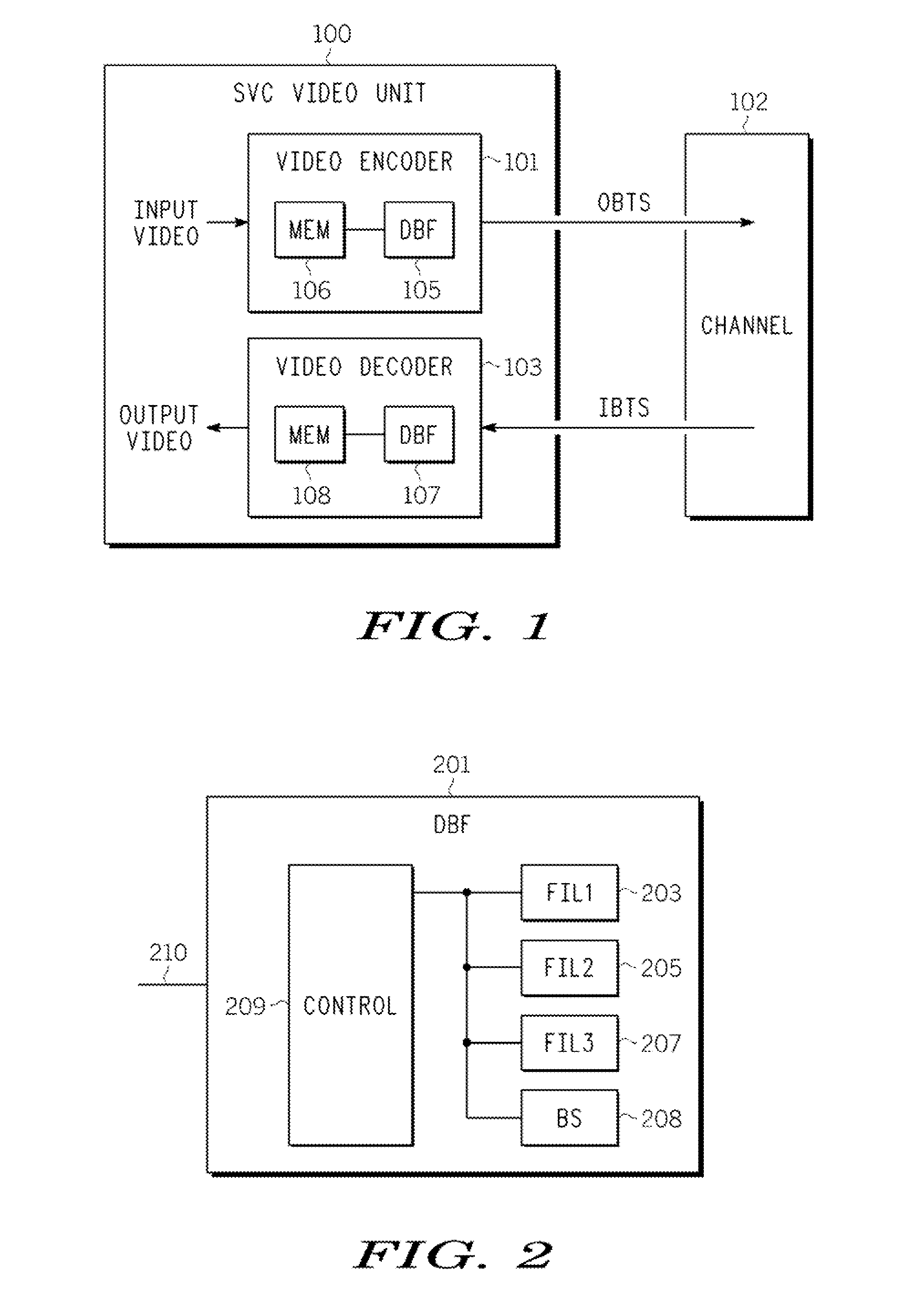

Simplified deblock filtering for reduced memory access and computational complexity

InactiveUS20080240252A1Color television with pulse code modulationColor television with bandwidth reductionComputational scienceBoundary strength

A method of simplifying deblock filtering of video blocks of an enhanced layer of scalable video information is disclosed which includes selecting an adjacent pair of video blocks, determining whether boundary strength of the video blocks is a first value, evaluating first conditions using component values of a first component line if the boundary strength is not the first value, and bypassing deblock filtering between the video blocks if the boundary strength is the first value or if any of the first conditions is false. The method may include bypassing evaluating conditions and deblock filtering associated with the maximum boundary strength. The method may include bypassing evaluating second conditions and bypassing corresponding deblock filtering if the intermediate edge is a horizontal edge. The method may include bypassing less efficient memory reads associated with component values used for evaluating the second conditions.

Owner:NORTH STAR INNOVATIONS

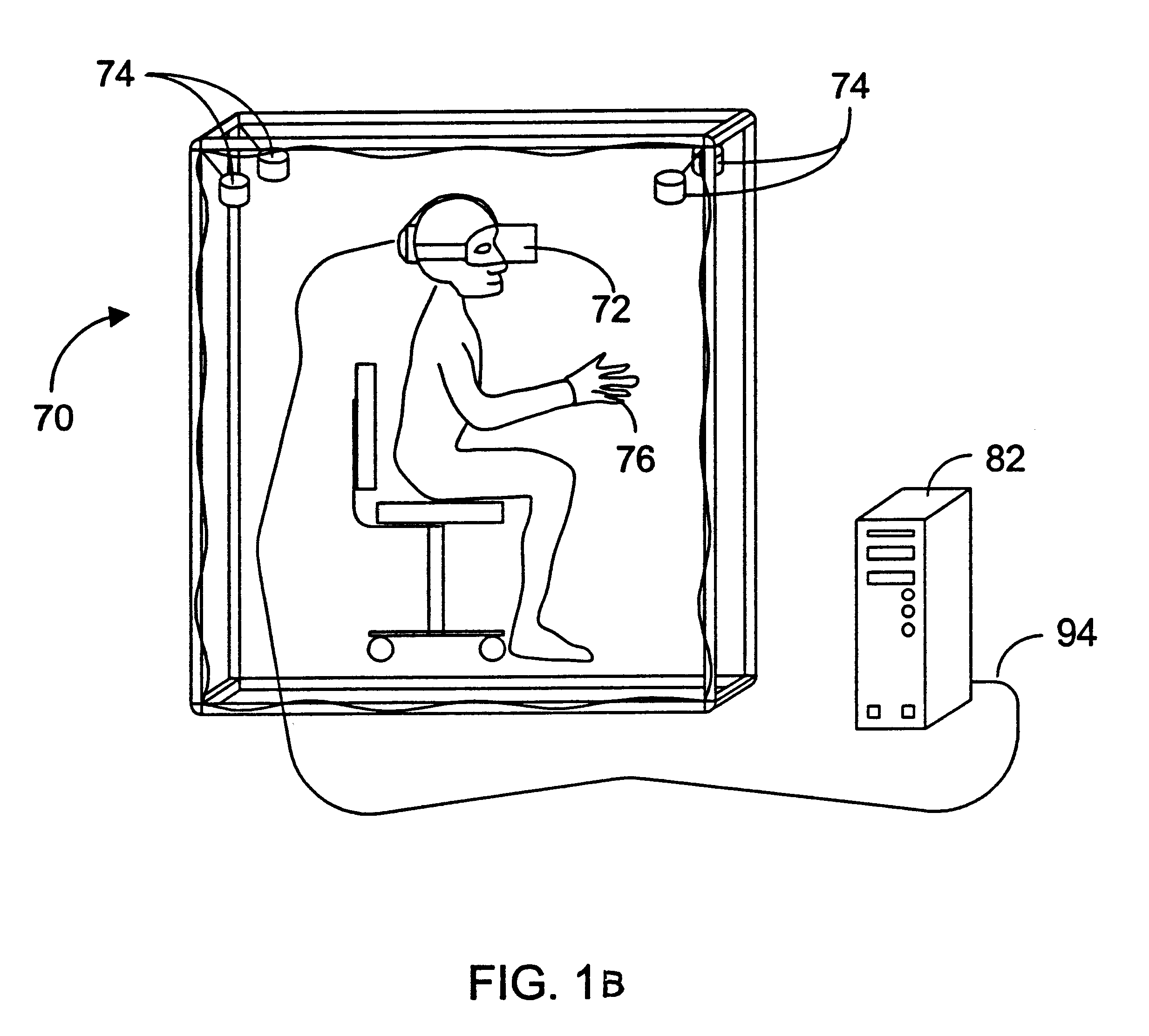

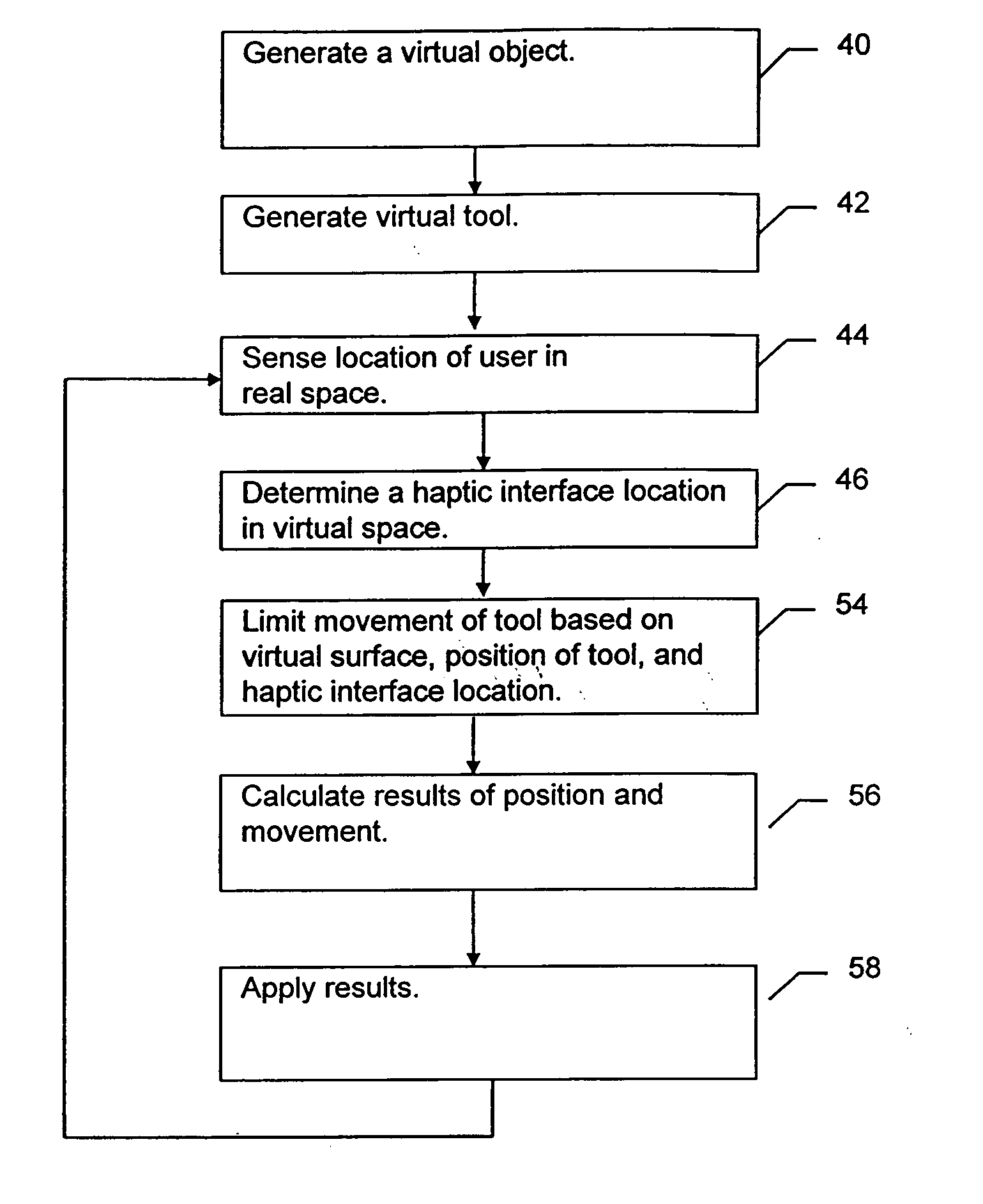

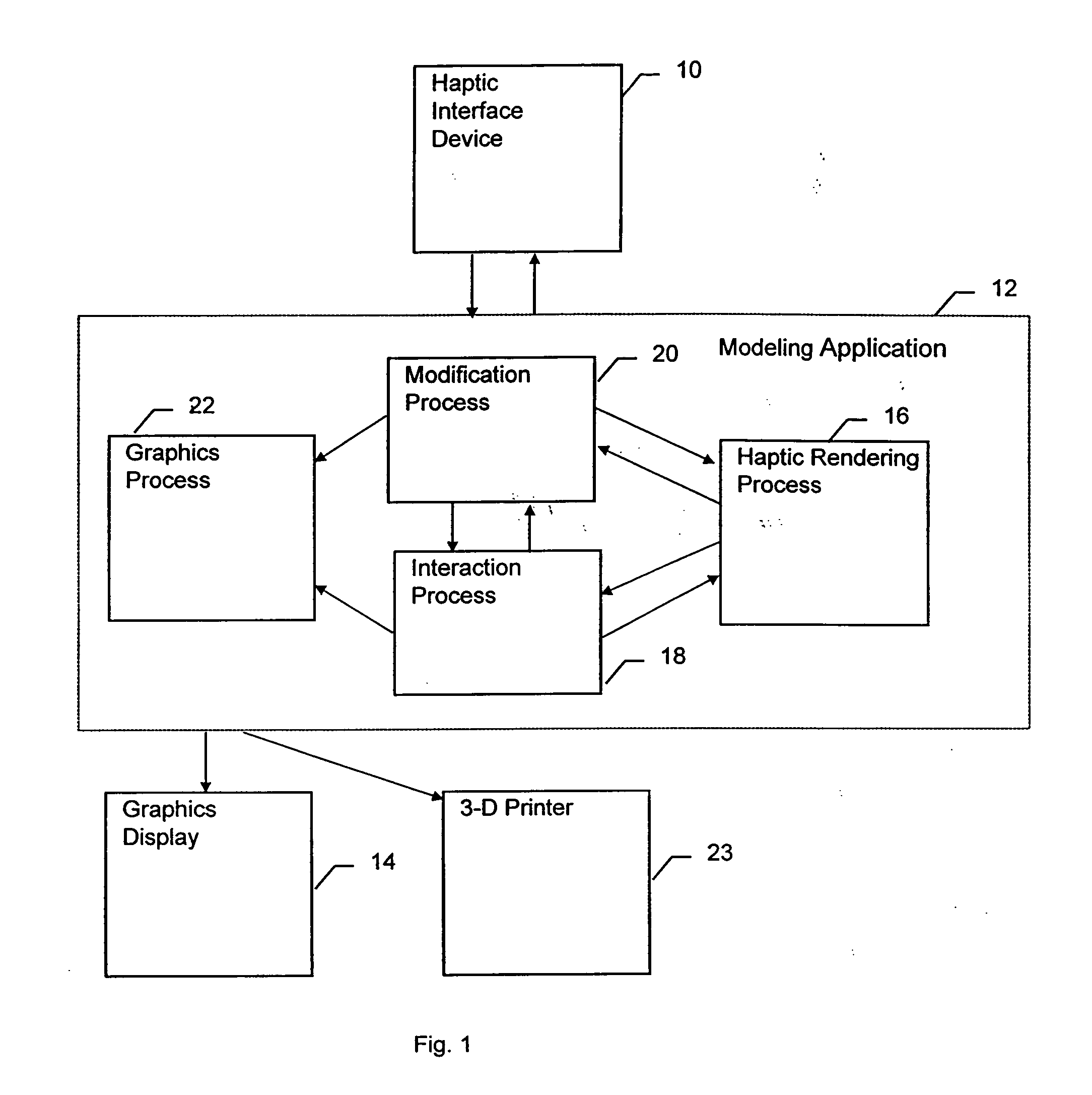

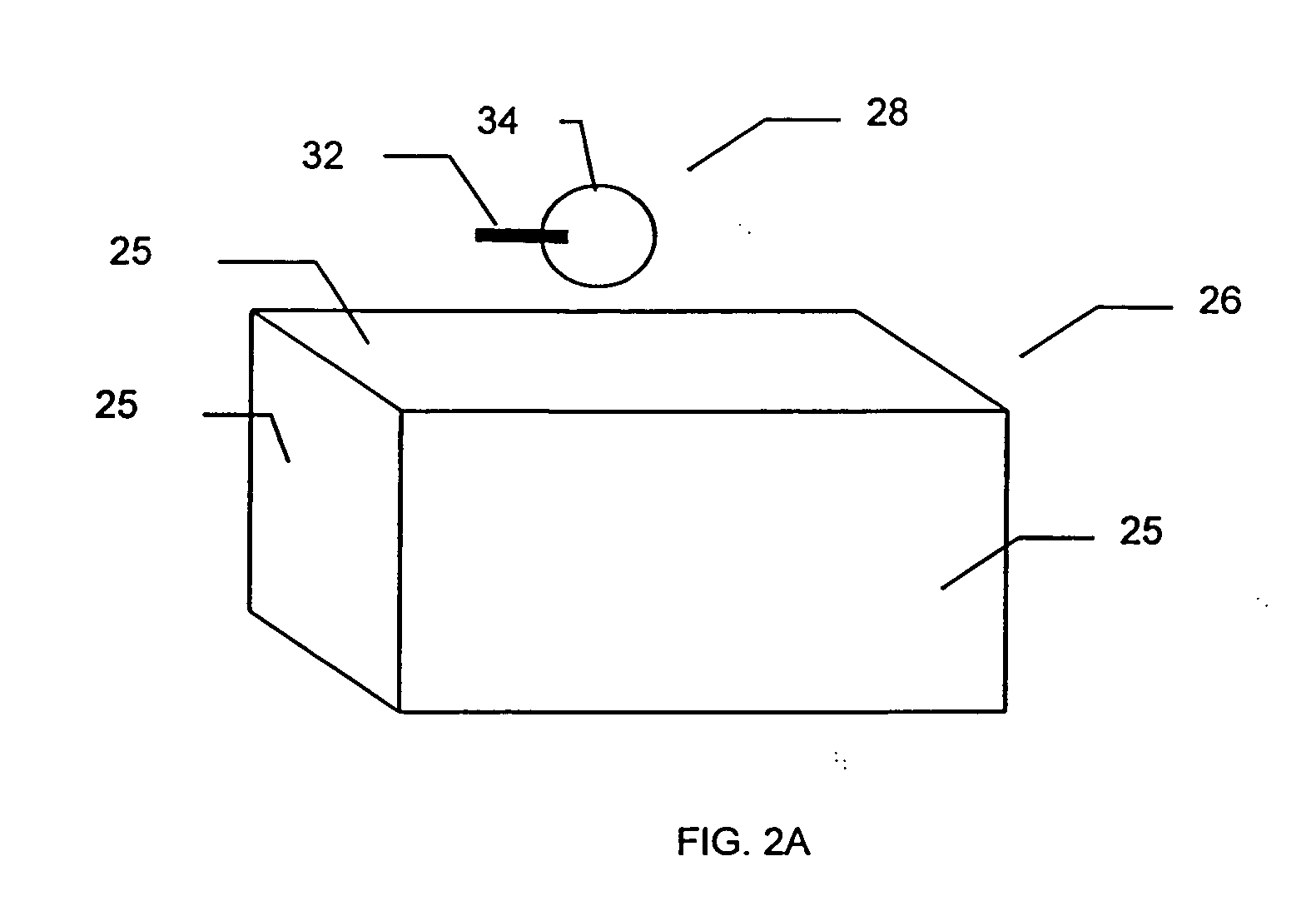

Systems and methods for creating virtual objects in a sketch mode in a haptic virtual reality environment

InactiveUS20050062738A1Ease and expressivenessResolve ambiguityInput/output for user-computer interactionAdditive manufacturing apparatusMirror imageVirtual reality

Owner:3D SYST INC

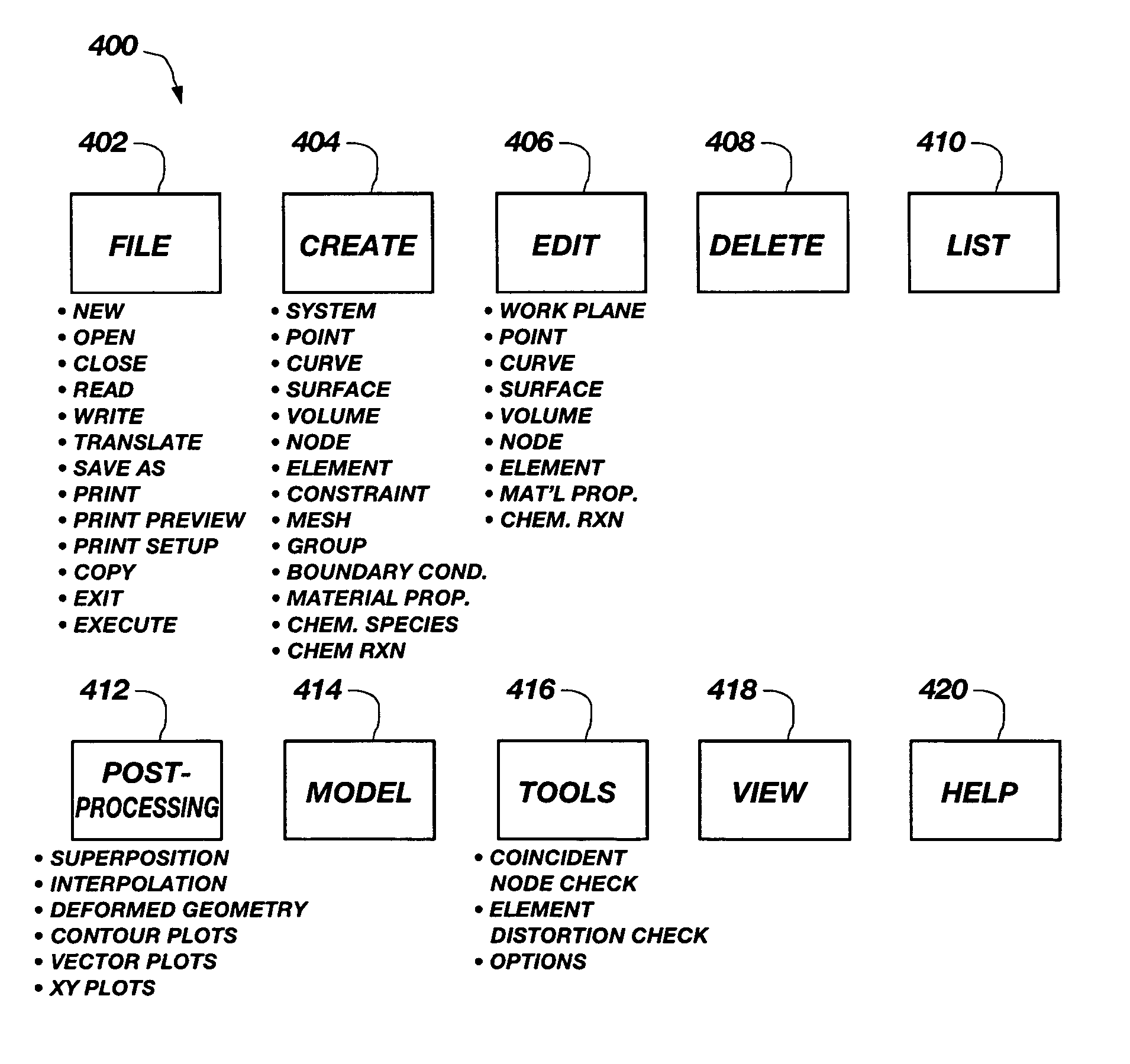

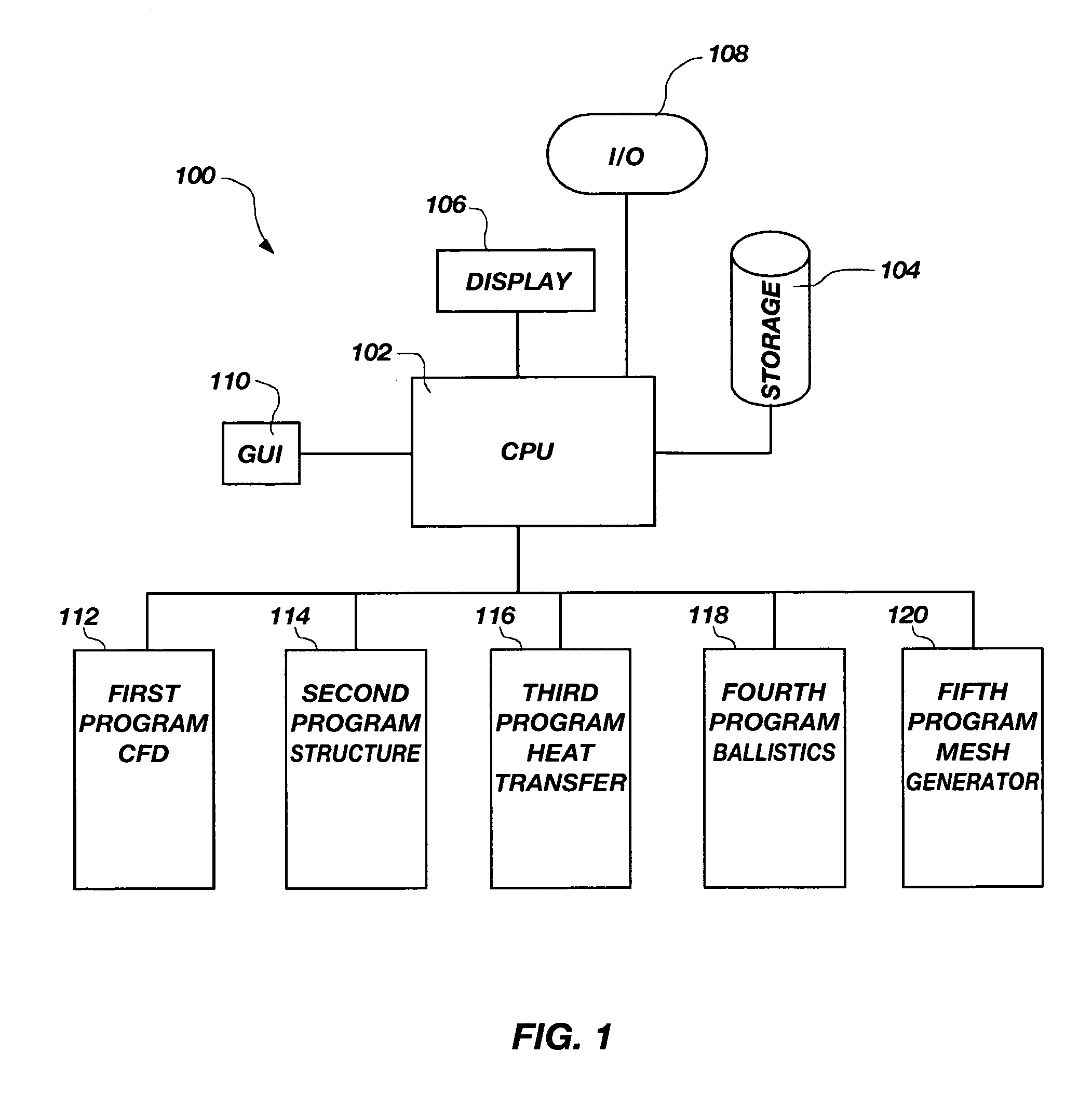

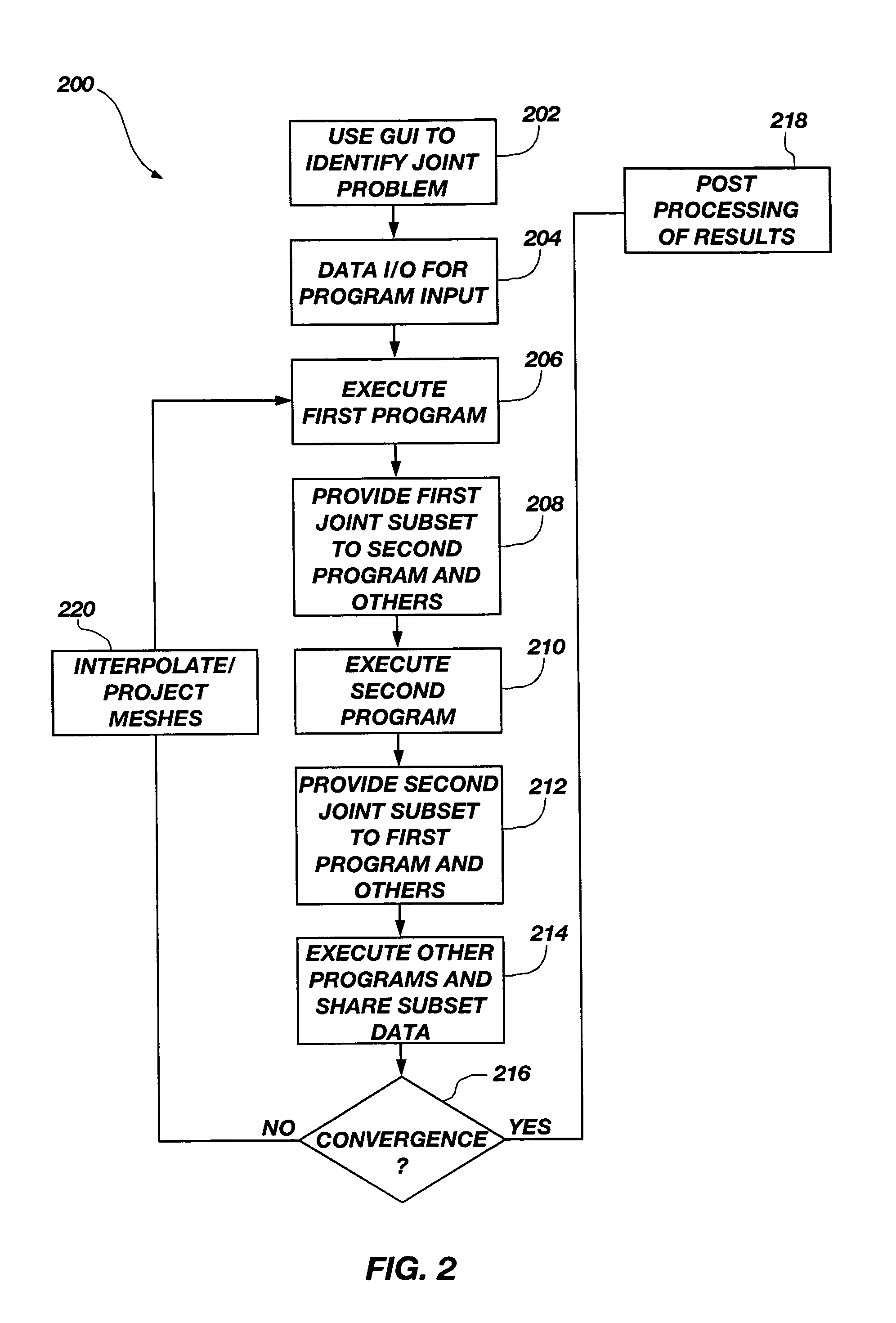

System for performing coupled finite analysis

ActiveUS7127380B1Stay flexibleAccelerated settlementComputation using non-denominational number representationDesign optimisation/simulationComputational scienceGraphics

A graphical user interface, together with a comparable scripting interface, couples a plurality of finite element, finite volume, or finite difference analytical programs and permits iterative convergence of multiple programs through one set of predefined commands. The user is permitted to select the joint problem for solution by choosing program selections. Data linkages that couple the program are predefined by an expert system administrator to permit less skilled modelers access to a comprehensive and multifaceted solution that would not be possible for the less skilled modelers to complete absent the graphical user interface.

Owner:NORTHROP GRUMMAN SYST CORP

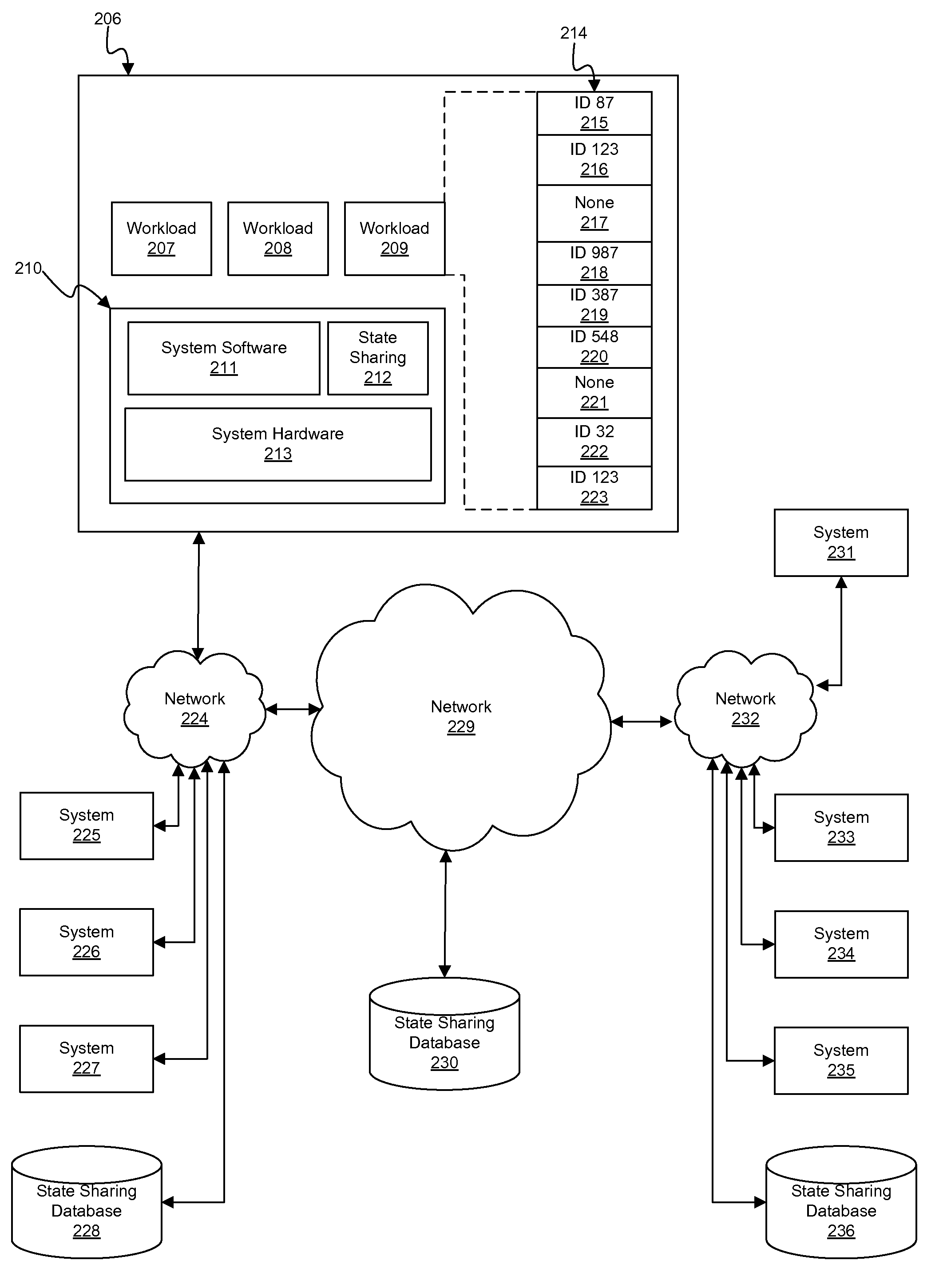

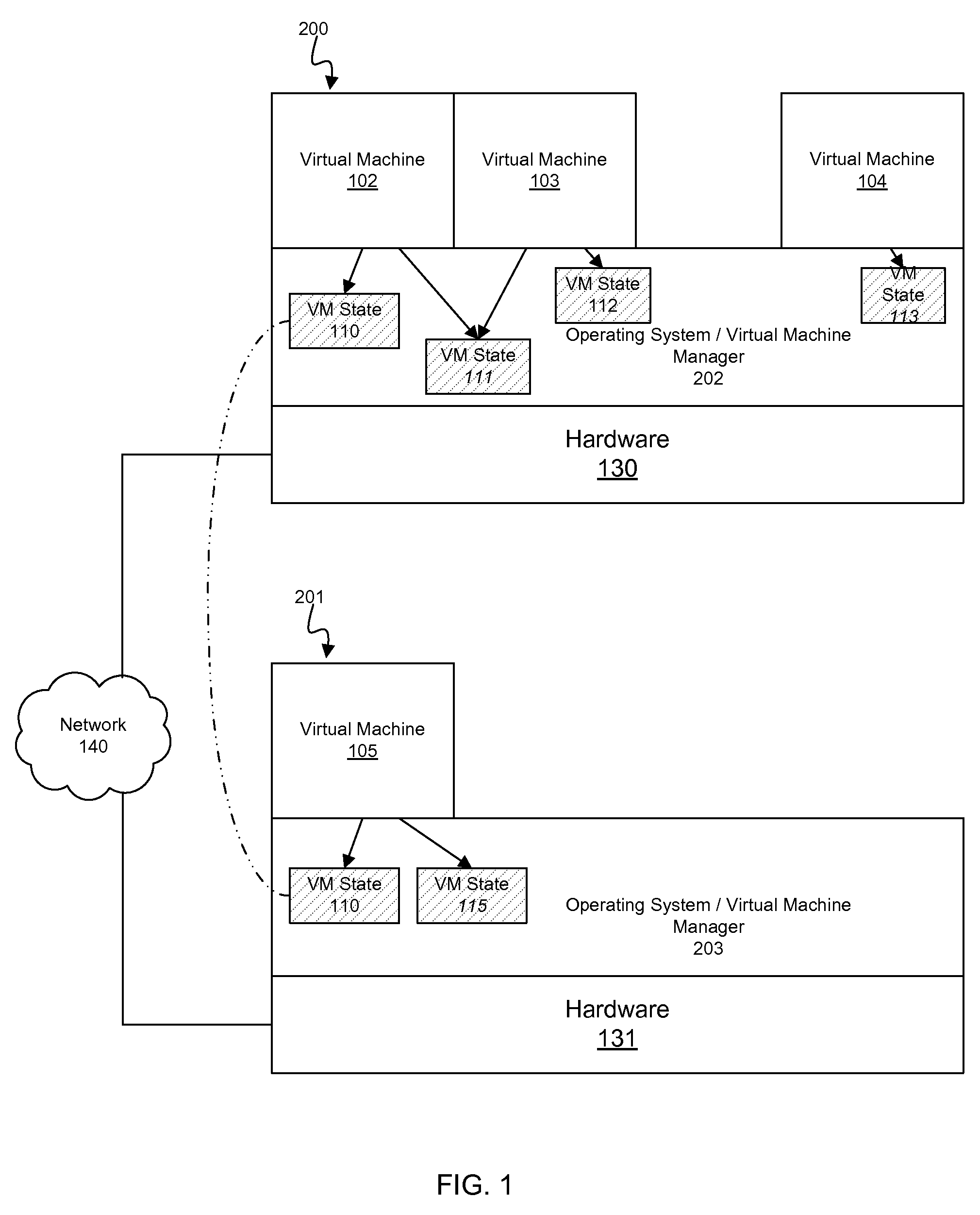

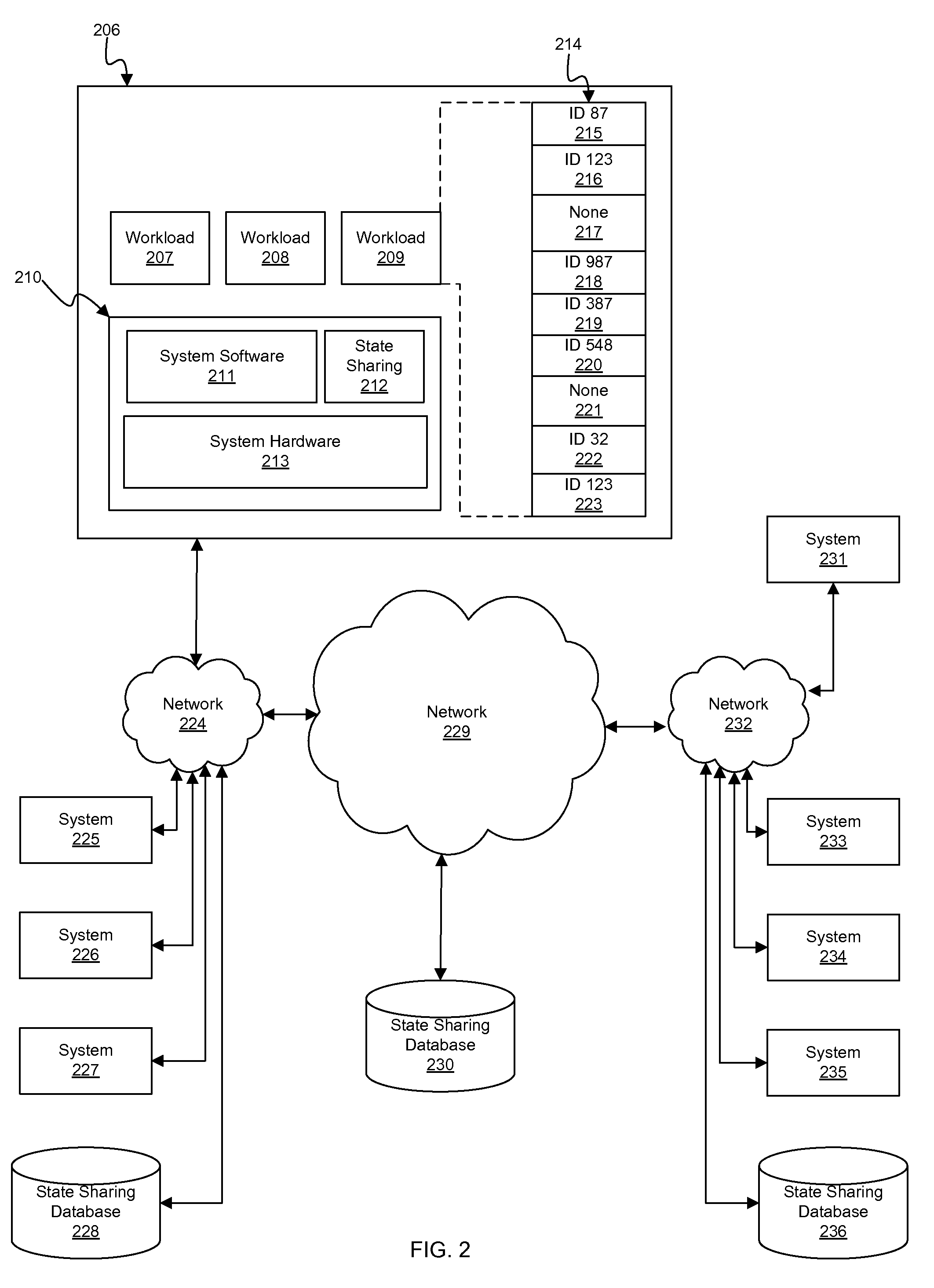

Using memory equivalency across compute clouds for accelerated virtual memory migration and memory de-duplication

InactiveUS20090204718A1Memory adressing/allocation/relocationComputer security arrangementsComputational scienceVirtual memory

A memory state equivalency analysis fabric which notionally overlays a given compute cloud. Equivalent sections of memory state are identified, and that equivalency information is conveyed throughout the fabric. Such a compute cloud-wide memory equivalency fabric is utilized as a powerful foundation for numerous memory state management and optimization activities, such as workload live migration and memory de-duplication across the entire cloud.

Owner:LAWTON KEVIN P +1

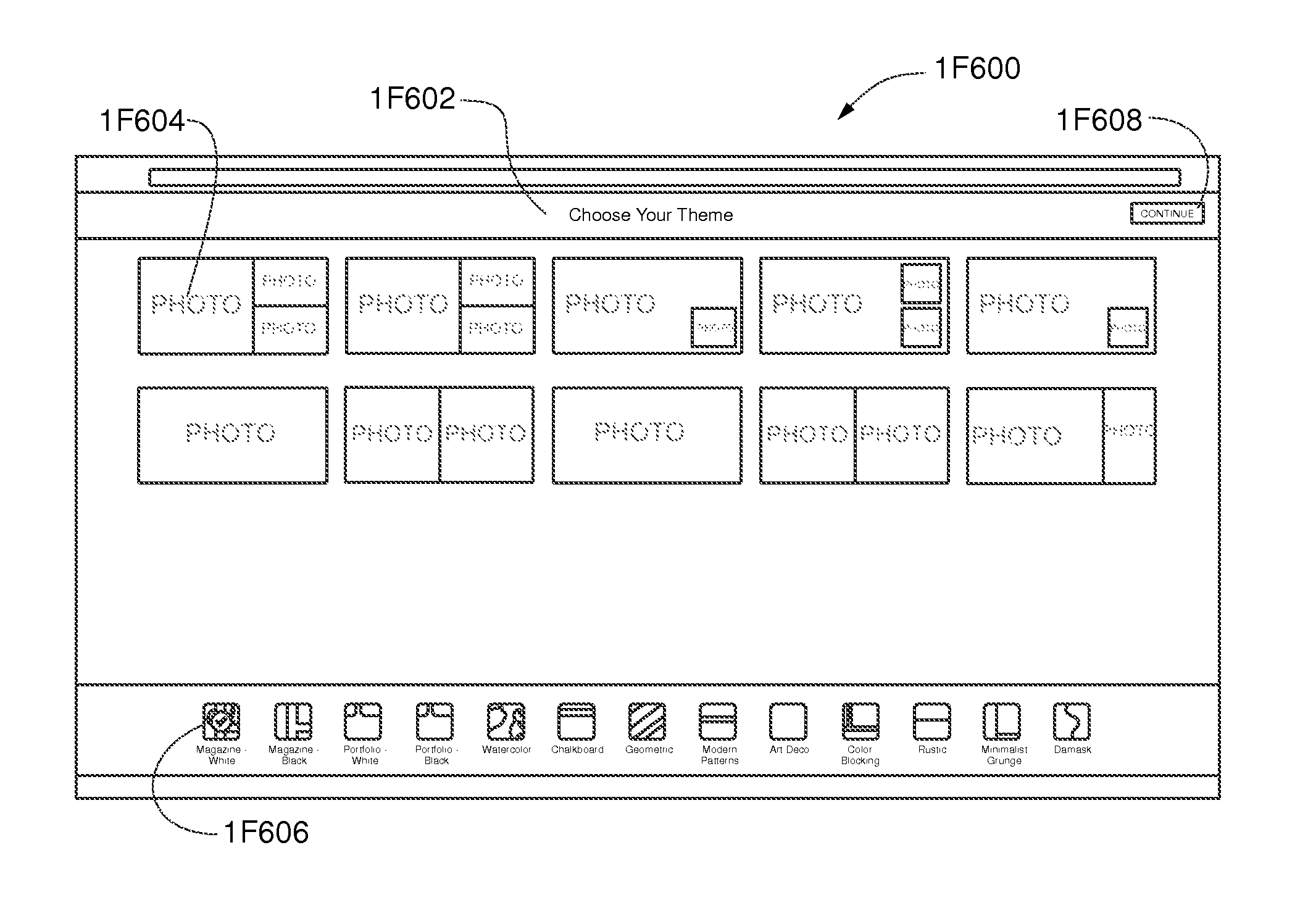

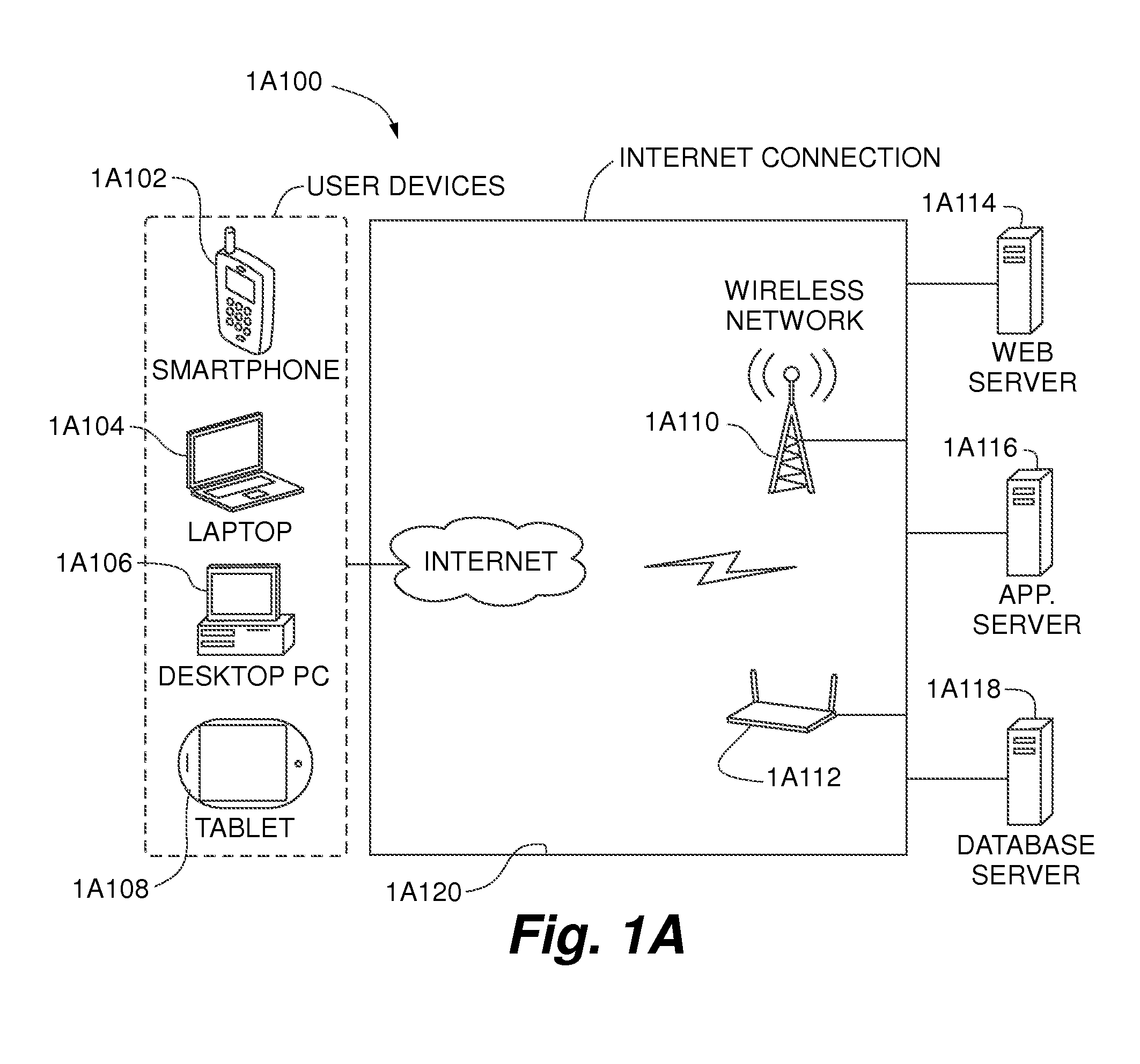

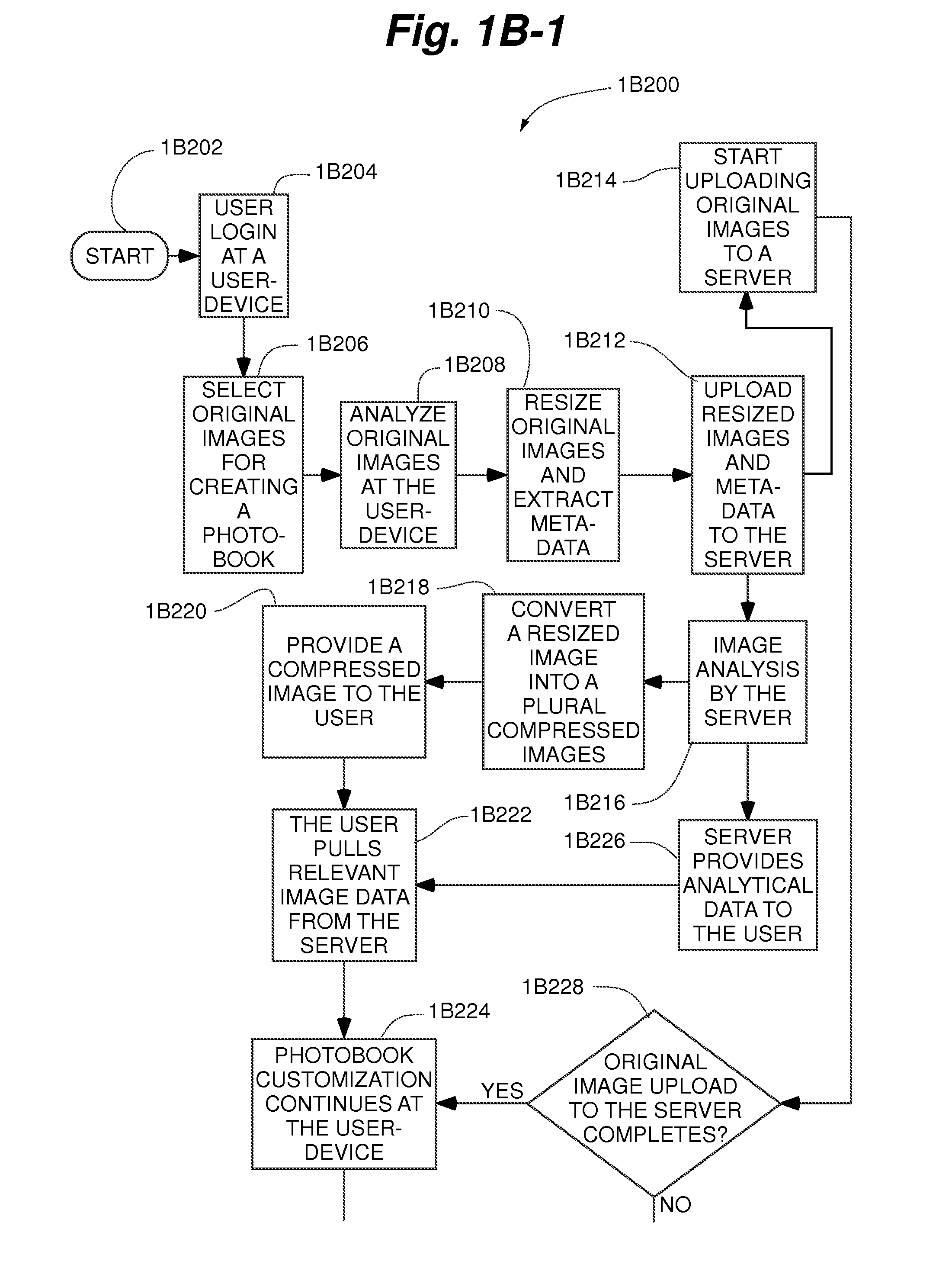

Automatic target box in methods and systems for editing content-rich layouts in media-based projects

ActiveUS20160139761A1Raise countNatural language data processingPictoral communicationDrag and dropAnimation

Methods and systems for editing media rich photo projects are disclosed. In one embodiment, the present invention uses drag and drop features to add a photo to a spread, to remove a photo from the spread, and / or to create a new spread. In another embodiment, drop areas are utilized to facilitate addition and removal of photos at an editor. In another embodiment, drop targets are determined by an animated highlight of a drop target, a time delay allowing a user to wait until a drop target is auto-selected by the pre-set rules of the editor, and pre-set rules of a drop target as determined by the location of a drop. Furthermore, drop targets are determined by location coordinates of a photo over the spread before being dropped, proximity of a dragged photo with a photo slot, or a pre-calculated photo slot based on pre-set rules.

Owner:INTERACTIVE MEMORIES

Increased scalability in the fragment shading pipeline

ActiveUS20060055695A1Drawing from basic elementsCathode-ray tube indicatorsComputational scienceDistributor

A fragment processor includes a fragment shader distributor, a fragment shader collector, and a plurality of fragment shader pipelines. Each fragment shader pipeline executes a fragment shader program on a segment of fragments. The plurality of fragment shader pipelines operate in parallel, executing the same or different fragment shader programs. The fragment shader distributor receives a stream of fragments from a rasterization unit and dispatches a portion of the stream of fragments to a selected fragment shader pipeline until the capacity of the selected fragment shader pipeline is reached. The fragment shader distributor then selects another fragment shader pipeline. The capacity of each of the fragment shader pipelines is limited by several different resources. As the fragment shader distributor dispatches fragments, it tracks the remaining available resources of the selected fragment shader pipeline. A fragment shader collector retrieves processed fragments from the plurality of fragment shader pipelines.

Owner:NVIDIA CORP

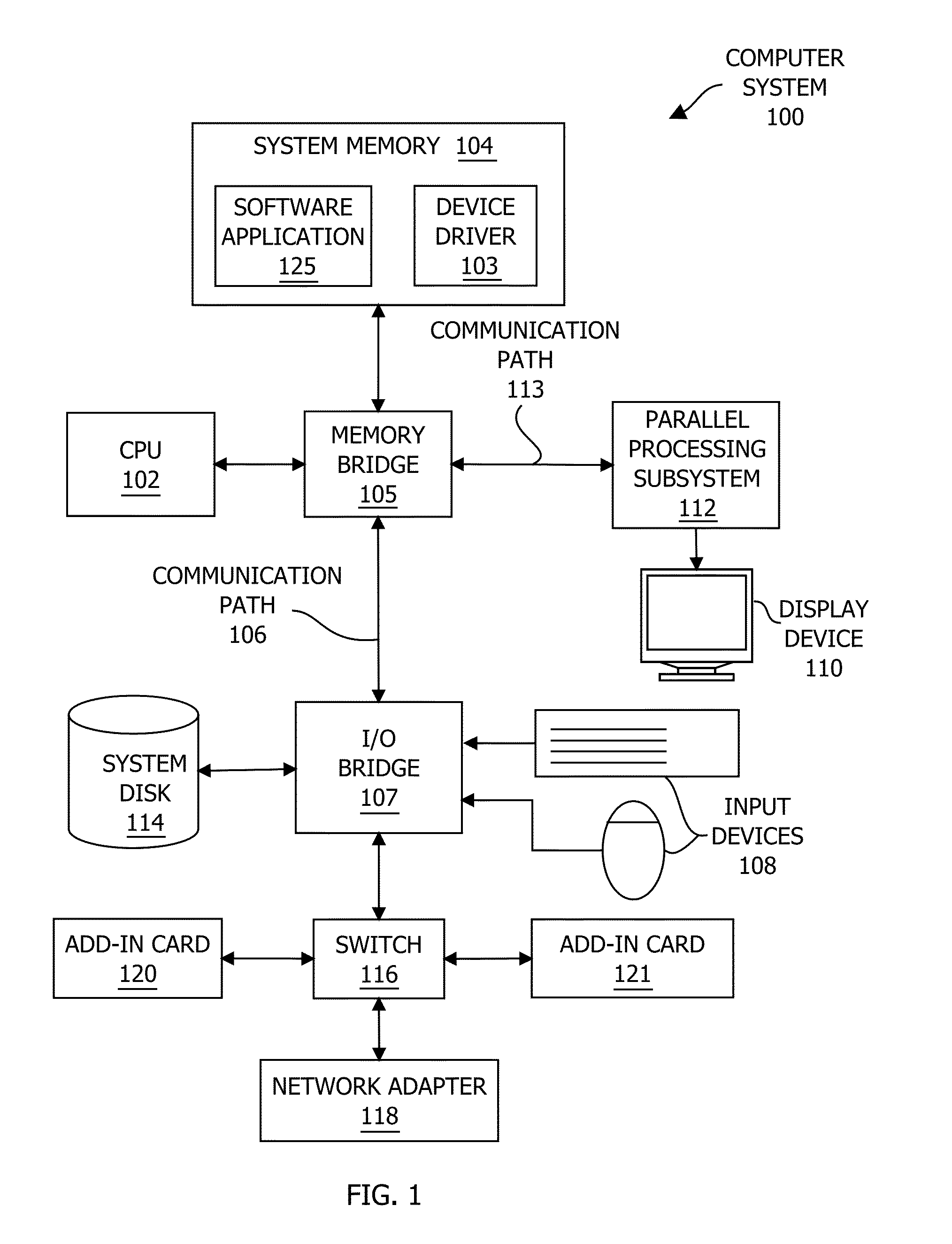

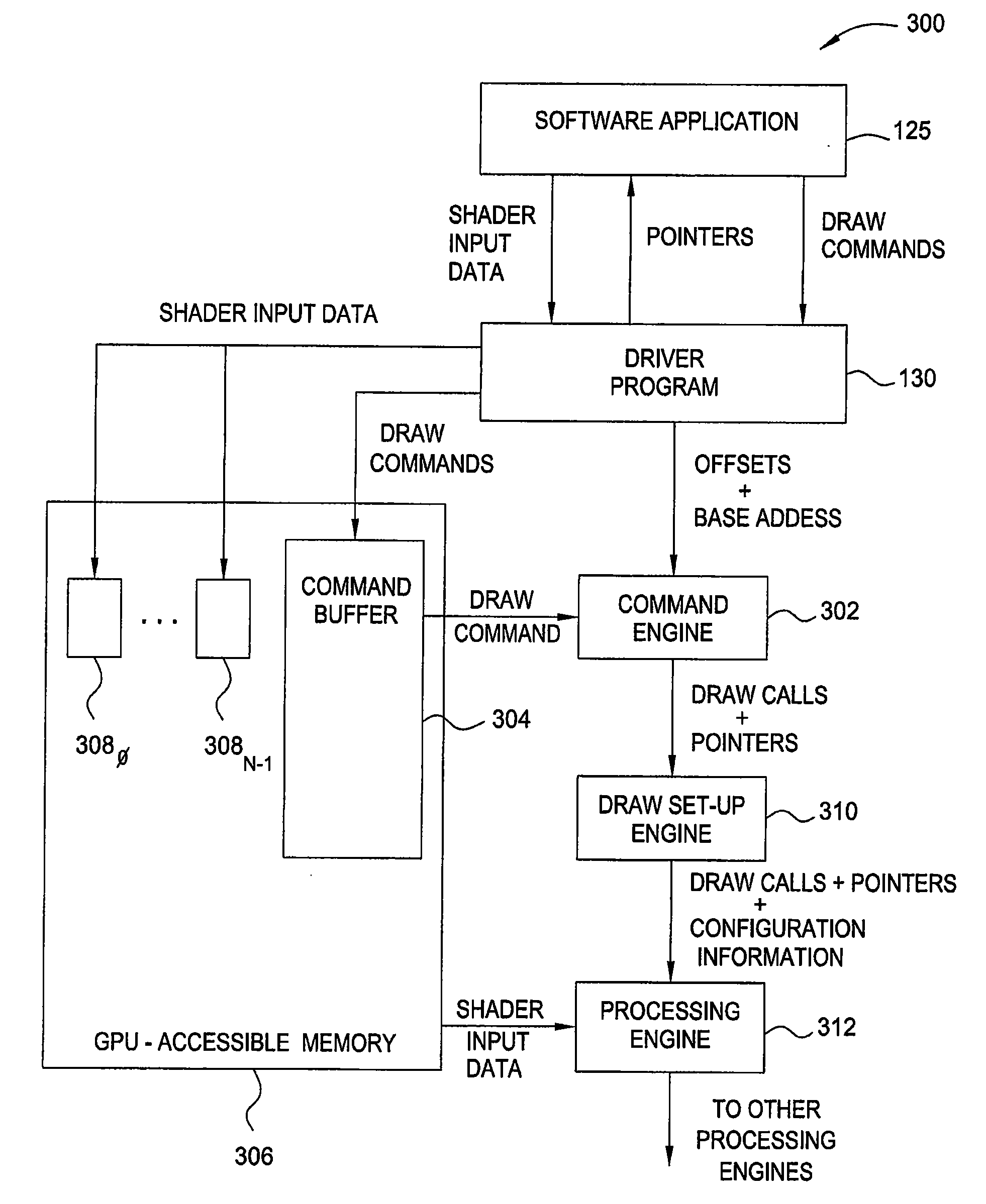

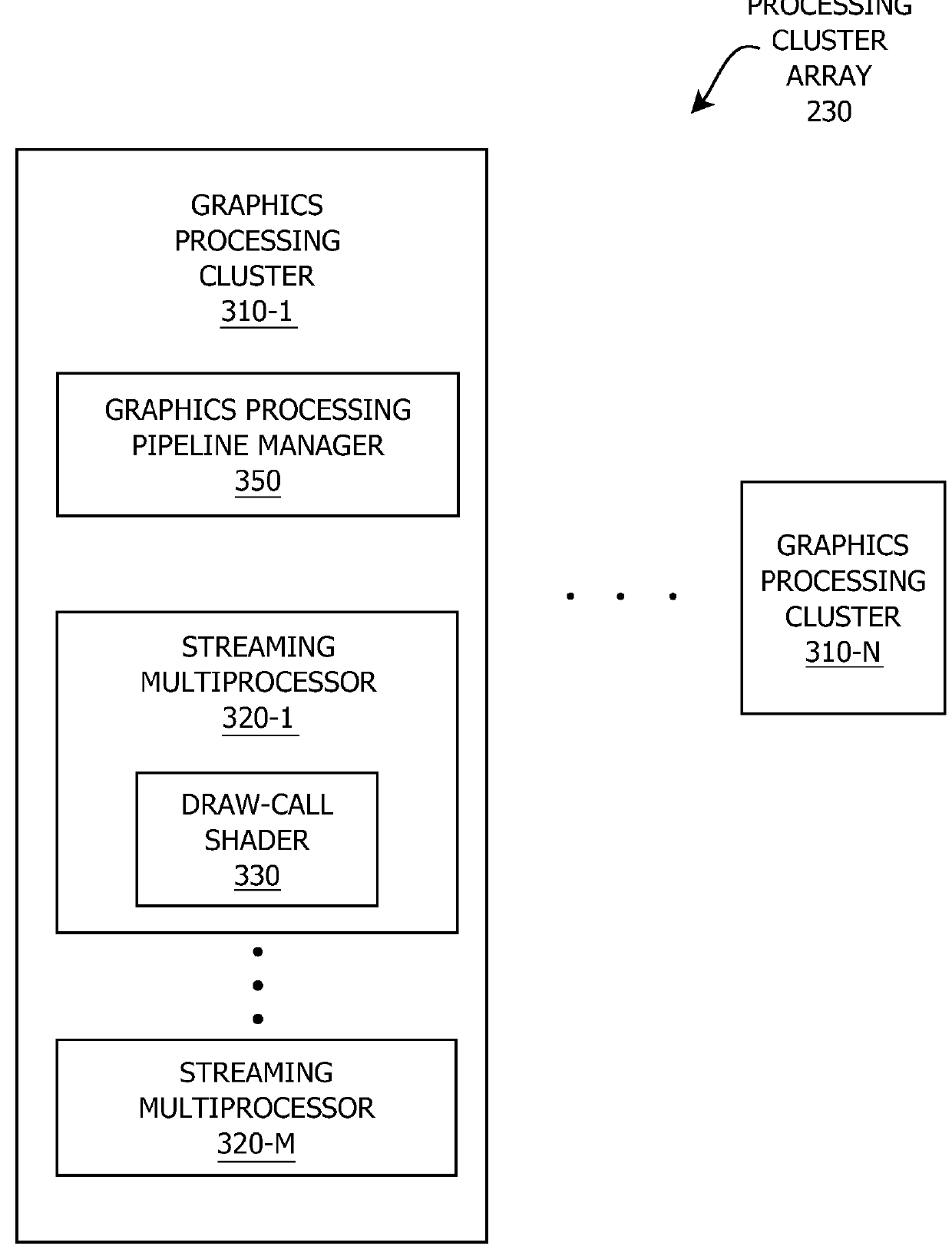

Techniques for locally modifying draw calls

ActiveUS20140292771A1Reduce the amount requiredAmount of data transferredDigital computer detailsProcessor architectures/configurationComputational scienceApplication software

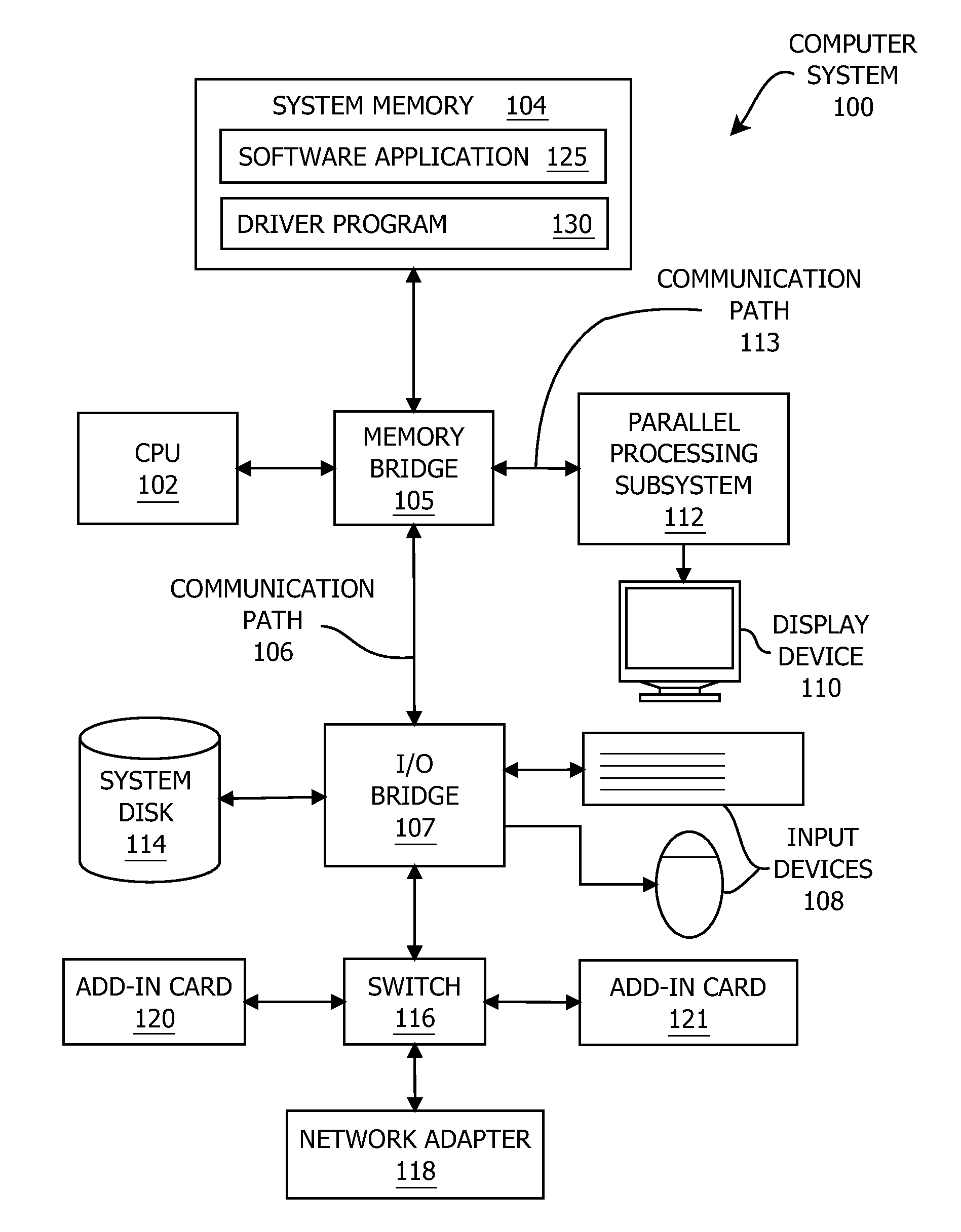

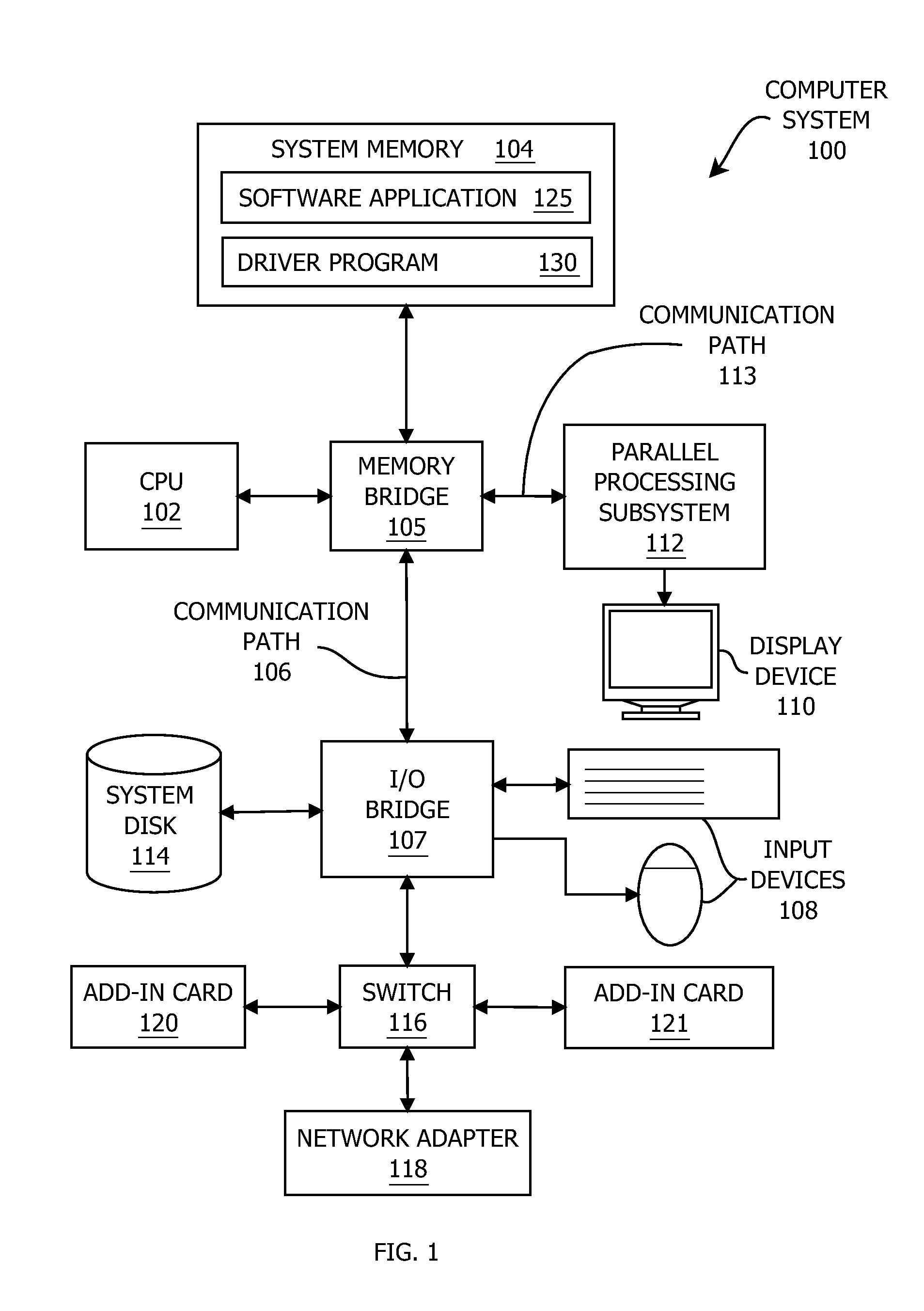

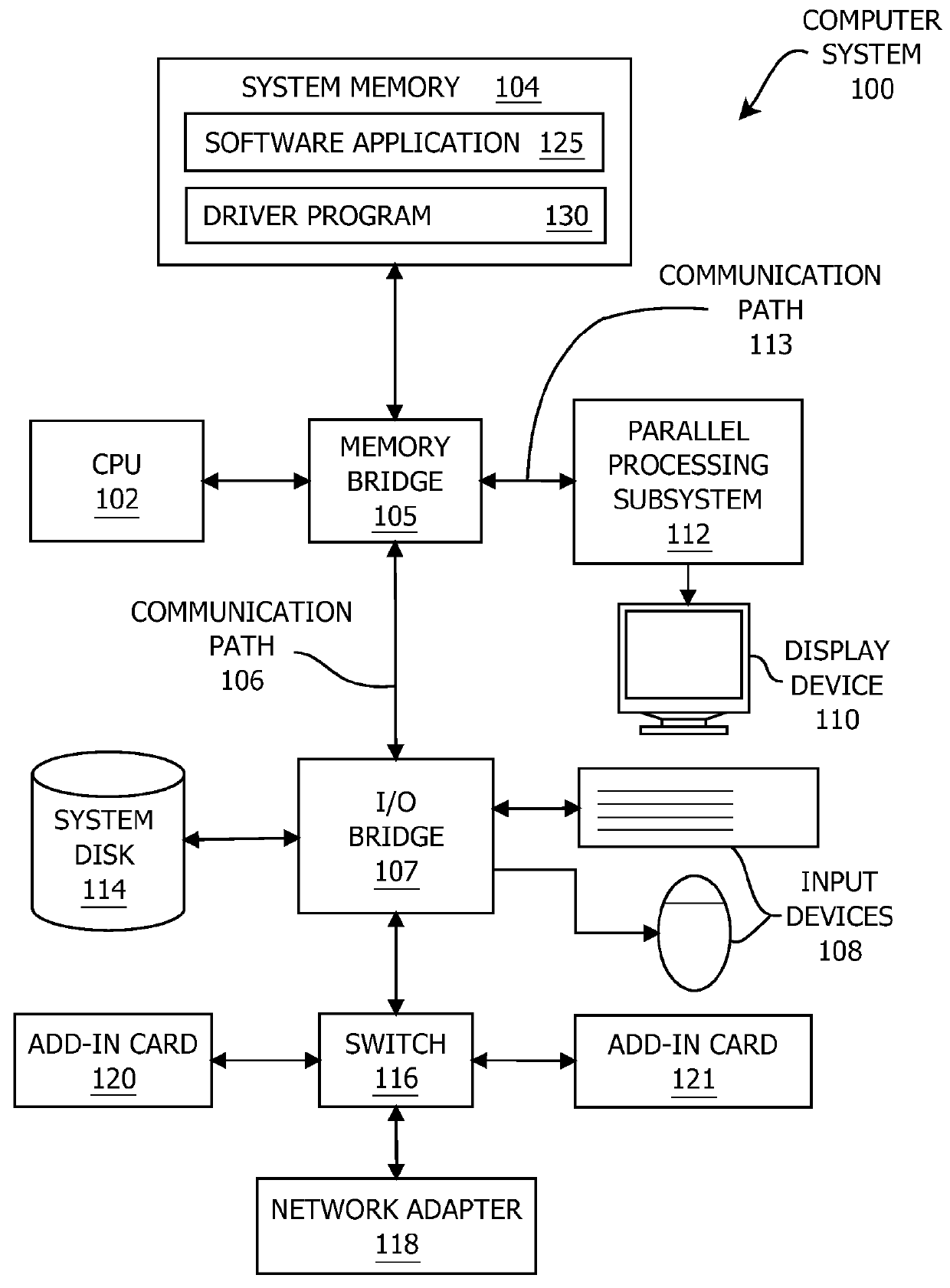

One embodiment sets forth a method for modifying draw calls using a draw-call shader program included in a processing subsystem configured to process draw calls. The draw call shader receives a draw call from a software application, evaluates graphics state information included in the draw call, generates modified graphics state information, and generates a modified draw call that includes the modified graphics state information. Subsequently, the draw-call shader causes the modified draw call to be executed within a graphics processing pipeline. By performing the computations associated with generating the modified draw call on-the-fly within the processing subsystem, the draw-call shader decreases the amount of system memory required to render graphics while increasing the overall processing efficiency of the graphics processing pipeline.

Owner:NVIDIA CORP

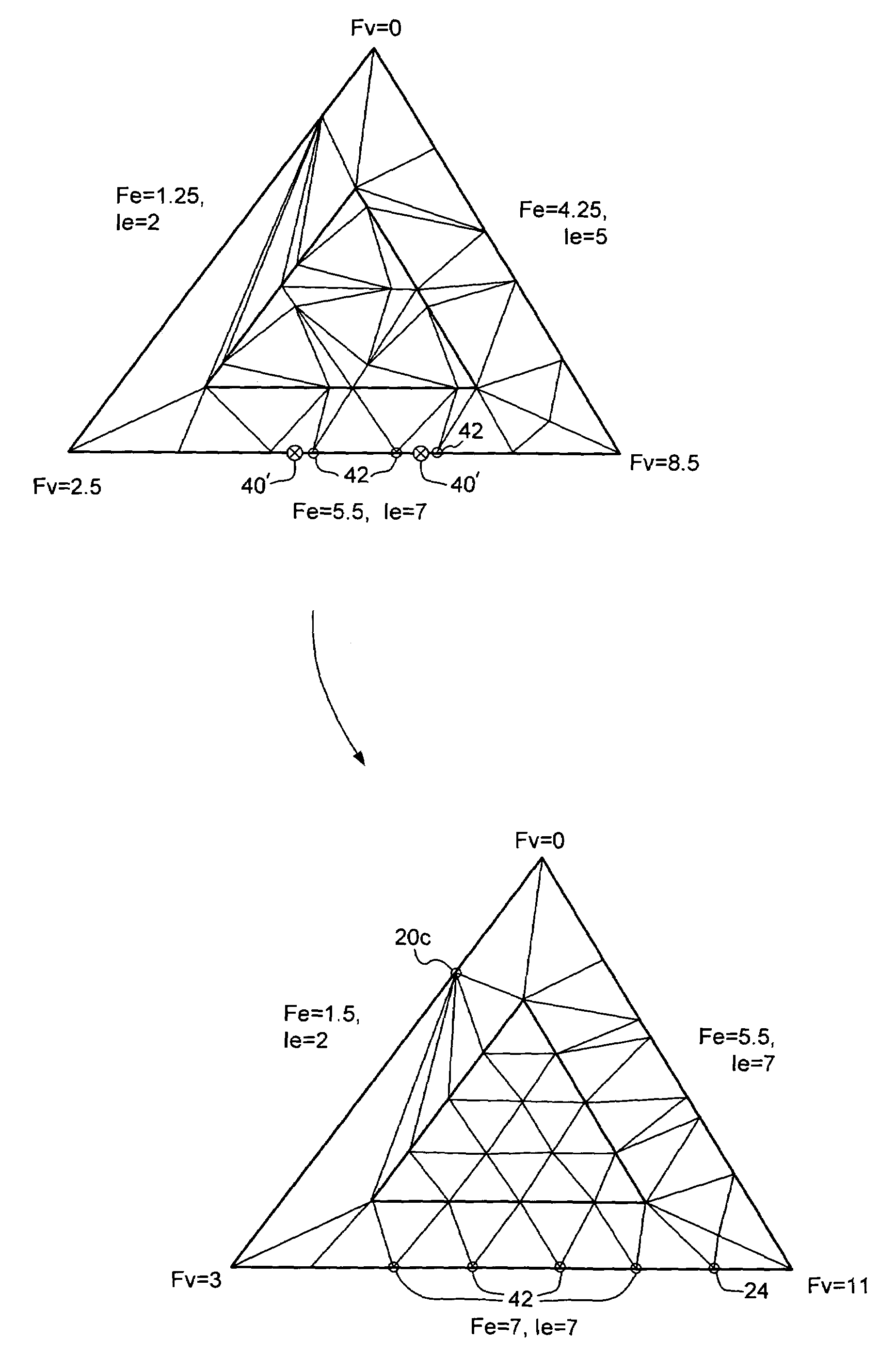

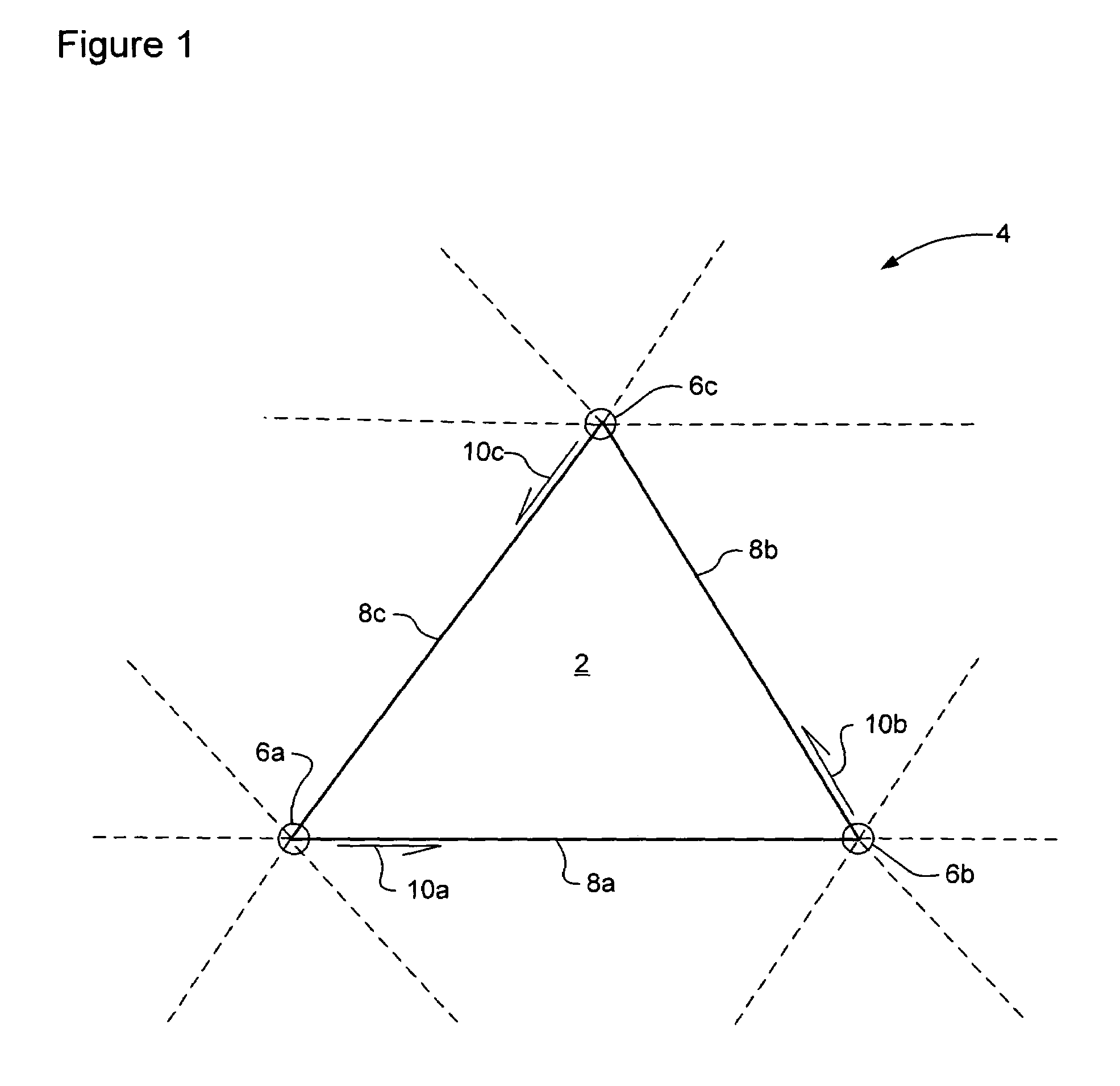

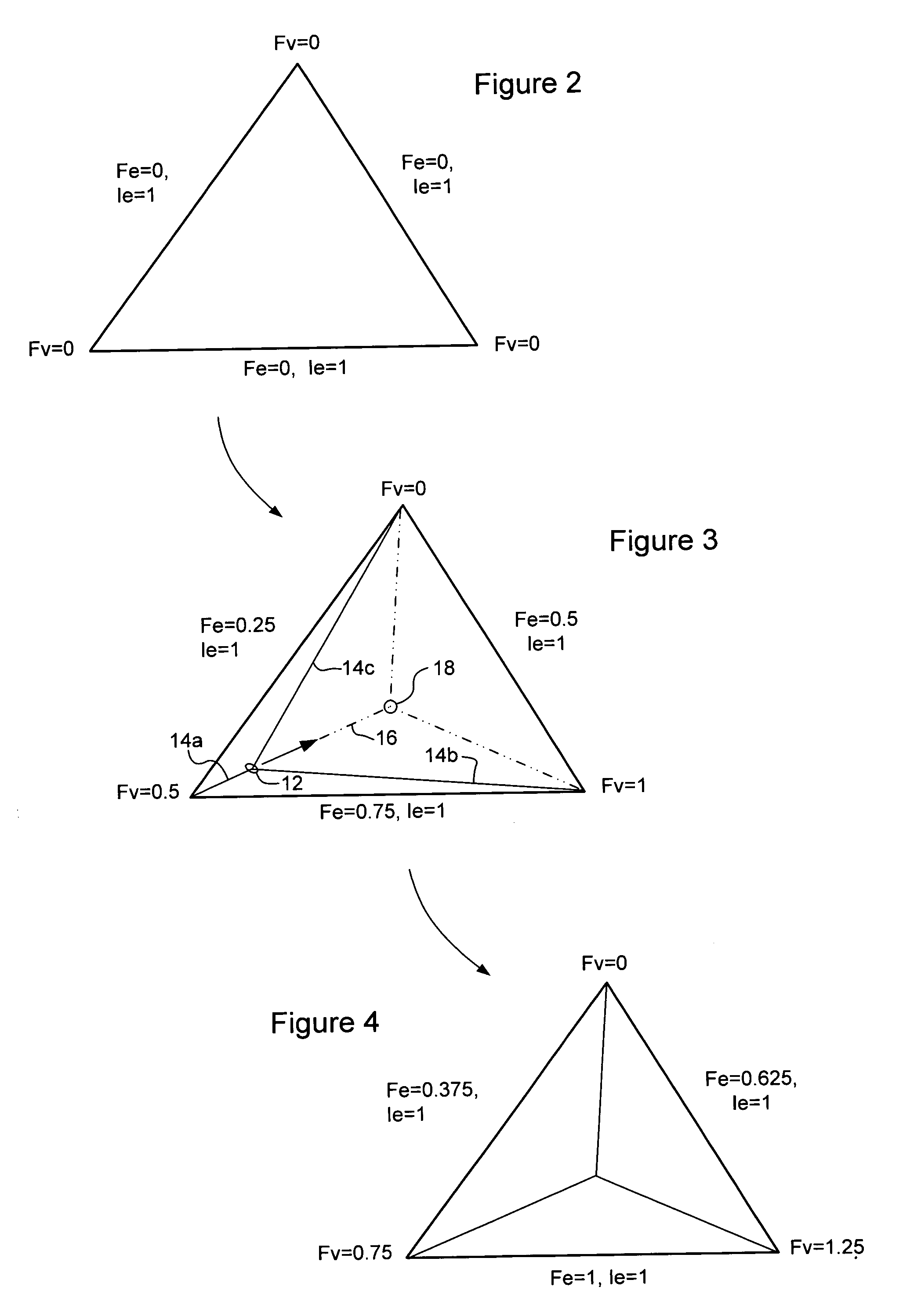

Dynamic tessellation of a base mesh

InactiveUS6940505B1Avoid crackingEfficient of processing resourceDrawing from basic elements3D-image renderingComputational scienceLevel of detail

A primitive of a base mesh having at least three base vertices is dynamically tessellated to enable smooth changes in detail of an image rendered on a screen. A respective floating point vertex tessellation value (Fv) is assigned to each base vertex of the base mesh, based on a desired level of detail in the rendered image. For each edge of the primitive: a respective floating point edge tessellation rate (Fe) of the edge is calculated using the respective vertex tessellation values (Fv) of the base vertices terminating the edge. A position of at least one child vertex of the primitive is then calculated using the respective calculated edge tessellation rate (Fe). By this means, child vertices of the primitive can be generated coincident with a parent vertex, and smoothly migrate in response to changing vertex tessellation values of the base vertices of the primitive.

Owner:MATROX

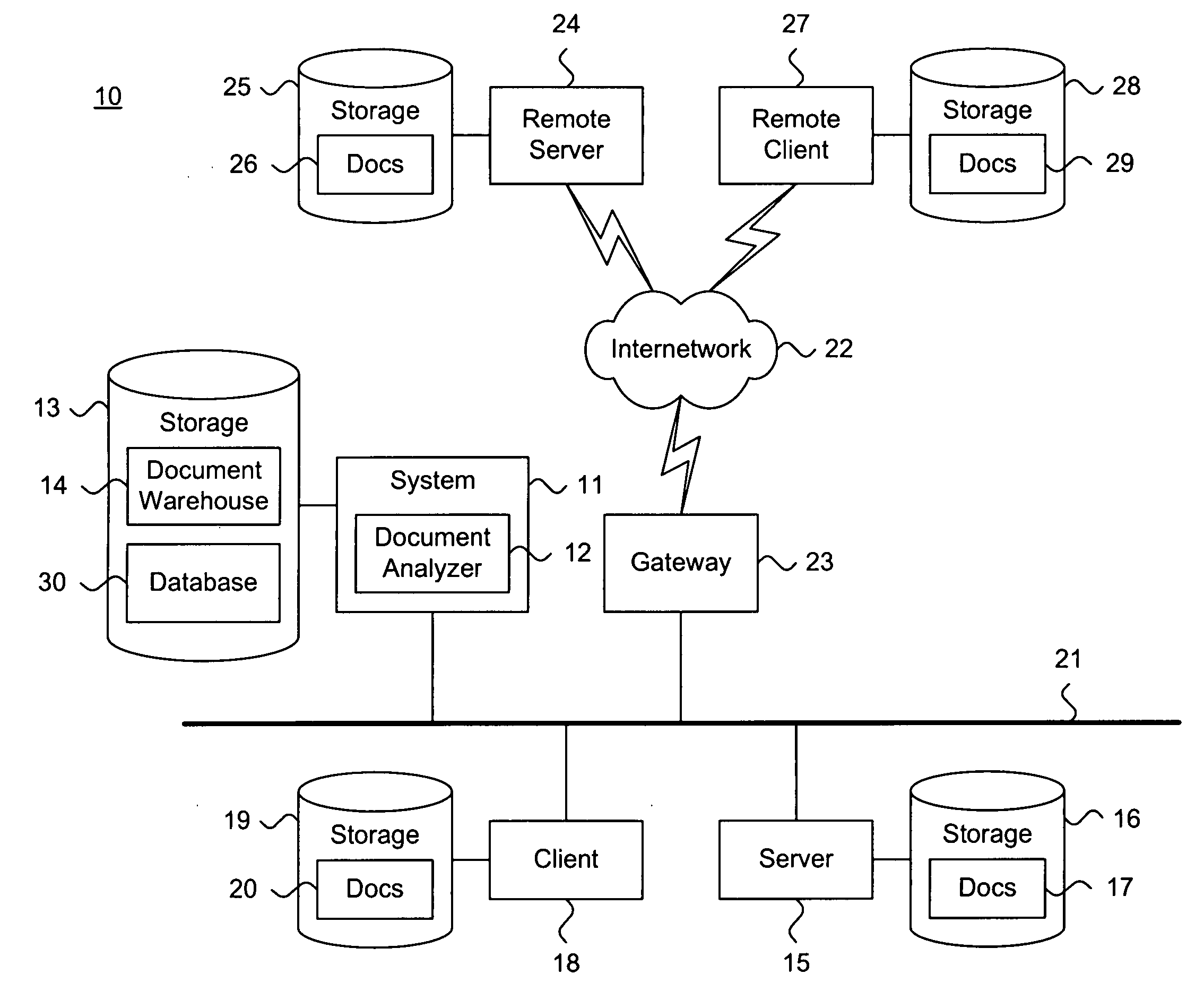

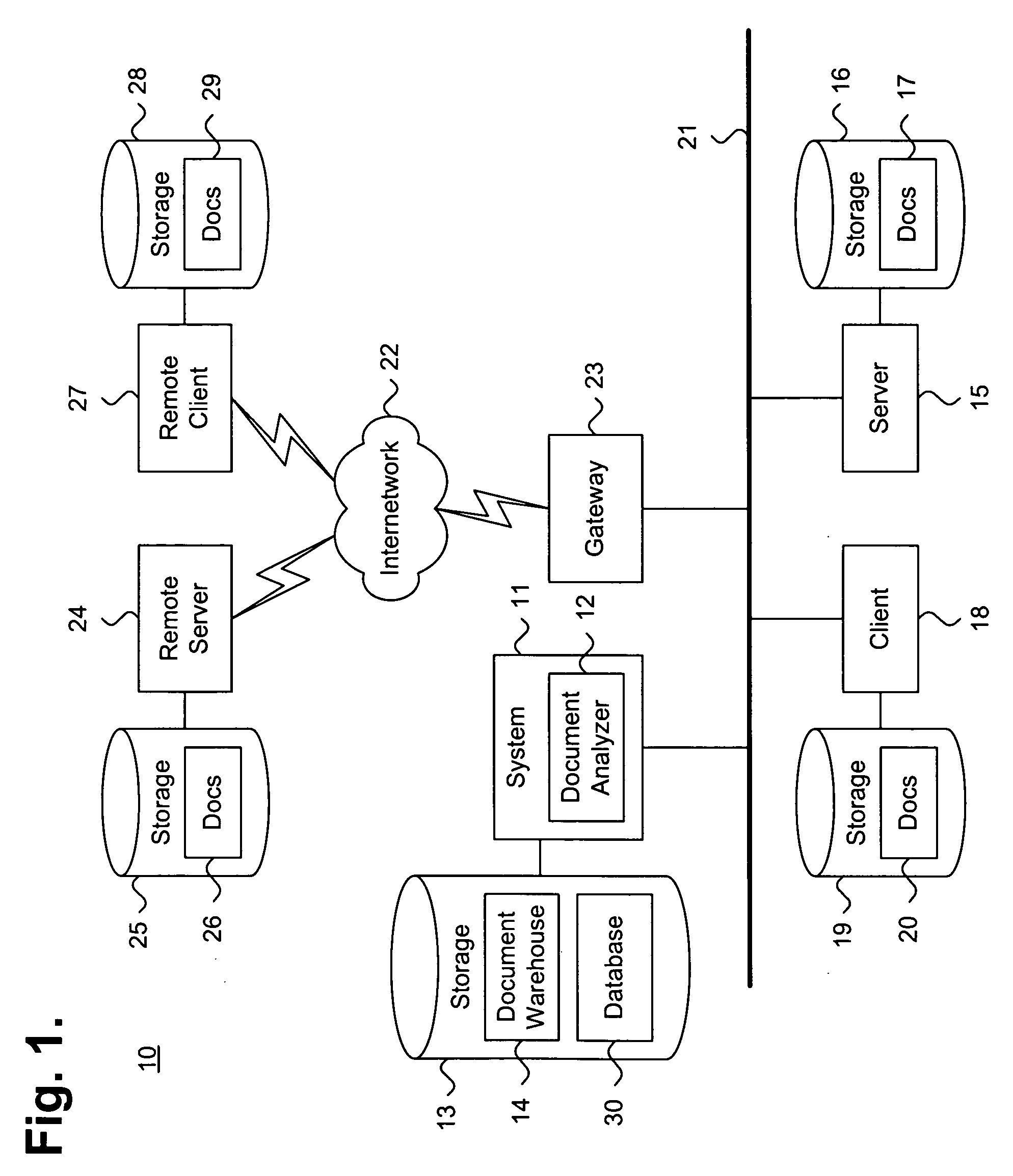

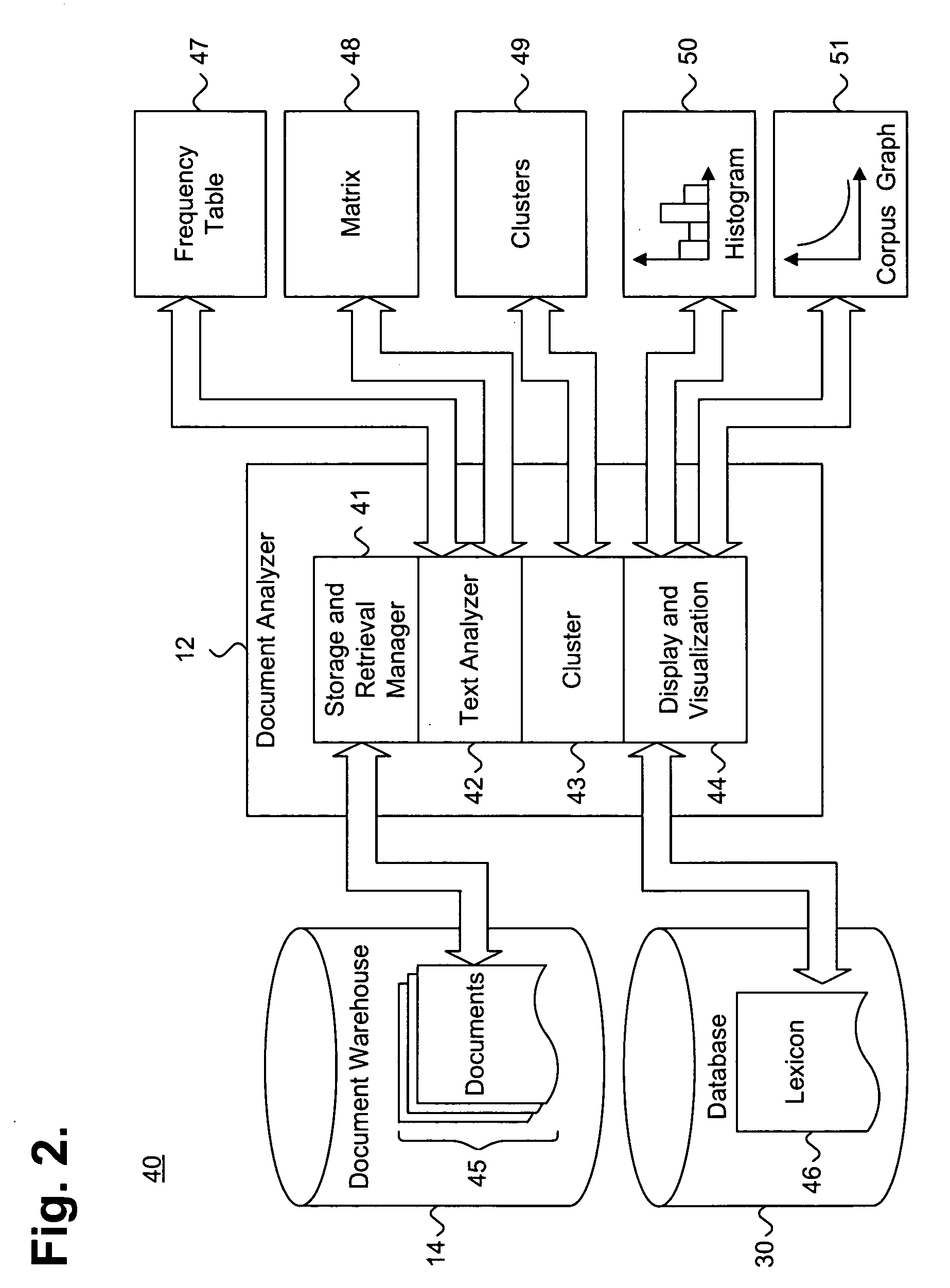

System and method for efficiently generating cluster groupings in a multi-dimensional concept space

ActiveUS20050010555A1Generate efficientlyData processing applicationsWeb data indexingComputational scienceAlgorithm

A system and method for efficiently generating cluster groupings in a multi-dimensional concept space is described. A plurality of terms is extracted from each document in a collection of stored unstructured documents. A concept space is built over the document collection. Terms substantially correlated between a plurality of documents within the document collection are identified. Each correlated term is expressed as a vector mapped along an angle θ originating from a common axis in the concept space. A difference between the angle θ for each document and an angle σ for each cluster within the concept space is determined. Each such cluster is populated with those documents having such difference between the angle θ for each such document and the angle σ for each such cluster falling within a predetermined variance. A new cluster is created within the concept space those documents having such difference between the angle θ for each such document and the angle σ for each such cluster falling outside the predetermined variance.

Owner:NUIX NORTH AMERICA

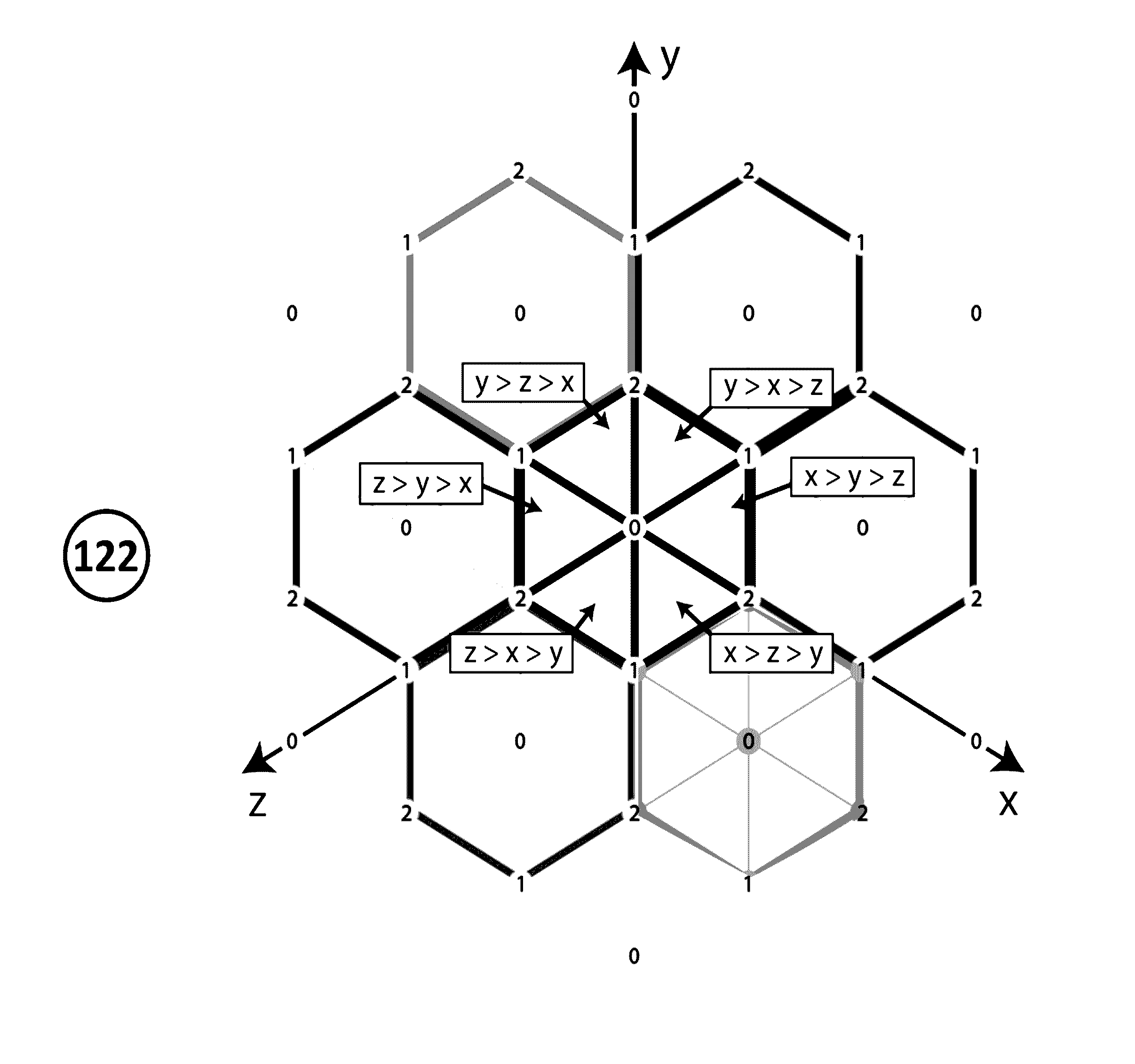

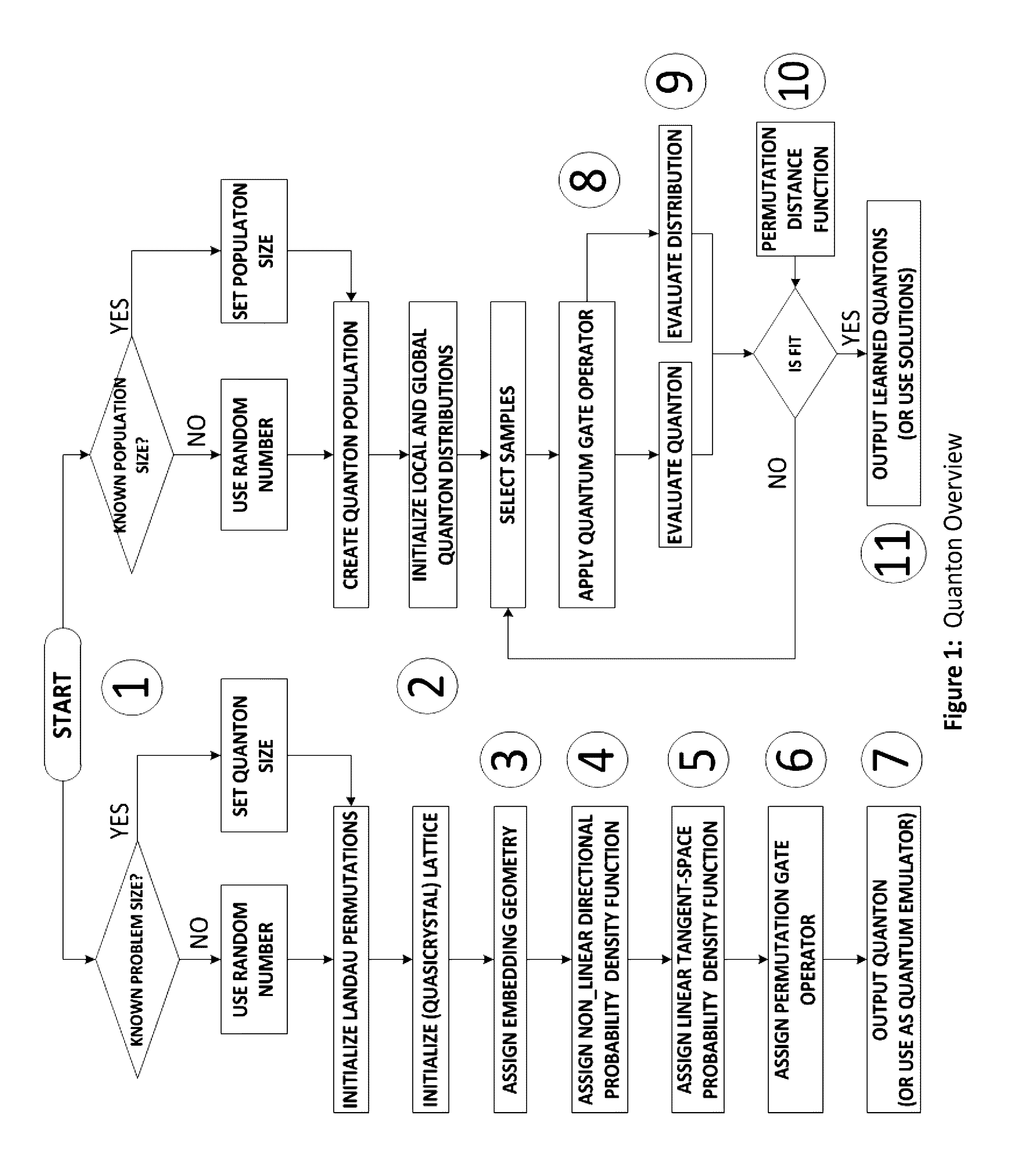

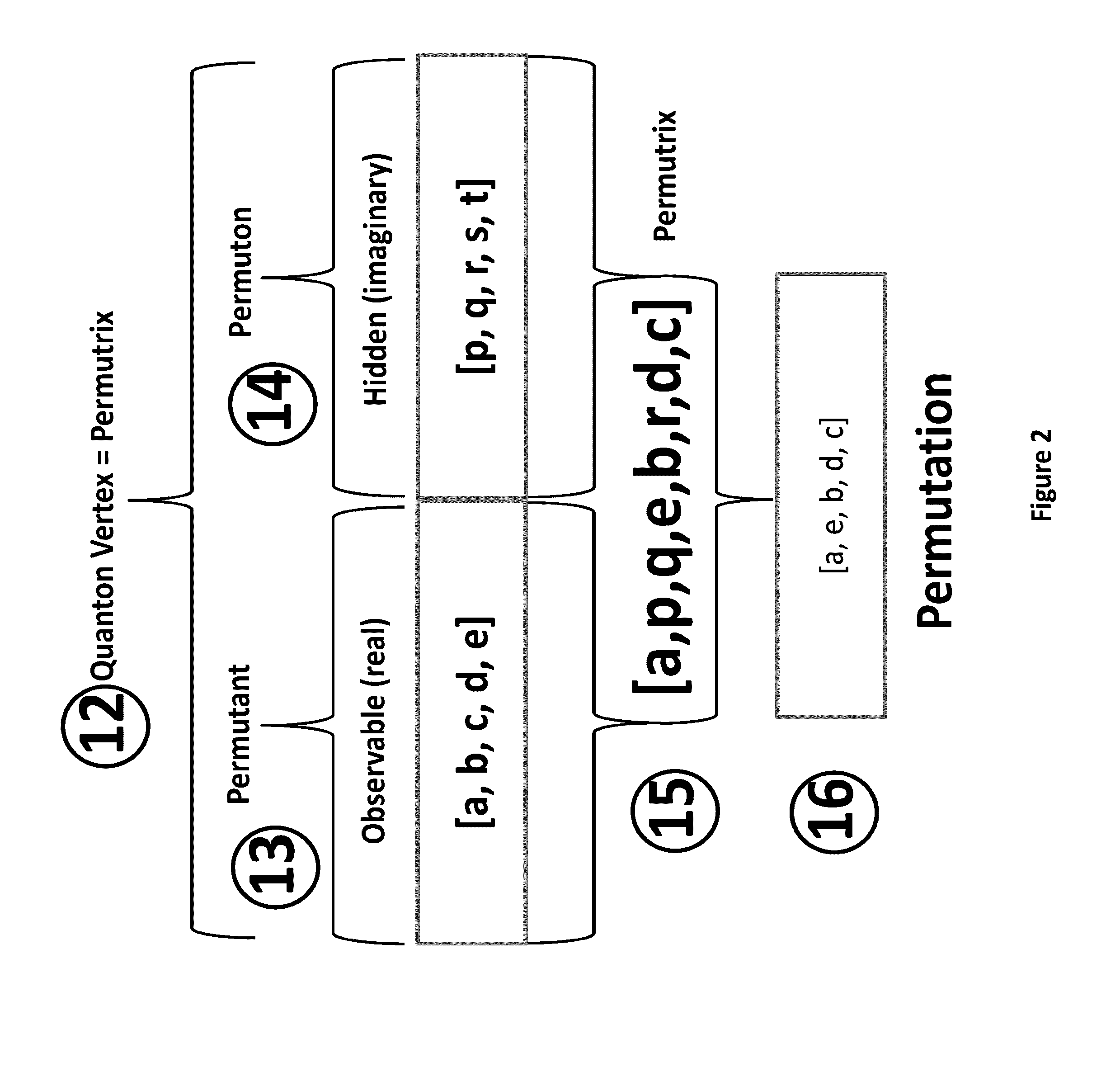

Quanton representation for emulating quantum-like computation on classical processors

ActiveUS20160328253A1Quantum computersMathematical modelsComputational scienceTheoretical computer science

The Quanton virtual machine approximates solutions to NP-Hard problems in factorial spaces in polynomial time. The data representation and methods emulate quantum computing on classical hardware but also implement quantum computing if run on quantum hardware. The Quanton uses permutations indexed by Lehmer codes and permutation-operators to represent quantum gates and operations. A generating function embeds the indexes into a geometric object for efficient compressed representation. A nonlinear directional probability distribution is embedded to the manifold and at the tangent space to each index point is also a linear probability distribution. Simple vector operations on the distributions correspond to quantum gate operations. The Quanton provides features of quantum computing: superpositioning, quantization and entanglement surrogates. Populations of Quantons are evolved as local evolving gate operations solving problems or as solution candidates in an Estimation of Distribution algorithm. The Quanton representation and methods are fully parallel on any hardware.

Owner:KYNDI

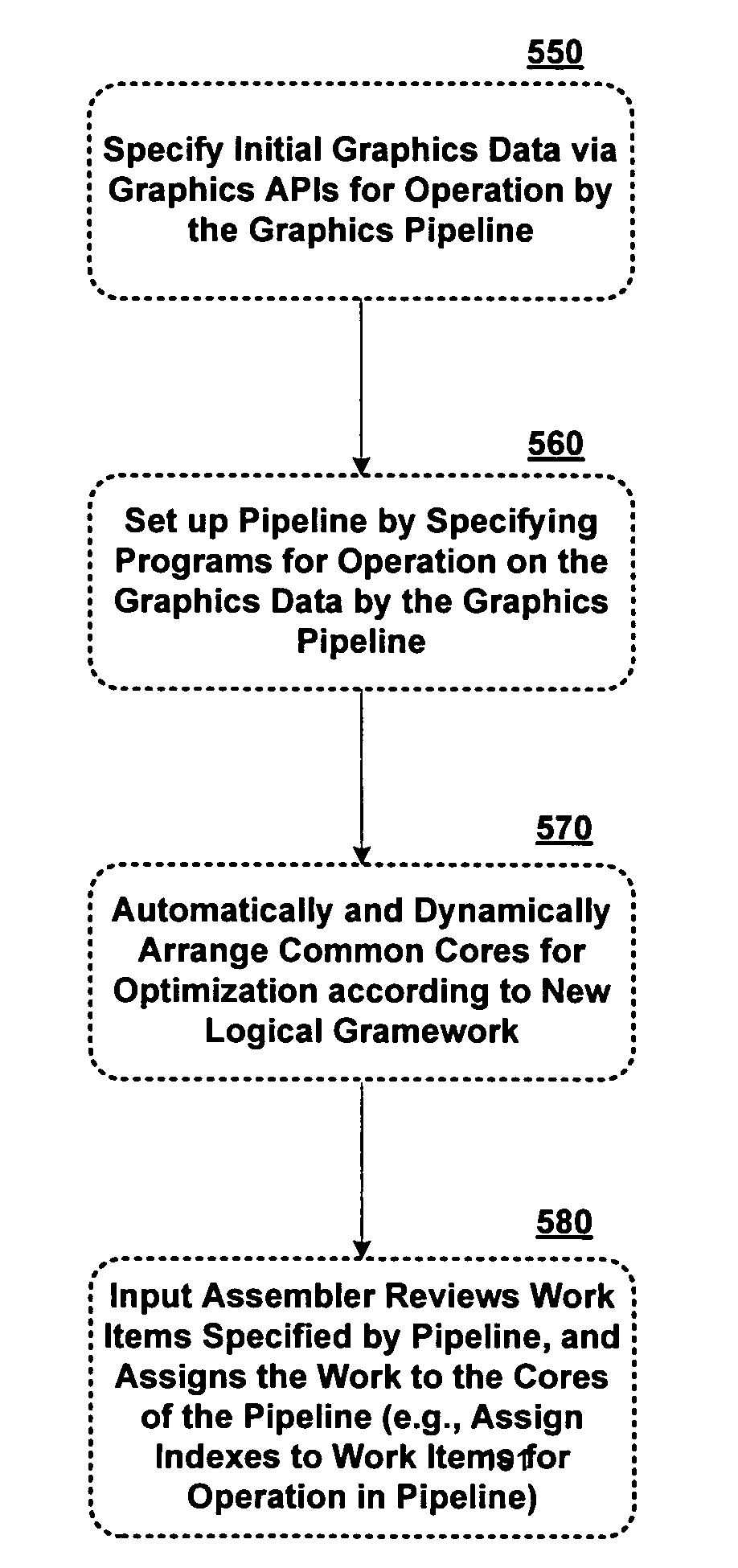

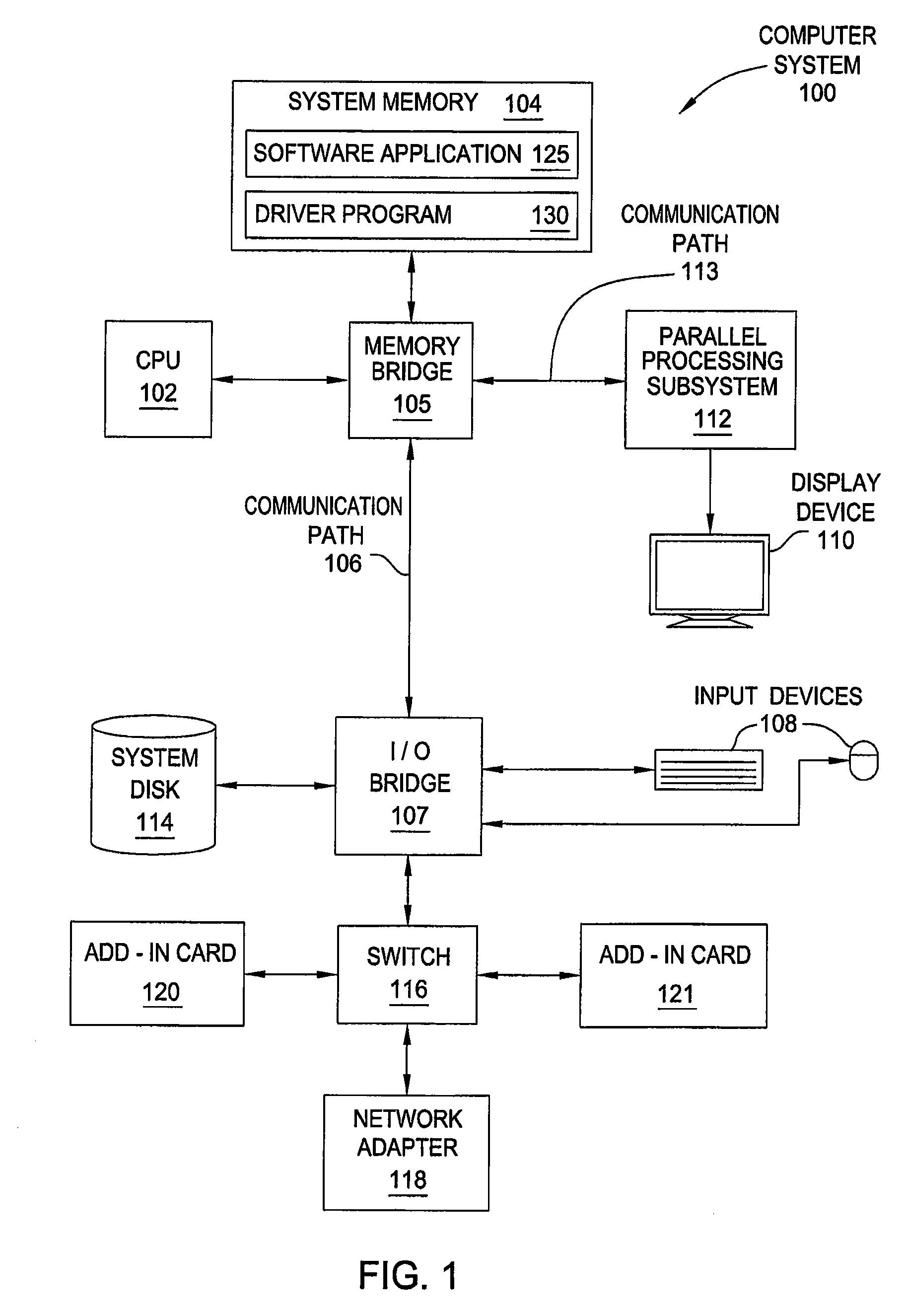

Systems and methods for providing an enhanced graphics pipeline

An enhanced graphics pipeline is provided that enables common core hardware to perform as different components of the graphics pipeline, programmability of primitives including lines and triangles by a component in the pipeline, and a stream output before or simultaneously with the rendering a graphical display with the data in the pipeline. The programmer does not have to optimize the code, as the common core will balance the load of functions necessary and dynamically allocate those instructions on the common core hardware. The programmer may program primitives using algorithms to simplify all vertex calculations by substituting with topology made with lines and triangles. The programmer takes the calculated output data and can read it before or while it is being rendered. Thus, a programmer has greater flexibility in programming. By using the enhanced graphics pipeline, the programmer can optimize the usage of the hardware in the pipeline, program vertex, line or triangle topologies altogether rather than each vertex alone, and read any calculated data from memory where the pipeline can output the calculated information.

Owner:MICROSOFT TECH LICENSING LLC

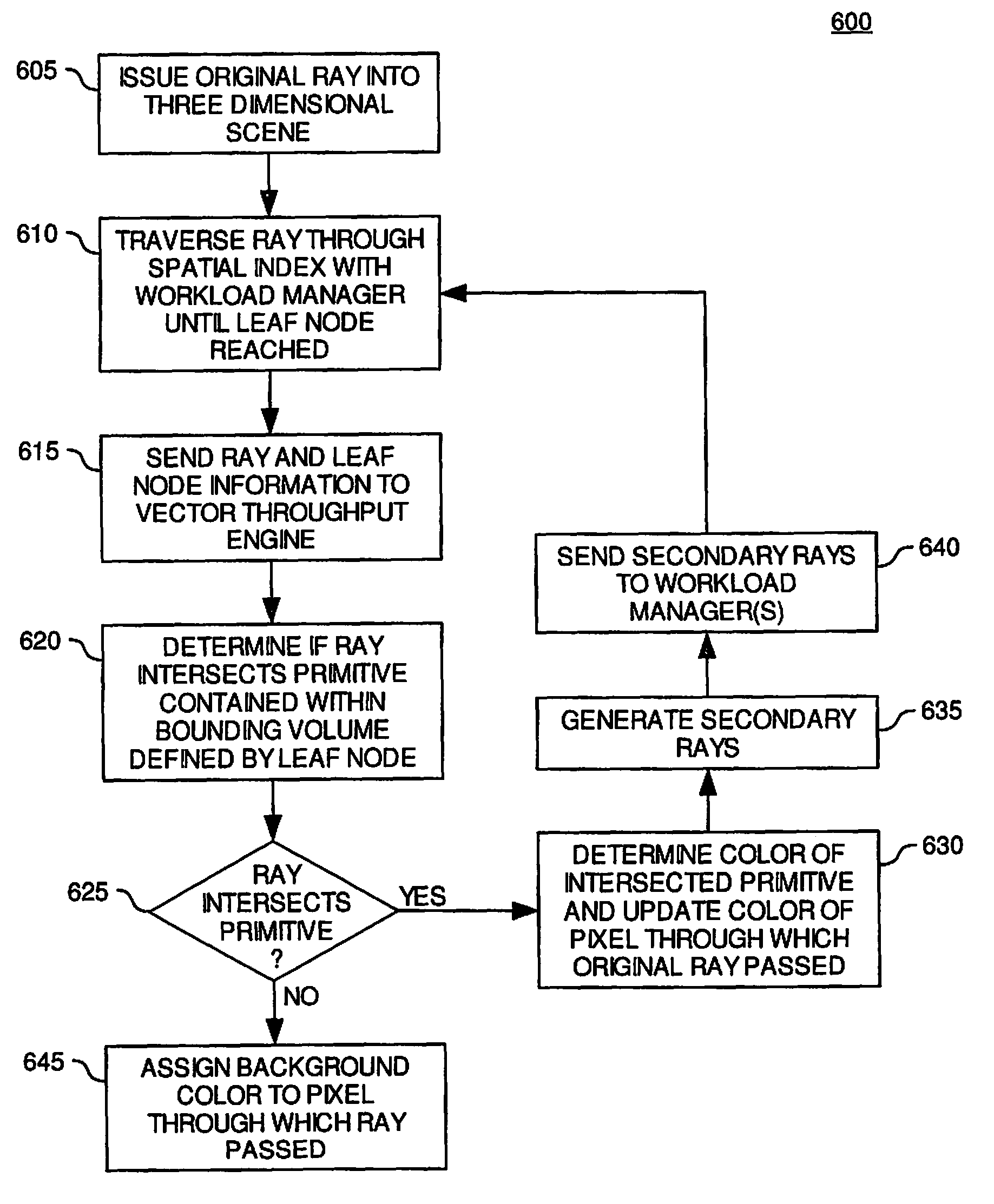

Method for reducing network bandwidth by delaying shadow ray generation

ActiveUS7782318B2Character and pattern recognitionCathode-ray tube indicatorsComputational scienceImaging processing

The present invention provides methods and apparatus in a ray tracing image processing system to reduce the amount of information passed between processing elements. According to embodiments of the invention, in response to a ray-primitive intersection, a first processing element in the image processing system may generate a portion of secondary rays and a second processing element may generate a second portion of secondary rays. The first processing element may generate reflected and refracted rays and the second processing element may generate shadow rays. The first processing element may send a ray-primitive intersection point to the second processing element so that the second processing element may generate the shadow rays. By only sending the intersection point to the second processing element, in contrast to sending a plurality of shadow rays, the amount of information communicated between the two processing elements may be reduced.

Owner:ACTIVISION PUBLISHING

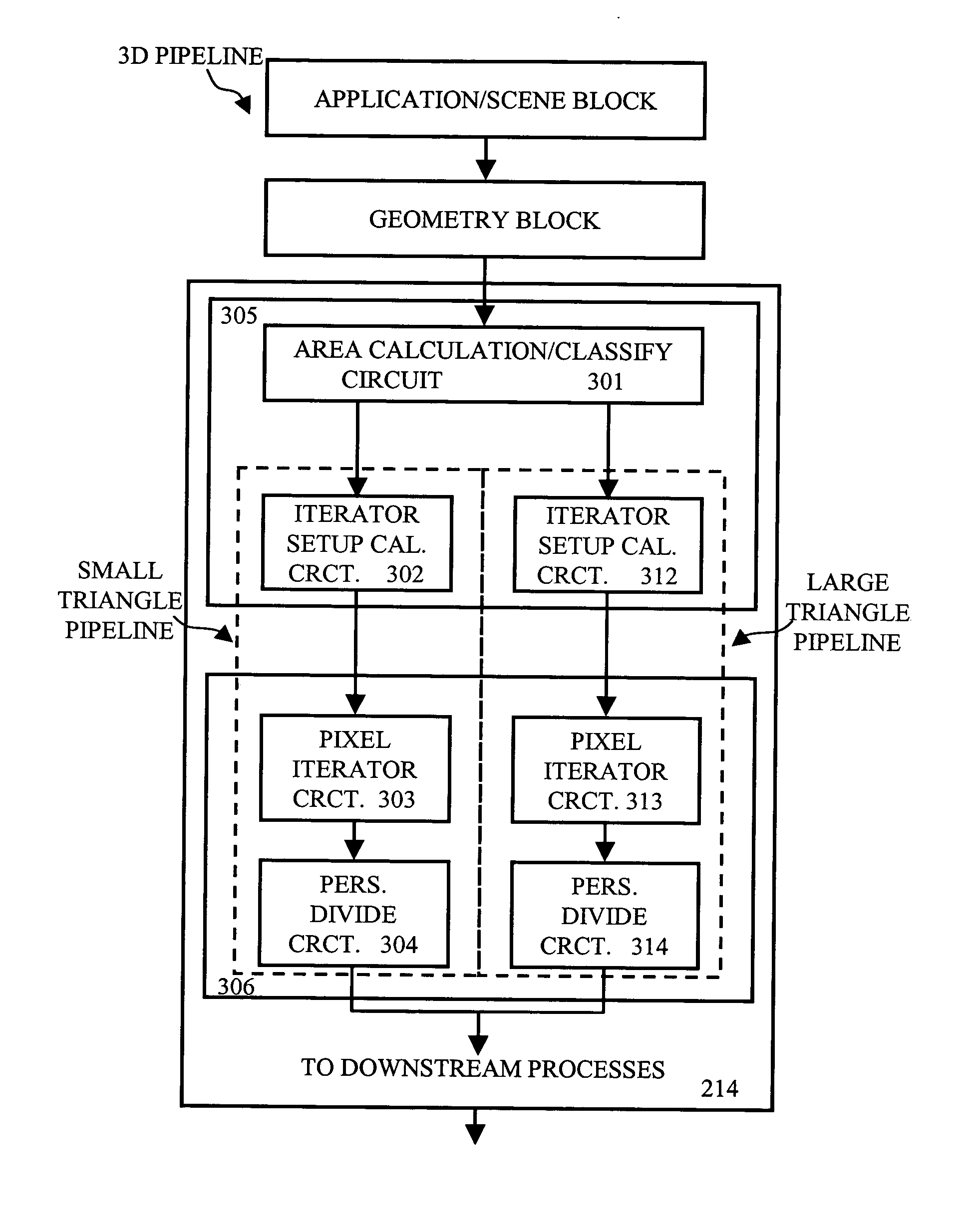

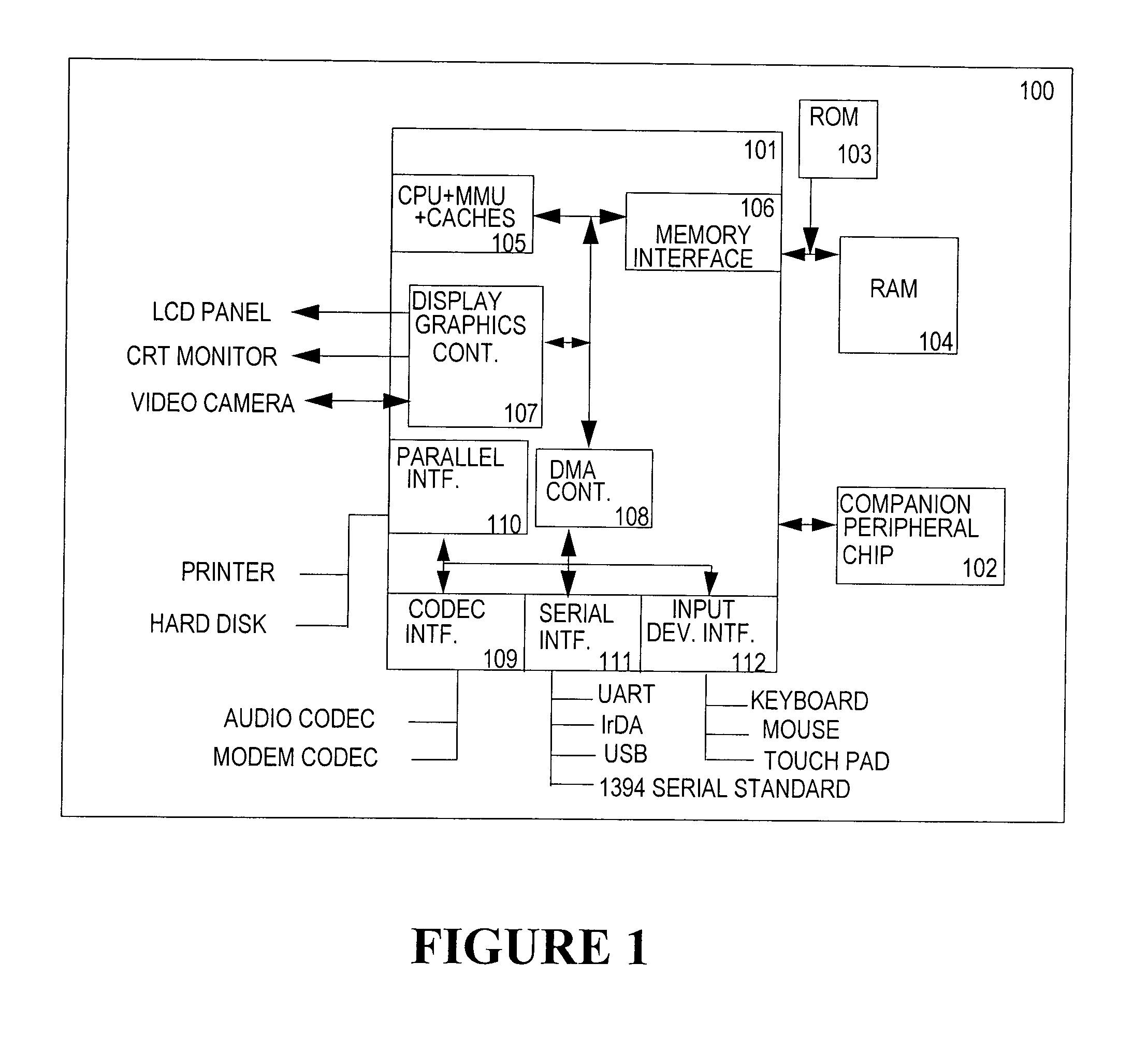

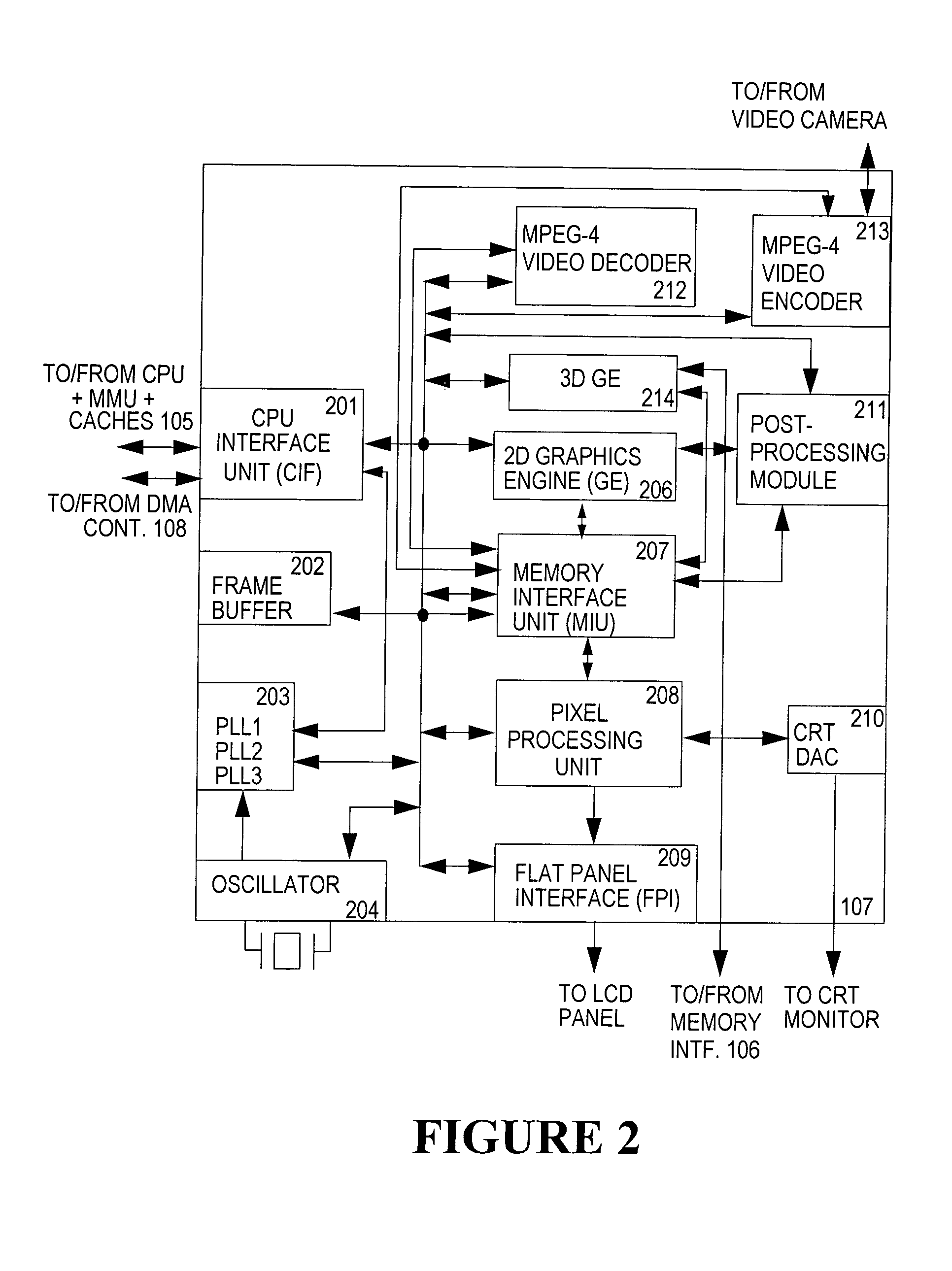

High quality and high performance three-dimensional graphics architecture for portable handheld devices

ActiveUS20050066205A1Quality improvementImprove performanceEnergy efficient ICTVolume/mass flow measurementComputational sciencePower efficient

A high quality and performance 3D graphics architecture suitable for portable handheld devices is provided. The 3D graphics architecture incorporates a module to classify polygons by size and other characteristics. In general, small and well-behaved triangles can be processed using “lower-precision” units with power efficient circuitry without any quality and performance sacrifice (e.g., realism, resolution, etc.). By classifying the primitives and selecting the more power-efficient processing unit to process the primitive, power consumption can be reduced without quality and performance sacrifice.

Owner:NVIDIA CORP

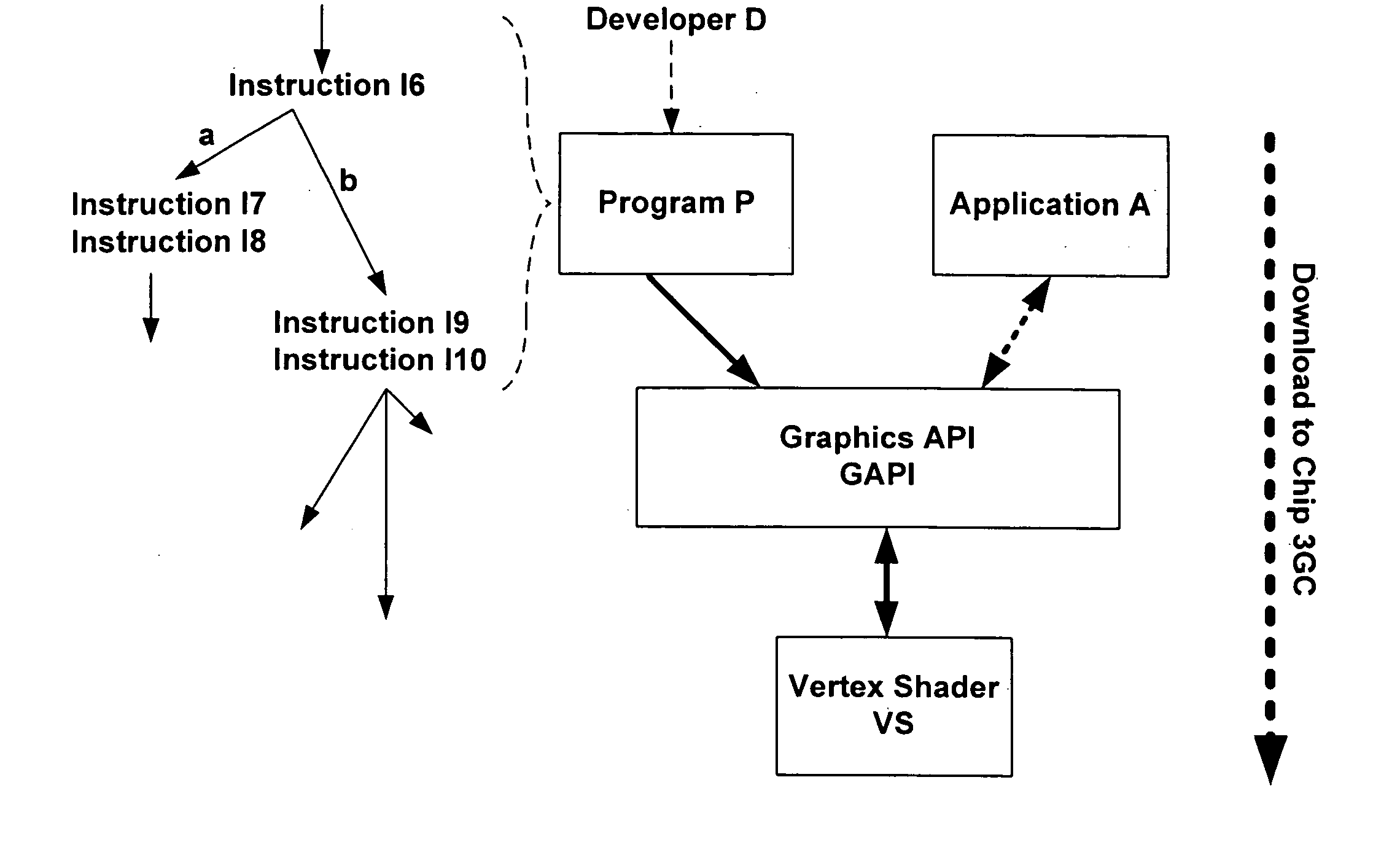

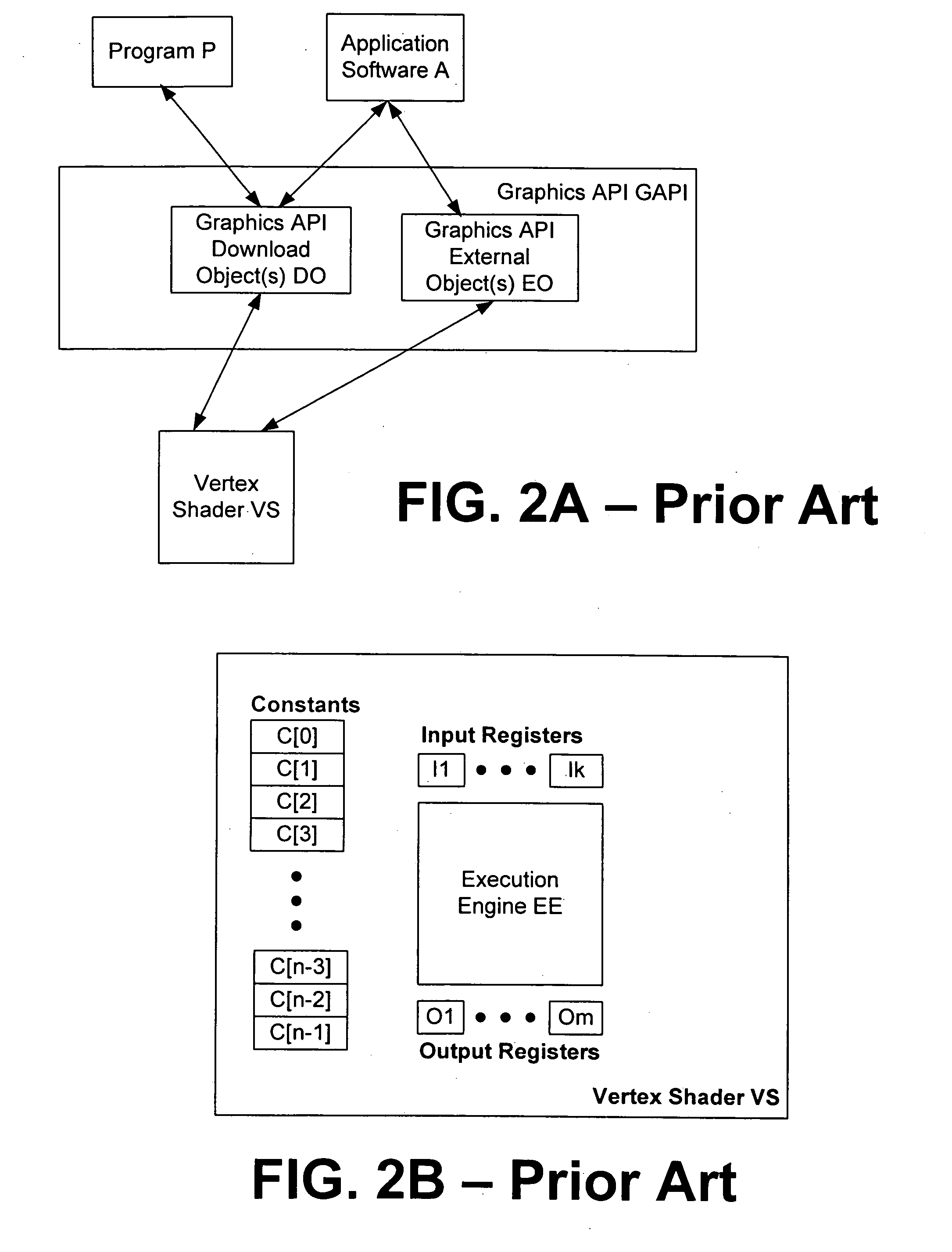

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding techniques

ActiveUS20050122330A1Improve abilitiesSophisticated effectDigital computer detailsNext instruction address formationComputational scienceCoprocessor

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding processing and communication techniques are provided. For an improved graphics pipeline, the invention provides a class of co-processing device, such as a graphics processor unit (GPU), providing improved capabilities for an abstract or virtual machine for performing graphics calculations and rendering. The invention allows for runtime-predicated flow control of programs downloaded to coprocessors, enables coprocessors to include indexable arrays of on-chip storage elements that are readable and writable during execution of programs, provides native support for textures and texture maps and corresponding operations in a vertex shader, provides frequency division of vertex streams input to a vertex shader with optional support for a stream modulo value, provides a register storage element on a pixel shader and associated interfaces for storage associated with representing the “face” of a pixel, provides vertex shaders and pixel shaders with more on-chip register storage and the ability to receive larger programs than any existing vertex or pixel shaders and provides 32 bit float number support in both vertex and pixel shaders.

Owner:MICROSOFT TECH LICENSING LLC

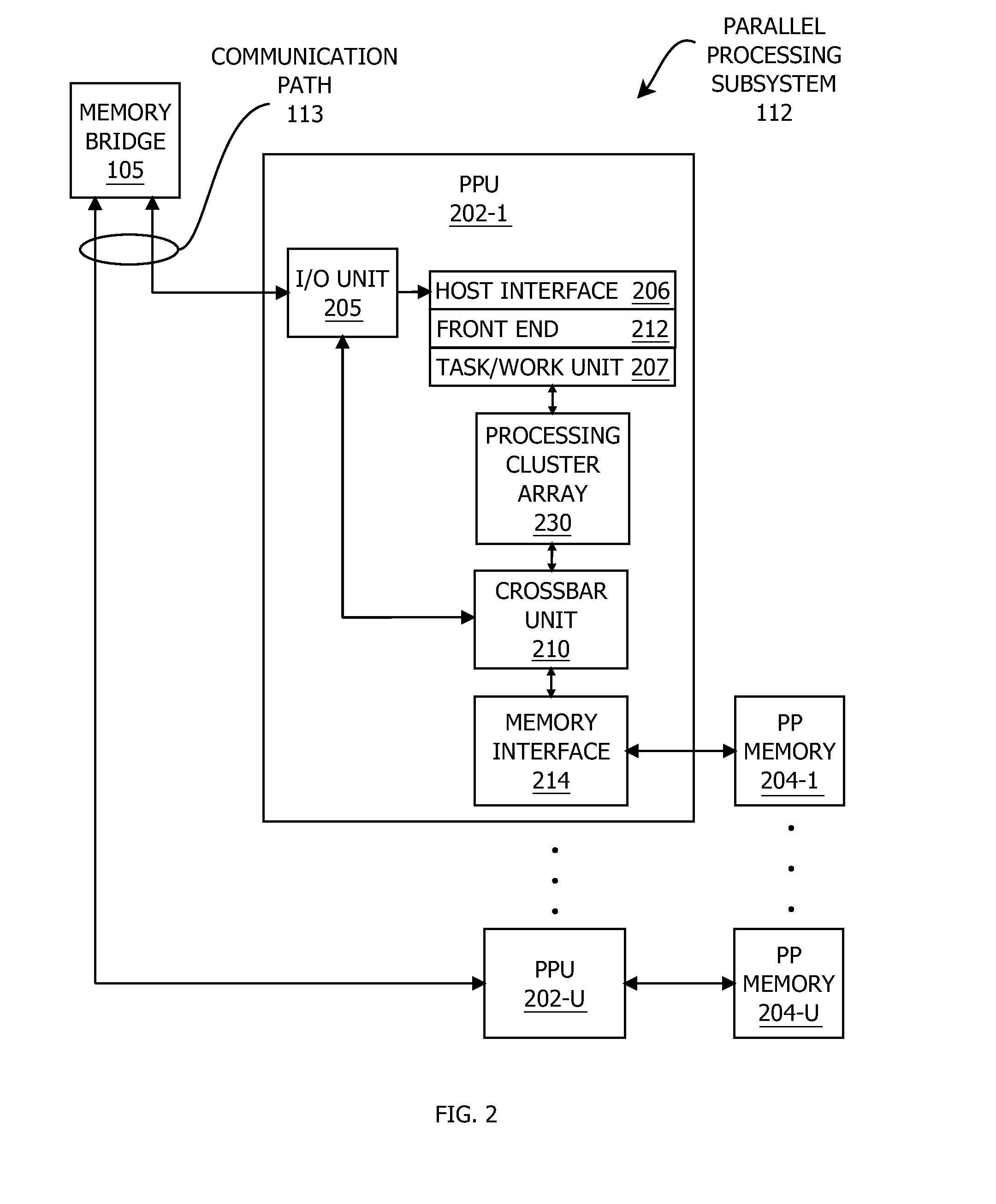

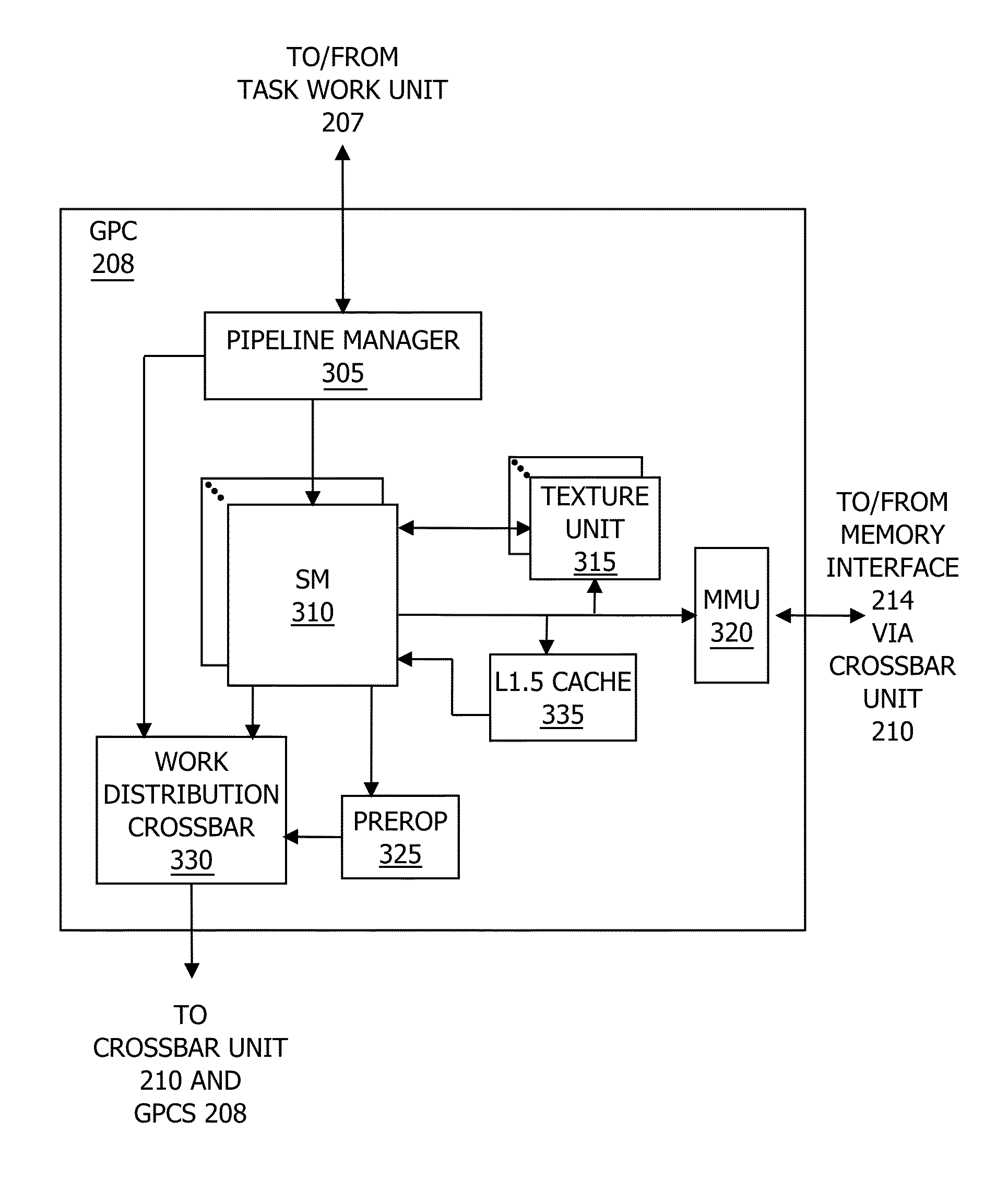

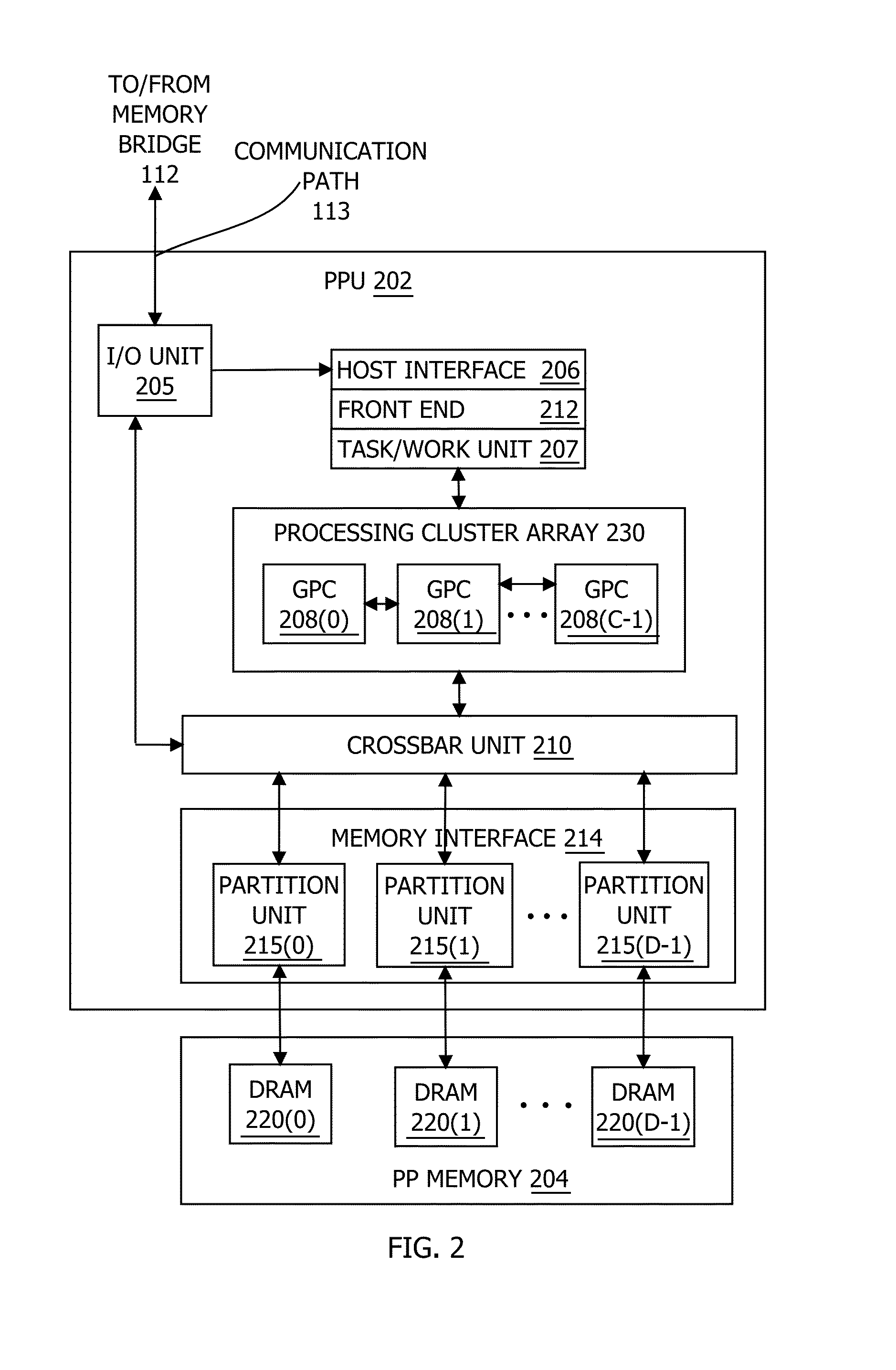

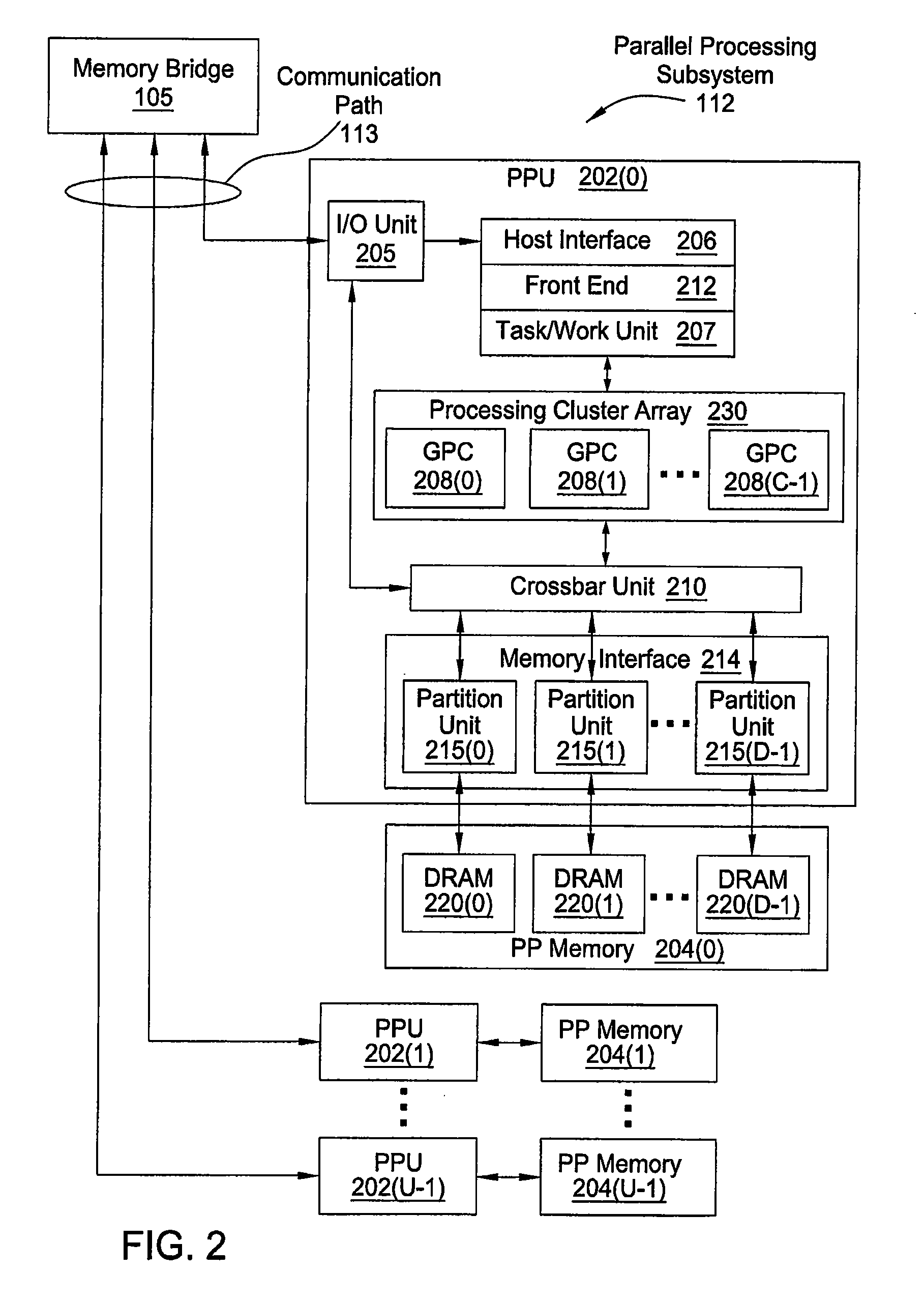

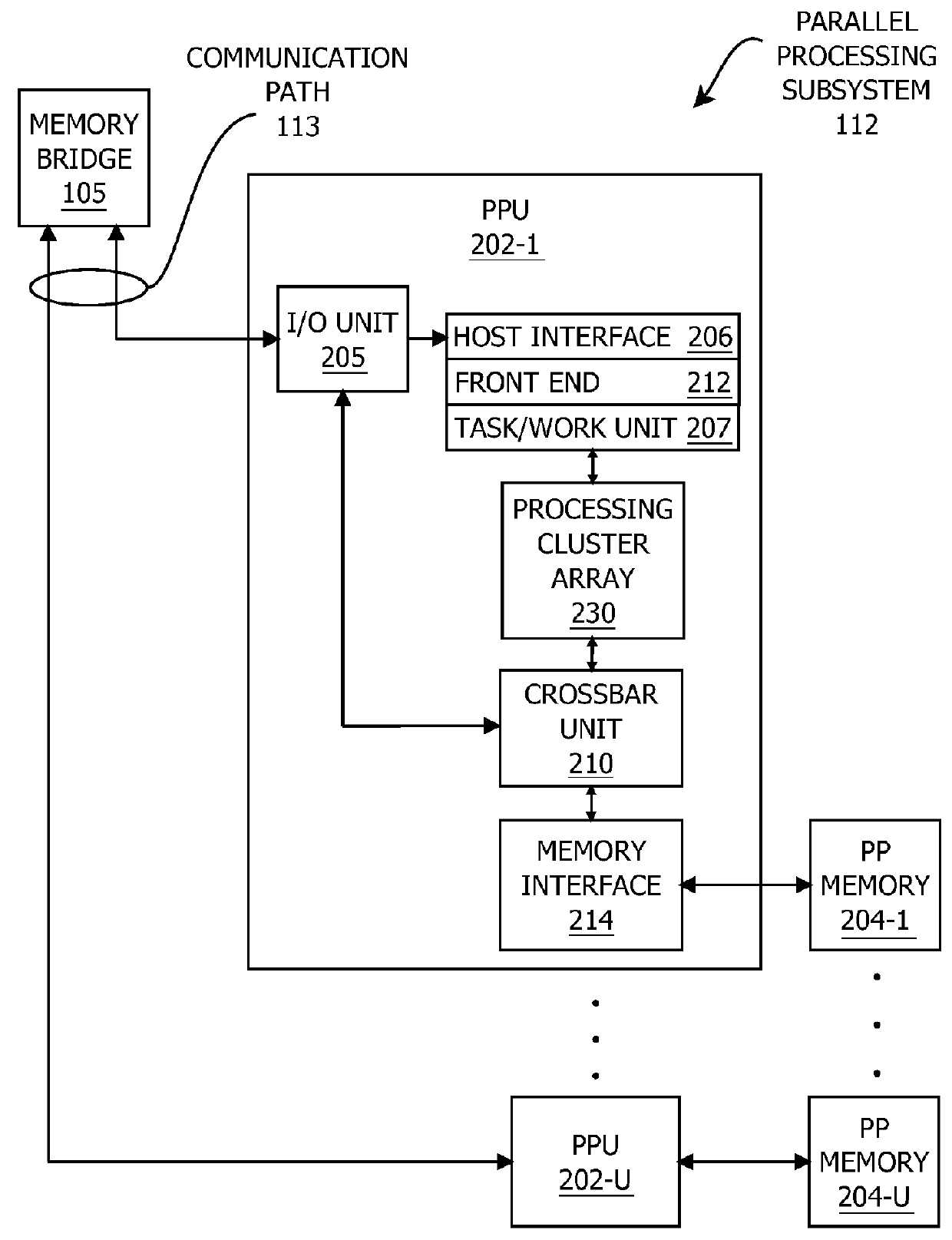

Adaptive shading in a graphics processing pipeline

ActiveUS20150170409A1Fine granularityConsumes less power3D-image renderingComputational scienceGraphics

One embodiment of the present invention includes a parallel processing unit (PPU) that performs pixel shading at variable granularities. For effects that vary at a low frequency across a pixel block, a coarse shading unit performs the associated shading operations on a subset of the pixels in the pixel block. By contrast, for effects that vary at a high frequency across the pixel block, fine shading units perform the associated shading operations on each pixel in the pixel block. Because the PPU implements coarse shading units and fine shading units, the PPU may tune the shading rate per-effect based on the frequency of variation across each pixel group. By contrast, conventional PPUs typically compute all effects per-pixel, performing redundant shading operations for low frequency effects. Consequently, to produce similar image quality, the PPU consumes less power and increases the rendering frame rate compared to a conventional PPU.

Owner:NVIDIA CORP

Techniques for setting up and executing draw calls

ActiveUS20140168242A1Improve processing efficiencyImage memory managementProgram controlComputational scienceApplication software

One embodiment sets forth a method for processing draw calls that includes setting up a plurality of shader input buffers in memory, receiving shader input data related to a graphics scene from a software application, storing the shader input data in the plurality of shader input buffers, computing a pointer to each shader input buffer included in the plurality of shader input buffers, and passing the pointers to the plurality of shader input buffers to the software application. By implementing the disclosed techniques, a shader program advantageously can access the shader input data associated with a graphics scene and stored in various shader input buffers without having to go through the central processing unit to have the shader input buffers binded to the shader program.

Owner:NVIDIA CORP

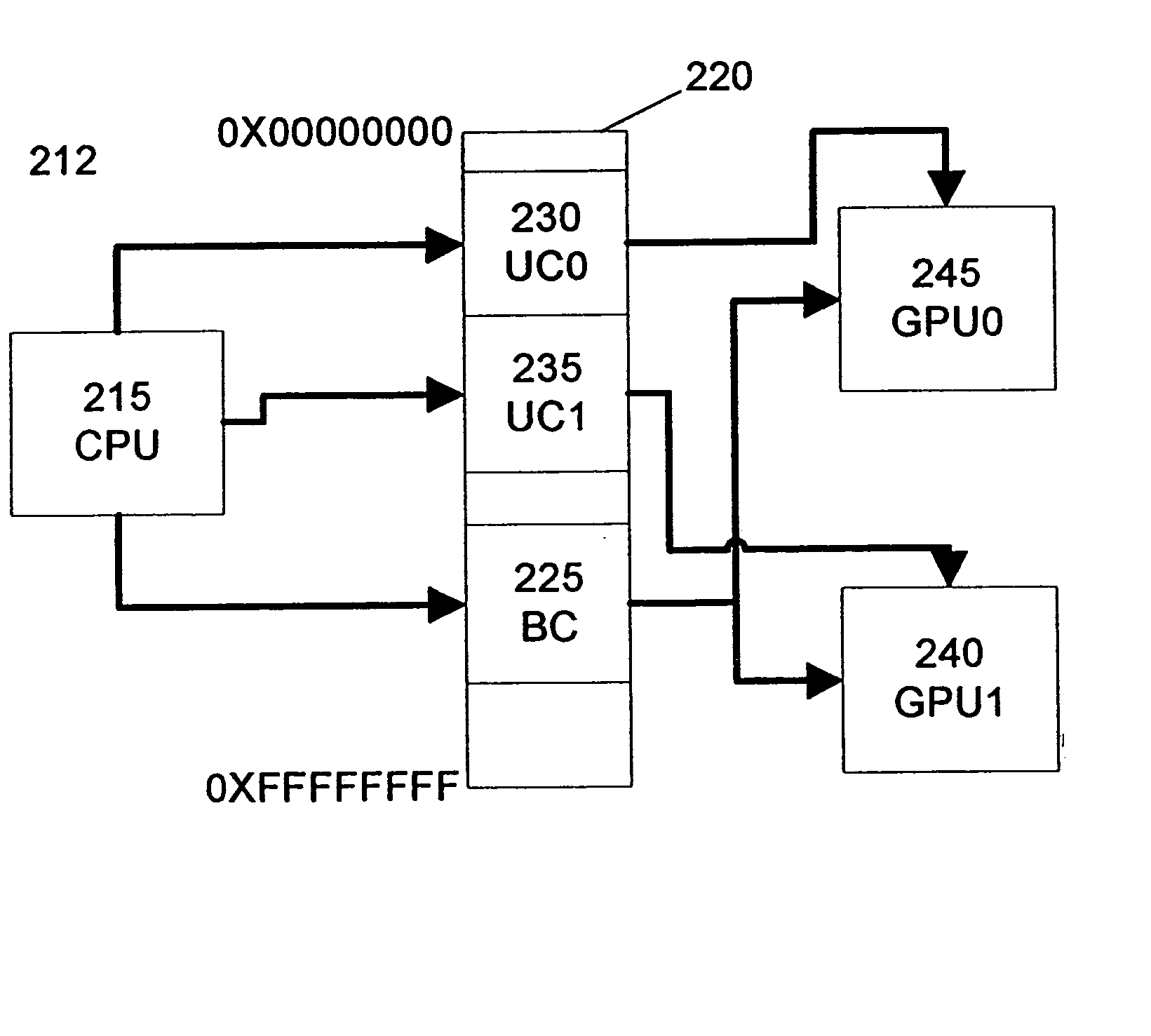

Programming multiple chips from a command buffer

ActiveUS20060114260A1Single instruction multiple data multiprocessorsProcessor architectures/configurationComputational scienceGraphics

Owner:NVIDIA CORP

Techniques for locally modifying draw calls

ActiveUS9342857B2Reduce the amount requiredAmount of data transferredProcessor architectures/configurationComputational scienceSoftware

One embodiment sets forth a method for modifying draw calls using a draw-call shader program included in a processing subsystem configured to process draw calls. The draw call shader receives a draw call from a software application, evaluates graphics state information included in the draw call, generates modified graphics state information, and generates a modified draw call that includes the modified graphics state information. Subsequently, the draw-call shader causes the modified draw call to be executed within a graphics processing pipeline. By performing the computations associated with generating the modified draw call on-the-fly within the processing subsystem, the draw-call shader decreases the amount of system memory required to render graphics while increasing the overall processing efficiency of the graphics processing pipeline.

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com