Hardware structure for realizing forward calculation of convolutional neural network

A technology of convolutional neural network and forward computing, which is applied in the field of hardware structure to realize forward computing of convolutional neural network, can solve the problems of reducing resource utilization and performance, and cannot accelerate parallel resources on the board, so as to reduce the on-chip The effect of cache requirements, large parallelism, and high processing performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] In order to make the purpose, technical solution and advantages of the application clearer, the application will be further described in detail below in conjunction with the accompanying drawings. Apparently, the described embodiments are only some of the embodiments of the application, not all of them. Based on the embodiments in this application, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the scope of protection of this application.

[0026] The embodiments of the present application will be further described in detail below in conjunction with the accompanying drawings.

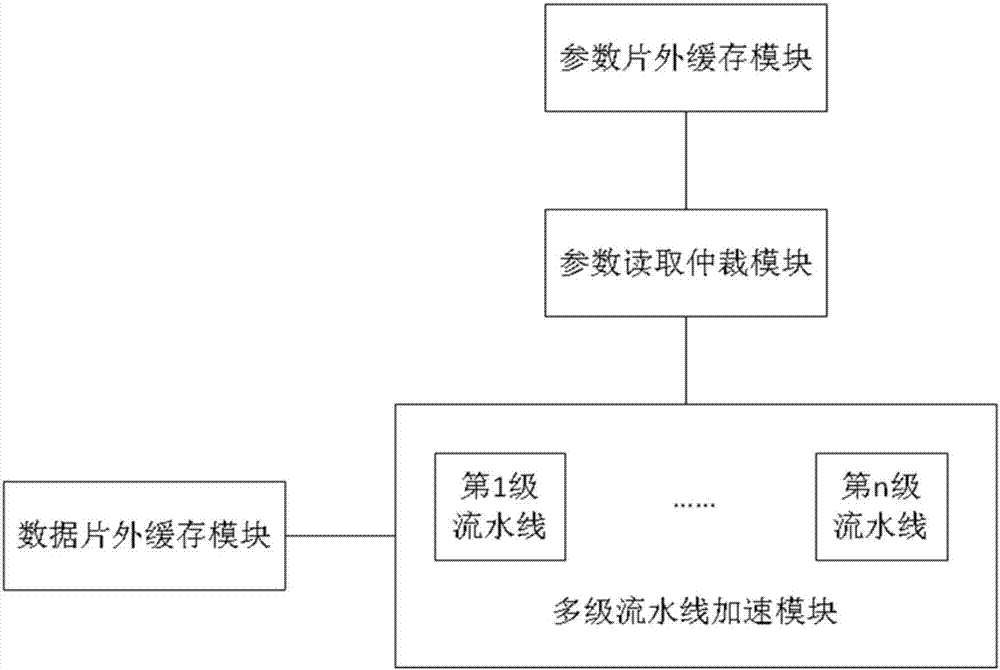

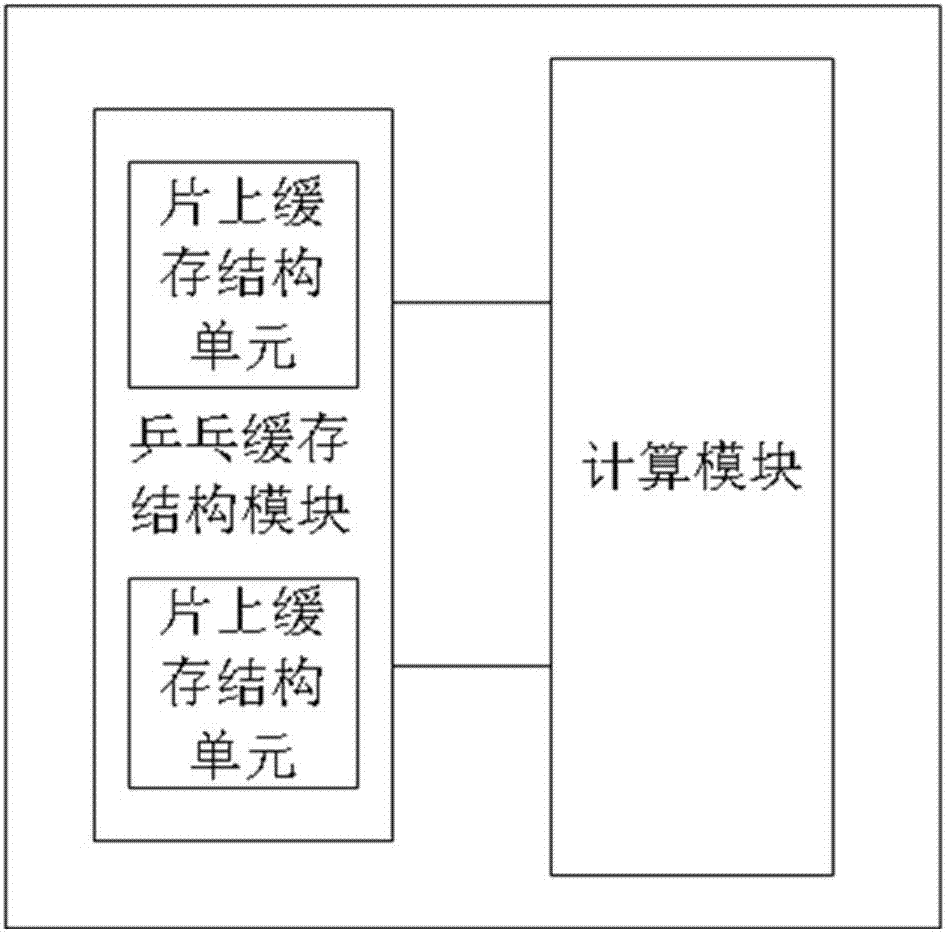

[0027] like figure 1 As shown, according to one aspect of the present application, a hardware structure for realizing the forward calculation of the convolutional neural network is provided. The hardware structure can be realized by using a field programmable gate array FPGA chip or an application specific integrated circuit ASIC ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com