Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1431 results about "Hardware acceleration" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, hardware acceleration is the use of computer hardware specially made to perform some functions more efficiently than is possible in software running on a general-purpose CPU. Any transformation of data or routine that can be computed, can be calculated purely in software running on a generic CPU, purely in custom-made hardware, or in some mix of both. An operation can be computed faster in application-specific hardware designed or programmed to compute the operation than specified in software and performed on a general-purpose computer processor. Each approach has advantages and disadvantages. The implementation of computing tasks in hardware to decrease latency and increase throughput is known as hardware acceleration.

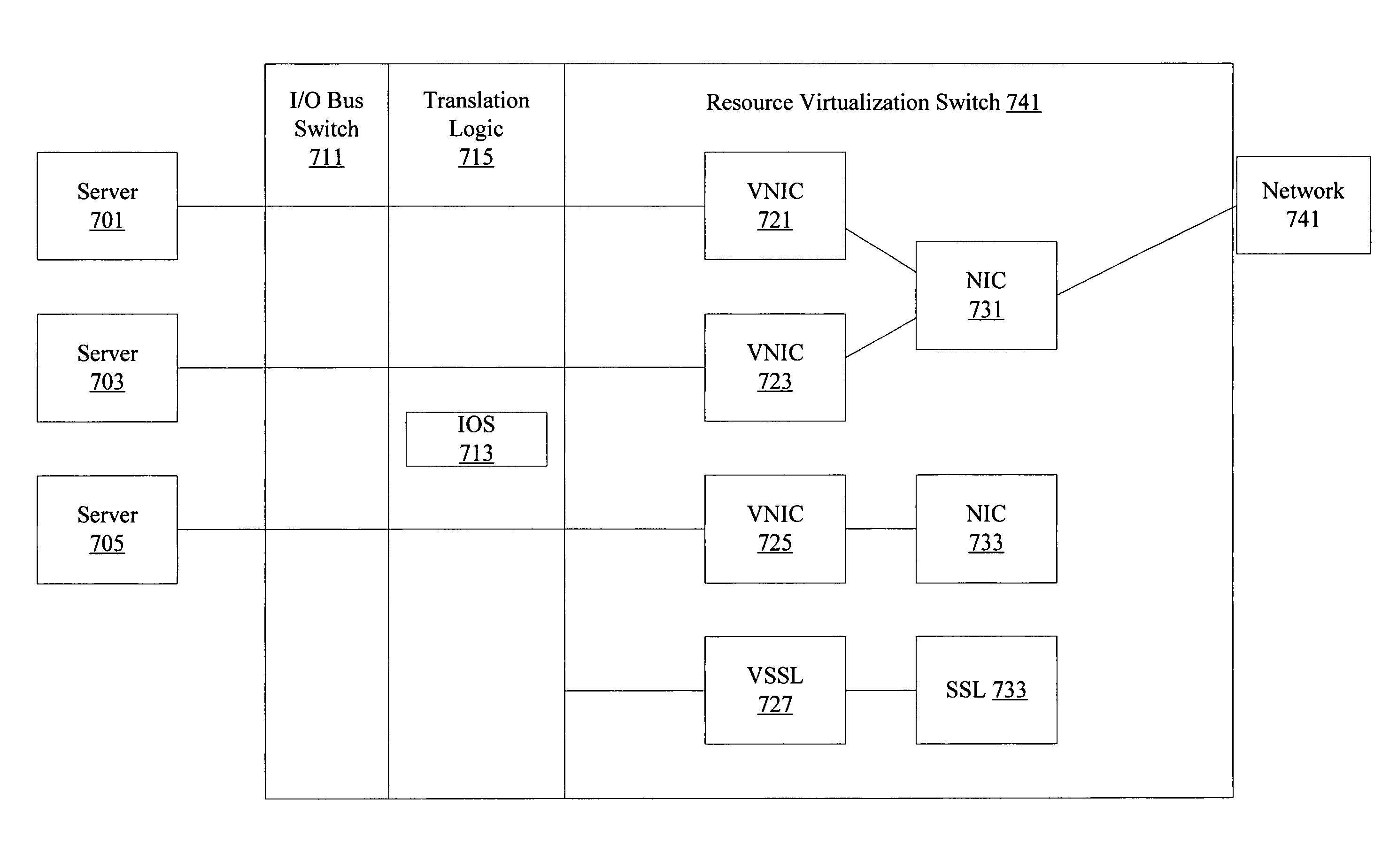

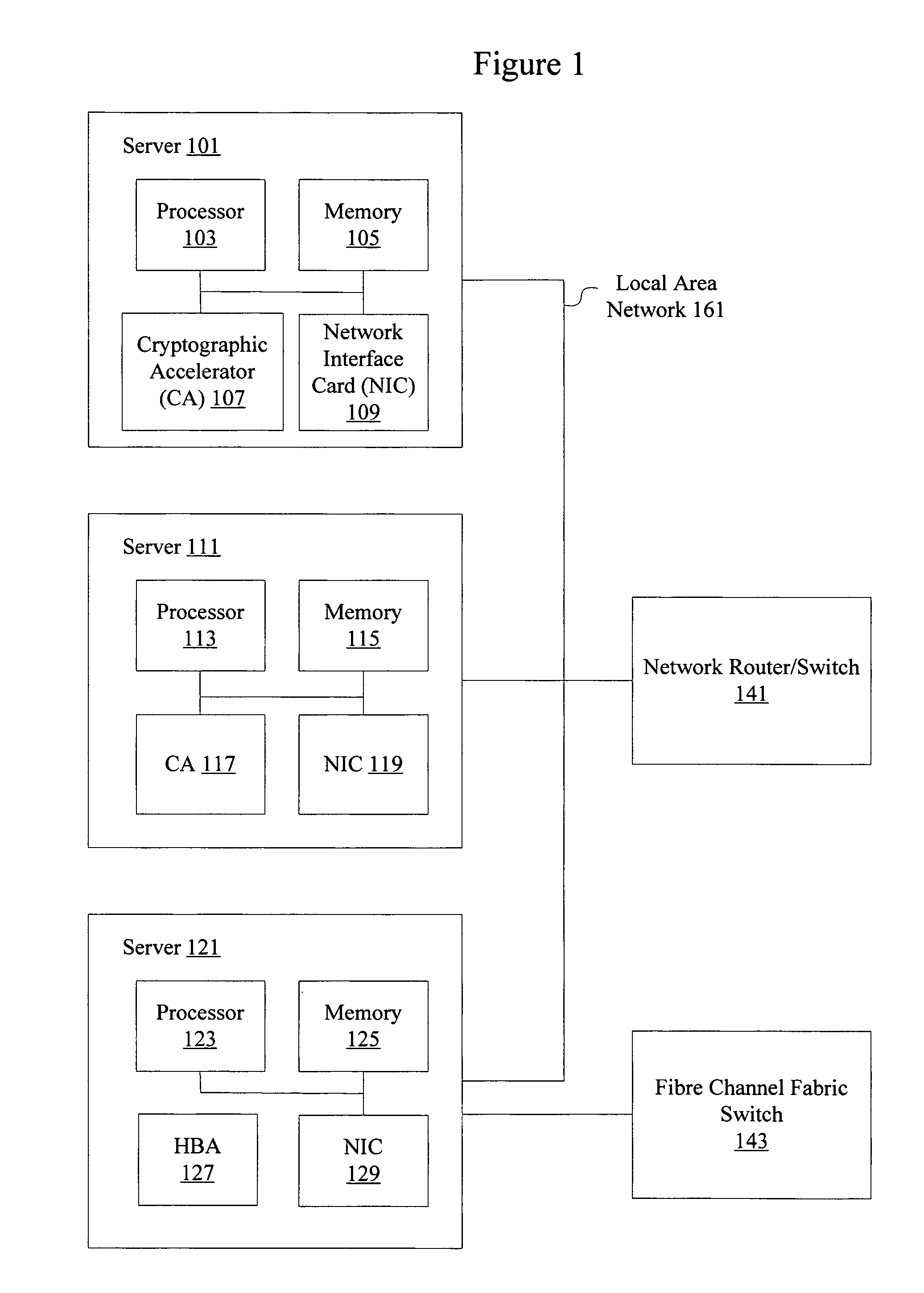

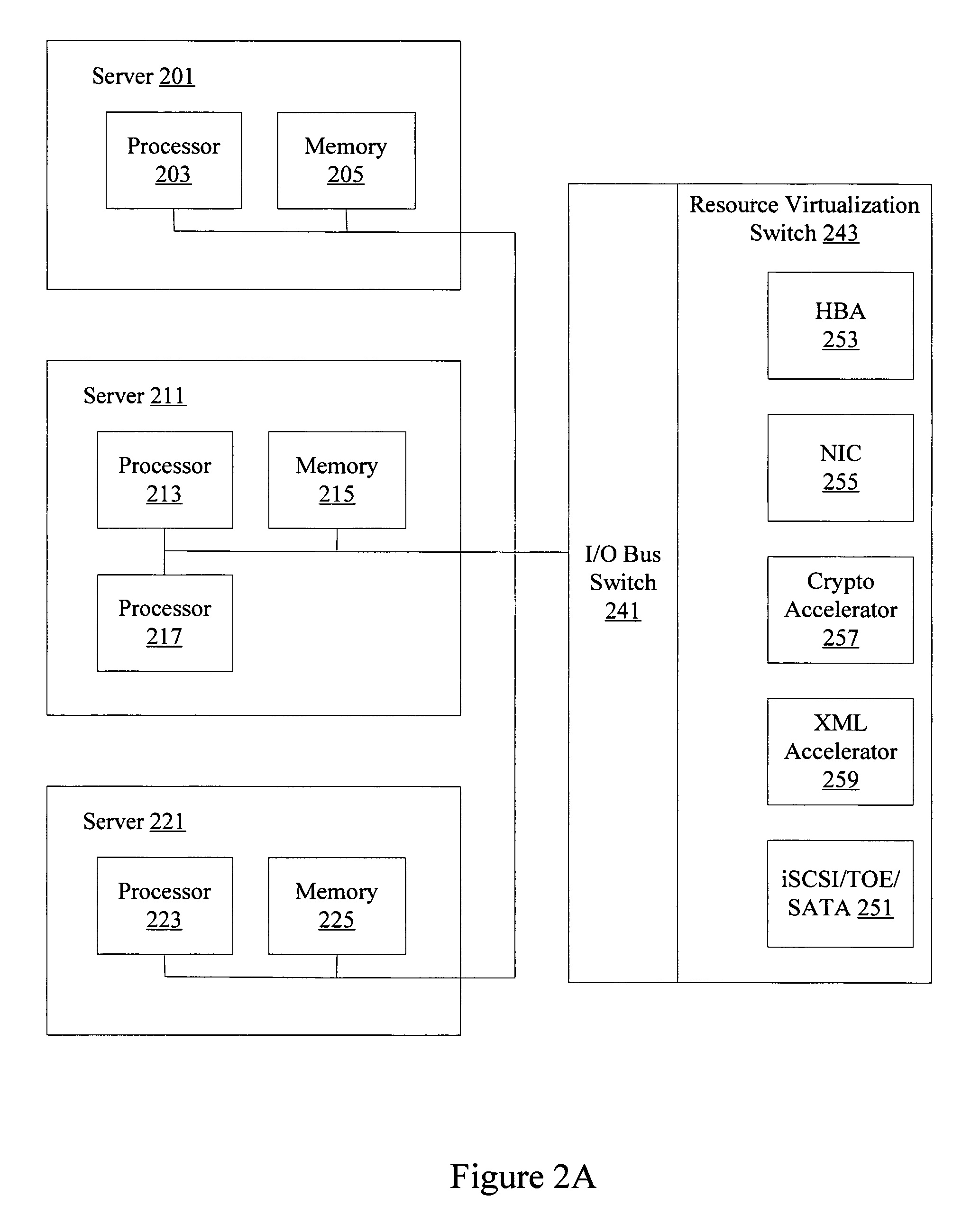

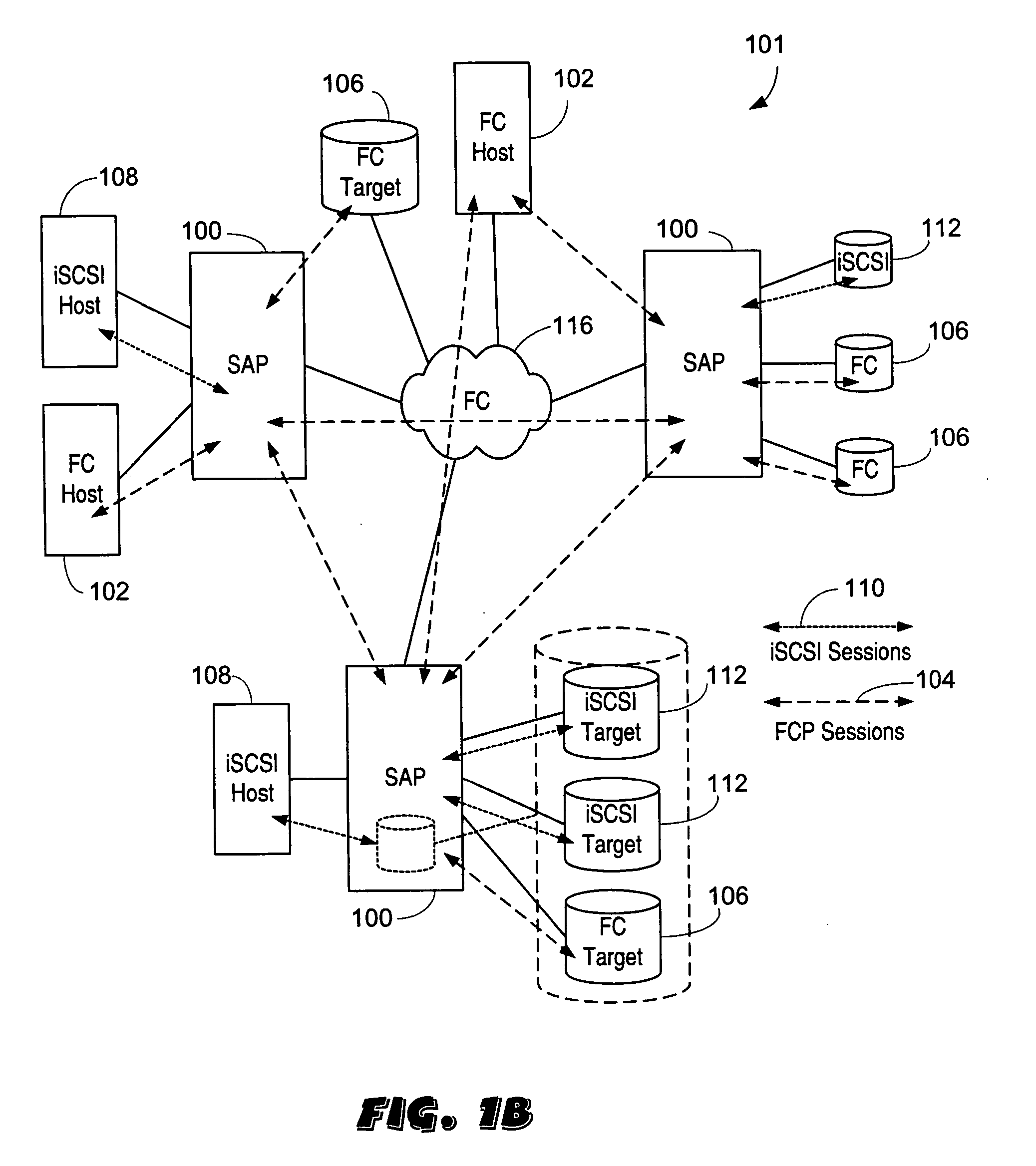

Resource virtualization switch

ActiveUS7502884B1Error detection/correctionData switching by path configurationResource virtualizationPCI Express

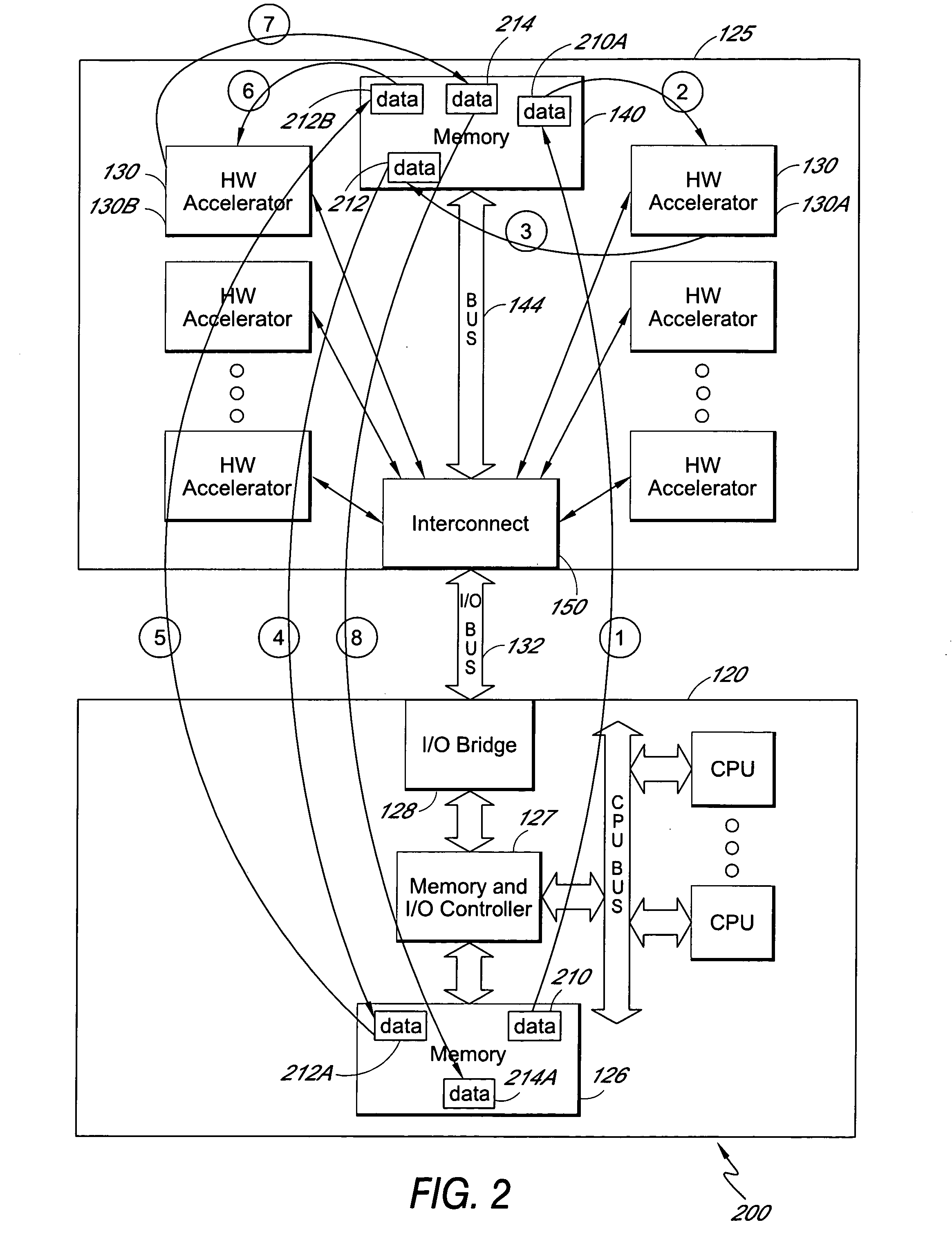

Methods and apparatus are provided for virtualizing resources including peripheral components and peripheral interfaces. Peripheral component such as hardware accelerators and peripheral interfaces such as port adapters are offloaded from individual servers onto a resource virtualization switch. Multiple servers are connected to the resource virtualization switch over an I / O bus fabric such as PCI Express or PCI-AS. The resource virtualization switch allows efficient access, sharing, management, and allocation of resources.

Owner:ORACLE INT CORP

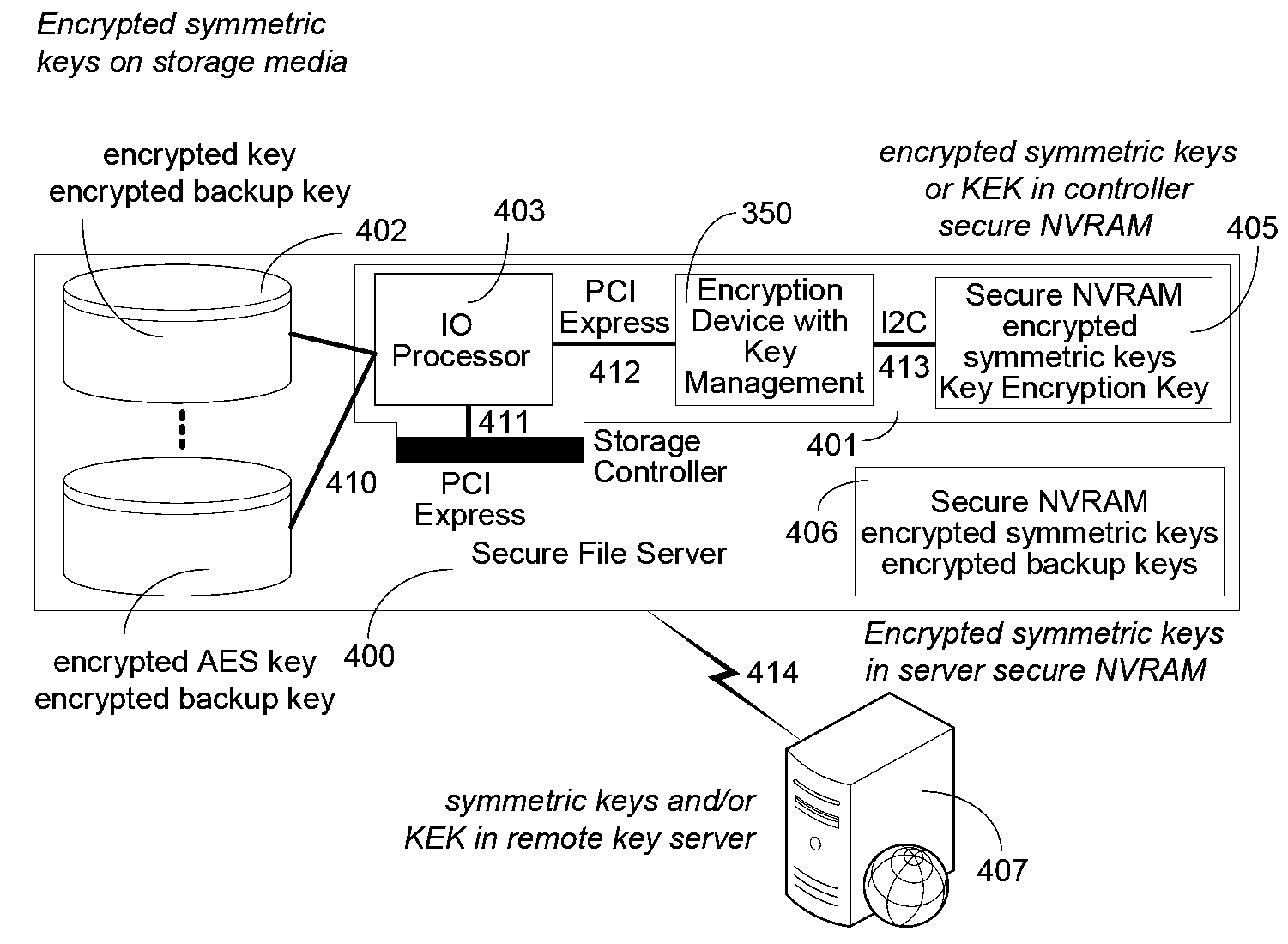

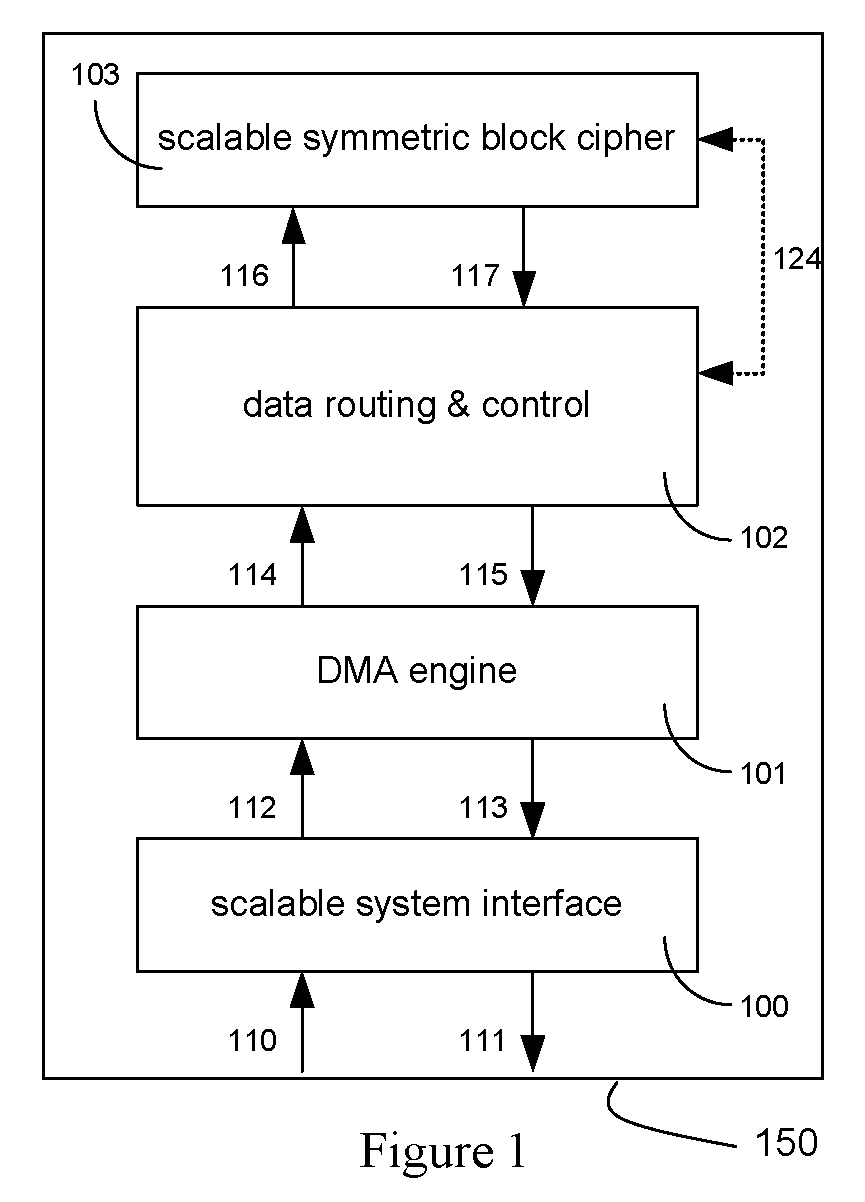

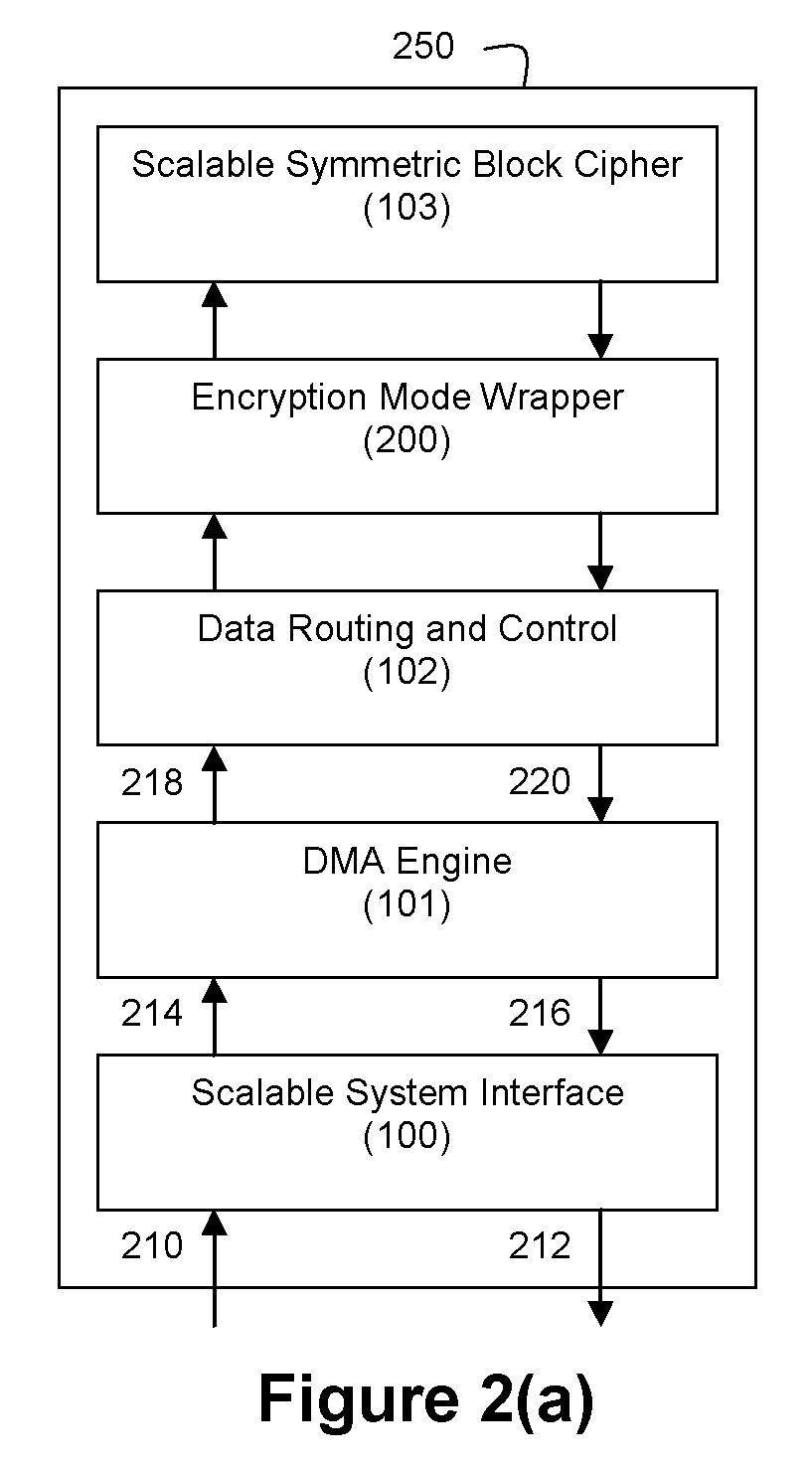

Method and Apparatus for Hardware-Accelerated Encryption/Decryption

ActiveUS20090060197A1Avoid contactMaximize availabilityEncryption apparatus with shift registers/memoriesSecret communicationMultiple encryptionComputer hardware

An integrated circuit for data encryption / decryption and secure key management is disclosed. The integrated circuit may be used in conjunction with other integrated circuits, processors, and software to construct a wide variety of secure data processing, storage, and communication systems. A preferred embodiment of the integrated circuit includes a symmetric block cipher that may be scaled to strike a favorable balance among processing throughput and power consumption. The modular architecture also supports multiple encryption modes and key management functions such as one-way cryptographic hash and random number generator functions that leverage the scalable symmetric block cipher. The integrated circuit may also include a key management processor that can be programmed to support a wide variety of asymmetric key cryptography functions for secure key exchange with remote key storage devices and enterprise key management servers. Internal data and key buffers enable the device to re-key encrypted data without exposing data. The key management functions allow the device to function as a cryptographic domain bridge in a federated security architecture.

Owner:IP RESERVOIR

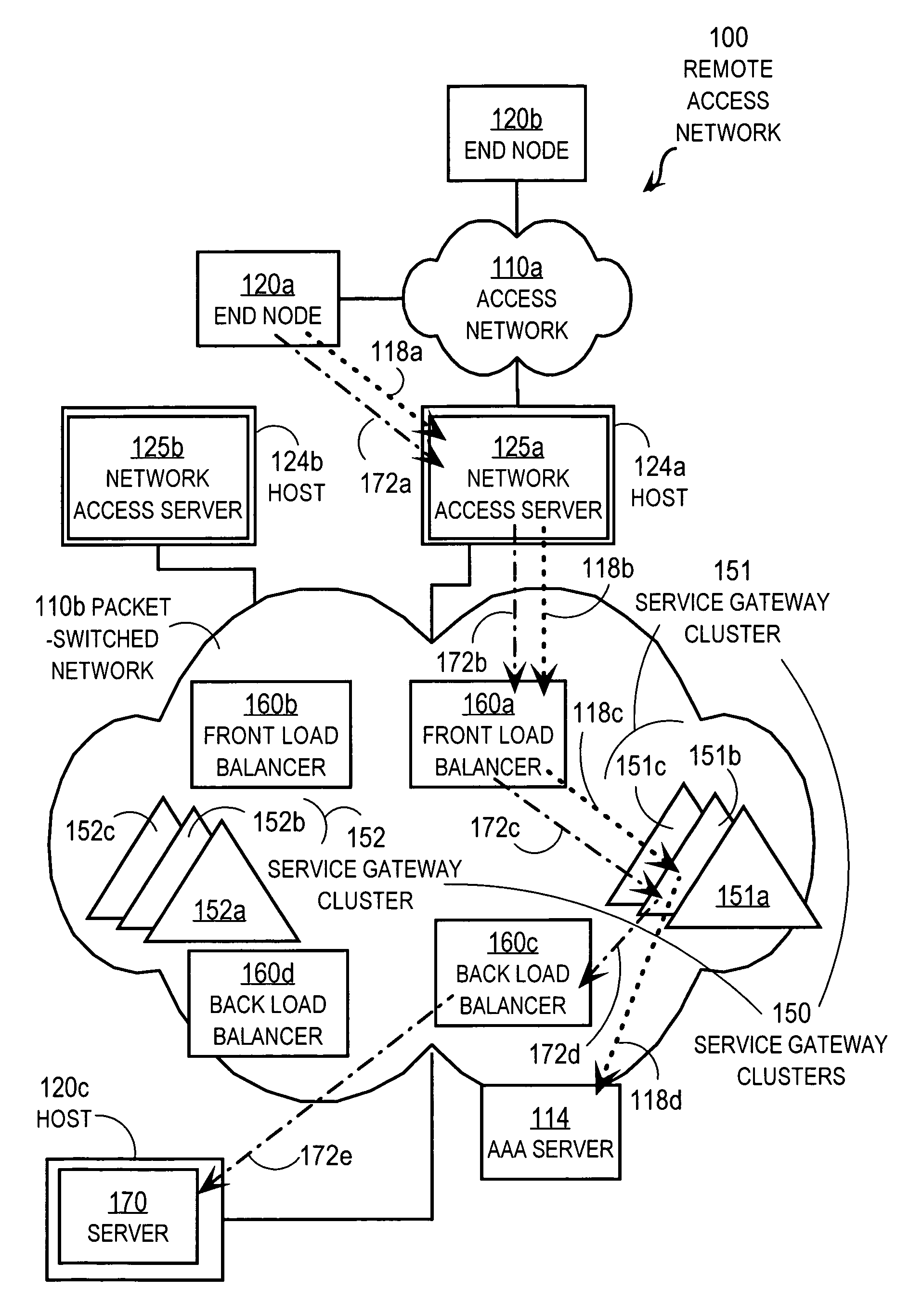

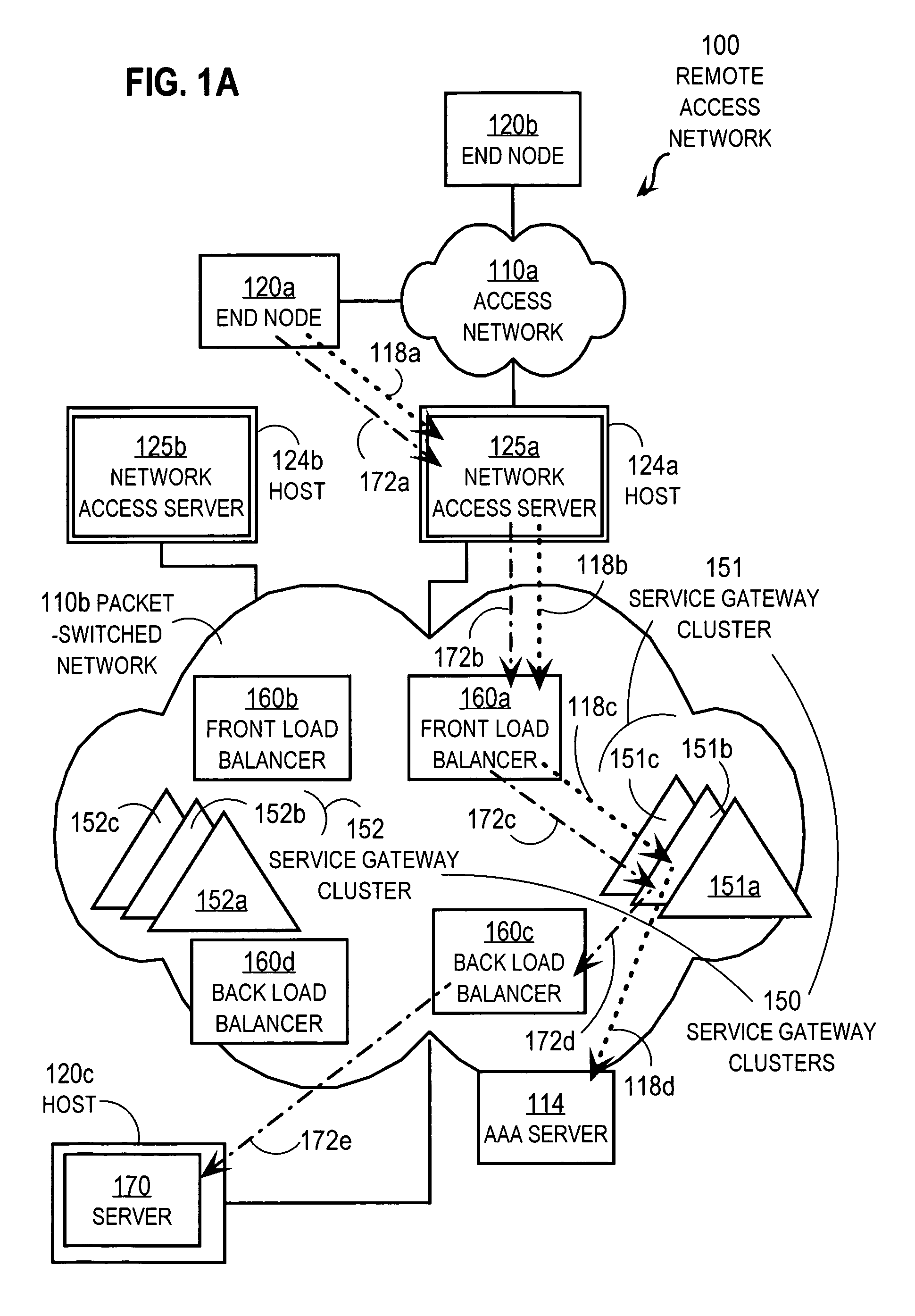

Techniques for load balancing over a cluster of subscriber-aware application servers

InactiveUS20070165622A1Digital computer detailsNetwork connectionsApplication serverDistributed computing

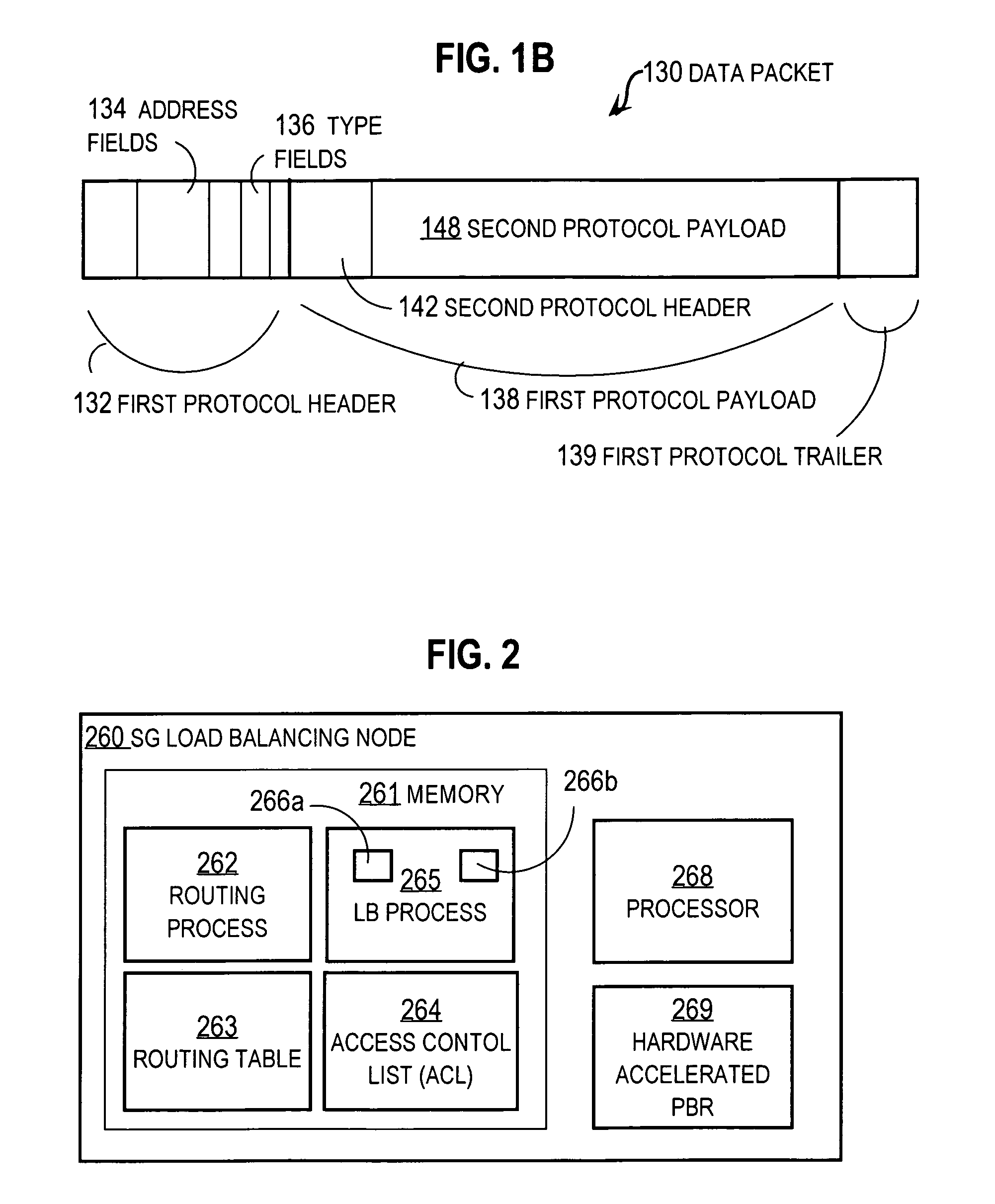

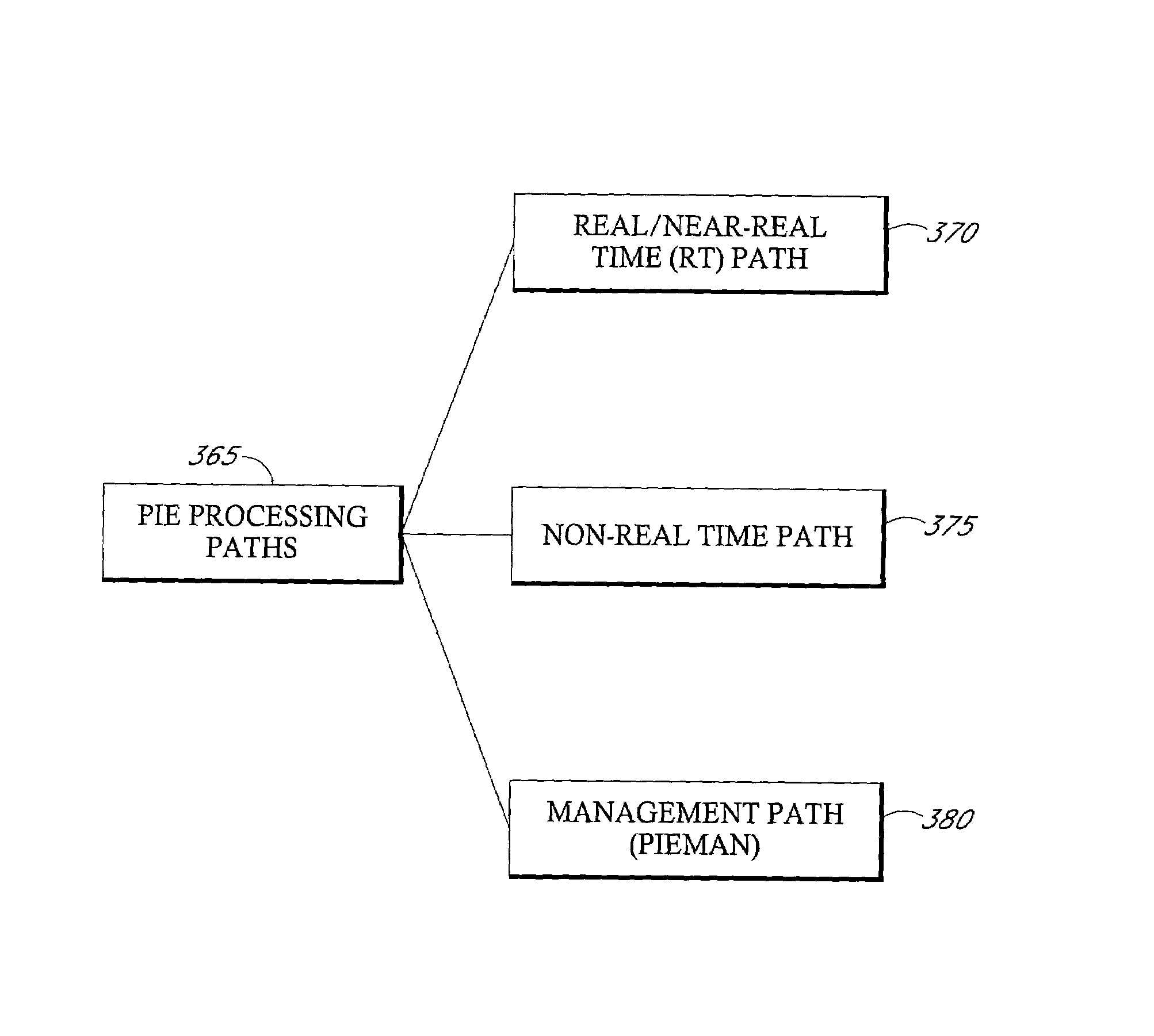

Techniques for distributing control plane traffic, from an end node in a packet switched network to a cluster of service gateway nodes that host subscriber-aware application servers, include receiving a control plane message for supporting data plane traffic from a particular subscriber. A particular service gateway node is determined among the cluster of service gateway nodes based on policy-based routing (PBR) for the data plane traffic from the particular subscriber. A message based on the control plane message is sent to a control plane process on the particular service gateway node. Thereby, data plane traffic and control plane traffic from the same subscriber are directed to the same gateway node, or otherwise related gateway nodes, of the cluster of service gateway nodes. This approach allows currently-available, hardware-accelerated PBR to be used with clusters of subscriber-aware service gateways that must also monitor control plane traffic from the same subscriber.

Owner:CISCO TECH INC

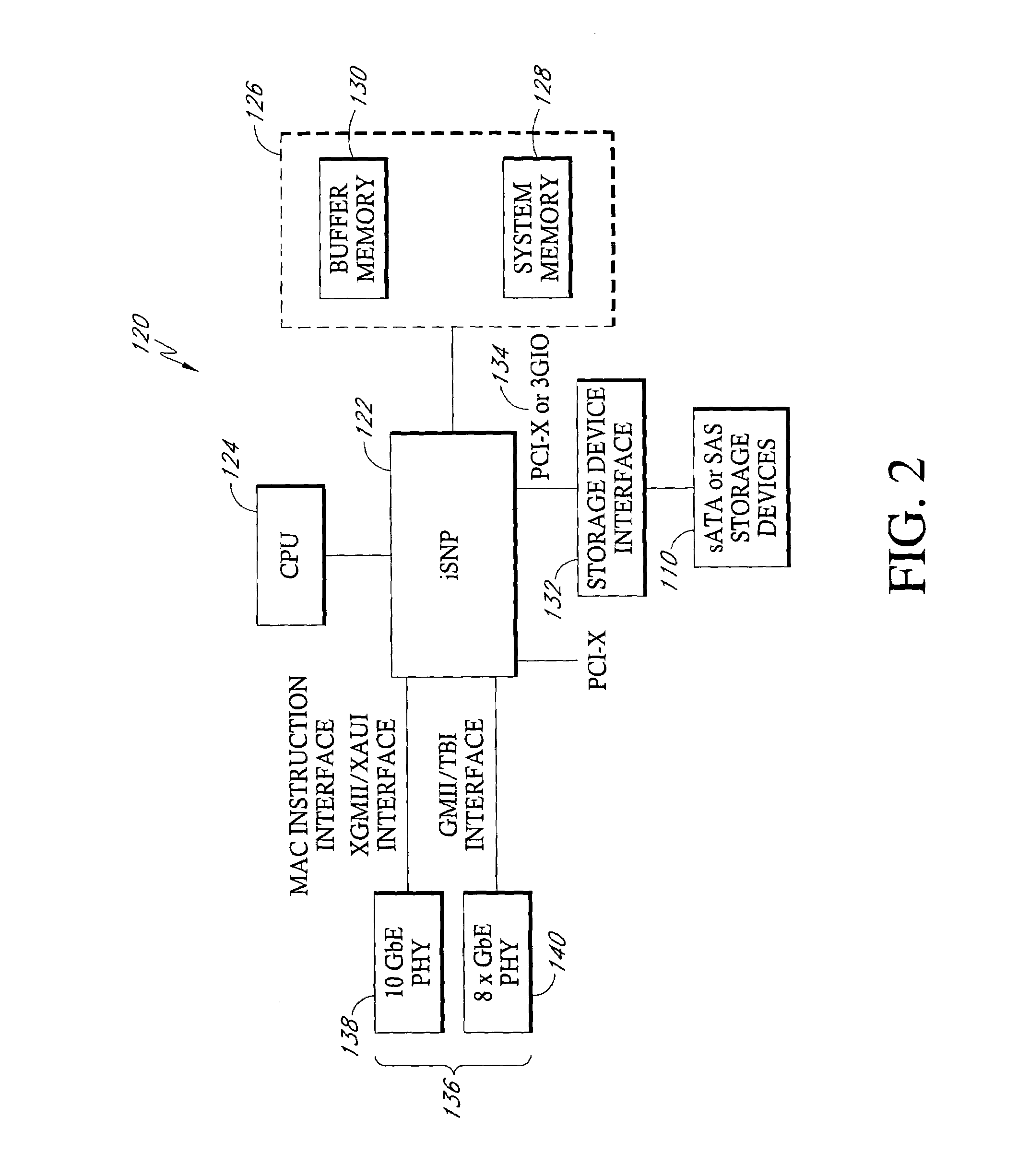

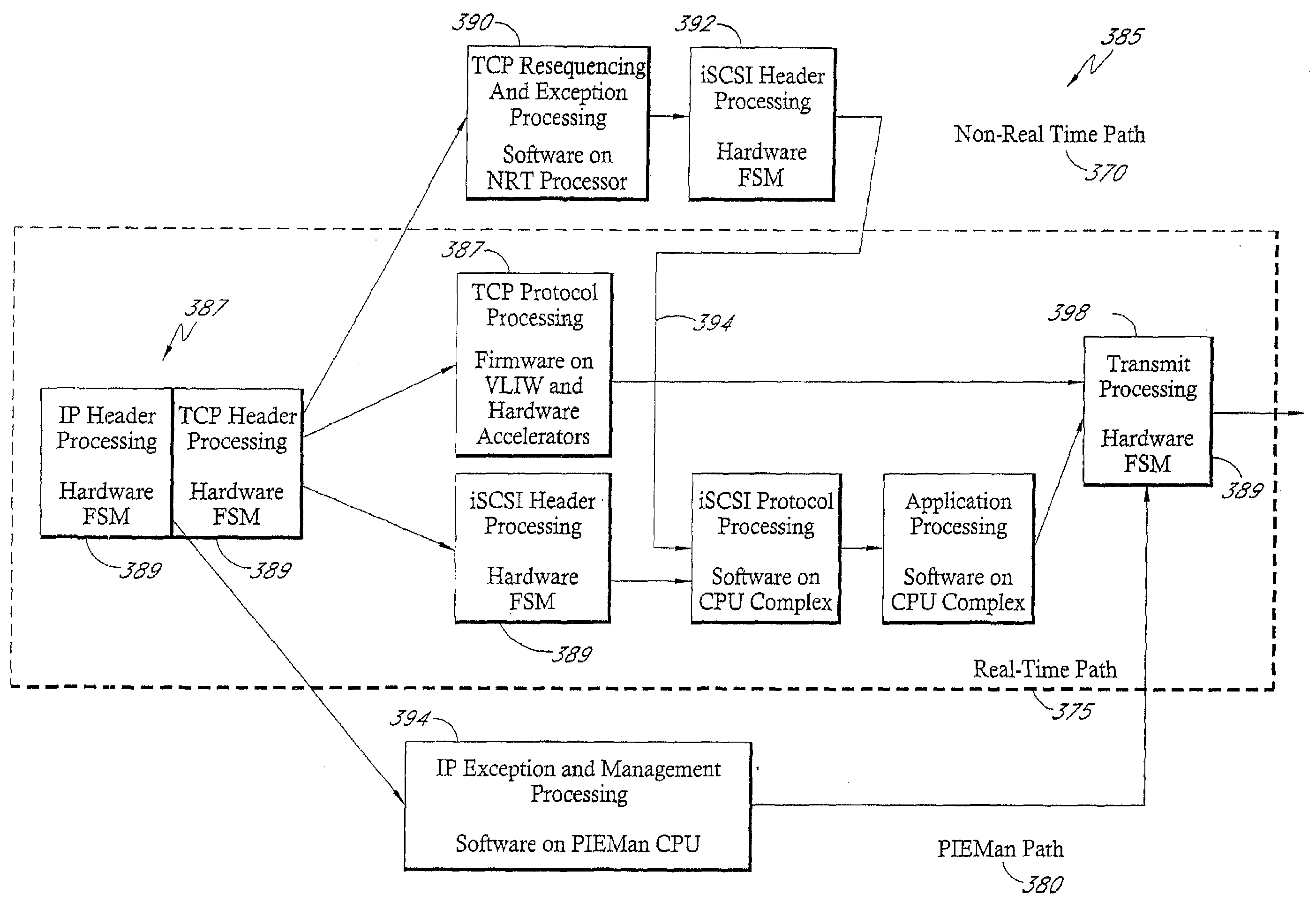

Network receive interface for high bandwidth hardware-accelerated packet processing

InactiveUS7460473B1Improve performanceError preventionFrequency-division multiplex detailsTraffic capacityHigh bandwidth

Disclosed is a system and methods for accelerating network packet processing for devices configured to process network traffic at relatively high data rates. The system incorporates a hardware-accelerated packet processing module that handles in-sequence network packets and a software-based processing module that handles out-of-sequence and exception case network packets.

Owner:PROMISE TECHNOLOGY

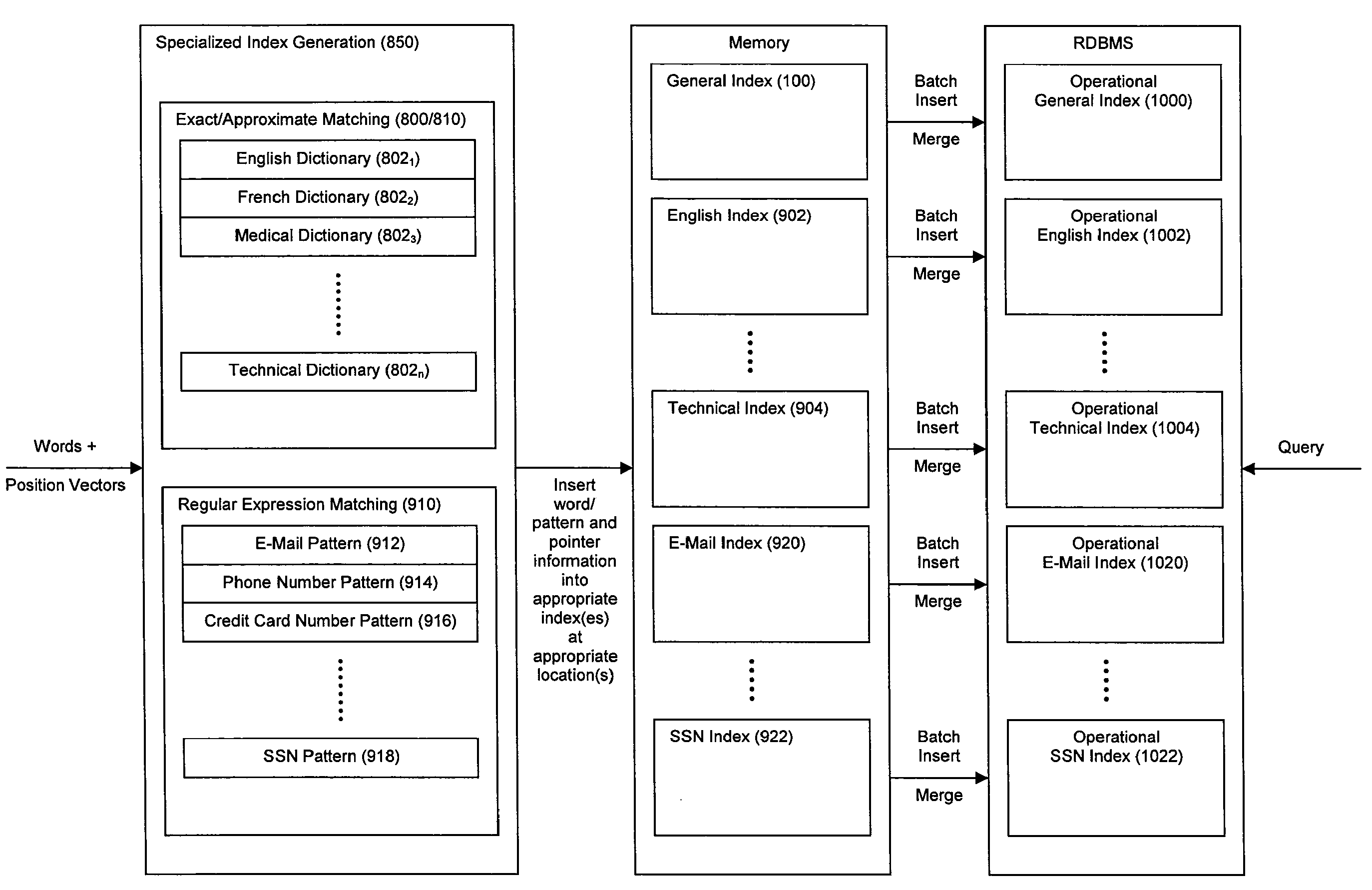

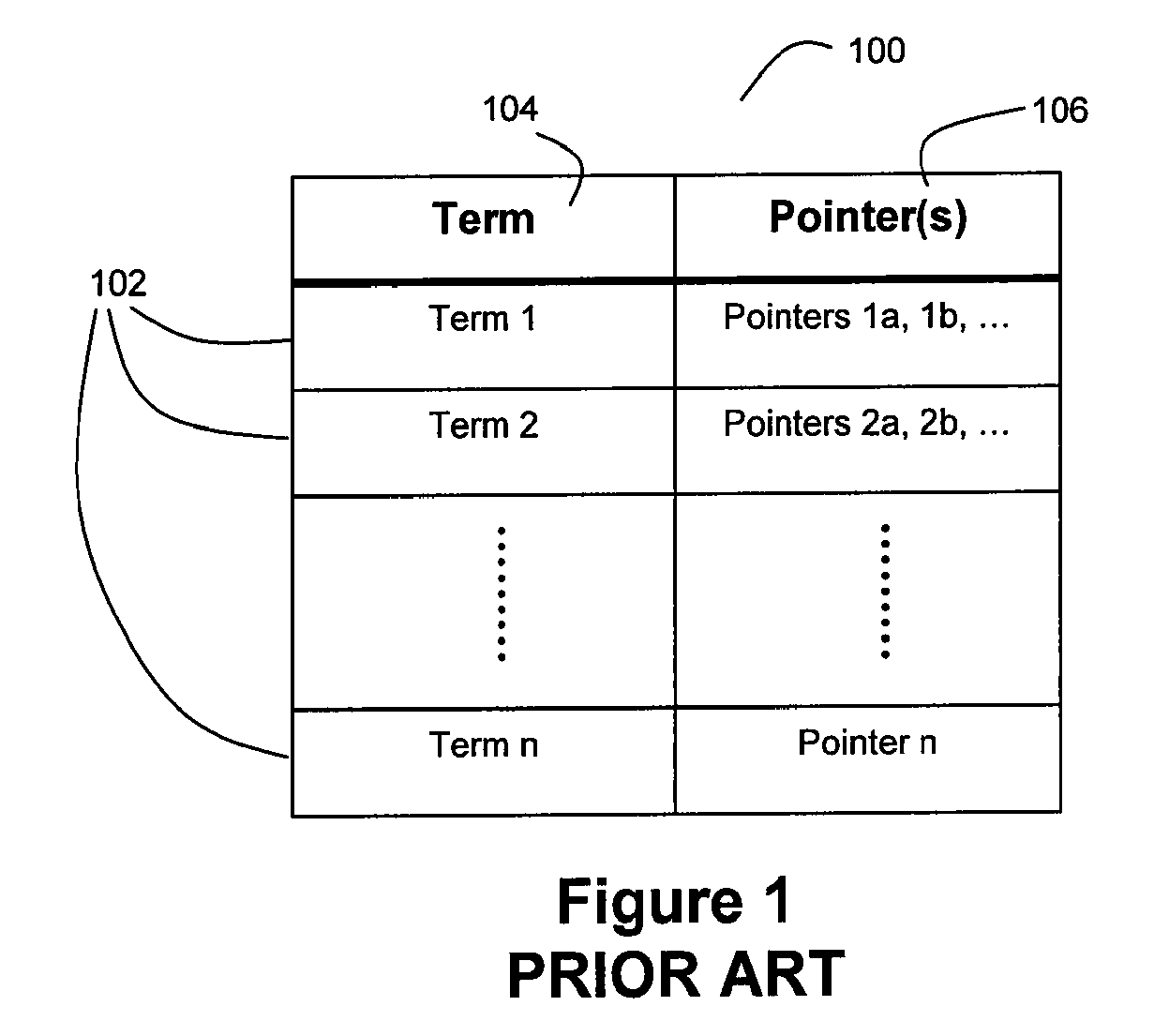

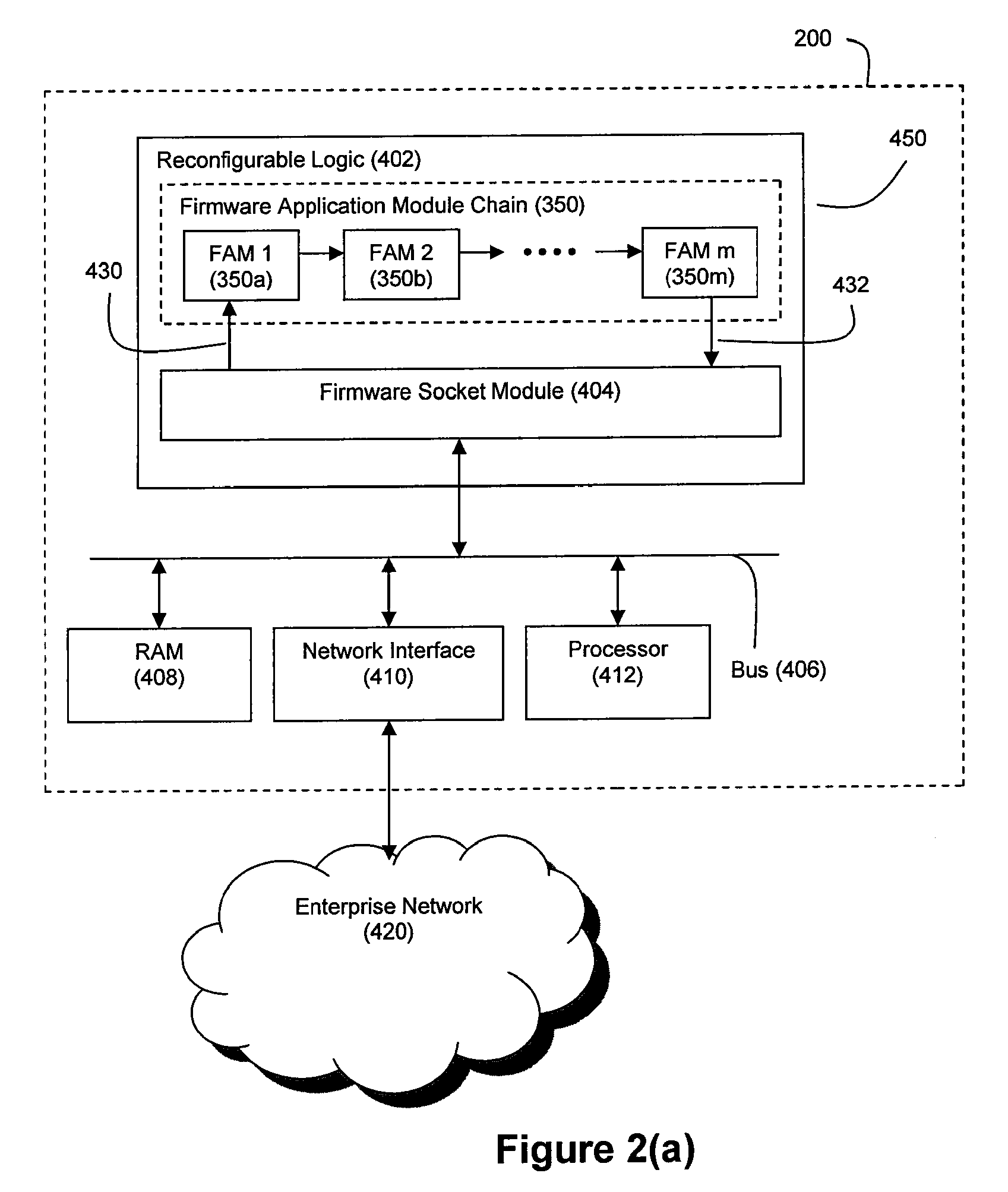

Method and System for High Performance Data Metatagging and Data Indexing Using Coprocessors

ActiveUS20080114725A1Robust and high performance data searchingHigh indexWeb data indexingFile access structuresData streamCoprocessor

Disclosed herein is a method and system for hardware-accelerating the generation of metadata for a data stream using a coprocessor. Using these techniques, data can be richly indexed, classified, and clustered at high speeds. Reconfigurable logic such a field programmable gate arrays (FPGAs) can be used by the coprocessor for this hardware acceleration. Techniques such as exact matching, approximate matching, and regular expression pattern matching can be employed by the coprocessor to generate desired metadata for the data stream.

Owner:IP RESERVOIR

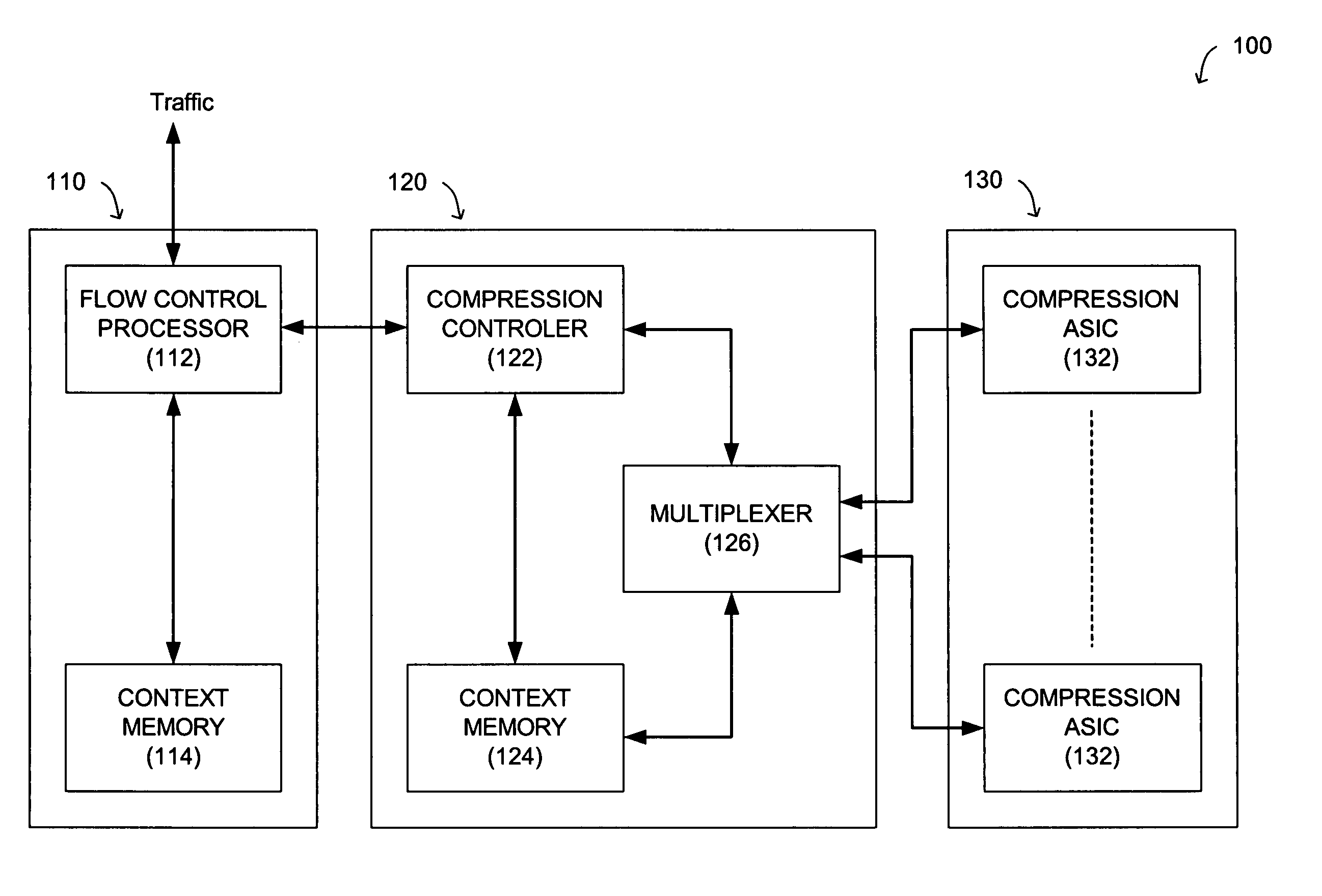

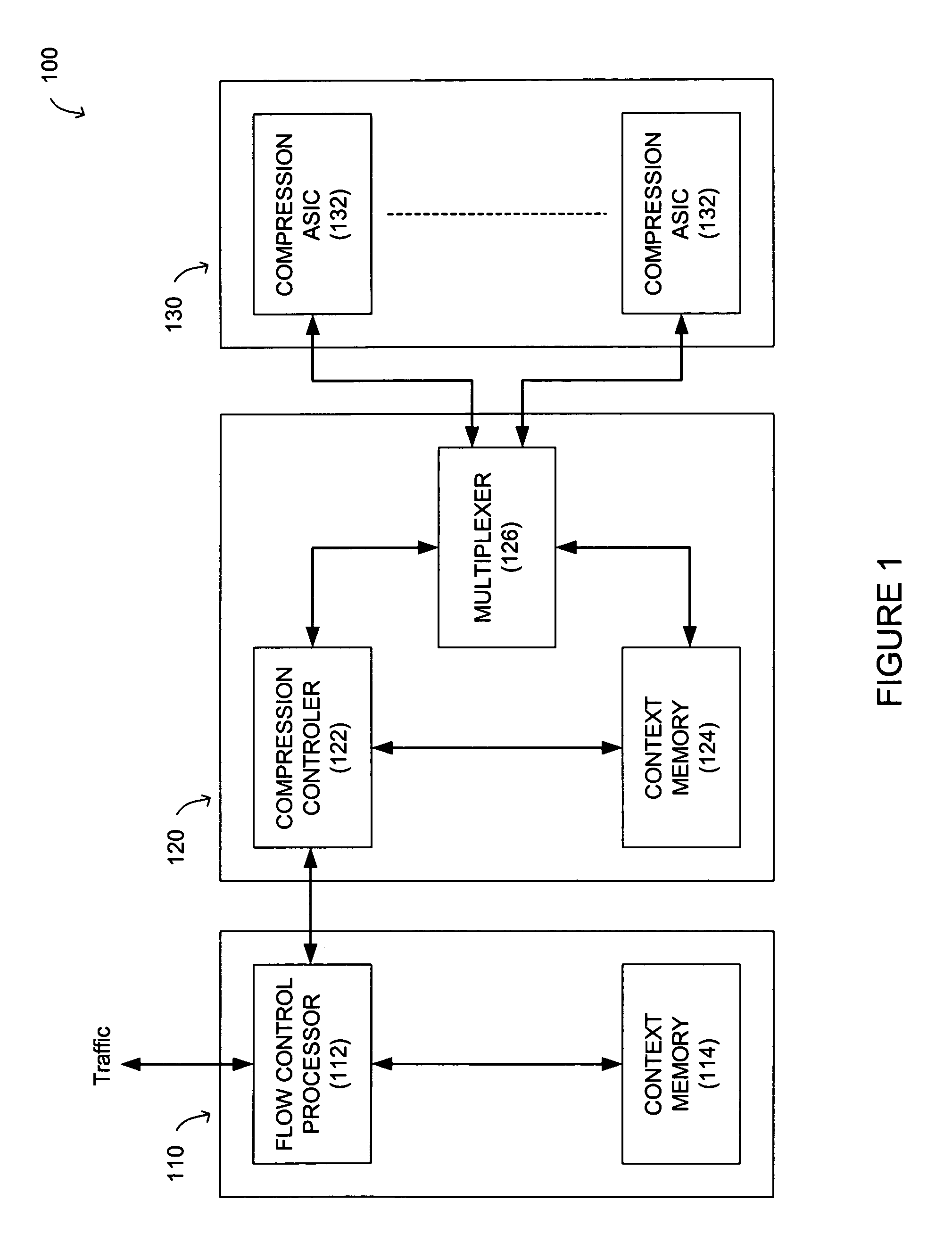

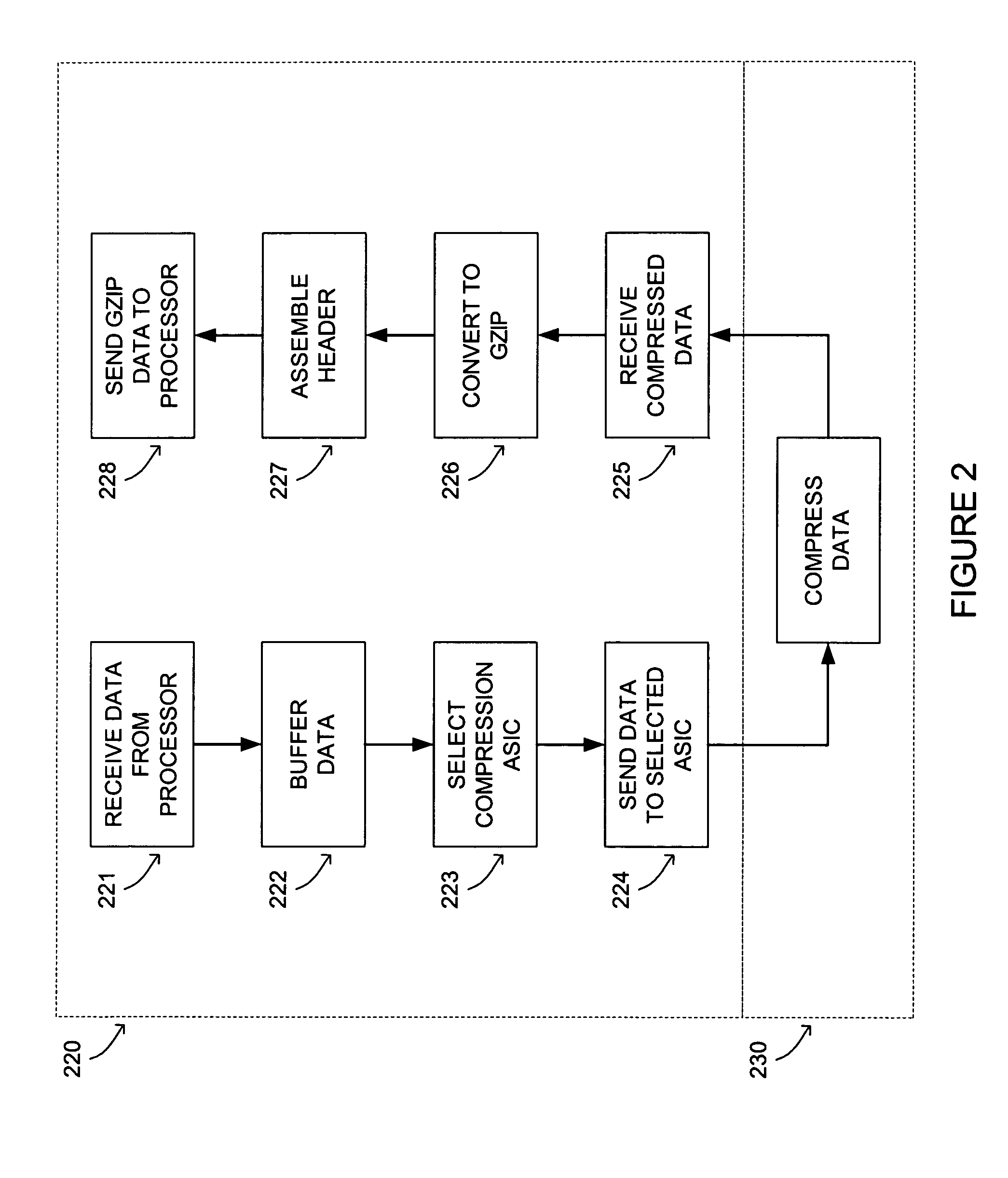

Hardware accelerated compression

ActiveUS7051126B1Improve compression speedImprove data throughputCode conversionMultiple digital computer combinationsData streamHardware acceleration

A compression system is arranged to use software and / or hardware accelerated compression techniques to increase compression speeds and enhance overall data throughput. A logic circuit is arranged to: receive a data stream from a flow control processor, buffer the data stream, select a hardware compressor (e.g., an ASIC), and forward the data to the selected hardware compressor. Each hardware compressor performs compression on the data (e.g., LZ77), and sends the compressed data back to the logic circuit. The logic circuit receives the compressed data, converts the data to another compressed format (e.g., GZIP), and forwards the converted and compressed data back to the flow control processor. History associated with the data stream can be stored in memory by the flow control processor, or in the logic circuit.

Owner:F5 NETWORKS INC

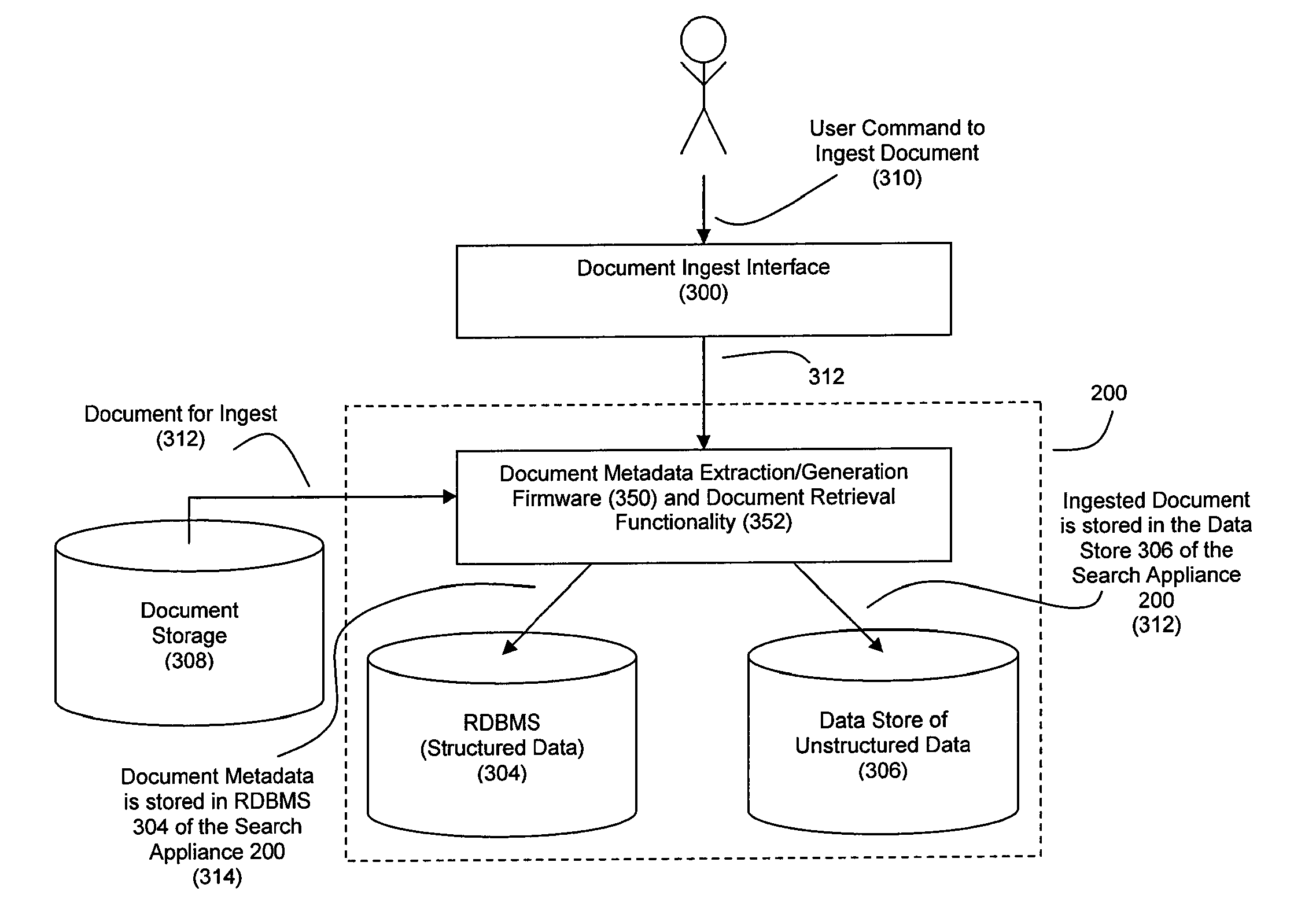

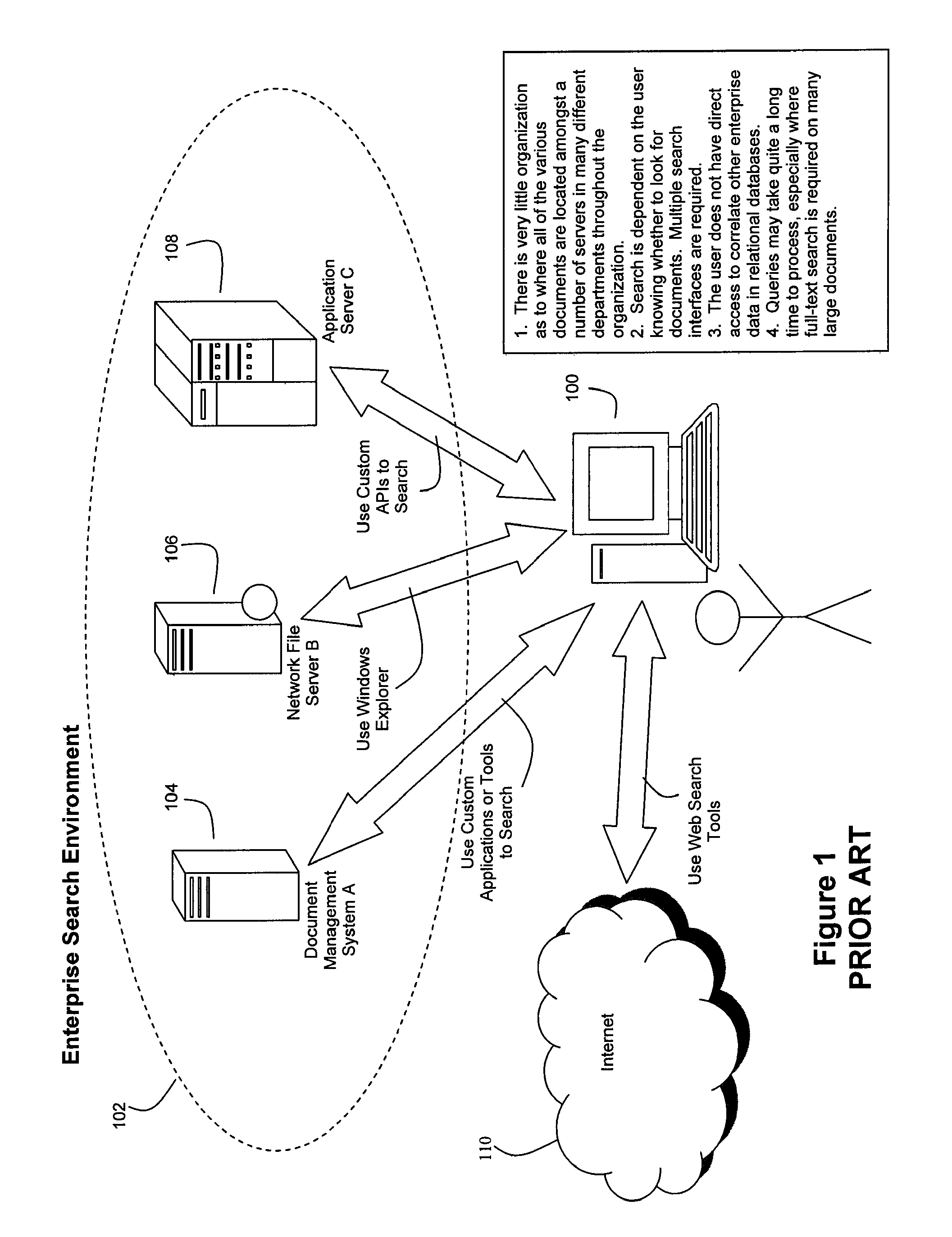

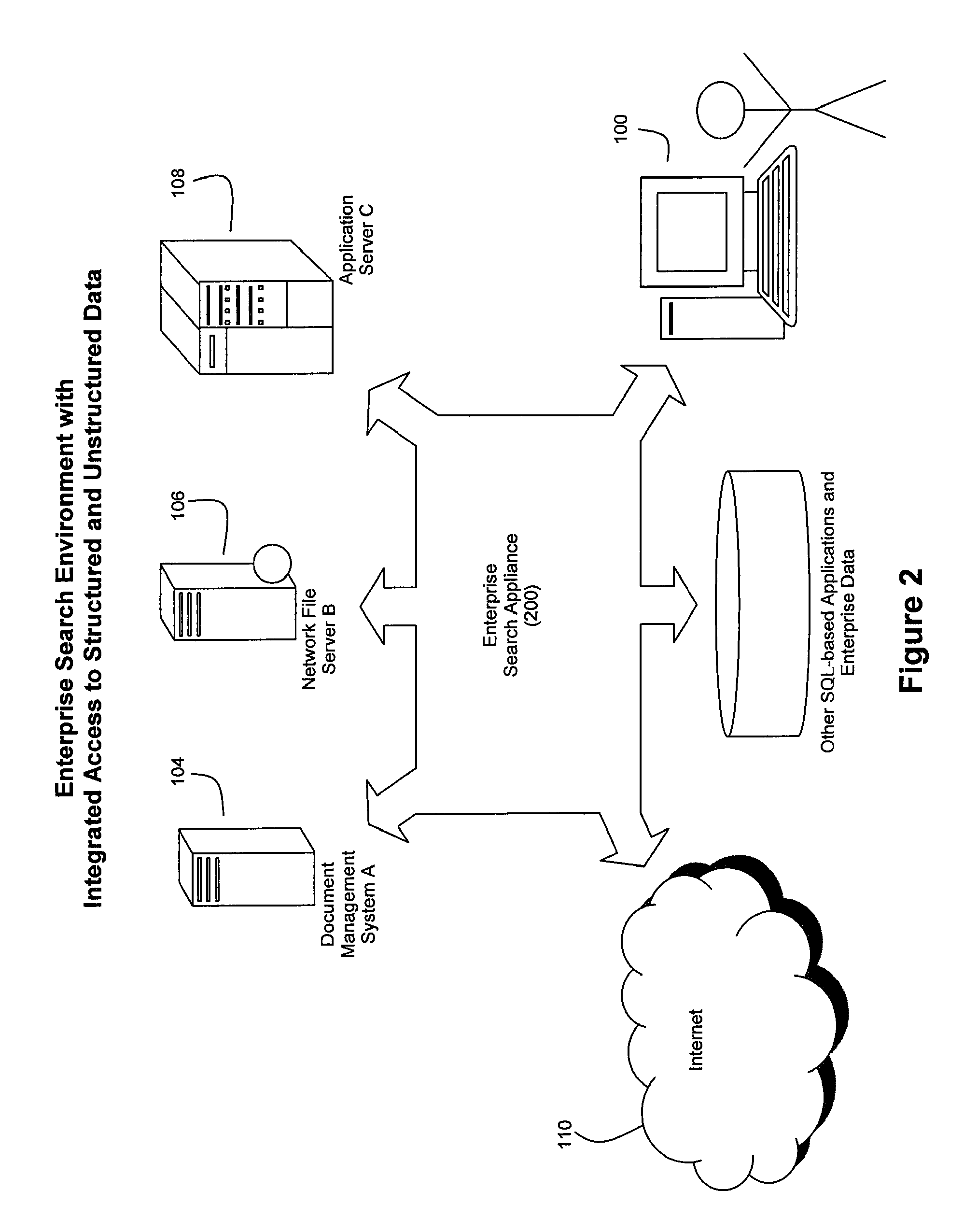

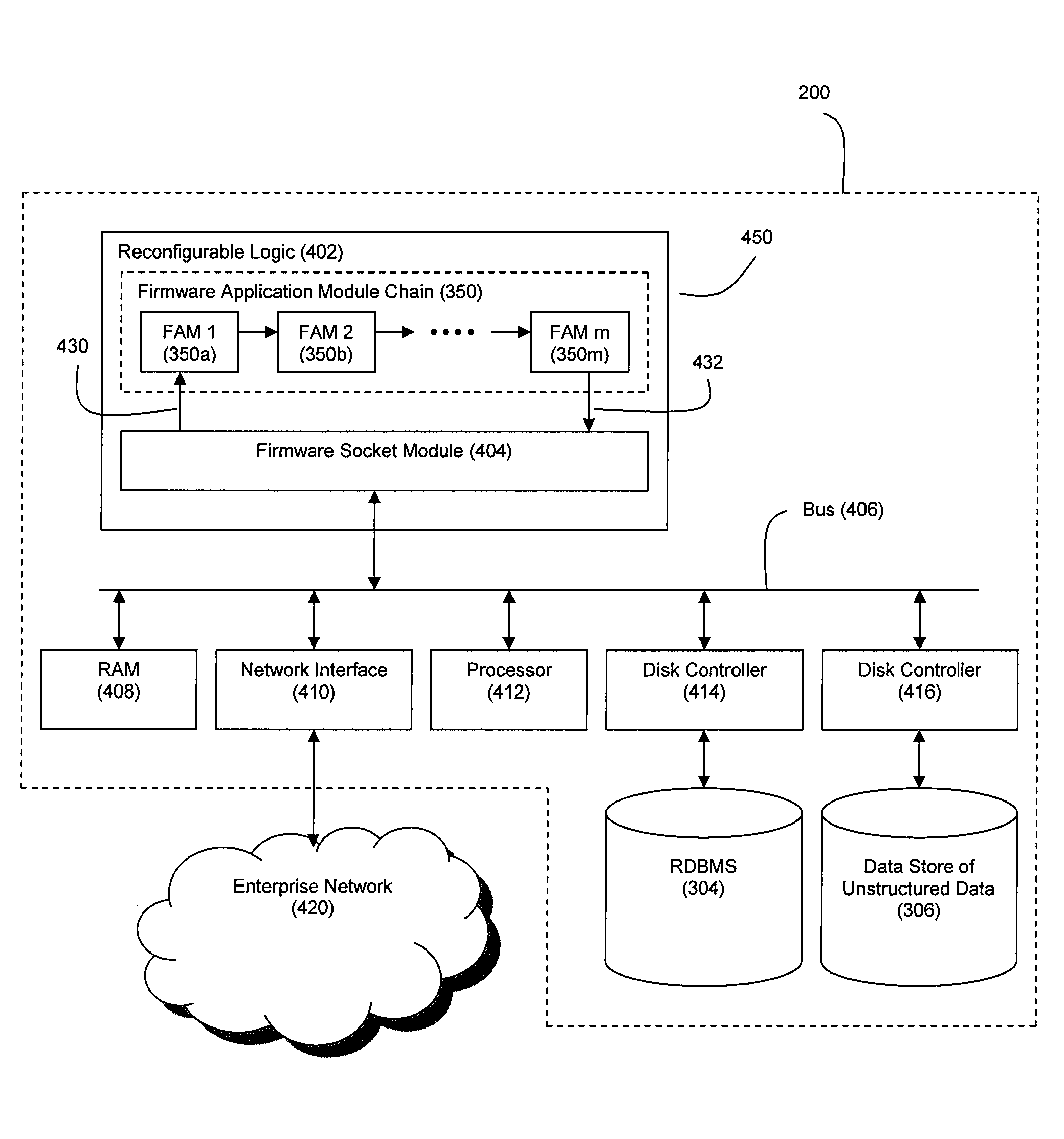

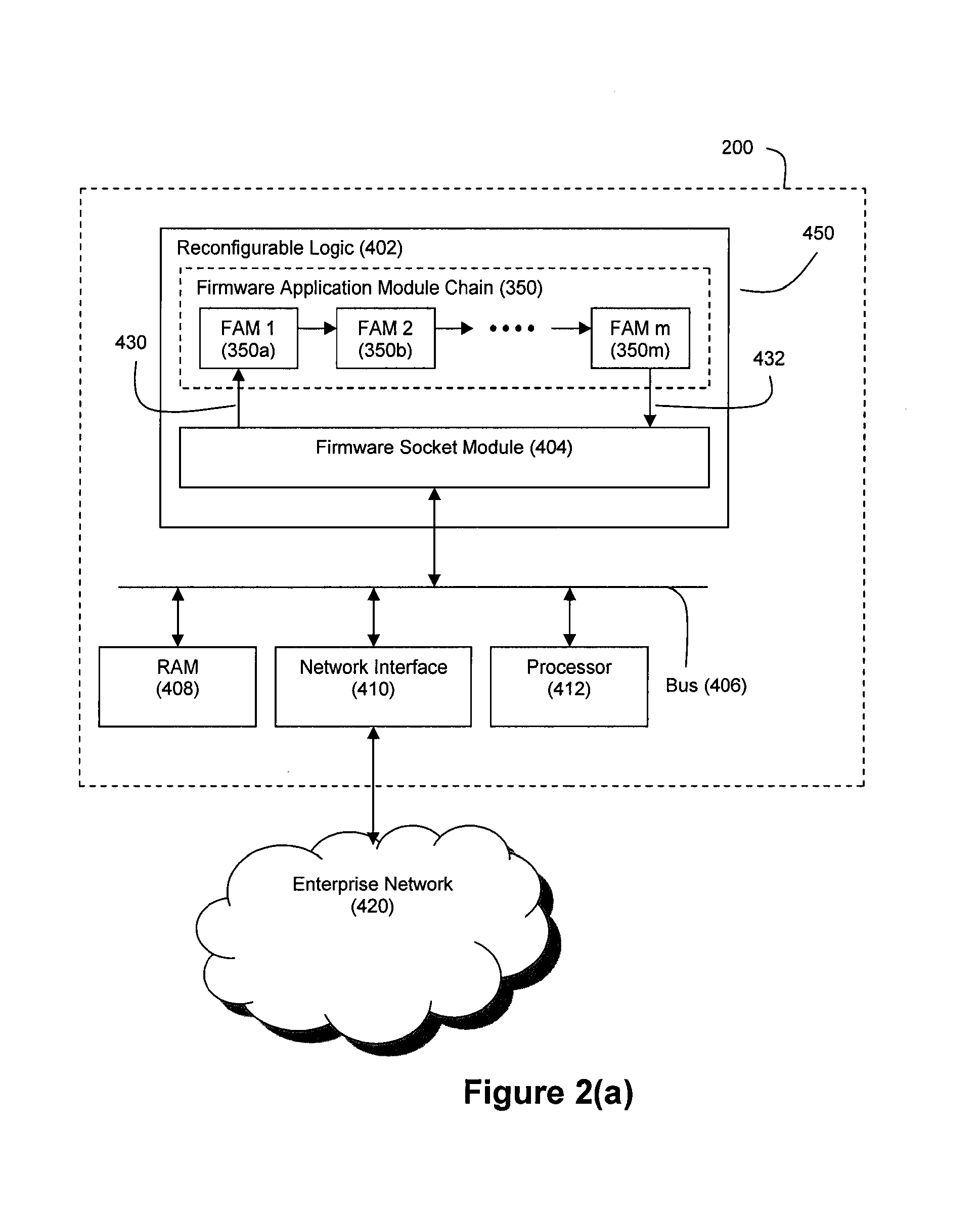

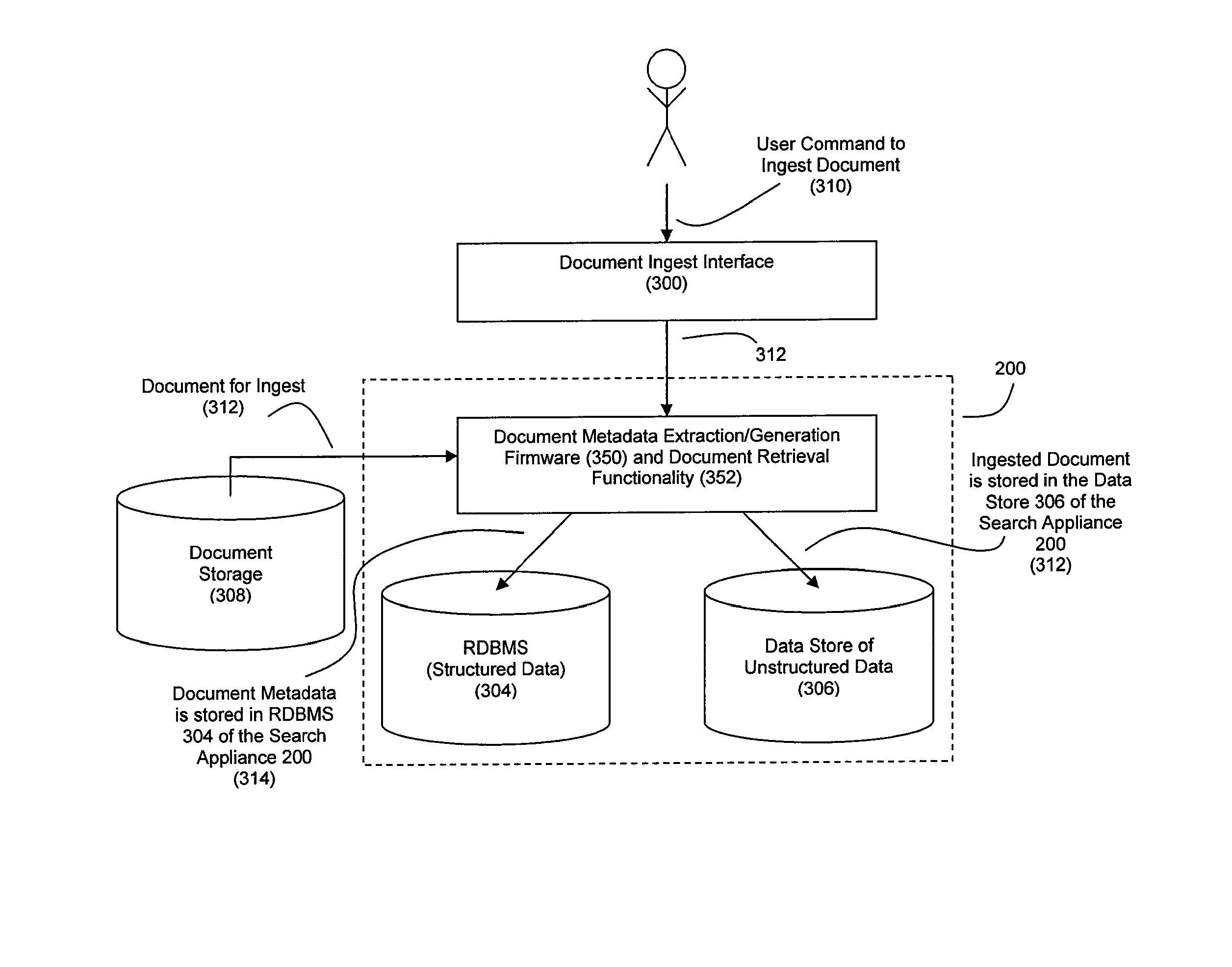

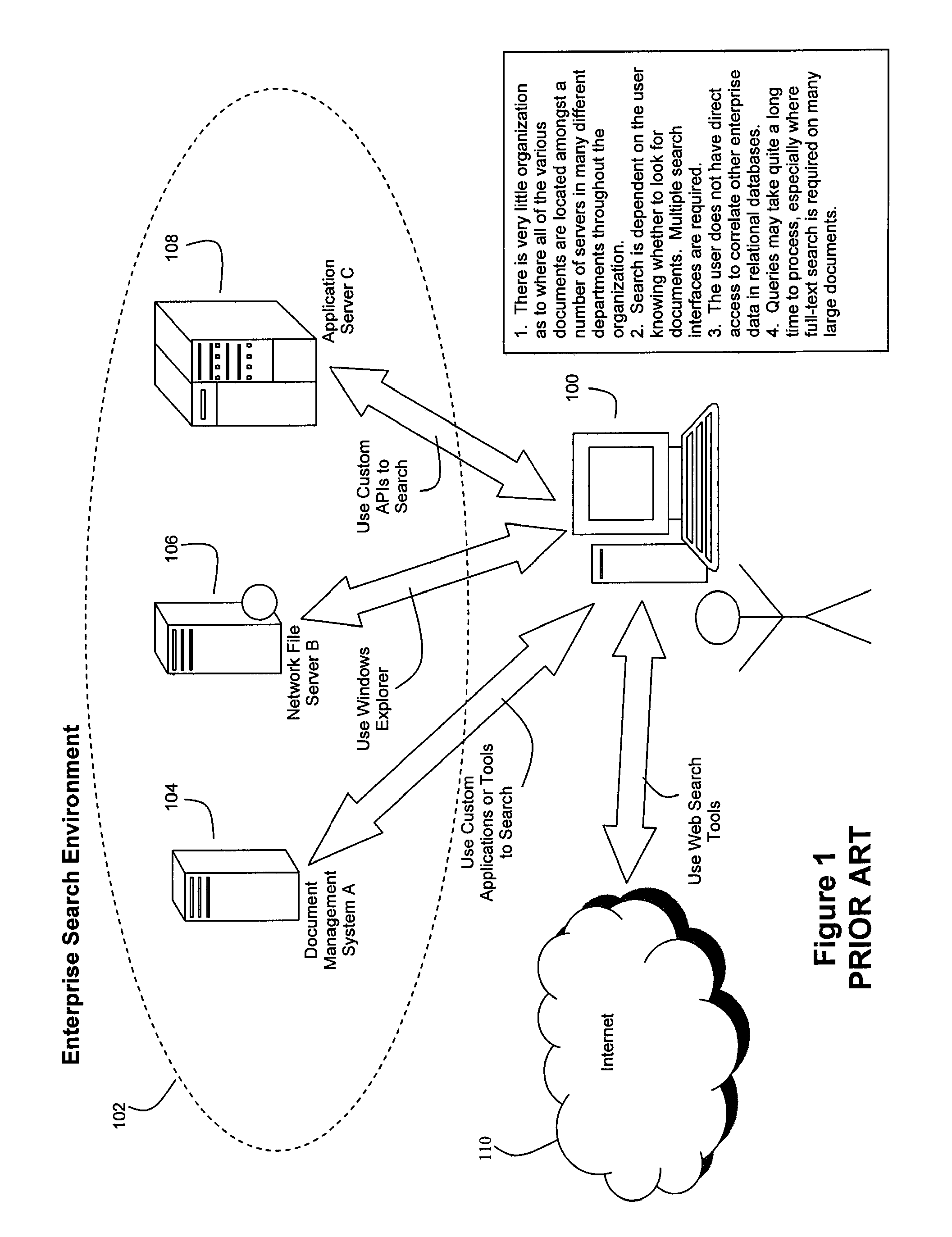

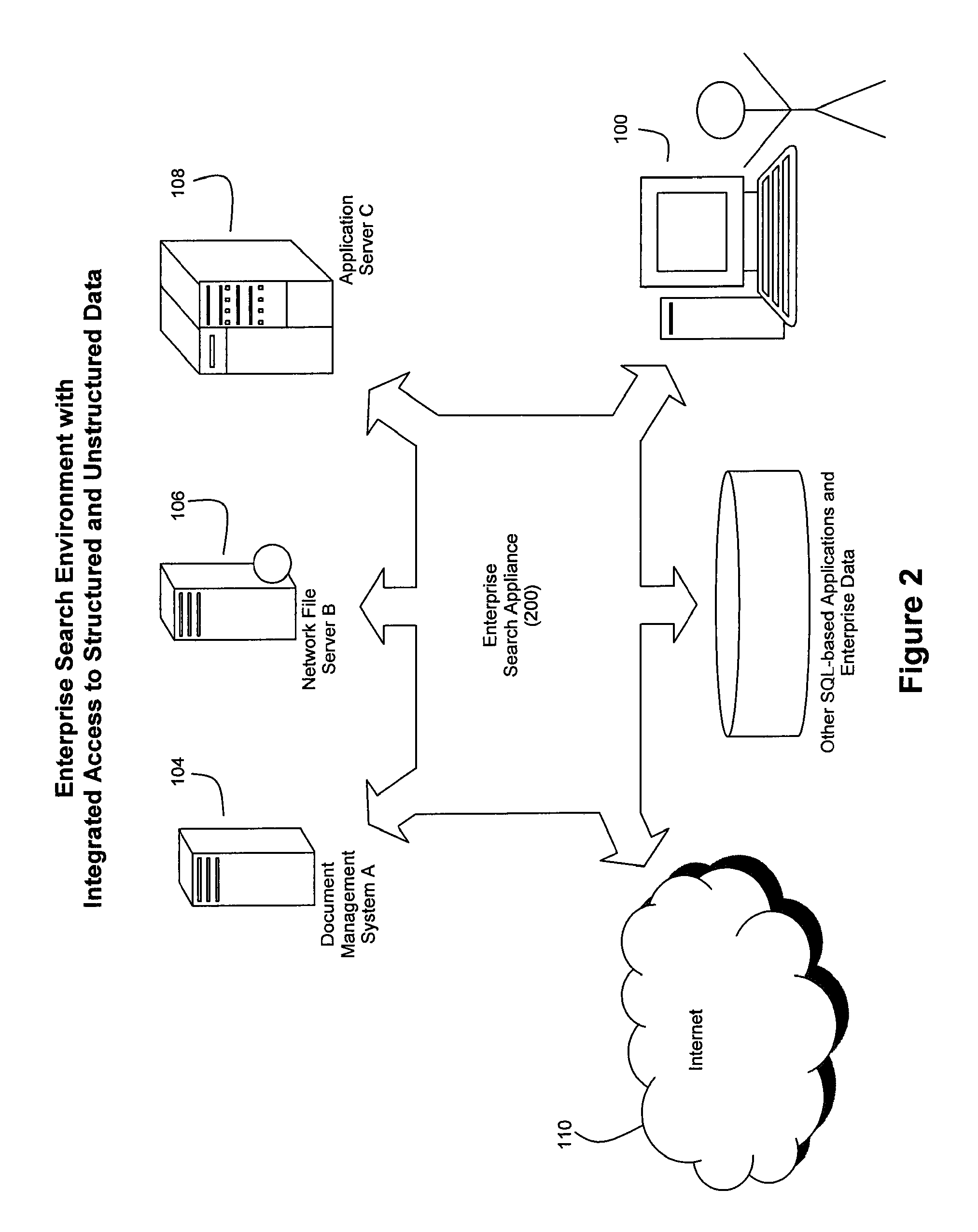

Method and System for High Performance Integration, Processing and Searching of Structured and Unstructured Data Using Coprocessors

ActiveUS20080114724A1Faster and more unified accessEffectively bifurcate query processingData processing applicationsDigital data processing detailsFull text searchRelational database

Disclosed herein is a method and system for integrating an enterprise's structured and unstructured data to provide users and enterprise applications with efficient and intelligent access to that data. Queries can be directed toward both an enterprise's structured and unstructured data using standardized database query formats such as SQL commands. A coprocessor can be used to hardware-accelerate data processing tasks (such as full-text searching) on unstructured data as necessary to handle a query. Furthermore, traditional relational database techniques can be used to access structured data stored by a relational database to determine which portions of the enterprise's unstructured data should be delivered to the coprocessor for hardware-accelerated data processing.

Owner:IP RESERVOIR

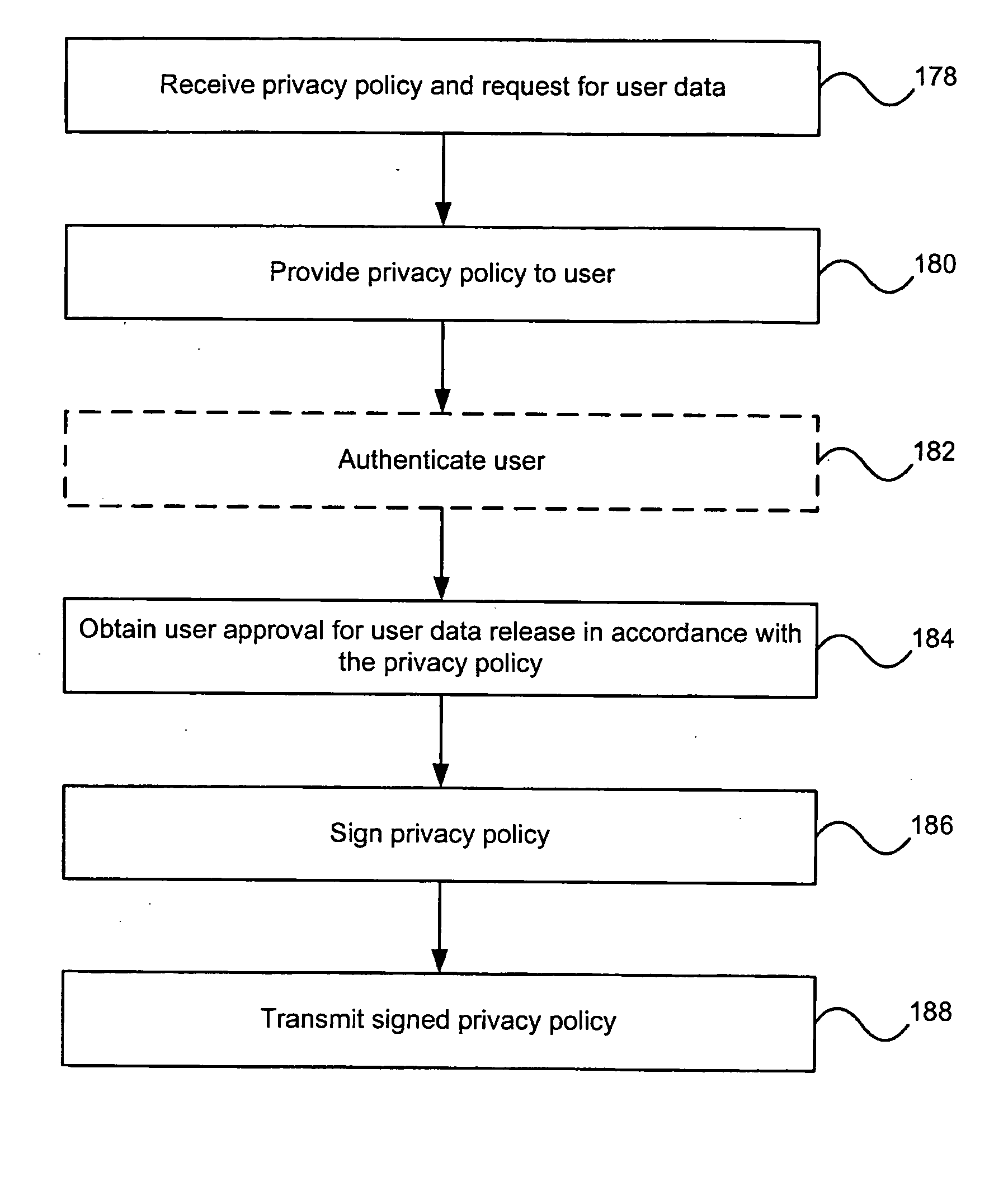

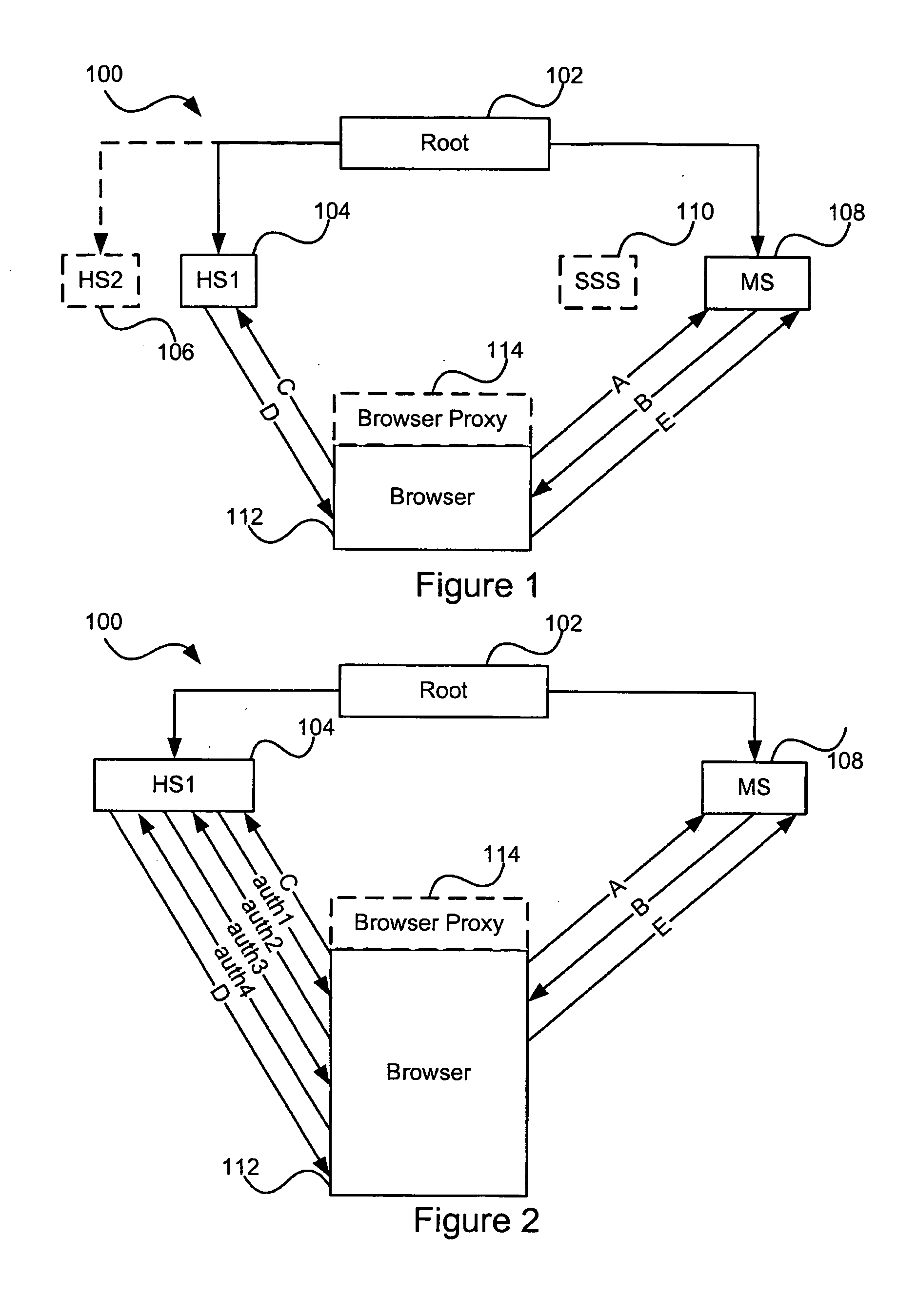

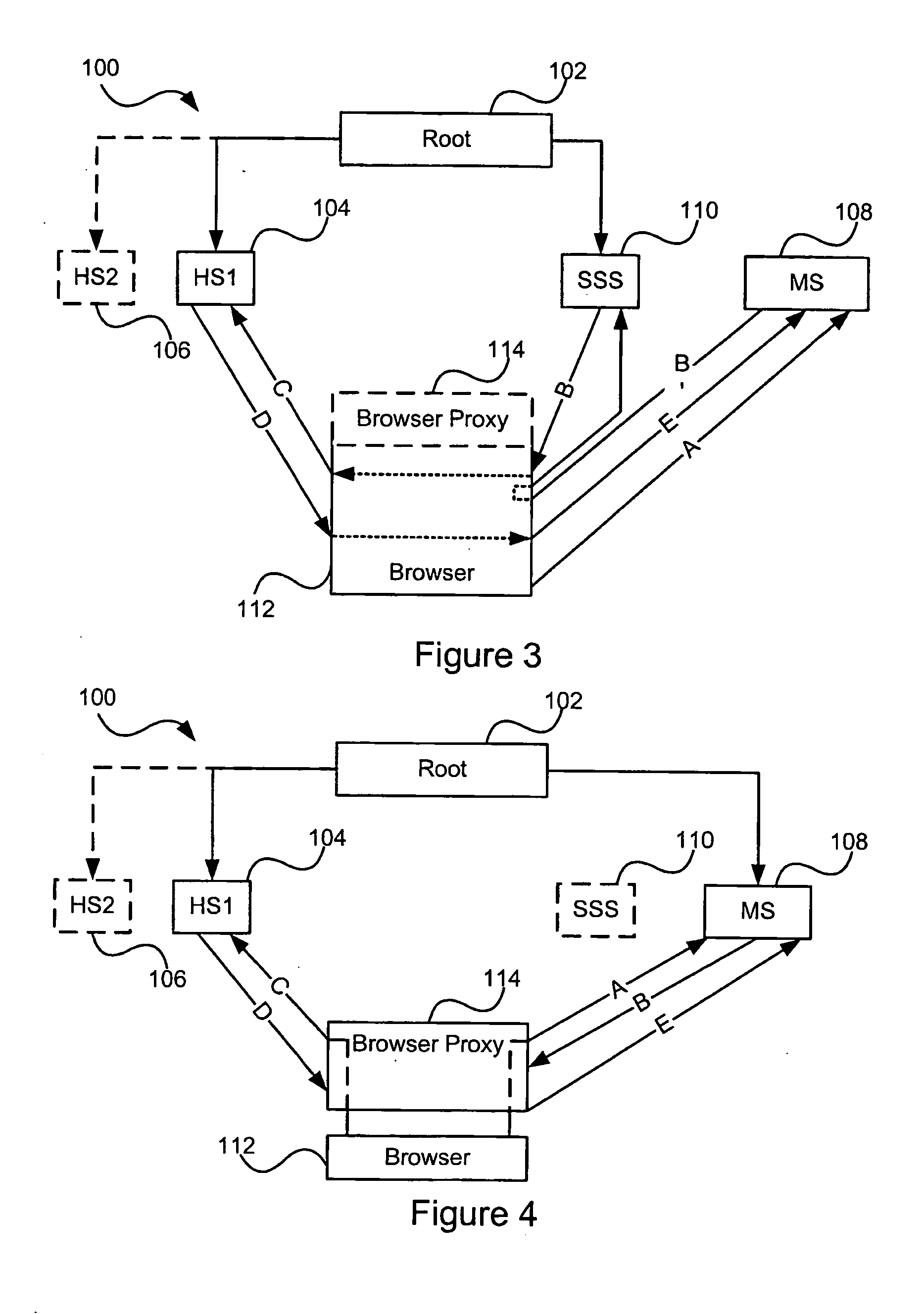

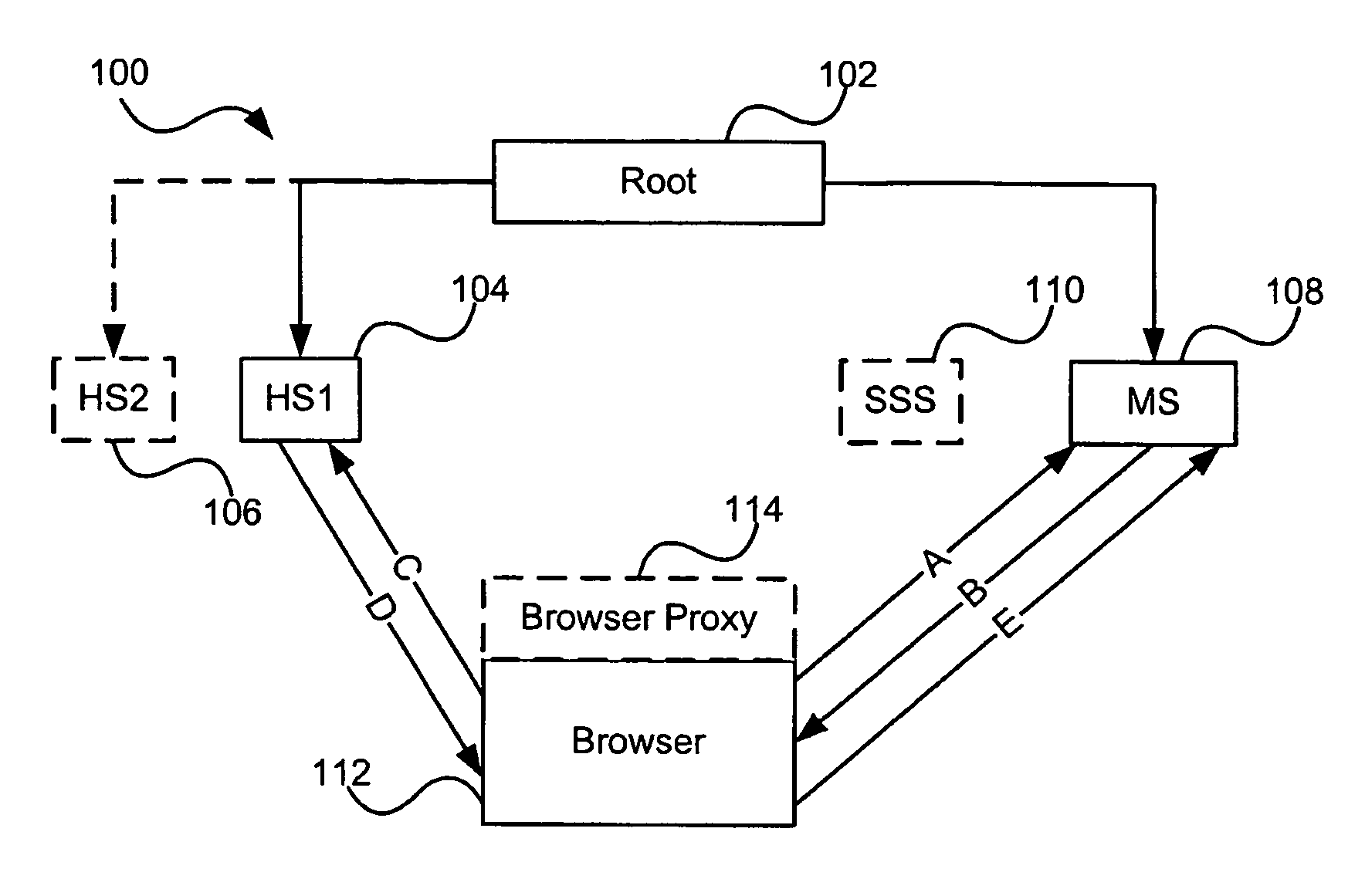

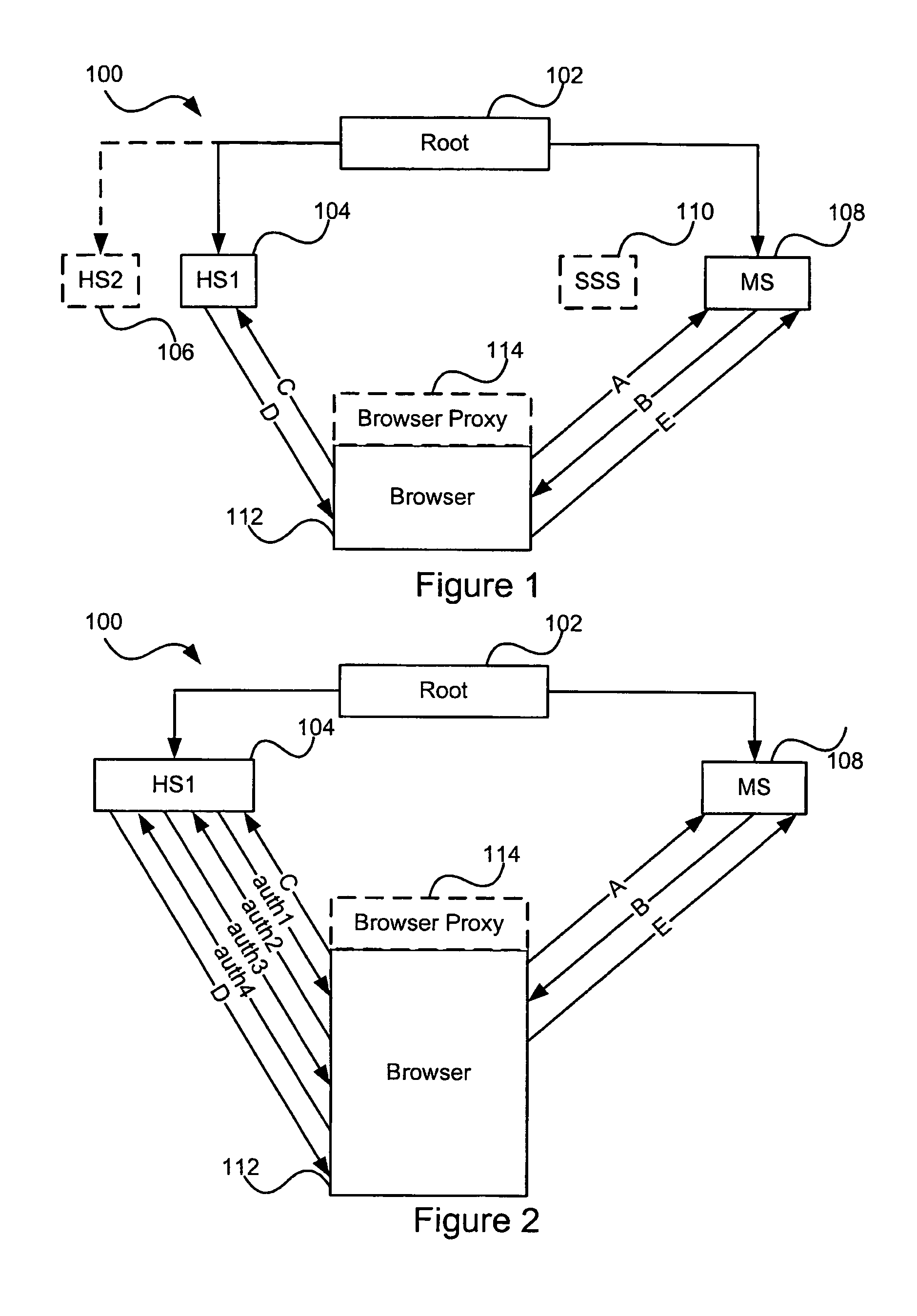

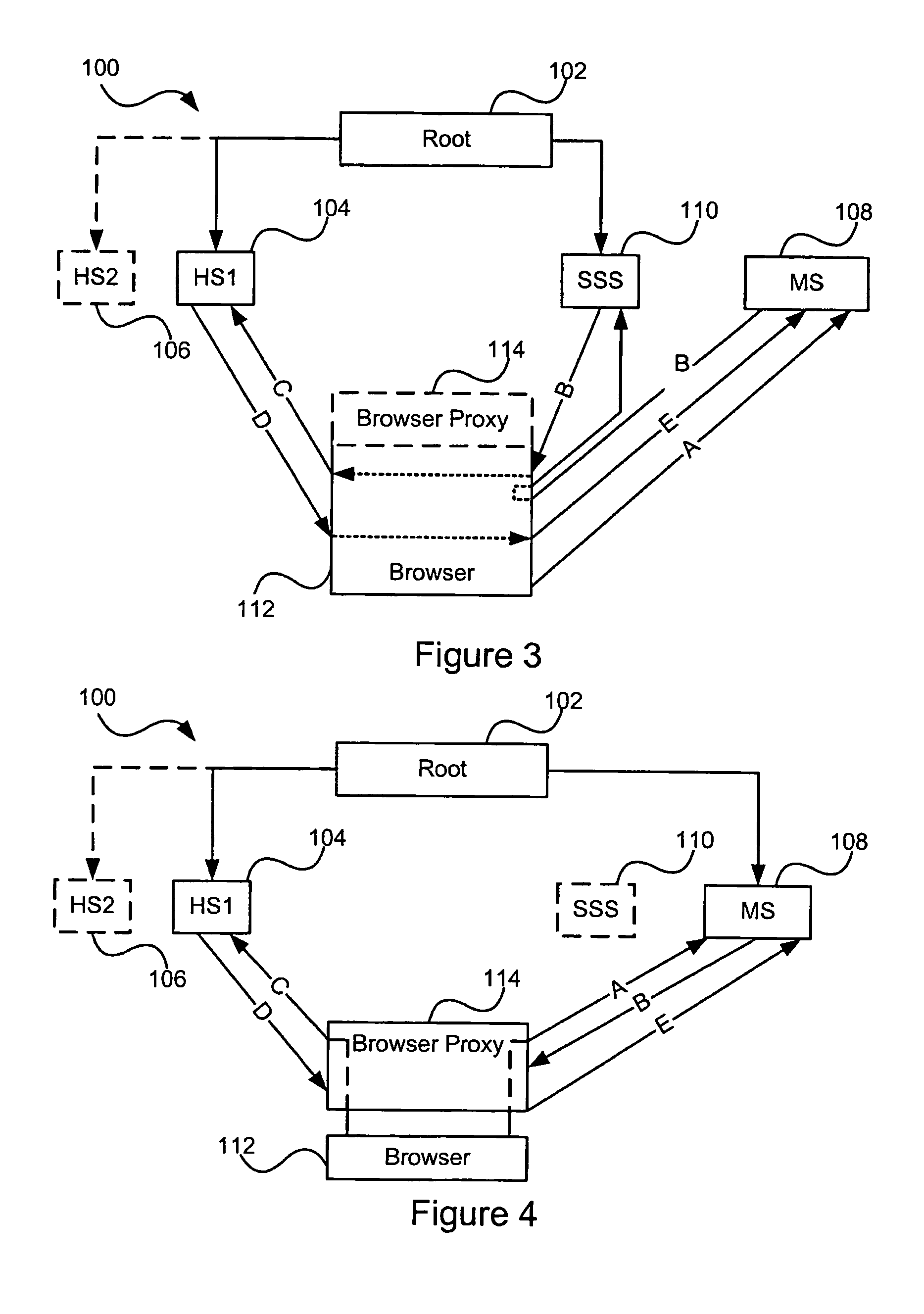

Distributed hierarchical identity management system authentication mechanisms

ActiveUS20050283614A1Data taking preventionUser identity/authority verificationThird partyInternet privacy

A set of methods, and systems, for use in an identity management system are disclosed herein. A modular user identity information datastore using hardware accelerated encryption for user data security operates in a network for receiving requests for, and issuing responses containing user information including third party accredited assertions.

Owner:CALLAHAN CELLULAR L L C

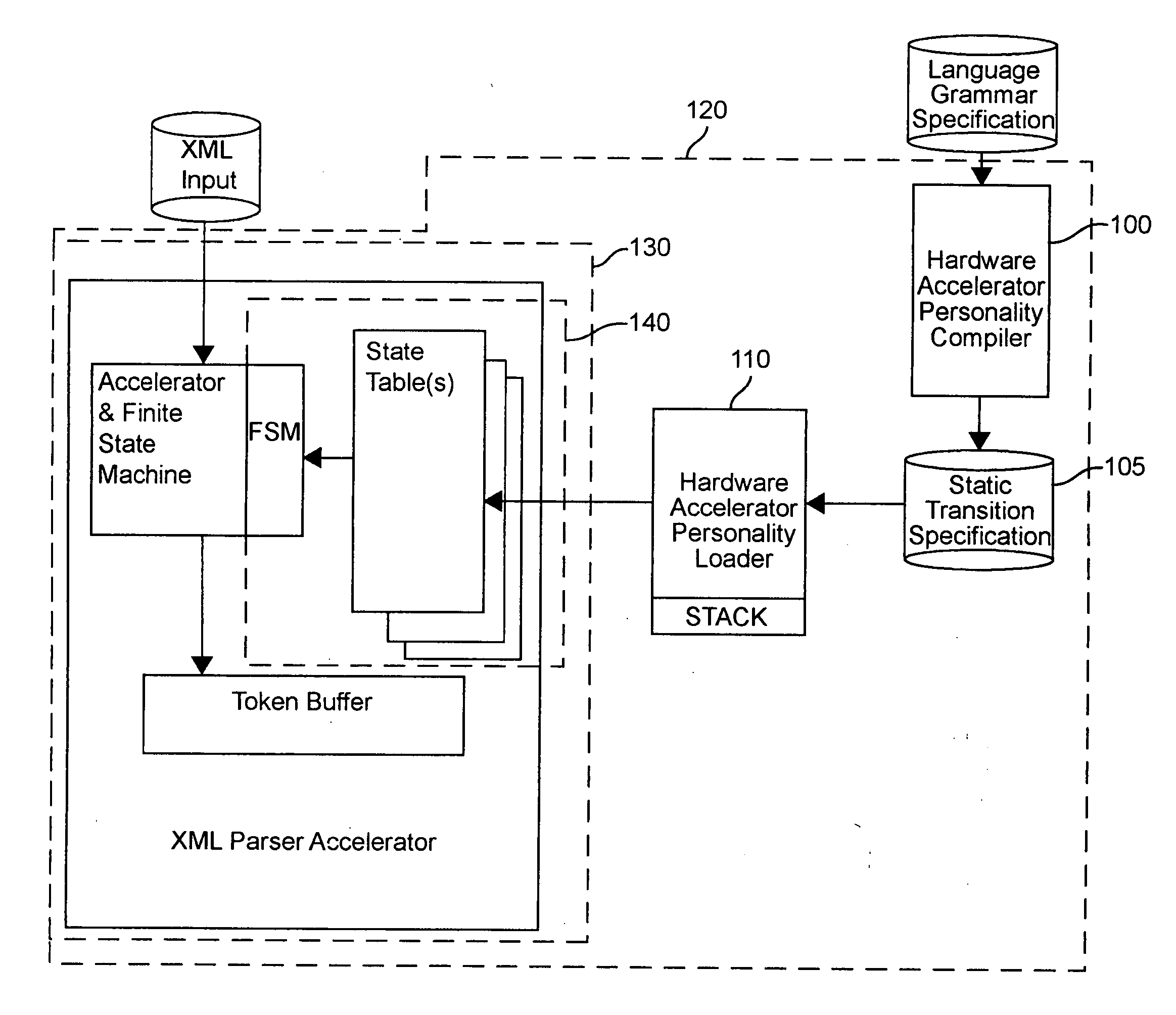

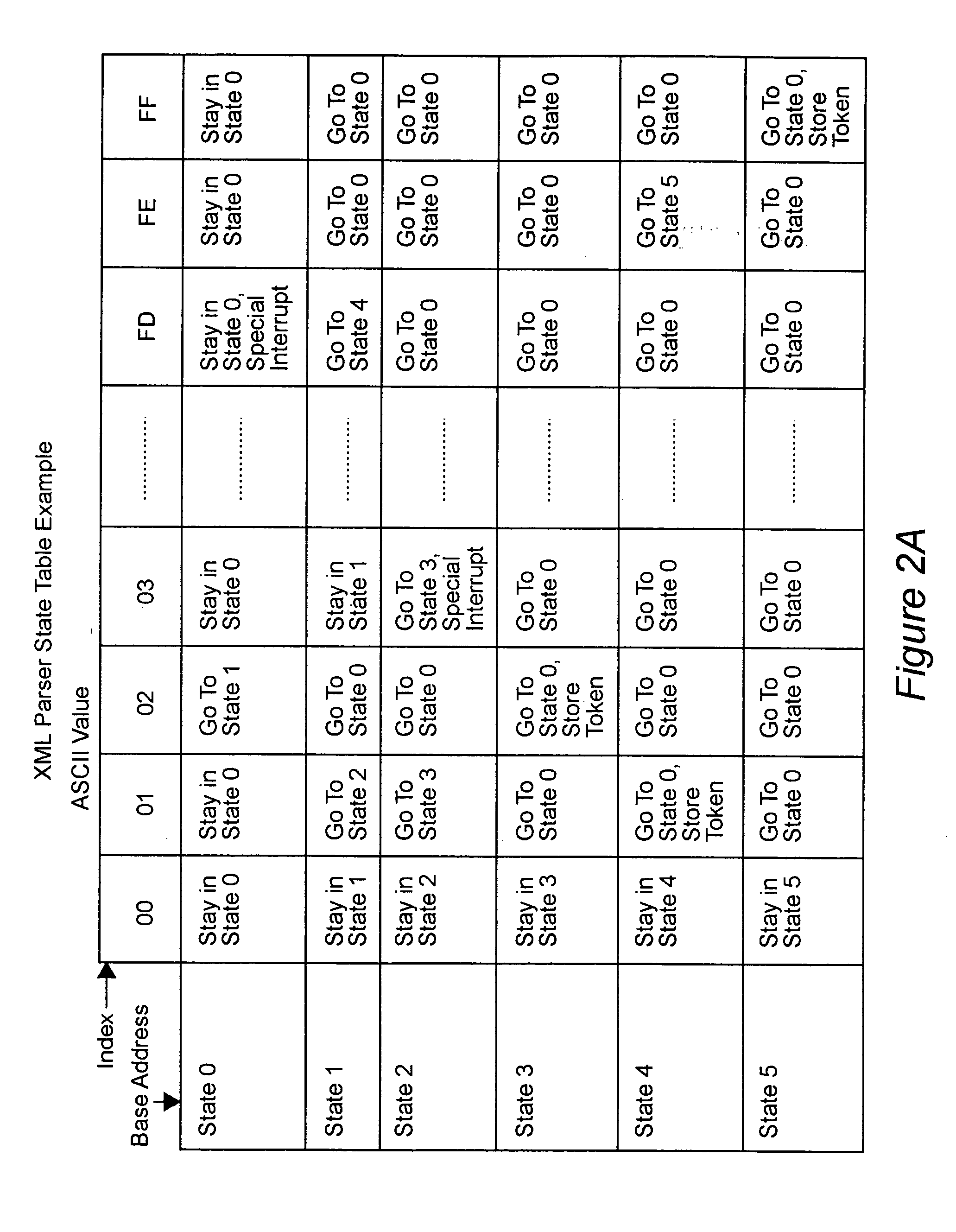

Hardware accelerator personality compiler

InactiveUS20040172234A1Software engineeringSpecial data processing applicationsState switchingAutomaton

Error-free state tables are automatically generated from a specification of a group of desired performable functions, such as are provided in a programming language in a formal notation such as Backus-Naur form or a derivative thereof by discriminating tokens corresponding to respective performable functions, identifications, arguments, syntax, grammar rules, special symbols and the like. The tokens may be recursive (e.g. infinite), in which case they are transformed into a finite automata which may be deterministic or non-deterministic. Non-deterministic finite automata are transformed into deterministic finite automata and then into state transitions which are used to build a state table which can then be stored or, preferably, loaded into a finite state machine of a hardware parser accelerator to define its personality.

Owner:LOCKHEED MARTIN CORP

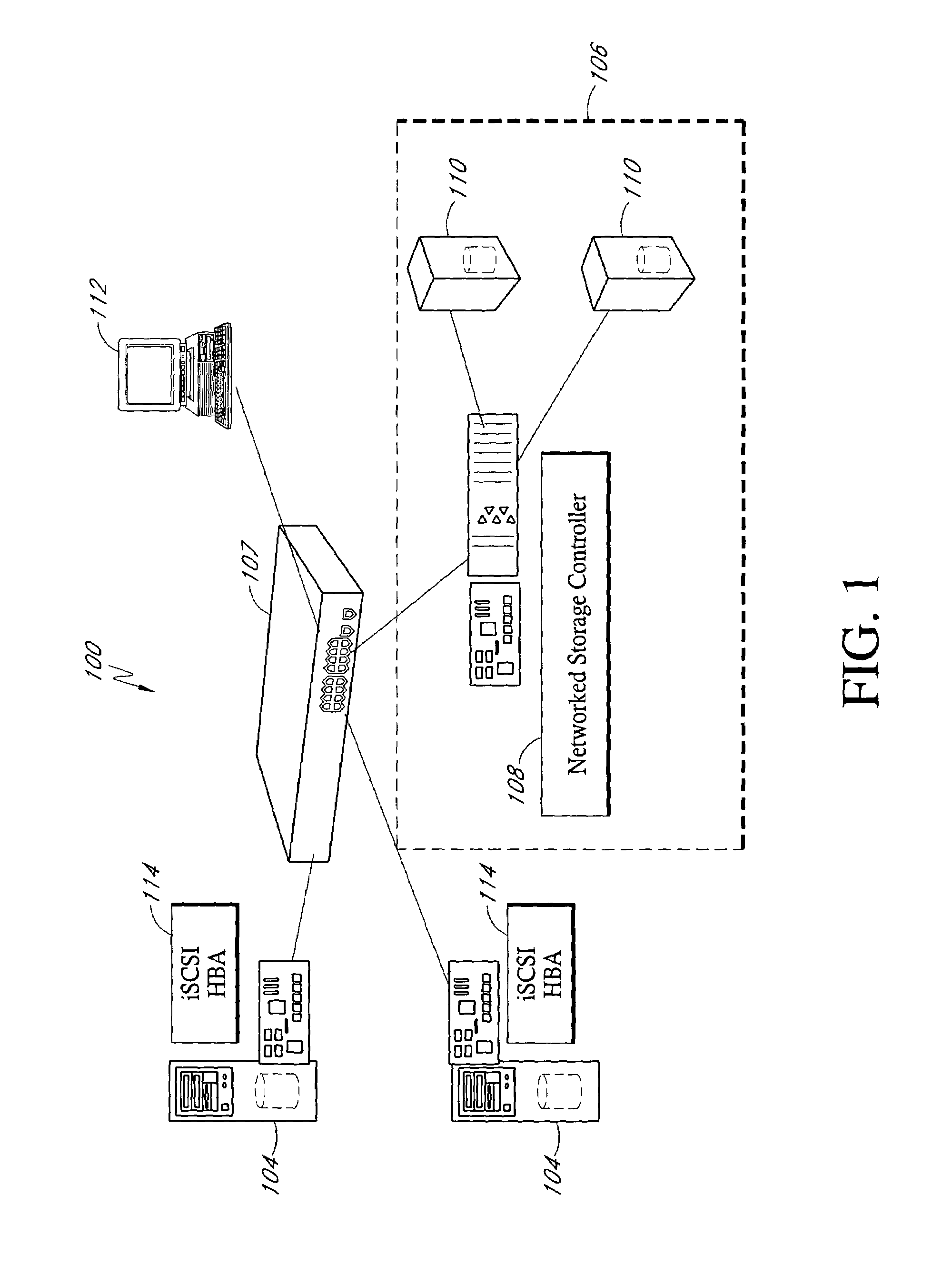

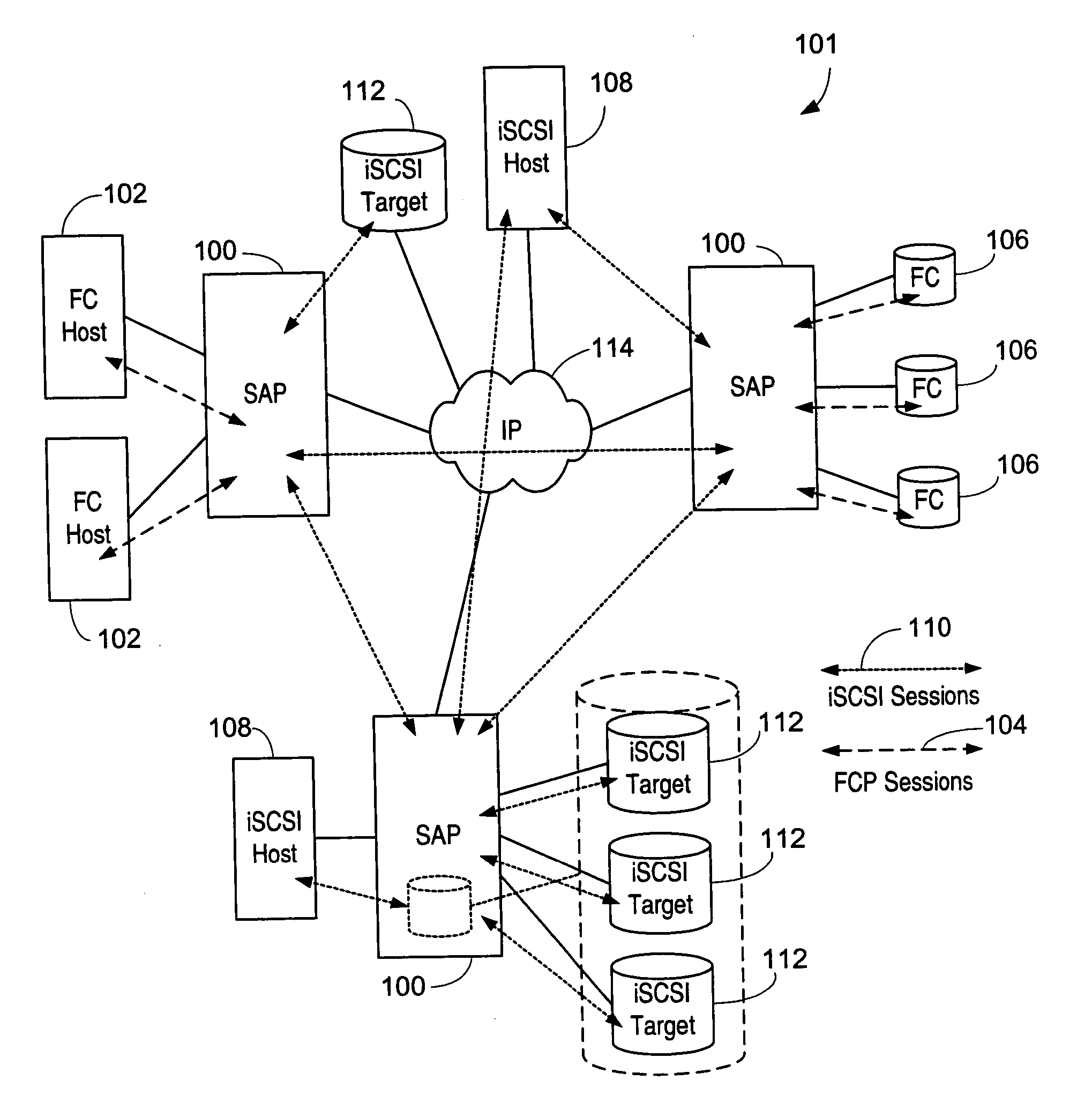

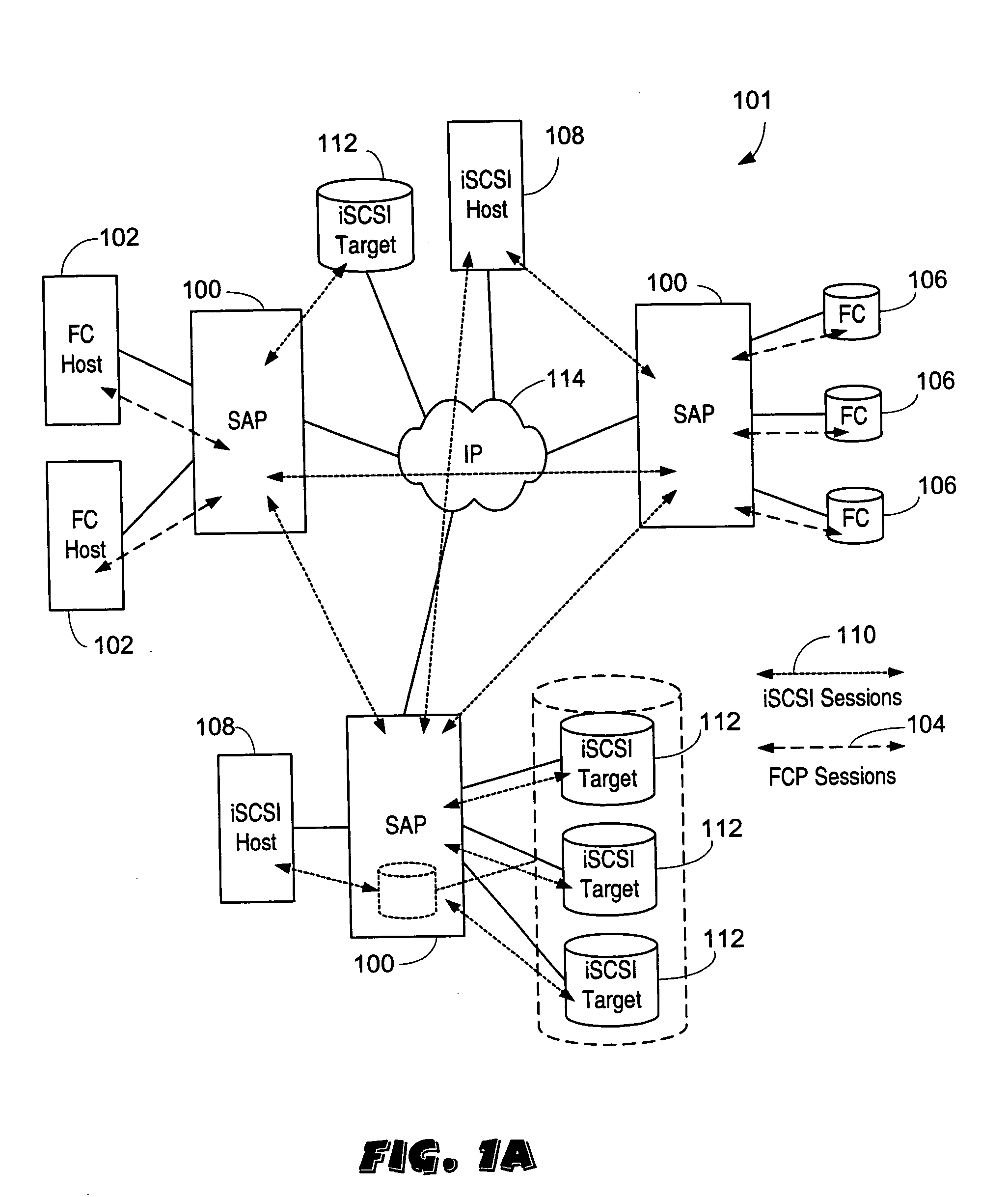

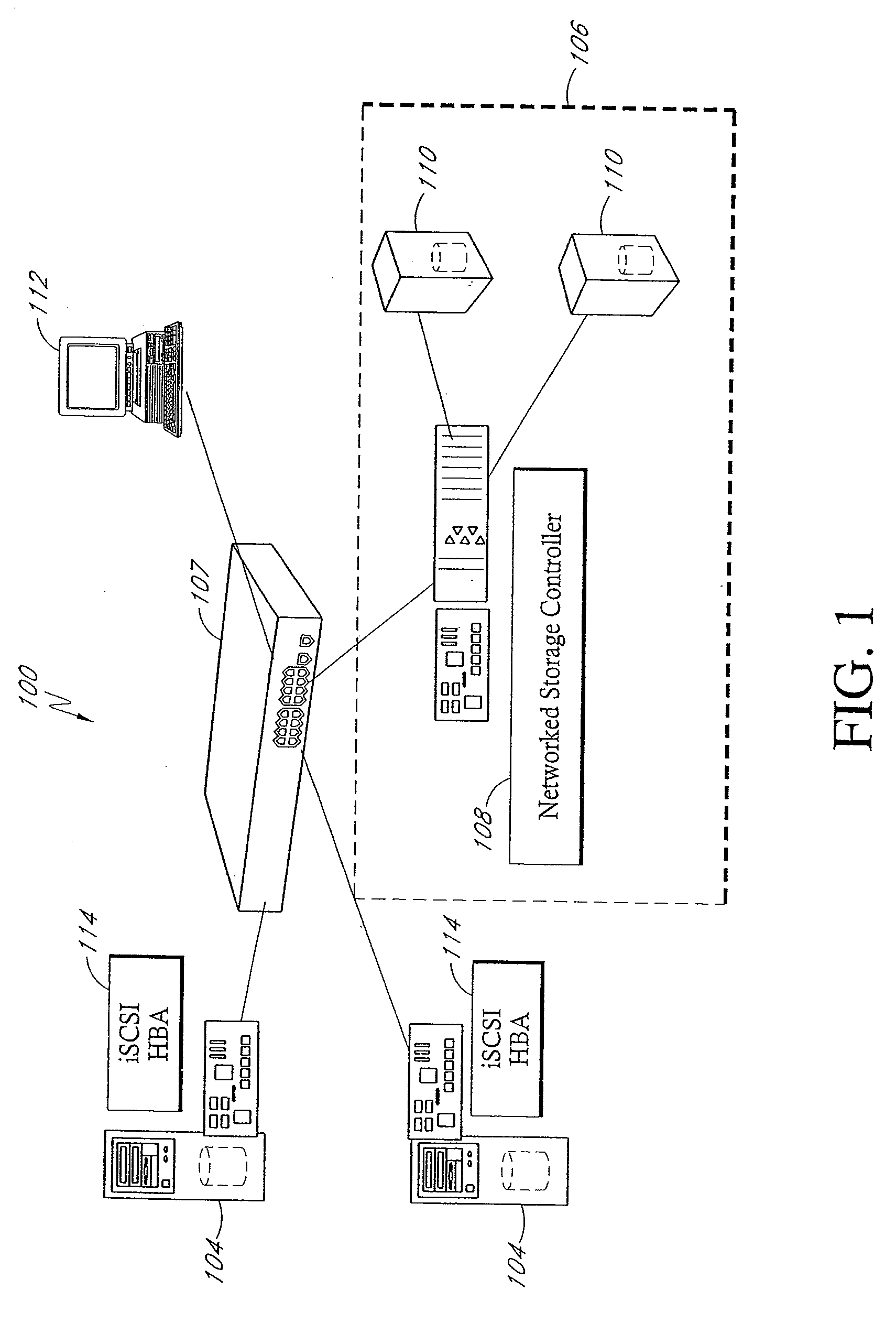

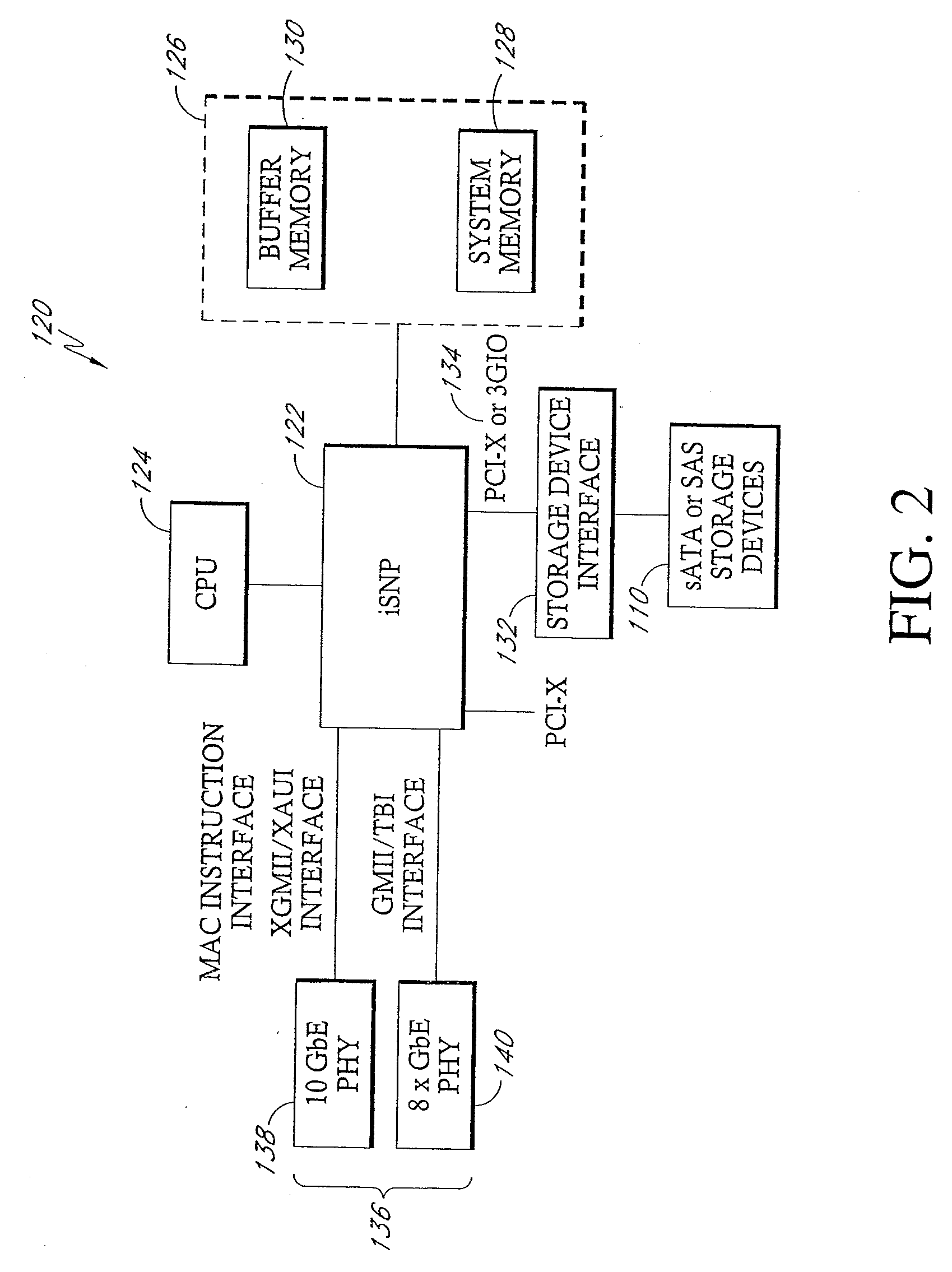

Apparatus and method for data virtualization in a storage processing device

ActiveUS20050033878A1Achieve scaleProvide flexibilityMultiplex system selection arrangementsInput/output to record carriersInternet trafficMulti protocol

A system including a storage processing device with an input / output module. The input / output module has port processors to receive and transmit network traffic. The input / output module also has a switch connecting the port processors. Each port processor categorizes the network traffic as fast path network traffic or control path network traffic. The switch routes fast path network traffic from an ingress port processor to a specified egress port processor. The storage processing device also includes a control module to process the control path network traffic received from the ingress port processor. The control module routes processed control path network traffic to the switch for routing to a defined egress port processor. The control module is connected to the input / output module. The input / output module and the control module are configured to interactively support data virtualization, data migration, data journaling, and snapshotting. The distributed control and fast path processors achieve scaling of storage network software. The storage processors provide line-speed processing of storage data using a rich set of storage-optimized hardware acceleration engines. The multi-protocol switching fabric provides a low-latency, protocol-neutral interconnect that integrally links all components with any-to-any non-blocking throughput.

Owner:AVAGO TECH INT SALES PTE LTD

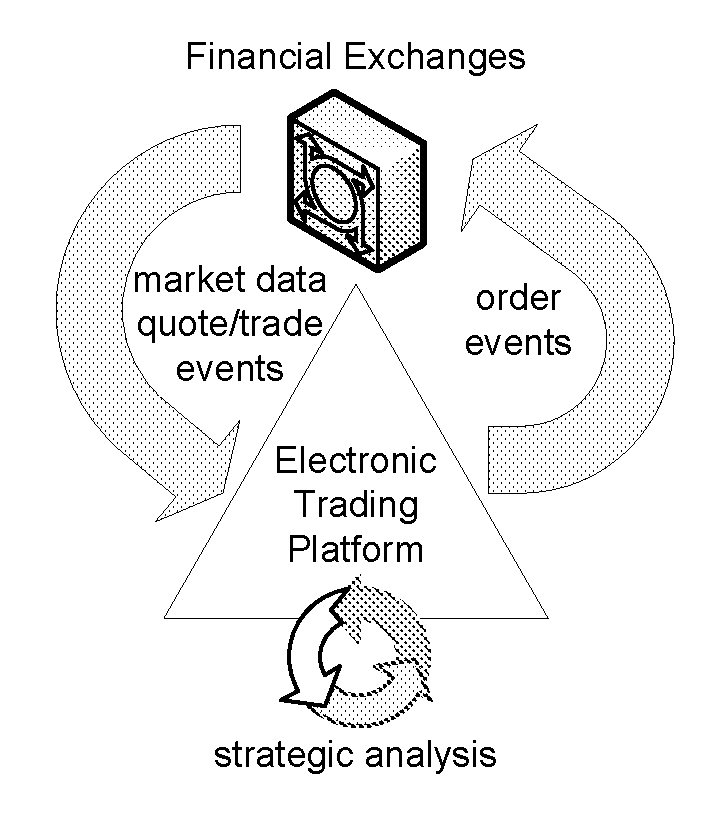

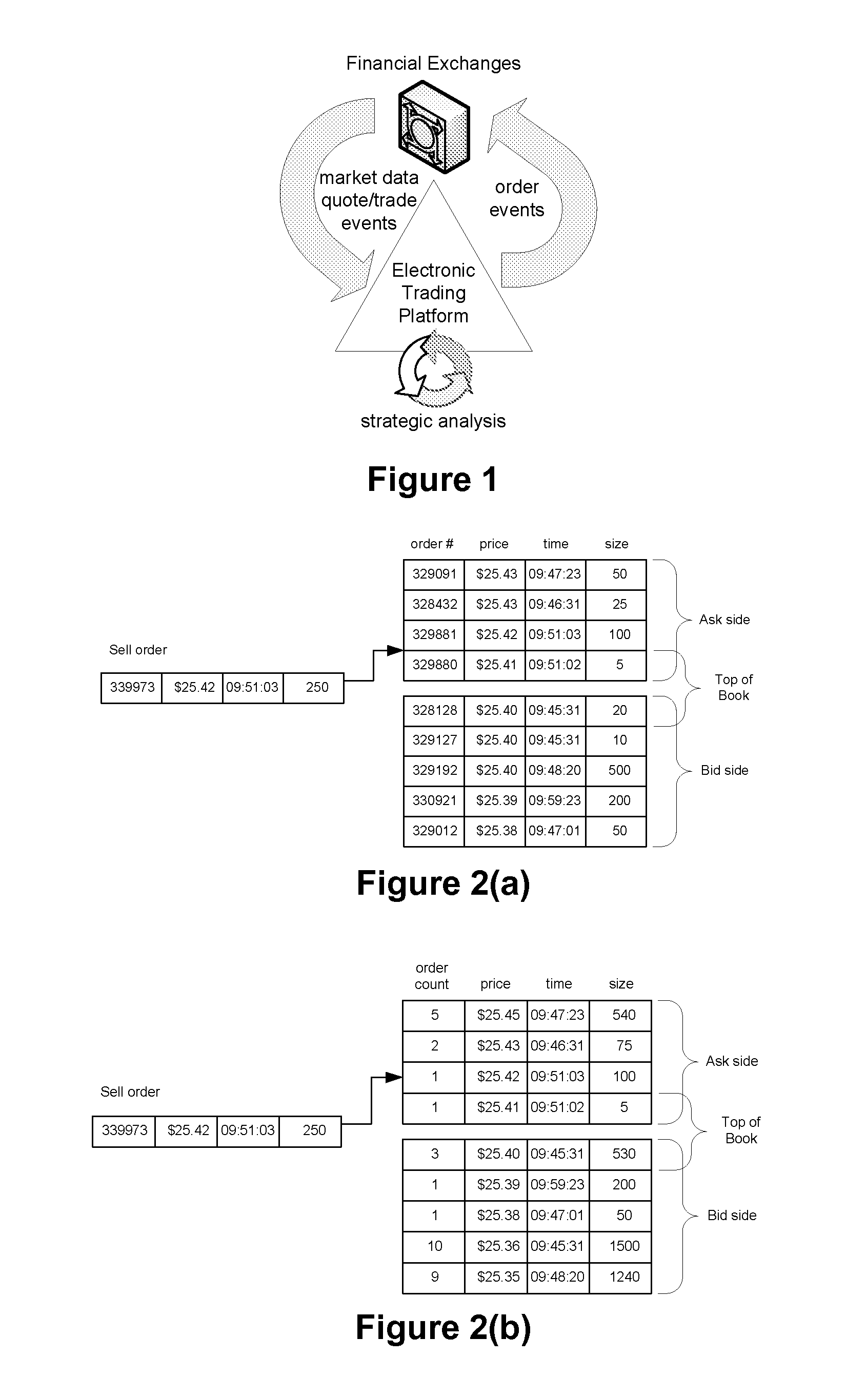

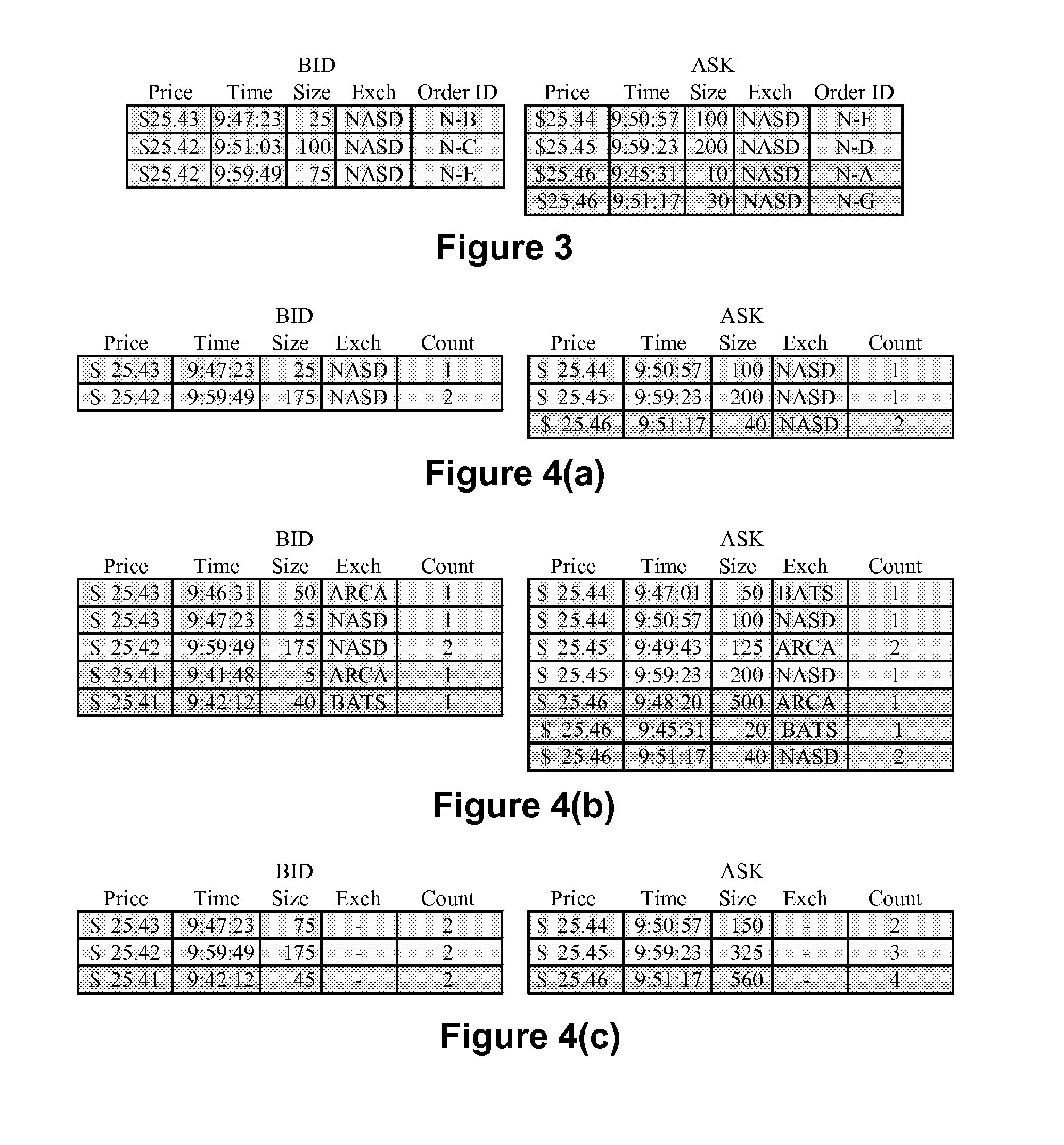

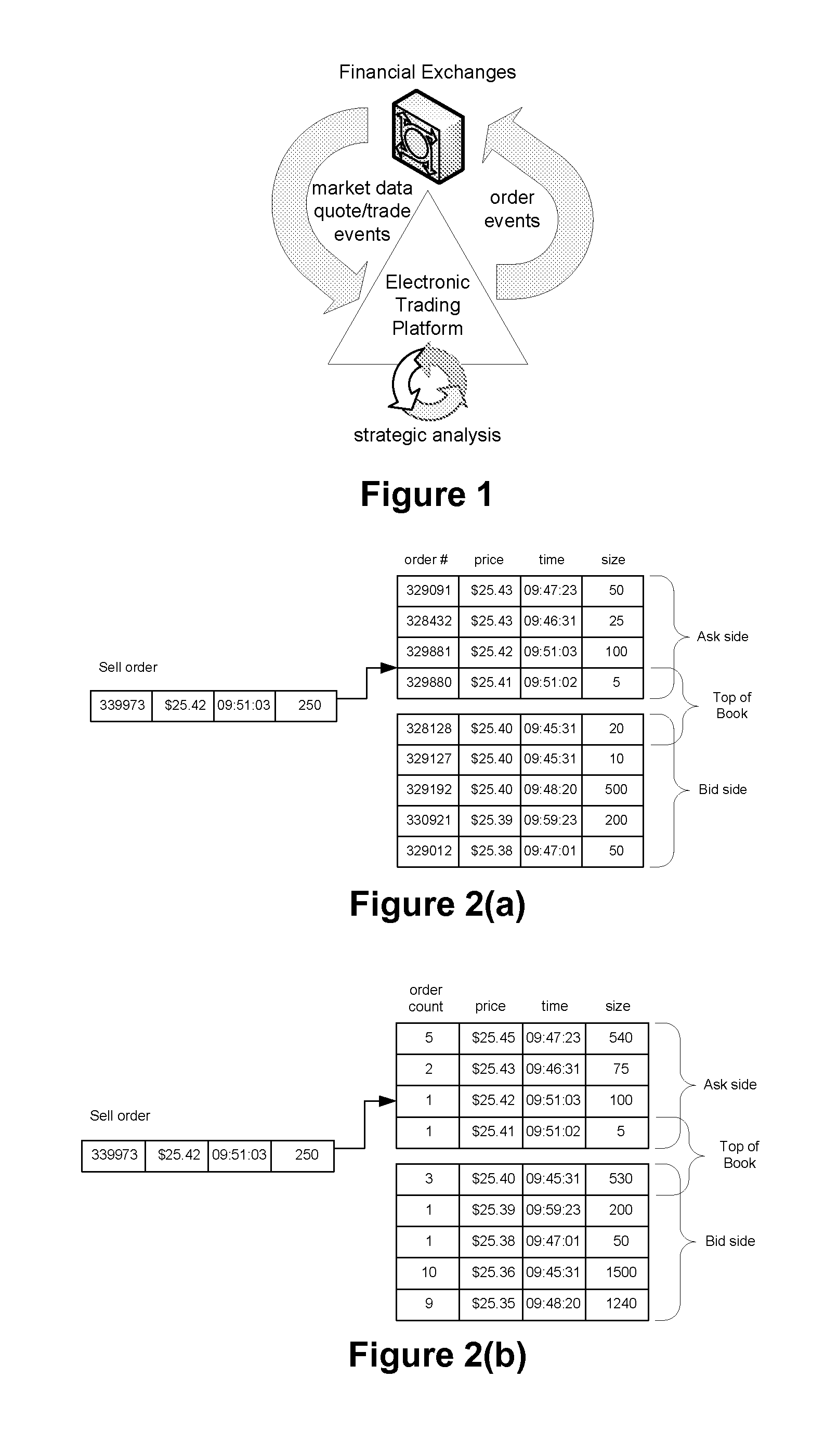

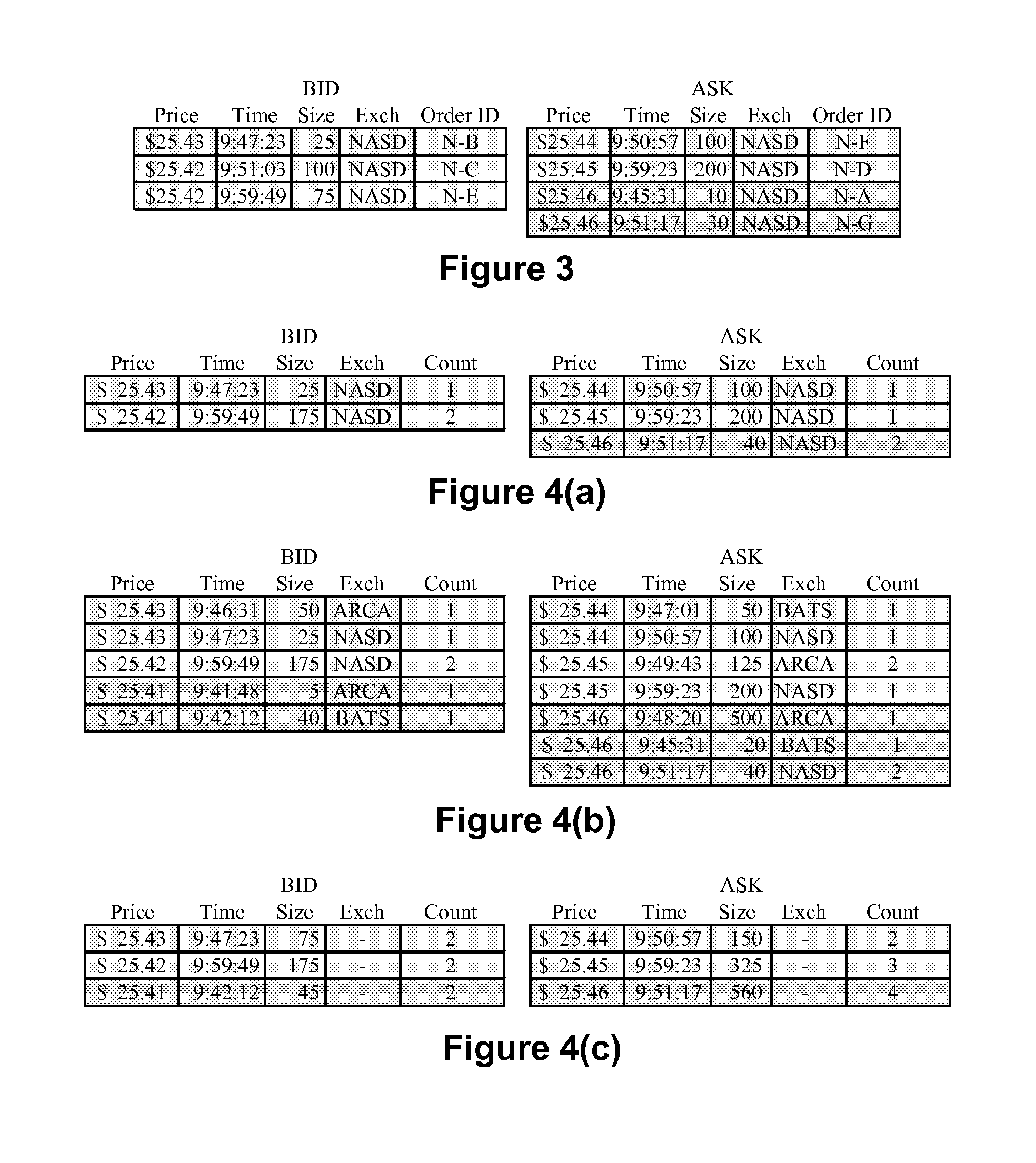

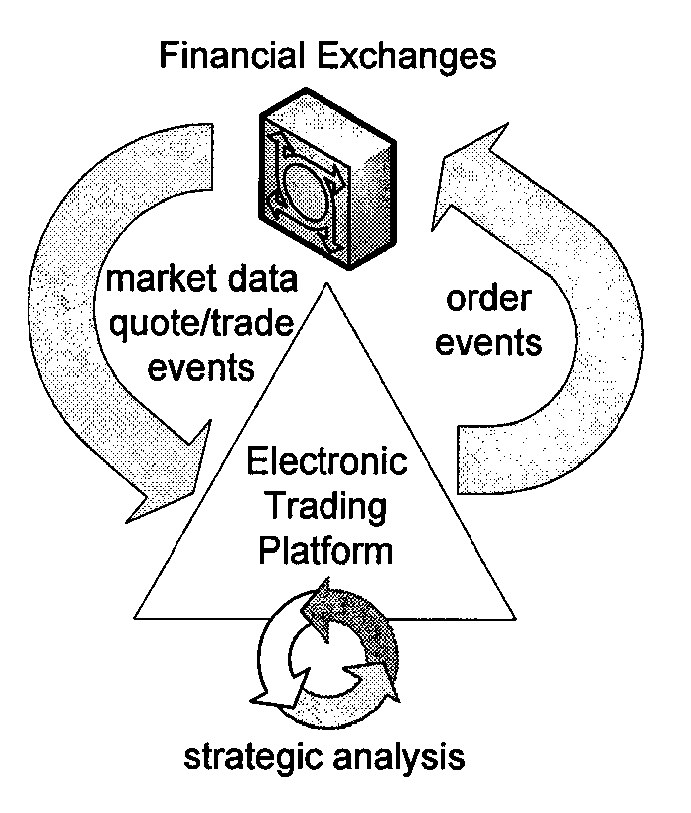

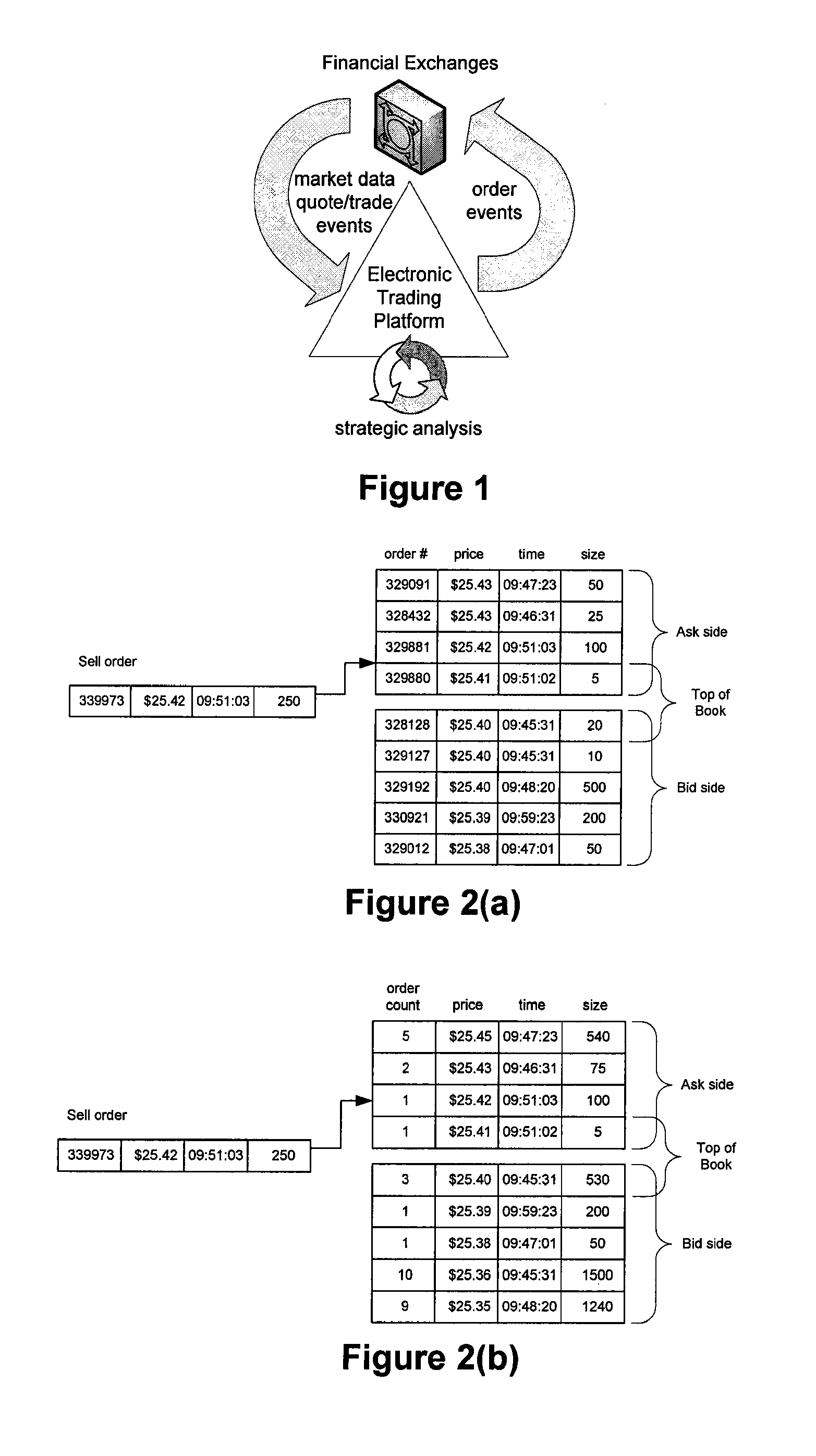

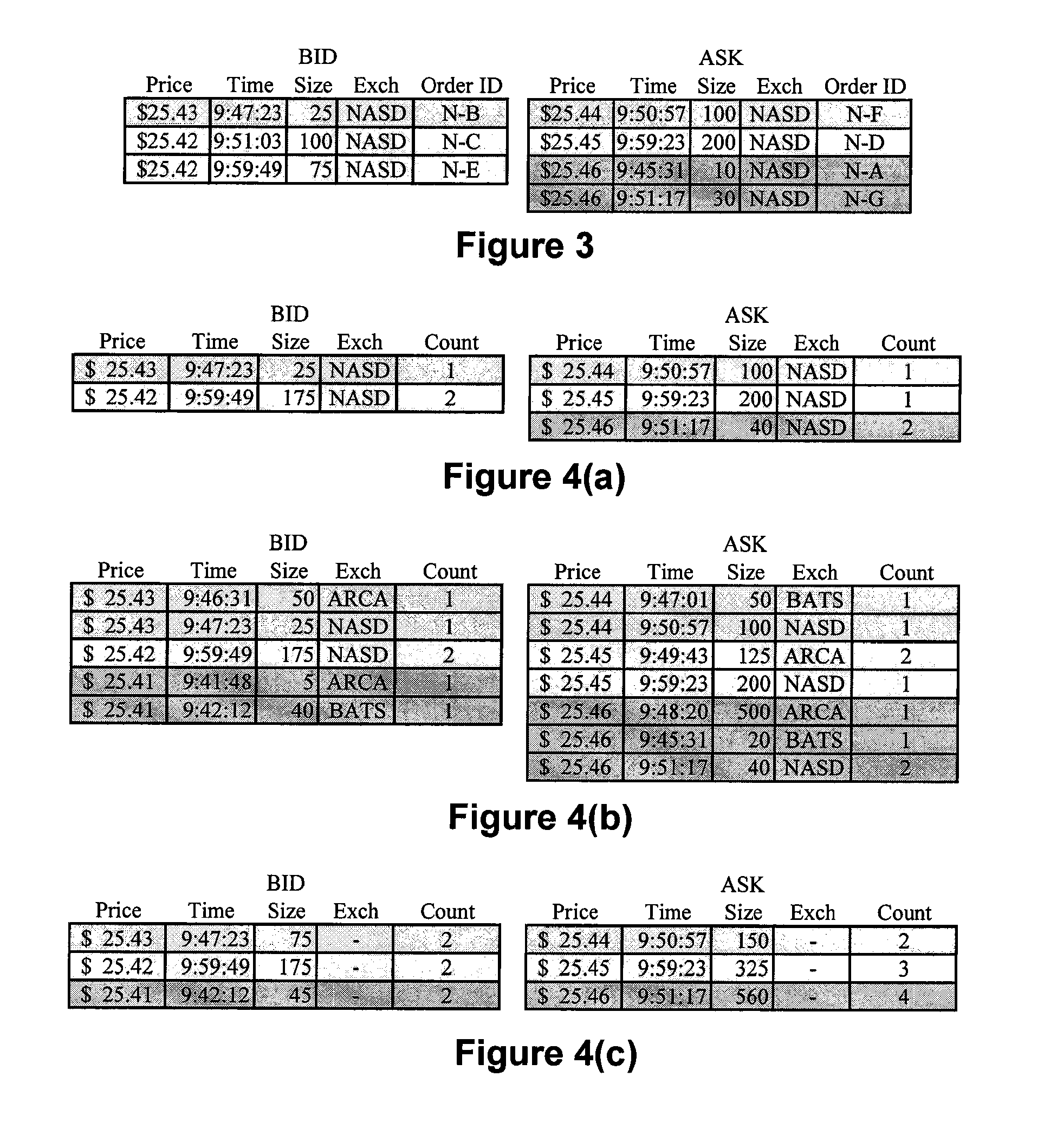

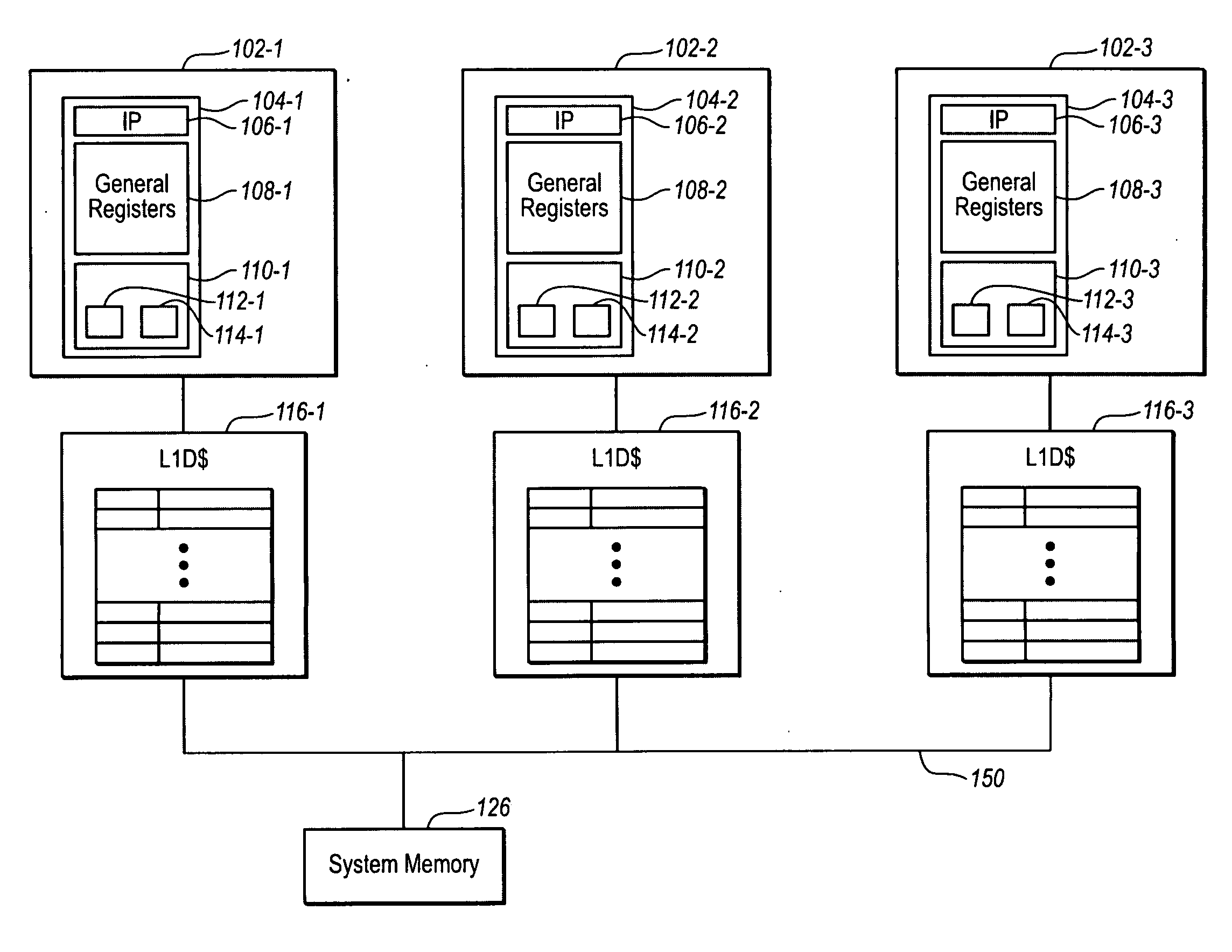

Method and Apparatus for High-Speed Processing of Financial Market Depth Data

ActiveUS20120089496A1Minimize total system latencyProcessing latencyFinanceCoprocessorLatency (engineering)

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to enrich a stream of limit order events pertaining to financial instruments with data from a plurality of updated order books.

Owner:EXEGY INC

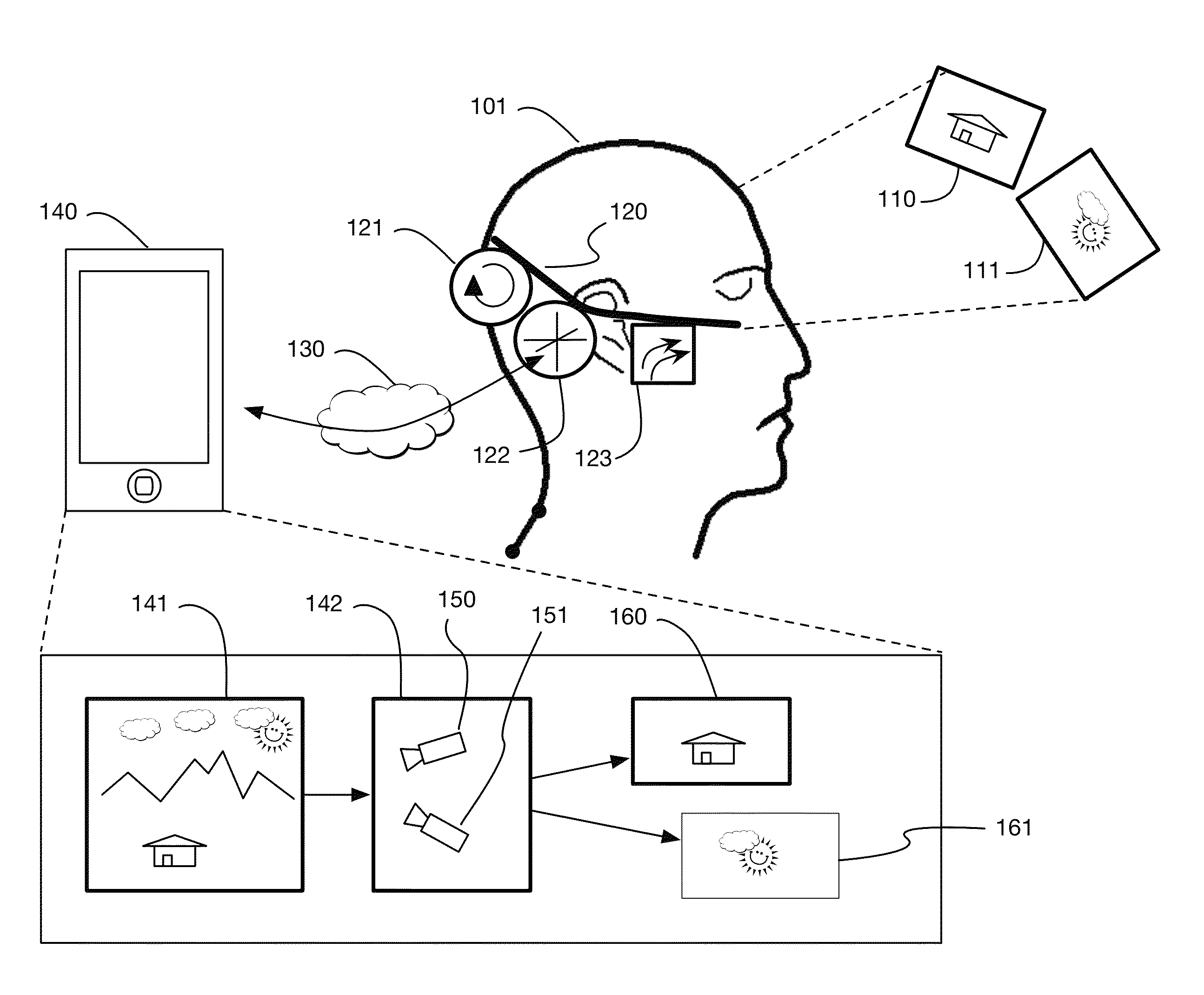

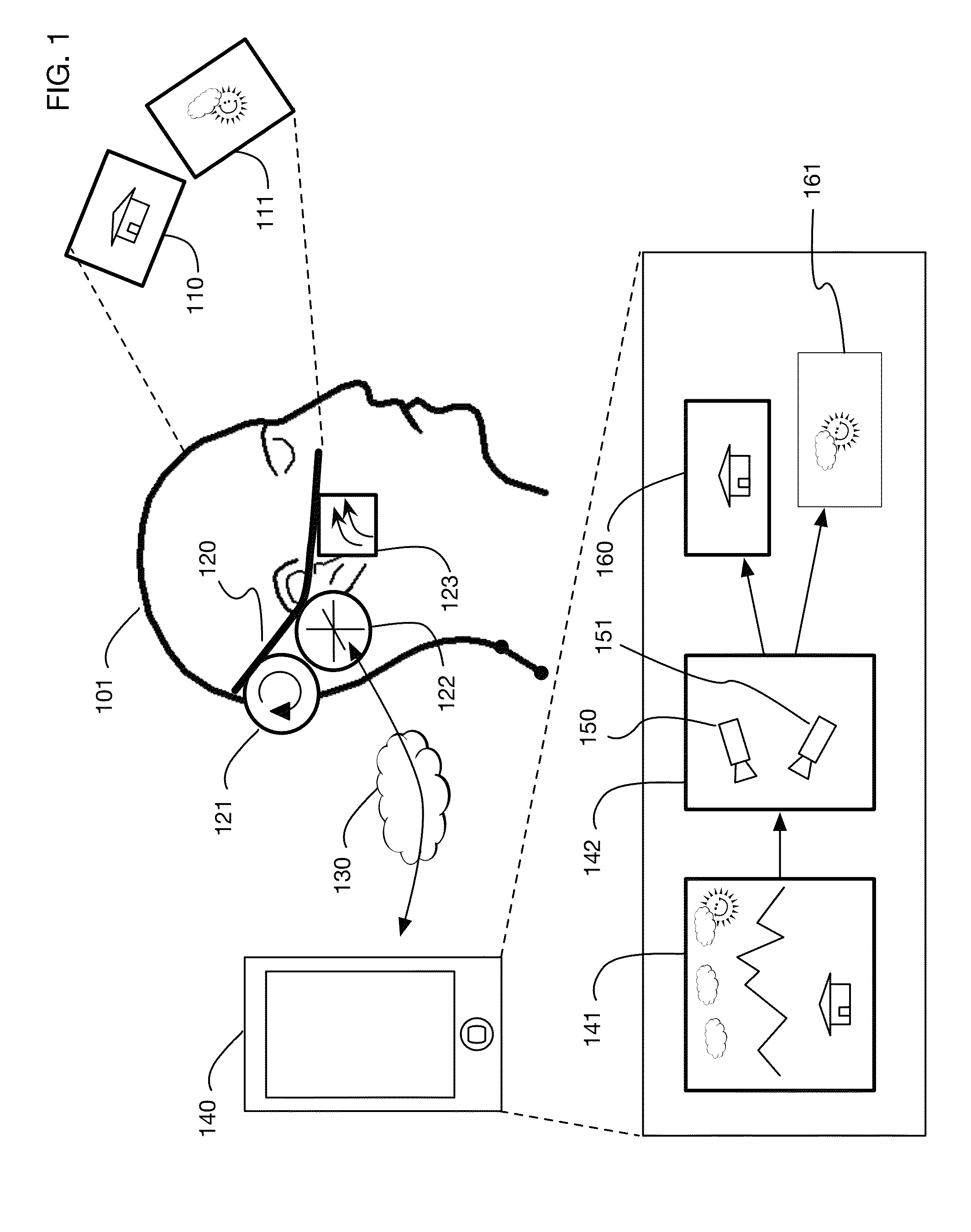

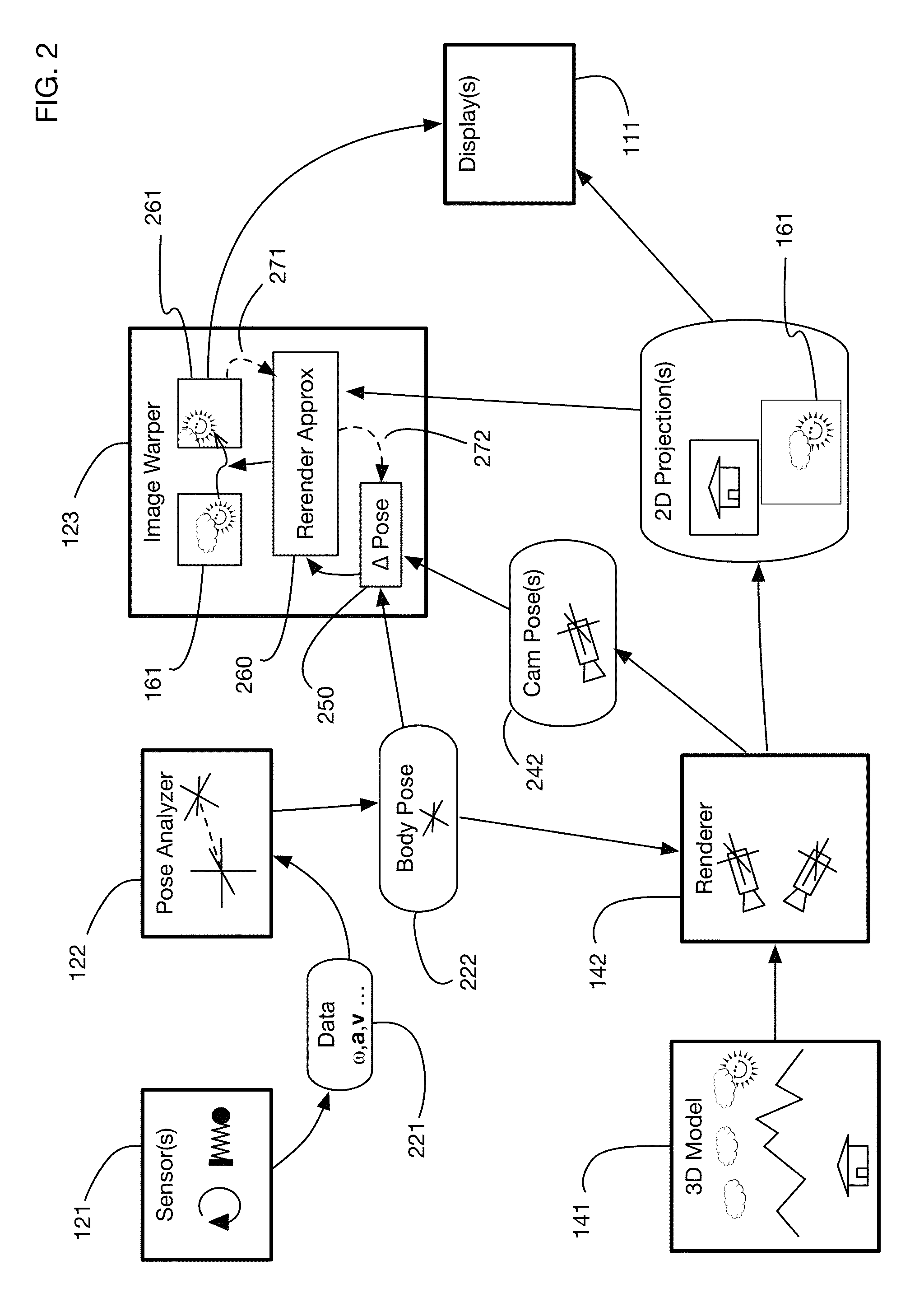

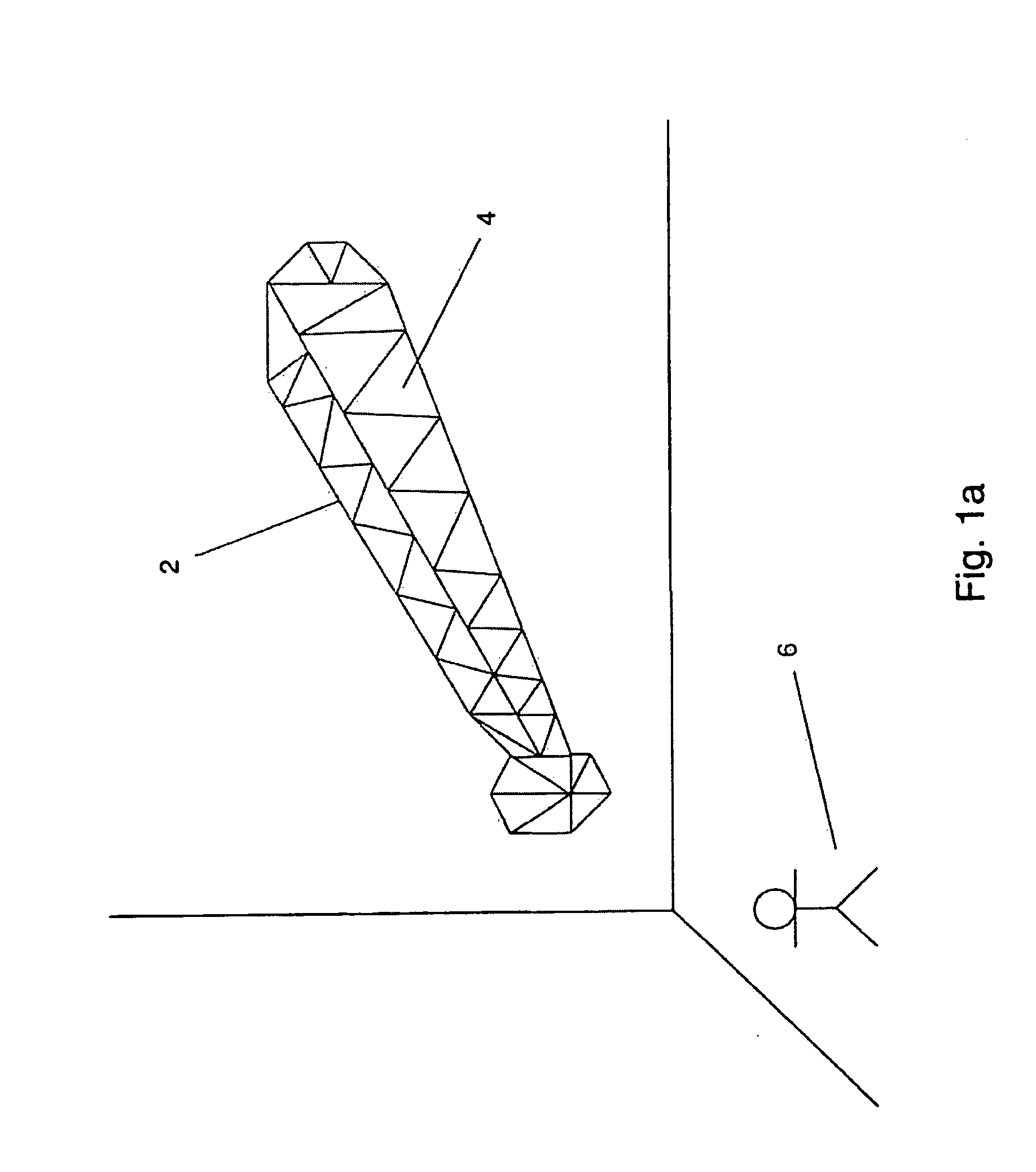

Low-latency virtual reality display system

InactiveUS9240069B1Enhance the virtual reality experienceEfficient and rapid rerendering reduces latencyImage renderingInput/output processes for data processingComputer graphics (images)Display device

A low-latency virtual reality display system that provides rapid updates to virtual reality displays in response to a user's movements. Display images generated by rendering a 3D virtual world are modified using an image warping approximation, which allows updates to be calculated and displayed quickly. Image warping is performed using various rerendering approximations that use simplified 3D models and simplified 3D to 2D projections. An example of such a rerendering approximation is a shifting of all pixels in the display by a pixel translation vector that is calculated based on the user's movements; pixel translation may be done very quickly, possibly using hardware acceleration, and may be sufficiently realistic particularly for small changes in a user's position and orientation. Additional features include techniques to fill holes in displays generated by image warping, synchronizing full rendering with approximate rerendering, and using prediction of a user's future movements to reduce apparent latency.

Owner:LI ADAM

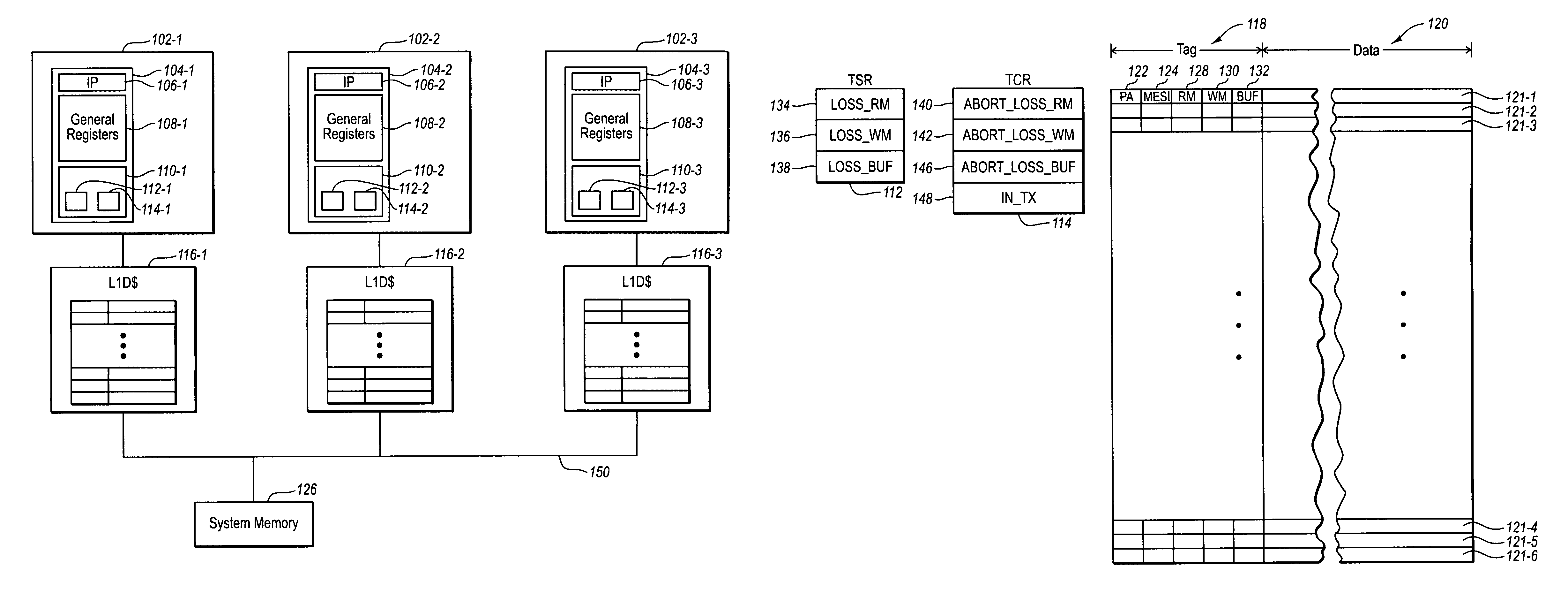

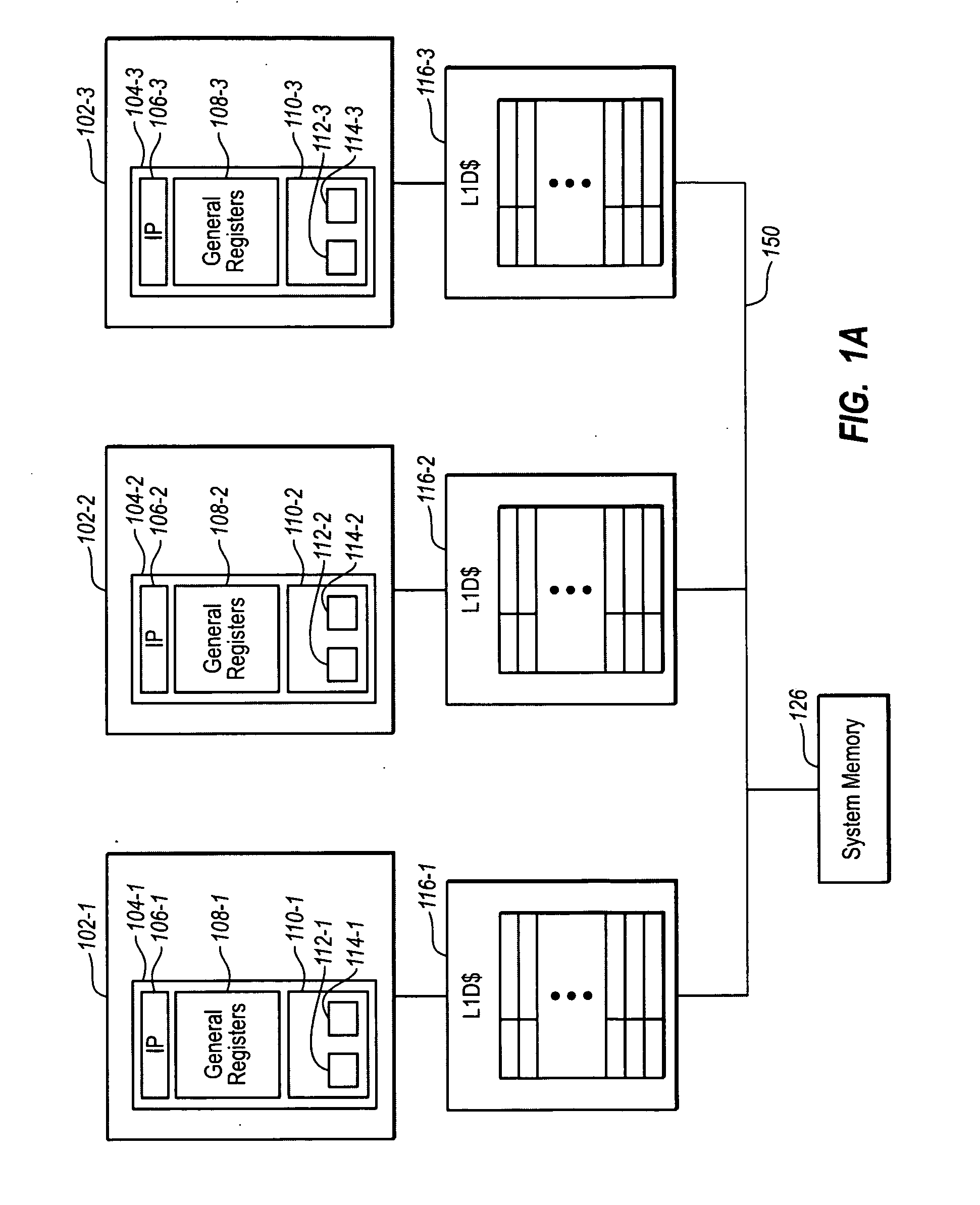

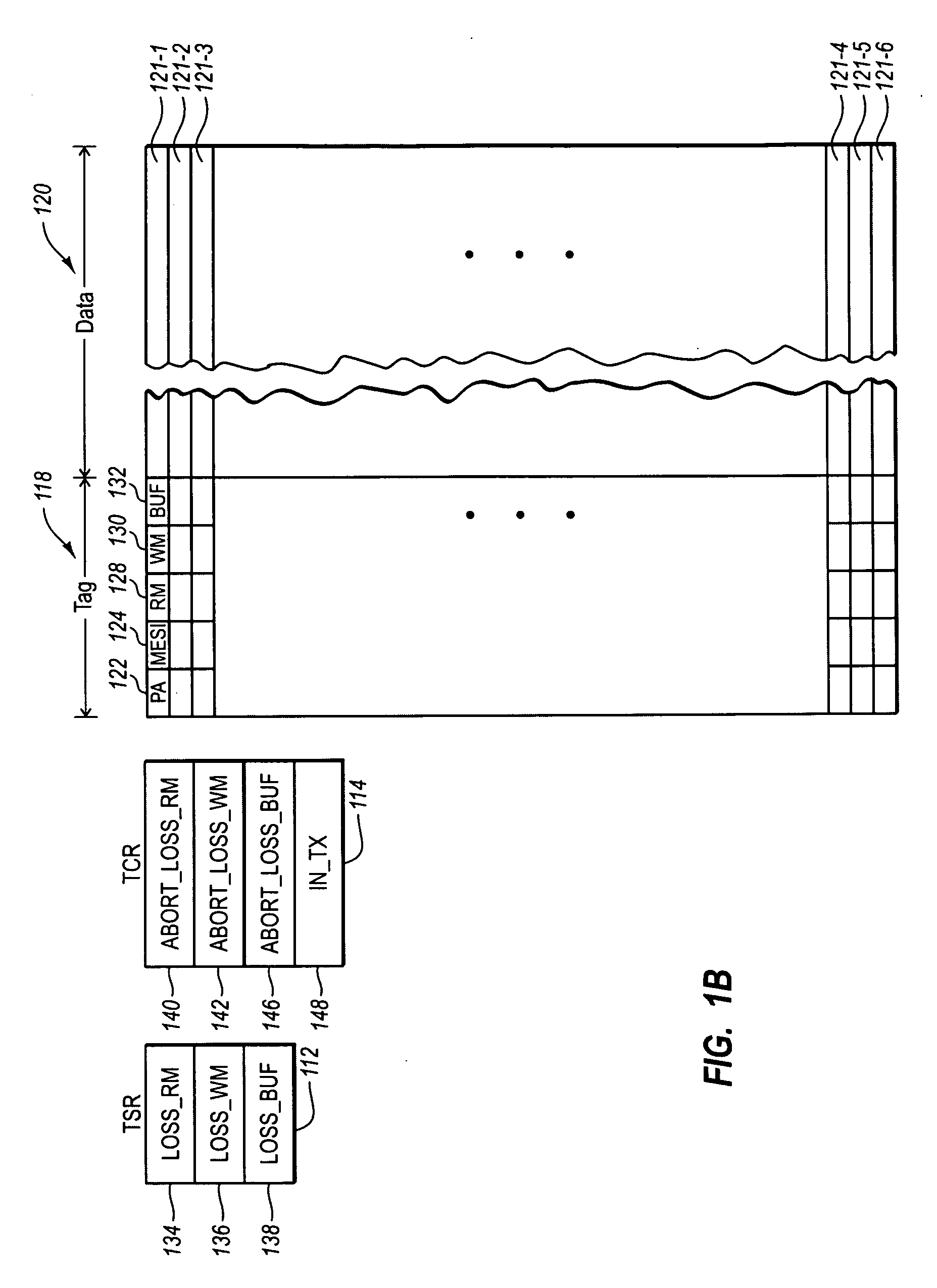

Hardware accelerated transactional memory system with open nested transactions

ActiveUS8229907B2Digital data information retrievalDigital data processing detailsComputer hardwareHardware acceleration

Owner:MICROSOFT TECH LICENSING LLC

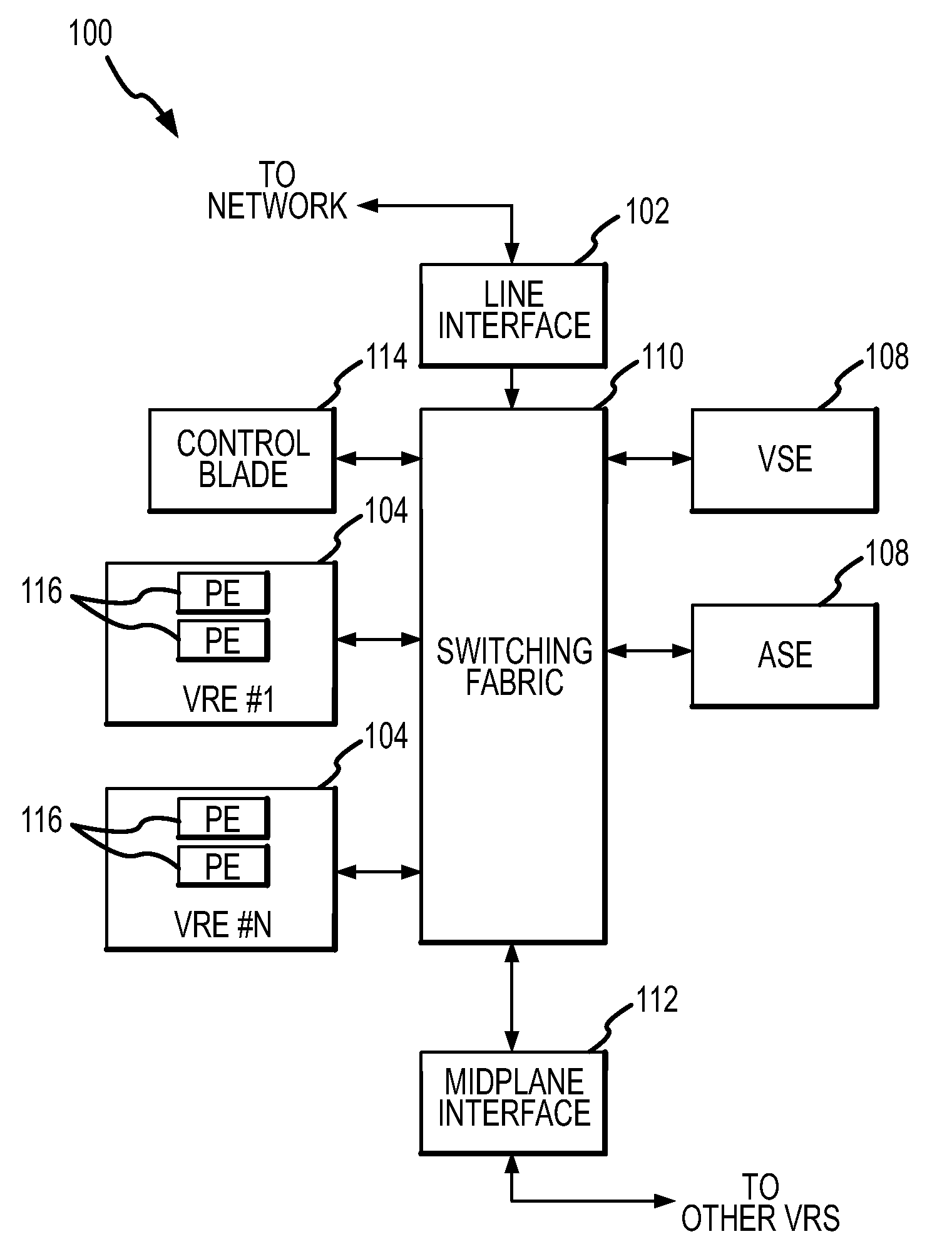

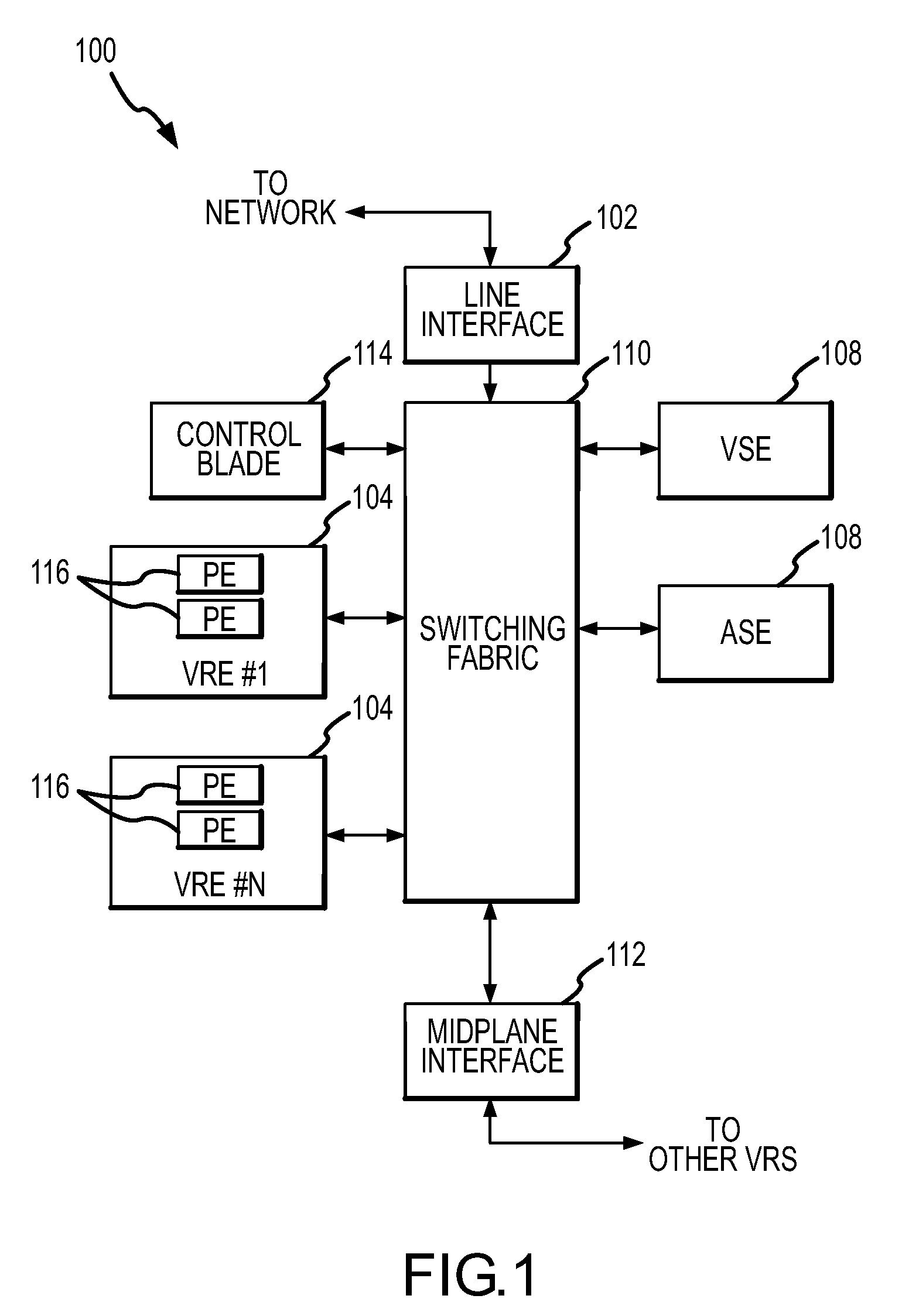

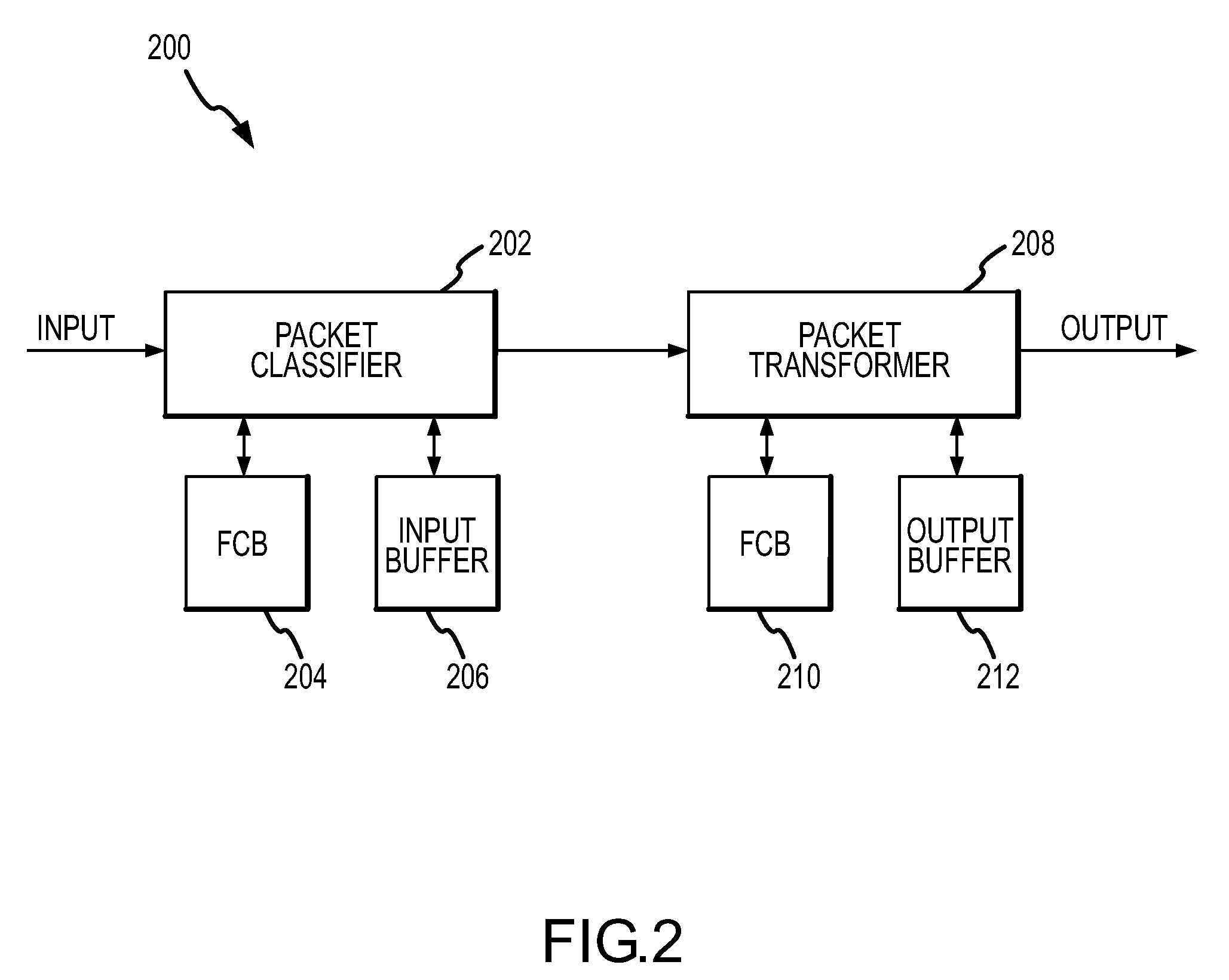

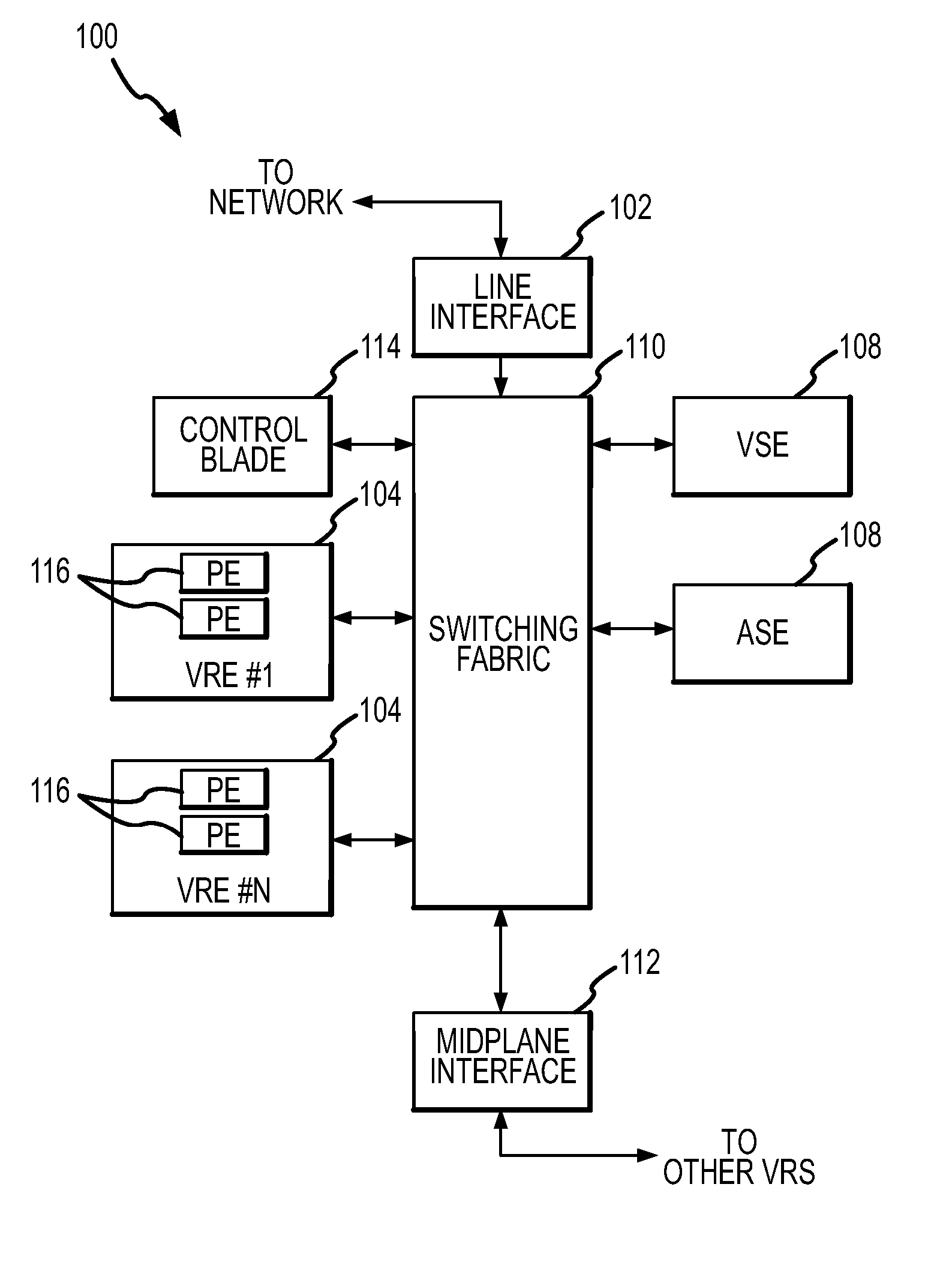

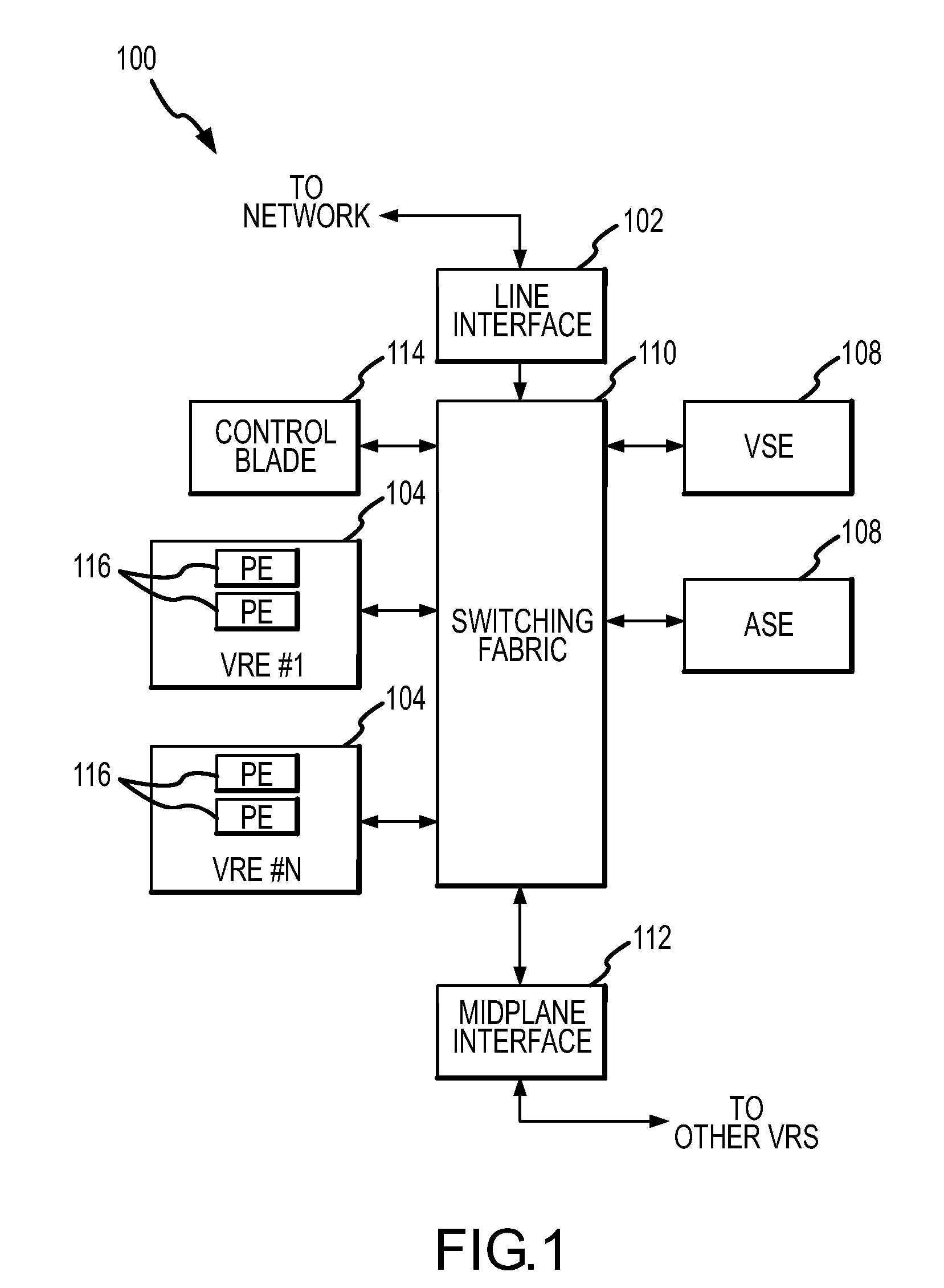

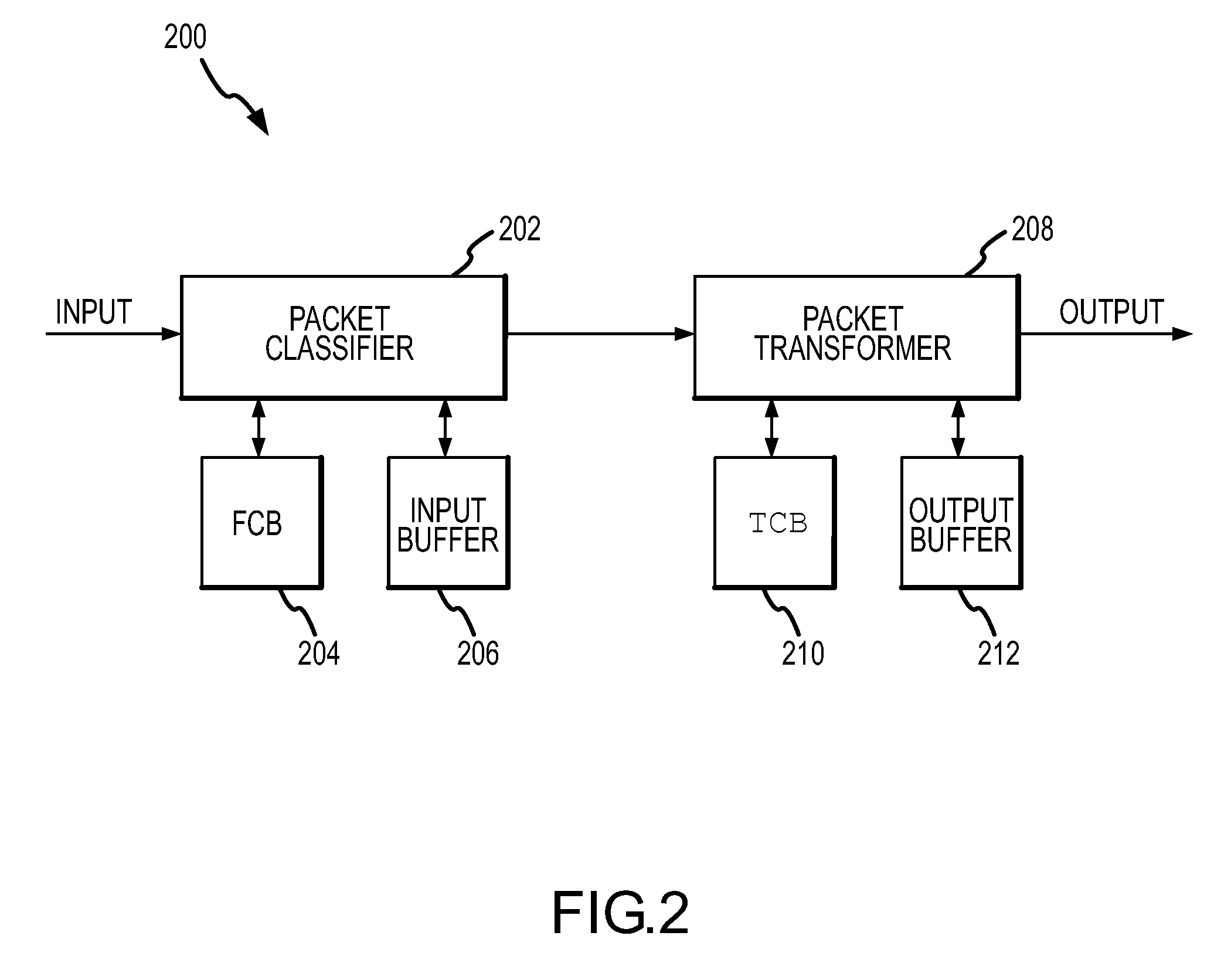

System and method for hardware accelerated packet multicast in a virtual routing system

ActiveUS7266120B2Special service provision for substationData switching by path configurationExternal storageMulti processor

A packet-forwarding engine (PFE) of a multiprocessor system uses an array of flow classification block (FCB) indices to multicast a packet. Packets are received and buffered in external memory. In one embodiment, when a multicast packet is identified, a bit is set in a packet descriptor and an FCB index is generated and sent with a null-packet to the egress processors which generate multiple descriptors with different indices for each instance of multicasting. All the descriptors may point to the same buffer in the external memory, which stores the multicast packet. A DMA engine reads from the same buffer multiple times and egress processors may access an appropriate transform control block (TCB) index so that the proper headers may be installed on the outgoing packet. The buffer may be released after the last time the packet is read by setting a particular bit of the FCB index.

Owner:FORTINET

Method and Apparatus for High-Speed Processing of Financial Market Depth Data

ActiveUS20120089497A1Minimize total system latencyProcessing latencyFinanceCoprocessorLatency (engineering)

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to generate a quote event in response to a limit order event being determined to modify the top of an order book.

Owner:EXEGY INC

Method and apparatus for high-speed processing of financial market depth data

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to enrich a stream of limit order events pertaining to financial instruments with data from a plurality of updated order books.

Owner:EXEGY INC

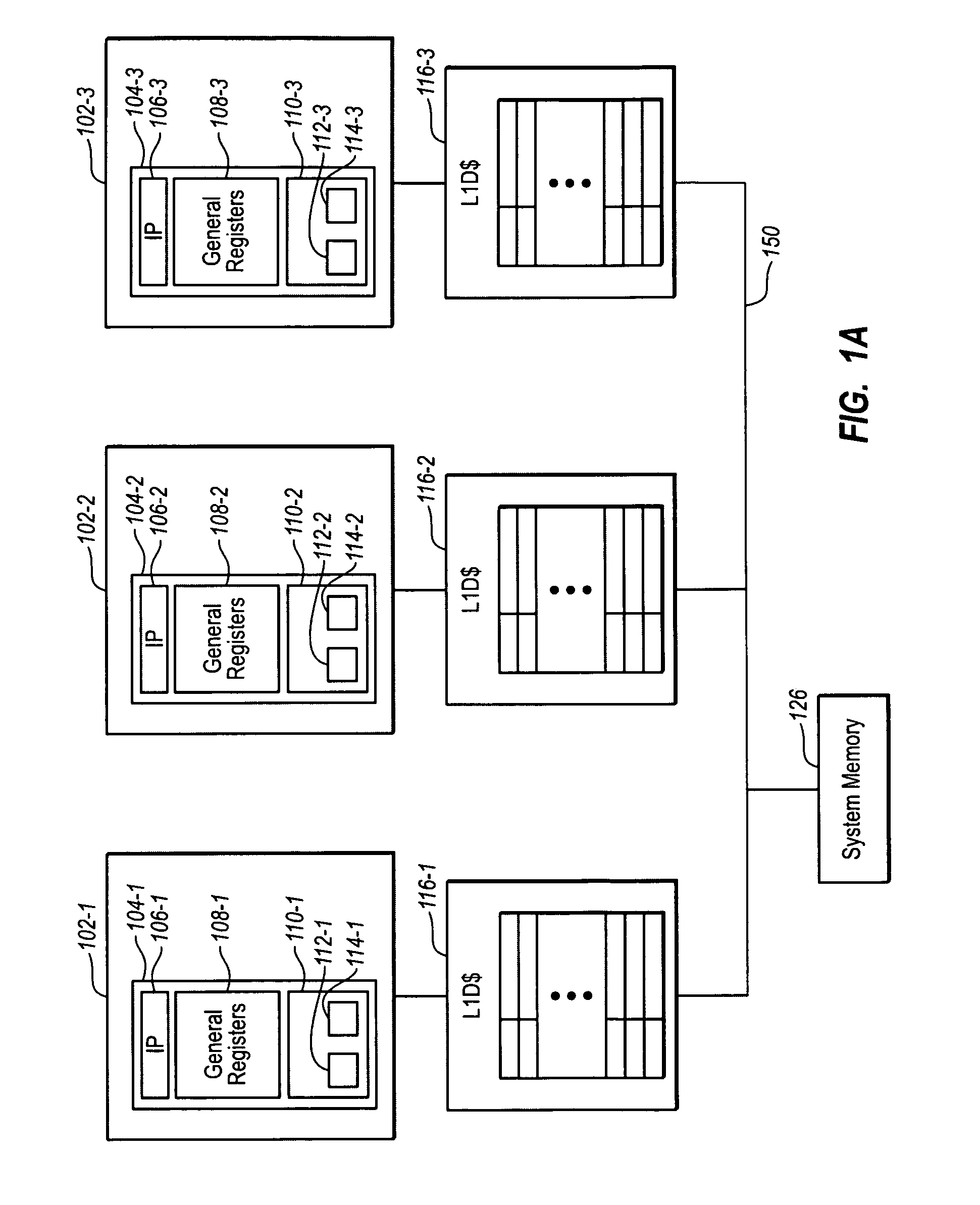

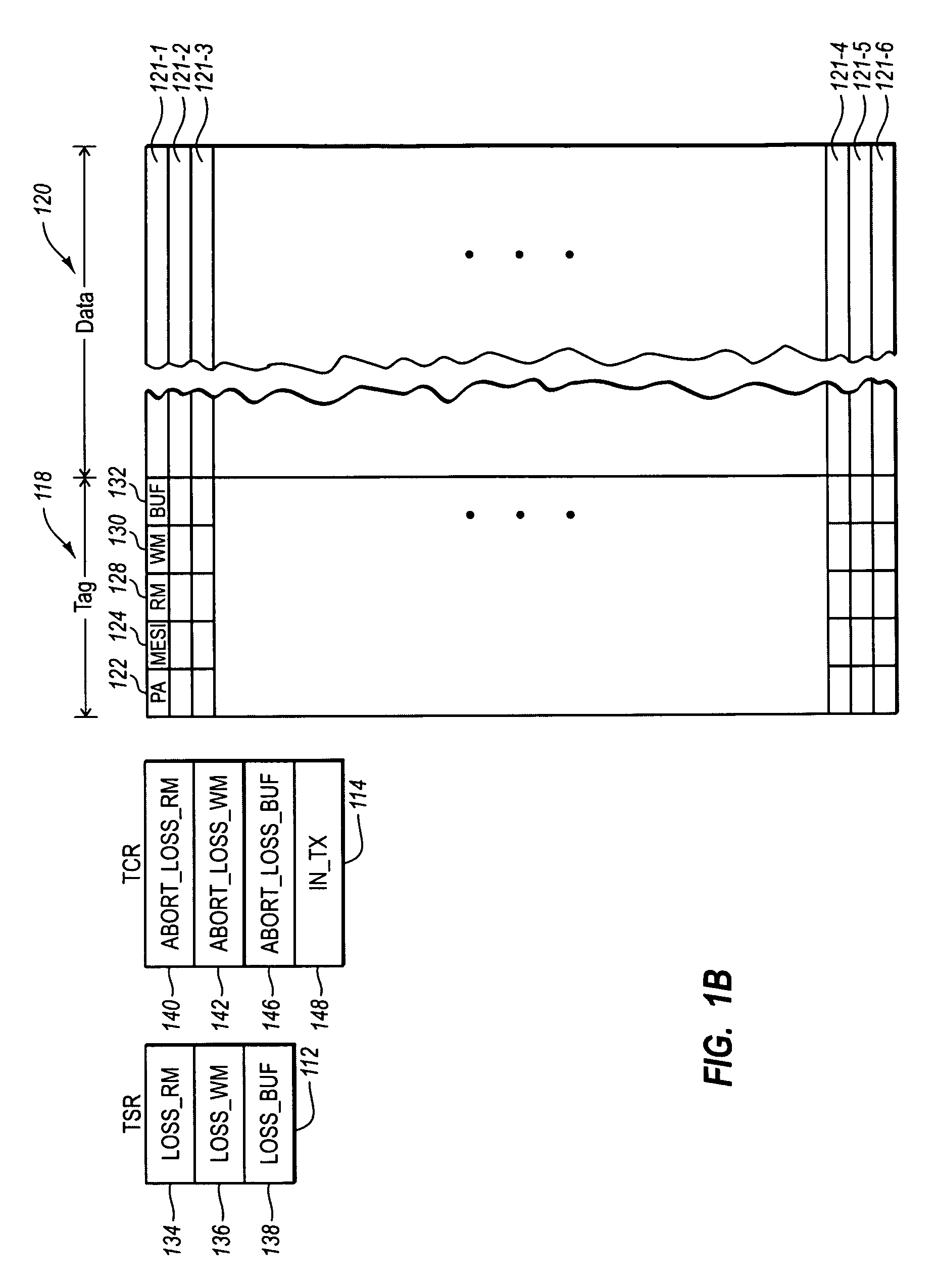

Hardware accelerated transactional memory system with open nested transactions

ActiveUS20100332538A1Well formedDigital data information retrievalDigital data processing detailsComputer hardwareSoftware lockout

Hardware assisted transactional memory system with open nested transactions. Some embodiments described herein implement a system whereby hardware acceleration of transactions can be accomplished by implementing open nested transaction in hardware which respect software locks such that a top level transaction can be implemented in software, and thus not be limited by hardware constraints typical when using hardware transactional memory systems.

Owner:MICROSOFT TECH LICENSING LLC

System and methods for high rate hardware-accelerated network protocol processing

InactiveUS20090063696A1Improve performanceError prevention/detection by using return channelTransmission systemsTraffic capacityHigh rate

Disclosed is a system and methods for accelerating network protocol processing for devices configured to process network traffic at relatively high data rates. The system incorporates a hardware-accelerated protocol processing module that handles steady state network traffic and a software-based processing module that handles infrequent and exception cases in network traffic processing.

Owner:PROMISE TECHNOLOGY

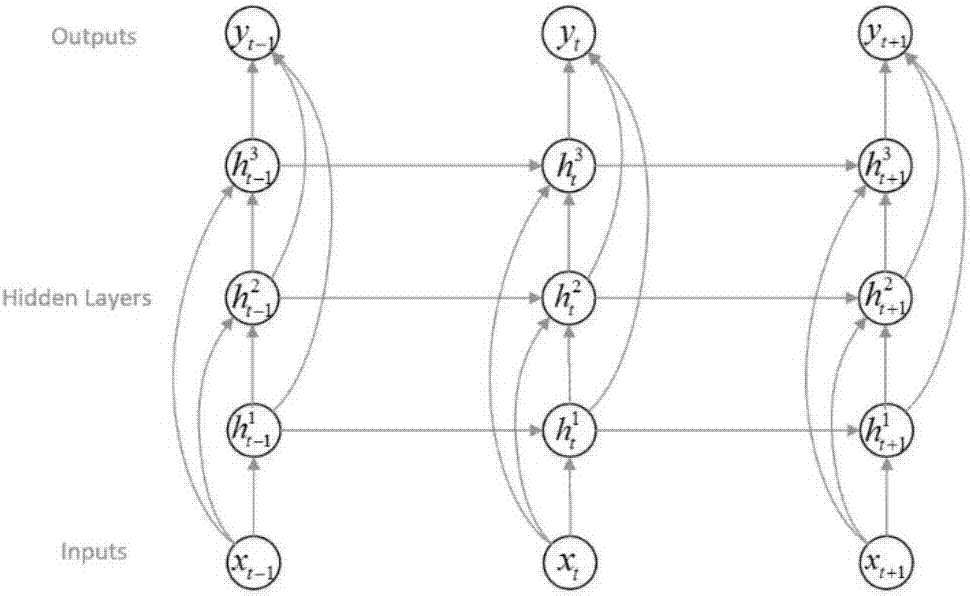

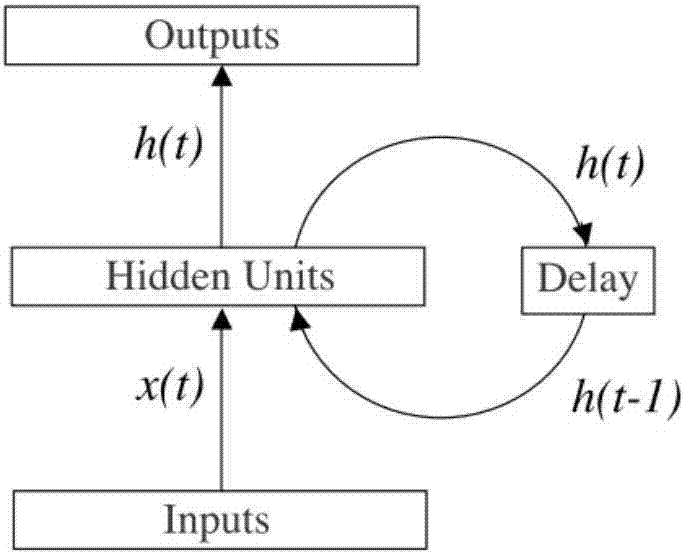

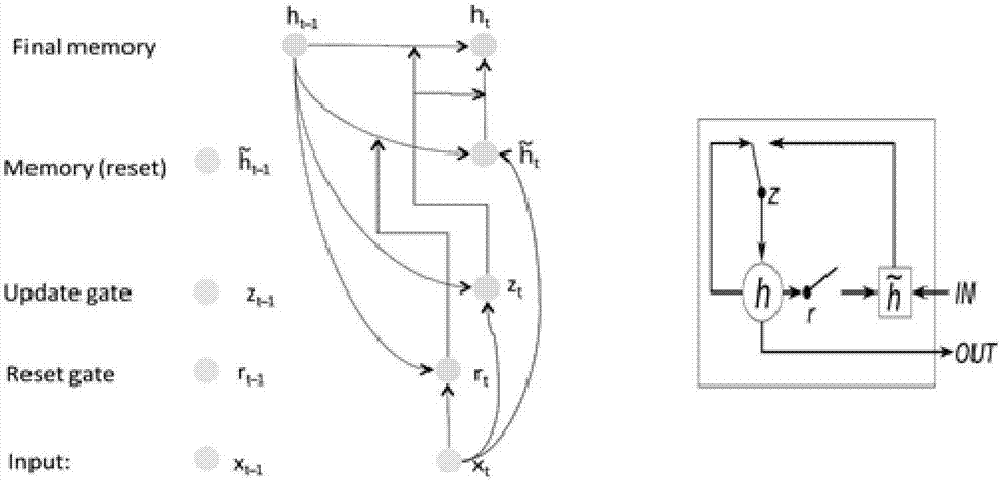

Hardware accelerator and method for realizing sparse GRU neural network based on FPGA

The invention provides a hardware accelerator and method for realizing a sparse GRU neural network based on FPGA. According to the invention, an apparatus for realizing sparse GRU neural network includes: an input receiving unit which is intended for receiving a plurality of input vectors and distributing the plurality of input vectors to a plurality of computing units; a plurality of computing units which acquire input vectors from the input receiving unit, read weight matrix data of a neural network, decoding the weight matrix data and conduct matrix calculation on the decoded weight matrix data and the input vectors, and output the result of the matrix calculation to a hidden layer state computing module; a hidden layer state computing module which acquires the result of matrix calculation from the computing units PE, and computing the state of the hidden layer; and a control unit which is intended for global control. In addition, the invention also provides a method for realizing sparse GRU neural network through iteration.

Owner:XILINX INC

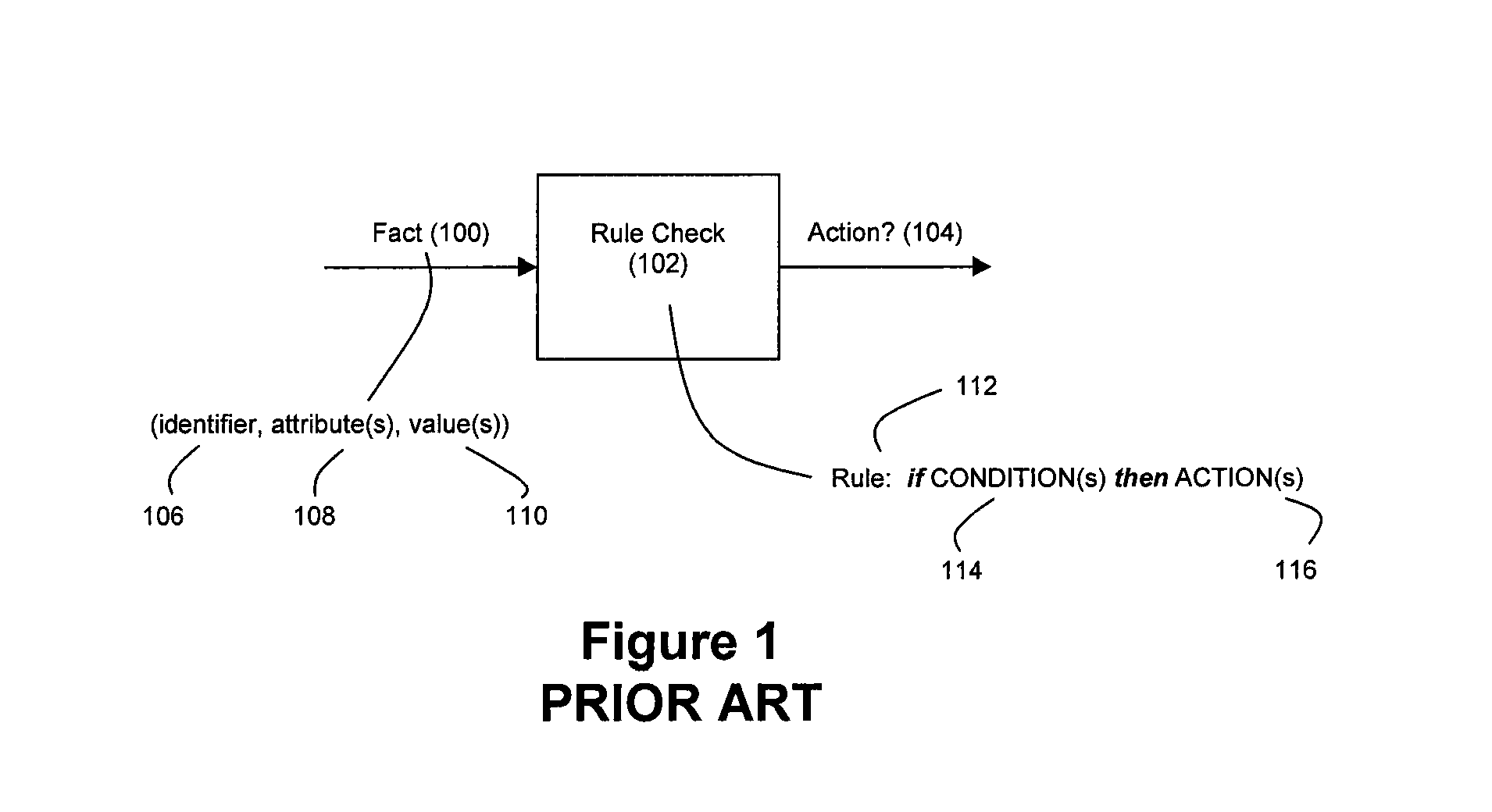

Method and system for accelerated stream processing

ActiveUS8374986B2Low degreeImprove latencyDigital computer detailsCode conversionProgramming languageData stream

Disclosed herein is a method and system for hardware-accelerating various data processing operations in a rule-based decision-making system such as a business rules engine, an event stream processor, and a complex event stream processor. Preferably, incoming data streams are checked against a plurality of rule conditions. Among the data processing operations that are hardware-accelerated include rule condition check operations, filtering operations, and path merging operations. The rule condition check operations generate rule condition check results for the processed data streams, wherein the rule condition check results are indicative of any rule conditions which have been satisfied by the data streams. The generation of such results with a low degree of latency provides enterprises with the ability to perform timely decision-making based on the data present in received data streams.

Owner:IP RESERVOIR

Acceleration of rendering of web-based content

ActiveUS20090228782A1Digital data information retrievalNatural language data processingGraphicsApplication programming interface

Systems and methods for hardware accelerated presentation of web pages on mobile computing devices are presented. A plurality of web pages may be received by a computing device capable of processing and displaying web pages using layout engines, hardware accelerated graphics application programming interfaces (APIs). Upon receipt of the web pages, the web pages may be divided into a plurality of rendering layers, based upon stylesheets of the web pages. An algorithm walks through rendering layers so as to select a plurality of layers that may receive compositing layers so as to take advantage of hardware acceleration when rendered. The web pages may be subsequently presented on a display of the mobile computing devices using remaining rendering layers and compositing layers. In this manner, visual representation of web content remains intact even when content which may not have been originally designed for use with layout engine may be displayed.

Owner:APPLE INC

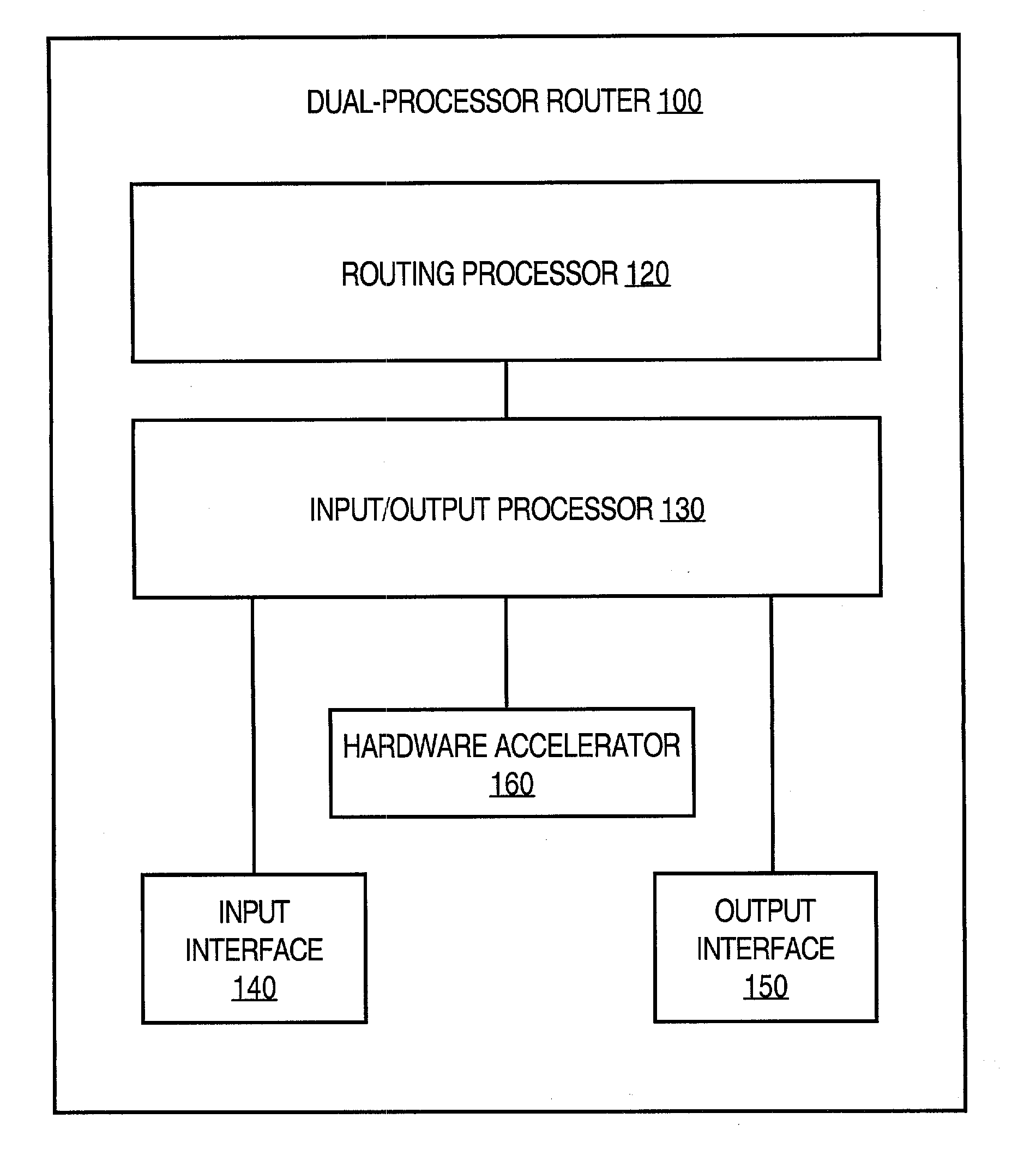

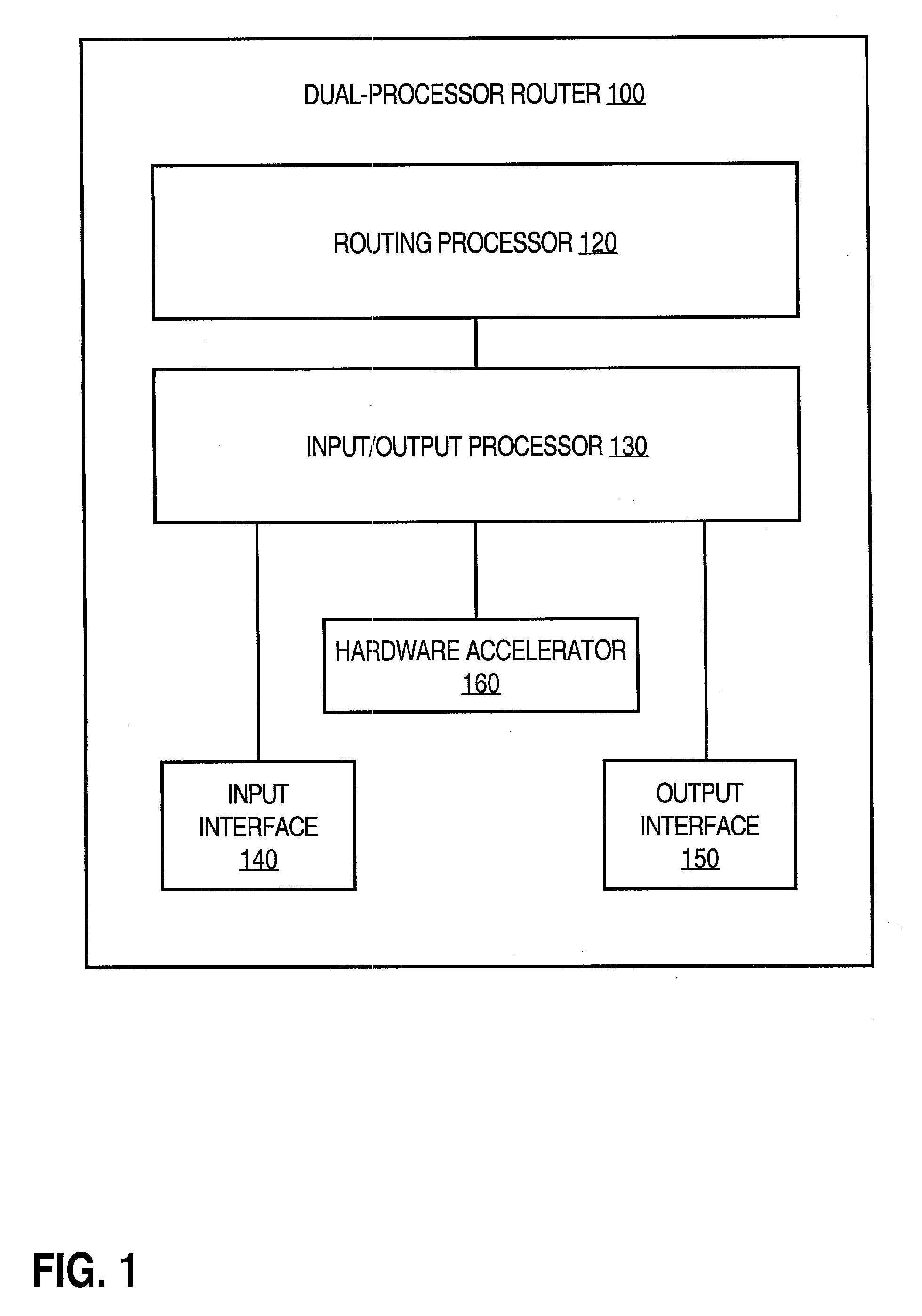

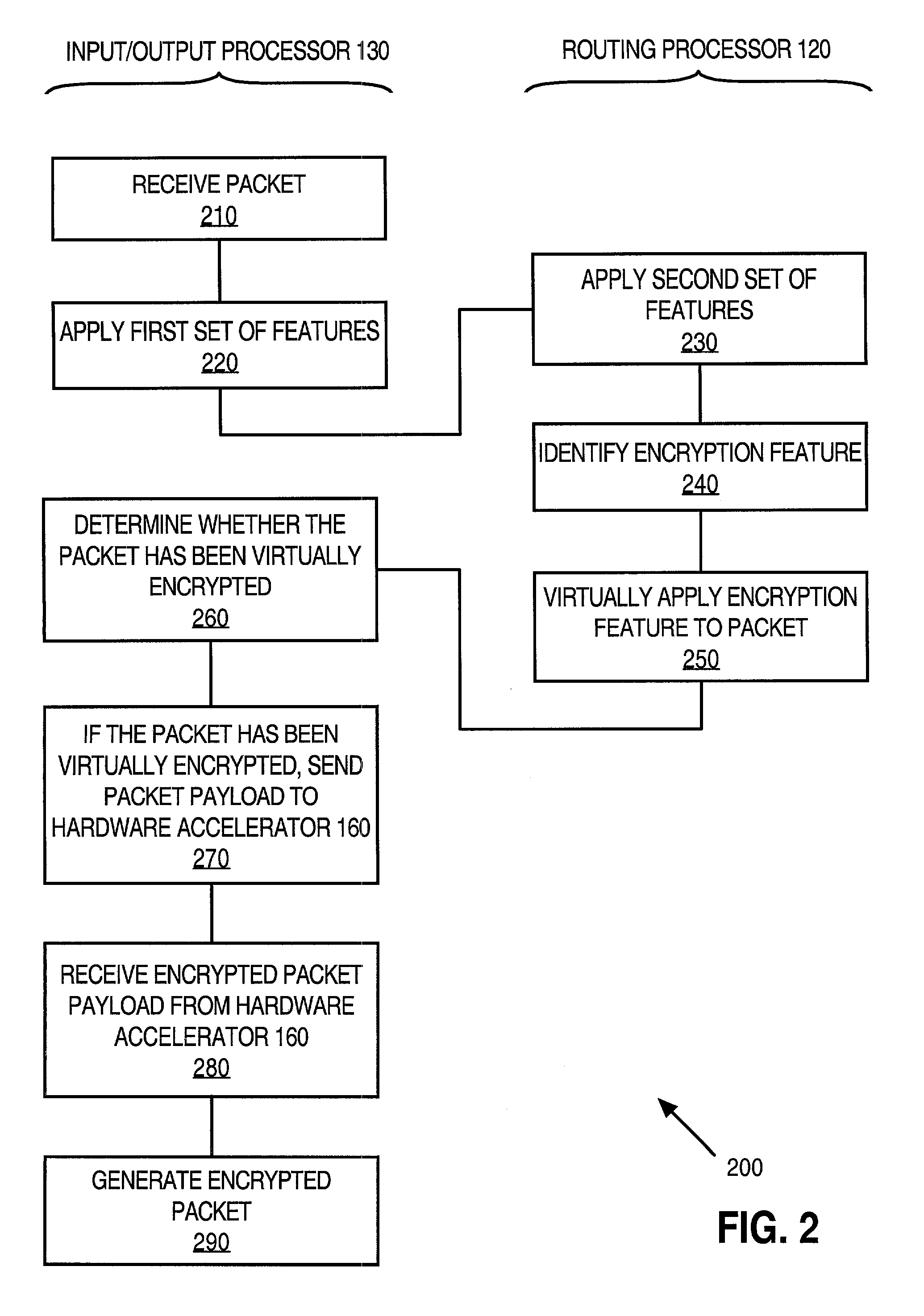

Virtual application of features to electronic messages

InactiveUS7082477B1Data switching by path configurationMultiple digital computer combinationsComputer hardwareHardware acceleration

A method and apparatus for virtual application of features to electronic messages is disclosed. When a device applies a set of features to an electronic message, one or more of the features may be virtually applied instead of actually applied. For example, instead of encrypting a payload portion of a packet and adding an encryption header, the packet may not be encrypted. However, an appropriate encryption header may still be included in the packet such that the packet appears to have been encrypted when other features are applied. Prior to sending the packet, the payload portion is actually encrypted, such as by using a hardware accelerator. Some implementations may use a dual processor router, in which the input / output processor controls the hardware accelerator, the routing processor performs the virtual application of a feature, and prior to sending the packet the input / output processor actually applies the virtually applied feature.

Owner:CISCO TECH INC

Method and Apparatus for Accelerated Data Quality Checking

ActiveUS20130151458A1Low degreeImprove latencyDigital computer detailsCode conversionData streamData quality

Disclosed herein is a method and apparatus for hardware-accelerating various data quality checking operations. Incoming data streams can be processed with respect to a plurality of data quality check operations using offload engines (e.g., reconfigurable logic such as field programmable gate arrays (FPGAs)). Accelerated data quality checking can be highly advantageous for use in connection with Extract, Transfer, and Load (ETL) systems.

Owner:IP RESERVOIR

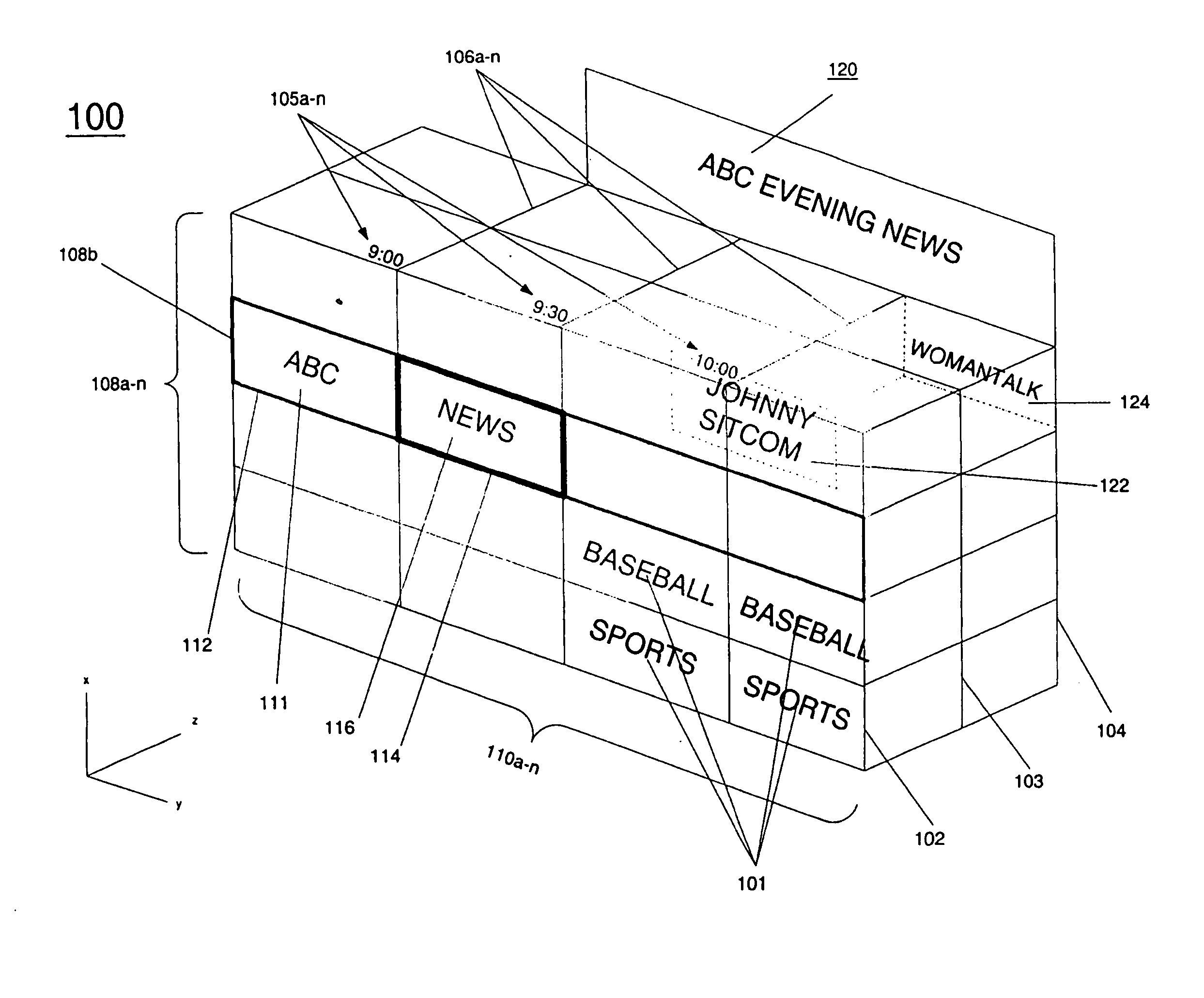

Three dimensional light electronic programming guide

InactiveUS20050097603A1Reduced graphic hardware requirementLow hardware requirementsTelevision system detailsDrawing from basic elementsThree dimensional graphicsHardware acceleration

A method and apparatus of displaying an Electronic Programming Guide (EPG). In one embodiment, an EPG is displayed in a three dimensional virtual mesh, in which independent objects representing television programs are situated. The simplified nature of the three dimensional EPG reduces the amount of processing necessary to display it. In addition, the virtual mesh may be displayed isometrically, so that hardware requirements are further reduced and it may be possible to use a software only three dimensional graphics pipeline. If a user has a set top box (STB) with a hardware accelerated graphics pipeline, the EPG may be displayed in a full three dimensional perspective view. A user can navigate the mesh to find television programs that they wish to view. A user can assign values to types of television programs that they prefer, and these programs will be displayed more prominently.

Owner:JLB VENTURES LLC

Distributed hierarchical identity management system authentication mechanisms

ActiveUS7454623B2Data taking preventionUser identity/authority verificationThird partyInternet privacy

A set of methods, and systems, for use in an identity management system are disclosed herein. A modular user identity information datastore using hardware accelerated encryption for user data security operates in a network for receiving requests for, and issuing responses containing user information including third party accredited assertions.

Owner:CALLAHAN CELLULAR L L C

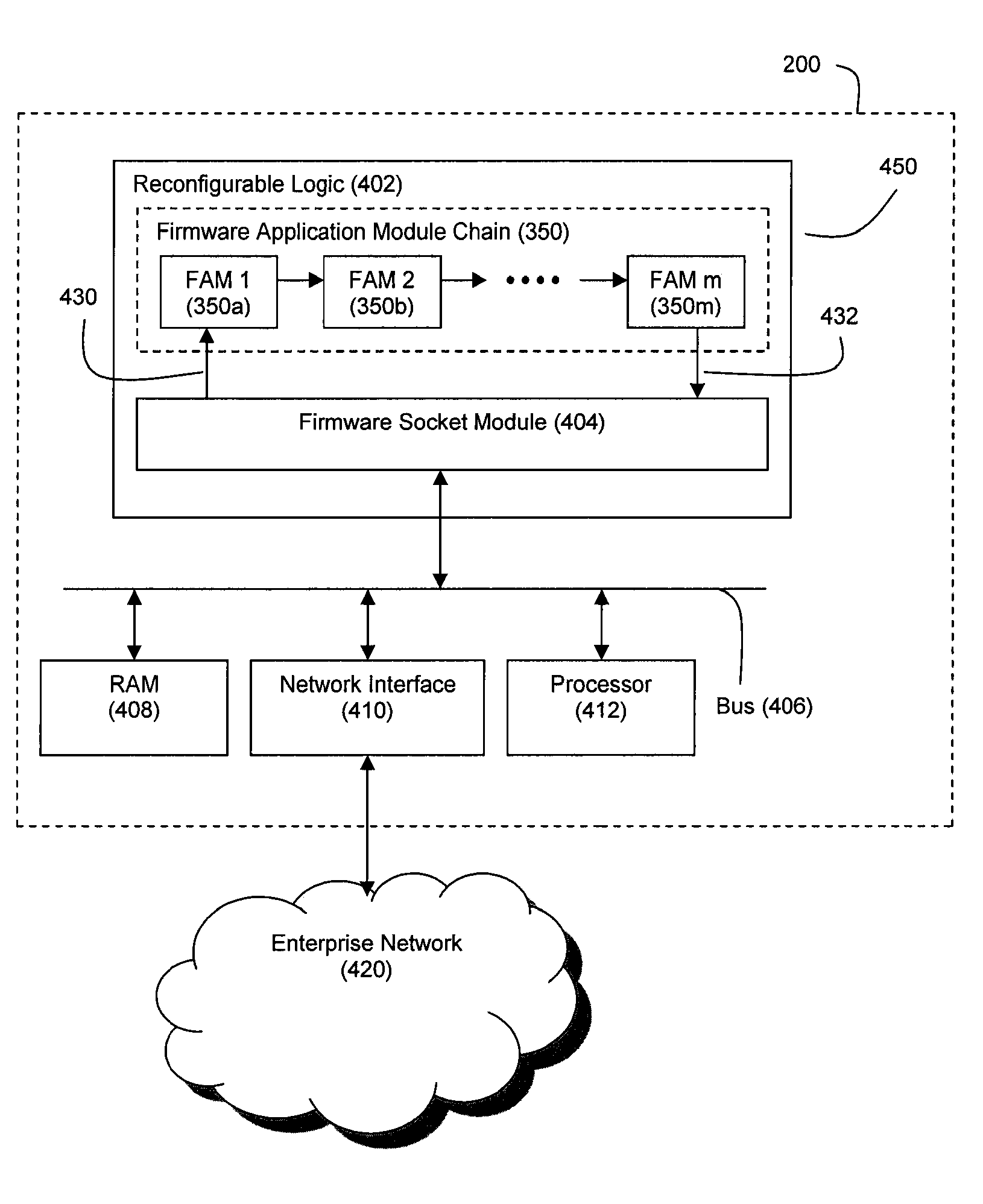

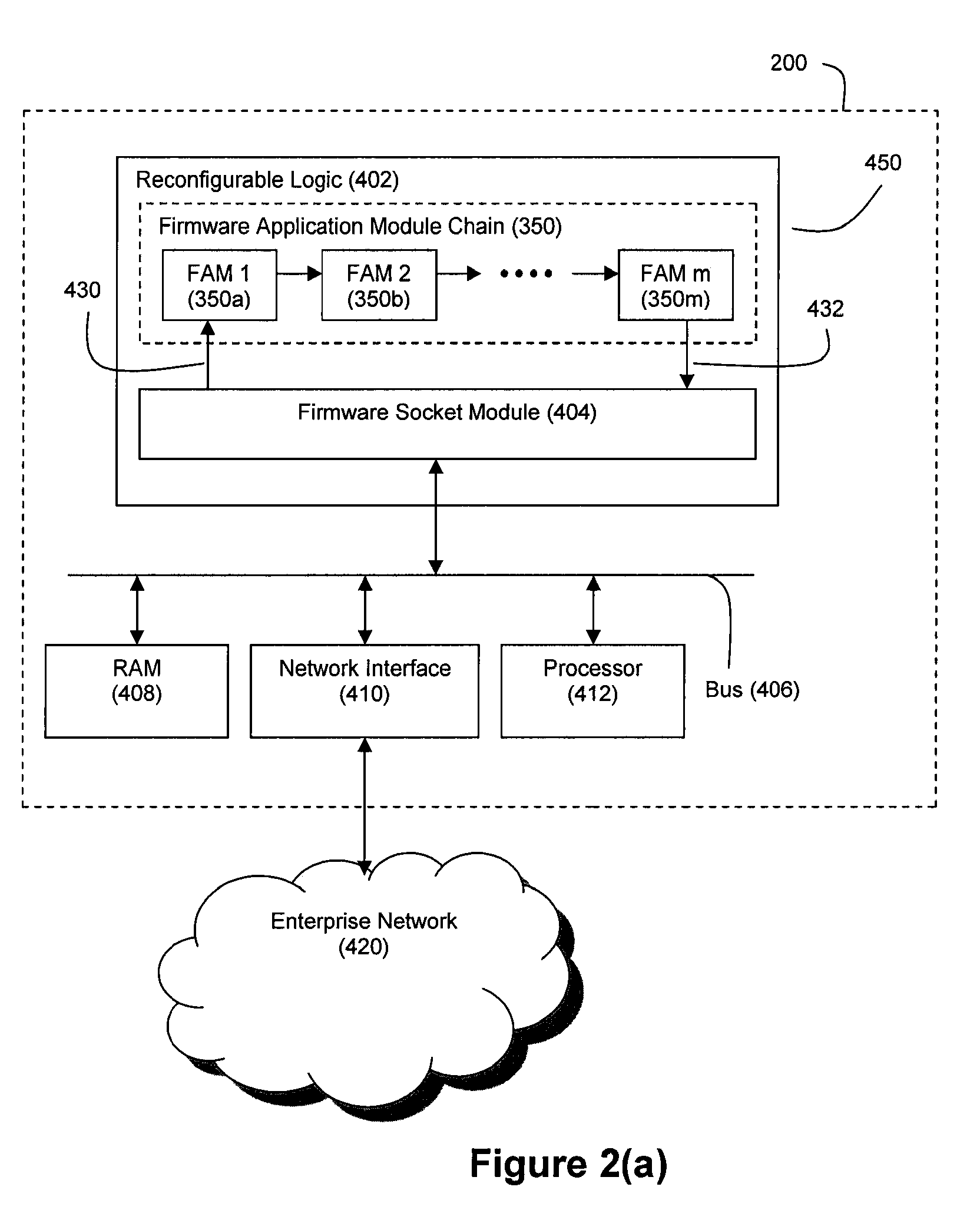

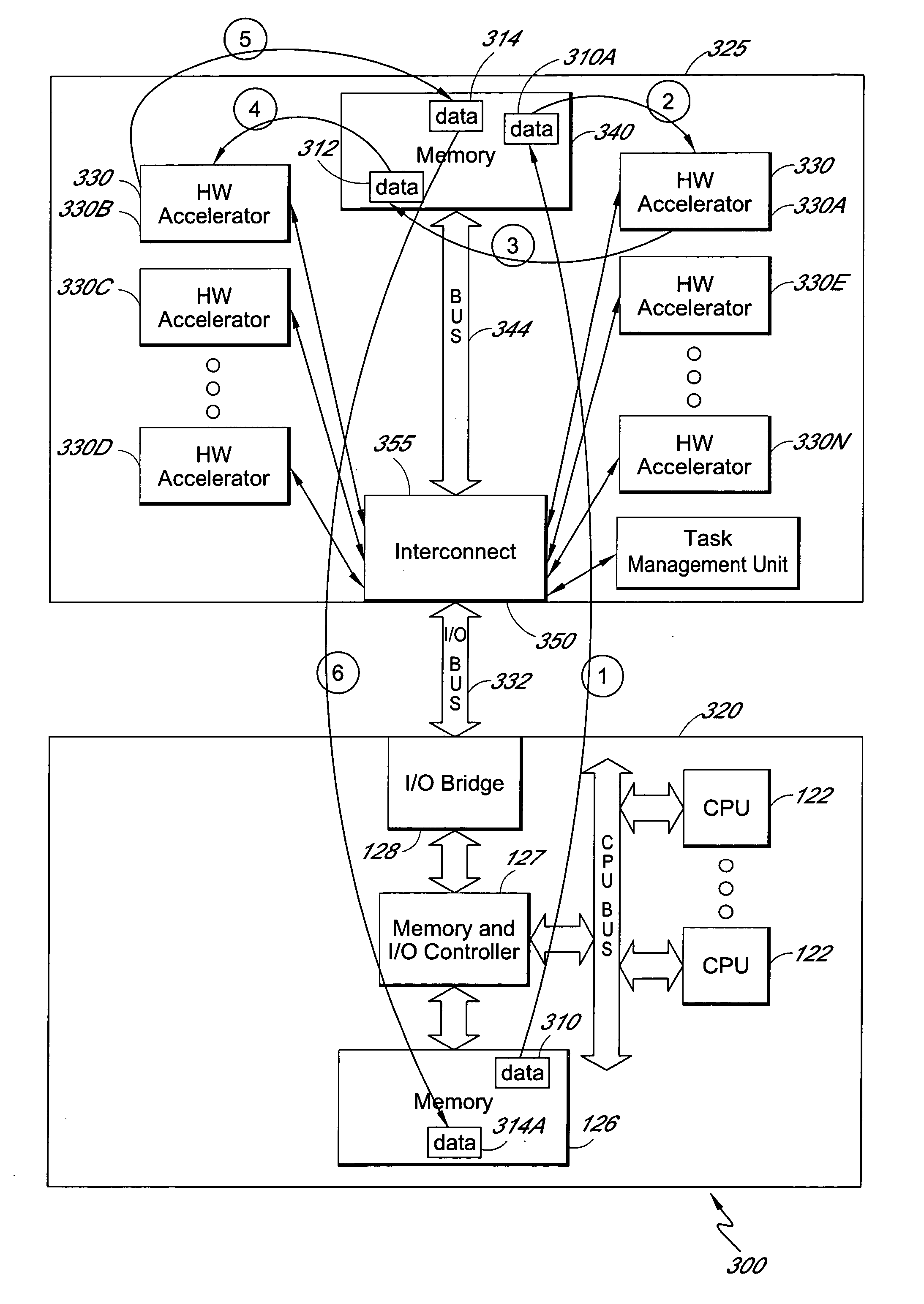

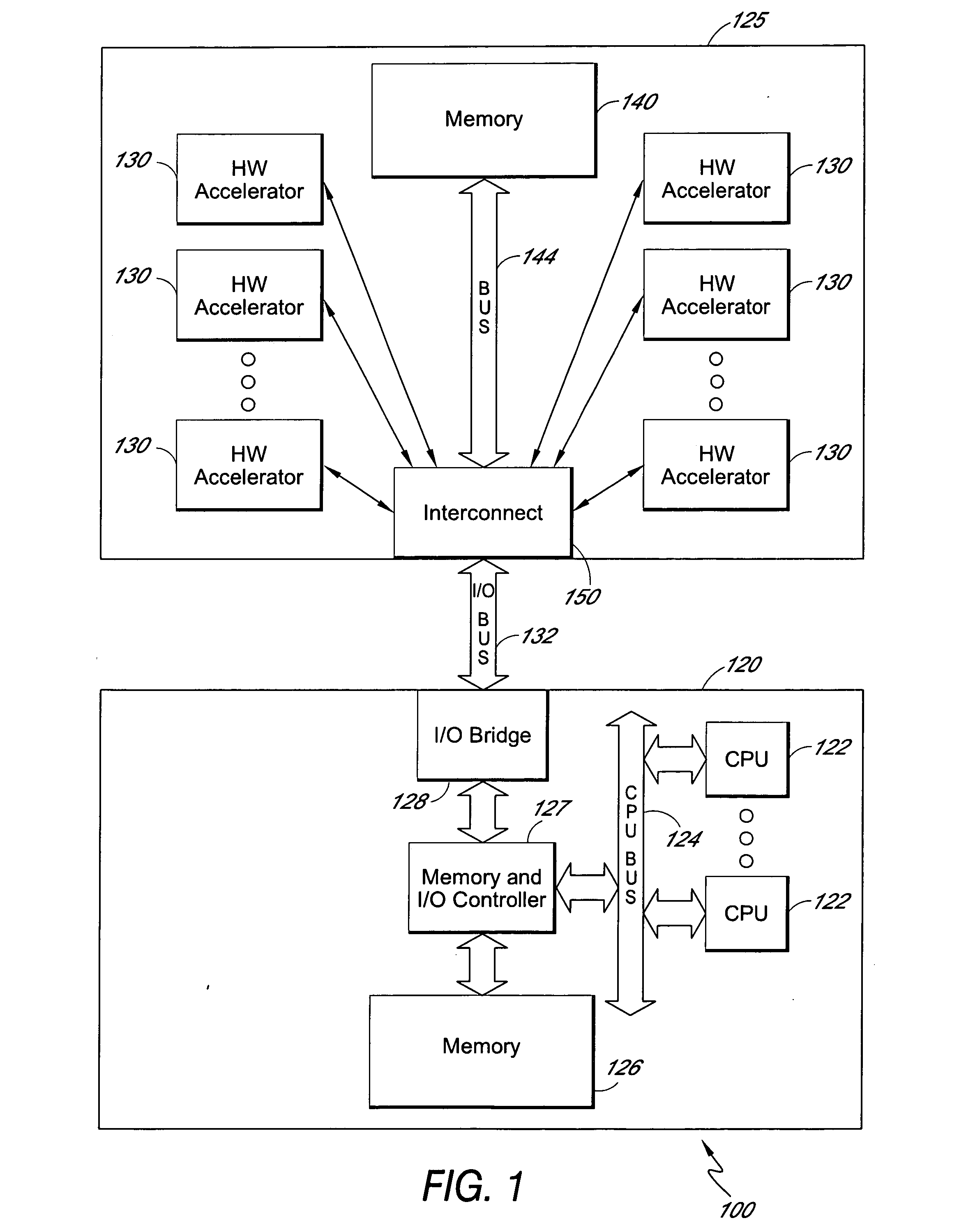

Method and apparatus for chaining multiple independent hardware acceleration operations

InactiveUS20050278502A1Reduce dataPreserving interconnect bandwidthProgram initiation/switchingGeneral purpose stored program computerComputer hardwareGeneral purpose

Multiple hardware accelerators can be used to efficiently perform processes that would otherwise be performed by general purpose hardware running software. The software overhead and bus bandwidth associated with running multiple hardware acceleration processes can be reduced by chaining multiple independent hardware acceleration operations within a circuit card assembly. Multiple independent hardware accelerators can be configured on a single circuit card assembly that is coupled to a computing device. The computing device can generate a playlist of hardware acceleration operations identifying hardware accelerators and associated accelerator options. A task management unit on the circuit card assembly receives the playlist and schedules the hardware acceleration operations such that multiple acceleration operations may be successively chained together without intervening data exchanges with the computing device.

Owner:INTEL CORP

Hardware-accelerated packet multicasting in a virtual routing system

InactiveUS20070291755A1Special service provision for substationData switching by path configurationMulticast packetsHardware acceleration

Methods and systems are provided for hardware-accelerated packet multicasting in a virtual routing system. According to one embodiment, a multicast packet is received at an ingress system of a packet-forwarding engine (PFE). The ingress system identifies flow classification indices for the multicast packet. Then, for each instance of multicasting, the ingress system sends a single copy of the multicast packet and the flow classification indices to an egress system of the PFE. The single copy of the multicast packet is buffered in a memory accessible by the egress system. The egress system prepares the multicast packet for transmission by for each flow classification index, identifying corresponding transform control instructions based on the flow classification index, reading the single copy of the multicast packet from the memory, causing the multicast packet to be transformed in accordance with the identified transform control instructions and outputting the transformed multicast packet.

Owner:FORTINET

Method and system for high performance integration, processing and searching of structured and unstructured data using coprocessors

ActiveUS7660793B2Effectively bifurcate query processingTimely responseData processing applicationsDigital data processing detailsFull text searchRelational database

Disclosed herein is a method and system for integrating an enterprise's structured and unstructured data to provide users and enterprise applications with efficient and intelligent access to that data. Queries can be directed toward both an enterprise's structured and unstructured data using standardized database query formats such as SQL commands. A coprocessor can be used to hardware-accelerate data processing tasks (such as full-text searching) on unstructured data as necessary to handle a query. Furthermore, traditional relational database techniques can be used to access structured data stored by a relational database to determine which portions of the enterprise's unstructured data should be delivered to the coprocessor for hardware-accelerated data processing.

Owner:IP RESERVOIR

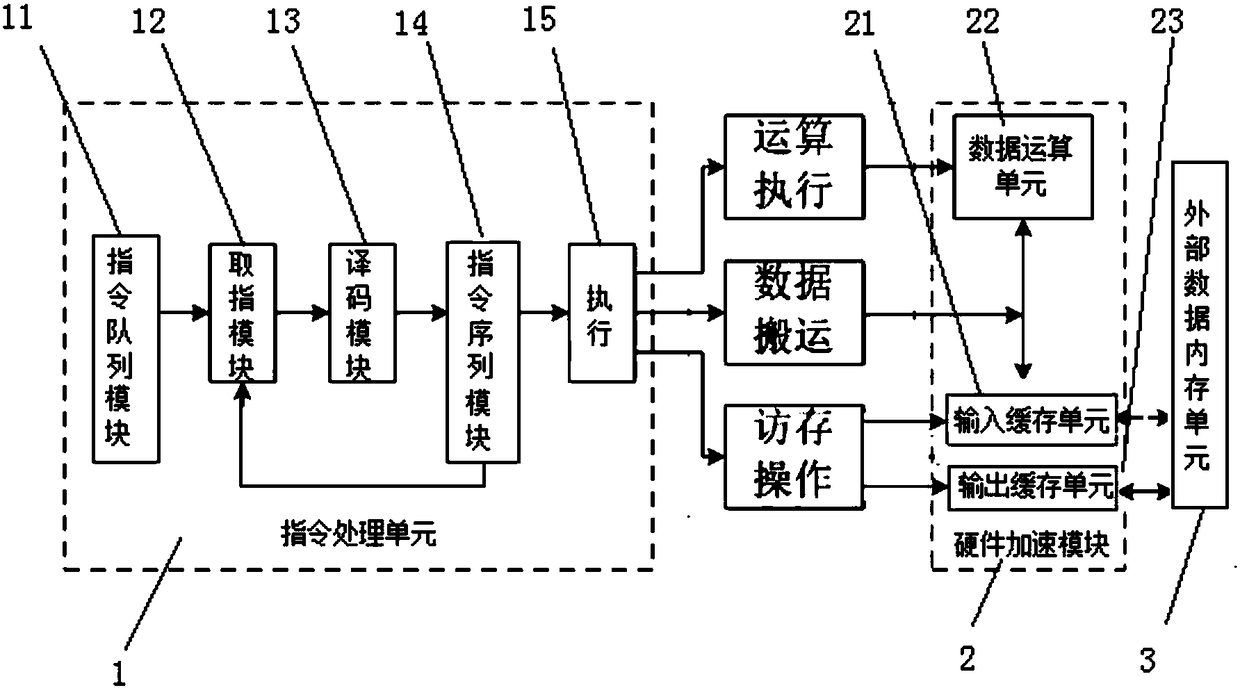

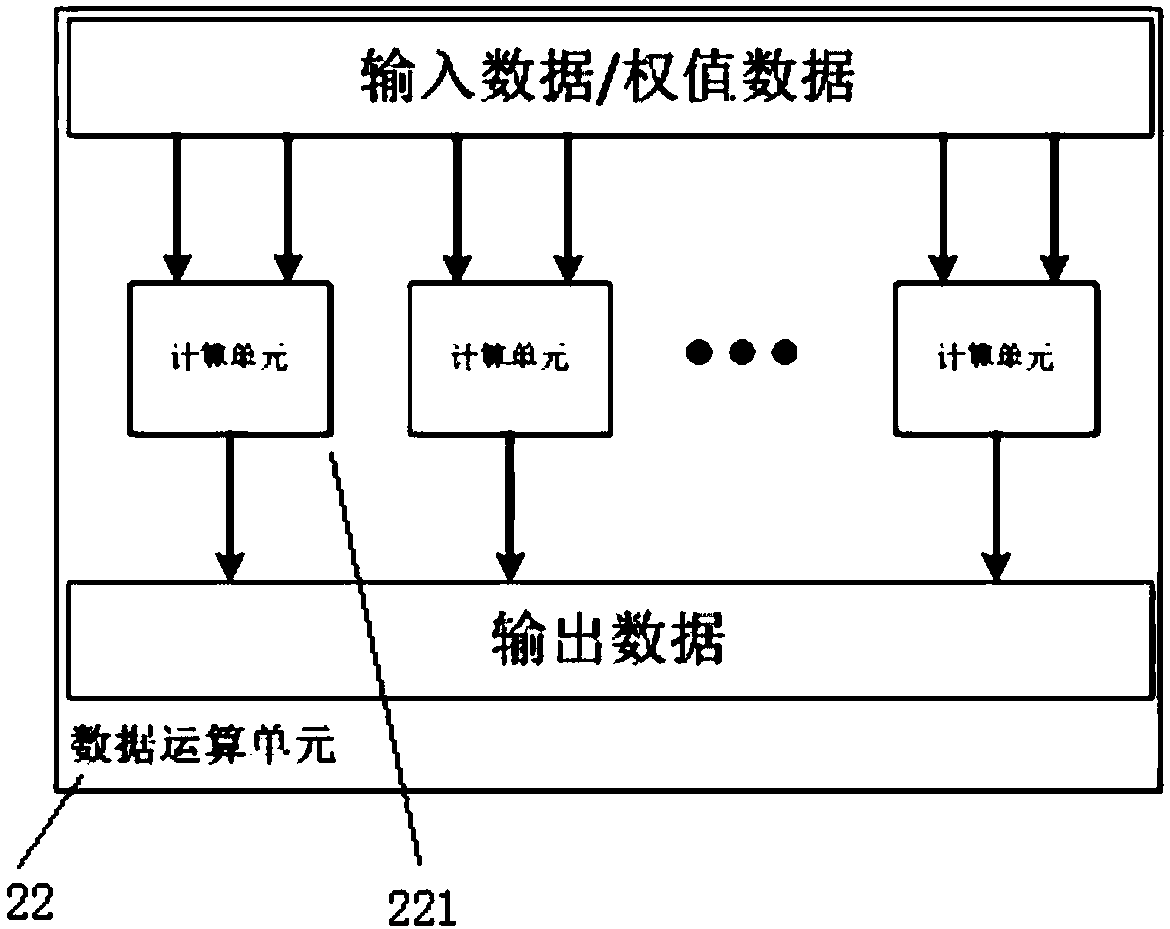

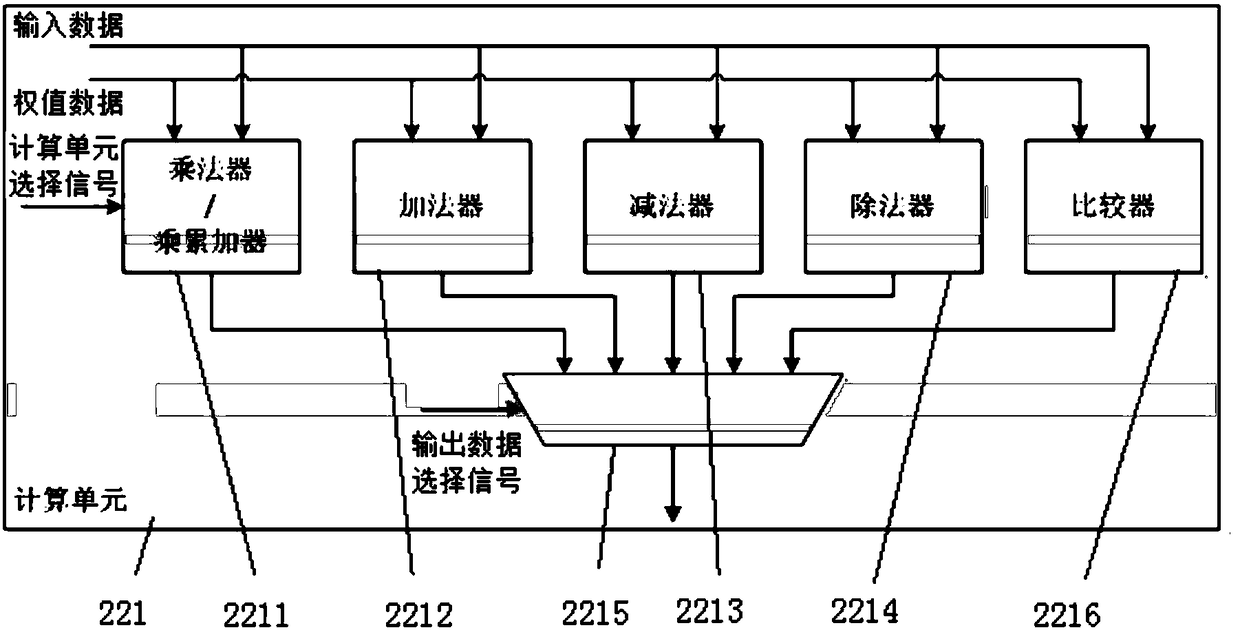

Convolutional neural network hardware acceleration device, convolution calculation method, and storage medium

InactiveCN108197705AImprove efficiencyImprove versatilityInstruction analysisPhysical realisationInstruction processing unitData operations

The invention relates to a convolutional neural network hardware acceleration device, a convolution calculation method, and a storage medium. The device comprises an instruction processing unit, a hardware acceleration module and an external data memory unit, wherein the instruction processing unit decodes an instruction set to execute a corresponding operation so as to control the hardware acceleration module; the hardware acceleration module comprises an input caching unit, a data operation unit and an output caching unit, wherein the input caching unit executes the memory access operation of the instruction processing unit, and stores data read from the external data memory unit; the data operation unit executes the operation execution operation of the instruction processing unit, processes the data operation of the convolutional neural network, and controls a data operation process and a data flow direction according to an operation instruction set; the output caching unit executesthe memory access operation of the instruction processing unit, and stores a calculation result which is output by the data operation unit and needs to be written into the external data memory unit;and the external data memory unit stores a calculation result output by the output caching unit and transmits data to the input caching unit according to the reading of the input caching unit.

Owner:NATIONZ TECH INC

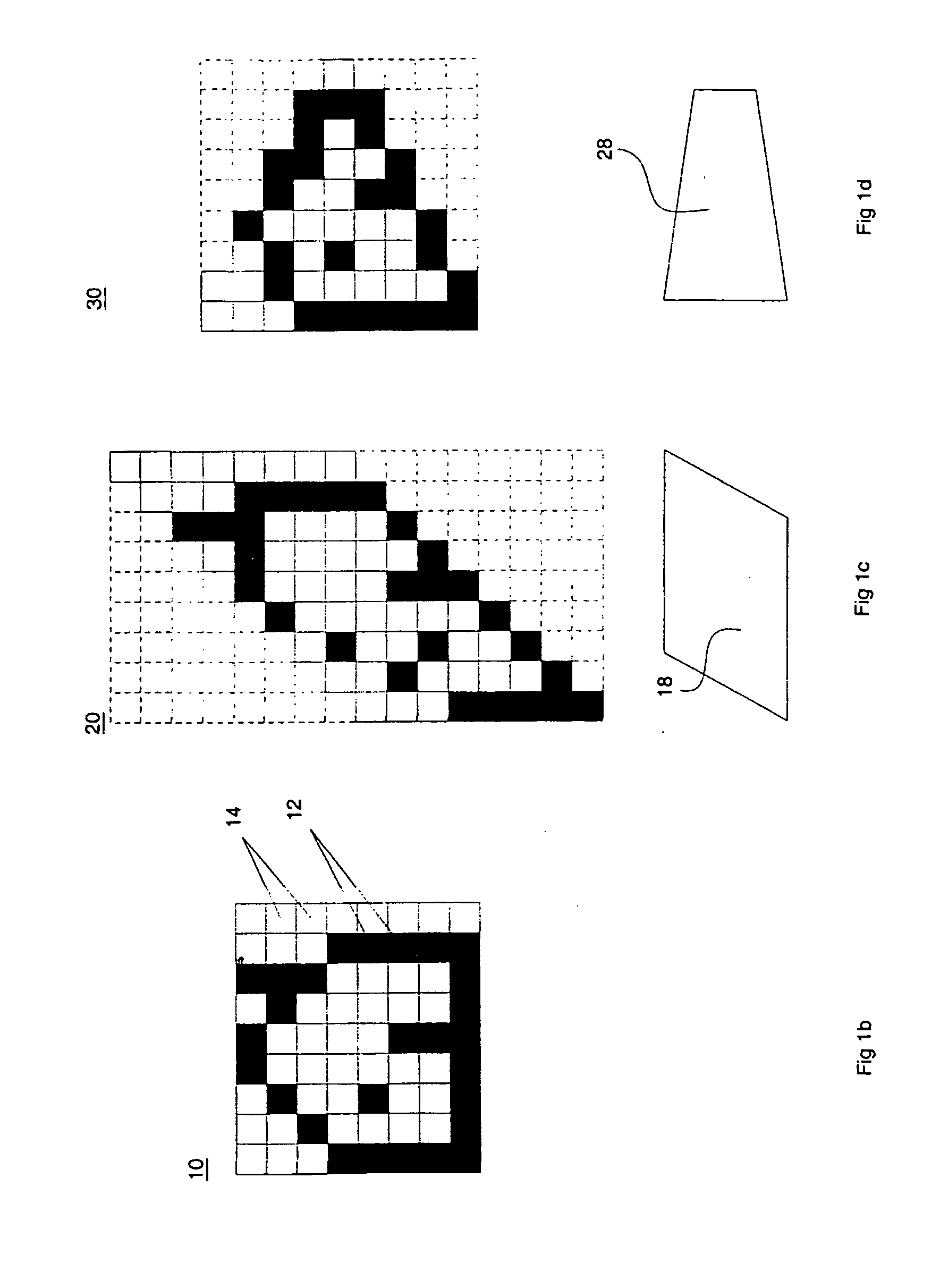

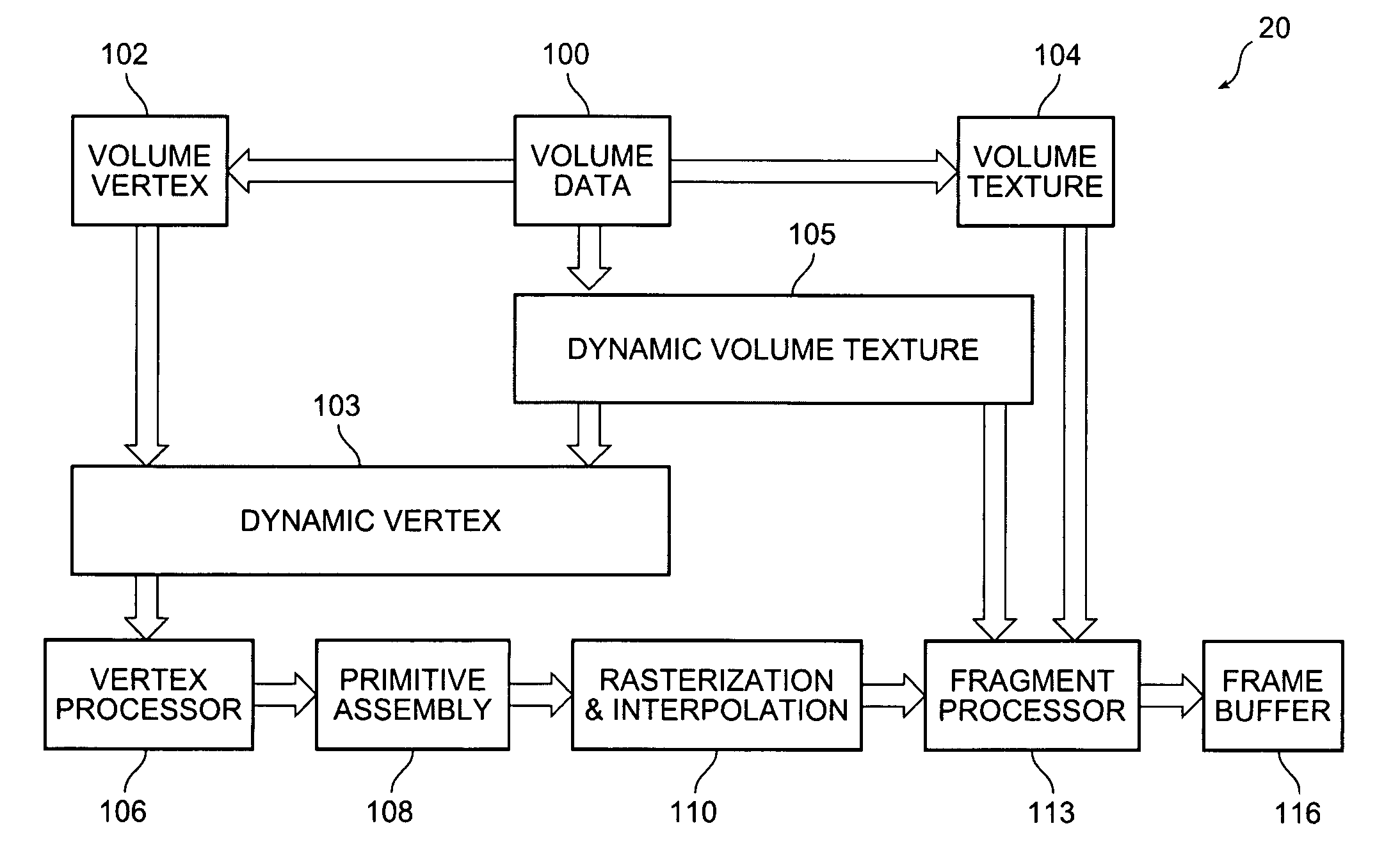

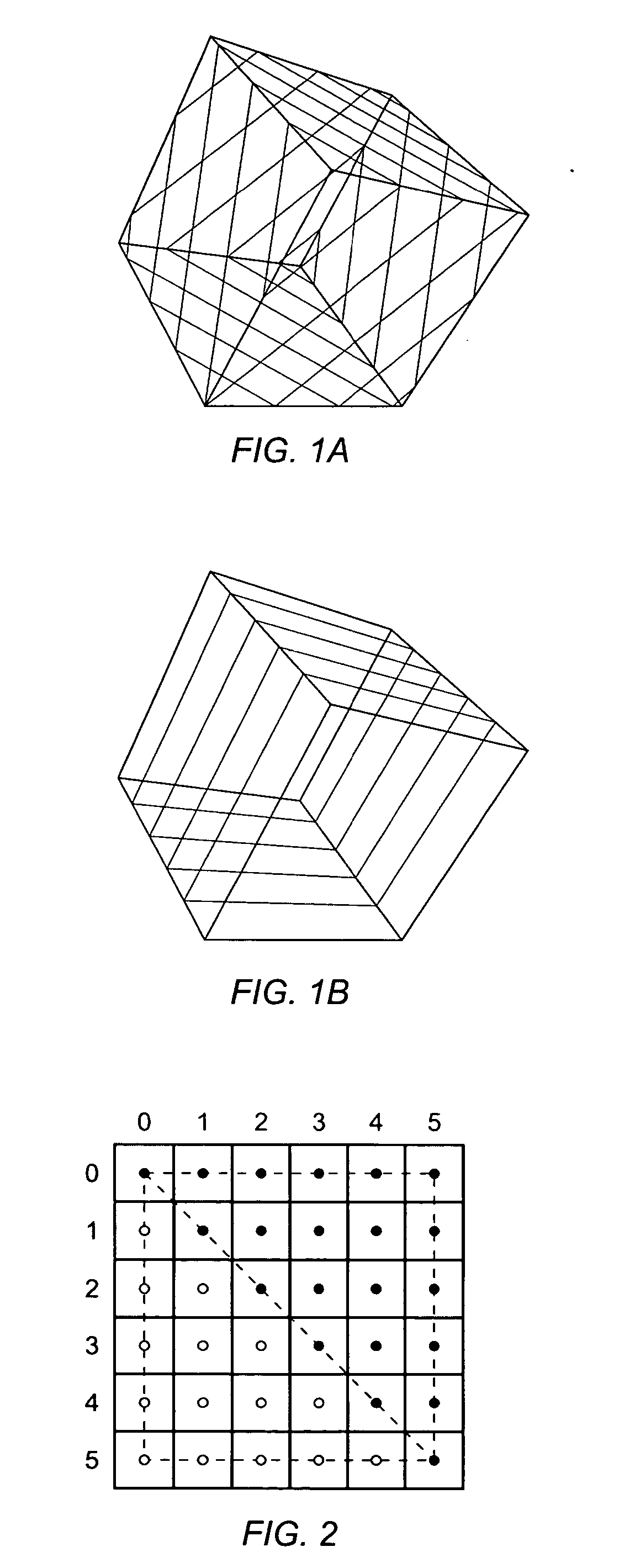

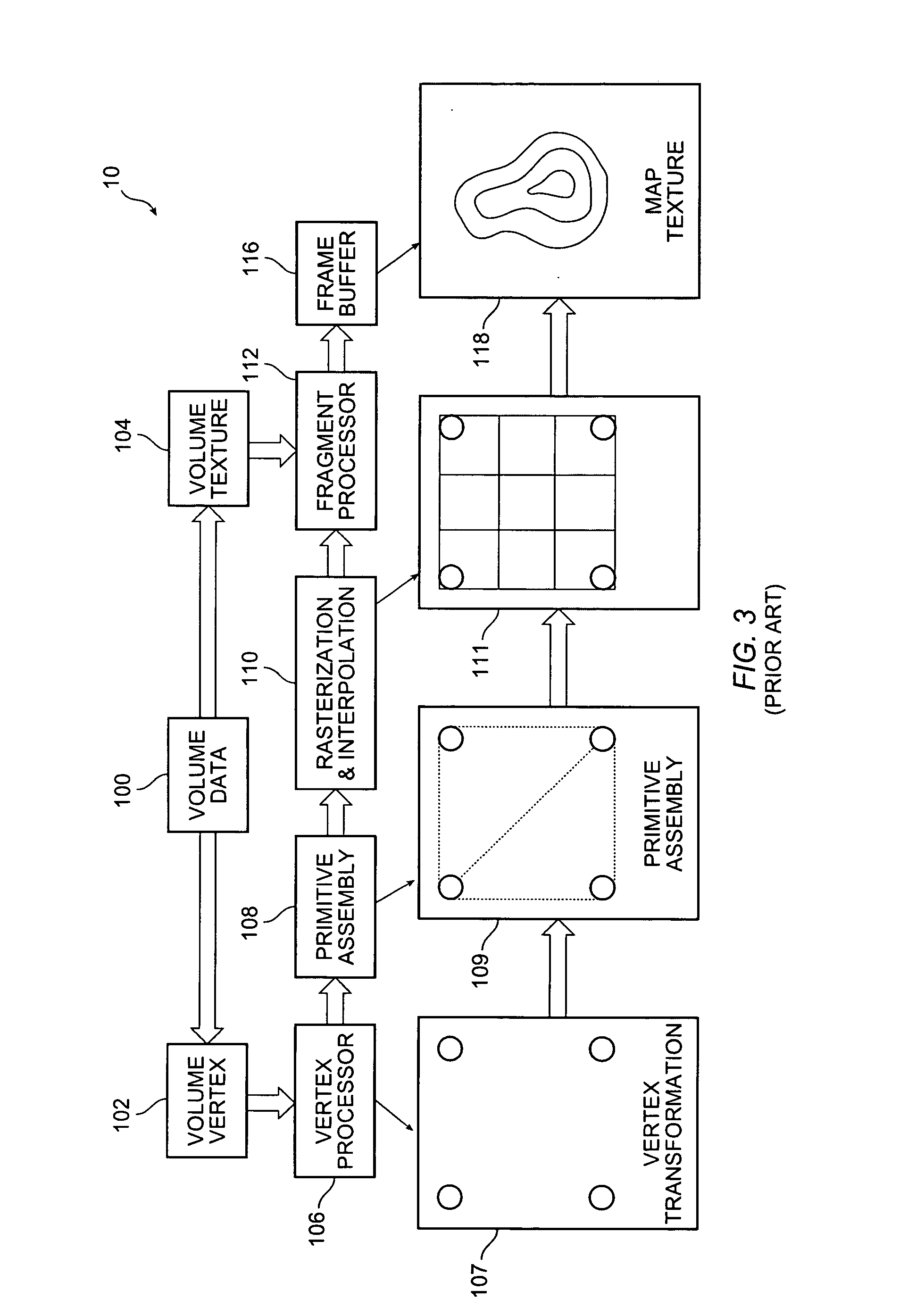

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

ActiveUS20050231503A1Reduce the burden onDetails involving 3D image dataCathode-ray tube indicatorsVoxelFiltration

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com