Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3539results about "Details involving 3D image data" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

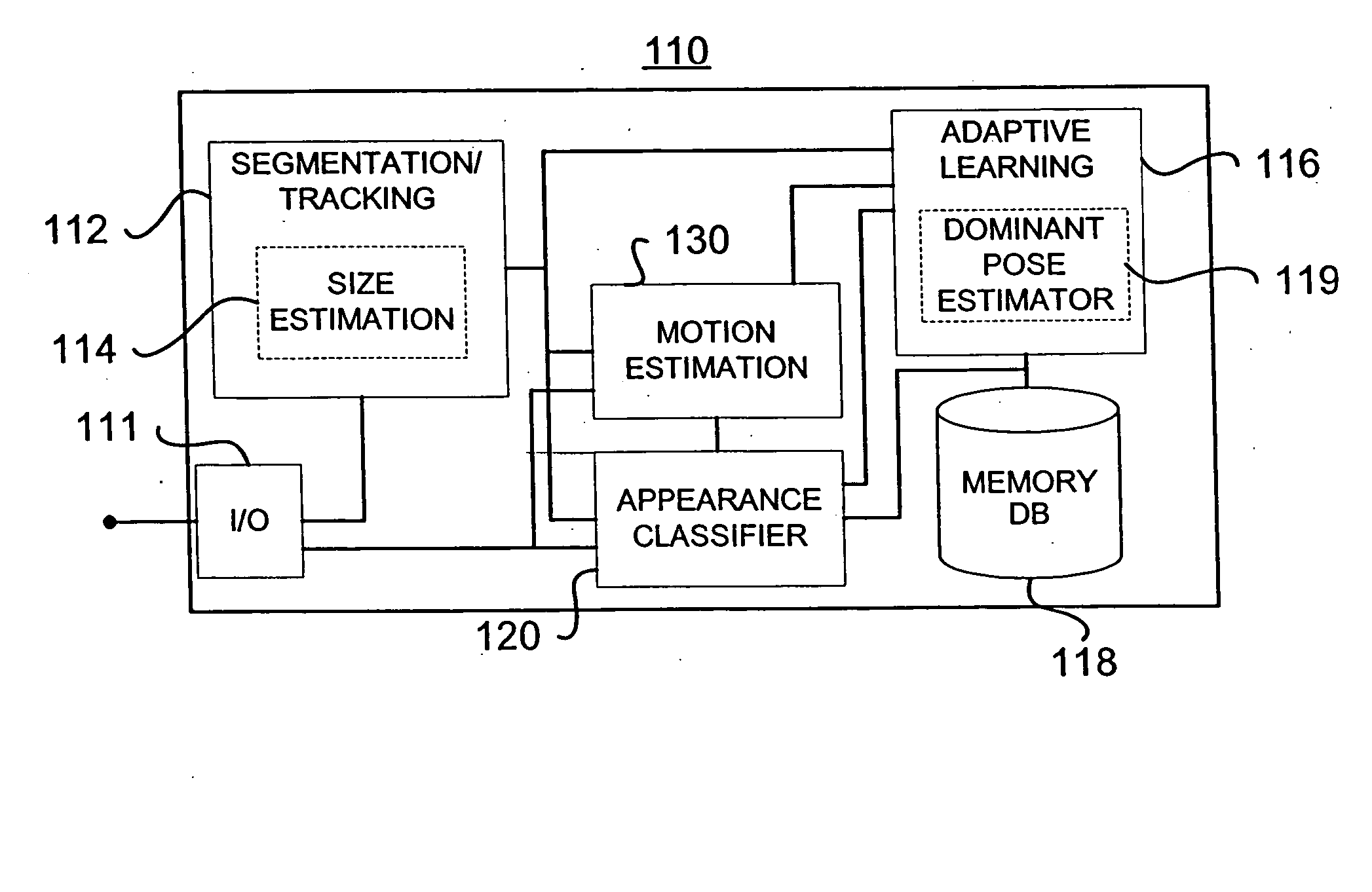

Target orientation estimation using depth sensing

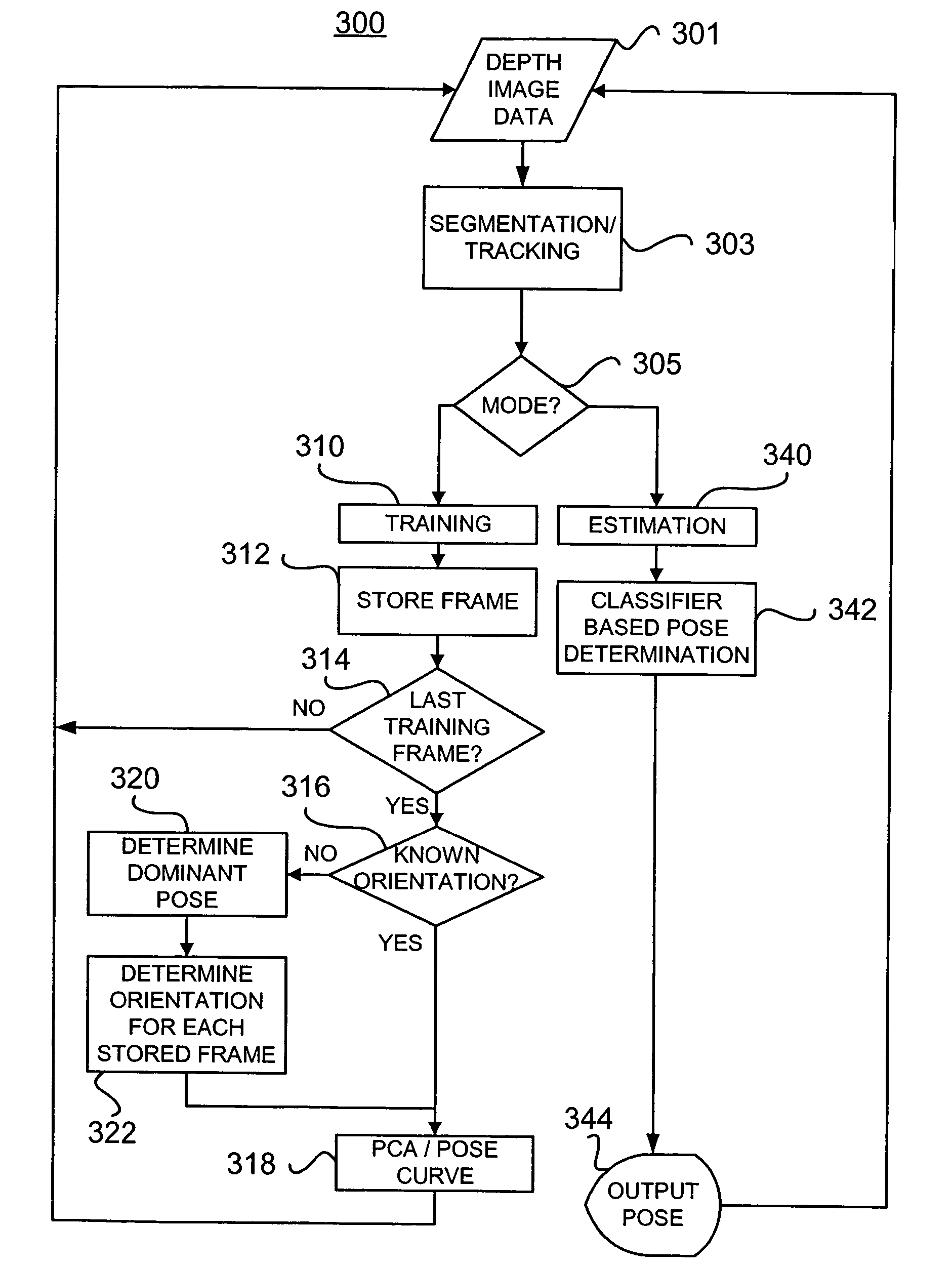

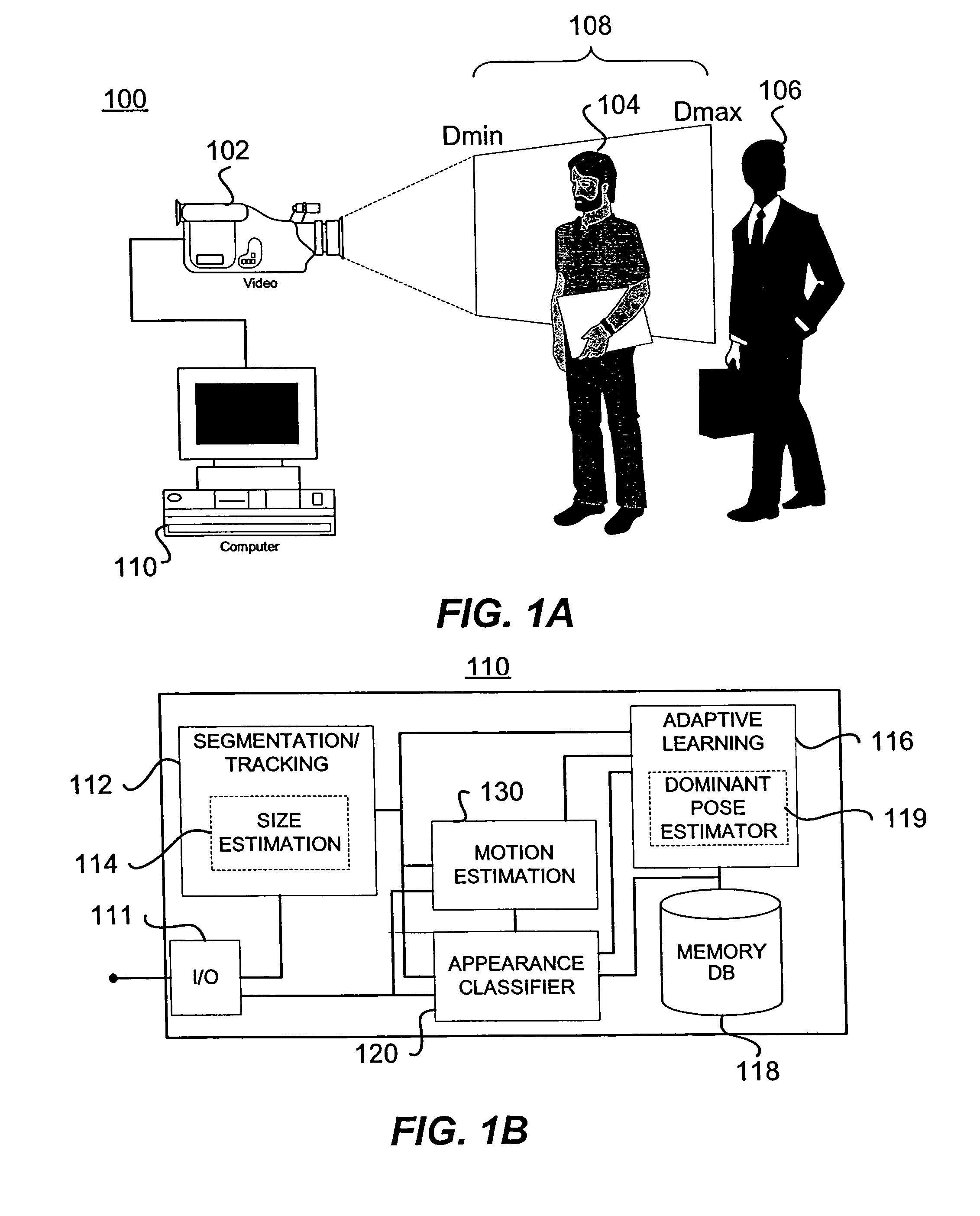

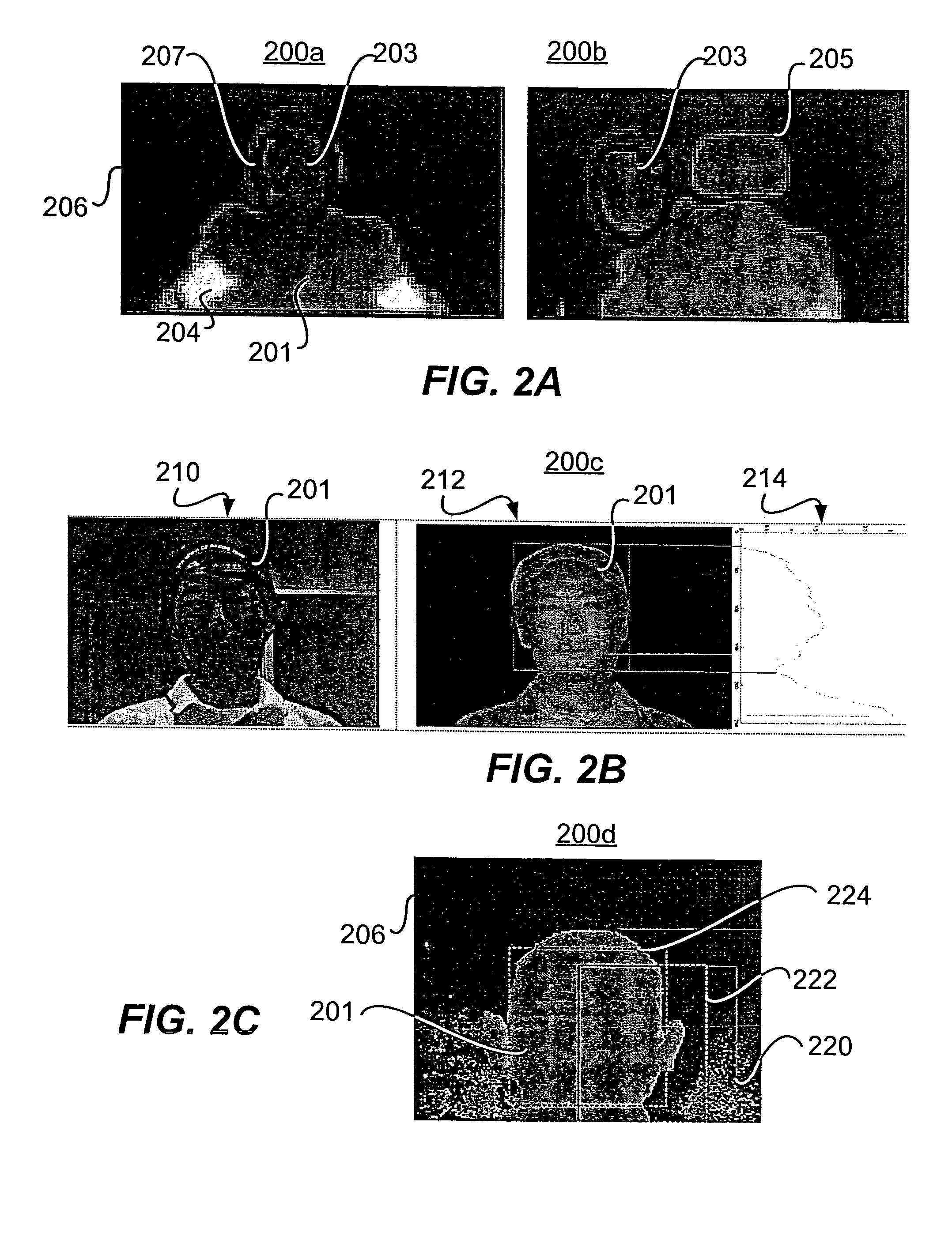

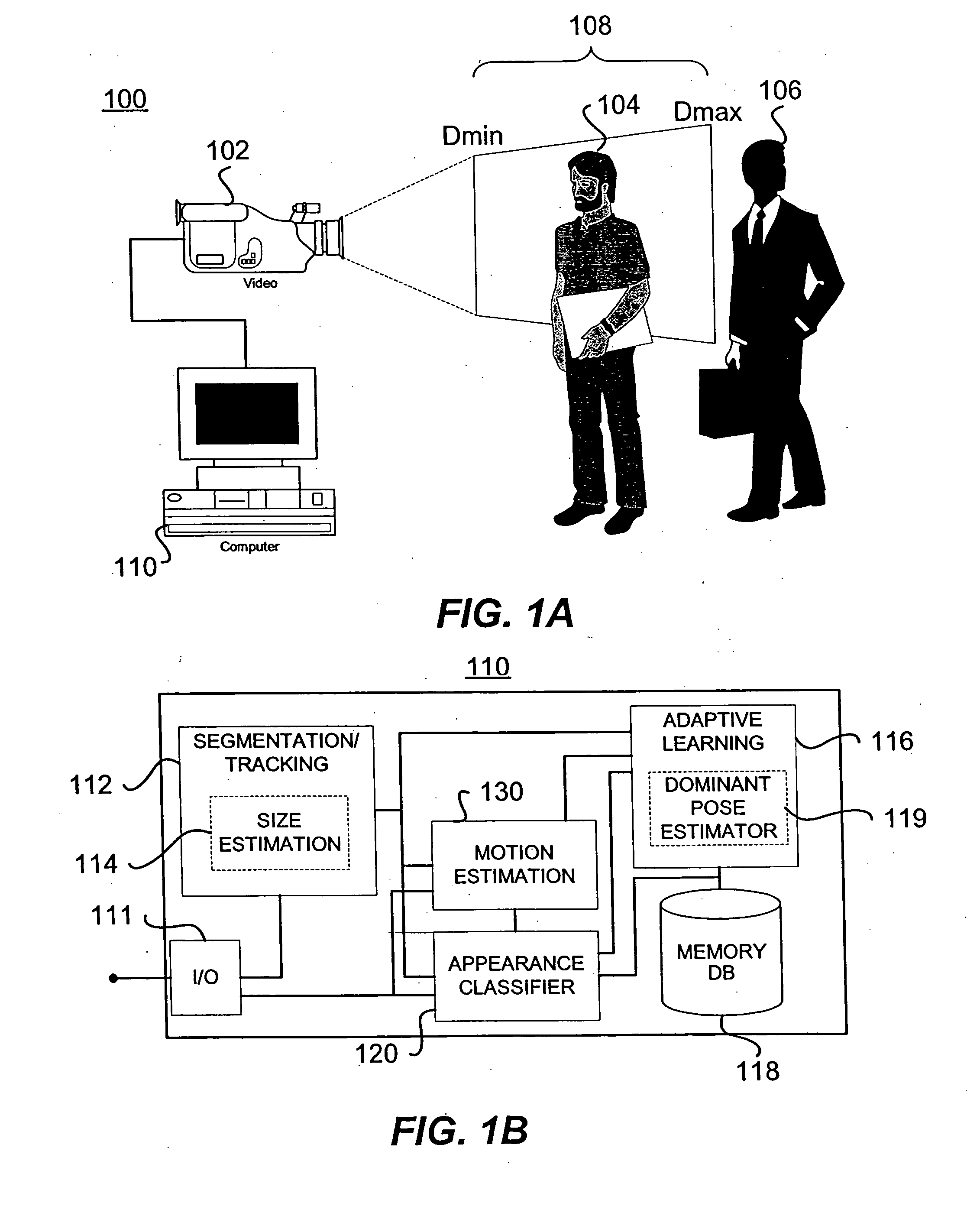

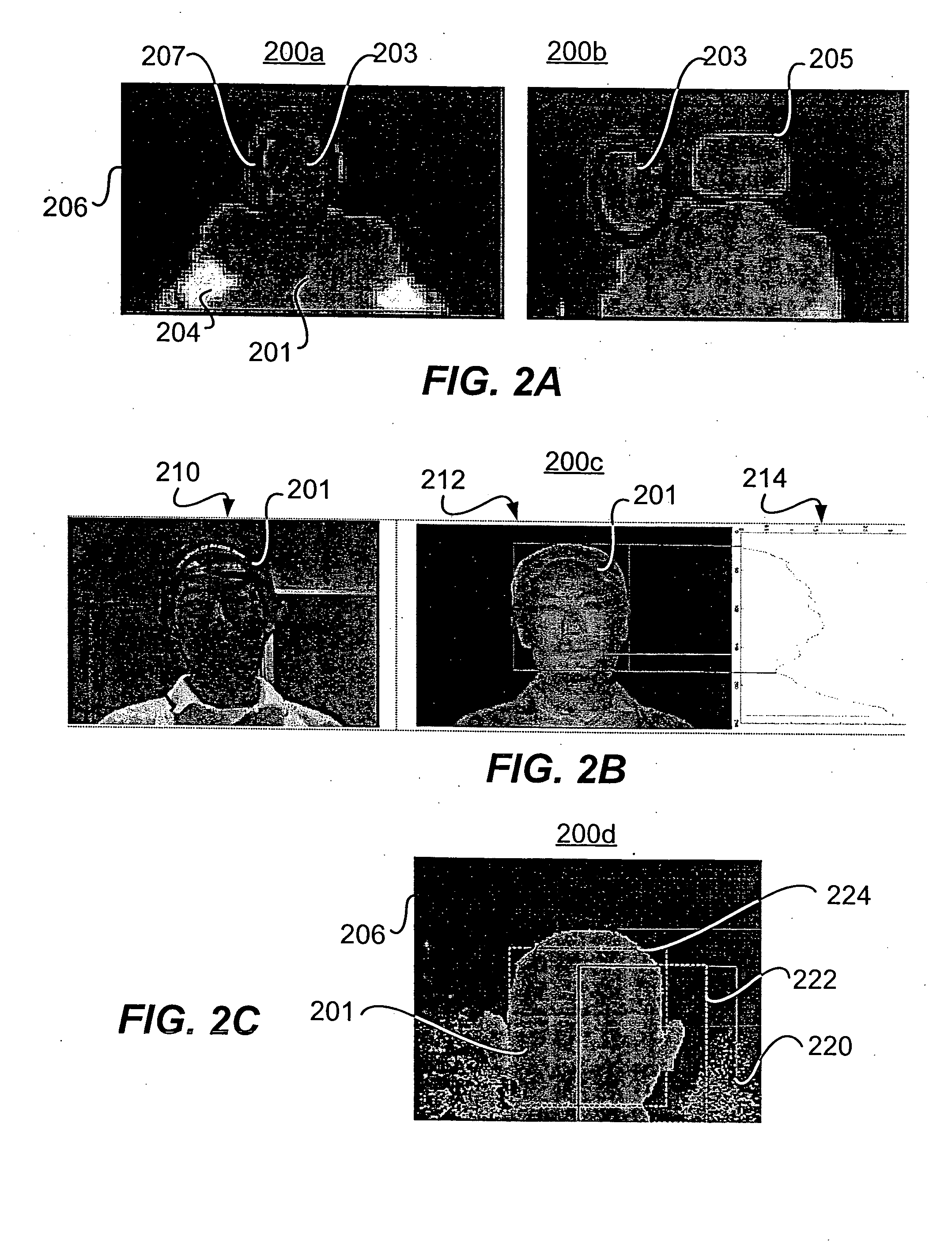

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

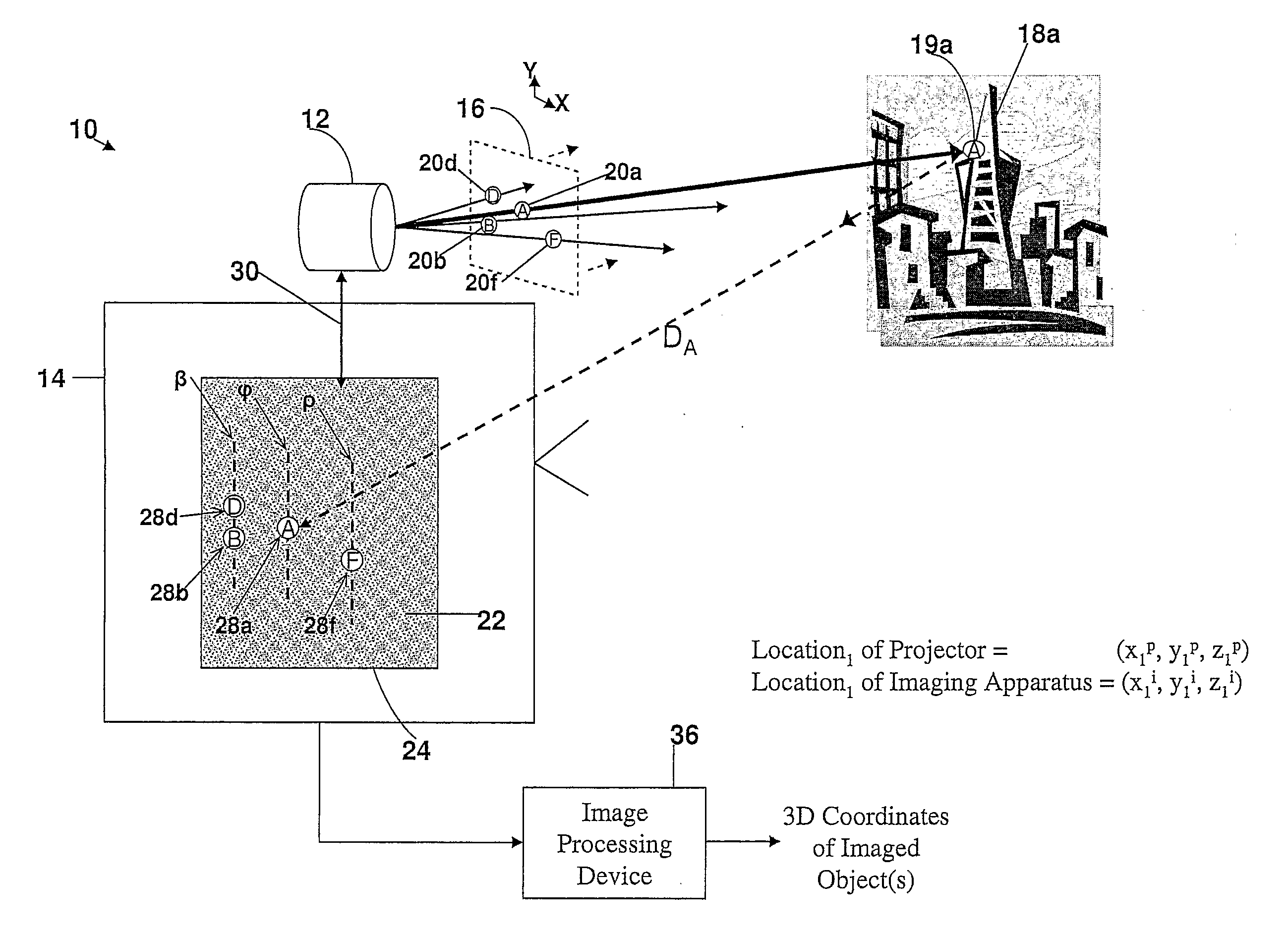

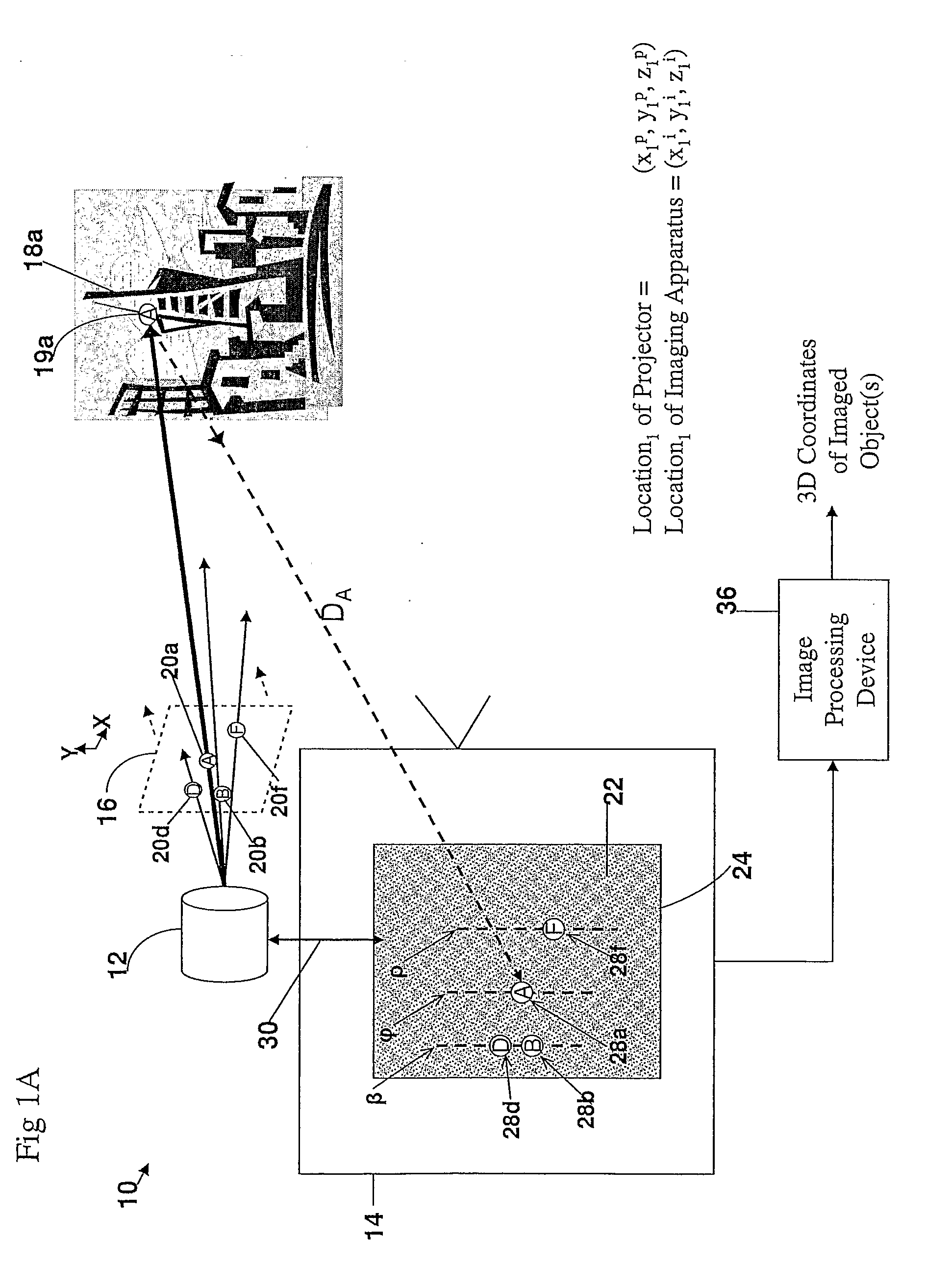

3D geometric modeling and 3D video content creation

A system, apparatus and method of obtaining data from a 2D image in order to determine the 3D shape of objects appearing in said 2D image, said 2D image having distinguishable epipolar lines, said method comprising: (a) providing a predefined set of types of features, giving rise to feature types, each feature type being distinguishable according to a unique bi-dimensional formation; (b) providing a coded light pattern comprising multiple appearances of said feature types; (c) projecting said coded light pattern on said objects such that the distance between epipolar lines associated with substantially identical features is less than the distance between corresponding locations of two neighboring features; (d) capturing a 2D image of said objects having said projected coded light pattern projected thereupon, said 2D image comprising reflected said feature types; and (e) extracting: (i) said reflected feature types according to the unique bi-dimensional formations; and (ii) locations of said reflected feature types on respective said epipolar lines in said 2D image.

Owner:MANTIS VISION

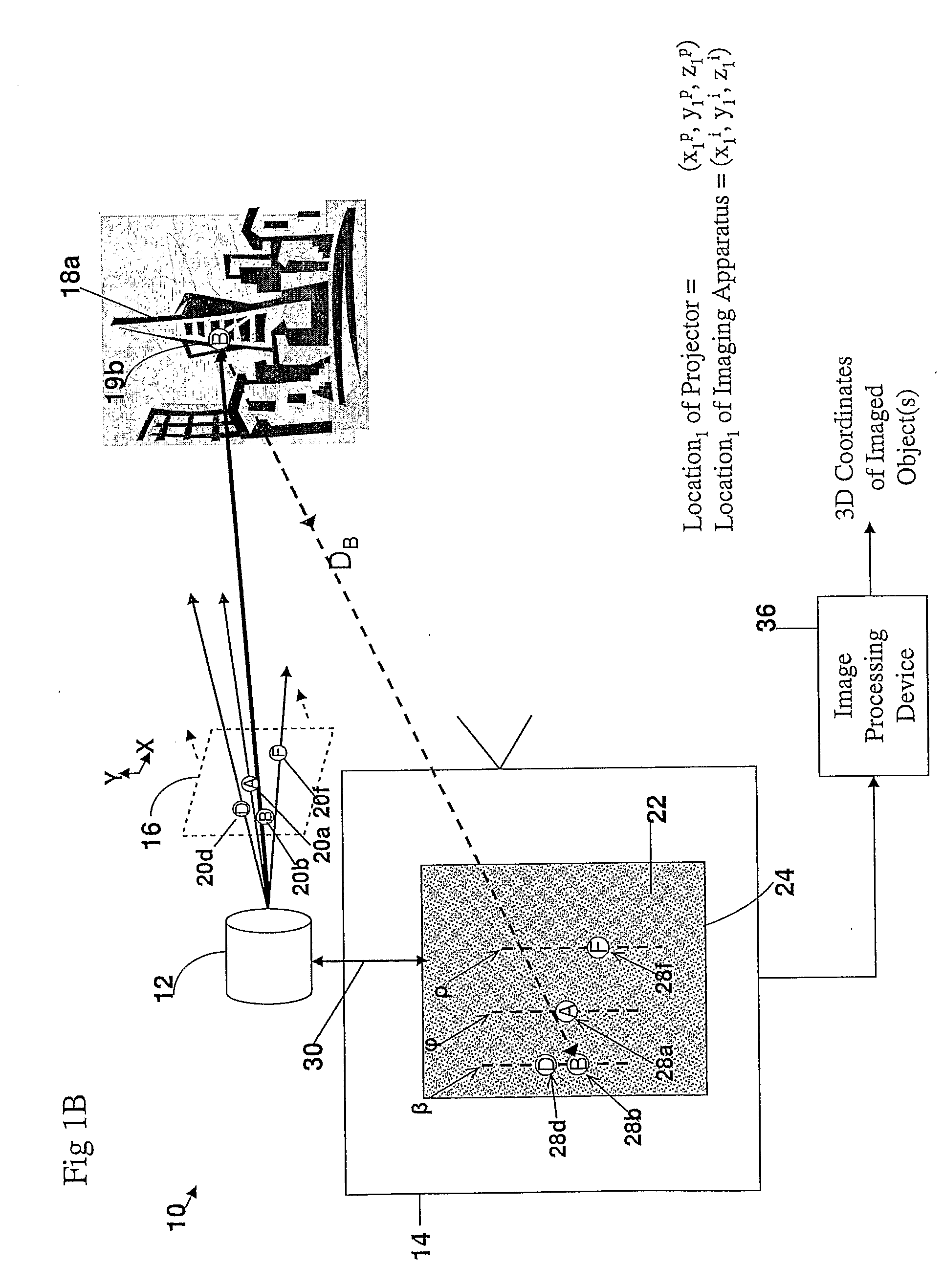

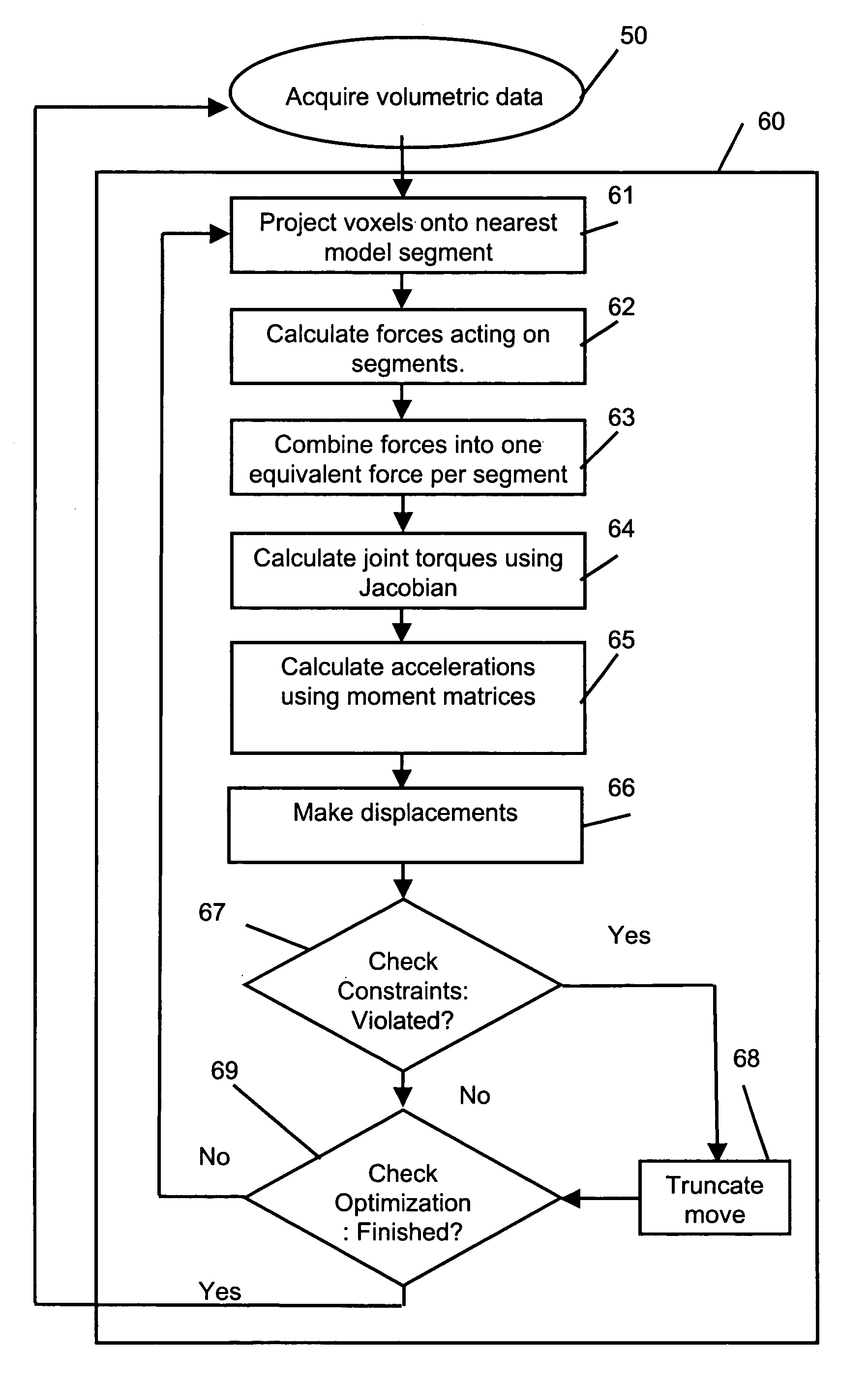

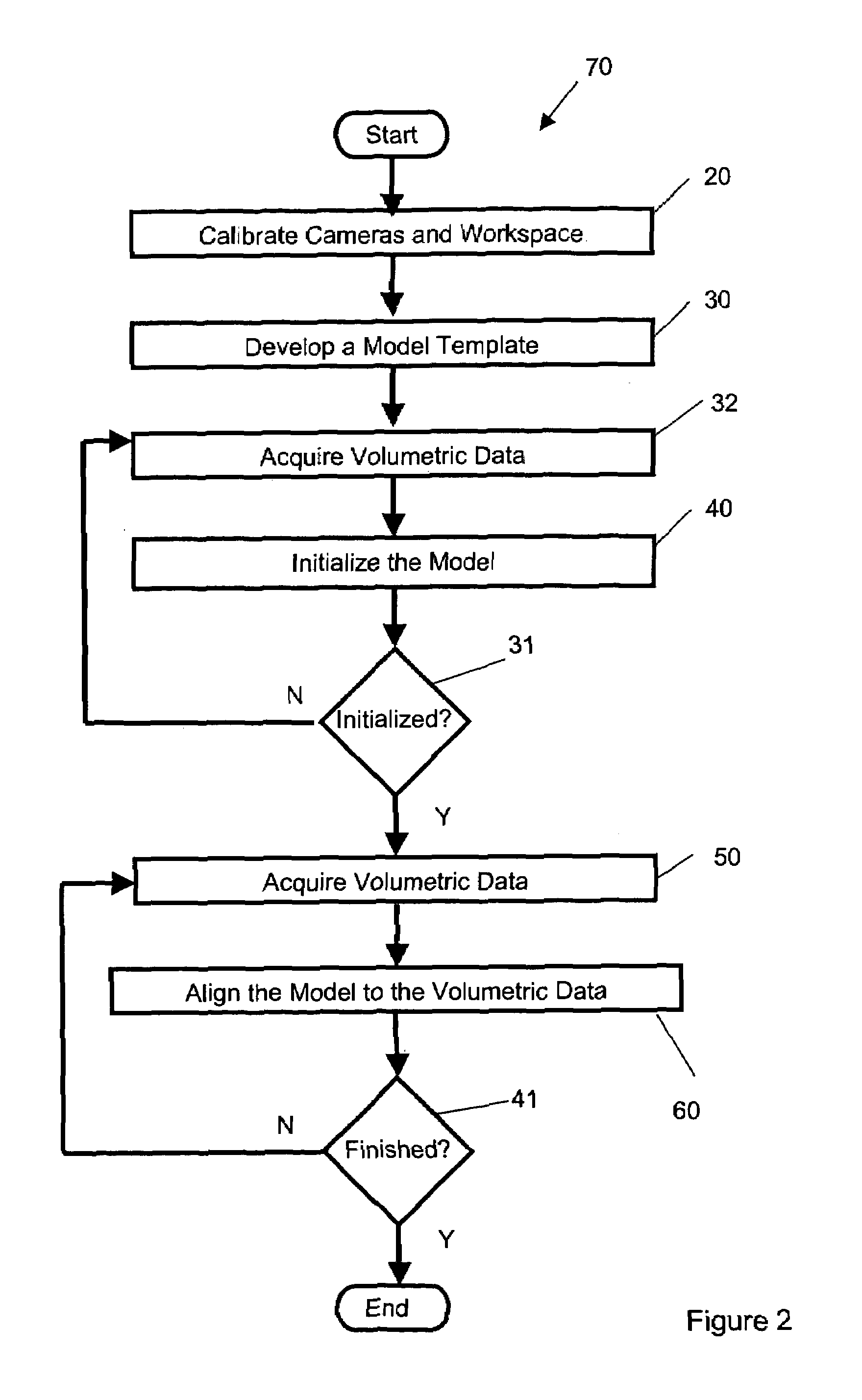

Real time markerless motion tracking using linked kinematic chains

A markerless method is described for tracking the motion of subjects in a three dimensional environment using a model based on linked kinematic chains. The invention is suitable for tracking robotic, animal or human subjects in real-time using a single computer with inexpensive video equipment, and does not require the use of markers or specialized clothing. A simple model of rigid linked segments is constructed of the subject and tracked using three dimensional volumetric data collected by a multiple camera video imaging system. A physics based method is then used to compute forces to align the model with subsequent volumetric data sets in real-time. The method is able to handle occlusion of segments and accommodates joint limits, velocity constraints, and collision constraints and provides for error recovery. The method further provides for elimination of singularities in Jacobian based calculations, which has been problematic in alternative methods.

Owner:NAT TECH & ENG SOLUTIONS OF SANDIA LLC

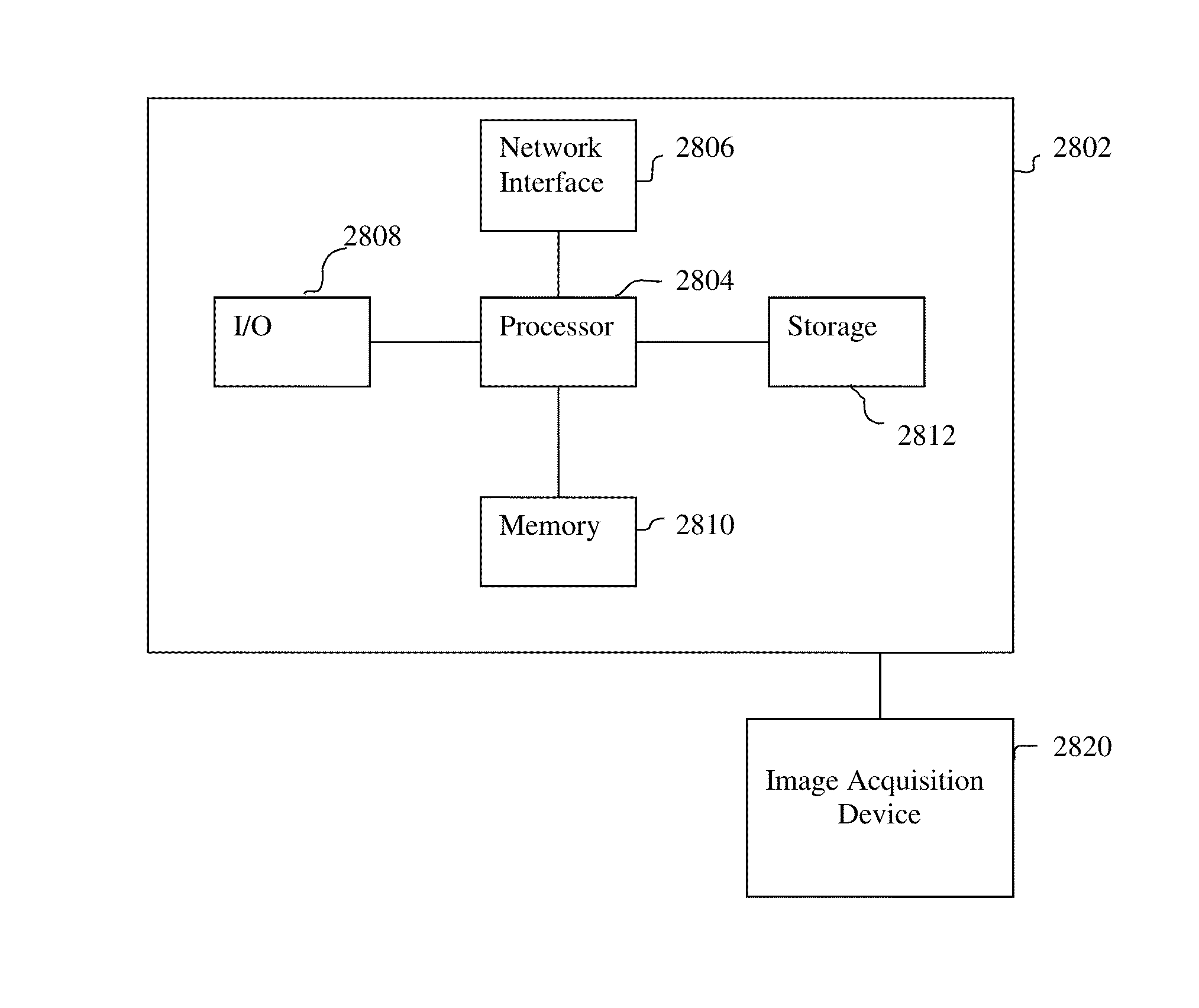

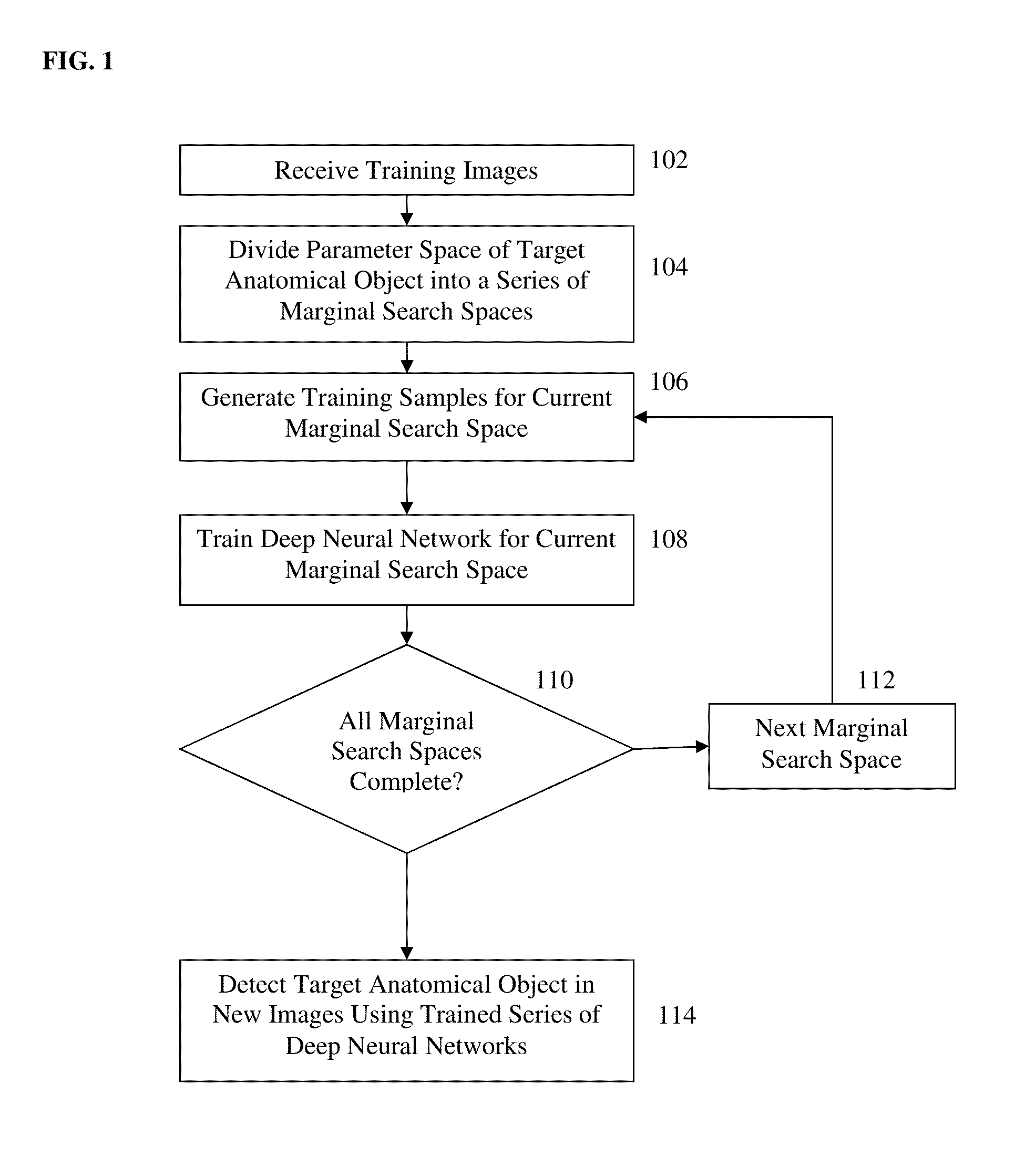

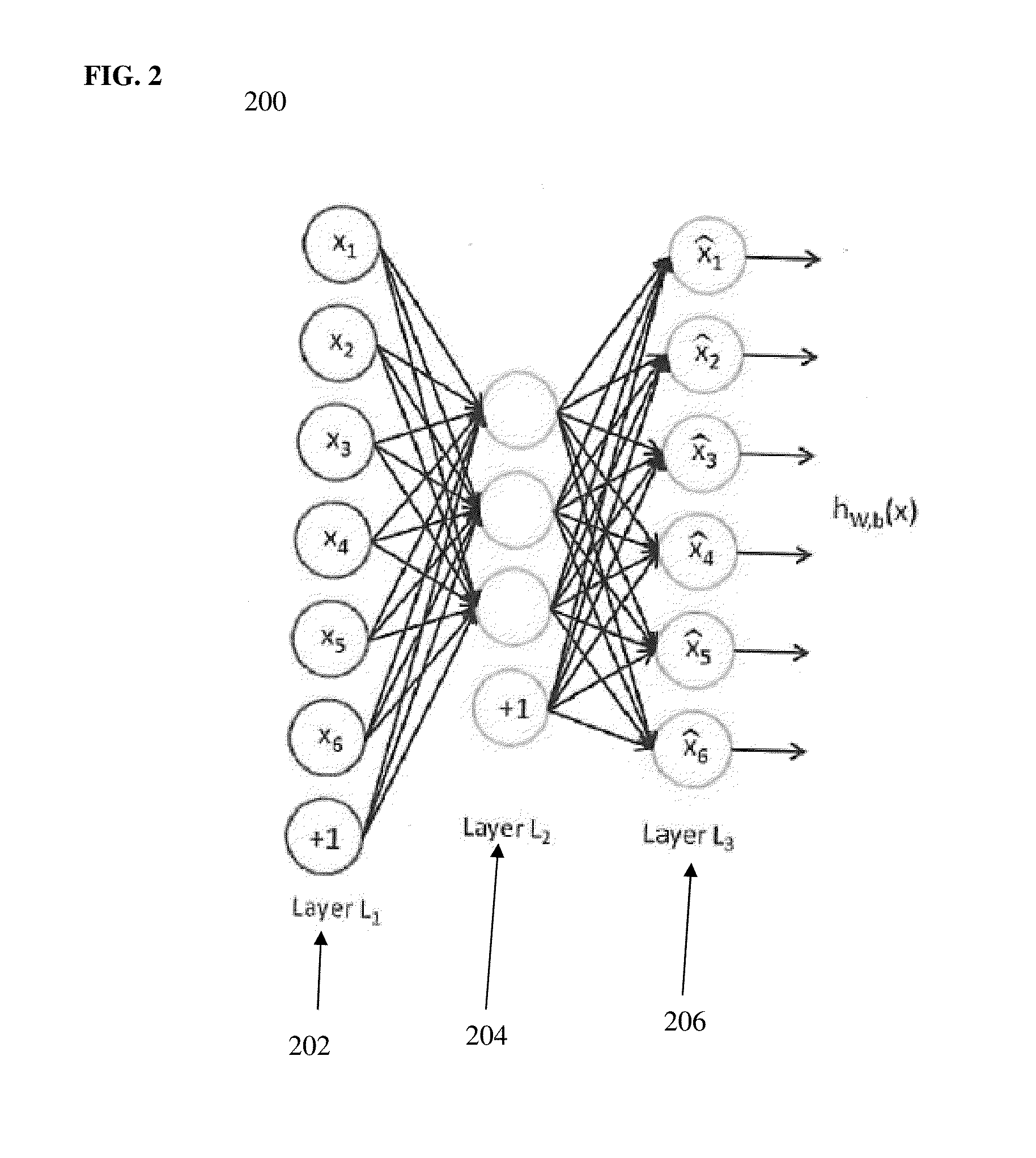

Method and System for Anatomical Object Detection Using Marginal Space Deep Neural Networks

ActiveUS20160174902A1Add dimensionUltrasonic/sonic/infrasonic diagnosticsImage enhancementMachine learningObject detection

A method and system for anatomical object detection using marginal space deep neural networks is disclosed. The pose parameter space for an anatomical object is divided into a series of marginal search spaces with increasing dimensionality. A respective sparse deep neural network is trained for each of the marginal search spaces, resulting in a series of trained sparse deep neural networks. Each of the trained sparse deep neural networks is trained by injecting sparsity into a deep neural network by removing filter weights of the deep neural network.

Owner:SIEMENS HEALTHCARE GMBH

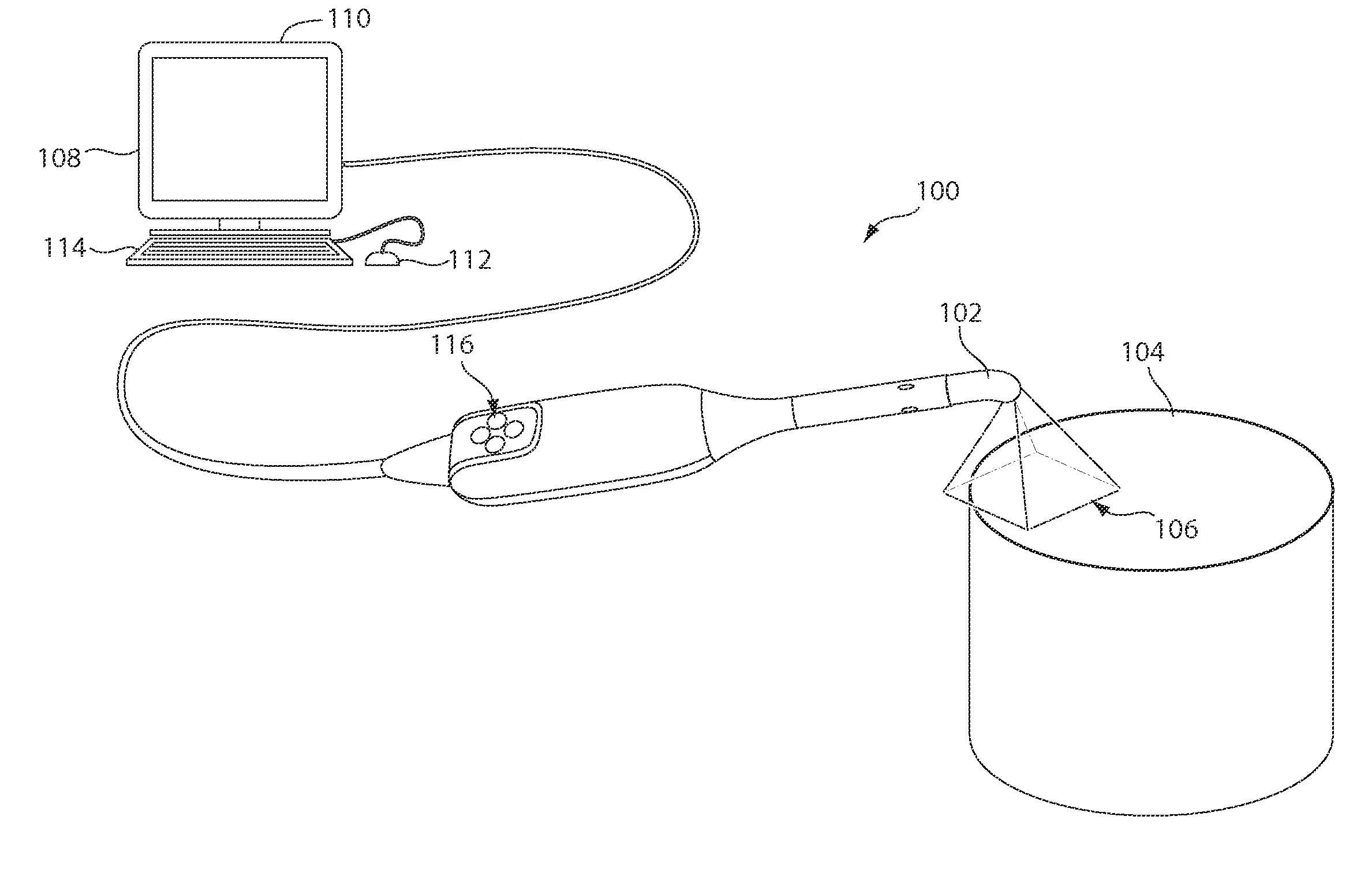

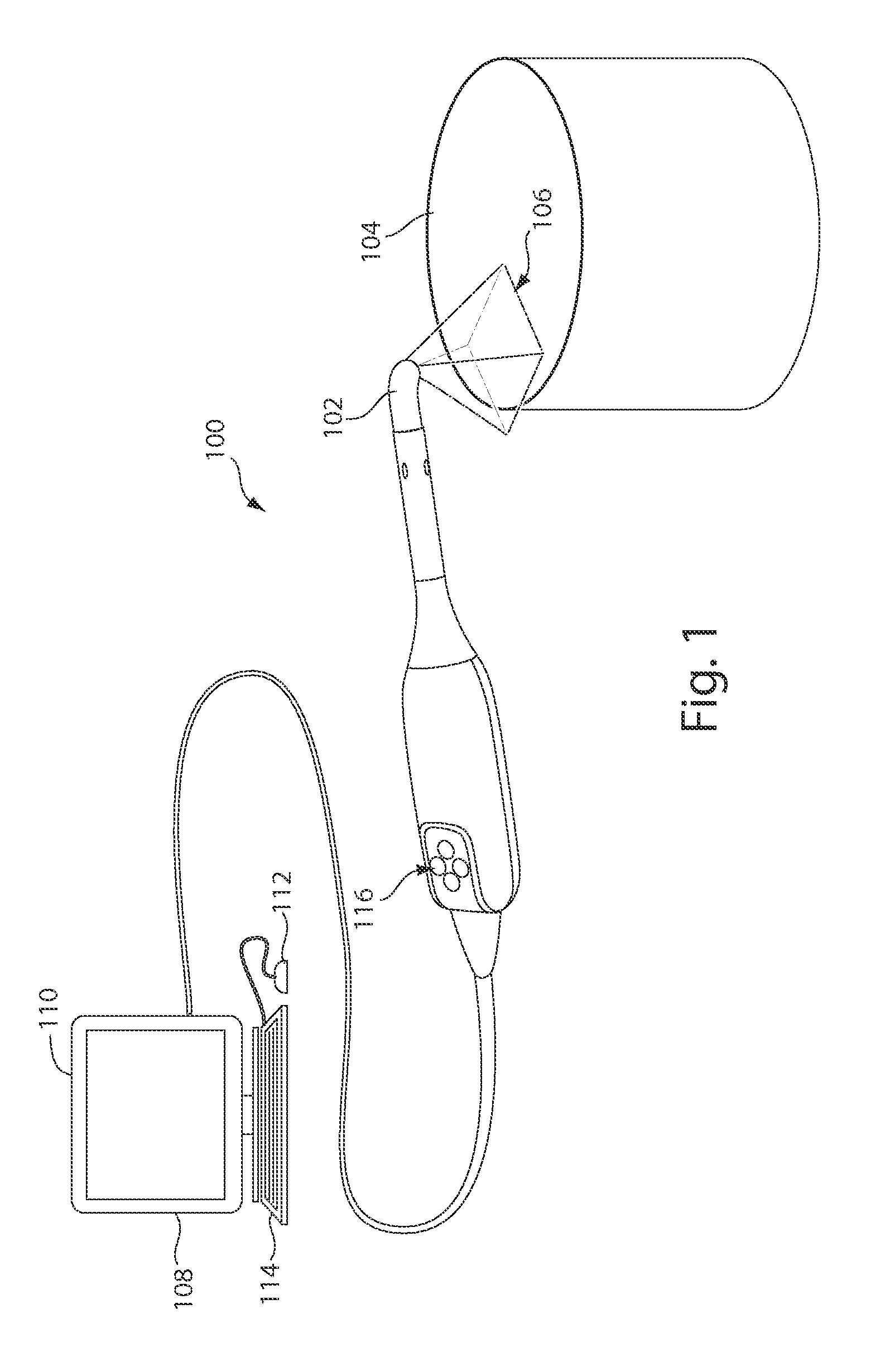

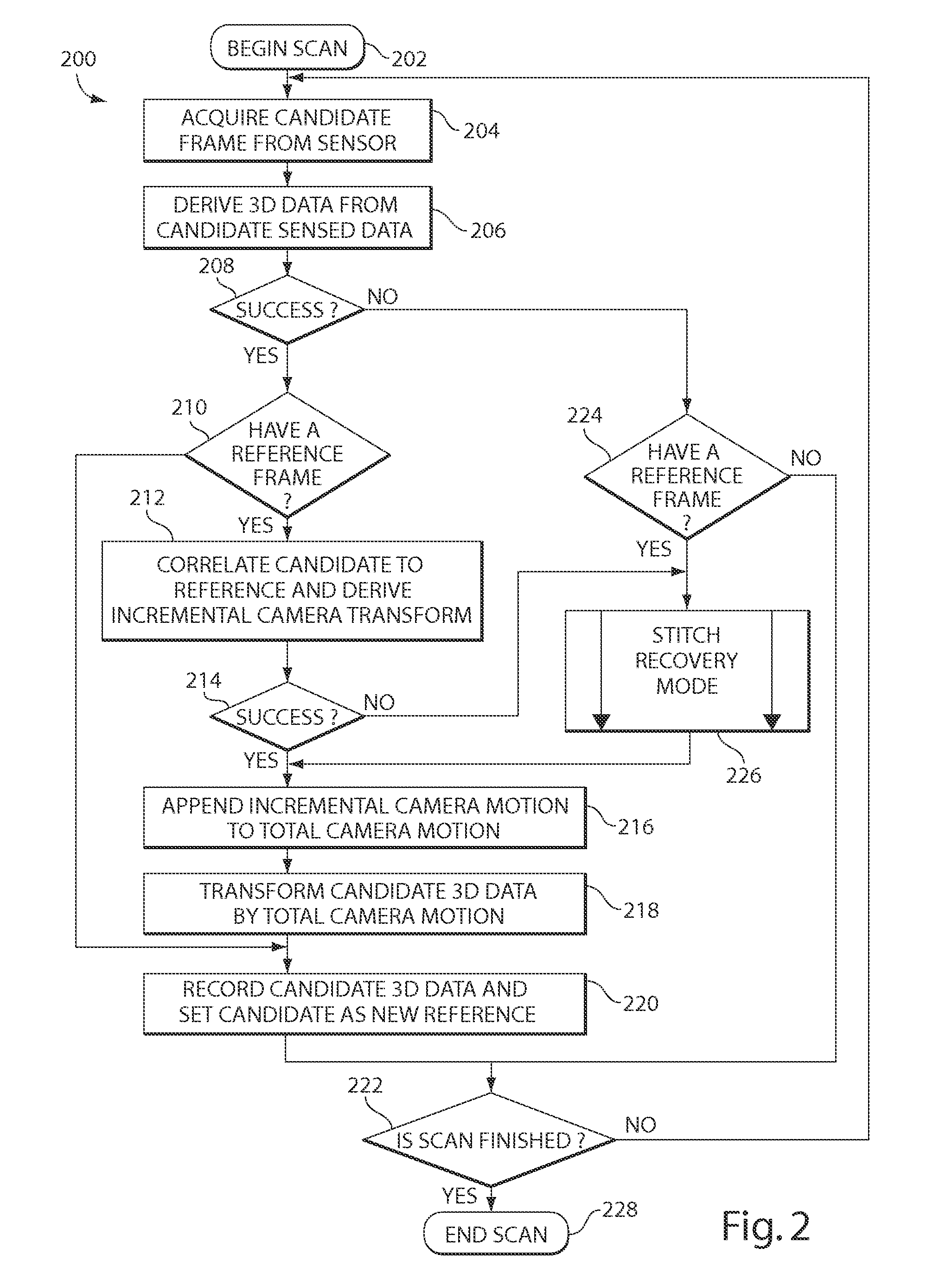

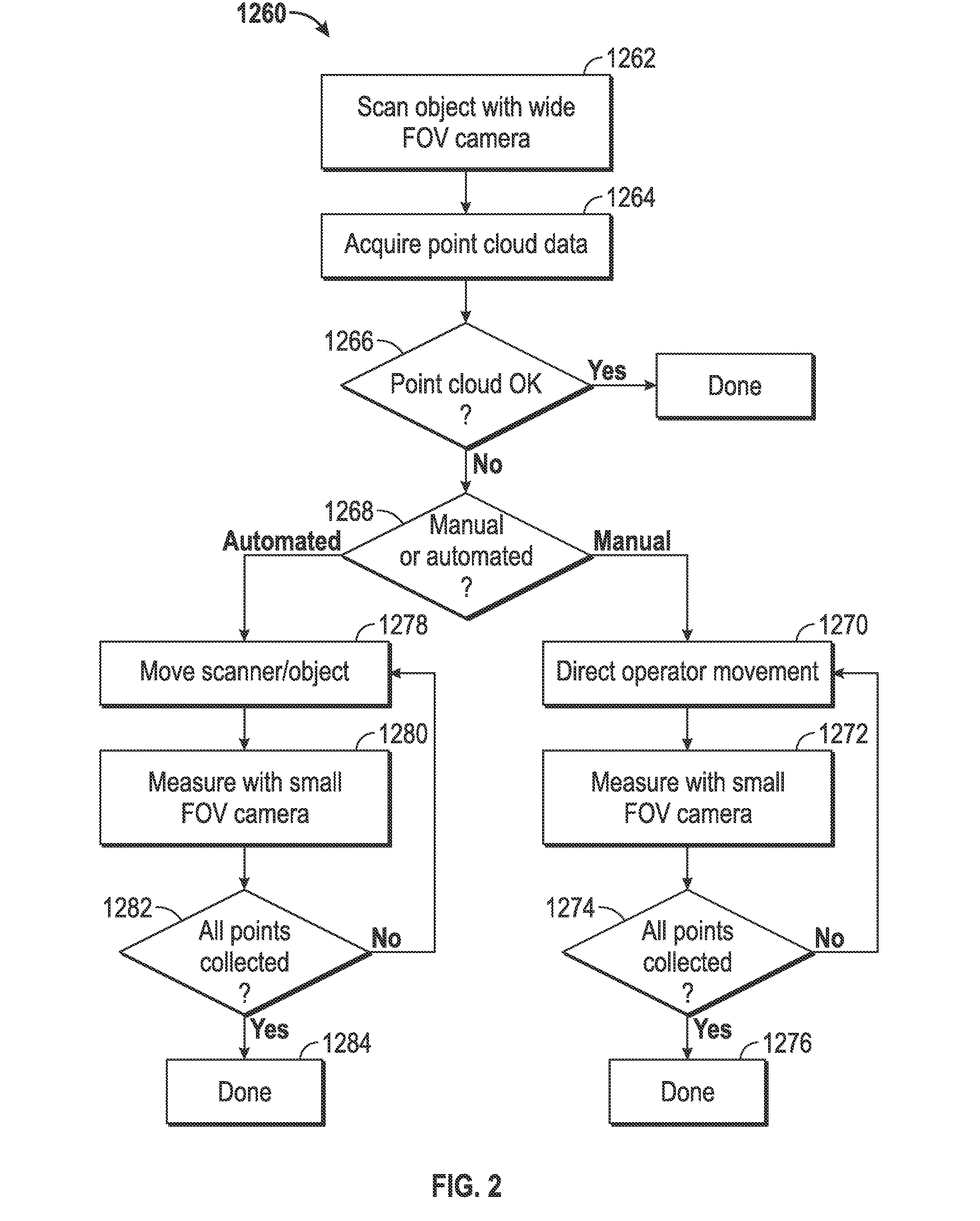

Three-dimensional scan recovery

A scanning system that acquires three-dimensional images as an incremental series of fitted three-dimensional data sets is improved by testing for successful incremental fits in real time and providing a variety of visual user cues and process modifications depending upon the relationship of newly acquired data to previously acquired data. The system may be used to aid in error-free completion of three-dimensional scans. The methods and systems described herein may also usefully be employed to scan complex surfaces including occluded or obstructed surfaces by maintaining a continuous three-dimensional scan across separated subsections of the surface. In one useful dentistry application, a full three-dimensional surface scan may be obtained for two dental arches in occlusion.

Owner:MEDIT CORP

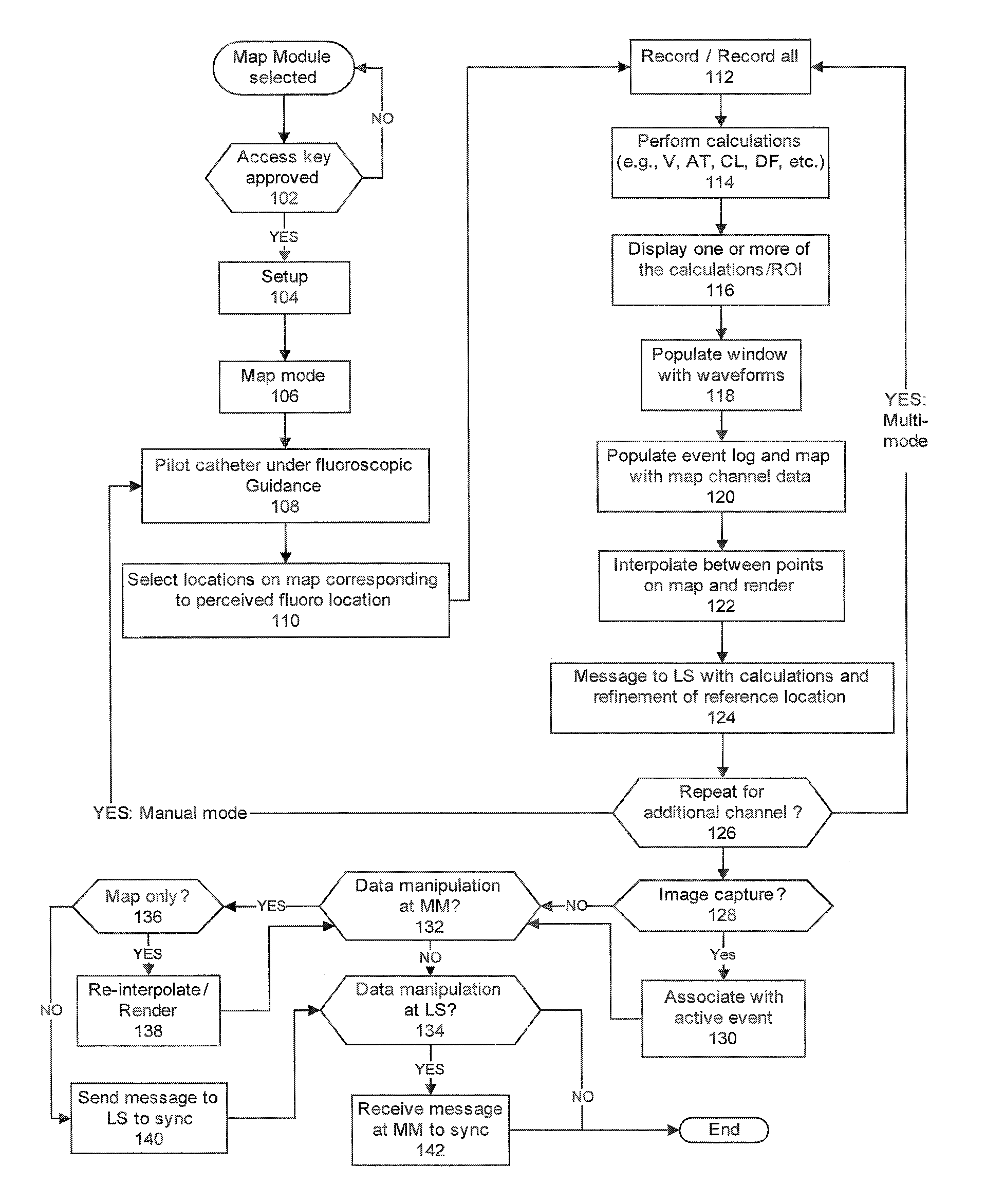

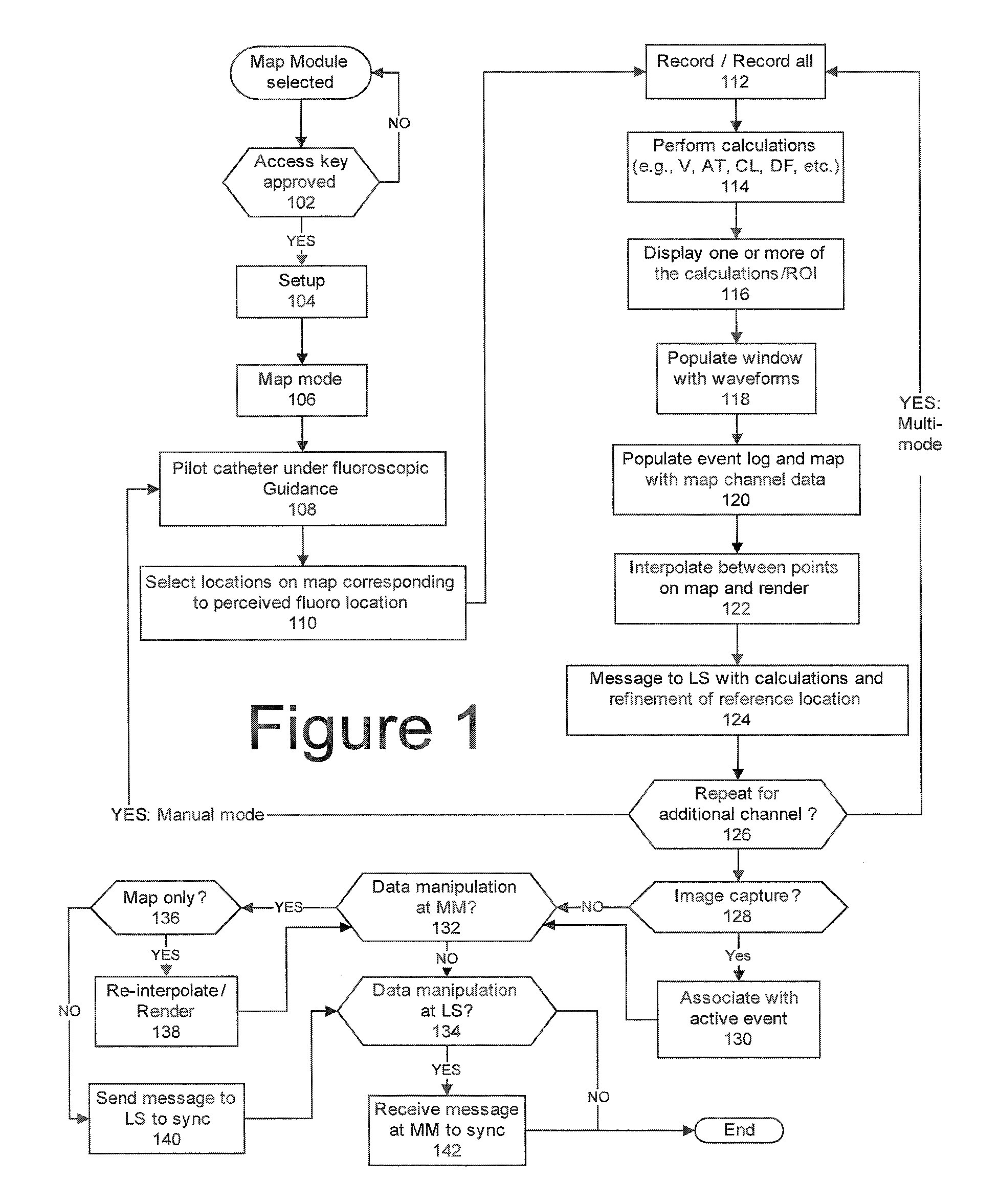

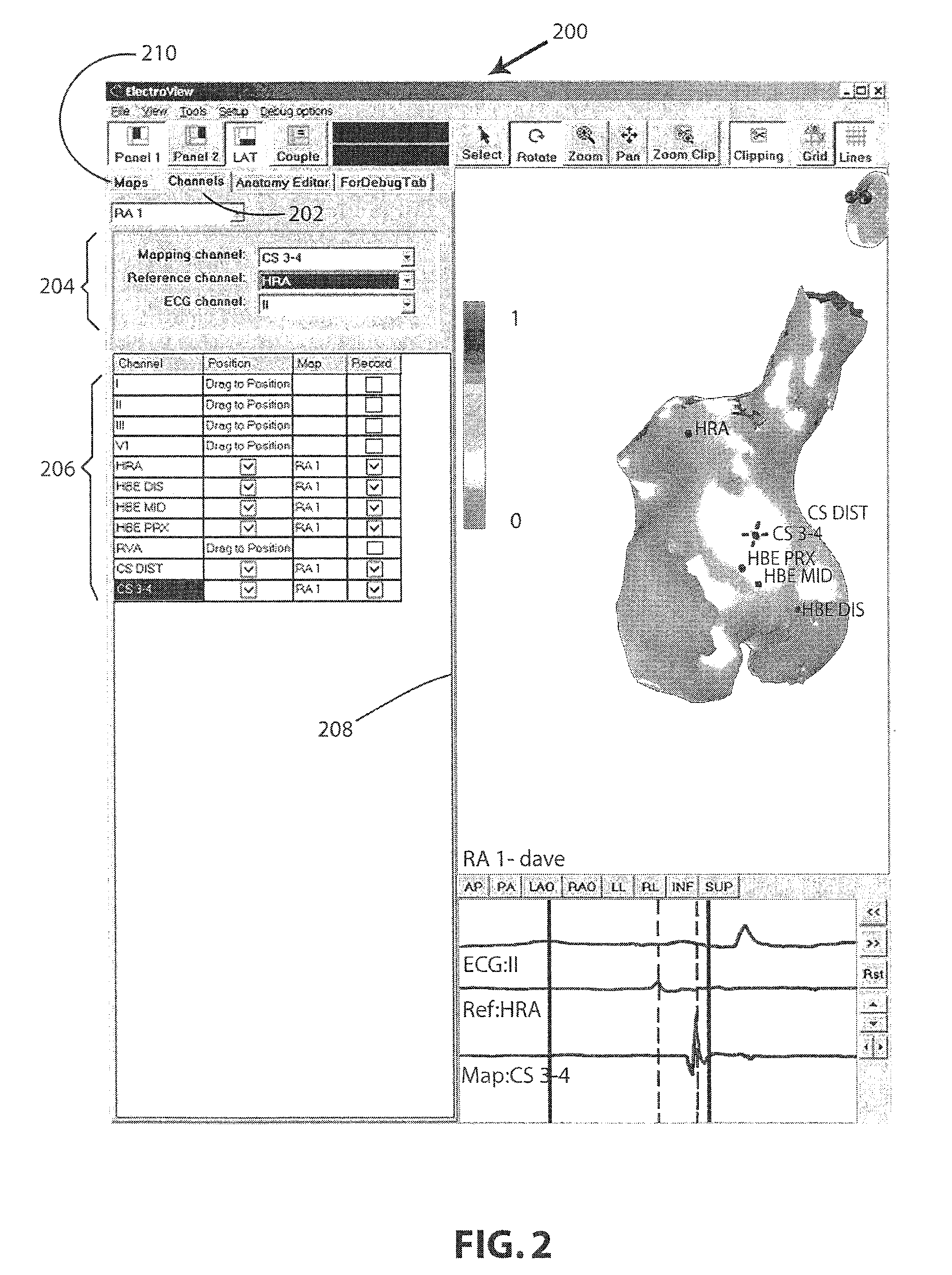

Rapid 3D mapping using multielectrode position data

An electrophysiology (EP) system includes an interface for operator-interaction with the results of code executing therein. A template model can have channels positioned or repositioned thereupon by the user to define a set-up useful in rapid catheter positioning. Mapping operations can be performed without the requirement for precise catheter location determinations. A map module coordinates EP data associated with each selected channel and its associated position on the template model to provide this result, and can update the resulting map in the event that the channel or location is changed. Messaging and other dynamic features enable synchronized presentation of a myriad of EP data. Additional systems and methods are disclosed herein.

Owner:BOSTON SCI SCIMED INC

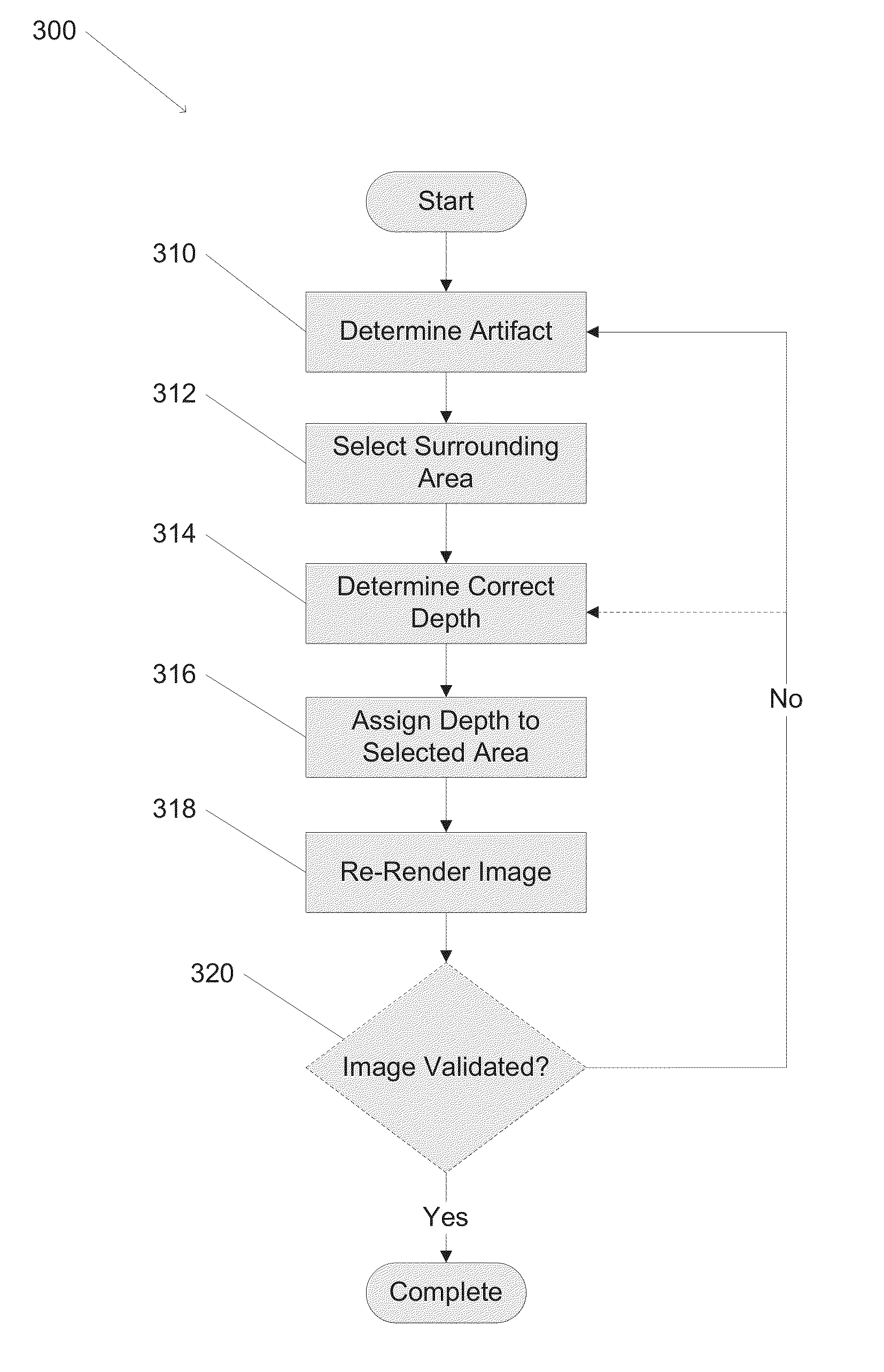

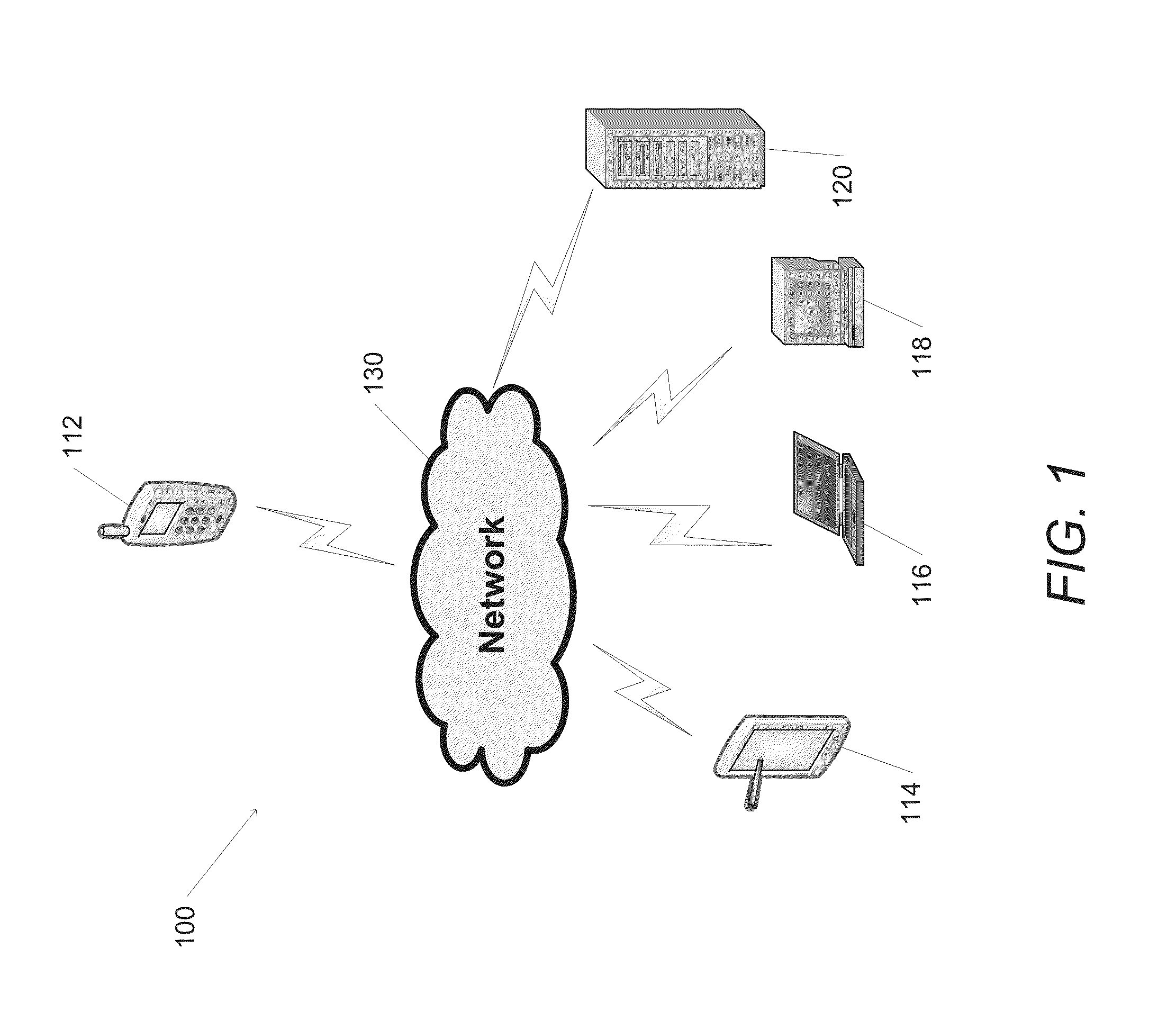

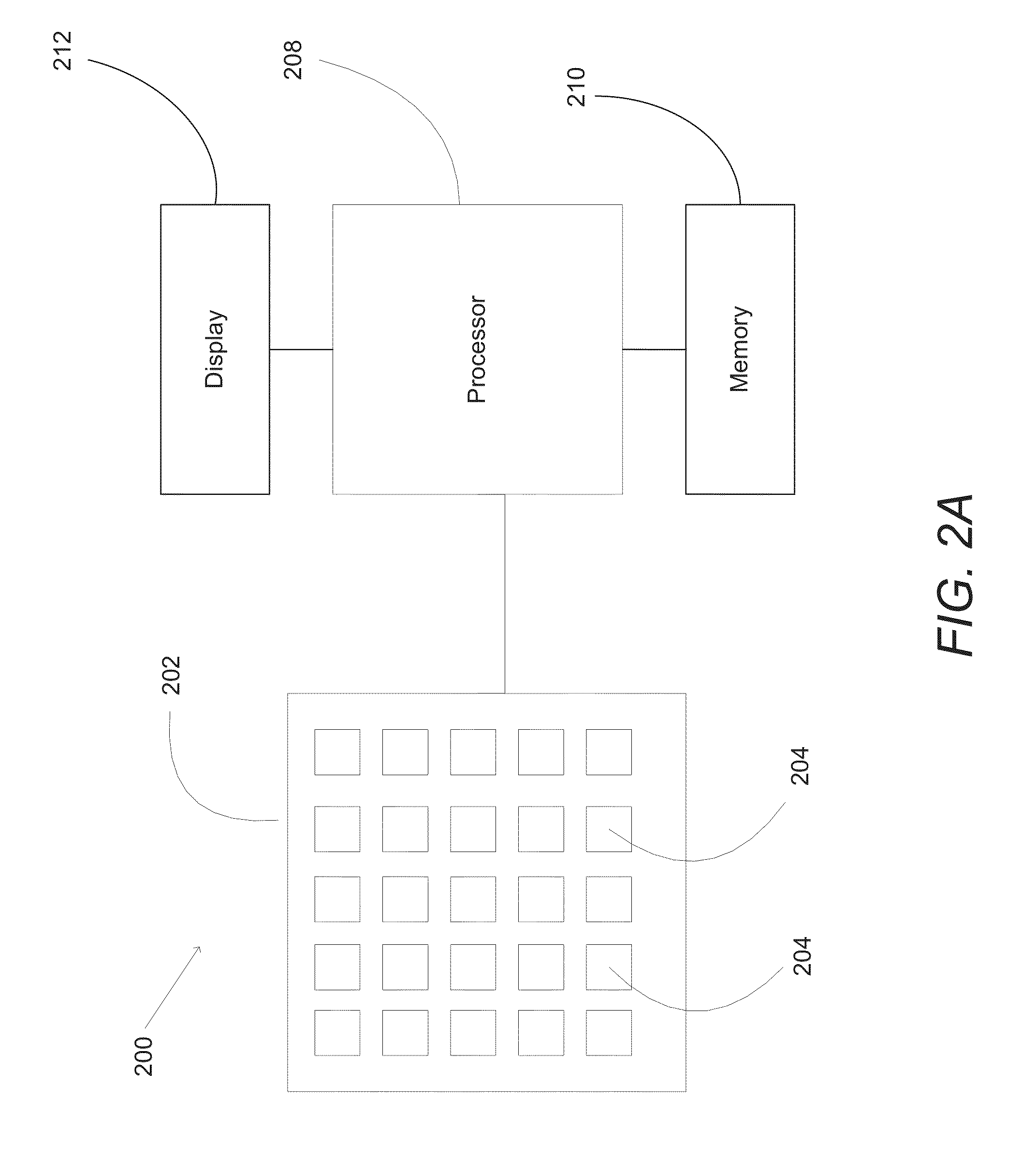

Systems and methods for correcting user identified artifacts in light field images

Systems and methods for correction of user identified artifacts in light field images are disclosed. One embodiment of the invention is a method for correcting artifacts in a light field image rendered from a light field obtained by capturing a set of images from different viewpoints and initial depth estimates for pixels within the light field using a processor configured by an image processing application, where the method includes: receiving a user input indicating the location of an artifact within said light field image; selecting a region of the light field image containing the indicated artifact; generating updated depth estimates for pixels within the selected region; and re-rendering at least a portion of the light field image using the updated depth estimates for the pixels within the selected region.

Owner:FOTONATION LTD

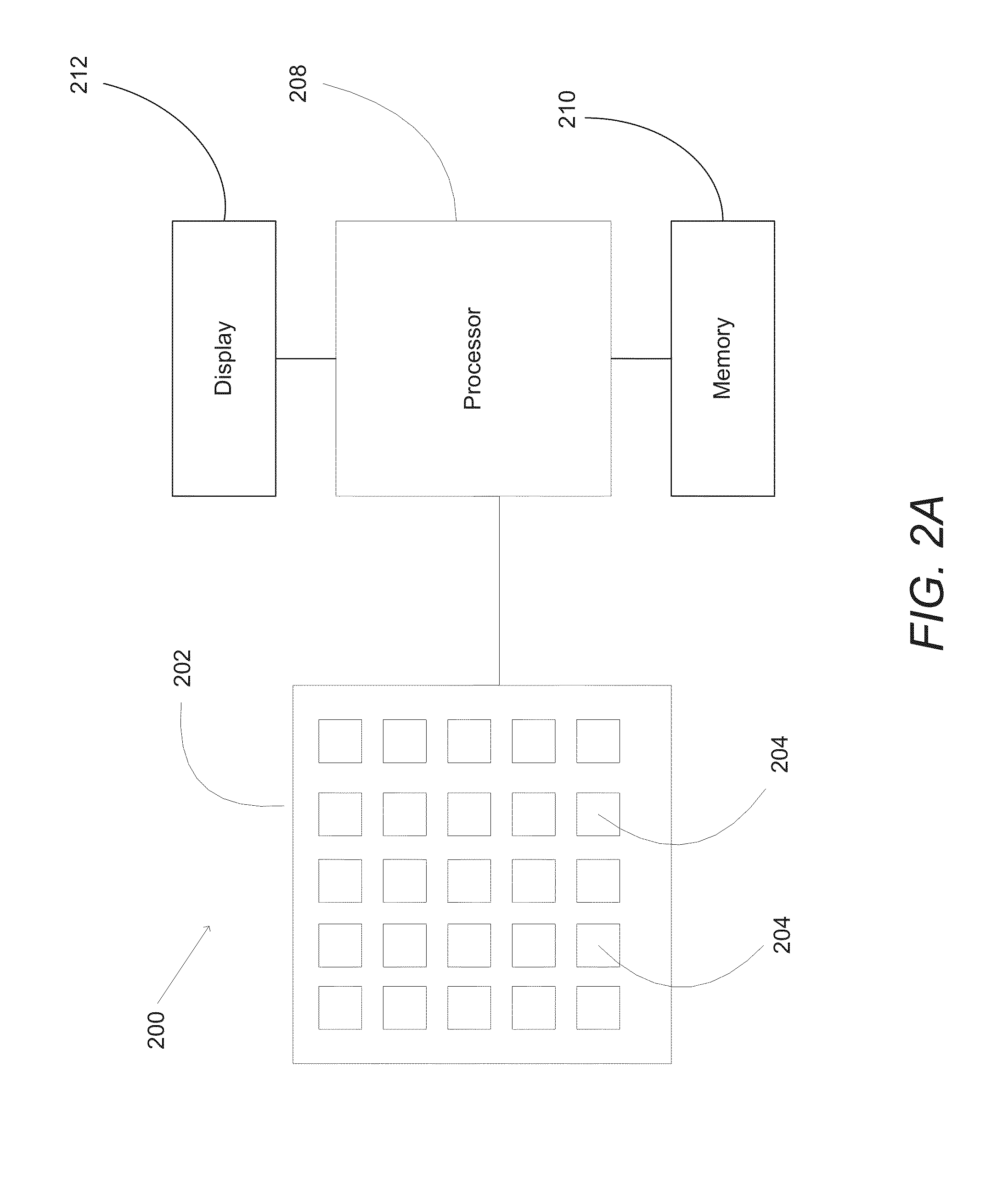

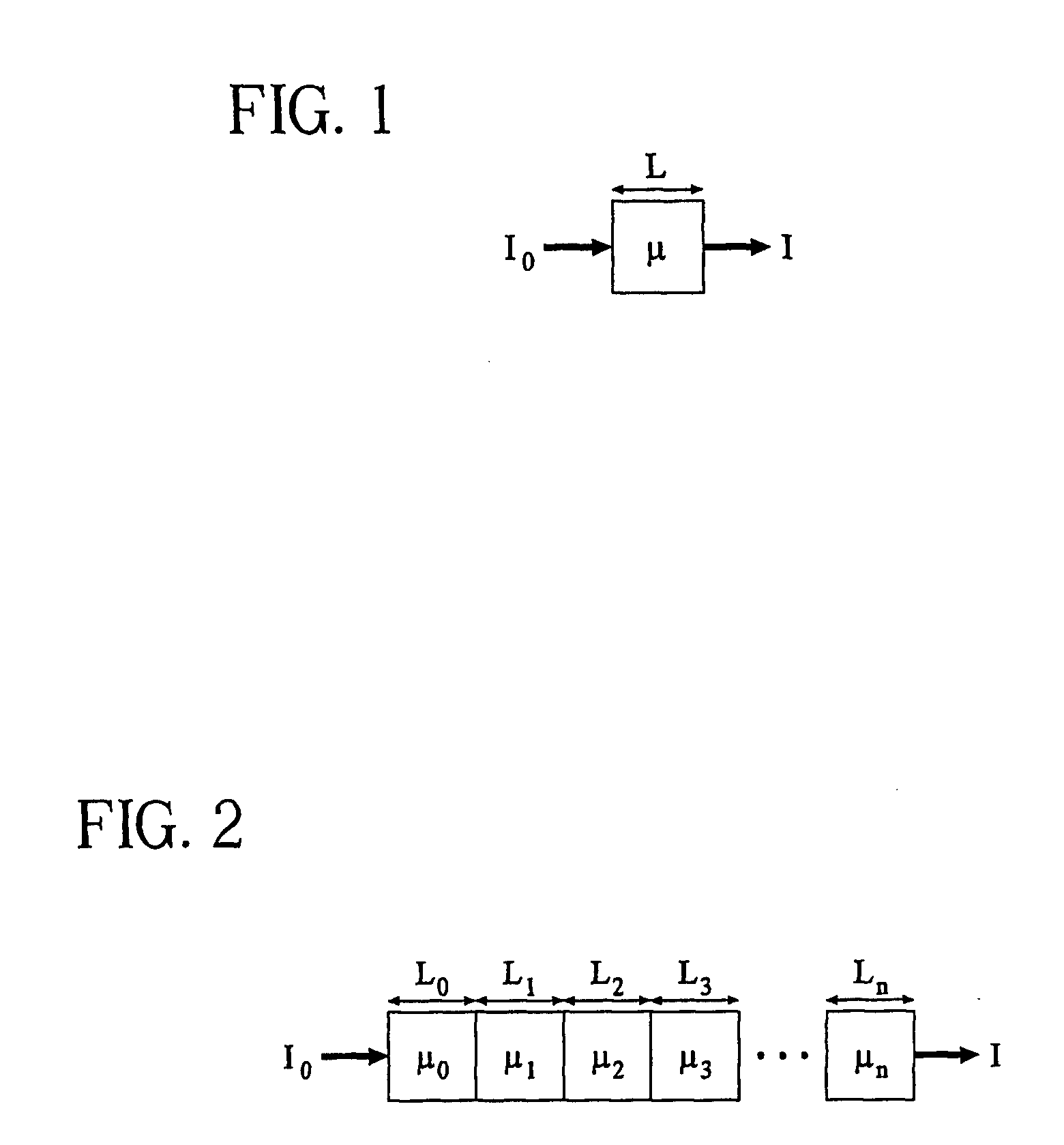

System and method for three-dimensional image rendering and analysis

InactiveUS20080205717A1Reduce decreaseReduce contributionImage enhancementReconstruction from projectionImaging analysis3d image

The present invention relates to methods and systems for conducting three-dimensional image analysis and diagnosis and possible treatment relating thereto. The invention includes methods of handling signals containing information (data) relating to three-dimensional representation of objects scanned by a scanning medium. The invention also includes methods of making and analyzing volumetric measurements and changes in volumetric measurements which can be used for the purpose of diagnosis and treatment.

Owner:CORNELL RES FOUNDATION INC

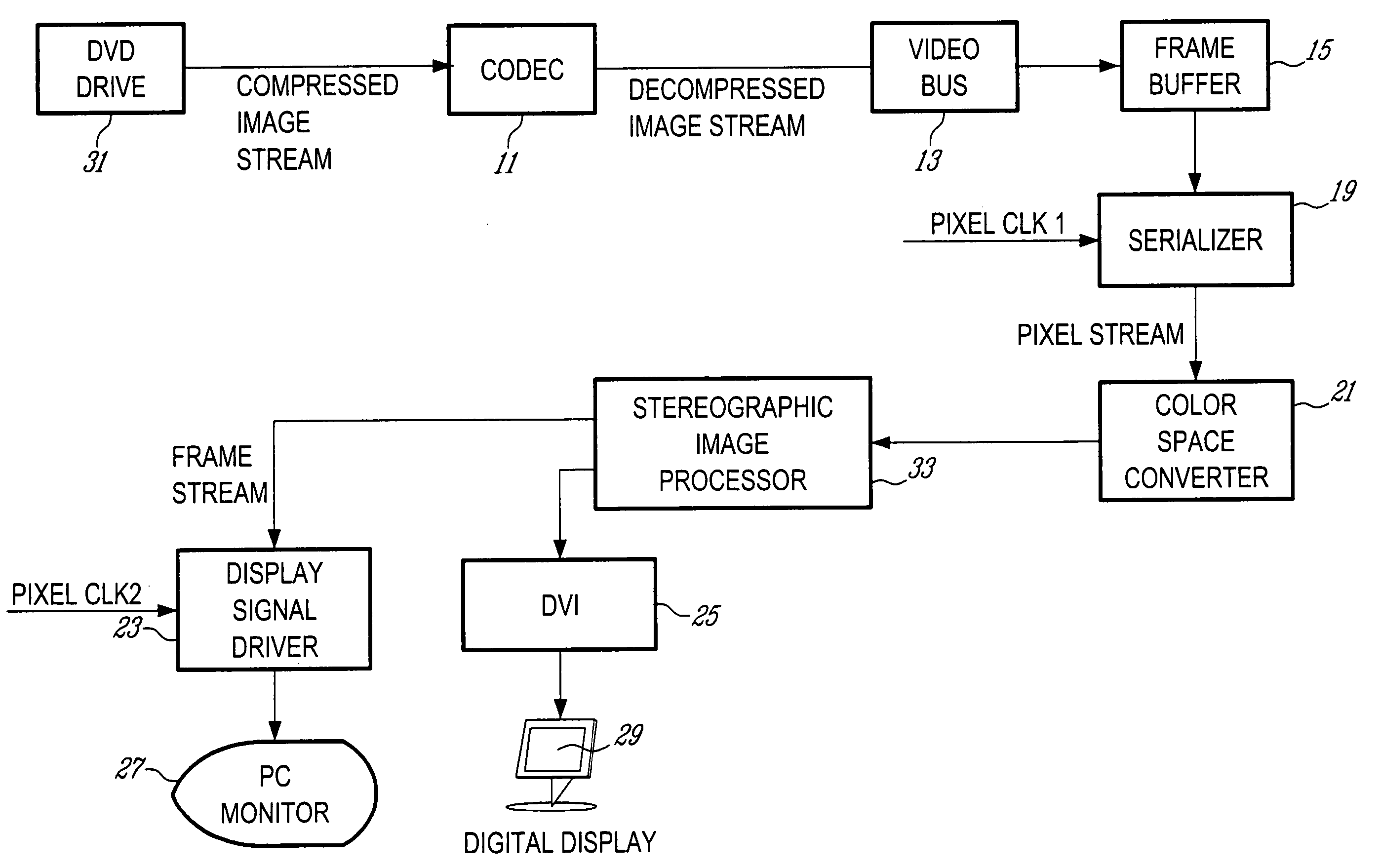

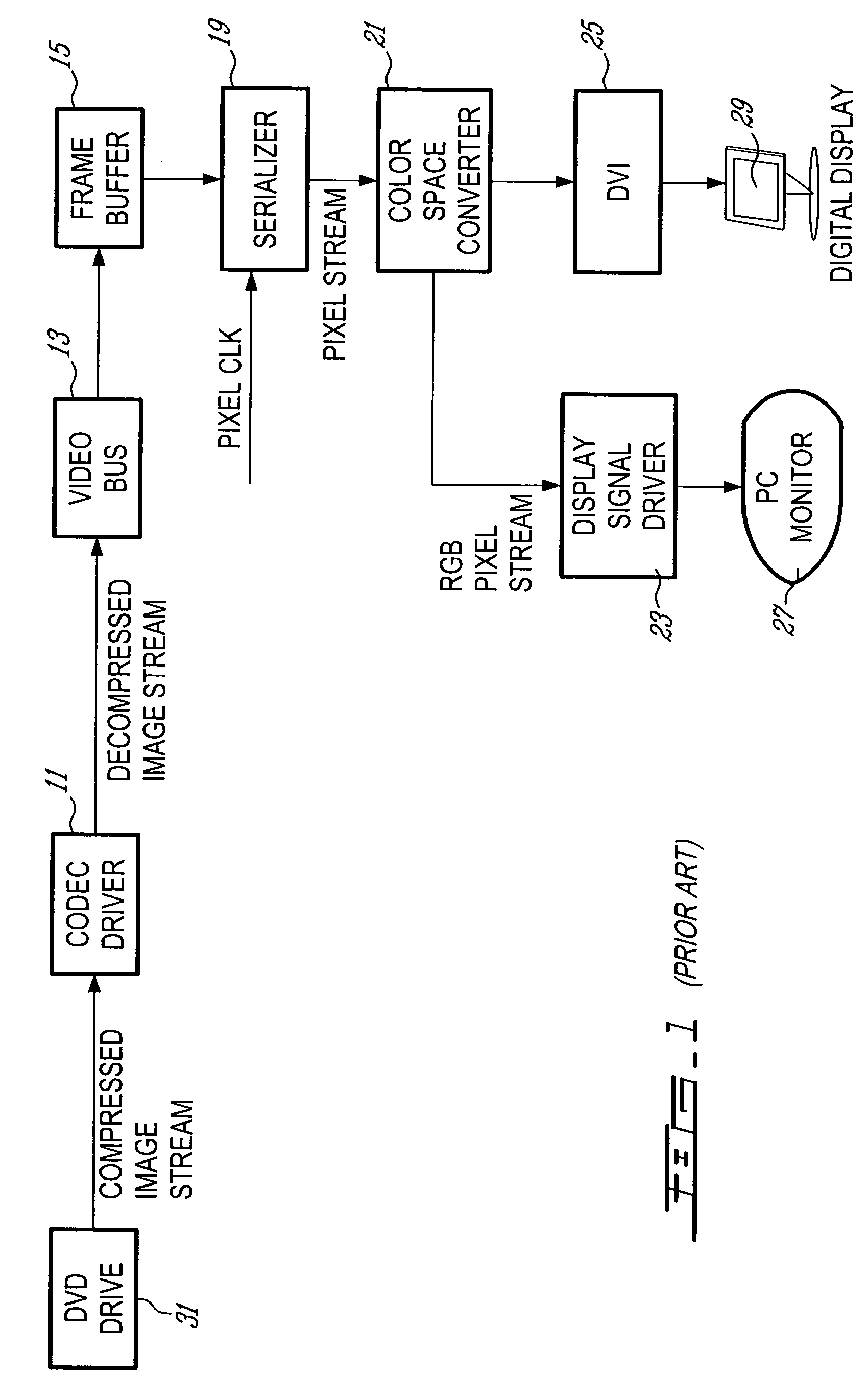

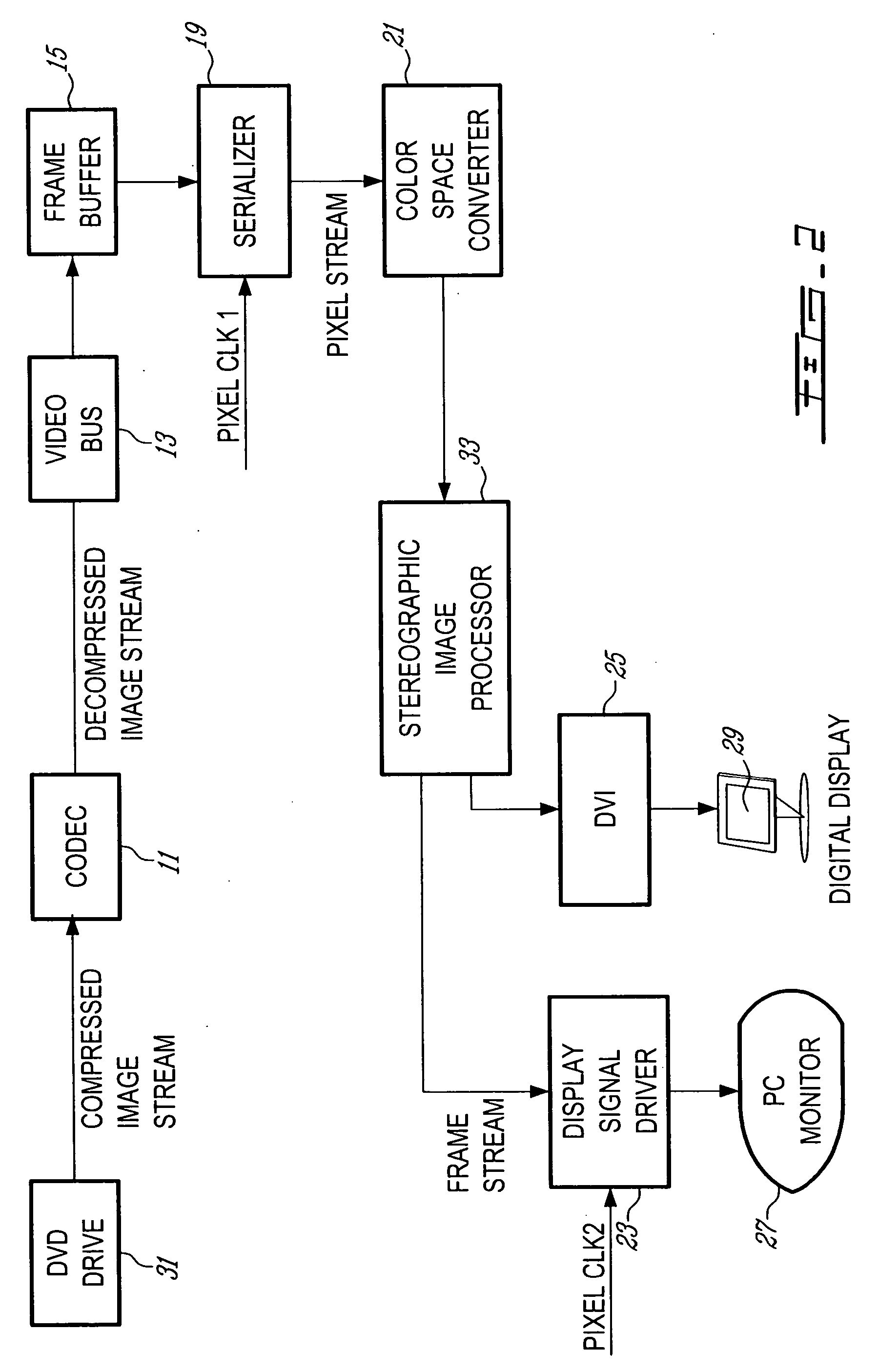

Apparatus for processing a stereoscopic image stream

ActiveUS20050117637A1High visual quality reproductionElimination substantialImage analysisDetails involving 3D image dataComputer visionSignal generator

A system is provided for processing a compressed image stream of a stereoscopic image stream, the compressed image stream having a plurality of frames in a first format, each frame consisting of a merged image comprising pixels sampled from a left image and pixels sampled from a right image. A receiver receives the compressed image stream and a decompressing module in communication with the receiver decompresses the compressed image stream. The left and right images of the decompressed image stream are stored in a frame buffer. A serializing unit reads pixels of the frames stored in the frame buffer and outputs a pixel stream comprising pixels of a left frame and pixels of a right frame. A stereoscopic image processor receives the pixel stream, buffers the pixels, performs interpolation in order to reconstruct pixels of the left and right images and outputs a reconstructed left pixel stream and a reconstructed right pixel stream, the reconstructed streams having a format different from the first format. A display signal generator receives the stereoscopic pixel stream to provide an output display signal.

Owner:3DN

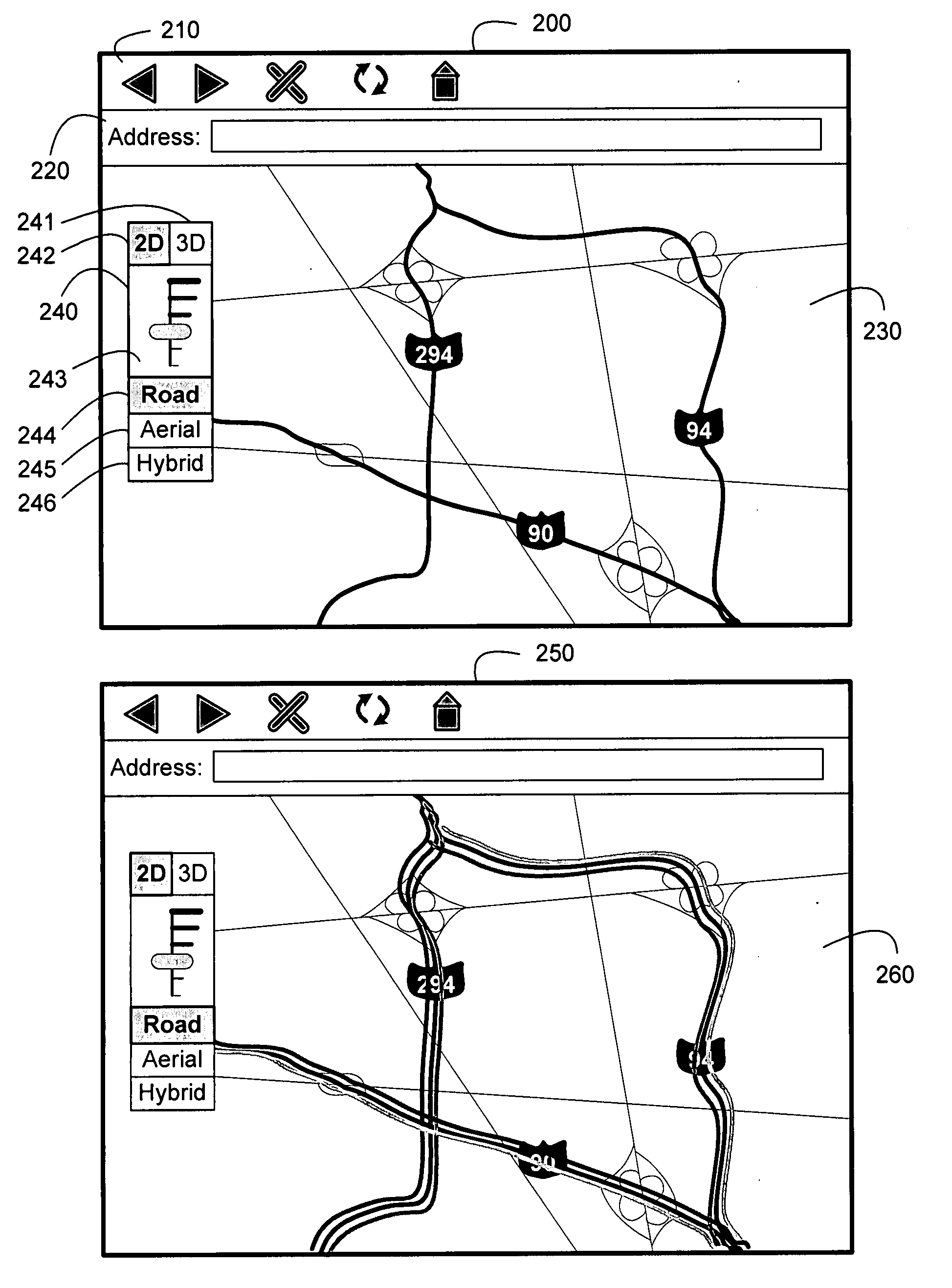

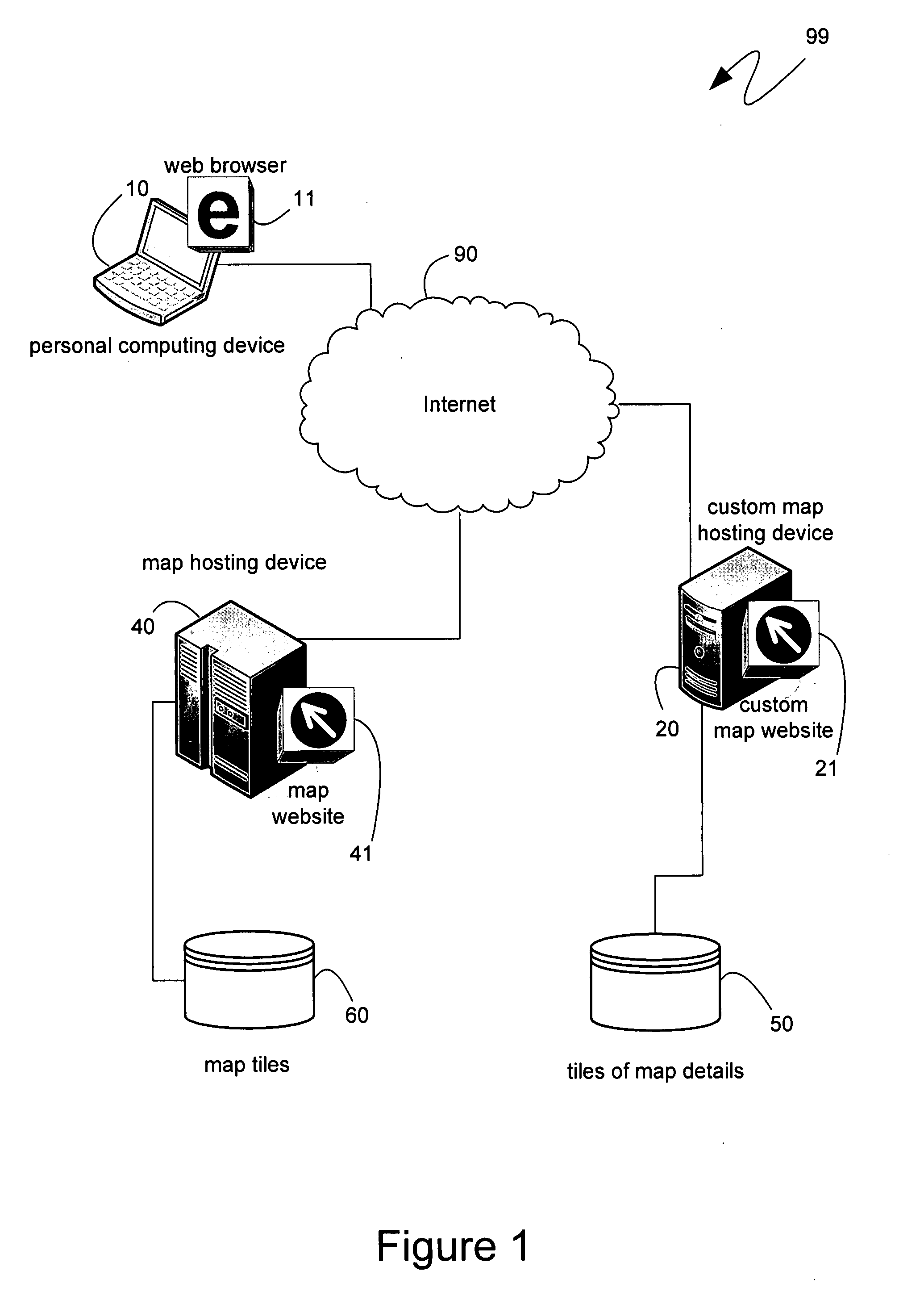

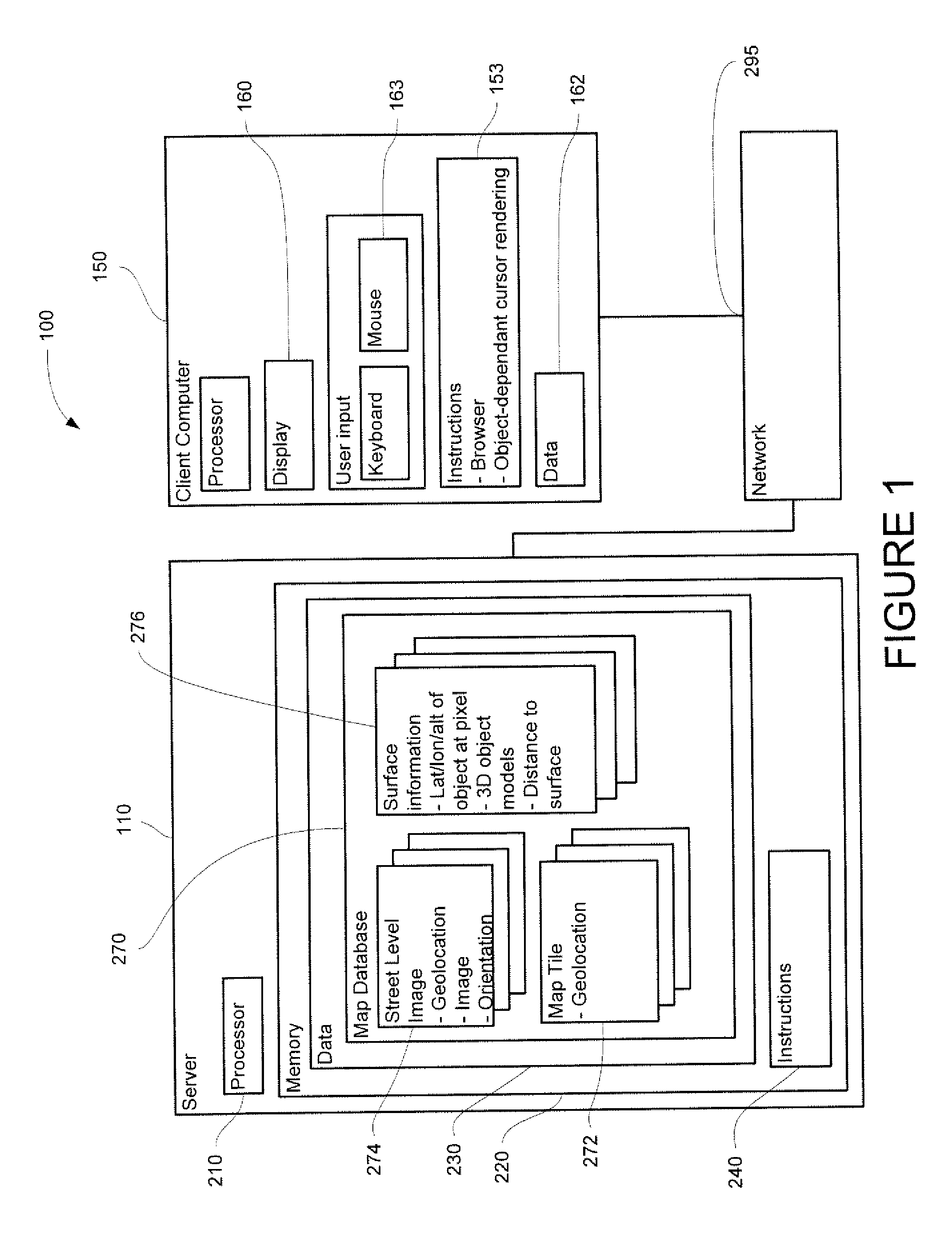

Adding custom content to mapping applications

ActiveUS20080238941A1Level of detail availableHigh bandwidthImage enhancementImage analysisApplication softwareComputer science

Digital maps can be composed of a series of image tiles that are selected based on the context of the map to be presented. Independently hosted tiles can comprise additional details that can be added to the map. A manifest can be created that describes the layers of map details composed of such independently hosted tiles. Externally referable mechanisms can, based on the manifest and map context, select tiles, from among the independently hosted tiles, that correspond to map tiles being displayed to a user. Subsequently, the mechanisms can instruct a browser, as specified in the manifest, to combine the map tiles and the independently hosted tiles to generate a more detailed map. Alternatively, customized mechanisms can generate map detail tiles in real-time, based on an exported map context. Also, controls instantiated by the browser can render three-dimensional images based on the combined map tiles.

Owner:MICROSOFT TECH LICENSING LLC

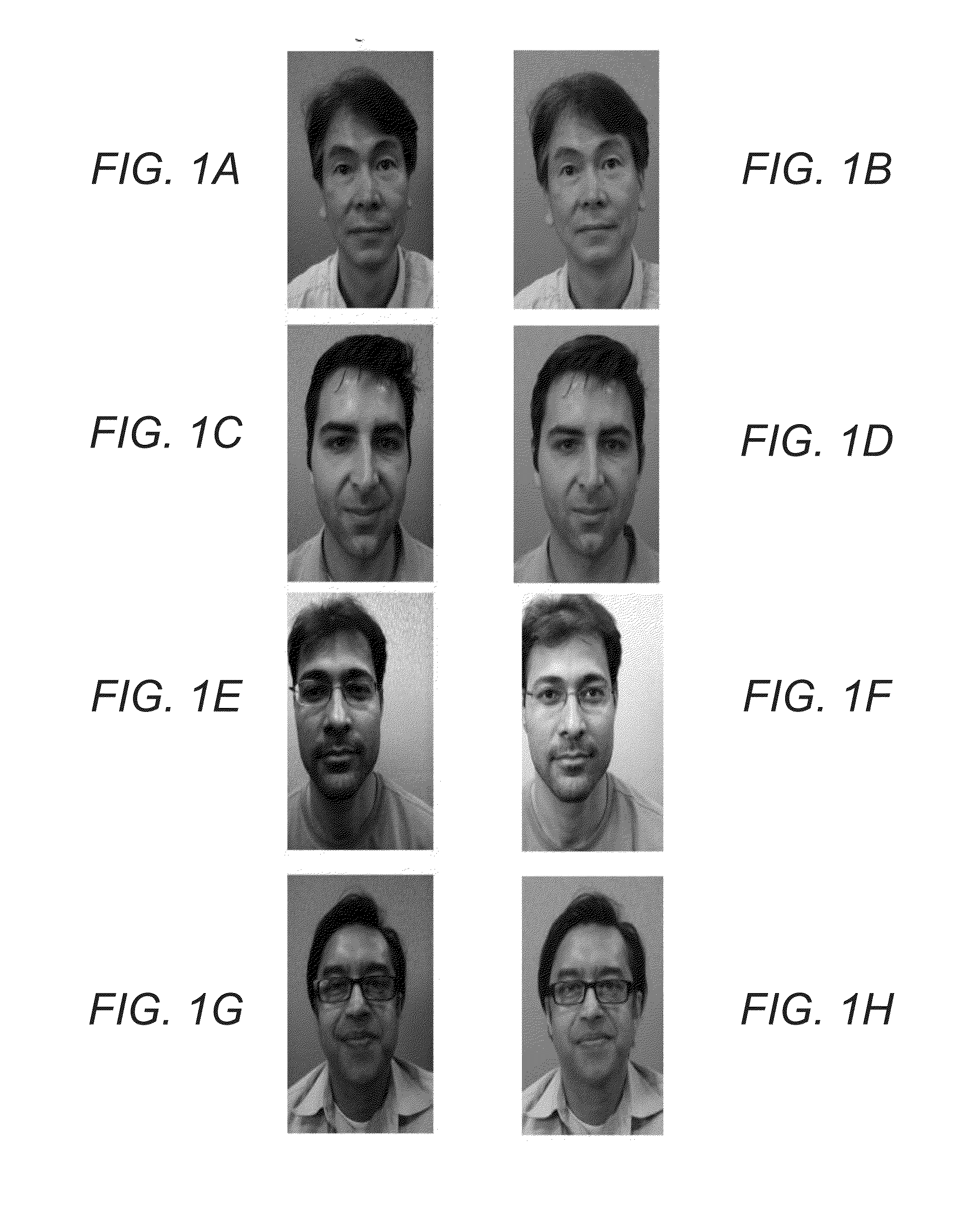

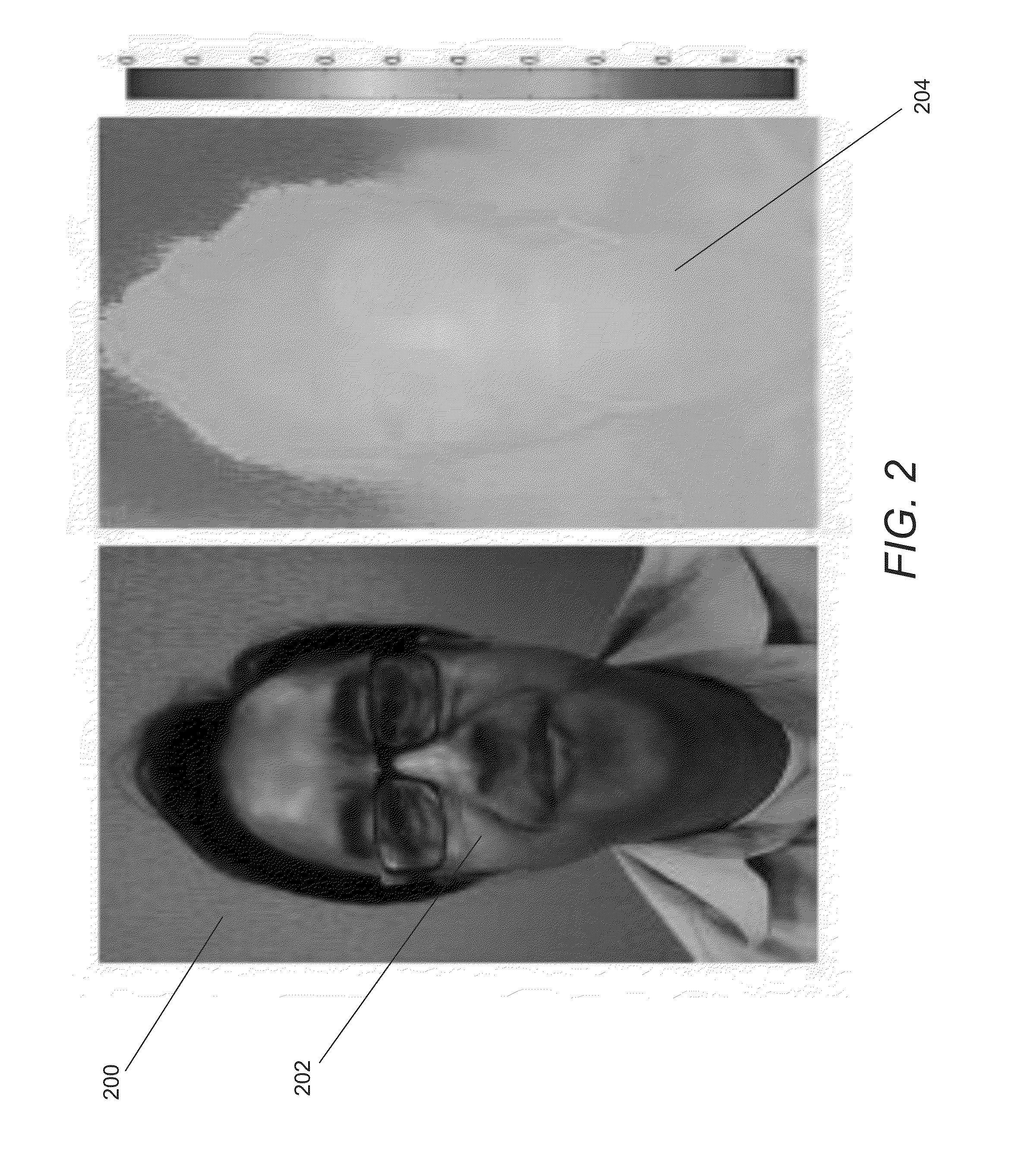

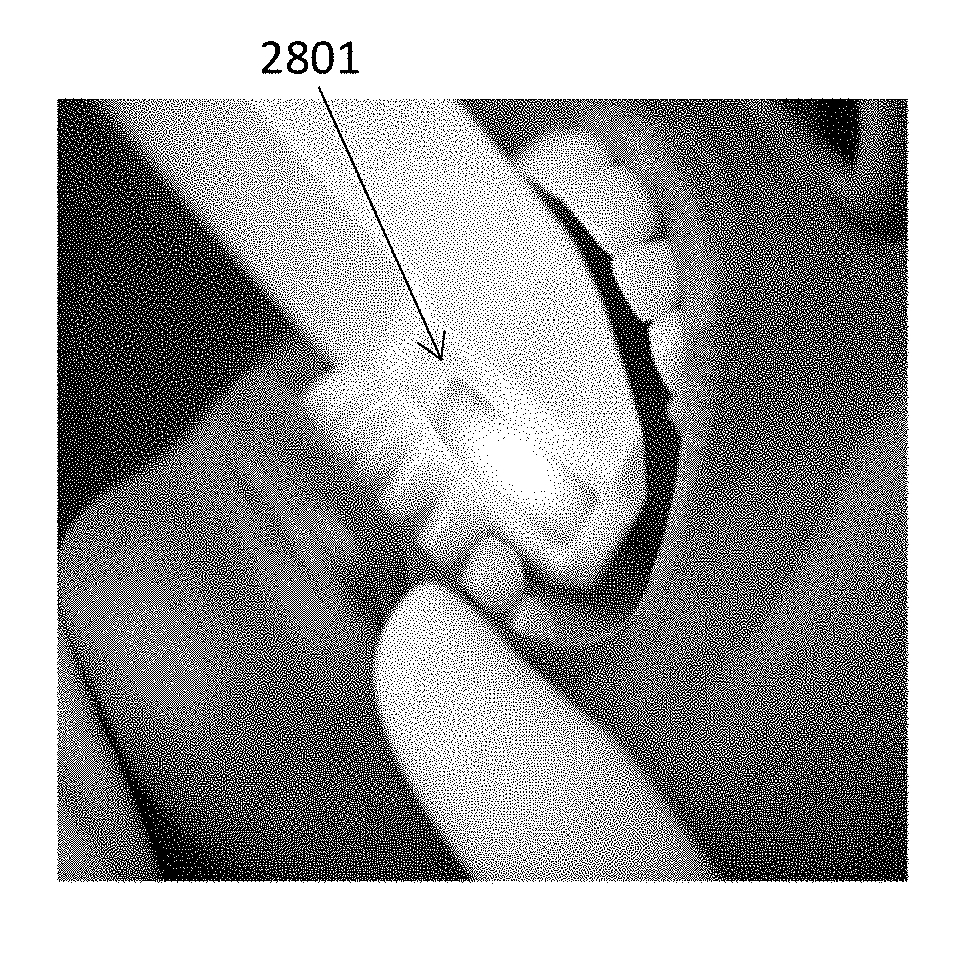

Systems and Methods for Depth-Assisted Perspective Distortion Correction

ActiveUS20150091900A1Reduce Perspective DistortionDistortion in appearanceImage enhancementImage analysisViewpointsAngle of view

Systems and methods for automatically correcting apparent distortions in close range photographs that are captured using an imaging system capable of capturing images and depth maps are disclosed. In many embodiments, faces are automatically detected and segmented from images using a depth-assisted alpha matting. The detected faces can then be re-rendered from a more distant viewpoint and composited with the background to create a new image in which apparent perspective distortion is reduced.

Owner:FOTONATION LTD

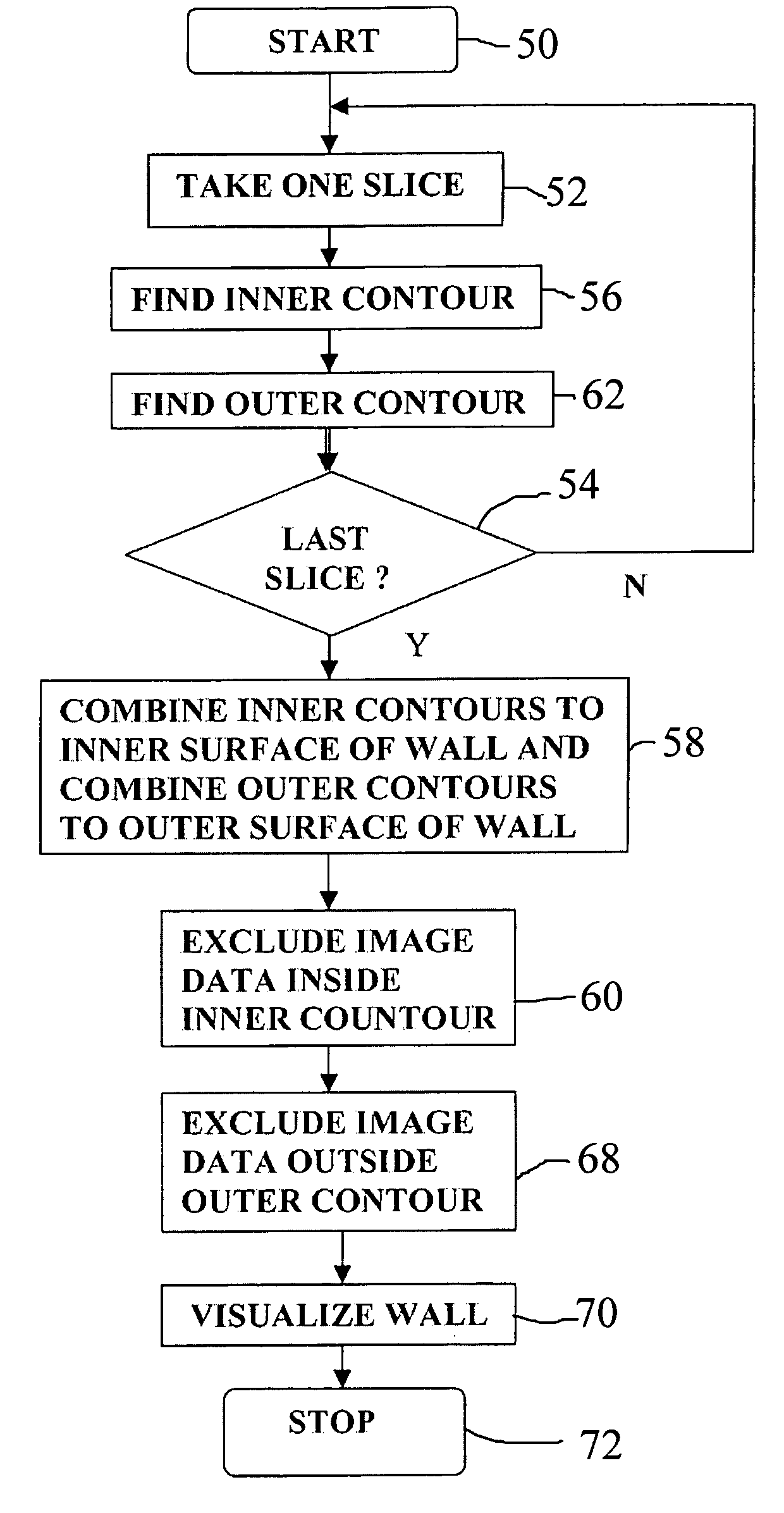

Method and apparatus for visualization of biological structures with use of 3D position information from segmentation results

A data processing methodology and corresponding apparatus for visualizing the characteristics of a particular object volume in an overall medical / biological environment receives a source image data set pertaining to the overall environment. First and second contour surfaces within the environment are established which collectively define a target object volume. By way of segmenting, all information pertaining to structures outside the target object volume are excluded from the image data. A visual representation of the target object based on nonexcluded information is displayed. In particular, the method establishes i) the second contour surface through combining both voxel intensities and relative positions among voxel subsets, and ii) a target volume by excluding all data outside the outer surface and inside the inner surface (which allows non-uniform spacing between the first and second contour surfaces). The second contour surface is used as a discriminative for the segmenting.

Owner:PIE MEDICAL IMAGING

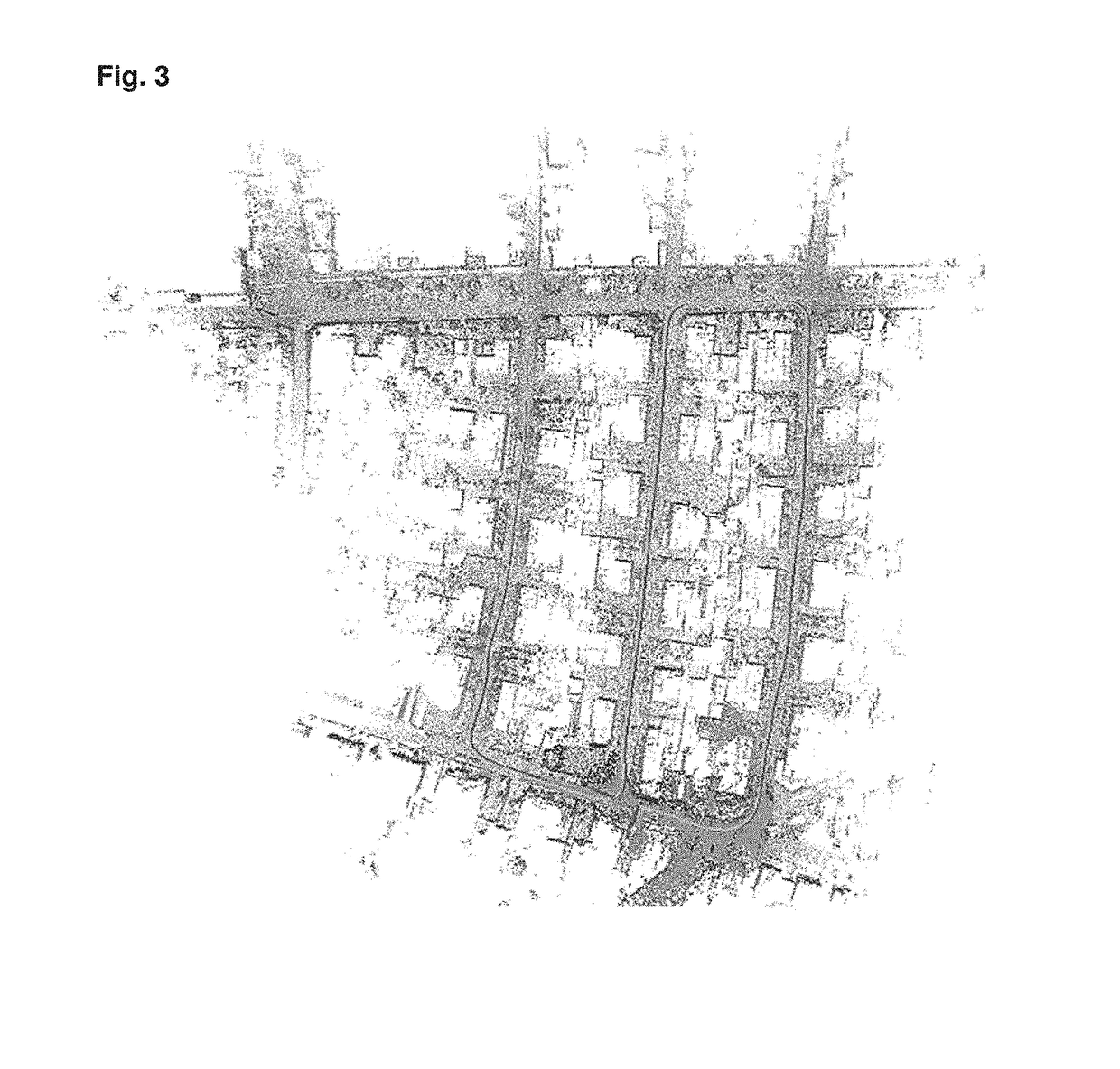

Method and device for real-time mapping and localization

ActiveUS20180075643A1Efficient storageImprove system robustnessImage enhancementImage analysisReference mapLaser ranging

A method for constructing a 3D reference map useable in real-time mapping, localization and / or change analysis, wherein the 3D reference map is built using a 3D SLAM (Simultaneous Localization And Mapping) framework based on a mobile laser range scanner A method for real-time mapping, localization and change analysis, in particular in GPS-denied environments, as well as a mobile laser scanning device for implementing said methods.

Owner:EURATOM

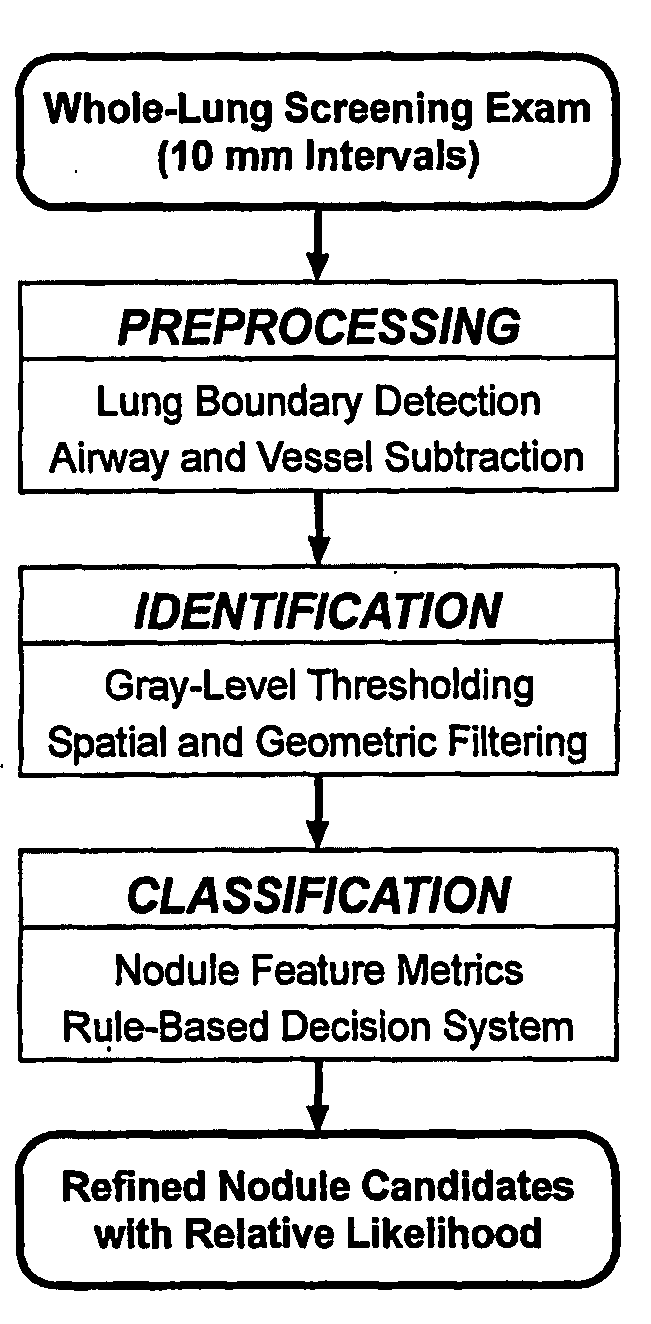

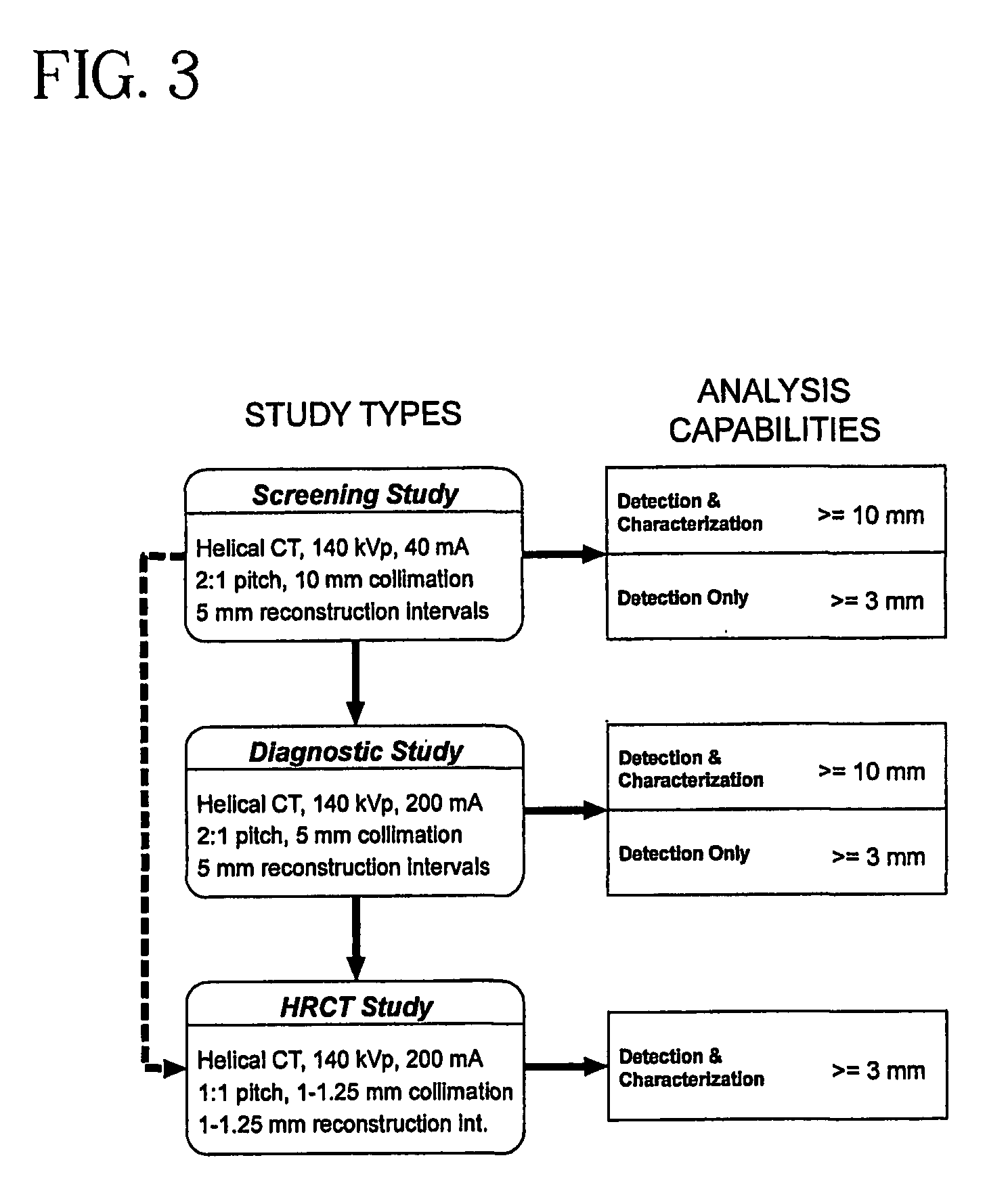

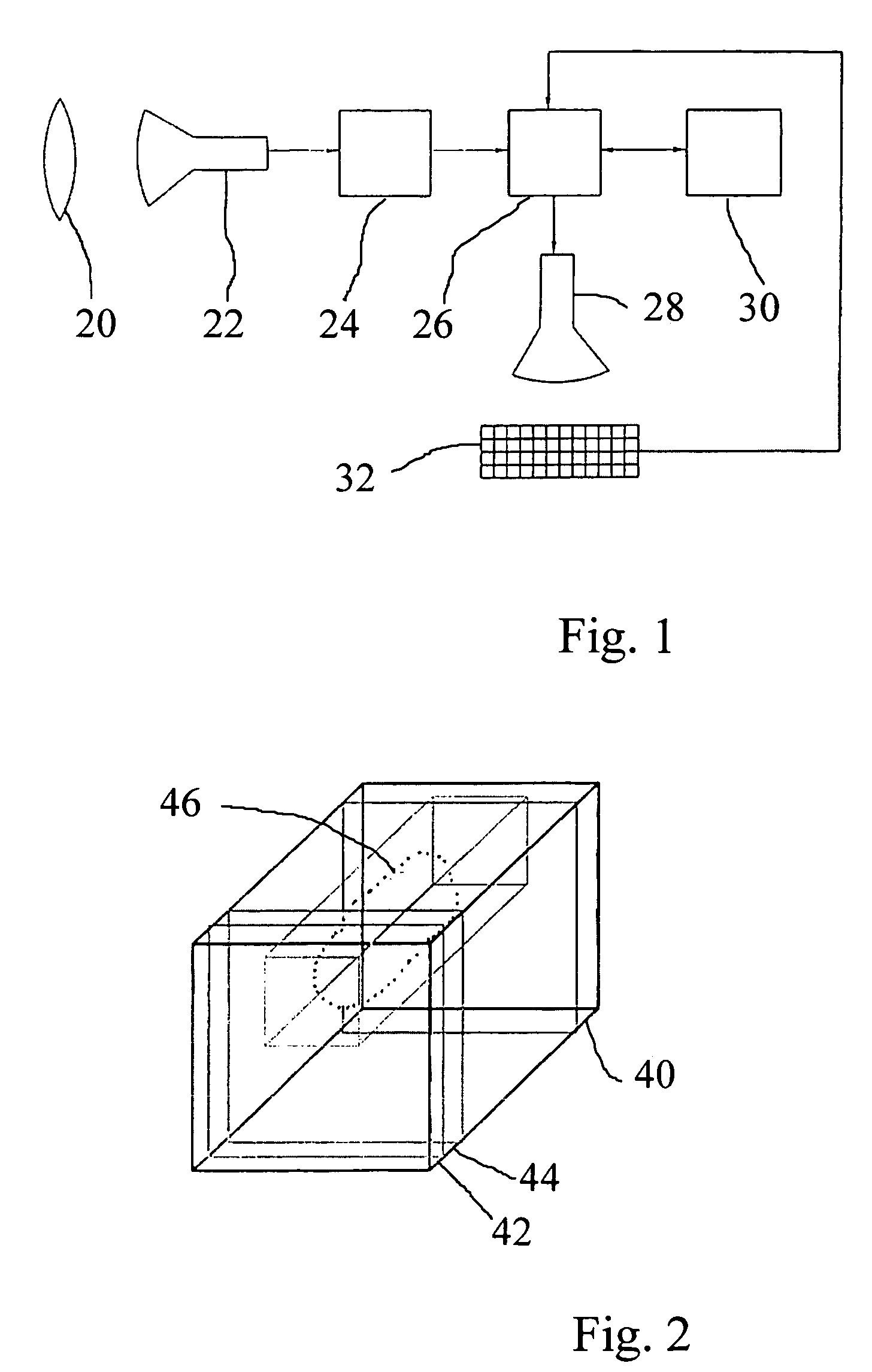

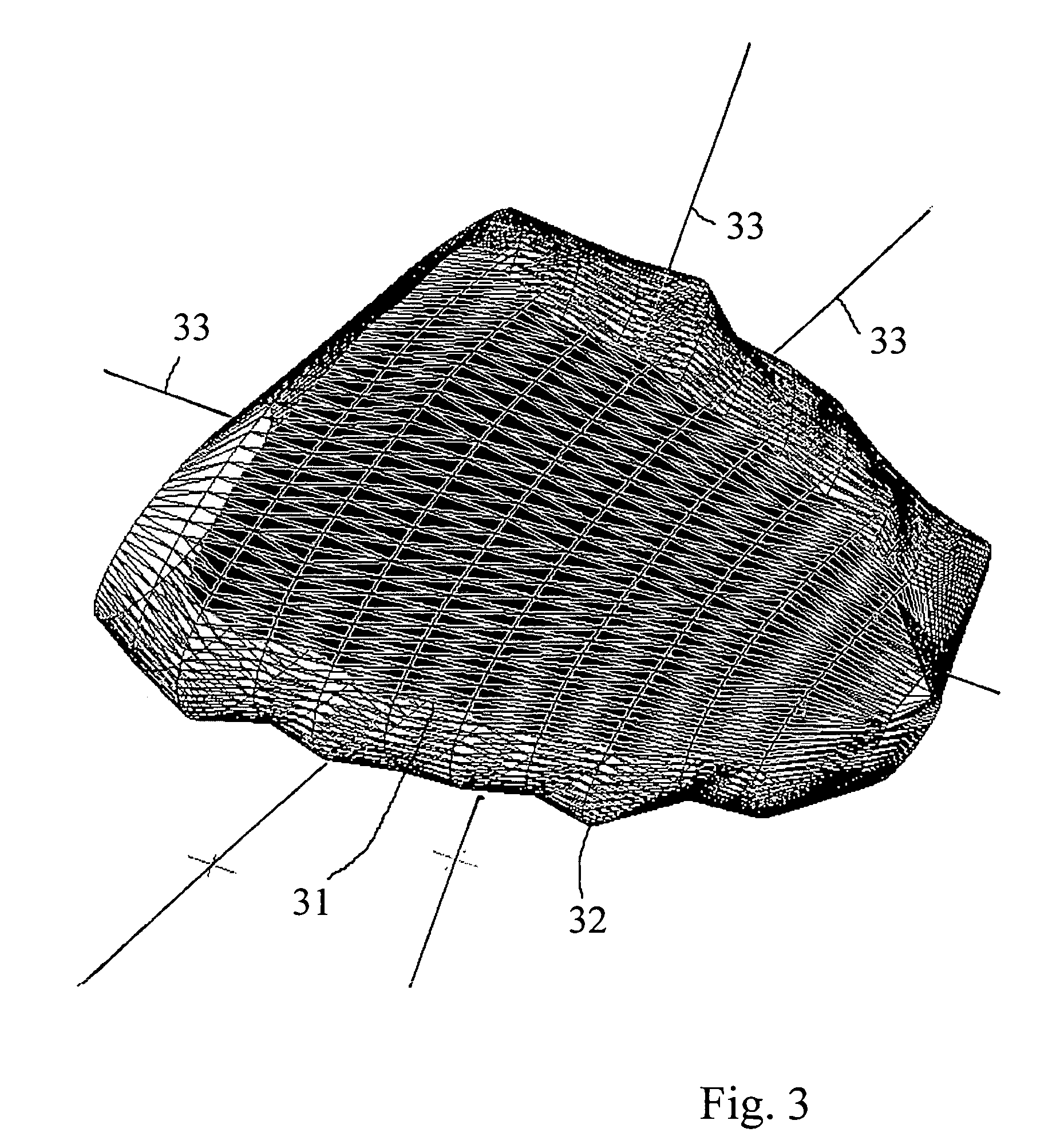

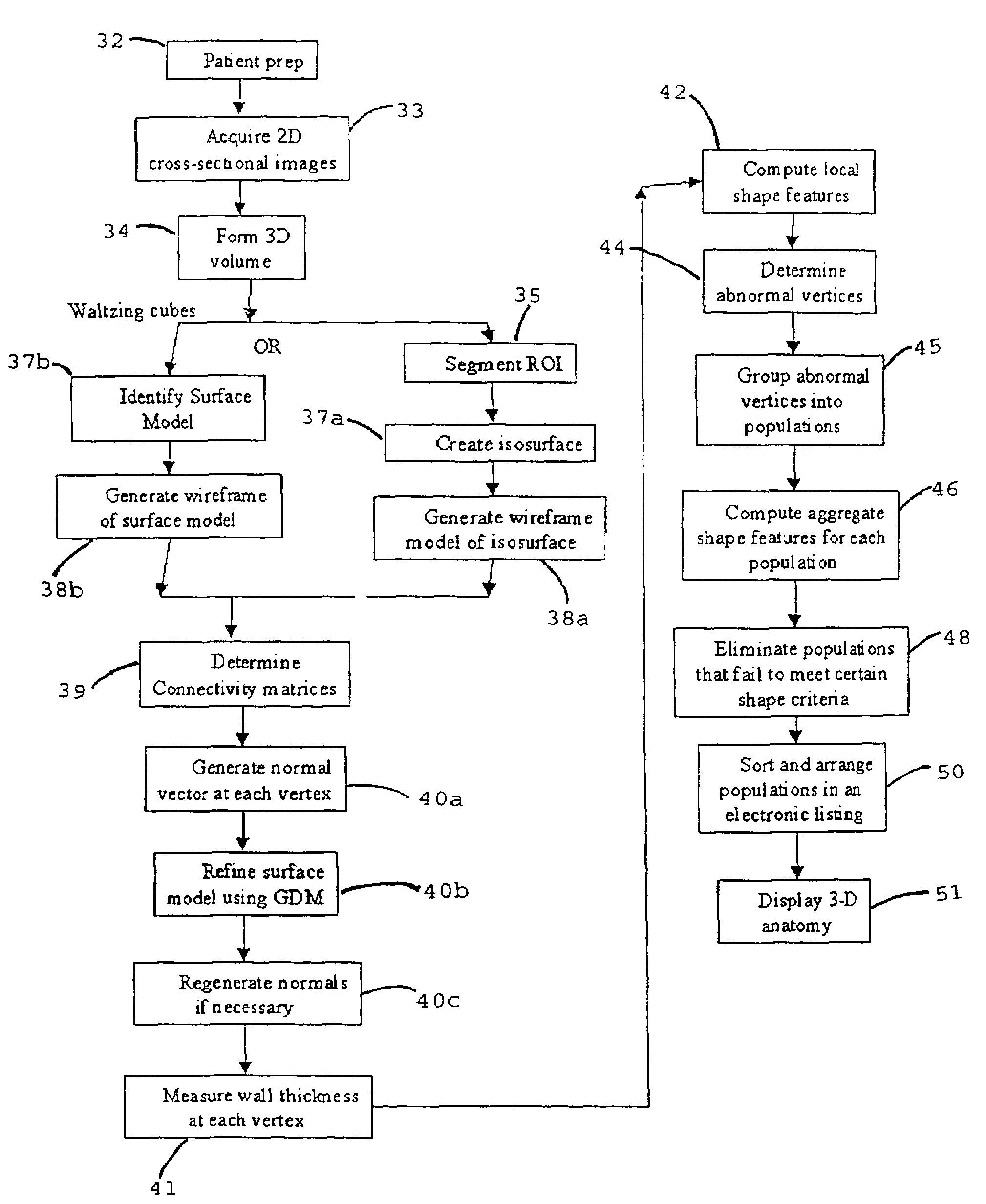

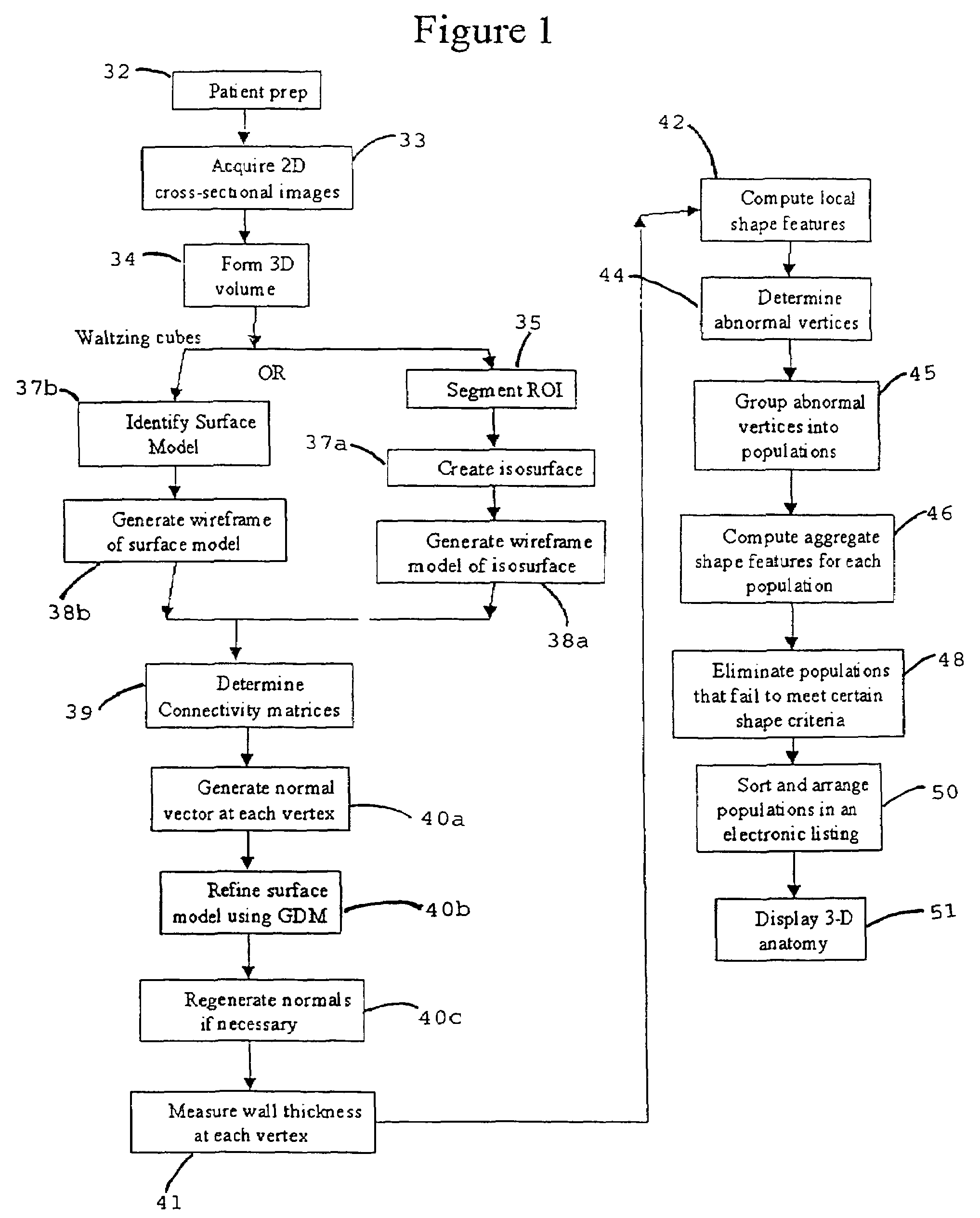

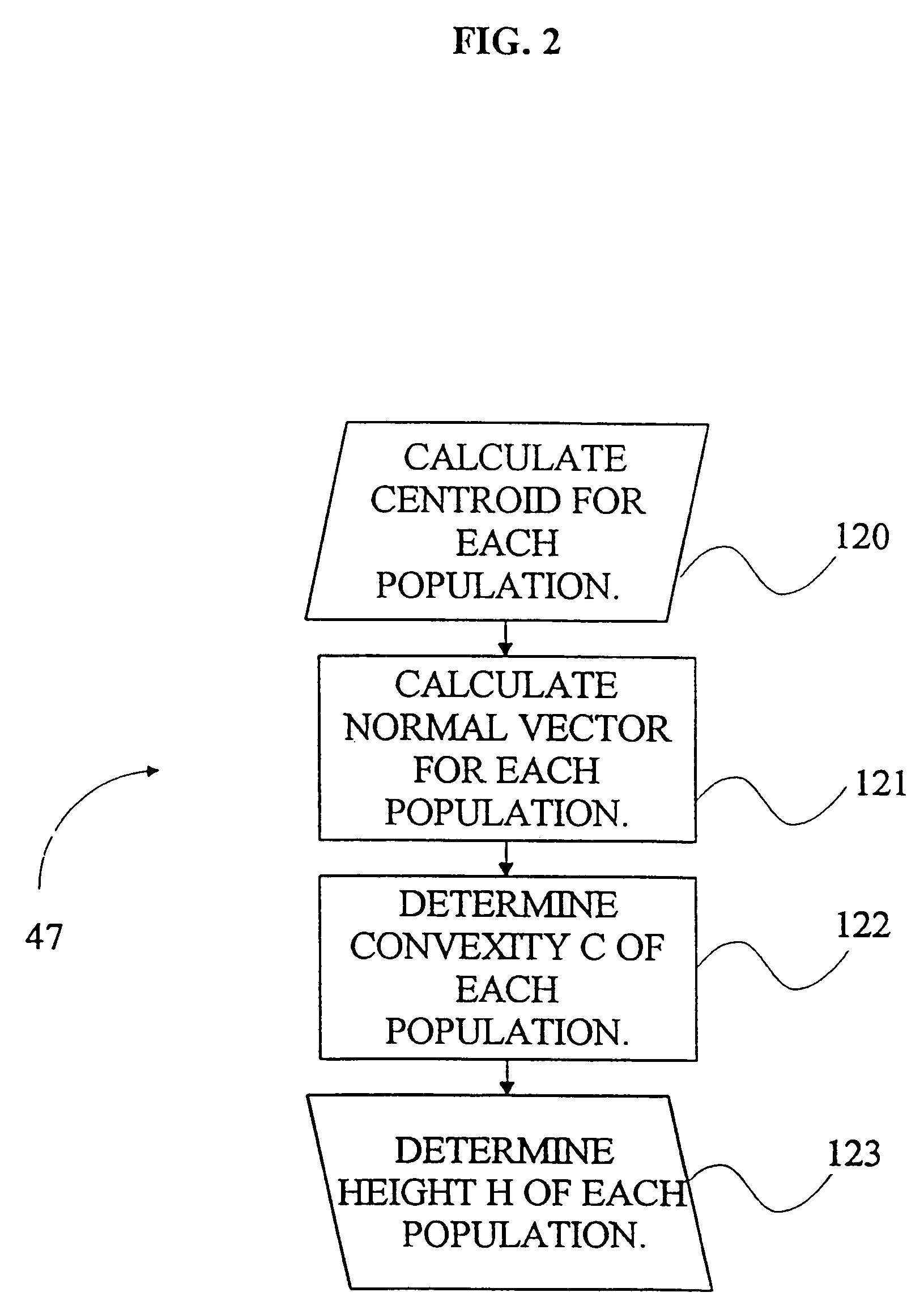

Virtual endoscopy with improved image segmentation and lesion detection

InactiveUS7747055B1Exact matchAccurate representationUltrasonic/sonic/infrasonic diagnosticsImage enhancementBody organsLesion detection

A system, and computer implemented method are provided for interactively displaying three-dimensional structures. Three-dimensional volume data (34) is formed from a series of two-dimensional images (33) representing at least one physical property associated with the three-dimensional structure, such as a body organ having a lumen. A wire frame model of a selected region of interest is generated (38b). The wireframe model is then deformed or reshaped to more accurately represent the region of interest (40b). Vertices of the wire frame model may be grouped into regions having a characteristic indicating abnormal structure, such as a lesion. Finally, the deformed wire frame model may be rendered in an interactive three-dimensional display.

Owner:WAKE FOREST UNIV HEALTH SCI INC

Method and apparatus for increasing field of view in cone-beam computerized tomography acquisition

ActiveUS9795354B2Cost of component being equalIncrease in sizeReconstruction from projectionRadiation diagnostic device controlTomographyField of view

A method and apparatus for Cone-Beam Computerized Tomography, (CBCT) is configured to increase the maximum Field-Of-View (FOV) through a composite scanning protocol and includes acquisition and reconstruction of multiple volumes related to partially overlapping different anatomic areas, and the subsequent stitching of those volumes, thereby obtaining, as a final result, a single final volume having dimensions larger than those otherwise provided by the geometry of the acquisition system.

Owner:CEFLA SOC COOP

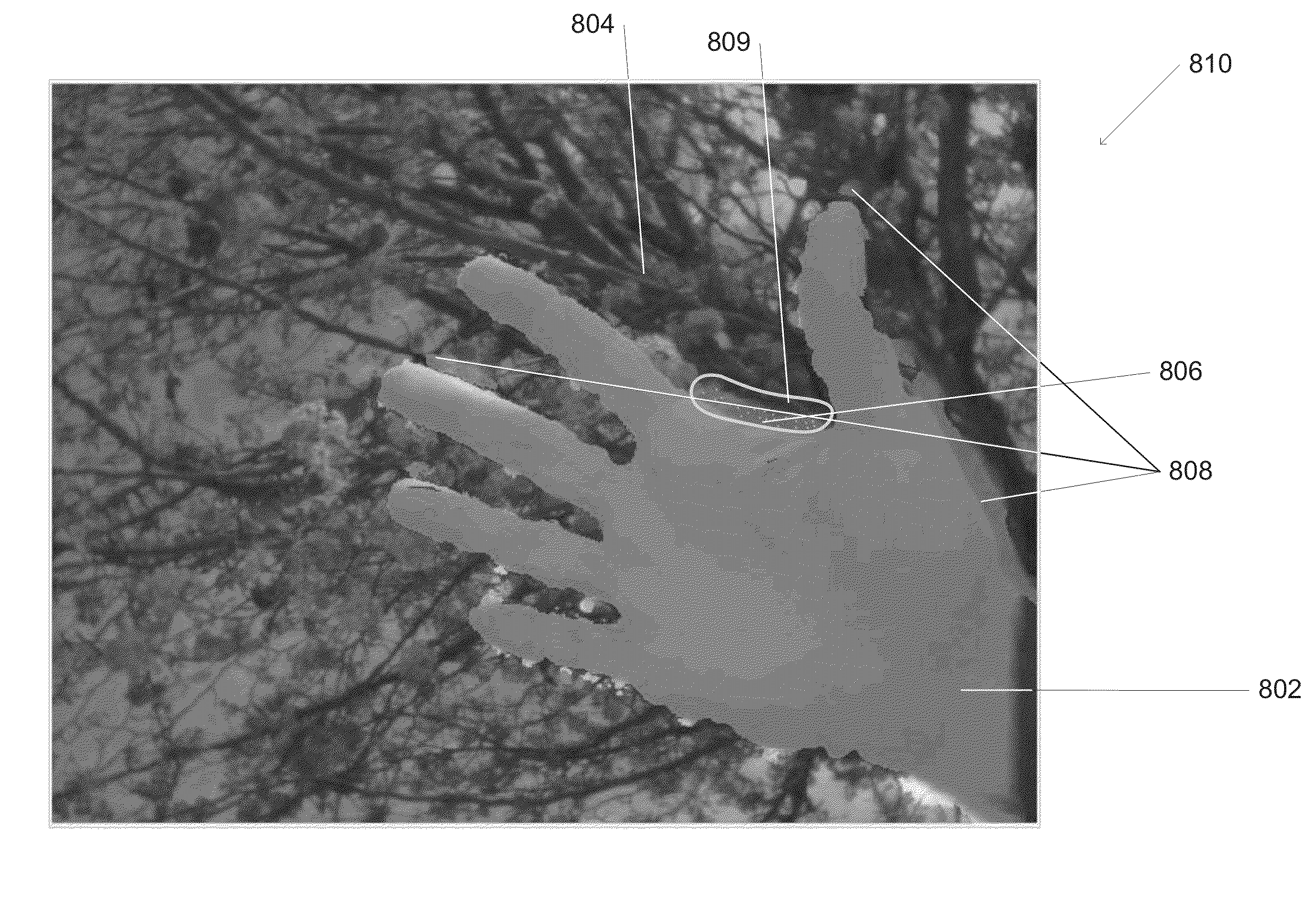

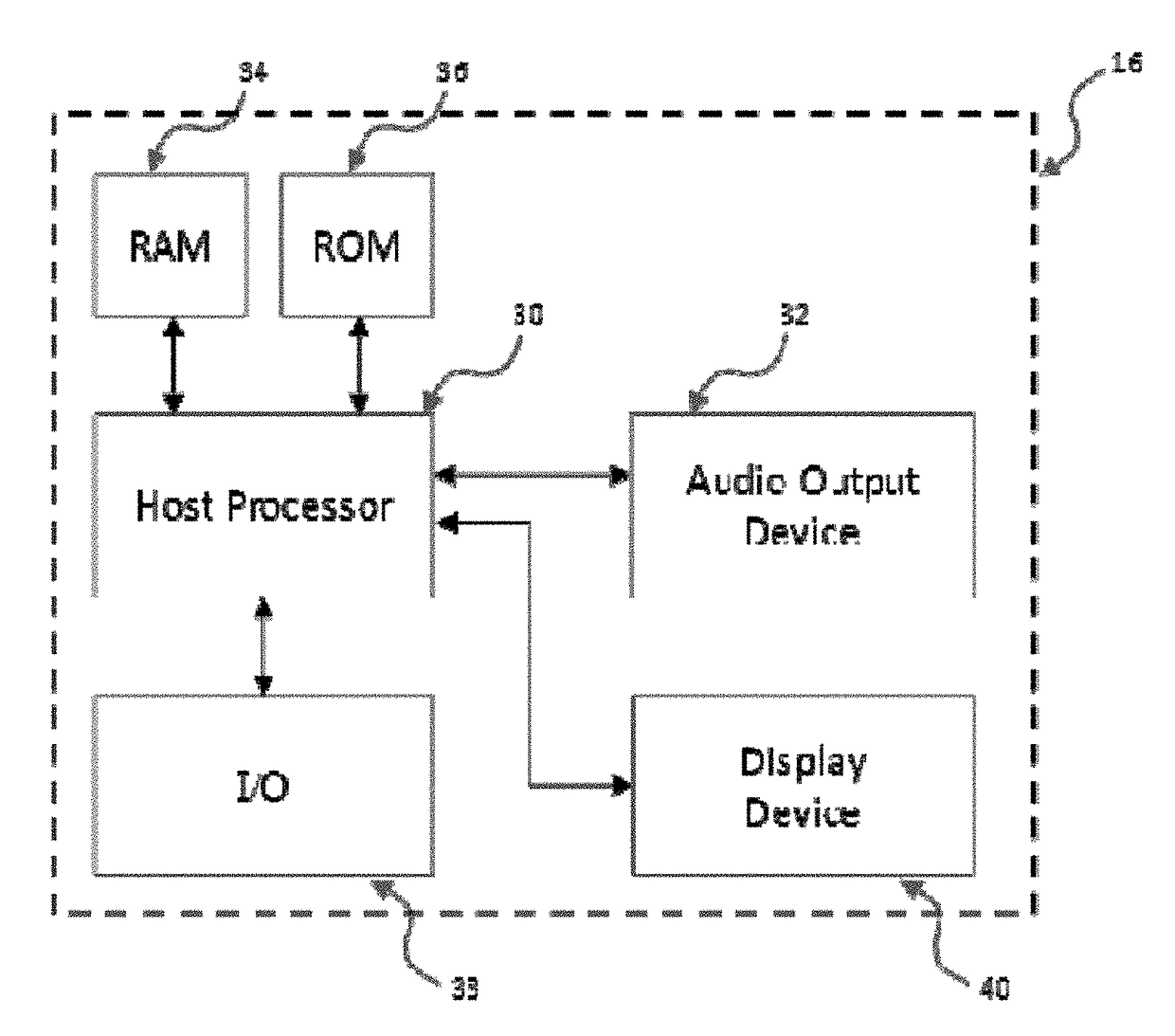

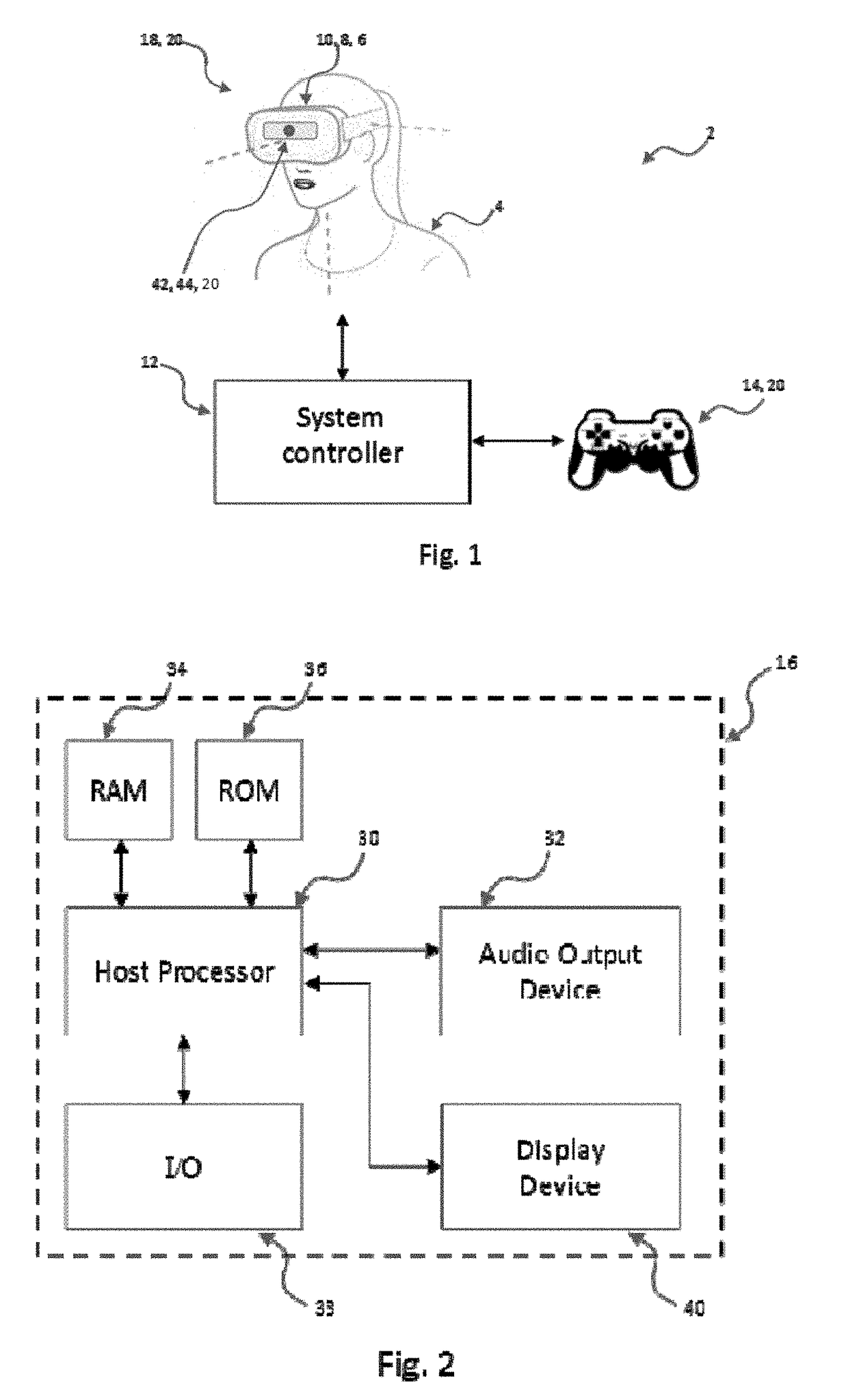

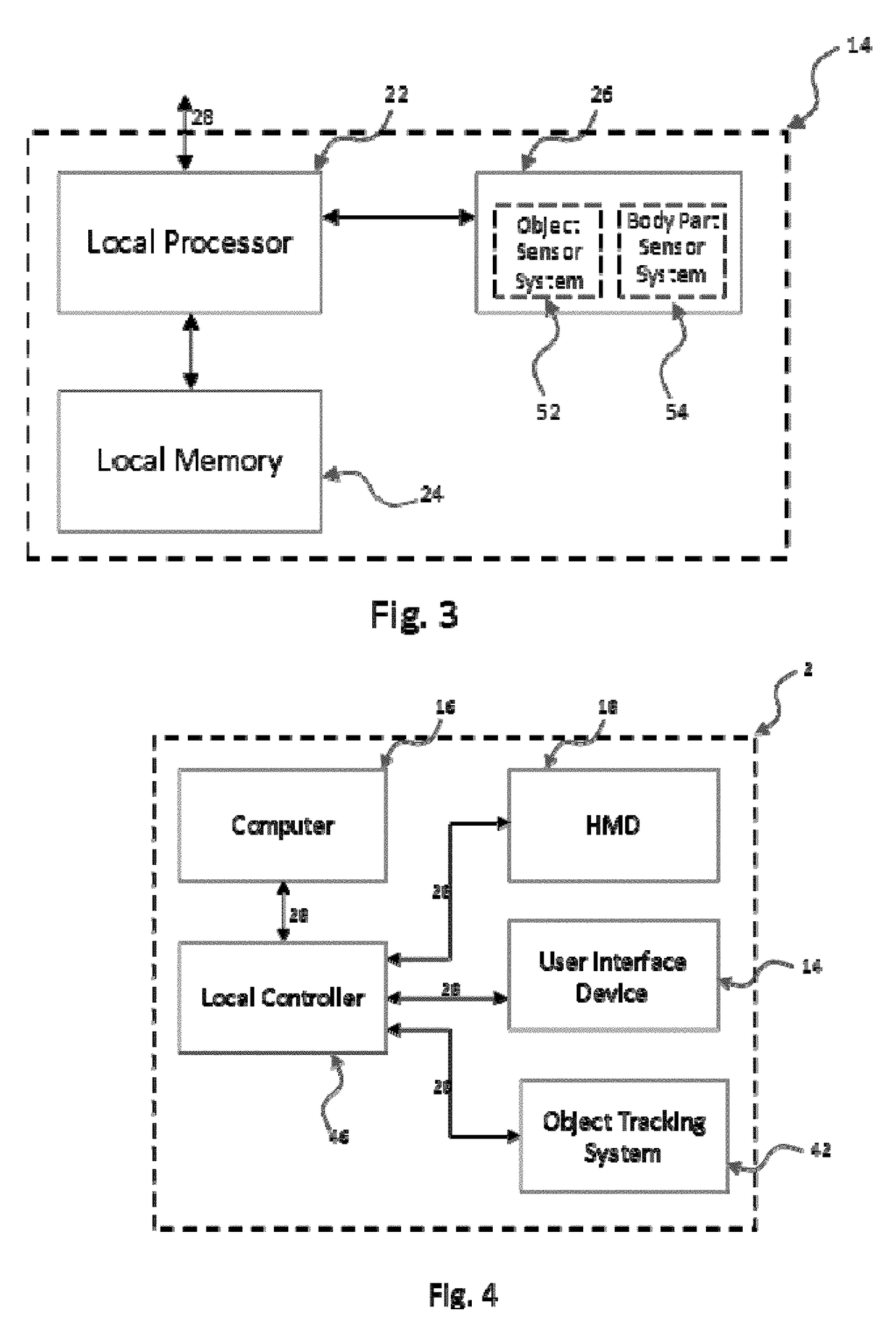

Transition between virtual and augmented reality

ActiveUS20170262045A1Input/output for user-computer interactionDetails involving 3D image dataGraphicsBiological activation

In an embodiment there is provided a user interface device for interfacing a user with a computer, the computer comprising at least one processor to generate instructions for display of a graphical environment of a virtual reality simulation. The user interface device includes a sensor for detecting activation and / or a sensor for detecting proximity of a body part of the user (e.g., hand). At least partially based on detection by one of these sensors, a transition between virtual reality states is triggered.

Owner:LOGITECH EURO SA

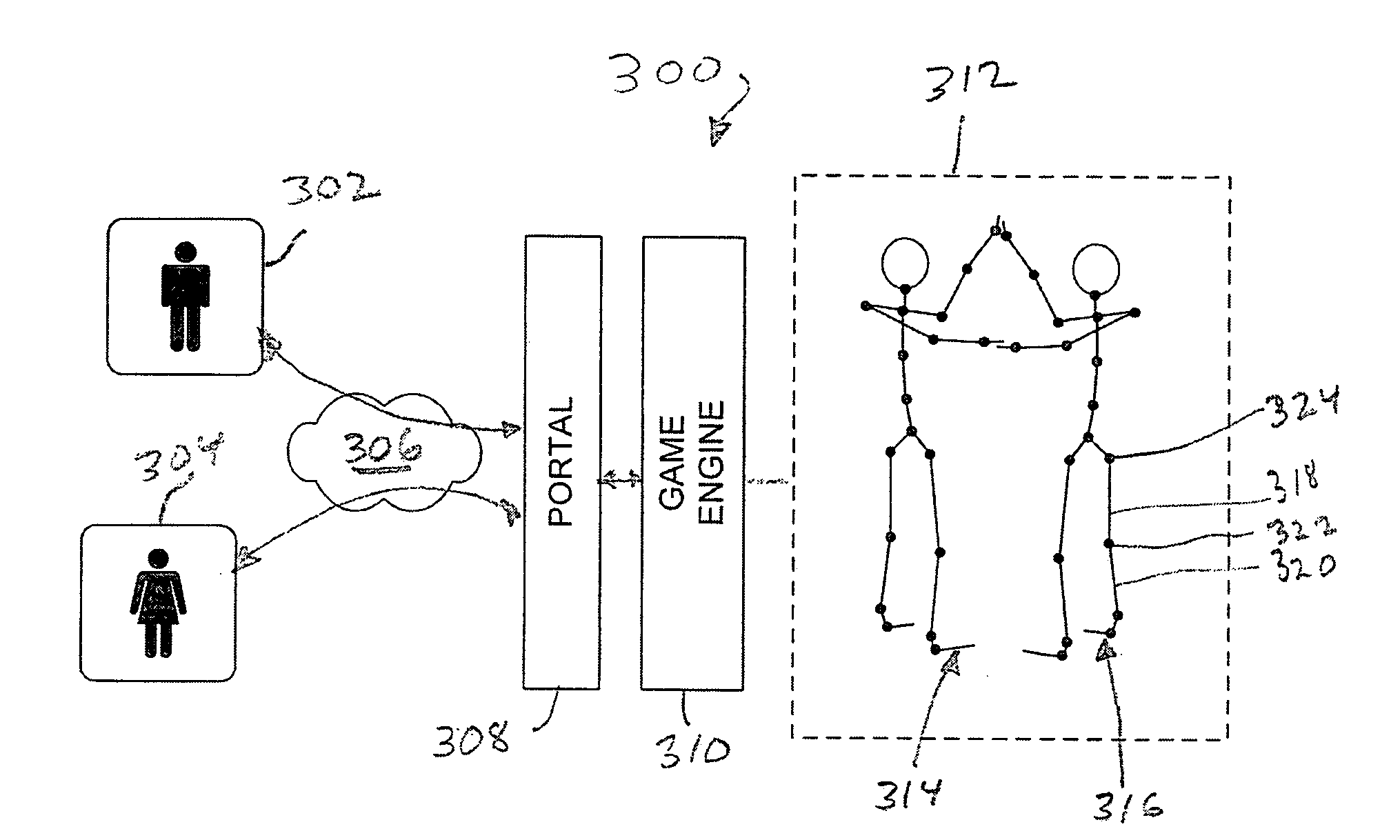

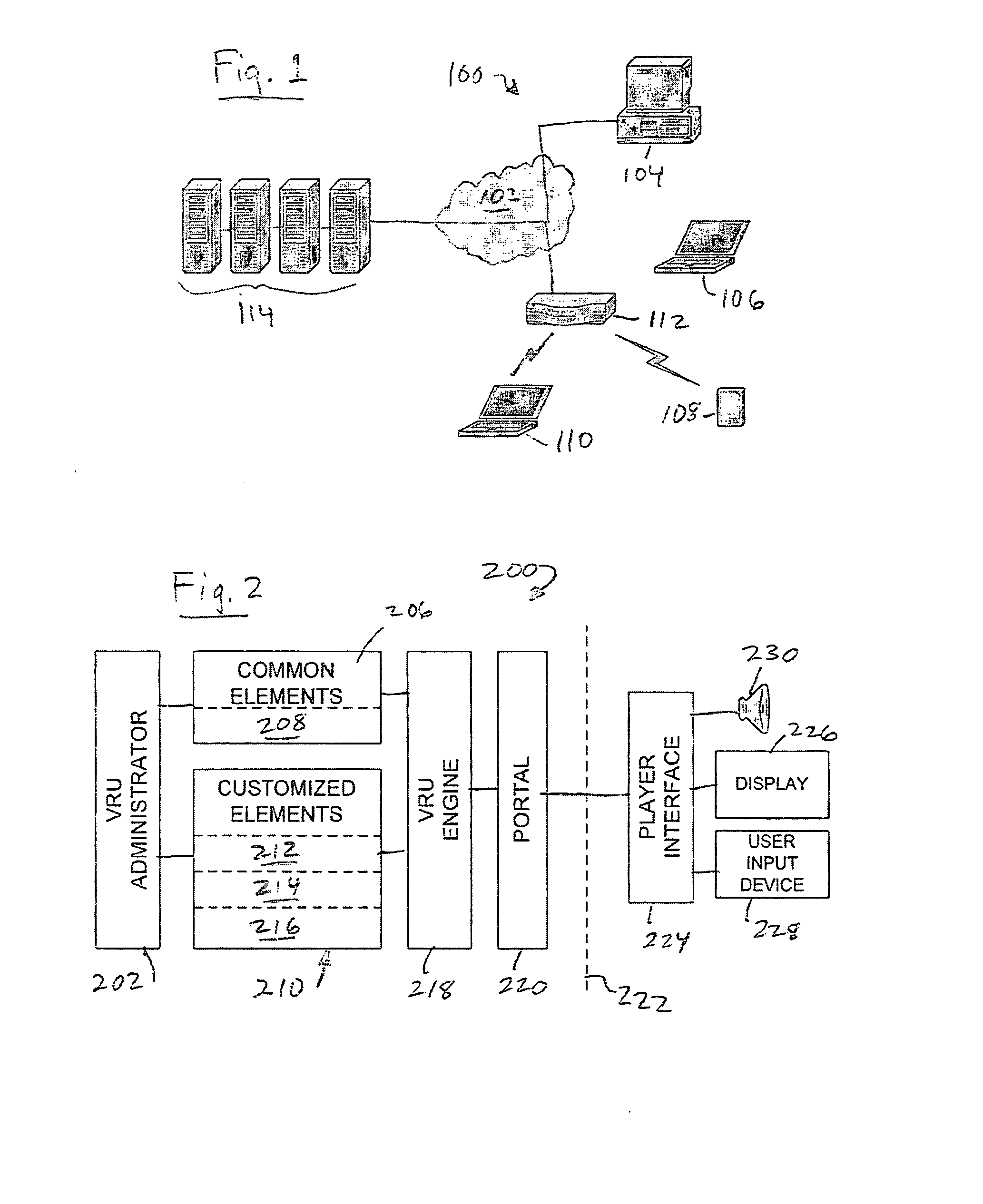

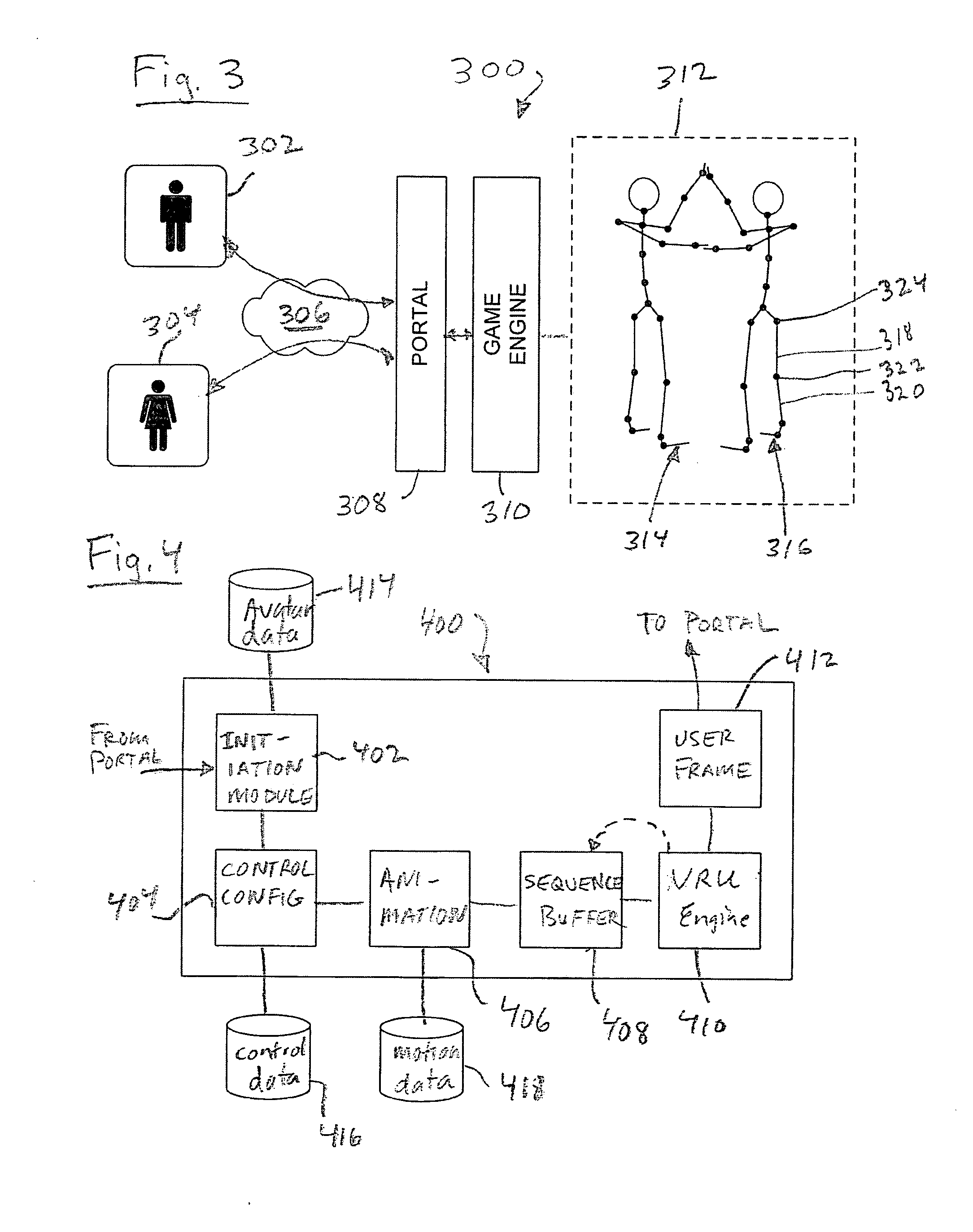

Animation control method for multiple participants

ActiveUS20080158232A1Complete understandingEnhanced advantageDetails involving 3D image dataAnimationAnimationClient-side

A computer system is used to host a virtual reality universe process in which multiple avatars are independently controlled in response to client input. The host provides coordinated motion information for defining coordinated movement between designated portions of multiple avatars, and an application responsive to detect conditions triggering a coordinated movement sequence between two or more avatars. During coordinated movement, user commands for controlling avatar movement may be in part used normally and in part ignored or otherwise processed to cause the involved avatars to respond in part to respective client input and in part to predefined coordinated movement information. Thus, users may be assisted with executing coordinated movement between multiple avatars.

Owner:PFAQUTRUMA RES LLC

Target orientation estimation using depth sensing

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

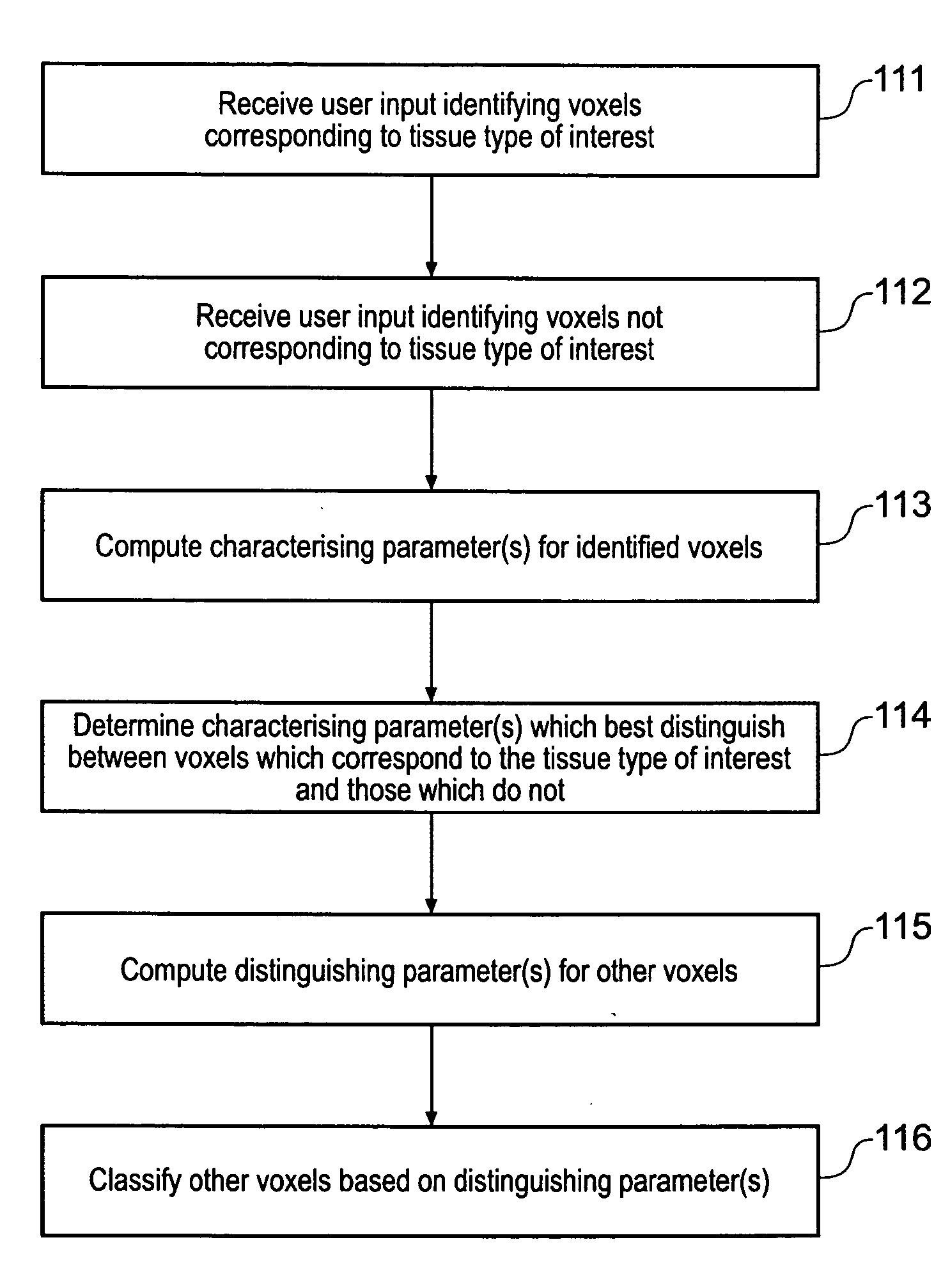

Displaying image data using automatic presets

InactiveUS20050017972A1Easy and intuitiveAccurate classificationUltrasonic/sonic/infrasonic diagnosticsImage enhancementPattern recognitionVoxel

A computer automated method that applies supervised pattern recognition to classify whether voxels in a medical image data set correspond to a tissue type of interest is described. The method comprises a user identifying examples of voxels which correspond to the tissue type of interest and examples of voxels which do not. Characterizing parameters, such as voxel value, local averages and local standard deviations of voxel value are then computed for the identified example voxels. From these characterizing parameters, one or more distinguishing parameters are identified. The distinguishing parameter are those parameters having values which depend on whether or not the voxel with which they are associated corresponds to the tissue type of interest. The distinguishing parameters are then computed for other voxels in the medical image data set, and these voxels are classified on the basis of the value of their distinguishing parameters. The approach allows tissue types which differ only slightly to be distinguished according to a user's wishes.

Owner:VOXAR

Systems and methods for correcting user identified artifacts in light field images

Systems and methods for correction of user identified artifacts in light field images are disclosed. One embodiment of the invention is a method for correcting artifacts in a light field image rendered from a light field obtained by capturing a set of images from different viewpoints and initial depth estimates for pixels within the light field using a processor configured by an image processing application, where the method includes: receiving a user input indicating the location of an artifact within said light field image; selecting a region of the light field image containing the indicated artifact; generating updated depth estimates for pixels within the selected region; and re-rendering at least a portion of the light field image using the updated depth estimates for the pixels within the selected region.

Owner:FOTONATION LTD

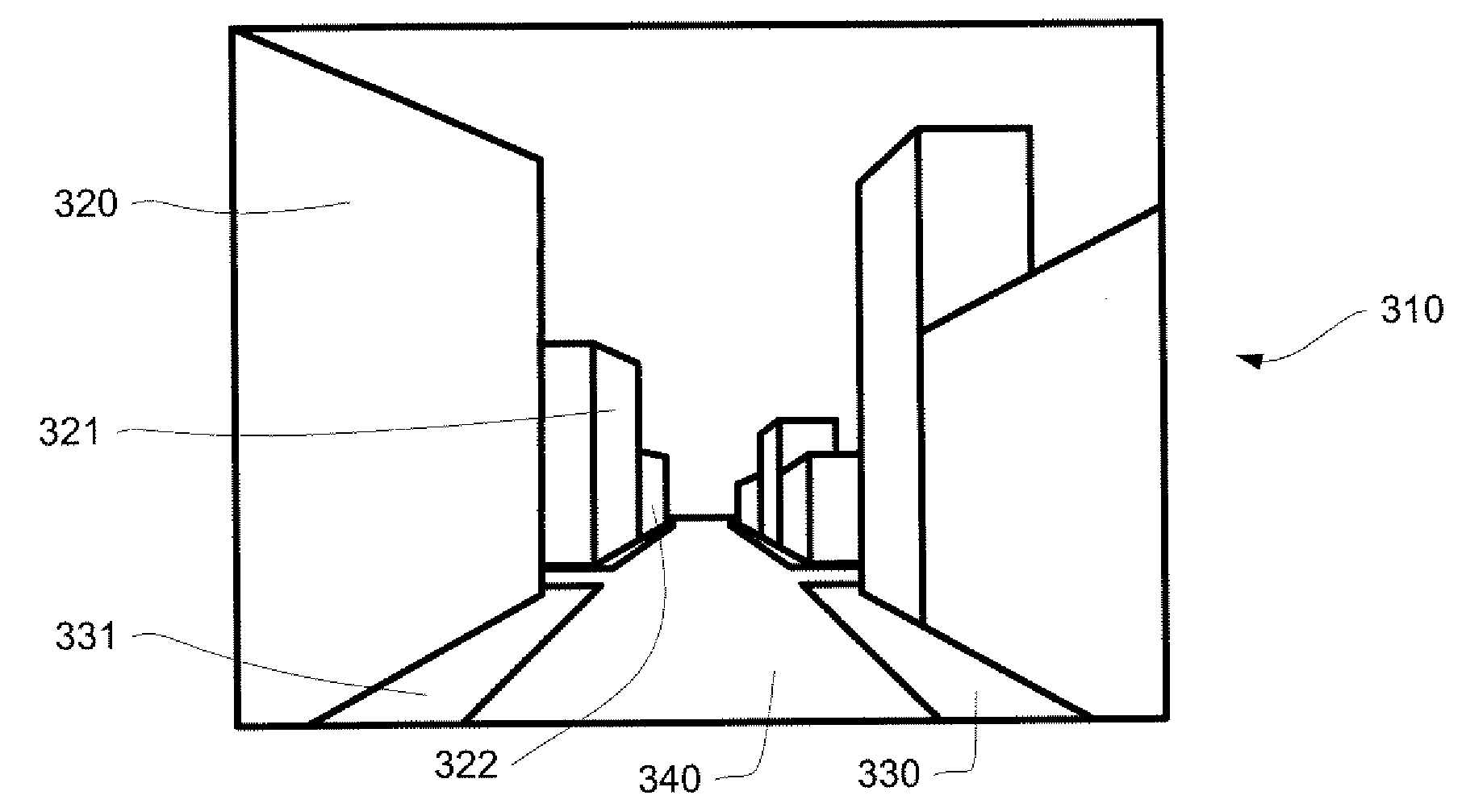

System and method of indicating transition between street level images

InactiveUS20100215250A1Details involving 3D image dataCharacter and pattern recognitionComputer graphics (images)Angle of view

A system and method of displaying transitions between street level images is provided. In one aspect, the system and method creates a plurality of polygons that are both textured with images from a 2D street level image and associated with 3D positions, where the 3D positions correspond with the 3D positions of the objects contained in the image. These polygons, in turn, are rendered from different perspectives to convey the appearance of moving among the objects contained in the original image.

Owner:GOOGLE LLC

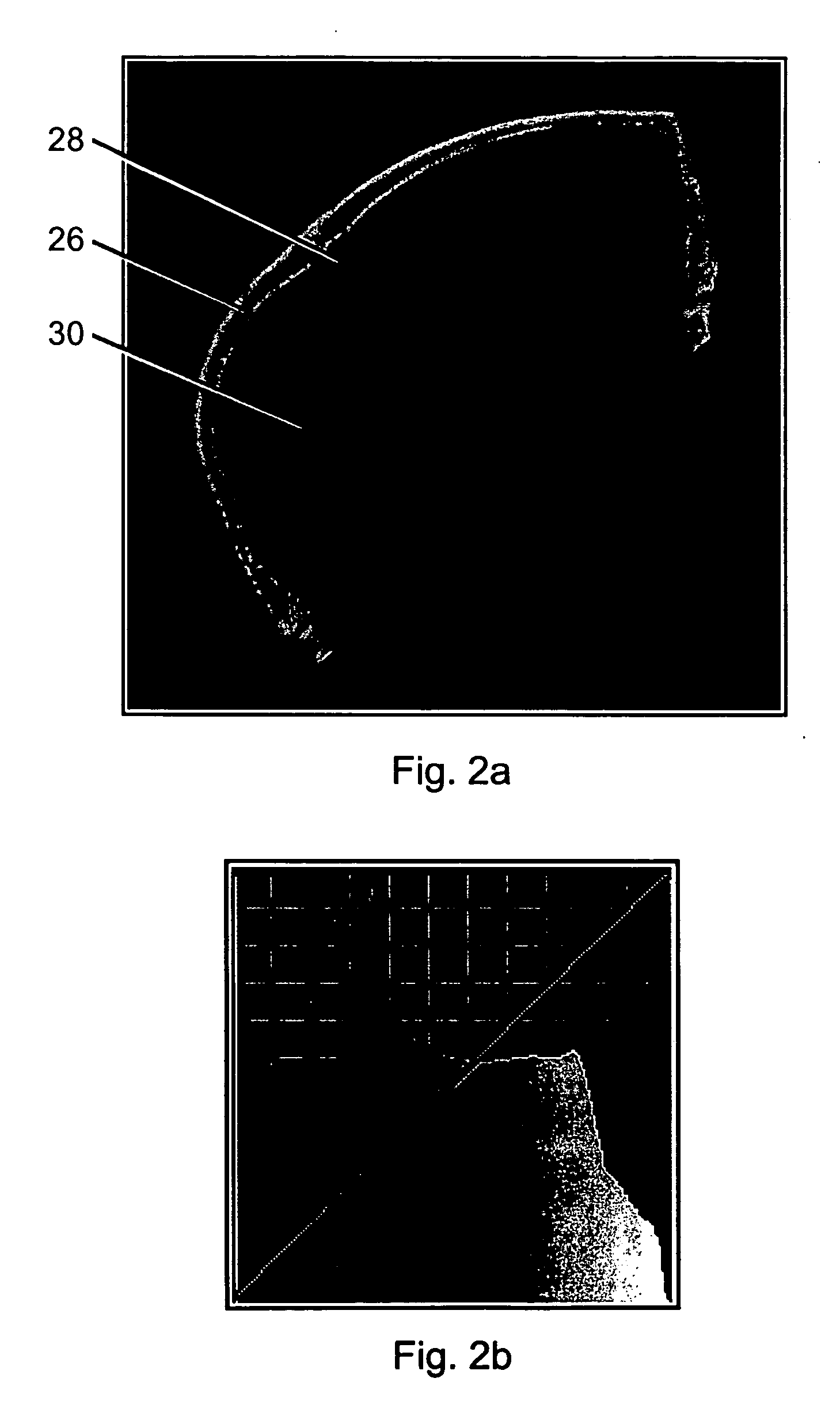

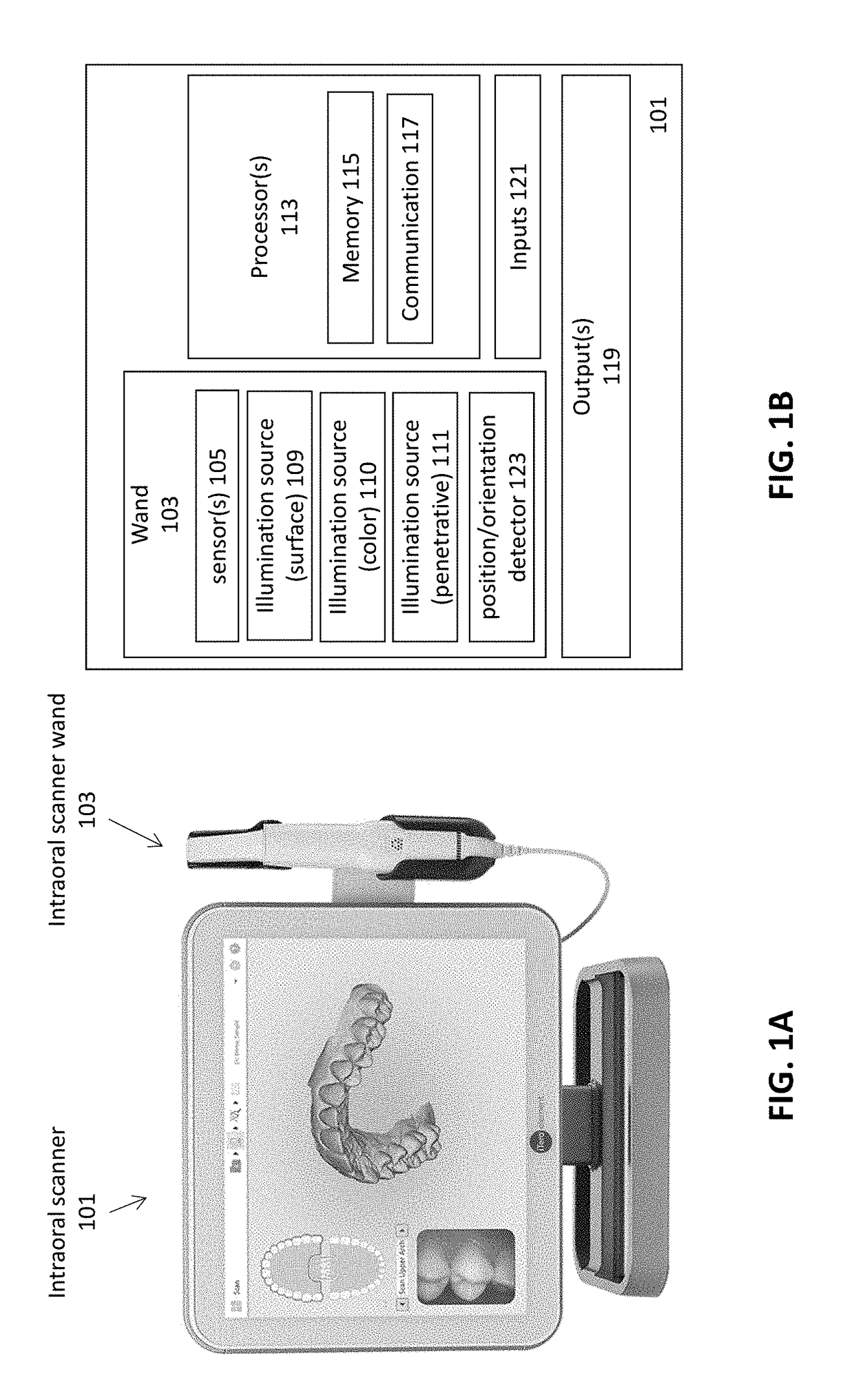

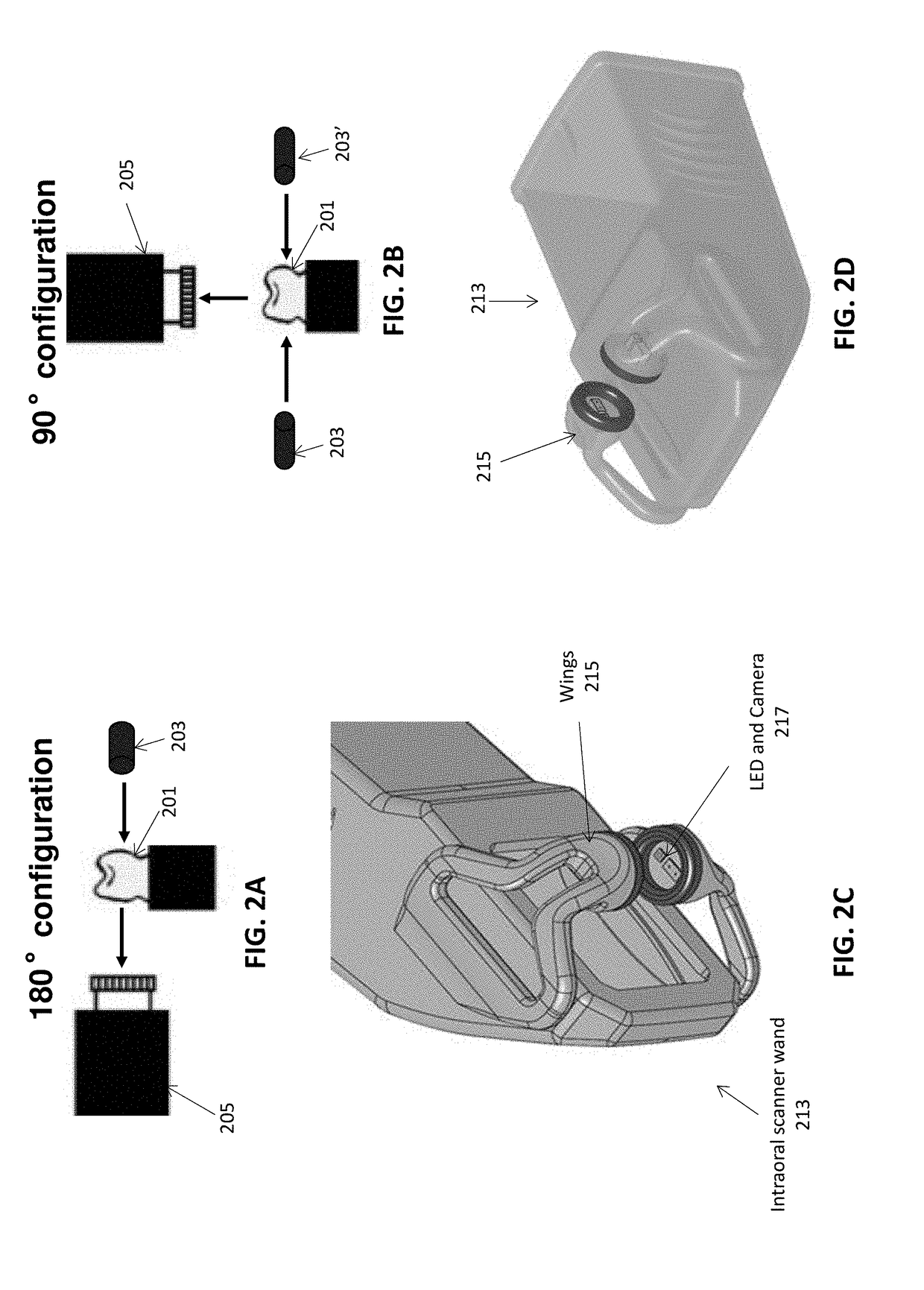

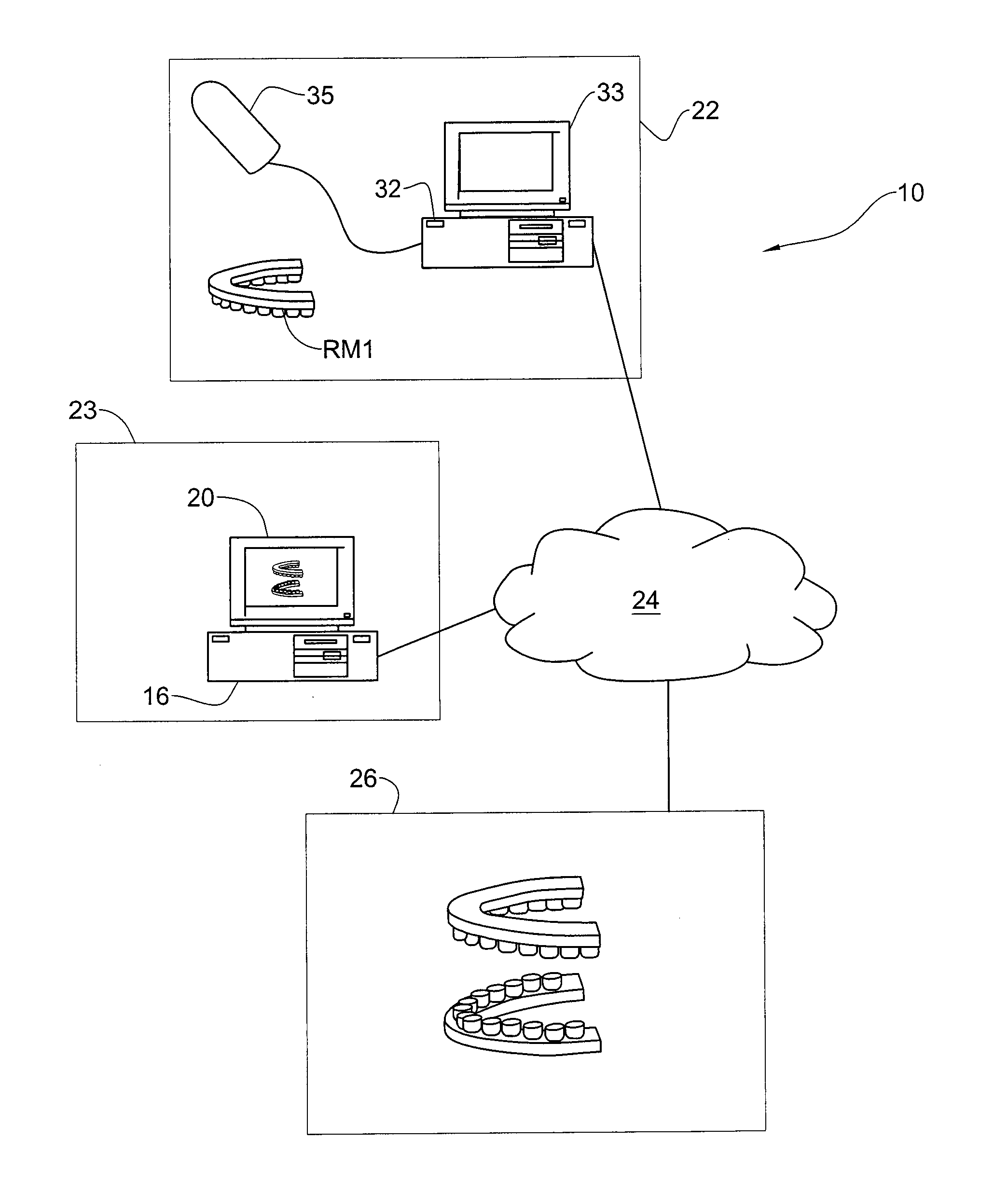

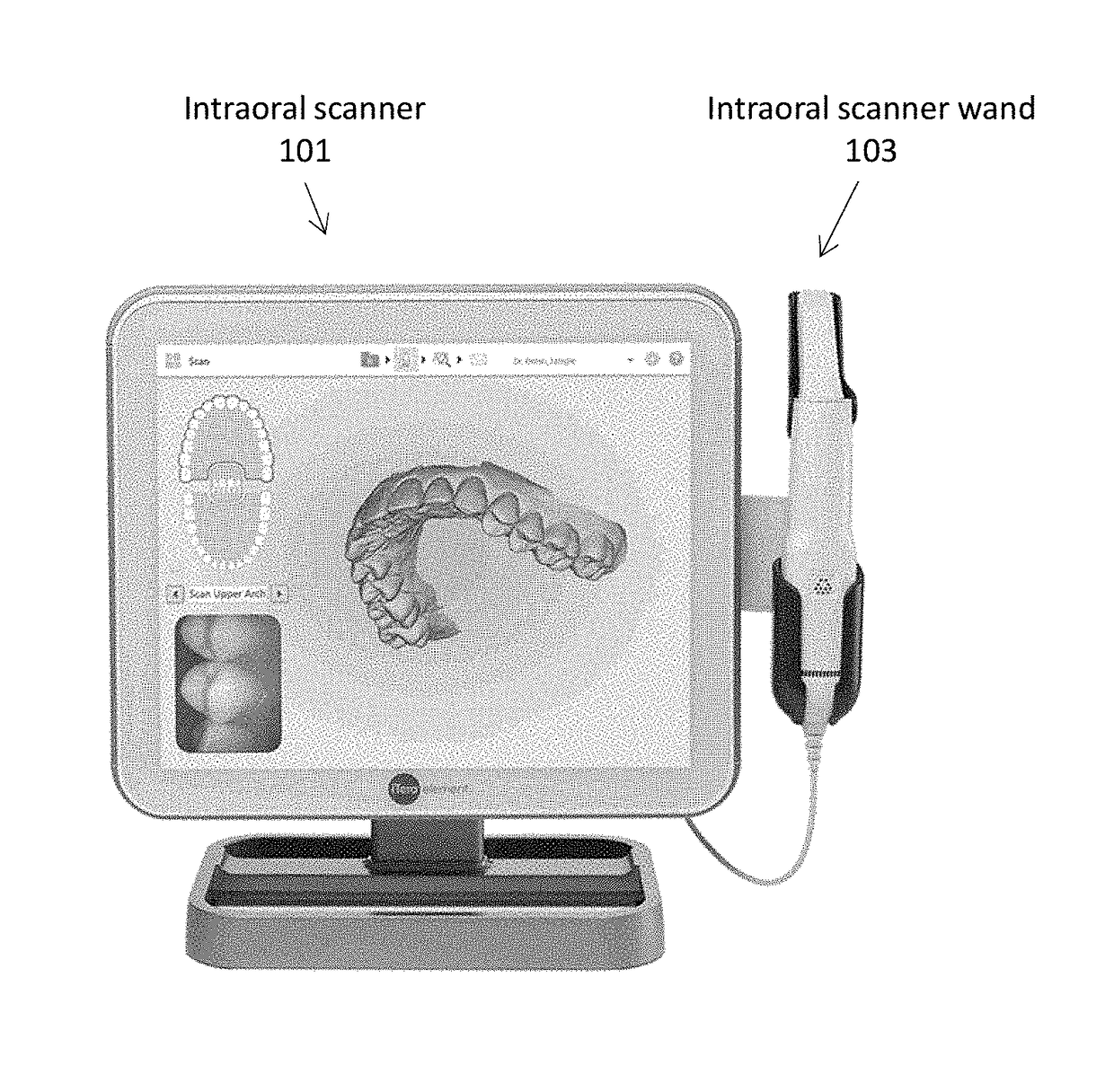

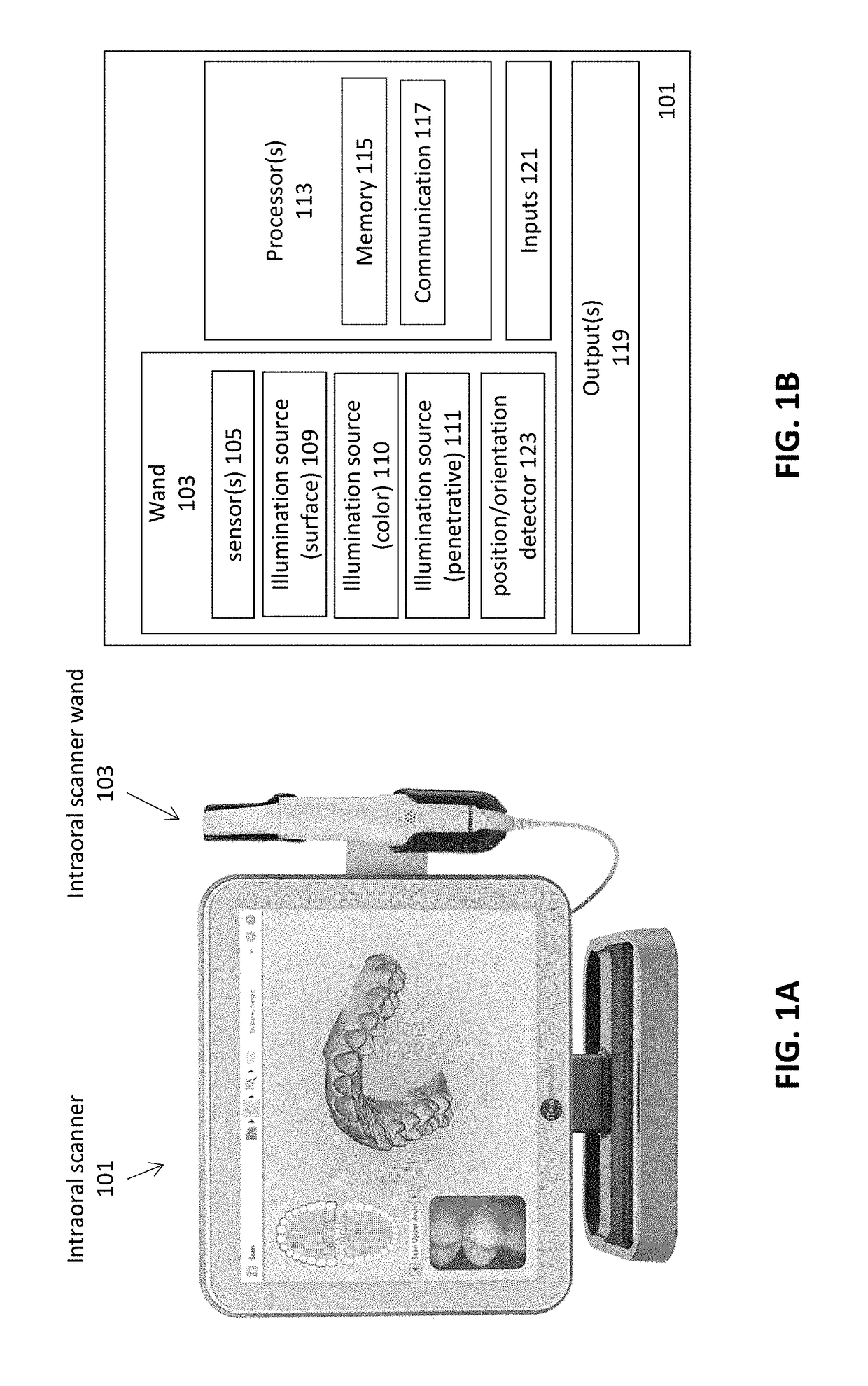

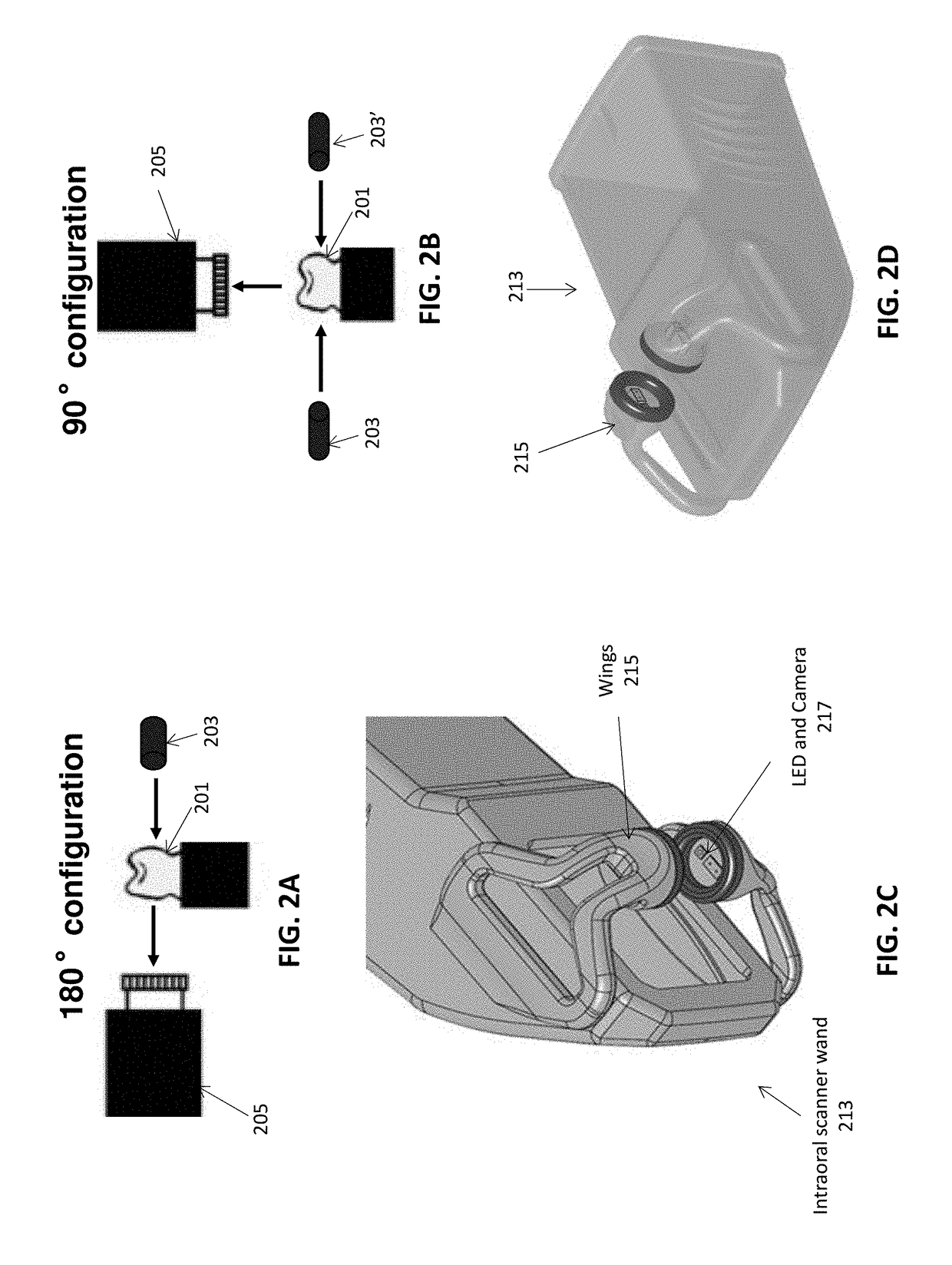

Methods and apparatuses for forming a three-dimensional volumetric model of a subject's teeth

Methods and apparatuses for generating a model of a subject's teeth. Described herein are intraoral scanning methods and apparatuses for generating a three-dimensional model of a subject's intraoral region (e.g., teeth) including both surface features and internal features. These methods and apparatuses may be used for identifying and evaluating lesions, caries and cracks in the teeth. Any of these methods and apparatuses may use minimum scattering coefficients and / or segmentation to form a volumetric model of the teeth.

Owner:ALIGN TECH

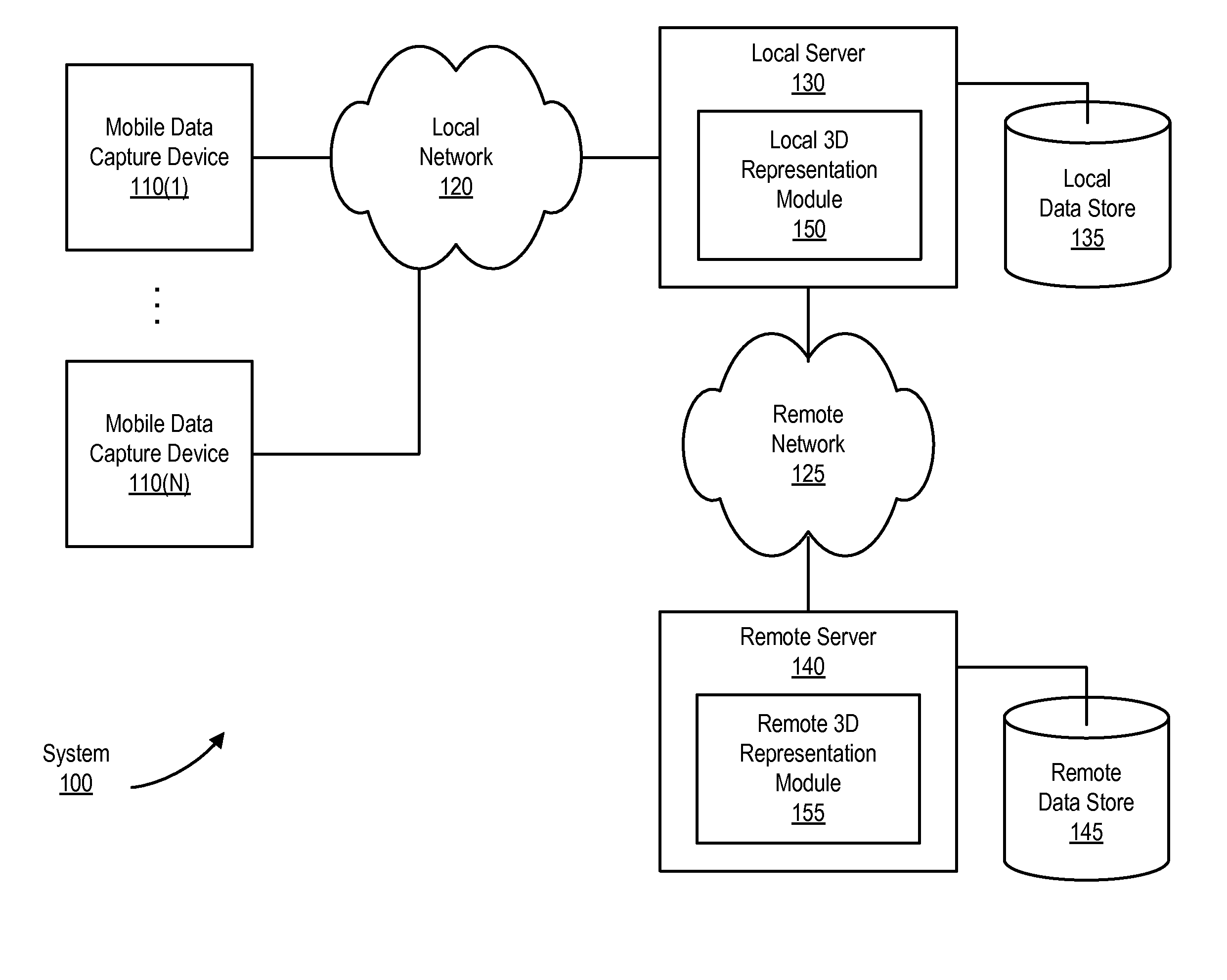

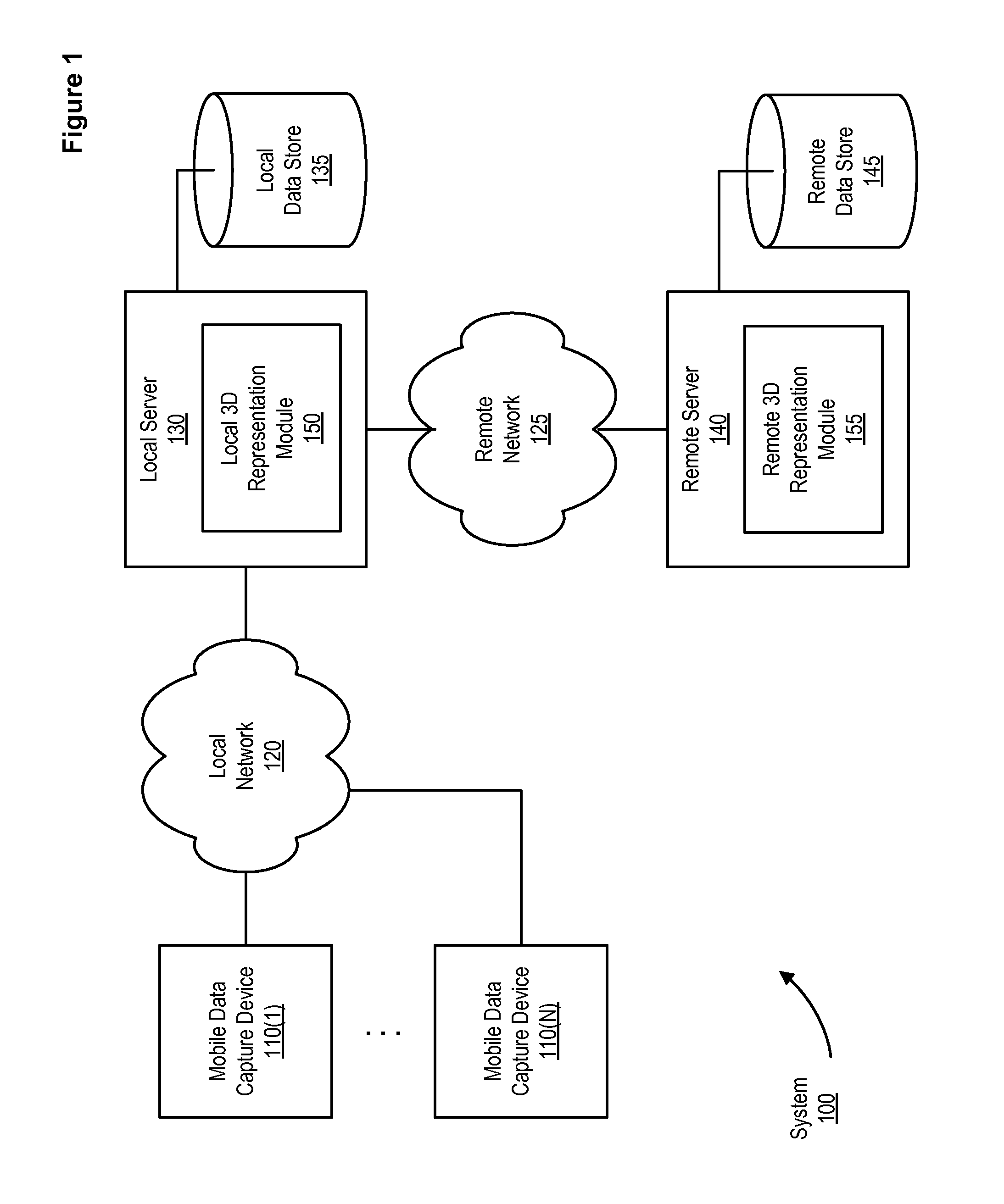

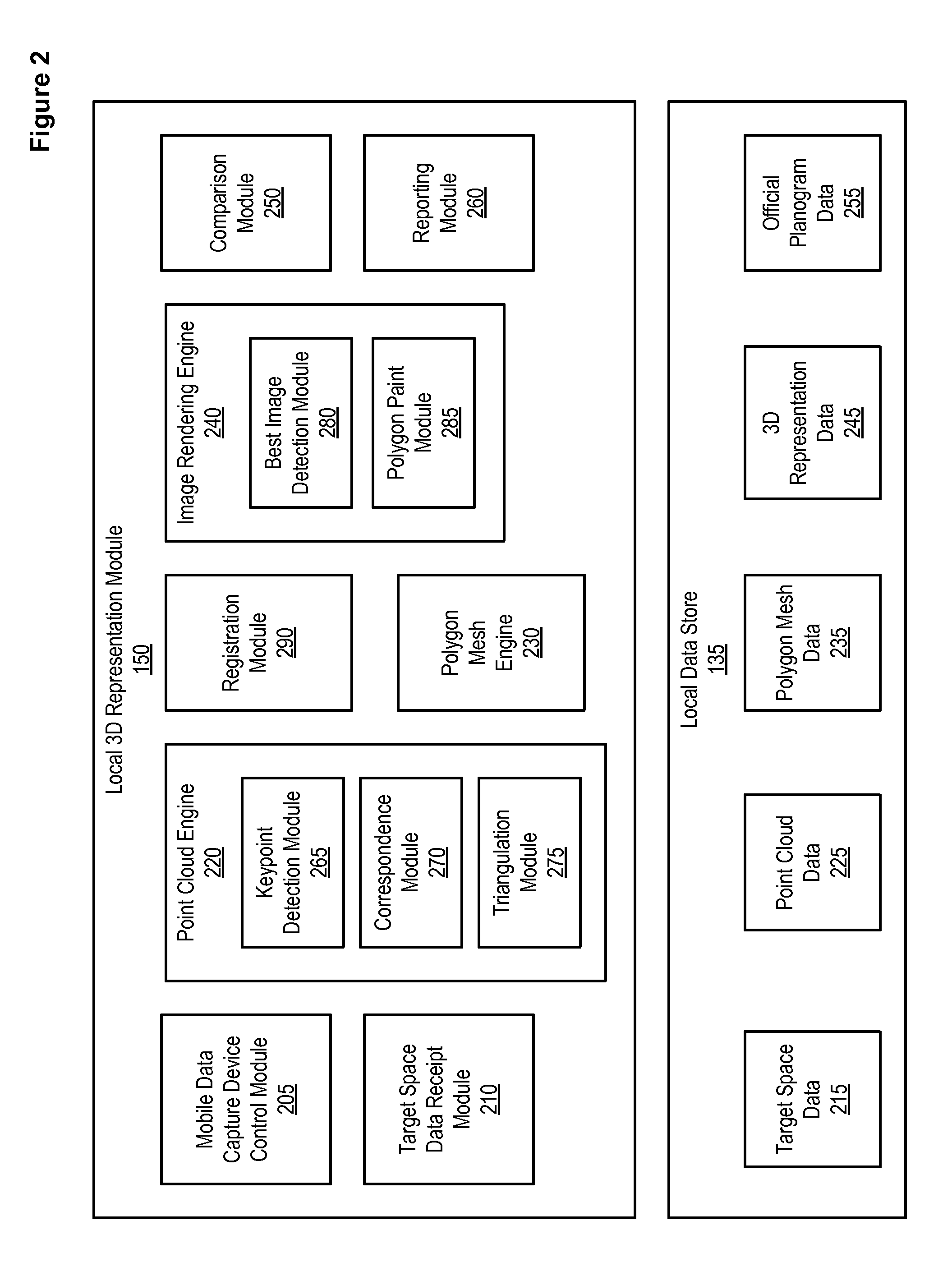

Automated generation of a three-dimensional space representation and planogram verification

The present disclosure provides an automated scheme for generating and verifying a three-dimensional (3D) representation of a target space. In one embodiment, the automatic generation of a 3D representation of a target space includes receiving target space data from one or more mobile data capture devices and generating a local point cloud from the target space data. In one embodiment, the local point cloud is incorporated into a master point cloud. In one embodiment, a polygon mesh is generated using the master point cloud and the polygon mesh is rendered, using a plurality of visual images captured from the target space, which generates the 3D representation. In one embodiment, the automatic verification includes comparing a portion of the 3D representation with a portion of an approved layout, and identifying one or more discrepancies between the portion of the 3D representation and the portion of the approved layout.

Owner:ORACLE INT CORP

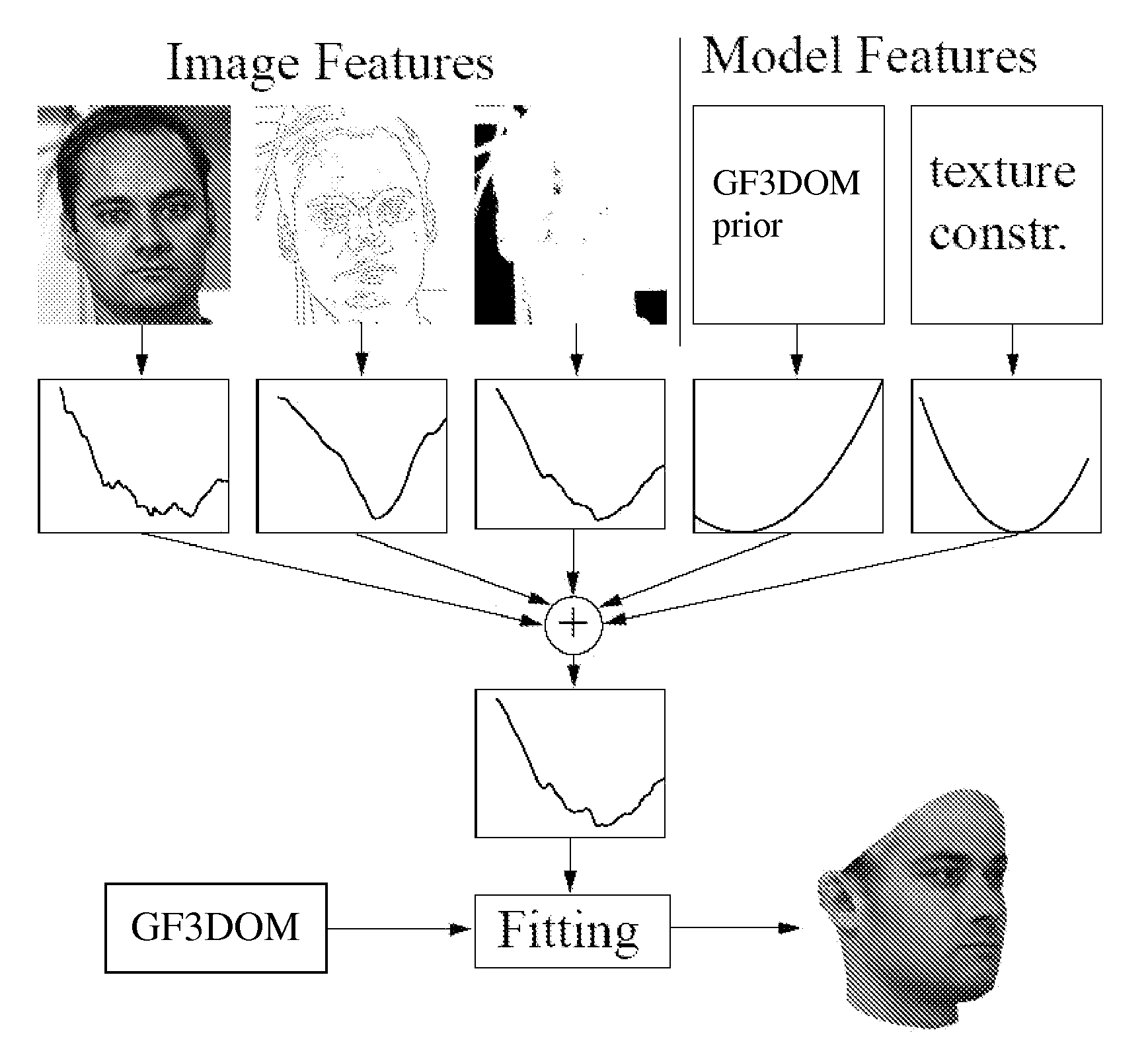

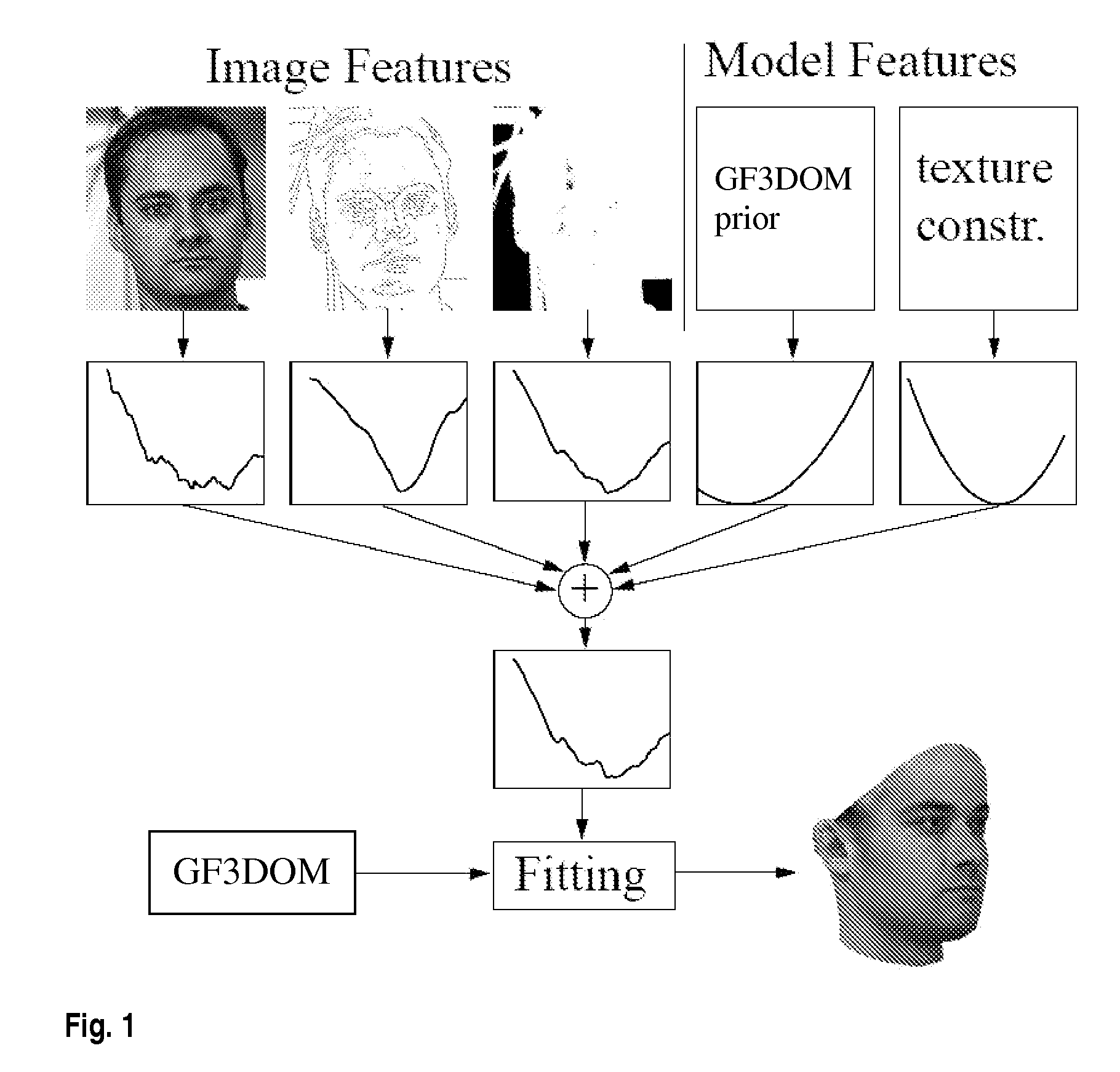

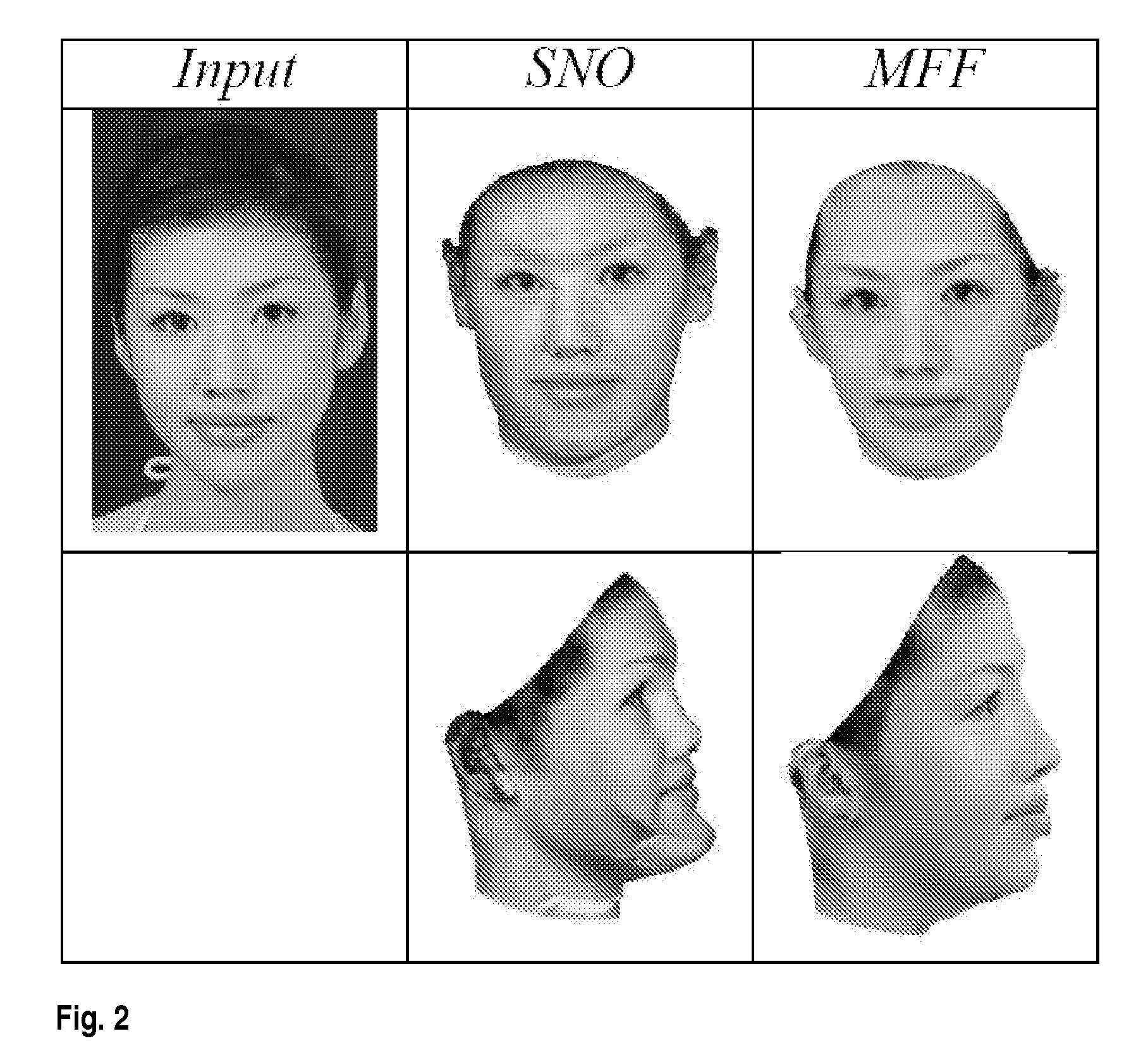

Estimating 3D shape and texture of a 3D object based on a 2d image of the 3D object

InactiveUS20070031028A1Maximizing posterior probabilityPerformance maximizationAdditive manufacturing apparatusDrawing from basic elements3d shapesObject based

Disclosed is an improved algorithm for estimating the 3D shape of a 3-dimensional object, such as a human face, based on information retrieved from a single photograph by recovering parameters of a 3-dimensional model and methods and systems using the same. Beside the pixel intensity, the invention uses various image features in a multi-features fitting algorithm (MFF) that has a wider radius of convergence and a higher level of precision and provides thereby better results.

Owner:UNIVERSITY OF BASEL

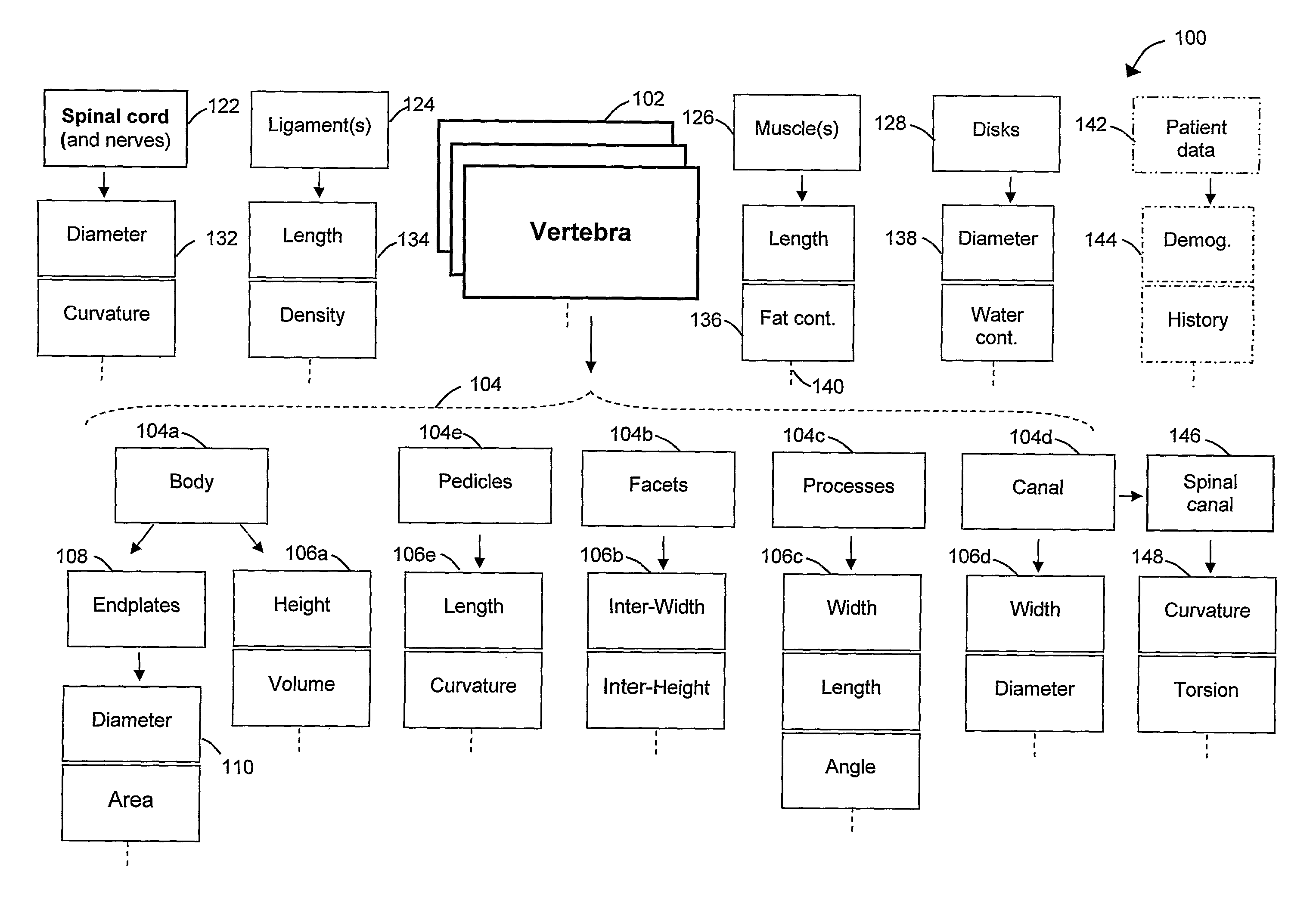

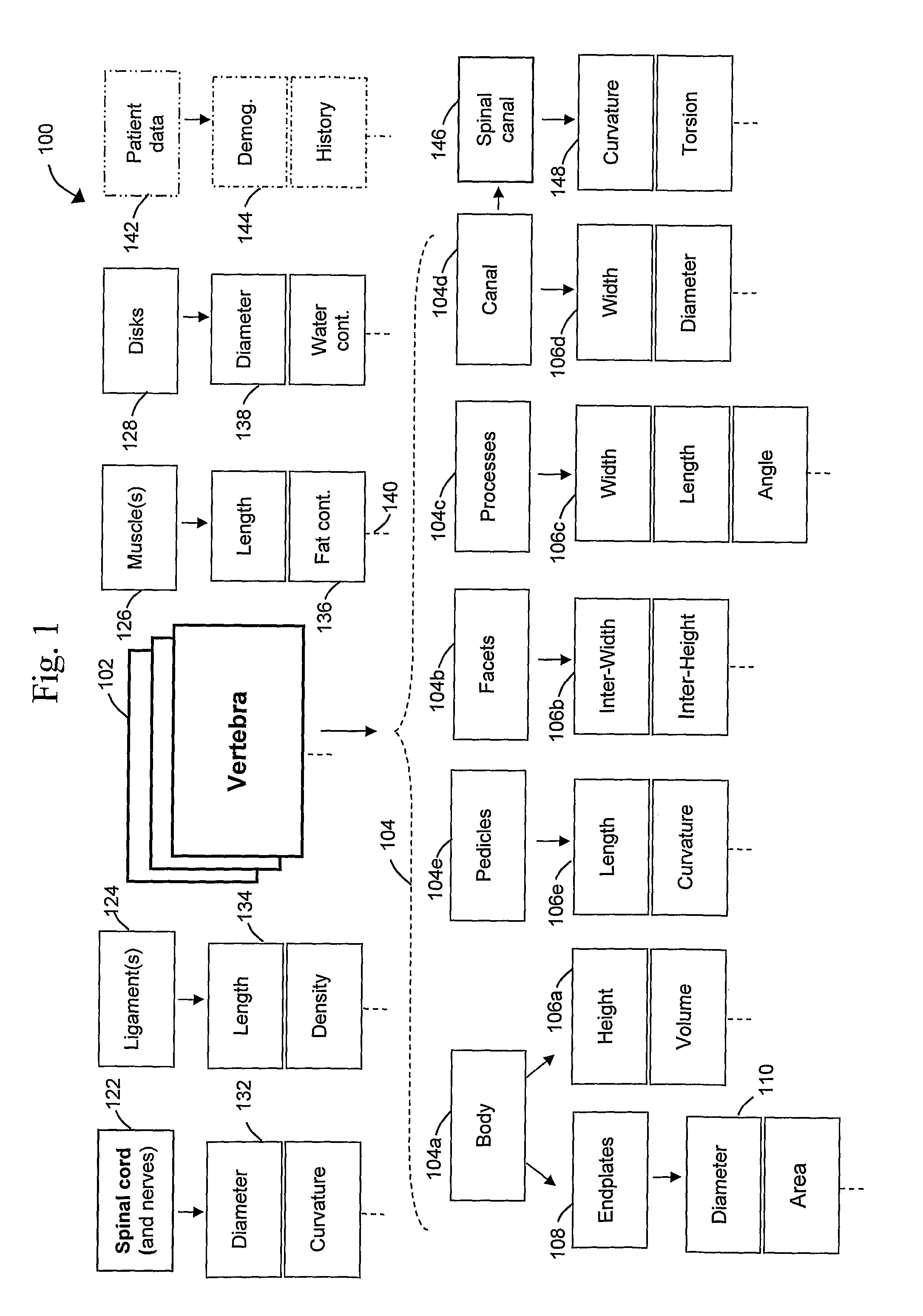

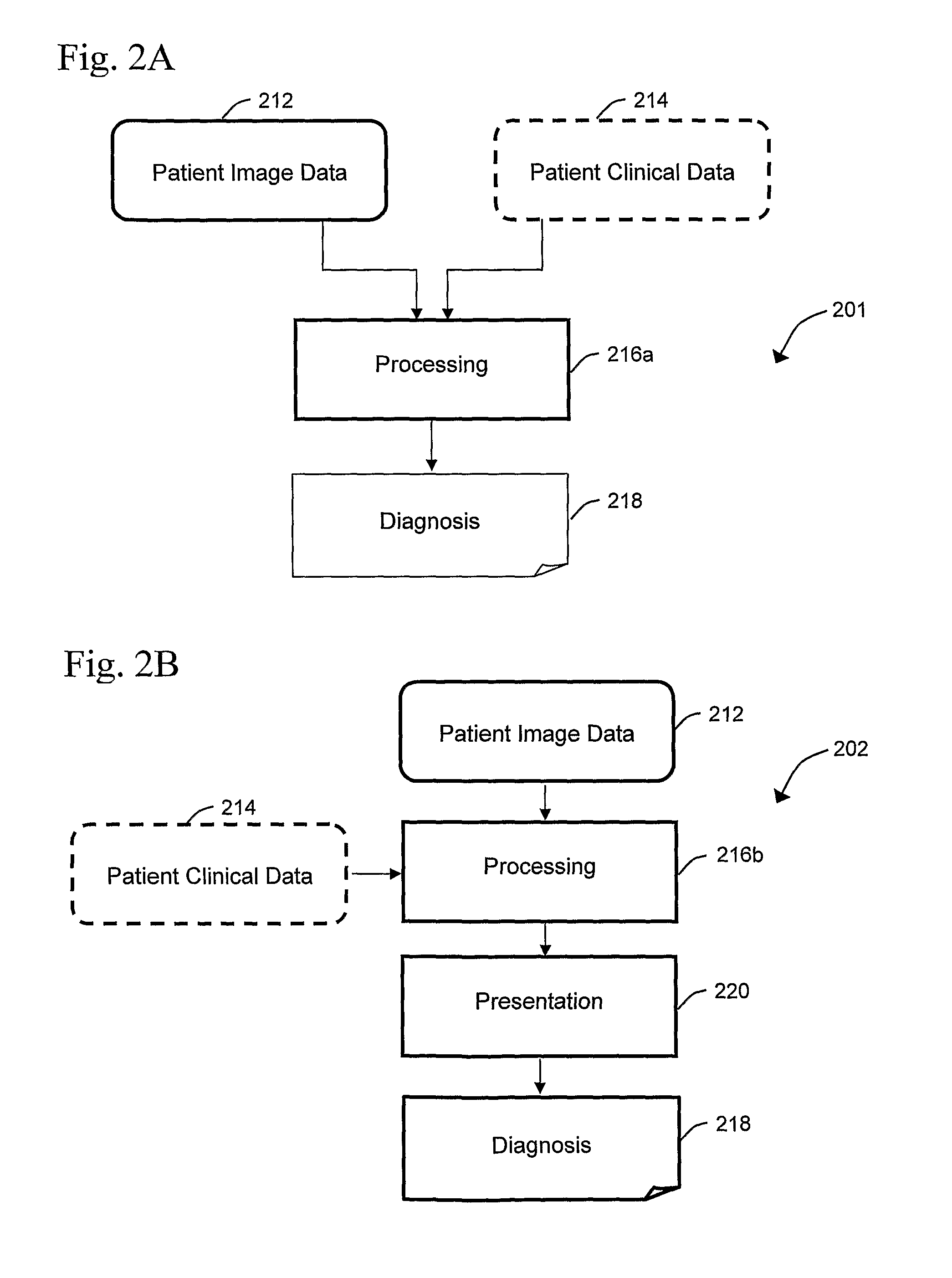

Assessment of Spinal Anatomy

A method for modeling a spine, comprising providing at least one image of the spine, extracting a plurality of anatomical elements of the spine from the at least one image, and constructing a model representing the anatomy of the spine using the anatomical elements.

Owner:HAY ORI +1

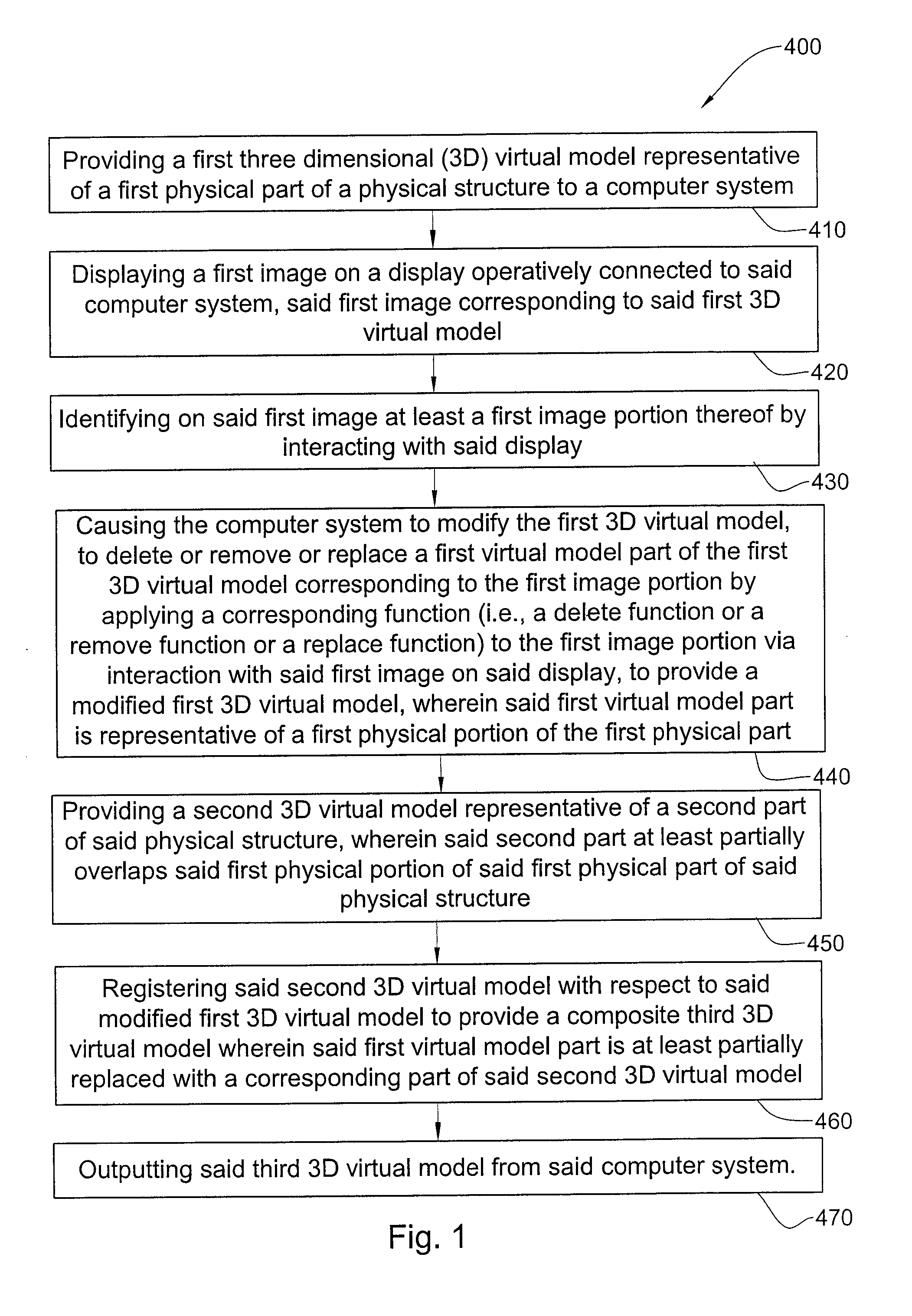

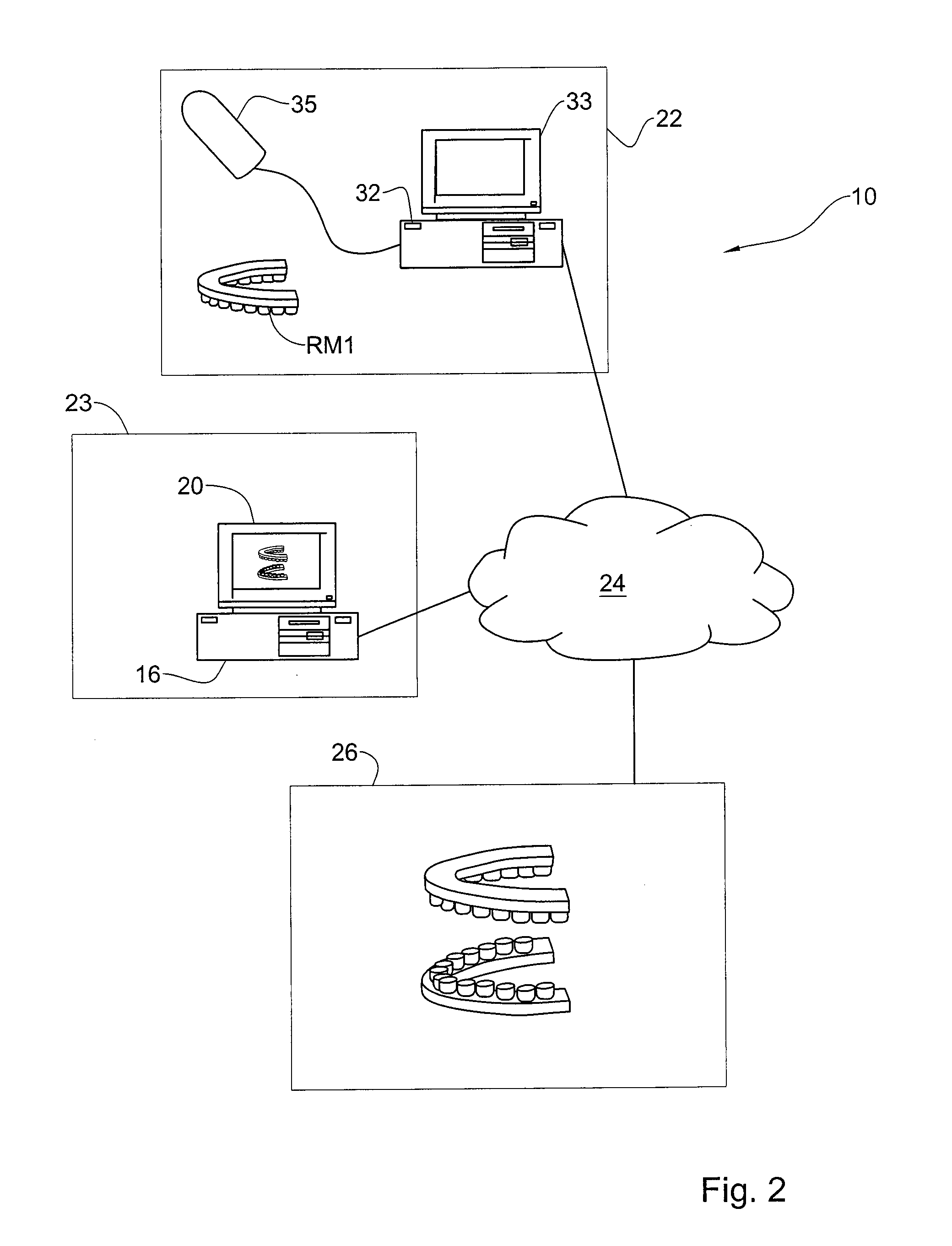

Methods and systems for creating and interacting with three dimensional virtual models

ActiveUS20130110469A1The process is convenient and fastImage enhancementImpression capsComputer graphics (images)Virtual model

Systems and methods are provided for modifying a virtual model of a physical structure with additional 3D data obtained from the physical structure to provide a modified virtual model.

Owner:ALIGN TECH

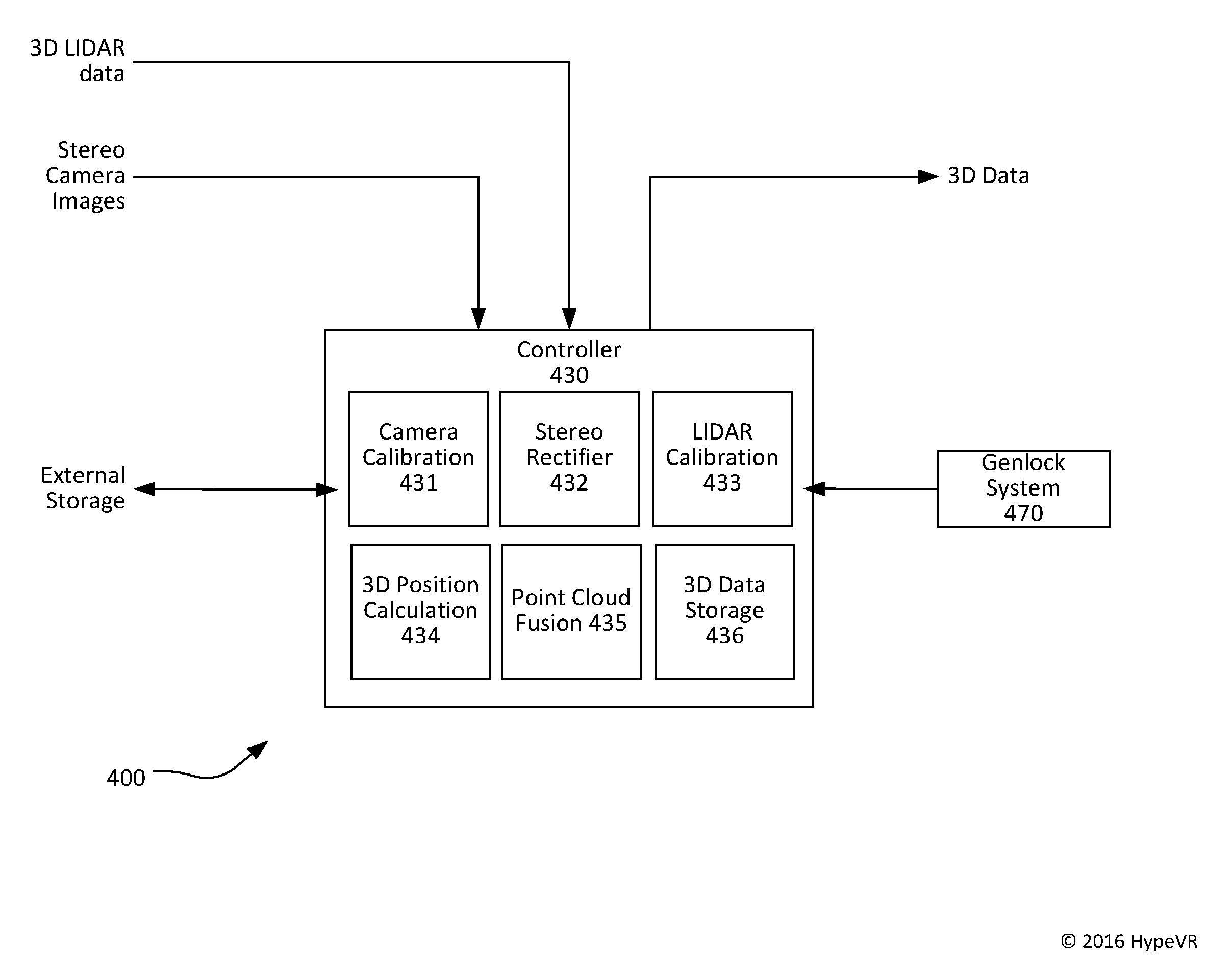

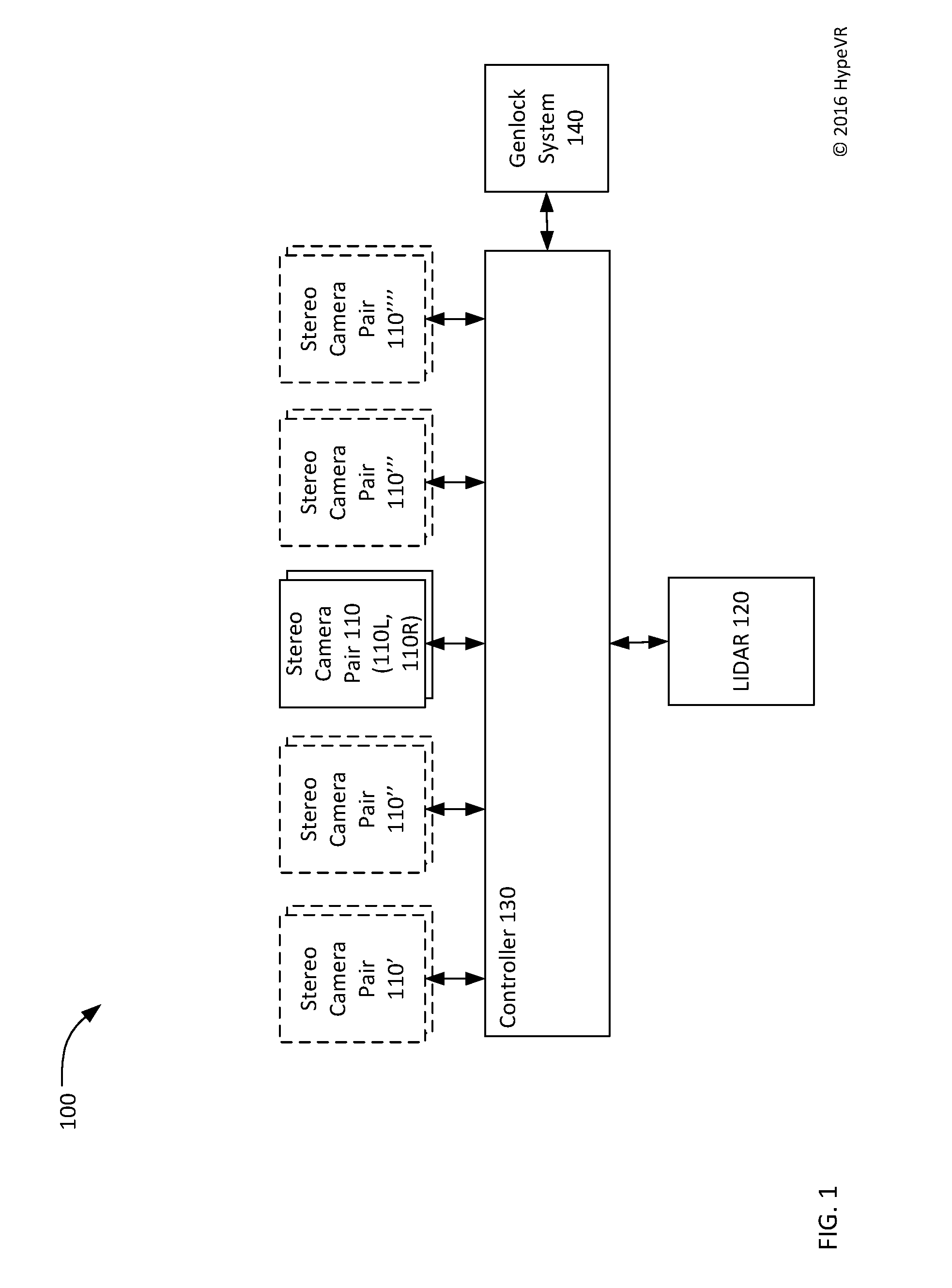

Lidar stereo fusion live action 3D model video reconstruction for six degrees of freedom 360° volumetric virtual reality video

A system for capturing live-action three-dimensional video is disclosed. The system includes pairs of stereo cameras and a LIDAR for generating stereo images and three-dimensional LIDAR data from which three-dimensional data may be derived. A depth-from-stereo algorithm may be used to generate the three-dimensional camera data for the three-dimensional space from the stereo images and may be combined with the three-dimensional LIDAR data taking precedence over the three-dimensional camera data to thereby generate three-dimensional data corresponding to the three-dimensional space.

Owner:HYPEVR

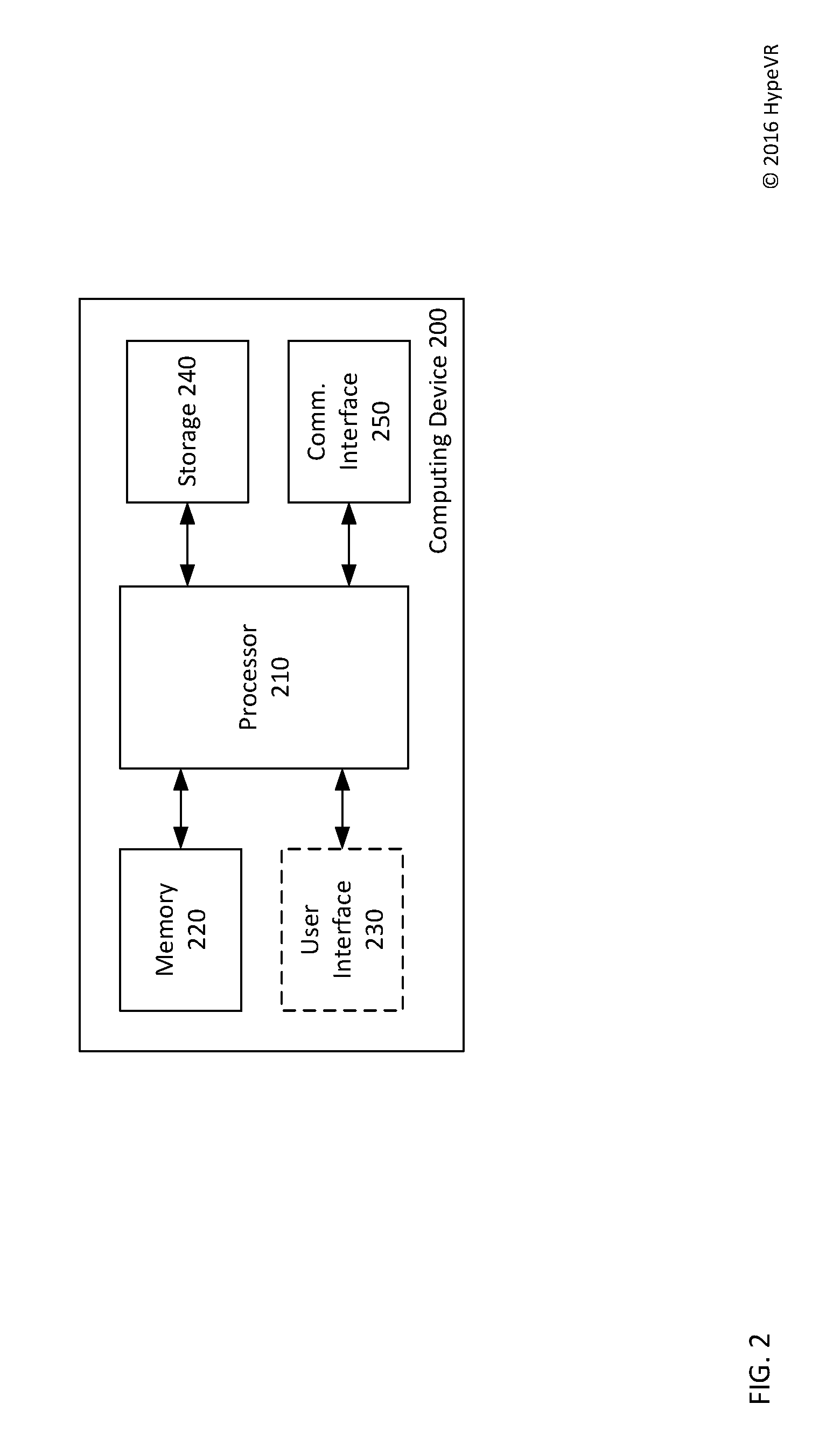

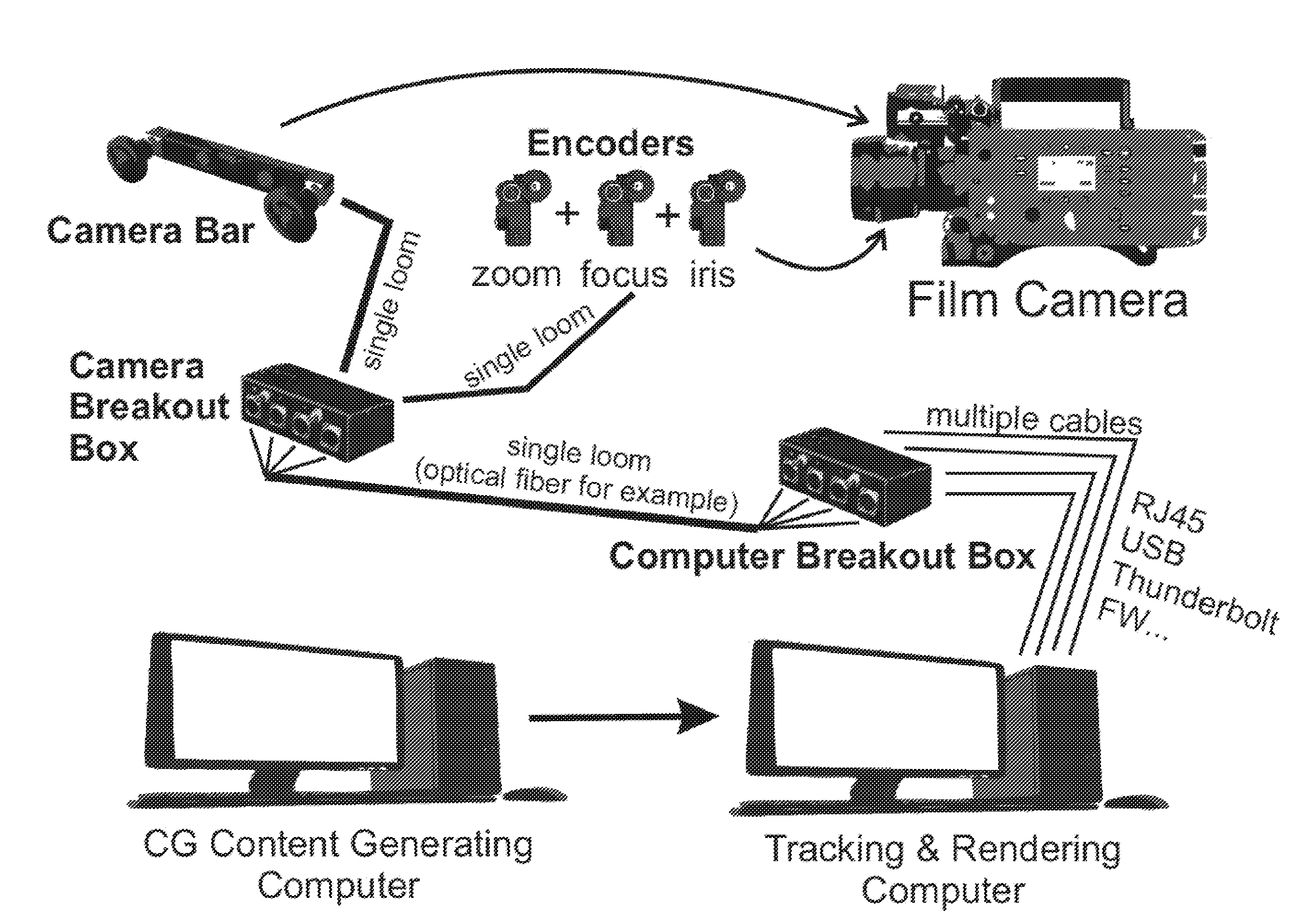

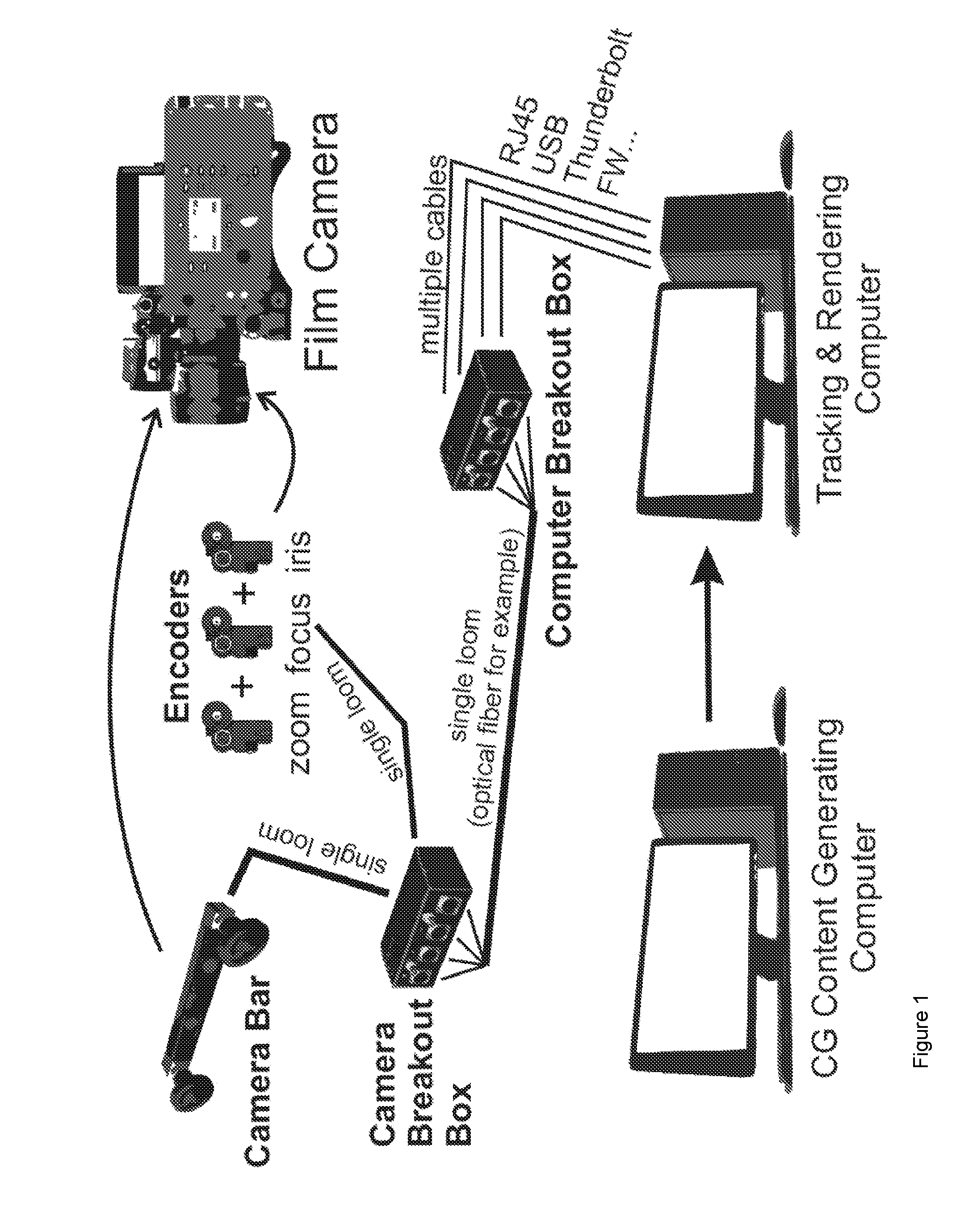

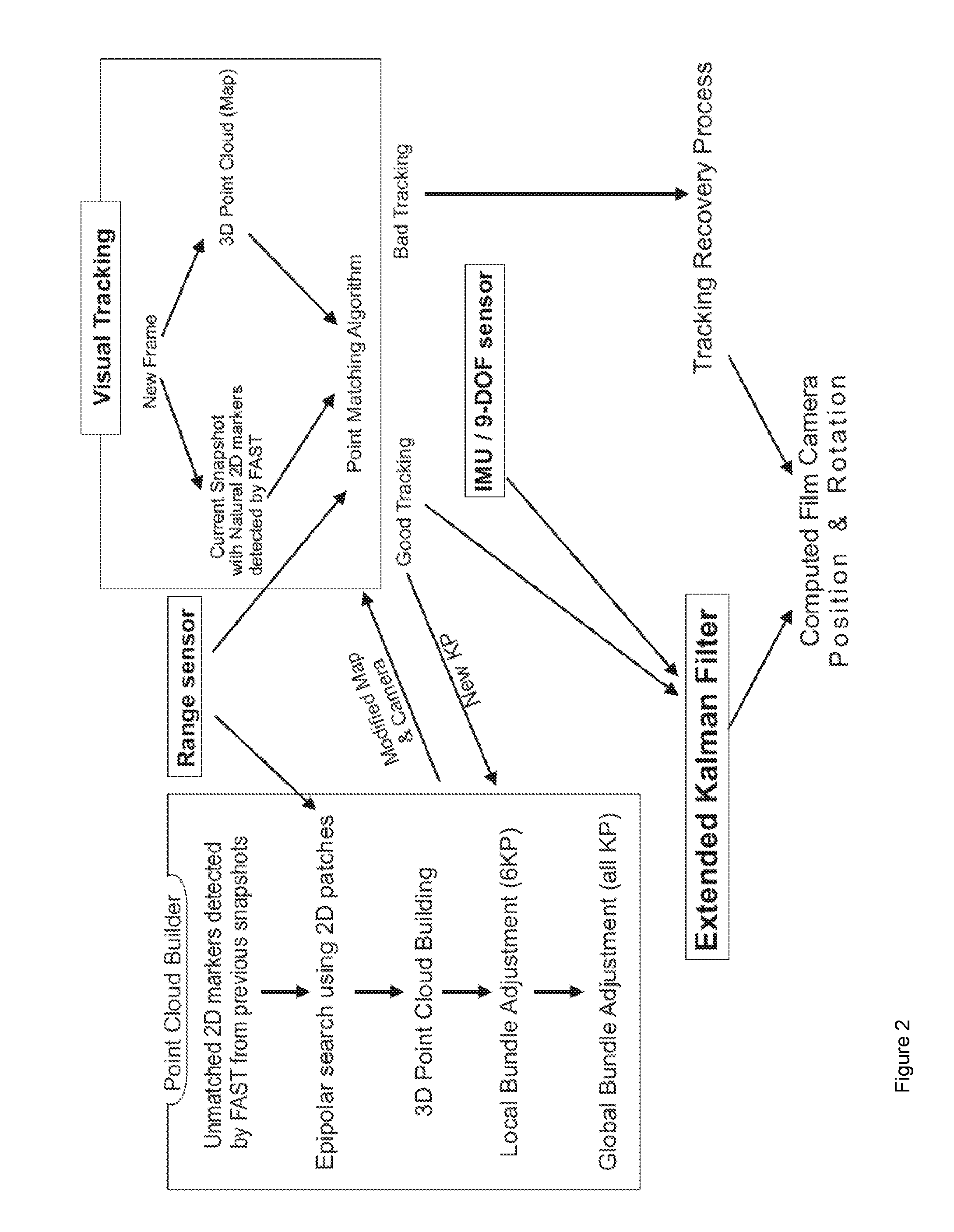

System for mixing or compositing in real-time, computer generated 3D objects and a video feed from a film camera

ActiveUS20150084951A1Lessen VFX design processSaving wastageTelevision system detailsImage enhancementComputer graphics (images)Computer generation

A method of mixing or compositing in real-time, computer generated 3D objects and a video feed from a film camera in which the body of the film camera can be moved in 3D and sensors in or attached to the camera provide real-time positioning data defining the 3D position and 3D orientation of the camera, or enabling the 3D position to be calculated.

Owner:NCAM TECH

Intraoral scanner with dental diagnostics capabilities

Methods and apparatuses for generating a model of a subject's teeth. Described herein are intraoral scanning methods and apparatuses for generating a three-dimensional model of a subject's intraoral region (e.g., teeth) including both surface features and internal features. These methods and apparatuses may be used for identifying and evaluating lesions, caries and cracks in the teeth. Any of these methods and apparatuses may use minimum scattering coefficients and / or segmentation to form a volumetric model of the teeth.

Owner:ALIGN TECH

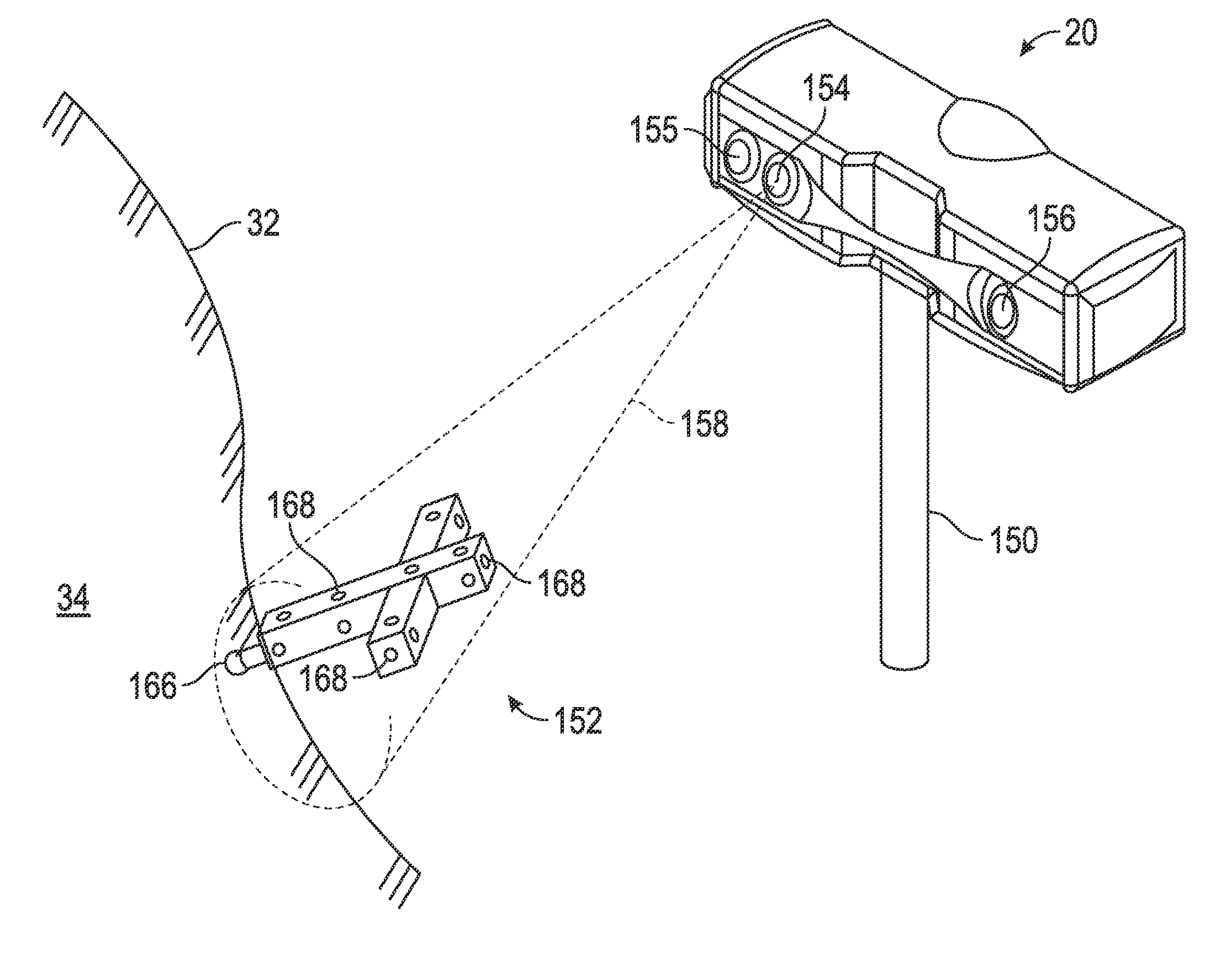

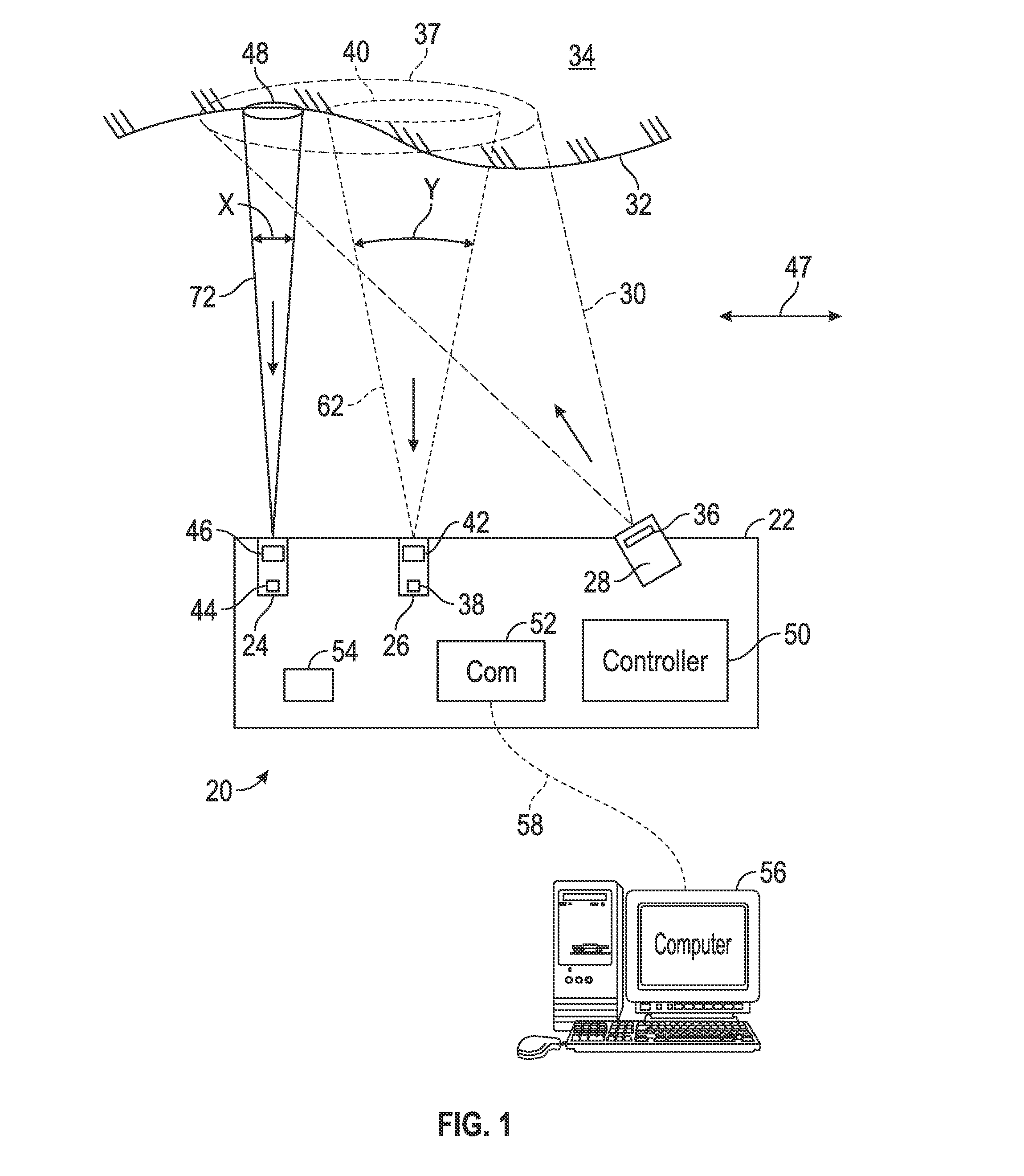

Three-dimensional coordinate scanner and method of operation

A noncontact optical three-dimensional measuring device that includes a projector, a first camera, and a second camera; a processor electrically coupled to the projector, the first camera and the second camera; and computer readable media which, when executed by the processor, causes the first digital signal to be collected at a first time and the second digital signal to be collected at a second time different than the first time and determines three-dimensional coordinates of a first point on the surface based at least in part on the first digital signal and the first distance and determines three-dimensional coordinates of a second point on the surface based at least in part on the second digital signal and the second distance.

Owner:FARO TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com