Hardware accelerator and method for realizing sparse GRU neural network based on FPGA

A neural network and sparse technology, applied in the field of hardware accelerators, can solve the problems of limited processor acceleration ratio, general-purpose processors cannot obtain better benefits from sparse technology, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

[0171] Next, two calculation units (Process Element, referred to as PE) PE0 and PE1 are used to calculate a matrix-vector multiplication, and column storage (ccs) is used as an example to briefly explain the basic idea of performing corresponding operations based on the hardware of the present invention.

[0172] The matrix sparsity in the compressed GRU is unbalanced, which leads to lower utilization of computing resources.

[0173] Such as Figure 11 As shown, suppose the input vector a contains 6 elements {a0, a1, a2, a3, a4, a5}, and the weight matrix contains 8×6 elements. Two PEs (PE0 and PE1) are responsible for calculating a3×w[3], where a3 is the fourth element of the input vector, and w[3] is the fourth column of the weight matrix.

[0174] From Figure 11 It can be seen from the figure that the workloads of PE0 and PE1 are different. PE0 performs three multiplication operations, while PE1 only performs one multiplication operation.

[0175] In the prior art, th...

example 2

[0188] Through this embodiment, it is intended to explain the IO bandwidth and computing unit balance of the present invention.

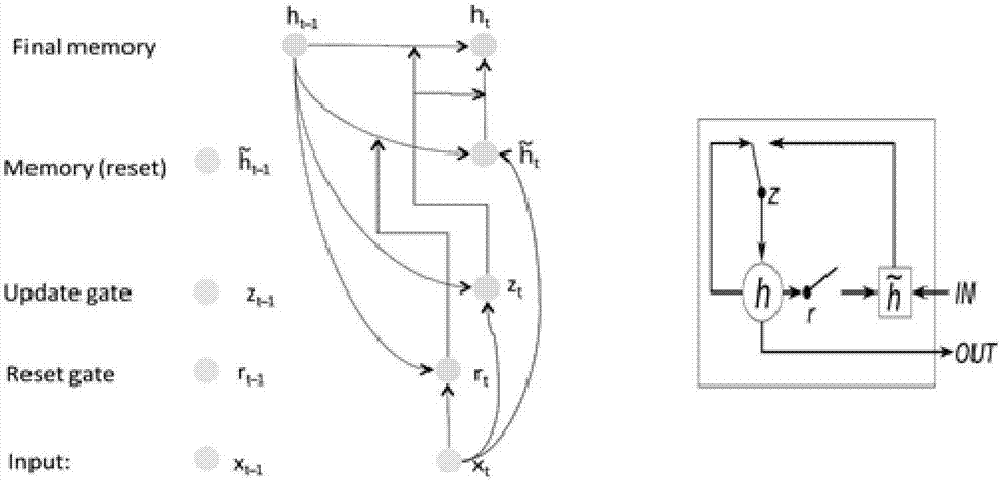

[0189] If the memory controller user interface is 512bit and the clock is 250Mhz, then the required PE concurrency is 512*250Mhz=(PE_num*freq_PE*data_bit), if the fixed point is 8bit weight, the clock frequency of the PE calculation module is 200Mhz, and the number of PEs required for 80 pcs.

[0190] For a network with 2048*1024 input of 1024, under different sparsity, the most time-consuming calculation is still the matrix multiplication vector. For sparse GRU networks z t , r t , and for h t The calculation can be multiplied by matrix vector Wx t and Uh t-1 covered by the calculation. Since the subsequent point multiplication and addition operations are serially pipelined, the resources required are relatively small. In summary, the present invention fully combines sparse matrix-vector multiplication, IO and calculation balance, and serial...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com