Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

313 results about "High speed memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

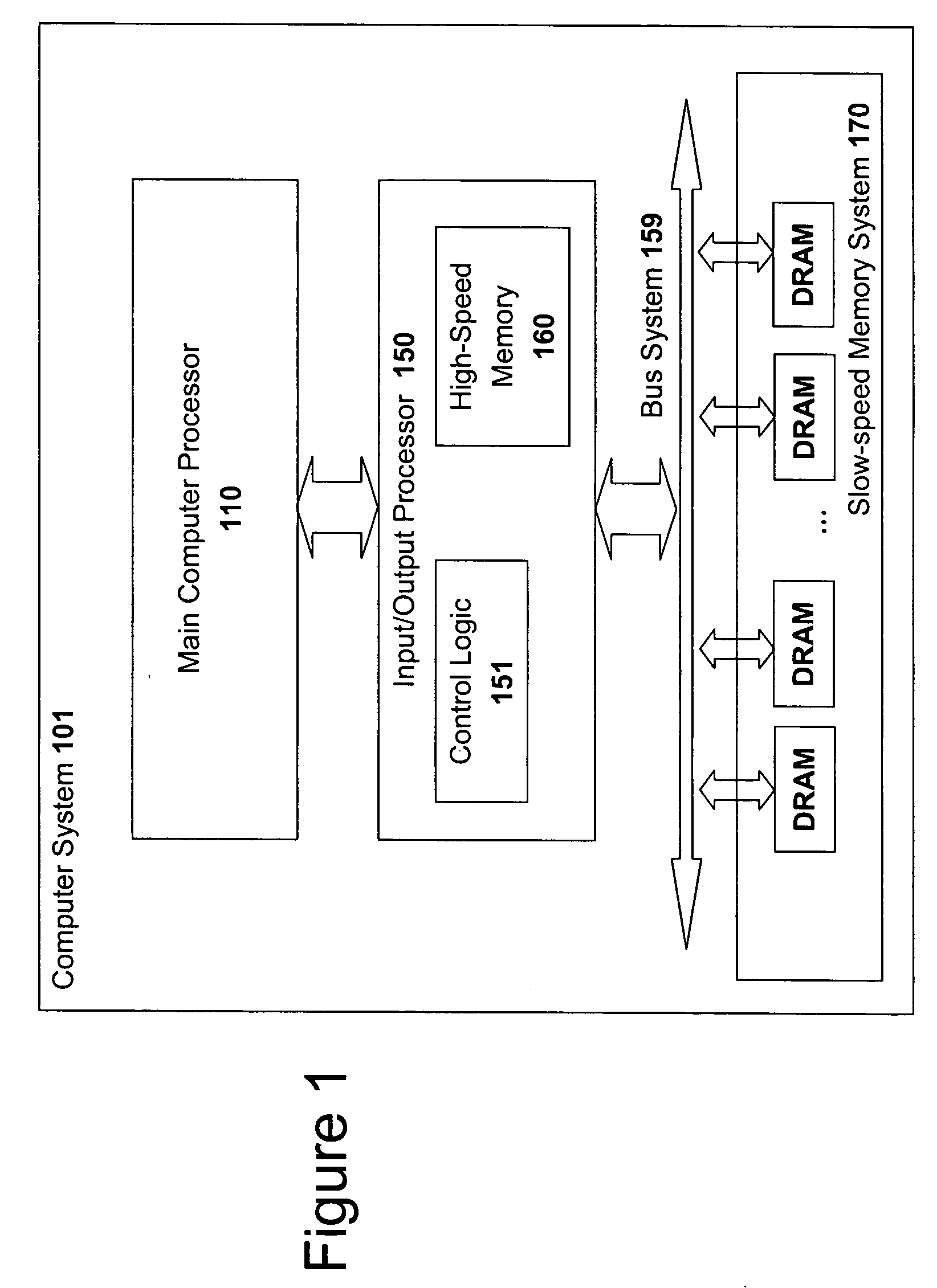

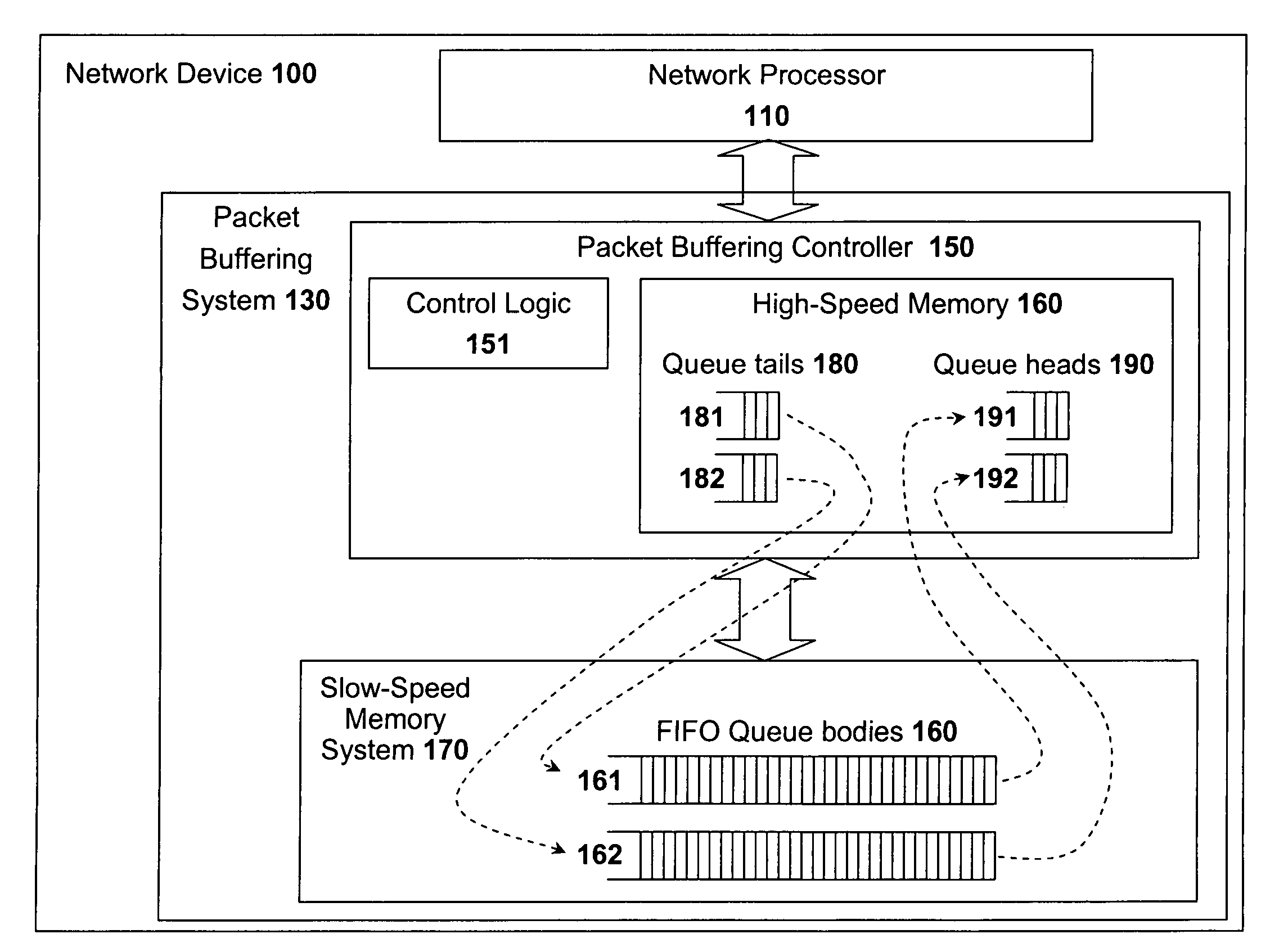

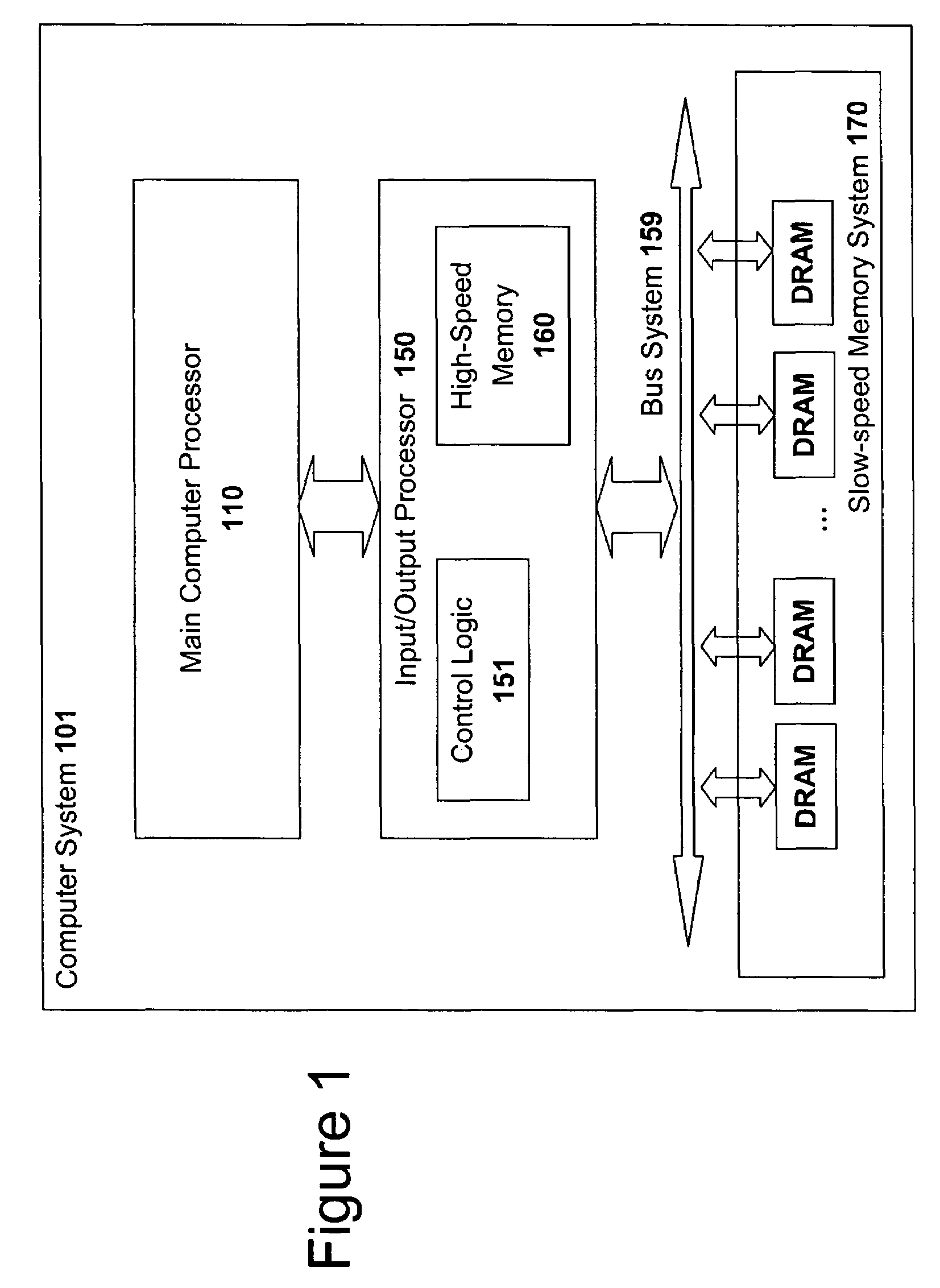

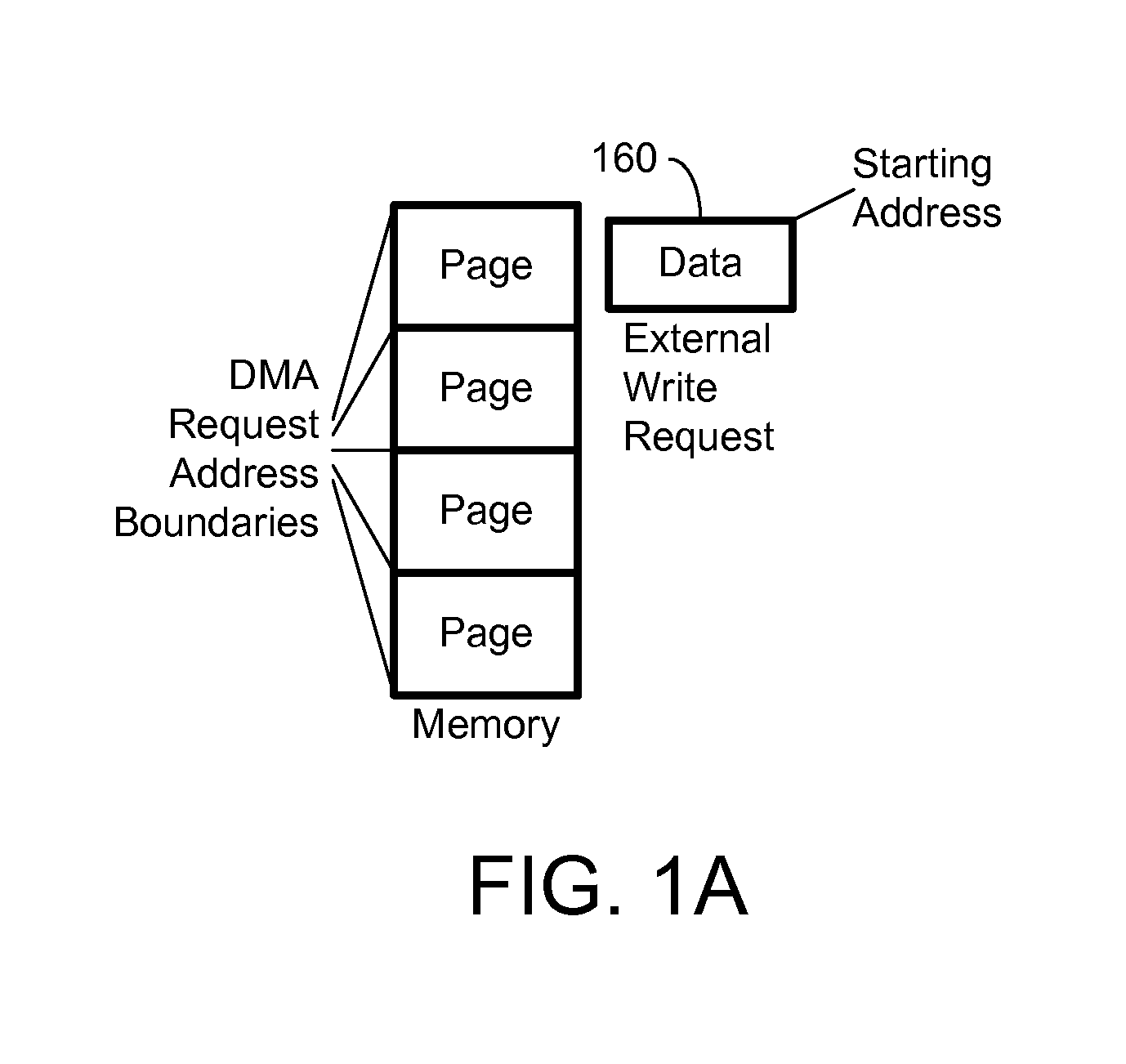

High speed memory control and I/O processor system

ActiveUS20050240745A1Easy to handleSimplify memory access taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryTailored approach

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

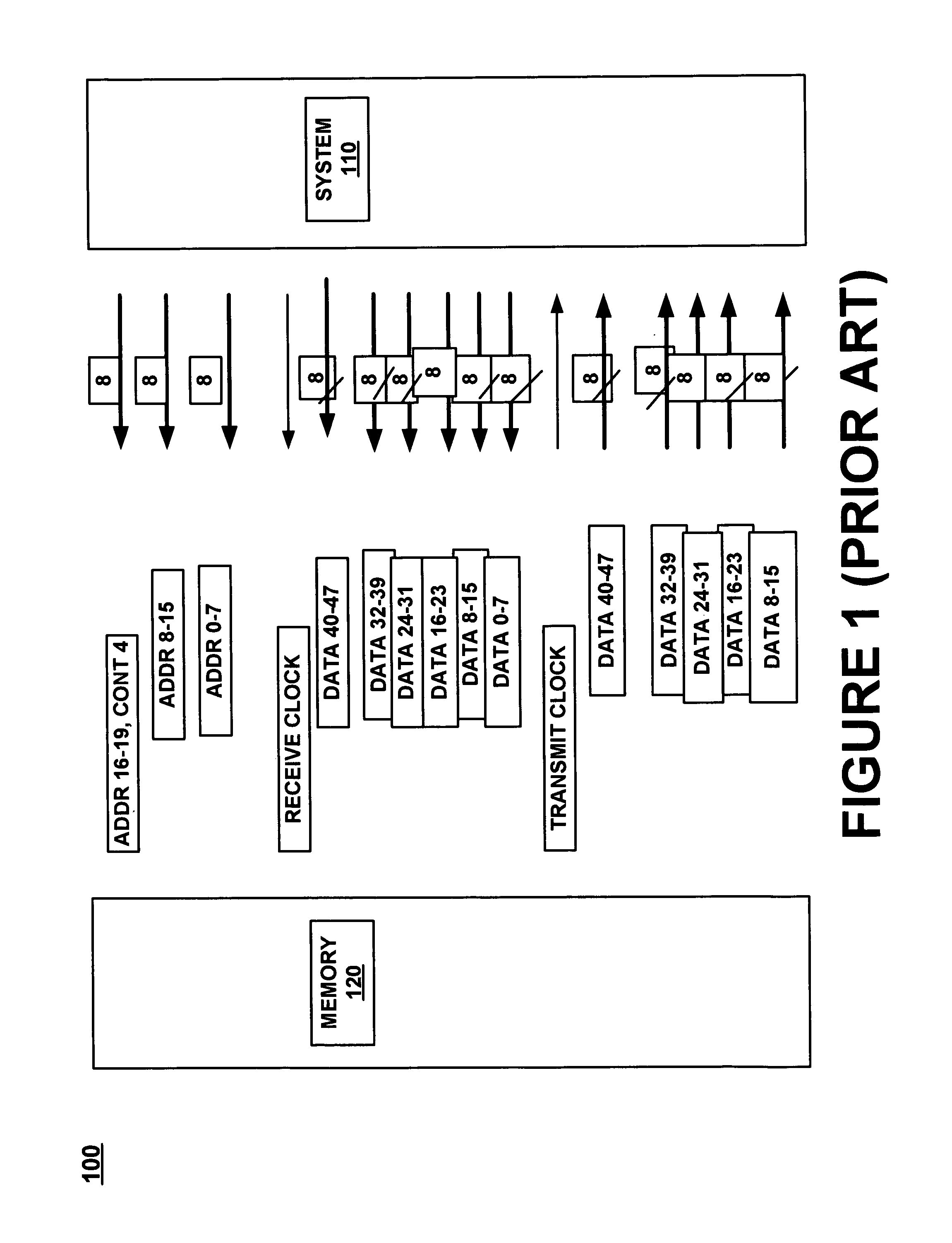

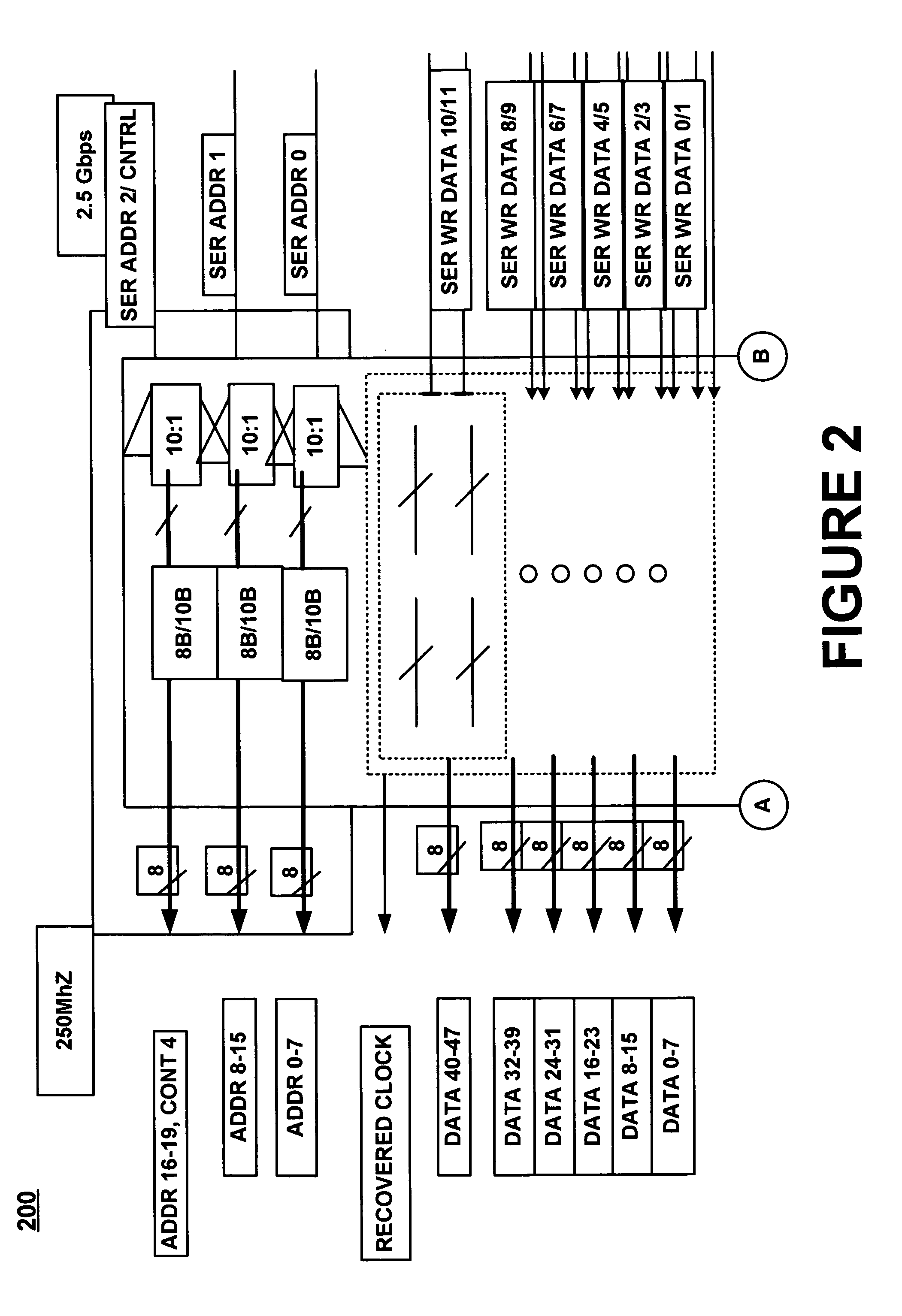

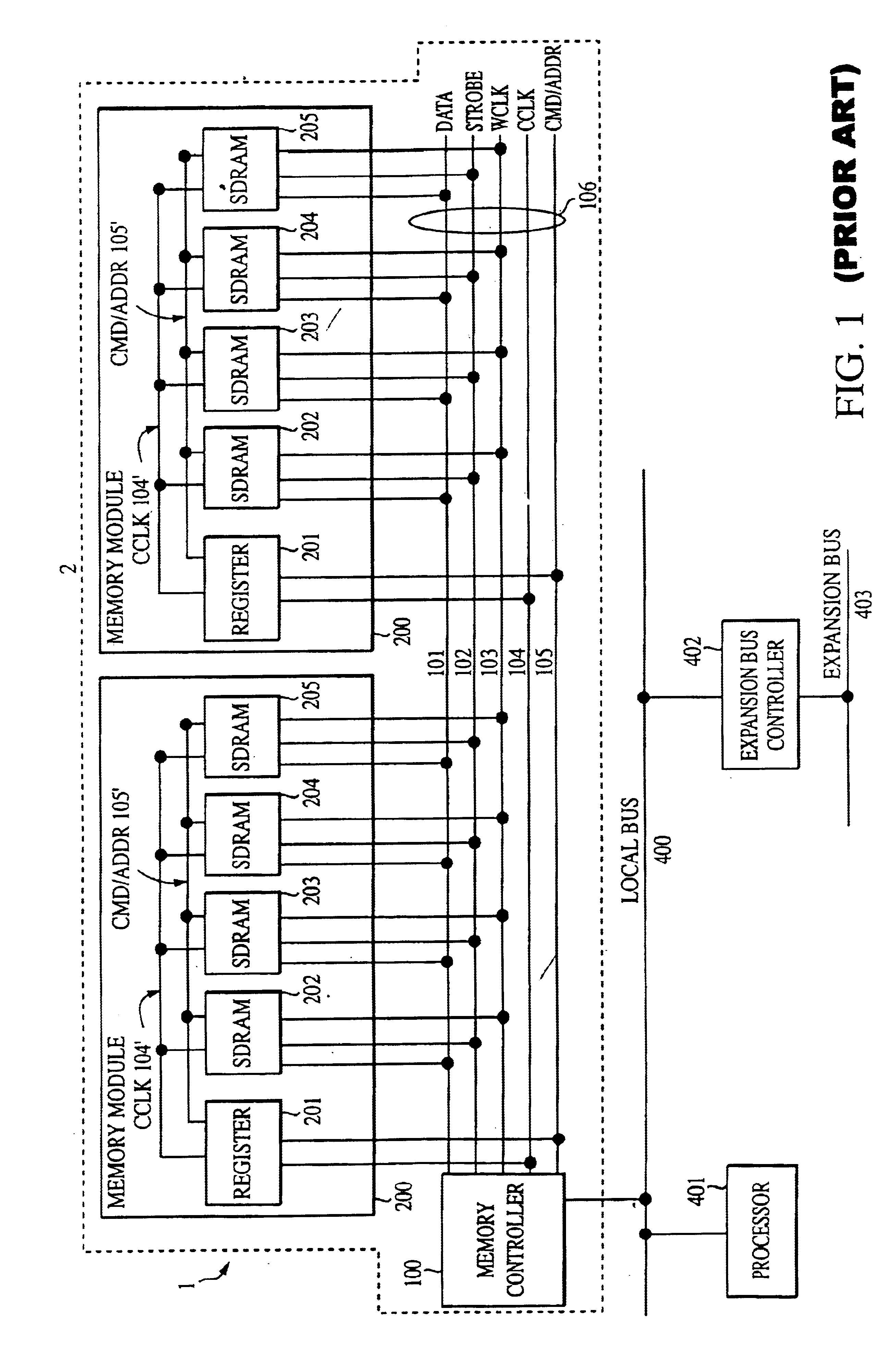

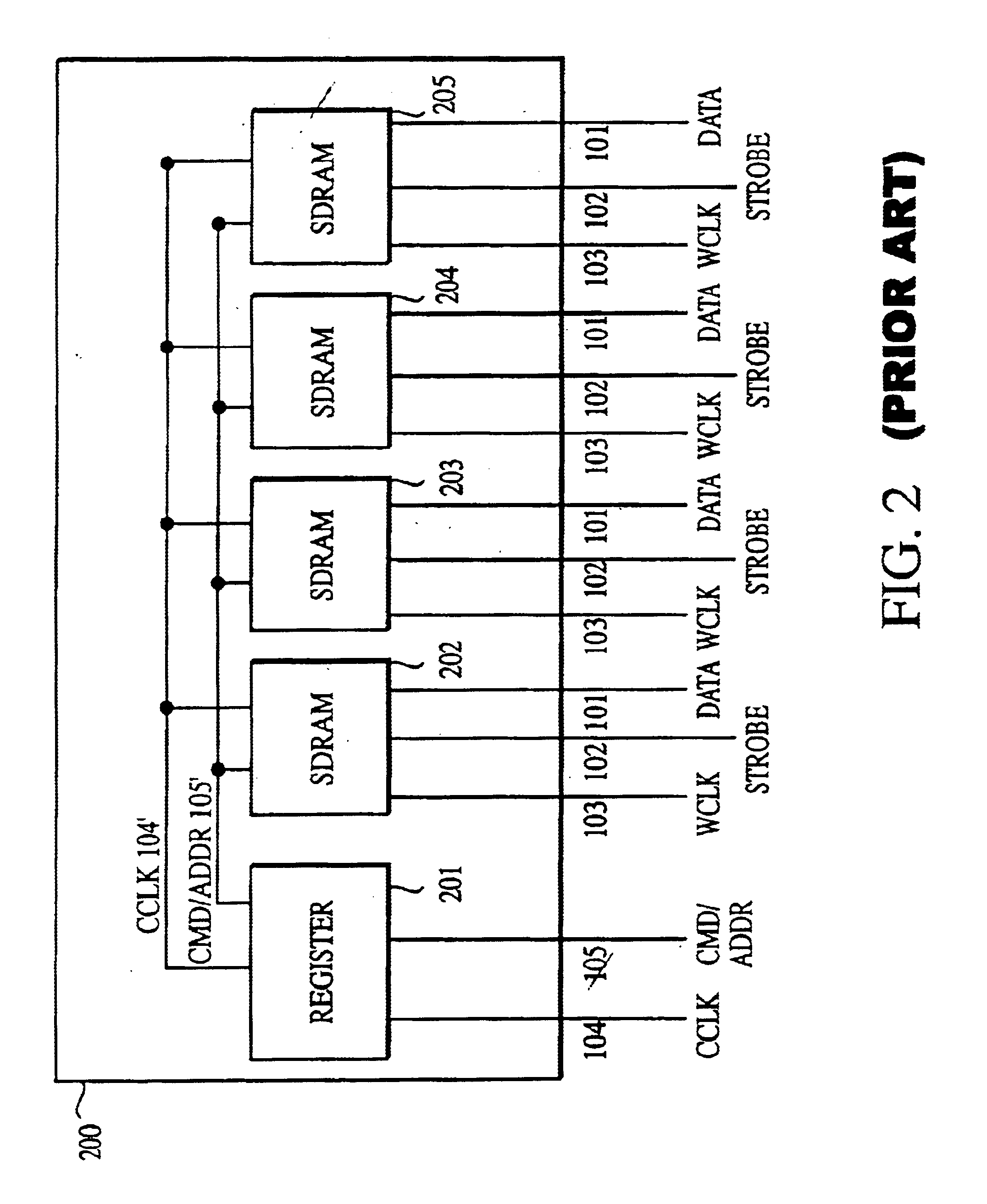

High speed memory interface system and method

InactiveUS7013359B1Facilitate efficient communicationIncrease speedElectric digital data processingHigh speed memoryMemory interface

The present invention is a high speed serial memory interface system and method that facilitates efficient communication of information between a system controller operating at a relatively high speed serial communication rate and a memory array operating at a relatively slow speed serial communication rate. In one embodiment the present invention is a high speed serial memory interface system with an information configuration core for coordinating proper alignment of information communication signals, a system interface for communicating with a system controller, and a memory array interface for communicating with a memory array. A memory module array for storing information and a high speed serial memory interface system for providing interface configuration management are integrated on a single substrate.

Owner:LONGITUDE FLASH MEMORY SOLUTIONS LTD

Method and apparatus for protecting digital rights of copyright holders of publicly distributed multimedia files

Owner:CLEAR GOSPEL CAMPAIGN

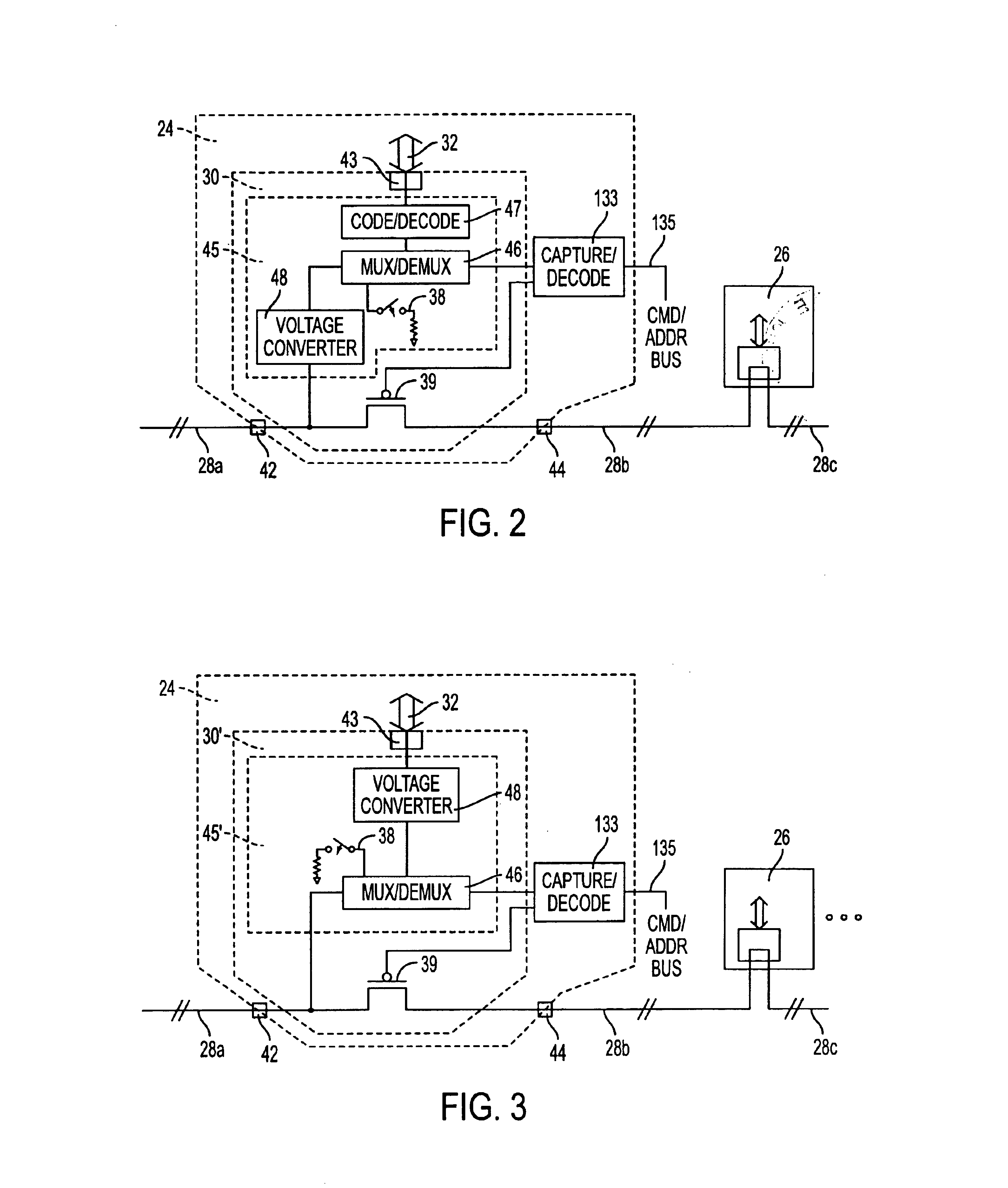

Data transmission circuit for memory subsystem, has switching circuit that selectively connects or disconnects two data bus segments to respectively enable data transmission or I/O circuit connection

InactiveUS6871253B2Improve acceleration performanceReduce reflectionEnergy efficient ICTDigital data processing detailsElectricityHigh speed memory

A method and associated apparatus is provided for improving the performance of a high speed memory bus using switches. Bus reflections caused by electrical stubs are substantially eliminated by connecting system components in a substantially stubless configuration using a segmented bus wherein bus segments are connected through switches. The switches disconnect unused bus segments during operations so that communicating devices are connected in an substantially point-to-point communication path.

Owner:MICRON TECH INC

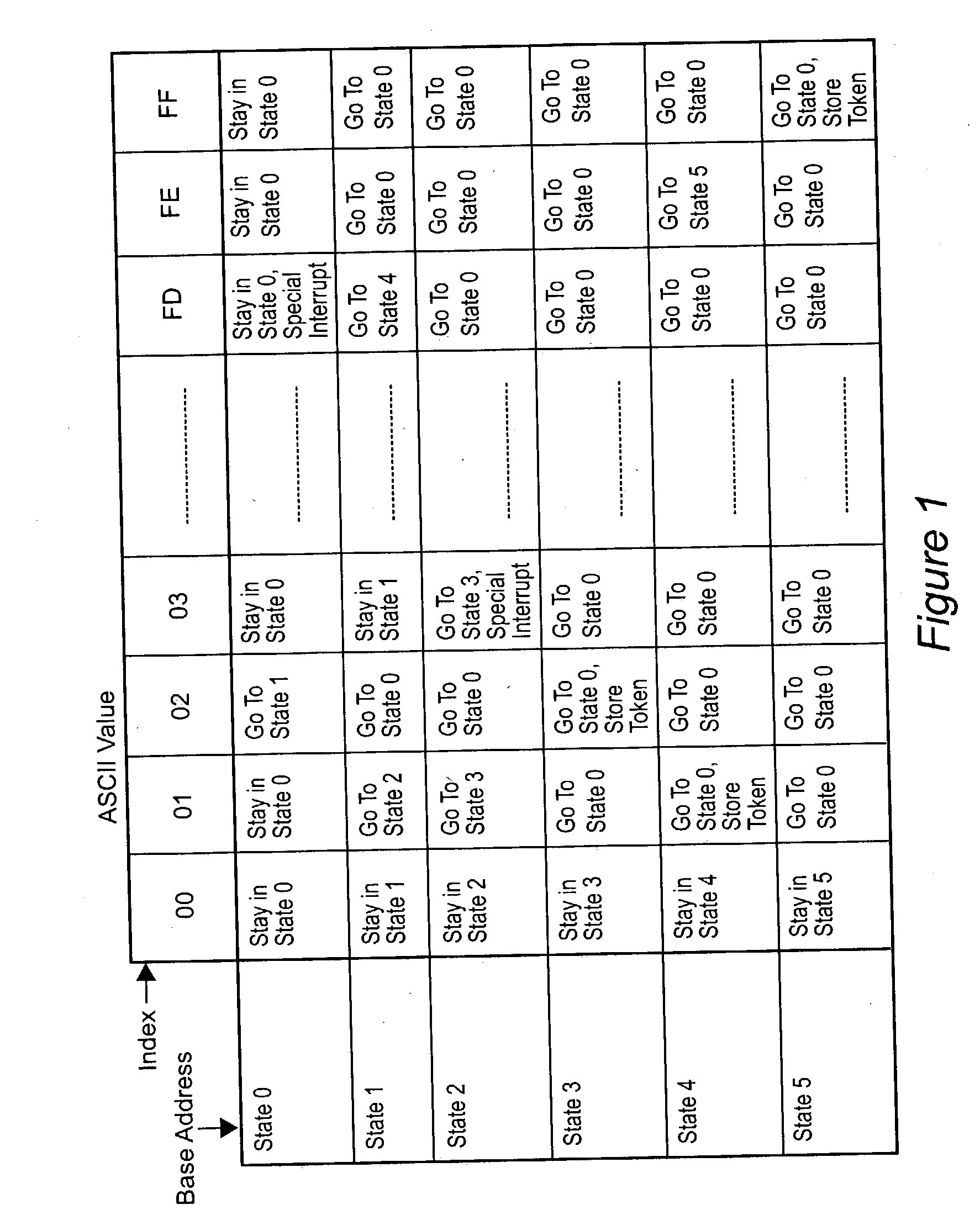

Hardware parser accelerator

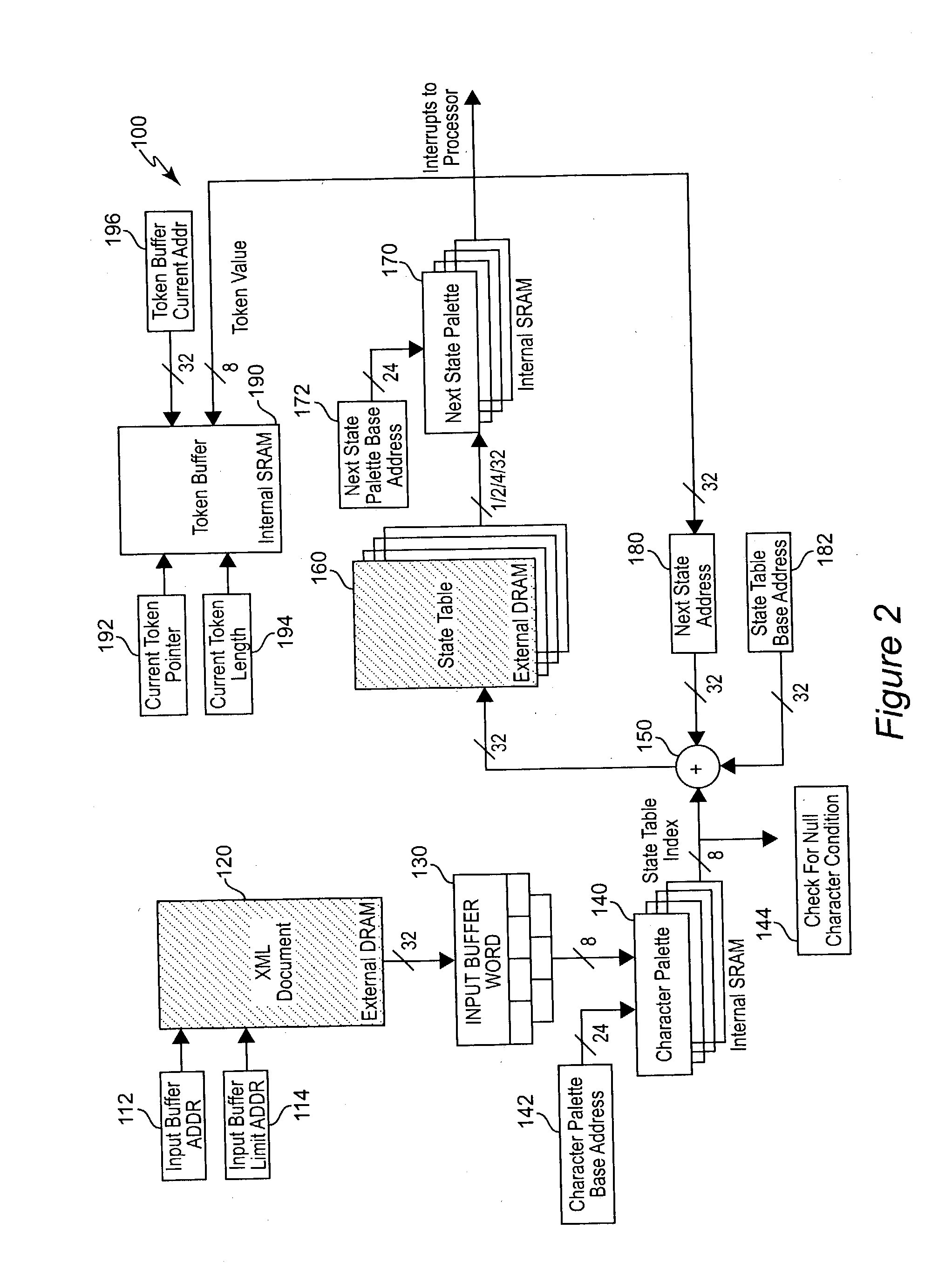

InactiveUS20040083466A1Software engineeringNatural language data processingHigh speed memoryTrace table

Dedicated hardware is employed to perform parsing of documents such as XML(TM) documents in much reduced time while removing a substantial processing burden from the host CPU. The conventional use of a state table is divided into a character palette, a state table in abbreviated form, and a next state palette. The palettes may be implemented in dedicated high speed memory and a cache arrangement may be used to accelerate accesses to the abbreviated state table. Processing is performed in parallel pipelines which may be partially concurrent. dedicated registers may be updated in parallel as well and strings of special characters of arbitrary length accommodated by a character palette skip feature under control of a flag bit to further accelerate parsing of a document.

Owner:LOCKHEED MARTIN CORP

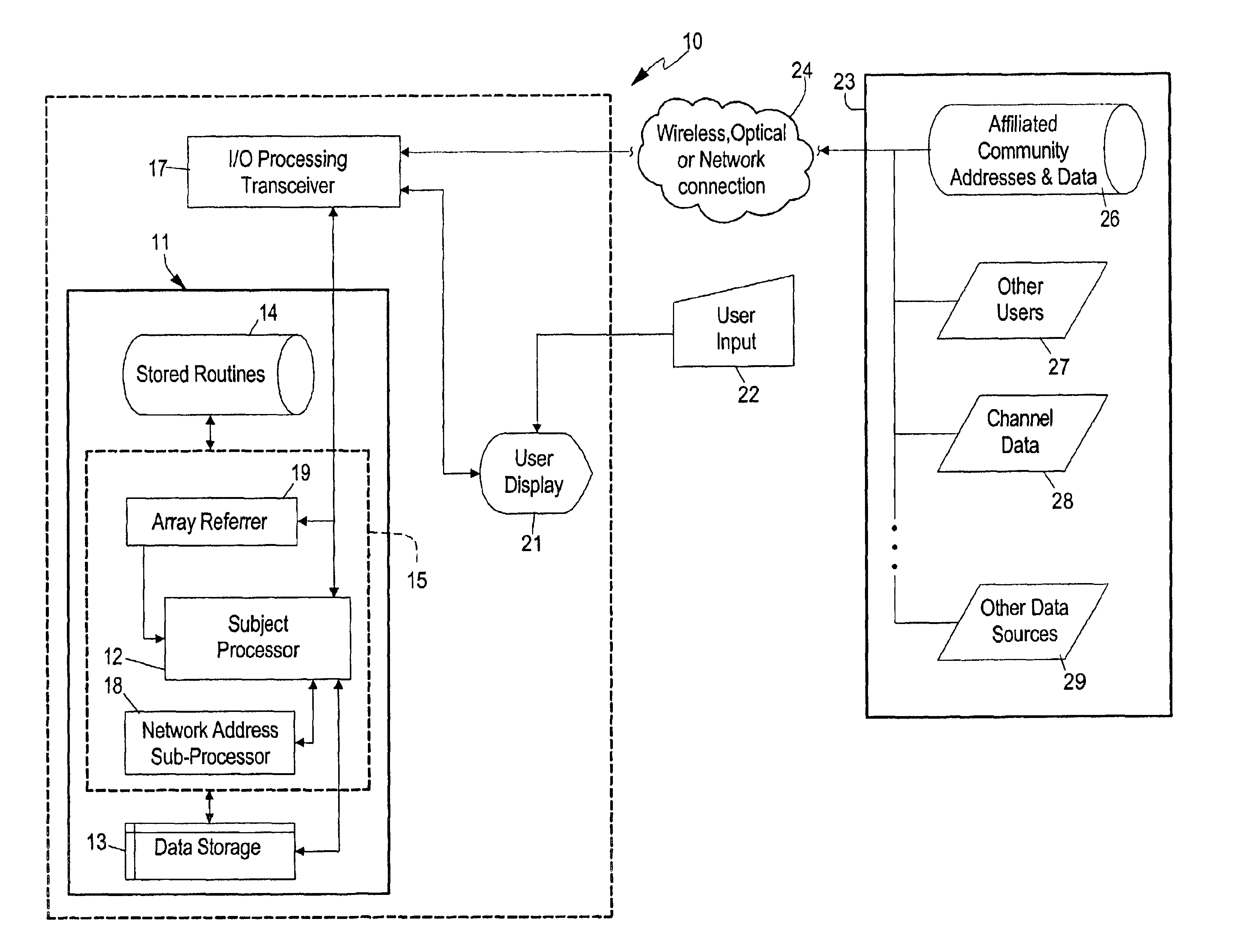

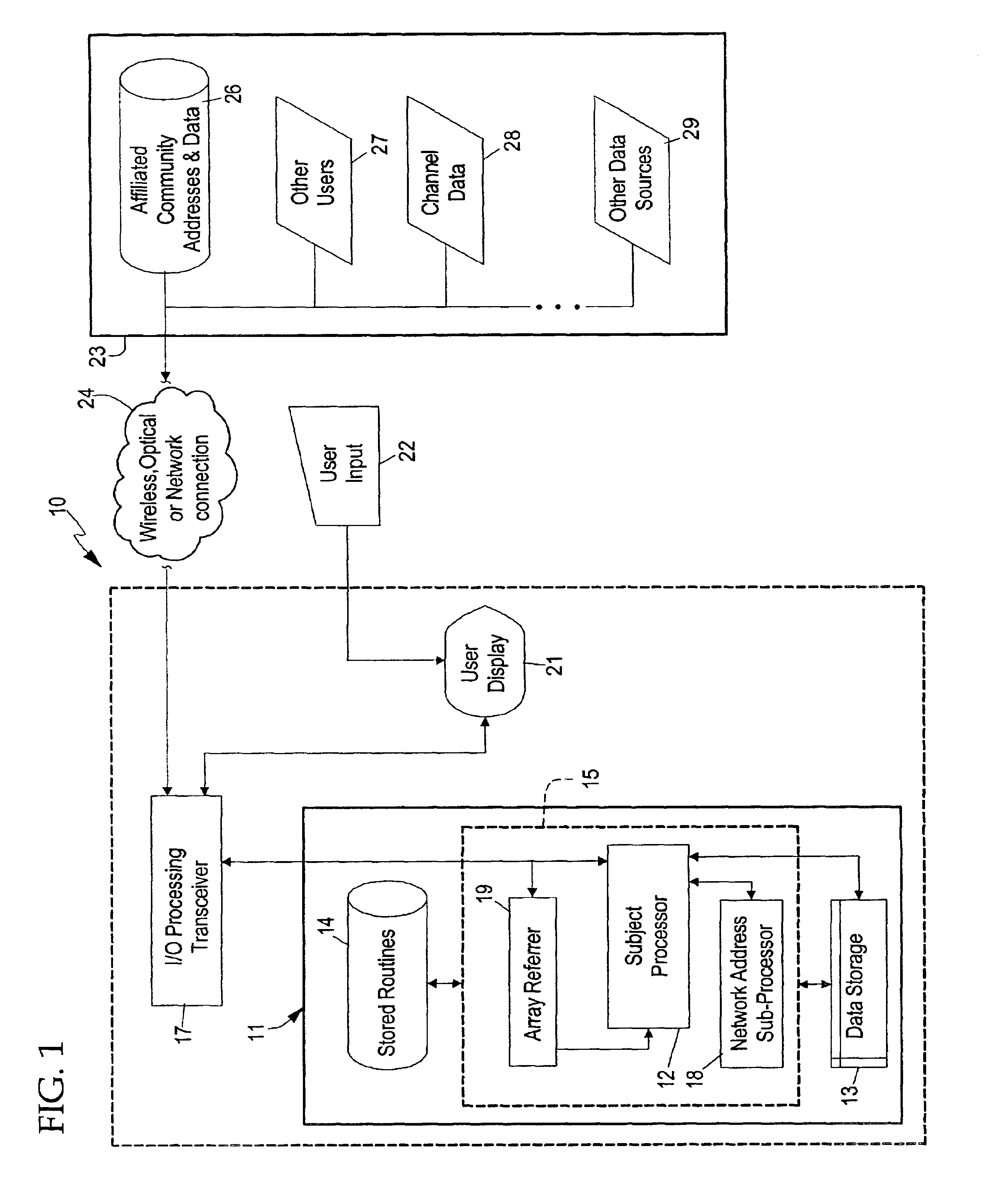

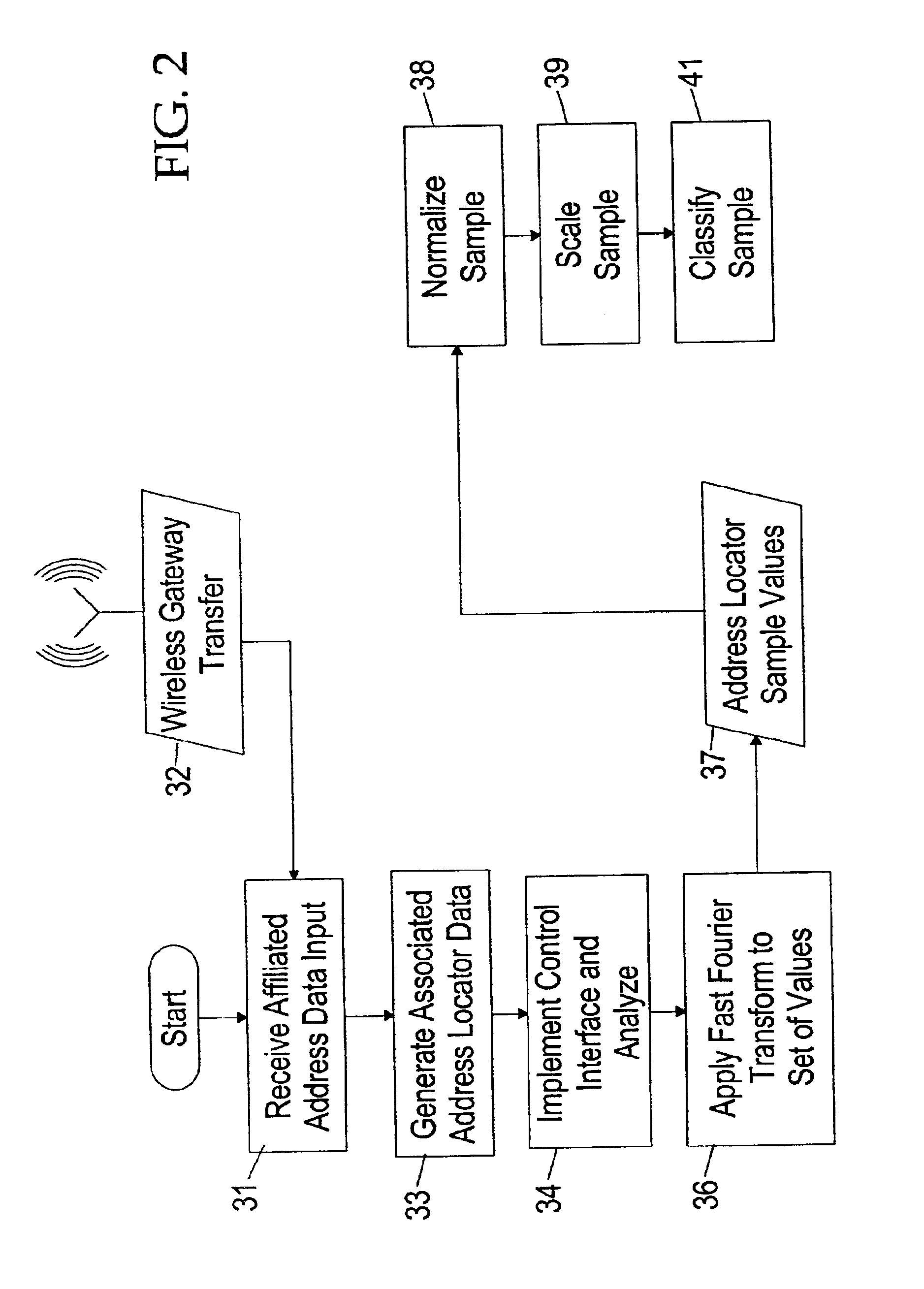

System and method for efficiently accessing affiliated network addresses from a wireless device

InactiveUS6873610B1Data switching by path configurationMultiple digital computer combinationsHigh speed memoryNetwork addressing

A system and method for a wireless device to efficiently access affiliated addresses across linked topical communities, such as an Internet WebRing, through a wireless gateway. The invention includes a processing unit running on a wireless device controlled by an affiliated address control program. The processing unit includes a processing unit with a subject processor, a program store for holding an apparatus control program, a network address sub-processor, an address array referrer, an input mechanism, a display device for selecting retrieved affiliated addresses, and a high speed memory for holding site address selectors and associated content buffer. The wireless device communicates with a network via conventional wireless communication means which provides a path for updating the content buffer and array referrer, as well as transference of other types of sensory data. Means for predicting search failures is also integrated into the apparatus control program of the processing unit. Data received from the wireless gateway is statistically preprocessed then supplied to a processor called a network address sub-processor. The system then incorporates sorted affiliated addresses into the system on the wireless device to make possible a real-time detector system for a wireless device accessing content through a wireless gateway. The system may be offered as a service benefit for wireless device subscription or as a per occurrence chargeable item for a wireless subscriber. The system relieves the standard “hit-or-miss” method for affiliated address selection and site address storage and retrieval.

Owner:MOBULAR TECH

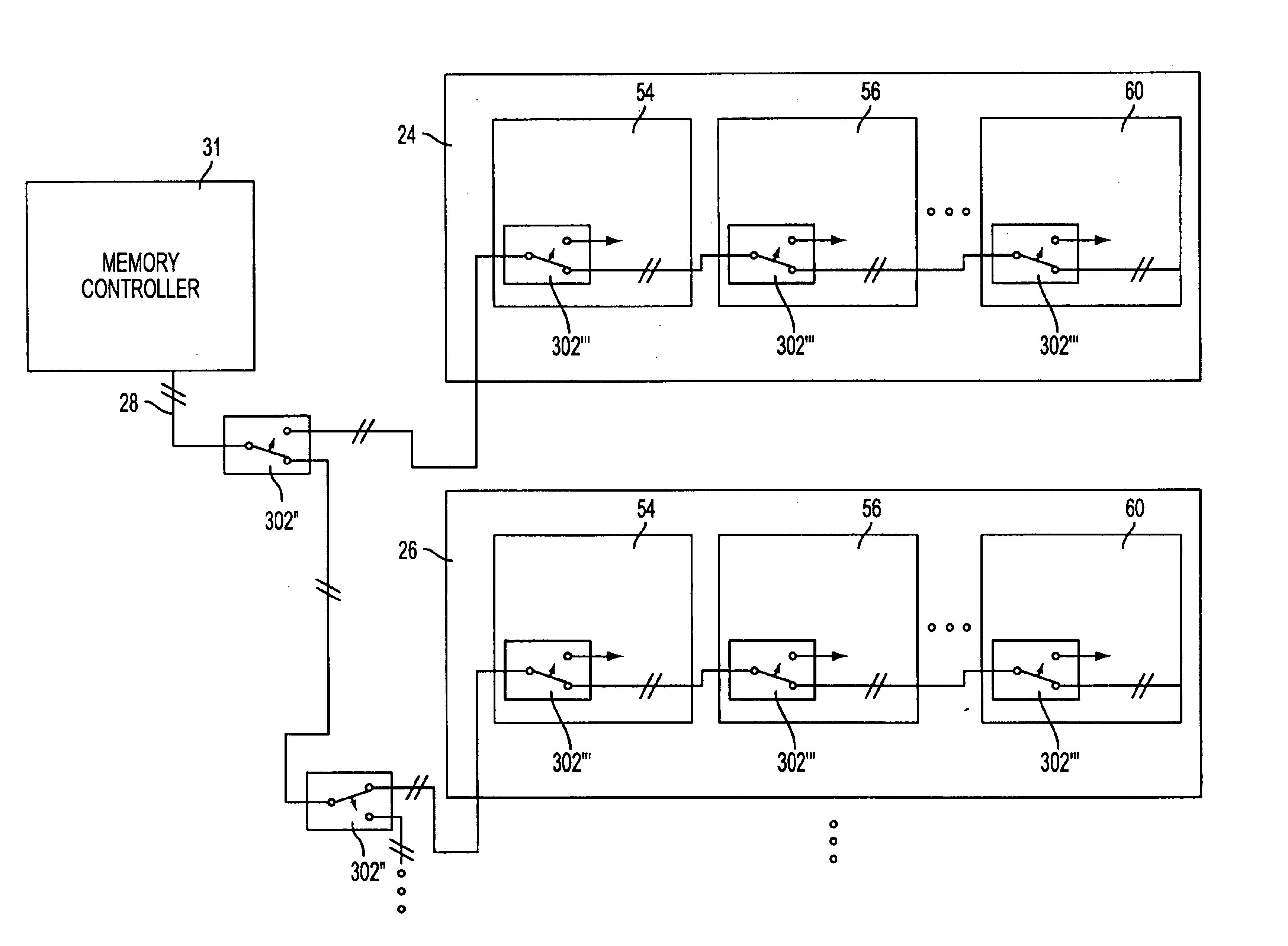

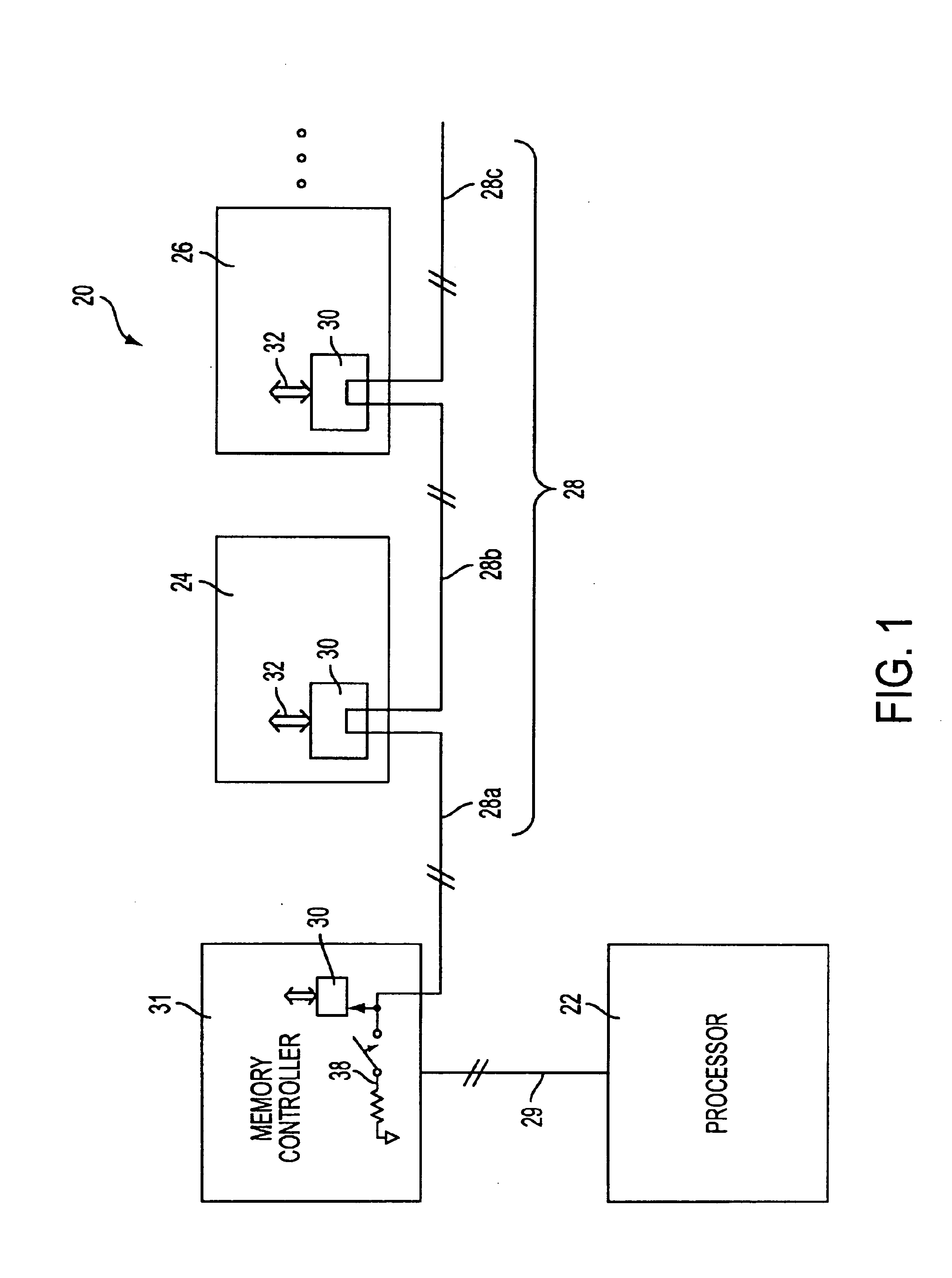

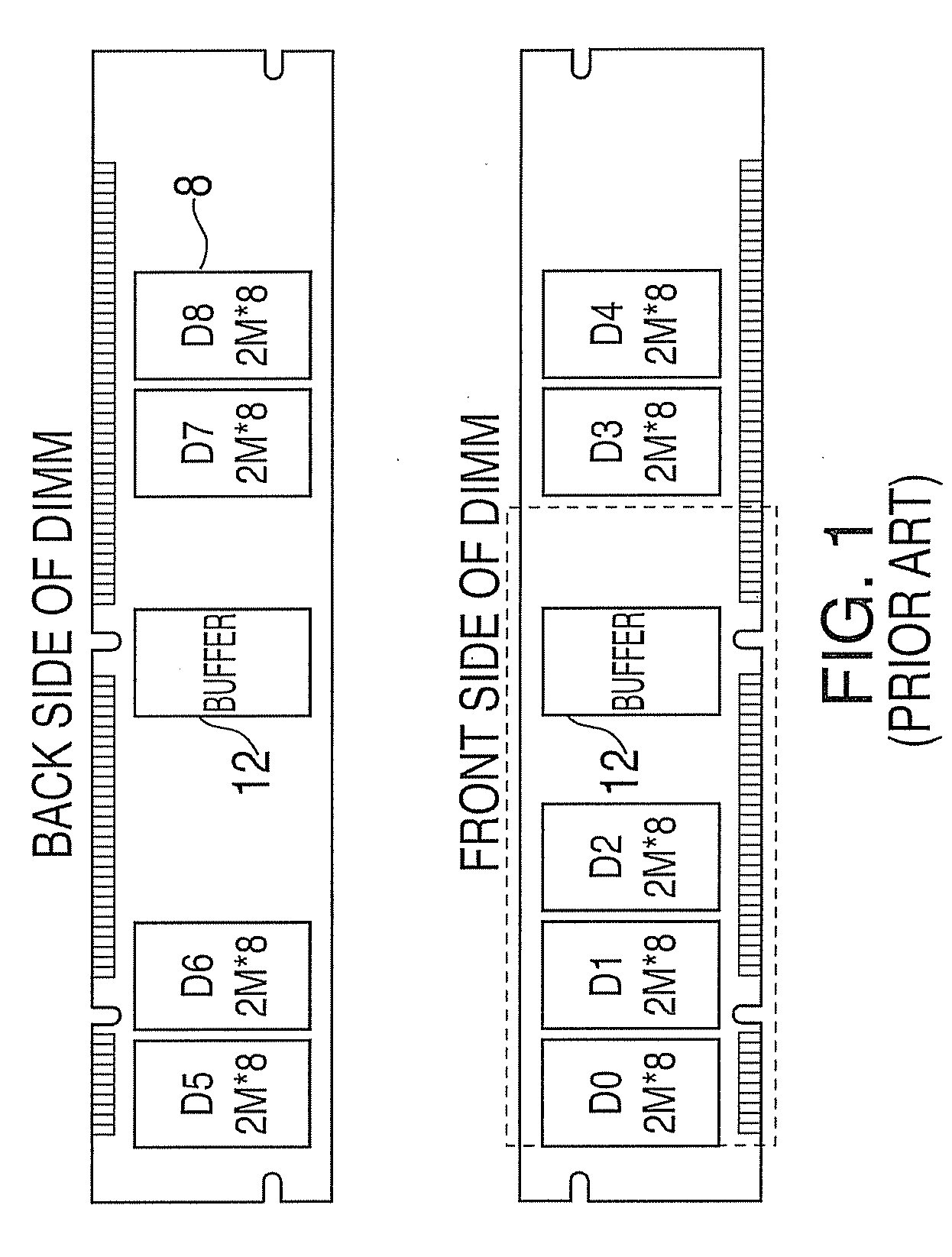

System and method for providing an adapter for re-use of legacy dimms in a fully buffered memory environment

A system and method for providing an adapter for re-use of legacy DIMMS in a fully buffered memory environment. The system includes a memory adapter card having two rows of contacts along a leading edge of a length of the card. The rows of contacts are adapted to be inserted into a socket that is connected to a daisy chain high-speed memory bus via a packetized multi-transfer interface. The memory adapter card also includes a socket installed on the trailing edge of the card. In addition, the memory adapter card includes a hub device for converting the packetized multi-transfer interface into a parallel interface having timings and interface levels that are operable with a memory module having a parallel interface that is inserted into the socket. In addition, the hub device converts the packetized multi-transfer interface into a parallel interface having timings and interface levels that are operable with a memory module having a parallel interface that is inserted into the socket. The hub device also converts the parallel interface into the packetized multi-transfer interface.

Owner:IBM CORP

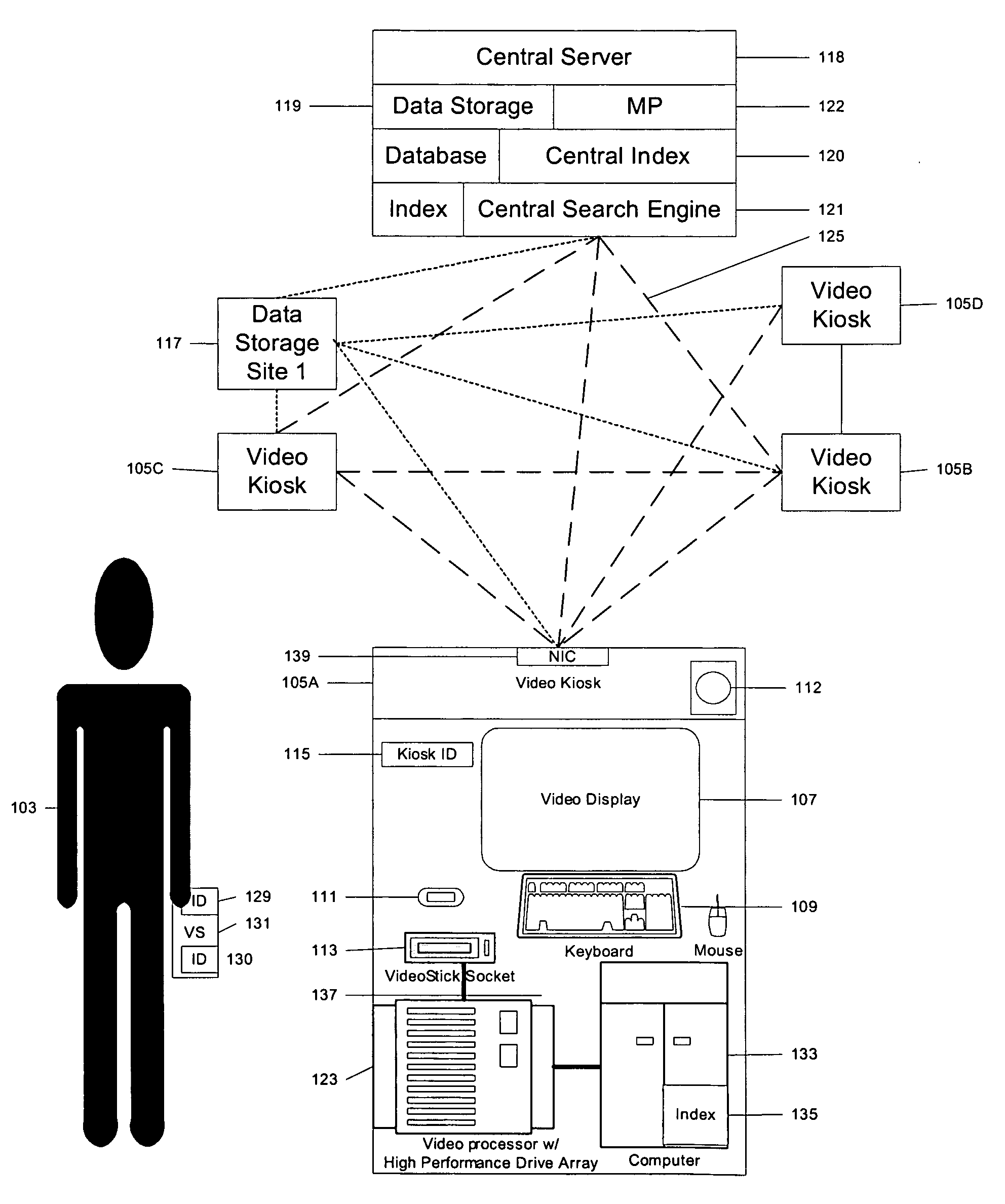

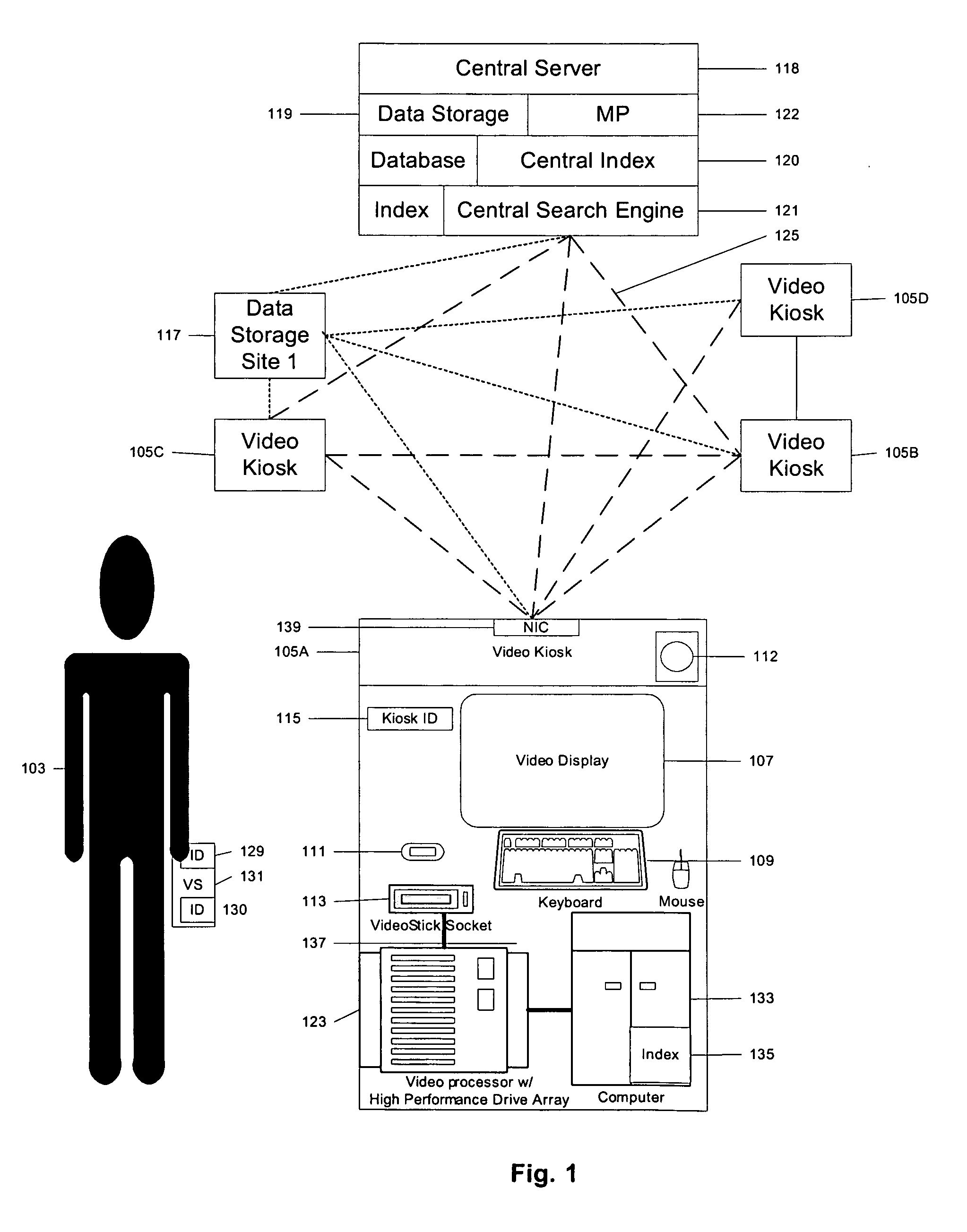

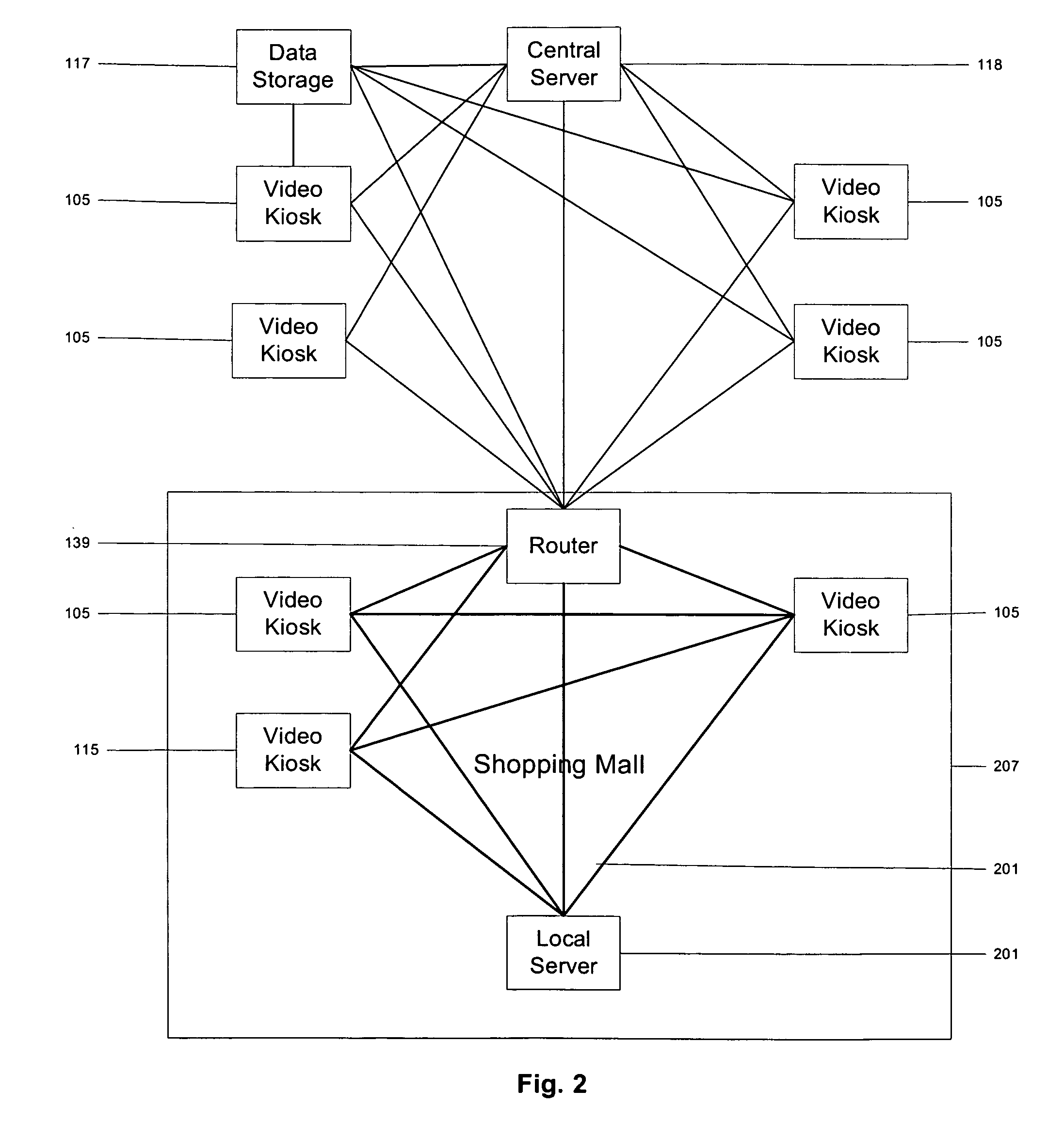

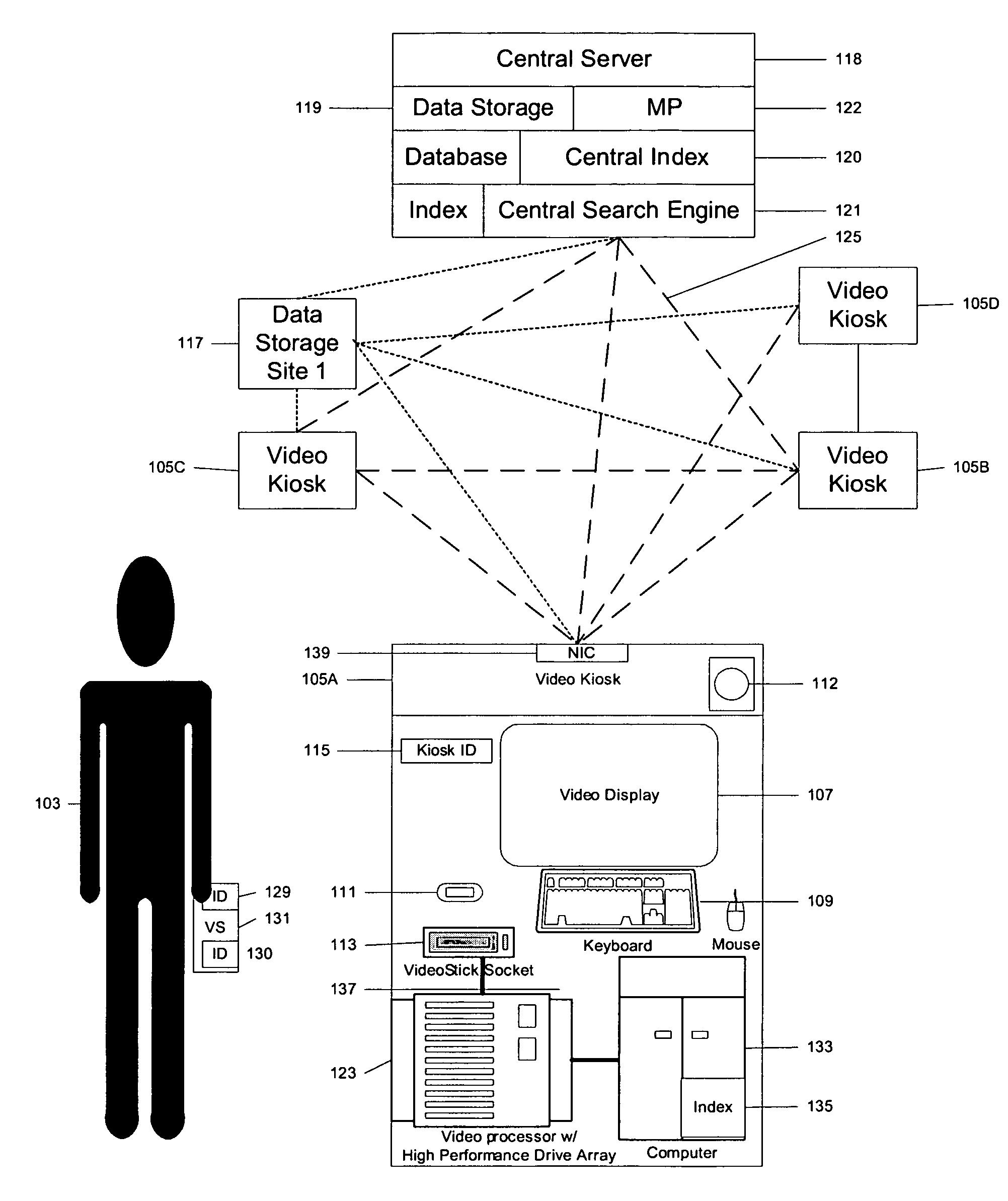

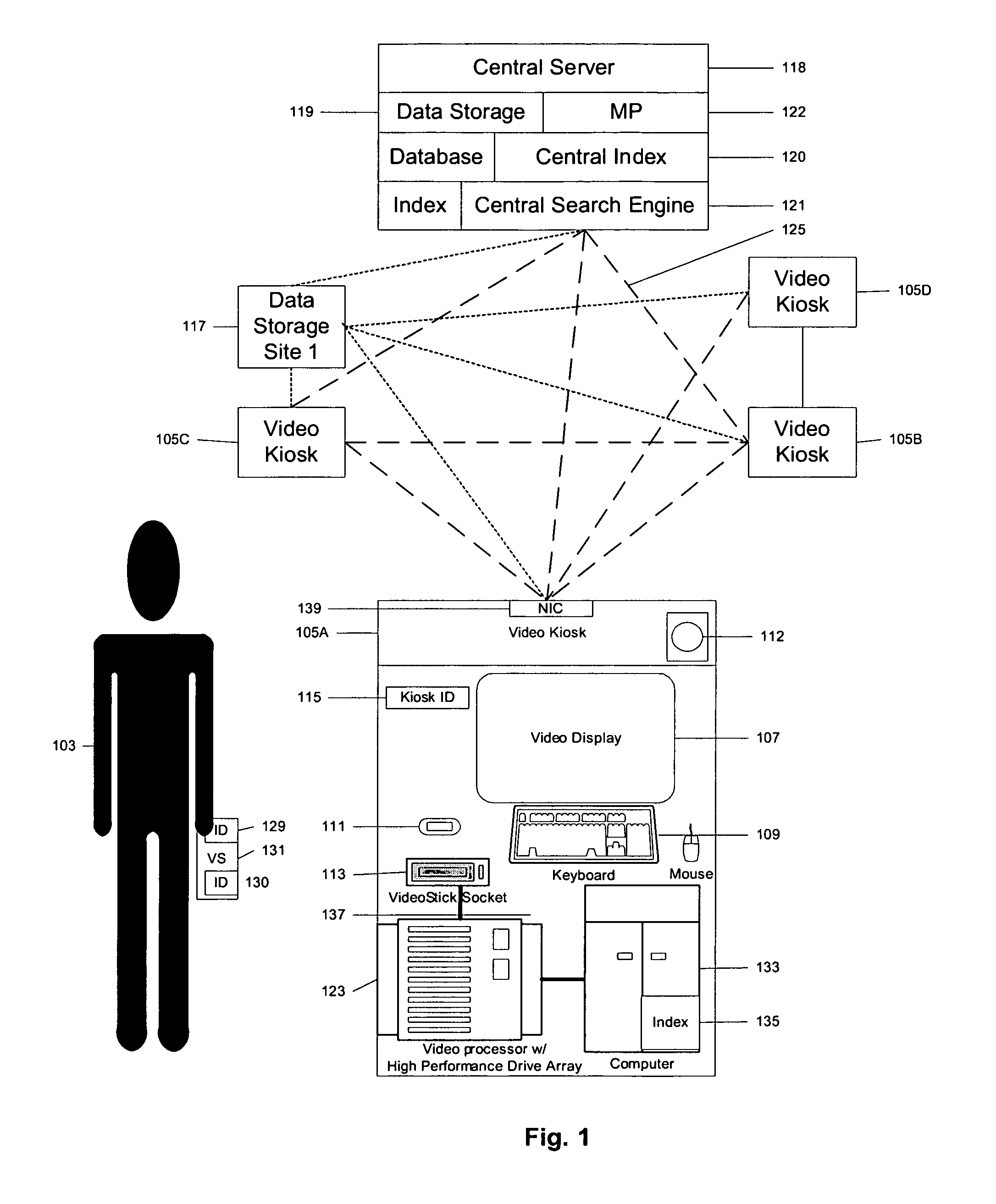

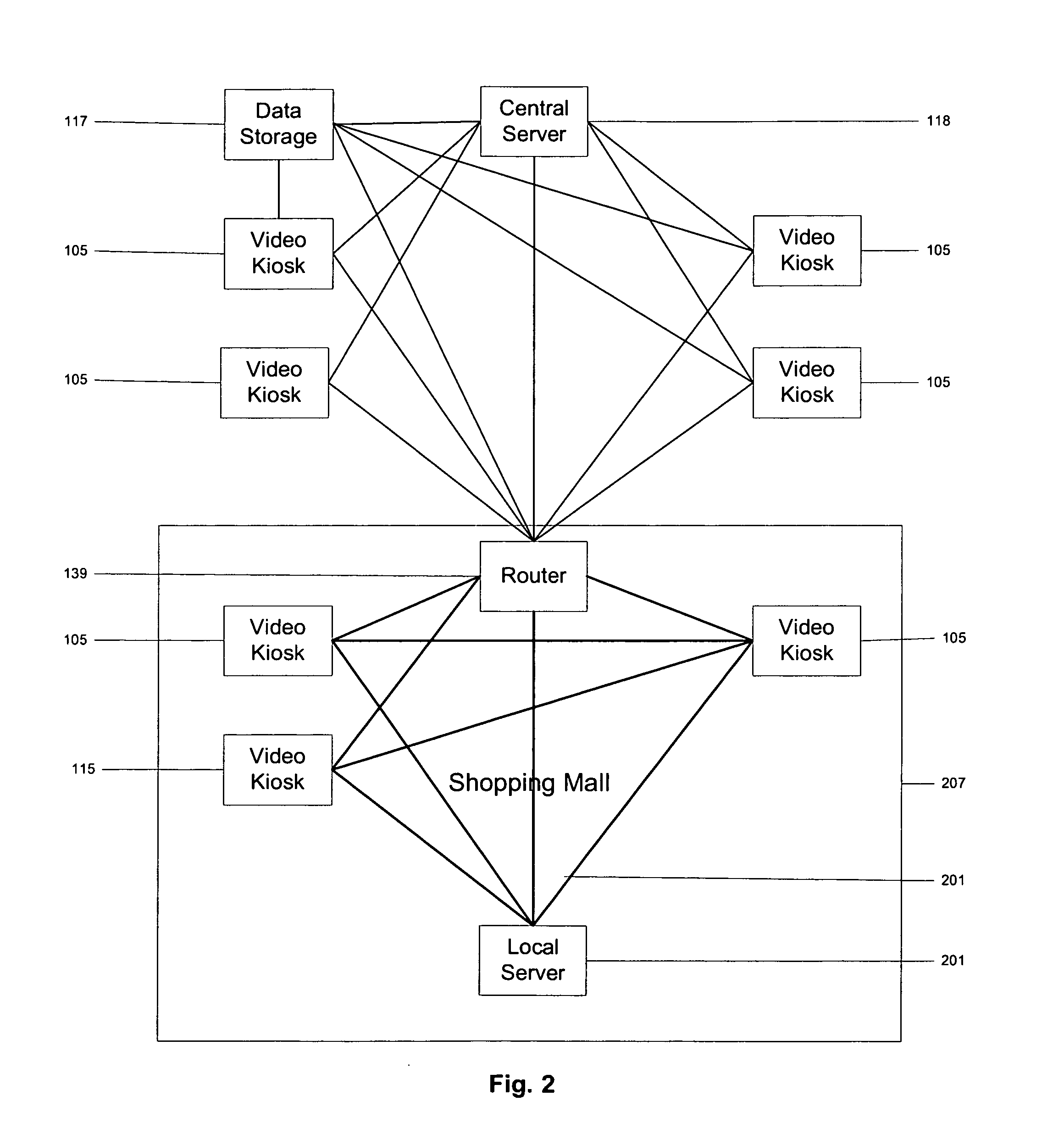

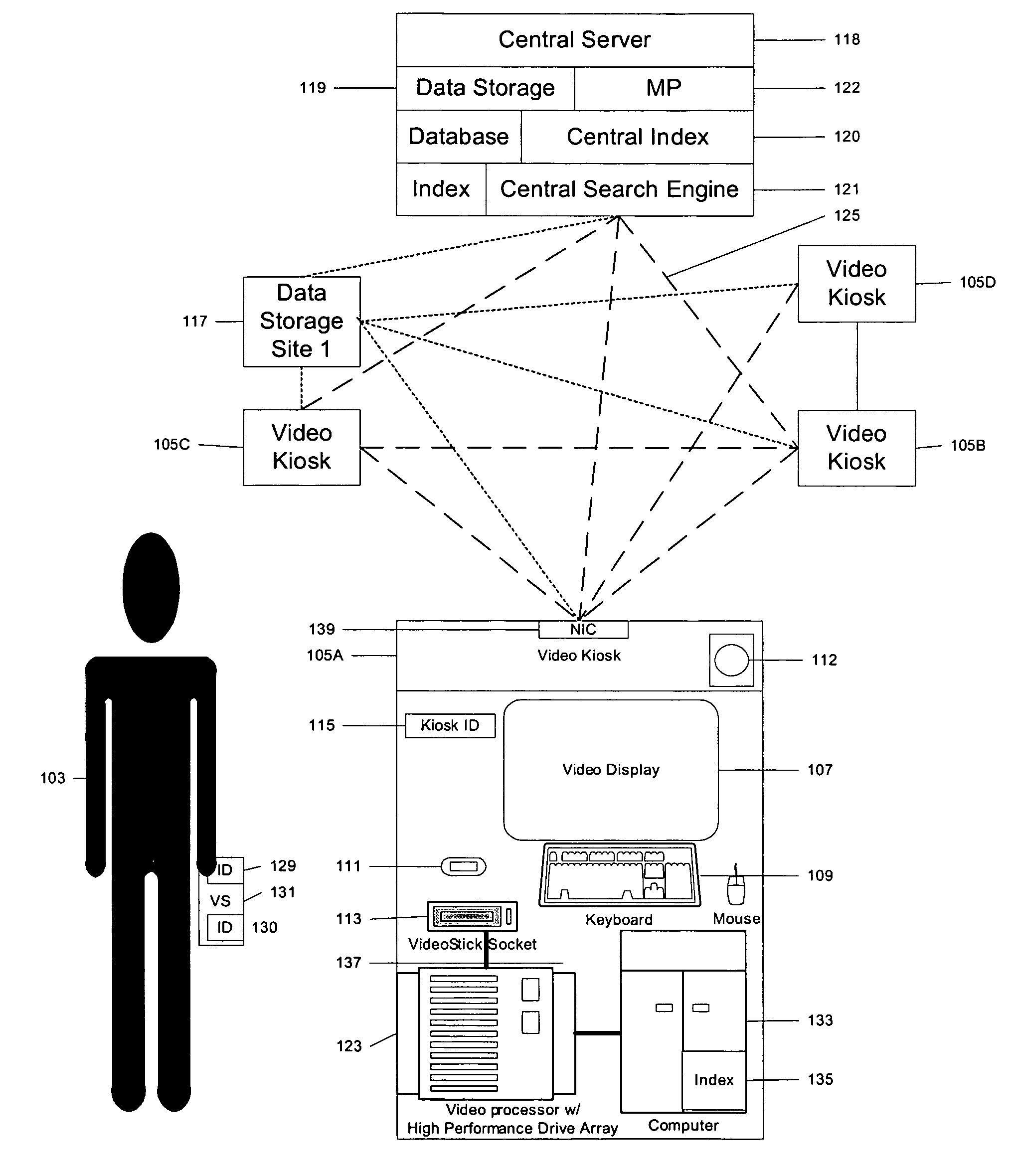

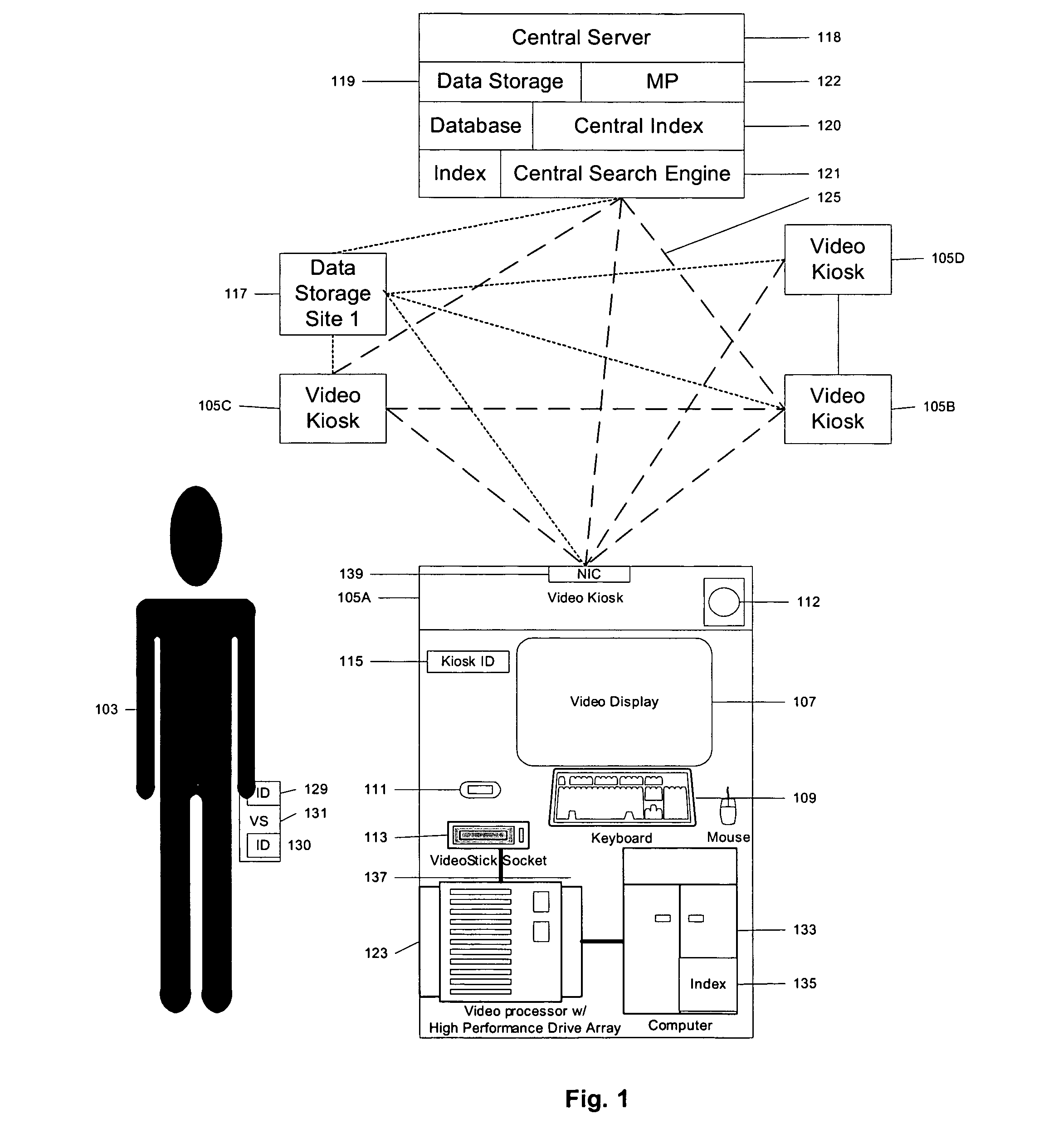

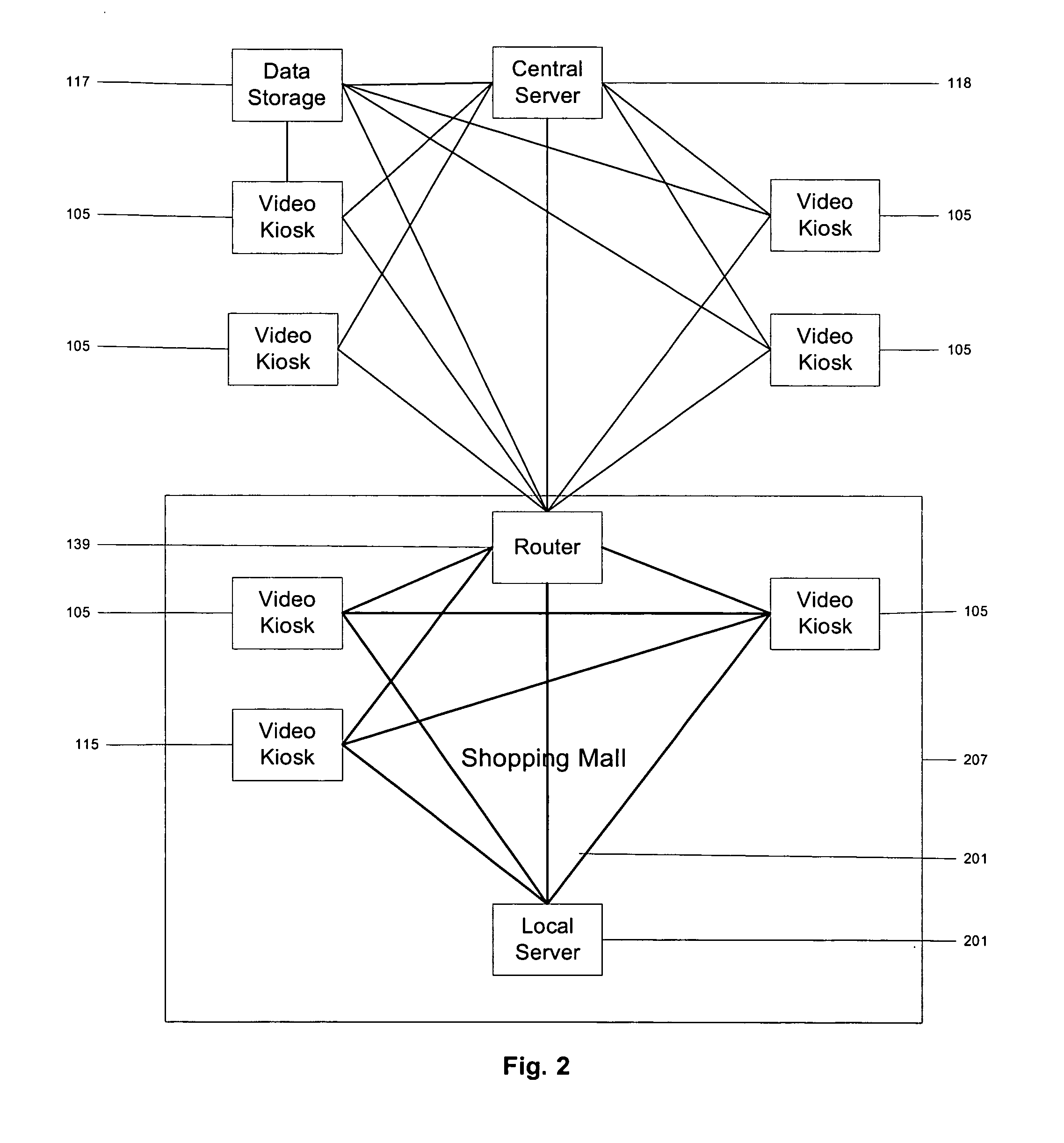

Method and apparatus for managing a digital inventory of multimedia files stored across a dynamic distributed network

InactiveUS20080228821A1Enhance reader comprehensionData processing applicationsSolid-state devicesPaymentHigh speed memory

A video network includes public kiosks having digital storage capacity. Centralized inventory control manages the video files stored at individual kiosks or network LANs. A user requests a multimedia file for download, and selects various ancillary files and control features, such as languages, subtitles, control of nudity, etc. The requested file is encrypted according to an encryption key, watermarked, and downloaded from a high-speed port of a public kiosk to a hand-held proprietary high speed memory device of a user. Payment is received at the time of request or at the time of download, and royalties are distributed by the video network to copyright holders. Computer applications or playback devices allow users to store and / or play video files that have been downloaded to a hand-held device while managing and enforcing digital rights of content providers through the watermarking and / or encryption.

Owner:CLEAR GOSPEL CAMPAIGN

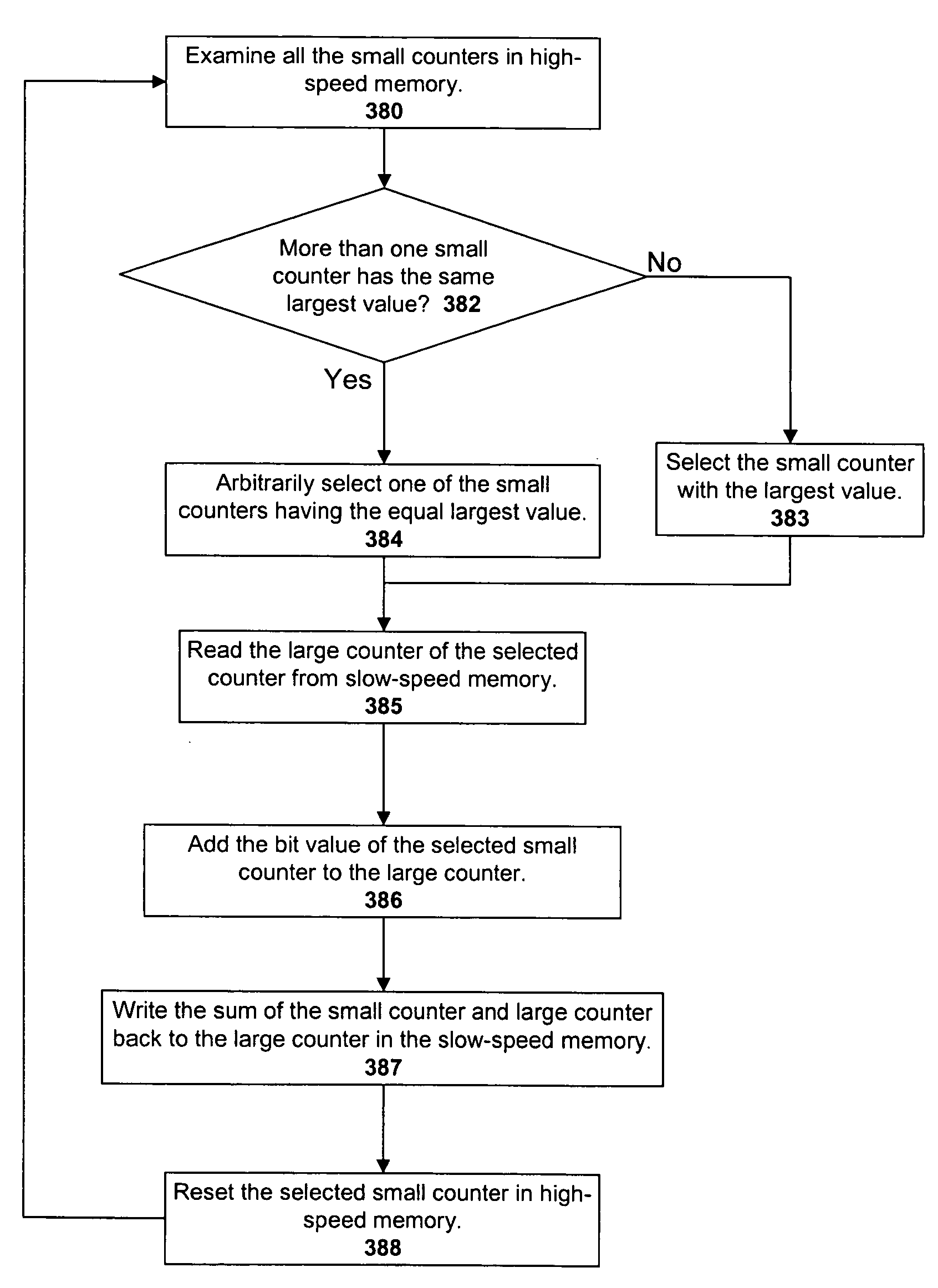

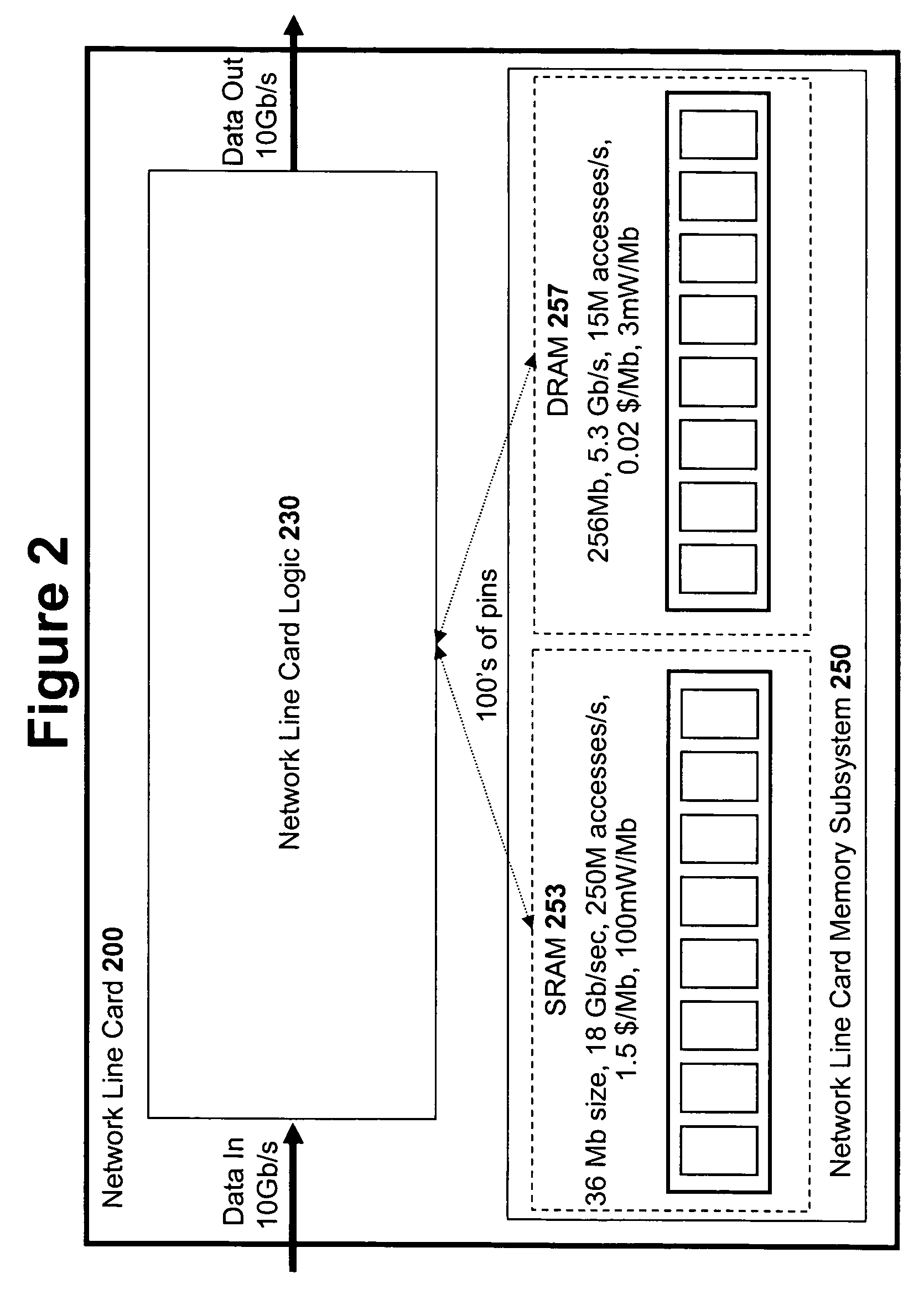

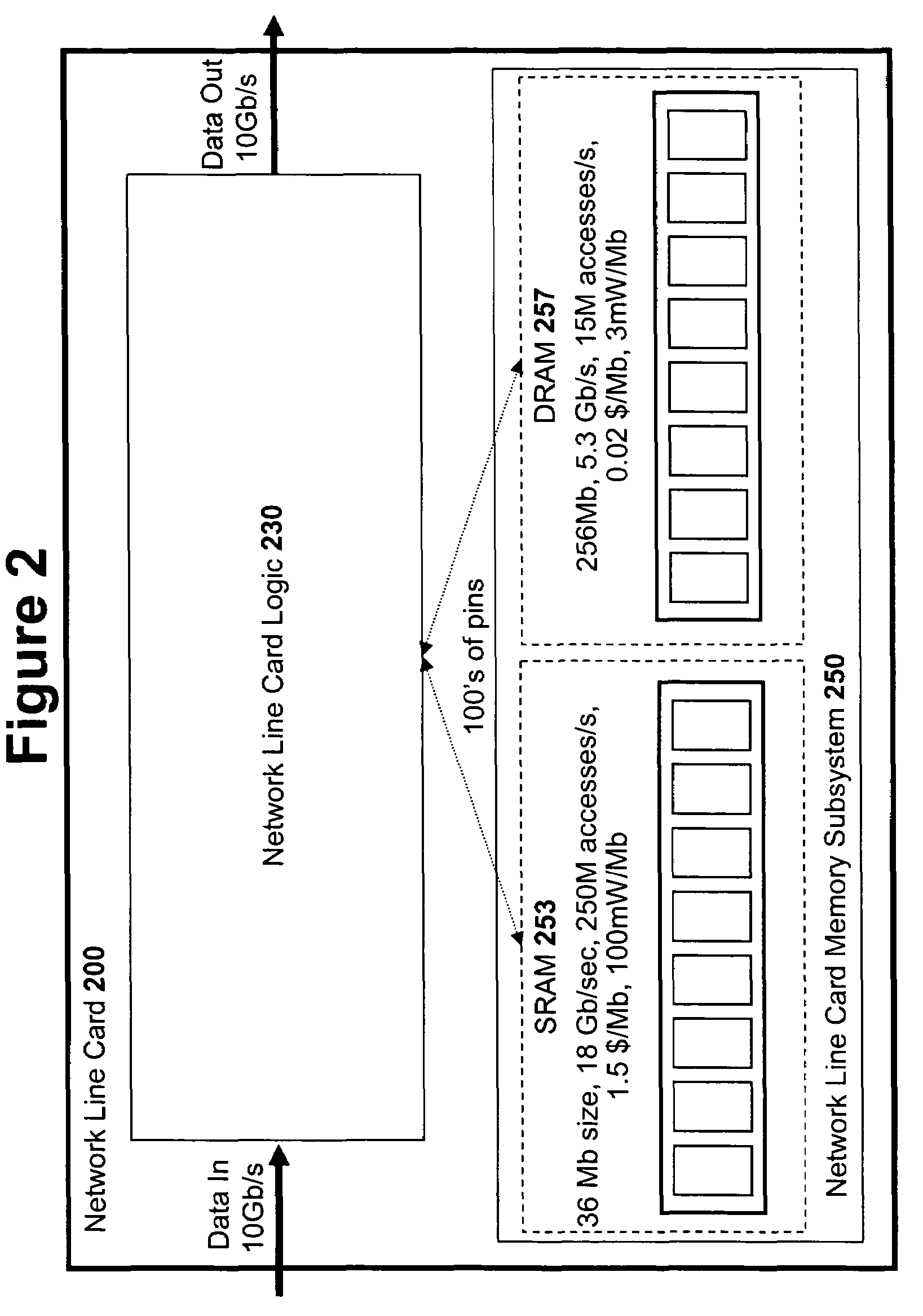

High speed memory and input/output processor subsystem for efficiently allocating and using high-speed memory and slower-speed memory

ActiveUS7657706B2Easy to handleSimple taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryMemory bank

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

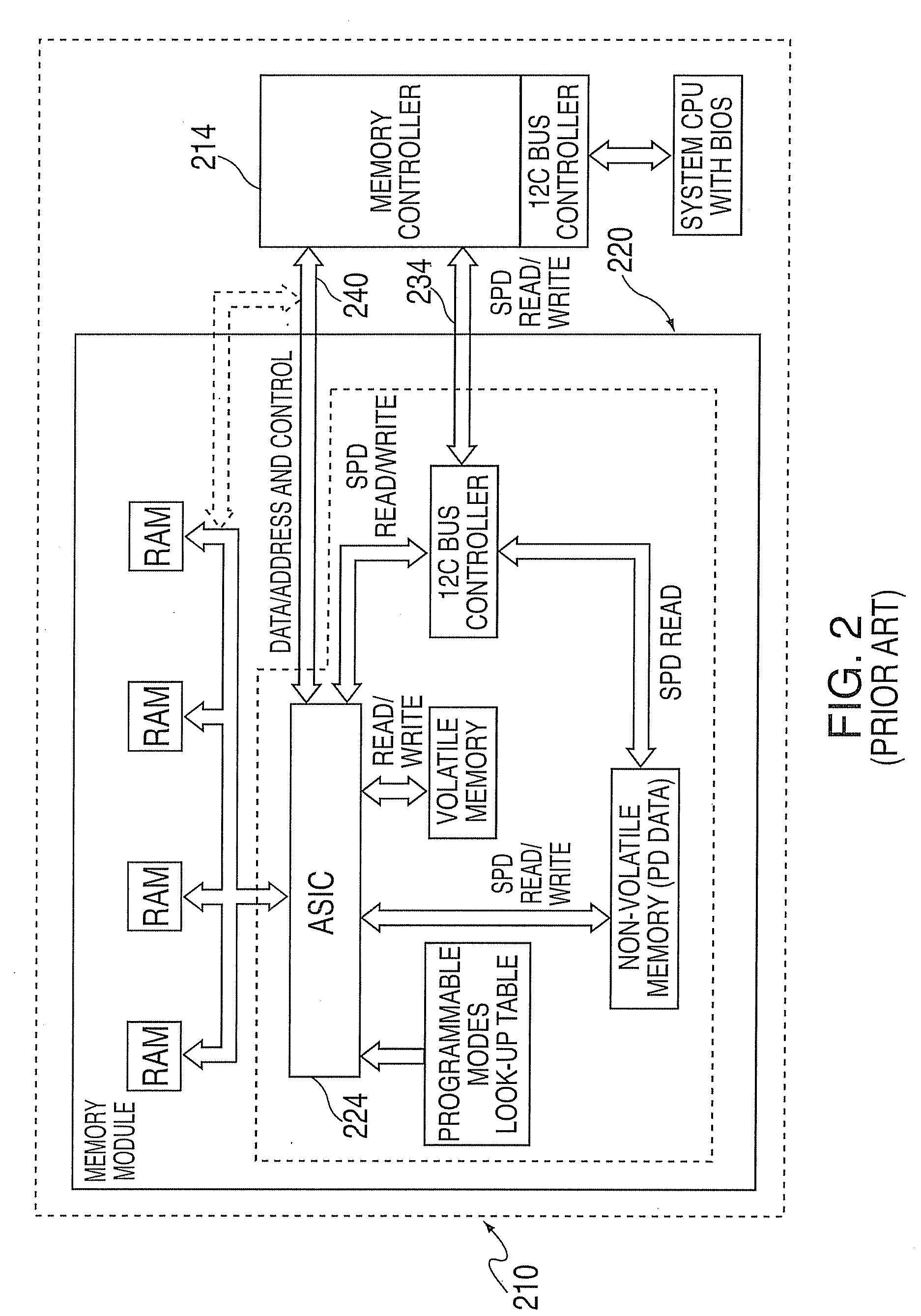

Method and system for resolving interoperability of multiple types of dual in-line memory modules

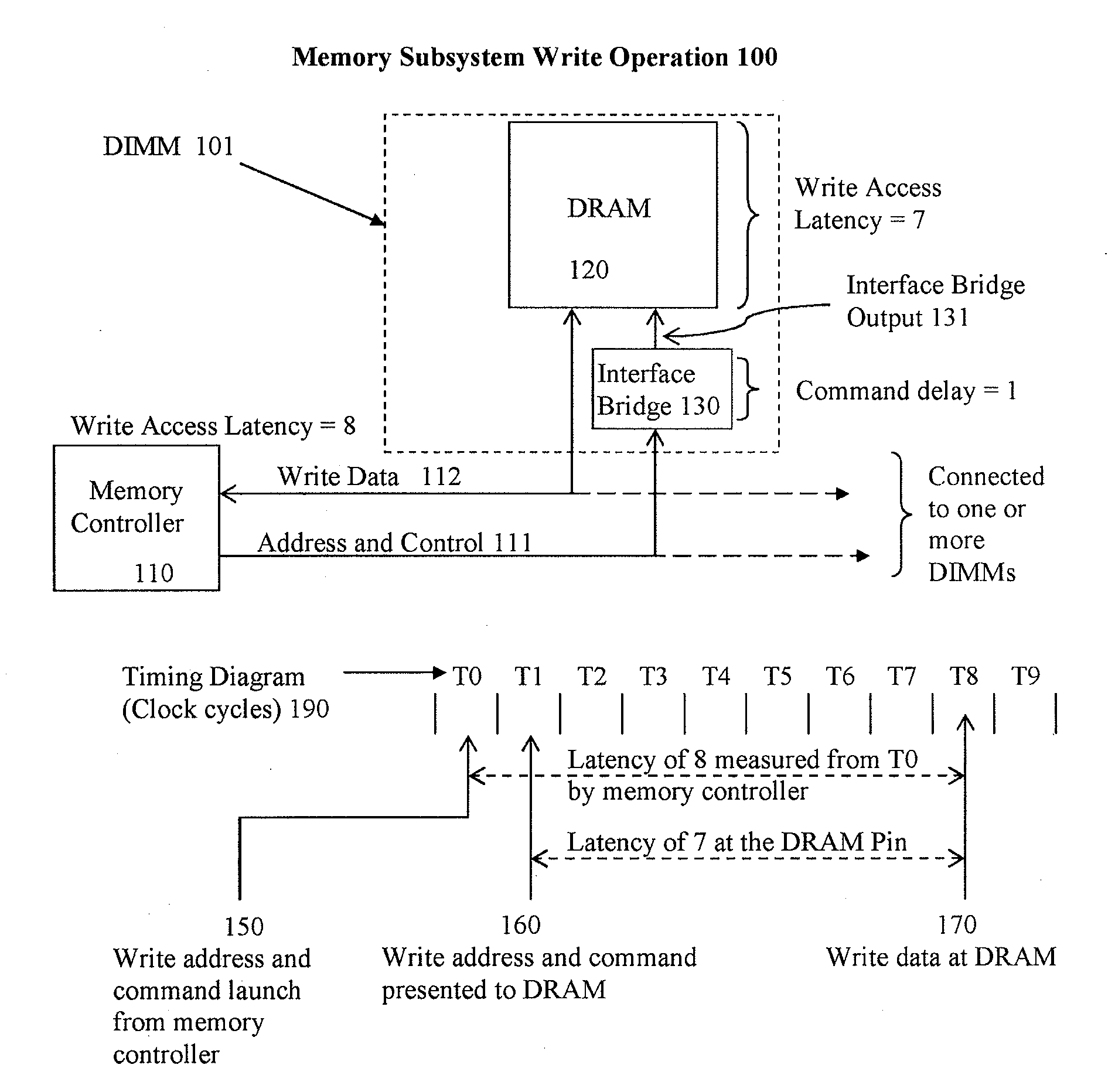

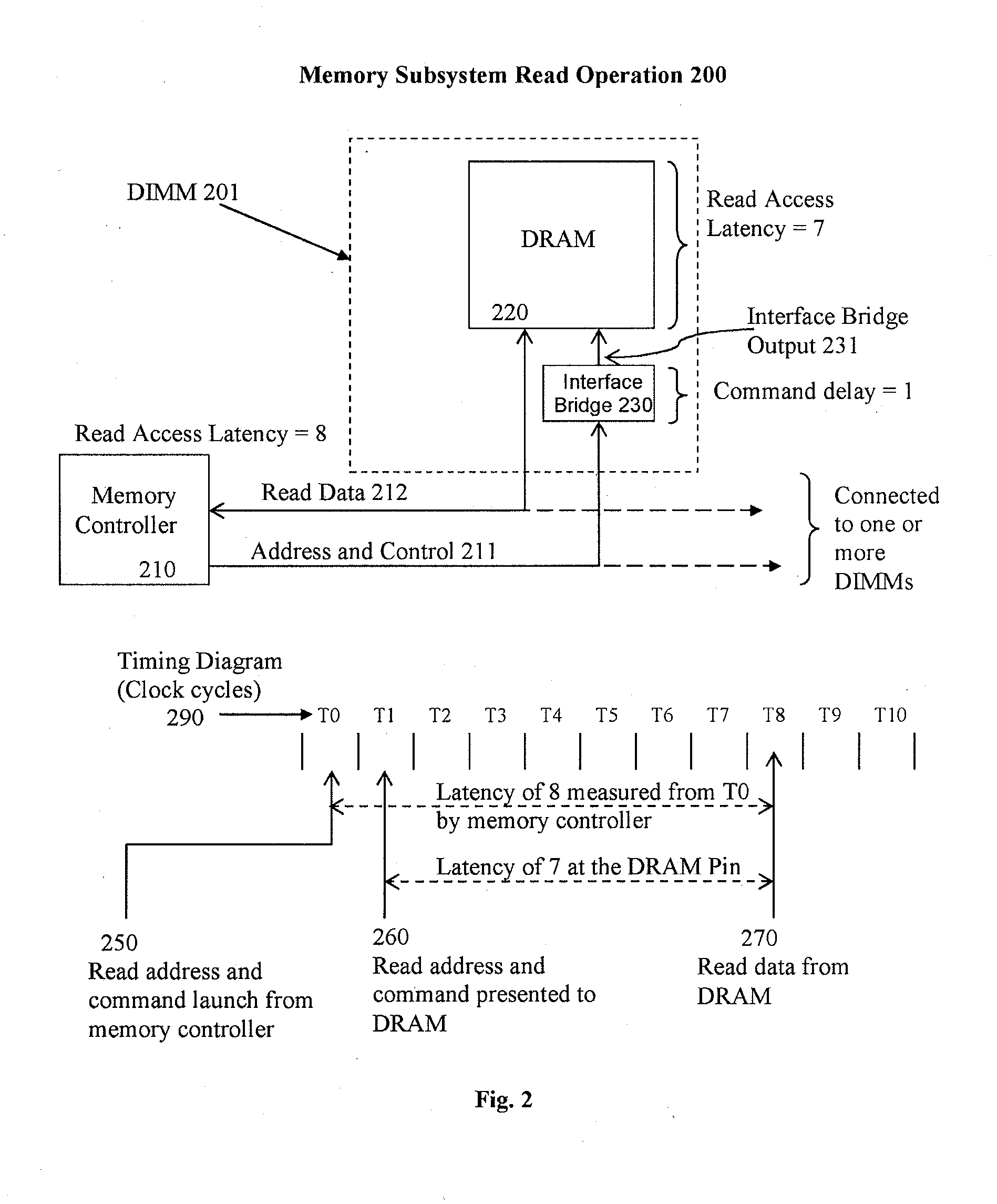

Systems and methods are described for resolving certain interoperability issues among multiple types of memory modules in the same memory subsystem. The system provides a single data load DIMM for constructing a high density and high speed memory subsystem that supports the standard JEDEC RDIMM interface while presenting a single load to the memory controller. At least one memory module includes one or more DRAM, a bi-directional data buffer and an interface bridge with a conflict resolution block. The interface bridge translates the CAS latency (CL) programming value that a memory controller sends to program the DRAMs, modifies the latency value, and is used for resolving command conflicts between the DRAMs and the memory controller to insure proper operation of the memory subsystem.

Owner:NETLIST INC

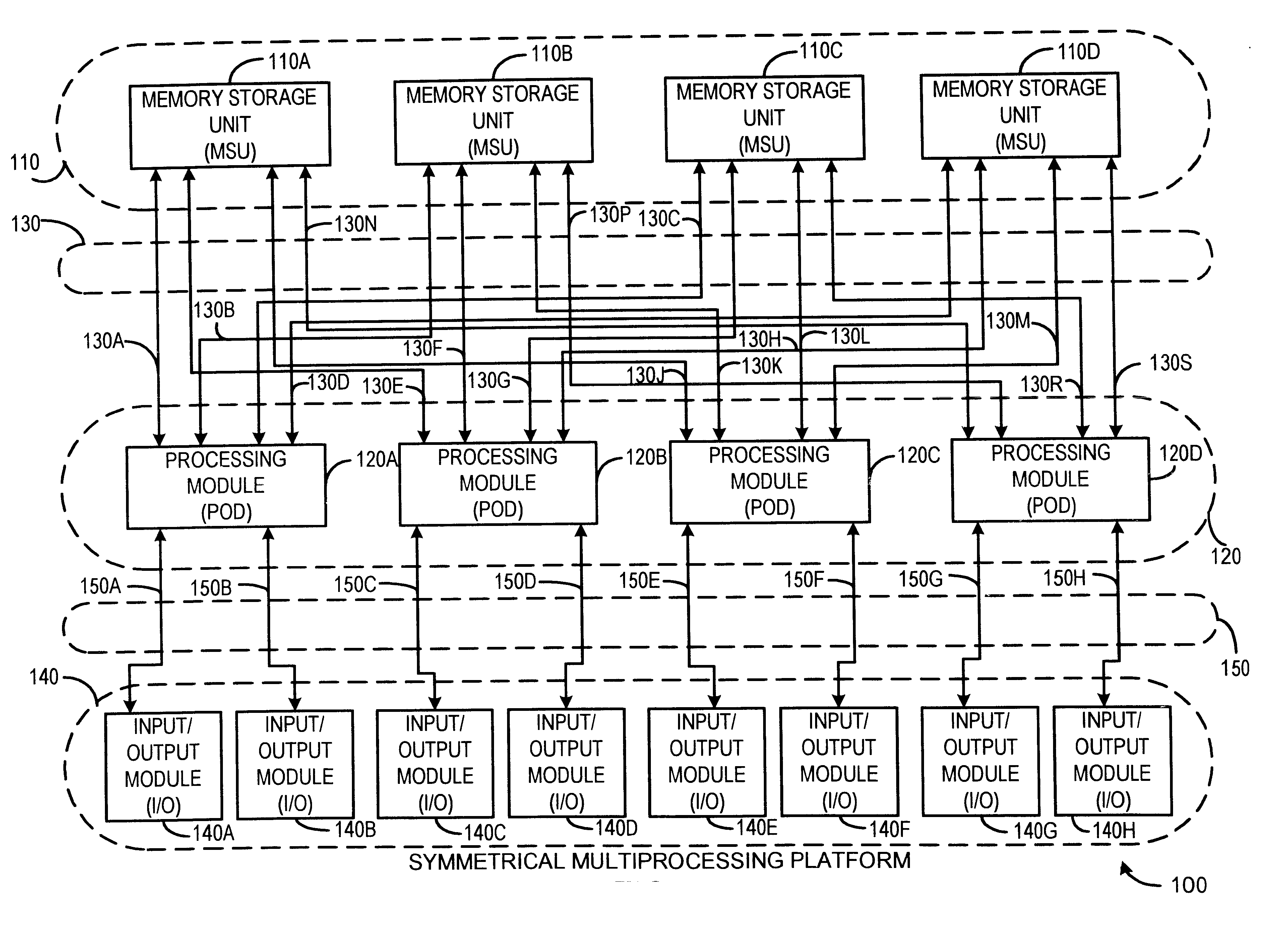

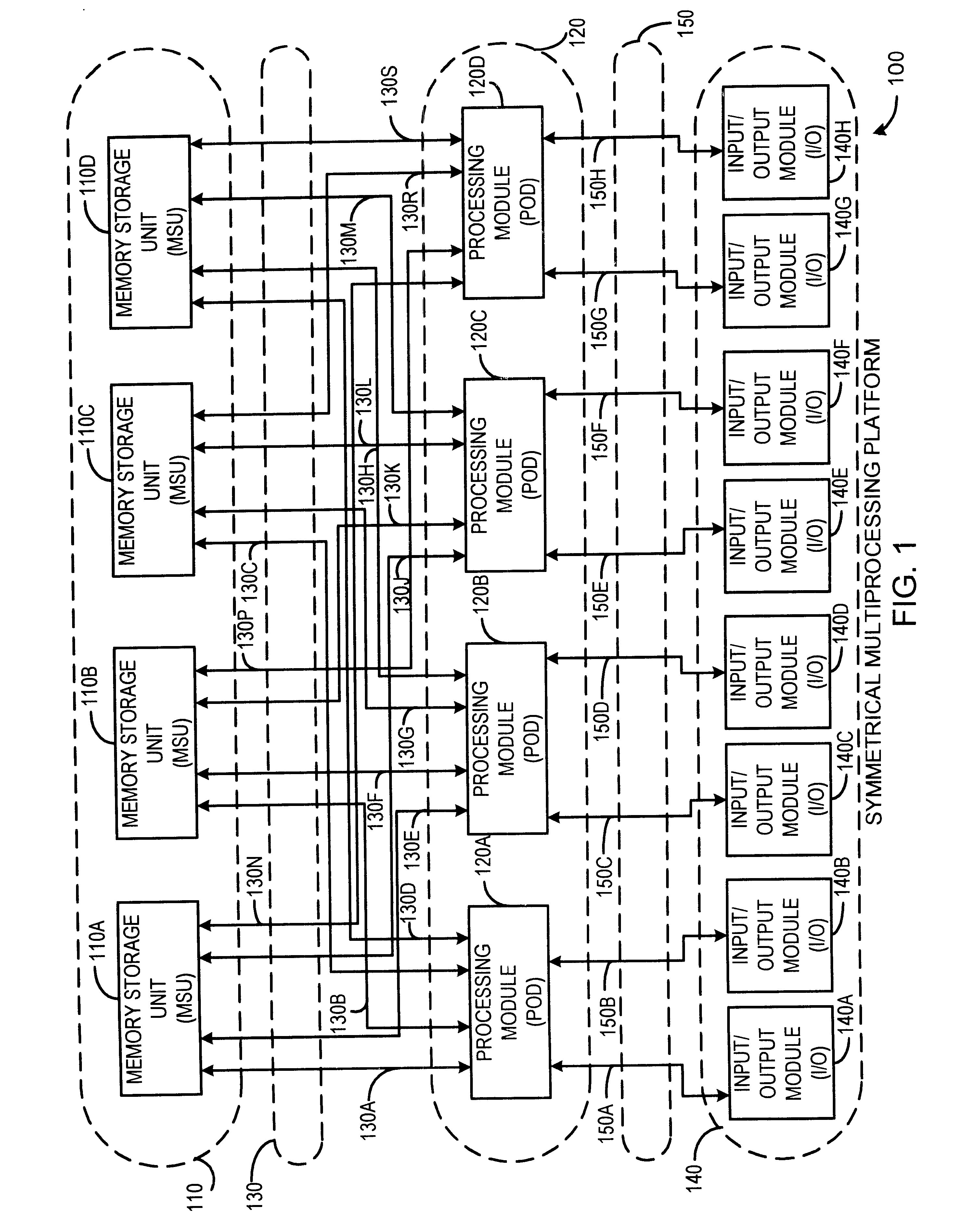

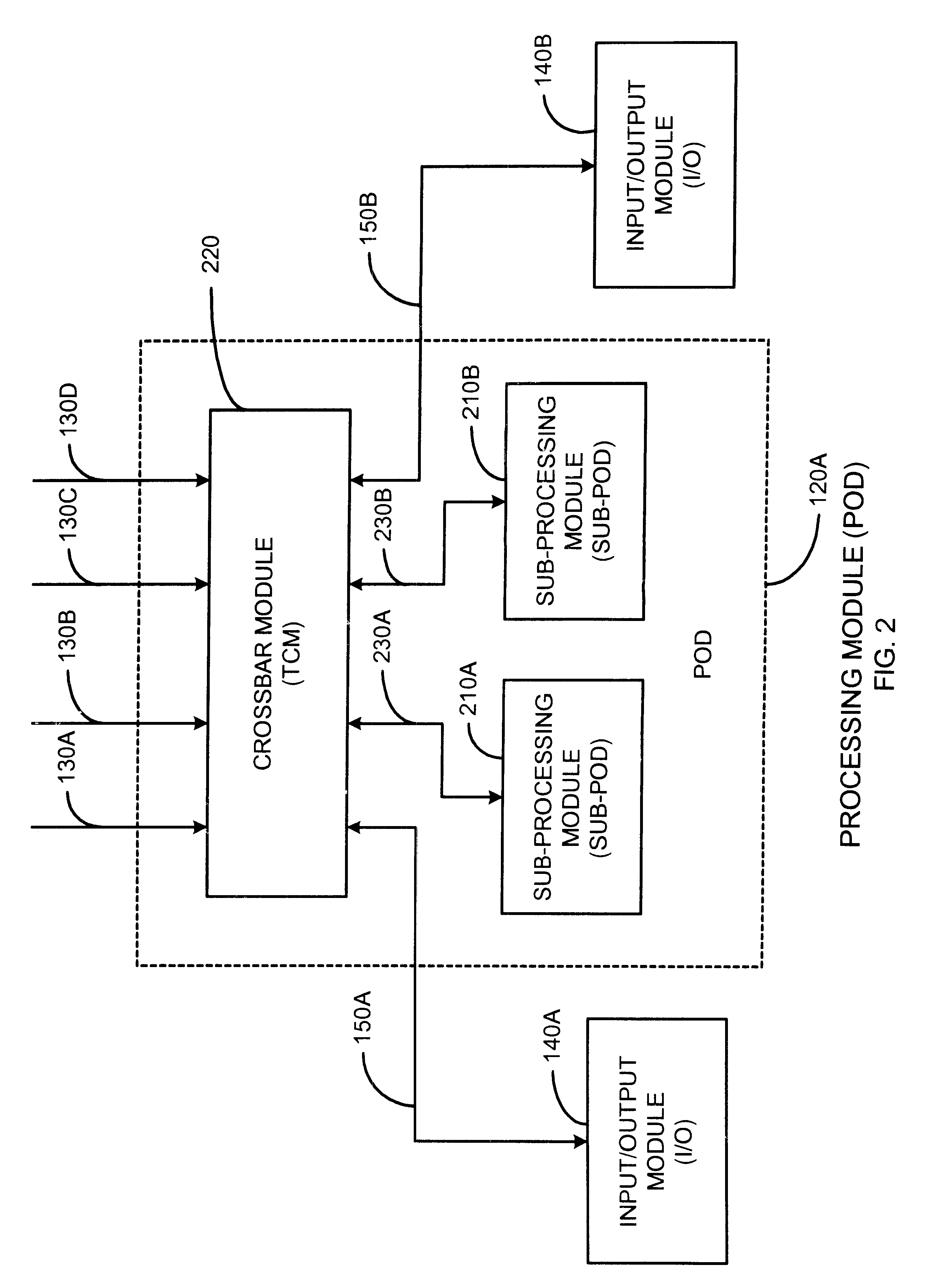

High-speed memory storage unit for a multiprocessor system having integrated directory and data storage subsystems

InactiveUS6415364B1Easy to manageImprove system throughputMemory adressing/allocation/relocationInput/output processes for data processingHigh speed memoryImpact system

A high-speed memory system is disclosed for use in supporting a directory-based cache coherency protocol. The memory system includes at least one data system for storing data, and a corresponding directory system for storing the corresponding cache coherency information. Each data storage operation involves a block transfer operation performed to multiple sequential addresses within the data system. Each data storage operation occurs in conjunction with an associated read-modify-write operation performed on cache coherency information stored within the corresponding directory system. Multiple ones of the data storage operations may be occurring within one or more of the data systems in parallel. Likewise, multiple ones of the read-modify-write operations may be performed to one or more of the directory systems in parallel. The transfer of address, control, and data signals for these concurrently performed operations occurs in an interleaved manner. The use of block transfer operations in combination with the interleaved transfer of signals to memory systems prevents the overhead associated with the read-modify-write operations from substantially impacting system performance. This is true even when data and directory systems are implemented using the same memory technology.

Owner:UNISYS CORP

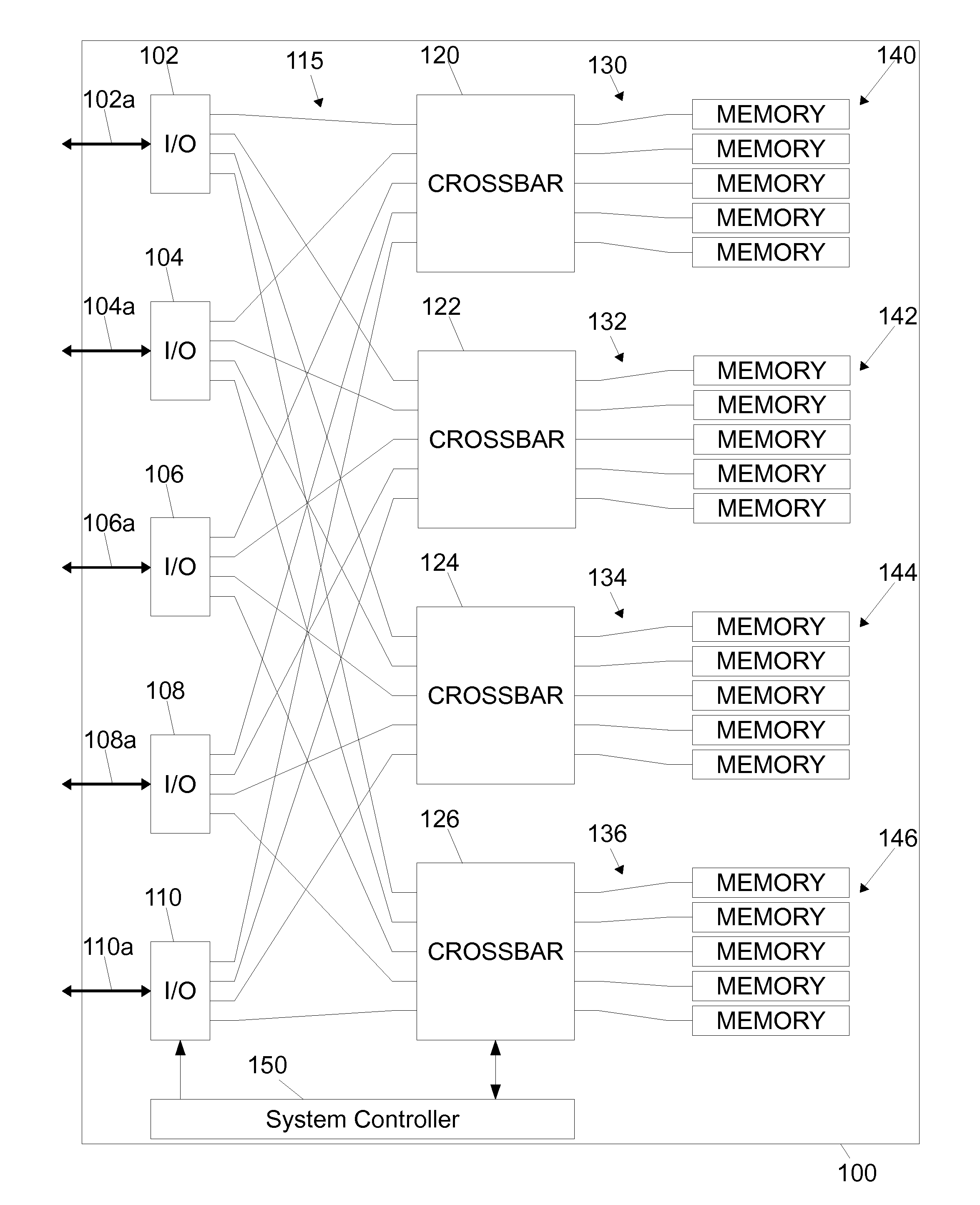

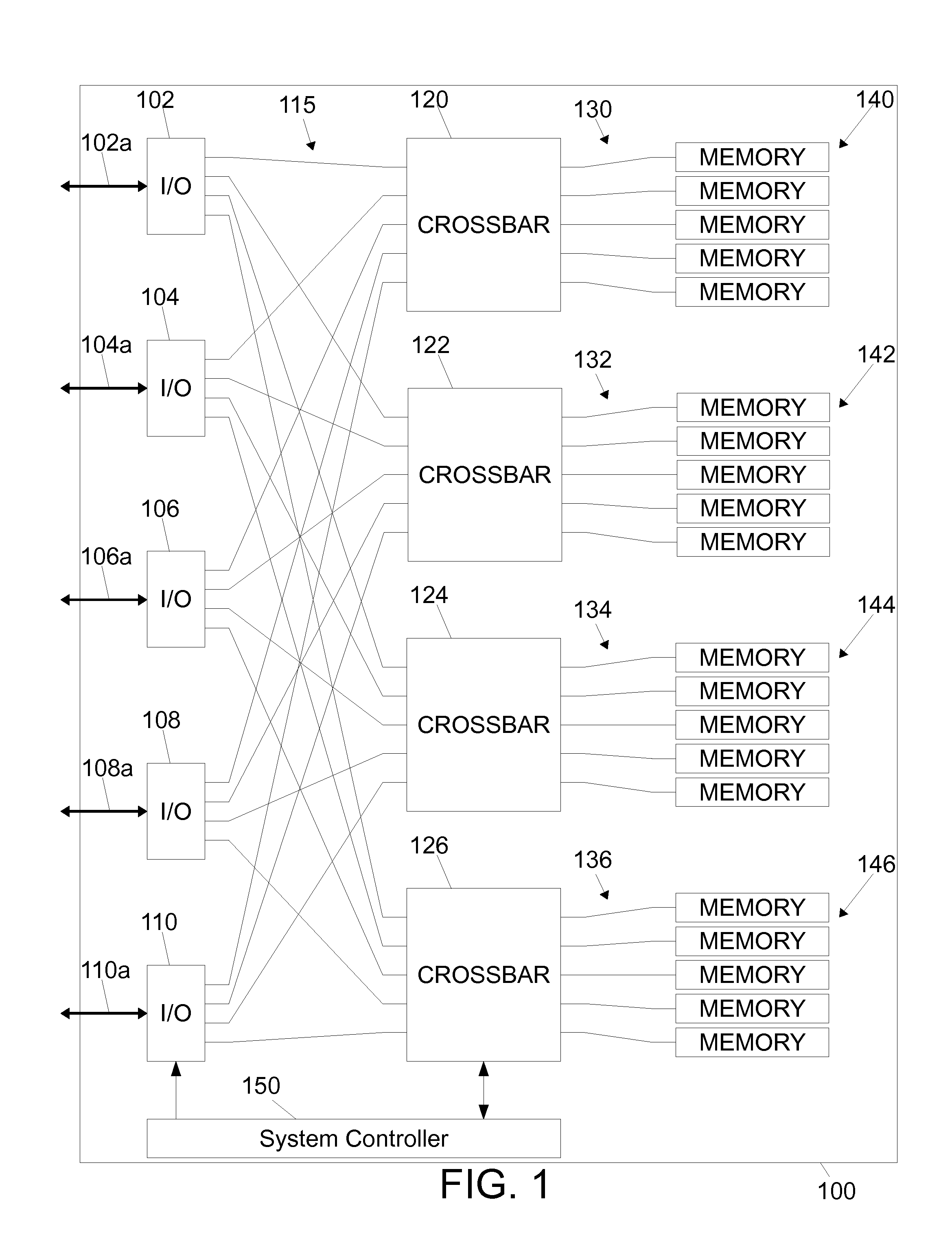

High-speed memory system

InactiveUS20120079352A1Effective and efficient and optimalMemory architecture accessing/allocationDigital storageTelecommunications linkHigh speed memory

The disclosed embodiments relate to a Flash-based memory module having high-speed serial communication. The Flash-based memory module comprises, among other things, a plurality of I / O modules, each configured to communicate with an external device over one or more external communication links, a plurality of Flash-based memory cards, each comprising a plurality of Flash memory devices, and a plurality of crossbar switching elements, each being connected to a respective one of the Flash-based memory cards and configured to allow each one of the I / O modules to communicate with the respective one of the Flash-based memory cards. Each I / O module is connected to each crossbar switching element by a high-speed serial communication link, and each crossbar switching element is connected to the respective one of the Flash-based memory cards by a plurality of parallel communication links.

Owner:IBM CORP

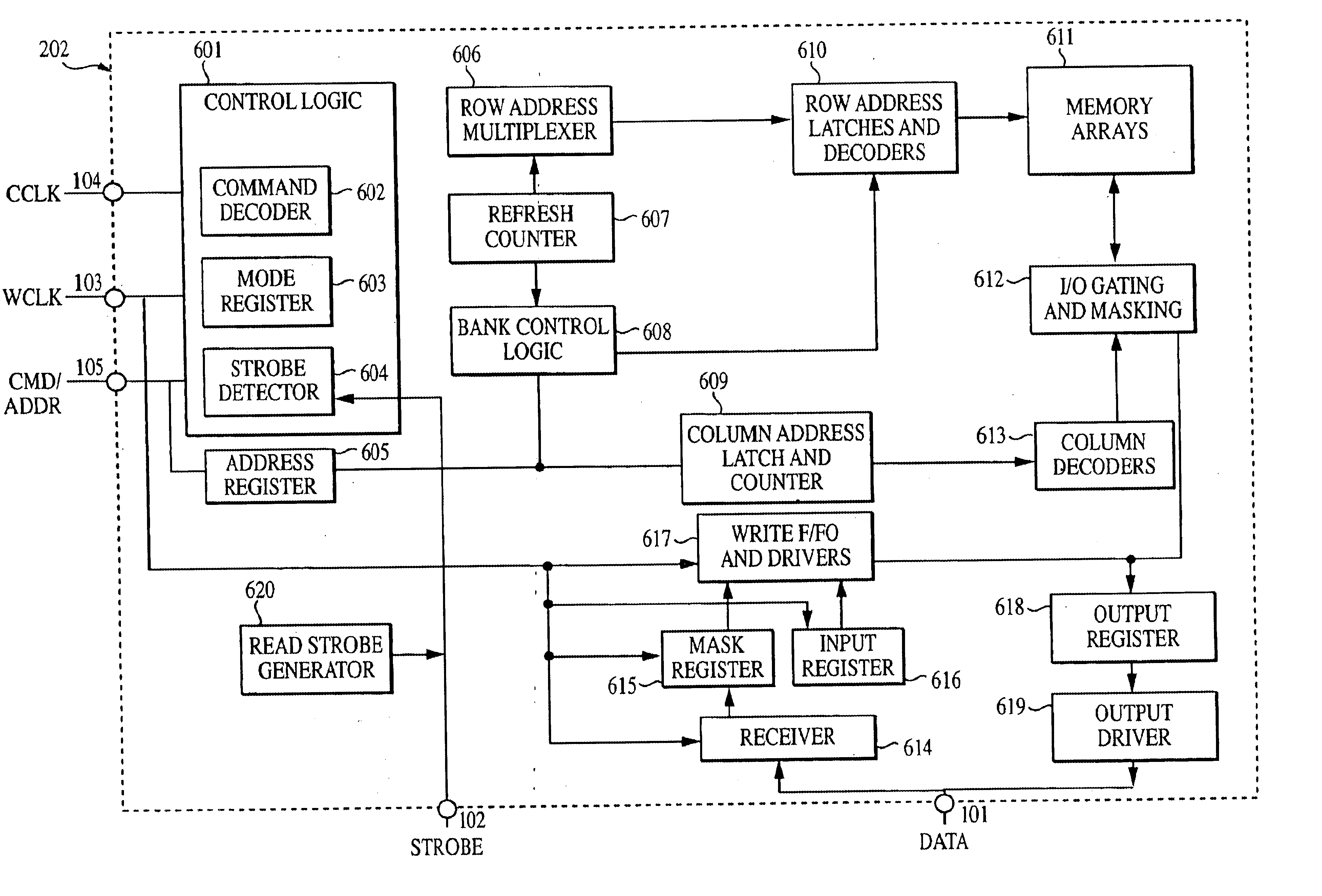

Synchronized write data on a high speed memory bus

InactiveUS6807613B1Memory adressing/allocation/relocationRead-only memoriesPhase shiftedHigh speed memory

Some synchronous semiconductor memory devices accept a command clock which is buffered and a write clock which is unbuffered. Write command are synchronized to the command clock while the associated write data is synchronized to the write clock. Due to the use of the buffer, an arbitrary phase shift can exist between the command and write clocks. The presence of the phase shift between the two clocks makes it difficult to determine when a memory device should accept write data associated a write command. A synchronous memory device in accordance with the present invention utilizes the unbuffered strobe signal which is normally tristated during writes as a flag to mark the start of write data. A preamble signal may be asserted on the strobe signal line prior to asserting the flag signal in order to simplify flag detection.

Owner:ROUND ROCK RES LLC

Method and apparatus for distributing a multimedia file to a public kiosk across a network

Owner:CLEAR GOSPEL CAMPAIGN

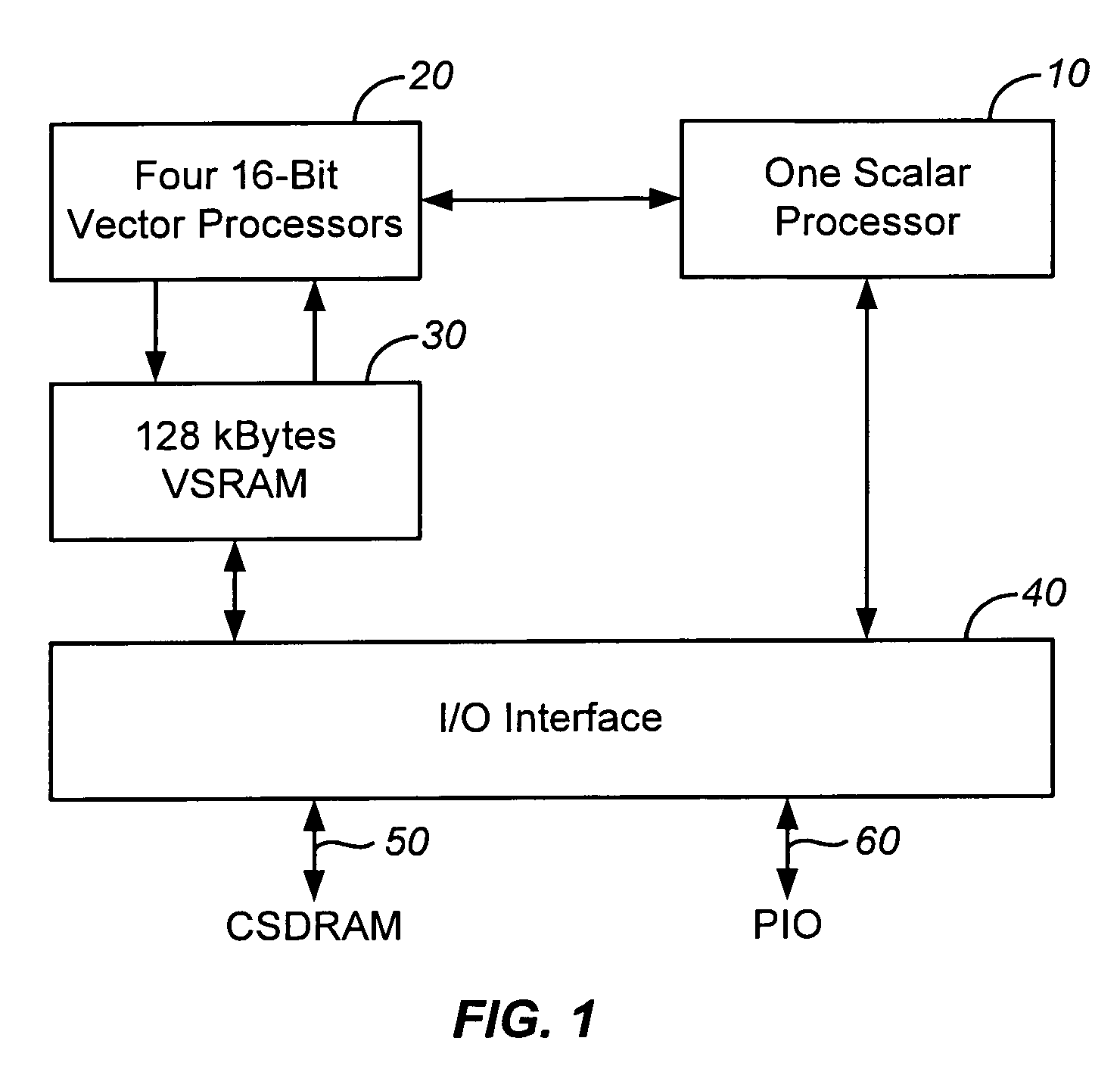

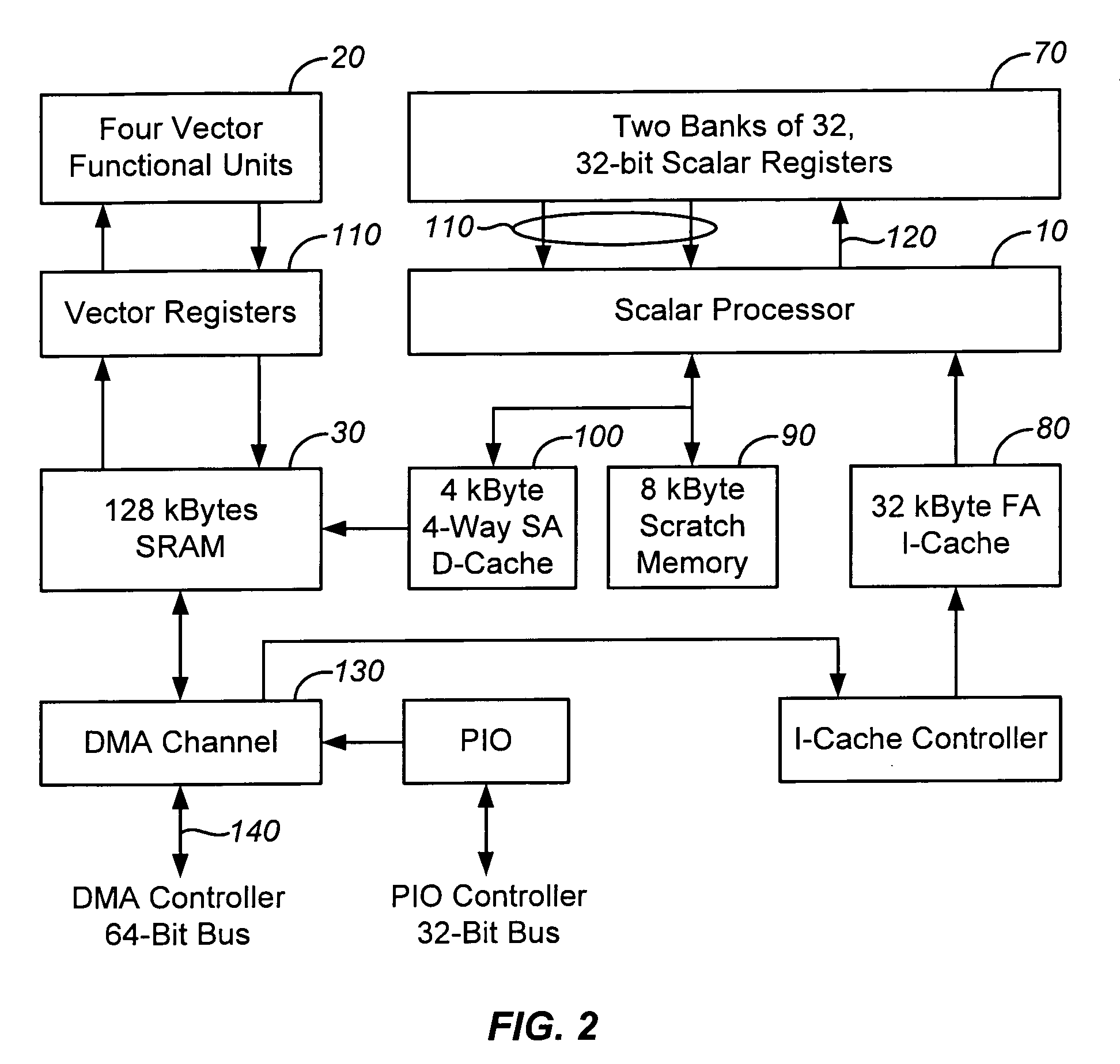

Vector processor with special purpose registers and high speed memory access

InactiveUS20060259737A1Limited instruction widthGreat instruction widthConditional code generationRegister arrangementsVector processorHigh speed memory

A vector processor includes a set of vector registers for storing data to be used in the execution of instructions and a vector functional unit coupled to the vector registers for executing instructions. The functional unit executes instructions using operation codes provided to it which operation codes include a field referencing a special register. The special register contains information about the length and starting point for each vector instruction. The processor includes a high speed memory access system to facilitate faster operation.

Owner:MEADLOCK JAMES W MEAD

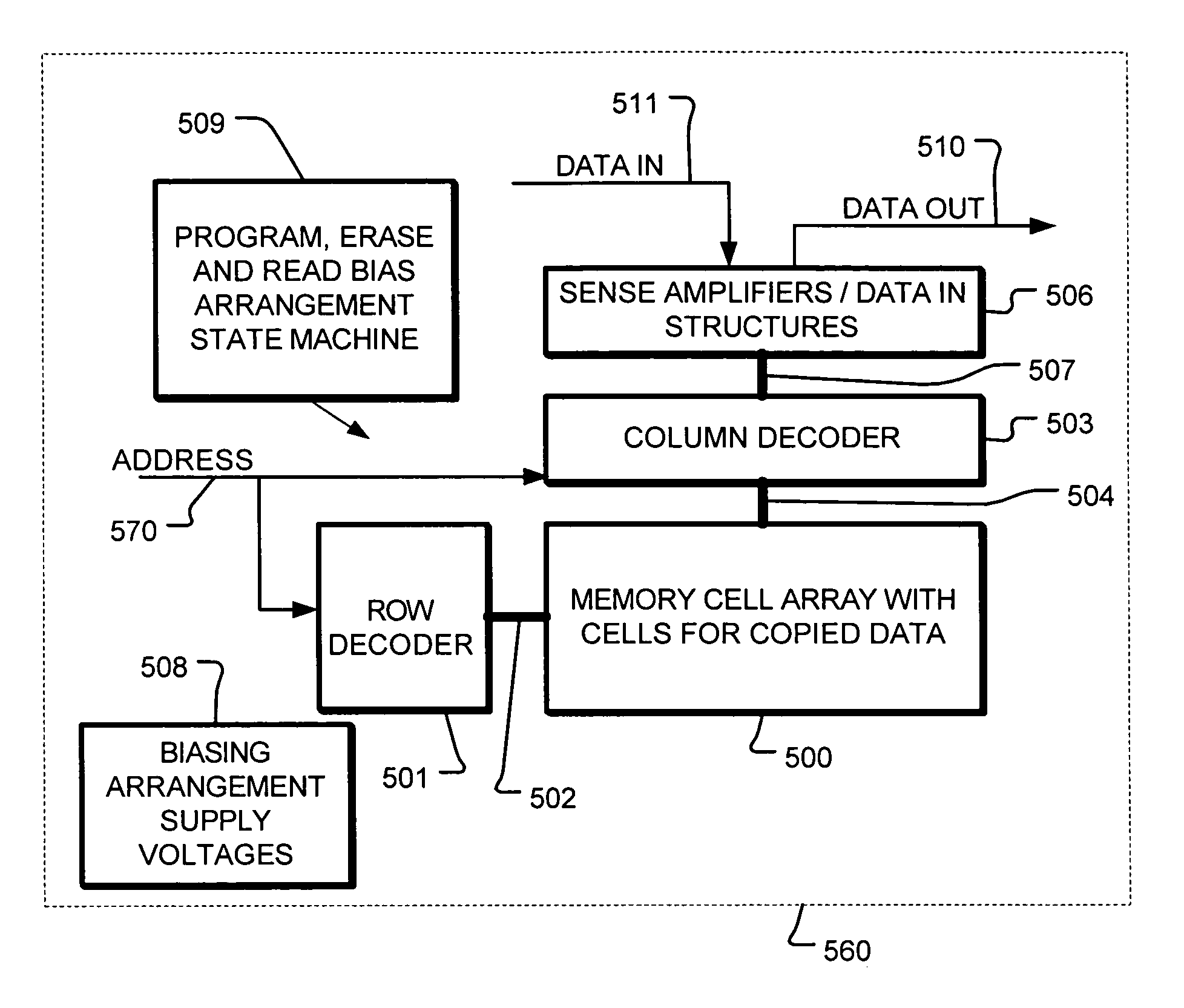

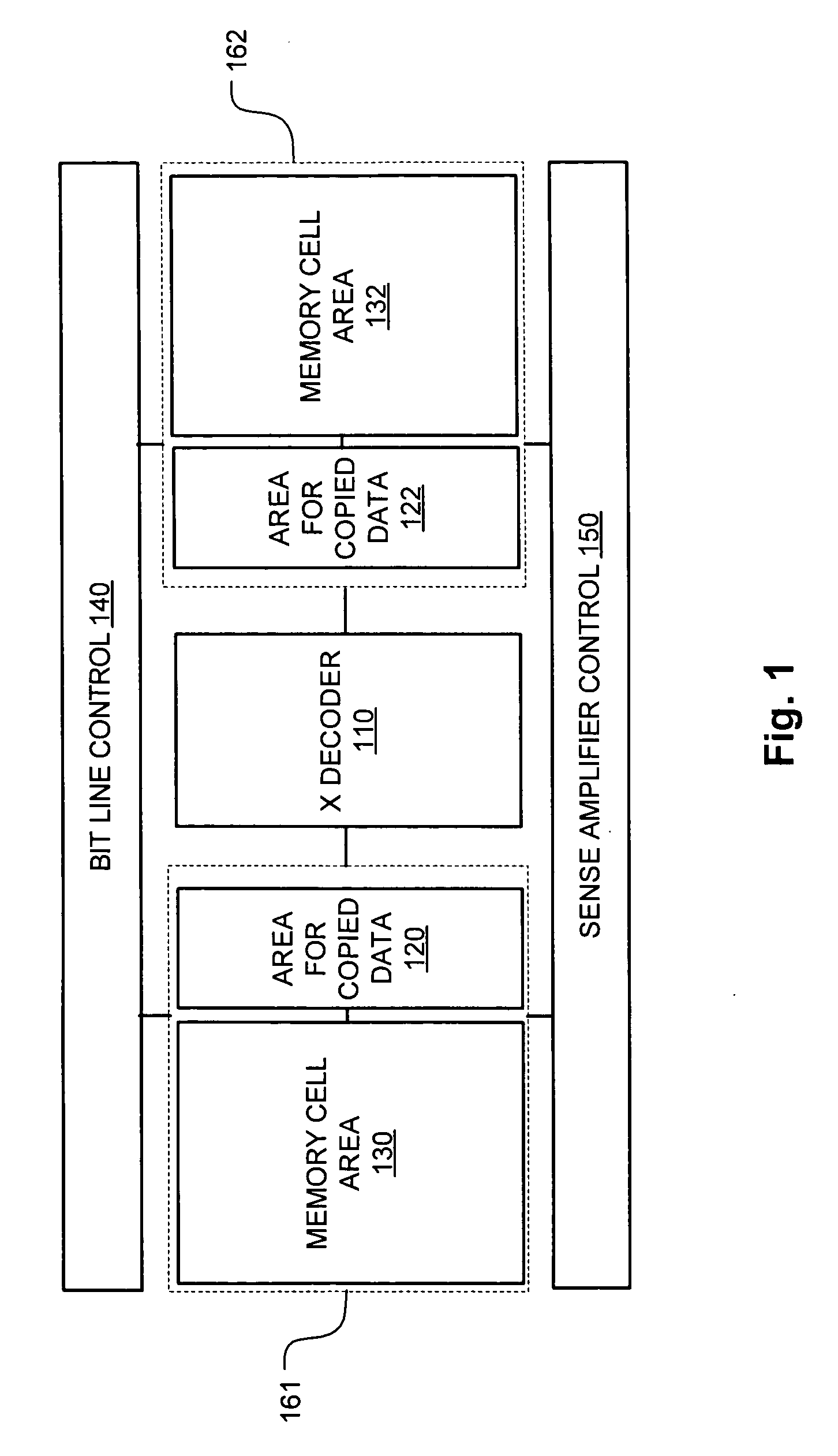

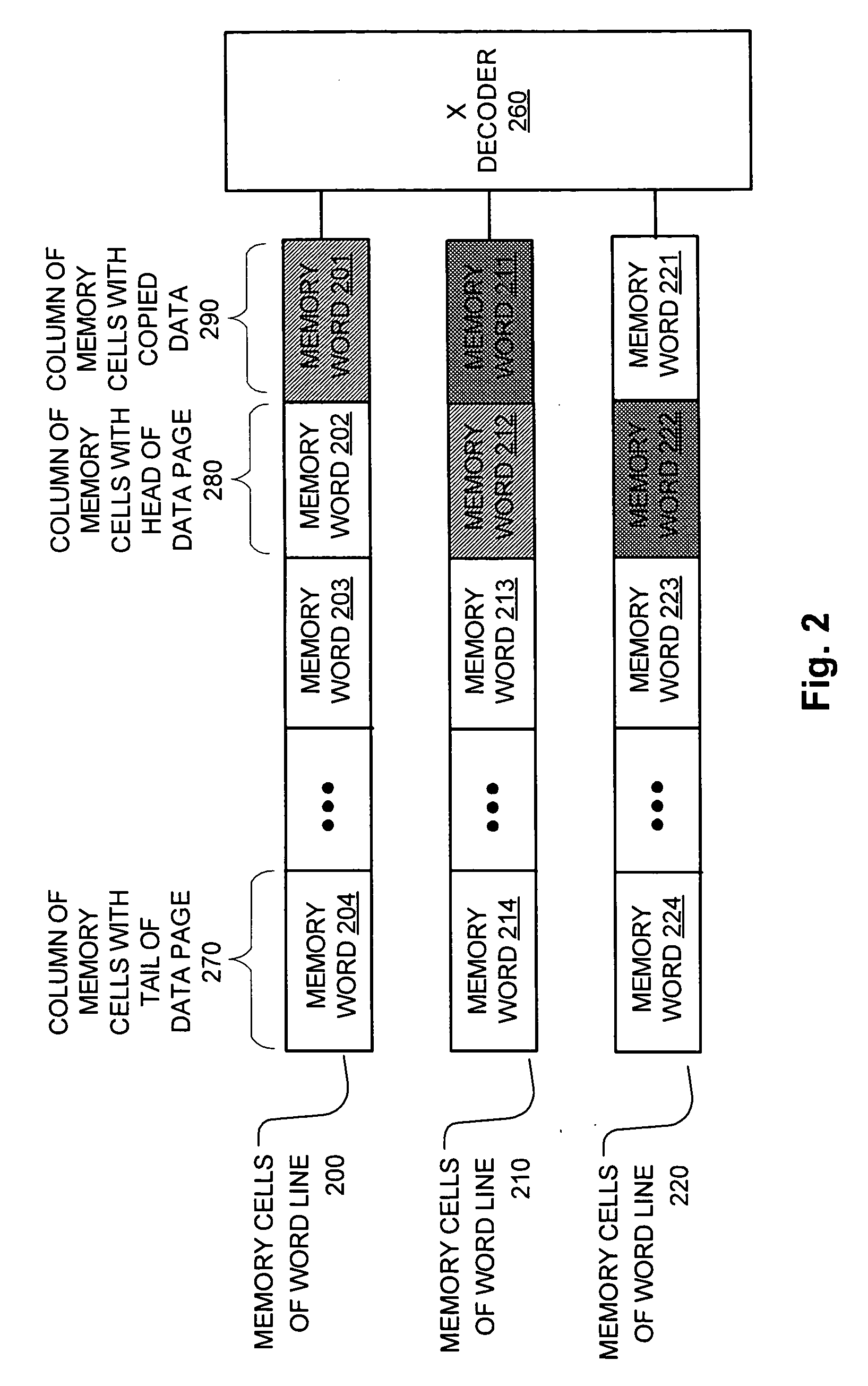

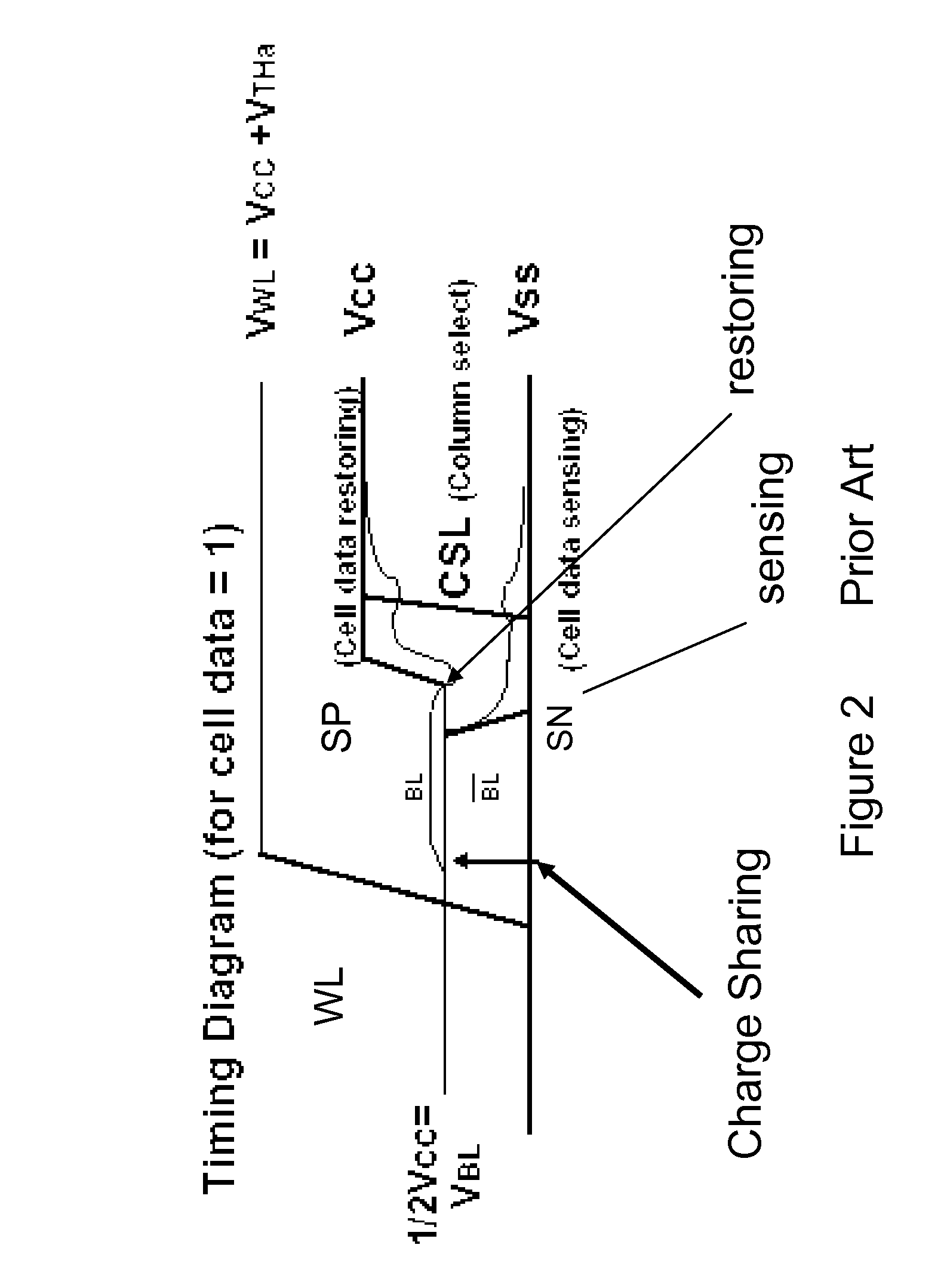

Method and apparatus for implementing high speed memory

Owner:MACRONIX INT CO LTD

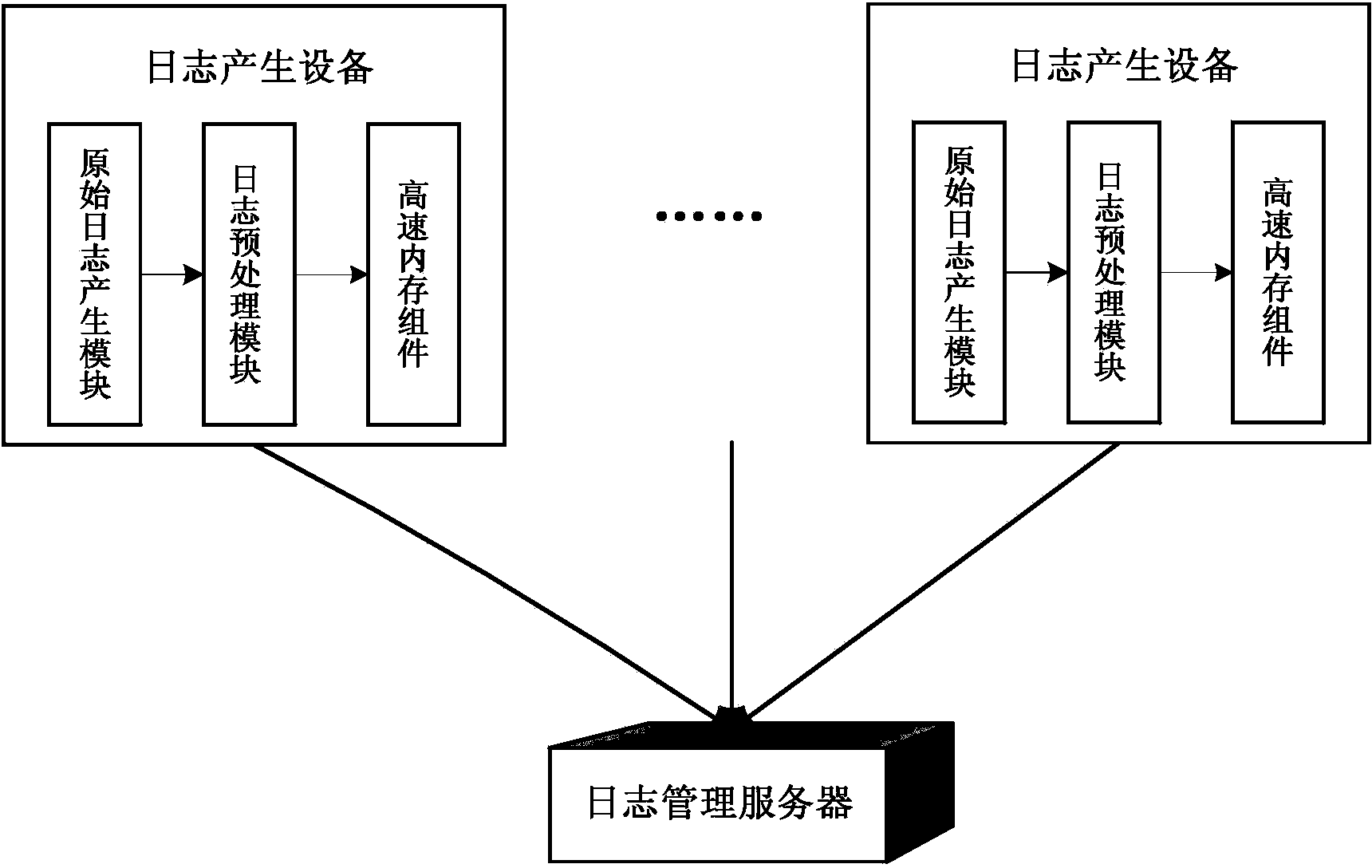

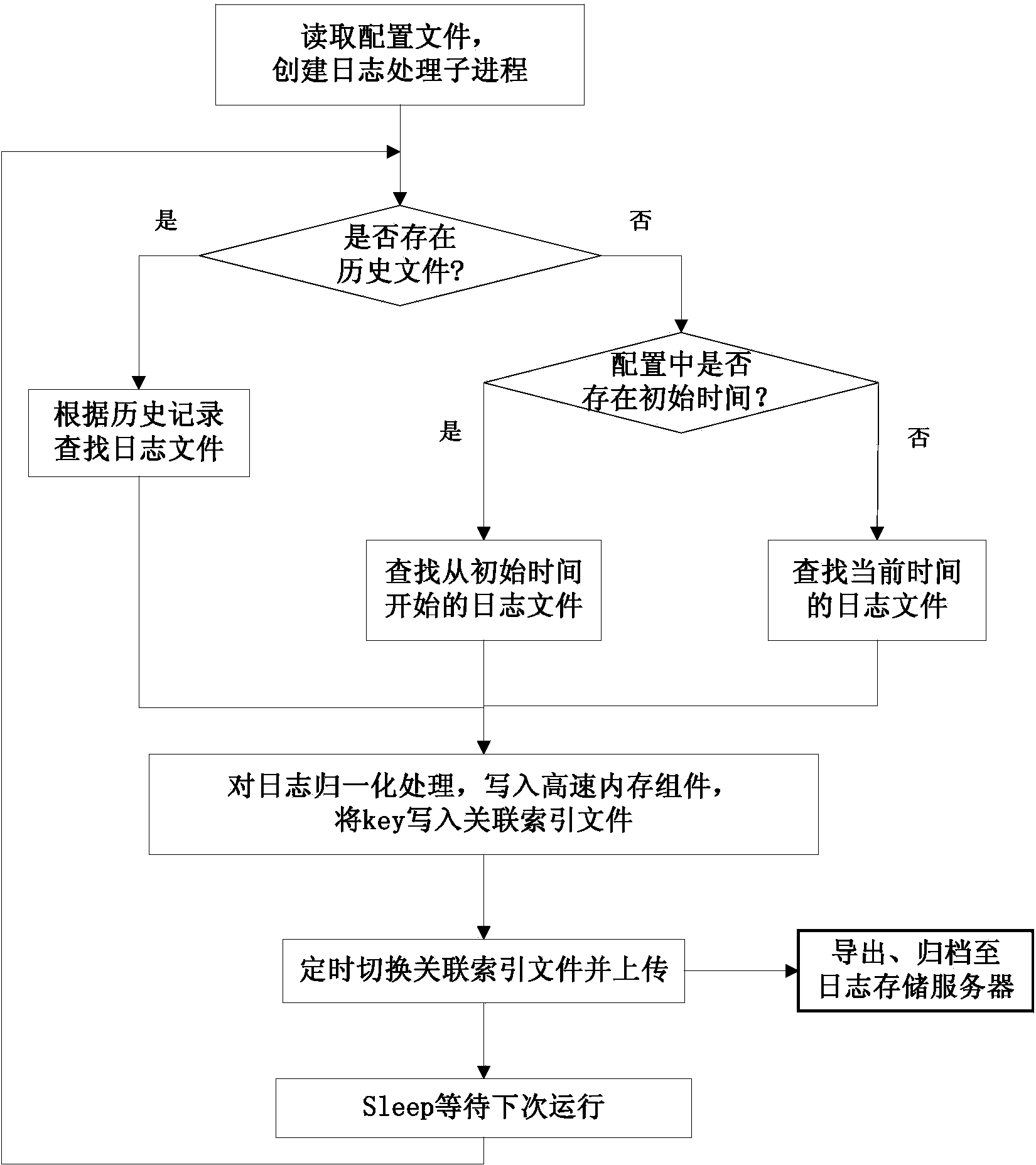

System and method for high-speed memory and distributed type processing of massive logs

ActiveCN103532754AEasy to handleReal-time processingData switching networksHigh speed memoryLog management

The invention discloses a system and a method for high-speed memory and distributed type processing of massive logs. The system comprises a plurality of log generating devices and a log management server, wherein the log generating devices are dispersed in all network elements and are used for carrying out format normalization on various original logs, caching the original logs into high-speed memory components and uploading the original logs to the log management server periodically; each log generation device is respectively provided with three parts, i.e., an original log generation module, a log pre-processing module and a high-speed memory component. The log management server is responsible for unified derivation and filing storage of the logs beyond the caching period in the high-speed memory components distributed in all the log generating devices so as to be used for inquiring and analyzing the logs by other systems. The system and the method disclosed by the invention have the beneficial effects that a plurality of types of logs can be processed in real time with high-efficiency and low cost, and both the expandability and the actionability are better. Compared with the mode of adopting a relational database to store the logs, the system and the method disclosed by the invention have the advantages that the processing cost is reduced, the bottleneck problem of the database with large data volume is also solved, and the efficiency of querying and analyzing the logs is improved.

Owner:BEIJING CAPITEK

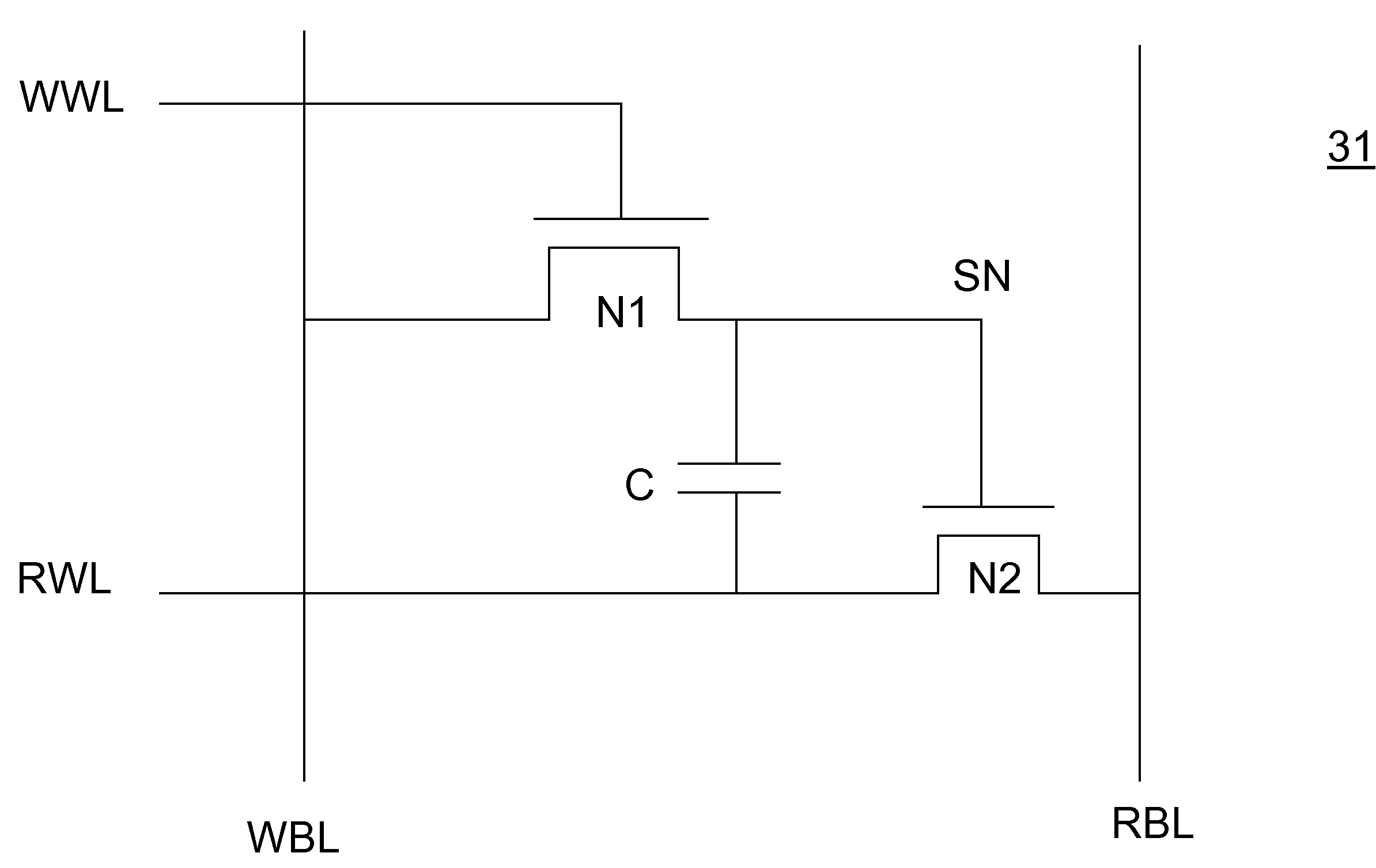

Circuit and Method for a High Speed Memory Cell

InactiveUS20100165704A1Easy to operateHigh operating requirementsDigital storageWrite bitHigh speed memory

A memory cell is disclosed, including a write access transistor coupled between a storage node and a write bit line, and active during a write cycle responsive to a voltage on a write word line; a read access transistor coupled between a read word line and a read bit line, and active during a read cycle responsive to a voltage at the storage node; and a storage capacitor coupled between the read word line and the storage node. Methods for operating the memory cell are also disclosed.

Owner:TAIWAN SEMICON MFG CO LTD

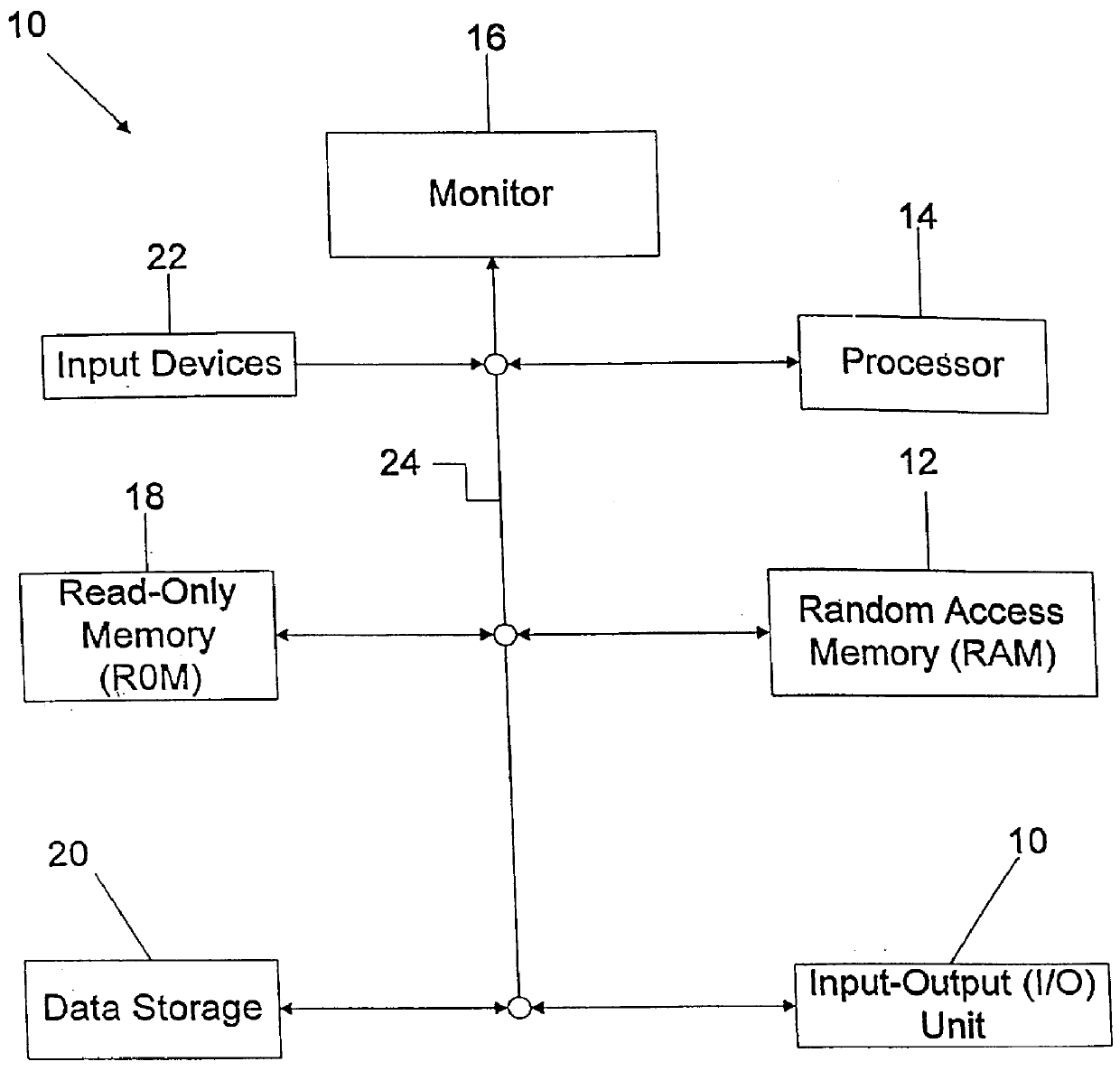

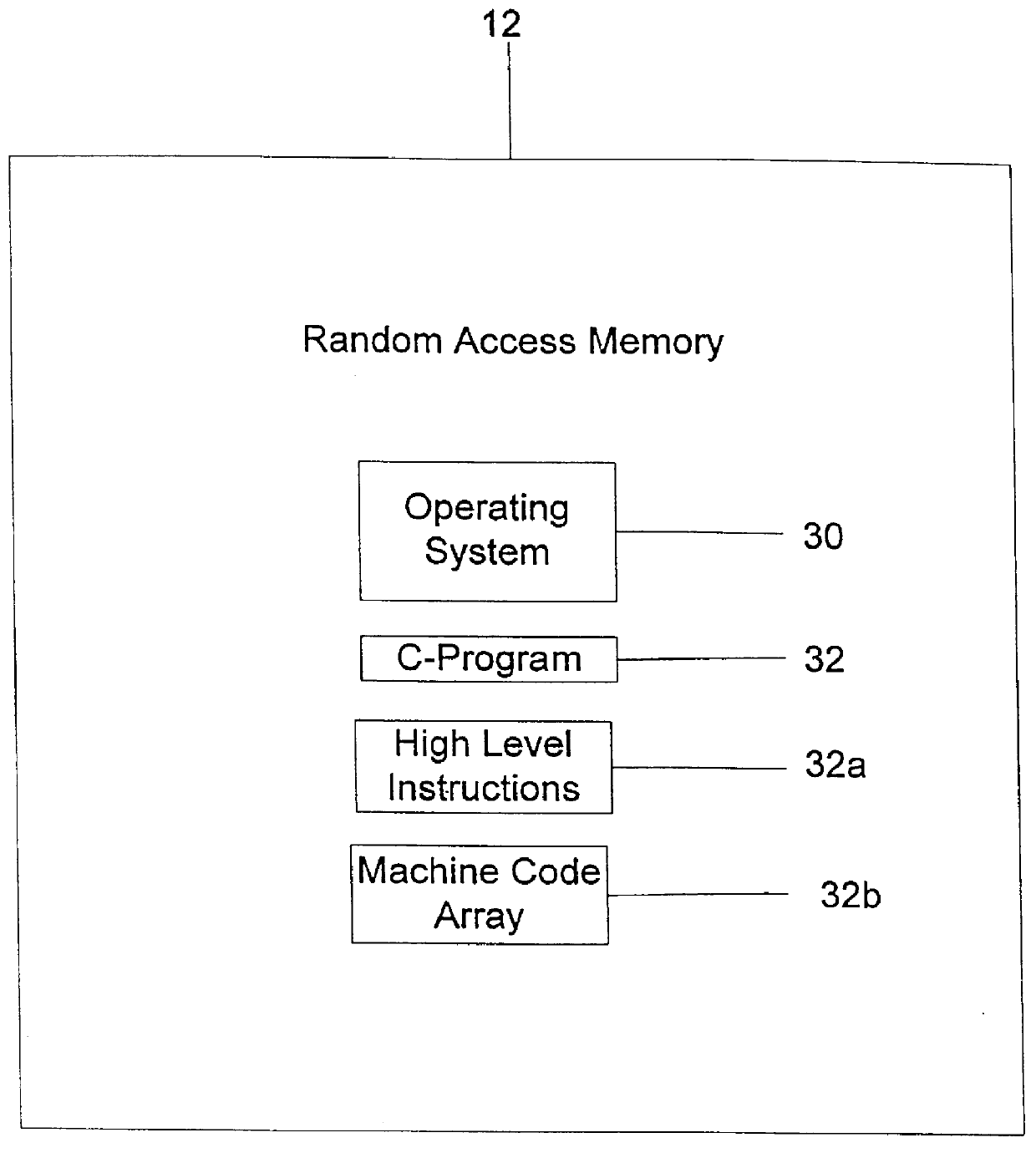

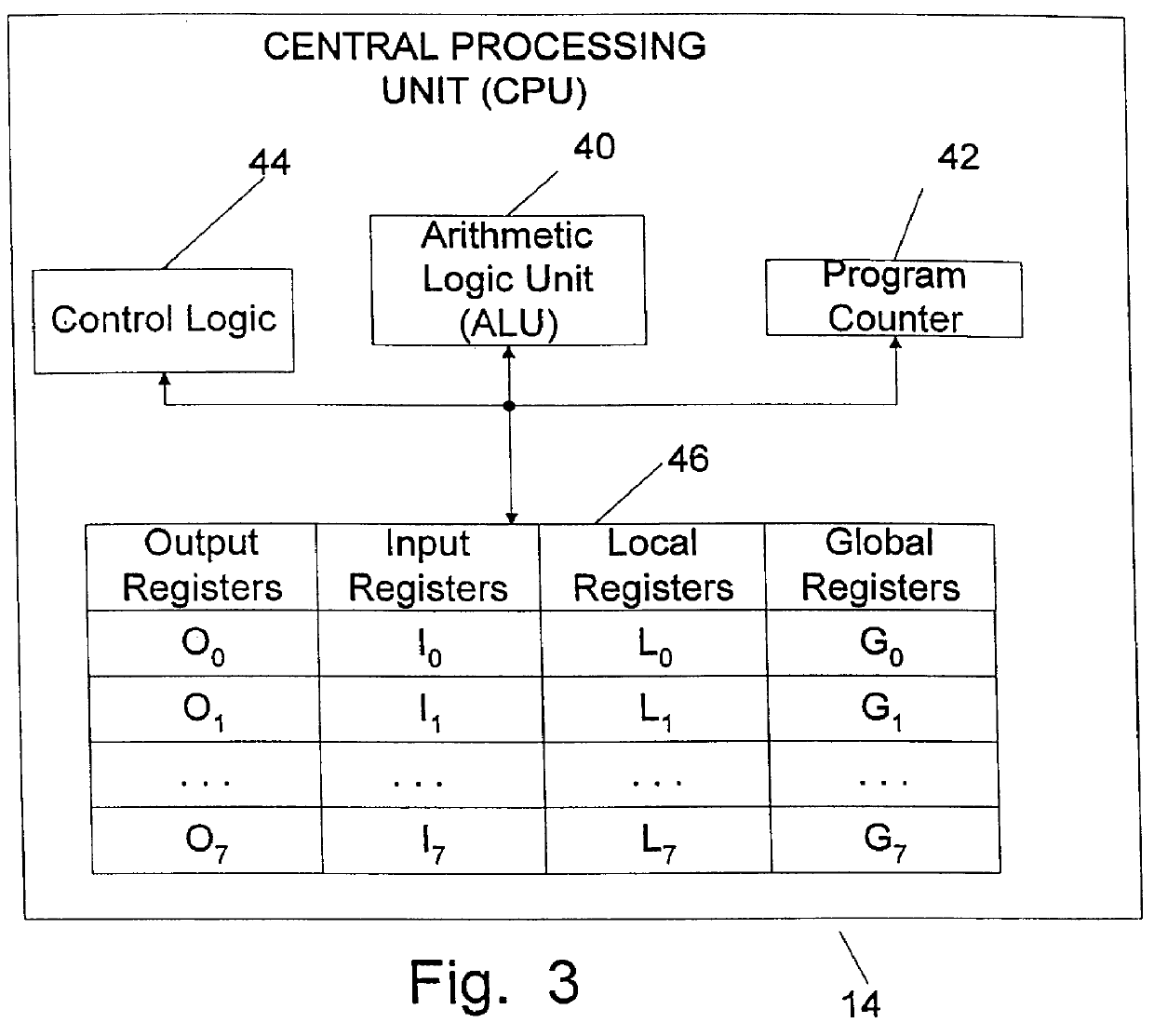

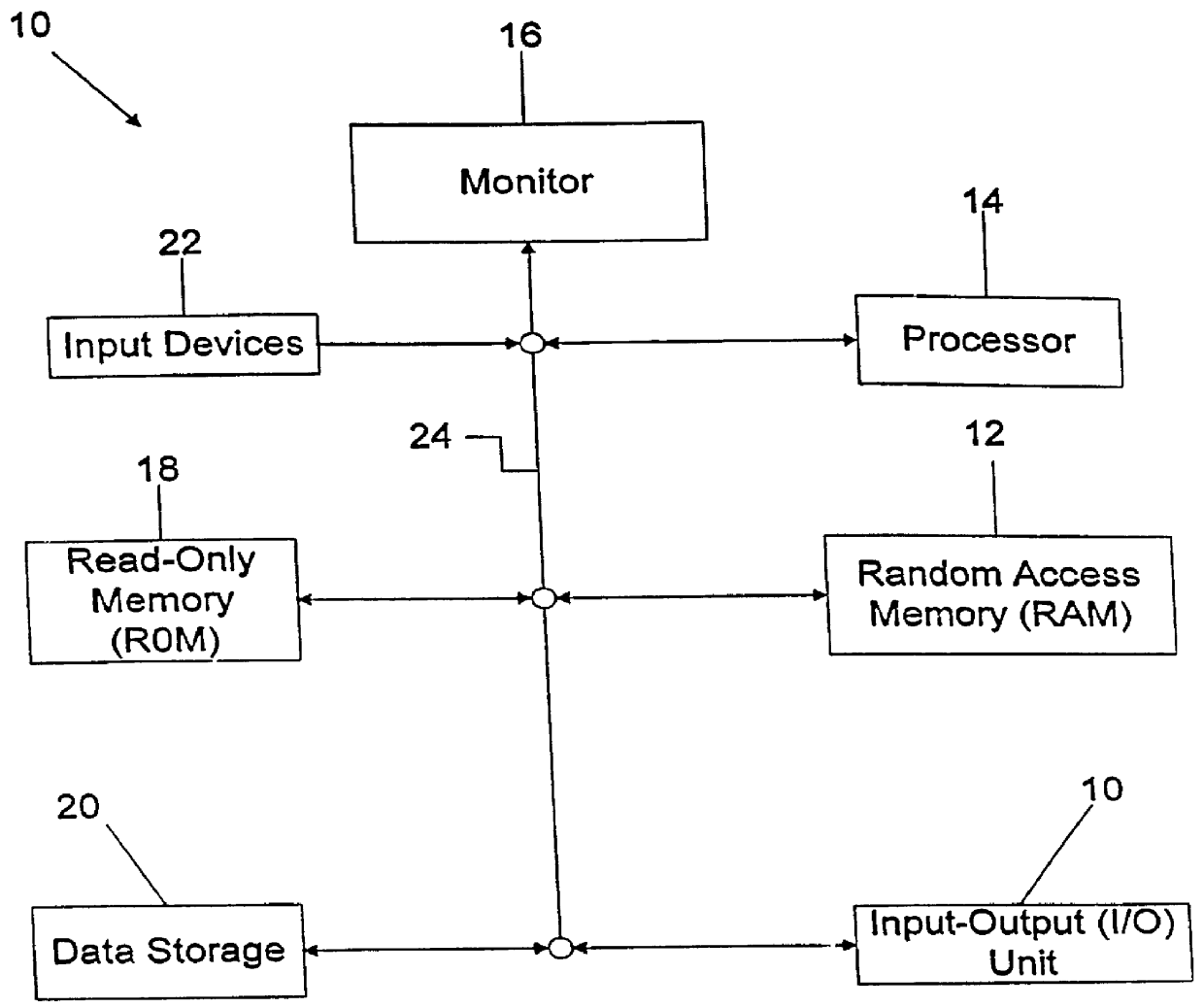

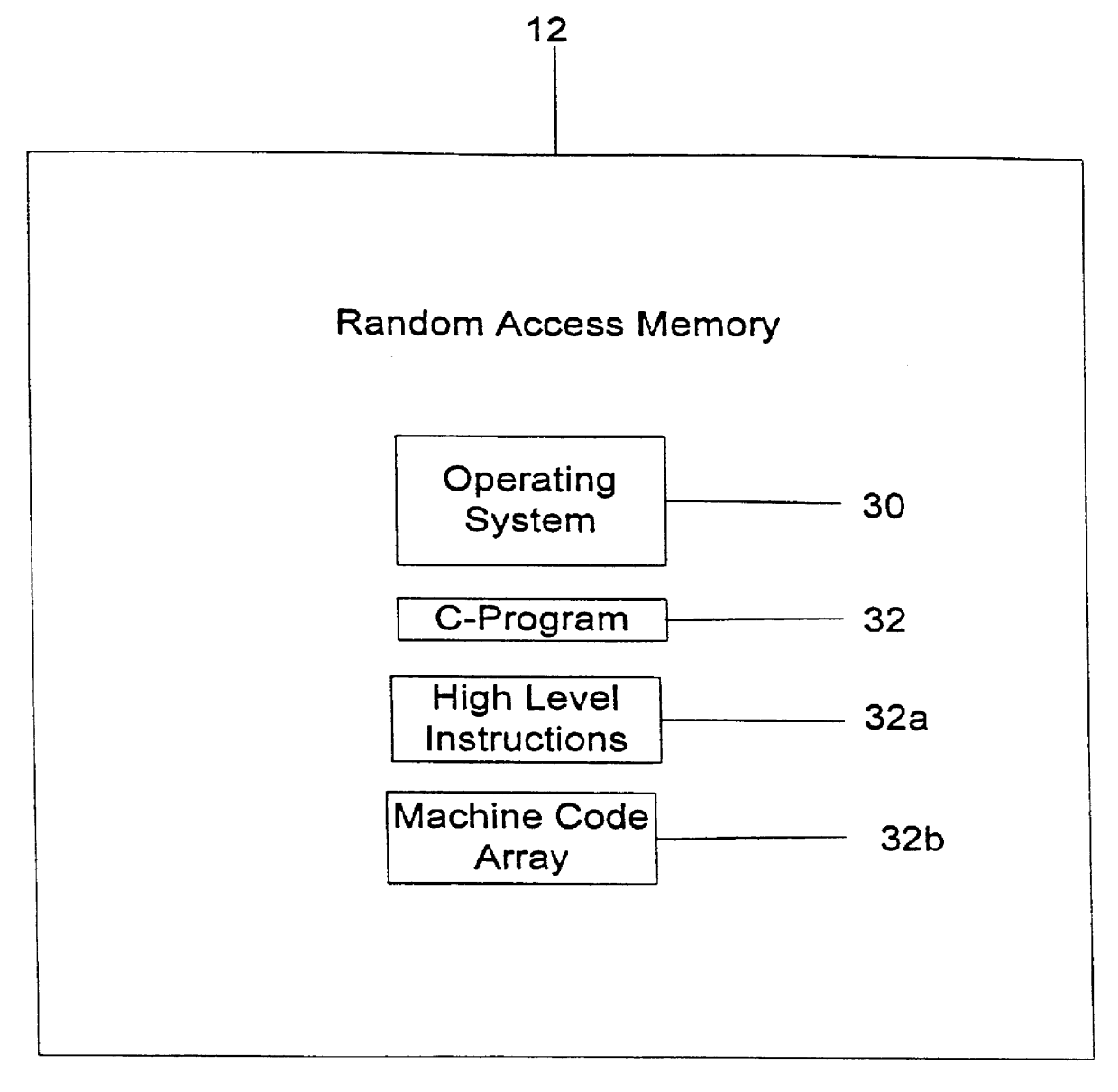

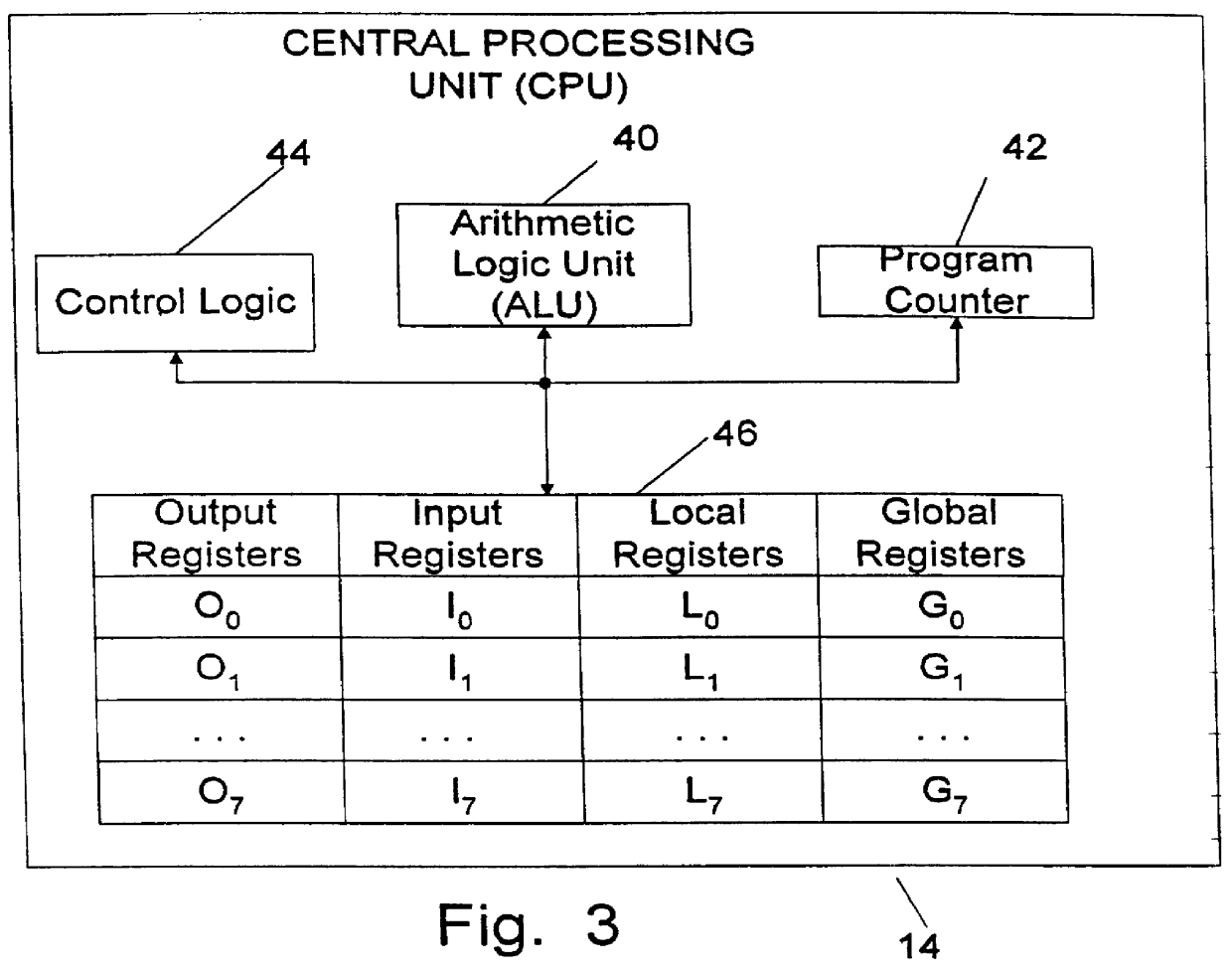

Computer implemented machine learning method and system

One or more machine code entities such as functions are created which represent solutions to a problem and are directly executable by a computer. The programs are created and altered by a program in a higher level language such as "C" which is not directly executable, but requires translation into executable machine code through compilation, interpretation, translation, etc. The entities are initially created as an integer array that can be altered by the program as data, and are executed by the program by recasting a pointer to the array as a function type. The entities are evaluated by executing them with training data as inputs, and calculating fitnesses based on a predetermined criterion. The entities are then altered based on their fitnesses using a machine learning algorithm by recasting the pointer to the array as a data (e.g. integer) type. This process is iteratively repeated until an end criterion is reached. The entities evolve in such a manner as to improve their fitness, and one entity is ultimately produced which represents an optimal solution to the problem. Each entity includes a plurality of directly executable machine code instructions, a header, a footer, and a return instruction. The instructions include branch instructions which enable subroutines, leaf functions, external function calls, recursion, and loops. The system can be implemented on an integrated circuit chip, with the entities stored in high speed memory in a central processing unit.

Owner:NORDIN PETER +1

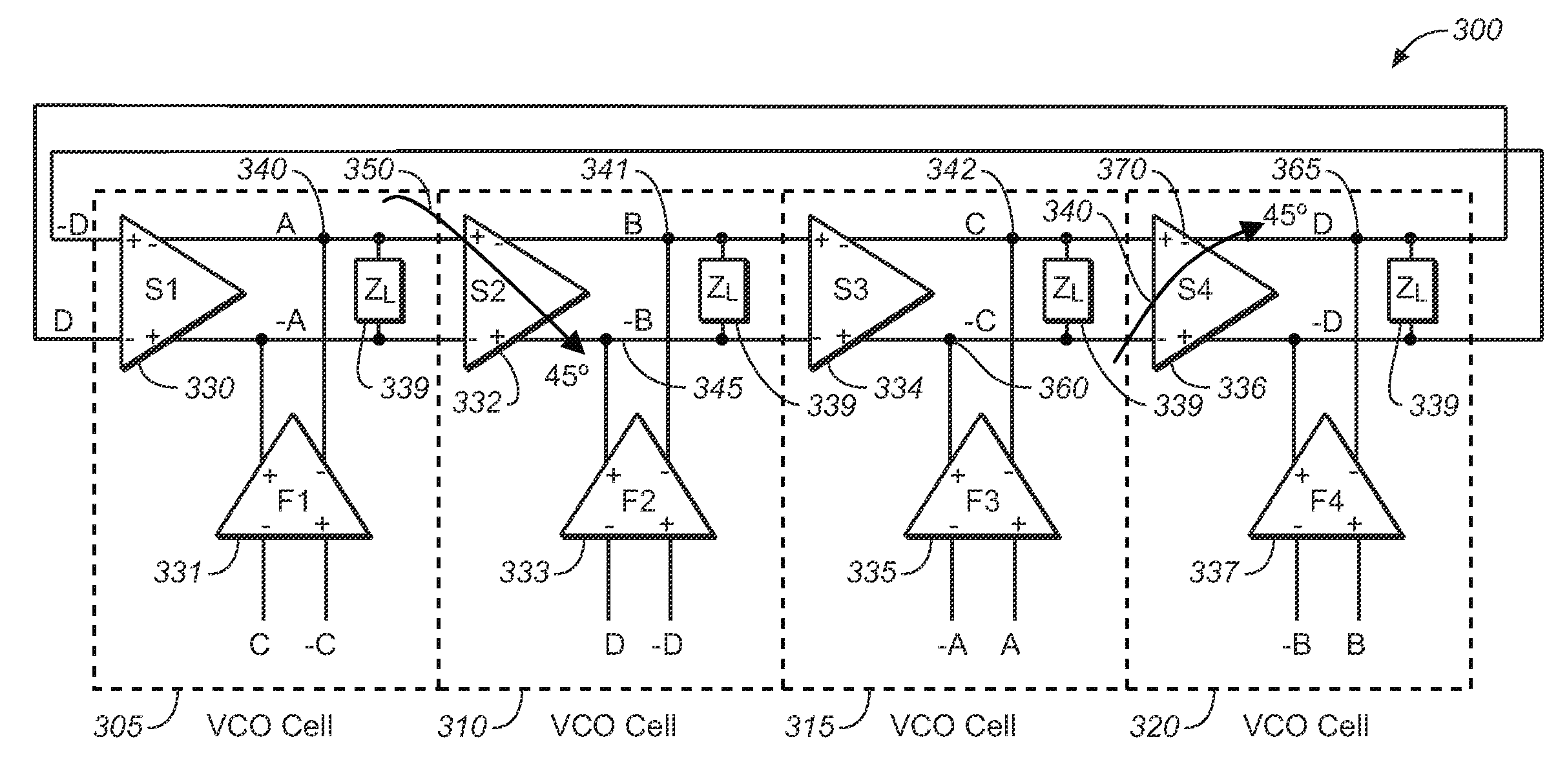

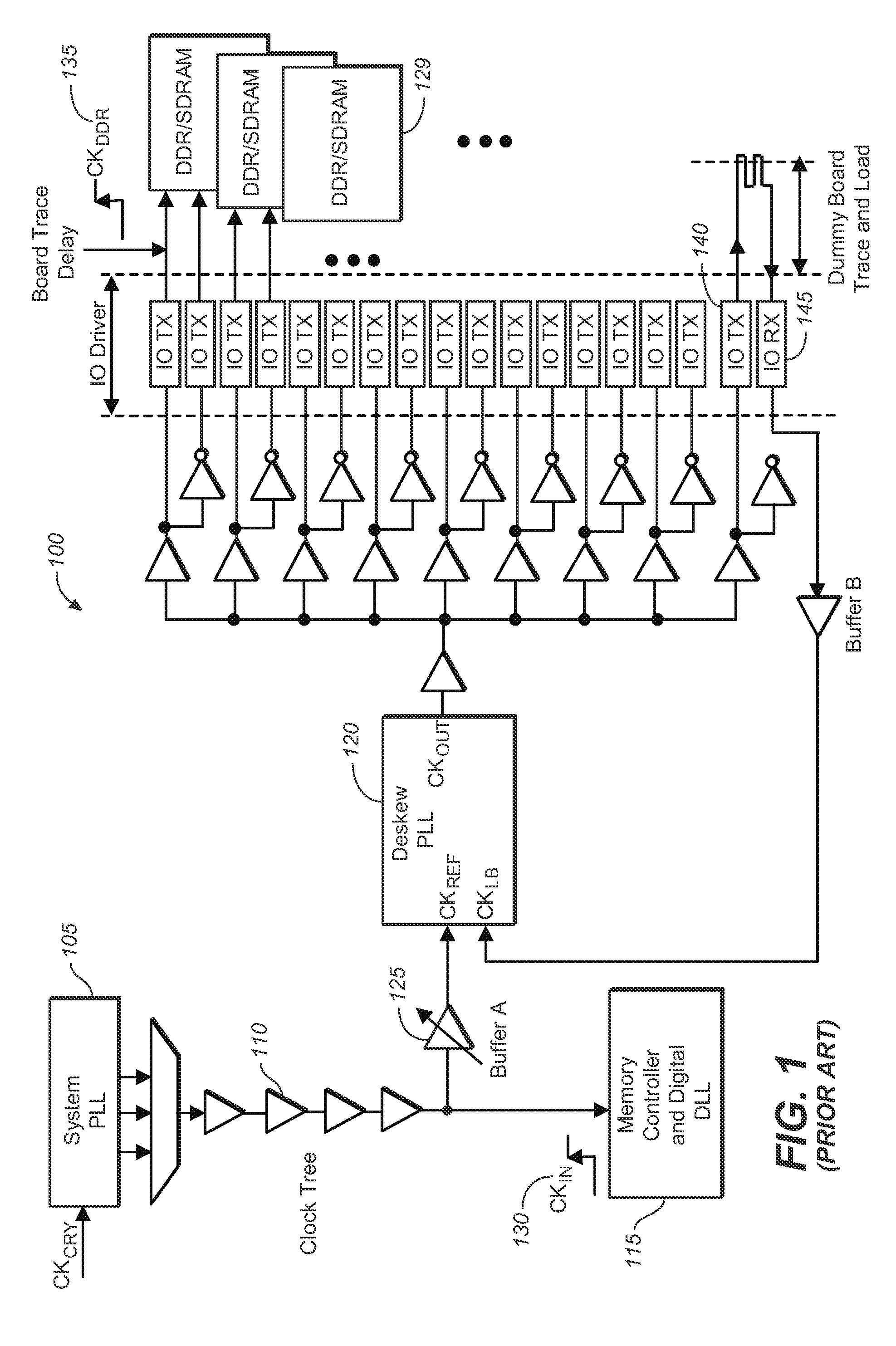

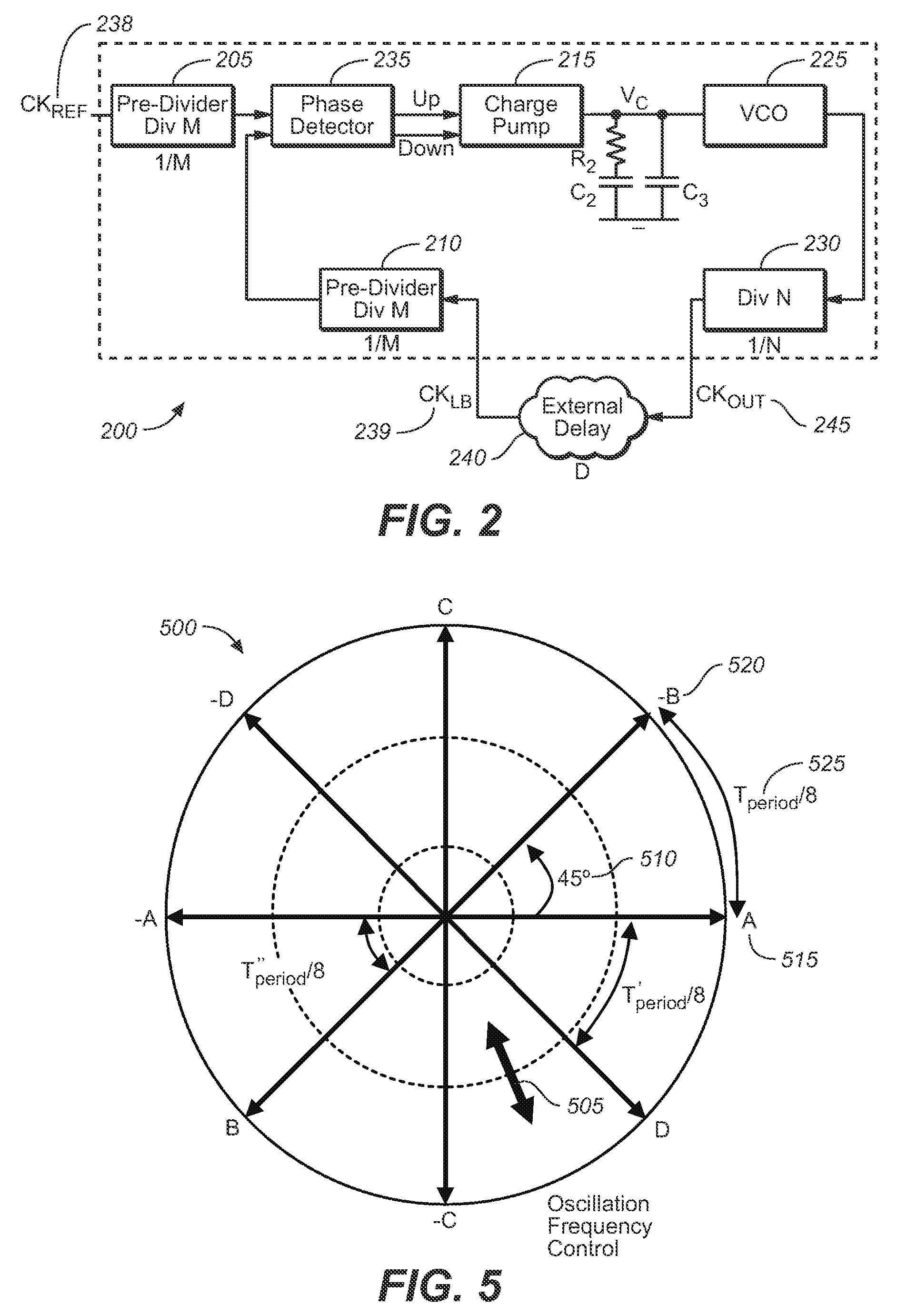

System and method for phase-locked loop (PLL) for high-speed memory interface (HSMI)

ActiveUS7659783B2Optimize allocationMeet the blocking requirementsPulse automatic controlGenerator stabilizationLoop filterDouble data rate

A phase-locked loop (PLL) to provide clock generation for high-speed memory interface is presented as the innovate PLL (IPLL). The IPLL architecture is able to tolerate external long loop delay without deteriorating jitter performance. The IPLL comprises in part a common mode feedback circuit with a current mode approach, so as to minimize the effects of mismatch in charge-pump circuit, for instance. The voltage-controlled oscillator (VCO) of the IPLL is designed using a mutually interpolating technique generating a 50% duty clock output, beneficial to high-speed double data rate applications. The IPLL further comprises loop filter voltages that are directly connected to each VCO cell of the IPLL. Conventional voltage-to-current (V-I) converter between loop filter and VCO is hence not required. A tight distribution of VCO gain curves is therefore obtained for the present invention across process corners and varied temperatures.

Owner:MICREL

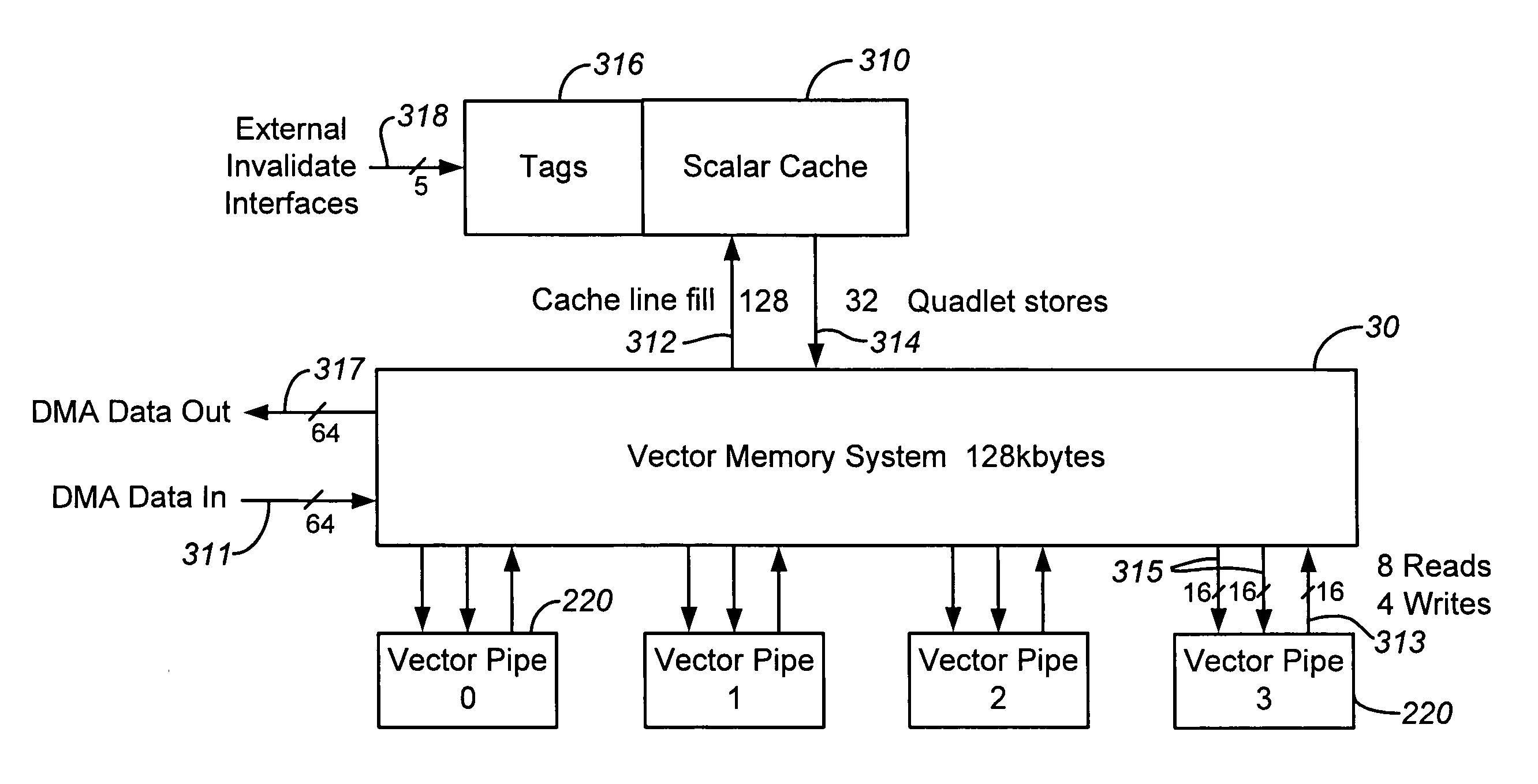

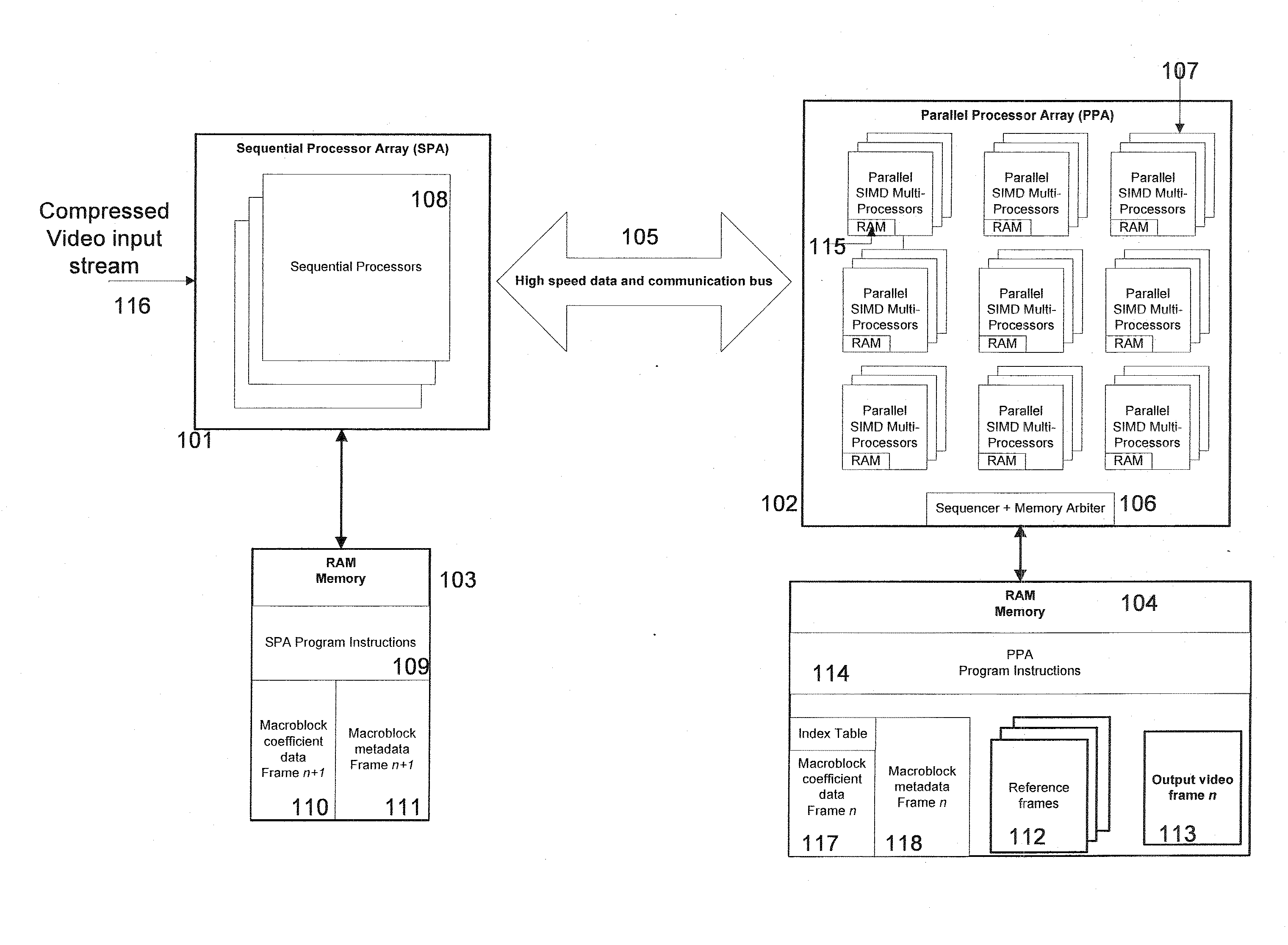

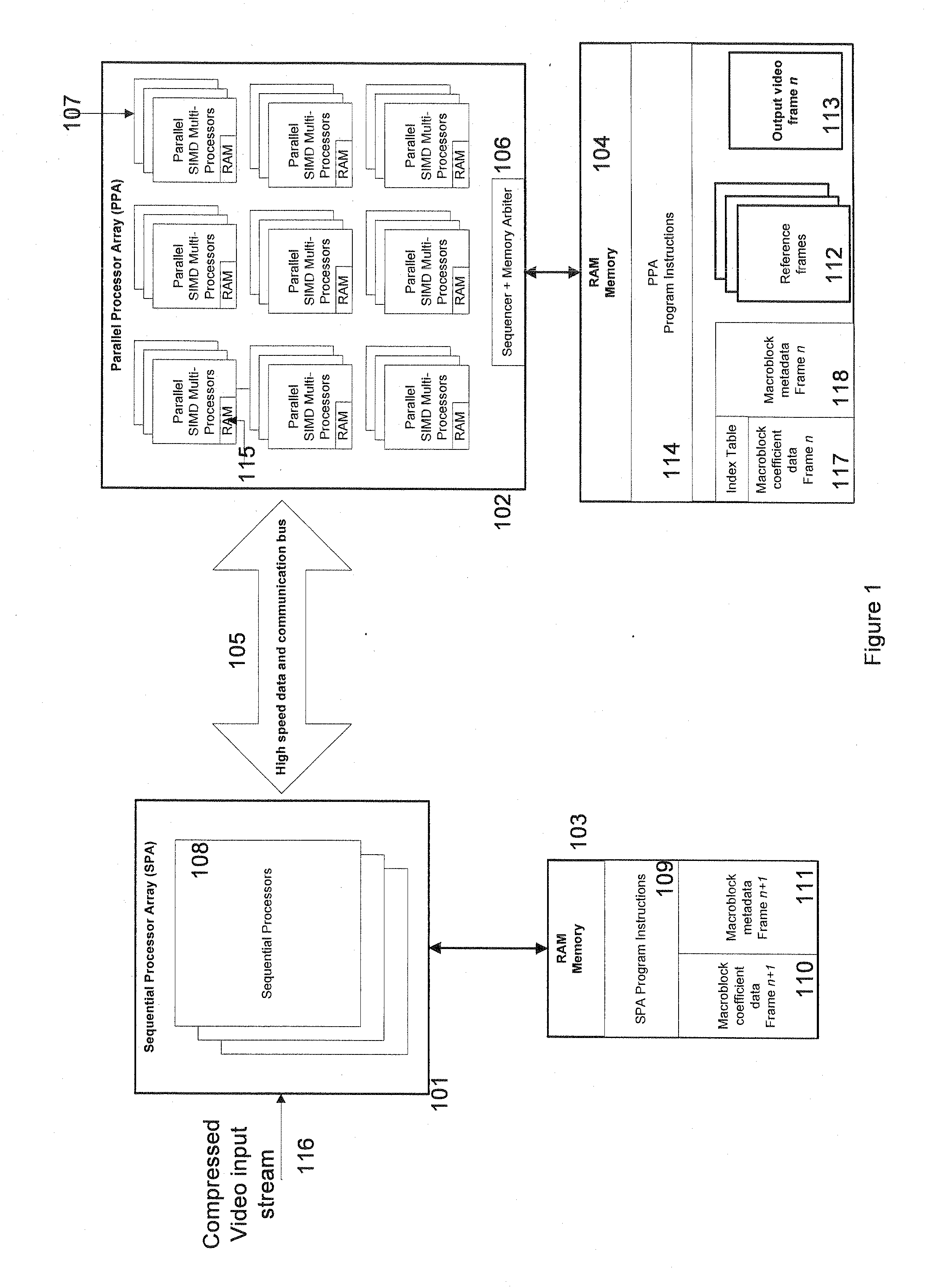

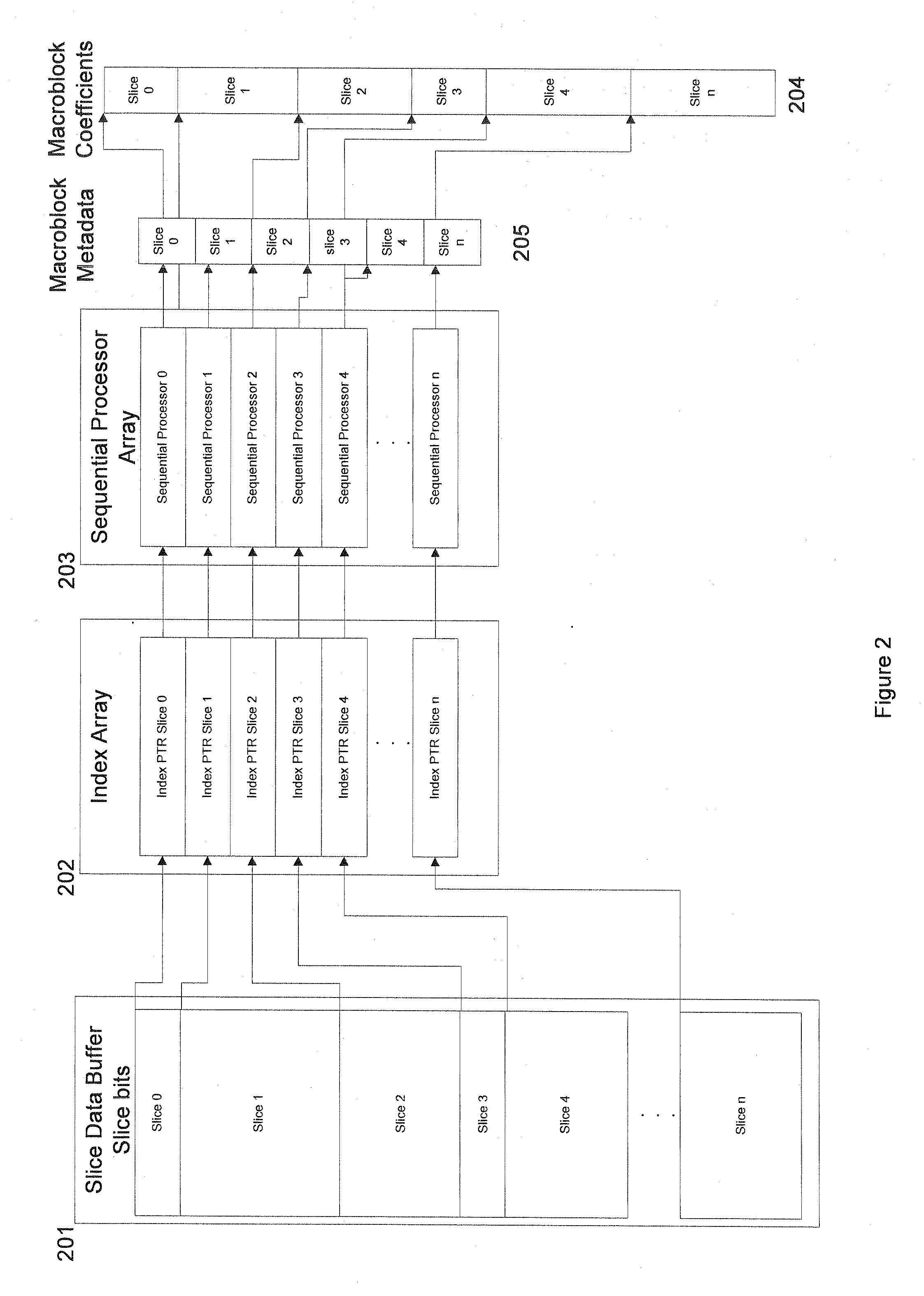

Video encoding and decoding using parallel processors

ActiveUS20090125538A1Single instruction multiple data multiprocessorsPicture reproducers using cathode ray tubesVideo bitstreamGeneral purpose

A method is disclosed for the decoding and encoding of a block-based video bit-stream such as MPEG2, H.264-AVC, VC1, or VP6 using a system containing one or more high speed sequential processors, a homogenous array of software configurable general purpose parallel processors, and a high speed memory system to transfer data between processors or processor sets. This disclosure includes a method for load balancing between the two sets of processors.

Owner:ELEMENTAL TECH LLC

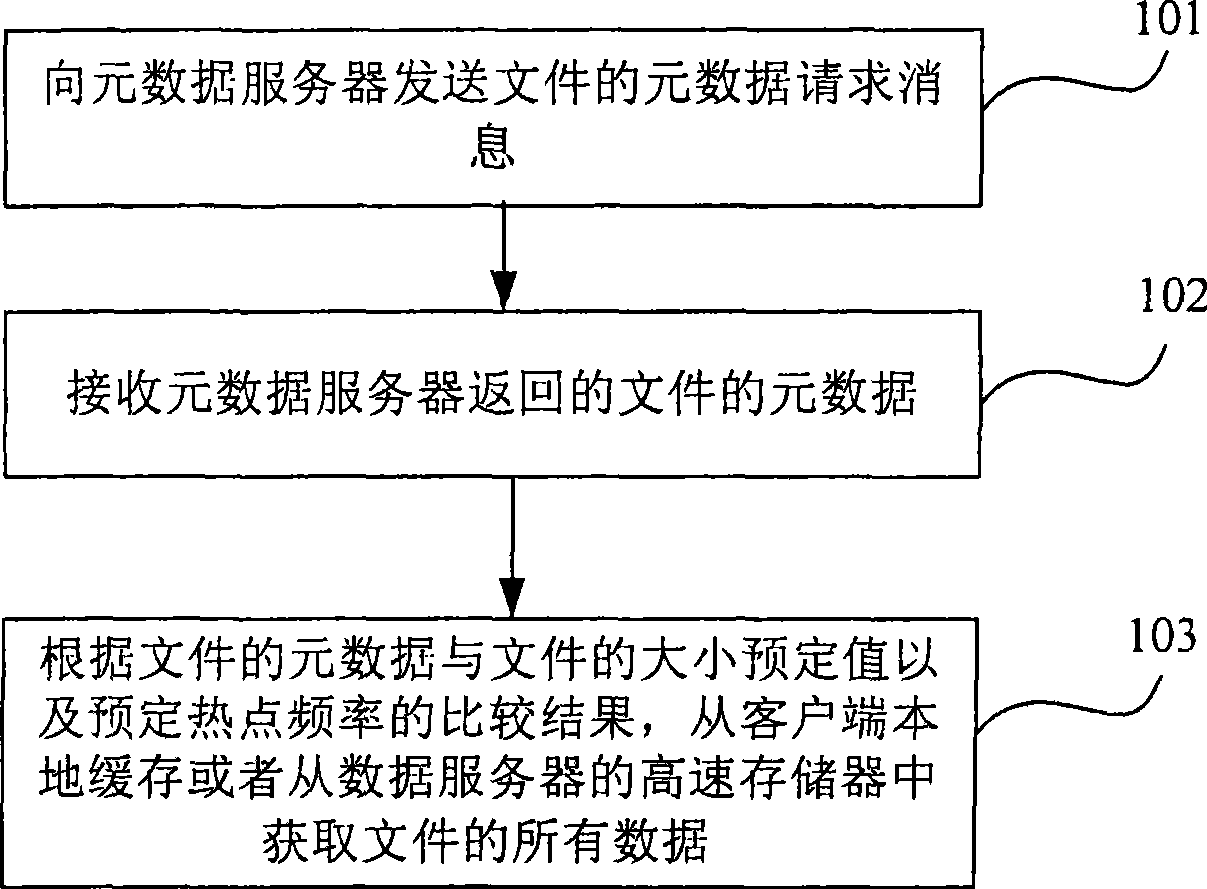

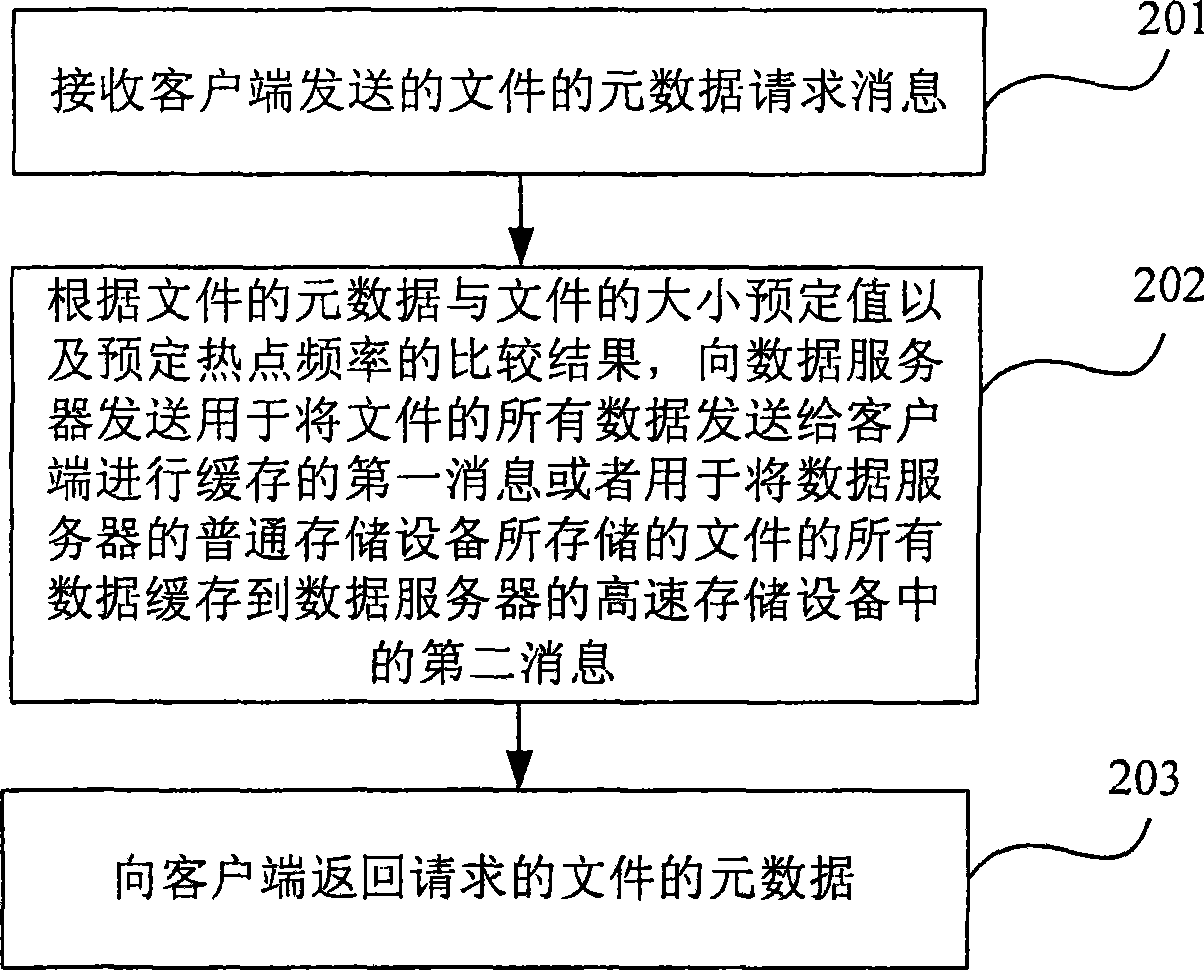

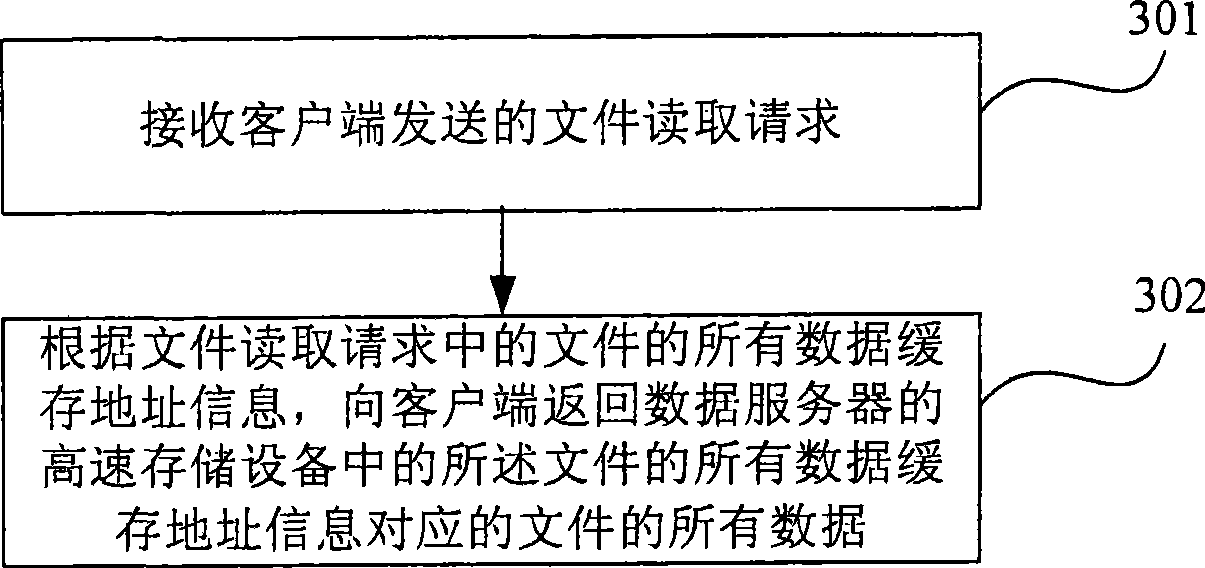

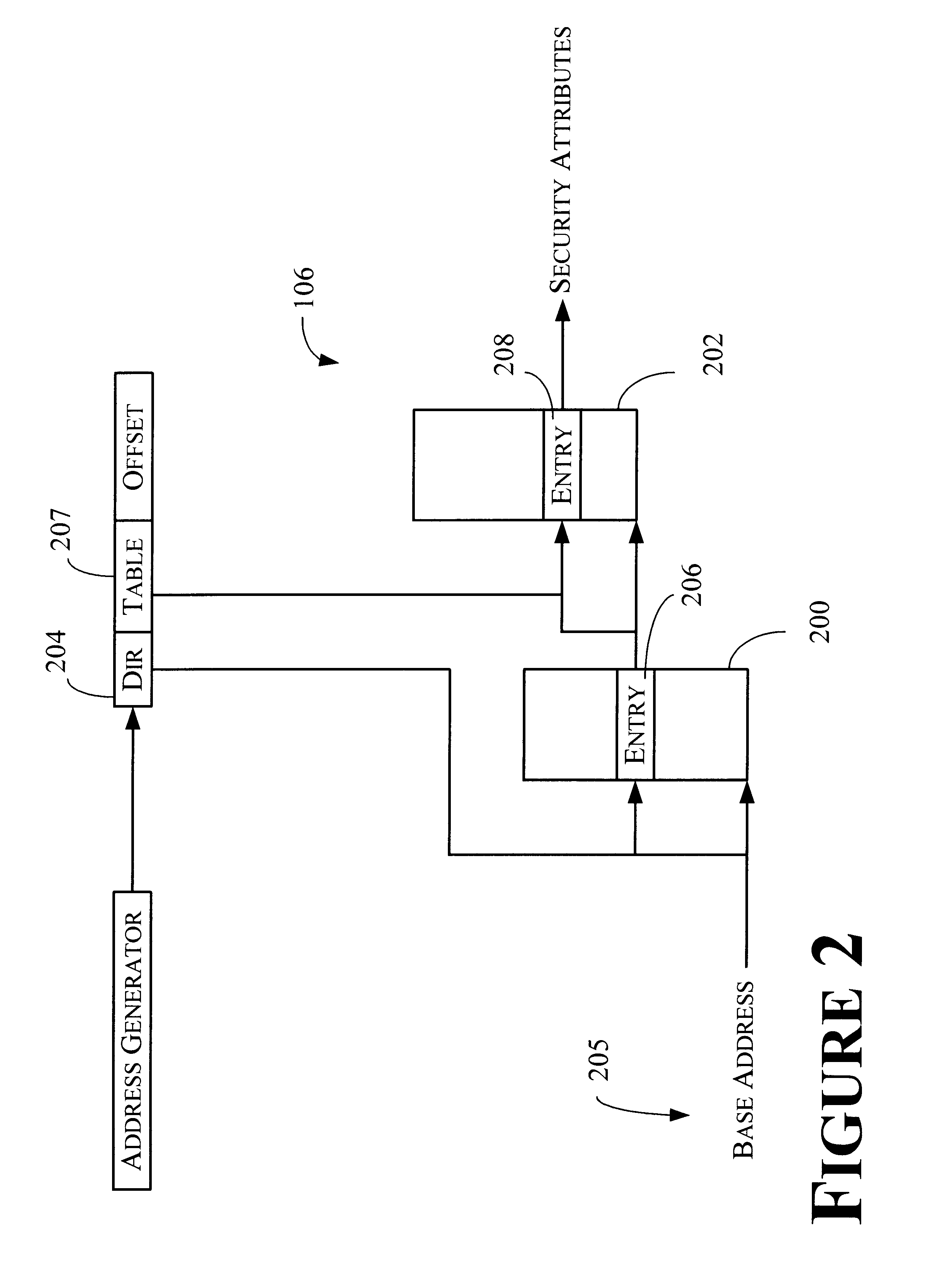

File data accessing method, apparatus and system

ActiveCN101510219AImplement multi-level cachingImprove reading efficiencyTransmissionSpecial data processing applicationsTraffic capacityHigh speed memory

The invention provides a method, a device and a system for file data access, and the file data accessing method comprises the following steps: a file metadata request message is sent to a metadata server; file metadata returned by the metadata server is received; and according to the comparison result of the file metadata, a preset file size value and preset hotspot frequency, all the file data is obtained from a local cache of the client terminal or a high-speed memory of a data server. The file data accessing method, the device and the system realize multilevel caching of files with small hotspot, and can obtain all the file data from the local cache or the high-speed cache of the data server especially for the files with small hotspot, thus improving the reading efficiency of the files with small hotspot in network memory, and further raising the access speed of the files with small hotspot and reducing network flow.

Owner:CHENGDU HUAWEI TECH

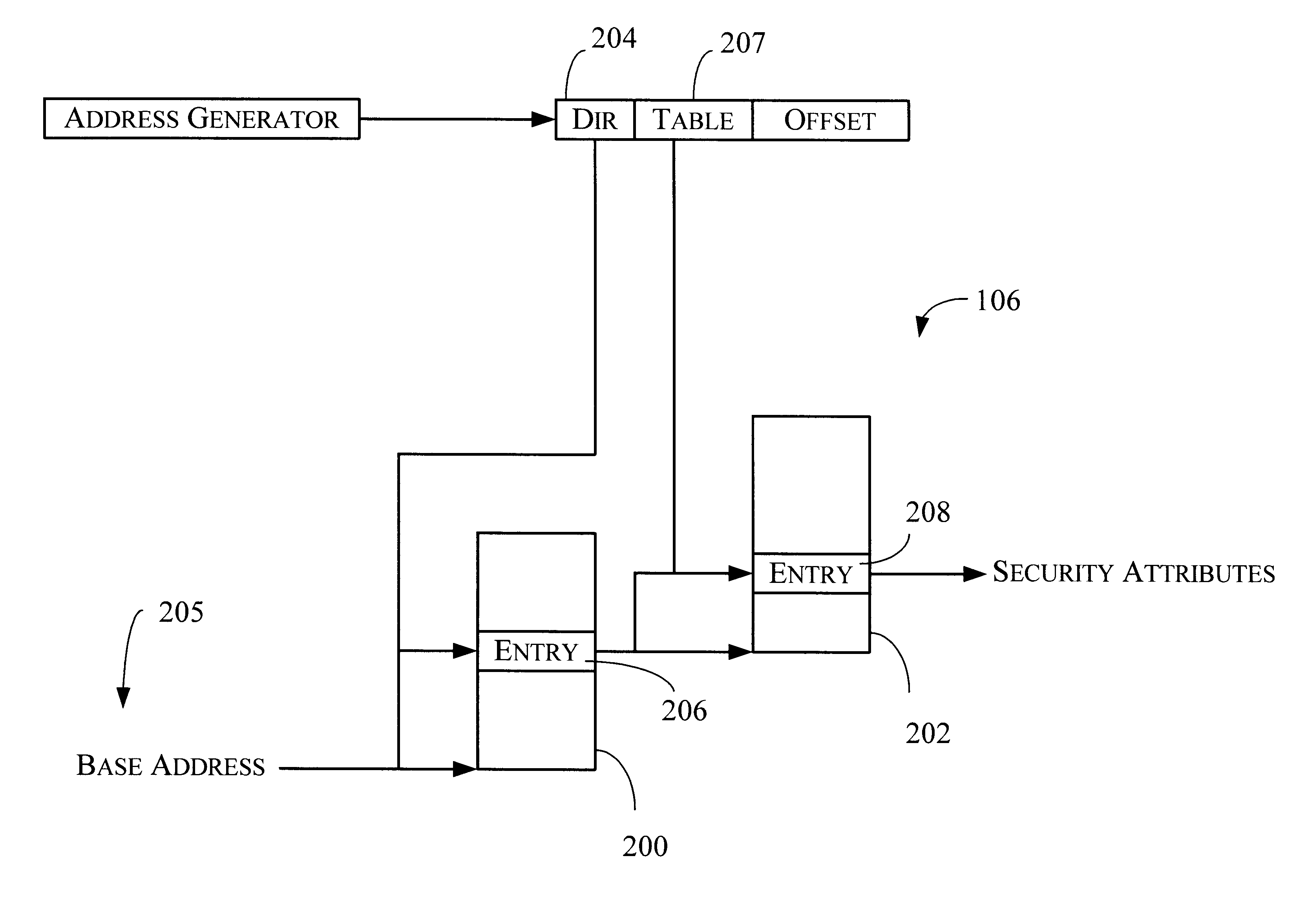

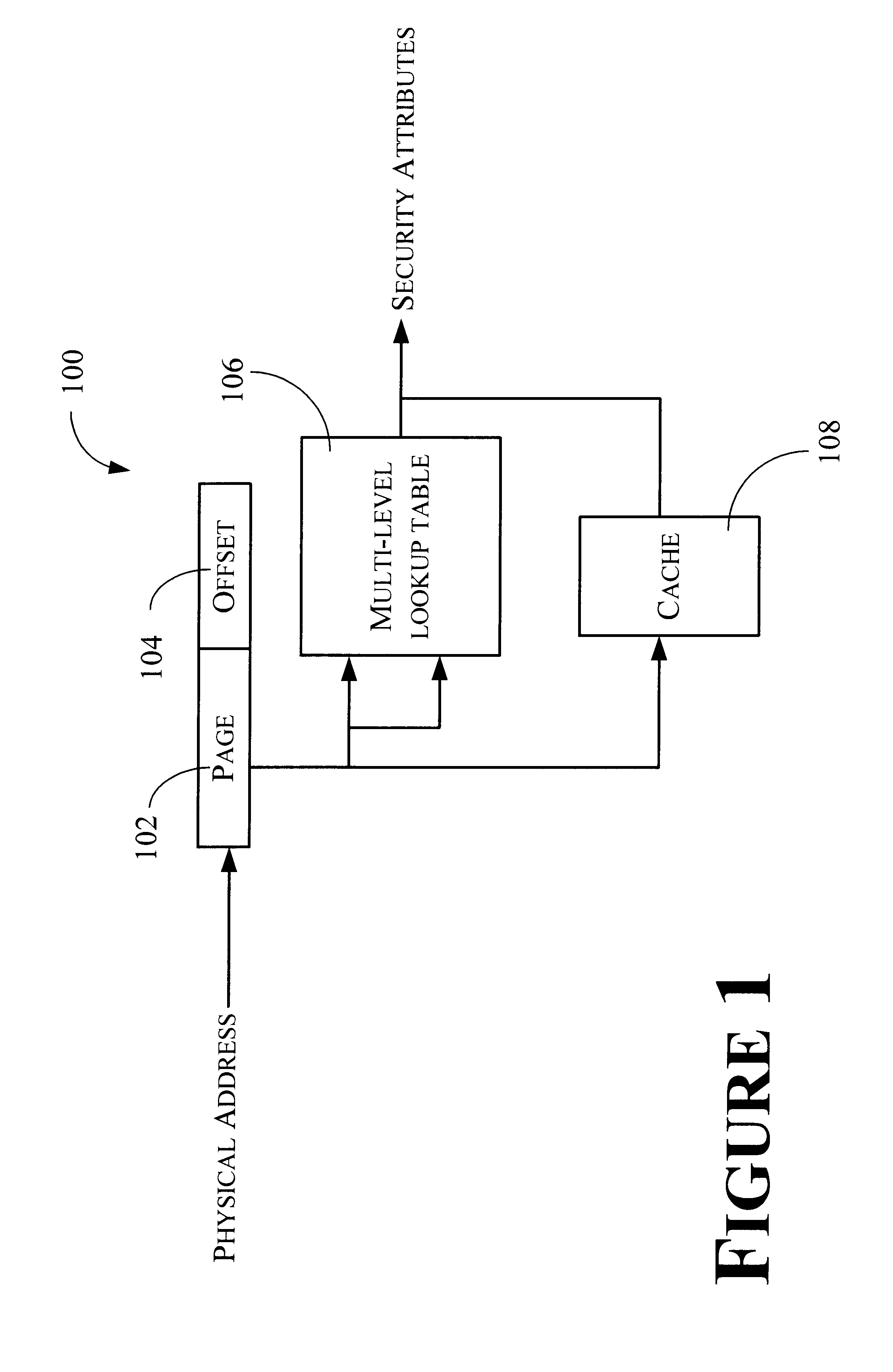

Method and apparatus for storing and retrieving security attributes

An apparatus is provided for providing security in a computer system. The apparatus comprises an address generator, a multi-level lookup table, and a cache. The address generator is adapted for producing an address associated with a memory location in the computer system. The multi-level lookup table is adapted for receiving at least a portion of said address and delivering security attributes stored therein associated with said address, wherein the security attributes are associated with each page of memory in the computer system. The cache is a high-speed memory that contains a subset of the information contained in the multi-level lookup table, and may be used to speed the overall retrieval of the requested security attributes when the requested information is present in the cache.

Owner:ADVANCED MICRO DEVICES INC

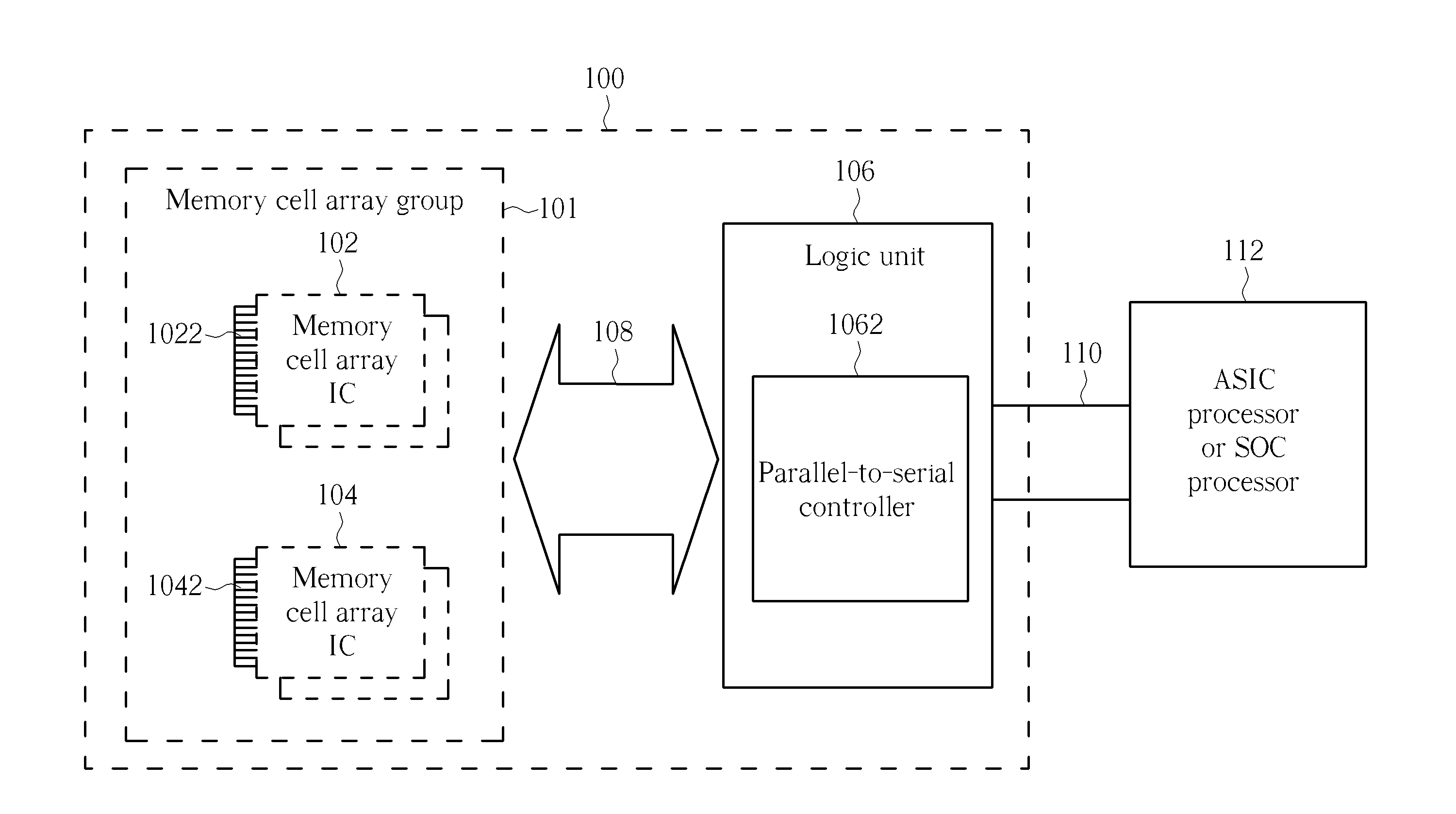

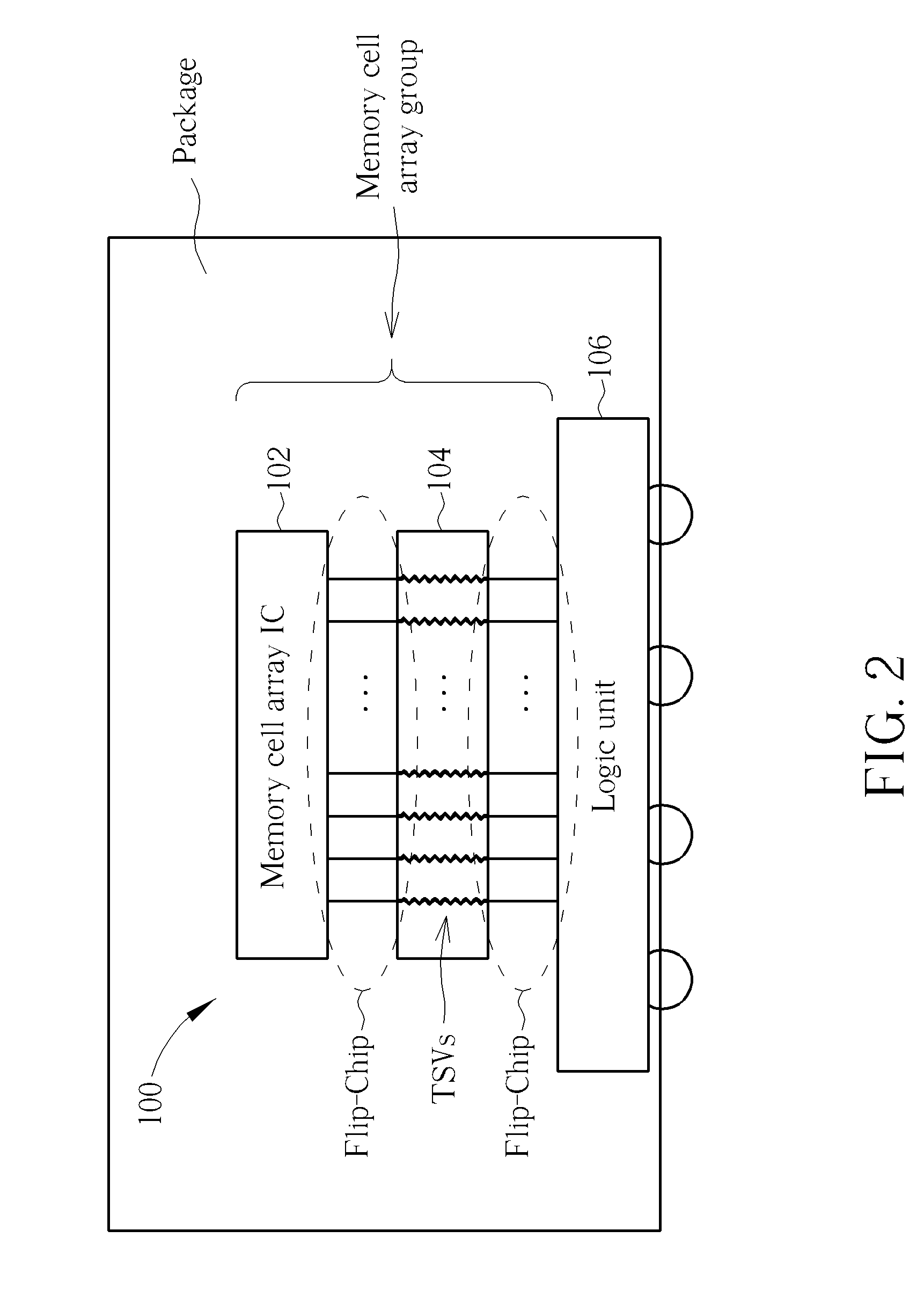

Reconfigurable high speed memory chip module and electronics system device

ActiveUS20130091312A1Improve shielding effectImprove cooling effectSemiconductor/solid-state device detailsSolid-state devicesElectronic systemsHigh speed memory

A reconfigurable high speed memory chip module includes a type of memory cell array group, a first transmission bus, and a logic unit. The type memory cell array group includes multiple memory cell array integrated circuits (ICs). The first transmission bus coupled to the type memory cell array group has a first programmable transmitting or receiving data rate, a first programmable transmitting or receiving signal swing, a first programmable bus width, and a combination thereof. The logic unit is coupled to the first transmission bus for accessing the type memory cell array group through the first transmission bus.

Owner:ETRON TECH INC

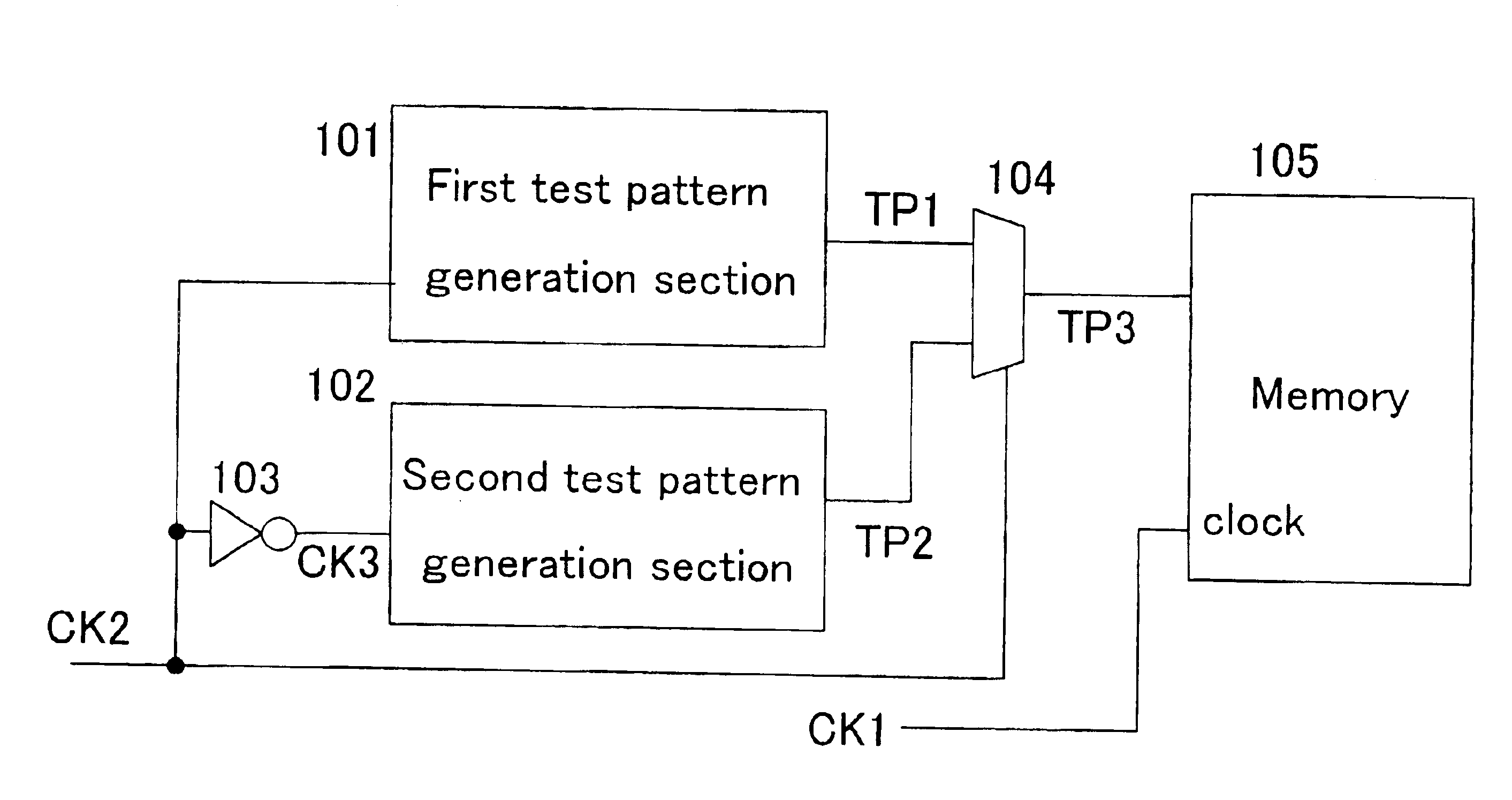

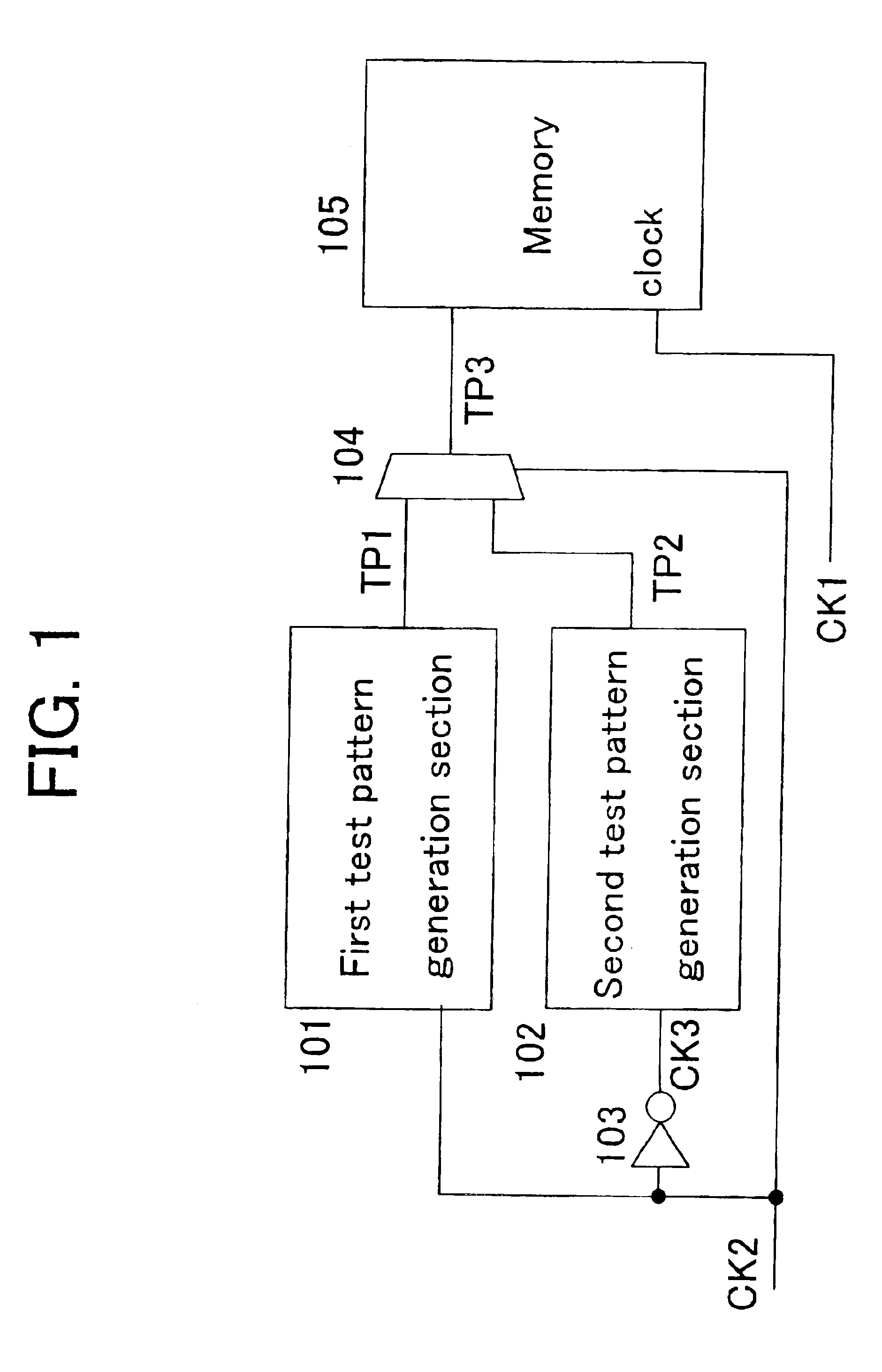

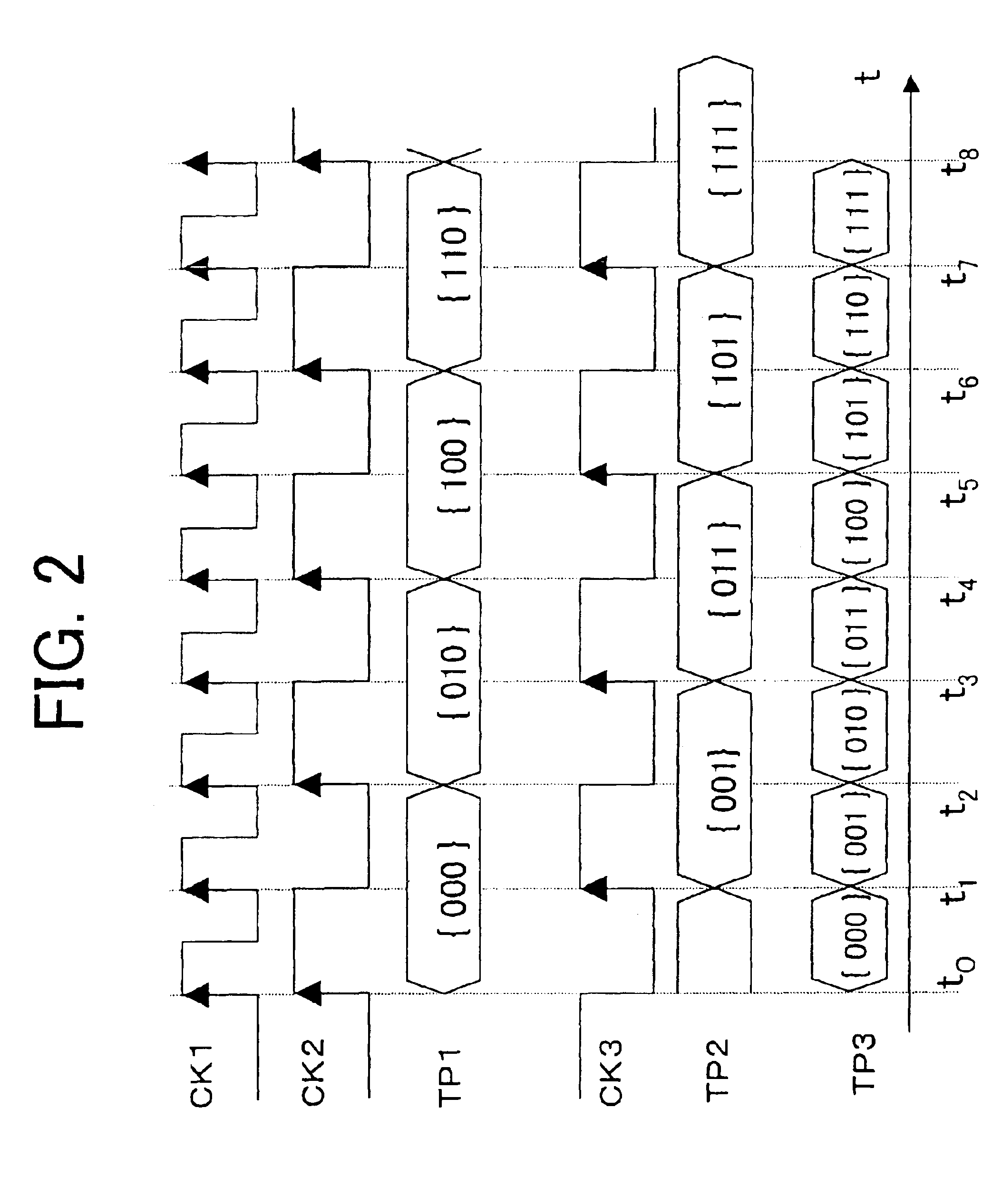

Semiconductor integrated circuit and memory test method

InactiveUS6917215B2Improve test qualityQuality improvementResistance/reactance/impedenceStatic storageHigh speed memoryClock rate

The present invention provides a semiconductor integrated circuit capable of testing a high-speed memory at the actual operation speed of the memory even when the operation speed of the BIST circuit of the integrated circuit is restricted.In order to test a memory operating on a first clock, the integrated circuit is provided with a first test pattern generation section, operating on a second clock, for generating test data, and a second test pattern generation section, operating on a third clock, the inverted clock of the second clock, for generating test data. Furthermore, the integrated circuit is provided with a test data selection section for selectively outputting either the test data output from the first test pattern generation section or the test data output from the second test pattern generation section depending on the signal value of the second clock, thereby inputting the test data to the memory as test data. The frequency of the second clock is half the frequency of the first clock.

Owner:SOCIONEXT INC

Computer implemented machine learning method and system

One or more machine code entities such as functions are created which represent solutions to a problem and are directly executable by a computer. The programs are created and altered by a program in a higher level language such as "C" which is not directly executable, but requires translation into executable machine code through compilation, interpretation, translation, etc. The entities are initially created as an integer array that can be altered by the program as data, and are executed by the program by recasting a pointer to the array as a function type. The entities are evaluated by executing them with training data as inputs, and calculating fitnesses based on a predetermined criterion. The entities are then altered based on their fitnesses using a machine learning algorithm by recasting the pointer to the array as a data (e.g. integer) type. This process is iteratively repeated until an end criterion is reached. The entities evolve in such a manner as to improve their fitness, and one entity is ultimately produced which represents an optimal solution to the problem. Each entity includes a plurality of directly executable machine code instructions, a header, a footer, and a return instruction. The alteration process is controlled such that only valid instructions are produced. The headers, footers and return instructions are protected from alteration. The system can be implemented on an integrated circuit chip, with the entities stored in high speed memory in a central processing unit.

Owner:NORDIN PETER

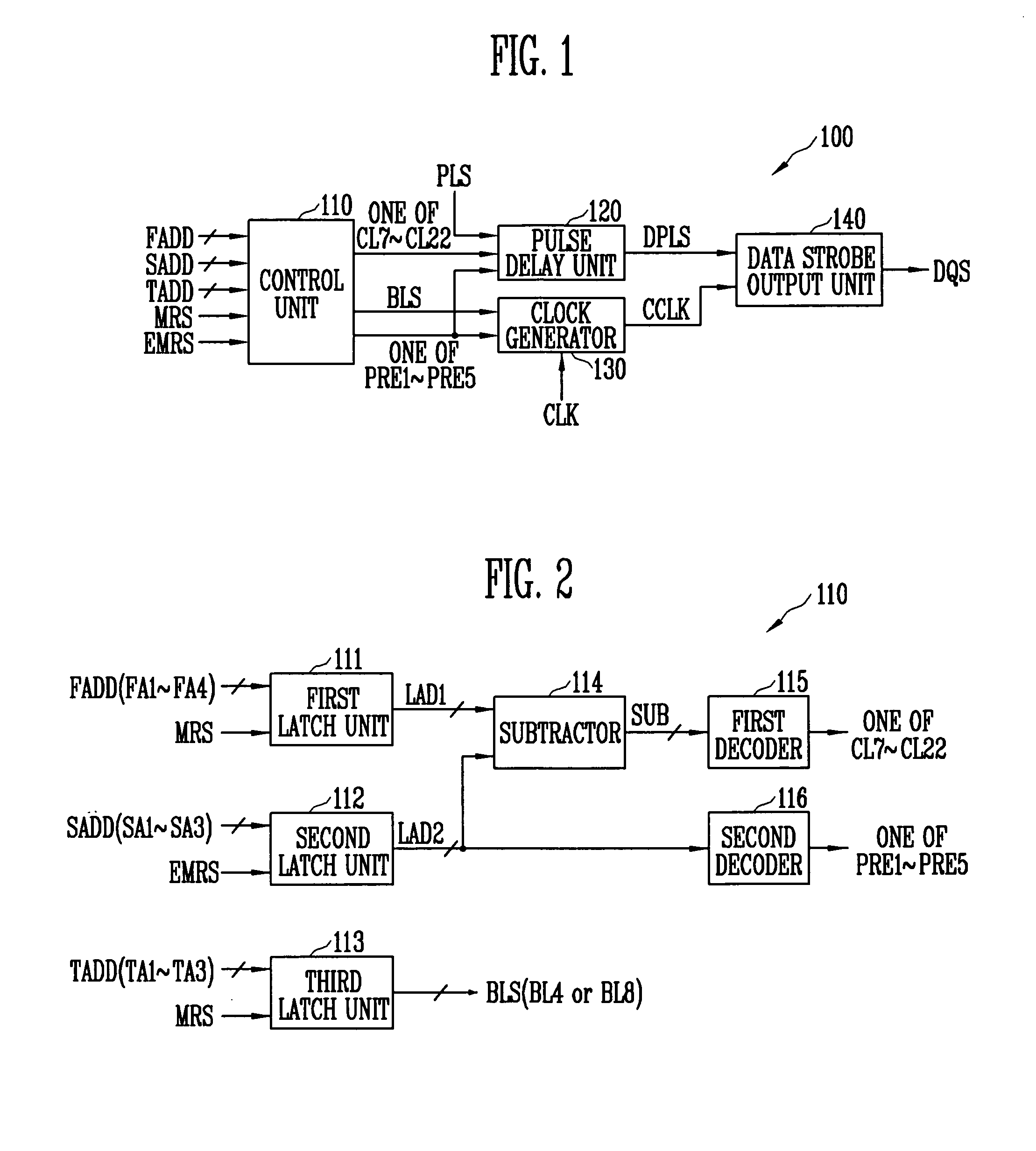

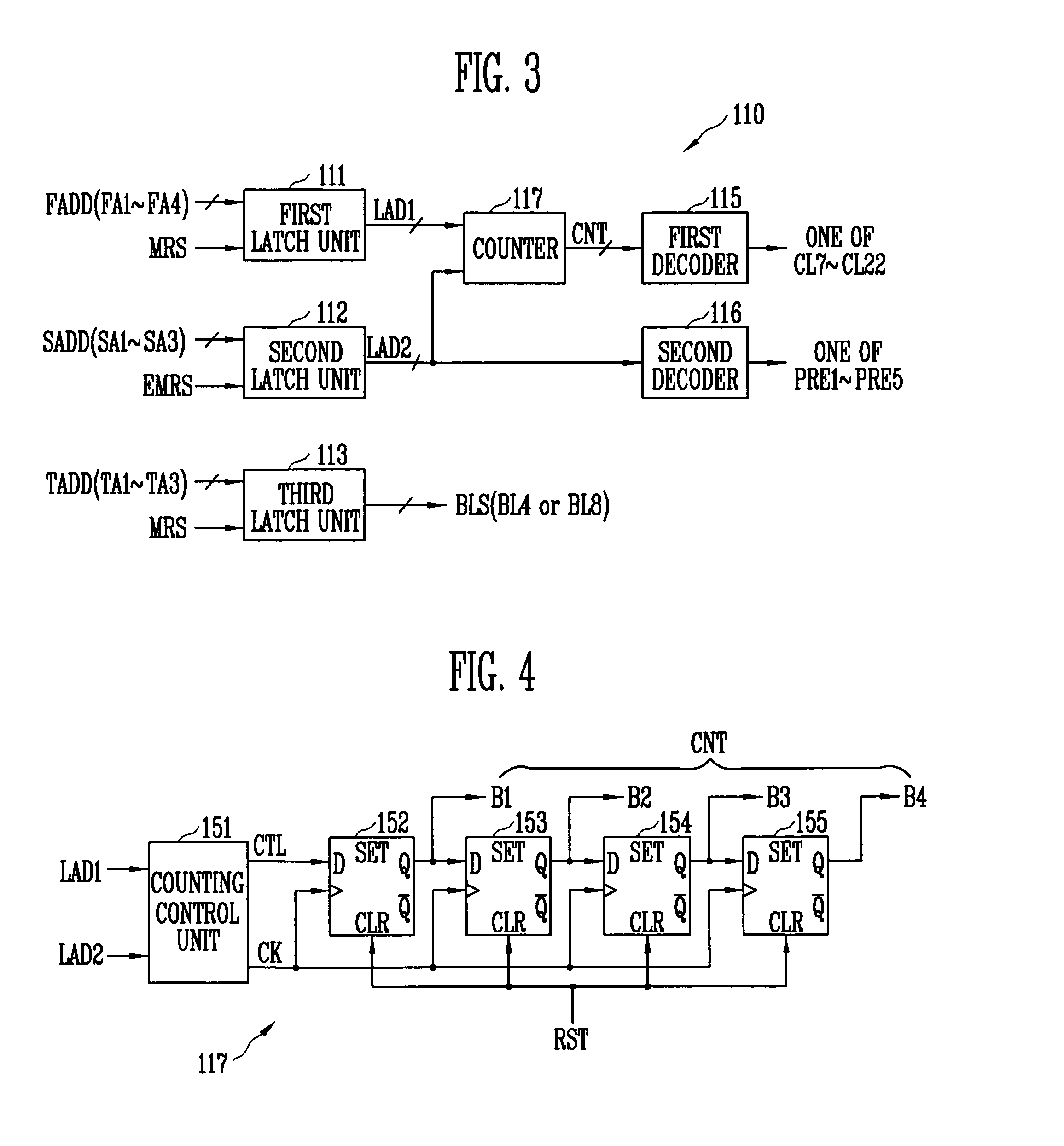

Data strobe signal generator for generating data strobe signal based on adjustable preamble value and semiconductor memory device with the same

InactiveUS20070291558A1Stabilized data output operationIncrease speedDigital storageHigh speed memoryControl signal

A data strobe signal generator according to the present invention includes a control unit, a pulse delay unit, a clock generator, and a data strobe output unit. The control unit generates a CAS latency signal and a preamble signal. The pulse delay unit delays a pulse signal for predetermined time and outputs a delayed pulse signal. The clock generator outputs a control clock signal. The data strobe output unit outputs a data strobe signal. The data strobe signal generator and the semiconductor memory device having the same according to the present invention generate a data strobe signal based on an adjustable preamble value, thereby ensuring the stabilized data output operation of a high-speed memory device.

Owner:SK HYNIX INC

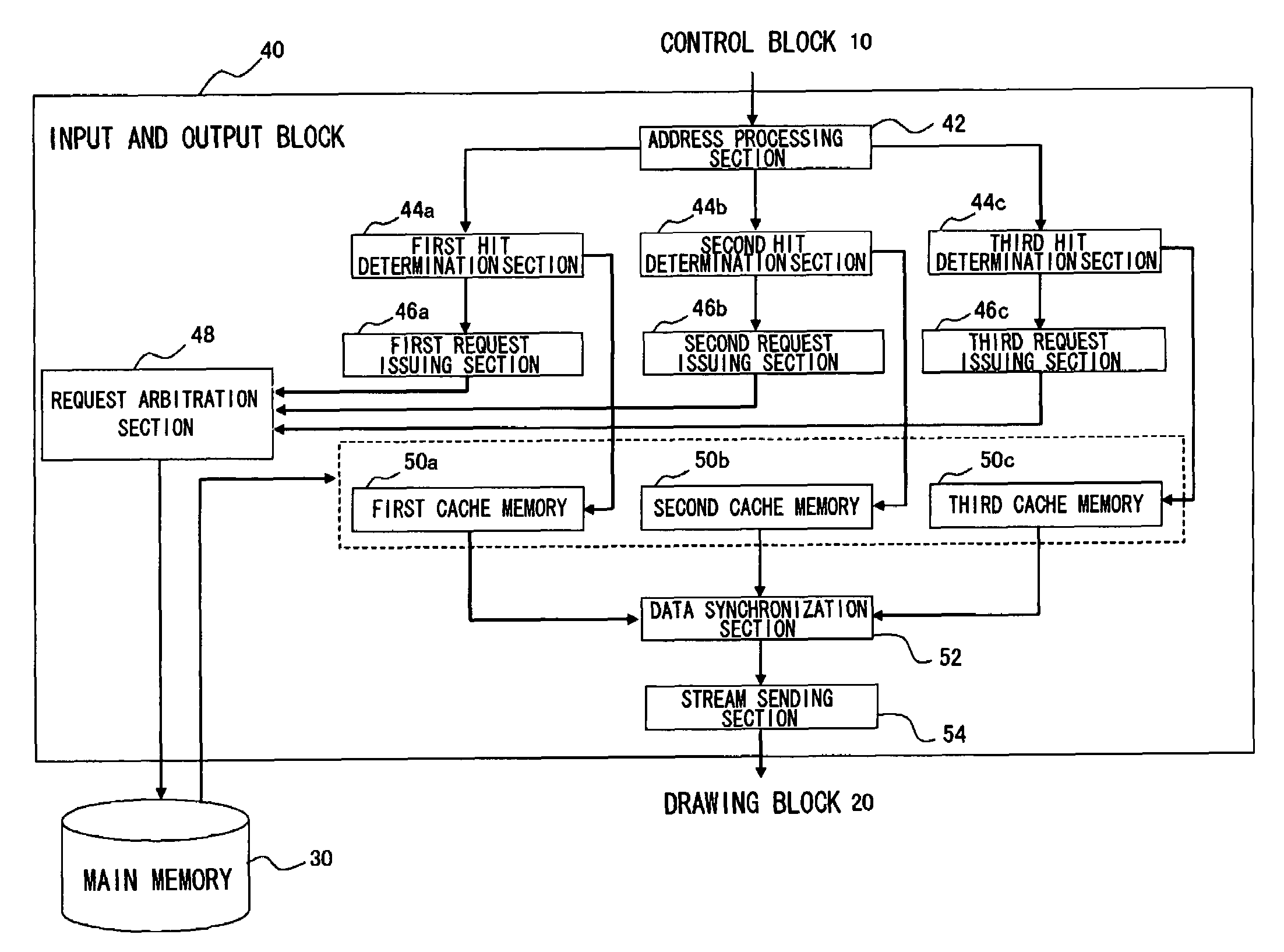

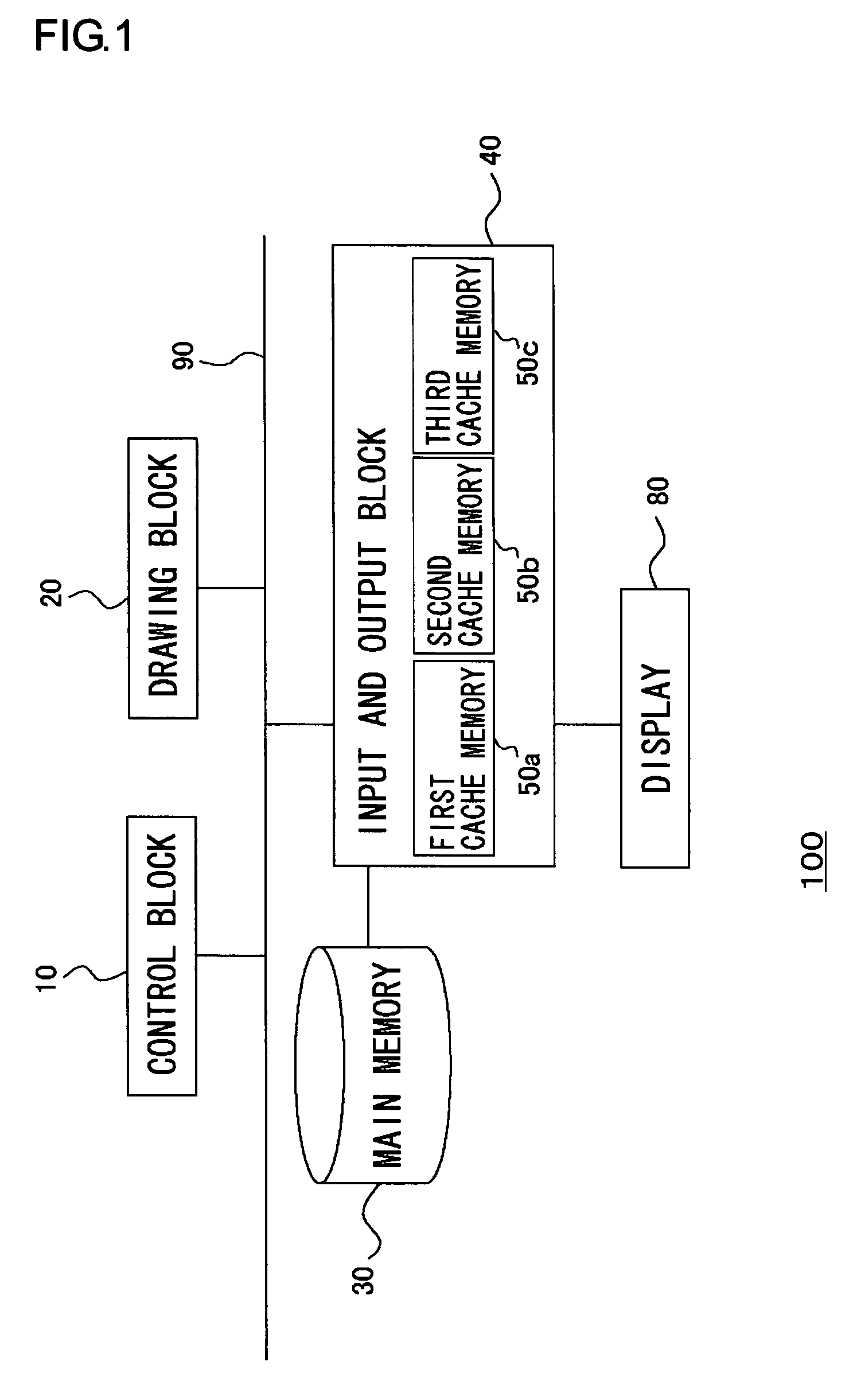

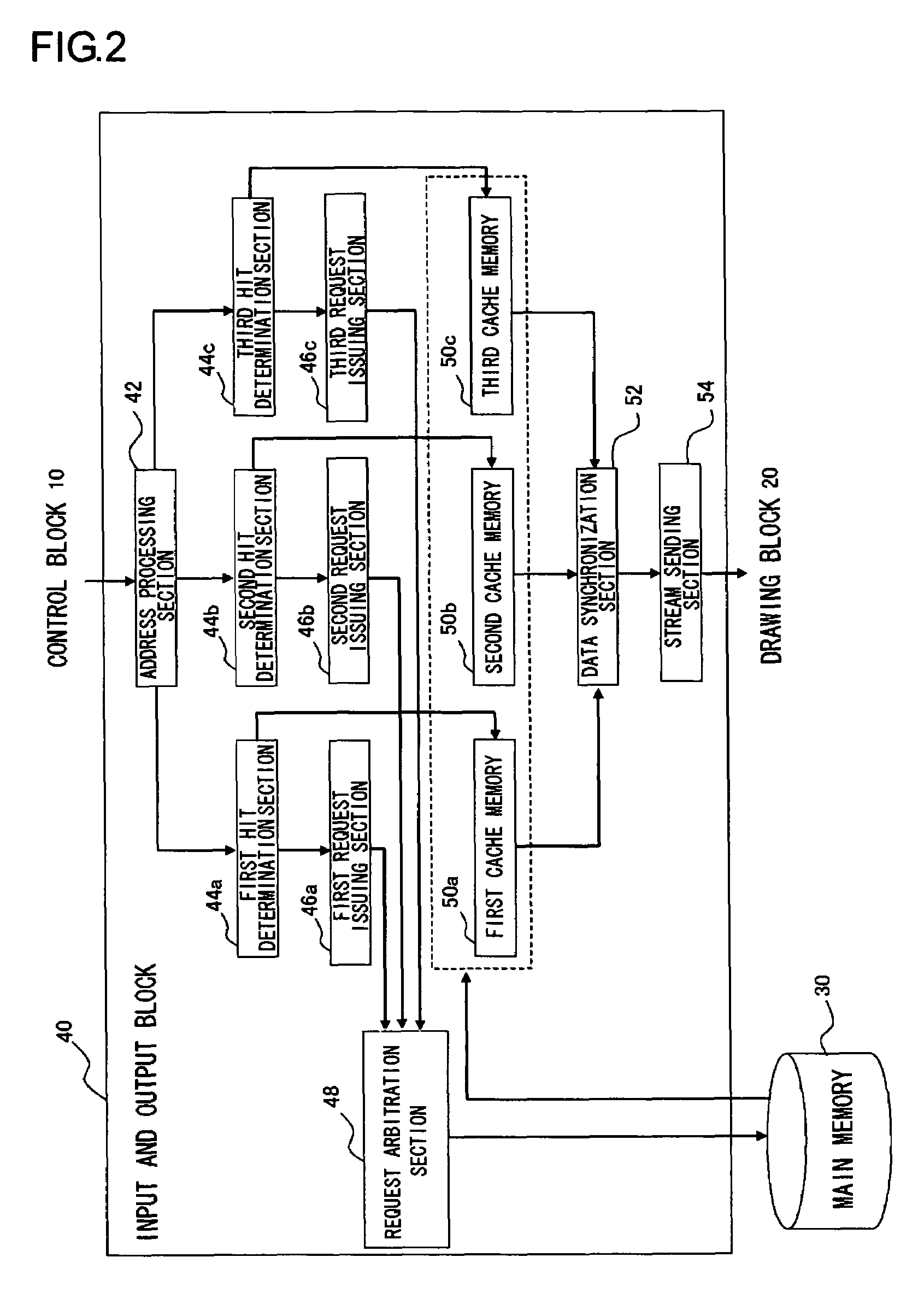

Data stream generation method for enabling high-speed memory access

ActiveUS7475210B2Lower latencyCathode-ray tube indicatorsMemory systemsData synchronizationData stream

An address processing section allocates addresses of desired data in a main memory, input from a control block, to any of three hit determination sections based on the type of the data. If the hit determination sections determine that the data stored in the allocated addresses does not exist in the corresponding cache memories, request issuing sections issue transfer requests for the data from the main memory to the cache memories, to a request arbitration section. The request arbitration section transmits the transfer requests to the main memory with priority given to data of greater sizes to transfer. The main memory transfers data to the cache memories in accordance with the transfer requests. A data synchronization section reads a plurality of read units of data from a plurality of cache memories, and generates a data stream for output by a stream sending section.

Owner:SONY COMPUTER ENTERTAINMENT INC

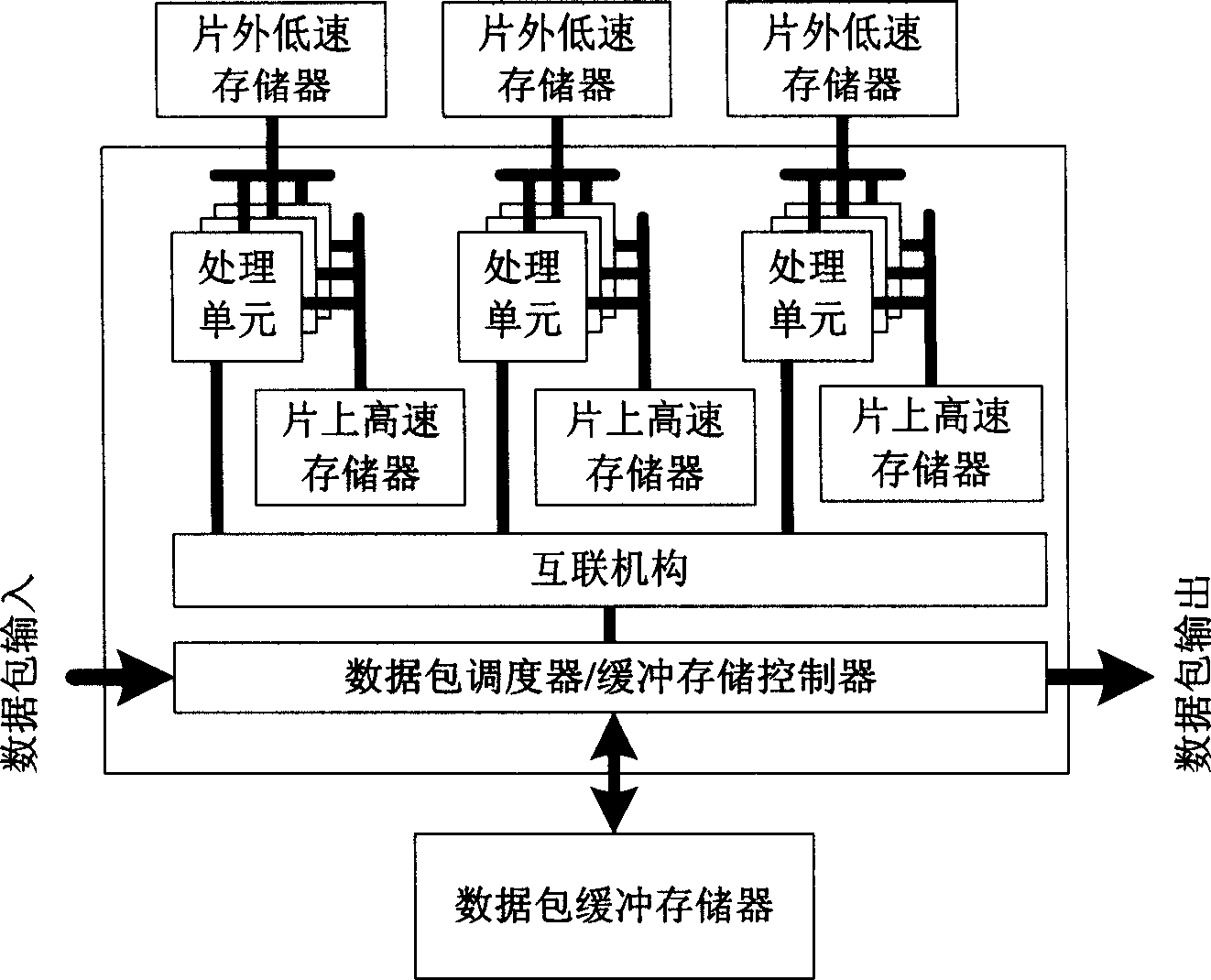

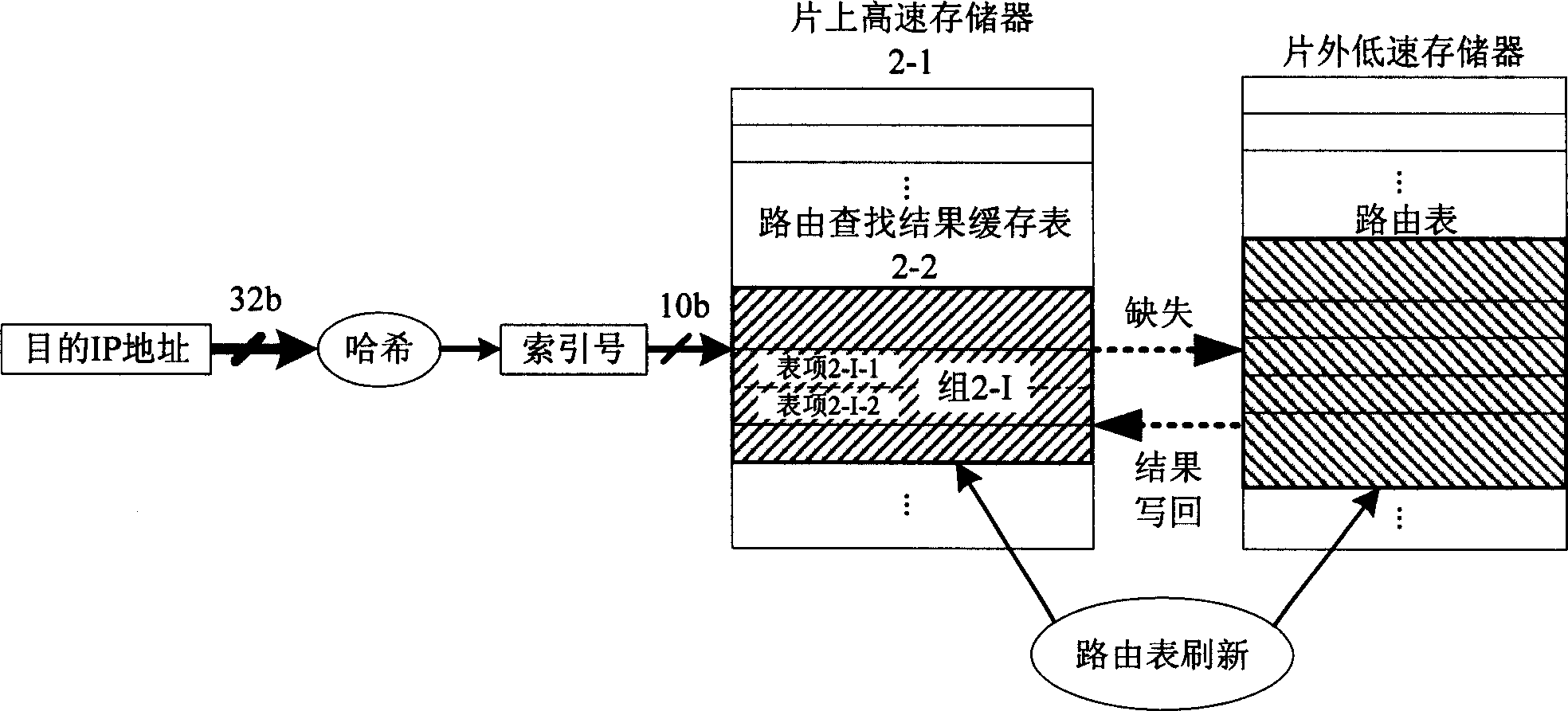

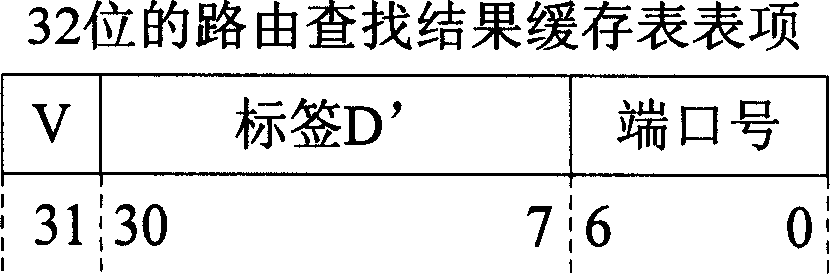

Route searching result cache method based on network processor

InactiveCN1863169AImprove search efficiencyEvenly distributedData switching networksLow speedRouting table

The invention a network processor-based route searching result caching method, belonging to computer field, characterized in: building and maintaining a route searching result caching table in on-chip high speed memory of the network processor; after each to-be-searched destination IP address is received by the network processor, firstly making fast searching in the caching list by Hash function; if its result has existed in the caching list, directly returning the route searching result and regulating the sequence of items in the caching list by a rule that the closer an item is, the least it is used, to find the result as soon as possible in the follow-up searching; otherwise searching the route list stored in an outside-chip low speed memory, returning the searching result to application program and writing the searching result back into the caching list. And the invention reduces number of accessing times of outside-chip low speed memory for route searching and reducing occupation of memory bandwidth.

Owner:TSINGHUA UNIV

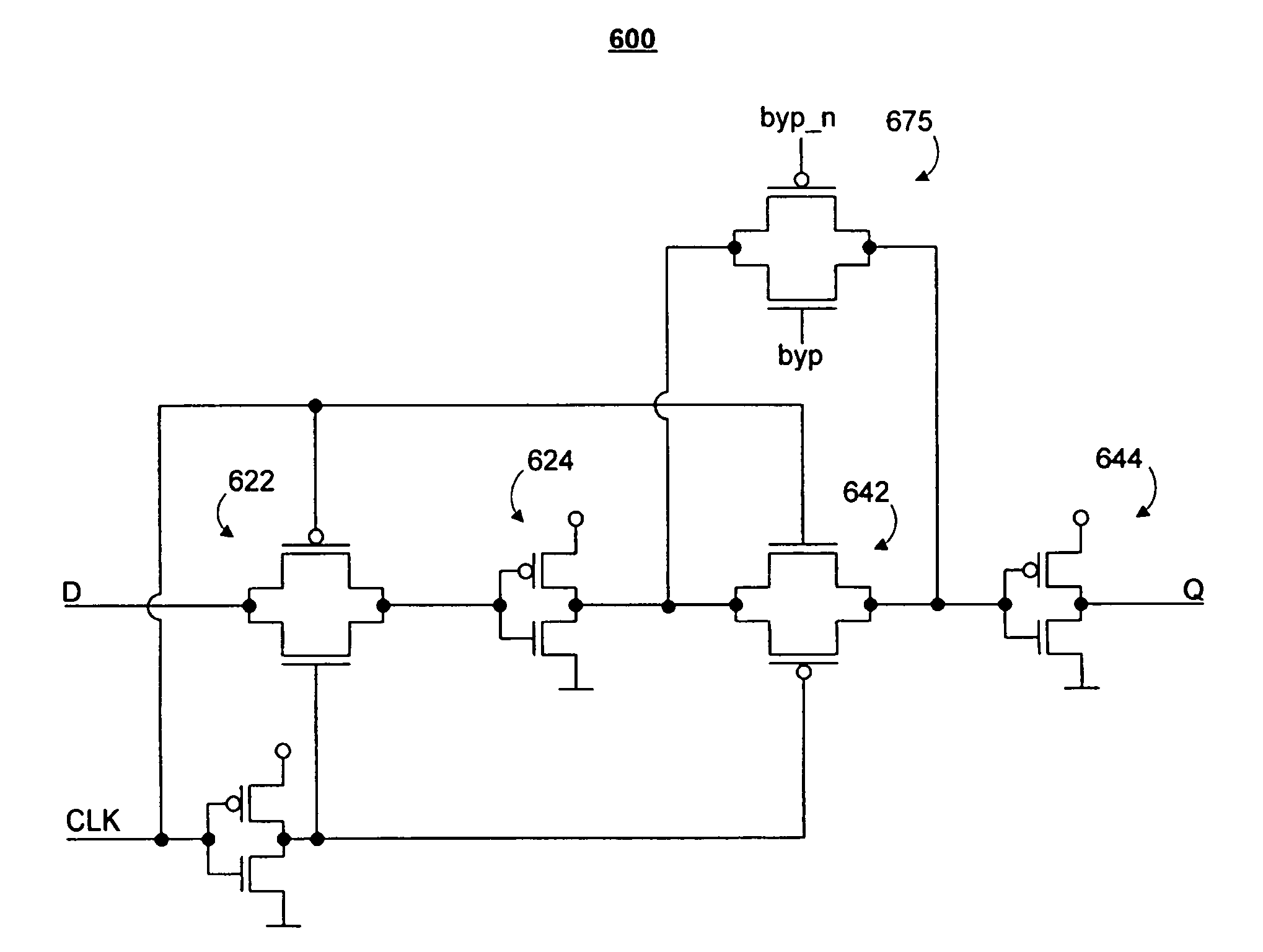

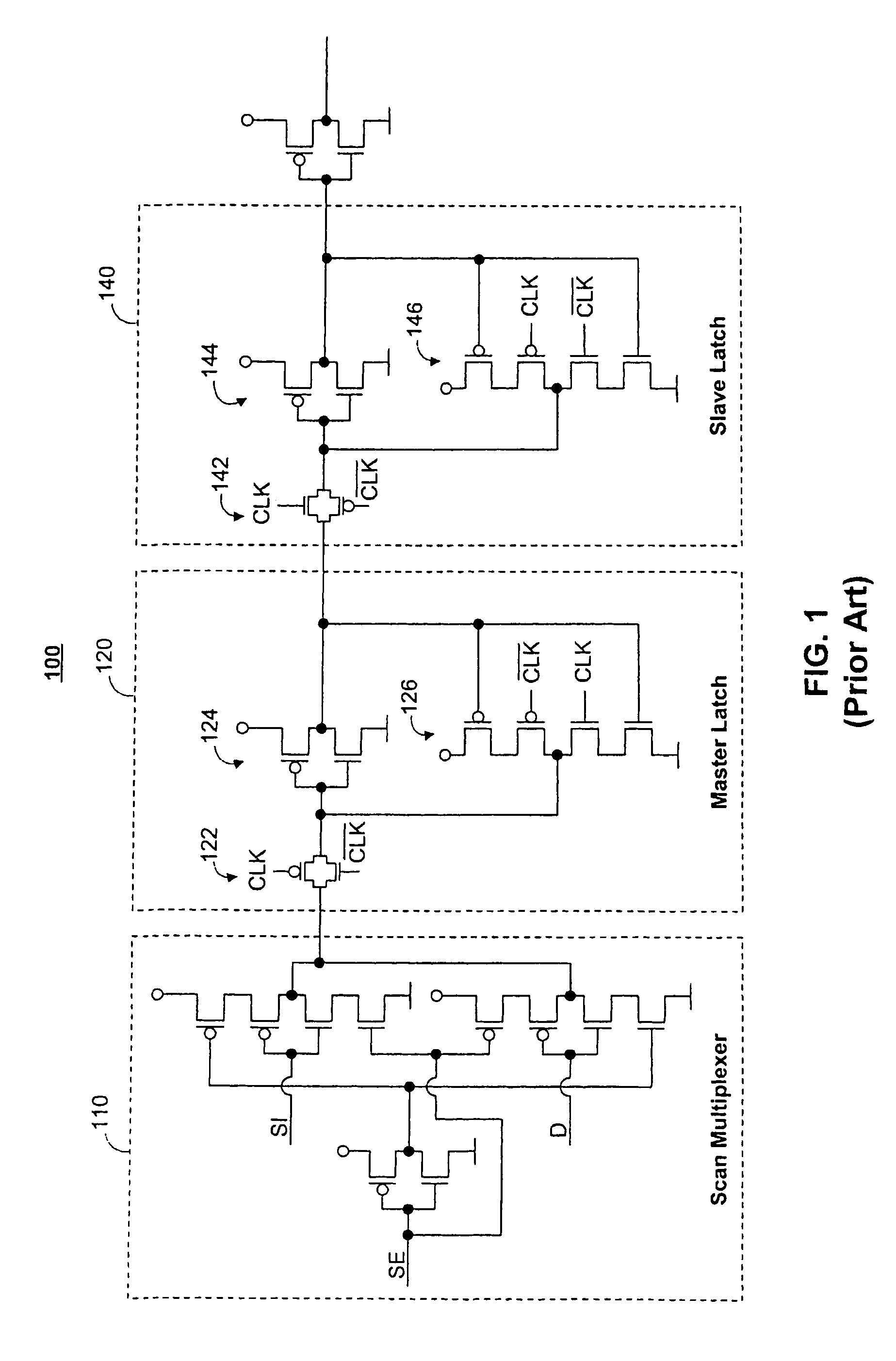

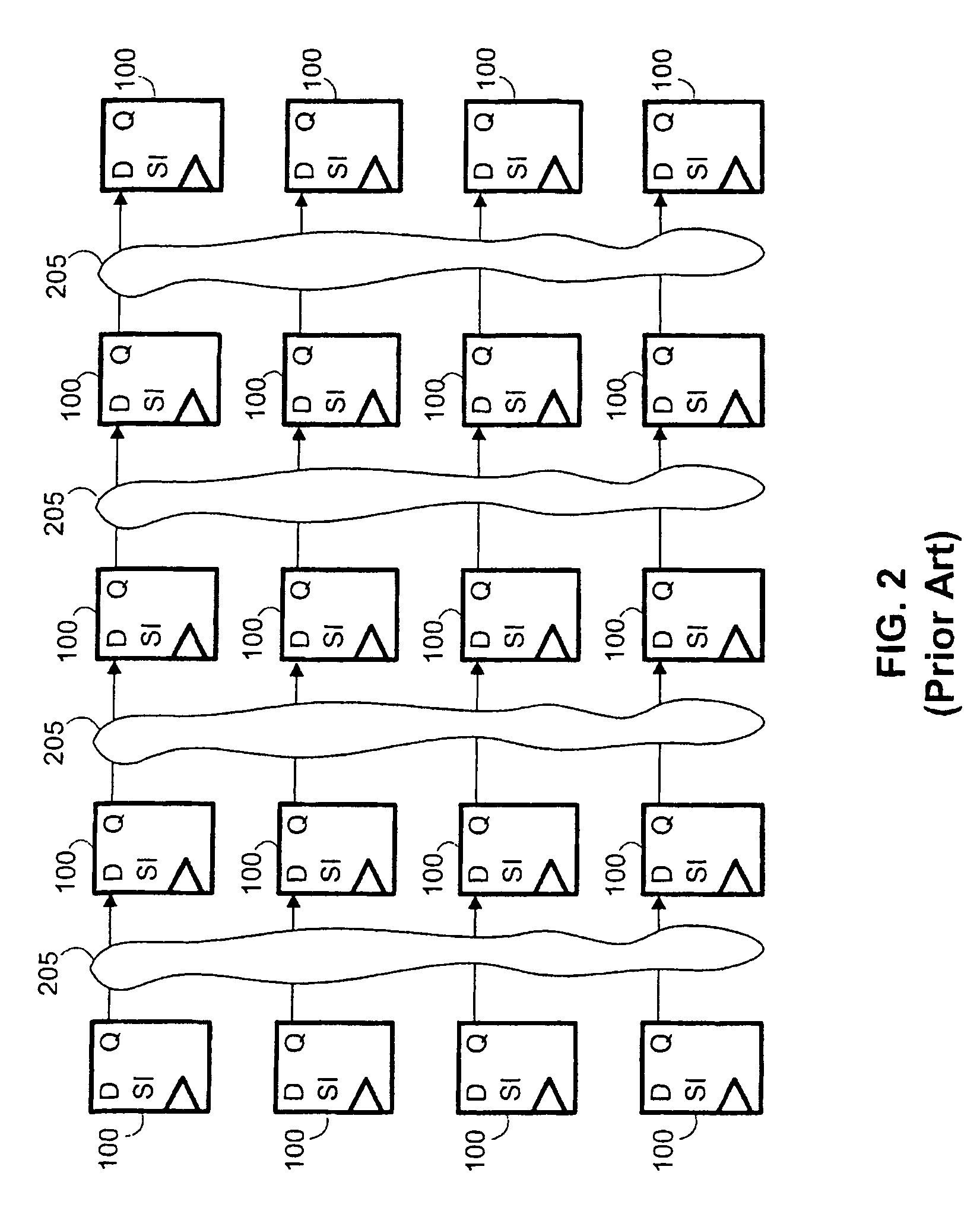

Area optimized edge-triggered flip-flop for high-speed memory dominated design

An area optimized edge-triggered flip-flop for high-speed memory dominated design is provided. The area optimized flip-flop also provides a bypass mode. The bypass mode allows the area optimized flip-flops to act like a buffer. This allows the area optimized flip-flop to provide the basic functionality of a flip-flop during standard operation, but also allows the area optimized flip-flop to act like a buffer when desirable, such as during modes of testing of the design. The area optimized flip-flop provides most of the functionality of a typical flip-flop, while reducing the total area and power consumption of the design.

Owner:MARVELL INT LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com