Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

124 results about "Memory access pattern" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a memory access pattern or IO access pattern is the pattern with which a system or program reads and writes memory on secondary storage. These patterns differ in the level of locality of reference and drastically affect cache performance, and also have implications for the approach to parallelism and distribution of workload in shared memory systems. Further, cache coherency issues can affect multiprocessor performance, which means that certain memory access patterns place a ceiling on parallelism (which manycore approaches seek to break).

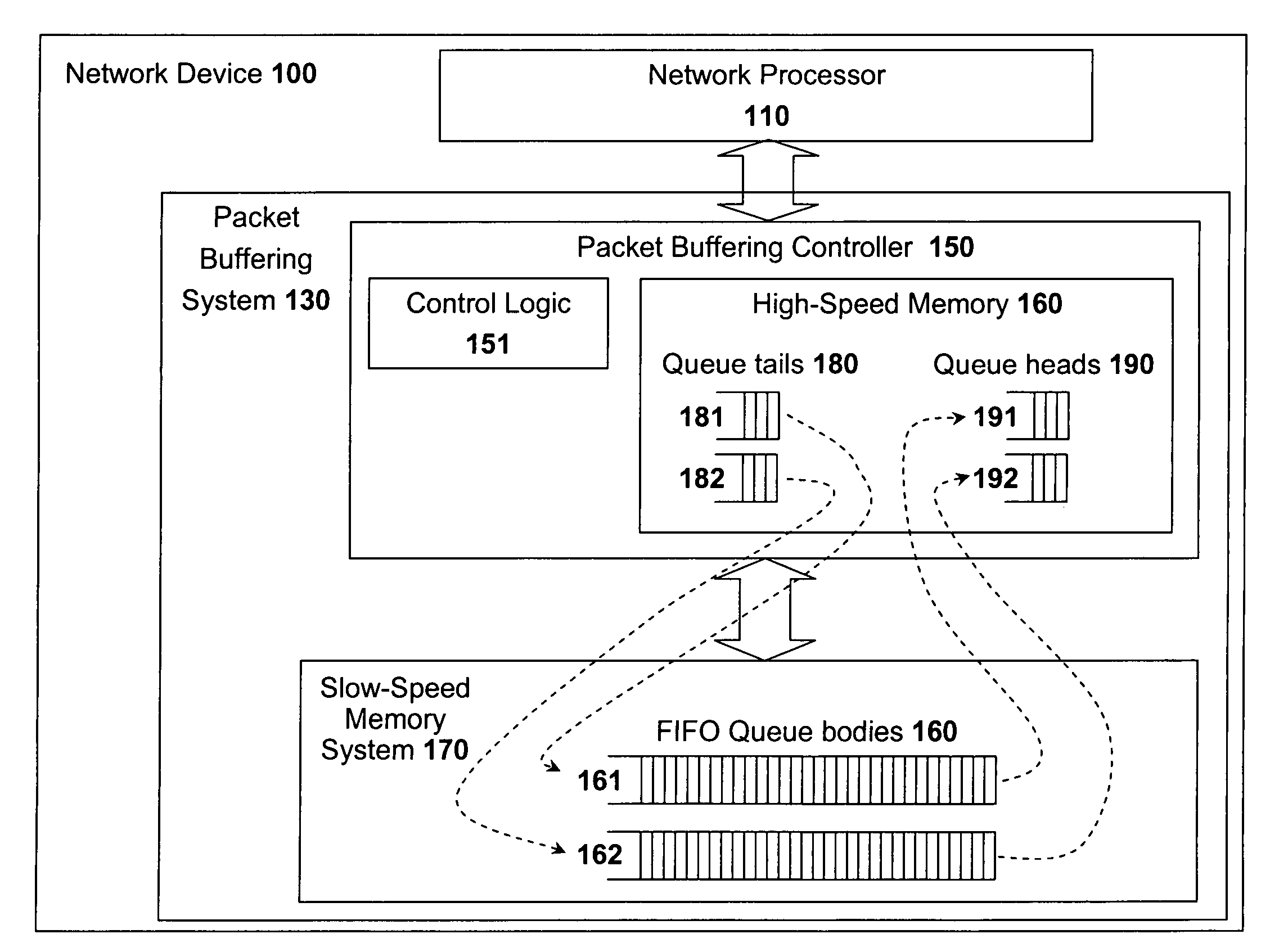

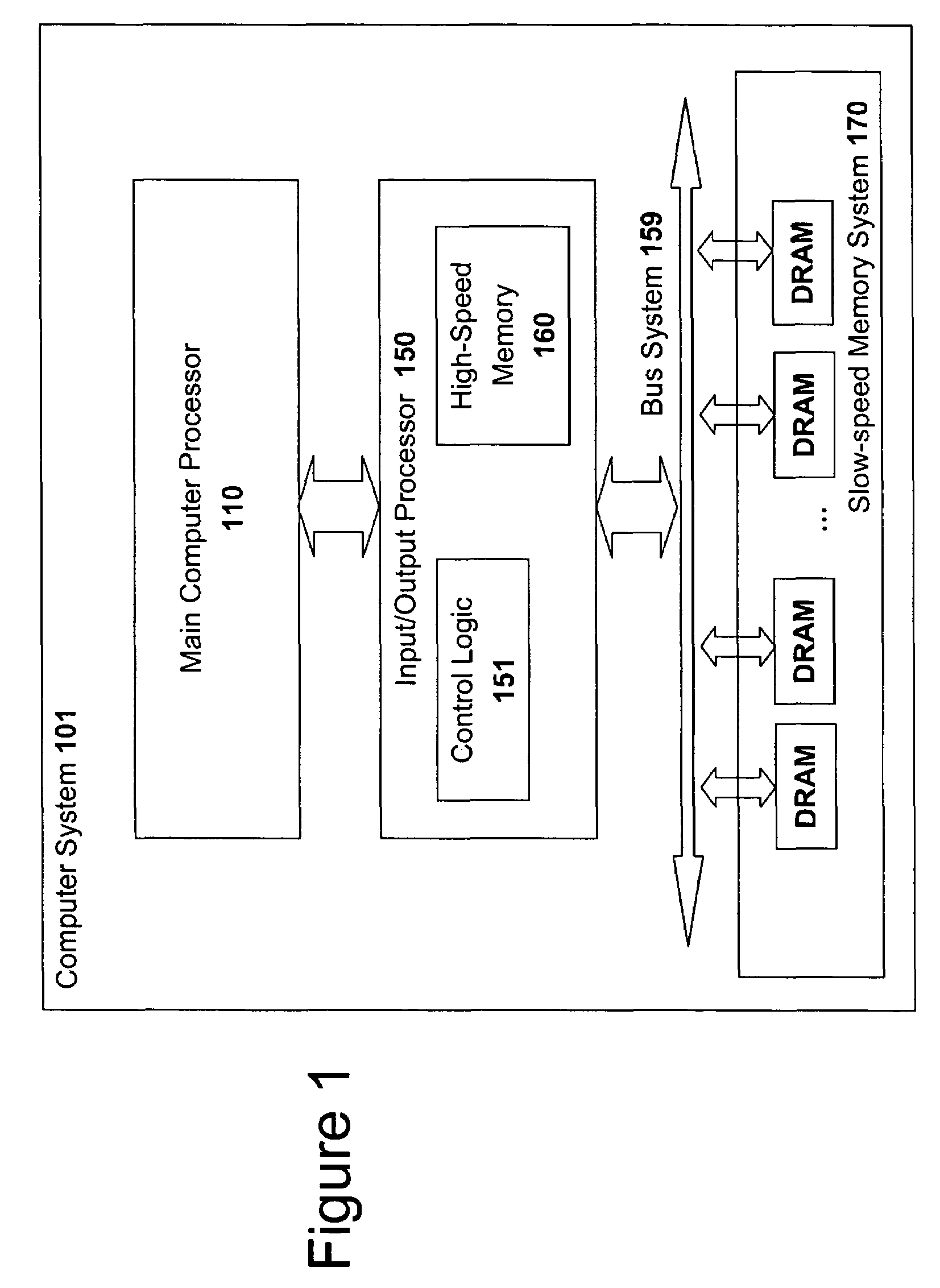

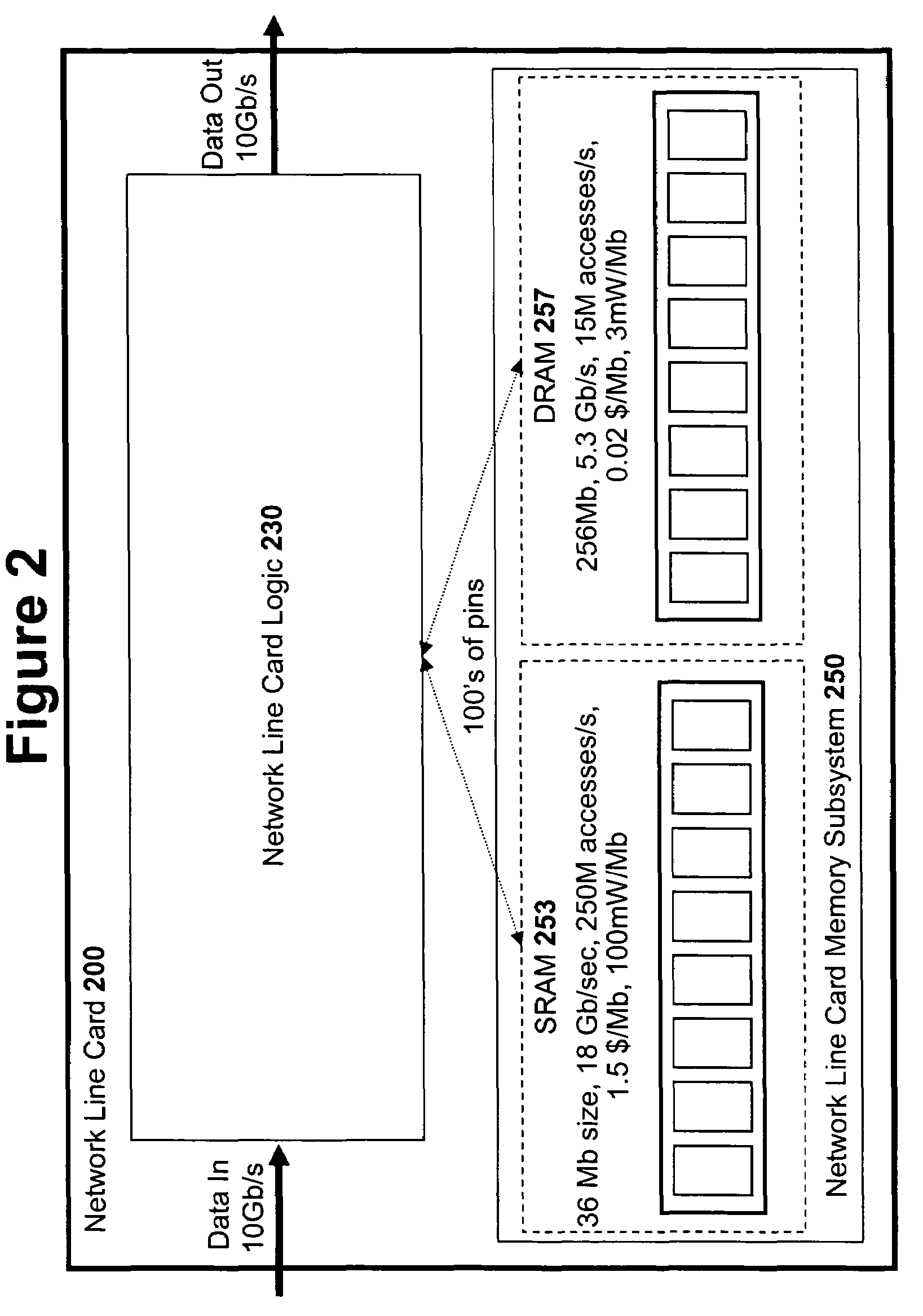

High speed memory control and I/O processor system

ActiveUS20050240745A1Easy to handleSimplify memory access taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryTailored approach

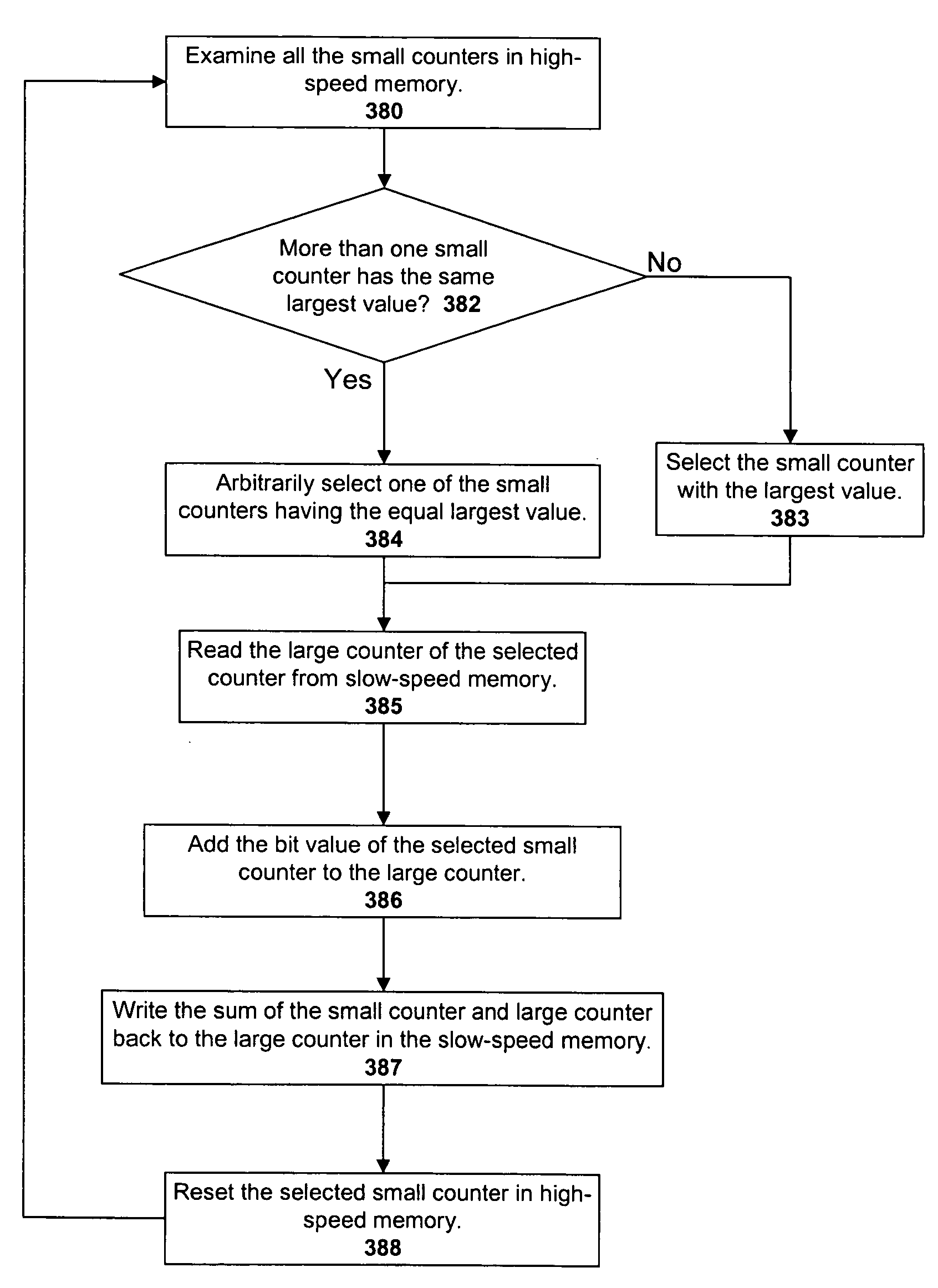

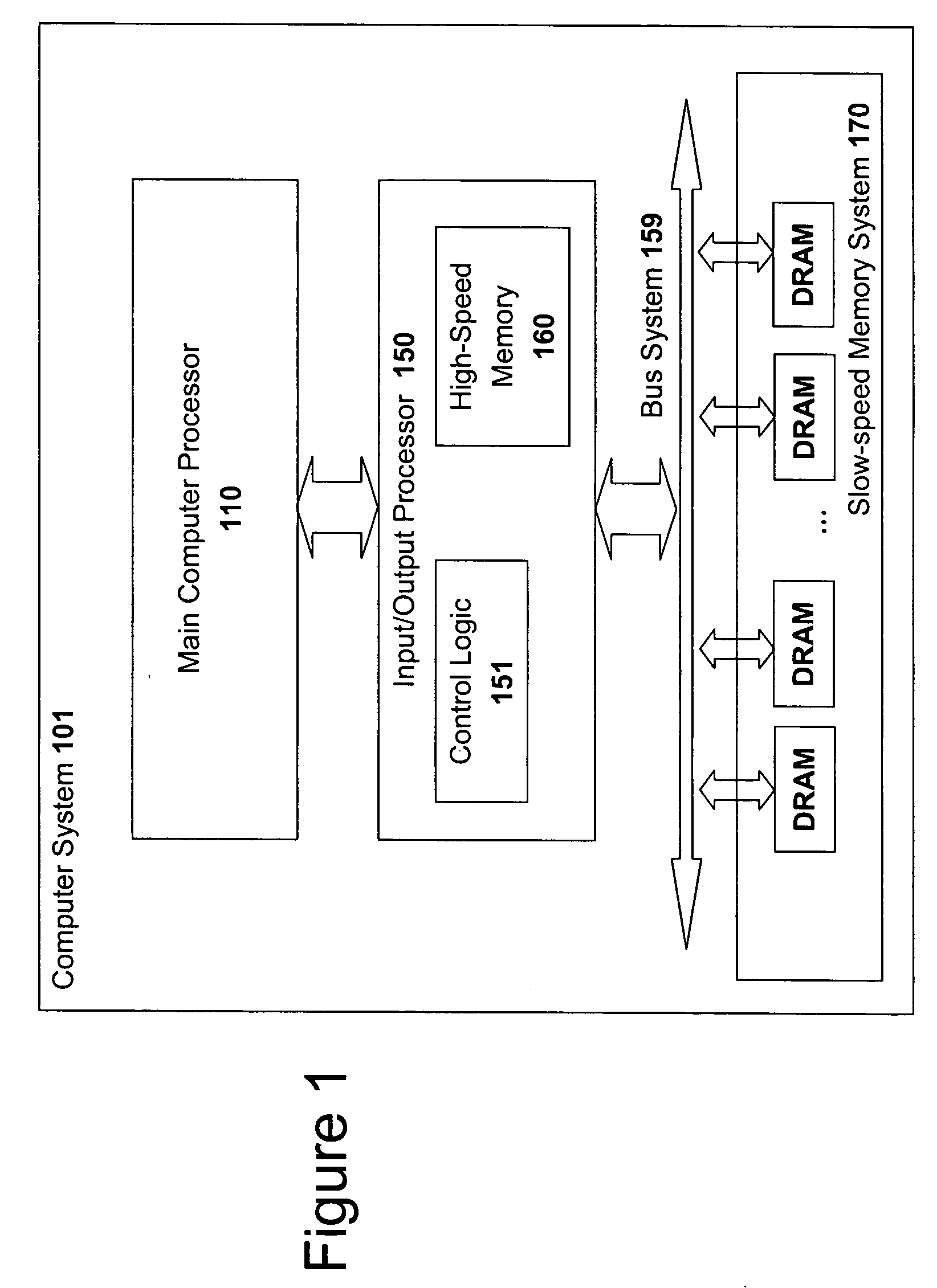

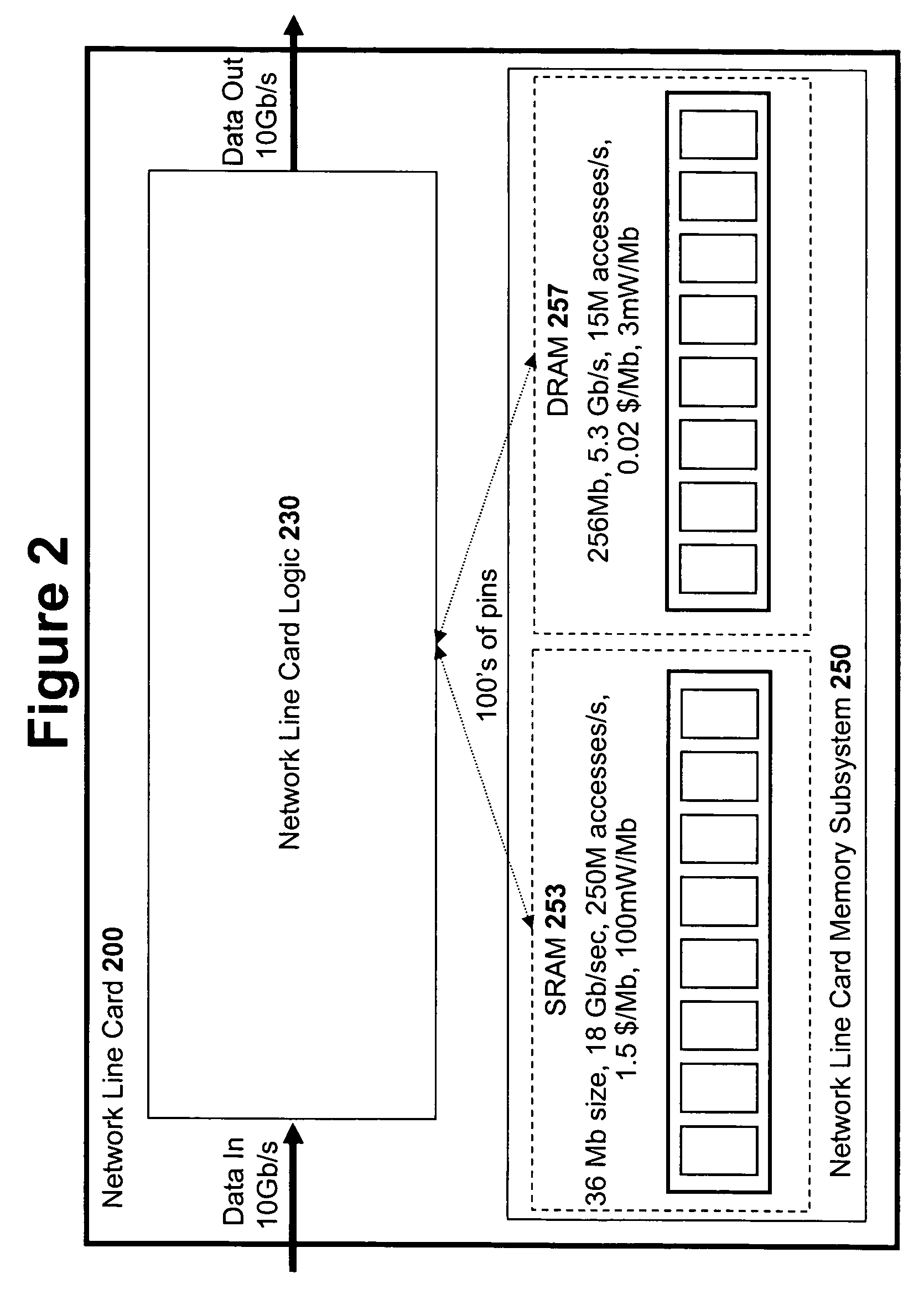

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

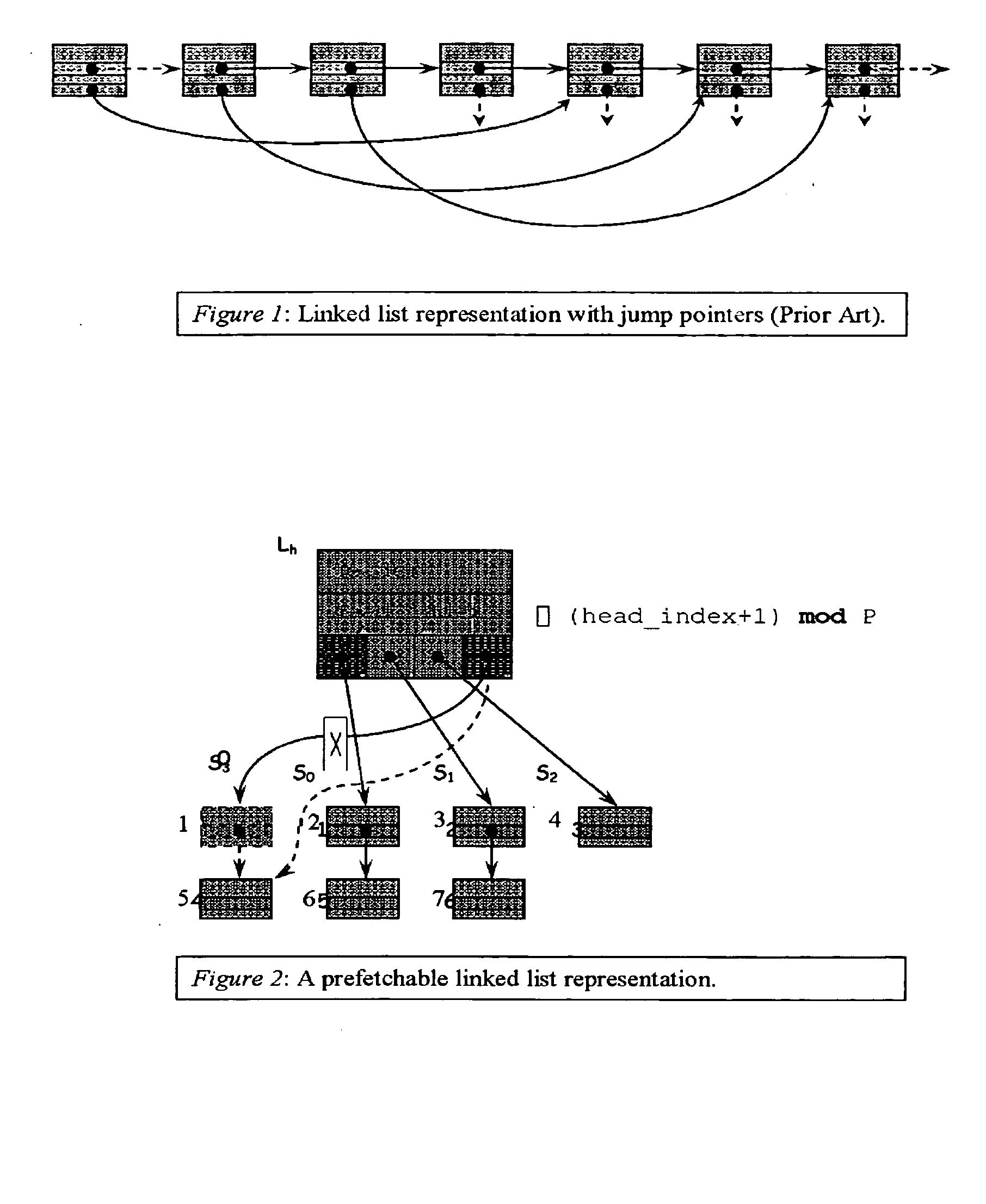

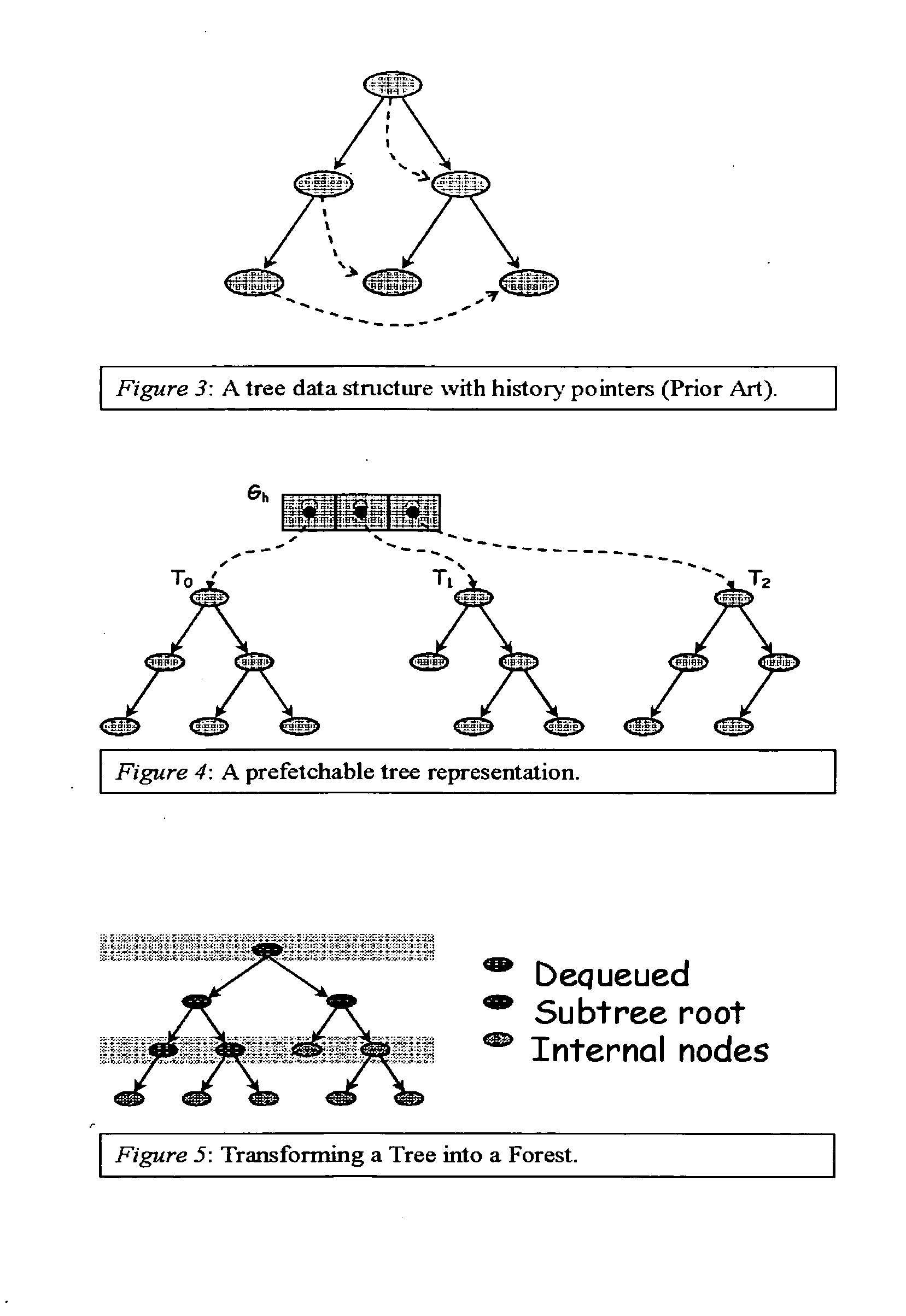

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

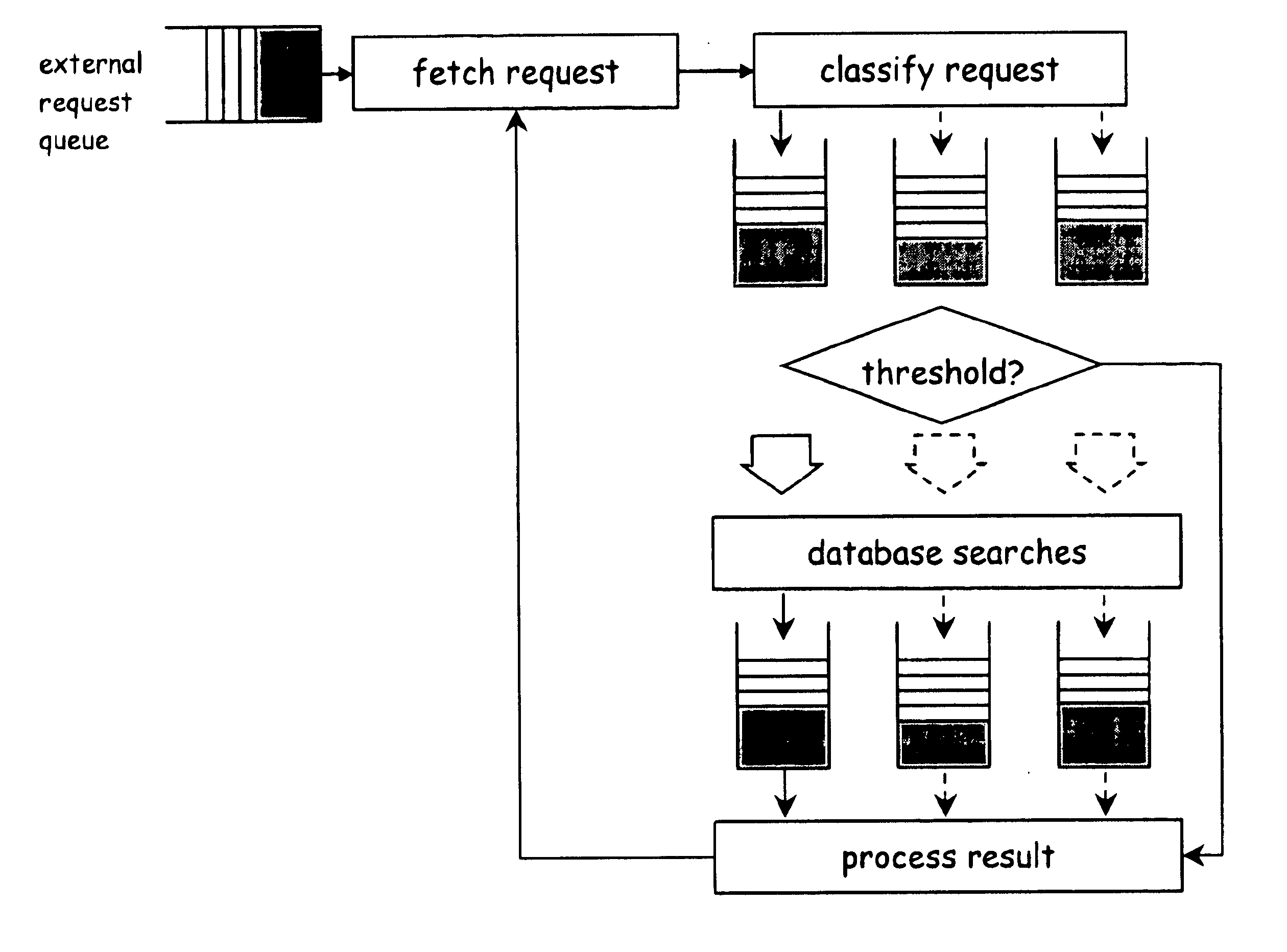

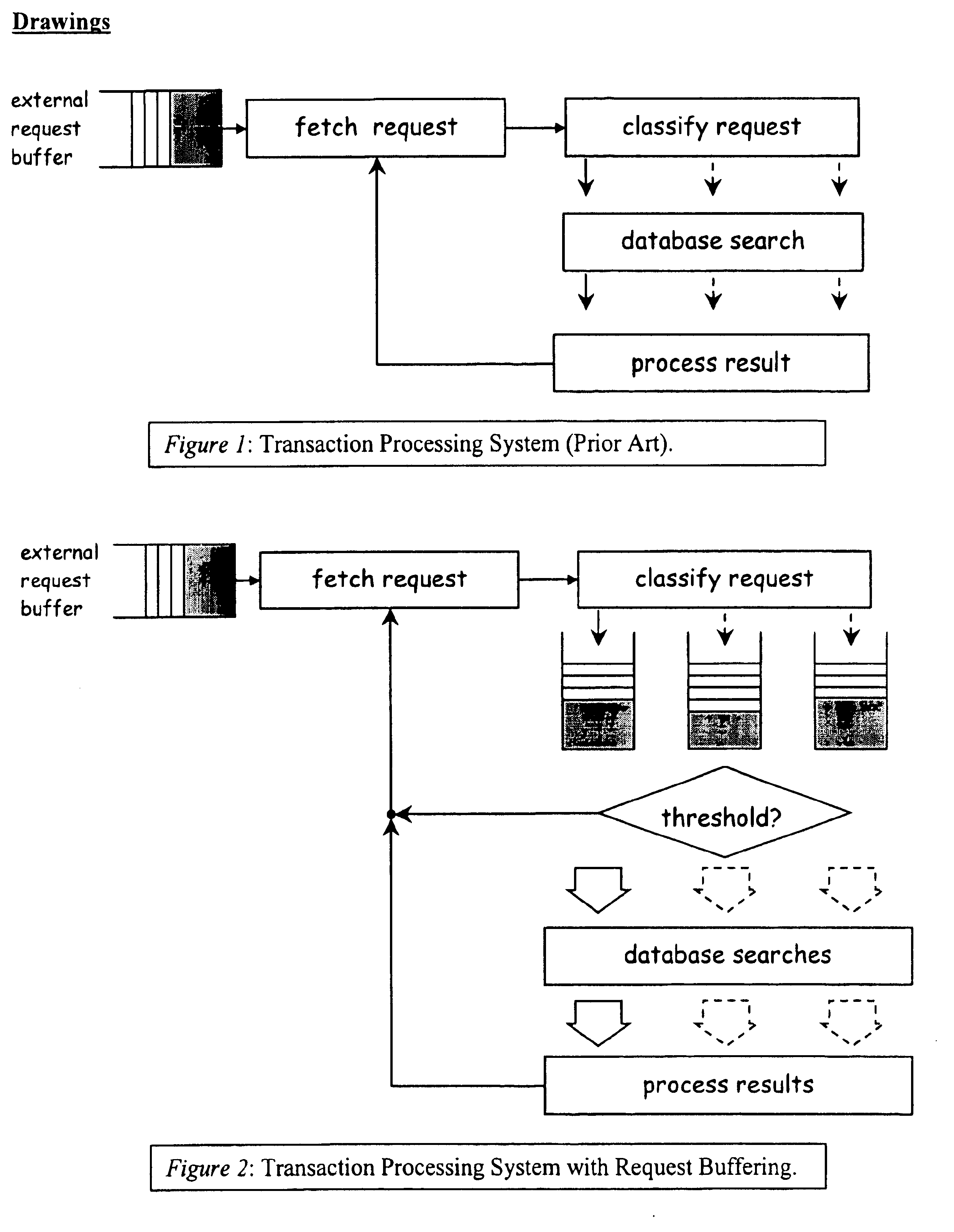

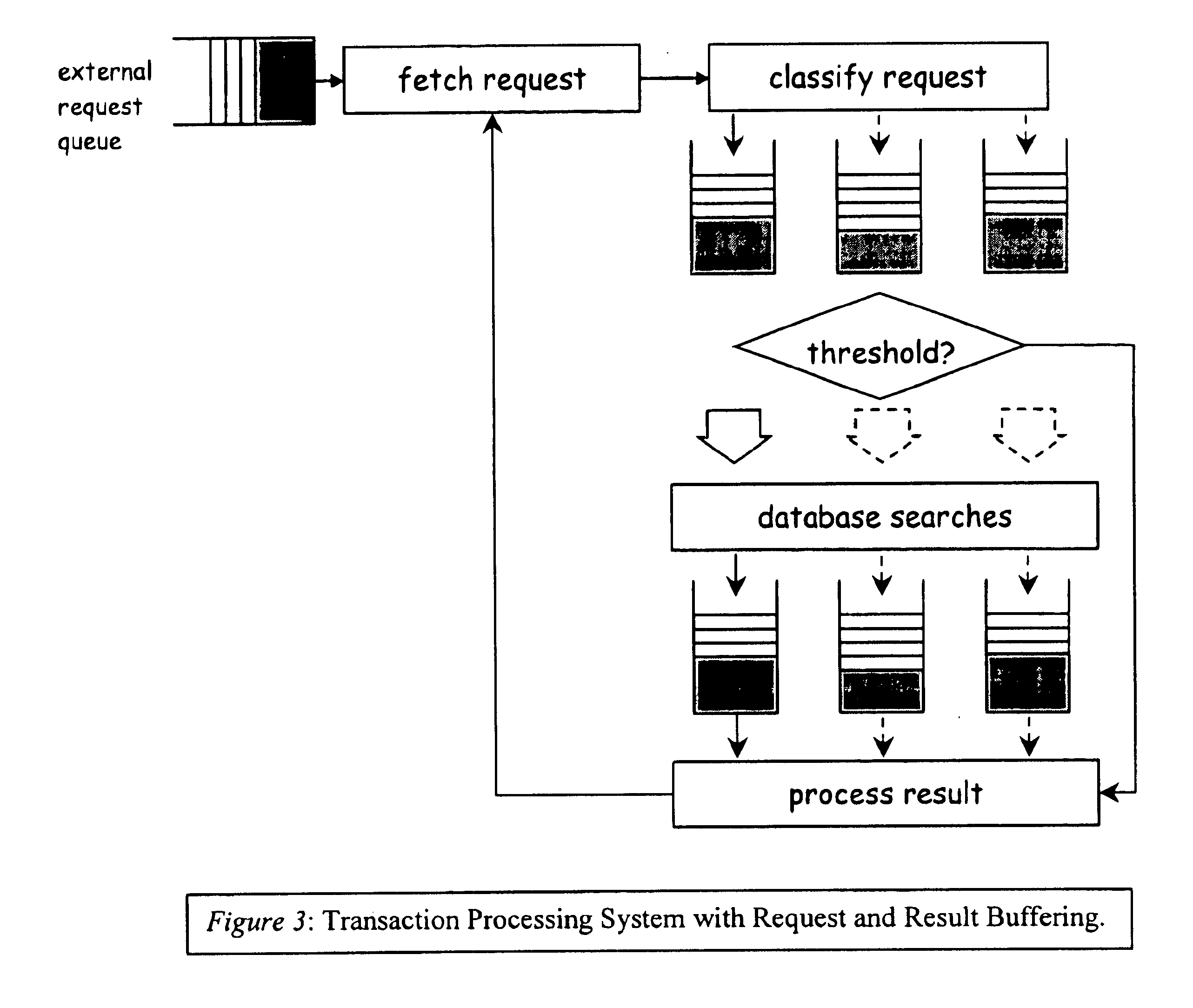

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

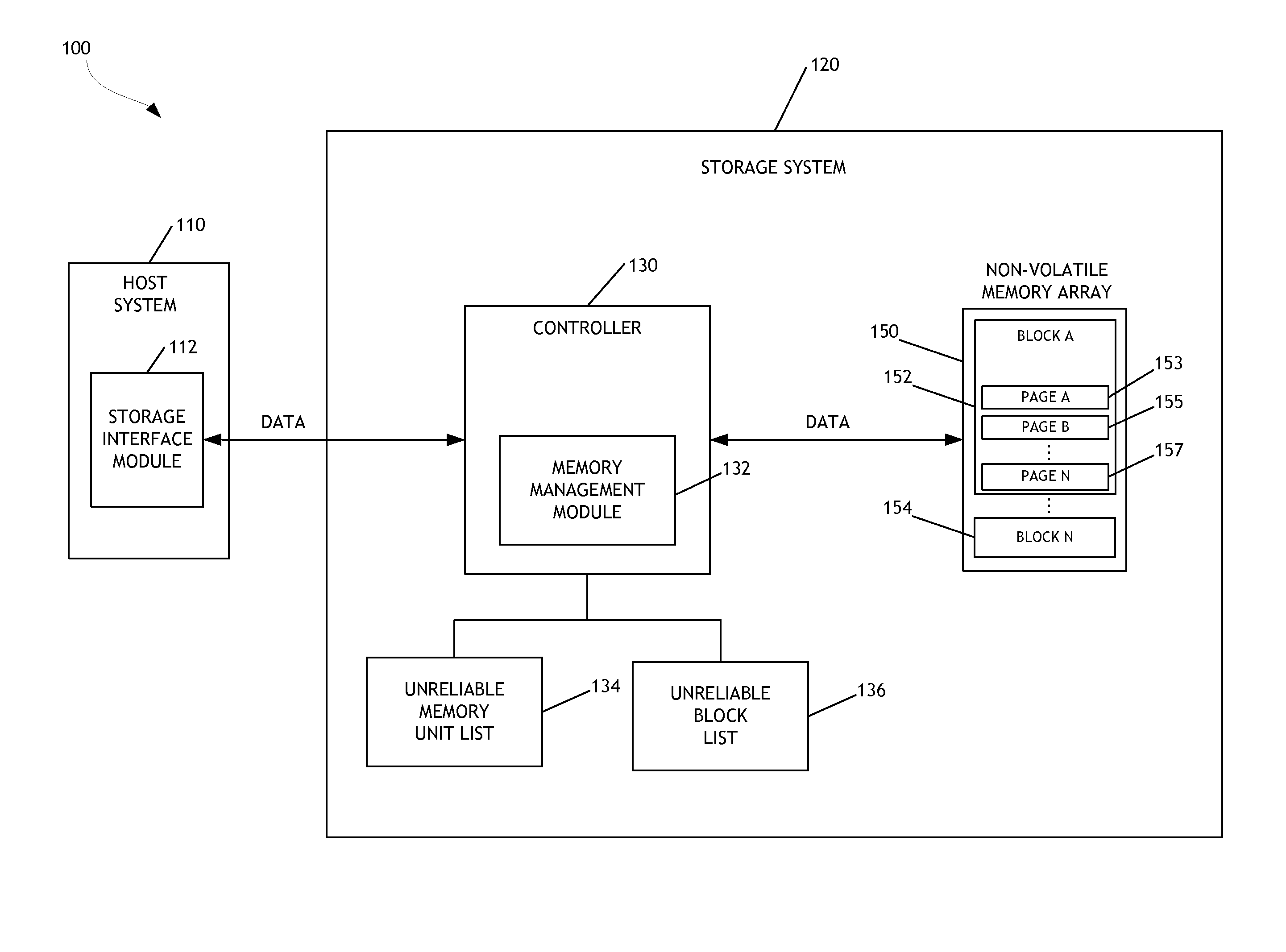

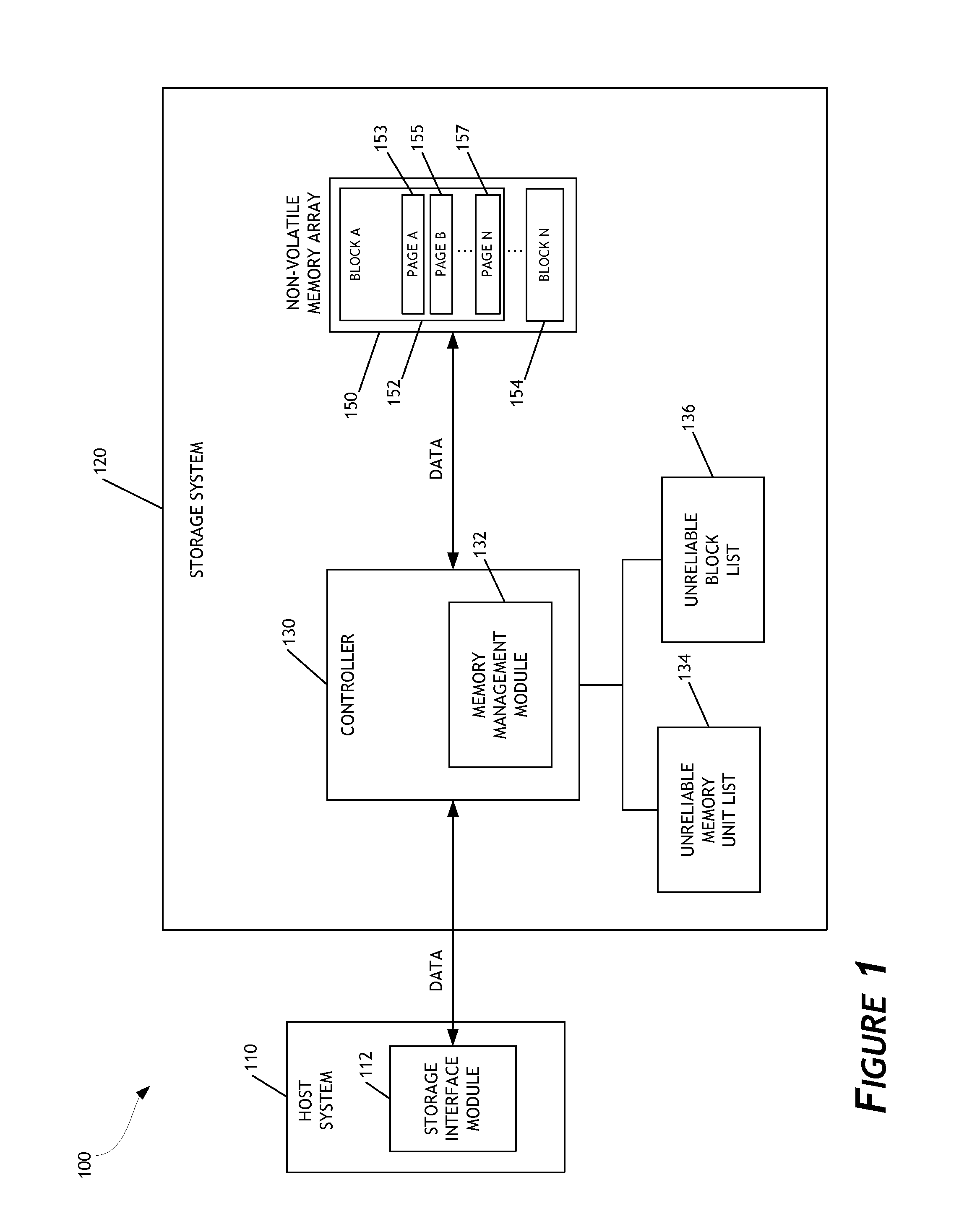

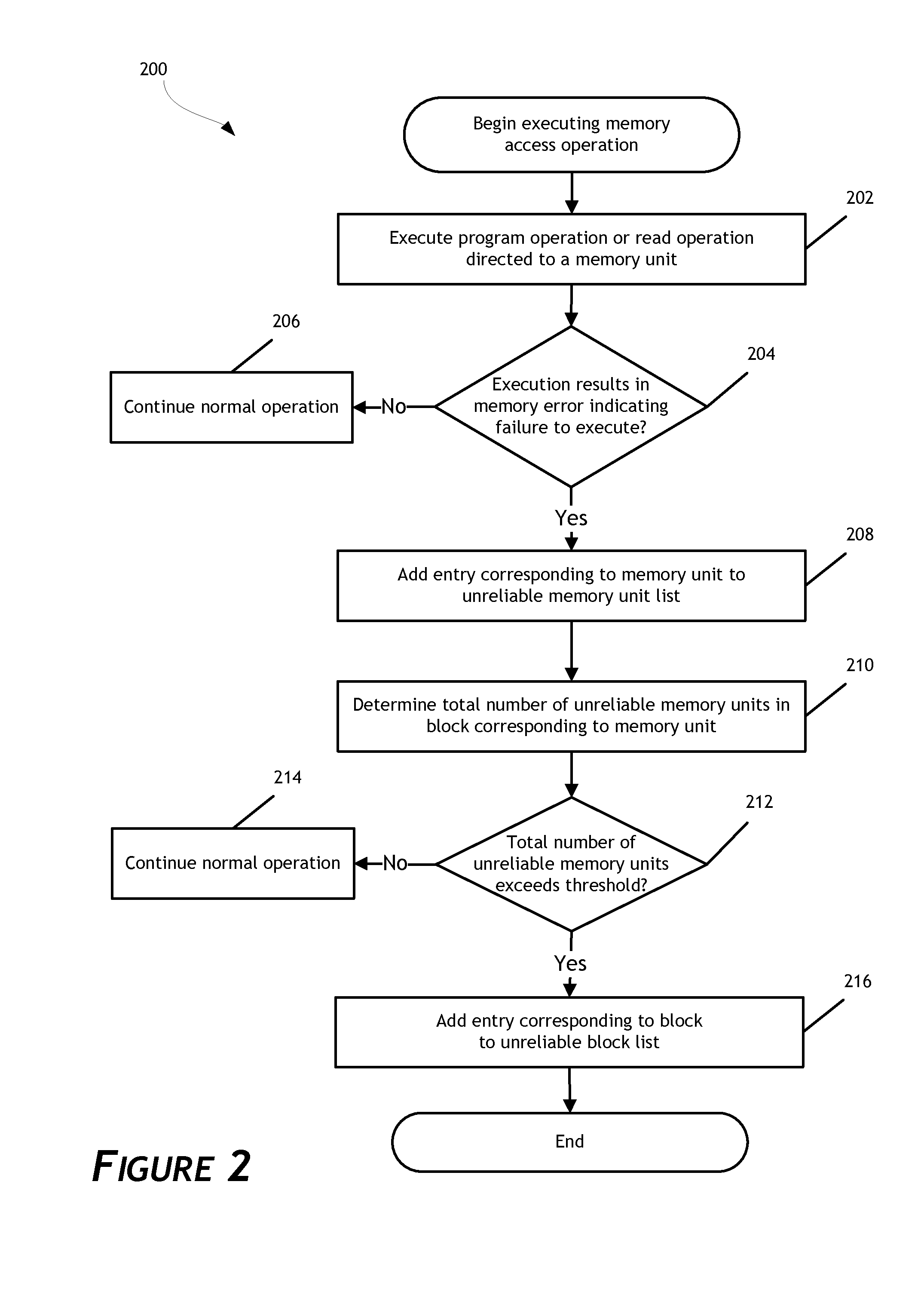

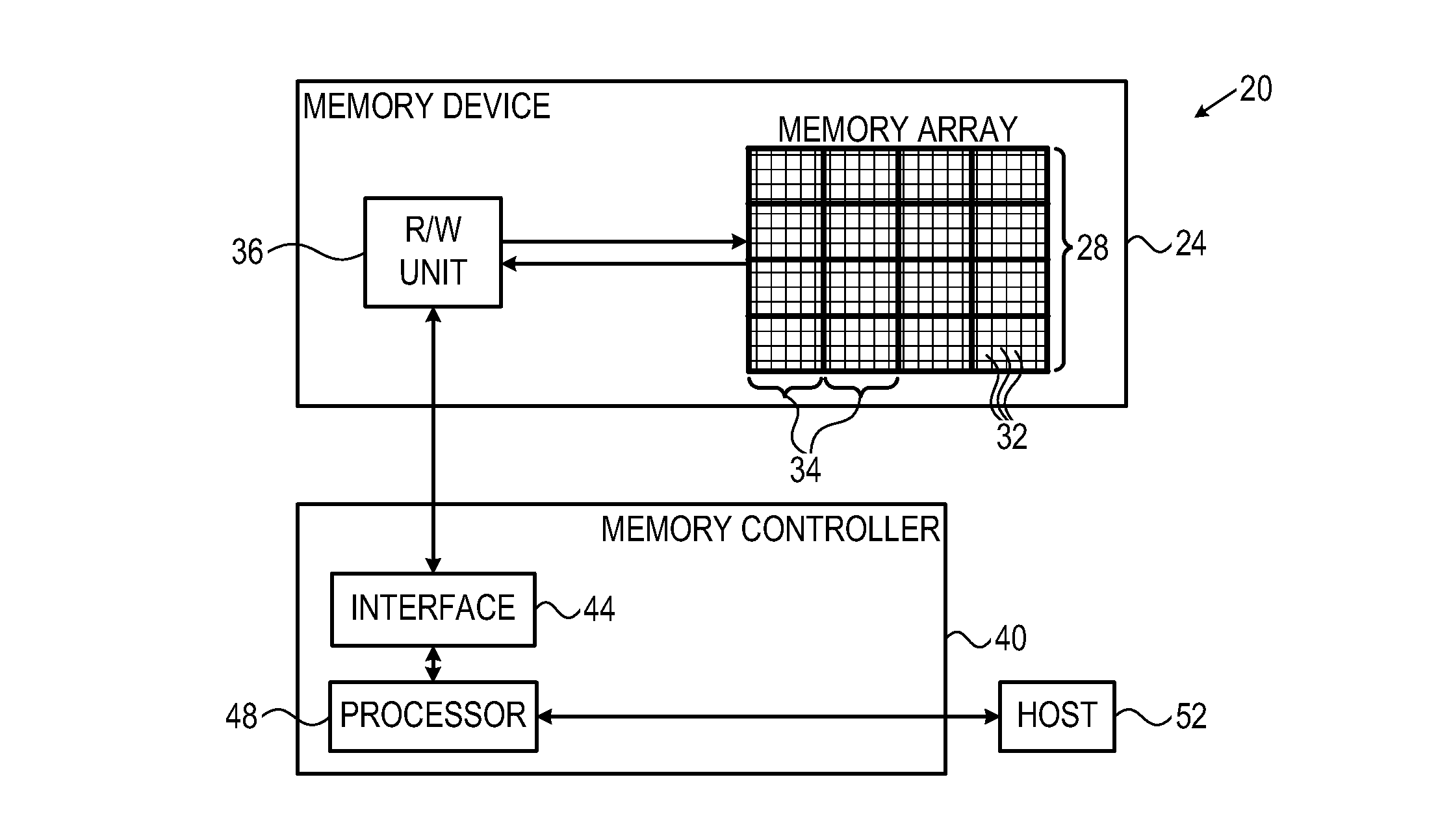

Managing unreliable memory in data storage systems

A data storage system configured to manage unreliable memory units is disclosed. In one embodiment, the data storage system maintains an unreliable memory unit list designating memory units in a non-volatile memory array as reliable or unreliable. The unreliable memory unit list facilitates management of unreliable memory at a granularity level finer than the granularity of a block of memory. The data storage system can add entries to the unreliable memory unit list as unreliable memory units are discovered. Further, the data storage system can continue to perform memory access operations directed to reliable memory units in blocks containing other memory units determined to be unreliable. As a result, the operational life of the data storage system is extended.

Owner:WESTERN DIGITAL TECH INC

High speed memory and input/output processor subsystem for efficiently allocating and using high-speed memory and slower-speed memory

ActiveUS7657706B2Easy to handleSimple taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryMemory bank

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

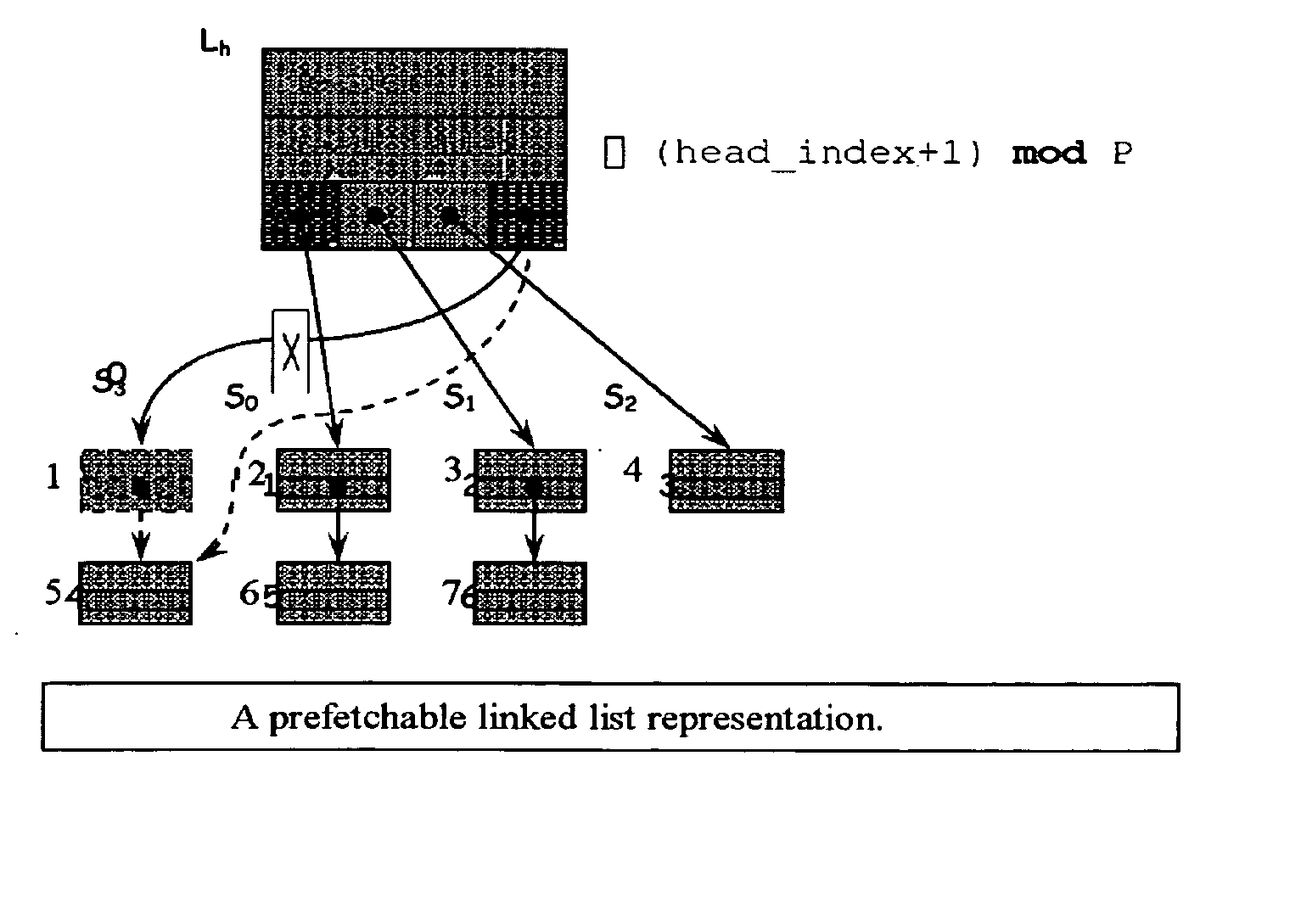

Method for prefetching recursive data structure traversals

InactiveUS20050102294A1Improve cache hit ratioPotential throughput of the computer systemDigital data information retrievalData processing applicationsOperational systemTerm memory

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. In modern transaction processing systems, database servers, operating systems, and other commercial and engineering applications, information is frequently organized in trees, graphs, and linked lists. Lack of spatial locality results in a high probability that a miss will be incurred at each cache in the memory hierarchy. Each cache miss causes the processor to stall while the referenced value is fetched from lower levels of the memory hierarchy. Because this is likely to be the case for a significant fraction of the nodes traversed in the data structure, processor utilization suffers. The inability to compute the address of the next address to be referenced makes prefetching difficult in such applications. The invention allows compilers and / or programmers to restructure data structures and traversals so that pointers are dereferenced in a pipelined manner, thereby making it possible to schedule prefetch operations in a consistent fashion. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. For data structure traversals in which the traversal path may be predetermined, a transformation is performed on the data structure that permits references to nodes that will be traversed in the future be computed sufficiently far in advance to prefetch the data into cache.

Owner:DIGITAL CACHE LLC

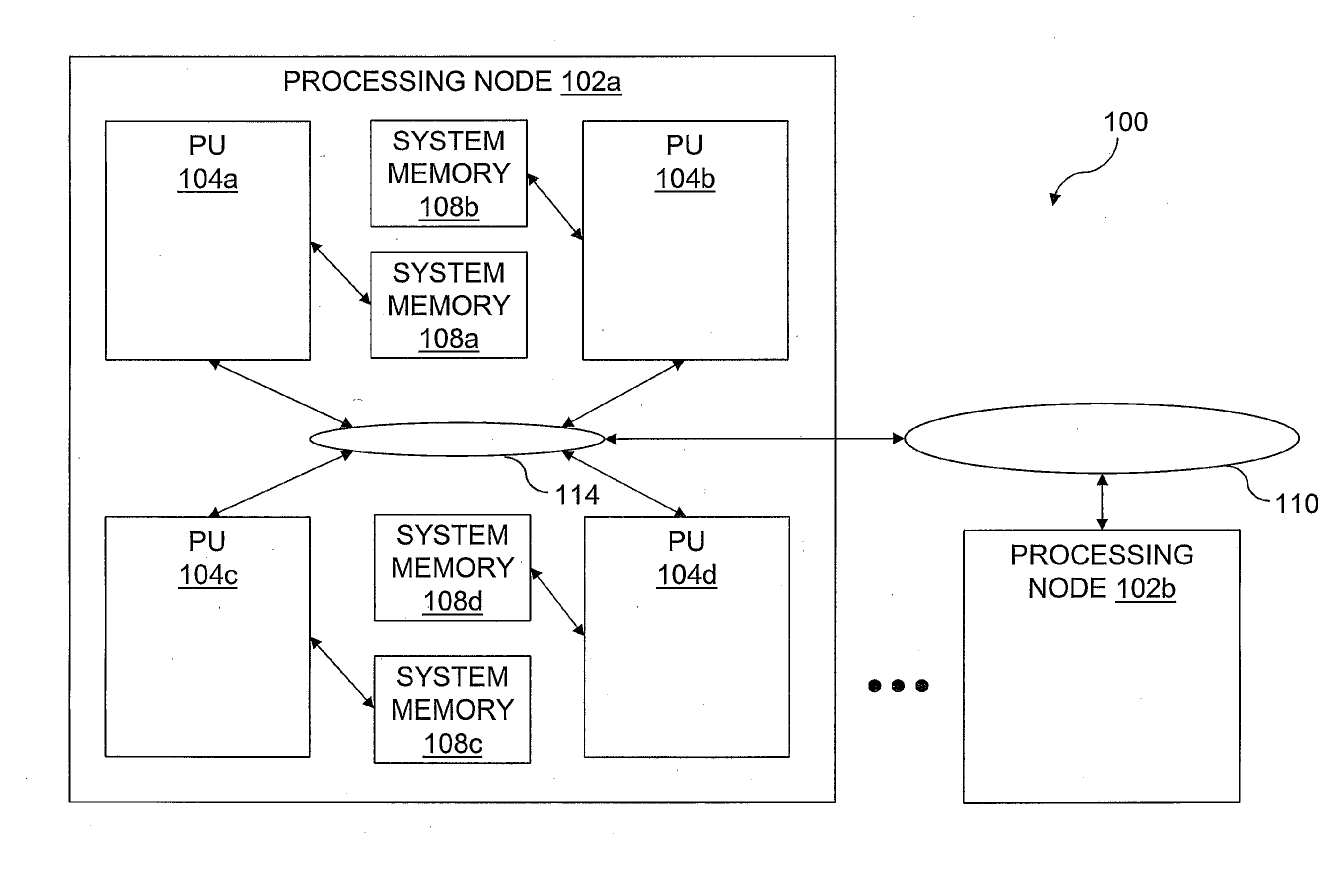

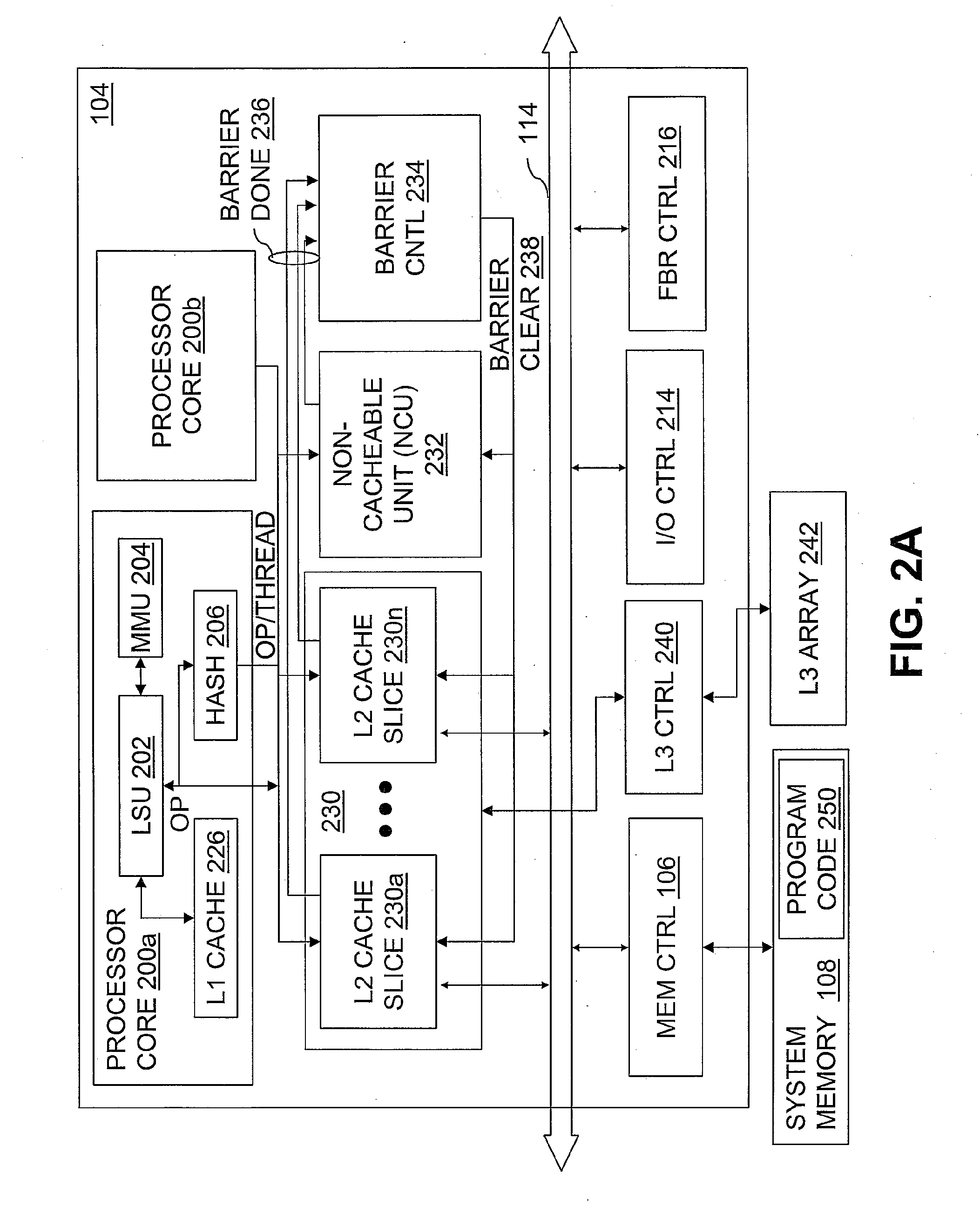

Data Processing System and Method for Reducing Cache Pollution by Write Stream Memory Access Patterns

InactiveUS20080046736A1User identity/authority verificationMemory systemsData processing systemCache hierarchy

A data processing system includes a system memory and a cache hierarchy that caches contents of the system memory. According to one method of data processing, a storage modifying operation having a cacheable target real memory address is received. A determination is made whether or not the storage modifying operation has an associated bypass indication. In response to determining that the storage modifying operation has an associated bypass indication, the cache hierarchy is bypassed, and an update indicated by the storage modifying operation is performed in the system memory. In response to determining that the storage modifying operation does not have an associated bypass indication, the update indicated by the storage modifying operation is performed in the cache hierarchy.

Owner:IBM CORP

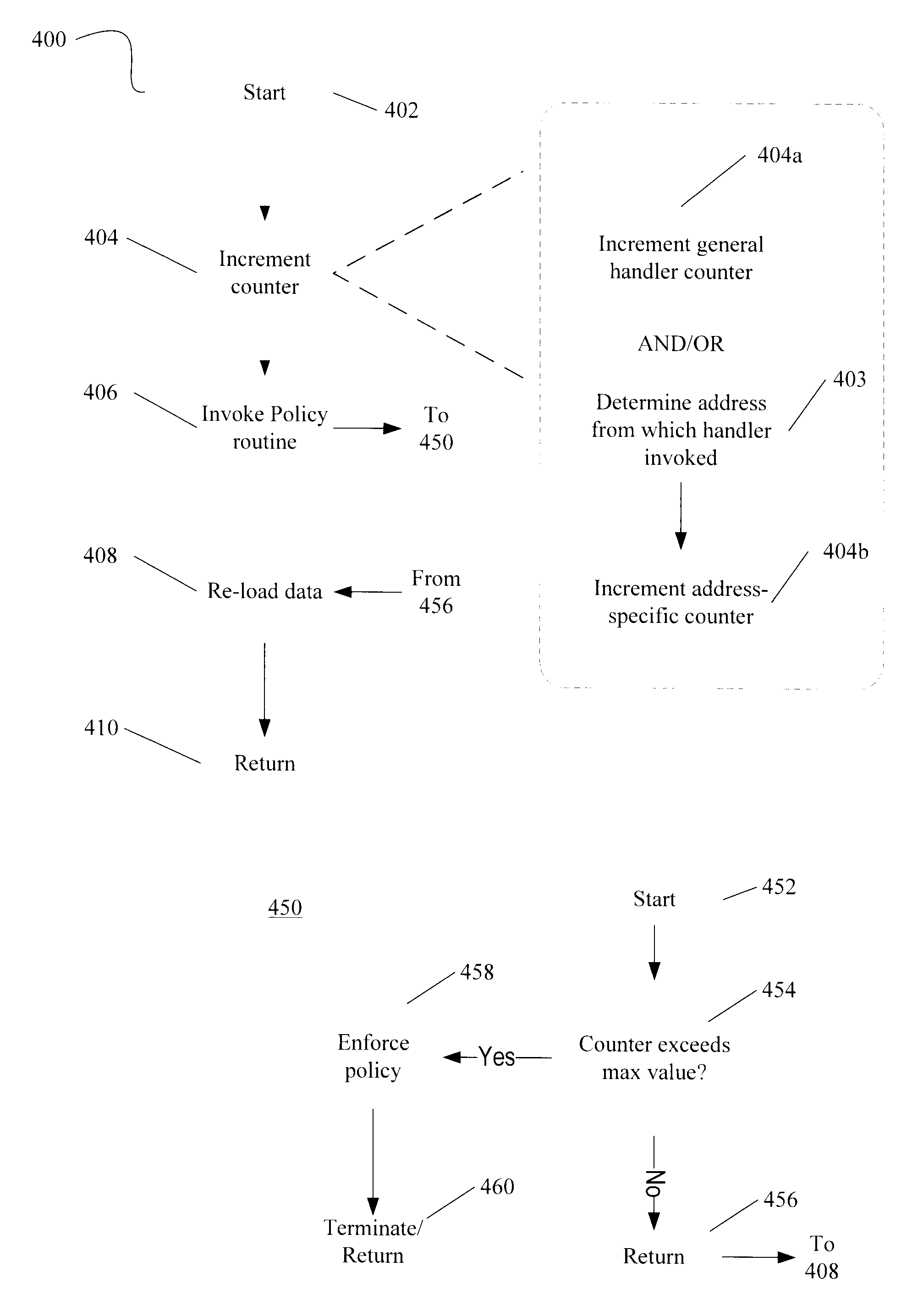

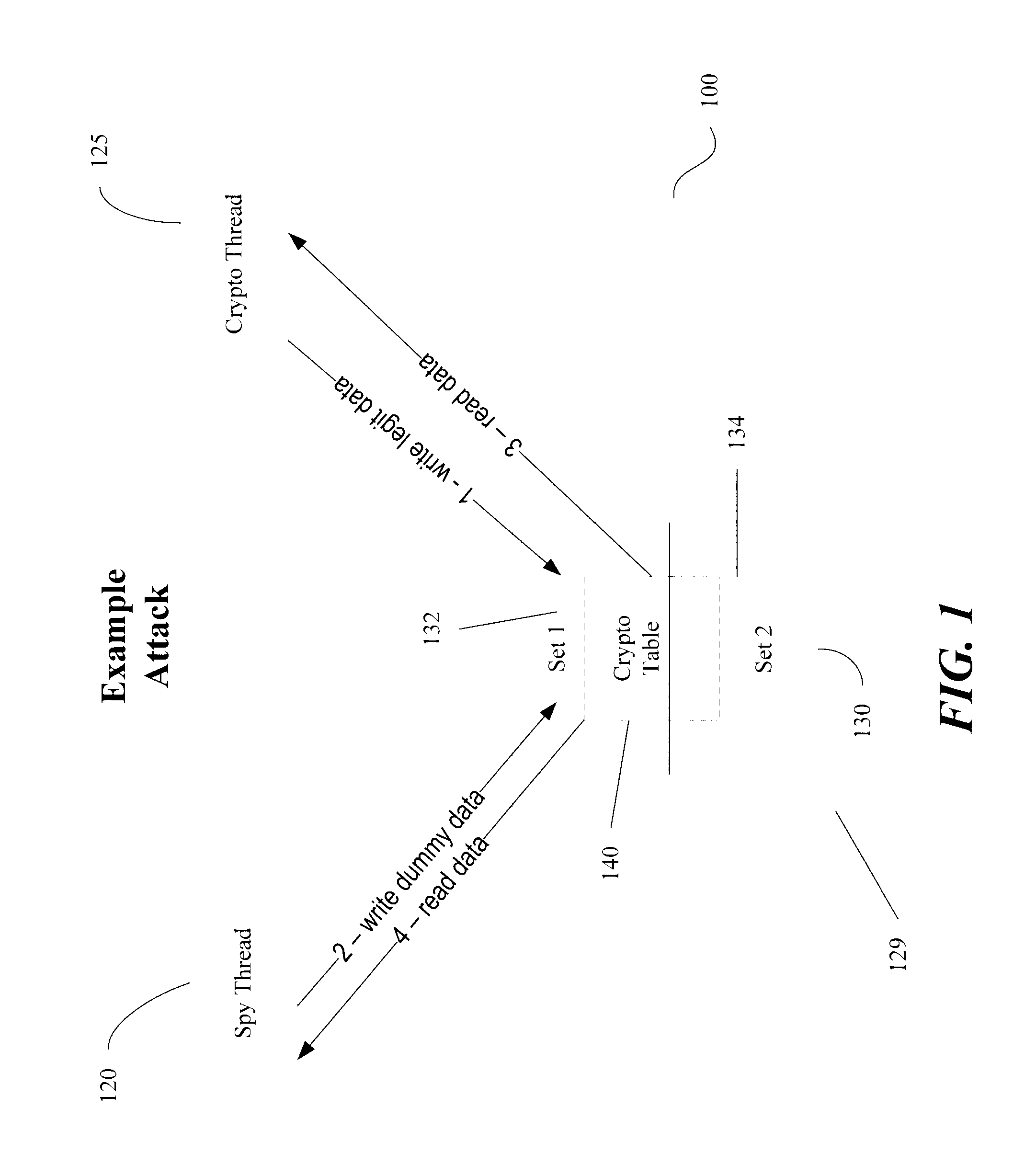

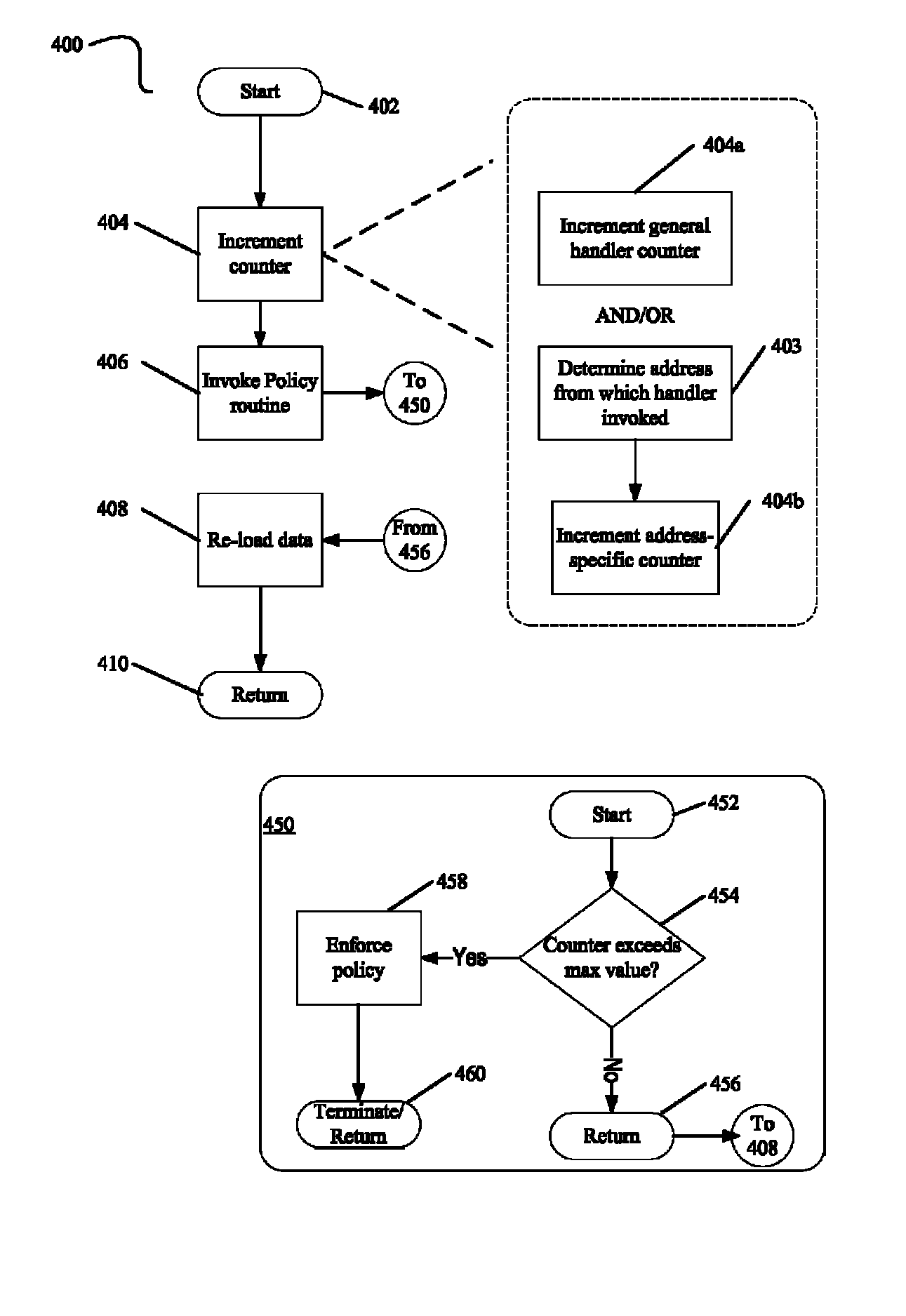

Obscuring Memory Access Patterns in Conjunction with Deadlock Detection or Avoidance

ActiveUS20090172304A1Memory architecture accessing/allocationComputer security arrangementsMonitoring featuresDeadlock

Methods, apparatus and systems for memory access obscuration are provided. A first embodiment provides memory access obscuration in conjunction with deadlock avoidance. Such embodiment utilizes processor features including an instruction to enable monitoring of specified cache lines and an instruction that sets a status bit responsive to any foreign access (e.g., write or eviction due to a read) to the specified lines. A second embodiment provides memory access obscuration in conjunction with deadlock detection. Such embodiment utilizes the monitoring feature, as well as handler registration. A user-level handler may be asynchronously invoked responsive to a foreign write to any of the specified lines. Invocation of the handler more frequently than expected indicates that a deadlock may have been encountered. In such case, a deadlock policy may be enforced. Other embodiments are also described and claimed.

Owner:INTEL CORP

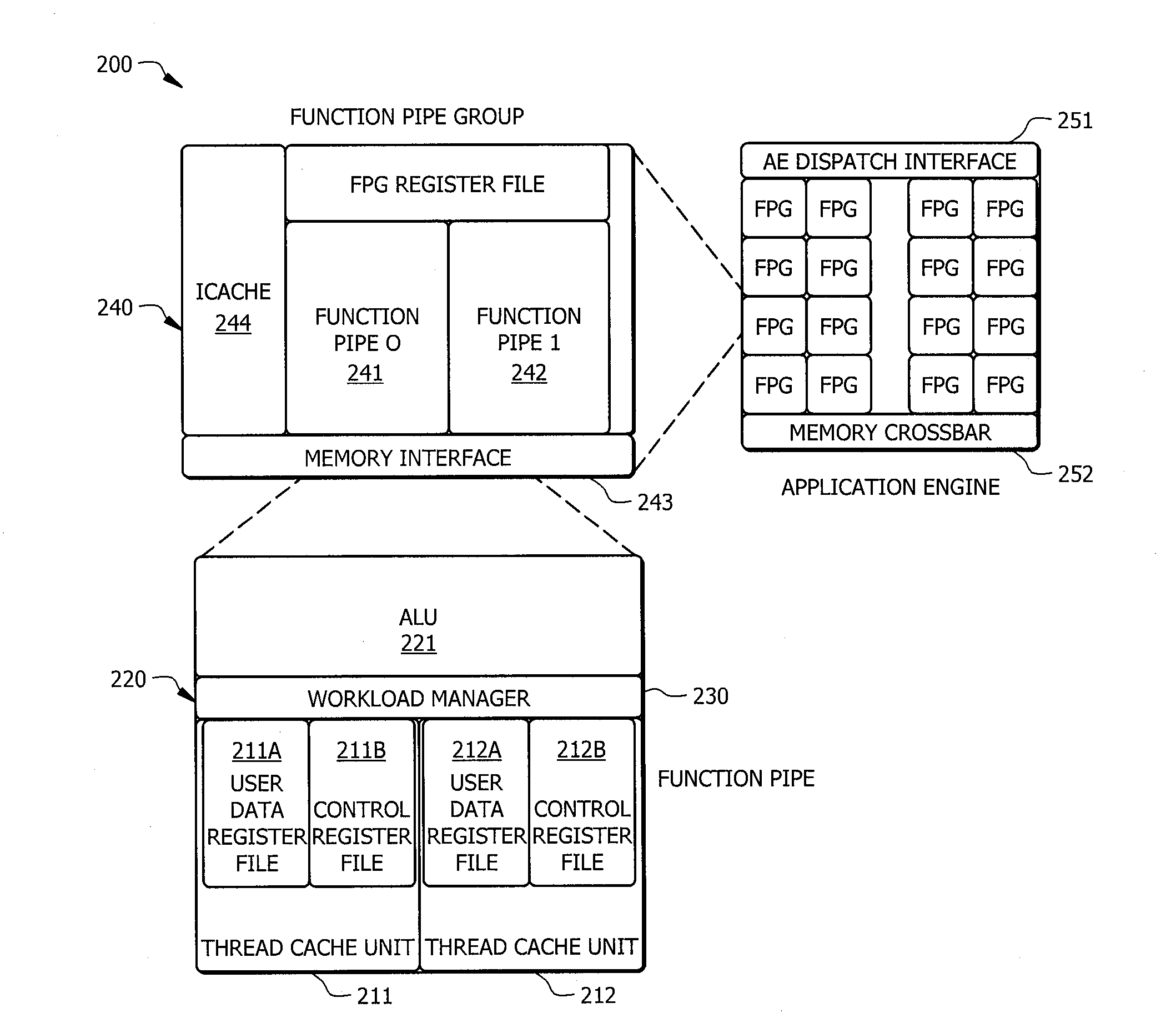

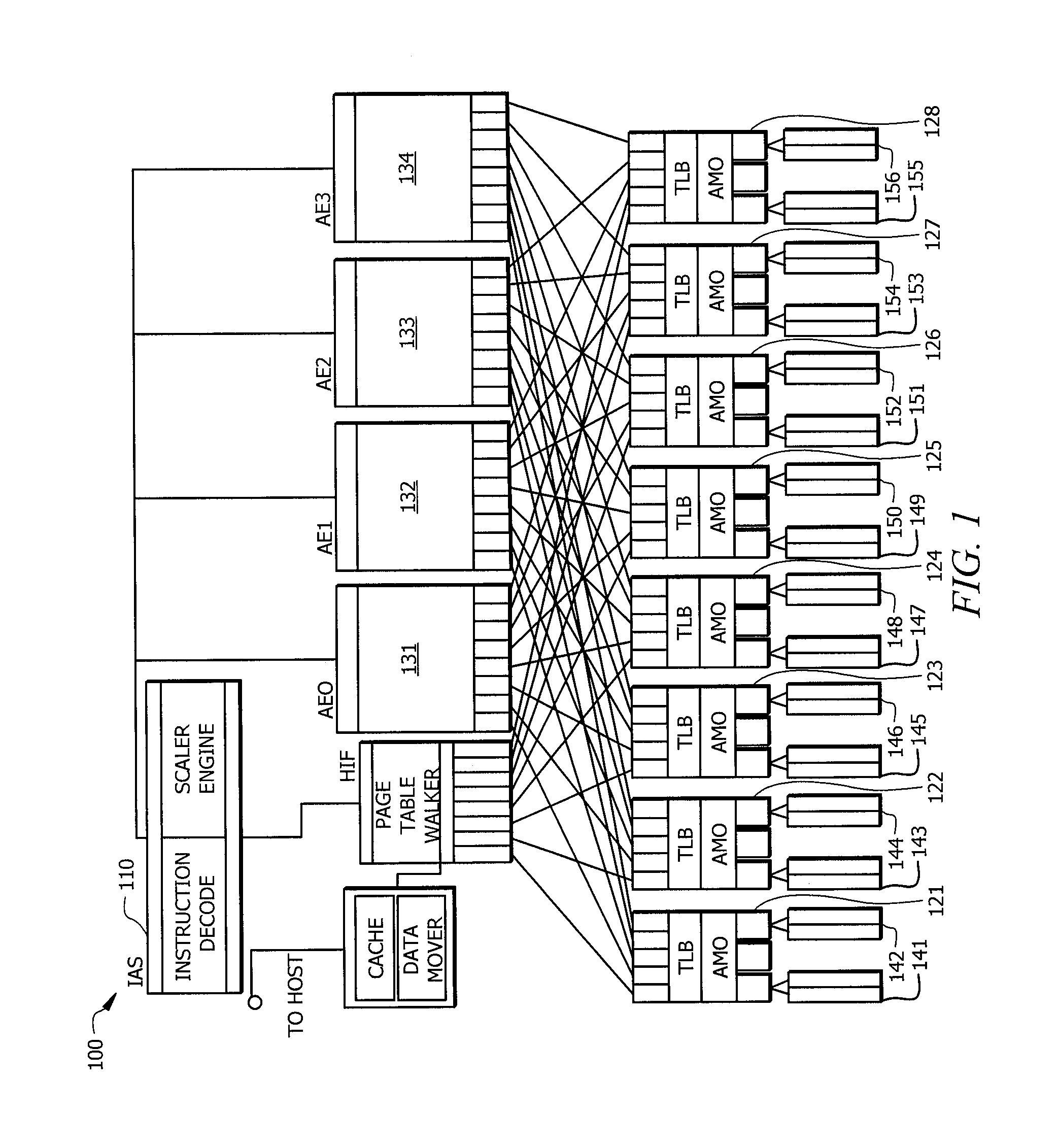

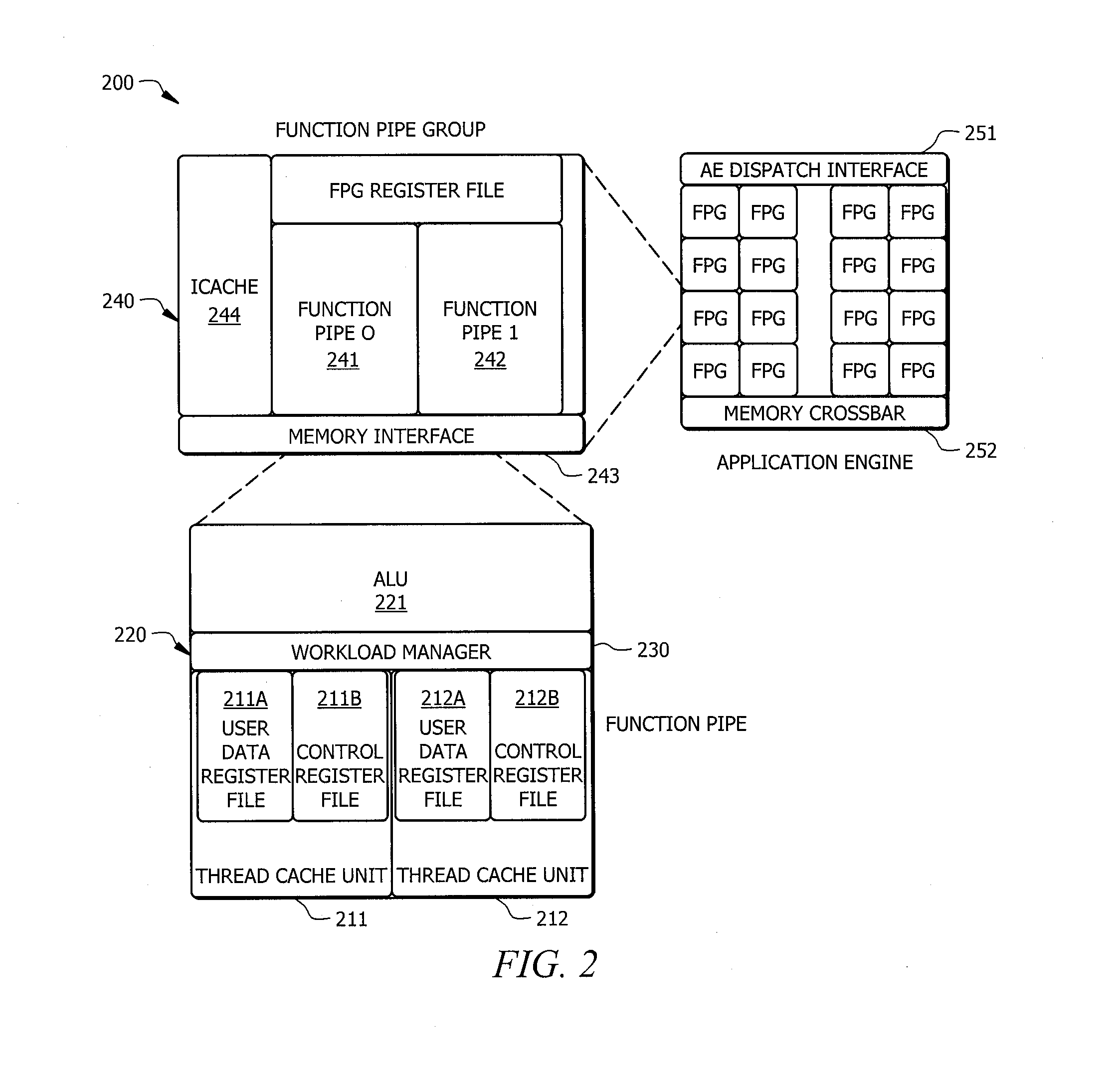

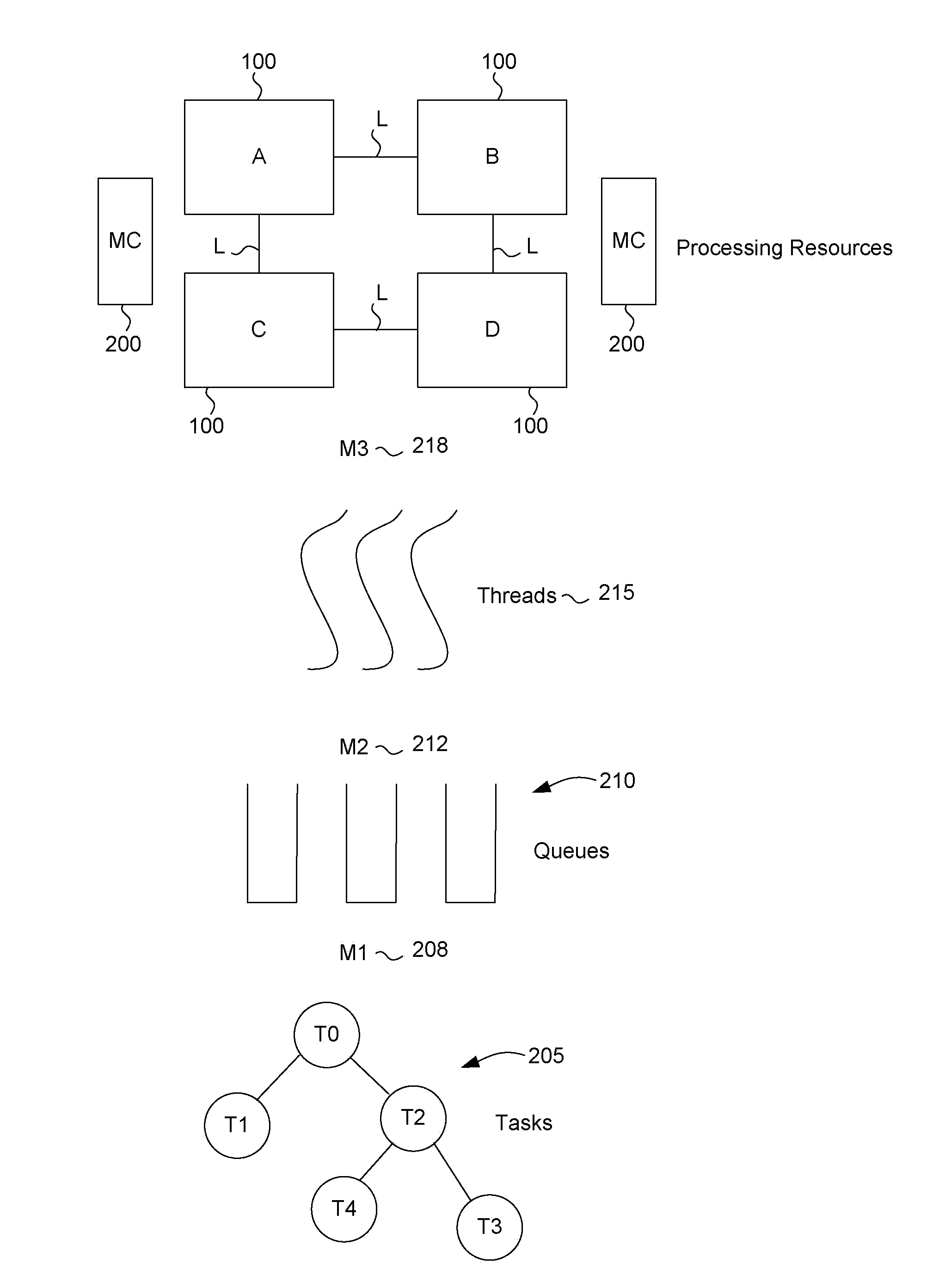

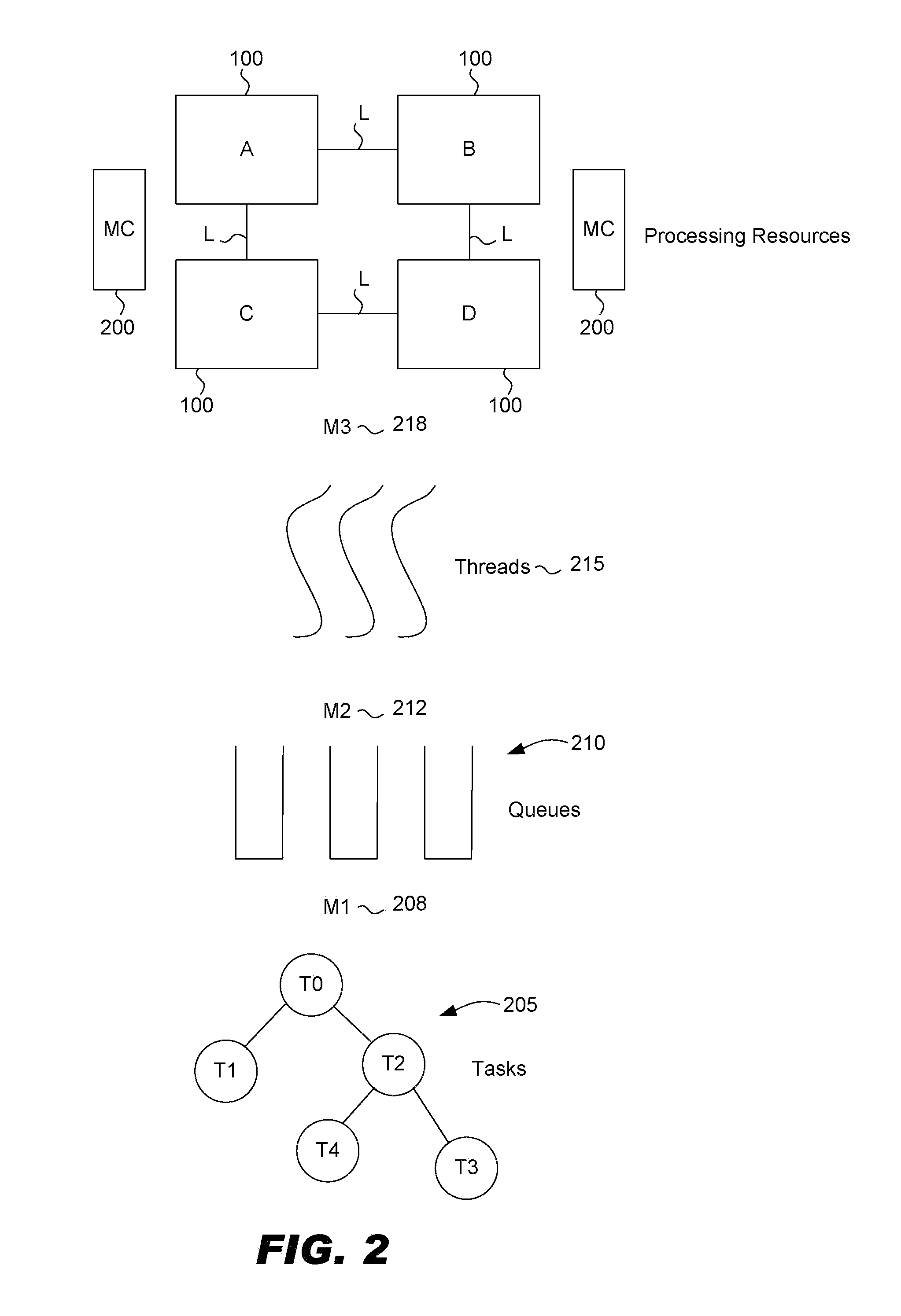

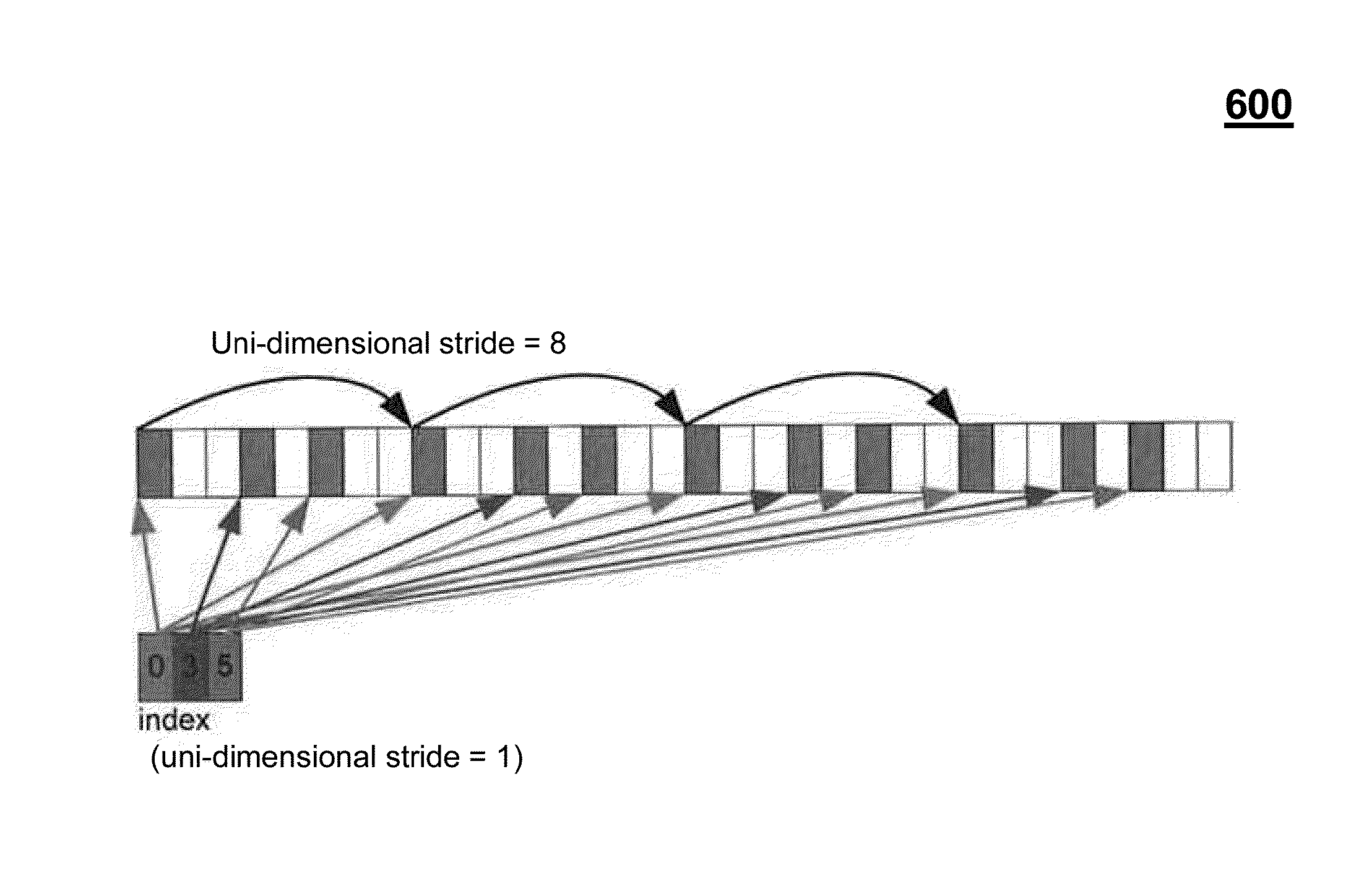

Systems and methods for efficient scheduling of concurrent applications in multithreaded processors

ActiveUS20130332711A1Efficient executionEasy to addInstruction analysisDigital computer detailsInstruction set designConcurrency control

Systems and methods which provide a modular processor framework and instruction set architecture designed to efficiently execute applications whose memory access patterns are irregular or non-unit stride as disclosed. A hybrid multithreading framework (HMTF) of embodiments provides a framework for constructing tightly coupled, chip-multithreading (CMT) processors that contain specific features well-suited to hiding latency to main memory and executing highly concurrent applications. The HMTF of embodiments includes an instruction set designed specifically to exploit the high degree of parallelism and concurrency control mechanisms present in the HMTF hardware modules. The instruction format implemented by a HMTF of embodiments is designed to give the architecture, the runtime libraries, and / or the application ultimate control over how and when concurrency between thread cache units is initiated. For example, one or more bit of the instruction payload may be designated as a context switch bit (CTX) for expressly controlling context switching.

Owner:MICRON TECH INC

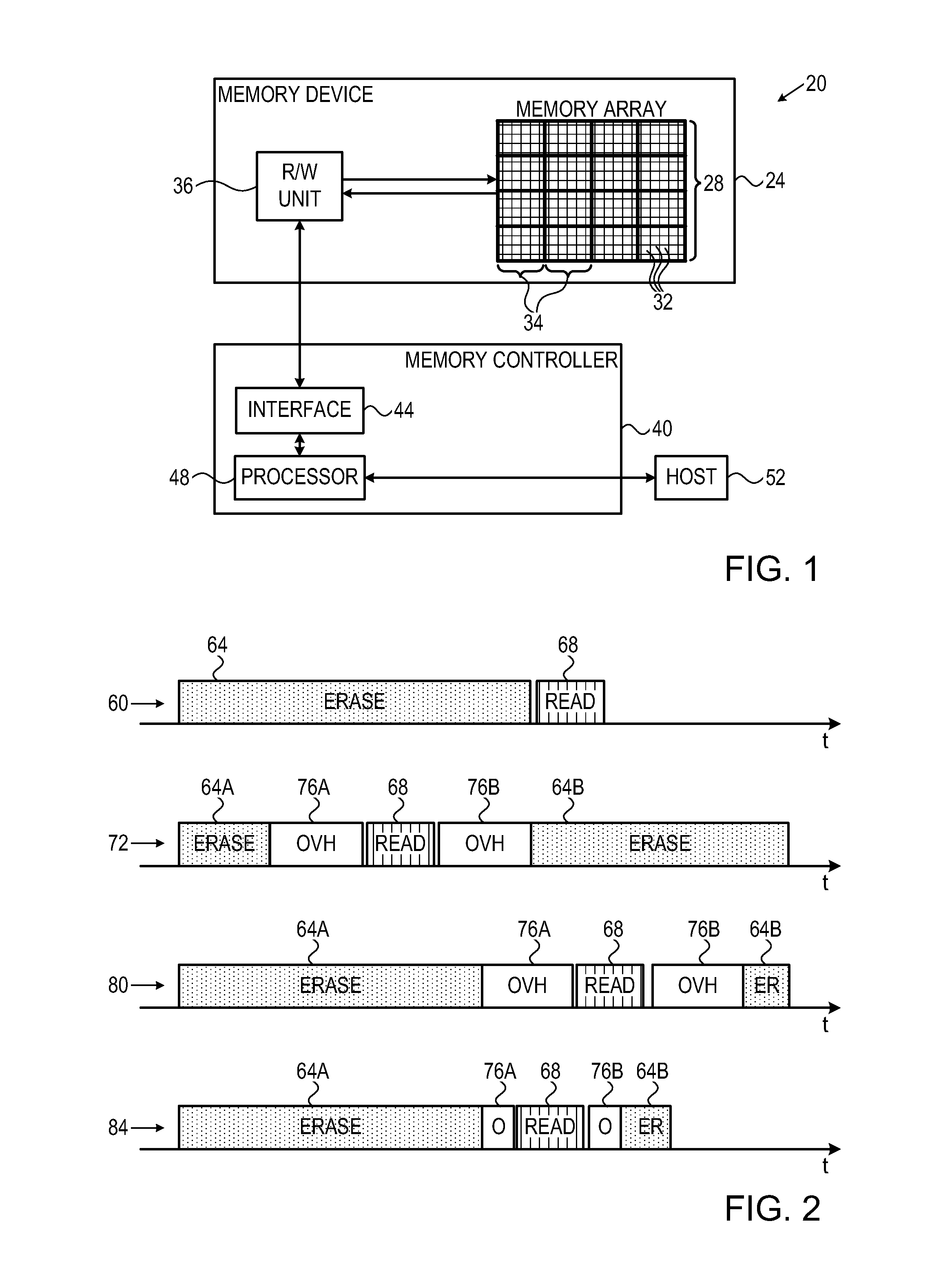

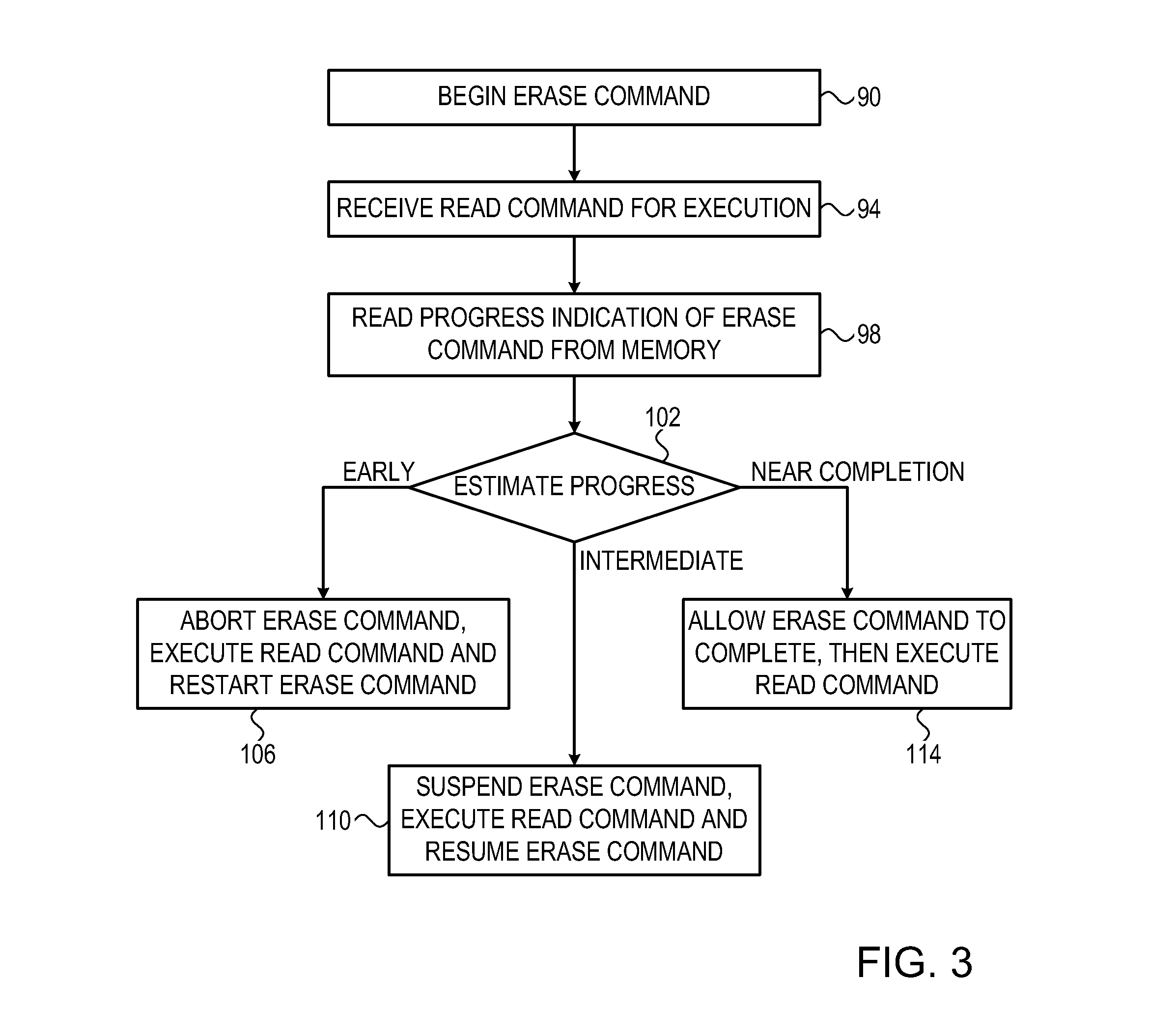

Efficient suspend-resume operation in memory devices

Owner:APPLE INC

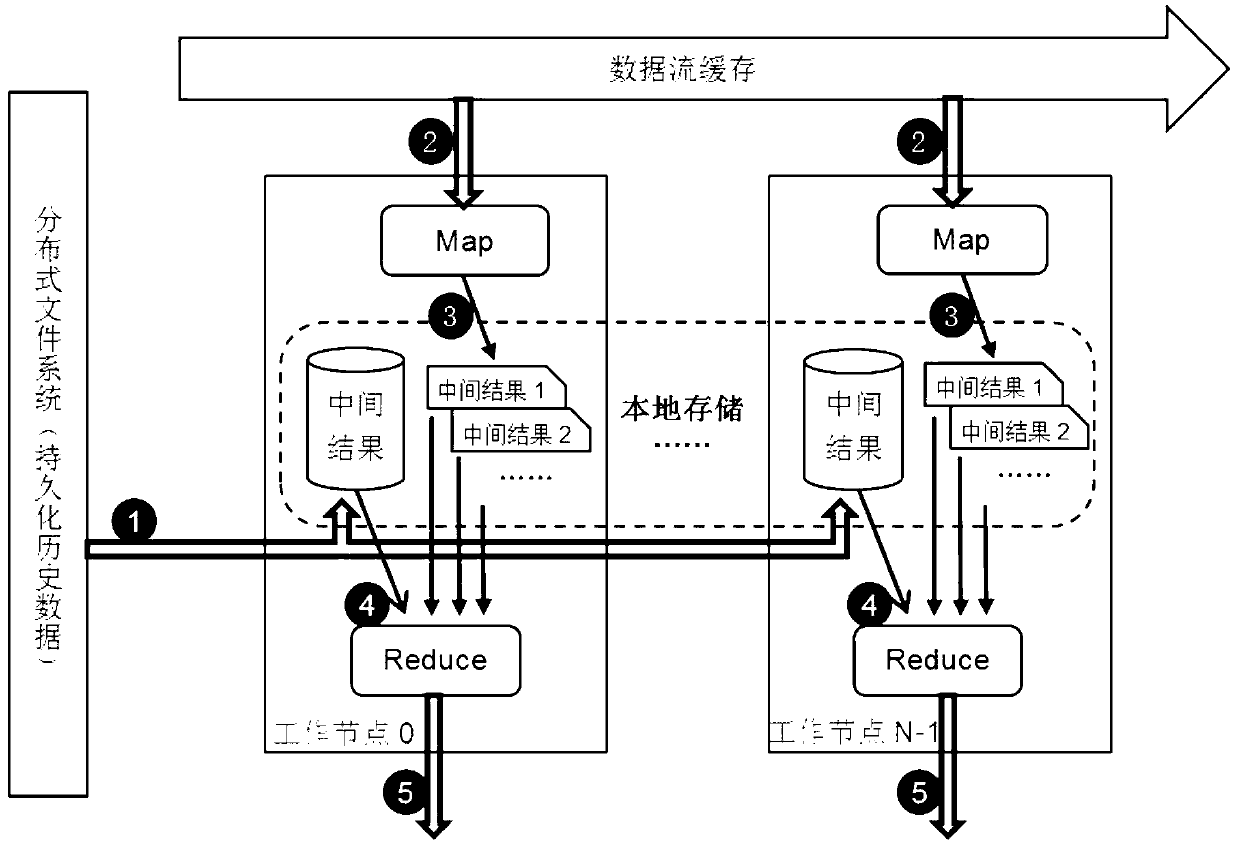

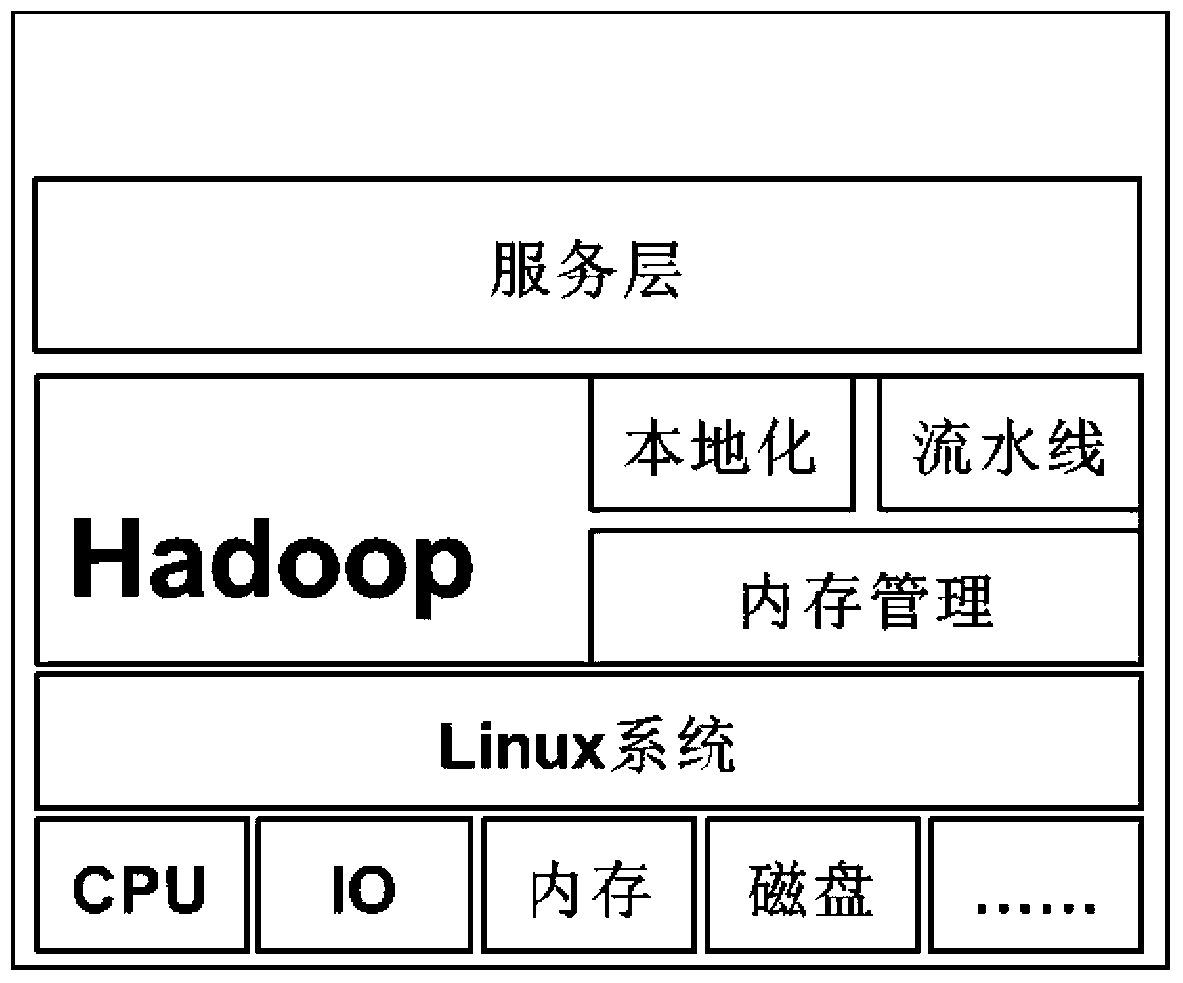

Streamed data processing method in big data environment

InactiveCN103345514AEasy to handleUniform loadSpecial data processing applicationsSpecific program execution arrangementsProcess mechanismStream data

Owner:FOCUS TECH +1

Obscuring memory access patterns in conjunction with deadlock detection or avoidance

ActiveUS8407425B2Memory architecture accessing/allocationComputer security arrangementsMonitoring featuresDeadlock

Methods, apparatus and systems for memory access obscuration are provided. A first embodiment provides memory access obscuration in conjunction with deadlock avoidance. Such embodiment utilizes processor features including an instruction to enable monitoring of specified cache lines and an instruction that sets a status bit responsive to any foreign access (e.g., write or eviction due to a read) to the specified lines. A second embodiment provides memory access obscuration in conjunction with deadlock detection. Such embodiment utilizes the monitoring feature, as well as handler registration. A user-level handler may be asynchronously invoked responsive to a foreign write to any of the specified lines. Invocation of the handler more frequently than expected indicates that a deadlock may have been encountered. In such case, a deadlock policy may be enforced. Other embodiments are also described and claimed.

Owner:INTEL CORP

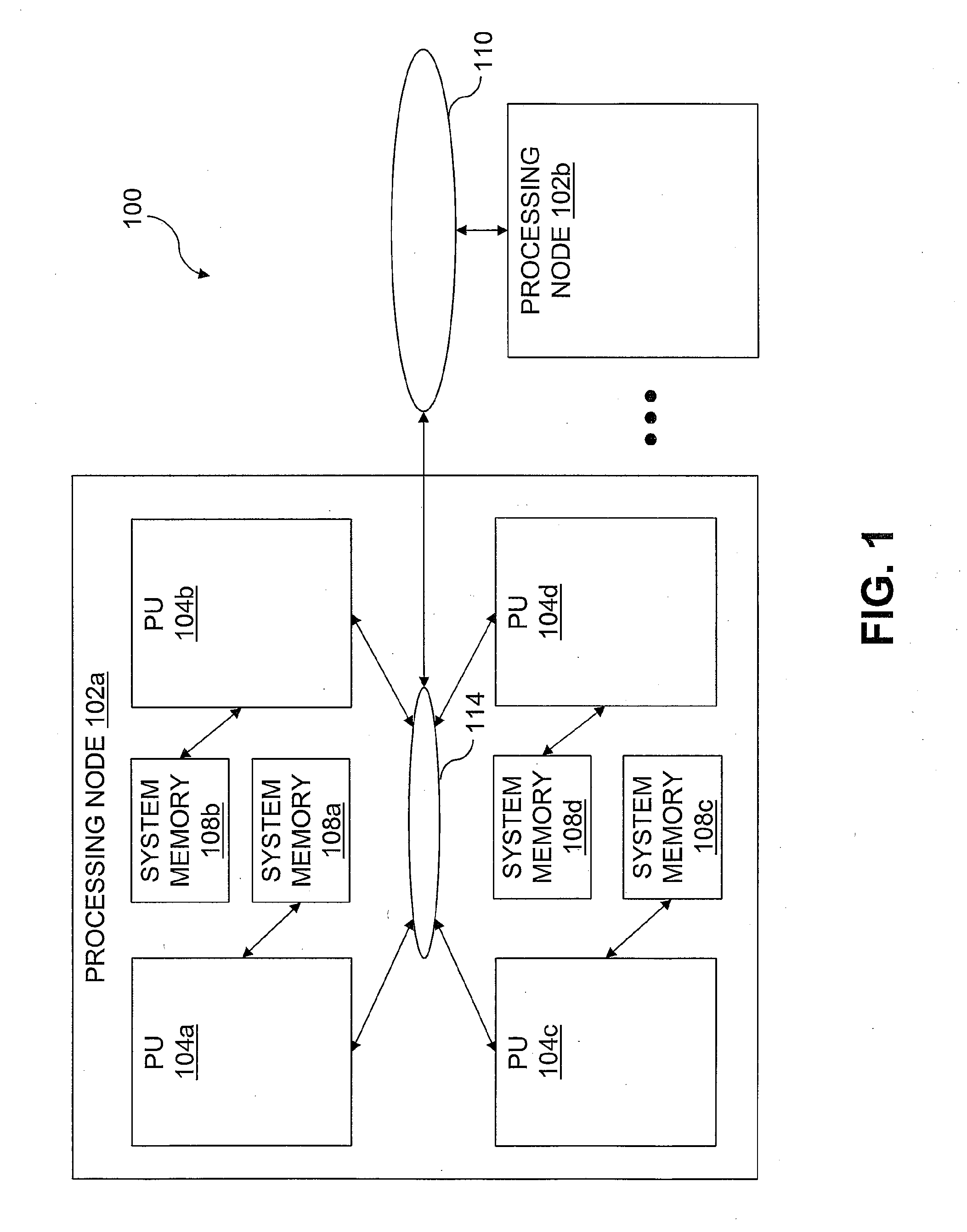

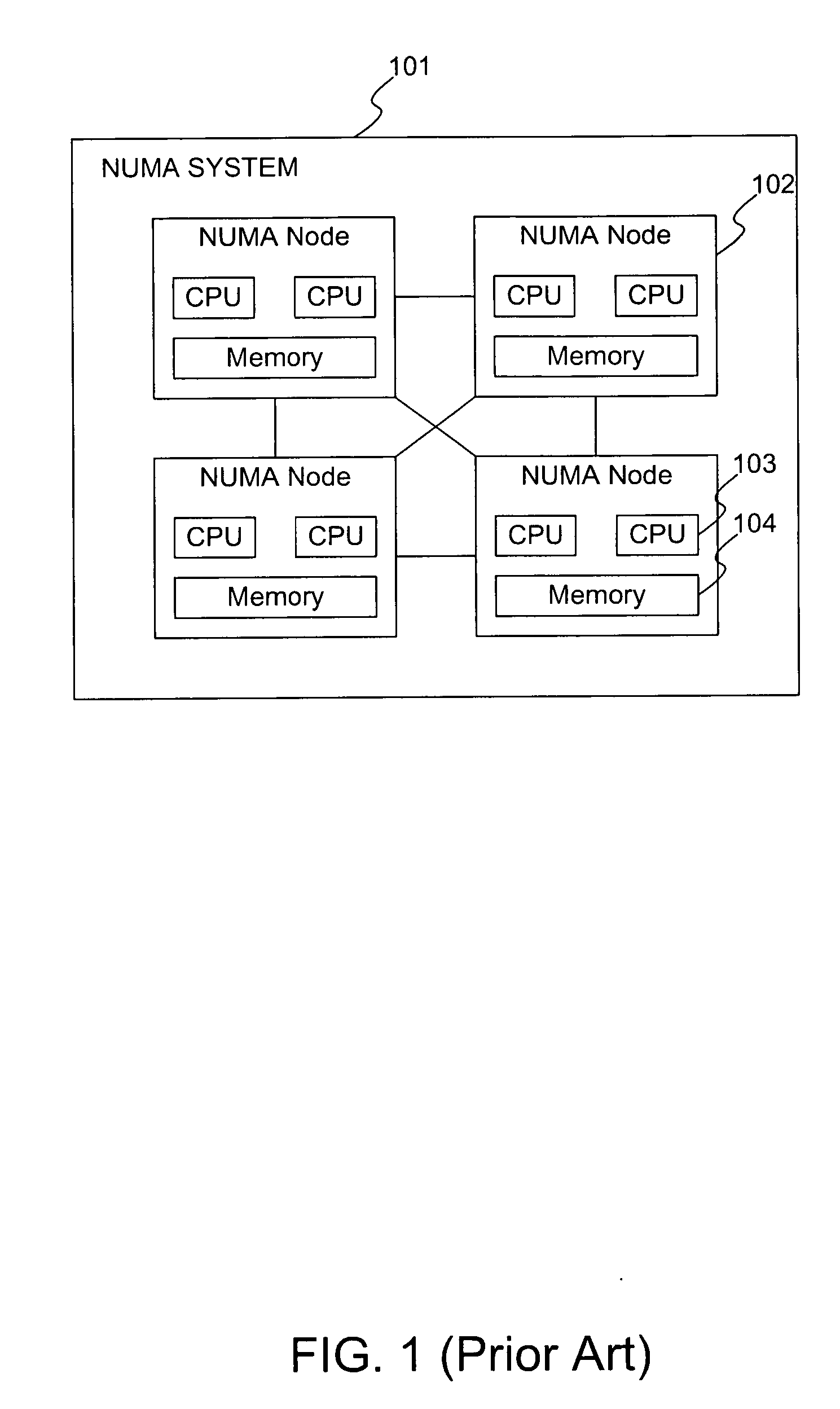

Numa aware system task management

ActiveUS20120102500A1Reduces memory access costMinimal costResource allocationMemory systemsMemory controllerMajorization minimization

Task management in a Non-Uniform Memory Access (NUMA) architecture having multiple processor cores is aware of the NUMA topology in task management. As a result memory access penalties are reduced. Each processor is assigned to a zone allocated to a memory controller. The zone assignment is based on a cost function. In a default mode a thread of execution attempts to perform work in a queue of the same zone as the processor to minimize memory access penalties. Additional work stealing rules may be invoked if there is no work for a thread to perform from its default zone queue.

Owner:SAMSUNG ELECTRONICS CO LTD

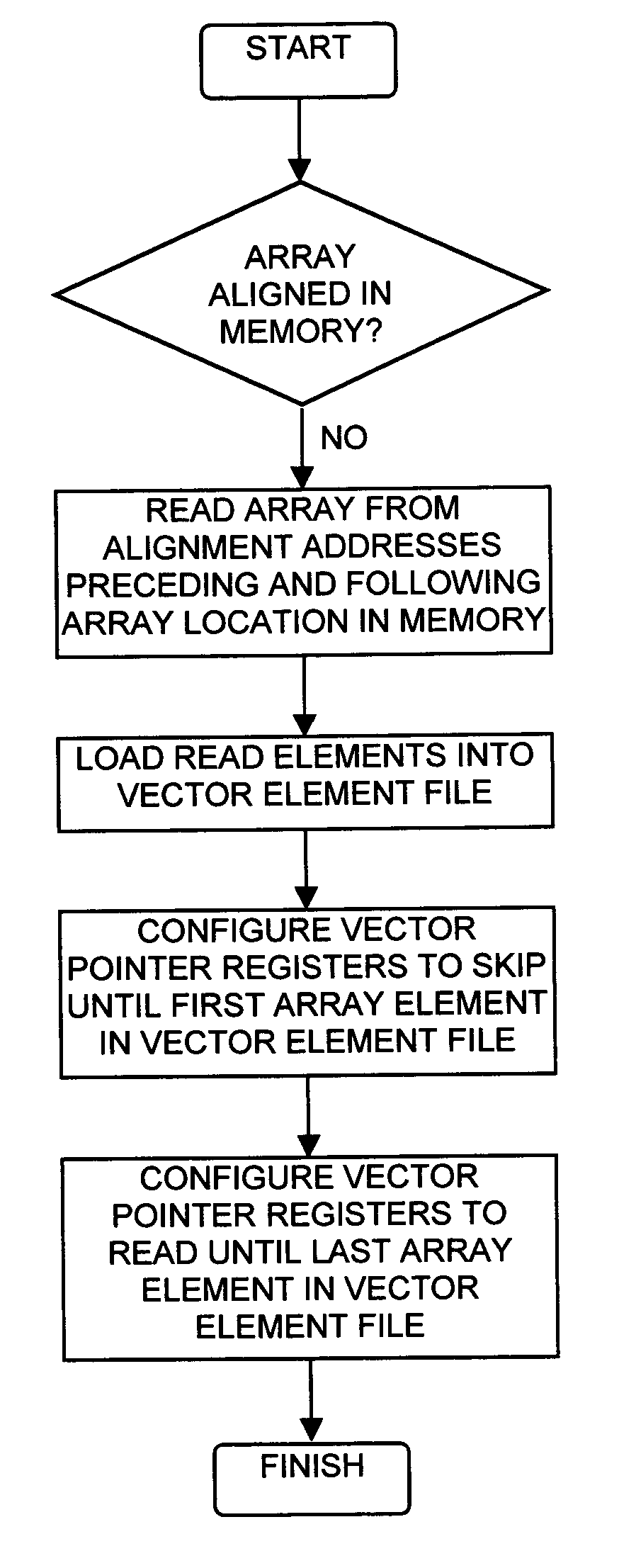

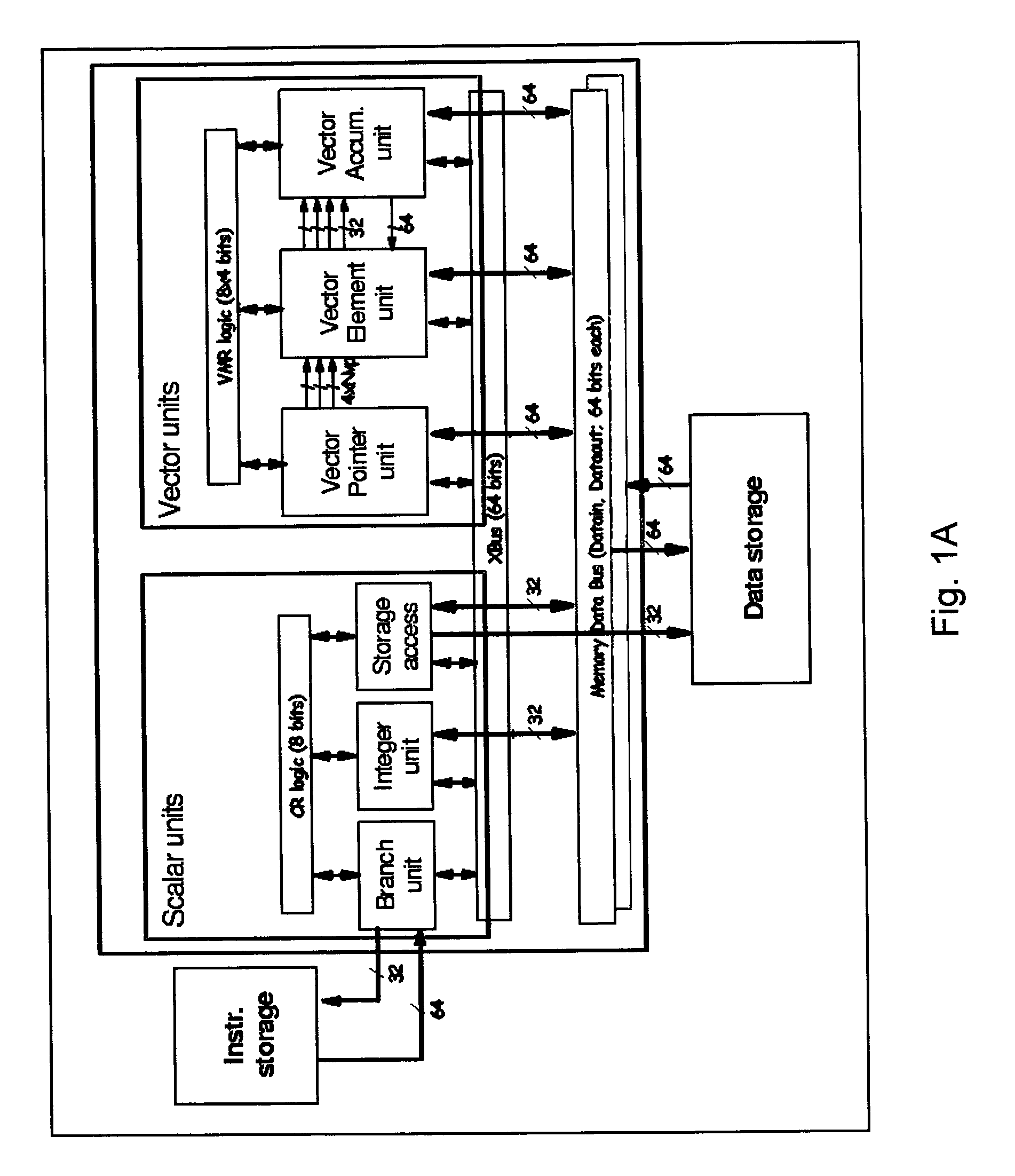

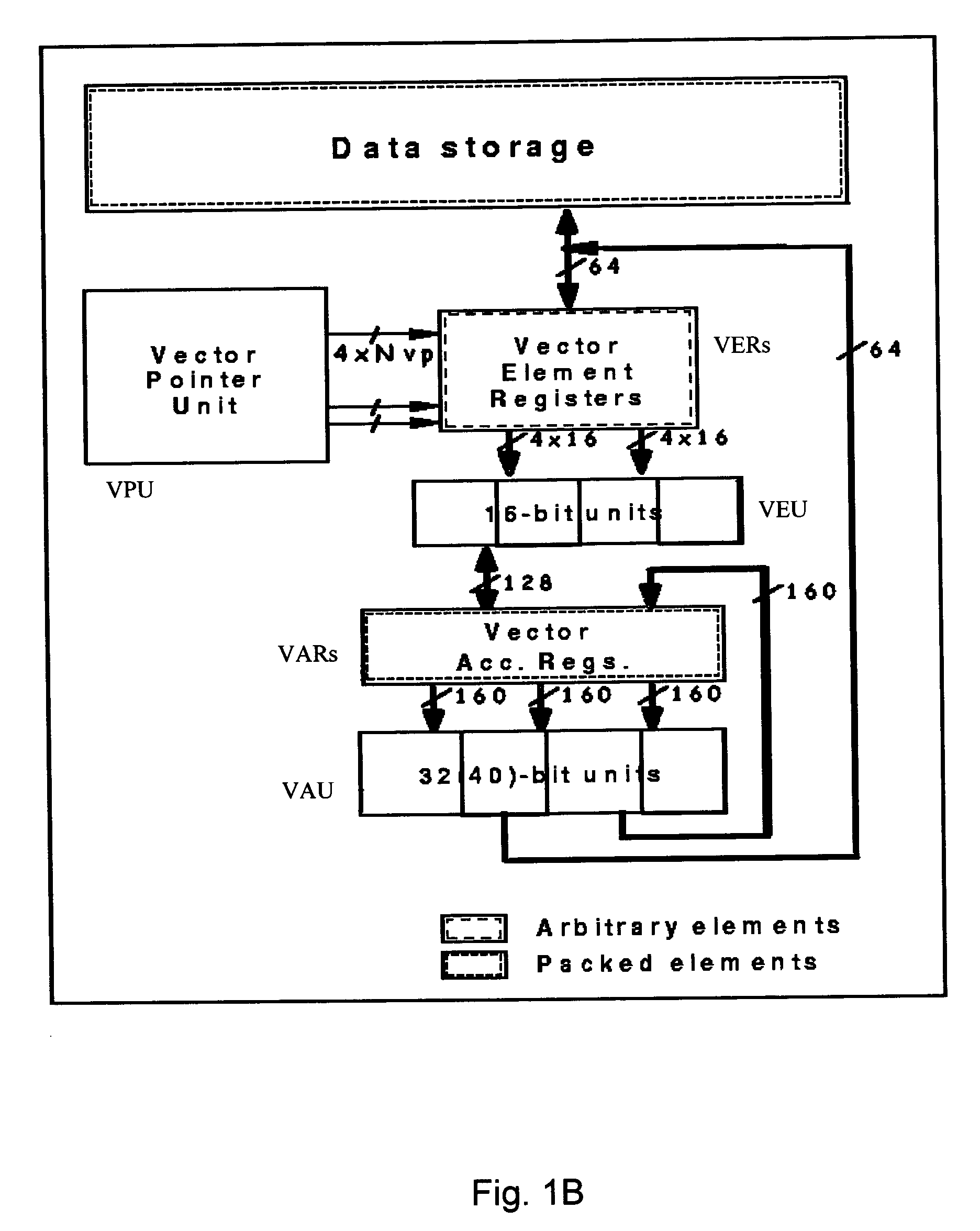

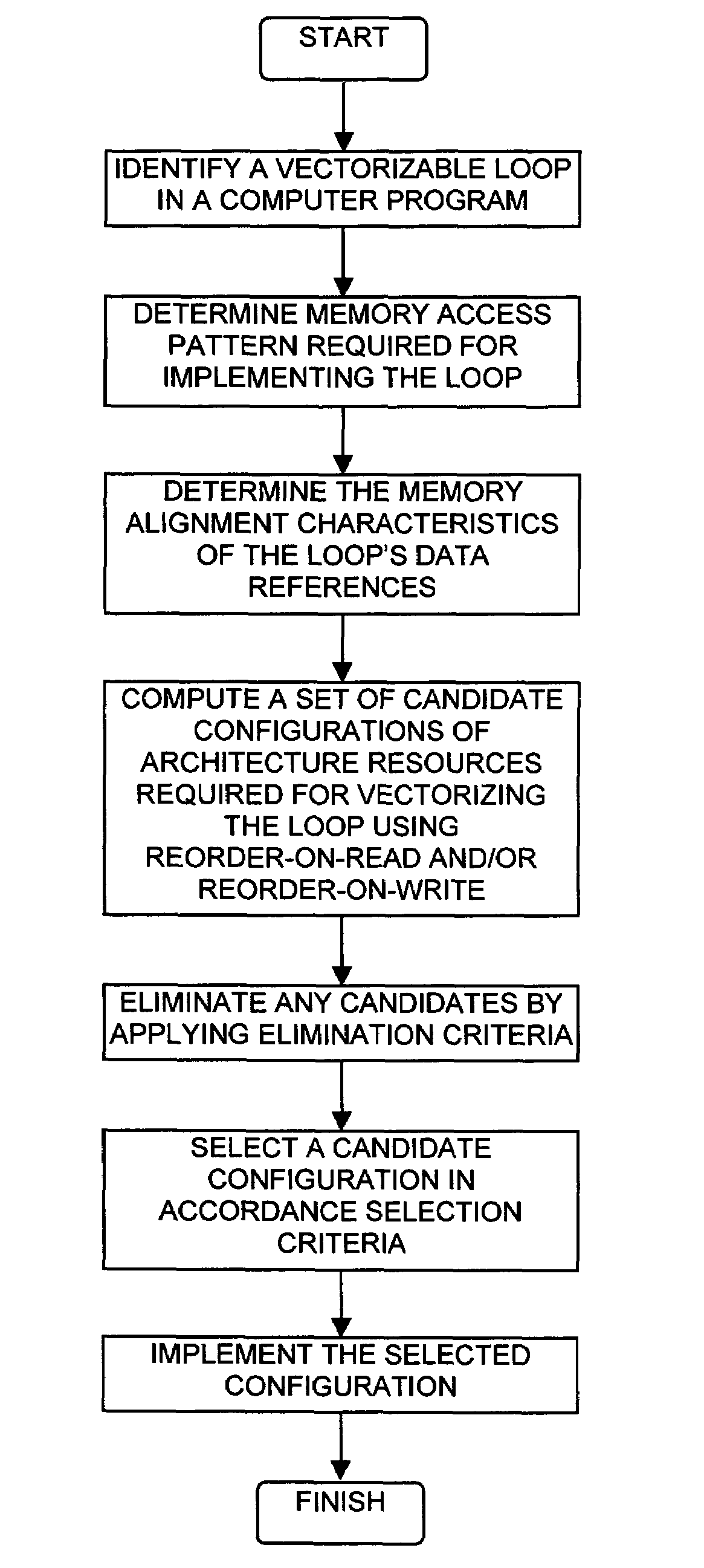

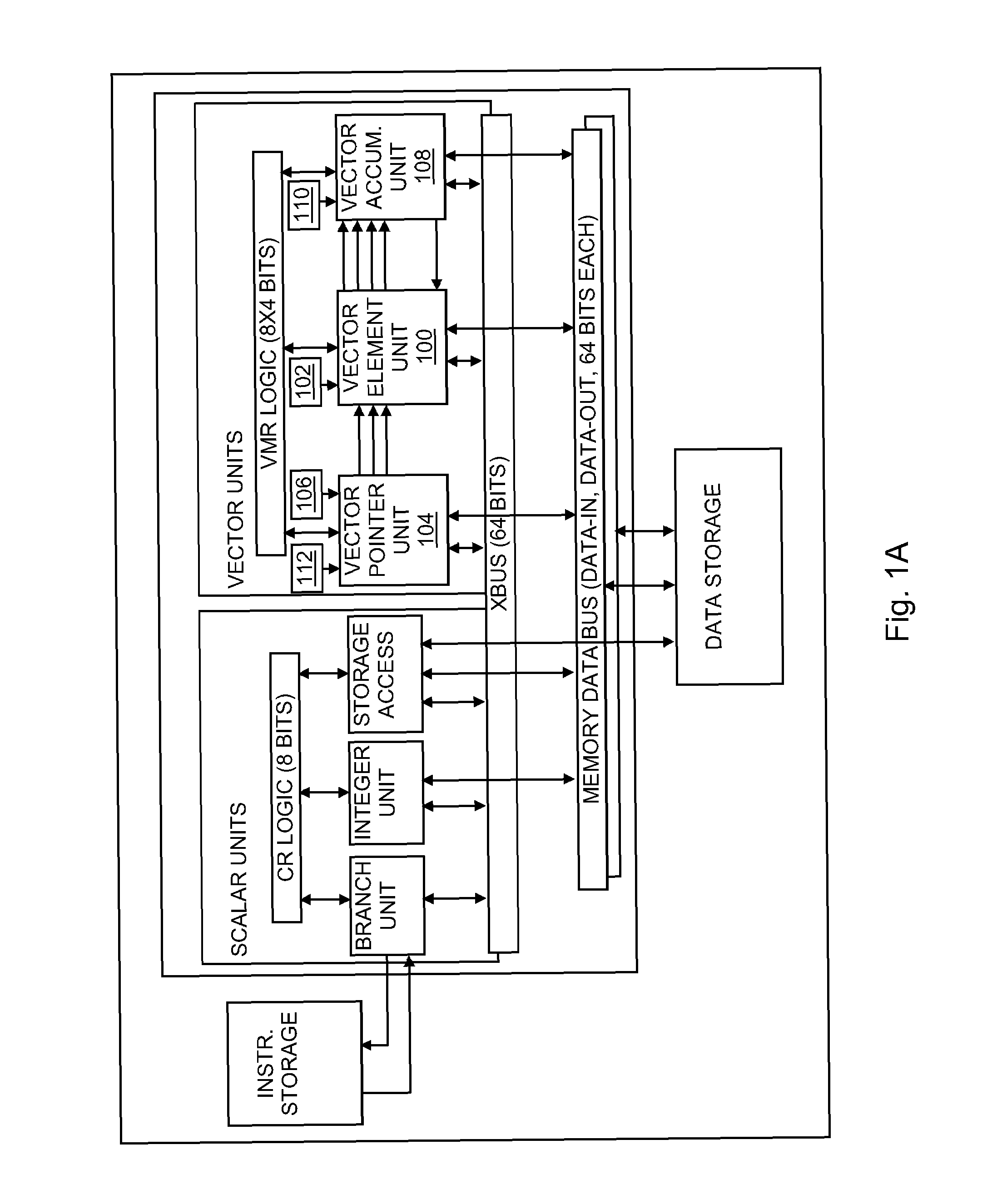

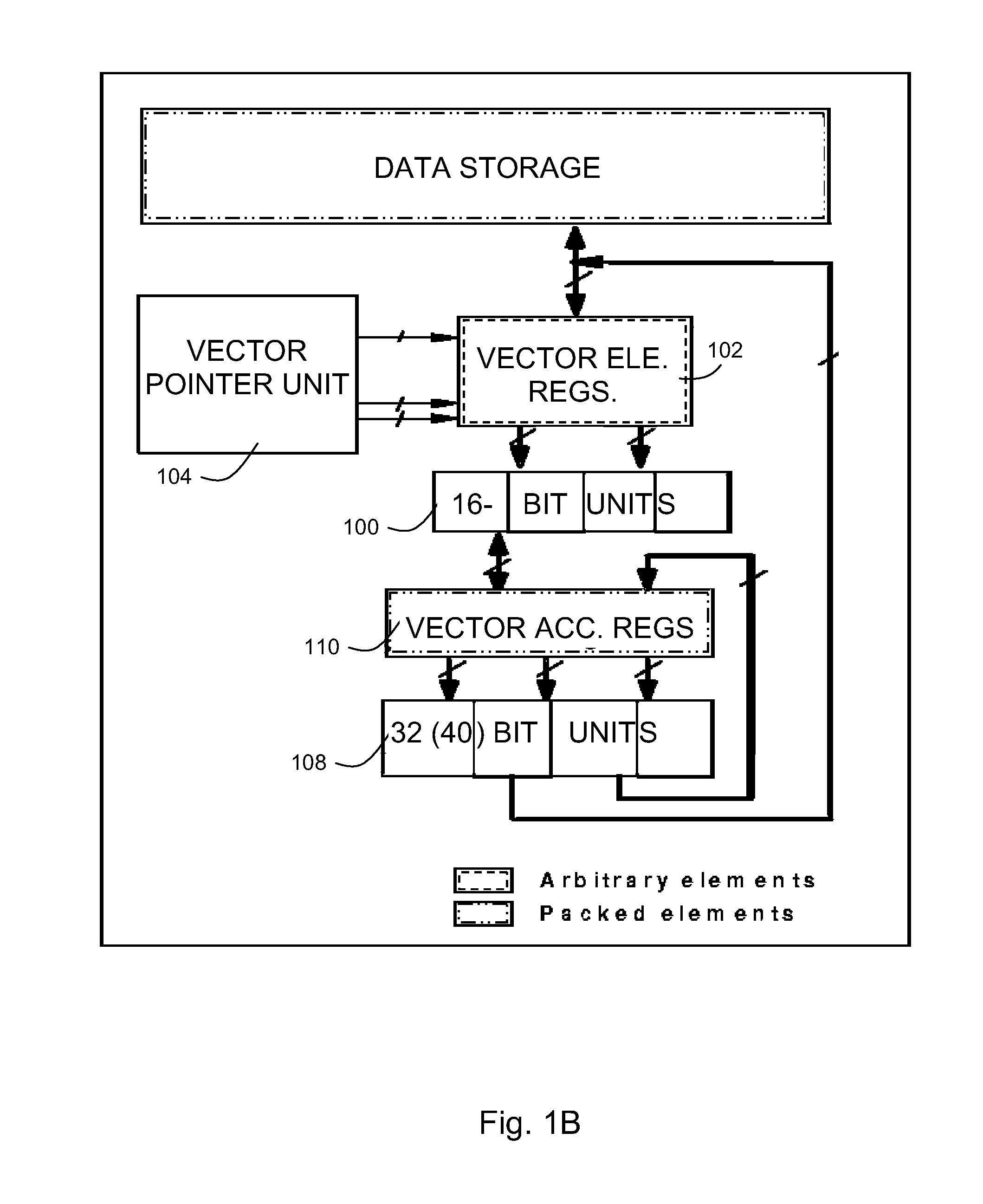

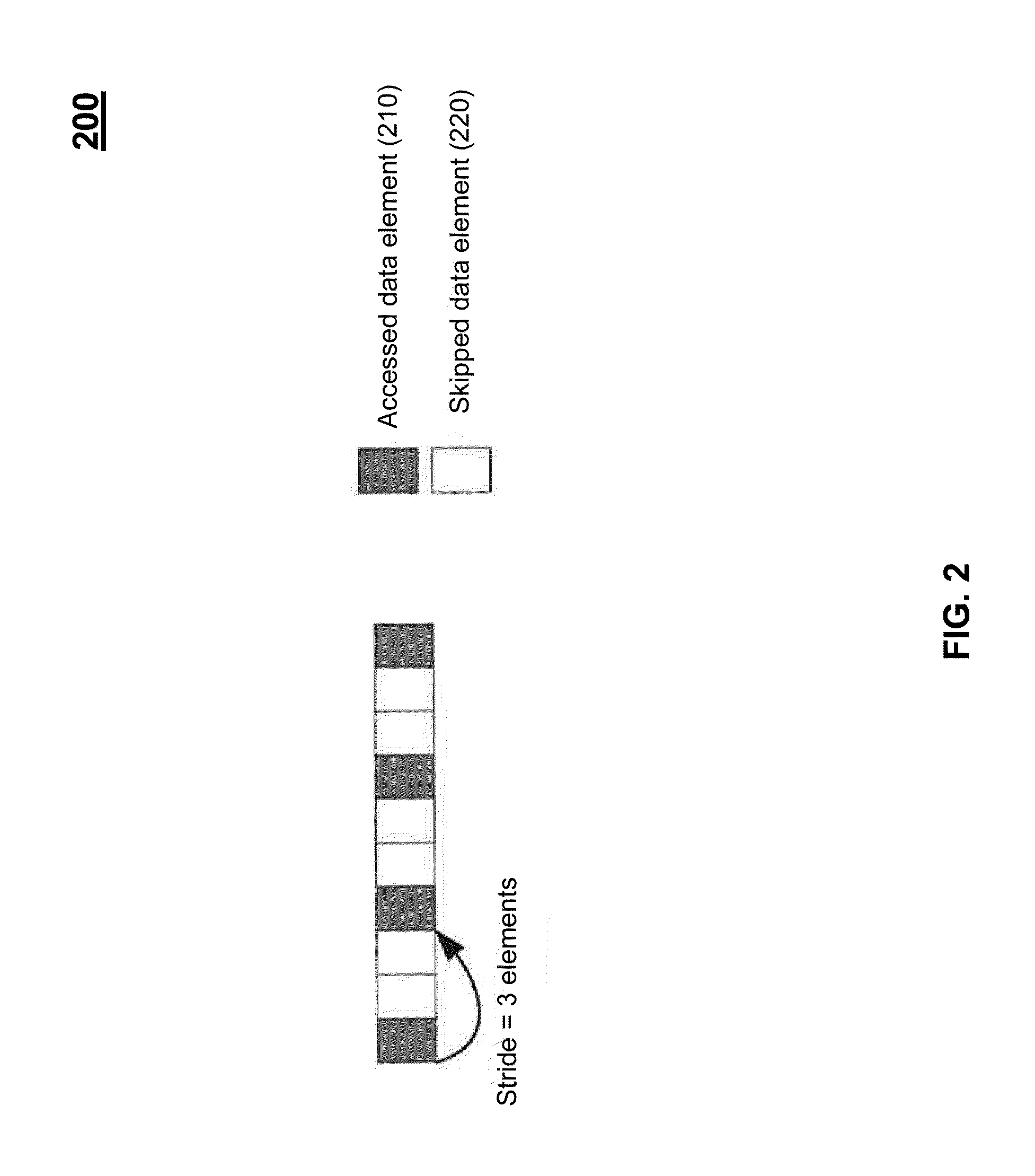

Vectorization in a SIMdD DSP architecture

InactiveUS20050097301A1Simple technologyGeneral purpose stored program computerProgram controlDsp architectureVector element

A method for determining vectorization configurations in a computer processor architecture, the method including identifying a vectorizable loop in a computer program, identifying a memory access pattern of data required for implementing the loop in the architecture, computing a set of candidate configurations of resources required for vectorizing the data in the architecture, where the computing step includes configuring a vector pointer register of the architecture in support of either of reorder-on-read use and reorder-on-write use of a vector element file of the architecture, selecting one of the candidates in accordance with predefined selection criteria, and implementing the selected vectorization configuration in the architecture.

Owner:IBM CORP

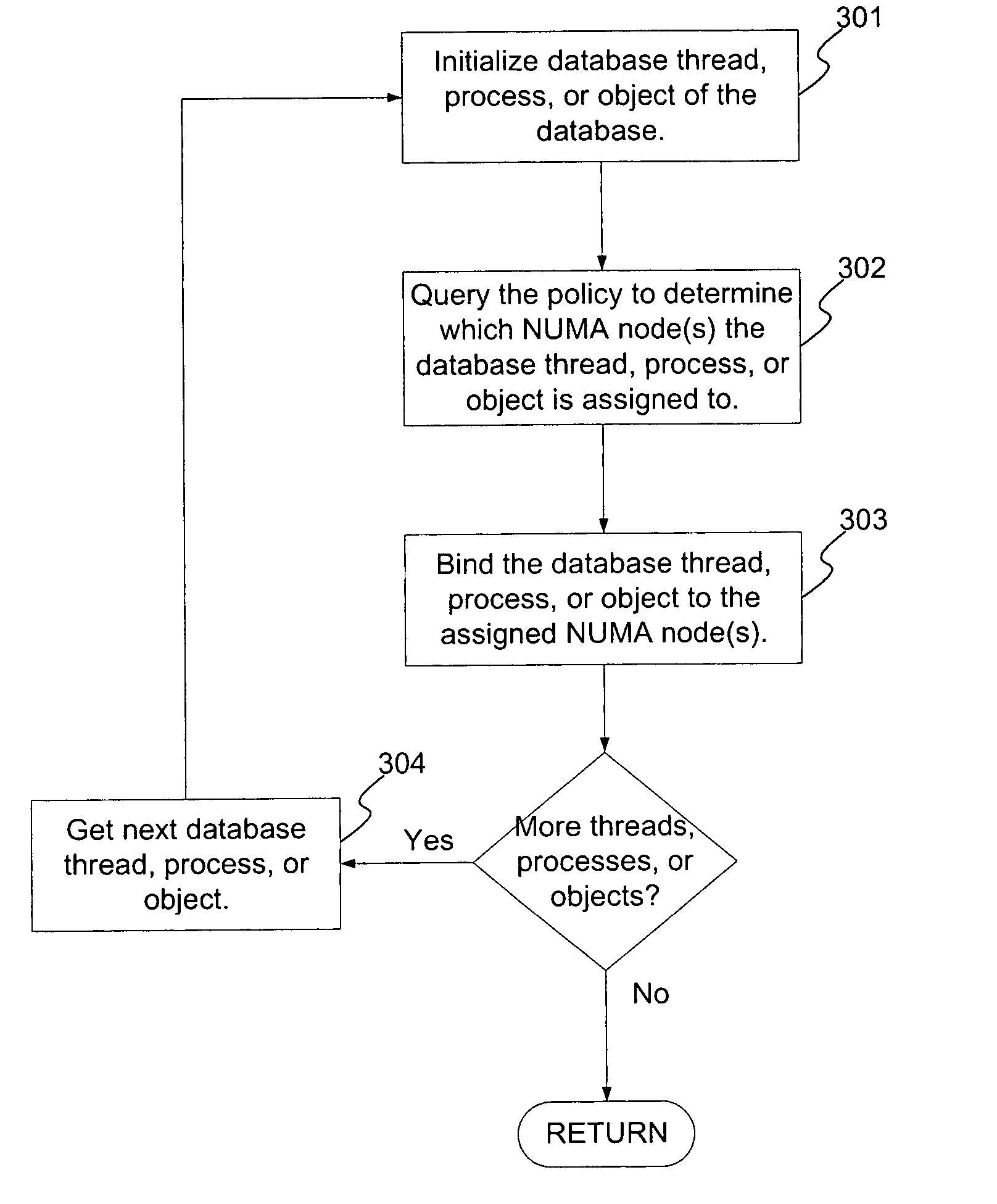

System and method for optimally configuring software systems for a NUMA platform

ActiveUS20060206489A1Expedites memory accessReduce the amount requiredProgram controlSpecial data processing applicationsRemote memory accessSoftware system

A method and system for improving memory access patterns of software systems on NUMA systems discovers NUMA system resources, where the NUMA system resources comprises a plurality of NUMA nodes; determines a plurality of database threads, processes, and objects for a database configuration; and generates a policy which assigns the plurality of database threads, processes, and objects to the plurality of NUMA nodes, wherein the generating is performed prior to initialization of the plurality of database threads, processes, and objects. The assignment of the database threads, processes, or objects to NUMA nodes is such that the amount of remote memory accesses is reduced. When the database thread, process, or object initializes, the database server queries the policy for its assigned NUMA node(s). The database thread, process, or object is then bound to the assigned NUMA node(s). In this manner, the costs from remote memory accesses are significantly reduced.

Owner:GOOGLE LLC

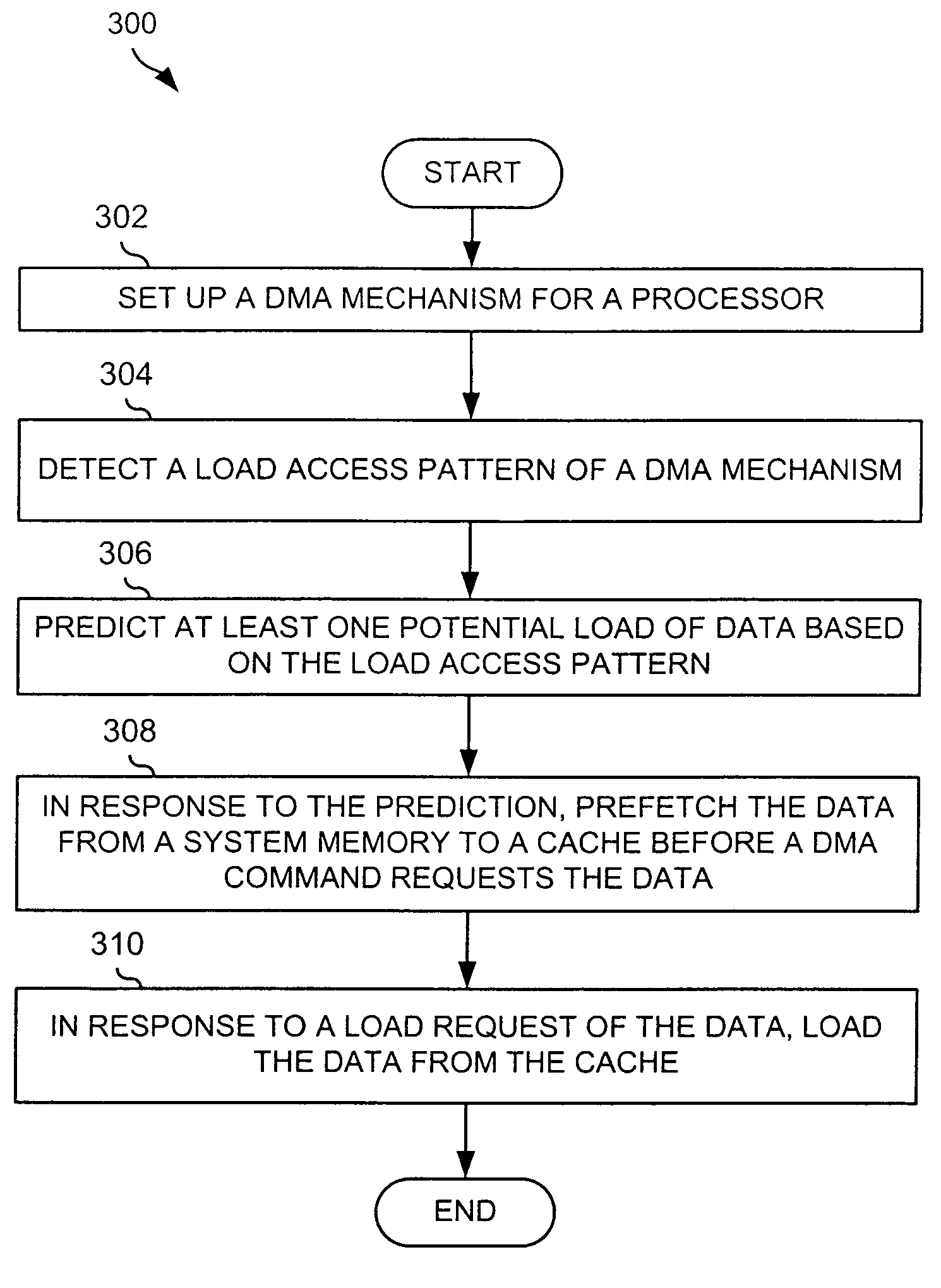

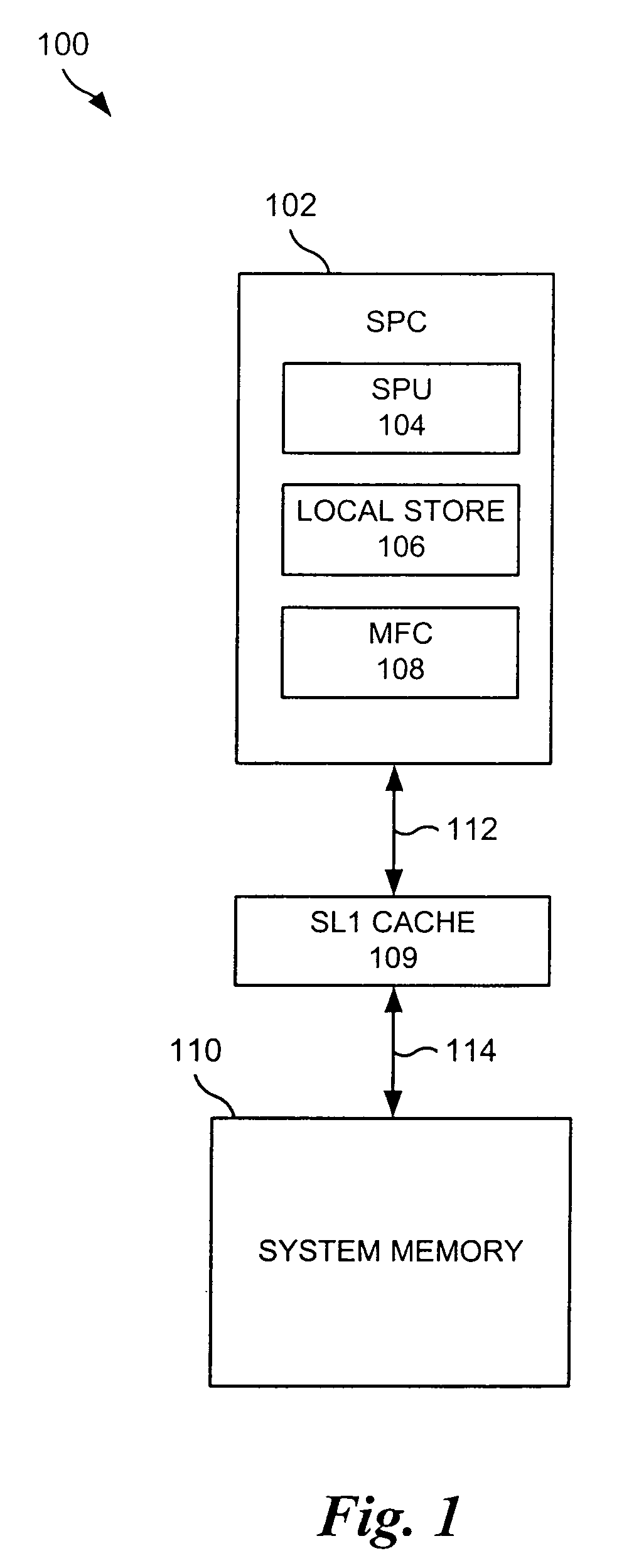

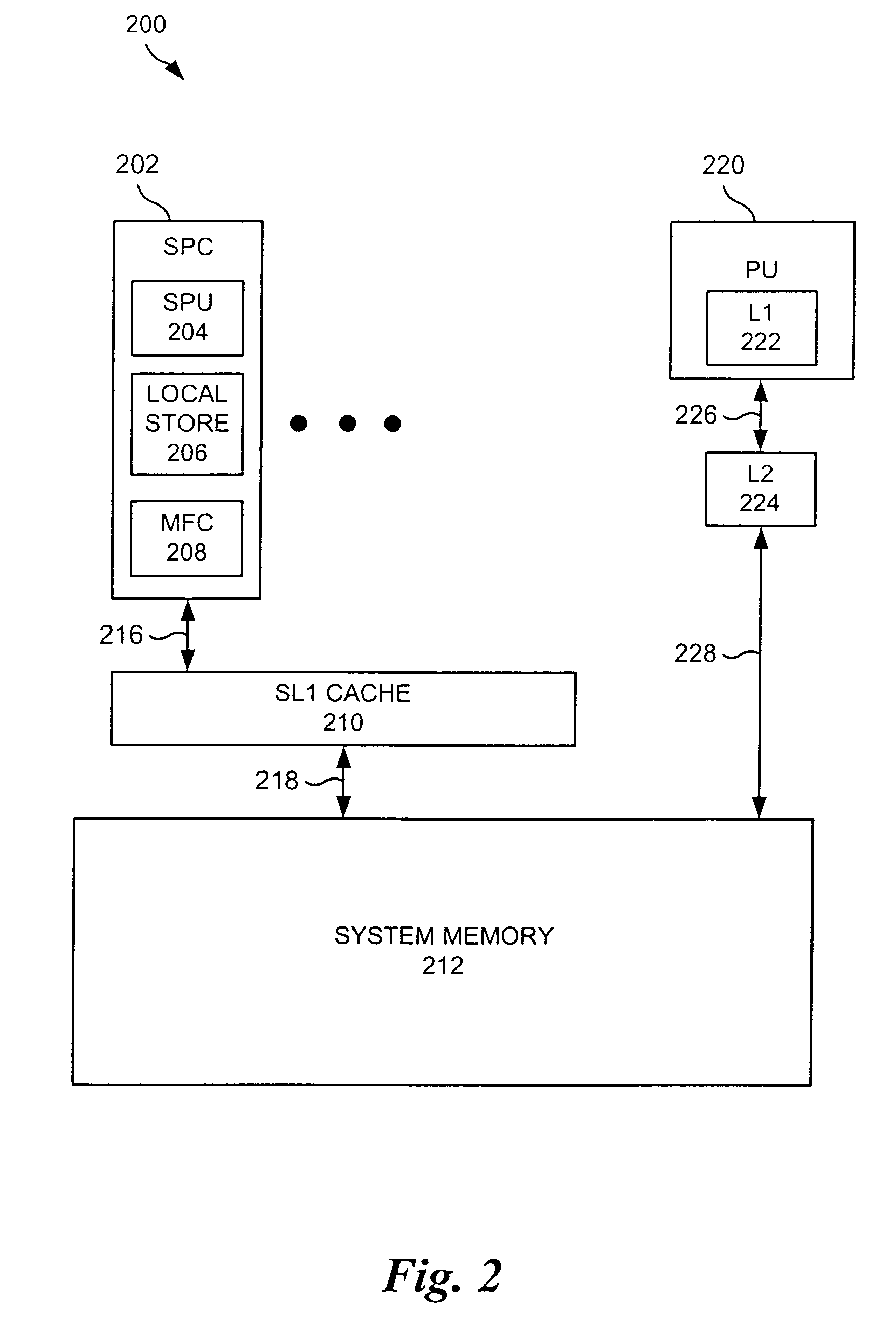

DMA prefetch

InactiveUS7010626B2General purpose stored program computerMemory systemsDirect memory accessComputerized system

A method and an apparatus are provided for prefetching data from a system memory to a cache for a direct memory access (DMA) mechanism in a computer system. A DMA mechanism is set up for a processor. A load access pattern of the DMA mechanism is detected. At least one potential load of data is predicted based on the load access pattern. In response to the prediction, the data is prefetched from a system memory to a cache before a DMA command requests the data.

Owner:INTEL CORP

Vectorization in a SIMdD DSP architecture

Owner:INT BUSINESS MASCH CORP

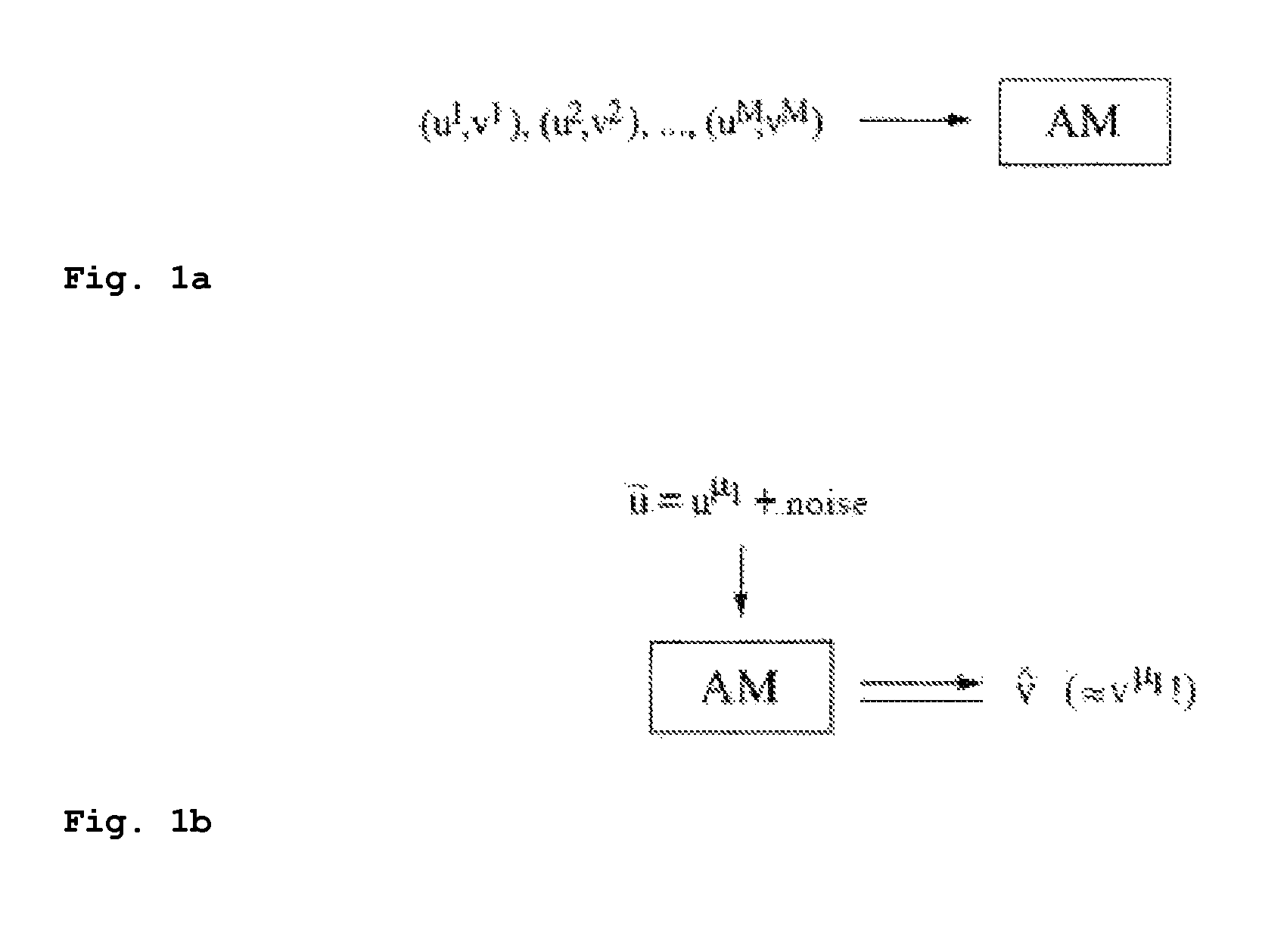

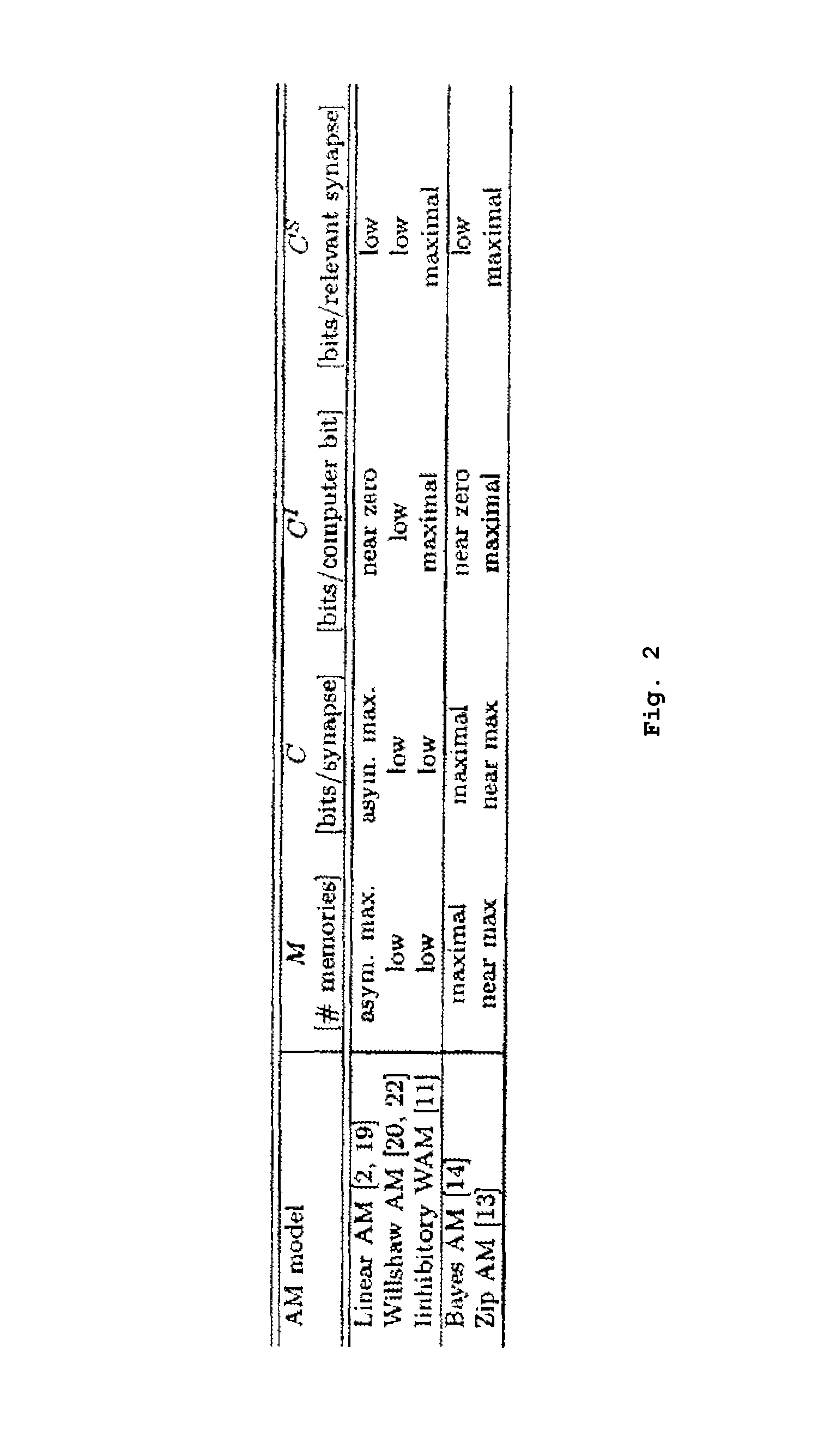

Neural associative memories based on optimal bayesian learning

This invention is in the field of machine learning and neural associative memory. In particular the invention discloses a neural associative memory structure for storing and maintaining associations between memory address patterns and memory content patterns using a neural network, as well as methods for storing and retrieving such associations. Bayesian learning is applied to achieve non-linear learning.

Owner:HONDA RES INST EUROPE

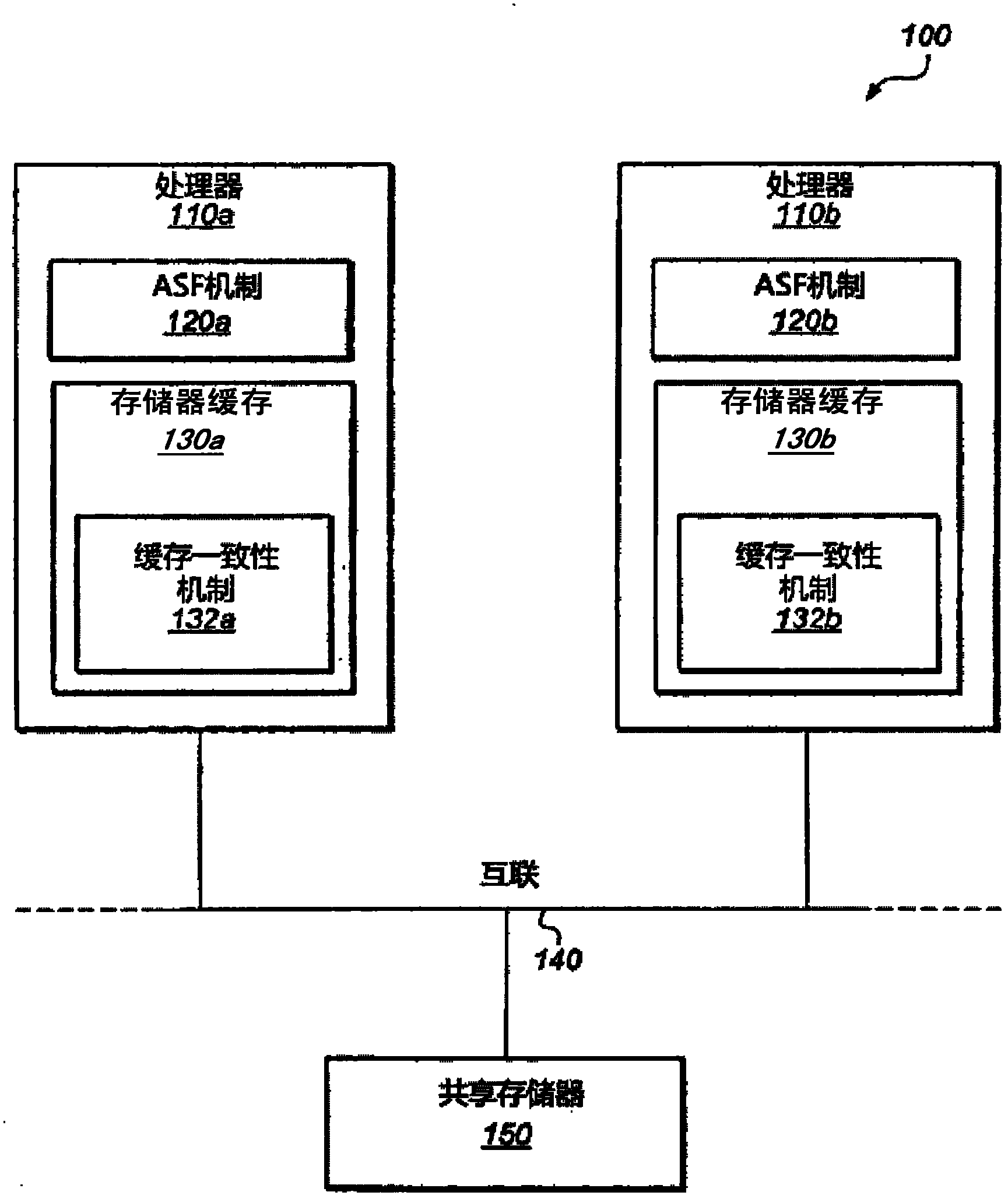

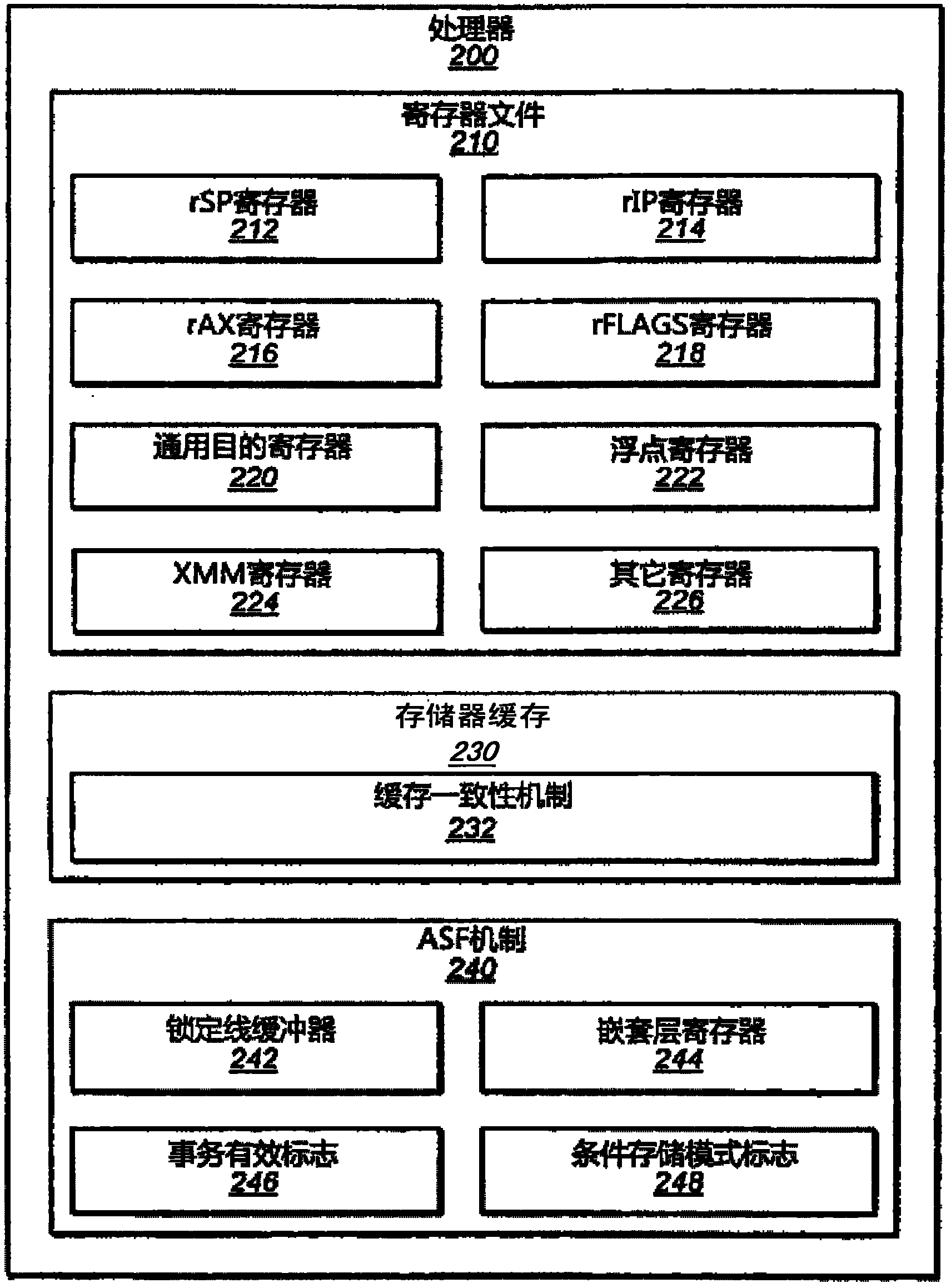

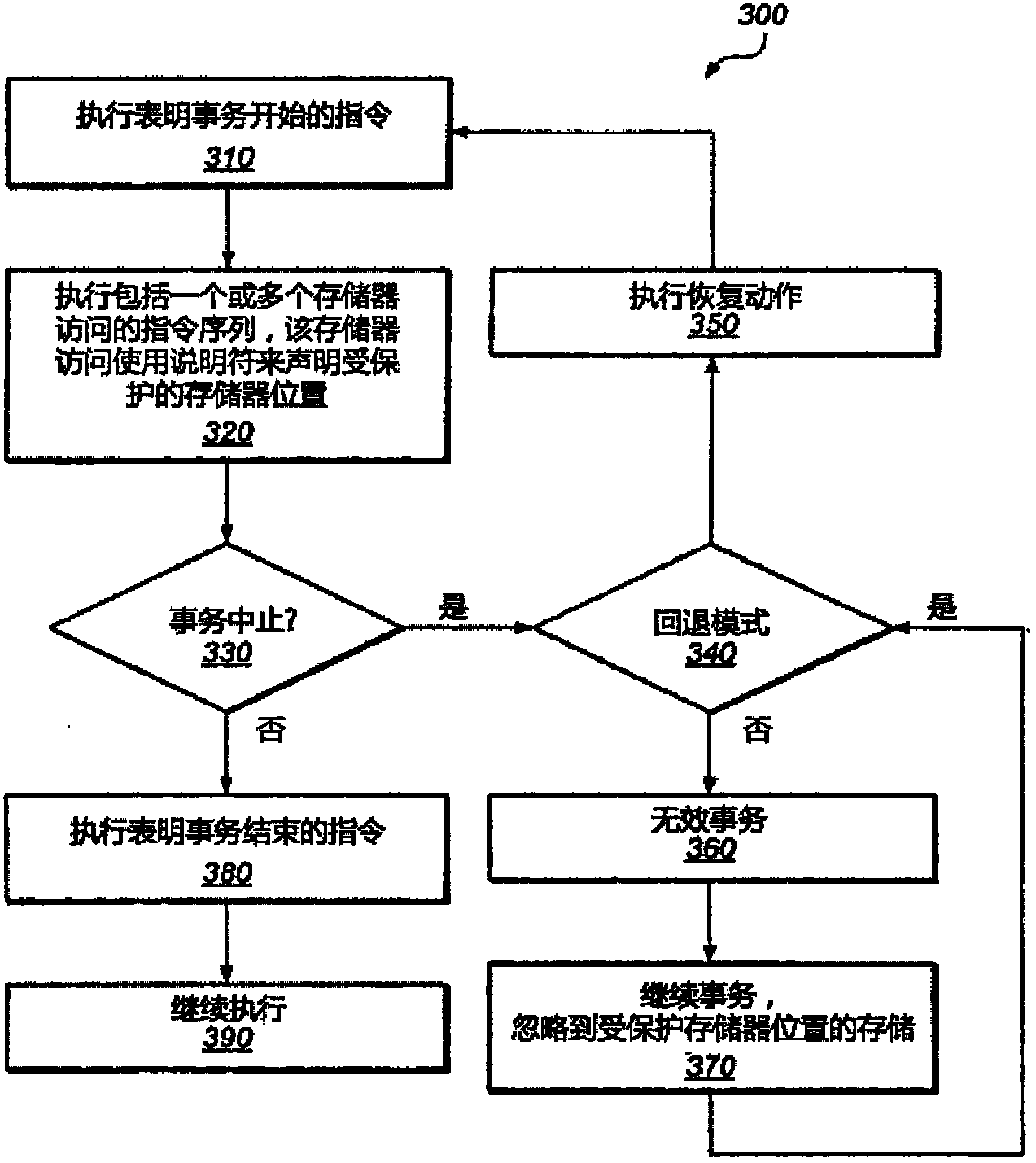

Virtualizable advanced synchronization facility

InactiveCN102144218ASpecific access rightsProgram synchronisationVirtualizationAdvanced Synchronization Facility

A system and method for executing a transaction in a transactional memory system is disclosed. The system includes a processor of a plurality of processors coupled to shared memory, wherein the processor is configured to execute a section of code, including a plurality of memory access operations to the shared memory, as an atomic transaction relative to the execution of the plurality of processors. According to embodiments, the processor is configured to determine whether the memory access operations include any of a set of disallowed instructions, wherein the set includes one or more instructions that operate differently in a virtualized computing environment than in a native computing environment. If any of the memory access operations are ones of the disallowed instructions, then the processor aborts the transaction.

Owner:ADVANCED MICRO DEVICES INC

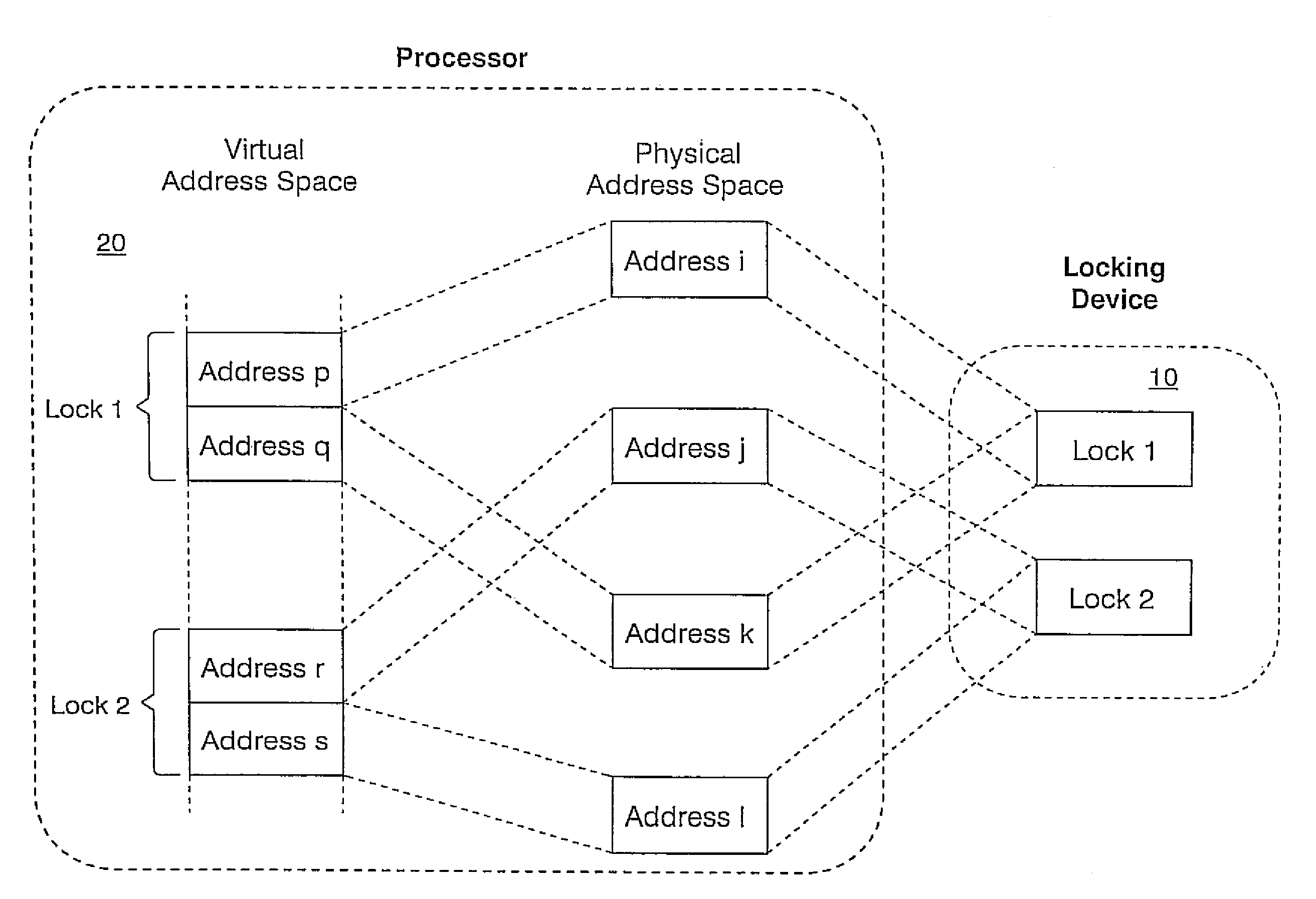

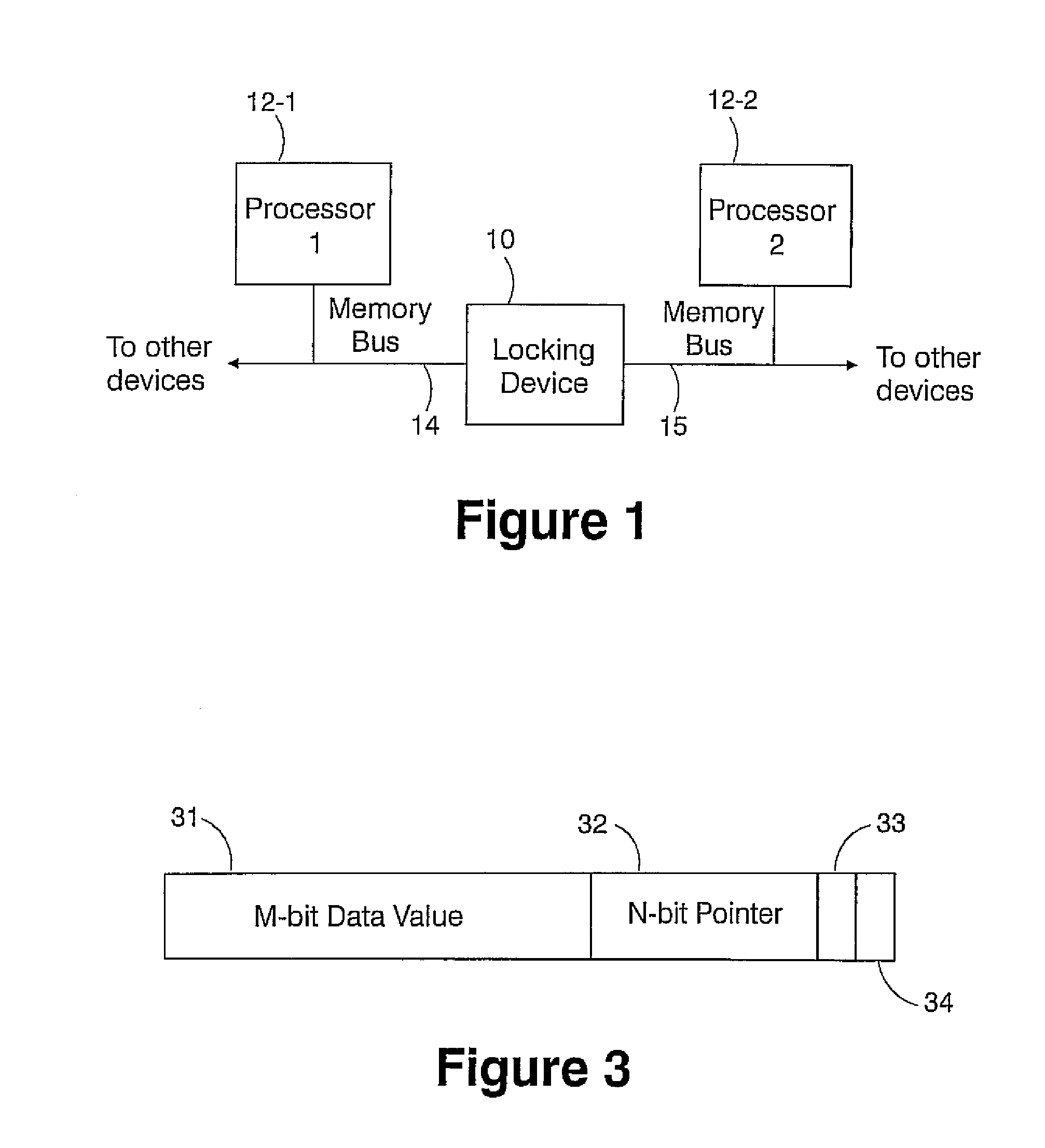

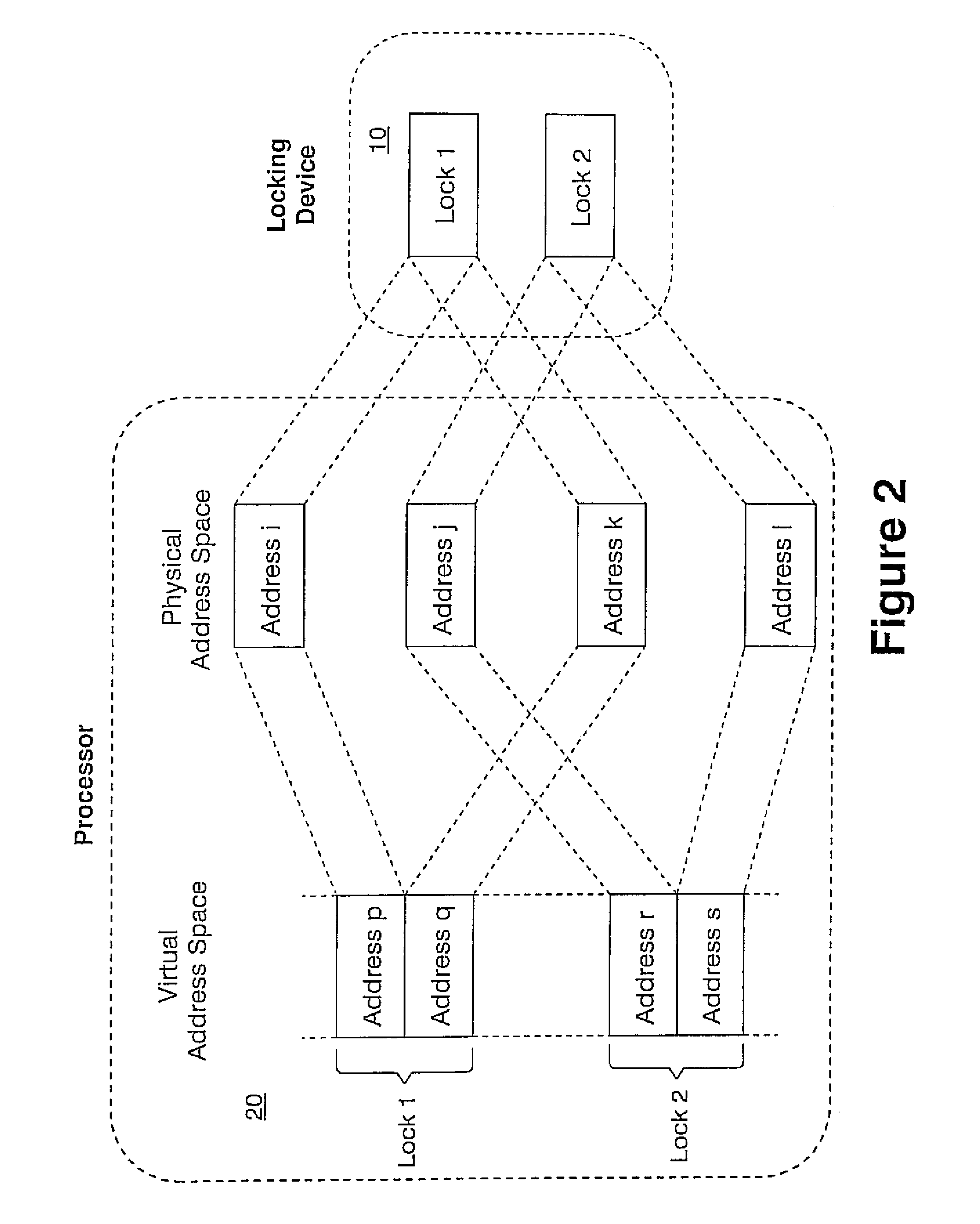

Method for prefetching non-contiguous data structures

InactiveUS7529895B2Efficient accessMemory architecture accessing/allocationProgram synchronisationMulti processorLatency (engineering)

A low latency memory system access is provided in association with a weakly-ordered multiprocessor system. Each processor in the multiprocessor shares resources, and each shared resource has an associated lock within a locking device that provides support for synchronization between the multiple processors in the multiprocessor and the orderly sharing of the resources. A processor only has permission to access a resource when it owns the lock associated with that resource, and an attempt by a processor to own a lock requires only a single load operation, rather than a traditional atomic load followed by store, such that the processor only performs a read operation and the hardware locking device performs a subsequent write operation rather than the processor. A simple perfecting for non-contiguous data structures is also disclosed. A memory line is redefined so that in addition to the normal physical memory data, every line includes a pointer that is large enough to point to any other line in the memory, wherein the pointers to determine which memory line to prefect rather than some other predictive algorithm. This enables hardware to effectively prefect memory access patterns that are non-contiguous, but repetitive.

Owner:INT BUSINESS MASCH CORP

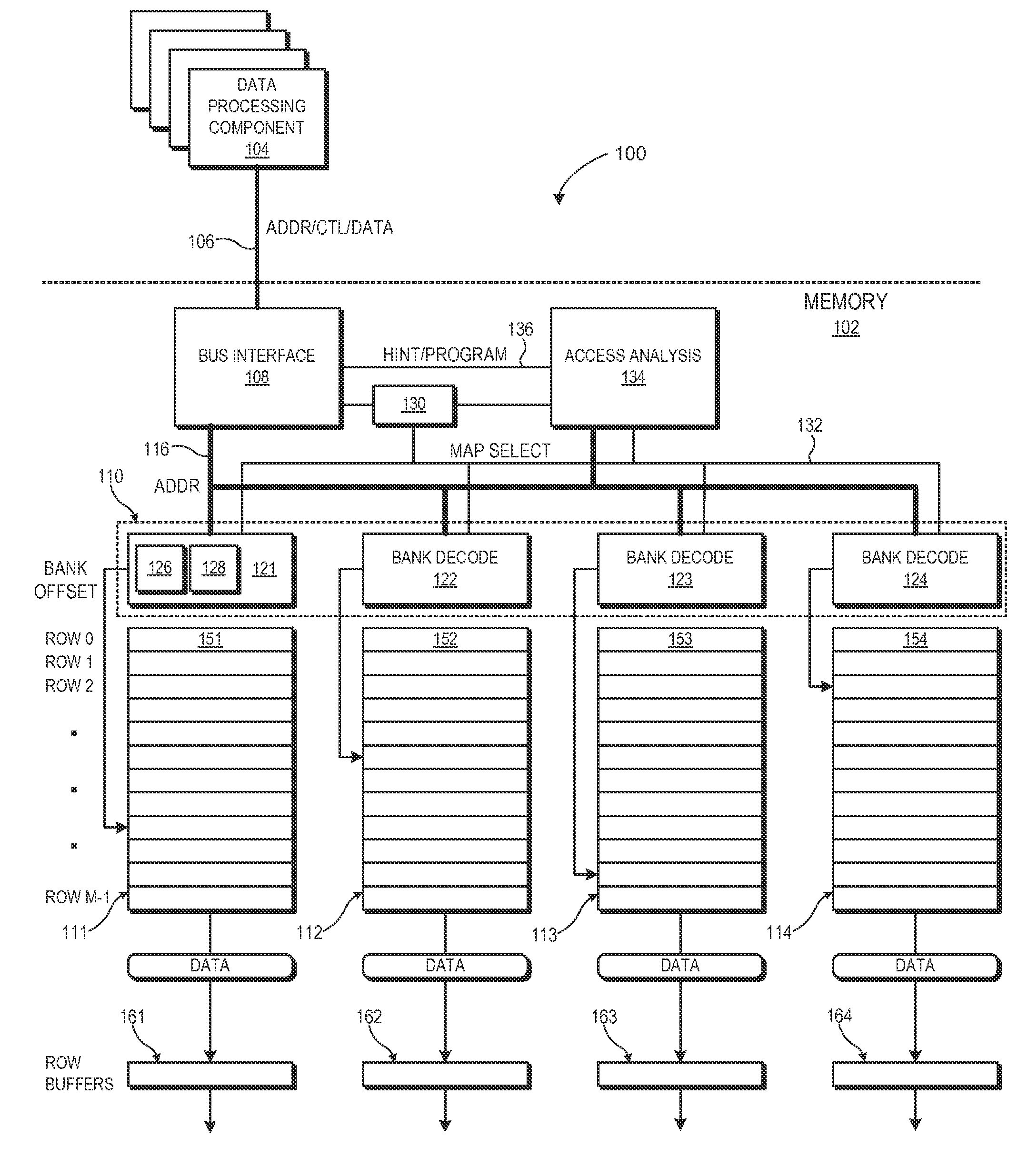

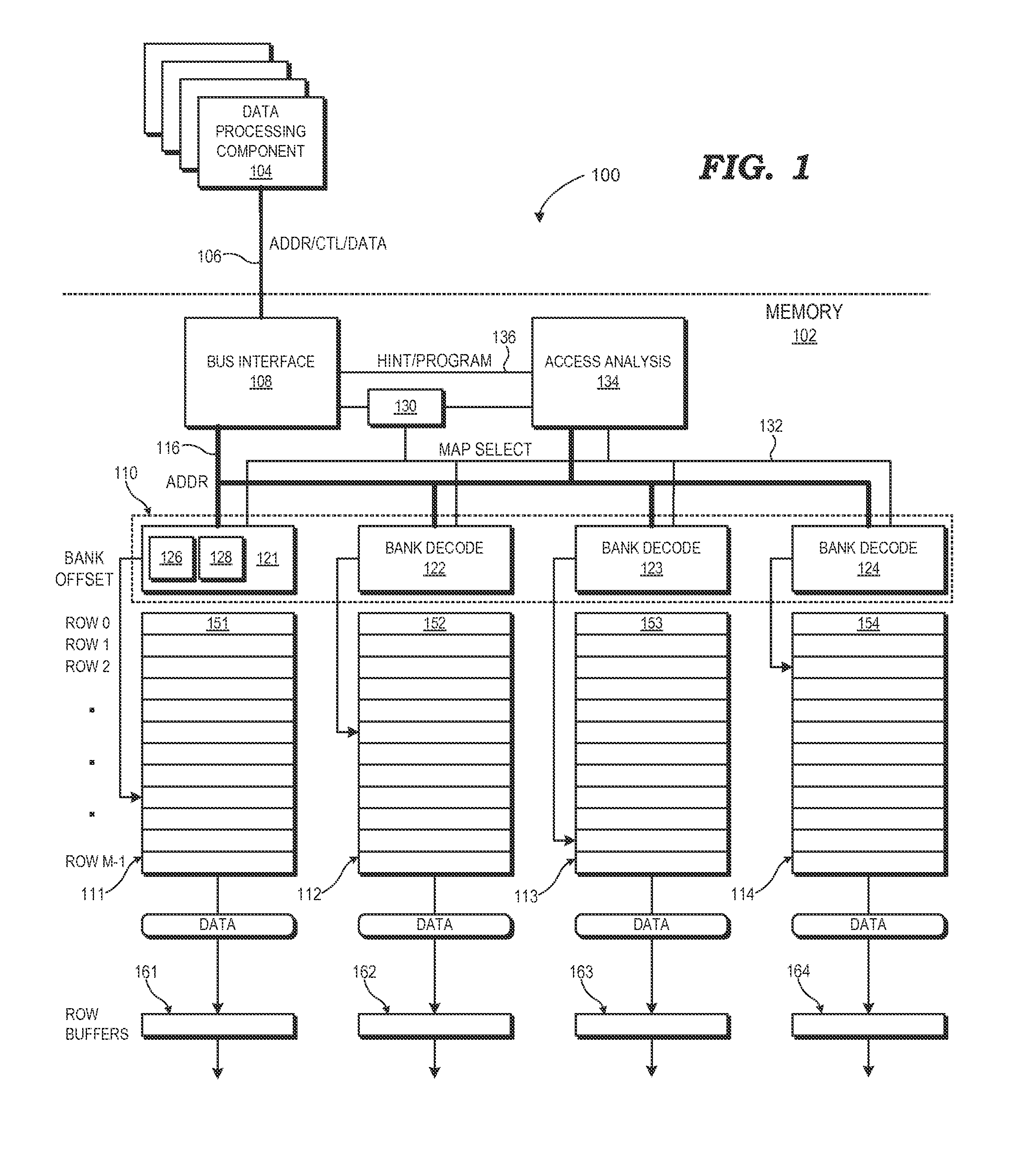

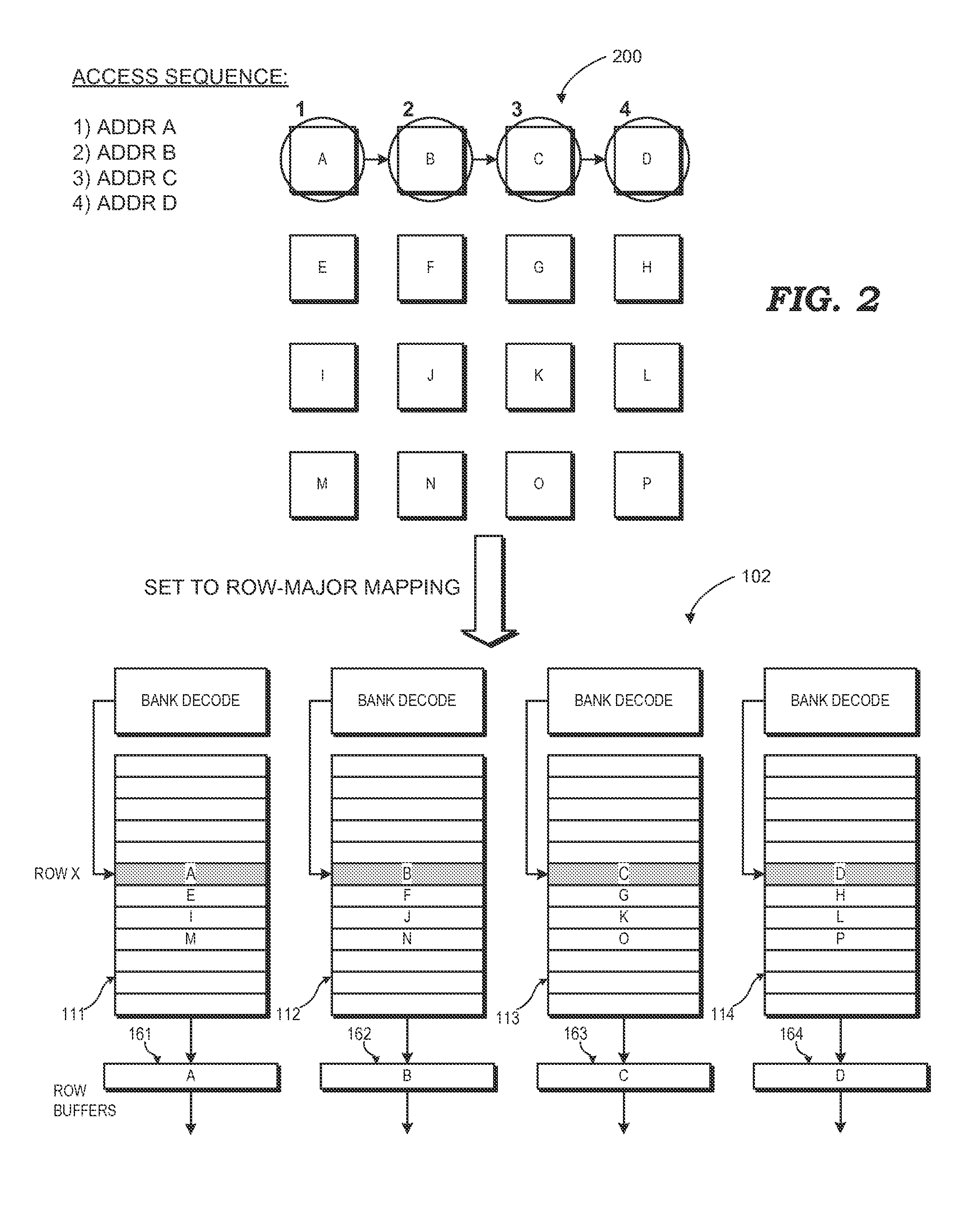

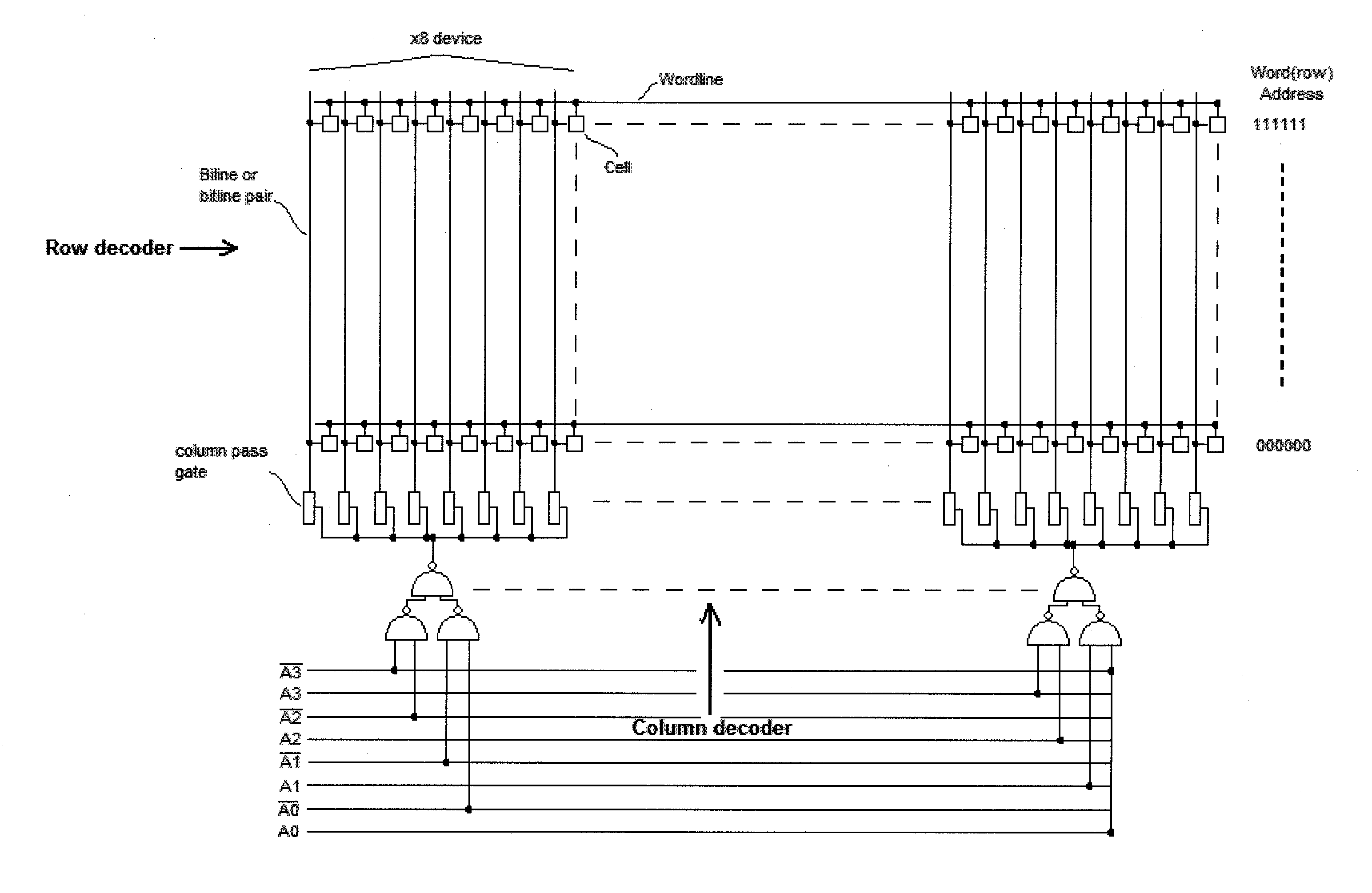

Programmable physical address mapping for memory

ActiveUS20140082322A1Memory architecture accessing/allocationMemory systemsComputer architectureMemory bank

A memory implements a programmable physical address mapping that can change to reflect changing memory access patterns, observed or anticipated, to the memory. The memory employs address decode logic that can implement any of a variety of physical address mappings between physical addresses and corresponding memory locations. The physical address mappings may locate the data within one or more banks and rows of the memory so as to facilitate more efficient memory accesses for a given access pattern. The programmable physical address mapping employed by the hardware of the memory can include, but is not limited to, hardwired logic gates, programmable look-up tables or other mapping tables, reconfigurable logic, or combinations thereof. The physical address mapping may be programmed for the entire memory or on a per-memory region basis.

Owner:ADVANCED MICRO DEVICES INC

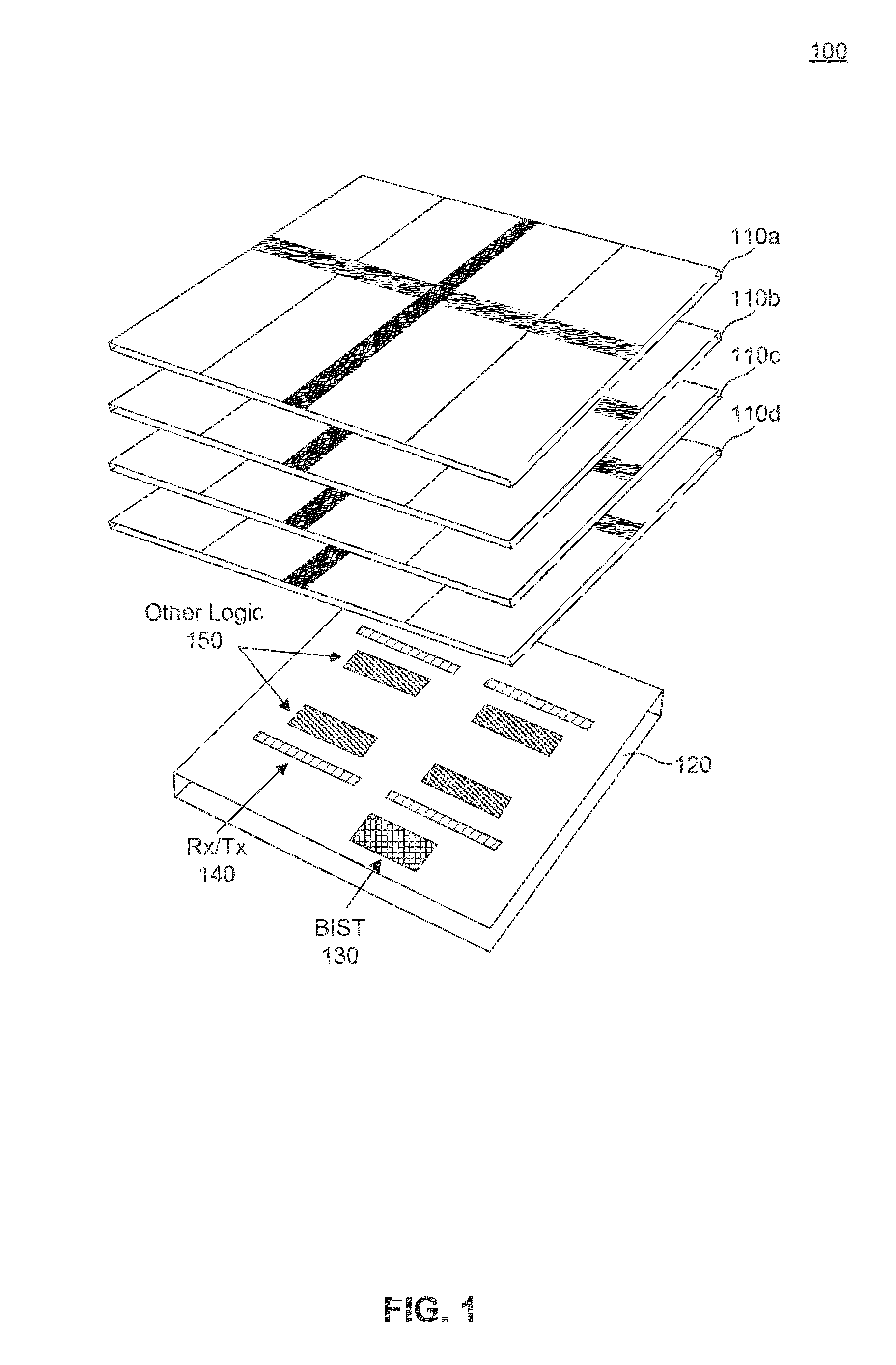

Compound Memory Operations in a Logic Layer of a Stacked Memory

InactiveUS20140181427A1Lower performance requirementsReduce energy overheadDigital computer detailsProgram controlSilicon interposerVisibility

Some die-stacked memories will contain a logic layer in addition to one or more layers of DRAM (or other memory technology). This logic layer may be a discrete logic die or logic on a silicon interposer associated with a stack of memory dies. Additional circuitry / functionality is placed on the logic layer to implement functionality to perform various data movement and address calculation operations. This functionality would allow compound memory operations—a single request communicated to the memory that characterizes the accesses and movement of many data items. This eliminates the performance and power overheads associated with communicating address and control information on a fine-grain, per-data-item basis from a host processor (or other device) to the memory. This approach also provides better visibility of macro-level memory access patterns to the memory system and may enable additional optimizations in scheduling memory accesses.

Owner:ADVANCED MICRO DEVICES INC

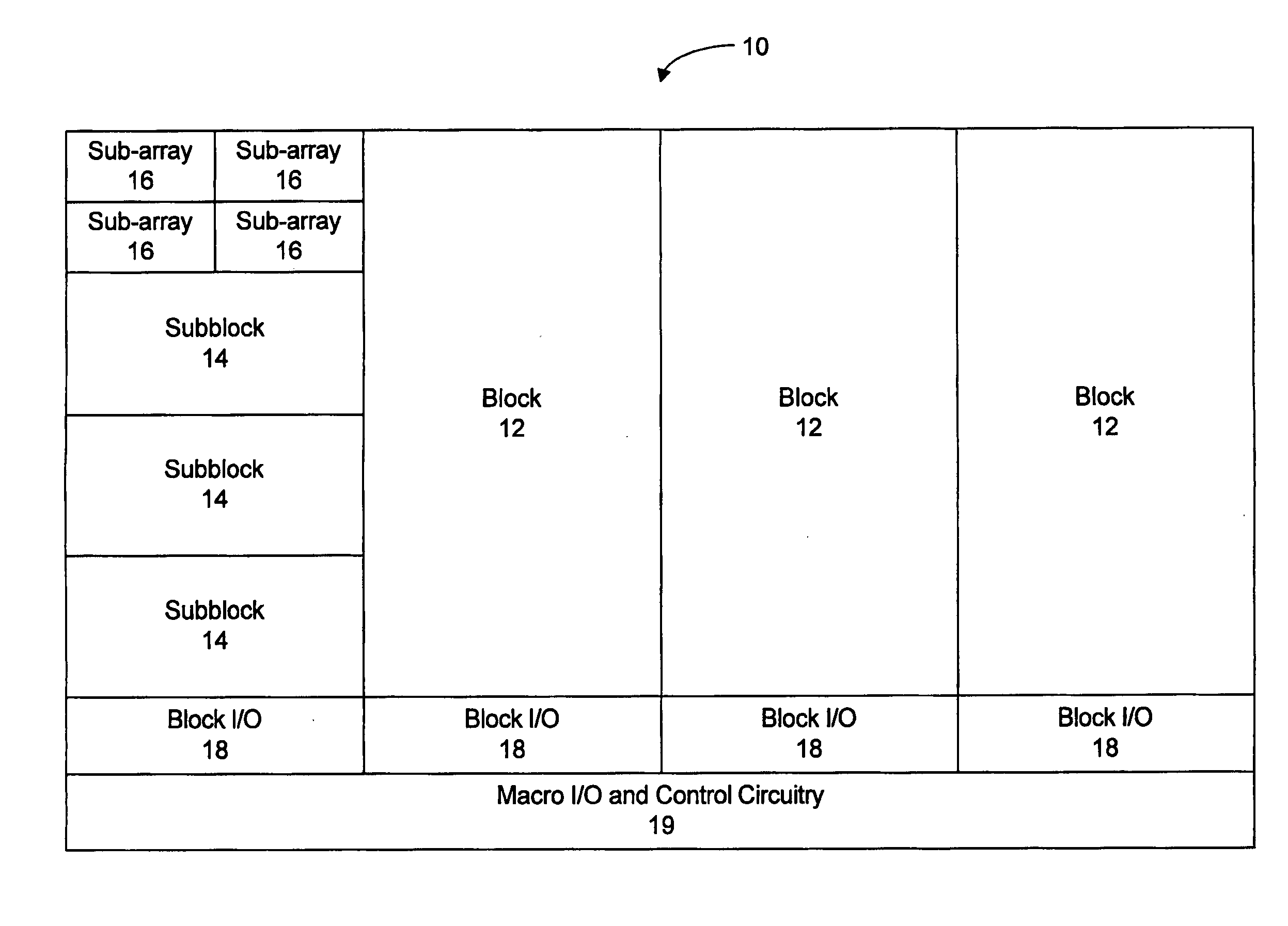

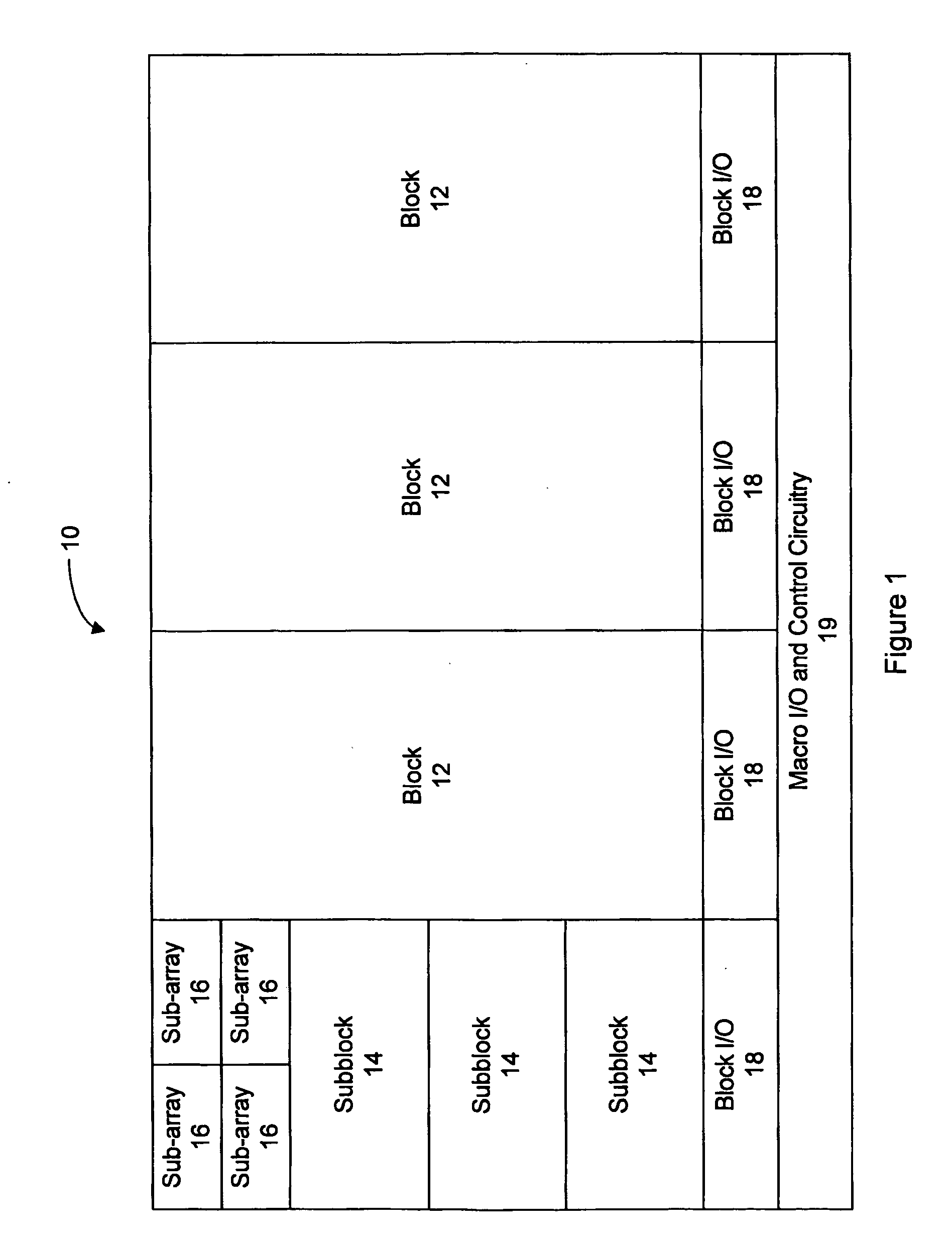

Low power memory architecture

ActiveUS20070201295A1Minimizing current leakageMinimize current leakageDigital data processing detailsDigital storageDatapath circuitsPower grid

A memory architecture and circuits for minimizing current leakage in the memory array. Subdivisions of the memory array each have local power grids that can be selectively connected to power supplies, such that only an accessed subdivision will receive power to execute the memory access operation. The memory array can further include databuses which are precharged to one voltage during idle times and a second voltage during active read cycles, which reduces leakage current in datapath circuitry connected to the databuses within the memory array blocks.

Owner:CONVERSANT INTPROP MANAGEMENT INC

System and method for performing shaped memory access operations

ActiveCN103218208ANo resource conflictsRegister arrangementsConcurrent instruction executionArray data structureProcessor register

One embodiment of the present invention sets forth a technique that provides an efficient way to retrieve operands from a register file. Specifically, the instruction dispatch unit receives one or more instructions, each of which includes one or more operands. Collectively, the operands are organized into one or more operand groups from which a shaped access may be formed. The operands are retrieved from the register file and stored in a collector. Once all operands are read and collected in the collector, the instruction dispatch unit transmits the instructions and corresponding operands to functional units within the streaming multiprocessor for execution. One advantage of the present invention is that multiple operands are retrieved from the register file in a single register access operation without resource conflict. Performance in retrieving operands from the register file is improved by forming shaped accesses that efficiently retrieve operands exhibiting recognized memory access patterns.

Owner:NVIDIA CORP

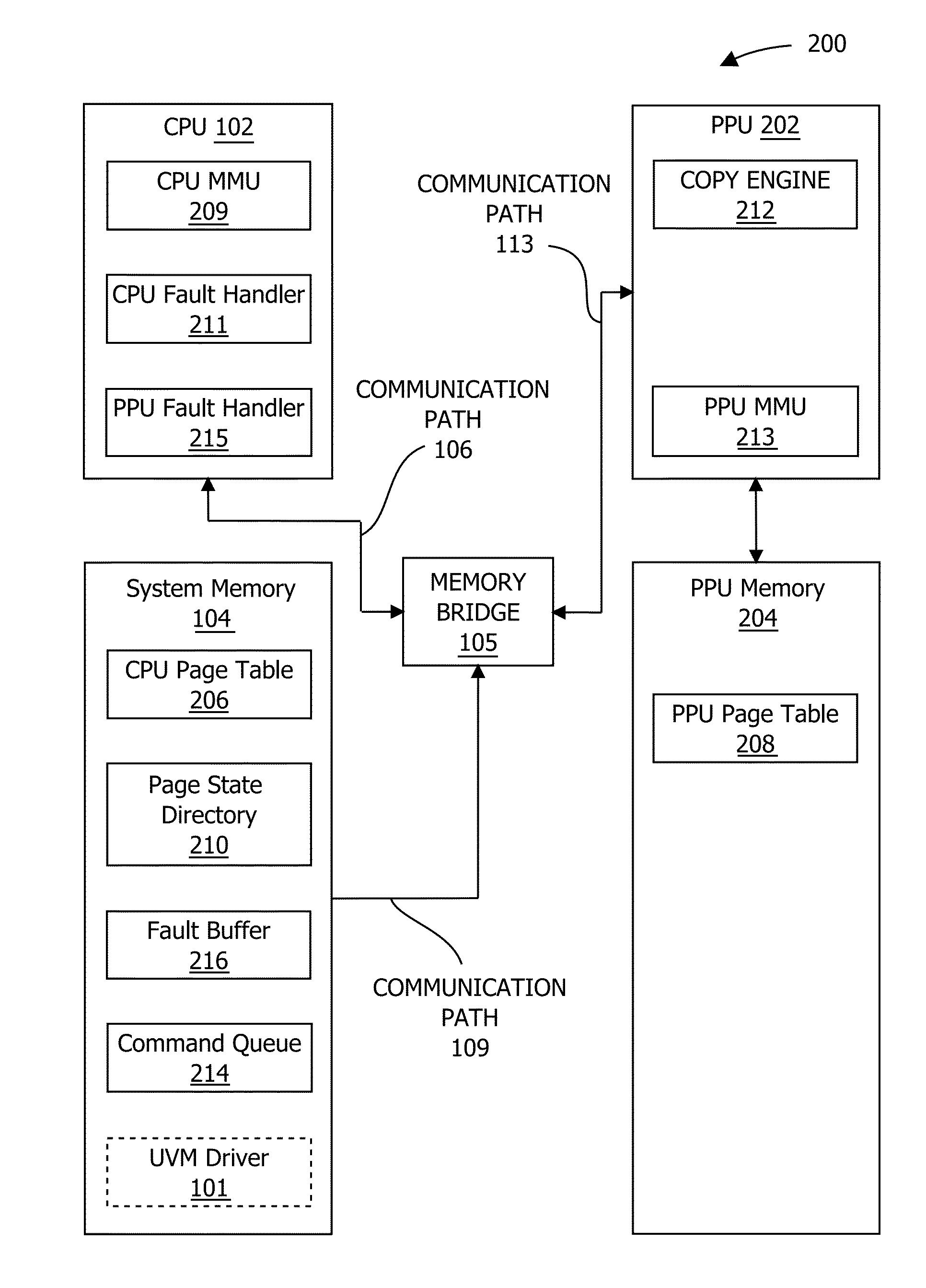

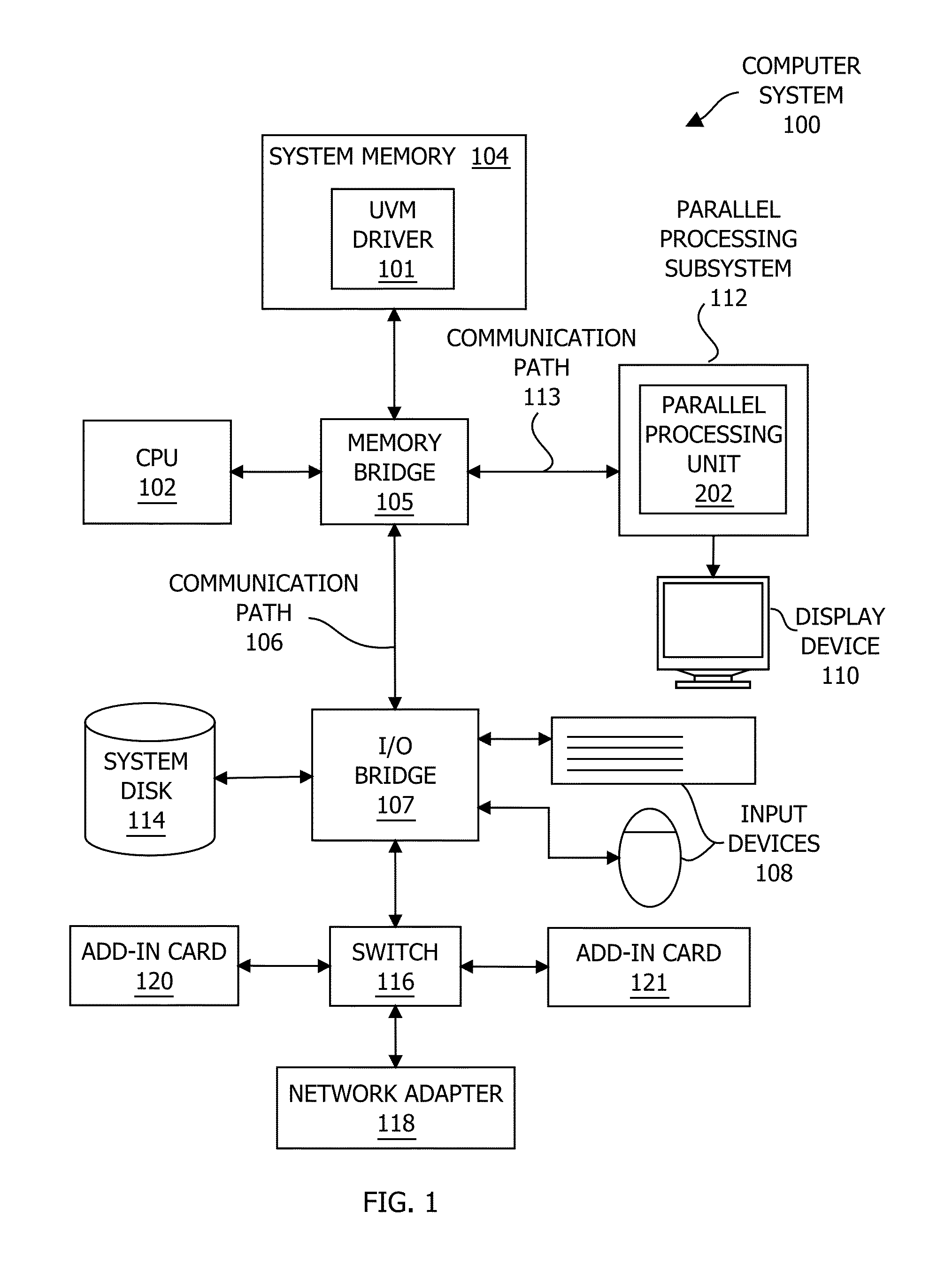

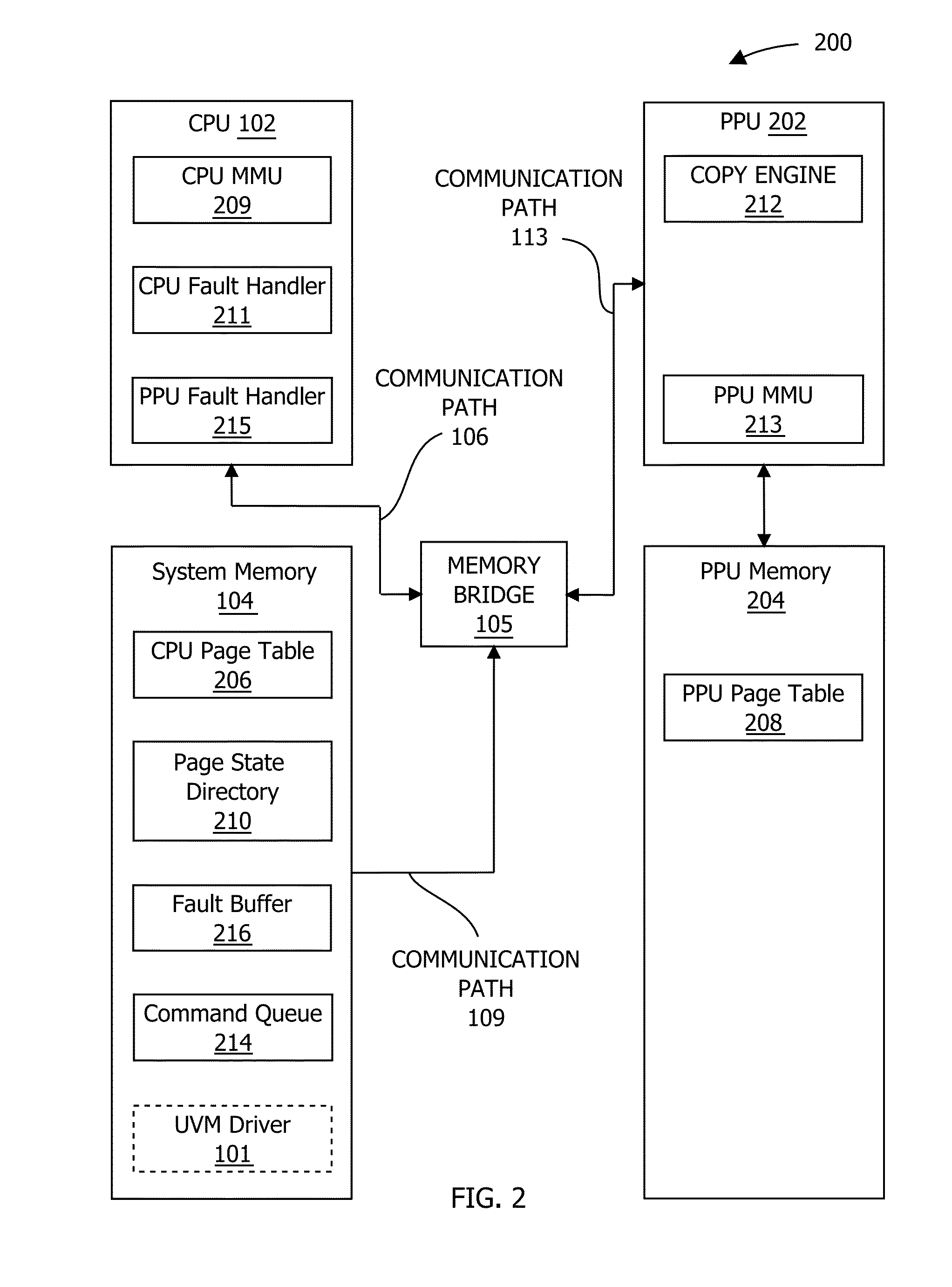

Frame buffer access tracking via a sliding window in a unified virtual memory system

ActiveUS20140281365A1Improve memory performanceImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryComputer hardware

One embodiment of the present invention is a memory subsystem that includes a sliding window tracker that tracks memory accesses associated with a sliding window of memory page groups. When the sliding window tracker detects an access operation associated with a memory page group within the sliding window, the sliding window tracker sets a reference bit that is associated with the memory page group and is included in a reference vector that represents accesses to the memory page groups within the sliding window. Based on the values of the reference bits, the sliding window tracker causes the selection a memory page in a memory page group that has fallen into disuse from a first memory to a second memory. Because the sliding window tracker tunes the memory pages that are resident in the first memory to reflect memory access patterns, the overall performance of the memory subsystem is improved.

Owner:NVIDIA CORP

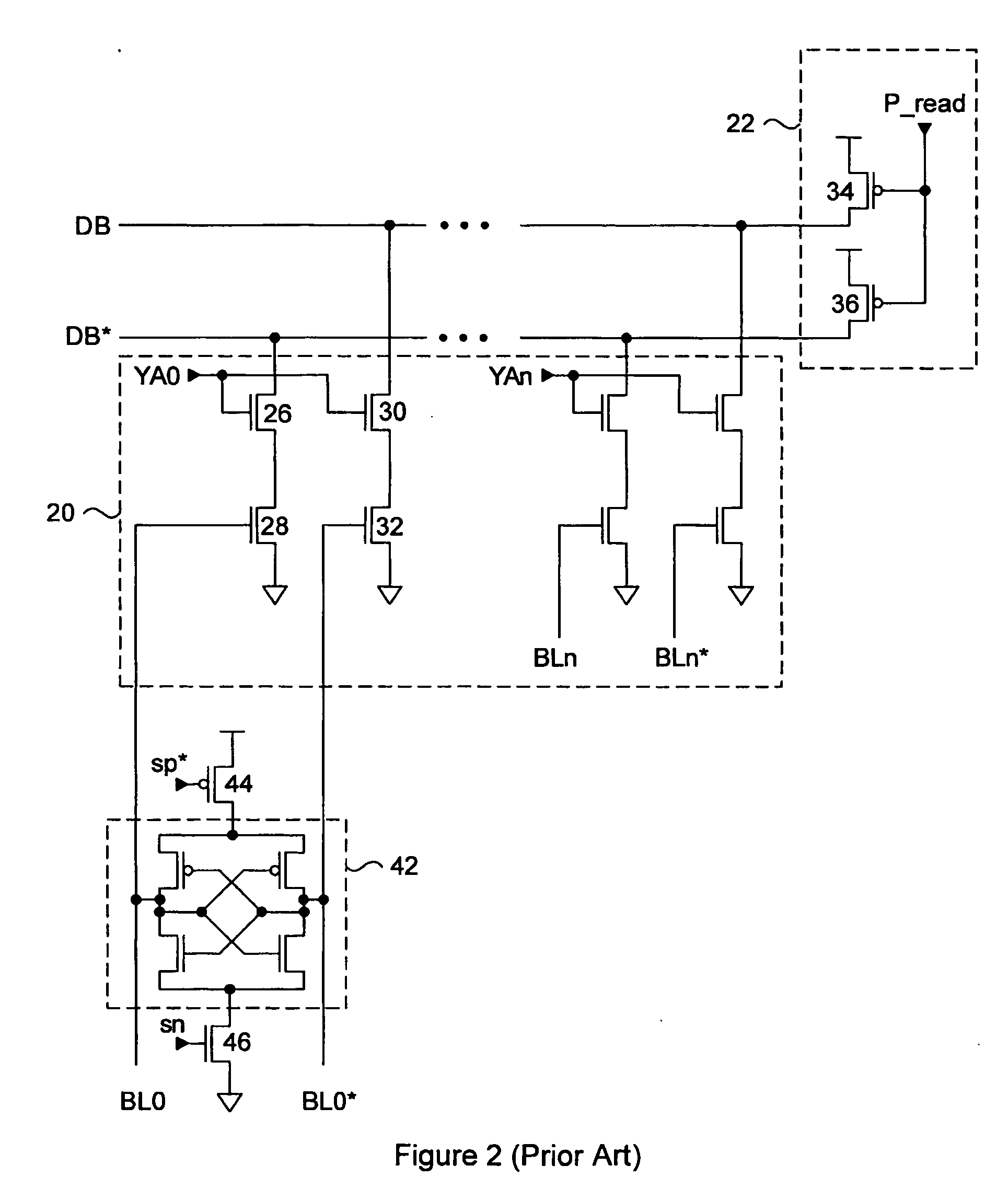

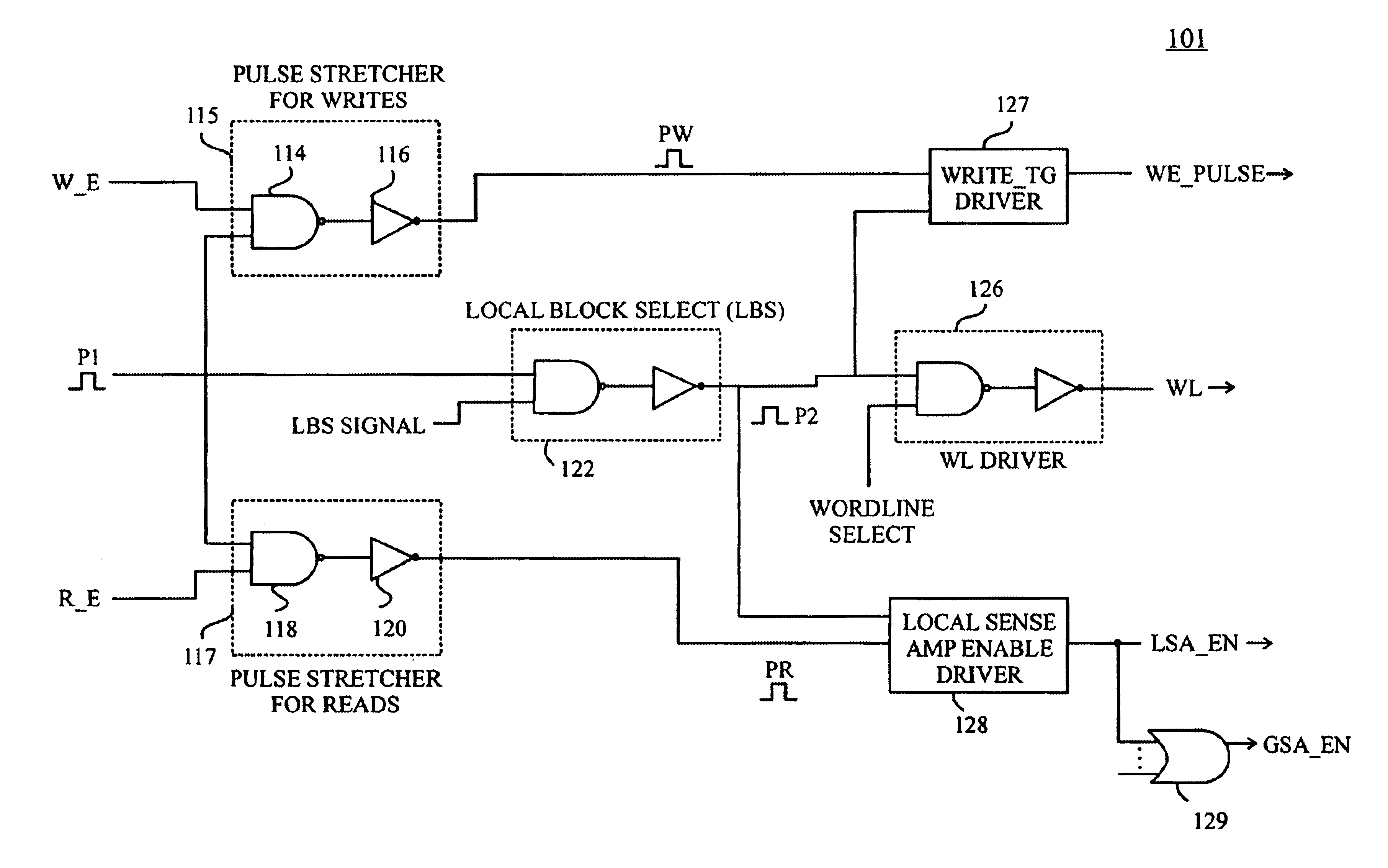

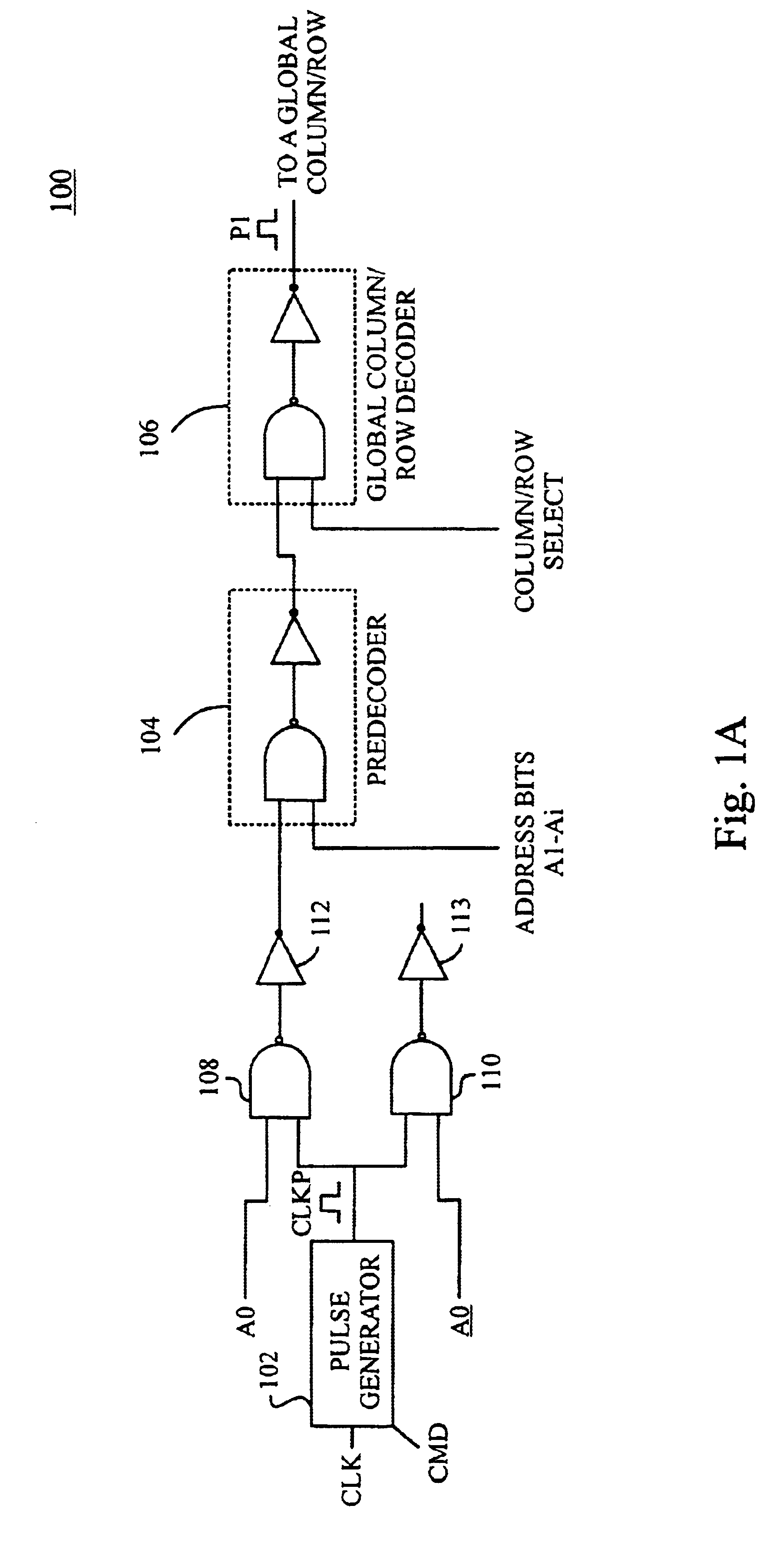

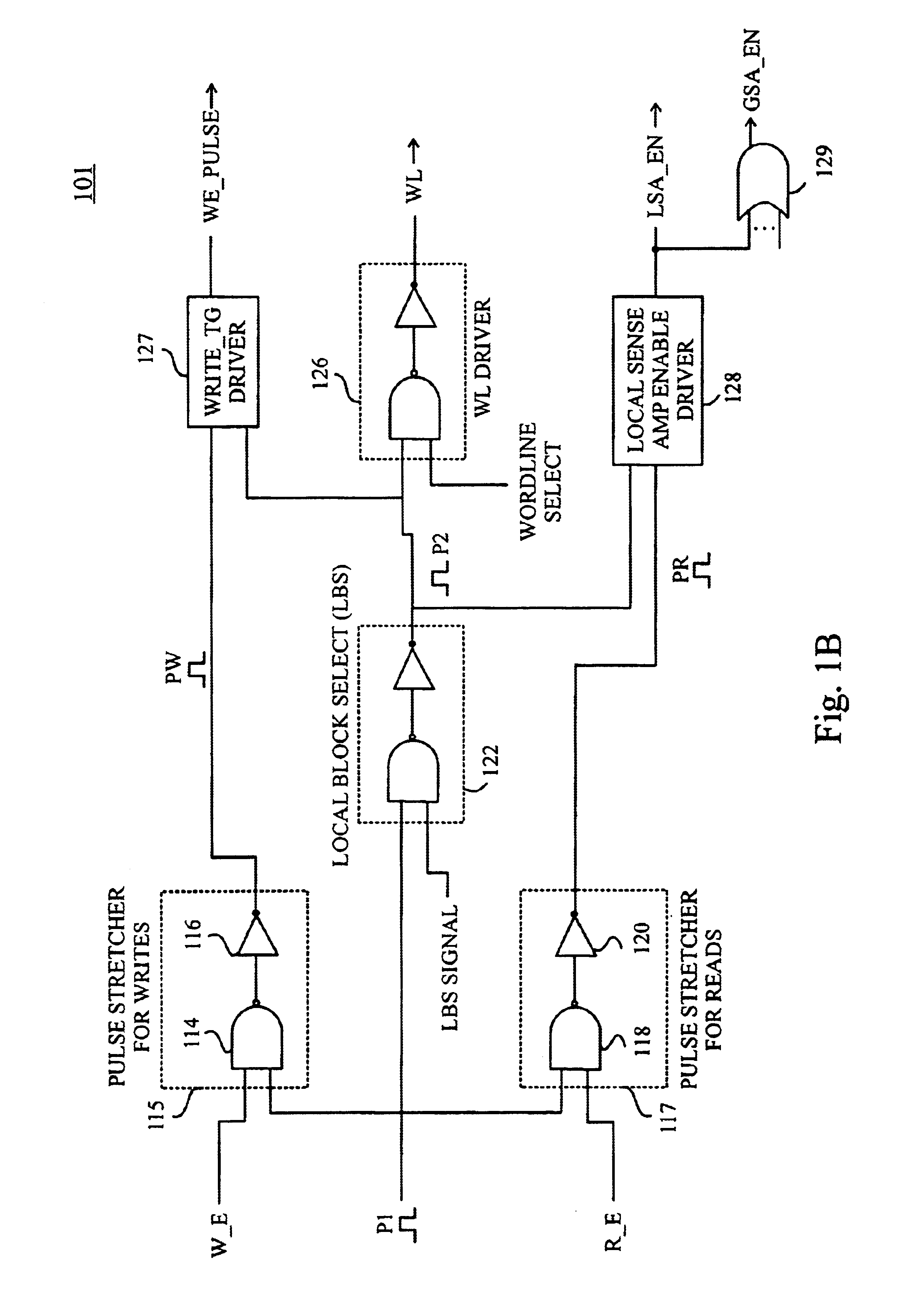

Decode path gated low active power SRAM

Circuits and methods for controlling data access operations in memory devices such as SRAM (static random access memory) devices. The circuits and methods provide timing and control for memory access operations by propagating a control pulse along a decode path from which a sequence of control pulses are generated at points in the decode path to synchronize activation of wordlines and sense amplifiers and precharge / equalization of bit lines. Preferably, an address gated pulse schema is implemented to synchronize and restrict switching activity spatially and temporally to only regions of a memory array that are being accessed, and for limited periods of time just sufficient to generate signals for read or write operations. Advantageously, the circuits and methods enable SRAM cell delays to track CMOS gate delays more closely at low voltages and reduce switching power by restricting switching transitions only to the regions of memory that are accessed.

Owner:GLOBALFOUNDRIES US INC

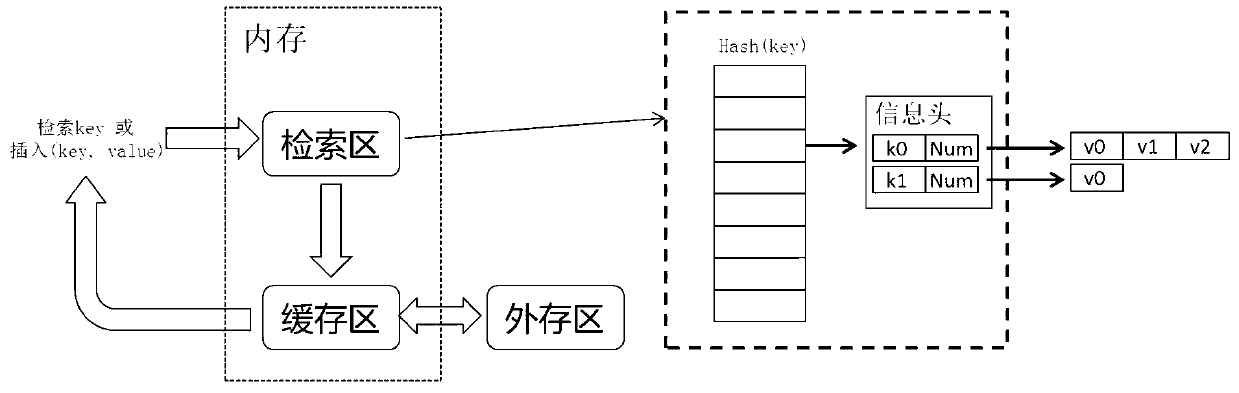

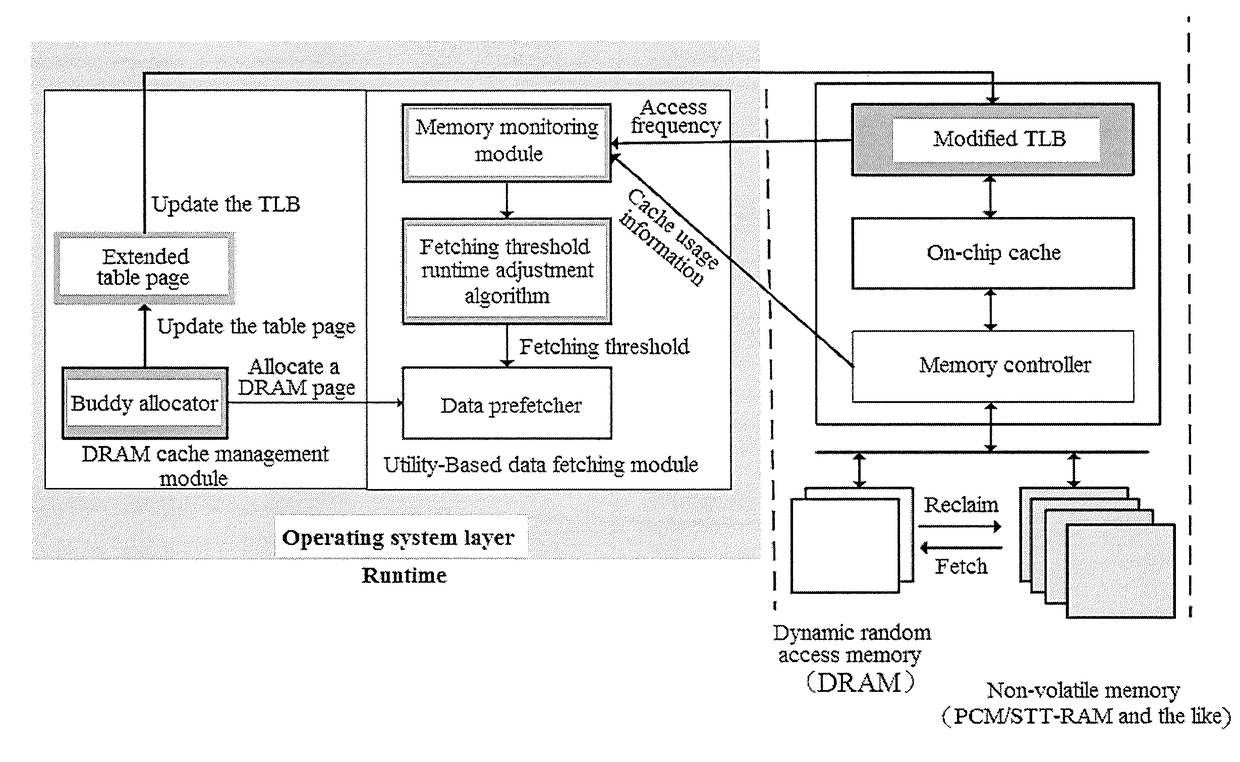

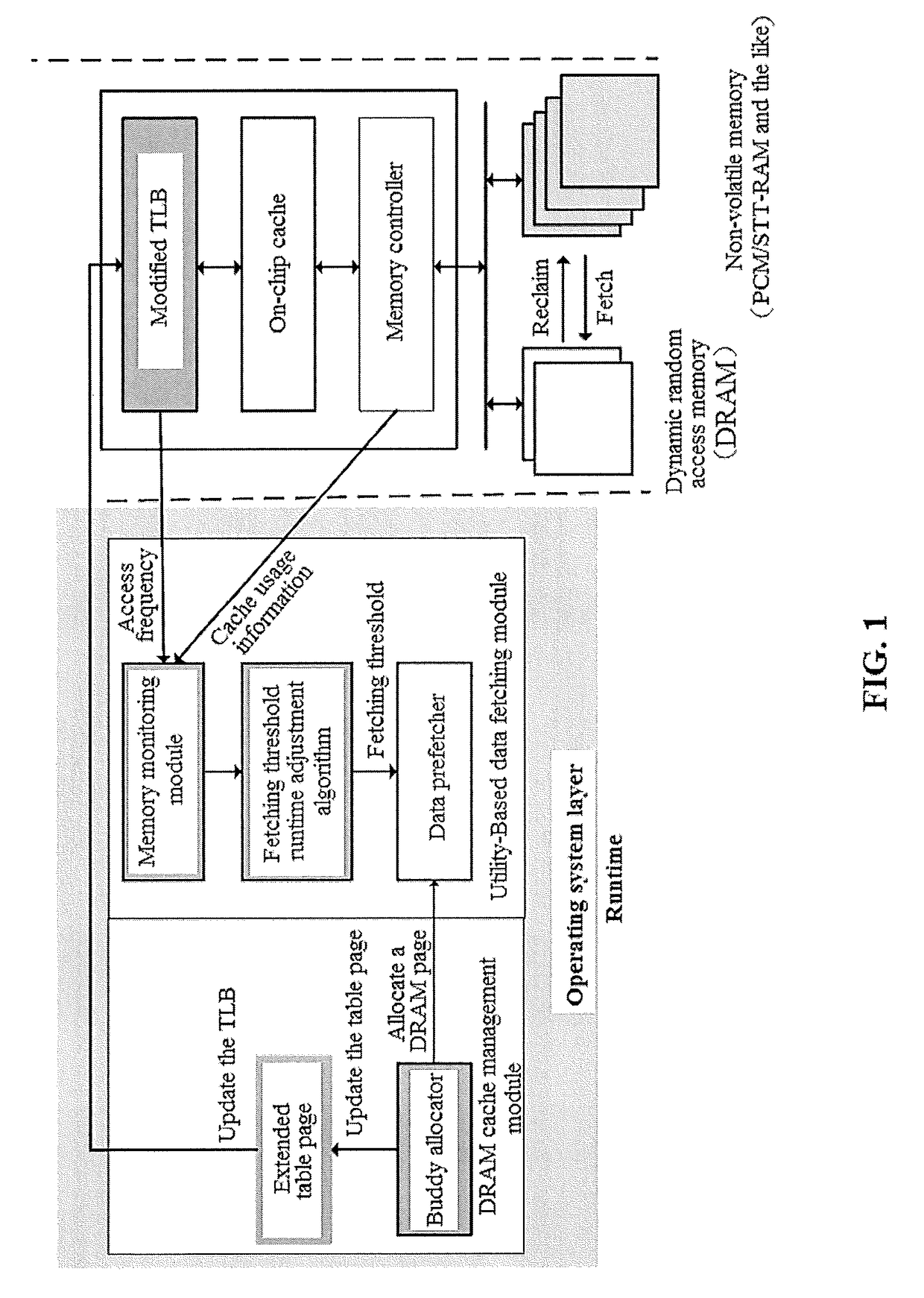

Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

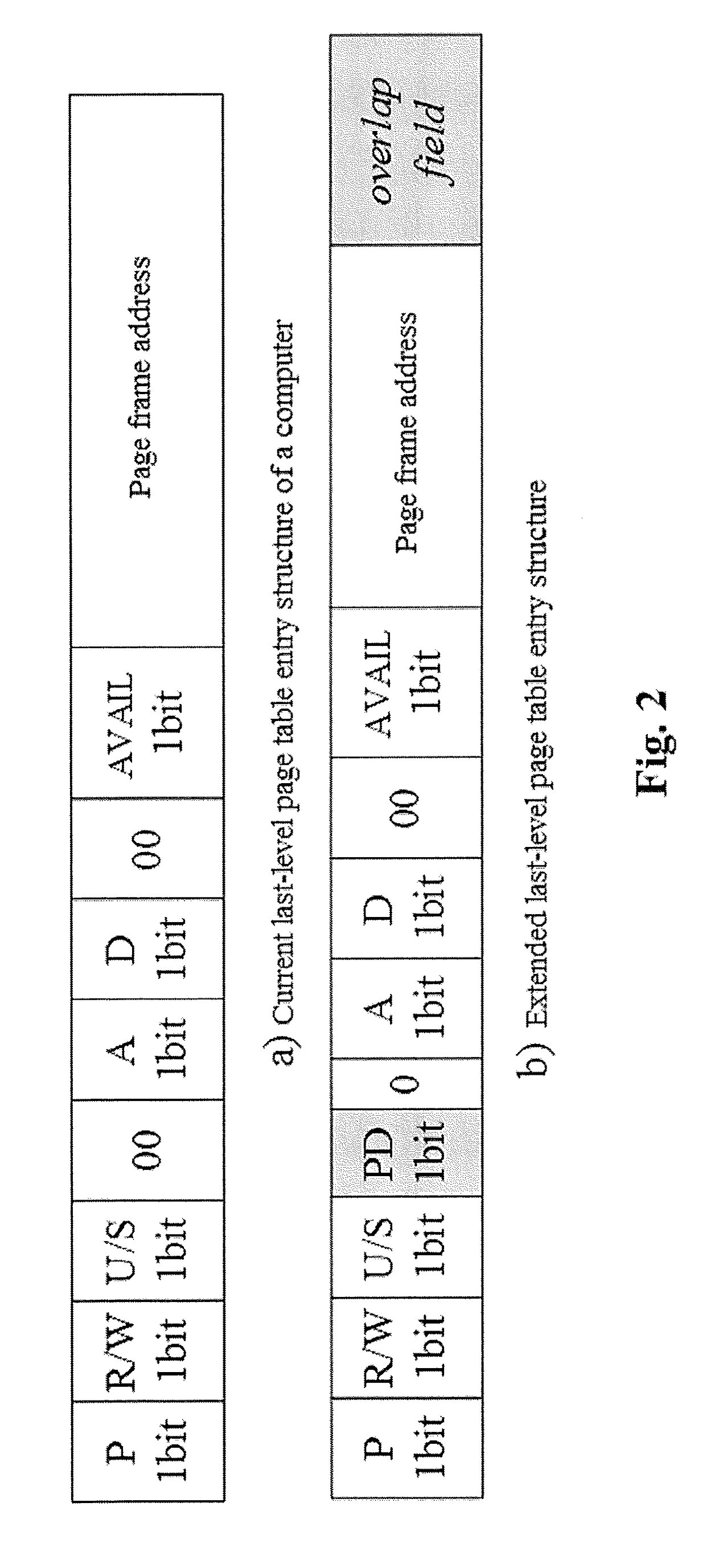

ActiveUS20170277640A1Eliminates hardwareReducing memory access delayMemory architecture accessing/allocationMemory systemsTerm memoryPage table

The present invention provides a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management schemes. In the system, NVM is used as large-capacity main memory, and DRAM is used as a cache to the NVM. Some reserved bits in the data structure of TLB and last-level page table are employed effectively to eliminate hardware costs in the conventional hardware-managed hierarchical memory architecture. The cache management in such a heterogeneous memory system is pushed to the software level. Moreover, the invention is able to reduce memory access latency in case of last-level cache misses. Considering that many applications have relatively poor data locality in big data application environments, the conventional demand-based data fetching policy for DRAM cache can aggravates cache pollution. In the present invention, an utility-based data fetching mechanism is adopted in the DRAM / NVM hierarchical memory system, and it determines whether data in the NVM should be cached in the DRAM according to current DRAM memory utilization and application memory access patterns. It improves the efficiency of the DRAM cache and bandwidth usage between the NVM main memory and the DRAM cache.

Owner:HUAZHONG UNIV OF SCI & TECH

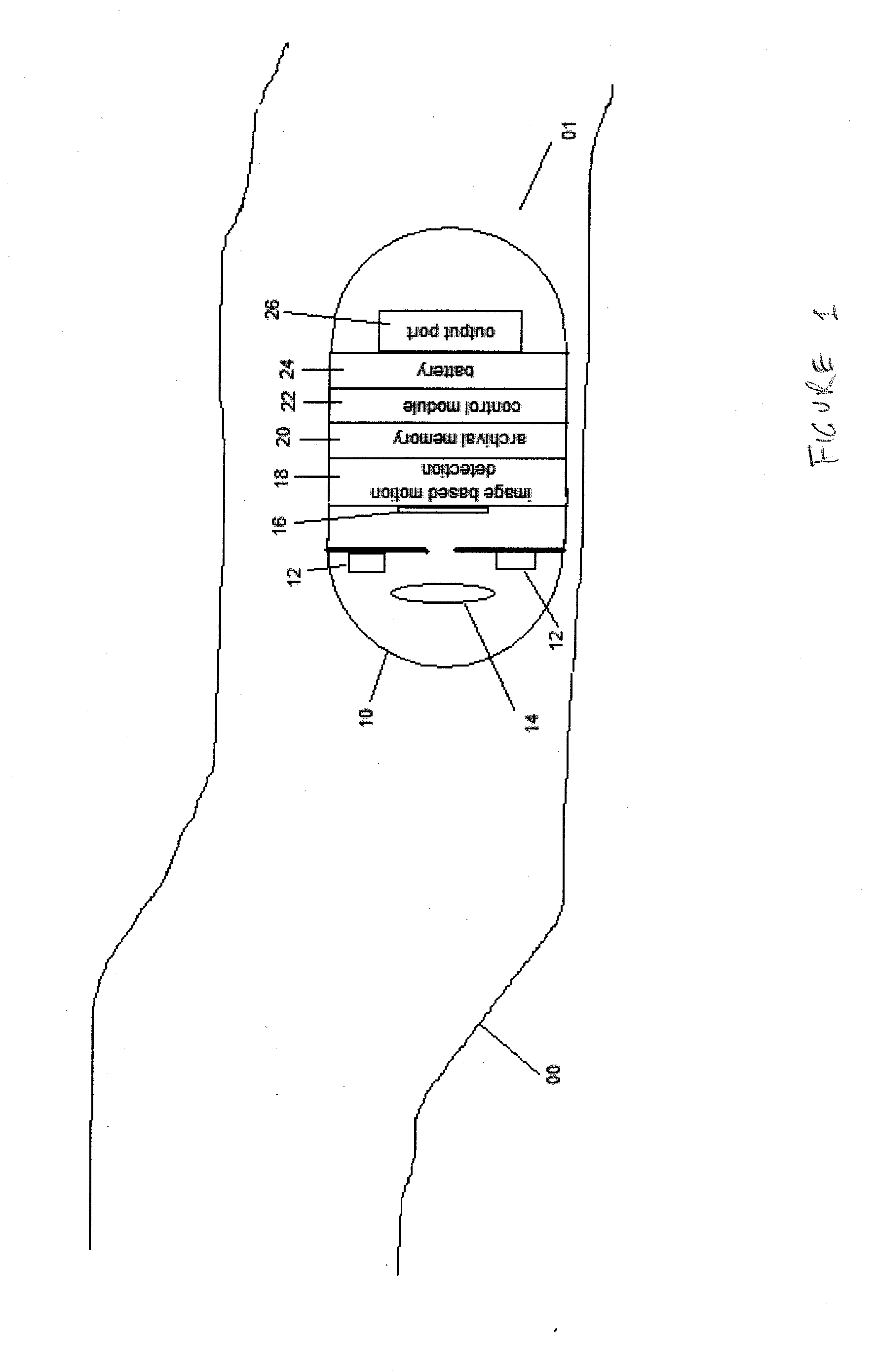

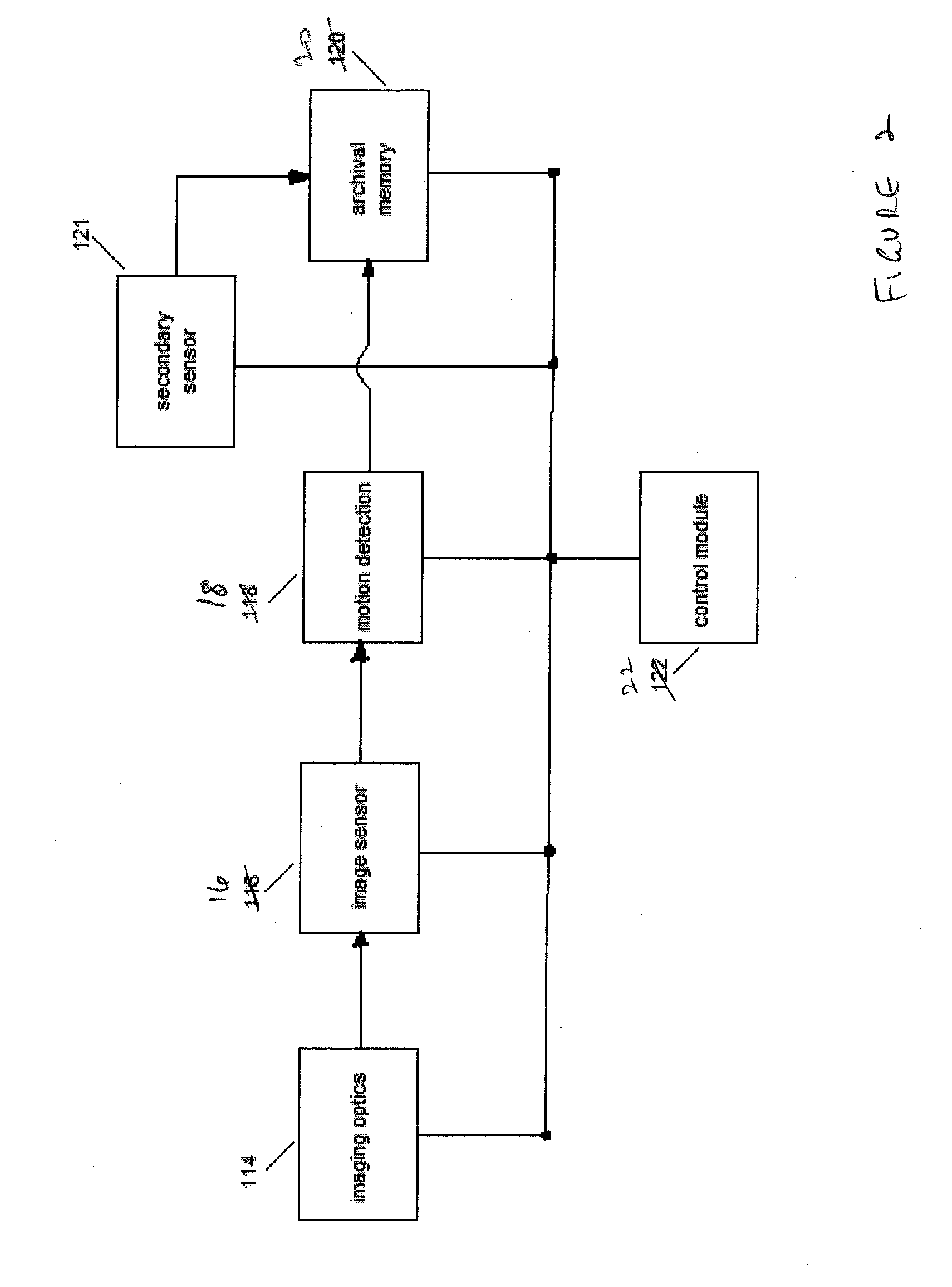

Onboard data storage and method

ActiveUS20070091713A1Simple processSave powerTelevision system detailsPrintersDevice materialMemory circuits

A semiconductor memory device and an associated method suitable for use in specific applications with predictable memory access pattern, such as in a capsule camera. The memory device takes advantage of the memory access pattern to simplify address processing circuit to realize savings in power and silicon area. Because random access to the semiconductor device is not required, the interface from external to the semiconductor device is also simplified by eliminating at least the address port that is used to specify the memory locations accessed. The method is applicable not only to non-volatile memory technologies (e.g., flash memory), it is also applicable to volatile memory technologies, such as transient charge storage-based memory circuits (e.g., DRAMs) and metastable states-based memory circuits (e.g., SRAMs).

Owner:CAPSO VISION INC

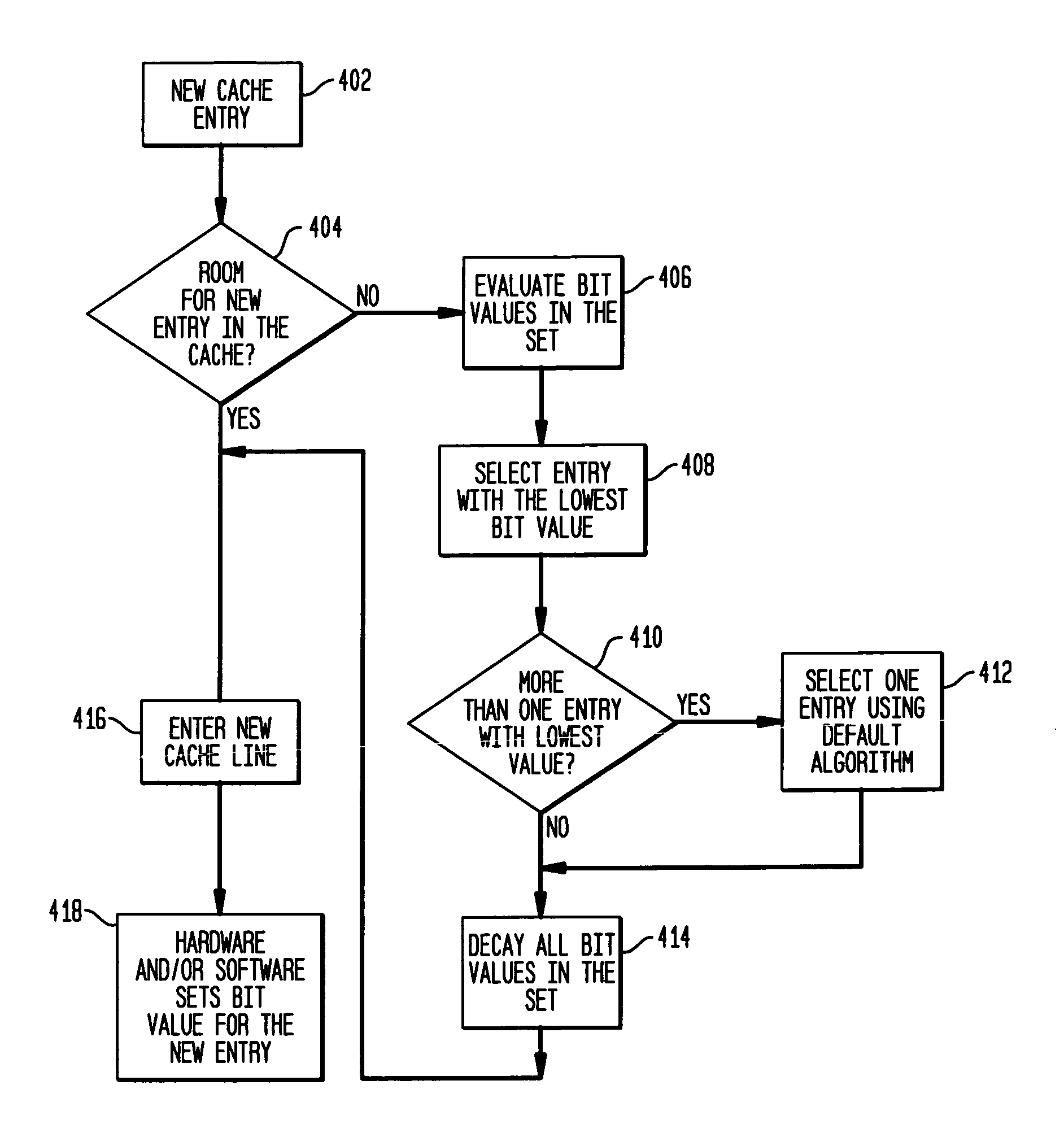

Intelligent cache replacement mechanism with varying and adaptive temporal residency requirements

A method for replacing cached data is disclosed. The method in one aspect associates an importance value to each block of data in the cache. When a new entry needs to be stored in the cache, a cache block for replacing is selected based on the importance values associated with cache blocks. In another aspect, the importance values are set according to the hardware and / or software's knowledge of the memory access patterns. The method in one aspect may also include varying the importance value over time over different processing requirements.

Owner:INT BUSINESS MASCH CORP

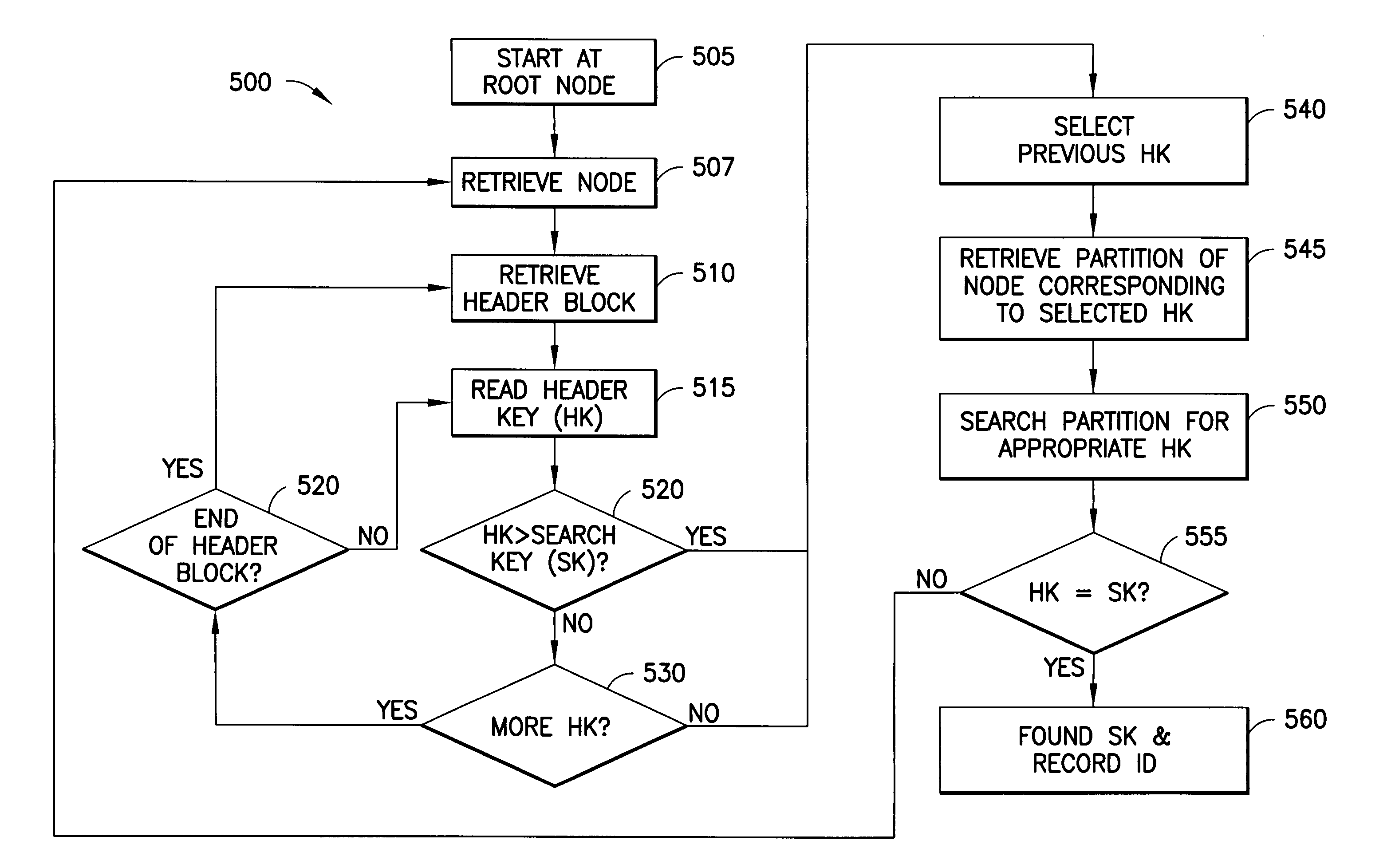

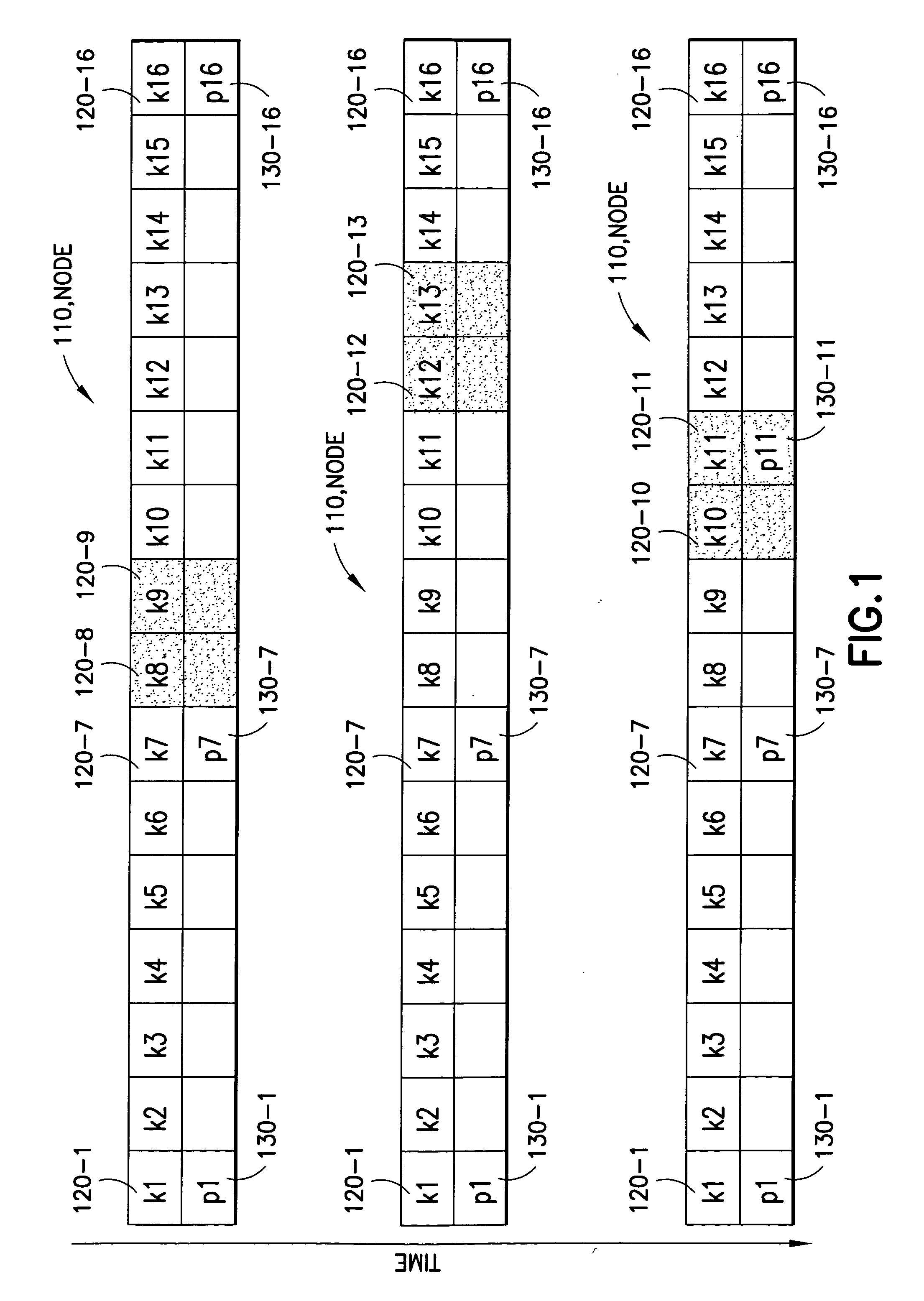

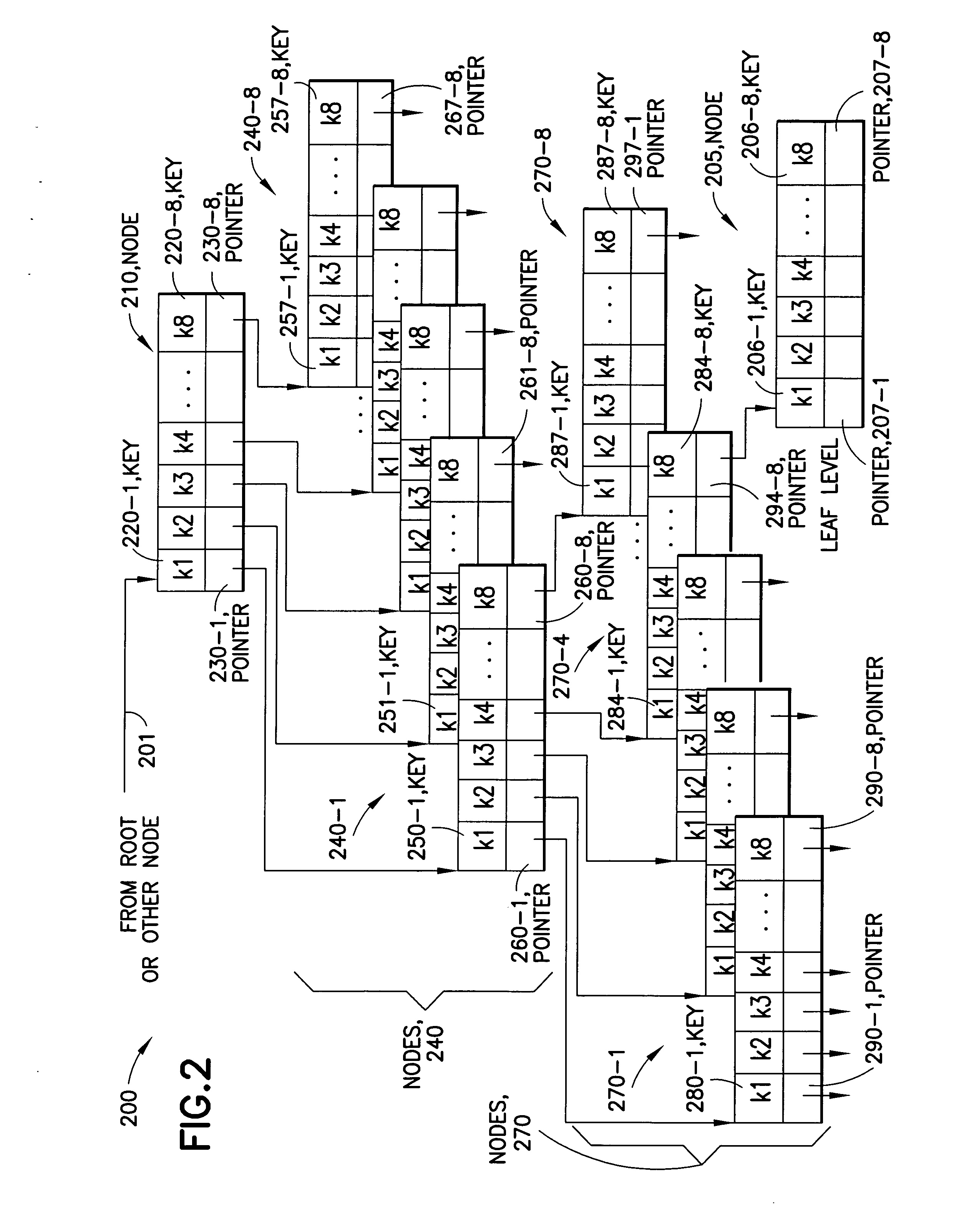

Techniques for improving memory access patterns in tree-based data index structures

ActiveUS20060190468A1Improving memory access patternData processing applicationsDigital data information retrievalTheoretical computer scienceMemory access pattern

Owner:SAP AG

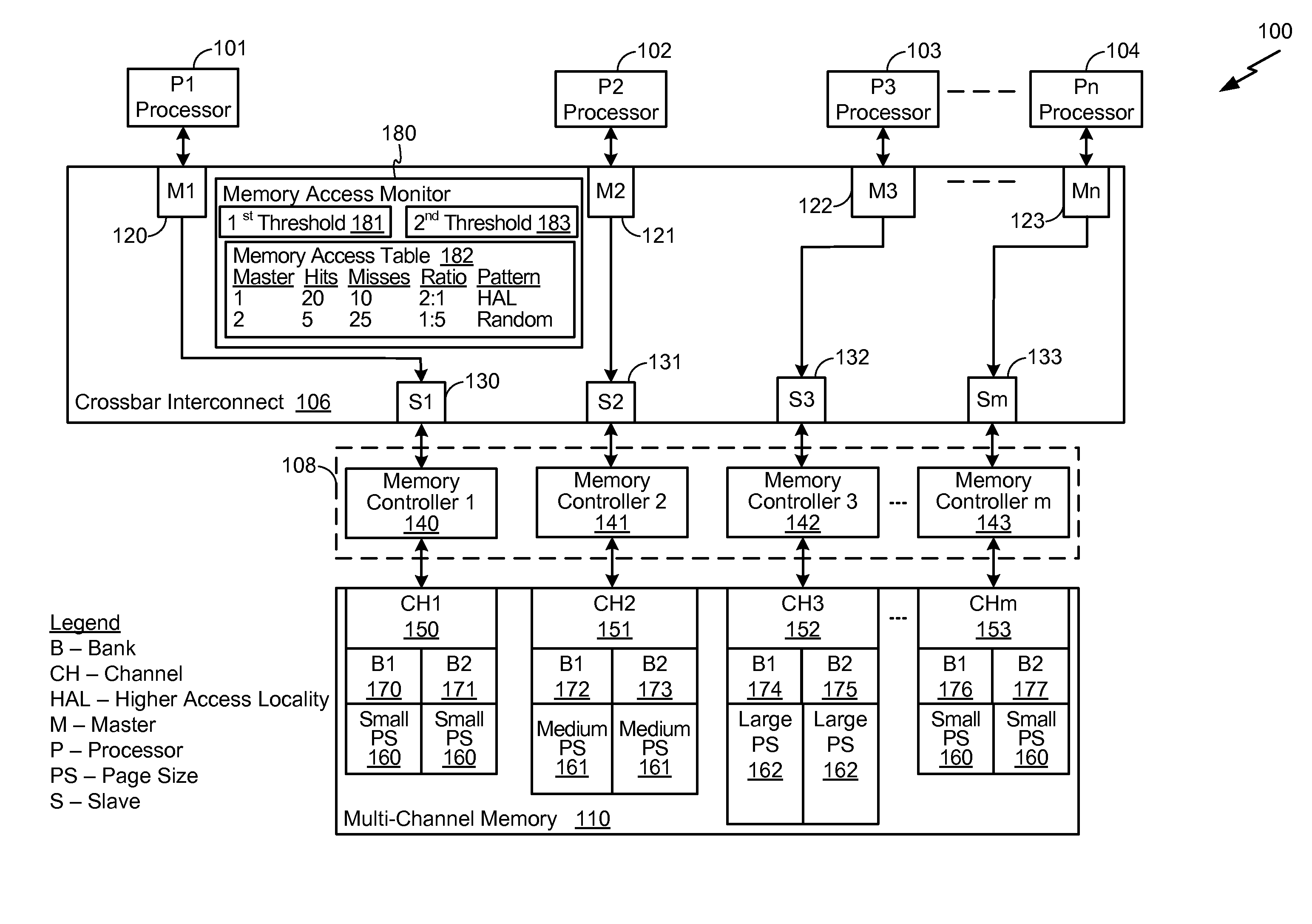

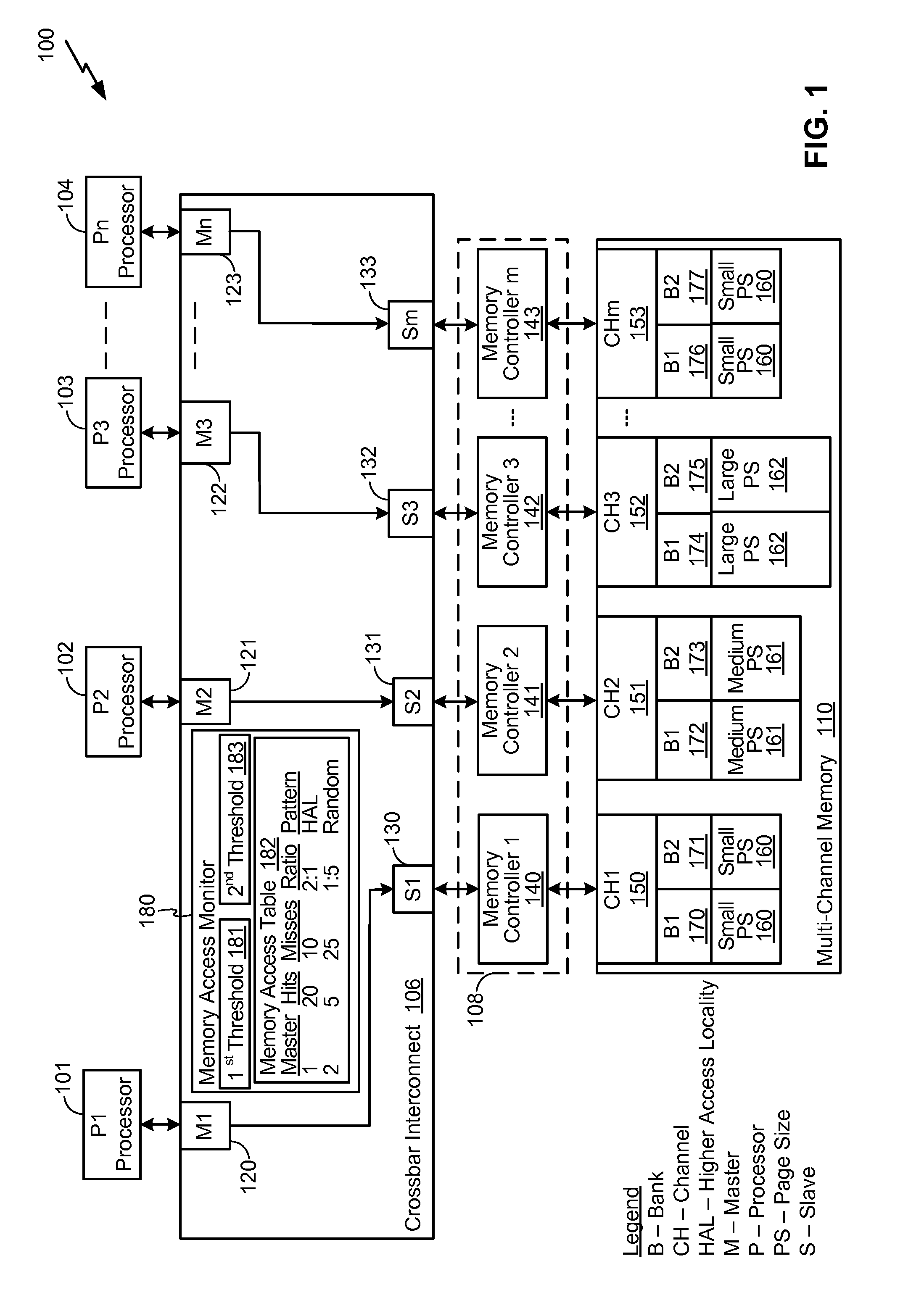

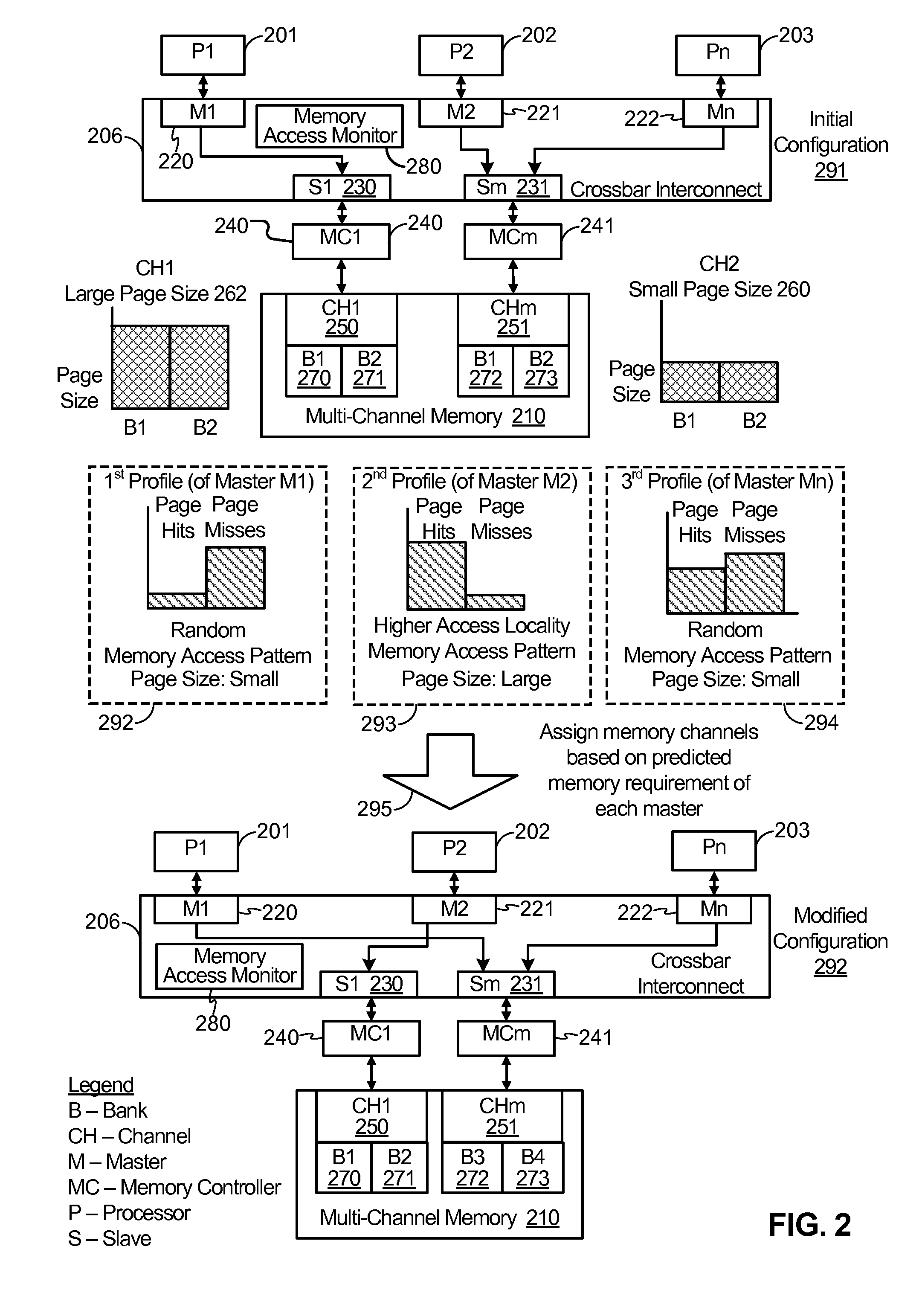

Accessing a Multi-Channel Memory System Having Non-Uniform Page Sizes

ActiveUS20110087846A1Increase page hit rateReduce page open powerEnergy efficient ICTMemory adressing/allocation/relocationCrossbar switchMemory bank

A method includes predicting a memory access pattern of each master of a plurality of masters. The plurality of masters can access a multi-channel memory via a crossbar interconnect, where the multi-channel memory has a plurality of banks The method includes identifying a page size associated with each bank of the plurality of banks The method also includes assigning at least one bank of the plurality of banks to each master of the plurality of masters based on the memory access pattern of each master.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com