Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

81 results about "Dram cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

DRAM is typically your system's main memory. Cache memory is typically a small volume of very expensive high-performance SRAM that can be accessed and written to by the CPU much faster than main memory access.

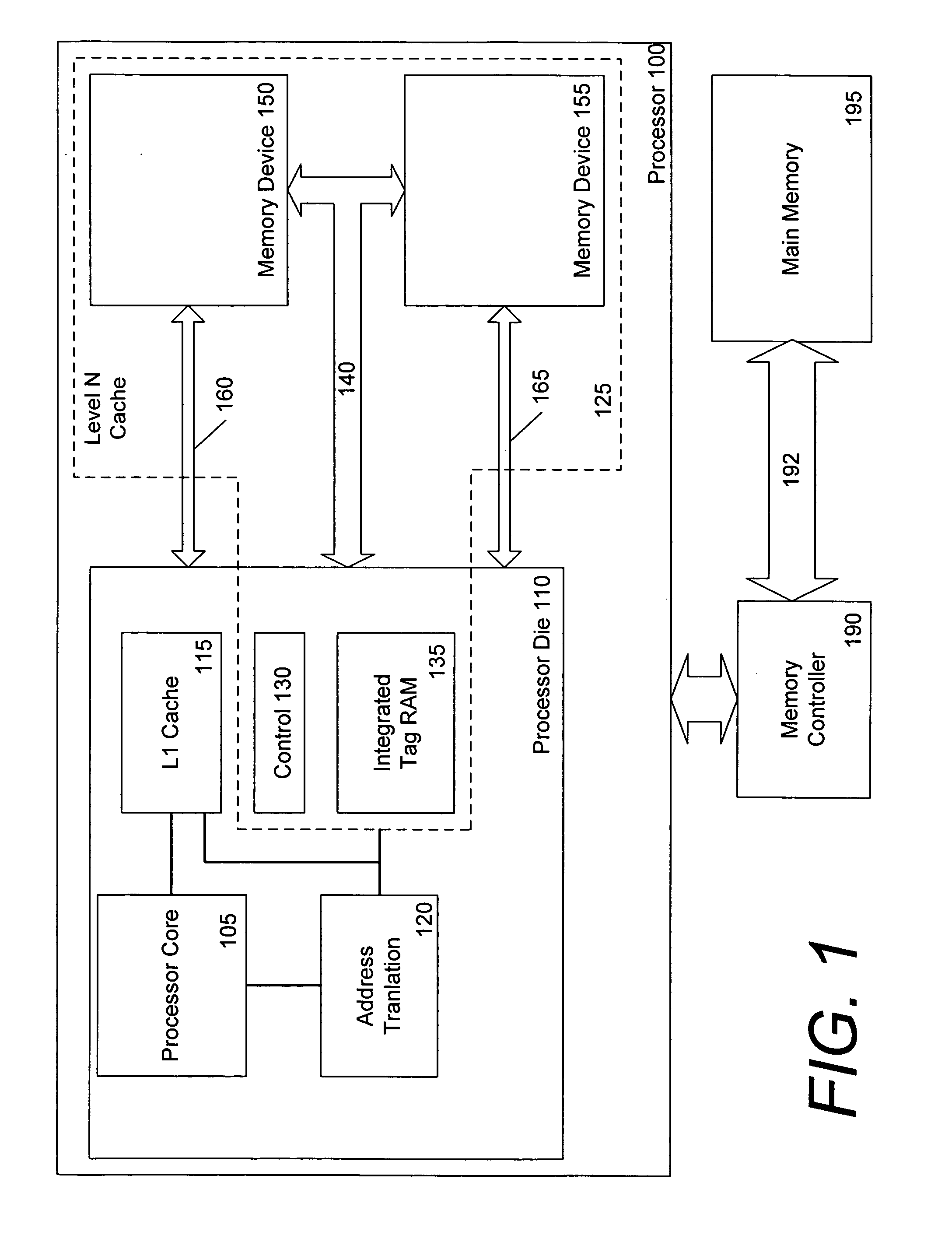

Dram cache with tags and data jointly stored in physical rows

ActiveUS20130138892A1Reduce latencyReduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationParallel computingData access

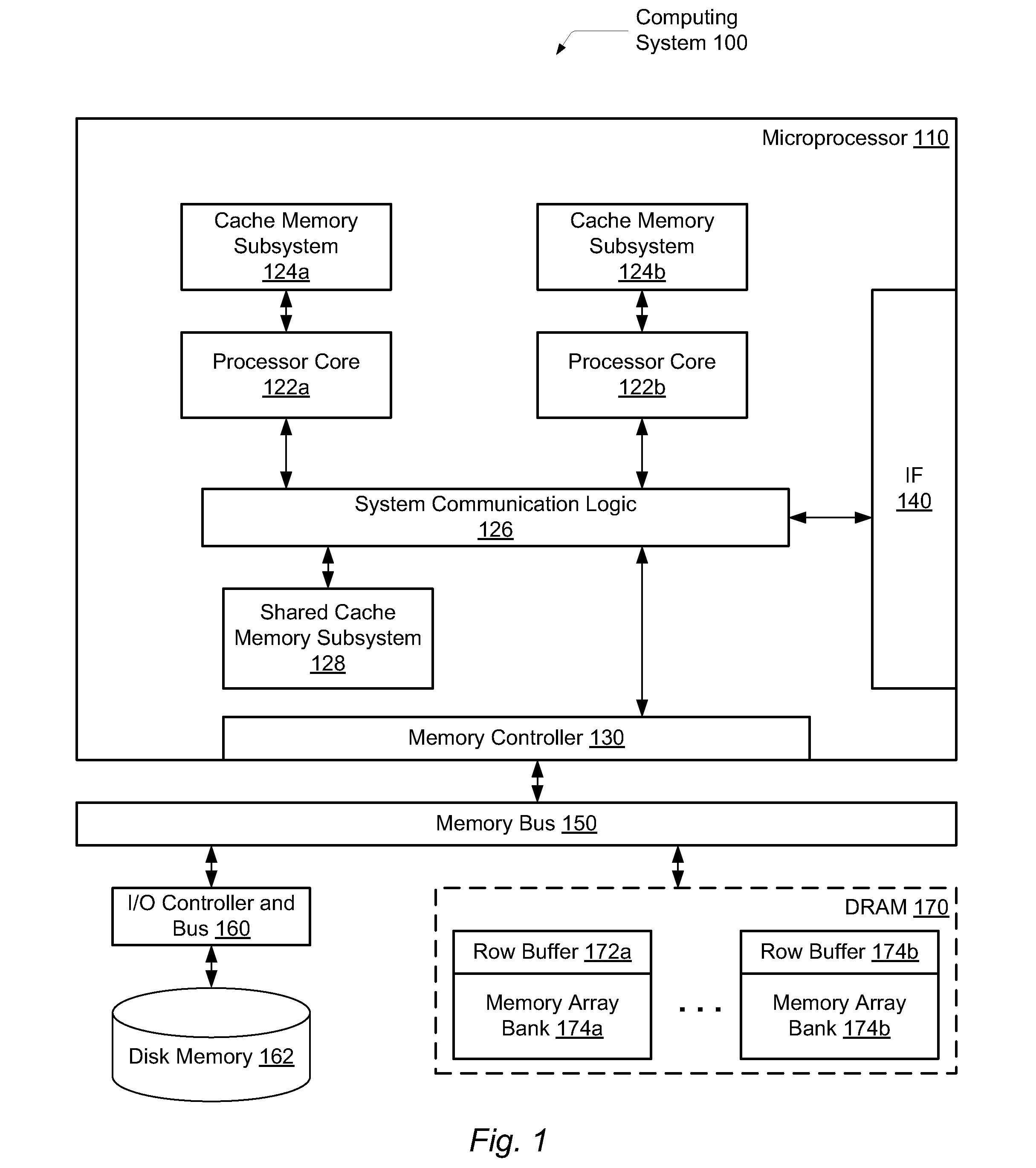

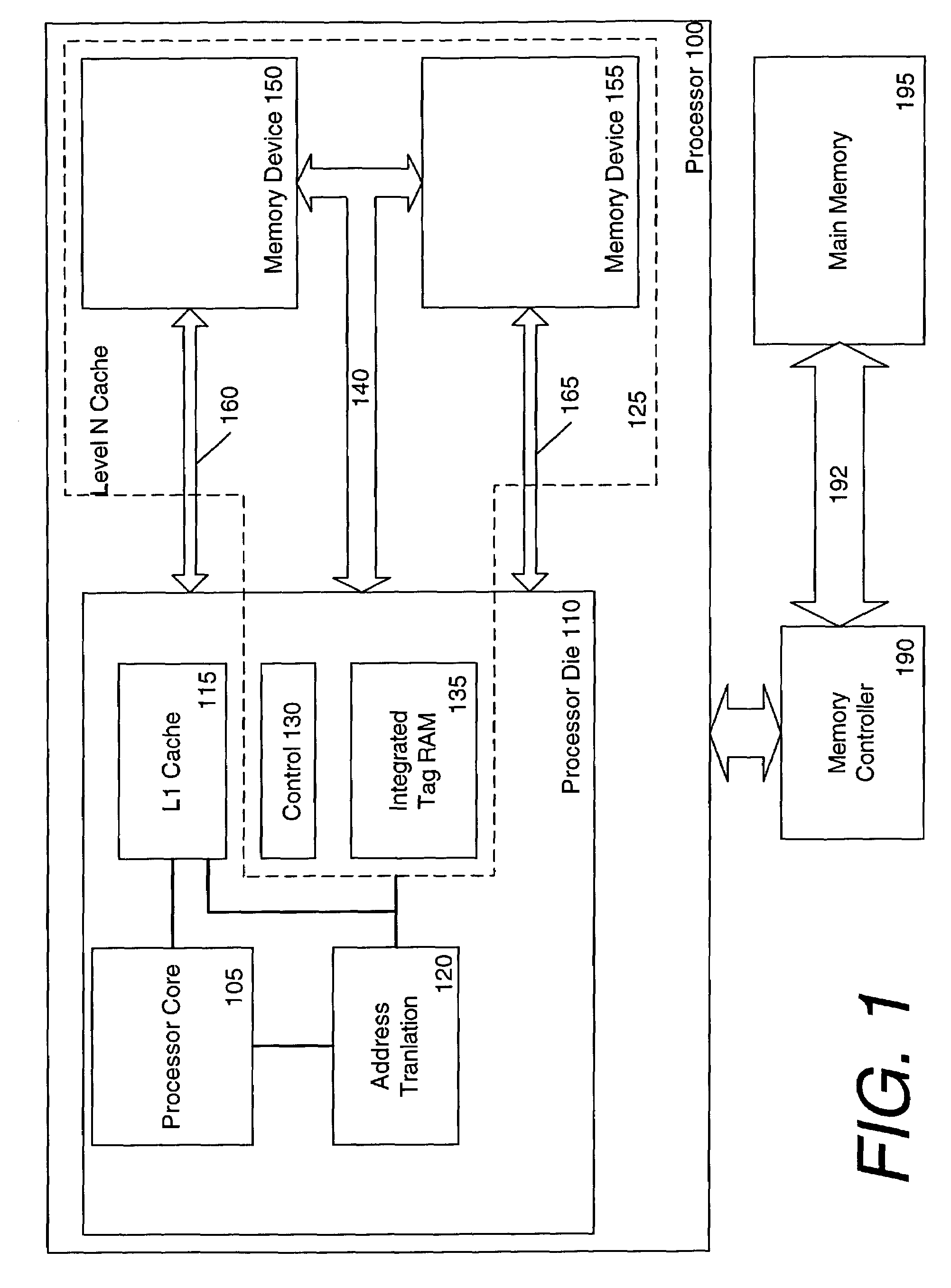

A system and method for efficient cache data access in a large row-based memory of a computing system. A computing system includes a processing unit and an integrated three-dimensional (3D) dynamic random access memory (DRAM). The processing unit uses the 3D DRAM as a cache. Each row of the multiple rows in the memory array banks of the 3D DRAM stores at least multiple cache tags and multiple corresponding cache lines indicated by the multiple cache tags. In response to receiving a memory request from the processing unit, the 3D DRAM performs a memory access according to the received memory request on a given cache line indicated by a cache tag within the received memory request. Rather than utilizing multiple DRAM transactions, a single, complex DRAM transaction may be used to reduce latency and power consumption.

Owner:ADVANCED MICRO DEVICES INC

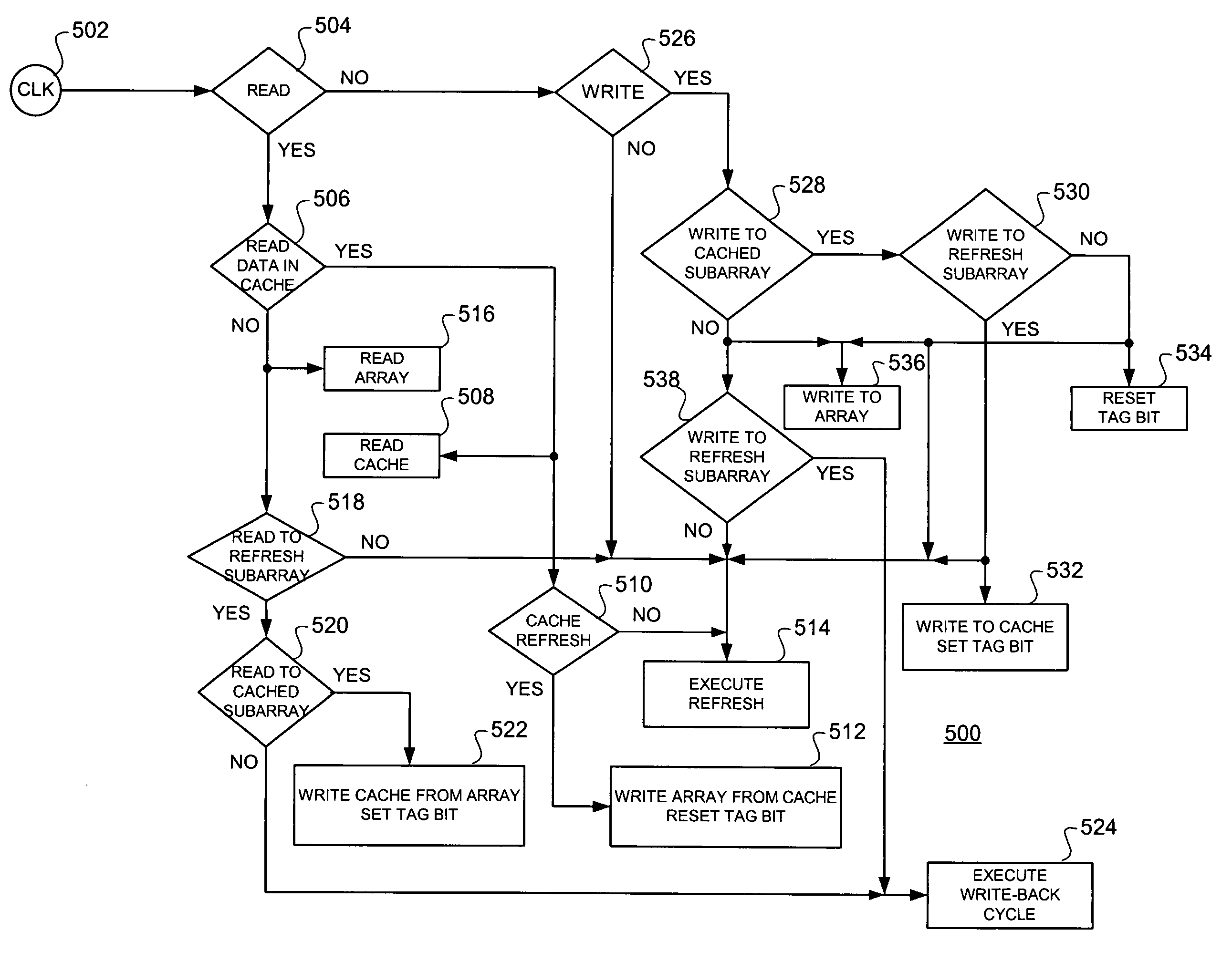

Method and apparatus for performing data access and refresh operations in different sub-arrays of a DRAM cache memory

InactiveUS6697909B1Memory adressing/allocation/relocationDigital storageData accessComputerized system

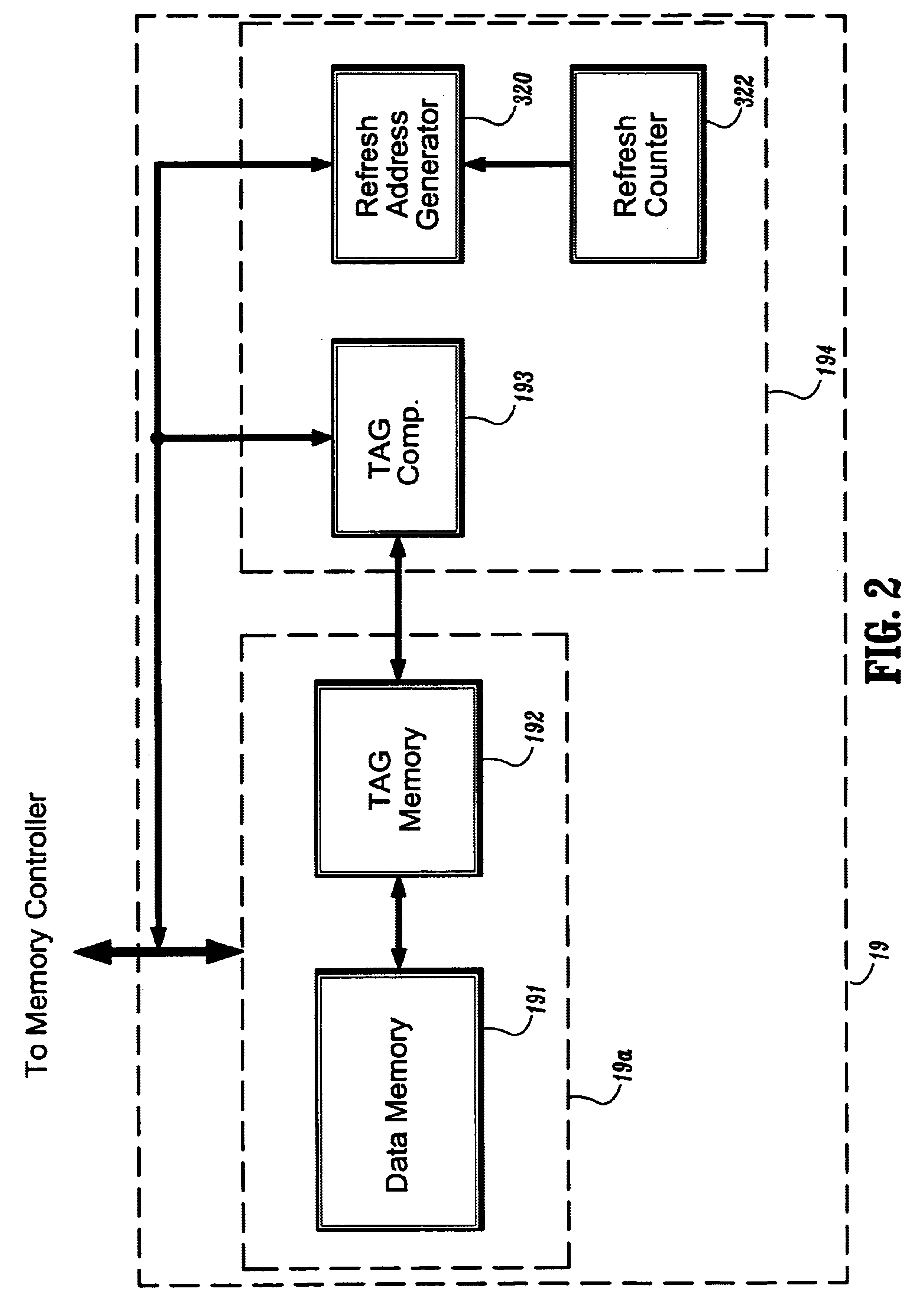

A method and apparatus for refreshing data in a dynamic random access memory (DRAM) cache memory in a computer system are provided to perform a data refresh operation without refresh penalty (e.g., delay in a processor). A data refresh operation is performed with respect to a DRAM cache memory by detecting a request address from a processor, stopping a normal refresh operation when the request address is detected, comparing the request address with TAG addresses stored in a TAG memory, generating refresh addresses to refresh data stored in the cache memory, each of which is generated based on an age of data corresponding to the refresh address, and performing a read / write operation on a wordline accessed by the request addresses and refreshing data on wordlines accessed by the refresh addresses, wherein the read / write operation and the refreshing of data are performed simultaneously.

Owner:GOOGLE LLC

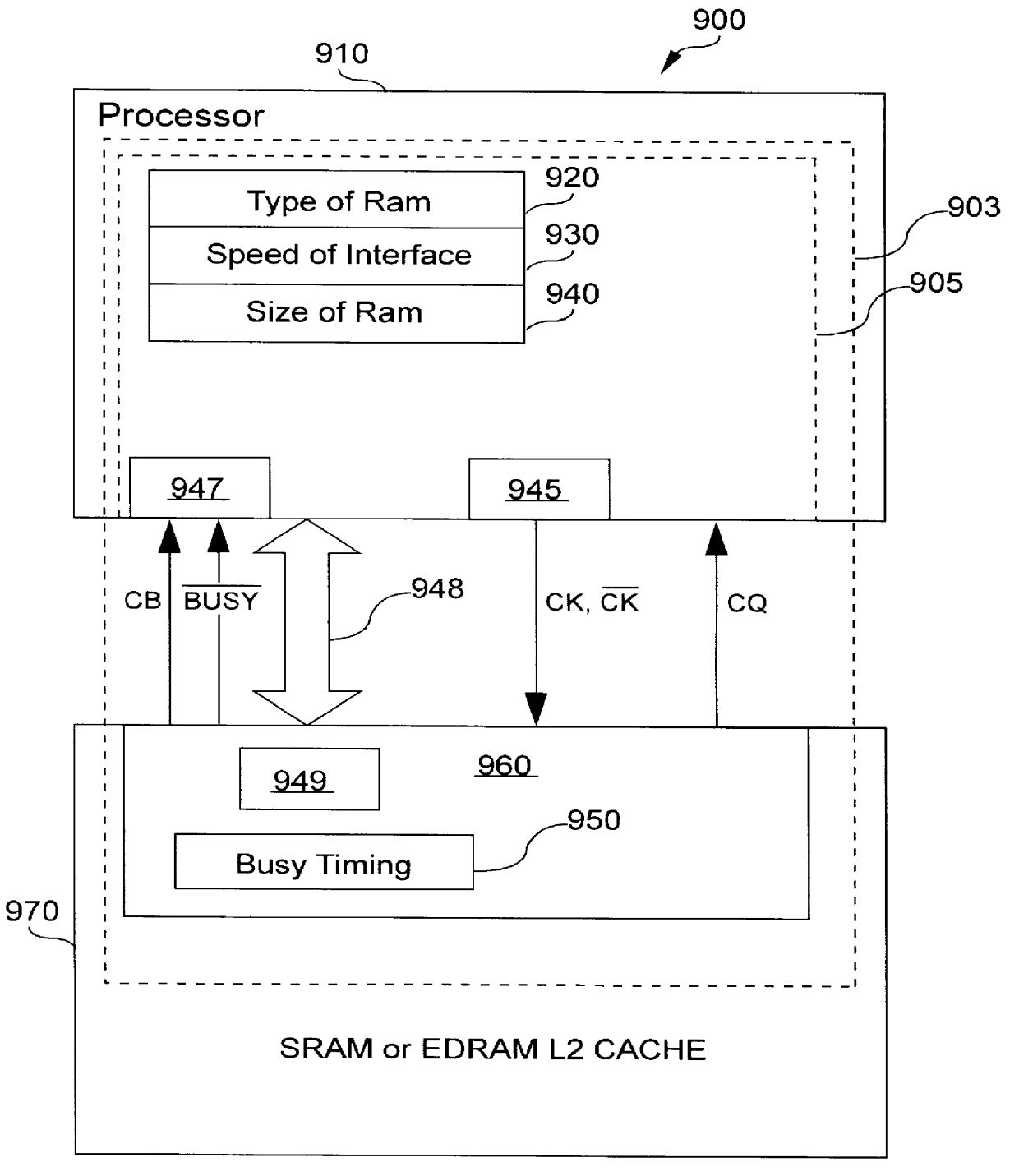

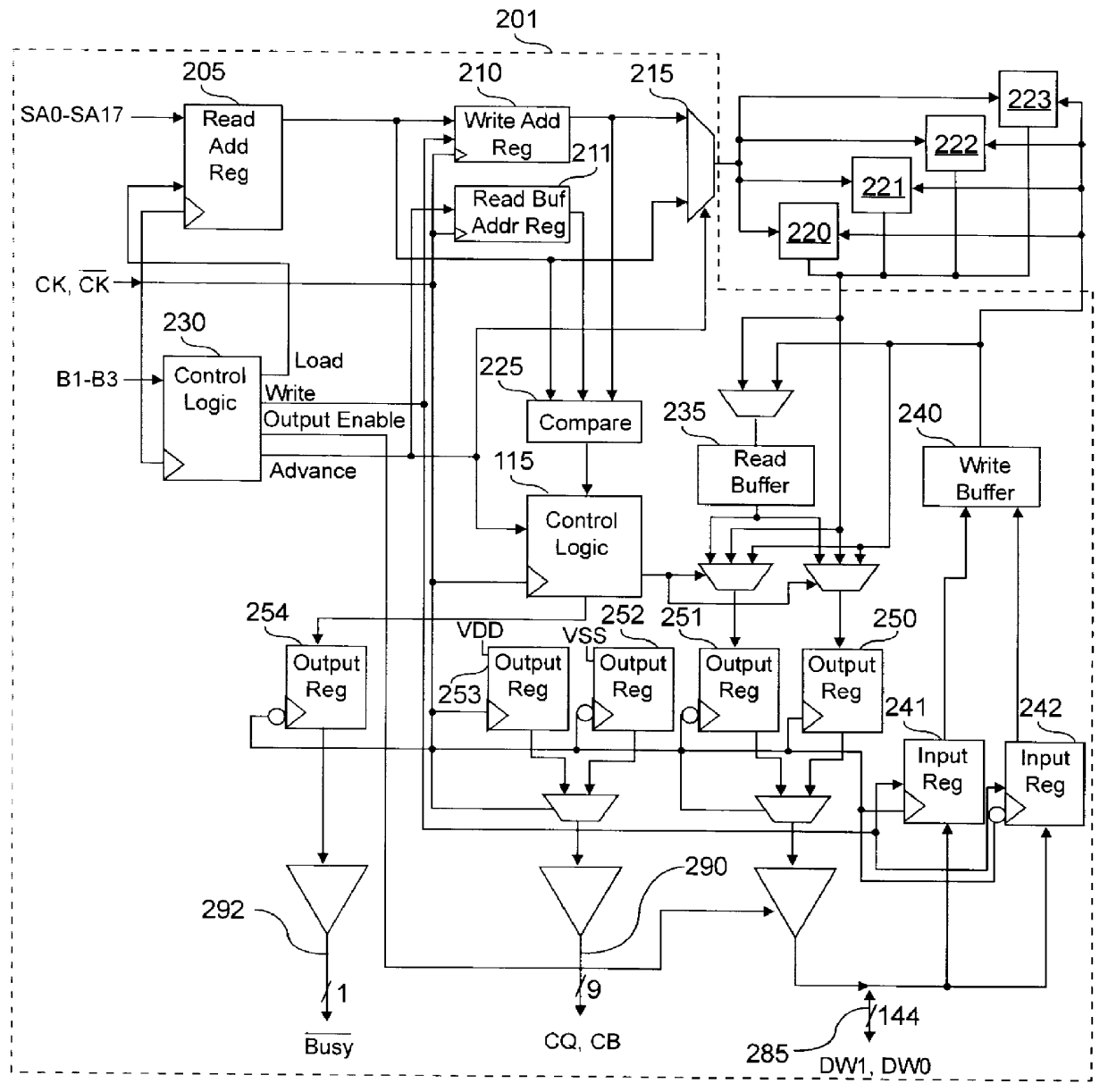

Programmable SRAM and DRAM cache interface with preset access priorities

InactiveUS6151664ACache interface portionMemory adressing/allocation/relocationStatic random-access memoryParallel computing

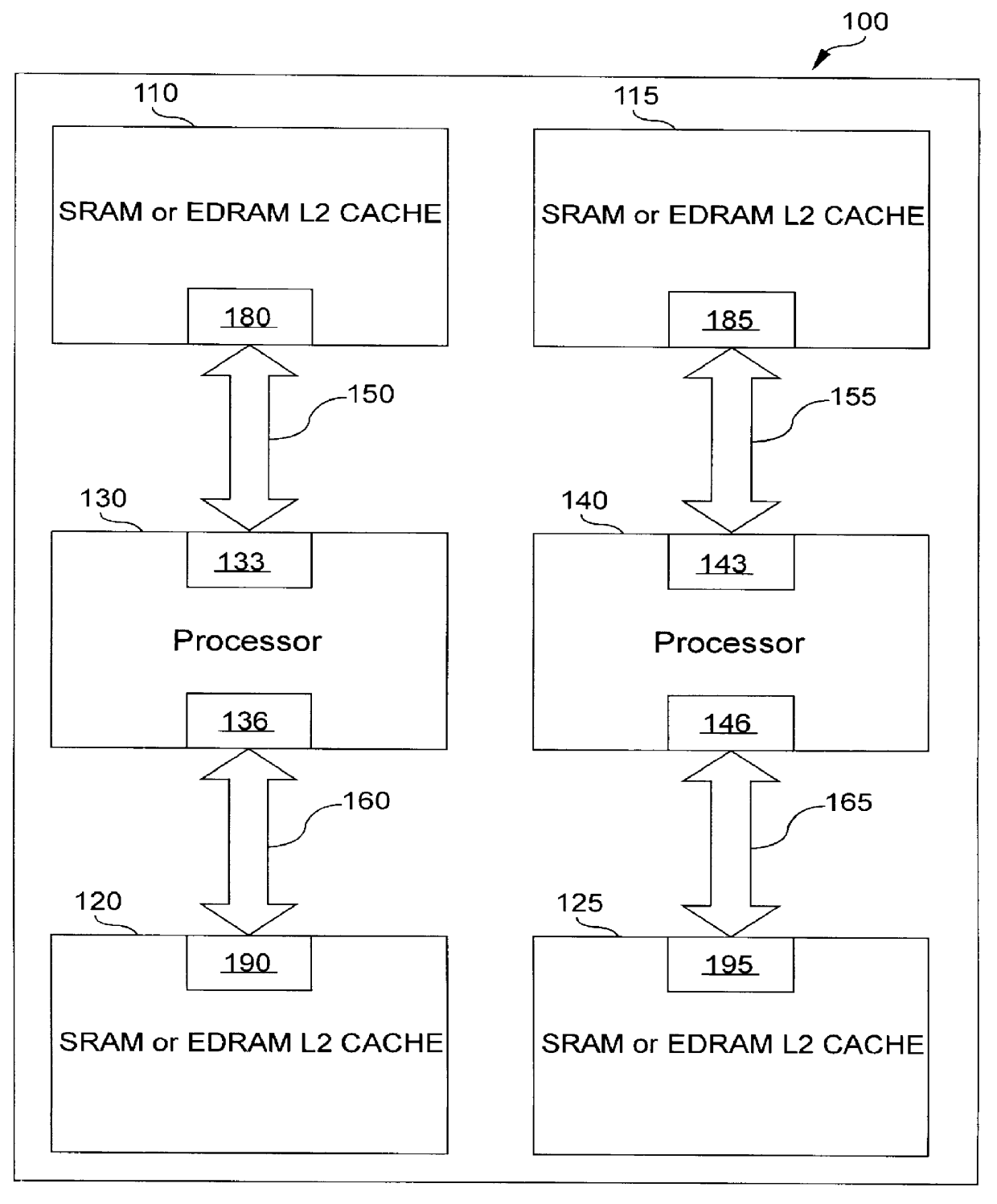

A cache interface that supports both Static Random Access Memory (SRAM) and Dynamic Random Access Memory (DRAM) is disclosed. The cache interface preferably comprises two portions, one portion on the processor and one portion on the cache. A designer can simply select which RAM he or she wishes to use for a cache, and the cache controller interface portion on the processor configures the processor to use this type of RAM. The cache interface portion on the cache is simple when being used with DRAM in that a busy indication is asserted so that the processor knows when an access collision occurs between an access generated by the processor and the DRAM cache. An access collision occurs when the DRAM cache is unable to read or write data due to a precharge, initialization, refresh, or standby state. When the cache interface is used with an SRAM cache, the busy indication is preferably ignored by a processor and the processor's cache interface portion. Additionally, the disclosed cache interface allows speed and size requirements for the cache to be programmed into the interface. In this manner, the interface does not have to be redesigned for use with different sizes or speeds of caches.

Owner:IBM CORP

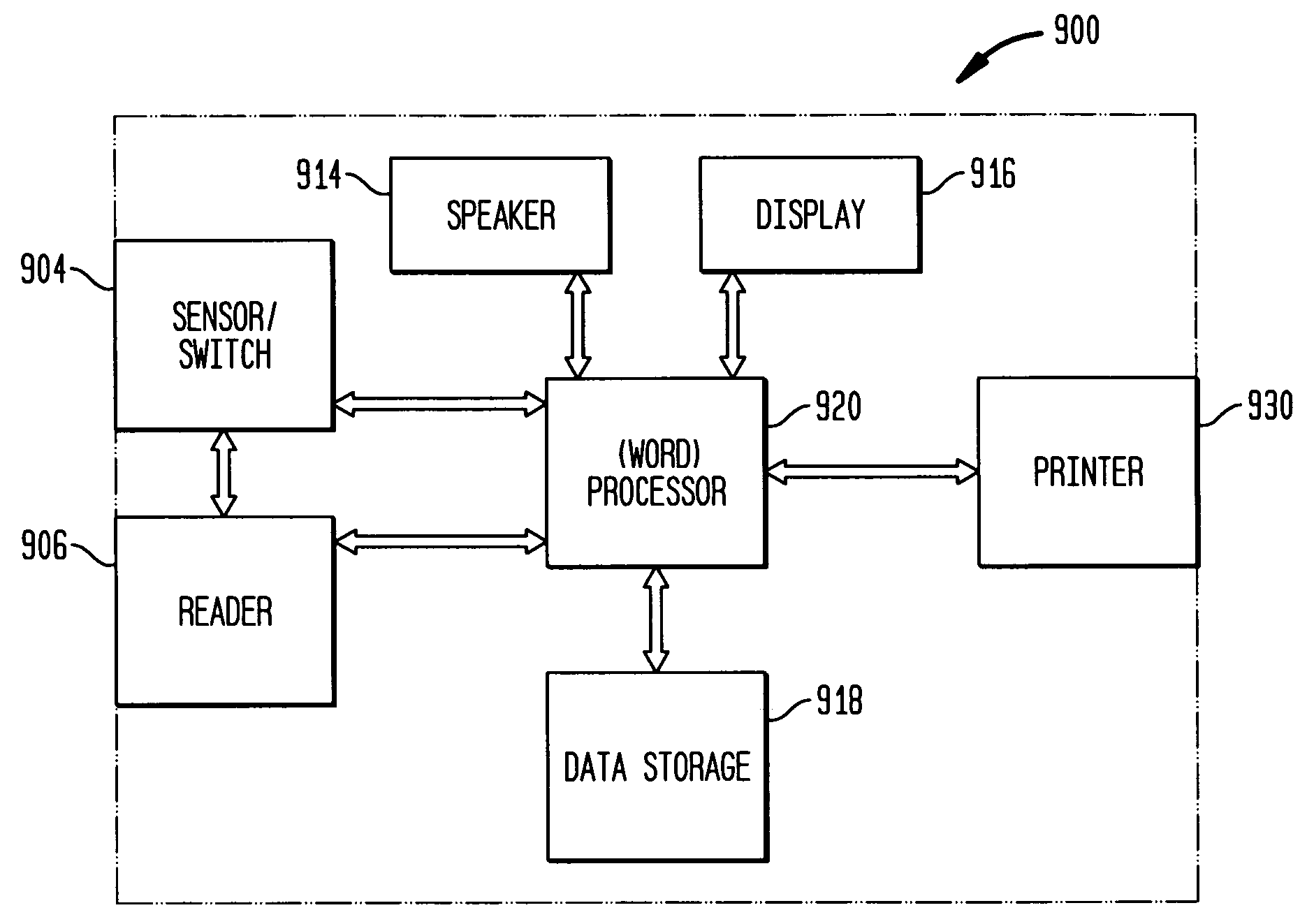

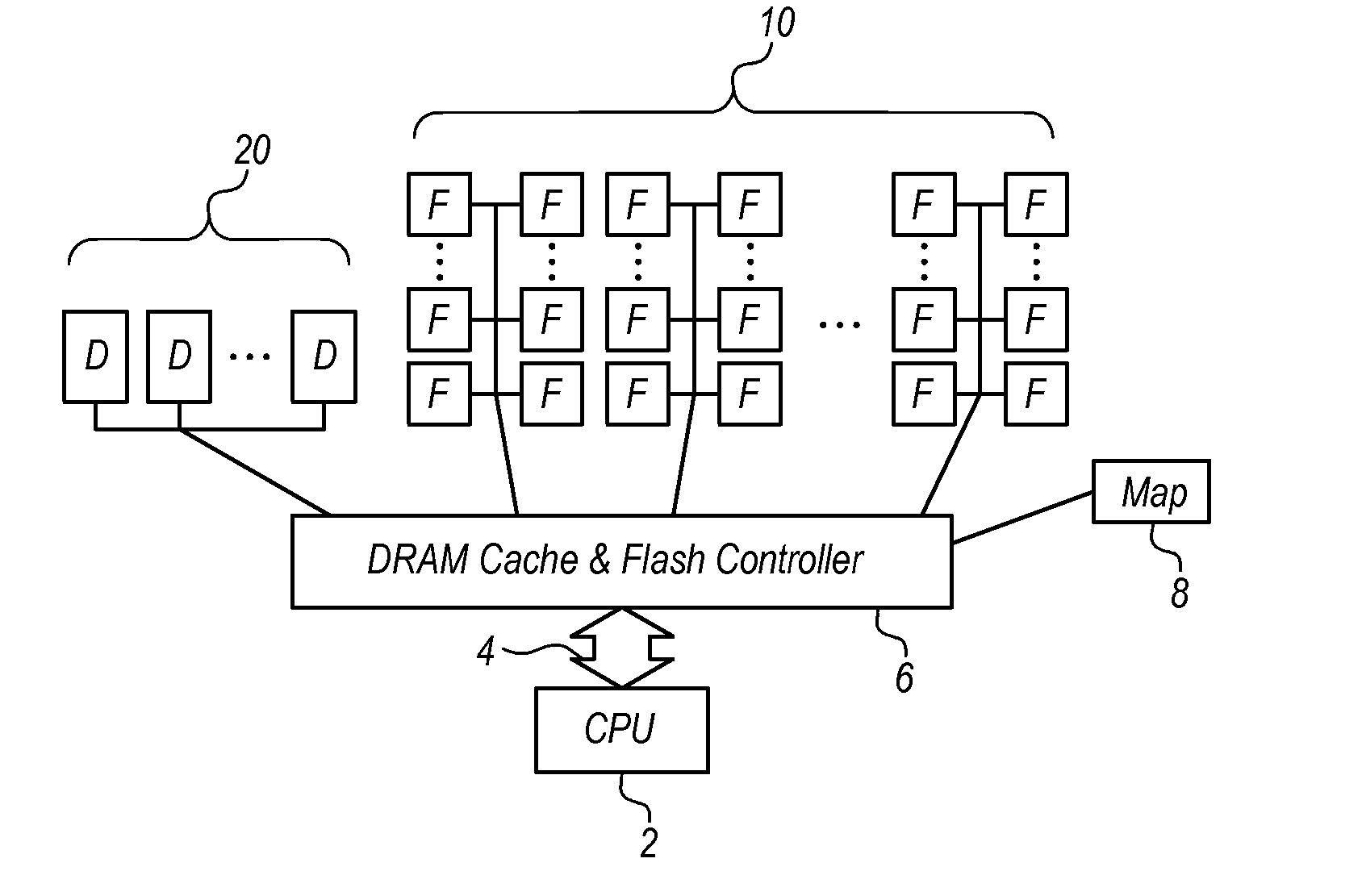

Method of managing a large array of non-volatile memories

InactiveUS20080155183A1Efficient managementIncreased longevityMemory architecture accessing/allocationMemory systemsParallel computingModularity

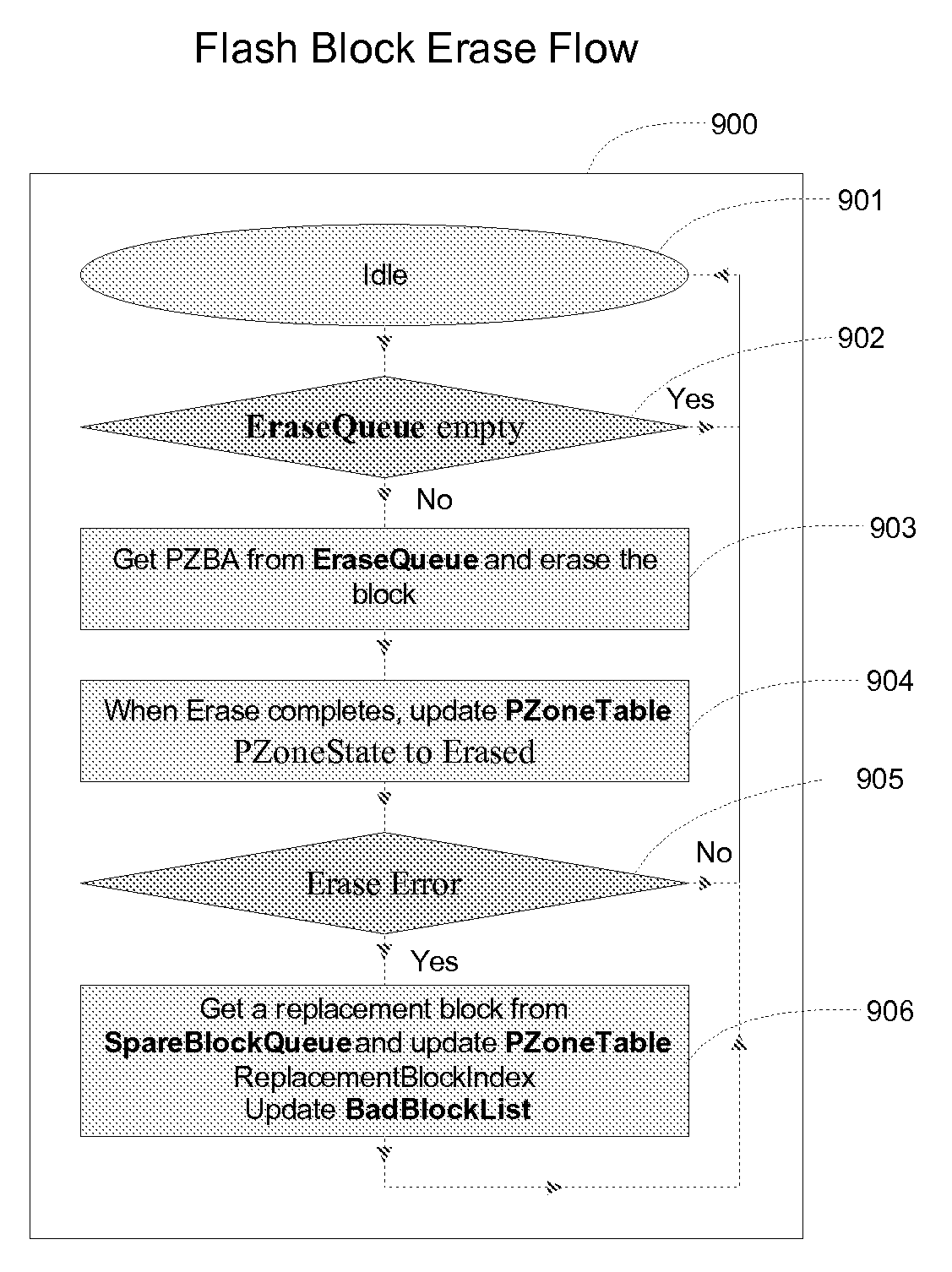

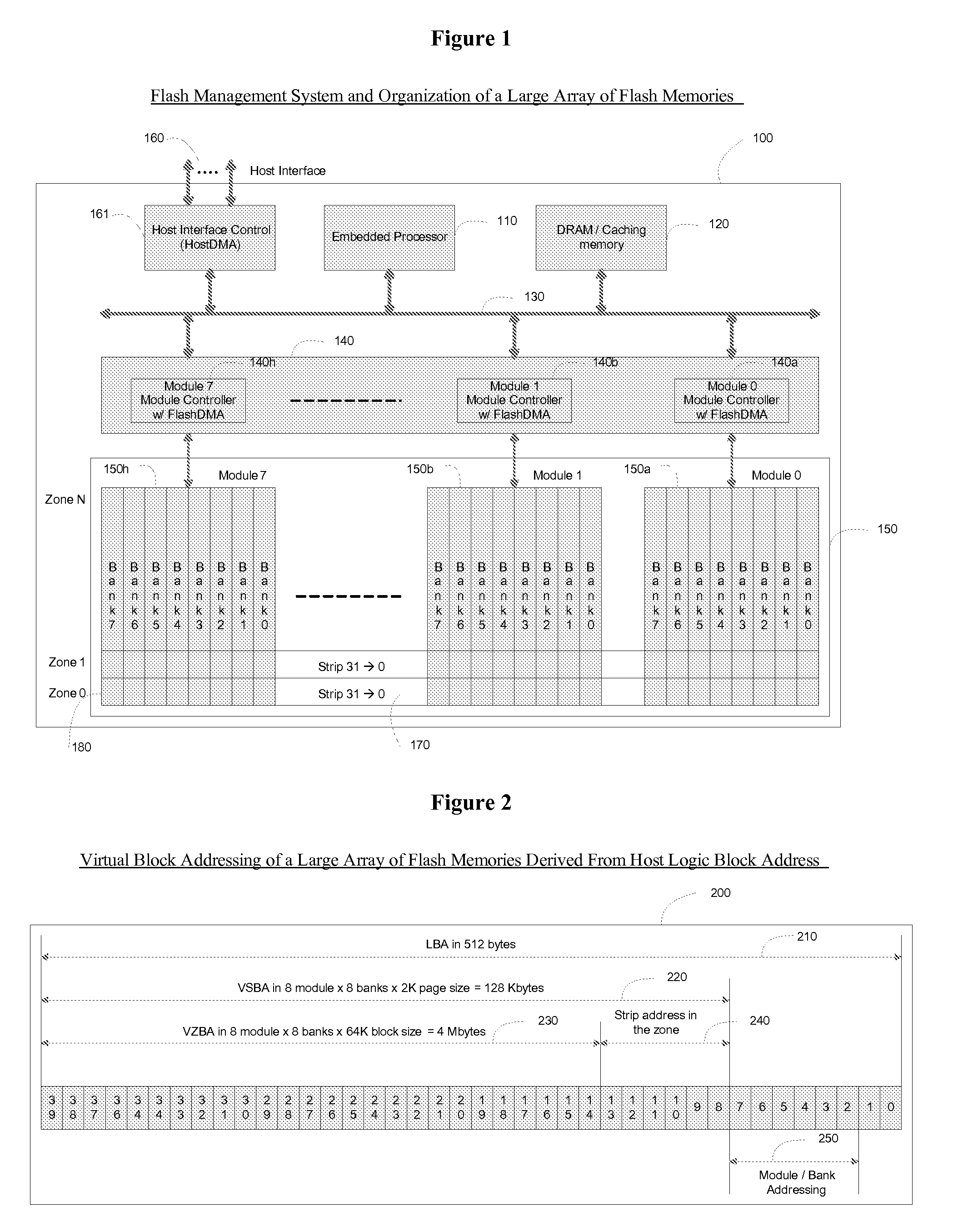

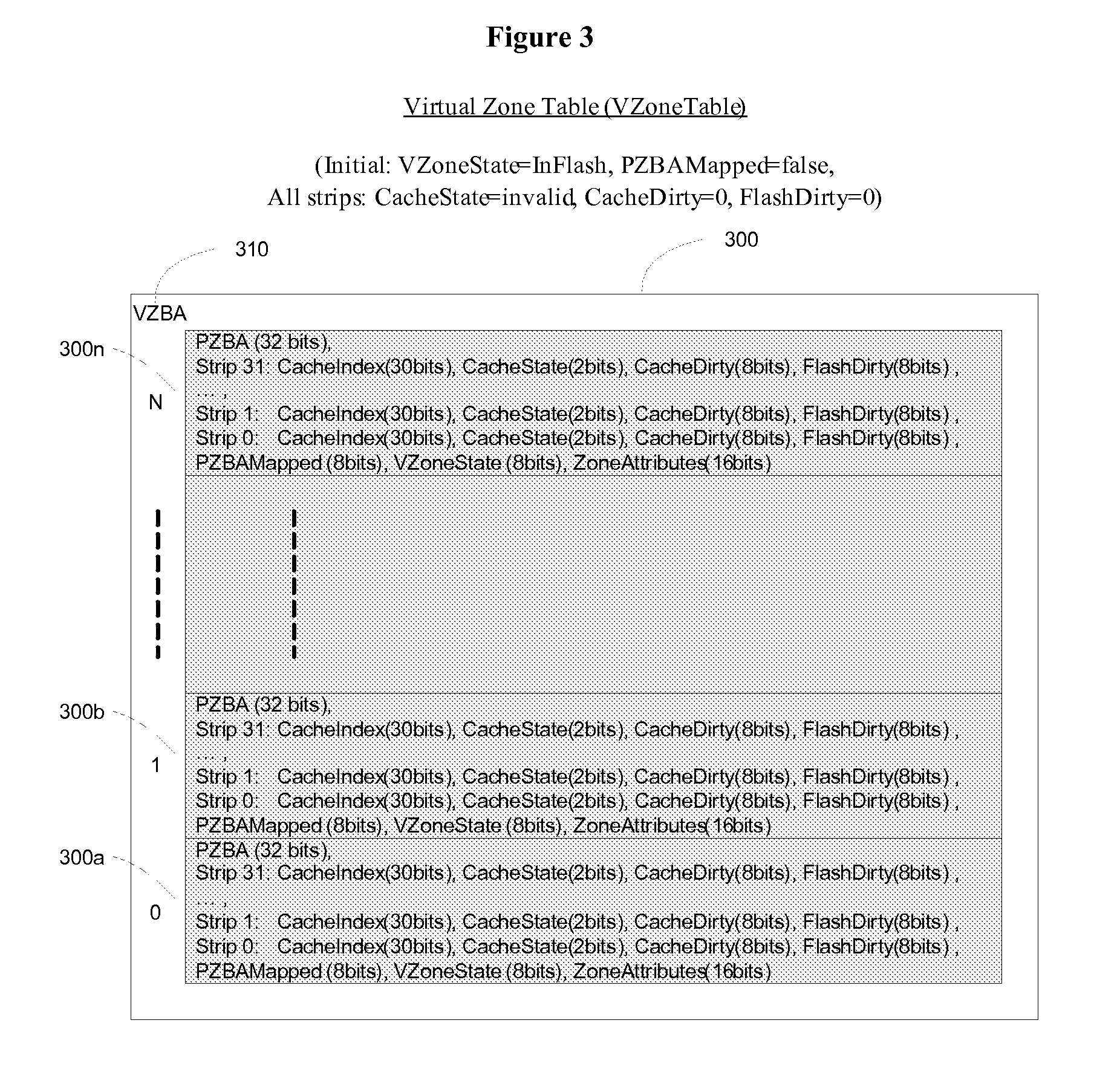

The present invention provides a non-volatile flash memory management system and method that provides the ability to efficiently manage a large array of flash devices and allocate flash memory use in a way that improves reliability and longevity, while maintaining excellent performance. The invention mainly comprises of a processor, an array of flash memories that are modularly organized, an array of module flash controllers and DRAM caching. The processor manages the above mention large array of flash devices with caching memory through mainly two tables: Virtual Zone Table and Physical Zone Table, a number of queues: Cache Line Queue, Evict Queue, Erase Queue, Free Block Queue, and a number of lists: Spare Block List and Bad Block List.

Owner:ZHUANG ZHIQING +1

Method of managing a large array of non-volatile memories

InactiveUS20100115175A9Efficient managementIncreased longevityMemory architecture accessing/allocationMemory systemsParallel computingModularity

The present invention provides a non-volatile flash memory management system and method that provides the ability to efficiently manage a large array of flash devices and allocate flash memory use in a way that improves reliability and longevity, while maintaining excellent performance. The invention mainly comprises of a processor, an array of flash memories that are modularly organized, an array of module flash controllers and DRAM caching. The processor manages the above mention large array of flash devices with caching memory through mainly two tables: Virtual Zone Table and Physical Zone Table, a number of queues: Cache Line Queue, Evict Queue, Erase Queue, Free Block Queue, and a number of lists: Spare Block List and Bad Block List.

Owner:ZHUANG ZHIQING +1

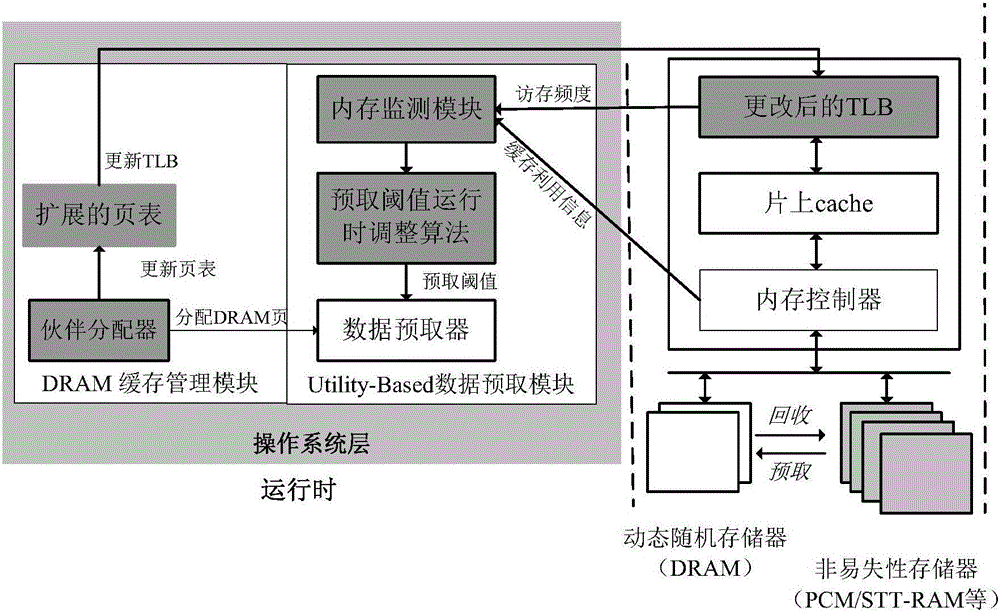

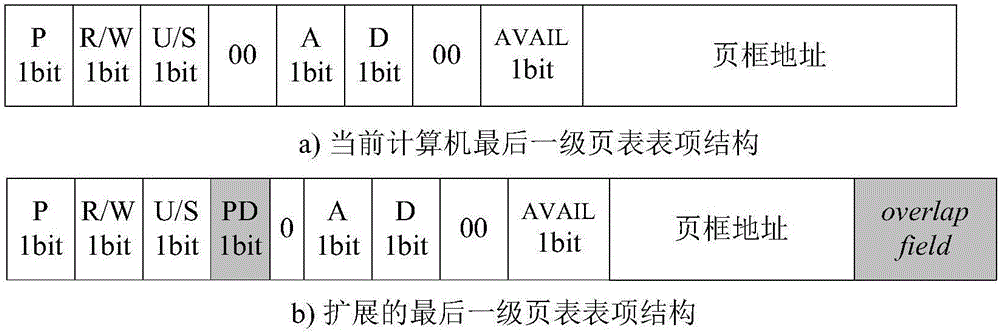

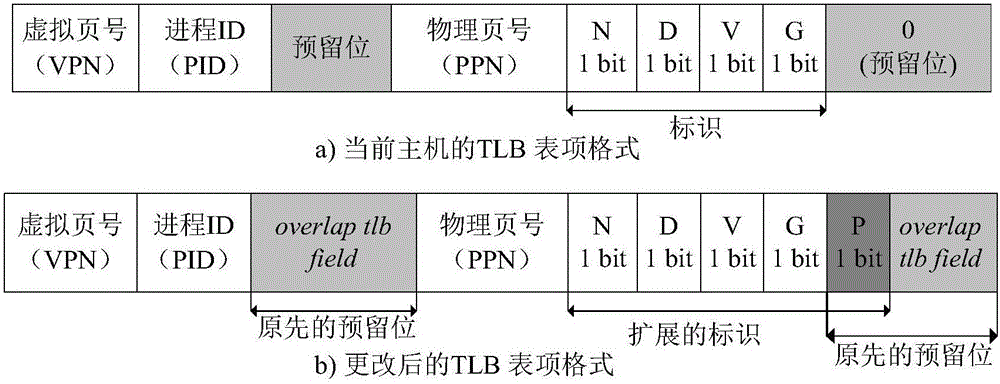

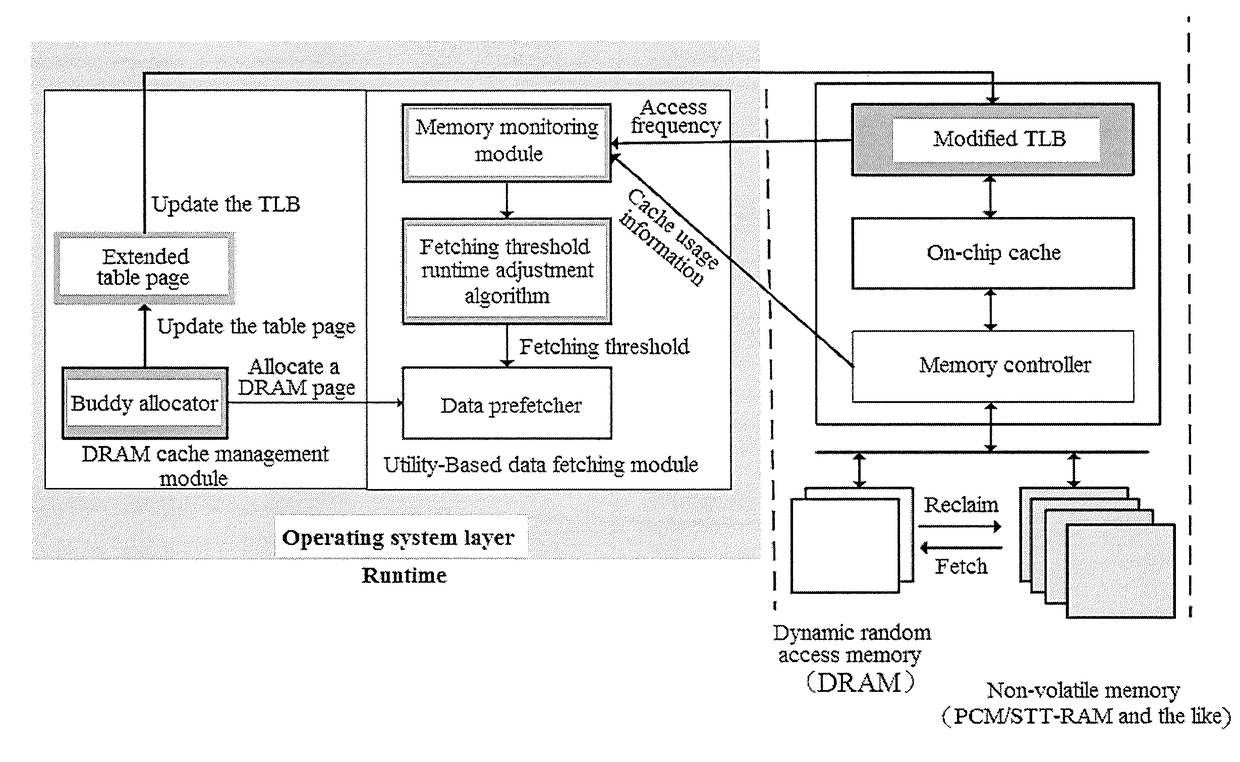

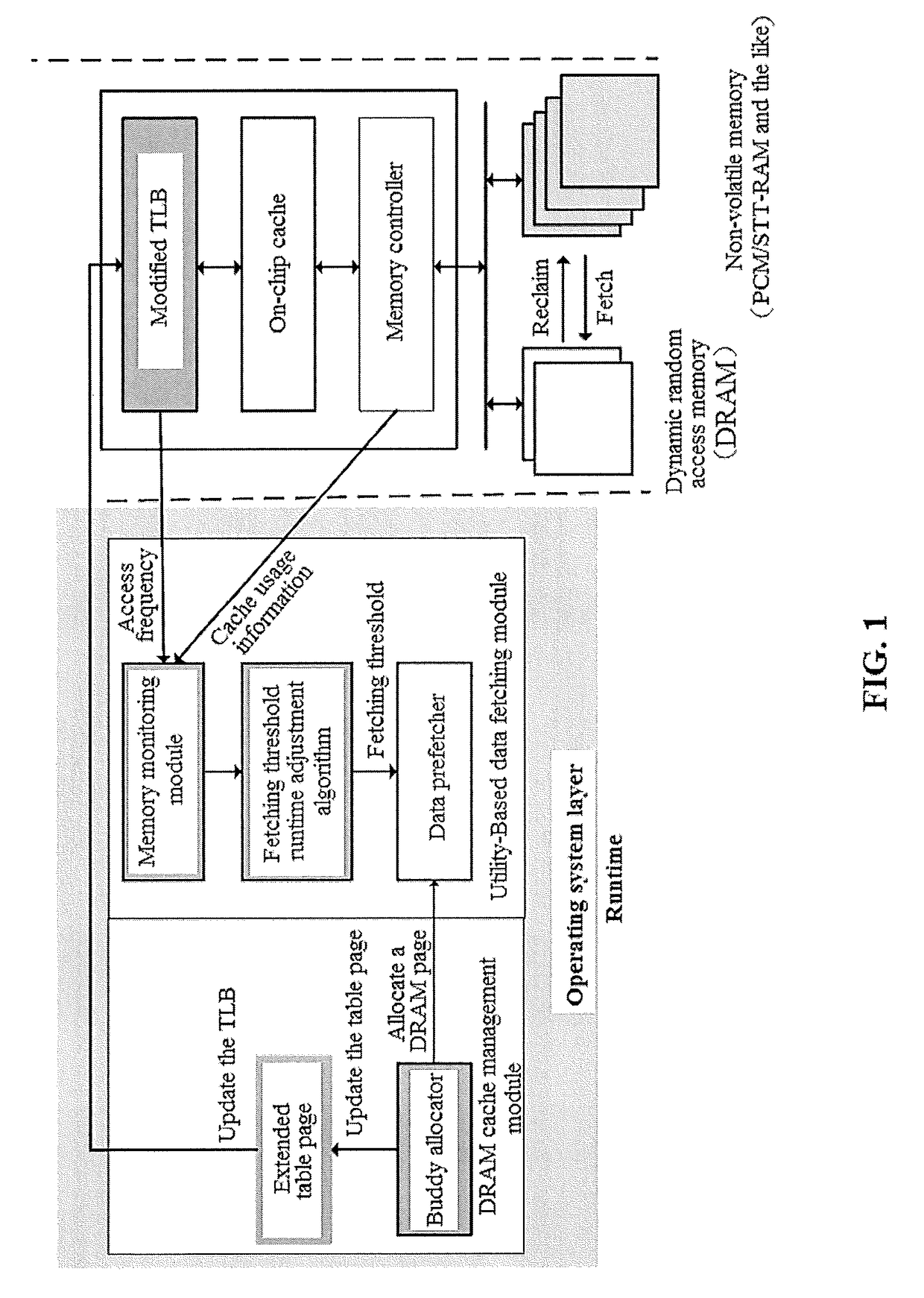

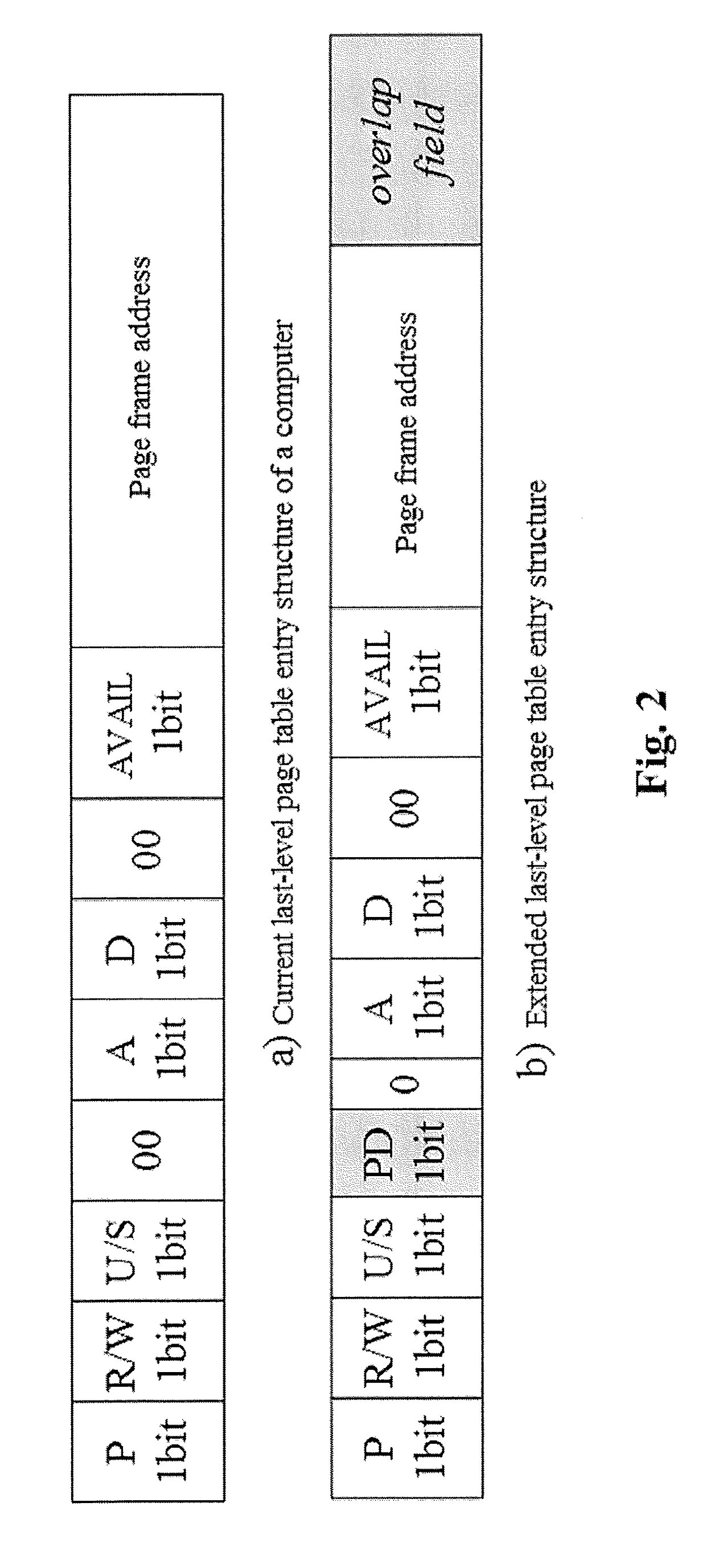

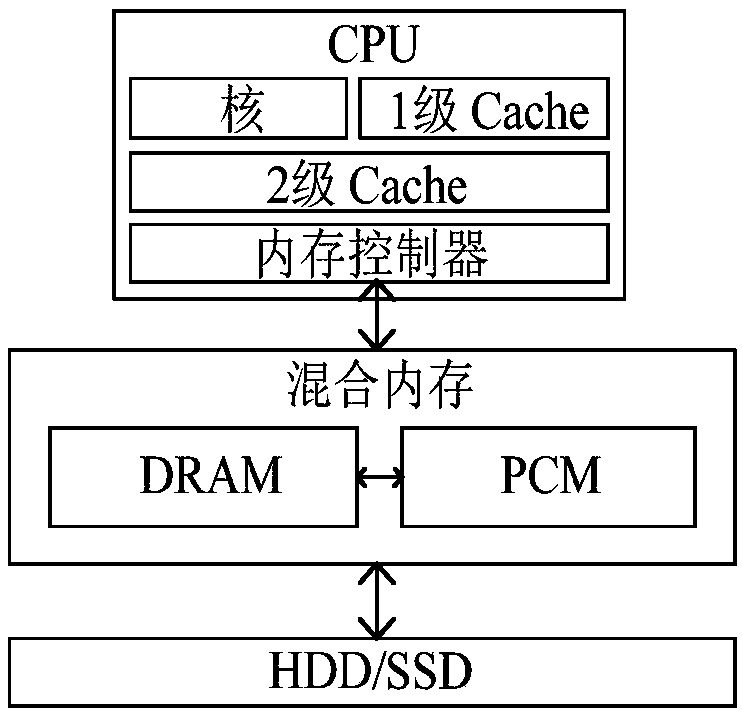

DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory access method and system adopting software and hardware collaborative management

ActiveCN105786717ARealize unified managementEliminate overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationPage tableCache management

The invention provides a DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory system adopting software and hardware collaborative management. According to the system, an NVM is taken as a large-capacity NVM for use while DRAM is taken as a cache of the NVM. Hardware overhead of a hierarchical heterogeneous memory architecture in traditional hardware management is eliminated through effective use of certain reserved bits in a TLB (translation lookaside buffer) and a page table structure, a cache management problem of the heterogeneous memory system is transferred to the software hierarchy, and meanwhile, the memory access delay after missing of final-stage cache is reduced. In view of the problems that many applications in a big data application environment have poorer data locality and cache pollution can be aggravated with adoption of a traditional demand-based data prefetching strategy in the DRAM cache, a Utility-Based data prefetching mechanism is adopted in the DRAM-NVM hierarchical heterogeneous memory system, whether data in the NVM are cached into the DRAM is determined according to the current memory pressure and memory access characteristics of the applications, so that the use efficiency of the DRAM cache and the use efficiency of the bandwidth from the NVM main memory to the DRAM cache are increased.

Owner:HUAZHONG UNIV OF SCI & TECH

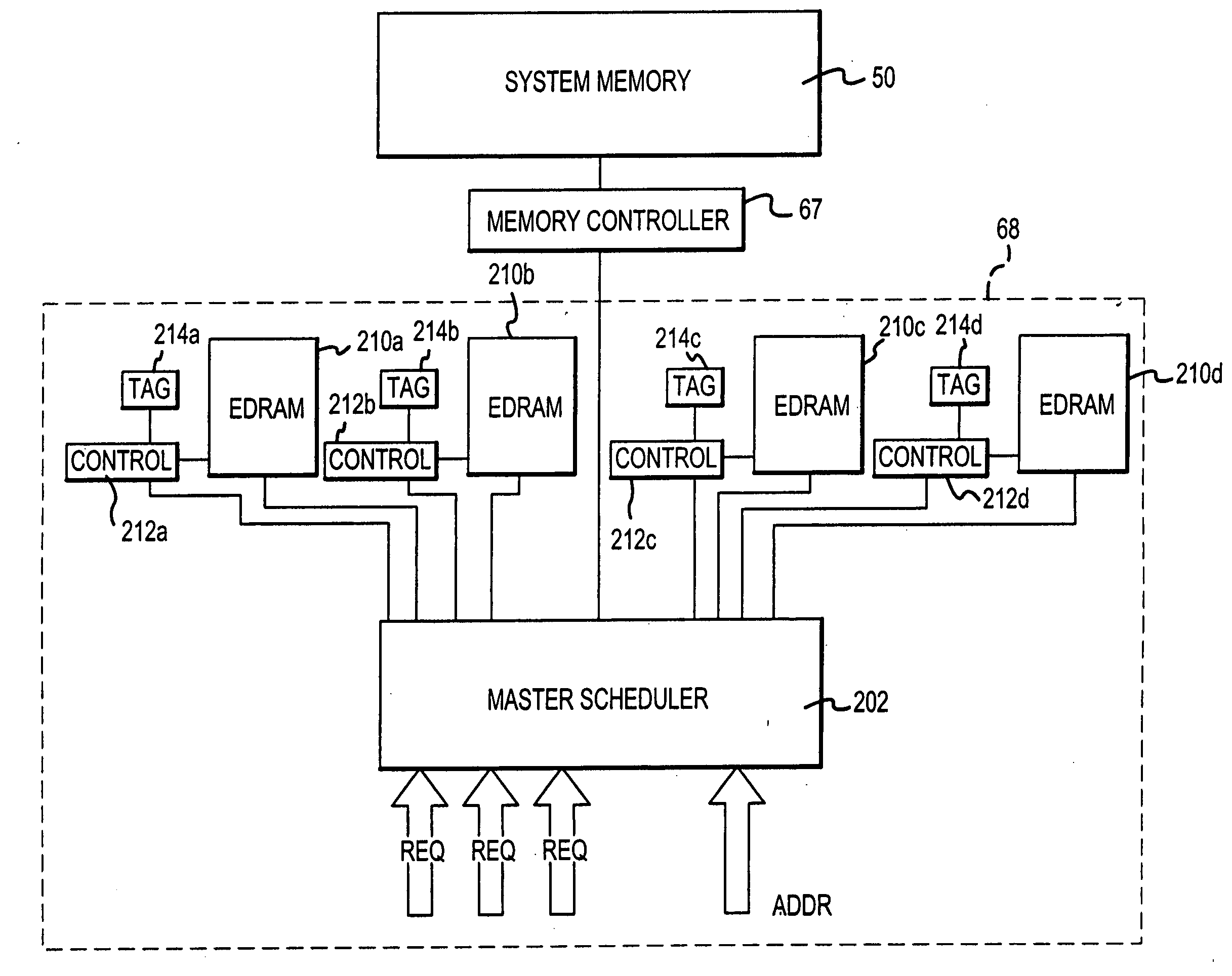

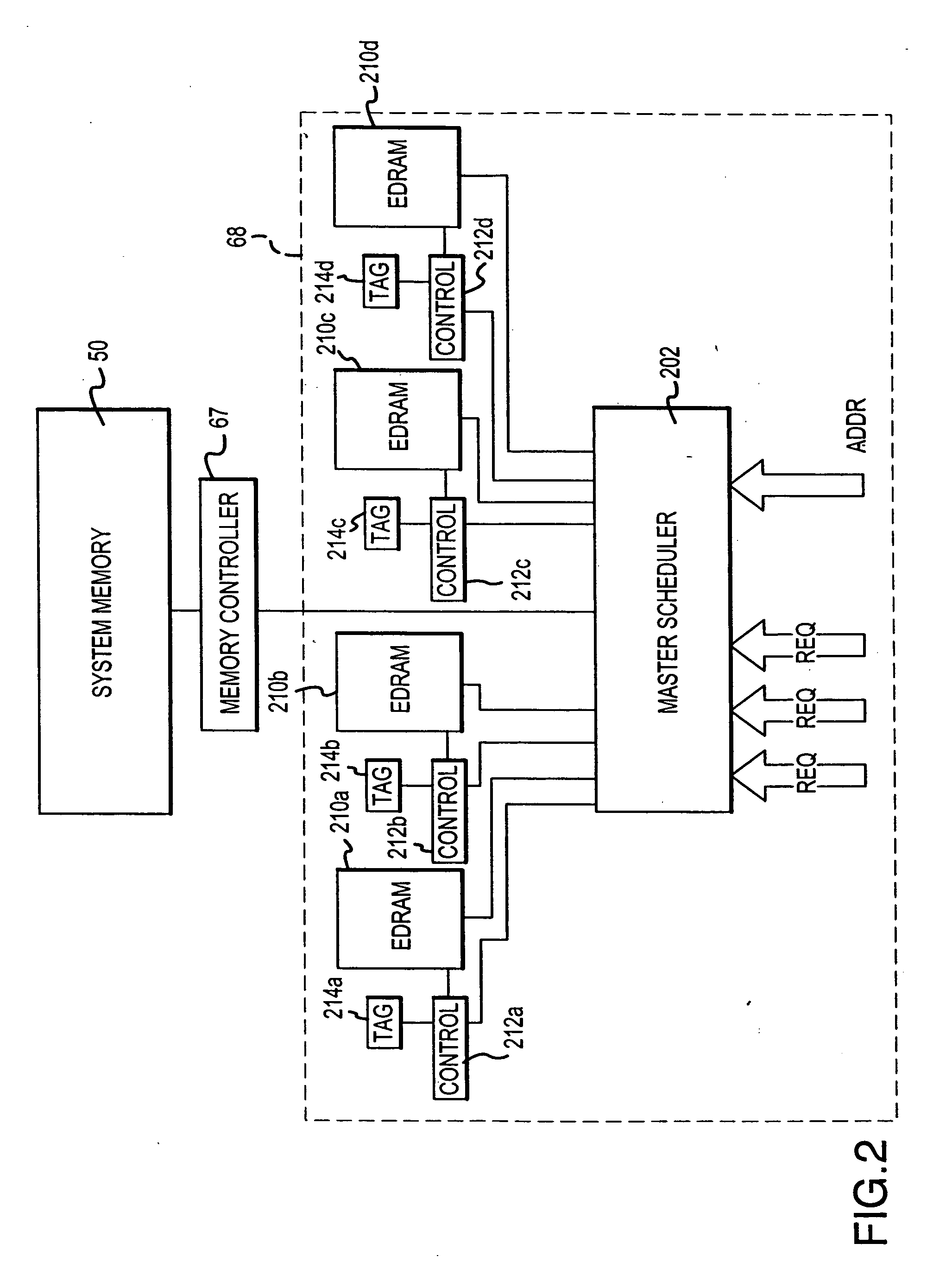

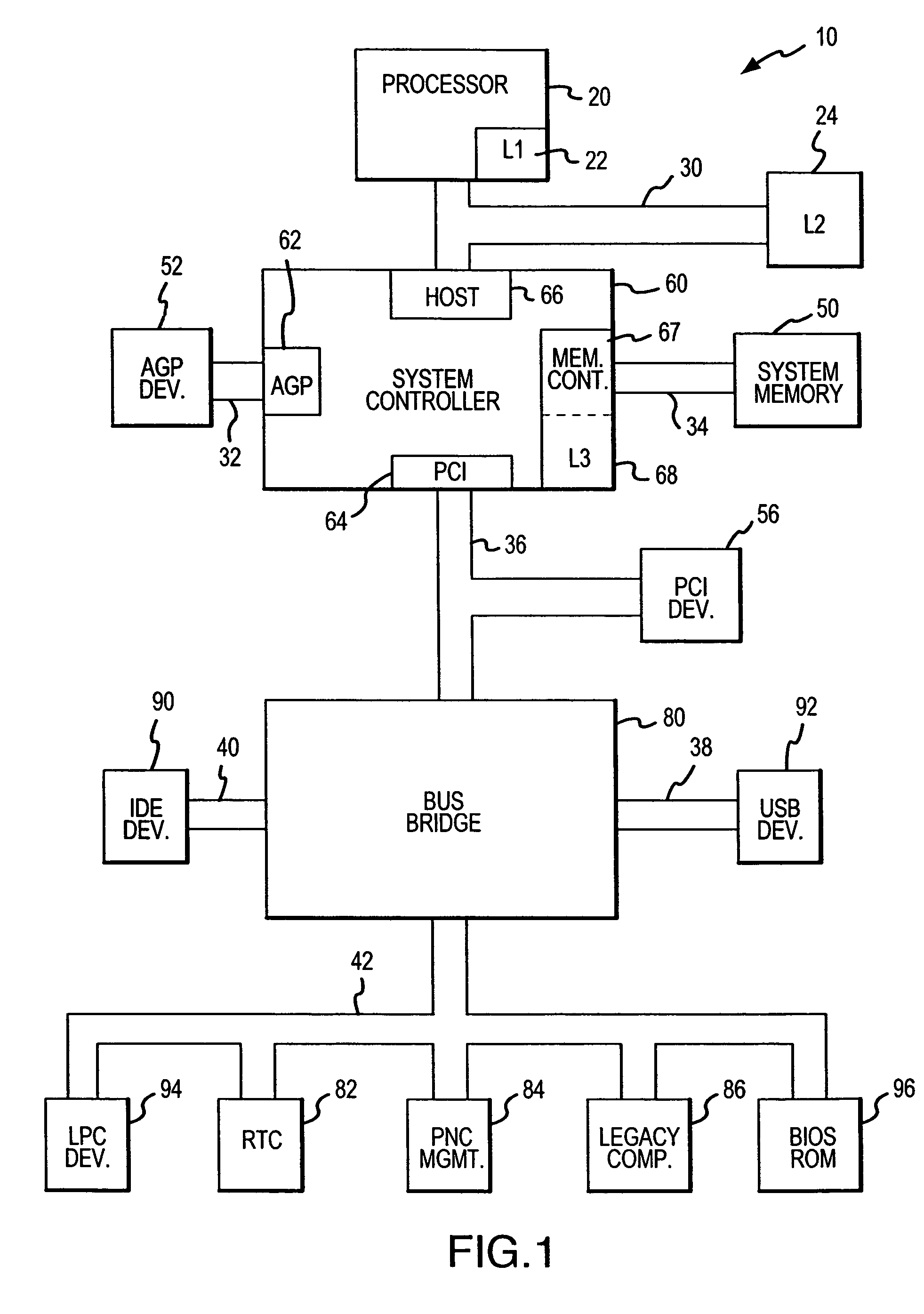

Embedded DRAM cache memory and method having reduced latency

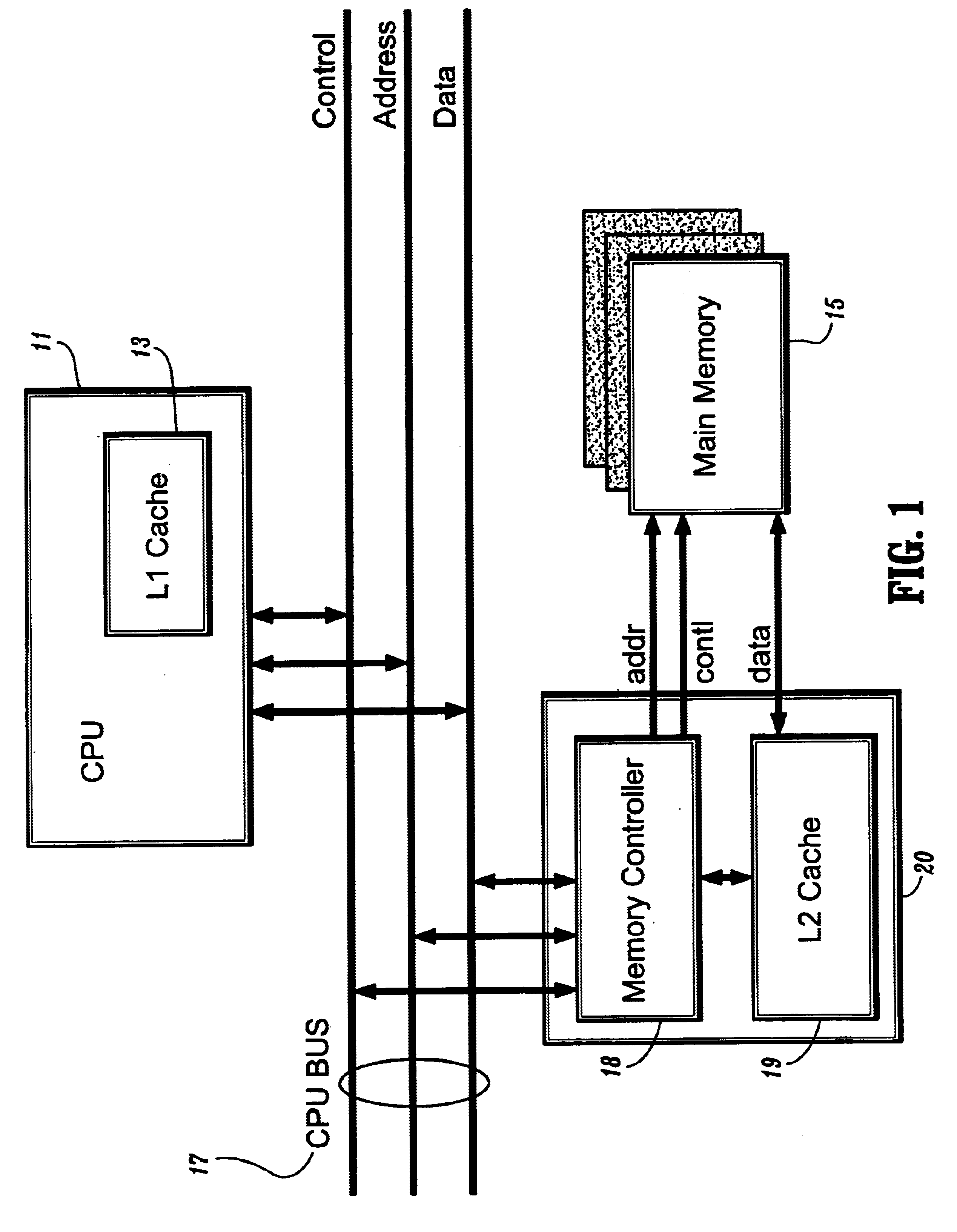

A computer system includes a processor, a system memory, and an integrated circuit system controller coupled to the processor and the system memory. The system controller includes a system memory controller coupled to the system memory, a processor interface coupled to the processor and an embedded cache memory integrated with the memory controller and the processor interface. The cache memory includes at least one DRAM array, at least one tag memory, and at least one cache memory controller. The cache memory controller initiates an access to either or both the DRAM array and the tag memory, as well as the system memory, before the cache memory controller has determined if the access will result in a cache hit or a cache miss. If the cache memory controller determines that the access will result in a cache hit, data are coupled from the DRAM array to the processor. If the cache memory controller determines that the access will result in a cache miss, data are coupled from the system memory to the processor.

Owner:MICRON TECH INC

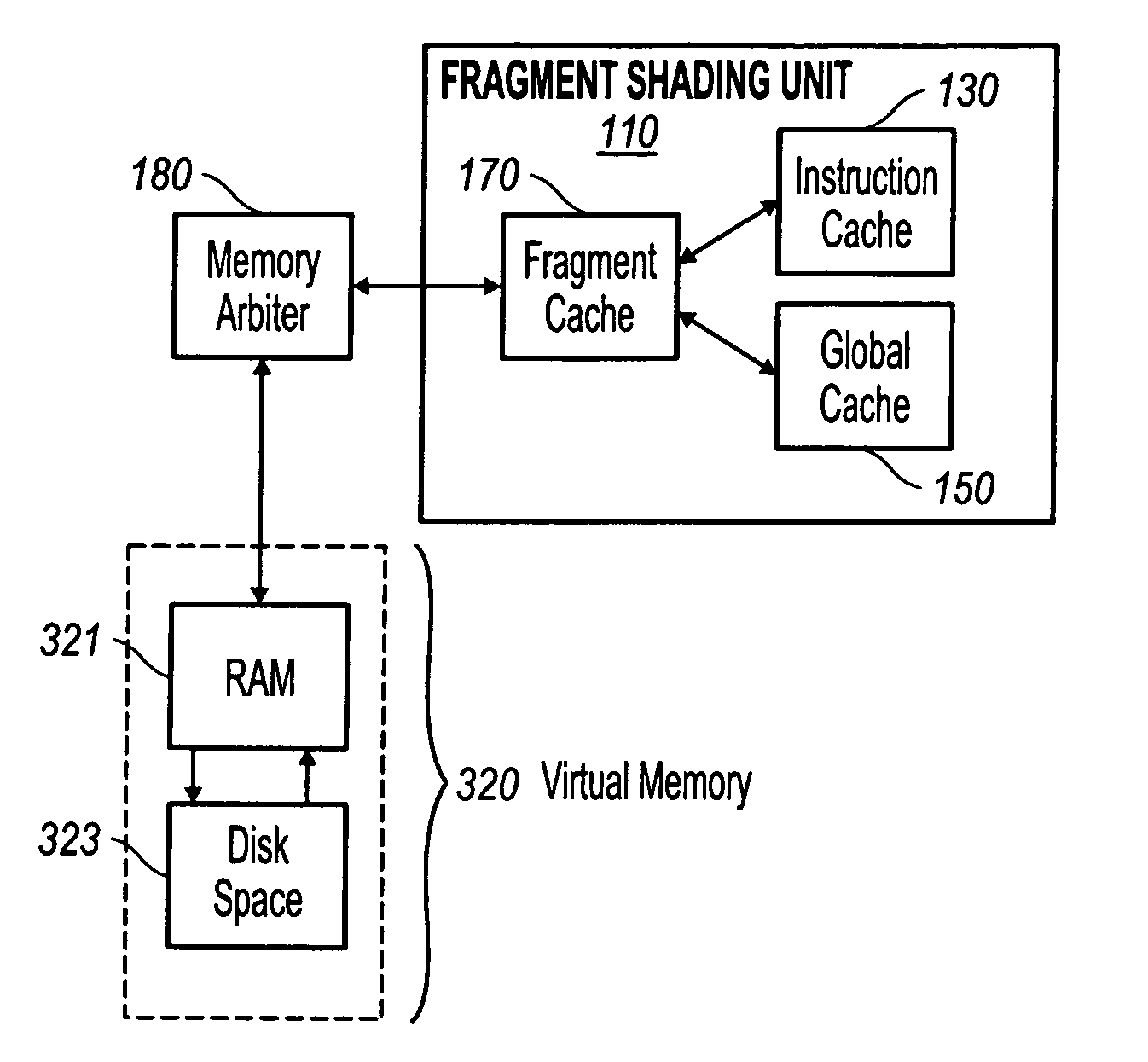

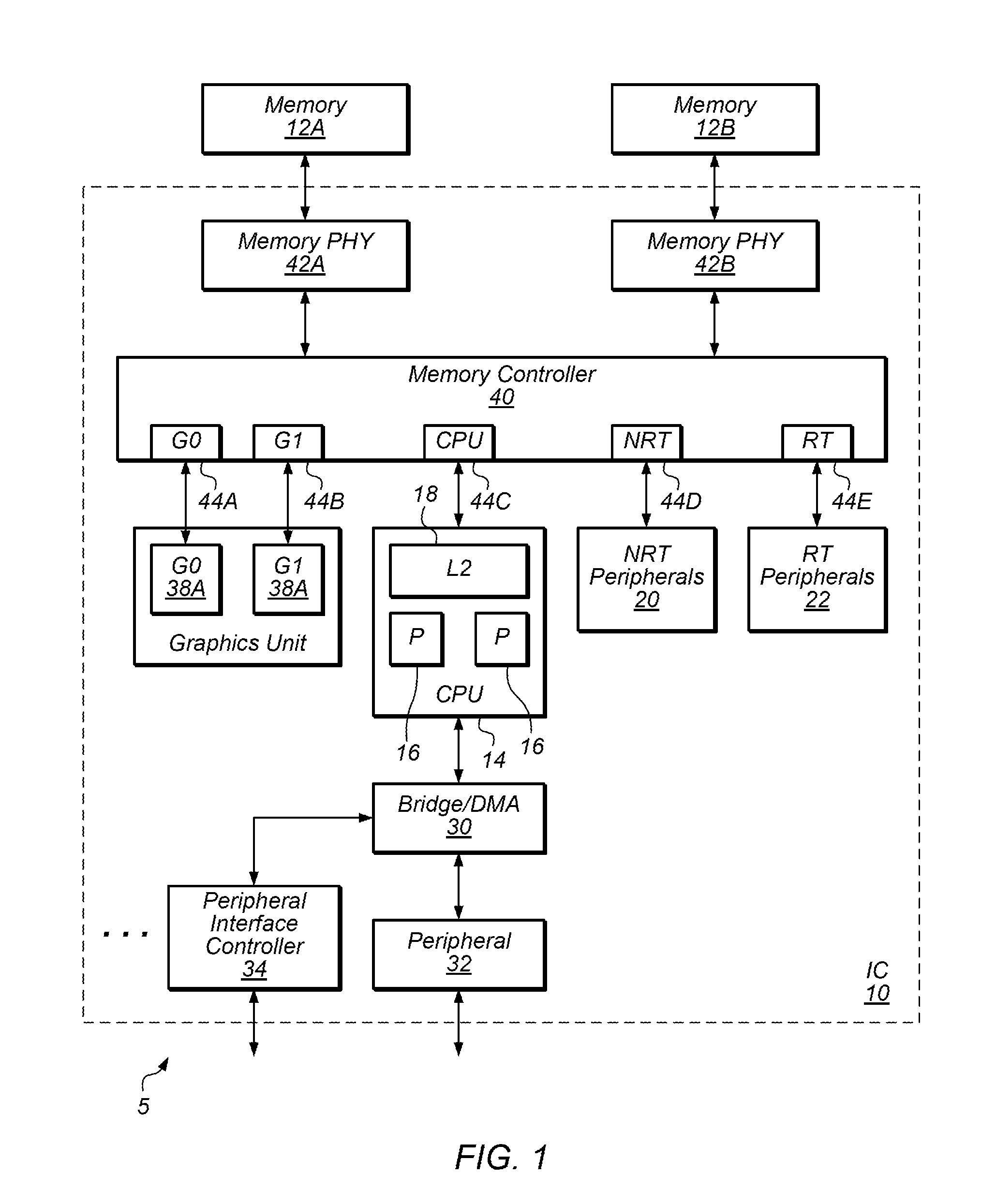

Shader with global and instruction caches

ActiveUS8643659B1Increased complexityIncrease frame rateMemory architecture accessing/allocationDetails involving 3D image dataVirtualizationControl store

An instruction cache and data cache used to virtualize the storage of global data and instructions used by graphics shaders. Present day hardware design stores the global data and instructions used by the shaders in a fixed amount of registers or writable control store (WCS). However, this traditional approach limits the size and the complexity of the shaders that can be supported. By virtualizing the storage of the global data and instructions, the amount of global or state memory available to the shader and the length of the shading programs are no longer constrained by the physical on-chip memory.

Owner:XUESHAN TECH INC

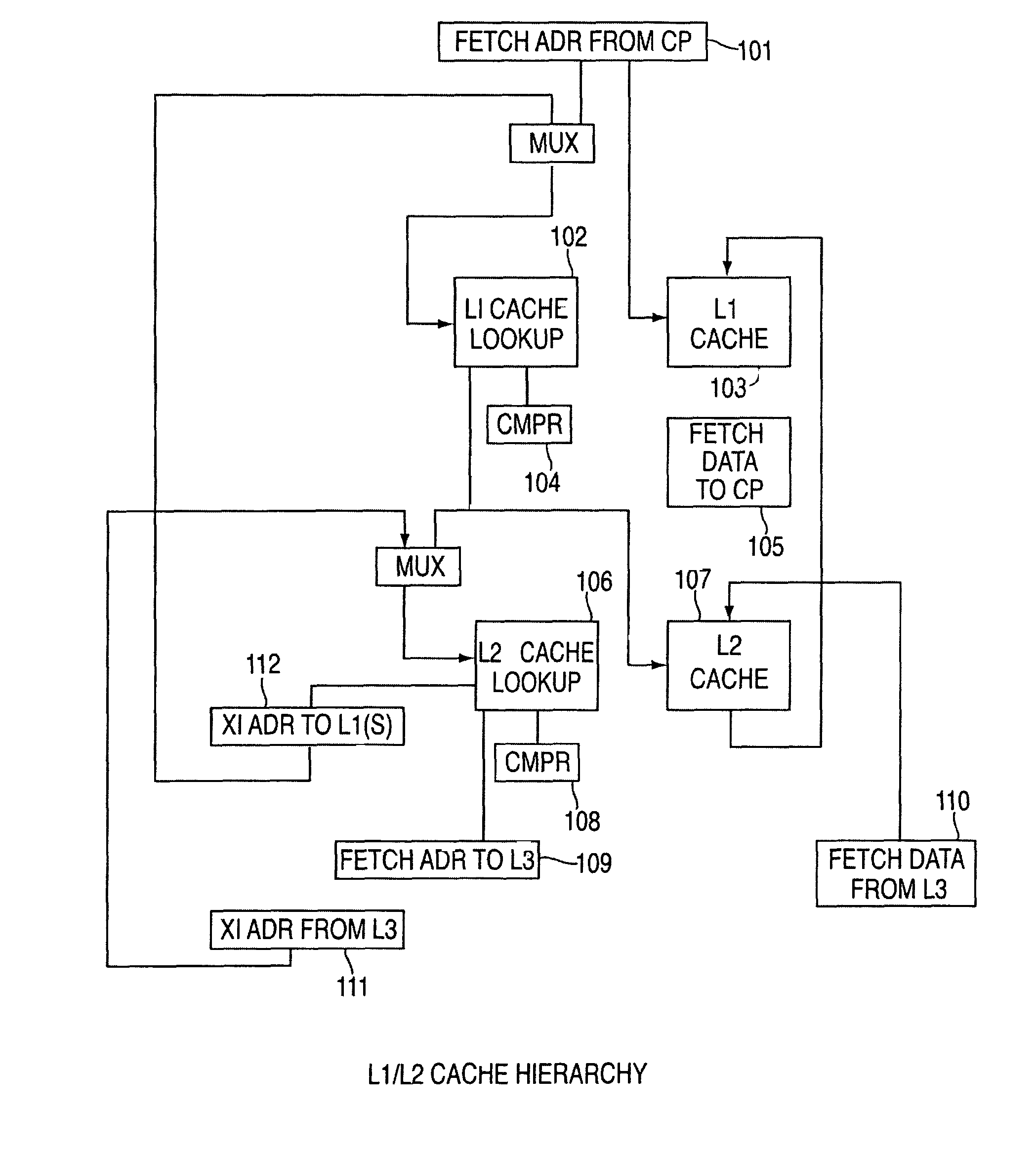

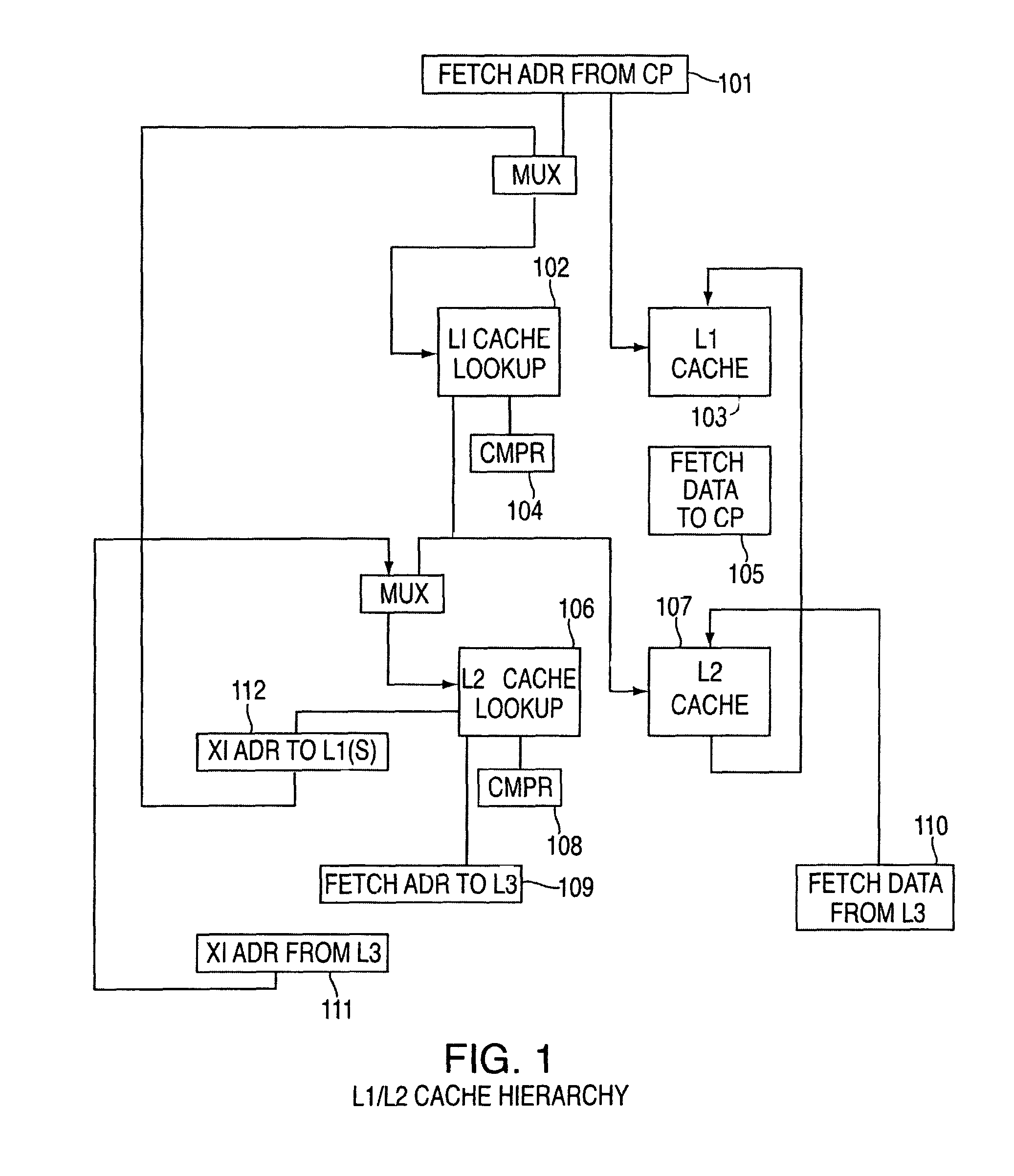

Method and system for a multi-level virtual/real cache system with synonym resolution

Method and system for a multi-level virtual / real cache system with synonym resolution. An exemplary embodiment includes a multi-level cache hierarchy, including a set of L1 caches associated with one or more processor cores and a set of L2 caches, wherein the set of L1 caches are a subset of the set of L2 caches, wherein the set of L1 caches underneath a given L2 cache are associated with one or more of the processor cores.

Owner:DAEDALUS BLUE LLC

Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

ActiveUS20170277640A1Eliminates hardwareReducing memory access delayMemory architecture accessing/allocationMemory systemsTerm memoryPage table

The present invention provides a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management schemes. In the system, NVM is used as large-capacity main memory, and DRAM is used as a cache to the NVM. Some reserved bits in the data structure of TLB and last-level page table are employed effectively to eliminate hardware costs in the conventional hardware-managed hierarchical memory architecture. The cache management in such a heterogeneous memory system is pushed to the software level. Moreover, the invention is able to reduce memory access latency in case of last-level cache misses. Considering that many applications have relatively poor data locality in big data application environments, the conventional demand-based data fetching policy for DRAM cache can aggravates cache pollution. In the present invention, an utility-based data fetching mechanism is adopted in the DRAM / NVM hierarchical memory system, and it determines whether data in the NVM should be cached in the DRAM according to current DRAM memory utilization and application memory access patterns. It improves the efficiency of the DRAM cache and bandwidth usage between the NVM main memory and the DRAM cache.

Owner:HUAZHONG UNIV OF SCI & TECH

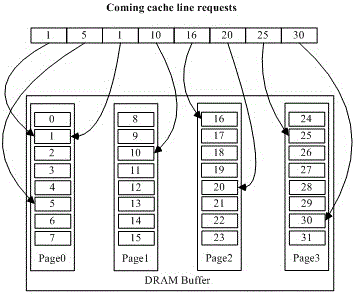

NVMM: An Extremely Large, Logically Unified, Sequentially Consistent Main-Memory System

InactiveUS20160253123A1Large capacityReduce power consumptionMemory architecture accessing/allocationInput/output to record carriersArea networkApplication software

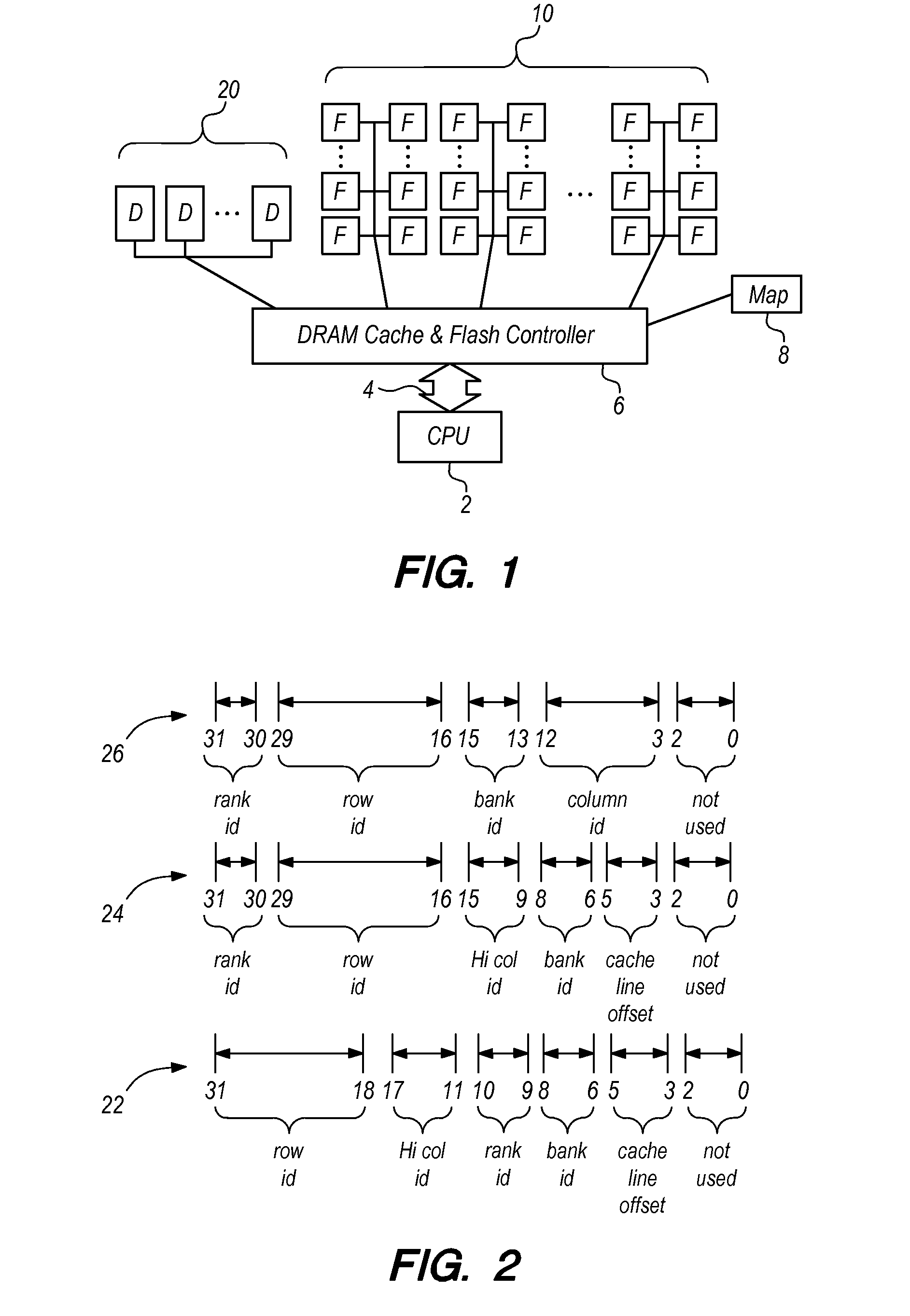

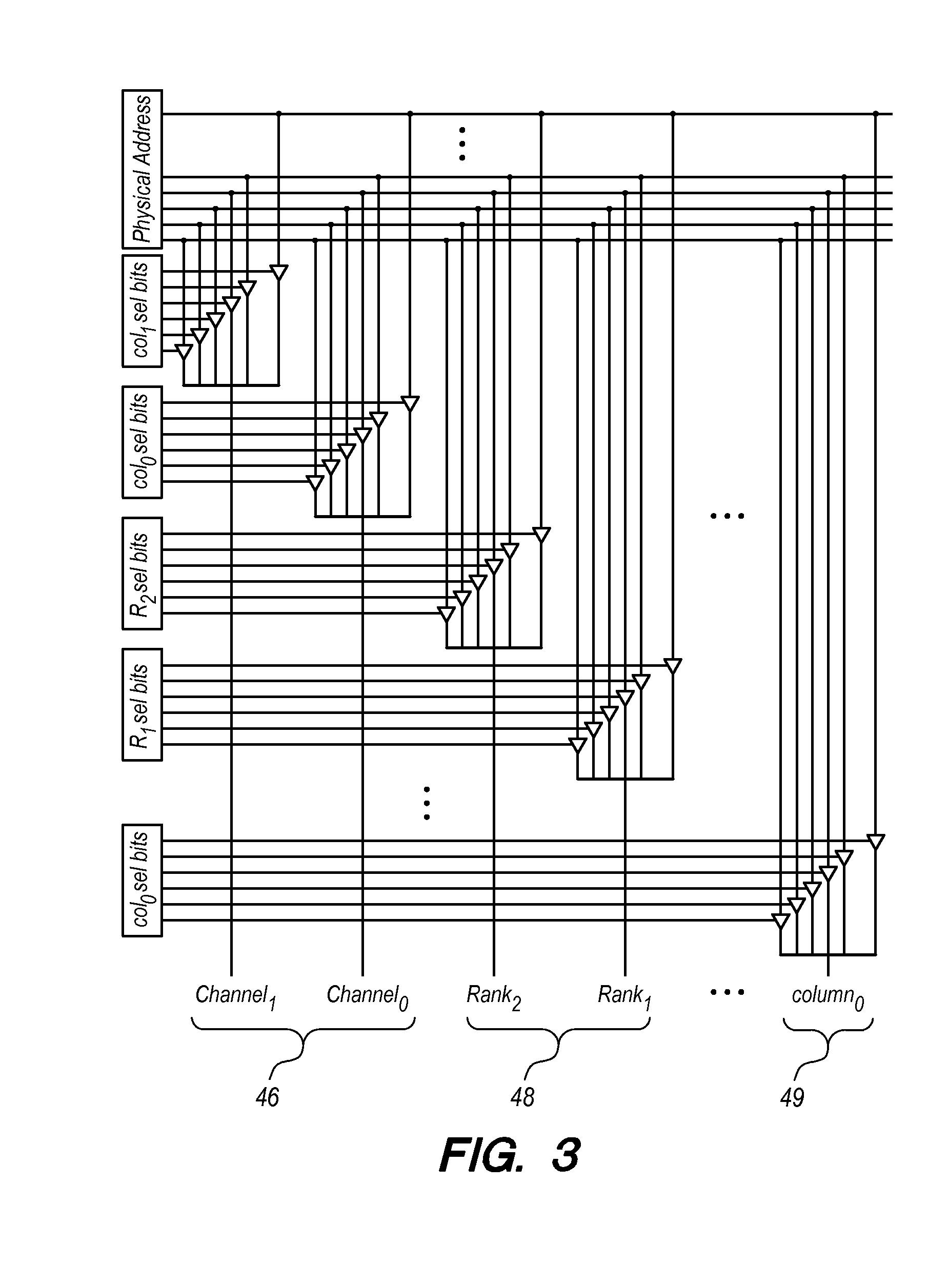

Embodiments of both a non-volatile main memory (NVMM) single node and a multi-node computing system are disclosed. One embodiment of the NVMM single node system has a cache subsystem composed of all DRAM, a large main memory subsystem of all NAND flash, and provides different address-mapping policies for each software application. The NVMM memory controller provides high, sustained bandwidths for client processor requests, by managing the DRAM cache as a large, highly banked system with multiple ranks and multiple DRAM channels, and large cache blocks to accommodate large NAND flash pages. Multi-node systems organize the NVMM single nodes in a large inter-connected cache / flash main memory low-latency network. The entire interconnected flash system exports a single address space to the client processors and, like a unified cache, the flash system is shared in a way that can be divided unevenly among its client processors: client processors that need more memory resources receive it at the expense of processors that need less storage. Multi-node systems have numerous configurations, from board-area networks, to multi-board networks, and all nodes are connected in various Moore graph topologies. Overall, the disclosed memory architecture dissipates less power per GB than traditional DRAM architectures, uses an extremely large solid-state capacity of a terabyte or more of main memory per CPU socket, with a cost-per-bit approaching that of NAND flash memory, and performance approaching that of an all DRAM system.

Owner:JACOB BRUCE LEDLEY

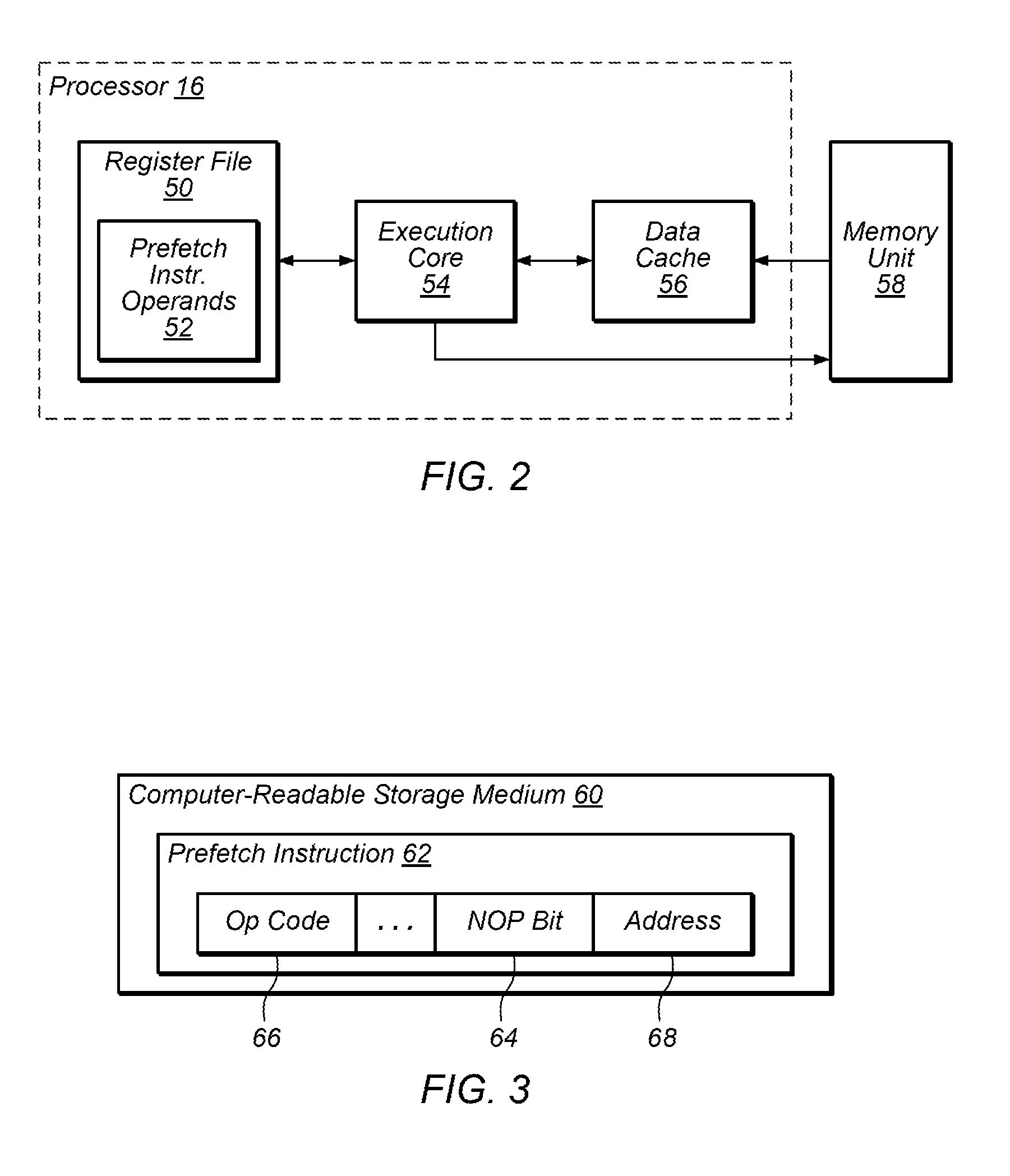

Prefetch instruction

ActiveUS20120110269A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressDram cache

Techniques are disclosed relating to prefetching data from memory. In one embodiment, an integrated circuit may include a processor containing an execution core and a data cache. The execution core may be configured to receive an instance of a prefetch instruction that specifies a memory address from which to retrieve data. In response to the instance of the instruction, the execution core retrieves data from the memory address and stores it in the data in the data cache, regardless of whether the data corresponding to that particular memory address is already stored in the data cache. In this manner, the data cache may be used as a prefetch buffer for data in memory buffers where coherence has not been maintained.

Owner:APPLE INC

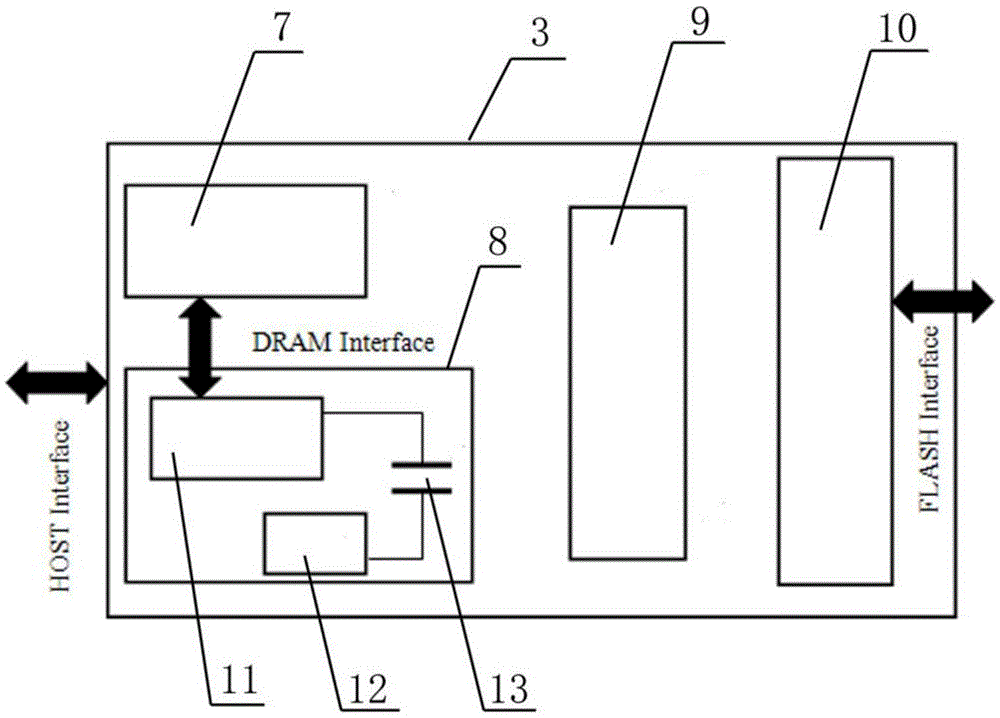

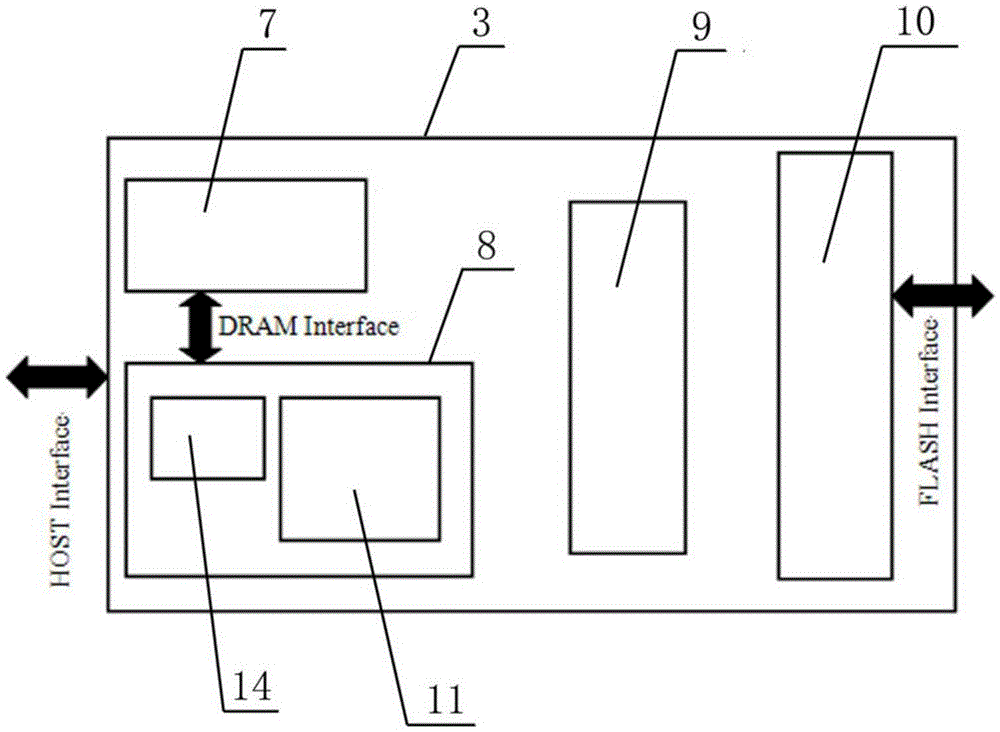

SSD controller based on read-write cache separation of STT-MRAM

InactiveCN105550127AAddressing Inadequate Data ProtectionImprove reliabilityDigital storageMemory systemsCapacitanceDram cache

The invention belongs to the technical field of computer memory equipment and particularly relates to an SSD controller based on read-write cache separation of an STT-MRAM. The SSD controller comprises a control logic module, a read-write cache module, an error correction module and a read-write driver module, wherein the read-write cache module comprises an STT-MRAM and a DRAM; the STT-MRAM caches all data required to be written in a FLASH memory array and an LBA modification increment table; and the DRAM caches all data required to be read from the FLASH memory array, an LBA mapping table, a controller program and user configuration. The SSD controller has the beneficial effects that the STT-MRAM is adopted as a write cache in the SSD controller; by utilizing high-speed read-write performance and power failure nonvolatile performance of the STT-MRAM, the problem of data protection shortage after power failure in the SSD controller is solved; and original power failure protection capacitor and power failure detection circuit in the controller are removed, so that the system reliability is improved and the complexity of system design is lowered.

Owner:CETHIK GRP

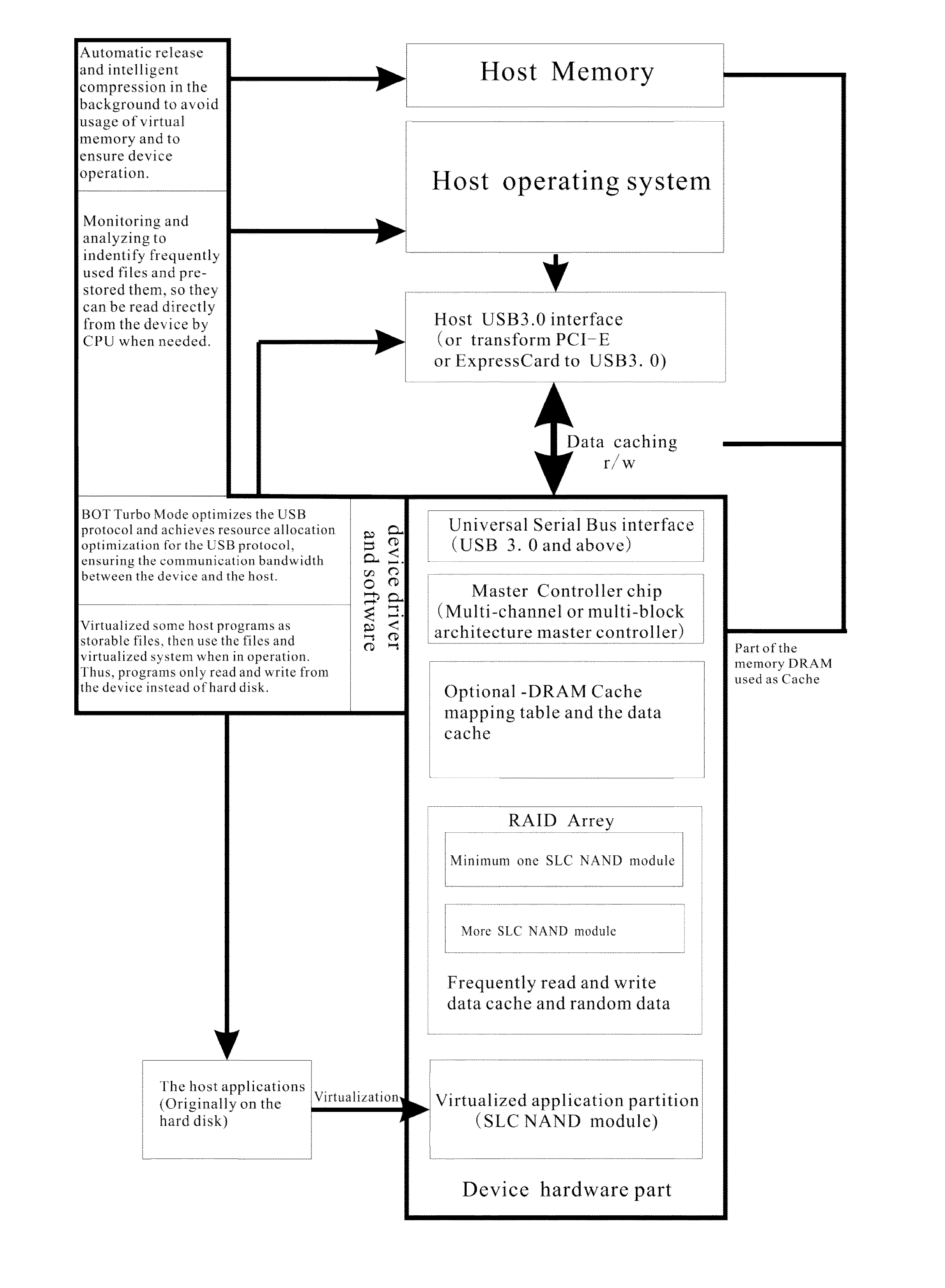

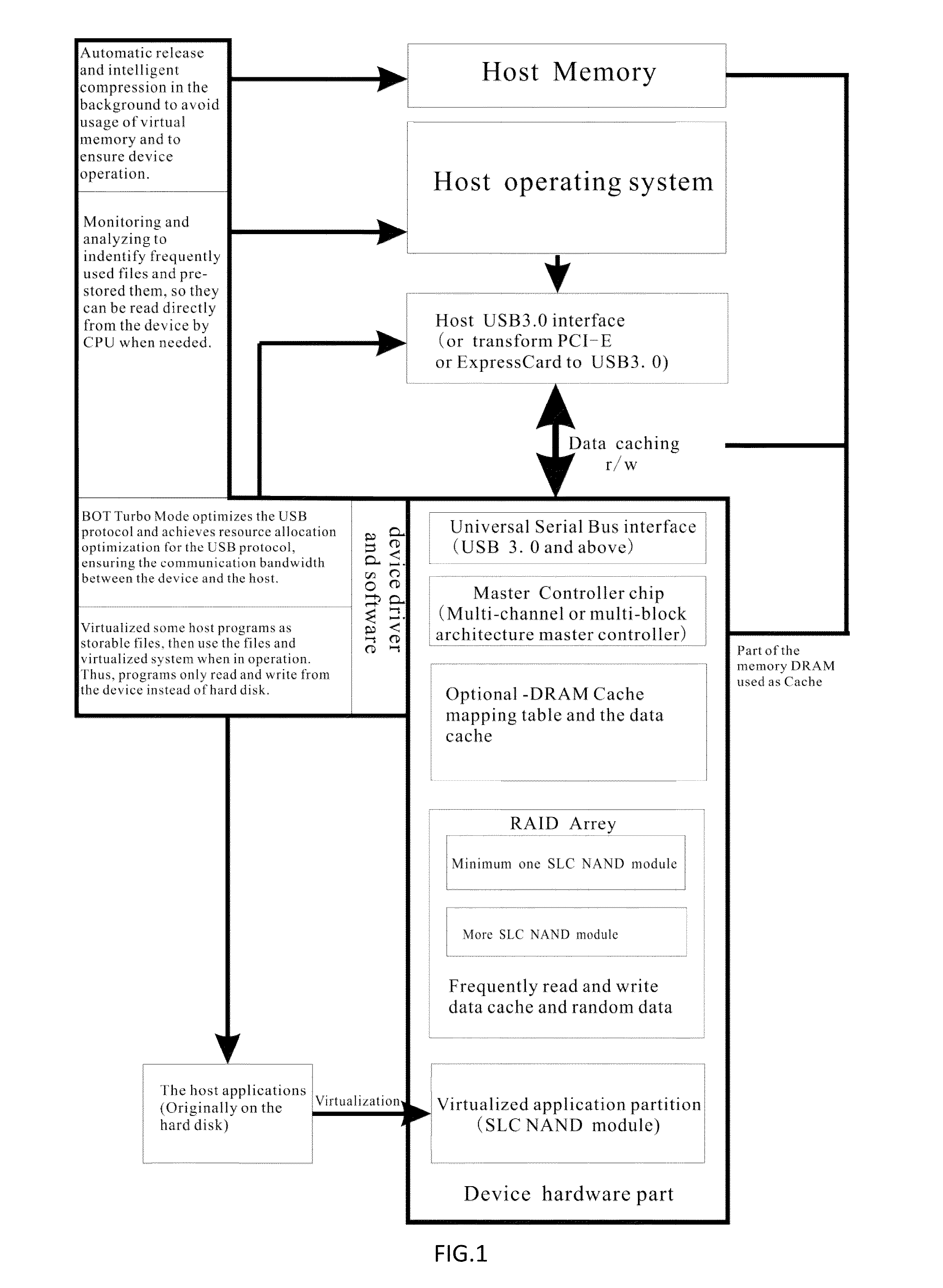

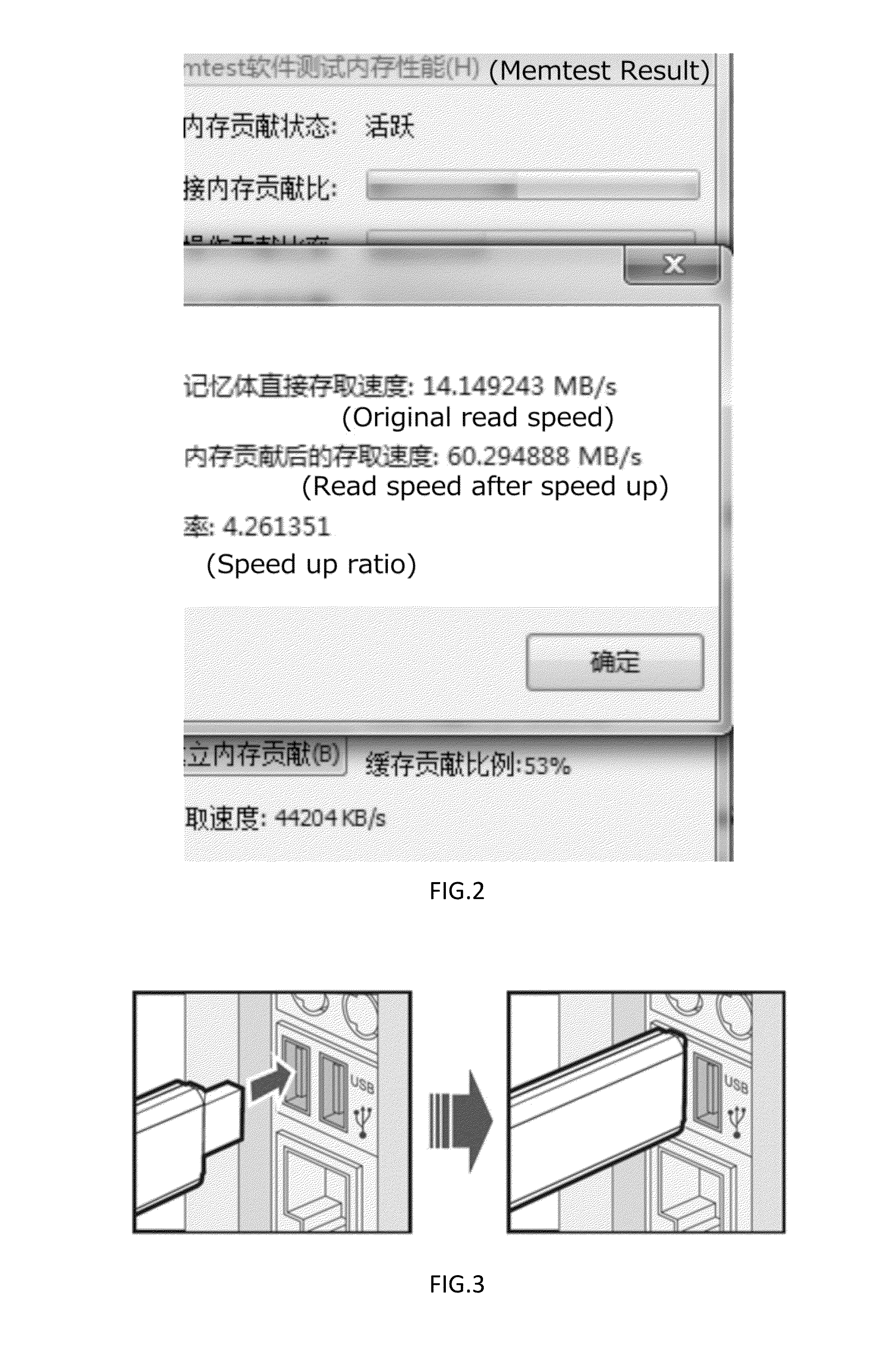

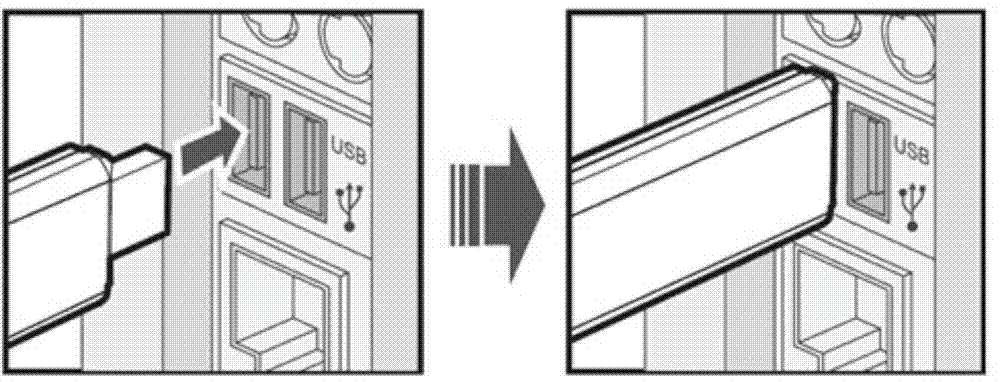

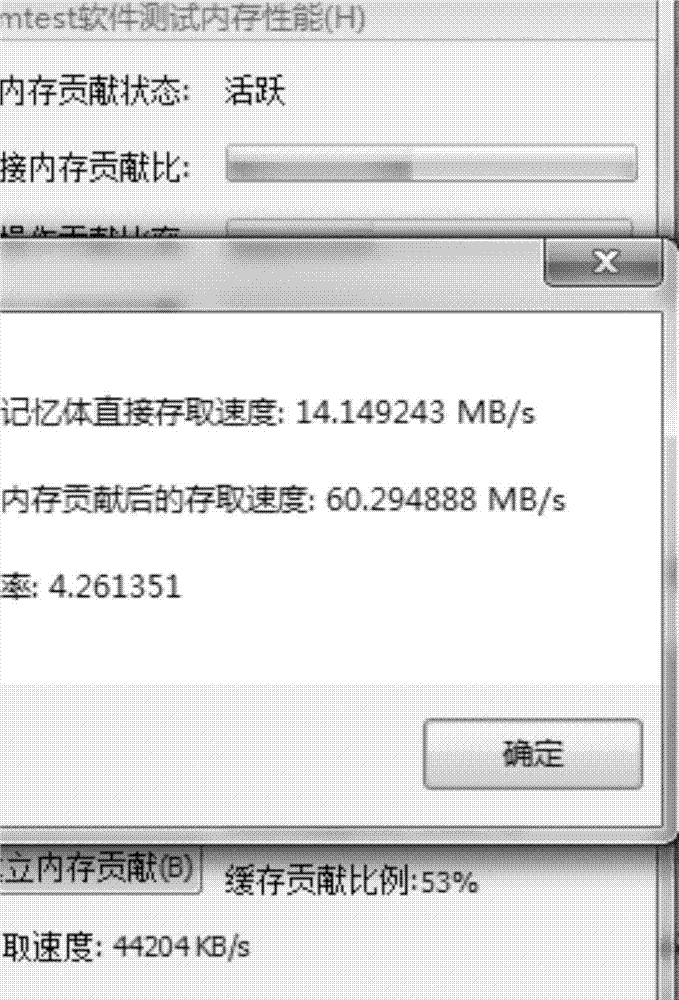

A new USB protocol based computer acceleration device using multi I/O channel SLC NAND and DRAM cache

InactiveUS20160253093A1Increase speedEasy to operateMemory architecture accessing/allocationInput/output to record carriersHabitApplication software

This study presents a new USB protocol based computer acceleration device that uses multi-channel single-level cell NAND type flash memory (SLC NAND) and Dynamic random-access memory (DRAM) cache. This device includes a main controller chip, at least one SLC NAND module, and a USB interface to connect the device to a computer. It then creates and assigns a cache file in SLC NAND and DRAM for the computer cache system, caches the common used applications, and read and pre-reads frequently used files. The device drive improves the USB protocol, optimizes the BOT protocol in the traditional USB interface protocol, and optimizes resource allocation for the USB transport protocol.The algorithm and framework of the device employ the following design:1. The device virtualizes the application programs for pre-storing all program files and the system environment files required by programs into the device.2. The device works in multi I / O channel mode, an array module integrates an array of SLC NAND chips and uses main controller chip that can deal with multi I / O channel.3. By monitoring long-term user habits, data that will be used by system can be estimated, and the data can be pre-stored in the device.4. The device allows intelligent compression and automatic release of system memory in background.

Owner:WEIJIA ZHANG

Novel USB protocol computer accelerating device based on multi-channel SLC NAND and DRAM cache memory

InactiveCN103500076AEasy to operateGood effectMemory architecture accessing/allocationInput/output to record carriersTransmission protocolVirtualization

Owner:张维加

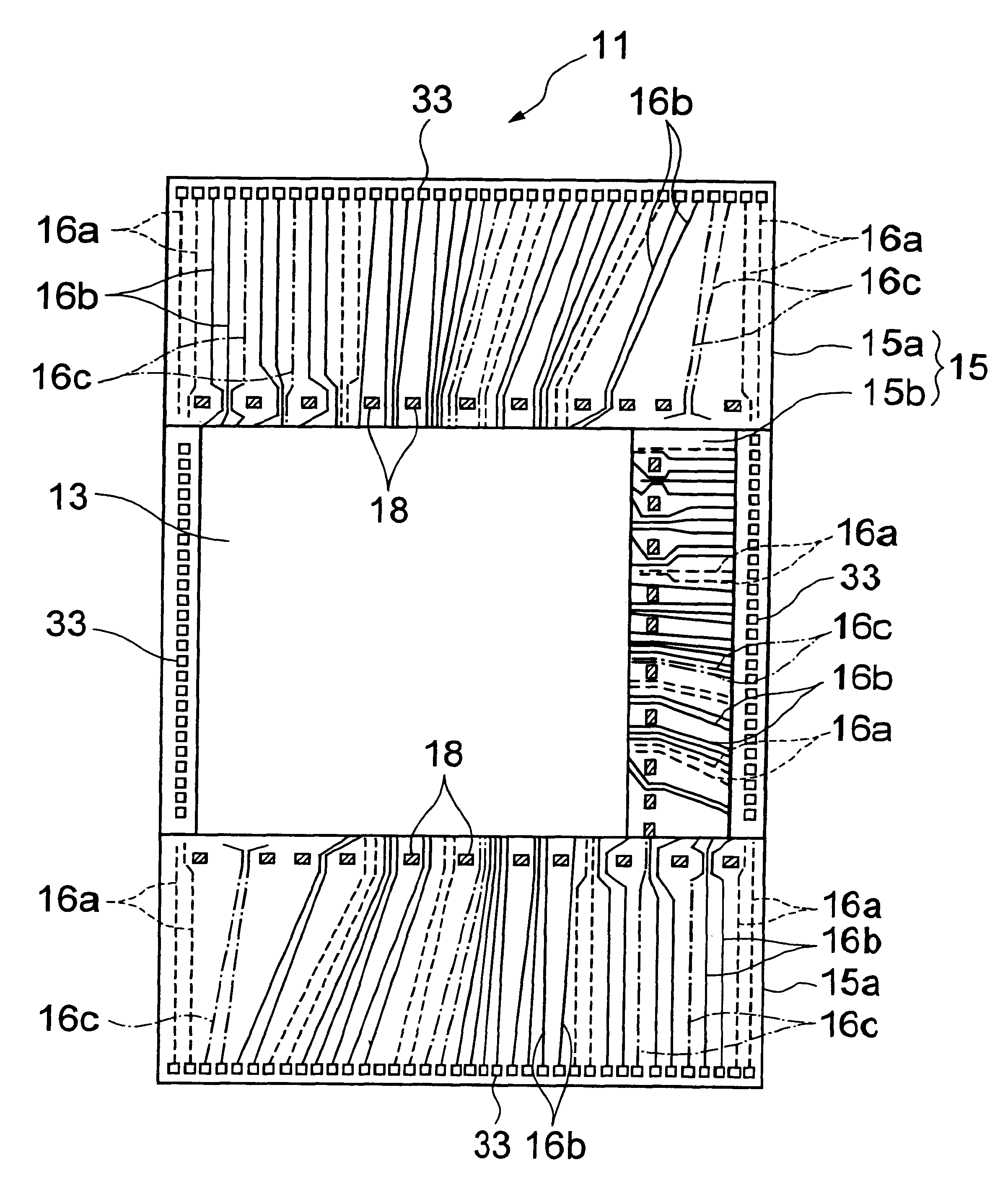

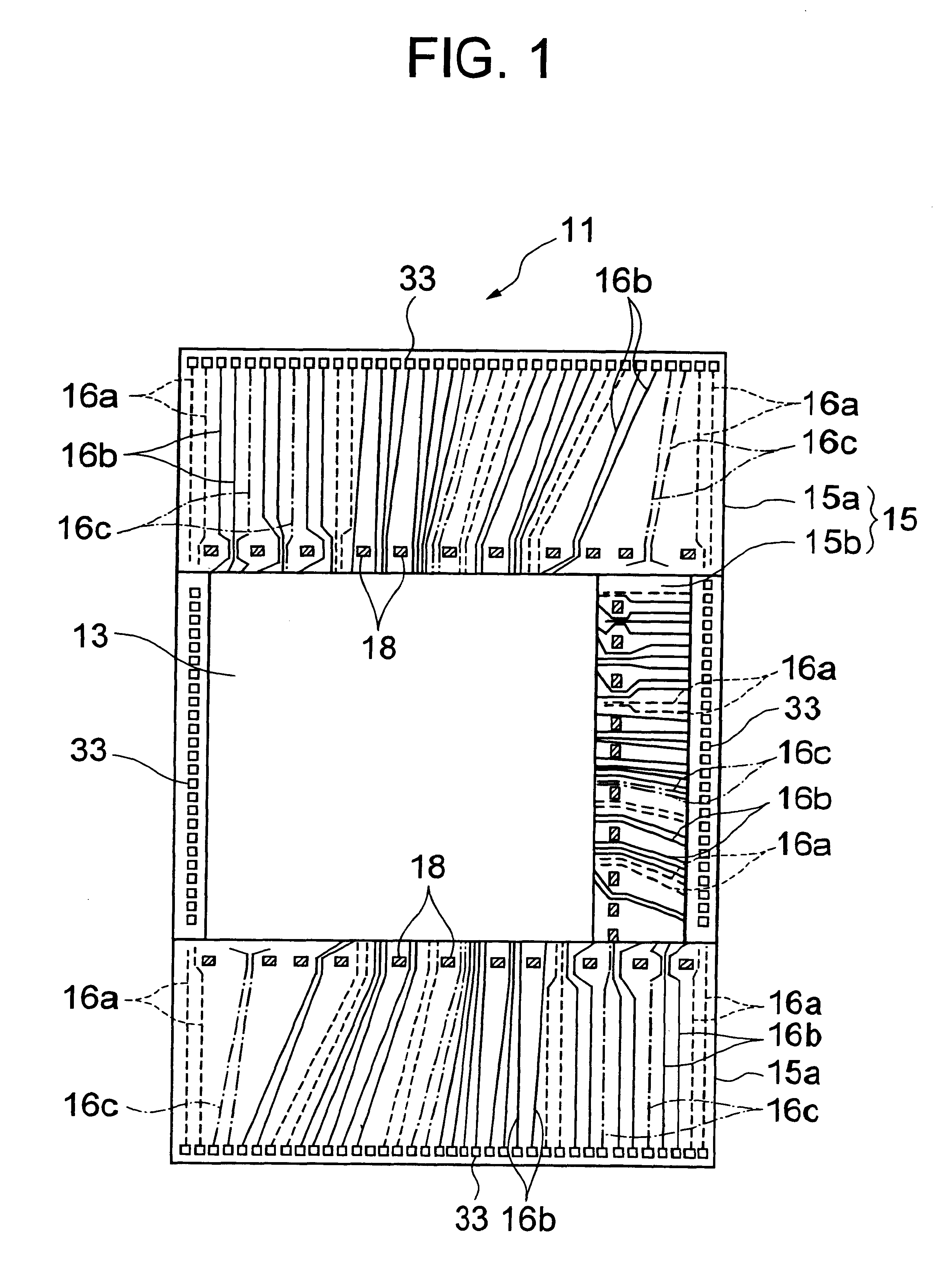

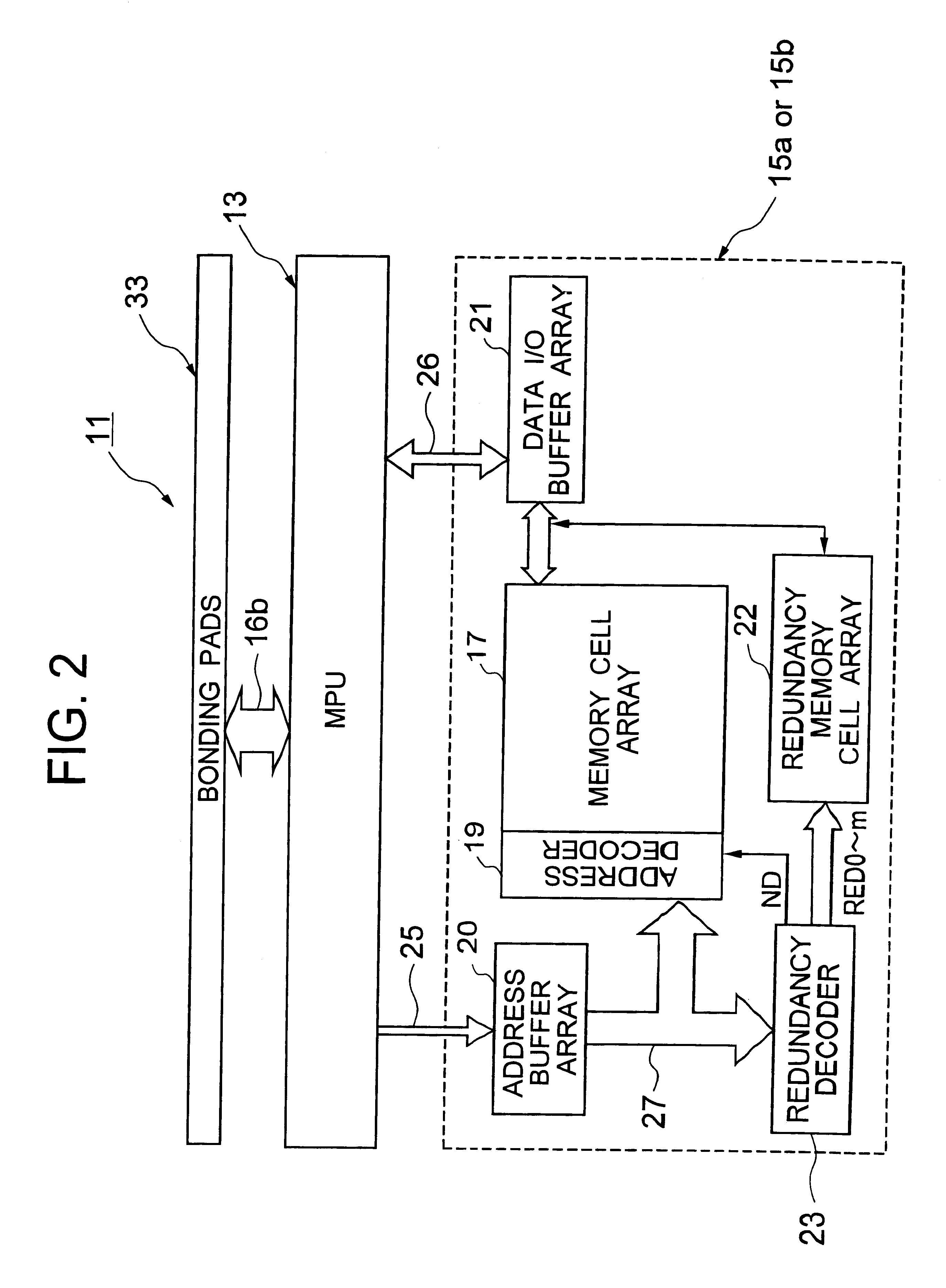

Semiconductor integrated circuit having a MPU and a DRAM cache memory

InactiveUS6378118B1Reduce noise transmissionPotential fluctuationTransistorSemiconductor/solid-state device detailsDram cacheComputer science

A large scale semiconductor integrated circuit, implemented on a chip, includes an MPU and a DRAM cache memory including a plurality of DRAM macro blocks located around the MPU. The DRAM macro block has a redundancy function for which a plurality of fuses are disposed for cut-out by laser beams. The lower metallic layers implement source lines for power and ground to the DRAM macro blocks, whereas a topmost metallic layer implements source lines for the MPU. The topmost metallic layer circumvents areas of the chip where portions of a metallic layer constituting fuses for implementing the redundancy function are located.

Owner:NEC ELECTRONICS CORP

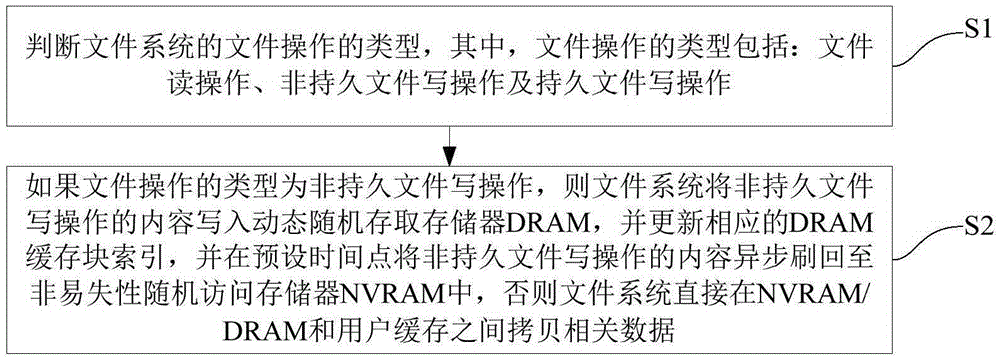

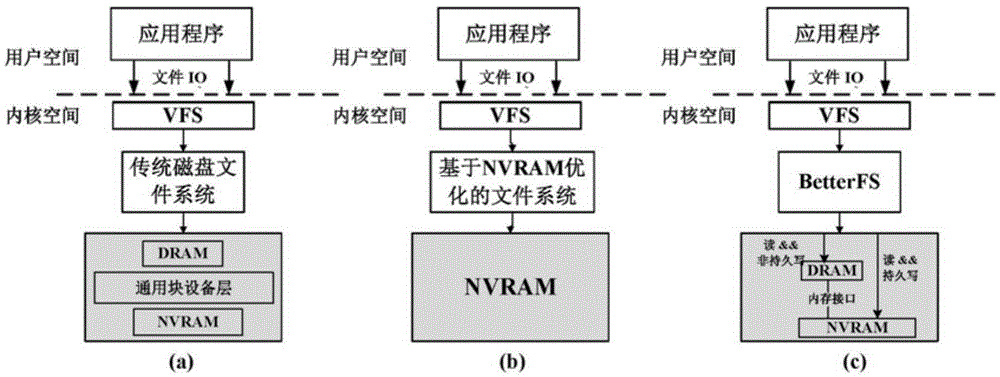

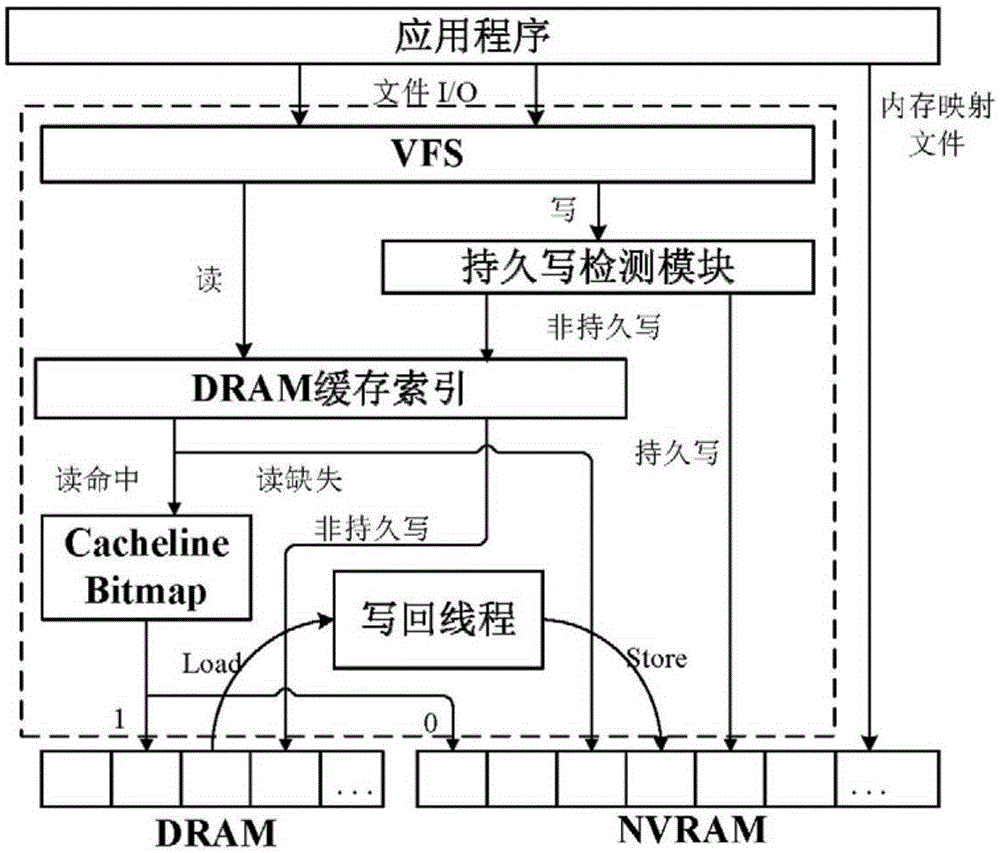

NVRAM-based method for efficiently constructing file system

ActiveCN105404673AAvoid overheadImprove write performanceFile system administrationSpecial data processing applicationsFile systemDram cache

The present invention provides an NVRAM-based method for efficiently constructing a file system. The method comprises the following steps: S1: determining a type of a file operation of a file system, wherein the type of the file operation comprises: a file reading operation of a file, a non-persistent file writing operation, and a persistent file writing operation; and S2: if the type of the file operation is the non-persistent file writing operation, the file system writing contents of the non-persistent file writing operation into a dynamic random access memory (DRAM), updating a corresponding DRAM cache block index, and asynchronously flashing back the contents of the non-persistent file writing operation into a non-volatile random access memory (NVRAM) at a preset time point; and if the type of the file operation is not the non-persistent file writing operation, the file system directly copying related data between the NVRAM / DRAM and a user cache. According to the method, not only extra copy overheads and conventional software layer overheads can be avoided, but also write performance of the DRAM can be fully utilized to improve write performance of the NVRAM file system, thereby improving overall performance of the system.

Owner:TSINGHUA UNIV

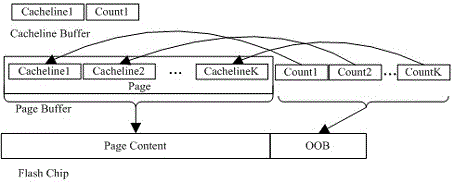

Memory system cache mechanism based on flash memory

The invention relates to a memory system cache mechanism based on flash memory. It is provided that two cache areas are constructed in the DRAM of the memory system based on the flash memory, namely a page cache area and a cache line cache area. On one hand, hot cache line in each data page is identified and stored through a history perception hotspot identification mechanism, and the hit rate of DRAM cache is increased. On the other hand, a delay refresh mechanism is adopted, and a clean data block is preferably removed when the cache area is full so as to reduce writing for the flash memory. Meanwhile, a weak variable coefficient algorithm is adopted, modes of history visit records, dirty mark bits and the like are added, and the expenses of the cache mechanism on time and space are not high. The memory system cache mechanism based on the flash memory utilizes the nature of the flash memory, writing delay of the memory system can be effectively improved, and service life of the memory system can be effectively prolonged.

Owner:NAT UNIV OF DEFENSE TECH

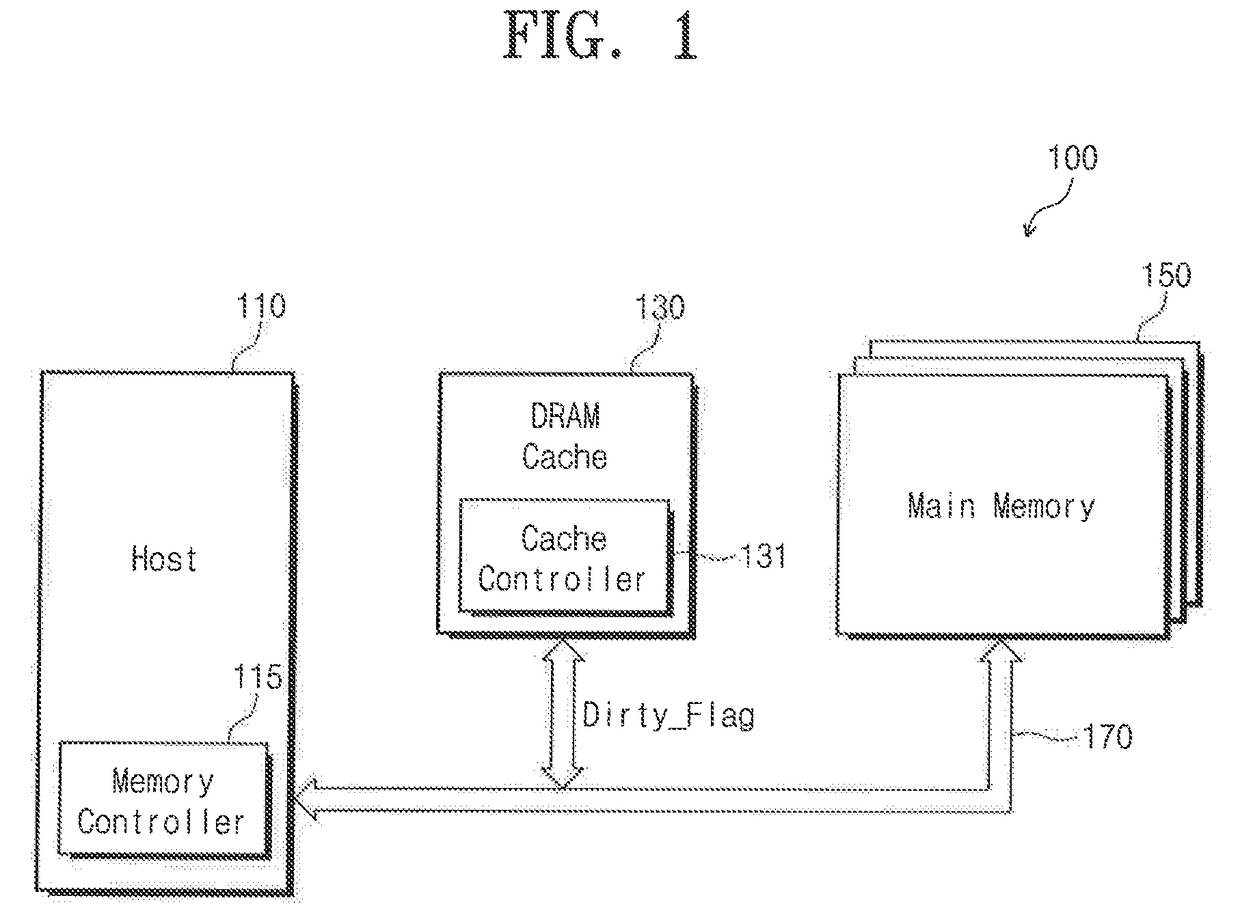

Memory system including dram cache and cache management method thereof

ActiveUS20170192888A1Avoid efficiencyMemory architecture accessing/allocationInput/output to record carriersParallel computingDram cache

A memory system includes a nonvolatile memory electrically connected to a data bus, a DRAM electrically connected to the data bus, and a memory controller configured to drive the DRAM as a cache memory and the nonvolatile memory as a main memory and to synchronize data of a cache line with data of the nonvolatile memory in units of cache units based on a dirty flag. The DRAM is configured to load data of the cache line that caches data stored in the nonvolatile memory and to store the dirty flag, which indicates whether a cache unit is dirty, in units of cache units, where a size of each cache unit is smaller than a size of the cache line.

Owner:SAMSUNG ELECTRONICS CO LTD

Embedded DRAM cache memory and method having reduced latency

A computer system includes a processor, a system memory, and an integrated circuit system controller coupled to the processor and the system memory. The system controller includes a system memory controller coupled to the system memory, a processor interface coupled to the processor and an embedded cache memory integrated with the memory controller and the processor interface. The cache memory includes at least one DRAM array, at least one tag memory, and at least one cache memory controller. The cache memory controller initiates an access to either or both the DRAM array and the tag memory, as well as the system memory, before the cache memory controller has determined if the access will result in a cache hit or a cache miss. If the cache memory controller determines that the access will result in a cache hit, data are coupled from the DRAM array to the processor. If the cache memory controller determines that the access will result in a cache miss, data are coupled from the system memory to the processor.

Owner:MICRON TECH INC

PCM and DRAM-based hybrid memory system and management method thereof

InactiveCN108762671AImprove I/O access performanceImprove access performanceInput/output to record carriersEnergy efficient computingDram cachePhase change

The invention discloses a PCM and DRAM-based hybrid memory system and a management method thereof. The hybrid memory system comprises an MB level small capacity DRAM as a cache, a GB level large capacity DRAM and GB level non-volatile phase change storage medium PCM as master memories. The management method comprises the steps of (10) small capacity DRAM cache: managing based on improved two-levelcache algorithms of LRU and LFU-Aging; (20) large capacity DRAM as master memory: managing a write oriented data page by using an LRU replacement algorithm; and (30) the phase change storage medium PC as master memory: managing a read oriented data page by using an inter-group abrasion balance method and an LRU replacement algorithm. The hybrid memory system and management method have advantagesof high performance and low energy consumption of the hybrid memory I / O, and write load balance and long service life of PCM.

Owner:ARMY ENG UNIV OF PLA

Methods for Caching and Reading Data to be Programmed into a Storage Unit and Apparatuses Using the Same

ActiveUS20160132432A1Memory architecture accessing/allocationMemory adressing/allocation/relocationDram cacheOperating system

A method for caching and reading data to be programmed into a storage unit, performed by a processing unit, including at least the following steps. A write command for programming at least a data page into a first address is received from a master device via an access interface. It is determined whether a block of data to be programmed has been collected, where the block contains a specified number of pages. The data page is stored in a DRAM (Dynamic Random Access Memory) and cache information is updated to indicate that the data page has not been programmed into the storage unit, and to also indicate the location of the DRAM caching the data page when the block of data to be programmed has not been collected.

Owner:SILICON MOTION INC (TW)

Static random access memory (SRAM) compatible, high availability memory array and method employing synchronous dynamic random access memory (DRAM) in conjunction with a single DRAM cache and tag

InactiveUS20060190678A1Avoid data lossIncrease capacityMemory architecture accessing/allocationDigital storageStatic random-access memoryDram memory

A static random access memory (SRAM) compatible, high availability memory array and method employing synchronous dynamic random access memory (DRAM) in conjunction with a single DRAM cache and tag provides a memory architecture comprising low cost DRAM memory cells that is available for system accesses 100% of the time and is capable of executing refreshes frequently enough to prevent data loss. Any subarray of the memory can be written from cache or refreshed at the same time any other subarray is read or written externally.

Owner:UNITED MEMORIES +1

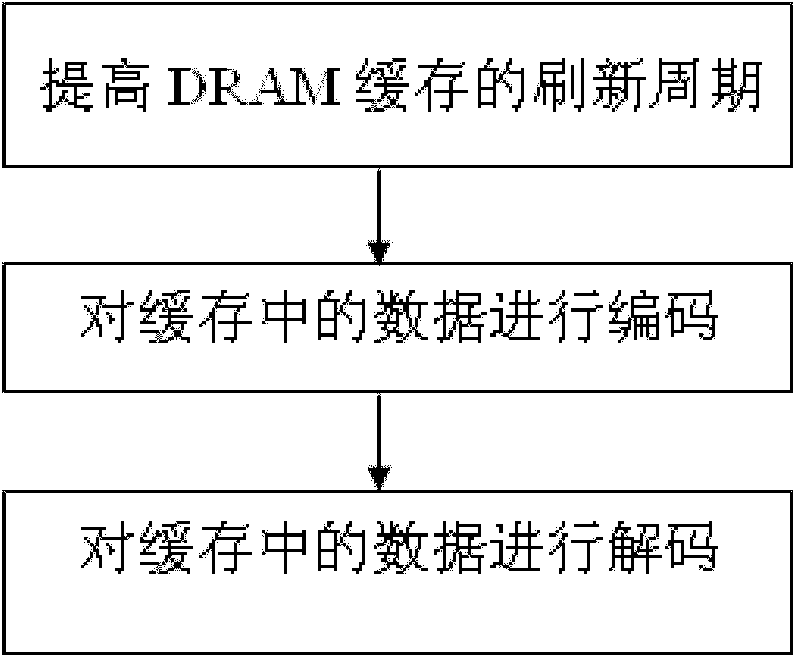

Error correction encoding method for dynamic random access memory (DRAM) buffer

InactiveCN101980339AReduce the number of refreshesEasy to loseStatic storageStatic random-access memoryRefresh cycle

The invention discloses an error corrosion encoding method for a dynamic random access memory (DRAM) buffer, which comprises the following steps of: (1) prolonging a refresh cycle of the DRAM buffer and reducing refresh frequency; and (2) encoding and decoding data in the buffer. In the method, refresh time is set according to the most error-prone bits, some error data is compensated by error correction technology which is powerful enough to correct a plurality of bits, which means the refresh time can be prolonged, thereby reducing power consumption.

Owner:ZHEJIANG UNIV

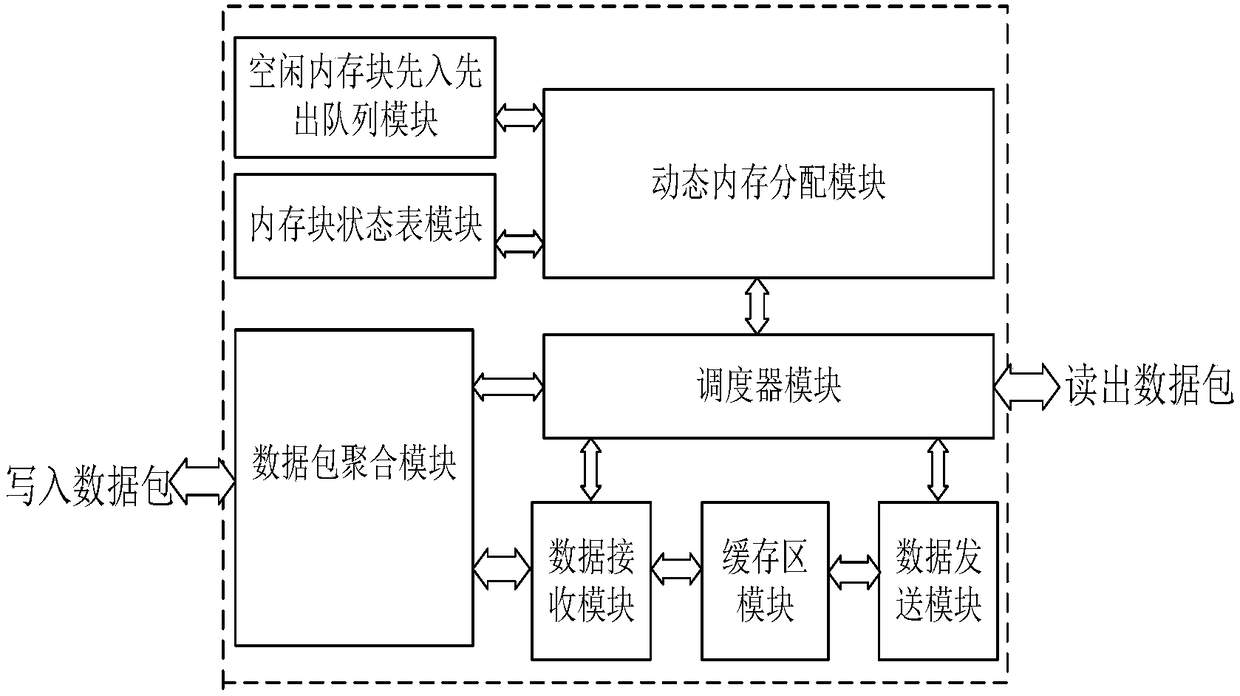

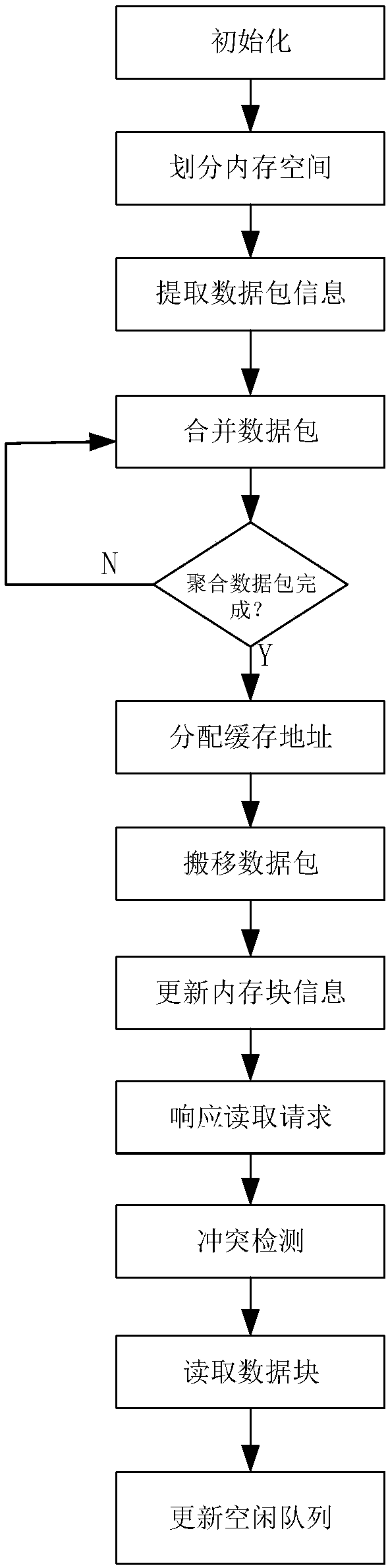

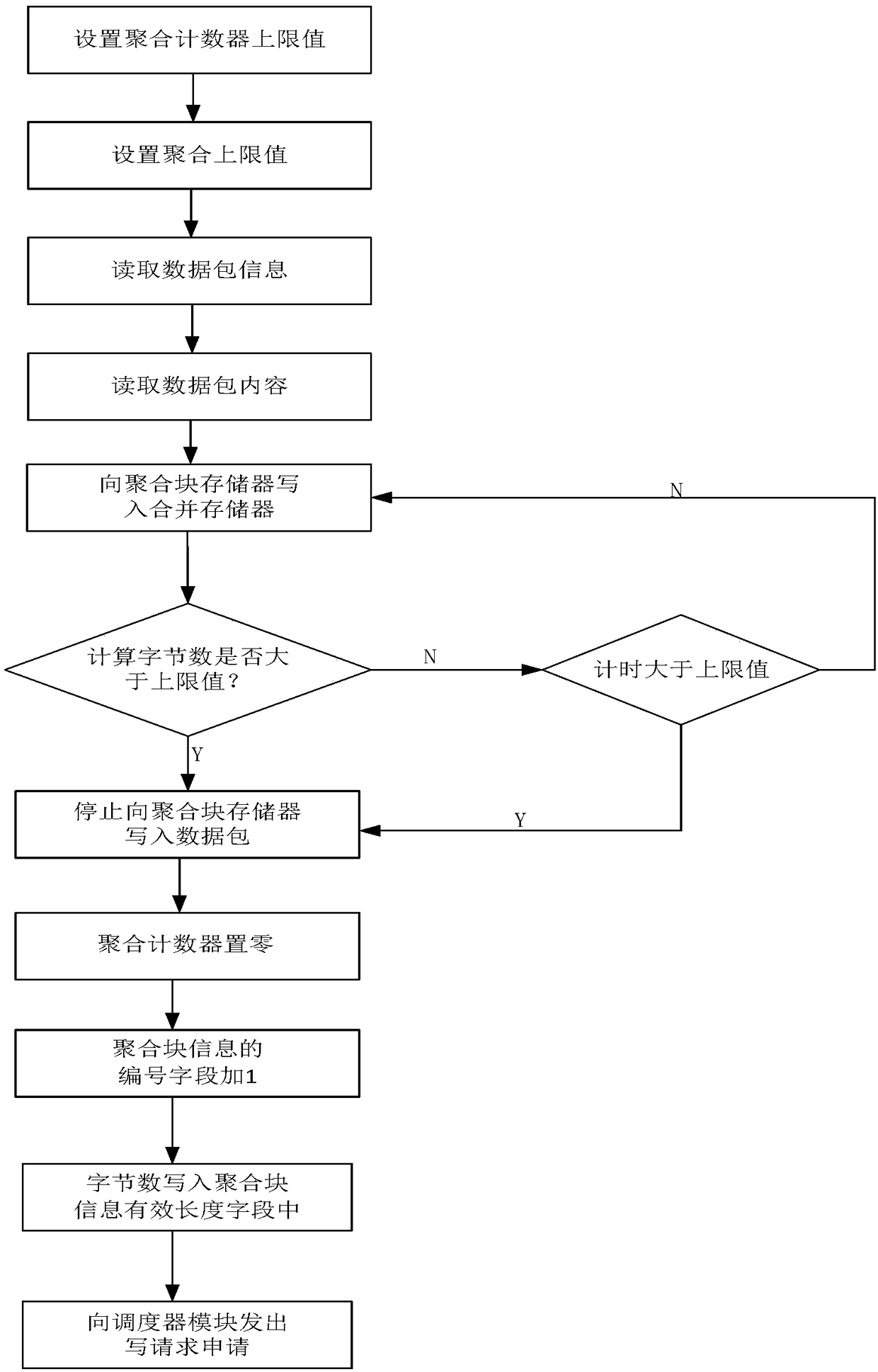

Data packet low-delay caching device and method for exchange equipment

ActiveCN108366111AIncrease processing rateOvercoming processing speed limitationsData switching networksDram cacheLow delay

The invention discloses a data packet low-delay caching device and method for switch equipment. The device comprises a data packet aggregation module, a scheduler module, a data receiving module, a caching region module, a dynamic memory distribution module, a data sending module, a memory block state table module, and an idle memory block first-in first-out queue module. The method comprises thefollowing steps: the aggregation module aggregates a data packet as an aggregated data block with fixed size; the scheduler module distributes a caching address for the aggregated data block; the dynamic memory management module maintains memory block use information; the data receiving module moves the aggregated data block to the corresponding DRAM caching unit; an output port sends a read application to the scheduler module, and the data sending module outputs the data packet to the external from the caching region after the conflict detection. The delay of the cache is lowered, and the processing speed of the exchange equipment is improved.

Owner:XIDIAN UNIV

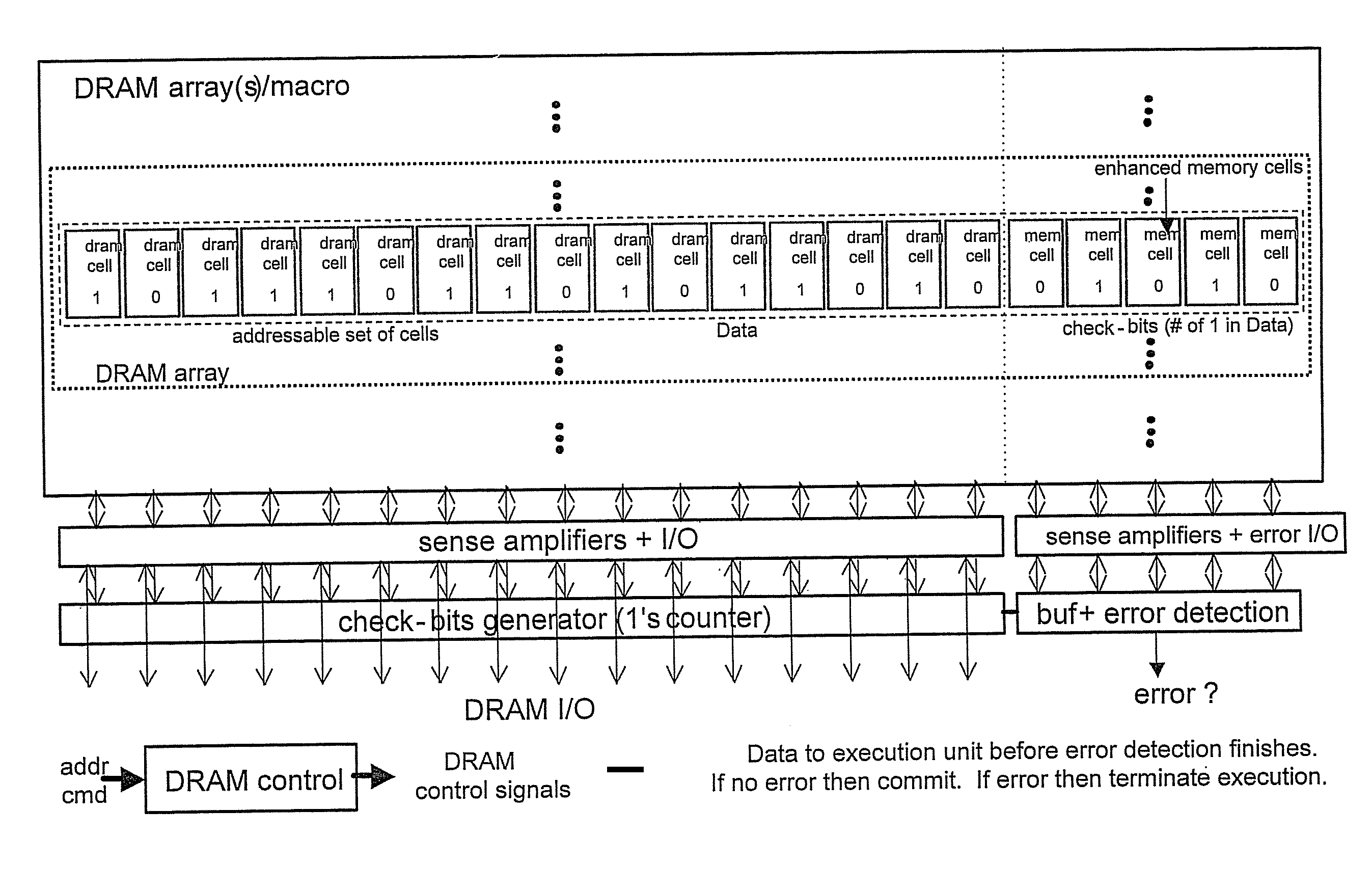

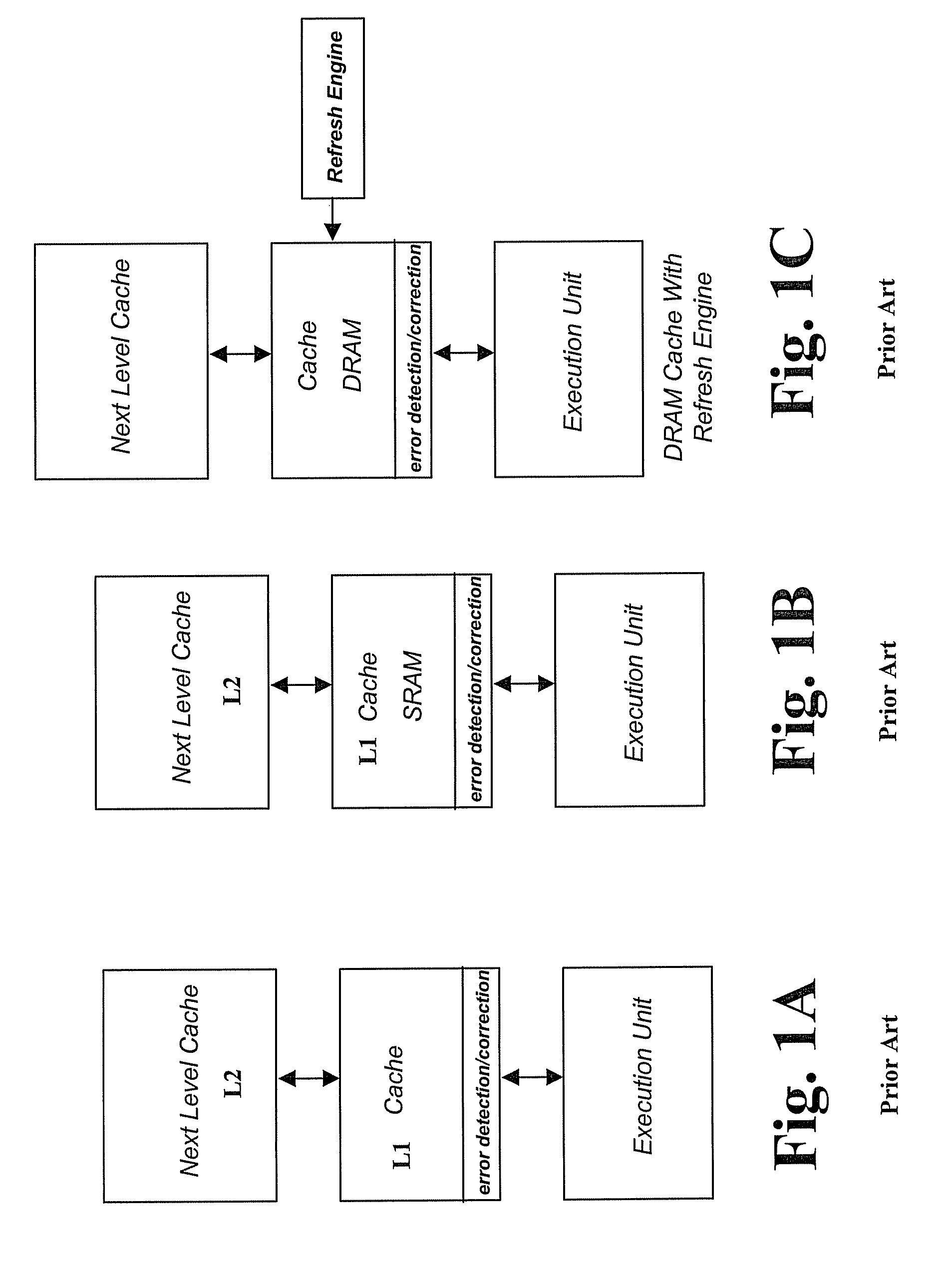

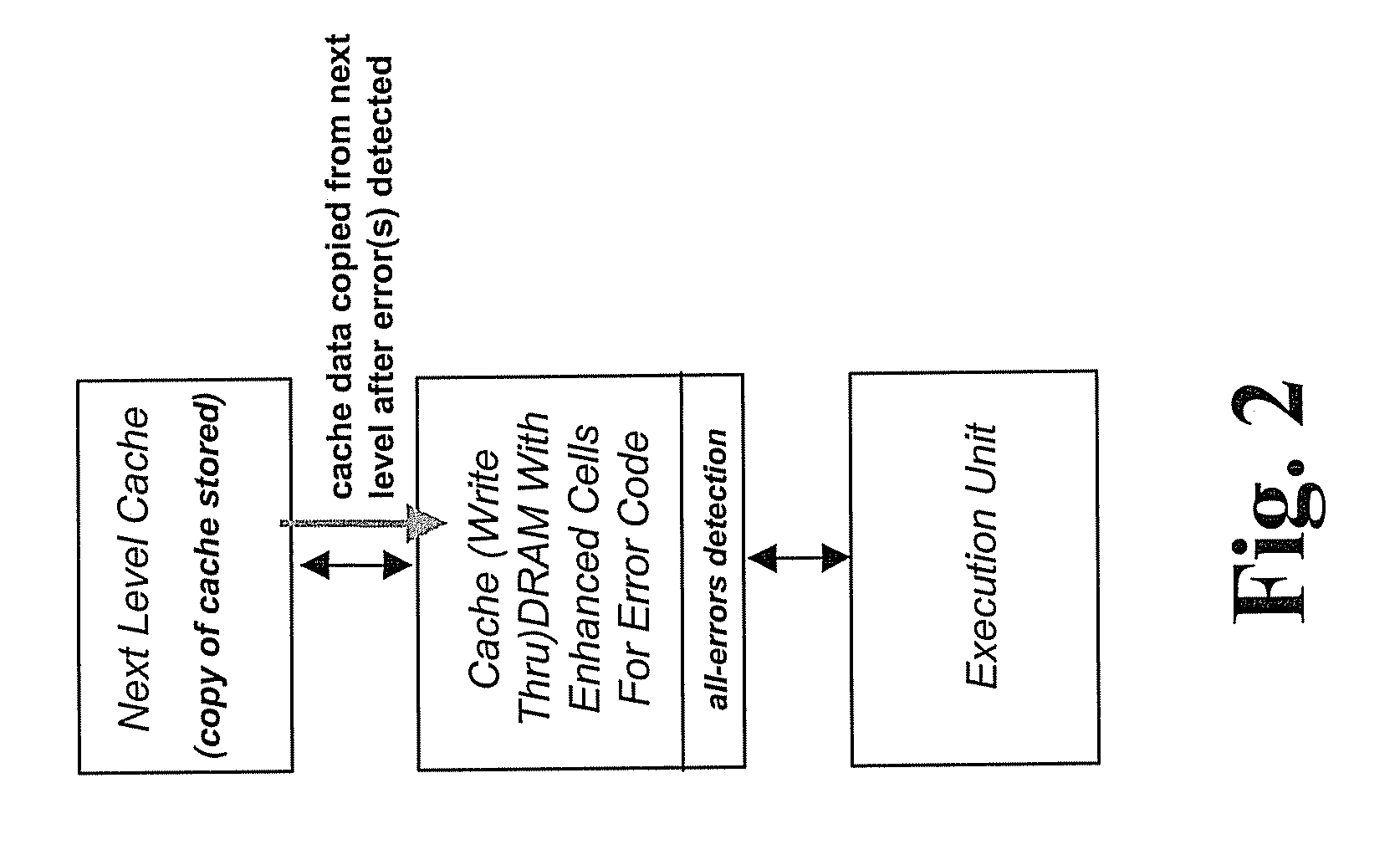

DRAM Cache with on-demand reload

Embodiments include a DRAM cache structure, associated circuits and method of operations suitable for use with high-speed caches. The DRAM caches do not require regular refresh of its data and hence the refresh blank-out period and refresh power are eliminated, thus improving cache availability and reducing power compared to conventional DRAM caches. Compared to existing SRAM caches, the new cache structures can potentially achieve the same (or better) speed, lower power and better tolerance to chip process variations in future process technologies.

Owner:IBM CORP

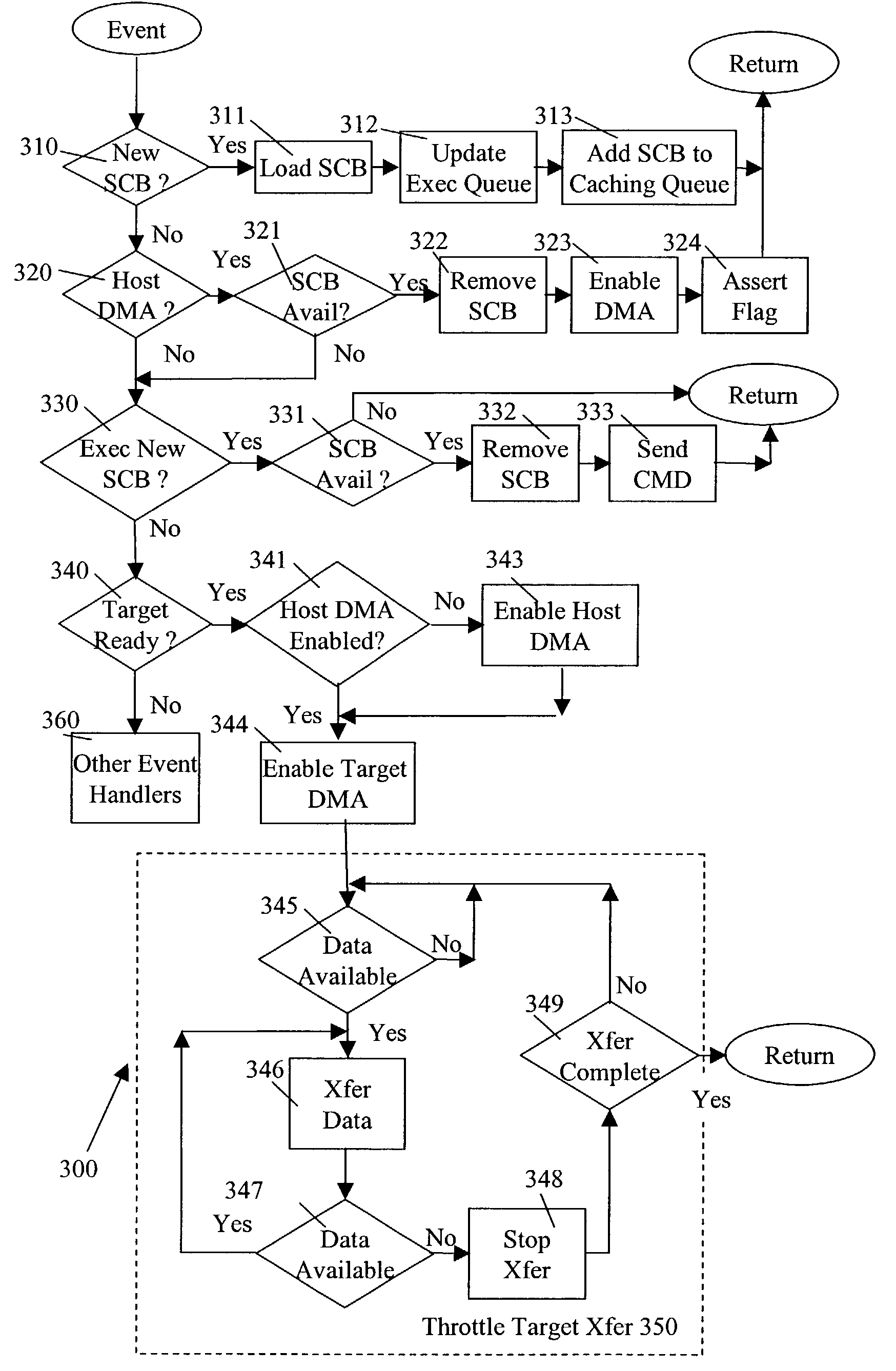

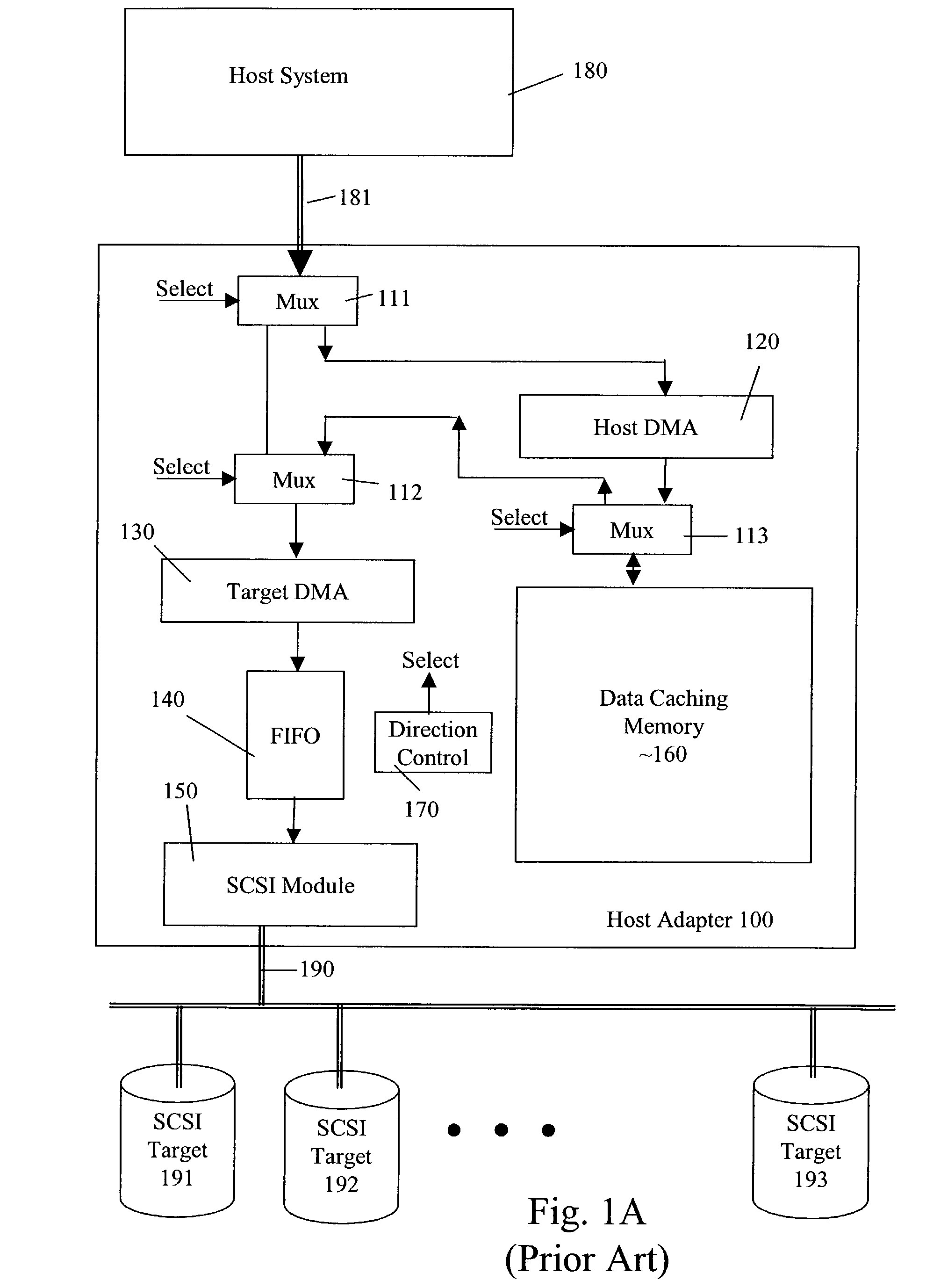

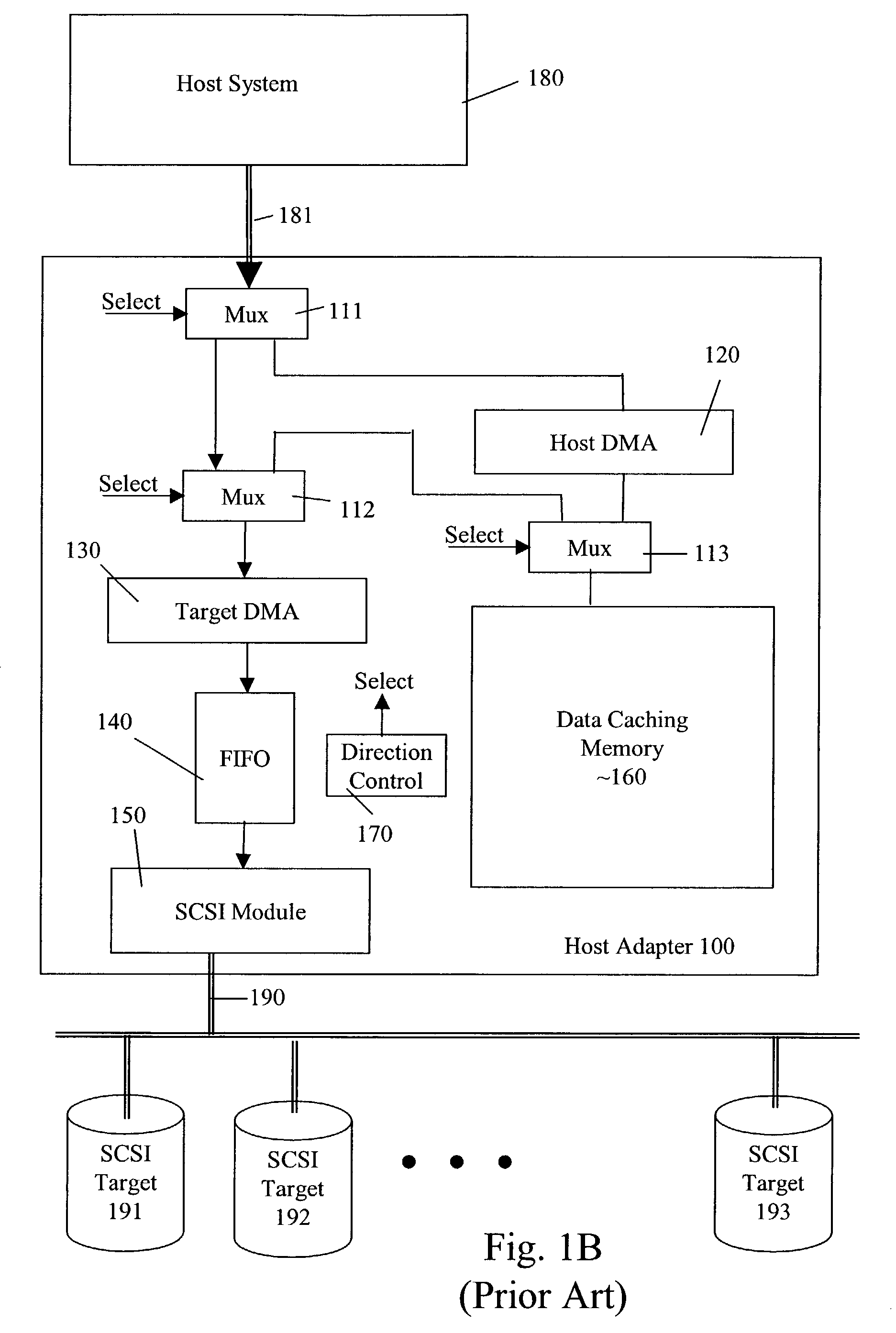

Host adapter integrated data FIFO and data cache and method for improved host adapter sourcing latency

InactiveUS7024523B1Reduce congestionEliminate needMemory systemsInput/output processes for data processingComputer hardwareDram cache

A host adapter, which interfaces two I / O buses, caches data transferred from one I / O bus to another I / O bus in a data first-in-first-out (FIFO) / caching memory. In addition, when a target device on the another I / O bus is ready to receive the data, data is transferred from the data FIFO / caching memory even though not all of the data may be cached in that memory. Hence, data is concurrently transferred to and transferred from the data FIFO / caching memory. The data transfer to the target device is throttled if cached data is unavailable in the data FIFO / caching memory for transfer, e.g., the data cache is empty for the current context.

Owner:PMC-SIERRA

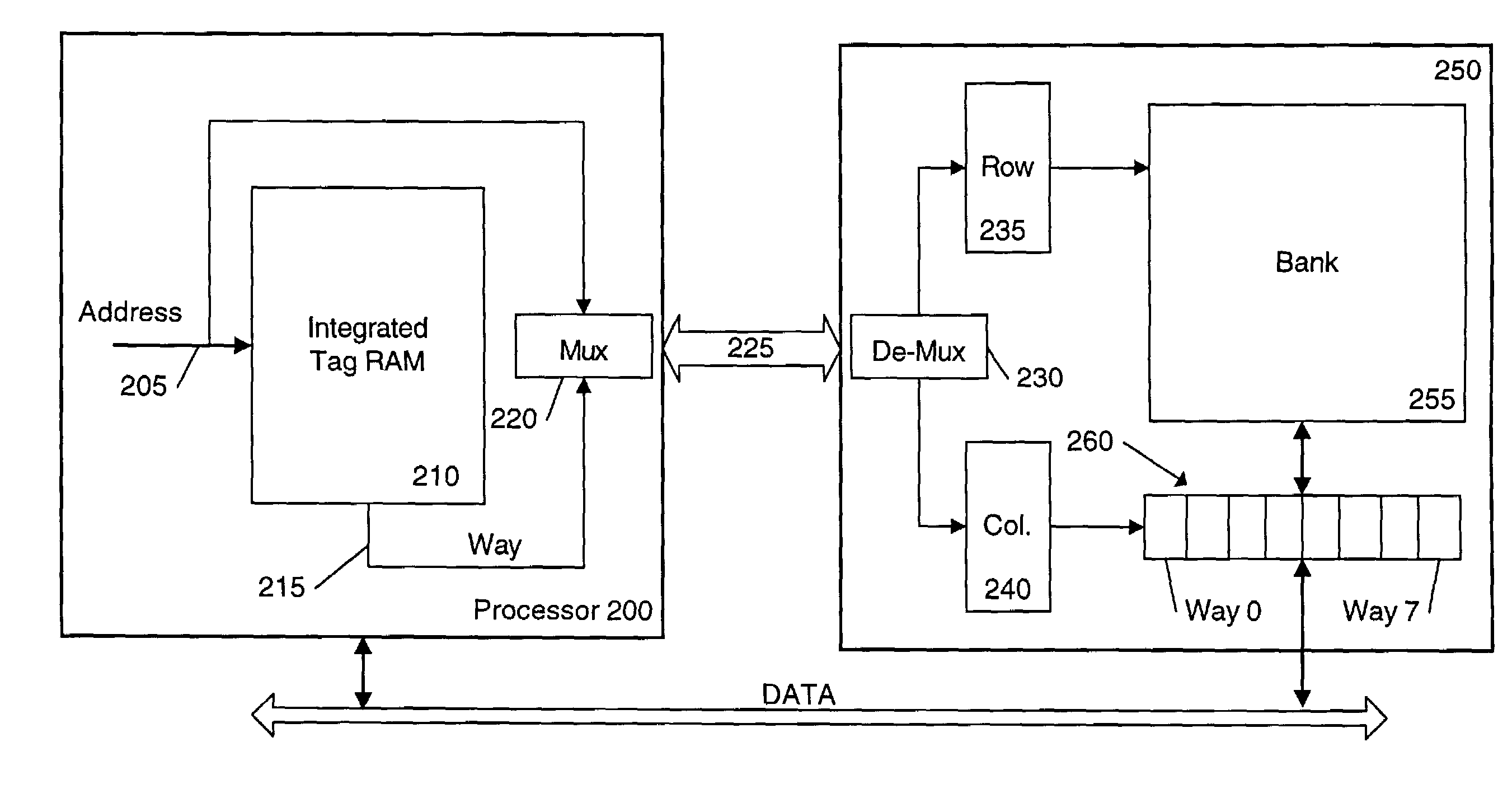

High speed DRAM cache architecture

InactiveUS20060117129A1Memory adressing/allocation/relocationMicro-instruction address formationMultiplexingDram cache

A high speed DRAM cache architecture. One disclosed embodiment includes a multiplexed bus interface to interface with a multiplexed bus. A cache control circuit drives a row address portion of an address on the multiplexed bus interface and a command to open a memory page containing data for a plurality of ways. The cache control circuit subsequently drives a column address including at least a way indicator to the multiplexed bus interface.

Owner:INTEL CORP

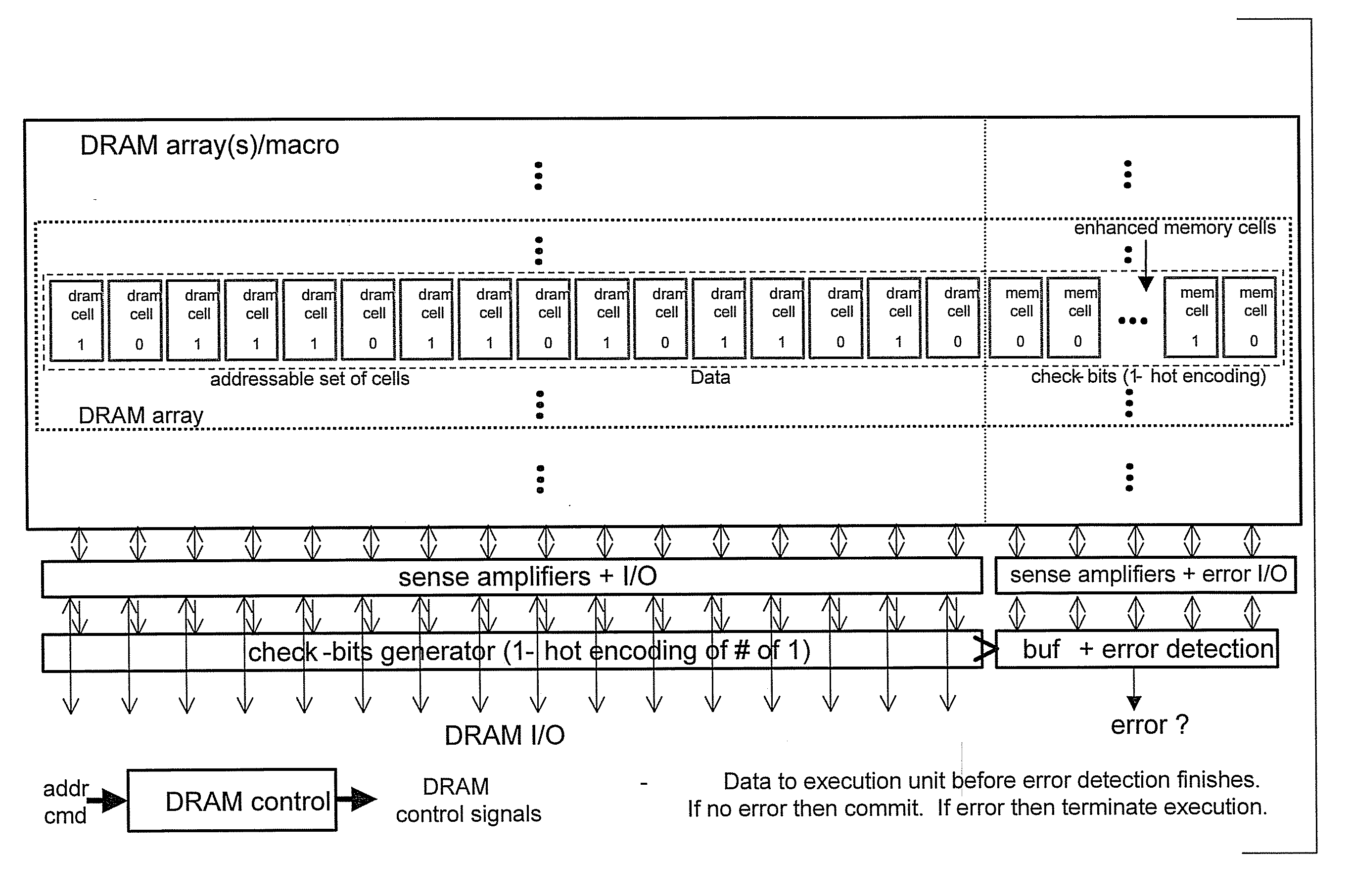

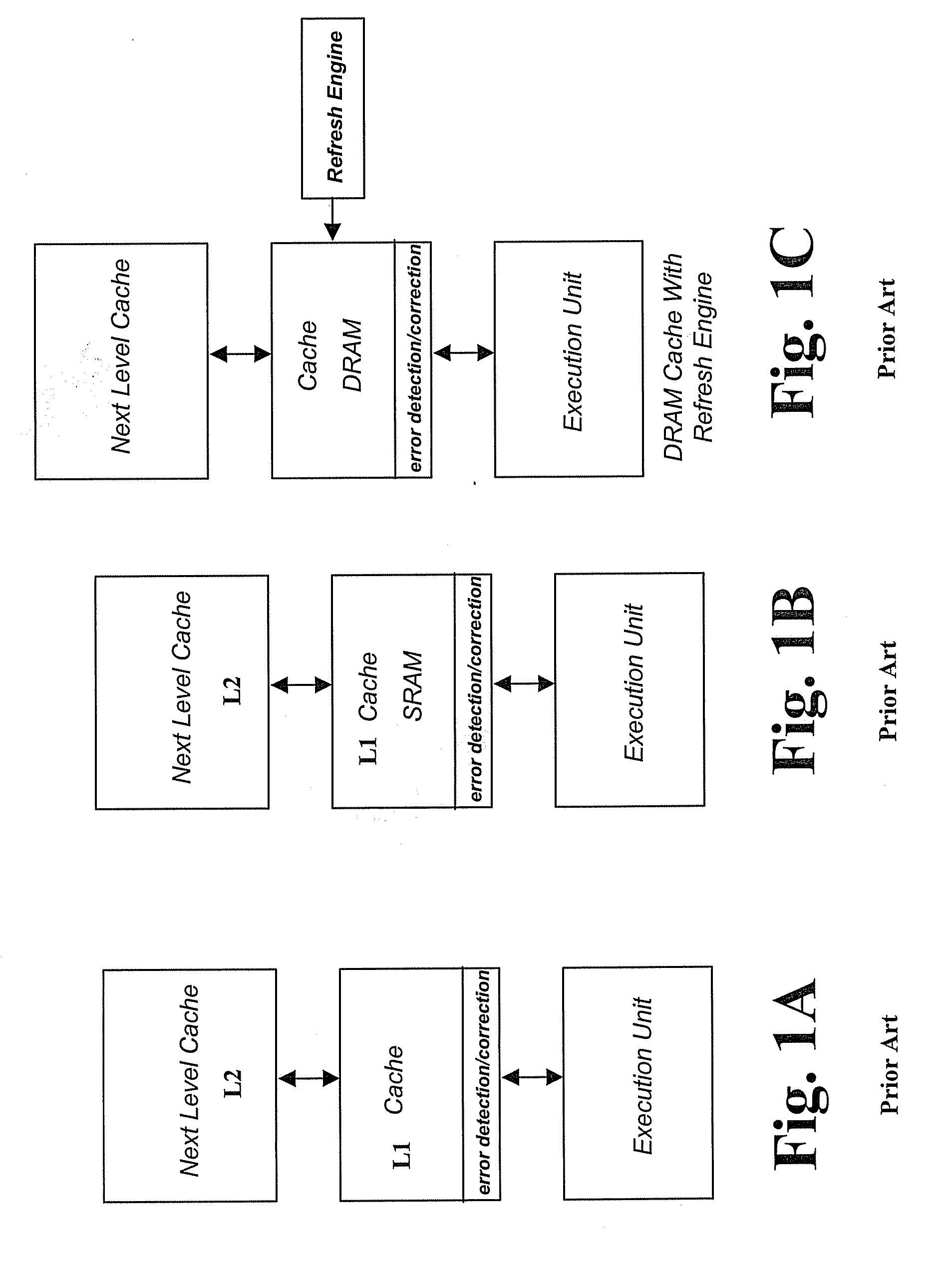

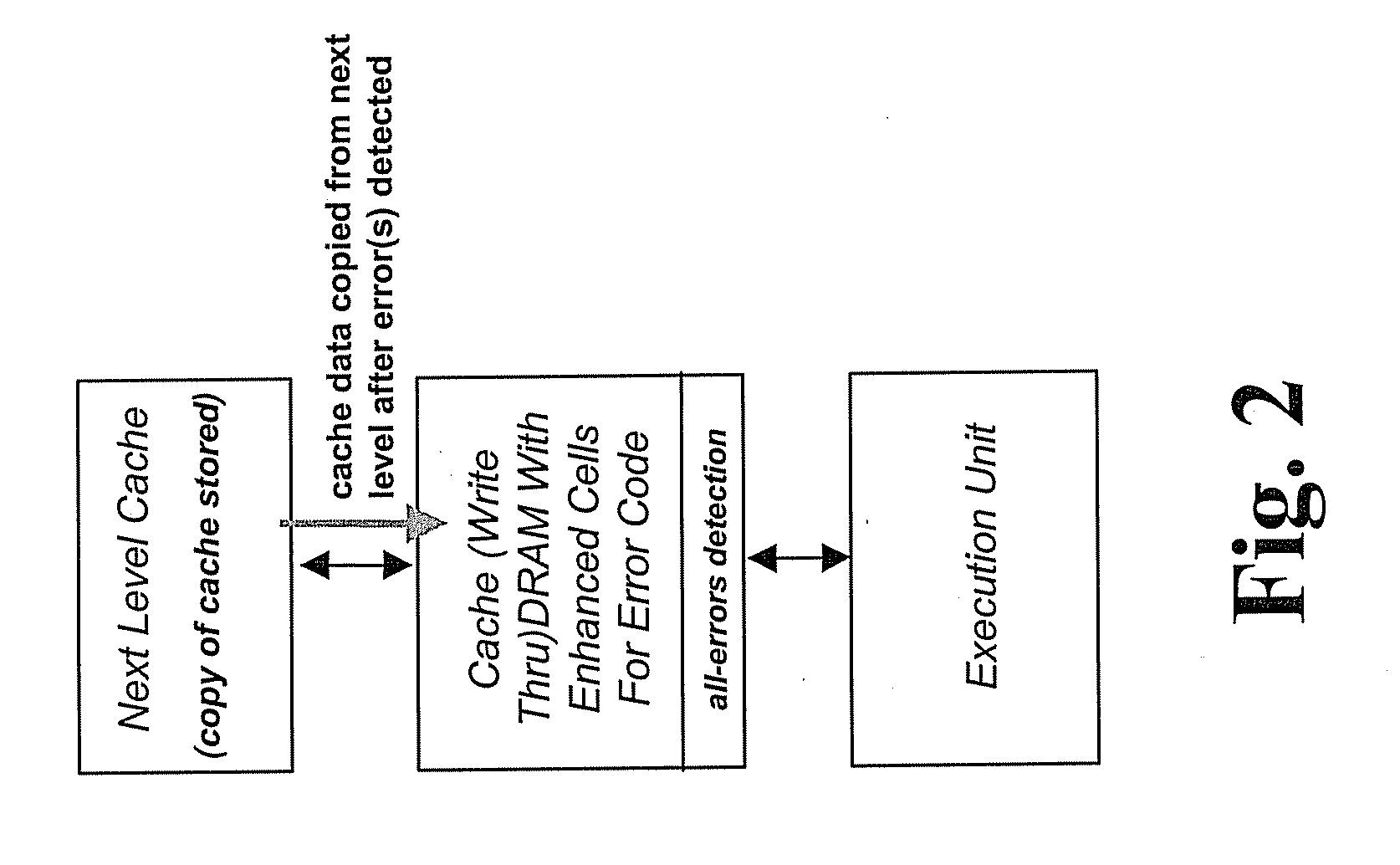

Dram cache with on-demand reload

ActiveUS20080195887A1Energy efficient ICTError detection/correctionParallel computingHigh availability

Embodiments include a DRAM cache structure, associated circuits and method of operations suitable for use with high-speed caches. The DRAM caches do not require regular refresh of its data and hence the refresh blank-out period and refresh power are eliminated, thus improving cache availability and reducing power compared to conventional DRAM caches. Compared to existing SRAM caches, the new cache structures can potentially achieve the same (or better) speed, lower power and better tolerance to chip process variations in future process technologies.

Owner:IBM CORP

High speed DRAM cache architecture

A high speed DRAM cache architecture. One disclosed embodiment includes a multiplexed bus interface to interface with a multiplexed bus. A cache control circuit drives a row address portion of an address on the multiplexed bus interface and a command to open a memory page containing data for a plurality of ways. The cache control circuit subsequently drives a column address including at least a way indicator to the multiplexed bus interface.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com