Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

84 results about "Cache pollution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

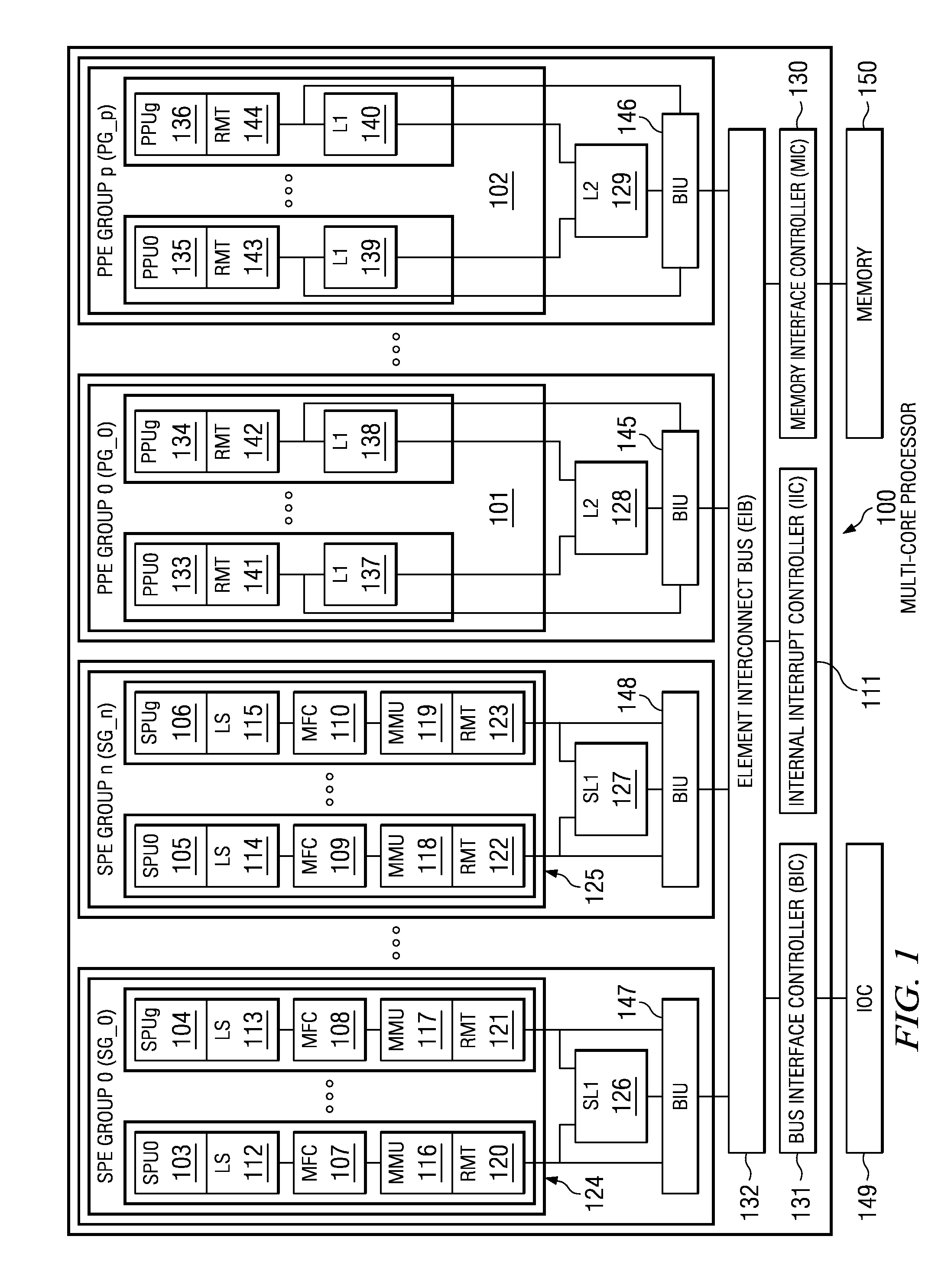

Cache pollution describes situations where an executing computer program loads data into CPU cache unnecessarily, thus causing other useful data to be evicted from the cache into lower levels of the memory hierarchy, degrading performance. For example, in a multi-core processor, one core may replace the blocks fetched by other cores into shared cache, or prefetched blocks may replace demand-fetched blocks from the cache.

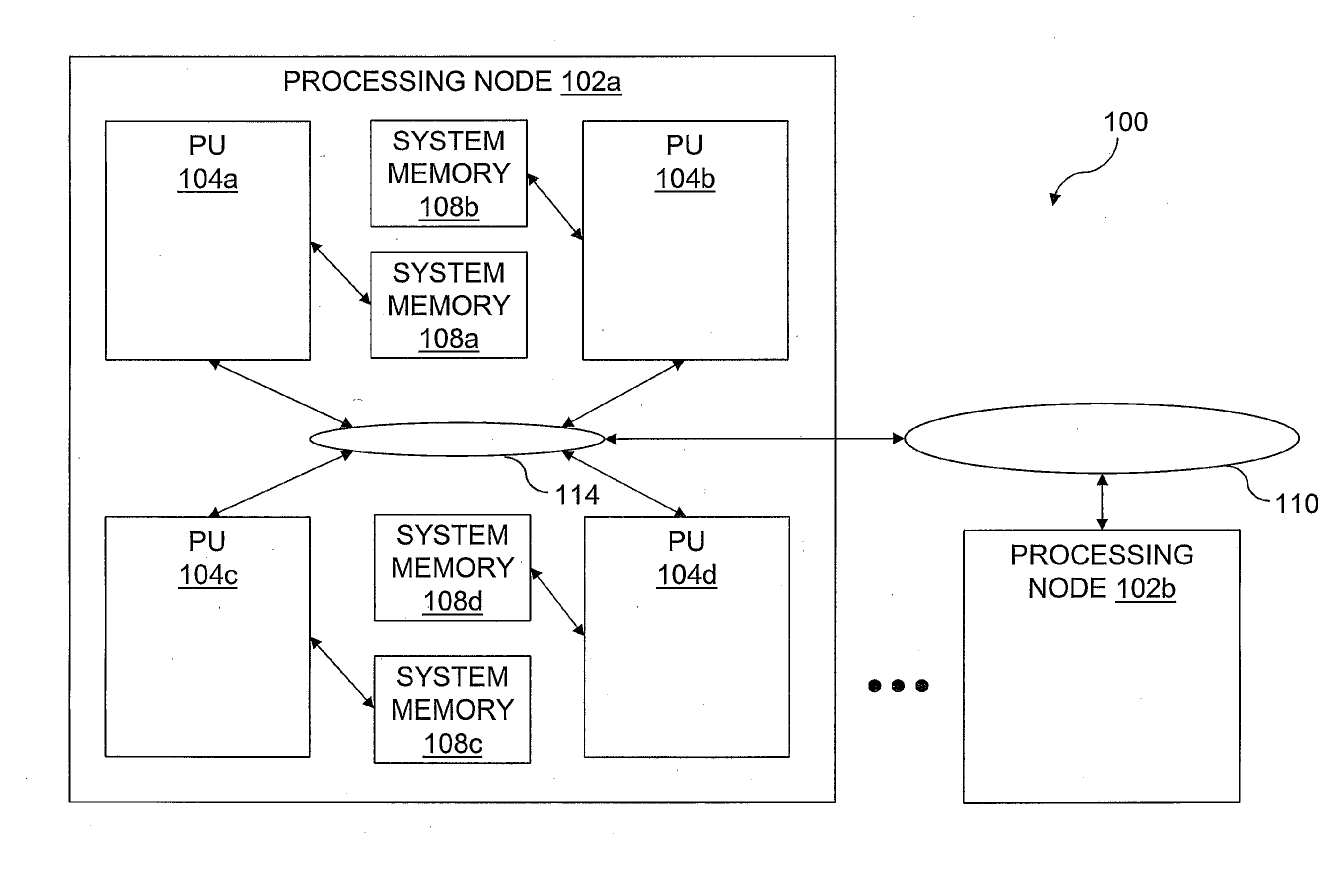

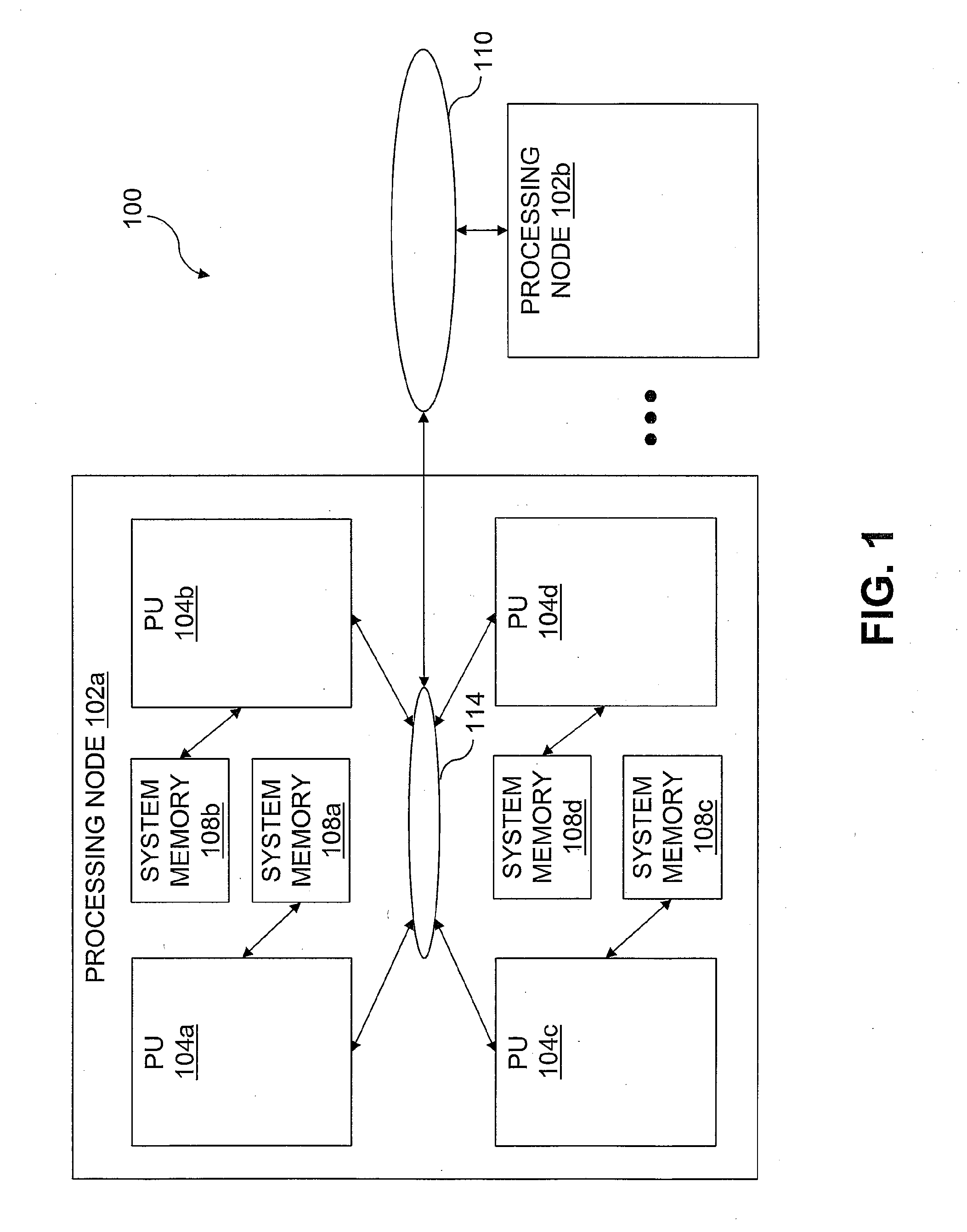

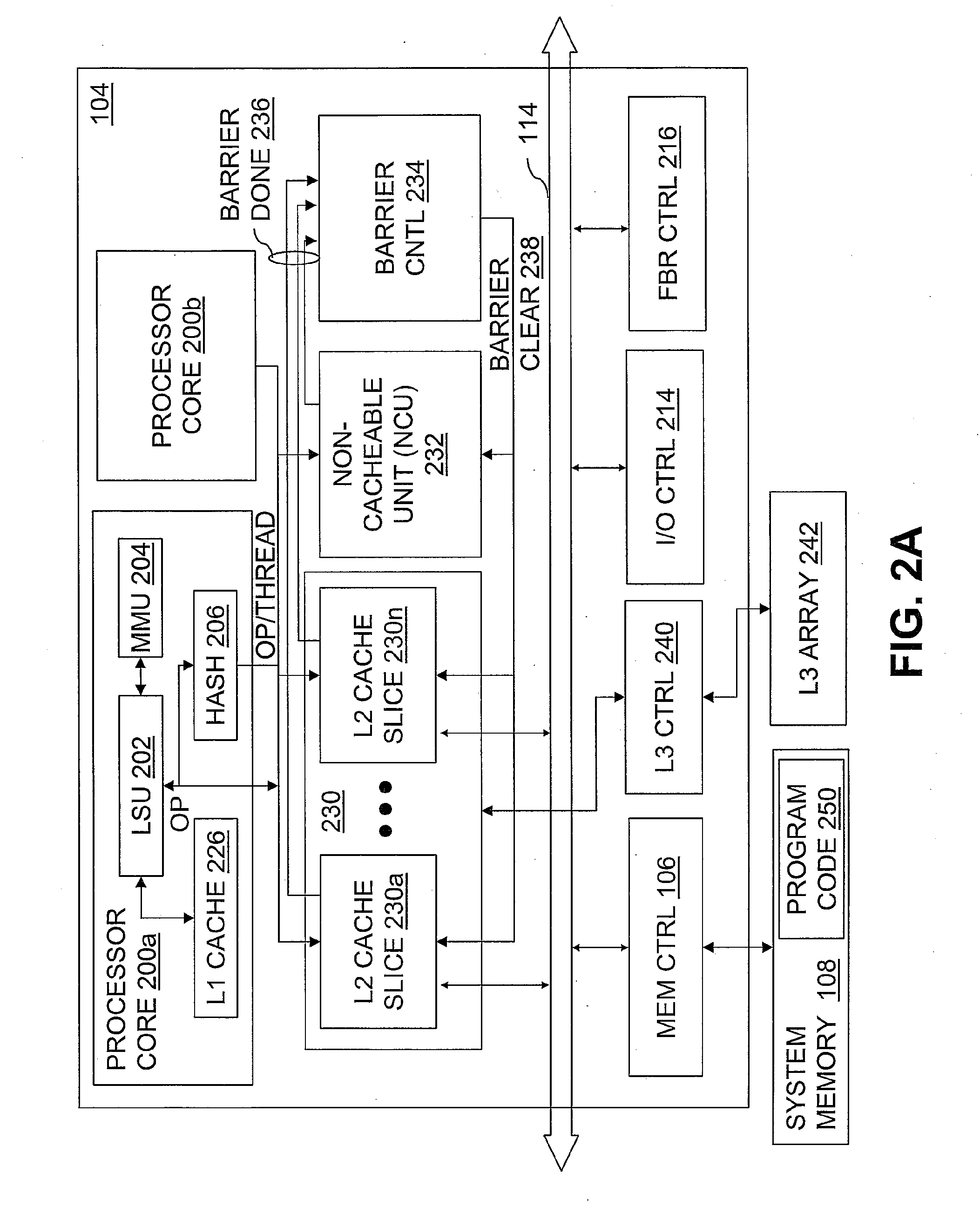

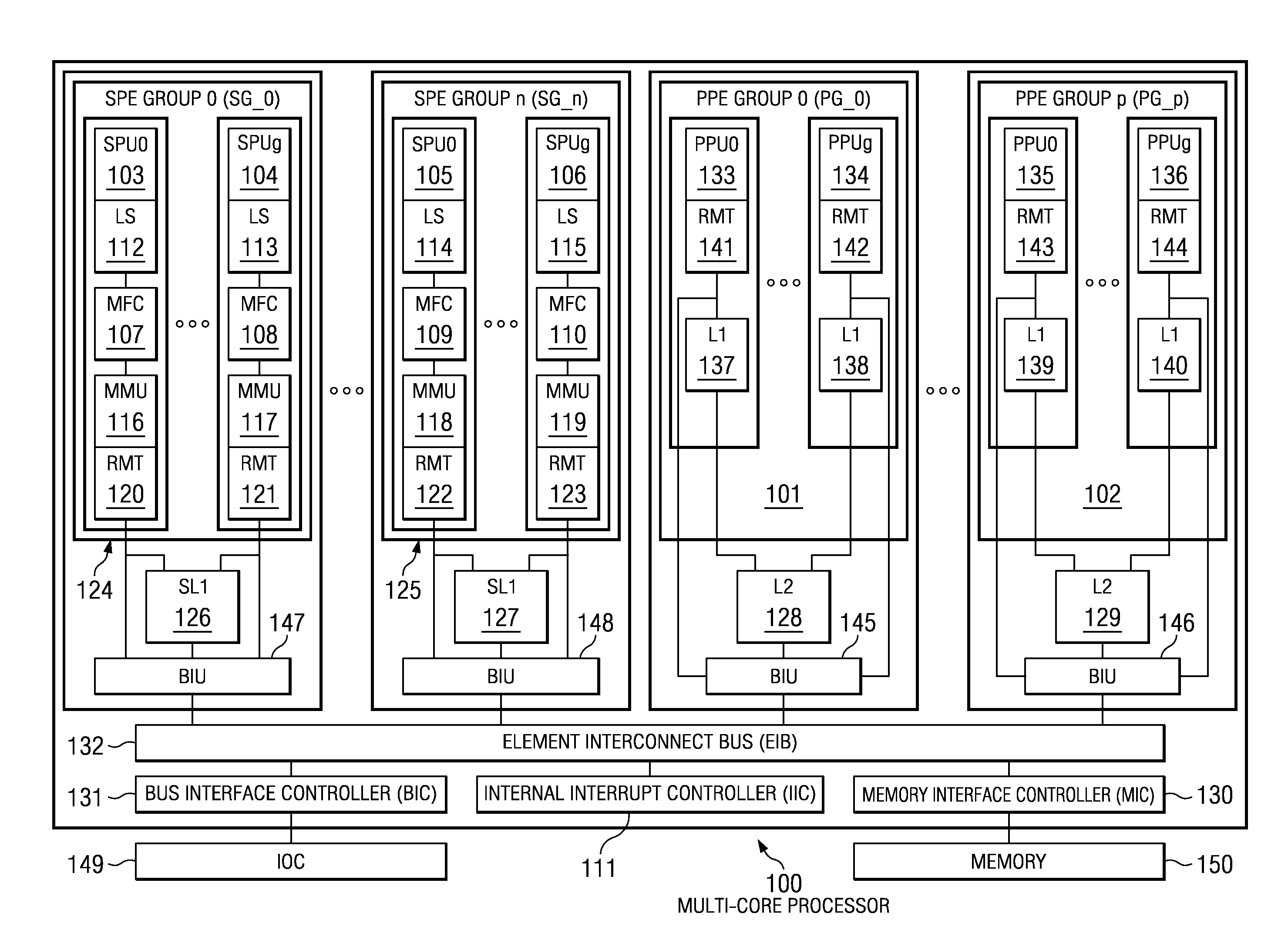

Data Processing System and Method for Reducing Cache Pollution by Write Stream Memory Access Patterns

InactiveUS20080046736A1User identity/authority verificationMemory systemsData processing systemCache hierarchy

A data processing system includes a system memory and a cache hierarchy that caches contents of the system memory. According to one method of data processing, a storage modifying operation having a cacheable target real memory address is received. A determination is made whether or not the storage modifying operation has an associated bypass indication. In response to determining that the storage modifying operation has an associated bypass indication, the cache hierarchy is bypassed, and an update indicated by the storage modifying operation is performed in the system memory. In response to determining that the storage modifying operation does not have an associated bypass indication, the update indicated by the storage modifying operation is performed in the cache hierarchy.

Owner:IBM CORP

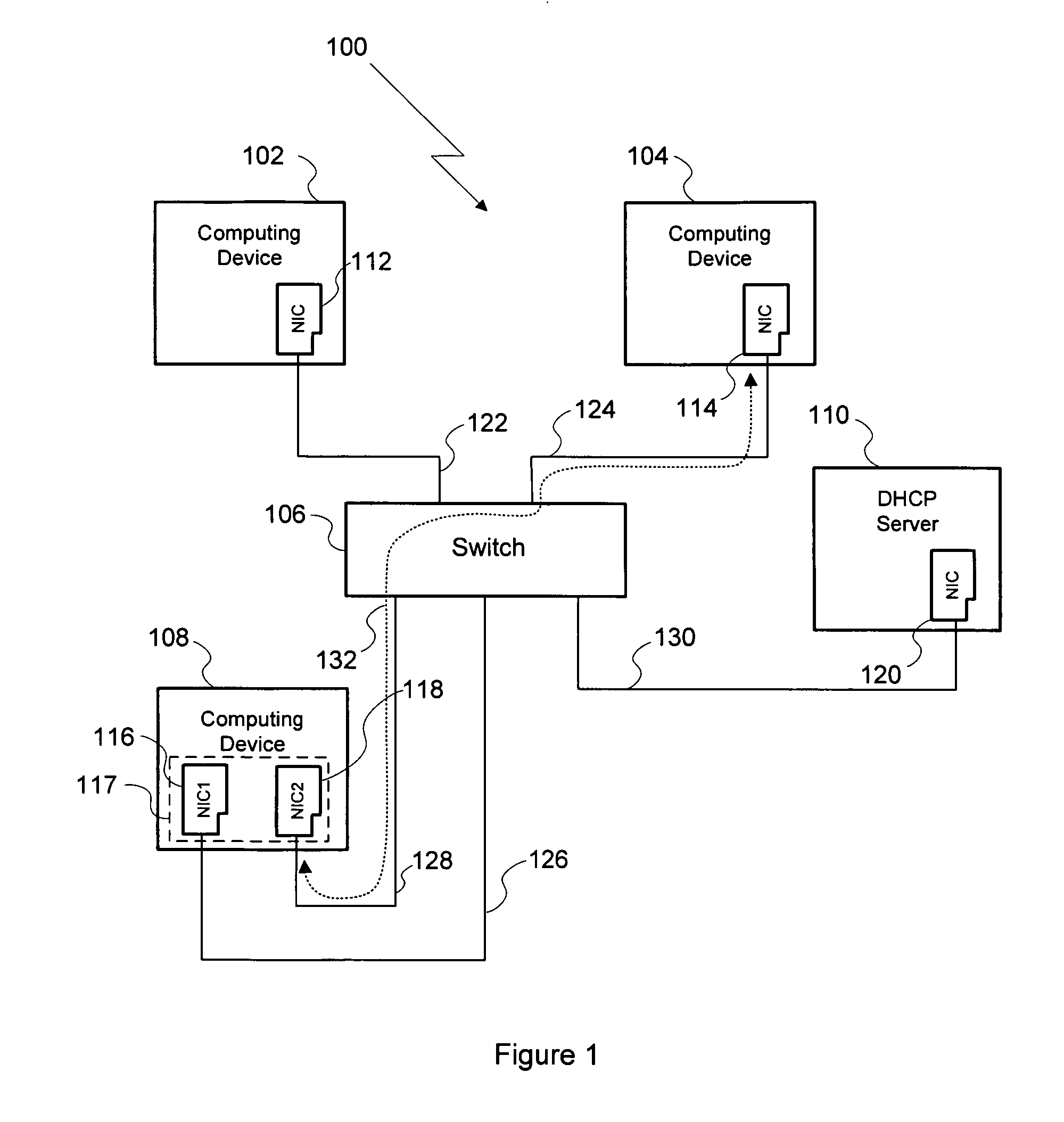

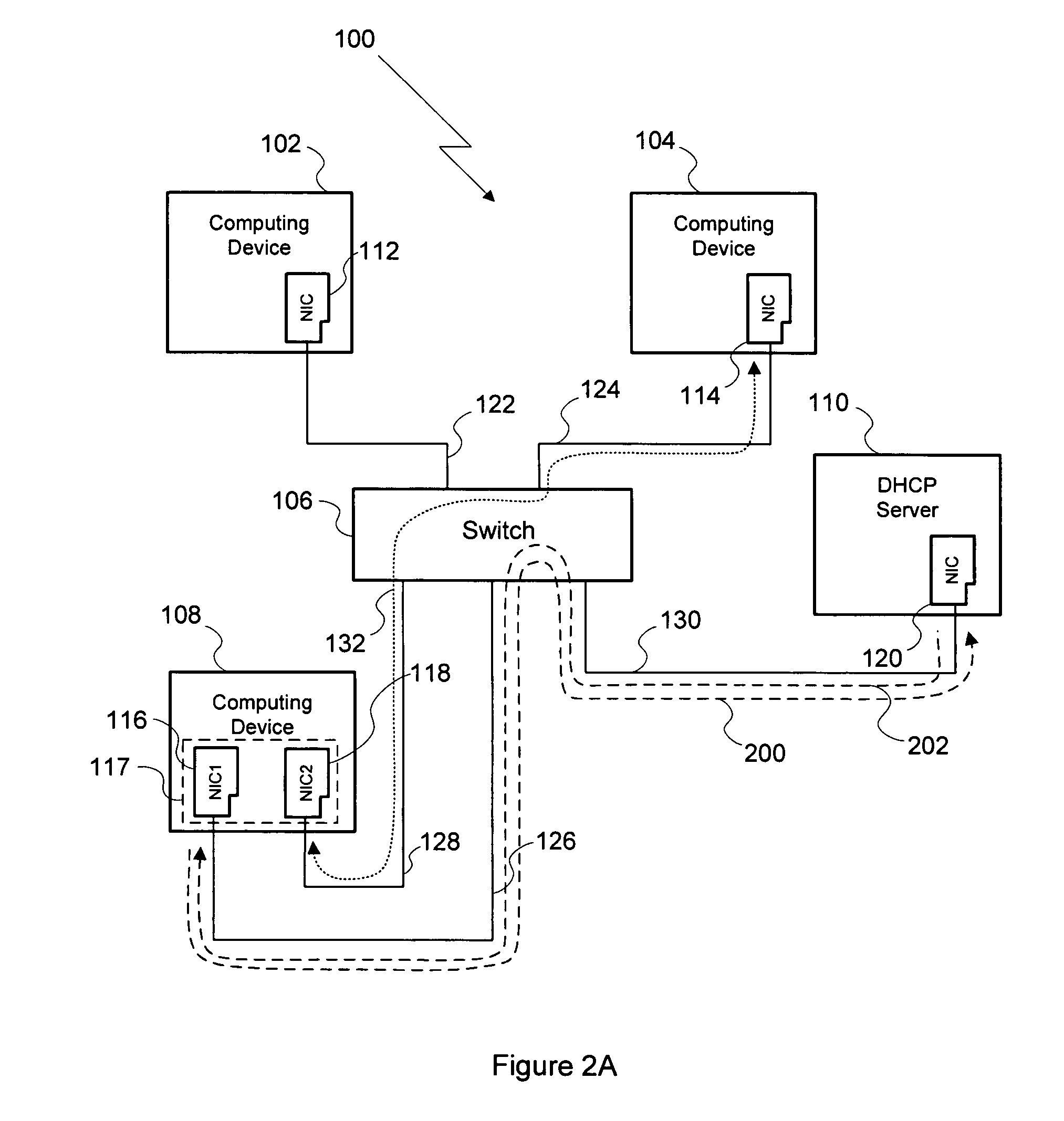

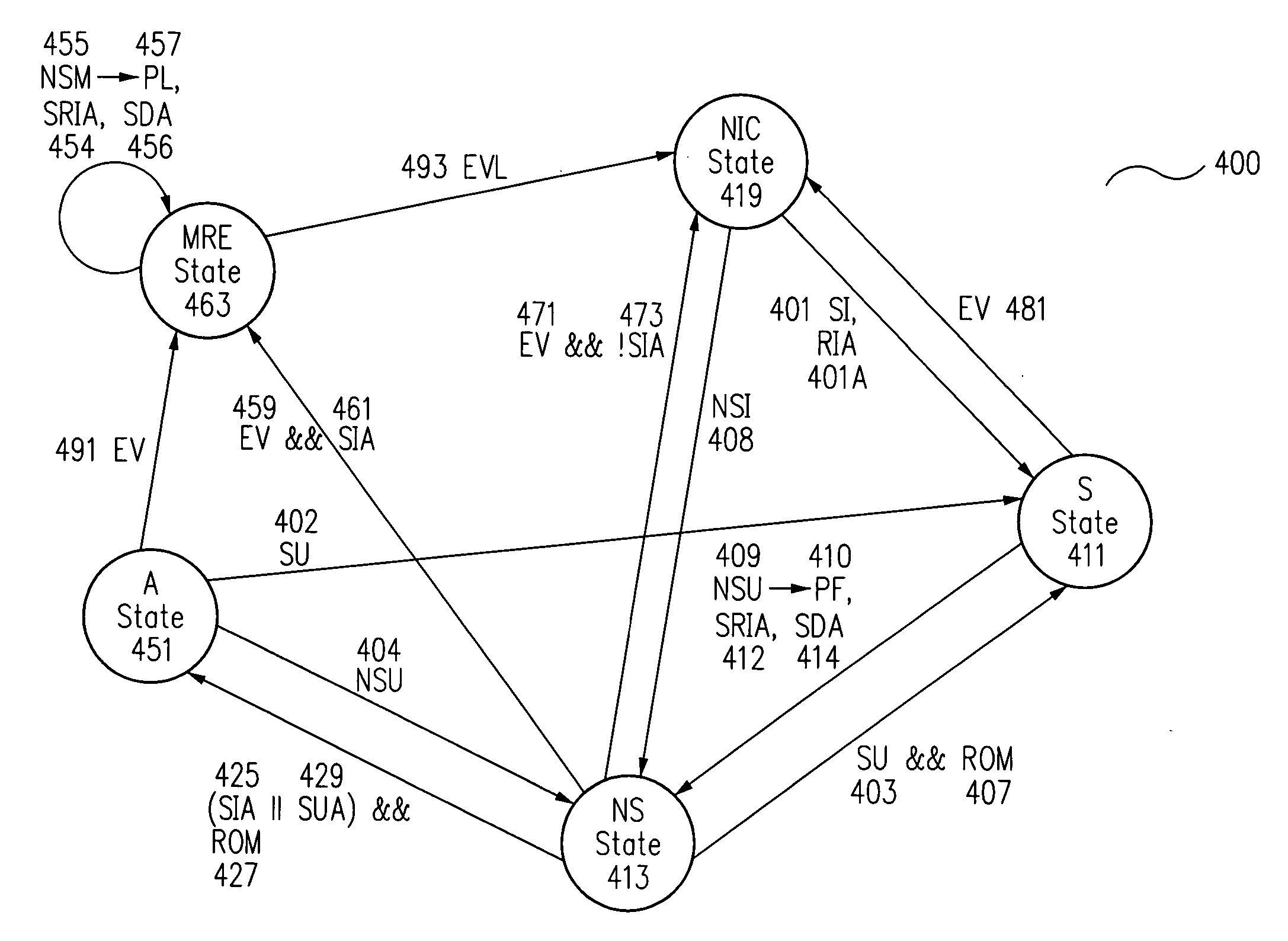

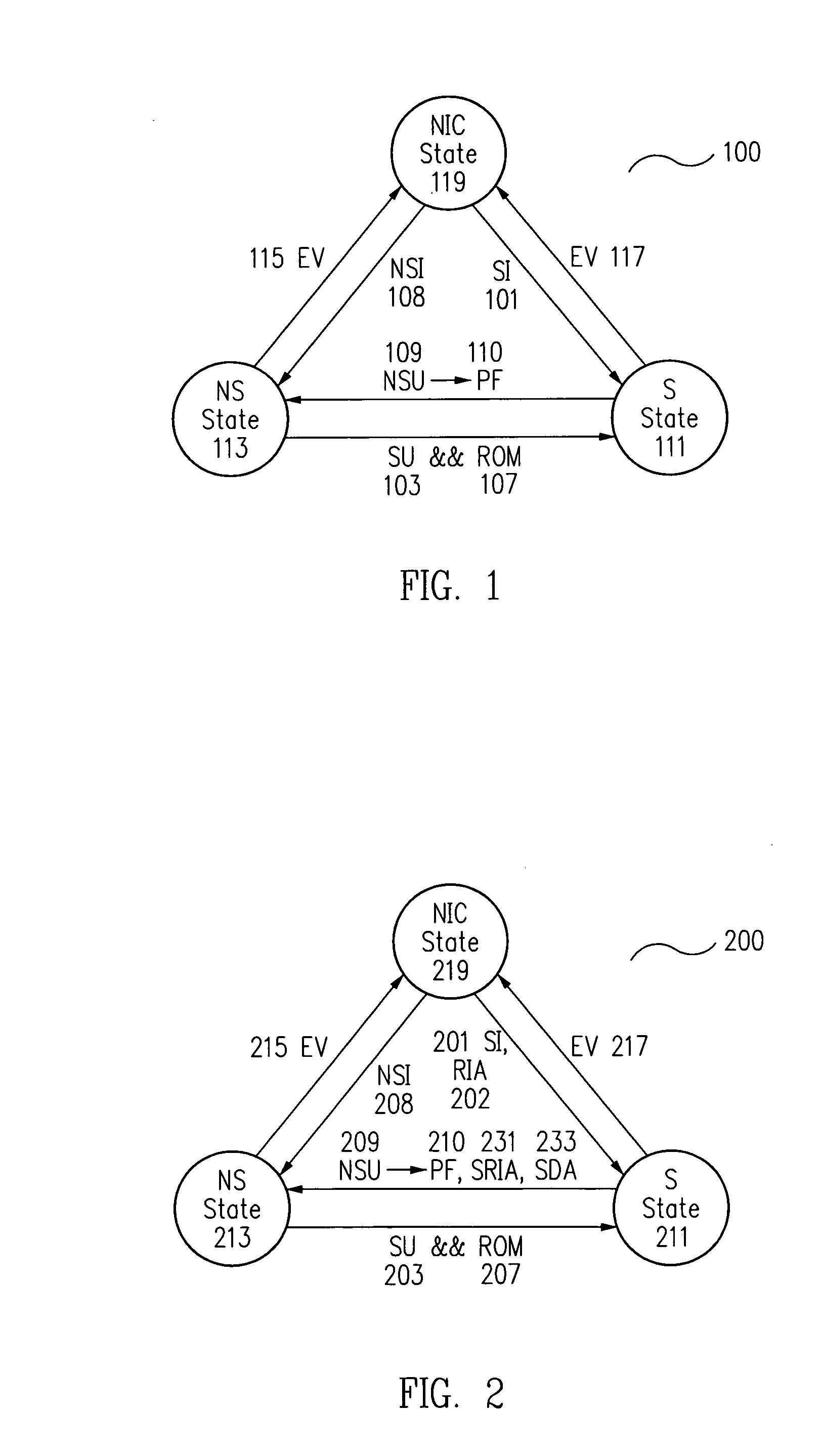

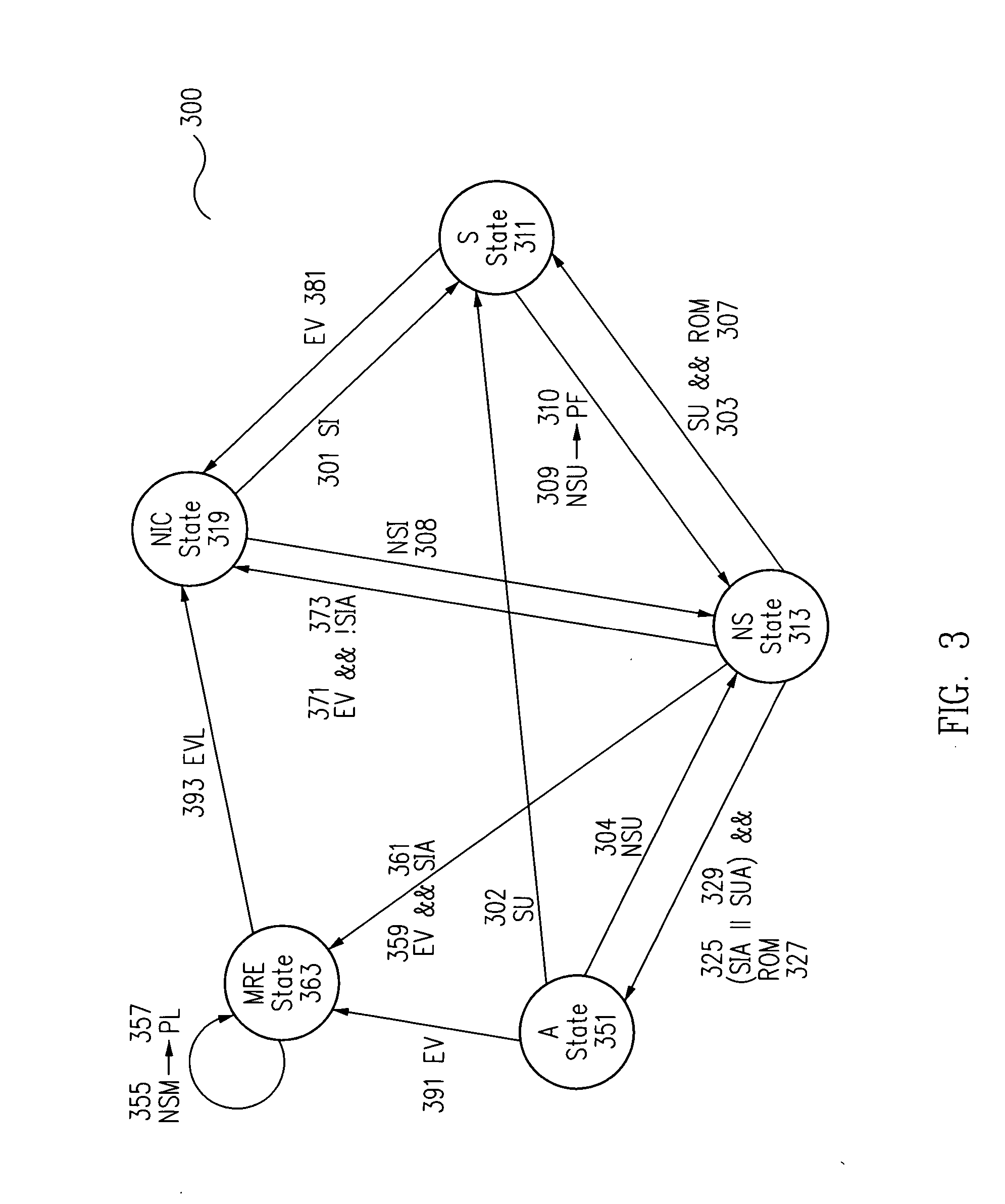

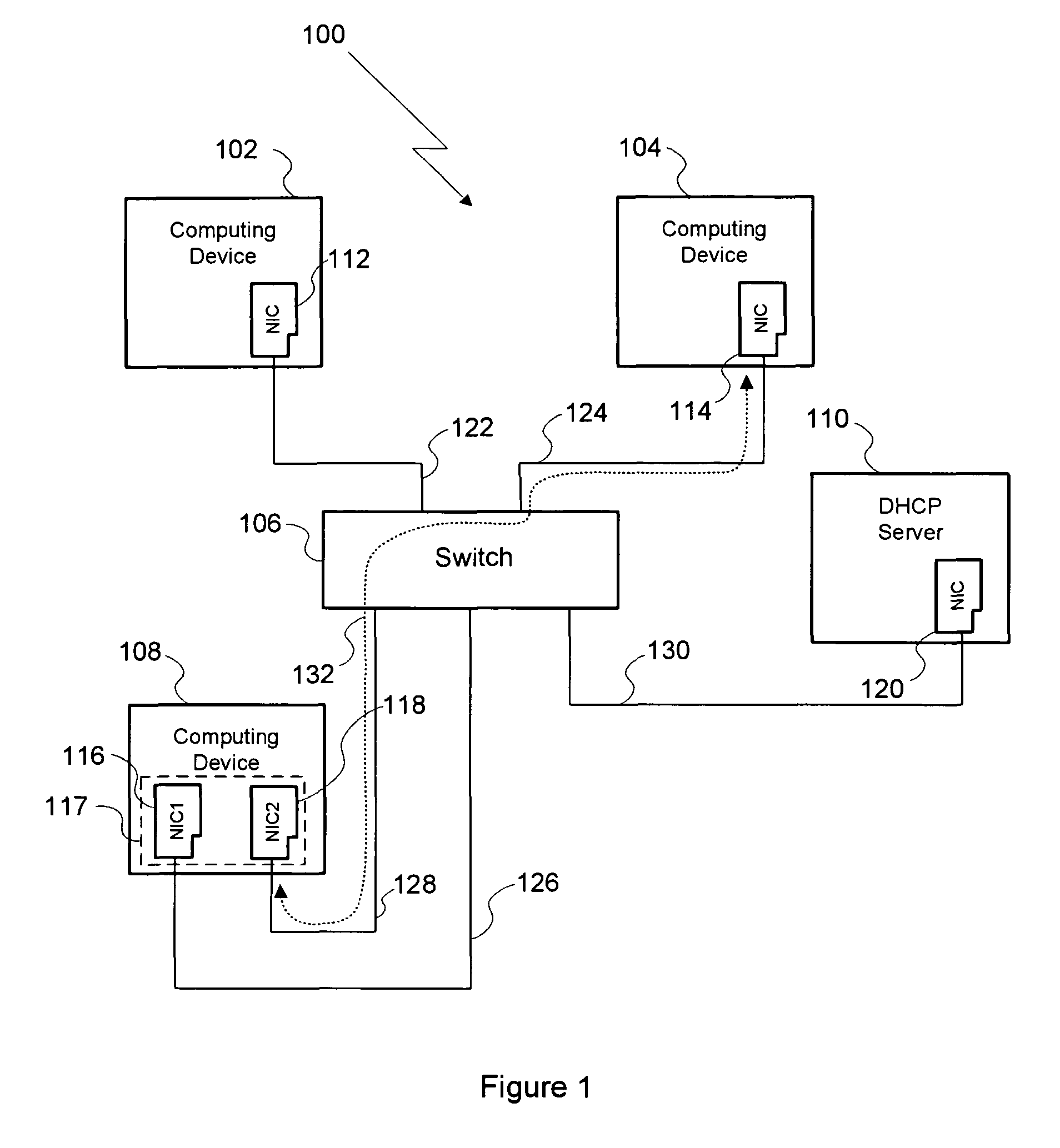

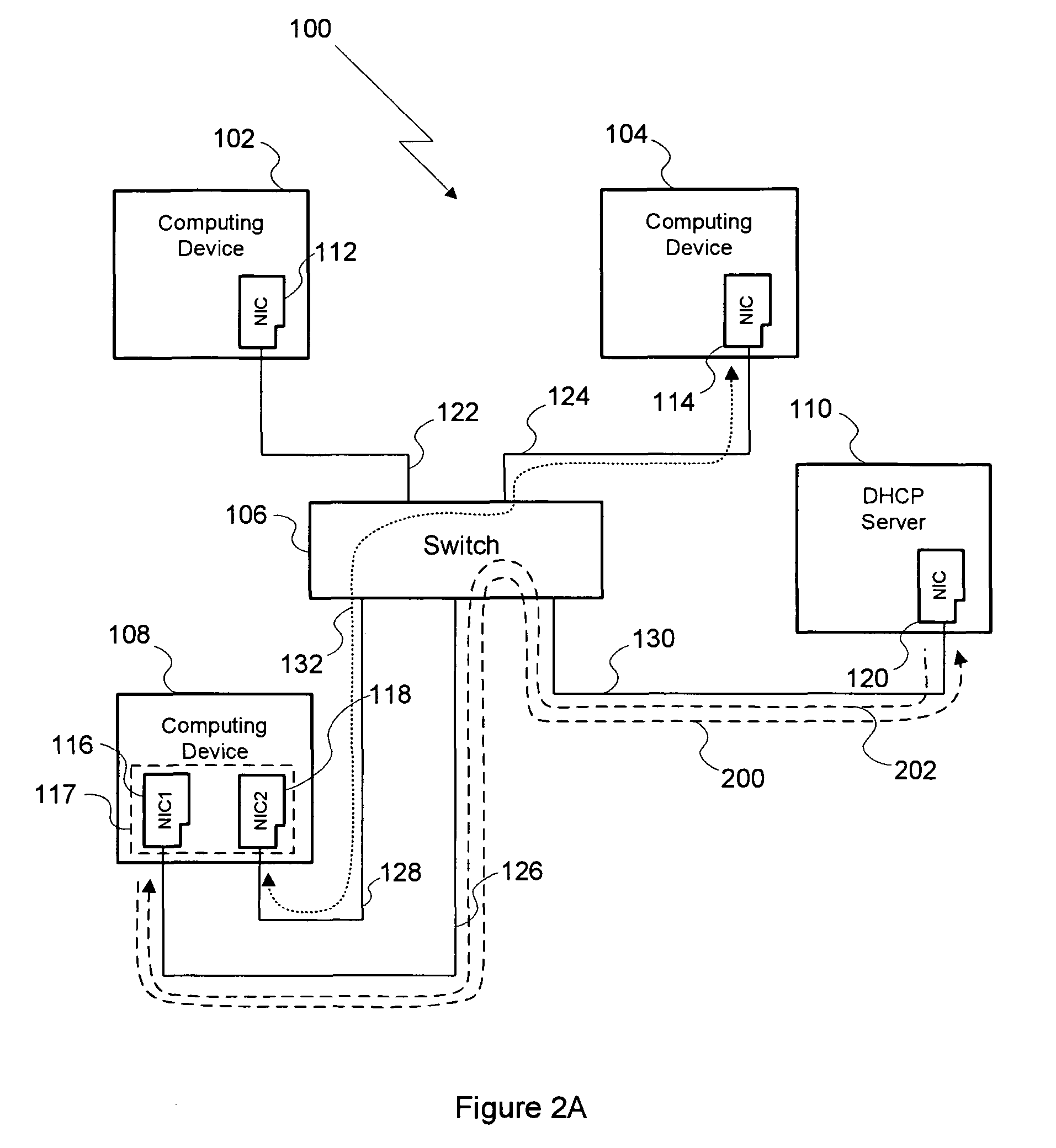

System and method for avoiding neighbor cache pollution

ActiveUS8284783B1Avoiding cache corruptionData switching by path configurationNetwork connectionCache pollution

A method of avoiding cache corruption when establishing a network connection includes the steps of transmitting a request to a computing device, where the request includes a masquerade layer-3 address, and receiving a reply transmitted by the computing device in response to the request, where the reply includes a MAC address associated with the computing device. Since the masquerade layer-3 address is unique relative to the computer network, computing devices within the network do no overwrite existing layer-3-to-MAC relationships in their respective caches with the layer-3-to-MAC relationship reflected in the request. Thus, the method enables a network connection to be initiated between two computing devices in the same computer network while avoiding neighbor cache pollution on other computing devices in that network.

Owner:NVIDIA CORP

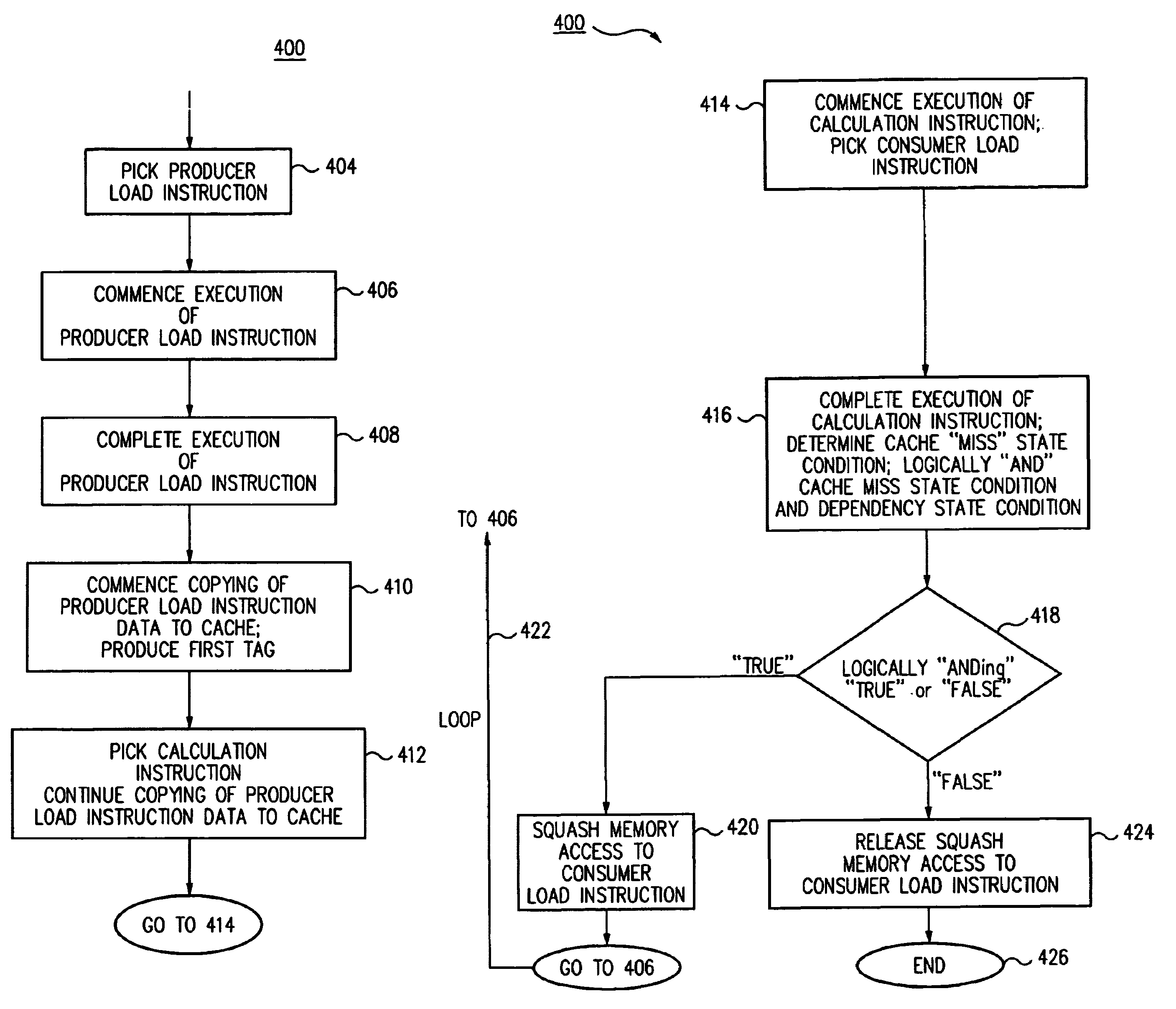

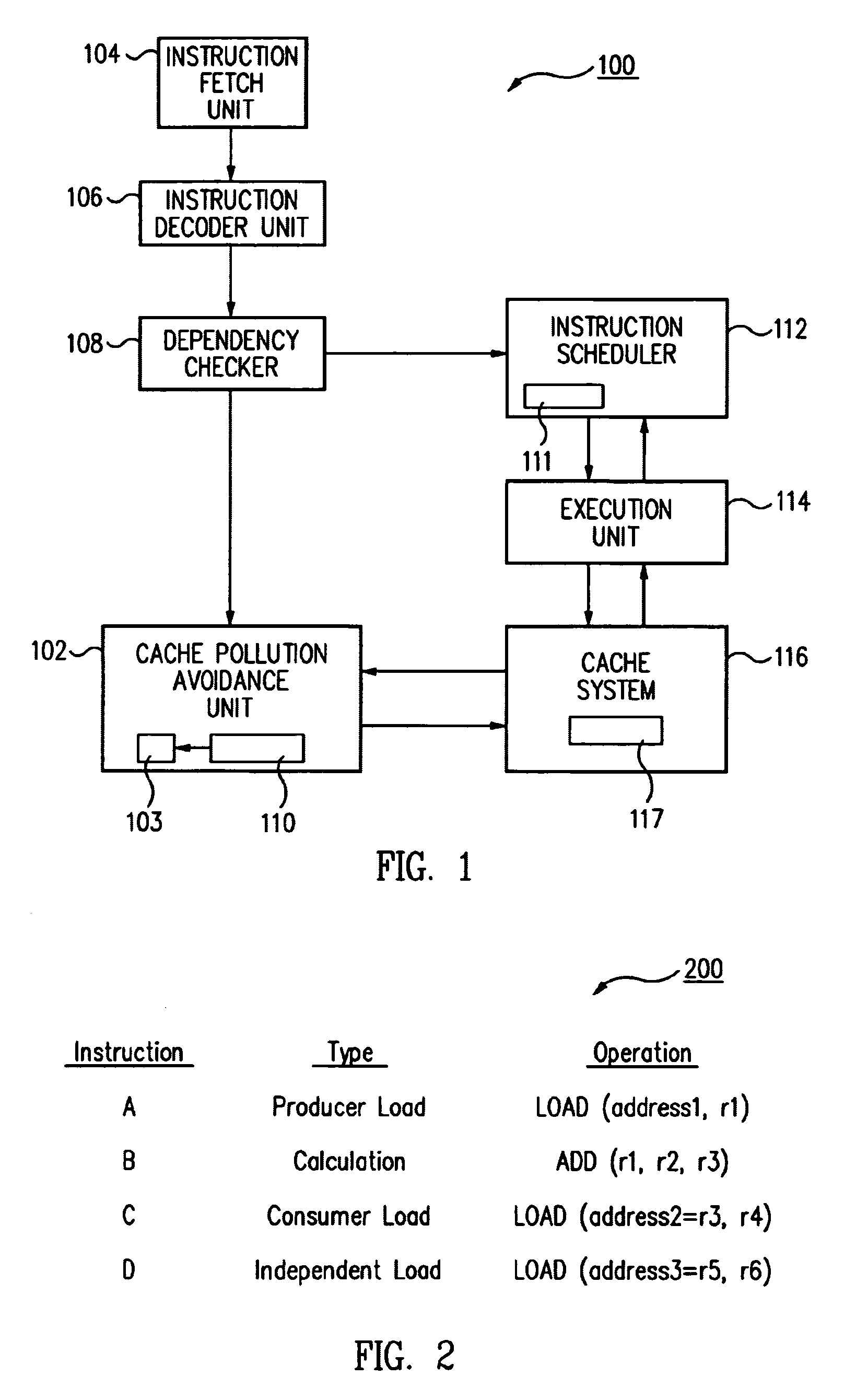

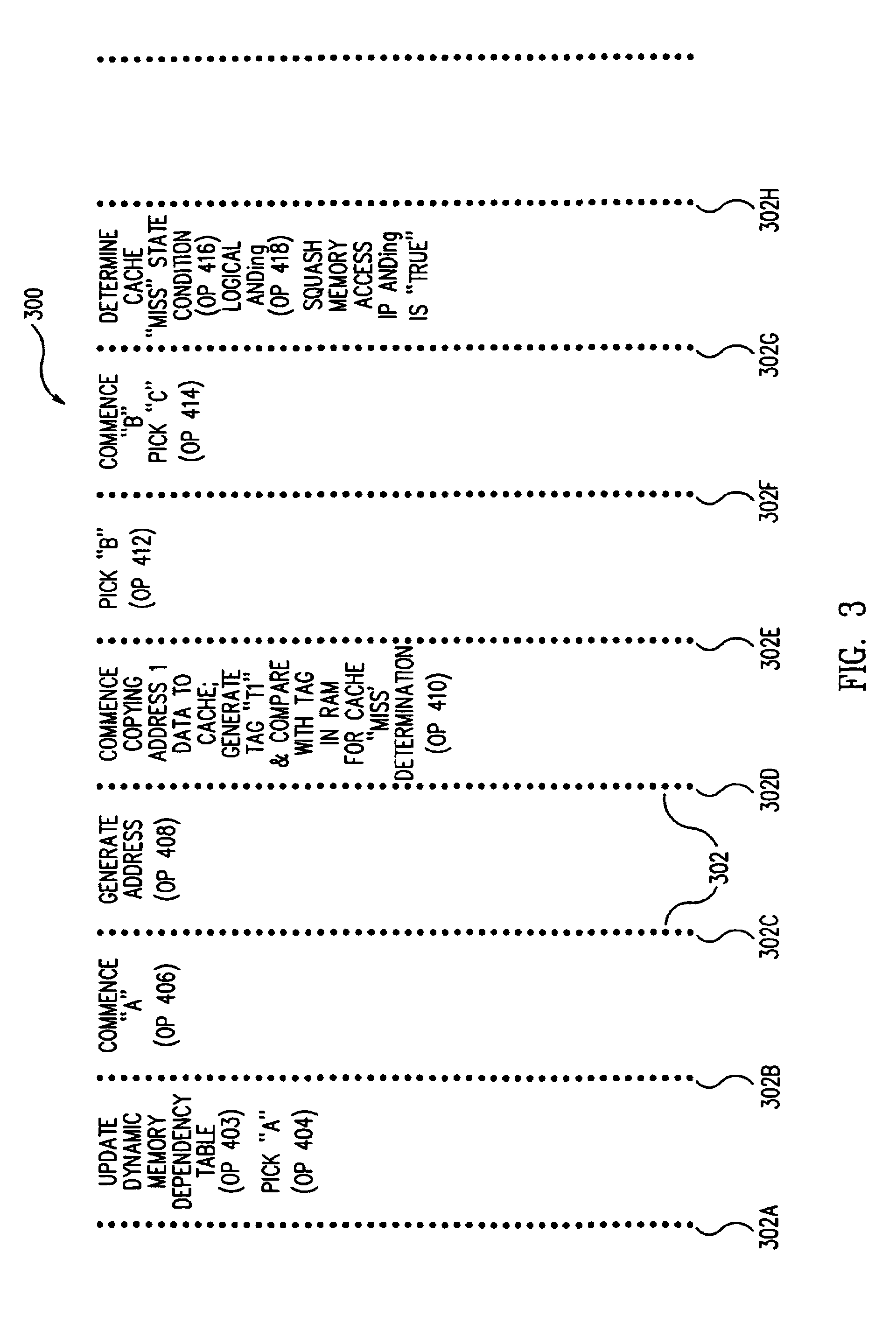

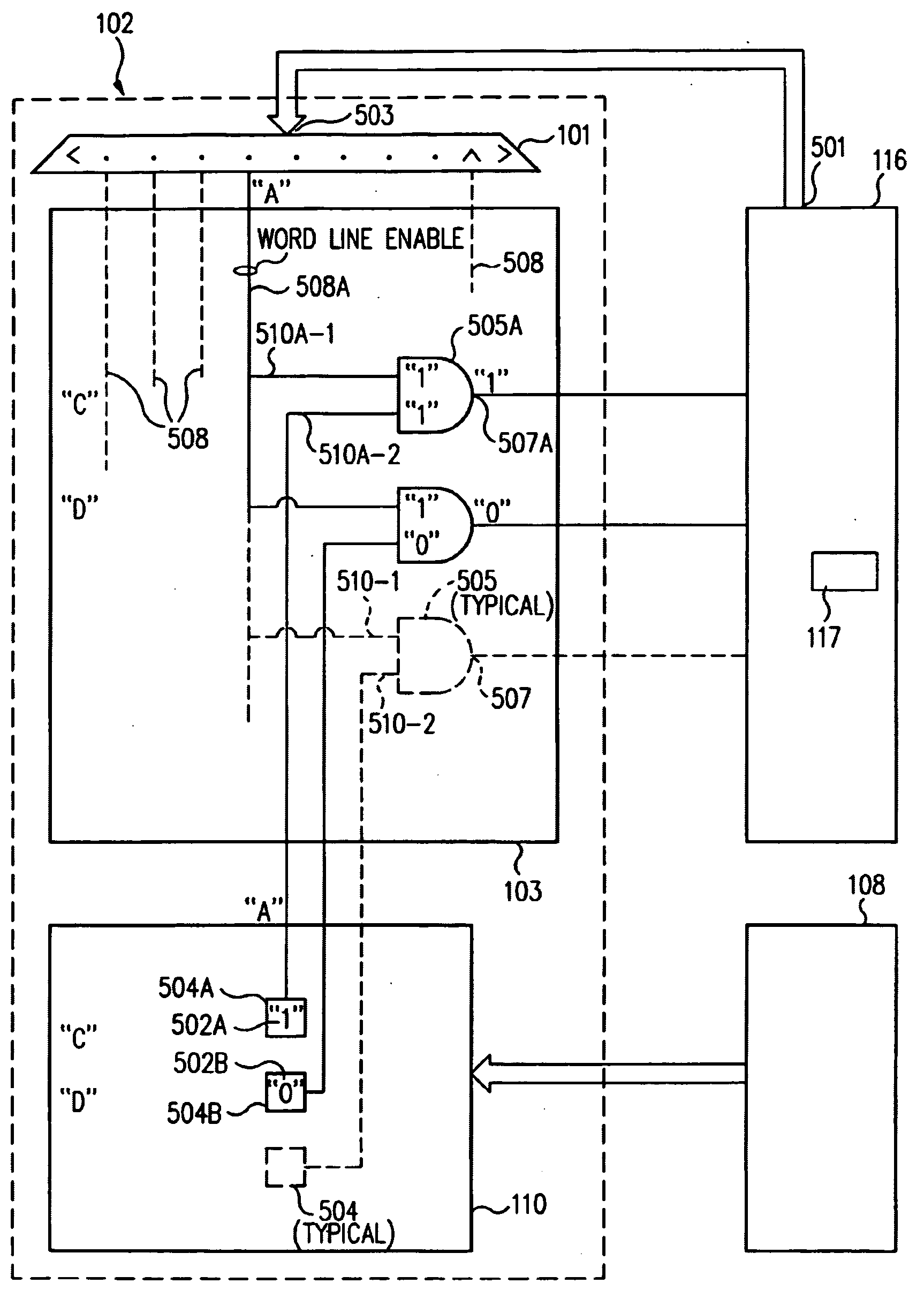

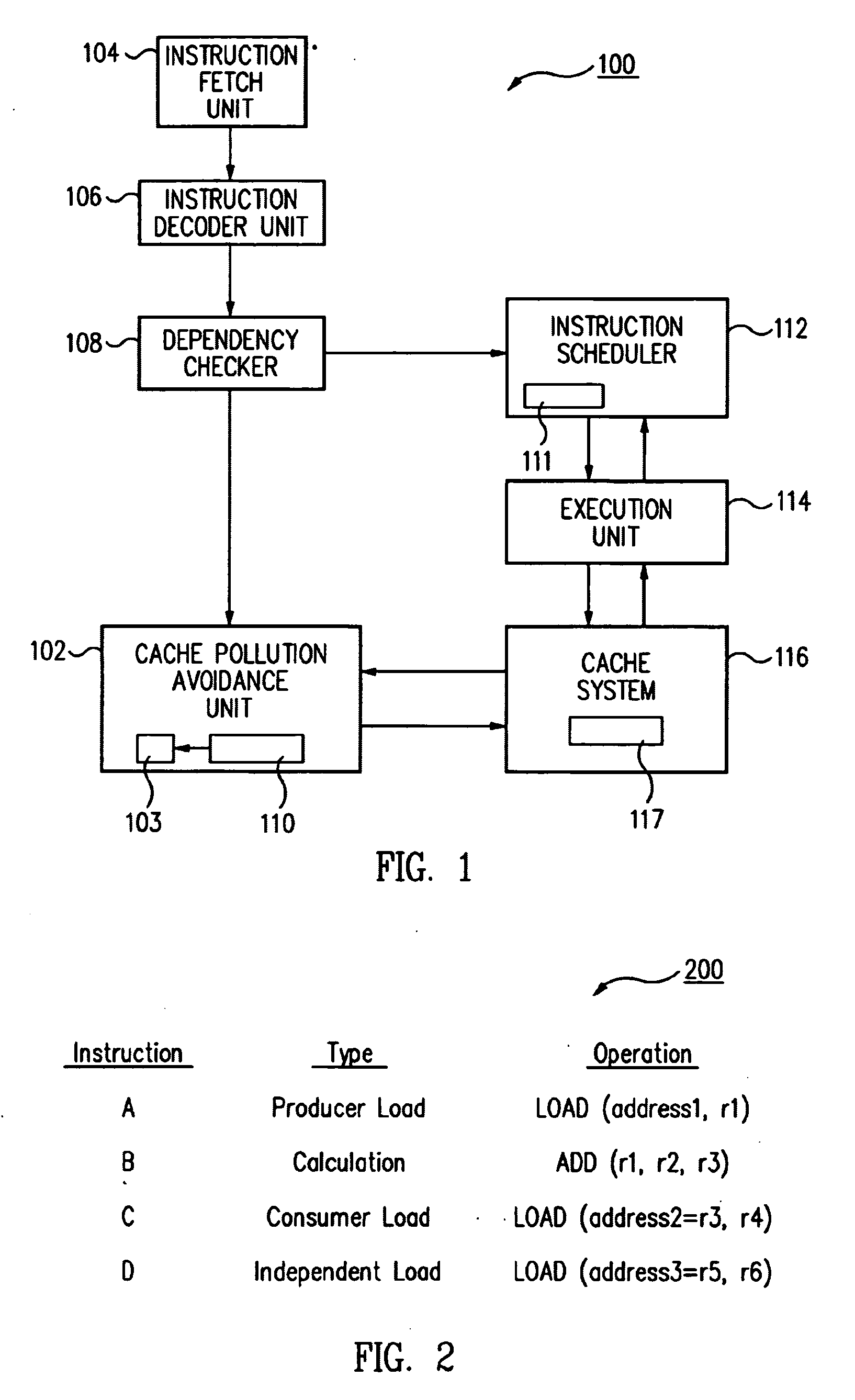

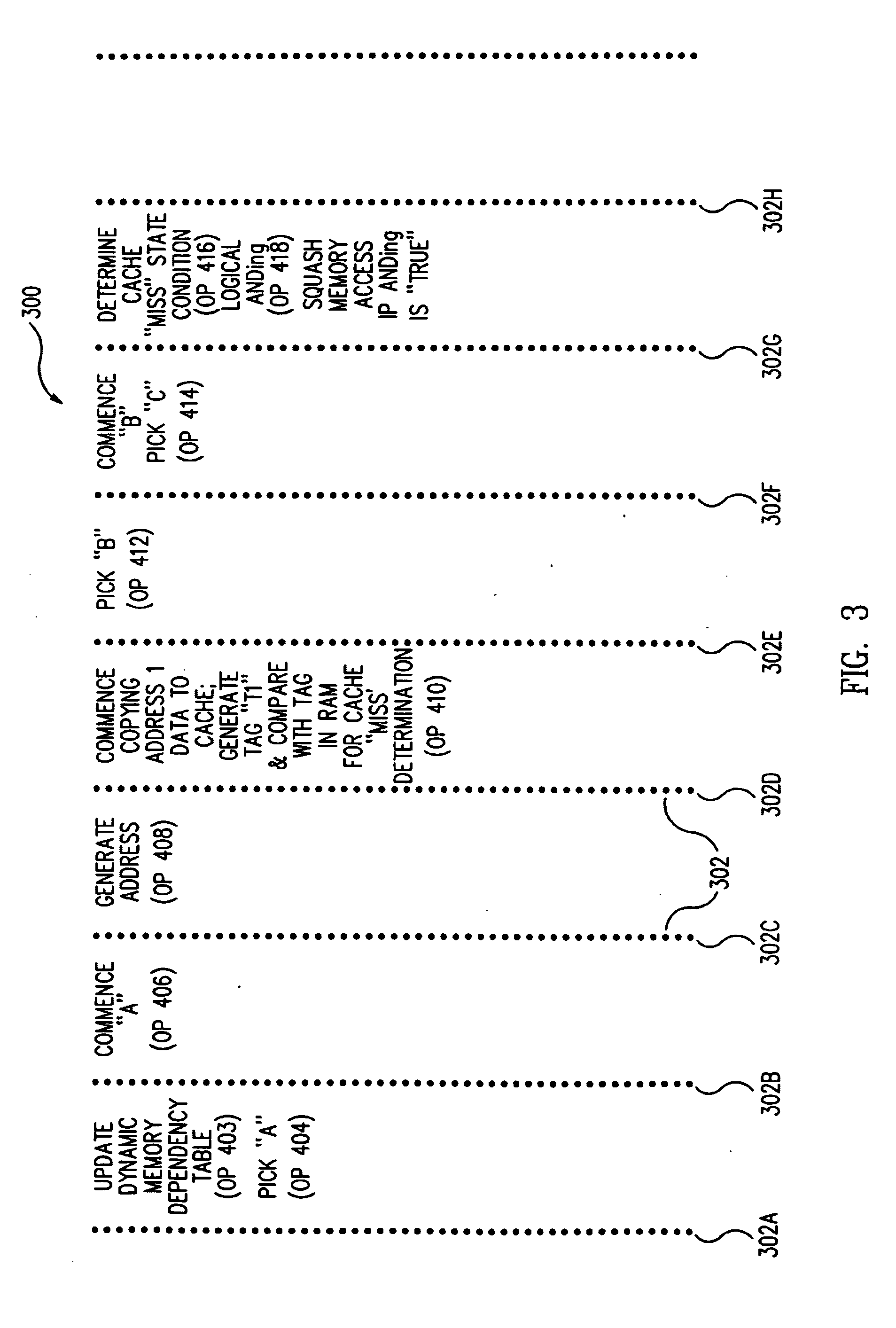

Method and apparatus for avoiding cache pollution due to speculative memory load operations in a microprocessor

ActiveUS7010648B2Eliminate pollutionGeneral purpose stored program computerConcurrent instruction executionLoad instructionOperand

A cache pollution avoidance unit includes a dynamic memory dependency table for storing a dependency state condition between a first load instruction and a sequentially later second load instruction, which may depend on the completion of execution of the first load instruction for operand data. The cache pollution avoidance unit logically ANDs the dependency state condition stored in the dynamic memory dependency table with a cache memory “miss” state condition returned by the cache pollution avoidance unit for operand data produced by the first load instruction and required by the second load instruction. If the logical ANDing is true, memory access to the second load instruction is squashed and the execution of the second load instruction is re-scheduled.

Owner:ORACLE INT CORP

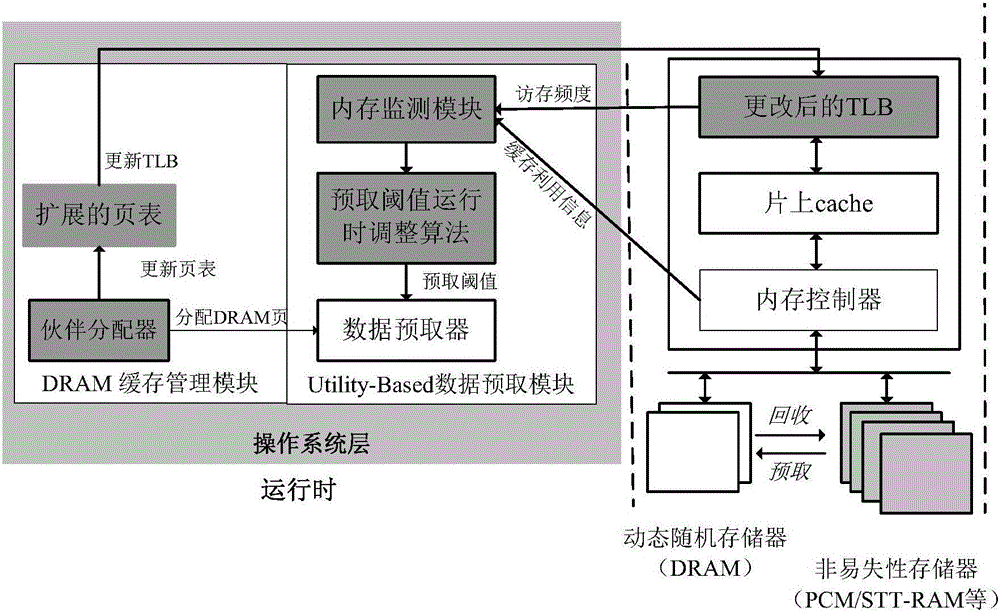

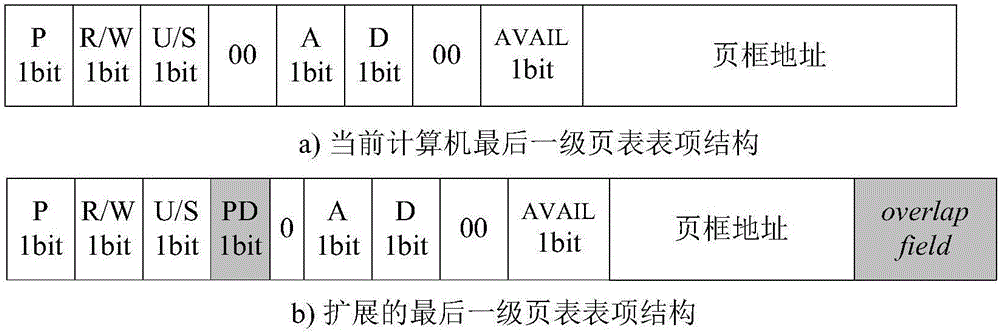

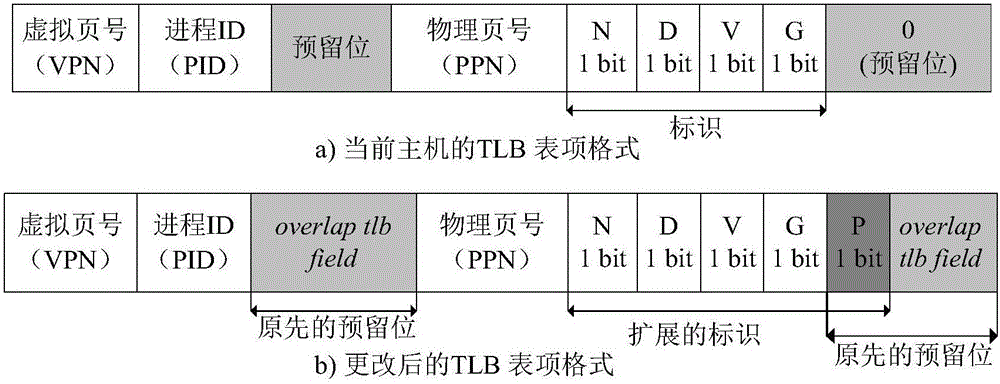

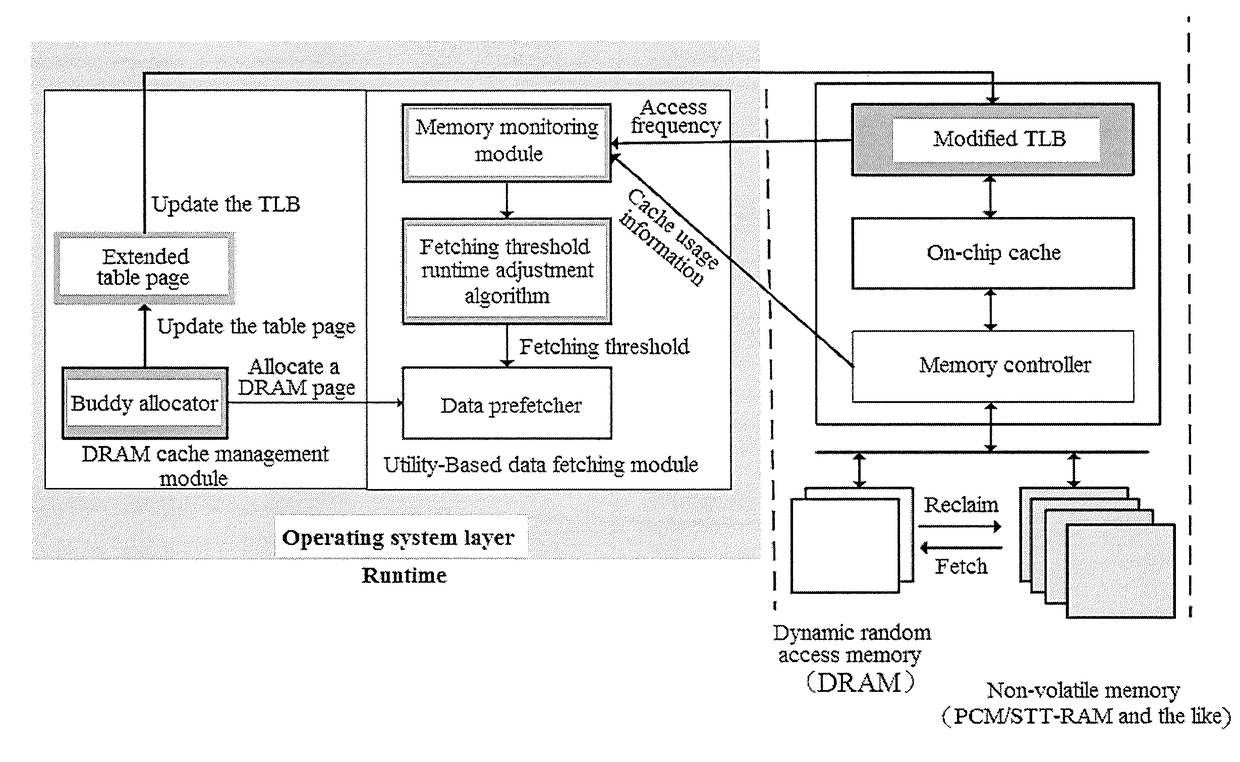

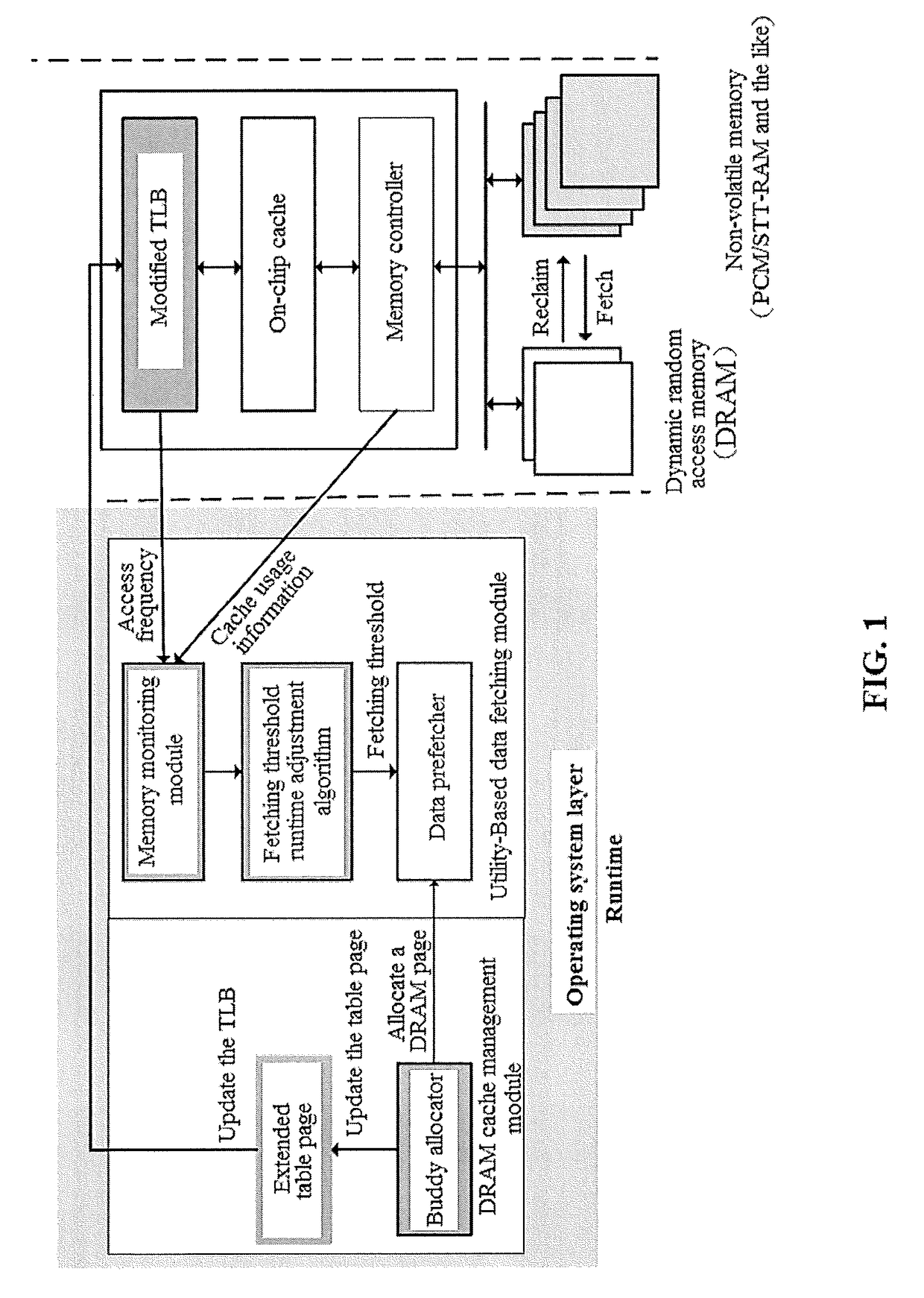

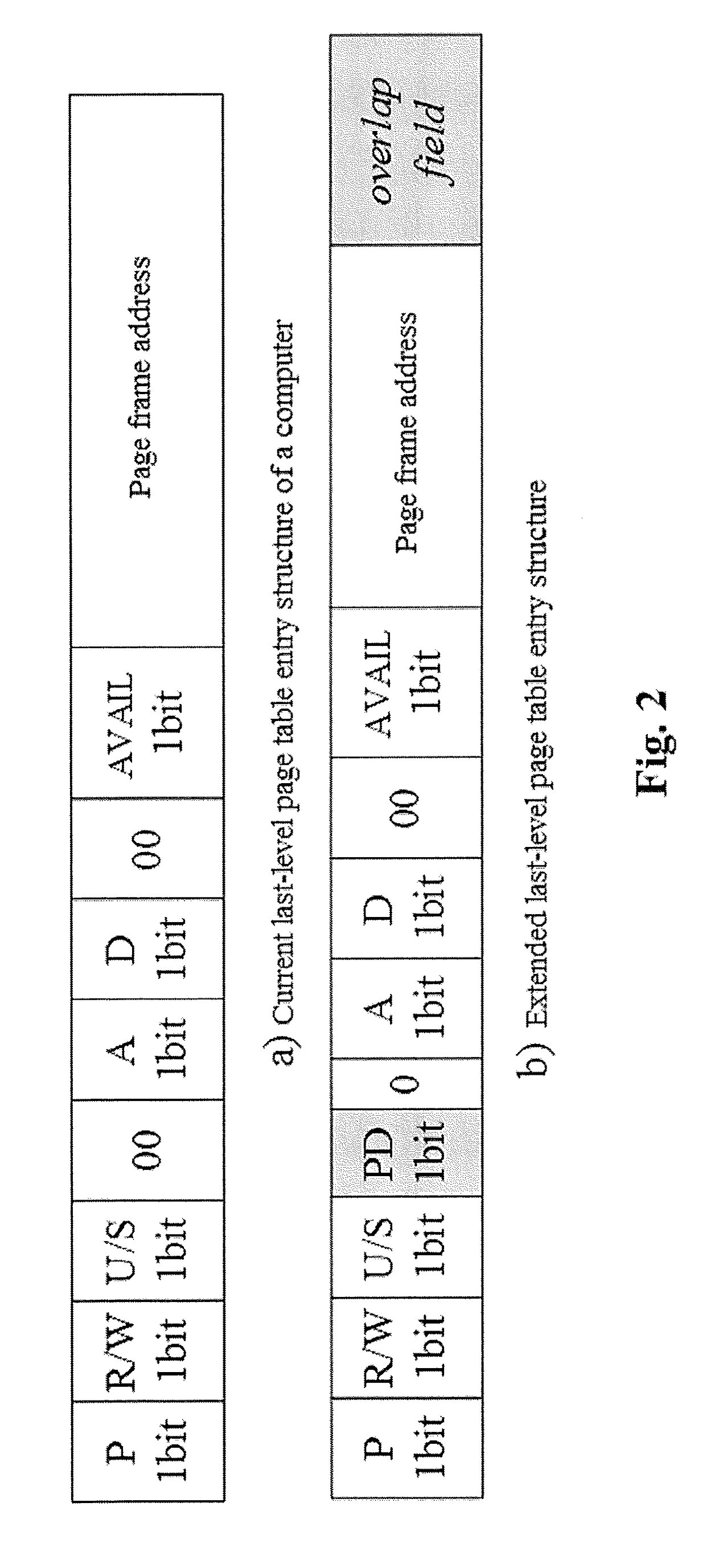

DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory access method and system adopting software and hardware collaborative management

ActiveCN105786717ARealize unified managementEliminate overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationPage tableCache management

The invention provides a DRAM (dynamic random access memory)-NVM (non-volatile memory) hierarchical heterogeneous memory system adopting software and hardware collaborative management. According to the system, an NVM is taken as a large-capacity NVM for use while DRAM is taken as a cache of the NVM. Hardware overhead of a hierarchical heterogeneous memory architecture in traditional hardware management is eliminated through effective use of certain reserved bits in a TLB (translation lookaside buffer) and a page table structure, a cache management problem of the heterogeneous memory system is transferred to the software hierarchy, and meanwhile, the memory access delay after missing of final-stage cache is reduced. In view of the problems that many applications in a big data application environment have poorer data locality and cache pollution can be aggravated with adoption of a traditional demand-based data prefetching strategy in the DRAM cache, a Utility-Based data prefetching mechanism is adopted in the DRAM-NVM hierarchical heterogeneous memory system, whether data in the NVM are cached into the DRAM is determined according to the current memory pressure and memory access characteristics of the applications, so that the use efficiency of the DRAM cache and the use efficiency of the bandwidth from the NVM main memory to the DRAM cache are increased.

Owner:HUAZHONG UNIV OF SCI & TECH

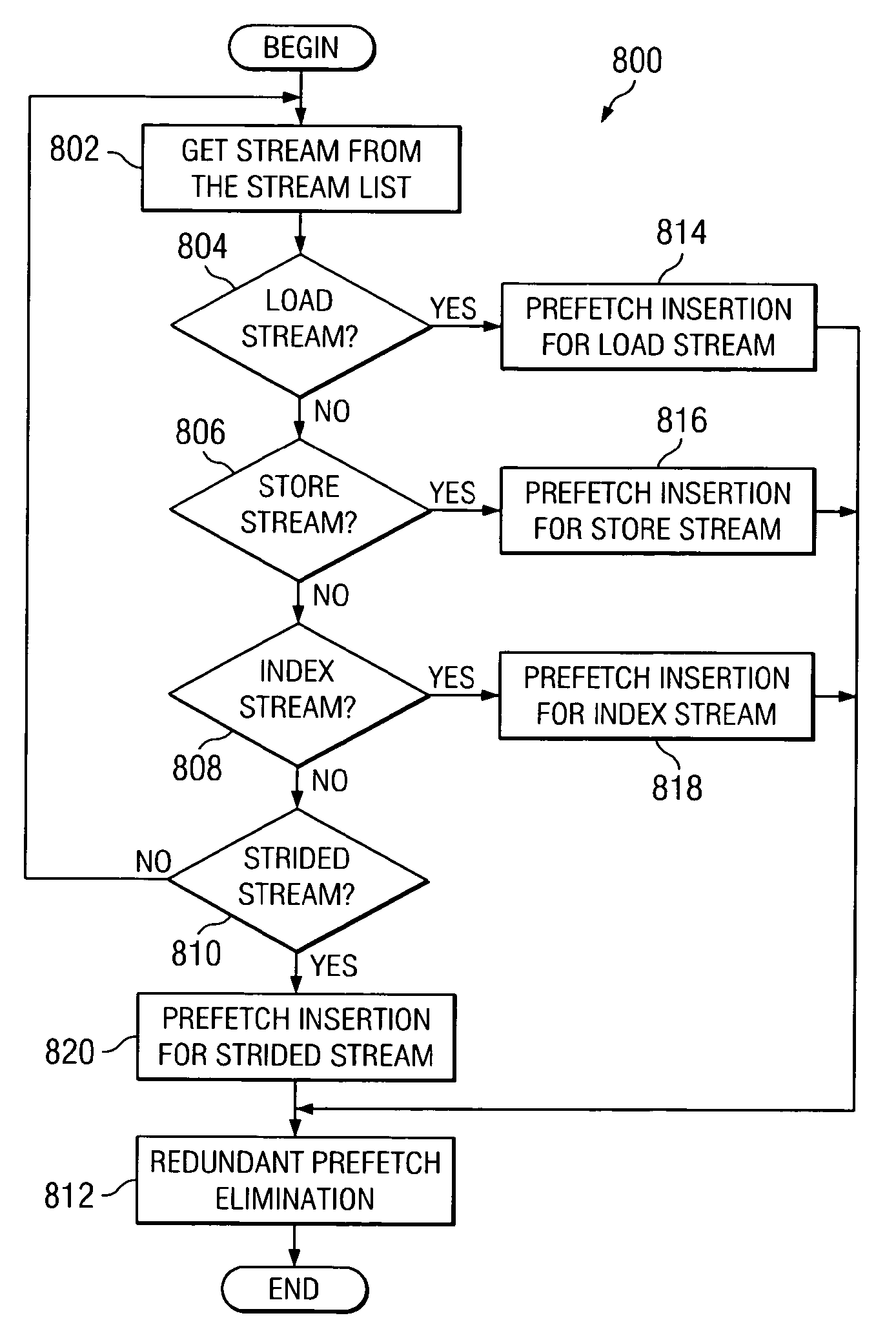

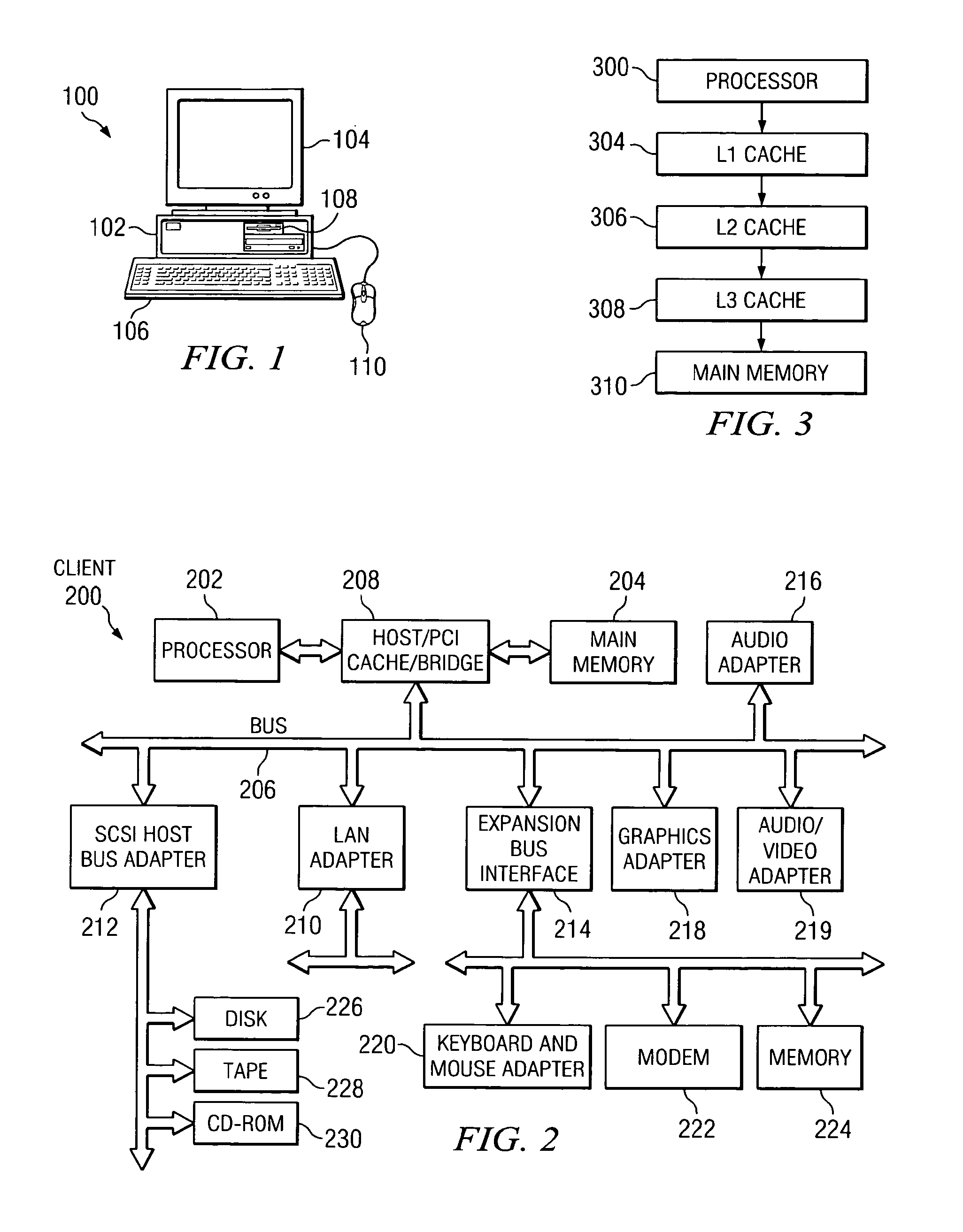

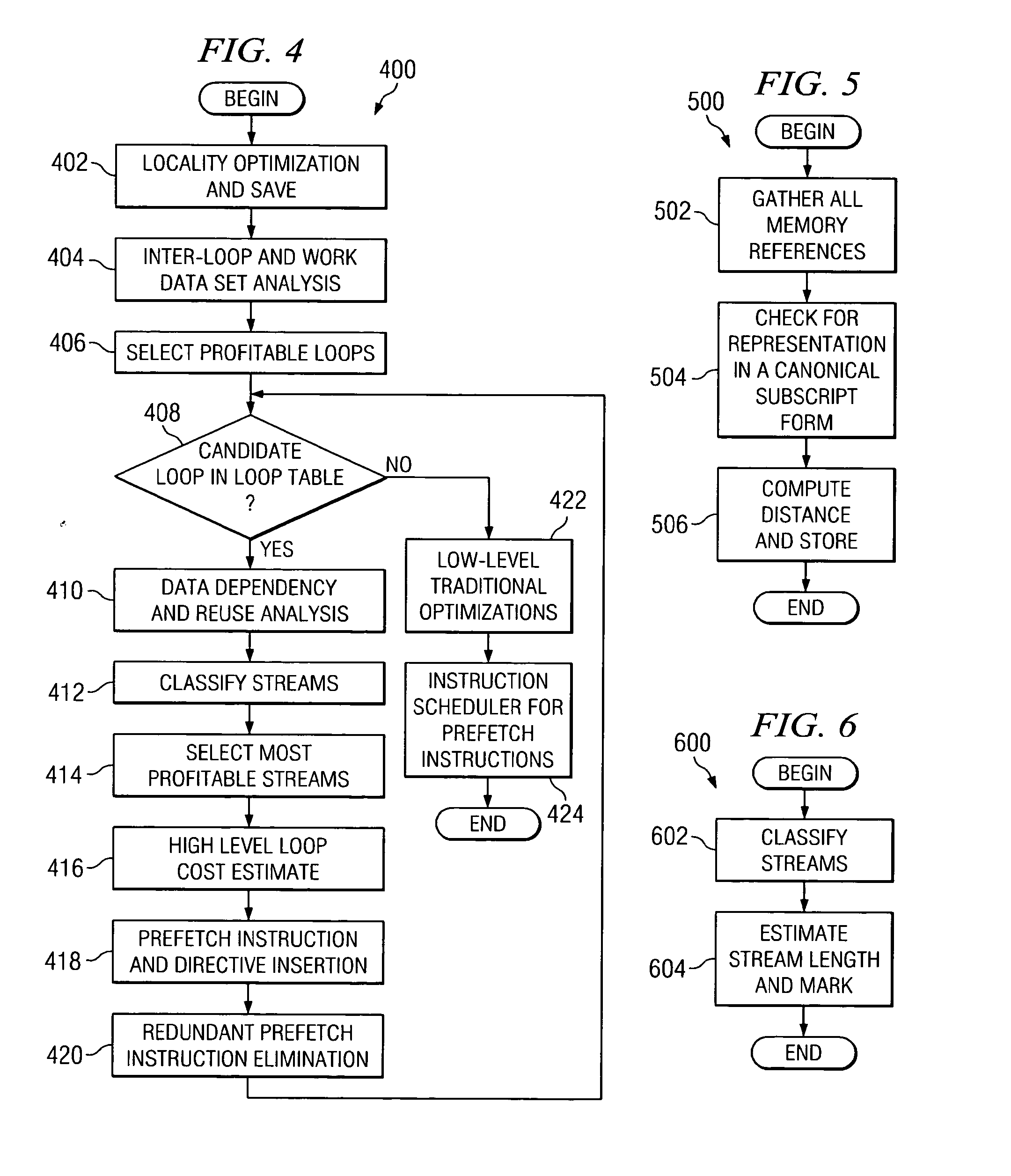

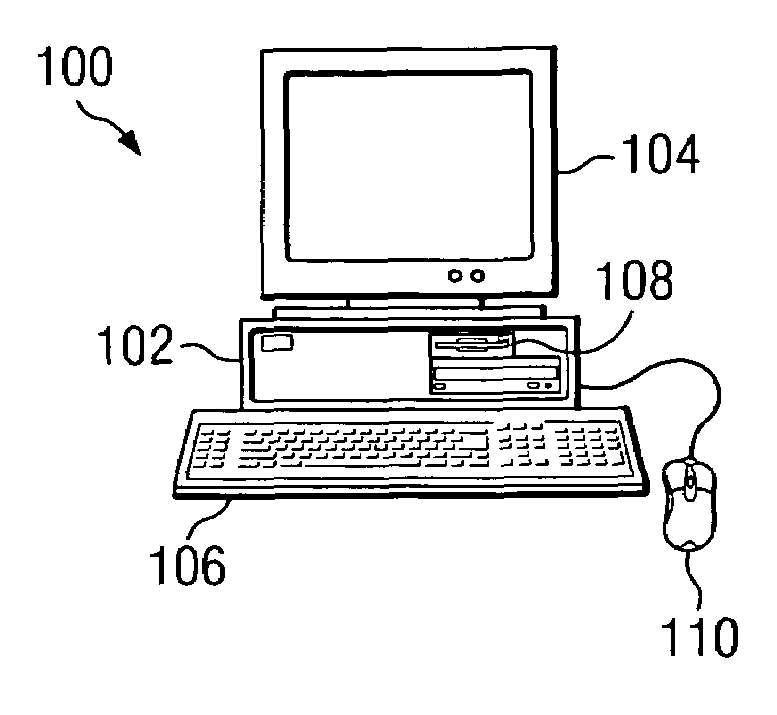

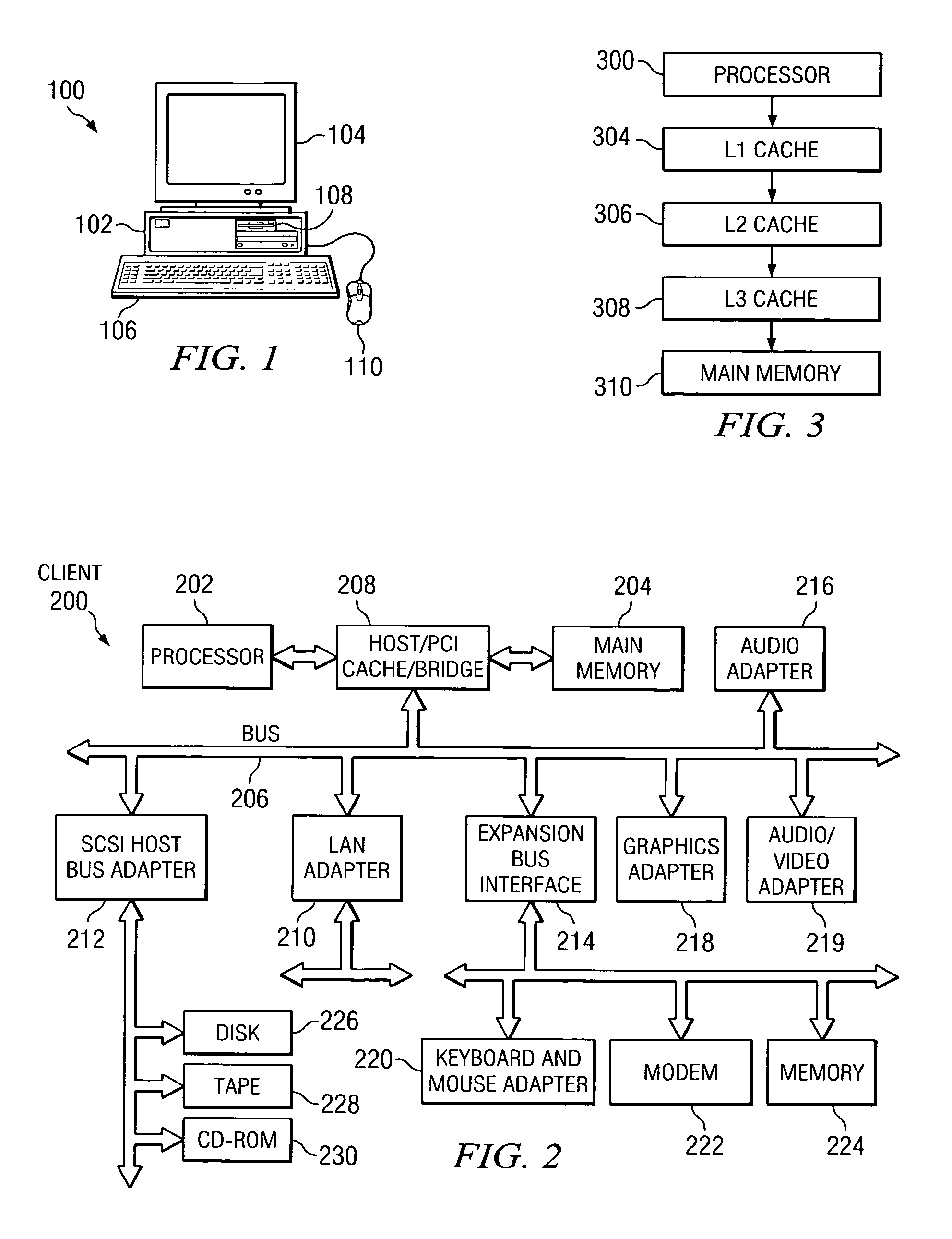

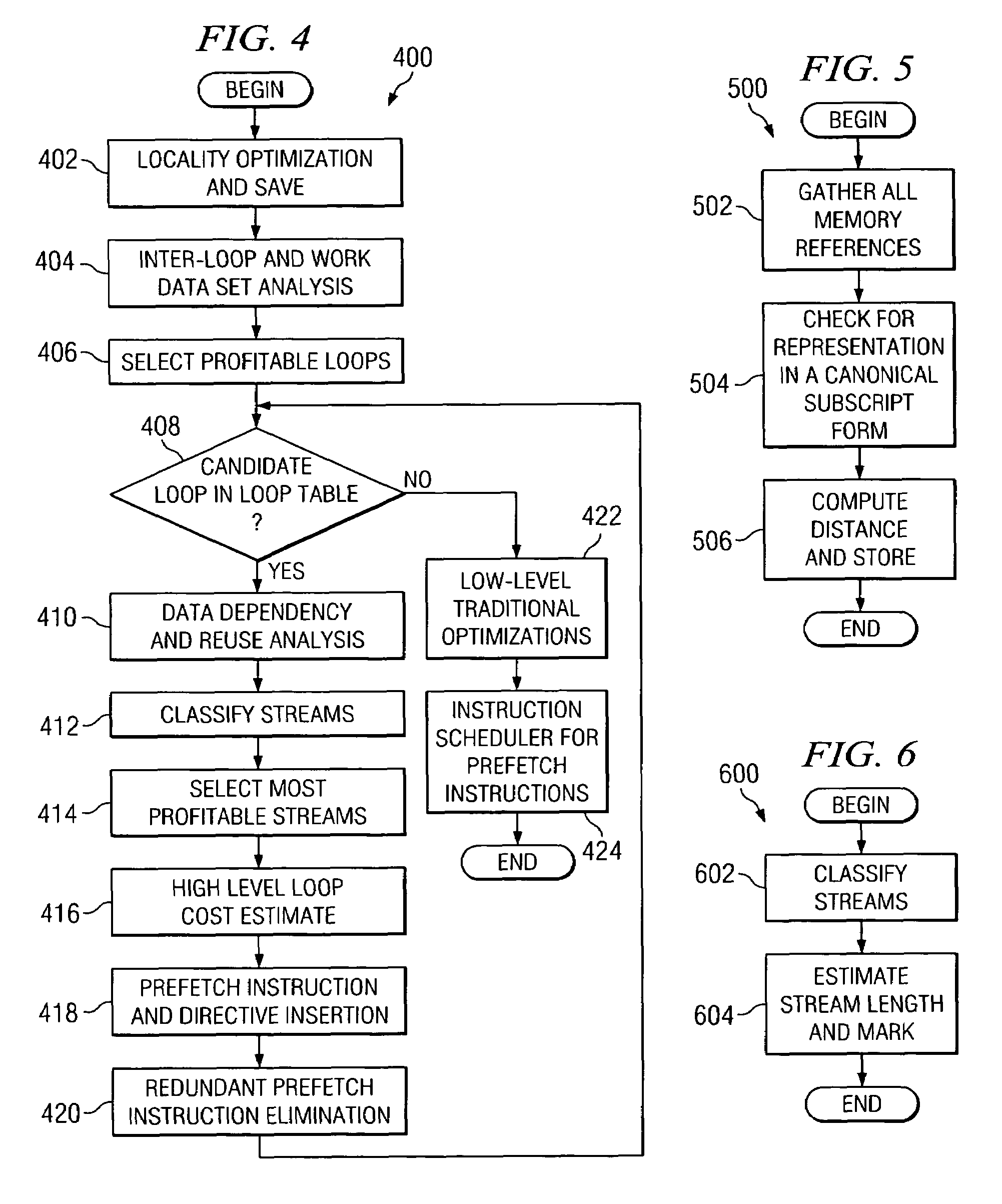

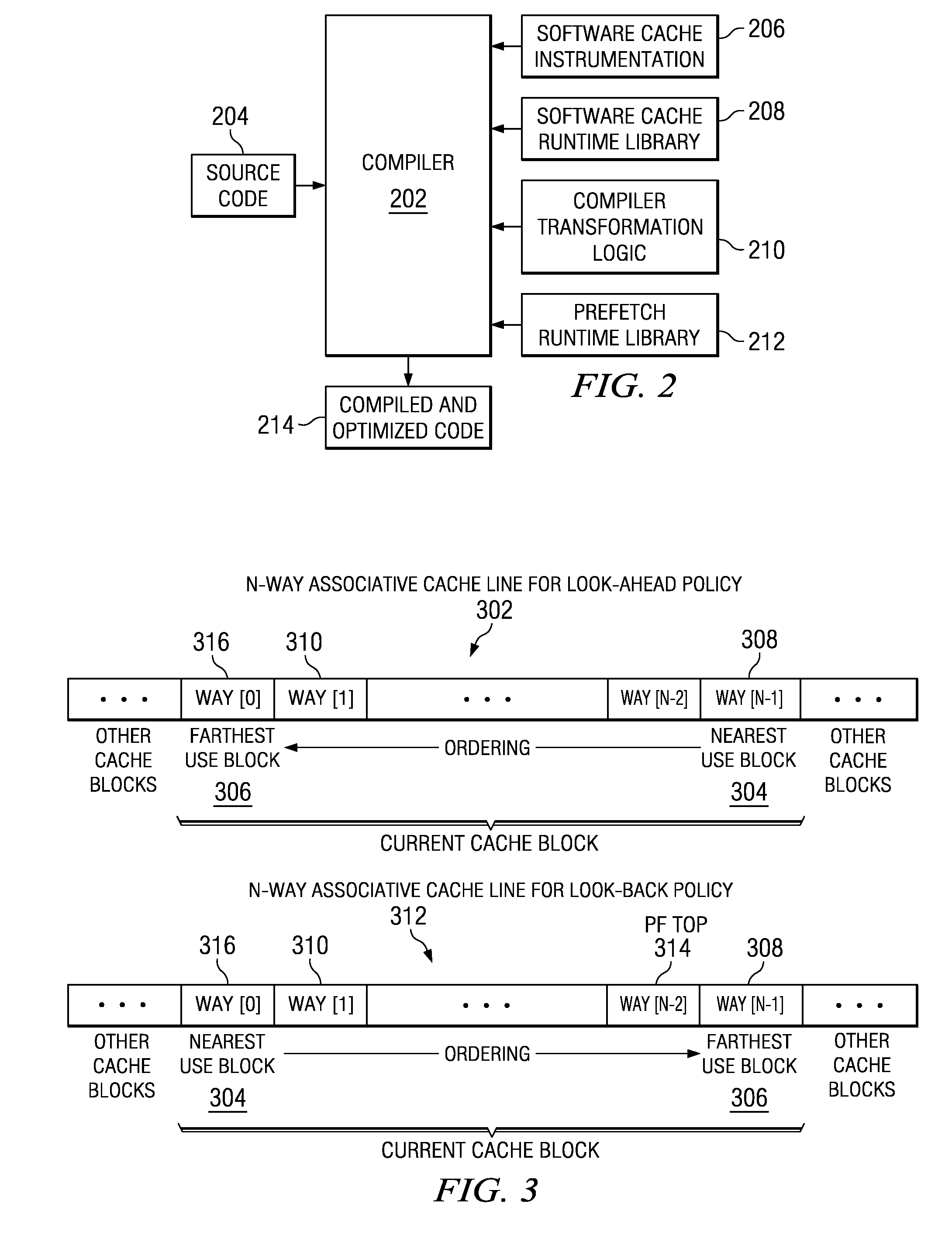

Fine-grained software-directed data prefetching using integrated high-level and low-level code analysis optimizations

InactiveUS20060048120A1Eliminate redundant prefetchingAvoids cache pollutionSoftware engineeringDigital computer detailsCost analysisParallel computing

A mechanism for minimizing effective memory latency without unnecessary cost through fine-grained software-directed data prefetching using integrated high-level and low-level code analysis and optimizations is provided. The mechanism identifies and classifies streams, identifies data that is most likely to incur a cache miss, exploits effective hardware prefetching to determine the proper number of streams to be prefetched, exploits effective data prefetching on different types of streams in order to eliminate redundant prefetching and avoid cache pollution, and uses high-level transformations with integrated lower level cost analysis in the instruction scheduler to schedule prefetch instructions effectively.

Owner:IBM CORP

Method and apparatus for avoiding cache pollution due to speculative memory load operations in a microprocessor

ActiveUS20050055533A1Eliminate cache pollutionEliminate pollutionGeneral purpose stored program computerConcurrent instruction executionLoad instructionOperand

A cache pollution avoidance unit includes a dynamic memory dependency table for storing a dependency state condition between a first load instruction and a sequentially later second load instruction, which may depend on the completion of execution of the first load instruction for operand data. The cache pollution avoidance unit logically ANDs the dependency state condition stored in the dynamic memory dependency table with a cache memory “miss” state condition returned by the cache pollution avoidance unit for operand data produced by the first load instruction and required by the second load instruction. If the logical ANDing is true, memory access to the second load instruction is squashed and the execution of the second load instruction is re-scheduled.

Owner:ORACLE INT CORP

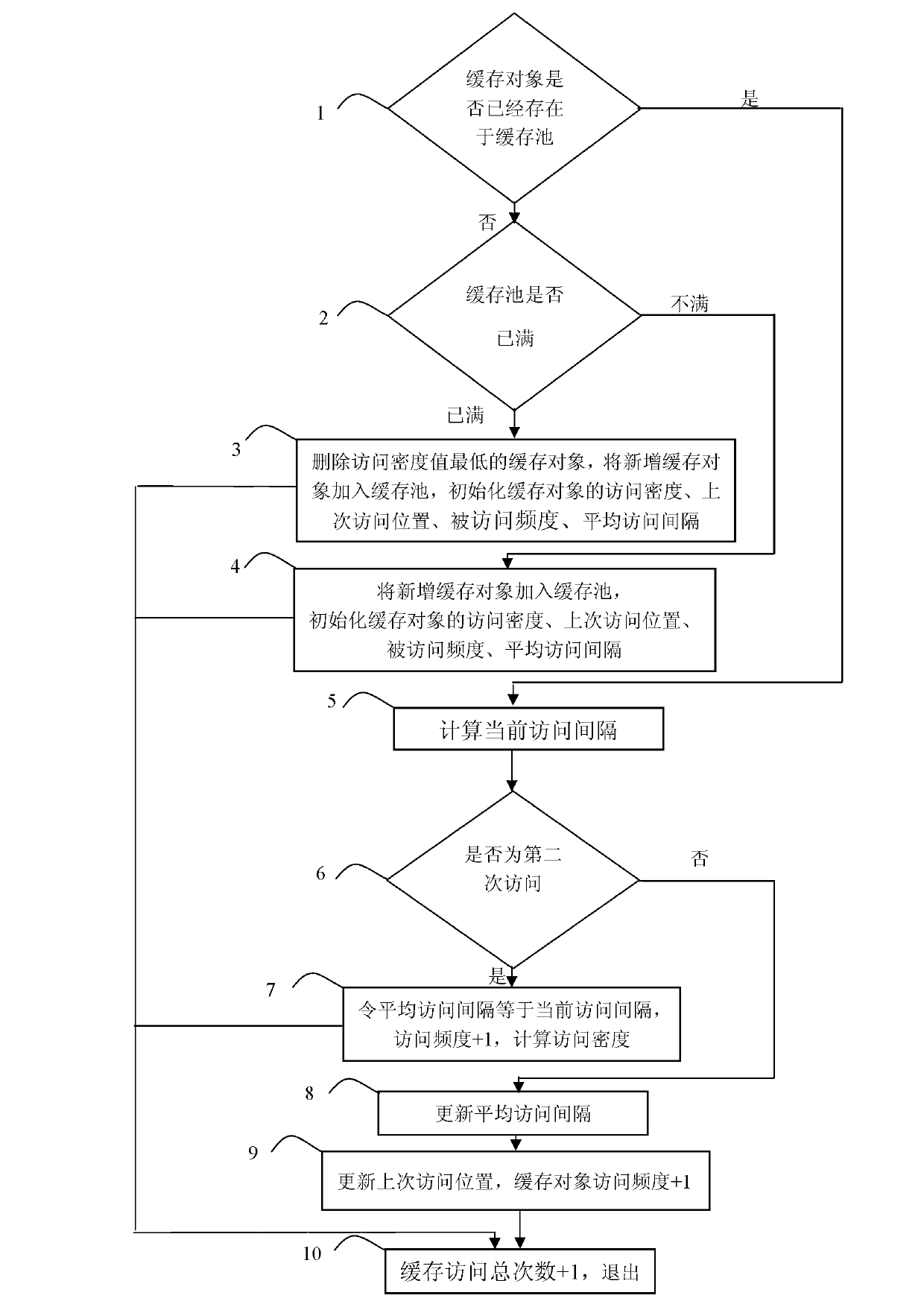

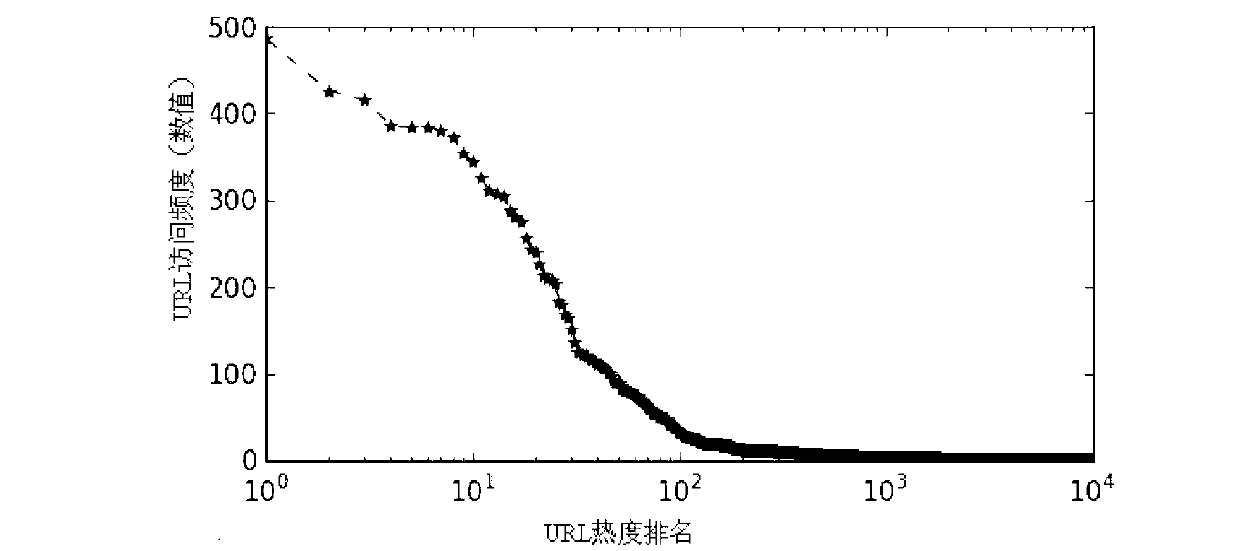

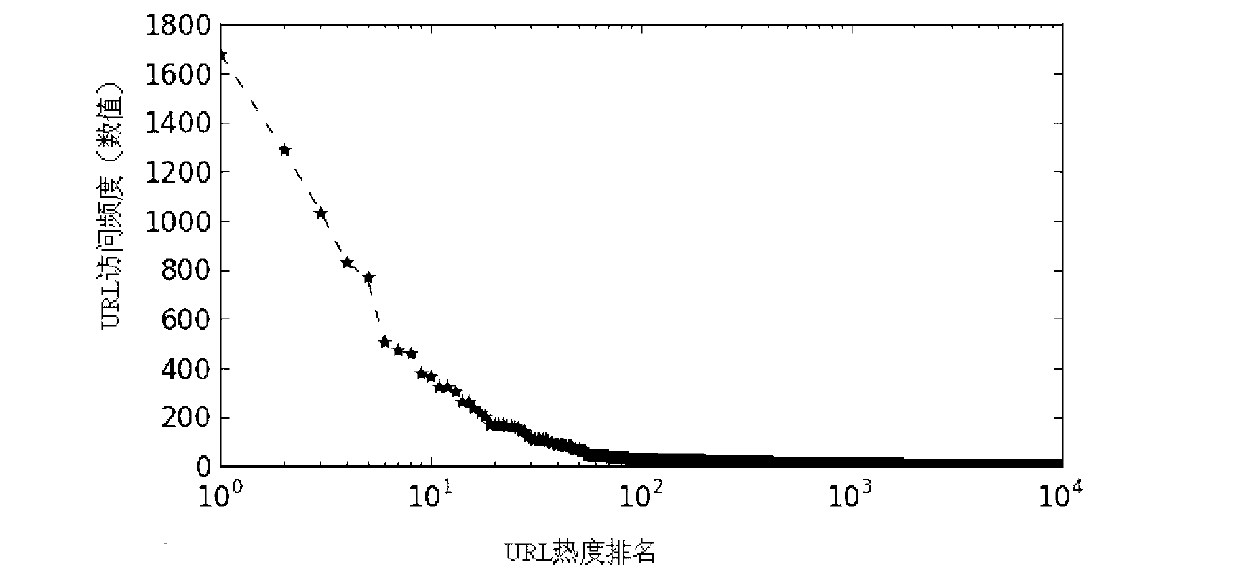

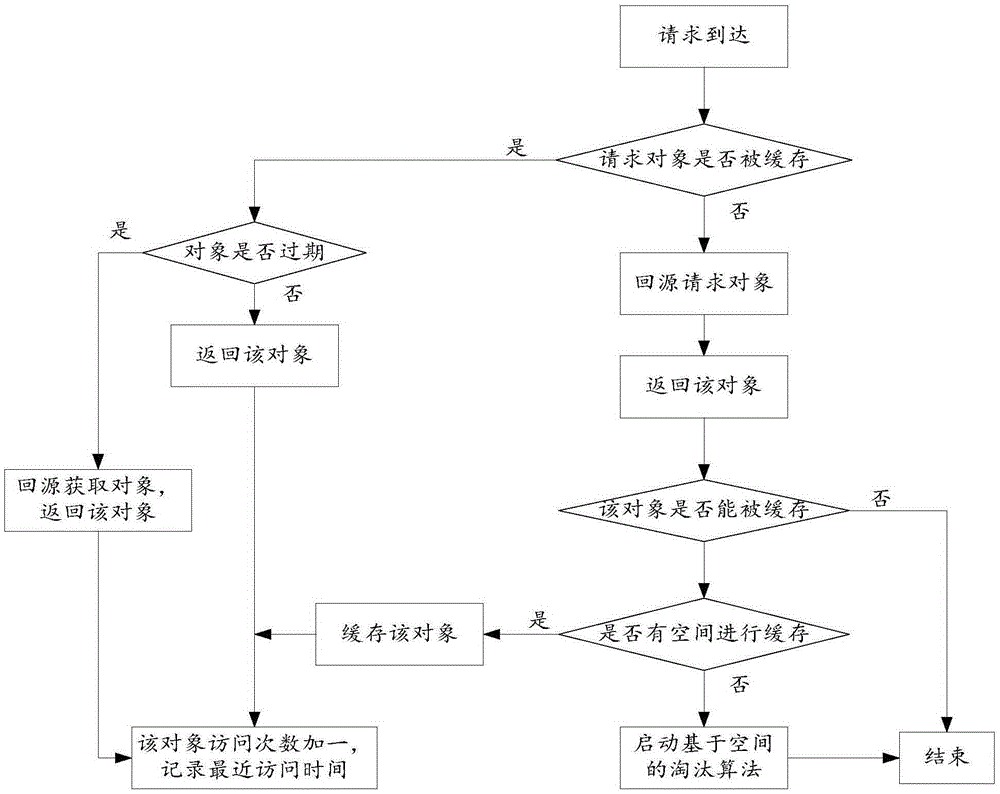

Web cache replacement method based on access density

ActiveCN103106153AImprove accuracyAvoid hit lossMemory adressing/allocation/relocationParallel computingThe Internet

The invention relates to a web cache replacement method based on access density. The web cache replacement method resolves the problems that existing least recently used (LRU) has locality, least frequently used (LFU) has cache pollution, and the hit rate is low. The web cache replacement method based on the access density includes judging whether cache objects exist in a memory pool; judging whether the memory pool is full; initializing multiply; deleting the cache object having the lowest density, and enabling newly increased cache objects to be added to the memory pool; calculating current access intervals; judging whether second access is conducted; calculating the access density; calculating the access density according to formulas, and updating even access intervals; updating relative values; and exiting. The web cache replacement method is applied to the field of internet storage.

Owner:HARBIN INST OF TECH

Dram/nvm hierarchical heterogeneous memory access method and system with software-hardware cooperative management

ActiveUS20170277640A1Eliminates hardwareReducing memory access delayMemory architecture accessing/allocationMemory systemsTerm memoryPage table

The present invention provides a DRAM / NVM hierarchical heterogeneous memory system with software-hardware cooperative management schemes. In the system, NVM is used as large-capacity main memory, and DRAM is used as a cache to the NVM. Some reserved bits in the data structure of TLB and last-level page table are employed effectively to eliminate hardware costs in the conventional hardware-managed hierarchical memory architecture. The cache management in such a heterogeneous memory system is pushed to the software level. Moreover, the invention is able to reduce memory access latency in case of last-level cache misses. Considering that many applications have relatively poor data locality in big data application environments, the conventional demand-based data fetching policy for DRAM cache can aggravates cache pollution. In the present invention, an utility-based data fetching mechanism is adopted in the DRAM / NVM hierarchical memory system, and it determines whether data in the NVM should be cached in the DRAM according to current DRAM memory utilization and application memory access patterns. It improves the efficiency of the DRAM cache and bandwidth usage between the NVM main memory and the DRAM cache.

Owner:HUAZHONG UNIV OF SCI & TECH

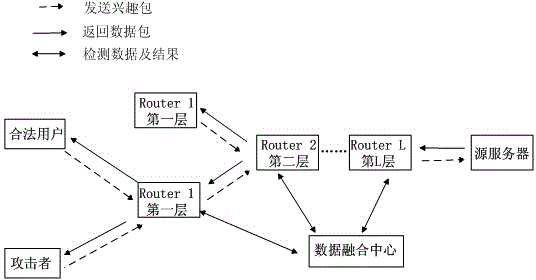

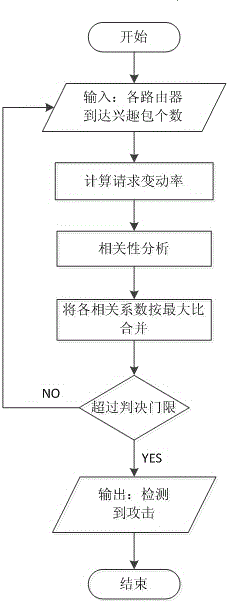

Coordinated detection method of NDN low-speed cache pollution attack

The invention discloses a coordinated detection method of an NDN low-speed cache pollution attack, which can be used for detecting attack behaviour through calculating multilayer node request change rates on a transmission path, thereby fusing nodes in each layer and correlation of request change rates of nodes in a first layer. The method of the invention can ensure high correct detection rate under occasion of the low-speed cache pollution attack, and simultaneously reduce detection delay of a dispersed attack by cooperating with more than three layers of routes. The method of the invention can be used for effectively detecting a low-speed concentrated attack and the dispersed attack simultaneously and improving detection performance by sacrificing space complexity. The method of the invention can be applied to detect a cache pollution attack of named data networking.

Owner:JIANGSU UNIV

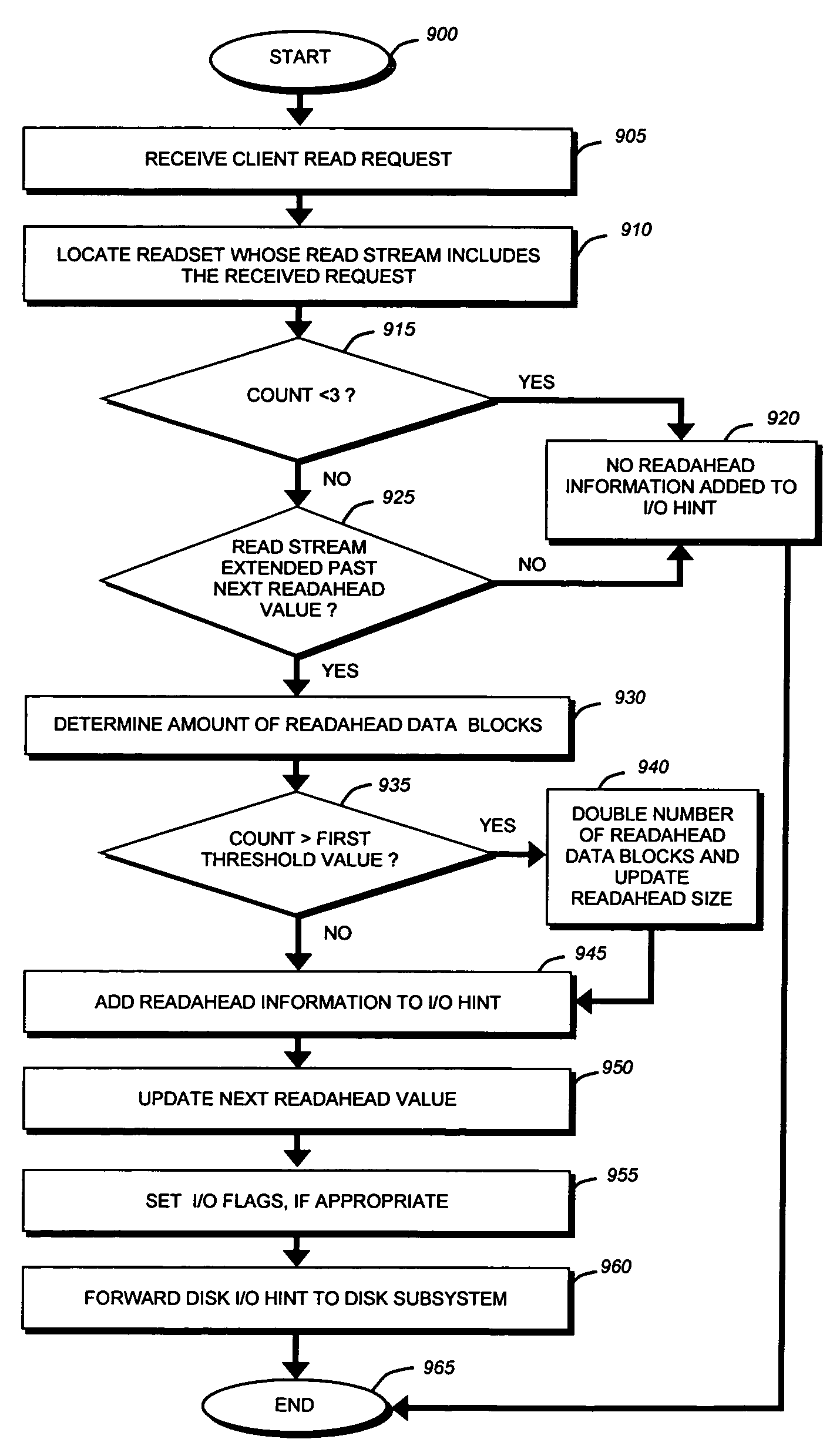

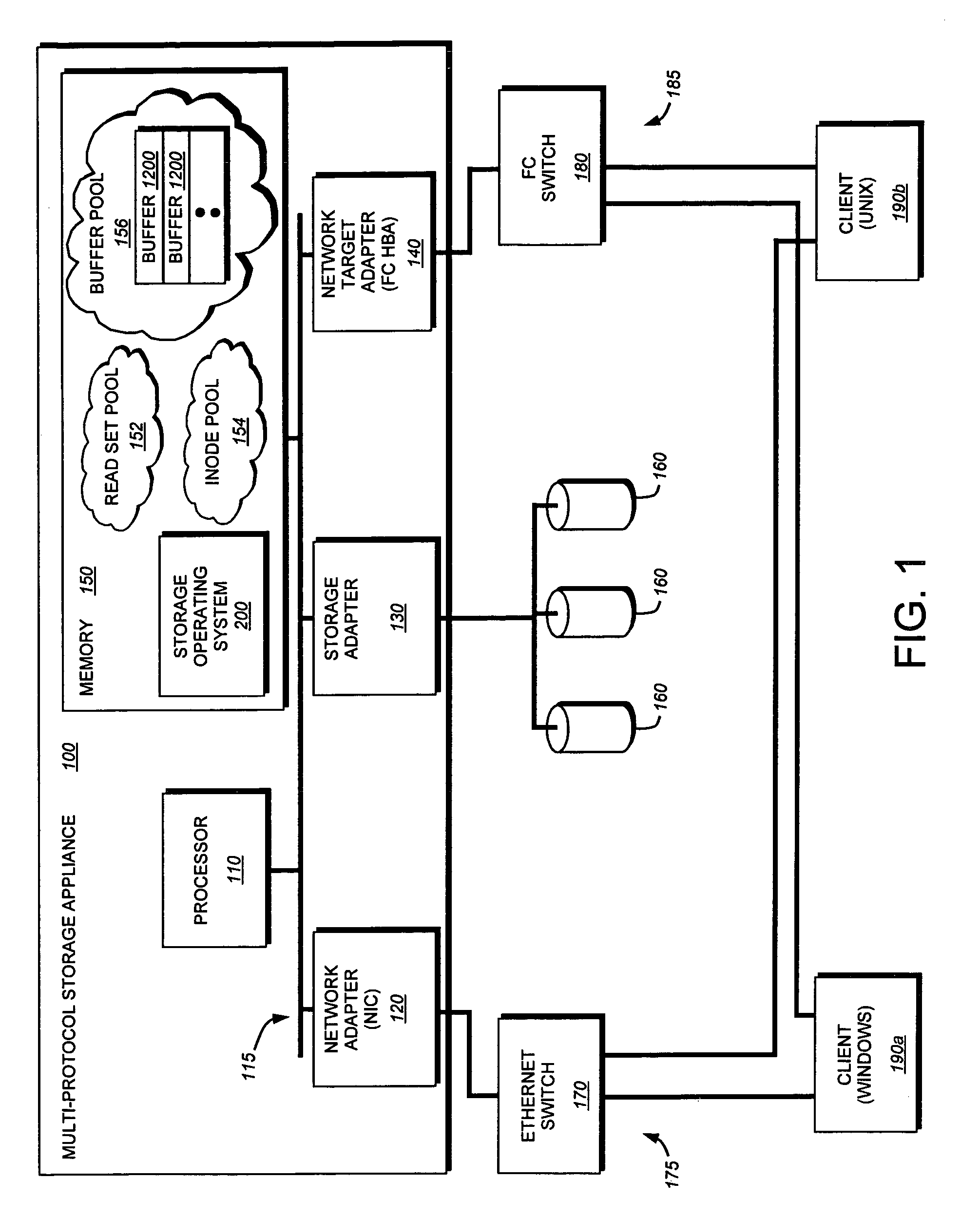

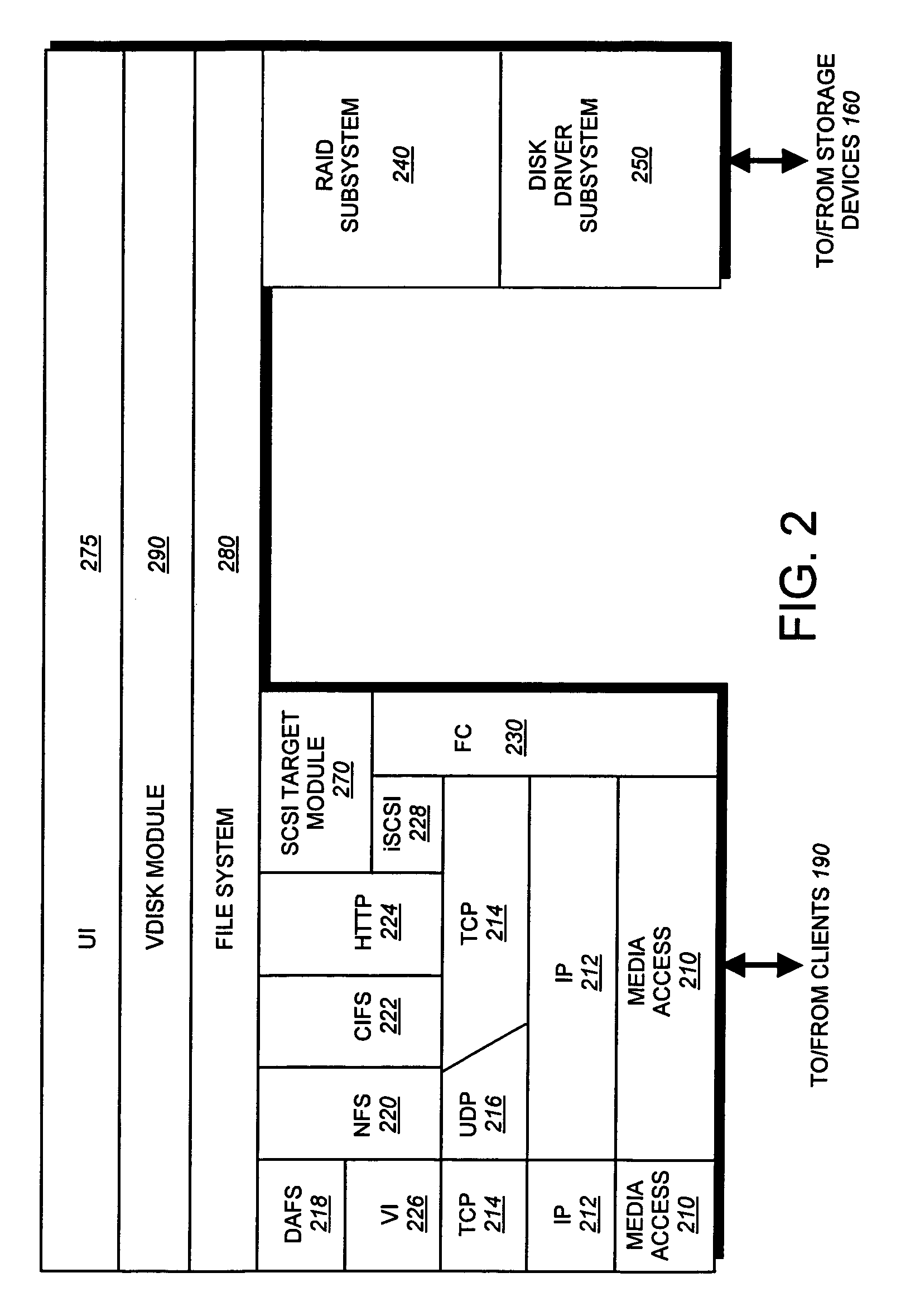

Adaptive file readahead based on multiple factors

ActiveUS7631148B2Pollution minimizationConvenient amountDigital data information retrievalInput/output to record carriersFile systemClient-side

A storage system is provided that implements a file system configured to optimize the amount of readahead data retrieved for each read stream managed by the file system. The file system relies on various factors to adaptively select an optimized readahead size for each read stream. Such factors may include the number of read requests processed in the read stream, an amount of client-requested data requested in the read stream, a read-access style associated with the read stream, and so forth. The file system is also configured to minimize cache pollution by adaptively selecting when readahead operations are performed for each read stream and determining how long each read stream's retrieved data is retained in memory.

Owner:NETWORK APPLIANCE INC

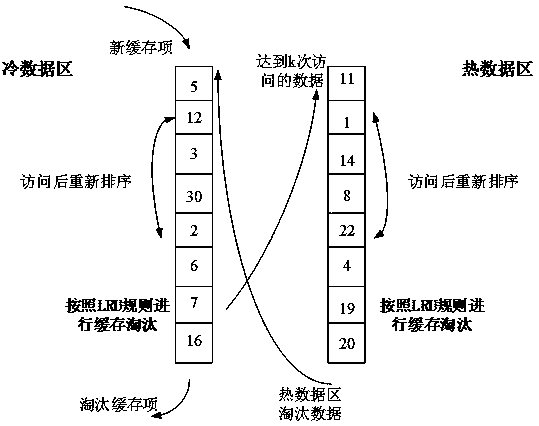

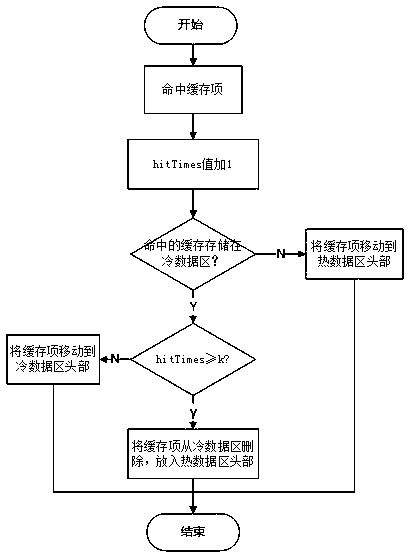

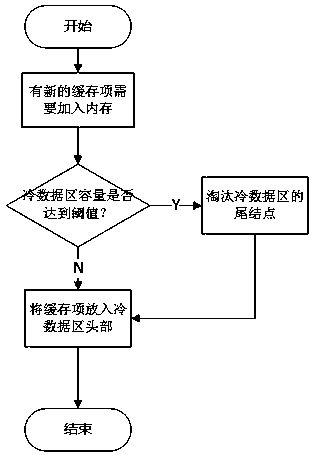

Dynamically adjusted cache data management and elimination method

PendingCN111159066AAvoid pollutionAvoid replacementMemory architecture accessing/allocationMemory systemsParallel computingEngineering

The invention discloses a dynamically adjusted cache data management and elimination method. According to the invention, the position of the cache item in the memory is dynamically adjusted accordingto the access time and hit frequency attribute of the cache item. The memory is divided into a hot data area and a cold data area, the cache items with high hit frequency and short access time are kept at the front part of the hot data area, and the cache items with low hit frequency and long access time are kept at the tail part of the cold data area. When the cache capacity reaches a threshold value and data needs to be eliminated, cache items at the tail part of the cold data area is directly deleted. Accurate elimination of data is realized through dynamic adjustment of the cold and hot data area, the proportion of hotspot data in cache is increased, the cache pollution problem is relieved, and the cache hit rate is increased.

Owner:HANGZHOU DIANZI UNIV

Method and structure for monitoring pollution and prefetches due to speculative accesses

ActiveUS20050251629A1Avoid problemsMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache pollution

Owner:ORACLE INT CORP

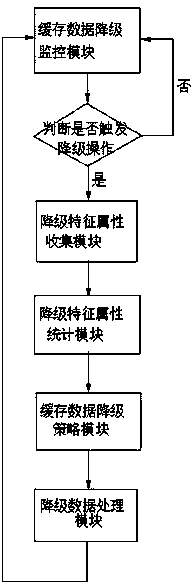

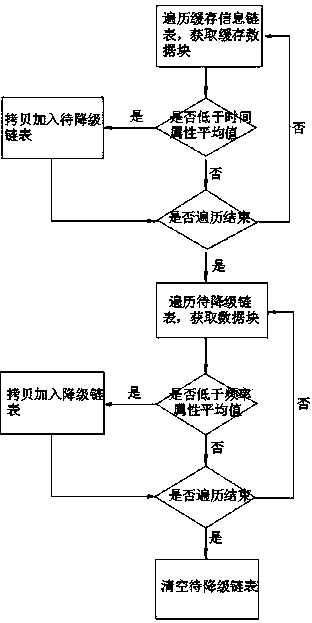

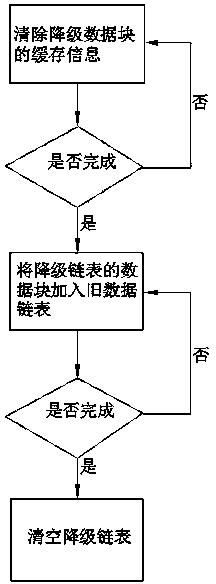

Method for realizing intelligent degradation of data cached in SSD (Solid State Disk) of storage system

InactiveCN103744623AGuaranteed proactive downgradeEfficient use ofInput/output to record carriersParallel computingCache pollution

The invention discloses a method for realizing intelligent degradation of data cached in an SSD (Solid State Disk) of a storage system. The method comprises the following steps: identifying and counting the degradation characteristic attributes of cached data by adopting a set of intelligent cached data degrading strategy and using an intelligent cached data degrading thread; when a degradation condition is satisfied, actively completing degrading operation of the cached data according to an intelligent degradation algorithm, and transferring data blocks needing to be degraded from an SSD cache into an HDD (Hard Disk Drive), thereby realizing active degradation of the cached data, lowering the cache poisoning and effectively using an SSD cache space. By adopting the method, the cache poisoning can be lowered greatly, data in the cache are hottest data, efficient utilization of the SSD cache space is ensured, the throughput of the storage system is increased, the response time is shortened, and the performance of the storage system is improved integrally.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

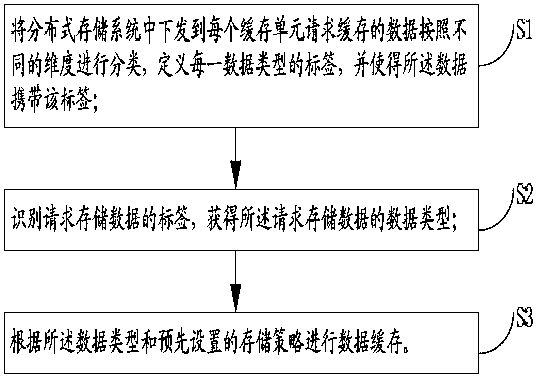

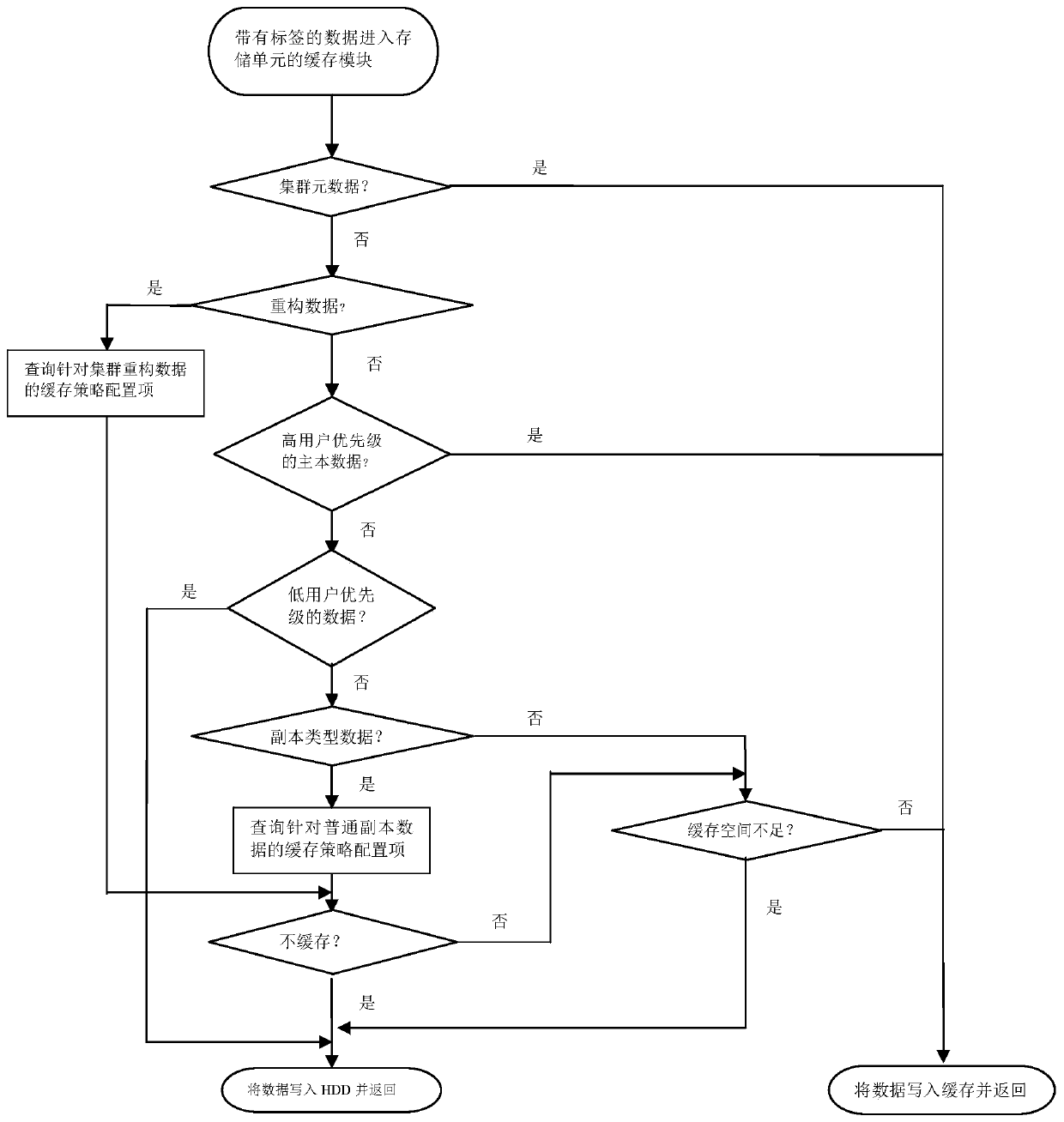

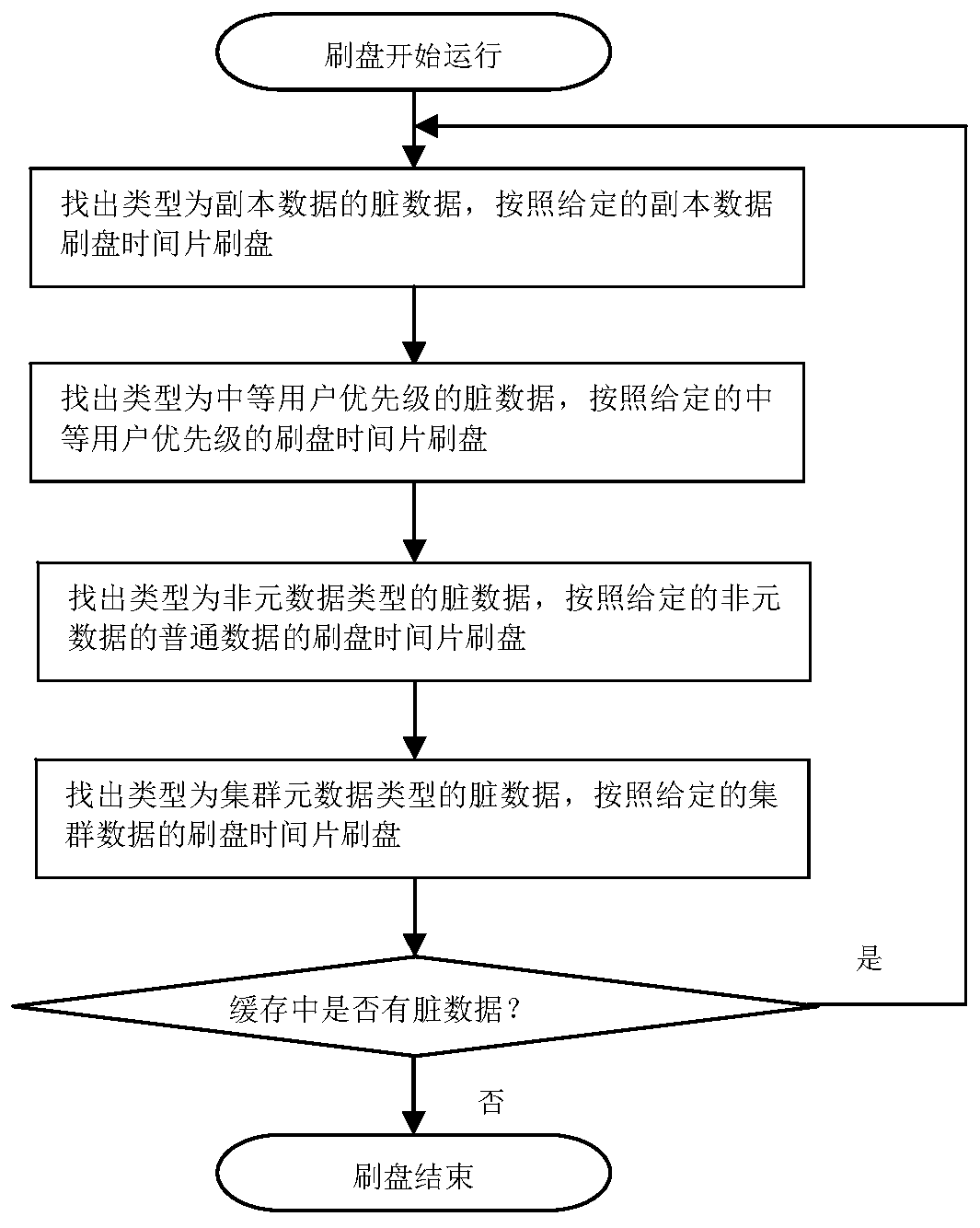

A data caching method of a distributed storage system

ActiveCN109947363AImprove service qualityEasy to useInput/output to record carriersQuality of serviceData type

The invention provides a data caching method for a distributed storage system, and the method comprises the steps: classifying data which is issued to each cache unit in the distributed storage systemand requires to be cached according to different dimensions, defining a label of each data type, and enabling the data to carry the label; Identifying a label of data requested to be stored, and obtaining a data type of the data requested to be stored; And caching the data according to the data type and a preset storage strategy. According to the method, the disk refreshing efficiency can be greatly improved, the use of cache resources is optimized, cache pollution is avoided, and the service quality of the whole distributed storage system is improved through the cache technology.

Owner:SHENZHEN POWER SUPPLY BUREAU +1

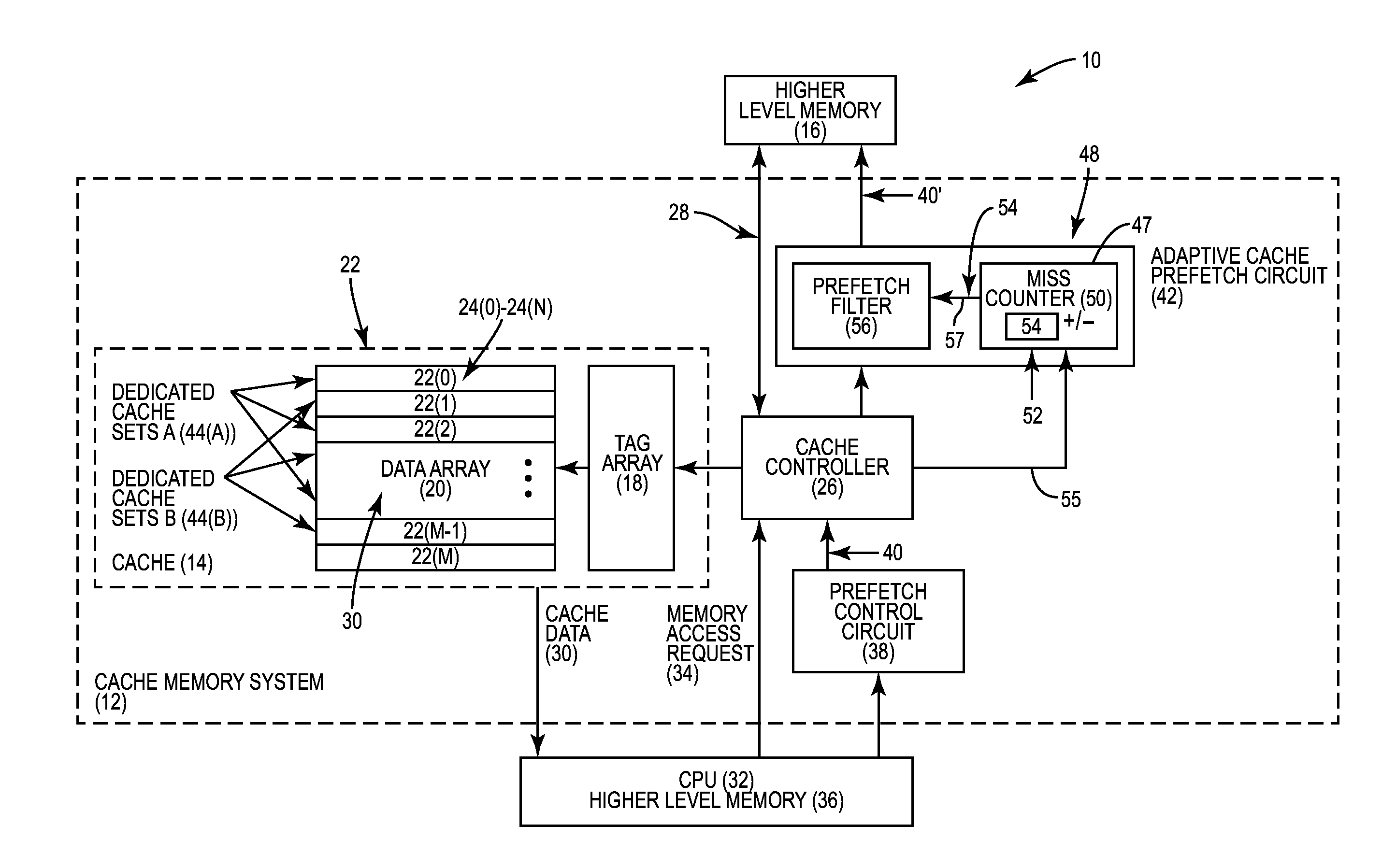

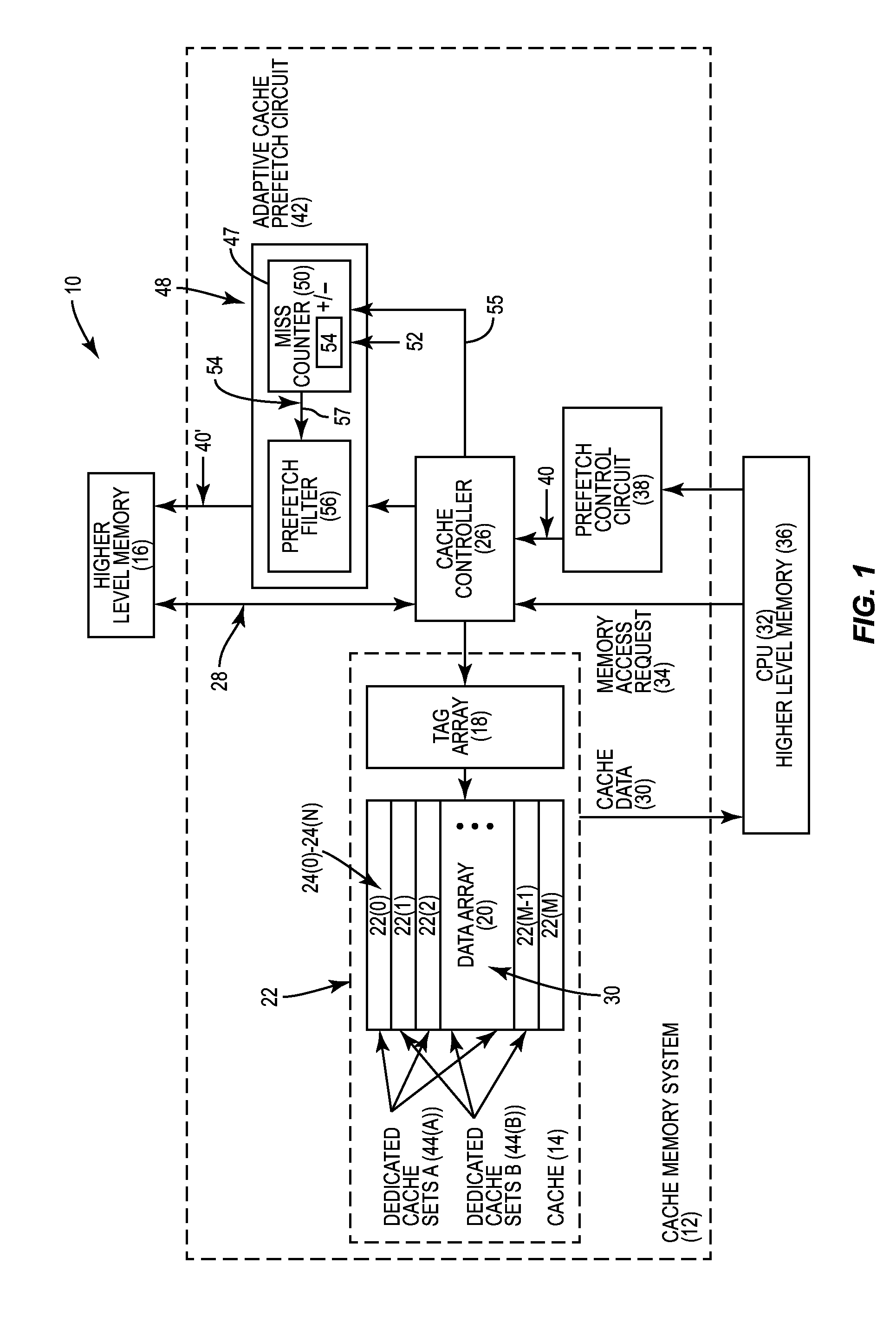

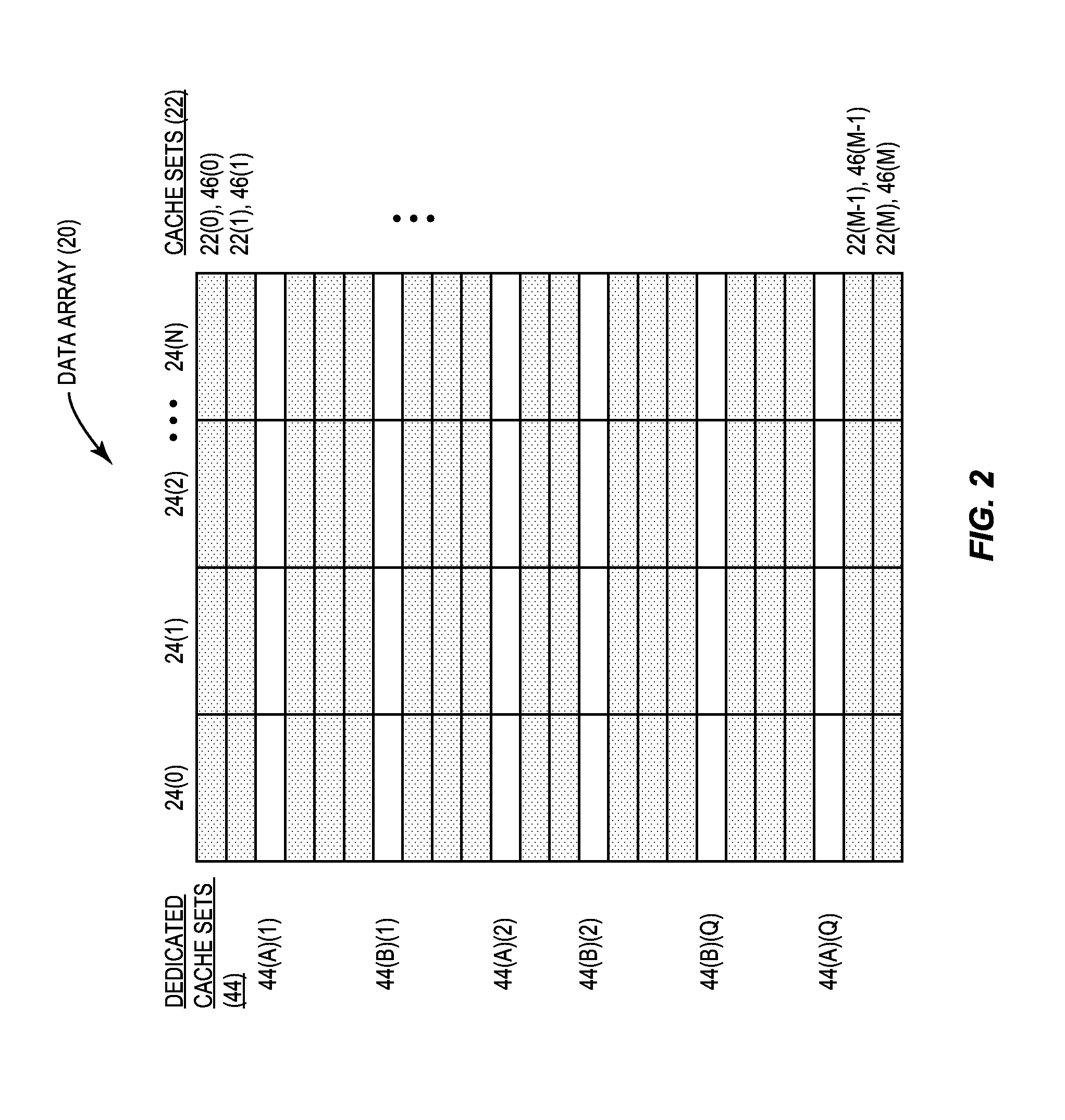

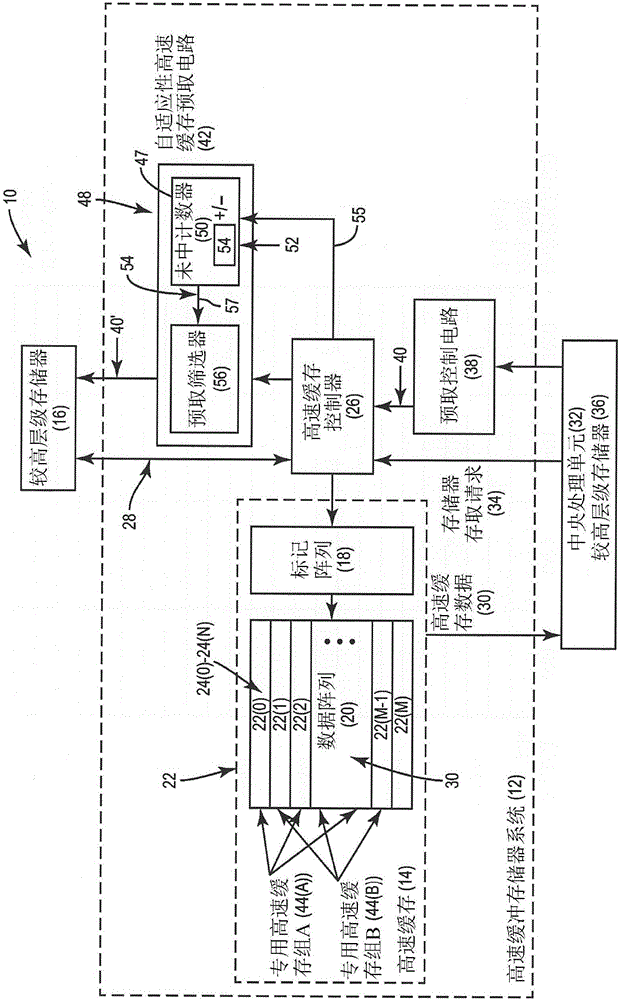

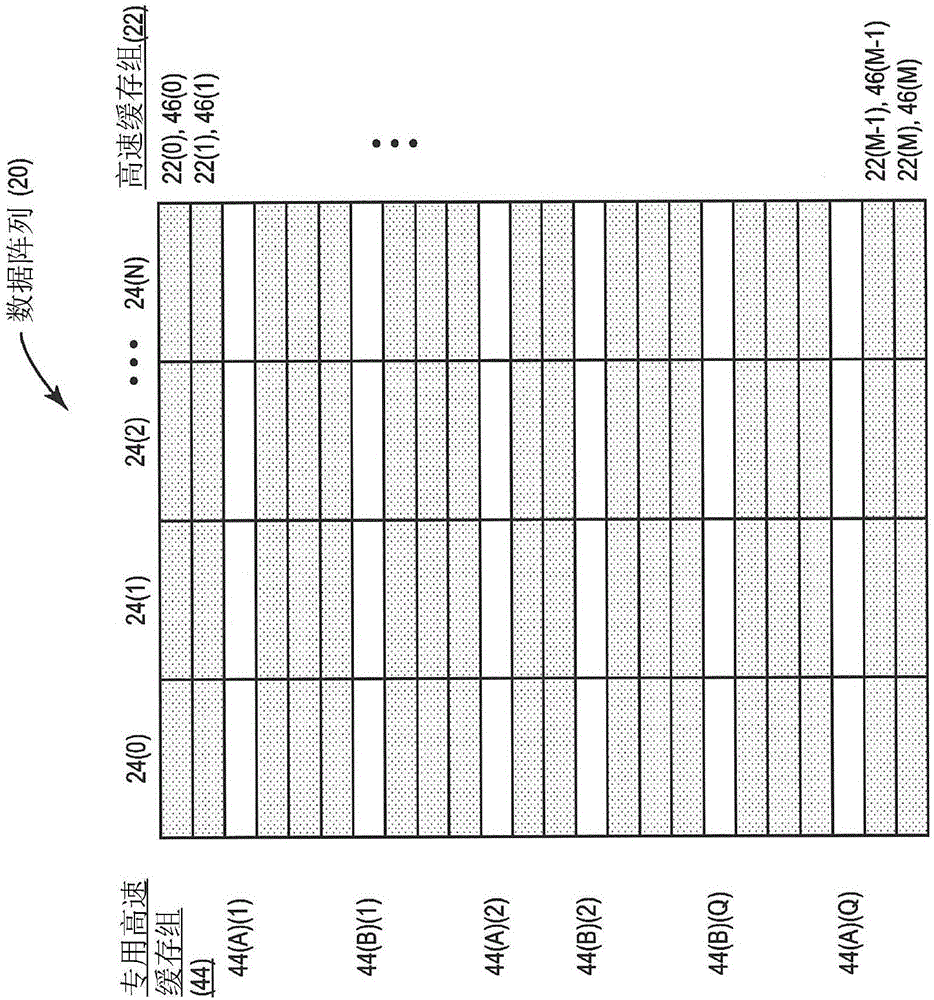

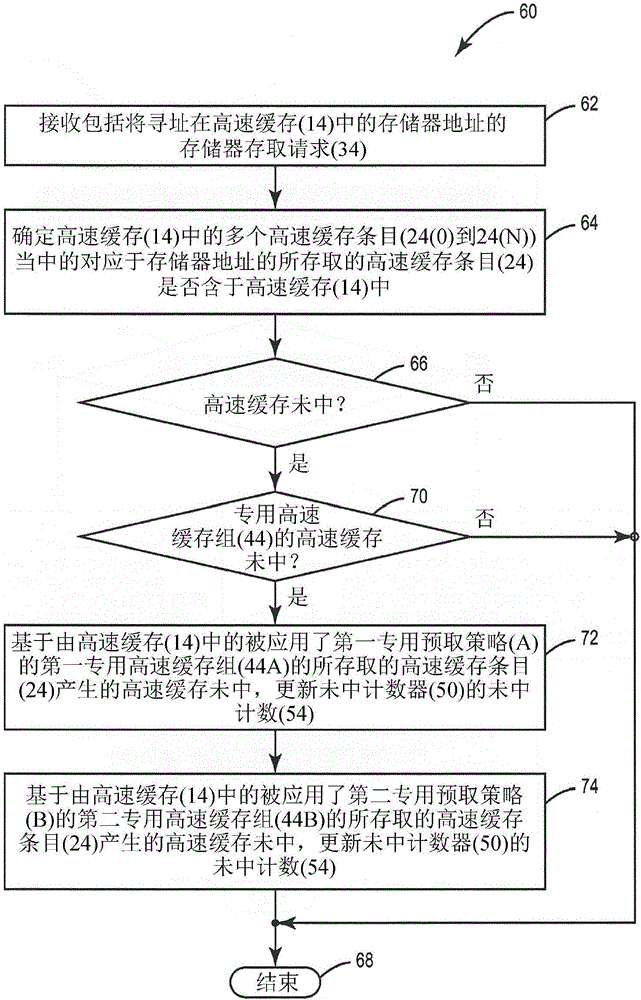

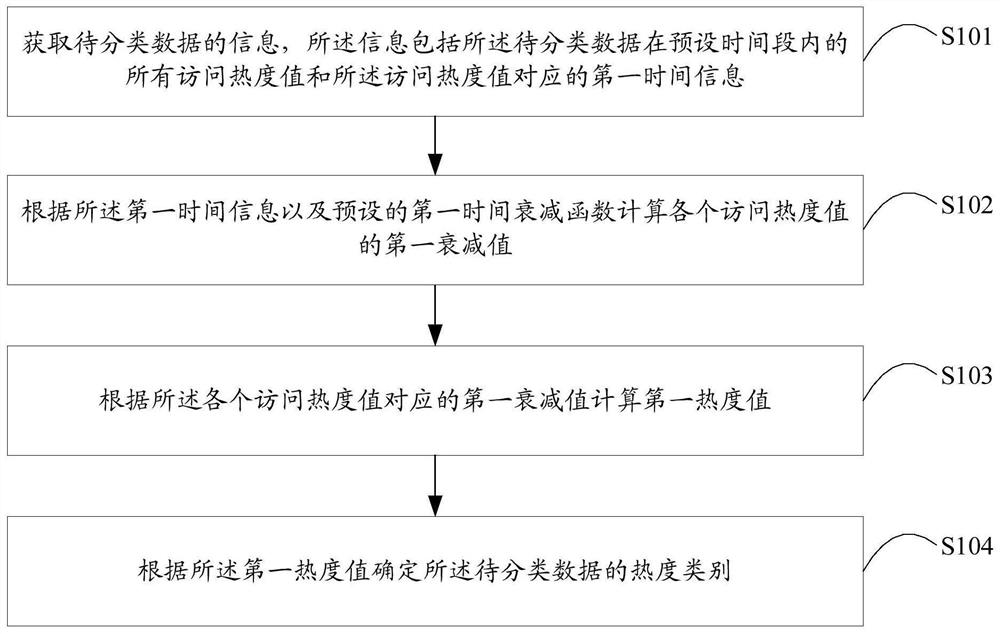

Adaptive cache prefetching based on competing dedicated prefetch policies in dedicated cache sets to reduce cache pollution

InactiveUS20150286571A1Reducing cache pollutionImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingSelf adaptive

Adaptive cache prefetching based on competing dedicated prefetch policies in dedicated cache sets to reduce cache pollution is disclosed. In one aspect, an adaptive cache prefetch circuit is provided for prefetching data into a cache. The adaptive cache prefetch circuit is configured to determine which prefetch policy to use as a replacement policy based on competing dedicated prefetch policies applied to dedicated cache sets in the cache. Each dedicated cache set has an associated dedicated prefetch policy used as a replacement policy for the given dedicated cache set. Cache misses for accesses to each of the dedicated cache sets are tracked by the adaptive cache prefetch circuit. The adaptive cache prefetch circuit can be configured to apply a prefetch policy to the other follower (i.e., non-dedicated) cache sets in the cache using the dedicated prefetch policy that incurred fewer cache misses to its respective dedicated cache sets to reduce cache pollution.

Owner:QUALCOMM INC

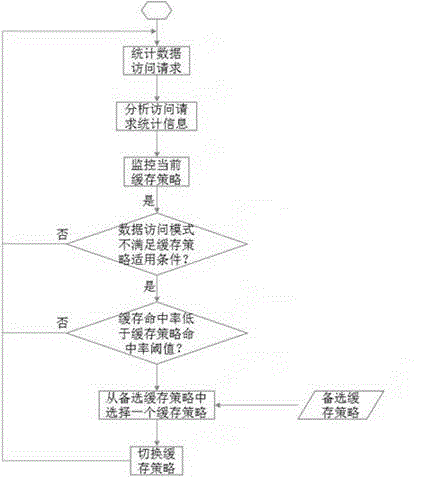

Storage system caching strategy self-adaptive method

ActiveCN104572502AImprove performanceSolve the inability to adapt to complex and changing business needsMemory adressing/allocation/relocationStatistical analysisData access

The invention particularly relates to a storage system caching strategy self-adaptive method. The storage system caching strategy self-adaptive method includes the steps of: performing statistical analysis on data access requests of a storage system to obtain a data access mode, and automatically selecting a proper caching strategy according to the date access mode. The storage system caching strategy self-adaptive method solves the problems that the single caching strategy of the storage system cannot adapt to complex and fickle business requirements and change of the caching strategy needs to be performed manually, so that the storage system can automatically select the caching strategy best for the current date access mode according to change of the actual date access characters, the cache hit ratio is improved, the caching pollution is reduced, the caching performance overhead is lowered, and thereby the performance of the storage system is promoted.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Fine-Grained Software-Directed Data Prefetching Using Integrated High-Level and Low-Level Code Analysis Optimizations

InactiveUS20100095271A1Eliminate redundant prefetchingAvoid pollutionSoftware engineeringDigital computer detailsCost analysisScheduling instructions

A mechanism for minimizing effective memory latency without unnecessary cost through fine-grained software-directed data prefetching using integrated high-level and low-level code analysis and optimizations is provided. The mechanism identifies and classifies streams, identifies data that is most likely to incur a cache miss, exploits effective hardware prefetching to determine the proper number of streams to be prefetched, exploits effective data prefetching on different types of streams in order to eliminate redundant prefetching and avoid cache pollution, and uses high-level transformations with integrated lower level cost analysis in the instruction scheduler to schedule prefetch instructions effectively.

Owner:INT BUSINESS MASCH CORP

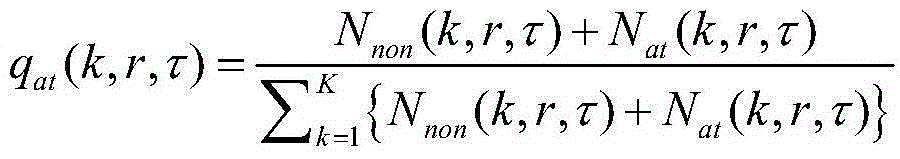

Multi-parameter cache pollution attack detection method in content centric networking

The invention relates to content centric networking, in particular to a multi-parameter cache pollution attack detection method in the content centric networking. The multi-parameter cache pollution attack detection method in the content centric networking comprises the following steps: performing attack detection periodically and circularly, and calculating a sampling value of the attack impact degree in the current cycle; defining the variable quantity of the sampling value of the current attack impact degree to the last cycle as the variable quantity of the attack impact degree; setting a threshold according to the standard deviation of the variable quantity of the attack impact degree in a number of cycles; and determining that the attack occurs if the variable quantity of the attack impact degree exceeds the threshold. Compared with the traditional cache pollution attack detection method, the multi-parameter cache pollution attack detection method in the content centric networkingcan cope with more complex network environment, and can detect the cache pollution attack behavior in time and accurately, therefore, the multi-parameter cache pollution attack detection method in the content centric networking helps to improve the overall security performance of the content centric networking.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Fine-grained software-directed data prefetching using integrated high-level and low-level code analysis optimizations

InactiveUS7669194B2Eliminate redundant prefetchingAvoid pollutionSoftware engineeringDigital computer detailsCost analysisScheduling instructions

A mechanism for minimizing effective memory latency without unnecessary cost through fine-grained software-directed data prefetching using integrated high-level and low-level code analysis and optimizations is provided. The mechanism identifies and classifies streams, identifies data that is most likely to incur a cache miss, exploits effective hardware prefetching to determine the proper number of streams to be prefetched, exploits effective data prefetching on different types of streams in order to eliminate redundant prefetching and avoid cache pollution, and uses high-level transformations with integrated lower level cost analysis in the instruction scheduler to schedule prefetch instructions effectively.

Owner:INT BUSINESS MASCH CORP

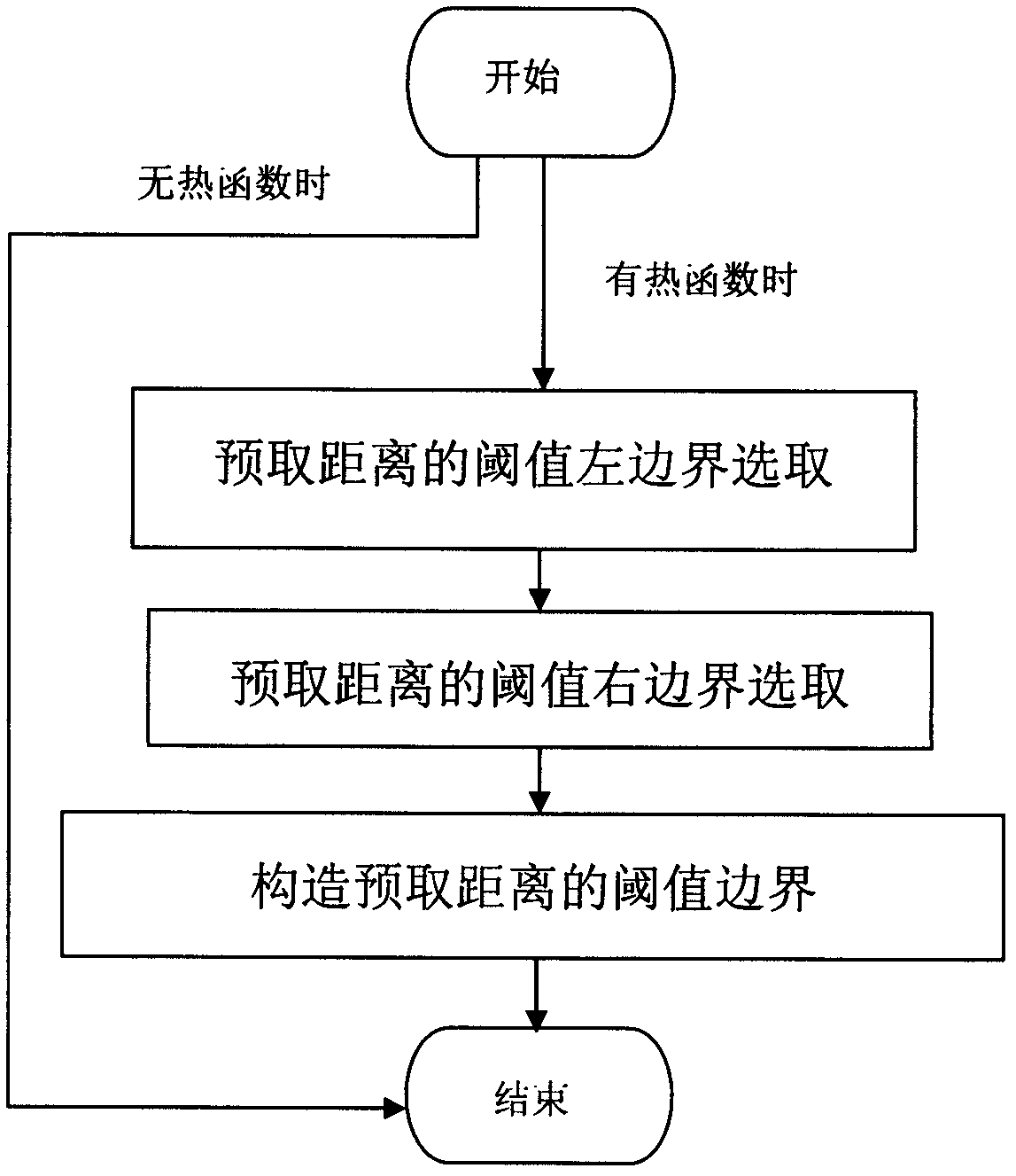

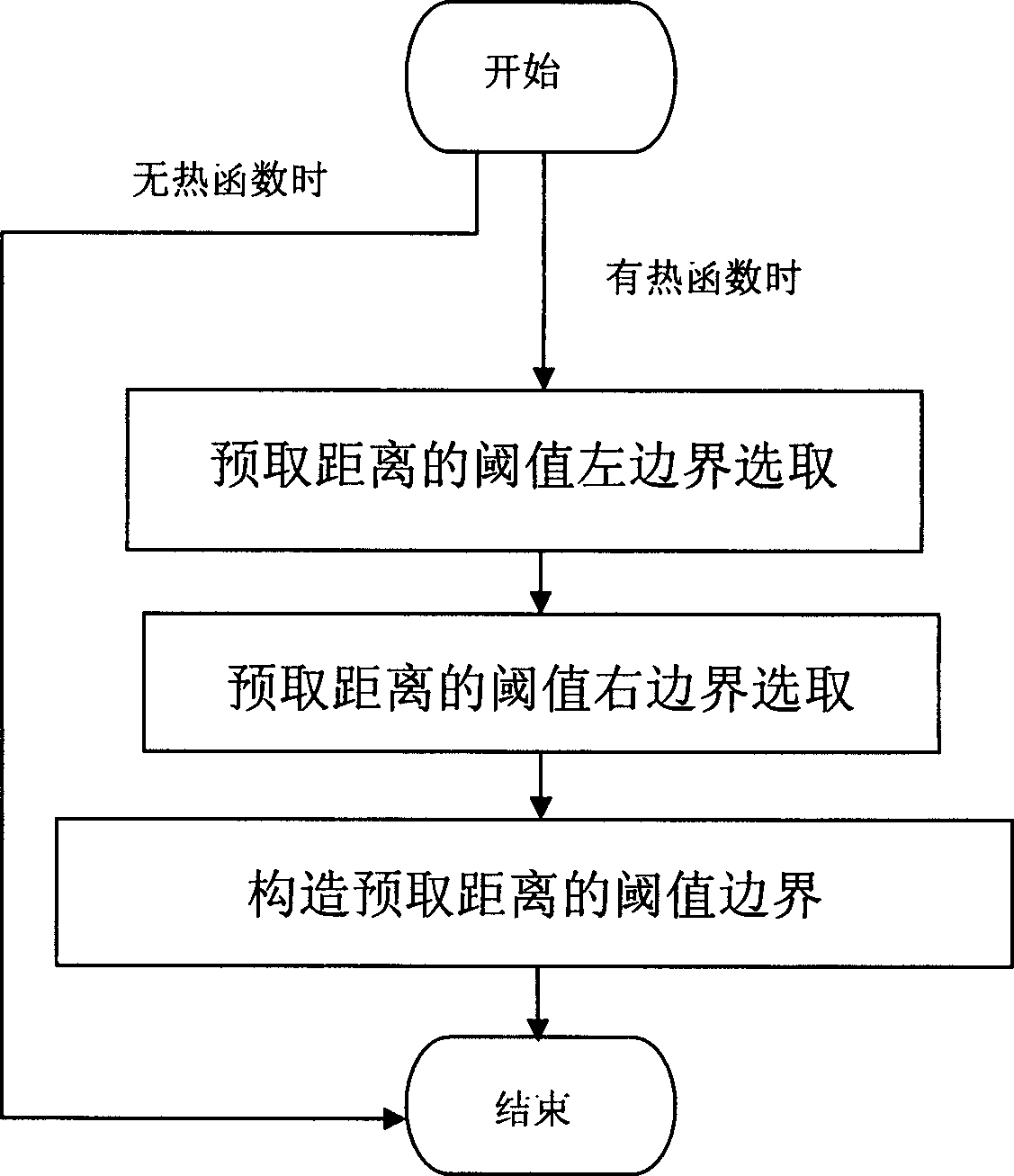

Threshold boundary selecting method for supporting helper thread pre-fetching distance parameters

InactiveCN102662638AEffective determination of threshold boundariesNarrow down the range of valuesMemory adressing/allocation/relocationConcurrent instruction executionParallel computingData memory

The invention relates to a threshold boundary selecting method for supporting helper thread pre-fetching distance parameters and belongs to the technical field of memory access performance optimization of multi-core computers. The threshold boundary selecting method can be used for enhancing execution performance of irregular data intensive application. On the basis of a multi-core architecture of a shared cache, aiming to the helper thread pre-fetching distance parameters based on mixed pre-fetching and by means of introduction of the technologies of left threshold boundary selection for pre-fetching distance, right threshold boundary selection for the pre-fetching distance, threshold boundary constitution for the pre-fetching distance and the like, the threshold boundary of each pre-fetching distance parameter is automatically selected so that an optimal threshold of the pre-fetching distance parameter can be obtained within a determined boundary range, and helper thread pre-fetching control quality is improved. The method can be widely applied to irregular intensive data memory access performance optimization, pre-fetching distance threshold optimization in a helper thread pre-fetching control strategy, shared cache contamination control and other aspects.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

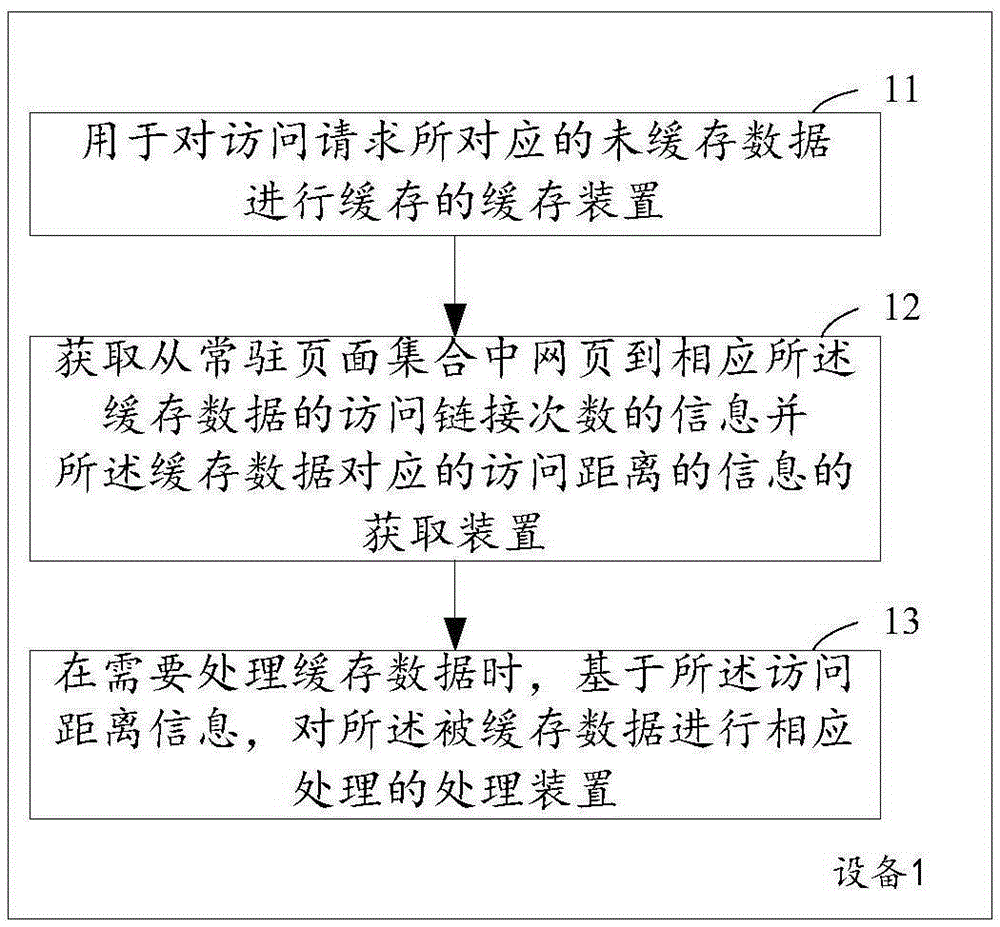

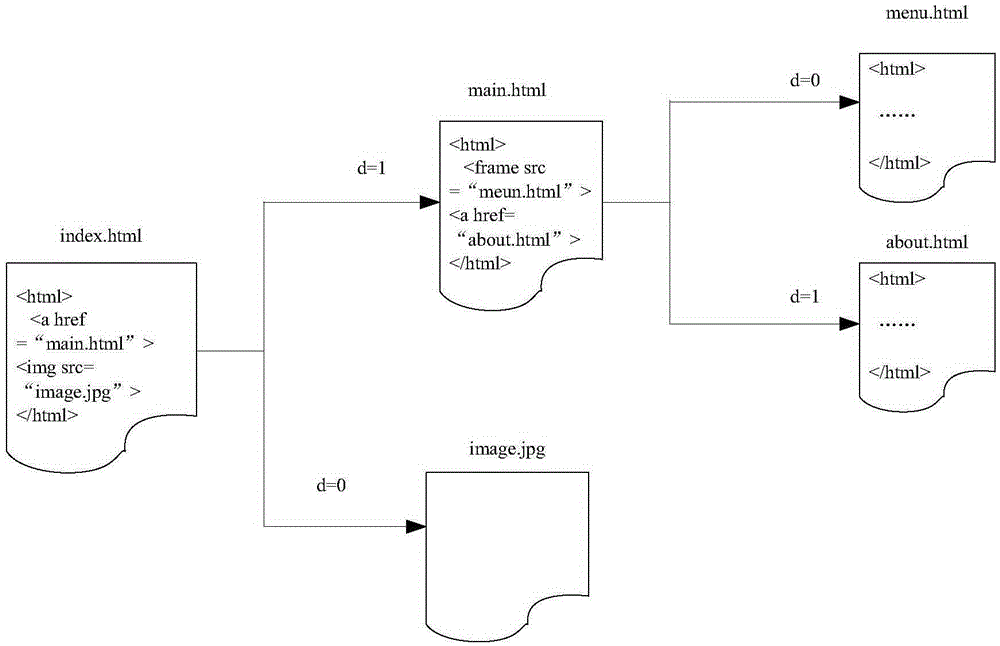

Method and equipment for processing cache data

ActiveCN106649313AThe elimination mechanism is reasonableImprove performanceWebsite content managementSpecial data processing applicationsPersonalizationParallel computing

The invention aims at providing a technology for processing cache data. The technology comprises the following steps of: caching un-cached data corresponding to an access request; obtaining information of access link frequency from a webpage in a resident page set to corresponding cache data, and determining and recording information of an access distance corresponding to the cache data on the basis of the information of the access link frequency; and when the cache data needs to be processed, carrying out corresponding processing on the cached data on the basis of the information of the access distance. Through a manner of processing the cache data on the basis of the information of the access distance, an elimination mechanism of the cache data is more reasonable, the cache pollution phenomena are decreased and unreasonable elimination is avoided, so that the performance of proxy cache servers is improved and the user access speed and individualized experience are improved.

Owner:ALIBABA GRP HLDG LTD

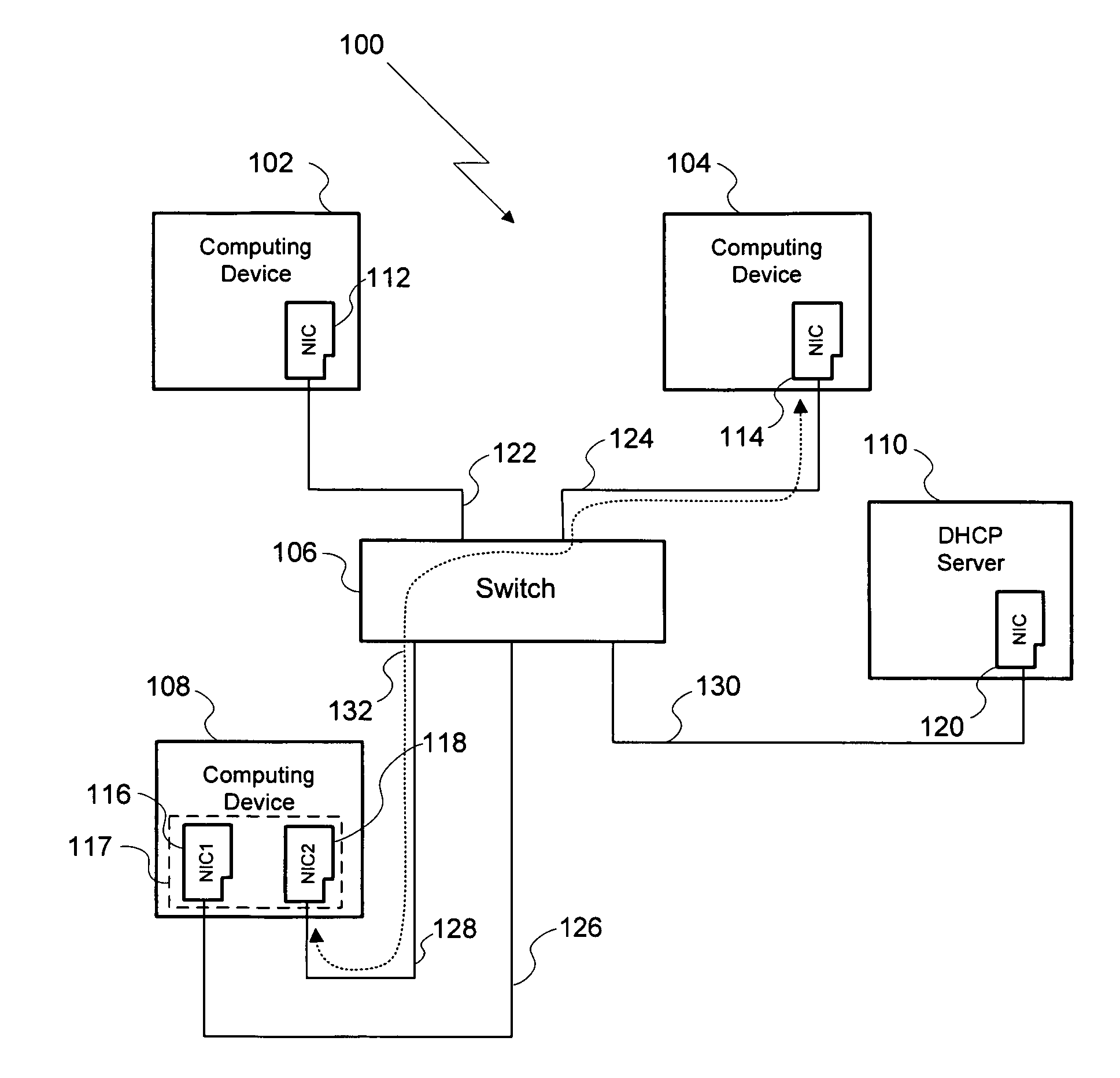

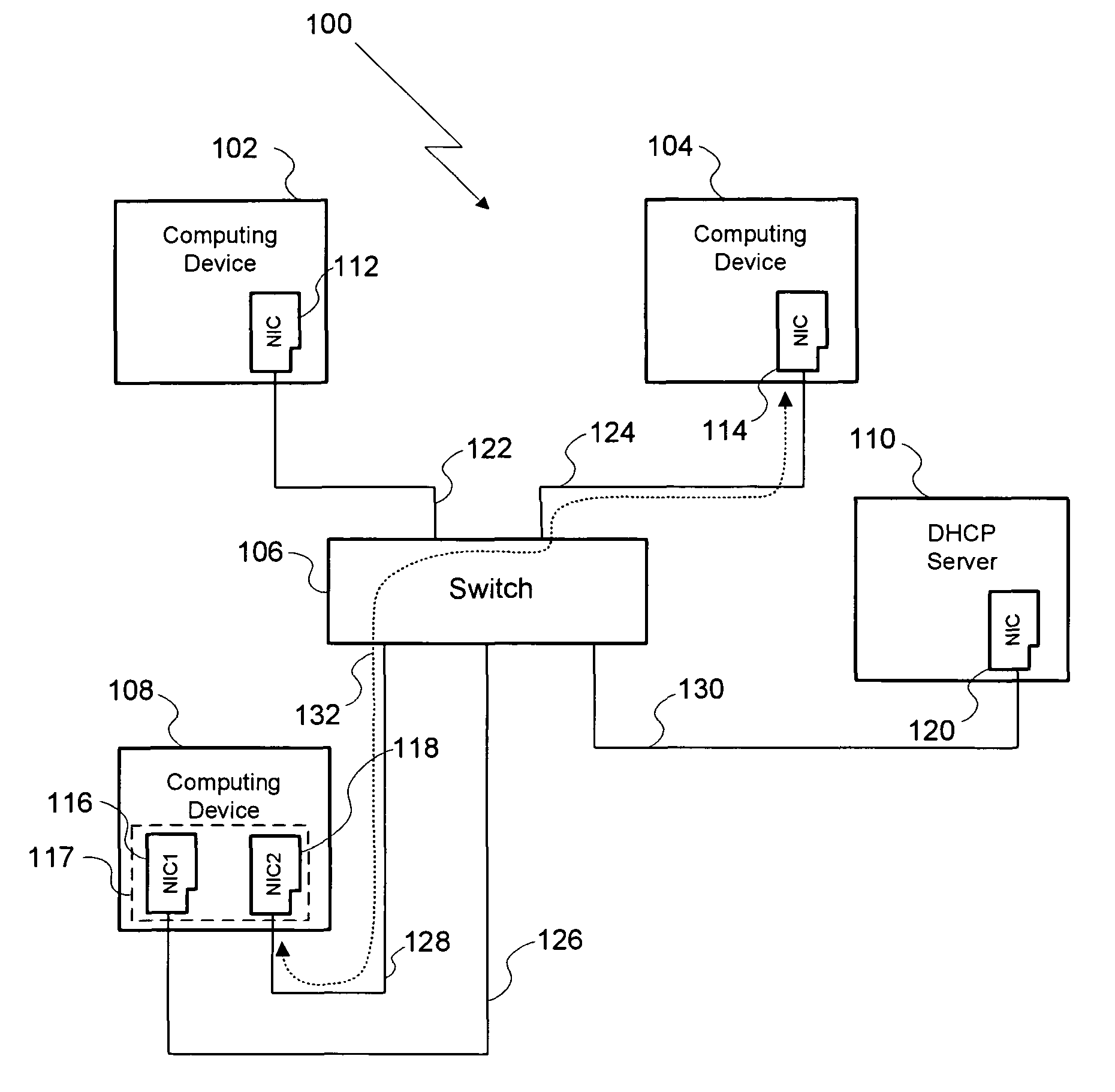

System and method for avoiding ARP cache pollution

ActiveUS8284782B1Avoiding ARP cache corruptionData switching by path configurationIp addressNetwork connection

A method for establishing a network connection between two computing devices within the same computer network includes the steps of generating a masquerade IP address request, where the masquerade IP address request includes a masquerade MAC address, transmitting the masquerade IP address request to a DHCP server, and receiving a masquerade IP address from the DHCP server. The masquerade IP address is then used as the sender's IP address in an ARP broadcast request transmitted to set up the network connection. Since the masquerade IP address is unique relative to the computer network, computing devices within the network do no overwrite existing IP-to-MAC relationships in their respective ARP caches with the IP-to-MAC relationship reflected in the ARP broadcast request. Thus, the method enables a network connection to be initiated between two computing devices in the same computer network while avoiding ARP cache pollution on other computing devices in that network.

Owner:NVIDIA CORP

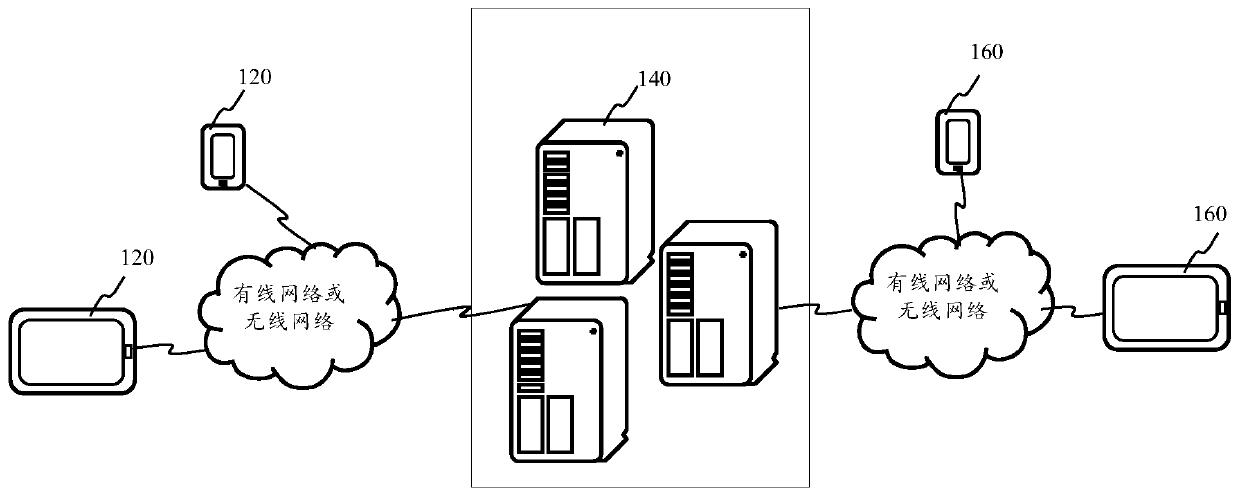

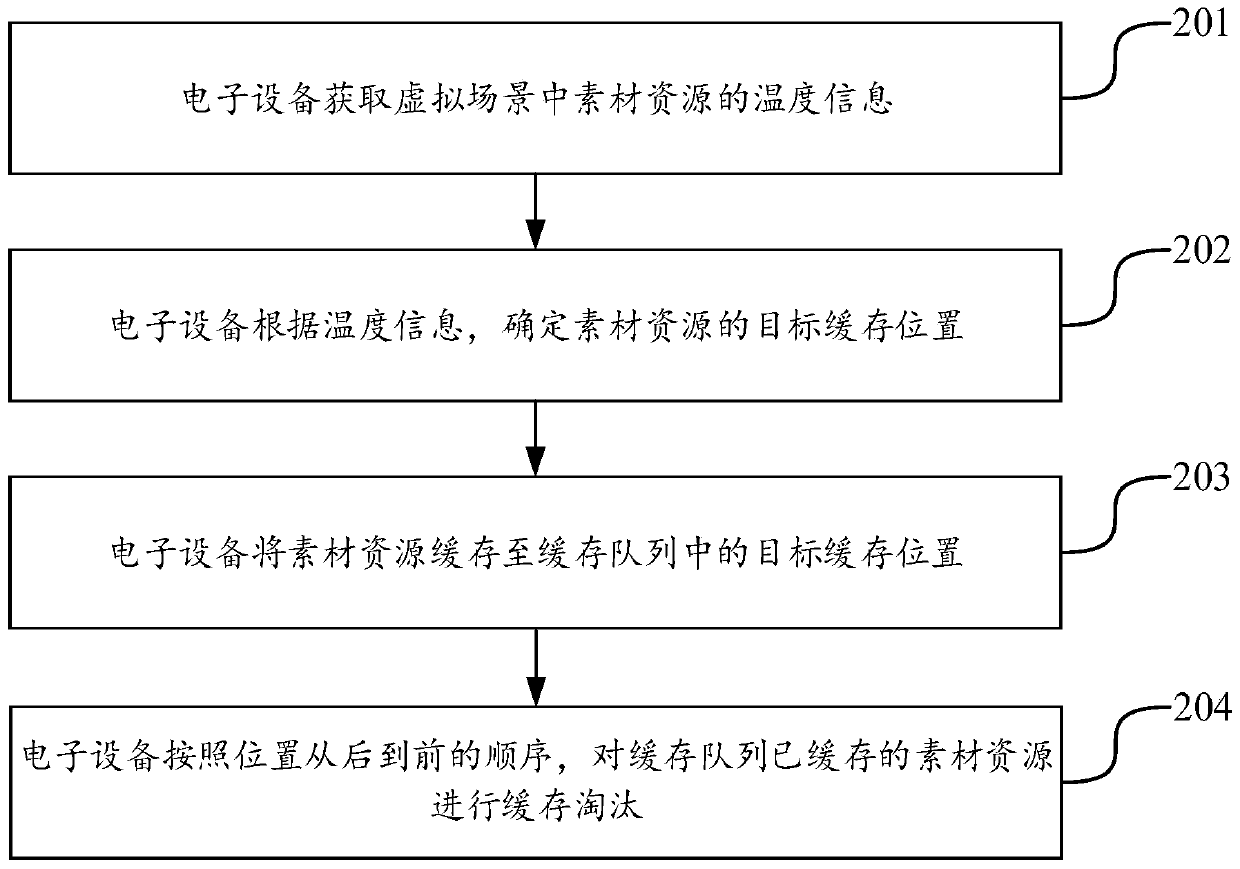

Cache management method and device, equipment and storage medium

ActiveCN110908612ANot easy to eliminateExtended dwell timeInput/output to record carriersParallel computingMaterial resources

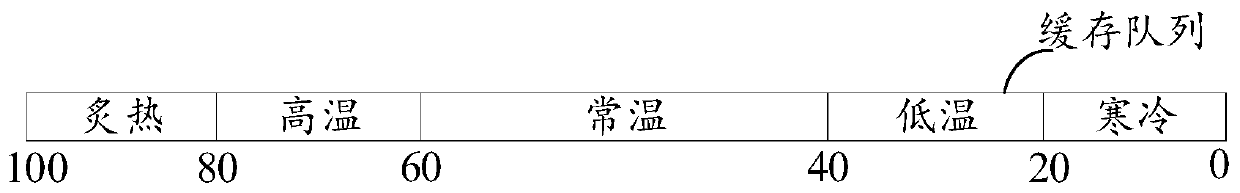

The invention discloses a cache management method and device, equipment and a storage medium, and belongs to the technical field of storage. The embodiment of the invention provides a method for caching and eliminating material resources of a virtual scene based on temperature information, adopts the temperature information to represent the probability that the material resources are accessed in the virtual scene, uses the positions, corresponding to the temperature information, in the cache queue for caching the material resources, and conducts cache elimination on the cache queue according to the sequence of the positions from back to front. In this way, the cold resources of the virtual scene are eliminated first, and then the hot resources of the virtual scene are eliminated. On one hand, by prolonging the residence time of the hotspot resources in the cache, the probability of hitting the cache when the hotspot resources are accessed is improved, so that the cache hit rate is improved, and the problem of cache pollution is solved. And on the other hand, the cold resources in the cache are removed as soon as possible, so that the cache space is saved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

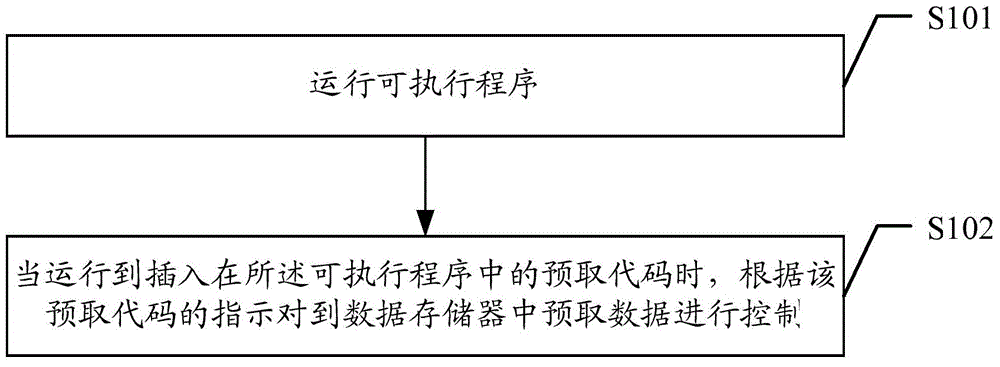

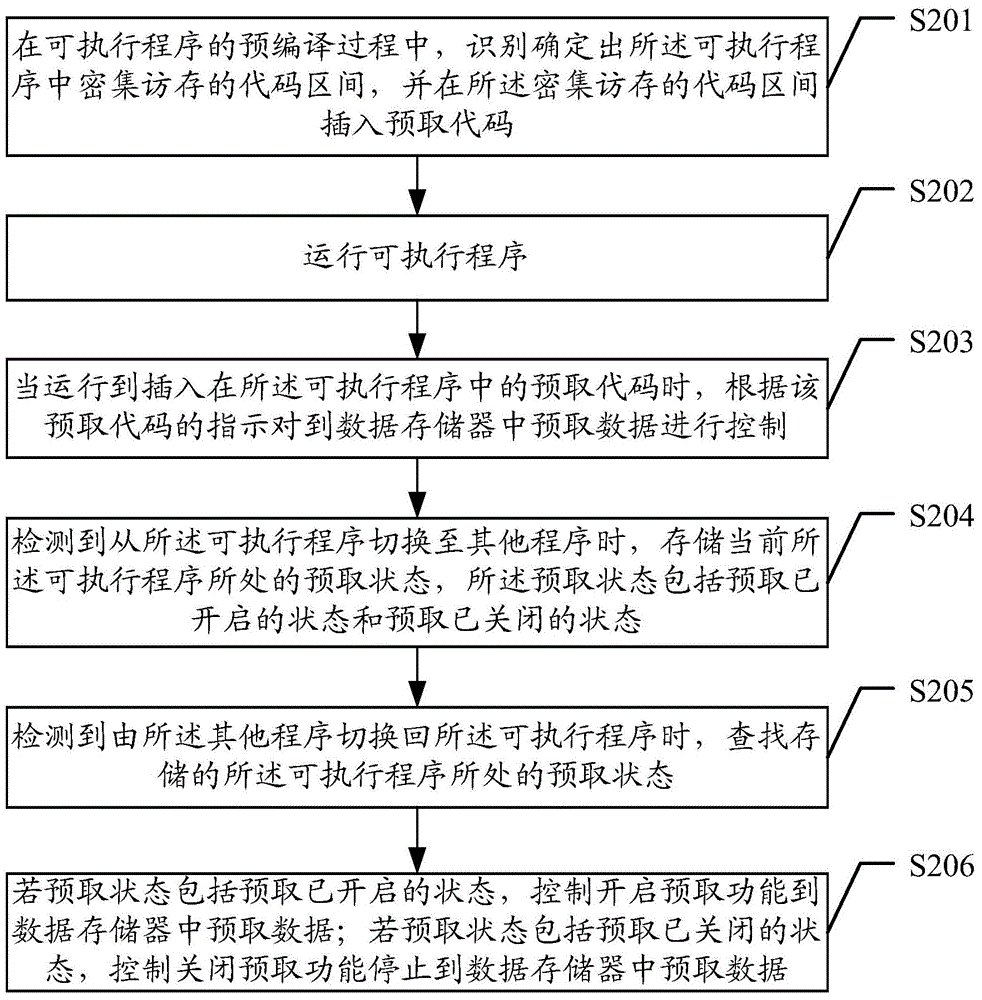

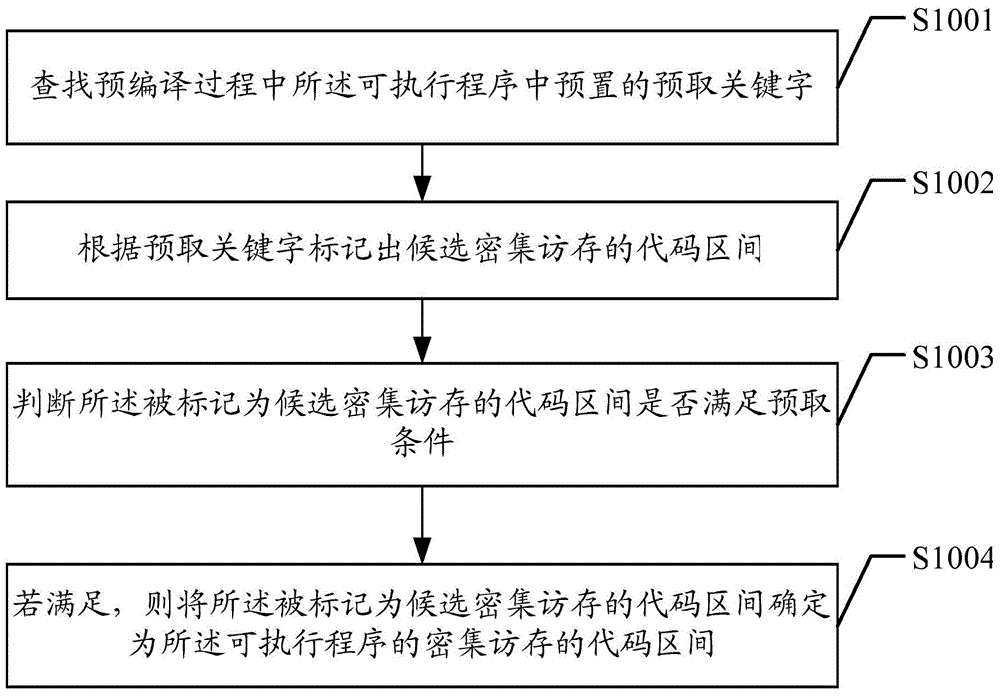

Data prefetching method, and related devices and systems

ActiveCN103608768AReduce power consumptionLow costEnergy efficient ICTMemory adressing/allocation/relocationParallel computingData memory

Embodiments of the invention disclose a data prefetching method, and related devices and systems. The method comprises operating an executable program; and, when the program runs to a prefetching code, performing control on data prefetching in a data memory according the instruction of the prefetching code, wherein the control of data prefetching in the data memory according to the instruction of the prefetching code involves: if the prefetching code includes a prefetching enabling code that instruct the prefetching function to be enabled, controlling the prefetching function to be enabled and conducting the data prefetching in the data memory; and if the prefetching code includes a prefetching disabling code that instruct the prefetching function to be disabled, controlling the prefetching function to be disabled and stopping the data prefetching in the data memory. According to the invention, the prefetching function of a data prefetching device can be enabled or disabled by directly controlling the cache, so that the power consumption is reduced, and the problem of cache pollution caused by prefetching misoperation is effectively prevented.

Owner:HUAWEI TECH CO LTD

Adaptive cache prefetching based on competing dedicated prefetch policies in dedicated cache sets to reduce cache pollution

InactiveCN106164875AMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache pollution

Adaptive cache prefetching based on competing dedicated prefetch policies in dedicated cache sets to reduce cache pollution is disclosed. In one aspect, an adaptive cache prefetch circuit is provided for prefetching data into a cache. The adaptive cache prefetch circuit is configured to determine which prefetch policy to use as a replacement policy based on competing dedicated prefetch policies applied to dedicated cache sets in the cache. Each dedicated cache set has an associated dedicated prefetch policy used as a replacement policy for the given dedicated cache set. Cache misses for accesses to each of the dedicated cache sets are tracked by the adaptive cache prefetch circuit. The adaptive cache prefetch circuit can be configured to apply a prefetch policy to the other follower (i.e., non-dedicated) cache sets in the cache using the dedicated prefetch policy that incurred fewer cache misses to its respective dedicated cache sets to reduce cache pollution.

Owner:QUALCOMM INC

Reducing Cache Pollution of a Software Controlled Cache

InactiveUS20090254711A1Reducing cache pollutionDigital computer detailsMemory systemsCache accessParallel computing

Reducing cache pollution of a software controlled cache is provided. A request is received to prefetch data into the software controlled cache. A first designator is set for a first cache access to a first value. If there is the second cache access to prefetch, a determination is made as to whether data associated with the second cache access exists in the software controlled cache. If the data is in the software controlled cache, a determination is made as to whether a second value of a second designator is greater than the first value of the first cache access. If the second value fails to be greater than the first value, the position of the first cache access and the second cache access in a cache line is swapped. The first value is decremented by a predetermined amount and the second value is replaced to equal the first value.

Owner:IBM CORP

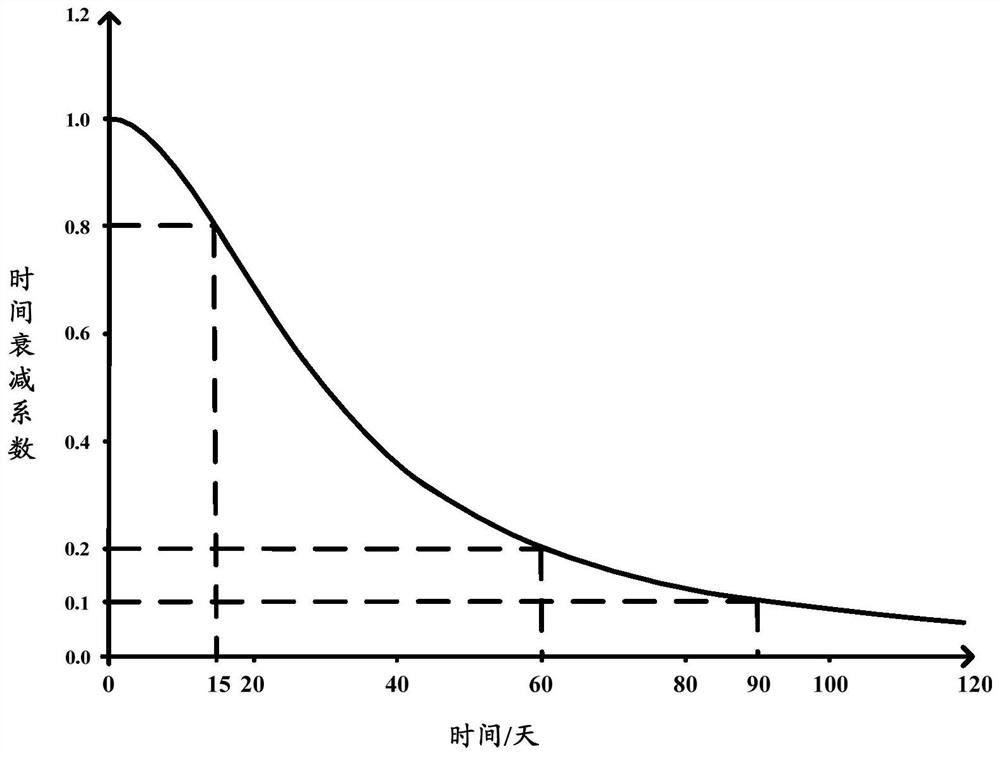

Data processing method and device, terminal equipment and computer readable storage medium

PendingCN112948171AReduce pollution effectImprove accuracyInput/output to record carriersMemory adressing/allocation/relocationTime informationTerminal equipment

The invention is suitable for the technical field of data processing, and provides a data processing method and device, terminal equipment and a computer readable storage medium; the method comprises the following steps: obtaining information of to-be-classified data, the information comprising all access heat values of the to-be-classified data in a preset time period and first time information corresponding to each access heat value; calculating a first attenuation value of each access popularity value according to the first time information and a preset first time attenuation function; calculating a first popularity value according to the first attenuation value corresponding to each access popularity value; and determining the popularity category of the to-be-classified data according to the first popularity value. According to the invention, the problems that a current data classification scheme is low in accuracy and cache pollution is easily caused can be solved.

Owner:HUAWEI TECH CO LTD

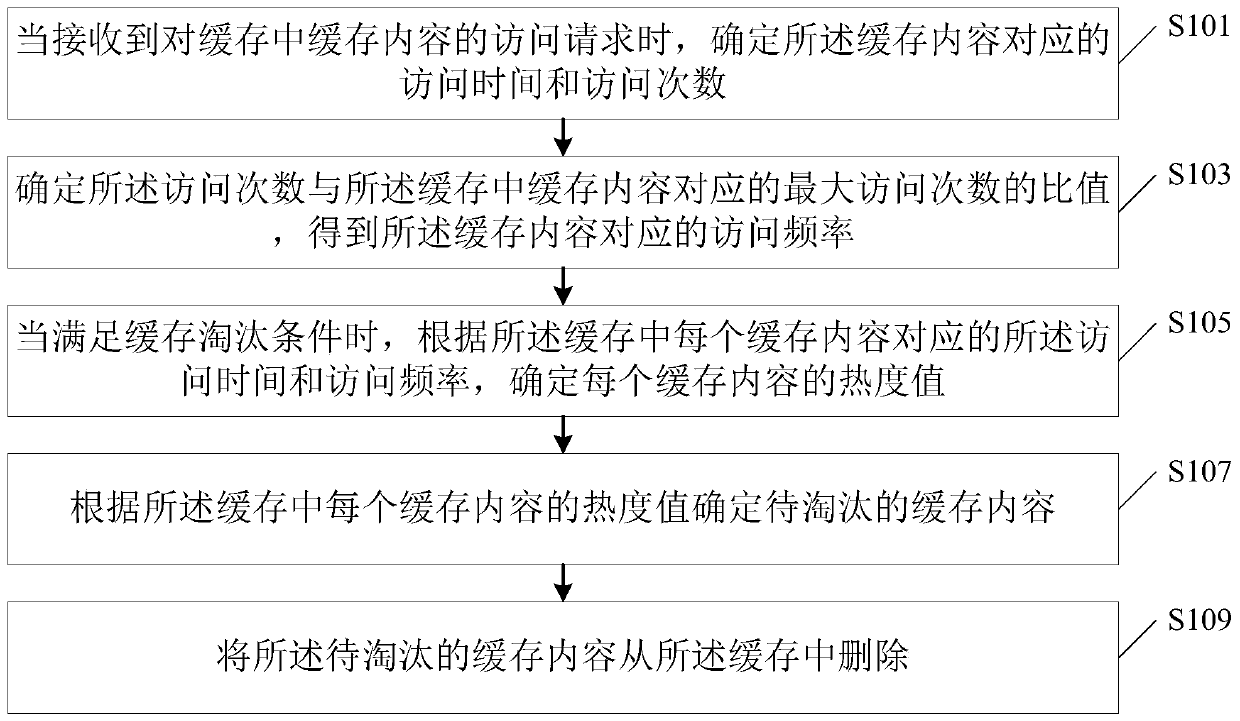

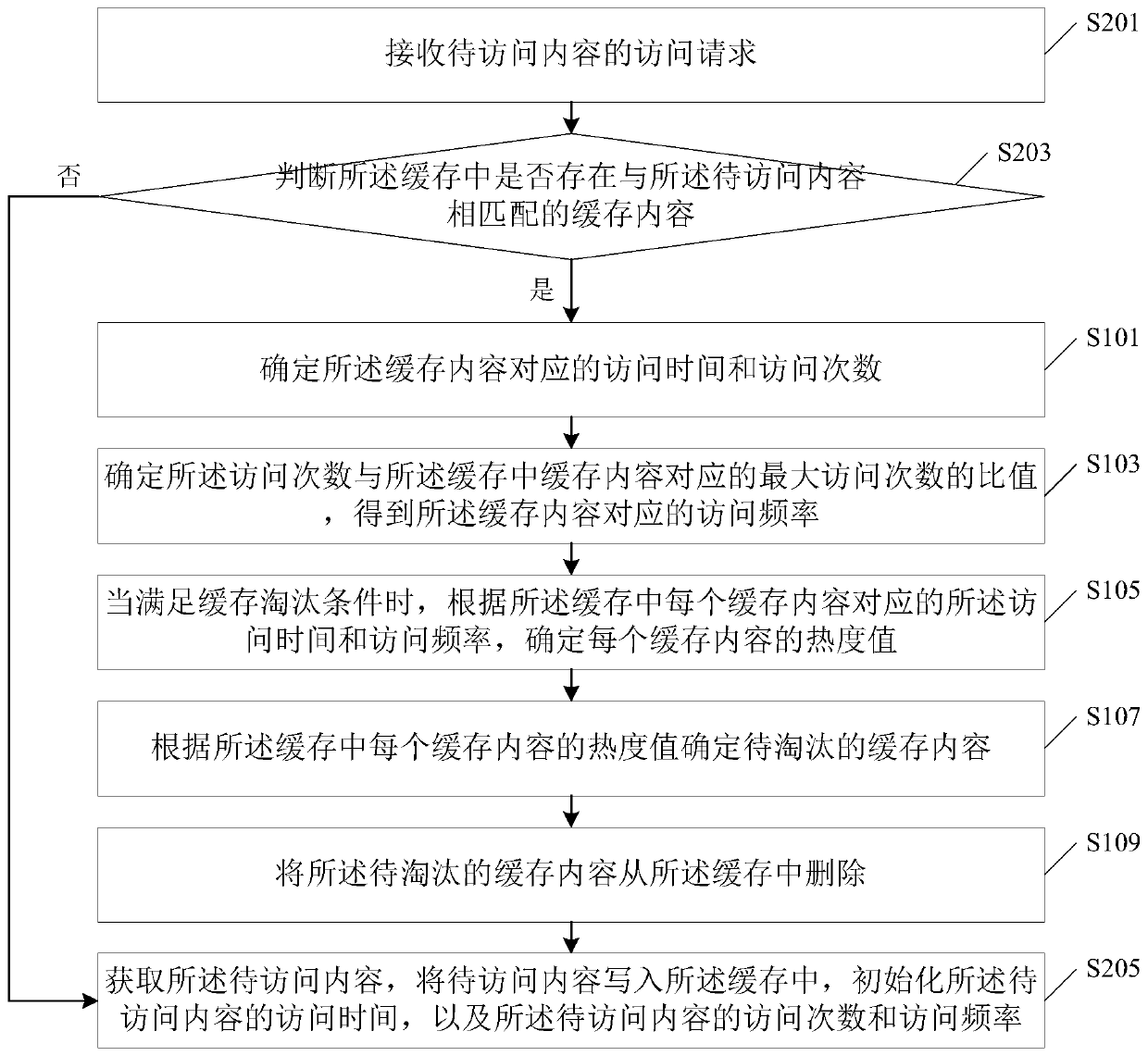

Cache management method and device, computer equipment and storage medium

ActiveCN111176560AAvoid pollutionImprove hit rateInput/output to record carriersAccess timeParallel computing

The invention discloses a cache management method and device, computer equipment and a storage medium, and the method comprises the following steps: determining the access time and access frequency corresponding to cache contents when an access request for the cache contents in a cache is received; determining the ratio of the access times to the maximum access times corresponding to the cache content in the cache to obtain the access frequency corresponding to the cache content; when a cache elimination condition is met, determining a popularity value of each cache content according to the access time and the access frequency corresponding to each cache content in the cache; determining cache content to be eliminated according to the popularity value of each cache content in the cache; and deleting the cache content to be eliminated from the cache. According to the method, the time dimension and the access frequency dimension are combined to comprehensively consider the cache contentto be eliminated, so that the cache content to be eliminated is determined more accurately, cache pollution is avoided, and the cache hit rate is increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

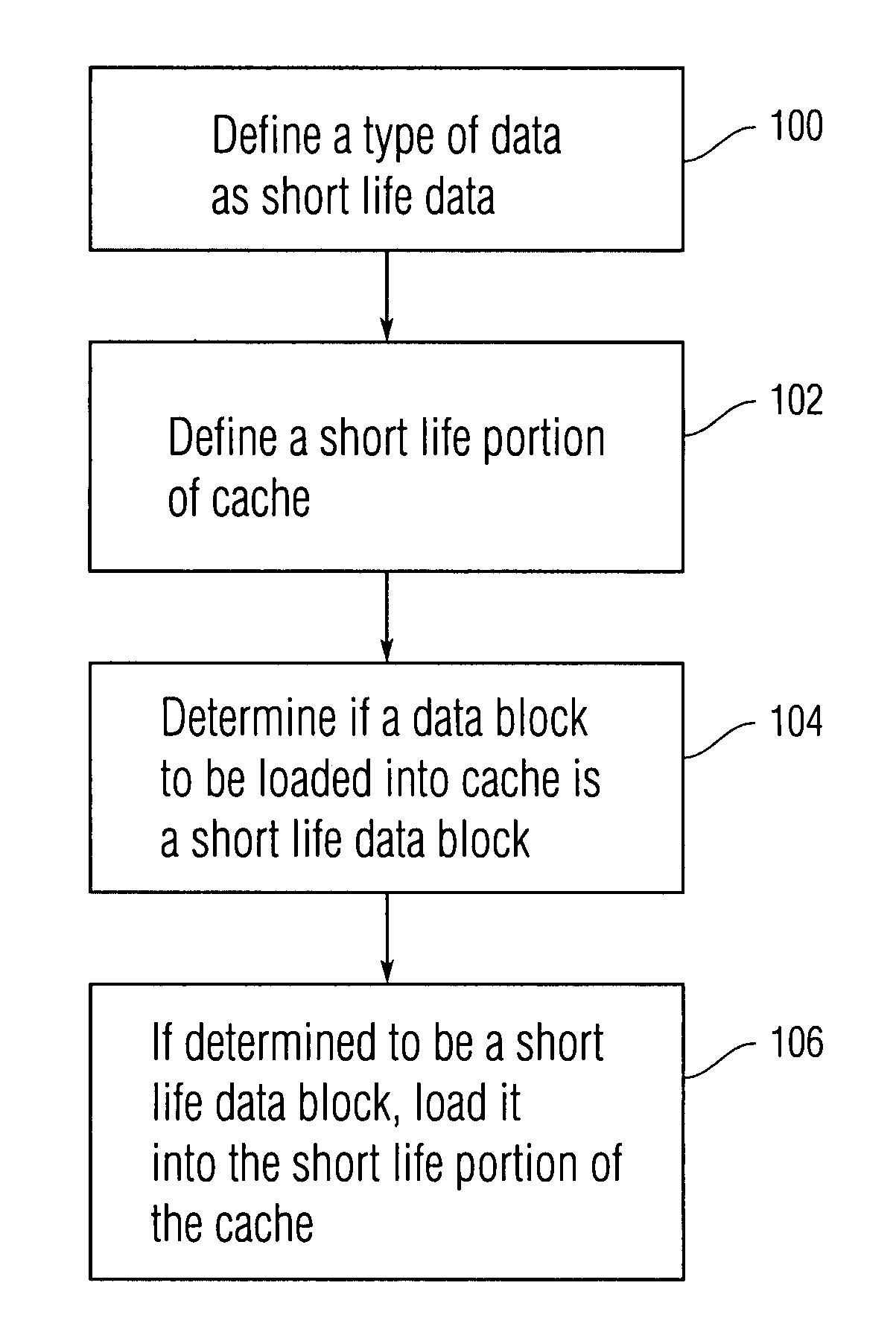

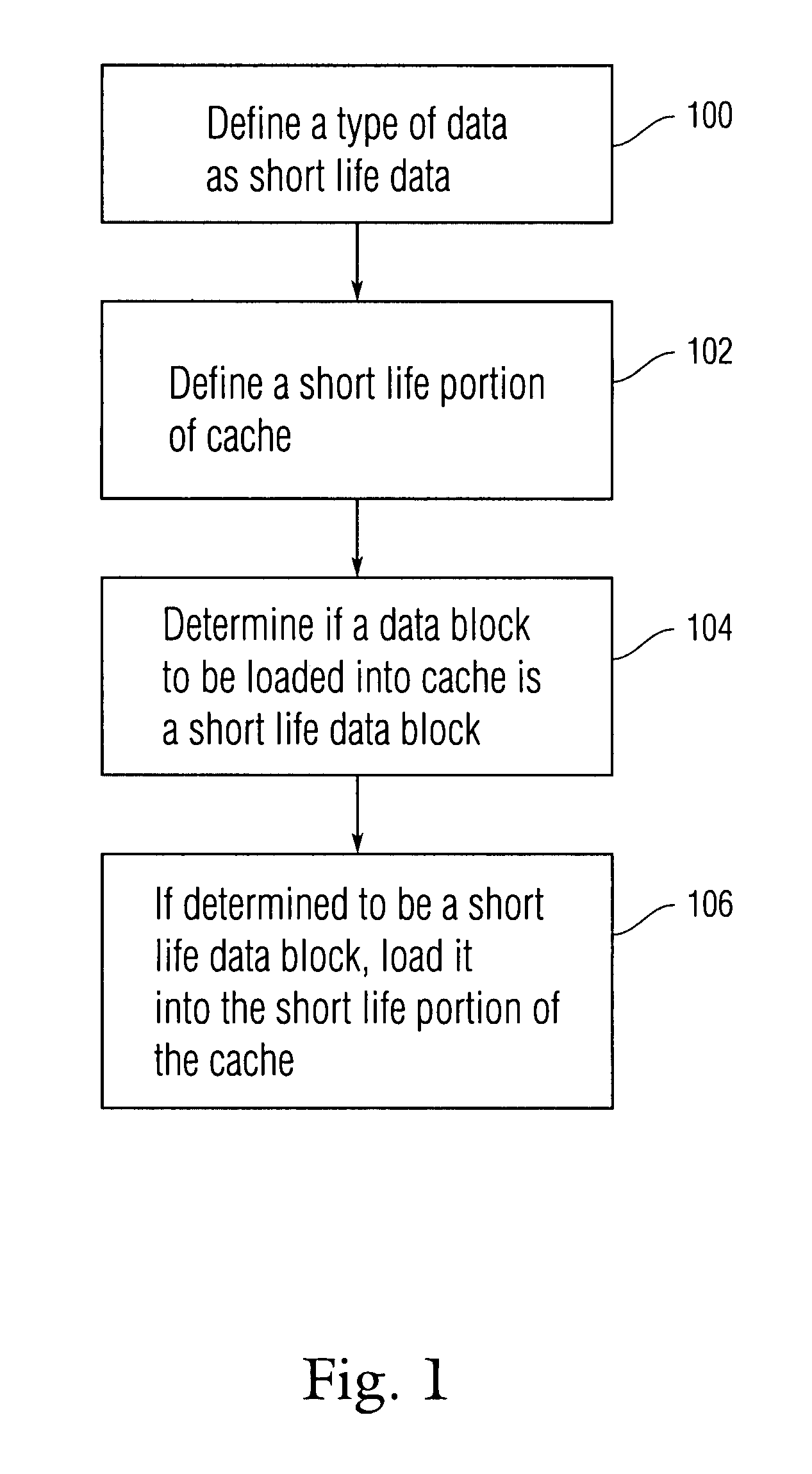

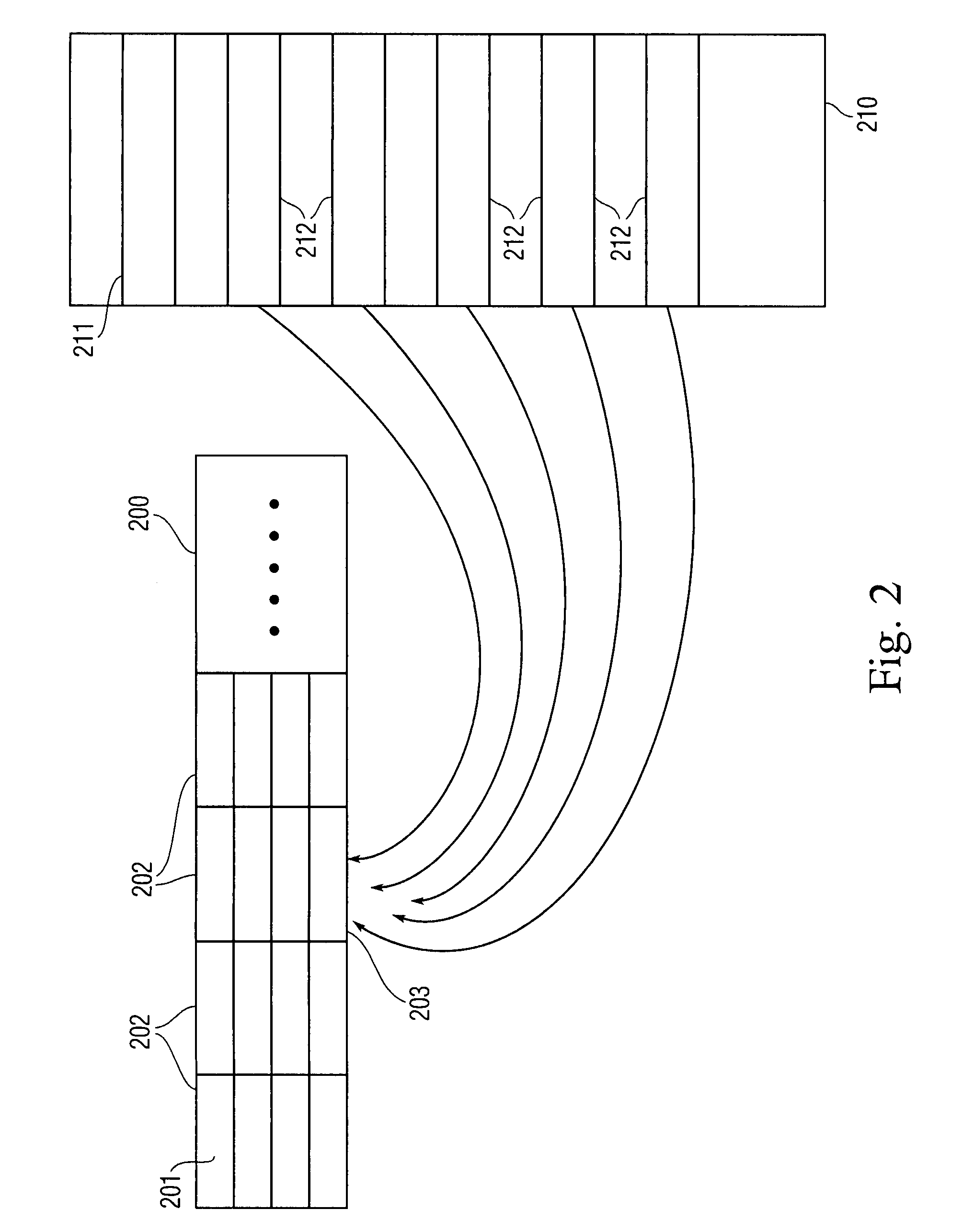

Cache pollution avoidance

Embodiments of the present invention are directed to a scheme in which information as to the future behavior of particular software is used in order to optimize cache management and reduce cache pollution. Accordingly, a certain type of data can be defined as “short life data” by using knowledge of the expected behavior of particular software. Short life data can be a type of data which, according to the ordinary expected operation of the software, is not expected to be used by the software often in the future. Data blocks which are to be stored in the cache can be examined to determine if they are short life data blocks. If the data blocks are in fact short life data blocks they can be stored only in a particular short life area of the cache.

Owner:AVAGO TECH INT SALES PTE LTD

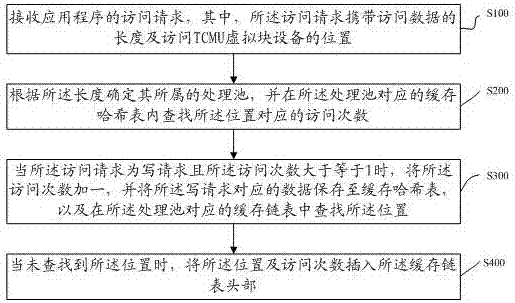

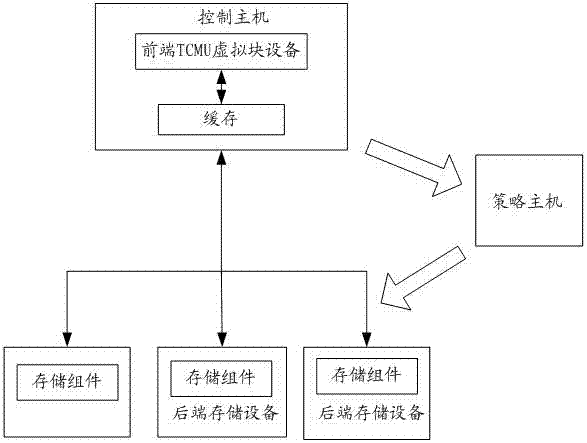

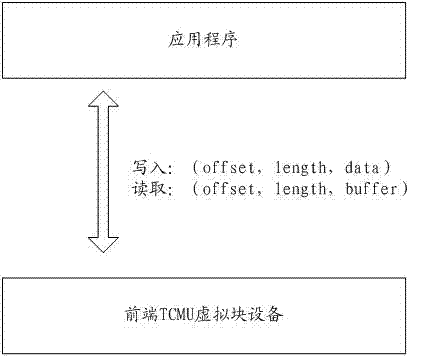

Cache data access method and system based on TCMU virtual block device

The invention discloses a cache data access method and system based on a TCMU virtual block device. The method includes the steps that an access request of an application program is received; a processing pool which data in the access request belongs to is determined according to the length, and a cache hash table corresponding to the processing pool is searched for the number of access times corresponding to the position; when the access request is a write request and the number of access times is larger than or equal to 1, 1 is added to the number of access times, data corresponding to the write request is stored in the cache hash table, and a cache chain table corresponding to the processing pool is searched for the position; when the position is not found, the position and the number of access times are inserted into the head of the cache chain table. By means of the method in accordance with the IO read-write characteristics of a TCMU, the read and write performance is improved; by means of the harsh table adopting secondary affirmation and the cache chain table adopting access-time-based elimination, hotspot data accessed many times recently can be effectively cached, massive accidental data access can be filtered out, and cache pollution is avoided.

Owner:深圳市联云港科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com