Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

331 results about "Cache capacity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The current cache capacity is the sum of the "used size" capacity of all of the data SSDs used in Flash Pools on the system. Parity SSDs do not count toward the limit.

Cache memory allocation method

InactiveUS7000072B1Effective preventive measureAvoid bumpingMemory architecture accessing/allocationData processing applicationsDistribution methodComputerized system

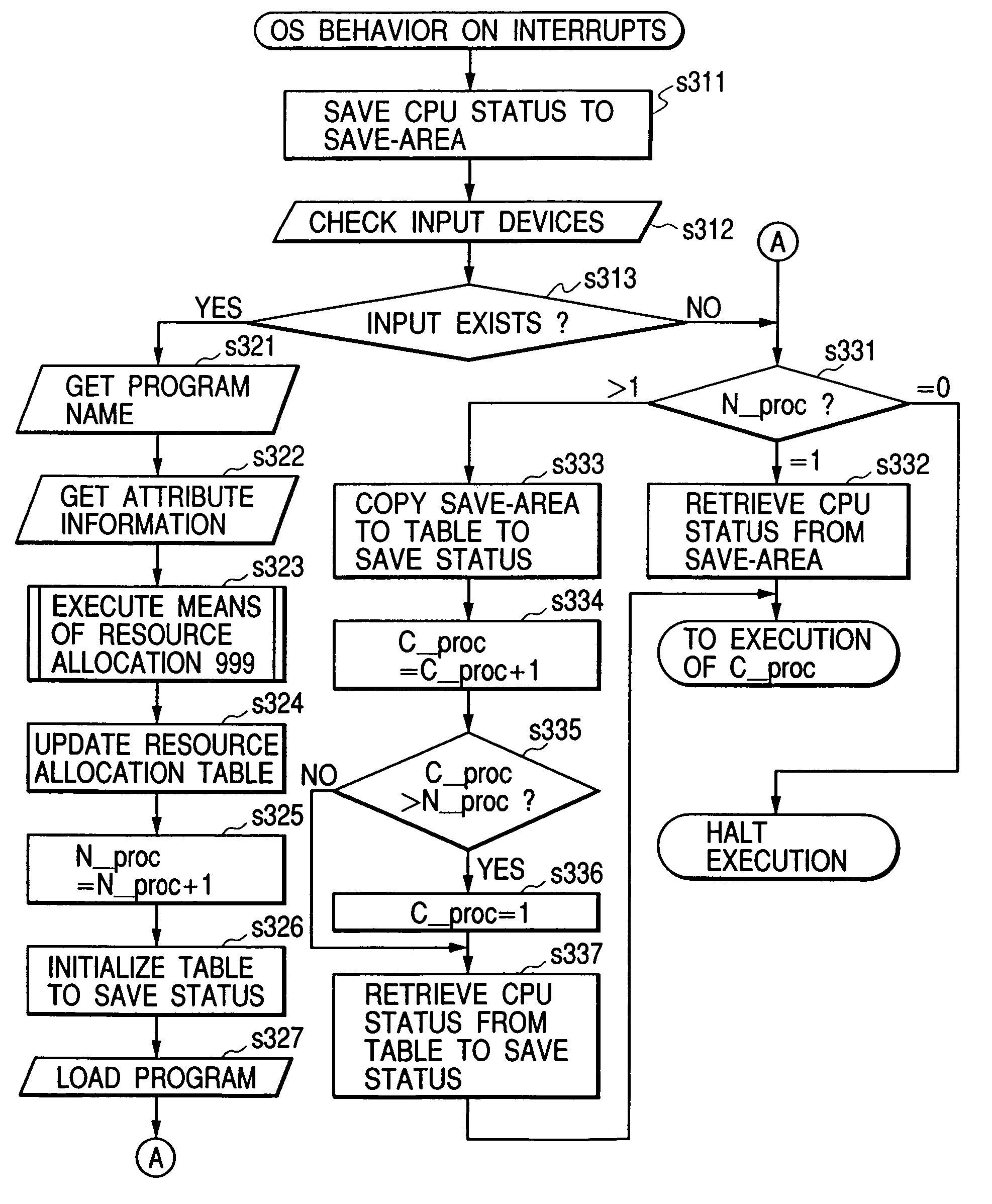

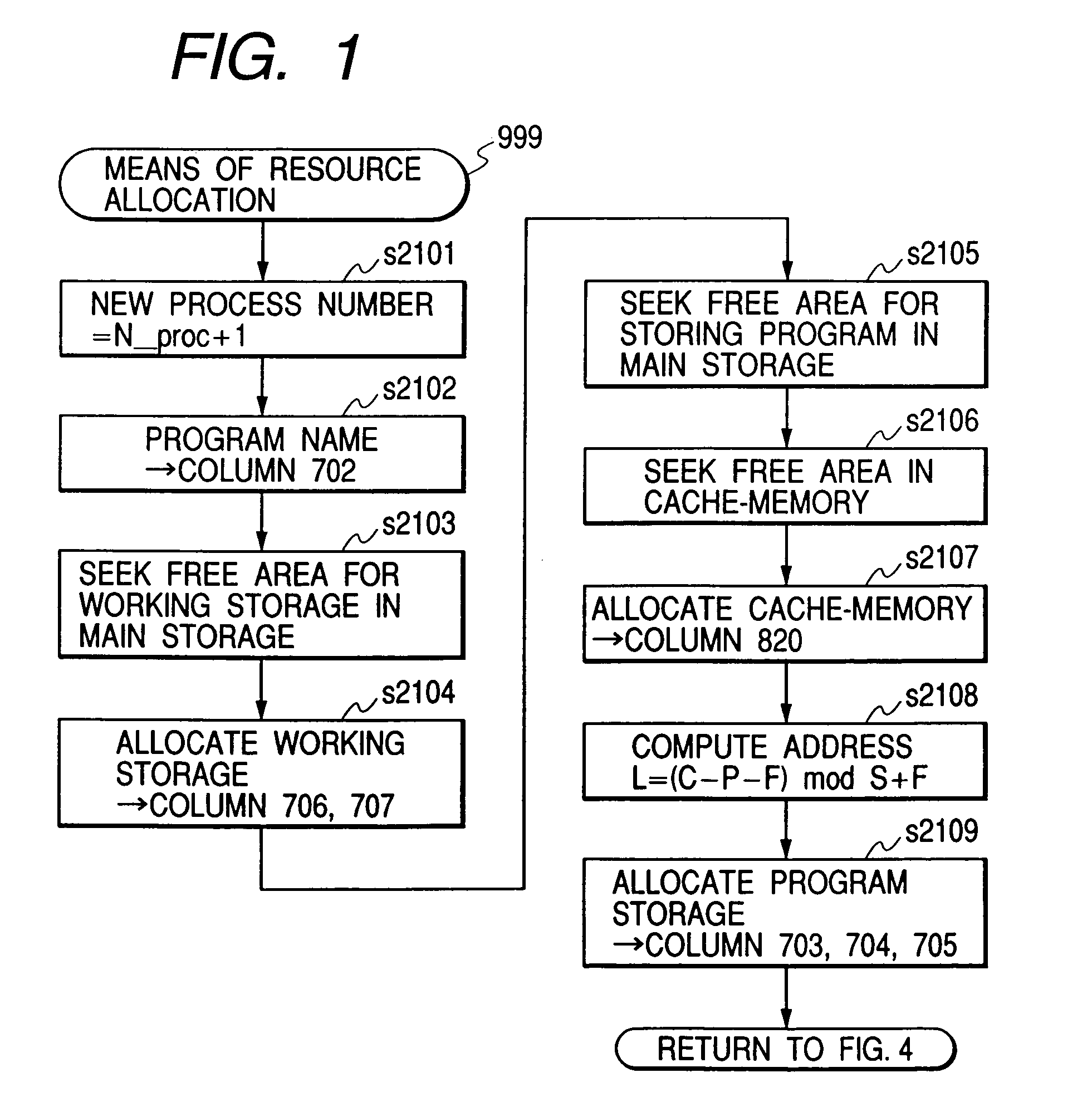

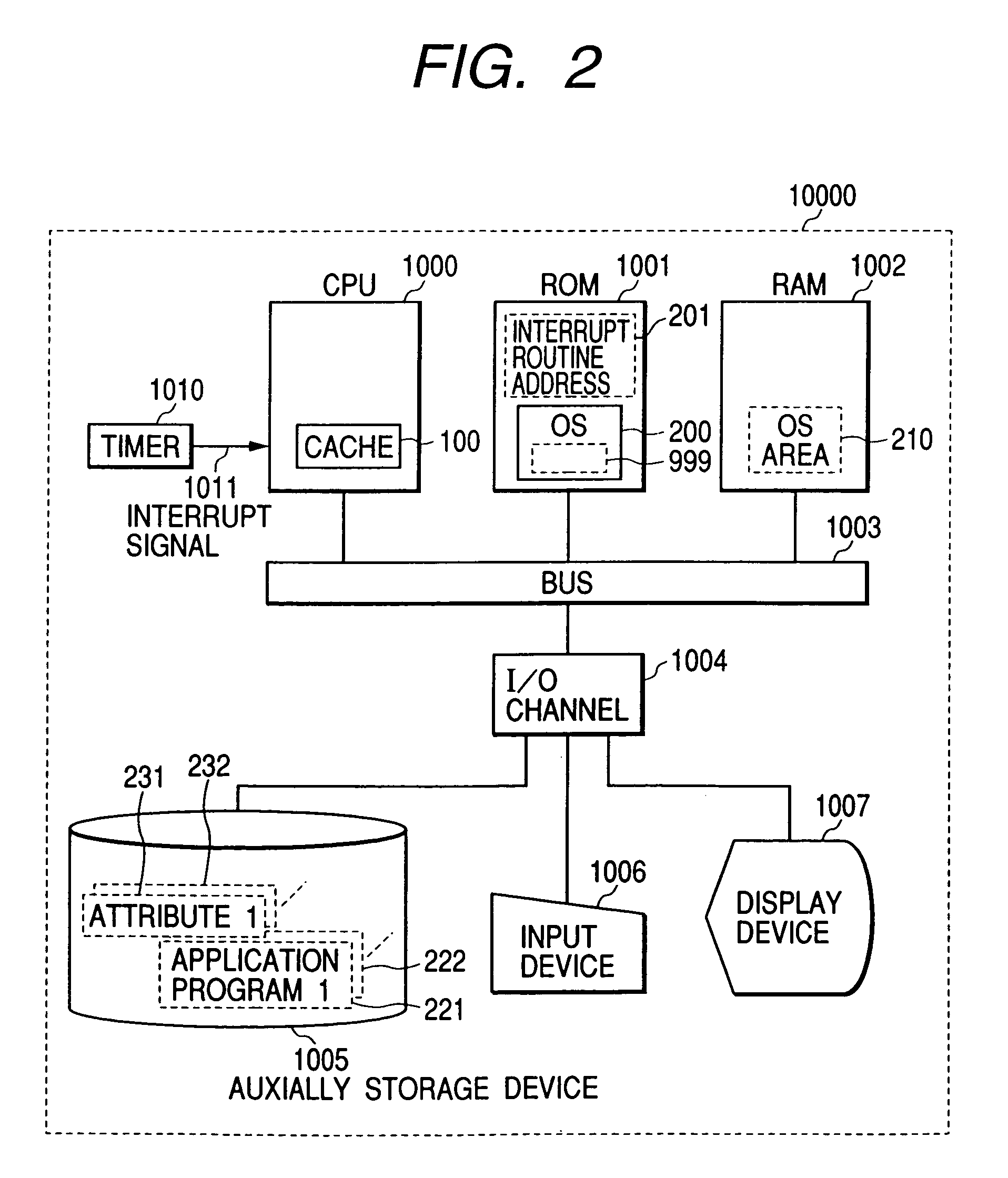

To assure the multiprocessing performance of CPU on a microprocessor, the invention provides a method of memory mapping for multiple concurrent processes, thus minimizing cache thrashing. An OS maintains a management (mapping) table for controlling the cache occupancy status. When a process is activated, the OS receives from the process the positional information for a specific part (principal part) to be executed most frequently in the process and coordinates addressing of a storage area where the process is loaded by referring to the management table, ensuring that the cache address assigned for the principal part of the process differs from that for any other existing process. Taking cache memory capacity, configuration scheme, and process execution priority into account when executing the above coordination, a computer system is designed such that a highest priority process can have a first priority in using the cache.

Owner:HITACHI LTD

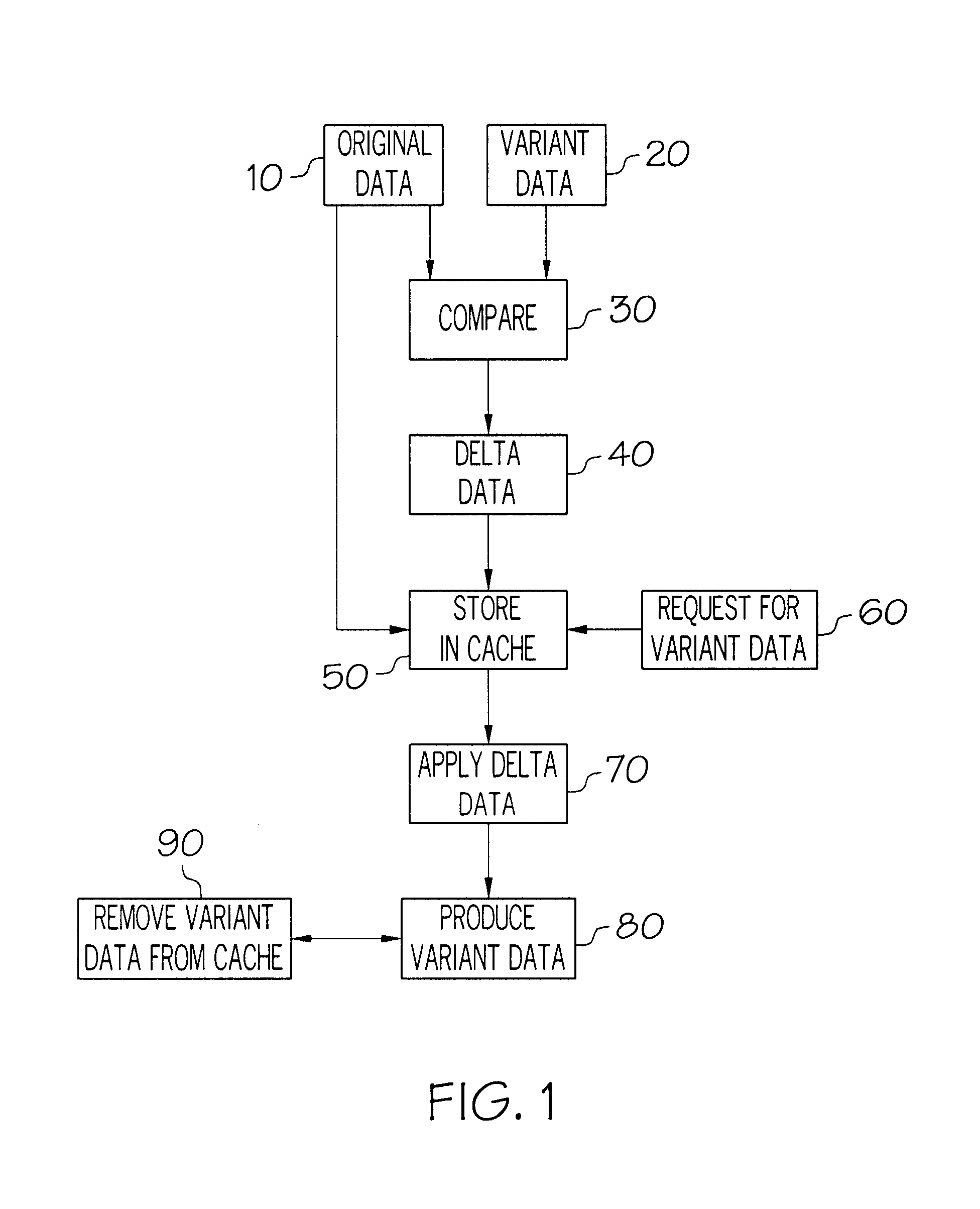

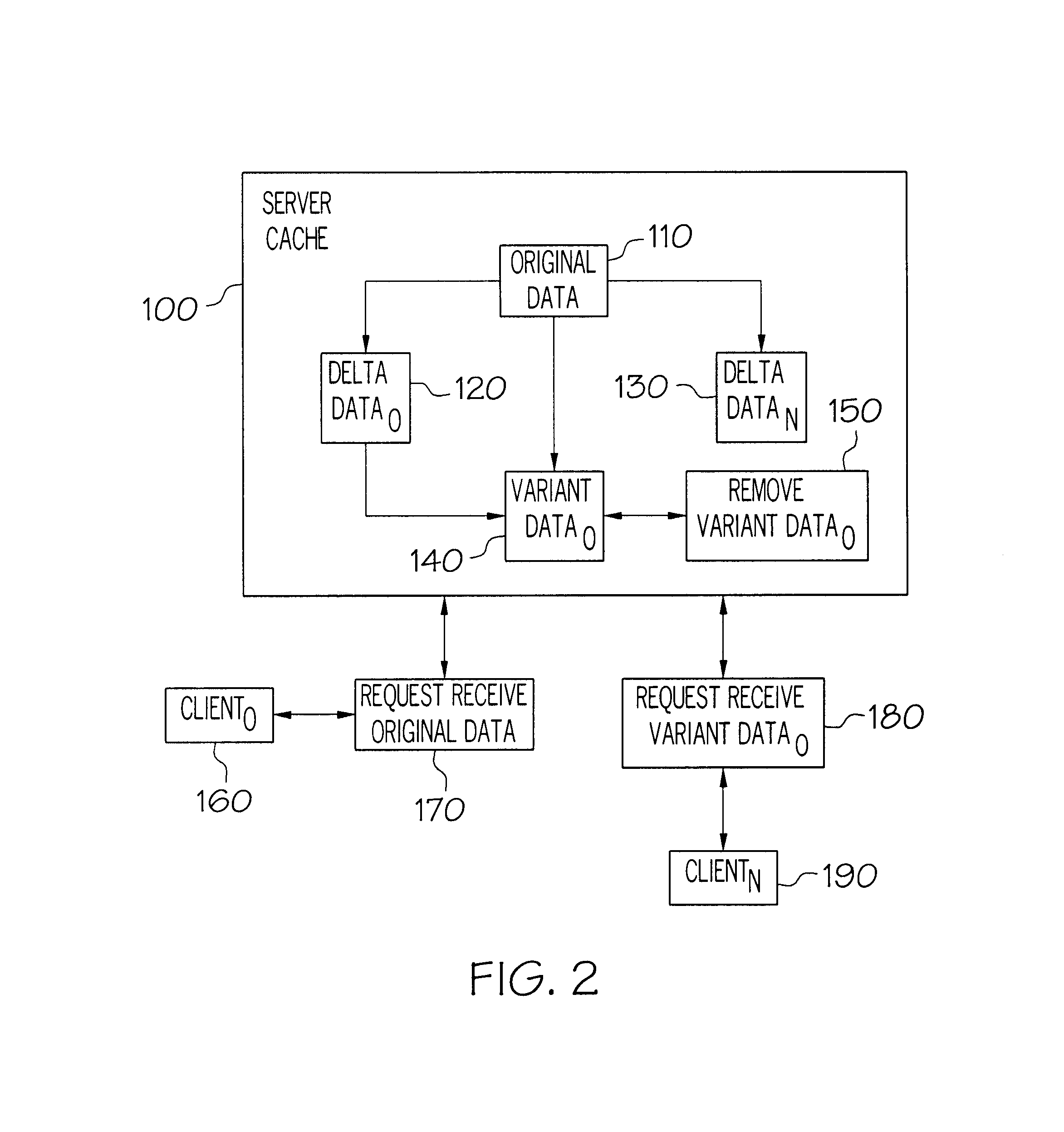

Methods for increasing cache capacity utilizing delta data

InactiveUS6850964B1Improve caching capacityIncrease capacityDigital data information retrievalData processing applicationsOriginal dataParallel computing

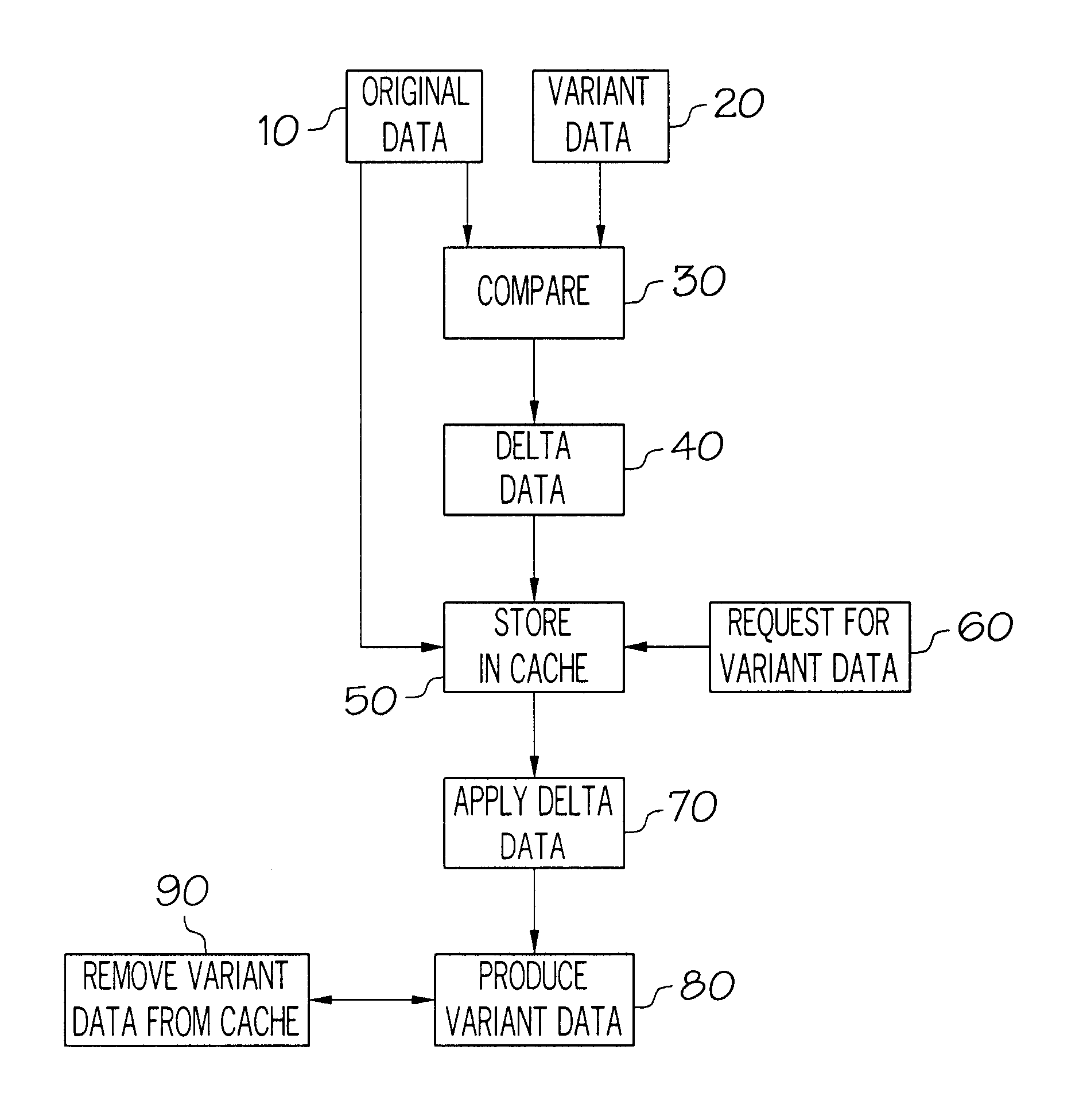

Methods of increasing cache capacity are provided. A method of increasing cache capacity is provided wherein original data are received in a cache with access to one or more variants of the original data being required, but the variants do not reside in the cache. Access is provided to the variants by using one or more delta data operable to be applied against the original data to produce the variants. Moreover, a method of reducing cache storage requirements is provided wherein original data and variants of the original data are identified and located within the cache. Further, delta data are produced by noting one or more differences between the original data and the variants, with the variants being purged from the cache. Furthermore, a method of producing derivatives from an original data is provided wherein the original data and variants of the original data are identified. Delta data are produced by recording the difference between each variant and the original data, with the delta data and the original data being retained in the cache.

Owner:MICRO FOCUS SOFTWARE INC

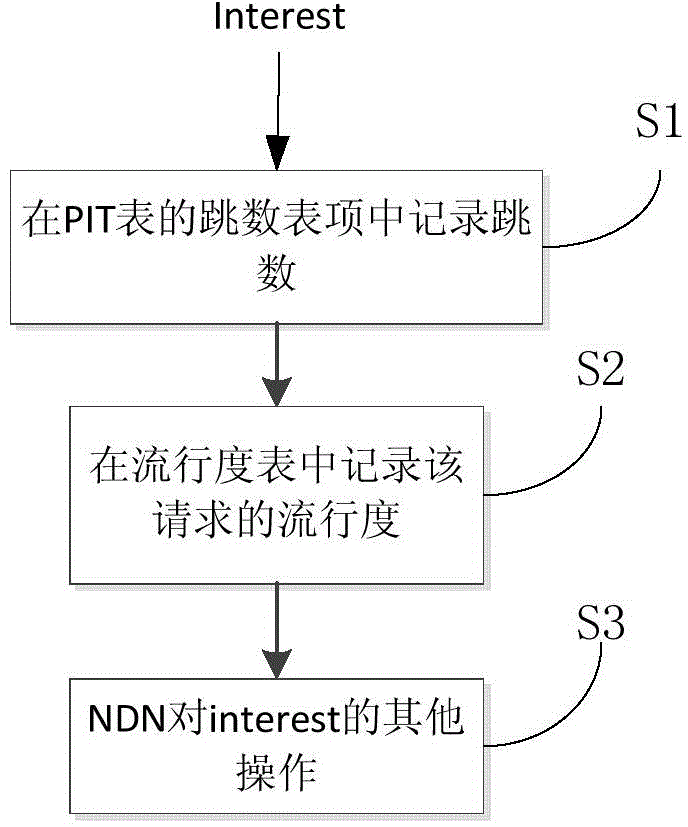

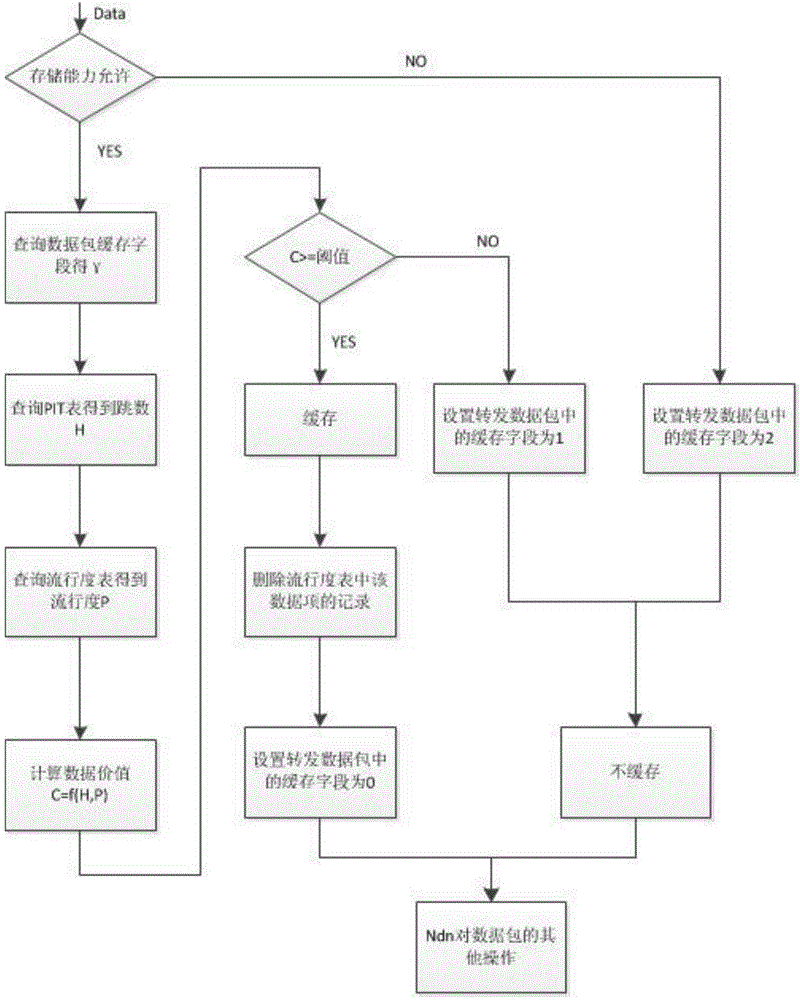

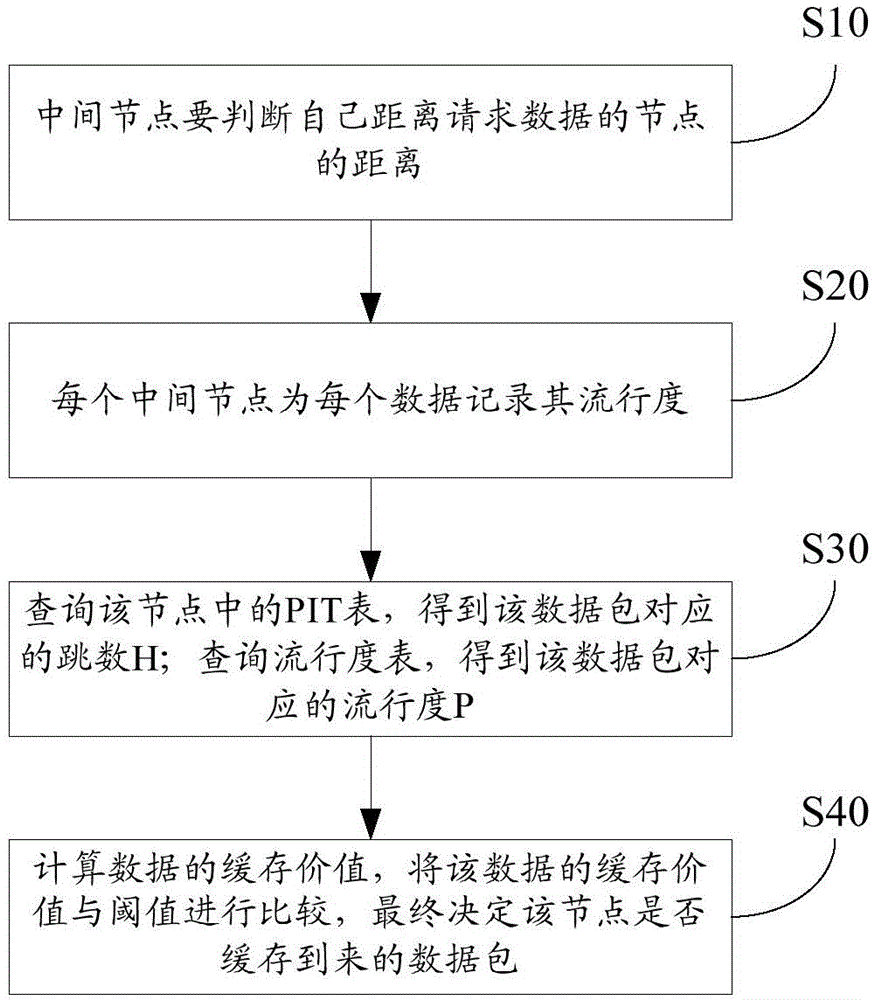

Popularity-based equilibrium distribution caching method for named data networking

InactiveCN104901980AReduce redundancyQuick responseData switching networksTraffic capacityNetwork packet

The invention provides a popularity-based equilibrium distribution caching method for named data networking and relates to the technical field of caching in named data networking. The method comprises the steps as follows: an intermediate node judging the distance between the intermediate node itself and a node of requested data; recording popularity for each data at each intermediate node; querying a PIT table in the node to obtain a hop count H corresponding to a data package; querying a popularity table to obtain popularity P corresponding to the data package; calculating the caching value of the data, comparing the caching value of the data with a threshold value, and finally determining whether to cache the arrived data package. The popularity-based equilibrium distribution caching method of the invention improves response speed and reduces network flow, simultaneously gives consideration to cache capacity of mobile nodes and reduces the redundancy degree of data in the network.

Owner:BEIJING UNIV OF TECH

Method for building dynamic buffer pool to improve performance of storage system based on virtual RAID

ActiveCN101604226AGood effectNo additional cost of acquisitionInput/output to record carriersMemory adressing/allocation/relocationRAIDTurnkey

The invention provides a method for building dynamic buffer pool to improve performance of a storage system based on virtual RAID. The storage system comprises a physical storage device, a storage array, a storage array turnkey, virtual RAID and a virtual buffer pool. The building method comprises the following steps of setting up strategies for the virtual buffer pool, creating the virtual RAID, creating a block device mapping table, creating the virtual buffer pool, creating a virtual RAID mapping table, fetching hotspot data to the buffer pool, creating a mapping table for the virtual buffer pool, updating the hotspot data at regular time, modifying a mapping table of the hotspot data, clearing the virtual buffer pool, modifying the virtual RAID, modifying the block device mapping table, modifying the virtual buffer pool, and modifying the virtual RAID mapping table. The method of the invention effectively solves the problem that the system performance is lowered due to insufficient buffer capacity under the premises that the limited device slots of the system are not occupied and the hard disk cost is not increased.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

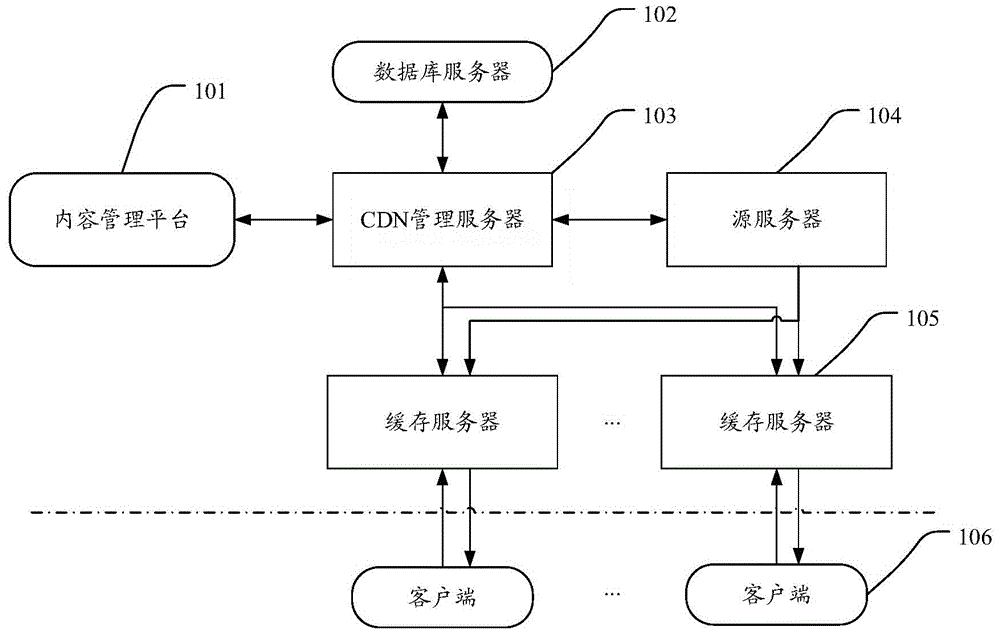

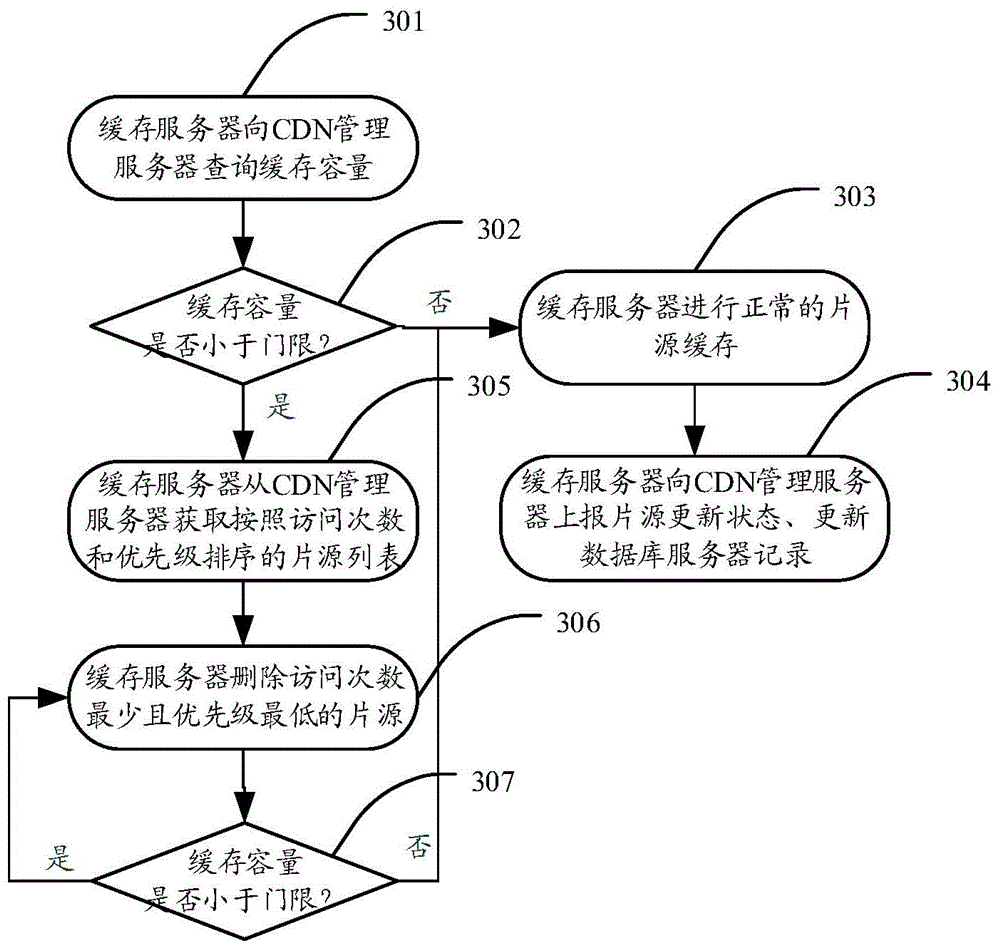

CDN video buffer system and method

ActiveCN104967861ASave cache capacitySave bandwidthSelective content distributionVideo monitoringCache server

The invention relates to a CDN (Content Delivery Network) video buffer system, comprising a CDN management server, a content management platform, a plurality of buffer servers, a source server, a plurality of clients and a database server. The invention also relates to a CDN video buffer method which obtains the times of film sources being visited by clients in a certain period in real time from the plurality of buffer servers, and only when the times of being visited reach a threshold value can the film sources being buffered in the buffer servers. In addition, the system comprises performing priority annotation on each film source in the system, buffering film sources with high priorities into the buffer servers, and deleting film sources with low priorities when the capacities of the buffer servers are full. The CDN video buffer system and method optimize buffer capacity management, avoid frequent buffer replacement and bandwidth waste, and are widely applied to the fields of video click or live video, and network video monitoring.

Owner:SHANGHAI MEIQI PUYUE COMM TECH

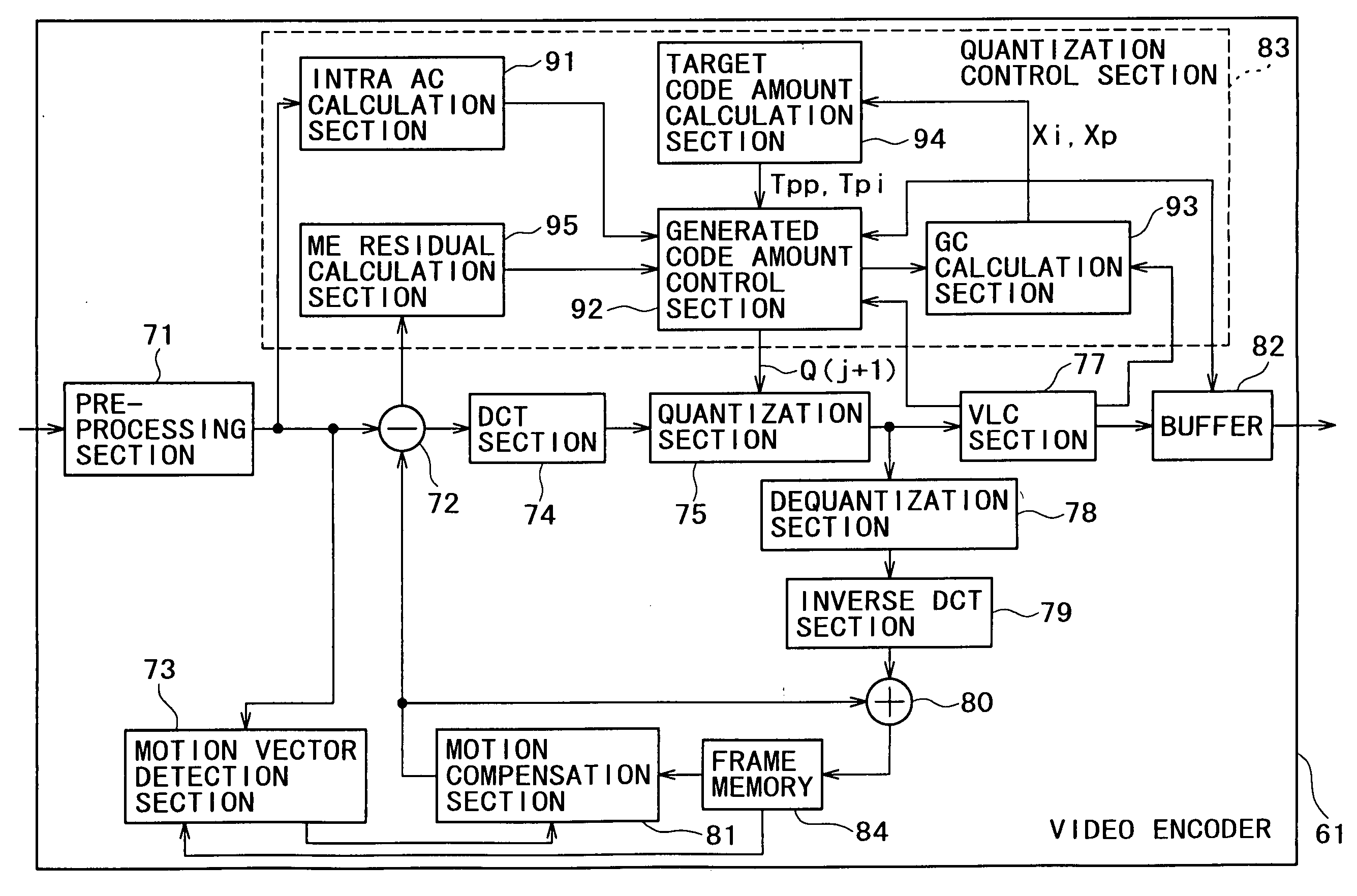

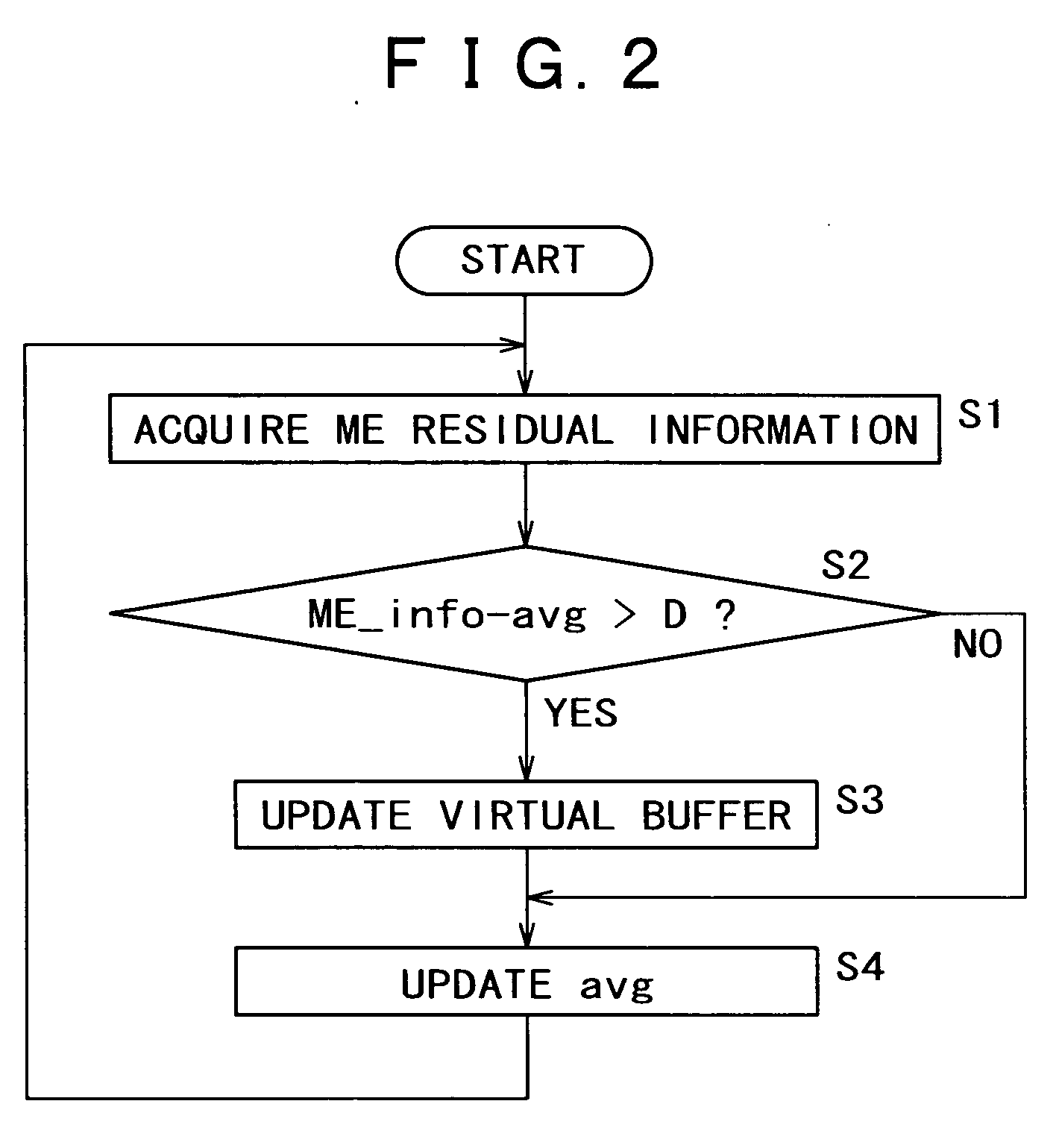

Coding apparatus and method, program, and recording medium

InactiveUS20050152450A1Quality improvementTelevision system detailsPicture reproducers using cathode ray tubesArtificial intelligenceCache capacity

A coding apparatus and method is disclosed by which quantization index data can be determined without referring to a virtual buffer capacity initialized in response to a scene change. ME residual information is acquired first, and it is discriminated whether or not the ME residual information is higher than a threshold value. If it is discriminated that the ME residual information is higher than the threshold value, then the initial buffer capacity of a virtual buffer is updated. On the other hand, if it is discriminated that the ME residual information is equal to or lower than the threshold value, then it is discriminated whether or not the picture being currently processed is a picture next to a scene change or is a next picture of the same type as that of a picture with which a scene change has occurred. If it is discriminated that the picture being processed is a picture next to a scene change or a next picture of the same type, then the initial buffer capacity is calculated and updated. Further, an average value of the ME residual information is updated. The invention can be applied to a video encoder.

Owner:SONY CORP

Data Compression/Decompression Device

ActiveUS20150100556A1Improve compression performanceGood decompression effectMemory architecture accessing/allocationDigital data processing detailsData compressionData compression ratio

When compressing an arrangement of fixed-length records in a columnar direction, a data compression device carries out data compression aligned with the performance of a data decompression device by computing a number of rows processed with one columnar compression from the performance on the decompression device side, such as the memory cache capacity of the decompression device or the capacity of a primary storage device which may be used by an application, and the size of one record. Thus, while improving compression ratios of large volumes of data, including an alignment of a plurality of fixed-length records, decompression performance is improved.

Owner:CLARION CO LTD

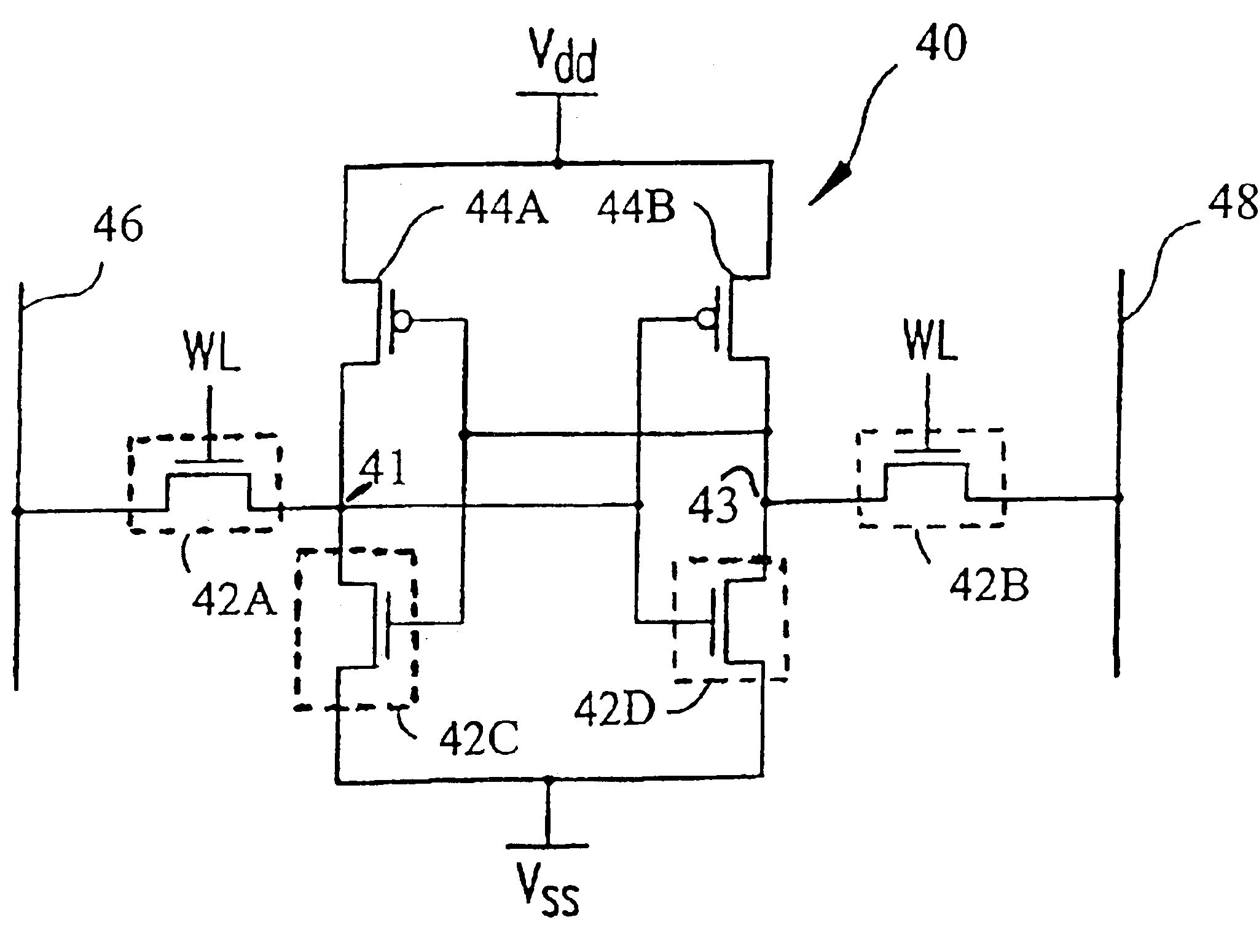

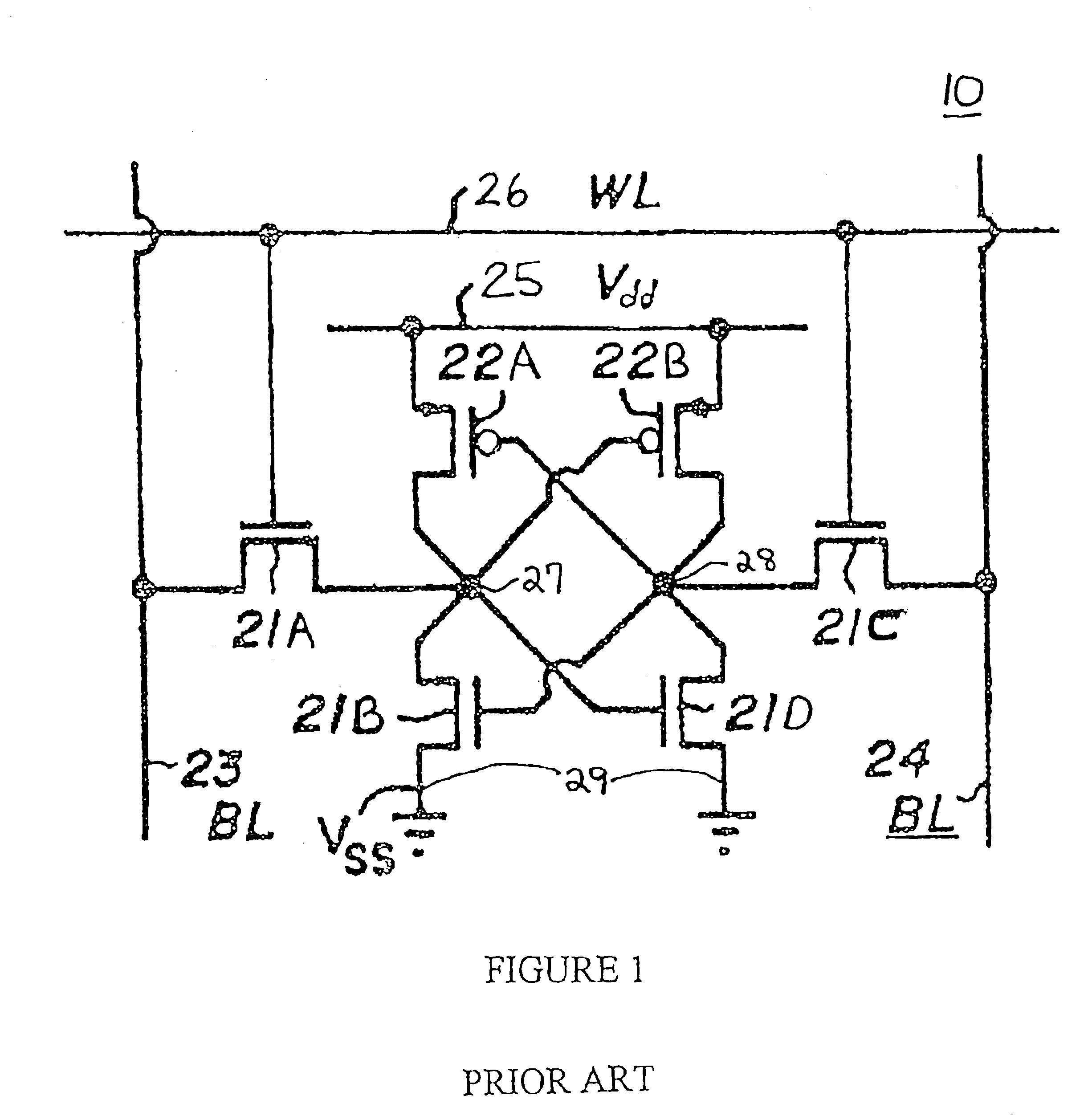

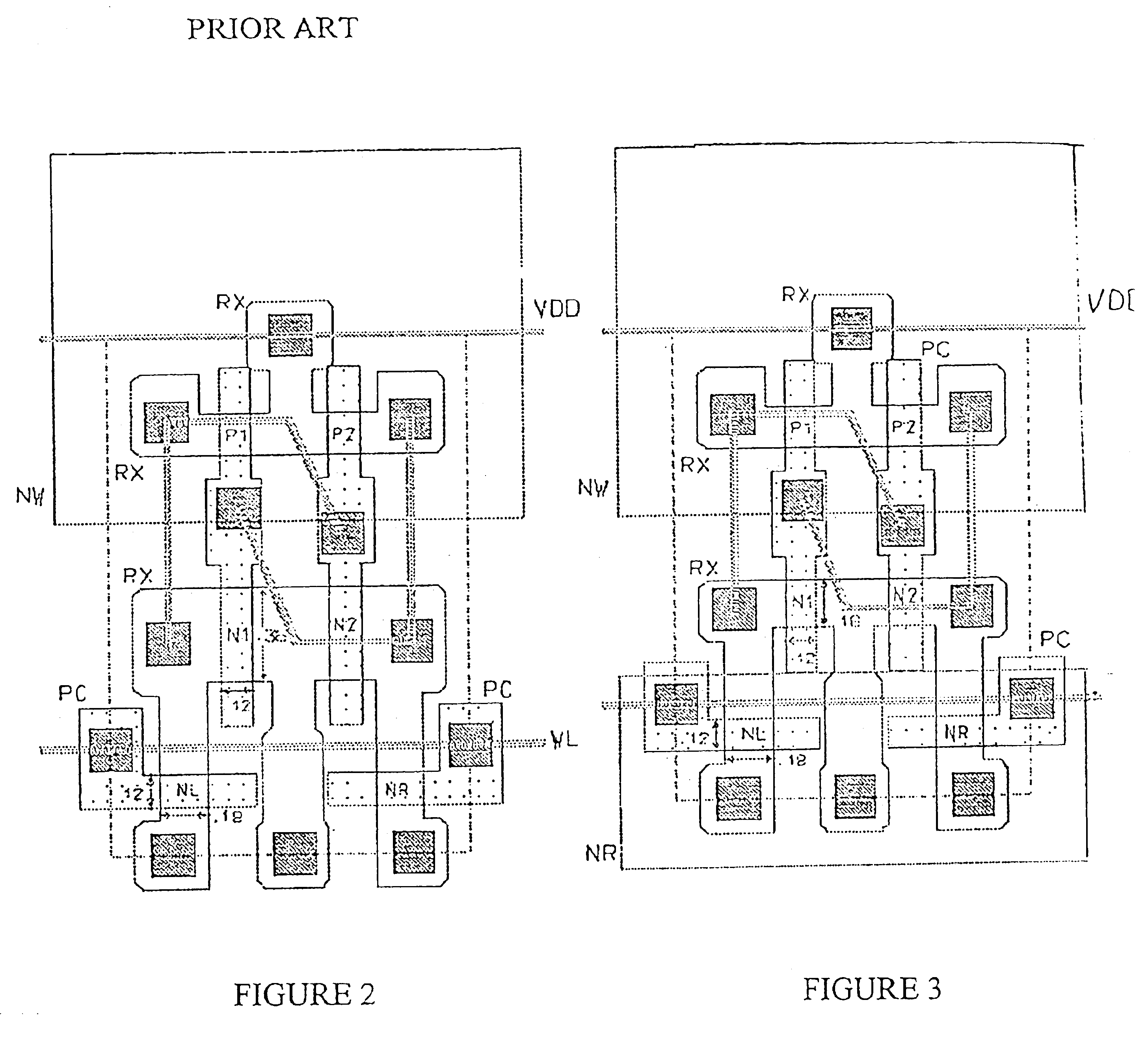

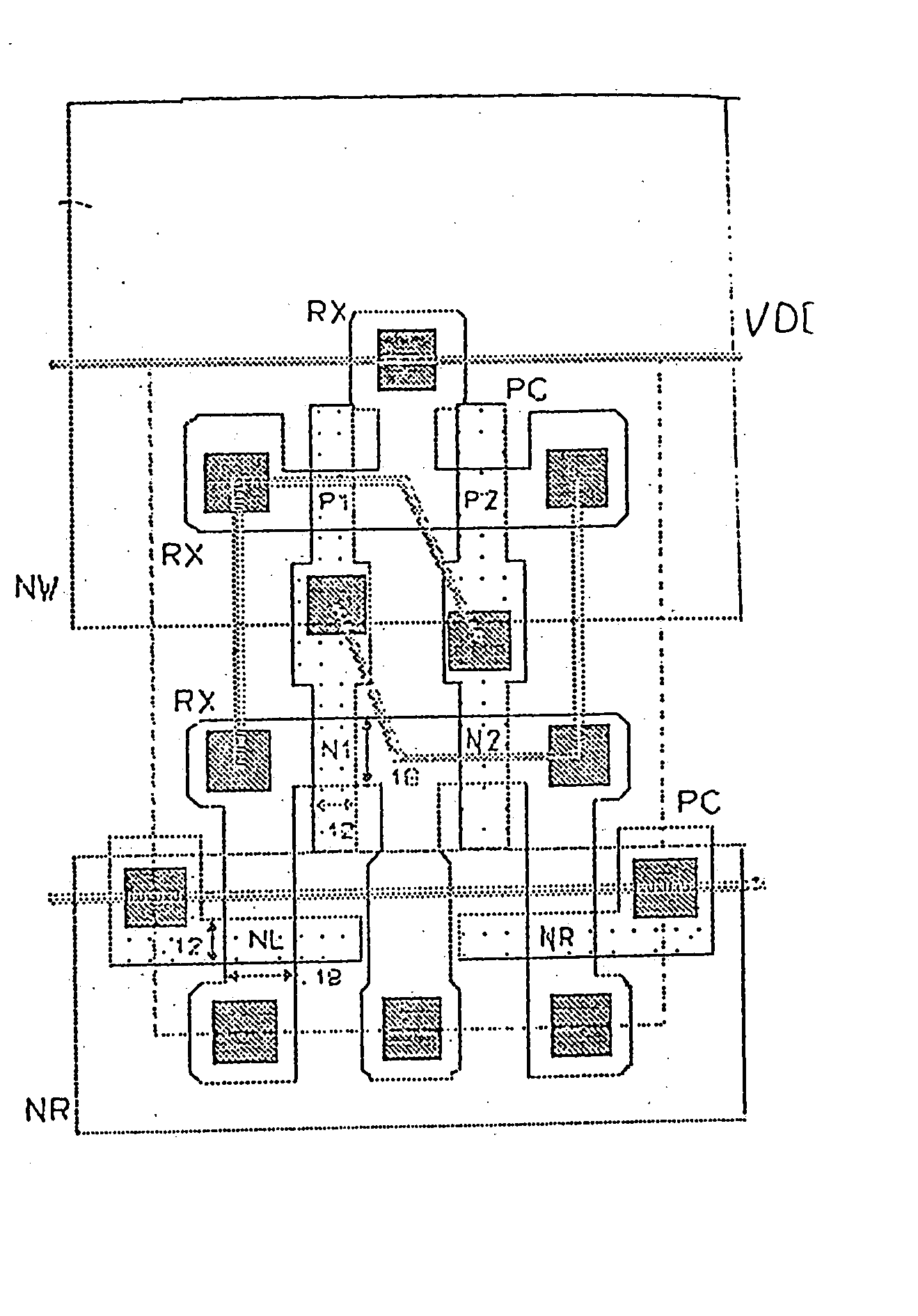

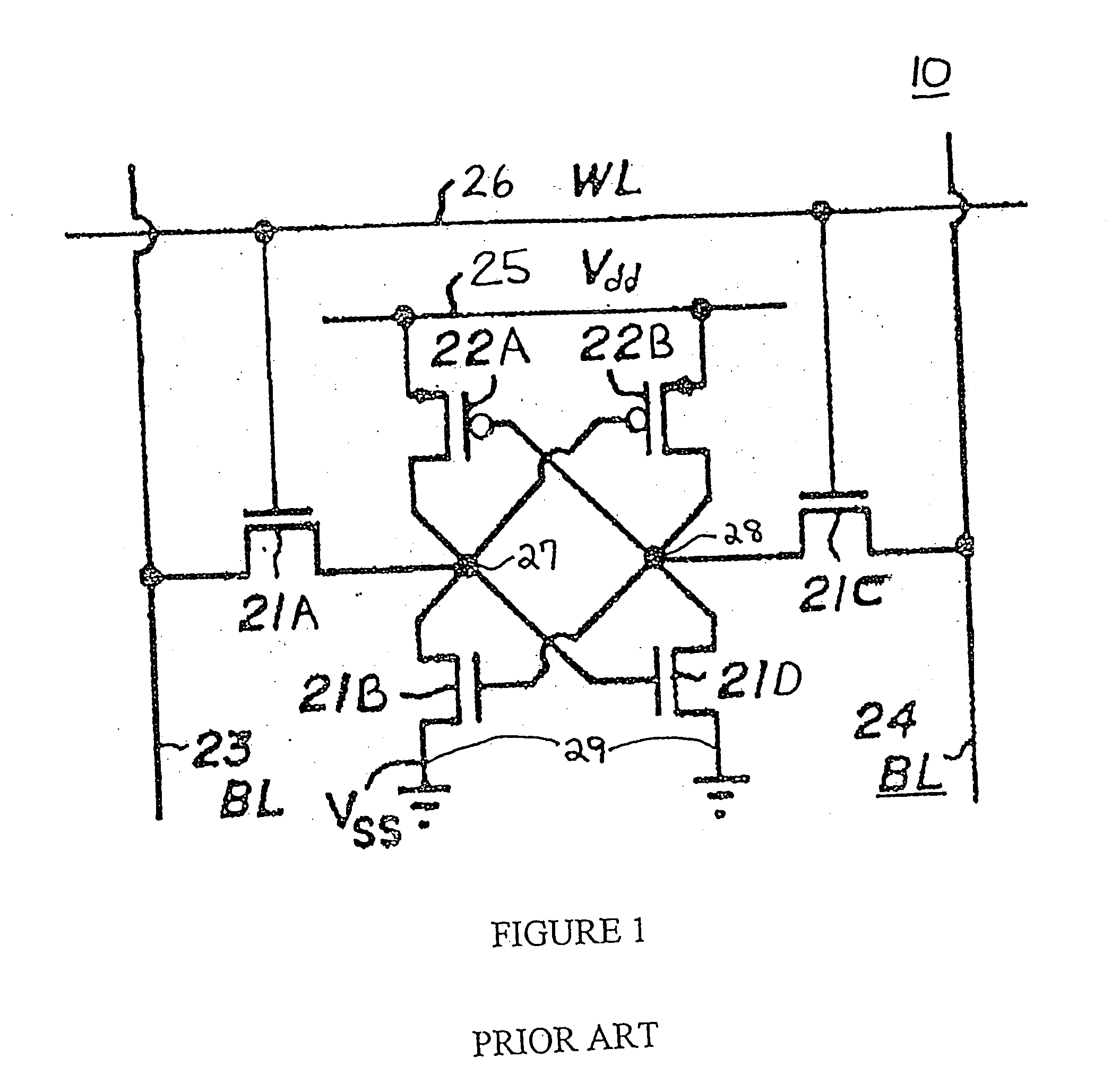

Method to improve cache capacity of SOI and bulk

InactiveUS6934182B2High voltageSmall sizeSolid-state devicesSemiconductor/solid-state device manufacturingEngineeringSmall cell

Methods for designing a 6T SRAM cell having greater stability and / or a smaller cell size are provided. A 6T SRAM cell has a pair access transistors (NFETs), a pair of pull-up transistors (PFETs), and a pair of pull-down transistors (NFETs), wherein the access transistors have a higher threshold voltage than the pull-down transistors, which enables the SRAM cell to effectively maintain a logic “0” during access of the cell thereby increasing the stability of the cell, especially for cells during “half select.” Further, a channel width of a pull-down transistor can be reduced thereby decreasing the size of a high performance six transistor SRAM cell without effecting cell the stability during access. And, by decreasing the cell size, the overall design layout of a chip may also be decreased.

Owner:INTEL CORP

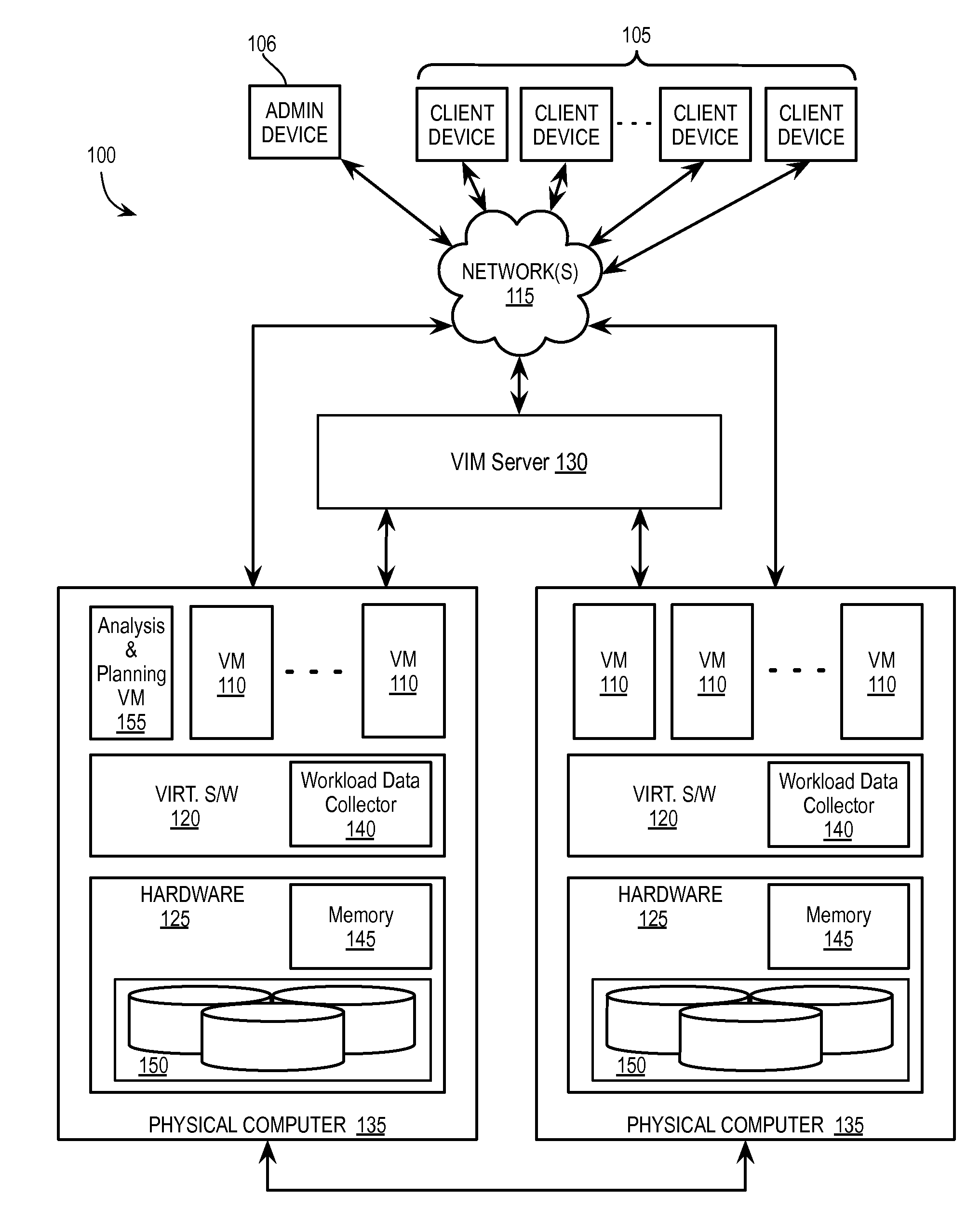

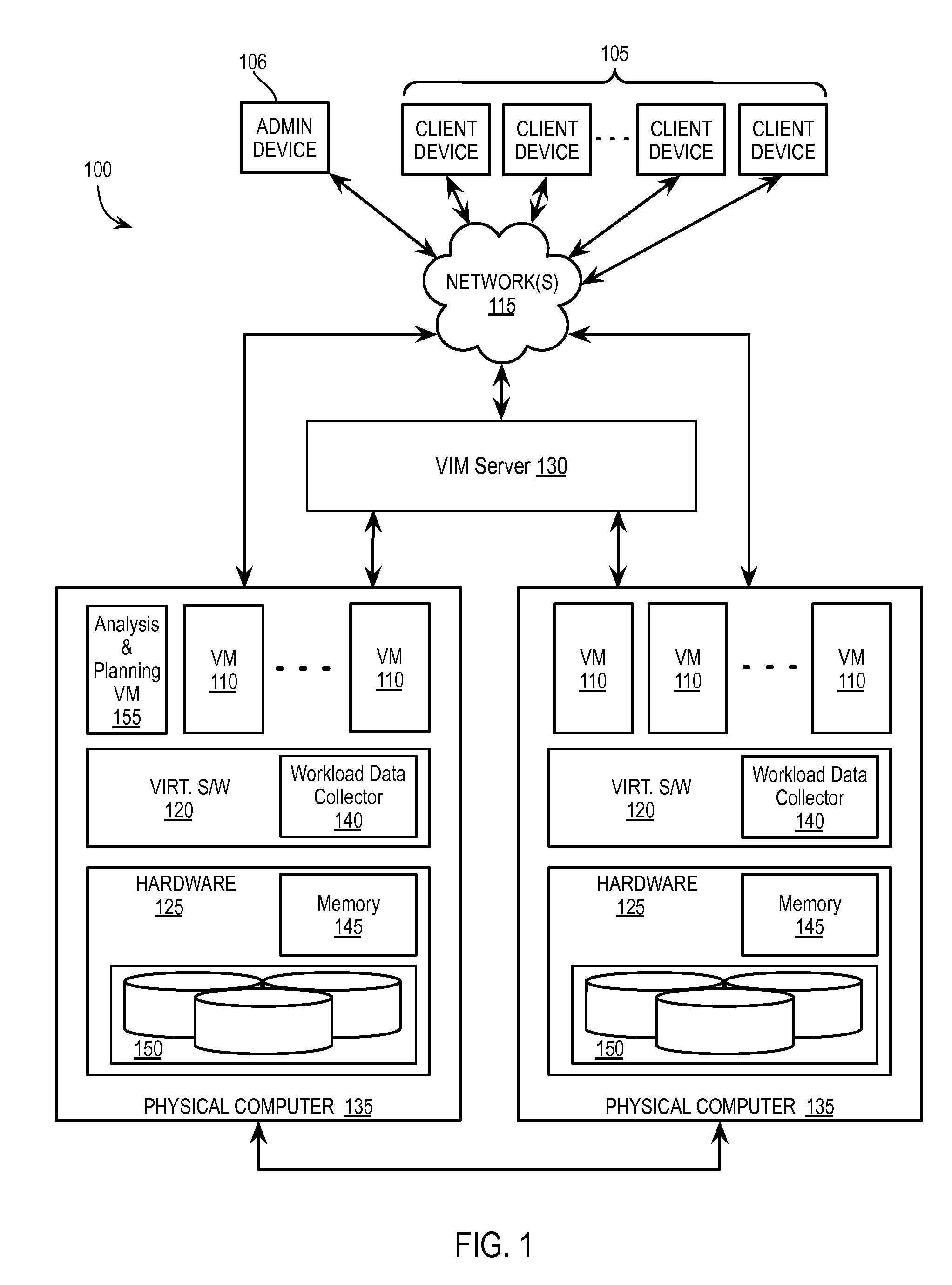

Workload selection and cache capacity planning for a virtual storage area network

ActiveUS20160150003A1Memory architecture accessing/allocationError detection/correctionFrequency spectrumArea network

Exemplary methods, apparatuses, and systems receive characteristics of a plurality of input / output (I / O) requests from a workload, including logical address distance values between I / O requests and data lengths of the plurality of I / O requests. Based upon the characteristics, a data length value representative of the data lengths of the plurality of I / O requests is determined and an access pattern of the plurality of I / O requests is determined. A notification that the first workload is suitable for a virtual storage area network environment is generated based upon the characteristics. The first workload is selected as suitable in response to determining the data length value for the data lengths of the plurality of I / O requests is less than a data length threshold and / or the access pattern of the plurality of I / O requests is more random than an access pattern threshold on the spectrum from random access to sequential access.

Owner:VMWARE INC

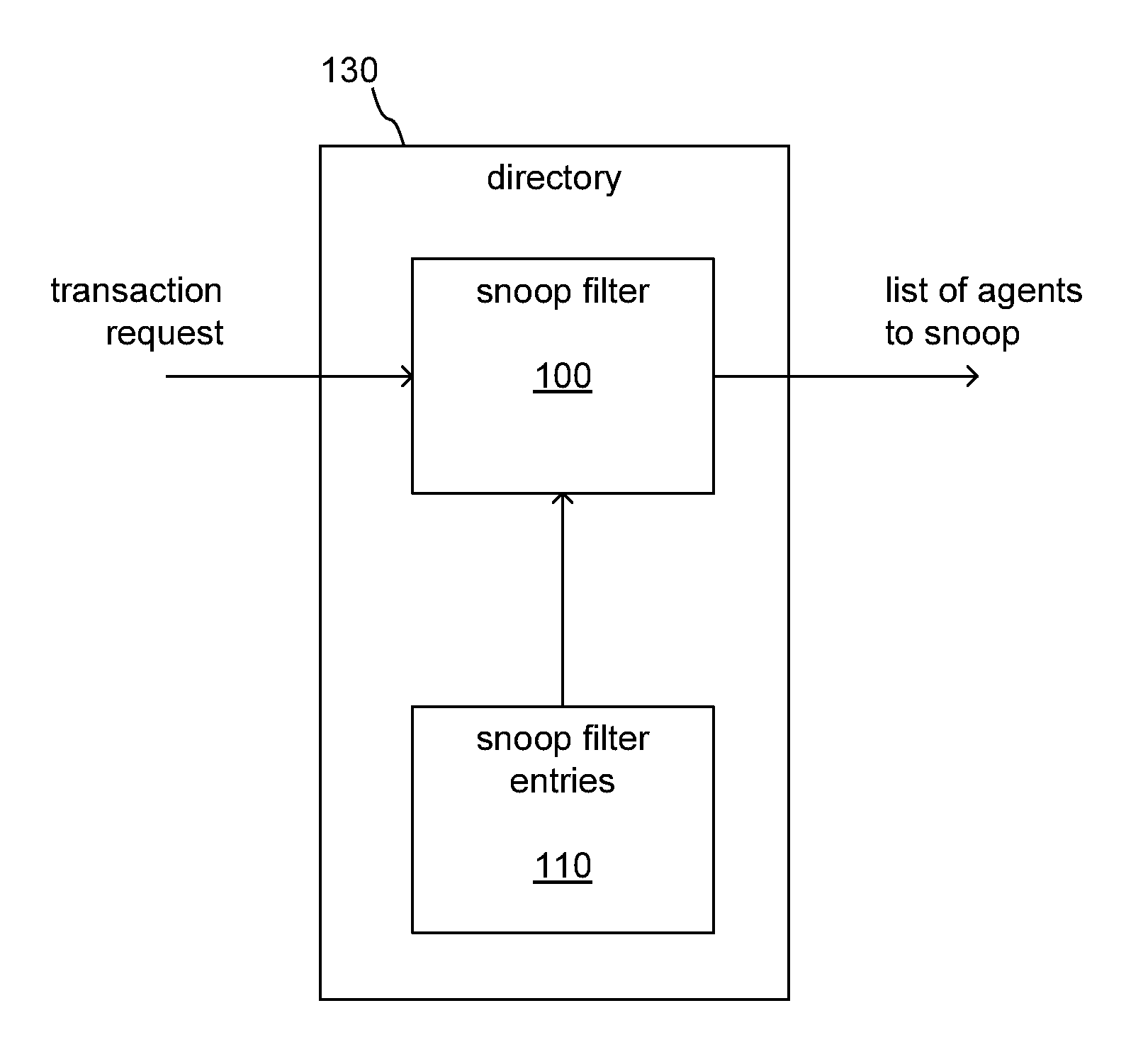

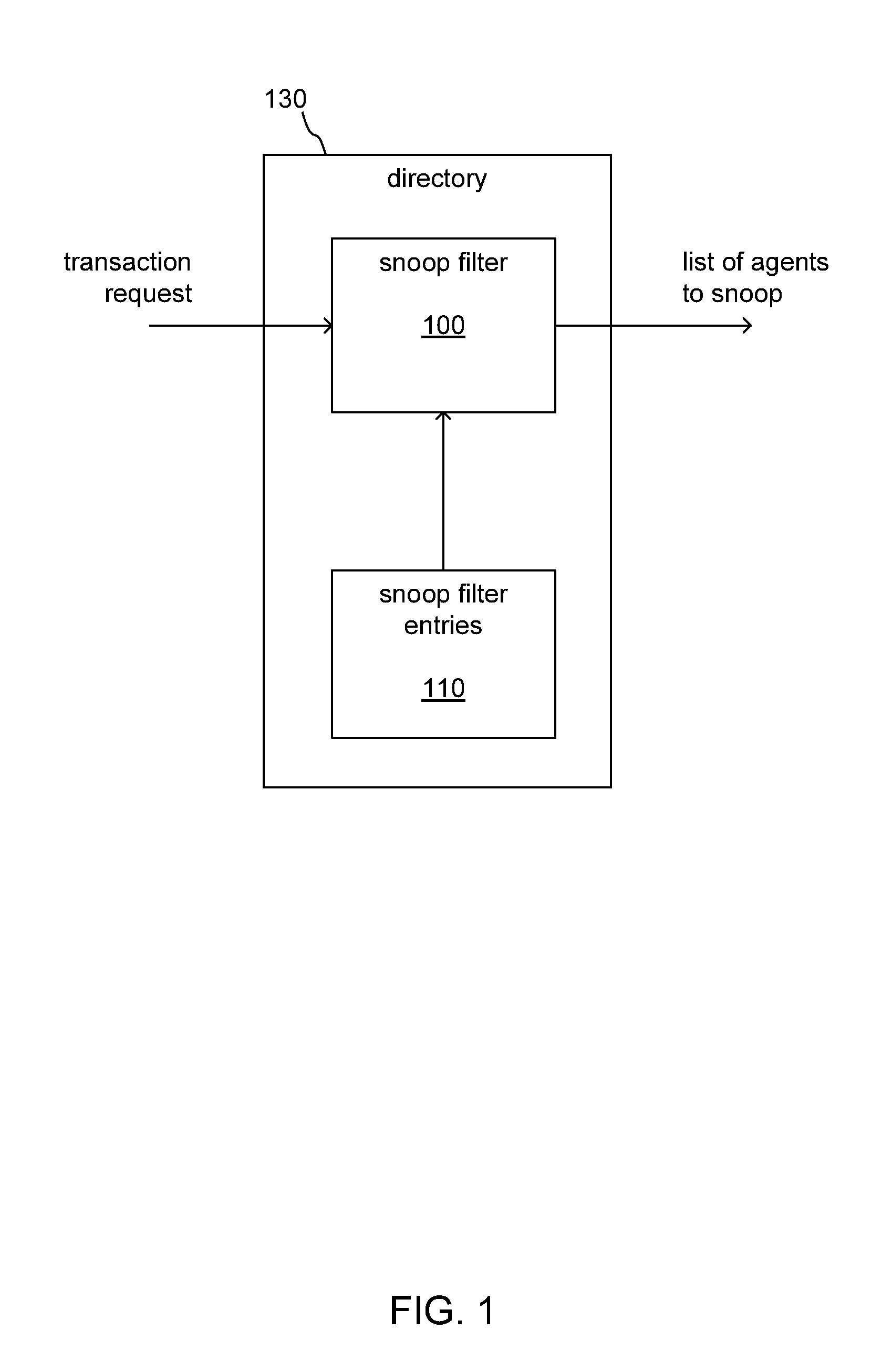

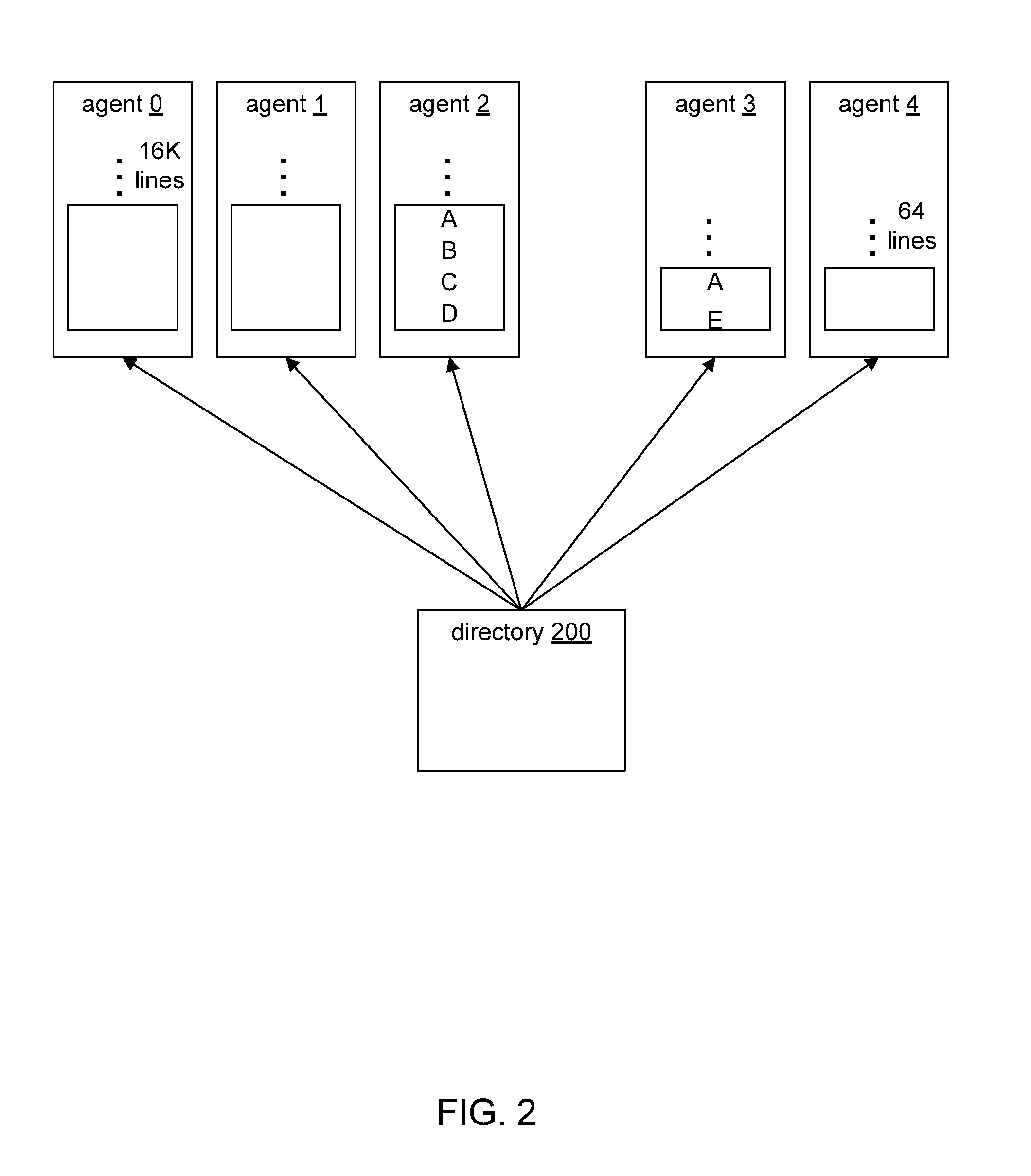

Configurable snoop filters for cache coherent systems

ActiveUS20160188471A1Memory architecture accessing/allocationMemory adressing/allocation/relocationInformation typeParallel computing

A cache coherent system includes a directory with more than one snoop filter, each of which stores information in a different set of snoop filter entries. Each snoop filter is associated with a subset of all caching agents within the system. Each snoop filter uses an algorithm chosen for best performance on the caching agents associated with the snoop filter. The number of snoop filter entries in each snoop filter is primarily chosen based on the caching capacity of just the caching agents associated with the snoop filter. The type of information stored in each snoop filter entry of each snoop filter is chosen to meet the desired filtering function of the specific snoop filter.

Owner:ARTERIS

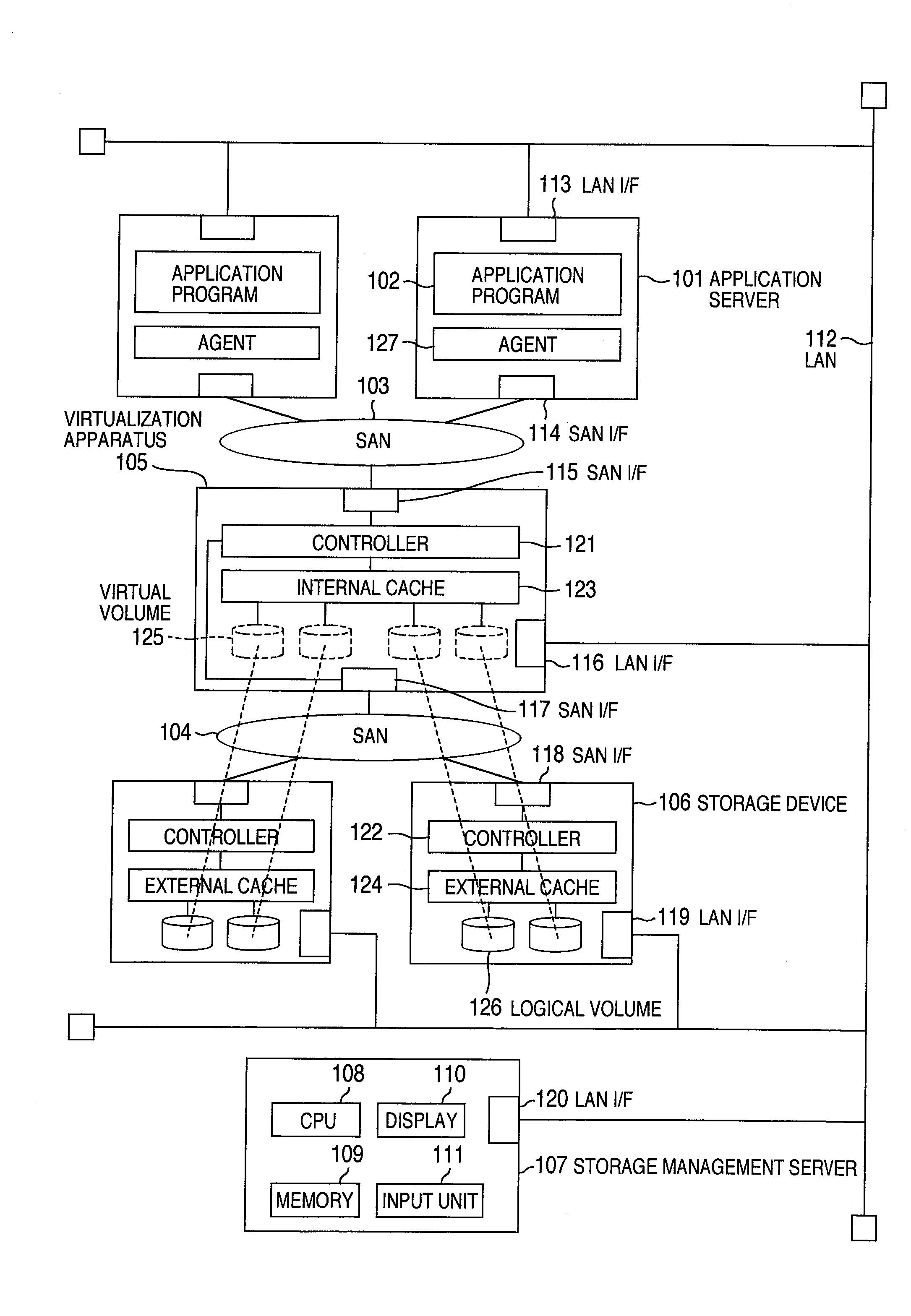

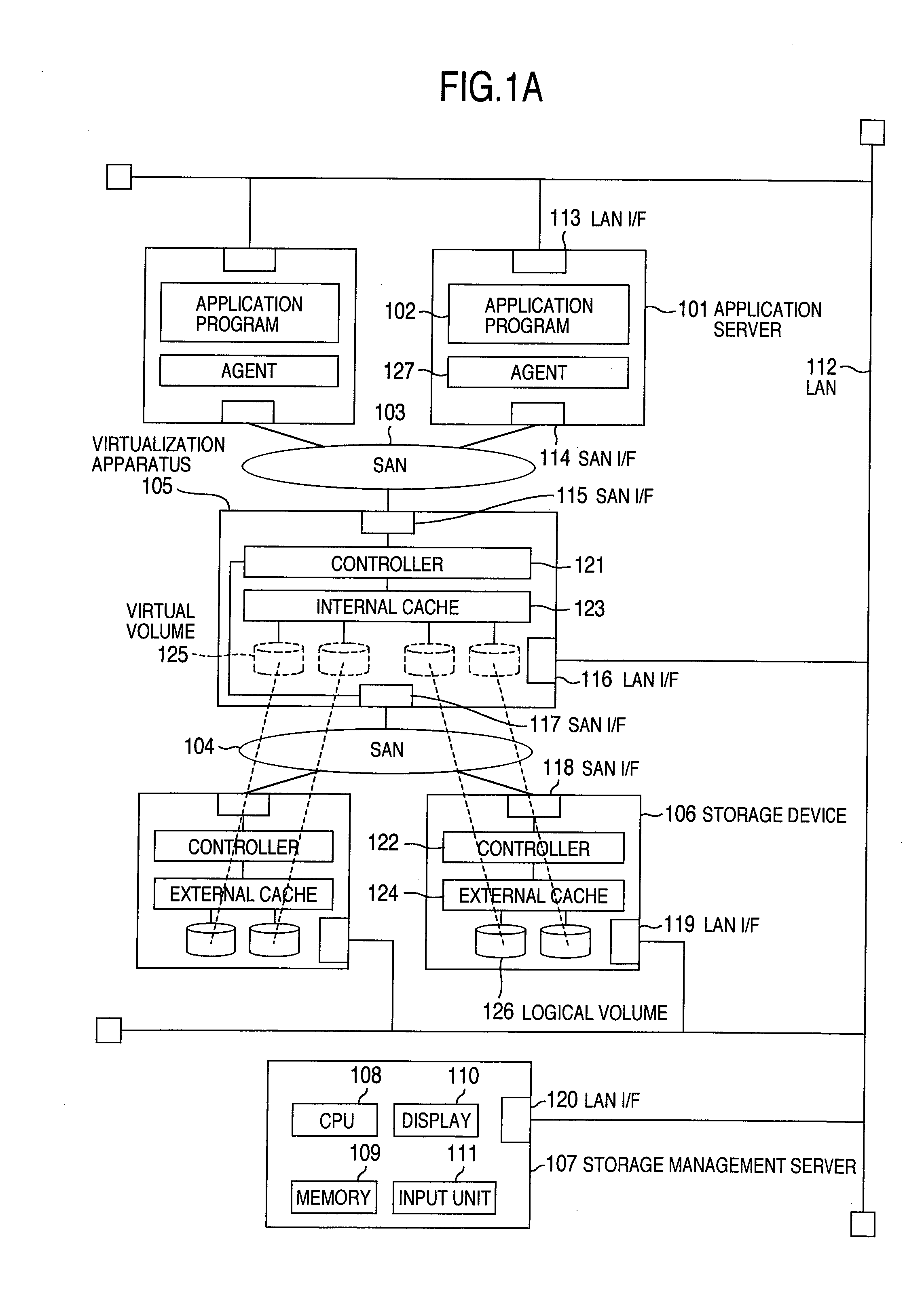

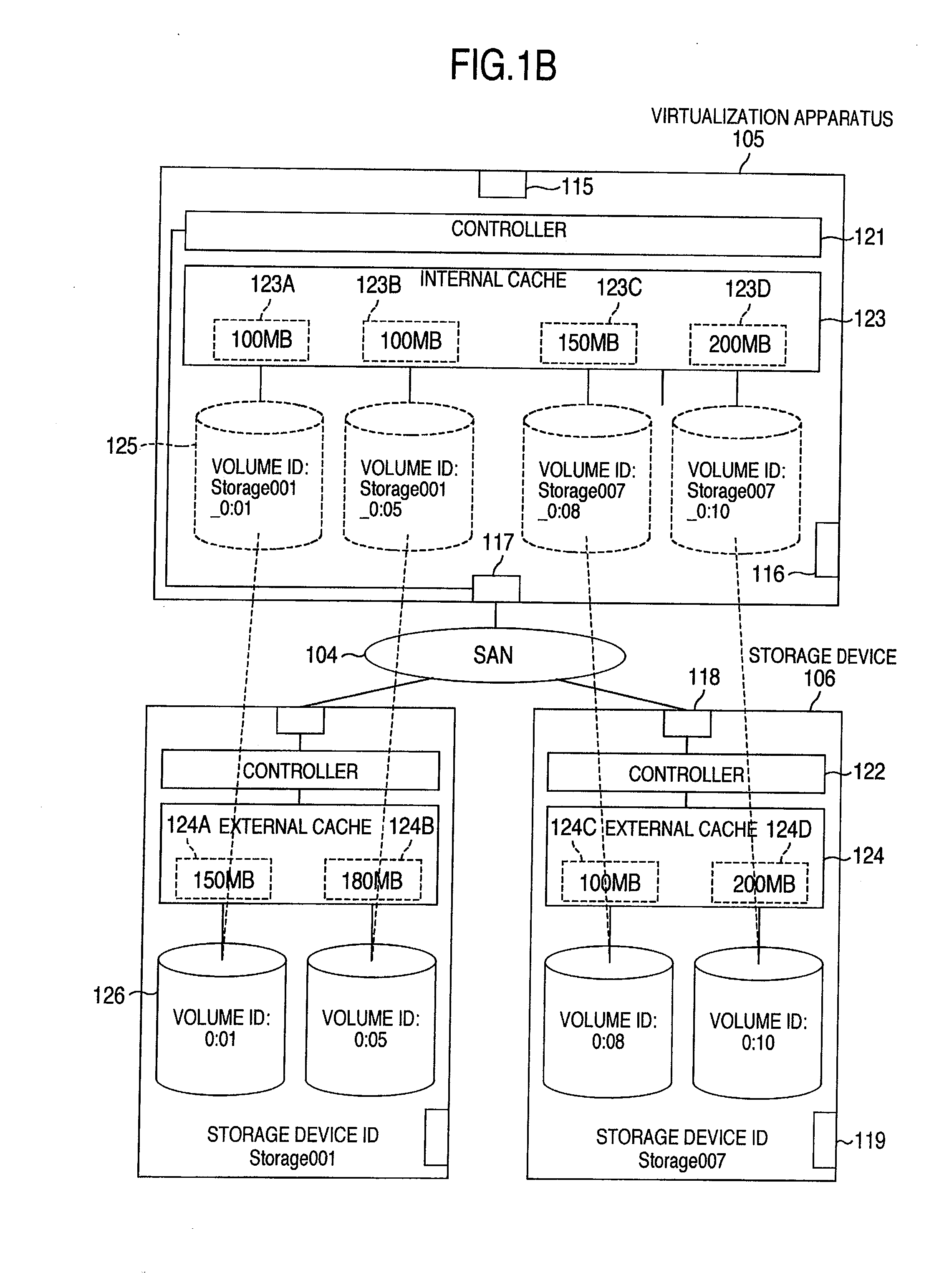

Cache configuration system, management server and cache configuration management method

InactiveUS20100100604A1Reduce workloadMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtualizationApplication server

A cache configuration management system capable of lightening workloads of estimation of a cache capacity in virtualization apparatus and / or cache assignment is provided. In a storage system having application servers, storage devices, a virtualization apparatus for letting the storage devices be distinctly recognizable as virtualized storages, and a storage management server, the storage management server predicts a response time of the virtualization apparatus with respect to a application server from cache configurations and access performances of the virtualization apparatus and storage device and then evaluates the presence or absence of the assignment to a virtual volume of internal cache and a predictive performance value based on a to-be-assigned capacity to thereby perform judgment of the cache capacity within the virtualization apparatus and estimation of an optimal cache capacity, thus enabling preparation of an internal cache configuration change plan.

Owner:HITACHI LTD

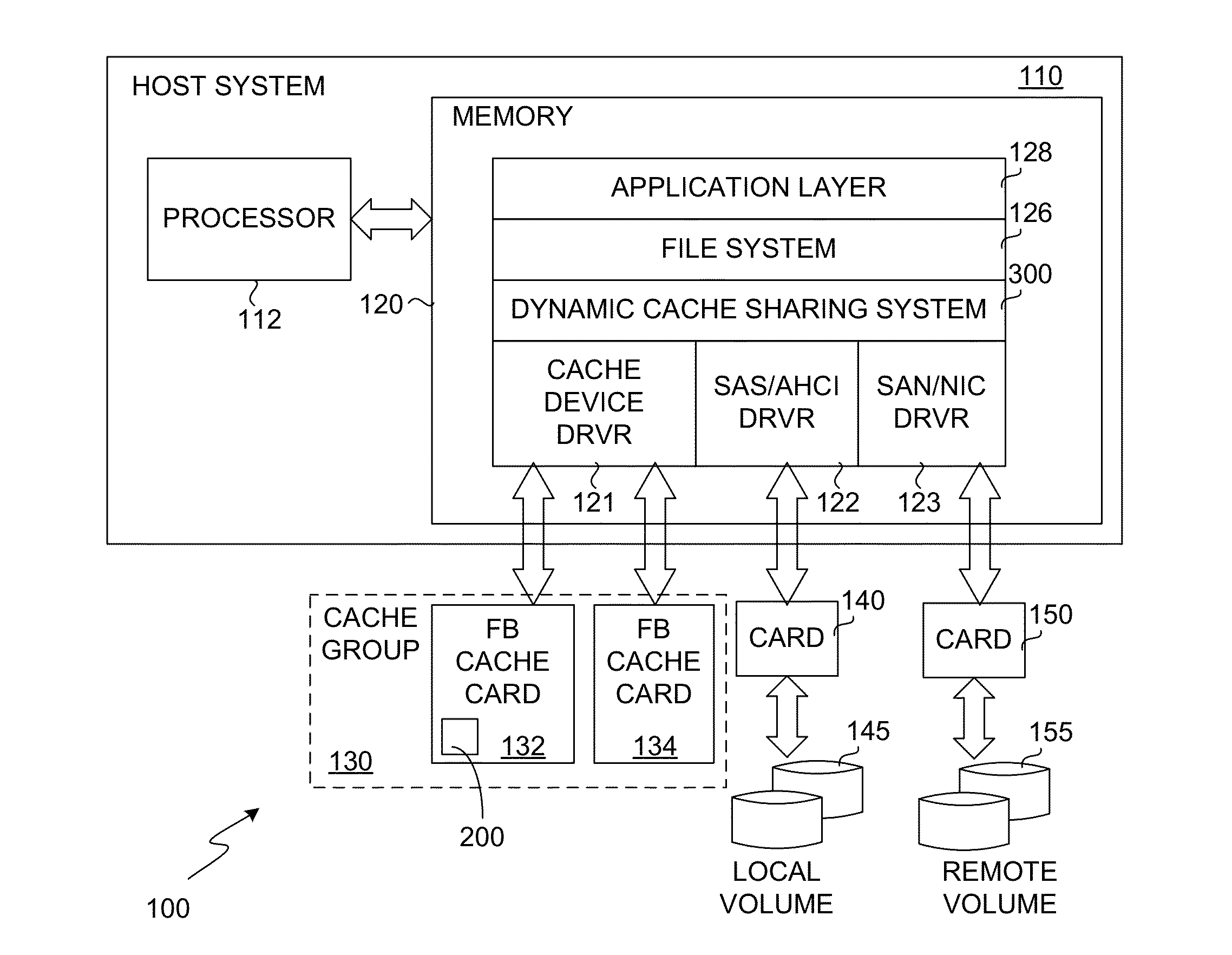

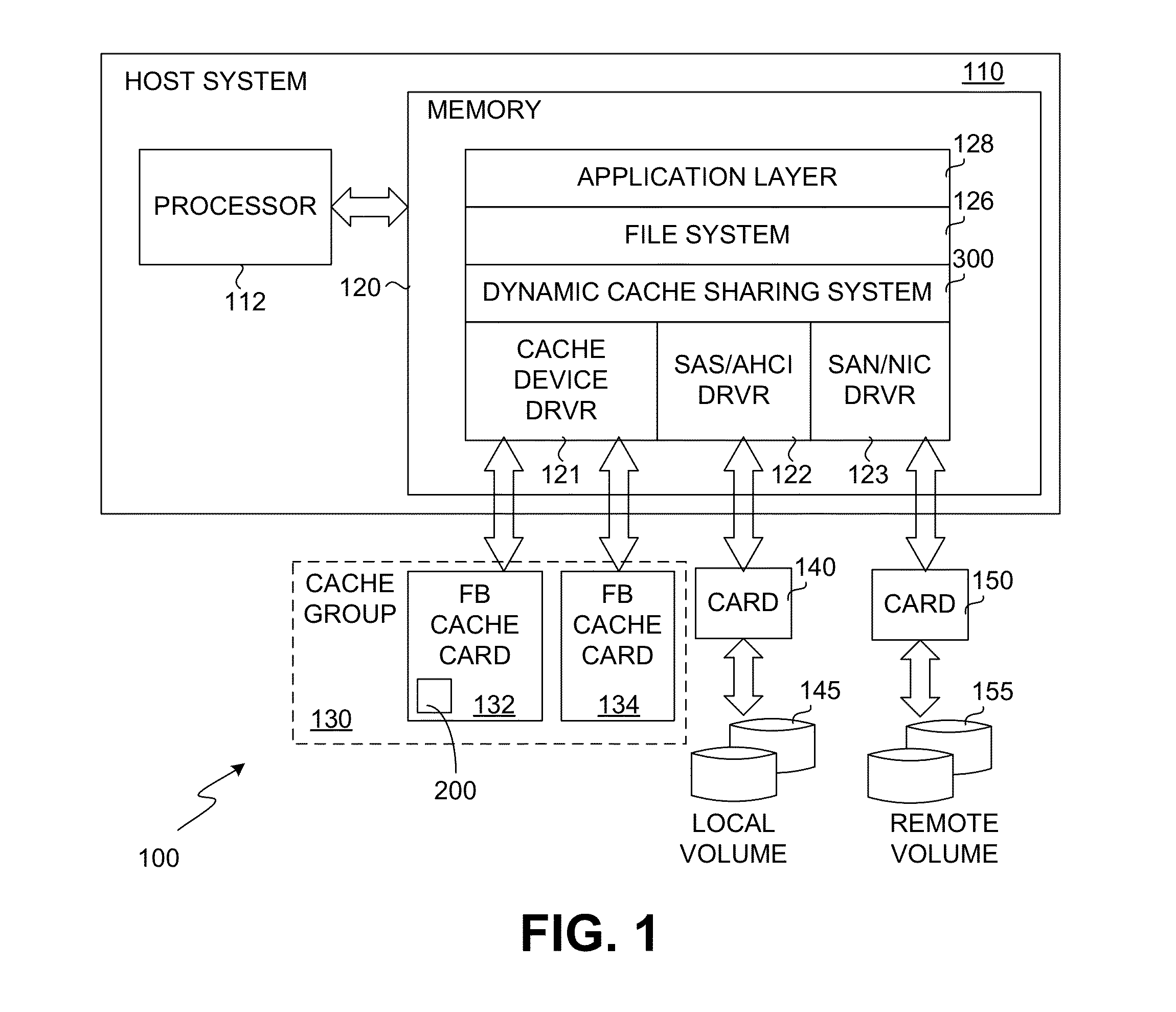

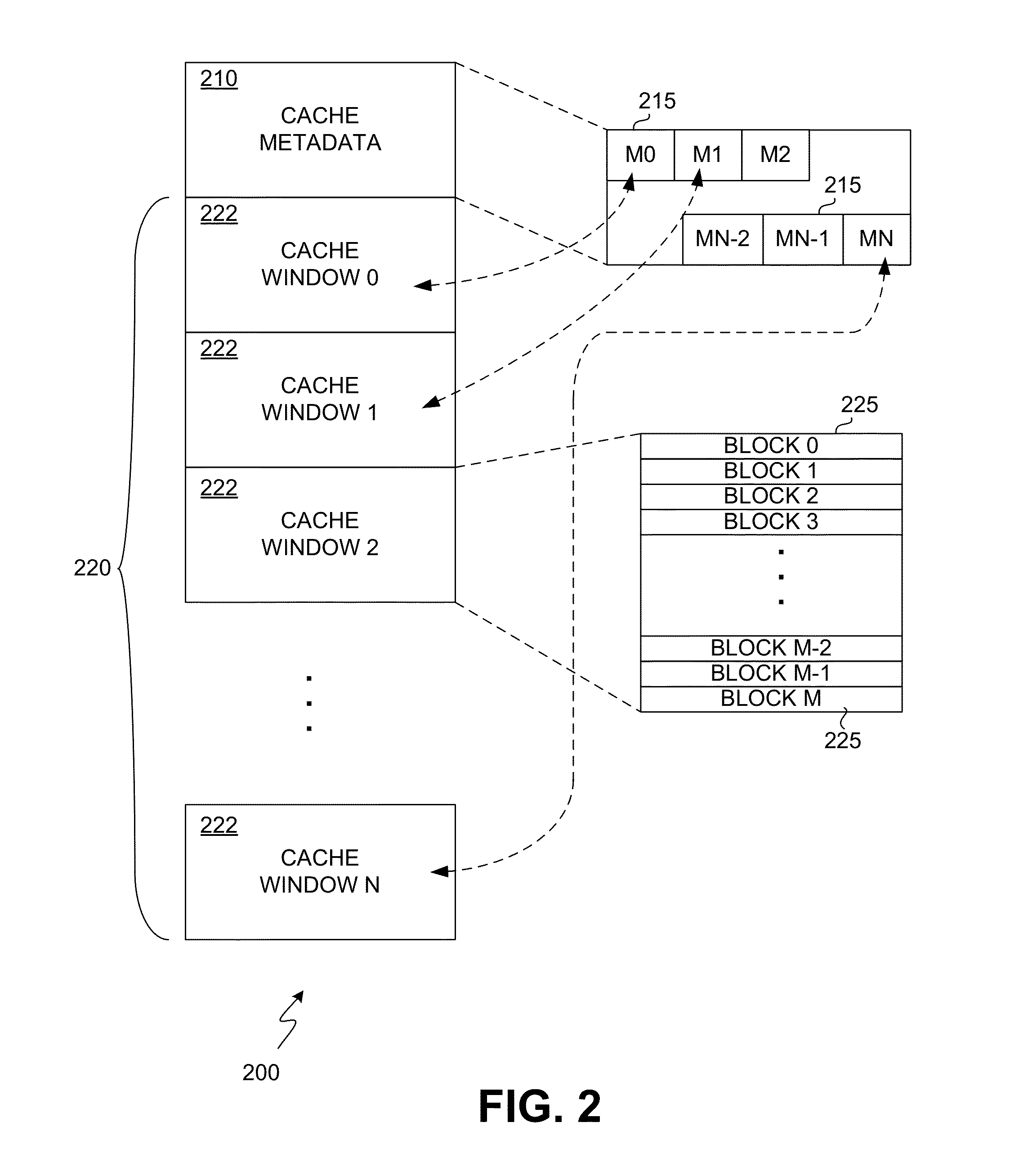

System, method and computer-readable medium for dynamic cache sharing in a flash-based caching solution supporting virtual machines

InactiveUS20140258595A1Improve responsivenessMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareResource allocation

A cache controller implemented in O / S kernel, driver and application levels within a guest virtual machine dynamically allocates a cache store to virtual machines for improved responsiveness to changing demands of virtual machines. A single cache device or a group of cache devices are provisioned as multiple logical devices and exposed to a resource allocator. A core caching algorithm executes in the guest virtual machine. As new virtual machines are added under the management of the virtual machine monitor, existing virtual machines are prompted to relinquish a portion of the cache store allocated for use by the respective existing machines. The relinquished cache is allocated to the new machine. Similarly, if a virtual machine is shutdown or migrated to a new host system, the cache capacity allocated to the virtual machine is redistributed among the remaining virtual machines being managed by the virtual machine monitor.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

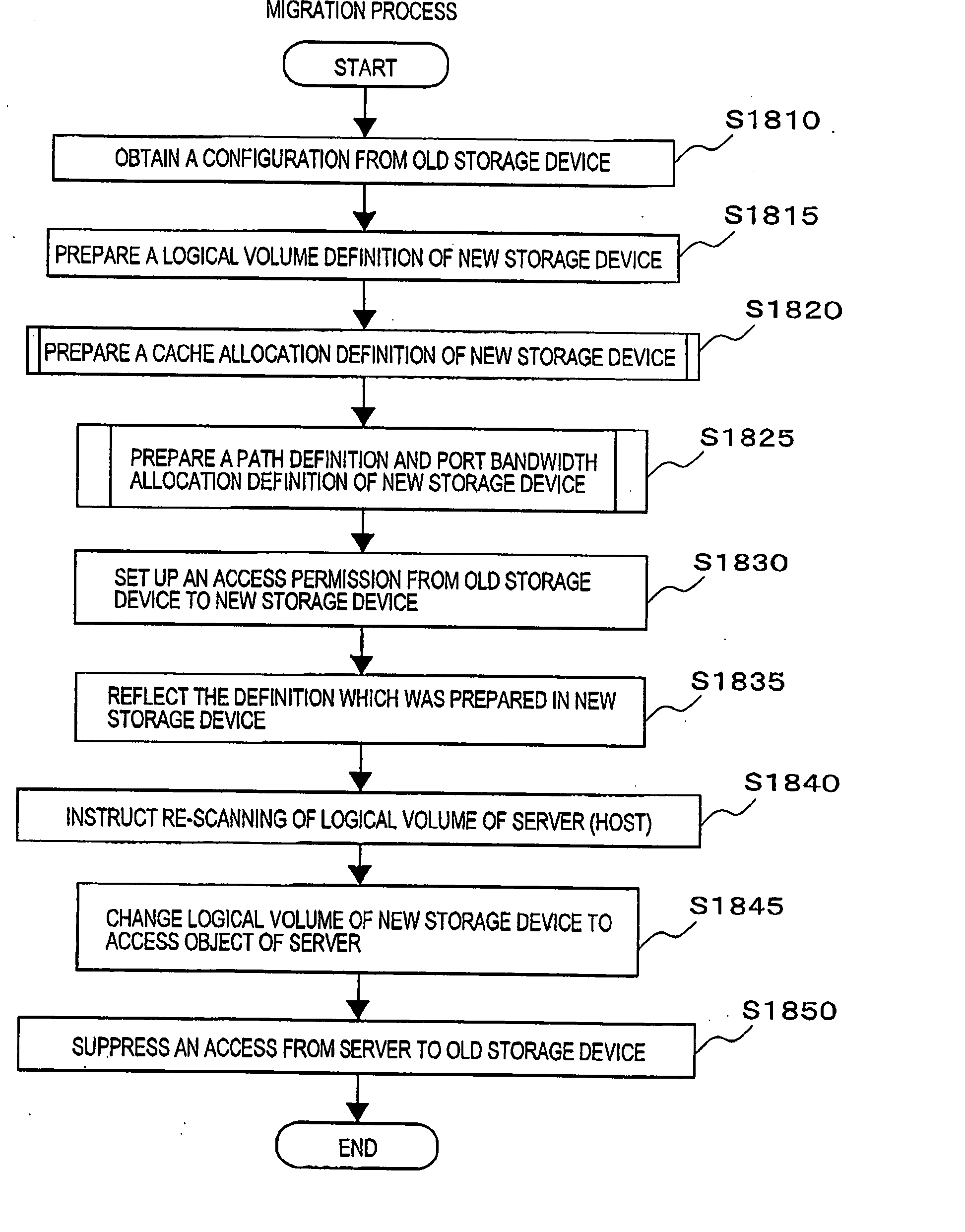

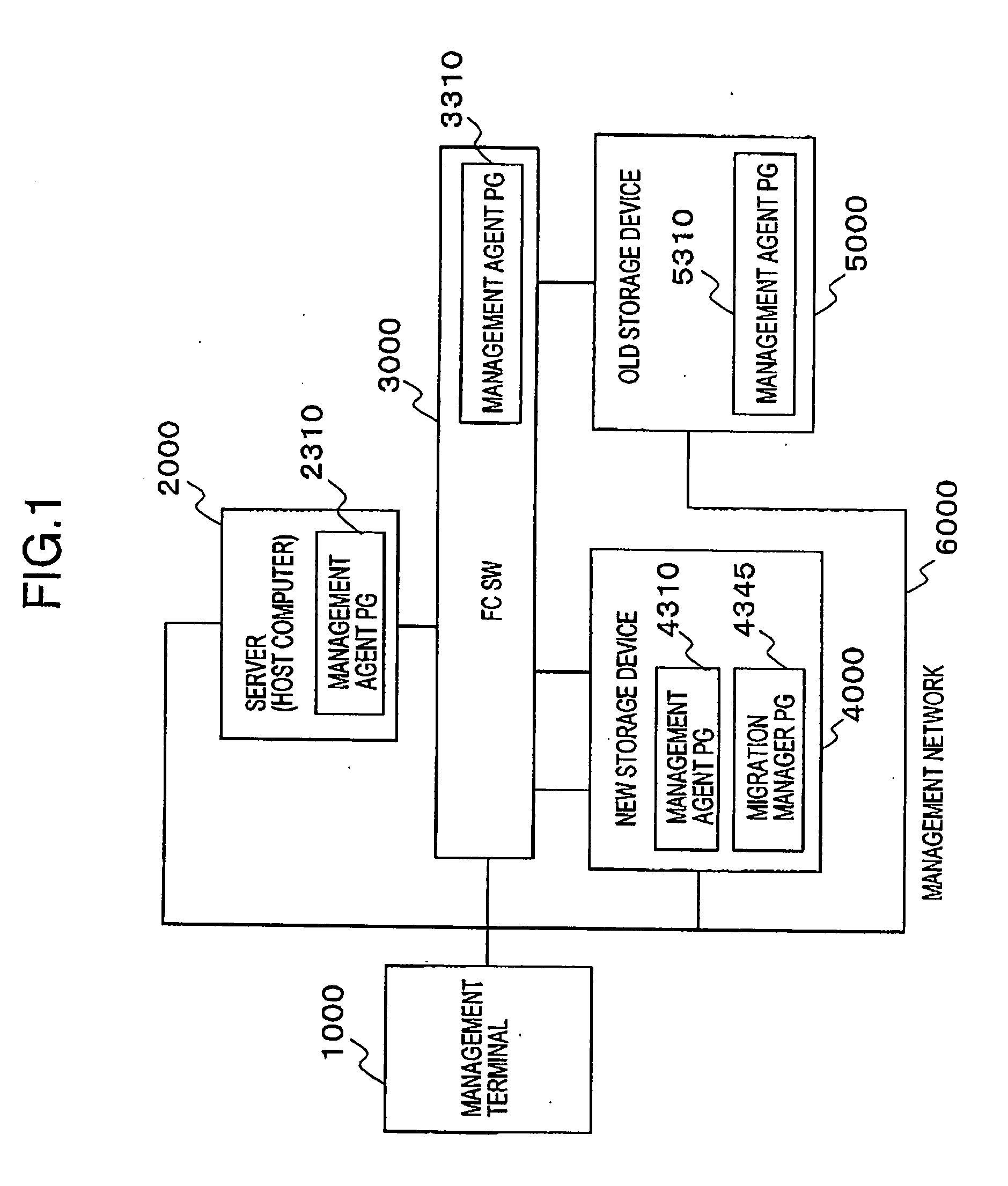

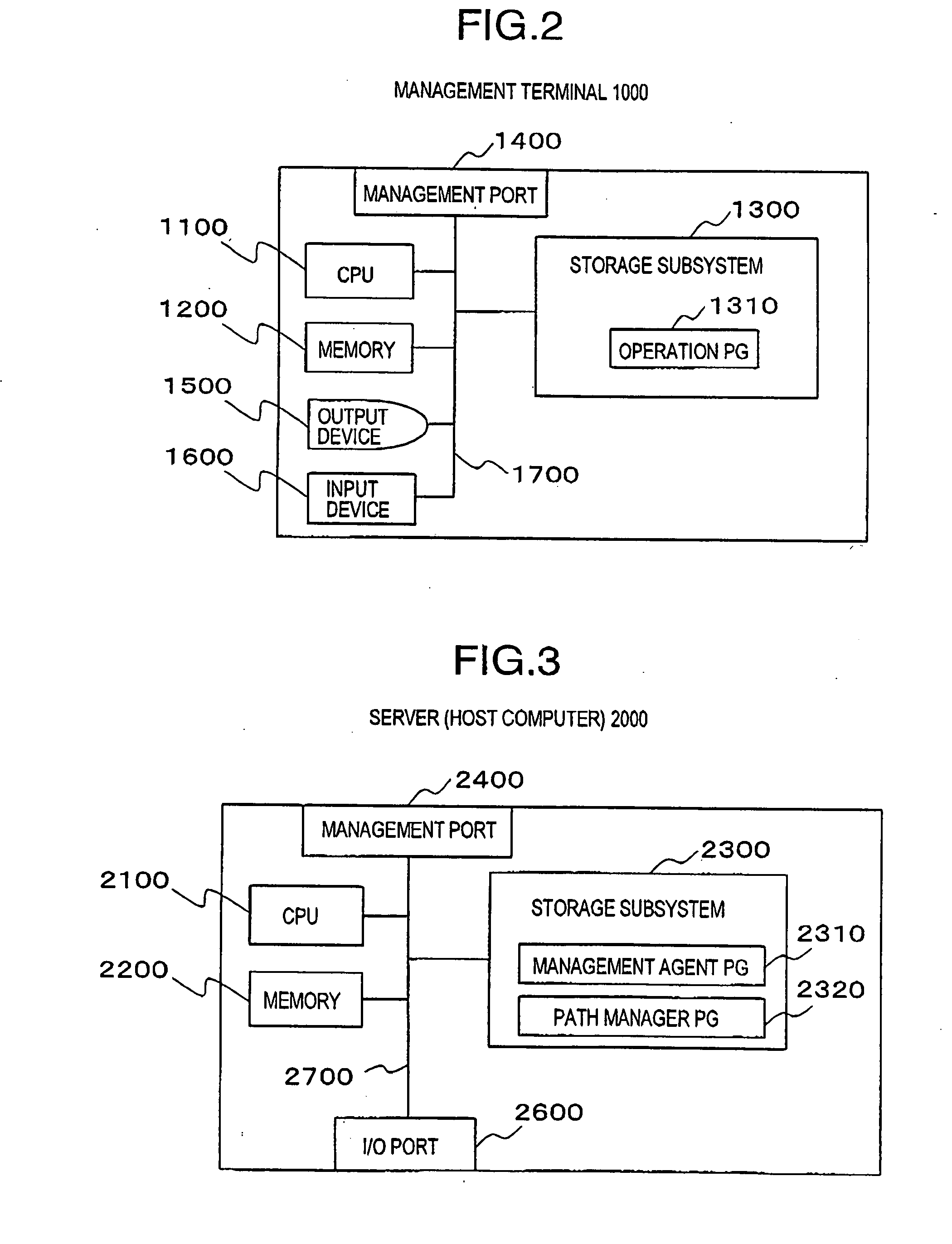

Configuration management apparatus and method

InactiveUS20050144414A1Improve access performanceDecrease of access performanceInput/output to record carriersMemory adressing/allocation/relocationOperating systemCache capacity

To migrate a configuration that an old storage device has to a new storage devics. A new storage device 4000 obtains a configuration of an old storage device 5000, by using a migration manager PG 4345, and prepares a logical volume definition of the new storage device 4000 on the basis of a logical volume definition which is included in the configuration. Also, on the basis of a cache allocation definition included in the configuration of the old storage device 5000 and cache capacity of the new storage device 4000, a cache allocation definition of the new storage device 4000 is prepared. Also, on the basis of a port bandwidth allocation definition included in the configuration of the old storage device 5000 and bandwidth capacity of a port of the new storage device 4000, a port bandwidth allocation definition of the new storage device 4000 is prepared. And, the logical volume definition, the cache allocation definition and the port bandwidth allocation definition which were prepared as above are set up in a configuration of the new storage device 4000.

Owner:HITACHI LTD

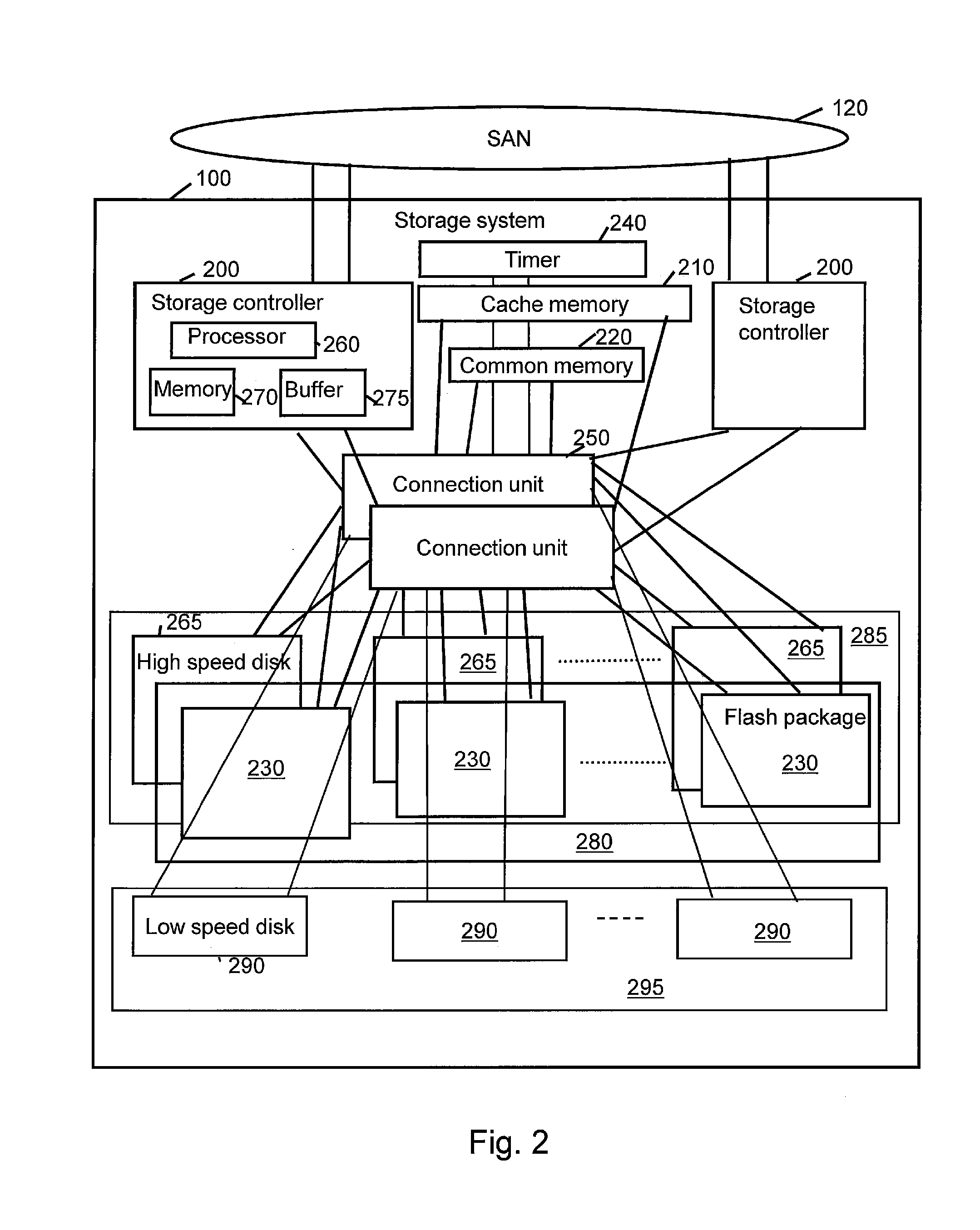

Storage system and storage control method for using storage area based on secondary storage as cache area

InactiveUS20130318196A1Improve performanceDigital computer detailsMemory systemsVirtualizationThin provisioning

In general, a DRAM is used as a cache memory, and when attempting to expand the capacity of the cache memory to increase the hit ratio, the DRAM is required to be physically augmented, which is not a simple task. Consequently, a storage system uses a page, which conforms to a capacity virtualization function (for example, a page allocatable to a logical volume in accordance with Thin Provisioning), as a cache area. This makes it possible to dynamically increase and decrease the cache capacity.

Owner:HITACHI LTD

Distributive cache storage method for dynamic self-adaptive video streaming media

ActiveCN105979274AIncrease profitReduce loadDigital video signal modificationSelective content distributionQuality of serviceCache optimization

The invention provides a distributive cache storage method for dynamic self-adaptive video streaming media. In combination with dynamic self-adaptive streaming media encoding technology of a main server, the method encodes videos into versions of different code rate. At the same time, difference of code rate-distortion performance among different video content, buffer capacity limits of edge servers, network connection conditions of different users and video on demand probability distribution are taken into consideration. Subsets of video versions of the edges servers required cache are determined by adopting the distributive cache optimization storage method. Finally, the optimization of integral quality of videos downloaded by the user through the edge servers is realized. The method provided by the invention improves the utilization rate of cache video content of the edge servers, reduces the video streaming media service load of the main server and provides batter video service quality for the user.

Owner:SHANGHAI JIAO TONG UNIV

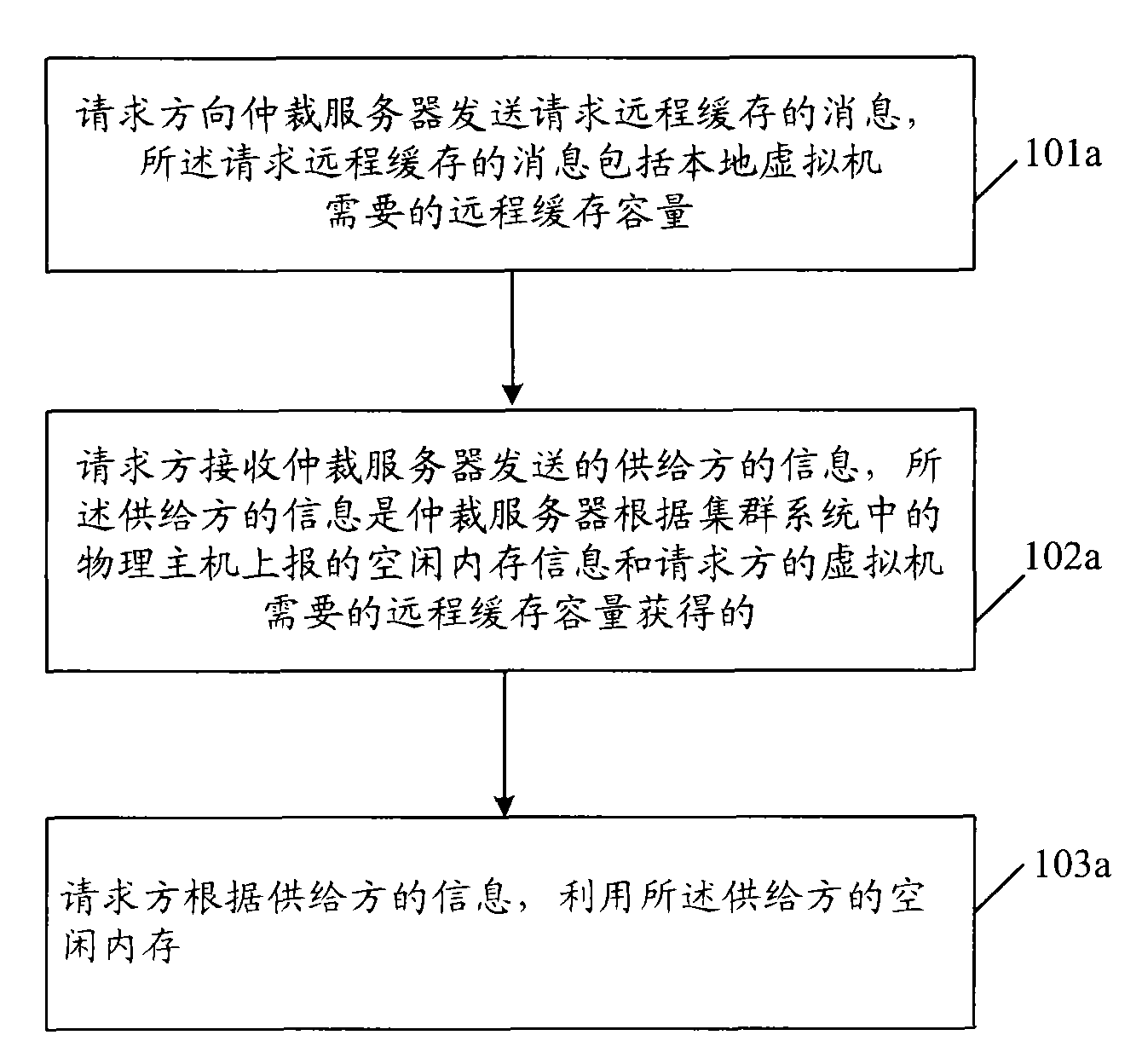

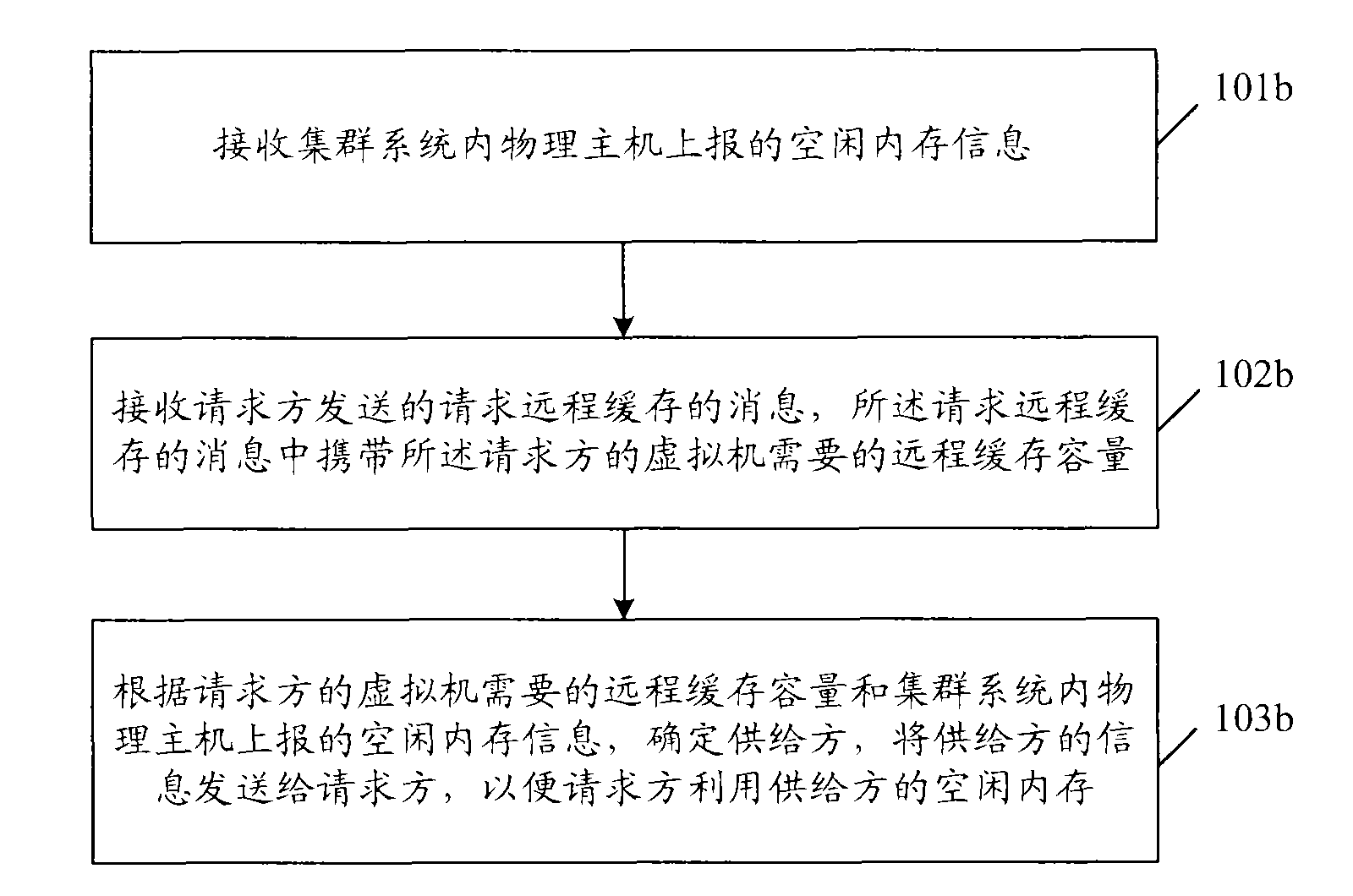

Method and device for managing memory resources in cluster system, and network system

ActiveCN101594309AMeet memory requirementsIncrease profitMemory adressing/allocation/relocationData switching networksCluster systemsDistributed computing

The embodiment of the invention provides a method and a device for managing memory resources in a cluster system. The method for managing the memory resources in the cluster system comprises: sending a message for requesting remote caching to an arbitration server by a requesting party, wherein the message for requesting the remote caching comprises remote caching capacity required by a local virtual machine; receiving information of a provision party sent by the arbitration server by the requesting party, wherein the information of the provision party is acquired by the arbitration server according to idle memory information reported by a physical host in the cluster system and the remote caching capacity required by the local virtual machine; and utilizing an idle memory of the provision party by the requesting party according to the information of the provision party, wherein the requesting party and the provision party are different physical hosts in the cluster system. By using the technical proposal provided by the embodiment of the invention, the utilization rate of the memory resources in the cluster system can be improved.

Owner:HUAWEI TECH CO LTD +1

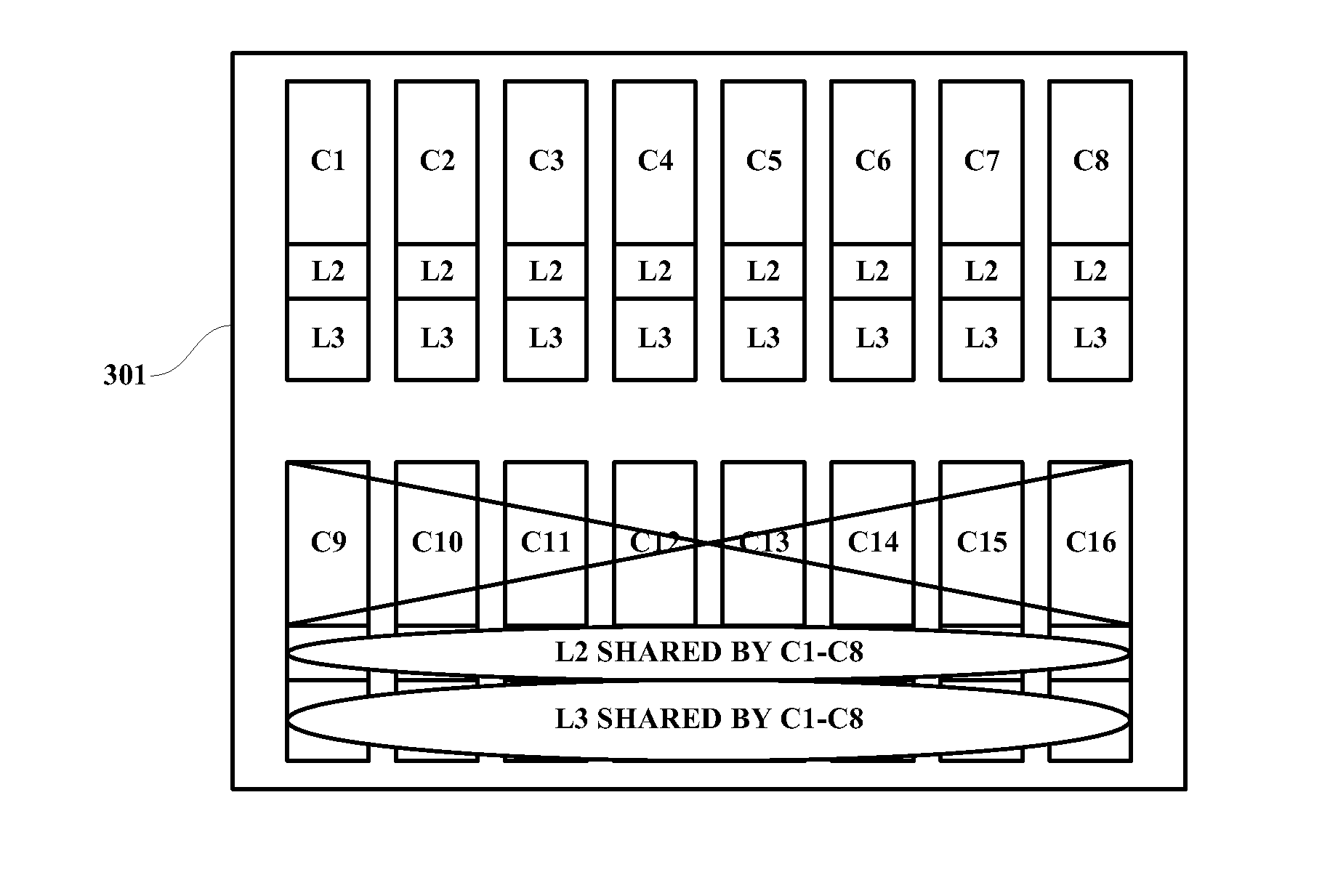

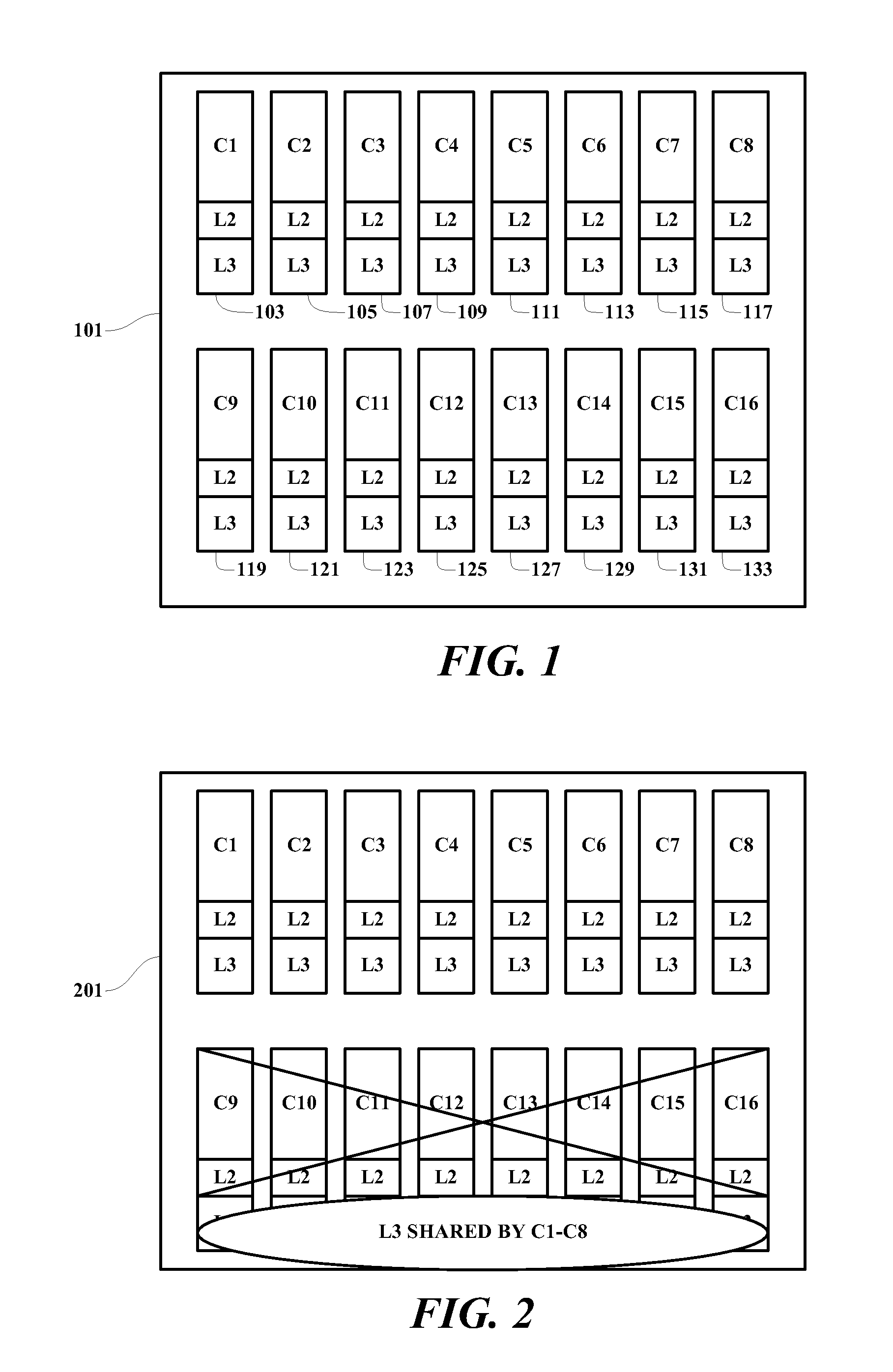

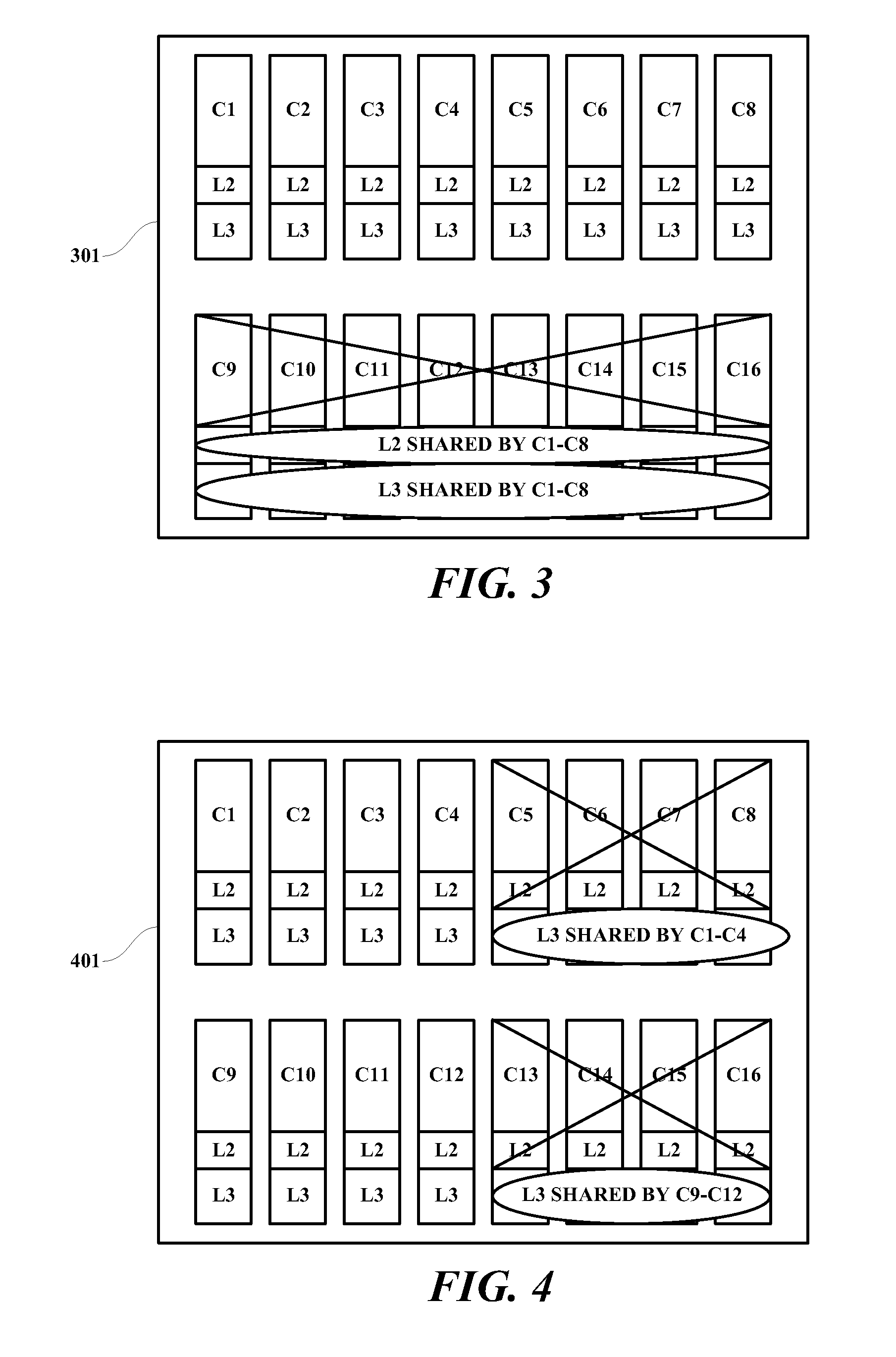

Extended Cache Capacity

ActiveUS20110107031A1Improve caching capacityMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache capacity

A method, programmed medium and system are provided for enabling a core's cache capacity to be increased by using the caches of the disabled or non-enabled cores on the same chip. Caches of disabled or non-enabled cores on a chip are made accessible to store cachelines for those chip cores that have been enabled, thereby extending cache capacity of enabled cores.

Owner:IBM CORP

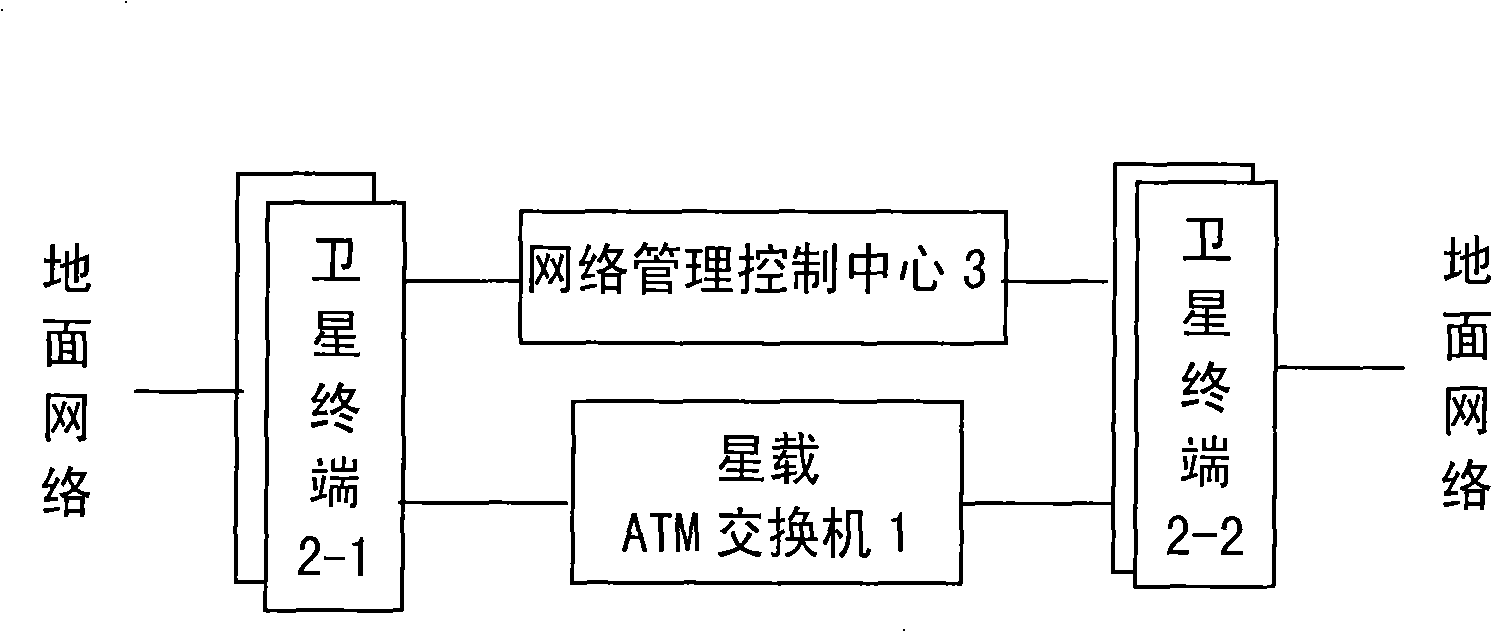

Congestion management suitable for wideband satellite communication system

ActiveCN101309103AImprove service qualitySimplify Design ComplexityRadio transmissionData switching networksQuality of serviceLevel design

The invention discloses a congestion management method suitable for a broadband satellite communication system, which relates to the congestion management technology of the broadband satellite communication system in telecommunication field. The invention provides the proposal design of the system congestion management function and provides a configuration requirement on the buffer capacity of the satellite equipment from the aspects of satellite-ground congestion management function distribution, bandwidth resource distribution type, priority level design and congestion control measures based on the application and technology characters of broadband satellite communication system. The invention mainly uses pre-arranged bandwidth resource plan and distribution, adopts satellite equipment configured with large buffer to simplify the congestion control measures between satellite equipment and ground equipment, and effectively solves the design conflict between the quality of service of the system and the utilizing ratio of the bandwidth resource. The congestion management method suitable for a broadband satellite communication system of the invention has the advantages of simplifying the complexity of satellite equipment, enhancing the system running stability, and increasing the quality of service and the bandwidth resource utilizing ratio of the system. The congestion management method suitable for a broadband satellite communication system of the invention is especially suitable for the node-interconnected broadband satellite communication system.

Owner:NO 54 INST OF CHINA ELECTRONICS SCI & TECH GRP +1

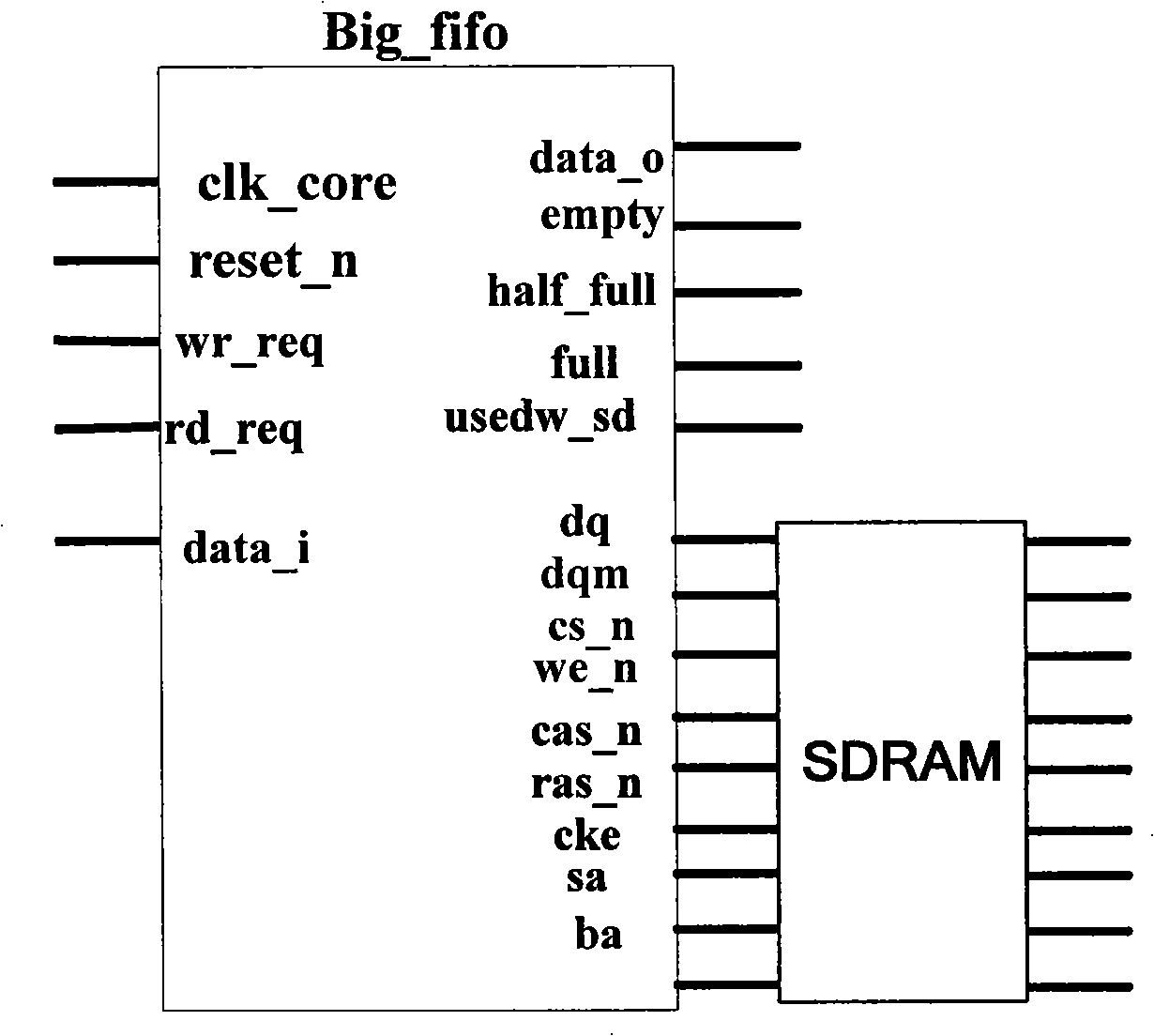

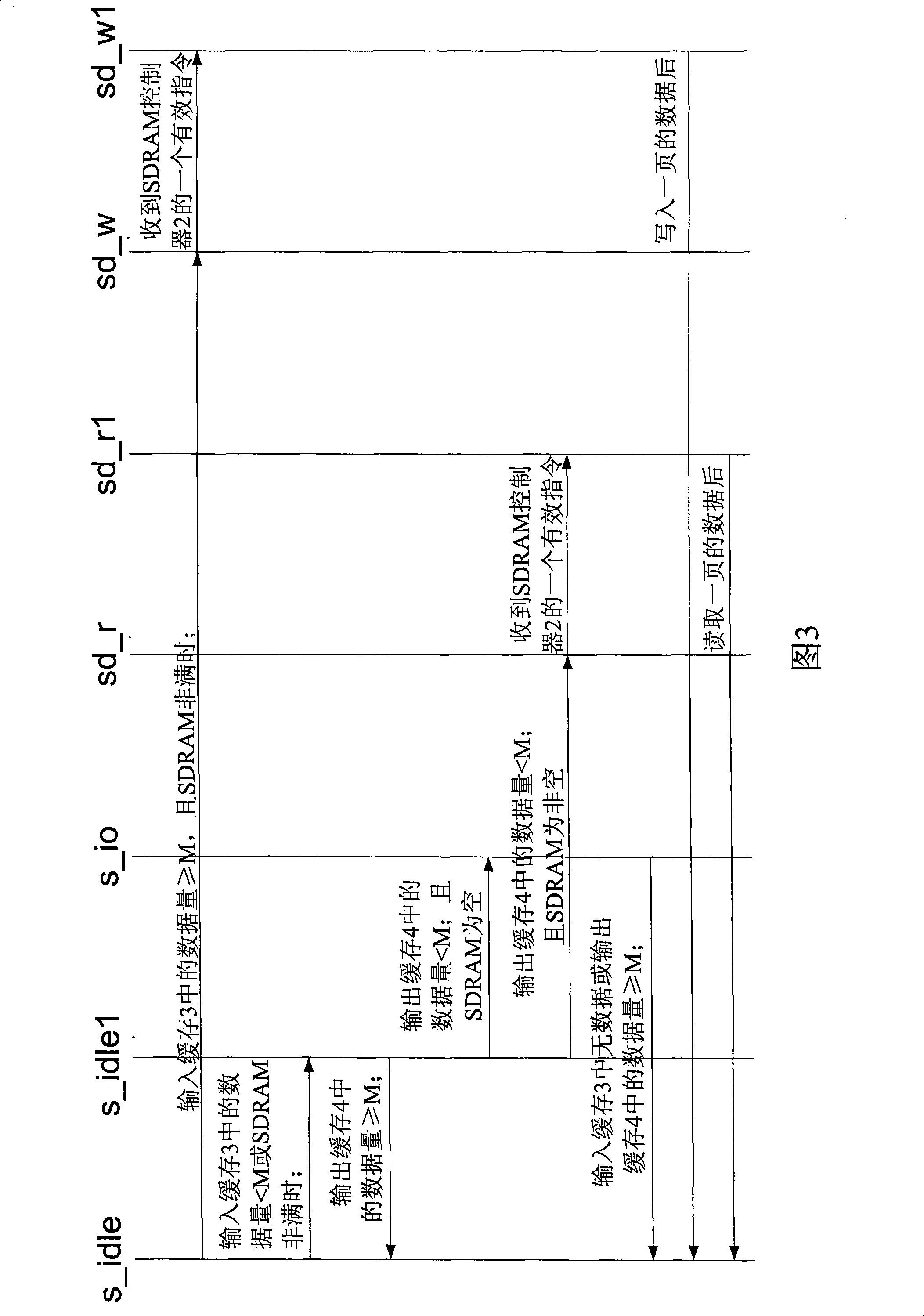

FIFO burst buffer with large capacity based on SDRAM and data storage method

InactiveCN101308697AImprove read and write speedReduce occupancyDigital storageData conversionMaster controllerData store

A FIFO burst buffer memory with large capacity based on SDRAM and a data storage method are disclosed, relating to burst buffer memory, solving the problems of small capacity and high cost on FIFO burst buffer memories and avoiding the defect on SDRAM storages that reading and writing operations can not be done at the same time and the defect of low operation efficiency. The SDRAM controller of the invention is a module used to control the SDRAM storage; the master controller is the control center of the whole system, with the responsibility for attempering the whole system; the input buffer memory and the output buffer memory, two FIFOs with small capacity, are respectively used as cushions for input date and output data; the input data first enter the input buffer memory and when the data in the input buffer memory are added up to certain number, the master controller transmits part of the data in the input buffer memory to the SDRAM storage. When the output buffer memory is in deficiency of data, the master controller transmits part of the data in the SDRAM storage to the output buffer memory. The FIFO burst buffer memory is low in cost and has a data read-write speed of 75MHz.

Owner:HARBIN INST OF TECH

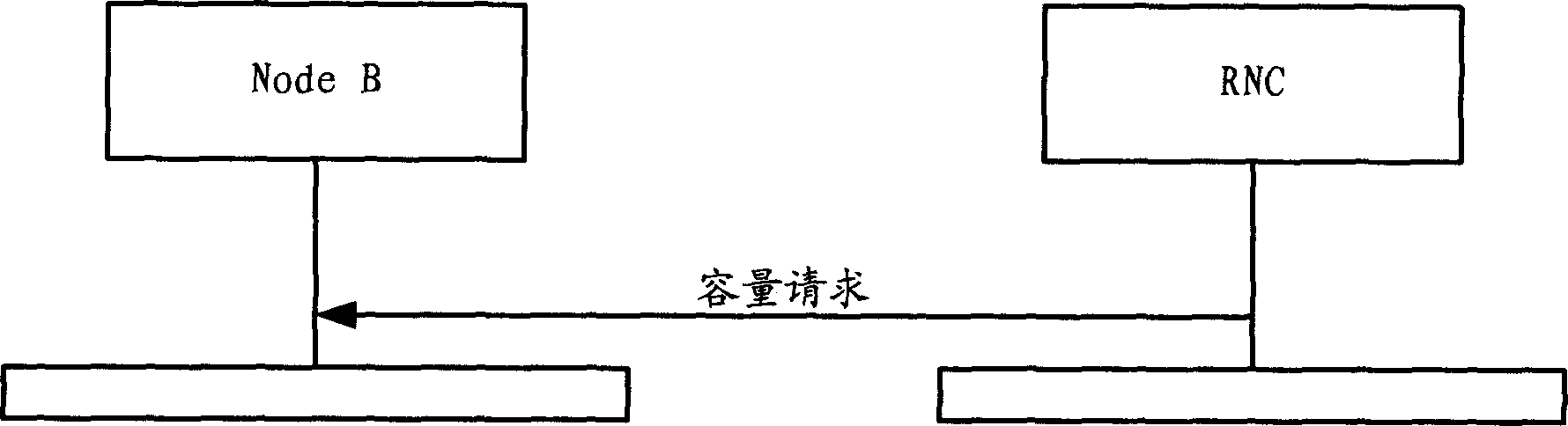

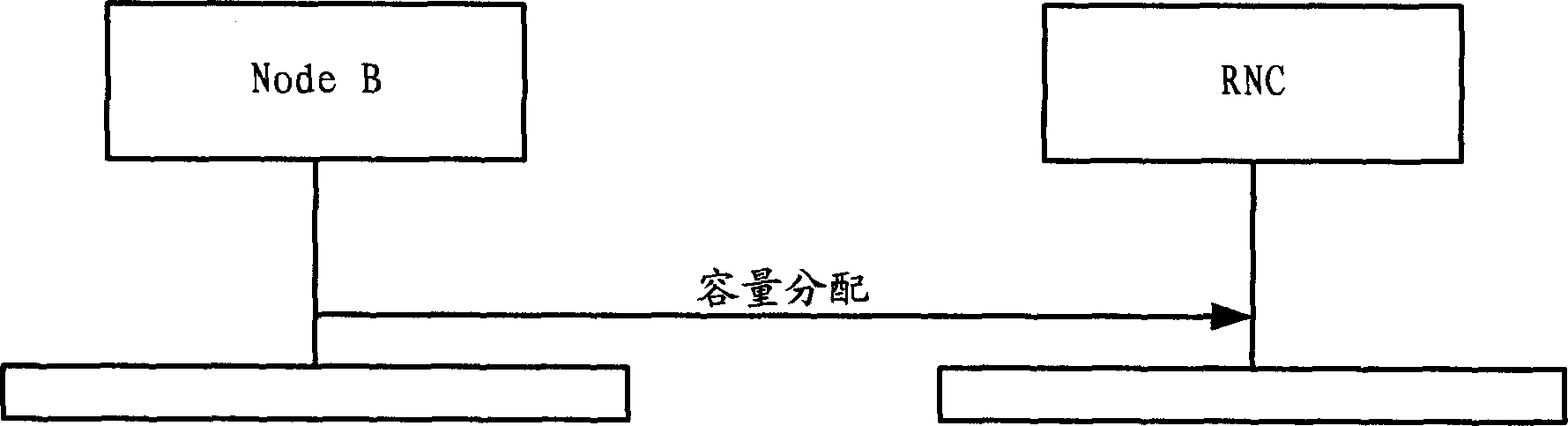

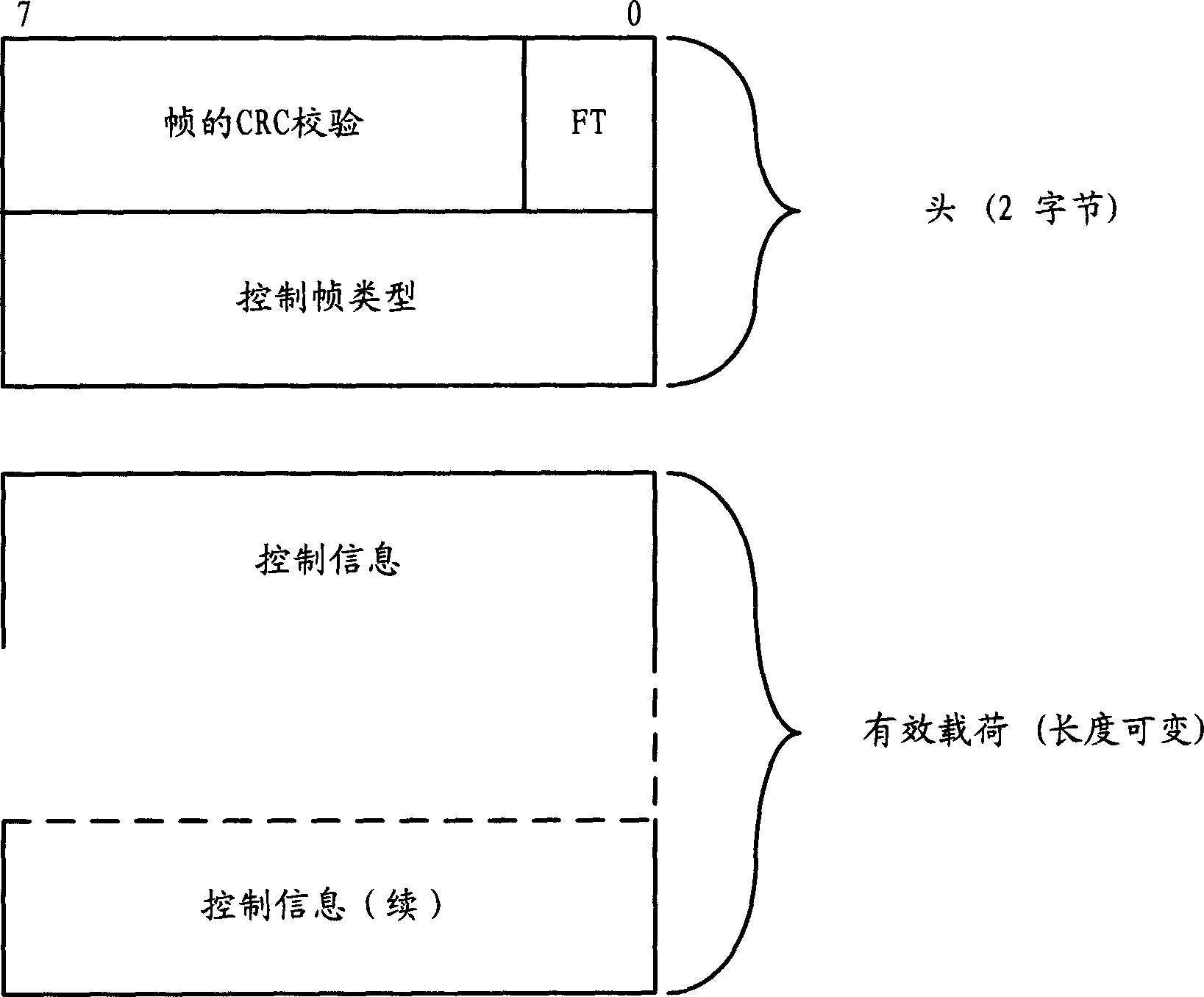

Iub interface flow control plan

The present invention discloses a WCDMAR and Iub interface flow control scheme of follow-up compatible version, for raising Iub interface bandwidth utilization ratio. The present invention provided method contains redistributing the all HSDPA user in NodeB when current the rest distributable Iub band width exceeds up and down threshold, in HSDPA user cache capacity exceeding upper threshold, demanding RNC stopping transmitting; in user cache capacity less than down threshold, demanding RNC distributing the air interface concordant flow rate, in user cache capacity being zero, demanding RNC distributing greater than the air interface flow rate; furthermore said invention also defining delay zone to different kinds of threshold.

Owner:SHANGHAI HUAWEI TECH CO LTD

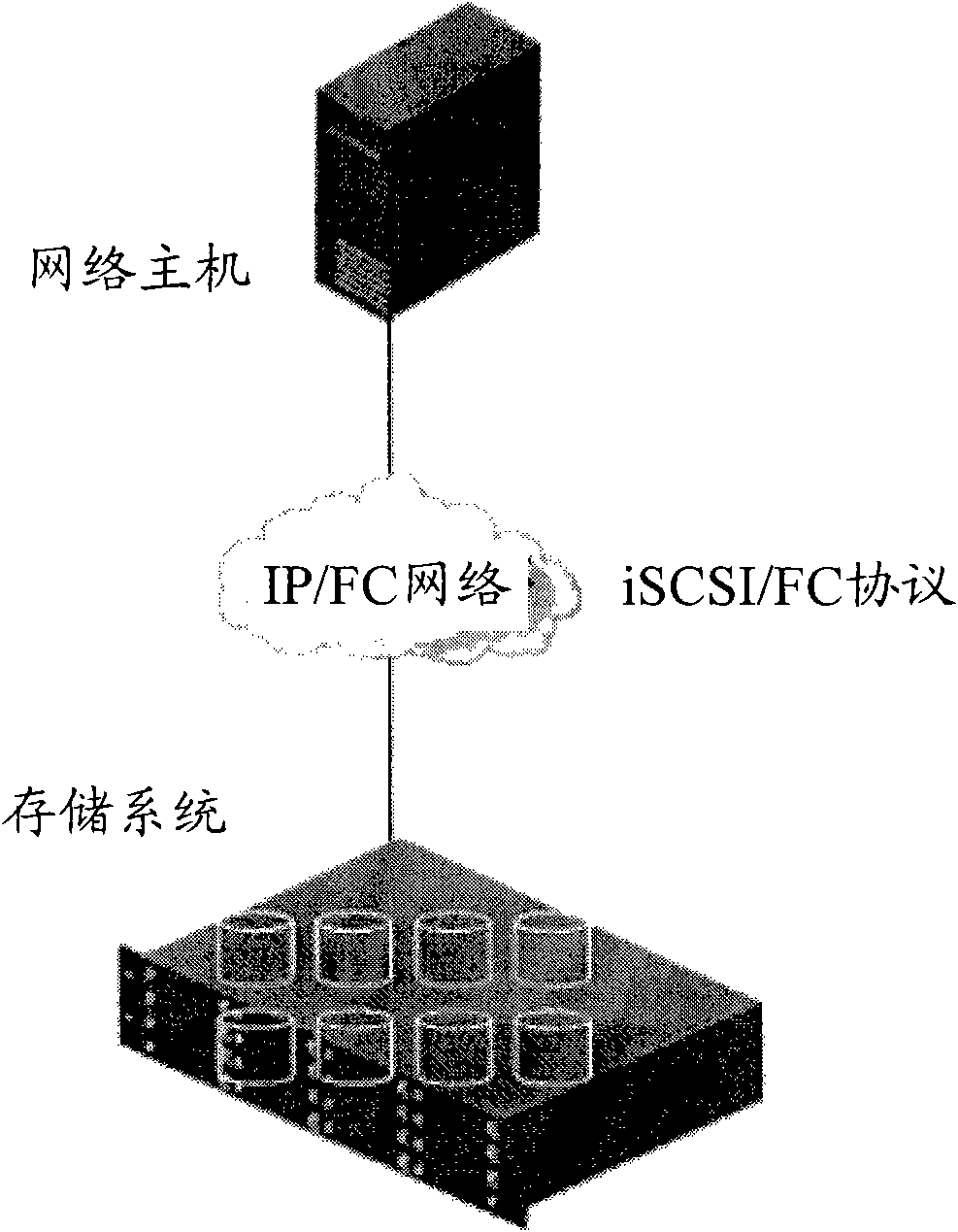

Memory system, memory controller and data caching method

InactiveCN101566927AAvoiding contention for cache resourcesImprove usabilityInput/output to record carriersMemory adressing/allocation/relocationControl storeUsability

The invention discloses a memory system. A high-speed disk is arranged in a memory controller, and is used as a virtual cache, so that the cache capacity can be infinitely expanded by increasing the capacity of the high-speed disk and the size of the configured virtual cache. Therefore, SAN and NAS memory architectures are integrated into the integrative memory system, the prior physical memory of the memory system is used as the cache of the SAN memory architecture, and the arranged high-speed disk is used as the cache of the NAS memory architecture, so that flexible memory access service can be provided for a network host, the cache capacity can be expanded limitlessly, the condition that the SAN and the NAS compete cache resources caused by deficient cache capacity can be avoided, and the usability of the memory system can be improved. The invention also discloses a memory controller and a data caching method in the memory system.

Owner:NEW H3C TECH CO LTD

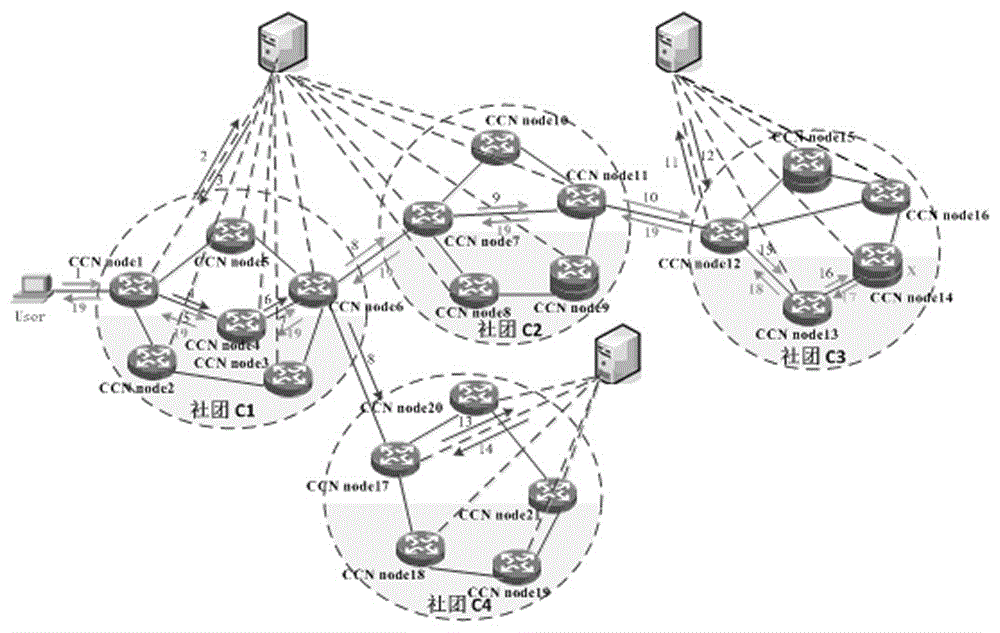

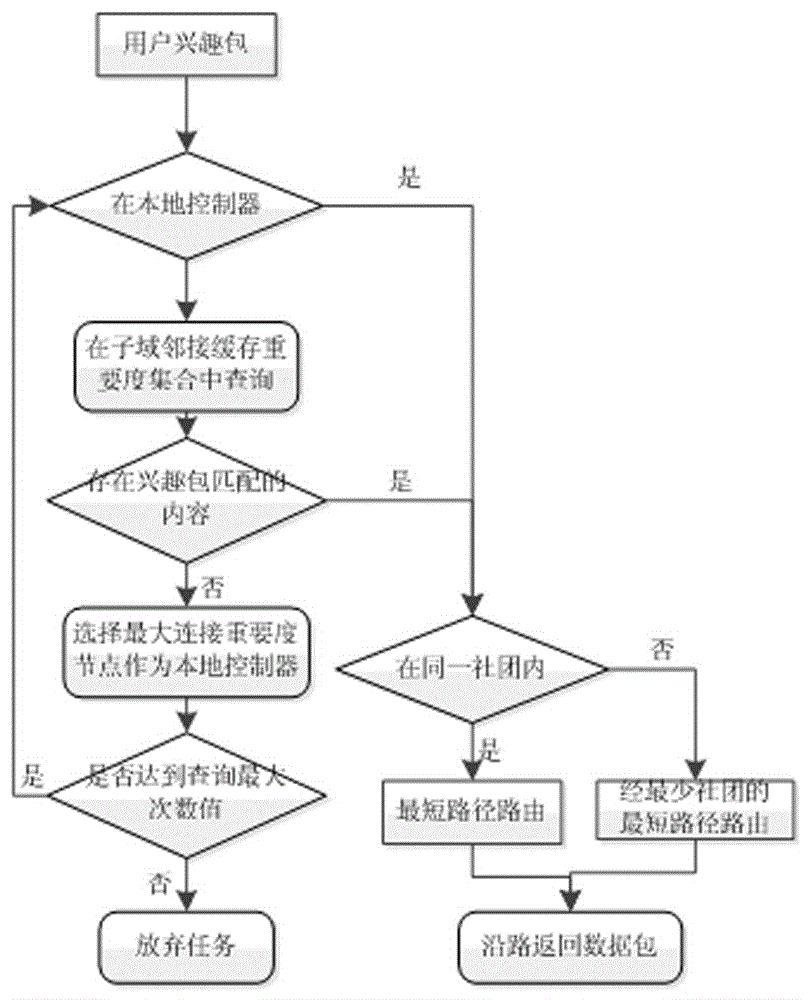

SDN (software-defined networking)-based ICN (information-centric networking) routing method

The invention relates to an SDN (software-defined networking)-based ICN (information-centric networking) routing method. According to the method, the thought of the separation of control and forwarding of SDN (software-defined networking) is fused in an ICN (information-centric networking) structure, network sources and network statuses are sensed, and the content name-based routing of the ICN is realized. The method mainly comprises following two steps: (1) if the interest packet of the query content of the ICN and the content are located in the same controller and are in the same community, a shortest path is adopted to forward the interest packet, if the interest packet of the query content of the ICN and the content are not in the same community, a shortest path which passes the least communities is adopted to forward the interest packet; and (2) if the interest packet of the query content of the ICN and the content are not under the same controller, query is carried out according to the set of adjoining caching capacity importance degrees of current controller, if the content is in a node controlled by a certain adjoining controller, the data packet is returned to a user along a route, if the content is not in nodes controlled by the adjoining controllers, a controller with the largest neighbor connection importance degree is set as the current controller, a next round of query is carried out until the number of the times of query achieves the maximum number of the times of query. With the method adopted, problems such as low routing efficiency and difficult configuration of the ICN can be solved.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

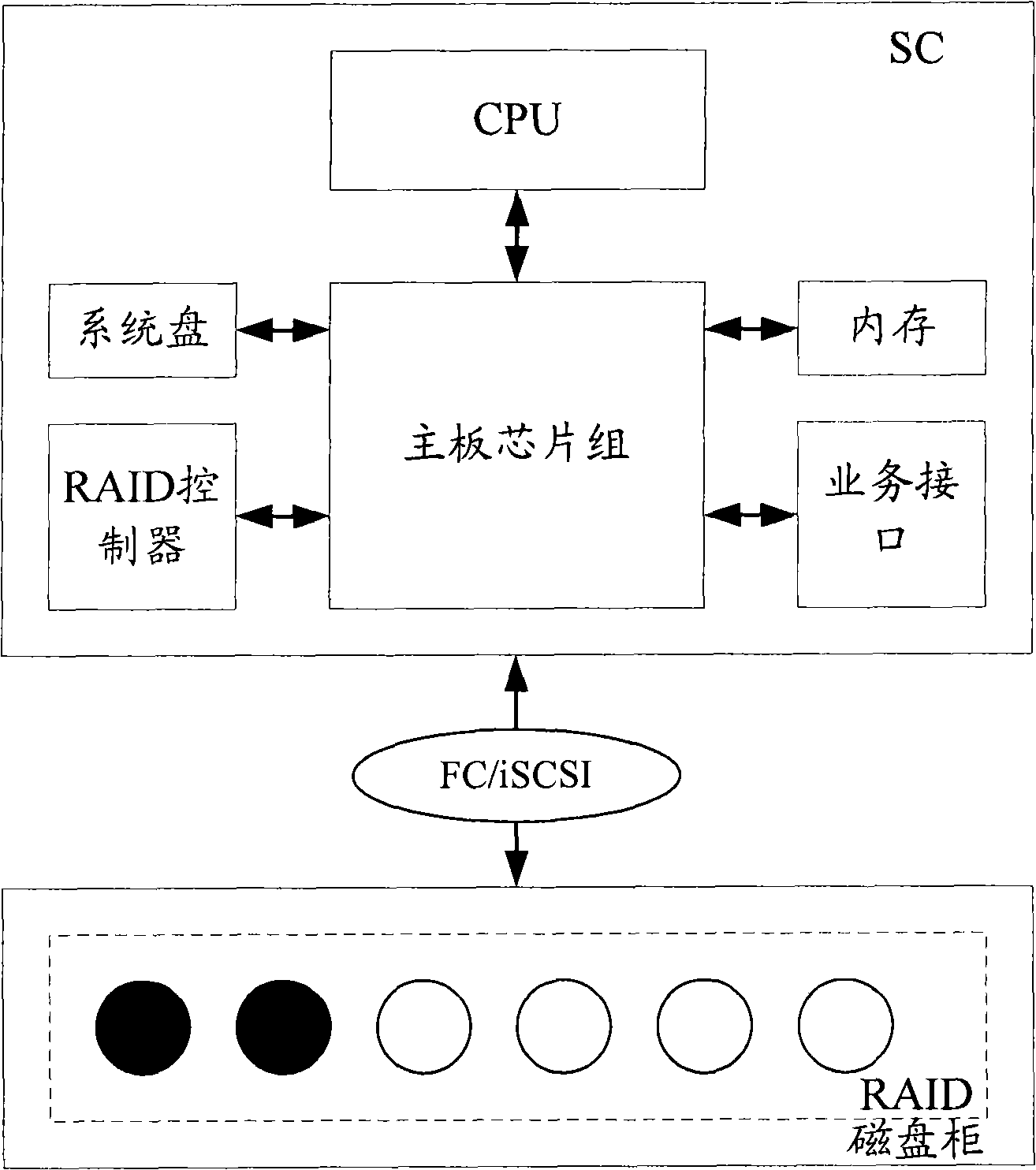

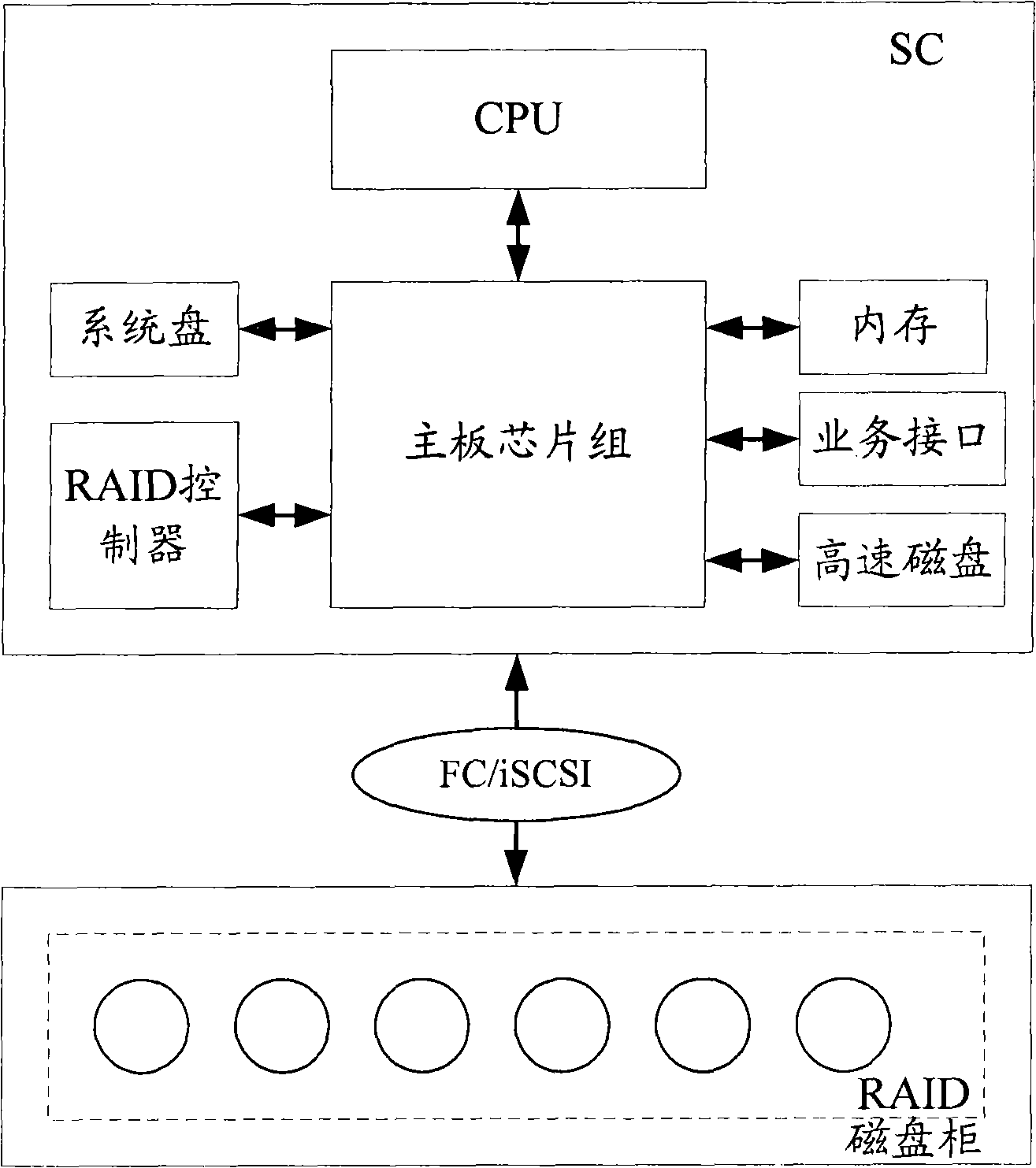

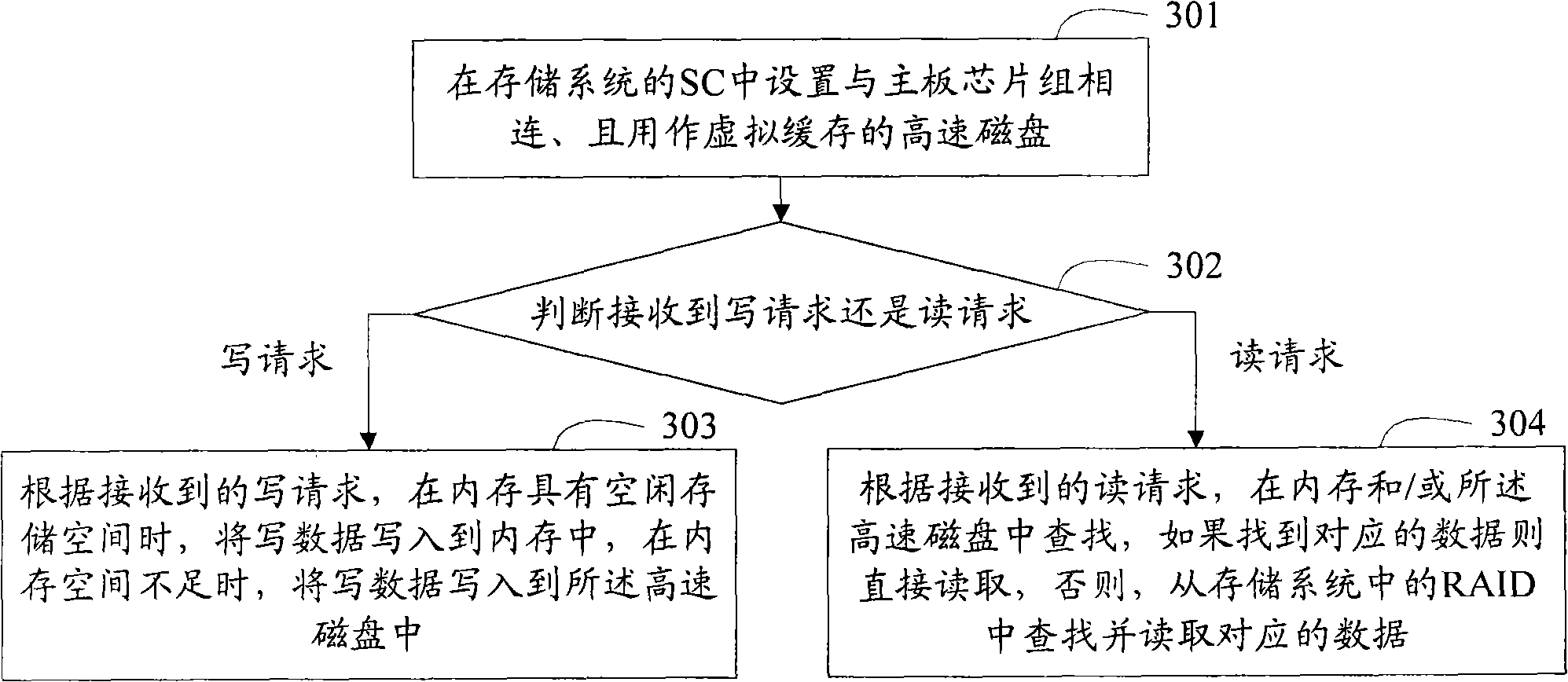

Storage system, storage controller, and cache implementing method in the storage system

InactiveCN101493795AIncrease capacityNo expensive problemsMemory adressing/allocation/relocationControl storeDisk array

The invention discloses a storage system. The interior of a storage controller (SC) is provided with a high-speed disk which is used as a virtual buffer memory, so that the buffer memory capacity can be infinitely expanded through increasing the capacity of the high-speed disk and the size of the equipped virtual buffer memory; therefore, the capacity of the buffer memory can be flexibly expanded without the limits of system hardware; and simultaneously the problem of large cost of data paths caused by repeated data reading and writing during the process of reading and writing the buffer can not appear, the problem of competing resources of the buffer memory with a disk array in a disk cabinet can not appear, and the potential troubles of data safety can be eliminated. That is to say, the invention can realize the capacity expansion of the buffer memory and meanwhile, can avoid the problems with probability of resulting in lowering the performance of the storage system in the existing scheme, and therefore, the performance of the storage system can be improved by the capacity expansion of the buffer memory. The invention further discloses an SC in the storage system and a buffer memory realizing method in the storage system.

Owner:NEW H3C TECH CO LTD

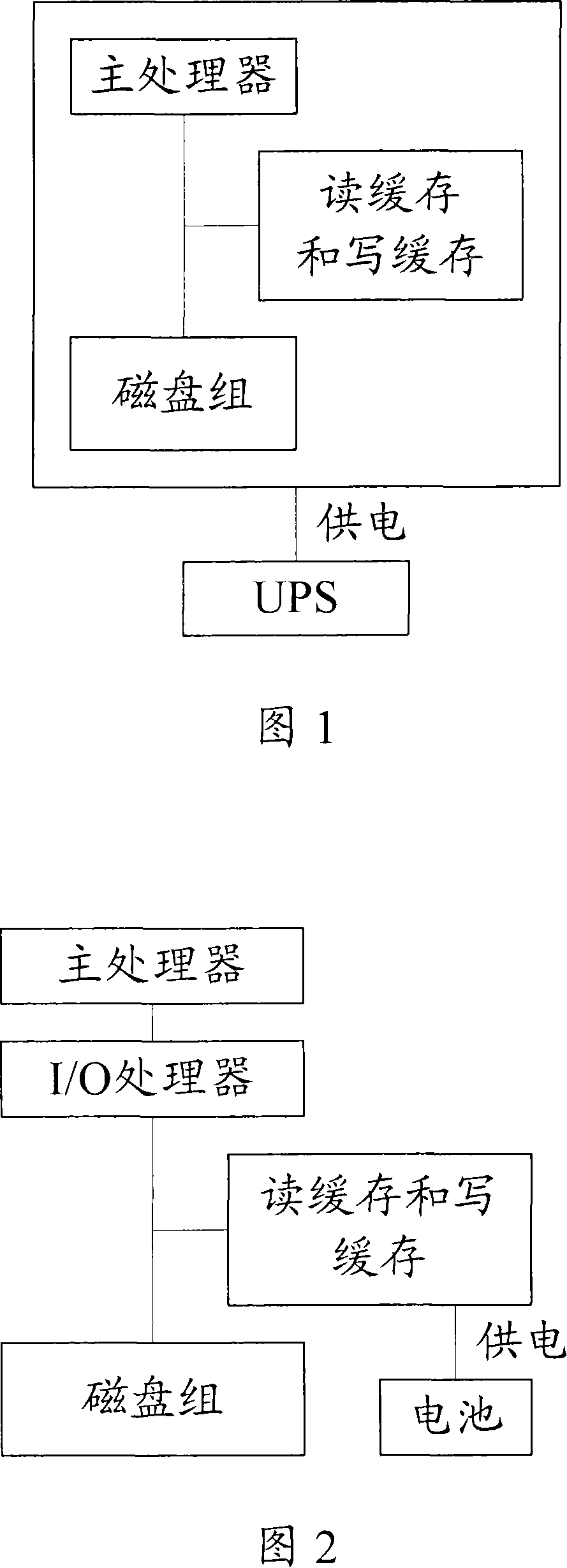

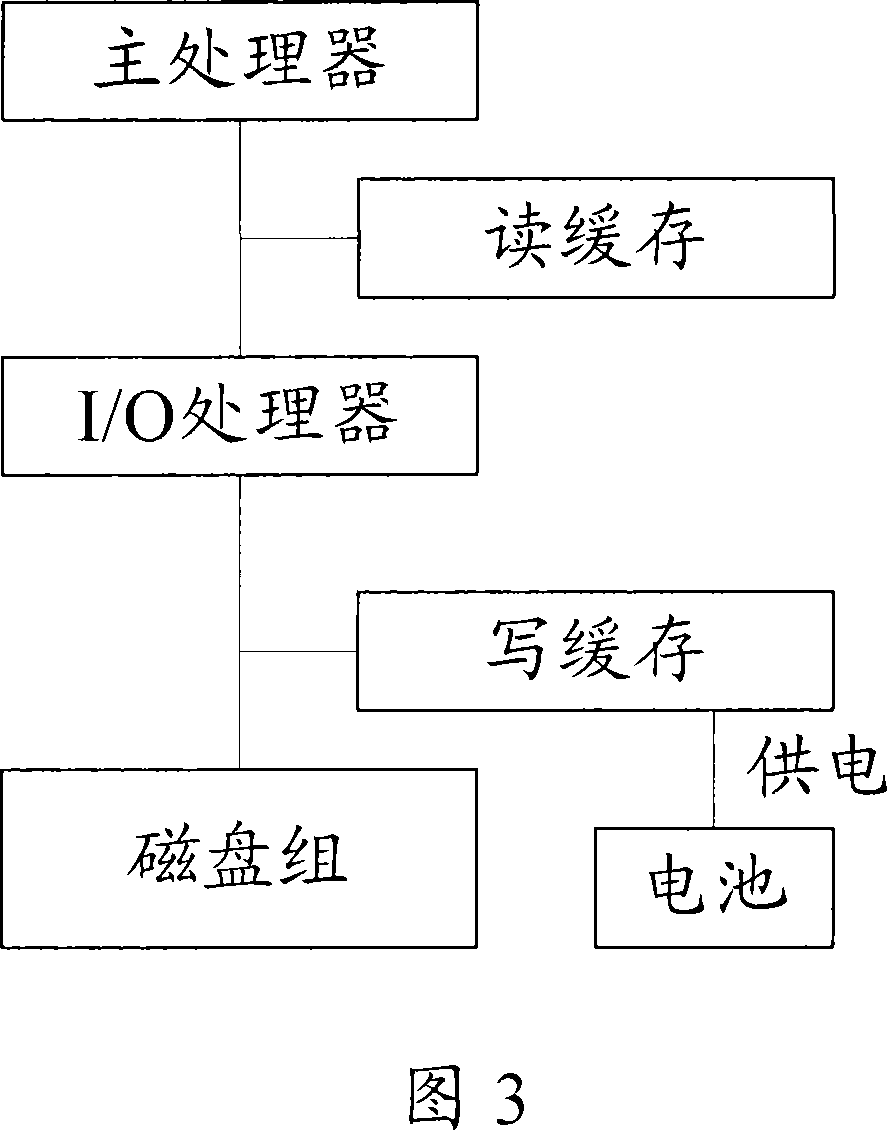

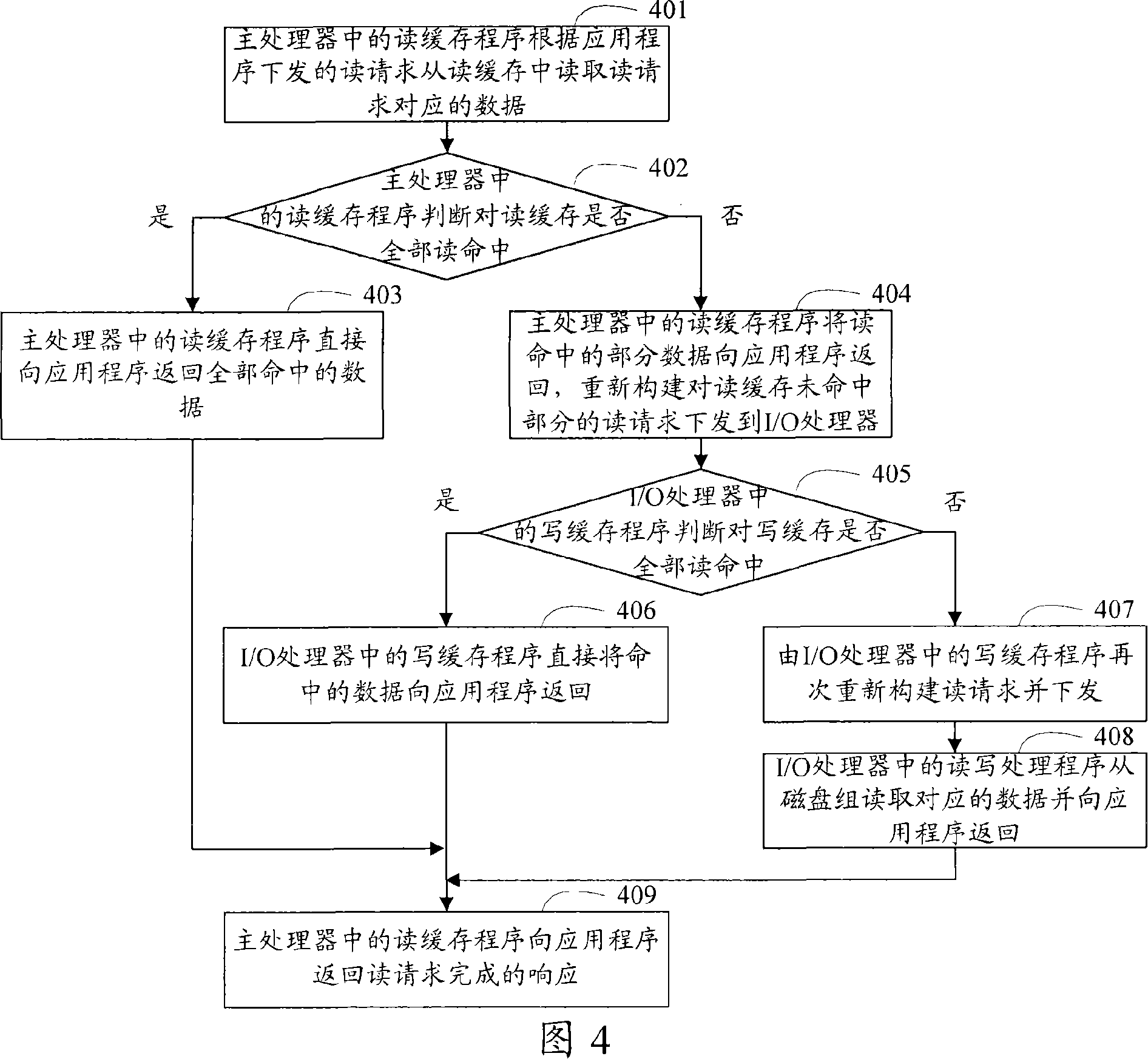

Storage apparatus comprising read-write cache and cache implementation method

InactiveCN101149668AImprove processing efficiencyLarge capacityInput/output to record carriersMemory adressing/allocation/relocationComputer architectureWrite buffer

This invention discloses one read-write buffer storage device and the method of one storage device's read-write buffer to realize. This invention makes the reading buffer and the writing buffer separate, namely carries on the access control for reading buffer and writing buffer separately by the different processors, namely the reading buffer hangs directly under the main processor, thus enhances the processing efficiency of the main processor to the reading buffer, and the main processor can support the big memory space, thus guarantees the reading buffer have large capacity, then, improves the processing efficiency of the main processor to the reading buffer; The writing buffer hangs under the I / O processor, the I / O processor's necessary battery supply circuit realizes to supply the writing buffer power continually, and the writing buffer doesn't need to share the limited memory space the I / O processor can support with the reading buffer, thus the processing efficiency of the main processor to the writing buffer can be improved as a result of the writing buffer capacity enhancement.

Owner:NEW H3C TECH CO LTD

Method to improve cache capacity of SOI and bulk

InactiveUS20050073874A1High voltage thresholdSmall sizeSolid-state devicesSemiconductor/solid-state device manufacturingEngineeringSmall cell

Methods for designing a 6T SRAM cell having greater stability and / or a smaller cell size are provided. A 6T SRAM cell has a pair access transistors (NFETs), a pair of pull-up transistors (PFETs), and a pair of pull-down transistors (NFETs), wherein the access transistors have a higher threshold voltage than the pull-down transistors, which enables the SRAM cell to effectively maintain a logic “0” during access of the cell thereby increasing the stability of the cell, especially for cells during “half select.” Further, a channel width of a pull-down transistor can be reduced thereby decreasing the size of a high performance six transistor SRAM cell without effecting cell the stability during access. And, by decreasing the cell size, the overall design layout of a chip may also be decreased.

Owner:INTEL CORP

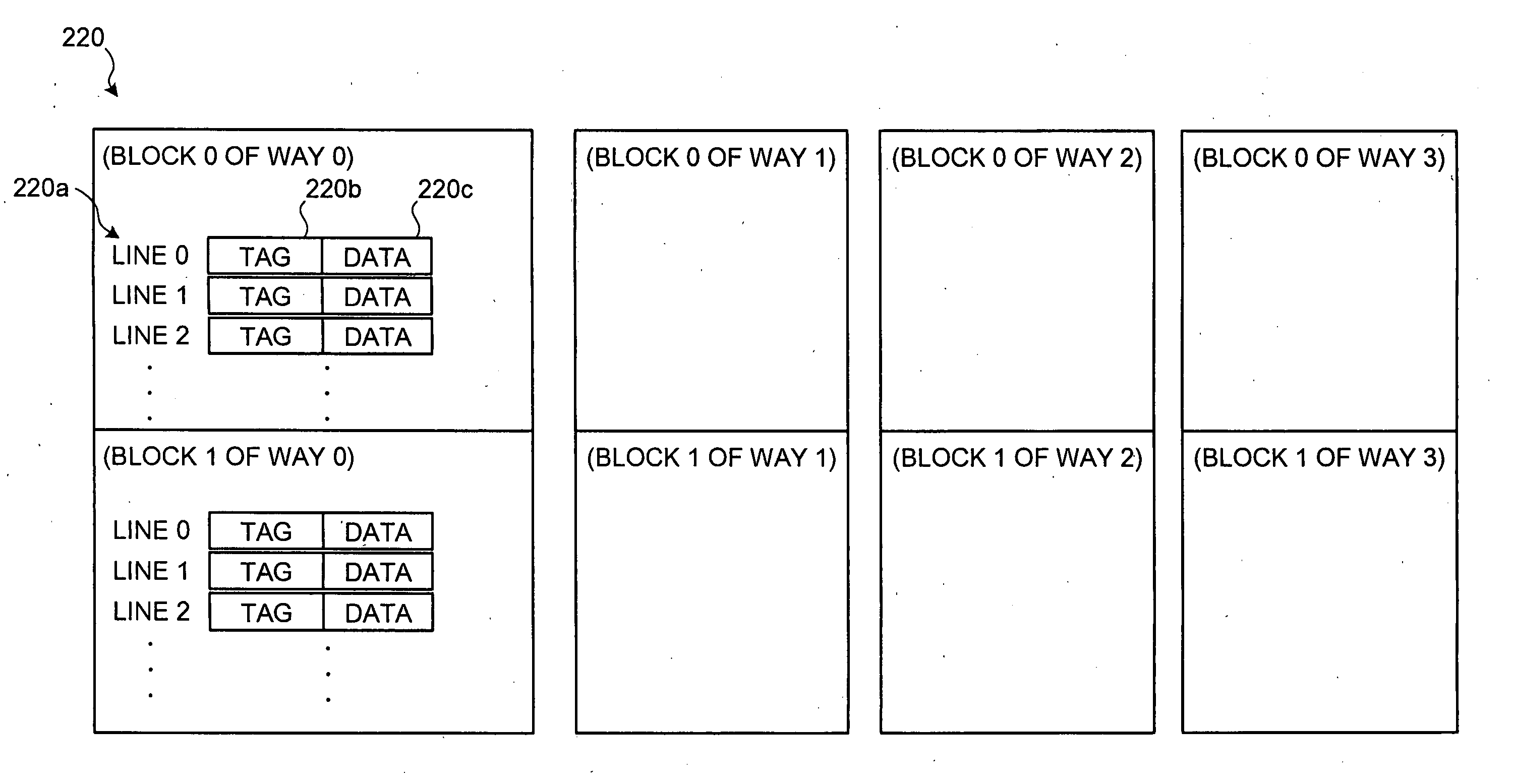

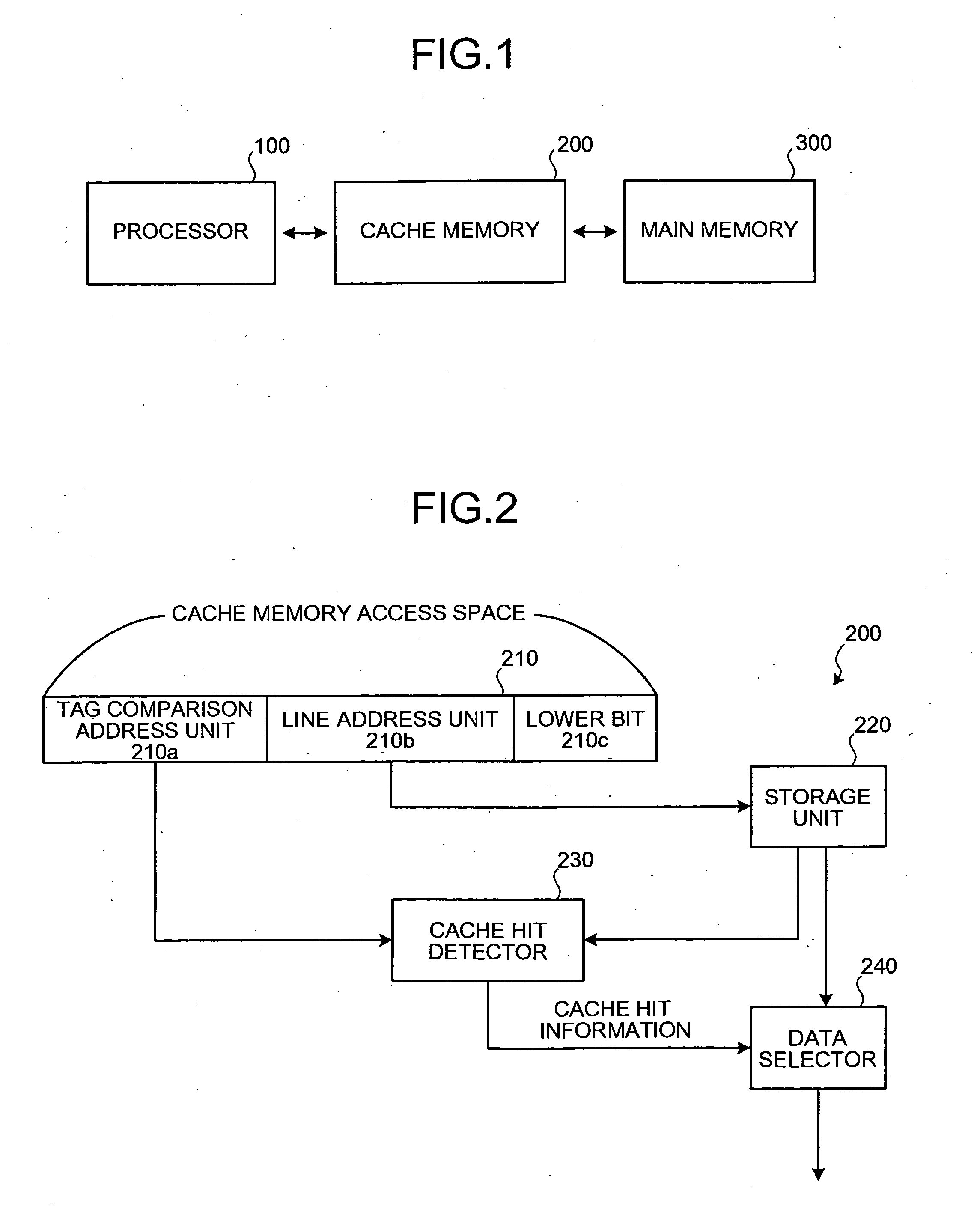

Cache memory and method of controlling memory

InactiveUS20060026356A1Memory architecture accessing/allocationMemory systemsParallel computingTerm memory

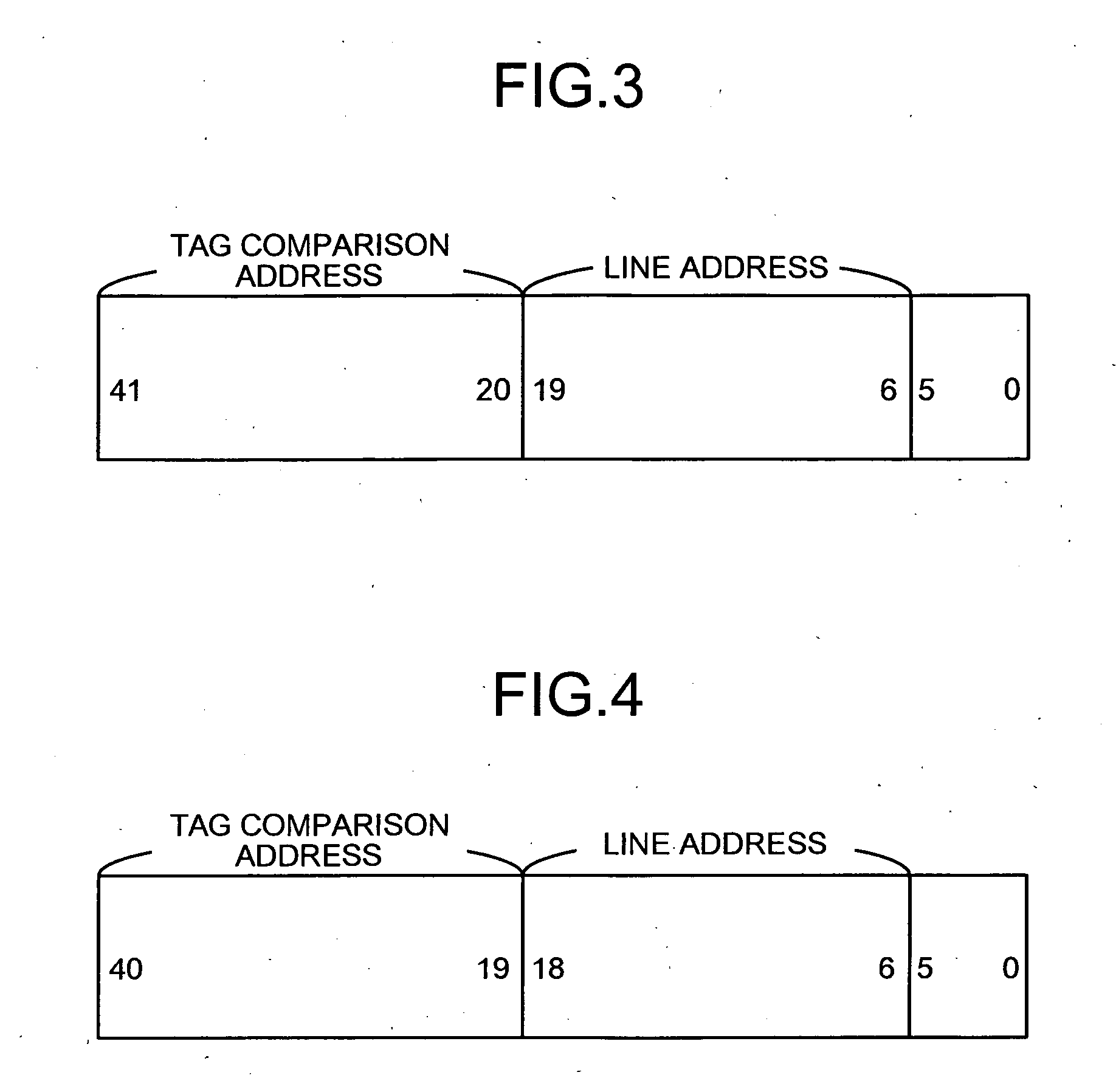

A cacheable memory access space receives memory access addresses having different data structures according to a status of a cache capacity from a processor. A cache hit detector determines whether data has been hit based on a mode signal, an enbblk [n] signal, and a signal indicating whether the way is valid or invalid, which are preset in the cache hit detector, a tag comparison address received from the cacheable memory access space, and a tag received from the storage unit.

Owner:FUJITSU LTD

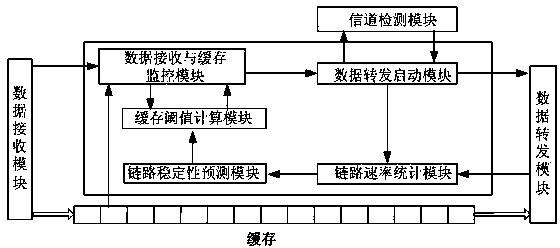

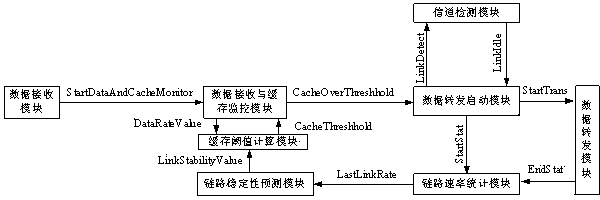

Control system and method for node data caching and forwarding of wireless sensor network

InactiveCN103401804AImplement Adaptive AggregationDeliver on demandEnergy efficient ICTNetwork topologiesWireless mesh networkWireless sensor network

The invention discloses a control system for node data caching and forwarding of a wireless sensor network. The system comprises a cache threshold calculating module, a data reception and cache monitoring module, a data forwarding starting module, a link speed counting module and a link stability predicting module, wherein the cache threshold calculating module calculates a cache threshold of an update node, the data reception and buffer monitoring module monitors buffer capacity and a data reception module, the data forwarding starting module starts a data forwarding module, the link speed counting module counts the link speed of a data forwarding process after data forwarding is completed, and the link stability predicating module predicts the link speed of data forwarding in the next time. The control system can realize self-adaptive polymerization of sensor node data, and reduces problems of energy consumption and package loss due to frequency data transmission. The invention also discloses a control method based on the control system for node data caching and forwarding of the wireless sensor network.

Owner:PLA UNIV OF SCI & TECH

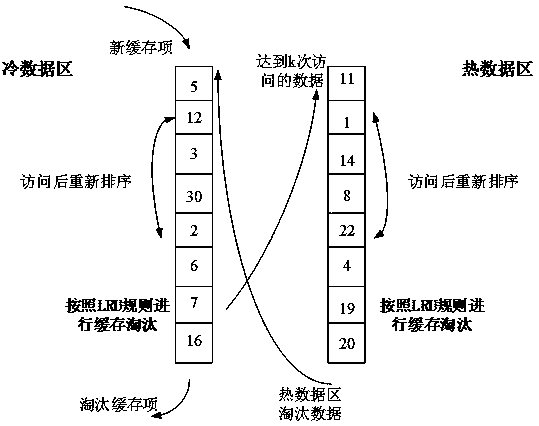

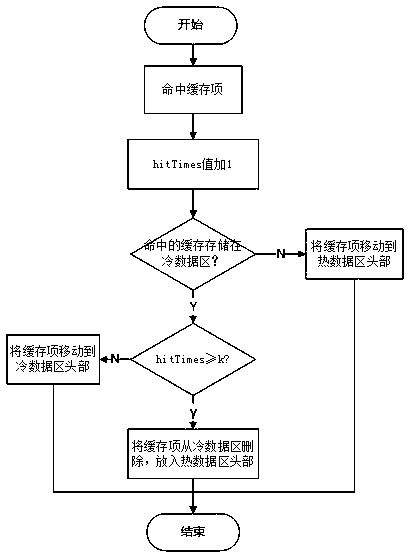

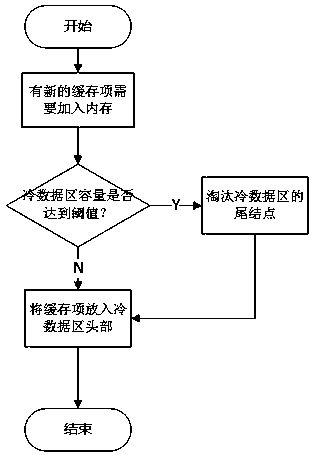

Dynamically adjusted cache data management and elimination method

PendingCN111159066AAvoid pollutionAvoid replacementMemory architecture accessing/allocationMemory systemsParallel computingEngineering

The invention discloses a dynamically adjusted cache data management and elimination method. According to the invention, the position of the cache item in the memory is dynamically adjusted accordingto the access time and hit frequency attribute of the cache item. The memory is divided into a hot data area and a cold data area, the cache items with high hit frequency and short access time are kept at the front part of the hot data area, and the cache items with low hit frequency and long access time are kept at the tail part of the cold data area. When the cache capacity reaches a threshold value and data needs to be eliminated, cache items at the tail part of the cold data area is directly deleted. Accurate elimination of data is realized through dynamic adjustment of the cold and hot data area, the proportion of hotspot data in cache is increased, the cache pollution problem is relieved, and the cache hit rate is increased.

Owner:HANGZHOU DIANZI UNIV

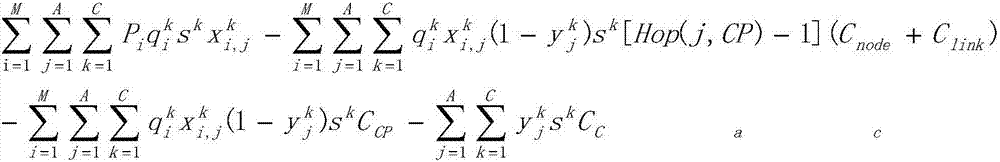

Construction method of ISP and CP combined content distribution mechanism based on edge cache

InactiveCN107483630AReduce redundant transmissionImprove experienceSelective content distributionData switching networksContent distributionEdge based

The invention discloses a construction method of an ISP and CP combined content distribution mechanism based on edge cache. The method comprises the following steps: acquiring network content request data, and extracting content related information; analyzing a network content popularity feature, and designing a content popularity model; deploying the content at a network edge according to the content popularity model; counting the load of a CP content source server, and computing CP service cost; analyzing the load of an ISP network node and the traffic transmitted by a link, and computing ISP network service cost; computing the cost caused by cache importing according to the deployed cache capacity; constructing a yield model of the ISP and CP combined service based on the edge cache; and analyzing the relation among various factors in the designed model, and verifying the effectiveness of the designed model in a simulation way.

Owner:BEIJING UNIV OF TECH

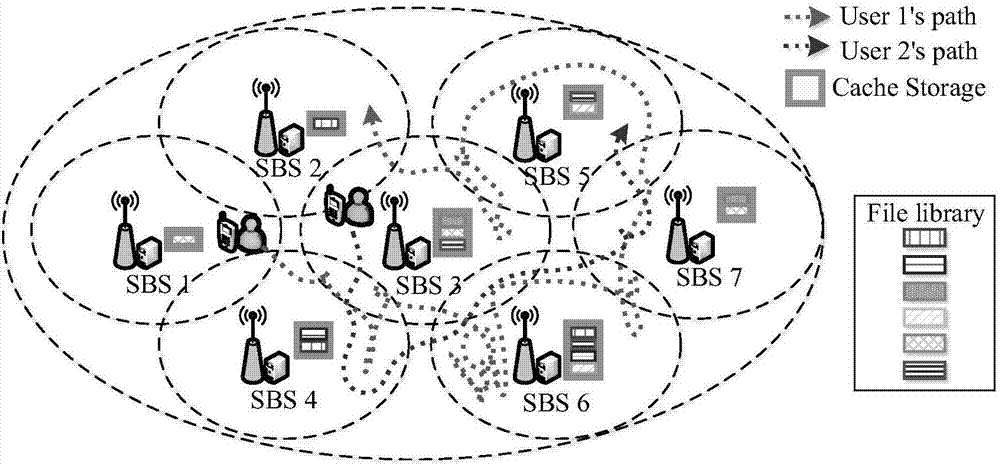

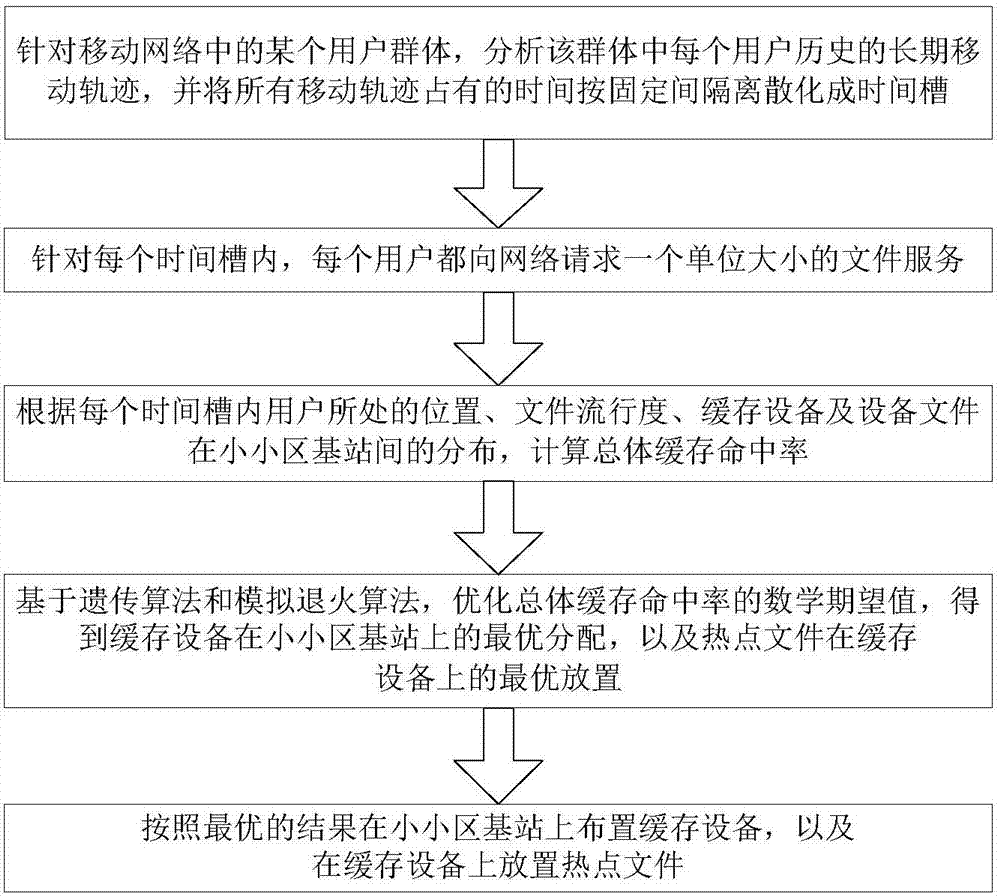

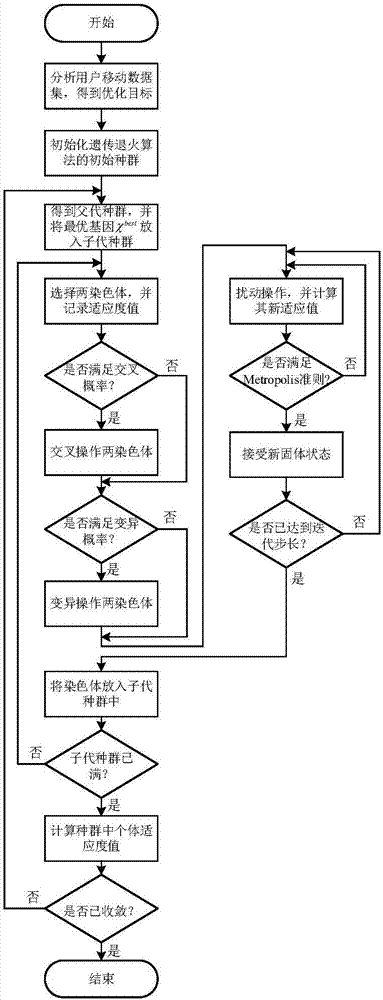

Tiny cell cache device allocation algorithm based on user mobility

ActiveCN107466016AImprove cache hit ratioImprove cache hit rate performanceLocation information based serviceData setParallel computing

The invention discloses a tiny cell cache device allocation algorithm based on user mobility and belongs to the field of wireless communication. The method comprises the following steps: performing data set analysis on a long-term user group mobility track at an initialization phase, and dividing system time of a user mobility track data set into discrete time slots according to certain time intervals; enabling the user to request a file once in each time slot, and calculating the total cache hit rate; converting a cache allocation problem into an integer programming problem; searching an optimal solution of the initial allocation problem of cache device capacity in a solution space by using a genetic-annealing algorithm, wherein the optional solution comprises specially optimized design operations such as an a fitness function, a selection operation and an interlace operation; and outputting the allocation of device files among tiny cell base stations if the solution is converged, and allocating the cache device among the tiny cell base stations according to the allocation, thereby obtaining the optimal cache device allocation scheme. Therefore, the cache hit rate performance of users is improved, and the device installation cost is effectively saved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com