Method and device for managing memory resources in cluster system, and network system

A cluster system, memory resource technology, applied in transmission systems, storage systems, data exchange networks, etc., can solve the problem that idle memory resources are not used, the utilization rate of memory resources in cluster systems is low, and memory servers are difficult to meet large-capacity requirements. Memory requirements and other issues to meet the needs of large-capacity memory and improve utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

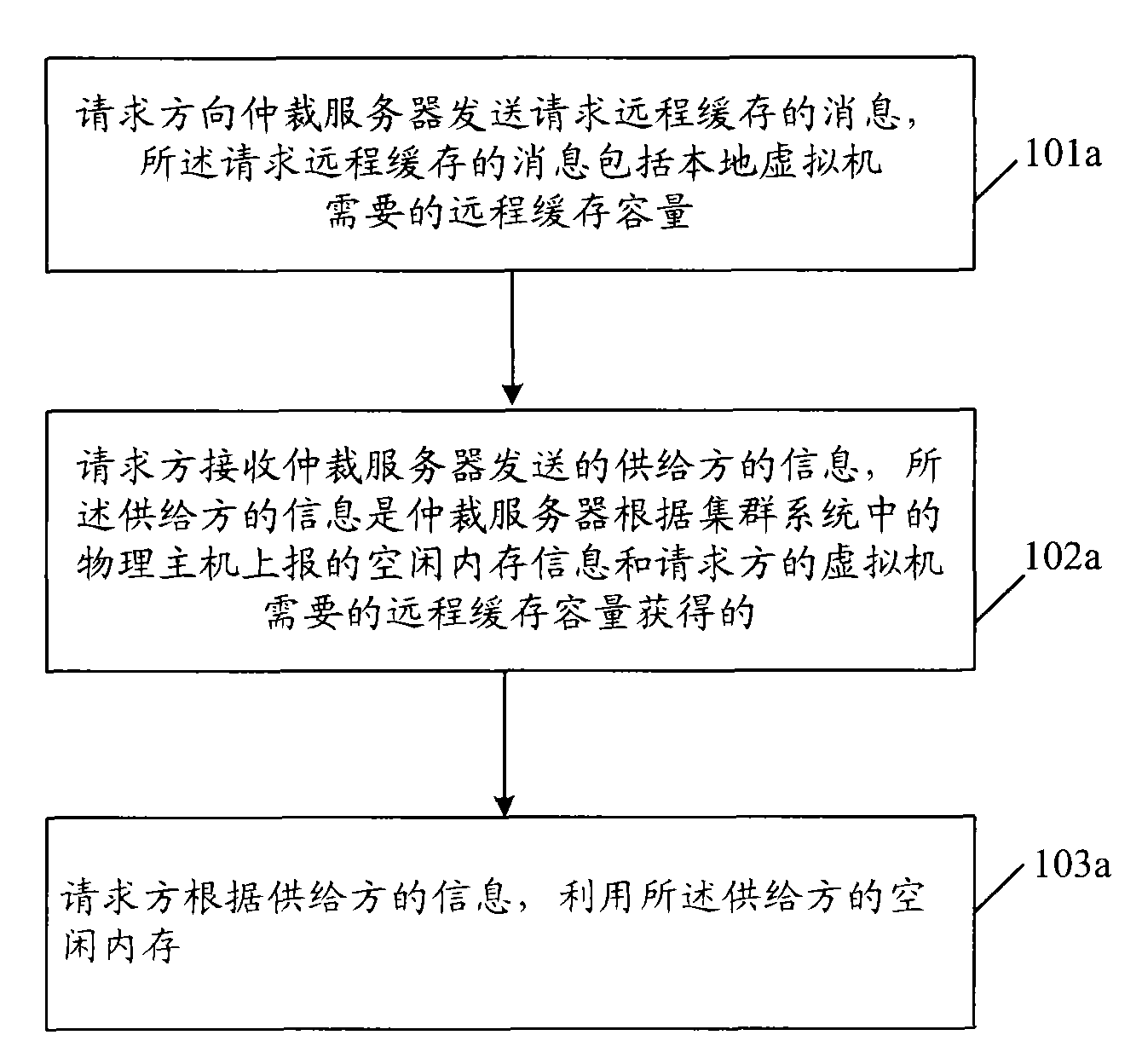

[0039] refer to Figure 1a , Embodiment 1 of the present invention provides a method for managing memory resources in a cluster system, the method comprising:

[0040] 101a. The requester sends a remote cache request message to the arbitration server, where the remote cache request message includes the remote cache capacity required by the local virtual machine.

[0041] 102a. The requester receives the supplier's information sent by the arbitration server, and the supplier's information is the free memory information reported by the arbitration server according to the physical host in the cluster system and the remote cache capacity required by the local virtual machine acquired.

[0042] 103a. The requester uses the free memory of the provider according to the information of the provider.

[0043] Wherein, the requester and the provider are different physical hosts in the cluster system.

[0044] In Embodiment 1 of the present invention, the requester receives the informat...

Embodiment 2

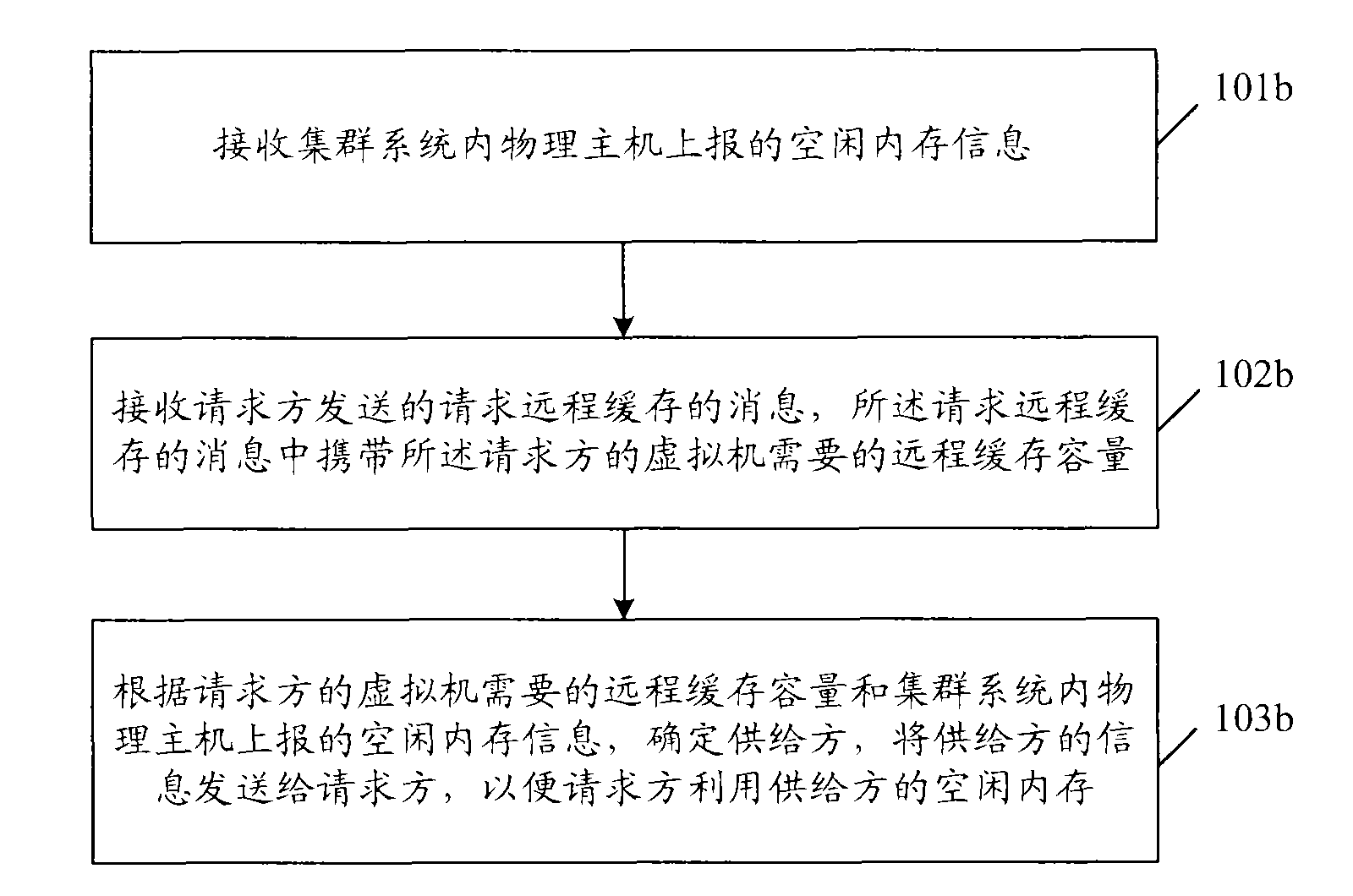

[0046] refer to Figure 1b , Embodiment 2 of the present invention provides a method for managing memory resources in a cluster system, the method comprising:

[0047] 101b. Receive free memory information reported by physical hosts in the cluster system;

[0048] 102b. Receive a message requesting remote cache sent by the requester, where the message requesting remote cache carries the remote cache capacity required by the virtual machine of the requester;

[0049] 103b. Determine the provider according to the remote cache capacity required by the virtual machine of the requester and the free memory information reported by the physical host in the cluster system, and send the information of the provider to the requester, so that the requester The free memory of the provider is utilized, wherein the requester and the provider are different physical hosts in the cluster system.

[0050]The subject of execution of the method is the arbitration server, and the arbitration serve...

Embodiment 3

[0054] refer to figure 2 , Embodiment 3 of the present invention provides a method for managing memory resources in a cluster system. The method uses a fast switching network to migrate the page content of the requester to the free memory of the provider for storage. The method includes:

[0055] 201. Each physical host in the cluster system predicts the size of the free memory it can provide according to the operation status of its own virtual machine, and periodically sends a heartbeat message to the arbitration server, and the heartbeat message carries the size of the free memory; the arbitration server can use a The data structure or data table saves the free memory size of each physical host, and periodically updates the free memory size of each physical host.

[0056] In this step, the physical hosts in the cluster system can use the following methods to predict the size of free memory they can provide:

[0057] The guest operating system Guest OS on the physical host ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com