Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

494 results about "Host memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Consumed Host Memory usage is defined as the amount of host memory that is allocated to the virtual machine. Active Guest Memory is defined as the amount of guest memory that is currently being used by the guest operating system and its applications.

TCP host

ActiveUS20060075119A1Eliminate needImproves host performanceMultiple digital computer combinationsTransmissionApplication softwareHost memory

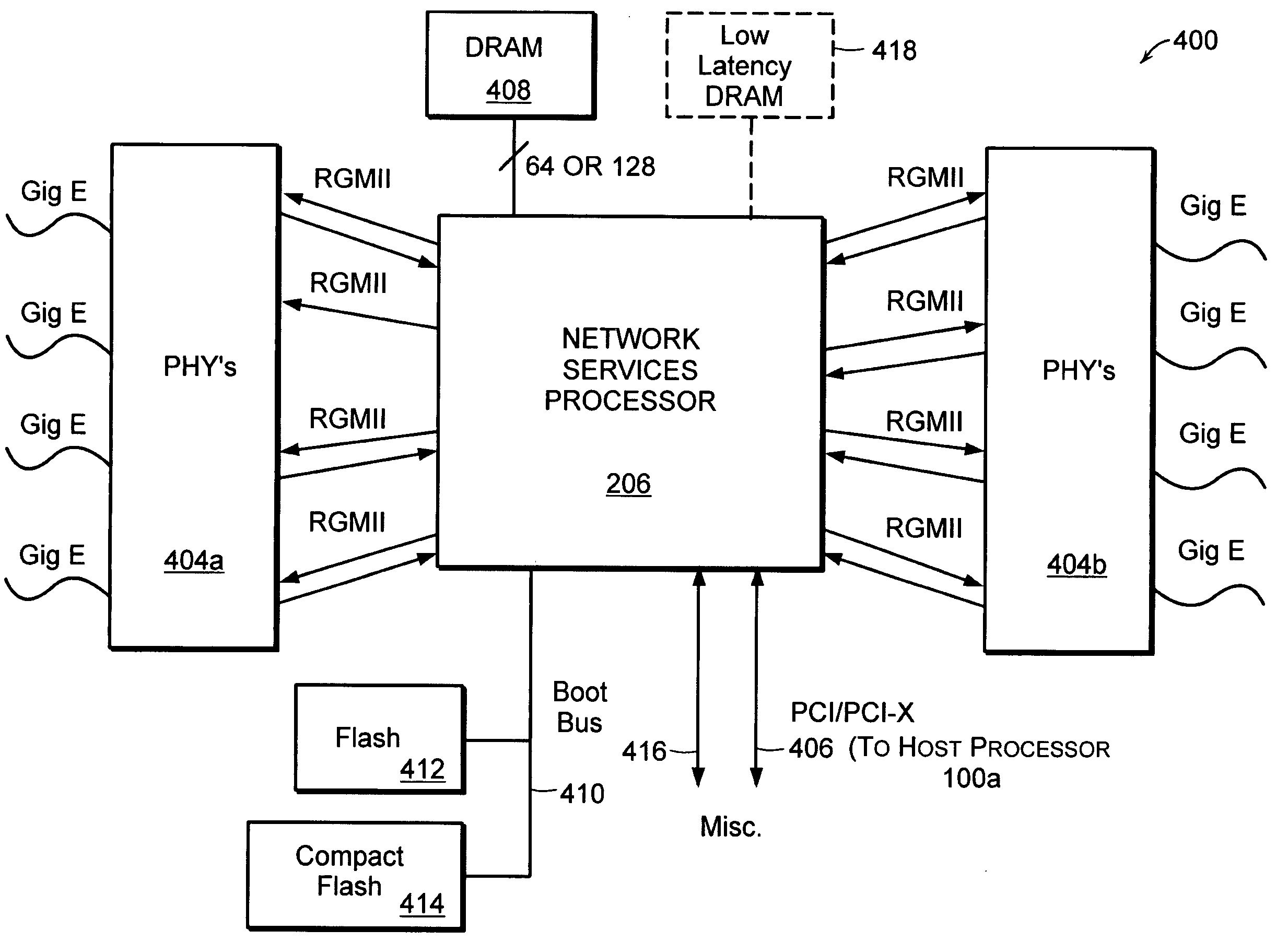

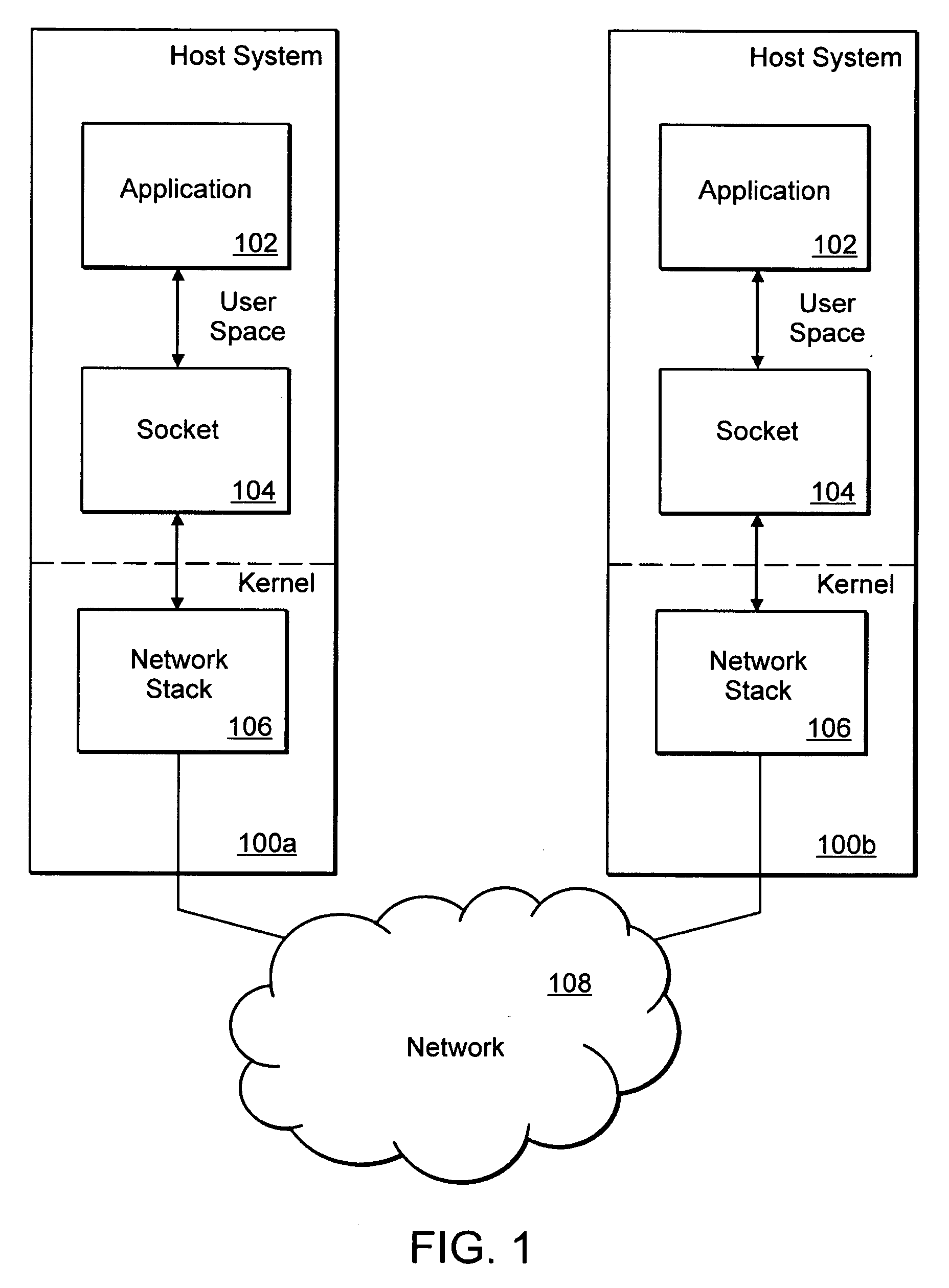

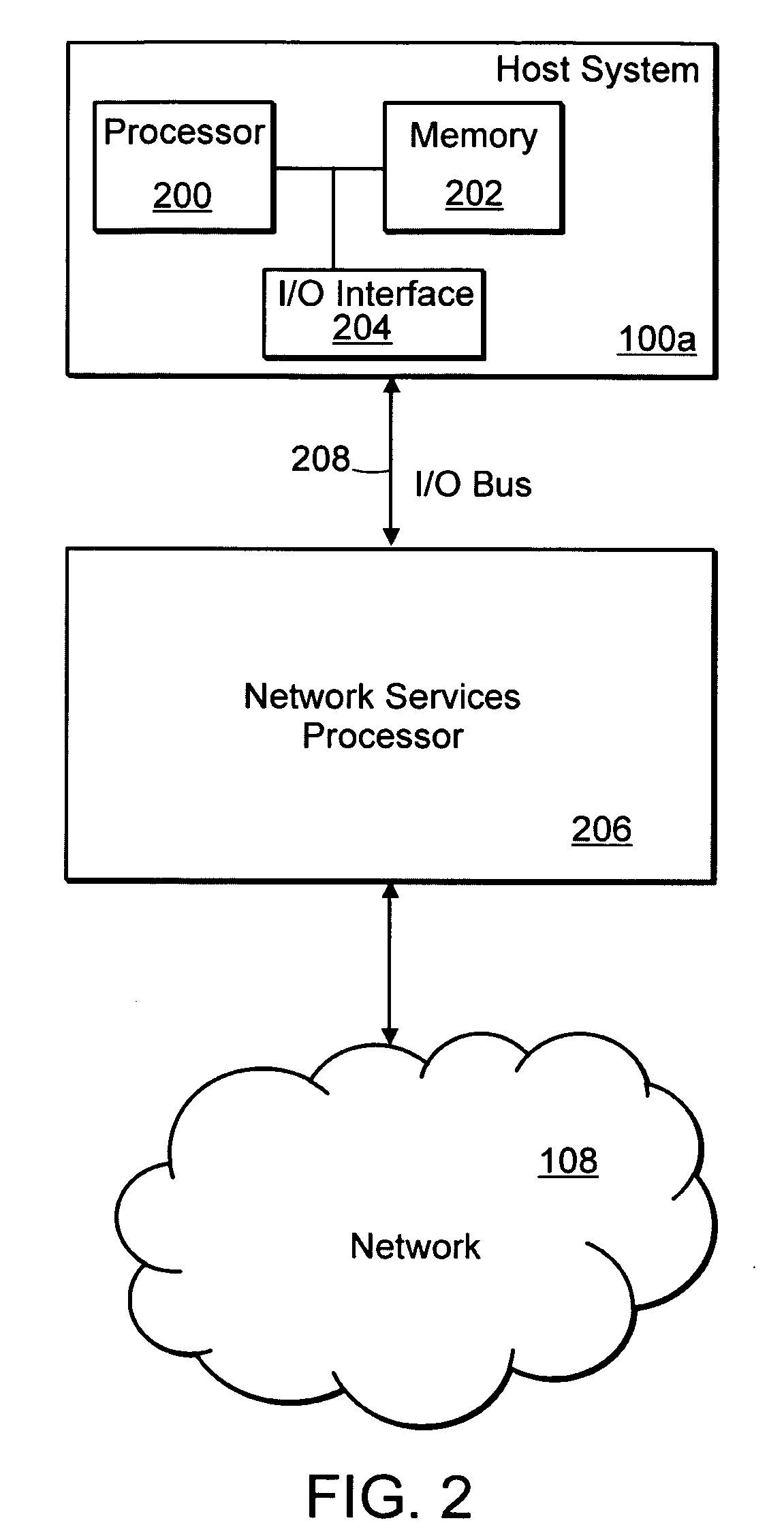

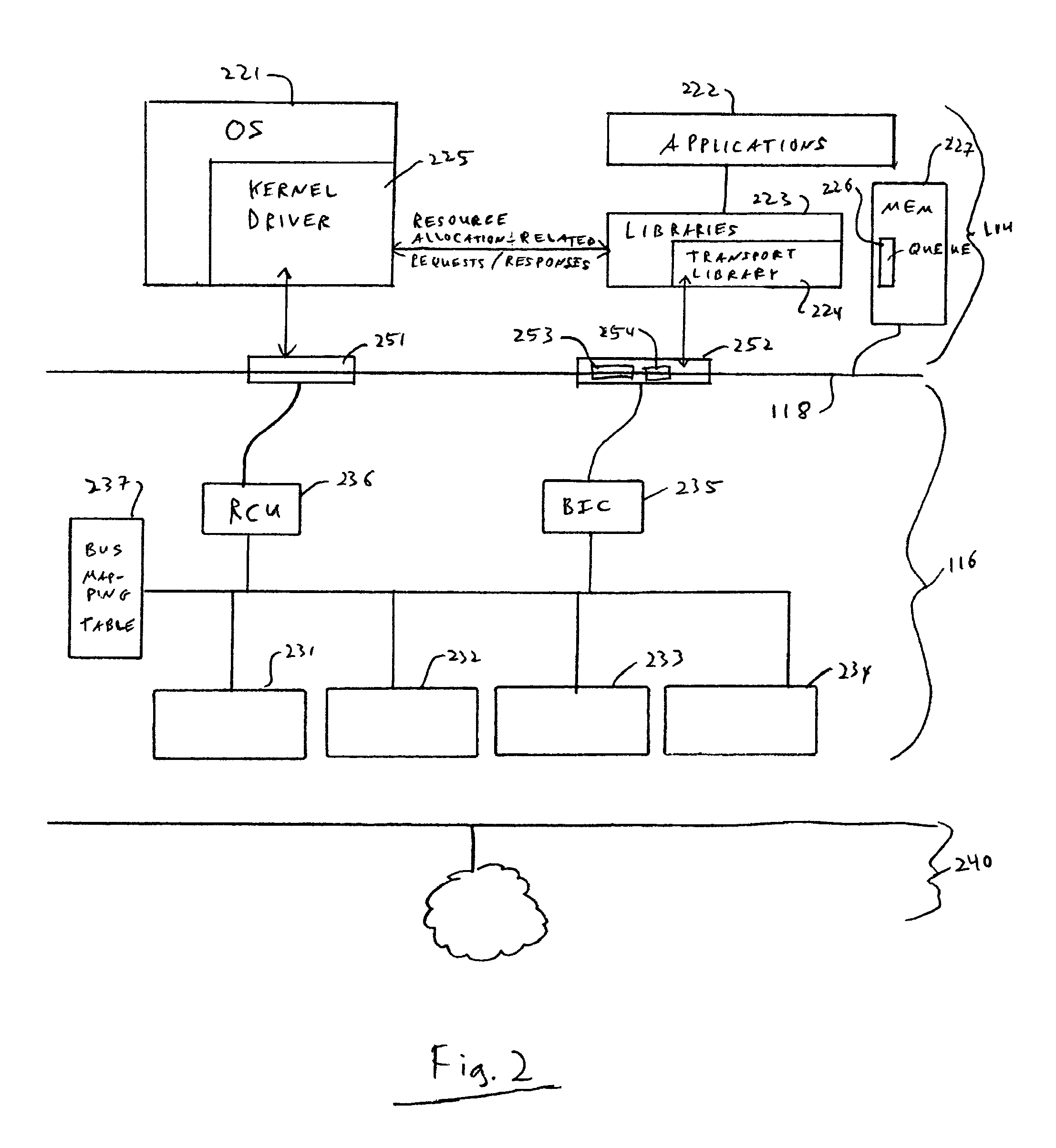

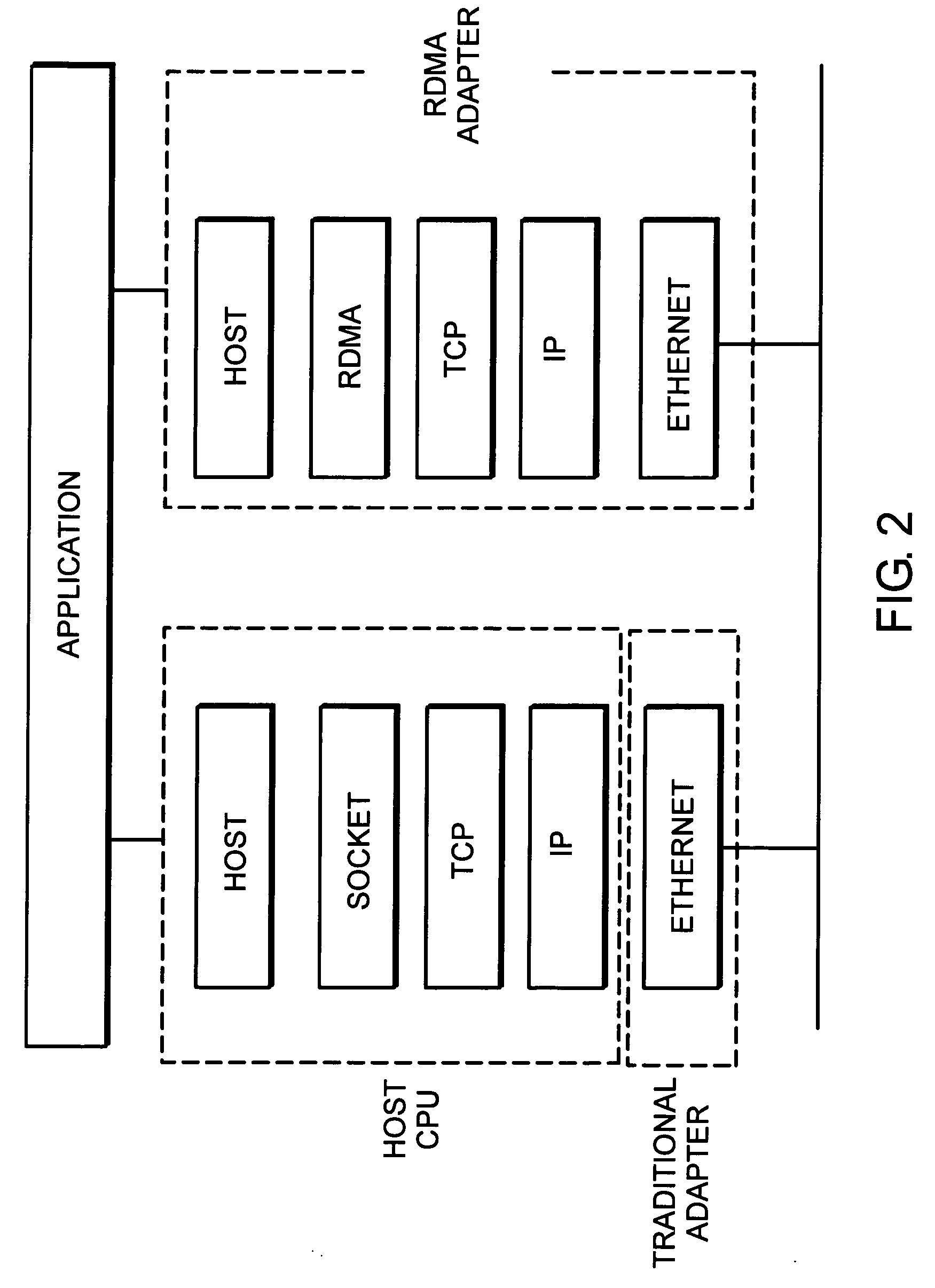

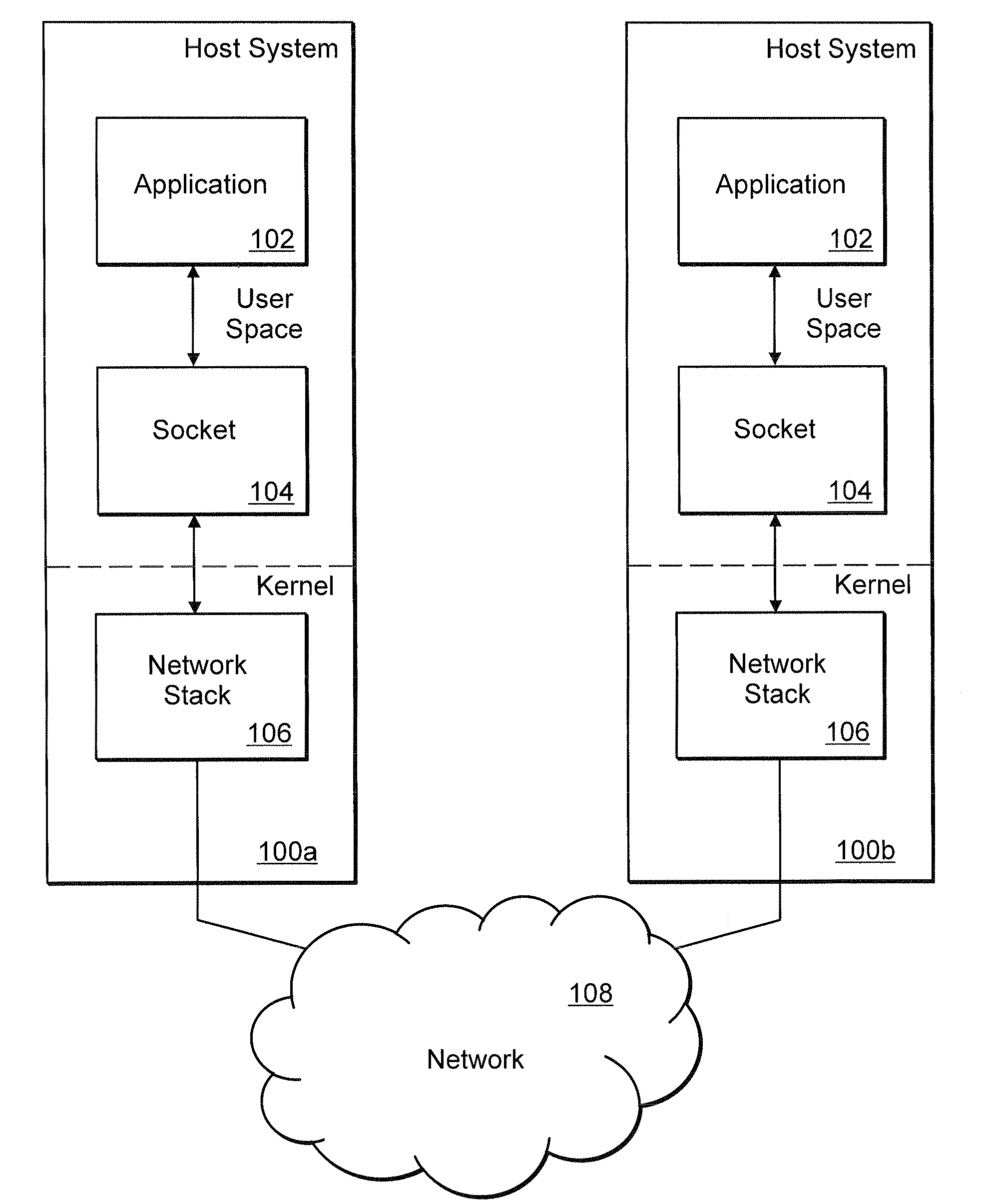

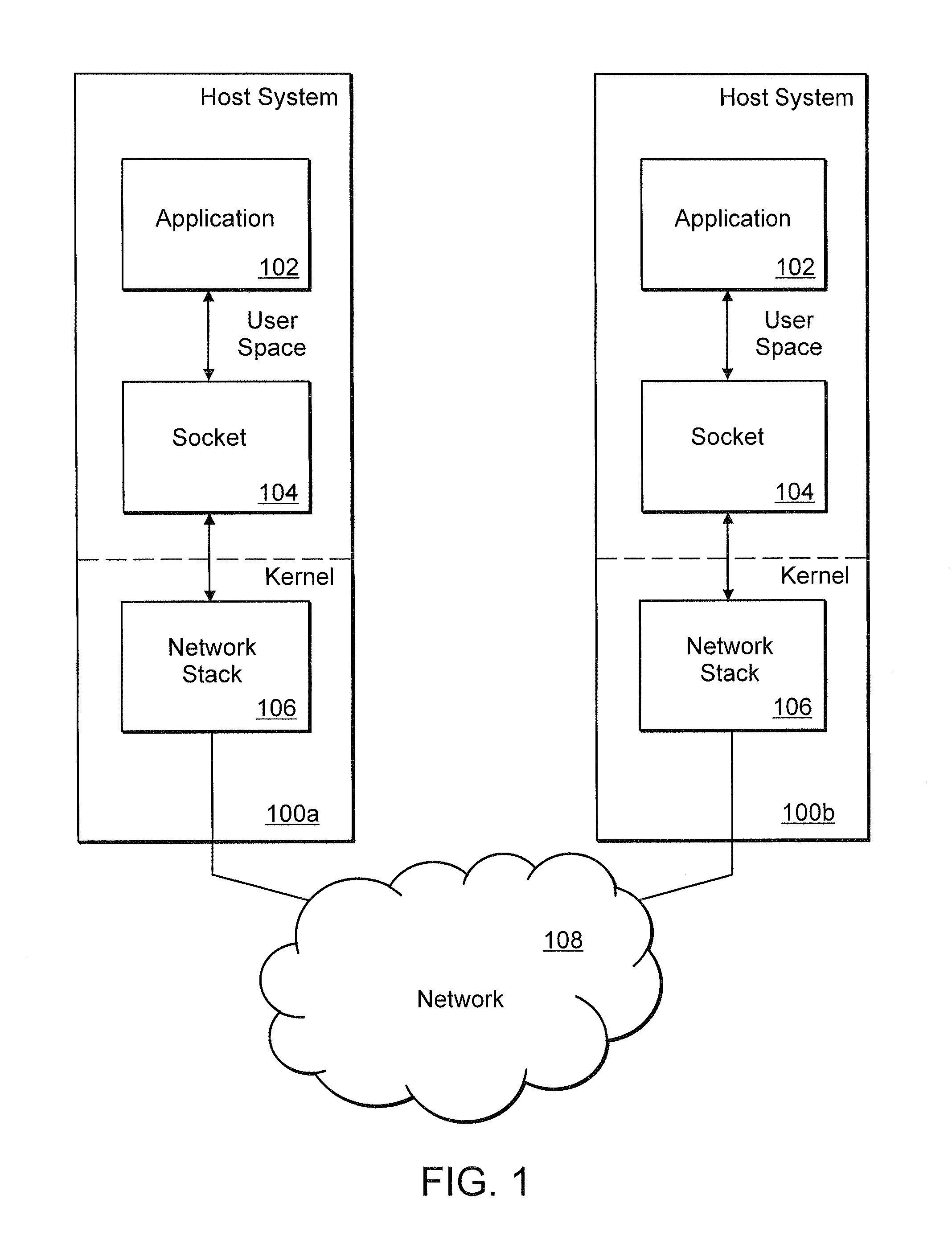

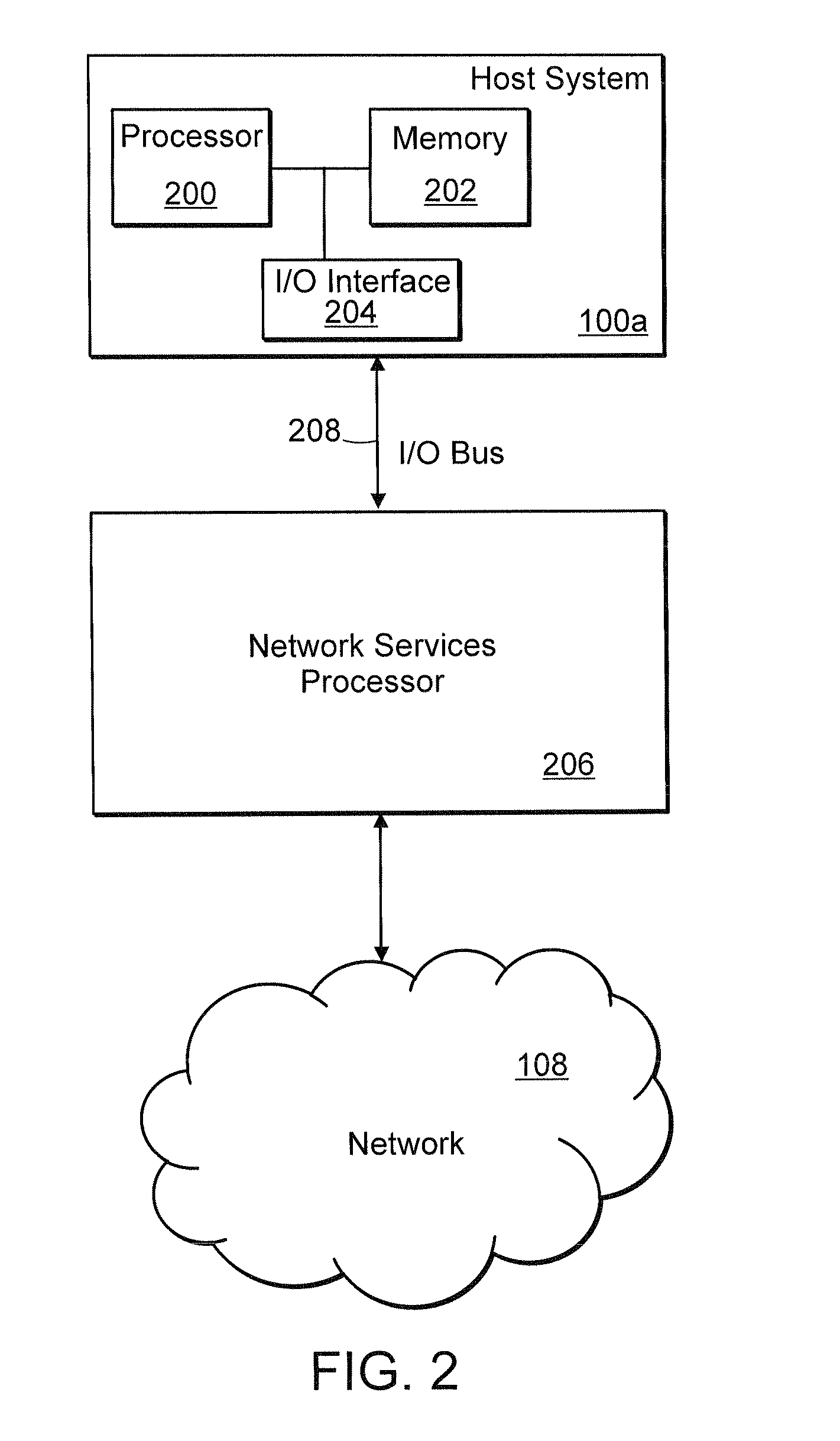

A network application executing on a host system provides a list of application buffers in host memory stored in a queue to a network services processor coupled to the host system. The application buffers are used for storing data transferred on a socket established between the network application and a remote network application executing in a remote host system. Using the application buffers, data received by the network services processor over the network is transferred between the network services processor and the application buffers. After the transfer, a completion notification is written to one of the two control queues in the host system. The completion notification includes the size of the data transferred and an identifier associated with the socket. The identifier identifies a thread associated with the transferred data and the location of the data in the host system.

Owner:MARVELL ASIA PTE LTD

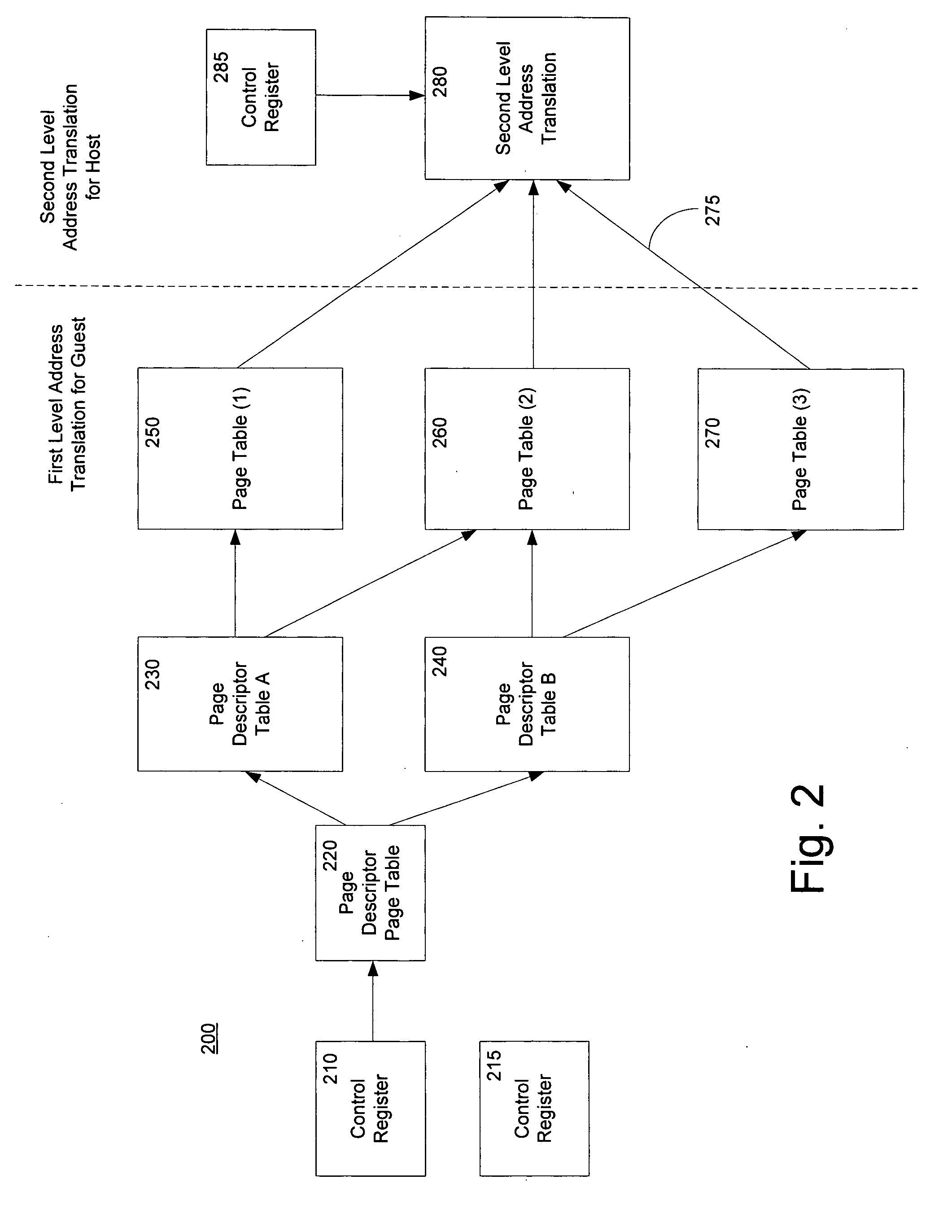

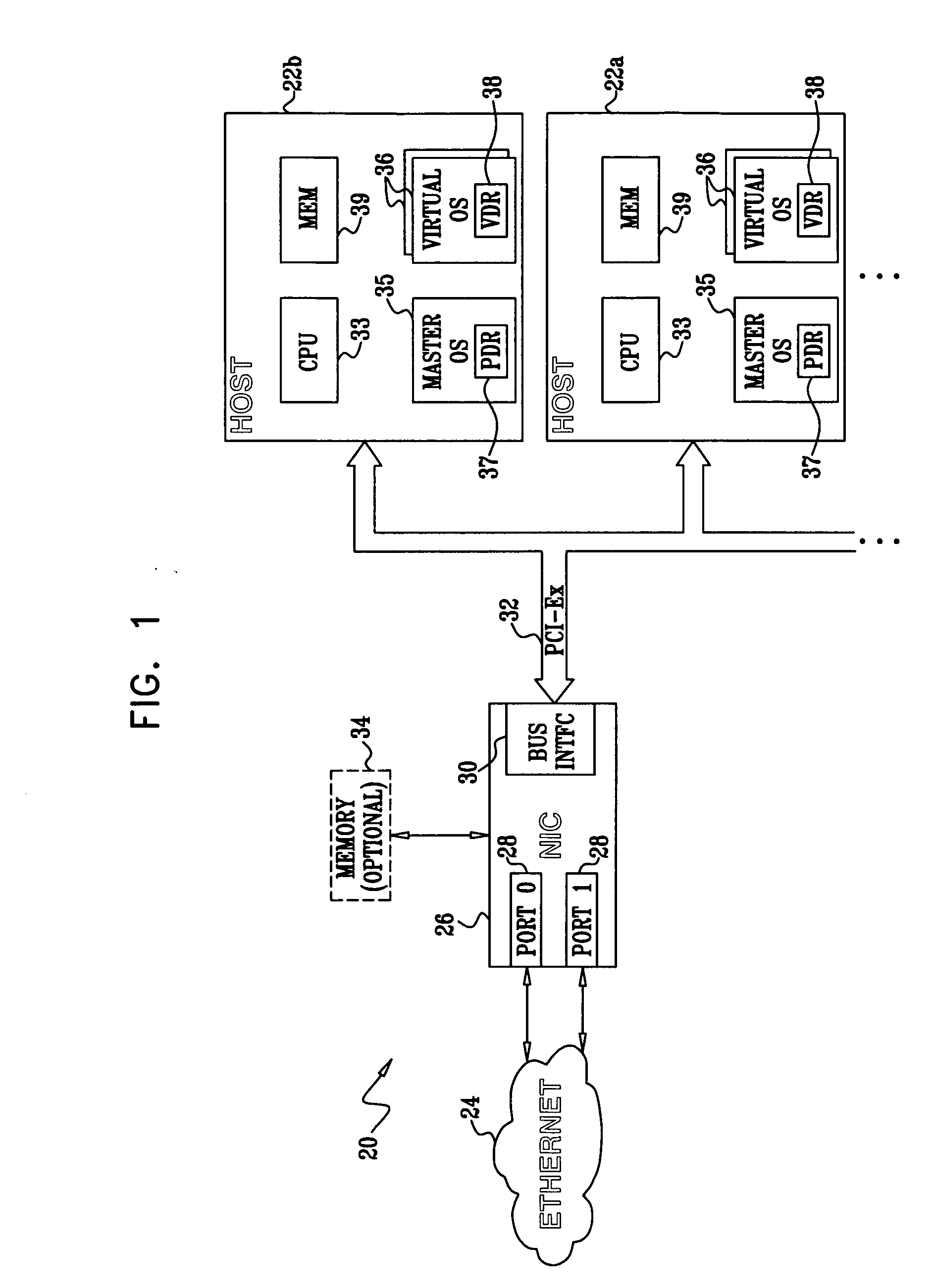

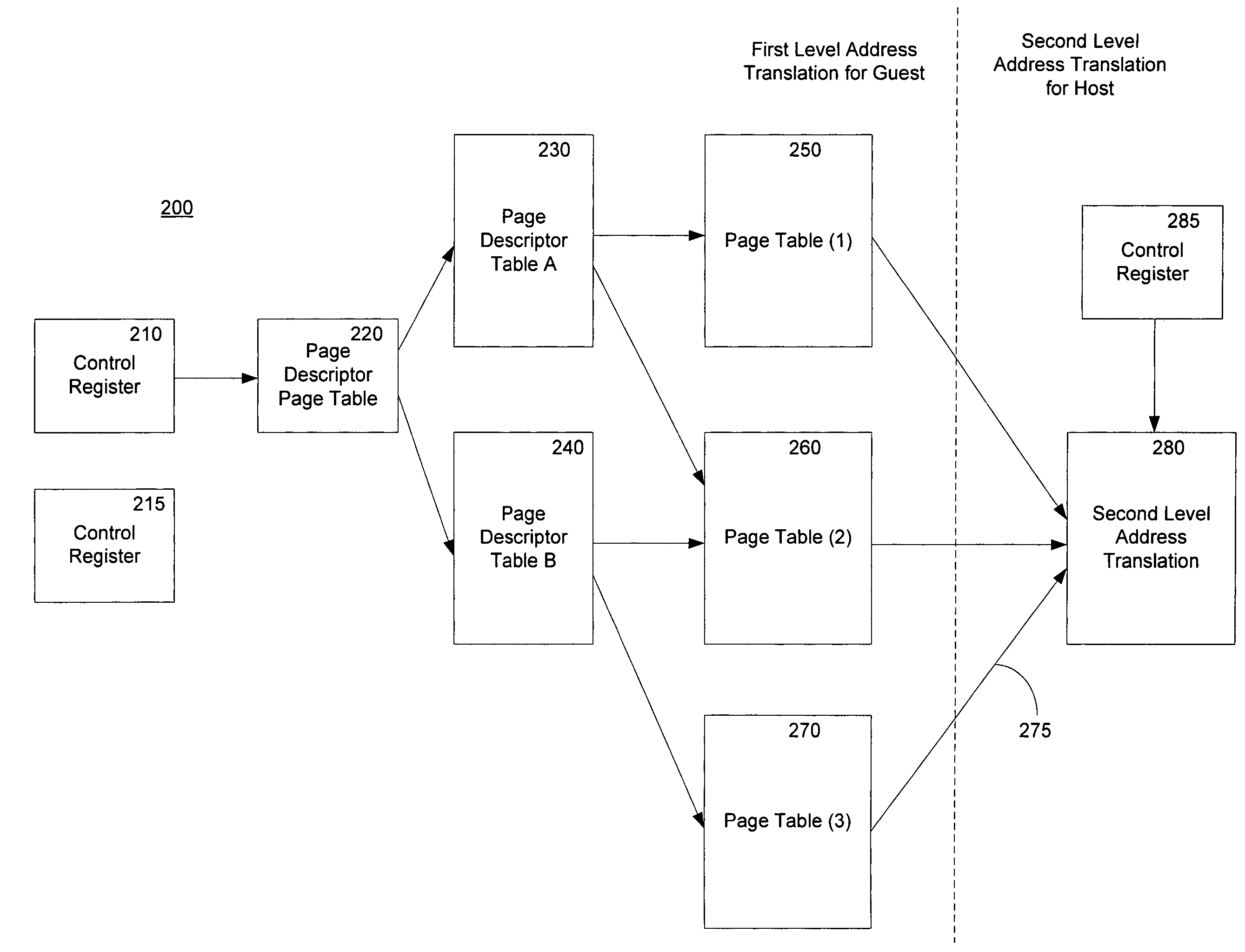

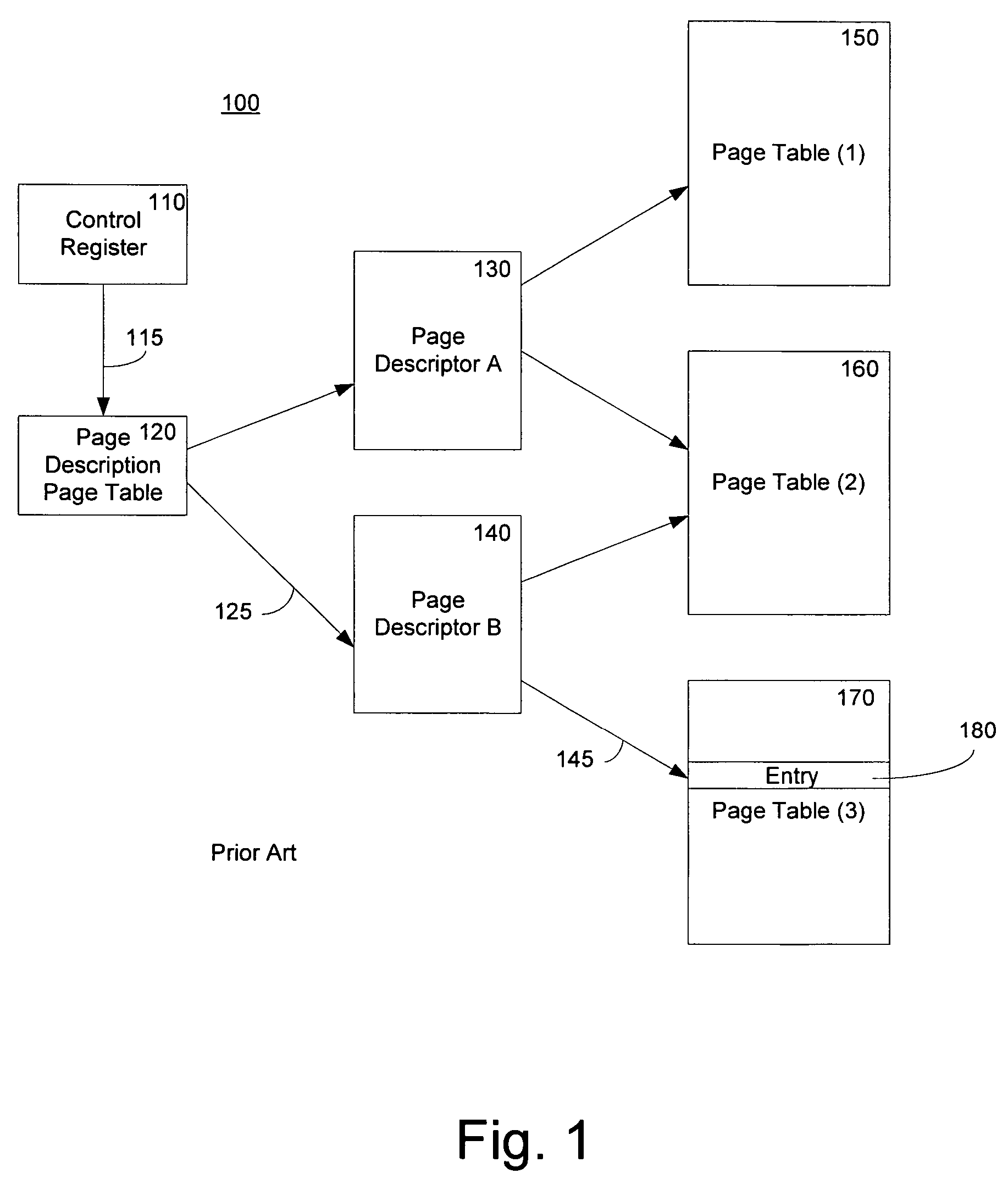

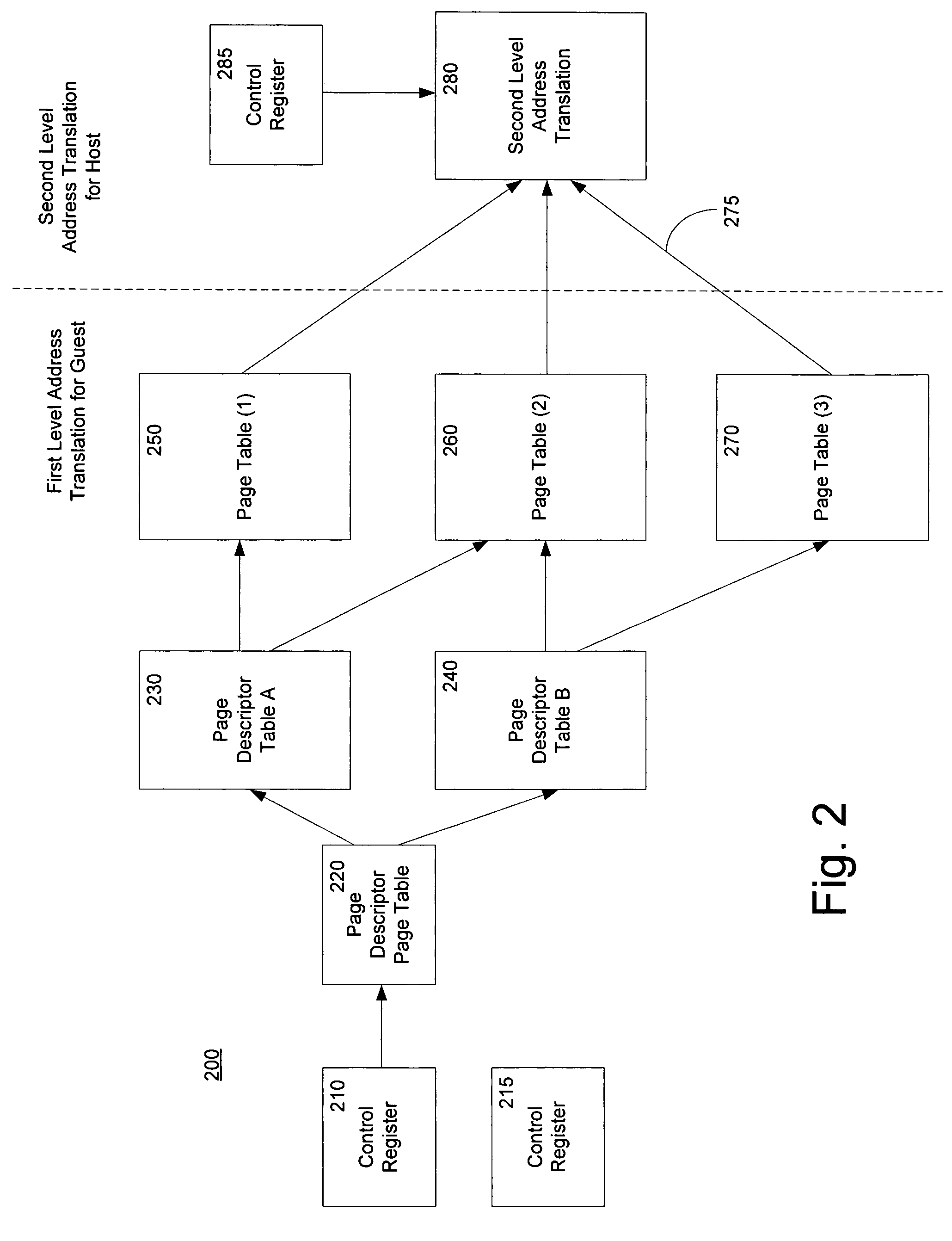

Method and system for a second level address translation in a virtual machine environment

ActiveUS20060206687A1Increase speedReduce usageMemory architecture accessing/allocationMemory adressing/allocation/relocationPhysical addressHost memory

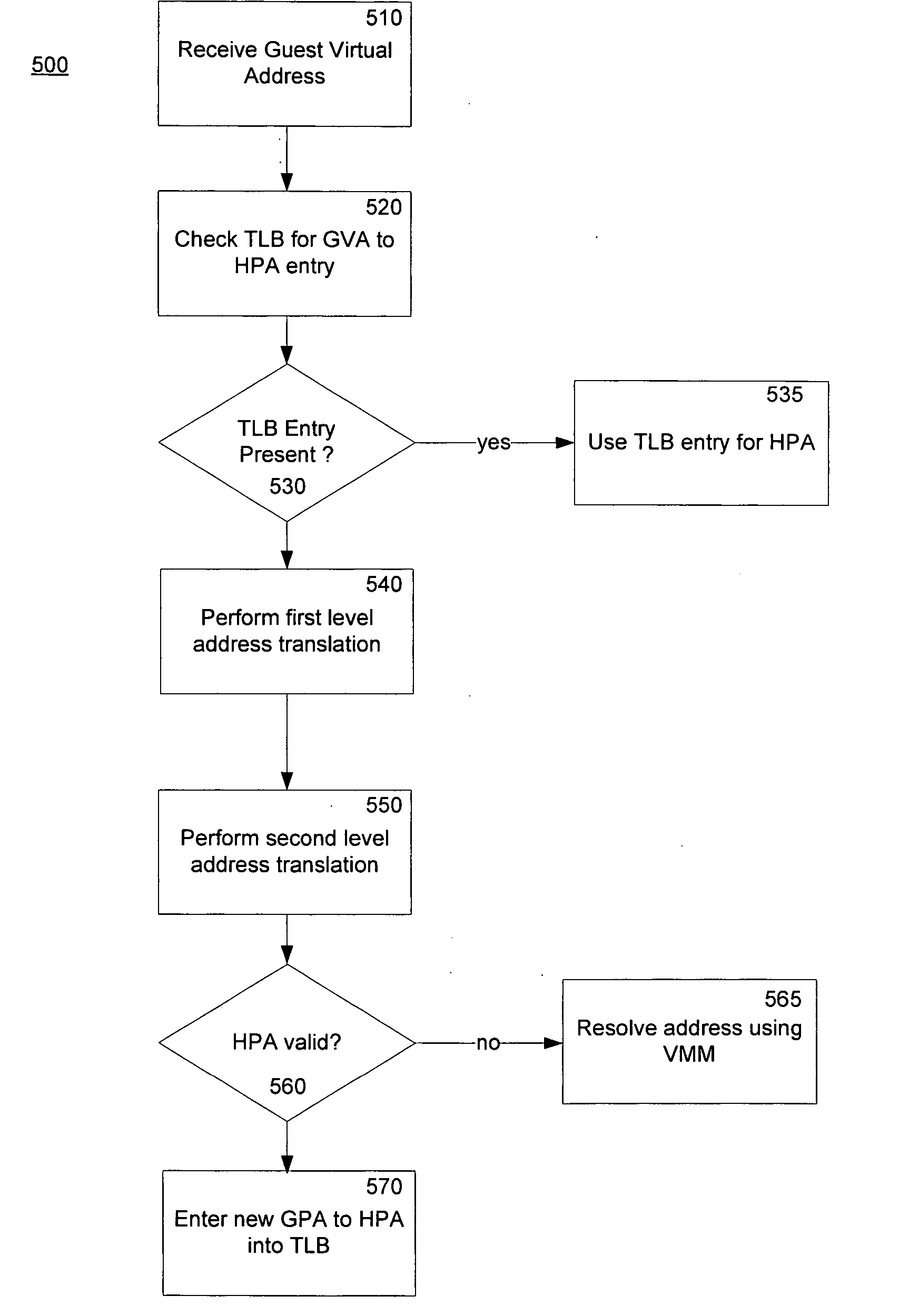

A method of performing a translation from a guest virtual address to a host physical address in a virtual machine environment includes receiving a guest virtual address from a host computer executing a guest virtual machine program and using the hardware oriented method of the host CPU to determine the guest physical address. A second level address translation to a host physical address is then performed. In one embodiment, a multiple tier tree is traversed which translates the guest physical address into a host physical address. In another embodiment, the second level of address translation is performed by employing a hash function of the guest physical address and a reference to a hash table. One aspect of the invention is the incorporation of access overrides associated with the host physical address which can control the access permissions of the host memory.

Owner:MICROSOFT TECH LICENSING LLC

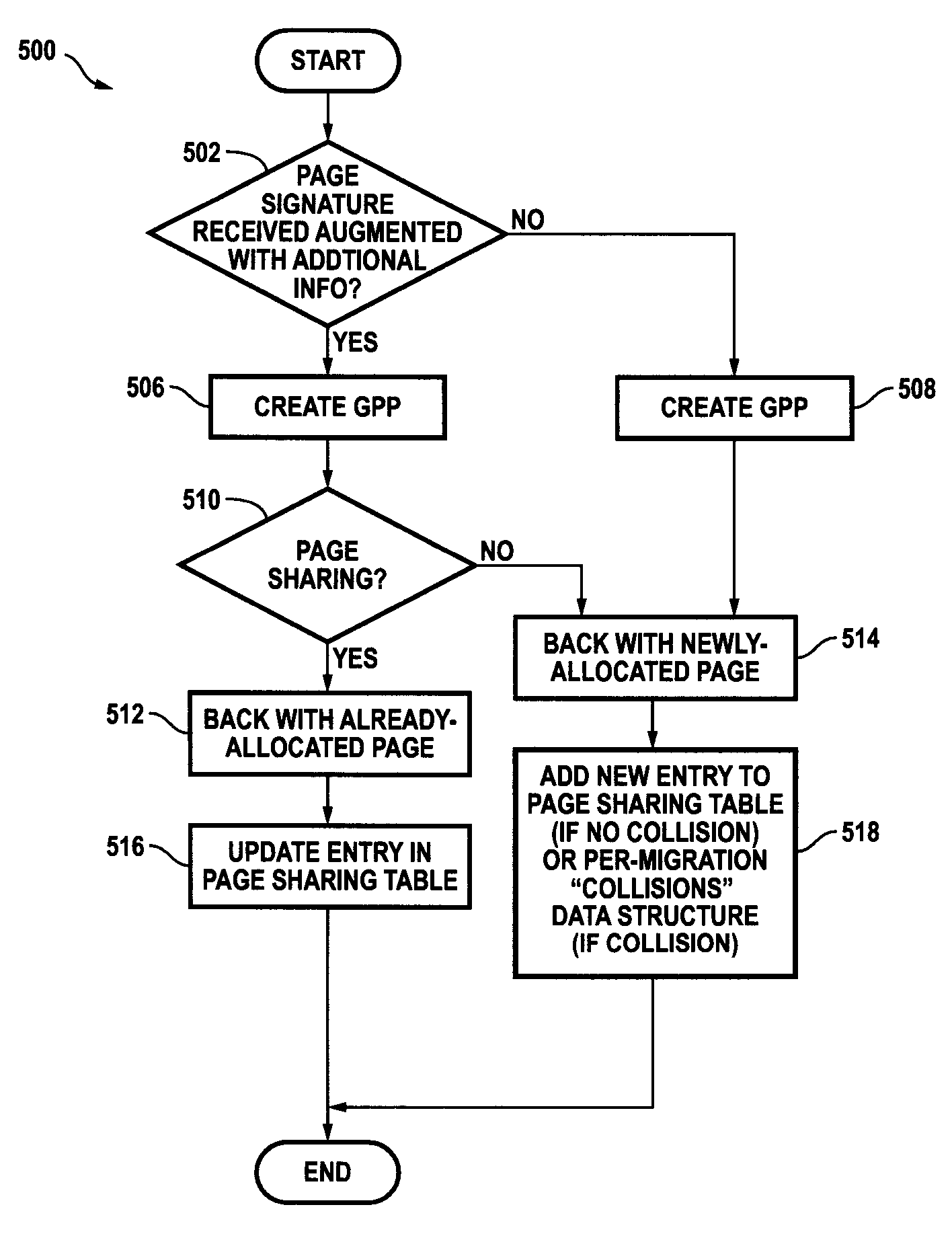

Page signature disambiguation for increasing the efficiency of virtual machine migration in shared-page virtualized computer systems

ActiveUS7925850B1Inhibitory contentAvoid collisionProgram controlMemory systemsVirtualizationAmbiguity

A system for increasing the efficiency of migrating, at least in part, a virtual machine from a source host to a destination host is described wherein the content of one or more portions of the address space of the virtual machine are each uniquely associated at the source host with a signature that may collide, absent disambiguation, with different content at the destination host. Code in both the source and destination hosts disambiguates the signature(s) so that each disambiguated signature may be uniquely associated with content at the destination host, and so that collisions with different content are avoided at the destination host. Logic is configured to determine whether the content uniquely associated with a disambiguated signature at the destination host is already present in the destination host memory, and, if so, to back one or more portions of the address space of the virtual machine having this content with one or more portions of the destination host memory already holding this content.

Owner:VMWARE INC

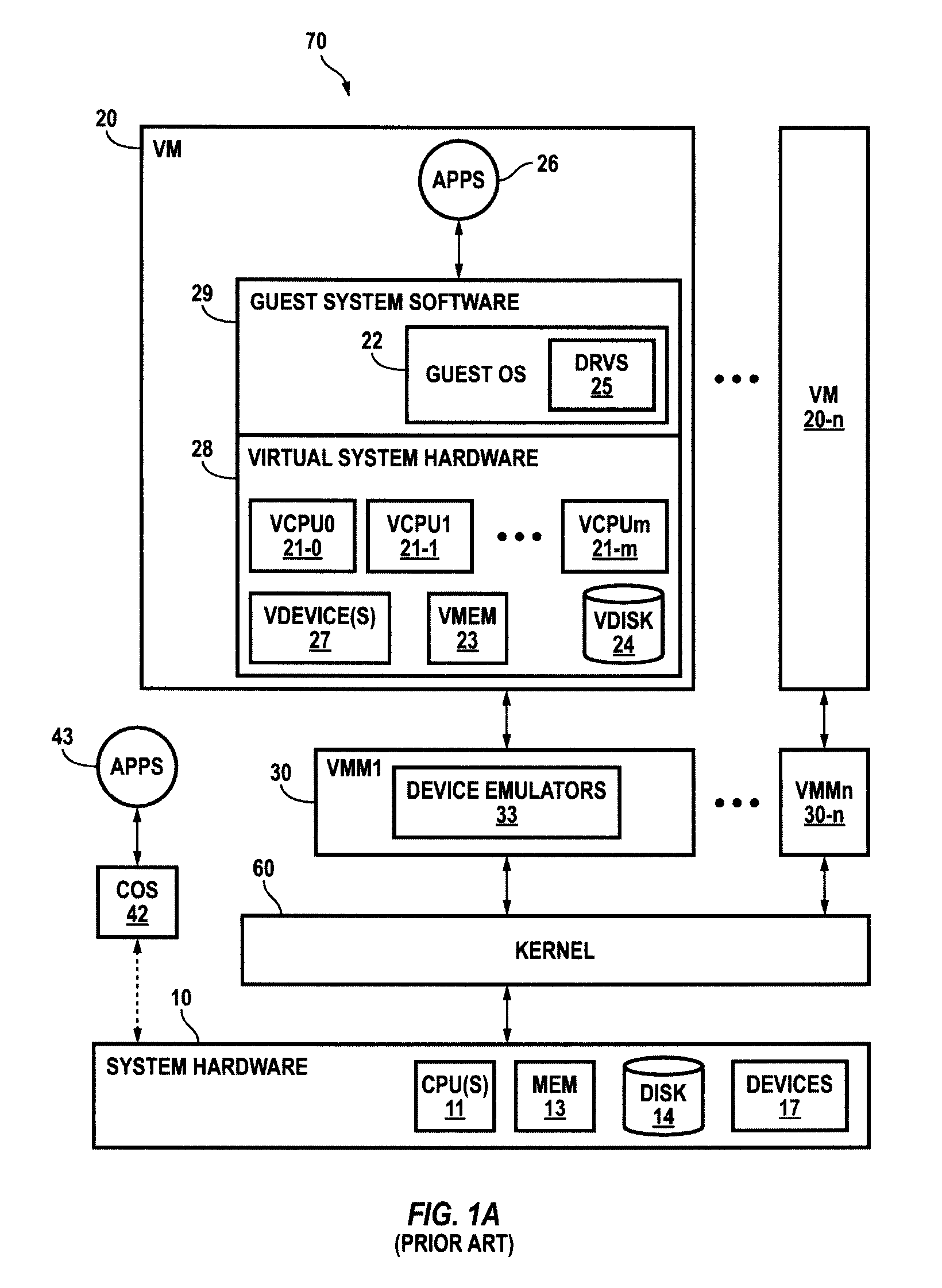

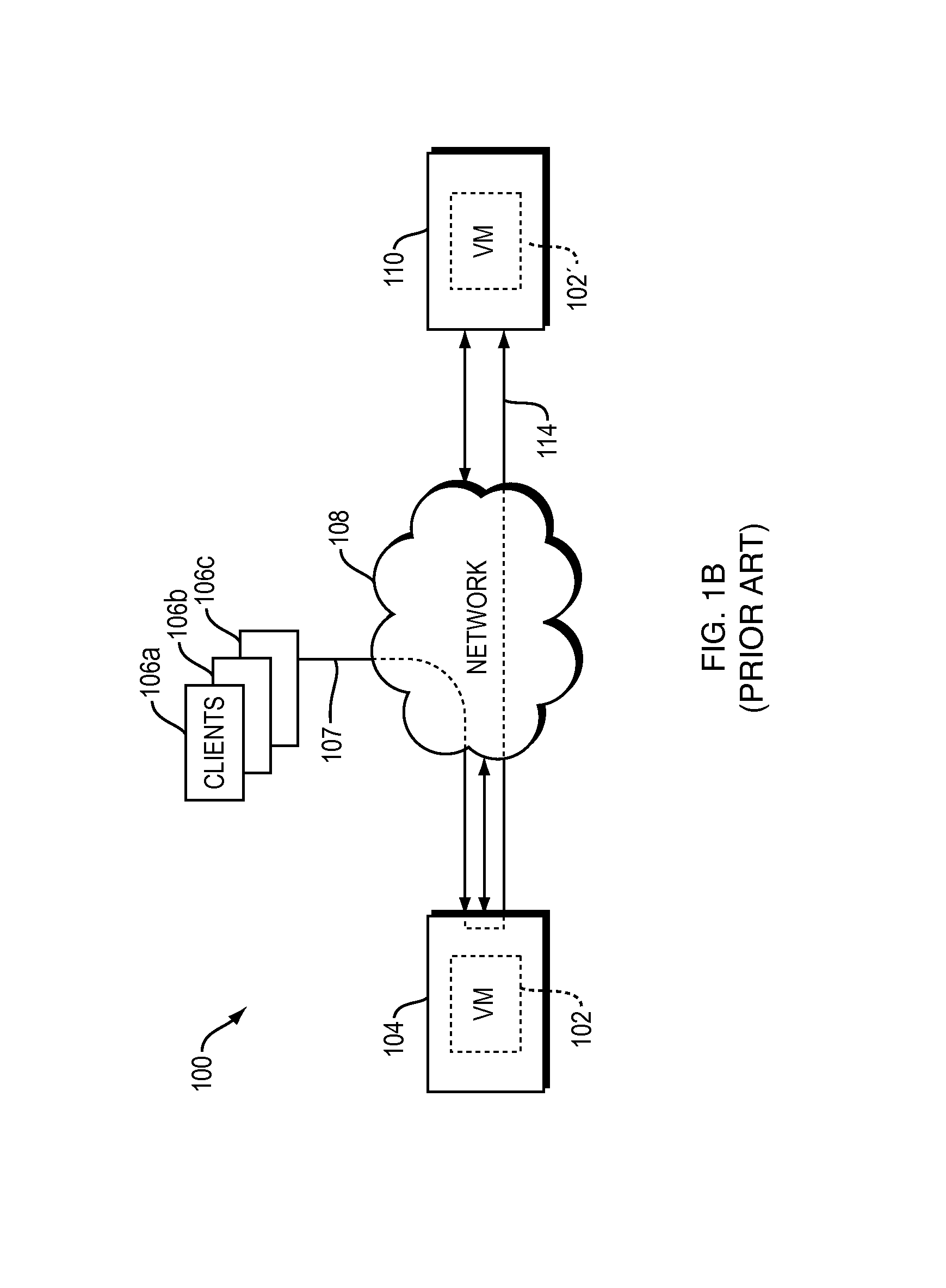

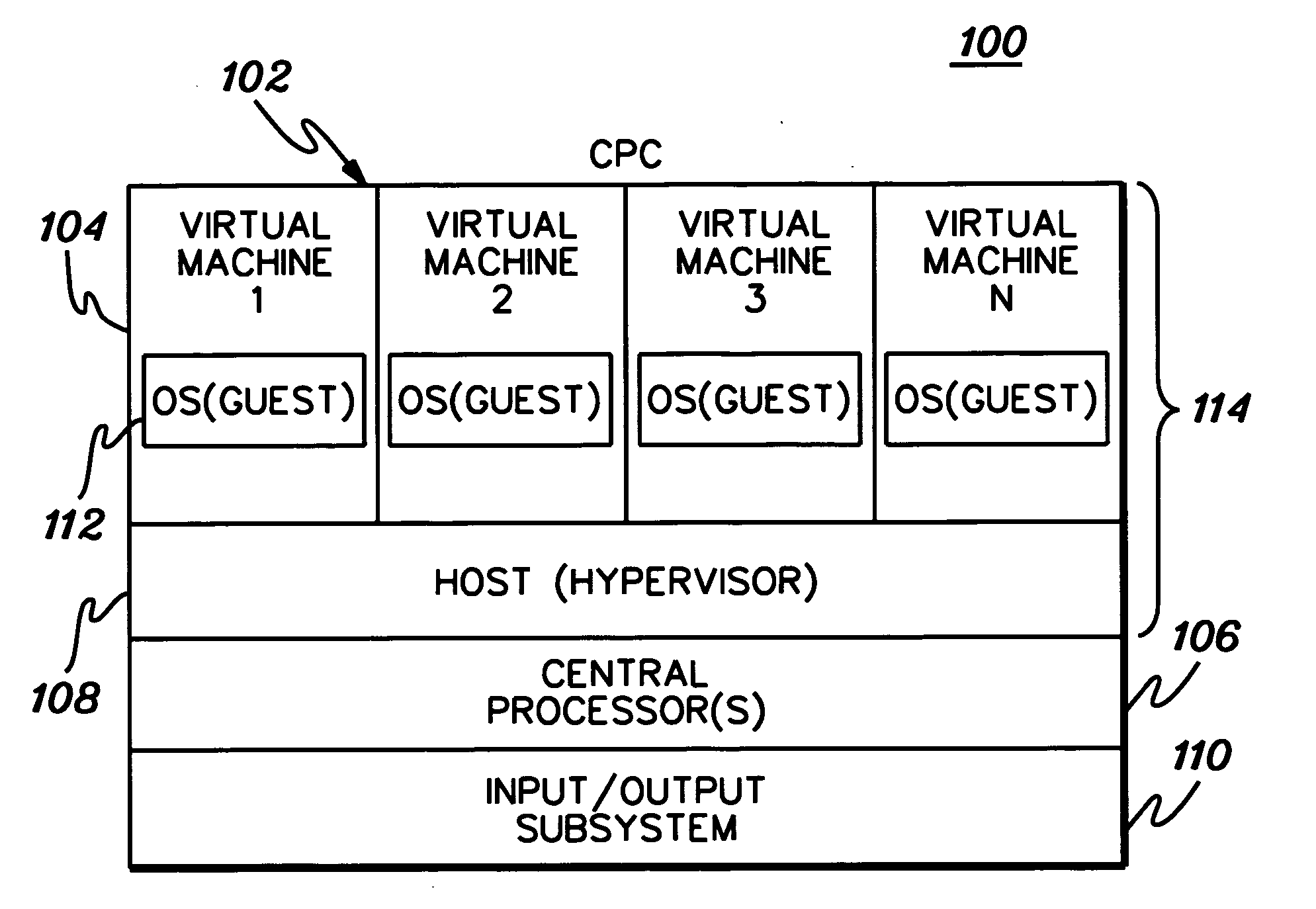

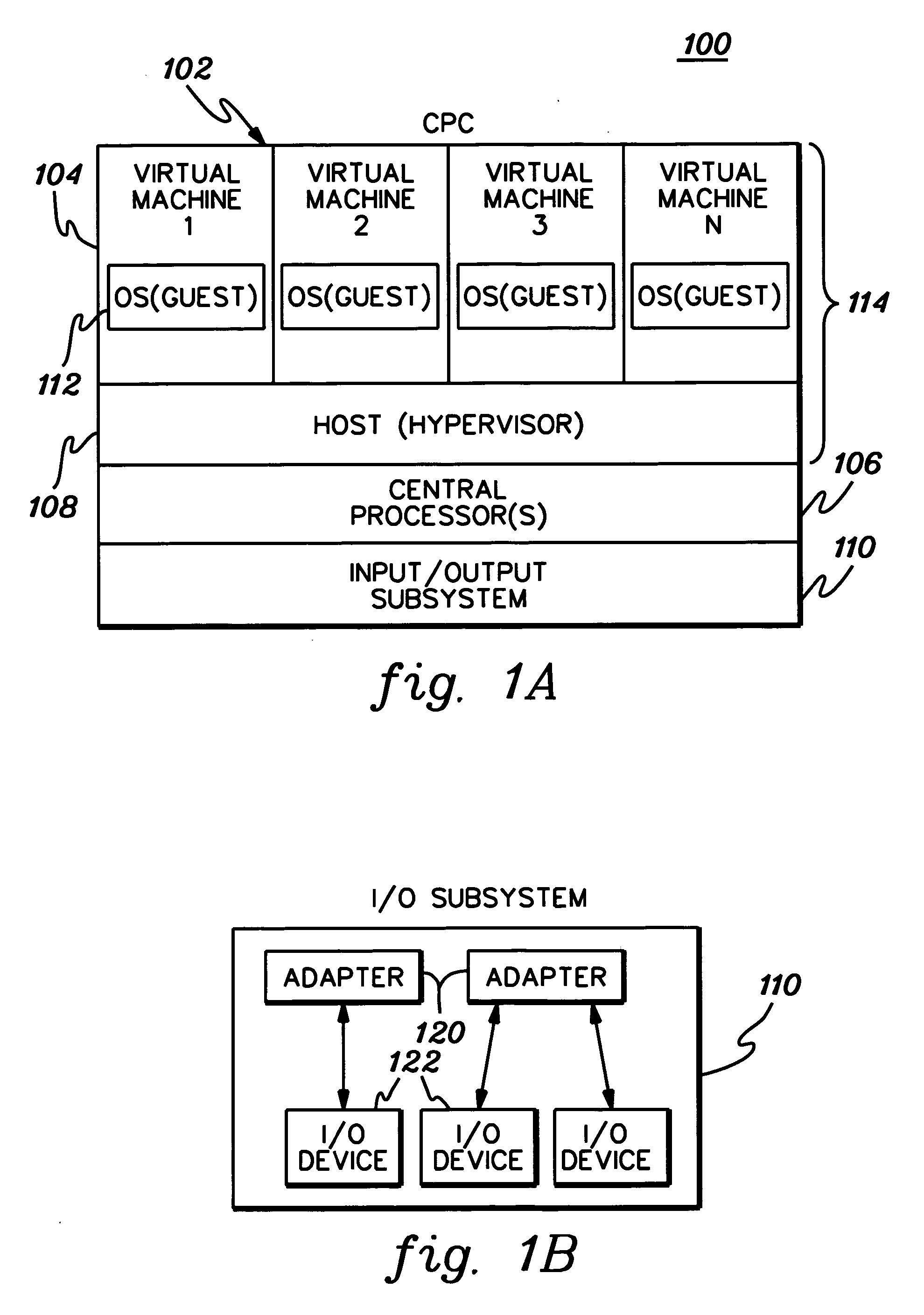

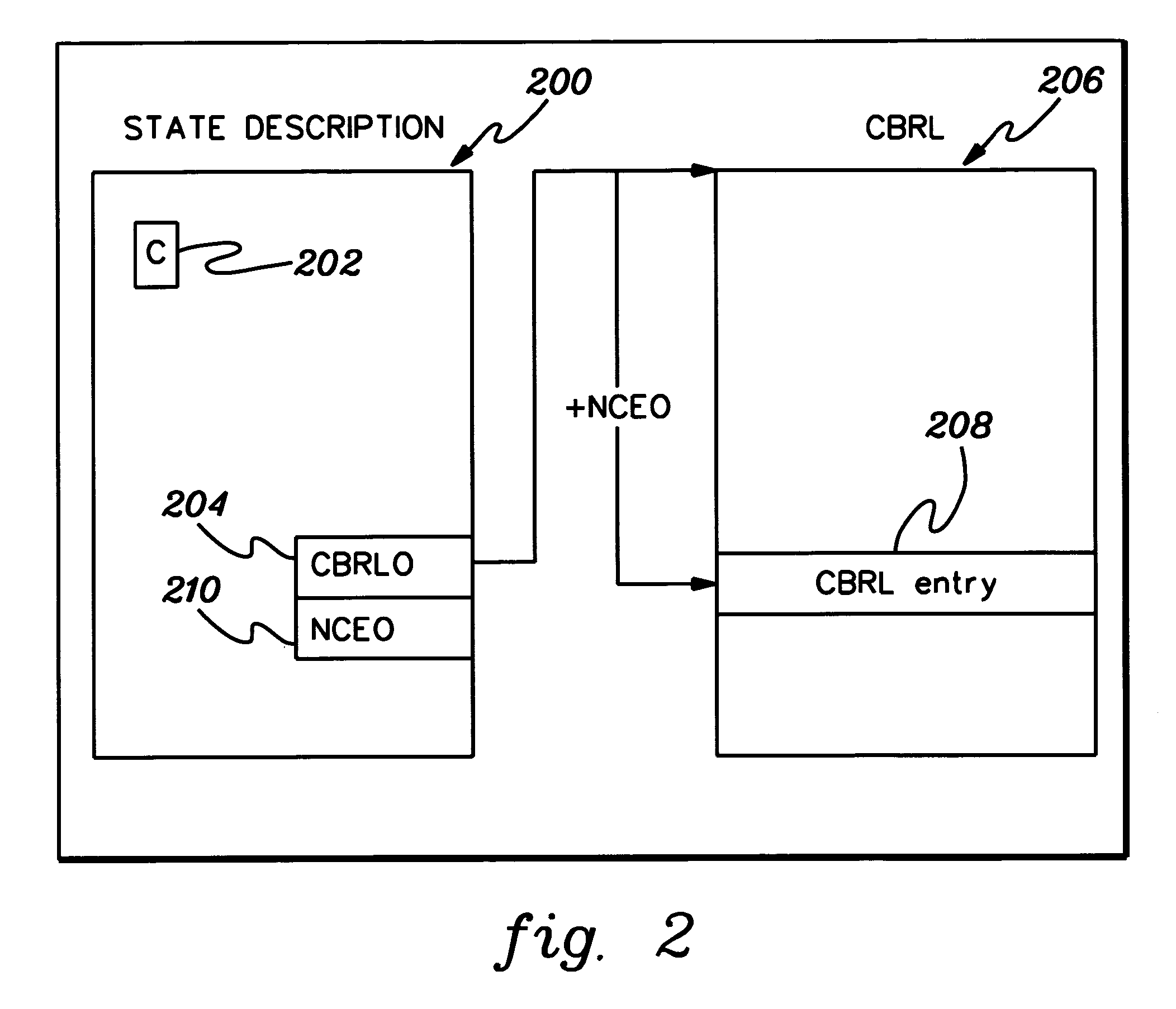

Facilitating processing within computing environments supporting pageable guests

ActiveUS20070016904A1Overcomes shortcomingEnhanced advantageMultiprogramming arrangementsSoftware simulation/interpretation/emulationPagingHost memory

Processing within a computing environment that supports pageable guests is facilitated. Processing is facilitated in many ways, including, but not limited to, associating guest and host state information with guest blocks of storage; maintaining the state information in control blocks in host memory; enabling the changing of states; and using the state information in management decisions. In one particular example, the guest state includes an indication of usefulness and importance of memory contents to the guest, and the host state reflects the ease of access to memory contents. The host and guest state information is used in managing memory of the host and / or guests.

Owner:IBM CORP

Efficient handling of work requests in a network interface device

ActiveUS7688838B1Easy to handleEnergy efficient ICTData switching by path configurationNetwork interface deviceContext data

A method for communication includes inputting from a host processor to a network interface device a sequence of work requests indicative of operations to be carried out by the network interface device with respect to a plurality of the connections. The device looks ahead through the sequence in order to identify at least first and second operations that are to be carried out with respect to one of the connections in response to first and second work requests, respectively, wherein the second work request does not immediately follow the first work request in the sequence. The device loads the context data for the one of the connections from a host memory into a context cache, and performs at least the first and second operations sequentially while the context data are held in the cache.

Owner:BROADCOM ISRAEL R&D

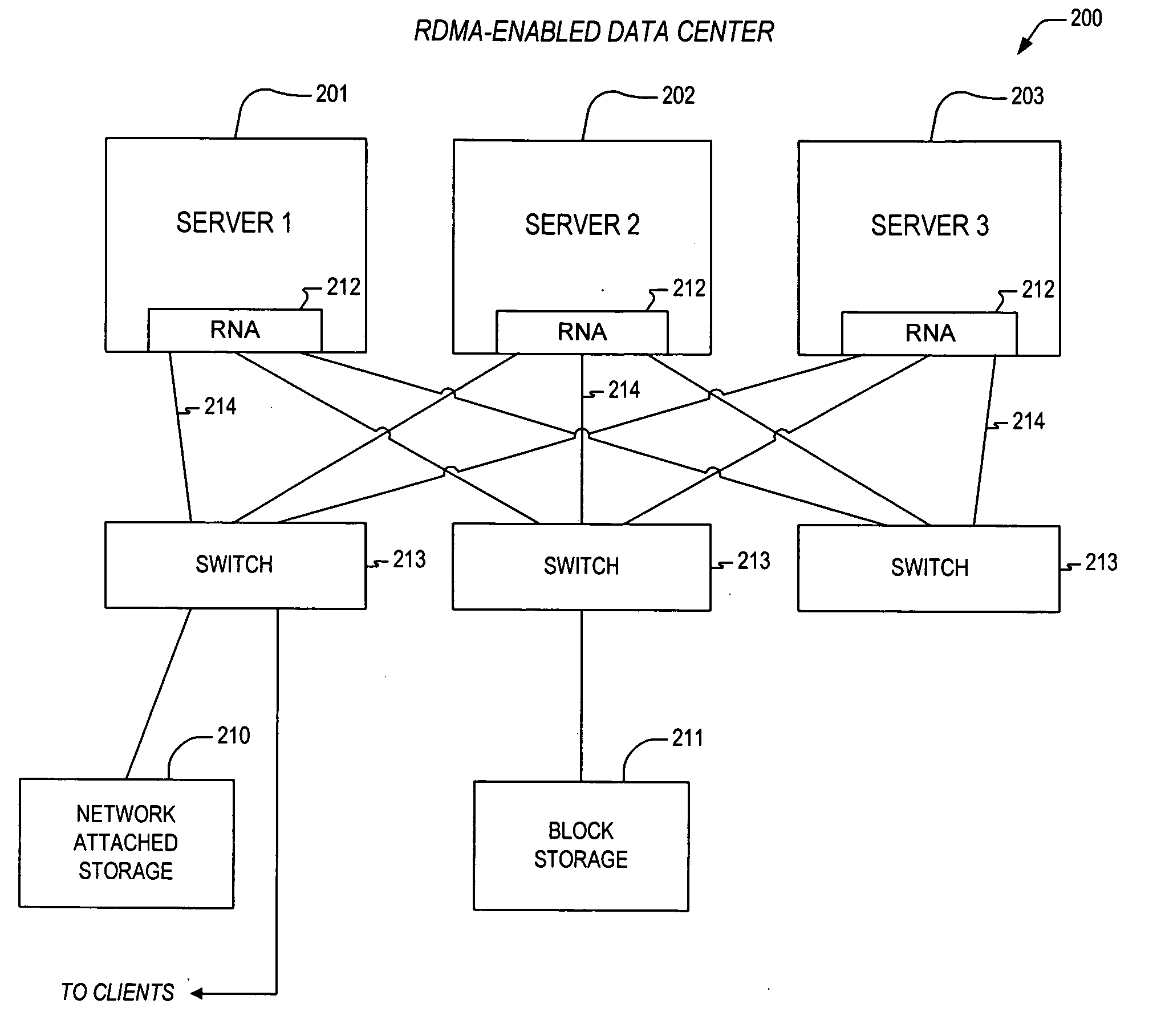

Apparatus and method for packet transmission over a high speed network supporting remote direct memory access operations

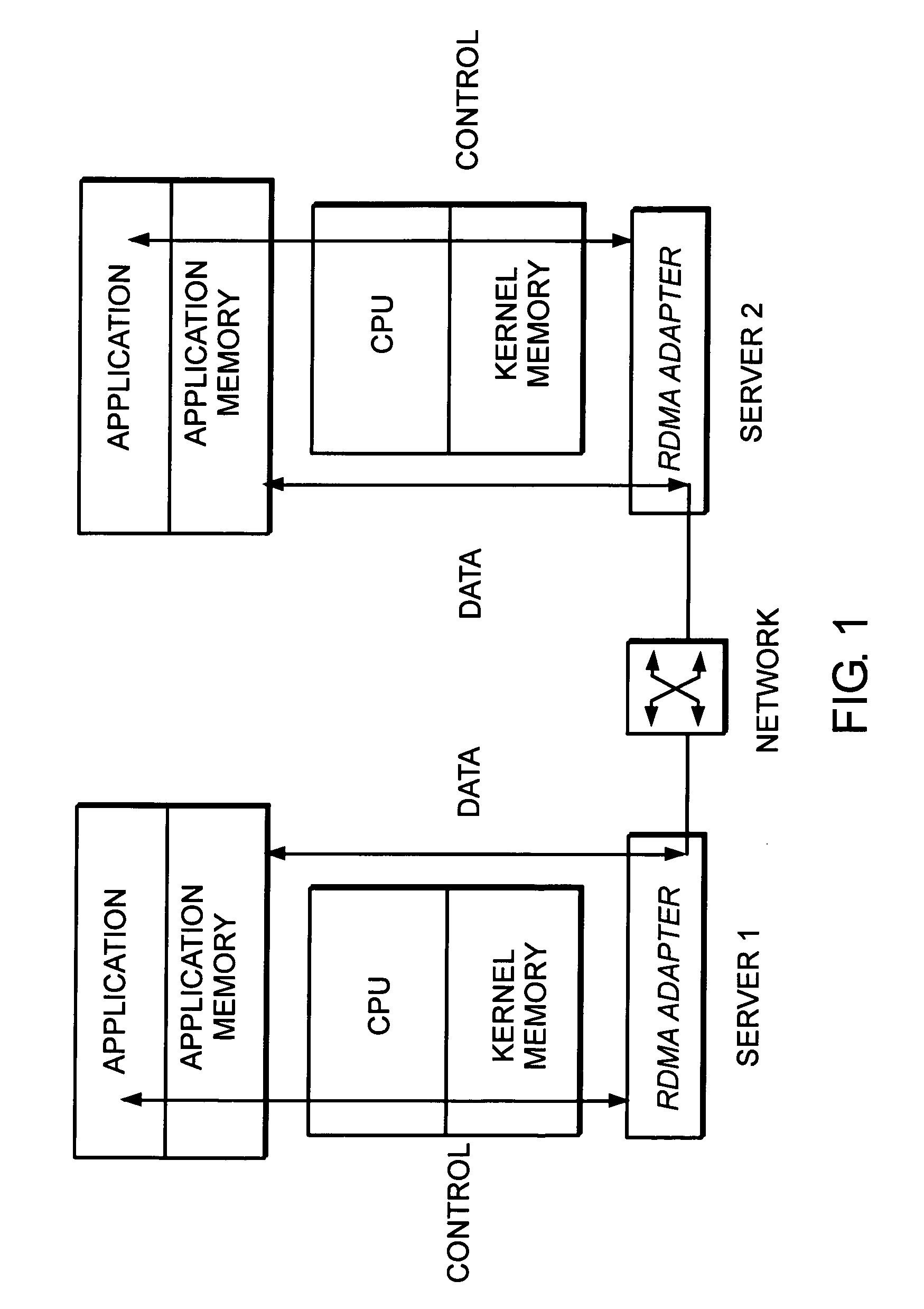

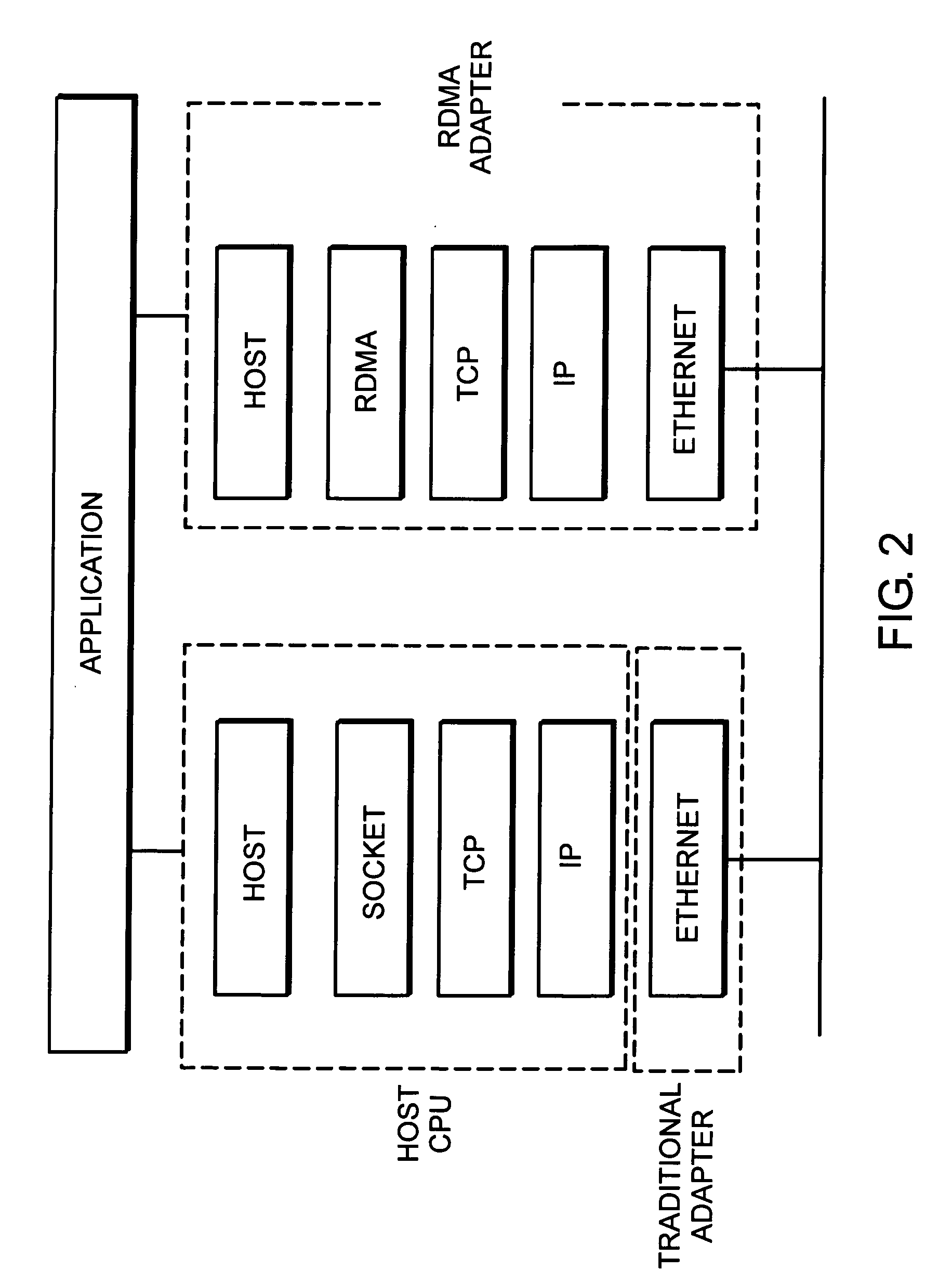

ActiveUS20060230119A1Efficient and effective rebuildDigital computer detailsTransmissionRemote direct memory accessTerm memory

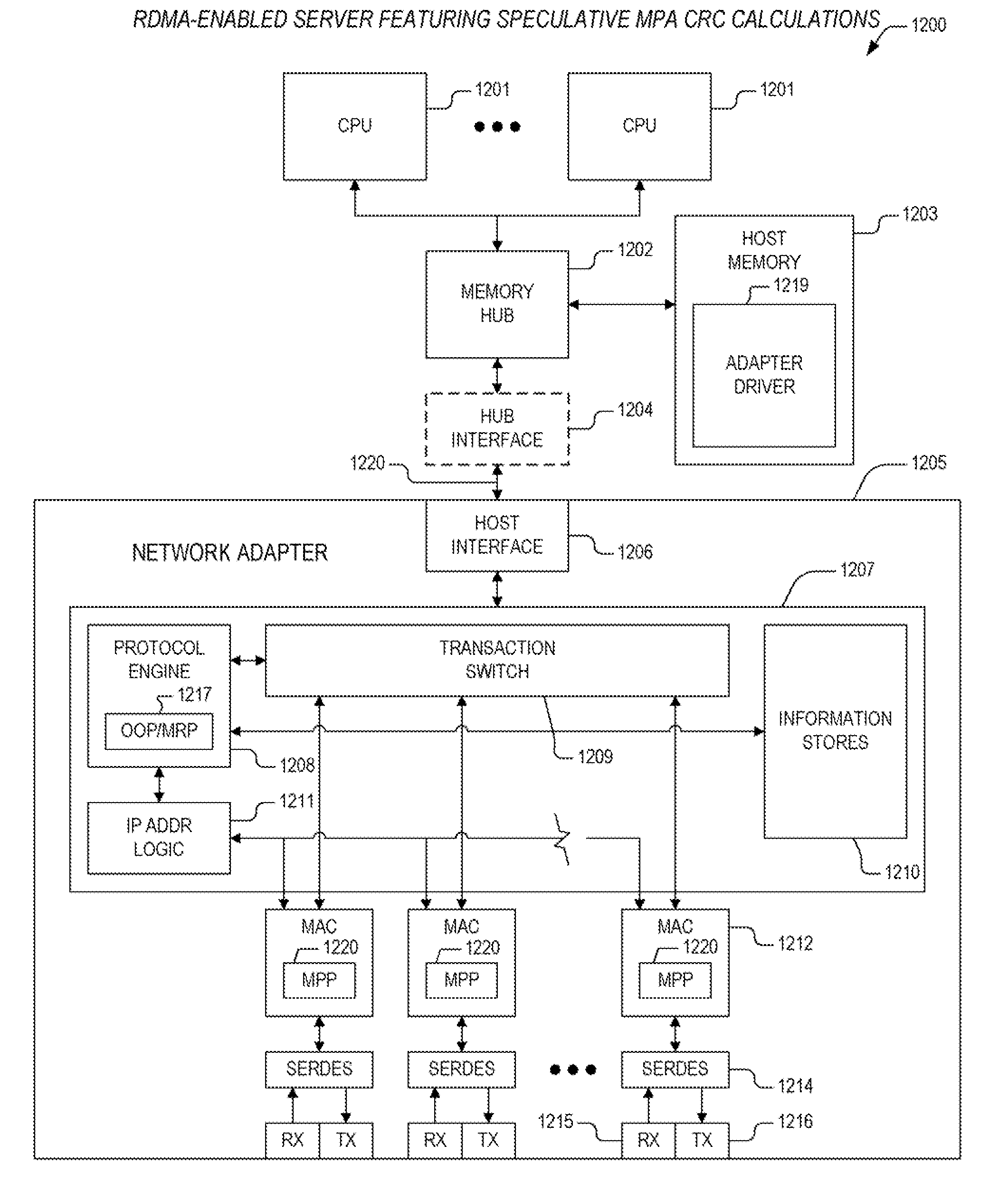

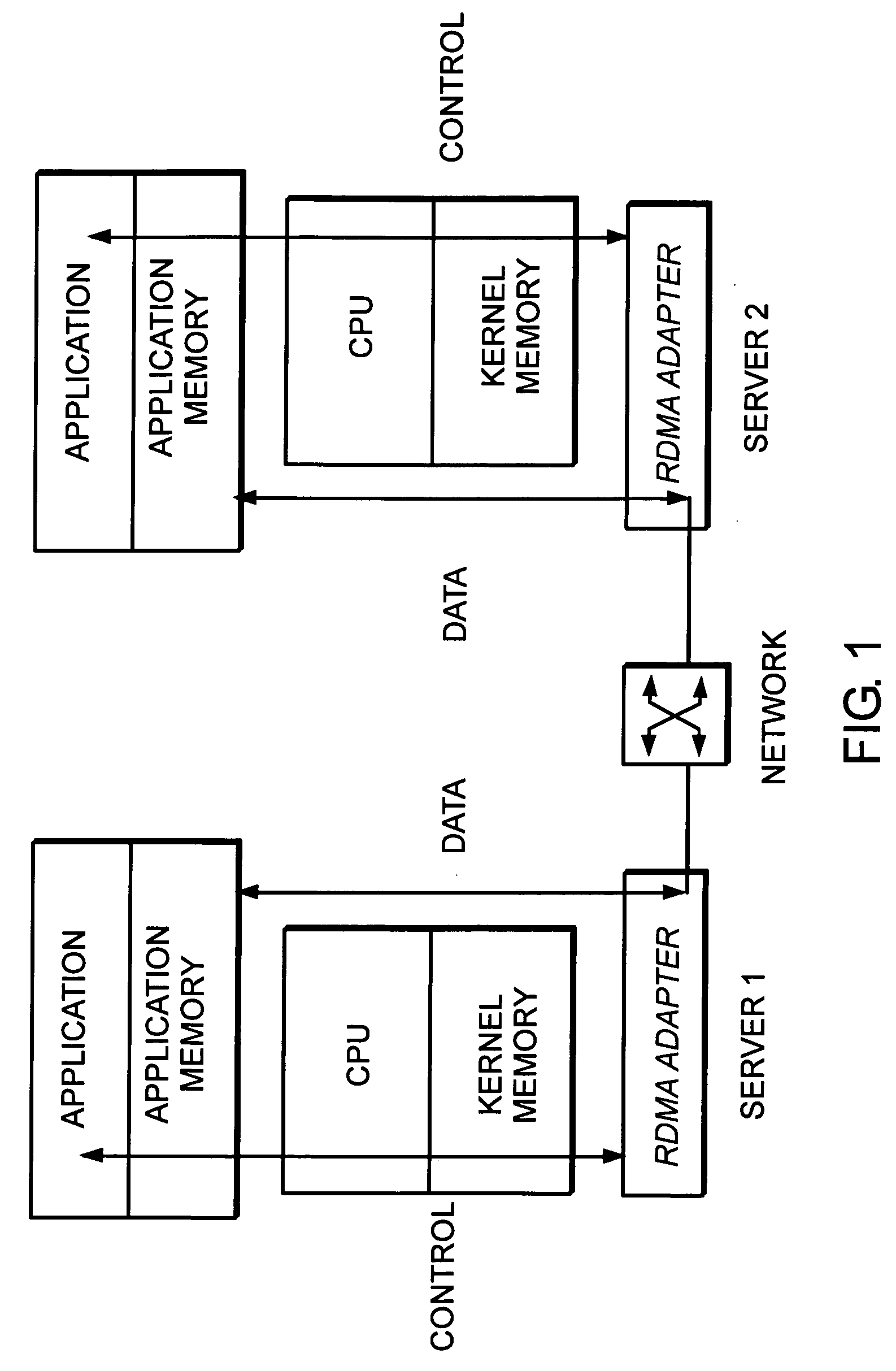

A mechanism for performing remote direct memory access (RDMA) operations between a first server and a second server over an Ethernet fabric. The RDMA operations are initiated by execution of a verb according to a remote direct memory access protocol. The verb is executed by a CPU on the first server. The apparatus includes transaction logic that is configured to process a work queue element corresponding to the verb, and that is configured to accomplish the RDMA operations over a TCP / IP interface between the first and second servers, where the work queue element resides within first host memory corresponding to the first server. The transaction logic includes transmit history information stores and a protocol engine. The transmit history information stores maintains parameters associated with said work queue element. The protocol engine is coupled to the transmit history information stores and is configured to access the parameters to enable retransmission of one or more TCP segments corresponding to the RDMA operations.

Owner:INTEL CORP

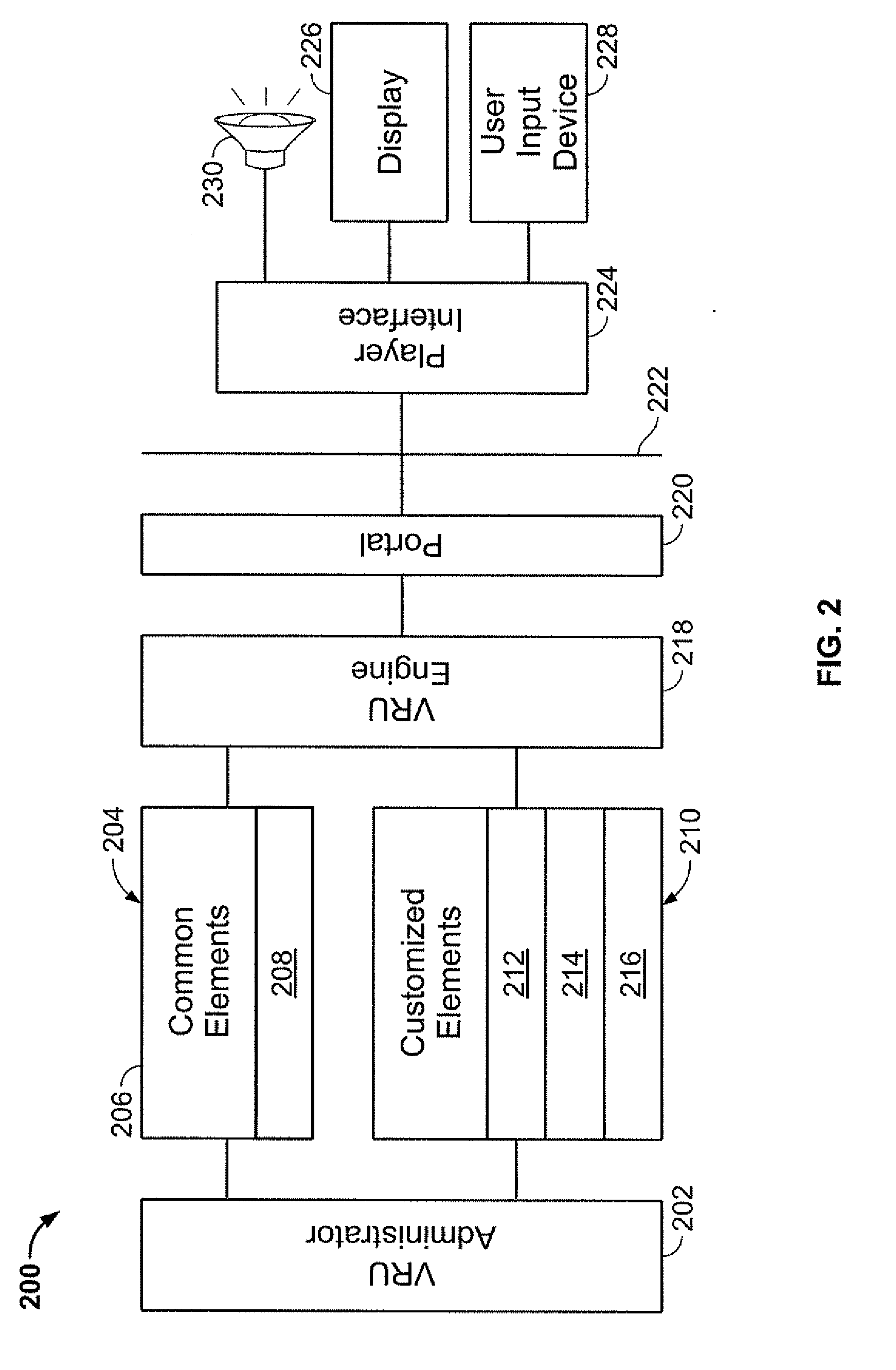

Computer Simulation Method With User-Defined Transportation And Layout

A multi-user process receives input from multiple remote clients to manipulate avatars through a virtual environment modeled in a host memory. The environment includes portal objects operable to transport avatars, which are modeled objects operated in response to client input, between defined areas of the virtual environment. The portals are customizable in response to client input to transport avatars to destinations preferred by users. Adjacent defined areas are not confined in extent by shared boundaries. The host provides model data for display of the modeled environment to participating clients.

Owner:PFAQUTRUMA RES LLC

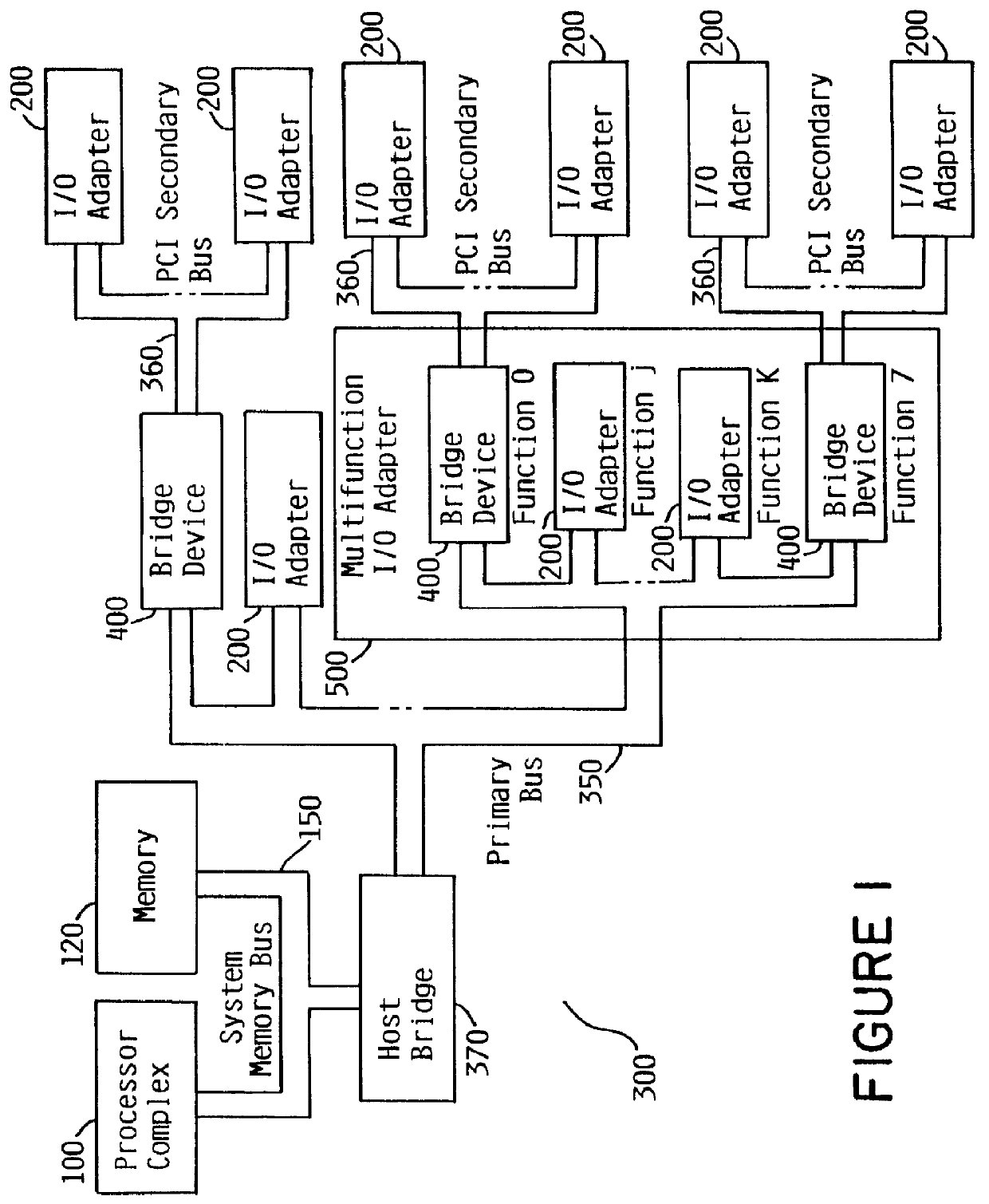

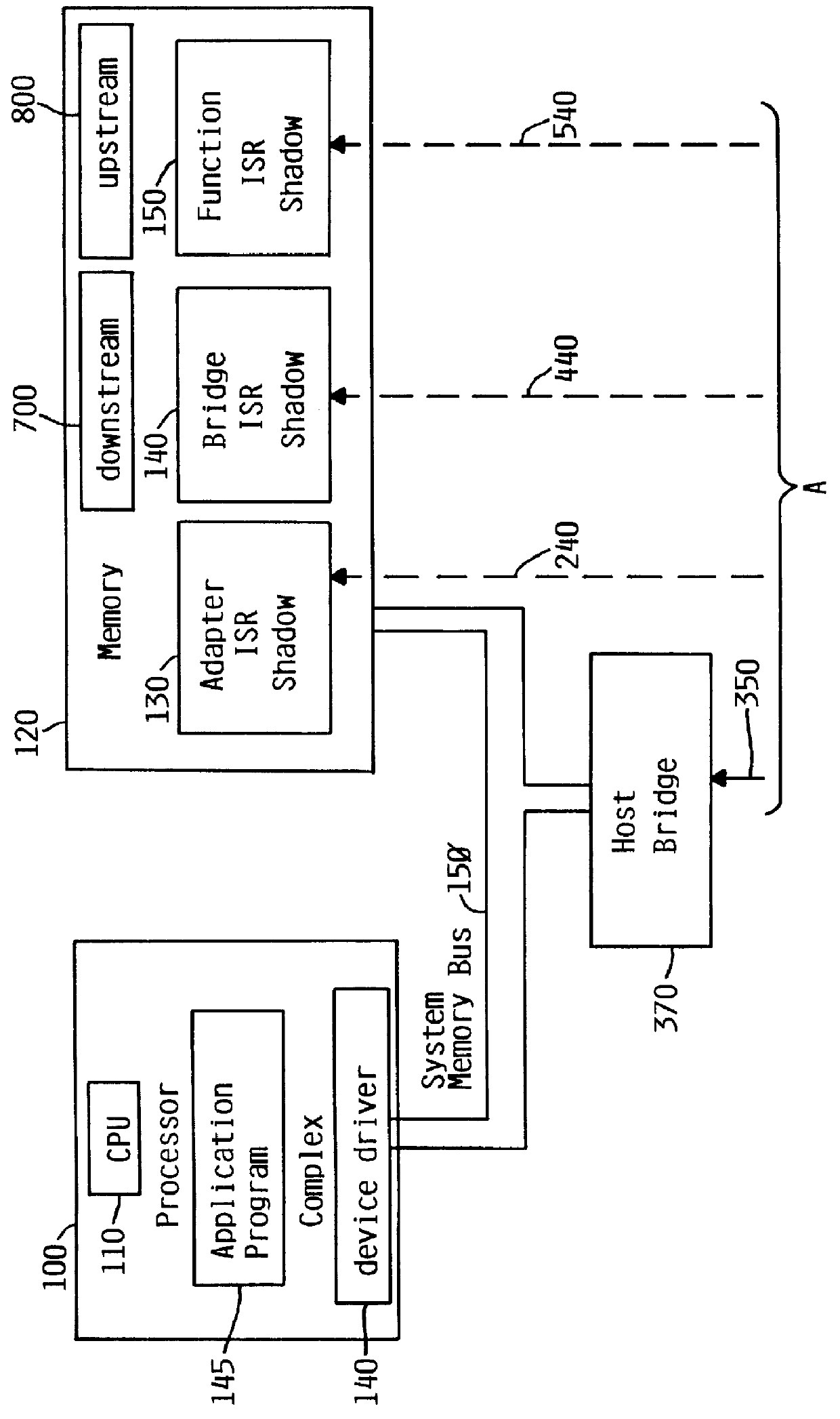

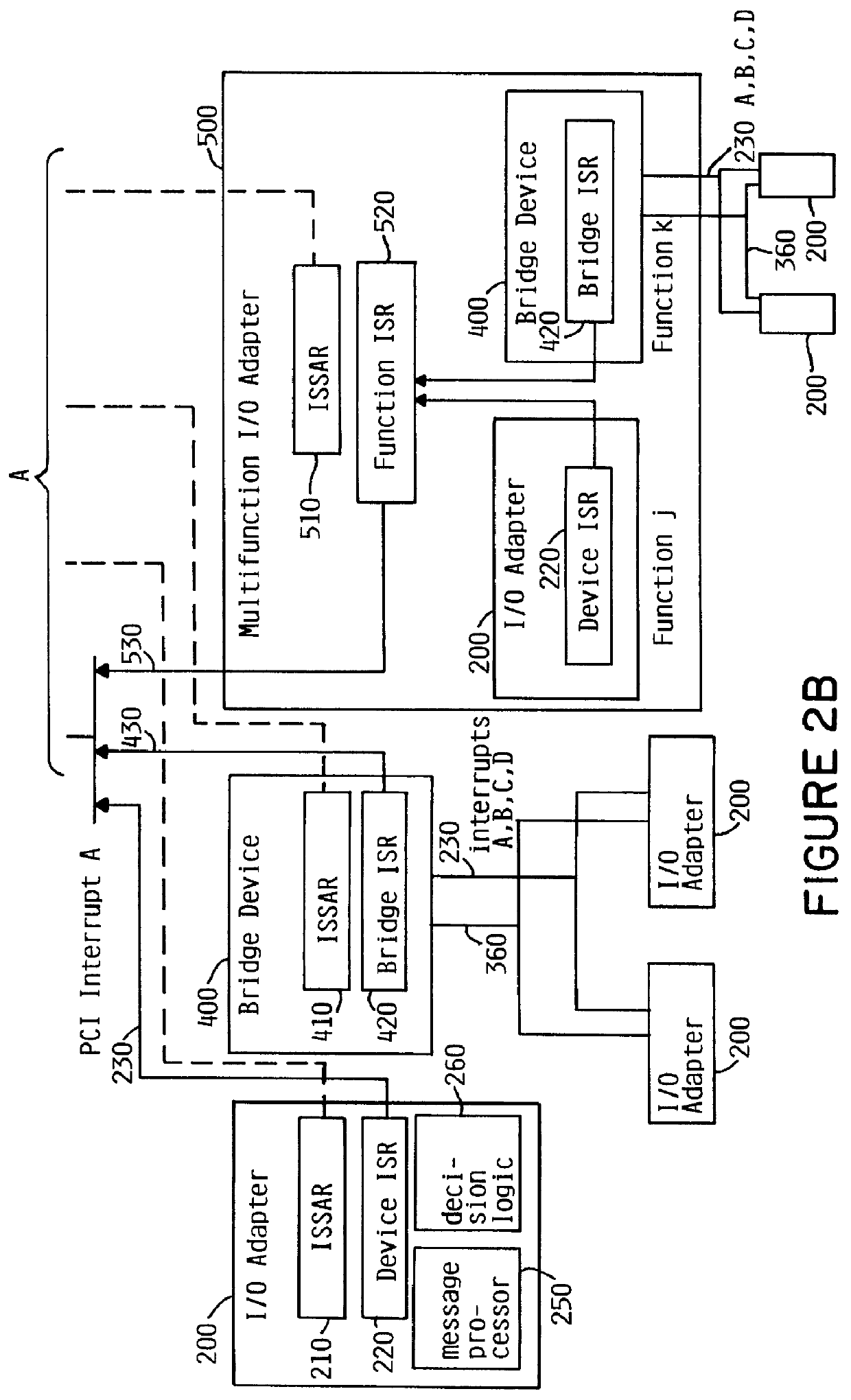

System for determining adapter interrupt status where interrupt is sent to host after operating status stored in register is shadowed to host memory

InactiveUS6078970AMemory adressing/allocation/relocationDigital computer detailsProcessor registerHost memory

An I / O adapter connects an I / O adapter to an I / O bus and includes a device interrupt status register and an interrupt status shadow address register. The device interrupt status register stores the interrupt status of the I / O adapter. The I / O adapter accesses the interrupt status shadow address register to determine an address of main memory at which the device interrupt status register is shadowed. After shadowing the interrupt status, the I / O adapter interrupts the processor complex which may then access local, main memory to determine the interrupt status. A multifunction I / O adapter permits a plurality of I / O adapters to be connected thereto and includes a function interrupt status register to summarize the interrupt status of all the I / O adapters attached thereto. After shadowing the summarized interrupt status, the multifunction I / O adapter interrupts the processor complex which may then access local, main memory to determine the interrupt status.

Owner:GOOGLE LLC

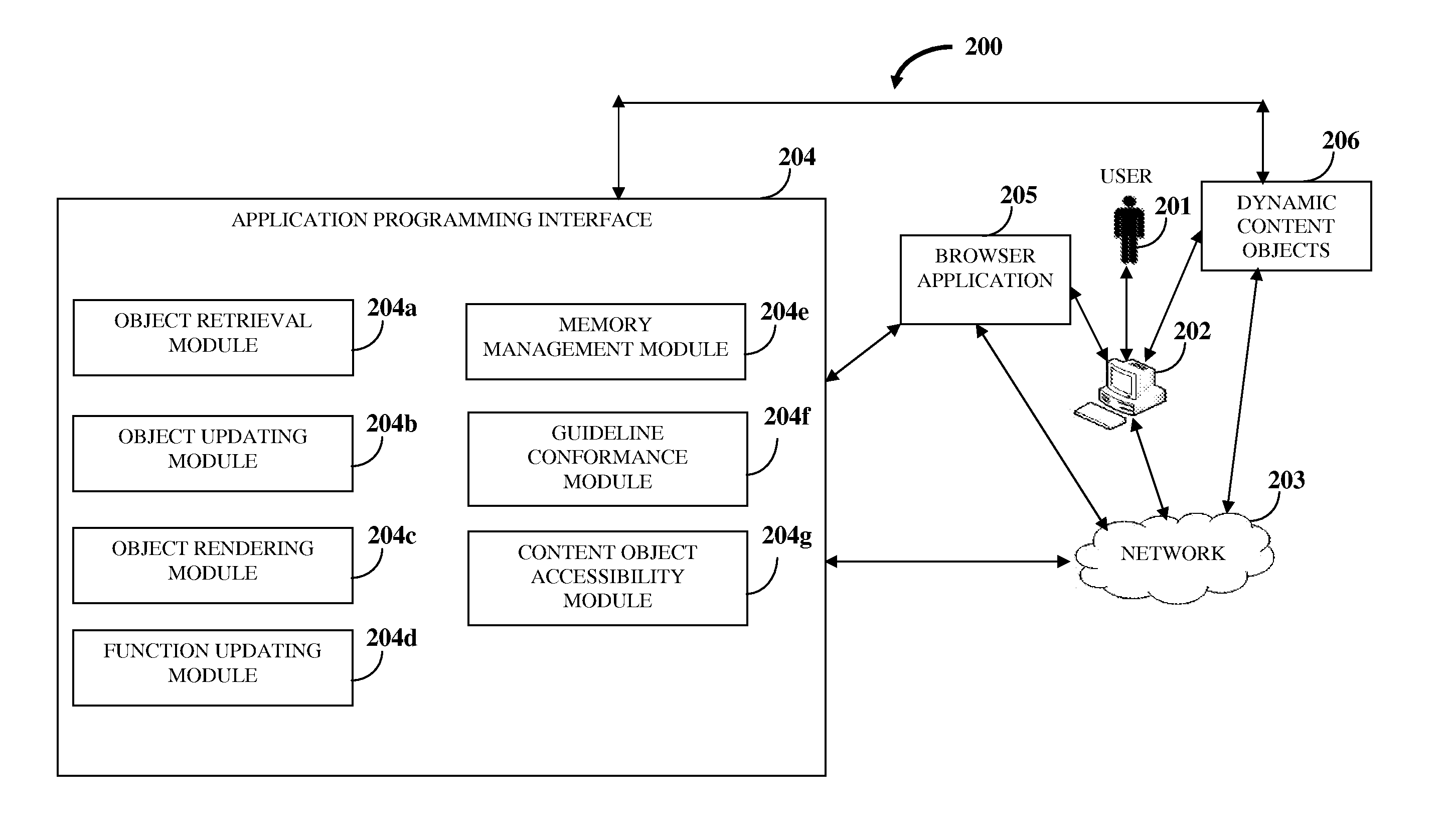

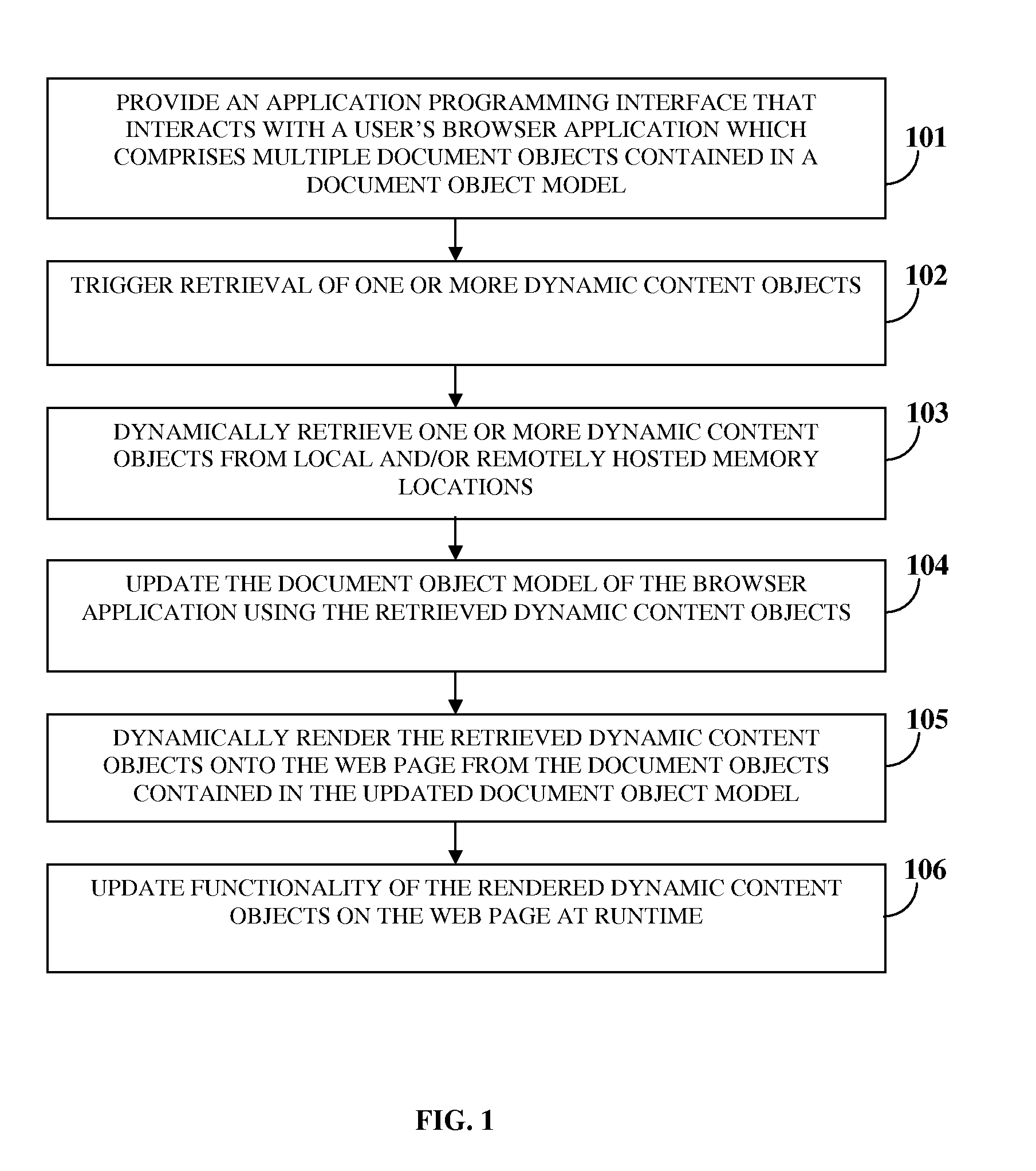

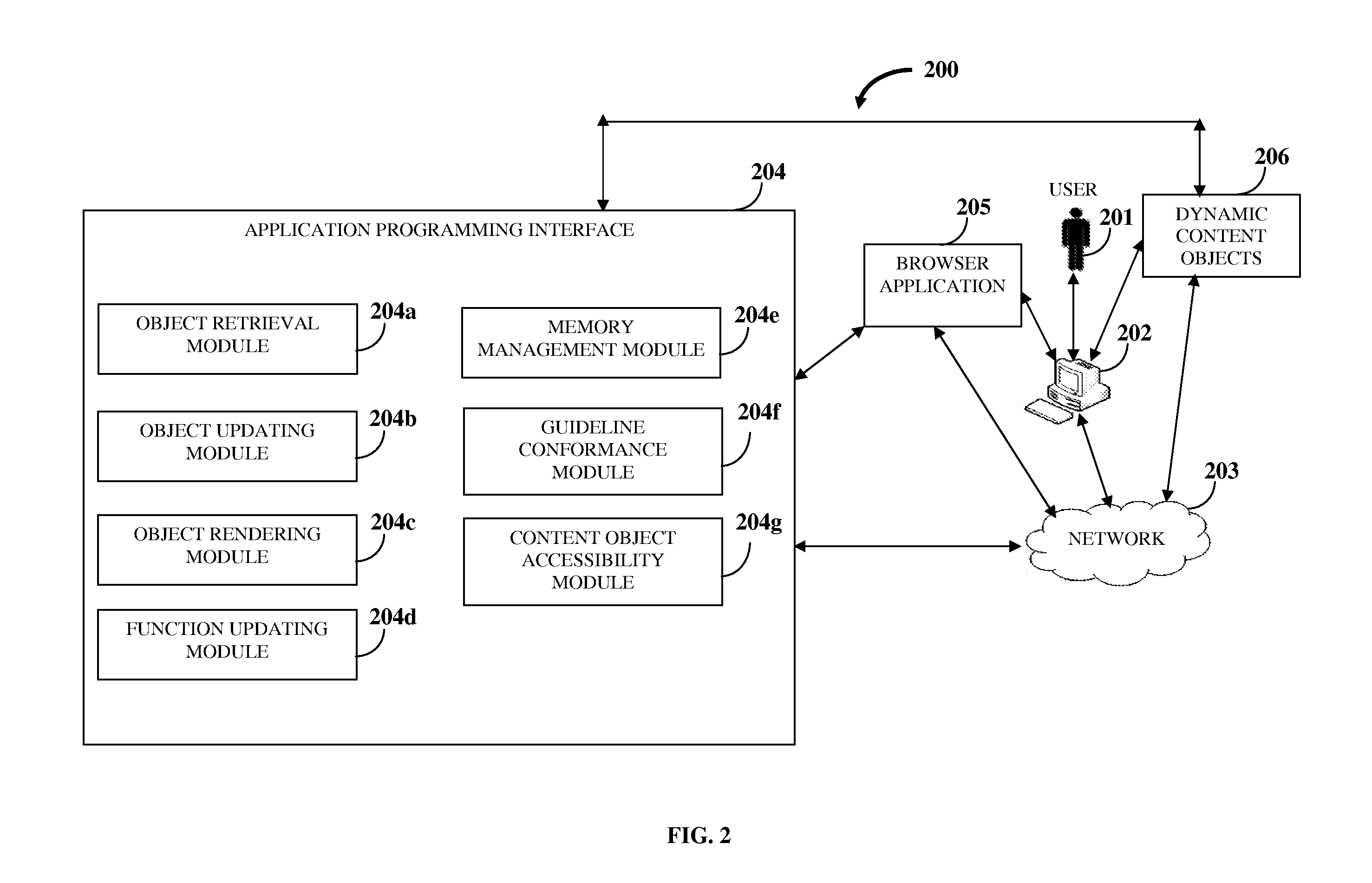

Automatic Creation And Management Of Dynamic Content

InactiveUS20110197124A1Website content managementSpecial data processing applicationsApplication programming interfaceUser input

A computer implemented method and system for creating and managing dynamic content on a web page is provided. An application programming interface that interacts with a user's browser application is provided. The browser application comprises multiple document objects contained in a document object model. A user input triggers retrieval of one or more dynamic content objects on the web page. The application programming interface dynamically retrieves the dynamic content objects from local and / or remotely hosted memory locations. The application programming interface updates the document object model using the retrieved dynamic content objects. The retrieved dynamic content objects define the document objects in the document object model. The application programming interface dynamically renders the retrieved dynamic content objects onto the web page from the document objects contained in the updated document object model. The application programming interface updates functionality of the rendered dynamic content objects on the web page at runtime.

Owner:GARAVENTA BRYAN ELI

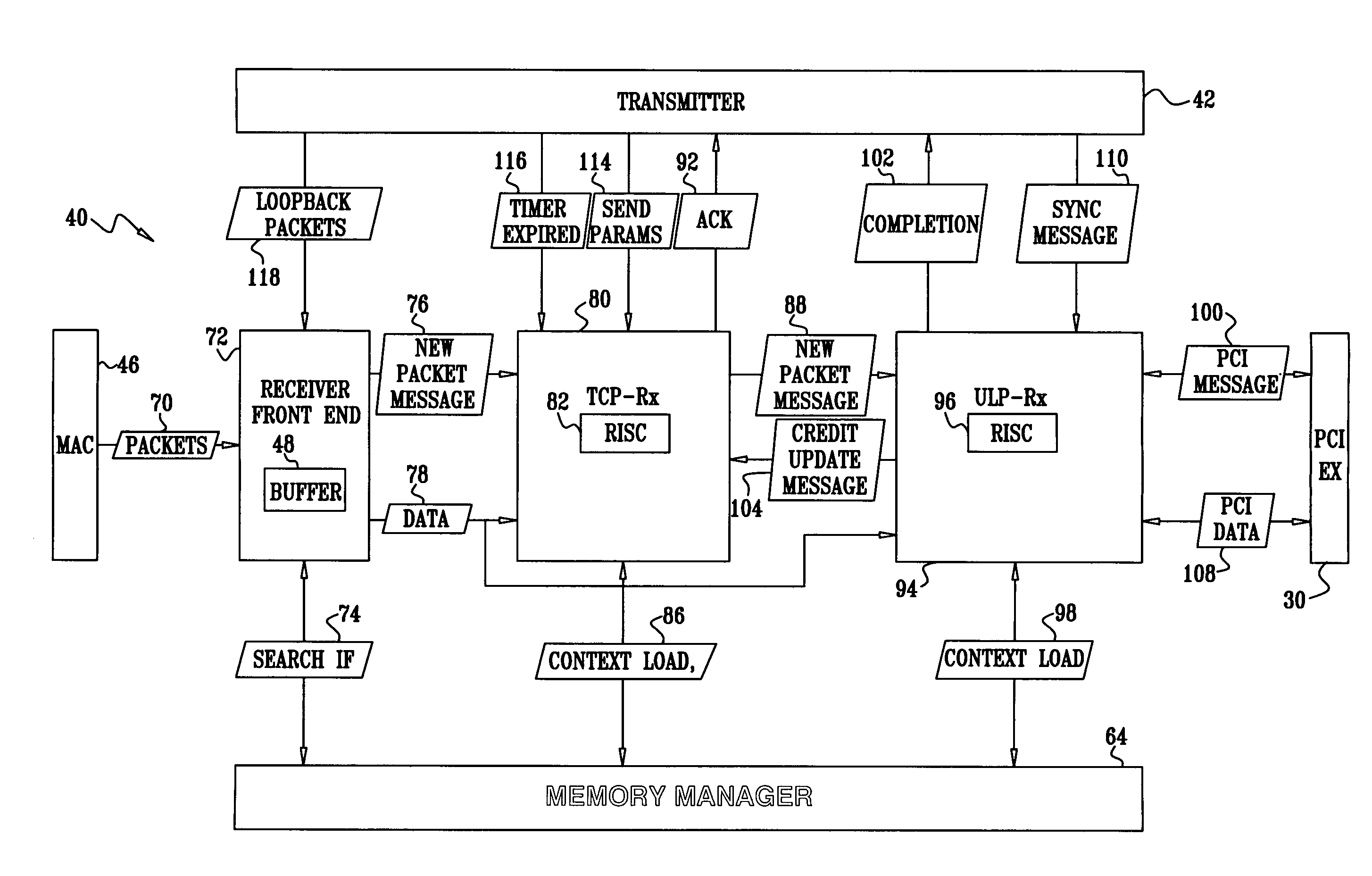

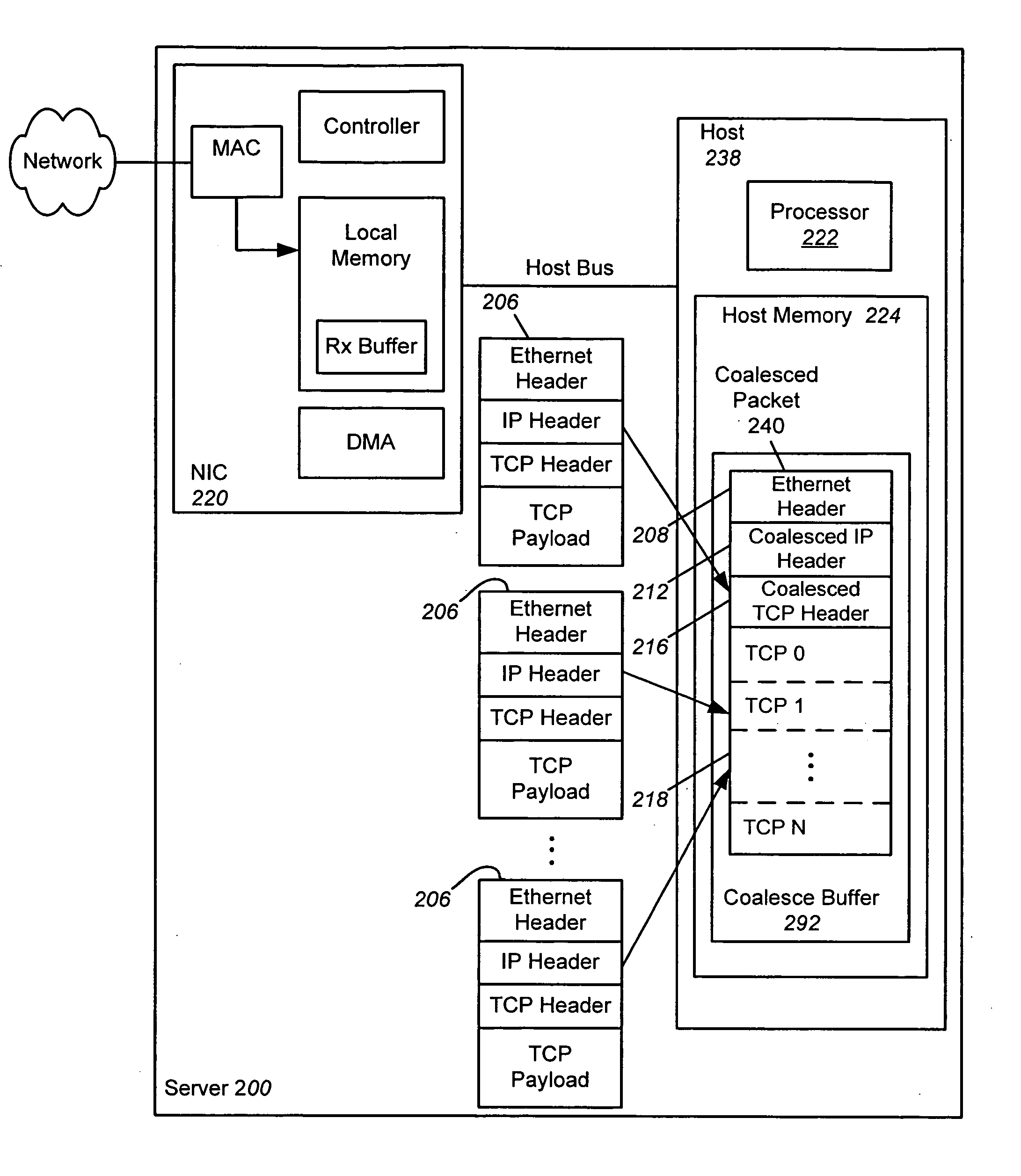

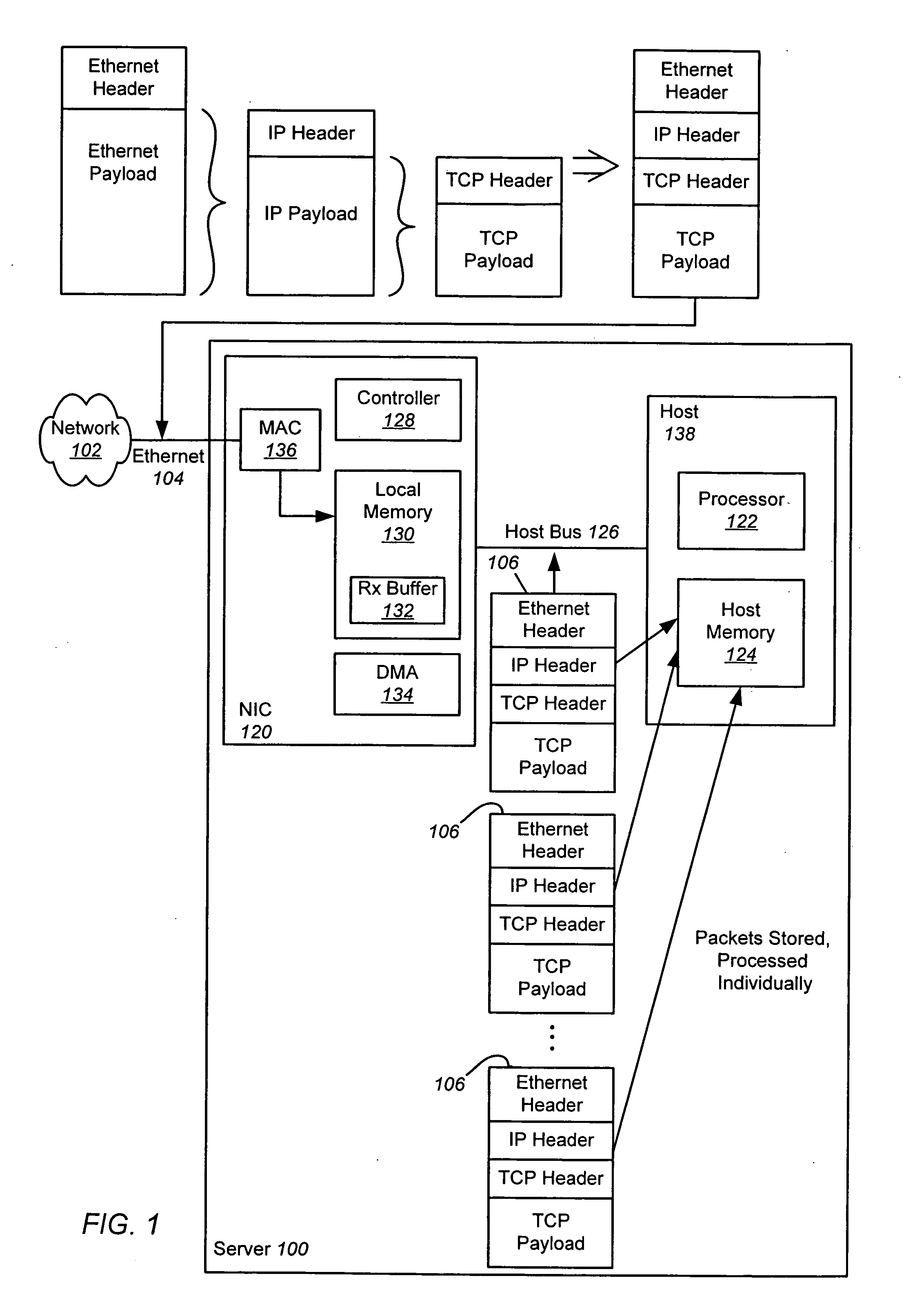

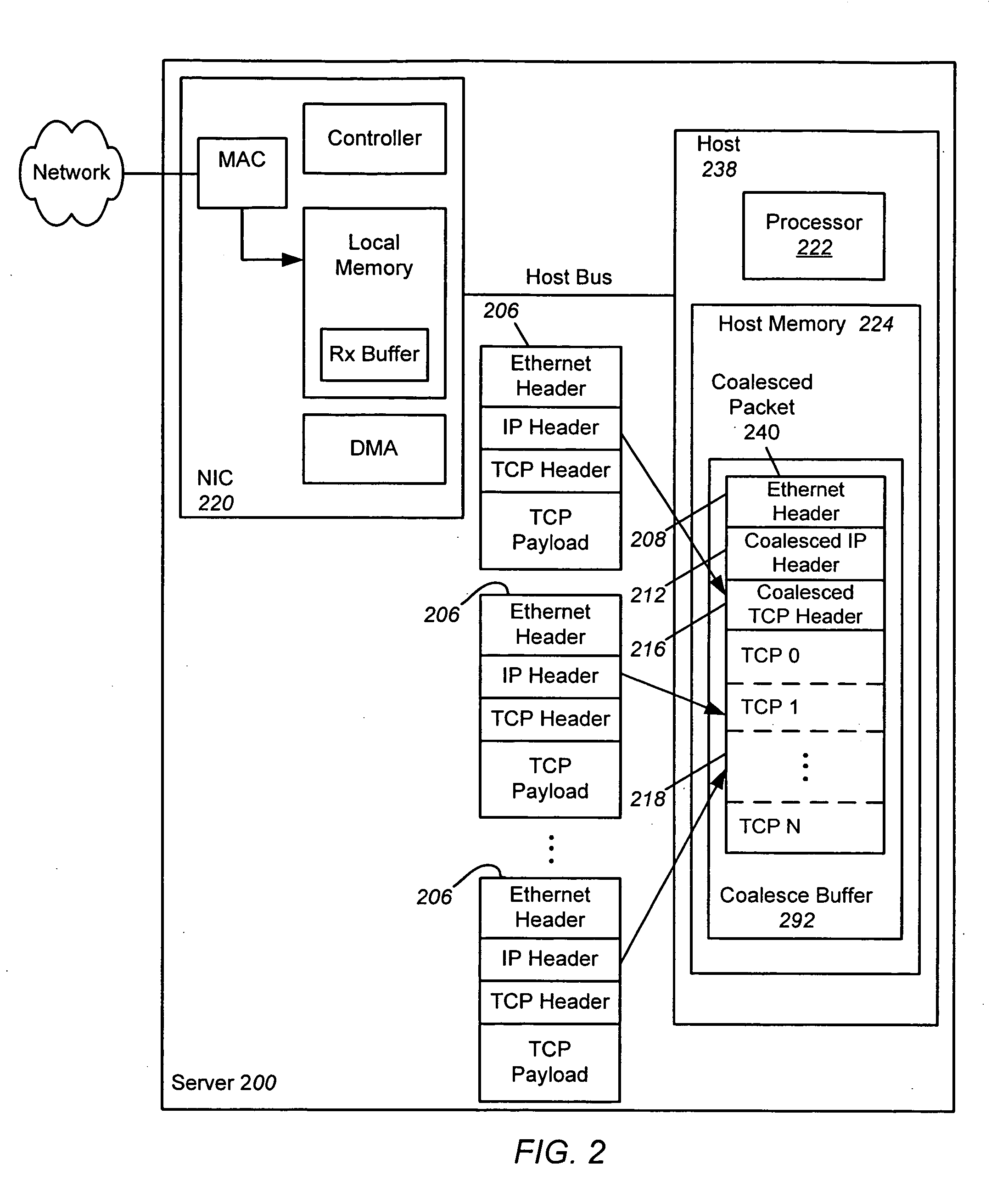

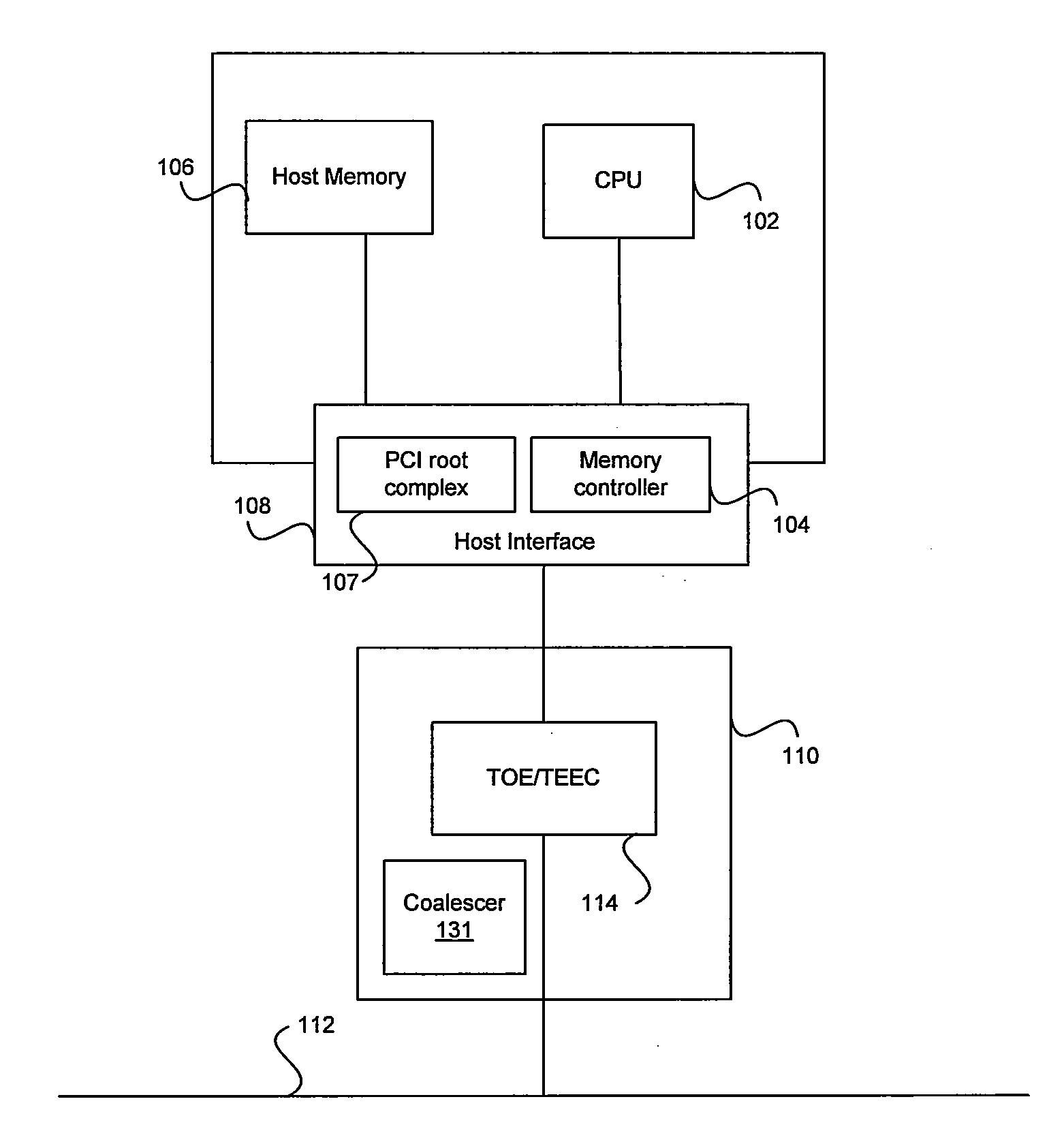

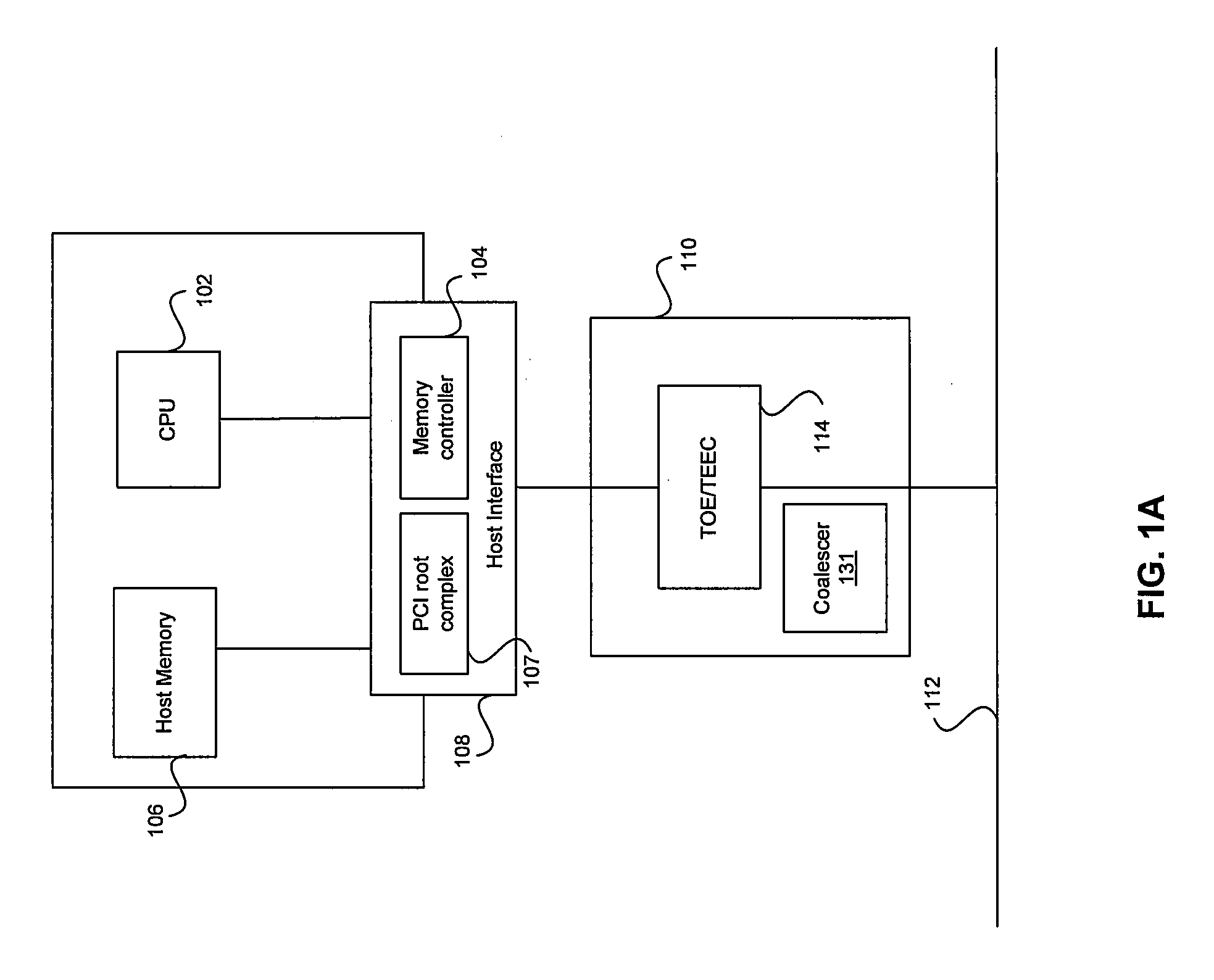

Receive coalescing and automatic acknowledge in network interface controller

ActiveUS20070064737A1Reduce computational overheadImprove network performanceTime-division multiplexTransmissionHost memoryInternet Protocol

An apparatus and method is disclosed for reducing the computational overhead incurred by a host processor during packet processing and improving network performance by adding additional functionality to a Network Interface Controller (NIC). Under certain circumstances the NIC coalesces multiple receive packets into a single coalesced packet stored within a coalesce buffer in host memory. The coalesced packet includes an Ethernet header, a coalesced Internet Protocol (IP) header, a coalesced Transmission Control Protocol (TCP) header, and a coalesced TCP payload containing the TCP payloads of the multiple receive packets. By coalescing received packets into fewer larger coalesced packets within the host memory, the host software needed to process a receive packet will be invoked less often, meaning that less processor overhead is incurred in the host.

Owner:AVAGO TECH INT SALES PTE LTD

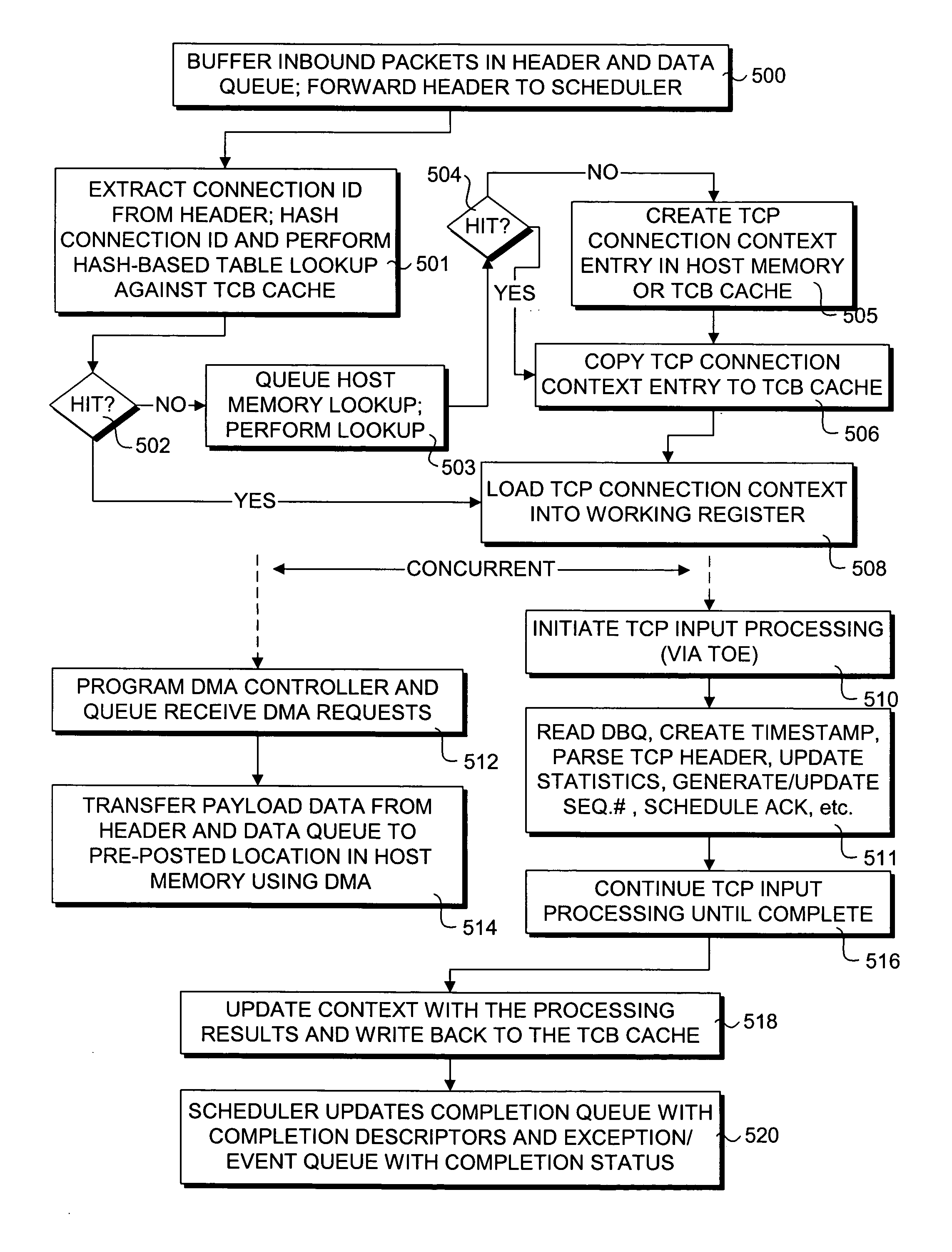

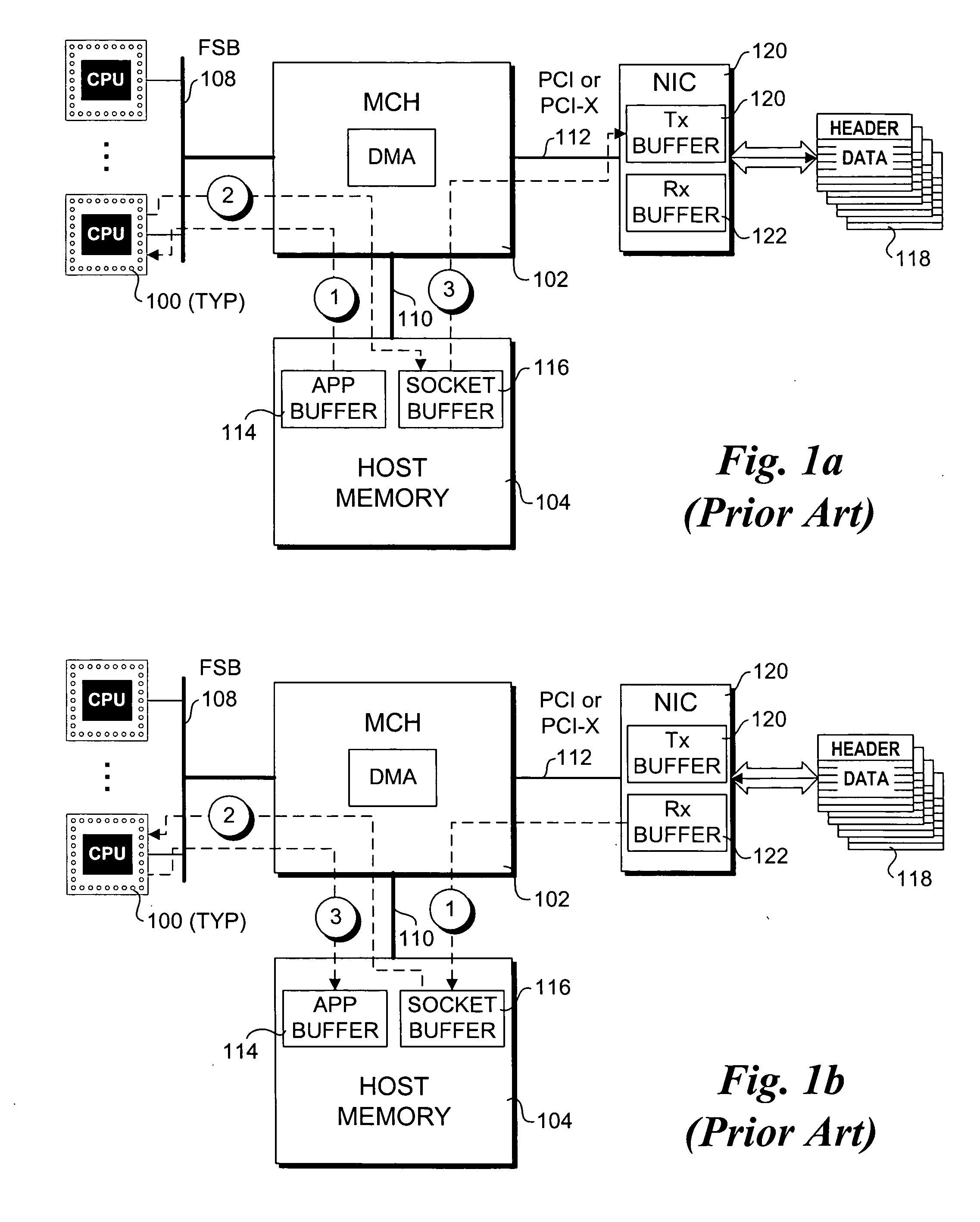

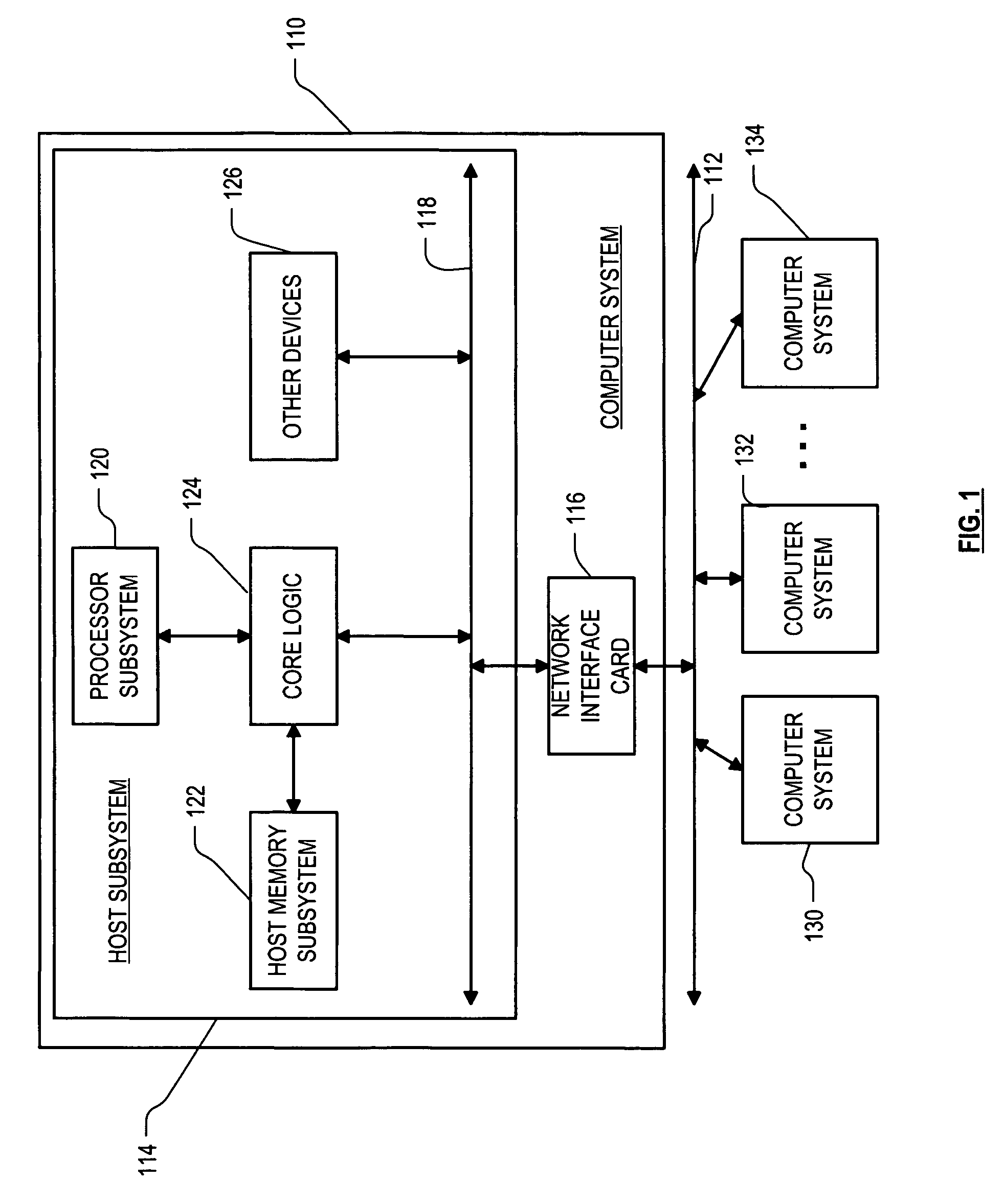

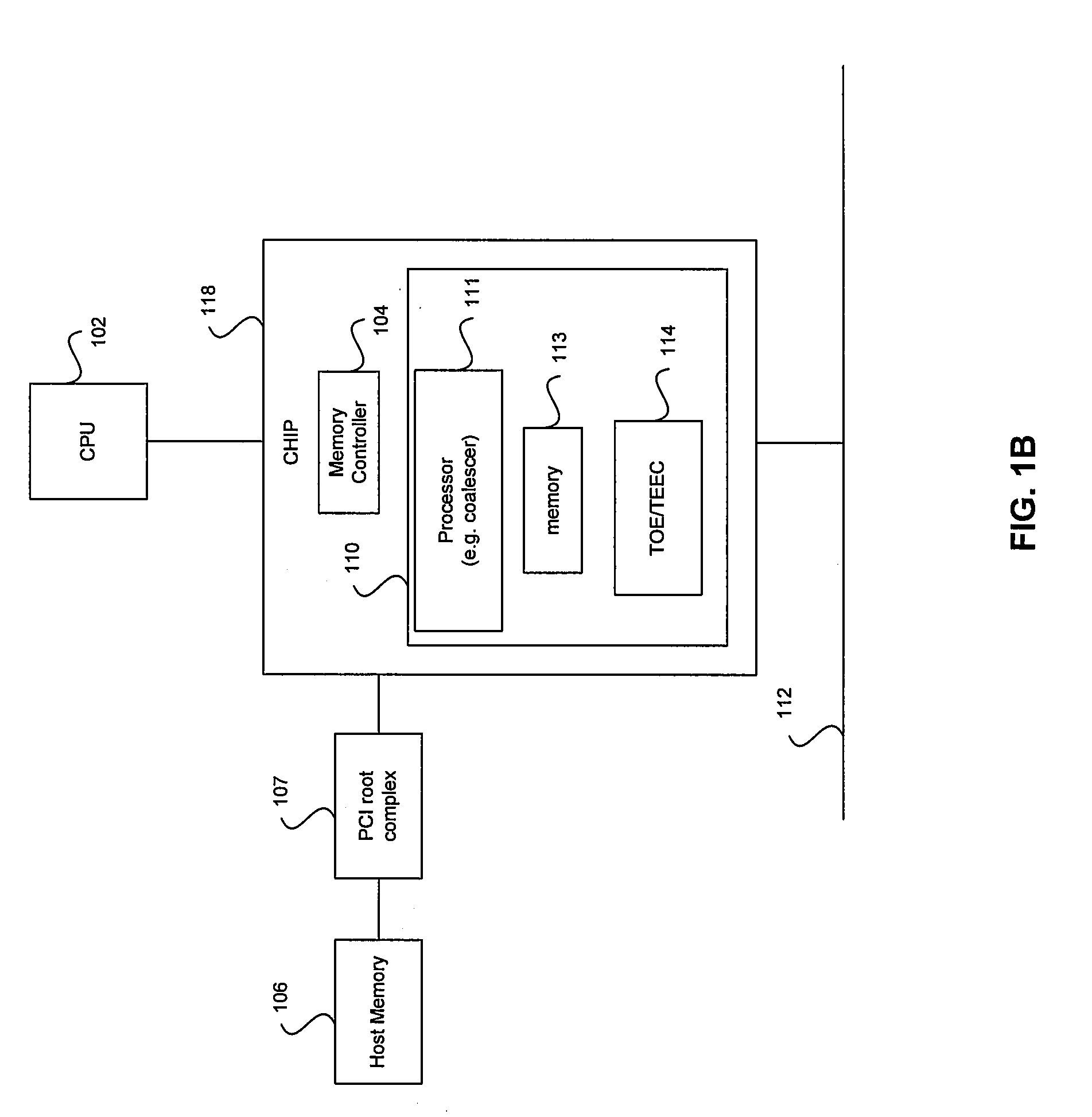

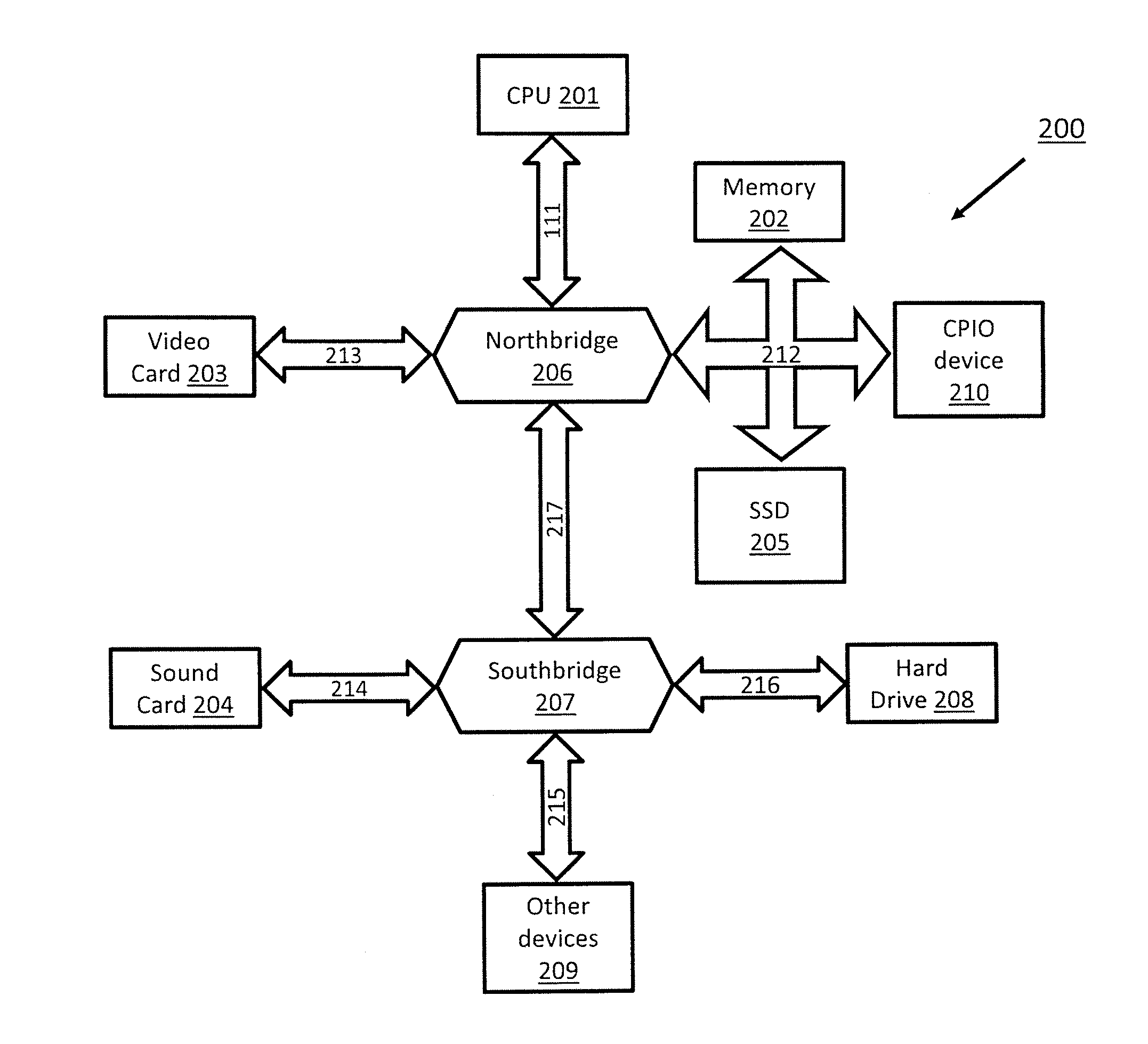

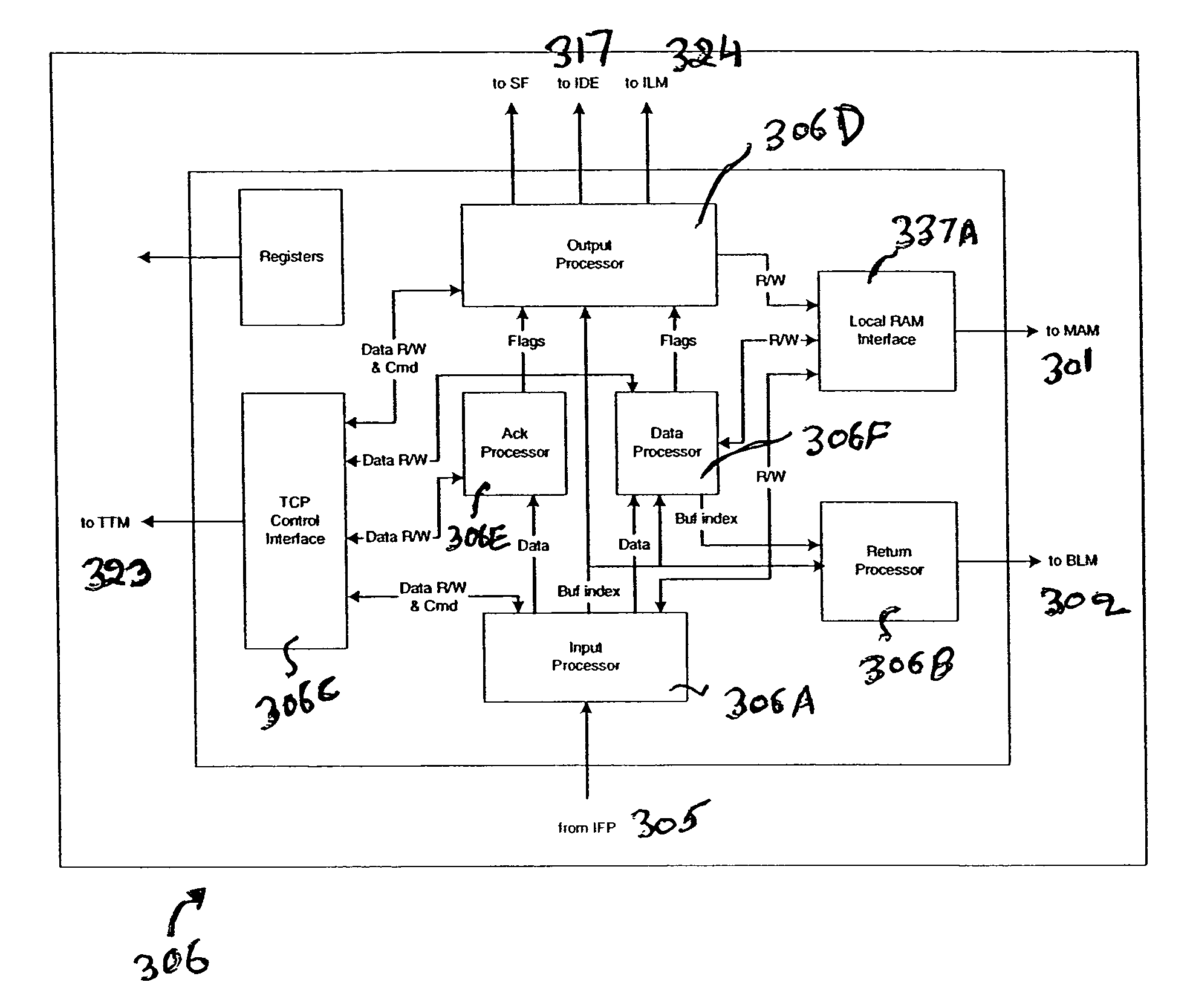

Hardware-based multi-threading for packet processing

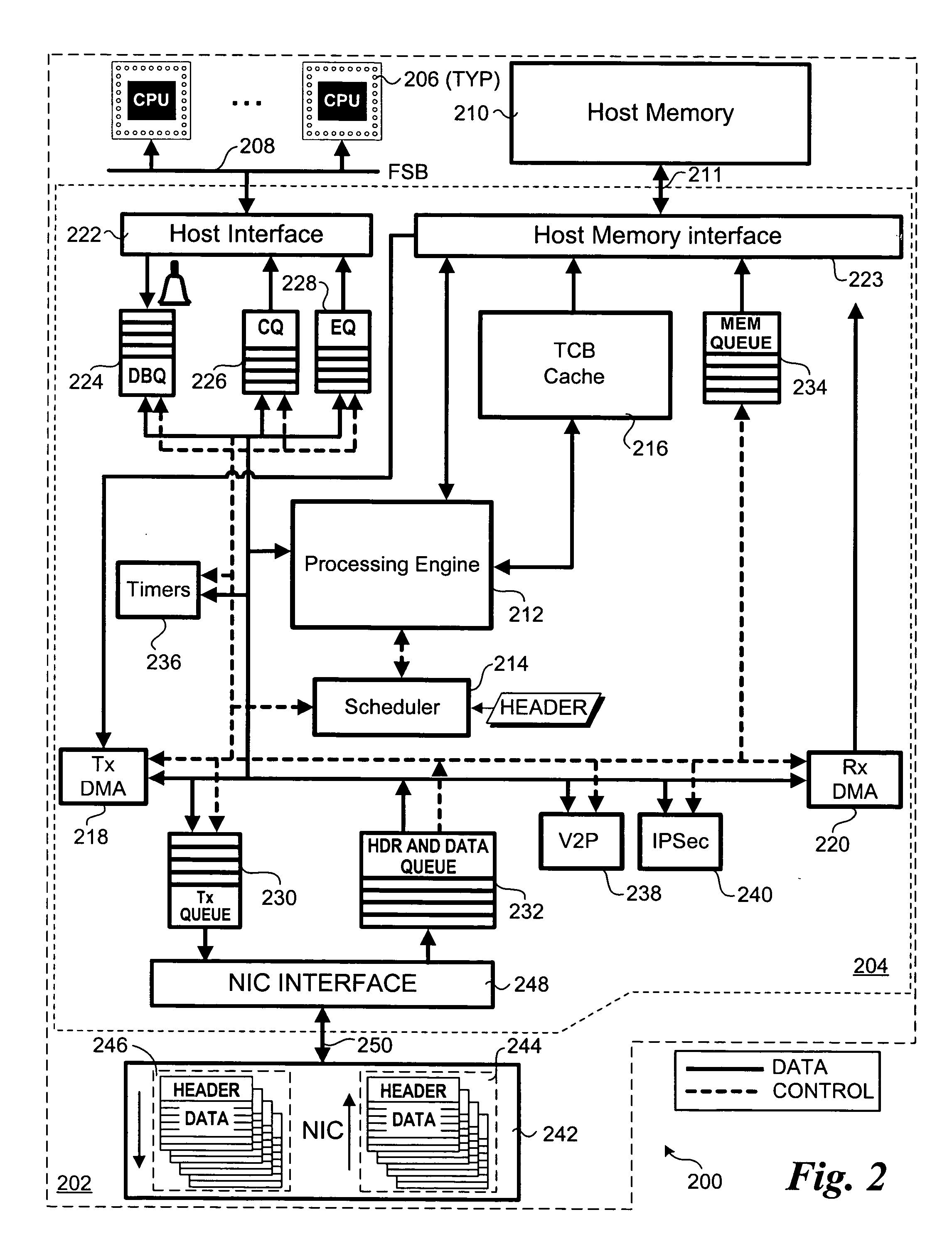

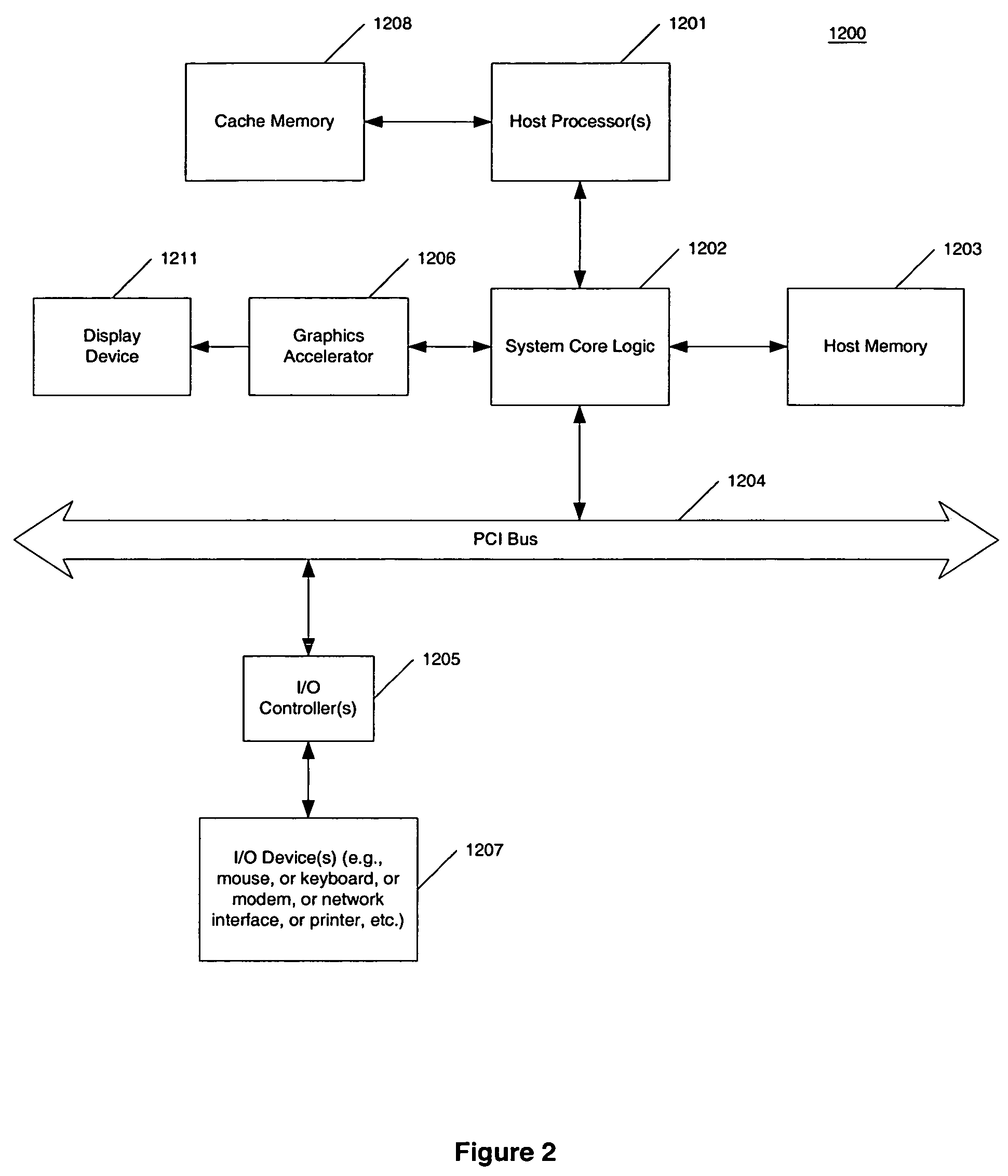

Methods and apparatus for processing transmission control protocol (TCP) packets using hardware-based multi-threading techniques. Inbound and outbound TCP packet are processed using a multi-threaded TCP offload engine (TOE). The TOE includes an execution core comprising a processing engine, a scheduler, an on-chip cache, a host memory interface, a host interface, and a network interface controller (NIC) interface. In one embodiment, the TOE is embodied as a memory controller hub (MCH) component of a platform chipset. The TOE may further include an integrated direct memory access (DMA) controller, or the DMA controller may be embodied as separate circuitry on the MCH. In one embodiment, inbound packets are queued in an input buffer, the headers are provided to the scheduler, and the scheduler arbitrates thread execution on the processing engine. Concurrently, DMA payload data transfers are queued and asynchronously performed in a manner that hides memory latencies. In one embodiment, the technique can process typical-size TCP packets at 10 Gbps or greater line speeds.

Owner:TAHOE RES LTD

Apparatus and method for in-line insertion and removal of markers

ActiveUS20080043750A1Efficient and effective insertion/removalData switching by path configurationDirect memory accessHost memory

An apparatus is provided, for performing a direct memory access (DMA) operation between a host memory in a first server and a network adapter. The apparatus includes a host frame parser and a protocol engine. The host frame parser is configured to receive data corresponding to the DMA operation from a host interface, and is configured to insert markers on-the-fly into the data at a prescribed interval and to provide marked data for transmission to a second server over a network fabric. The protocol engine is coupled to the host frame parser. The protocol engine is configured to direct the host frame parser to insert the markers, and is configured to specify a first marker value and an offset value, whereby the host frame parser is enabled to locate and insert a first marker into the data.

Owner:INTEL CORP

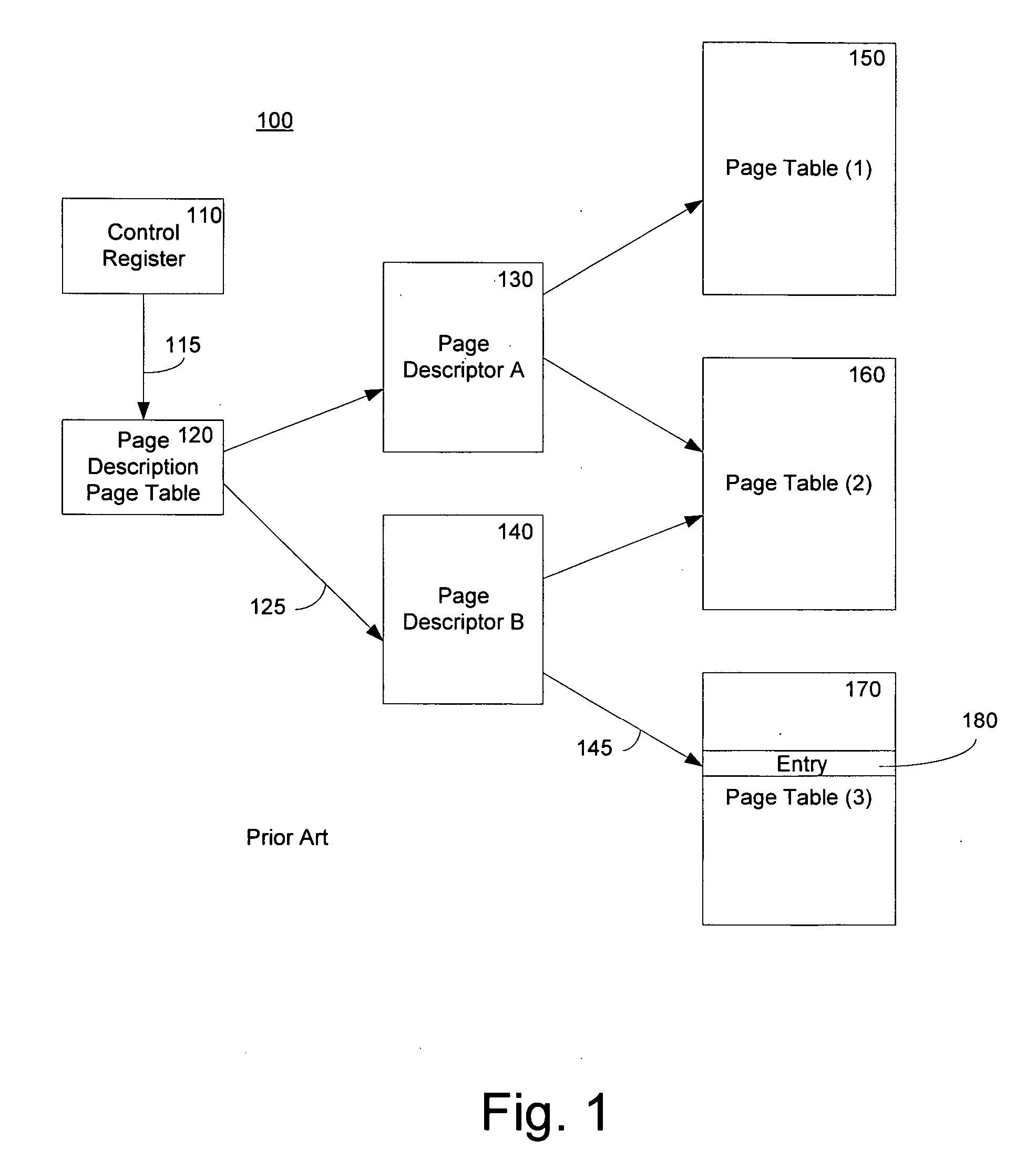

Method and system for a second level address translation in a virtual machine environment

ActiveUS7428626B2Increase speedReduce usageMemory architecture accessing/allocationMemory adressing/allocation/relocationHost memoryComputer science

A method of performing a translation from a guest virtual address to a host physical address in a virtual machine environment includes receiving a guest virtual address from a host computer executing a guest virtual machine program and using the hardware oriented method of the host CPU to determine the guest physical address. A second level address translation to a host physical address is then performed. In one embodiment, a multiple tier tree is traversed which translates the guest physical address into a host physical address. In another embodiment, the second level of address translation is performed by employing a hash function of the guest physical address and a reference to a hash table. One aspect of the invention is the incorporation of access overrides associated with the host physical address which can control the access permissions of the host memory.

Owner:MICROSOFT TECH LICENSING LLC

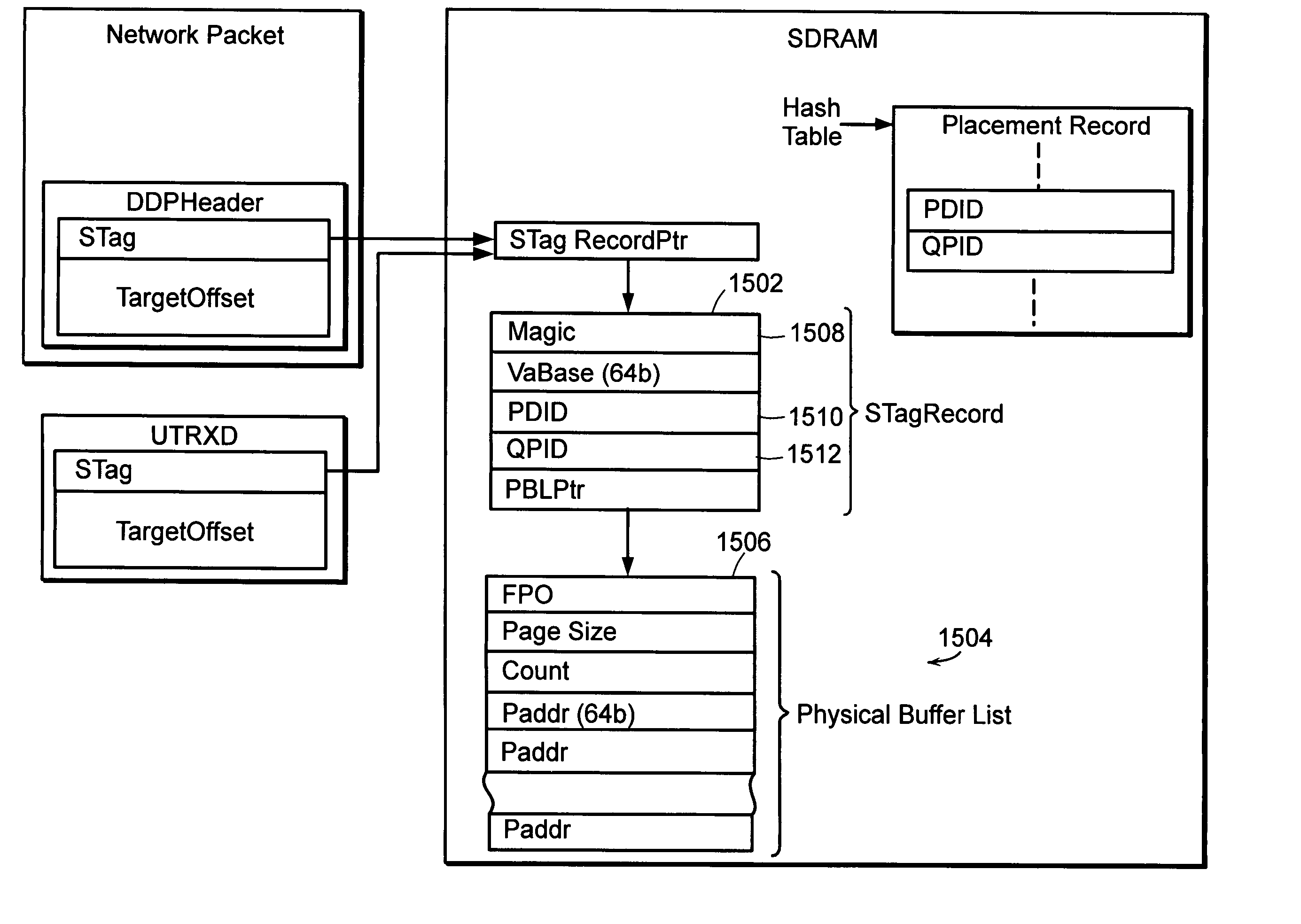

System and method for placement of sharing physical buffer lists in RDMA communication

InactiveUS20050223118A1Memory adressing/allocation/relocationMultiple digital computer combinationsVirtual memoryPhysical address

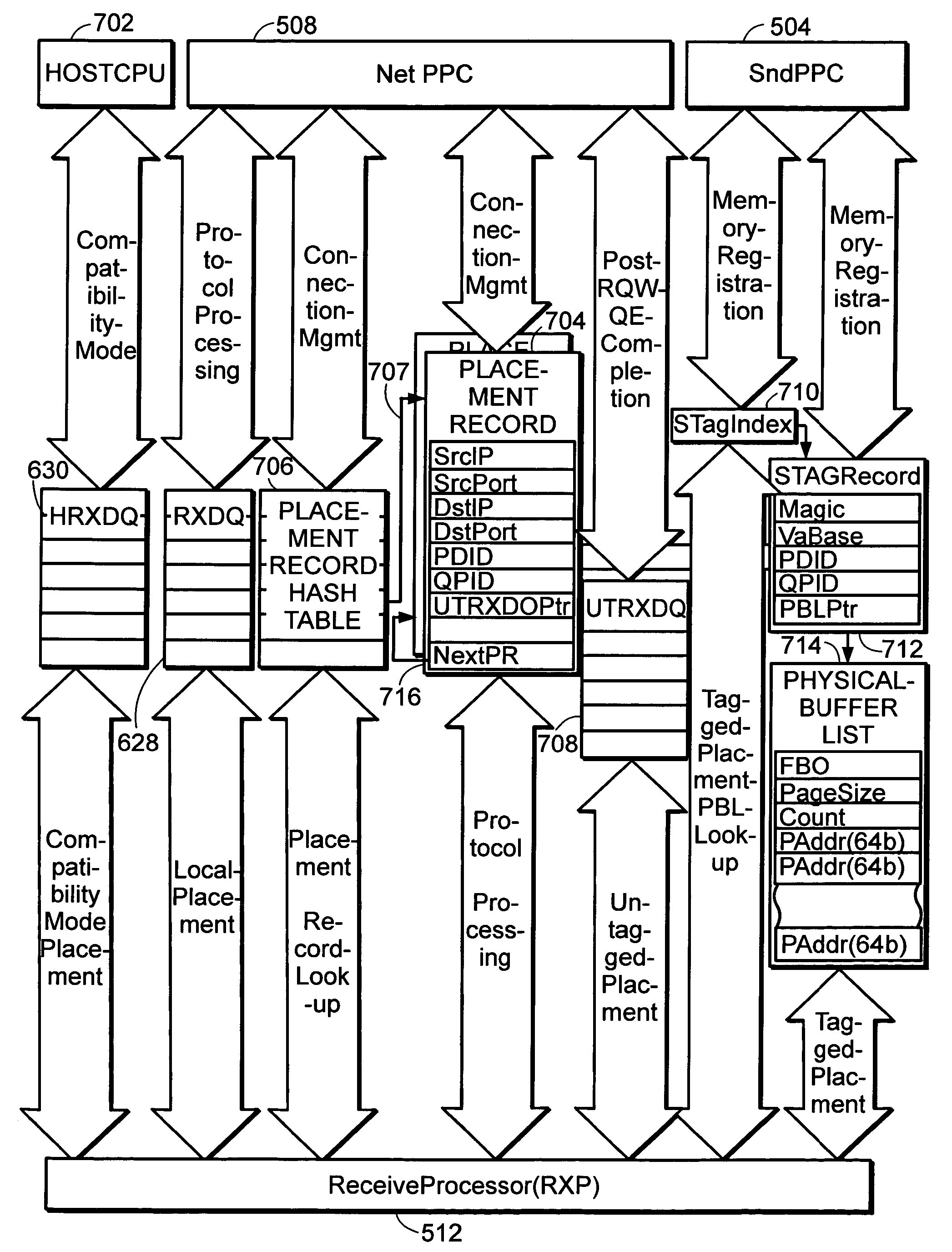

A system and method for placement of sharing physical buffer lists in RDMA communication. According to one embodiment, a network adapter system for use in a computer system includes a host processor and host memory and is capable for use in network communication in accordance with a direct data placement (DDP) protocol. The DDP protocol specifies tagged and untagged data movement into a connection-specific application buffer in a contiguous region of virtual memory space of a corresponding endpoint computer application executing on said host processor. The DDP protocol specifies the permissibility of memory regions in host memory and specifies the permissibility of at least one memory window within a memory region. The memory regions and memory windows have independently definable application access rights, the network adapter system includes adapter memory and a plurality of physical buffer lists in the adapter memory. Each physical buffer list specifies physical address locations of host memory corresponding to one of said memory regions. A plurality of steering tag records are in the adapter memory, each steering tag record corresponding to a steering tag. Each steering tag record specifies memory locations and access permissions for one of a memory region and a memory window. Each physical buffer list is capable of having a one to many correspondence with steering tag records such that many memory windows may share a single physical buffer list. According to another embodiment, each steering tag record includes a pointer to a corresponding physical buffer list.

Owner:AMMASSO

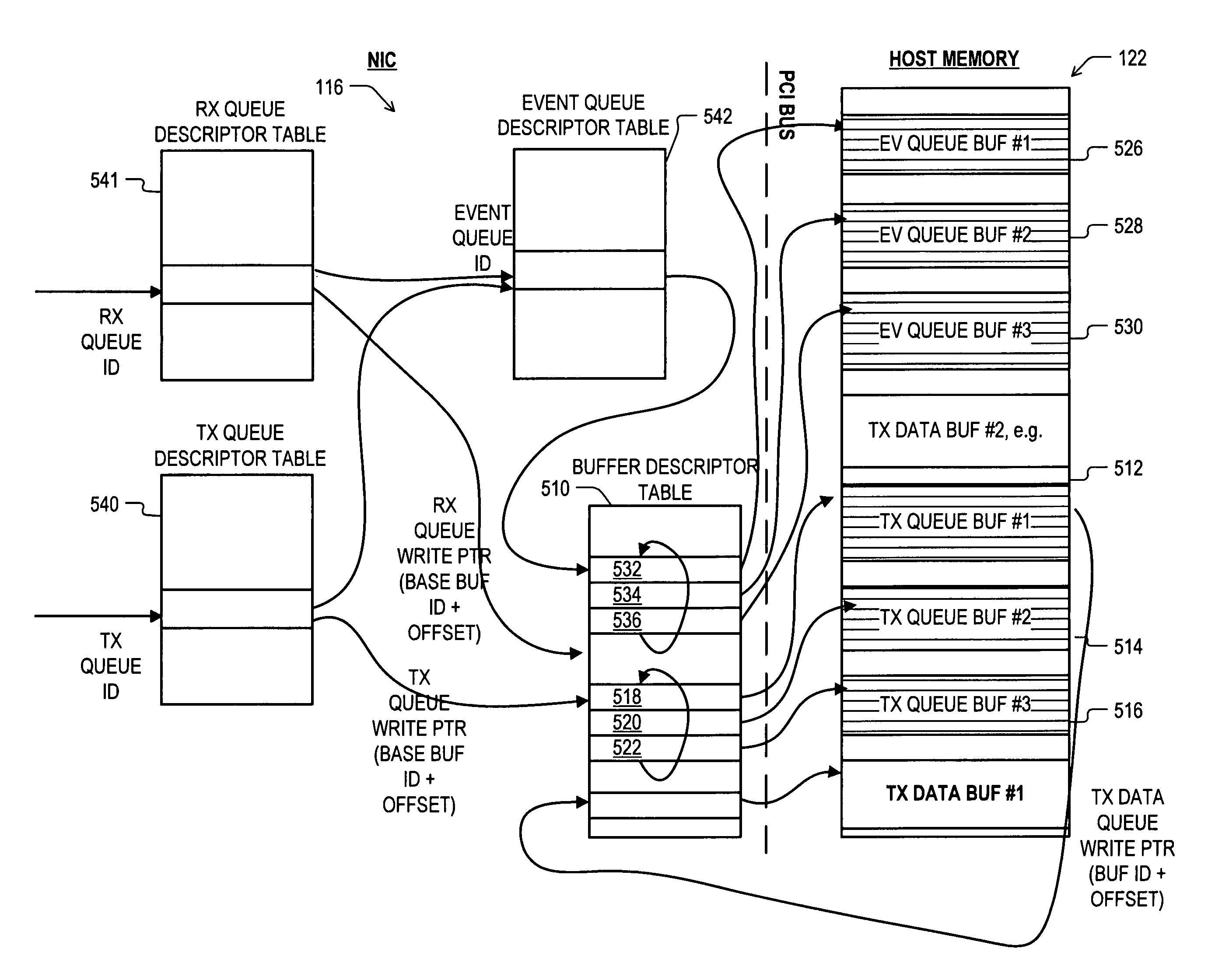

DMA descriptor queue read and cache write pointer arrangement

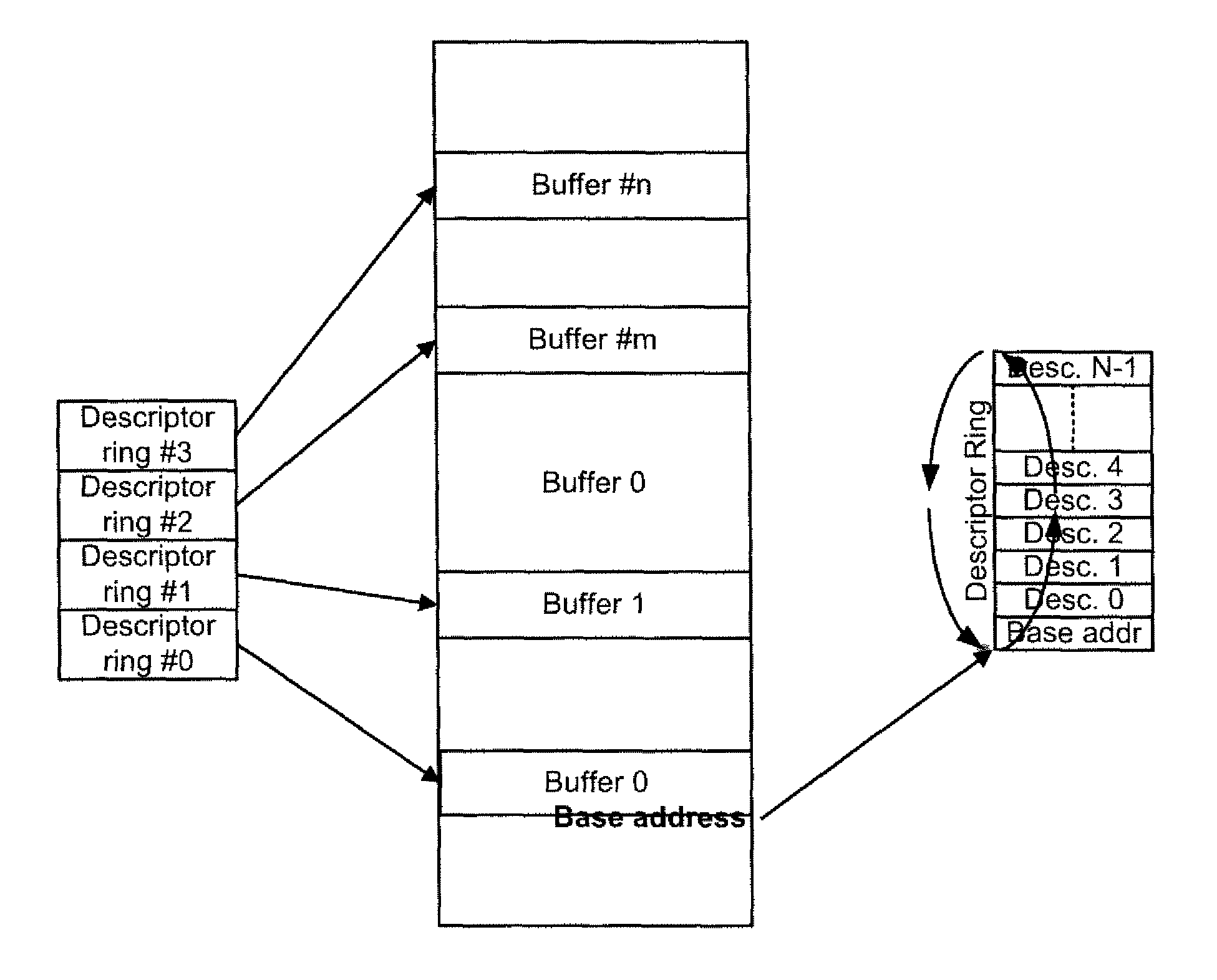

Method and apparatus for retrieving buffer descriptors from a host memory for use by a peripheral device. In an embodiment, a peripheral device such as a NIC includes a plurality of buffer descriptor caches each corresponding to a respective one of a plurality of host memory descriptor queues, and a plurality of queue descriptors each corresponding to a respective one of the host memory descriptor queues. Each of the queue descriptors includes a host memory read address pointer for the corresponding descriptor queue, and this same read pointer is used to derive algorithmically the descriptor cache write addresses at which to write buffer descriptors retrieved from the corresponding host memory descriptor queue.

Owner:XILINX INC

Packet buffer apparatus and method

In managing and buffering packet data for transmission out of a host, descriptor ring data is pushed in from a host memory into a descriptor ring cache and cached therein. The descriptor ring data is processed to read a data packet descriptor, and a direct memory access is initiated to the host to read the data packet corresponding to the read data packet descriptor to a data transmission buffer. The data packet is written by the direct memory access into the data transmission buffer and cached therein. A return pointer is written to the host memory by the direct memory access indicating that the data packet descriptor has been read and the corresponding data packet has been transmitted. In managing and buffering packet data for transmission to a host, descriptor ring data is pushed in from a host memory into a descriptor ring cache and cached therein. Data packets for transmission to the host memory are received and cached in a data reception buffer. Data is read from the data reception buffer according to a data packet descriptor retrieved from the descriptor ring cache, and the data packet is written to a data reception queue within the host memory by a direct memory access. A return pointer is written to the host memory by the direct memory access indicating that the data packet has been written.

Owner:MARVELL ASIA PTE LTD

System and method for placement of RDMA payload into application memory of a processor system

InactiveUS20060067346A1Memory adressing/allocation/relocationData switching by path configurationComputer hardwarePhysical address

A system and method for placement of RDMA payload into application memory of a processor system. Under one embodiment, a network adapter system is capable of use in network communication in accordance with a direct data placement (DDP) protocol, e.g., RDMA. The network adapter system includes adapter memory and a plurality of placement records in the adapter memory. Each placement record specifies per-connection placement data including at least network address information and port identifications of source and destination network entities for a corresponding DDP protocol connection. Placement record identification logic uniquely identifies a placement record from network address information and port identification information contained in a DDP message received by the network adapter system. Untagged message payload placement logic directly places the payload of the received untagged DDP message into physical address locations of host memory corresponding to one of said connection-specific application buffers. Tagged message payload placement logic directly places the payload of the tagged DDP message into physical address locations of host memory corresponding to the identifier in the received DDP message. According to one embodiment, the placement records are organized as an array of hash buckets with each element of the array containing a placement record and each placement record containing a specification of a next placement record in the same bucket. The placement record identification logic includes hashing logic to create a hash index pointing to a bucket in the array by hashing a 4-tuple consisting of a source address, a destination address, a source port, and a destination port contained in the received DDP message.

Owner:AMMASSO

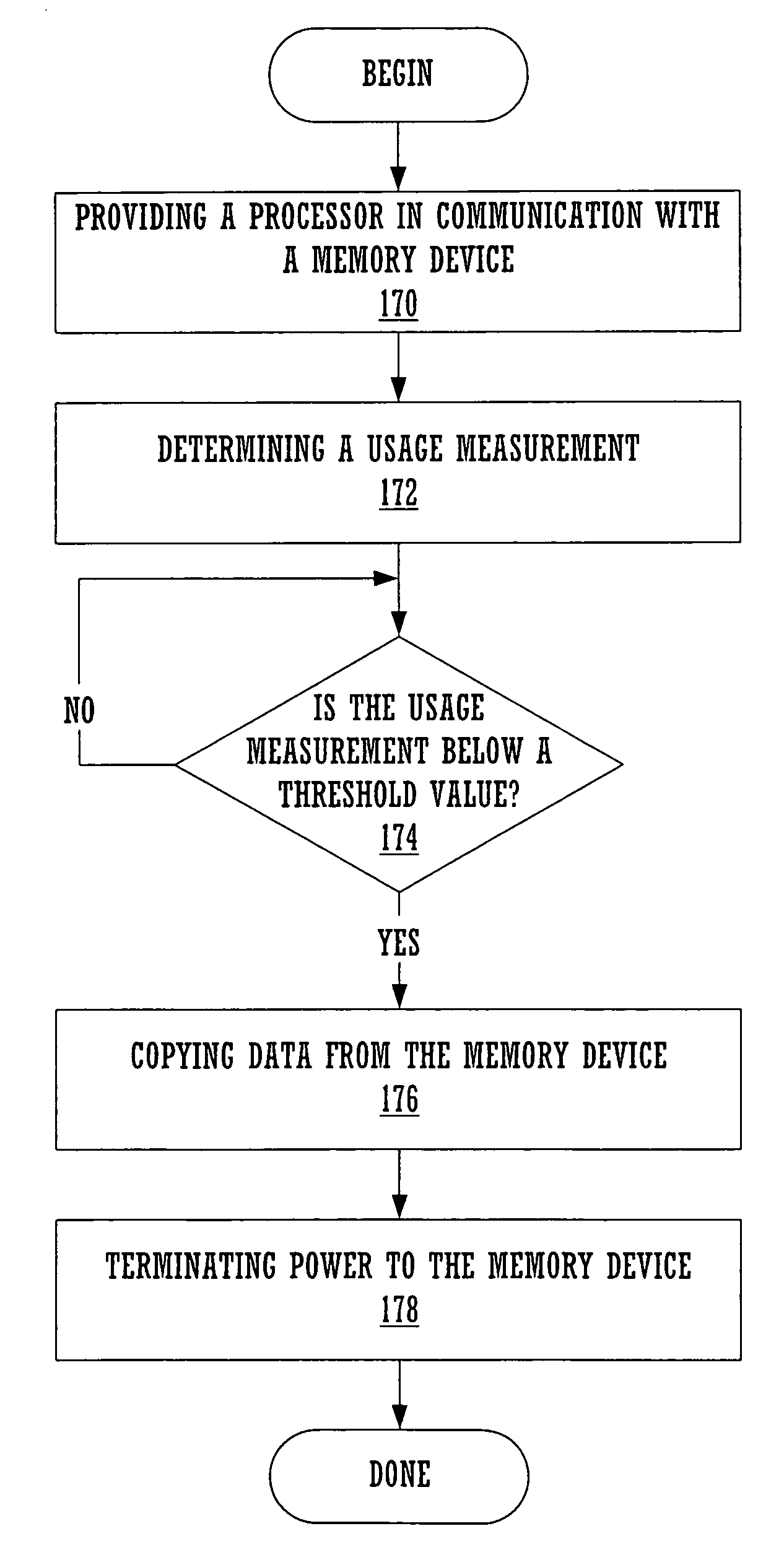

Method and apparatus for partial memory power shutoff

A method for managing host system power consumption is provided. The host system includes host memory and external memory. The method initiates with providing a processor in communication with a memory chip over a bus. The memory chip is external memory. Then, a usage measurement of the external memory is determined. If the usage measurement is below a threshold value, the method includes copying data from the memory chip to the host memory and terminating power to the memory chip. In one embodiment, the power is terminated to at least one bank of memory in the memory chip. In another embodiment, an amount of reduction of the external memory can be determined rather than a usage measurement. In yet another embodiment, an address map is reconfigured in order to maintain a contiguous configuration. A graphical user interface and a memory chip are provided also.

Owner:NVIDIA CORP

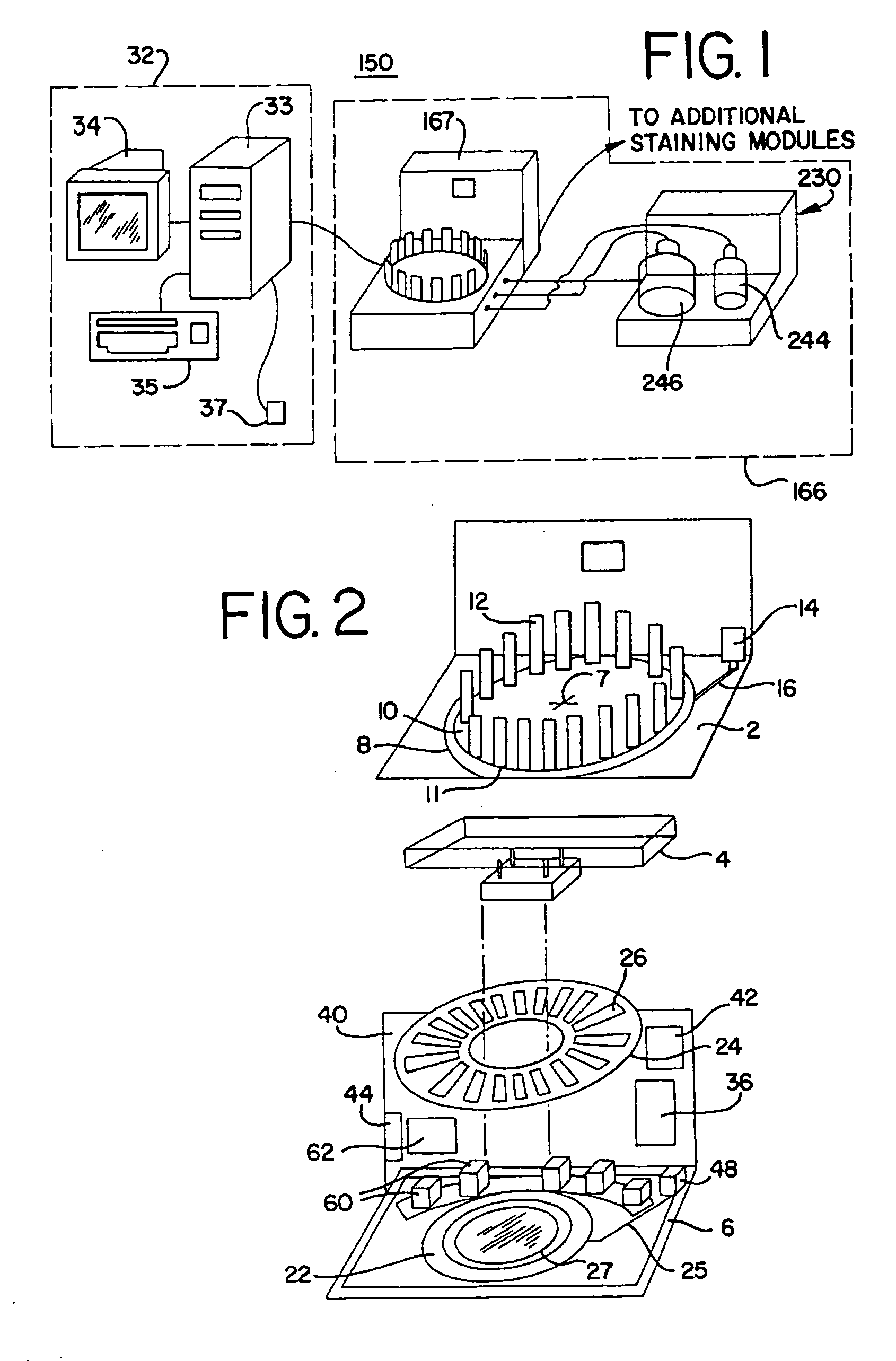

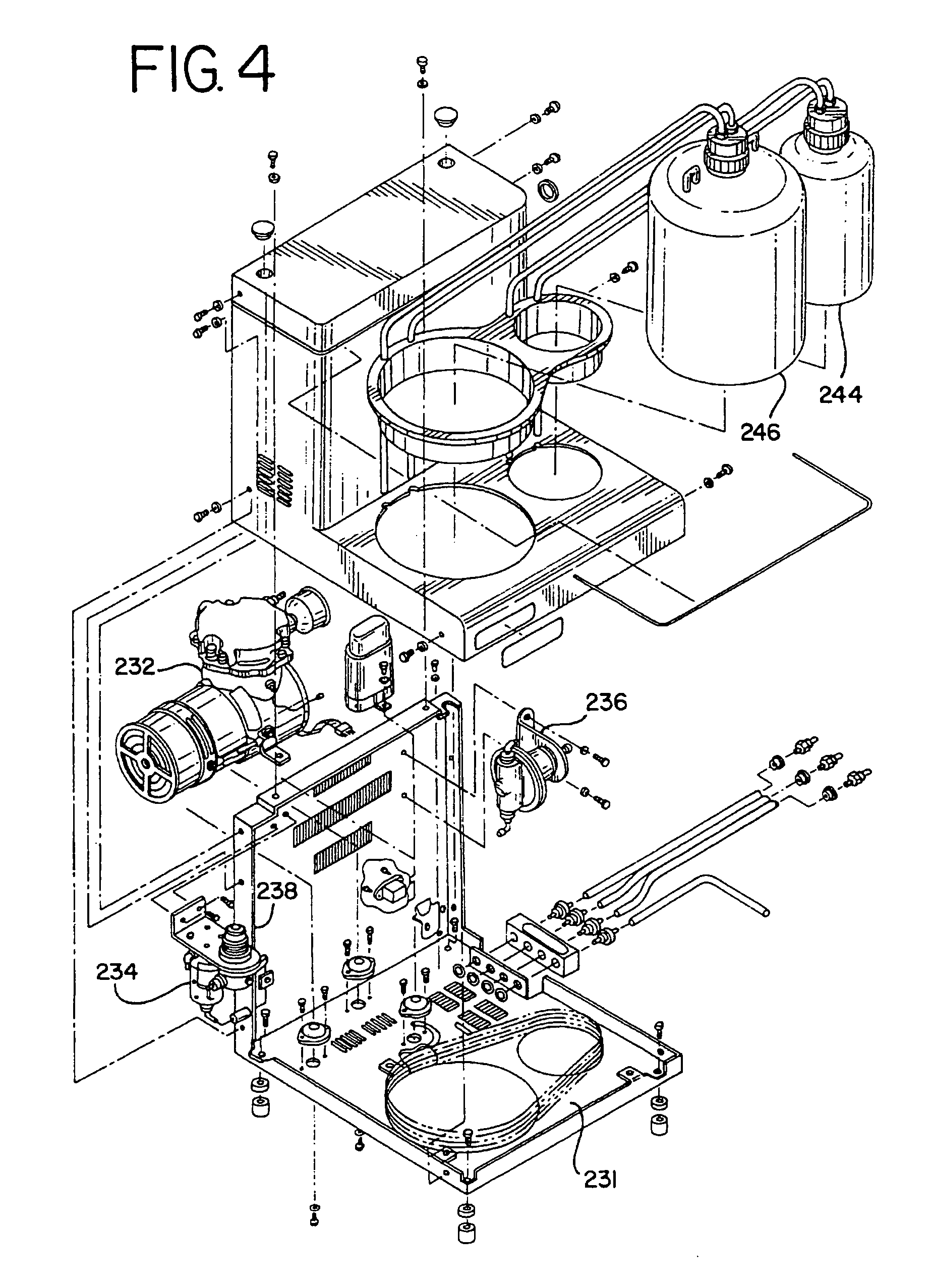

Memory management method and apparatus for automated biological reaction system

InactiveUS20060190185A1Analysis using chemical indicatorsWithdrawing sample devicesEngineeringHost memory

A reagent management system for an automated biological reaction apparatus including an automated biological reaction apparatus including at least one reagent dispenser for applying a reagent to a sample, a memory device containing data for a reagent device selected from the group consisting of a reagent dispenser or a reagent kit used in the automated biological reaction apparatus, a host device comprising a processor and a host device memory connected to the processor, the host memory device including a reagent table, and a scanner for transferring the memory device data to the host device memory,

Owner:VENTANA MEDICAL SYST INC

Method and System for Delayed Completion Coalescing

Certain aspects of a method and system for delayed completion coalescing may be disclosed. Exemplary aspects of the method may include accumulating a plurality of bytes of incoming TCP segments in a host memory until a number of the plurality of bytes of incoming TCP segments reaches a threshold value. A completion queue entry (CQE) may be generated to a driver when the plurality of bytes of incoming TCP segments reaches the threshold value and the plurality of bytes of incoming TCP segments may be copied to a user application. The method may also include delaying in a driver, an update of a TCP receive window size until one of the incoming TCP segments corresponding to a particular sequence number is copied to the user application. The CQE may also be generated to the driver when at least one of the incoming TCP segments is received with a TCP PUSH bit SET and the TCP receive window size is greater than a particular window size value.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

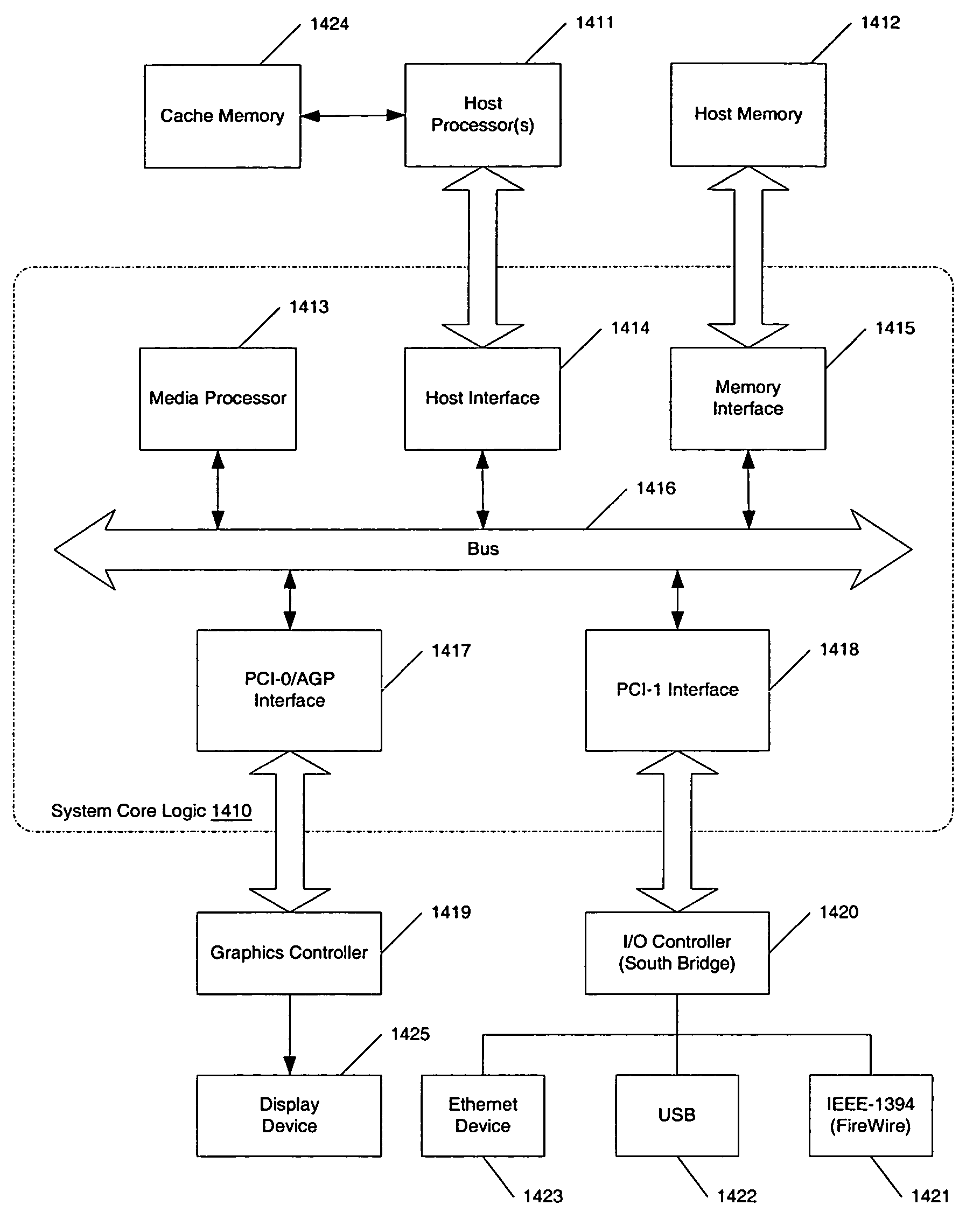

Method and apparatus for data processing

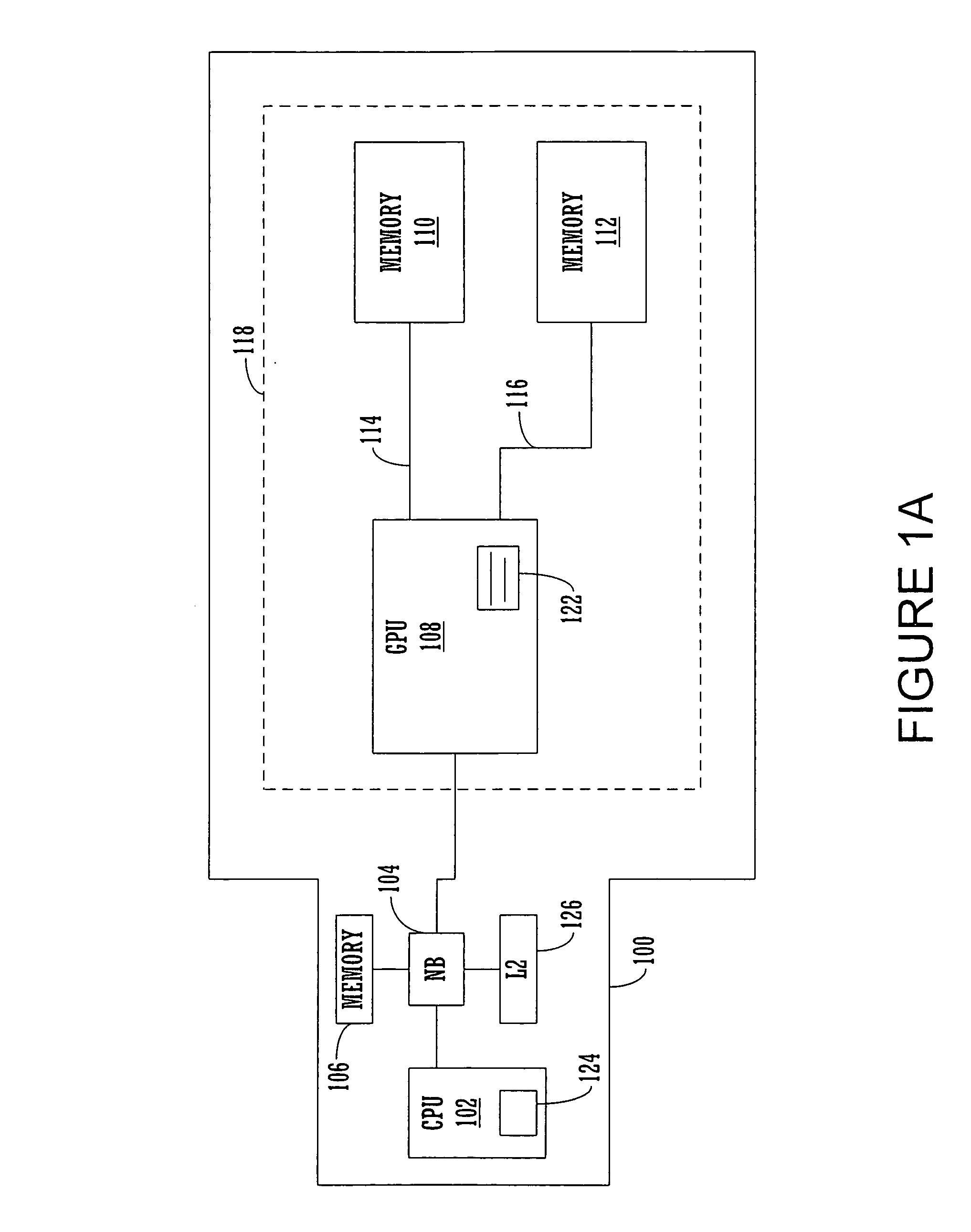

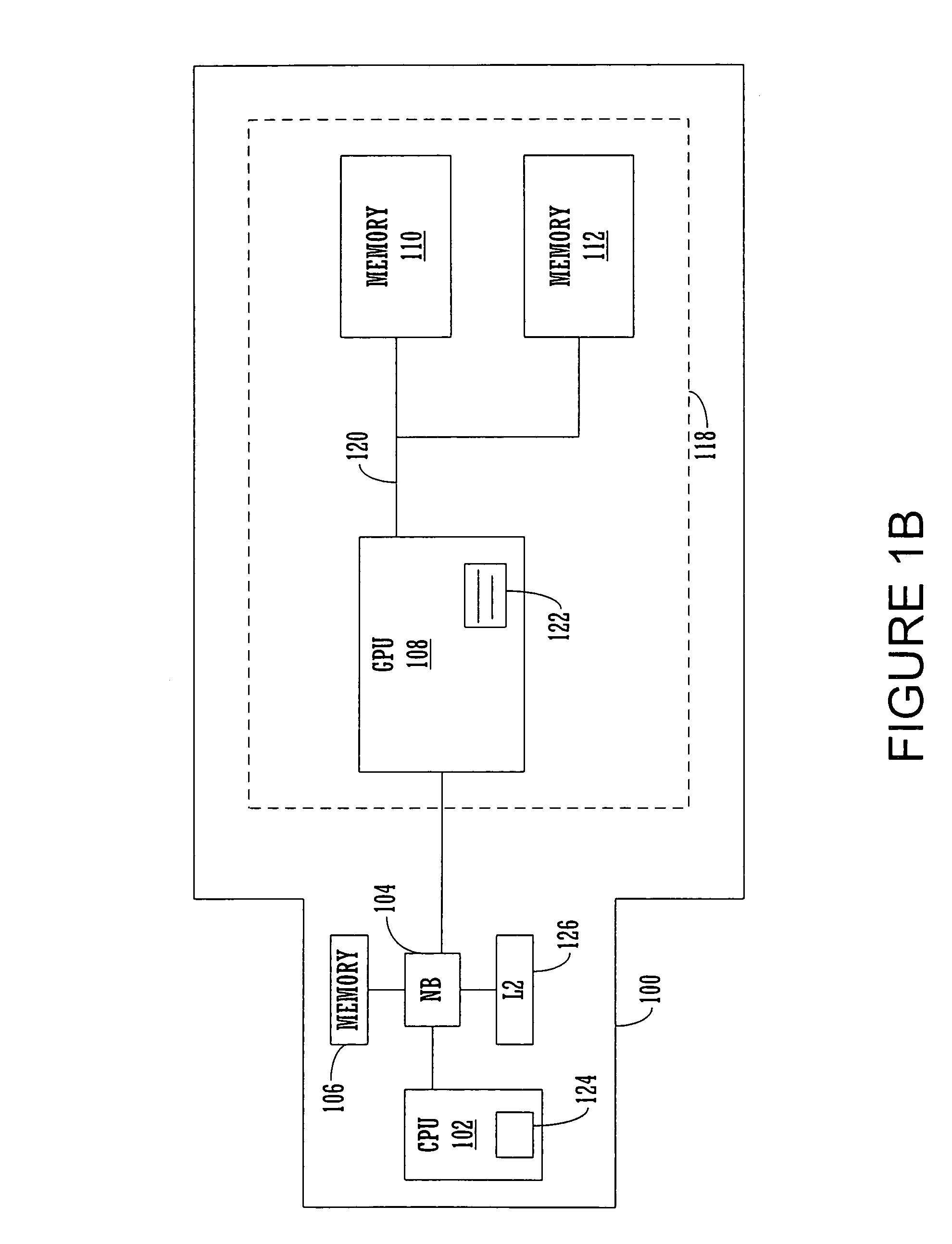

InactiveUS7305540B1General purpose stored program computerMultiple digital computer combinationsMemory controllerHost memory

Methods and apparatuses for a data processing system are described herein. In one aspect of the invention, an exemplary apparatus includes a chip interconnect, a memory controller for controlling the host memory comprising DRAM memory, the memory controller coupled to the chip interconnect, a scalar processing unit coupled the chip interconnect wherein the scalar processing unit is capable of executing instructions to perform scalar data processing, a vector processing unit coupled the chip interconnect wherein the vector processing unit is capable of executing instructions to perform vector data processing, and an input / output (I / O) interface coupled to the chip interconnect wherein the I / O interface receives / transmits data from / to the scalar and / or vector processing units.

Owner:APPLE INC

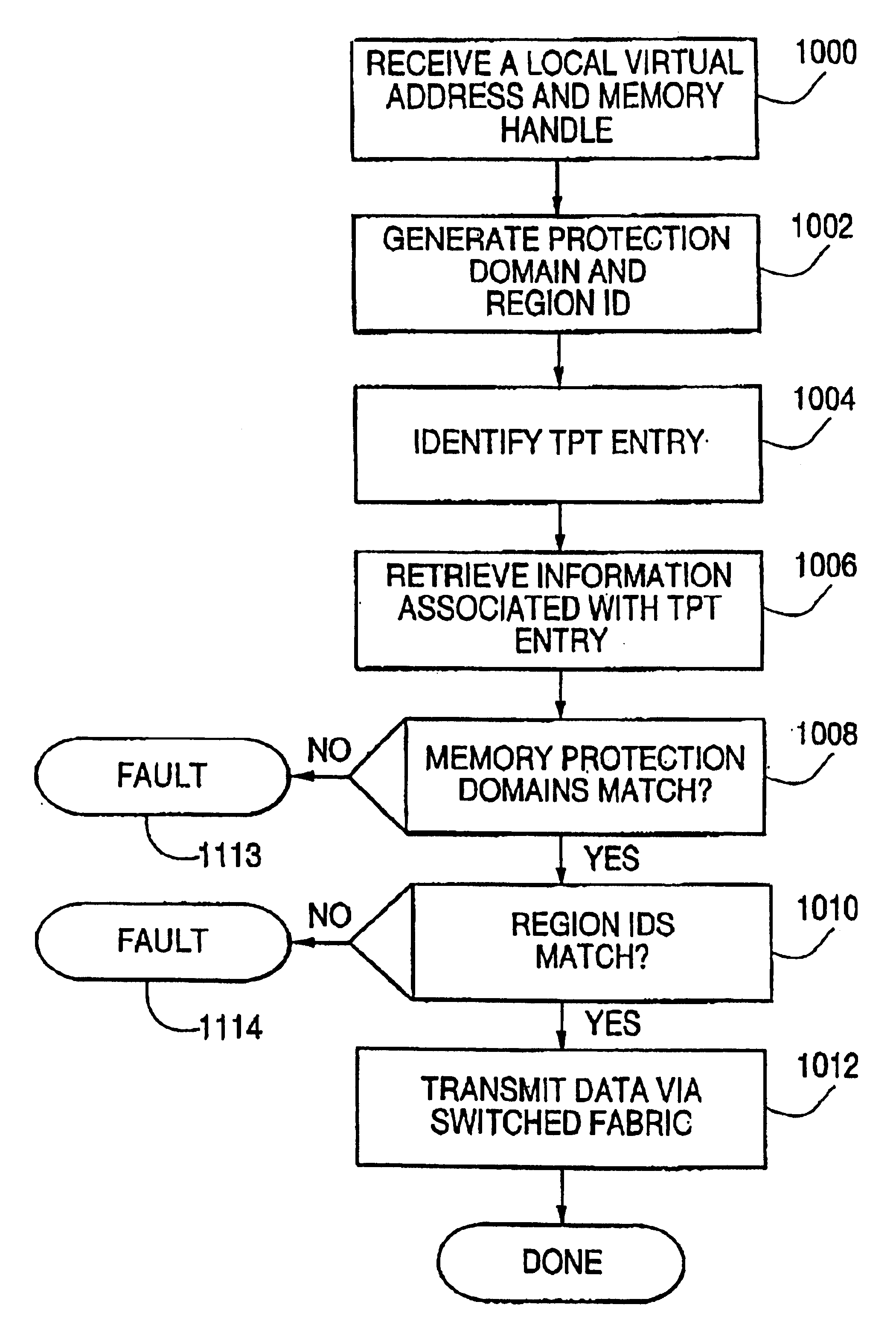

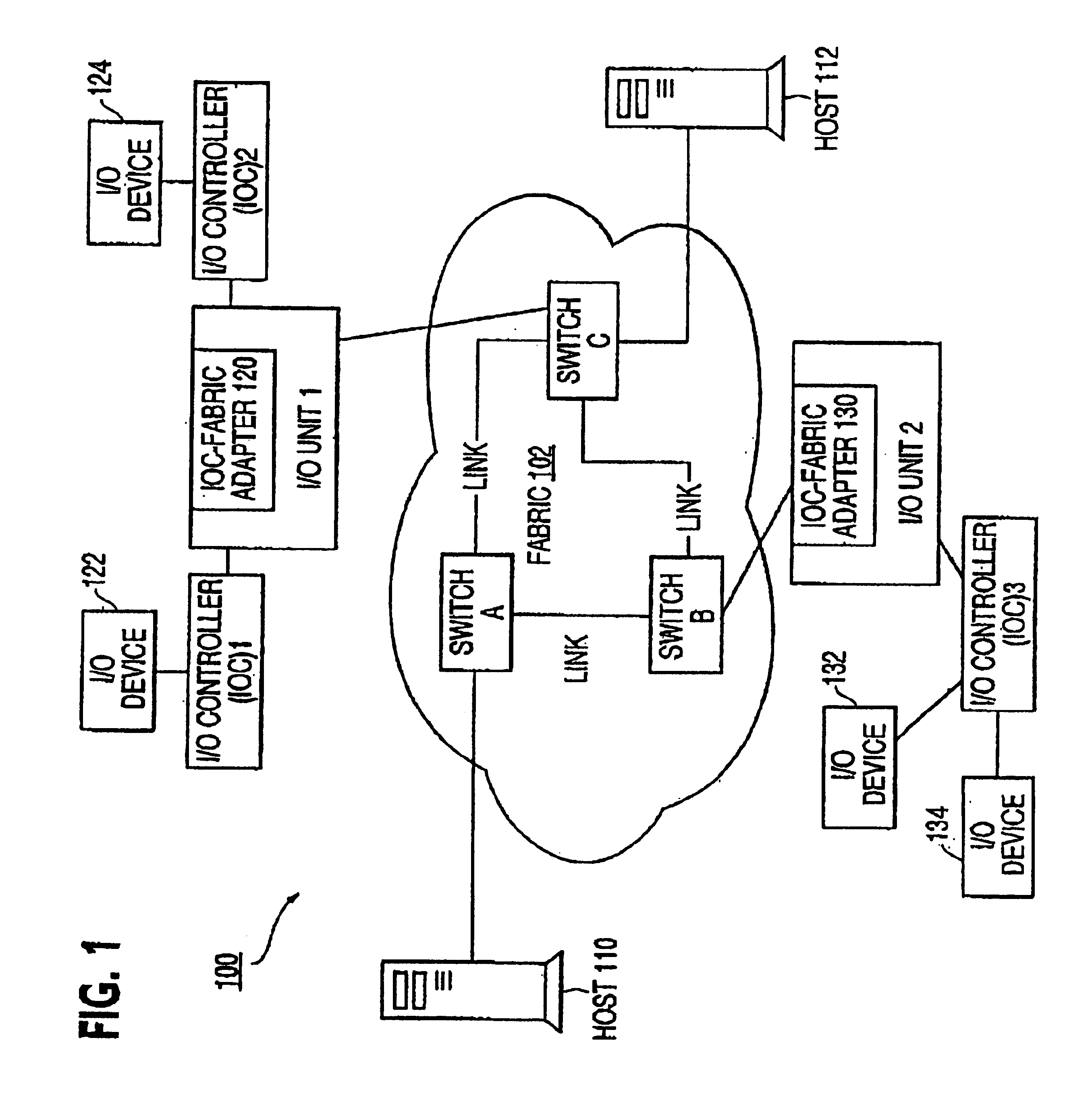

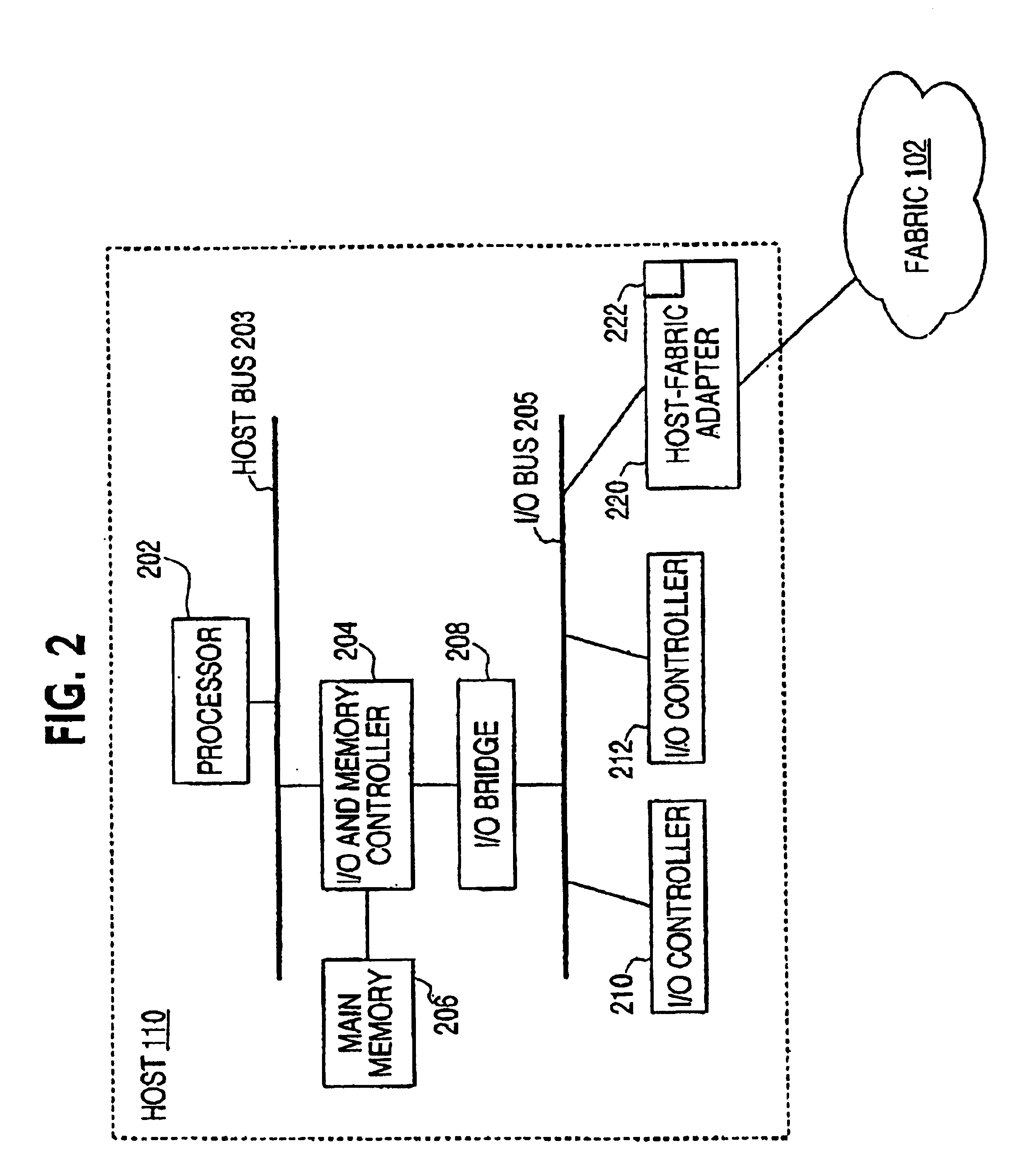

Translation and protection table and method of using the same to validate access requests

InactiveUS6859867B1Memory adressing/allocation/relocationUnauthorized memory use protectionProtection domainHost memory

A host may be coupled to a switched fabric and include a processor, a host memory coupled to the processor and a host-fabric adapter coupled to the host memory and the processor and be provided to interface with the switched fabric. The host-fabric adapter accesses a translation and protection table from the host memory for a data transaction. The translation and protection table entries include a region identifier field and a protection domain field used to validate an access request.

Owner:INTEL CORP

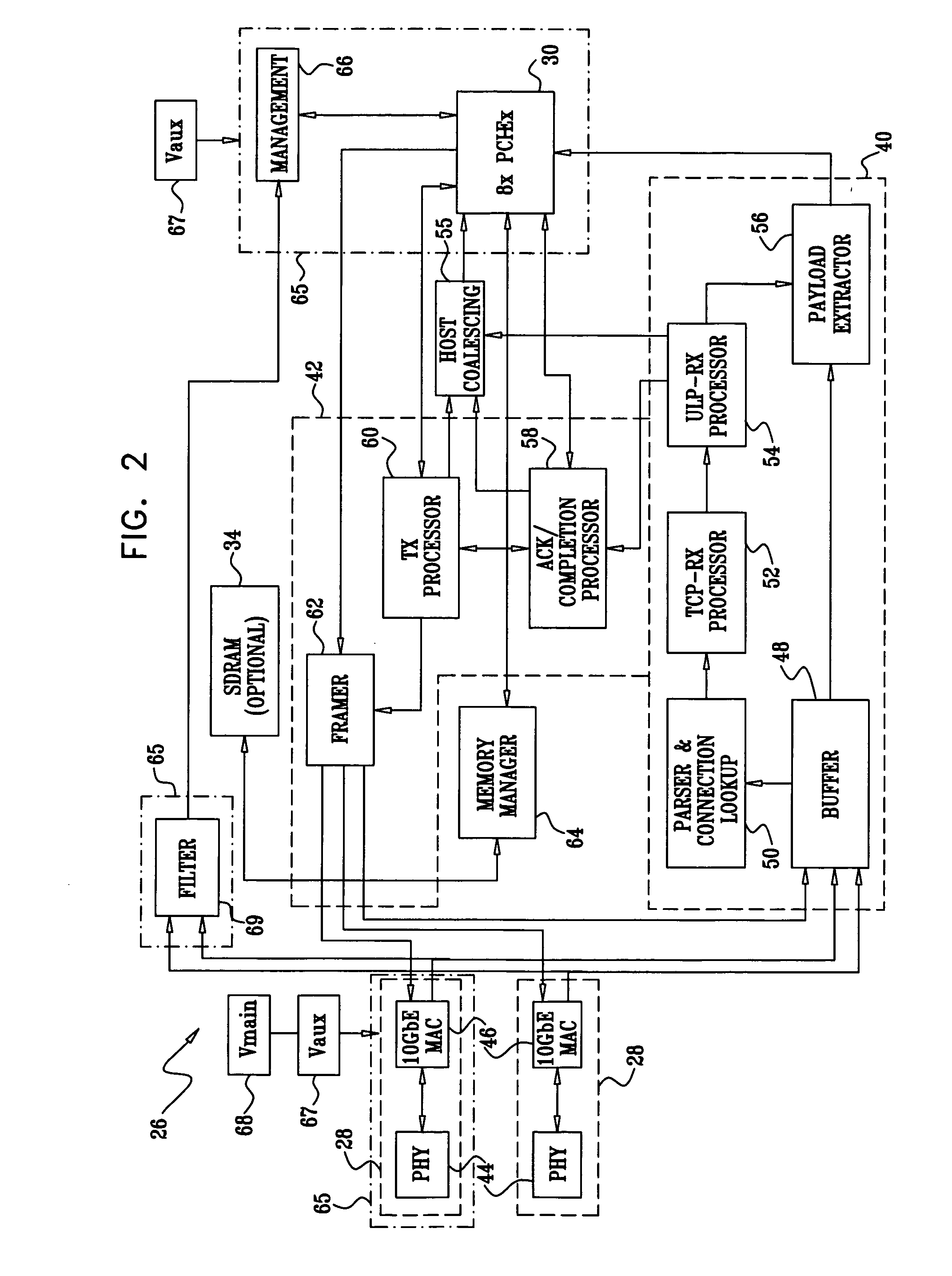

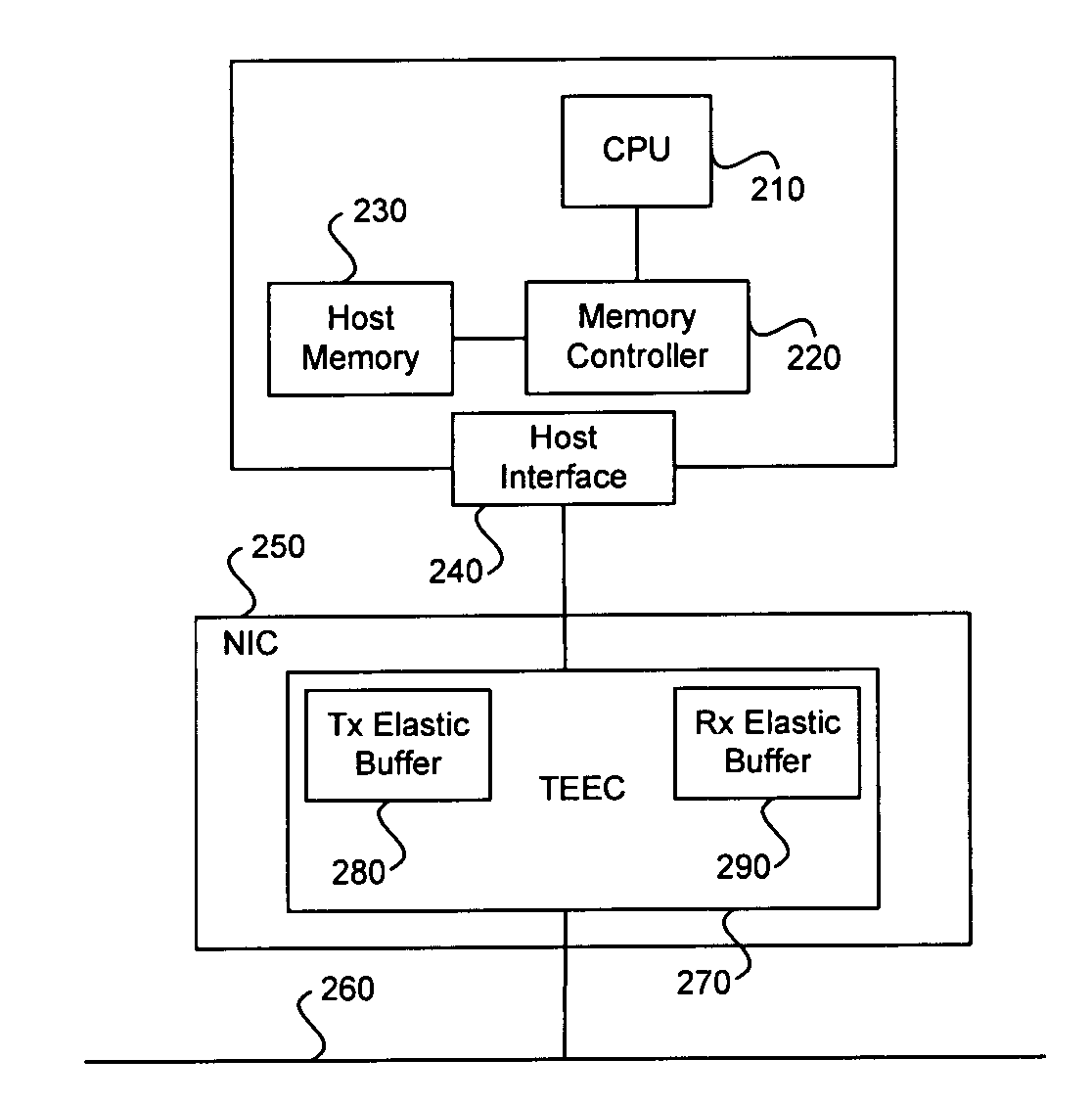

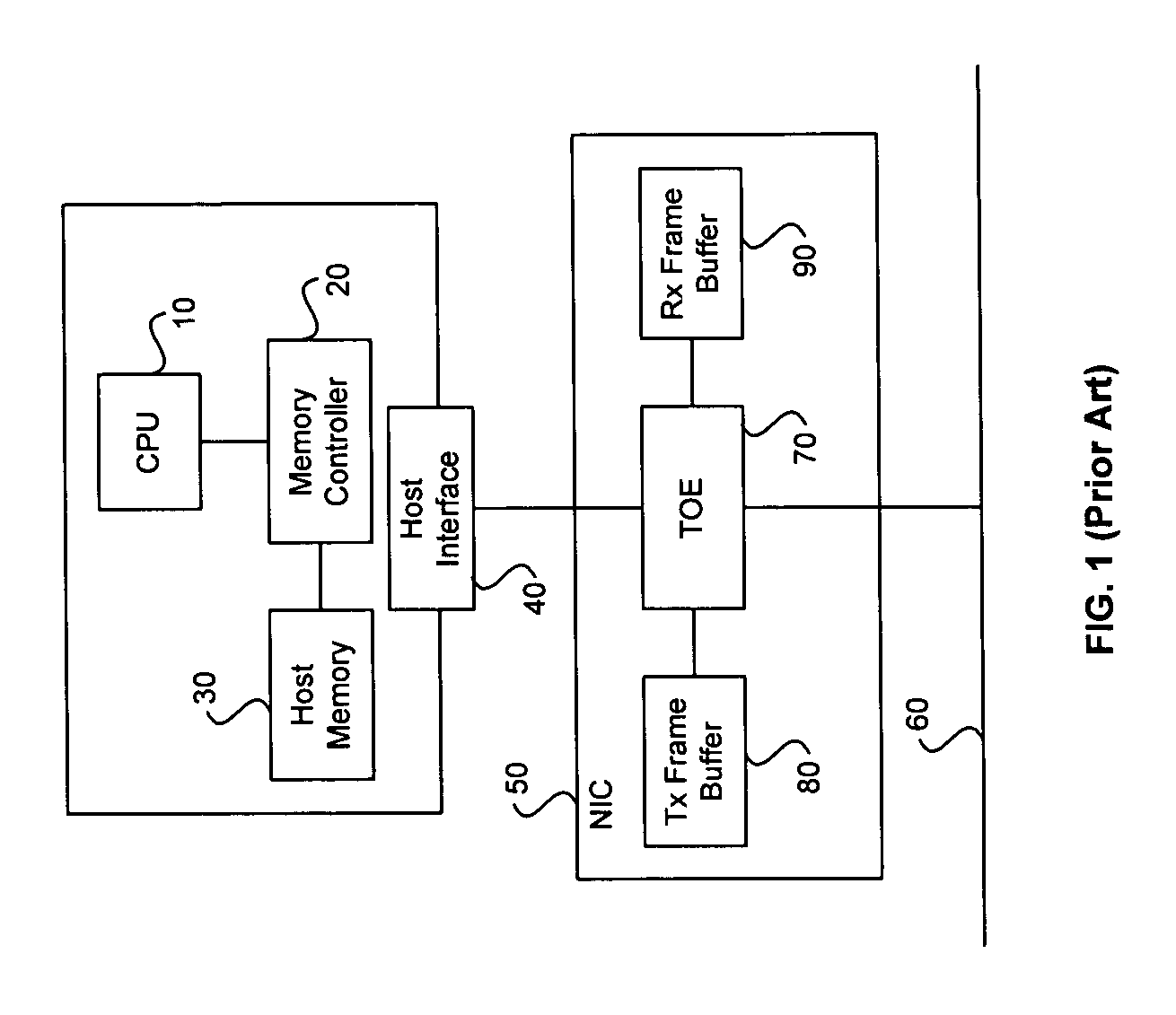

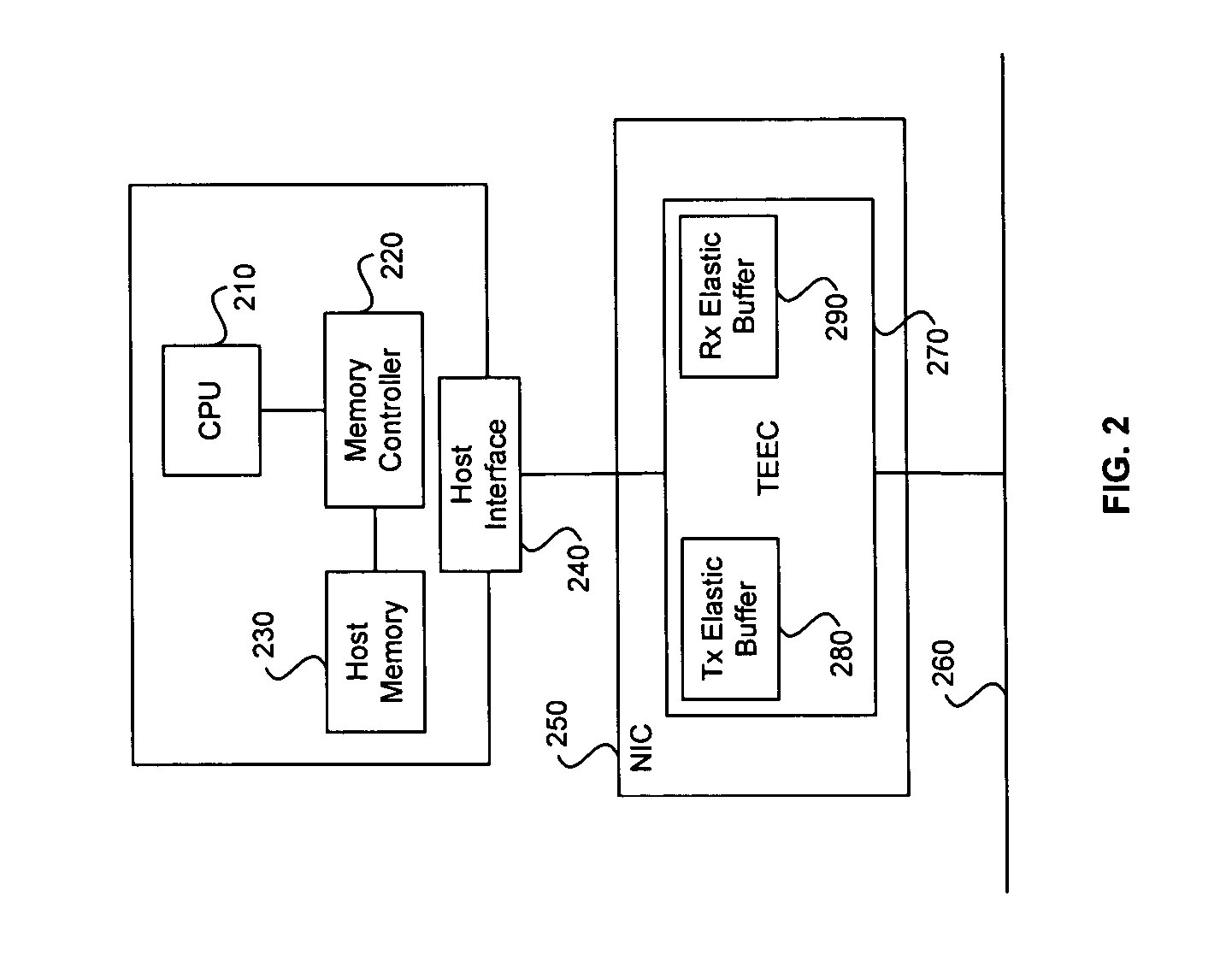

System and method for TCP offload

ActiveUS7346701B2Data switching by path configurationMultiple digital computer combinationsHost memoryComputer science

Aspects of the invention may comprise receiving an incoming TCP packet at a TEEC and processing at least a portion of the incoming packet once by the TEEC without having to do any reassembly and / or retransmission by the TEEC. At least a portion of the incoming TCP packet may be buffered in at least one internal elastic buffer of the TEEC. The internal elastic buffer may comprise a receive internal elastic buffer and / or a transmit internal elastic buffer. Accordingly, at least a portion of the incoming TCP packet may be buffered in the receive internal elastic buffer. At least a portion of the processed incoming packet may be placed in a portion of a host memory for processing by a host processor or CPU. Furthermore, at least a portion of the processed incoming TCP packet may be DMA transferred to a portion of the host memory.

Owner:AVAGO TECH INT SALES PTE LTD

System and method for providing a command buffer in a memory system

ActiveUS20140237205A1Input/output to record carriersError detection/correctionCoprocessorEntry point

A system for interfacing with a co-processor or input / output device is disclosed. According to one embodiment, the system is configured to receive a command from a host memory controller of a host system and store the command in a command buffer entry. The system determines that the command is complete using a buffer check logic and provides the command to a command buffer. The command buffer comprises a first field that specifies an entry point of the command within the command buffer entry.

Owner:RAMBUS INC

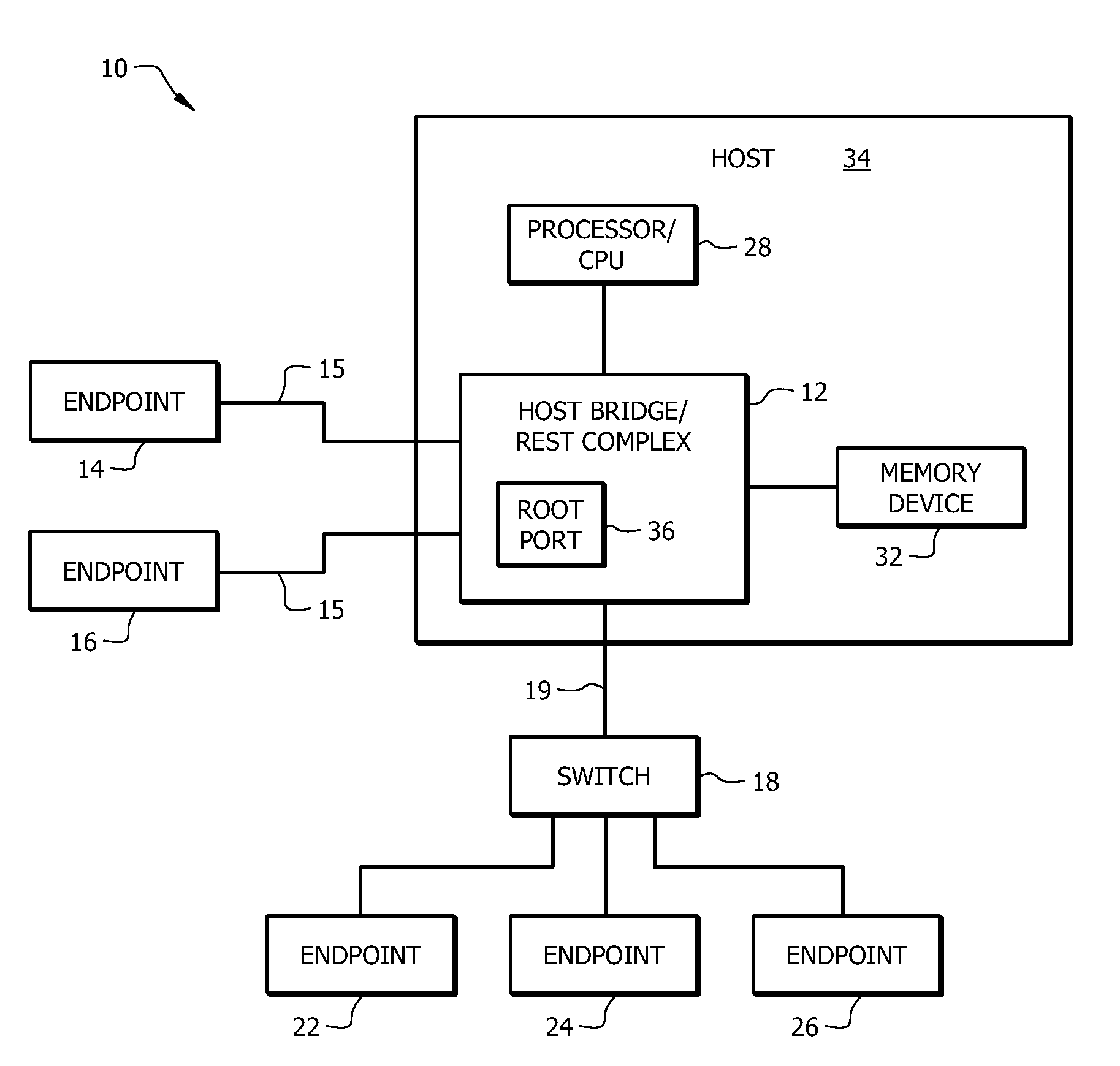

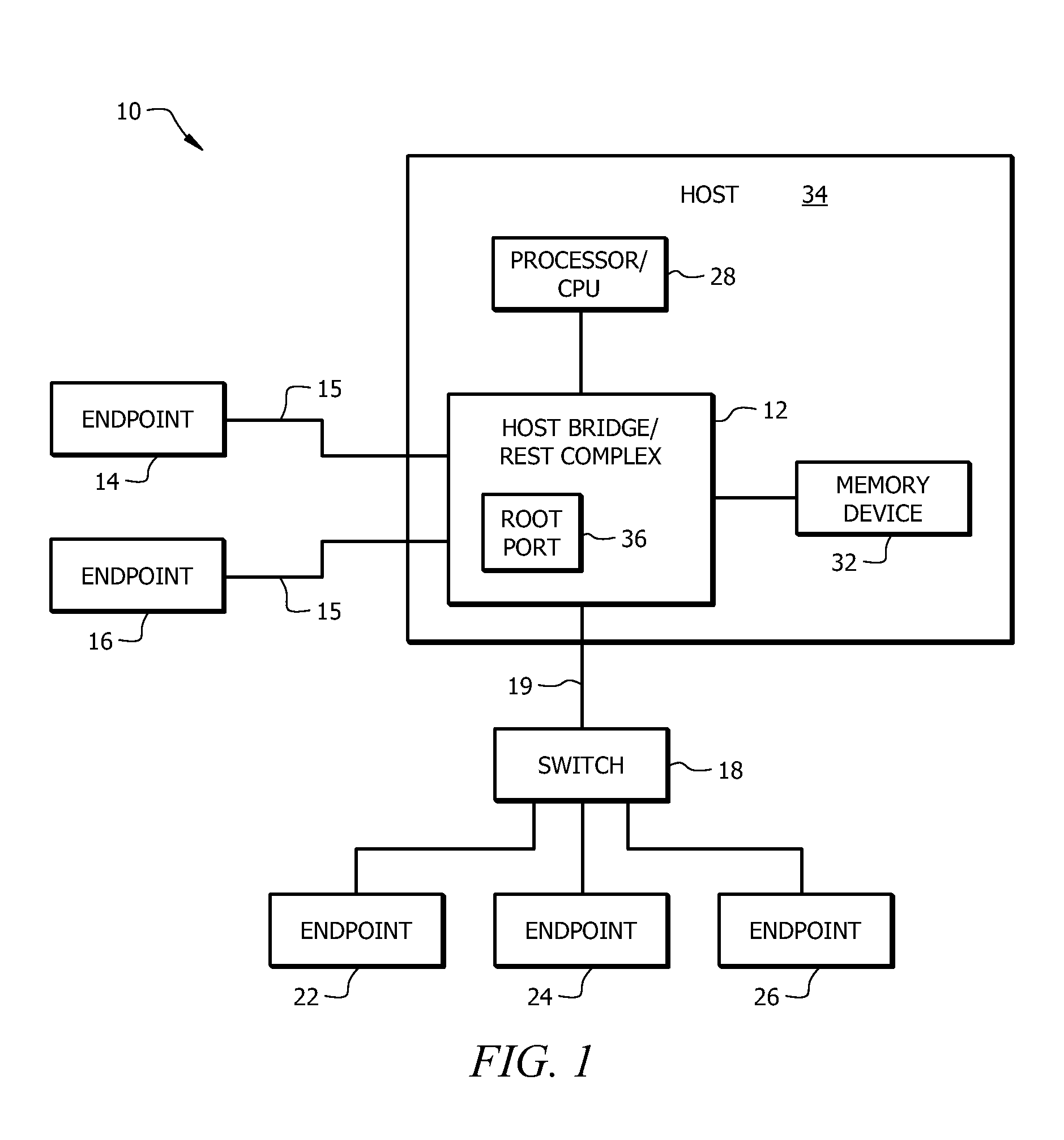

Apparatus and system having PCI root port and direct memory access device functionality

An apparatus and system having both PCI Root Port (RP) device and Direct Memory Access (DMA) End Point device functionality is disclosed. The apparatus is for use in an input / output (I / O) system interconnect module (IOSIM) device. A DMA / RP module includes a RP portion and one or more DMA / RP portions. The RP portion has one or more queue pipes and is configured to function as a standard PCIe Root Port device. Each of the DMA / RP portions includes DMA engines and DMA input and output channels, and is configured to behave more like an End Point device. The DMA / RP module also includes one or more PCIe hard core portions, an ICAM (I / O Caching Agent Module), and at least one PCIe service block (PSB). *The hard core portion couples the DMA / RP module and IOSIM device to an I / O device via a PCIe link, and the ICAM transitions data to a host memory device operating system.

Owner:CAVANAGH JR EDWARD T +3

Method and apparatus for reducing host overhead in a socket server implementation

ActiveUS20100023626A1Eliminate needImproves host performanceMultiple digital computer combinationsTransmissionApplication softwareNetwork service

A network application executing on a host system provides a list of application buffers in host memory stored in a queue to a network services processor coupled to the host system. The application buffers are used for storing data transferred on a socket established between the network application and a remote network application executing in a remote host system. Using the application buffers, data received by the network services processor over the network is transferred between the network services processor and the application buffers. After the transfer, a completion notification is written to one of the two control queues in the host system. The completion notification includes the size of the data transferred and an identifier associated with the socket. The identifier identifies a thread associated with the transferred data and the location of the data in the host system.

Owner:MARVELL ASIA PTE LTD

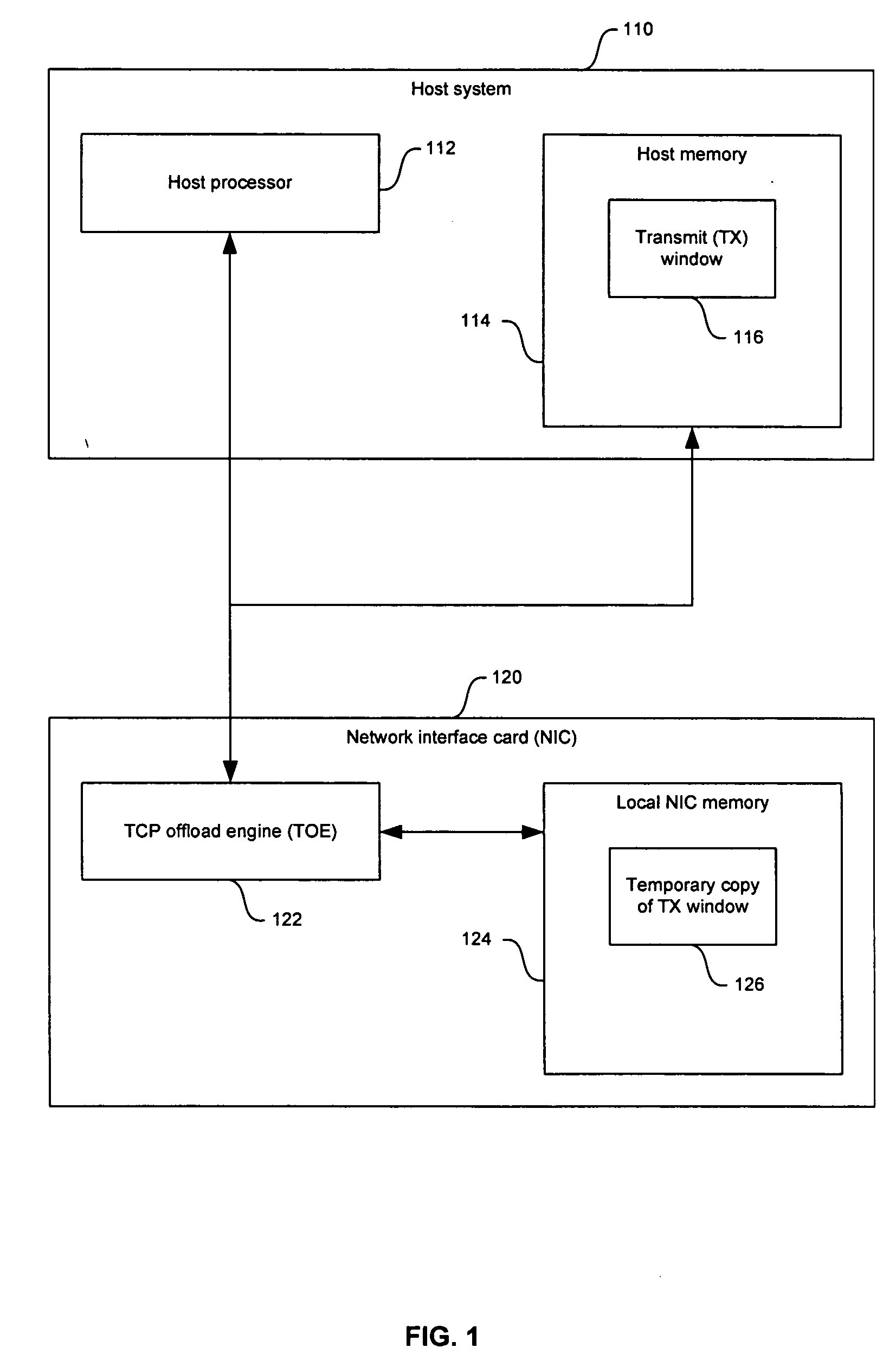

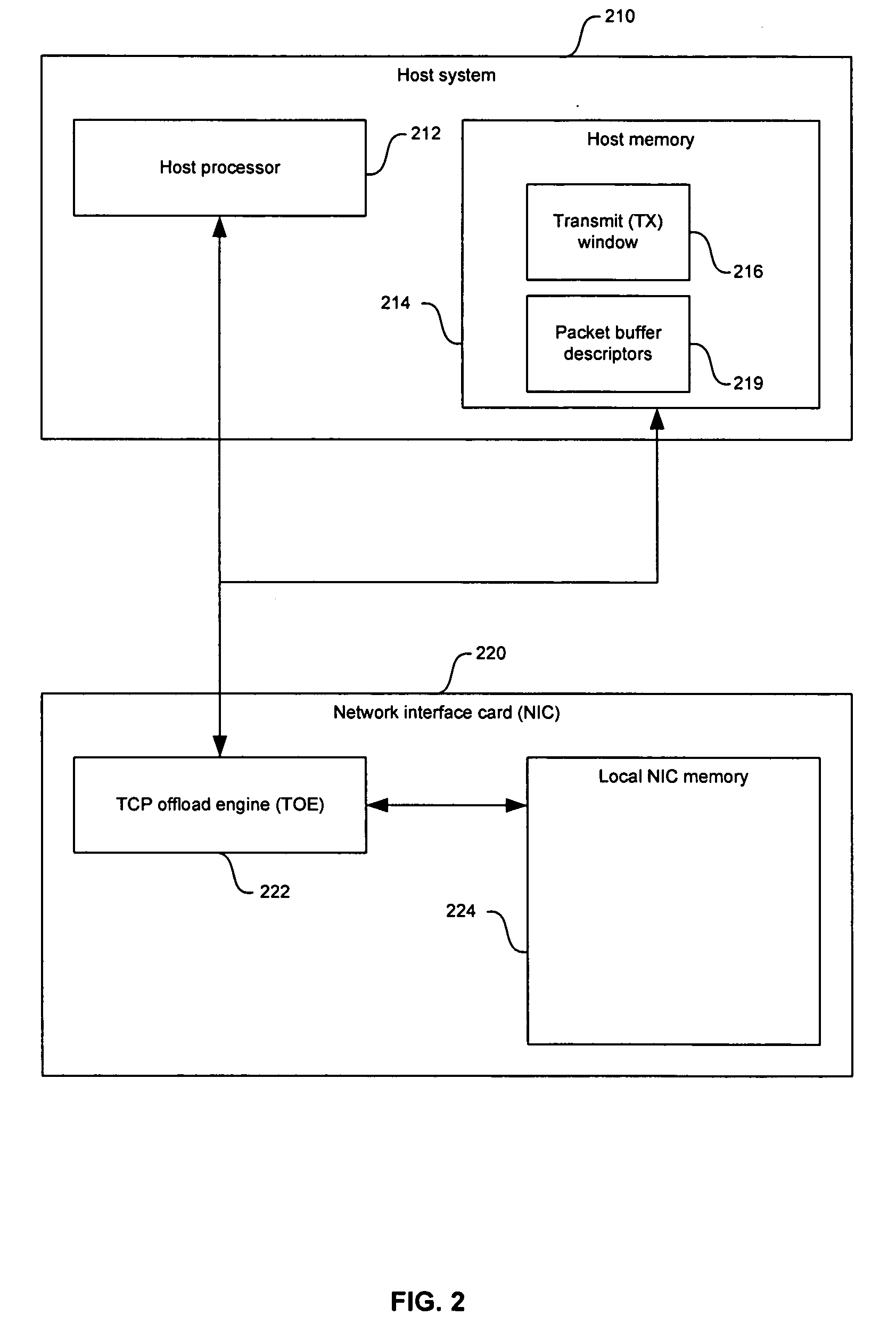

Method and system for transmission control protocol (TCP) retransmit processing

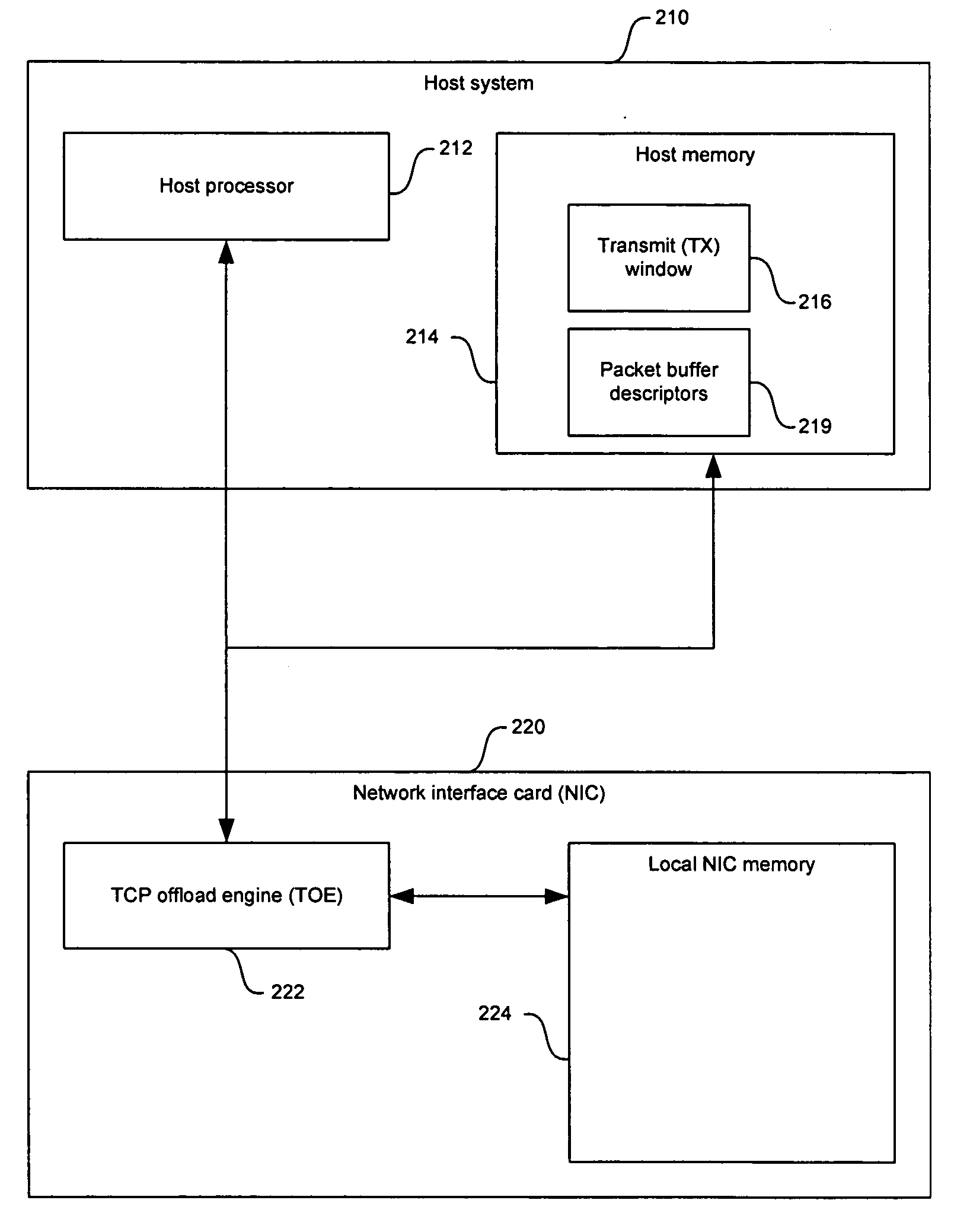

InactiveUS20050135412A1Improve network performanceData switching by path configurationNetwork connectionsHost memoryTimer

Certain aspects of the present invention for transmission control protocol (TCP) retransmission processing may comprise receiving a request for packet retransmission to be processed by an offload network interface card (NIC). A remote peer, a retransmission timer, or a fast retransmission signal may initiate the request. The NIC processes the request information and sends notification to the host of the request. The host searches the TCP buffers of the TCP transmission window in host memory for the packet. Once the packet is located, the host may send the offload NIC the buffer descriptors containing data that locates the packet in host memory. The offload NIC may retrieve the packet from host memory and may retransmit the packet according to the request information. The offload NIC may send notification to the host that the packet has been retransmitted.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and system for processing network data packets

ActiveUS7515612B1Time-division multiplexMultiple digital computer combinationsNetworking protocolNetwork control

A method for processing IP datagrams using an outbound processing state machine in an outbound processor, wherein the IP datagrams are generated by a host system is provided. The method includes, creating an IOCB with plural host memory addresses that define host data to be sent and a host memory address of a network control block (“NCB”) used to build network protocol headers, wherein the host sends the IOCB to the outbound processor. The outbound processor reads the NCB from host memory and creates an IP and MAC level protocol header(s) for a data packet(s) used to send the IP data. If a datagram fits into an IP packet, the outbound processor builds headers to send the datagram and then uses the plural host memory addresses defining the host data to read the data from the host, places the data into the packet and sends the packet.

Owner:MARVELL ASIA PTE LTD

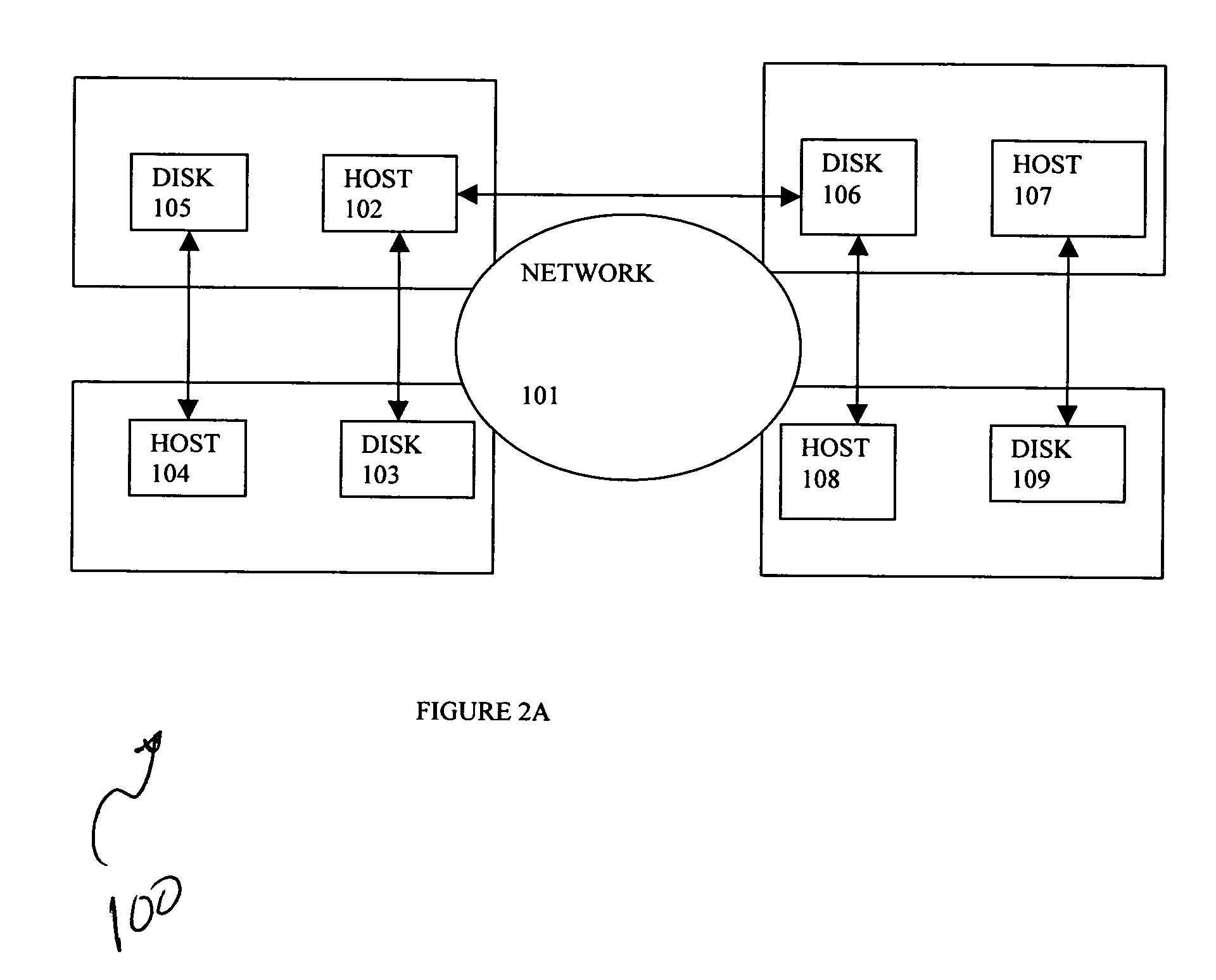

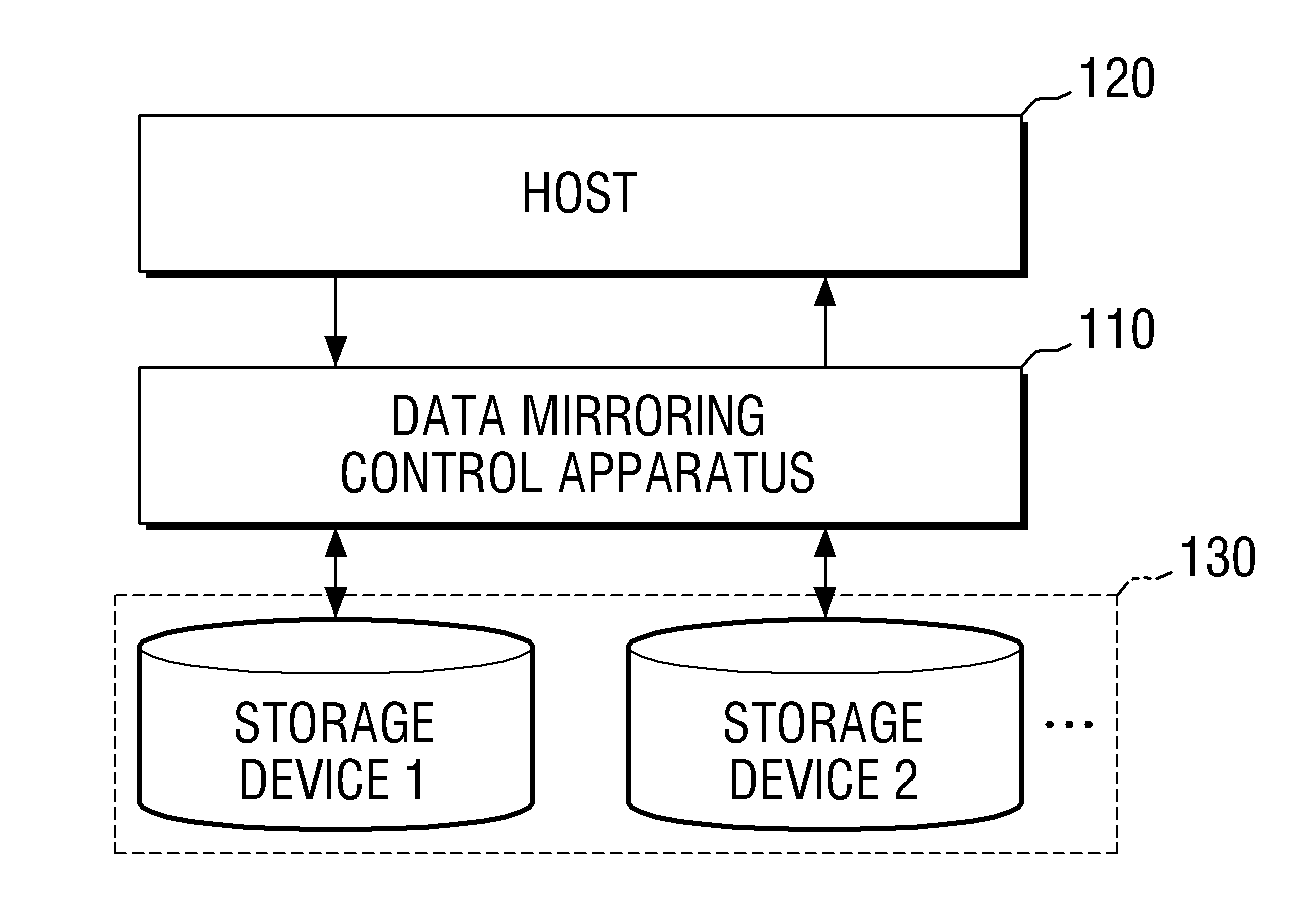

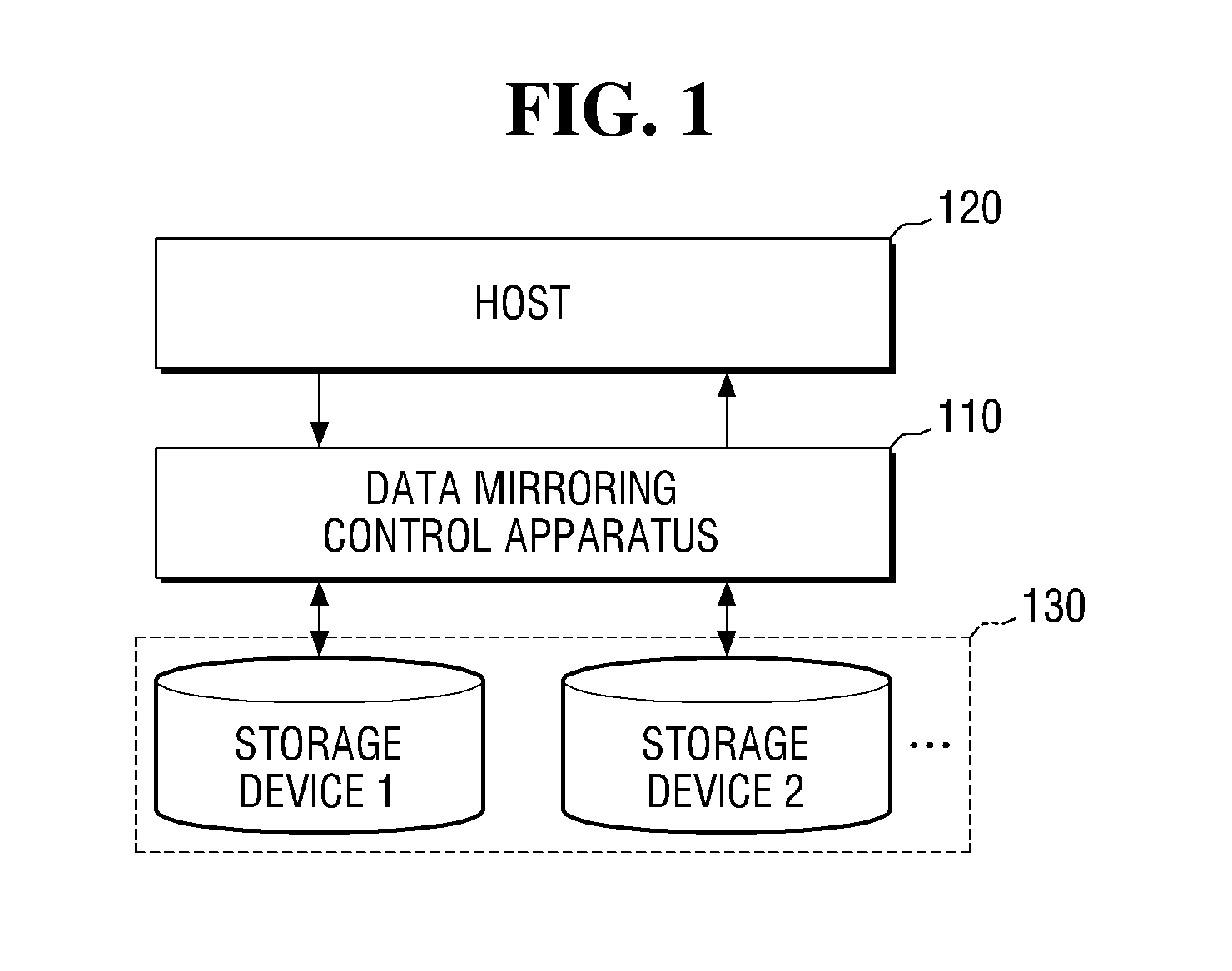

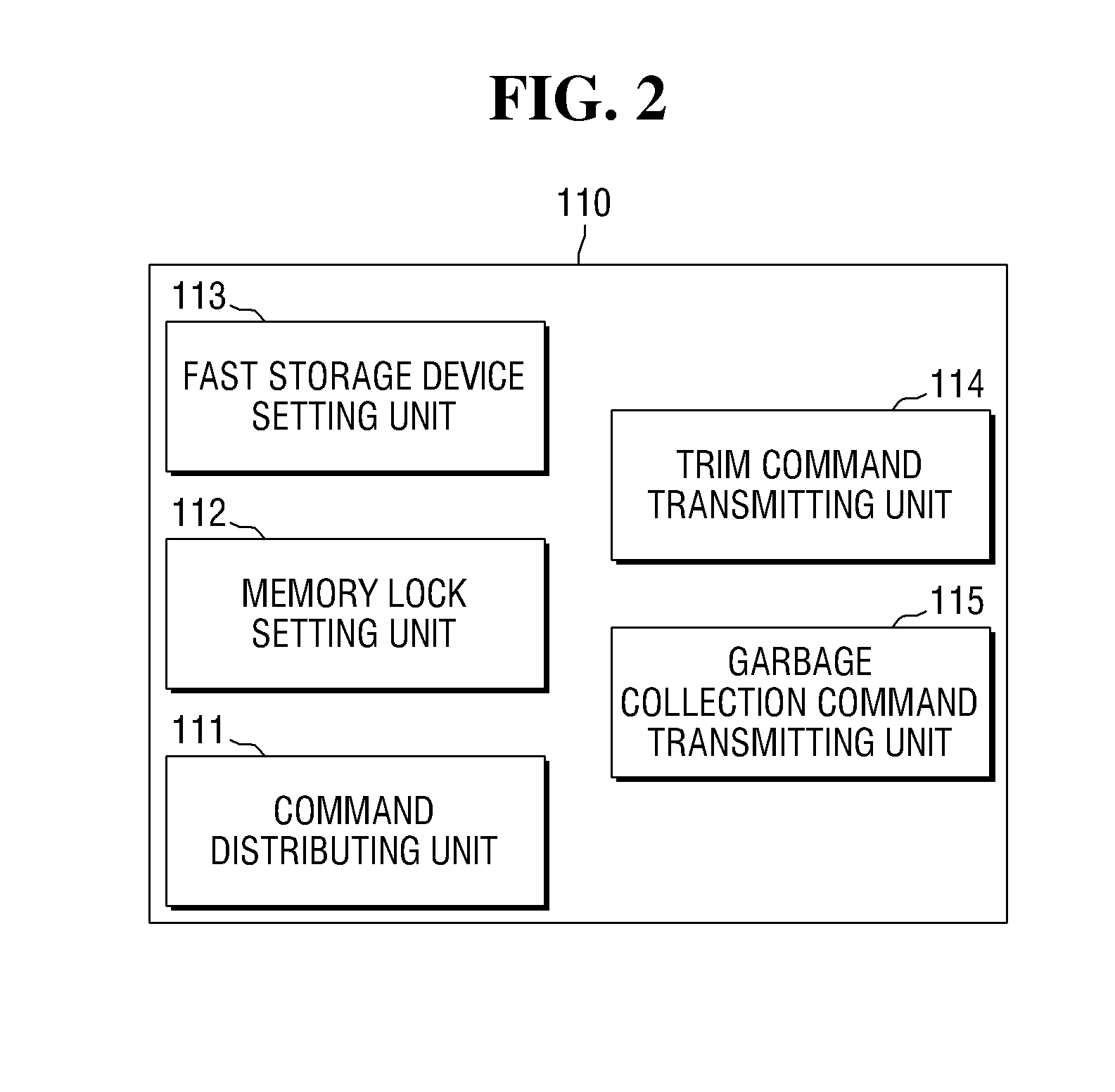

Data mirroring control apparatus and method

A data mirroring control apparatus includes a command distributing unit configured to transmit a first write command to a plurality of mirroring storage devices, the first write command including an instruction for data requested by a host to be written; and a memory lock setting unit configured to set a memory lock on the data requested by the host to be written among data stored in a host memory and configured to release the memory lock on the data after the data with the memory lock is written to the plurality of mirroring storage devices.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and system for deferred pinning of host memory for stateful network interfaces

ActiveUS20080082696A1Multiple digital computer combinationsProgram controlOperational systemHost memory

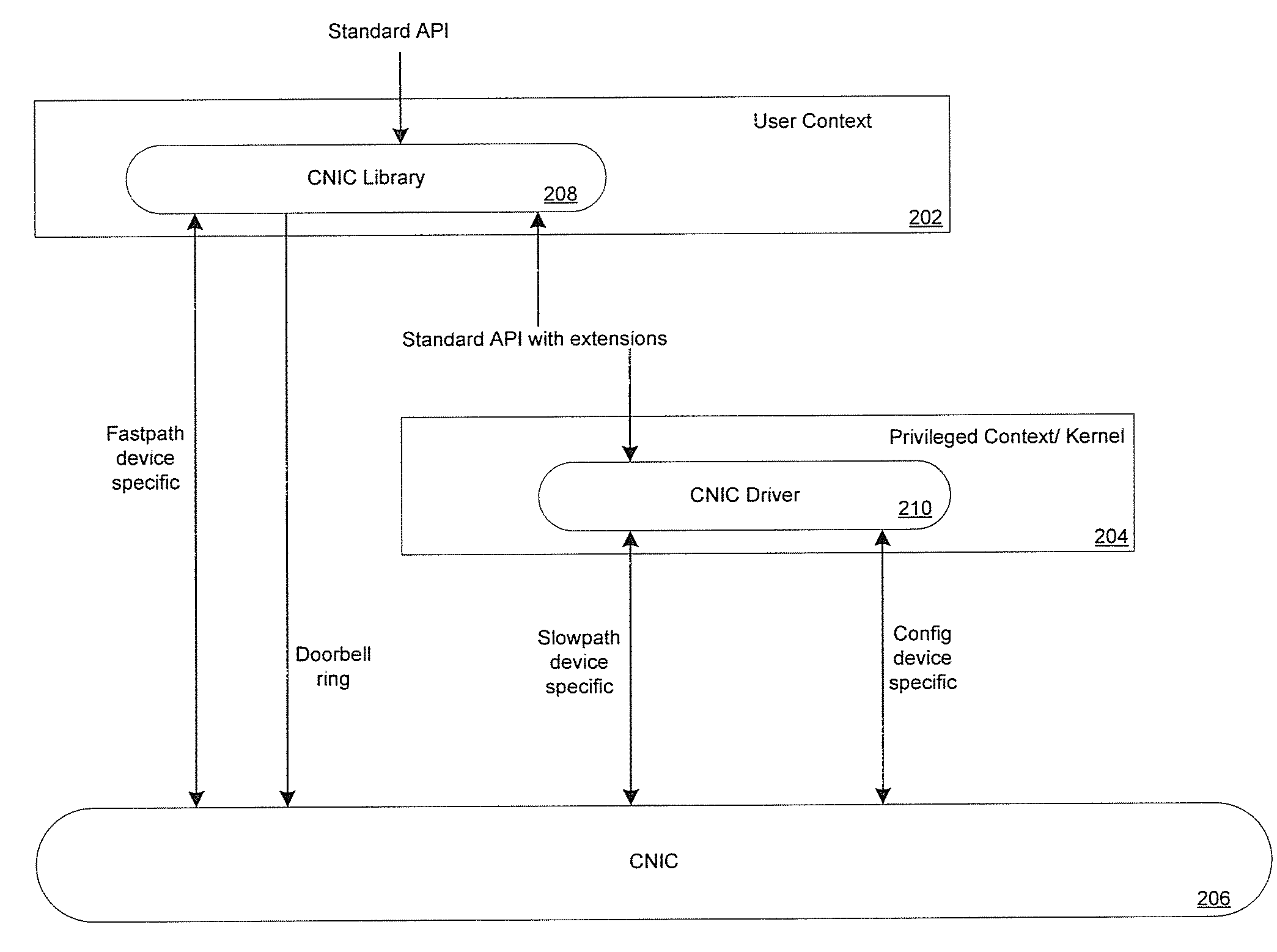

Certain aspects of a method and system for deferred pinning of host memory for stateful network interfaces are disclosed. Aspects of a method may include dynamically pinning by a convergence network interface controller (CNIC), at least one of a plurality of memory pages. At least one of: a hypervisor and a guest operating system (GOS) may be prevented from swapping at least one of the dynamically pinned plurality of memory pages.

Owner:AVAGO TECH INT SALES PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com