Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

16981results about How to "Reduce occupancy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

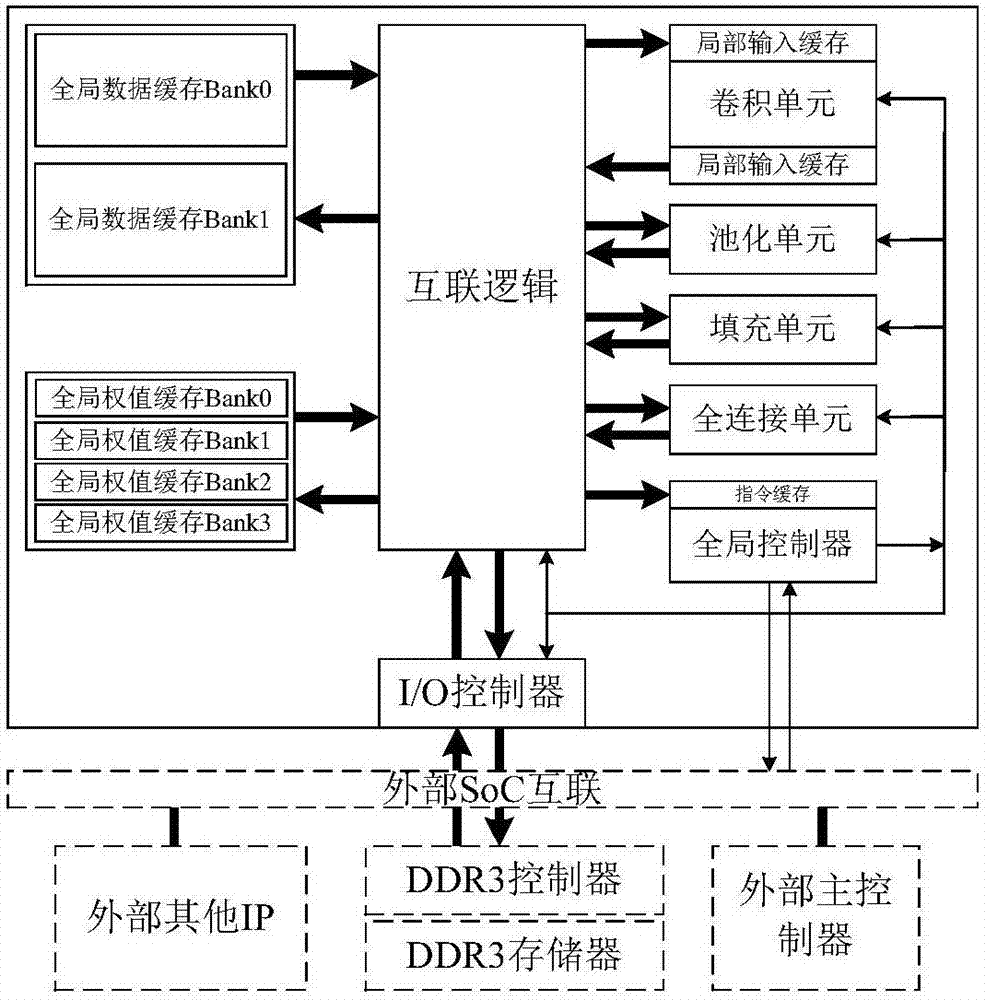

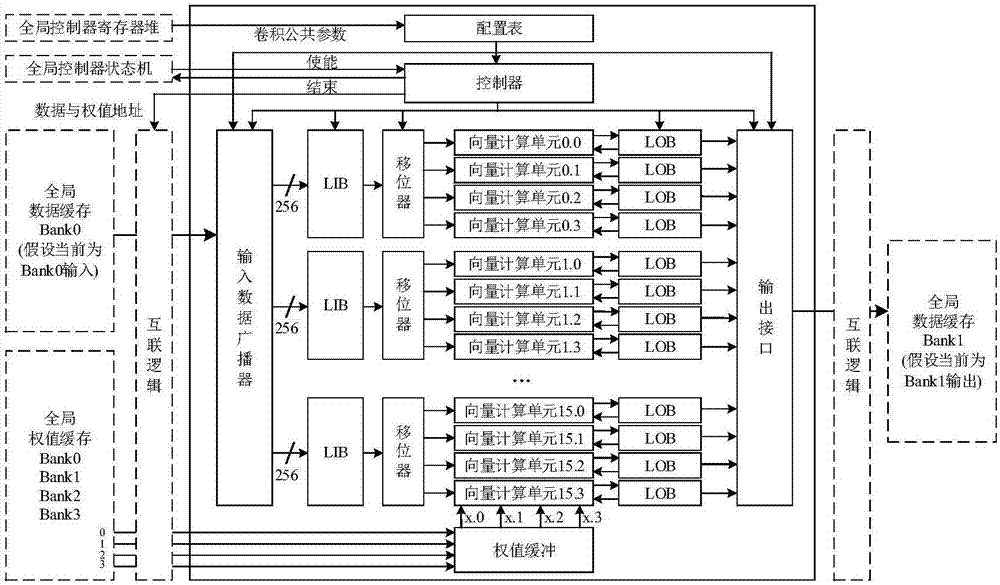

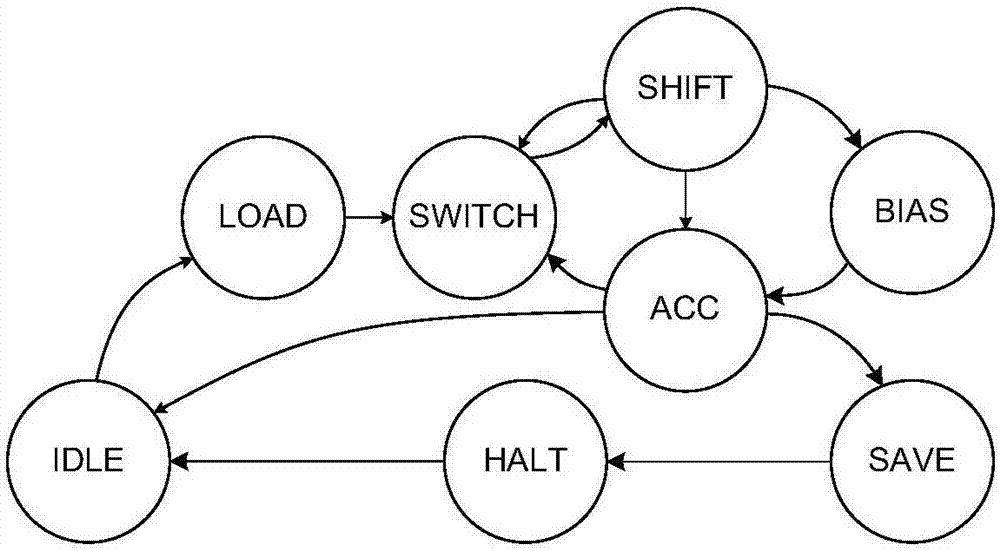

Co-processor IP core of programmable convolutional neural network

ActiveCN106940815AReduce frequencyReduce bandwidth pressureNeural architecturesPhysical realisationHardware structureInstruction set design

The present invention discloses a co-processor IP core of a programmable convolutional neural network. The invention aims to realize the arithmetic acceleration of the convolutional neural network on a digital chip (FPGA or ASIC). The co-processor IP core specifically comprises a global controller, an I / O controller, a multi-level cache system, a convolution unit, a pooling unit, a filling unit, a full-connection unit, an internal interconnection logical unit, and an instruction set designed for the co-processor IP. The proposed hardware structure supports the complete flows of convolutional neural networks diversified in scale. The hardware-level parallelism is fully utilized and the multi-level cache system is designed. As a result, the characteristics of high performance, low power consumption and the like are realized. The operation flow is controlled through instructions, so that the programmability and the configurability are realized. The co-processor IP core can be easily applied to different application scenes.

Owner:XI AN JIAOTONG UNIV

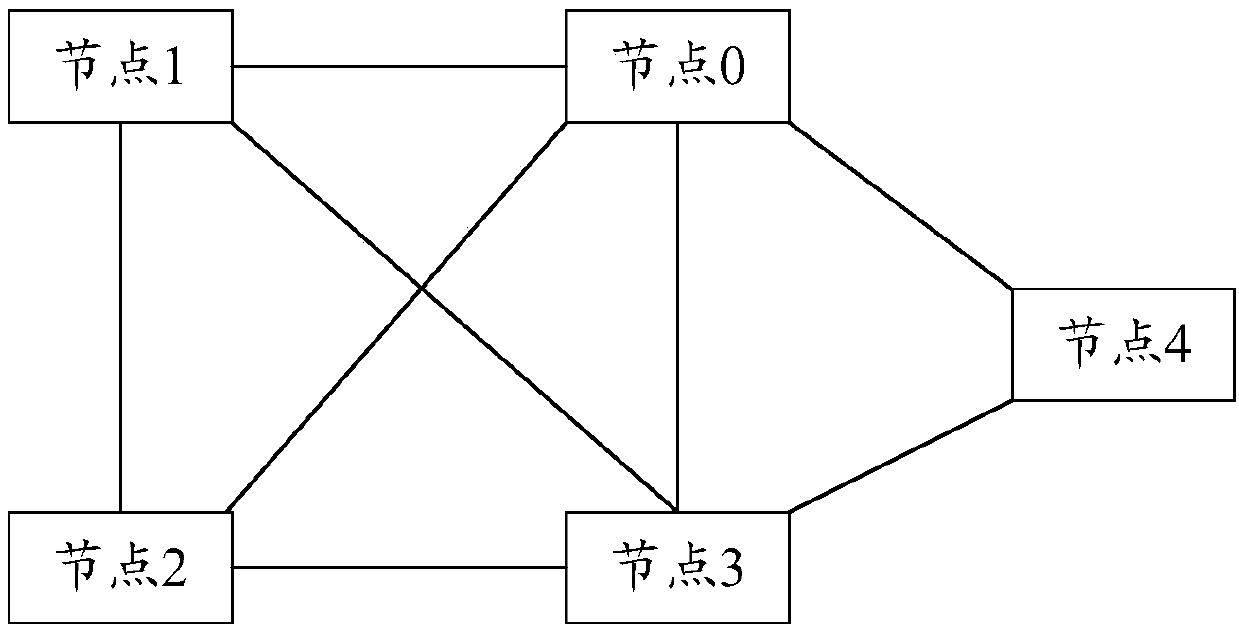

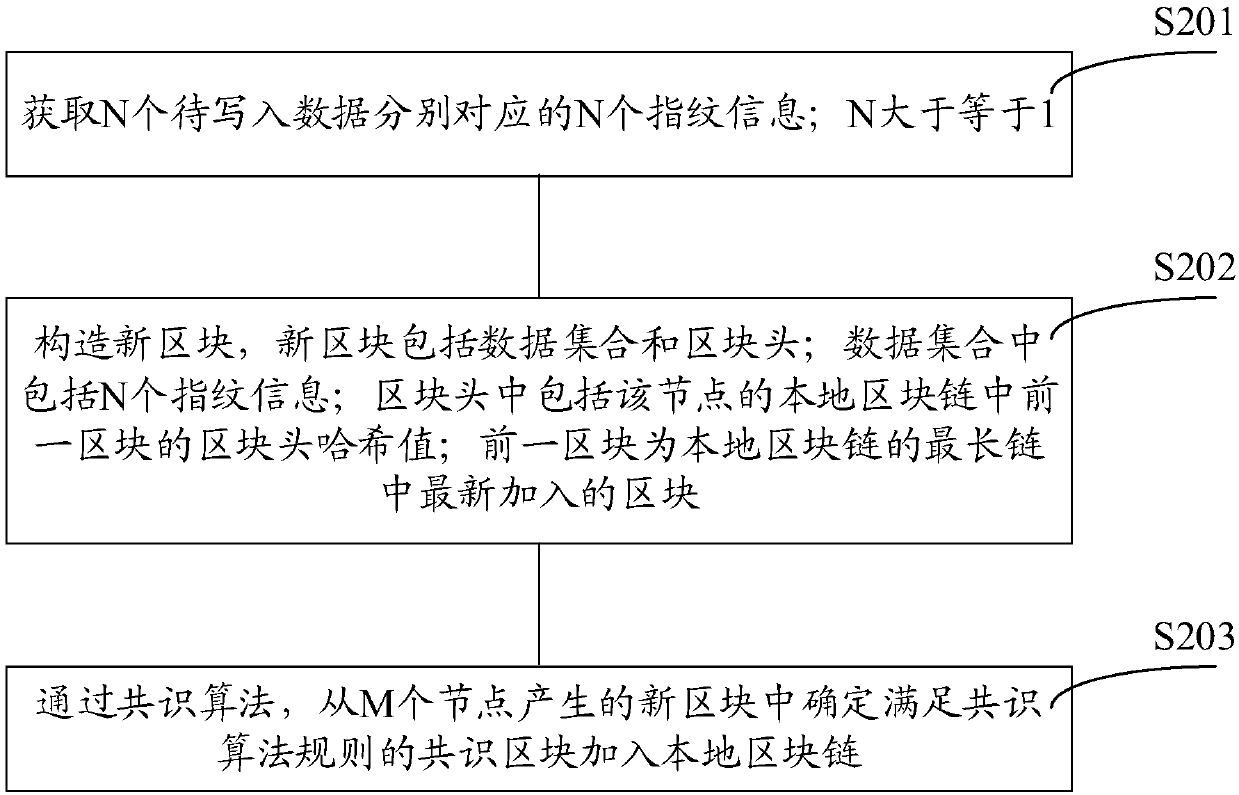

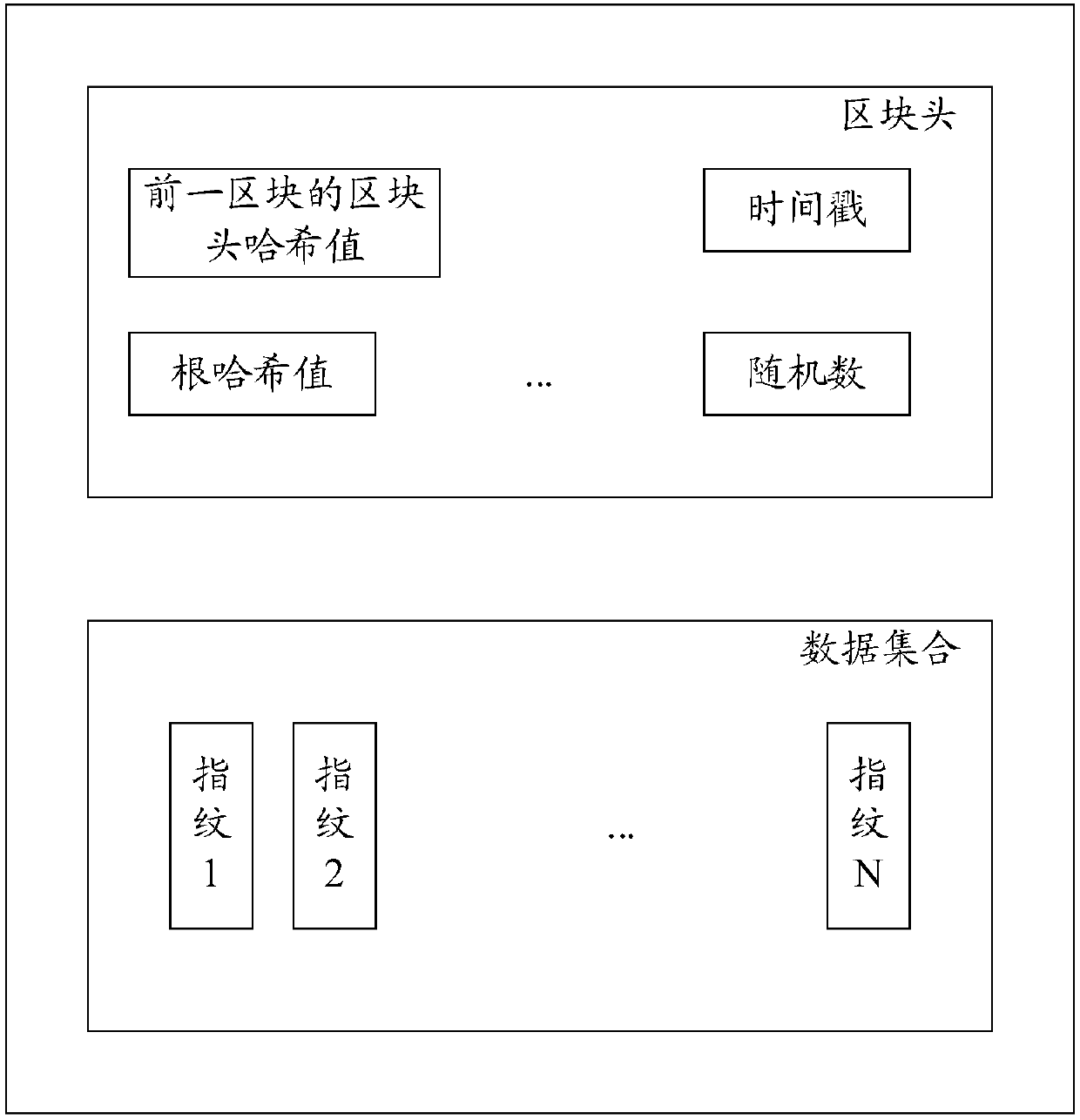

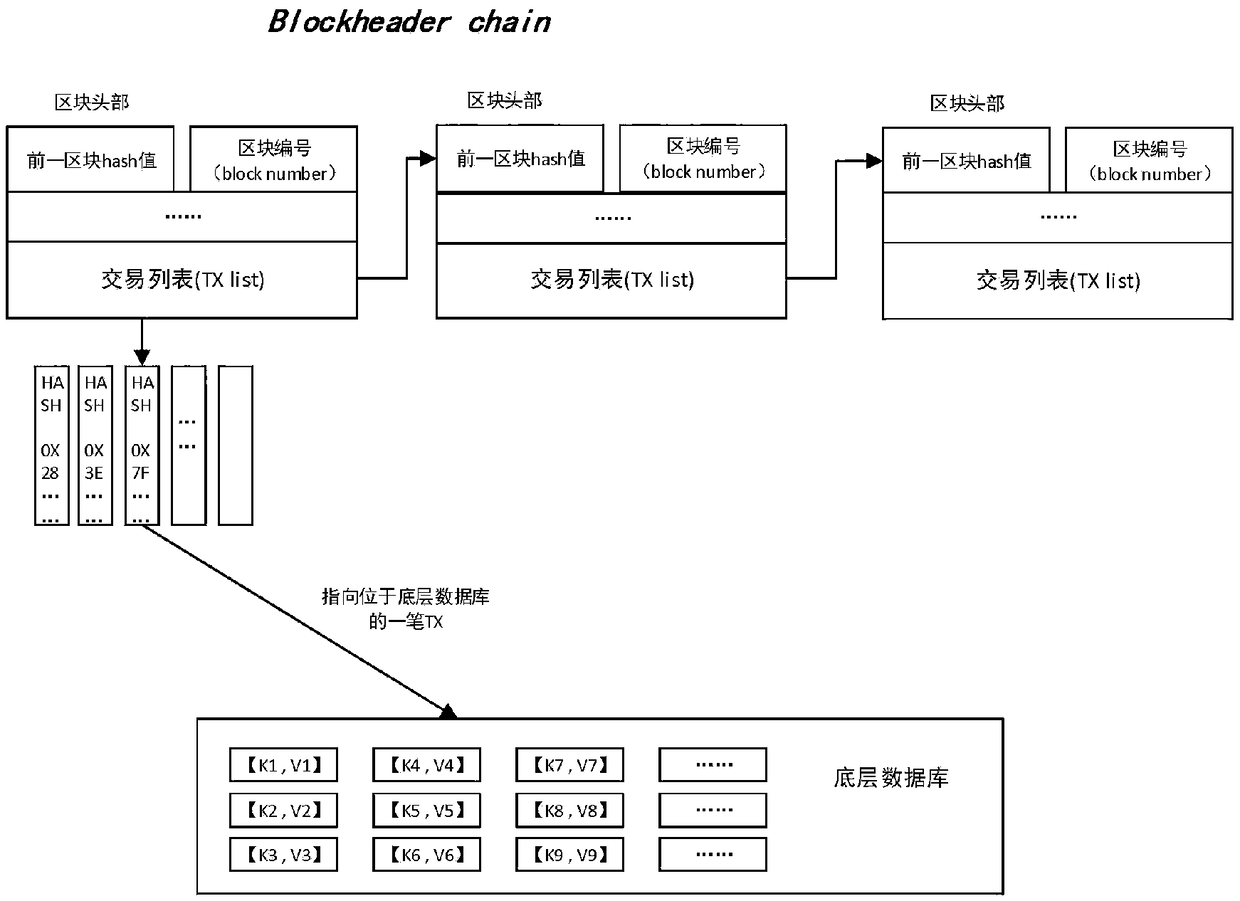

Block chain generation method, data verification method, nodes and system

ActiveCN107807951AReduce occupancyIncrease profitUser identity/authority verificationDigital data protectionData validationData set

The invention discloses a block chain generation method, a data verification method, nodes and a system. The block chain generation method is used for increasing the utilization rate of a storage space and is applied to a block chain system containing M nodes. According to each of the M nodes, the block chain generation method comprises the steps that N pieces of fingerprint information corresponding to N pieces of to-be-written data are acquired, wherein N is greater than or equal to 1; a new block is constructed, wherein the new block comprises a data set and a block head; the data set comprises N pieces of fingerprint information; the block head comprises a Hash value of a block head of a previous block in a local block chain of the node, wherein the previous block is a newly added block in a longest chain of the local block chain; and through a consensus algorithm, a consensus block is determined from new blocks generated by M nodes and is added into the local block chain. In thisway, the storage space occupied by other information of non-to-be-written data in transaction information is saved, and therefore the utilization rate of the storage space and is increased.

Owner:UNION MOBILE PAY

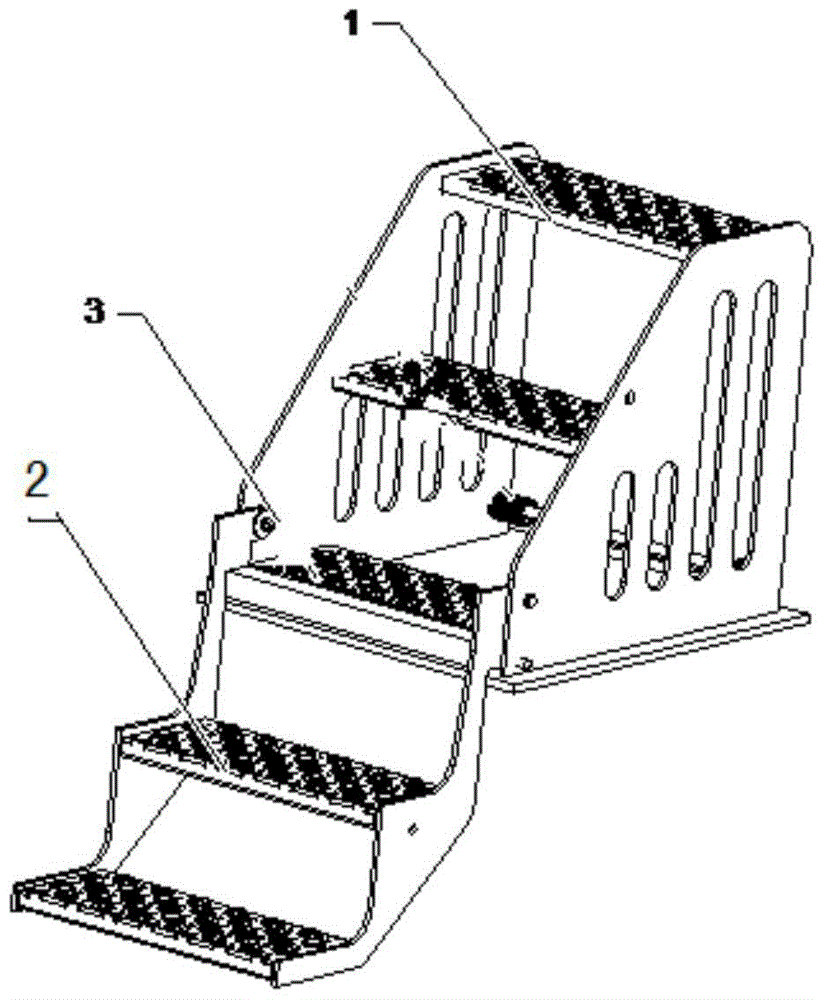

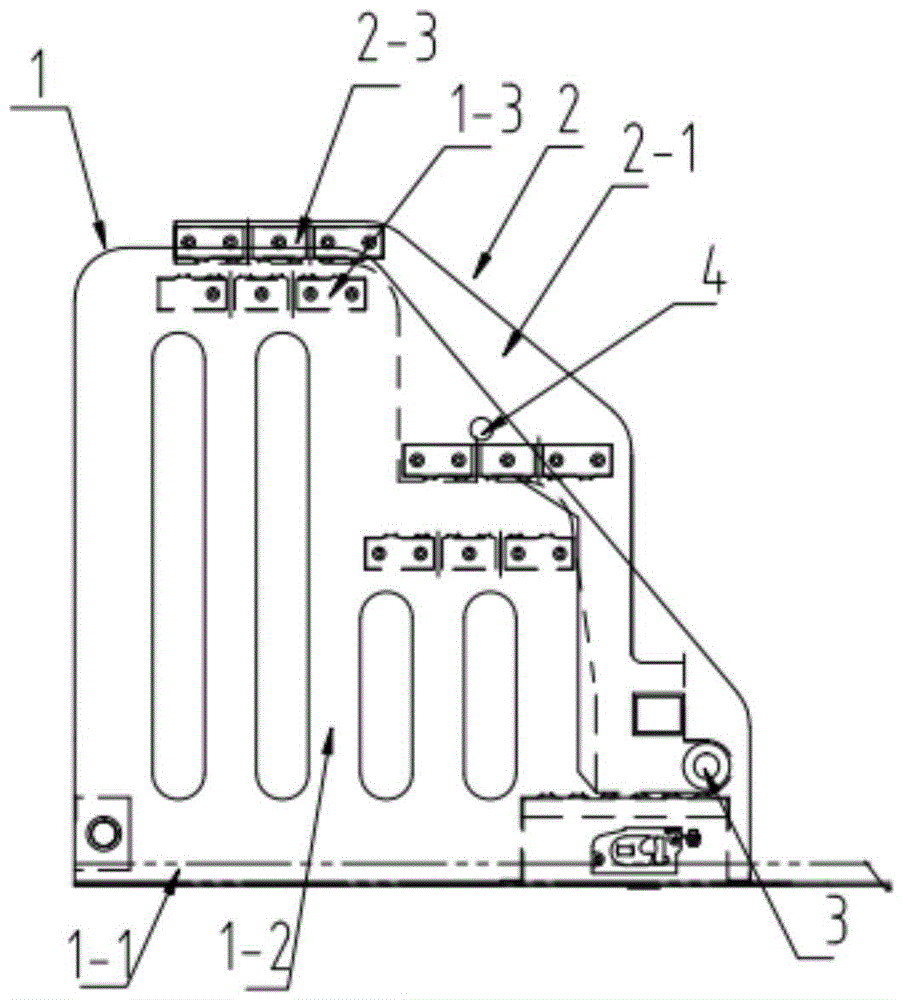

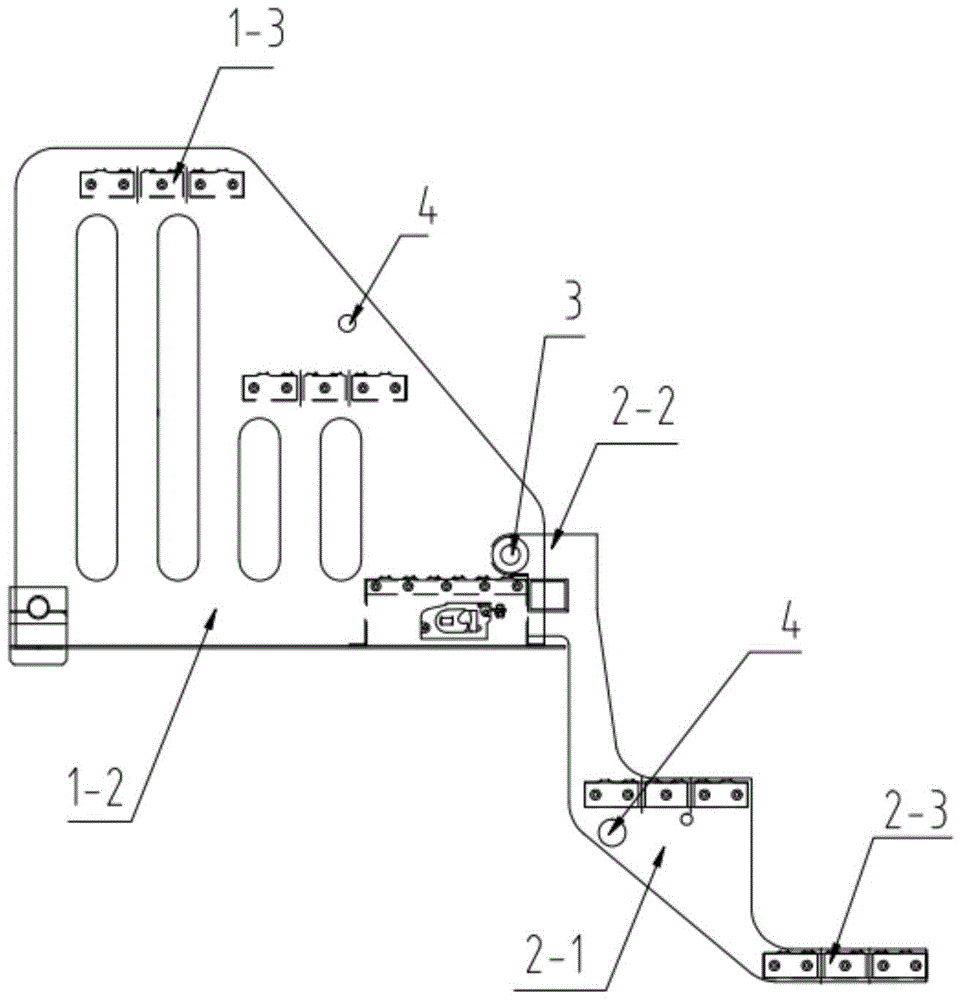

A hidden flip ladder and oil field operation equipment

ActiveCN104260672BEasy to pull apartReduce occupancySteps arrangementElectrical and Electronics engineeringMultiple stages

Owner:YANTAI JEREH PETROLEUM EQUIP & TECH CO LTD

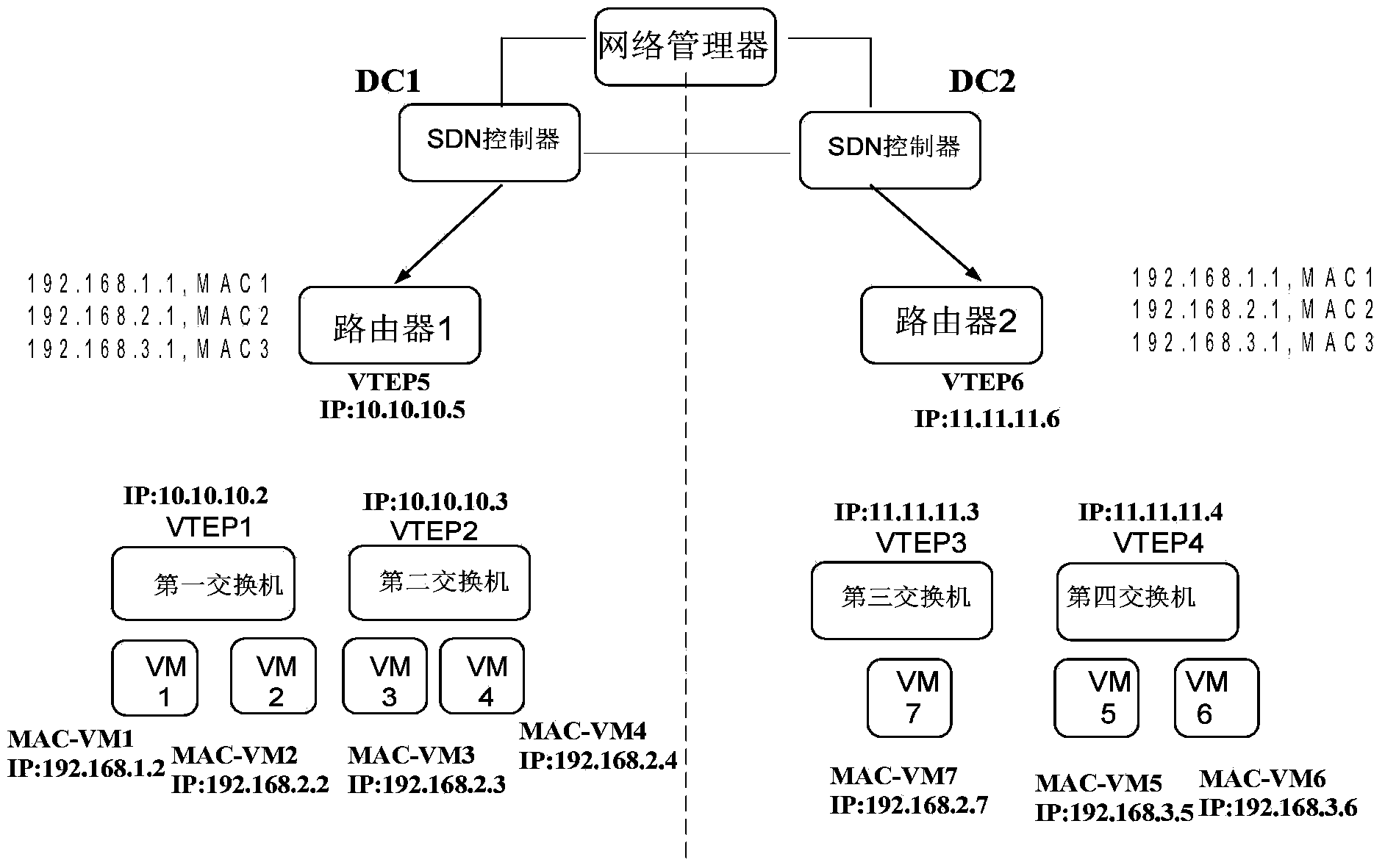

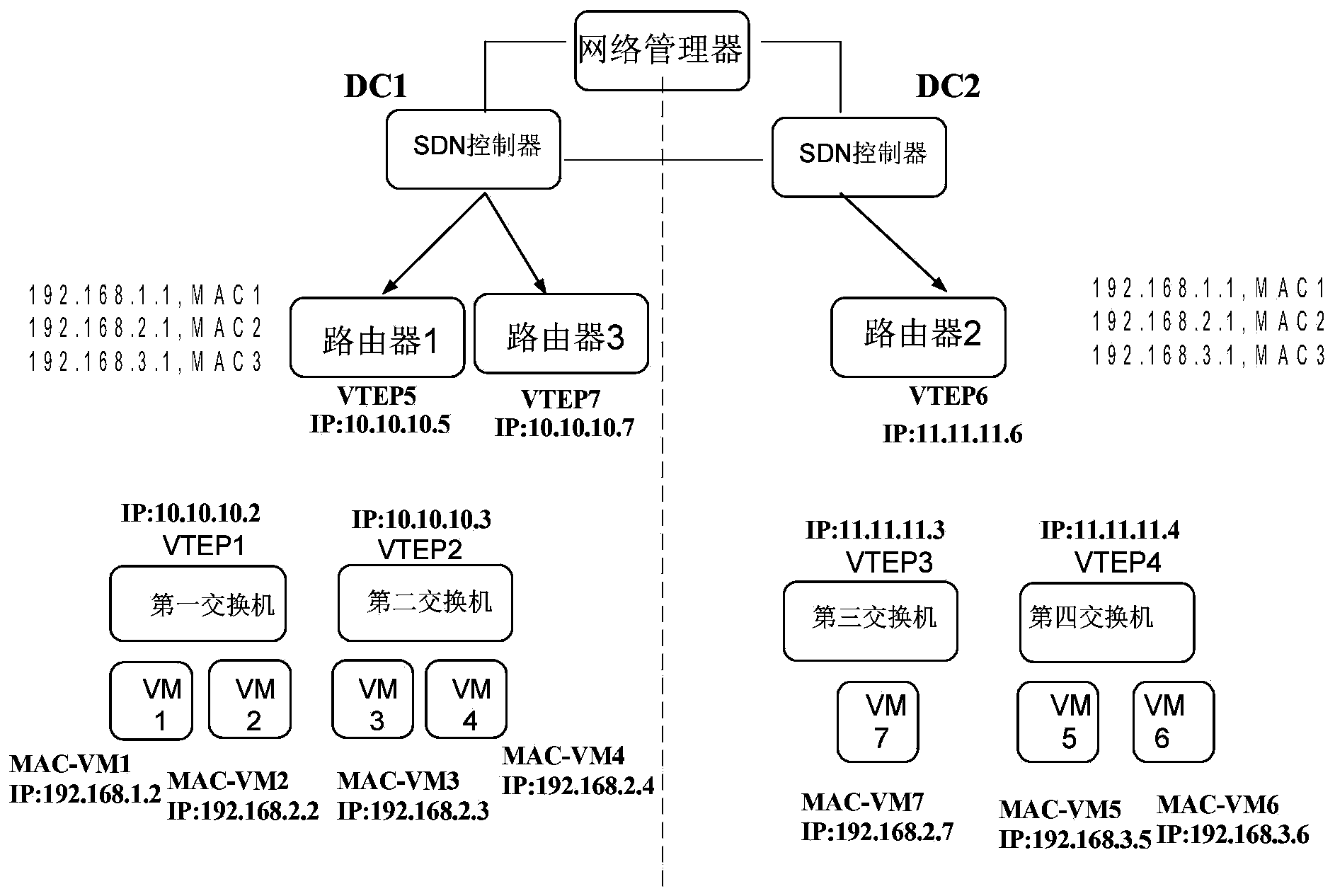

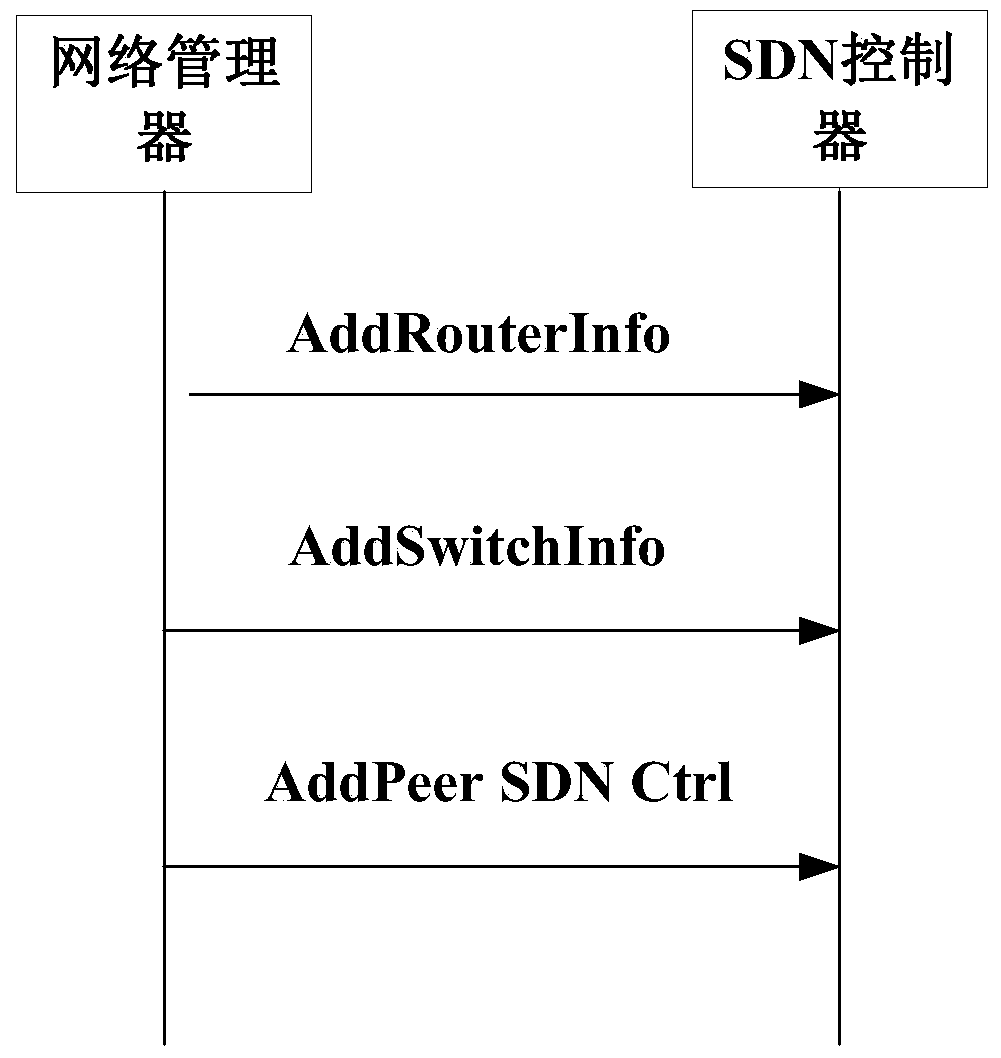

Method and device for achieving virtual machine communication

ActiveCN104115453AReduce detoursReduce occupancyNetworks interconnectionSoftware simulation/interpretation/emulationData centerTransmission bandwidth

The invention provides a method and a device, a first interchanger receives an ARP response from an SDN controller, the ARP response carries an MAC address of a destination gateway, the first interchanger acquires a VTEP message corresponding to the MAC address of the destination gateway in dependence on the MAC address of the destination gateway, and a router corresponding to the VTEP message is arranged in a first data center; the first interchanger sends an IP message to the router corresponding to the VTEP message in dependence on the VTEP message, and therefore the router corresponding to the VTEP message sends the IP message to a second virtual machine through the router and a channel of a second interchanger. Therefore, the SDN controller answers instead, the transmission bandwidth occupied by broadcasting messages is reduced, and the messages only pass through the router in the first data center, and the messages are prevented from detouring among data centers.

Owner:HUAWEI TECH CO LTD

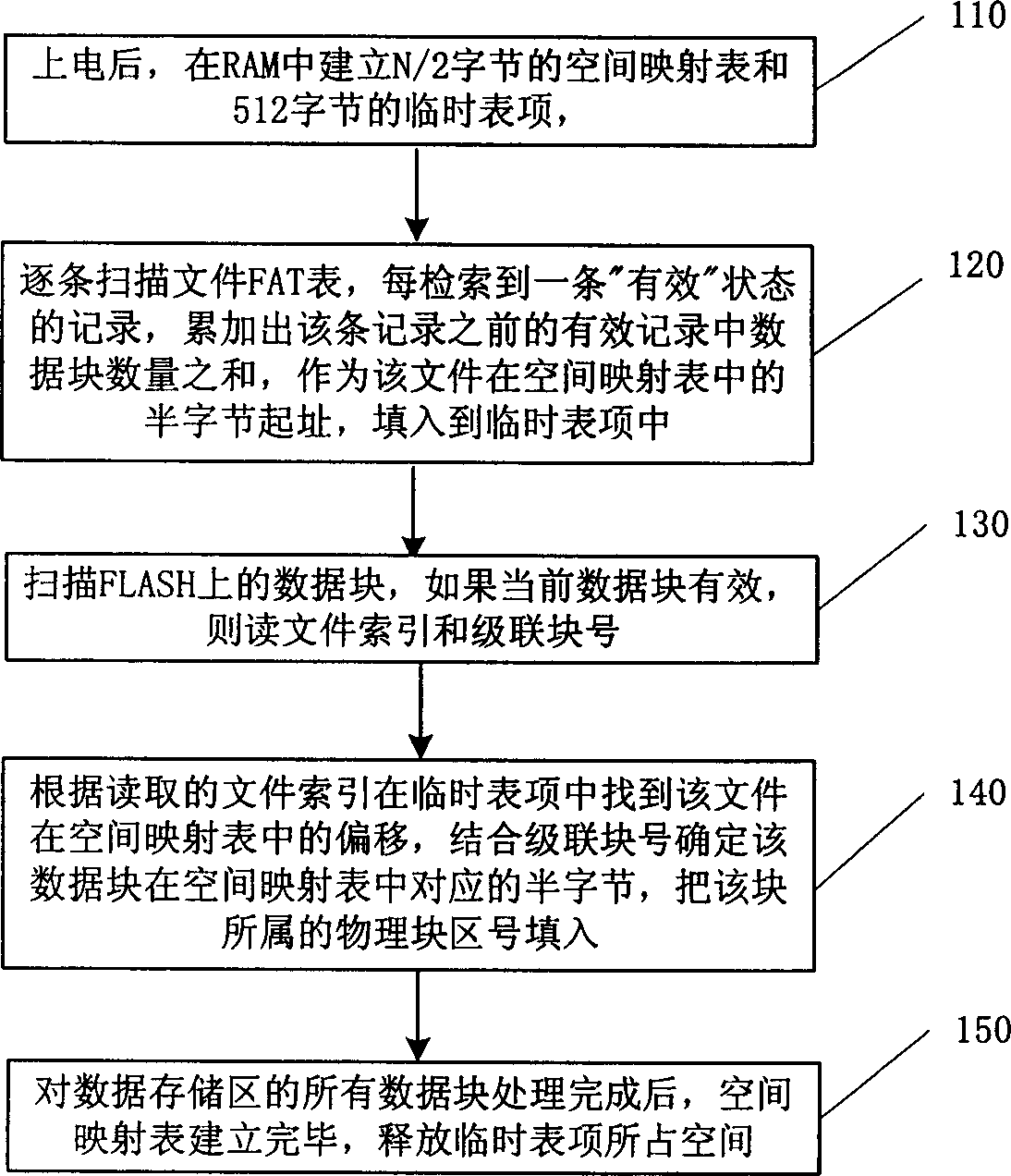

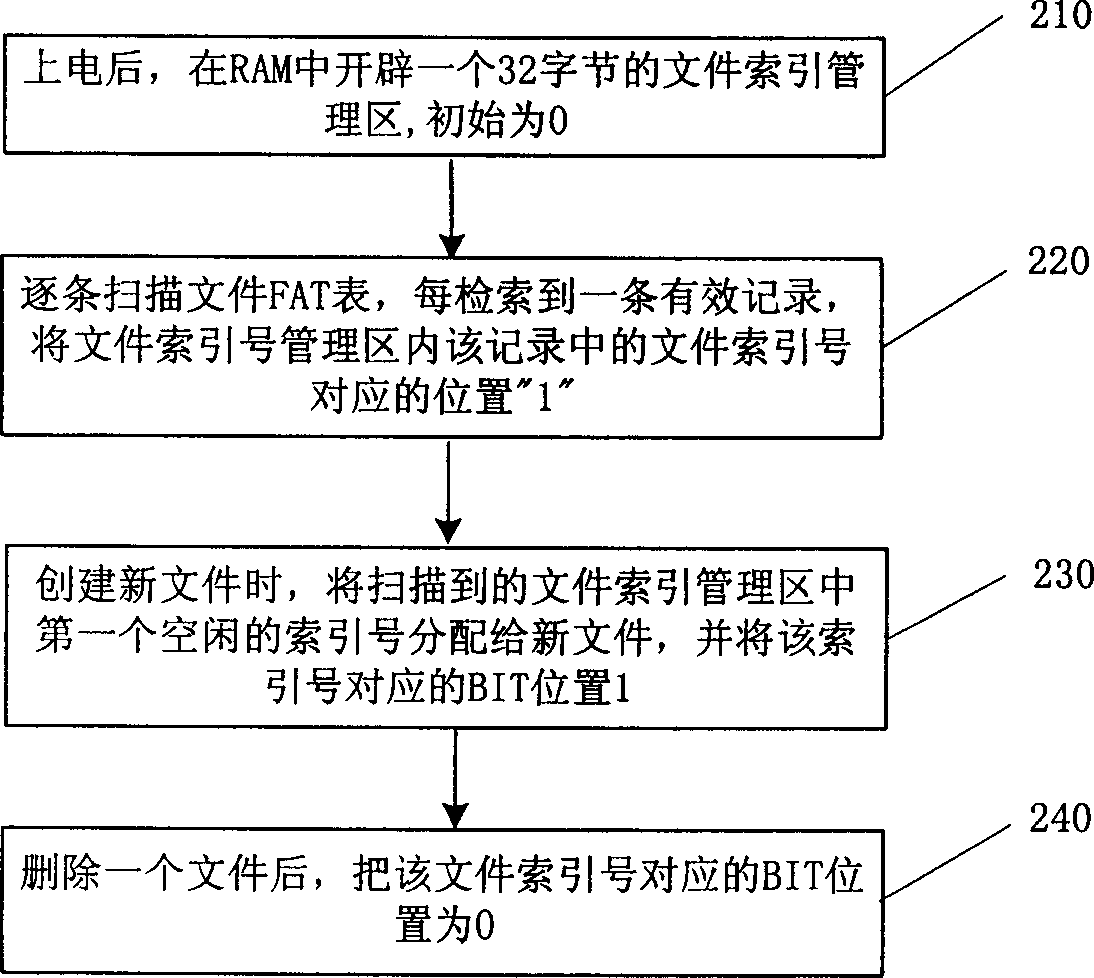

A flash memory file system management method

ActiveCN1632765AImprove retrieval efficiencyEnsure safetyRead-only memoriesMemory systemsFlash file systemFile system

This invention discloses a flash file system management method, which comprises the following steps: to divide the flash memory into FAT area and data memory area; to establish the space mapping form and to write the data block of each file into the block; to write the new file ID, block number, file researching number into the record; then to orderly write each data block into the spare block and to write each data block number into relative positions of the space mapping form; only to operate the file data memory area when updating file data; to identify the place of the data to be read when reading the data for searching the data block.

Owner:DATANG MICROELECTRONICS TECH CO LTD

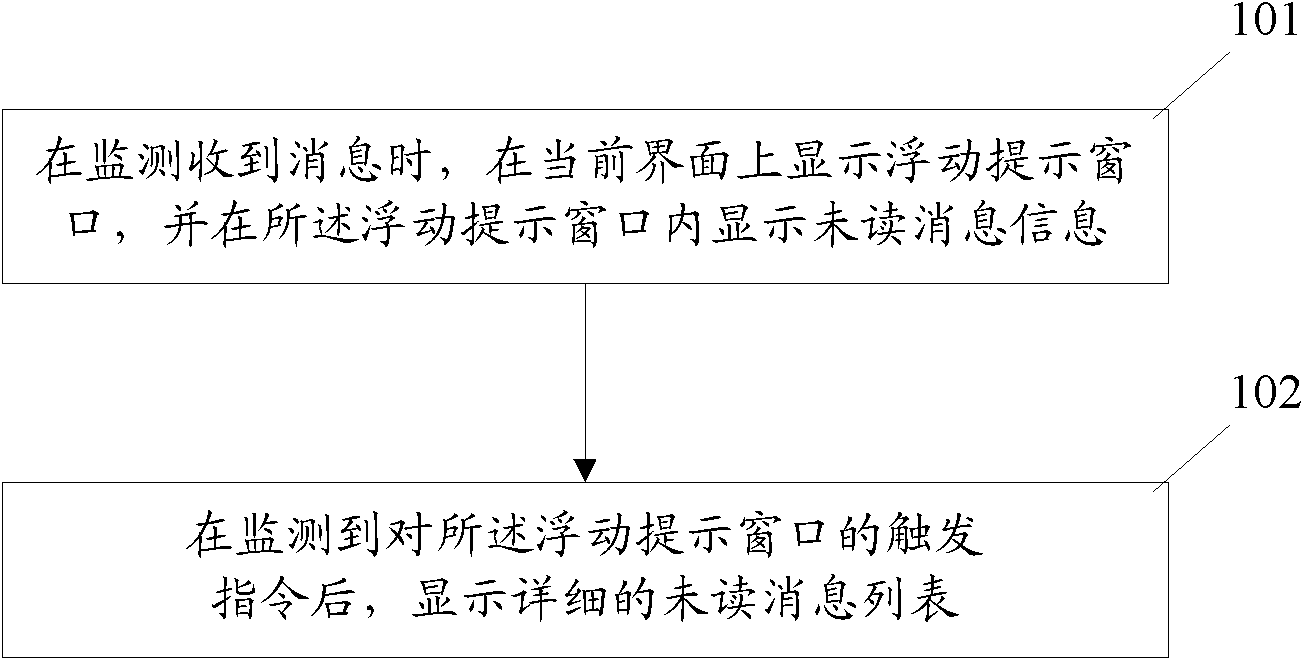

Message prompting method and device of instant messaging client

ActiveCN103051516ASave display spaceEasy to operateData switching networksComputer scienceInstant messaging

The invention discloses a message prompting method and a message prompting device of an instant messaging client. The method comprises the steps of: when monitoring a received message, displaying a floating prompting window on current interface, and displaying unread message information in the floating prompting window; and after monitoring a trigger command to the floating prompting window, displaying a detailed unread message list. The device comprises a prompting window display module and a message list display module, wherein the prompting window display module is used for monitoring whether a message communication module of the instant messaging client receives the message or not, if so, the prompting window display module displays the floating prompting window on current interface, and displays unread message information in the floating prompting window when the message received is monitored; and the message list display module is used for monitoring the trigger command to the floating prompting window, and displaying the detailed unread message list after the monitoring. With the adoption of the method and device provided by the invention, the screen display space of the terminal is less occupied for the convenience of user's operation.

Owner:TENCENT TECH (SHENZHEN) CO LTD

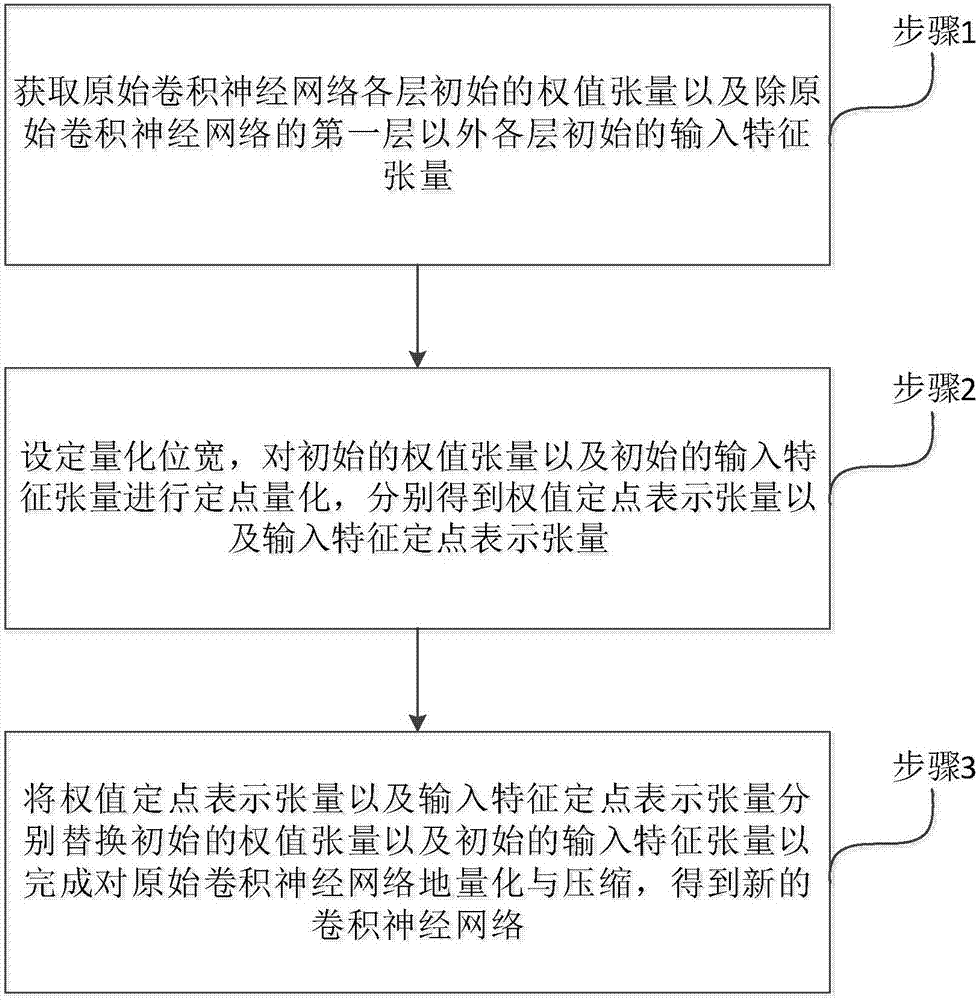

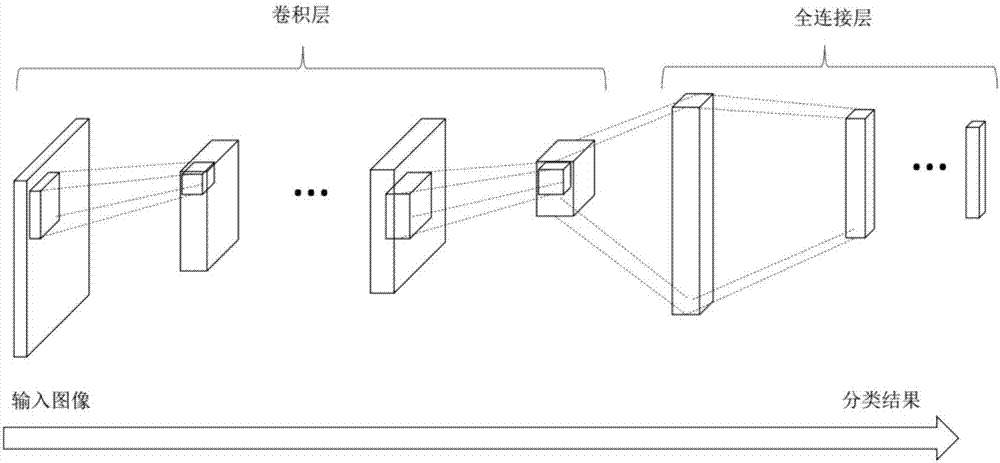

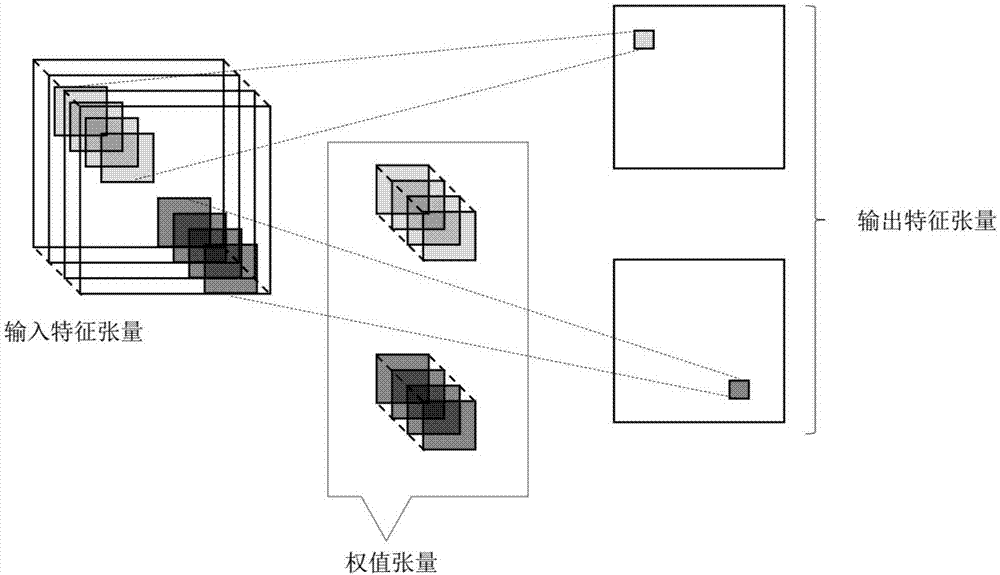

Method and apparatus for quantizing and compressing neural network with adjustable quantization bit width

ActiveCN107480770AReduce occupancyReduce transfer timeNeural architecturesNeural learning methodsNetwork structureTensor

The invention relates to the technical field of neural networks, and specifically provides a method and apparatus for quantifying and compressing a convolutional neural network. The invention aims to solve the existing problem of large loss of network performance caused by an existing method for quantifying and compressing a neural network. The method of the invention comprises the steps of obtaining a weight tensor and an input eigen tensor of an original convolutional neural network; performing fixed-point quantization on the weight tensor and the input eigen tensor based on a preset quantization bit width; and replacing the original weight tensor and the input eigen tensor with the obtained weight fixed-point representation tensor and the input feature fixed-point representation tensor to obtain a new convolutional neural network after quantization and compression of the original convolutional neural network. The method of the invention can flexibly adjust the bit width according to different task requirements and can realize quantization and compression of the convolutional neural network without adjusting the algorithm structure and the network structure so as to reduce the occupation of memory and storage resources. The invention further provides a storage apparatus and a processing apparatus, which have the above beneficial effects.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

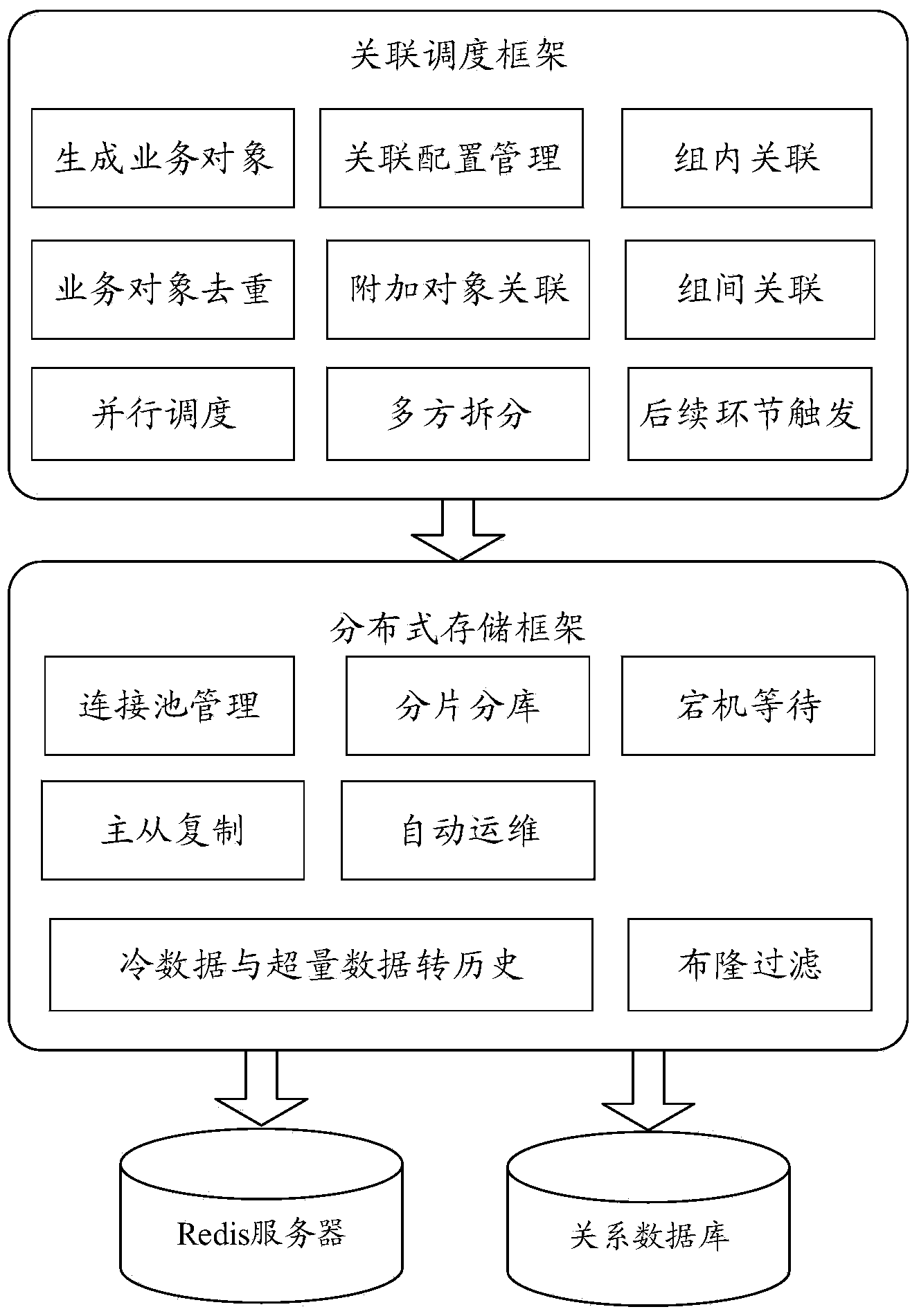

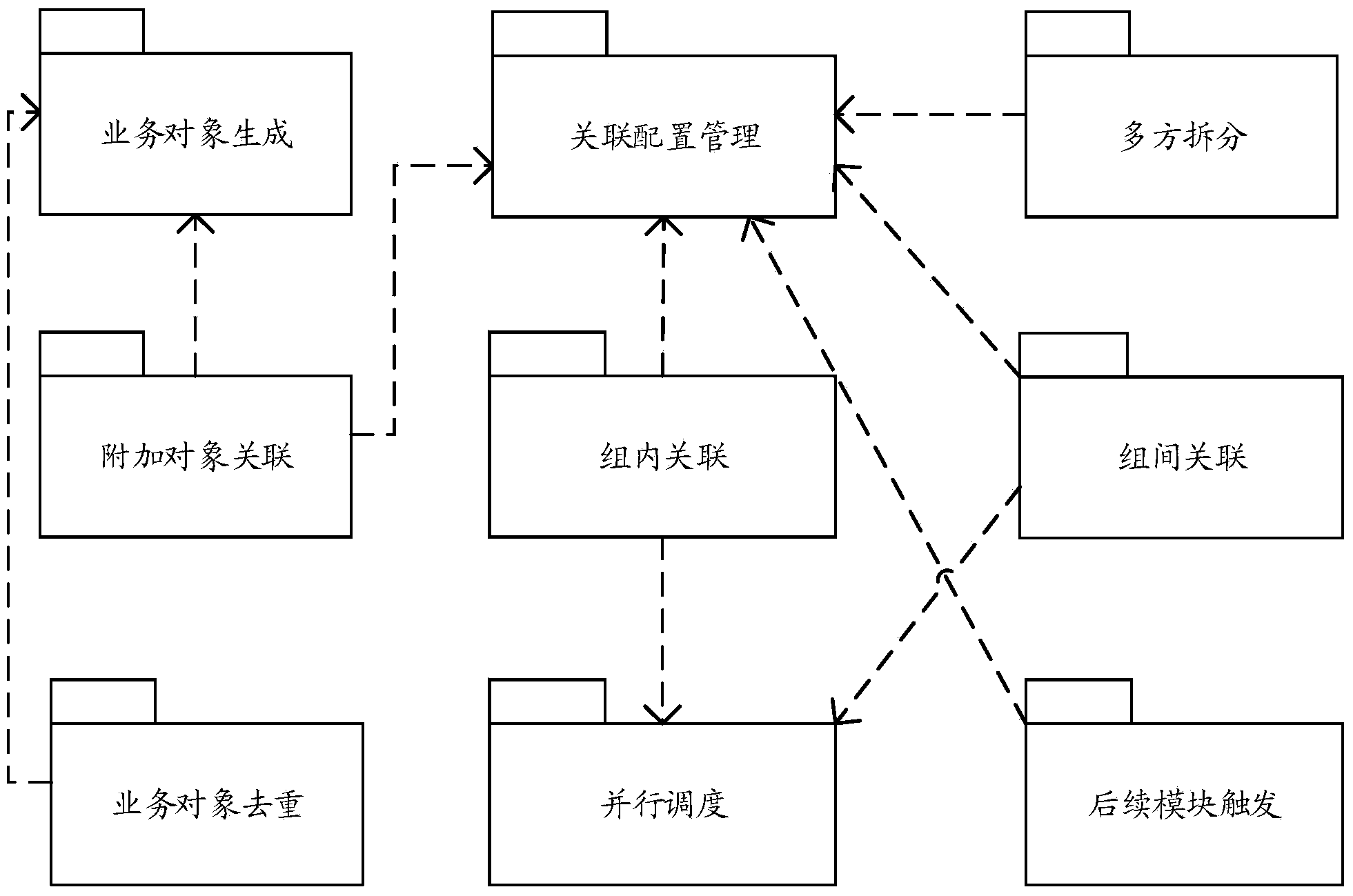

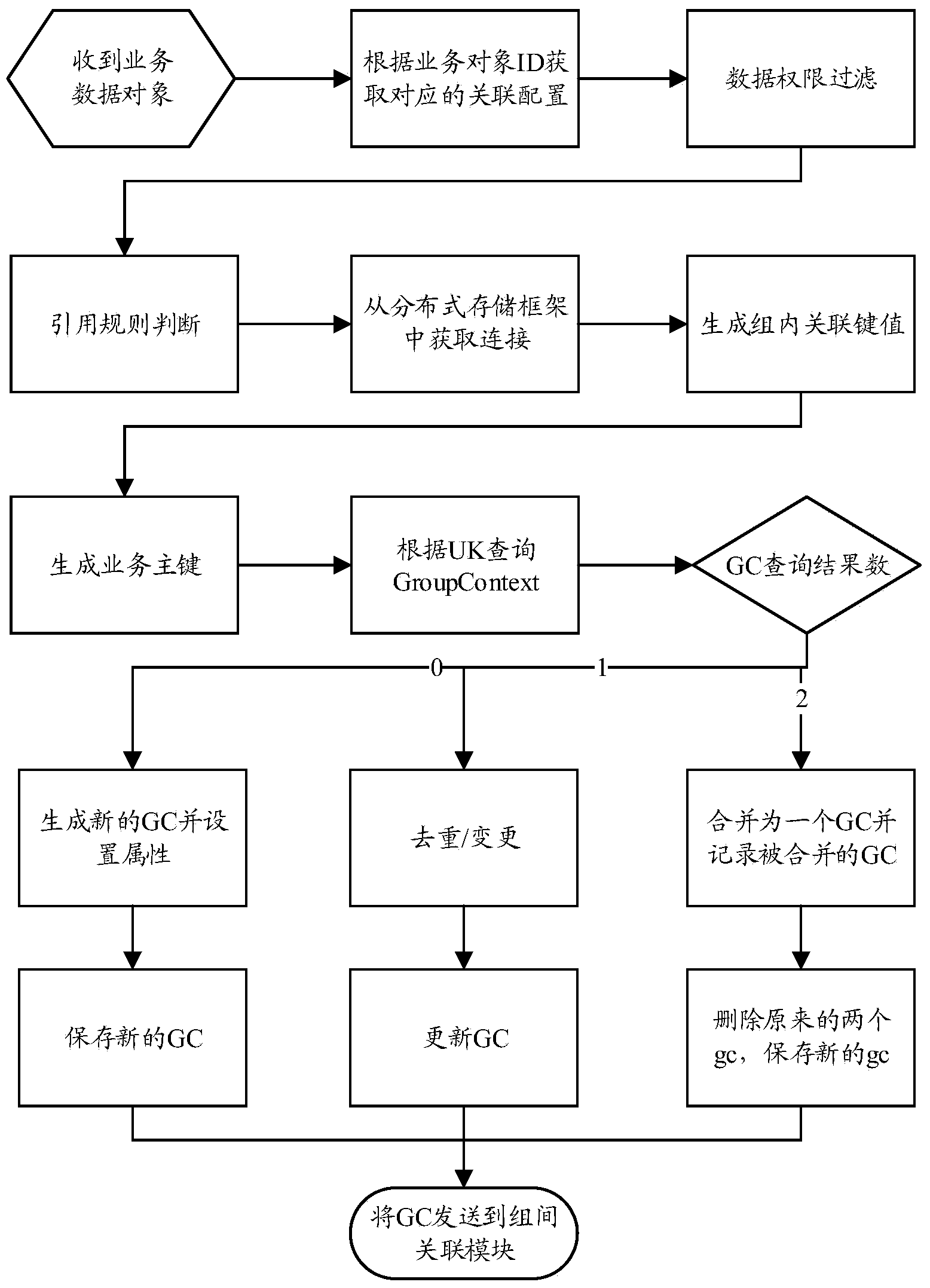

System and method for realizing real-time data association in big data environment

ActiveCN103646111AImprove general performanceImprove flexibilityError detection/correctionRelational databasesStreaming dataReal-time data

The invention relates to a system and method for realizing real-time data association in the big data environment. The system comprises an association scheduling framework, a distributed storage framework, a relational database and Redis servers, wherein the association scheduling framework is used for conducting intra-class association, inter association and concurrent scheduling on business objects; the distributed storage framework is used for establishing a connection pool for a master server of each Redis server, storing data related to current data association, sending cold data surpassing updating time limit and excessive data surpassing memory capacity limit to the relational database, and inquiring key values of intra-class association and inter association transferred to the relational database; the relational database is used for storing key values of intra-class association, key values of inter association, intra-class contexts and monitoring contexts. Through the adoption of the system and method, real-time association supporting mass streaming data can be realized, the demand of complex association in various conditions can be met, the model generality is high, and the application range is relatively wide.

Owner:PRIMETON INFORMATION TECH

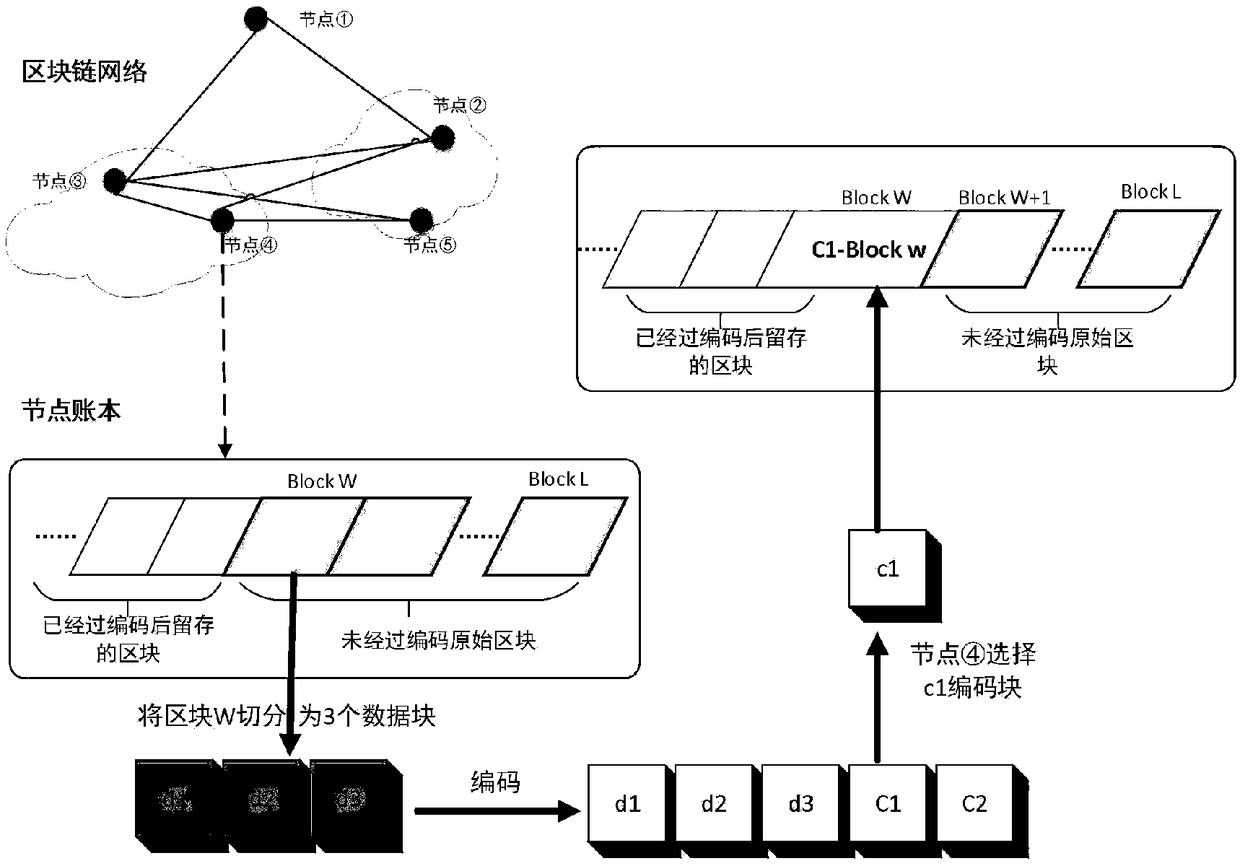

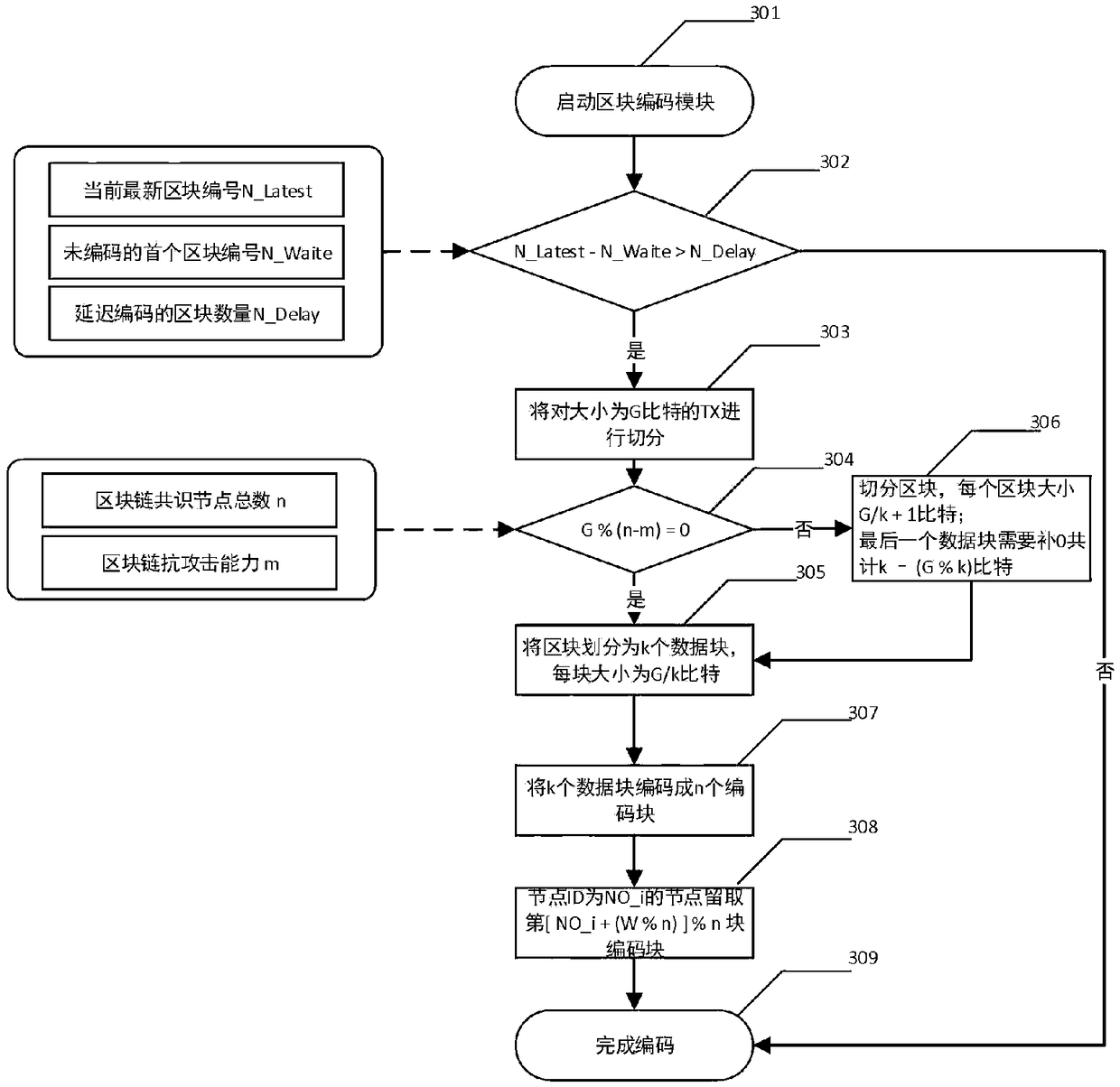

Block chain account book distributed storage technology based on erasure code

InactiveCN109359223AReduce redundancyReduce demandOther databases indexingTransmissionCoding blockComputer architecture

The invention relates to a block chain account book distributed storage technology based on erasure code, and belongs to the block chain technology field. The technology comprises the following steps:a) the block delay coding strategy allows to delay the coding of a certain number of new blocks to meet the synchronization requirements of other block chain consensus nodes for blocks; B) accordingto the set block coding algorithm, the blocks satisfying the coding condition are cut into k data blocks, each data block having a size of about 1 / k of the original block, and further the cut k datablocks are encoded into n (n) k encoded blocks by the erasure coding technology; (c) each block chain node reserves a part of that coding block, and finally the whole block chain network store the coding blocks decentrally; D) when the original block needs to be recovered from the encoded block, the current node collects the remaining encoded block corresponding to the block from the remaining nodes, and then recovers the original block according to the corresponding decoding algorithm. The method reduces the requirement of the block chain for storage resources and improves the utilization rate of storage space.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

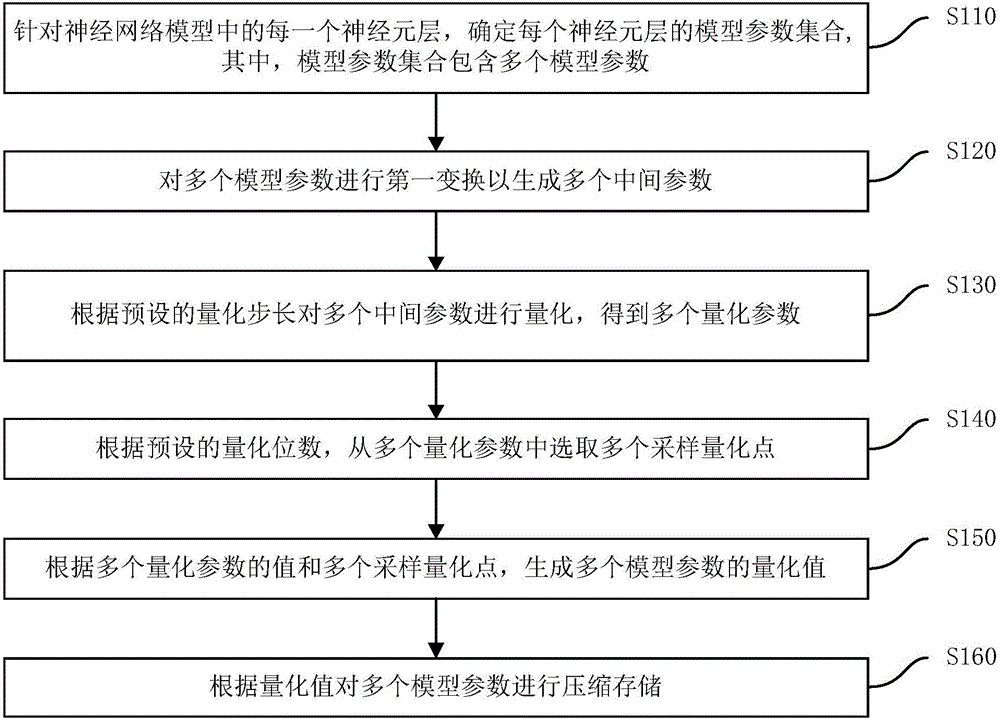

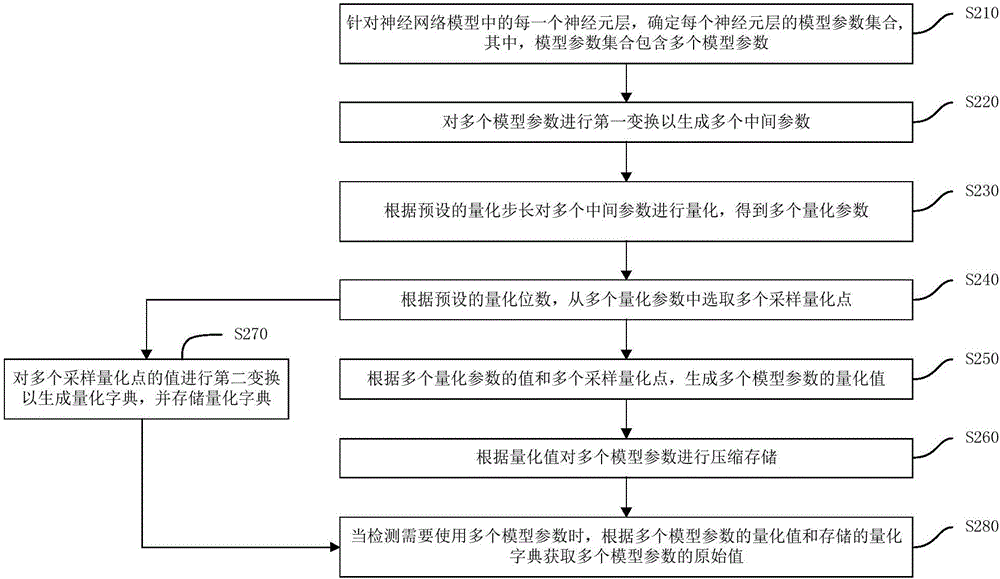

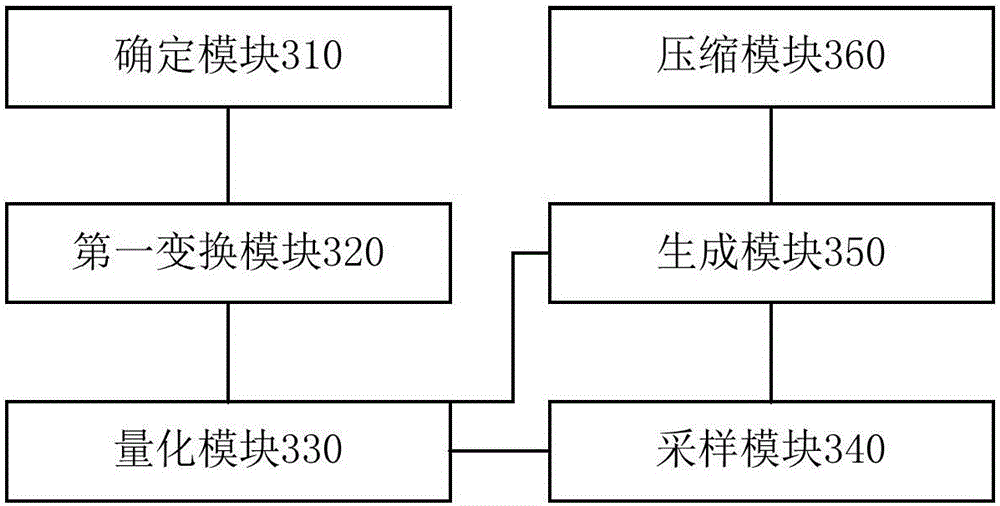

Neural network model compression method and device

ActiveCN106485316AReduce sizeReduce occupancyAnalogue/digital conversionElectric signal transmission systemsCompression deviceModel parameters

The invention discloses a neural network model compression method and a neural network model compression device. The neural network model compression method comprises the steps of: determining a model parameter set of each neuron layer for each neuron layer in a neural network model, wherein the model parameter set includes a plurality of model parameters; carrying out first transformation on the plurality of model parameters to generate a plurality of intermediate parameters; quantizing the plurality of intermediate parameters according to a preset quantization step size to obtain a plurality of quantization parameters; selecting a plurality of sampling quantization points from the plurality of quantization parameters according to a preset quantization bit number; generating quantized values of the plurality of model parameters according to values of the plurality of quantization parameters and the plurality of sampling quantization points; and compressing and storing the plurality of model parameters according to the quantized values. The neural network model compression method can better maintain the effect of the model, greatly reduces the size of the neural network model, and reduces the occupation of computing resources, especially memory resources.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

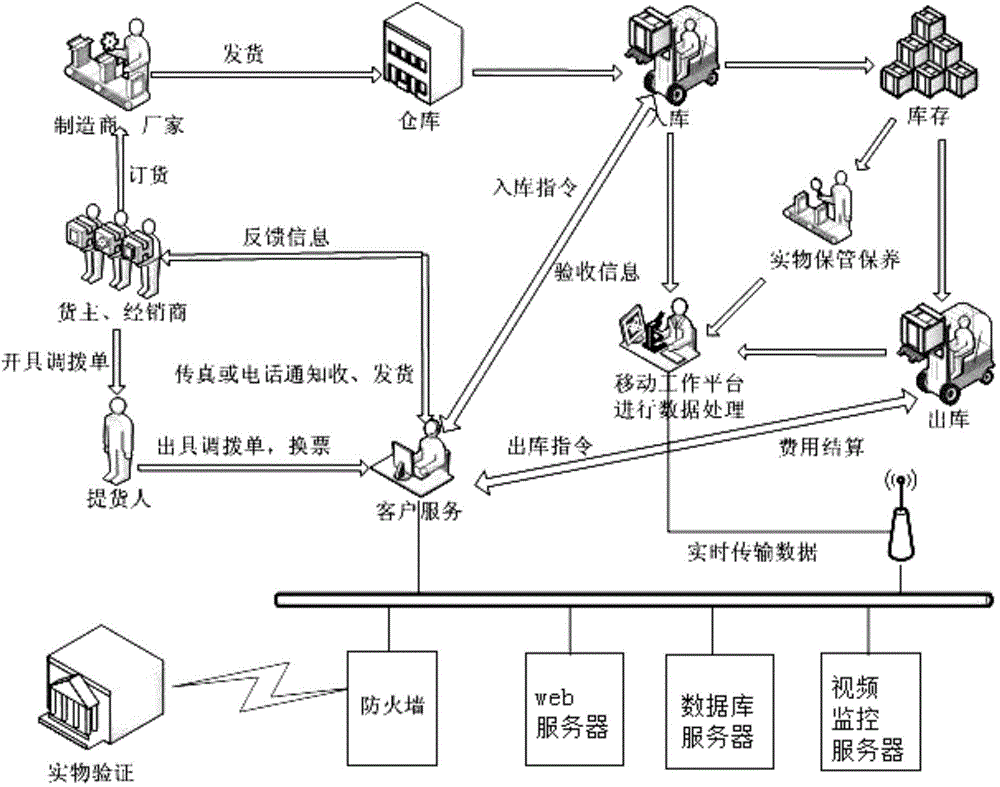

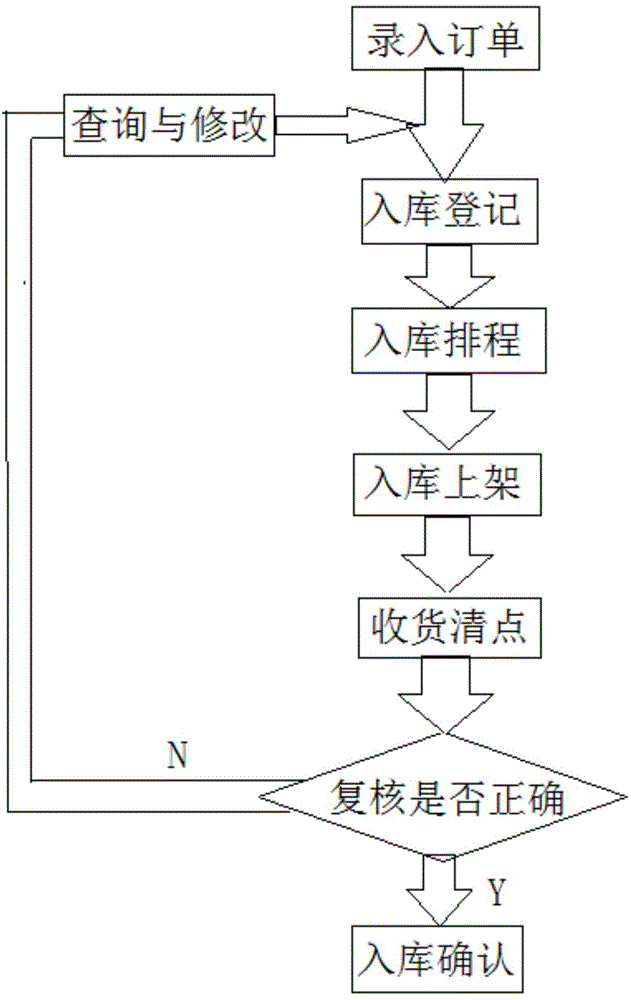

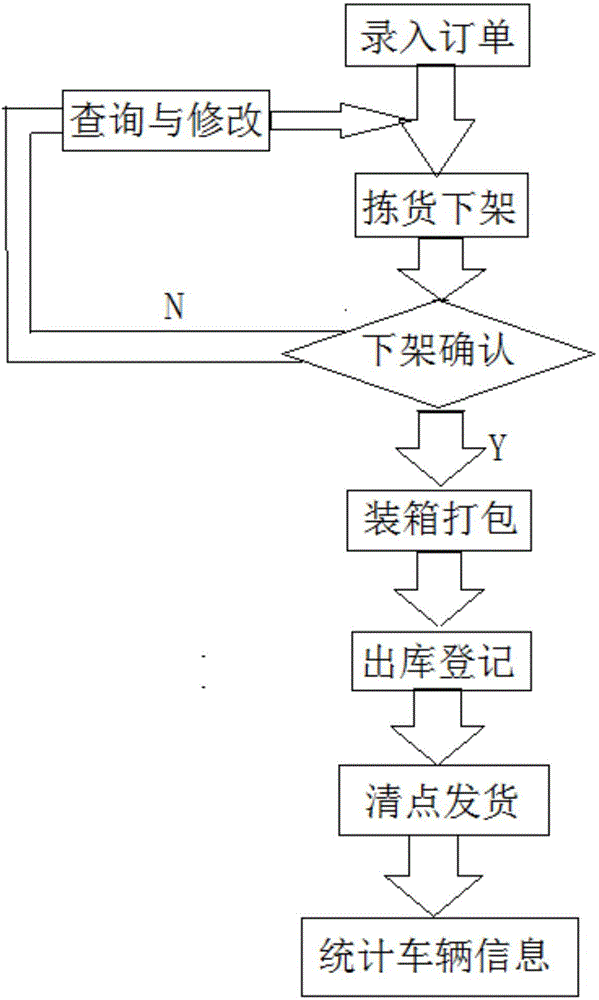

Cold-chain logistics storage supervisory system and method

The invention discloses a cold-chain logistics storage supervisory system and method. The cold-chain logistics storage supervisory system includes a temperature monitoring module, a WMS (Warehouse Management System) management module, an order management module, an in-out stock monitoring module, a three-dimensional planning module, a processing module, an inventory management module and a locating and tracking module. The old-chain logistics storage supervisory system effectively organizes the staff, space and equipment by aid of the WMS for receiving, storing, picking and transporting goods, transporting raw materials and parts to manufacturing enterprises and conveying finished products to wholesalers, distributors and final customers. The main functions of the cold-chain logistics storage supervisory system are managing and controlling all in-out stock dynamic conditions of the warehouse and doing statistic analysis about inventory data, so decision makers can find problems timely, adopt corresponding measures to adjust the inventory structure, shorten the storage period, quicken capital turnover, and further to ensure smooth logistics in enterprise production.

Owner:临沂市义兰物流信息科技有限公司

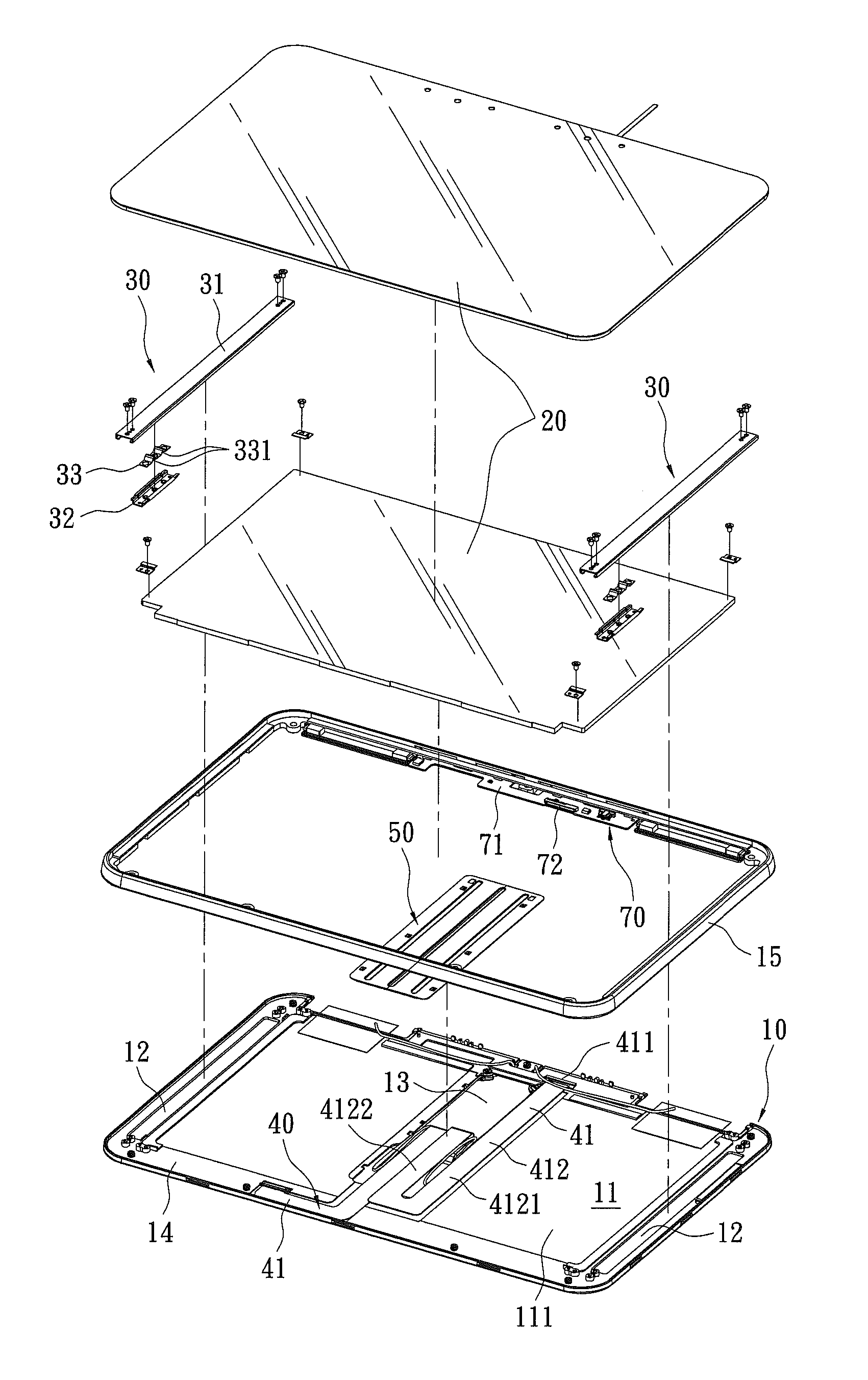

Display structure of slip-cover-hinge electronic device

ActiveUS8116073B2Simple structureImprove adaptabilityPiezoelectric/electrostrictive microphonesDigital data processing detailsElectrical connectionHinge angle

Owner:AMTEK SYST

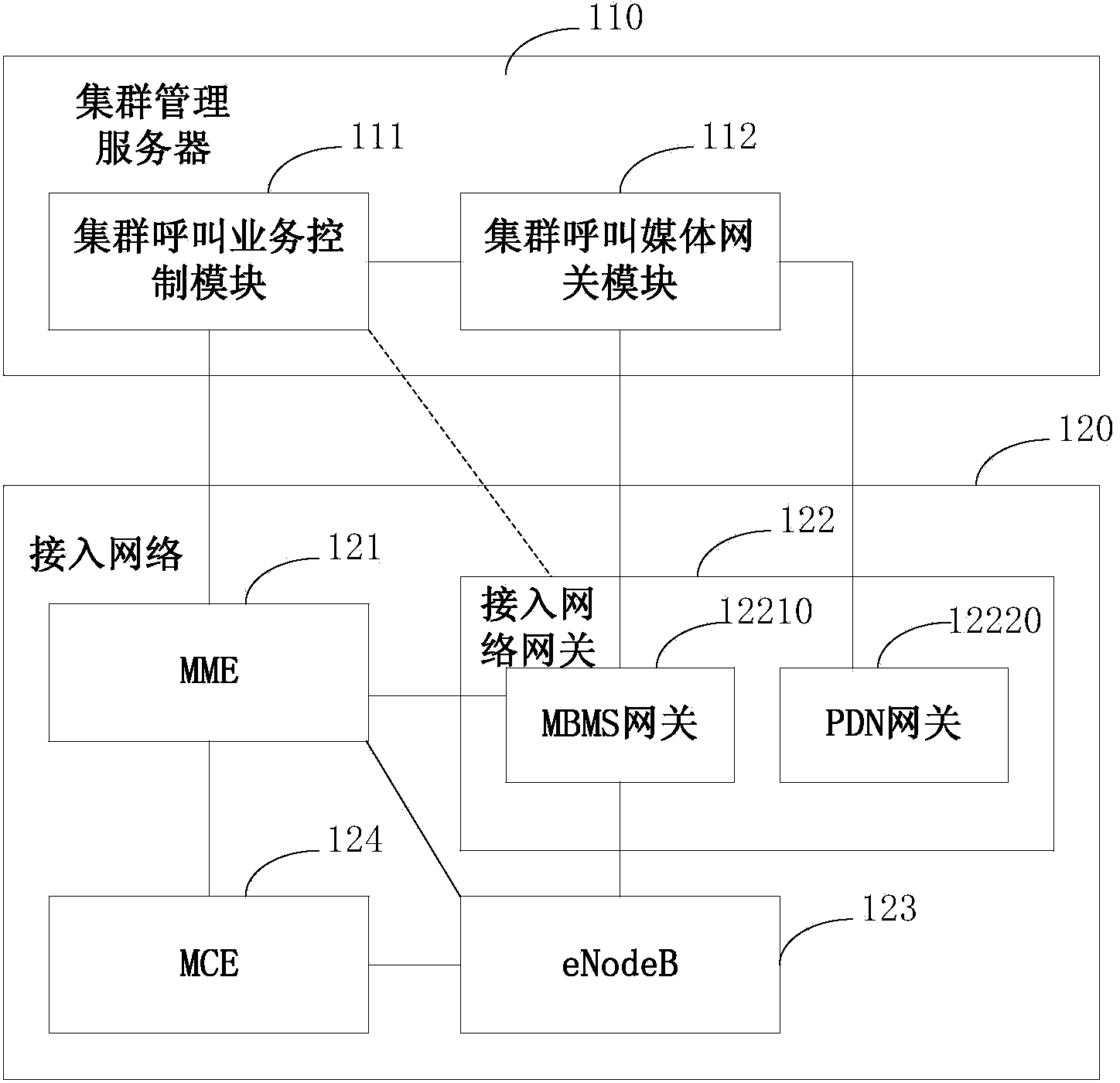

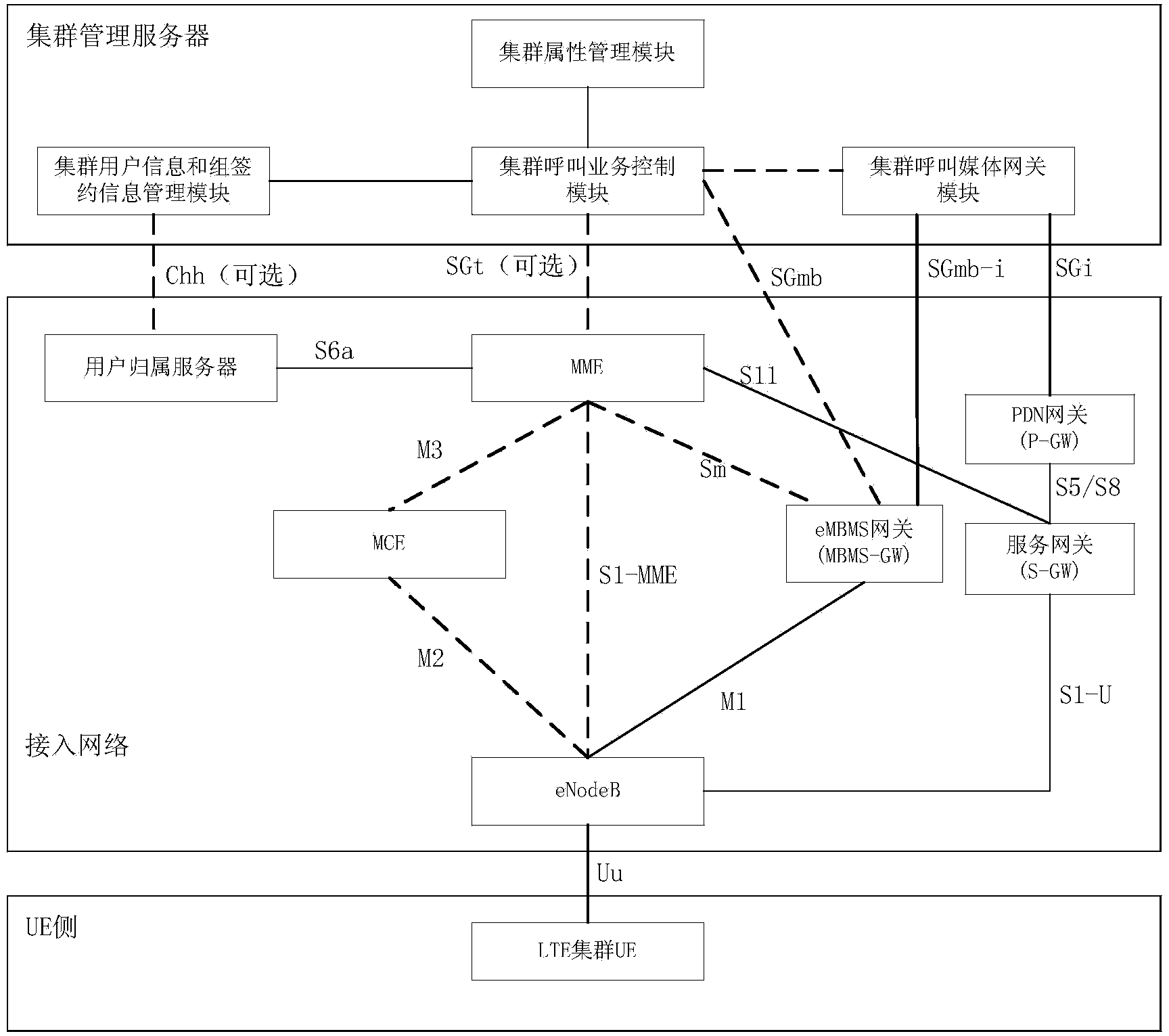

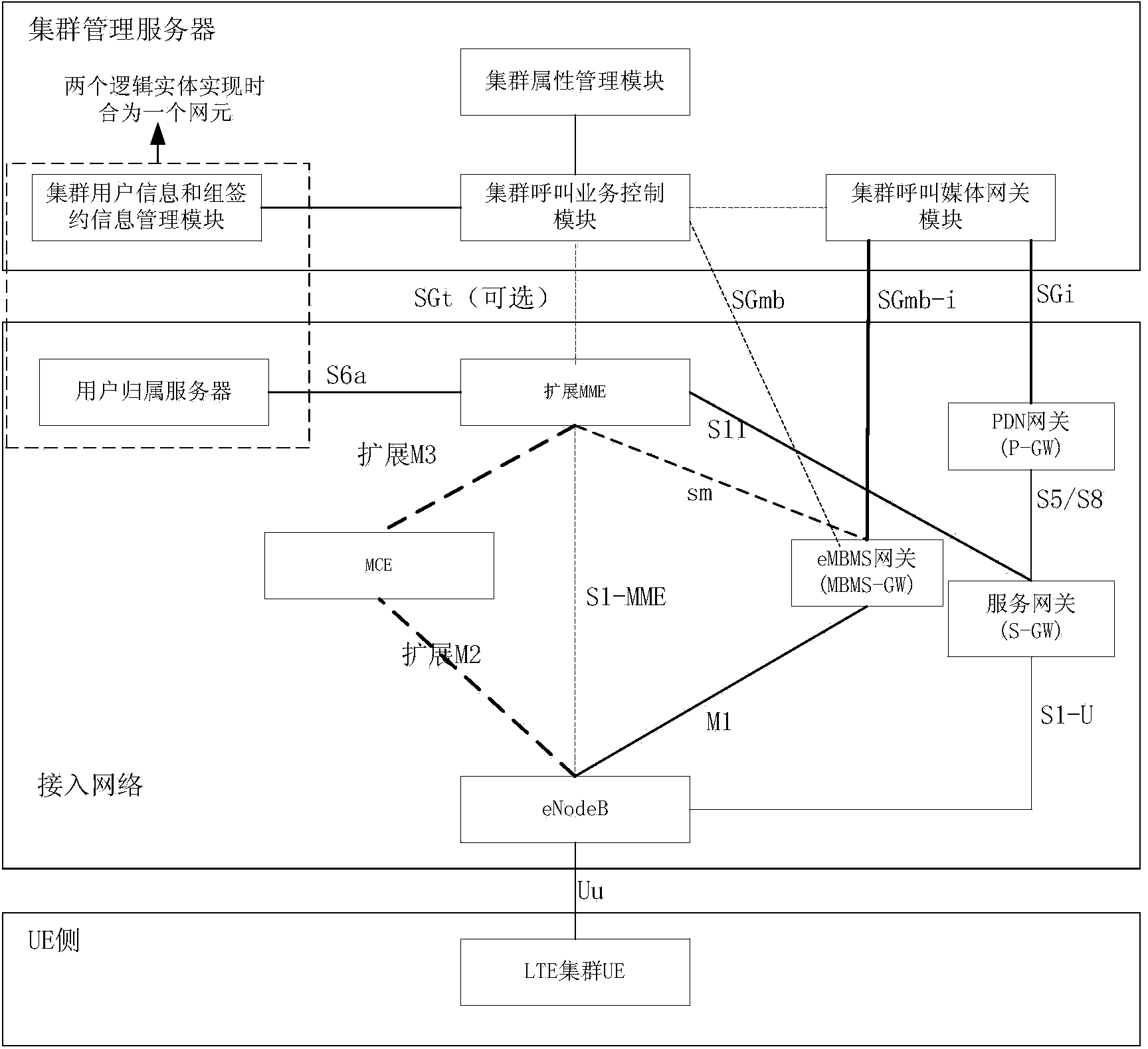

Cluster communication system, cluster server, access network and cluster communication method

ActiveCN103609147AReduce occupancyImplement control plane communicationNetwork topologiesBroadcast service distributionAccess networkCommunications system

The invention provides a cluster communication system, a server, an access network and a cluster communication method. The system comprises: a cluster management server and an access network. The cluster management server includes a cluster call service control module, and a cluster call medium gate module connected thereto. The cluster call media gate module is used for receiving service data transmitted by a UE in a UE cluster through the access network, and transmitting the service data according to the communication type of the service data so as to achieve the user-side communication of the UE cluster. The cluster call service control module is used for receiving a communication request transmitted by a UE in the UE cluster through the access network, and carrying out call control and bearing management on the UE cluster according to the communication type of the communication request so as to achieve the control-side communication of the cluster UE. According the invention, the paging of the UE cluster is realized in a multicast manner in the cluster communication process, thereby improving the access performance and access efficiency of the system.

Owner:HUAWEI TECH CO LTD

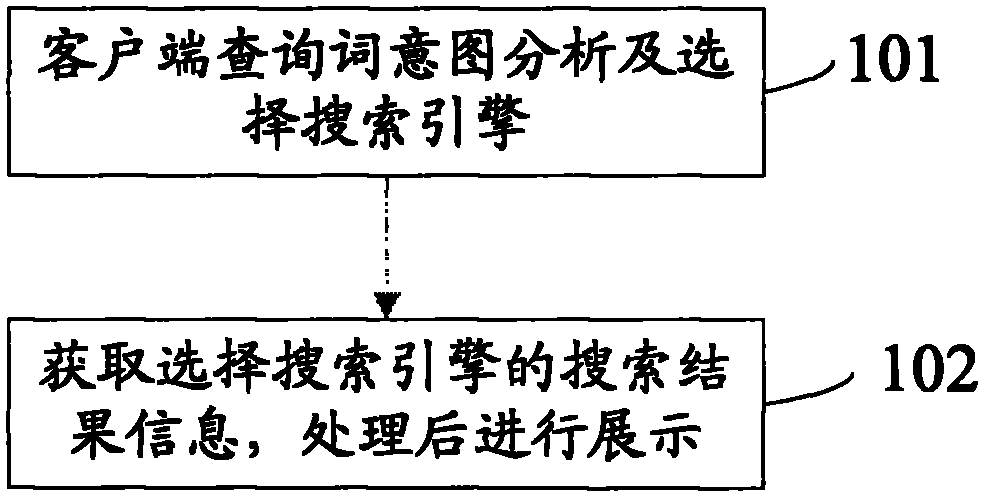

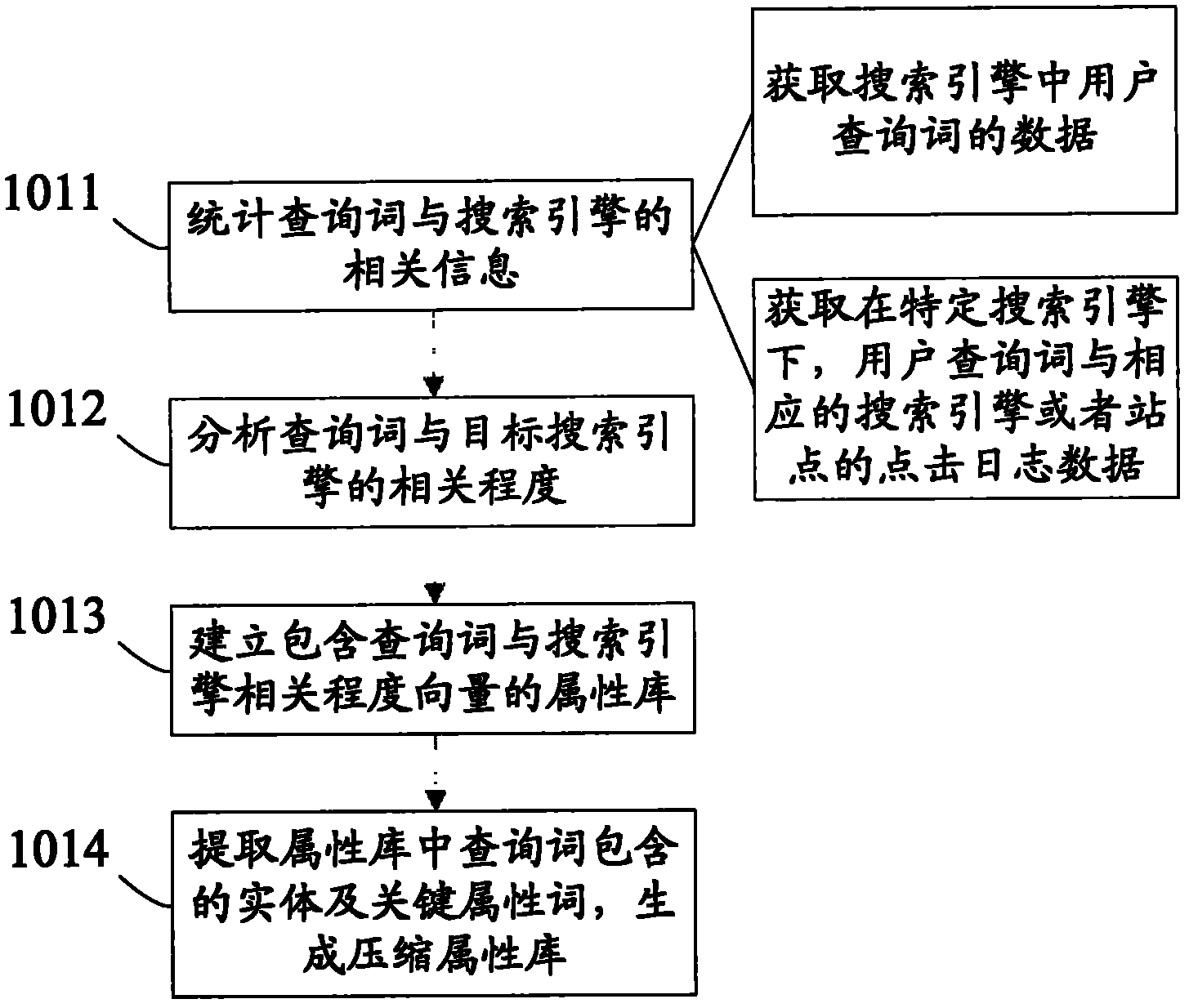

Search method and device based on query word

ActiveCN102043833AImprove search accuracyImprove efficiencySpecial data processing applicationsUser needsUser input

The invention provides a search method and device based on a query word. The method comprises the following steps: searching a query word which is input by a user through a client, and selecting a search engine suitable for the query word from multiple search engines by combining the query word with a query word attribute library, wherein the query word attribute library is used for characterizing the correlativity between each query word or query word class and each search engine; and acquiring the search result information of the selected search engine, processing the search result information and displaying the processed search result information. In the invention, when multiple search engines exist, by means of carrying out intention comprehension and analysis on the query word of the user, the search engine related to the search user requirement can be selected or the search engine with higher accuracy can be searched based on the intention of the user, and pertinent link search is carried out, thus the system efficiency can be improved, and the search accuracy of the user is also increased.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

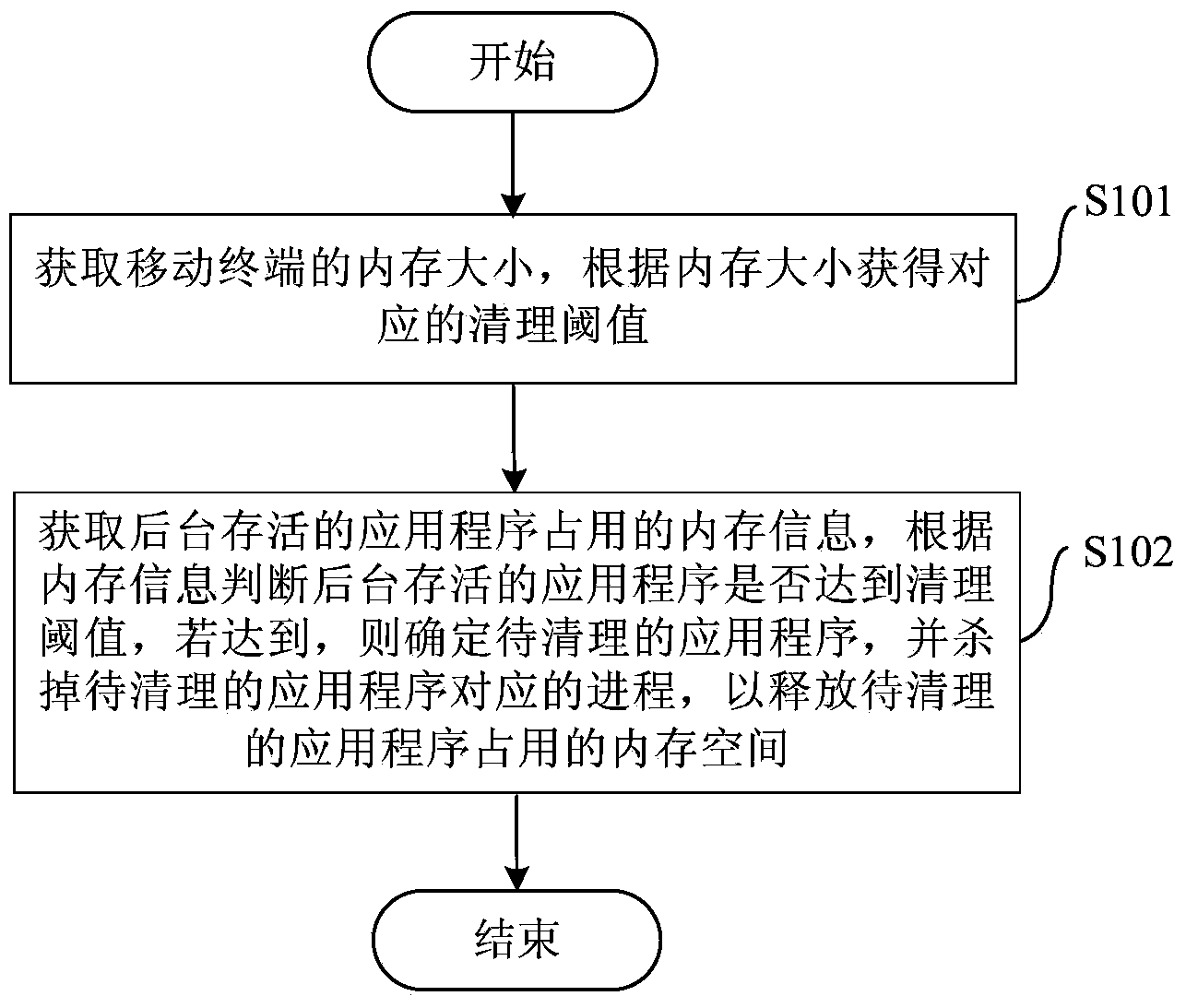

Method and device for clearing memory of mobile terminal and mobile terminal

InactiveCN104298612AReduce occupancyImprove operational efficiencyMemory adressing/allocation/relocationKilled processComputer engineering

Owner:BEIJING KINGSOFT INTERNET SECURITY SOFTWARE CO LTD

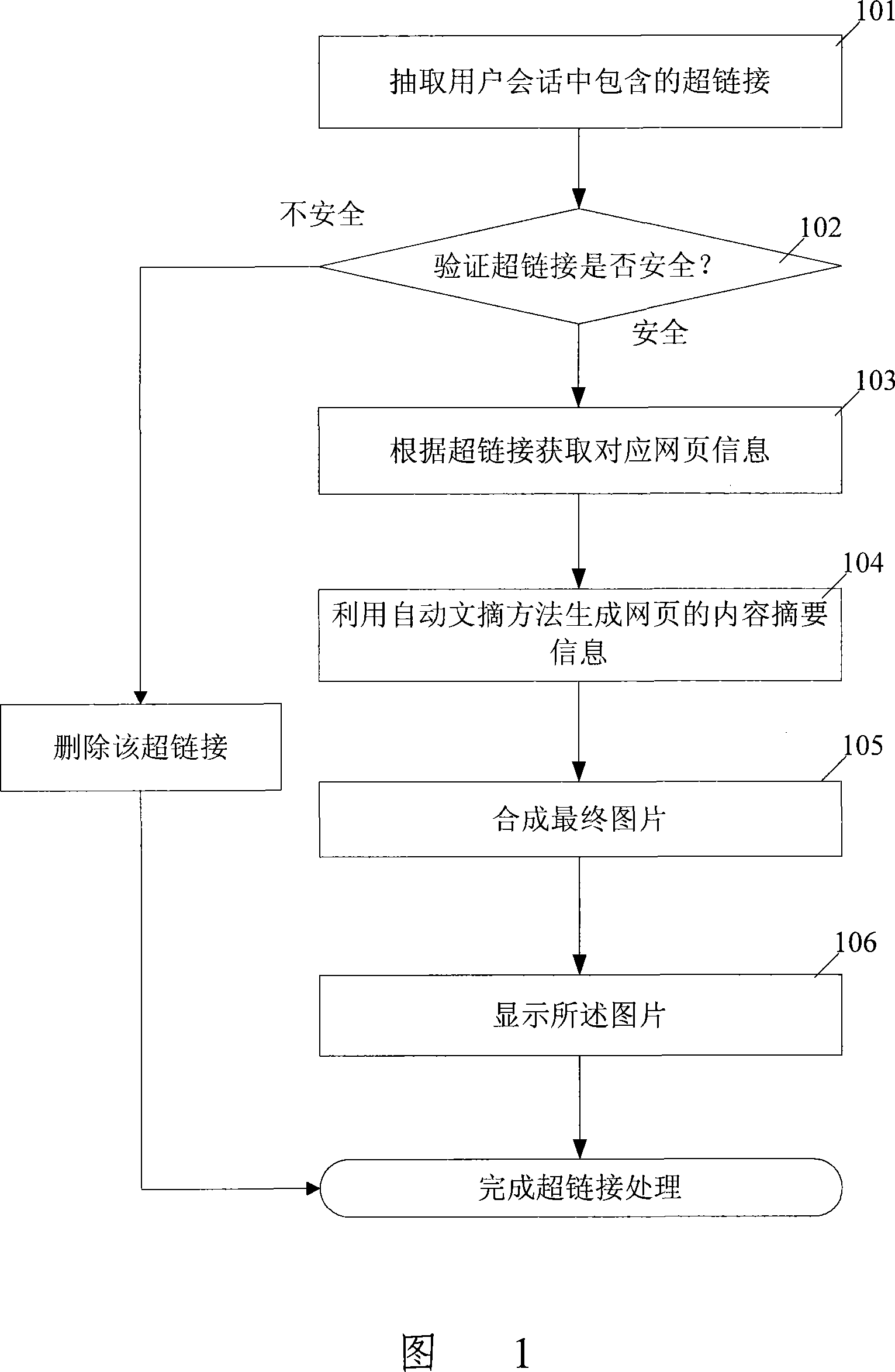

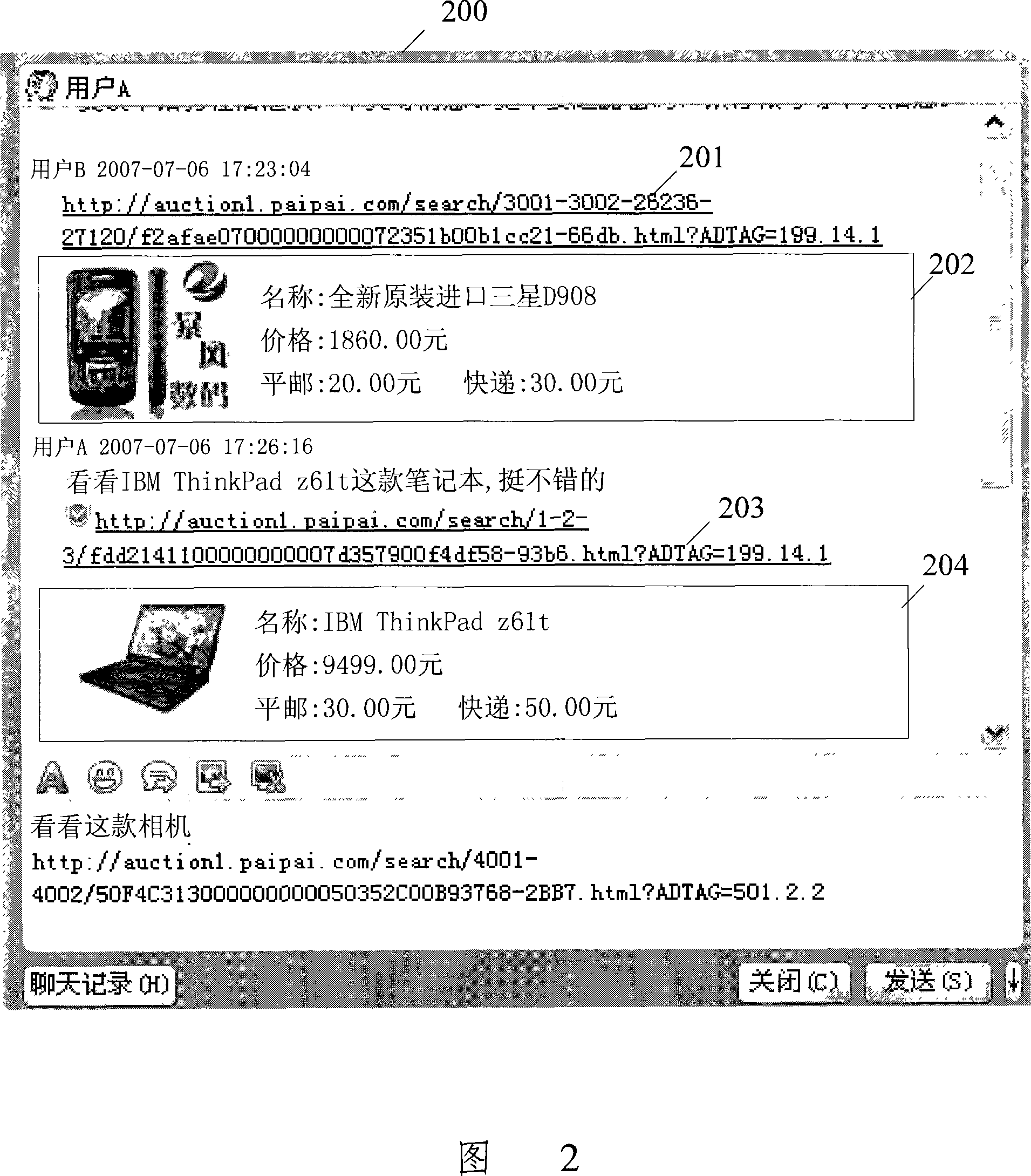

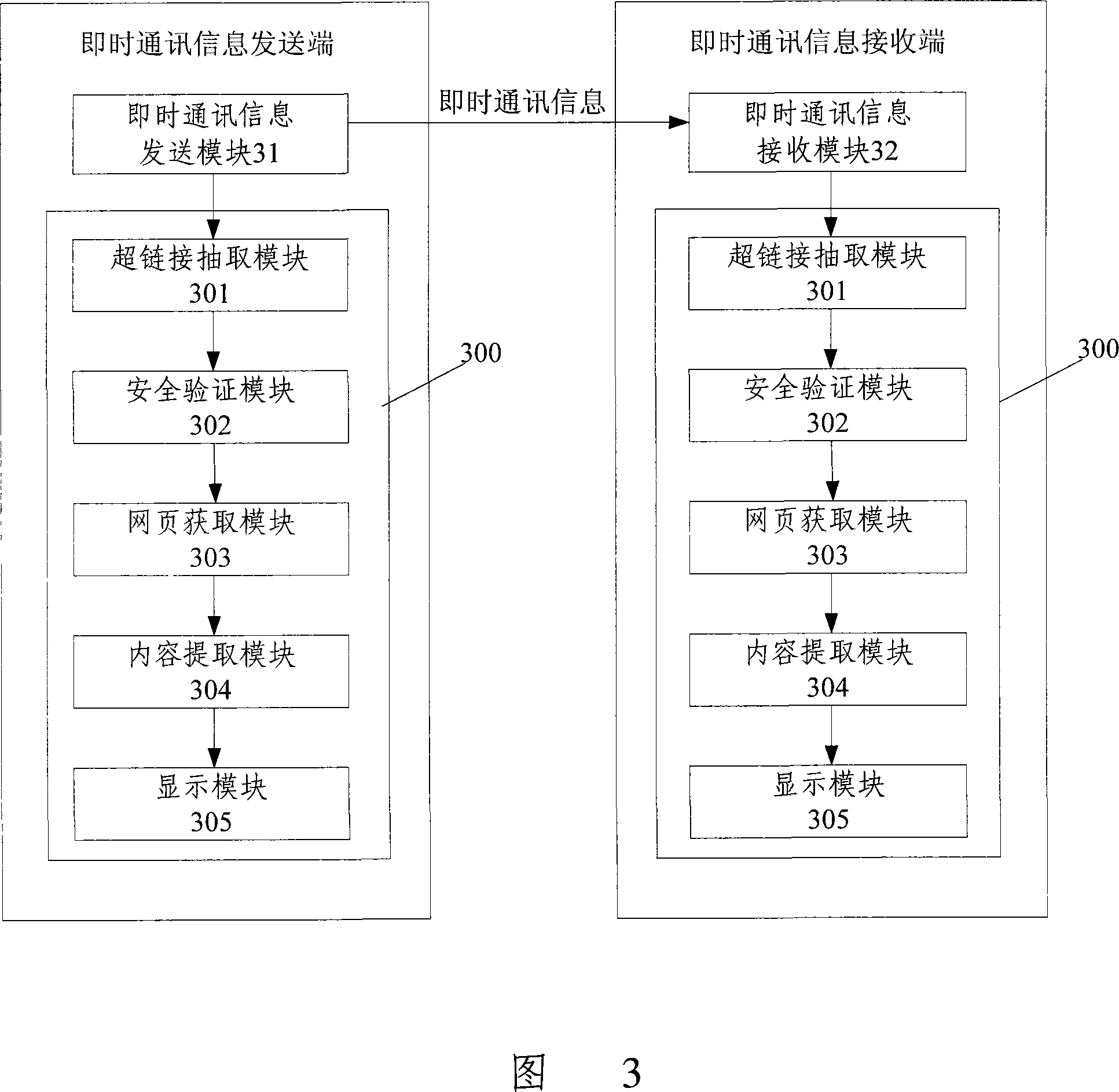

Processing method and device for instant communication information including hyperlink

ActiveCN101102255AConvenient instant messaging operationImprove interactivityDigital data information retrievalStore-and-forward switching systemsHyperlinkComputer terminal

Owner:TENCENT TECH (SHENZHEN) CO LTD

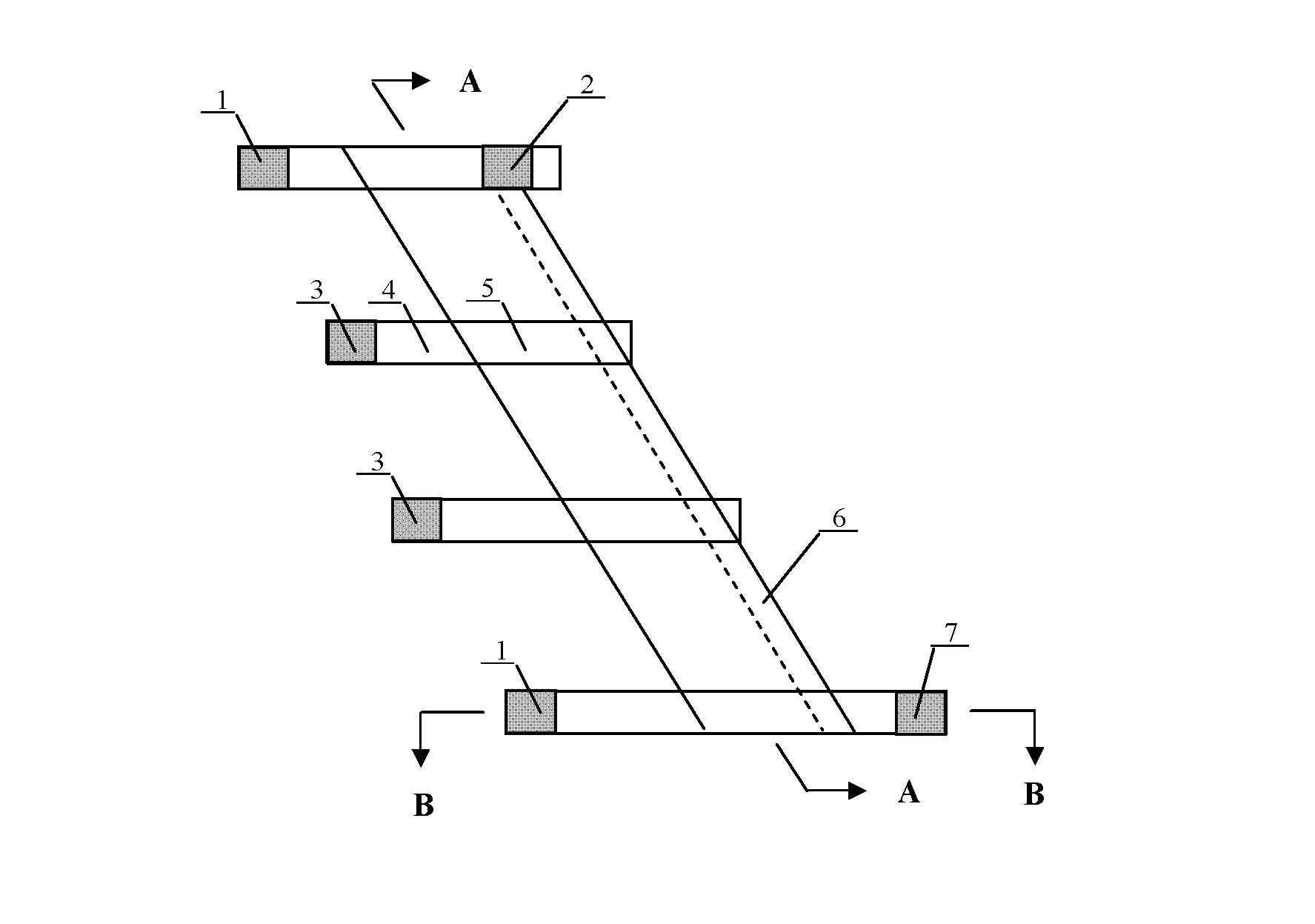

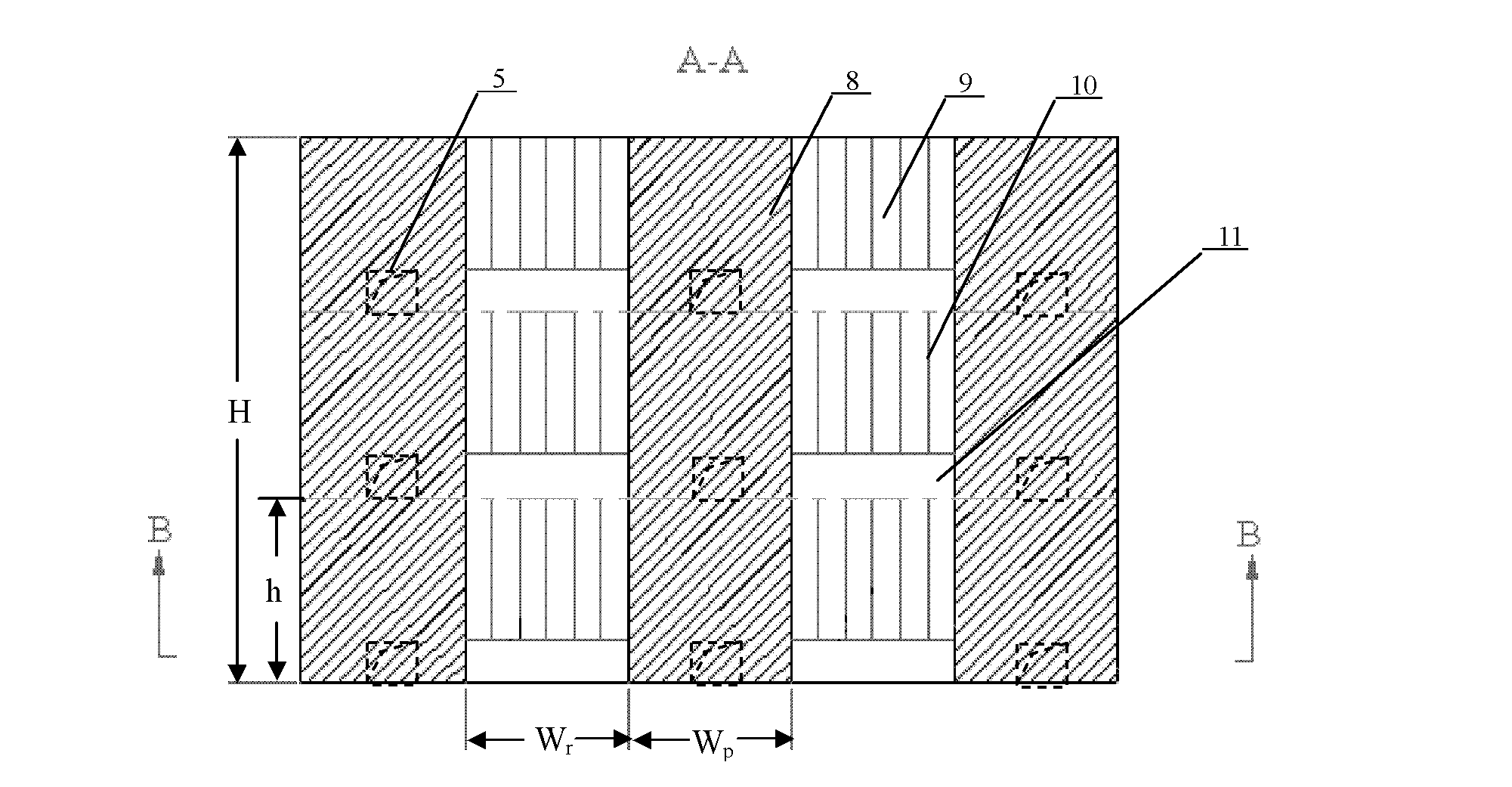

Sublevel open-stop and delayed filling mining method

InactiveCN102562065AImprove drilling positioning accuracyNo lossUnderground miningSurface miningTailings damFilling materials

The invention relates to a sublevel open-stop and delayed filling mining method. According to the method, cutting crosscuts are wholly undercut along the width of chambers and pillars so that the space of scope rock drilling is shared with ore drawing, drilling operations of vertical parallel blast holes are achieved in undercutting space, positioning accuracy of drilling the vertical parallel blast holes is high, the intervals among the blast holes are even, and the defect of high blasting boulder yield caused by drilling sector blast holes in traditional rock drilling cross cuts is avoided; during chamber (or pillar) scoping, driving of ore drawing gateways and ore drawing admission passages in fill materials of adjacent pillars (or chambers) isn't needed, and accordingly the safety is improved; simultaneously, ores are directly drawn from the undercutting space of the chambers and the pillars, the space of ore drawing is large, mutual interference is small, ore drawing efficiency is high, and ores are drawn completely without any dead space and secondary ore loss; artificial sill pillars are constructed, ore sill plates aren't needed to be reserved, the ores are free of primary loss, and the rate of resource recycling is high. Barren rocks and ore beneficiation tailings are used for filling gobs, the barren rocks are not taken out of pits, constructions of tailing dams and barren rock yards are reduced, and land occupation is small.

Owner:UNIV OF SCI & TECH BEIJING

Shift register, grid driver and display device

ActiveCN102654968ASimple structureLess signal wiringStatic indicating devicesDigital storageShift registerDisplay device

The invention relates to the technical field of a display device, and provides a shift register, a grid driver and a display device. The shift register comprises an input programming unit, a latch unit, an output programming unit and an inverted output unit, wherein the input programming unit is connected with an input end of the latch unit and programs for the input end of the latch unit; the latch unit is used for latching an output signal; the non-inverted and inverted output ends of the latch unit are connected through the output programming unit; the output programming unit is connected with an output end of the latch unit and programs for the output end of the latch unit; and the inverted output unit is connected with an inverted output end of the latch unit and used for generating an inverted output signal of the shift register. In the invention, the signal shift output function is realized by use of one latch unit only; the circuit has simple structure and little signal wiring; and a GOA (Gate Driver on Array) circuit formed by cascading occupies a small area, and the occupation of the display area of the display panel can be further reduced, thus the high resolution and narrow border of the display device are realized.

Owner:BOE TECH GRP CO LTD +1

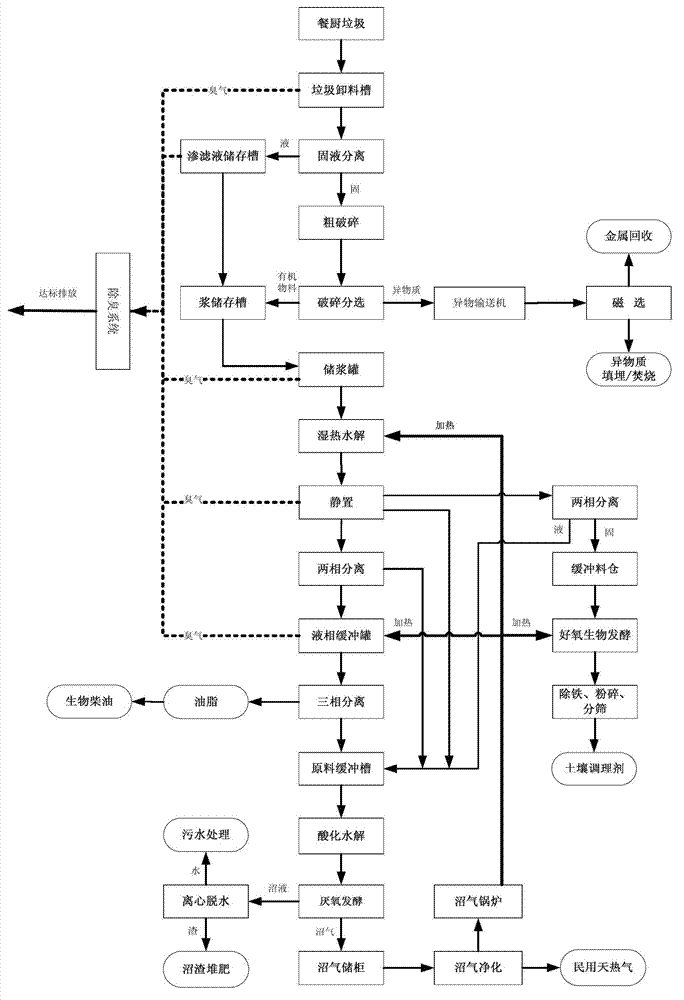

Harmless disposal method of kitchen garbage

InactiveCN102921704AShorten the processing chainPromote engineering applicationSolid waste disposalFurniture waste recoveryOil and greaseEconomic benefits

The invention relates to a harmless disposal method of kitchen garbage, which comprises the following steps of: (1) pretreatment: after unloading the kitchen garbage, performing solid-liquid separation, rough crushing, crushing and sorting and performing magnetic separation, and recycling metal substances mixed in the kitchen garbage; (2) hydrothermal hydrolysis: after the pretreatment, adopting the hydrothermal hydrolysis to fully inactivate viruses and bacteria in the kitchen garbage, separating out animal and vegetable fatty oil from the kitchen garbage, and hydrolyzing organic materials at the same time; (3) using the fatty oil obtained in the step (2) as an industrial fatty oil material or further performing deep processing to obtain fatty acid methyl ester or biodiesel; mixing and proportioning the pasty organic materials and then conveying into an anaerobic fermentation system; and conveying large granular organic materials into an aerobic biological fermentation device. The method provided by the invention realizes harmless disposal of the kitchen garbage mainly by the pretreatment and the hydrothermal hydrolysis process, increases the conversion rate of biological energy of the kitchen garbage and increases the economic benefit of kitchen garbage recycling products.

Owner:CHINA URBAN CONSTR DESIGN & RES INST CO LTD +2

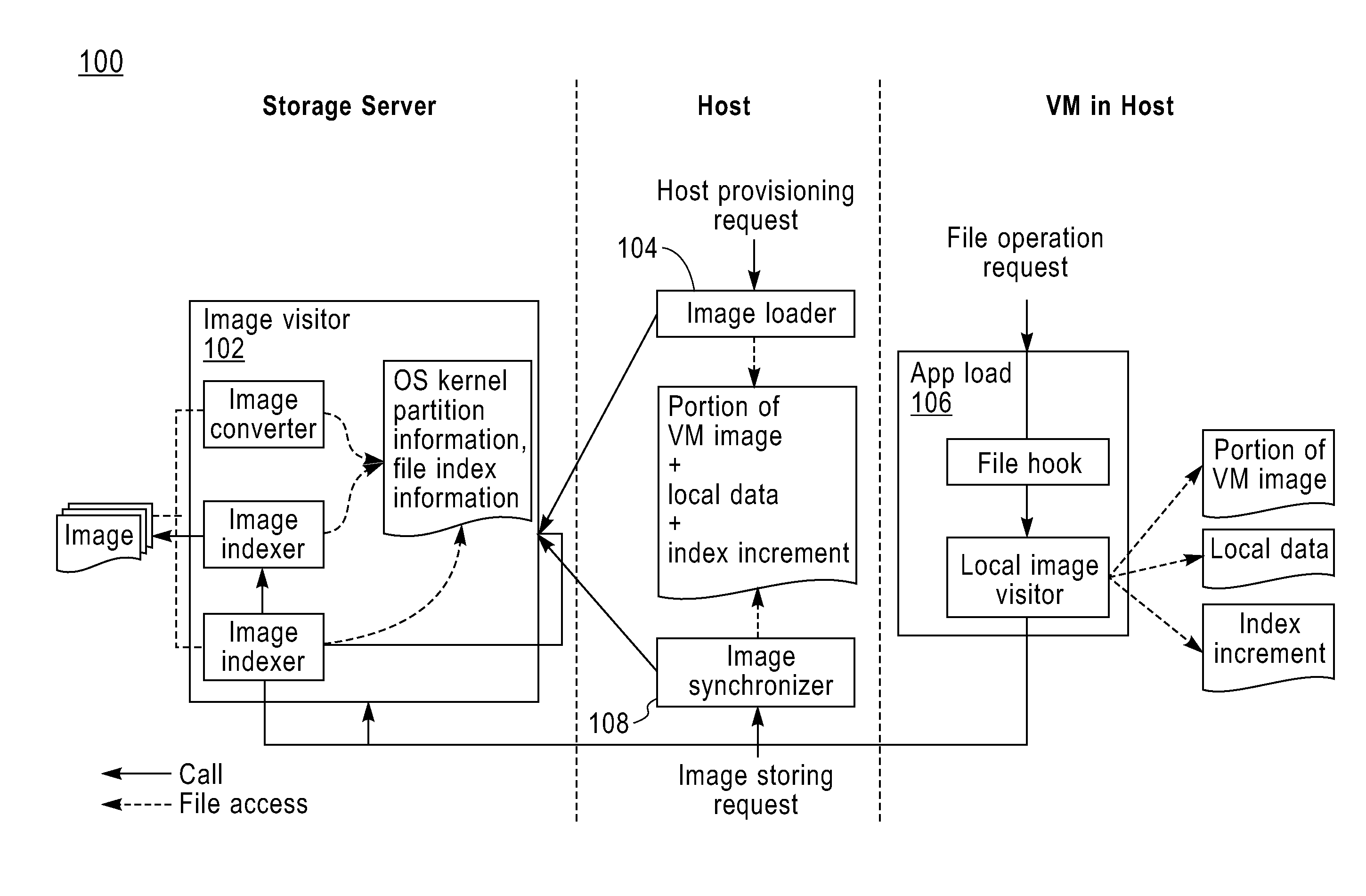

Method and system for running virtual machine image

InactiveUS20110078681A1Reduce occupancyReduce network bandwidth consumptionSoftware simulation/interpretation/emulationMemory systemsVirtual machineHost machine

A computer-implemented methods and systems for a running virtual machine image in a host machine. One method includes: receiving a virtual machine image provisioning request; sending to a storage server a request to copy a virtual machine image related to the virtual machine image provisioning request; receiving a portion of the virtual machine image; starting a virtual machine in the host machine by running the received portion of the virtual machine image; intercepting a file operation request of a program running in the virtual machine; and acquiring a file related to the file operation request.

Owner:IBM CORP

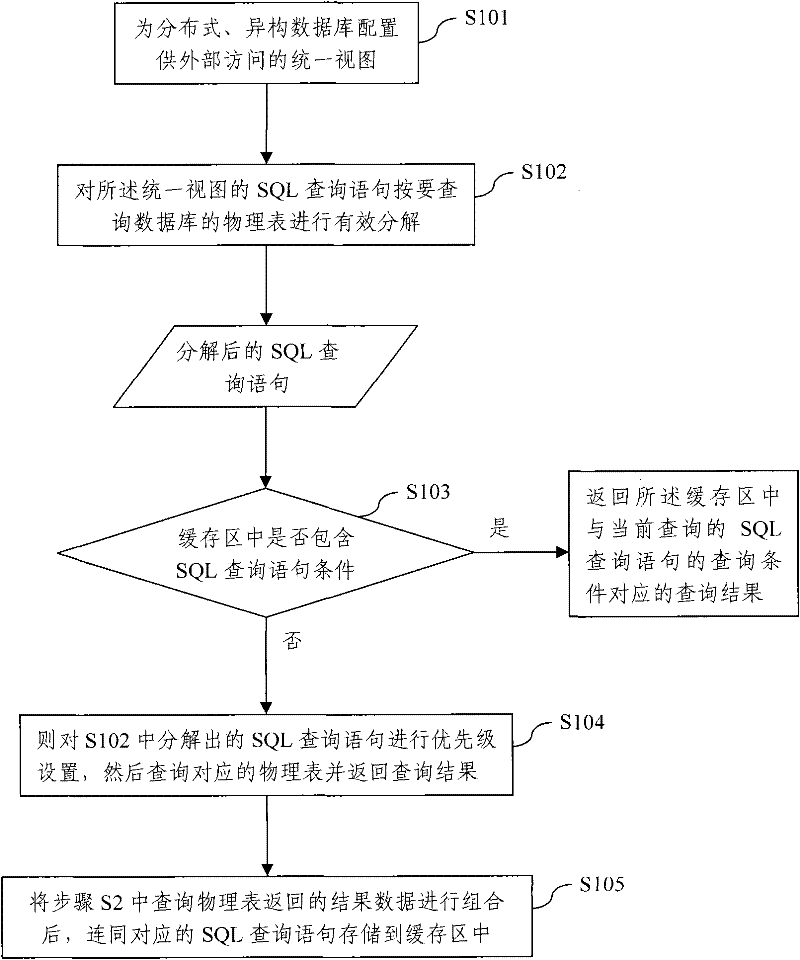

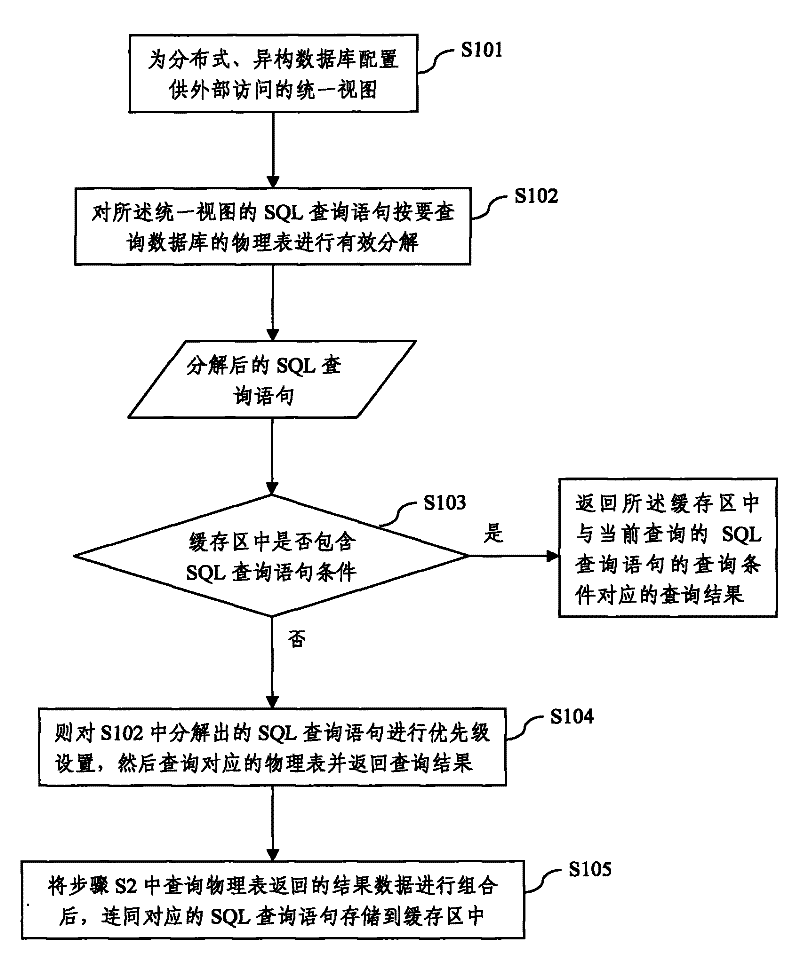

Query optimization method based on unified view of distributed heterogeneous database

ActiveCN102163195AImprove query efficiencyReduce occupancySpecial data processing applicationsQuery optimizationResult set

The invention discloses a query optimization method based on a unified view of a distributed heterogeneous database. The method comprises the following steps of: configuring the unified view for the distributed heterogeneous database for external access; effectively decomposing SQL query statements of the unified view according to a physical table of a database to be queried so as to maximally reduce the number of accesses; querying corresponding physical table by the decomposed SQL query statements and returning back resultant data, wherein a cache region is firstly queried, if the cache region contains corresponding resultant data, the result is directly obtained, and priorities are set for the SQL query statements so as to obtain an optimal result set as much as possible in each time while querying the physical table; and combining the resultant data returned after querying the physical table, and storing the combined resultant data and the corresponding SQL query statements into the cache region. The query optimization method based on the unified view of the distributed heterogeneous database improves efficiency for querying the unified view of the distributed heterogeneous database and reduces usage of resource.

Owner:BEIJING TONGTECH CO LTD

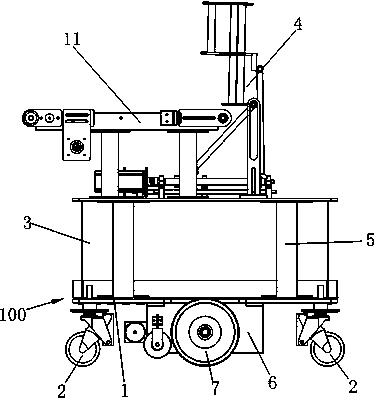

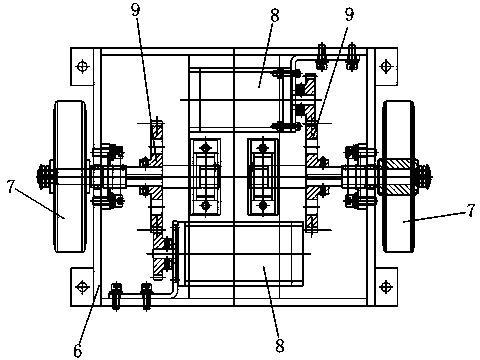

AGV self-propelled unloading transport cart

InactiveCN103482317AEasy to operateRealize in-situ rotation and steeringControl devices for conveyorsMechanical conveyorsCartEngineering

The invention relates to an AGV self-propelled unloading transport cart. The AGV self-propelled unloading transport cart is characterized in that two universal wheels are mounted at each of the front and rear ends of a chassis, a drive wheel box is mounted below the middle of the chassis, two coaxial drive wheels are disposed on two sides of the drive wheel box and are connected with respective independent drive mechanisms respectively, an unloading lifting overturn mechanism is disposed at the top of a frame, and a belt conveyer is disposed on one side of the unloading lifting overturn mechanism. The AGV self-propelled cart is combined with the unloading lifting overturn mechanism and the belt conveyer, the process of manual taking, turning, unloading and transporting is replaced, and accordingly labor intensity is reduced for workers, work efficiency is improved and production cost is reduced. The independently driven drive wheels are disposed between driven wheels at front and rear ends of a cart body, the drive wheels are reversely rotated at the same speed, the transport cart is allowed to rotate and steer in situ, less space is occupied, and materials can be steered, taken and unloaded in narrow passages.

Owner:WUXI HONGYE AUTOMATION ENG

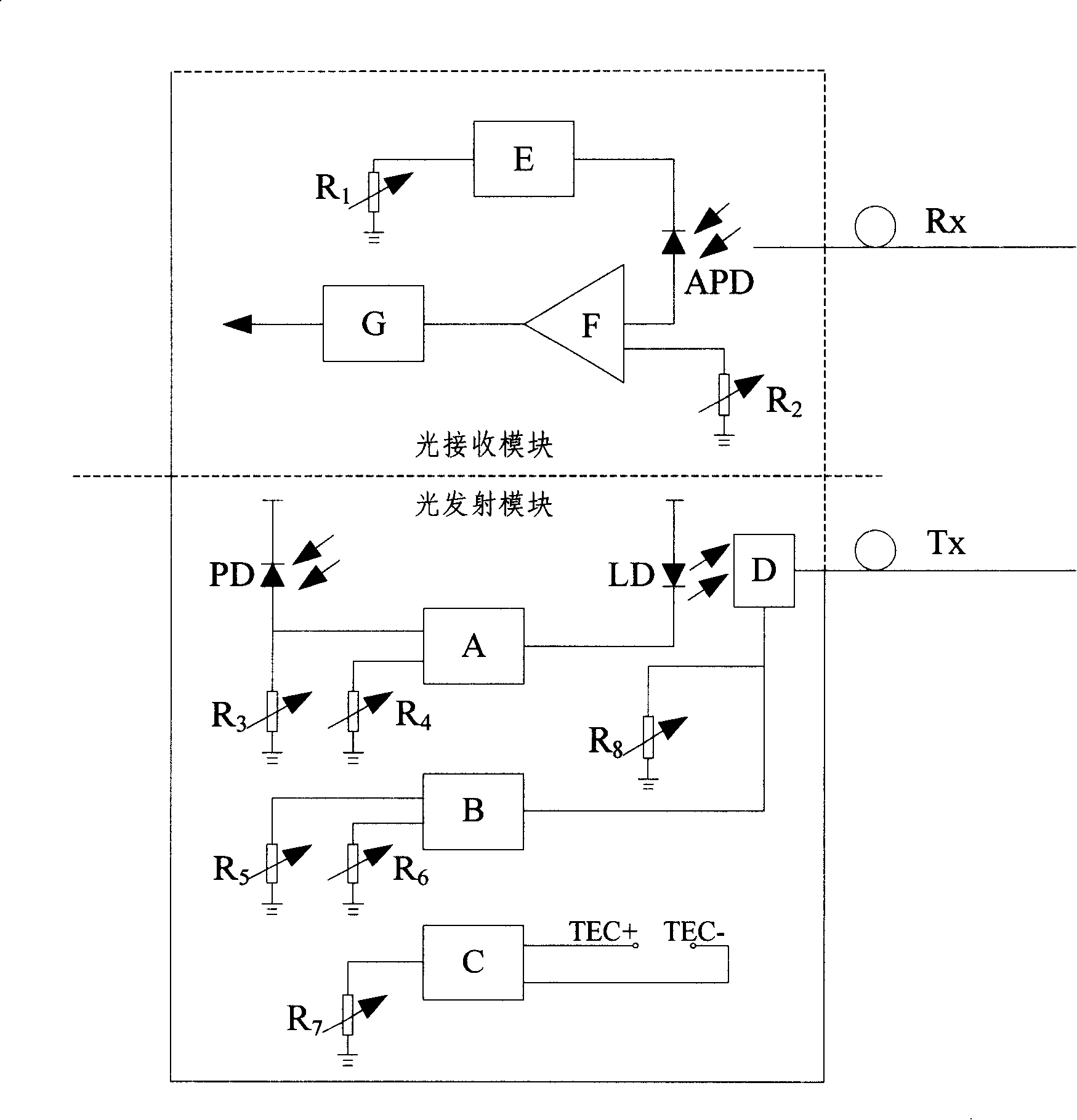

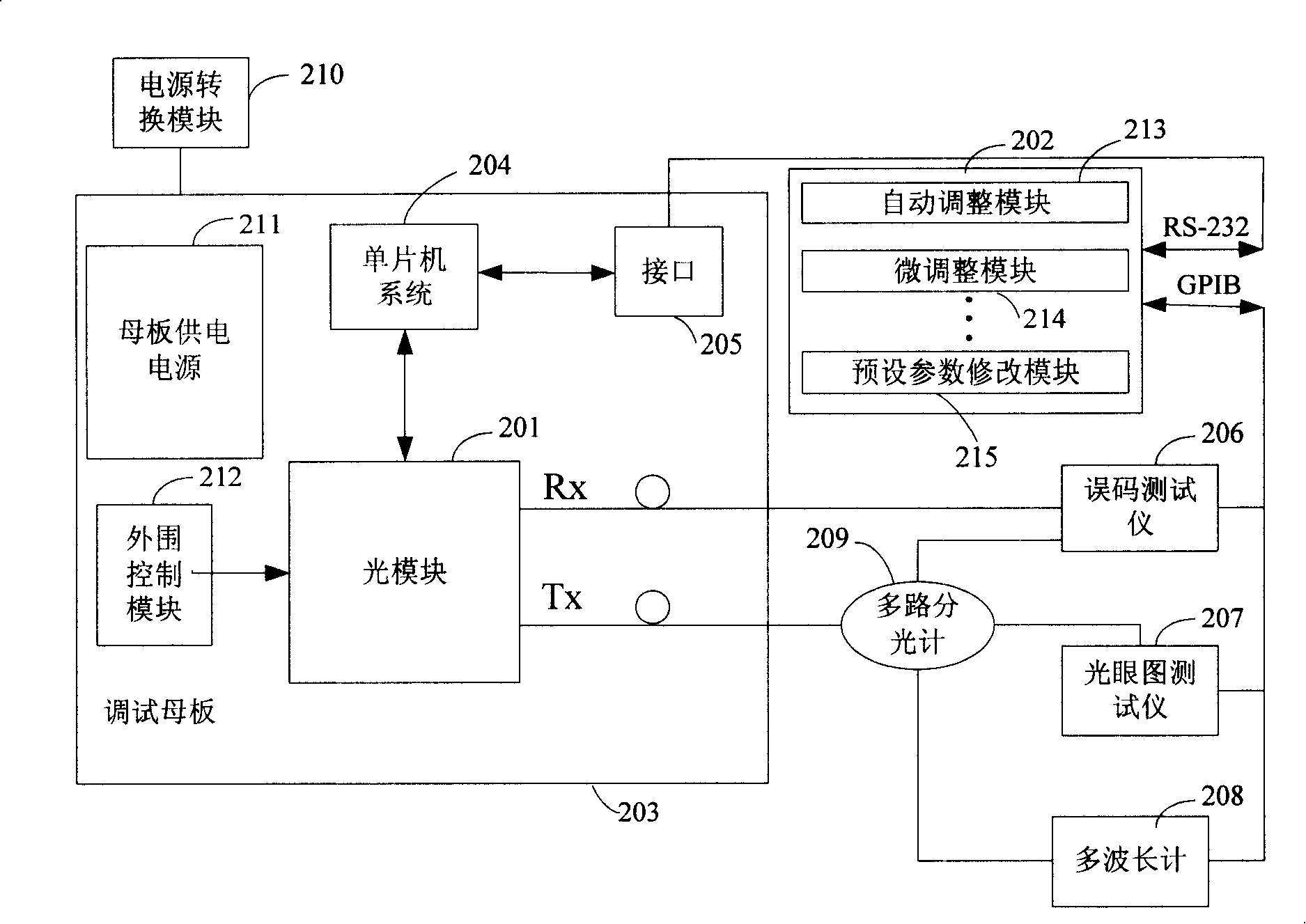

Automatic debugging method and system of optical module

InactiveCN101179331AIncrease productivityReduce the involvement of human factorsTransmission monitoring/testing/fault-measurement systemsOptical ModuleEmbedded system

The invention provides a light module automation debugging system, wherein, the invention includes: a light module, which includes a digital controller used for reporting a performance amount or an alarm amount and revising value of the digital controller corresponding to the address of key parameter of the light module r according to the digital controller instruction delivered; a main control computer, which is used for downloading a reference value of the light module and receiving an actual value reported by the light module, and then comparing the reference value to the actual value, and if the values are not equal, finds out the digital controller requiring adjustment corresponding to the address of the key parameter of the light module and delivering a numerical instruction of the revised digital controller; and a debugging motherboard, which is used for bearing the light module and is connected with the main control computer through a interface for realizing a communication between the light module and the main control computer. Furthermore, the invention also provides a method for carrying out the light module automatic debugging, thus a production efficiency of the light module debugging is improved, and artificial quality hidden dangers in the process of the debugging are reduced.

Owner:ZTE CORP

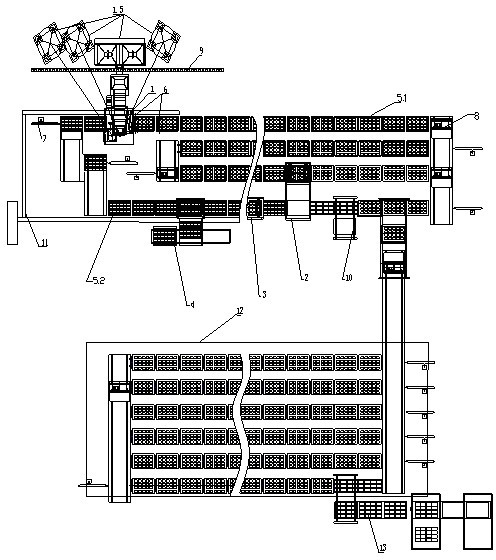

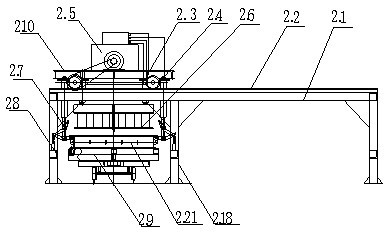

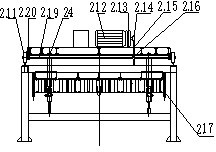

Production line for self-insulation building blocks

InactiveCN102555052AReduce occupancyReduce consumptionDischarging arrangementCeramic shaping plantsProduction lineBrick

The invention relates to a tool used in an insulation brick manufacturing process, in particular to a production line for self-insulation building blocks, which comprises a stirring machine. The production line is characterized in that automatic metering devices are arranged on a feeding port and at the bottom of the stirring machine, a production track capable of automatically conveying molds isdisposed below a distributing port of the stirring machine, a stripper is further arranged on the production track and is spaced from the stirring machine by a proper distance, a return track and a track leading into a maintenance area are respectively disposed on two sides of the other end of the stripper, the return track sequentially penetrates through a cleaning area, an oil injection area and a core setting area, a link plate machine is arranged at the other end of the maintenance area, and a manipulator is disposed at the other end of the link plate machine. The production line is high in production efficiency and annual yield, occupied land area and workshop investment are reduced, operating expense is saved, and the production line can operate continuously.

Owner:张建兴

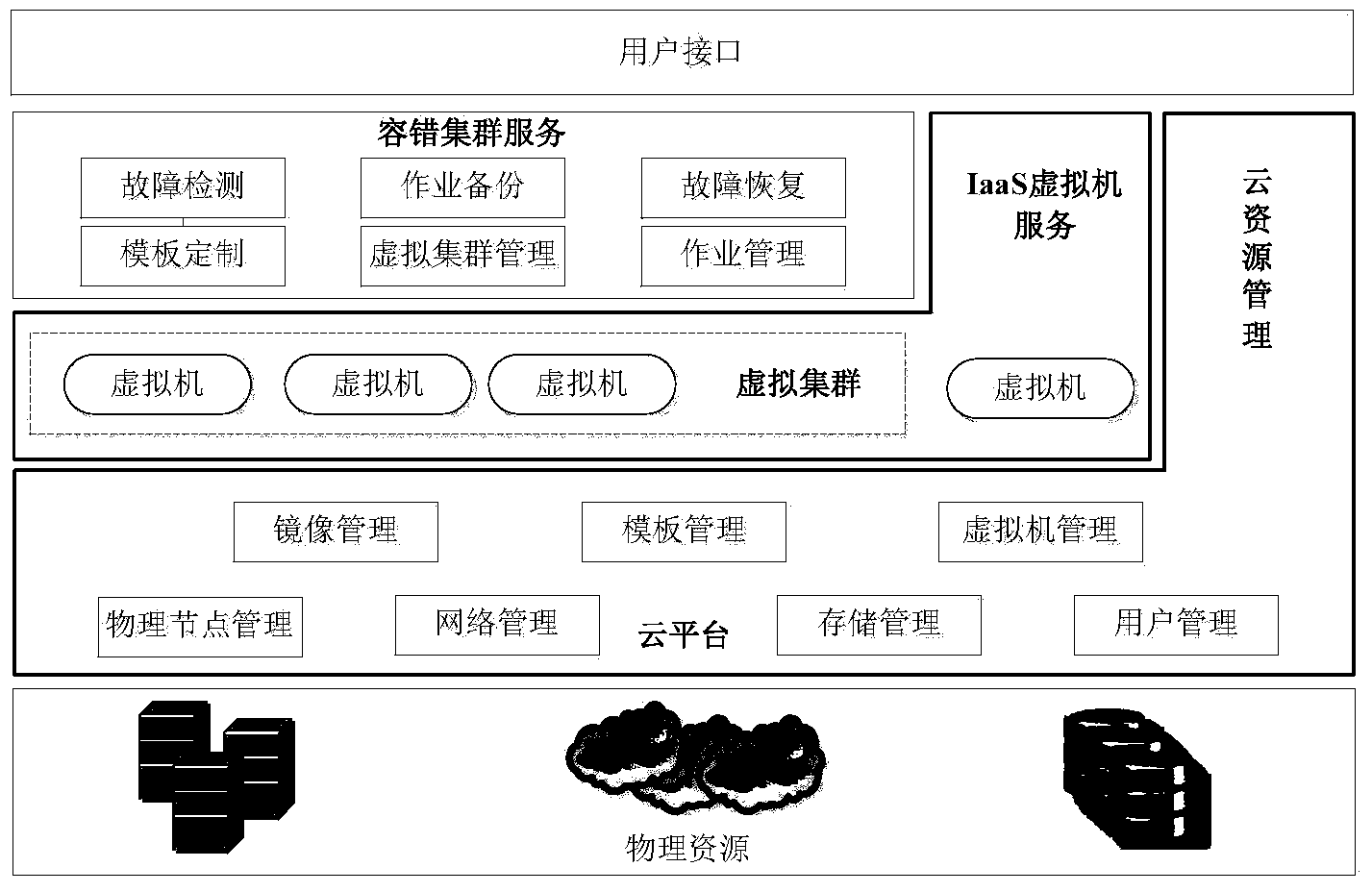

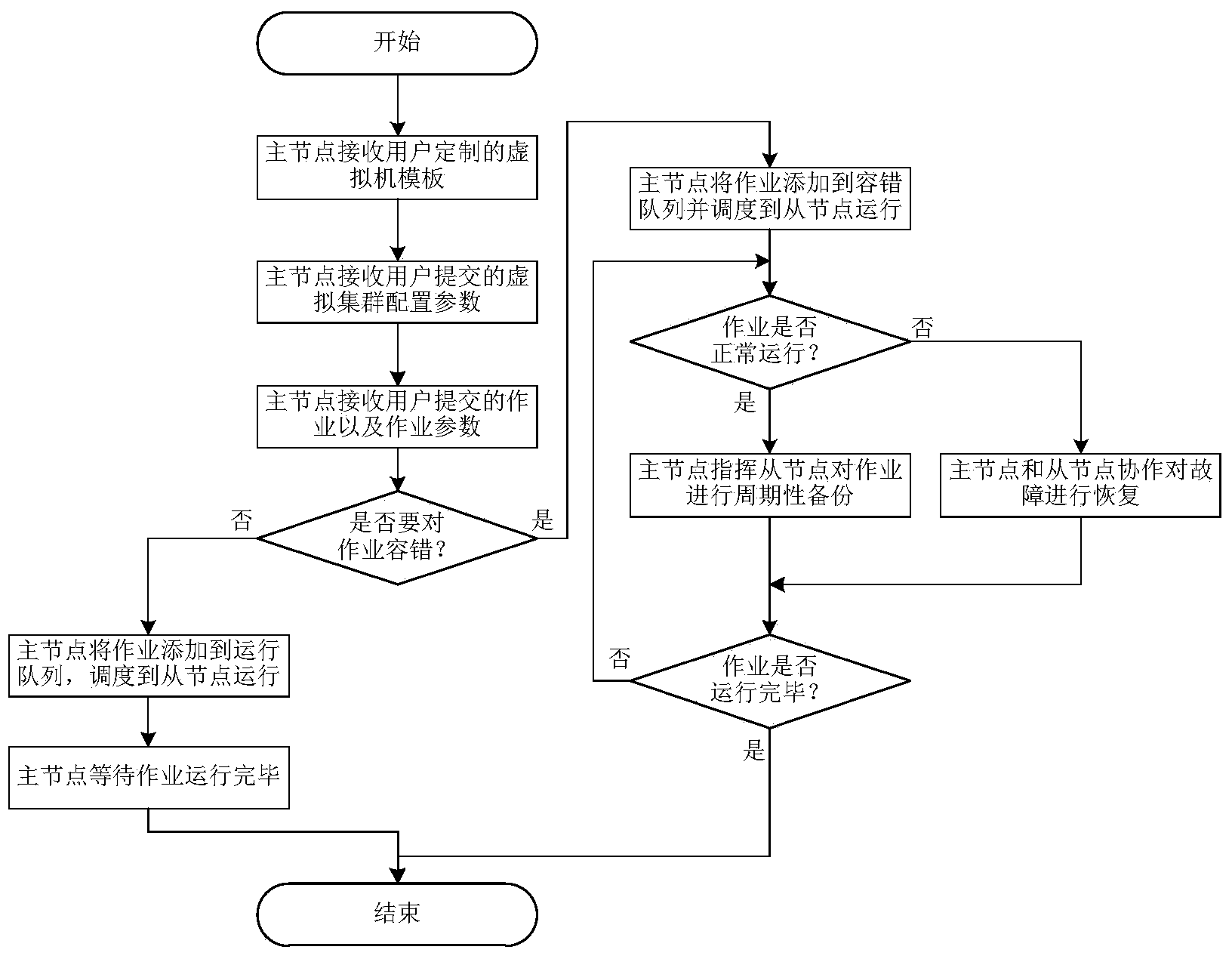

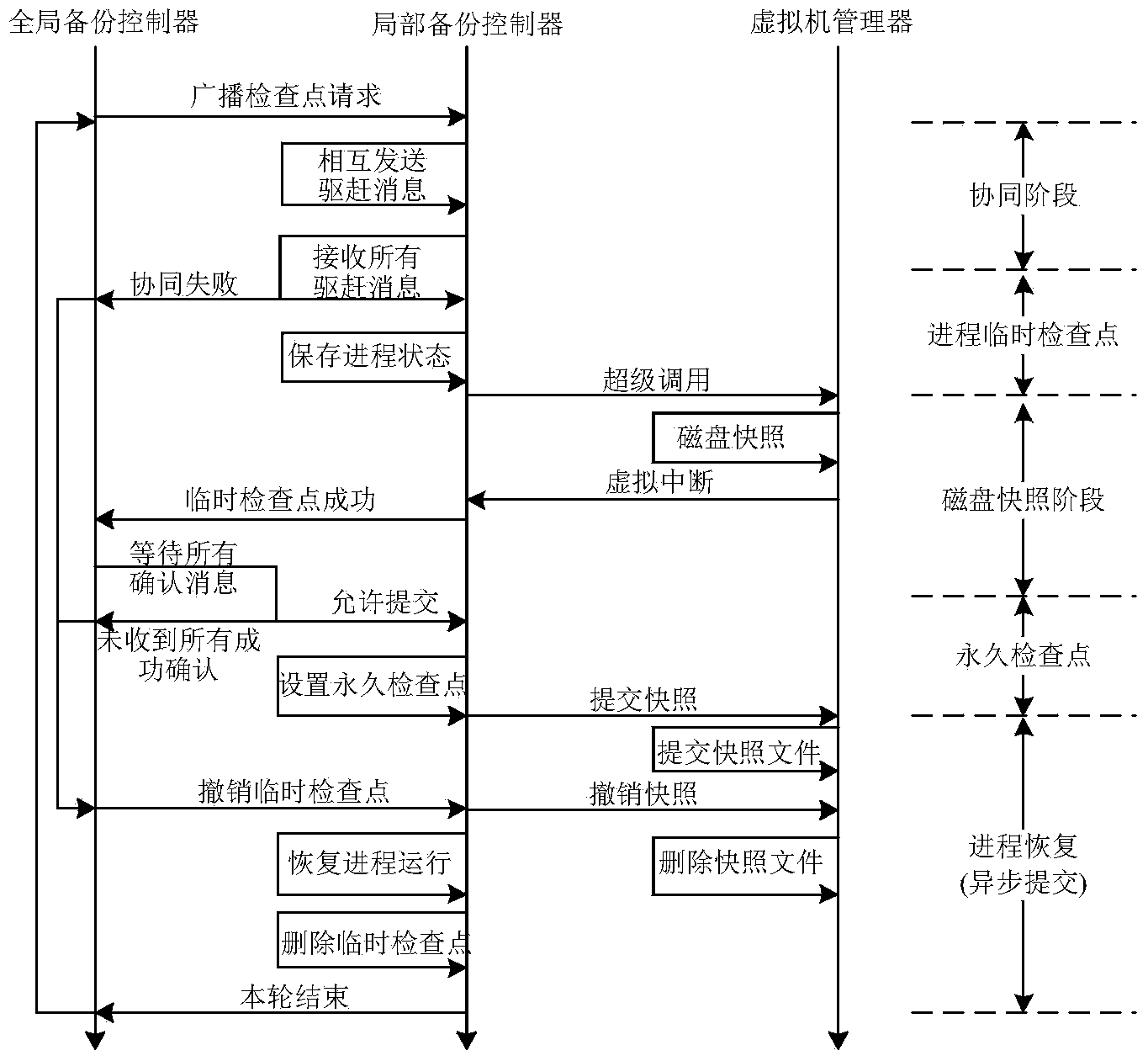

Distributed system multilevel fault tolerance method under cloud environment

ActiveCN103778031AEasy to use interfaceLower the thresholdRedundant operation error correctionVirtualizationVirtual machine

Owner:HUAZHONG UNIV OF SCI & TECH

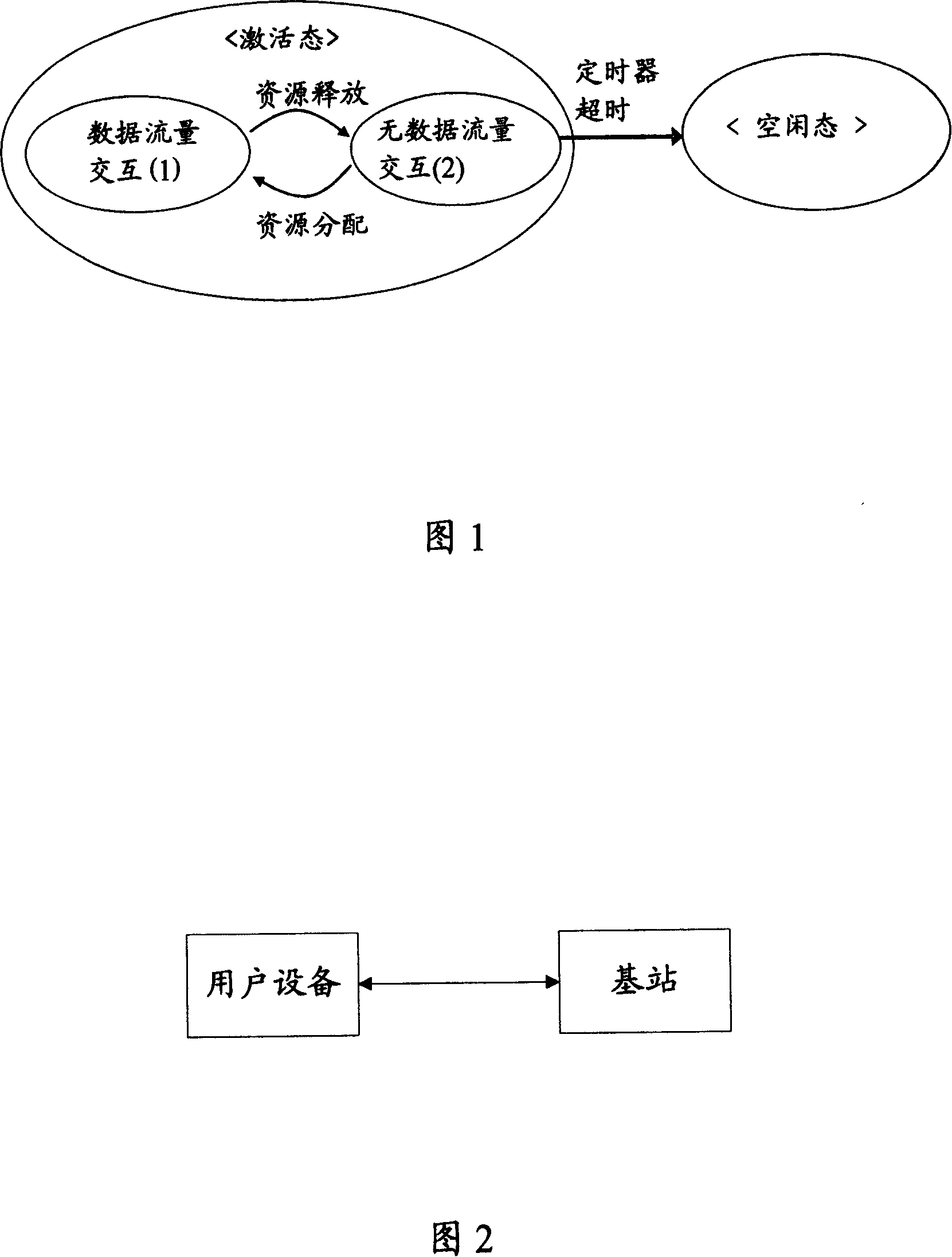

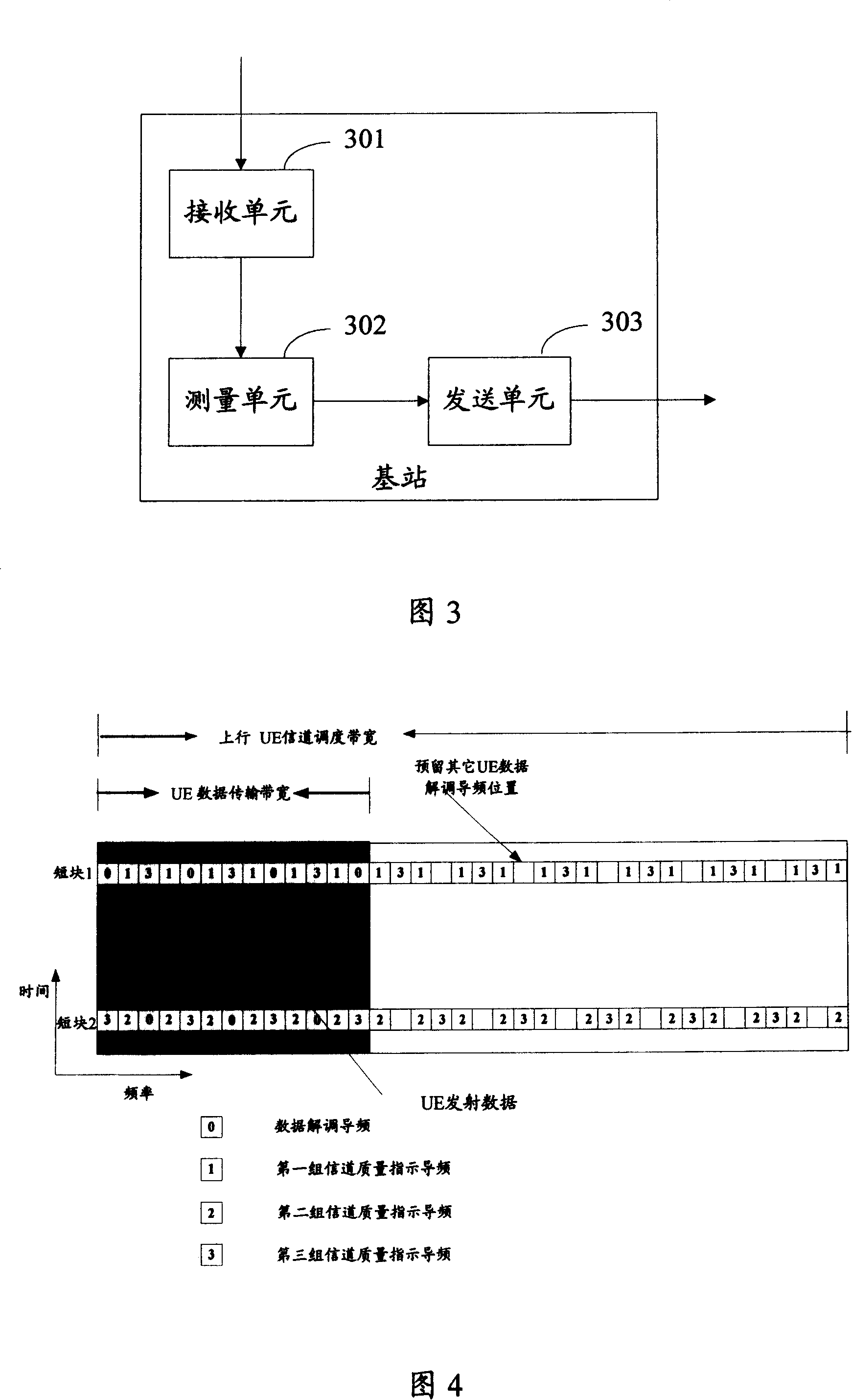

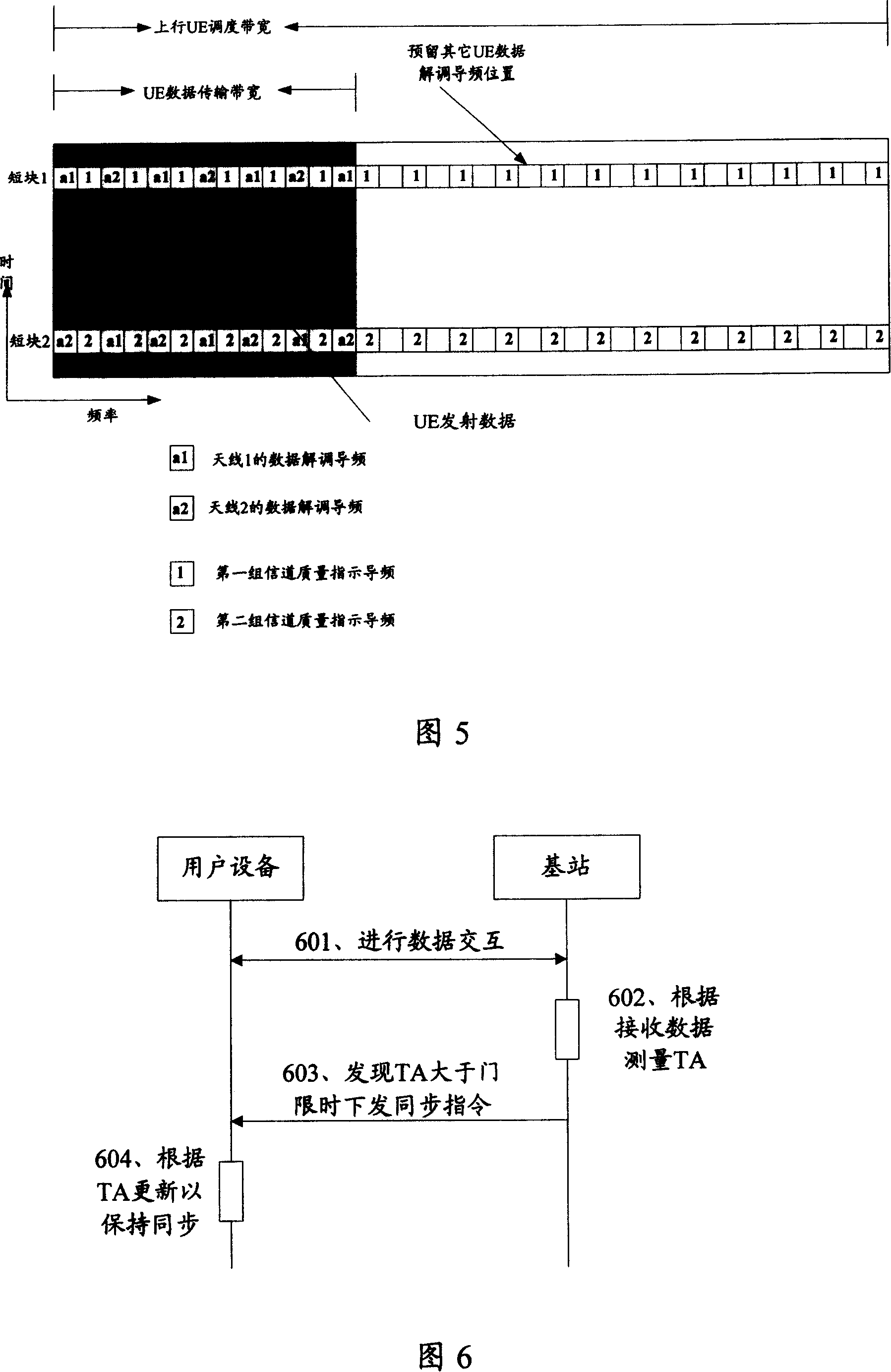

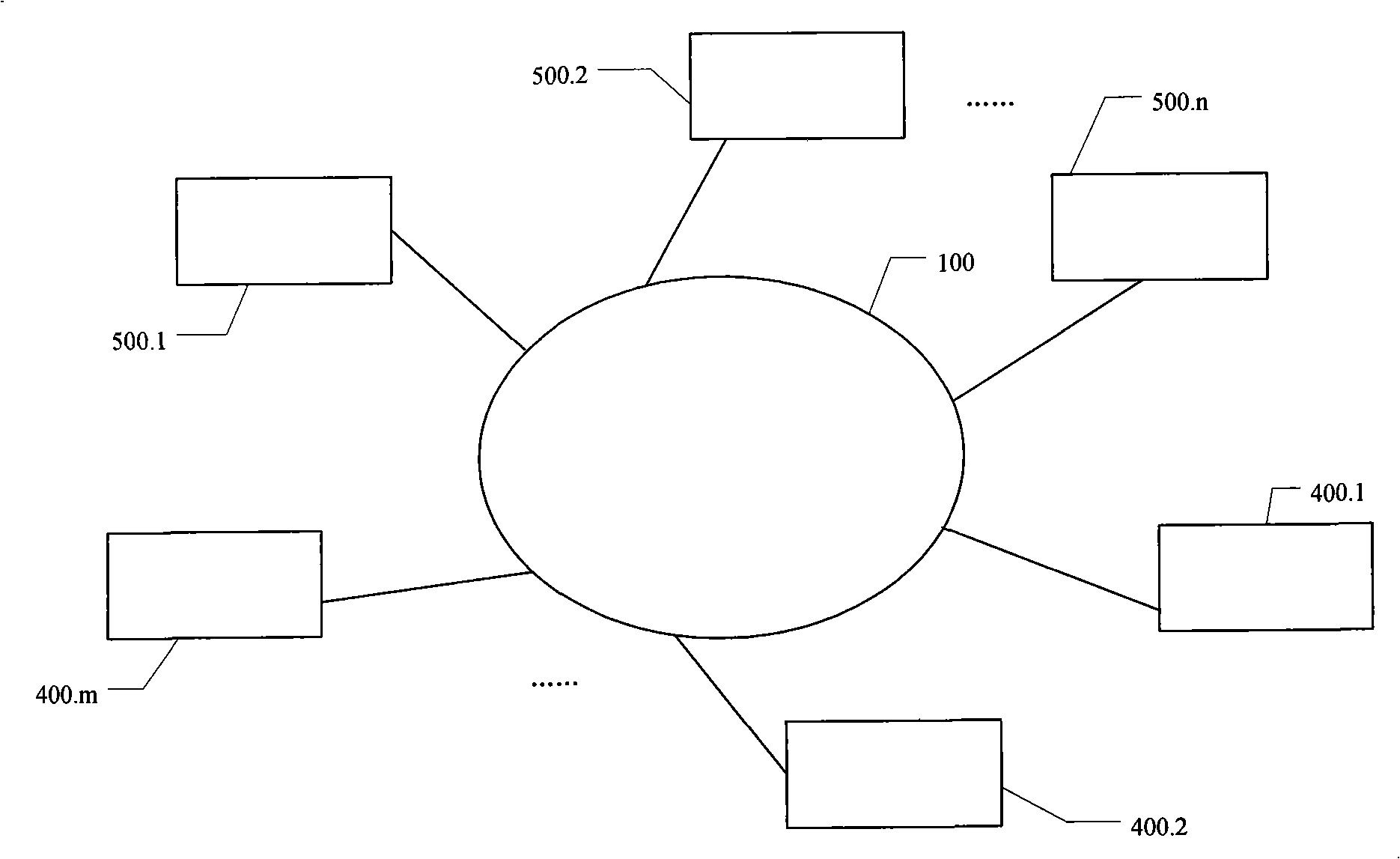

Method and system for remaining ascending synchronization

ActiveCN101154984AAvoid occupyingReduce occupancySynchronisation arrangementRadio transmission for post communicationTelecommunicationsData traffic

The present invention discloses a method for keeping the uplink synchronization in the long-term evolving system and the system, which causes the UE and Node B keep uplink synchronization in the TDD LTE system and reduce the spending of the system resource as much as possible. The Node B in the invention receives the uplink information transmitted by the UE; the Node B confirms the time advance amount TA according to the received uplink information and transmits the TA adjusting information to the UE when the TA is larger than the threshold value; and the UE sends TA to do updating to the local uplink according to the TA adjusting information in order to keep the uplink synchronization. Besides, the UE in the on-data flow quantity interaction state transmits uplink pilot signal to the Node B in the assigned time-frequency resource with the existing uplink resource. The system comprises the UE and the Node B connected with the UE, wherein the Node includes a receiving unit, a measuring unit and a transmitting unit.

Owner:DATANG MOBILE COMM EQUIP CO LTD

Data disaster tolerance system suitable for WAN

InactiveCN101316274ATo achieve the purpose of disaster recoveryReduce data volumeTransmissionRedundant operation error correctionComputer moduleGeolocation

The invention discloses a data disaster tolerant system which is applicable to a wide area network, pertains to the technical filed of computer information storage and solves the problem that the current data disaster tolerant system does not take the effect of data transmission links and the need of lots of redundant data storage space in the wide area network into consideration. The system of the invention comprises M local storage clients connected to the wide area network and N storage servers in different geographical positions; every local storage client consists of a web manager, a snap module, a mapping file redundant array module, a compression module, a decompression module, an encryption module, a decryption module, a backup distribution module and a recovery and integration module; the storage server consists of a failure detection module, a distributed arbitration module, a recovery module, a distributed copy service module, a backup and recovery service module. The system of the invention combines disaster tolerant coding and copying, reduces the amount of redundant data and effectively utilizes storage space. Meanwhile, data to be transmitted is compressed and encrypted, thus improving the safety and reliability of data on network transmission, effectively utilizing network bandwidth and reaching excellent disaster tolerant effect.

Owner:HUAZHONG UNIV OF SCI & TECH

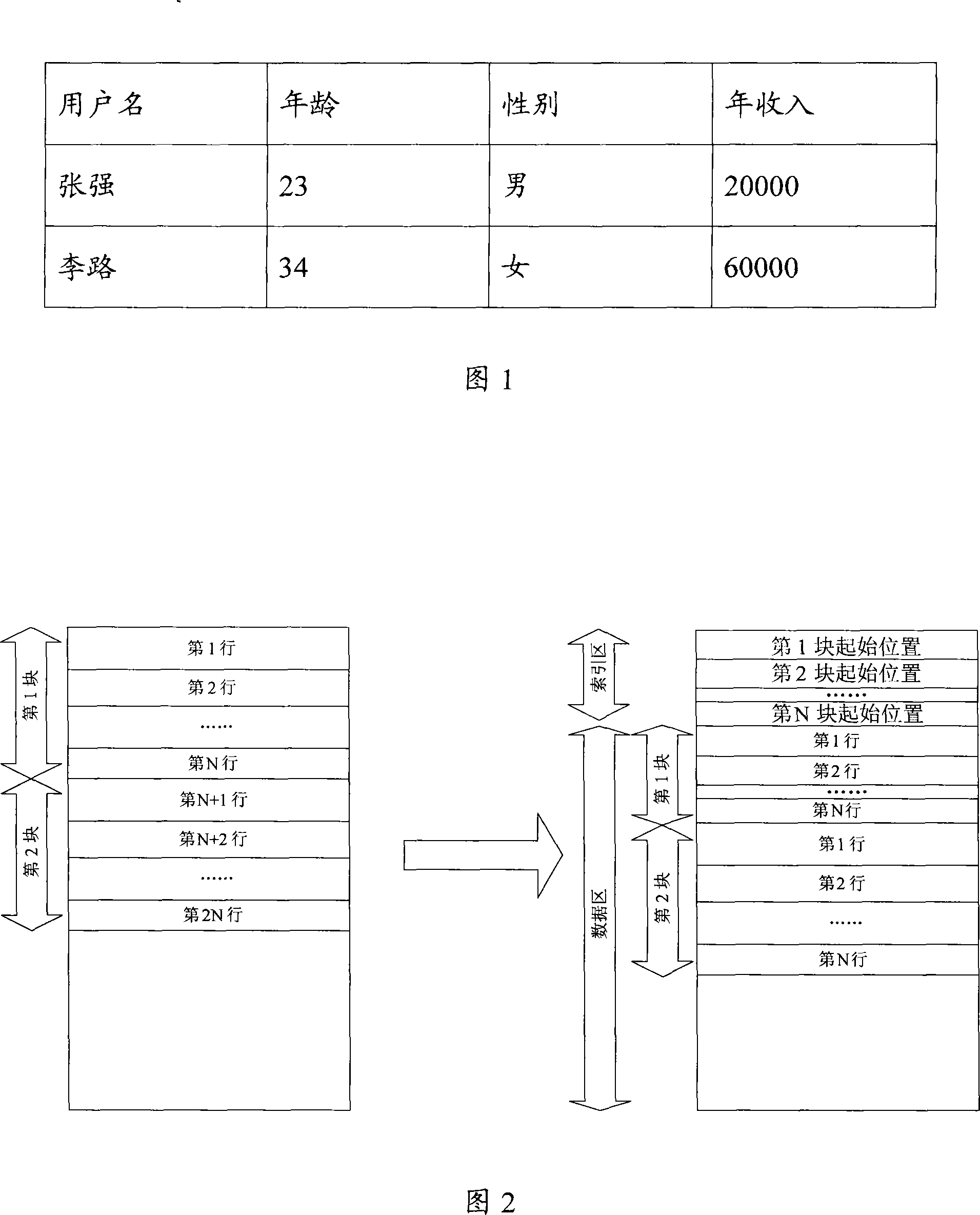

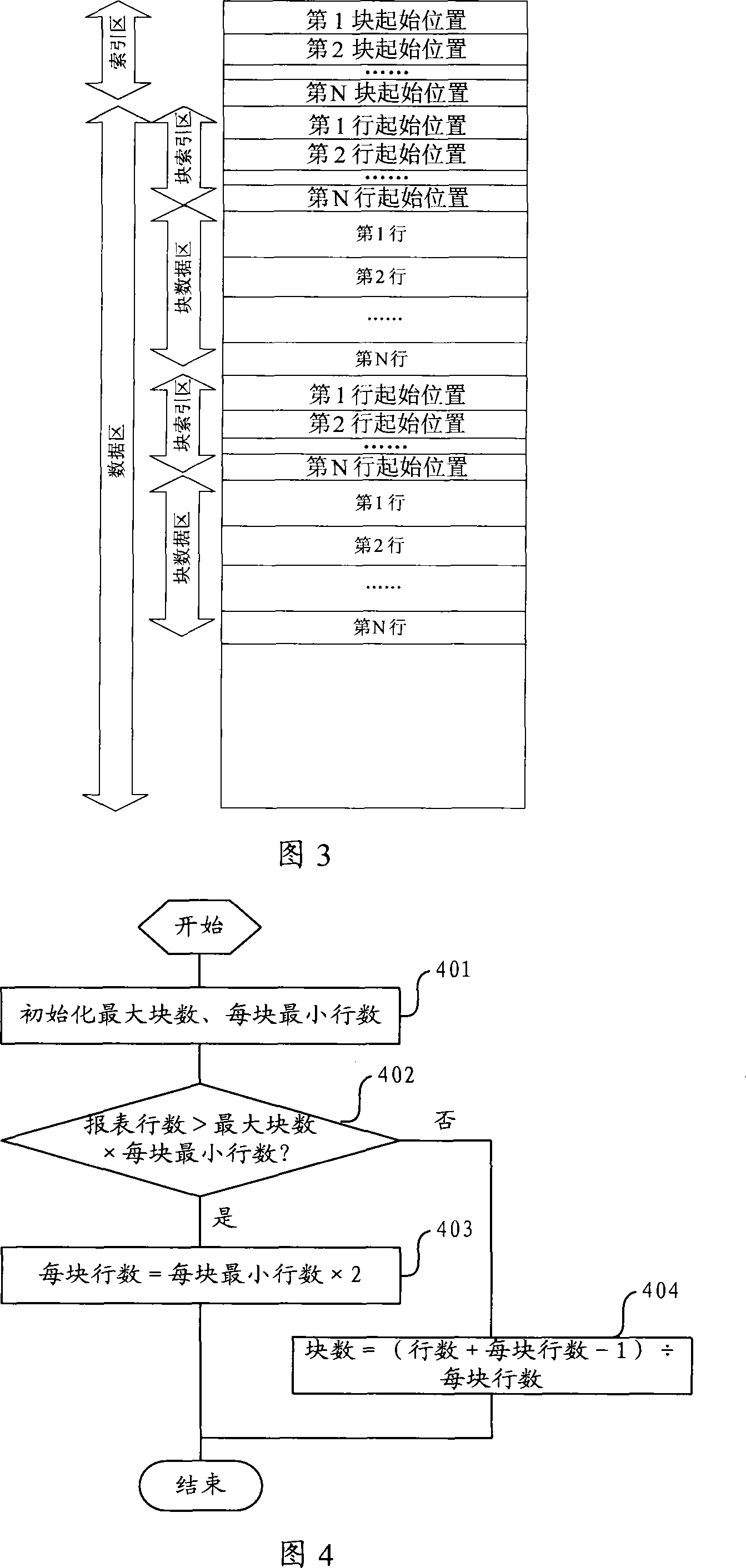

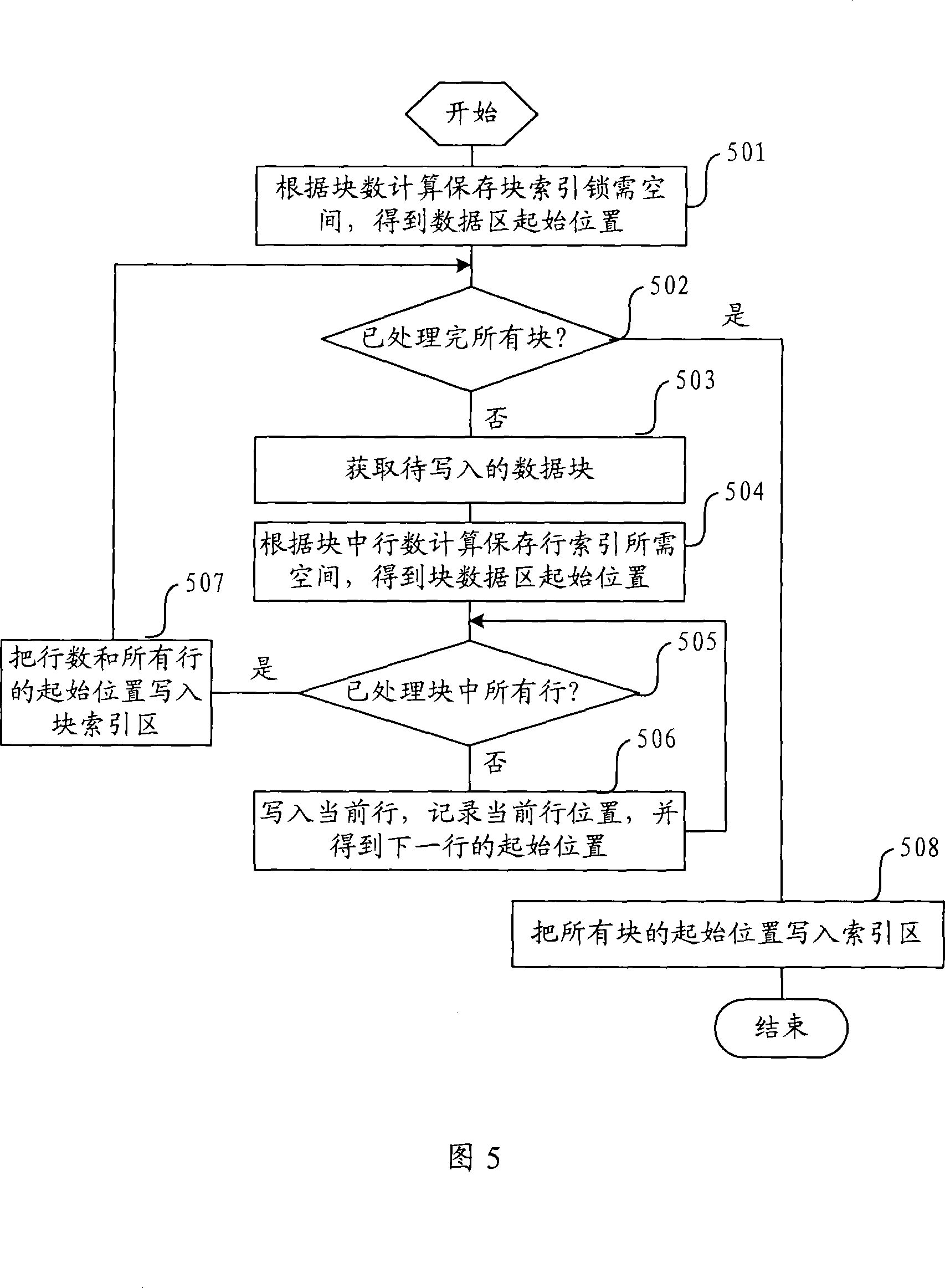

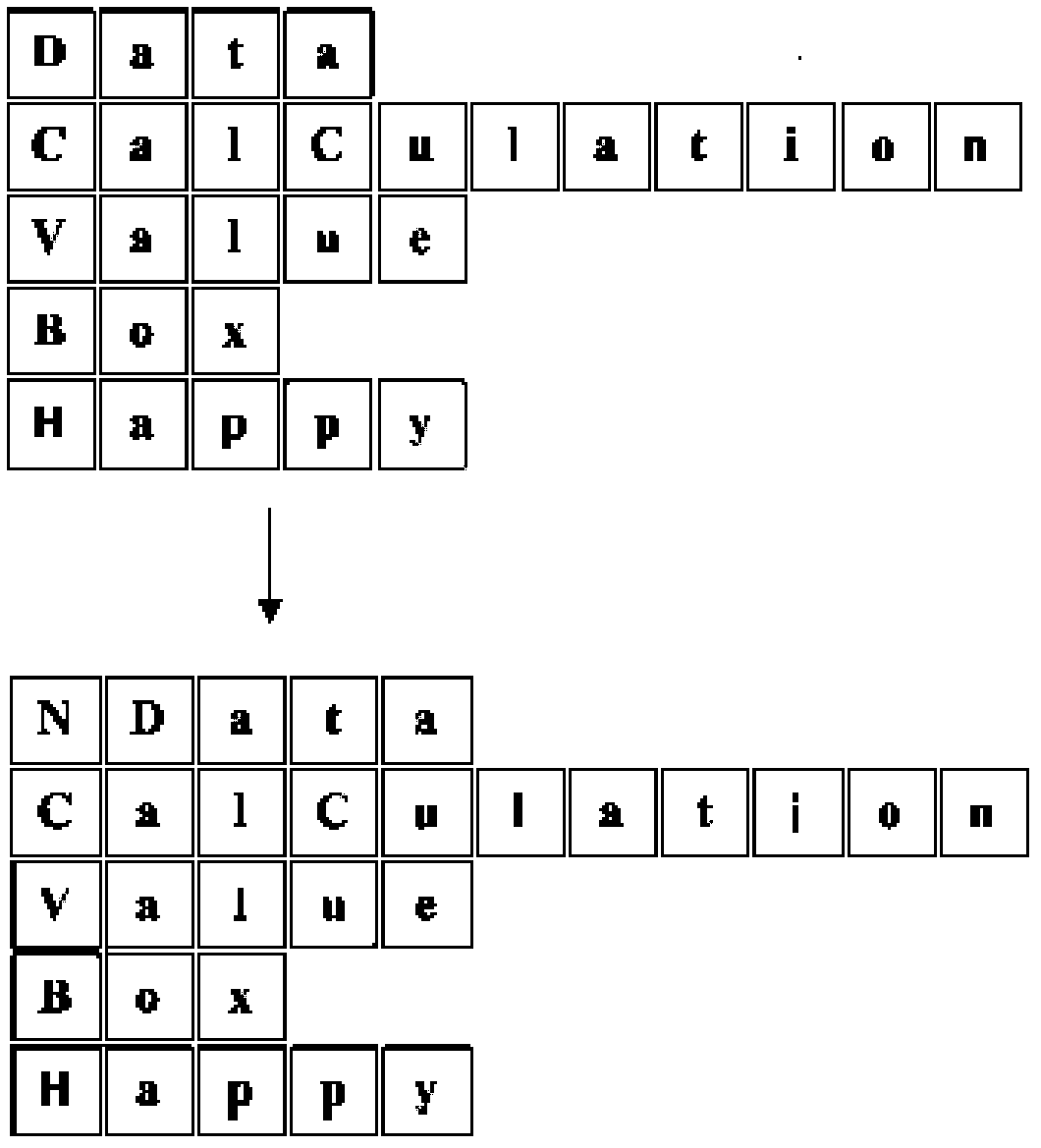

Data cache method and system

ActiveCN101178693AQuick searchReduce occupancyMemory adressing/allocation/relocationSpecial data processing applicationsSpatial partitionDatabase

The invention discloses a data buffering method and a system to solve the problems of the mutual collision of the memory consumption and the visiting speed of the existing report forms buffering method. The method comprises that: the invention divides the storing space into an index district and a data district; the data is partitioned; each block of data is sequentially written into the data district of the storing space and the initial position of each block is sequentially written into the index district according to the partitioning result; and the data is read according to the partitioning result and the initial position of each block. The buffering technique reduces the occupation of the memory, because of reading the data from the fixed disk every time, can greatly save the use of the memory space and elevate concurrent processing ability of the system under the status of multiple concurrent and big data quantity; besides, the invention greatly improves the efficiency of the document reading by the index method to read the report forms from fixed disk. Therefore, the method of the invention gets a balance between the memory space and the visiting speed.

Owner:NEUSOFT CORP

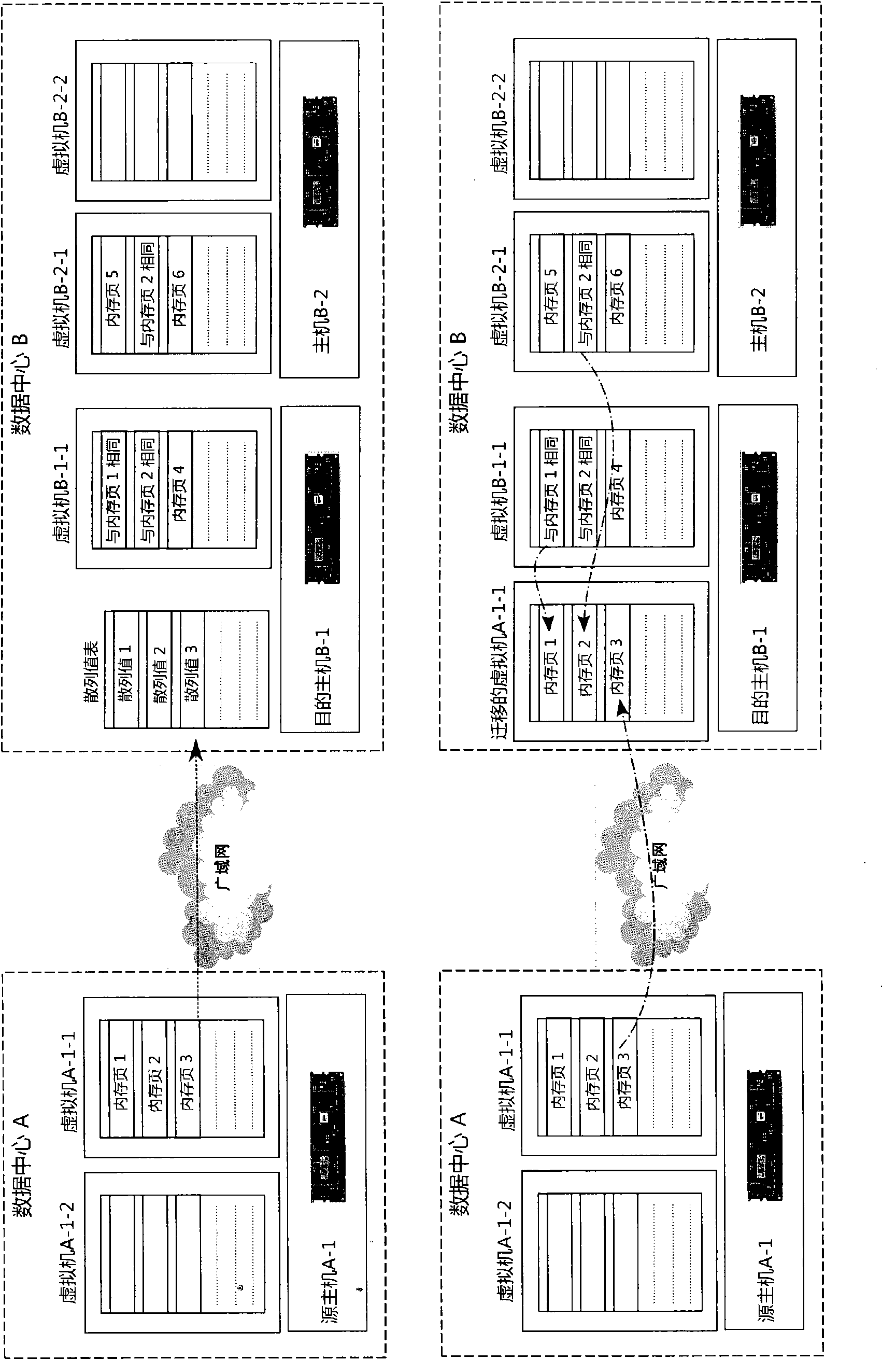

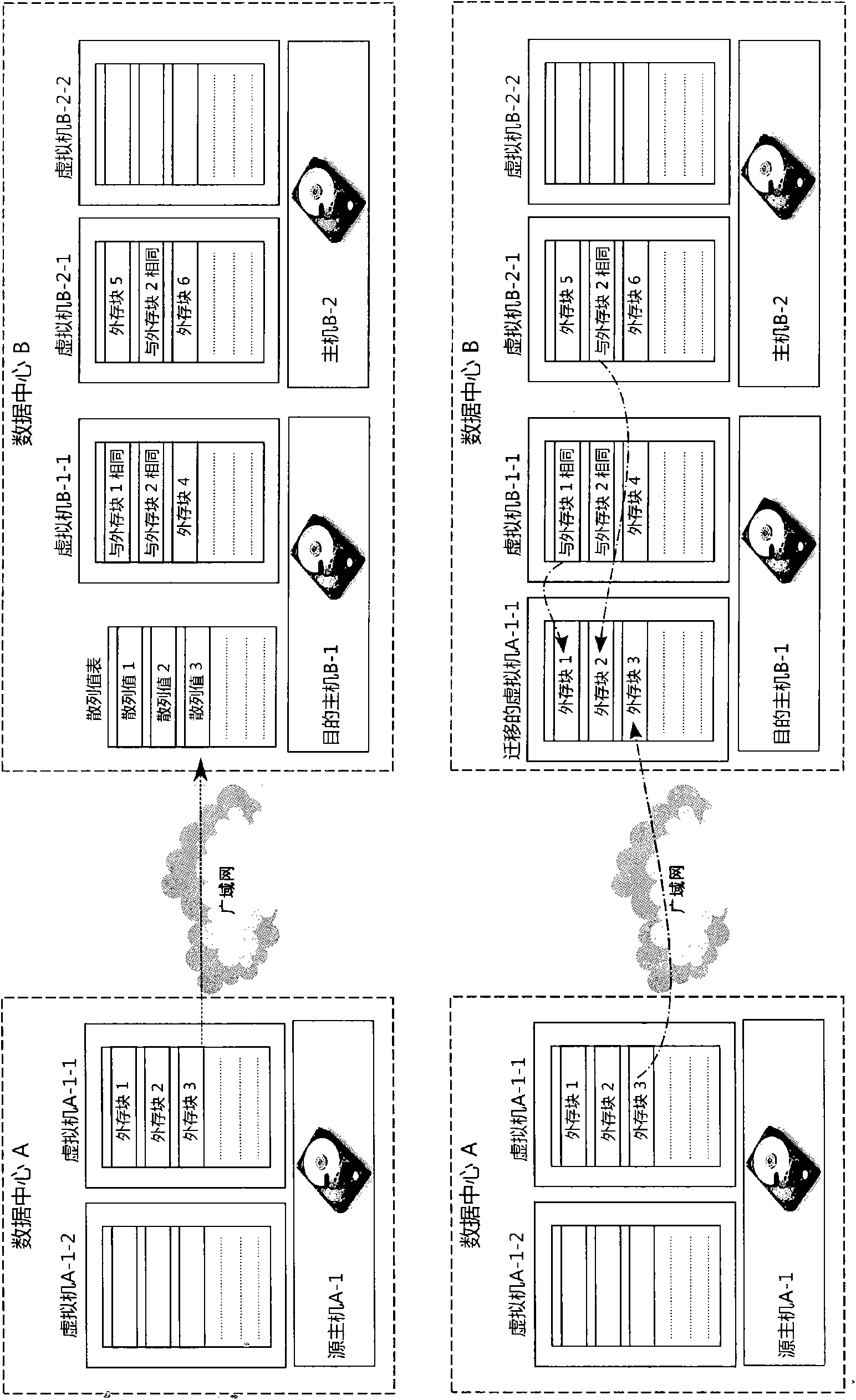

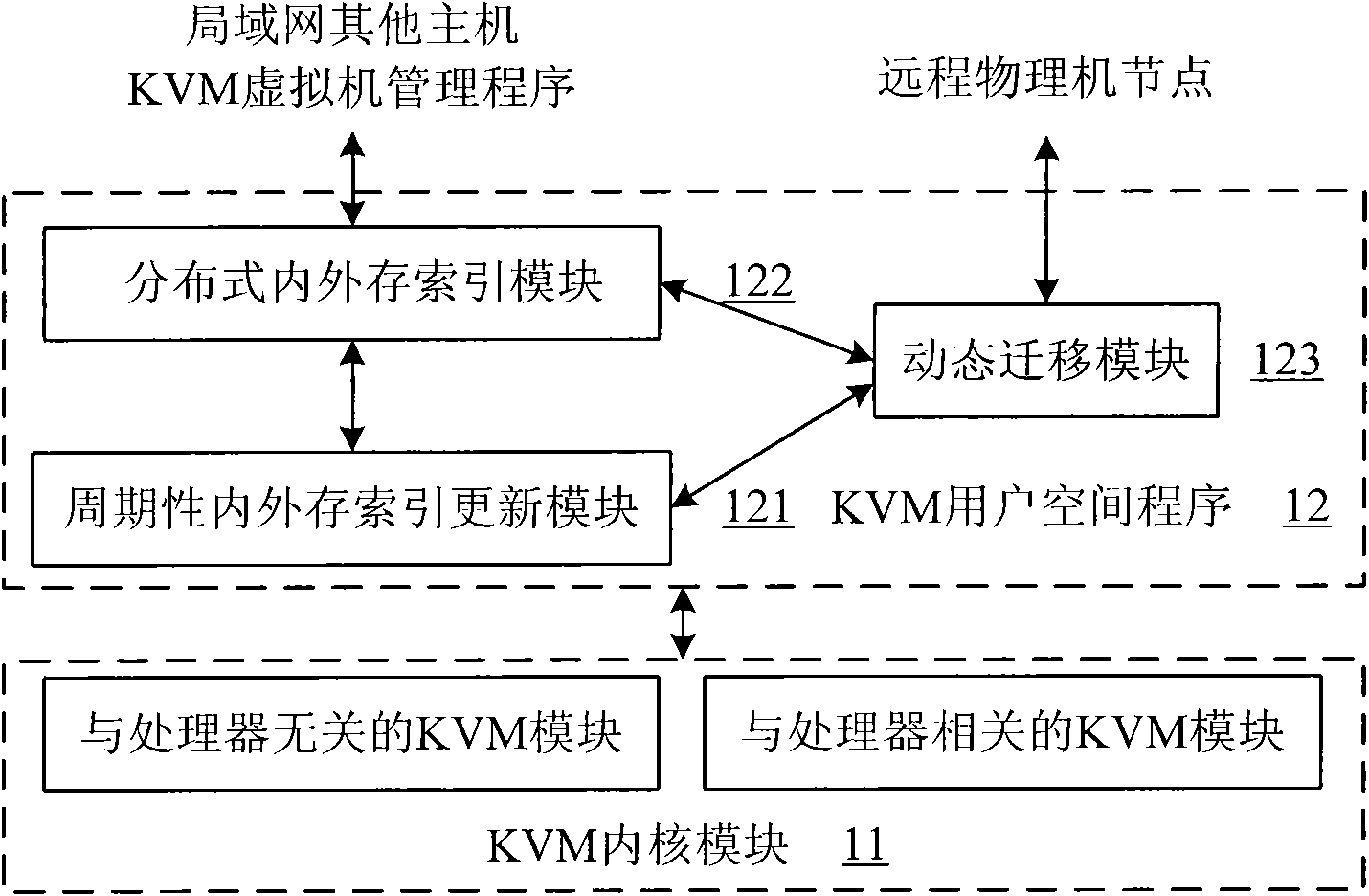

Method and system for dynamic migration of WAN virtual machines

ActiveCN102455942AImprove migration efficiencySave network bandwidthProgram initiation/switchingTransmissionHosting environmentVirtual machine

The invention provides a method for dynamic migration of WAN (Wide Area Network) virtual machines. The method aims to perform dynamic migration of one or more virtual machines from a source host to a destination host in a wide area network. The method comprises the following steps of: establishing a distributed index of the internal memory and external memory data of all virtual machines in operation in a data center to which the destination host belongs, thus forming a destination host environment; in the migration process of the virtual machines, retrieving the internal memory and external memory data of the virtual machines in the source host in the destination host environment, and transmitting the internal memory and external memory data not present in the destination host environment only through the wide area network; and simultaneously, in the transmission process, marking internal memory and external memory dirty blocks by the virtual machine manager of the source host, iteratively sending the internal memory and external memory dirty blocks until reaching a stop condition, and then stopping the transmission of the internal memory and external memory, causing the virtual machines in the source host to enter a stop stage, and starting the virtual machines by the destination host. The method is capable of reducing the network bandwidth occupied by the migration of the virtual machines in the wide area network and shortening the time of the dynamic migration of the virtual machines.

Owner:CHINA STANDARD SOFTWARE

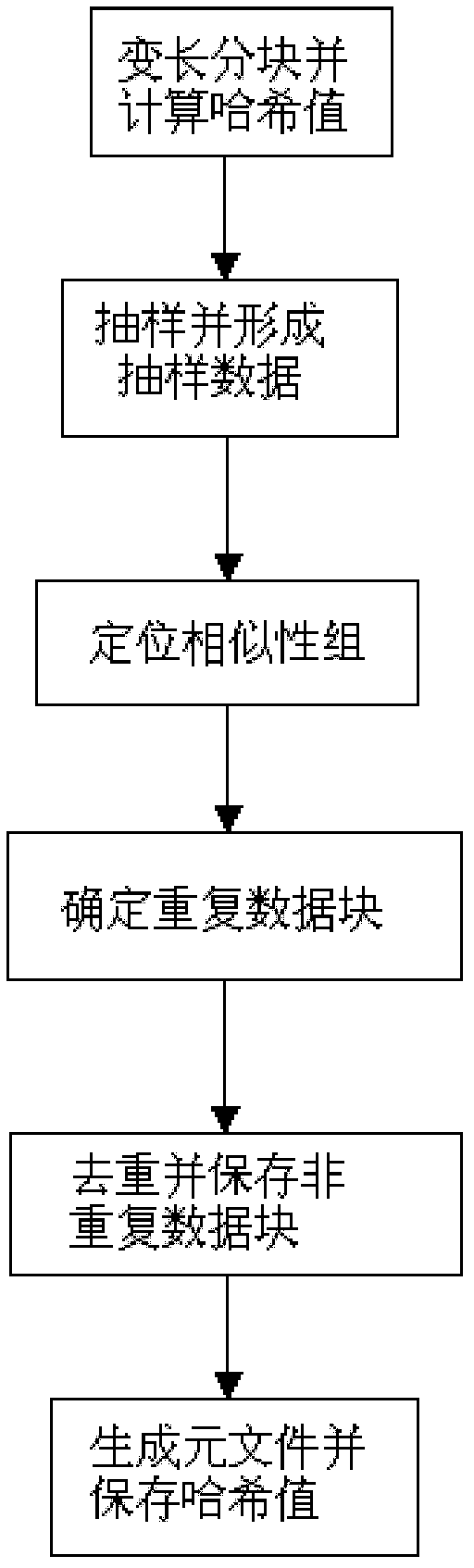

Data de-duplication method

InactiveCN102323958AGuaranteed occupancyReduce occupancySpecial data processing applicationsData miningData library

The invention discloses a data de-duplication method, which comprises the following steps of: writing a file, lengthening the file, dividing the file into a plurality of data blocks with different lengths, and calculating Hash values of the data blocks; sampling the Hash values, and thus forming the sampling data of the file; by comparing the sampling data of the file with the sampling data of the conventional file, positioning a similarity group of the file; by comparing the Hash value of the file with the Hash value of the similarity group in a meta database, determining duplicated data blocks; de-duplicating, and storing non-duplicated data blocks; and generating a meta file, and storing the Hash values of the non-duplicated data blocks into the meta database. By adoption of the data de-duplication method, the occupation of de-duplication operation on resources of a system can be dynamically adjusted, the performance of in-line service is preferentially guaranteed, and the influence of the in-line service of the service is minimized. The data de-duplication method has the characteristics of high reliability, good stability and higher de-duplication rate.

Owner:上海文广互动电视有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com